SLAM In Healthcare Robotics For Assisted Surgery

SEP 12, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

SLAM Technology Evolution in Surgical Robotics

SLAM technology in surgical robotics has undergone significant evolution since its initial adaptation from autonomous navigation systems. The journey began in the early 2000s with rudimentary implementations focused primarily on basic spatial mapping of operating rooms, with limited real-time capabilities and accuracy constraints that prevented clinical adoption.

By 2010-2015, the second generation of surgical SLAM systems emerged, characterized by improved computational efficiency and the integration of stereo vision techniques. These advancements allowed for more precise tracking of surgical instruments and anatomical structures, though still facing challenges with tissue deformation and dynamic environments typical in surgical settings.

The period from 2015-2018 marked a transformative phase with the incorporation of deep learning algorithms into SLAM frameworks. This integration enabled more robust feature extraction and tracking in visually complex surgical environments. Simultaneously, sensor fusion techniques began combining visual data with force feedback and electromagnetic tracking, creating more resilient systems capable of maintaining spatial awareness even during occlusion events.

From 2019 onwards, real-time deformable SLAM emerged as a breakthrough technology, addressing one of the most significant challenges in surgical applications—the non-rigid nature of biological tissues. These systems incorporated biomechanical models to predict and compensate for tissue deformation during surgical manipulation, dramatically improving tracking accuracy during actual procedures.

The most recent evolution (2021-present) has seen the development of context-aware SLAM systems that incorporate procedural knowledge and anatomical constraints. These systems can differentiate between various tissue types and surgical phases, adapting their mapping and localization strategies accordingly. Edge computing architectures have also been implemented to reduce latency, a critical factor in surgical applications where milliseconds matter.

Miniaturization represents another significant evolutionary trend, with SLAM sensors and processing units becoming increasingly compact, enabling integration into smaller surgical tools and robotic end-effectors without compromising the surgical workflow or requiring additional equipment in the already crowded operating theater.

Looking forward, the convergence of SLAM with augmented reality interfaces is creating intuitive visualization systems that overlay critical information directly onto the surgeon's view, while cloud-based collaborative SLAM frameworks are beginning to enable knowledge sharing across procedures and institutions, creating continuously improving surgical navigation systems.

By 2010-2015, the second generation of surgical SLAM systems emerged, characterized by improved computational efficiency and the integration of stereo vision techniques. These advancements allowed for more precise tracking of surgical instruments and anatomical structures, though still facing challenges with tissue deformation and dynamic environments typical in surgical settings.

The period from 2015-2018 marked a transformative phase with the incorporation of deep learning algorithms into SLAM frameworks. This integration enabled more robust feature extraction and tracking in visually complex surgical environments. Simultaneously, sensor fusion techniques began combining visual data with force feedback and electromagnetic tracking, creating more resilient systems capable of maintaining spatial awareness even during occlusion events.

From 2019 onwards, real-time deformable SLAM emerged as a breakthrough technology, addressing one of the most significant challenges in surgical applications—the non-rigid nature of biological tissues. These systems incorporated biomechanical models to predict and compensate for tissue deformation during surgical manipulation, dramatically improving tracking accuracy during actual procedures.

The most recent evolution (2021-present) has seen the development of context-aware SLAM systems that incorporate procedural knowledge and anatomical constraints. These systems can differentiate between various tissue types and surgical phases, adapting their mapping and localization strategies accordingly. Edge computing architectures have also been implemented to reduce latency, a critical factor in surgical applications where milliseconds matter.

Miniaturization represents another significant evolutionary trend, with SLAM sensors and processing units becoming increasingly compact, enabling integration into smaller surgical tools and robotic end-effectors without compromising the surgical workflow or requiring additional equipment in the already crowded operating theater.

Looking forward, the convergence of SLAM with augmented reality interfaces is creating intuitive visualization systems that overlay critical information directly onto the surgeon's view, while cloud-based collaborative SLAM frameworks are beginning to enable knowledge sharing across procedures and institutions, creating continuously improving surgical navigation systems.

Market Analysis for SLAM-Enabled Surgical Systems

The global market for SLAM-enabled surgical systems is experiencing significant growth, driven by increasing demand for minimally invasive procedures and precision surgery. Currently valued at approximately $4.2 billion in 2023, this segment is projected to reach $9.7 billion by 2028, representing a compound annual growth rate (CAGR) of 18.3%. This remarkable growth trajectory is supported by several key market factors that are reshaping the landscape of surgical robotics.

North America dominates the market with a 42% share, followed by Europe (31%) and Asia-Pacific (21%). The remaining 6% is distributed across other regions. The United States leads in adoption rates due to its advanced healthcare infrastructure and substantial investment in medical technology innovation. However, China and India are emerging as the fastest-growing markets, with annual growth rates exceeding 25%, primarily driven by healthcare modernization initiatives and increasing access to advanced surgical technologies.

From a demand perspective, hospitals and ambulatory surgical centers constitute the primary customer base, accounting for 78% of total market revenue. Academic and research institutions represent 15%, while the remaining 7% comes from specialty clinics and other healthcare facilities. Large teaching hospitals and academic medical centers are typically early adopters, while community hospitals follow as technologies mature and costs decrease.

The market segmentation by surgical specialty reveals that neurosurgery (28%), orthopedic surgery (24%), and general surgery (22%) are the leading application areas for SLAM-enabled systems. Gynecological procedures (12%), urological surgeries (9%), and other specialties (5%) constitute the remainder of the market. Neurosurgical applications command premium pricing due to the critical nature of procedures and the high precision requirements.

Key market drivers include increasing prevalence of chronic diseases requiring surgical intervention, growing patient preference for minimally invasive procedures, and rising healthcare expenditure globally. Additionally, the aging population in developed economies is creating sustained demand for surgical interventions where precision is paramount.

Market barriers include high initial investment costs for SLAM-enabled surgical systems, which typically range from $1.5 million to $3 million per unit, plus annual maintenance costs of $100,000 to $150,000. Regulatory hurdles and the need for specialized training for surgical teams also present significant challenges to market expansion, particularly in emerging economies.

North America dominates the market with a 42% share, followed by Europe (31%) and Asia-Pacific (21%). The remaining 6% is distributed across other regions. The United States leads in adoption rates due to its advanced healthcare infrastructure and substantial investment in medical technology innovation. However, China and India are emerging as the fastest-growing markets, with annual growth rates exceeding 25%, primarily driven by healthcare modernization initiatives and increasing access to advanced surgical technologies.

From a demand perspective, hospitals and ambulatory surgical centers constitute the primary customer base, accounting for 78% of total market revenue. Academic and research institutions represent 15%, while the remaining 7% comes from specialty clinics and other healthcare facilities. Large teaching hospitals and academic medical centers are typically early adopters, while community hospitals follow as technologies mature and costs decrease.

The market segmentation by surgical specialty reveals that neurosurgery (28%), orthopedic surgery (24%), and general surgery (22%) are the leading application areas for SLAM-enabled systems. Gynecological procedures (12%), urological surgeries (9%), and other specialties (5%) constitute the remainder of the market. Neurosurgical applications command premium pricing due to the critical nature of procedures and the high precision requirements.

Key market drivers include increasing prevalence of chronic diseases requiring surgical intervention, growing patient preference for minimally invasive procedures, and rising healthcare expenditure globally. Additionally, the aging population in developed economies is creating sustained demand for surgical interventions where precision is paramount.

Market barriers include high initial investment costs for SLAM-enabled surgical systems, which typically range from $1.5 million to $3 million per unit, plus annual maintenance costs of $100,000 to $150,000. Regulatory hurdles and the need for specialized training for surgical teams also present significant challenges to market expansion, particularly in emerging economies.

Current SLAM Challenges in Operating Rooms

Operating rooms present unique challenges for SLAM (Simultaneous Localization and Mapping) systems in surgical robotics. The dynamic nature of these environments creates significant obstacles for accurate spatial mapping and navigation. Surgical staff movement, equipment repositioning, and the constant reconfiguration of the operating theater introduce unpredictable changes that conventional SLAM algorithms struggle to process in real-time.

Lighting conditions in operating rooms pose another substantial challenge. The combination of bright focused surgical lights, shadows, and reflective surfaces creates complex illumination patterns that can confuse vision-based SLAM systems. These lighting variations often lead to feature detection errors and tracking failures, compromising the reliability of spatial mapping during critical procedures.

The presence of soft tissues and deformable objects further complicates SLAM implementation. Unlike industrial environments with rigid structures, biological tissues change shape during surgery, creating non-static reference points. Current SLAM algorithms predominantly assume environmental rigidity, making them ill-suited for tracking and mapping these dynamic biological surfaces that deform, pulsate, and shift during surgical manipulation.

Sterility requirements impose severe constraints on sensor deployment and design. SLAM systems must function without compromising the sterile field, limiting the types of sensors that can be employed and their placement. This restriction often forces compromises in sensor coverage and quality, directly impacting mapping accuracy and completeness.

Real-time performance demands present another significant hurdle. Surgical SLAM systems must operate with minimal latency to support precise instrument control and augmented reality guidance. The computational complexity of processing high-resolution visual data while maintaining sub-millimeter accuracy creates substantial processing bottlenecks that current hardware struggles to overcome.

Occlusion management remains particularly problematic in the crowded operating room environment. Surgical instruments, hands, and equipment frequently obstruct camera views, creating discontinuities in visual tracking. These occlusions can lead to catastrophic tracking failures if not properly managed, requiring sophisticated prediction and recovery mechanisms that exceed the capabilities of standard SLAM implementations.

Integration with existing surgical workflows represents a final major challenge. SLAM systems must operate seamlessly alongside established surgical protocols without adding complexity or time to procedures. The technology must be intuitive enough for surgical teams to adopt without extensive training, while still providing the precision and reliability necessary for clinical applications.

Lighting conditions in operating rooms pose another substantial challenge. The combination of bright focused surgical lights, shadows, and reflective surfaces creates complex illumination patterns that can confuse vision-based SLAM systems. These lighting variations often lead to feature detection errors and tracking failures, compromising the reliability of spatial mapping during critical procedures.

The presence of soft tissues and deformable objects further complicates SLAM implementation. Unlike industrial environments with rigid structures, biological tissues change shape during surgery, creating non-static reference points. Current SLAM algorithms predominantly assume environmental rigidity, making them ill-suited for tracking and mapping these dynamic biological surfaces that deform, pulsate, and shift during surgical manipulation.

Sterility requirements impose severe constraints on sensor deployment and design. SLAM systems must function without compromising the sterile field, limiting the types of sensors that can be employed and their placement. This restriction often forces compromises in sensor coverage and quality, directly impacting mapping accuracy and completeness.

Real-time performance demands present another significant hurdle. Surgical SLAM systems must operate with minimal latency to support precise instrument control and augmented reality guidance. The computational complexity of processing high-resolution visual data while maintaining sub-millimeter accuracy creates substantial processing bottlenecks that current hardware struggles to overcome.

Occlusion management remains particularly problematic in the crowded operating room environment. Surgical instruments, hands, and equipment frequently obstruct camera views, creating discontinuities in visual tracking. These occlusions can lead to catastrophic tracking failures if not properly managed, requiring sophisticated prediction and recovery mechanisms that exceed the capabilities of standard SLAM implementations.

Integration with existing surgical workflows represents a final major challenge. SLAM systems must operate seamlessly alongside established surgical protocols without adding complexity or time to procedures. The technology must be intuitive enough for surgical teams to adopt without extensive training, while still providing the precision and reliability necessary for clinical applications.

Existing SLAM Solutions for Surgical Navigation

01 Visual SLAM techniques for autonomous navigation

Visual SLAM techniques use camera data to simultaneously map an environment and locate a device within it. These systems process visual features to create 3D maps while tracking the camera's position in real-time. Advanced algorithms handle feature extraction, matching, and optimization to ensure accurate localization even in dynamic environments. This approach is particularly valuable for autonomous vehicles, drones, and robots that need to navigate without external positioning systems.- Visual SLAM techniques for autonomous navigation: Visual SLAM systems use camera data to simultaneously map an environment and locate a device within it. These systems process visual features to create 3D maps while tracking the camera's position in real-time. Advanced implementations incorporate deep learning for improved feature detection and matching, enabling more robust performance in challenging environments such as low-light conditions or scenes with repetitive patterns.

- SLAM for robotics and autonomous vehicles: SLAM technology enables robots and autonomous vehicles to navigate unknown environments by building maps while simultaneously determining their position. These systems often combine multiple sensor inputs including LiDAR, cameras, and inertial measurement units to achieve accurate localization and mapping. The technology supports path planning, obstacle avoidance, and efficient navigation in dynamic environments without requiring pre-existing maps.

- Machine learning approaches to SLAM: Machine learning algorithms are increasingly integrated into SLAM systems to enhance performance and reliability. These approaches use neural networks for feature extraction, scene understanding, and loop closure detection. By learning from data, these systems can better handle environmental variations, improve mapping accuracy, and reduce computational requirements. Deep learning models help in identifying objects and understanding semantic information within the environment.

- AR/VR applications of SLAM technology: SLAM technology is crucial for augmented and virtual reality applications, enabling precise alignment of virtual content with the real world. These systems track the user's device in 3D space while mapping the surrounding environment, allowing for immersive and interactive experiences. SLAM for AR/VR must operate with minimal latency and high accuracy to maintain the illusion of virtual objects existing in physical space.

- Optimization techniques for real-time SLAM: Various optimization methods are employed to make SLAM systems operate efficiently in real-time on devices with limited computational resources. These include sparse mapping techniques, keyframe selection algorithms, and parallel processing approaches. Advanced optimization strategies focus on reducing memory usage, minimizing power consumption, and maintaining accuracy while processing sensor data at high frame rates.

02 Machine learning approaches for SLAM optimization

Machine learning algorithms are increasingly integrated into SLAM systems to improve accuracy and efficiency. Neural networks can enhance feature detection, predict motion patterns, and filter sensor noise. Deep learning models help with scene understanding and object recognition within mapped environments. These AI-enhanced SLAM systems can adapt to changing conditions and learn from previous mapping experiences, making them more robust for real-world applications.Expand Specific Solutions03 Sensor fusion for robust SLAM implementation

Combining data from multiple sensors enhances SLAM performance across diverse environments. Sensor fusion techniques integrate information from cameras, LiDAR, IMUs, and other sensors to overcome the limitations of any single sensor type. This approach improves mapping accuracy in challenging conditions such as low light, reflective surfaces, or featureless areas. Advanced fusion algorithms synchronize data streams and weight inputs based on reliability to create consistent environmental representations.Expand Specific Solutions04 Loop closure and map optimization techniques

Loop closure algorithms detect when a system revisits previously mapped areas, allowing for correction of accumulated errors. These techniques use feature matching and geometric consistency checks to identify revisited locations. Once loops are detected, global optimization methods adjust the entire map to maintain consistency. Advanced approaches incorporate probabilistic frameworks to handle uncertainty and improve the overall map quality, which is essential for long-term autonomous operation.Expand Specific Solutions05 Real-time SLAM for AR/VR applications

Real-time SLAM systems designed specifically for augmented and virtual reality applications focus on low latency and high precision tracking. These implementations often operate under strict computational constraints while maintaining accurate spatial awareness. Specialized algorithms prioritize tracking stability and quick initialization to provide seamless user experiences. The systems must handle rapid camera movements and varying lighting conditions while enabling realistic placement of virtual objects in the physical world.Expand Specific Solutions

Leading Companies in Surgical SLAM Technology

SLAM in healthcare robotics for assisted surgery is in the early growth phase, with the market expected to expand significantly due to increasing demand for precision surgical procedures. Key players include established medical technology companies like Intuitive Surgical Operations, Medtronic (Covidien), and Johnson & Johnson (DePuy Synthes), alongside emerging specialists such as Auris Health and Mazor Robotics. The technology is approaching maturity in certain applications but continues to evolve, with companies like Brainlab and XtalPi advancing AI integration. Major electronics corporations including Samsung, LG, and Mitsubishi Electric are also entering this space, leveraging their expertise in robotics and imaging technologies to develop next-generation surgical navigation systems.

Auris Health, Inc.

Technical Solution: Auris Health has developed a bronchoscopy-focused SLAM system for their Monarch Platform that specializes in navigating the complex branching structure of the lungs. Their approach combines electromagnetic tracking with computer vision algorithms to create and maintain accurate maps of the bronchial tree during diagnostic and therapeutic procedures. The system employs a unique "memory-augmented" SLAM technique that leverages preoperative CT scans as a baseline map, then continuously updates this model using real-time visual and electromagnetic data from the bronchoscope. This hybrid approach allows for robust navigation even when direct visualization is compromised by blood, mucus, or tissue contact. Their proprietary feature-tracking algorithms are specifically optimized for the textural and structural characteristics of bronchial passages, enabling reliable localization even in visually challenging environments. Recent advancements include automated path planning that identifies optimal routes to peripheral lung nodules while avoiding critical structures.

Strengths: Specialized optimization for bronchoscopic procedures; robust performance in challenging visual conditions; seamless integration of preoperative and intraoperative data. Weaknesses: Limited application outside pulmonary procedures; requires specialized training; dependent on initial CT imaging quality.

Mindmaze Group SA

Technical Solution: Mindmaze has developed a neural-enhanced SLAM system for surgical navigation that uniquely integrates brain-computer interfaces with spatial mapping technologies. Their platform combines conventional visual SLAM techniques with neural signal processing to create what they term "cognitive maps" of the surgical environment. This approach allows surgeons to interact with the navigation system through a combination of physical gestures, voice commands, and even direct neural inputs via their proprietary headsets. The system employs distributed computing architecture where initial mapping is performed on high-performance hardware, while real-time updates and tracking are handled by edge computing devices within the surgical field. Their SLAM implementation features adaptive resolution mapping that allocates computational resources to areas of current surgical focus while maintaining lower-resolution awareness of the broader anatomical context. Recent innovations include predictive movement compensation that anticipates surgeon actions based on neural signals detected milliseconds before physical movement occurs.

Strengths: Revolutionary neural interface integration; intuitive surgeon-system interaction; reduced cognitive load through intelligent assistance. Weaknesses: Emerging technology with limited clinical validation; requires specialized training; additional equipment in already crowded operating rooms.

Key Patents in Medical SLAM Implementation

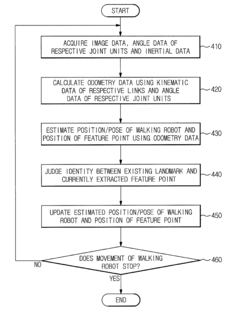

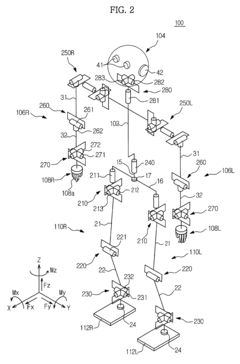

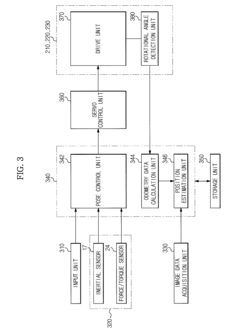

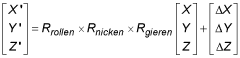

Walking robot and simultaneous localization and mapping method thereof

PatentActiveUS8873831B2

Innovation

- The implementation of odometry data, acquired through kinematic and rotational angle data, is integrated with image-based SLAM technology to improve the accuracy and convergence of localization, and inertial data is fused with odometry data to enhance the precision of position estimation and mapping.

procedures FOR SIMULTANEOUS LOCATION AND IMAGE

PatentActiveDE102019220616A1

Innovation

- A SLAM method that continuously detects the environment, classifies surrounding objects as moving or static, and filters out information related to moving objects using a SLAM algorithm to enhance positioning precision.

Clinical Validation Requirements for Surgical SLAM

The clinical validation of Surgical SLAM systems requires rigorous testing protocols that exceed standard robotics applications due to the high-stakes nature of surgical environments. Regulatory bodies such as the FDA in the United States and the EMA in Europe mandate comprehensive validation studies before SLAM-enabled surgical robots can be approved for clinical use. These validation requirements typically follow a multi-phase approach, beginning with laboratory testing on phantoms and cadavers, followed by animal studies, and culminating in carefully designed human clinical trials.

For initial validation, SLAM systems must demonstrate sub-millimeter accuracy in controlled environments, with particular emphasis on maintaining this precision during tissue deformation and instrument movement. Validation metrics must include not only spatial accuracy but also temporal consistency, system latency, and robustness under varying lighting conditions typical in operating rooms.

Safety validation represents a critical component, requiring fail-safe mechanisms that can detect when tracking quality deteriorates below acceptable thresholds. Systems must demonstrate graceful degradation rather than catastrophic failure when faced with challenging conditions such as sudden lighting changes, smoke from electrocautery, or blood obscuring visual features.

Clinical validation protocols must address the diversity of surgical procedures, with specialized testing for different anatomical regions and surgical approaches. For instance, SLAM systems intended for neurosurgery require validation of higher precision standards compared to those for abdominal procedures, where tissue deformation presents different challenges.

Real-time performance validation is essential, with requirements typically specifying maximum acceptable latency (usually under 100ms) and minimum frame rates (typically 25-30 fps) to ensure surgeon comfort and safety. These performance metrics must be maintained even during computationally intensive operations such as registration with pre-operative imaging.

Usability testing with surgical teams forms another crucial validation component, assessing how effectively surgeons can interpret and utilize the spatial information provided by SLAM systems during procedures. This includes evaluation of visualization interfaces and integration with existing surgical workflows.

Long-term validation studies are increasingly required to assess system drift over extended procedures, with some regulatory bodies now requesting data on performance during surgeries lasting several hours. Additionally, validation protocols must include stress testing under worst-case scenarios such as partial occlusion of cameras, unexpected instrument movements, and rapid patient position changes.

For initial validation, SLAM systems must demonstrate sub-millimeter accuracy in controlled environments, with particular emphasis on maintaining this precision during tissue deformation and instrument movement. Validation metrics must include not only spatial accuracy but also temporal consistency, system latency, and robustness under varying lighting conditions typical in operating rooms.

Safety validation represents a critical component, requiring fail-safe mechanisms that can detect when tracking quality deteriorates below acceptable thresholds. Systems must demonstrate graceful degradation rather than catastrophic failure when faced with challenging conditions such as sudden lighting changes, smoke from electrocautery, or blood obscuring visual features.

Clinical validation protocols must address the diversity of surgical procedures, with specialized testing for different anatomical regions and surgical approaches. For instance, SLAM systems intended for neurosurgery require validation of higher precision standards compared to those for abdominal procedures, where tissue deformation presents different challenges.

Real-time performance validation is essential, with requirements typically specifying maximum acceptable latency (usually under 100ms) and minimum frame rates (typically 25-30 fps) to ensure surgeon comfort and safety. These performance metrics must be maintained even during computationally intensive operations such as registration with pre-operative imaging.

Usability testing with surgical teams forms another crucial validation component, assessing how effectively surgeons can interpret and utilize the spatial information provided by SLAM systems during procedures. This includes evaluation of visualization interfaces and integration with existing surgical workflows.

Long-term validation studies are increasingly required to assess system drift over extended procedures, with some regulatory bodies now requesting data on performance during surgeries lasting several hours. Additionally, validation protocols must include stress testing under worst-case scenarios such as partial occlusion of cameras, unexpected instrument movements, and rapid patient position changes.

Safety Standards for SLAM-Based Surgical Robotics

The development of safety standards for SLAM-based surgical robotics represents a critical frontier in healthcare technology regulation. Current standards primarily derive from general medical device frameworks such as ISO 13485 for quality management systems and IEC 60601 for electrical safety, which provide foundational requirements but lack specificity for autonomous navigation in surgical environments.

Regulatory bodies including the FDA in the United States, the EMA in Europe, and the PMDA in Japan have begun developing specialized guidelines addressing the unique challenges of SLAM technology in surgical applications. These emerging standards focus on three critical domains: system reliability, real-time performance validation, and failure mode management.

System reliability standards emphasize the need for robust localization accuracy within sub-millimeter tolerances, particularly crucial during critical surgical procedures. The FDA's recent guidance document (2022) specifies that SLAM systems must maintain spatial accuracy of 0.5mm or better in 99.99% of operational scenarios, with comprehensive validation protocols across diverse anatomical environments.

Real-time performance validation requirements address the computational demands of SLAM algorithms in dynamic surgical settings. Standards now mandate maximum latency thresholds of 100ms for visual feedback and 50ms for robotic control loops. Additionally, systems must demonstrate consistent performance under varying lighting conditions, tissue deformation scenarios, and in the presence of surgical smoke or fluids.

Failure mode management represents perhaps the most stringent aspect of safety standards for surgical SLAM systems. Requirements now include mandatory redundant sensing modalities, graceful degradation protocols, and comprehensive fault detection mechanisms. The IEC 80601-2-77 extension specifically requires SLAM-based surgical systems to implement three-tier safety architectures with independent verification channels.

Certification processes have evolved to include specialized testing protocols for SLAM-based surgical robots. These include simulated surgical scenarios with anatomical phantoms, deliberate sensor occlusion tests, and electromagnetic interference challenges. Manufacturers must demonstrate system resilience through extensive documentation of edge cases and recovery mechanisms.

Looking forward, standards bodies are actively developing frameworks for AI-enhanced SLAM systems that incorporate machine learning for improved feature recognition and tracking. These emerging standards will likely address model validation, continuous learning safeguards, and explainability requirements to ensure that autonomous navigation decisions remain transparent to surgical teams.

Regulatory bodies including the FDA in the United States, the EMA in Europe, and the PMDA in Japan have begun developing specialized guidelines addressing the unique challenges of SLAM technology in surgical applications. These emerging standards focus on three critical domains: system reliability, real-time performance validation, and failure mode management.

System reliability standards emphasize the need for robust localization accuracy within sub-millimeter tolerances, particularly crucial during critical surgical procedures. The FDA's recent guidance document (2022) specifies that SLAM systems must maintain spatial accuracy of 0.5mm or better in 99.99% of operational scenarios, with comprehensive validation protocols across diverse anatomical environments.

Real-time performance validation requirements address the computational demands of SLAM algorithms in dynamic surgical settings. Standards now mandate maximum latency thresholds of 100ms for visual feedback and 50ms for robotic control loops. Additionally, systems must demonstrate consistent performance under varying lighting conditions, tissue deformation scenarios, and in the presence of surgical smoke or fluids.

Failure mode management represents perhaps the most stringent aspect of safety standards for surgical SLAM systems. Requirements now include mandatory redundant sensing modalities, graceful degradation protocols, and comprehensive fault detection mechanisms. The IEC 80601-2-77 extension specifically requires SLAM-based surgical systems to implement three-tier safety architectures with independent verification channels.

Certification processes have evolved to include specialized testing protocols for SLAM-based surgical robots. These include simulated surgical scenarios with anatomical phantoms, deliberate sensor occlusion tests, and electromagnetic interference challenges. Manufacturers must demonstrate system resilience through extensive documentation of edge cases and recovery mechanisms.

Looking forward, standards bodies are actively developing frameworks for AI-enhanced SLAM systems that incorporate machine learning for improved feature recognition and tracking. These emerging standards will likely address model validation, continuous learning safeguards, and explainability requirements to ensure that autonomous navigation decisions remain transparent to surgical teams.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!