SLAM Techniques For Micro Aerial Vehicles

SEP 12, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

MAV SLAM Evolution and Objectives

Simultaneous Localization and Mapping (SLAM) for Micro Aerial Vehicles (MAVs) has evolved significantly over the past two decades, transforming from theoretical concepts to practical implementations that enable autonomous navigation in complex environments. The evolution began in the early 2000s with ground-based SLAM algorithms being adapted for aerial platforms, facing unique challenges due to the three-dimensional movement capabilities and limited payload capacity of MAVs.

The initial SLAM approaches for MAVs relied heavily on external infrastructure such as motion capture systems or GPS, limiting their operational environments. By the mid-2000s, researchers began developing visual SLAM techniques specifically tailored for aerial vehicles, marking a significant milestone in MAV autonomy. These early systems typically utilized monocular cameras due to weight constraints, though they struggled with scale ambiguity issues inherent in monocular vision.

Between 2010 and 2015, the field witnessed substantial advancements with the introduction of RGB-D sensors and lightweight LiDAR systems that could be carried by larger MAVs. This period also saw the development of filter-based approaches like Extended Kalman Filter (EKF) SLAM and optimization-based methods such as graph SLAM, which improved accuracy and robustness in dynamic environments.

The current technological landscape features a diverse array of SLAM techniques for MAVs, including visual-inertial odometry (VIO), which fuses camera data with inertial measurements to achieve more reliable state estimation. Event-based cameras have emerged as promising sensors for high-speed MAV navigation due to their high temporal resolution and dynamic range. Additionally, semantic SLAM approaches that incorporate object recognition are enhancing environmental understanding capabilities.

The primary objectives of MAV SLAM research focus on several key areas: reducing computational requirements to enable real-time processing on resource-constrained platforms; improving robustness against environmental challenges such as varying lighting conditions, textureless surfaces, and dynamic obstacles; enhancing accuracy for precise navigation in confined spaces; and extending operational duration through energy-efficient algorithms.

Future directions in MAV SLAM technology aim to achieve fully autonomous navigation in GPS-denied environments without prior mapping, enable collaborative SLAM among multiple MAVs for faster exploration and mapping of large areas, and develop systems capable of long-term operation with minimal human intervention. These advancements will be crucial for applications including search and rescue operations, infrastructure inspection, precision agriculture, and urban air mobility.

The initial SLAM approaches for MAVs relied heavily on external infrastructure such as motion capture systems or GPS, limiting their operational environments. By the mid-2000s, researchers began developing visual SLAM techniques specifically tailored for aerial vehicles, marking a significant milestone in MAV autonomy. These early systems typically utilized monocular cameras due to weight constraints, though they struggled with scale ambiguity issues inherent in monocular vision.

Between 2010 and 2015, the field witnessed substantial advancements with the introduction of RGB-D sensors and lightweight LiDAR systems that could be carried by larger MAVs. This period also saw the development of filter-based approaches like Extended Kalman Filter (EKF) SLAM and optimization-based methods such as graph SLAM, which improved accuracy and robustness in dynamic environments.

The current technological landscape features a diverse array of SLAM techniques for MAVs, including visual-inertial odometry (VIO), which fuses camera data with inertial measurements to achieve more reliable state estimation. Event-based cameras have emerged as promising sensors for high-speed MAV navigation due to their high temporal resolution and dynamic range. Additionally, semantic SLAM approaches that incorporate object recognition are enhancing environmental understanding capabilities.

The primary objectives of MAV SLAM research focus on several key areas: reducing computational requirements to enable real-time processing on resource-constrained platforms; improving robustness against environmental challenges such as varying lighting conditions, textureless surfaces, and dynamic obstacles; enhancing accuracy for precise navigation in confined spaces; and extending operational duration through energy-efficient algorithms.

Future directions in MAV SLAM technology aim to achieve fully autonomous navigation in GPS-denied environments without prior mapping, enable collaborative SLAM among multiple MAVs for faster exploration and mapping of large areas, and develop systems capable of long-term operation with minimal human intervention. These advancements will be crucial for applications including search and rescue operations, infrastructure inspection, precision agriculture, and urban air mobility.

Market Analysis for MAV SLAM Applications

The global market for Micro Aerial Vehicle (MAV) SLAM applications is experiencing robust growth, driven by increasing adoption across diverse sectors. The current market size is estimated at $2.3 billion, with projections indicating a compound annual growth rate of 18.7% through 2028. This growth trajectory is supported by the expanding application landscape and technological advancements in miniaturized SLAM systems.

Commercial drone applications represent the largest market segment, accounting for approximately 42% of the total MAV SLAM market. Within this segment, aerial photography, mapping, and surveying services dominate, particularly in construction, real estate, and infrastructure inspection industries. The precision and efficiency offered by SLAM-equipped MAVs have significantly reduced operational costs and improved data quality for these applications.

The industrial inspection sector has emerged as the fastest-growing application area, with a 23.5% annual growth rate. Oil and gas companies, utility providers, and manufacturing facilities are increasingly deploying SLAM-enabled MAVs for infrastructure monitoring, reducing human risk exposure and operational downtime. The ability to navigate complex indoor environments without GPS has proven particularly valuable in these settings.

Defense and security applications constitute another significant market segment, valued at $580 million. Military organizations worldwide are investing in MAV SLAM technologies for reconnaissance, surveillance, and tactical operations in GPS-denied environments. The demand for autonomous navigation capabilities in urban warfare scenarios has accelerated research funding in this domain.

Regional analysis reveals North America as the dominant market, holding 38% of the global share, followed by Europe (29%) and Asia-Pacific (24%). However, the Asia-Pacific region is expected to witness the highest growth rate, driven by increasing industrial automation and infrastructure development in China, Japan, and South Korea.

Consumer applications represent an emerging opportunity, particularly in the prosumer drone segment. As SLAM technologies become more affordable and compact, their integration into consumer-grade MAVs is creating new market possibilities for indoor navigation and obstacle avoidance features.

Key market challenges include price sensitivity, as high-performance SLAM systems remain costly for mass-market applications. Additionally, regulatory constraints regarding autonomous drone operations in various countries continue to impact market expansion. Despite these challenges, the convergence of decreasing sensor costs, improved computational efficiency, and expanding use cases indicates strong long-term market potential for MAV SLAM technologies.

Commercial drone applications represent the largest market segment, accounting for approximately 42% of the total MAV SLAM market. Within this segment, aerial photography, mapping, and surveying services dominate, particularly in construction, real estate, and infrastructure inspection industries. The precision and efficiency offered by SLAM-equipped MAVs have significantly reduced operational costs and improved data quality for these applications.

The industrial inspection sector has emerged as the fastest-growing application area, with a 23.5% annual growth rate. Oil and gas companies, utility providers, and manufacturing facilities are increasingly deploying SLAM-enabled MAVs for infrastructure monitoring, reducing human risk exposure and operational downtime. The ability to navigate complex indoor environments without GPS has proven particularly valuable in these settings.

Defense and security applications constitute another significant market segment, valued at $580 million. Military organizations worldwide are investing in MAV SLAM technologies for reconnaissance, surveillance, and tactical operations in GPS-denied environments. The demand for autonomous navigation capabilities in urban warfare scenarios has accelerated research funding in this domain.

Regional analysis reveals North America as the dominant market, holding 38% of the global share, followed by Europe (29%) and Asia-Pacific (24%). However, the Asia-Pacific region is expected to witness the highest growth rate, driven by increasing industrial automation and infrastructure development in China, Japan, and South Korea.

Consumer applications represent an emerging opportunity, particularly in the prosumer drone segment. As SLAM technologies become more affordable and compact, their integration into consumer-grade MAVs is creating new market possibilities for indoor navigation and obstacle avoidance features.

Key market challenges include price sensitivity, as high-performance SLAM systems remain costly for mass-market applications. Additionally, regulatory constraints regarding autonomous drone operations in various countries continue to impact market expansion. Despite these challenges, the convergence of decreasing sensor costs, improved computational efficiency, and expanding use cases indicates strong long-term market potential for MAV SLAM technologies.

SLAM Challenges in Micro Aerial Vehicles

Simultaneous Localization and Mapping (SLAM) for Micro Aerial Vehicles (MAVs) presents unique challenges compared to ground-based robotic systems. The miniaturized nature of MAVs imposes severe constraints on computational resources, power consumption, and payload capacity, significantly limiting the complexity and weight of onboard SLAM systems.

Weight constraints represent one of the most critical challenges, as MAVs typically have payload capacities ranging from a few grams to several hundred grams. This restricts the types of sensors that can be deployed, often necessitating the use of lightweight cameras instead of heavier LiDAR systems, which in turn creates challenges for accurate depth perception and environmental mapping.

Power limitations further compound these difficulties, as energy-intensive sensors and processors can dramatically reduce flight time. Most commercial MAVs operate with flight durations between 10-30 minutes, requiring SLAM algorithms to be highly efficient to maximize operational capabilities within these constraints.

Computational resources on MAVs are typically limited to embedded processors with restricted memory and processing power. This necessitates algorithmic optimizations and potential offloading of computationally intensive tasks to ground stations, introducing latency concerns for real-time navigation applications.

The dynamic nature of MAV movement presents additional challenges for SLAM implementation. Unlike ground robots, MAVs operate in three-dimensional space with six degrees of freedom, experiencing rapid movements, vibrations, and rotations that can cause motion blur in visual sensors and complicate feature tracking. These dynamics require specialized motion models and sensor fusion approaches to maintain accurate state estimation.

Environmental factors also pose significant challenges. MAVs often operate in GPS-denied environments such as indoor spaces, forests, or urban canyons, requiring SLAM systems to function reliably without external positioning references. Variable lighting conditions, reflective surfaces, and textureless areas further complicate visual SLAM implementations.

Scale ambiguity represents another fundamental challenge for monocular vision-based SLAM systems commonly used in MAVs due to weight constraints. Without additional sensors or known reference objects, determining absolute scale from a single camera is mathematically impossible, leading to potential drift in position estimates over time.

Real-time performance requirements add another layer of complexity, as MAVs need immediate feedback for stable flight control and obstacle avoidance. This necessitates careful balancing between accuracy and computational efficiency, often requiring algorithmic compromises that may impact mapping quality or localization precision.

Weight constraints represent one of the most critical challenges, as MAVs typically have payload capacities ranging from a few grams to several hundred grams. This restricts the types of sensors that can be deployed, often necessitating the use of lightweight cameras instead of heavier LiDAR systems, which in turn creates challenges for accurate depth perception and environmental mapping.

Power limitations further compound these difficulties, as energy-intensive sensors and processors can dramatically reduce flight time. Most commercial MAVs operate with flight durations between 10-30 minutes, requiring SLAM algorithms to be highly efficient to maximize operational capabilities within these constraints.

Computational resources on MAVs are typically limited to embedded processors with restricted memory and processing power. This necessitates algorithmic optimizations and potential offloading of computationally intensive tasks to ground stations, introducing latency concerns for real-time navigation applications.

The dynamic nature of MAV movement presents additional challenges for SLAM implementation. Unlike ground robots, MAVs operate in three-dimensional space with six degrees of freedom, experiencing rapid movements, vibrations, and rotations that can cause motion blur in visual sensors and complicate feature tracking. These dynamics require specialized motion models and sensor fusion approaches to maintain accurate state estimation.

Environmental factors also pose significant challenges. MAVs often operate in GPS-denied environments such as indoor spaces, forests, or urban canyons, requiring SLAM systems to function reliably without external positioning references. Variable lighting conditions, reflective surfaces, and textureless areas further complicate visual SLAM implementations.

Scale ambiguity represents another fundamental challenge for monocular vision-based SLAM systems commonly used in MAVs due to weight constraints. Without additional sensors or known reference objects, determining absolute scale from a single camera is mathematically impossible, leading to potential drift in position estimates over time.

Real-time performance requirements add another layer of complexity, as MAVs need immediate feedback for stable flight control and obstacle avoidance. This necessitates careful balancing between accuracy and computational efficiency, often requiring algorithmic compromises that may impact mapping quality or localization precision.

Current SLAM Solutions for MAVs

01 Visual SLAM for Augmented Reality

Visual Simultaneous Localization and Mapping (SLAM) techniques are used in augmented reality applications to track the position and orientation of devices in real-time while building a map of the surrounding environment. These techniques enable accurate overlay of virtual content onto the real world by using camera images to simultaneously estimate device motion and create a 3D representation of the environment. Advanced visual SLAM systems incorporate feature detection, tracking, and mapping algorithms to provide seamless AR experiences even in challenging environments.- Visual SLAM for Augmented Reality: Visual Simultaneous Localization and Mapping (SLAM) techniques are used in augmented reality applications to track the position and orientation of devices in real-time while building a map of the surrounding environment. These techniques enable accurate overlay of virtual content onto the real world by using camera images to detect features and estimate motion. Advanced visual SLAM systems can handle challenging conditions such as rapid movement and varying lighting conditions.

- SLAM for Autonomous Navigation: SLAM techniques are crucial for autonomous navigation systems in robotics and vehicles. These methods allow devices to simultaneously create maps of unknown environments while keeping track of their location within these maps. The integration of sensors such as LiDAR, cameras, and IMUs enhances the accuracy and robustness of navigation. These systems can detect obstacles, plan paths, and make real-time decisions for safe and efficient movement.

- LiDAR-based SLAM Systems: LiDAR-based SLAM systems utilize laser scanning technology to create precise 3D maps of environments. These systems offer advantages in accuracy and range compared to camera-only solutions, particularly in challenging lighting conditions or feature-poor environments. The point cloud data generated by LiDAR sensors enables detailed environmental mapping and robust localization, making these systems suitable for applications requiring high precision such as autonomous vehicles and industrial robotics.

- Sensor Fusion in SLAM: Sensor fusion approaches in SLAM combine data from multiple sensor types to improve accuracy and reliability. By integrating information from cameras, LiDAR, radar, IMUs, and other sensors, these systems can overcome the limitations of individual sensors. Fusion algorithms typically employ probabilistic methods such as Kalman filters or particle filters to combine sensor data optimally. This approach enhances performance in challenging environments and increases robustness against sensor failures.

- SLAM for Indoor Positioning: SLAM techniques specialized for indoor environments address the challenges of GPS-denied locations. These systems often rely on visual features, Wi-Fi signals, magnetic field variations, or architectural elements to establish position. Indoor SLAM solutions are designed to handle the unique challenges of enclosed spaces, such as reflective surfaces, repetitive features, and dynamic obstacles. Applications include indoor navigation, facility management, and location-based services in buildings.

02 SLAM for Autonomous Navigation

SLAM techniques are fundamental for autonomous navigation systems, allowing vehicles and robots to navigate unknown environments without external positioning infrastructure. These systems combine sensor data from cameras, LiDAR, and other sensors to build maps while simultaneously determining their position within those maps. The technology enables path planning, obstacle avoidance, and efficient navigation in dynamic environments, making it essential for self-driving cars, drones, and mobile robots.Expand Specific Solutions03 LiDAR-based SLAM Systems

LiDAR-based SLAM techniques utilize laser scanning technology to create precise 3D maps of environments while tracking position. These systems offer advantages in accuracy and reliability, particularly in environments where visual features may be limited. LiDAR SLAM algorithms process point cloud data to identify distinctive features, estimate motion, and construct detailed environmental models. The technology is particularly valuable in applications requiring high-precision mapping such as autonomous vehicles, robotics, and industrial automation.Expand Specific Solutions04 Multi-sensor Fusion SLAM

Multi-sensor fusion SLAM techniques integrate data from multiple sensor types to improve mapping accuracy and robustness. By combining complementary sensors such as cameras, LiDAR, IMUs, and radar, these systems overcome the limitations of single-sensor approaches. Sensor fusion algorithms synchronize and integrate heterogeneous data streams to provide more complete environmental understanding and more reliable position estimation, particularly in challenging conditions like poor lighting or featureless environments.Expand Specific Solutions05 SLAM for Indoor Positioning

SLAM techniques specialized for indoor environments enable precise positioning and mapping where GPS signals are unavailable. These systems often rely on visual features, Wi-Fi signals, magnetic field variations, or specialized indoor sensors to create maps and track position. Indoor SLAM solutions address unique challenges such as repetitive structures, dynamic objects, and limited sensing range. Applications include indoor navigation assistance, retail analytics, facility management, and augmented reality experiences in interior spaces.Expand Specific Solutions

Leading Companies in MAV SLAM Development

The SLAM (Simultaneous Localization and Mapping) techniques for Micro Aerial Vehicles (MAVs) market is currently in a growth phase, with an estimated market size of $2-3 billion and projected annual growth of 15-20%. The technology is approaching maturity but still faces challenges in real-time processing and power efficiency for small-scale aerial platforms. Academic institutions like Northwestern Polytechnical University, Shanghai Jiao Tong University, and Zhejiang University are leading fundamental research, while companies such as UISEE Technologies, Samsung Electronics, and Ford Global Technologies are commercializing applications. The competitive landscape shows a balanced distribution between specialized MAV manufacturers, technology giants investing in autonomous systems, and research institutions developing core algorithms, with increasing industry-academia collaborations accelerating innovation.

Northwestern Polytechnical University

Technical Solution: Northwestern Polytechnical University has developed an innovative event-based SLAM system specifically designed for high-speed MAV operations. Unlike conventional frame-based approaches, their system utilizes neuromorphic event cameras that capture pixel-level brightness changes with microsecond resolution. This enables robust tracking during rapid rotations and in high dynamic range environments where traditional cameras would suffer from motion blur or over/under-exposure. Their implementation features a hybrid mapping approach that combines sparse point features for global localization with semi-dense depth maps for local navigation. A key innovation is their asynchronous processing pipeline that handles event data streams without enforcing artificial frames, allowing for ultra-low latency state estimation critical for agile flight. The system incorporates an efficient direct event alignment method that minimizes computational overhead while maximizing information extraction from the event stream. Extensive flight tests have demonstrated the system's ability to maintain accurate localization during aggressive maneuvers exceeding 300 degrees/second in rotation and 3m/s in translation.

Strengths: Exceptional performance during high-speed maneuvers and challenging lighting conditions; ultra-low latency suitable for agile flight control; lower power consumption compared to traditional vision systems. Weaknesses: Requires specialized event camera hardware not commonly available on commercial MAVs; event processing algorithms are computationally intensive and require careful optimization.

Beijing Yihang Yuanzhi Technology Co., Ltd.

Technical Solution: Beijing Yihang Yuanzhi Technology (commonly known as EHang in international markets) has developed a comprehensive SLAM solution tailored for their autonomous aerial vehicle fleet. Their approach integrates visual-inertial odometry with LiDAR point cloud registration to achieve robust localization in diverse environments. The system features a multi-layered mapping framework that maintains different resolution maps for various operational needs: high-definition maps for precise landing, medium-resolution maps for navigation, and sparse maps for global positioning. A key innovation is their cloud-distributed SLAM architecture that enables individual vehicles to contribute to and benefit from collectively built maps, significantly enhancing operation in previously visited areas. Their implementation includes specialized algorithms for detecting and tracking dynamic objects, crucial for safe urban air mobility operations. The system employs advanced sensor fusion techniques that adaptively weight different sensor inputs based on environmental conditions and flight phases. EHang has extensively tested this SLAM solution across their autonomous aerial vehicle fleet, demonstrating reliable performance in urban environments with challenging features like glass facades and repetitive structures that typically confound traditional SLAM approaches.

Strengths: Comprehensive solution addressing the full spectrum of autonomous flight requirements; robust performance in urban environments; innovative cloud-distributed architecture enabling fleet-wide map sharing and updates. Weaknesses: Heavy reliance on high-quality sensors increases platform cost; complex system architecture requires significant computational resources, potentially limiting deployment on smaller MAVs.

Key SLAM Algorithms and Sensor Fusion Techniques

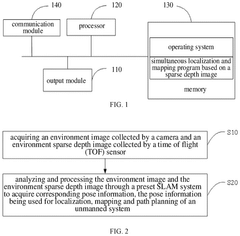

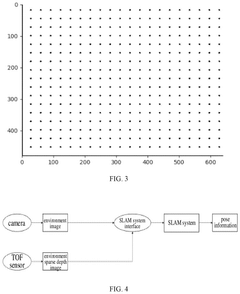

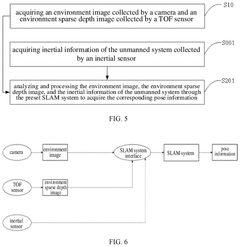

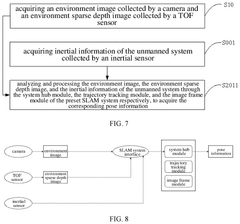

Slam method and device based on sparse depth image, terminal equipment and medium

PatentPendingUS20240346682A1

Innovation

- The proposed method utilizes sparse depth images acquired by a TOF sensor, combined with environment images from a camera, and inertial information, processed through a preset SLAM system to provide necessary depth information for localization, mapping, and path planning, thereby reducing the time required for depth information preparation and enhancing system performance.

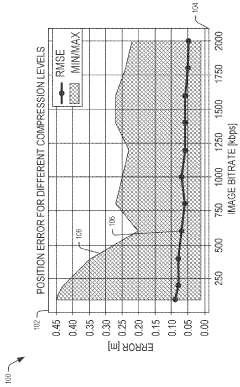

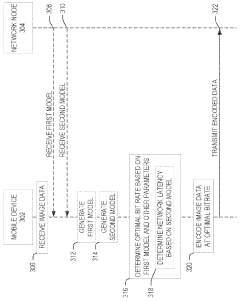

Bitrate adaptation for edge-assisted localization given network availability for mobile devices

PatentWO2024136880A1

Innovation

- A dynamic bitrate adaptation method for edge-assisted SLAM, where a model determines an optimal image data bitrate based on network latency, map presence, and environment bitrate, allowing for compression and transmission at an improved bitrate to enhance localization performance.

Power Efficiency Considerations for MAV SLAM

Power efficiency represents a critical constraint in the development and deployment of SLAM systems for Micro Aerial Vehicles (MAVs). The limited payload capacity of MAVs restricts battery size, creating a fundamental tension between flight time and computational capabilities. Most commercial MAVs currently achieve only 20-30 minutes of flight time under optimal conditions, with this duration significantly reduced when running power-intensive SLAM algorithms.

The power consumption profile of MAV SLAM systems can be divided into three primary components: sensing hardware, onboard computation, and communication overhead. Vision sensors typically consume between 0.5W to 2W depending on resolution and frame rate, while LiDAR systems demand substantially more power, ranging from 4W to 15W for units suitable for MAVs. Computational units represent another significant power drain, with embedded GPUs consuming 5-20W during intensive SLAM processing.

Recent advancements in power-efficient SLAM have focused on algorithmic optimizations that reduce computational complexity while maintaining acceptable accuracy. Techniques such as keyframe selection, map point culling, and adaptive feature extraction have demonstrated power reductions of 30-45% with minimal impact on localization accuracy. The emergence of event-based cameras presents a promising direction, as they capture only brightness changes rather than full frames, potentially reducing sensor power requirements by up to 70% in dynamic environments.

Hardware-software co-design approaches have shown particular promise in the MAV domain. Field-Programmable Gate Arrays (FPGAs) and Application-Specific Integrated Circuits (ASICs) tailored for SLAM workloads can achieve 5-10x better performance-per-watt compared to general-purpose processors. Intel's Movidius Neural Compute Stick and NVIDIA's Jetson Nano represent commercial implementations of this approach, offering energy-efficient platforms specifically designed for edge AI applications including visual SLAM.

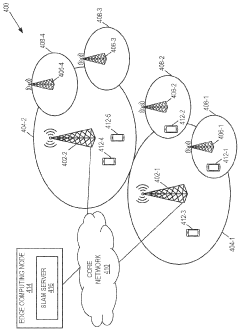

Distributed SLAM architectures present another avenue for power optimization, offloading computationally intensive tasks to ground stations or cloud resources. However, this approach introduces communication overhead and latency concerns that must be carefully balanced against power savings. Hybrid approaches that dynamically allocate processing between onboard and offboard resources based on battery status and mission requirements show particular promise for extending effective operational time.

The trade-off between accuracy and power consumption remains a fundamental challenge in MAV SLAM design. Research indicates that accepting a 5-10% reduction in localization precision can yield power savings of up to 60% through simplified algorithms and reduced sensor sampling rates, a compromise that may be acceptable for many applications where centimeter-level precision is not critical.

The power consumption profile of MAV SLAM systems can be divided into three primary components: sensing hardware, onboard computation, and communication overhead. Vision sensors typically consume between 0.5W to 2W depending on resolution and frame rate, while LiDAR systems demand substantially more power, ranging from 4W to 15W for units suitable for MAVs. Computational units represent another significant power drain, with embedded GPUs consuming 5-20W during intensive SLAM processing.

Recent advancements in power-efficient SLAM have focused on algorithmic optimizations that reduce computational complexity while maintaining acceptable accuracy. Techniques such as keyframe selection, map point culling, and adaptive feature extraction have demonstrated power reductions of 30-45% with minimal impact on localization accuracy. The emergence of event-based cameras presents a promising direction, as they capture only brightness changes rather than full frames, potentially reducing sensor power requirements by up to 70% in dynamic environments.

Hardware-software co-design approaches have shown particular promise in the MAV domain. Field-Programmable Gate Arrays (FPGAs) and Application-Specific Integrated Circuits (ASICs) tailored for SLAM workloads can achieve 5-10x better performance-per-watt compared to general-purpose processors. Intel's Movidius Neural Compute Stick and NVIDIA's Jetson Nano represent commercial implementations of this approach, offering energy-efficient platforms specifically designed for edge AI applications including visual SLAM.

Distributed SLAM architectures present another avenue for power optimization, offloading computationally intensive tasks to ground stations or cloud resources. However, this approach introduces communication overhead and latency concerns that must be carefully balanced against power savings. Hybrid approaches that dynamically allocate processing between onboard and offboard resources based on battery status and mission requirements show particular promise for extending effective operational time.

The trade-off between accuracy and power consumption remains a fundamental challenge in MAV SLAM design. Research indicates that accepting a 5-10% reduction in localization precision can yield power savings of up to 60% through simplified algorithms and reduced sensor sampling rates, a compromise that may be acceptable for many applications where centimeter-level precision is not critical.

Safety and Regulatory Framework for Autonomous MAVs

The integration of SLAM techniques in Micro Aerial Vehicles (MAVs) necessitates a robust safety and regulatory framework to ensure responsible deployment. Current regulations governing autonomous MAVs vary significantly across jurisdictions, creating a complex landscape for developers and operators. In the United States, the Federal Aviation Administration (FAA) has established Part 107 rules for small unmanned aircraft systems, requiring visual line-of-sight operations and imposing altitude restrictions, which presents challenges for fully autonomous SLAM-enabled MAVs. Similarly, the European Union Aviation Safety Agency (EASA) has implemented a risk-based regulatory approach categorizing drone operations based on risk levels.

Safety certification for SLAM-enabled MAVs requires comprehensive validation of perception systems, with particular emphasis on failure mode analysis and redundancy mechanisms. The dynamic nature of SLAM algorithms introduces unique challenges in safety certification, as traditional deterministic safety assessment methodologies may not adequately address the probabilistic nature of these systems. Industry standards such as DO-178C for software and ARP4754A for system development provide partial frameworks, but specific standards for autonomous navigation systems in MAVs remain underdeveloped.

Collision avoidance represents a critical safety component for autonomous MAVs, with regulatory bodies increasingly requiring detect-and-avoid capabilities. Current regulations typically mandate minimum separation distances from people, vehicles, and structures, while emerging standards are beginning to address dynamic obstacle avoidance requirements. The integration of SLAM-based navigation with dedicated collision avoidance systems presents both technical and regulatory challenges that must be addressed through comprehensive testing protocols.

Privacy and data security considerations also factor significantly into the regulatory framework. SLAM systems continuously capture and process environmental data, raising concerns about inadvertent surveillance and data protection. Regulations such as the EU's General Data Protection Regulation (GDPR) impose requirements on data collection and processing that directly impact SLAM implementation in commercial MAVs. Technical solutions including automatic data anonymization and selective mapping are emerging to address these concerns.

Looking forward, regulatory harmonization efforts are underway through organizations like the International Civil Aviation Organization (ICAO) and Joint Authorities for Rulemaking on Unmanned Systems (JARUS). These initiatives aim to develop performance-based standards that can accommodate rapid technological advancement while maintaining safety. The establishment of dedicated test sites and regulatory sandboxes provides opportunities for accelerated validation of SLAM-enabled autonomous MAV technologies under controlled conditions, potentially expediting the path to certification and widespread deployment.

Safety certification for SLAM-enabled MAVs requires comprehensive validation of perception systems, with particular emphasis on failure mode analysis and redundancy mechanisms. The dynamic nature of SLAM algorithms introduces unique challenges in safety certification, as traditional deterministic safety assessment methodologies may not adequately address the probabilistic nature of these systems. Industry standards such as DO-178C for software and ARP4754A for system development provide partial frameworks, but specific standards for autonomous navigation systems in MAVs remain underdeveloped.

Collision avoidance represents a critical safety component for autonomous MAVs, with regulatory bodies increasingly requiring detect-and-avoid capabilities. Current regulations typically mandate minimum separation distances from people, vehicles, and structures, while emerging standards are beginning to address dynamic obstacle avoidance requirements. The integration of SLAM-based navigation with dedicated collision avoidance systems presents both technical and regulatory challenges that must be addressed through comprehensive testing protocols.

Privacy and data security considerations also factor significantly into the regulatory framework. SLAM systems continuously capture and process environmental data, raising concerns about inadvertent surveillance and data protection. Regulations such as the EU's General Data Protection Regulation (GDPR) impose requirements on data collection and processing that directly impact SLAM implementation in commercial MAVs. Technical solutions including automatic data anonymization and selective mapping are emerging to address these concerns.

Looking forward, regulatory harmonization efforts are underway through organizations like the International Civil Aviation Organization (ICAO) and Joint Authorities for Rulemaking on Unmanned Systems (JARUS). These initiatives aim to develop performance-based standards that can accommodate rapid technological advancement while maintaining safety. The establishment of dedicated test sites and regulatory sandboxes provides opportunities for accelerated validation of SLAM-enabled autonomous MAV technologies under controlled conditions, potentially expediting the path to certification and widespread deployment.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!