Why Robust SLAM Matters In Long-Term Autonomy?

SEP 12, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

SLAM Evolution and Autonomy Goals

Simultaneous Localization and Mapping (SLAM) has evolved significantly since its inception in the 1980s, transitioning from theoretical concepts to practical implementations across various autonomous systems. The evolution of SLAM technology has been characterized by increasing sophistication in algorithms, sensor integration capabilities, and computational efficiency, all aimed at enhancing the autonomy of robotic systems in dynamic environments.

Early SLAM implementations relied heavily on extended Kalman filters and were limited to controlled, static environments. The mid-2000s saw a paradigm shift with the introduction of particle filters and graph-based optimization techniques, enabling more robust performance in semi-dynamic settings. Recent advancements have focused on deep learning integration, multi-sensor fusion architectures, and semantic understanding, pushing SLAM capabilities toward operation in highly dynamic, unstructured environments.

The primary goal of robust SLAM development is to achieve persistent autonomy—enabling systems to operate continuously over extended periods without human intervention. This requires not only accurate localization and mapping but also adaptation to environmental changes, handling of perceptual ambiguities, and recovery from failures. Long-term autonomy demands SLAM systems that can maintain performance despite seasonal variations, lighting changes, and structural modifications to the environment.

Another critical objective is achieving operational resilience across diverse conditions. Modern autonomous systems are expected to function reliably in challenging scenarios including low-light conditions, adverse weather, GPS-denied environments, and areas with minimal distinguishing features. The evolution of SLAM technology has been increasingly focused on addressing these edge cases that traditionally caused system failures.

Resource efficiency represents another significant goal in SLAM development. As autonomous systems become more widespread in commercial applications, SLAM solutions must balance computational requirements with power constraints, particularly for mobile and embedded platforms. The trend toward efficient algorithms and hardware-accelerated implementations reflects this priority.

The convergence of SLAM with other technologies such as artificial intelligence, cloud computing, and digital twin frameworks is shaping new autonomy paradigms. These integrations aim to create systems capable of not only navigating environments but also understanding them contextually, predicting changes, and making informed decisions based on spatial awareness and historical data.

As SLAM technology continues to mature, the focus has shifted from solving fundamental technical challenges to addressing practical deployment considerations that enable true long-term autonomy across diverse application domains including autonomous vehicles, service robotics, industrial automation, and augmented reality systems.

Early SLAM implementations relied heavily on extended Kalman filters and were limited to controlled, static environments. The mid-2000s saw a paradigm shift with the introduction of particle filters and graph-based optimization techniques, enabling more robust performance in semi-dynamic settings. Recent advancements have focused on deep learning integration, multi-sensor fusion architectures, and semantic understanding, pushing SLAM capabilities toward operation in highly dynamic, unstructured environments.

The primary goal of robust SLAM development is to achieve persistent autonomy—enabling systems to operate continuously over extended periods without human intervention. This requires not only accurate localization and mapping but also adaptation to environmental changes, handling of perceptual ambiguities, and recovery from failures. Long-term autonomy demands SLAM systems that can maintain performance despite seasonal variations, lighting changes, and structural modifications to the environment.

Another critical objective is achieving operational resilience across diverse conditions. Modern autonomous systems are expected to function reliably in challenging scenarios including low-light conditions, adverse weather, GPS-denied environments, and areas with minimal distinguishing features. The evolution of SLAM technology has been increasingly focused on addressing these edge cases that traditionally caused system failures.

Resource efficiency represents another significant goal in SLAM development. As autonomous systems become more widespread in commercial applications, SLAM solutions must balance computational requirements with power constraints, particularly for mobile and embedded platforms. The trend toward efficient algorithms and hardware-accelerated implementations reflects this priority.

The convergence of SLAM with other technologies such as artificial intelligence, cloud computing, and digital twin frameworks is shaping new autonomy paradigms. These integrations aim to create systems capable of not only navigating environments but also understanding them contextually, predicting changes, and making informed decisions based on spatial awareness and historical data.

As SLAM technology continues to mature, the focus has shifted from solving fundamental technical challenges to addressing practical deployment considerations that enable true long-term autonomy across diverse application domains including autonomous vehicles, service robotics, industrial automation, and augmented reality systems.

Market Demand for Long-Term Autonomous Systems

The market for long-term autonomous systems is experiencing unprecedented growth across multiple sectors, driven by technological advancements and increasing demand for automation solutions that can operate reliably over extended periods without human intervention. According to recent market analyses, the global autonomous systems market is projected to reach $623 billion by 2030, with a compound annual growth rate of 16.8% from 2023 to 2030.

In the industrial sector, manufacturing facilities are increasingly adopting autonomous mobile robots (AMRs) for material handling, inventory management, and quality control. These systems require persistent operation in dynamic environments where layouts may change and obstacles are unpredictable. The manufacturing robotics market segment alone is expected to grow at 19.3% CAGR through 2028, with robust SLAM capabilities cited as a critical enabler for this growth.

Logistics and warehousing represent another significant market driver, with companies like Amazon, DHL, and Alibaba deploying thousands of autonomous vehicles in their fulfillment centers. These environments demand systems that can operate continuously for 18+ hours daily while maintaining precise localization accuracy despite constant changes in inventory placement and human traffic patterns.

The autonomous vehicle industry presents perhaps the most visible market need for robust SLAM technologies. With major automotive manufacturers and technology companies investing billions in self-driving capabilities, the requirement for vehicles that can navigate reliably in all weather conditions, across seasons, and through infrastructure changes has become paramount. Market adoption hinges directly on the ability of these systems to maintain safety and reliability over years of operation.

Urban service robotics represents an emerging market segment with significant growth potential. From autonomous cleaning robots in commercial spaces to security patrol robots and last-mile delivery systems, these applications require robots to navigate complex, people-filled environments that undergo frequent changes. Market research indicates this segment could reach $95 billion by 2027, with SLAM robustness being a key technical differentiator among competing solutions.

Agriculture and mining sectors are increasingly adopting autonomous systems for field operations, crop monitoring, and resource extraction. These harsh outdoor environments present some of the most challenging conditions for SLAM systems, with dramatic lighting changes, weather effects, and seasonal variations. The agricultural robotics market alone is projected to grow at 22.5% CAGR through 2026, driven by labor shortages and efficiency demands.

Military and defense applications represent another significant market driver, with requirements for autonomous systems that can operate in GPS-denied environments for reconnaissance, logistics support, and other mission-critical functions. These applications demand SLAM systems that maintain reliability under extreme conditions and potential adversarial interference.

In the industrial sector, manufacturing facilities are increasingly adopting autonomous mobile robots (AMRs) for material handling, inventory management, and quality control. These systems require persistent operation in dynamic environments where layouts may change and obstacles are unpredictable. The manufacturing robotics market segment alone is expected to grow at 19.3% CAGR through 2028, with robust SLAM capabilities cited as a critical enabler for this growth.

Logistics and warehousing represent another significant market driver, with companies like Amazon, DHL, and Alibaba deploying thousands of autonomous vehicles in their fulfillment centers. These environments demand systems that can operate continuously for 18+ hours daily while maintaining precise localization accuracy despite constant changes in inventory placement and human traffic patterns.

The autonomous vehicle industry presents perhaps the most visible market need for robust SLAM technologies. With major automotive manufacturers and technology companies investing billions in self-driving capabilities, the requirement for vehicles that can navigate reliably in all weather conditions, across seasons, and through infrastructure changes has become paramount. Market adoption hinges directly on the ability of these systems to maintain safety and reliability over years of operation.

Urban service robotics represents an emerging market segment with significant growth potential. From autonomous cleaning robots in commercial spaces to security patrol robots and last-mile delivery systems, these applications require robots to navigate complex, people-filled environments that undergo frequent changes. Market research indicates this segment could reach $95 billion by 2027, with SLAM robustness being a key technical differentiator among competing solutions.

Agriculture and mining sectors are increasingly adopting autonomous systems for field operations, crop monitoring, and resource extraction. These harsh outdoor environments present some of the most challenging conditions for SLAM systems, with dramatic lighting changes, weather effects, and seasonal variations. The agricultural robotics market alone is projected to grow at 22.5% CAGR through 2026, driven by labor shortages and efficiency demands.

Military and defense applications represent another significant market driver, with requirements for autonomous systems that can operate in GPS-denied environments for reconnaissance, logistics support, and other mission-critical functions. These applications demand SLAM systems that maintain reliability under extreme conditions and potential adversarial interference.

Robust SLAM Challenges and Limitations

Despite significant advancements in SLAM (Simultaneous Localization and Mapping) technology, robust implementation for long-term autonomy faces numerous challenges. Environmental dynamics present a fundamental obstacle, as real-world settings constantly change through seasonal variations, weather conditions, lighting fluctuations, and human activities. These changes can render previously mapped features unrecognizable, causing localization failures in systems that rely on static environment assumptions.

Perceptual aliasing compounds these difficulties, particularly in repetitive environments like corridors, highways, or uniform urban landscapes where similar-looking features can trigger false loop closures. This phenomenon significantly degrades mapping accuracy and can lead to catastrophic localization failures when autonomous systems cannot distinguish between visually similar but spatially distinct locations.

Computational resource constraints pose another significant limitation, especially for mobile robots with restricted processing power, memory, and energy reserves. Real-time performance requirements often necessitate algorithmic compromises that impact robustness, creating a challenging balance between efficiency and reliability that becomes increasingly difficult to maintain over extended operational periods.

Sensor degradation and failure represent critical vulnerabilities in long-term deployments. Environmental factors like dust, moisture, and temperature fluctuations gradually degrade sensor performance, while physical impacts or component aging can cause complete failures. Most current SLAM systems lack sophisticated fault detection and recovery mechanisms to handle these inevitable hardware issues.

Scale and drift problems emerge prominently in extended operations, as small estimation errors accumulate over time and distance. Without external reference corrections, these errors can grow unbounded, particularly in visual or LiDAR-only SLAM systems that lack absolute positioning references like GPS.

Multi-session mapping presents unique challenges for maintaining consistent representations across separate operational periods. Current systems struggle with efficient map merging, update strategies, and managing the growing computational demands of expanding maps while preserving historical data integrity.

Semantic understanding limitations restrict many SLAM systems to geometric representations without meaningful object recognition or scene comprehension. This deficiency hampers adaptation to environmental changes, as systems cannot differentiate between temporary obstacles and permanent structural modifications, leading to unnecessary map updates or missed critical changes.

Cross-domain robustness remains elusive, with most SLAM solutions optimized for specific environments and struggling when deployed in different settings. The lack of generalized approaches that perform consistently across diverse operational domains significantly limits the versatility of autonomous systems in real-world applications.

Perceptual aliasing compounds these difficulties, particularly in repetitive environments like corridors, highways, or uniform urban landscapes where similar-looking features can trigger false loop closures. This phenomenon significantly degrades mapping accuracy and can lead to catastrophic localization failures when autonomous systems cannot distinguish between visually similar but spatially distinct locations.

Computational resource constraints pose another significant limitation, especially for mobile robots with restricted processing power, memory, and energy reserves. Real-time performance requirements often necessitate algorithmic compromises that impact robustness, creating a challenging balance between efficiency and reliability that becomes increasingly difficult to maintain over extended operational periods.

Sensor degradation and failure represent critical vulnerabilities in long-term deployments. Environmental factors like dust, moisture, and temperature fluctuations gradually degrade sensor performance, while physical impacts or component aging can cause complete failures. Most current SLAM systems lack sophisticated fault detection and recovery mechanisms to handle these inevitable hardware issues.

Scale and drift problems emerge prominently in extended operations, as small estimation errors accumulate over time and distance. Without external reference corrections, these errors can grow unbounded, particularly in visual or LiDAR-only SLAM systems that lack absolute positioning references like GPS.

Multi-session mapping presents unique challenges for maintaining consistent representations across separate operational periods. Current systems struggle with efficient map merging, update strategies, and managing the growing computational demands of expanding maps while preserving historical data integrity.

Semantic understanding limitations restrict many SLAM systems to geometric representations without meaningful object recognition or scene comprehension. This deficiency hampers adaptation to environmental changes, as systems cannot differentiate between temporary obstacles and permanent structural modifications, leading to unnecessary map updates or missed critical changes.

Cross-domain robustness remains elusive, with most SLAM solutions optimized for specific environments and struggling when deployed in different settings. The lack of generalized approaches that perform consistently across diverse operational domains significantly limits the versatility of autonomous systems in real-world applications.

Current Robust SLAM Solutions

01 Error handling and uncertainty management in SLAM

Robust SLAM systems incorporate advanced error handling mechanisms and uncertainty management techniques to maintain accuracy in challenging environments. These systems use probabilistic approaches to model sensor uncertainties, detect and filter outliers, and implement recovery mechanisms when localization fails. By explicitly representing and propagating uncertainty through the mapping process, these systems can make more reliable decisions and maintain consistency even when faced with noisy or ambiguous sensor data.- Error handling and uncertainty management in SLAM: Robust SLAM systems incorporate advanced error handling mechanisms and uncertainty management techniques to maintain accuracy in challenging environments. These systems can detect and correct errors in sensor data, handle outliers, and manage uncertainty in position estimates. By implementing probabilistic approaches and statistical filters, these systems can maintain reliable mapping and localization even when faced with noisy or incomplete sensor data.

- Multi-sensor fusion for robust SLAM: Integrating data from multiple sensors enhances the robustness of SLAM systems by providing complementary information and redundancy. These systems typically combine data from cameras, LiDAR, IMUs, GPS, and other sensors to create more accurate environmental maps and position estimates. Sensor fusion algorithms help overcome limitations of individual sensors, such as camera failures in low light or GPS signal loss in indoor environments, ensuring continuous and reliable operation across diverse conditions.

- Loop closure and global optimization techniques: Loop closure detection and global optimization are critical for maintaining long-term consistency in SLAM systems. These techniques identify when a robot revisits a previously mapped area and adjust the entire map to reduce accumulated errors. Advanced algorithms can recognize places despite appearance changes, handle perceptual aliasing, and efficiently optimize large-scale maps. These capabilities are essential for creating consistent maps during extended operations and enabling reliable navigation in complex environments.

- Dynamic environment adaptation: Robust SLAM systems can adapt to dynamic and changing environments by distinguishing between static and moving objects. These systems employ specialized algorithms to identify and filter out dynamic elements, focus mapping on stable features, and update maps when permanent changes occur. This capability allows SLAM systems to maintain accurate localization in crowded spaces, adapt to seasonal changes, and handle environments where objects are frequently moved or rearranged.

- Computational efficiency and real-time performance: Maintaining real-time performance while ensuring robustness is crucial for practical SLAM applications. Advanced systems employ efficient algorithms, parallel processing, and hardware acceleration to reduce computational load while preserving accuracy. These approaches include selective feature extraction, hierarchical mapping structures, and adaptive processing that adjusts computational resources based on situational demands. Such optimizations enable robust SLAM to operate on platforms with limited computational resources, such as mobile robots and augmented reality devices.

02 Multi-sensor fusion for robust SLAM

Integrating data from multiple sensor types enhances SLAM robustness by compensating for individual sensor limitations. These systems combine inputs from cameras, LiDAR, IMUs, GPS, and other sensors to create redundant measurement pathways. When one sensor encounters difficulties (such as cameras in low-light conditions or GPS in indoor environments), other sensors can maintain localization accuracy. Advanced fusion algorithms weight sensor contributions based on estimated reliability in different contexts, ensuring consistent performance across diverse environments.Expand Specific Solutions03 Loop closure and global optimization techniques

Robust SLAM implementations employ sophisticated loop closure detection and global optimization to correct accumulated drift and maintain map consistency. These systems use appearance-based or geometric feature matching to identify when a robot revisits previously mapped areas, then apply graph optimization or bundle adjustment to globally refine the map and trajectory estimates. Advanced loop closure techniques are designed to be resilient against perceptual aliasing and can function even with significant viewpoint or lighting changes.Expand Specific Solutions04 Environment-adaptive SLAM frameworks

Adaptive SLAM systems automatically adjust their parameters and processing pipelines based on environmental conditions. These frameworks can detect challenging scenarios such as dynamic objects, featureless areas, or extreme lighting conditions, and modify their feature extraction, matching criteria, or motion models accordingly. Some implementations incorporate machine learning techniques to classify environments and select optimal algorithms for each context, ensuring robust performance across diverse operational settings without manual reconfiguration.Expand Specific Solutions05 Semantic and object-aware SLAM

Incorporating semantic understanding and object recognition into SLAM systems significantly enhances robustness. By identifying and tracking stable landmarks and distinguishing between static and dynamic elements in the environment, these systems can filter out moving objects that would otherwise corrupt the map. Semantic SLAM approaches leverage deep learning to extract meaningful features that are more persistent across different conditions than traditional geometric features, leading to more reliable localization in challenging and changing environments.Expand Specific Solutions

Key Industry Players in Robust SLAM

Robust SLAM (Simultaneous Localization and Mapping) has become a critical technology in the evolving autonomous systems landscape, with the market currently in a growth phase characterized by increasing adoption across industries. The global market size for SLAM technologies is expanding rapidly, driven by applications in robotics, autonomous vehicles, and AR/VR. Companies like Intel, Samsung, and Honda are leading commercial applications, while academic institutions such as Northwestern Polytechnical University and Southeast University are advancing fundamental research. The technology maturity varies across sectors, with companies like TRX Systems specializing in GPS-denied environments, Softbank Robotics focusing on consumer applications, and Metoak Technology developing vision-based solutions. The integration of SLAM with AI and sensor fusion represents the next frontier, with collaborations between industry and academia accelerating development toward more reliable long-term autonomy solutions.

Intel Corp.

Technical Solution: Intel has developed RealSense technology that integrates with their robust SLAM solutions for long-term autonomy. Their approach combines visual-inertial odometry with loop closure detection to maintain accurate localization over extended periods. Intel's RealSense Tracking Camera T265 specifically implements a V-SLAM system that operates with extremely low latency (under 6ms) and low power consumption (1.5W). The system employs proprietary algorithms that can handle challenging scenarios like feature-poor environments and dynamic objects. Intel's robust SLAM implementation includes redundant sensor fusion (combining camera data with IMU measurements) and advanced error recovery mechanisms that allow autonomous systems to recover from tracking failures without human intervention. Their technology also incorporates machine learning techniques to improve feature detection reliability in changing environmental conditions, addressing one of the key challenges in long-term autonomy.

Strengths: Low power consumption and latency make it ideal for mobile robots and drones. Robust recovery mechanisms enhance reliability in real-world deployments. Weaknesses: Primarily vision-based approach may struggle in extreme lighting conditions or highly reflective environments. Requires significant computational resources for optimal performance.

Honda Motor Co., Ltd.

Technical Solution: Honda has developed a multi-layered robust SLAM system specifically designed for long-term autonomy in their autonomous vehicle and robotics platforms. Their approach integrates visual, LiDAR, and radar data through a sophisticated sensor fusion framework that maintains localization accuracy even when individual sensors fail or environmental conditions change. Honda's system implements a hierarchical mapping structure that separates permanent landmarks from temporary features, allowing vehicles to maintain accurate positioning despite seasonal changes, construction, or other environmental modifications. Their SLAM solution incorporates semantic understanding of environments, enabling the system to recognize and adapt to structural changes while maintaining localization. Honda has also implemented a distributed mapping system where vehicles can share map updates, allowing the entire fleet to benefit from individual experiences and adapt to environmental changes collectively. This collaborative approach significantly enhances long-term autonomy by creating a continuously updated and refined environmental model.

Strengths: Multi-sensor fusion provides redundancy and robustness across various environmental conditions. Hierarchical mapping structure effectively handles environmental changes over time. Weaknesses: High hardware requirements increase system cost. Complex integration may require significant calibration and maintenance.

Core SLAM Algorithms and Innovations

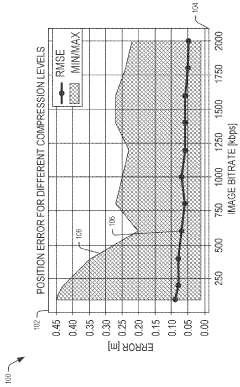

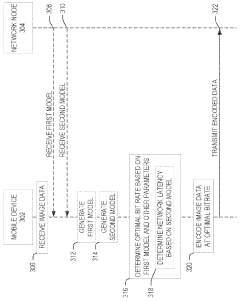

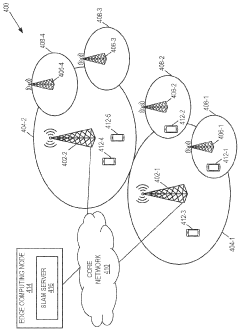

Bitrate adaptation for edge-assisted localization given network availability for mobile devices

PatentWO2024136880A1

Innovation

- A dynamic bitrate adaptation method for edge-assisted SLAM, where a model determines an optimal image data bitrate based on network latency, map presence, and environment bitrate, allowing for compression and transmission at an improved bitrate to enhance localization performance.

Intelligent mobile device brain-like SLAM method based on multi-source information fusion

PatentPendingCN118031934A

Innovation

- The smart mobile device brain-inspired SLAM method uses multi-source information fusion, combines IMU, encoder and camera sensors, and integrates multiple sensor information by extending the Kalman filter model and grid cell model to build an experience map to improve navigation accuracy and robustness. Great sex.

Environmental Factors Affecting SLAM Performance

The performance of SLAM (Simultaneous Localization and Mapping) systems is significantly influenced by various environmental factors, which pose substantial challenges for long-term autonomous operation. Dynamic environments represent one of the most significant challenges, as moving objects such as pedestrians, vehicles, and even furniture rearrangements can invalidate previously mapped features, leading to localization failures and map inconsistencies.

Lighting conditions dramatically affect vision-based SLAM systems. Variations in illumination throughout the day, sudden changes from indoor to outdoor environments, or even artificial lighting fluctuations can alter the appearance of visual features. This phenomenon, known as perceptual aliasing, can cause feature mismatches and subsequent mapping errors, particularly in systems relying heavily on visual odometry.

Weather conditions introduce another layer of complexity for outdoor SLAM applications. Rain, snow, and fog can obscure sensors, reduce visibility, and create noise in sensor readings. LiDAR systems, for instance, may produce scattered points when laser beams interact with raindrops or snowflakes, while cameras struggle with reduced contrast in foggy conditions.

Seasonal changes present long-term challenges as environments transform over months. Vegetation growth, snow cover, or construction activities can significantly alter the appearance and structure of environments. A robust SLAM system must adapt to these gradual yet substantial changes to maintain accurate localization capabilities throughout the year.

Sensor degradation and environmental interference also impact SLAM performance. Dust accumulation on optical sensors, temperature fluctuations affecting electronic components, and electromagnetic interference in urban environments can all degrade sensor readings. Additionally, reflective surfaces like glass facades or water bodies can cause specular reflections in LiDAR data or confuse visual feature extraction algorithms.

Geometric ambiguity in certain environments, such as long corridors, open spaces, or repetitive structures, can lead to the "kidnapped robot problem" where the system cannot distinguish between similar-looking locations. This ambiguity often results in loop closure failures or incorrect path estimations, compromising the overall mapping accuracy.

Understanding these environmental factors is crucial for developing robust SLAM solutions capable of supporting long-term autonomy across diverse operational conditions. The ability to anticipate, detect, and adapt to these environmental challenges represents a frontier in advancing SLAM technology toward truly resilient autonomous systems.

Lighting conditions dramatically affect vision-based SLAM systems. Variations in illumination throughout the day, sudden changes from indoor to outdoor environments, or even artificial lighting fluctuations can alter the appearance of visual features. This phenomenon, known as perceptual aliasing, can cause feature mismatches and subsequent mapping errors, particularly in systems relying heavily on visual odometry.

Weather conditions introduce another layer of complexity for outdoor SLAM applications. Rain, snow, and fog can obscure sensors, reduce visibility, and create noise in sensor readings. LiDAR systems, for instance, may produce scattered points when laser beams interact with raindrops or snowflakes, while cameras struggle with reduced contrast in foggy conditions.

Seasonal changes present long-term challenges as environments transform over months. Vegetation growth, snow cover, or construction activities can significantly alter the appearance and structure of environments. A robust SLAM system must adapt to these gradual yet substantial changes to maintain accurate localization capabilities throughout the year.

Sensor degradation and environmental interference also impact SLAM performance. Dust accumulation on optical sensors, temperature fluctuations affecting electronic components, and electromagnetic interference in urban environments can all degrade sensor readings. Additionally, reflective surfaces like glass facades or water bodies can cause specular reflections in LiDAR data or confuse visual feature extraction algorithms.

Geometric ambiguity in certain environments, such as long corridors, open spaces, or repetitive structures, can lead to the "kidnapped robot problem" where the system cannot distinguish between similar-looking locations. This ambiguity often results in loop closure failures or incorrect path estimations, compromising the overall mapping accuracy.

Understanding these environmental factors is crucial for developing robust SLAM solutions capable of supporting long-term autonomy across diverse operational conditions. The ability to anticipate, detect, and adapt to these environmental challenges represents a frontier in advancing SLAM technology toward truly resilient autonomous systems.

Safety and Reliability Standards for Autonomous Systems

The integration of autonomous systems into various sectors necessitates comprehensive safety and reliability standards to ensure public trust and operational effectiveness. For robust SLAM (Simultaneous Localization and Mapping) systems supporting long-term autonomy, these standards are particularly critical as they directly impact navigation accuracy and decision-making reliability in dynamic environments.

Current regulatory frameworks for autonomous systems vary significantly across regions and industries. In the automotive sector, ISO 26262 provides functional safety standards for electrical and electronic systems, while ISO 21448 addresses the safety of intended functionality. However, these standards do not fully address the specific challenges of SLAM systems operating over extended periods in changing environments.

For robust SLAM implementation, standards must address both hardware reliability and algorithmic robustness. Key performance indicators include localization accuracy, mapping precision, recovery capabilities after failures, and adaptability to environmental changes. Standards should establish minimum thresholds for these metrics under various operational conditions, including adverse weather, lighting variations, and seasonal changes that affect sensor readings.

Certification processes for SLAM-enabled autonomous systems require rigorous testing protocols that simulate long-term operation. These should include accelerated aging tests for sensors, stress testing for computational components, and simulation of environmental variations. Validation methodologies must verify that systems maintain performance within acceptable parameters throughout their operational lifespan.

Fault tolerance represents another critical dimension of safety standards. Robust SLAM systems must incorporate redundancy mechanisms, graceful degradation capabilities, and fail-safe protocols. Standards should mandate minimum requirements for system behavior during partial failures, ensuring that autonomous systems can safely manage unexpected situations even when SLAM functionality is compromised.

Data integrity and security standards are increasingly important as SLAM systems often rely on stored environmental maps and real-time sensor data. Requirements for data encryption, protection against tampering, and secure update mechanisms must be established to prevent malicious exploitation of navigation systems.

International harmonization of these standards remains a significant challenge. Efforts by organizations such as IEEE, ISO, and industry consortia are working toward creating unified frameworks, but considerable fragmentation persists. This creates compliance challenges for manufacturers developing autonomous systems for global markets.

Current regulatory frameworks for autonomous systems vary significantly across regions and industries. In the automotive sector, ISO 26262 provides functional safety standards for electrical and electronic systems, while ISO 21448 addresses the safety of intended functionality. However, these standards do not fully address the specific challenges of SLAM systems operating over extended periods in changing environments.

For robust SLAM implementation, standards must address both hardware reliability and algorithmic robustness. Key performance indicators include localization accuracy, mapping precision, recovery capabilities after failures, and adaptability to environmental changes. Standards should establish minimum thresholds for these metrics under various operational conditions, including adverse weather, lighting variations, and seasonal changes that affect sensor readings.

Certification processes for SLAM-enabled autonomous systems require rigorous testing protocols that simulate long-term operation. These should include accelerated aging tests for sensors, stress testing for computational components, and simulation of environmental variations. Validation methodologies must verify that systems maintain performance within acceptable parameters throughout their operational lifespan.

Fault tolerance represents another critical dimension of safety standards. Robust SLAM systems must incorporate redundancy mechanisms, graceful degradation capabilities, and fail-safe protocols. Standards should mandate minimum requirements for system behavior during partial failures, ensuring that autonomous systems can safely manage unexpected situations even when SLAM functionality is compromised.

Data integrity and security standards are increasingly important as SLAM systems often rely on stored environmental maps and real-time sensor data. Requirements for data encryption, protection against tampering, and secure update mechanisms must be established to prevent malicious exploitation of navigation systems.

International harmonization of these standards remains a significant challenge. Efforts by organizations such as IEEE, ISO, and industry consortia are working toward creating unified frameworks, but considerable fragmentation persists. This creates compliance challenges for manufacturers developing autonomous systems for global markets.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!