How Deep Learning Enhances Simultaneous Localization And Mapping?

SEP 5, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Deep Learning in SLAM: Background and Objectives

Simultaneous Localization and Mapping (SLAM) has evolved significantly since its inception in the 1980s, transitioning from traditional probabilistic approaches to increasingly sophisticated methods. The integration of deep learning with SLAM represents a pivotal advancement in robotics and autonomous systems, enabling machines to perceive, understand, and navigate complex environments with unprecedented accuracy and efficiency.

The historical trajectory of SLAM technology reveals a steady progression from filter-based methods like Extended Kalman Filters (EKF) to graph-based optimization techniques. Traditional SLAM approaches relied heavily on hand-crafted features and geometric constraints, which often struggled with dynamic environments, varying lighting conditions, and perceptual aliasing. These limitations created a technological ceiling that impeded further advancement in autonomous navigation capabilities.

Deep learning has emerged as a transformative force in addressing these fundamental challenges. Neural networks, particularly Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs), have demonstrated remarkable capabilities in feature extraction, pattern recognition, and sequential data processing—all critical components of effective SLAM systems. The convergence of these technologies marks a paradigm shift from purely geometric approaches to learning-based methods that can adapt to environmental complexities.

The primary objective of integrating deep learning with SLAM is to enhance robustness, accuracy, and generalization across diverse operational scenarios. Specifically, deep learning aims to improve feature detection and matching, loop closure recognition, semantic understanding, and dynamic object handling within SLAM frameworks. These enhancements directly address the persistent challenges that have limited traditional approaches.

Current technological trends indicate an accelerating adoption of end-to-end learning systems that can perform multiple SLAM components simultaneously. Research is increasingly focused on developing architectures that combine the interpretability and geometric consistency of traditional methods with the adaptive power of neural networks. This hybrid approach seeks to leverage the complementary strengths of both paradigms.

The evolution toward deep learning-enhanced SLAM is driven by expanding application demands across autonomous vehicles, drones, augmented reality, and service robotics. Each domain presents unique environmental challenges that conventional methods struggle to address consistently. The ultimate technical goal is to develop SLAM systems that can operate reliably in unstructured, dynamic environments with minimal prior knowledge—effectively mimicking human-like spatial cognition and navigation capabilities.

The historical trajectory of SLAM technology reveals a steady progression from filter-based methods like Extended Kalman Filters (EKF) to graph-based optimization techniques. Traditional SLAM approaches relied heavily on hand-crafted features and geometric constraints, which often struggled with dynamic environments, varying lighting conditions, and perceptual aliasing. These limitations created a technological ceiling that impeded further advancement in autonomous navigation capabilities.

Deep learning has emerged as a transformative force in addressing these fundamental challenges. Neural networks, particularly Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs), have demonstrated remarkable capabilities in feature extraction, pattern recognition, and sequential data processing—all critical components of effective SLAM systems. The convergence of these technologies marks a paradigm shift from purely geometric approaches to learning-based methods that can adapt to environmental complexities.

The primary objective of integrating deep learning with SLAM is to enhance robustness, accuracy, and generalization across diverse operational scenarios. Specifically, deep learning aims to improve feature detection and matching, loop closure recognition, semantic understanding, and dynamic object handling within SLAM frameworks. These enhancements directly address the persistent challenges that have limited traditional approaches.

Current technological trends indicate an accelerating adoption of end-to-end learning systems that can perform multiple SLAM components simultaneously. Research is increasingly focused on developing architectures that combine the interpretability and geometric consistency of traditional methods with the adaptive power of neural networks. This hybrid approach seeks to leverage the complementary strengths of both paradigms.

The evolution toward deep learning-enhanced SLAM is driven by expanding application demands across autonomous vehicles, drones, augmented reality, and service robotics. Each domain presents unique environmental challenges that conventional methods struggle to address consistently. The ultimate technical goal is to develop SLAM systems that can operate reliably in unstructured, dynamic environments with minimal prior knowledge—effectively mimicking human-like spatial cognition and navigation capabilities.

Market Analysis for DL-Enhanced SLAM Applications

The global market for SLAM (Simultaneous Localization and Mapping) technologies enhanced by deep learning is experiencing robust growth, driven by increasing applications across multiple sectors. Current market valuations indicate that the DL-enhanced SLAM market reached approximately 4.3 billion USD in 2022 and is projected to grow at a CAGR of 25.7% through 2028, significantly outpacing traditional SLAM solutions.

Autonomous vehicles represent the largest market segment, accounting for nearly 38% of the total market share. Major automotive manufacturers and tech companies are investing heavily in DL-SLAM technologies to improve navigation accuracy and safety in self-driving vehicles. The integration of deep learning with SLAM has reduced localization errors by up to 60% compared to conventional methods, making it increasingly attractive for commercial deployment.

Robotics applications form the second-largest market segment at 27%, with warehouse automation and logistics showing particularly strong demand. Companies like Amazon and Alibaba have implemented DL-SLAM solutions in their fulfillment centers, reporting efficiency improvements of 30-40% in navigation and object handling tasks. The industrial robotics sector is expected to grow at 32% annually as manufacturers seek more adaptive and precise navigation systems.

Consumer electronics, particularly AR/VR devices and domestic robots, constitute a rapidly expanding market segment (18% share) with the highest projected growth rate of 35% annually. Apple's investment in LiDAR-equipped devices and Meta's focus on spatial computing indicate strong future demand for consumer-facing DL-SLAM applications.

Regionally, North America leads with 42% market share, followed by Asia-Pacific (31%) and Europe (22%). China is emerging as the fastest-growing market with substantial investments in autonomous systems and smart city infrastructure that leverage DL-SLAM technologies.

Key market drivers include decreasing costs of sensor hardware, increasing computational efficiency of deep learning models, and growing demand for automation across industries. The integration of edge computing with DL-SLAM is creating new market opportunities by enabling real-time processing capabilities in resource-constrained environments.

Market challenges include high implementation costs for small and medium enterprises, technical barriers to deployment in unstructured environments, and concerns regarding data privacy and security. Despite these challenges, the market outlook remains highly positive as technological advancements continue to address existing limitations and expand potential applications.

Autonomous vehicles represent the largest market segment, accounting for nearly 38% of the total market share. Major automotive manufacturers and tech companies are investing heavily in DL-SLAM technologies to improve navigation accuracy and safety in self-driving vehicles. The integration of deep learning with SLAM has reduced localization errors by up to 60% compared to conventional methods, making it increasingly attractive for commercial deployment.

Robotics applications form the second-largest market segment at 27%, with warehouse automation and logistics showing particularly strong demand. Companies like Amazon and Alibaba have implemented DL-SLAM solutions in their fulfillment centers, reporting efficiency improvements of 30-40% in navigation and object handling tasks. The industrial robotics sector is expected to grow at 32% annually as manufacturers seek more adaptive and precise navigation systems.

Consumer electronics, particularly AR/VR devices and domestic robots, constitute a rapidly expanding market segment (18% share) with the highest projected growth rate of 35% annually. Apple's investment in LiDAR-equipped devices and Meta's focus on spatial computing indicate strong future demand for consumer-facing DL-SLAM applications.

Regionally, North America leads with 42% market share, followed by Asia-Pacific (31%) and Europe (22%). China is emerging as the fastest-growing market with substantial investments in autonomous systems and smart city infrastructure that leverage DL-SLAM technologies.

Key market drivers include decreasing costs of sensor hardware, increasing computational efficiency of deep learning models, and growing demand for automation across industries. The integration of edge computing with DL-SLAM is creating new market opportunities by enabling real-time processing capabilities in resource-constrained environments.

Market challenges include high implementation costs for small and medium enterprises, technical barriers to deployment in unstructured environments, and concerns regarding data privacy and security. Despite these challenges, the market outlook remains highly positive as technological advancements continue to address existing limitations and expand potential applications.

Current SLAM Technologies and Deep Learning Integration Challenges

Traditional SLAM (Simultaneous Localization and Mapping) systems have evolved significantly over the past decades, from early filter-based approaches to modern graph-based optimization methods. Current mainstream SLAM technologies can be categorized into visual SLAM, LiDAR SLAM, and multi-sensor fusion approaches. Visual SLAM systems like ORB-SLAM and DSO (Direct Sparse Odometry) rely on feature extraction or direct methods to track camera motion and build sparse or semi-dense maps. LiDAR-based systems such as LOAM (LiDAR Odometry and Mapping) and LeGO-LOAM offer robust performance in various environments by leveraging point cloud data for precise distance measurements.

Despite these advancements, conventional SLAM systems face significant challenges in dynamic environments, low-texture scenes, and changing lighting conditions. They often struggle with perceptual aliasing, loop closure detection in large-scale environments, and semantic understanding of surroundings. These limitations have prompted researchers to explore deep learning integration as a potential solution pathway.

The integration of deep learning with SLAM introduces new technical challenges. First, there exists a fundamental paradigm mismatch between traditional geometric SLAM methods (which rely on explicit mathematical models) and learning-based approaches (which learn implicit representations from data). Bridging this gap requires novel architectural designs and training methodologies that can preserve the best aspects of both worlds.

Computational efficiency presents another major hurdle. Deep learning models, particularly those based on convolutional neural networks, demand substantial computational resources that may not be available on resource-constrained platforms like mobile robots or AR/VR devices. This necessitates model compression techniques, efficient network architectures, and hardware-aware algorithm design.

Data dependency and generalization capability also pose significant challenges. Deep learning models require large amounts of labeled training data to perform effectively, and they often struggle to generalize to unseen environments. Creating diverse, high-quality datasets for SLAM is labor-intensive and expensive, while ensuring model robustness across varied operational conditions remains an open research question.

The integration of temporal consistency into deep learning frameworks represents another technical obstacle. Traditional SLAM systems maintain coherent maps over time through probabilistic frameworks, while deep learning models typically process individual frames independently. Developing architectures that can effectively incorporate temporal information and maintain global consistency is crucial for successful integration.

Finally, interpretability and reliability concerns arise when deploying deep learning in safety-critical SLAM applications. The "black box" nature of neural networks makes it difficult to provide performance guarantees or understand failure modes, which is particularly problematic for autonomous navigation systems where errors could have serious consequences.

Despite these advancements, conventional SLAM systems face significant challenges in dynamic environments, low-texture scenes, and changing lighting conditions. They often struggle with perceptual aliasing, loop closure detection in large-scale environments, and semantic understanding of surroundings. These limitations have prompted researchers to explore deep learning integration as a potential solution pathway.

The integration of deep learning with SLAM introduces new technical challenges. First, there exists a fundamental paradigm mismatch between traditional geometric SLAM methods (which rely on explicit mathematical models) and learning-based approaches (which learn implicit representations from data). Bridging this gap requires novel architectural designs and training methodologies that can preserve the best aspects of both worlds.

Computational efficiency presents another major hurdle. Deep learning models, particularly those based on convolutional neural networks, demand substantial computational resources that may not be available on resource-constrained platforms like mobile robots or AR/VR devices. This necessitates model compression techniques, efficient network architectures, and hardware-aware algorithm design.

Data dependency and generalization capability also pose significant challenges. Deep learning models require large amounts of labeled training data to perform effectively, and they often struggle to generalize to unseen environments. Creating diverse, high-quality datasets for SLAM is labor-intensive and expensive, while ensuring model robustness across varied operational conditions remains an open research question.

The integration of temporal consistency into deep learning frameworks represents another technical obstacle. Traditional SLAM systems maintain coherent maps over time through probabilistic frameworks, while deep learning models typically process individual frames independently. Developing architectures that can effectively incorporate temporal information and maintain global consistency is crucial for successful integration.

Finally, interpretability and reliability concerns arise when deploying deep learning in safety-critical SLAM applications. The "black box" nature of neural networks makes it difficult to provide performance guarantees or understand failure modes, which is particularly problematic for autonomous navigation systems where errors could have serious consequences.

State-of-the-Art Deep Learning SLAM Architectures

01 Neural Network Architectures for SLAM

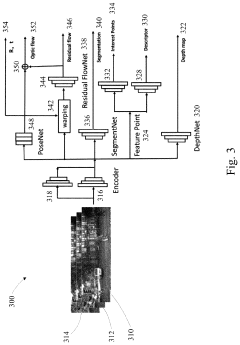

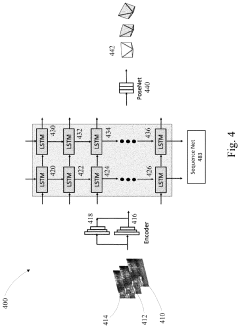

Various neural network architectures can be employed to enhance SLAM systems. These include convolutional neural networks (CNNs), recurrent neural networks (RNNs), and transformer-based models that can process visual and spatial data. These architectures help in feature extraction, pattern recognition, and sequential data processing, which are crucial for accurate localization and mapping in dynamic environments.- Neural networks for feature extraction and mapping in SLAM: Deep learning techniques, particularly neural networks, can be used to extract features from sensor data and create more accurate maps in SLAM systems. These networks can identify and track distinctive environmental features across frames, improving localization accuracy. The neural networks can process raw sensor data such as images or point clouds to generate robust feature representations that are less affected by environmental variations like lighting changes or viewpoint differences.

- Deep learning for pose estimation and trajectory optimization: Deep learning models can enhance pose estimation accuracy in SLAM systems by predicting camera or robot positions and orientations from sequential sensor inputs. These models can learn to estimate relative motion between frames and optimize trajectory paths, reducing drift and improving overall system performance. By incorporating recurrent neural networks or transformer architectures, the system can maintain temporal consistency and better handle dynamic environments.

- Integration of semantic understanding in SLAM systems: Deep learning enables semantic understanding of environments by classifying objects and scenes within SLAM frameworks. This semantic information can be used to improve mapping quality by distinguishing between static and dynamic objects, identifying loop closures, and creating more meaningful maps. Semantic SLAM approaches allow robots to reason about their environment at a higher level, enabling more intelligent navigation and interaction capabilities.

- End-to-end deep learning SLAM architectures: End-to-end deep learning approaches for SLAM integrate multiple components (feature extraction, mapping, localization) into unified neural network architectures. These systems can be trained jointly to optimize overall performance rather than individual components. End-to-end architectures can reduce the need for hand-engineered features and traditional geometric algorithms, potentially offering more robust performance in challenging environments where classical methods struggle.

- Sensor fusion and multi-modal learning for SLAM: Deep learning techniques can effectively fuse data from multiple sensors (cameras, LiDAR, IMU, etc.) to enhance SLAM performance. Neural networks can learn optimal ways to combine complementary information from different sensor modalities, making the system more robust to sensor failures or environmental challenges. This approach allows SLAM systems to operate reliably in diverse conditions such as low light, featureless environments, or areas with dynamic objects.

02 Deep Learning for Visual SLAM Enhancement

Deep learning techniques can significantly improve visual SLAM systems by enhancing feature detection, tracking, and loop closure. Neural networks trained on large datasets can recognize visual landmarks more robustly, handle varying lighting conditions, and maintain tracking even in challenging environments. This approach combines traditional geometric methods with learning-based components to achieve more reliable mapping and localization.Expand Specific Solutions03 Semantic Understanding in SLAM Systems

Incorporating semantic understanding into SLAM systems allows for object recognition and scene comprehension. Deep learning models can classify objects in the environment, creating semantically enriched maps that distinguish between different types of structures and obstacles. This semantic information improves navigation decision-making and enables more intelligent interaction with the environment.Expand Specific Solutions04 Real-time Performance Optimization for Deep Learning SLAM

Techniques for optimizing the computational efficiency of deep learning SLAM systems enable real-time performance on resource-constrained devices. These include model compression, quantization, pruning, and hardware-specific optimizations. Such approaches balance the trade-off between accuracy and speed, making deep learning SLAM practical for applications like autonomous vehicles, drones, and mobile robots.Expand Specific Solutions05 Multi-sensor Fusion with Deep Learning

Deep learning approaches can effectively fuse data from multiple sensors such as cameras, LiDAR, radar, and IMUs to enhance SLAM performance. Neural networks learn optimal ways to combine these different data sources, handling their varying characteristics and noise profiles. This sensor fusion improves robustness in challenging conditions like poor lighting, featureless environments, or sensor failures.Expand Specific Solutions

Leading Companies and Research Institutions in DL-SLAM

Deep learning is revolutionizing Simultaneous Localization And Mapping (SLAM) technology, with the market currently in a growth phase characterized by increasing adoption across autonomous vehicles, robotics, and AR applications. The global market size is projected to expand significantly, driven by advancements in neural network architectures that enhance mapping accuracy and real-time performance. Leading academic institutions like Tsinghua University and Zhejiang University are collaborating with industry giants such as Samsung, Intel, and Baidu to advance the technology. Automotive companies including Ford, GM, and Bosch are integrating deep learning SLAM into autonomous driving systems, while specialized firms like Niantic Spatial and Aurora Operations are developing novel applications. The technology is approaching maturity in controlled environments but still faces challenges in complex, dynamic scenarios.

Samsung Electronics Co., Ltd.

Technical Solution: Samsung has pioneered deep learning-enhanced SLAM solutions primarily targeted at mobile and AR/VR applications. Their approach integrates neural networks with visual-inertial SLAM to achieve accurate localization on consumer devices. Samsung's deep learning SLAM framework employs lightweight neural networks designed specifically for mobile processors, enabling real-time performance while minimizing battery consumption. Their system uses CNNs to extract robust features from camera inputs and predict depth information, which is then fused with inertial measurement unit (IMU) data through a custom deep fusion network[5]. Samsung has implemented unsupervised learning techniques to reduce the dependency on labeled training data, allowing their system to adapt to new environments more effectively. Their SLAM solution incorporates semantic understanding of scenes, enabling object-level mapping and recognition that enhances AR applications. Samsung has demonstrated their technology in their AR products, achieving sub-centimeter accuracy in indoor environments while running efficiently on their Exynos processors with neural processing units[6].

Strengths: Highly optimized for mobile devices with efficient power consumption; seamless integration with consumer electronics; strong performance in AR applications. Weaknesses: May sacrifice some accuracy for power efficiency; primarily focused on consumer rather than industrial applications; performance can vary across different lighting conditions.

Intel Corp.

Technical Solution: Intel has developed a comprehensive deep learning-enhanced SLAM framework that leverages their hardware acceleration capabilities. Their approach combines traditional geometric SLAM methods with deep neural networks to improve robustness and accuracy. Intel's RealSense technology incorporates deep learning for depth estimation and feature matching, enabling more reliable SLAM in complex environments. Their neural network architecture uses a combination of CNNs for spatial feature extraction and LSTMs for temporal consistency, running efficiently on Intel's neural compute hardware[3]. Intel's system particularly excels at indoor navigation scenarios, where traditional SLAM often struggles with repetitive structures and texture-poor surfaces. Their deep learning models are trained to recognize semantic features in environments, allowing for more meaningful map representations that distinguish between different types of objects and surfaces[4]. Intel has also developed optimization techniques that allow their deep learning SLAM solutions to run efficiently on edge devices with limited computational resources.

Strengths: Hardware-optimized implementation providing efficient processing; strong performance in texture-poor environments; semantic understanding of scenes enhancing mapping quality. Weaknesses: Some solutions remain tied to Intel's hardware ecosystem; indoor performance generally stronger than outdoor applications; requires careful calibration for optimal results.

Key Algorithms and Neural Network Models for SLAM

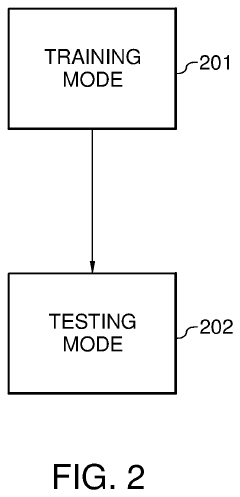

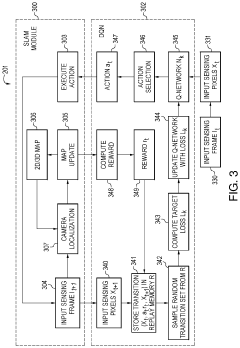

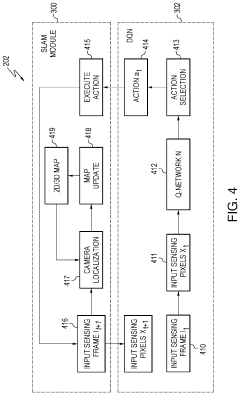

Simultaneous localization and mapping with reinforcement learning

PatentActiveUS10748061B2

Innovation

- Integration of deep reinforcement learning with the SLAM framework using a deep Q-Network (DQN) framework, which employs rewards such as map coverage, quality, and traversability to determine optimal robotic movement and map updates, improving path planning and obstacle avoidance in unknown dynamic environments.

Deep learning based visual simultaneous localization and mapping

PatentActiveUS11983627B2

Innovation

- A joint training network architecture that combines a feature network, depth network, flow network, segmentation network, and pose network, utilizing convolutional neural networks and long short-term memory (LSTM) frameworks, to jointly learn and predict interest points, depth maps, optical flow, and camera pose, enhancing feature extraction and pose estimation.

Real-time Performance Benchmarks and Optimization

Real-time performance represents a critical benchmark for evaluating the practical applicability of deep learning enhanced Simultaneous Localization and Mapping (SLAM) systems. Traditional SLAM algorithms typically operate at 30-60 frames per second on standard computing hardware, establishing a baseline performance standard that deep learning implementations must meet or exceed to be considered viable alternatives.

Current benchmarks indicate that pure deep learning SLAM approaches often struggle with real-time performance, with many research implementations operating at 5-15 frames per second on high-end GPUs. This performance gap presents a significant challenge for deployment in resource-constrained environments such as mobile robots, drones, and augmented reality devices where computational resources are limited and power consumption is a concern.

Several optimization techniques have emerged to address these performance limitations. Model compression methods, including pruning, quantization, and knowledge distillation, have demonstrated the ability to reduce computational requirements by 40-70% with minimal accuracy degradation. For instance, ORB-SLAM integrated with lightweight CNN feature extractors has achieved processing speeds of 25 frames per second on consumer-grade hardware, approaching the performance of classical methods.

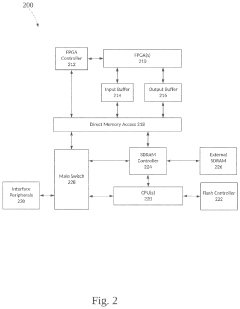

Hardware acceleration represents another critical optimization pathway. Specialized neural processing units (NPUs) and field-programmable gate arrays (FPGAs) have been employed to accelerate specific deep learning operations within the SLAM pipeline. Google's Edge TPU and NVIDIA's Jetson platforms have shown particular promise, enabling up to 3x performance improvements for certain deep learning SLAM components compared to CPU implementations.

Algorithmic optimizations have also yielded significant performance gains. Techniques such as sparse convolutions, conditional computation, and early-exit networks allow systems to dynamically allocate computational resources based on scene complexity. These approaches have enabled some hybrid systems to maintain consistent frame rates even in challenging environments by intelligently managing the trade-off between classical and learning-based components.

Benchmark datasets specifically designed to evaluate real-time performance have become increasingly important. The TUM RGB-D, KITTI, and EuRoC datasets now include standardized timing metrics that consider not only accuracy but also computational efficiency across diverse hardware platforms, providing a more comprehensive evaluation framework for real-world deployment scenarios.

Current benchmarks indicate that pure deep learning SLAM approaches often struggle with real-time performance, with many research implementations operating at 5-15 frames per second on high-end GPUs. This performance gap presents a significant challenge for deployment in resource-constrained environments such as mobile robots, drones, and augmented reality devices where computational resources are limited and power consumption is a concern.

Several optimization techniques have emerged to address these performance limitations. Model compression methods, including pruning, quantization, and knowledge distillation, have demonstrated the ability to reduce computational requirements by 40-70% with minimal accuracy degradation. For instance, ORB-SLAM integrated with lightweight CNN feature extractors has achieved processing speeds of 25 frames per second on consumer-grade hardware, approaching the performance of classical methods.

Hardware acceleration represents another critical optimization pathway. Specialized neural processing units (NPUs) and field-programmable gate arrays (FPGAs) have been employed to accelerate specific deep learning operations within the SLAM pipeline. Google's Edge TPU and NVIDIA's Jetson platforms have shown particular promise, enabling up to 3x performance improvements for certain deep learning SLAM components compared to CPU implementations.

Algorithmic optimizations have also yielded significant performance gains. Techniques such as sparse convolutions, conditional computation, and early-exit networks allow systems to dynamically allocate computational resources based on scene complexity. These approaches have enabled some hybrid systems to maintain consistent frame rates even in challenging environments by intelligently managing the trade-off between classical and learning-based components.

Benchmark datasets specifically designed to evaluate real-time performance have become increasingly important. The TUM RGB-D, KITTI, and EuRoC datasets now include standardized timing metrics that consider not only accuracy but also computational efficiency across diverse hardware platforms, providing a more comprehensive evaluation framework for real-world deployment scenarios.

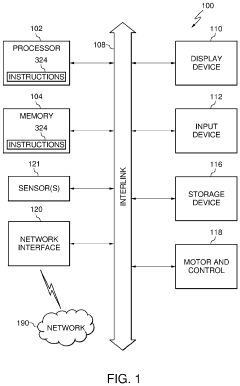

Hardware Requirements for Deep Learning SLAM Deployment

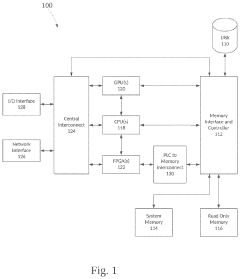

The deployment of Deep Learning SLAM systems requires substantial computational resources that significantly exceed those needed for traditional SLAM approaches. Modern deep learning-based SLAM solutions typically demand high-performance GPUs with at least 8GB VRAM, with NVIDIA's RTX series or Tesla units being preferred choices for research and industrial applications. For real-time operation, CUDA cores ranging from 2,000 to 5,000 are generally necessary to handle parallel processing of neural network operations.

CPU requirements are equally demanding, with multi-core processors (minimum 4 cores, recommended 8+ cores) needed to manage the simultaneous processing of sensor data acquisition, feature extraction, and mapping functions. Memory requirements typically start at 16GB RAM for basic implementations, while production systems often utilize 32GB or more to accommodate larger neural network models and extensive map data.

Storage considerations are two-fold: high-speed SSDs (preferably NVMe) with at least 500GB capacity are essential for rapid data access during operation, while additional storage is needed for training datasets and map persistence. The I/O bandwidth between storage, memory, and processing units represents a potential bottleneck that must be carefully addressed in system design.

For edge deployment scenarios such as autonomous vehicles or drones, specialized hardware accelerators like NVIDIA Jetson AGX Xavier, Google Edge TPU, or Intel Movidius Neural Compute Stick provide optimized platforms for neural network inference while maintaining power efficiency. These platforms typically offer 10-30 TOPS (Trillion Operations Per Second) performance within constrained power envelopes of 10-50W.

Power consumption presents a significant challenge, particularly for mobile robotics applications. Deep learning SLAM systems can consume between 50-300W depending on hardware configuration, necessitating robust power management strategies and potentially limiting operational duration for battery-powered devices.

Thermal management systems must be designed to dissipate heat effectively, especially in compact deployments where thermal throttling could severely impact performance. Active cooling solutions are typically required for sustained operation of high-performance computing components.

Sensor integration hardware represents another critical requirement, with high-resolution cameras (minimum 1080p, ideally 4K), depth sensors, and IMUs needing to be synchronized with microsecond precision. This often necessitates dedicated hardware timing circuits and high-bandwidth data buses to ensure consistent data flow to the processing units.

CPU requirements are equally demanding, with multi-core processors (minimum 4 cores, recommended 8+ cores) needed to manage the simultaneous processing of sensor data acquisition, feature extraction, and mapping functions. Memory requirements typically start at 16GB RAM for basic implementations, while production systems often utilize 32GB or more to accommodate larger neural network models and extensive map data.

Storage considerations are two-fold: high-speed SSDs (preferably NVMe) with at least 500GB capacity are essential for rapid data access during operation, while additional storage is needed for training datasets and map persistence. The I/O bandwidth between storage, memory, and processing units represents a potential bottleneck that must be carefully addressed in system design.

For edge deployment scenarios such as autonomous vehicles or drones, specialized hardware accelerators like NVIDIA Jetson AGX Xavier, Google Edge TPU, or Intel Movidius Neural Compute Stick provide optimized platforms for neural network inference while maintaining power efficiency. These platforms typically offer 10-30 TOPS (Trillion Operations Per Second) performance within constrained power envelopes of 10-50W.

Power consumption presents a significant challenge, particularly for mobile robotics applications. Deep learning SLAM systems can consume between 50-300W depending on hardware configuration, necessitating robust power management strategies and potentially limiting operational duration for battery-powered devices.

Thermal management systems must be designed to dissipate heat effectively, especially in compact deployments where thermal throttling could severely impact performance. Active cooling solutions are typically required for sustained operation of high-performance computing components.

Sensor integration hardware represents another critical requirement, with high-resolution cameras (minimum 1080p, ideally 4K), depth sensors, and IMUs needing to be synchronized with microsecond precision. This often necessitates dedicated hardware timing circuits and high-bandwidth data buses to ensure consistent data flow to the processing units.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!