SLAM Algorithms For Next-Generation Mobile Devices

SEP 12, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

SLAM Evolution and Objectives

Simultaneous Localization and Mapping (SLAM) technology has evolved significantly since its inception in the 1980s, transforming from theoretical concepts to practical applications across various domains. The evolution of SLAM algorithms has been driven by the increasing demand for autonomous navigation capabilities in mobile devices, robots, and vehicles. Initially developed for robotics applications, SLAM has expanded its reach to consumer electronics, particularly mobile devices, where it enables augmented reality experiences, indoor navigation, and context-aware computing.

The historical trajectory of SLAM development can be traced through several distinct phases. Early SLAM implementations relied heavily on extended Kalman filters and particle filters, which were computationally intensive and limited in scalability. The introduction of visual SLAM in the early 2000s marked a significant advancement, enabling systems to use camera inputs for mapping and localization. Subsequently, the development of RGB-D SLAM leveraged depth sensors to enhance mapping accuracy, while visual-inertial SLAM integrated camera data with inertial measurement units to improve robustness in dynamic environments.

Recent years have witnessed the emergence of semantic SLAM, which incorporates object recognition and scene understanding capabilities, allowing systems to create more meaningful maps with identified objects and spaces. This evolution reflects the broader trend toward more intelligent and context-aware SLAM systems that can understand and interact with their environments in increasingly sophisticated ways.

For next-generation mobile devices, SLAM technology aims to achieve several critical objectives. First, computational efficiency remains paramount, as mobile devices have limited processing power and energy resources compared to dedicated computing systems. Algorithms must be optimized to run in real-time on mobile processors while minimizing battery consumption. Second, robustness across diverse environments presents a significant challenge, requiring SLAM systems to maintain accuracy in varying lighting conditions, dynamic scenes, and environments with limited distinctive features.

Accuracy and precision constitute another key objective, with applications like AR demanding sub-centimeter positioning accuracy to ensure proper alignment of virtual and physical elements. Seamless integration with other mobile sensors and systems represents a fourth objective, enabling SLAM to leverage data from cameras, IMUs, GPS, and other sensors for improved performance. Finally, privacy and security considerations are increasingly important, as SLAM systems capture and process potentially sensitive spatial data about users' environments.

The technological trajectory suggests that future SLAM algorithms will increasingly leverage machine learning approaches, particularly deep learning, to enhance feature extraction, loop closure detection, and scene understanding capabilities. Edge AI processing will enable more sophisticated SLAM implementations on mobile devices without requiring cloud connectivity, addressing both performance and privacy concerns simultaneously.

The historical trajectory of SLAM development can be traced through several distinct phases. Early SLAM implementations relied heavily on extended Kalman filters and particle filters, which were computationally intensive and limited in scalability. The introduction of visual SLAM in the early 2000s marked a significant advancement, enabling systems to use camera inputs for mapping and localization. Subsequently, the development of RGB-D SLAM leveraged depth sensors to enhance mapping accuracy, while visual-inertial SLAM integrated camera data with inertial measurement units to improve robustness in dynamic environments.

Recent years have witnessed the emergence of semantic SLAM, which incorporates object recognition and scene understanding capabilities, allowing systems to create more meaningful maps with identified objects and spaces. This evolution reflects the broader trend toward more intelligent and context-aware SLAM systems that can understand and interact with their environments in increasingly sophisticated ways.

For next-generation mobile devices, SLAM technology aims to achieve several critical objectives. First, computational efficiency remains paramount, as mobile devices have limited processing power and energy resources compared to dedicated computing systems. Algorithms must be optimized to run in real-time on mobile processors while minimizing battery consumption. Second, robustness across diverse environments presents a significant challenge, requiring SLAM systems to maintain accuracy in varying lighting conditions, dynamic scenes, and environments with limited distinctive features.

Accuracy and precision constitute another key objective, with applications like AR demanding sub-centimeter positioning accuracy to ensure proper alignment of virtual and physical elements. Seamless integration with other mobile sensors and systems represents a fourth objective, enabling SLAM to leverage data from cameras, IMUs, GPS, and other sensors for improved performance. Finally, privacy and security considerations are increasingly important, as SLAM systems capture and process potentially sensitive spatial data about users' environments.

The technological trajectory suggests that future SLAM algorithms will increasingly leverage machine learning approaches, particularly deep learning, to enhance feature extraction, loop closure detection, and scene understanding capabilities. Edge AI processing will enable more sophisticated SLAM implementations on mobile devices without requiring cloud connectivity, addressing both performance and privacy concerns simultaneously.

Market Demand for Mobile SLAM Applications

The mobile SLAM (Simultaneous Localization and Mapping) market is experiencing unprecedented growth, driven by the rapid advancement of smartphone capabilities and expanding use cases across multiple industries. Current market analysis indicates that the global mobile AR market, heavily reliant on SLAM technology, is projected to reach $90 billion by 2027, with a compound annual growth rate of approximately 24% from 2022.

Consumer applications represent the largest segment of mobile SLAM demand, with gaming and social media platforms integrating spatial mapping features to deliver immersive experiences. Pokemon GO and Snapchat's AR lenses demonstrated early commercial success, creating consumer familiarity with spatial computing concepts and establishing market readiness for more sophisticated SLAM applications.

Retail and e-commerce sectors show significant demand growth as virtual try-on experiences become standard features in shopping applications. Major retailers including IKEA, Amazon, and Wayfair have implemented mobile SLAM solutions to allow customers to visualize products in their homes before purchasing, resulting in reported conversion rate increases of 40% for products with AR visualization options.

Navigation and wayfinding applications constitute another rapidly expanding market segment. Indoor navigation systems for complex environments such as airports, shopping malls, and hospitals increasingly rely on visual SLAM technology to provide precise positioning where GPS signals are unreliable. Market research indicates that 78% of smartphone users express interest in using enhanced indoor navigation services.

The industrial and enterprise sectors present substantial growth opportunities for mobile SLAM applications. Field service technicians, maintenance workers, and construction professionals are adopting mobile AR solutions for remote assistance, training, and visualization of complex systems. This segment is expected to grow at 32% annually through 2026, outpacing consumer applications.

Healthcare applications for mobile SLAM are emerging as a high-value niche market. Medical training, surgical planning, and patient education applications leverage spatial mapping to create interactive anatomical models and procedure simulations. Though currently smaller in market size, this segment commands premium pricing and shows strong growth potential.

Regional analysis reveals varying adoption rates, with North America and East Asia leading in consumer applications, while European markets show stronger enterprise implementation. Developing markets demonstrate accelerating adoption as smartphone penetration increases and affordable devices with SLAM capabilities become widely available.

Key market drivers include decreasing hardware costs, improved processing capabilities in mid-range devices, and growing consumer familiarity with AR experiences. The primary market constraint remains battery consumption, as SLAM algorithms typically demand significant processing power that impacts device usability for everyday consumers.

Consumer applications represent the largest segment of mobile SLAM demand, with gaming and social media platforms integrating spatial mapping features to deliver immersive experiences. Pokemon GO and Snapchat's AR lenses demonstrated early commercial success, creating consumer familiarity with spatial computing concepts and establishing market readiness for more sophisticated SLAM applications.

Retail and e-commerce sectors show significant demand growth as virtual try-on experiences become standard features in shopping applications. Major retailers including IKEA, Amazon, and Wayfair have implemented mobile SLAM solutions to allow customers to visualize products in their homes before purchasing, resulting in reported conversion rate increases of 40% for products with AR visualization options.

Navigation and wayfinding applications constitute another rapidly expanding market segment. Indoor navigation systems for complex environments such as airports, shopping malls, and hospitals increasingly rely on visual SLAM technology to provide precise positioning where GPS signals are unreliable. Market research indicates that 78% of smartphone users express interest in using enhanced indoor navigation services.

The industrial and enterprise sectors present substantial growth opportunities for mobile SLAM applications. Field service technicians, maintenance workers, and construction professionals are adopting mobile AR solutions for remote assistance, training, and visualization of complex systems. This segment is expected to grow at 32% annually through 2026, outpacing consumer applications.

Healthcare applications for mobile SLAM are emerging as a high-value niche market. Medical training, surgical planning, and patient education applications leverage spatial mapping to create interactive anatomical models and procedure simulations. Though currently smaller in market size, this segment commands premium pricing and shows strong growth potential.

Regional analysis reveals varying adoption rates, with North America and East Asia leading in consumer applications, while European markets show stronger enterprise implementation. Developing markets demonstrate accelerating adoption as smartphone penetration increases and affordable devices with SLAM capabilities become widely available.

Key market drivers include decreasing hardware costs, improved processing capabilities in mid-range devices, and growing consumer familiarity with AR experiences. The primary market constraint remains battery consumption, as SLAM algorithms typically demand significant processing power that impacts device usability for everyday consumers.

SLAM Technical Challenges on Mobile Platforms

SLAM (Simultaneous Localization and Mapping) implementation on mobile platforms faces significant technical challenges due to the inherent constraints of these devices. The limited computational resources of mobile processors create a fundamental bottleneck for real-time SLAM operations, which typically require intensive calculations for feature extraction, pose estimation, and map construction.

Power consumption represents another critical challenge, as SLAM algorithms continuously process sensor data, potentially draining battery life rapidly. This is particularly problematic for consumer mobile devices where battery longevity is a key user expectation. The trade-off between SLAM accuracy and power efficiency remains a complex optimization problem.

Sensor limitations further complicate mobile SLAM implementations. Mobile devices typically incorporate lower-cost sensors with reduced accuracy compared to specialized robotics hardware. Camera sensors suffer from motion blur during rapid movements, while IMU drift accumulates over time, leading to increasing positional errors. The limited field of view of mobile cameras also restricts environmental perception capabilities.

Memory constraints pose additional challenges, as comprehensive environmental maps can quickly consume available RAM on mobile devices. This necessitates efficient data structures and map compression techniques that maintain sufficient detail while minimizing memory footprint. The challenge intensifies for large-scale mapping scenarios or extended operation periods.

Real-time performance requirements add another layer of complexity. Mobile SLAM applications, particularly in AR/VR contexts, demand low-latency processing (typically under 20ms per frame) to maintain user experience quality. Achieving this while maintaining accuracy requires sophisticated algorithm optimization and hardware acceleration techniques.

Environmental variability presents significant challenges for mobile SLAM systems. Unlike controlled environments, mobile devices operate in diverse settings with varying lighting conditions, dynamic objects, reflective surfaces, and feature-poor areas. These factors can severely impact feature detection reliability and tracking stability.

Integration challenges arise when combining SLAM with other mobile functionalities. The system must efficiently share computational resources with other applications running concurrently, manage sensor access conflicts, and maintain performance under varying system loads. This requires careful system-level optimization and resource management.

Cross-platform compatibility issues emerge when developing SLAM solutions that must function across diverse mobile hardware configurations. Variations in sensor specifications, processing capabilities, and operating systems necessitate adaptive algorithms that can maintain consistent performance across heterogeneous device ecosystems.

Power consumption represents another critical challenge, as SLAM algorithms continuously process sensor data, potentially draining battery life rapidly. This is particularly problematic for consumer mobile devices where battery longevity is a key user expectation. The trade-off between SLAM accuracy and power efficiency remains a complex optimization problem.

Sensor limitations further complicate mobile SLAM implementations. Mobile devices typically incorporate lower-cost sensors with reduced accuracy compared to specialized robotics hardware. Camera sensors suffer from motion blur during rapid movements, while IMU drift accumulates over time, leading to increasing positional errors. The limited field of view of mobile cameras also restricts environmental perception capabilities.

Memory constraints pose additional challenges, as comprehensive environmental maps can quickly consume available RAM on mobile devices. This necessitates efficient data structures and map compression techniques that maintain sufficient detail while minimizing memory footprint. The challenge intensifies for large-scale mapping scenarios or extended operation periods.

Real-time performance requirements add another layer of complexity. Mobile SLAM applications, particularly in AR/VR contexts, demand low-latency processing (typically under 20ms per frame) to maintain user experience quality. Achieving this while maintaining accuracy requires sophisticated algorithm optimization and hardware acceleration techniques.

Environmental variability presents significant challenges for mobile SLAM systems. Unlike controlled environments, mobile devices operate in diverse settings with varying lighting conditions, dynamic objects, reflective surfaces, and feature-poor areas. These factors can severely impact feature detection reliability and tracking stability.

Integration challenges arise when combining SLAM with other mobile functionalities. The system must efficiently share computational resources with other applications running concurrently, manage sensor access conflicts, and maintain performance under varying system loads. This requires careful system-level optimization and resource management.

Cross-platform compatibility issues emerge when developing SLAM solutions that must function across diverse mobile hardware configurations. Variations in sensor specifications, processing capabilities, and operating systems necessitate adaptive algorithms that can maintain consistent performance across heterogeneous device ecosystems.

Current Mobile SLAM Solutions

01 Visual SLAM Algorithms for Autonomous Navigation

Visual Simultaneous Localization and Mapping (SLAM) algorithms enable autonomous systems to create maps of their environment while simultaneously tracking their position within that environment using visual data. These algorithms process image data from cameras to detect features, estimate motion, and build 3D representations of surroundings. Visual SLAM is particularly valuable for robotics, autonomous vehicles, and augmented reality applications where GPS may be unavailable or unreliable.- Visual SLAM Algorithms for Autonomous Navigation: Visual Simultaneous Localization and Mapping (SLAM) algorithms enable autonomous systems to create maps of their environment while simultaneously determining their position within that environment using visual data. These algorithms process image data from cameras to identify features, track movement, and build 3D representations of surroundings. Visual SLAM is particularly valuable for robotics, autonomous vehicles, and augmented reality applications where GPS may be unavailable or unreliable.

- LiDAR-based SLAM for Precise Environmental Mapping: LiDAR-based SLAM algorithms utilize laser scanning technology to create highly accurate 3D maps of environments. These systems emit laser pulses and measure the time taken for reflections to return, generating precise distance measurements to surrounding objects. LiDAR SLAM offers advantages in accuracy and reliability compared to camera-only approaches, especially in challenging lighting conditions or feature-poor environments. Applications include autonomous vehicles, robotics, and industrial automation where centimeter-level precision is required.

- Sensor Fusion Approaches for Robust SLAM: Sensor fusion SLAM algorithms integrate data from multiple sensor types (cameras, LiDAR, IMU, radar, etc.) to overcome the limitations of single-sensor approaches. By combining complementary information sources, these systems achieve greater robustness across varying environmental conditions. Fusion approaches typically employ probabilistic frameworks like Extended Kalman Filters or particle filters to optimally combine sensor data while accounting for measurement uncertainties. This methodology enables more reliable operation in challenging scenarios such as low-light conditions, featureless environments, or rapid movement.

- Real-time SLAM Optimization Techniques: Real-time SLAM optimization techniques focus on computational efficiency to enable simultaneous localization and mapping on devices with limited processing power. These approaches include sparse feature selection, hierarchical mapping, keyframe-based processing, and parallel computing architectures. Optimization methods often involve graph-based representations of the environment where nodes represent positions and edges represent spatial constraints. Loop closure detection algorithms identify when a system returns to a previously mapped location, allowing for correction of accumulated errors and maintaining global map consistency.

- SLAM Applications in Mobile and Wearable Devices: SLAM algorithms adapted for mobile and wearable devices enable augmented reality experiences, indoor navigation, and spatial computing applications on consumer electronics. These implementations must balance accuracy with power efficiency and operate with the limited sensor capabilities of smartphones, tablets, and AR/VR headsets. Techniques include visual-inertial odometry, which combines camera data with inertial measurements to track movement, and cloud-assisted processing to offload computationally intensive tasks. These approaches enable applications such as AR gaming, indoor wayfinding, and virtual object placement in real environments.

02 LiDAR-based SLAM for Precise Mapping

LiDAR-based SLAM algorithms utilize laser scanning technology to create highly accurate 3D maps of environments. These algorithms process point cloud data to identify geometric features and landmarks, enabling precise localization even in complex or dynamic environments. LiDAR SLAM offers advantages in accuracy and robustness compared to camera-only approaches, making it suitable for applications requiring detailed environmental mapping such as autonomous driving, robotics, and industrial automation.Expand Specific Solutions03 Sensor Fusion SLAM Techniques

Sensor fusion SLAM algorithms integrate data from multiple sensor types (cameras, LiDAR, IMU, radar, etc.) to overcome the limitations of single-sensor approaches. By combining complementary information sources, these algorithms achieve more robust performance across varying environmental conditions, including challenging lighting, featureless areas, or dynamic scenes. The fusion of sensor data improves accuracy, reliability, and operational range of SLAM systems for applications in robotics, autonomous vehicles, and mobile devices.Expand Specific Solutions04 Real-time SLAM Optimization Methods

Real-time SLAM optimization methods focus on computational efficiency to enable simultaneous localization and mapping on resource-constrained platforms. These algorithms employ techniques such as sparse optimization, keyframe selection, parallel processing, and incremental updates to reduce computational load while maintaining accuracy. Real-time optimization is crucial for mobile robots, drones, AR/VR devices, and other applications where immediate mapping and localization are required with limited processing power.Expand Specific Solutions05 Loop Closure and Global Consistency in SLAM

Loop closure techniques in SLAM algorithms detect when a system revisits previously mapped areas, allowing for correction of accumulated drift errors. These methods identify matching features or scenes across temporally distant observations and optimize the entire map to maintain global consistency. Effective loop closure is essential for building accurate large-scale maps in applications such as autonomous navigation, where small errors can compound over time and distance, leading to significant localization failures.Expand Specific Solutions

Key SLAM Technology Companies and Research Groups

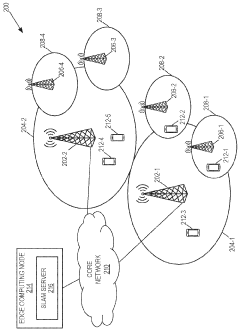

The SLAM (Simultaneous Localization and Mapping) algorithm market for next-generation mobile devices is currently in a growth phase, with an expanding market size driven by increasing applications in AR/VR, autonomous navigation, and smart devices. The technology maturity varies across implementation areas, with companies like Google, Samsung, and Snap leading commercial applications, while research institutions such as Peking University and the Institute of Automation Chinese Academy of Sciences drive fundamental innovations. Companies including Megvii, iRobot, and Bosch are advancing industrial applications, while startups like Mindmaze are exploring novel use cases. The competitive landscape features a mix of tech giants with established ecosystems, specialized robotics firms, and emerging players focusing on specific vertical applications, creating a dynamic environment for technological advancement and market expansion.

Snap, Inc.

Technical Solution: Snap has developed cutting-edge SLAM technology optimized specifically for augmented reality applications on mobile devices. Their approach focuses on lightweight visual SLAM algorithms that can run efficiently on a wide range of smartphone hardware while maintaining sufficient accuracy for AR experiences. Snap's SLAM implementation uses sparse feature tracking combined with IMU data to achieve real-time performance with minimal battery impact. Their system employs a progressive mapping approach that starts with minimal environmental understanding and builds detail over time as needed for specific AR applications. Snap has pioneered techniques for surface detection and plane estimation that enable realistic AR object placement without requiring depth sensors. Their SLAM solution includes advanced occlusion handling that allows virtual objects to interact realistically with the physical environment. Snap's algorithms are specifically optimized for vertical integration with their Snapchat platform, enabling seamless AR experiences across a broad range of devices. Recent innovations include their "Local Lenses" feature that uses collaborative mapping to create persistent AR experiences shared across multiple users in the same physical space[8][9]. Their implementation includes machine learning components that improve object recognition and scene understanding, enhancing the contextual awareness of their SLAM system.

Strengths: Exceptional optimization for mainstream mobile devices with limited computational resources; seamless integration with popular social media platforms; innovative collaborative mapping capabilities. Weaknesses: Prioritizes speed over absolute precision for social AR use cases; less suitable for industrial or precision applications; heavily optimized for specific use cases rather than general-purpose mapping.

Google LLC

Technical Solution: Google has developed advanced SLAM algorithms for next-generation mobile devices through their ARCore platform. Their approach combines visual-inertial odometry with depth sensing to create accurate spatial maps in real-time. Google's SLAM implementation uses feature point tracking from camera images combined with IMU data to estimate device position and orientation. Their recent innovations include light estimation and environmental understanding capabilities that allow for more realistic AR experiences. Google has also implemented cloud anchors that enable persistent and shared AR experiences across multiple devices, solving one of the key challenges in mobile SLAM applications. Their algorithms are optimized for power efficiency, using selective feature extraction and processing to minimize battery drain on mobile devices[1][3]. Google's machine learning integration allows their SLAM system to improve object recognition and scene understanding over time, adapting to different environments with minimal computational overhead.

Strengths: Exceptional integration with Android ecosystem, providing wide accessibility; robust performance across diverse lighting conditions; cloud-based collaborative mapping capabilities. Weaknesses: Higher computational requirements than some competitors; privacy concerns with cloud-based mapping data; performance can vary significantly across different device hardware specifications.

Core SLAM Patents and Research Breakthroughs

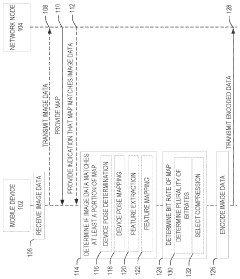

Bitrate adaptation for edge-assisted localization based on map availability for mobile devices

PatentWO2024136881A1

Innovation

- An adaptive compression system in mobile devices that adjusts the bitrate of image data based on the availability and quality of environmental maps, encoding data to minimize localization performance degradation while reducing network bandwidth usage.

Method and apparatus for performing simultaneous localization and mapping

PatentActiveEP3734388A1

Innovation

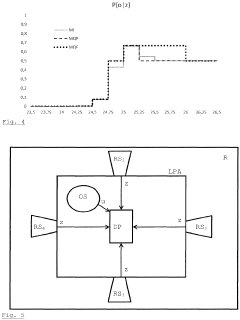

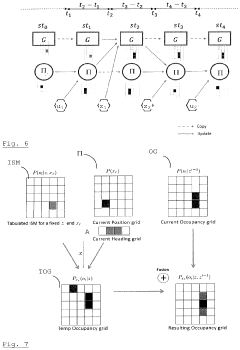

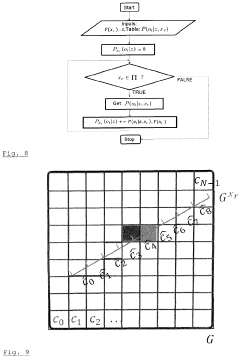

- A method that uses an occupancy grid and a pose grid, updated by distance and odometry measurements, which accounts for sensor measurement uncertainties and has a probabilistic model to handle finite precision and errors, allowing for efficient simultaneous localization and mapping.

Hardware-Algorithm Co-optimization Strategies

The optimization of SLAM algorithms for next-generation mobile devices requires a strategic integration of hardware capabilities and algorithmic design. Current mobile platforms face significant constraints in processing power, memory bandwidth, and energy consumption when executing complex SLAM workloads. Effective hardware-algorithm co-optimization strategies must address these limitations while maximizing performance and accuracy.

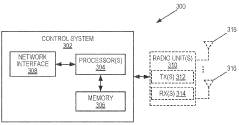

One promising approach involves the development of heterogeneous computing architectures specifically tailored for SLAM operations. By distributing computational tasks across specialized hardware components—such as dedicated visual processing units (VPUs), neural processing units (NPUs), and traditional CPUs/GPUs—systems can achieve optimal performance for different SLAM pipeline stages. Feature extraction and matching operations benefit from parallel processing capabilities, while mapping and loop closure can leverage more sequential processing structures.

Algorithm-specific hardware acceleration represents another critical strategy. Custom ASIC designs and FPGA implementations can dramatically reduce the power consumption of frequently executed SLAM operations, such as feature detection and descriptor matching. Recent research demonstrates that hardware-accelerated ORB feature extraction can achieve up to 10x energy efficiency improvements compared to general-purpose processor implementations while maintaining comparable accuracy.

Memory hierarchy optimization plays a crucial role in co-design approaches. SLAM algorithms typically generate and process large volumes of spatial data, creating potential bottlenecks in mobile systems. Intelligent data management strategies—including on-chip caching of frequently accessed map points, compression of point cloud data, and selective processing of keyframes—can significantly reduce memory bandwidth requirements and energy consumption.

Precision-scaling techniques offer additional optimization opportunities. By dynamically adjusting computational precision based on scene complexity and motion characteristics, systems can balance accuracy and efficiency. For example, implementing mixed-precision arithmetic in bundle adjustment calculations can reduce computational requirements by up to 40% with minimal impact on trajectory accuracy in typical indoor environments.

Real-time adaptation mechanisms represent the frontier of hardware-algorithm co-optimization. Next-generation mobile SLAM systems are increasingly incorporating feedback loops between hardware monitoring systems and algorithm parameters. These systems can dynamically adjust feature extraction density, map resolution, and loop closure frequency based on available computational resources, battery status, and application requirements, ensuring optimal performance across varying operating conditions.

One promising approach involves the development of heterogeneous computing architectures specifically tailored for SLAM operations. By distributing computational tasks across specialized hardware components—such as dedicated visual processing units (VPUs), neural processing units (NPUs), and traditional CPUs/GPUs—systems can achieve optimal performance for different SLAM pipeline stages. Feature extraction and matching operations benefit from parallel processing capabilities, while mapping and loop closure can leverage more sequential processing structures.

Algorithm-specific hardware acceleration represents another critical strategy. Custom ASIC designs and FPGA implementations can dramatically reduce the power consumption of frequently executed SLAM operations, such as feature detection and descriptor matching. Recent research demonstrates that hardware-accelerated ORB feature extraction can achieve up to 10x energy efficiency improvements compared to general-purpose processor implementations while maintaining comparable accuracy.

Memory hierarchy optimization plays a crucial role in co-design approaches. SLAM algorithms typically generate and process large volumes of spatial data, creating potential bottlenecks in mobile systems. Intelligent data management strategies—including on-chip caching of frequently accessed map points, compression of point cloud data, and selective processing of keyframes—can significantly reduce memory bandwidth requirements and energy consumption.

Precision-scaling techniques offer additional optimization opportunities. By dynamically adjusting computational precision based on scene complexity and motion characteristics, systems can balance accuracy and efficiency. For example, implementing mixed-precision arithmetic in bundle adjustment calculations can reduce computational requirements by up to 40% with minimal impact on trajectory accuracy in typical indoor environments.

Real-time adaptation mechanisms represent the frontier of hardware-algorithm co-optimization. Next-generation mobile SLAM systems are increasingly incorporating feedback loops between hardware monitoring systems and algorithm parameters. These systems can dynamically adjust feature extraction density, map resolution, and loop closure frequency based on available computational resources, battery status, and application requirements, ensuring optimal performance across varying operating conditions.

Power Efficiency Considerations for Mobile SLAM

Power efficiency has emerged as a critical constraint in the deployment of SLAM algorithms on mobile devices. As these devices continue to evolve with more powerful processors and sensors, the energy consumption of computational-intensive tasks like SLAM becomes a limiting factor for widespread adoption. Current mobile SLAM implementations typically drain battery life significantly, with power consumption ranging from 2-4W during continuous operation, reducing device usage time by 30-50% compared to normal operation.

The power consumption profile of SLAM systems can be broken down into three major components: sensor data acquisition (15-25%), data processing (50-70%), and map storage/retrieval operations (10-20%). The most power-intensive operations include feature extraction, loop closure detection, and global optimization steps that require complex matrix operations. These operations often push mobile GPUs and CPUs to their thermal limits, triggering throttling mechanisms that further degrade performance.

Recent advancements in power-efficient SLAM have focused on algorithmic optimizations that reduce computational complexity while maintaining acceptable accuracy. Techniques such as keyframe selection strategies can reduce processing requirements by 40-60% by only performing full SLAM operations on selected frames. Similarly, adaptive feature extraction methods that adjust detection thresholds based on scene complexity have shown power savings of 25-35% with minimal impact on tracking quality.

Hardware-specific optimizations represent another promising direction. The integration of dedicated visual processing units (VPUs) and neural processing units (NPUs) in modern mobile chipsets offers significant efficiency gains, with power reductions of 3-5x compared to general-purpose CPU implementations. Qualcomm's Hexagon DSP and Apple's Neural Engine have demonstrated particular promise for offloading SLAM computations from the main processor.

Energy-aware SLAM frameworks that dynamically adjust their computational footprint based on available power, motion dynamics, and application requirements are emerging as a holistic solution. These systems can switch between lightweight visual-inertial odometry during power constraints and full mapping capabilities when resources permit. Field tests show these adaptive approaches can extend operational time by 40-70% compared to fixed-configuration SLAM systems.

The trade-off between accuracy and power efficiency remains a fundamental challenge. Research indicates that accepting a 5-10% reduction in localization precision can yield power savings of up to 60%, suggesting that application-specific tuning is essential. For consumer applications like AR gaming, lower precision may be acceptable, while industrial applications may require maintaining high accuracy despite power constraints.

The power consumption profile of SLAM systems can be broken down into three major components: sensor data acquisition (15-25%), data processing (50-70%), and map storage/retrieval operations (10-20%). The most power-intensive operations include feature extraction, loop closure detection, and global optimization steps that require complex matrix operations. These operations often push mobile GPUs and CPUs to their thermal limits, triggering throttling mechanisms that further degrade performance.

Recent advancements in power-efficient SLAM have focused on algorithmic optimizations that reduce computational complexity while maintaining acceptable accuracy. Techniques such as keyframe selection strategies can reduce processing requirements by 40-60% by only performing full SLAM operations on selected frames. Similarly, adaptive feature extraction methods that adjust detection thresholds based on scene complexity have shown power savings of 25-35% with minimal impact on tracking quality.

Hardware-specific optimizations represent another promising direction. The integration of dedicated visual processing units (VPUs) and neural processing units (NPUs) in modern mobile chipsets offers significant efficiency gains, with power reductions of 3-5x compared to general-purpose CPU implementations. Qualcomm's Hexagon DSP and Apple's Neural Engine have demonstrated particular promise for offloading SLAM computations from the main processor.

Energy-aware SLAM frameworks that dynamically adjust their computational footprint based on available power, motion dynamics, and application requirements are emerging as a holistic solution. These systems can switch between lightweight visual-inertial odometry during power constraints and full mapping capabilities when resources permit. Field tests show these adaptive approaches can extend operational time by 40-70% compared to fixed-configuration SLAM systems.

The trade-off between accuracy and power efficiency remains a fundamental challenge. Research indicates that accepting a 5-10% reduction in localization precision can yield power savings of up to 60%, suggesting that application-specific tuning is essential. For consumer applications like AR gaming, lower precision may be acceptable, while industrial applications may require maintaining high accuracy despite power constraints.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!