SLAM With LiDAR And Camera Fusion For Autonomous Vehicles

SEP 12, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

LiDAR-Camera Fusion SLAM Background and Objectives

Simultaneous Localization and Mapping (SLAM) technology has evolved significantly over the past two decades, transitioning from theoretical research to practical applications in autonomous vehicles. The fusion of LiDAR (Light Detection and Ranging) and camera sensors represents a pivotal advancement in this evolution, combining the precise depth measurement capabilities of LiDAR with the rich visual information provided by cameras. This technological convergence addresses fundamental limitations inherent in single-sensor approaches.

The historical trajectory of SLAM development began with filter-based methods in the early 2000s, progressing through optimization-based techniques in the 2010s, to the current era of deep learning integration. Early SLAM systems relied predominantly on either visual information (Visual SLAM) or LiDAR data (LiDAR SLAM), each with distinct advantages and limitations. Visual SLAM offers rich feature extraction but struggles with scale ambiguity and environmental variations, while LiDAR SLAM provides accurate depth information but lacks texture recognition capabilities.

The technological trend clearly points toward sensor fusion as the optimal path forward. This approach leverages complementary sensor characteristics to enhance robustness, accuracy, and reliability in diverse operational environments. The integration of these technologies has been accelerated by advancements in computational hardware, particularly GPUs and specialized AI processors, enabling real-time processing of multi-modal sensor data.

Current research focuses on developing algorithms that can seamlessly integrate data from heterogeneous sensors while maintaining computational efficiency. The objective is to create SLAM systems capable of operating reliably across varying environmental conditions, including challenging scenarios such as low-light environments, adverse weather conditions, and complex urban landscapes with dynamic objects.

The primary technical goals for LiDAR-Camera fusion SLAM systems include achieving sub-centimeter localization accuracy, real-time performance on embedded platforms, resilience to sensor degradation, and adaptability to diverse environmental conditions. Additionally, there is a growing emphasis on developing systems that can function effectively with reduced computational resources, making them viable for commercial deployment in mass-market autonomous vehicles.

Looking forward, the field is moving toward end-to-end learning approaches that can optimize the entire SLAM pipeline, from sensor data processing to map construction. This evolution aims to overcome the traditional trade-offs between accuracy, robustness, and computational efficiency, ultimately enabling autonomous vehicles to navigate complex environments with human-like perception capabilities but machine-level precision and reliability.

The historical trajectory of SLAM development began with filter-based methods in the early 2000s, progressing through optimization-based techniques in the 2010s, to the current era of deep learning integration. Early SLAM systems relied predominantly on either visual information (Visual SLAM) or LiDAR data (LiDAR SLAM), each with distinct advantages and limitations. Visual SLAM offers rich feature extraction but struggles with scale ambiguity and environmental variations, while LiDAR SLAM provides accurate depth information but lacks texture recognition capabilities.

The technological trend clearly points toward sensor fusion as the optimal path forward. This approach leverages complementary sensor characteristics to enhance robustness, accuracy, and reliability in diverse operational environments. The integration of these technologies has been accelerated by advancements in computational hardware, particularly GPUs and specialized AI processors, enabling real-time processing of multi-modal sensor data.

Current research focuses on developing algorithms that can seamlessly integrate data from heterogeneous sensors while maintaining computational efficiency. The objective is to create SLAM systems capable of operating reliably across varying environmental conditions, including challenging scenarios such as low-light environments, adverse weather conditions, and complex urban landscapes with dynamic objects.

The primary technical goals for LiDAR-Camera fusion SLAM systems include achieving sub-centimeter localization accuracy, real-time performance on embedded platforms, resilience to sensor degradation, and adaptability to diverse environmental conditions. Additionally, there is a growing emphasis on developing systems that can function effectively with reduced computational resources, making them viable for commercial deployment in mass-market autonomous vehicles.

Looking forward, the field is moving toward end-to-end learning approaches that can optimize the entire SLAM pipeline, from sensor data processing to map construction. This evolution aims to overcome the traditional trade-offs between accuracy, robustness, and computational efficiency, ultimately enabling autonomous vehicles to navigate complex environments with human-like perception capabilities but machine-level precision and reliability.

Market Analysis for Autonomous Vehicle Perception Systems

The autonomous vehicle perception systems market is experiencing robust growth, driven by increasing demand for advanced driver assistance systems (ADAS) and fully autonomous driving capabilities. Current market valuations place the global autonomous vehicle perception system market at approximately 21 billion USD in 2023, with projections indicating a compound annual growth rate (CAGR) of 14.5% through 2030, potentially reaching 53 billion USD by the end of the decade.

LiDAR and camera fusion-based SLAM systems represent a significant segment within this market, accounting for roughly 30% of the total perception systems market share. This segment is expected to grow at an accelerated rate of 17% annually as automotive manufacturers increasingly recognize the complementary benefits of sensor fusion approaches.

Regional analysis reveals North America currently leads the market with approximately 40% share, followed by Europe (30%) and Asia-Pacific (25%). However, the Asia-Pacific region, particularly China, Japan, and South Korea, is demonstrating the fastest growth trajectory, with annual expansion rates exceeding 20% as domestic automakers and technology companies intensify their autonomous driving development efforts.

Consumer demand patterns indicate a clear preference for vehicles equipped with Level 2 and Level 3 autonomous capabilities, with safety features being the primary purchasing consideration. Market surveys show that 68% of new vehicle buyers consider advanced perception systems as "important" or "very important" in their purchasing decisions, representing a 15% increase from just three years ago.

The competitive landscape features traditional automotive suppliers like Bosch, Continental, and Aptiv alongside technology companies such as Mobileye, Nvidia, and specialized startups. These companies are increasingly focusing on integrated perception solutions that combine multiple sensor modalities, with LiDAR-camera fusion emerging as the preferred approach for achieving both accuracy and redundancy.

Supply chain analysis reveals potential bottlenecks in the production of high-quality LiDAR sensors, with current global production capacity struggling to meet projected demand. This supply constraint is expected to influence pricing strategies and technology adoption rates over the next 3-5 years.

Customer segmentation shows three distinct market tiers: premium automotive manufacturers implementing comprehensive perception systems, mid-market manufacturers adopting selective advanced features, and mass-market producers beginning to incorporate basic perception capabilities as costs decrease. The premium segment currently generates 55% of market revenue despite representing only 15% of vehicle production volume.

LiDAR and camera fusion-based SLAM systems represent a significant segment within this market, accounting for roughly 30% of the total perception systems market share. This segment is expected to grow at an accelerated rate of 17% annually as automotive manufacturers increasingly recognize the complementary benefits of sensor fusion approaches.

Regional analysis reveals North America currently leads the market with approximately 40% share, followed by Europe (30%) and Asia-Pacific (25%). However, the Asia-Pacific region, particularly China, Japan, and South Korea, is demonstrating the fastest growth trajectory, with annual expansion rates exceeding 20% as domestic automakers and technology companies intensify their autonomous driving development efforts.

Consumer demand patterns indicate a clear preference for vehicles equipped with Level 2 and Level 3 autonomous capabilities, with safety features being the primary purchasing consideration. Market surveys show that 68% of new vehicle buyers consider advanced perception systems as "important" or "very important" in their purchasing decisions, representing a 15% increase from just three years ago.

The competitive landscape features traditional automotive suppliers like Bosch, Continental, and Aptiv alongside technology companies such as Mobileye, Nvidia, and specialized startups. These companies are increasingly focusing on integrated perception solutions that combine multiple sensor modalities, with LiDAR-camera fusion emerging as the preferred approach for achieving both accuracy and redundancy.

Supply chain analysis reveals potential bottlenecks in the production of high-quality LiDAR sensors, with current global production capacity struggling to meet projected demand. This supply constraint is expected to influence pricing strategies and technology adoption rates over the next 3-5 years.

Customer segmentation shows three distinct market tiers: premium automotive manufacturers implementing comprehensive perception systems, mid-market manufacturers adopting selective advanced features, and mass-market producers beginning to incorporate basic perception capabilities as costs decrease. The premium segment currently generates 55% of market revenue despite representing only 15% of vehicle production volume.

Current Challenges in Sensor Fusion for SLAM

Despite significant advancements in SLAM technology utilizing LiDAR and camera fusion for autonomous vehicles, several critical challenges persist that impede optimal performance. One fundamental issue is sensor synchronization, as LiDARs and cameras operate at different sampling rates and processing times. Even millisecond-level discrepancies can lead to significant errors in high-speed scenarios, causing misalignment between point clouds and images that deteriorates mapping accuracy.

Calibration complexity represents another major hurdle, requiring precise extrinsic parameter determination between sensors. Environmental factors like temperature fluctuations and vehicle vibrations can gradually invalidate initial calibrations, necessitating robust online recalibration mechanisms that don't currently exist in production-ready form.

Data association across heterogeneous sensors presents unique difficulties, as features detected in camera images must be correctly matched with corresponding LiDAR points. This challenge intensifies in dynamic environments with moving objects or when dealing with reflective surfaces that create ambiguous LiDAR returns. Current algorithms struggle with reliable feature matching across these modalities, especially in adverse conditions.

Computational resource constraints significantly limit real-time performance. Fusion algorithms require substantial processing power, creating a trade-off between accuracy and latency that is particularly problematic for autonomous driving applications where split-second decisions are critical. Edge computing solutions remain insufficient for handling the full complexity of sensor fusion SLAM at highway speeds.

Environmental robustness represents perhaps the most significant challenge. Camera performance degrades in low-light conditions, direct sunlight, or adverse weather, while LiDAR effectiveness diminishes in rain, fog, or snow due to signal scattering. Current fusion approaches lack sophisticated adaptation mechanisms to dynamically adjust sensor weighting based on environmental conditions.

Scale inconsistency between monocular cameras (which provide relative scale) and LiDAR (which provides absolute measurements) creates integration difficulties. Although theoretical solutions exist, practical implementations often suffer from drift and accumulated errors over extended operation periods.

Finally, semantic understanding limitations hinder high-level scene interpretation. While cameras excel at object classification, and LiDARs provide precise spatial information, fusing these capabilities for comprehensive semantic mapping remains challenging. Current systems struggle to maintain consistent object identification and tracking across sensor modalities, particularly for partially occluded objects or those at long distances.

Calibration complexity represents another major hurdle, requiring precise extrinsic parameter determination between sensors. Environmental factors like temperature fluctuations and vehicle vibrations can gradually invalidate initial calibrations, necessitating robust online recalibration mechanisms that don't currently exist in production-ready form.

Data association across heterogeneous sensors presents unique difficulties, as features detected in camera images must be correctly matched with corresponding LiDAR points. This challenge intensifies in dynamic environments with moving objects or when dealing with reflective surfaces that create ambiguous LiDAR returns. Current algorithms struggle with reliable feature matching across these modalities, especially in adverse conditions.

Computational resource constraints significantly limit real-time performance. Fusion algorithms require substantial processing power, creating a trade-off between accuracy and latency that is particularly problematic for autonomous driving applications where split-second decisions are critical. Edge computing solutions remain insufficient for handling the full complexity of sensor fusion SLAM at highway speeds.

Environmental robustness represents perhaps the most significant challenge. Camera performance degrades in low-light conditions, direct sunlight, or adverse weather, while LiDAR effectiveness diminishes in rain, fog, or snow due to signal scattering. Current fusion approaches lack sophisticated adaptation mechanisms to dynamically adjust sensor weighting based on environmental conditions.

Scale inconsistency between monocular cameras (which provide relative scale) and LiDAR (which provides absolute measurements) creates integration difficulties. Although theoretical solutions exist, practical implementations often suffer from drift and accumulated errors over extended operation periods.

Finally, semantic understanding limitations hinder high-level scene interpretation. While cameras excel at object classification, and LiDARs provide precise spatial information, fusing these capabilities for comprehensive semantic mapping remains challenging. Current systems struggle to maintain consistent object identification and tracking across sensor modalities, particularly for partially occluded objects or those at long distances.

State-of-the-Art LiDAR-Camera Fusion Approaches

01 Sensor fusion techniques for SLAM systems

Fusion of LiDAR and camera data enhances SLAM performance by combining the strengths of both sensors. LiDAR provides accurate depth measurements while cameras offer rich visual information. Various fusion algorithms integrate these complementary data sources to improve localization accuracy, feature extraction, and mapping quality. These techniques often involve point cloud registration with image features to create more robust environmental representations.- Sensor fusion techniques for SLAM systems: Sensor fusion techniques combine data from LiDAR and cameras to enhance SLAM performance. By integrating complementary sensor data, these systems can overcome the limitations of individual sensors. LiDAR provides precise depth measurements while cameras contribute rich visual features, resulting in more robust localization and mapping. Advanced fusion algorithms synchronize and calibrate these sensors to create comprehensive environmental representations with improved accuracy.

- Feature extraction and matching algorithms: Feature extraction and matching algorithms are crucial for SLAM systems using LiDAR and camera fusion. These algorithms identify distinctive environmental features from both sensor modalities and establish correspondences between them. Advanced techniques include point cloud segmentation from LiDAR data and visual feature detection from camera images. By effectively matching these features across different sensor frames, SLAM systems can achieve more accurate pose estimation and mapping capabilities.

- Loop closure and drift correction methods: Loop closure and drift correction methods are essential for maintaining accuracy in SLAM systems. These techniques identify when a system revisits previously mapped areas and correct accumulated errors. By recognizing familiar locations through sensor data comparison, the system can adjust its trajectory estimation and map representation. Advanced algorithms use both visual cues from cameras and geometric information from LiDAR to detect loops reliably, significantly improving long-term mapping accuracy.

- Real-time optimization and computational efficiency: Real-time optimization and computational efficiency are critical for practical SLAM applications. These approaches focus on balancing accuracy with processing speed to enable operation on platforms with limited computational resources. Techniques include parallel processing of sensor data, selective feature processing, and efficient map representation methods. By optimizing algorithms for speed while maintaining sufficient accuracy, these systems can perform reliable localization and mapping in dynamic environments without significant latency.

- Environmental adaptation and robustness: Environmental adaptation and robustness features enable SLAM systems to function reliably across diverse conditions. These approaches include adaptive sensor weighting based on environmental factors, handling dynamic objects in the scene, and maintaining performance during sensor degradation. By intelligently adjusting fusion parameters based on context and implementing redundancy mechanisms, these systems can maintain accurate localization and mapping in challenging scenarios such as poor lighting, reflective surfaces, or adverse weather conditions.

02 Real-time mapping and localization optimization

Advanced algorithms optimize the real-time performance of LiDAR-camera fusion SLAM systems. These methods include efficient data processing pipelines, parallel computing architectures, and optimization techniques that reduce computational overhead. By minimizing latency while maintaining accuracy, these approaches enable practical applications in autonomous navigation, robotics, and augmented reality where immediate environmental understanding is critical.Expand Specific Solutions03 Feature extraction and matching for improved accuracy

Enhanced feature extraction and matching techniques significantly improve SLAM accuracy when fusing LiDAR and camera data. These methods identify distinctive environmental landmarks from both sensor modalities and establish correspondences between them. Advanced algorithms extract geometric features from LiDAR point clouds and visual features from camera images, then use robust matching techniques to align these features across different sensor frames, reducing drift and improving mapping precision.Expand Specific Solutions04 Loop closure and global optimization

Loop closure detection and global optimization techniques are essential for maintaining long-term accuracy in LiDAR-camera fusion SLAM systems. These methods identify when a system revisits previously mapped areas and correct accumulated errors. By recognizing familiar scenes through both visual and geometric signatures, the system can adjust the entire trajectory and map to maintain global consistency, significantly reducing drift in extended operations.Expand Specific Solutions05 Environmental adaptation and robustness

Adaptive SLAM systems using LiDAR-camera fusion can maintain accuracy across diverse and challenging environments. These systems dynamically adjust sensor weighting based on environmental conditions, compensating for scenarios where either sensor might be compromised. For example, relying more on LiDAR in low-light conditions or camera data when LiDAR performance is affected by reflective surfaces. This adaptability ensures consistent mapping and localization performance across varying operational contexts.Expand Specific Solutions

Leading Companies in Autonomous Driving Perception

SLAM with LiDAR and camera fusion for autonomous vehicles is currently in a growth phase, with the market expanding rapidly as autonomous driving technologies mature. The global market size is projected to reach significant volumes by 2025, driven by increasing adoption in commercial and passenger vehicles. Technologically, the field shows varying maturity levels across companies. Leaders like Velodyne Lidar and QUALCOMM have established robust sensor fusion platforms, while automotive manufacturers including Hyundai, Kia, and Ford are integrating these systems into production vehicles. Research institutions such as Peking University, UESTC, and Northwestern Polytechnical University are advancing fundamental algorithms. Regional players like Delu Technology and Xi'an Inno Aviation are developing specialized applications, creating a competitive landscape balanced between established corporations and innovative startups.

Hyundai Motor Co., Ltd.

Technical Solution: Hyundai has developed an advanced SLAM system for autonomous vehicles that integrates LiDAR and camera fusion. Their approach employs a multi-stage fusion architecture that begins with sensor-specific processing before combining data at feature and decision levels. The system utilizes high-definition LiDAR sensors for precise distance measurements and stereo camera arrays for rich visual information. Hyundai's implementation features adaptive temporal synchronization that accounts for varying sensor latencies and processing times. Their proprietary mapping algorithm creates multi-layered environmental representations, separating static infrastructure from dynamic objects to improve localization stability. The system incorporates confidence-based sensor weighting that dynamically adjusts the influence of each sensor modality based on current environmental conditions and sensor health metrics. Hyundai has implemented specialized processing for challenging scenarios such as tunnels (where cameras may struggle with lighting transitions) and open highways (where LiDAR features may be sparse). Their solution includes predictive sensor degradation models that anticipate performance issues in adverse weather conditions, proactively adjusting fusion parameters to maintain system reliability.

Strengths: Robust performance across diverse Asian and European driving environments demonstrates versatility. The multi-layered mapping approach enables efficient updates of dynamic elements without rebuilding entire maps. Weaknesses: The system requires extensive calibration procedures when deployed on new vehicle platforms. Performance in extremely dense urban environments with numerous moving objects can lead to increased computational load and potential processing delays.

Mitsubishi Electric Corp.

Technical Solution: Mitsubishi Electric has developed a comprehensive SLAM solution for autonomous vehicles that fuses LiDAR and camera data through their proprietary Multi-Modal Fusion Framework. Their approach implements a tightly-coupled optimization strategy where measurements from both sensors contribute to a unified state estimation problem. The system employs specialized front-end processing for each sensor type - extracting geometric features from LiDAR point clouds and visual features from camera images - before combining them in a joint back-end optimization. Mitsubishi's implementation features adaptive voxelization of LiDAR data that adjusts resolution based on distance and region importance, optimizing computational efficiency. Their solution incorporates illumination-invariant visual descriptors that maintain performance across different lighting conditions, addressing a common challenge in camera-based SLAM. The system utilizes a factor graph representation for the fusion problem, allowing efficient incorporation of constraints from both sensor modalities and smooth integration of GPS/IMU data when available. Mitsubishi has implemented specialized handling for dynamic objects, using semantic segmentation from camera data to identify and filter non-static elements from the mapping process, improving localization stability in busy environments.

Strengths: Excellent performance in challenging weather conditions common in Japanese environments, including heavy rain and fog. The system's computational efficiency enables deployment on production-grade automotive hardware. Weaknesses: The solution requires precise initial calibration between sensors to achieve optimal fusion results. The system's performance can degrade in environments with limited distinctive features for either sensor modality.

Key Patents and Research in Sensor Fusion SLAM

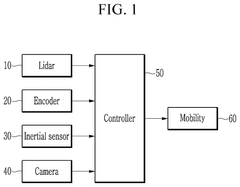

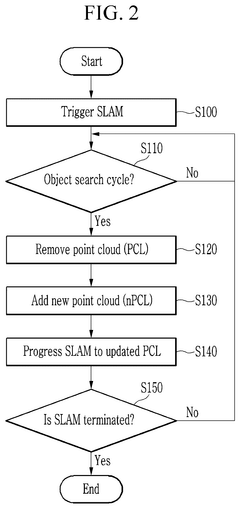

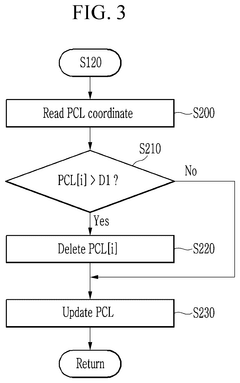

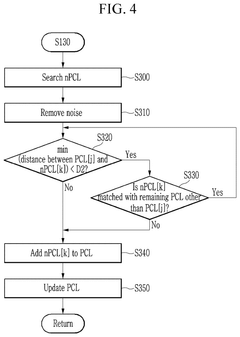

Method and system for simultaneous localization and mapping based on 2d lidar and a camera with different viewing ranges

PatentPendingUS20250209652A1

Innovation

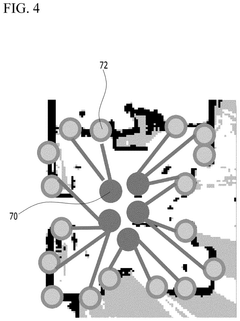

- A method and system that maintains feature points detected by a camera with a narrow viewing angle in conjunction with a Lidar sensor's wide viewing angle, involving a controller to remove feature points exceeding a set distance and add newly searched points, ensuring robustness and reducing memory usage.

Method and system of simultaneous localization and mapping based on 2d lidar and camera

PatentPendingUS20250138192A1

Innovation

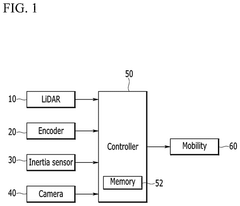

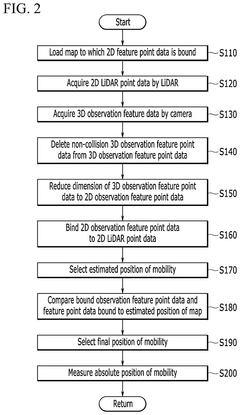

- A method and system that bind observation feature points from a camera to 2D LiDAR points and compare them with a map to accurately localize the mobility and create a map, using a controller to load maps, acquire LiDAR and camera data, reduce dimensionality, and detect absolute positions.

Safety and Reliability Standards for AV Perception

The integration of SLAM systems with LiDAR and camera fusion in autonomous vehicles necessitates adherence to rigorous safety and reliability standards. These standards are critical for ensuring that perception systems can operate dependably across diverse environmental conditions and scenarios.

ISO 26262, the international standard for functional safety in automotive systems, provides a comprehensive framework for evaluating and certifying perception systems in autonomous vehicles. It mandates a systematic approach to risk assessment through Automotive Safety Integrity Levels (ASIL), with perception systems typically requiring ASIL C or D classification due to their critical role in vehicle operation.

For sensor fusion applications specifically, the IEEE P2020 standard addresses image quality and reliability metrics for camera-based systems, while ISO/PAS 21448 (SOTIF - Safety Of The Intended Functionality) covers scenarios where traditional functional safety approaches may be insufficient for complex perception algorithms.

Reliability testing for LiDAR-camera fusion systems must include extensive validation across operational design domains (ODDs). This encompasses performance verification in adverse weather conditions, varying lighting scenarios, and challenging environments such as tunnels or areas with reflective surfaces. The NHTSA guidelines recommend a minimum of 99.9999% reliability for critical perception functions, often referred to as the "six nines" standard.

Redundancy requirements constitute another crucial aspect of these standards. Perception systems must implement fail-operational or fail-safe mechanisms to maintain minimum safety functionality even when primary sensors or processing units experience failures. This typically involves architectural designs with independent sensing modalities that can cross-validate each other's outputs.

Real-time performance standards mandate maximum latency thresholds for perception systems, typically requiring processing cycles to complete within 50-100ms to ensure timely response to dynamic environments. Additionally, standards like AUTOSAR and ISO 21434 address cybersecurity concerns, ensuring that perception systems are protected against potential attacks that could compromise their reliability.

Certification processes for these systems involve rigorous testing methodologies including hardware-in-the-loop simulation, closed-course testing, and limited public road testing under controlled conditions. The evidence gathered must demonstrate compliance with predetermined safety performance indicators (SPIs) and key performance indicators (KPIs) specific to perception accuracy, precision, and reliability.

ISO 26262, the international standard for functional safety in automotive systems, provides a comprehensive framework for evaluating and certifying perception systems in autonomous vehicles. It mandates a systematic approach to risk assessment through Automotive Safety Integrity Levels (ASIL), with perception systems typically requiring ASIL C or D classification due to their critical role in vehicle operation.

For sensor fusion applications specifically, the IEEE P2020 standard addresses image quality and reliability metrics for camera-based systems, while ISO/PAS 21448 (SOTIF - Safety Of The Intended Functionality) covers scenarios where traditional functional safety approaches may be insufficient for complex perception algorithms.

Reliability testing for LiDAR-camera fusion systems must include extensive validation across operational design domains (ODDs). This encompasses performance verification in adverse weather conditions, varying lighting scenarios, and challenging environments such as tunnels or areas with reflective surfaces. The NHTSA guidelines recommend a minimum of 99.9999% reliability for critical perception functions, often referred to as the "six nines" standard.

Redundancy requirements constitute another crucial aspect of these standards. Perception systems must implement fail-operational or fail-safe mechanisms to maintain minimum safety functionality even when primary sensors or processing units experience failures. This typically involves architectural designs with independent sensing modalities that can cross-validate each other's outputs.

Real-time performance standards mandate maximum latency thresholds for perception systems, typically requiring processing cycles to complete within 50-100ms to ensure timely response to dynamic environments. Additionally, standards like AUTOSAR and ISO 21434 address cybersecurity concerns, ensuring that perception systems are protected against potential attacks that could compromise their reliability.

Certification processes for these systems involve rigorous testing methodologies including hardware-in-the-loop simulation, closed-course testing, and limited public road testing under controlled conditions. The evidence gathered must demonstrate compliance with predetermined safety performance indicators (SPIs) and key performance indicators (KPIs) specific to perception accuracy, precision, and reliability.

Real-Time Processing Requirements and Solutions

Real-time processing represents one of the most critical challenges in SLAM systems for autonomous vehicles that fuse LiDAR and camera data. These systems must process massive amounts of sensor data while maintaining low latency responses to ensure safe vehicle operation. Current autonomous driving platforms typically require processing speeds of 10-30Hz (33-100ms per cycle) to achieve acceptable performance in dynamic environments.

The computational demands of LiDAR-camera fusion SLAM are particularly intensive due to several factors. Point cloud registration from LiDAR data can involve billions of calculations per second, while feature extraction from high-resolution camera images adds another layer of computational complexity. When these processes run simultaneously with data fusion algorithms, even powerful computing platforms can struggle to maintain real-time performance.

Hardware acceleration solutions have emerged as essential components for meeting these requirements. NVIDIA's Drive AGX platform, featuring specialized GPUs and AI accelerators, has become an industry standard, offering up to 2000 TOPS (Trillion Operations Per Second) of processing power. Similarly, Tesla's custom-designed FSD (Full Self-Driving) chip delivers 144 TOPS while optimizing for power efficiency. Intel's Mobileye EyeQ5 provides another specialized solution with dedicated vision processing units.

Software optimization techniques play an equally crucial role in achieving real-time performance. Parallel processing frameworks like CUDA and OpenCL enable efficient utilization of GPU resources, while algorithmic optimizations such as adaptive voxel grid filtering and sparse feature selection reduce computational load without sacrificing accuracy. Companies like Waymo and Cruise have developed proprietary software stacks that implement these optimizations alongside custom scheduling algorithms to prioritize critical processing tasks.

Edge computing architectures are increasingly being deployed to distribute computational loads. By performing initial sensor data processing at the edge (within the vehicle), only relevant information needs to be transmitted to more powerful centralized systems. This approach reduces latency and bandwidth requirements while improving system reliability. Qualcomm's Snapdragon Ride platform exemplifies this trend, offering scalable edge computing solutions specifically designed for autonomous driving applications.

Benchmark testing reveals that state-of-the-art LiDAR-camera fusion SLAM systems can achieve processing times of 20-50ms per frame on high-end hardware, meeting the requirements for most autonomous driving scenarios. However, challenging conditions such as dense urban environments or adverse weather can still push these systems to their limits, highlighting the need for continued advancement in both hardware and software solutions.

The computational demands of LiDAR-camera fusion SLAM are particularly intensive due to several factors. Point cloud registration from LiDAR data can involve billions of calculations per second, while feature extraction from high-resolution camera images adds another layer of computational complexity. When these processes run simultaneously with data fusion algorithms, even powerful computing platforms can struggle to maintain real-time performance.

Hardware acceleration solutions have emerged as essential components for meeting these requirements. NVIDIA's Drive AGX platform, featuring specialized GPUs and AI accelerators, has become an industry standard, offering up to 2000 TOPS (Trillion Operations Per Second) of processing power. Similarly, Tesla's custom-designed FSD (Full Self-Driving) chip delivers 144 TOPS while optimizing for power efficiency. Intel's Mobileye EyeQ5 provides another specialized solution with dedicated vision processing units.

Software optimization techniques play an equally crucial role in achieving real-time performance. Parallel processing frameworks like CUDA and OpenCL enable efficient utilization of GPU resources, while algorithmic optimizations such as adaptive voxel grid filtering and sparse feature selection reduce computational load without sacrificing accuracy. Companies like Waymo and Cruise have developed proprietary software stacks that implement these optimizations alongside custom scheduling algorithms to prioritize critical processing tasks.

Edge computing architectures are increasingly being deployed to distribute computational loads. By performing initial sensor data processing at the edge (within the vehicle), only relevant information needs to be transmitted to more powerful centralized systems. This approach reduces latency and bandwidth requirements while improving system reliability. Qualcomm's Snapdragon Ride platform exemplifies this trend, offering scalable edge computing solutions specifically designed for autonomous driving applications.

Benchmark testing reveals that state-of-the-art LiDAR-camera fusion SLAM systems can achieve processing times of 20-50ms per frame on high-end hardware, meeting the requirements for most autonomous driving scenarios. However, challenging conditions such as dense urban environments or adverse weather can still push these systems to their limits, highlighting the need for continued advancement in both hardware and software solutions.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!