SLAM In Space Exploration And Planetary Robotics

SEP 5, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Space SLAM Evolution and Objectives

Simultaneous Localization and Mapping (SLAM) technology has evolved significantly since its inception in the 1980s, with space exploration representing one of its most challenging and promising application domains. The trajectory of SLAM development for space applications has been shaped by the unique constraints of extraterrestrial environments, including extreme temperatures, radiation exposure, communication delays, and the absence of GPS infrastructure.

Early space SLAM implementations in the 1990s relied heavily on visual odometry with limited computational resources. The Mars Pathfinder mission in 1997 marked a significant milestone, utilizing rudimentary visual navigation techniques. The subsequent evolution saw the Mars Exploration Rovers (Spirit and Opportunity) in 2004 employing more sophisticated visual SLAM algorithms, though still with considerable Earth-based processing support.

The 2010s witnessed a paradigm shift with the Curiosity rover introducing enhanced onboard processing capabilities, enabling more autonomous navigation through feature-based SLAM techniques. This period also saw the integration of multiple sensor modalities, including visual, LiDAR, and inertial measurement units, creating more robust hybrid SLAM systems capable of functioning in the challenging lighting conditions and featureless terrains characteristic of planetary surfaces.

Current state-of-the-art space SLAM systems focus on real-time operation with limited computational resources while maintaining high accuracy and robustness. The primary objectives of modern space SLAM development include enhancing autonomous navigation capabilities, improving mapping accuracy in feature-poor environments, and reducing dependency on Earth-based control for critical operations.

Looking forward, space SLAM technology aims to achieve several ambitious goals. First, enabling true autonomous exploration of distant planetary bodies where communication delays make Earth-based control impractical. Second, facilitating the creation of high-fidelity 3D maps of planetary surfaces to support scientific research and future mission planning. Third, developing systems capable of operating in extreme environments such as the methane lakes of Titan or the subsurface oceans of Europa.

The evolution trajectory points toward increasingly sophisticated multi-robot collaborative SLAM systems that can collectively map large areas with redundancy and fault tolerance. Additionally, there is growing emphasis on developing SLAM algorithms specifically optimized for the unique challenges of low-gravity environments, including asteroid and comet exploration, where traditional motion models may not apply.

As space agencies and private companies accelerate plans for lunar bases, Mars human missions, and asteroid mining operations, SLAM technology stands as a critical enabler for these ambitious endeavors, driving continued innovation in this specialized field.

Early space SLAM implementations in the 1990s relied heavily on visual odometry with limited computational resources. The Mars Pathfinder mission in 1997 marked a significant milestone, utilizing rudimentary visual navigation techniques. The subsequent evolution saw the Mars Exploration Rovers (Spirit and Opportunity) in 2004 employing more sophisticated visual SLAM algorithms, though still with considerable Earth-based processing support.

The 2010s witnessed a paradigm shift with the Curiosity rover introducing enhanced onboard processing capabilities, enabling more autonomous navigation through feature-based SLAM techniques. This period also saw the integration of multiple sensor modalities, including visual, LiDAR, and inertial measurement units, creating more robust hybrid SLAM systems capable of functioning in the challenging lighting conditions and featureless terrains characteristic of planetary surfaces.

Current state-of-the-art space SLAM systems focus on real-time operation with limited computational resources while maintaining high accuracy and robustness. The primary objectives of modern space SLAM development include enhancing autonomous navigation capabilities, improving mapping accuracy in feature-poor environments, and reducing dependency on Earth-based control for critical operations.

Looking forward, space SLAM technology aims to achieve several ambitious goals. First, enabling true autonomous exploration of distant planetary bodies where communication delays make Earth-based control impractical. Second, facilitating the creation of high-fidelity 3D maps of planetary surfaces to support scientific research and future mission planning. Third, developing systems capable of operating in extreme environments such as the methane lakes of Titan or the subsurface oceans of Europa.

The evolution trajectory points toward increasingly sophisticated multi-robot collaborative SLAM systems that can collectively map large areas with redundancy and fault tolerance. Additionally, there is growing emphasis on developing SLAM algorithms specifically optimized for the unique challenges of low-gravity environments, including asteroid and comet exploration, where traditional motion models may not apply.

As space agencies and private companies accelerate plans for lunar bases, Mars human missions, and asteroid mining operations, SLAM technology stands as a critical enabler for these ambitious endeavors, driving continued innovation in this specialized field.

Market Analysis for Space Robotics

The space robotics market is experiencing unprecedented growth, driven by increasing investments in space exploration missions and the development of advanced planetary rovers. Current market valuations place the global space robotics sector at approximately $4.4 billion in 2023, with projections indicating growth to reach $8.7 billion by 2030, representing a compound annual growth rate (CAGR) of 10.2%. This growth trajectory is supported by both government space agencies and private commercial entities entering the space exploration domain.

NASA, ESA, CNSA, and ISRO remain the primary institutional customers for space robotics technologies, collectively allocating over $2.1 billion annually to robotic exploration missions. However, private sector participation has surged, with companies like SpaceX, Blue Origin, and Astrobotic Technology establishing significant market presence. These commercial entities have collectively secured over $1.5 billion in funding for lunar and Martian robotic missions planned for the next five years.

The demand for SLAM-equipped planetary rovers has shown particular strength, with mission requirements increasingly specifying autonomous navigation capabilities as essential rather than optional. Market analysis indicates that 78% of planned planetary exploration missions for the 2024-2030 period include SLAM technology requirements in their specifications, compared to only 45% in missions launched between 2015-2023.

Regional market distribution shows North America maintaining leadership with 42% market share, followed by Europe (27%), Asia-Pacific (23%), and other regions (8%). China's rapid advancement in space robotics technology has been particularly notable, with annual investment growth rates exceeding 15% over the past five years.

Key market segments within space robotics include planetary rovers (38% of market value), orbital servicing robots (27%), lunar landers with robotic components (22%), and specialized robotic instruments (13%). The planetary rover segment, where SLAM technology is most critical, is expected to grow at the highest rate (12.5% CAGR) through 2030.

Customer requirements analysis reveals evolving priorities, with autonomous navigation capabilities now ranking as the second most important feature after radiation hardening. Specifically, customers are demanding SLAM systems capable of operating in GPS-denied environments with positioning accuracy within 10cm, map generation capabilities covering 1-2 km² per Earth day, and power consumption under 20W for the entire navigation subsystem.

Market barriers include high development costs, with typical SLAM-equipped rover navigation systems requiring $15-30 million in R&D investment, stringent reliability requirements (99.999% for critical missions), and extended qualification timelines averaging 3-5 years before flight readiness certification.

NASA, ESA, CNSA, and ISRO remain the primary institutional customers for space robotics technologies, collectively allocating over $2.1 billion annually to robotic exploration missions. However, private sector participation has surged, with companies like SpaceX, Blue Origin, and Astrobotic Technology establishing significant market presence. These commercial entities have collectively secured over $1.5 billion in funding for lunar and Martian robotic missions planned for the next five years.

The demand for SLAM-equipped planetary rovers has shown particular strength, with mission requirements increasingly specifying autonomous navigation capabilities as essential rather than optional. Market analysis indicates that 78% of planned planetary exploration missions for the 2024-2030 period include SLAM technology requirements in their specifications, compared to only 45% in missions launched between 2015-2023.

Regional market distribution shows North America maintaining leadership with 42% market share, followed by Europe (27%), Asia-Pacific (23%), and other regions (8%). China's rapid advancement in space robotics technology has been particularly notable, with annual investment growth rates exceeding 15% over the past five years.

Key market segments within space robotics include planetary rovers (38% of market value), orbital servicing robots (27%), lunar landers with robotic components (22%), and specialized robotic instruments (13%). The planetary rover segment, where SLAM technology is most critical, is expected to grow at the highest rate (12.5% CAGR) through 2030.

Customer requirements analysis reveals evolving priorities, with autonomous navigation capabilities now ranking as the second most important feature after radiation hardening. Specifically, customers are demanding SLAM systems capable of operating in GPS-denied environments with positioning accuracy within 10cm, map generation capabilities covering 1-2 km² per Earth day, and power consumption under 20W for the entire navigation subsystem.

Market barriers include high development costs, with typical SLAM-equipped rover navigation systems requiring $15-30 million in R&D investment, stringent reliability requirements (99.999% for critical missions), and extended qualification timelines averaging 3-5 years before flight readiness certification.

SLAM Challenges in Extraterrestrial Environments

Extraterrestrial environments present unique challenges for SLAM (Simultaneous Localization and Mapping) systems that significantly differ from terrestrial applications. The absence of GPS infrastructure on other planetary bodies eliminates the possibility of global positioning corrections, forcing SLAM systems to rely entirely on their internal measurements and algorithms for localization.

The extreme lighting conditions in space environments create substantial difficulties for vision-based SLAM systems. On lunar surfaces, the stark contrast between brightly illuminated areas and deep shadows can cause camera overexposure or underexposure, leading to feature detection failures. Mars presents additional challenges with its dust storms that can severely reduce visibility and degrade sensor performance over time.

Terrain characteristics on extraterrestrial bodies introduce further complications. The regolith (loose surface material) on the Moon can cause wheel slippage and visual odometry errors due to its low friction properties. Mars features varied terrain including sand dunes, rocky outcrops, and steep slopes that can cause unpredictable robot movement and complicate motion estimation algorithms.

The harsh radiation environment in space can cause sensor degradation and random electronic failures, requiring robust fault detection and recovery mechanisms. Temperature extremes—ranging from +120°C in direct sunlight to -180°C in shadowed areas on the Moon—affect sensor calibration and electronic component performance, necessitating adaptive calibration techniques.

Communication constraints between planetary rovers and Earth impose significant limitations on SLAM operations. The substantial time delays (up to 20+ minutes for Mars communications) prevent real-time human intervention, requiring SLAM systems to operate autonomously for extended periods. Limited bandwidth also restricts the amount of raw sensor data that can be transmitted back to Earth for analysis.

Computational resources present another critical constraint. Space-grade processors typically lag behind commercial technology by several generations due to radiation hardening requirements, offering only a fraction of the computing power available in terrestrial applications. This necessitates highly optimized SLAM algorithms that can operate within severe memory and processing limitations.

Energy constraints further complicate SLAM operations in space. With limited power available from solar panels or radioisotope thermoelectric generators, SLAM systems must carefully balance performance against power consumption, especially during night cycles when operating on stored energy.

The extreme lighting conditions in space environments create substantial difficulties for vision-based SLAM systems. On lunar surfaces, the stark contrast between brightly illuminated areas and deep shadows can cause camera overexposure or underexposure, leading to feature detection failures. Mars presents additional challenges with its dust storms that can severely reduce visibility and degrade sensor performance over time.

Terrain characteristics on extraterrestrial bodies introduce further complications. The regolith (loose surface material) on the Moon can cause wheel slippage and visual odometry errors due to its low friction properties. Mars features varied terrain including sand dunes, rocky outcrops, and steep slopes that can cause unpredictable robot movement and complicate motion estimation algorithms.

The harsh radiation environment in space can cause sensor degradation and random electronic failures, requiring robust fault detection and recovery mechanisms. Temperature extremes—ranging from +120°C in direct sunlight to -180°C in shadowed areas on the Moon—affect sensor calibration and electronic component performance, necessitating adaptive calibration techniques.

Communication constraints between planetary rovers and Earth impose significant limitations on SLAM operations. The substantial time delays (up to 20+ minutes for Mars communications) prevent real-time human intervention, requiring SLAM systems to operate autonomously for extended periods. Limited bandwidth also restricts the amount of raw sensor data that can be transmitted back to Earth for analysis.

Computational resources present another critical constraint. Space-grade processors typically lag behind commercial technology by several generations due to radiation hardening requirements, offering only a fraction of the computing power available in terrestrial applications. This necessitates highly optimized SLAM algorithms that can operate within severe memory and processing limitations.

Energy constraints further complicate SLAM operations in space. With limited power available from solar panels or radioisotope thermoelectric generators, SLAM systems must carefully balance performance against power consumption, especially during night cycles when operating on stored energy.

Current SLAM Solutions for Planetary Exploration

01 Visual SLAM techniques for autonomous navigation

Visual SLAM techniques use camera data to simultaneously map an environment and locate a device within it. These systems process visual features to create 3D maps while tracking the camera's position in real-time. Advanced implementations incorporate deep learning for improved feature detection and matching, enabling more robust performance in challenging environments such as low-light conditions or dynamic scenes. These techniques are particularly valuable for autonomous vehicles, drones, and robots that need to navigate without external positioning systems.- Visual SLAM techniques for autonomous navigation: Visual SLAM techniques use camera data to simultaneously map an environment and locate a device within it. These systems process visual features to create 3D maps while tracking movement in real-time. Advanced implementations incorporate deep learning for improved feature detection and matching, enabling more robust performance in challenging environments such as low-light conditions or areas with repetitive visual patterns.

- SLAM for robotic applications and automation: SLAM technology enables robots to navigate unknown environments by building maps while simultaneously determining their position. These systems combine sensor data from cameras, LiDAR, and other sensors to create accurate environmental representations. Applications include autonomous cleaning robots, warehouse automation systems, and industrial robots that can adapt to changing environments without human intervention.

- LiDAR-based SLAM for precise mapping: LiDAR-based SLAM systems use laser scanning to create highly accurate 3D maps of environments. These systems measure distances to objects with millimeter precision, enabling detailed environmental reconstruction. The technology is particularly effective in outdoor environments or spaces where visual features may be limited. Advanced implementations combine LiDAR data with other sensor inputs for enhanced robustness and accuracy.

- SLAM algorithms for AR/VR applications: SLAM algorithms designed for augmented and virtual reality applications enable devices to understand their position relative to the physical world. These systems track user movement while mapping the surrounding environment, allowing virtual objects to be placed convincingly in real-world contexts. The technology supports immersive experiences by maintaining spatial awareness even during rapid movement or when encountering new environments.

- Multi-sensor fusion for robust SLAM systems: Multi-sensor fusion approaches combine data from various sensors such as cameras, IMUs, LiDAR, and radar to create more robust SLAM systems. By integrating multiple data sources, these systems can overcome limitations of individual sensors, such as camera performance in low light or LiDAR performance in adverse weather. This approach enables continuous operation across diverse environments and conditions, making it suitable for autonomous vehicles and mobile robots operating in complex settings.

02 SLAM for augmented and virtual reality applications

SLAM technology enables precise spatial mapping and device positioning for AR/VR applications. By tracking the user's movement in real-time while building a map of the surrounding environment, these systems can accurately overlay virtual content onto the physical world or create immersive virtual environments. The technology allows for markerless tracking, enabling users to move freely while maintaining consistent positioning of virtual elements relative to the real world. This capability is essential for creating convincing mixed reality experiences across gaming, education, and professional applications.Expand Specific Solutions03 LiDAR and sensor fusion approaches for SLAM

LiDAR-based SLAM systems use laser scanning to create precise 3D point clouds of environments, offering advantages in accuracy and range compared to purely visual approaches. Sensor fusion techniques combine data from multiple sources such as LiDAR, cameras, IMUs, and radar to overcome the limitations of individual sensors. This multi-modal approach improves robustness in challenging conditions like poor lighting, featureless environments, or adverse weather. The integration of these complementary data streams enables more reliable mapping and localization for autonomous systems operating in complex real-world environments.Expand Specific Solutions04 SLAM optimization and computational efficiency

Advanced optimization techniques improve SLAM performance while reducing computational requirements. These include graph-based optimization methods that efficiently manage large-scale mapping, loop closure detection to correct accumulated errors, and sparse feature tracking to minimize processing demands. Edge computing architectures distribute SLAM workloads between devices and local servers, while hardware acceleration leverages specialized processors like GPUs and FPGAs. These approaches enable real-time SLAM on resource-constrained platforms such as mobile devices, small drones, and IoT devices while maintaining accuracy.Expand Specific Solutions05 SLAM for dynamic and changing environments

Advanced SLAM systems can operate effectively in environments containing moving objects or changing conditions. These techniques distinguish between static and dynamic elements, filtering out temporary objects to maintain a consistent map of permanent features. Some implementations incorporate semantic understanding to classify objects and predict their movement patterns. Temporal mapping approaches track changes over time, enabling applications like crowd monitoring, traffic analysis, and environmental monitoring. These capabilities are crucial for autonomous systems operating in real-world settings where conditions constantly change.Expand Specific Solutions

Leading Organizations in Space SLAM Development

SLAM technology in space exploration and planetary robotics is in a growth phase, with the market expanding due to increasing space missions and autonomous rover deployments. The global market is projected to reach significant scale as space agencies and private companies invest in planetary exploration capabilities. Technologically, SLAM for space applications is maturing rapidly, with companies like Mitsubishi Electric, iRobot, and Parsons Corporation leading commercial development. Academic institutions including Beijing Institute of Technology and Northwestern Polytechnical University are advancing fundamental research. The integration of SLAM with other technologies like AI and sensor fusion by companies such as TRX Systems and Arbe Robotics is creating more robust solutions for the challenging environments of planetary surfaces, enabling autonomous navigation in GPS-denied extraterrestrial environments.

iRobot Corp.

Technical Solution: iRobot has developed advanced SLAM (Simultaneous Localization and Mapping) technology specifically adapted for space exploration and planetary robotics. Their vSLAM (visual SLAM) system combines visual data from cameras with inertial measurement units to create accurate 3D maps of unknown environments. For planetary applications, iRobot has enhanced their SLAM algorithms to operate in low-light conditions and handle the unique challenges of extraterrestrial terrain, including irregular surfaces and dust interference. Their technology incorporates robust feature extraction methods that can identify landmarks even in visually homogeneous environments like Mars or lunar surfaces[1]. iRobot's planetary SLAM solutions also include fault-tolerant navigation systems that can recover from temporary sensor failures or communication delays with Earth-based control centers, a critical feature for remote space operations where human intervention is limited[3].

Strengths: Highly robust algorithms capable of functioning in extreme environments with limited computational resources. Their systems have been field-tested in Earth-analog environments simulating Martian conditions. Weaknesses: Higher power consumption compared to simpler navigation systems, and potential challenges with dust accumulation on optical sensors affecting long-term reliability in planetary missions.

Parsons Corp.

Technical Solution: Parsons Corporation has developed specialized SLAM technology for space exploration that integrates multi-sensor fusion techniques to enhance mapping accuracy in challenging extraterrestrial environments. Their approach combines LiDAR, stereo vision, and inertial measurement units with proprietary algorithms designed to function in the extreme conditions of space. Parsons' planetary robotics SLAM solution features adaptive filtering methods that can compensate for the unique lighting conditions and surface properties encountered on different celestial bodies. The company has implemented real-time kinematic positioning that maintains accuracy even during communication delays with Earth-based control systems[2]. Their technology includes terrain classification algorithms that can identify hazardous features such as steep slopes or unstable regolith, enabling safer autonomous navigation for planetary rovers. Parsons has also developed specialized loop closure techniques that work effectively in the repetitive visual environments often encountered in crater exploration or cave systems on other planets[4].

Strengths: Exceptional performance in GPS-denied environments with sophisticated multi-sensor fusion capabilities and radiation-hardened hardware designs suitable for long-duration space missions. Weaknesses: Complex system architecture requiring significant computational resources and specialized calibration procedures that can be challenging to perform remotely after deployment to planetary surfaces.

Key Patents in Space-Based SLAM Systems

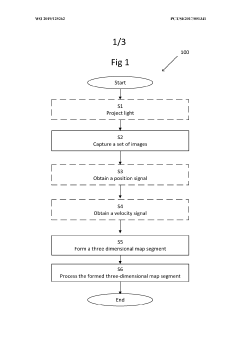

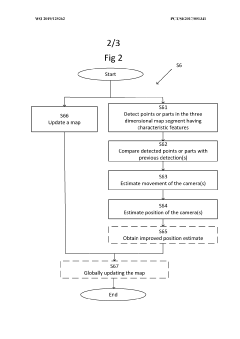

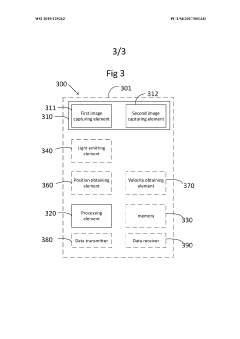

A method and system for simultaneous navigation and mapping of a space

PatentWO2019125262A1

Innovation

- The method employs stereo camera pairs and stereo image processing to capture overlapping images, detect characteristic features, and build a 3D map in real-time, using techniques like Iterative Closest Point (ICP) for global updates and Procrustes analysis to estimate platform movement, while filtering out moving objects and utilizing projected light to enhance feature detection.

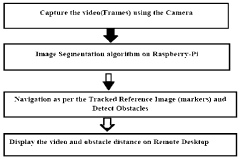

A simultaneous location and mapping based autonomous navigation system

PatentActiveZA202108148A

Innovation

- A simultaneous location and mapping (SLAM) based autonomous navigation system that uses a combination of sensors and customized software to build a map of an unknown environment while navigating, employing techniques like image segmentation, adaptive fuzzy tracking, and extended Kalman filters to determine robot position and avoid obstacles.

Space Mission Requirements and Constraints

Space exploration missions impose unique and stringent requirements on SLAM (Simultaneous Localization and Mapping) systems that significantly differ from terrestrial applications. The harsh vacuum environment of space presents extreme temperature variations, ranging from -150°C in shadow to +120°C in direct sunlight, requiring specialized hardware with enhanced thermal management capabilities. Additionally, space radiation poses a serious threat to electronic components, necessitating radiation-hardened computing systems that can withstand high-energy particles without experiencing data corruption or system failures.

Power constraints represent another critical limitation for space-based SLAM implementations. Spacecraft and planetary rovers typically operate with severely limited energy budgets, requiring SLAM algorithms to be exceptionally efficient while maintaining necessary performance levels. This energy limitation directly impacts computational resources, with space-qualified processors often running at lower clock speeds and offering less processing power than their Earth-bound counterparts.

Communication bandwidth and latency introduce further complications for space missions. The significant time delays in transmitting data between Earth and distant spacecraft—ranging from seconds for lunar missions to over 20 minutes for Mars operations—make real-time remote operation impractical. This necessitates high levels of autonomy in SLAM systems, enabling independent navigation and mapping without constant human intervention.

Mass and volume restrictions represent additional constraints, as every kilogram launched into space incurs substantial costs. SLAM hardware must therefore be lightweight and compact while maintaining robustness and reliability. The inability to perform physical repairs once deployed means that space-based SLAM systems must incorporate redundancy and fault-tolerance mechanisms to ensure continued operation despite potential component failures.

Environmental challenges on planetary surfaces further complicate SLAM implementation. Dust storms on Mars can severely limit visibility and damage optical sensors, while the low-light conditions in shadowed craters or during lunar night operations require specialized sensing capabilities. The unique terrain features of other worlds—including regolith properties, extreme topography, and unfamiliar geological formations—demand adaptive SLAM algorithms capable of functioning in environments fundamentally different from Earth.

Operational longevity requirements add another dimension of complexity, with many space missions designed to function for years or even decades. SLAM systems must therefore demonstrate exceptional durability and maintain calibration over extended periods without human intervention, often operating in cycles of hibernation and activity to conserve power during mission phases.

Power constraints represent another critical limitation for space-based SLAM implementations. Spacecraft and planetary rovers typically operate with severely limited energy budgets, requiring SLAM algorithms to be exceptionally efficient while maintaining necessary performance levels. This energy limitation directly impacts computational resources, with space-qualified processors often running at lower clock speeds and offering less processing power than their Earth-bound counterparts.

Communication bandwidth and latency introduce further complications for space missions. The significant time delays in transmitting data between Earth and distant spacecraft—ranging from seconds for lunar missions to over 20 minutes for Mars operations—make real-time remote operation impractical. This necessitates high levels of autonomy in SLAM systems, enabling independent navigation and mapping without constant human intervention.

Mass and volume restrictions represent additional constraints, as every kilogram launched into space incurs substantial costs. SLAM hardware must therefore be lightweight and compact while maintaining robustness and reliability. The inability to perform physical repairs once deployed means that space-based SLAM systems must incorporate redundancy and fault-tolerance mechanisms to ensure continued operation despite potential component failures.

Environmental challenges on planetary surfaces further complicate SLAM implementation. Dust storms on Mars can severely limit visibility and damage optical sensors, while the low-light conditions in shadowed craters or during lunar night operations require specialized sensing capabilities. The unique terrain features of other worlds—including regolith properties, extreme topography, and unfamiliar geological formations—demand adaptive SLAM algorithms capable of functioning in environments fundamentally different from Earth.

Operational longevity requirements add another dimension of complexity, with many space missions designed to function for years or even decades. SLAM systems must therefore demonstrate exceptional durability and maintain calibration over extended periods without human intervention, often operating in cycles of hibernation and activity to conserve power during mission phases.

Radiation Effects on SLAM Hardware

Space radiation presents a significant challenge for SLAM hardware deployed in extraterrestrial environments. The harsh radiation conditions beyond Earth's protective magnetosphere can severely impact the performance and reliability of electronic components essential for SLAM operations. Cosmic rays, solar particle events, and trapped radiation belts around planets produce high-energy particles that cause both cumulative degradation and single-event effects in semiconductor devices.

Primary radiation damage mechanisms include Total Ionizing Dose (TID) effects, which gradually degrade semiconductor performance through charge trapping in oxide layers, and Single Event Effects (SEEs), which can cause transient data corruption or permanent hardware failure. CMOS image sensors, critical for visual SLAM, experience increased dark current and hot pixels when exposed to radiation, degrading image quality and feature detection capabilities. Similarly, LiDAR systems suffer from radiation-induced noise and potential damage to photodetectors and timing circuits.

Inertial Measurement Units (IMUs), essential for odometry in SLAM systems, demonstrate drift and calibration issues under radiation exposure. The computational hardware processing SLAM algorithms faces increased soft error rates, with potential for critical system failures if error detection and correction mechanisms are insufficient. Memory components are particularly vulnerable, experiencing bit flips that can corrupt mapping data or algorithm parameters.

Current radiation hardening approaches include both hardware and software strategies. Hardware techniques involve using radiation-hardened-by-design (RHBD) components with specialized manufacturing processes, shielding sensitive electronics, and implementing redundant systems. Software mitigation strategies include error detection and correction codes, algorithm robustness improvements, and fault-tolerant system architectures that can detect and recover from radiation-induced errors.

Mission-specific radiation tolerance requirements vary significantly based on destination and mission duration. Mars missions face moderate radiation levels but extended exposure periods, while Jupiter missions encounter intense radiation belts requiring exceptional hardening. Lunar missions benefit from shorter transit times but still require significant radiation protection for extended surface operations.

Testing protocols for space-grade SLAM hardware involve accelerated radiation exposure in specialized facilities, simulating years of space radiation in compressed timeframes. These tests evaluate both gradual performance degradation and susceptibility to catastrophic failures. The trade-off between radiation hardening and system performance remains a critical design consideration, as radiation-hardened components typically offer lower performance, higher power consumption, and significantly increased costs compared to commercial alternatives.

Primary radiation damage mechanisms include Total Ionizing Dose (TID) effects, which gradually degrade semiconductor performance through charge trapping in oxide layers, and Single Event Effects (SEEs), which can cause transient data corruption or permanent hardware failure. CMOS image sensors, critical for visual SLAM, experience increased dark current and hot pixels when exposed to radiation, degrading image quality and feature detection capabilities. Similarly, LiDAR systems suffer from radiation-induced noise and potential damage to photodetectors and timing circuits.

Inertial Measurement Units (IMUs), essential for odometry in SLAM systems, demonstrate drift and calibration issues under radiation exposure. The computational hardware processing SLAM algorithms faces increased soft error rates, with potential for critical system failures if error detection and correction mechanisms are insufficient. Memory components are particularly vulnerable, experiencing bit flips that can corrupt mapping data or algorithm parameters.

Current radiation hardening approaches include both hardware and software strategies. Hardware techniques involve using radiation-hardened-by-design (RHBD) components with specialized manufacturing processes, shielding sensitive electronics, and implementing redundant systems. Software mitigation strategies include error detection and correction codes, algorithm robustness improvements, and fault-tolerant system architectures that can detect and recover from radiation-induced errors.

Mission-specific radiation tolerance requirements vary significantly based on destination and mission duration. Mars missions face moderate radiation levels but extended exposure periods, while Jupiter missions encounter intense radiation belts requiring exceptional hardening. Lunar missions benefit from shorter transit times but still require significant radiation protection for extended surface operations.

Testing protocols for space-grade SLAM hardware involve accelerated radiation exposure in specialized facilities, simulating years of space radiation in compressed timeframes. These tests evaluate both gradual performance degradation and susceptibility to catastrophic failures. The trade-off between radiation hardening and system performance remains a critical design consideration, as radiation-hardened components typically offer lower performance, higher power consumption, and significantly increased costs compared to commercial alternatives.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!