Why Real-Time SLAM Matters In High-Speed Robotics?

SEP 12, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

SLAM Technology Evolution and Objectives

Simultaneous Localization and Mapping (SLAM) technology has evolved significantly since its conceptual inception in the 1980s. Initially developed for autonomous robot navigation in controlled environments, SLAM has transformed into a critical technology enabling machines to understand and interact with their surroundings in real-time. The evolution trajectory shows a clear progression from theoretical frameworks to practical implementations across diverse robotic platforms.

Early SLAM systems were computationally intensive and primarily focused on accuracy rather than speed, making them suitable only for slow-moving robots in static environments. The introduction of probabilistic methods in the 1990s, particularly Extended Kalman Filters (EKF) and particle filters, marked a significant advancement in handling sensor uncertainties and environmental noise, though still limited by computational constraints.

The 2000s witnessed the emergence of visual SLAM techniques, leveraging camera data alongside traditional sensors like LiDAR and radar. This period also saw the development of graph-based optimization methods that improved mapping accuracy while reducing computational demands. By 2010, real-time SLAM implementations began appearing on consumer devices, demonstrating the technology's growing maturity and accessibility.

Recent advancements have been driven by the integration of deep learning techniques with traditional SLAM algorithms, creating hybrid systems that combine the robustness of geometric methods with the adaptability of learning-based approaches. These developments have significantly enhanced performance in challenging scenarios such as dynamic environments, varying lighting conditions, and high-speed motion.

The primary objective of modern real-time SLAM research is to achieve reliable performance at increasingly higher speeds without sacrificing accuracy or robustness. This is particularly crucial for high-speed robotics applications where delayed mapping or localization errors can lead to catastrophic failures. The technical goals include reducing computational latency, improving feature extraction and tracking at high velocities, and developing more efficient algorithms for loop closure detection.

Another key objective is enhancing SLAM's resilience to the motion blur, rapid scene changes, and increased sensor noise that characterize high-speed operations. Researchers aim to develop systems capable of maintaining centimeter-level accuracy even when robots are moving at several meters per second, a requirement that pushes the boundaries of current sensor technology and algorithmic approaches.

The evolution of SLAM technology is increasingly focused on edge computing implementations that minimize dependence on external processing resources, enabling truly autonomous operation in diverse environments. This trend aligns with the growing demand for high-speed robotic applications in logistics, delivery services, search and rescue operations, and competitive robotics where real-time environmental understanding is non-negotiable.

Early SLAM systems were computationally intensive and primarily focused on accuracy rather than speed, making them suitable only for slow-moving robots in static environments. The introduction of probabilistic methods in the 1990s, particularly Extended Kalman Filters (EKF) and particle filters, marked a significant advancement in handling sensor uncertainties and environmental noise, though still limited by computational constraints.

The 2000s witnessed the emergence of visual SLAM techniques, leveraging camera data alongside traditional sensors like LiDAR and radar. This period also saw the development of graph-based optimization methods that improved mapping accuracy while reducing computational demands. By 2010, real-time SLAM implementations began appearing on consumer devices, demonstrating the technology's growing maturity and accessibility.

Recent advancements have been driven by the integration of deep learning techniques with traditional SLAM algorithms, creating hybrid systems that combine the robustness of geometric methods with the adaptability of learning-based approaches. These developments have significantly enhanced performance in challenging scenarios such as dynamic environments, varying lighting conditions, and high-speed motion.

The primary objective of modern real-time SLAM research is to achieve reliable performance at increasingly higher speeds without sacrificing accuracy or robustness. This is particularly crucial for high-speed robotics applications where delayed mapping or localization errors can lead to catastrophic failures. The technical goals include reducing computational latency, improving feature extraction and tracking at high velocities, and developing more efficient algorithms for loop closure detection.

Another key objective is enhancing SLAM's resilience to the motion blur, rapid scene changes, and increased sensor noise that characterize high-speed operations. Researchers aim to develop systems capable of maintaining centimeter-level accuracy even when robots are moving at several meters per second, a requirement that pushes the boundaries of current sensor technology and algorithmic approaches.

The evolution of SLAM technology is increasingly focused on edge computing implementations that minimize dependence on external processing resources, enabling truly autonomous operation in diverse environments. This trend aligns with the growing demand for high-speed robotic applications in logistics, delivery services, search and rescue operations, and competitive robotics where real-time environmental understanding is non-negotiable.

Market Demand for High-Speed Robotic Navigation

The market for high-speed robotic navigation systems is experiencing unprecedented growth, driven by increasing automation across multiple industries. According to recent market analyses, the global SLAM technology market is projected to reach $1.2 billion by 2025, with a compound annual growth rate of approximately 37%. This rapid expansion reflects the critical need for robots capable of navigating complex environments at high speeds while maintaining operational safety and efficiency.

Manufacturing and logistics sectors represent the largest current demand segments for high-speed robotic navigation. Warehouse automation has become essential as e-commerce continues its explosive growth, with companies seeking to optimize fulfillment operations through faster autonomous mobile robots (AMRs). These environments require robots to navigate dynamic spaces at speeds exceeding 2 meters per second while avoiding collisions with humans, infrastructure, and other robots.

The automotive industry presents another significant market opportunity, particularly in the development of autonomous vehicles. Real-time SLAM capabilities are fundamental to enabling vehicles to navigate at highway speeds while maintaining precise localization and mapping. Market research indicates that automotive applications of SLAM technology will grow at a rate of 42% annually through 2027, outpacing most other application segments.

Consumer robotics represents an emerging but rapidly growing market segment. High-speed navigation capabilities are increasingly demanded in premium robotic vacuum cleaners, lawn mowers, and other household robots. Consumers expect these devices to complete tasks efficiently while navigating complex home environments without collisions or mapping errors.

Agricultural robotics presents a particularly promising growth area, with autonomous harvesting and field management robots requiring precise navigation at commercially viable speeds. The agricultural robotics market is expected to reach $20.6 billion by 2025, with navigation systems representing a critical component of this growth.

Security and surveillance applications are driving demand for high-speed robotic navigation in both commercial and government sectors. Autonomous security robots must patrol large areas efficiently, requiring both speed and precise environmental awareness. This market segment is growing at approximately 25% annually, with particular emphasis on solutions that can operate in GPS-denied environments.

The healthcare sector is also emerging as a significant market for high-speed robotic navigation, particularly in large hospital environments where autonomous delivery robots must efficiently transport medications, supplies, and equipment through crowded corridors. The healthcare robotics market is projected to reach $16.5 billion by 2025, with navigation capabilities representing a key differentiator among competing solutions.

Manufacturing and logistics sectors represent the largest current demand segments for high-speed robotic navigation. Warehouse automation has become essential as e-commerce continues its explosive growth, with companies seeking to optimize fulfillment operations through faster autonomous mobile robots (AMRs). These environments require robots to navigate dynamic spaces at speeds exceeding 2 meters per second while avoiding collisions with humans, infrastructure, and other robots.

The automotive industry presents another significant market opportunity, particularly in the development of autonomous vehicles. Real-time SLAM capabilities are fundamental to enabling vehicles to navigate at highway speeds while maintaining precise localization and mapping. Market research indicates that automotive applications of SLAM technology will grow at a rate of 42% annually through 2027, outpacing most other application segments.

Consumer robotics represents an emerging but rapidly growing market segment. High-speed navigation capabilities are increasingly demanded in premium robotic vacuum cleaners, lawn mowers, and other household robots. Consumers expect these devices to complete tasks efficiently while navigating complex home environments without collisions or mapping errors.

Agricultural robotics presents a particularly promising growth area, with autonomous harvesting and field management robots requiring precise navigation at commercially viable speeds. The agricultural robotics market is expected to reach $20.6 billion by 2025, with navigation systems representing a critical component of this growth.

Security and surveillance applications are driving demand for high-speed robotic navigation in both commercial and government sectors. Autonomous security robots must patrol large areas efficiently, requiring both speed and precise environmental awareness. This market segment is growing at approximately 25% annually, with particular emphasis on solutions that can operate in GPS-denied environments.

The healthcare sector is also emerging as a significant market for high-speed robotic navigation, particularly in large hospital environments where autonomous delivery robots must efficiently transport medications, supplies, and equipment through crowded corridors. The healthcare robotics market is projected to reach $16.5 billion by 2025, with navigation capabilities representing a key differentiator among competing solutions.

Real-Time SLAM Challenges and Constraints

Real-time SLAM (Simultaneous Localization and Mapping) implementation faces significant challenges when deployed in high-speed robotic applications. The computational demands increase exponentially with velocity as robots must process more environmental data per unit time. This creates a fundamental tension between processing speed and accuracy that engineers must carefully balance.

Processing latency becomes critical at high speeds, where even millisecond delays can result in substantial positioning errors. For instance, a robot moving at 10 m/s experiences a 1-centimeter displacement every millisecond, making real-time performance not just desirable but essential for operational safety and functionality.

Hardware constraints present another major hurdle. While powerful computing platforms exist, they often conflict with the size, weight, and power (SWaP) limitations of mobile robots. Edge computing solutions must be optimized for parallel processing of sensor data while maintaining energy efficiency. The thermal management of these compact yet powerful systems adds another layer of complexity.

Sensor limitations further complicate real-time SLAM implementation. High-speed movement can introduce motion blur in visual sensors, while LiDAR scan rates may become insufficient to capture adequate environmental detail. Sensor fusion approaches that combine multiple data sources help mitigate these issues but introduce additional computational overhead and synchronization challenges.

Algorithm optimization represents perhaps the most active area of research in addressing these constraints. Traditional SLAM algorithms often prioritize accuracy over speed, employing computationally expensive optimization techniques. Recent innovations focus on sparse feature extraction, efficient loop closure detection, and incremental mapping approaches that maintain acceptable accuracy while reducing computational load.

Memory management becomes increasingly important as map sizes grow. High-speed robots cover more ground quickly, requiring efficient data structures to store and access spatial information. Hierarchical mapping approaches and dynamic memory allocation strategies help manage these growing datasets without compromising real-time performance.

The robustness of SLAM systems under varying environmental conditions presents additional challenges. High-speed robots encounter rapidly changing lighting conditions, dynamic obstacles, and potentially GPS-denied environments. Algorithms must adapt to these variations while maintaining consistent performance, often requiring sophisticated failure detection and recovery mechanisms.

These technical constraints collectively define the research frontier for real-time SLAM in high-speed robotics, driving innovation toward more efficient algorithms, specialized hardware solutions, and novel sensor configurations designed specifically for high-velocity applications.

Processing latency becomes critical at high speeds, where even millisecond delays can result in substantial positioning errors. For instance, a robot moving at 10 m/s experiences a 1-centimeter displacement every millisecond, making real-time performance not just desirable but essential for operational safety and functionality.

Hardware constraints present another major hurdle. While powerful computing platforms exist, they often conflict with the size, weight, and power (SWaP) limitations of mobile robots. Edge computing solutions must be optimized for parallel processing of sensor data while maintaining energy efficiency. The thermal management of these compact yet powerful systems adds another layer of complexity.

Sensor limitations further complicate real-time SLAM implementation. High-speed movement can introduce motion blur in visual sensors, while LiDAR scan rates may become insufficient to capture adequate environmental detail. Sensor fusion approaches that combine multiple data sources help mitigate these issues but introduce additional computational overhead and synchronization challenges.

Algorithm optimization represents perhaps the most active area of research in addressing these constraints. Traditional SLAM algorithms often prioritize accuracy over speed, employing computationally expensive optimization techniques. Recent innovations focus on sparse feature extraction, efficient loop closure detection, and incremental mapping approaches that maintain acceptable accuracy while reducing computational load.

Memory management becomes increasingly important as map sizes grow. High-speed robots cover more ground quickly, requiring efficient data structures to store and access spatial information. Hierarchical mapping approaches and dynamic memory allocation strategies help manage these growing datasets without compromising real-time performance.

The robustness of SLAM systems under varying environmental conditions presents additional challenges. High-speed robots encounter rapidly changing lighting conditions, dynamic obstacles, and potentially GPS-denied environments. Algorithms must adapt to these variations while maintaining consistent performance, often requiring sophisticated failure detection and recovery mechanisms.

These technical constraints collectively define the research frontier for real-time SLAM in high-speed robotics, driving innovation toward more efficient algorithms, specialized hardware solutions, and novel sensor configurations designed specifically for high-velocity applications.

Current Real-Time SLAM Implementations

01 Hardware acceleration for real-time SLAM

Hardware acceleration techniques are employed to enhance the real-time performance of SLAM systems. These include utilizing specialized processors, GPUs, FPGAs, and dedicated hardware architectures to parallelize computations and reduce processing time. By offloading intensive calculations to dedicated hardware, these approaches significantly improve the speed and efficiency of SLAM algorithms, enabling real-time operation even in resource-constrained environments.- Optimization techniques for real-time SLAM performance: Various optimization techniques can be employed to enhance the real-time performance of SLAM systems. These include algorithmic optimizations, parallel processing, and efficient data structures that reduce computational complexity. By implementing these optimizations, SLAM systems can achieve faster processing speeds and lower latency, which are crucial for applications requiring real-time response such as autonomous navigation and augmented reality.

- Hardware acceleration for SLAM systems: Hardware acceleration technologies, such as GPUs, FPGAs, and specialized processors, can significantly improve the real-time performance of SLAM algorithms. These hardware solutions offload computationally intensive tasks from the main processor, enabling parallel processing of SLAM operations like feature extraction, mapping, and localization. This approach reduces processing time and enables SLAM systems to operate in real-time even with limited computational resources.

- Memory management and data structures for real-time SLAM: Efficient memory management and optimized data structures are essential for real-time SLAM performance. Techniques such as sparse representations, hierarchical data structures, and memory pooling can reduce memory usage and access times. These approaches minimize the computational overhead associated with data handling, allowing SLAM algorithms to process information more quickly and maintain real-time operation even with large-scale environments or limited memory resources.

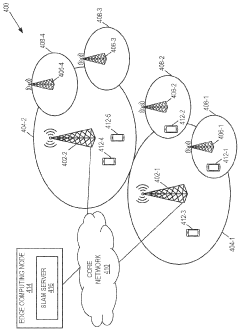

- Distributed and cloud-based SLAM processing: Distributed computing architectures and cloud-based processing can enhance real-time SLAM performance by offloading computationally intensive tasks to remote servers. This approach allows resource-constrained devices to perform complex SLAM operations by distributing the computational load across multiple systems. Edge computing techniques can also be employed to balance between local processing for time-critical operations and remote processing for more complex tasks, ensuring real-time performance while maintaining accuracy.

- Lightweight SLAM algorithms for real-time applications: Lightweight SLAM algorithms are specifically designed to operate in real-time on devices with limited computational resources. These algorithms employ techniques such as feature selection, keyframe culling, and simplified mathematical models to reduce computational complexity while maintaining acceptable accuracy. By focusing on essential information and discarding redundant data, these approaches enable real-time SLAM performance on mobile devices, drones, and other platforms where processing power and energy consumption are constrained.

02 Optimization algorithms for real-time SLAM processing

Various optimization algorithms are implemented to improve the real-time performance of SLAM systems. These include efficient feature extraction, sparse mapping techniques, keyframe selection strategies, and loop closure detection methods. By reducing computational complexity and focusing on the most informative data, these algorithms enable faster processing while maintaining accuracy, which is crucial for real-time applications in robotics and augmented reality.Expand Specific Solutions03 Distributed computing approaches for SLAM

Distributed computing architectures are utilized to enhance the real-time performance of SLAM systems. These approaches involve dividing the SLAM workload across multiple processing units or devices, implementing client-server architectures, and utilizing cloud computing resources. By distributing computational tasks, these systems can process data more efficiently, enabling real-time operation even for complex environments or when using limited local resources.Expand Specific Solutions04 Memory management techniques for real-time SLAM

Efficient memory management techniques are implemented to improve the real-time performance of SLAM systems. These include optimized data structures, cache-friendly algorithms, dynamic memory allocation strategies, and memory compression methods. By reducing memory access times and optimizing storage requirements, these techniques minimize processing delays and enable faster execution of SLAM algorithms, which is essential for real-time applications.Expand Specific Solutions05 Lightweight SLAM algorithms for mobile devices

Lightweight SLAM algorithms are designed specifically for mobile and embedded devices with limited computational resources. These approaches include simplified feature detection, reduced map complexity, efficient pose estimation techniques, and adaptive processing based on available resources. By balancing accuracy with computational efficiency, these algorithms enable real-time SLAM performance on smartphones, drones, and other resource-constrained platforms.Expand Specific Solutions

Leading Companies in Real-Time SLAM Solutions

Real-time SLAM (Simultaneous Localization and Mapping) technology is currently in a growth phase, with the market expanding rapidly due to increasing applications in high-speed robotics. The global market size is projected to reach significant volumes as industries adopt autonomous navigation solutions. From a technical maturity perspective, while core algorithms are established, real-time performance at high speeds remains challenging. Leading academic institutions like Peking University, Huazhong University of Science & Technology, and Southeast University are advancing theoretical frameworks, while companies such as Intel, TRX Systems, and Tencent are developing commercial implementations. The integration of SLAM with sensor fusion and edge computing by players like Yujin Robot and Ericsson is pushing the technology toward broader industrial adoption in high-velocity robotic applications.

Intel Corp.

Technical Solution: Intel has developed RealSense technology specifically addressing real-time SLAM challenges in high-speed robotics. Their solution combines depth cameras with specialized processors to achieve low-latency visual SLAM. Intel's T265 Tracking Camera integrates two fisheye lenses with an IMU and dedicated vision processing unit (VPS) to deliver accurate real-time tracking with 6 degrees of freedom. The system processes all SLAM algorithms on-device, requiring minimal computational resources from the host system. Intel's approach emphasizes edge computing for SLAM, enabling robots to navigate at speeds up to 1.5 m/s with position accuracy within 1% of distance traveled. Their RealSense SDK provides developers with ready-to-use SLAM capabilities that can be deployed across various robotic platforms, from autonomous drones to warehouse robots, supporting velocities that would overwhelm traditional SLAM implementations.

Strengths: Intel's solution offers exceptional processing efficiency by offloading SLAM computations to dedicated hardware, reducing latency to under 5ms. The integration of visual and inertial data provides robust tracking even in challenging lighting conditions. Weaknesses: The reliance on specialized hardware increases implementation costs compared to software-only solutions, and performance may degrade in extremely dynamic environments with numerous moving objects.

Beijing Institute of Technology

Technical Solution: Beijing Institute of Technology has developed a multi-sensor fusion approach to real-time SLAM specifically addressing high-speed robotics challenges. Their system, known as FastSLAM-X, integrates visual, inertial, and LiDAR data through a novel hierarchical optimization framework that prioritizes computational resources based on robot velocity. At speeds exceeding 5 m/s, the system automatically shifts to a streamlined processing pipeline that maintains localization accuracy while deferring detailed mapping to background processes. BIT researchers have implemented a predictive trajectory modeling component that anticipates sensor data needs based on current velocity vectors, pre-allocating computational resources to critical path planning functions. Their implementation achieves processing rates of 100Hz on embedded GPU platforms, with demonstrated performance on autonomous vehicles moving at highway speeds. The system incorporates dynamic feature selection algorithms that adapt to motion blur at high velocities, maintaining tracking when traditional feature detectors would fail. BIT has successfully deployed this technology on experimental autonomous vehicles navigating complex urban environments at speeds up to 60 km/h while maintaining centimeter-level positioning accuracy.

Strengths: The multi-sensor fusion approach provides exceptional robustness across varied environments and lighting conditions. The adaptive computational allocation ensures consistent performance across a wide range of speeds. Weaknesses: The system requires significant hardware resources including specialized GPUs for full functionality, and initial calibration procedures are complex and time-consuming.

Key Patents and Research in High-Speed SLAM

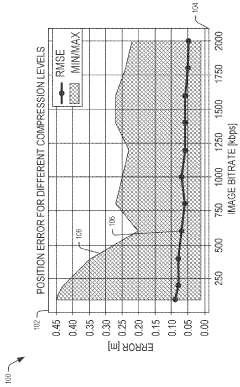

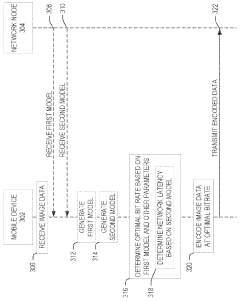

Bitrate adaptation for edge-assisted localization given network availability for mobile devices

PatentWO2024136880A1

Innovation

- A dynamic bitrate adaptation method for edge-assisted SLAM, where a model determines an optimal image data bitrate based on network latency, map presence, and environment bitrate, allowing for compression and transmission at an improved bitrate to enhance localization performance.

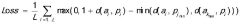

SLAM method based on improved ORB feature extraction and matching

PatentInactiveCN117292257A

Innovation

- The adaptive threshold algorithm based on FAST feature detection is used for feature extraction, and the deep learning network HardNet training descriptor is combined with the brute force matching method and SIFT matching standard to establish feature matching relationships. The random sampling consensus algorithm is used to remove false matches and improve feature extraction and Matching accuracy and robustness.

Hardware Acceleration for SLAM Processing

The computational demands of real-time SLAM in high-speed robotics necessitate specialized hardware acceleration solutions. Traditional CPU-based processing often fails to meet the stringent latency requirements when robots operate at high velocities, where environmental data must be processed within milliseconds to enable safe navigation and operation.

Field-Programmable Gate Arrays (FPGAs) represent a significant advancement in SLAM acceleration, offering reconfigurable hardware that can be optimized for specific SLAM algorithms. These devices excel at parallel processing tasks such as feature extraction and point cloud registration, reducing processing time by up to 70% compared to general-purpose processors. Companies like Intel and Xilinx have developed FPGA solutions specifically targeting robotics applications, with the Xilinx Zynq UltraScale+ MPSoC emerging as a popular platform for integrating SLAM acceleration.

Graphics Processing Units (GPUs) have become increasingly prevalent in SLAM implementations due to their massive parallel processing capabilities. NVIDIA's CUDA platform has enabled significant acceleration of computationally intensive SLAM components, particularly in dense mapping and visual odometry. The NVIDIA Jetson series, especially the Xavier and Orin models, provides an optimal balance of power efficiency and processing capability for mobile robotics platforms, delivering up to 30 frames per second processing for complex visual SLAM algorithms.

Application-Specific Integrated Circuits (ASICs) represent the frontier of SLAM acceleration, offering custom silicon solutions designed exclusively for SLAM workloads. Google's Visual Positioning System utilizes custom ASIC hardware to achieve sub-centimeter localization accuracy with minimal power consumption. Similarly, Boston Dynamics has developed proprietary ASIC solutions that enable their robots to perform real-time SLAM while executing dynamic movements at high speeds.

Heterogeneous computing architectures that combine multiple acceleration technologies are emerging as the most promising approach. These systems typically integrate CPUs for general control, GPUs for parallel processing, and specialized accelerators for specific SLAM components. Qualcomm's Robotics RB5 platform exemplifies this approach, combining a Kryo CPU, Adreno GPU, and Hexagon DSP to distribute SLAM workloads optimally across different processing elements.

Edge AI processors represent the latest evolution in SLAM acceleration, with companies like Hailo, Horizon Robotics, and Kneron developing neural processing units specifically optimized for spatial AI tasks. These processors can achieve up to 10x improvement in performance-per-watt compared to traditional GPU solutions, enabling sophisticated SLAM capabilities on battery-powered robots with limited thermal budgets.

Field-Programmable Gate Arrays (FPGAs) represent a significant advancement in SLAM acceleration, offering reconfigurable hardware that can be optimized for specific SLAM algorithms. These devices excel at parallel processing tasks such as feature extraction and point cloud registration, reducing processing time by up to 70% compared to general-purpose processors. Companies like Intel and Xilinx have developed FPGA solutions specifically targeting robotics applications, with the Xilinx Zynq UltraScale+ MPSoC emerging as a popular platform for integrating SLAM acceleration.

Graphics Processing Units (GPUs) have become increasingly prevalent in SLAM implementations due to their massive parallel processing capabilities. NVIDIA's CUDA platform has enabled significant acceleration of computationally intensive SLAM components, particularly in dense mapping and visual odometry. The NVIDIA Jetson series, especially the Xavier and Orin models, provides an optimal balance of power efficiency and processing capability for mobile robotics platforms, delivering up to 30 frames per second processing for complex visual SLAM algorithms.

Application-Specific Integrated Circuits (ASICs) represent the frontier of SLAM acceleration, offering custom silicon solutions designed exclusively for SLAM workloads. Google's Visual Positioning System utilizes custom ASIC hardware to achieve sub-centimeter localization accuracy with minimal power consumption. Similarly, Boston Dynamics has developed proprietary ASIC solutions that enable their robots to perform real-time SLAM while executing dynamic movements at high speeds.

Heterogeneous computing architectures that combine multiple acceleration technologies are emerging as the most promising approach. These systems typically integrate CPUs for general control, GPUs for parallel processing, and specialized accelerators for specific SLAM components. Qualcomm's Robotics RB5 platform exemplifies this approach, combining a Kryo CPU, Adreno GPU, and Hexagon DSP to distribute SLAM workloads optimally across different processing elements.

Edge AI processors represent the latest evolution in SLAM acceleration, with companies like Hailo, Horizon Robotics, and Kneron developing neural processing units specifically optimized for spatial AI tasks. These processors can achieve up to 10x improvement in performance-per-watt compared to traditional GPU solutions, enabling sophisticated SLAM capabilities on battery-powered robots with limited thermal budgets.

Safety Standards for High-Speed Autonomous Systems

The integration of high-speed robotics into various industries necessitates robust safety standards to mitigate risks associated with rapid autonomous operations. Current safety frameworks for high-speed autonomous systems primarily focus on three critical areas: collision avoidance, system reliability, and human-robot interaction safety protocols. ISO/TS 15066, specifically designed for collaborative robots, provides guidelines that must be adapted for high-velocity operations where reaction times are significantly compressed.

Real-time SLAM (Simultaneous Localization and Mapping) systems introduce unique safety considerations due to their computational demands and potential for mapping errors at high speeds. Safety standards must address the increased uncertainty in environmental perception when robots operate at velocities exceeding 5 m/s, where even millisecond delays in SLAM processing can result in significant positioning errors.

Industry-specific standards have emerged to address particular applications of high-speed robotics. For instance, the automotive industry has developed the ISO 26262 standard for functional safety of electrical and electronic systems, which includes provisions for autonomous driving systems utilizing SLAM technology. Similarly, the aerospace sector employs DO-178C for software considerations in airborne systems, with specific guidance for real-time processing requirements.

Performance metrics for safety certification of high-speed SLAM implementations typically include maximum allowable latency (generally under 10ms for systems operating above 10 m/s), minimum detection reliability (99.9% for critical obstacles), and fault tolerance specifications. These metrics must be verified through standardized testing procedures that simulate worst-case scenarios and edge cases.

Regulatory bodies worldwide are actively developing frameworks to address the safety implications of high-speed autonomous systems. The European Union's Machinery Directive 2006/42/EC is currently being revised to better accommodate advanced robotics, while the U.S. National Institute of Standards and Technology (NIST) has established performance test methods for response robots that include high-speed navigation capabilities.

Future safety standards will likely incorporate adaptive safety margins that dynamically adjust based on operational velocity and environmental complexity. This approach recognizes that fixed safety parameters are insufficient for systems that may operate across varying speed profiles. Additionally, emerging standards are beginning to address the ethical dimensions of autonomous decision-making in high-speed scenarios where traditional human oversight becomes impractical due to time constraints.

Real-time SLAM (Simultaneous Localization and Mapping) systems introduce unique safety considerations due to their computational demands and potential for mapping errors at high speeds. Safety standards must address the increased uncertainty in environmental perception when robots operate at velocities exceeding 5 m/s, where even millisecond delays in SLAM processing can result in significant positioning errors.

Industry-specific standards have emerged to address particular applications of high-speed robotics. For instance, the automotive industry has developed the ISO 26262 standard for functional safety of electrical and electronic systems, which includes provisions for autonomous driving systems utilizing SLAM technology. Similarly, the aerospace sector employs DO-178C for software considerations in airborne systems, with specific guidance for real-time processing requirements.

Performance metrics for safety certification of high-speed SLAM implementations typically include maximum allowable latency (generally under 10ms for systems operating above 10 m/s), minimum detection reliability (99.9% for critical obstacles), and fault tolerance specifications. These metrics must be verified through standardized testing procedures that simulate worst-case scenarios and edge cases.

Regulatory bodies worldwide are actively developing frameworks to address the safety implications of high-speed autonomous systems. The European Union's Machinery Directive 2006/42/EC is currently being revised to better accommodate advanced robotics, while the U.S. National Institute of Standards and Technology (NIST) has established performance test methods for response robots that include high-speed navigation capabilities.

Future safety standards will likely incorporate adaptive safety margins that dynamically adjust based on operational velocity and environmental complexity. This approach recognizes that fixed safety parameters are insufficient for systems that may operate across varying speed profiles. Additionally, emerging standards are beginning to address the ethical dimensions of autonomous decision-making in high-speed scenarios where traditional human oversight becomes impractical due to time constraints.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!