How Simultaneous Localization And Mapping Improves Autonomous Navigation?

SEP 5, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

SLAM Technology Evolution and Objectives

Simultaneous Localization and Mapping (SLAM) has evolved significantly since its conceptual inception in the 1980s, transforming from a theoretical framework into a cornerstone technology for autonomous navigation systems. The evolution of SLAM technology has been driven by the fundamental need for mobile robots and autonomous vehicles to navigate unknown environments without prior maps while simultaneously building those maps.

Early SLAM approaches in the 1990s relied heavily on probabilistic methods, particularly Extended Kalman Filters (EKF), which provided a mathematical foundation for fusing sensor data and estimating positions. These initial implementations were computationally intensive and limited to small-scale environments due to processing constraints of the era.

The 2000s marked a significant advancement with the introduction of particle filters and graph-based optimization techniques, which improved accuracy and enabled operation in larger environments. FastSLAM algorithm, introduced in 2002, represented a breakthrough by efficiently handling the high-dimensional state space of SLAM problems through a combination of particle filters and EKFs.

Visual SLAM emerged in the late 2000s, incorporating camera data to create more detailed environmental representations. This period saw the development of influential algorithms like MonoSLAM and PTAM (Parallel Tracking and Mapping), which demonstrated real-time performance on standard computing hardware.

The 2010s witnessed the integration of deep learning techniques with traditional SLAM approaches, giving rise to semantic SLAM systems capable of not only mapping geometric structures but also understanding and classifying objects within the environment. This period also saw the development of robust commercial SLAM solutions for various applications including autonomous vehicles, drones, and service robots.

Current SLAM technology objectives focus on several key areas: improving robustness in dynamic and challenging environments, reducing computational requirements for deployment on resource-constrained platforms, enhancing long-term mapping capabilities for persistent autonomy, and integrating multi-sensor fusion for more comprehensive environmental understanding.

The ultimate goal of modern SLAM development is to create systems that can operate reliably in any environment, regardless of lighting conditions, weather, or the presence of moving objects. This includes addressing edge cases that remain challenging, such as featureless environments, highly reflective surfaces, and scenarios with significant environmental changes over time.

Looking forward, SLAM technology aims to achieve human-like spatial understanding capabilities, enabling autonomous systems to navigate and interact with their surroundings with minimal supervision while adapting to environmental changes. This evolution continues to push the boundaries of what's possible in autonomous navigation, supporting applications from household robots to self-driving vehicles and beyond.

Early SLAM approaches in the 1990s relied heavily on probabilistic methods, particularly Extended Kalman Filters (EKF), which provided a mathematical foundation for fusing sensor data and estimating positions. These initial implementations were computationally intensive and limited to small-scale environments due to processing constraints of the era.

The 2000s marked a significant advancement with the introduction of particle filters and graph-based optimization techniques, which improved accuracy and enabled operation in larger environments. FastSLAM algorithm, introduced in 2002, represented a breakthrough by efficiently handling the high-dimensional state space of SLAM problems through a combination of particle filters and EKFs.

Visual SLAM emerged in the late 2000s, incorporating camera data to create more detailed environmental representations. This period saw the development of influential algorithms like MonoSLAM and PTAM (Parallel Tracking and Mapping), which demonstrated real-time performance on standard computing hardware.

The 2010s witnessed the integration of deep learning techniques with traditional SLAM approaches, giving rise to semantic SLAM systems capable of not only mapping geometric structures but also understanding and classifying objects within the environment. This period also saw the development of robust commercial SLAM solutions for various applications including autonomous vehicles, drones, and service robots.

Current SLAM technology objectives focus on several key areas: improving robustness in dynamic and challenging environments, reducing computational requirements for deployment on resource-constrained platforms, enhancing long-term mapping capabilities for persistent autonomy, and integrating multi-sensor fusion for more comprehensive environmental understanding.

The ultimate goal of modern SLAM development is to create systems that can operate reliably in any environment, regardless of lighting conditions, weather, or the presence of moving objects. This includes addressing edge cases that remain challenging, such as featureless environments, highly reflective surfaces, and scenarios with significant environmental changes over time.

Looking forward, SLAM technology aims to achieve human-like spatial understanding capabilities, enabling autonomous systems to navigate and interact with their surroundings with minimal supervision while adapting to environmental changes. This evolution continues to push the boundaries of what's possible in autonomous navigation, supporting applications from household robots to self-driving vehicles and beyond.

Market Demand for Autonomous Navigation Systems

The autonomous navigation systems market is experiencing unprecedented growth, driven by advancements in robotics, artificial intelligence, and sensor technologies. Current market valuations indicate that the global autonomous navigation market reached approximately 2.3 billion USD in 2022 and is projected to grow at a compound annual growth rate of 16.7% through 2030. This robust growth trajectory is fueled by increasing demand across multiple sectors including automotive, logistics, healthcare, agriculture, and consumer electronics.

In the automotive sector, demand for advanced driver assistance systems (ADAS) and fully autonomous vehicles continues to accelerate as manufacturers race to achieve higher levels of autonomy. Major automakers and technology companies are investing billions in developing reliable navigation systems, with SLAM technology becoming a critical component for achieving Level 4 and Level 5 autonomy. Consumer expectations for safer, more efficient transportation options are further driving this market segment.

The logistics and warehousing industry represents another significant market for autonomous navigation systems. E-commerce growth has created unprecedented demand for efficient warehouse operations and last-mile delivery solutions. Companies like Amazon, DHL, and Alibaba are deploying autonomous mobile robots (AMRs) equipped with SLAM technology to optimize operations, reduce costs, and address labor shortages. Industry reports indicate that warehouses implementing SLAM-based navigation systems have experienced productivity improvements of up to 30%.

Healthcare applications present a rapidly expanding market segment, with autonomous navigation systems being integrated into medical robots for surgery assistance, patient care, and hospital logistics. The COVID-19 pandemic accelerated adoption as hospitals sought solutions for contactless delivery and disinfection, creating a sustained demand that continues post-pandemic.

Consumer robotics represents another high-growth segment, with household robots like autonomous vacuum cleaners and lawn mowers becoming increasingly sophisticated. The global household robot market is expected to reach 21.9 billion USD by 2027, with SLAM technology enabling more efficient cleaning patterns and improved navigation capabilities in complex home environments.

Military and defense applications constitute a significant market driver, with governments worldwide investing in autonomous navigation systems for reconnaissance, surveillance, and combat operations. These applications demand highly reliable SLAM solutions capable of operating in GPS-denied environments and adverse conditions.

Market analysis reveals that customers across all segments increasingly prioritize navigation accuracy, operational reliability, and adaptability to dynamic environments—all capabilities that advanced SLAM technologies directly enhance. This alignment between market demands and SLAM's core benefits indicates substantial growth potential for companies developing innovative SLAM solutions.

In the automotive sector, demand for advanced driver assistance systems (ADAS) and fully autonomous vehicles continues to accelerate as manufacturers race to achieve higher levels of autonomy. Major automakers and technology companies are investing billions in developing reliable navigation systems, with SLAM technology becoming a critical component for achieving Level 4 and Level 5 autonomy. Consumer expectations for safer, more efficient transportation options are further driving this market segment.

The logistics and warehousing industry represents another significant market for autonomous navigation systems. E-commerce growth has created unprecedented demand for efficient warehouse operations and last-mile delivery solutions. Companies like Amazon, DHL, and Alibaba are deploying autonomous mobile robots (AMRs) equipped with SLAM technology to optimize operations, reduce costs, and address labor shortages. Industry reports indicate that warehouses implementing SLAM-based navigation systems have experienced productivity improvements of up to 30%.

Healthcare applications present a rapidly expanding market segment, with autonomous navigation systems being integrated into medical robots for surgery assistance, patient care, and hospital logistics. The COVID-19 pandemic accelerated adoption as hospitals sought solutions for contactless delivery and disinfection, creating a sustained demand that continues post-pandemic.

Consumer robotics represents another high-growth segment, with household robots like autonomous vacuum cleaners and lawn mowers becoming increasingly sophisticated. The global household robot market is expected to reach 21.9 billion USD by 2027, with SLAM technology enabling more efficient cleaning patterns and improved navigation capabilities in complex home environments.

Military and defense applications constitute a significant market driver, with governments worldwide investing in autonomous navigation systems for reconnaissance, surveillance, and combat operations. These applications demand highly reliable SLAM solutions capable of operating in GPS-denied environments and adverse conditions.

Market analysis reveals that customers across all segments increasingly prioritize navigation accuracy, operational reliability, and adaptability to dynamic environments—all capabilities that advanced SLAM technologies directly enhance. This alignment between market demands and SLAM's core benefits indicates substantial growth potential for companies developing innovative SLAM solutions.

SLAM Implementation Challenges and Limitations

Despite the significant advancements in SLAM technology, implementing robust SLAM systems for autonomous navigation faces numerous challenges and limitations. One of the primary obstacles is computational complexity, as SLAM algorithms require substantial processing power to handle real-time mapping and localization simultaneously. This becomes particularly problematic for resource-constrained platforms such as small drones or mobile robots where power consumption and processing capabilities are limited.

Environmental factors pose another significant challenge for SLAM implementation. Dynamic environments with moving objects can confuse SLAM systems, as most algorithms assume static surroundings. When objects move or environments change rapidly, the system may generate inconsistent maps or lose tracking altogether. Similarly, feature-poor environments like empty hallways or repetitive structures can lead to localization failures due to insufficient distinguishable landmarks.

Sensor limitations represent a critical constraint in SLAM performance. Sensor noise, drift, and calibration errors accumulate over time, leading to increasing inaccuracies in mapping and localization. This problem, known as drift, becomes particularly pronounced in large-scale environments or during extended operation periods. Additionally, different sensors have inherent limitations—cameras struggle in low-light conditions, LiDAR performance degrades in adverse weather, and IMUs suffer from integration errors over time.

Scale and loop closure present technical challenges that impact SLAM reliability. As mapping areas expand, computational requirements grow exponentially, making it difficult to maintain real-time performance. Loop closure—the ability to recognize previously visited locations—remains algorithmically complex, especially in environments with similar-looking features or when approaching familiar areas from different angles.

Robustness across diverse environments remains an unsolved problem. Most SLAM systems are optimized for specific conditions and struggle when deployed in unfamiliar settings. Indoor SLAM solutions often fail outdoors, while systems designed for structured environments may collapse in natural, unstructured terrains. This lack of generalizability limits widespread adoption across different autonomous navigation applications.

Integration challenges arise when combining SLAM with other autonomous navigation components. Synchronizing SLAM outputs with path planning, obstacle avoidance, and decision-making systems requires careful engineering and introduces additional complexity. Furthermore, maintaining consistent performance during transitions between different environments (indoor to outdoor, well-lit to dark areas) remains problematic for many implementations.

Environmental factors pose another significant challenge for SLAM implementation. Dynamic environments with moving objects can confuse SLAM systems, as most algorithms assume static surroundings. When objects move or environments change rapidly, the system may generate inconsistent maps or lose tracking altogether. Similarly, feature-poor environments like empty hallways or repetitive structures can lead to localization failures due to insufficient distinguishable landmarks.

Sensor limitations represent a critical constraint in SLAM performance. Sensor noise, drift, and calibration errors accumulate over time, leading to increasing inaccuracies in mapping and localization. This problem, known as drift, becomes particularly pronounced in large-scale environments or during extended operation periods. Additionally, different sensors have inherent limitations—cameras struggle in low-light conditions, LiDAR performance degrades in adverse weather, and IMUs suffer from integration errors over time.

Scale and loop closure present technical challenges that impact SLAM reliability. As mapping areas expand, computational requirements grow exponentially, making it difficult to maintain real-time performance. Loop closure—the ability to recognize previously visited locations—remains algorithmically complex, especially in environments with similar-looking features or when approaching familiar areas from different angles.

Robustness across diverse environments remains an unsolved problem. Most SLAM systems are optimized for specific conditions and struggle when deployed in unfamiliar settings. Indoor SLAM solutions often fail outdoors, while systems designed for structured environments may collapse in natural, unstructured terrains. This lack of generalizability limits widespread adoption across different autonomous navigation applications.

Integration challenges arise when combining SLAM with other autonomous navigation components. Synchronizing SLAM outputs with path planning, obstacle avoidance, and decision-making systems requires careful engineering and introduces additional complexity. Furthermore, maintaining consistent performance during transitions between different environments (indoor to outdoor, well-lit to dark areas) remains problematic for many implementations.

Current SLAM Methodologies and Frameworks

01 Visual SLAM techniques for navigation

Visual SLAM techniques use camera data to simultaneously map an environment and locate a device within it. These methods process visual features from images to create 3D maps and determine position in real-time. Advanced algorithms extract distinctive points from camera frames, track them across sequential images, and use geometric calculations to estimate movement and build environmental models. This approach is particularly valuable for autonomous navigation in GPS-denied environments.- Visual SLAM techniques for navigation: Visual SLAM techniques use camera data to simultaneously map an environment and locate a device within it. These systems process visual features from images to create 3D maps while tracking the camera's position in real-time. Advanced implementations may incorporate feature detection algorithms, visual odometry, and loop closure detection to improve accuracy and robustness in various environments. Visual SLAM is particularly valuable for autonomous navigation in GPS-denied environments.

- Sensor fusion approaches for SLAM navigation: Sensor fusion combines data from multiple sensors such as cameras, LiDAR, IMUs, and radar to enhance SLAM performance. By integrating complementary sensor data, these systems can overcome limitations of individual sensors, providing more robust navigation in challenging conditions like low light or feature-poor environments. Sensor fusion algorithms typically employ probabilistic methods like Kalman filters or particle filters to optimally combine measurements and reduce uncertainty in position and mapping estimates.

- SLAM for augmented and virtual reality applications: SLAM technology enables precise spatial tracking for AR/VR applications, allowing virtual content to be accurately positioned in the real world. These implementations focus on low-latency processing and high accuracy to maintain immersive experiences. The systems typically use inside-out tracking with onboard cameras to detect environmental features and determine device position and orientation in real-time, enabling seamless integration of virtual objects with the physical environment.

- AI and machine learning enhanced SLAM: Machine learning and AI techniques are being integrated into SLAM systems to improve performance in complex environments. Deep learning approaches can enhance feature detection, scene understanding, and object recognition within SLAM frameworks. Neural networks may be used to predict motion, identify dynamic objects, or improve loop closure detection. These AI-enhanced systems can better handle challenging scenarios like changing lighting conditions or dynamic environments where traditional SLAM algorithms might struggle.

- SLAM optimization for resource-constrained devices: Specialized SLAM implementations focus on optimizing performance for devices with limited computational resources, such as mobile robots, drones, or AR headsets. These approaches include algorithmic optimizations, hardware acceleration, and efficient data structures to reduce memory usage and processing requirements while maintaining acceptable accuracy. Some implementations may use simplified map representations or selective feature processing to balance computational demands with navigation performance.

02 Sensor fusion for improved SLAM accuracy

Combining multiple sensor inputs enhances SLAM performance by leveraging the strengths of different sensor types. This approach typically integrates data from cameras, LiDAR, IMUs, and other sensors to create more robust mapping and localization. Sensor fusion algorithms compensate for individual sensor limitations, reduce drift, and improve accuracy in challenging environments. This multi-modal approach enables more reliable navigation across diverse conditions including low-light environments and areas with limited visual features.Expand Specific Solutions03 Real-time SLAM for autonomous vehicles and robots

Real-time SLAM implementations enable autonomous vehicles and robots to navigate dynamically changing environments. These systems process sensor data on-the-fly to continuously update environmental maps and position estimates. Optimized algorithms balance computational efficiency with accuracy to meet the demands of mobile platforms with limited processing resources. This capability is essential for applications like self-driving cars, delivery robots, and industrial autonomous vehicles that must make immediate navigation decisions.Expand Specific Solutions04 Loop closure and map optimization techniques

Loop closure algorithms detect when a system revisits previously mapped areas, allowing for correction of accumulated errors in SLAM systems. These techniques identify matching features between current and past observations to create consistent global maps. Map optimization methods then refine the entire trajectory and environmental model using techniques like pose graph optimization and bundle adjustment. These approaches significantly reduce drift and improve the long-term reliability of SLAM-based navigation systems.Expand Specific Solutions05 SLAM for augmented reality and mixed reality applications

SLAM technology enables precise spatial anchoring of virtual content in augmented and mixed reality applications. These implementations track the user's device position and orientation while mapping the surrounding environment to create convincing integration between digital and physical worlds. Specialized algorithms optimize for low latency and high accuracy to maintain immersion. This approach allows virtual objects to interact realistically with physical surfaces and maintain proper perspective as users move through space.Expand Specific Solutions

Leading Companies and Research Institutions in SLAM

Simultaneous Localization And Mapping (SLAM) technology is currently in a growth phase within autonomous navigation, with the market expected to reach significant expansion as autonomous vehicles gain traction. The competitive landscape features established industrial players like Honeywell, Bosch, and Continental Automotive developing robust SLAM solutions alongside specialized autonomous vehicle companies such as Aurora, Motional, and Zoox. Technology maturity varies across applications, with companies like Mobileye and TomTom focusing on high-definition mapping capabilities, while newer entrants like DeepMap and Nexar are advancing real-time localization technologies. Major tech corporations including Microsoft and Samsung are also investing in SLAM research, indicating the technology's strategic importance across multiple industries beyond automotive, including robotics and augmented reality applications.

Robert Bosch GmbH

Technical Solution: Bosch has developed a comprehensive SLAM solution for autonomous navigation that integrates with their broader automotive systems portfolio. Their approach utilizes a sensor fusion architecture combining radar, camera, and ultrasonic sensors with selective LiDAR deployment for cost-effective implementation across various vehicle segments. Bosch's SLAM technology incorporates proprietary visual-inertial odometry algorithms that maintain accurate localization even during GPS signal loss. Their system features a distributed computing architecture where edge processing handles immediate navigation needs while cloud infrastructure manages map updates and global optimization. Bosch's implementation includes specialized lane-level mapping capabilities with semantic understanding of road features and traffic regulations. Their SLAM solution is designed for scalability across different automation levels (L2-L4) and vehicle types, with configurable sensor packages based on the specific application requirements and price points.

Strengths: Integration with Bosch's extensive automotive systems portfolio enables seamless deployment; scalable approach allows implementation across various vehicle segments and automation levels; established supplier relationships with major automakers facilitates adoption. Weaknesses: More conservative approach may lag behind specialized autonomous vehicle companies in cutting-edge capabilities; balancing cost constraints with performance requirements may limit capabilities in entry-level implementations.

Mobileye Vision Technologies Ltd.

Technical Solution: Mobileye's Road Experience Management (REM) platform leverages SLAM technology to create and maintain high-definition maps for autonomous driving. Their approach uses camera-based systems with proprietary visual SLAM algorithms that process real-time visual data from multiple vehicles to build collective mapping intelligence. Mobileye's SLAM implementation incorporates semantic understanding, allowing their systems to identify and classify objects while simultaneously mapping environments. Their technology employs a distributed architecture where lightweight processing occurs on-vehicle while complex map fusion happens in cloud infrastructure. This enables centimeter-level localization accuracy while requiring significantly less data transmission than traditional mapping approaches. Mobileye's SLAM system also features self-healing map capabilities, automatically detecting and updating environmental changes through continuous crowd-sourced data collection from their extensive vehicle fleet.

Strengths: Camera-based approach reduces hardware costs compared to LiDAR-dependent systems; massive deployment across multiple OEMs provides unparalleled data collection scale; semantic understanding enables higher-level reasoning. Weaknesses: Visual-only approach may face challenges in adverse weather or lighting conditions; higher computational requirements for processing complex visual data.

Key SLAM Patents and Technical Innovations

A simultaneous location and mapping based autonomous navigation system

PatentActiveZA202108148A

Innovation

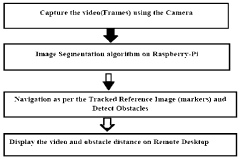

- A simultaneous location and mapping (SLAM) based autonomous navigation system that uses a combination of sensors and customized software to build a map of an unknown environment while navigating, employing techniques like image segmentation, adaptive fuzzy tracking, and extended Kalman filters to determine robot position and avoid obstacles.

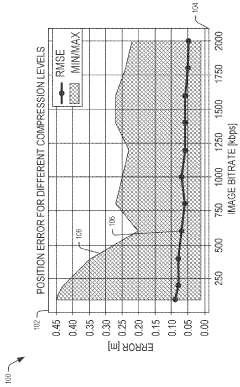

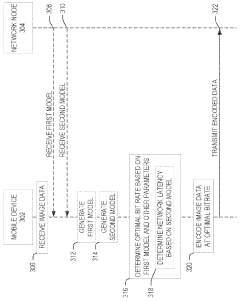

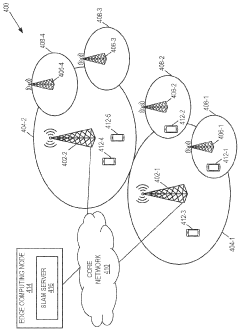

Bitrate adaptation for edge-assisted localization given network availability for mobile devices

PatentWO2024136880A1

Innovation

- A dynamic bitrate adaptation method for edge-assisted SLAM, where a model determines an optimal image data bitrate based on network latency, map presence, and environment bitrate, allowing for compression and transmission at an improved bitrate to enhance localization performance.

Hardware-Software Integration for SLAM Systems

Effective SLAM systems require seamless integration between hardware components and software algorithms to achieve optimal performance in autonomous navigation. The hardware foundation typically consists of sensors such as LiDAR, cameras, IMUs, and wheel encoders that provide complementary data streams. These sensors must be carefully selected based on the operational environment, with considerations for range, resolution, update frequency, and power consumption requirements.

Sensor fusion represents a critical aspect of hardware-software integration, where data from multiple sensors is combined to overcome individual sensor limitations. This process requires precise temporal synchronization and spatial calibration between sensors to ensure accurate data alignment. Modern SLAM implementations often utilize hardware acceleration through GPUs, FPGAs, or dedicated SLAM processors to handle computationally intensive operations in real-time.

Resource management presents significant challenges in SLAM system design, particularly for mobile robots with limited computational capabilities and power constraints. Software architectures must efficiently allocate processing resources, implementing techniques such as dynamic resolution adjustment, selective feature extraction, and adaptive processing based on environmental complexity. Edge computing approaches are increasingly being adopted to distribute computational loads between onboard processors and external systems.

Middleware frameworks like ROS (Robot Operating System) and RTAB-Map have emerged as standard platforms that facilitate hardware-software integration by providing standardized interfaces, communication protocols, and development tools. These frameworks enable modular system design where hardware components and software algorithms can be interchanged with minimal reconfiguration, accelerating development cycles and promoting code reusability across different robotic platforms.

The integration process must address latency considerations throughout the system pipeline. Sensor data acquisition, processing algorithms, and control outputs must maintain minimal delays to ensure responsive navigation, particularly in dynamic environments. This requires careful optimization of data transfer protocols, processing queues, and execution scheduling to maintain real-time performance.

Testing and validation methodologies for integrated SLAM systems have evolved to include hardware-in-the-loop simulations, where virtual environments interact with physical sensors and processors. These approaches enable comprehensive evaluation of system performance under controlled conditions before deployment in real-world scenarios, reducing development risks and accelerating iteration cycles.

Sensor fusion represents a critical aspect of hardware-software integration, where data from multiple sensors is combined to overcome individual sensor limitations. This process requires precise temporal synchronization and spatial calibration between sensors to ensure accurate data alignment. Modern SLAM implementations often utilize hardware acceleration through GPUs, FPGAs, or dedicated SLAM processors to handle computationally intensive operations in real-time.

Resource management presents significant challenges in SLAM system design, particularly for mobile robots with limited computational capabilities and power constraints. Software architectures must efficiently allocate processing resources, implementing techniques such as dynamic resolution adjustment, selective feature extraction, and adaptive processing based on environmental complexity. Edge computing approaches are increasingly being adopted to distribute computational loads between onboard processors and external systems.

Middleware frameworks like ROS (Robot Operating System) and RTAB-Map have emerged as standard platforms that facilitate hardware-software integration by providing standardized interfaces, communication protocols, and development tools. These frameworks enable modular system design where hardware components and software algorithms can be interchanged with minimal reconfiguration, accelerating development cycles and promoting code reusability across different robotic platforms.

The integration process must address latency considerations throughout the system pipeline. Sensor data acquisition, processing algorithms, and control outputs must maintain minimal delays to ensure responsive navigation, particularly in dynamic environments. This requires careful optimization of data transfer protocols, processing queues, and execution scheduling to maintain real-time performance.

Testing and validation methodologies for integrated SLAM systems have evolved to include hardware-in-the-loop simulations, where virtual environments interact with physical sensors and processors. These approaches enable comprehensive evaluation of system performance under controlled conditions before deployment in real-world scenarios, reducing development risks and accelerating iteration cycles.

Real-world Performance Metrics and Benchmarking

Evaluating SLAM systems in real-world environments requires comprehensive performance metrics that go beyond laboratory conditions. The most fundamental metric is localization accuracy, typically measured as the Root Mean Square Error (RMSE) between estimated and ground truth positions. For autonomous navigation applications, trajectory tracking error becomes equally critical, measuring how closely a vehicle follows its intended path. These metrics must be evaluated across diverse environmental conditions including varying lighting, weather, and terrain types.

Processing time represents another crucial benchmark, with real-time operation demanding SLAM algorithms to complete mapping and localization within strict time constraints—typically under 100ms per frame for vehicular applications and under 30ms for drone navigation. Memory consumption and computational efficiency metrics help determine hardware requirements and energy consumption profiles, particularly important for resource-constrained platforms like mobile robots.

Map quality assessment involves evaluating feature density, landmark persistence, and loop closure effectiveness. The EVO (Evaluation of Odometry) and KITTI benchmarking frameworks have emerged as industry standards, providing standardized datasets and evaluation methodologies that enable fair comparisons between different SLAM implementations. The KITTI Vision Benchmark Suite specifically offers challenging autonomous driving scenarios with precise ground truth data.

Robustness metrics quantify a SLAM system's ability to maintain performance during challenging scenarios such as rapid movements, feature-poor environments, or dynamic obstacles. Mean time between failures (MTBF) and recovery time after localization loss provide practical insights into operational reliability. For commercial applications, the percentage of successful missions completed without human intervention serves as a key performance indicator.

Energy efficiency benchmarks are increasingly important for battery-powered autonomous systems, measuring power consumption per distance traveled or per mapping area. This metric directly impacts operational range and mission duration. Finally, integration benchmarks assess how effectively SLAM systems interface with other navigation components like path planning algorithms and obstacle avoidance systems, measuring end-to-end performance rather than isolated SLAM capabilities.

Cross-platform performance comparison remains challenging due to hardware variations, but standardized compute units and normalized metrics are emerging to address this issue. As SLAM technology matures, these benchmarking methodologies continue to evolve, with increasing emphasis on real-world performance under uncertainty rather than idealized testing conditions.

Processing time represents another crucial benchmark, with real-time operation demanding SLAM algorithms to complete mapping and localization within strict time constraints—typically under 100ms per frame for vehicular applications and under 30ms for drone navigation. Memory consumption and computational efficiency metrics help determine hardware requirements and energy consumption profiles, particularly important for resource-constrained platforms like mobile robots.

Map quality assessment involves evaluating feature density, landmark persistence, and loop closure effectiveness. The EVO (Evaluation of Odometry) and KITTI benchmarking frameworks have emerged as industry standards, providing standardized datasets and evaluation methodologies that enable fair comparisons between different SLAM implementations. The KITTI Vision Benchmark Suite specifically offers challenging autonomous driving scenarios with precise ground truth data.

Robustness metrics quantify a SLAM system's ability to maintain performance during challenging scenarios such as rapid movements, feature-poor environments, or dynamic obstacles. Mean time between failures (MTBF) and recovery time after localization loss provide practical insights into operational reliability. For commercial applications, the percentage of successful missions completed without human intervention serves as a key performance indicator.

Energy efficiency benchmarks are increasingly important for battery-powered autonomous systems, measuring power consumption per distance traveled or per mapping area. This metric directly impacts operational range and mission duration. Finally, integration benchmarks assess how effectively SLAM systems interface with other navigation components like path planning algorithms and obstacle avoidance systems, measuring end-to-end performance rather than isolated SLAM capabilities.

Cross-platform performance comparison remains challenging due to hardware variations, but standardized compute units and normalized metrics are emerging to address this issue. As SLAM technology matures, these benchmarking methodologies continue to evolve, with increasing emphasis on real-world performance under uncertainty rather than idealized testing conditions.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!