The Future Of SLAM In Consumer AR/VR Devices

SEP 5, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

SLAM Technology Evolution and AR/VR Integration Goals

Simultaneous Localization and Mapping (SLAM) technology has evolved significantly since its inception in the 1980s, transforming from theoretical robotics concepts to practical applications across multiple industries. The evolution of SLAM has been characterized by increasing accuracy, reduced computational requirements, and enhanced real-time performance—all critical factors for consumer AR/VR integration.

The early development of SLAM focused primarily on robotic navigation using laser scanners and sonar. By the early 2000s, visual SLAM emerged, utilizing camera inputs to create environmental maps. The introduction of RGB-D cameras around 2010 marked a significant advancement, enabling depth perception alongside visual data. Recent years have witnessed the rise of AI-enhanced SLAM systems that leverage deep learning for improved feature detection and environmental understanding.

For consumer AR/VR devices, SLAM technology aims to achieve seamless integration of virtual content with the physical world, creating immersive experiences that respond naturally to user movements. The primary technical goals include sub-millimeter tracking accuracy, minimal latency (under 20ms), reduced power consumption, and robust performance across diverse environments—from well-lit indoor spaces to challenging outdoor conditions.

Current consumer AR/VR integration goals focus on miniaturization of SLAM hardware components to fit sleek, lightweight form factors that consumers will find appealing and comfortable for extended use. This includes the development of specialized chips for SLAM processing that minimize battery drain while maintaining high performance.

Another critical objective is achieving persistent spatial mapping, allowing AR/VR devices to remember environments between sessions and share spatial maps between users. This capability enables collaborative experiences and persistent virtual content placement—fundamental features for mainstream adoption of AR/VR technologies.

The convergence of SLAM with other sensing technologies represents another important evolutionary direction. Integration with inertial measurement units (IMUs), time-of-flight sensors, and even neuromorphic vision systems is creating more robust tracking solutions that can operate under challenging conditions such as rapid movement or low lighting.

Looking forward, the industry is moving toward edge-based SLAM solutions that reduce dependency on cloud processing, enabling AR/VR experiences in areas with limited connectivity while addressing privacy concerns related to environmental mapping. The ultimate goal is to develop SLAM systems that approach human-level spatial understanding, capable of recognizing objects, predicting movement patterns, and adapting to dynamic environments in real-time.

The early development of SLAM focused primarily on robotic navigation using laser scanners and sonar. By the early 2000s, visual SLAM emerged, utilizing camera inputs to create environmental maps. The introduction of RGB-D cameras around 2010 marked a significant advancement, enabling depth perception alongside visual data. Recent years have witnessed the rise of AI-enhanced SLAM systems that leverage deep learning for improved feature detection and environmental understanding.

For consumer AR/VR devices, SLAM technology aims to achieve seamless integration of virtual content with the physical world, creating immersive experiences that respond naturally to user movements. The primary technical goals include sub-millimeter tracking accuracy, minimal latency (under 20ms), reduced power consumption, and robust performance across diverse environments—from well-lit indoor spaces to challenging outdoor conditions.

Current consumer AR/VR integration goals focus on miniaturization of SLAM hardware components to fit sleek, lightweight form factors that consumers will find appealing and comfortable for extended use. This includes the development of specialized chips for SLAM processing that minimize battery drain while maintaining high performance.

Another critical objective is achieving persistent spatial mapping, allowing AR/VR devices to remember environments between sessions and share spatial maps between users. This capability enables collaborative experiences and persistent virtual content placement—fundamental features for mainstream adoption of AR/VR technologies.

The convergence of SLAM with other sensing technologies represents another important evolutionary direction. Integration with inertial measurement units (IMUs), time-of-flight sensors, and even neuromorphic vision systems is creating more robust tracking solutions that can operate under challenging conditions such as rapid movement or low lighting.

Looking forward, the industry is moving toward edge-based SLAM solutions that reduce dependency on cloud processing, enabling AR/VR experiences in areas with limited connectivity while addressing privacy concerns related to environmental mapping. The ultimate goal is to develop SLAM systems that approach human-level spatial understanding, capable of recognizing objects, predicting movement patterns, and adapting to dynamic environments in real-time.

Market Analysis for SLAM-Enabled AR/VR Devices

The global market for SLAM-enabled AR/VR devices is experiencing robust growth, driven by increasing consumer adoption and technological advancements. Current market projections indicate that the AR/VR market will reach approximately $300 billion by 2025, with SLAM technology serving as a critical enabler for premium device functionality. Consumer demand for immersive experiences continues to fuel this expansion, with particular growth observed in gaming, education, and remote collaboration applications.

Market segmentation reveals distinct consumer preferences across different regions. North American and European markets show stronger demand for high-fidelity tracking and spatial mapping capabilities, prioritizing premium experiences over cost considerations. In contrast, emerging markets in Asia-Pacific regions demonstrate greater price sensitivity while still valuing basic SLAM functionality for entry-level devices.

The consumer AR/VR device market can be categorized into three primary segments: high-end tethered headsets, standalone mid-range devices, and smartphone-based solutions. SLAM technology adoption varies significantly across these segments, with the most sophisticated implementations appearing in premium standalone devices that require precise environmental mapping without external sensors.

Industry analysis indicates that consumer expectations are evolving rapidly, with increasing demand for devices that offer seamless integration between virtual and physical environments. This shift is evidenced by the growing popularity of mixed reality applications that rely heavily on accurate SLAM capabilities to blend digital content with real-world surroundings.

Market research suggests that consumer purchasing decisions are increasingly influenced by tracking accuracy, latency performance, and power efficiency—all factors directly impacted by SLAM implementation quality. Devices offering superior spatial awareness and persistent mapping capabilities command premium pricing, with consumers demonstrating willingness to pay 30-40% more for enhanced immersion.

Competition in this space is intensifying as technology companies recognize SLAM's strategic importance in delivering next-generation AR/VR experiences. Market consolidation has accelerated, with major players acquiring specialized SLAM startups to secure competitive advantages in tracking technology.

Consumer feedback indicates growing sophistication in user expectations, with early adopters specifically evaluating devices based on tracking stability in challenging environments such as low-light conditions or highly dynamic scenes. This represents a significant shift from earlier market phases when basic functionality was sufficient to drive adoption.

The market trajectory suggests that SLAM capabilities will increasingly become a standard feature rather than a premium differentiator, with competition shifting toward implementation quality, power efficiency, and integration with other sensing technologies. This evolution presents both opportunities and challenges for device manufacturers seeking to establish market leadership in consumer AR/VR.

Market segmentation reveals distinct consumer preferences across different regions. North American and European markets show stronger demand for high-fidelity tracking and spatial mapping capabilities, prioritizing premium experiences over cost considerations. In contrast, emerging markets in Asia-Pacific regions demonstrate greater price sensitivity while still valuing basic SLAM functionality for entry-level devices.

The consumer AR/VR device market can be categorized into three primary segments: high-end tethered headsets, standalone mid-range devices, and smartphone-based solutions. SLAM technology adoption varies significantly across these segments, with the most sophisticated implementations appearing in premium standalone devices that require precise environmental mapping without external sensors.

Industry analysis indicates that consumer expectations are evolving rapidly, with increasing demand for devices that offer seamless integration between virtual and physical environments. This shift is evidenced by the growing popularity of mixed reality applications that rely heavily on accurate SLAM capabilities to blend digital content with real-world surroundings.

Market research suggests that consumer purchasing decisions are increasingly influenced by tracking accuracy, latency performance, and power efficiency—all factors directly impacted by SLAM implementation quality. Devices offering superior spatial awareness and persistent mapping capabilities command premium pricing, with consumers demonstrating willingness to pay 30-40% more for enhanced immersion.

Competition in this space is intensifying as technology companies recognize SLAM's strategic importance in delivering next-generation AR/VR experiences. Market consolidation has accelerated, with major players acquiring specialized SLAM startups to secure competitive advantages in tracking technology.

Consumer feedback indicates growing sophistication in user expectations, with early adopters specifically evaluating devices based on tracking stability in challenging environments such as low-light conditions or highly dynamic scenes. This represents a significant shift from earlier market phases when basic functionality was sufficient to drive adoption.

The market trajectory suggests that SLAM capabilities will increasingly become a standard feature rather than a premium differentiator, with competition shifting toward implementation quality, power efficiency, and integration with other sensing technologies. This evolution presents both opportunities and challenges for device manufacturers seeking to establish market leadership in consumer AR/VR.

Current SLAM Implementations and Technical Barriers

Current SLAM implementations in consumer AR/VR devices face significant technical challenges despite notable advancements. Visual-inertial SLAM systems dominate the market, combining camera data with IMU readings to achieve six degrees of freedom tracking. Apple's ARKit employs visual-inertial odometry with plane detection and scene understanding capabilities, while Google's ARCore utilizes feature points and motion sensors for environmental mapping. Meta's Oculus devices implement a hybrid approach with inside-out tracking using onboard cameras and IMUs.

Despite these implementations, several technical barriers persist. Power consumption remains a critical limitation, with sophisticated SLAM algorithms demanding substantial computational resources that drain battery life in mobile AR/VR devices. This creates a fundamental tension between tracking accuracy and device longevity that manufacturers continue to struggle with.

Processing constraints present another significant challenge. Consumer devices have limited computational capabilities compared to research-grade systems, necessitating algorithm optimization that often sacrifices accuracy or feature richness. Edge computing solutions are emerging but introduce latency concerns that can disrupt immersive experiences.

Environmental robustness represents a persistent barrier, as current SLAM systems perform inconsistently across varying lighting conditions, reflective surfaces, and textureless environments. Dynamic scenes with moving objects further complicate mapping and localization processes, leading to tracking failures that break immersion.

Scale and drift issues continue to plague consumer SLAM implementations. Without absolute positioning references, accumulated errors cause drift over time, particularly problematic for extended AR/VR sessions. Loop closure techniques help mitigate this but add computational overhead and can cause jarring corrections when detected.

Map persistence and sharing capabilities remain underdeveloped in most consumer implementations. The ability to save, reload, and share spatial maps between sessions and devices is crucial for persistent AR experiences but requires solving complex problems of map compression, updating, and alignment across different hardware configurations.

Initialization speed presents a user experience challenge, with many systems requiring a "scanning" period before full functionality. This creates friction in consumer adoption, as users expect immediate responsiveness when launching AR/VR applications. Balancing quick initialization with accurate environment understanding remains an unsolved optimization problem in consumer SLAM implementations.

Despite these implementations, several technical barriers persist. Power consumption remains a critical limitation, with sophisticated SLAM algorithms demanding substantial computational resources that drain battery life in mobile AR/VR devices. This creates a fundamental tension between tracking accuracy and device longevity that manufacturers continue to struggle with.

Processing constraints present another significant challenge. Consumer devices have limited computational capabilities compared to research-grade systems, necessitating algorithm optimization that often sacrifices accuracy or feature richness. Edge computing solutions are emerging but introduce latency concerns that can disrupt immersive experiences.

Environmental robustness represents a persistent barrier, as current SLAM systems perform inconsistently across varying lighting conditions, reflective surfaces, and textureless environments. Dynamic scenes with moving objects further complicate mapping and localization processes, leading to tracking failures that break immersion.

Scale and drift issues continue to plague consumer SLAM implementations. Without absolute positioning references, accumulated errors cause drift over time, particularly problematic for extended AR/VR sessions. Loop closure techniques help mitigate this but add computational overhead and can cause jarring corrections when detected.

Map persistence and sharing capabilities remain underdeveloped in most consumer implementations. The ability to save, reload, and share spatial maps between sessions and devices is crucial for persistent AR experiences but requires solving complex problems of map compression, updating, and alignment across different hardware configurations.

Initialization speed presents a user experience challenge, with many systems requiring a "scanning" period before full functionality. This creates friction in consumer adoption, as users expect immediate responsiveness when launching AR/VR applications. Balancing quick initialization with accurate environment understanding remains an unsolved optimization problem in consumer SLAM implementations.

Mainstream SLAM Algorithms and Frameworks

01 Visual SLAM techniques for autonomous navigation

Visual SLAM systems use camera data to simultaneously map an environment and locate a device within it. These systems process visual features to create 3D maps while tracking movement in real-time. Advanced implementations incorporate machine learning algorithms to improve feature detection, matching, and environmental understanding, enabling more robust navigation in dynamic environments. This technology is particularly valuable for autonomous vehicles, drones, and robots that need to navigate without pre-existing maps.- Visual SLAM techniques for autonomous navigation: Visual SLAM techniques use camera data to simultaneously map an environment and locate a device within it. These systems process visual features to create 3D maps while tracking movement in real-time. Advanced implementations incorporate deep learning for improved feature detection and matching, enabling more robust performance in challenging environments such as low-light conditions or areas with repetitive visual patterns. These technologies are particularly valuable for autonomous vehicles, drones, and robots that need to navigate without external positioning systems.

- SLAM for augmented and virtual reality applications: SLAM technology enables precise spatial mapping for AR/VR applications, allowing virtual objects to interact realistically with the physical environment. These systems track user movement while building a detailed environmental model, creating immersive experiences where digital content appears anchored to the real world. The technology processes sensor data to identify distinctive features and track their positions across frames, maintaining spatial consistency even during rapid movement. This approach supports applications ranging from interactive gaming to industrial training simulations and architectural visualization.

- Integration of multiple sensors for robust SLAM: Multi-sensor SLAM systems combine data from various sources such as cameras, LiDAR, IMUs, and depth sensors to improve mapping accuracy and robustness. These integrated approaches leverage the complementary strengths of different sensors to overcome individual limitations, such as camera performance in low light or LiDAR range constraints. Sensor fusion algorithms synchronize and combine heterogeneous data streams to create more complete environmental models while reducing uncertainty in localization. This multi-modal approach enables SLAM systems to function reliably across diverse environments and operating conditions.

- Loop closure and optimization techniques in SLAM: Loop closure algorithms detect when a system revisits previously mapped areas, allowing for correction of accumulated errors in SLAM systems. These techniques identify matching features between current and past observations to recognize revisited locations, then optimize the entire map to maintain global consistency. Graph-based optimization methods represent locations as nodes and sensor measurements as edges, minimizing errors across the complete trajectory. Advanced implementations use appearance-based recognition and geometric verification to handle perceptual aliasing, where different locations appear visually similar.

- SLAM for resource-constrained devices: Lightweight SLAM implementations enable localization and mapping on devices with limited computational resources, such as mobile phones or small robots. These systems employ efficient algorithms that reduce processing requirements while maintaining acceptable accuracy, often using sparse feature tracking or simplified map representations. Some approaches offload intensive computations to cloud services or employ model compression techniques to reduce memory footprint. Hardware-accelerated implementations leverage specialized processors like GPUs or neural processing units to achieve real-time performance despite resource limitations.

02 SLAM with sensor fusion for improved accuracy

Combining multiple sensor types enhances SLAM performance by leveraging the strengths of different data sources. Systems that integrate cameras, LiDAR, IMUs, and other sensors can overcome limitations of single-sensor approaches. Sensor fusion algorithms synchronize and weight data from various sources to create more accurate environmental maps and position estimates. This approach is particularly effective in challenging conditions such as low light, featureless environments, or when rapid movement causes motion blur in visual data.Expand Specific Solutions03 Deep learning approaches for SLAM optimization

Neural networks and deep learning techniques are revolutionizing SLAM by improving feature extraction, loop closure detection, and map representation. These approaches can learn complex patterns from training data to enhance localization accuracy and mapping efficiency. Deep learning models help SLAM systems better handle challenging scenarios like changing lighting conditions, dynamic objects, and repetitive environments. The integration of AI enables more adaptive and robust SLAM solutions that can generalize across different environments with minimal manual tuning.Expand Specific Solutions04 Real-time SLAM for AR/VR applications

Specialized SLAM implementations for augmented and virtual reality focus on low latency and high precision to create immersive experiences. These systems must track user movement with minimal delay while maintaining accurate spatial mapping to properly anchor virtual content in the physical world. Optimizations include efficient algorithms for feature tracking, predictive motion models, and lightweight map representations that can run on mobile devices. AR/VR SLAM systems often incorporate user interaction data to improve mapping of areas that receive the most attention.Expand Specific Solutions05 SLAM for GPS-denied environments

SLAM techniques designed for GPS-denied environments enable navigation in indoor spaces, underground locations, or areas with poor satellite coverage. These systems rely entirely on onboard sensors and processing to maintain positional awareness without external reference signals. Key innovations include drift correction methods, loop closure techniques for error reduction, and efficient map storage for extended operations. Applications include indoor robots, mining equipment, underwater vehicles, and emergency response systems that must operate in signal-blocked environments.Expand Specific Solutions

Leading Companies in SLAM and AR/VR Ecosystem

The SLAM (Simultaneous Localization and Mapping) technology in consumer AR/VR is evolving rapidly, currently transitioning from early adoption to growth phase. The market is projected to expand significantly as AR/VR devices become mainstream, with an estimated value exceeding $20 billion by 2025. Technologically, industry leaders demonstrate varying maturity levels: Sony, Qualcomm, and Meta have established robust SLAM implementations in commercial products, while Huawei, MediaTek, and BOE are making significant R&D investments. Academic institutions like Peking University and ShanghaiTech are advancing fundamental research, while specialized players such as Auki Labs focus on solving specific challenges like instant calibration and cross-app interoperability. The ecosystem is becoming increasingly competitive as hardware manufacturers partner with software developers to create integrated solutions.

Sony Group Corp.

Technical Solution: Sony has developed a hybrid SLAM system for their PlayStation VR platform that combines marker-based and markerless tracking techniques. Their approach uses a dual camera system with infrared markers for precise controller tracking while implementing visual SLAM for headset positioning. Sony's SLAM technology incorporates depth sensing capabilities that create detailed environmental meshes, enabling realistic physics interactions between virtual objects and real-world surfaces. Their solution emphasizes temporal consistency in tracking, using predictive algorithms to maintain smooth motion even during partial occlusion or rapid movement. Sony has recently enhanced their SLAM implementation with eye-tracking integration, allowing for foveated rendering that concentrates processing resources where the user is looking[4]. This approach optimizes performance on consumer hardware while maintaining high visual fidelity. Sony's SLAM system also features adaptive mapping that adjusts detail levels based on available processing power and scene complexity, ensuring consistent performance across their consumer device ecosystem.

Strengths: Highly accurate tracking through hybrid marker/markerless approach; excellent integration with gaming applications; robust performance in home environments. Weaknesses: More complex setup than fully inside-out tracking solutions; higher dependency on controlled lighting conditions; less portable than some competing systems.

Auki Labs Ltd.

Technical Solution: Auki Labs has developed a decentralized SLAM technology called Spatial Web Protocol that focuses on creating persistent AR experiences across multiple devices and sessions. Their approach differs from traditional SLAM implementations by emphasizing spatial anchoring that works across heterogeneous devices and platforms. Auki's SLAM solution incorporates blockchain technology to create verifiable spatial maps that can be shared securely between users without centralized control. Their system uses a combination of visual features, depth information, and inertial data to create robust environmental maps that persist over time and can be accessed by multiple users simultaneously. Auki Labs has pioneered techniques for compressing spatial maps to minimize data transfer requirements while maintaining sufficient detail for accurate localization[6]. Their SLAM implementation includes privacy-preserving features that allow users to control which spatial data is shared and with whom. The company has focused on solving the "AR cloud" problem by creating infrastructure for persistent, multi-user AR experiences that maintain consistent positioning of virtual content across sessions and devices.

Strengths: Innovative decentralized approach to persistent AR; strong privacy controls; excellent cross-platform compatibility. Weaknesses: Less established than major competitors; higher dependency on network connectivity for multi-user features; relatively new technology with fewer real-world deployments.

Key Patents and Research Breakthroughs in SLAM

System and method for spatially mapping smart objects within augmented reality scenes

PatentWO2019168886A1

Innovation

- A method and system that utilize a mobile device equipped with a camera and wireless transceivers to capture images, generate a three-dimensional map, measure distance measurements between the device and IoT devices, and determine their locations within the map, enabling spatially aware interactions and unified interface for IoT device management.

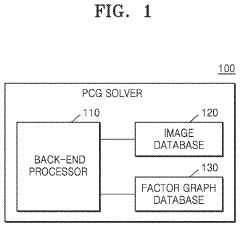

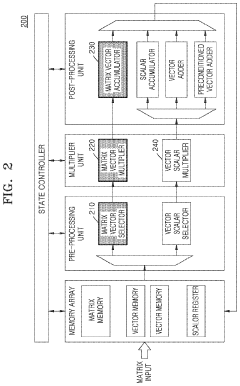

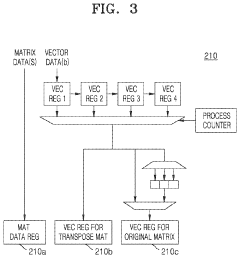

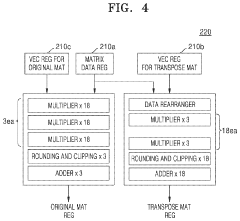

Method of accelerating simultaneous localization and mapping (SLAM) and device using same

PatentActiveUS11886491B2

Innovation

- A preconditioned conjugate gradient (PCG) solver is implemented within an electronic device, utilizing an image database and a factor graph database to perform re-localization and calculate six degrees of freedom-related components, optimizing matrix operations by calculating diagonal elements and reusing block elements, and using a shift register for data shifting and accumulation.

Power Efficiency Challenges in Mobile SLAM Processing

Power efficiency remains one of the most critical challenges for implementing SLAM (Simultaneous Localization and Mapping) technology in consumer AR/VR devices. As these devices continue to shrink in form factor while demanding higher performance, the computational burden of SLAM algorithms creates significant power constraints that must be addressed for mainstream adoption.

Current mobile SLAM implementations typically consume between 2-5 watts of power when running at full capacity, which is prohibitively high for all-day wearable devices targeting 1-2 watts for the entire system. This power consumption primarily stems from three components: sensor data acquisition, feature extraction processing, and map maintenance operations. The continuous nature of SLAM, requiring real-time processing at 60-90Hz to maintain tracking stability, further exacerbates these power demands.

Hardware acceleration has emerged as a promising approach to mitigate power concerns. Dedicated Visual Processing Units (VPUs) and SLAM-specific ASICs have demonstrated 5-10x improvements in power efficiency compared to general-purpose CPU implementations. Companies like Qualcomm and MediaTek have integrated specialized SLAM cores into their XR-focused chipsets, achieving sub-watt SLAM processing for limited environments.

Software optimization techniques also play a crucial role in power reduction. Adaptive SLAM approaches that dynamically adjust processing frequency based on user movement have shown 30-40% power savings in real-world testing. Similarly, keyframe selection algorithms that reduce redundant processing of similar frames can decrease computational load by up to 50% in static environments without significantly impacting accuracy.

The industry is exploring several promising directions to further improve power efficiency. Event-based cameras, which only transmit pixel changes rather than full frames, could reduce sensor data bandwidth by 90% in typical use cases. Neuromorphic computing approaches mimicking brain function show potential for ultra-low-power SLAM implementations, with research prototypes demonstrating sub-100mW operation for limited environments.

Cloud-assisted SLAM represents another frontier, where devices offload heavy computational tasks to edge servers. This hybrid approach can reduce on-device power consumption by 60-70%, though it introduces latency and connectivity dependencies that limit applicability for many consumer scenarios. The optimal balance between on-device processing and cloud offloading remains an active research area.

For consumer AR/VR to achieve the desired form factors and all-day battery life, power efficiency in SLAM processing must improve by approximately 5-10x from current levels. This challenge represents one of the most significant barriers to mainstream adoption of sophisticated spatial computing in truly wearable form factors.

Current mobile SLAM implementations typically consume between 2-5 watts of power when running at full capacity, which is prohibitively high for all-day wearable devices targeting 1-2 watts for the entire system. This power consumption primarily stems from three components: sensor data acquisition, feature extraction processing, and map maintenance operations. The continuous nature of SLAM, requiring real-time processing at 60-90Hz to maintain tracking stability, further exacerbates these power demands.

Hardware acceleration has emerged as a promising approach to mitigate power concerns. Dedicated Visual Processing Units (VPUs) and SLAM-specific ASICs have demonstrated 5-10x improvements in power efficiency compared to general-purpose CPU implementations. Companies like Qualcomm and MediaTek have integrated specialized SLAM cores into their XR-focused chipsets, achieving sub-watt SLAM processing for limited environments.

Software optimization techniques also play a crucial role in power reduction. Adaptive SLAM approaches that dynamically adjust processing frequency based on user movement have shown 30-40% power savings in real-world testing. Similarly, keyframe selection algorithms that reduce redundant processing of similar frames can decrease computational load by up to 50% in static environments without significantly impacting accuracy.

The industry is exploring several promising directions to further improve power efficiency. Event-based cameras, which only transmit pixel changes rather than full frames, could reduce sensor data bandwidth by 90% in typical use cases. Neuromorphic computing approaches mimicking brain function show potential for ultra-low-power SLAM implementations, with research prototypes demonstrating sub-100mW operation for limited environments.

Cloud-assisted SLAM represents another frontier, where devices offload heavy computational tasks to edge servers. This hybrid approach can reduce on-device power consumption by 60-70%, though it introduces latency and connectivity dependencies that limit applicability for many consumer scenarios. The optimal balance between on-device processing and cloud offloading remains an active research area.

For consumer AR/VR to achieve the desired form factors and all-day battery life, power efficiency in SLAM processing must improve by approximately 5-10x from current levels. This challenge represents one of the most significant barriers to mainstream adoption of sophisticated spatial computing in truly wearable form factors.

Privacy and Security Implications of Spatial Mapping

As spatial mapping technologies become more sophisticated in SLAM-based AR/VR devices, significant privacy and security concerns emerge that require careful consideration. Consumer devices continuously scan, map, and store detailed information about users' private spaces, creating digital twins of homes, offices, and other personal environments. This spatial data contains highly sensitive information that could reveal lifestyle patterns, valuable possessions, security vulnerabilities, and even biometric identifiers embedded in spatial contexts.

The primary privacy risk stems from the comprehensive nature of spatial mapping data. Unlike discrete data points, spatial maps provide contextual information that can be used to infer activities, habits, and personal details about users. For instance, the layout of furniture, presence of medical devices, or children's toys can reveal sensitive personal information that users may not intend to share. When this data is transmitted to cloud services for processing or storage, it becomes vulnerable to unauthorized access or potential breaches.

Security vulnerabilities in SLAM systems present additional concerns. Adversarial attacks could potentially manipulate spatial maps to create safety hazards or compromise the integrity of AR experiences. Research has demonstrated that carefully crafted visual inputs can deceive SLAM algorithms, potentially causing navigation errors or system malfunctions. In shared AR experiences, malicious actors might exploit access to spatial data to inject deceptive or harmful virtual content into users' environments.

Data governance frameworks for spatial information remain underdeveloped compared to the rapid advancement of the technology itself. Questions about ownership, consent, and control of spatial data are particularly complex when maps include shared spaces or when multiple users contribute to collaborative mapping. The boundary between public and private space becomes blurred in mixed reality environments, creating novel legal and ethical challenges.

Technical solutions being explored include on-device processing to minimize data transmission, differential privacy techniques for spatial data, and encryption methods specifically designed for 3D environmental information. Some researchers propose abstraction approaches that retain functional utility while removing personally identifiable details from spatial maps. Edge computing architectures that keep sensitive spatial data local while enabling necessary cloud functionality represent a promising direction.

Regulatory frameworks are beginning to address these issues, with some jurisdictions considering spatial data as a special category requiring enhanced protection. Industry standards for spatial data handling are emerging, though consensus remains elusive on best practices for consent mechanisms and transparency in spatial computing applications.

The primary privacy risk stems from the comprehensive nature of spatial mapping data. Unlike discrete data points, spatial maps provide contextual information that can be used to infer activities, habits, and personal details about users. For instance, the layout of furniture, presence of medical devices, or children's toys can reveal sensitive personal information that users may not intend to share. When this data is transmitted to cloud services for processing or storage, it becomes vulnerable to unauthorized access or potential breaches.

Security vulnerabilities in SLAM systems present additional concerns. Adversarial attacks could potentially manipulate spatial maps to create safety hazards or compromise the integrity of AR experiences. Research has demonstrated that carefully crafted visual inputs can deceive SLAM algorithms, potentially causing navigation errors or system malfunctions. In shared AR experiences, malicious actors might exploit access to spatial data to inject deceptive or harmful virtual content into users' environments.

Data governance frameworks for spatial information remain underdeveloped compared to the rapid advancement of the technology itself. Questions about ownership, consent, and control of spatial data are particularly complex when maps include shared spaces or when multiple users contribute to collaborative mapping. The boundary between public and private space becomes blurred in mixed reality environments, creating novel legal and ethical challenges.

Technical solutions being explored include on-device processing to minimize data transmission, differential privacy techniques for spatial data, and encryption methods specifically designed for 3D environmental information. Some researchers propose abstraction approaches that retain functional utility while removing personally identifiable details from spatial maps. Edge computing architectures that keep sensitive spatial data local while enabling necessary cloud functionality represent a promising direction.

Regulatory frameworks are beginning to address these issues, with some jurisdictions considering spatial data as a special category requiring enhanced protection. Industry standards for spatial data handling are emerging, though consensus remains elusive on best practices for consent mechanisms and transparency in spatial computing applications.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!