SLAM For Agricultural Robots In Dynamic Fields

SEP 12, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Agricultural SLAM Technology Background and Objectives

Simultaneous Localization and Mapping (SLAM) technology has evolved significantly since its inception in the 1980s, transitioning from theoretical concepts to practical applications across various domains. In agricultural settings, SLAM represents a critical enabling technology for autonomous field operations, allowing robots to navigate complex and changing environments while building accurate maps of their surroundings. The agricultural sector's increasing labor shortages and productivity demands have accelerated interest in robotic solutions, with SLAM serving as a foundational component for effective deployment.

Traditional SLAM algorithms were developed primarily for static, structured indoor environments, presenting significant limitations when applied to agricultural fields characterized by dynamic vegetation, varying lighting conditions, and uneven terrain. The evolution of agricultural SLAM has been marked by adaptations to address these unique challenges, incorporating multi-sensor fusion approaches and specialized algorithms capable of distinguishing between permanent landmarks and temporary or changing features in crop fields.

The technical objectives for agricultural SLAM development focus on creating robust systems that maintain localization accuracy despite the highly dynamic nature of growing crops, changing weather conditions, and seasonal variations. These systems must achieve centimeter-level precision to enable precise operations such as selective harvesting, targeted spraying, and row-following in diverse crop types and growth stages.

Recent advancements in computer vision, LiDAR technology, and machine learning have opened new possibilities for agricultural SLAM implementations. Deep learning approaches now enable better feature extraction and classification in vegetative environments, while improved sensor technologies provide higher resolution data even in challenging outdoor conditions. The integration of RTK-GPS with visual-inertial SLAM systems has emerged as a promising direction for maintaining global consistency while preserving local mapping accuracy.

The agricultural robotics industry has established several key performance indicators for effective SLAM systems, including localization accuracy under 5cm, real-time operation capabilities, resilience to environmental variations, and power efficiency for extended field operations. Meeting these objectives requires overcoming significant technical hurdles related to feature persistence in growing crops, computational efficiency on mobile platforms, and robustness to dust, moisture, and vibration.

Looking forward, agricultural SLAM technology aims to evolve beyond simple navigation to incorporate semantic understanding of field conditions, enabling robots to make autonomous decisions based on crop health, growth stage, and field conditions. This represents a shift from purely geometric mapping to agricultural intelligence systems that can interpret the environment in agronomically meaningful ways, supporting precision agriculture practices and sustainable farming operations.

Traditional SLAM algorithms were developed primarily for static, structured indoor environments, presenting significant limitations when applied to agricultural fields characterized by dynamic vegetation, varying lighting conditions, and uneven terrain. The evolution of agricultural SLAM has been marked by adaptations to address these unique challenges, incorporating multi-sensor fusion approaches and specialized algorithms capable of distinguishing between permanent landmarks and temporary or changing features in crop fields.

The technical objectives for agricultural SLAM development focus on creating robust systems that maintain localization accuracy despite the highly dynamic nature of growing crops, changing weather conditions, and seasonal variations. These systems must achieve centimeter-level precision to enable precise operations such as selective harvesting, targeted spraying, and row-following in diverse crop types and growth stages.

Recent advancements in computer vision, LiDAR technology, and machine learning have opened new possibilities for agricultural SLAM implementations. Deep learning approaches now enable better feature extraction and classification in vegetative environments, while improved sensor technologies provide higher resolution data even in challenging outdoor conditions. The integration of RTK-GPS with visual-inertial SLAM systems has emerged as a promising direction for maintaining global consistency while preserving local mapping accuracy.

The agricultural robotics industry has established several key performance indicators for effective SLAM systems, including localization accuracy under 5cm, real-time operation capabilities, resilience to environmental variations, and power efficiency for extended field operations. Meeting these objectives requires overcoming significant technical hurdles related to feature persistence in growing crops, computational efficiency on mobile platforms, and robustness to dust, moisture, and vibration.

Looking forward, agricultural SLAM technology aims to evolve beyond simple navigation to incorporate semantic understanding of field conditions, enabling robots to make autonomous decisions based on crop health, growth stage, and field conditions. This represents a shift from purely geometric mapping to agricultural intelligence systems that can interpret the environment in agronomically meaningful ways, supporting precision agriculture practices and sustainable farming operations.

Market Analysis for Agricultural Robotics Solutions

The agricultural robotics market is experiencing significant growth, driven by increasing labor shortages, rising food demand, and the need for sustainable farming practices. The global market for agricultural robots was valued at approximately $7.4 billion in 2022 and is projected to reach $20.6 billion by 2030, representing a compound annual growth rate (CAGR) of 13.5%. SLAM-enabled agricultural robots specifically are emerging as a crucial segment within this broader market.

North America currently leads the agricultural robotics market, accounting for about 35% of global market share, followed by Europe at 28% and Asia-Pacific at 25%. The remaining 12% is distributed across other regions. Countries with advanced agricultural sectors such as the United States, Germany, Netherlands, Japan, and China are showing the highest adoption rates of SLAM-based agricultural robotics solutions.

Demand for SLAM-equipped agricultural robots is particularly strong in high-value crop production, including fruits, vegetables, and vineyards, where precision operations are critical. These sectors represent approximately 45% of the current market for agricultural SLAM solutions. Field crops such as corn, wheat, and soybeans account for roughly 30% of the market, while specialty applications like greenhouse operations comprise the remaining 25%.

Key market drivers include labor cost inflation, which has increased by 4-6% annually in major agricultural regions, creating economic incentives for automation. Additionally, precision agriculture initiatives aimed at reducing chemical inputs by 15-20% are pushing farmers toward technology-enabled solutions that can deliver targeted applications.

Customer segments for SLAM-based agricultural robots include large commercial farms (40% of the market), mid-sized operations (35%), agricultural service providers (15%), and research institutions (10%). The willingness to invest in these technologies varies significantly across segments, with large operations showing the highest adoption rates due to their greater financial resources and economies of scale.

Market barriers include the high initial investment costs, with sophisticated SLAM-equipped agricultural robots ranging from $50,000 to $250,000 depending on capabilities. Technical challenges related to SLAM performance in dynamic agricultural environments also limit market penetration, particularly in regions with smaller farm sizes or challenging terrain conditions.

The subscription and service-based business models are gaining traction, with approximately 30% of new deployments utilizing robotics-as-a-service (RaaS) approaches rather than outright purchases. This trend is expected to accelerate, potentially reaching 50% of the market by 2027, as it reduces initial capital requirements and aligns with farmers' seasonal cash flow patterns.

North America currently leads the agricultural robotics market, accounting for about 35% of global market share, followed by Europe at 28% and Asia-Pacific at 25%. The remaining 12% is distributed across other regions. Countries with advanced agricultural sectors such as the United States, Germany, Netherlands, Japan, and China are showing the highest adoption rates of SLAM-based agricultural robotics solutions.

Demand for SLAM-equipped agricultural robots is particularly strong in high-value crop production, including fruits, vegetables, and vineyards, where precision operations are critical. These sectors represent approximately 45% of the current market for agricultural SLAM solutions. Field crops such as corn, wheat, and soybeans account for roughly 30% of the market, while specialty applications like greenhouse operations comprise the remaining 25%.

Key market drivers include labor cost inflation, which has increased by 4-6% annually in major agricultural regions, creating economic incentives for automation. Additionally, precision agriculture initiatives aimed at reducing chemical inputs by 15-20% are pushing farmers toward technology-enabled solutions that can deliver targeted applications.

Customer segments for SLAM-based agricultural robots include large commercial farms (40% of the market), mid-sized operations (35%), agricultural service providers (15%), and research institutions (10%). The willingness to invest in these technologies varies significantly across segments, with large operations showing the highest adoption rates due to their greater financial resources and economies of scale.

Market barriers include the high initial investment costs, with sophisticated SLAM-equipped agricultural robots ranging from $50,000 to $250,000 depending on capabilities. Technical challenges related to SLAM performance in dynamic agricultural environments also limit market penetration, particularly in regions with smaller farm sizes or challenging terrain conditions.

The subscription and service-based business models are gaining traction, with approximately 30% of new deployments utilizing robotics-as-a-service (RaaS) approaches rather than outright purchases. This trend is expected to accelerate, potentially reaching 50% of the market by 2027, as it reduces initial capital requirements and aligns with farmers' seasonal cash flow patterns.

Current SLAM Challenges in Dynamic Agricultural Environments

Agricultural SLAM systems face significant challenges in dynamic field environments that differ substantially from traditional indoor or urban settings. The primary challenge stems from the constantly changing nature of agricultural fields, where crops grow and change appearance over time, creating a dynamic environment that traditional SLAM algorithms struggle to handle. Vegetation growth can alter reference points within days or weeks, rendering previously created maps obsolete and complicating localization tasks.

Environmental factors present another layer of complexity. Varying lighting conditions throughout the day and seasons create inconsistent visual features, while weather conditions like rain, fog, or dust can severely degrade sensor performance. Agricultural robots must operate in these adverse conditions, requiring robust SLAM solutions that can adapt to rapidly changing environmental parameters.

The terrain itself poses unique challenges, with uneven surfaces, muddy conditions, and varying soil compaction affecting odometry measurements. Unlike structured indoor environments, agricultural fields lack distinct geometric features like walls or corners that traditional SLAM systems rely on for mapping and localization. Instead, they present repetitive patterns of similar-looking plants that create perceptual aliasing problems, where different locations appear nearly identical.

Sensor limitations compound these difficulties. Conventional visual sensors struggle with the homogeneous appearance of crop rows, while LiDAR systems face occlusion issues from dense vegetation. GPS, often used as a fallback, suffers from signal degradation under canopy cover and lacks the precision required for row-level navigation tasks.

Computational constraints represent another significant challenge. Agricultural robots typically have limited onboard processing capabilities due to power constraints, yet must process substantial sensor data in real-time while operating autonomously for extended periods. This creates a difficult balance between SLAM accuracy and computational efficiency.

Scale and coverage requirements further complicate agricultural SLAM implementation. Modern farms span vast areas that must be mapped efficiently, requiring solutions that can handle large-scale mapping while maintaining precision at the plant level for tasks like selective harvesting or targeted spraying.

Motion dynamics of agricultural machinery add another dimension of complexity. Heavy equipment causes significant vibration that can affect sensor readings, while the slow movement speeds typical in precision agriculture operations can paradoxically make feature tracking more difficult for visual SLAM systems designed for more dynamic environments.

Environmental factors present another layer of complexity. Varying lighting conditions throughout the day and seasons create inconsistent visual features, while weather conditions like rain, fog, or dust can severely degrade sensor performance. Agricultural robots must operate in these adverse conditions, requiring robust SLAM solutions that can adapt to rapidly changing environmental parameters.

The terrain itself poses unique challenges, with uneven surfaces, muddy conditions, and varying soil compaction affecting odometry measurements. Unlike structured indoor environments, agricultural fields lack distinct geometric features like walls or corners that traditional SLAM systems rely on for mapping and localization. Instead, they present repetitive patterns of similar-looking plants that create perceptual aliasing problems, where different locations appear nearly identical.

Sensor limitations compound these difficulties. Conventional visual sensors struggle with the homogeneous appearance of crop rows, while LiDAR systems face occlusion issues from dense vegetation. GPS, often used as a fallback, suffers from signal degradation under canopy cover and lacks the precision required for row-level navigation tasks.

Computational constraints represent another significant challenge. Agricultural robots typically have limited onboard processing capabilities due to power constraints, yet must process substantial sensor data in real-time while operating autonomously for extended periods. This creates a difficult balance between SLAM accuracy and computational efficiency.

Scale and coverage requirements further complicate agricultural SLAM implementation. Modern farms span vast areas that must be mapped efficiently, requiring solutions that can handle large-scale mapping while maintaining precision at the plant level for tasks like selective harvesting or targeted spraying.

Motion dynamics of agricultural machinery add another dimension of complexity. Heavy equipment causes significant vibration that can affect sensor readings, while the slow movement speeds typical in precision agriculture operations can paradoxically make feature tracking more difficult for visual SLAM systems designed for more dynamic environments.

Current SLAM Implementations in Agricultural Robotics

01 Visual SLAM techniques for autonomous navigation

Visual SLAM techniques use camera data to simultaneously map an environment and locate a device within it. These systems process visual features to create 3D maps while tracking the camera's position in real-time. Advanced algorithms handle feature extraction, matching, and optimization to ensure accurate localization even in dynamic environments. This approach is particularly valuable for autonomous vehicles, drones, and robots that need to navigate without external positioning systems.- Visual SLAM techniques for autonomous navigation: Visual SLAM techniques use camera data to simultaneously map an environment and locate a device within it. These systems process visual features to create 3D maps while tracking the camera's position in real-time. Advanced implementations incorporate deep learning for improved feature detection and matching, enabling more robust performance in challenging environments such as low-light conditions or scenes with repetitive patterns.

- SLAM for augmented and virtual reality applications: SLAM technology enables precise spatial mapping for AR/VR applications, allowing virtual objects to interact realistically with the physical environment. These systems track user movement while building a detailed environmental model, supporting features like occlusion, surface detection, and persistent AR content placement. The technology combines sensor fusion from cameras, IMUs, and depth sensors to achieve low-latency tracking essential for immersive experiences.

- Machine learning enhancements for SLAM systems: Machine learning approaches significantly improve SLAM performance by enhancing feature extraction, loop closure detection, and map optimization. Neural networks can identify semantic information in environments, enabling object recognition and scene understanding alongside traditional mapping. These AI-enhanced systems demonstrate greater resilience to environmental changes and can operate more effectively in dynamic settings where traditional geometric approaches struggle.

- Sensor fusion approaches for robust SLAM: Sensor fusion techniques combine data from multiple sensors such as cameras, LiDAR, radar, IMUs, and GPS to create more accurate and reliable SLAM systems. By leveraging the complementary strengths of different sensor types, these approaches can overcome limitations of single-sensor systems, such as visual SLAM's difficulty in featureless environments or LiDAR's challenges in adverse weather. The integration of heterogeneous sensor data improves localization precision and mapping completeness across diverse operating conditions.

- Optimization methods for real-time SLAM performance: Computational optimization techniques enable SLAM systems to operate efficiently on resource-constrained devices while maintaining accuracy. These methods include sparse mapping approaches that selectively process key features, hierarchical processing structures, and parallel computing architectures. Advanced algorithms reduce computational complexity through techniques like keyframe selection, map compression, and incremental updates, allowing SLAM to function effectively on mobile devices, drones, and other platforms with limited processing power.

02 Machine learning integration with SLAM systems

Machine learning algorithms are being integrated with SLAM systems to enhance mapping accuracy and object recognition capabilities. Neural networks can improve feature detection, classification of environmental elements, and prediction of movement patterns. These ML-enhanced SLAM systems can better handle challenging scenarios like changing lighting conditions, moving objects, and complex environments. The combination results in more robust localization and mapping performance across diverse real-world applications.Expand Specific Solutions03 SLAM for augmented and virtual reality applications

SLAM technology enables precise tracking for augmented and virtual reality experiences by mapping physical spaces and determining device position within them. This allows virtual objects to be placed accurately in the real world and remain stable as users move. The technology processes sensor data from cameras and IMUs to create persistent spatial maps that enhance immersion and interaction. These systems must operate with minimal latency and high accuracy to provide convincing mixed reality experiences.Expand Specific Solutions04 LiDAR and sensor fusion approaches for SLAM

LiDAR sensors combined with other sensing modalities create robust SLAM systems that function across various environmental conditions. By fusing data from LiDAR, cameras, IMUs, and other sensors, these systems can generate accurate 3D maps with improved depth perception and feature recognition. Sensor fusion techniques help overcome the limitations of individual sensors, providing redundancy and enhancing performance in challenging scenarios like low light, reflective surfaces, or featureless environments. This approach is particularly valuable for autonomous vehicles and outdoor robotics applications.Expand Specific Solutions05 Optimization algorithms for real-time SLAM performance

Advanced optimization algorithms improve the computational efficiency and accuracy of SLAM systems for real-time applications. These include loop closure detection to correct accumulated errors, graph-based optimization to maintain global map consistency, and parallel processing techniques to handle large-scale environments. Efficient data structures and algorithms reduce memory requirements and processing time while maintaining mapping precision. These optimizations enable SLAM to function on devices with limited computational resources and in time-critical applications.Expand Specific Solutions

Leading Agricultural Robotics and SLAM Solution Providers

SLAM for agricultural robots in dynamic fields is currently in an early growth phase, with the market expected to expand significantly as precision agriculture adoption increases. The global market for agricultural robotics is projected to reach $20 billion by 2025, with SLAM technologies representing a crucial component. Technical maturity varies across players: established companies like Samsung Electronics, Siemens AG, and Midea Group possess advanced capabilities in sensor fusion and real-time mapping, while specialized firms like UBTECH Robotics and Yujin Robot focus on agricultural-specific implementations. Academic institutions including China Agricultural University, South China Agricultural University, and Chongqing University are driving innovation through field testing and algorithm development for complex crop environments. The technology faces challenges in handling dynamic vegetation and varying field conditions but is rapidly evolving.

South China Agricultural University

Technical Solution: South China Agricultural University has developed "AgriDynaSLAM," a specialized SLAM system designed specifically for agricultural robots operating in dynamic field environments. Their approach combines traditional visual SLAM techniques with agricultural-specific innovations to address the unique challenges of farm environments. The system employs a multi-layer mapping framework that separates static elements (field boundaries, permanent structures) from dynamic elements (growing crops, temporary equipment) to maintain consistent localization despite environmental changes[7]. Their solution incorporates crop growth models to predict and account for changes in visual features over time, allowing robots to anticipate how landmarks will evolve throughout growing seasons. The university's research team has also developed specialized feature descriptors that are robust to the repetitive patterns often found in crop rows, addressing one of the key challenges in agricultural SLAM. Additionally, their system includes a weather-adaptive parameter adjustment mechanism that modifies SLAM algorithms based on current environmental conditions, ensuring consistent performance across varying lighting and visibility scenarios[8].

Strengths: Highly specialized for agricultural applications with excellent handling of crop-specific challenges; innovative integration of agronomic knowledge into SLAM algorithms; good performance in repetitive field environments. Weaknesses: Currently limited to specific crop types where growth models have been developed; requires initial calibration for each new field environment.

Siemens AG

Technical Solution: Siemens has developed an advanced SLAM solution for agricultural robotics called "AgriMap+" that addresses the unique challenges of dynamic field environments. Their system employs a multi-modal sensing approach combining visual, LiDAR, and radar technologies to maintain reliable localization even when visual features are obscured by dust, varying lighting conditions, or changing crop appearances[4]. The solution incorporates a proprietary dynamic object classification algorithm that can differentiate between permanent landmarks, slowly changing elements (growing crops), and rapidly moving objects (workers, animals, other machinery). Siemens' approach includes a hierarchical mapping framework that maintains both detailed local maps for immediate navigation and global reference maps that track field evolution over time. Their system also features adaptive parameter tuning that automatically adjusts SLAM parameters based on field conditions, crop types, and growth stages, optimizing performance across diverse agricultural scenarios[6]. Additionally, Siemens has implemented edge computing capabilities that allow for real-time processing of sensor data directly on the agricultural robots, reducing latency in navigation decisions.

Strengths: Exceptional reliability in harsh agricultural conditions; sophisticated handling of multi-timescale dynamics; strong integration capabilities with existing farm management systems. Weaknesses: Higher initial cost compared to simpler solutions; requires periodic maintenance and calibration to maintain optimal performance.

Key Technical Innovations in Field-Ready SLAM Systems

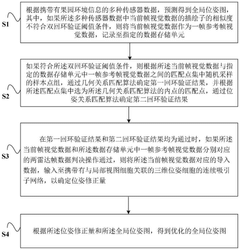

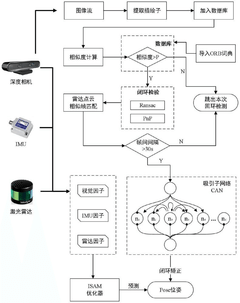

Pose map optimization method and device, orchard robot and orchard operation system

PatentPendingCN119313731A

Innovation

- Using the pose map optimization method based on multi-source SLAM loopback, through the dual loopback verification and radar frame judgment process, combined with the continuous attractor network of three-dimensional pose cells, the global pose map is optimized, the number of sensors is reduced, and the anti-interference and stability are improved. sex.

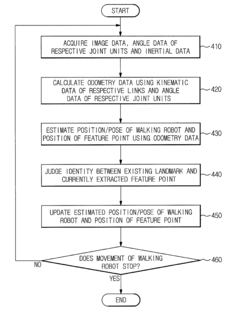

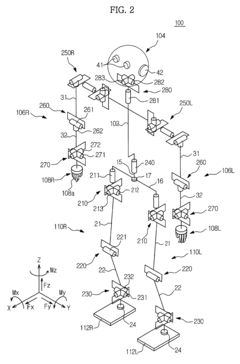

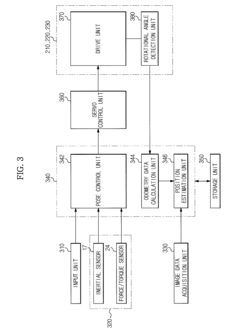

Walking robot and simultaneous localization and mapping method thereof

PatentActiveUS8873831B2

Innovation

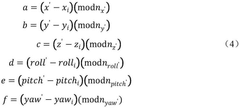

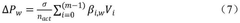

- The implementation of odometry data, acquired through kinematic and rotational angle data, is integrated with image-based SLAM technology to improve the accuracy and convergence of localization, and inertial data is fused with odometry data to enhance the precision of position estimation and mapping.

Environmental Factors Affecting Agricultural SLAM Performance

Agricultural SLAM systems face unique environmental challenges that significantly impact their performance in dynamic field conditions. Weather variations constitute a primary factor, with rain, fog, and snow reducing sensor visibility and accuracy. Precipitation can obscure camera lenses and interfere with LiDAR signals, while extreme temperatures affect electronic component reliability and battery efficiency. Seasonal changes in agricultural environments present additional complexities, as vegetation growth cycles dramatically alter field appearances, challenging feature recognition algorithms that rely on environmental consistency.

Light conditions in agricultural settings fluctuate dramatically, from direct sunlight causing sensor oversaturation to low-light situations reducing feature detection capabilities. These variations necessitate robust sensor fusion approaches and adaptive exposure control mechanisms. Dust and particulate matter, especially prevalent during tilling, harvesting, and dry conditions, can compromise sensor functionality by coating optical surfaces and scattering laser beams, degrading measurement accuracy.

Terrain irregularity presents another significant challenge, with uneven surfaces, furrows, and irrigation channels creating unpredictable robot movements that complicate odometry calculations. These physical disturbances generate sensor noise and motion artifacts that standard SLAM algorithms struggle to compensate for. Additionally, the dynamic nature of agricultural environments, including moving machinery, workers, and animals, introduces non-static elements that violate fundamental SLAM assumptions about environmental stability.

Electromagnetic interference from nearby agricultural equipment and communication systems can disrupt GPS signals and sensor communications, particularly affecting precision agriculture applications that rely on centimeter-level accuracy. Vibrations from rough terrain and equipment operation further compound these issues by introducing mechanical noise to sensor readings and potentially causing hardware misalignment over time.

Water bodies and reflective surfaces in agricultural settings create particular challenges for vision and LiDAR-based systems. Irrigation equipment, wet soil, and standing water produce specular reflections that confuse depth perception algorithms and generate false readings. These environmental factors collectively necessitate specialized SLAM approaches for agricultural applications, including robust sensor fusion techniques, adaptive filtering methods, and context-aware mapping algorithms designed specifically for dynamic field conditions.

Light conditions in agricultural settings fluctuate dramatically, from direct sunlight causing sensor oversaturation to low-light situations reducing feature detection capabilities. These variations necessitate robust sensor fusion approaches and adaptive exposure control mechanisms. Dust and particulate matter, especially prevalent during tilling, harvesting, and dry conditions, can compromise sensor functionality by coating optical surfaces and scattering laser beams, degrading measurement accuracy.

Terrain irregularity presents another significant challenge, with uneven surfaces, furrows, and irrigation channels creating unpredictable robot movements that complicate odometry calculations. These physical disturbances generate sensor noise and motion artifacts that standard SLAM algorithms struggle to compensate for. Additionally, the dynamic nature of agricultural environments, including moving machinery, workers, and animals, introduces non-static elements that violate fundamental SLAM assumptions about environmental stability.

Electromagnetic interference from nearby agricultural equipment and communication systems can disrupt GPS signals and sensor communications, particularly affecting precision agriculture applications that rely on centimeter-level accuracy. Vibrations from rough terrain and equipment operation further compound these issues by introducing mechanical noise to sensor readings and potentially causing hardware misalignment over time.

Water bodies and reflective surfaces in agricultural settings create particular challenges for vision and LiDAR-based systems. Irrigation equipment, wet soil, and standing water produce specular reflections that confuse depth perception algorithms and generate false readings. These environmental factors collectively necessitate specialized SLAM approaches for agricultural applications, including robust sensor fusion techniques, adaptive filtering methods, and context-aware mapping algorithms designed specifically for dynamic field conditions.

ROI Analysis for SLAM-Enabled Agricultural Automation

Implementing SLAM technology in agricultural robots represents a significant investment for farming operations. This ROI analysis examines the financial implications and potential returns of adopting SLAM-enabled agricultural automation systems in dynamic field environments.

Initial implementation costs for SLAM-equipped agricultural robots typically range from $50,000 to $150,000 per unit, depending on sophistication and capabilities. This includes hardware components (sensors, processors, navigation systems), software licensing, and integration expenses. Additionally, farms must budget for infrastructure modifications, including potential field mapping services ($2,000-$5,000) and communication networks ($5,000-$15,000).

Labor savings constitute the primary financial benefit, with SLAM-enabled automation reducing manual labor requirements by 30-60% for tasks like planting, weeding, and harvesting. For a mid-sized farm operation (500 acres), this translates to approximately $45,000-$90,000 annual savings in labor costs. Precision improvements enabled by SLAM technology further enhance ROI through resource optimization.

Input efficiency gains represent another significant return factor. SLAM-guided precision application systems reduce seed usage by 10-15%, fertilizer by 15-20%, and pesticides by 20-30%. For the average farm, this optimization translates to $15,000-$40,000 in annual savings while simultaneously reducing environmental impact and promoting sustainability goals.

Yield improvements of 7-12% are consistently reported following SLAM implementation, primarily through more precise planting patterns, optimized resource distribution, and reduced crop damage during field operations. For commodity crops, this yield increase represents $35,000-$60,000 additional annual revenue per 500 acres.

Maintenance costs must be factored into ROI calculations, averaging $5,000-$12,000 annually per robot, including software updates, sensor calibration, and mechanical servicing. However, these costs typically decrease as systems mature and farm personnel develop maintenance expertise.

The payback period for SLAM agricultural automation systems ranges from 2-4 years, depending on farm size, crop value, and implementation scope. High-value specialty crop operations often achieve ROI in under 24 months, while commodity crop operations may require 36-48 months to reach break-even.

Long-term financial benefits extend beyond direct cost savings, including improved product quality, enhanced market access through sustainability certification, and data-driven decision making capabilities that continue to compound returns over time.

Initial implementation costs for SLAM-equipped agricultural robots typically range from $50,000 to $150,000 per unit, depending on sophistication and capabilities. This includes hardware components (sensors, processors, navigation systems), software licensing, and integration expenses. Additionally, farms must budget for infrastructure modifications, including potential field mapping services ($2,000-$5,000) and communication networks ($5,000-$15,000).

Labor savings constitute the primary financial benefit, with SLAM-enabled automation reducing manual labor requirements by 30-60% for tasks like planting, weeding, and harvesting. For a mid-sized farm operation (500 acres), this translates to approximately $45,000-$90,000 annual savings in labor costs. Precision improvements enabled by SLAM technology further enhance ROI through resource optimization.

Input efficiency gains represent another significant return factor. SLAM-guided precision application systems reduce seed usage by 10-15%, fertilizer by 15-20%, and pesticides by 20-30%. For the average farm, this optimization translates to $15,000-$40,000 in annual savings while simultaneously reducing environmental impact and promoting sustainability goals.

Yield improvements of 7-12% are consistently reported following SLAM implementation, primarily through more precise planting patterns, optimized resource distribution, and reduced crop damage during field operations. For commodity crops, this yield increase represents $35,000-$60,000 additional annual revenue per 500 acres.

Maintenance costs must be factored into ROI calculations, averaging $5,000-$12,000 annually per robot, including software updates, sensor calibration, and mechanical servicing. However, these costs typically decrease as systems mature and farm personnel develop maintenance expertise.

The payback period for SLAM agricultural automation systems ranges from 2-4 years, depending on farm size, crop value, and implementation scope. High-value specialty crop operations often achieve ROI in under 24 months, while commodity crop operations may require 36-48 months to reach break-even.

Long-term financial benefits extend beyond direct cost savings, including improved product quality, enhanced market access through sustainability certification, and data-driven decision making capabilities that continue to compound returns over time.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!