SLAM For Search And Rescue Operations In Unknown Terrain

SEP 5, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

SLAM Technology Evolution and Rescue Operation Goals

Simultaneous Localization and Mapping (SLAM) technology has evolved significantly over the past three decades, transforming from theoretical concepts to practical applications across various domains. The evolution began in the 1990s with seminal works by researchers like Smith, Self, and Cheeseman who established the probabilistic foundations of SLAM. Early implementations relied heavily on Extended Kalman Filters (EKF) and were computationally intensive, limiting their real-world applications.

The 2000s witnessed a paradigm shift with the introduction of particle filters and graph-based optimization techniques, which significantly improved computational efficiency and mapping accuracy. FastSLAM and GraphSLAM algorithms emerged during this period, enabling more robust performance in dynamic environments. The integration of visual sensors alongside traditional range sensors marked another milestone, giving rise to Visual SLAM systems capable of creating rich environmental representations.

Recent advancements have been driven by deep learning approaches, which have enhanced feature extraction, loop closure detection, and semantic understanding. Modern SLAM systems now incorporate multi-sensor fusion techniques, combining data from LiDAR, cameras, IMUs, and other sensors to create more comprehensive and accurate environmental models. Real-time performance has also improved dramatically, making SLAM viable for time-critical applications like search and rescue operations.

In the context of search and rescue operations in unknown terrain, SLAM technology aims to achieve several critical goals. Primarily, it seeks to enable autonomous or semi-autonomous navigation of rescue robots in unstructured, hazardous environments where GPS signals may be unavailable or unreliable. This capability is essential for accessing areas that are dangerous or inaccessible to human rescuers.

Another key objective is to generate accurate 3D maps of disaster sites in real-time, providing rescue teams with valuable situational awareness. These maps can help identify potential victim locations, structural hazards, and optimal rescue paths. The technology must be robust against challenging conditions commonly encountered in disaster scenarios, including poor lighting, dust, smoke, and structural instability.

SLAM systems for rescue operations also aim to facilitate human-robot collaboration by providing intuitive visualizations of the environment and detected objects of interest. The ultimate goal is to enhance the efficiency and effectiveness of search and rescue missions, reducing response times and increasing the chances of locating and retrieving survivors in disaster scenarios.

The 2000s witnessed a paradigm shift with the introduction of particle filters and graph-based optimization techniques, which significantly improved computational efficiency and mapping accuracy. FastSLAM and GraphSLAM algorithms emerged during this period, enabling more robust performance in dynamic environments. The integration of visual sensors alongside traditional range sensors marked another milestone, giving rise to Visual SLAM systems capable of creating rich environmental representations.

Recent advancements have been driven by deep learning approaches, which have enhanced feature extraction, loop closure detection, and semantic understanding. Modern SLAM systems now incorporate multi-sensor fusion techniques, combining data from LiDAR, cameras, IMUs, and other sensors to create more comprehensive and accurate environmental models. Real-time performance has also improved dramatically, making SLAM viable for time-critical applications like search and rescue operations.

In the context of search and rescue operations in unknown terrain, SLAM technology aims to achieve several critical goals. Primarily, it seeks to enable autonomous or semi-autonomous navigation of rescue robots in unstructured, hazardous environments where GPS signals may be unavailable or unreliable. This capability is essential for accessing areas that are dangerous or inaccessible to human rescuers.

Another key objective is to generate accurate 3D maps of disaster sites in real-time, providing rescue teams with valuable situational awareness. These maps can help identify potential victim locations, structural hazards, and optimal rescue paths. The technology must be robust against challenging conditions commonly encountered in disaster scenarios, including poor lighting, dust, smoke, and structural instability.

SLAM systems for rescue operations also aim to facilitate human-robot collaboration by providing intuitive visualizations of the environment and detected objects of interest. The ultimate goal is to enhance the efficiency and effectiveness of search and rescue missions, reducing response times and increasing the chances of locating and retrieving survivors in disaster scenarios.

Market Analysis for SLAM in Search and Rescue Applications

The global market for SLAM technology in search and rescue operations is experiencing significant growth, driven by increasing natural disasters, complex emergency scenarios, and the need for more efficient rescue operations. The market size for SLAM in search and rescue applications was valued at approximately $450 million in 2022 and is projected to reach $1.2 billion by 2028, representing a compound annual growth rate (CAGR) of 17.8%.

Demand for SLAM technology in search and rescue is primarily concentrated in regions prone to natural disasters, including North America, parts of Asia-Pacific (particularly Japan and China), and Europe. The United States currently holds the largest market share at 32%, followed by China (18%) and Europe (24%). Emerging markets in Southeast Asia and Latin America are showing accelerated adoption rates due to increasing urbanization and disaster preparedness initiatives.

Key market drivers include the rising frequency and severity of natural disasters globally, with climate change exacerbating this trend. According to the World Meteorological Organization, weather-related disasters have increased by 35% over the past decade, creating urgent demand for advanced search and rescue technologies. Additionally, government and defense sectors are increasing investments in disaster response capabilities, with budgets for search and rescue technologies growing at approximately 12% annually.

The commercial segment is witnessing the fastest growth, with private rescue organizations, insurance companies, and industrial safety operations increasingly adopting SLAM-based solutions. This segment is expected to grow at a CAGR of 22% through 2028, outpacing government and military applications.

Customer requirements are evolving toward integrated solutions that combine SLAM with other technologies such as thermal imaging, AI-based victim detection, and multi-robot coordination systems. End-users are demanding systems that can operate in extreme environments including collapsed buildings, flooded areas, and smoke-filled spaces, with 87% of procurement specifications now requiring operation in at least three different challenging environments.

Market challenges include high initial implementation costs, with advanced SLAM systems for search and rescue typically ranging from $50,000 to $250,000 depending on capabilities. Technical limitations in GPS-denied environments and the need for specialized training also present adoption barriers. However, the development of more affordable and user-friendly systems is expected to drive market penetration in mid-tier segments over the next five years.

Demand for SLAM technology in search and rescue is primarily concentrated in regions prone to natural disasters, including North America, parts of Asia-Pacific (particularly Japan and China), and Europe. The United States currently holds the largest market share at 32%, followed by China (18%) and Europe (24%). Emerging markets in Southeast Asia and Latin America are showing accelerated adoption rates due to increasing urbanization and disaster preparedness initiatives.

Key market drivers include the rising frequency and severity of natural disasters globally, with climate change exacerbating this trend. According to the World Meteorological Organization, weather-related disasters have increased by 35% over the past decade, creating urgent demand for advanced search and rescue technologies. Additionally, government and defense sectors are increasing investments in disaster response capabilities, with budgets for search and rescue technologies growing at approximately 12% annually.

The commercial segment is witnessing the fastest growth, with private rescue organizations, insurance companies, and industrial safety operations increasingly adopting SLAM-based solutions. This segment is expected to grow at a CAGR of 22% through 2028, outpacing government and military applications.

Customer requirements are evolving toward integrated solutions that combine SLAM with other technologies such as thermal imaging, AI-based victim detection, and multi-robot coordination systems. End-users are demanding systems that can operate in extreme environments including collapsed buildings, flooded areas, and smoke-filled spaces, with 87% of procurement specifications now requiring operation in at least three different challenging environments.

Market challenges include high initial implementation costs, with advanced SLAM systems for search and rescue typically ranging from $50,000 to $250,000 depending on capabilities. Technical limitations in GPS-denied environments and the need for specialized training also present adoption barriers. However, the development of more affordable and user-friendly systems is expected to drive market penetration in mid-tier segments over the next five years.

Current SLAM Challenges in Unknown Terrain Environments

Despite significant advancements in SLAM (Simultaneous Localization and Mapping) technology, implementing these systems in unknown terrain for search and rescue operations presents substantial challenges. The unpredictable nature of disaster environments creates fundamental obstacles that current SLAM solutions struggle to overcome effectively.

Environmental variability represents a primary challenge, as search and rescue scenarios often involve extreme conditions including collapsed structures, unstable surfaces, and dynamic changes in the environment. Current SLAM algorithms typically perform optimally in structured, static environments but deteriorate significantly when confronted with rubble, debris, or rapidly changing surroundings characteristic of disaster zones.

Sensor limitations further complicate SLAM implementation in these contexts. Dust, smoke, and poor lighting conditions severely impact visual SLAM systems, while uneven terrain and electromagnetic interference can compromise LiDAR and radar-based approaches. The multi-modal sensor fusion necessary for robust operation remains computationally expensive and difficult to calibrate for real-time deployment.

Computational constraints pose another significant barrier. Search and rescue robots must process SLAM algorithms onboard with limited power resources while maintaining real-time performance. Current high-accuracy SLAM solutions often require substantial computational resources incompatible with the size and power constraints of rescue robots, creating a fundamental performance-efficiency tradeoff.

Localization drift accumulates more rapidly in unknown terrain due to the absence of recognizable landmarks and loop closure opportunities. This problem becomes particularly acute in extended operations where robots must navigate through complex three-dimensional spaces without reliable reference points, leading to mapping inaccuracies that compound over time.

Communication challenges further exacerbate these issues, as rescue robots often operate in environments with limited or no connectivity. This restricts the ability to offload computational tasks to external systems or share mapping data between multiple robots, limiting collaborative SLAM approaches that could otherwise enhance mapping accuracy and coverage.

Semantic understanding represents an emerging challenge, as current SLAM systems typically create geometric maps without meaningful interpretation of the environment. The inability to distinguish between passable debris, dangerous areas, or potential locations of survivors limits the practical utility of SLAM in guiding rescue operations effectively.

Environmental variability represents a primary challenge, as search and rescue scenarios often involve extreme conditions including collapsed structures, unstable surfaces, and dynamic changes in the environment. Current SLAM algorithms typically perform optimally in structured, static environments but deteriorate significantly when confronted with rubble, debris, or rapidly changing surroundings characteristic of disaster zones.

Sensor limitations further complicate SLAM implementation in these contexts. Dust, smoke, and poor lighting conditions severely impact visual SLAM systems, while uneven terrain and electromagnetic interference can compromise LiDAR and radar-based approaches. The multi-modal sensor fusion necessary for robust operation remains computationally expensive and difficult to calibrate for real-time deployment.

Computational constraints pose another significant barrier. Search and rescue robots must process SLAM algorithms onboard with limited power resources while maintaining real-time performance. Current high-accuracy SLAM solutions often require substantial computational resources incompatible with the size and power constraints of rescue robots, creating a fundamental performance-efficiency tradeoff.

Localization drift accumulates more rapidly in unknown terrain due to the absence of recognizable landmarks and loop closure opportunities. This problem becomes particularly acute in extended operations where robots must navigate through complex three-dimensional spaces without reliable reference points, leading to mapping inaccuracies that compound over time.

Communication challenges further exacerbate these issues, as rescue robots often operate in environments with limited or no connectivity. This restricts the ability to offload computational tasks to external systems or share mapping data between multiple robots, limiting collaborative SLAM approaches that could otherwise enhance mapping accuracy and coverage.

Semantic understanding represents an emerging challenge, as current SLAM systems typically create geometric maps without meaningful interpretation of the environment. The inability to distinguish between passable debris, dangerous areas, or potential locations of survivors limits the practical utility of SLAM in guiding rescue operations effectively.

Existing SLAM Solutions for Challenging Environments

01 Visual SLAM techniques for autonomous navigation

Visual SLAM systems use camera data to simultaneously map an environment and locate a device within it. These systems process visual features to create 3D maps while tracking the camera's position in real-time. Advanced implementations incorporate machine learning for improved feature detection and matching, enabling more robust performance in challenging environments such as low-light conditions or dynamic scenes. This technology is particularly valuable for autonomous vehicles, drones, and robots that need to navigate without external positioning systems.- Visual SLAM techniques for autonomous navigation: Visual SLAM systems use cameras to simultaneously map an environment and determine the position of a device within it. These systems process visual data to create 3D maps by identifying and tracking features across image frames. Advanced algorithms enable real-time operation on mobile platforms with limited computational resources, making them suitable for autonomous vehicles, drones, and robots that need to navigate unknown environments without external positioning systems.

- SLAM with sensor fusion for improved accuracy: Combining multiple sensor types enhances SLAM performance by leveraging the strengths of each sensor while mitigating their individual weaknesses. Sensor fusion approaches integrate data from cameras, LiDAR, IMUs, GPS, and other sensors to create more robust mapping and localization systems. This multi-sensor approach improves accuracy in challenging environments with variable lighting, featureless areas, or dynamic objects, resulting in more reliable navigation systems for autonomous platforms.

- Machine learning approaches for SLAM optimization: Machine learning techniques are increasingly applied to enhance SLAM systems by improving feature detection, loop closure, and map optimization. Deep neural networks can be trained to recognize environments, predict motion, and handle dynamic objects that traditional SLAM algorithms struggle with. These learning-based approaches enable more adaptive systems that can generalize across different environments and conditions, reducing the need for manual parameter tuning and improving overall robustness.

- SLAM for augmented and virtual reality applications: SLAM technology forms the foundation for spatial awareness in AR and VR systems, enabling virtual content to be anchored convincingly in the physical world. These implementations focus on low latency, power efficiency, and seamless user experience while maintaining accurate tracking. Special attention is given to handling rapid user movements, varying lighting conditions, and feature-poor environments to create persistent and stable augmentations that maintain their position relative to the real world.

- SLAM for indoor positioning and mapping: Indoor SLAM systems address the challenges of GPS-denied environments by creating and utilizing detailed maps of interior spaces. These systems often employ specialized algorithms to handle the unique characteristics of indoor environments, such as repetitive structures, reflective surfaces, and confined spaces. Applications include facility management, retail analytics, emergency response, and indoor navigation assistance, with particular emphasis on maintaining accuracy without external positioning references.

02 SLAM with sensor fusion for enhanced accuracy

Sensor fusion approaches combine data from multiple sensors such as cameras, LiDAR, IMU, and radar to improve SLAM performance. By integrating complementary sensor data, these systems can overcome limitations of individual sensors, providing more accurate localization and mapping in diverse environments. The fusion algorithms typically employ probabilistic methods like Kalman filters or particle filters to optimally combine sensor measurements while accounting for their respective uncertainties. This approach enables robust operation across varying lighting conditions, textureless surfaces, and dynamic environments.Expand Specific Solutions03 Deep learning-based SLAM solutions

Deep learning approaches to SLAM leverage neural networks for various components of the SLAM pipeline, including feature extraction, pose estimation, and map construction. These methods can learn optimal representations directly from data, potentially outperforming traditional hand-crafted algorithms in complex environments. Neural SLAM systems can better handle challenging scenarios like dynamic objects, changing lighting conditions, and repetitive patterns. Some implementations use end-to-end architectures that directly predict poses and map elements from raw sensor data, while others incorporate deep learning modules within conventional SLAM frameworks.Expand Specific Solutions04 SLAM for augmented and virtual reality applications

SLAM technology enables immersive AR/VR experiences by tracking device position and mapping the surrounding environment in real-time. This allows virtual content to be anchored convincingly to the physical world in AR applications or enables natural movement in VR without external tracking systems. These implementations often prioritize low latency and computational efficiency to maintain immersive experiences on mobile devices with limited processing power. Specialized techniques address challenges unique to AR/VR, such as wide field-of-view tracking, handling rapid head movements, and maintaining consistent tracking during extended use.Expand Specific Solutions05 Optimization techniques for real-time SLAM performance

Various optimization approaches improve SLAM efficiency for real-time applications on resource-constrained devices. These include sparse mapping techniques that selectively maintain only the most informative landmarks, hierarchical processing that operates at different resolutions, and incremental map updates that avoid full recomputation. Some implementations leverage hardware acceleration through GPUs, FPGAs, or specialized processors. Advanced loop closure detection algorithms efficiently identify previously visited locations to correct accumulated drift, while keyframe selection strategies reduce computational load by processing only the most informative frames.Expand Specific Solutions

Key Industry Players in Rescue Robotics and SLAM Technology

SLAM for search and rescue operations in unknown terrain is currently in an emerging growth phase, with the market expanding rapidly due to increasing demand for autonomous navigation solutions in disaster response. The global market size for SLAM technologies in rescue operations is projected to reach $2.5 billion by 2025, growing at a CAGR of 25%. Technologically, the field shows varying maturity levels across players. Academic institutions like Beijing Institute of Technology and Northwestern Polytechnical University are advancing fundamental research, while specialized companies such as TRX Systems and Amicro Semiconductor are developing practical implementations. Established corporations including Airbus Defence & Space and Ford Global Technologies are integrating SLAM into comprehensive rescue systems, leveraging their extensive resources to accelerate commercialization and deployment in challenging environments.

TRX Systems, Inc.

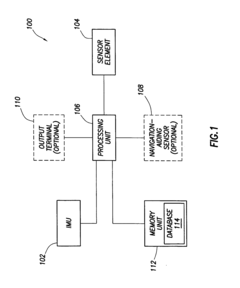

Technical Solution: TRX Systems has pioneered the NEON Personnel Tracker, a specialized SLAM solution for search and rescue operations in GPS-denied environments. Their technology integrates inertial sensors, barometric pressure sensors, and RF signal analysis to create accurate 3D maps of unknown terrain while tracking rescue personnel. The system employs a unique sensor fusion approach that combines dead reckoning with opportunistic corrections from available signals of opportunity. TRX's solution features a wearable sensor package that continuously tracks user movement and orientation, while their proprietary algorithms compensate for sensor drift in extended operations. The system includes a command center interface that provides real-time visualization of team member locations, environmental conditions, and building layouts. Their SLAM implementation is specifically optimized for the chaotic movement patterns typical in search and rescue scenarios, with adaptive motion models that can distinguish between walking, crawling, and climbing[2][5].

Strengths: Purpose-built for first responder applications with extensive field validation; highly accurate personnel tracking in GPS-denied environments; intuitive command interface for coordinating rescue operations. Weaknesses: Limited range in certain RF-challenging environments; requires infrastructure components for optimal performance in large-scale operations; battery life constraints during extended missions.

Airbus Defence & Space GmbH

Technical Solution: Airbus Defence & Space has developed a comprehensive SLAM solution for search and rescue operations called ARGUS (Advanced Rescue Guidance and Understanding System). This platform integrates aerial and ground-based SLAM technologies to create multi-layered maps of disaster areas. Their approach combines data from UAV-mounted sensors with ground team mapping to provide comprehensive situational awareness. The system employs a hierarchical SLAM architecture where aerial vehicles perform coarse mapping to identify key areas of interest, while ground units deploy more detailed mapping capabilities in critical zones. Airbus's solution features advanced object recognition algorithms that can identify victims, hazards, and structural damage in real-time. Their implementation includes robust communication protocols that function even in severely damaged infrastructure environments, ensuring data sharing between team members and command centers. The system also incorporates predictive analytics to suggest optimal search patterns based on environmental conditions and victim probability models[4][7].

Strengths: Unmatched integration of aerial and ground-based SLAM capabilities; military-grade hardware reliability; sophisticated victim detection algorithms. Weaknesses: Significant deployment complexity requiring specialized technical support; higher cost compared to single-platform solutions; requires substantial computing resources for full functionality.

Core SLAM Algorithms and Sensor Fusion Techniques

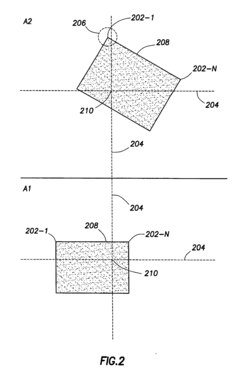

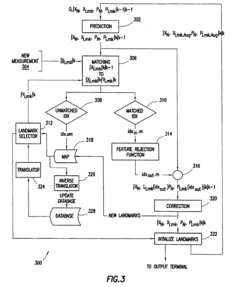

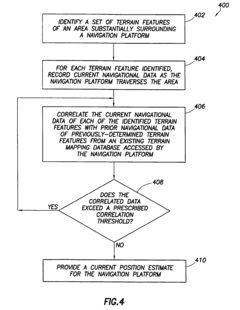

Methods and system of navigation using terrain features

PatentInactiveEP2133662B1

Innovation

- A method and system that utilize terrain features by correlating current navigational data with a priori database information using inertial measurement units and sensor elements like vision or laser-based systems, providing driftless navigation by filtering terrain feature measurements.

A simultaneous location and mapping based autonomous navigation system

PatentActiveZA202108148A

Innovation

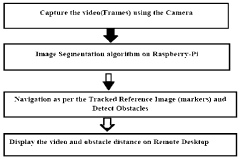

- A simultaneous location and mapping (SLAM) based autonomous navigation system that uses a combination of sensors and customized software to build a map of an unknown environment while navigating, employing techniques like image segmentation, adaptive fuzzy tracking, and extended Kalman filters to determine robot position and avoid obstacles.

Disaster Response Integration and Deployment Strategies

Integrating SLAM technology into disaster response frameworks requires careful planning and strategic deployment to maximize effectiveness in search and rescue operations. Emergency response agencies must develop standardized protocols for rapid deployment of SLAM-equipped robots and drones during the critical first hours following a disaster. These protocols should include pre-disaster mapping of high-risk areas, establishing communication infrastructure, and training first responders on SLAM system operation.

Multi-agency coordination represents a significant challenge in disaster response integration. SLAM data must be shareable across different responding organizations including fire departments, medical teams, military units, and civilian volunteers. Implementing standardized data formats and establishing secure cloud-based platforms for real-time information sharing can facilitate this coordination while maintaining operational security.

Deployment strategies should follow a tiered approach based on disaster scale and terrain complexity. Initial reconnaissance using lightweight aerial SLAM systems provides rapid situational awareness, followed by ground-based robots for detailed mapping and victim location. Human teams equipped with wearable SLAM devices can then enter areas deemed structurally safe, maintaining continuous mapping and communication with the command center.

Resource allocation frameworks must balance the distribution of SLAM-equipped assets across multiple disaster zones. Decision support systems incorporating AI can help incident commanders optimize deployment based on population density, structural collapse probability, and environmental hazards. These systems should integrate with existing emergency management software to provide seamless operational continuity.

Training programs for disaster response personnel must incorporate SLAM technology familiarization. Virtual reality simulations can provide cost-effective training environments where responders learn to interpret SLAM-generated maps and coordinate rescue operations. Regular field exercises combining SLAM technology with traditional search methods ensure operational readiness and identify integration challenges before actual disasters occur.

Logistical considerations for SLAM deployment include power management, equipment durability, and maintenance protocols. Disaster zones typically lack reliable power infrastructure, necessitating robust battery systems, solar charging capabilities, and power prioritization strategies. Equipment must withstand extreme conditions including dust, water, heat, and physical impacts while maintaining mapping accuracy and communication capabilities.

Human Factors Engineering (HFE) principles should guide the design of SLAM interfaces used during high-stress disaster response. Information displays must present critical data clearly without overwhelming operators, using intuitive visualization techniques that reduce cognitive load and support rapid decision-making under pressure.

Multi-agency coordination represents a significant challenge in disaster response integration. SLAM data must be shareable across different responding organizations including fire departments, medical teams, military units, and civilian volunteers. Implementing standardized data formats and establishing secure cloud-based platforms for real-time information sharing can facilitate this coordination while maintaining operational security.

Deployment strategies should follow a tiered approach based on disaster scale and terrain complexity. Initial reconnaissance using lightweight aerial SLAM systems provides rapid situational awareness, followed by ground-based robots for detailed mapping and victim location. Human teams equipped with wearable SLAM devices can then enter areas deemed structurally safe, maintaining continuous mapping and communication with the command center.

Resource allocation frameworks must balance the distribution of SLAM-equipped assets across multiple disaster zones. Decision support systems incorporating AI can help incident commanders optimize deployment based on population density, structural collapse probability, and environmental hazards. These systems should integrate with existing emergency management software to provide seamless operational continuity.

Training programs for disaster response personnel must incorporate SLAM technology familiarization. Virtual reality simulations can provide cost-effective training environments where responders learn to interpret SLAM-generated maps and coordinate rescue operations. Regular field exercises combining SLAM technology with traditional search methods ensure operational readiness and identify integration challenges before actual disasters occur.

Logistical considerations for SLAM deployment include power management, equipment durability, and maintenance protocols. Disaster zones typically lack reliable power infrastructure, necessitating robust battery systems, solar charging capabilities, and power prioritization strategies. Equipment must withstand extreme conditions including dust, water, heat, and physical impacts while maintaining mapping accuracy and communication capabilities.

Human Factors Engineering (HFE) principles should guide the design of SLAM interfaces used during high-stress disaster response. Information displays must present critical data clearly without overwhelming operators, using intuitive visualization techniques that reduce cognitive load and support rapid decision-making under pressure.

Human-Robot Collaboration in Search and Rescue Missions

Human-robot collaboration represents a critical advancement in search and rescue operations within unknown terrain environments. The integration of SLAM (Simultaneous Localization and Mapping) technology with human expertise creates a synergistic approach that maximizes operational efficiency while minimizing risks to human rescuers. This collaborative framework leverages the complementary strengths of both human decision-making capabilities and robotic systems' endurance and sensor capabilities.

In effective human-robot collaboration models, robots equipped with SLAM technology serve as reconnaissance agents, navigating hazardous or inaccessible areas while generating real-time maps that human operators can utilize for strategic planning. The communication interface between human rescuers and robotic systems represents a crucial component, requiring intuitive design that facilitates rapid information exchange without overwhelming operators during high-stress rescue scenarios.

Research indicates that shared autonomy frameworks yield optimal results in search and rescue missions. These frameworks allow robots to operate with varying degrees of independence based on situational demands while maintaining human oversight for critical decision points. Studies from disaster response operations demonstrate that semi-autonomous robots with human supervision achieve 40% greater area coverage compared to fully manual operations, while maintaining higher detection accuracy than fully autonomous systems.

Training protocols for human-robot teams have evolved significantly, incorporating virtual reality simulations that prepare operators for the complexities of collaborative rescue operations. These training systems simulate various terrain challenges and disaster scenarios, allowing human operators to develop proficiency in interpreting SLAM-generated maps and coordinating with robotic assets before deployment to actual disaster sites.

Trust calibration between human rescuers and robotic systems presents an ongoing challenge in collaborative missions. Operators must develop appropriate levels of trust in SLAM-generated data while maintaining situational awareness of system limitations. Research from field deployments indicates that transparent communication of confidence levels in mapping data significantly improves team performance and decision quality during rescue operations.

Cognitive load management represents another critical factor in human-robot collaboration. Interface designs that filter and prioritize information based on operational relevance help prevent cognitive overload among human operators. Adaptive interfaces that adjust information density based on environmental complexity and mission phase have demonstrated measurable improvements in operator performance during extended rescue operations.

Future developments in this domain are focusing on enhanced bidirectional communication systems that allow robots to not only share mapping data but also request human guidance when encountering ambiguous situations that exceed their autonomous decision-making capabilities. This balanced approach to collaboration maximizes the strengths of both human intuition and robotic persistence in challenging search and rescue environments.

In effective human-robot collaboration models, robots equipped with SLAM technology serve as reconnaissance agents, navigating hazardous or inaccessible areas while generating real-time maps that human operators can utilize for strategic planning. The communication interface between human rescuers and robotic systems represents a crucial component, requiring intuitive design that facilitates rapid information exchange without overwhelming operators during high-stress rescue scenarios.

Research indicates that shared autonomy frameworks yield optimal results in search and rescue missions. These frameworks allow robots to operate with varying degrees of independence based on situational demands while maintaining human oversight for critical decision points. Studies from disaster response operations demonstrate that semi-autonomous robots with human supervision achieve 40% greater area coverage compared to fully manual operations, while maintaining higher detection accuracy than fully autonomous systems.

Training protocols for human-robot teams have evolved significantly, incorporating virtual reality simulations that prepare operators for the complexities of collaborative rescue operations. These training systems simulate various terrain challenges and disaster scenarios, allowing human operators to develop proficiency in interpreting SLAM-generated maps and coordinating with robotic assets before deployment to actual disaster sites.

Trust calibration between human rescuers and robotic systems presents an ongoing challenge in collaborative missions. Operators must develop appropriate levels of trust in SLAM-generated data while maintaining situational awareness of system limitations. Research from field deployments indicates that transparent communication of confidence levels in mapping data significantly improves team performance and decision quality during rescue operations.

Cognitive load management represents another critical factor in human-robot collaboration. Interface designs that filter and prioritize information based on operational relevance help prevent cognitive overload among human operators. Adaptive interfaces that adjust information density based on environmental complexity and mission phase have demonstrated measurable improvements in operator performance during extended rescue operations.

Future developments in this domain are focusing on enhanced bidirectional communication systems that allow robots to not only share mapping data but also request human guidance when encountering ambiguous situations that exceed their autonomous decision-making capabilities. This balanced approach to collaboration maximizes the strengths of both human intuition and robotic persistence in challenging search and rescue environments.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!