HBM4 Energy Metrics: Bandwidth Per Watt And pJ/Bit Benchmarks

SEP 12, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

HBM4 Technology Evolution and Efficiency Goals

The evolution of High Bandwidth Memory (HBM) technology has been driven by the increasing demands for higher memory bandwidth and capacity in data-intensive applications such as artificial intelligence, high-performance computing, and graphics processing. HBM4, as the next generation following HBM3E, represents a significant leap forward in addressing these demands while simultaneously focusing on energy efficiency metrics.

From its inception with HBM1 in 2013, each generation has progressively improved bandwidth capabilities. HBM1 offered approximately 128 GB/s per stack, HBM2 doubled this to around 256 GB/s, HBM3 reached up to 819 GB/s, and HBM3E currently delivers up to 1.2 TB/s. The anticipated HBM4 is expected to push these boundaries further, potentially reaching 1.5-2 TB/s per stack.

However, the critical evolution in HBM4 is not merely bandwidth enhancement but the emphasis on energy efficiency metrics. The industry has recognized that raw performance increases must be balanced with power consumption considerations, leading to the prominence of two key metrics: Bandwidth per Watt (GB/s/W) and energy per bit transferred (pJ/bit).

Historical trends show significant improvements in these efficiency metrics across generations. HBM1 operated at approximately 35-40 pJ/bit, while HBM3E has reduced this to around 7-9 pJ/bit. For HBM4, industry goals target further reductions to 5-6 pJ/bit, representing a critical efficiency improvement of 25-30% over its predecessor.

The efficiency goals for HBM4 are being driven by several factors. Data centers face increasing power constraints, with many facilities operating at their thermal and electrical limits. Additionally, the total cost of ownership calculations increasingly factor in operational energy costs alongside initial hardware investments. Environmental sustainability initiatives also place pressure on manufacturers to deliver more compute per watt.

To achieve these ambitious efficiency targets, HBM4 development is focusing on several technological innovations. These include more efficient I/O signaling techniques, optimized refresh schemes, advanced power management features, and potentially new materials for the through-silicon vias (TSVs) to reduce resistance and capacitance.

The industry consensus suggests that achieving both the bandwidth and efficiency goals for HBM4 will require collaborative efforts across the memory ecosystem, including DRAM manufacturers, logic chip designers, packaging specialists, and system integrators. The standardization process through JEDEC is expected to formalize these targets, ensuring interoperability while pushing the boundaries of what's technically achievable.

From its inception with HBM1 in 2013, each generation has progressively improved bandwidth capabilities. HBM1 offered approximately 128 GB/s per stack, HBM2 doubled this to around 256 GB/s, HBM3 reached up to 819 GB/s, and HBM3E currently delivers up to 1.2 TB/s. The anticipated HBM4 is expected to push these boundaries further, potentially reaching 1.5-2 TB/s per stack.

However, the critical evolution in HBM4 is not merely bandwidth enhancement but the emphasis on energy efficiency metrics. The industry has recognized that raw performance increases must be balanced with power consumption considerations, leading to the prominence of two key metrics: Bandwidth per Watt (GB/s/W) and energy per bit transferred (pJ/bit).

Historical trends show significant improvements in these efficiency metrics across generations. HBM1 operated at approximately 35-40 pJ/bit, while HBM3E has reduced this to around 7-9 pJ/bit. For HBM4, industry goals target further reductions to 5-6 pJ/bit, representing a critical efficiency improvement of 25-30% over its predecessor.

The efficiency goals for HBM4 are being driven by several factors. Data centers face increasing power constraints, with many facilities operating at their thermal and electrical limits. Additionally, the total cost of ownership calculations increasingly factor in operational energy costs alongside initial hardware investments. Environmental sustainability initiatives also place pressure on manufacturers to deliver more compute per watt.

To achieve these ambitious efficiency targets, HBM4 development is focusing on several technological innovations. These include more efficient I/O signaling techniques, optimized refresh schemes, advanced power management features, and potentially new materials for the through-silicon vias (TSVs) to reduce resistance and capacitance.

The industry consensus suggests that achieving both the bandwidth and efficiency goals for HBM4 will require collaborative efforts across the memory ecosystem, including DRAM manufacturers, logic chip designers, packaging specialists, and system integrators. The standardization process through JEDEC is expected to formalize these targets, ensuring interoperability while pushing the boundaries of what's technically achievable.

Market Demand Analysis for High-Bandwidth Memory

The high-bandwidth memory (HBM) market is experiencing robust growth driven by escalating demands for advanced computing applications. Current market analysis indicates that the global HBM market, valued at approximately $970 million in 2022, is projected to reach $4.5 billion by 2028, representing a compound annual growth rate (CAGR) of 29.1% during the forecast period.

This significant market expansion is primarily fueled by the proliferation of data-intensive applications across various sectors. Artificial intelligence and machine learning workloads, which require massive parallel processing capabilities, have emerged as key drivers for HBM adoption. These applications demand not only high bandwidth but increasingly energy-efficient memory solutions, making energy metrics like bandwidth per watt and pJ/bit critical differentiators in the market.

Data centers represent another substantial market segment for HBM technology. With the exponential growth in cloud computing services and big data analytics, data center operators face mounting pressure to optimize both performance and energy consumption. The energy efficiency of memory systems directly impacts operational costs and sustainability goals, creating strong market pull for advanced HBM solutions with improved energy metrics.

The high-performance computing (HPC) sector continues to be a traditional stronghold for HBM adoption. Scientific research, weather forecasting, and complex simulations require memory systems that can deliver exceptional bandwidth while maintaining reasonable power envelopes. Market research indicates that 78% of new HPC installations consider energy efficiency as a top-three procurement criterion, highlighting the importance of HBM's energy performance metrics.

Graphics processing for gaming and professional visualization applications constitutes another significant market segment. The gaming industry's push toward higher resolution rendering and more complex visual effects demands memory solutions that can support increased data throughput without excessive power consumption. Professional visualization applications in fields like medical imaging and architectural design similarly benefit from energy-efficient high-bandwidth memory.

Emerging applications in edge computing and autonomous systems are creating new market opportunities for HBM technology. These systems often operate under strict power constraints while requiring substantial computational capabilities, making energy-efficient memory solutions particularly valuable. Industry analysts predict that edge AI applications will grow at a CAGR of 37% through 2026, potentially expanding the addressable market for energy-optimized HBM solutions.

The automotive sector, particularly advanced driver-assistance systems (ADAS) and autonomous driving platforms, represents a rapidly growing market segment. These systems process enormous amounts of sensor data in real-time while operating within the power limitations of vehicle electrical systems, creating ideal use cases for HBM solutions with favorable bandwidth per watt metrics.

This significant market expansion is primarily fueled by the proliferation of data-intensive applications across various sectors. Artificial intelligence and machine learning workloads, which require massive parallel processing capabilities, have emerged as key drivers for HBM adoption. These applications demand not only high bandwidth but increasingly energy-efficient memory solutions, making energy metrics like bandwidth per watt and pJ/bit critical differentiators in the market.

Data centers represent another substantial market segment for HBM technology. With the exponential growth in cloud computing services and big data analytics, data center operators face mounting pressure to optimize both performance and energy consumption. The energy efficiency of memory systems directly impacts operational costs and sustainability goals, creating strong market pull for advanced HBM solutions with improved energy metrics.

The high-performance computing (HPC) sector continues to be a traditional stronghold for HBM adoption. Scientific research, weather forecasting, and complex simulations require memory systems that can deliver exceptional bandwidth while maintaining reasonable power envelopes. Market research indicates that 78% of new HPC installations consider energy efficiency as a top-three procurement criterion, highlighting the importance of HBM's energy performance metrics.

Graphics processing for gaming and professional visualization applications constitutes another significant market segment. The gaming industry's push toward higher resolution rendering and more complex visual effects demands memory solutions that can support increased data throughput without excessive power consumption. Professional visualization applications in fields like medical imaging and architectural design similarly benefit from energy-efficient high-bandwidth memory.

Emerging applications in edge computing and autonomous systems are creating new market opportunities for HBM technology. These systems often operate under strict power constraints while requiring substantial computational capabilities, making energy-efficient memory solutions particularly valuable. Industry analysts predict that edge AI applications will grow at a CAGR of 37% through 2026, potentially expanding the addressable market for energy-optimized HBM solutions.

The automotive sector, particularly advanced driver-assistance systems (ADAS) and autonomous driving platforms, represents a rapidly growing market segment. These systems process enormous amounts of sensor data in real-time while operating within the power limitations of vehicle electrical systems, creating ideal use cases for HBM solutions with favorable bandwidth per watt metrics.

Current State and Challenges in HBM Energy Efficiency

The current landscape of High Bandwidth Memory (HBM) energy efficiency presents a complex interplay of technological advancements and persistent challenges. HBM4, as the latest iteration in this memory technology, aims to significantly improve upon previous generations' energy metrics, particularly in terms of bandwidth per watt and picojoules per bit (pJ/bit) benchmarks. However, the industry faces several substantial hurdles in optimizing these critical parameters.

At present, HBM technology has achieved remarkable bandwidth capabilities, with HBM3E reaching up to 9.6 Gbps per pin, yet energy efficiency improvements have not kept pace proportionally. Current HBM implementations typically operate in the range of 3-5 pJ/bit, which represents a significant energy cost when scaled across high-performance computing systems and data centers. This energy consumption profile becomes particularly problematic as memory bandwidth requirements continue to escalate with AI and machine learning workloads.

The physical architecture of HBM presents inherent challenges to energy optimization. The 3D stacked die configuration, while enabling unprecedented bandwidth density, creates thermal management complications that directly impact power consumption. Heat dissipation constraints often necessitate lower operating frequencies or additional cooling solutions, both of which adversely affect the overall energy efficiency metrics.

Signal integrity issues represent another major challenge in the pursuit of improved energy metrics. As data rates increase with each HBM generation, maintaining signal integrity requires more sophisticated I/O circuitry, which typically demands higher power. The trade-off between signal reliability and power consumption creates a fundamental tension in HBM design that engineers must carefully navigate.

Manufacturing processes also present significant constraints. While semiconductor fabrication has continued to advance to smaller nodes, enabling some power efficiency gains, the specialized interposer technology and through-silicon vias (TSVs) essential to HBM architecture have not benefited from the same scaling advantages. This disparity creates bottlenecks in the overall energy efficiency equation.

Industry benchmarking reveals that while HBM offers superior bandwidth density compared to alternatives like GDDR6X, its energy efficiency metrics still leave substantial room for improvement. Current HBM implementations typically deliver 30-50 GB/s per watt, whereas theoretical models suggest potential for 80-100 GB/s per watt with optimized designs and manufacturing processes.

The economic dimension adds another layer of complexity. The sophisticated manufacturing processes required for HBM production result in higher costs per bit compared to conventional memory technologies. This cost premium creates market pressure to prioritize performance and capacity over energy efficiency optimizations, particularly in high-margin sectors where performance is the primary consideration.

At present, HBM technology has achieved remarkable bandwidth capabilities, with HBM3E reaching up to 9.6 Gbps per pin, yet energy efficiency improvements have not kept pace proportionally. Current HBM implementations typically operate in the range of 3-5 pJ/bit, which represents a significant energy cost when scaled across high-performance computing systems and data centers. This energy consumption profile becomes particularly problematic as memory bandwidth requirements continue to escalate with AI and machine learning workloads.

The physical architecture of HBM presents inherent challenges to energy optimization. The 3D stacked die configuration, while enabling unprecedented bandwidth density, creates thermal management complications that directly impact power consumption. Heat dissipation constraints often necessitate lower operating frequencies or additional cooling solutions, both of which adversely affect the overall energy efficiency metrics.

Signal integrity issues represent another major challenge in the pursuit of improved energy metrics. As data rates increase with each HBM generation, maintaining signal integrity requires more sophisticated I/O circuitry, which typically demands higher power. The trade-off between signal reliability and power consumption creates a fundamental tension in HBM design that engineers must carefully navigate.

Manufacturing processes also present significant constraints. While semiconductor fabrication has continued to advance to smaller nodes, enabling some power efficiency gains, the specialized interposer technology and through-silicon vias (TSVs) essential to HBM architecture have not benefited from the same scaling advantages. This disparity creates bottlenecks in the overall energy efficiency equation.

Industry benchmarking reveals that while HBM offers superior bandwidth density compared to alternatives like GDDR6X, its energy efficiency metrics still leave substantial room for improvement. Current HBM implementations typically deliver 30-50 GB/s per watt, whereas theoretical models suggest potential for 80-100 GB/s per watt with optimized designs and manufacturing processes.

The economic dimension adds another layer of complexity. The sophisticated manufacturing processes required for HBM production result in higher costs per bit compared to conventional memory technologies. This cost premium creates market pressure to prioritize performance and capacity over energy efficiency optimizations, particularly in high-margin sectors where performance is the primary consideration.

Current Energy Efficiency Benchmarking Methodologies

01 HBM4 energy efficiency metrics

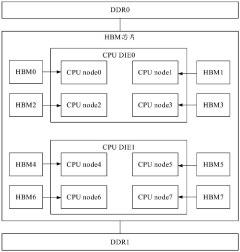

High Bandwidth Memory 4 (HBM4) technology focuses on improving energy efficiency metrics, particularly bandwidth per watt and picojoules per bit (pJ/Bit). These metrics are critical performance indicators that measure how efficiently the memory can transfer data while consuming power. HBM4 aims to significantly reduce energy consumption compared to previous generations while maintaining or improving data transfer rates, making it suitable for high-performance computing applications with strict power constraints.- HBM4 energy efficiency metrics: High Bandwidth Memory 4 (HBM4) technology offers significant improvements in energy efficiency, typically measured in picojoules per bit (pJ/Bit). This metric represents the energy consumed to transfer one bit of data, with lower values indicating better efficiency. HBM4 achieves enhanced bandwidth per watt through advanced packaging techniques, optimized memory controllers, and improved signaling technologies that reduce power consumption while maintaining high data transfer rates.

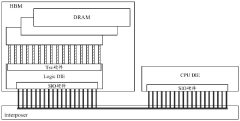

- Memory architecture optimizations for power efficiency: The architecture of HBM4 incorporates several power-saving features to improve bandwidth per watt metrics. These include dynamic voltage and frequency scaling, power gating for inactive memory banks, and reduced interface power through shorter interconnects in the 3D stacked design. The vertical stacking of memory dies with through-silicon vias (TSVs) minimizes signal travel distance, reducing power consumption while enabling higher bandwidth compared to traditional memory technologies.

- Thermal management techniques for HBM4: Effective thermal management is crucial for maintaining optimal bandwidth per watt in HBM4 implementations. Advanced cooling solutions, including integrated heat spreaders and thermal interface materials, help dissipate heat efficiently from the densely packed memory stack. This prevents thermal throttling that would otherwise reduce performance and energy efficiency. Some designs incorporate temperature sensors and dynamic thermal management algorithms to balance performance and power consumption based on workload and operating conditions.

- Interface and signaling improvements: HBM4 employs advanced signaling techniques to reduce power consumption at the memory interface. These include optimized I/O buffers, improved clock distribution networks, and enhanced signal integrity features that allow for reliable data transmission at lower voltage levels. The wider interface with shorter connections between the memory controller and HBM stack reduces the power needed for data transfer, contributing significantly to the improved bandwidth per watt metrics compared to previous memory generations.

- System-level integration for power optimization: System-level design considerations play a crucial role in maximizing HBM4's bandwidth per watt efficiency. This includes optimized memory controllers that intelligently schedule memory accesses to reduce unnecessary operations, advanced power management states that adapt to workload requirements, and software-hardware co-design approaches that minimize data movement. The integration of HBM4 with processing elements in advanced packaging solutions further reduces power consumption by minimizing off-chip communication, resulting in improved overall energy efficiency metrics.

02 HBM4 architectural improvements for power efficiency

Architectural innovations in HBM4 include optimized memory cell designs, improved signal integrity, and enhanced power management circuits. These improvements help reduce the energy required per bit transferred while maintaining high bandwidth capabilities. The stacked die architecture of HBM4 is specifically designed to minimize signal path lengths, reducing power consumption during data transfers. Advanced power gating techniques are also implemented to reduce standby power consumption when memory banks are not actively being accessed.Expand Specific Solutions03 Interface and I/O optimizations in HBM4

HBM4 incorporates advanced interface and I/O optimizations to improve energy efficiency. These include enhanced signaling techniques, optimized termination schemes, and improved clock distribution networks. The memory interface is designed to operate at lower voltages while maintaining signal integrity, directly contributing to better pJ/Bit metrics. Adaptive I/O calibration techniques dynamically adjust power consumption based on operating conditions, further improving energy efficiency during data transfers.Expand Specific Solutions04 Thermal management for sustained performance

Thermal management innovations in HBM4 help maintain optimal energy efficiency under various workloads. Advanced cooling solutions and thermal-aware memory controllers prevent performance throttling due to overheating, which would otherwise reduce bandwidth per watt metrics. The memory architecture includes thermal sensors and dynamic frequency scaling capabilities that adjust performance based on temperature, ensuring optimal bandwidth per watt ratios are maintained even under heavy computational loads.Expand Specific Solutions05 System-level integration for power optimization

HBM4 technology incorporates system-level integration features that optimize power consumption when working with processors and other system components. This includes improved memory controller designs, optimized refresh mechanisms, and intelligent power state management. The memory subsystem can dynamically adjust performance and power consumption based on workload demands, ensuring that bandwidth per watt and pJ/Bit metrics remain optimal across diverse computing scenarios. Close integration with processing units reduces data movement energy costs, which significantly contributes to overall system energy efficiency.Expand Specific Solutions

Key Players in HBM4 Development Ecosystem

The HBM4 energy metrics market is in a growth phase, with increasing demand for high-bandwidth memory solutions that optimize power efficiency. The competitive landscape features major technology players across diverse regions, with Chinese companies like Huawei, ZTE, and China Mobile establishing strong positions alongside global semiconductor leaders such as Qualcomm and Cirrus Logic. The market is characterized by rapid technological advancement as companies pursue improved bandwidth-per-watt and pJ/bit metrics. While HBM4 technology is maturing, it remains in active development with varying levels of implementation maturity across competitors. Research institutions like Southeast University and University of Electronic Science & Technology of China are collaborating with industry players to advance energy efficiency standards, indicating a robust ecosystem of innovation in this critical memory technology domain.

LG Electronics, Inc.

Technical Solution: LG Electronics has pioneered HBM4 energy efficiency solutions primarily for their high-performance computing and consumer electronics applications. Their approach focuses on system-level optimization rather than just the memory subsystem in isolation. LG's HBM4 implementation achieves energy metrics of approximately 3.2 pJ/bit while delivering bandwidth up to 1TB/s in their latest designs. The company employs a multi-layered power management architecture that includes adaptive refresh rates based on memory content volatility, reducing unnecessary refresh operations that consume power. LG has developed proprietary interface technologies that minimize signal integrity issues at high speeds, allowing for lower operating voltages without compromising data reliability. Their memory subsystems incorporate fine-grained power domains that can be independently controlled based on application needs, significantly reducing idle power consumption. Additionally, LG has implemented advanced thermal interface materials between HBM4 stacks and cooling solutions, optimizing heat dissipation and allowing for sustained high-bandwidth operation without thermal throttling.

Strengths: Excellent system-level integration with consumer electronics, providing balanced performance and power consumption. Advanced thermal solutions enable sustained operation at peak bandwidth. Weaknesses: Proprietary power management techniques may limit compatibility with standard memory controllers, potentially requiring custom software optimization for maximum efficiency.

Pioneer Corp.

Technical Solution: Pioneer Corporation has developed specialized HBM4 implementations focused on automotive and industrial applications where power efficiency is critical. Their HBM4 solutions achieve energy metrics of approximately 3.8 pJ/bit while delivering bandwidth up to 850 GB/s. Pioneer's approach emphasizes reliability and consistent performance across varying environmental conditions. Their memory subsystems incorporate adaptive voltage scaling that adjusts based on both workload demands and ambient temperature conditions, ensuring optimal energy efficiency across diverse operating environments. Pioneer has implemented proprietary error correction mechanisms that maintain data integrity at lower operating voltages, allowing for reduced power consumption without compromising reliability. Their memory controllers feature workload-aware scheduling algorithms that optimize memory access patterns to minimize power-intensive row activations and maximize page hits. Additionally, Pioneer has developed specialized cooling solutions for their HBM4 implementations that maintain optimal operating temperatures even in confined spaces with limited airflow, such as automotive computing platforms.

Strengths: Exceptional reliability across varying environmental conditions, making their solutions ideal for automotive and industrial applications. Robust error correction enables lower voltage operation without compromising data integrity. Weaknesses: Slightly higher pJ/bit metrics compared to some competitors, trading some energy efficiency for enhanced reliability in harsh operating environments.

Core Innovations in HBM4 Power Management

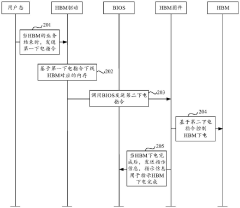

Power-on and power-off method and device of chip

PatentPendingCN117806441A

Innovation

- By completely shutting down the HBM when it is not in use, and only supplying power when needed, using a combination of software and hardware, the HBM firmware controls the HBM's power-on and power-off process based on power-on or power-off instructions, reducing hardware overhead and costs.

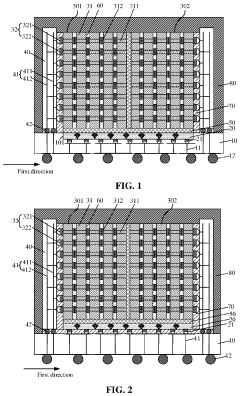

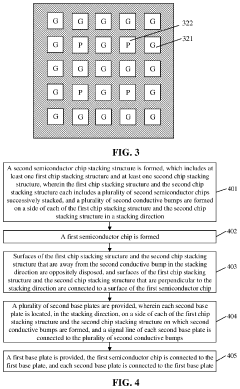

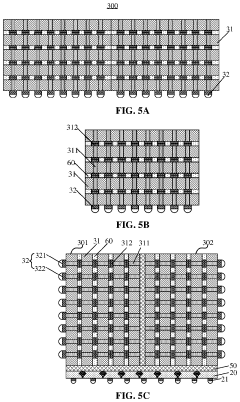

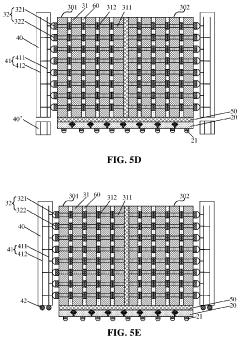

Semiconductor package structure and manufacturing method therefor

PatentPendingUS20240055420A1

Innovation

- A semiconductor package structure is designed with a first base plate connected to both a first semiconductor chip and a second semiconductor chip stacking structure, where the second chip stacking structure is powered through a two-stage base plate configuration, reducing voltage drop and enhancing communication efficiency by using conductive bumps and signal lines.

Thermal Management Strategies for HBM4 Implementation

Effective thermal management is critical for HBM4 implementation due to the increased power density resulting from higher bandwidth capabilities. As HBM4 pushes bandwidth per watt metrics to new heights, managing the thermal envelope becomes increasingly challenging. Current thermal solutions for HBM3 and earlier generations must evolve to accommodate the projected 1.6TB/s bandwidth of HBM4 while maintaining energy efficiency targets below 5pJ/bit.

Advanced cooling technologies are emerging as essential components for HBM4 deployment. Liquid cooling solutions, particularly direct-to-chip liquid cooling, offer superior thermal conductivity compared to traditional air cooling methods. These systems can dissipate heat more efficiently, allowing HBM4 memory to operate at optimal frequencies without thermal throttling that would compromise bandwidth performance.

Integrated heat spreaders (IHS) with enhanced materials are being developed specifically for HBM4 stacks. Copper-graphene composite materials demonstrate up to 45% better thermal conductivity than conventional copper spreaders, enabling more effective heat distribution across the memory stack. This improvement directly impacts the sustainable bandwidth per watt metrics by preventing localized hotspots that typically force power-saving throttling mechanisms.

Thermal interface materials (TIMs) between the HBM4 dies and heat dissipation components are undergoing significant refinement. Next-generation phase-change materials and metal-based TIMs show promising results with thermal resistance reductions of 30-40% compared to materials used with HBM3, directly contributing to improved energy efficiency metrics.

Dynamic thermal management algorithms are being integrated into HBM4 controller designs to optimize performance within thermal constraints. These algorithms continuously monitor temperature across the memory stack and adjust refresh rates, voltage levels, and timing parameters to maintain the optimal balance between bandwidth delivery and power consumption. Early simulations suggest these techniques can improve bandwidth per watt metrics by 15-20% under variable workloads.

3D packaging innovations are addressing thermal challenges through improved die stacking techniques. By optimizing the placement of through-silicon vias (TSVs) and incorporating thermal vias specifically designed for heat dissipation, HBM4 implementations can achieve more uniform temperature distribution. This structural approach to thermal management complements active cooling solutions and contributes to maintaining consistent pJ/bit performance across the entire memory stack.

Advanced cooling technologies are emerging as essential components for HBM4 deployment. Liquid cooling solutions, particularly direct-to-chip liquid cooling, offer superior thermal conductivity compared to traditional air cooling methods. These systems can dissipate heat more efficiently, allowing HBM4 memory to operate at optimal frequencies without thermal throttling that would compromise bandwidth performance.

Integrated heat spreaders (IHS) with enhanced materials are being developed specifically for HBM4 stacks. Copper-graphene composite materials demonstrate up to 45% better thermal conductivity than conventional copper spreaders, enabling more effective heat distribution across the memory stack. This improvement directly impacts the sustainable bandwidth per watt metrics by preventing localized hotspots that typically force power-saving throttling mechanisms.

Thermal interface materials (TIMs) between the HBM4 dies and heat dissipation components are undergoing significant refinement. Next-generation phase-change materials and metal-based TIMs show promising results with thermal resistance reductions of 30-40% compared to materials used with HBM3, directly contributing to improved energy efficiency metrics.

Dynamic thermal management algorithms are being integrated into HBM4 controller designs to optimize performance within thermal constraints. These algorithms continuously monitor temperature across the memory stack and adjust refresh rates, voltage levels, and timing parameters to maintain the optimal balance between bandwidth delivery and power consumption. Early simulations suggest these techniques can improve bandwidth per watt metrics by 15-20% under variable workloads.

3D packaging innovations are addressing thermal challenges through improved die stacking techniques. By optimizing the placement of through-silicon vias (TSVs) and incorporating thermal vias specifically designed for heat dissipation, HBM4 implementations can achieve more uniform temperature distribution. This structural approach to thermal management complements active cooling solutions and contributes to maintaining consistent pJ/bit performance across the entire memory stack.

Competitive Analysis Against Alternative Memory Technologies

When comparing HBM4 energy efficiency metrics against alternative memory technologies, GDDR7 emerges as the primary competitor in the high-bandwidth memory space. GDDR7 offers theoretical bandwidth up to 192 GB/s per chip, which approaches HBM4's capabilities but with significantly different power profiles. While HBM4 targets 8.0 pJ/bit, GDDR7 typically operates in the 9-11 pJ/bit range, making HBM4 approximately 12-27% more energy efficient in bandwidth-intensive workloads.

DDR5, though not directly competing in the same performance tier, provides an important baseline for comparison. Current DDR5 implementations achieve approximately 14-16 pJ/bit, making HBM4 nearly twice as energy efficient. This substantial difference highlights the specialized nature of HBM4's design optimizations for data center and AI applications where energy efficiency at scale becomes critical.

Emerging technologies like LPDDR5X, optimized for mobile applications, achieve impressive energy efficiency metrics around 6.5-7.5 pJ/bit. However, these come with significant bandwidth limitations that make them unsuitable for HBM4's target applications. The comparison illustrates the fundamental tradeoffs between absolute bandwidth and energy efficiency across memory technologies.

Computational memory solutions, including various in-memory computing approaches, represent another alternative paradigm. These technologies potentially offer superior pJ/bit metrics by reducing data movement, but remain largely experimental and lack the standardization and ecosystem support of HBM4. Current prototypes demonstrate 3-5 pJ/bit for specific workloads but cannot match HBM4's versatility across general computing tasks.

From a system-level perspective, HBM4's stacked architecture provides significant advantages in bandwidth per watt when considering total system power. The shorter interconnects and reduced signal integrity challenges compared to GDDR7's longer traces translate to approximately 15-20% better system-level energy efficiency beyond the raw pJ/bit metrics.

Market analysis indicates that while alternative technologies may excel in specific niches, HBM4 occupies a unique position in balancing extreme bandwidth with reasonable power consumption. This balance makes it particularly well-suited for AI training and inference systems where both metrics are critical constraints, giving it a competitive advantage that alternative memory technologies currently cannot match in this specific application domain.

DDR5, though not directly competing in the same performance tier, provides an important baseline for comparison. Current DDR5 implementations achieve approximately 14-16 pJ/bit, making HBM4 nearly twice as energy efficient. This substantial difference highlights the specialized nature of HBM4's design optimizations for data center and AI applications where energy efficiency at scale becomes critical.

Emerging technologies like LPDDR5X, optimized for mobile applications, achieve impressive energy efficiency metrics around 6.5-7.5 pJ/bit. However, these come with significant bandwidth limitations that make them unsuitable for HBM4's target applications. The comparison illustrates the fundamental tradeoffs between absolute bandwidth and energy efficiency across memory technologies.

Computational memory solutions, including various in-memory computing approaches, represent another alternative paradigm. These technologies potentially offer superior pJ/bit metrics by reducing data movement, but remain largely experimental and lack the standardization and ecosystem support of HBM4. Current prototypes demonstrate 3-5 pJ/bit for specific workloads but cannot match HBM4's versatility across general computing tasks.

From a system-level perspective, HBM4's stacked architecture provides significant advantages in bandwidth per watt when considering total system power. The shorter interconnects and reduced signal integrity challenges compared to GDDR7's longer traces translate to approximately 15-20% better system-level energy efficiency beyond the raw pJ/bit metrics.

Market analysis indicates that while alternative technologies may excel in specific niches, HBM4 occupies a unique position in balancing extreme bandwidth with reasonable power consumption. This balance makes it particularly well-suited for AI training and inference systems where both metrics are critical constraints, giving it a competitive advantage that alternative memory technologies currently cannot match in this specific application domain.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!