How to Validate GDI Engine Compression Across Cylinders

AUG 28, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

GDI Engine Compression Testing Background and Objectives

Gasoline Direct Injection (GDI) engine technology has evolved significantly over the past three decades, revolutionizing internal combustion engine efficiency and performance. The validation of compression across cylinders represents a critical aspect of GDI engine development and maintenance, directly impacting engine performance, fuel efficiency, and emissions control. This technical exploration aims to establish comprehensive methodologies for accurately measuring and validating compression consistency across multiple cylinders in modern GDI engines.

The historical progression of compression testing techniques has transitioned from basic mechanical gauges to sophisticated electronic measurement systems. Early methods relied primarily on manual cranking and analog pressure readings, while contemporary approaches incorporate real-time data acquisition systems with high-precision sensors. Understanding this evolution provides valuable context for current validation challenges and opportunities.

GDI engines present unique compression validation requirements compared to port fuel injection systems due to their higher compression ratios, direct fuel delivery into the combustion chamber, and more precise timing requirements. These characteristics necessitate specialized testing protocols that can accurately measure compression under various operating conditions while accounting for GDI-specific variables such as fuel stratification effects and injector timing.

The primary technical objectives of this investigation include developing standardized procedures for measuring compression across cylinders with minimal variance, identifying key indicators of compression irregularities specific to GDI systems, and establishing threshold parameters that differentiate between acceptable variations and problematic compression discrepancies requiring intervention.

Market demands for improved engine efficiency, reduced emissions, and extended service intervals have intensified the need for precise compression validation methods. Regulatory pressures worldwide continue to drive manufacturers toward engines with higher compression ratios and more sophisticated combustion control, further emphasizing the importance of accurate compression testing methodologies.

Current technical challenges in GDI compression validation include accounting for the effects of carbon buildup on valves and pistons, which can significantly alter compression characteristics over time. Additionally, the integration of variable valve timing and cylinder deactivation technologies introduces complexity to compression testing procedures, requiring dynamic measurement approaches rather than static testing alone.

This investigation aims to bridge existing knowledge gaps by synthesizing best practices from automotive manufacturing, service industries, and research institutions to create a comprehensive framework for GDI engine compression validation that balances precision with practical implementation in both production and maintenance environments.

The historical progression of compression testing techniques has transitioned from basic mechanical gauges to sophisticated electronic measurement systems. Early methods relied primarily on manual cranking and analog pressure readings, while contemporary approaches incorporate real-time data acquisition systems with high-precision sensors. Understanding this evolution provides valuable context for current validation challenges and opportunities.

GDI engines present unique compression validation requirements compared to port fuel injection systems due to their higher compression ratios, direct fuel delivery into the combustion chamber, and more precise timing requirements. These characteristics necessitate specialized testing protocols that can accurately measure compression under various operating conditions while accounting for GDI-specific variables such as fuel stratification effects and injector timing.

The primary technical objectives of this investigation include developing standardized procedures for measuring compression across cylinders with minimal variance, identifying key indicators of compression irregularities specific to GDI systems, and establishing threshold parameters that differentiate between acceptable variations and problematic compression discrepancies requiring intervention.

Market demands for improved engine efficiency, reduced emissions, and extended service intervals have intensified the need for precise compression validation methods. Regulatory pressures worldwide continue to drive manufacturers toward engines with higher compression ratios and more sophisticated combustion control, further emphasizing the importance of accurate compression testing methodologies.

Current technical challenges in GDI compression validation include accounting for the effects of carbon buildup on valves and pistons, which can significantly alter compression characteristics over time. Additionally, the integration of variable valve timing and cylinder deactivation technologies introduces complexity to compression testing procedures, requiring dynamic measurement approaches rather than static testing alone.

This investigation aims to bridge existing knowledge gaps by synthesizing best practices from automotive manufacturing, service industries, and research institutions to create a comprehensive framework for GDI engine compression validation that balances precision with practical implementation in both production and maintenance environments.

Market Demand for Advanced Compression Validation Methods

The automotive industry is witnessing a significant shift towards more efficient and environmentally friendly engine technologies, with Gasoline Direct Injection (GDI) engines at the forefront of this evolution. This market transition has created substantial demand for advanced compression validation methods across engine cylinders. According to recent industry reports, the global automotive engine testing market is projected to reach $4.3 billion by 2025, growing at a CAGR of 5.9% from 2020, with compression testing equipment representing a significant segment.

The demand for sophisticated compression validation methods is primarily driven by increasingly stringent emission regulations worldwide. The European Union's Euro 7 standards, the United States EPA's Tier 3 regulations, and China's National 6 standards all require manufacturers to achieve unprecedented levels of engine efficiency and emission control, making precise compression validation critical for compliance.

Vehicle manufacturers are facing mounting pressure to extend warranty periods while simultaneously reducing warranty costs. This paradoxical requirement necessitates more accurate diagnostic tools for production quality control and service departments. Advanced compression validation methods that can detect subtle variations across cylinders have become essential for predicting potential failures before they occur, thereby reducing warranty claims by up to 15% according to industry estimates.

Consumer expectations for vehicle performance and reliability continue to rise, with market research indicating that engine performance issues remain among the top three concerns for new vehicle buyers. This consumer sentiment has pushed manufacturers to implement more thorough validation processes, particularly for premium vehicle segments where customer satisfaction metrics directly impact brand value and market share.

The aftermarket service sector represents another significant demand driver, with over 280 million vehicles in operation in the United States alone requiring regular maintenance. Professional service centers are increasingly investing in advanced diagnostic equipment to improve service accuracy and efficiency, with compression testing being a fundamental procedure in engine performance evaluation.

The emergence of connected vehicle technologies and predictive maintenance models has created new opportunities for real-time compression monitoring systems. These technologies enable continuous assessment of engine health, allowing for preventive maintenance before catastrophic failures occur. Market analysis suggests that the predictive maintenance segment for automotive applications is growing at nearly 25% annually, significantly outpacing traditional diagnostic methods.

As manufacturers continue to push the boundaries of engine design with higher compression ratios, variable compression technologies, and multi-stage injection systems, the complexity of accurately validating compression across cylinders has increased exponentially, further driving demand for more sophisticated validation methodologies and equipment.

The demand for sophisticated compression validation methods is primarily driven by increasingly stringent emission regulations worldwide. The European Union's Euro 7 standards, the United States EPA's Tier 3 regulations, and China's National 6 standards all require manufacturers to achieve unprecedented levels of engine efficiency and emission control, making precise compression validation critical for compliance.

Vehicle manufacturers are facing mounting pressure to extend warranty periods while simultaneously reducing warranty costs. This paradoxical requirement necessitates more accurate diagnostic tools for production quality control and service departments. Advanced compression validation methods that can detect subtle variations across cylinders have become essential for predicting potential failures before they occur, thereby reducing warranty claims by up to 15% according to industry estimates.

Consumer expectations for vehicle performance and reliability continue to rise, with market research indicating that engine performance issues remain among the top three concerns for new vehicle buyers. This consumer sentiment has pushed manufacturers to implement more thorough validation processes, particularly for premium vehicle segments where customer satisfaction metrics directly impact brand value and market share.

The aftermarket service sector represents another significant demand driver, with over 280 million vehicles in operation in the United States alone requiring regular maintenance. Professional service centers are increasingly investing in advanced diagnostic equipment to improve service accuracy and efficiency, with compression testing being a fundamental procedure in engine performance evaluation.

The emergence of connected vehicle technologies and predictive maintenance models has created new opportunities for real-time compression monitoring systems. These technologies enable continuous assessment of engine health, allowing for preventive maintenance before catastrophic failures occur. Market analysis suggests that the predictive maintenance segment for automotive applications is growing at nearly 25% annually, significantly outpacing traditional diagnostic methods.

As manufacturers continue to push the boundaries of engine design with higher compression ratios, variable compression technologies, and multi-stage injection systems, the complexity of accurately validating compression across cylinders has increased exponentially, further driving demand for more sophisticated validation methodologies and equipment.

Current Challenges in Cylinder Compression Testing

Despite significant advancements in GDI (Gasoline Direct Injection) engine technology, cylinder compression testing continues to present numerous challenges for automotive engineers and technicians. The traditional methods of compression testing face limitations when applied to modern GDI engines, which feature complex combustion systems and higher compression ratios than their port fuel injection predecessors.

One primary challenge is the interference from the high-pressure fuel system. GDI engines operate with fuel pressures ranging from 2,000 to 3,000 psi, significantly higher than conventional systems. This high-pressure environment can affect compression readings and potentially damage testing equipment if proper isolation procedures are not followed. Technicians must ensure complete depressurization of the fuel system before testing, which adds complexity and time to the diagnostic process.

The presence of carbon deposits presents another significant obstacle. GDI engines are particularly prone to carbon buildup on intake valves and cylinder walls due to the absence of fuel washing effect that occurs in port injection systems. These deposits can create false readings during compression tests by altering the effective compression ratio or causing leakage paths that wouldn't exist in clean cylinders.

Timing chain stretch and variable valve timing mechanisms further complicate accurate compression testing. Modern GDI engines often employ sophisticated variable valve timing systems that can significantly alter compression characteristics depending on their position during testing. Without proper control or understanding of these systems' states, compression readings may vary widely and lead to misdiagnosis.

The integration of turbochargers and superchargers with GDI technology introduces additional variables. These forced induction systems create dynamic compression scenarios that static testing methods struggle to accurately evaluate. The compression characteristics under load differ substantially from those at idle, making traditional testing less representative of real-world operating conditions.

Temperature sensitivity represents another critical challenge. GDI engines often run at higher operating temperatures, and compression values can vary significantly between cold and hot states. This temperature dependence makes standardization of test conditions difficult and can lead to inconsistent results when comparing measurements taken under different thermal conditions.

Finally, the interpretation of results requires increasingly sophisticated analysis. With tighter engineering tolerances in modern GDI engines, the acceptable range for compression values has narrowed. Technicians must now consider not only absolute compression values but also the relative differences between cylinders, rate of pressure buildup, and other dynamic characteristics to accurately diagnose engine health.

One primary challenge is the interference from the high-pressure fuel system. GDI engines operate with fuel pressures ranging from 2,000 to 3,000 psi, significantly higher than conventional systems. This high-pressure environment can affect compression readings and potentially damage testing equipment if proper isolation procedures are not followed. Technicians must ensure complete depressurization of the fuel system before testing, which adds complexity and time to the diagnostic process.

The presence of carbon deposits presents another significant obstacle. GDI engines are particularly prone to carbon buildup on intake valves and cylinder walls due to the absence of fuel washing effect that occurs in port injection systems. These deposits can create false readings during compression tests by altering the effective compression ratio or causing leakage paths that wouldn't exist in clean cylinders.

Timing chain stretch and variable valve timing mechanisms further complicate accurate compression testing. Modern GDI engines often employ sophisticated variable valve timing systems that can significantly alter compression characteristics depending on their position during testing. Without proper control or understanding of these systems' states, compression readings may vary widely and lead to misdiagnosis.

The integration of turbochargers and superchargers with GDI technology introduces additional variables. These forced induction systems create dynamic compression scenarios that static testing methods struggle to accurately evaluate. The compression characteristics under load differ substantially from those at idle, making traditional testing less representative of real-world operating conditions.

Temperature sensitivity represents another critical challenge. GDI engines often run at higher operating temperatures, and compression values can vary significantly between cold and hot states. This temperature dependence makes standardization of test conditions difficult and can lead to inconsistent results when comparing measurements taken under different thermal conditions.

Finally, the interpretation of results requires increasingly sophisticated analysis. With tighter engineering tolerances in modern GDI engines, the acceptable range for compression values has narrowed. Technicians must now consider not only absolute compression values but also the relative differences between cylinders, rate of pressure buildup, and other dynamic characteristics to accurately diagnose engine health.

Modern Approaches to Cross-Cylinder Compression Validation

01 Compression ratio optimization in GDI engines

Gasoline Direct Injection (GDI) engines can achieve higher compression ratios compared to conventional port fuel injection engines, which improves thermal efficiency and power output. Various designs focus on optimizing the compression ratio while preventing knocking and maintaining fuel efficiency. These innovations include specialized piston designs, combustion chamber geometries, and variable compression ratio mechanisms that adapt to different operating conditions.- Compression ratio optimization in GDI engines: Gasoline Direct Injection (GDI) engines can achieve higher compression ratios compared to conventional port fuel injection engines, which improves thermal efficiency and performance. Various technologies are employed to optimize the compression ratio while preventing knock and maintaining reliability. These include variable compression ratio mechanisms, piston design modifications, and combustion chamber geometry optimization to accommodate the direct fuel injection process.

- Fuel injection strategies for GDI compression control: Advanced fuel injection strategies are crucial for controlling compression characteristics in GDI engines. These include multiple injection events (pre-injection, main injection, post-injection), variable injection timing, and pressure modulation techniques. Such strategies help manage combustion phasing, reduce knock tendency at high compression ratios, and optimize the air-fuel mixture formation for improved combustion efficiency and emissions control.

- Piston and combustion chamber design for GDI compression: Specialized piston and combustion chamber designs are developed for GDI engines to manage compression characteristics. These designs include piston crown geometries that create specific tumble or swirl patterns, bowl-in-piston configurations that direct the fuel spray, and combustion chamber shapes that enhance mixture formation. Such designs help maintain stable combustion at higher compression ratios while accommodating the direct injection spray pattern.

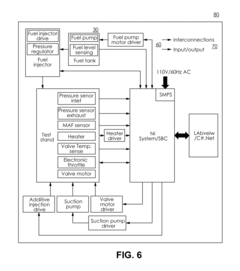

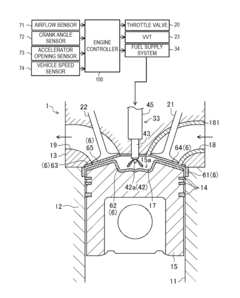

- Compression monitoring and control systems for GDI engines: Advanced monitoring and control systems are implemented in GDI engines to optimize compression-related parameters. These systems include in-cylinder pressure sensors, knock detection algorithms, and adaptive control strategies that adjust injection timing, spark timing, and other parameters based on real-time combustion feedback. Such systems help maintain optimal compression characteristics across various operating conditions while preventing damaging knock events.

- Thermal management for GDI compression optimization: Thermal management strategies are employed in GDI engines to optimize compression performance. These include advanced cooling systems, thermal barrier coatings, and heat recovery technologies that help maintain optimal cylinder and intake air temperatures. Effective thermal management prevents pre-ignition and knock at high compression ratios, extends engine durability, and ensures consistent performance across varying operating conditions.

02 Fuel injection strategies for GDI compression control

Advanced fuel injection strategies are employed in GDI engines to control compression characteristics and combustion efficiency. These include multiple injection events per cycle, precise timing control, and spray pattern optimization. By controlling the fuel injection parameters, engineers can manage compression-related issues such as pre-ignition and knocking while maximizing power output and reducing emissions under various load conditions.Expand Specific Solutions03 Combustion chamber design for improved compression

Specialized combustion chamber designs enhance compression characteristics in GDI engines. These designs include optimized piston crown shapes, strategic placement of spark plugs relative to injectors, and engineered flow patterns that promote efficient air-fuel mixing. The combustion chamber geometry significantly impacts the compression behavior, flame propagation, and overall thermal efficiency of the engine.Expand Specific Solutions04 Compression monitoring and diagnostic systems

Advanced monitoring systems are developed to measure and analyze compression characteristics in GDI engines during operation. These systems use sensors, optical diagnostics, and computational models to provide real-time data on compression parameters. The diagnostic capabilities help in early detection of compression-related issues, enabling preventive maintenance and ensuring optimal engine performance throughout its lifecycle.Expand Specific Solutions05 Thermal management for compression optimization

Thermal management systems are crucial for maintaining optimal compression conditions in GDI engines. These systems control the temperature of various engine components to prevent overheating and ensure consistent compression performance. Innovations include advanced cooling strategies, heat recovery systems, and thermal barrier coatings that help maintain ideal operating temperatures under varying load conditions, contributing to improved compression efficiency and engine durability.Expand Specific Solutions

Leading Manufacturers and Service Providers in Compression Testing

The GDI engine compression validation market is in a growth phase, with increasing demand driven by stricter emissions regulations and fuel efficiency requirements. Major automotive OEMs like Toyota, Ford, BMW, and Hyundai are competing alongside specialized powertrain technology providers such as Robert Bosch GmbH and AVL List GmbH. The market is characterized by moderate technological maturity, with established players like Bosch leading in diagnostic equipment development while automotive manufacturers focus on integrating advanced compression testing into their engine development processes. Emerging players from China, including Geely's powertrain subsidiaries, are rapidly advancing their capabilities, particularly in electrification-compatible GDI systems, creating a dynamic competitive landscape that balances innovation with standardization efforts.

Robert Bosch GmbH

Technical Solution: Bosch has developed comprehensive GDI engine compression validation systems that integrate multiple sensing technologies. Their approach combines in-cylinder pressure sensors with advanced signal processing algorithms to detect compression anomalies across cylinders. The system utilizes high-precision piezoelectric pressure transducers installed in each cylinder that can withstand high temperatures and pressures while providing accurate measurements. Bosch's validation methodology includes both static compression testing (measuring compression pressure at cranking speed) and dynamic compression analysis during actual engine operation. Their system can detect subtle variations in compression ratios between cylinders as small as 0.2:1, allowing for early identification of potential issues such as valve sealing problems, piston ring wear, or head gasket failures. The data acquisition system samples at rates exceeding 1 MHz to capture the rapid pressure changes during the compression stroke, with sophisticated filtering to eliminate noise.

Strengths: Exceptional measurement precision with industry-leading sensor technology; comprehensive diagnostic capabilities that can differentiate between various compression-related issues; integration with existing Bosch engine management systems. Weaknesses: Higher implementation cost compared to simpler testing methods; requires specialized training for proper interpretation of results; sensor durability in extreme operating conditions remains challenging.

Toyota Motor Corp.

Technical Solution: Toyota has developed a sophisticated GDI engine compression validation system that integrates multiple testing methodologies. Their approach combines traditional compression testing with advanced electronic monitoring systems that analyze combustion characteristics. Toyota's system utilizes high-precision in-cylinder pressure sensors during development and production validation, while implementing non-intrusive monitoring techniques for in-vehicle diagnostics. Their methodology includes both static compression testing and dynamic analysis during engine operation across various load conditions. Toyota's validation process incorporates a unique "pressure wave analysis" technique that can detect subtle anomalies in compression characteristics by analyzing the acoustic signature of each cylinder during operation. The system can identify compression variations as small as 4% between cylinders, enabling precise quality control during manufacturing and effective diagnostics during service. Toyota has implemented this technology across their global manufacturing facilities, ensuring consistent compression validation across all production engines. Their approach also includes adaptive learning algorithms that establish baseline compression signatures for each engine and monitor deviations over time.

Strengths: Exceptional balance between precision and implementation cost; robust validation methodology that works effectively across diverse engine designs; excellent integration with Toyota's overall quality control systems. Weaknesses: Requires specific test conditions for most accurate results; some diagnostic ambiguity between compression and injection-related issues; implementation complexity for aftermarket service facilities.

Key Technologies in Precision Compression Measurement

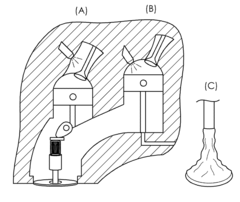

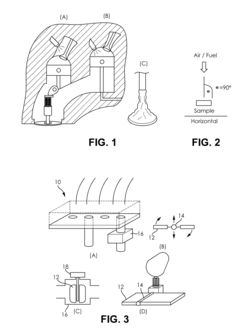

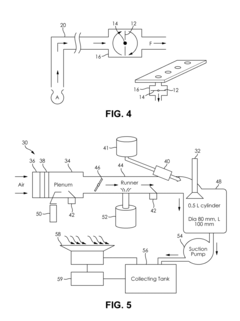

Evaluation of the delivery and effectiveness of engine performance chemicals and products

PatentActiveUS20170114716A1

Innovation

- A method and system for evaluating the delivery and effectiveness of engine performance chemicals and products for reducing intake valve deposits, utilizing a controlled environment with simulated engine conditions to quantify improvements, including adjustable parameters like air-fuel ratio, temperature, and oscillation frequency, and employing three approaches to introduce cleaners: airstream addition, suction-based distribution, and fuel additive application.

Gasoline direct-injection engine

PatentActiveUS20150144095A1

Innovation

- A gasoline direct-injection engine with a geometric compression ratio of 15:1 or higher employs a pre-injection to maintain in-cylinder temperature within a predetermined range through partial oxidative reactions, allowing for extended retardation of self-ignition combustion timing while reducing pressure increase rates and preventing excessive temperature increases.

Equipment Calibration and Measurement Standards

Accurate validation of GDI engine compression across cylinders requires adherence to stringent equipment calibration and measurement standards. Compression testing equipment must be calibrated according to ISO 17025 standards, which establish requirements for testing and calibration laboratories. This ensures measurement traceability and consistency across different testing environments. Calibration should be performed at regular intervals, typically every 6-12 months depending on usage frequency and manufacturer recommendations.

Pressure transducers used in compression testing require calibration against NIST-traceable standards with an accuracy of at least ±0.5% of full scale. Temperature compensation is essential for these devices, as GDI engine compression measurements are sensitive to ambient temperature variations. Calibration certificates should document the measurement uncertainty, calibration date, and environmental conditions during calibration.

Digital measurement systems must comply with SAE J1349 standards for engine power testing, which include specific requirements for data acquisition rates. For GDI engine compression testing, sampling rates of at least 1 kHz are recommended to capture the rapid pressure changes during the compression stroke. Signal conditioning equipment should have a bandwidth of at least 10 kHz to prevent signal distortion.

Cylinder pressure measurement tools should be calibrated using dead-weight testers that provide a known reference pressure. The calibration process must verify linearity across the entire measurement range, typically from 0 to 70 bar for GDI engines. Hysteresis effects should be quantified and documented as part of the calibration procedure.

Reference standards for timing measurements must be synchronized with crankshaft position sensors with an accuracy of ±0.1 degrees of crank angle. This precision is critical for accurate determination of peak compression pressure and its timing relative to top dead center (TDC). Calibration of TDC markers should be performed using capacitive or optical sensors with resolution better than 0.05 degrees.

Measurement system validation should include cross-verification with multiple measurement methods. For example, compression test results can be validated against in-cylinder pressure traces obtained from piezoelectric pressure transducers. Statistical analysis of measurement repeatability should demonstrate a coefficient of variation less than 2% across multiple test cycles to ensure reliable cylinder-to-cylinder comparison.

Documentation of calibration procedures must include verification of measurement system response time, which should not exceed 1 millisecond for accurate capture of compression events. All calibration records should be maintained according to IATF 16949 quality management system requirements, ensuring traceability throughout the measurement system's lifecycle.

Pressure transducers used in compression testing require calibration against NIST-traceable standards with an accuracy of at least ±0.5% of full scale. Temperature compensation is essential for these devices, as GDI engine compression measurements are sensitive to ambient temperature variations. Calibration certificates should document the measurement uncertainty, calibration date, and environmental conditions during calibration.

Digital measurement systems must comply with SAE J1349 standards for engine power testing, which include specific requirements for data acquisition rates. For GDI engine compression testing, sampling rates of at least 1 kHz are recommended to capture the rapid pressure changes during the compression stroke. Signal conditioning equipment should have a bandwidth of at least 10 kHz to prevent signal distortion.

Cylinder pressure measurement tools should be calibrated using dead-weight testers that provide a known reference pressure. The calibration process must verify linearity across the entire measurement range, typically from 0 to 70 bar for GDI engines. Hysteresis effects should be quantified and documented as part of the calibration procedure.

Reference standards for timing measurements must be synchronized with crankshaft position sensors with an accuracy of ±0.1 degrees of crank angle. This precision is critical for accurate determination of peak compression pressure and its timing relative to top dead center (TDC). Calibration of TDC markers should be performed using capacitive or optical sensors with resolution better than 0.05 degrees.

Measurement system validation should include cross-verification with multiple measurement methods. For example, compression test results can be validated against in-cylinder pressure traces obtained from piezoelectric pressure transducers. Statistical analysis of measurement repeatability should demonstrate a coefficient of variation less than 2% across multiple test cycles to ensure reliable cylinder-to-cylinder comparison.

Documentation of calibration procedures must include verification of measurement system response time, which should not exceed 1 millisecond for accurate capture of compression events. All calibration records should be maintained according to IATF 16949 quality management system requirements, ensuring traceability throughout the measurement system's lifecycle.

Environmental Impact of Compression Testing Procedures

The environmental implications of compression testing procedures for GDI engines deserve careful consideration as automotive manufacturers face increasing regulatory pressure to reduce their ecological footprint. Traditional compression testing methods often require engines to run for extended periods, consuming fuel and generating emissions during the diagnostic process. These emissions include not only carbon dioxide but also nitrogen oxides, particulate matter, and unburned hydrocarbons, which contribute to air pollution and climate change.

Modern compression testing equipment has evolved to minimize these environmental impacts. Advanced electronic compression testers can now perform diagnostics more efficiently, reducing the engine runtime required for accurate measurements. Some systems can complete comprehensive cylinder compression analysis in less than half the time of conventional methods, significantly decreasing the carbon footprint of each testing procedure.

The disposal of materials used during compression testing also presents environmental challenges. Oil and fuel contaminated during testing must be properly handled as hazardous waste. Progressive service centers have implemented closed-loop systems that capture and recycle these fluids, preventing soil and water contamination while reducing resource consumption.

Manufacturers are increasingly adopting non-invasive testing technologies that minimize environmental impact. Ultrasonic and vibration analysis techniques can detect compression issues without requiring the engine to operate at full capacity, thereby reducing emissions during the diagnostic phase. These methods represent a significant advancement in environmentally responsible maintenance practices.

The shift toward electric vehicles will eventually reduce the need for traditional compression testing, but during the transition period, hybrid vehicles present unique challenges. Testing procedures for these vehicles must account for both conventional engine components and electric systems, requiring specialized equipment that can accurately diagnose compression issues while minimizing environmental impact.

Regulatory frameworks in various regions now include provisions for emissions generated during maintenance procedures, including compression testing. Service centers must comply with increasingly stringent standards regarding the capture and treatment of exhaust gases produced during diagnostics. This regulatory landscape is driving innovation in testing methodologies that prioritize environmental protection without compromising diagnostic accuracy.

Modern compression testing equipment has evolved to minimize these environmental impacts. Advanced electronic compression testers can now perform diagnostics more efficiently, reducing the engine runtime required for accurate measurements. Some systems can complete comprehensive cylinder compression analysis in less than half the time of conventional methods, significantly decreasing the carbon footprint of each testing procedure.

The disposal of materials used during compression testing also presents environmental challenges. Oil and fuel contaminated during testing must be properly handled as hazardous waste. Progressive service centers have implemented closed-loop systems that capture and recycle these fluids, preventing soil and water contamination while reducing resource consumption.

Manufacturers are increasingly adopting non-invasive testing technologies that minimize environmental impact. Ultrasonic and vibration analysis techniques can detect compression issues without requiring the engine to operate at full capacity, thereby reducing emissions during the diagnostic phase. These methods represent a significant advancement in environmentally responsible maintenance practices.

The shift toward electric vehicles will eventually reduce the need for traditional compression testing, but during the transition period, hybrid vehicles present unique challenges. Testing procedures for these vehicles must account for both conventional engine components and electric systems, requiring specialized equipment that can accurately diagnose compression issues while minimizing environmental impact.

Regulatory frameworks in various regions now include provisions for emissions generated during maintenance procedures, including compression testing. Service centers must comply with increasingly stringent standards regarding the capture and treatment of exhaust gases produced during diagnostics. This regulatory landscape is driving innovation in testing methodologies that prioritize environmental protection without compromising diagnostic accuracy.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!