SLAM In Next-Generation Urban Air Mobility Systems

SEP 12, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

SLAM Technology Background and Objectives in UAM

Simultaneous Localization and Mapping (SLAM) technology has evolved significantly over the past three decades, transitioning from theoretical research to practical applications across various domains. In the context of Urban Air Mobility (UAM) systems, SLAM represents a critical enabling technology for autonomous aerial vehicles operating in complex urban environments. The evolution of SLAM began in the robotics field during the 1990s, with seminal work by researchers like Smith, Self, and Cheeseman establishing the probabilistic foundations that continue to underpin modern approaches.

Traditional SLAM implementations relied heavily on laser-based sensors and were primarily designed for ground-based robots in structured environments. However, the emergence of UAM systems presents unique challenges that have accelerated SLAM development toward more robust, real-time solutions capable of functioning in dynamic, three-dimensional urban landscapes with varying lighting conditions, weather patterns, and electromagnetic interference.

The primary technical objective for SLAM in next-generation UAM systems is to achieve centimeter-level positioning accuracy while maintaining operational integrity under all environmental conditions. This represents a significant advancement beyond current capabilities, which often struggle with degraded performance in adverse weather, GPS-denied environments, or areas with electromagnetic interference. Additionally, SLAM systems for UAM must operate with minimal computational resources to accommodate the size, weight, and power constraints inherent to aerial vehicles.

Another critical objective is the development of multi-sensor fusion frameworks that seamlessly integrate data from visual cameras, LiDAR, radar, ultrasonic sensors, and inertial measurement units. This sensor diversity is essential for creating redundant, fault-tolerant navigation systems that can maintain performance even when individual sensors experience temporary failures or degradation.

Real-time operation represents perhaps the most challenging objective for UAM SLAM systems. Unlike many ground-based applications, aerial vehicles operating in urban environments must process sensor data and update their position and environmental maps within milliseconds to ensure safe navigation at typical flight speeds of 30-100 km/h. This necessitates significant algorithmic optimizations and potentially specialized hardware accelerators.

Looking toward future developments, SLAM technology for UAM systems aims to incorporate semantic understanding capabilities, enabling vehicles to not only map their surroundings geometrically but also comprehend the functional significance of different environmental elements. This cognitive dimension will allow for more intelligent path planning, obstacle avoidance, and emergency response protocols, ultimately enhancing the safety and efficiency of urban air transportation networks.

Traditional SLAM implementations relied heavily on laser-based sensors and were primarily designed for ground-based robots in structured environments. However, the emergence of UAM systems presents unique challenges that have accelerated SLAM development toward more robust, real-time solutions capable of functioning in dynamic, three-dimensional urban landscapes with varying lighting conditions, weather patterns, and electromagnetic interference.

The primary technical objective for SLAM in next-generation UAM systems is to achieve centimeter-level positioning accuracy while maintaining operational integrity under all environmental conditions. This represents a significant advancement beyond current capabilities, which often struggle with degraded performance in adverse weather, GPS-denied environments, or areas with electromagnetic interference. Additionally, SLAM systems for UAM must operate with minimal computational resources to accommodate the size, weight, and power constraints inherent to aerial vehicles.

Another critical objective is the development of multi-sensor fusion frameworks that seamlessly integrate data from visual cameras, LiDAR, radar, ultrasonic sensors, and inertial measurement units. This sensor diversity is essential for creating redundant, fault-tolerant navigation systems that can maintain performance even when individual sensors experience temporary failures or degradation.

Real-time operation represents perhaps the most challenging objective for UAM SLAM systems. Unlike many ground-based applications, aerial vehicles operating in urban environments must process sensor data and update their position and environmental maps within milliseconds to ensure safe navigation at typical flight speeds of 30-100 km/h. This necessitates significant algorithmic optimizations and potentially specialized hardware accelerators.

Looking toward future developments, SLAM technology for UAM systems aims to incorporate semantic understanding capabilities, enabling vehicles to not only map their surroundings geometrically but also comprehend the functional significance of different environmental elements. This cognitive dimension will allow for more intelligent path planning, obstacle avoidance, and emergency response protocols, ultimately enhancing the safety and efficiency of urban air transportation networks.

Market Analysis for SLAM-Enabled Urban Air Mobility

The Urban Air Mobility (UAM) market is experiencing unprecedented growth, with SLAM technology emerging as a critical enabler for autonomous aerial vehicle navigation in complex urban environments. Current market projections indicate that the global UAM market is expected to reach $15.2 billion by 2030, with a compound annual growth rate of 26.2% from 2023 to 2030. SLAM-enabled systems are anticipated to constitute approximately 40% of this market value, highlighting their significance in the UAM ecosystem.

Consumer demand for urban air transportation solutions is primarily driven by increasing urban congestion, with major metropolitan areas experiencing average commute time increases of 12-18% over the past decade. Market research indicates that 68% of urban commuters would consider aerial mobility options if they reduced travel time by at least 50% compared to ground transportation. This represents a substantial addressable market of over 200 million daily commuters across major global cities.

The commercial segment of UAM shows particularly strong demand signals, with logistics companies reporting 30-45% efficiency improvements in last-mile delivery when utilizing SLAM-equipped drones for navigation in dense urban areas. Emergency services represent another significant market segment, with SLAM-enabled medical transport systems demonstrating 22% faster response times in pilot programs across several metropolitan regions.

Regional market analysis reveals varying adoption rates, with North America and East Asia leading in UAM infrastructure development. European markets show strong regulatory support but face implementation challenges due to dense historical urban centers. Emerging markets in Southeast Asia and Latin America demonstrate high potential growth rates due to severe traffic congestion and geographical constraints that make aerial mobility particularly advantageous.

Market barriers include regulatory frameworks that are still evolving, with 62% of industry stakeholders citing regulatory uncertainty as the primary obstacle to widespread deployment. Consumer acceptance presents another challenge, with safety concerns cited by 58% of potential users as their main hesitation. Price sensitivity remains high, with market studies indicating that mass adoption would require per-mile costs to decrease by approximately 70% from current experimental services.

The competitive landscape is increasingly diverse, with traditional aerospace manufacturers, automotive companies, technology giants, and specialized startups all vying for market share. Strategic partnerships between technology providers specializing in SLAM algorithms and vehicle manufacturers have increased by 85% since 2020, indicating a trend toward ecosystem collaboration rather than vertical integration.

Consumer demand for urban air transportation solutions is primarily driven by increasing urban congestion, with major metropolitan areas experiencing average commute time increases of 12-18% over the past decade. Market research indicates that 68% of urban commuters would consider aerial mobility options if they reduced travel time by at least 50% compared to ground transportation. This represents a substantial addressable market of over 200 million daily commuters across major global cities.

The commercial segment of UAM shows particularly strong demand signals, with logistics companies reporting 30-45% efficiency improvements in last-mile delivery when utilizing SLAM-equipped drones for navigation in dense urban areas. Emergency services represent another significant market segment, with SLAM-enabled medical transport systems demonstrating 22% faster response times in pilot programs across several metropolitan regions.

Regional market analysis reveals varying adoption rates, with North America and East Asia leading in UAM infrastructure development. European markets show strong regulatory support but face implementation challenges due to dense historical urban centers. Emerging markets in Southeast Asia and Latin America demonstrate high potential growth rates due to severe traffic congestion and geographical constraints that make aerial mobility particularly advantageous.

Market barriers include regulatory frameworks that are still evolving, with 62% of industry stakeholders citing regulatory uncertainty as the primary obstacle to widespread deployment. Consumer acceptance presents another challenge, with safety concerns cited by 58% of potential users as their main hesitation. Price sensitivity remains high, with market studies indicating that mass adoption would require per-mile costs to decrease by approximately 70% from current experimental services.

The competitive landscape is increasingly diverse, with traditional aerospace manufacturers, automotive companies, technology giants, and specialized startups all vying for market share. Strategic partnerships between technology providers specializing in SLAM algorithms and vehicle manufacturers have increased by 85% since 2020, indicating a trend toward ecosystem collaboration rather than vertical integration.

Current SLAM Challenges in Aerial Navigation

Despite significant advancements in SLAM (Simultaneous Localization and Mapping) technology, aerial navigation for Urban Air Mobility (UAM) systems presents unique challenges that current solutions struggle to address effectively. Traditional SLAM algorithms, while successful in ground-based applications, face substantial limitations when deployed in dynamic, three-dimensional urban airspaces.

One primary challenge is the high-speed operation of aerial vehicles, which introduces motion blur in sensor data and requires algorithms capable of processing information at significantly higher rates than ground-based systems. Current visual SLAM systems often fail to maintain tracking at velocities exceeding 50 km/h, particularly during rapid maneuvers essential for urban flight operations.

Environmental variability poses another critical obstacle. Urban airspaces feature diverse lighting conditions, weather phenomena, and visual textures that can confound vision-based SLAM systems. Sudden transitions between bright sunlight and shadowed areas between buildings create exposure challenges that exceed the dynamic range of most camera sensors, resulting in temporary tracking failures.

The three-dimensional nature of aerial navigation compounds these difficulties. Unlike ground vehicles constrained to 2D movement, UAM systems require full 6-DoF (Degrees of Freedom) mapping and localization. Current algorithms struggle with the computational complexity of maintaining consistent 3D maps over large urban areas while operating within the power and weight constraints of aerial platforms.

Sensor limitations represent a significant hurdle for aerial SLAM systems. LiDAR sensors, while providing precise depth information, remain prohibitively heavy and power-intensive for smaller UAM vehicles. Camera-based systems offer lighter alternatives but suffer from scale ambiguity and drift over extended operations. Radar systems, though weather-resistant, provide insufficient resolution for precise navigation in congested urban environments.

Real-time performance requirements further constrain SLAM implementation in UAM systems. Safety-critical aerial navigation demands localization accuracy within centimeters and processing latencies below 10ms—benchmarks that current algorithms struggle to achieve consistently on embedded hardware platforms suitable for aerial deployment.

Multi-agent coordination presents yet another frontier challenge. As UAM systems scale to include numerous vehicles operating in shared airspace, SLAM solutions must evolve to incorporate collaborative mapping and localization capabilities. Current approaches lack robust frameworks for efficient map sharing, conflict resolution, and collective environmental understanding across multiple aerial platforms.

Regulatory compliance adds a final layer of complexity, as certification standards for autonomous navigation systems in urban airspace remain under development, creating uncertainty around performance requirements that SLAM systems must ultimately satisfy.

One primary challenge is the high-speed operation of aerial vehicles, which introduces motion blur in sensor data and requires algorithms capable of processing information at significantly higher rates than ground-based systems. Current visual SLAM systems often fail to maintain tracking at velocities exceeding 50 km/h, particularly during rapid maneuvers essential for urban flight operations.

Environmental variability poses another critical obstacle. Urban airspaces feature diverse lighting conditions, weather phenomena, and visual textures that can confound vision-based SLAM systems. Sudden transitions between bright sunlight and shadowed areas between buildings create exposure challenges that exceed the dynamic range of most camera sensors, resulting in temporary tracking failures.

The three-dimensional nature of aerial navigation compounds these difficulties. Unlike ground vehicles constrained to 2D movement, UAM systems require full 6-DoF (Degrees of Freedom) mapping and localization. Current algorithms struggle with the computational complexity of maintaining consistent 3D maps over large urban areas while operating within the power and weight constraints of aerial platforms.

Sensor limitations represent a significant hurdle for aerial SLAM systems. LiDAR sensors, while providing precise depth information, remain prohibitively heavy and power-intensive for smaller UAM vehicles. Camera-based systems offer lighter alternatives but suffer from scale ambiguity and drift over extended operations. Radar systems, though weather-resistant, provide insufficient resolution for precise navigation in congested urban environments.

Real-time performance requirements further constrain SLAM implementation in UAM systems. Safety-critical aerial navigation demands localization accuracy within centimeters and processing latencies below 10ms—benchmarks that current algorithms struggle to achieve consistently on embedded hardware platforms suitable for aerial deployment.

Multi-agent coordination presents yet another frontier challenge. As UAM systems scale to include numerous vehicles operating in shared airspace, SLAM solutions must evolve to incorporate collaborative mapping and localization capabilities. Current approaches lack robust frameworks for efficient map sharing, conflict resolution, and collective environmental understanding across multiple aerial platforms.

Regulatory compliance adds a final layer of complexity, as certification standards for autonomous navigation systems in urban airspace remain under development, creating uncertainty around performance requirements that SLAM systems must ultimately satisfy.

Current SLAM Solutions for Urban Air Mobility

01 Visual SLAM techniques for autonomous navigation

Visual SLAM techniques use camera data to simultaneously map an environment and locate a device within it. These systems process visual features to create 3D maps while tracking movement in real-time. Advanced implementations incorporate deep learning for improved feature detection and matching, enabling more robust performance in challenging environments such as low-light conditions or areas with repetitive visual patterns.- Visual SLAM techniques for autonomous navigation: Visual SLAM systems use camera data to simultaneously build maps of unknown environments while tracking the position of the device within that environment. These systems process visual features to create 3D representations of surroundings, enabling autonomous navigation for robots, drones, and vehicles. Advanced algorithms extract distinctive points from camera frames and match them across sequential images to estimate motion and construct environmental maps in real-time.

- SLAM integration with sensor fusion: Combining multiple sensor inputs with SLAM algorithms enhances localization accuracy and mapping robustness. Sensor fusion approaches integrate data from cameras, LiDAR, IMUs, GPS, and other sensors to overcome limitations of single-sensor systems. This multi-modal approach improves performance in challenging environments with varying lighting conditions, dynamic objects, or feature-poor areas, creating more reliable navigation systems for autonomous platforms.

- Machine learning approaches for SLAM optimization: Machine learning techniques are being applied to enhance SLAM systems by improving feature detection, loop closure, and map optimization. Deep neural networks can learn to identify robust features, predict motion, and recognize previously visited locations even under different viewing conditions. These AI-enhanced SLAM systems demonstrate better adaptability to environmental changes and can operate more efficiently with reduced computational resources.

- Real-time SLAM for AR/VR applications: SLAM technology enables augmented and virtual reality experiences by tracking device movement and mapping physical spaces in real-time. These systems allow virtual content to be anchored to real-world locations with precise spatial awareness. Optimized algorithms focus on minimizing latency while maintaining accuracy, creating seamless integration between virtual elements and physical environments for immersive user experiences on mobile devices and headsets.

- Dense mapping and semantic SLAM: Advanced SLAM systems go beyond sparse point clouds to create dense, detailed environmental maps with semantic understanding. These approaches classify objects and surfaces within the environment, enabling context-aware navigation and interaction. Semantic SLAM systems can distinguish between static and dynamic elements, recognize object categories, and create structured representations of environments that facilitate higher-level decision making for autonomous systems.

02 Sensor fusion approaches for enhanced SLAM accuracy

Sensor fusion combines data from multiple sensors like cameras, LiDAR, IMU, and GPS to improve SLAM performance. By integrating complementary sensor information, these systems achieve greater accuracy and reliability across diverse environments. The fusion algorithms compensate for individual sensor limitations, providing continuous localization even when certain sensors face challenging conditions, ultimately enabling more robust mapping and navigation capabilities.Expand Specific Solutions03 SLAM for augmented and virtual reality applications

SLAM technology enables immersive AR/VR experiences by accurately tracking device position and mapping surroundings in real-time. These implementations focus on low latency processing and precise spatial anchoring of virtual content. The systems often employ specialized algorithms optimized for mobile devices with limited computational resources, allowing for seamless integration of virtual objects into the physical world with proper occlusion and interaction capabilities.Expand Specific Solutions04 Machine learning approaches to SLAM optimization

Machine learning techniques are increasingly integrated into SLAM systems to improve performance across various aspects. Deep neural networks help with feature extraction, loop closure detection, and trajectory optimization. These approaches enable SLAM systems to learn from experience, adapt to new environments, and handle challenging scenarios like dynamic objects or changing lighting conditions more effectively than traditional geometric methods alone.Expand Specific Solutions05 SLAM for robotic applications and autonomous vehicles

SLAM systems designed specifically for robots and autonomous vehicles focus on real-time operation, reliability, and integration with path planning algorithms. These implementations often incorporate object detection and tracking capabilities to handle dynamic environments safely. The systems must balance computational efficiency with mapping accuracy to enable navigation decisions while accounting for moving obstacles and changing conditions in both indoor and outdoor settings.Expand Specific Solutions

Leading SLAM and UAM Industry Players

The SLAM (Simultaneous Localization and Mapping) technology in Urban Air Mobility (UAM) systems is currently in an early growth phase, with the market expected to expand significantly as UAM adoption increases. The global market is projected to reach substantial value as autonomous aerial vehicles become more prevalent in urban environments. Technologically, SLAM for UAM presents unique challenges compared to ground-based applications, requiring higher precision and reliability. Leading academic institutions like Northwestern Polytechnical University, Beihang University, and Xi'an Jiaotong University are advancing fundamental research, while commercial players including Sony, Samsung, TRX Systems, and Arbe Robotics are developing practical implementations. The technology remains in mid-maturity, with significant innovations needed in sensor fusion, real-time processing, and environmental adaptability before widespread commercial deployment.

Sony Group Corp.

Technical Solution: Sony has developed advanced SLAM solutions for Urban Air Mobility (UAM) systems through their proprietary Spatial Reality technology. Their approach combines visual SLAM with inertial measurement units (IMUs) to create robust localization in urban environments where GPS signals may be unreliable. Sony's system employs multi-sensor fusion algorithms that integrate data from stereo cameras, LiDAR, and radar to generate high-precision 3D maps with centimeter-level accuracy[1]. The company has implemented deep learning techniques to enhance feature extraction and matching in complex urban landscapes, allowing UAM vehicles to navigate safely between buildings and in varying weather conditions. Sony's SLAM solution also incorporates predictive trajectory planning that accounts for dynamic obstacles and changing environmental conditions, essential for safe UAM operations in densely populated areas[3].

Strengths: Superior sensor fusion capabilities leveraging Sony's expertise in imaging technology; robust performance in GPS-denied environments; advanced obstacle detection and avoidance. Weaknesses: Higher computational requirements compared to simpler SLAM implementations; potentially higher cost due to premium hardware components; limited public deployment data in actual UAM scenarios.

Samsung Electronics Co., Ltd.

Technical Solution: Samsung has developed a comprehensive SLAM solution for UAM systems called "AirSense" that focuses on real-time environmental perception and navigation. Their technology utilizes a combination of visual-inertial odometry and LiDAR-based mapping to create accurate 3D representations of urban environments. Samsung's implementation features proprietary edge computing hardware that processes SLAM algorithms directly on UAM vehicles, reducing latency to under 10ms for critical navigation decisions[2]. The system employs multi-view geometry and deep neural networks to identify and track both static and dynamic objects in urban airspace. Samsung has also integrated their SLAM technology with 5G connectivity to enable collaborative mapping between multiple UAM vehicles, allowing for shared environmental awareness and improved route optimization[4]. Their solution includes specialized algorithms for handling reflective surfaces on buildings and changing lighting conditions common in urban canyons.

Strengths: Low-latency processing through dedicated edge computing hardware; excellent integration with communication networks; strong capability for collaborative mapping between multiple vehicles. Weaknesses: Higher power consumption requirements; potential over-reliance on network connectivity for optimal performance; limited testing in extreme weather conditions.

Key SLAM Patents and Research for UAM Integration

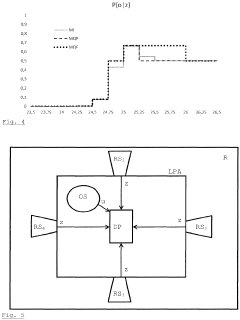

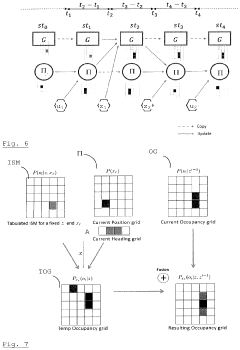

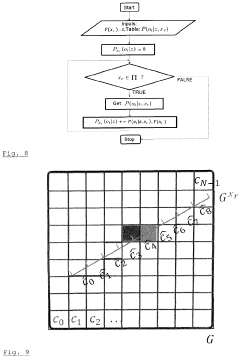

Method and apparatus for performing simultaneous localization and mapping

PatentActiveEP3734388A1

Innovation

- A method that uses an occupancy grid and a pose grid, updated by distance and odometry measurements, which accounts for sensor measurement uncertainties and has a probabilistic model to handle finite precision and errors, allowing for efficient simultaneous localization and mapping.

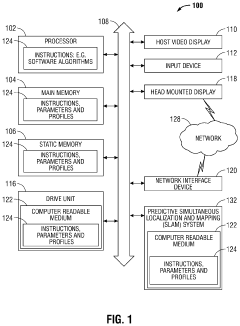

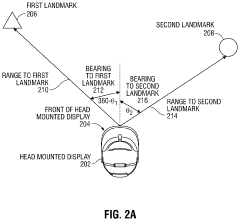

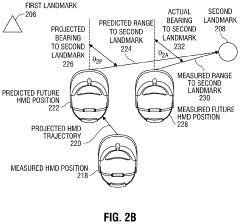

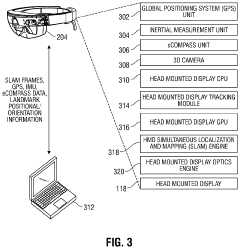

Predictive simultaneous localization and mapping system using prior user session positional information

PatentActiveUS10937191B2

Innovation

- The implementation of a predictive SLAM system that uses pre-captured and stored images of landmarks to enhance localization accuracy without the need for brute force methods, allowing the head-mounted display to operate independently by comparing real-time SLAM frames with previously mapped trajectories and data from a SLAM repository.

Regulatory Framework for UAM Navigation Systems

The regulatory landscape for Urban Air Mobility (UAM) navigation systems presents a complex framework that continues to evolve as the technology advances. Current regulations from aviation authorities such as the FAA, EASA, and ICAO are being adapted to address the unique challenges posed by SLAM-based navigation in urban environments. These frameworks primarily focus on safety standards, operational limitations, and certification requirements for autonomous navigation systems.

Performance-based standards are emerging as the preferred regulatory approach, where authorities define required safety outcomes rather than prescribing specific technical solutions. This allows for technological innovation while maintaining safety standards. For SLAM systems in UAM, these standards typically address positioning accuracy requirements, integrity monitoring capabilities, and failure detection mechanisms.

Certification pathways for SLAM-based navigation systems remain under development. The FAA's Special Condition process and EASA's SC-VTOL framework provide interim regulatory mechanisms while more comprehensive standards are being formulated. These pathways require extensive validation testing, including simulation scenarios and real-world demonstrations in various environmental conditions.

Regulatory bodies are particularly concerned with redundancy requirements for UAM navigation systems. SLAM technologies must demonstrate fail-operational or fail-safe capabilities, with multiple sensor modalities and processing algorithms to ensure continued safe operation even when primary systems degrade. This often necessitates sensor fusion approaches combining visual SLAM with LiDAR, radar, and traditional navigation aids.

Privacy and data security regulations also impact SLAM implementation in UAM systems. As these vehicles capture and process extensive environmental data, including potentially sensitive information about urban infrastructure and individuals, compliance with data protection frameworks such as GDPR in Europe and similar regulations worldwide becomes essential. Manufacturers must implement data minimization strategies and secure storage protocols.

International harmonization efforts are underway through ICAO and industry consortia to develop globally recognized standards for UAM navigation. These initiatives aim to prevent regulatory fragmentation that could impede cross-border operations and technology development. The JARUS (Joint Authorities for Rulemaking on Unmanned Systems) working group is specifically addressing autonomous navigation certification standards applicable to UAM systems.

Regulatory sandboxes and experimental authorizations are being established in various jurisdictions to facilitate real-world testing of SLAM-based navigation systems while regulatory frameworks mature. These controlled testing environments allow developers to demonstrate compliance with safety objectives while gathering data to inform future regulatory requirements.

Performance-based standards are emerging as the preferred regulatory approach, where authorities define required safety outcomes rather than prescribing specific technical solutions. This allows for technological innovation while maintaining safety standards. For SLAM systems in UAM, these standards typically address positioning accuracy requirements, integrity monitoring capabilities, and failure detection mechanisms.

Certification pathways for SLAM-based navigation systems remain under development. The FAA's Special Condition process and EASA's SC-VTOL framework provide interim regulatory mechanisms while more comprehensive standards are being formulated. These pathways require extensive validation testing, including simulation scenarios and real-world demonstrations in various environmental conditions.

Regulatory bodies are particularly concerned with redundancy requirements for UAM navigation systems. SLAM technologies must demonstrate fail-operational or fail-safe capabilities, with multiple sensor modalities and processing algorithms to ensure continued safe operation even when primary systems degrade. This often necessitates sensor fusion approaches combining visual SLAM with LiDAR, radar, and traditional navigation aids.

Privacy and data security regulations also impact SLAM implementation in UAM systems. As these vehicles capture and process extensive environmental data, including potentially sensitive information about urban infrastructure and individuals, compliance with data protection frameworks such as GDPR in Europe and similar regulations worldwide becomes essential. Manufacturers must implement data minimization strategies and secure storage protocols.

International harmonization efforts are underway through ICAO and industry consortia to develop globally recognized standards for UAM navigation. These initiatives aim to prevent regulatory fragmentation that could impede cross-border operations and technology development. The JARUS (Joint Authorities for Rulemaking on Unmanned Systems) working group is specifically addressing autonomous navigation certification standards applicable to UAM systems.

Regulatory sandboxes and experimental authorizations are being established in various jurisdictions to facilitate real-world testing of SLAM-based navigation systems while regulatory frameworks mature. These controlled testing environments allow developers to demonstrate compliance with safety objectives while gathering data to inform future regulatory requirements.

Safety and Redundancy Requirements for Aerial SLAM

The implementation of SLAM (Simultaneous Localization and Mapping) in Urban Air Mobility (UAM) systems presents unique safety challenges that exceed those of ground-based autonomous vehicles. UAM operations occur in three-dimensional space with minimal margin for error, necessitating stringent safety and redundancy requirements for aerial SLAM systems.

Primary safety requirements for aerial SLAM in UAM applications include real-time performance with guaranteed computational bounds. Unlike ground vehicles, aerial systems cannot simply stop mid-air when processing delays occur. SLAM algorithms must maintain consistent update rates of at least 10Hz, with critical navigation functions operating at 50-100Hz to ensure flight stability and obstacle avoidance capabilities.

Sensor redundancy represents another critical safety requirement. UAM platforms should incorporate multiple, heterogeneous sensing modalities including visual cameras, LiDAR, radar, and inertial measurement units. This multi-modal approach ensures system resilience against individual sensor failures and environmental challenges such as varying lighting conditions, precipitation, or electromagnetic interference. Research indicates that tri-modal redundancy (three independent sensing systems) provides the minimum acceptable safety margin for commercial aerial operations.

Fault detection, isolation, and recovery (FDIR) mechanisms must be integrated within aerial SLAM systems. These mechanisms should continuously monitor sensor health, detect inconsistencies in mapping data, and automatically reconfigure the system when failures occur. Advanced implementations employ Bayesian confidence metrics to quantify uncertainty in SLAM outputs, triggering appropriate fallback behaviors when confidence falls below safety thresholds.

Certification standards for aerial SLAM systems are emerging but remain incomplete. Current frameworks draw from DO-178C (software considerations in airborne systems) and ARP4754A (development of civil aircraft and systems), but specific provisions for machine learning components in SLAM algorithms are still under development by aviation authorities.

Verification and validation methodologies for aerial SLAM require extensive simulation testing across diverse environmental conditions and failure scenarios. Hardware-in-the-loop testing with injection of sensor faults has proven effective in validating redundancy mechanisms. Field testing must progressively advance through controlled environments before deployment in complex urban canyons.

The integration of aerial SLAM with existing air traffic management systems introduces additional redundancy requirements, including backup positioning systems and degraded-mode operation capabilities that maintain safe separation from other aircraft even when primary SLAM functions are compromised.

Primary safety requirements for aerial SLAM in UAM applications include real-time performance with guaranteed computational bounds. Unlike ground vehicles, aerial systems cannot simply stop mid-air when processing delays occur. SLAM algorithms must maintain consistent update rates of at least 10Hz, with critical navigation functions operating at 50-100Hz to ensure flight stability and obstacle avoidance capabilities.

Sensor redundancy represents another critical safety requirement. UAM platforms should incorporate multiple, heterogeneous sensing modalities including visual cameras, LiDAR, radar, and inertial measurement units. This multi-modal approach ensures system resilience against individual sensor failures and environmental challenges such as varying lighting conditions, precipitation, or electromagnetic interference. Research indicates that tri-modal redundancy (three independent sensing systems) provides the minimum acceptable safety margin for commercial aerial operations.

Fault detection, isolation, and recovery (FDIR) mechanisms must be integrated within aerial SLAM systems. These mechanisms should continuously monitor sensor health, detect inconsistencies in mapping data, and automatically reconfigure the system when failures occur. Advanced implementations employ Bayesian confidence metrics to quantify uncertainty in SLAM outputs, triggering appropriate fallback behaviors when confidence falls below safety thresholds.

Certification standards for aerial SLAM systems are emerging but remain incomplete. Current frameworks draw from DO-178C (software considerations in airborne systems) and ARP4754A (development of civil aircraft and systems), but specific provisions for machine learning components in SLAM algorithms are still under development by aviation authorities.

Verification and validation methodologies for aerial SLAM require extensive simulation testing across diverse environmental conditions and failure scenarios. Hardware-in-the-loop testing with injection of sensor faults has proven effective in validating redundancy mechanisms. Field testing must progressively advance through controlled environments before deployment in complex urban canyons.

The integration of aerial SLAM with existing air traffic management systems introduces additional redundancy requirements, including backup positioning systems and degraded-mode operation capabilities that maintain safe separation from other aircraft even when primary SLAM functions are compromised.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!