Why Sensor Calibration Is Critical For Accurate SLAM?

SEP 12, 202510 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

SLAM Sensor Calibration Background and Objectives

Simultaneous Localization and Mapping (SLAM) has evolved significantly since its conceptual introduction in the 1980s, with major theoretical breakthroughs occurring in the 1990s. The technology has transitioned from academic research to practical applications across robotics, autonomous vehicles, augmented reality, and industrial automation. This evolution has been driven by advances in sensor technology, computational capabilities, and algorithmic innovations, creating a rich ecosystem of SLAM approaches tailored to different operational environments and requirements.

The fundamental objective of sensor calibration in SLAM systems is to ensure accurate spatial perception by establishing precise mathematical relationships between sensor measurements and the physical world. As SLAM systems increasingly rely on multi-sensor fusion architectures, proper calibration becomes essential for maintaining spatial consistency across different sensing modalities. The goal is to minimize systematic errors that can propagate through the SLAM pipeline, ultimately affecting localization accuracy and map quality.

Current technological trends indicate a growing complexity in SLAM sensor configurations, with systems commonly integrating LiDAR, cameras, IMUs, wheel encoders, and other specialized sensors. Each sensor type introduces unique calibration challenges related to intrinsic parameters (internal characteristics) and extrinsic parameters (spatial relationships between sensors). The industry is moving toward more robust, automated calibration procedures that can adapt to environmental changes and sensor degradation over time.

Market demands are pushing SLAM technology toward higher precision requirements, with applications such as autonomous driving and precision manufacturing requiring sub-centimeter accuracy. This precision cannot be achieved without sophisticated calibration techniques that account for various error sources including sensor non-linearities, temperature effects, and temporal synchronization issues. The technical objective is to develop calibration methodologies that are both highly accurate and operationally practical.

Looking forward, the field is evolving toward self-calibrating SLAM systems capable of continuous parameter adjustment during operation. This represents a significant shift from traditional pre-deployment calibration approaches. Research objectives include developing online calibration algorithms that can detect and compensate for sensor drift, environmental influences, and mechanical wear without requiring system downtime or specialized calibration environments.

The intersection of machine learning with sensor calibration presents promising research directions, with data-driven approaches potentially overcoming limitations of traditional geometric calibration methods. The goal is to create learning-based calibration frameworks that can generalize across different operational conditions while maintaining computational efficiency suitable for resource-constrained platforms.

The fundamental objective of sensor calibration in SLAM systems is to ensure accurate spatial perception by establishing precise mathematical relationships between sensor measurements and the physical world. As SLAM systems increasingly rely on multi-sensor fusion architectures, proper calibration becomes essential for maintaining spatial consistency across different sensing modalities. The goal is to minimize systematic errors that can propagate through the SLAM pipeline, ultimately affecting localization accuracy and map quality.

Current technological trends indicate a growing complexity in SLAM sensor configurations, with systems commonly integrating LiDAR, cameras, IMUs, wheel encoders, and other specialized sensors. Each sensor type introduces unique calibration challenges related to intrinsic parameters (internal characteristics) and extrinsic parameters (spatial relationships between sensors). The industry is moving toward more robust, automated calibration procedures that can adapt to environmental changes and sensor degradation over time.

Market demands are pushing SLAM technology toward higher precision requirements, with applications such as autonomous driving and precision manufacturing requiring sub-centimeter accuracy. This precision cannot be achieved without sophisticated calibration techniques that account for various error sources including sensor non-linearities, temperature effects, and temporal synchronization issues. The technical objective is to develop calibration methodologies that are both highly accurate and operationally practical.

Looking forward, the field is evolving toward self-calibrating SLAM systems capable of continuous parameter adjustment during operation. This represents a significant shift from traditional pre-deployment calibration approaches. Research objectives include developing online calibration algorithms that can detect and compensate for sensor drift, environmental influences, and mechanical wear without requiring system downtime or specialized calibration environments.

The intersection of machine learning with sensor calibration presents promising research directions, with data-driven approaches potentially overcoming limitations of traditional geometric calibration methods. The goal is to create learning-based calibration frameworks that can generalize across different operational conditions while maintaining computational efficiency suitable for resource-constrained platforms.

Market Demand Analysis for Precise SLAM Systems

The global market for SLAM (Simultaneous Localization and Mapping) technology is experiencing unprecedented growth, driven by increasing applications across multiple industries. Current market projections indicate that the SLAM market will reach approximately $4.5 billion by 2025, with a compound annual growth rate exceeding 35% from 2020 to 2025. This remarkable growth trajectory underscores the critical importance of precise SLAM systems in modern technological applications.

The automotive sector represents one of the largest demand drivers for accurate SLAM systems. With autonomous vehicles transitioning from research projects to commercial reality, the need for highly precise localization and mapping capabilities has become paramount. Vehicle manufacturers and technology companies are investing heavily in SLAM technologies that can deliver centimeter-level accuracy even in challenging environmental conditions.

Robotics applications constitute another significant market segment. The industrial robotics sector, valued at over $18 billion globally, increasingly relies on precise SLAM for navigation in dynamic manufacturing environments. Similarly, the service robotics market—spanning from professional cleaning robots to delivery systems—demands SLAM solutions that can operate reliably in unstructured environments with minimal calibration requirements.

Consumer electronics represents an emerging but rapidly growing market for SLAM technology. Augmented reality applications in smartphones and headsets require accurate spatial mapping to deliver convincing user experiences. This market segment is particularly sensitive to cost constraints while still demanding high performance, creating unique challenges for sensor calibration solutions.

The healthcare sector has also begun adopting SLAM technology for applications ranging from autonomous hospital delivery systems to surgical robots. These applications demand exceptional precision and reliability, with error tolerances often measured in sub-millimeter ranges. The market for medical robotics utilizing SLAM is projected to grow at over 20% annually through 2025.

Geographic distribution of market demand shows concentration in North America, Europe, and East Asia, with North America currently representing approximately 40% of global demand. However, the fastest growth is occurring in Asian markets, particularly China, South Korea, and Japan, where robotics adoption is accelerating rapidly across multiple industries.

End-user requirements consistently emphasize several key factors: accuracy under varying environmental conditions, real-time performance, power efficiency, and increasingly, ease of calibration. Market research indicates that calibration complexity represents a significant barrier to adoption for many potential users, with over 65% of surveyed companies citing calibration challenges as a major concern when implementing SLAM-based systems.

AI-enhanced calibration solutions are emerging as a high-demand segment, with users seeking systems that can self-calibrate or assist technicians through automated procedures. This trend aligns with the broader industry movement toward more accessible and user-friendly robotic systems.

The automotive sector represents one of the largest demand drivers for accurate SLAM systems. With autonomous vehicles transitioning from research projects to commercial reality, the need for highly precise localization and mapping capabilities has become paramount. Vehicle manufacturers and technology companies are investing heavily in SLAM technologies that can deliver centimeter-level accuracy even in challenging environmental conditions.

Robotics applications constitute another significant market segment. The industrial robotics sector, valued at over $18 billion globally, increasingly relies on precise SLAM for navigation in dynamic manufacturing environments. Similarly, the service robotics market—spanning from professional cleaning robots to delivery systems—demands SLAM solutions that can operate reliably in unstructured environments with minimal calibration requirements.

Consumer electronics represents an emerging but rapidly growing market for SLAM technology. Augmented reality applications in smartphones and headsets require accurate spatial mapping to deliver convincing user experiences. This market segment is particularly sensitive to cost constraints while still demanding high performance, creating unique challenges for sensor calibration solutions.

The healthcare sector has also begun adopting SLAM technology for applications ranging from autonomous hospital delivery systems to surgical robots. These applications demand exceptional precision and reliability, with error tolerances often measured in sub-millimeter ranges. The market for medical robotics utilizing SLAM is projected to grow at over 20% annually through 2025.

Geographic distribution of market demand shows concentration in North America, Europe, and East Asia, with North America currently representing approximately 40% of global demand. However, the fastest growth is occurring in Asian markets, particularly China, South Korea, and Japan, where robotics adoption is accelerating rapidly across multiple industries.

End-user requirements consistently emphasize several key factors: accuracy under varying environmental conditions, real-time performance, power efficiency, and increasingly, ease of calibration. Market research indicates that calibration complexity represents a significant barrier to adoption for many potential users, with over 65% of surveyed companies citing calibration challenges as a major concern when implementing SLAM-based systems.

AI-enhanced calibration solutions are emerging as a high-demand segment, with users seeking systems that can self-calibrate or assist technicians through automated procedures. This trend aligns with the broader industry movement toward more accessible and user-friendly robotic systems.

Current Calibration Techniques and Challenges

Sensor calibration in SLAM systems encompasses a variety of techniques that have evolved significantly over the past decade. Current calibration approaches can be broadly categorized into factory calibration, field calibration, and online calibration methods. Factory calibration occurs during manufacturing and provides baseline parameters, but these often drift over time due to environmental factors and mechanical stress. Field calibration requires specialized equipment and controlled environments, making it precise but impractical for many real-world applications.

Online calibration has emerged as a critical advancement, allowing systems to self-calibrate during operation. This approach typically employs optimization-based methods that minimize reprojection errors or feature misalignments across sensor data streams. Extended Kalman Filter (EKF) frameworks have proven particularly effective for maintaining calibration parameters in dynamic environments, though they can struggle with highly non-linear sensor behaviors.

For visual-inertial systems, current techniques focus on camera-IMU calibration through motion-based approaches. These methods require specific motion patterns to ensure observability of all calibration parameters, presenting challenges in constrained environments. Time synchronization between sensors remains particularly problematic, with current solutions employing timestamp correlation techniques and continuous temporal alignment adjustments.

LiDAR-based SLAM systems face unique calibration challenges due to their complex scanning mechanisms. Point cloud distortion correction and scan registration techniques have improved significantly, but still struggle with dynamic environments and varying reflectivity surfaces. Multi-sensor fusion calibration represents perhaps the most challenging aspect, requiring precise spatial and temporal alignment between heterogeneous sensors like cameras, LiDARs, radars, and IMUs.

Deep learning approaches have recently emerged as promising alternatives to traditional calibration methods. These techniques can learn sensor characteristics directly from data, potentially overcoming limitations of model-based approaches. However, they typically require extensive training data and may not generalize well to novel environments or sensor configurations.

Despite these advances, significant challenges persist. Sensor degradation over time necessitates continuous recalibration, which current methods handle inadequately. Environmental factors like temperature fluctuations and vibration introduce unpredictable parameter shifts that are difficult to model. Computational efficiency remains a bottleneck, particularly for resource-constrained platforms like mobile robots and drones, where calibration must occur alongside other critical SLAM processes.

Cross-manufacturer sensor integration presents additional difficulties, as proprietary formats and undocumented sensor characteristics complicate standardized calibration approaches. The industry currently lacks robust validation metrics for calibration quality, making it difficult to quantitatively assess calibration performance across different systems and environments.

Online calibration has emerged as a critical advancement, allowing systems to self-calibrate during operation. This approach typically employs optimization-based methods that minimize reprojection errors or feature misalignments across sensor data streams. Extended Kalman Filter (EKF) frameworks have proven particularly effective for maintaining calibration parameters in dynamic environments, though they can struggle with highly non-linear sensor behaviors.

For visual-inertial systems, current techniques focus on camera-IMU calibration through motion-based approaches. These methods require specific motion patterns to ensure observability of all calibration parameters, presenting challenges in constrained environments. Time synchronization between sensors remains particularly problematic, with current solutions employing timestamp correlation techniques and continuous temporal alignment adjustments.

LiDAR-based SLAM systems face unique calibration challenges due to their complex scanning mechanisms. Point cloud distortion correction and scan registration techniques have improved significantly, but still struggle with dynamic environments and varying reflectivity surfaces. Multi-sensor fusion calibration represents perhaps the most challenging aspect, requiring precise spatial and temporal alignment between heterogeneous sensors like cameras, LiDARs, radars, and IMUs.

Deep learning approaches have recently emerged as promising alternatives to traditional calibration methods. These techniques can learn sensor characteristics directly from data, potentially overcoming limitations of model-based approaches. However, they typically require extensive training data and may not generalize well to novel environments or sensor configurations.

Despite these advances, significant challenges persist. Sensor degradation over time necessitates continuous recalibration, which current methods handle inadequately. Environmental factors like temperature fluctuations and vibration introduce unpredictable parameter shifts that are difficult to model. Computational efficiency remains a bottleneck, particularly for resource-constrained platforms like mobile robots and drones, where calibration must occur alongside other critical SLAM processes.

Cross-manufacturer sensor integration presents additional difficulties, as proprietary formats and undocumented sensor characteristics complicate standardized calibration approaches. The industry currently lacks robust validation metrics for calibration quality, making it difficult to quantitatively assess calibration performance across different systems and environments.

Existing Calibration Solutions and Implementation Approaches

01 Multi-sensor calibration techniques for SLAM systems

Calibration methods involving multiple sensors such as cameras, LiDAR, and IMUs to ensure accurate spatial relationships between different sensing modalities. These techniques include intrinsic and extrinsic parameter optimization, temporal synchronization between sensors, and automated calibration procedures that minimize geometric errors. Proper multi-sensor calibration significantly improves the accuracy of feature extraction and mapping in SLAM applications.- Multi-sensor calibration techniques for SLAM systems: Calibration methods for multiple sensors in SLAM systems to ensure accurate spatial alignment and data fusion. These techniques involve calibrating various sensors such as cameras, LiDAR, IMU, and radar to establish precise spatial relationships between them. The calibration process typically includes parameter optimization to minimize errors in sensor fusion, resulting in improved localization and mapping accuracy for autonomous navigation systems.

- IMU-camera calibration for visual SLAM: Specialized calibration methods for integrating inertial measurement units (IMUs) with camera systems in visual SLAM applications. These approaches focus on temporal and spatial alignment between IMU and camera data, addressing issues like time synchronization and coordinate transformation. The calibration processes often involve motion-based estimation of extrinsic parameters and bias correction to enhance the accuracy of visual-inertial SLAM systems used in robotics and augmented reality.

- LiDAR calibration for point cloud accuracy: Methods for calibrating LiDAR sensors to improve point cloud quality and accuracy in SLAM applications. These techniques address issues such as distortion correction, intensity calibration, and geometric alignment of point cloud data. The calibration processes often involve reference targets, optimization algorithms, and environmental feature matching to enhance the precision of 3D mapping and object detection capabilities in autonomous systems.

- Online calibration and self-calibration methods: Dynamic calibration approaches that allow SLAM systems to continuously adjust sensor parameters during operation. These methods enable real-time adaptation to environmental changes and sensor drift without requiring manual recalibration. The techniques typically involve error monitoring, parameter optimization during normal operation, and automatic adjustment mechanisms that maintain SLAM accuracy over extended periods of use in varying conditions.

- Calibration validation and error compensation: Techniques for validating calibration quality and compensating for residual errors in SLAM systems. These approaches include error modeling, uncertainty estimation, and adaptive compensation mechanisms to mitigate the effects of imperfect calibration. The methods often incorporate statistical analysis, machine learning algorithms, and feedback loops to detect calibration drift and apply corrections that maintain SLAM accuracy despite sensor imperfections.

02 Real-time sensor error compensation algorithms

Algorithms that dynamically detect and compensate for sensor errors during SLAM operation. These methods include drift correction, bias estimation, noise filtering, and adaptive parameter adjustment based on environmental conditions. Real-time error compensation ensures consistent SLAM performance across varying operational scenarios and extends the reliable operation time of autonomous navigation systems.Expand Specific Solutions03 Environmental factor calibration for robust SLAM

Calibration approaches that account for environmental factors affecting sensor performance, such as lighting conditions, reflective surfaces, and weather variations. These methods include adaptive filtering, environment-specific parameter tuning, and contextual calibration models that adjust sensor sensitivity based on detected environmental conditions. Environmental calibration enhances SLAM reliability in challenging and changing operational settings.Expand Specific Solutions04 Machine learning-based calibration optimization

Application of machine learning techniques to optimize sensor calibration parameters for SLAM systems. These approaches use neural networks, reinforcement learning, and other AI methods to automatically determine optimal calibration settings based on performance metrics. Machine learning calibration can adapt to sensor aging, detect calibration drift, and continuously refine parameters during operation to maintain high SLAM accuracy.Expand Specific Solutions05 Loop closure and map optimization for calibration refinement

Techniques that leverage loop closure detection and global map optimization to refine sensor calibration parameters. These methods use accumulated mapping data to retrospectively improve calibration accuracy, correct systematic errors, and ensure global consistency in SLAM results. By analyzing trajectory loops and feature correspondences, these approaches can identify and correct subtle calibration issues that affect long-term SLAM performance.Expand Specific Solutions

Leading Companies and Research Institutions in SLAM Technology

Sensor calibration is critical for accurate SLAM (Simultaneous Localization and Mapping) as it directly impacts the precision of environmental perception and navigation. The industry is currently in a growth phase, with the global SLAM market expanding rapidly due to increasing applications in robotics, autonomous vehicles, and AR/VR. Market size is projected to reach significant volumes by 2025, driven by demand for precise navigation solutions. Technologically, the field shows varying maturity levels across different sensor types. Academic institutions like Chongqing University, Harbin Institute of Technology, and Peking University are advancing theoretical frameworks, while companies such as Sony, OPPO, and RobArt are developing commercial implementations with calibration techniques ranging from factory-preset to self-calibrating systems.

Sony Group Corp.

Technical Solution: Sony has developed advanced sensor calibration technologies for SLAM (Simultaneous Localization and Mapping) systems that integrate multiple sensing modalities including visual, inertial, and depth sensors. Their approach employs factory pre-calibration combined with online self-calibration methods to maintain accuracy throughout device operation. Sony's calibration pipeline addresses intrinsic parameters (lens distortion, focal length), extrinsic parameters (sensor-to-sensor transformations), and temporal synchronization between sensors. Their technology implements a continuous self-monitoring system that detects calibration drift during operation and triggers recalibration when necessary. This is particularly important in consumer electronics and robotics applications where devices may experience physical impacts or temperature variations that affect calibration. Sony has also developed specialized calibration algorithms for their industry-leading image sensors that account for manufacturing variations and aging effects to ensure consistent performance over the product lifecycle[1][3].

Strengths: Sony's integration of factory and online calibration provides robust performance across varying environmental conditions. Their extensive sensor manufacturing expertise gives them unique insights into sensor behavior and drift patterns. Weaknesses: Their solutions may be optimized primarily for their own hardware ecosystem, potentially limiting compatibility with third-party components.

National University of Defense Technology

Technical Solution: The National University of Defense Technology (NUDT) has developed comprehensive sensor calibration frameworks for military-grade SLAM systems operating in challenging environments. Their approach emphasizes robustness and reliability under extreme conditions including vibration, temperature fluctuations, and potential electromagnetic interference. NUDT's calibration technology incorporates multi-sensor fusion techniques that dynamically weight sensor inputs based on estimated calibration quality and environmental conditions. Their system implements a hierarchical calibration architecture with three levels: factory calibration establishing baseline parameters, field calibration performed during deployment, and continuous runtime calibration that monitors and adjusts parameters during operation. A distinctive feature of NUDT's approach is their "adversarial calibration" methodology, which deliberately tests SLAM systems under degraded sensor conditions to ensure robustness when calibration is suboptimal. Their research has produced calibration techniques specifically designed for heterogeneous sensor arrays combining traditional sensors (cameras, LiDAR, IMU) with specialized military sensors. NUDT has also developed mathematical models for predicting calibration drift based on environmental factors and usage patterns, allowing preemptive recalibration before accuracy degrades significantly[7][9].

Strengths: NUDT's calibration technology offers exceptional reliability under harsh conditions and maintains accuracy even when individual sensors are compromised or degraded. Their solutions incorporate advanced security features to prevent tampering with calibration parameters. Weaknesses: The systems may be overengineered for civilian applications, with higher computational requirements and complexity than necessary for consumer or commercial use cases.

Key Calibration Algorithms and Technical Literature Review

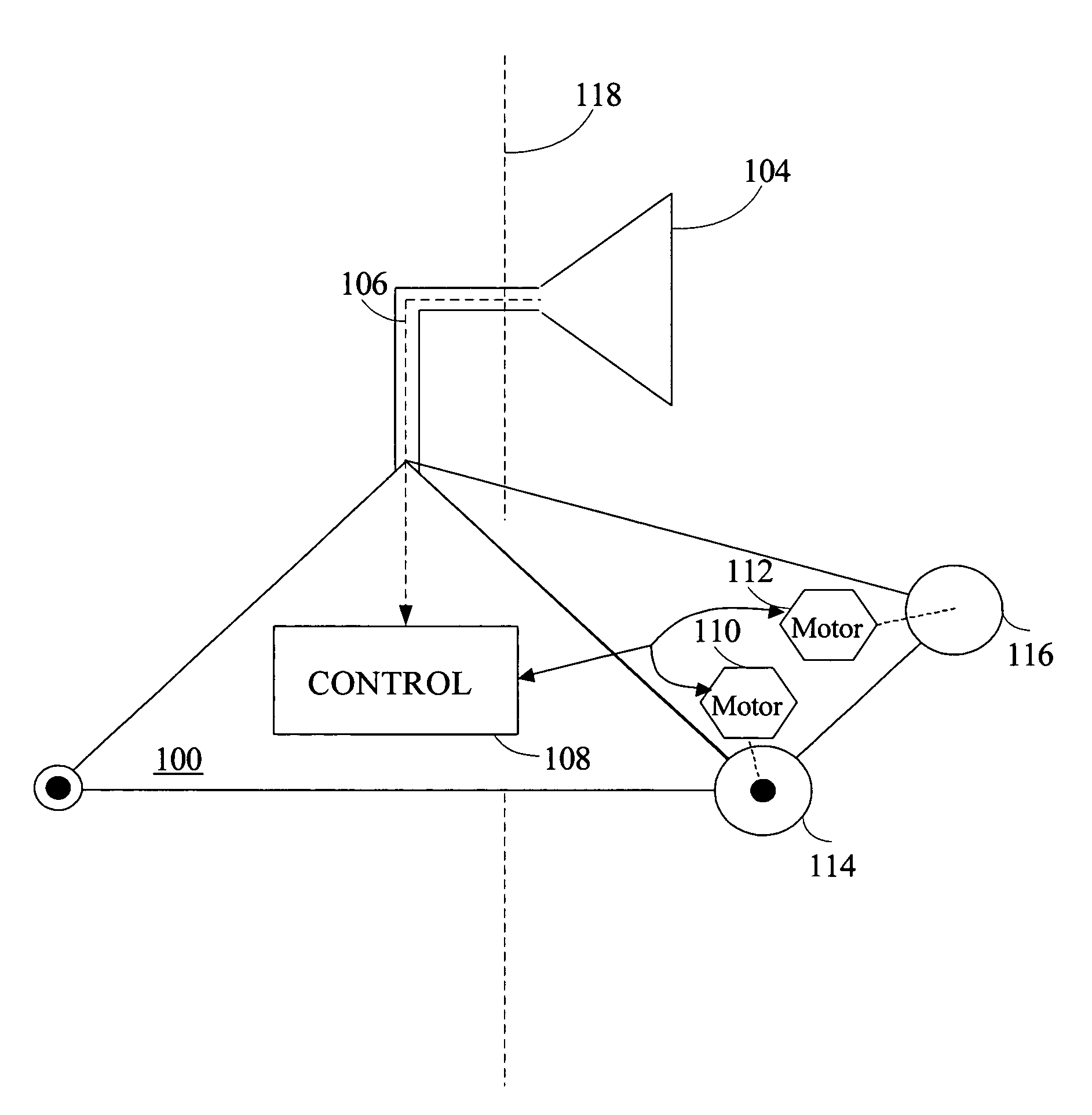

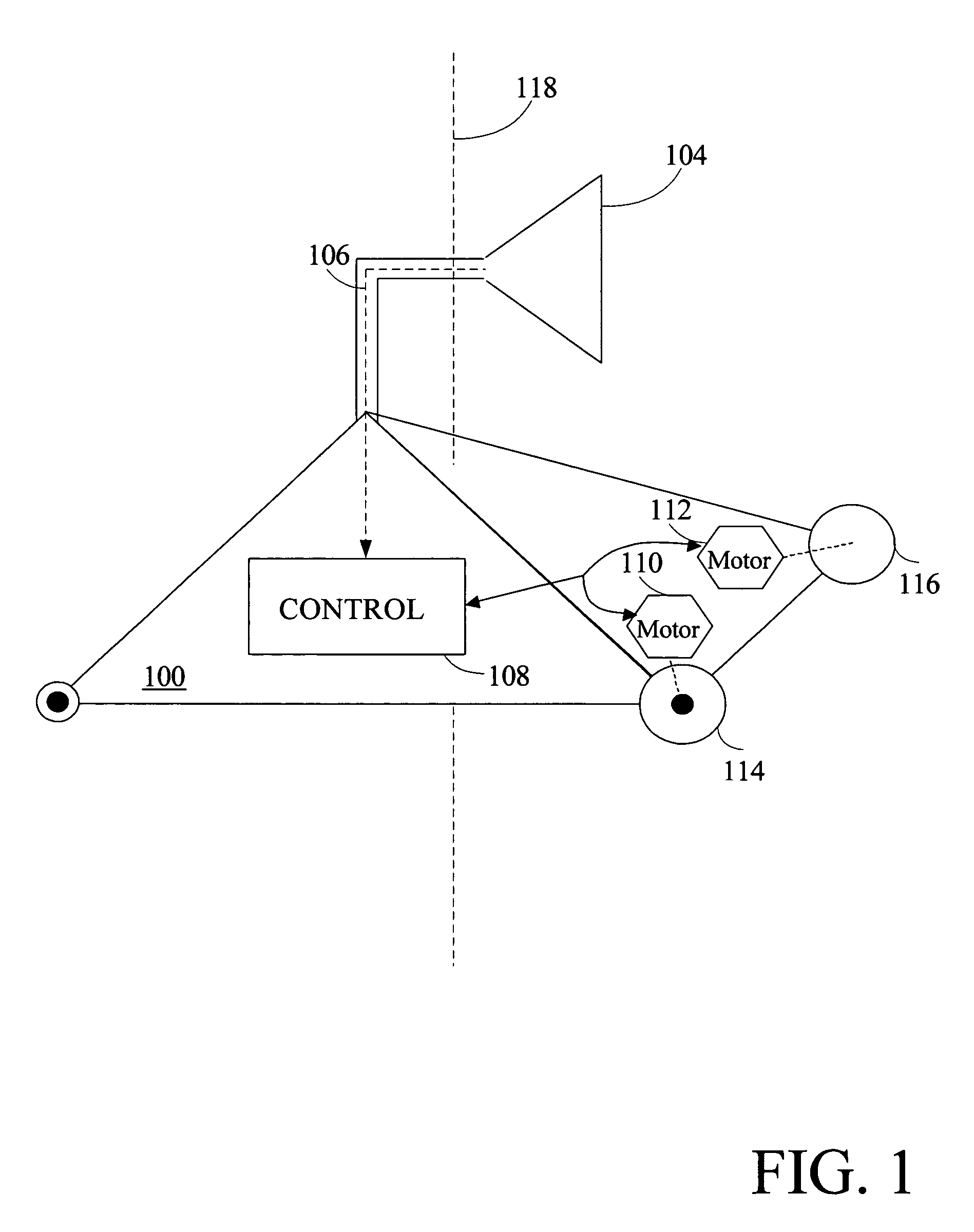

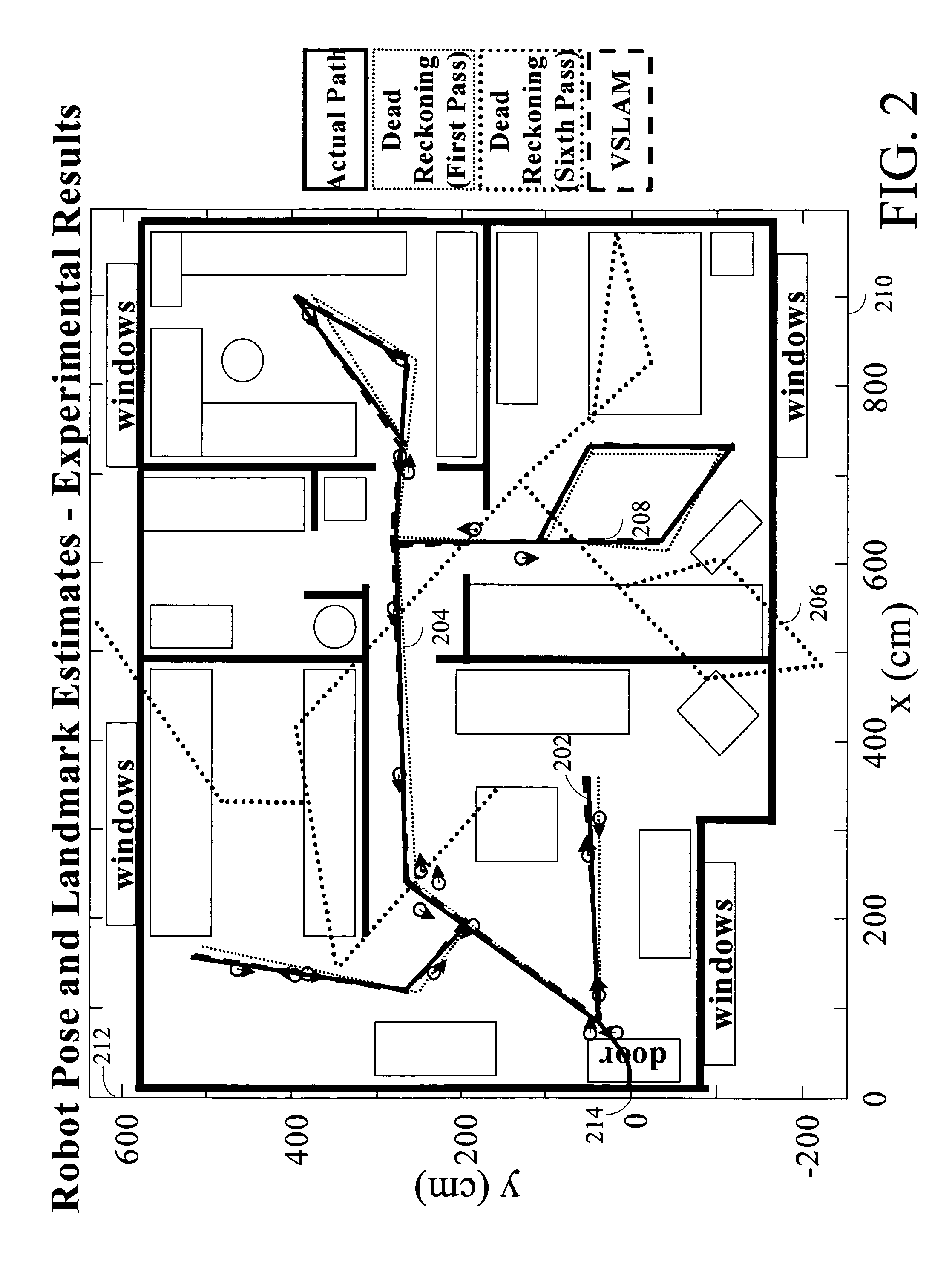

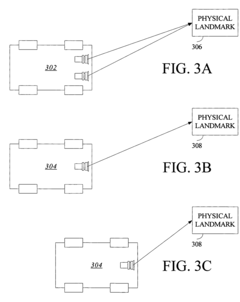

Robust sensor fusion for mapping and localization in a simultaneous localization and mapping (SLAM) system

PatentActiveUS7689321B2

Innovation

- The implementation of Visual Simultaneous Localization and Mapping (VSLAM) technologies using multiple particles to maintain multiple hypotheses, which combines data from visual sensors and dead reckoning sensors to create and update maps, allowing for robust recovery from kidnapping events by correcting drift in dead reckoning measurements with visual landmarks.

Detection method for positioning accuracy, electronic device and computer storage medium

PatentWO2018121617A1

Innovation

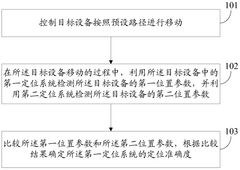

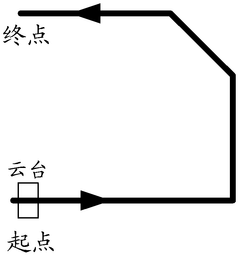

- By controlling the target device to move according to the preset path, the first positioning system is used to detect the first position parameter, and the second positioning system is used to detect the second position parameter, and the proximity between the two is compared to determine the positioning accuracy of the first positioning system. Mobile carrying devices or visual tags are used to detect positioning parameters.

Error Propagation and System Reliability Assessment

Error propagation in SLAM systems follows a cumulative pattern where small calibration inaccuracies can lead to significant trajectory deviations over time. When sensors like IMUs, cameras, or LiDARs are improperly calibrated, the errors in their measurements do not remain isolated but propagate through the entire estimation pipeline. This propagation effect is particularly pronounced in loop closure scenarios, where accumulated errors can prevent successful recognition of previously visited locations.

The mathematical foundation of error propagation in SLAM can be understood through covariance analysis. Each sensor measurement contributes uncertainty to the state estimation, and these uncertainties combine according to the system's observation model. Calibration errors effectively increase the baseline covariance of measurements, leading to faster uncertainty growth and degraded estimation performance. Studies have shown that even a 1-2% error in intrinsic camera parameters can result in position errors exceeding 10% of the traveled distance in visual SLAM systems.

System reliability in SLAM applications depends critically on robust error management strategies. Without proper calibration, SLAM systems may exhibit inconsistent behavior across different environmental conditions or operational durations. This inconsistency manifests as sudden localization failures, map distortions, or drift that exceeds acceptable bounds for the application. For safety-critical applications like autonomous vehicles or medical robots, such reliability issues present unacceptable risks.

Quantitative assessment of SLAM reliability typically involves metrics such as Absolute Trajectory Error (ATE), Relative Pose Error (RPE), and consistency measures like Normalized Estimation Error Squared (NEES). These metrics show significant degradation when calibration errors are present. Research has demonstrated that well-calibrated systems maintain consistent performance even under challenging conditions, while poorly calibrated systems exhibit exponential error growth rates.

The interdependence between different sensor calibration parameters creates complex error surfaces that are difficult to characterize analytically. For instance, errors in extrinsic calibration between a camera and IMU can interact with intrinsic camera calibration errors in ways that amplify their individual effects. Monte Carlo simulations and sensitivity analyses reveal that these interaction effects can sometimes double or triple the expected error compared to considering each calibration parameter independently.

Practical reliability assessment must therefore include stress testing under varied conditions to ensure calibration robustness. This includes testing across temperature ranges, after mechanical vibrations, and following extended operation periods. Only through such comprehensive testing can the long-term reliability of SLAM systems be assured in real-world deployments where recalibration opportunities may be limited.

The mathematical foundation of error propagation in SLAM can be understood through covariance analysis. Each sensor measurement contributes uncertainty to the state estimation, and these uncertainties combine according to the system's observation model. Calibration errors effectively increase the baseline covariance of measurements, leading to faster uncertainty growth and degraded estimation performance. Studies have shown that even a 1-2% error in intrinsic camera parameters can result in position errors exceeding 10% of the traveled distance in visual SLAM systems.

System reliability in SLAM applications depends critically on robust error management strategies. Without proper calibration, SLAM systems may exhibit inconsistent behavior across different environmental conditions or operational durations. This inconsistency manifests as sudden localization failures, map distortions, or drift that exceeds acceptable bounds for the application. For safety-critical applications like autonomous vehicles or medical robots, such reliability issues present unacceptable risks.

Quantitative assessment of SLAM reliability typically involves metrics such as Absolute Trajectory Error (ATE), Relative Pose Error (RPE), and consistency measures like Normalized Estimation Error Squared (NEES). These metrics show significant degradation when calibration errors are present. Research has demonstrated that well-calibrated systems maintain consistent performance even under challenging conditions, while poorly calibrated systems exhibit exponential error growth rates.

The interdependence between different sensor calibration parameters creates complex error surfaces that are difficult to characterize analytically. For instance, errors in extrinsic calibration between a camera and IMU can interact with intrinsic camera calibration errors in ways that amplify their individual effects. Monte Carlo simulations and sensitivity analyses reveal that these interaction effects can sometimes double or triple the expected error compared to considering each calibration parameter independently.

Practical reliability assessment must therefore include stress testing under varied conditions to ensure calibration robustness. This includes testing across temperature ranges, after mechanical vibrations, and following extended operation periods. Only through such comprehensive testing can the long-term reliability of SLAM systems be assured in real-world deployments where recalibration opportunities may be limited.

Multi-Sensor Fusion Strategies for Enhanced SLAM Performance

Multi-sensor fusion represents a critical advancement in SLAM (Simultaneous Localization and Mapping) technology, enabling systems to overcome the inherent limitations of single-sensor approaches. By strategically combining data from complementary sensors, fusion strategies significantly enhance the accuracy, robustness, and operational range of SLAM systems across diverse environments.

The fundamental fusion architectures include loose coupling, tight coupling, and deep coupling methodologies. Loose coupling maintains independent processing pipelines for each sensor before integration, offering modularity but potentially missing cross-sensor correlations. Tight coupling processes raw sensor data jointly, capturing interdependencies but requiring sophisticated algorithms. Deep coupling represents the most integrated approach, where sensor data streams are fused at the feature extraction level, often leveraging deep learning techniques.

Filter-based fusion methods, particularly Extended Kalman Filters (EKF) and particle filters, remain prevalent in real-time applications. These probabilistic approaches effectively manage sensor uncertainties while maintaining computational efficiency. The EKF framework excels in scenarios with approximately linear dynamics, while particle filters demonstrate superior performance in highly non-linear environments despite their higher computational demands.

Graph-based optimization has emerged as a powerful alternative, especially for visual-inertial systems. By representing sensor measurements as constraints in a factor graph, these methods can efficiently solve the joint estimation problem across multiple sensor modalities. Recent advances in incremental smoothing algorithms have made these approaches increasingly viable for real-time applications.

Learning-based fusion strategies represent the cutting edge of multi-sensor integration. Deep neural networks, particularly those employing attention mechanisms, can adaptively weight sensor contributions based on environmental conditions. End-to-end trainable architectures that directly map multi-sensor inputs to pose estimates have demonstrated remarkable robustness in challenging scenarios where traditional methods struggle.

Sensor-specific fusion strategies have evolved for common combinations. Visual-inertial systems leverage complementary properties: cameras provide rich environmental information while IMUs offer high-frequency motion estimates resistant to visual degradation. Similarly, LiDAR-radar fusion combines precise geometric mapping with all-weather perception capabilities. The integration of GPS/GNSS with local sensors enables drift-free operation in outdoor environments while maintaining accuracy during signal interruptions.

Adaptive fusion frameworks represent the next frontier, dynamically adjusting fusion strategies based on environmental conditions and sensor health. These systems can detect sensor failures, environmental challenges, and automatically reconfigure to maintain optimal performance across diverse operational scenarios.

The fundamental fusion architectures include loose coupling, tight coupling, and deep coupling methodologies. Loose coupling maintains independent processing pipelines for each sensor before integration, offering modularity but potentially missing cross-sensor correlations. Tight coupling processes raw sensor data jointly, capturing interdependencies but requiring sophisticated algorithms. Deep coupling represents the most integrated approach, where sensor data streams are fused at the feature extraction level, often leveraging deep learning techniques.

Filter-based fusion methods, particularly Extended Kalman Filters (EKF) and particle filters, remain prevalent in real-time applications. These probabilistic approaches effectively manage sensor uncertainties while maintaining computational efficiency. The EKF framework excels in scenarios with approximately linear dynamics, while particle filters demonstrate superior performance in highly non-linear environments despite their higher computational demands.

Graph-based optimization has emerged as a powerful alternative, especially for visual-inertial systems. By representing sensor measurements as constraints in a factor graph, these methods can efficiently solve the joint estimation problem across multiple sensor modalities. Recent advances in incremental smoothing algorithms have made these approaches increasingly viable for real-time applications.

Learning-based fusion strategies represent the cutting edge of multi-sensor integration. Deep neural networks, particularly those employing attention mechanisms, can adaptively weight sensor contributions based on environmental conditions. End-to-end trainable architectures that directly map multi-sensor inputs to pose estimates have demonstrated remarkable robustness in challenging scenarios where traditional methods struggle.

Sensor-specific fusion strategies have evolved for common combinations. Visual-inertial systems leverage complementary properties: cameras provide rich environmental information while IMUs offer high-frequency motion estimates resistant to visual degradation. Similarly, LiDAR-radar fusion combines precise geometric mapping with all-weather perception capabilities. The integration of GPS/GNSS with local sensors enables drift-free operation in outdoor environments while maintaining accuracy during signal interruptions.

Adaptive fusion frameworks represent the next frontier, dynamically adjusting fusion strategies based on environmental conditions and sensor health. These systems can detect sensor failures, environmental challenges, and automatically reconfigure to maintain optimal performance across diverse operational scenarios.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!