A Real-time Semantic Video Segmentation Method

A video segmentation and semantic segmentation technology, applied in the field of computer vision, can solve the problems of high noise, time-consuming technology, and failure to mine the coherence between video frames, etc., and achieve the effect of high accuracy and efficiency improvement

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

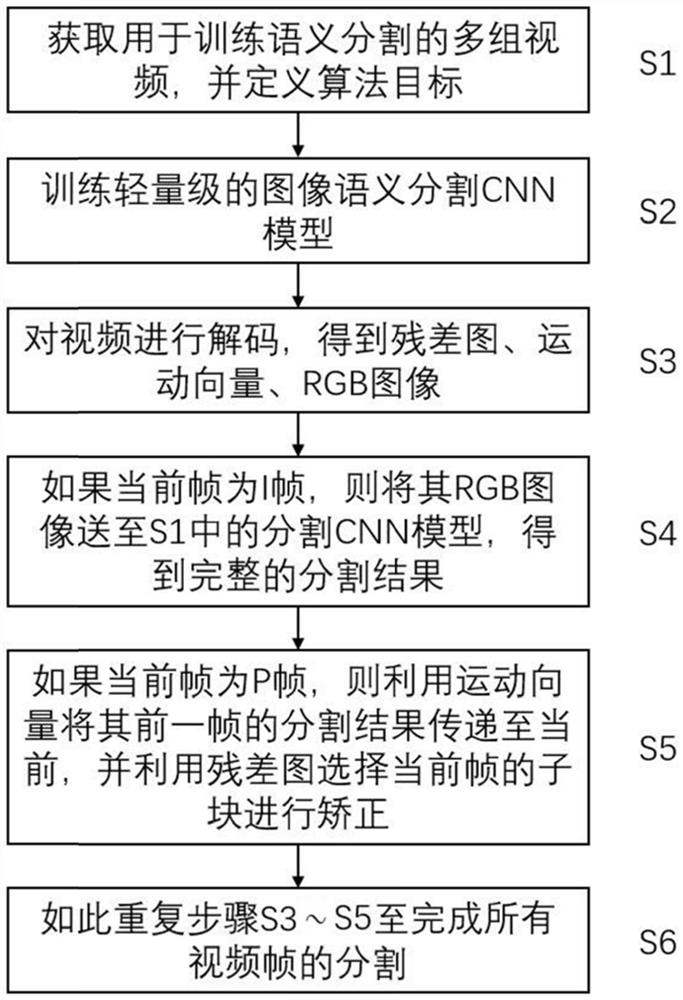

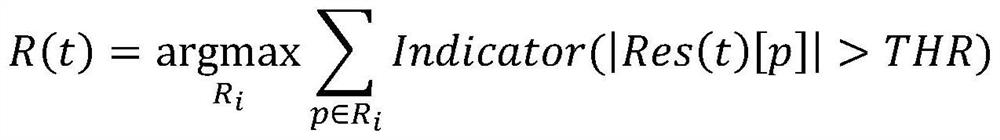

Method used

Image

Examples

Embodiment

[0070] The following simulation experiment is carried out based on the above method. The implementation method of this embodiment is as described above, and the specific steps will not be described in detail, and only the experimental results will be shown below.

[0071] This embodiment uses ICNet as a lightweight image semantic segmentation CNN model. And several experiments were carried out on the public semantic segmentation dataset Cityscapes, which contains 5000 short video clips, which proves that this method can significantly improve the efficiency of semantic video segmentation and ensure the accuracy. In the algorithm, the GOP parameter g is set to 12, and the B frame ratio β is set to 0.

[0072] Comparing the method of the present invention with the traditional frame-by-frame segmentation processing method through CNN, it can be seen from the algorithm flow that the main difference lies in whether to perform S3-S5 compression domain operations. The implementation ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com