Patents

Literature

606 results about "Linguistic model" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Linguistic model. [liŋ′gwis·tik ′mäd·əl] (computer science) A method of automatic pattern recognition in which a class of patterns is defined as those patterns satisfying a certain set of relations among suitably defined primitive elements. Also known as syntactic model.

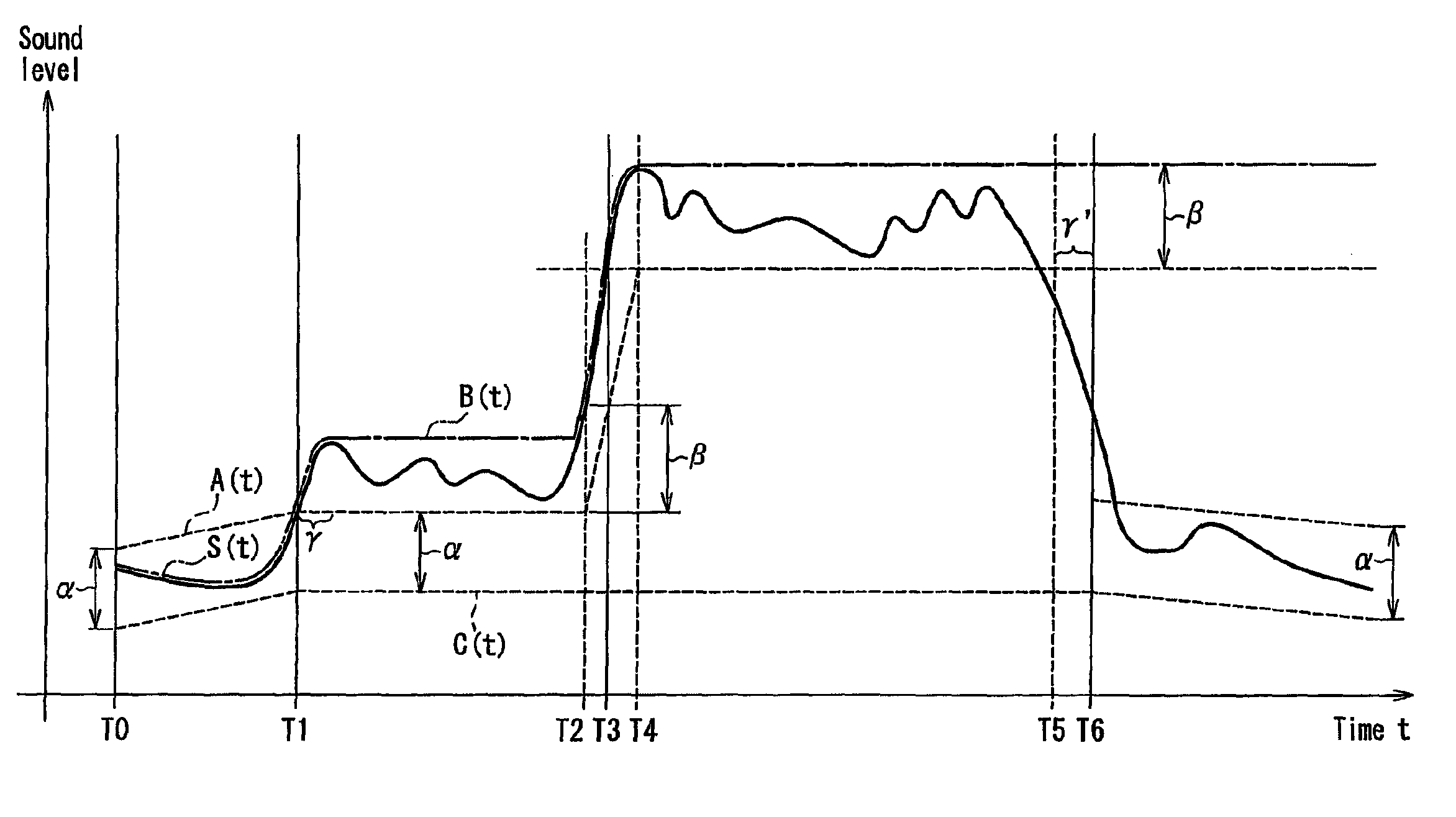

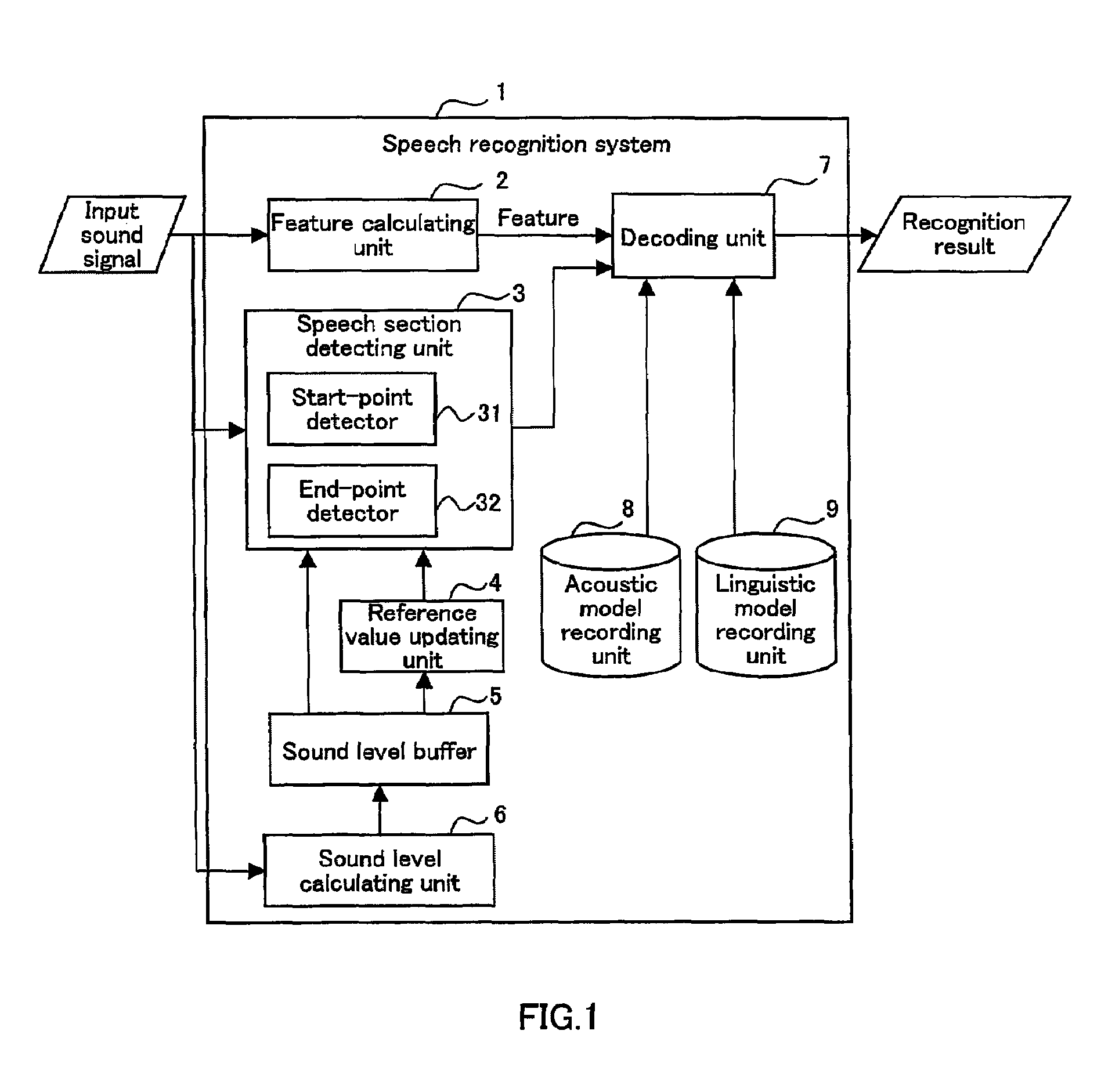

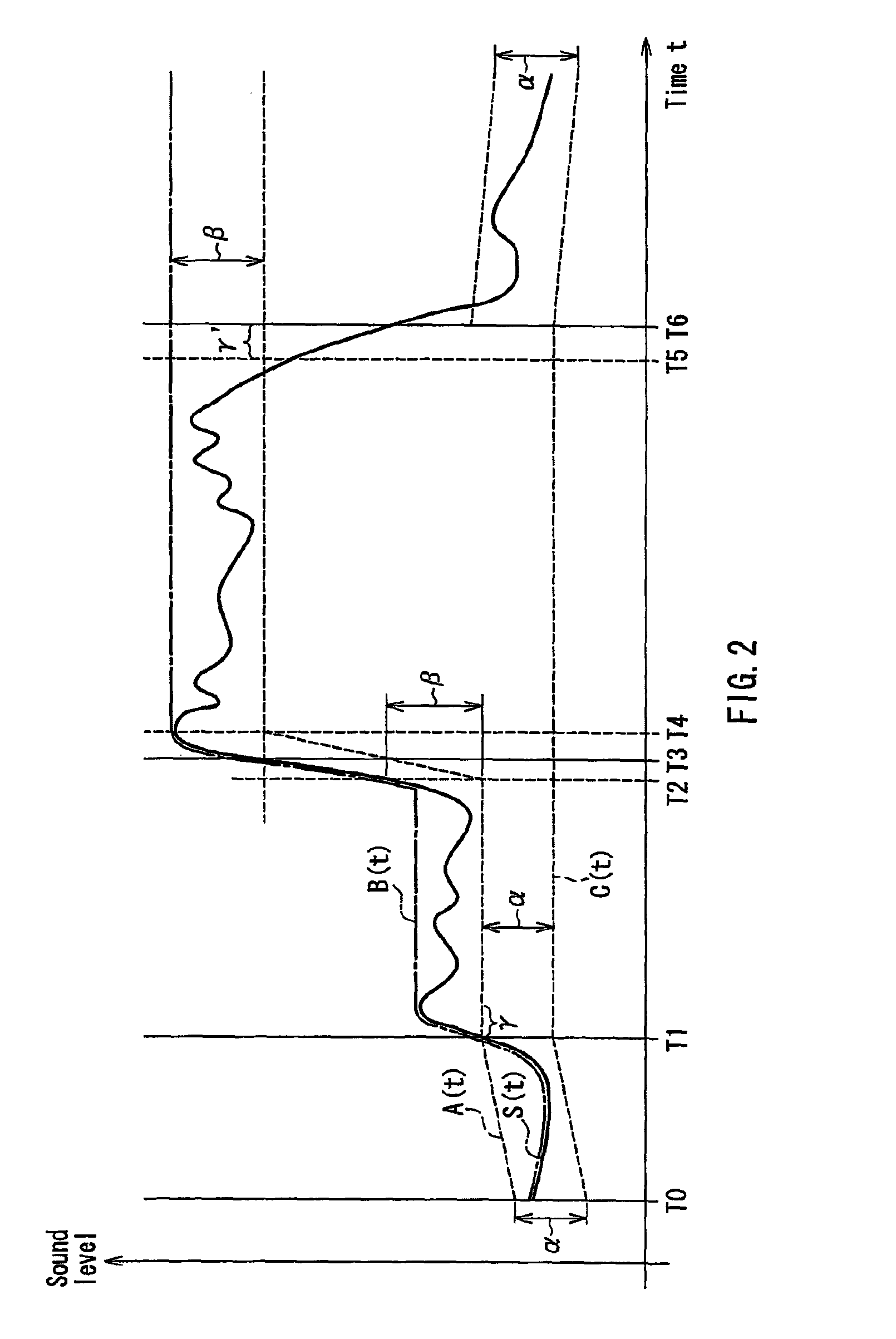

Correction of matching results for speech recognition

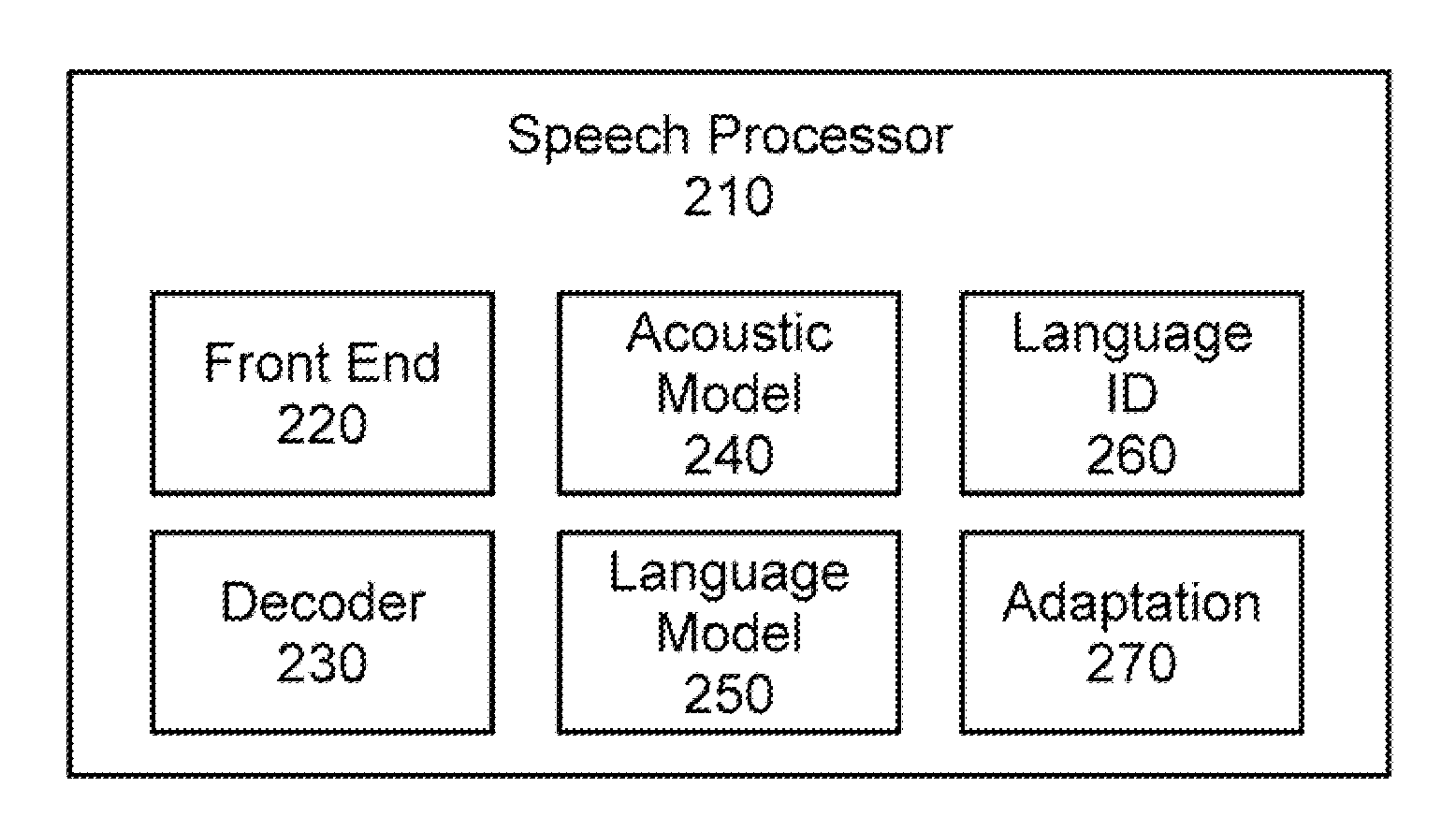

A speech recognition system includes the following: a feature calculating unit; a sound level calculating unit that calculates an input sound level in each frame; a decoding unit that matches the feature of each frame with an acoustic model and a linguistic model, and outputs a recognized word sequence; a start-point detector that determines a start frame of a speech section based on a reference value; an end-point detector that determines an end frame of the speech section based on a reference value; and a reference value updating unit that updates the reference value in accordance with variations in the input sound level. The start-point detector updates the start frame every time the reference value is updated. The decoding unit starts matching before being notified of the end frame and corrects the matching results every time it is notified of the start frame. The speech recognition system can suppress a delay in response time while performing speech recognition based on a proper speech section.

Owner:FUJITSU LTD

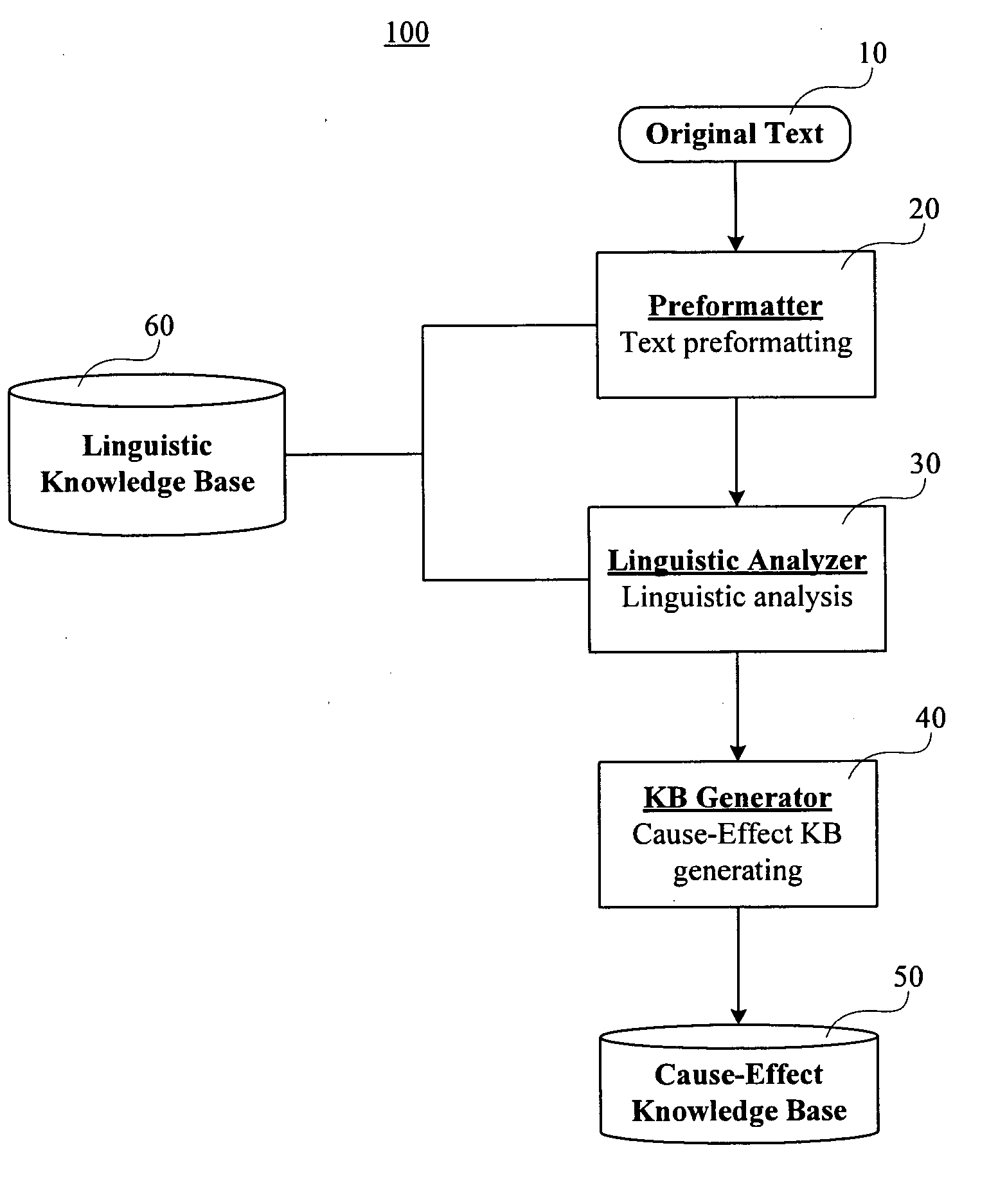

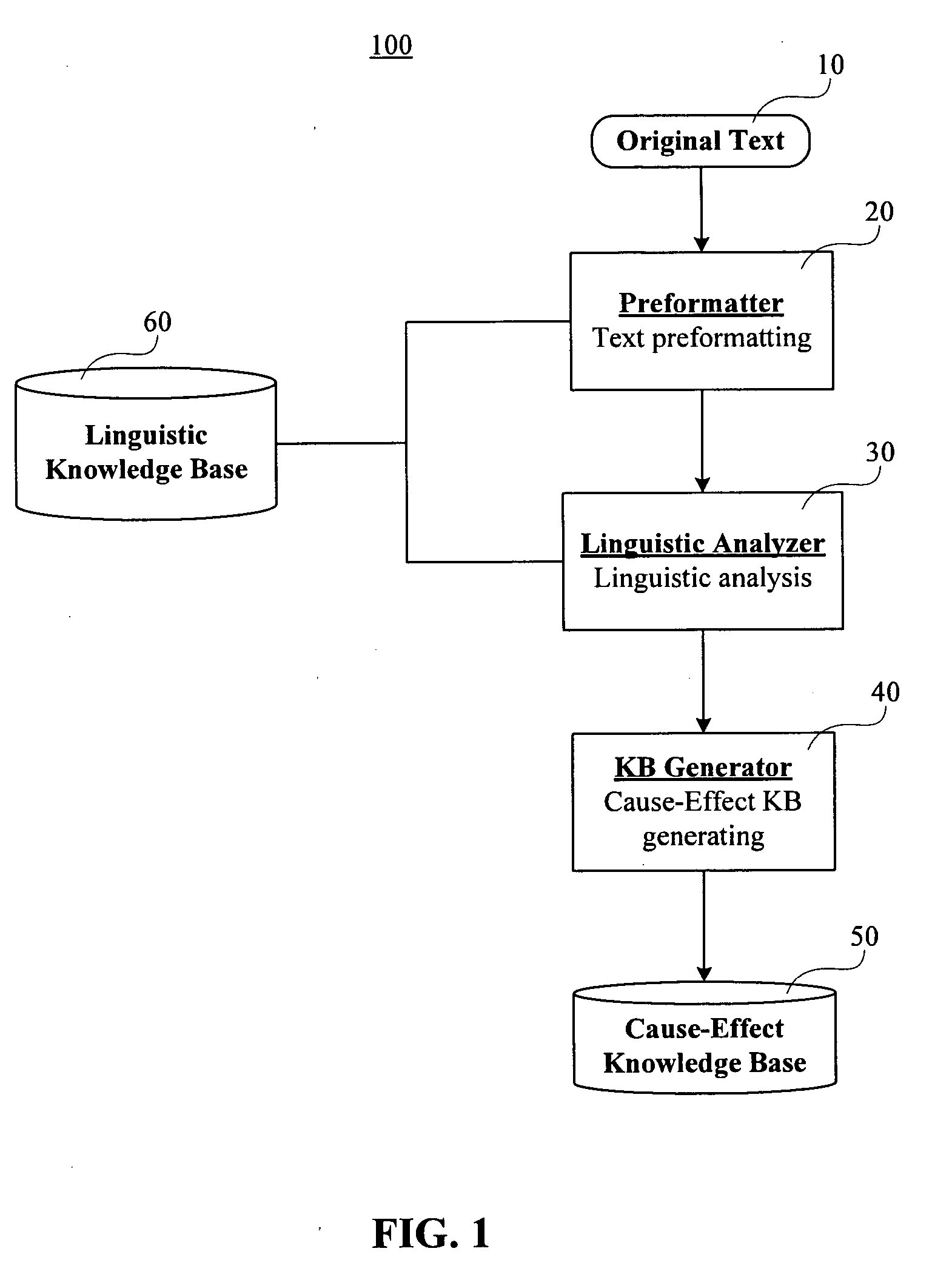

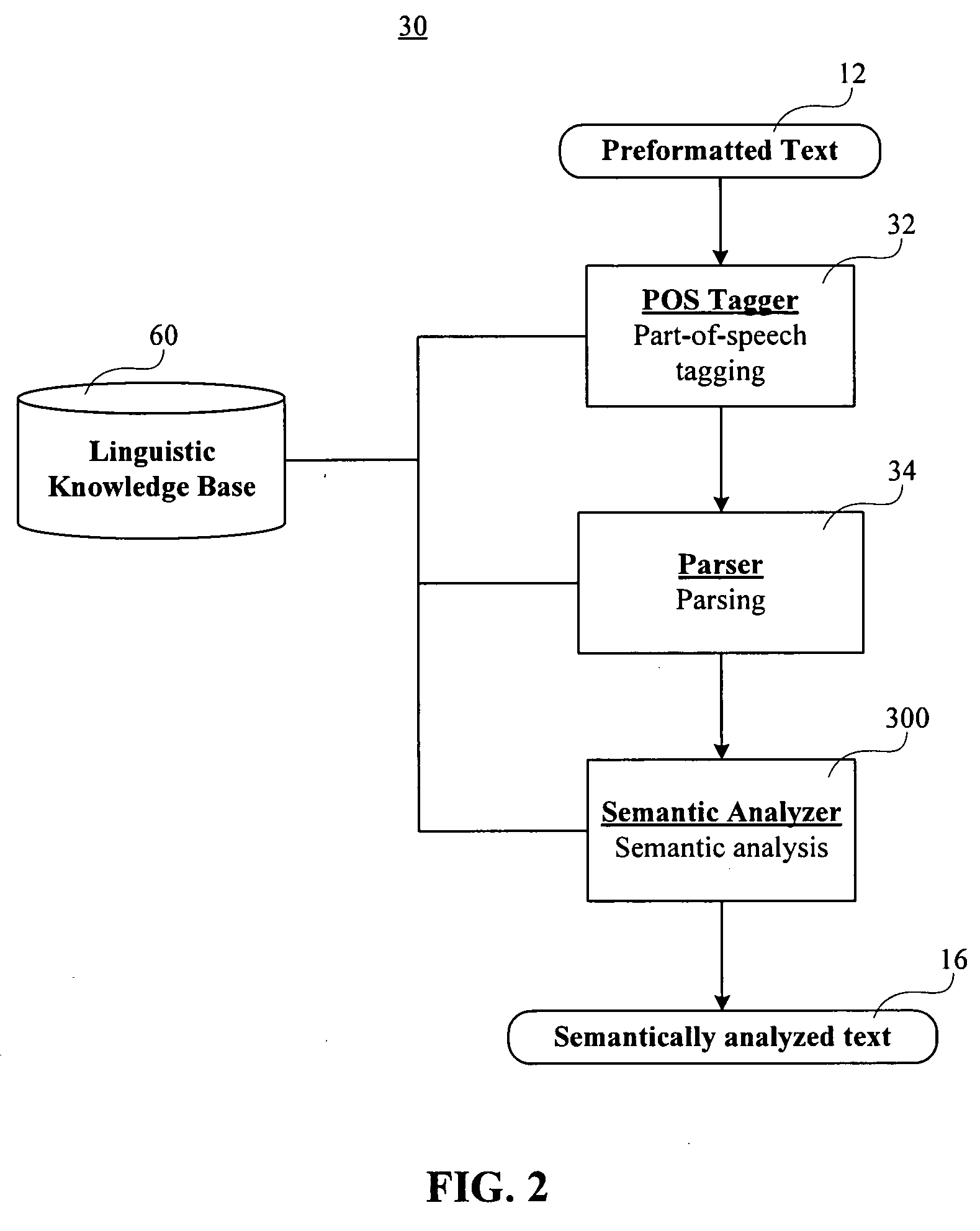

Semantic processor for recognition of cause-effect relations in natural language documents

ActiveUS20060041424A1Digital data information retrievalSemantic analysisLinguistic modelCausal knowledge

A Semantic Processor for the recognition of Cause-Effect relations in natural language documents which includes a Text Preformatter, a Linguistic Analyzer and a Cause-Effect Knowledge Base Generator. The Semantic Processor provides automatic recognition of cause-effect relation both inside single fact and between the facts in arbitrary text documents, where the facts are also automatically extracted from the text in the form of seven-field semantic units. The recognition of Cause-Effect relations is carried out on the basis of linguistic (including semantic) text analysis and a number of recognizing linguistic models built in the form of patterns.

Owner:ALLIUM US HLDG LLC

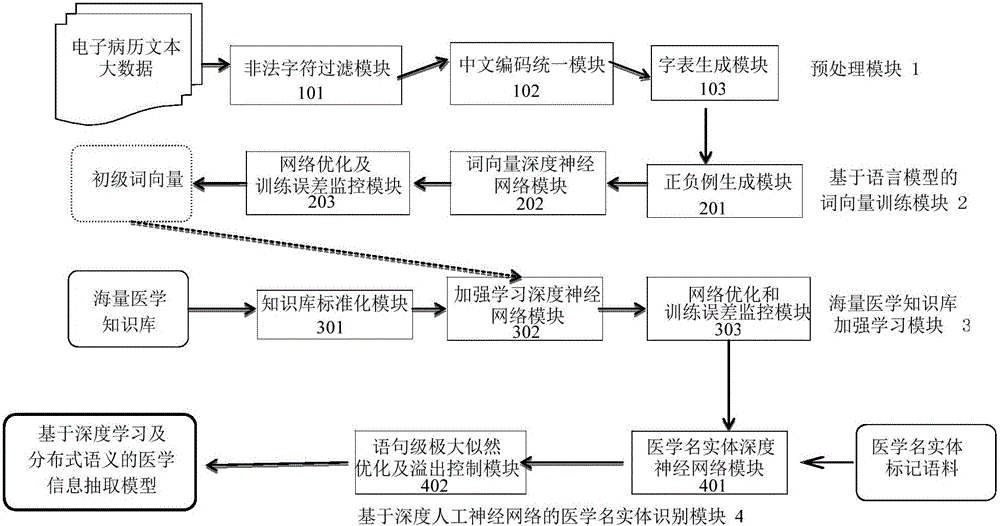

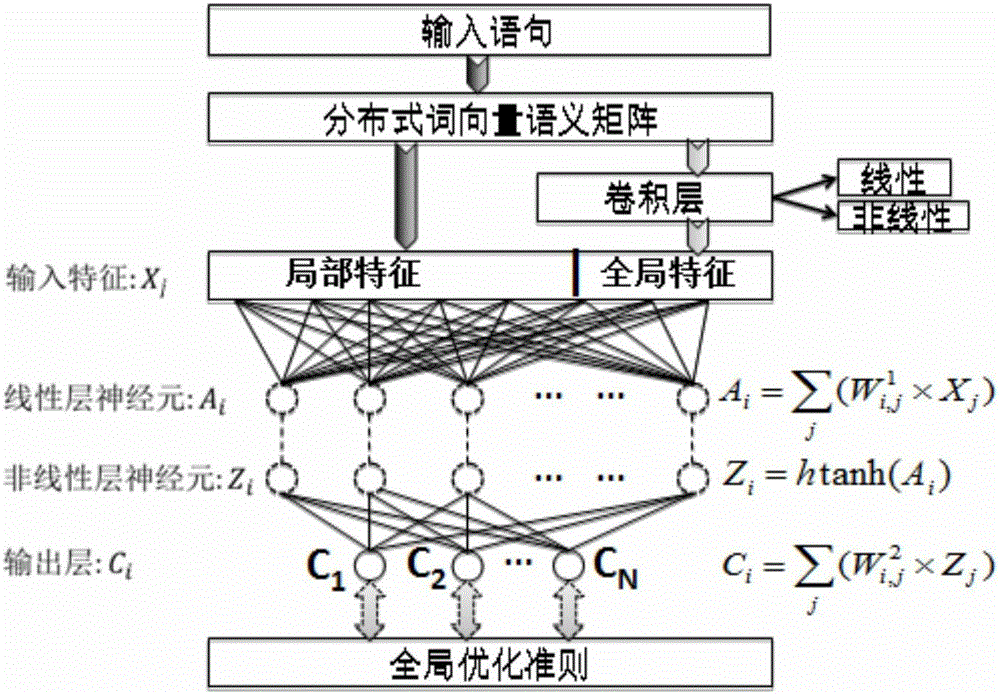

Medical information extraction system and method based on depth learning and distributed semantic features

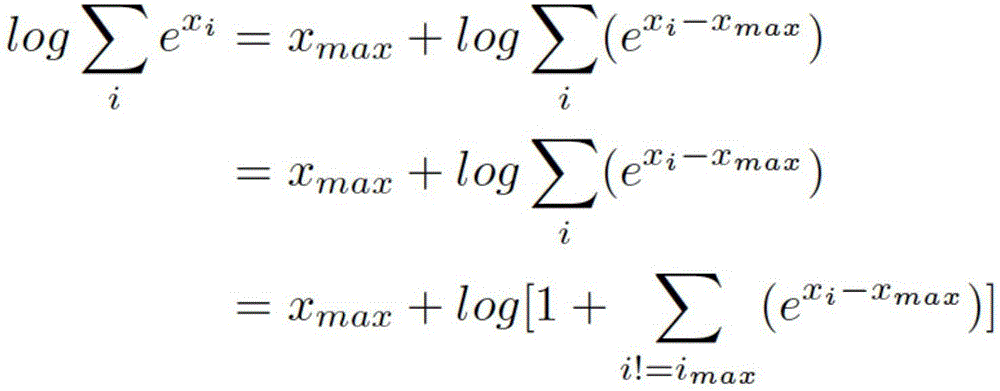

ActiveCN105894088AAvoid floating point overflow problemsHigh precisionNeural learning methodsNerve networkStudy methods

he invention discloses a medical information extraction system and method based on depth learning and distributed semantic features. The system is composed of a pretreatment module, a linguistic-model-based word vector training module, a massive medical knowledge base reinforced learning module, and a depth-artificial-neural-network-based medical term entity identification module. With a depth learning method, generation of the probability of a linguistic model is used as an optimization objective; and a primary word vector is trained by using medical text big data; on the basis of the massive medical knowledge base, a second depth artificial neural network is trained, and the massive knowledge base is combined to the feature leaning process of depth learning based on depth reinforced learning, so that distributed semantic features for the medical field are obtained; and then Chinese medical term entity identification is carried out by using the depth learning method based on the optimized statement-level maximum likelihood probability. Therefore, the word vector is generated by using lots of unmarked linguistic data, so that the tedious feature selection and optimization adjustment process during medical natural language process can be avoided.

Owner:神州医疗科技股份有限公司 +1

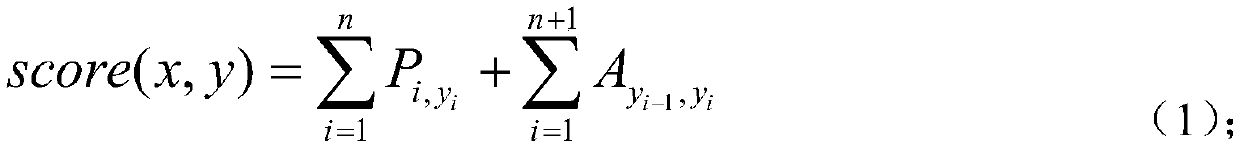

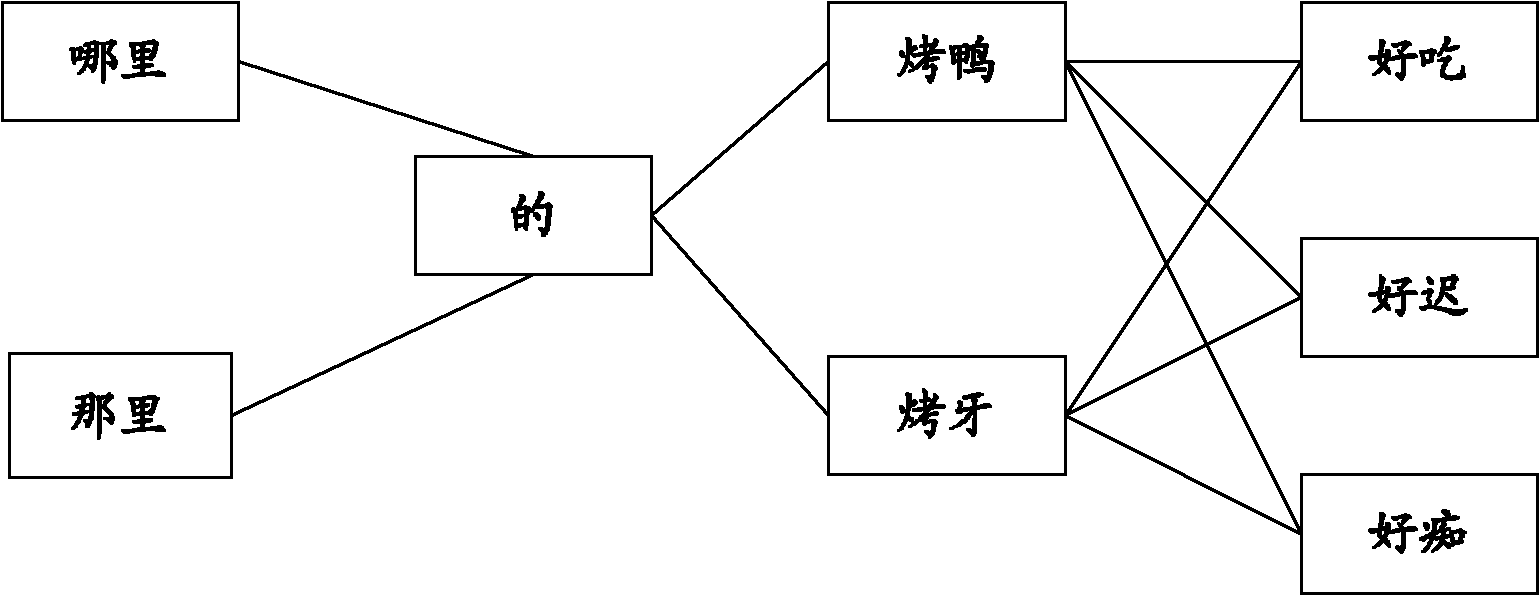

Intelligent error correcting system and method in network searching process

InactiveCN101206673AMeets preferencesSolve the problem of pinyin error correctionSpecial data processing applicationsLinguistic modelAlgorithm

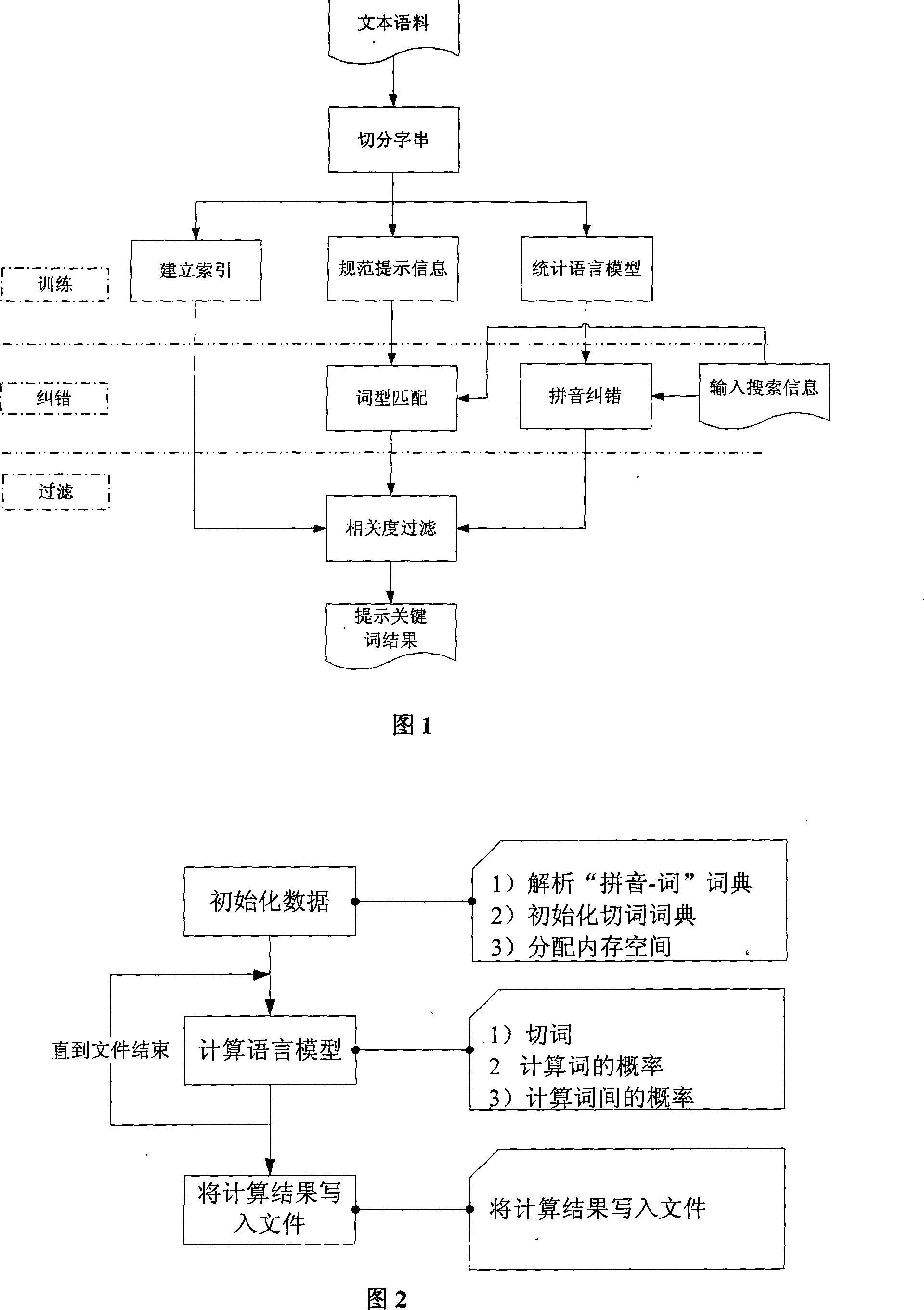

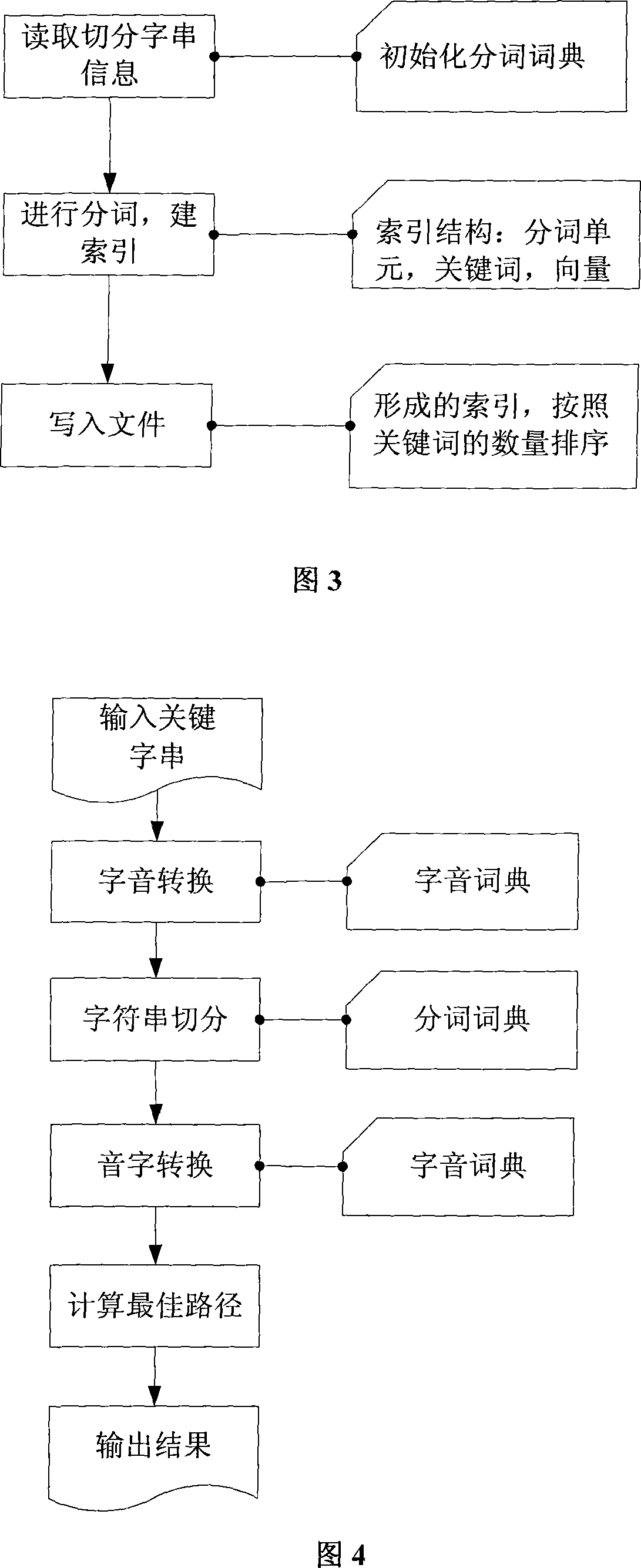

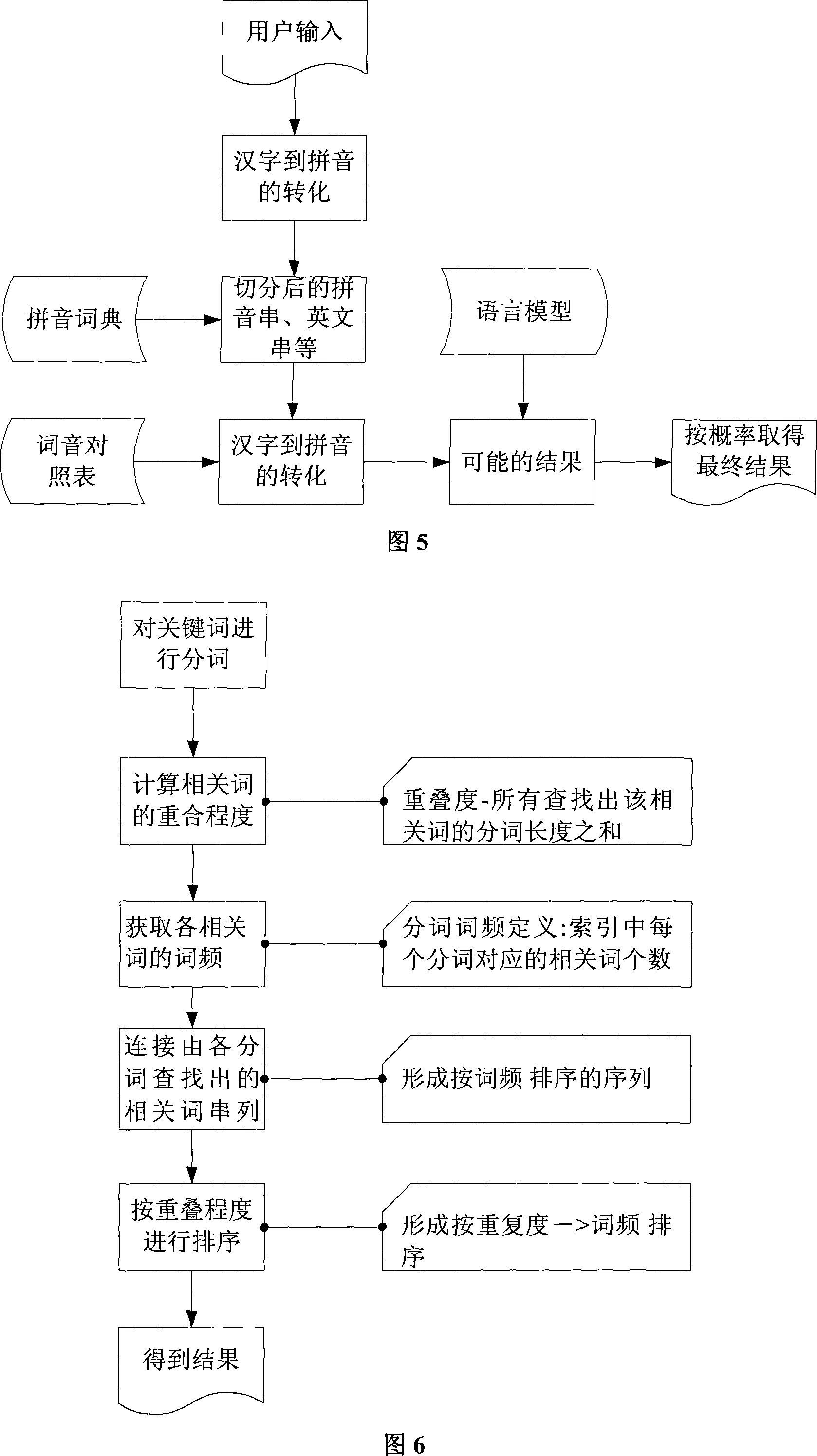

The invention relates to an intelligent error correction system of key words in the process of searching networks and a method thereof. On an Internet platform, firstly, a related linguistic model and a corresponding dictionary as well as a data index database are established through the training of related data information; secondly, a text is inputted, a Pinyin error correction part calculates the mistakes of Pinyin and characters, the error correction of characters is calculated by a fuzzy match; finally, all results are filtered according to the degree of association, a plurality of results are sorted to get the proximal results. The polyphone mistakes and character types as well as word types mistakes inputted by a user are corrected by means of the sound-character conversion and fuzzy error correction technical methods to correct the character replace mistakes, the unwanted character or the leakage of character mistakes, the character position mistakes, etc. in the input process. Moreover, the basic functions are expanded on the basis such as the English-Chinese and punctuations mixing error correction, the fuzzy match technique, the related prompt technique and the enhanced intelligence error correction.

Owner:北京当当网信息技术有限公司

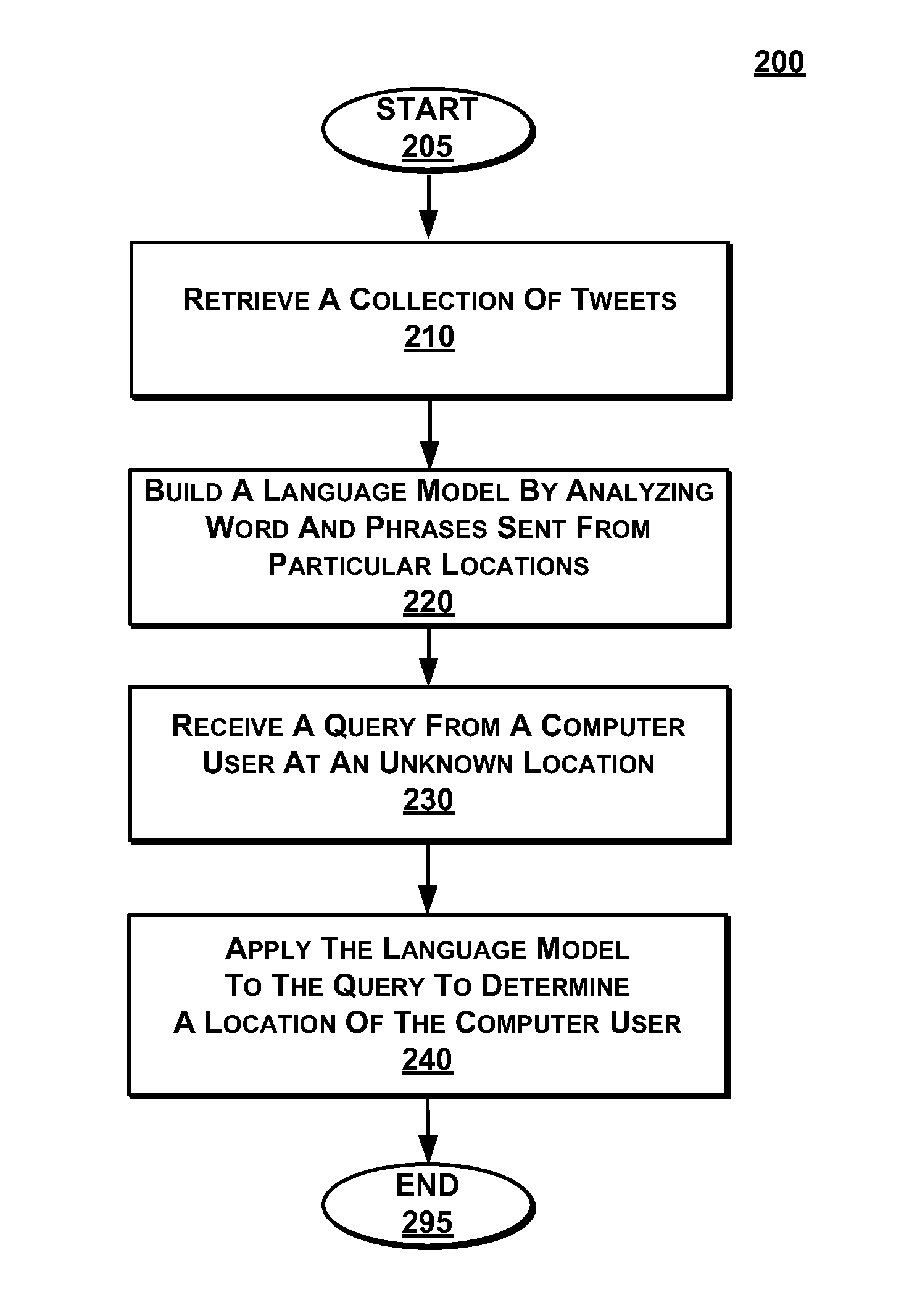

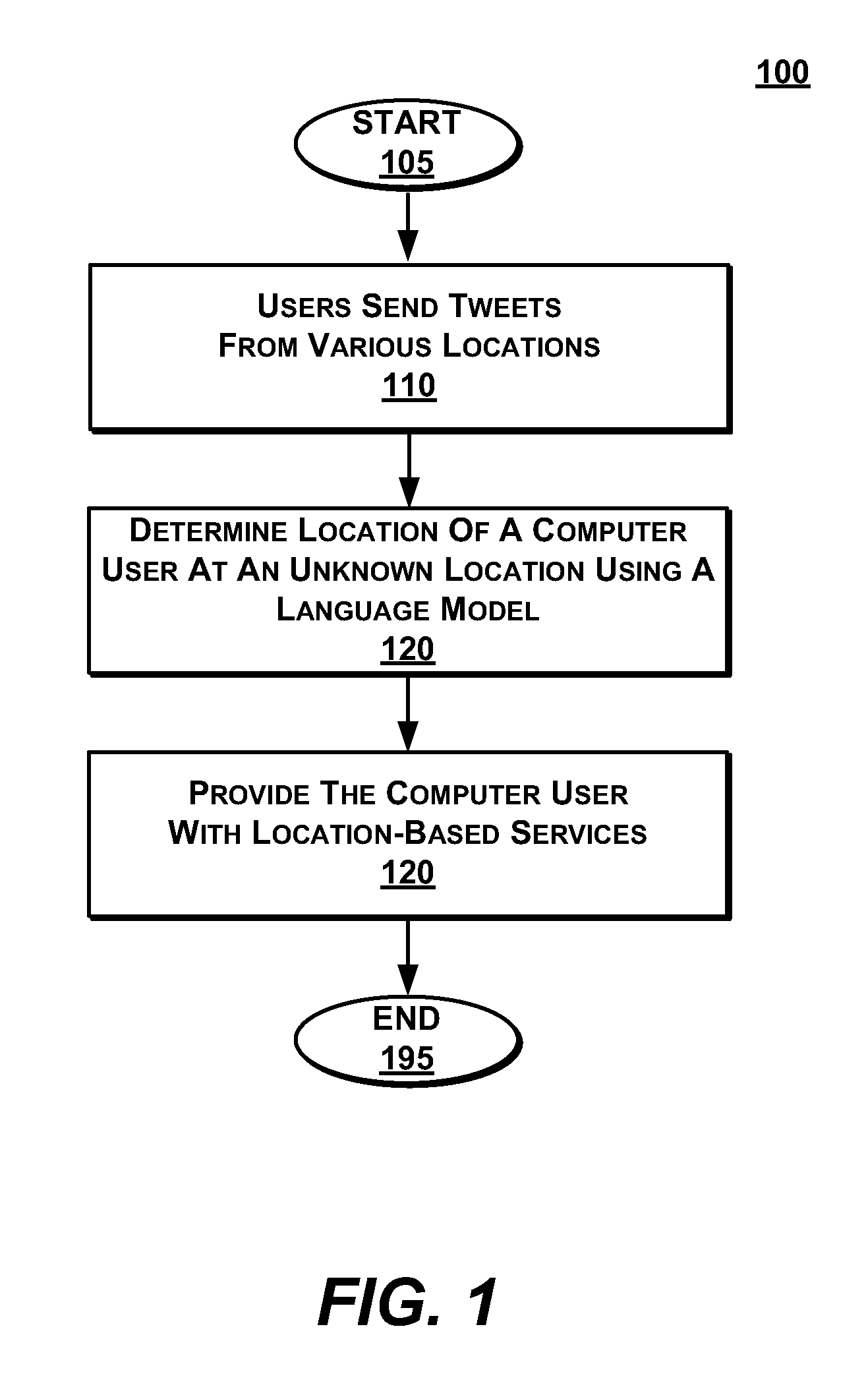

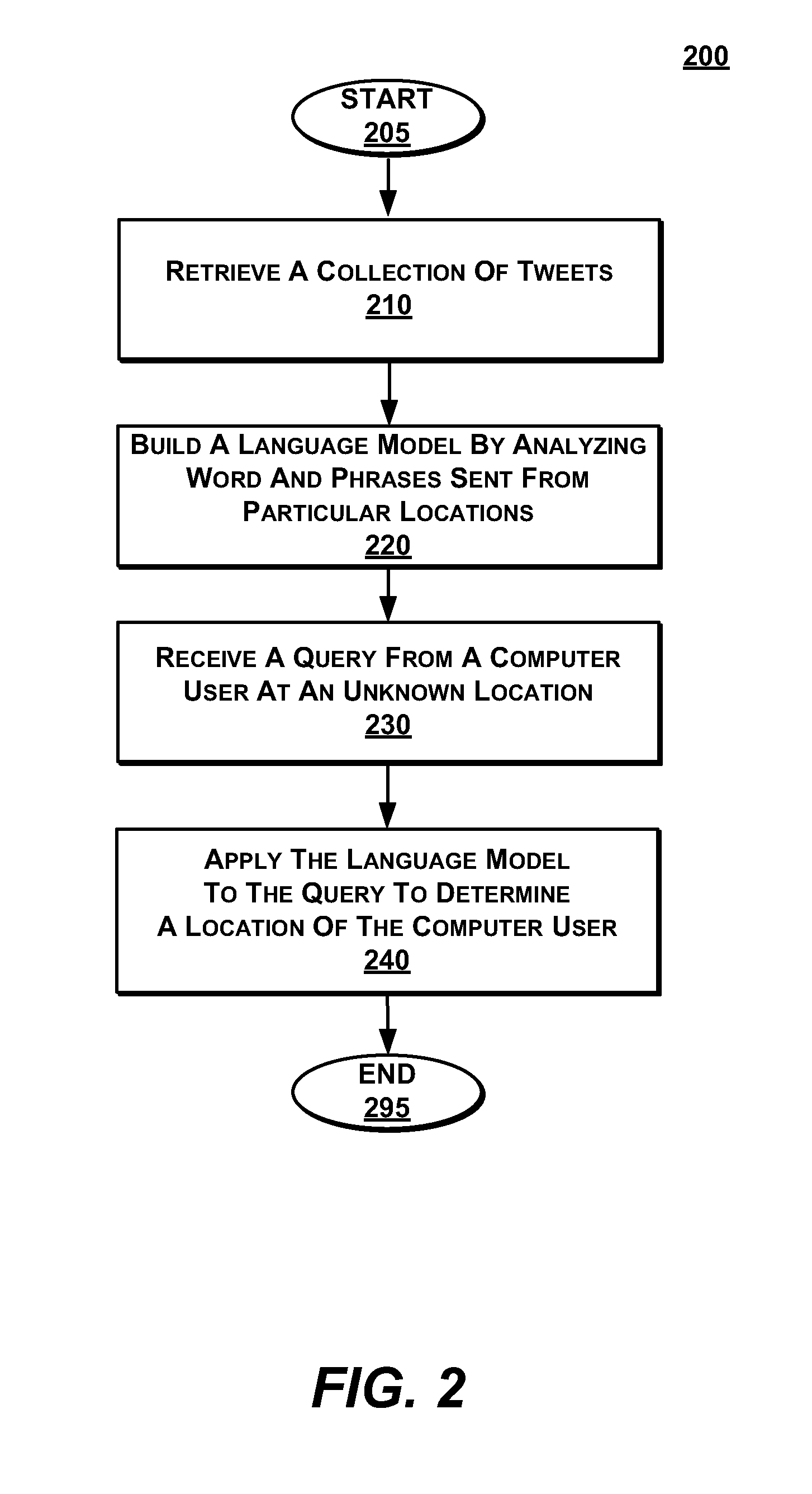

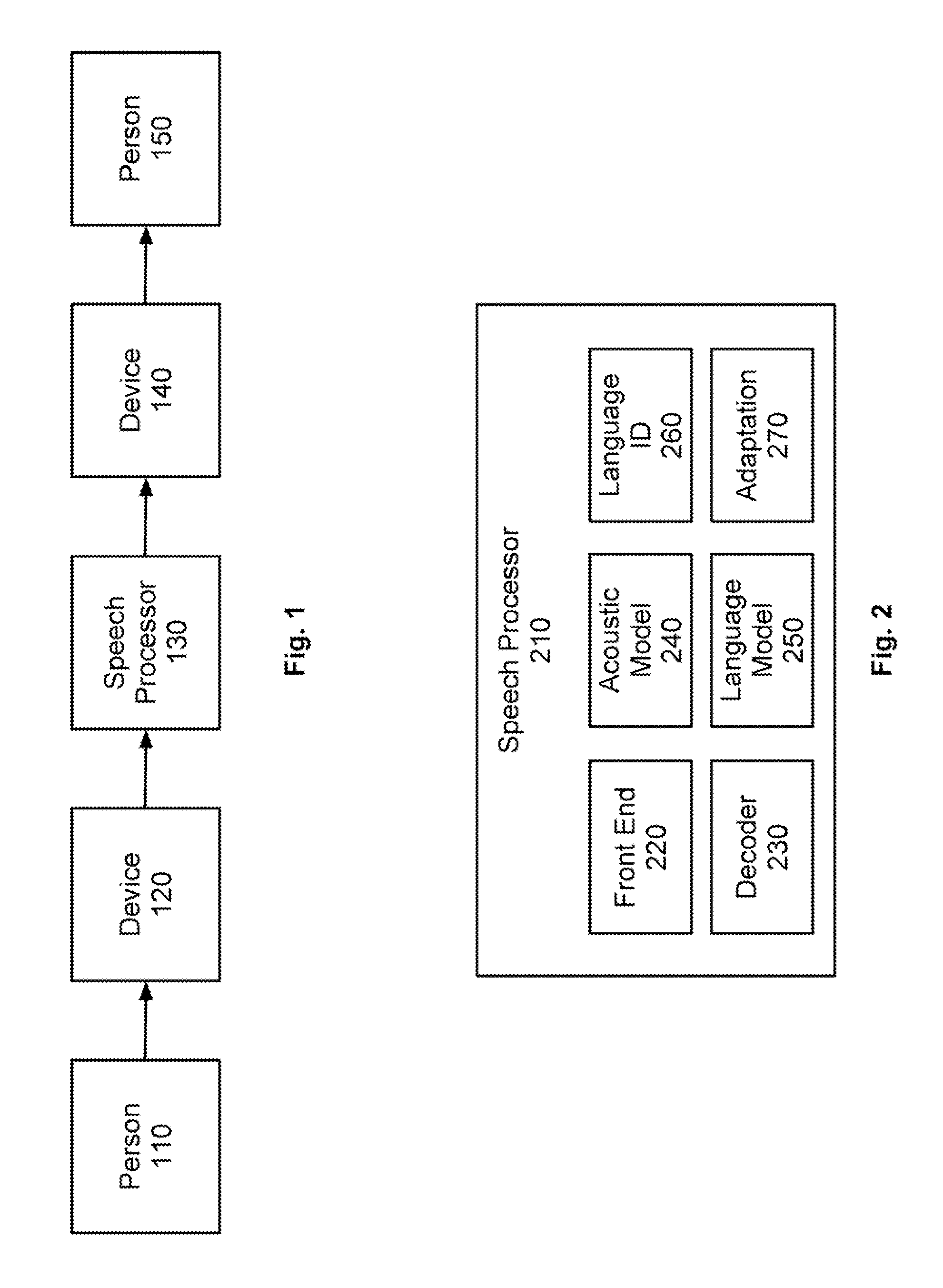

Locating a user based on aggregated tweet content associated with a location

InactiveUS20120166367A1RobustRobust location-based servicesMathematical modelsDigital computer detailsAlgorithmHuman language

A user submitting a query from a computer at an unknown location is located using a language model. The language model is derived from an aggregation of tweets that were sent from known locations.

Owner:R2 SOLUTIONS

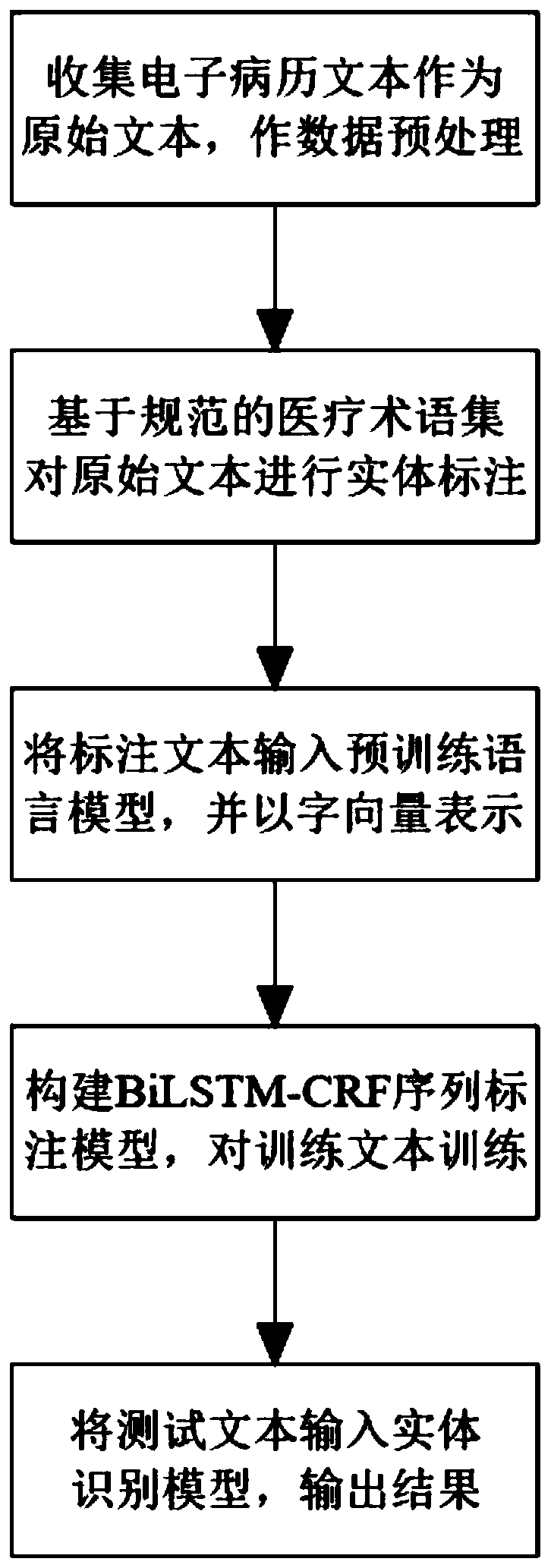

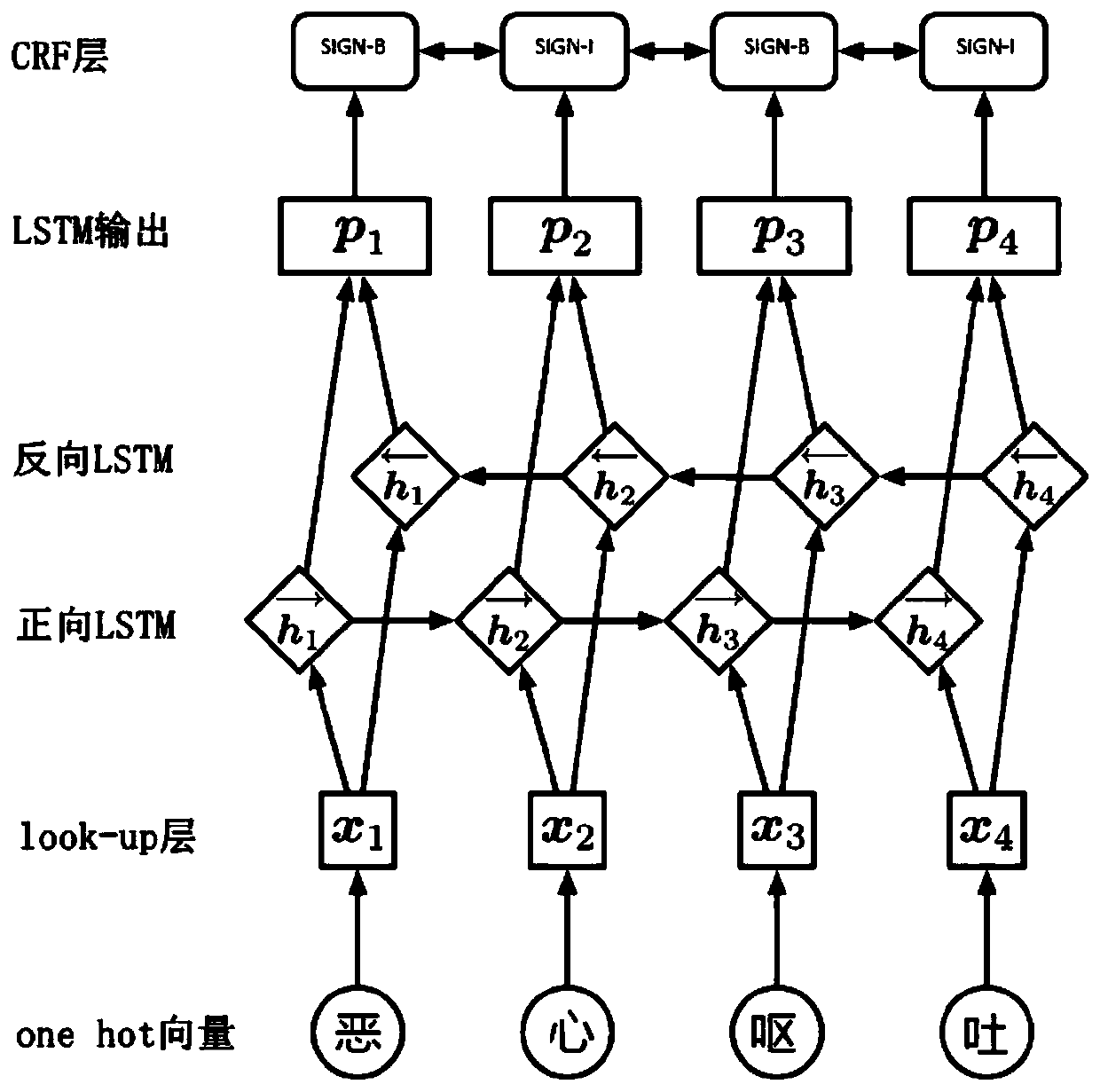

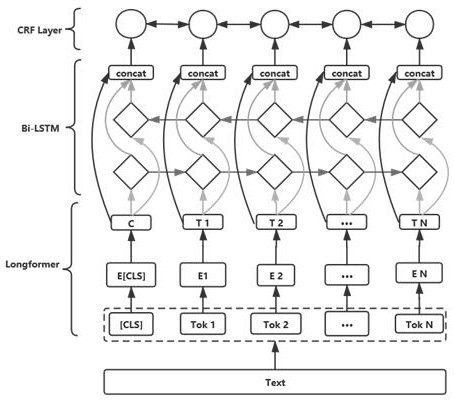

Electronic medical record text named entity recognition method based on pre-trained language model

PendingCN110705293AImprove recallImprove accuracySemantic analysisCharacter and pattern recognitionMedical recordManual annotation

The invention belongs to the technical field of medical information data processing, and particularly relates to an electronic medical record text named entity recognition method based on a pre-training language model, which comprises the following steps: collecting an electronic medical record text from a public data set as an original text, and preprocessing the original text; labeling the preprocessed original text entity based on the standard medical term set to obtain a labeled text; inputting the annotation text into a pre-training language model to obtain a training text represented bya word vector; constructing a BiLSTM-CRF sequence labeling model, and learning the training text to obtain a trained labeling model; and taking the trained labeling model as an entity recognition model, and inputting a test text to output a labeled category label sequence. According to the method, text features and semantic information in the deep language model are obtained through training in the super-large-scale Chinese corpus, a better semantic compression effect can be provided, the problem that manual annotation is tedious and complex is avoided, the method does not depend on dictionaries and rules, and the recall ratio and accuracy of named entity recognition are improved.

Owner:SUZHOU INST OF BIOMEDICAL ENG & TECH CHINESE ACADEMY OF SCI

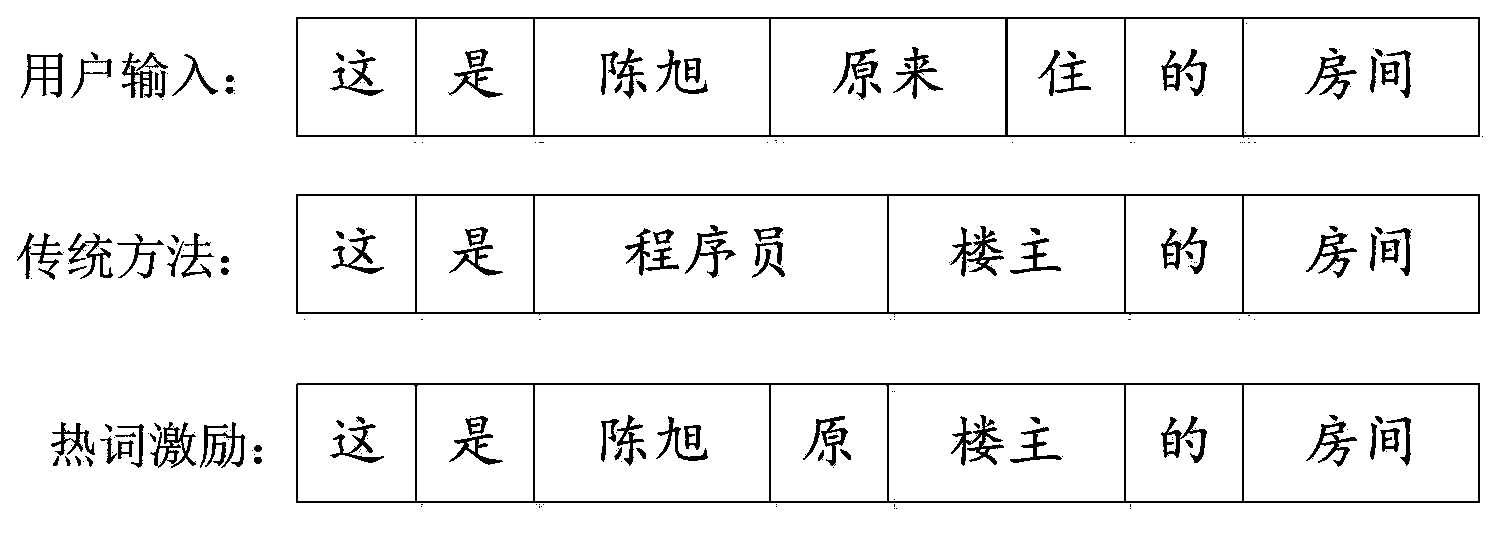

Method and system for improving accuracy of speech recognition

A method and an apparatus for improving accuracy of voice recognition. The method comprises: (201) matching, according to user preset information, a candidate word in a path set obtained by using voice decoding, and obtaining a new path set; (202) correcting the linguistic model probability of a candidate word in the new path set by using a classification-based linguistic model established by using the user preset information as elements; and (203) performing voice decoding processing according the corrected the linguistic model probability of the candidate word. By using the method, the recognition accuracy of specific user information and content of a context of the information is improved.

Owner:讯飞医疗科技股份有限公司

Method and device for error correction model training and text error correction

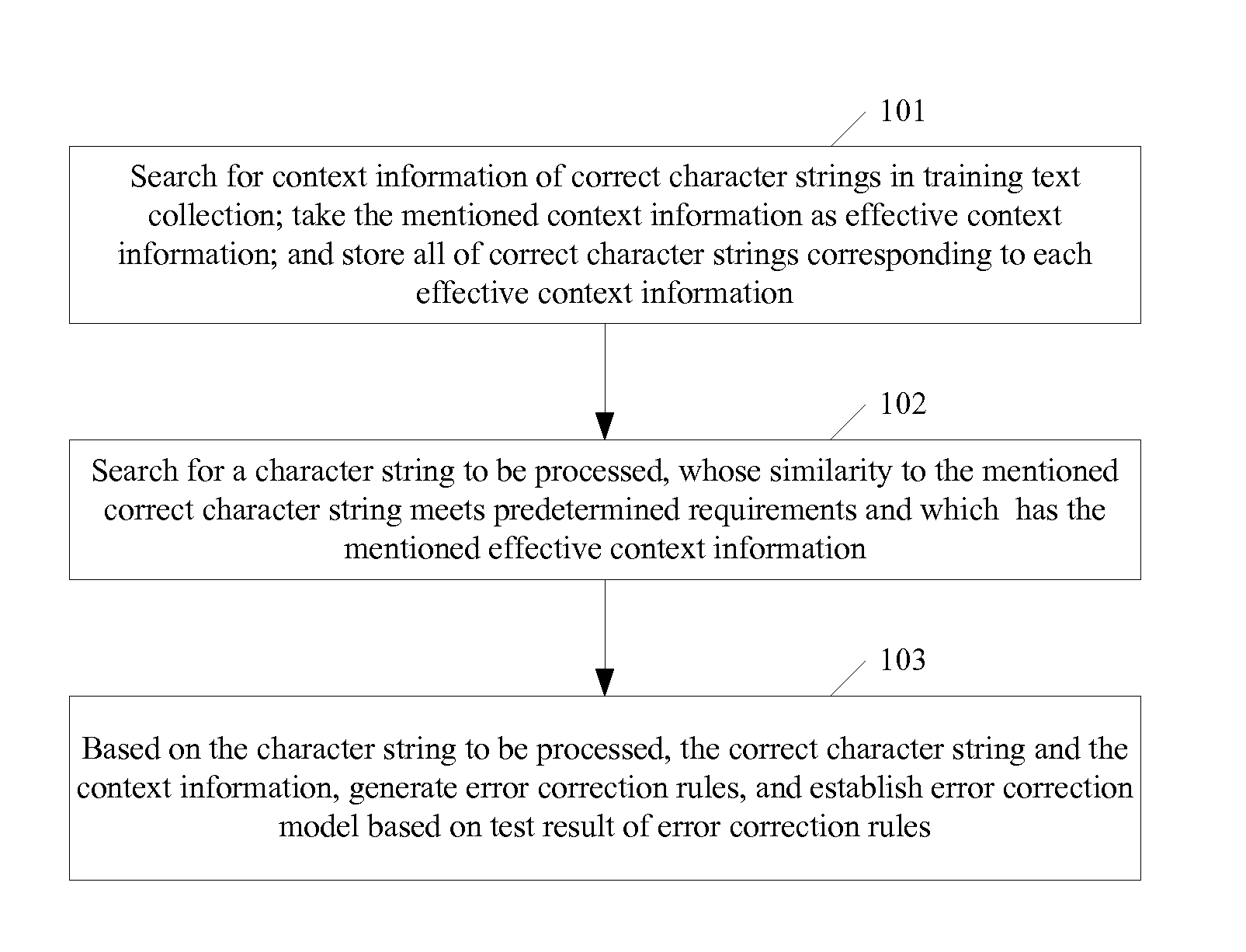

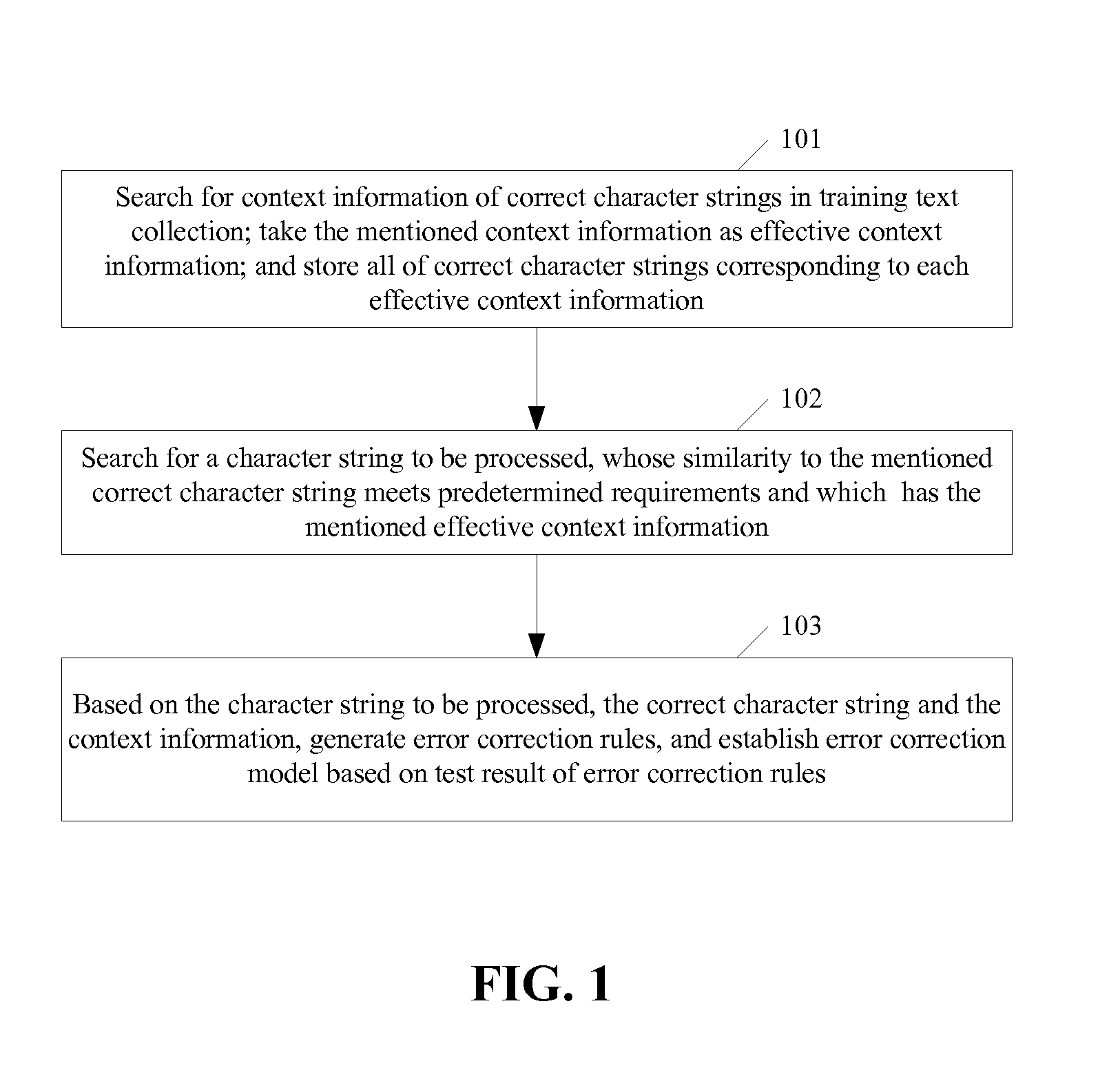

InactiveUS20140214401A1Improve accuracyImprove comprehensivenessNatural language data processingSpecial data processing applicationsLinguistic modelPerformed Procedure

A computer-implemented method is performed at a device having one or more processors and memory storing programs executed by the one or more processors. The method comprises: selecting a target word in a target sentence; from the target sentence, acquiring a first sequence of words that precede the target word and a second sequence of words that succeed the target word; from a sentence database, searching and acquiring a group of words, each of which separates the first sequence of words from the second sequence of words in a sentence; creating a candidate sentence for each of the candidate words by replacing the target word in the target sentence with each of the candidate words; determining the fittest sentence among the candidate sentences according to a linguistic model; and suggesting the candidate word within the fittest sentence as a correction.

Owner:TENCENT TECH (SHENZHEN) CO LTD

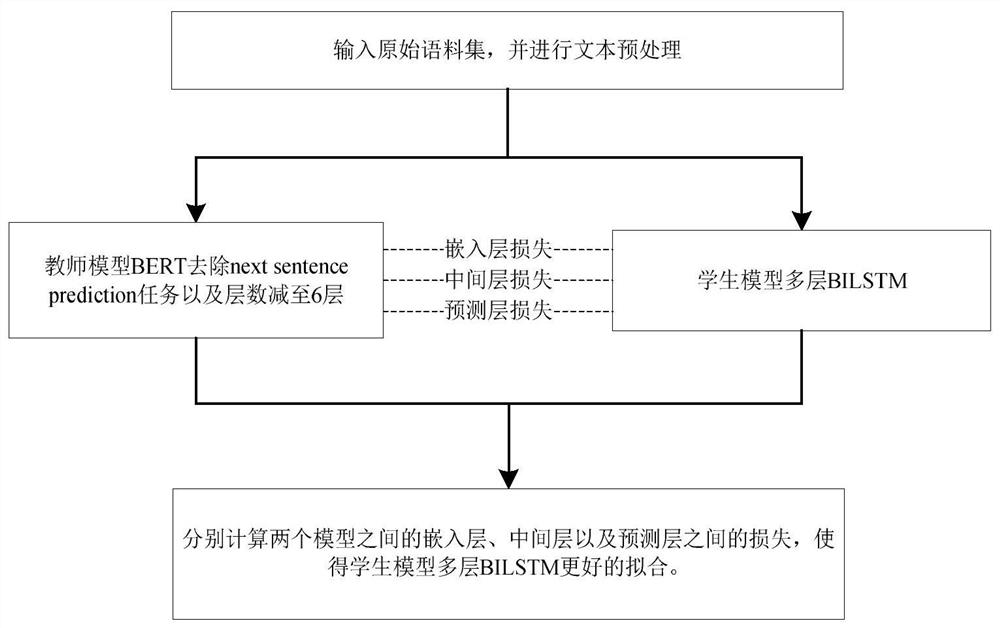

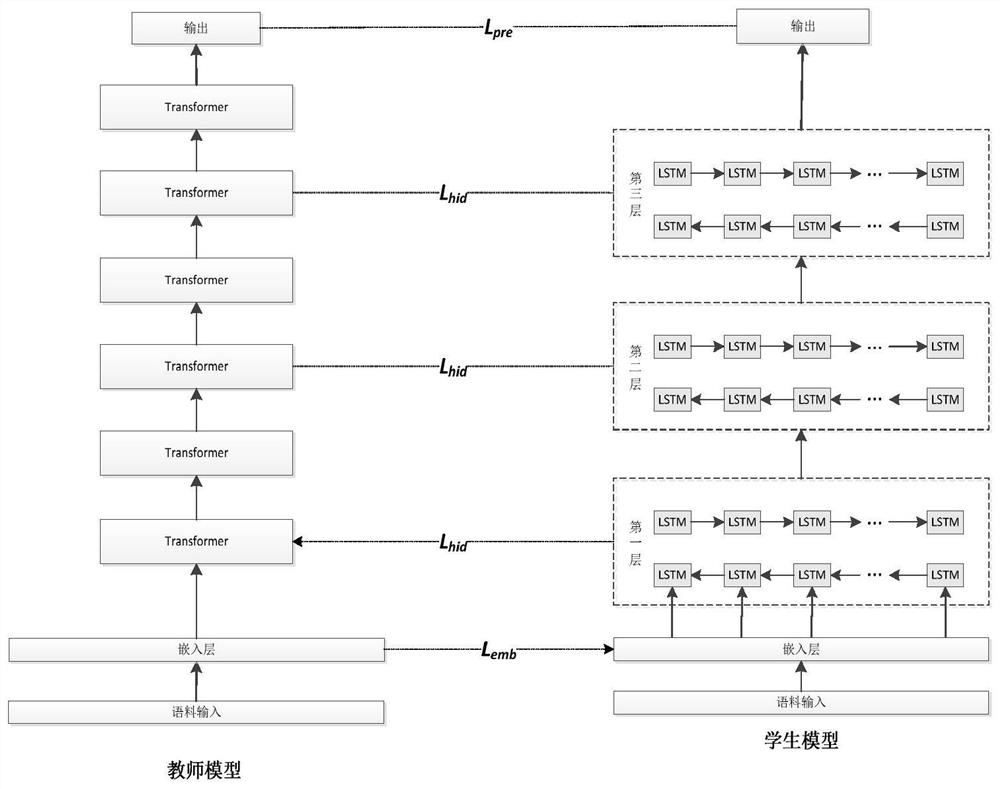

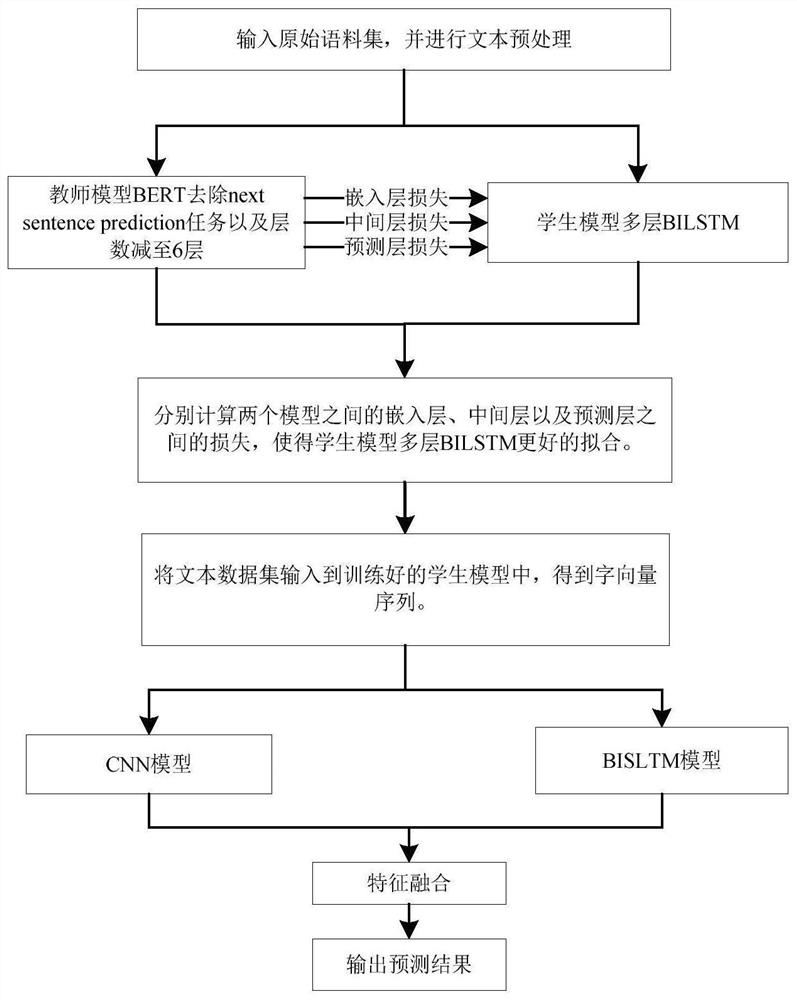

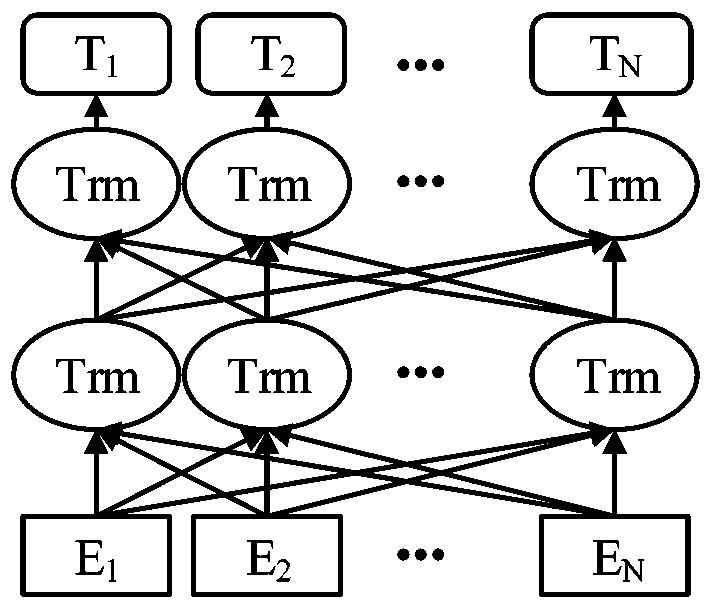

Multilayer neural network language model training method and device based on knowledge distillation

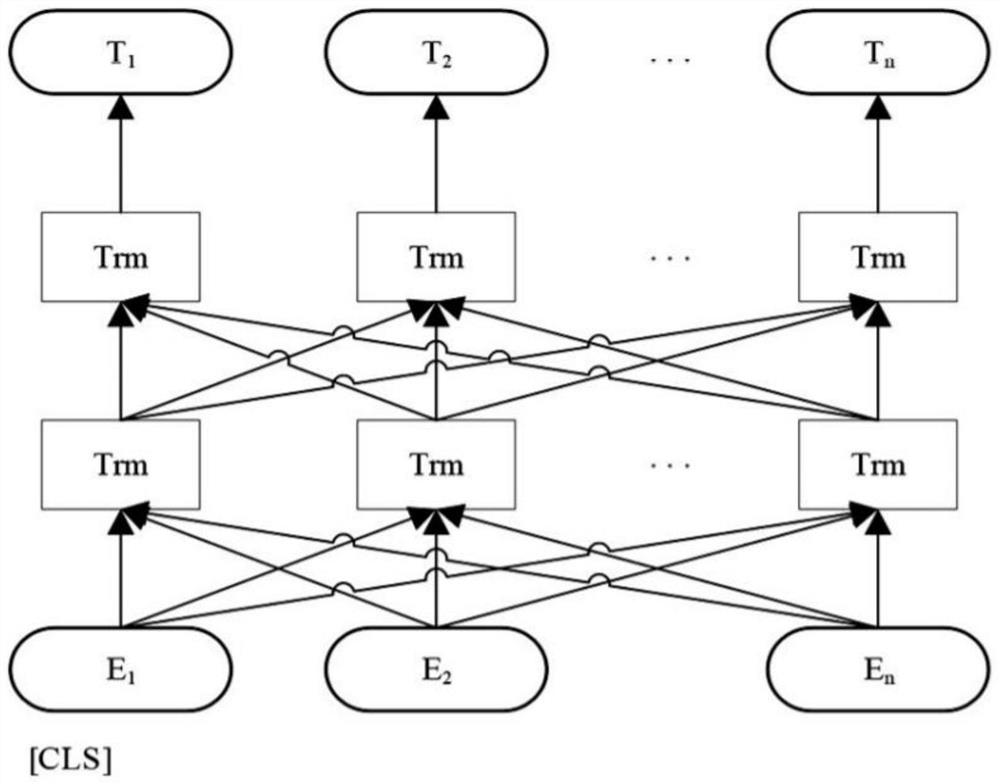

ActiveCN111611377AHigh precisionImprove learning effectSemantic analysisNeural architecturesHidden layerLinguistic model

The invention discloses a multilayer neural network language model training method and device based on knowledge distillation. The method comprises the steps that firstly, a BERT language model and amulti-layer BILSTM model are constructed to serve as a teacher model and a student model, the constructed BERT language model comprises six layers of transformers, and the multi-layer BILSTM model comprises three layers of BILSTM networks; then, after the text corpus set is preprocessed, the BERT language model is trained to obtain a trained teacher model; and the preprocessed text corpus set is input into a multilayer BILSTM model to train a student model based on a knowledge distillation technology, and different spatial representations are calculated through linear transformation when an embedding layer, a hiding layer and an output layer in a teacher model are learned. Based on the trained student model, the text can be subjected to vector conversion, and then a downstream network is trained to better classify the text. According to the method, the text pre-training efficiency and the accuracy of the text classification task can be effectively improved.

Owner:HUAIYIN INSTITUTE OF TECHNOLOGY

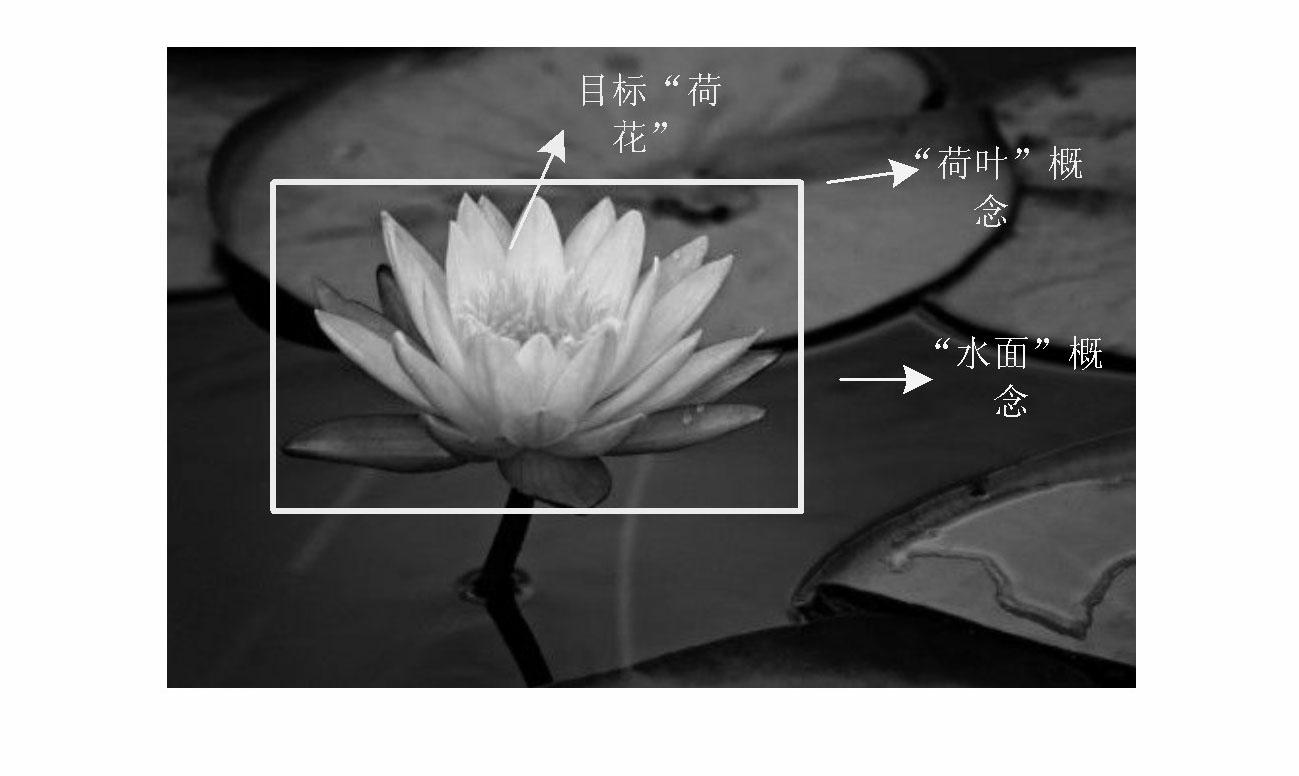

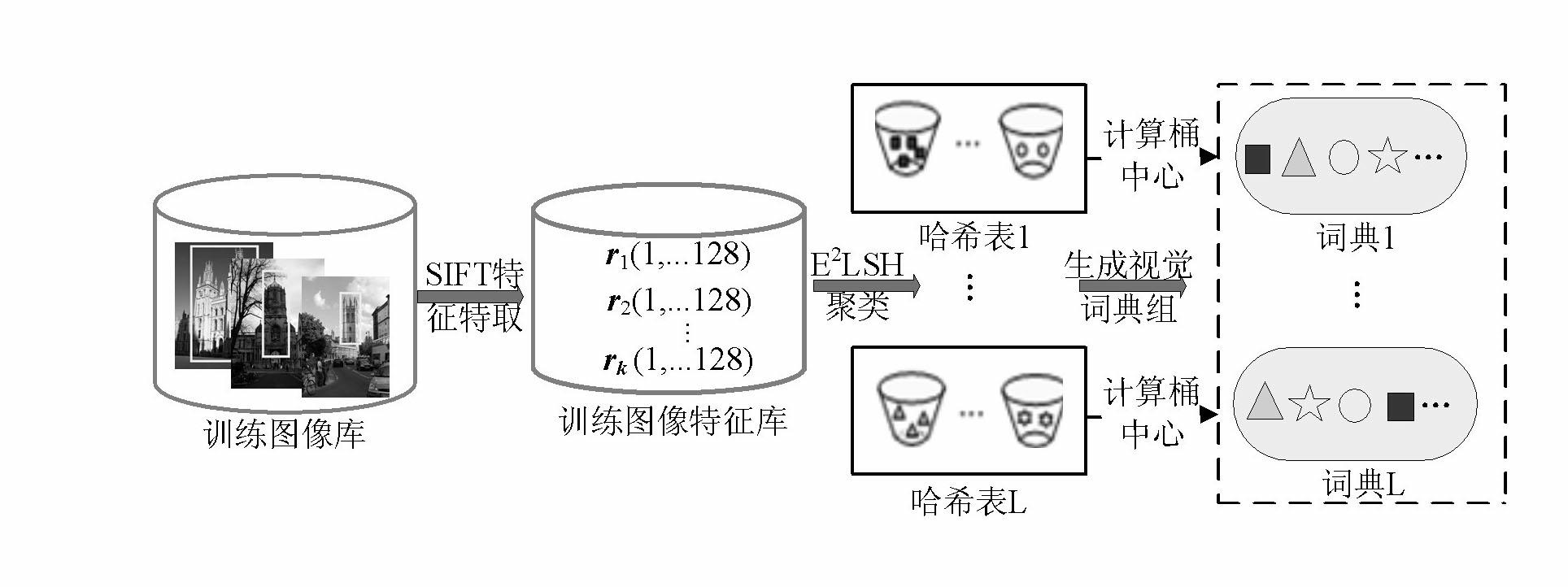

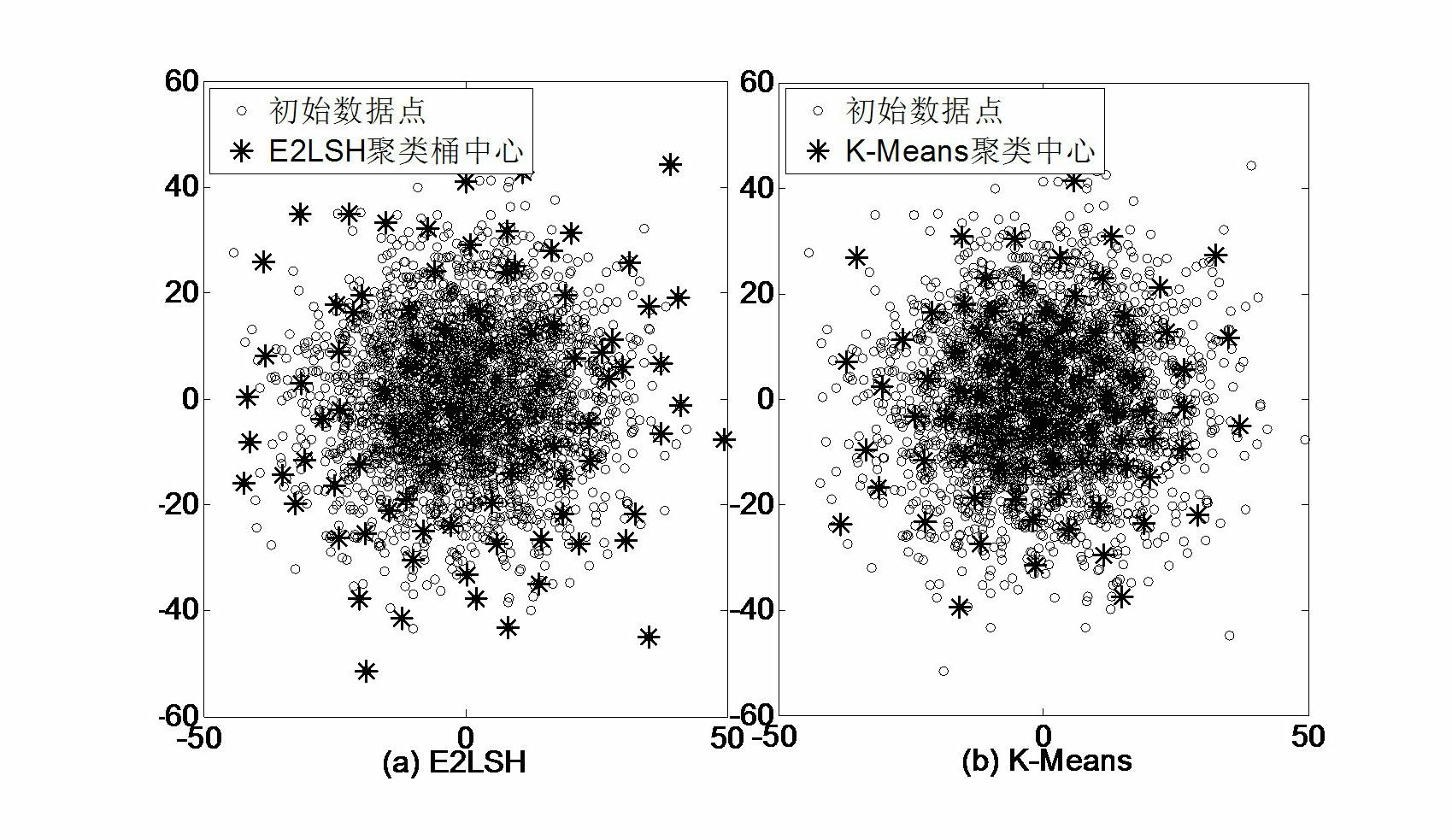

Target retrieval method based on group of randomized visual vocabularies and context semantic information

InactiveCN102693311AAddressing operational complexityReduce the semantic gapCharacter and pattern recognitionSpecial data processing applicationsImage databaseSimilarity measure

The invention relates to a target retrieval method based on a group of randomized visual vocabularies and context semantic information. The target retrieval method includes the following steps of clustering local features of a training image library by an exact Euclidean locality sensitive hash function to obtain a group of dynamically scalable randomized visual vocabularies; selecting an inquired image, bordering an target area with a rectangular frame, extracting SIFT (scale invariant feature transform) features of the inquired image and an image database, and subjecting the SIFT features to S<2>LSH (exact Euclidean locality sensitive hashing) mapping to realize the matching between feature points and the visual vocabularies; utilizing the inquired target area and definition of peripheral vision units to calculate a retrieval score of each visual vocabulary in the inquired image and construct an target model with target context semantic information on the basis of a linguistic model; storing a feature vector of the image library to be an index document, and measuring similarity of a linguistic model of the target and a linguistic model of any image in the image library by introducing a K-L divergence to the index document and obtaining a retrieval result.

Owner:THE PLA INFORMATION ENG UNIV

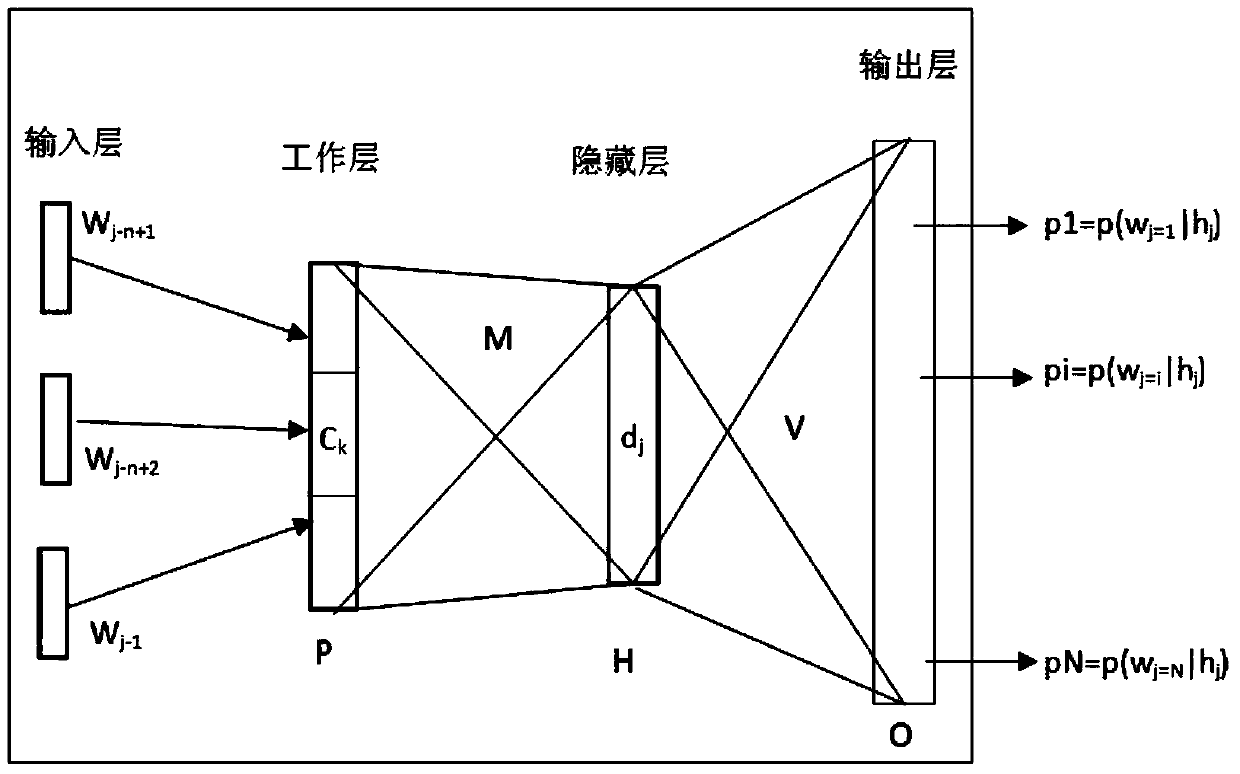

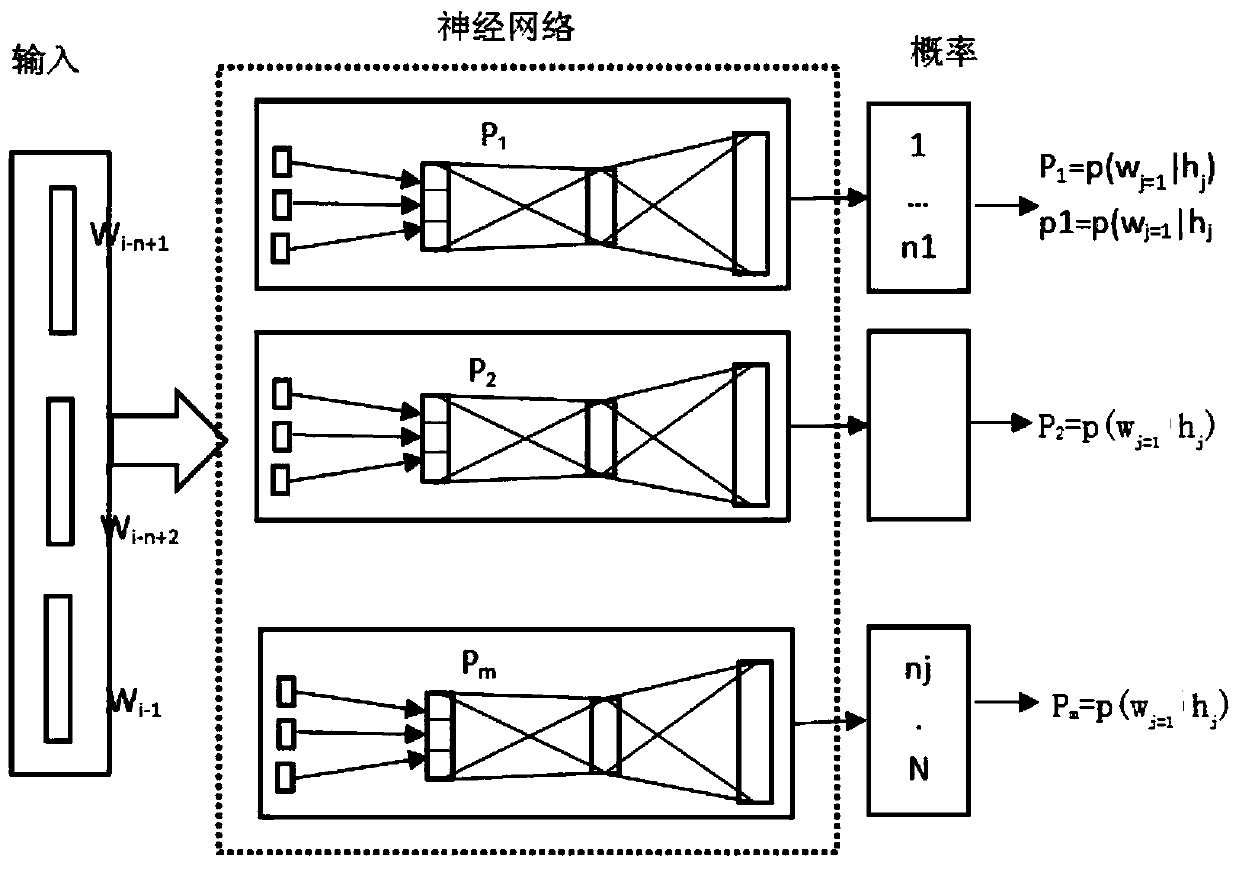

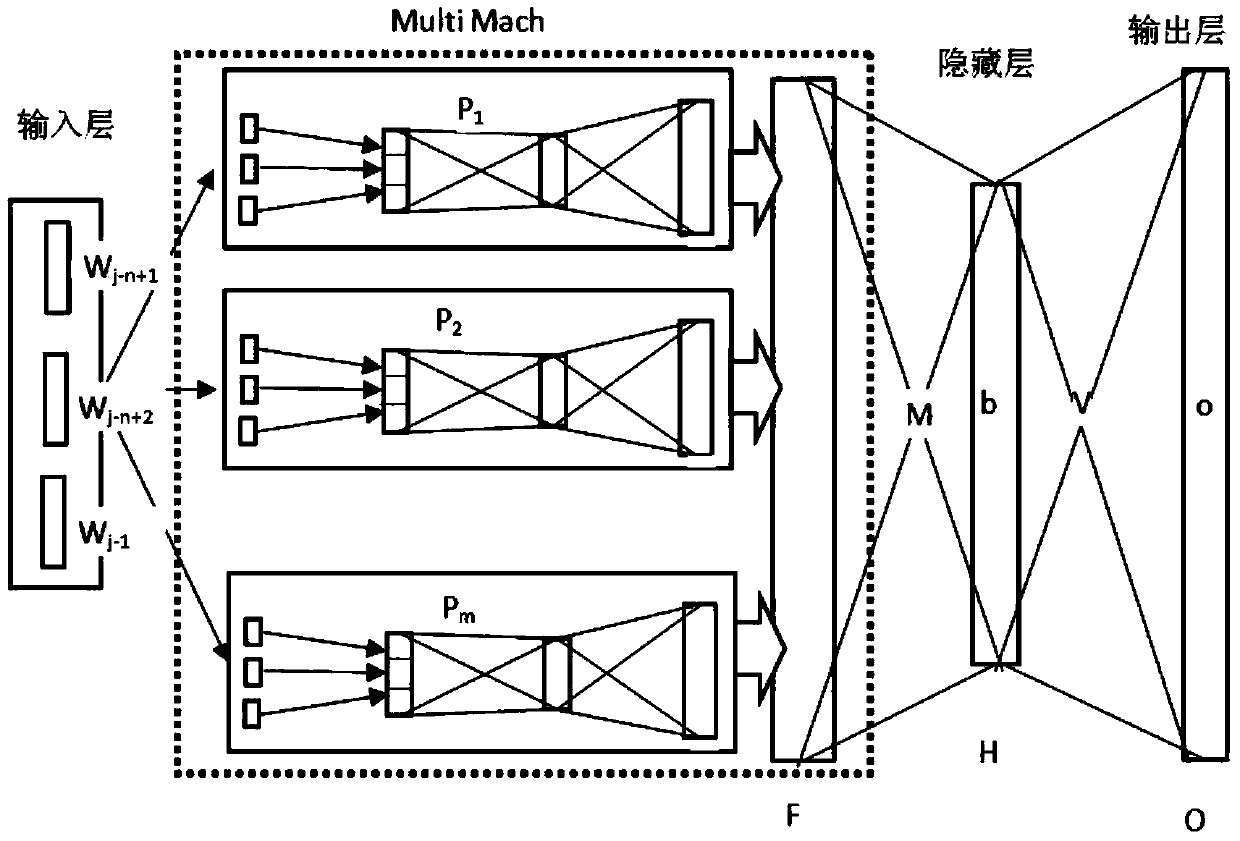

Linguistic model training method and system based on distributed neural networks

InactiveCN103810999AResolution timeSolving the problem of underutilizing neural networksSpeech recognitionLinguistic modelSpeech identification

The invention discloses linguistic model training method and system based on distributed neural networks. The method comprises the following steps: splitting a large vocabulary into a plurality of small vocabularies; corresponding each small vocabulary to a neural network linguistic model, each neural network linguistic model having the same number of input dimensions and being subjected to the first training independently; merging output vectors of each neural network linguistic model and performing the second training; obtaining a normalized neural network linguistic model. The system comprises an input module, a first training module, a second training model and an output model. According to the method, a plurality of neural networks are applied to training and learning different vocabularies, in this way, learning ability of the neural networks is fully used, learning and training time of the large vocabularies is greatly reduced; besides, outputs of the large vocabularies are normalized to realize normalization and sharing of the plurality of neural networks, so that NNLM can learn information as much as possible, and the accuracy of relevant application services, such as large-scale voice identification and machine translation, is improved.

Owner:TSINGHUA UNIV

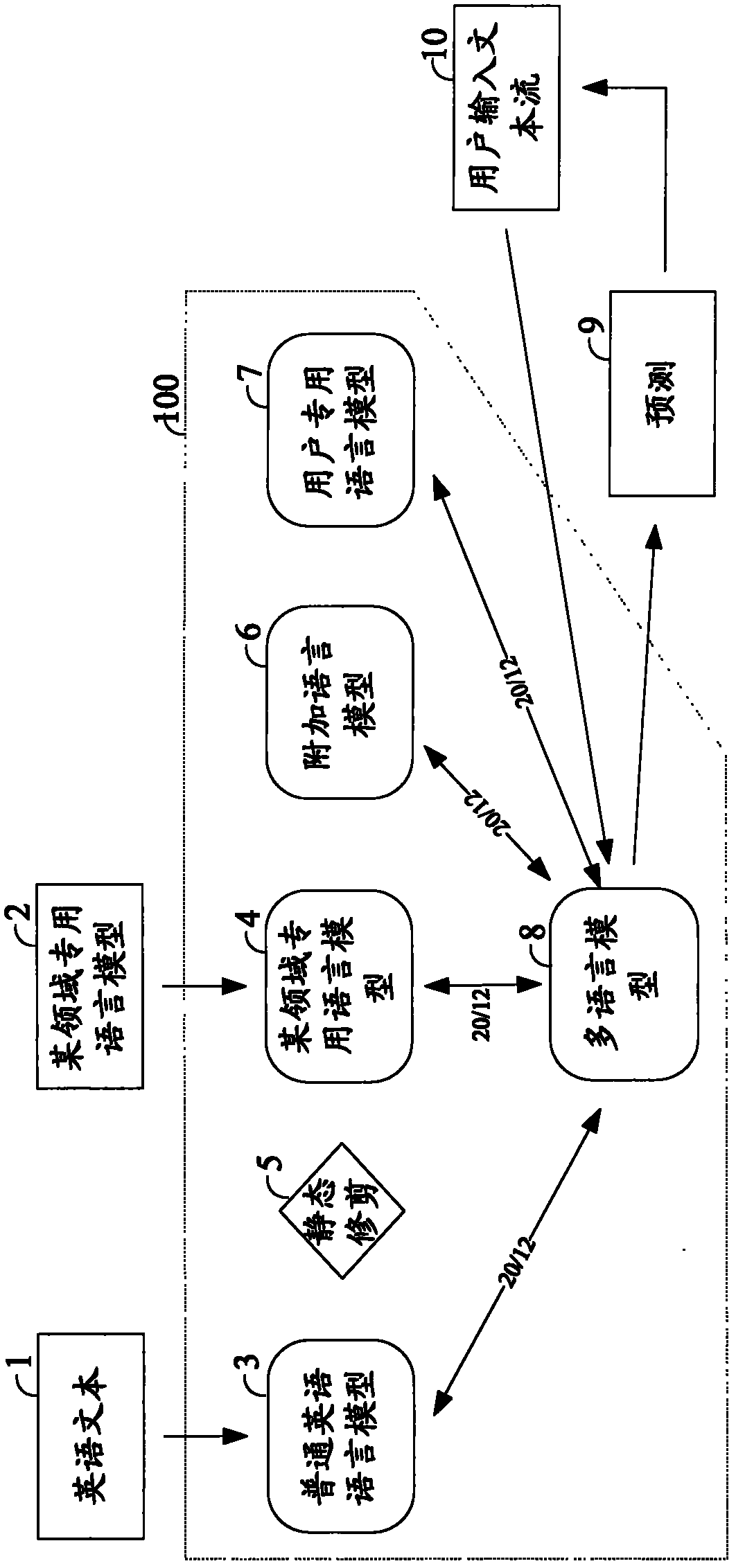

Text input system and method of electronic device

ActiveCN102439542AReduce laborNatural language data processingSpecial data processing applicationsLinguistic modelText entry

The present invention provides a text input system of an electronic device, comprising a user interface configured to receive text input by a user, a text prediction engine comprising a plurality of language models and configured to receive the input text from the user interface and to generate concurrently text predictions using the plurality of language models, and wherein the text prediction engine is further configured to provide text predictions to the user interface for display and user selection. In addition,a text input method of an electronic device and a user interface for use in the system and method are also provided.

Owner:MICROSOFT TECH LICENSING LLC

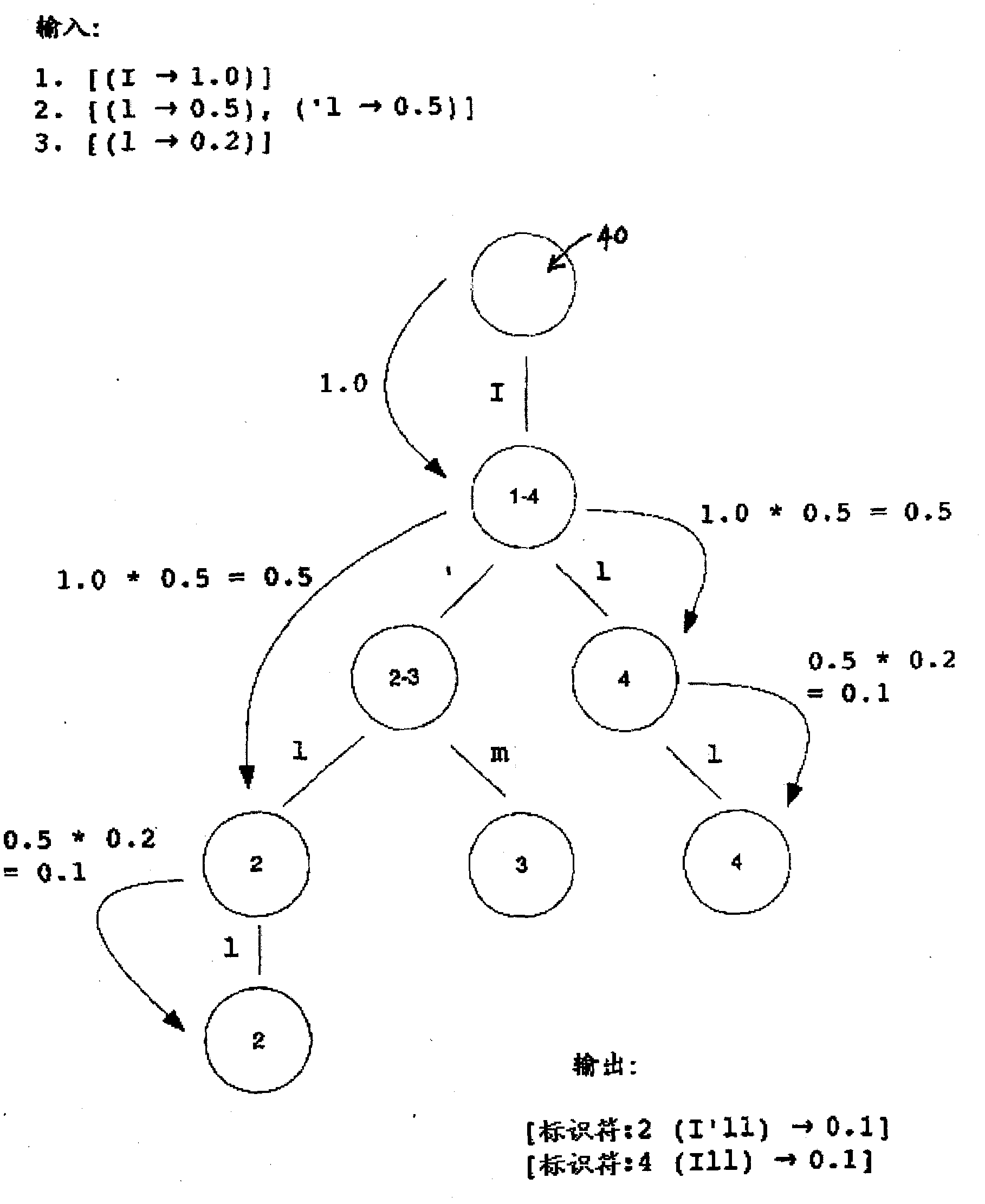

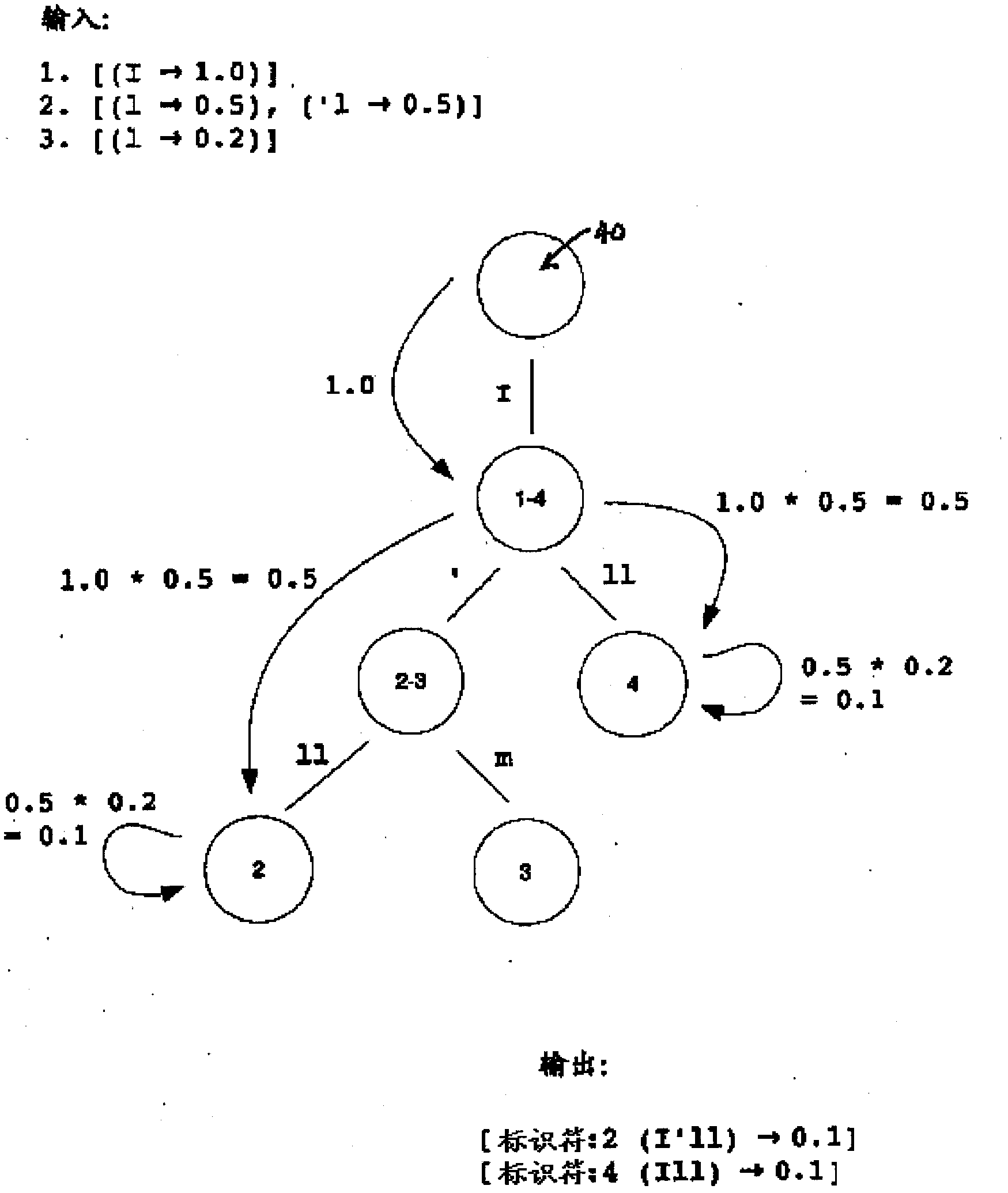

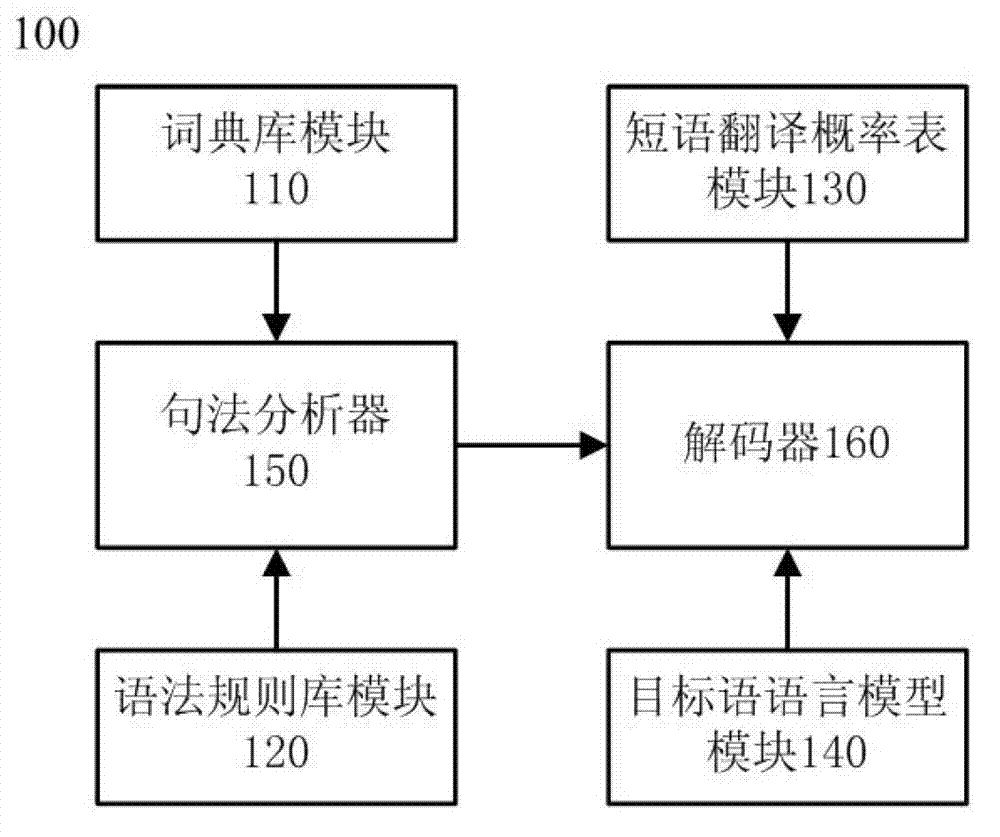

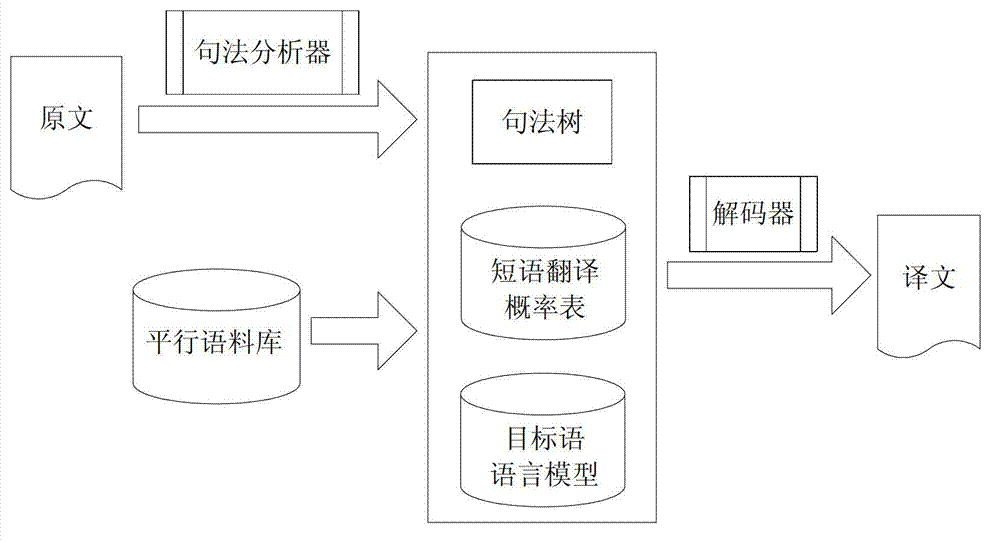

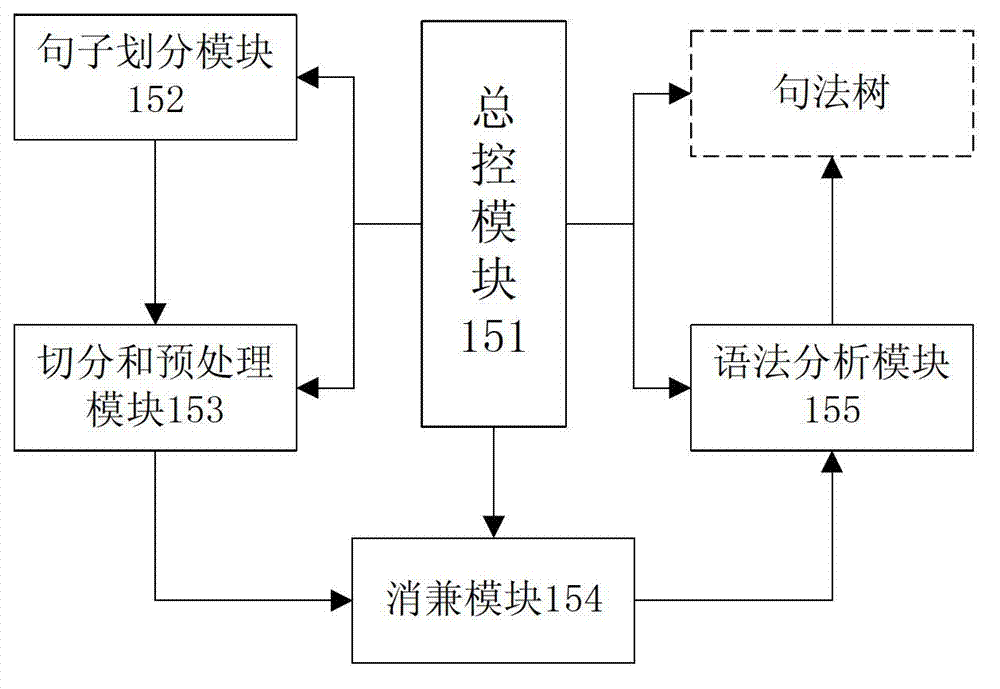

Translation method integrating syntactic tree and statistical machine translation technology and translation device

InactiveCN103116578AImprove translation qualitySpecial data processing applicationsNODALLinguistic model

The invention discloses a translation method integrating a syntactic tree and statistical machine translation technology and a translation device. The method comprises the following steps. First, a dictionary base, a grammatical rule base, a phrase translation probability table and a target language linguistic model between different languages are established. Then, segmentation, word property removing and grammatical analysis are conducted to an original input sentence, and a syntactic tree is generated. Then by adopting a top-down strategy, the syntactic tree is gone through, by means of each individual node and part of continuous nodes which cross the syntax, the original texts of leaf nodes are taken to be matched with the phrase translation probability table trained by the statistical machine translation, By utilizing the translated texts of the phrase translation table and the linguistic model of the target language, the purpose of improving the fluency and the accuracy of the output translated texts is achieved. By means of the translation method integrating the syntactic tree and the statistical machine translation technology and the translation device, not only is fine grit knowledge provided by the phrase translation table utilized, but also the advantages of the syntactic tree when solving the relevant problems of depth and long distance of a sentence are utilized, and the quality of the texts translated by the machine can be improved remarkably.

Owner:北京赛迪翻译技术有限公司

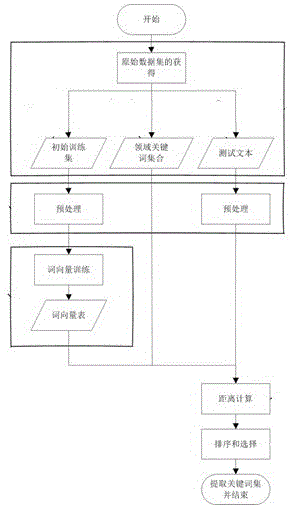

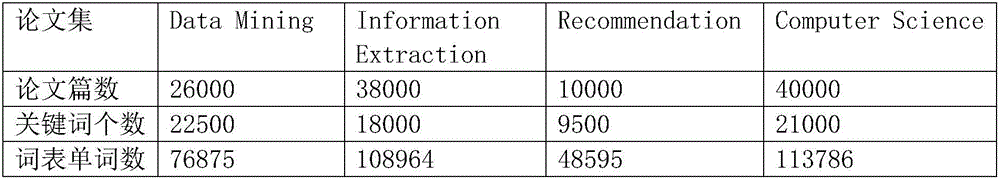

Keyword automatic extraction method based on distributed expression word vector calculation

InactiveCN106021272AHighlight substantiveLow extractabilityCharacter and pattern recognitionSpecial data processing applicationsLinguistic modelData set

The invention relates to a keyword automatic extraction method based on distributed expression word vector calculation. The method automatically generates characteristics, and preferably solves keyword automatic extraction. The steps of the method are as followings: step 1, obtaining a training original dataset; step 2, performing preprocessing on a training set and a test text, including removing punctuation, digits, stop words, and filtering word characteristics; step 3, after the training set is obtained, through training of a linguistic model, converting the training set into a word vector table; step 4, through a distance calculation method, calculating the distance from a keyword word vector to a to-be-tested text; step 5, by different distance calculation methods, respectively obtaining arithmetic average semantic distances between distributed expression word vectors of all keywords of a field keyword set and distributed expression word vectors of all words of the test text, so as to select and sort. The method provides a new thought for extraction of keywords, semantic information of a dataset is fully used, and accuracy of automatic extraction is substantially improved.

Owner:SHANGHAI UNIV

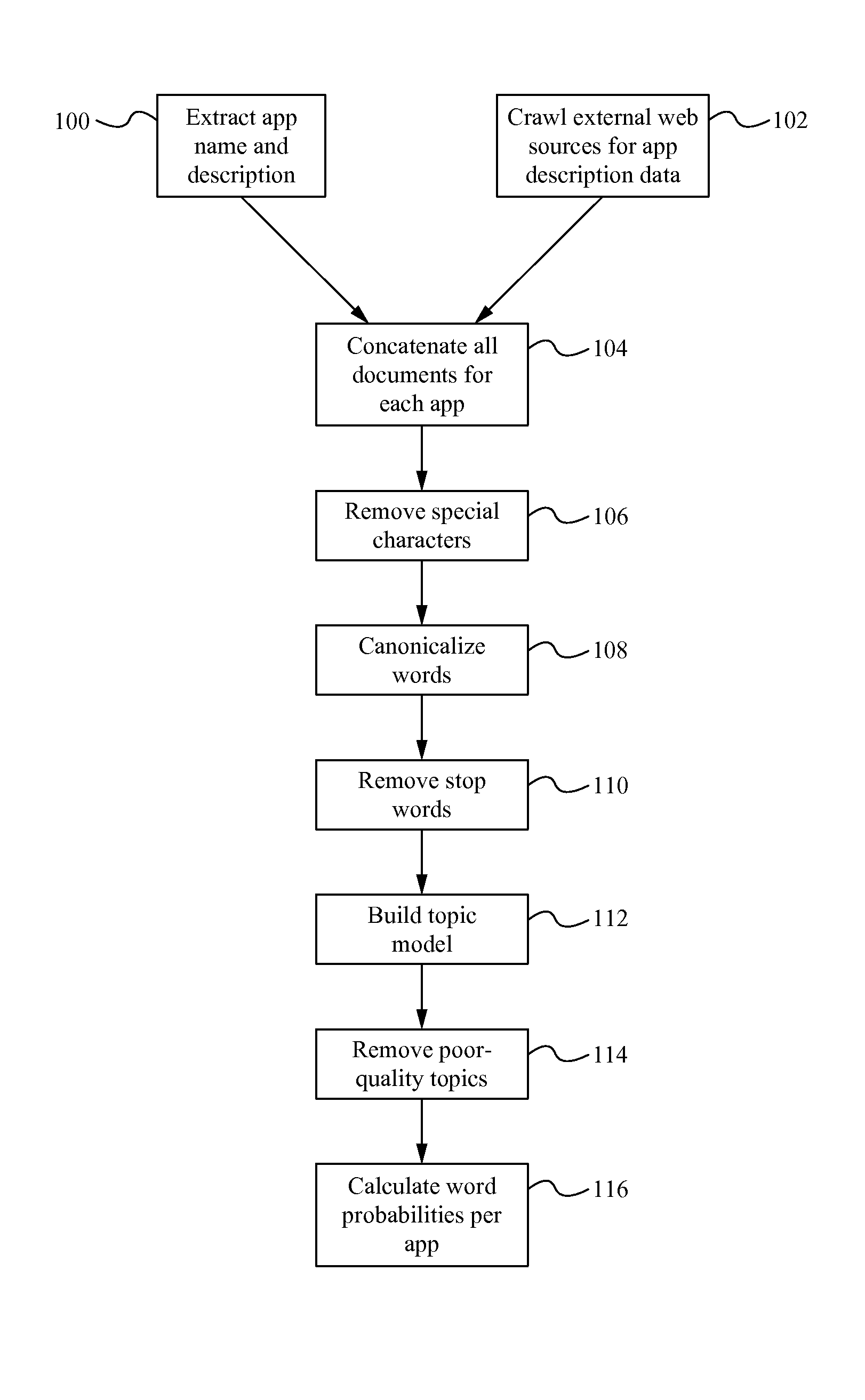

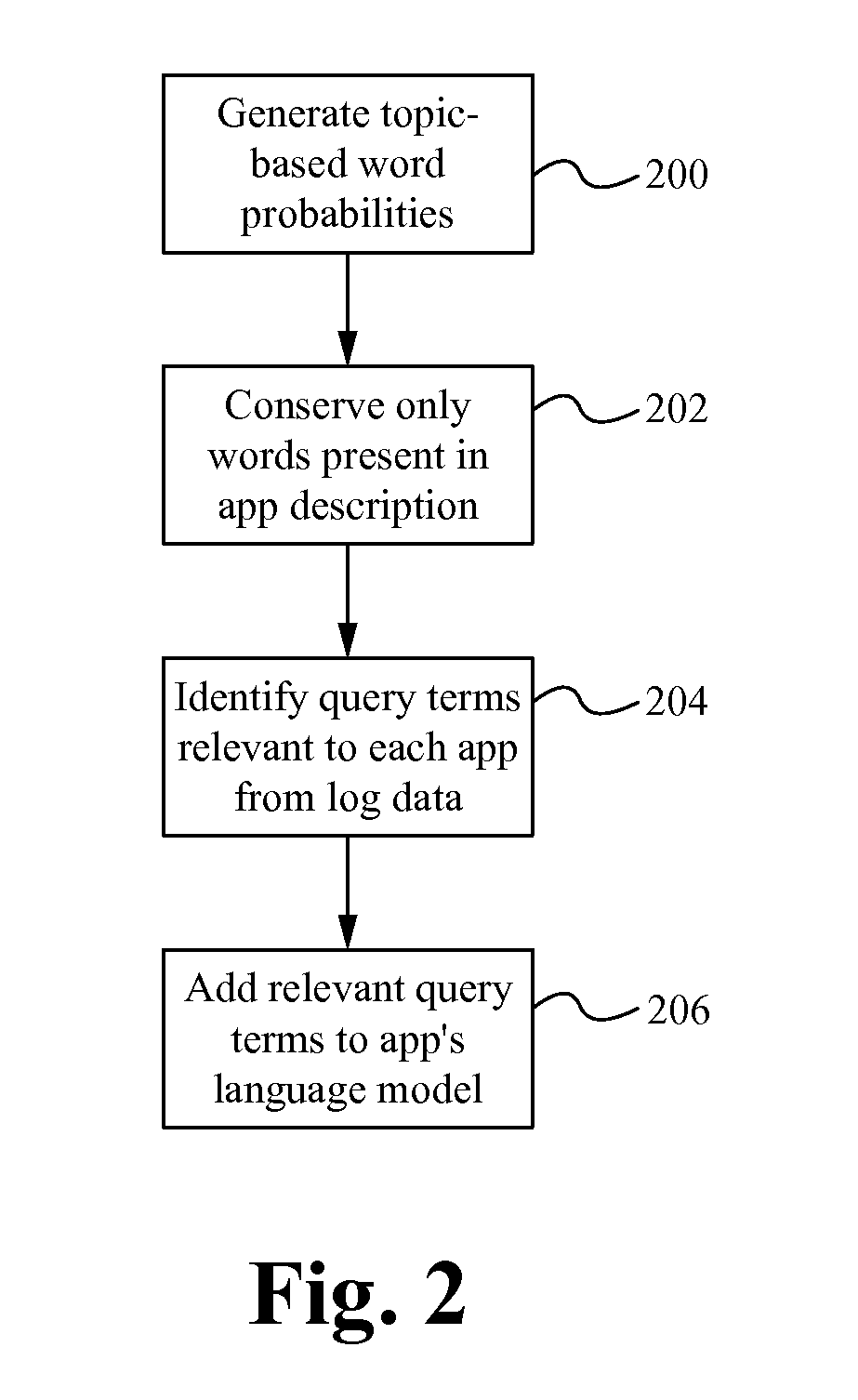

Generation of topic-based language models for an app search engine

Topic-based language models for an application search engine enable a user to search for an application based on the application's function rather than title. To enable a search based on function, information is gathered and processed, including application names, descriptions and external information. Processing the information includes filtering the information, generating a topic model and supplementing the topic model with additional information.

Owner:APPLE INC

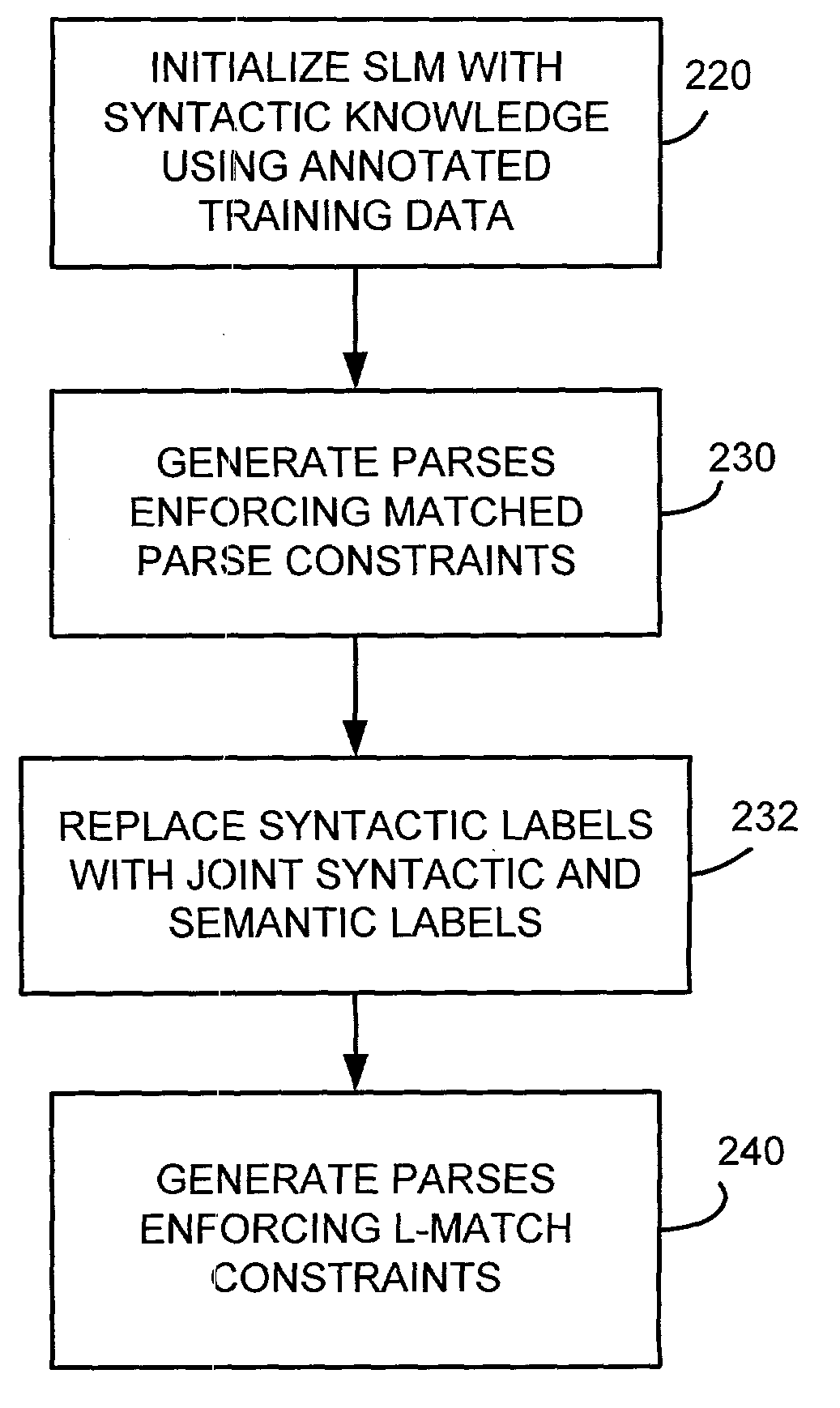

Applying a structured language model to information extraction

InactiveUS7805302B2Easy to practiceNatural language translationSemantic analysisSemantic annotationParse tree

One feature of the present invention uses the parsing capabilities of a structured language model in the information extraction process. During training, the structured language model is first initialized with syntactically annotated training data. The model is then trained by generating parses on semantically annotated training data enforcing annotated constituent boundaries. The syntactic labels in the parse trees generated by the parser are then replaced with joint syntactic and semantic labels. The model is then trained by generating parses on the semantically annotated training data enforcing the semantic tags or labels found in the training data. The trained model can then be used to extract information from test data using the parses generated by the model.

Owner:MICROSOFT TECH LICENSING LLC

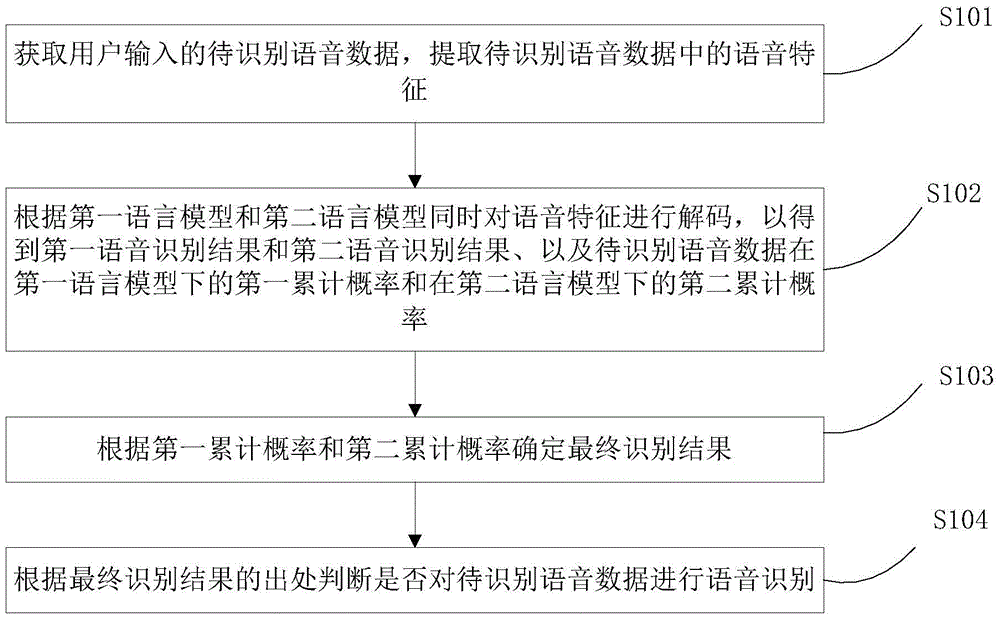

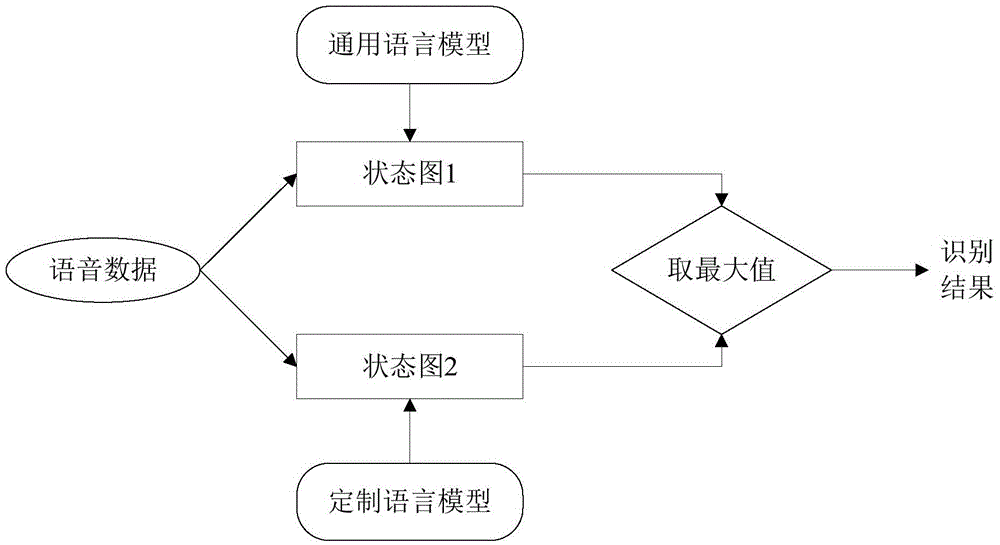

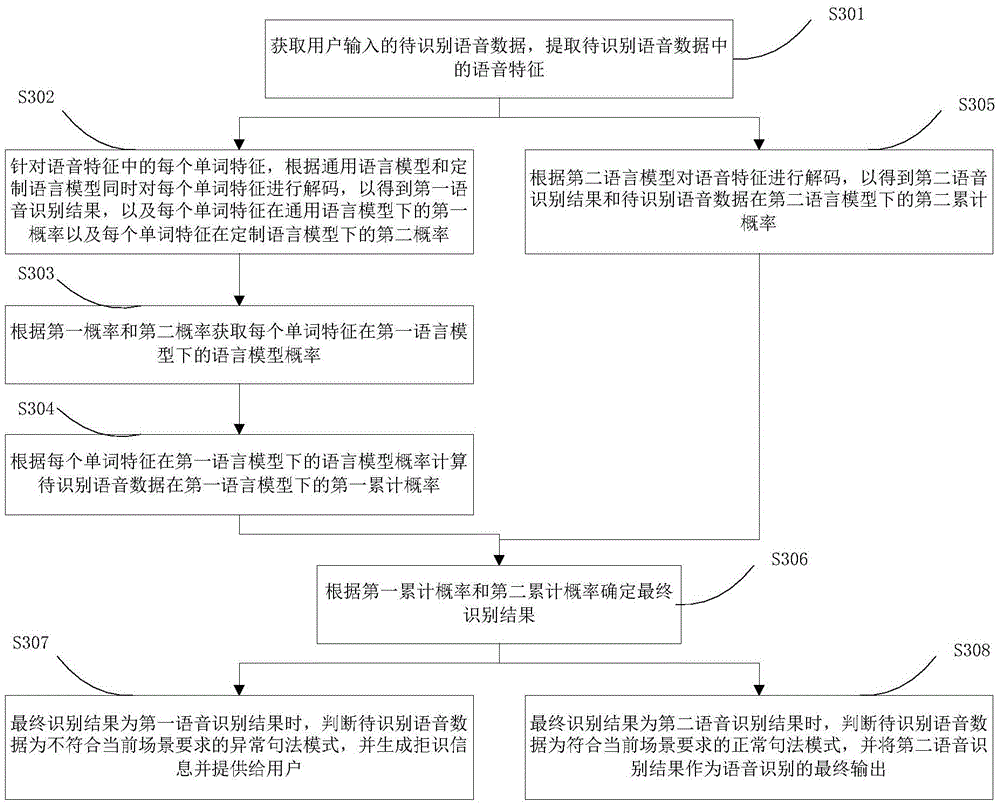

Method and device for voice recognition

ActiveCN105575386ASmooth voice interactionStable and reliable speech recognition environmentSpeech recognitionLinguistic modelSpeech identification

The invention discloses a method and a device for voice recognition. The method comprises the steps of: obtaining voice data to be recognized, and extracting voice characteristics; decoding the voice characteristics simultaneously according to a first linguistic model and a second linguistic model, obtaining a first voice recognition result, a second voice recognition result, a first cumulative probability under the first voice linguistic model and a second cumulative probability under the second voice linguistic model; determining a final recognition result according to the first cumulative probability and the second cumulative probability; and judging whether to carry out the voice recognition for the voice data to be recognized according to the provenance of the final recognition result. By adopting the voice recognition method, a stable and reliable voice recognition environment is provided and smoothness of human-computer interaction is ensured.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

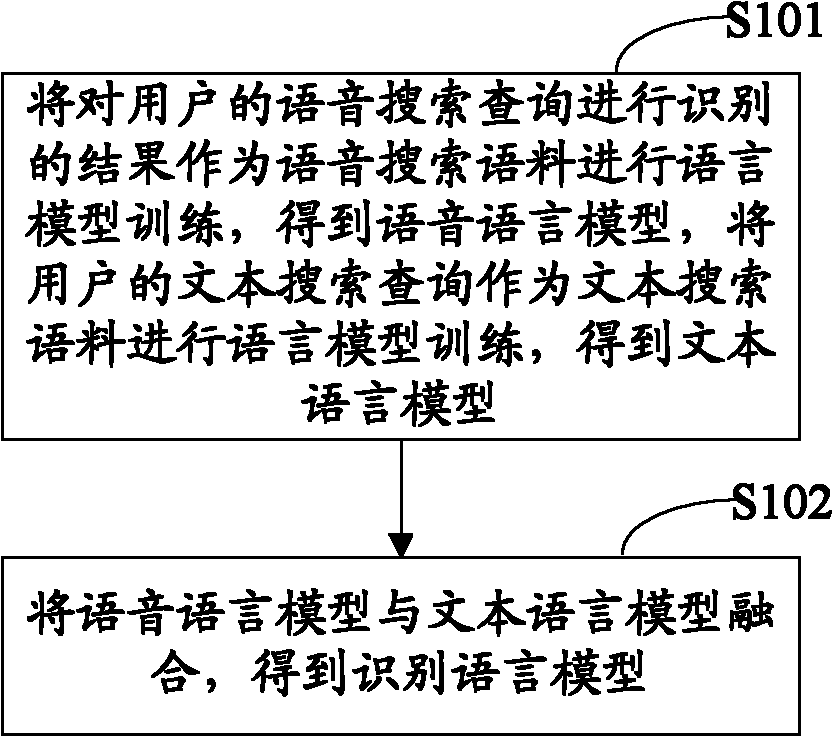

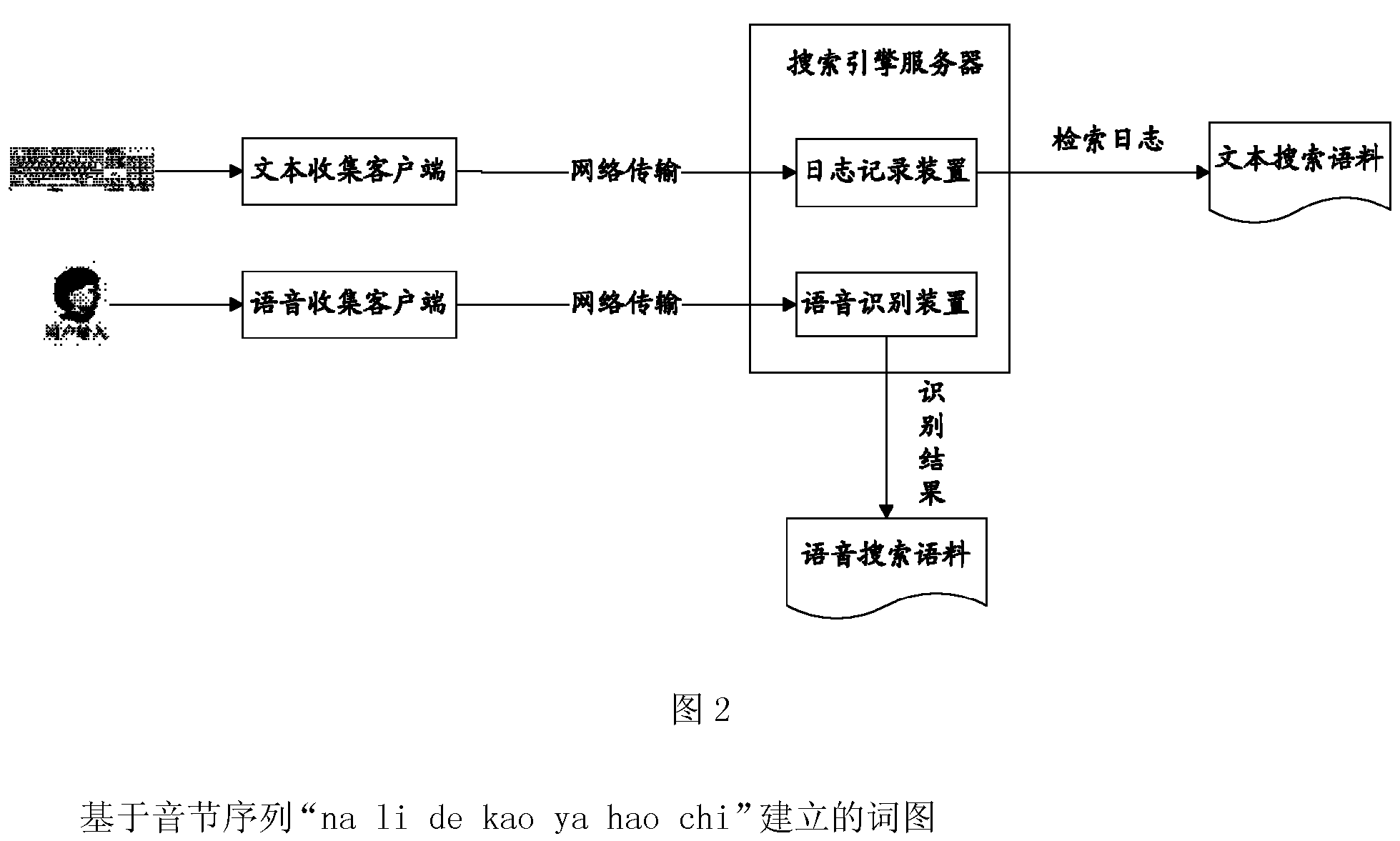

Method and device for establishing linguistic model for voice recognition

ActiveCN103187052AHigh precisionReflect word preferenceSpeech recognitionLinguistic modelSpeech identification

The invention provides a method and a device for establishing a linguistic model for voice recognition. The method includes a first step of utilizing recognition results of voice search queries to users as voice search linguistic data, carrying out linguistic model training to obtain a voice linguistic model, utilizing text search queries of the users as text search linguistic data, and carrying out linguistic model training to obtain a text linguistic model, and a second step of integrating the voice linguistic model and the text linguistic model to obtain a recognition linguistic model. The recognition linguistic model obtained through adoption of the method is capable of well reflecting wording preferences of the users in the voice input process and can be applied to voice recognition to improve accuracy of the voice recognition.

Owner:BEIJING BAIDU NETCOM SCI & TECH CO LTD

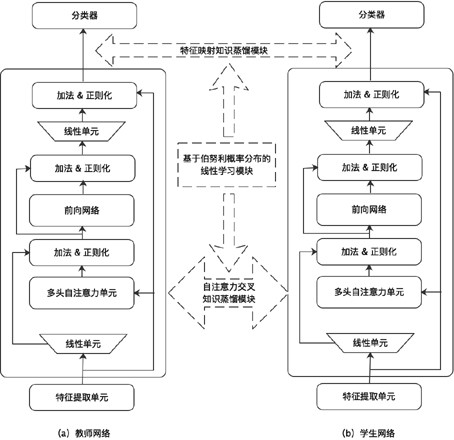

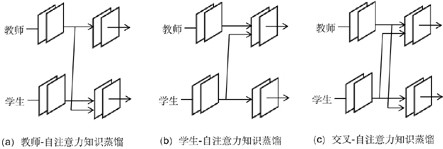

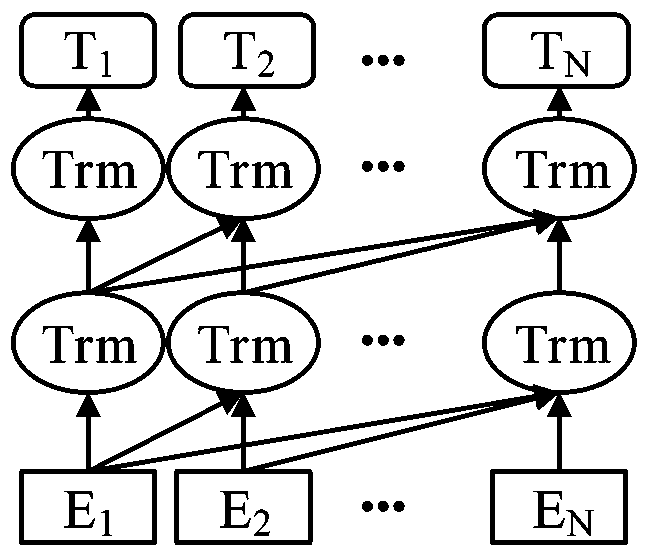

Pre-trained language model compression method and platform based on Knowledge distillation

ActiveCN111767711AImprove compression efficiencyTake advantage ofNatural language analysisSemantic analysisLinguistic modelSemantics

The invention discloses a pre-trained language model compression method and platform based on knowledge distillation. The method comprises the steps of firstly designing a universal knowledge distillation strategy of feature migration, mapping and approaching features of each layer of a student model to features of a teacher in a process of distilling knowledge of a teacher model to the student model, focusing on the feature expression ability of a small sample in the middle layer of the teacher model, and guiding the student model by utilizing the features; constructing a distillation methodbased on self-attention crossover knowledge by utilizing the ability of self-attention distribution of a teacher model to detect semantics and syntax among words; and in order to improve the learningquality of the learning model at the early stage of training and the generalization ability of the learning model at the later stage of training, designing a linear migration strategy based on Bernoulli probability distribution to gradually complete feature mapping from teachers to students and knowledge migration of self-attention distribution. According to the method and the device, the multi-task-oriented pre-trained language model is automatically compressed, so that the compression efficiency of the language model is improved.

Owner:ZHEJIANG LAB

Methods and systems for obtaining language models for transcribing communications

ActiveUS20120059652A1Improve performanceLikelihood of spoken wordsSpeech recognitionComputer scienceHuman language

A method for transcribing a spoken communication includes acts of receiving a spoken first communication from a first sender to a first recipient, obtaining information relating to a second communication, which is different from the first communication, from a second sender to a second recipient, using the obtained information to obtain a language model, and using the language model to transcribe the spoken first communication.

Owner:AMAZON TECH INC

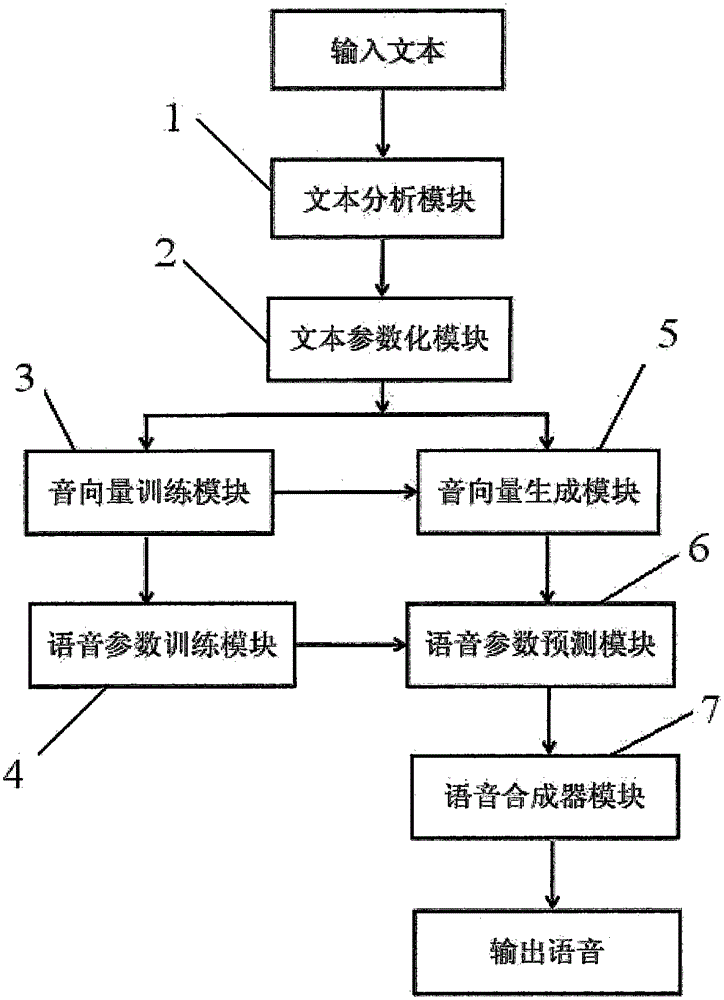

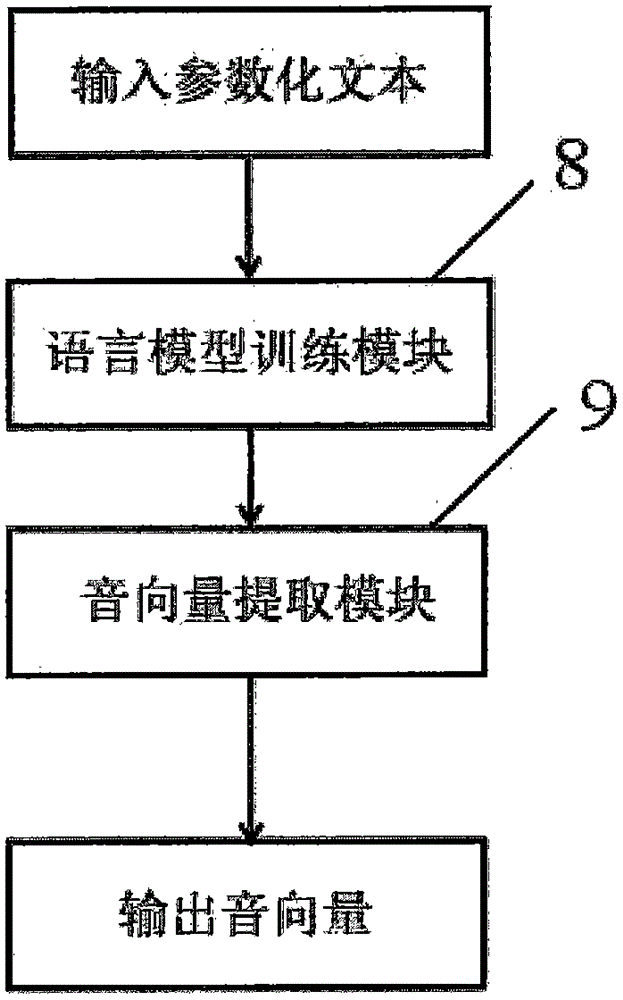

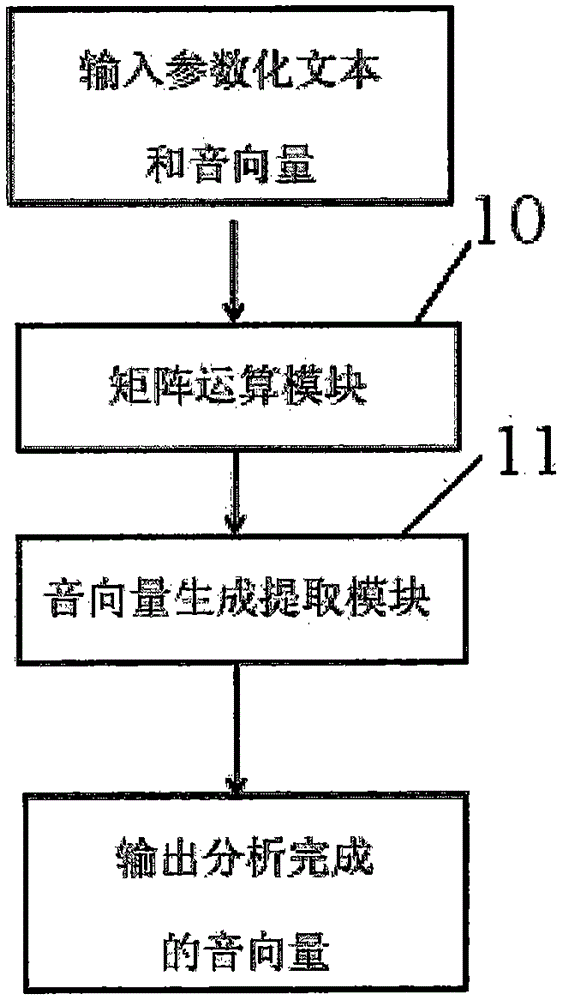

Voice synthesis method based on voice vector textual characteristics

ActiveCN105654939AImprove accuracyPreserve coherenceSpeech synthesisLinguistic modelSynthesis methods

The invention discloses a voice synthesis method based on voice vector textual characteristics. The voice synthesis method comprises the following steps: receiving an input text by a text analyzing module; carrying out regular processing on the textual characteristics and transmitting obtained text data to a text parameterization module; obtaining a parameterized text by adopting a single-bit heat code encoding method; receiving the parameterized text by a voice vector training module, and training a linguistic model based on voice vectors; then transmitting to a linguistic parameter training model to train a mapping model from the text to voice parameters; receiving the output text of the text parameterization module and the voice vector training module through a voice vector generation module, so as to generate the voice vectors of the text data; and transmitting the voice vectors of the text data and the mapping model from the text to the voice parameters to a linguistic parameter predication module to obtain the voice parameters corresponding to the voice vectors; and finally, synthesizing voices by a voice synthesis module. According to the voice synthesis method based on the voice vector textual characteristics, the accuracy of modeling of a voice synthesis system is improved; and the complexity and the manual participation degree of system realization are greatly reduced.

Owner:中科极限元(杭州)智能科技股份有限公司

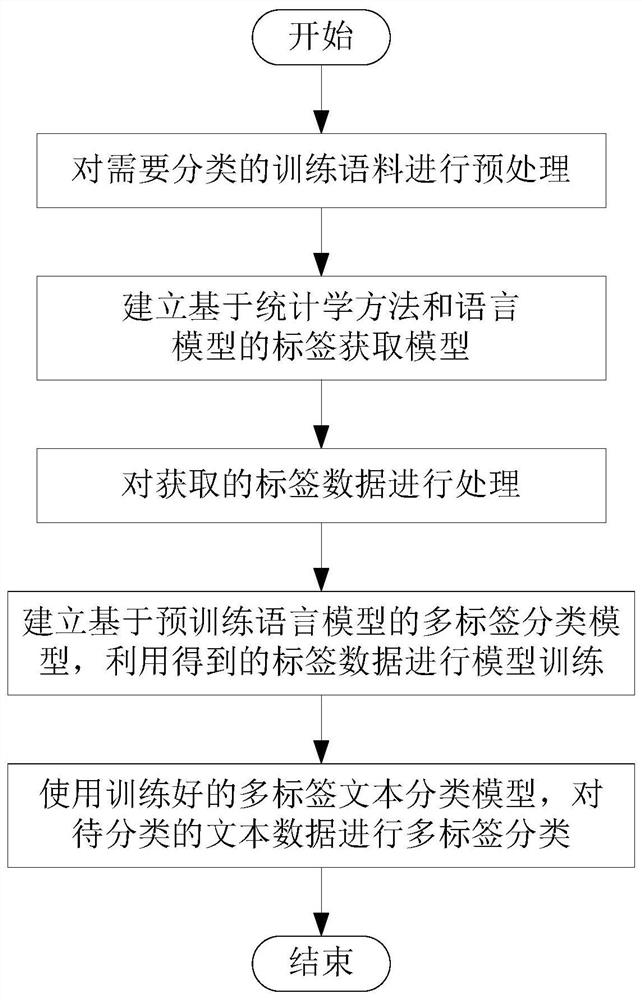

Multi-label text classification method based on statistics and pre-trained language model

ActiveCN112214599AImprove accuracySemantic analysisCharacter and pattern recognitionData setLinguistic model

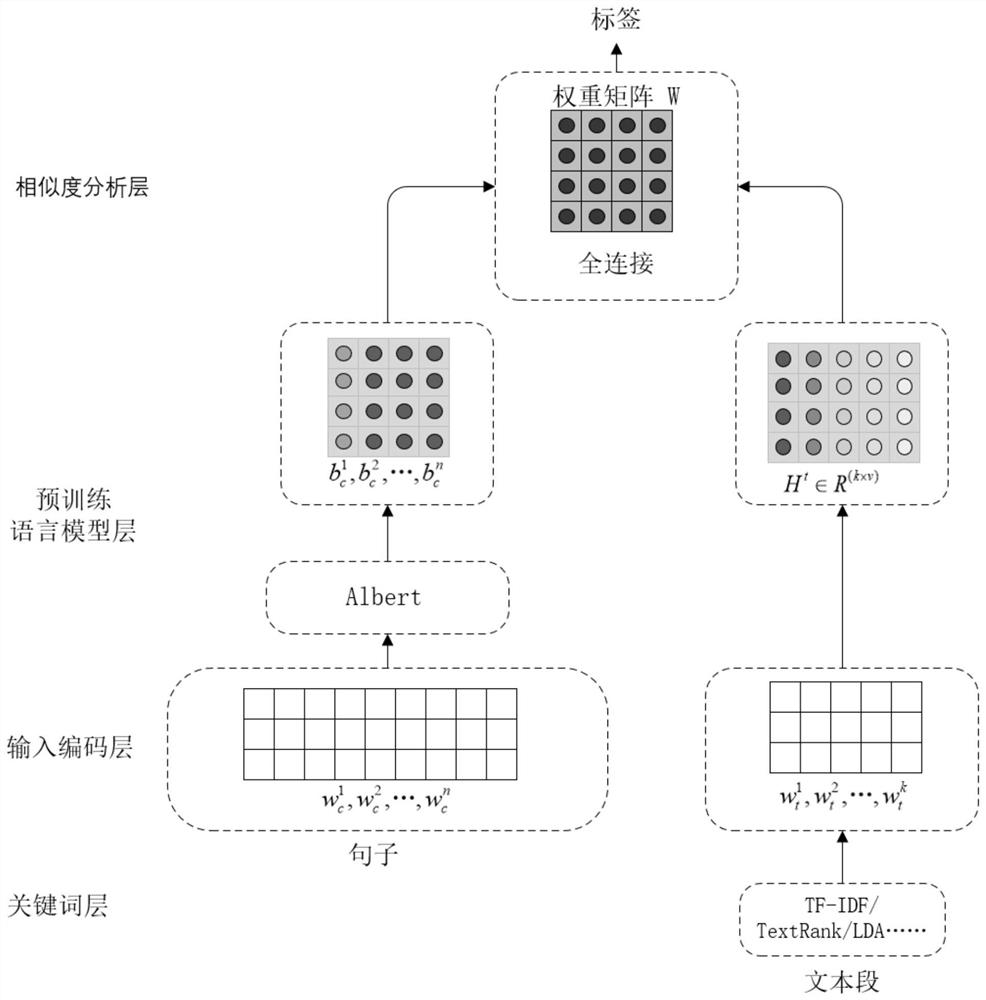

The invention discloses a multi-label text classification method based on statistics and a pre-training language model. The multi-label text classification method comprises the following steps: S1, preprocessing training corpora needing to be classified; S2, establishing a label acquisition model based on a statistical method and a language model; S3, processing the obtained label data; S4, establishing a multi-label classification model based on a pre-training language model, and performing model training by utilizing the obtained label data; S5, performing multi-label classification on the text data to be classified by using the trained multi-label text classification model. According to the method, a statistical method and a pre-trained language model label obtaining method are combined, the ALBERT language model is used for obtaining the semantic coding information of the text, a data set does not need to be manually labeled, and the label obtaining accuracy can be improved.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

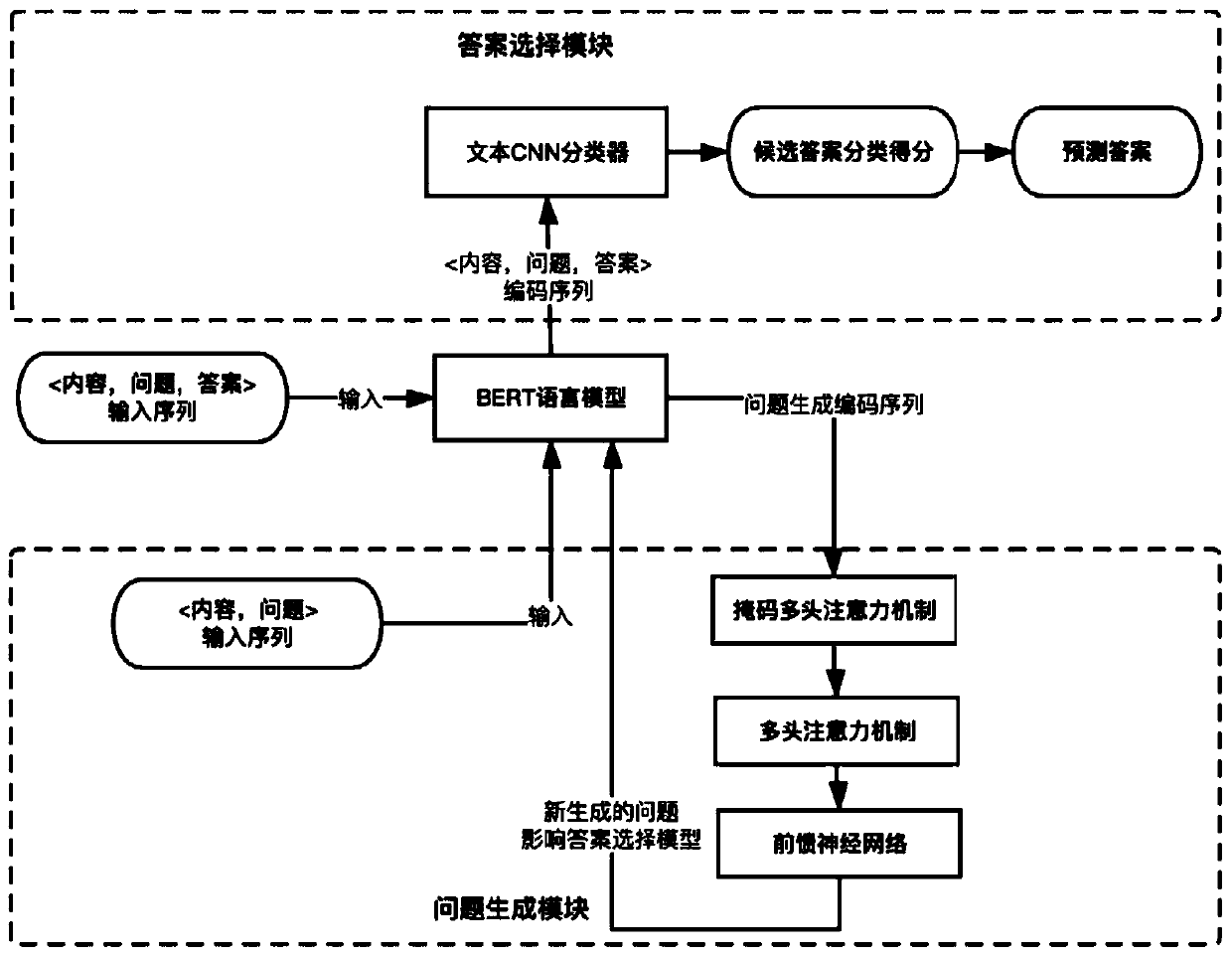

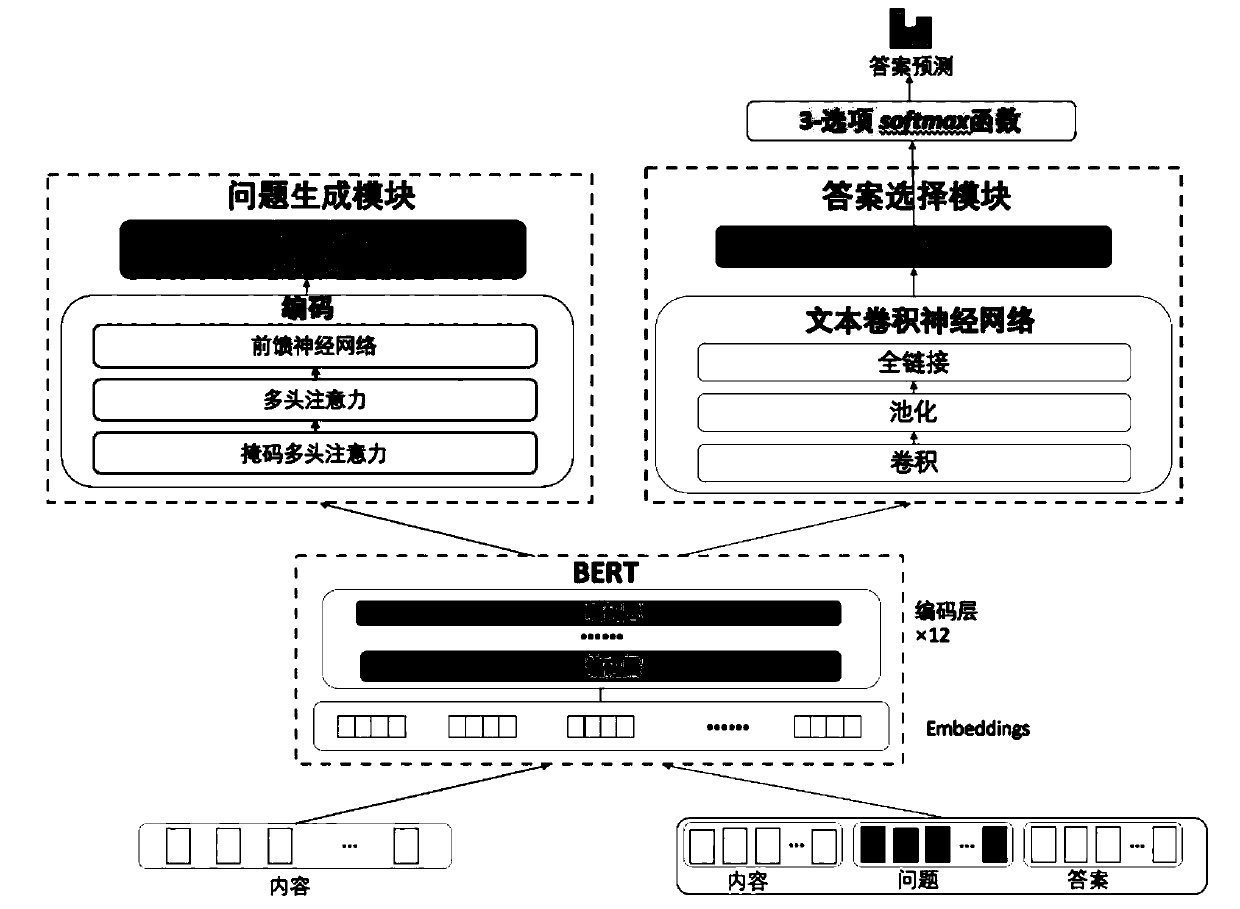

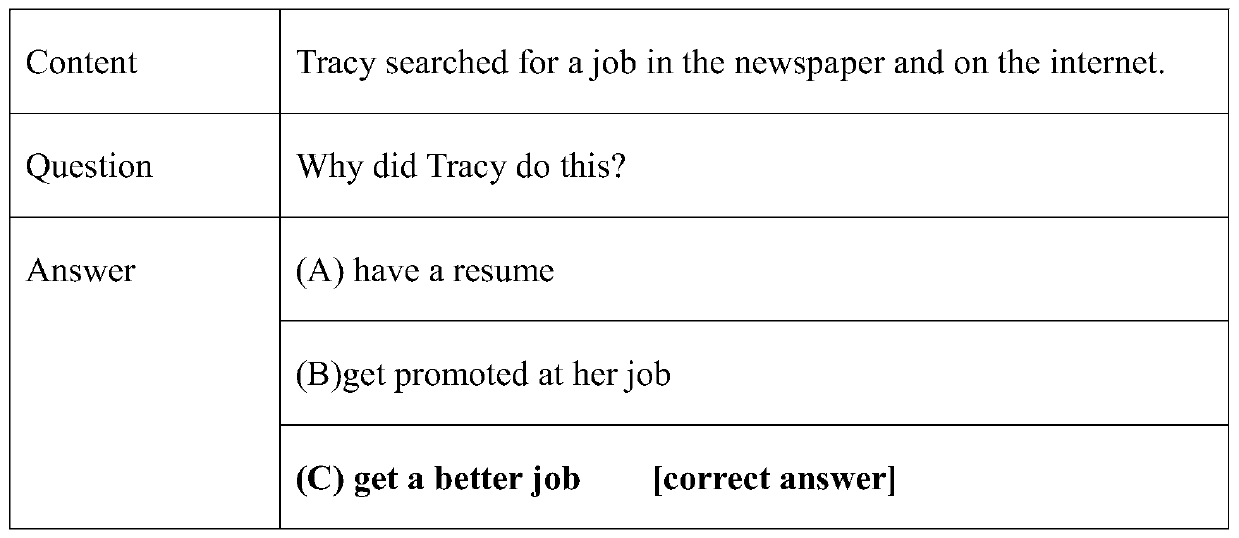

Common sense question-answering method based on question generation and convolutional neural network

ActiveCN110647619ADigital data information retrievalNeural architecturesQuestion generationLinguistic model

The invention provides a common sense question answering method based on question generation and a convolutional neural network. According to the method, content-problems are encoded into a vector sequence through a BERT language model; the vector sequence is sent to a problem generation module, and then is sent to a shared BERT language model; a triple composed of content, questions and answers passes through a BERT language model, an output content-question-answer coding sequence is transmitted to an answer selection module and classified through a convolutional neural network, and finally,an optimal option is selected as a candidate answer selected by the model according to scores obtained by the model.

Owner:SUN YAT SEN UNIV

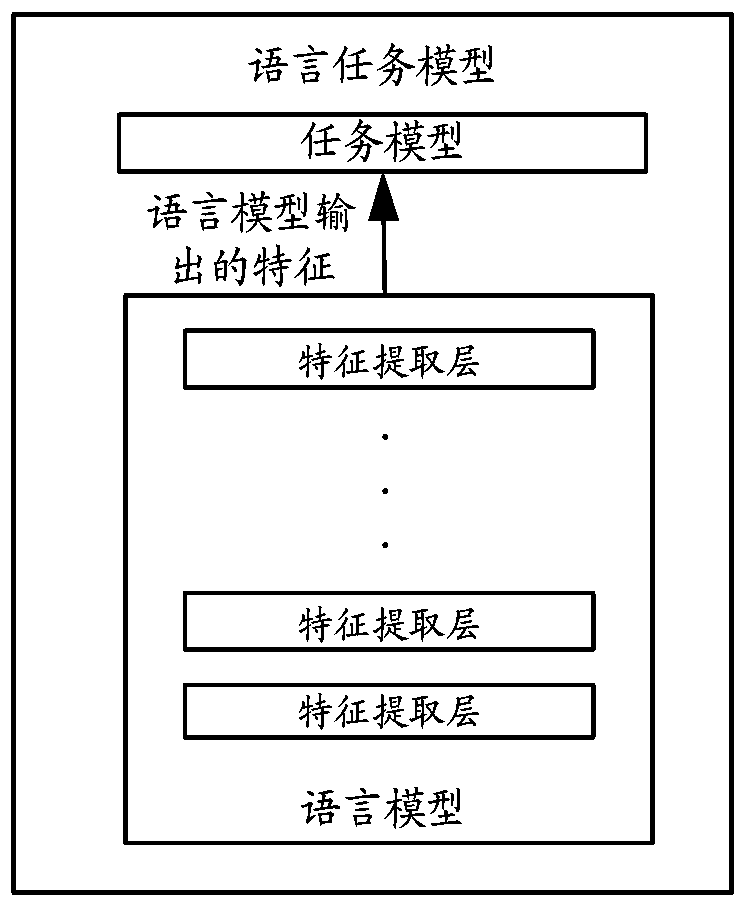

Language task model training method and device, electronic equipment and storage medium

ActiveCN111159416AEffective migrationSpecial data processing applicationsNeural learning methodsLinguistic modelForward propagation

The invention provides a language task model training method and device, electronic equipment and a storage medium. The method comprises the steps of performing hierarchical pre-training in a languagemodel based on corpus samples of corresponding language tasks in a pre-training sample set; carrying out forward propagation on corpus samples corresponding to language tasks in a training sample setin the language task model; fixing parameters of the language model, and performing back propagation in the language task model to update the parameters of the task model; and performing forward propagation and reverse propagation on corpus samples corresponding to the language tasks in the training sample set in the language task model so as to update parameters of the language model and the task model. By means of the method and device, the catastrophic forgetting phenomenon of the language model can be prevented, and meanwhile it is guaranteed that the language model and the task model canachieve the training effect meeting the corresponding learning rate.

Owner:TENCENT TECH (SHENZHEN) CO LTD

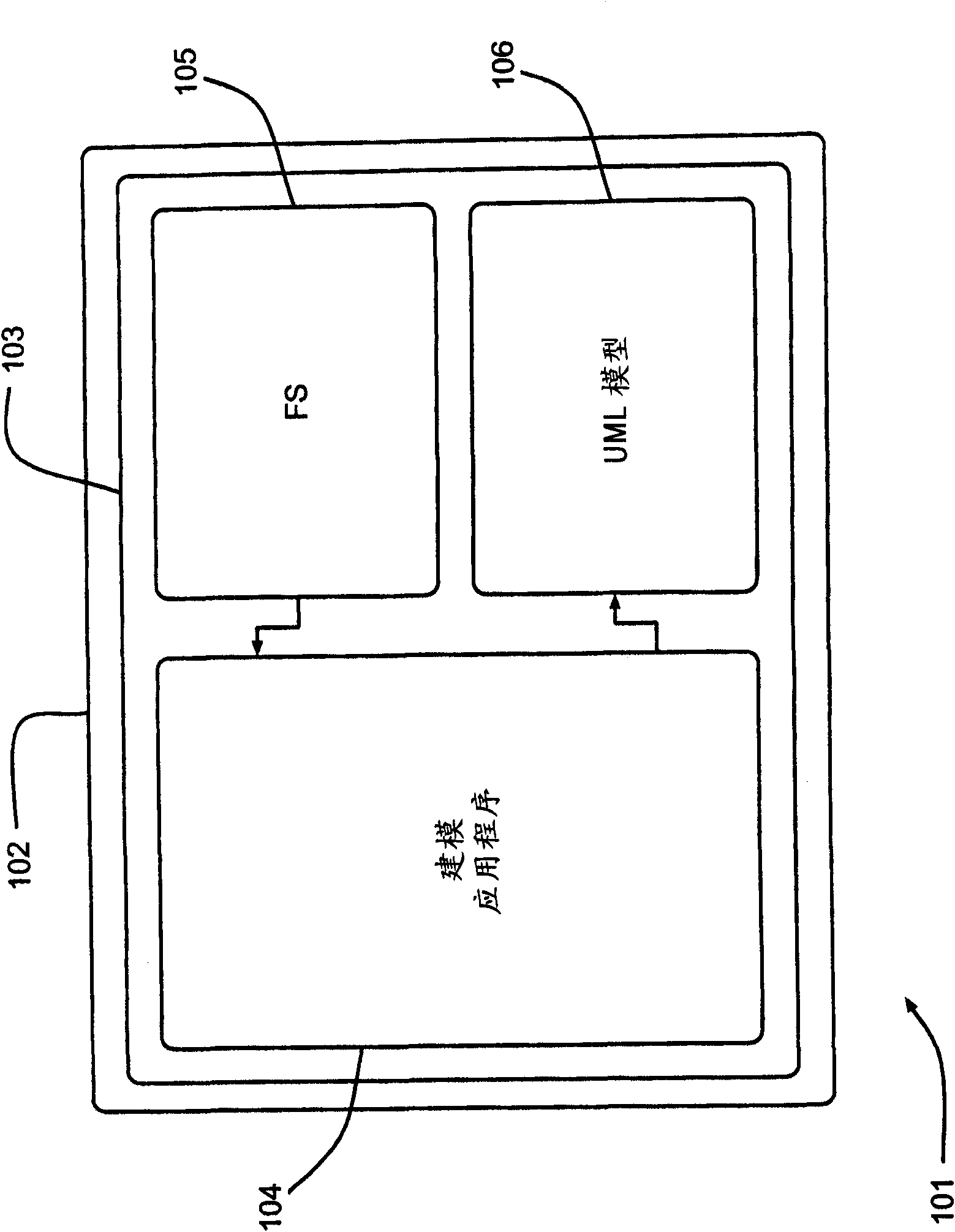

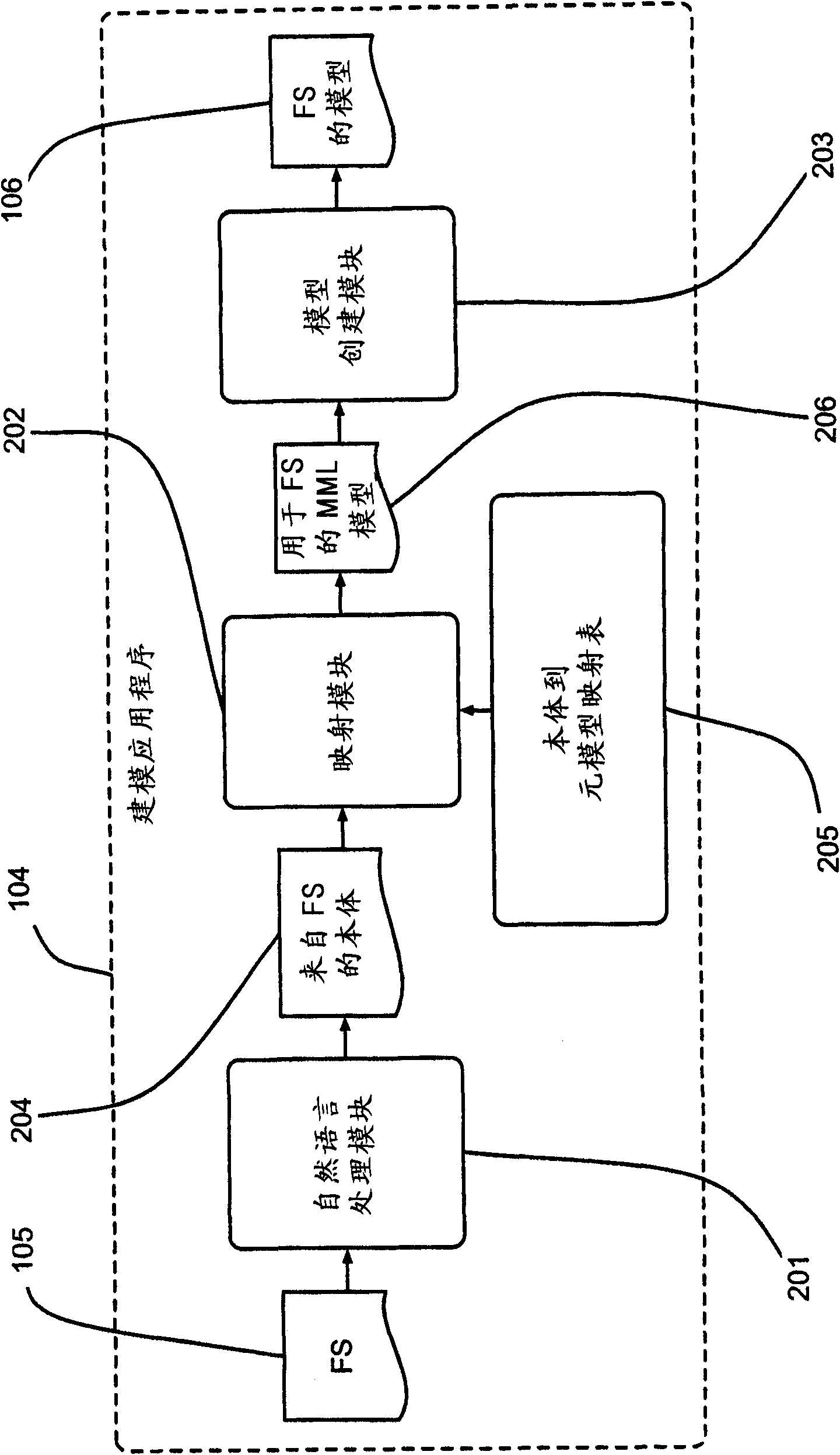

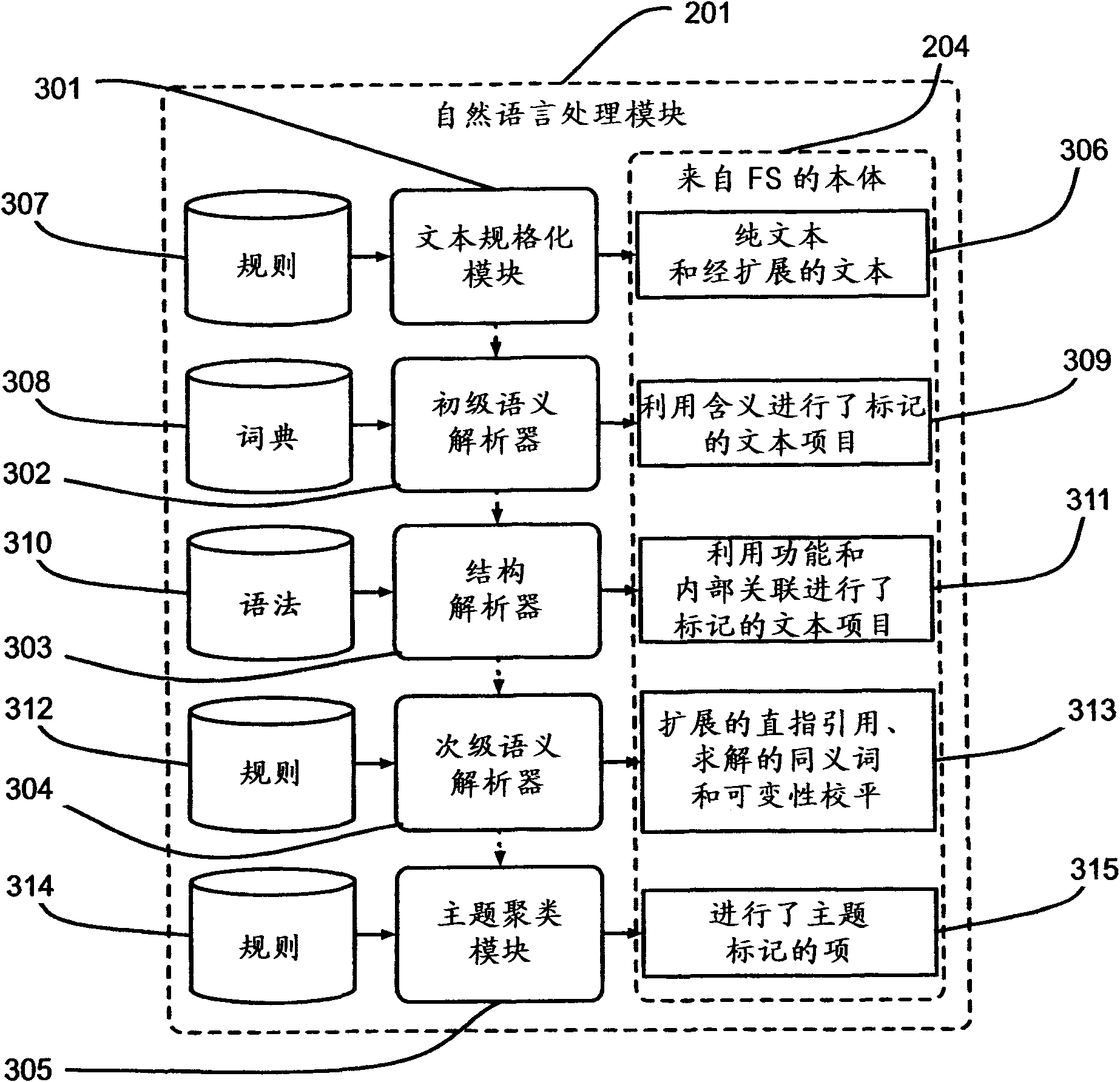

Method and device for automatically extracting language model of system modeling element

InactiveCN101872341ANatural language data processingSpeech recognitionLanguage modelNatural language

The invention discloses a method and a device for automatically extracting a language model of a system modeling element, in particular to a method, a device and software for automatically extracting a language model of a system modeling element from a natural language specification of the system.

Owner:IBM CORP

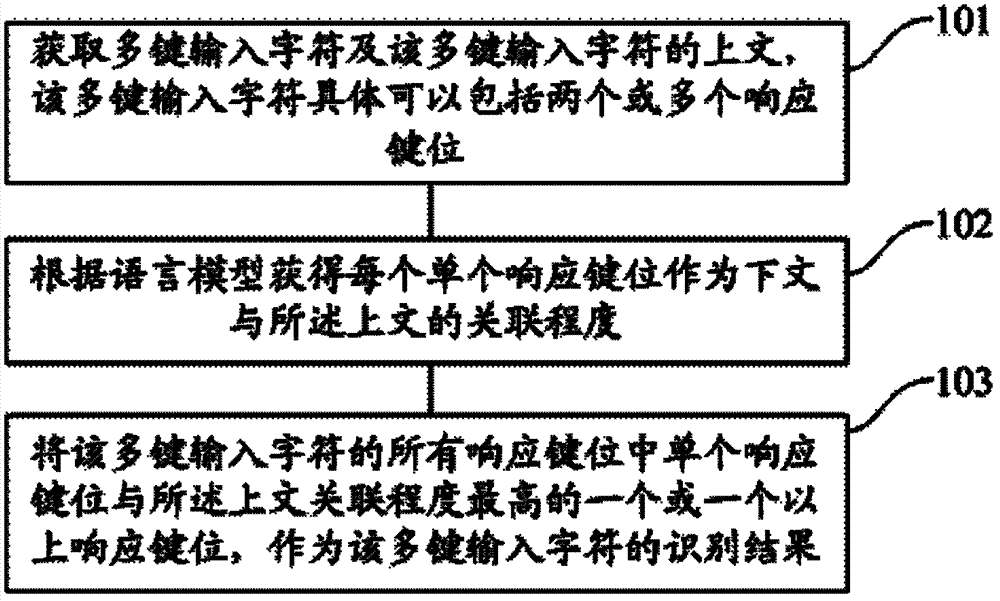

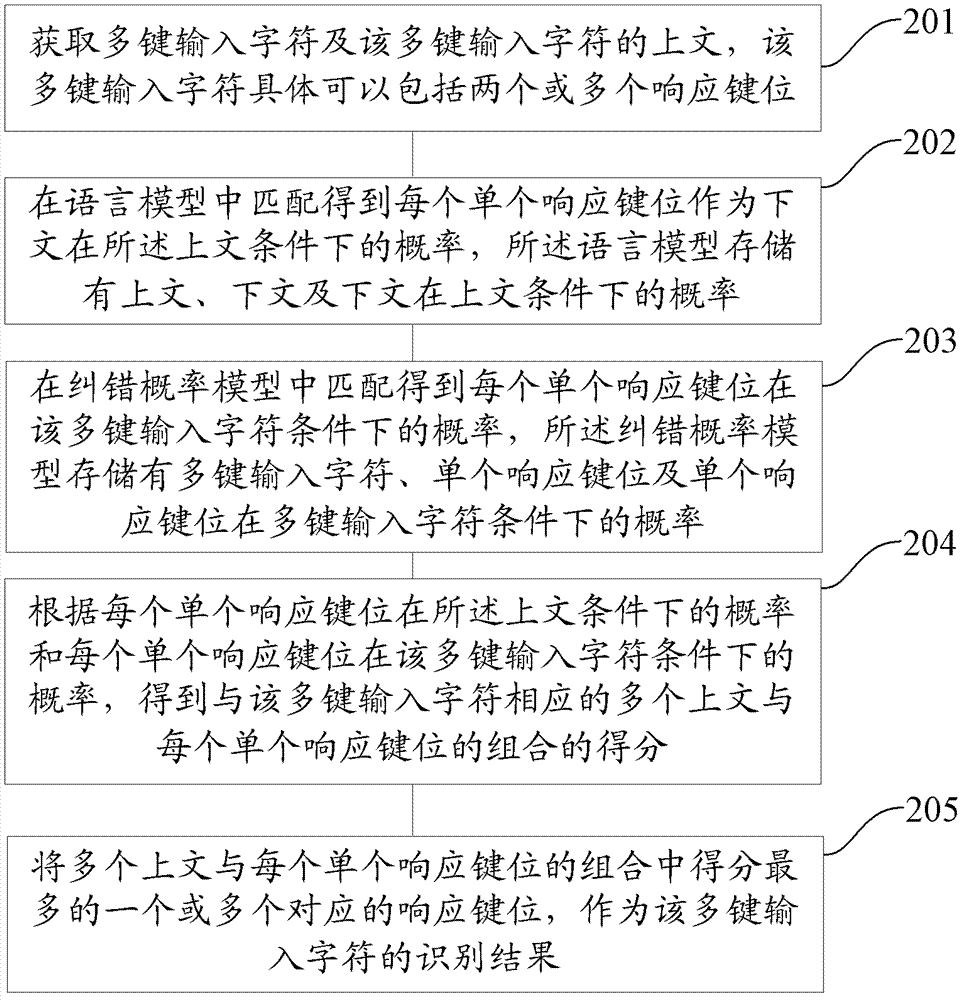

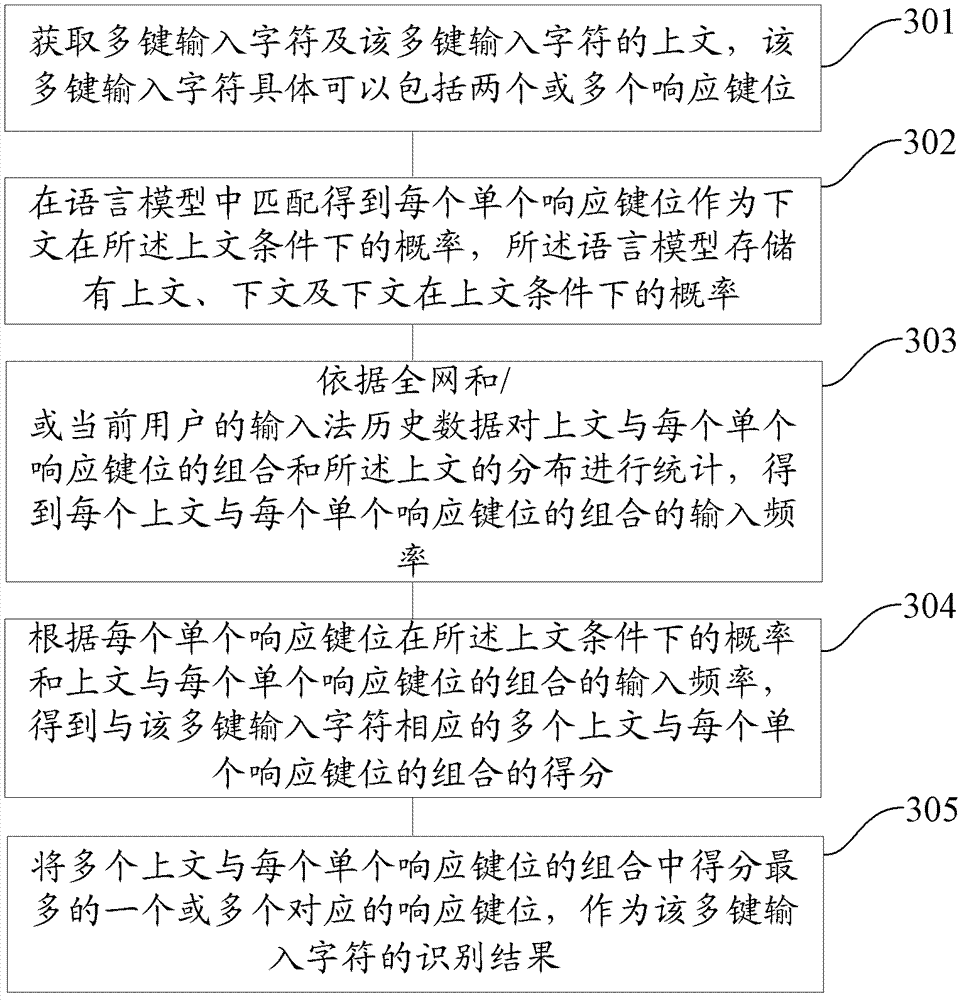

Method and device for identifying multi-key input characters

ActiveCN103365573AReasonable follow-upAutomatic correction of misoperationInput/output processes for data processingLinguistic modelData mining

The application provides a method and a device for identifying multi-key input characters. The method specifically includes that the multi-key input characters and the preceding part of the multi-key input characters are acquired, and the multi-key input characters comprise two or more responding key positions; according to the linguistic model, correlation degree between the preceding part and the below part is acquired when each single responding key position is taken as the following part; one or more single responding key positions of all the responding key positions of the multi-key input characters, which have highest correlation degree with the preceding part, are taken as the identify result of the multi-key input characters. By the method and the device for identifying the multi-key input characters, the multi-key input characters can be identified better and the input efficiency of users can be improved.

Owner:BEIJING SOGOU TECHNOLOGY DEVELOPMENT CO LTD

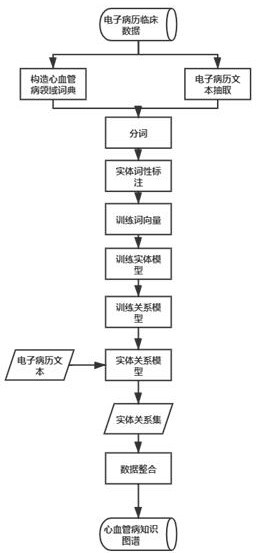

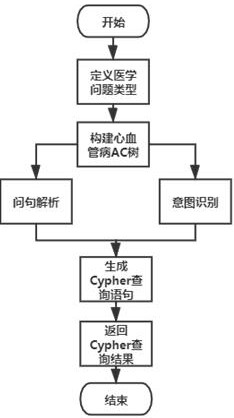

Cardiovascular and cerebrovascular knowledge map questioning and answering method based on electronic medical records

PendingCN112002411AEnrich Dataset FeaturesLow costMedical automated diagnosisNatural language data processingMedical recordConditional random field

The invention discloses a cardiovascular and cerebrovascular knowledge map questioning and answering method based on electronic medical records. The method comprises the following three parts: 1, constructing a crawler to extract encyclopedia and cardiovascular and cerebrovascular medical knowledge, fusing medical record data to construct a cardiovascular and cerebrovascular domain dictionary andcarrying out electronic medical record desensitization treatment; 2, constructing a knowledge graph based on an electronic medical record, namely realizing entity relationship extraction by using a language model, a bidirectional long-short-term memory network, a conditional random field and a convolutional neural network, screening entity relationships by using word2vec, and storing the entity relationships in Neo4j; and 3, constructing a question-answering method based on a traditional rule, constructing an ACTree and a question template by using the medical feature word set and the domain dictionary to perform question analysis and intention recognition, generating a Cypher query statement, and returning an answer. Based on the electronic medical record and the online knowledge base, cardiovascular and cerebrovascular knowledge graph construction and knowledge graph-based user complaint questioning and answering are realized.

Owner:HANGZHOU DIANZI UNIV

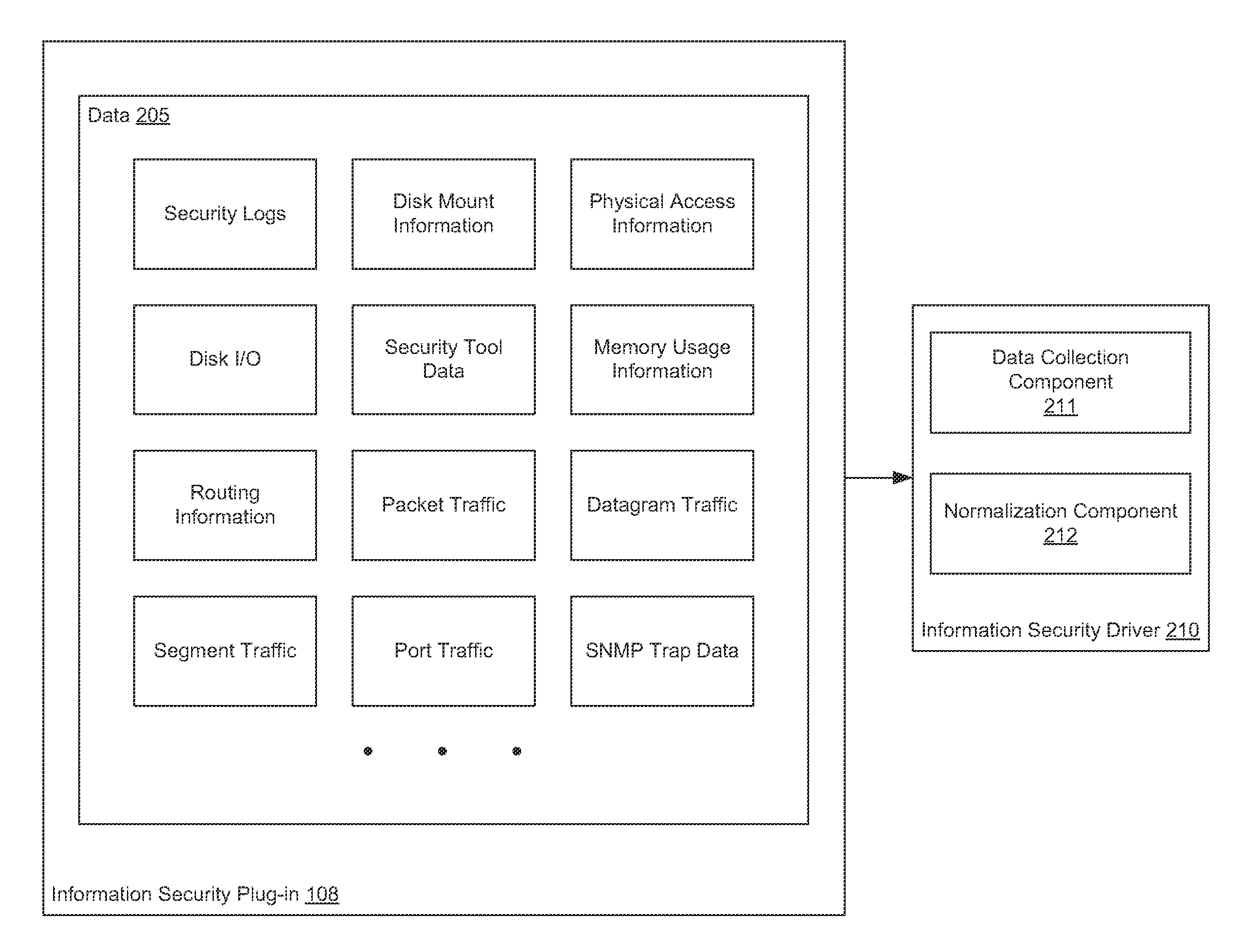

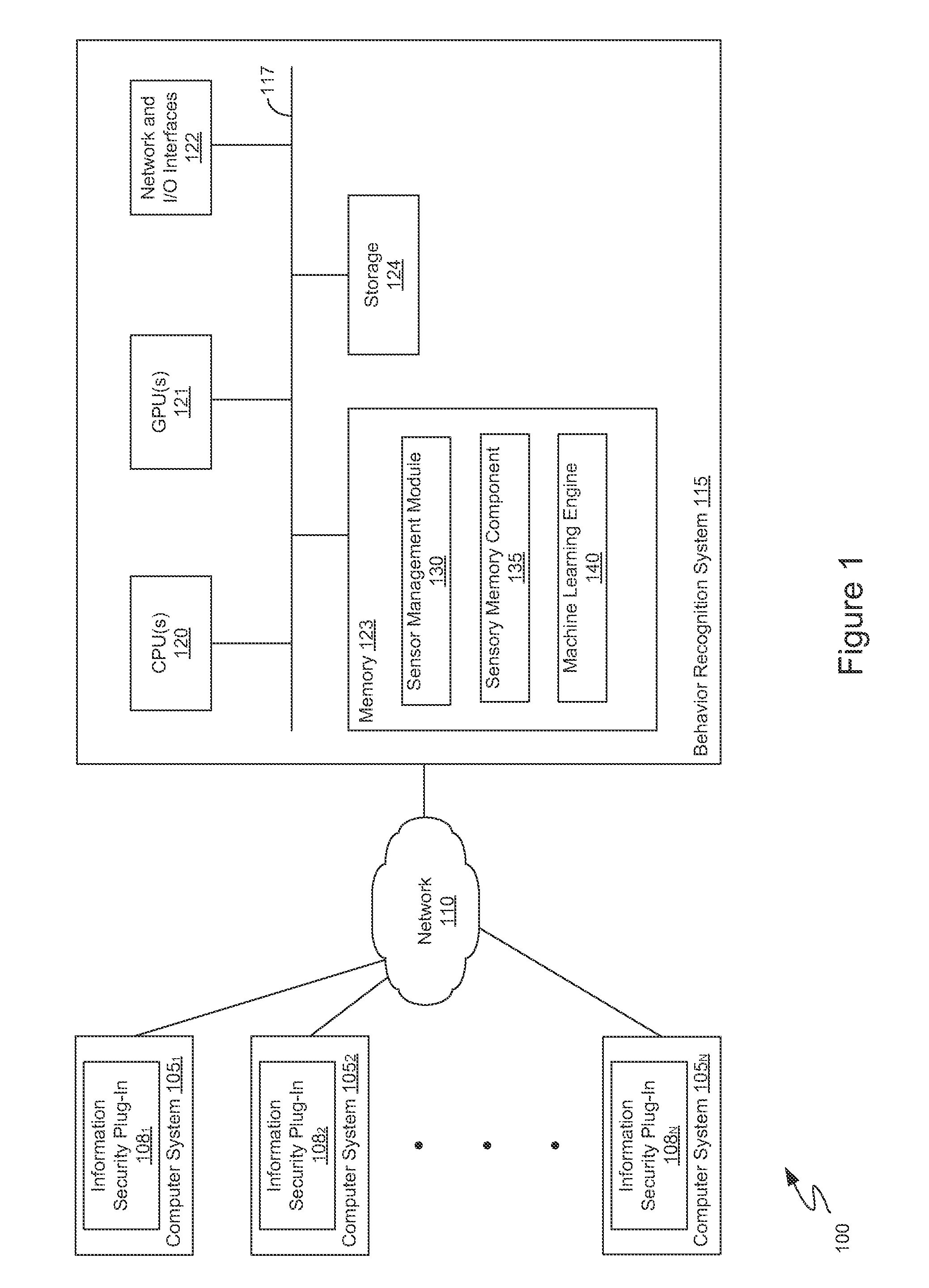

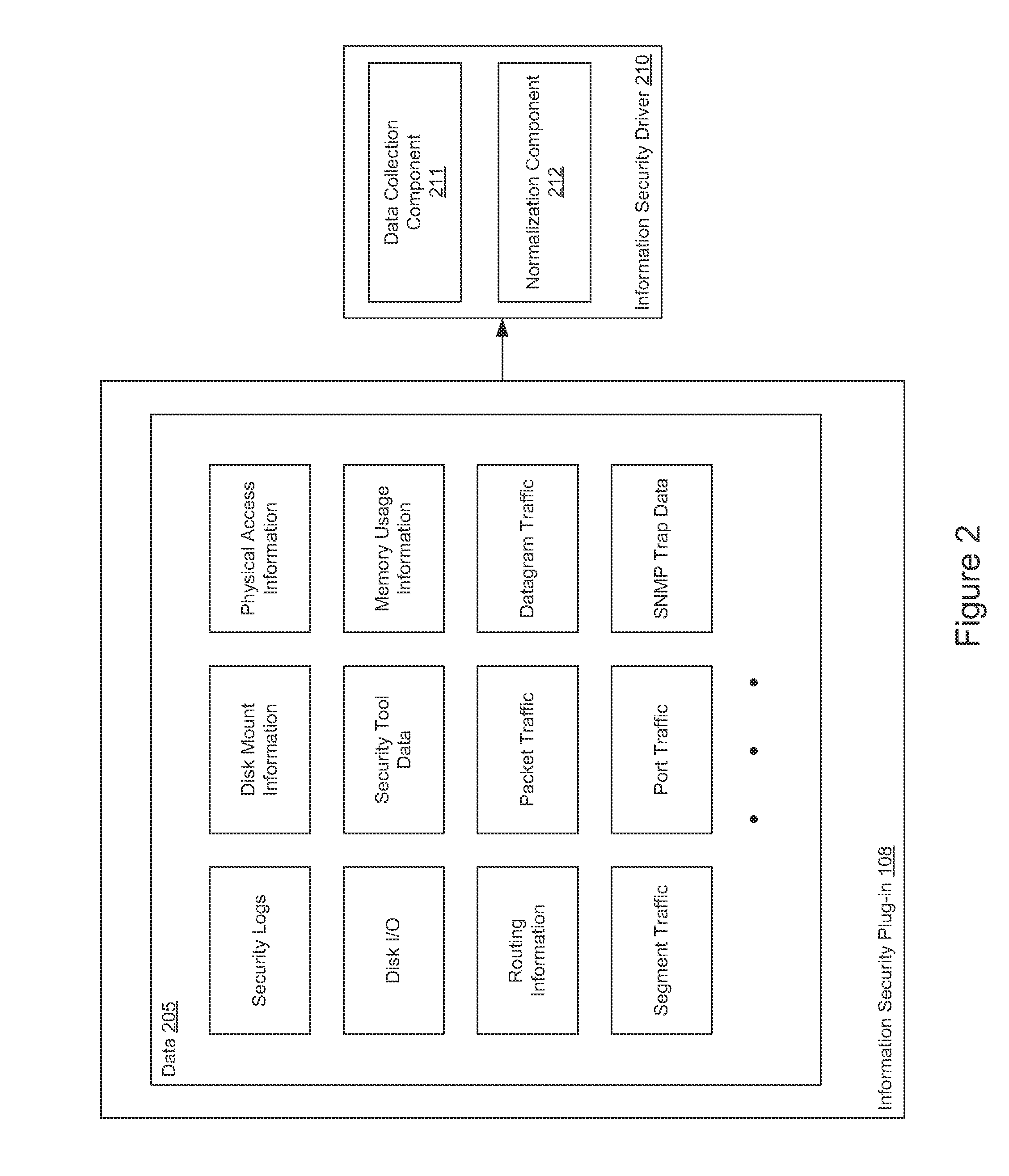

Cognitive information security using a behavioral recognition system

Embodiments presented herein describe a method for processing streams of data of one or more networked computer systems. According to one embodiment of the present disclosure, an ordered stream of normalized vectors corresponding to information security data obtained from one or more sensors monitoring a computer network is received. A neuro-linguistic model of the information security data is generated by clustering the ordered stream of vectors and assigning a letter to each cluster, outputting an ordered sequence of letters based on a mapping of the ordered stream of normalized vectors to the clusters, building a dictionary of words from of the ordered output of letters, outputting an ordered stream of words based on the ordered output of letters, and generating a plurality of phrases based on the ordered output of words.

Owner:INTELLECTIVE AI INC

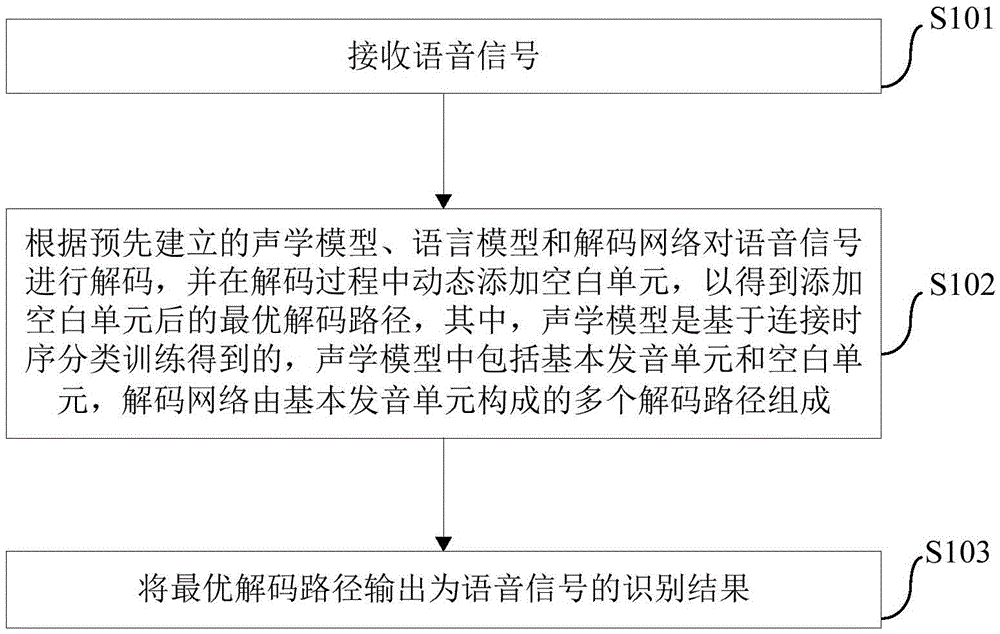

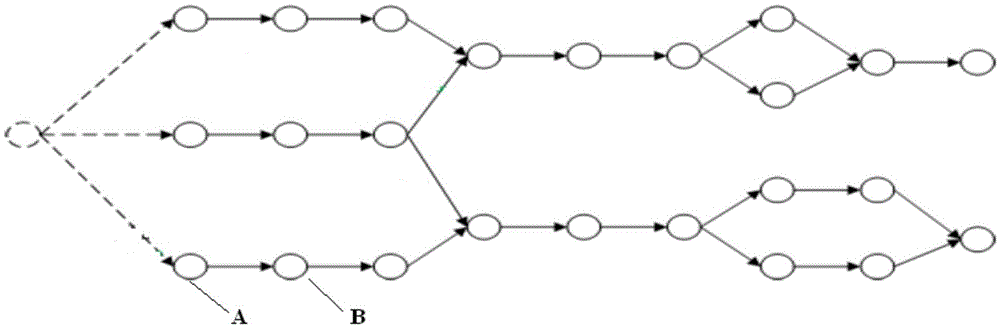

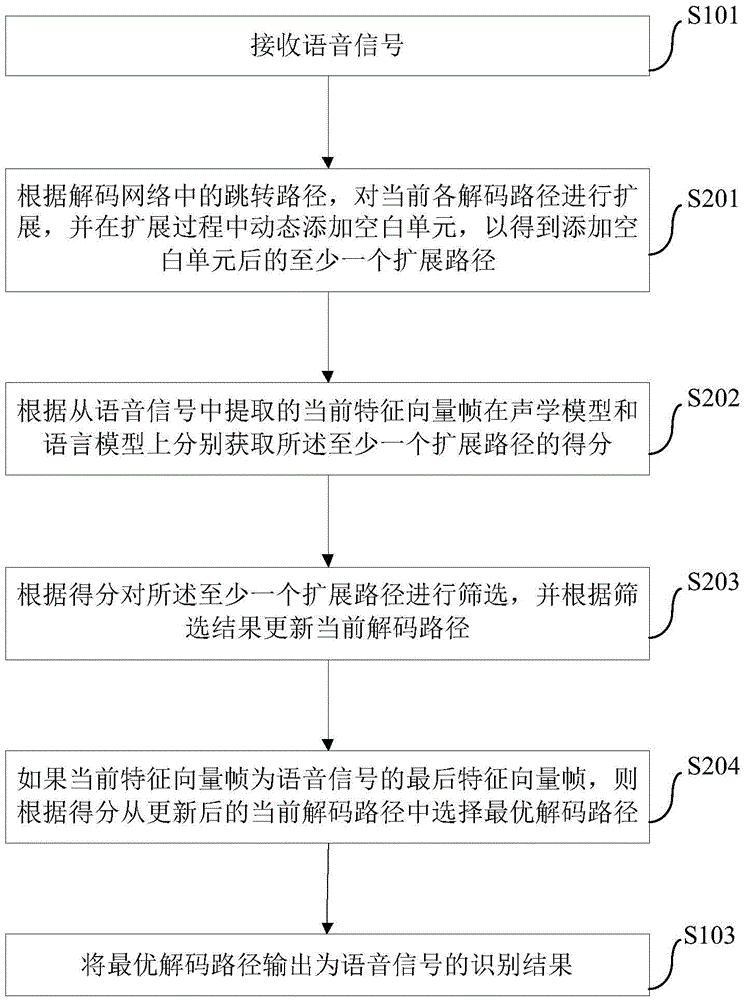

Voice identification method and apparatus

The invention provides a voice identification method and apparatus. The voice identification method comprises the following steps of receiving a voice signal; performing decoding on the voice signal according to a pre-established acoustic model, a linguistic model and a decoding network, and dynamically adding a blank unit in the decoding process to obtain an optimized decoding route with the added blank unit, wherein the acoustic model is obtained based on connectionist temporal classification training; the acoustic model comprises a basic pronouncing unit and the blank unit; the decoding network consists of multiple decoding routes formed by the basic pronouncing unit; and outputting the optimized decoding route to be used as the identification result of the voice signal. According to the voice identification method, the accuracy of voice identification can be improved, and the decoding speed in the identification process is improved.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

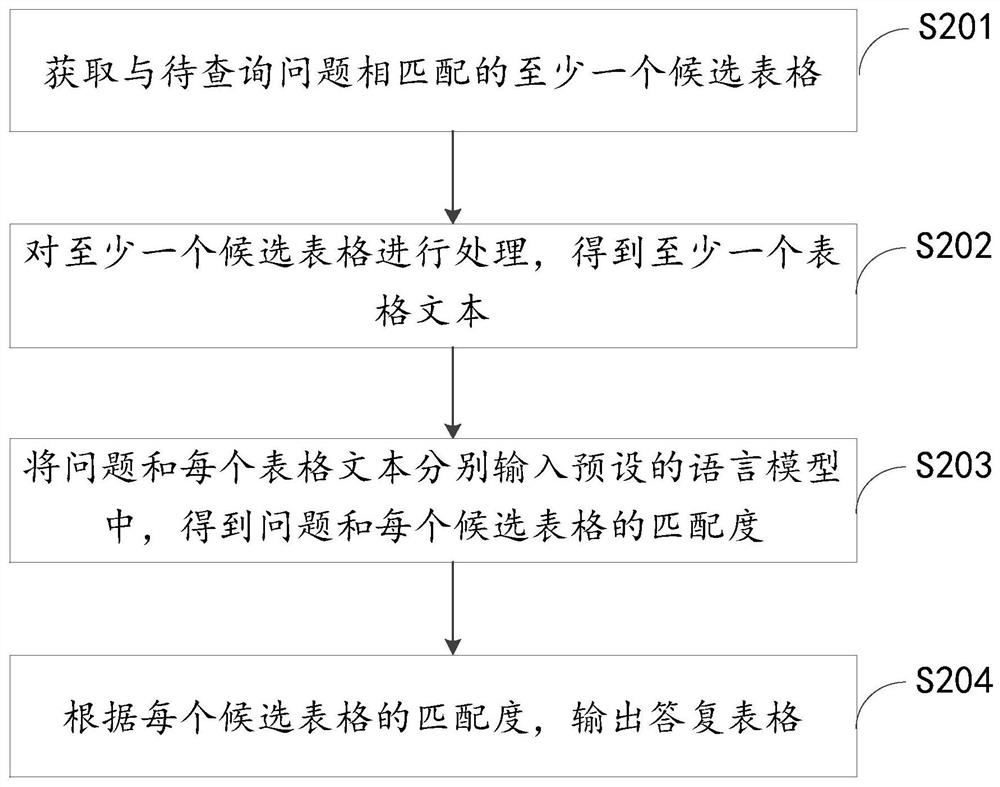

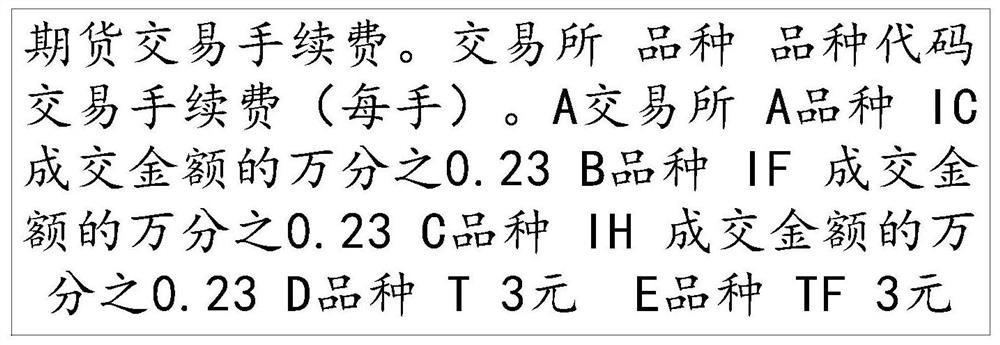

Question and answer processing method and device, language model training method and device, equipment and storage medium

The invention discloses a question and answer processing method and device, a language model training method and device, equipment and a storage medium, and relates to the field of natural language processing. The specific implementation scheme is as follows: obtaining at least one candidate table matched with a to-be-queried question, wherein each candidate table comprises a candidate answer corresponding to the question; processing the at least one candidate table to obtain at least one table text, the table text comprising text content of each domain in the candidate table, the domains comprising titles, headers and cells; respectively inputting the question and each table text into a preset language model to obtain a matching degree of the question and each candidate table; according to the matching degree of each candidate table, outputting a reply table, wherein the reply table is a candidate table of which the matching degree with the question is greater than a preset value or acandidate table corresponding to the maximum matching degree in the at least one candidate table. The language model is adopted to perform semantic matching on the questions and the texts, so that the matching accuracy and recall rate of the questions and the tables are improved.

Owner:BEIJING BAIDU NETCOM SCI & TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com