Patents

Literature

76 results about "Visual vocabularies" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Visual vocabulary consists of images or pictures that stand for words and their meanings. In the same way that individual words make written language possible, individual images make a visual language possible. The term also applies to a theory of visual communication that says pictures and images can be “read” in the same way that words can.

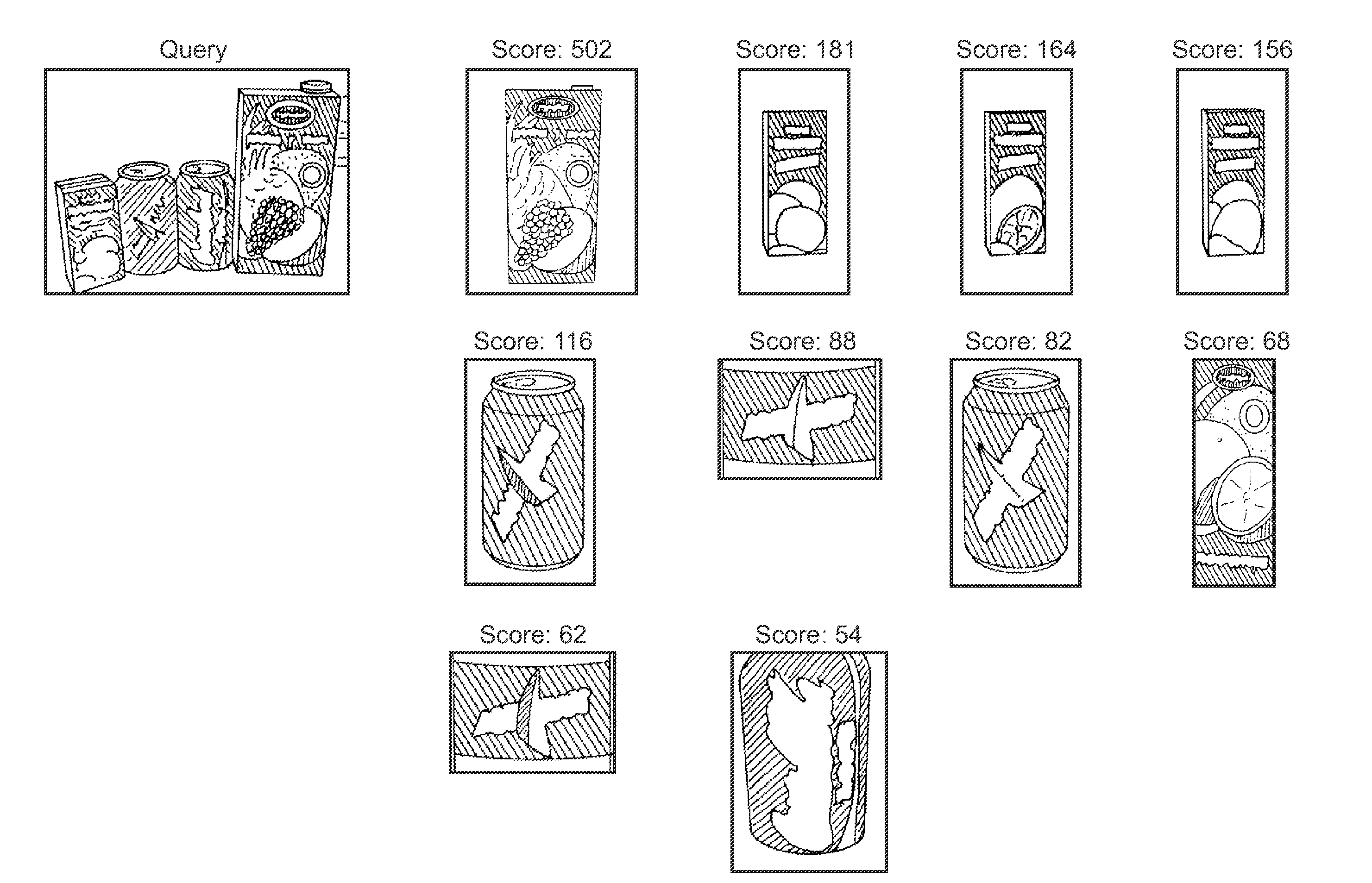

Method and system for fast and robust identification of specific product images

ActiveUS20130202213A1Improve scalabilitySimple backgroundDigital data information retrievalCharacter and pattern recognitionWord listReference image

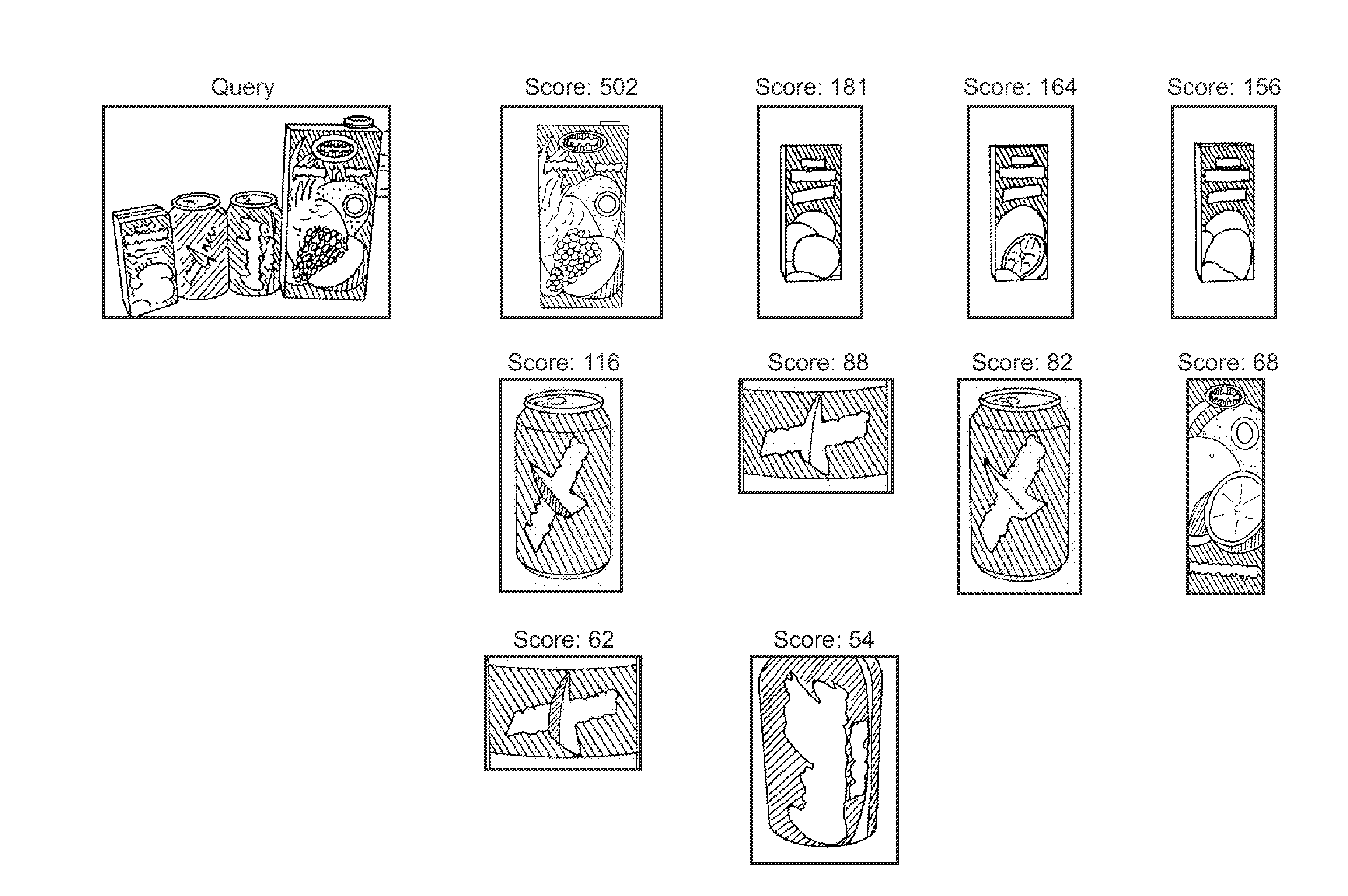

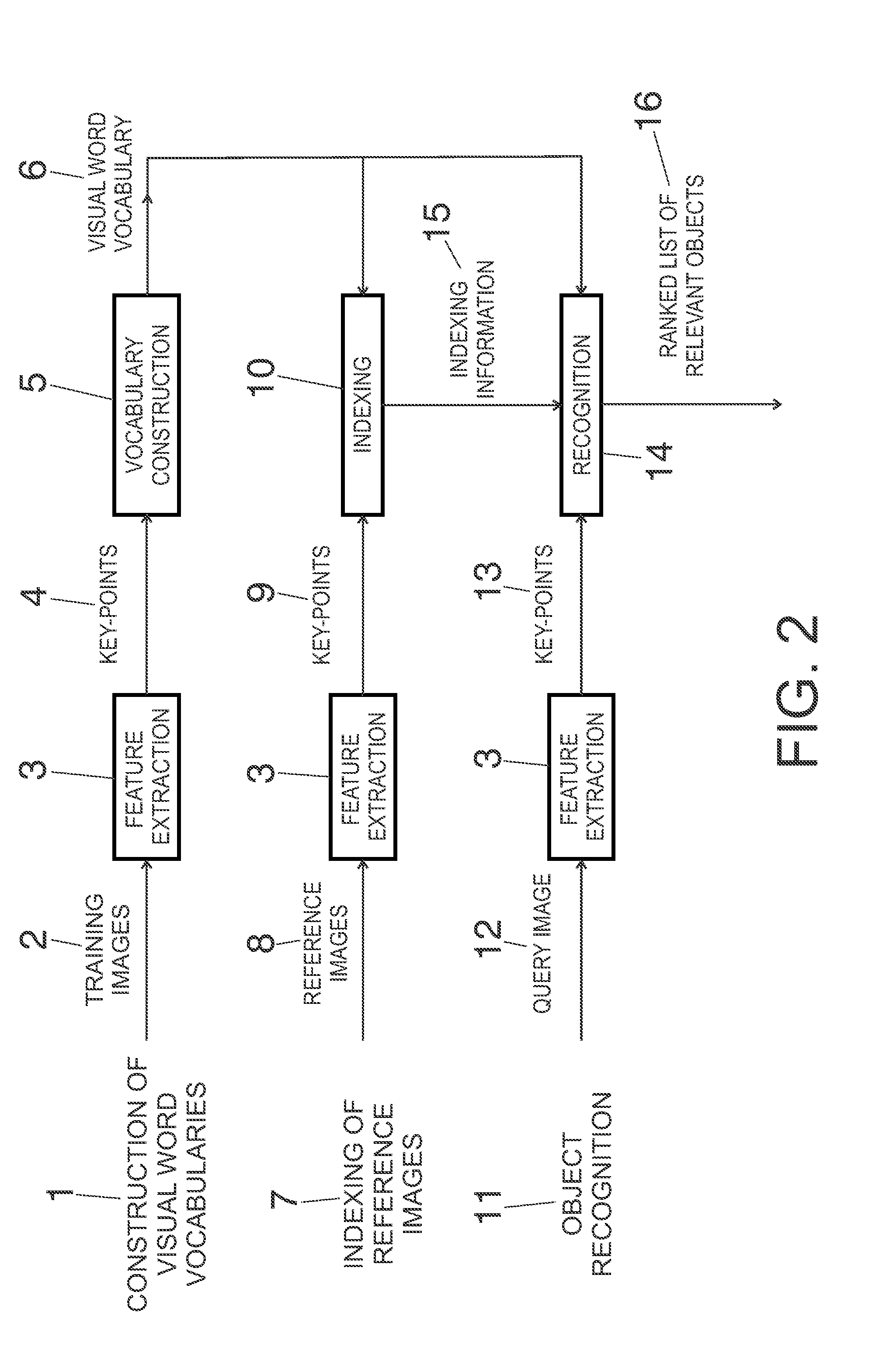

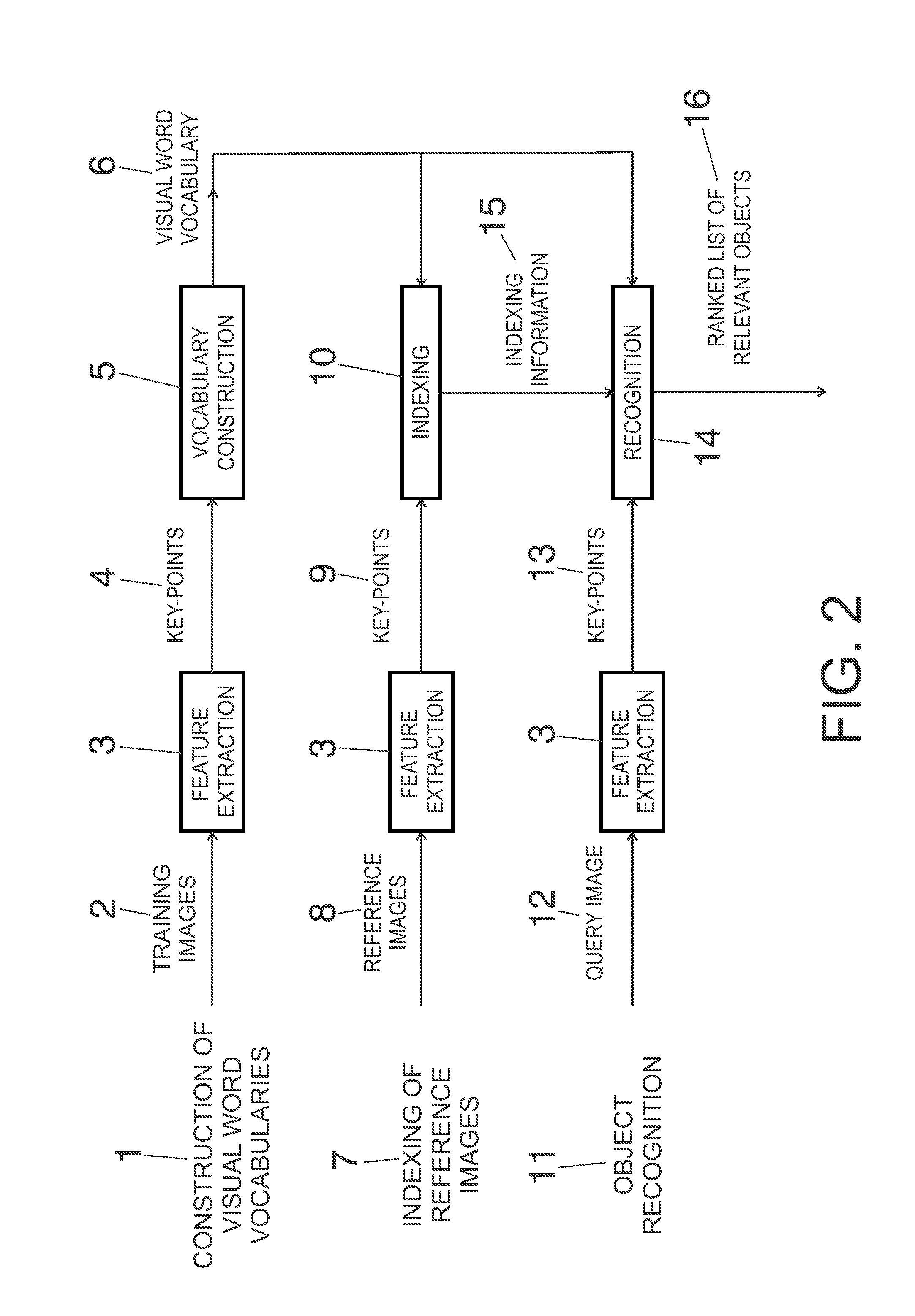

Identification of objects in images. All images are scanned for key-points and a descriptor is computed for each region. A large number of descriptor examples are clustered into a Vocabulary of Visual Words. An inverted file structure is extended to support clustering of matches in the pose space. It has a hit list for every visual word, which stores all occurrences of the word in all reference images. Every hit stores an identifier of the reference image where the key-point was detected and its scale and orientation. Recognition starts by assigning key-points from the query image to the closest visual words. Then, every pairing of the key-point and one of the hits from the list casts a vote into a pose accumulator corresponding to the reference image where the hit was found. Every pair key-point / hit predicts specific orientation and scale of the model represented by the reference image.

Owner:CATCHOOM TECH

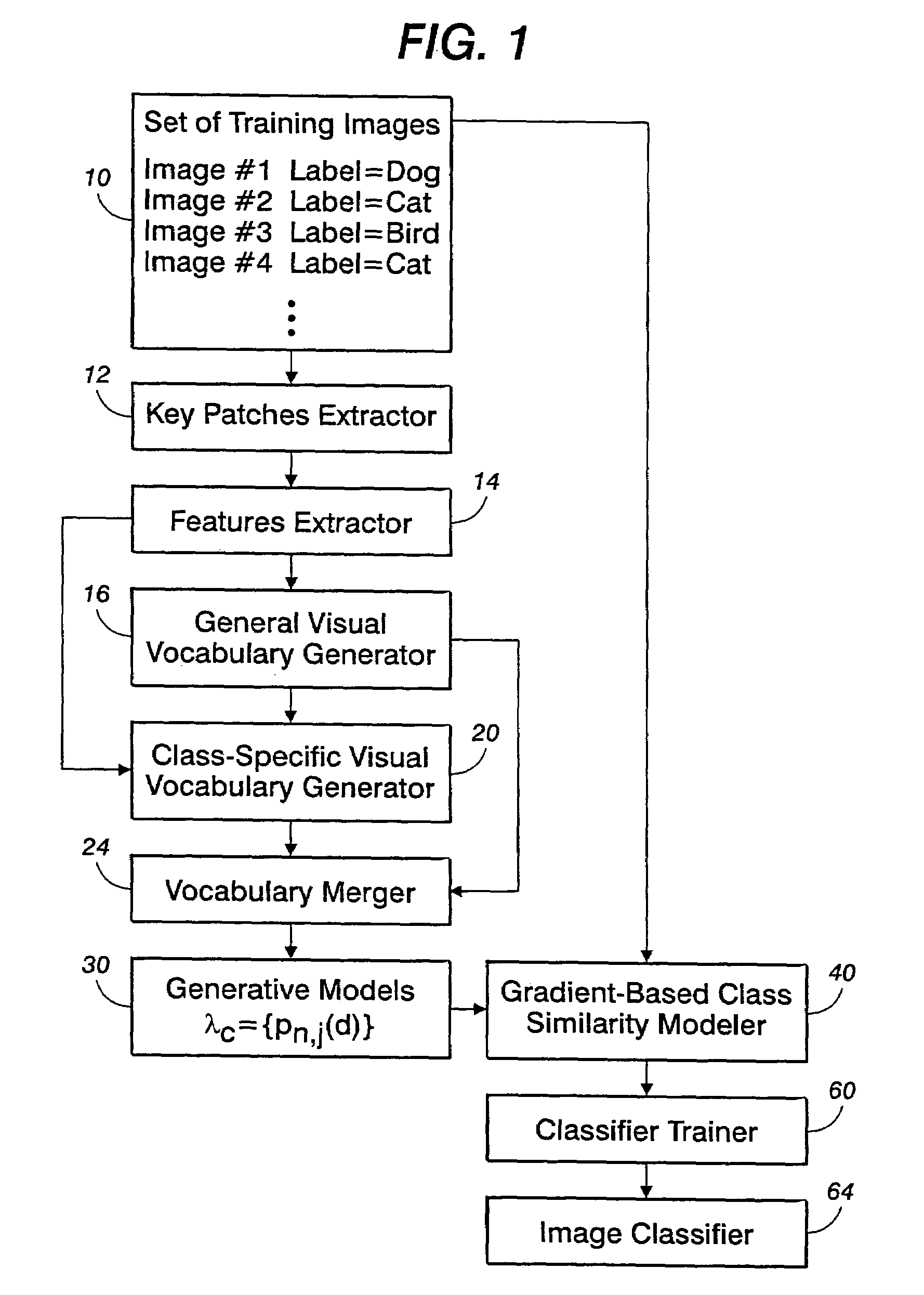

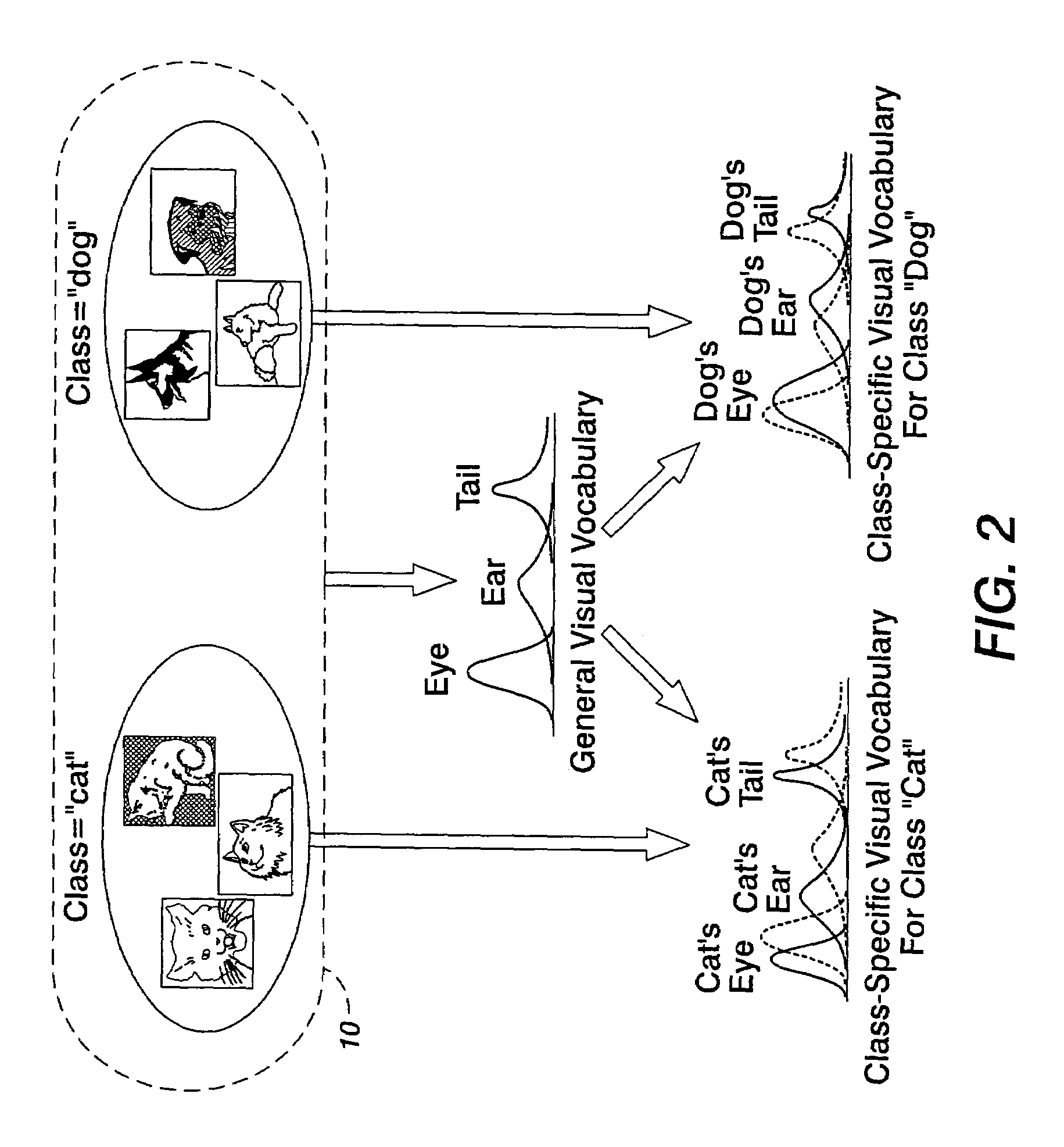

Generic visual classification with gradient components-based dimensionality enhancement

ActiveUS7680341B2Add dimensionCharacter and pattern recognitionPattern recognitionArtificial intelligence

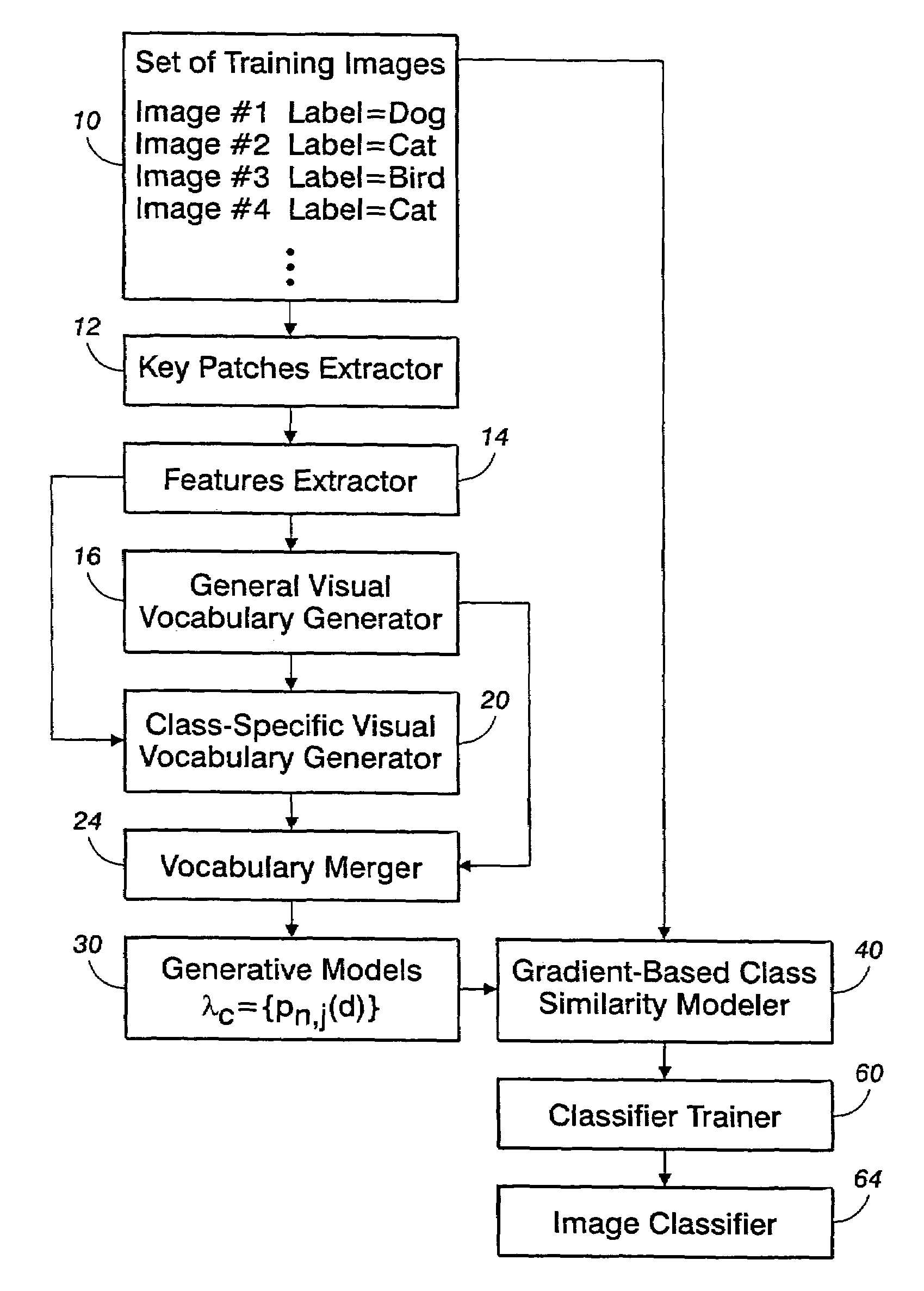

In an image classification system (70), a plurality of generative models (30) correspond to a plurality of image classes. Each generative model embodies a merger of a general visual vocabulary and an image class-specific visual vocabulary. A gradient-based class similarity modeler (40) includes (i) a model fitting data extractor (46) that generates model fitting data of an image (72) respective to each generative model and (ii) a dimensionality enhancer (50) that computes a gradient-based vector representation of the model fitting data with respect to each generative model in a vector space defined by the generative model. An image classifier (76) classifies the image respective to the plurality of image classes based on the gradient-based vector representations of class similarity.

Owner:XEROX CORP

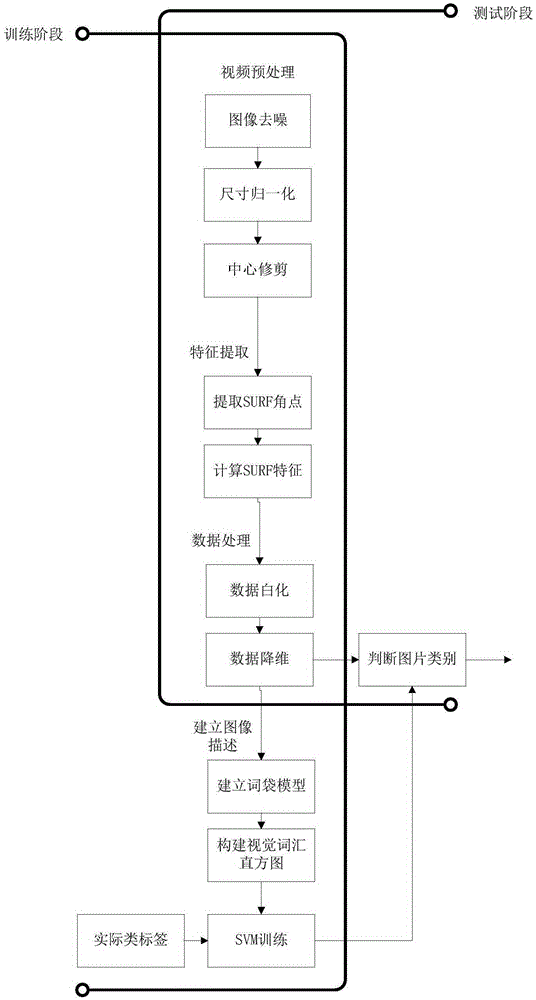

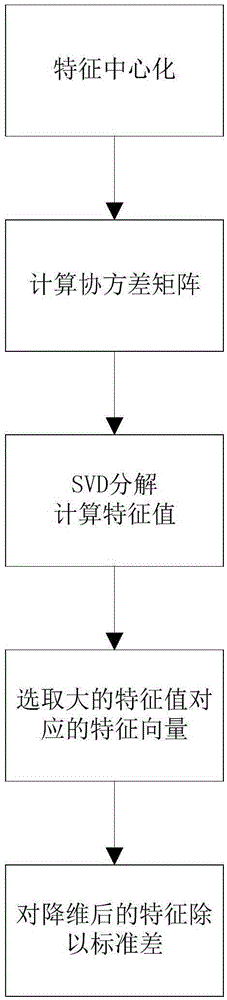

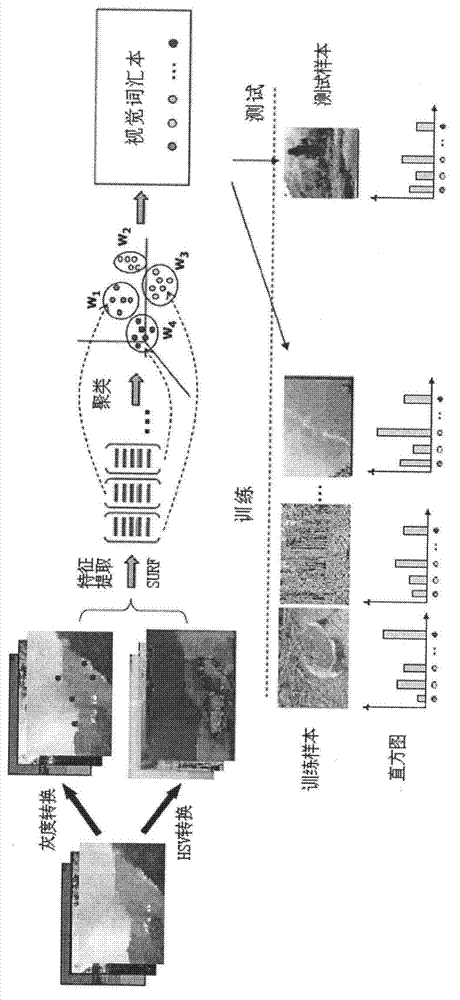

Image object recognition method based on SURF

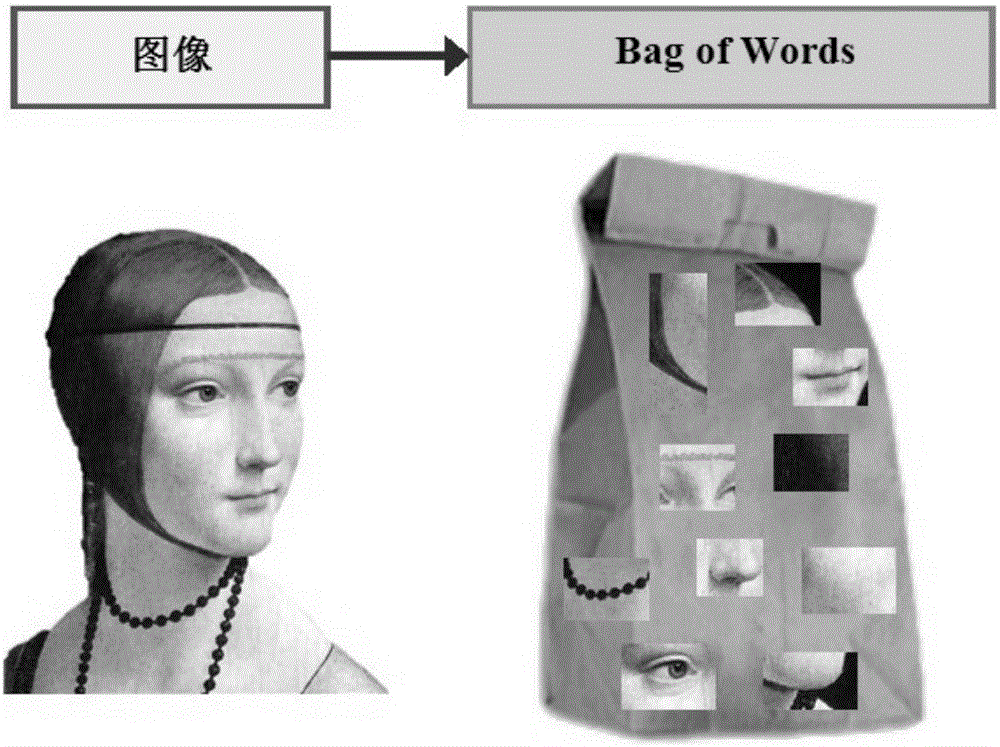

ActiveCN105389593ARealize the recognition functionObjectively and accurately reflectCharacter and pattern recognitionBag-of-words modelClassification methods

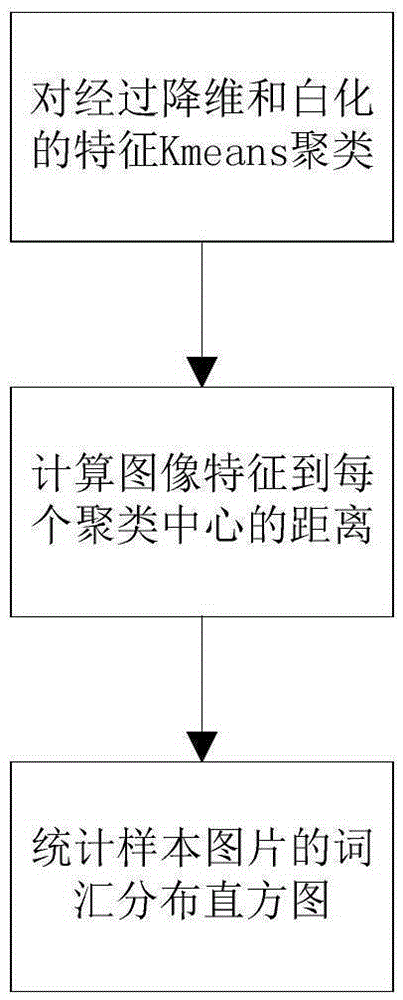

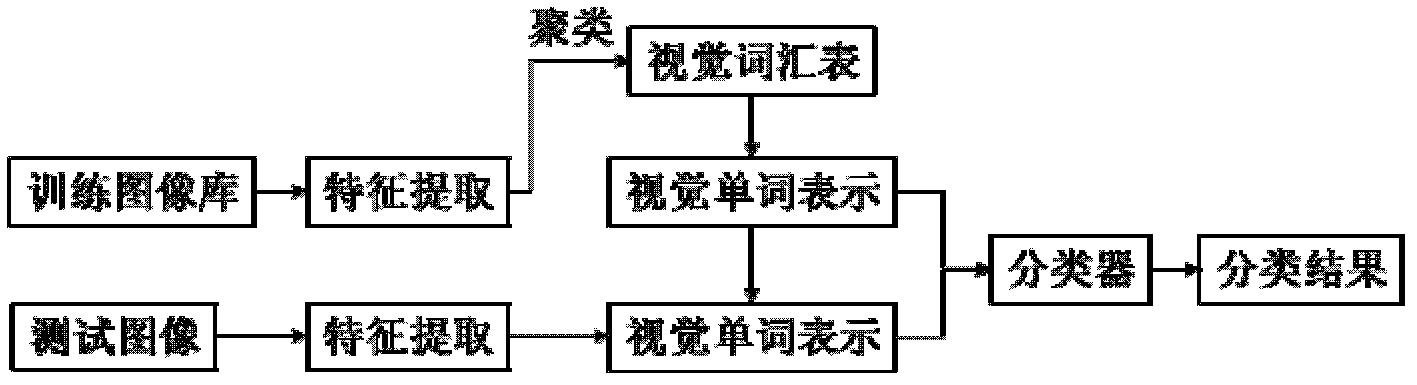

The invention provides an image object recognition method based on SURF (Speed Up Robust Feature), comprising the following steps: first, preprocessing images; second, extracting SURF corners and SURF descriptors of the images to describe the features of the images; third, processing the features through PCA data whitening and dimension reduction; establishing a bag-of-visual-words model through Kmeans clustering based on the features after processing, and using the bag-of-visual-words model to construct a visual vocabulary histogram of the images; and finally, carrying out training by a nonlinear support vector machine (SVM) classification method, and classifying the images to different categories. After classification model building of different images is completed in the training phase, the images tested in a concentrated way are detected in the testing phase, and therefore, different image objects can be recognized. The method has excellent performance in the aspects of recognition rate and speed, and can reflect the content of images more objectively and accurately. In addition, the classification result of an SVM classifier is optimized, and the error rate of judgment of the classifier and the limitation of the categories of training samples are reduced.

Owner:SHANGHAI JIAO TONG UNIV +1

High-spatial resolution remote-sensing image bag-of-word classification method based on linear words

InactiveCN102496034ASimple calculationFeature stabilizationCharacter and pattern recognitionFeature vectorWord list

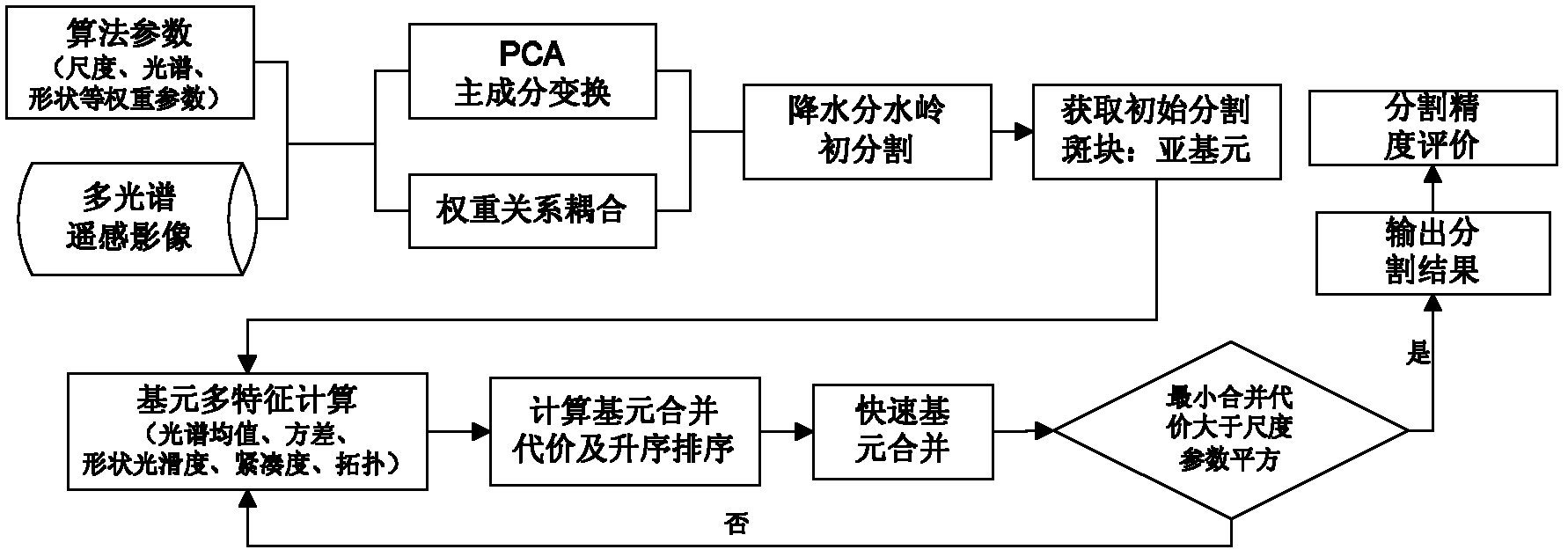

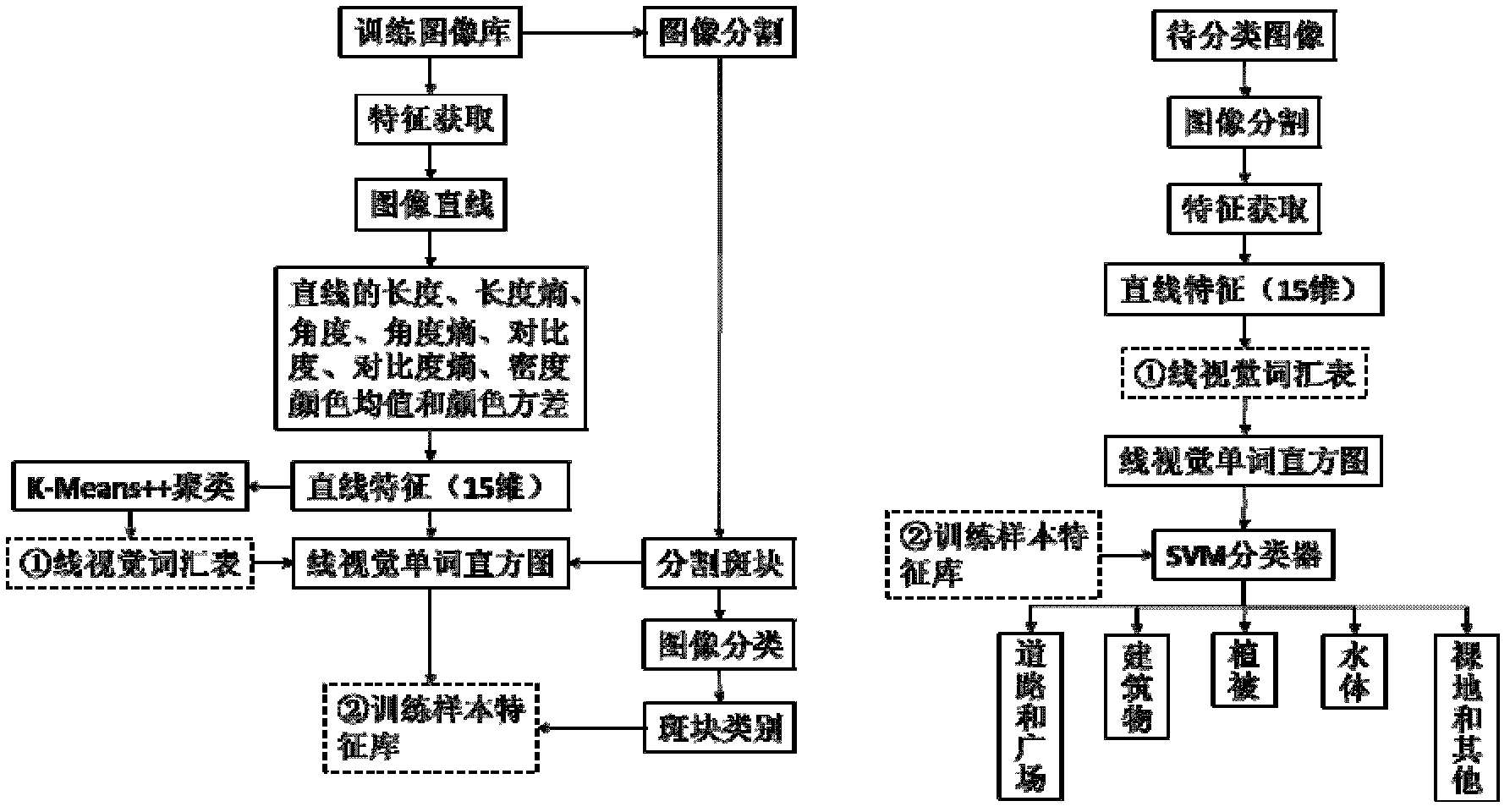

The invention discloses a high-spatial resolution remote-sensing image bag-of-word classification method based on linear words, which includes first dividing images to be classified into a practice sample and a classification sample. Steps for the practice sample include collecting linear characteristics of the practice image and calculating linear characteristic vector; utilizing K-Means++ arithmetic to generate linear vision word list in cluster mode; segmenting practice images and obtaining linear vision word list column diagram of each segmentation spot block on the base; and conducting class label on the spot block and putting the classification and linear vision word column diagram in storage. After sample practice, steps for the classification sample include collecting linear characteristics of the images to be classified, segmenting the images to be classified, calculating linear characteristics vector on the base, obtaining linear vision word list column diagram of each segmentation spot block and selecting an SVM classifier to classify the images to be classified to obtain classification results. The high-spatial resolution remote-sensing image bag-of-word classificationmethod utilizes linear characteristics to establish bag-of-word models and is capable of obtaining better high spatial resolution remote sensing image classification effect.

Owner:NANJING NORMAL UNIVERSITY

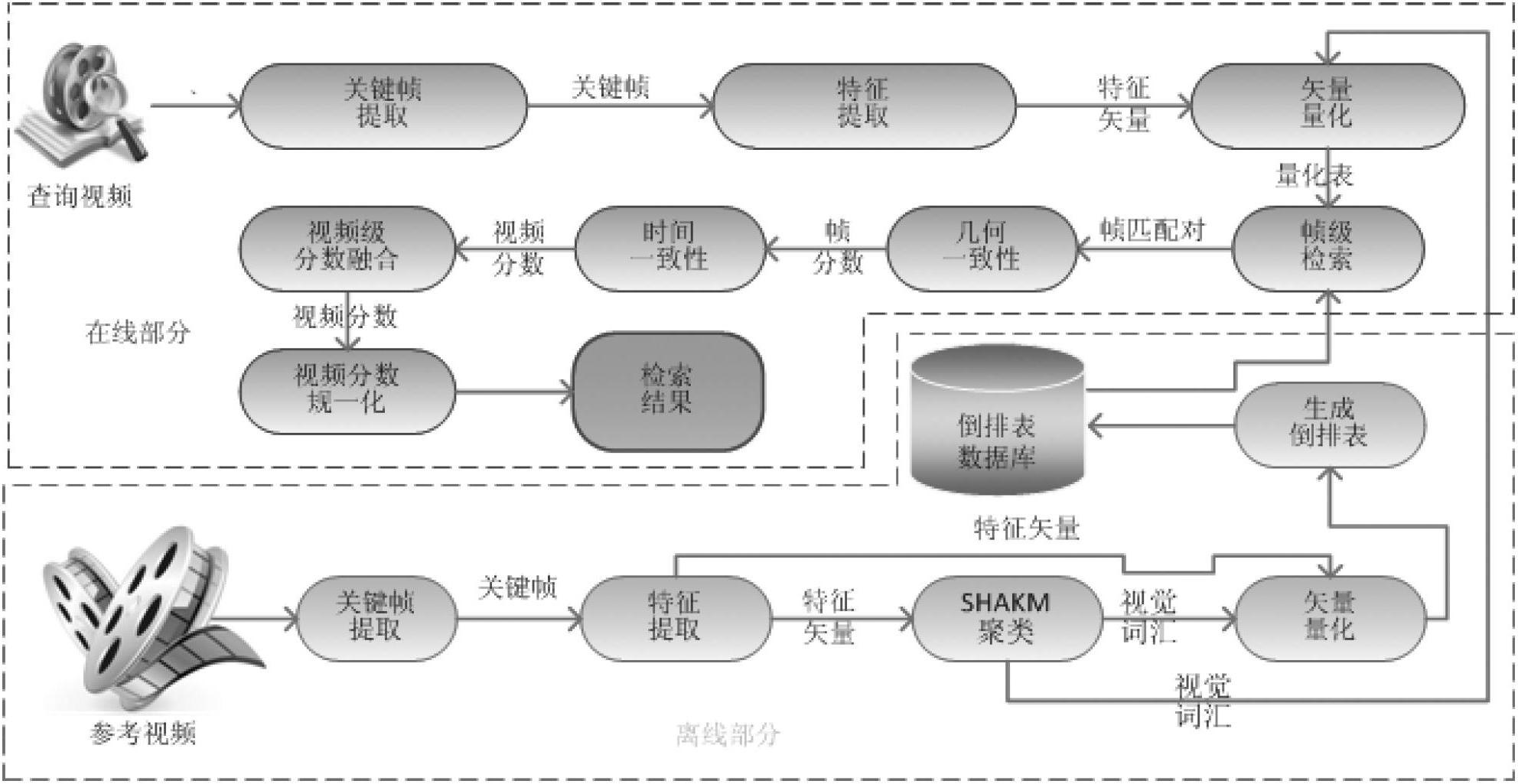

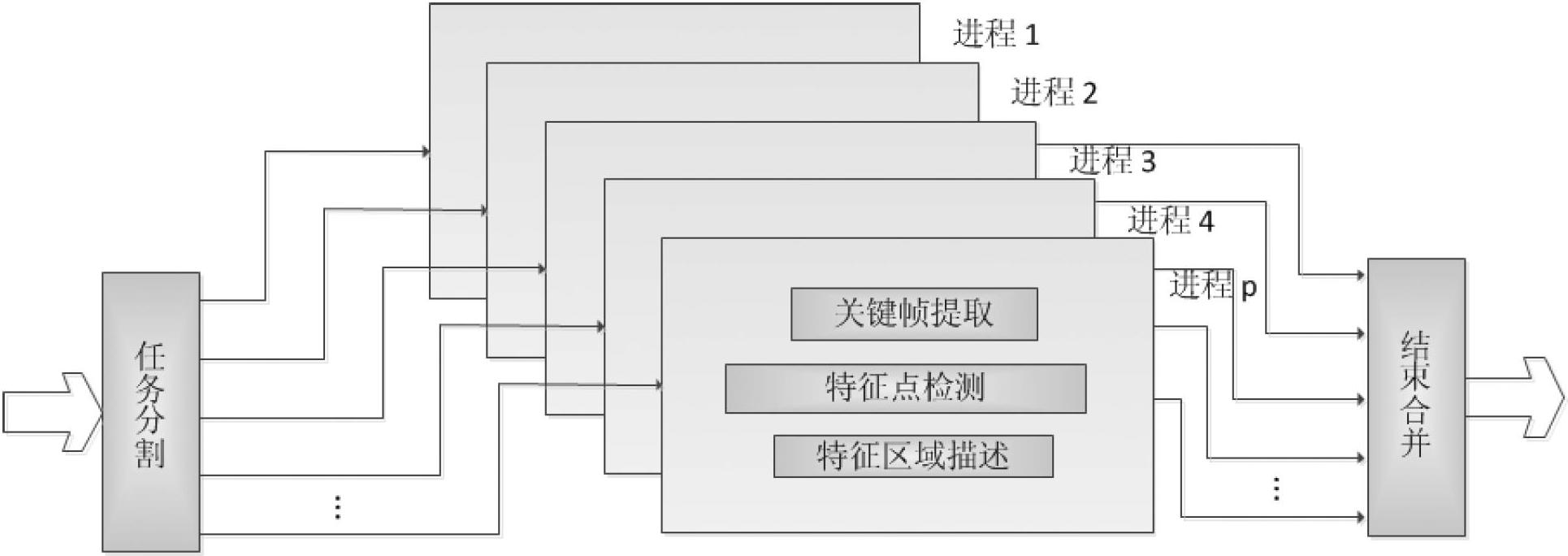

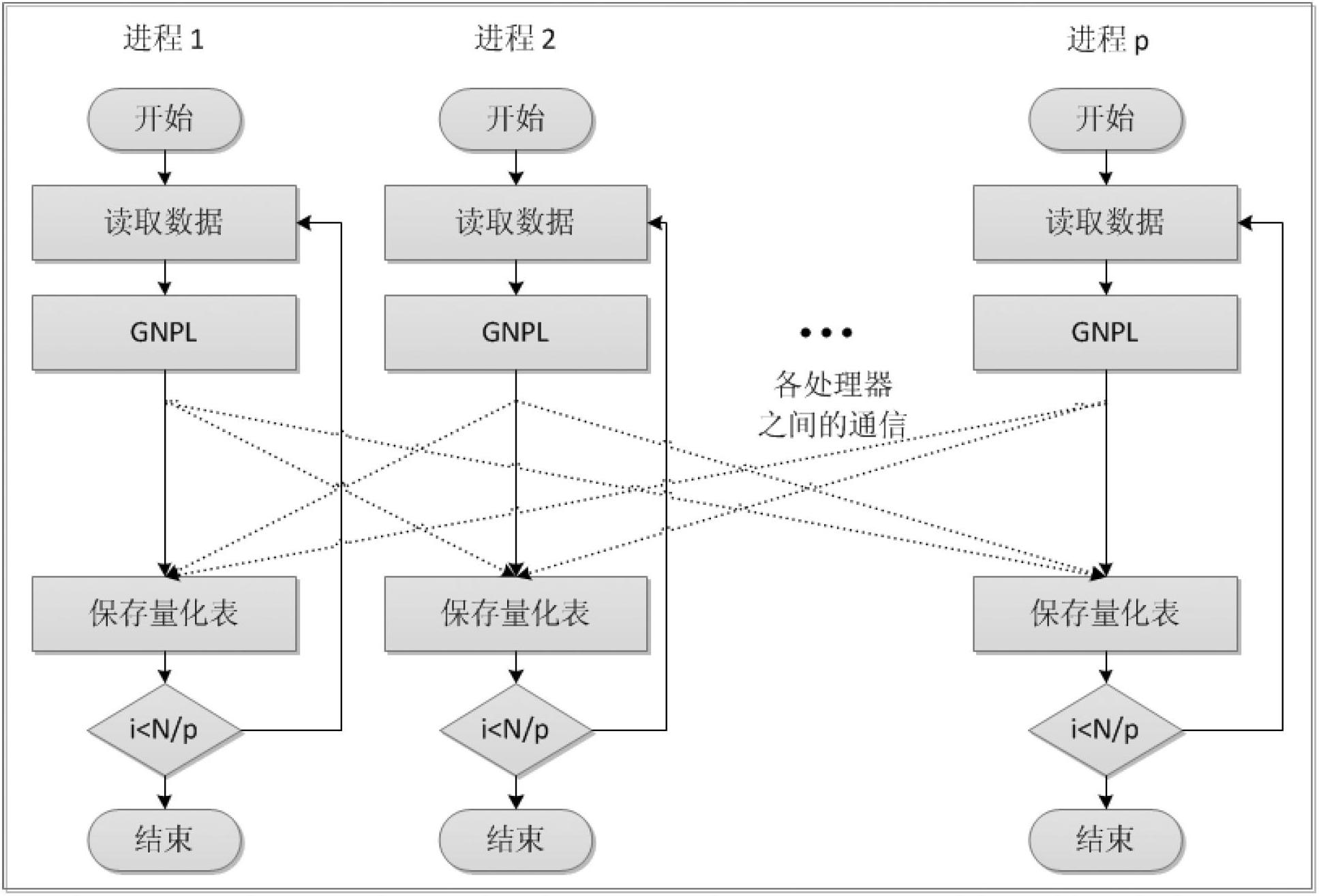

System and method for parallel video copy detection

InactiveCN102693299AImprove operational efficiencyClustering fastImage analysisSpecial data processing applicationsPattern recognitionVision based

The invention discloses a system and a method for parallel video copy detection. The method includes the steps of 1, selecting key-frames of a query video and a reference video by a parallel method and extracting MIFT features of the key-frames; 2, clustering extracted feature data of the reference video by a parallel hierarchical clustering method; 3, quantizing the features of the query video and the reference video by a quantitative method according to clustered results; 4, establishing indexes of quantized data of the reference video; and 5, retrieving by the parallel method, preliminarily searching in the indexes to obtain an alternative video by utilizing quantized data of the query video and then computing space consistency and time consistency to finally confirm a copy video. According to the system for parallel video copy detection, a parallel mechanism is adopted on the basis of fast retrieval of visual vocabulary bag model BOF (beginning of file), and accordingly detection efficiency of the system is improved greatly.

Owner:XI AN JIAOTONG UNIV

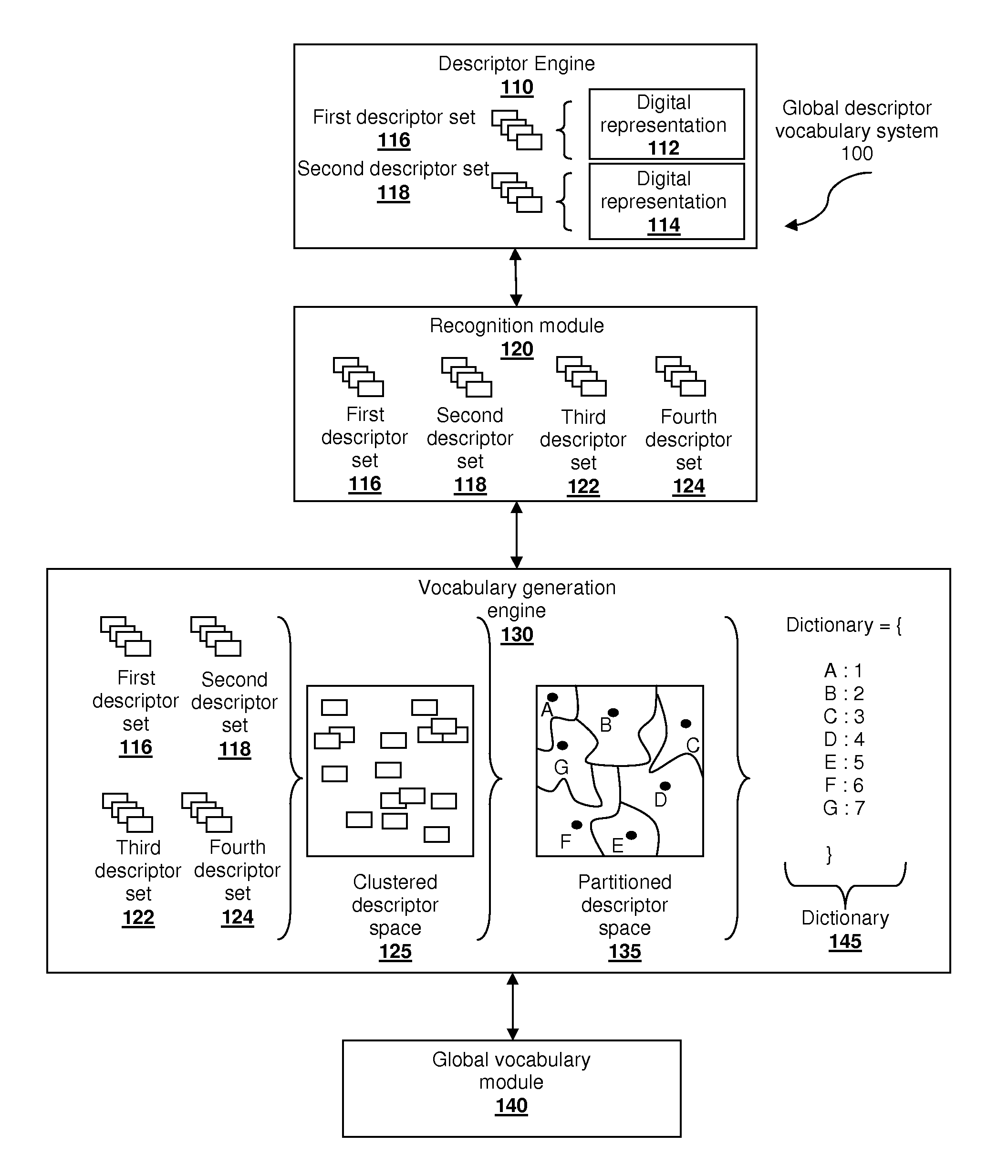

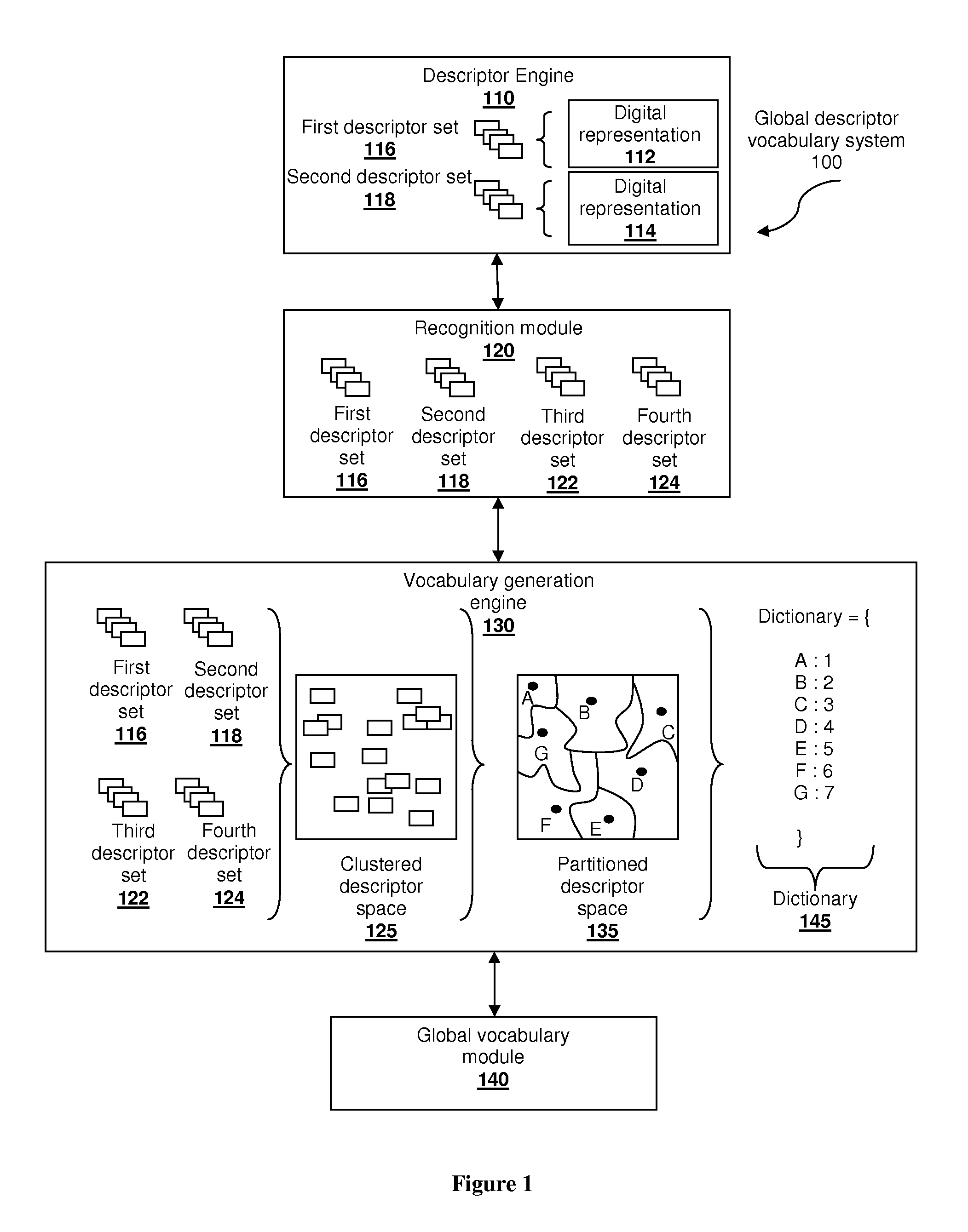

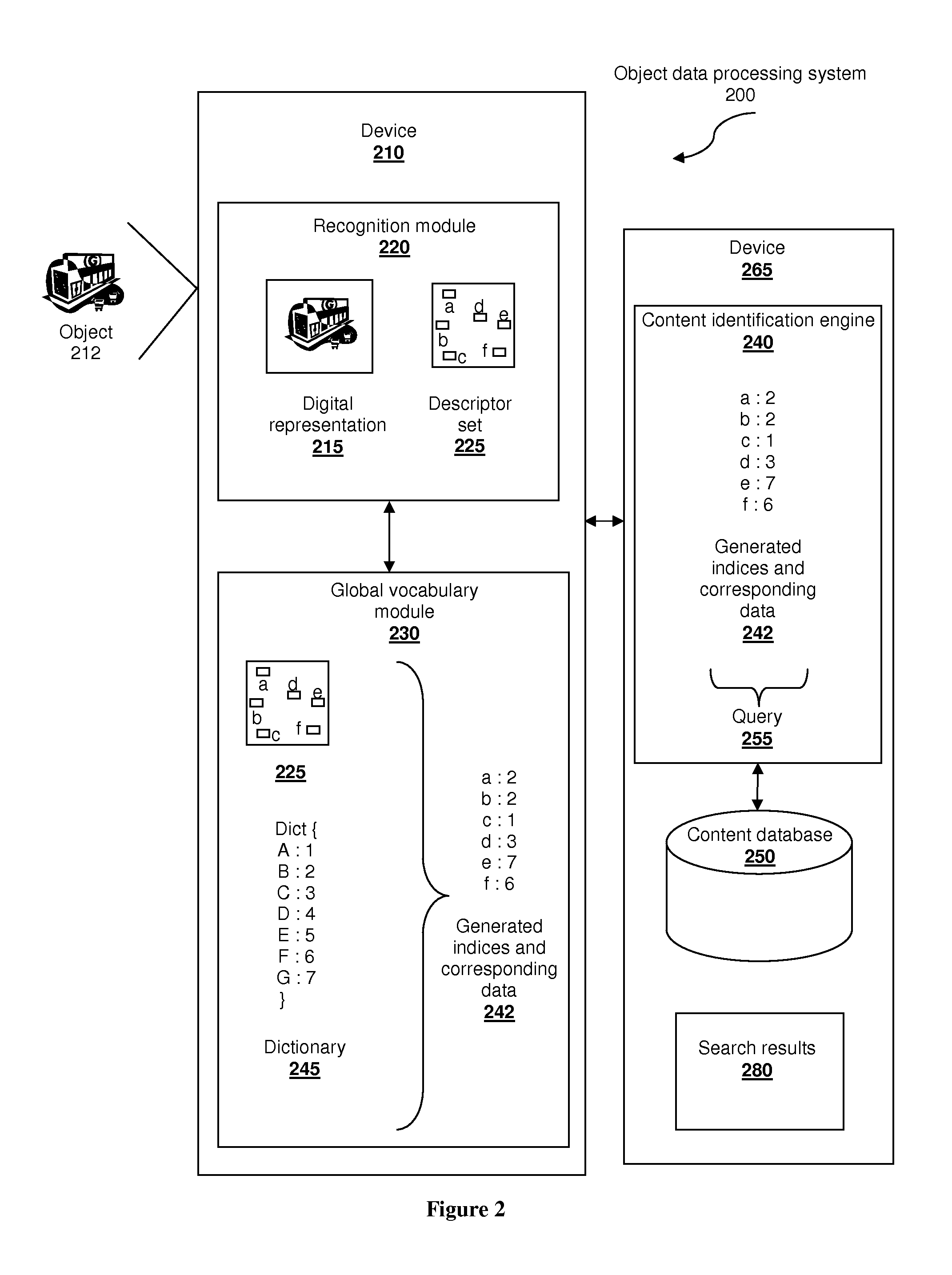

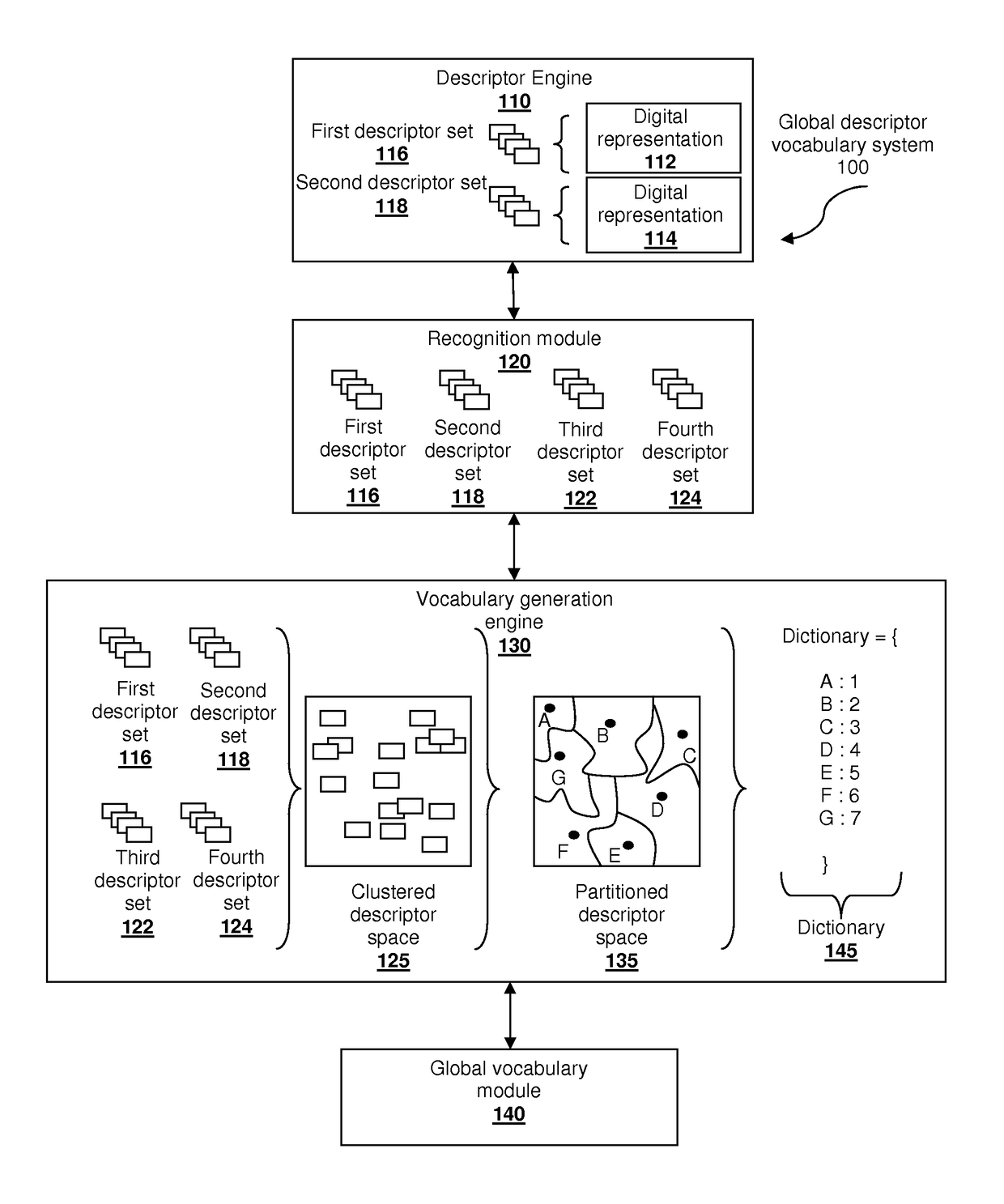

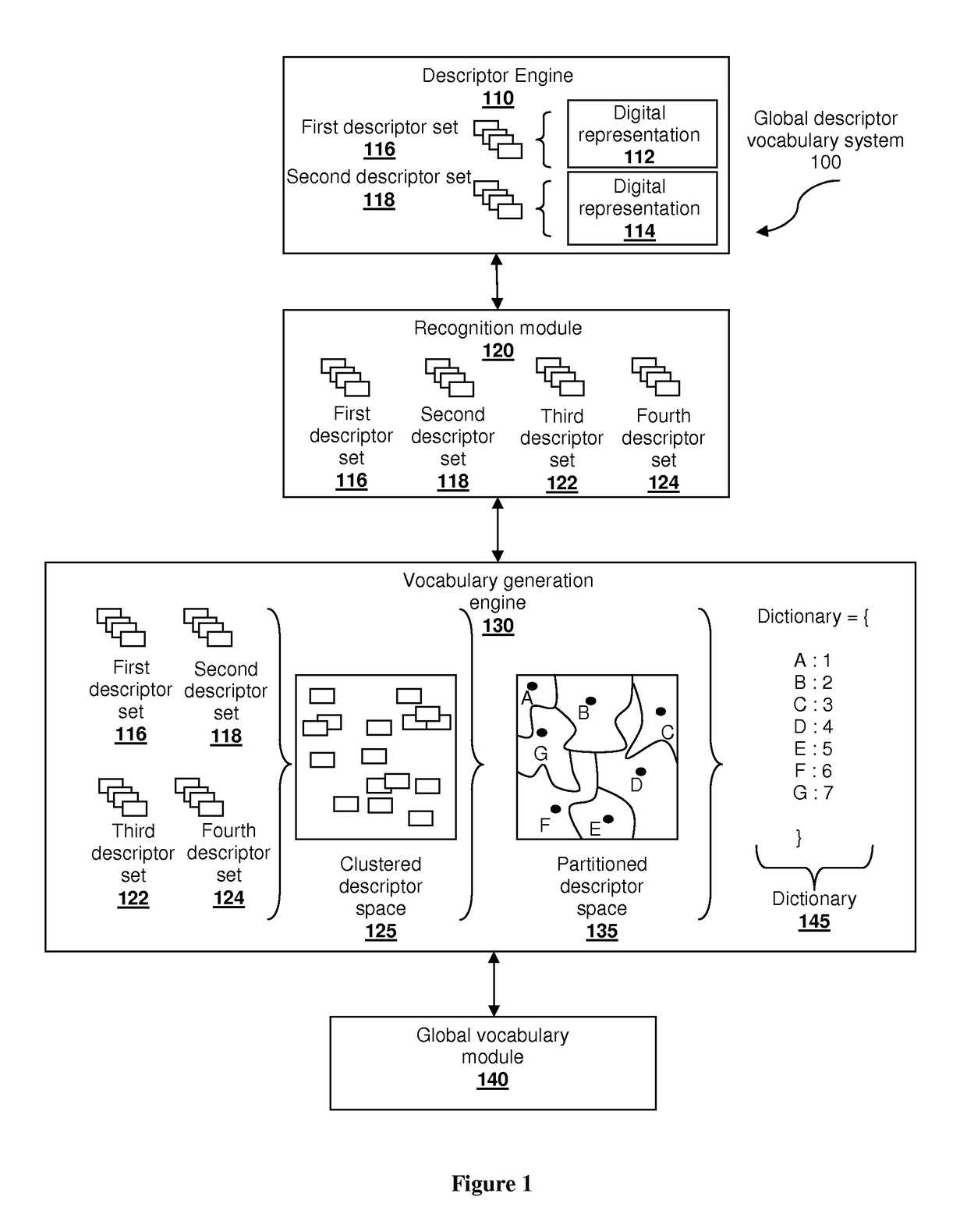

Global visual vocabulary, systems and methods

Systems and methods of generating a compact visual vocabulary are provided. Descriptor sets related to digital representations of objects are obtained, clustered and partitioned into cells of a descriptor space, and a representative descriptor and index are associated with each cell. Generated visual vocabularies could be stored in client-side devices and used to obtain content information related to objects of interest that are captured.

Owner:NANT HLDG IP LLC

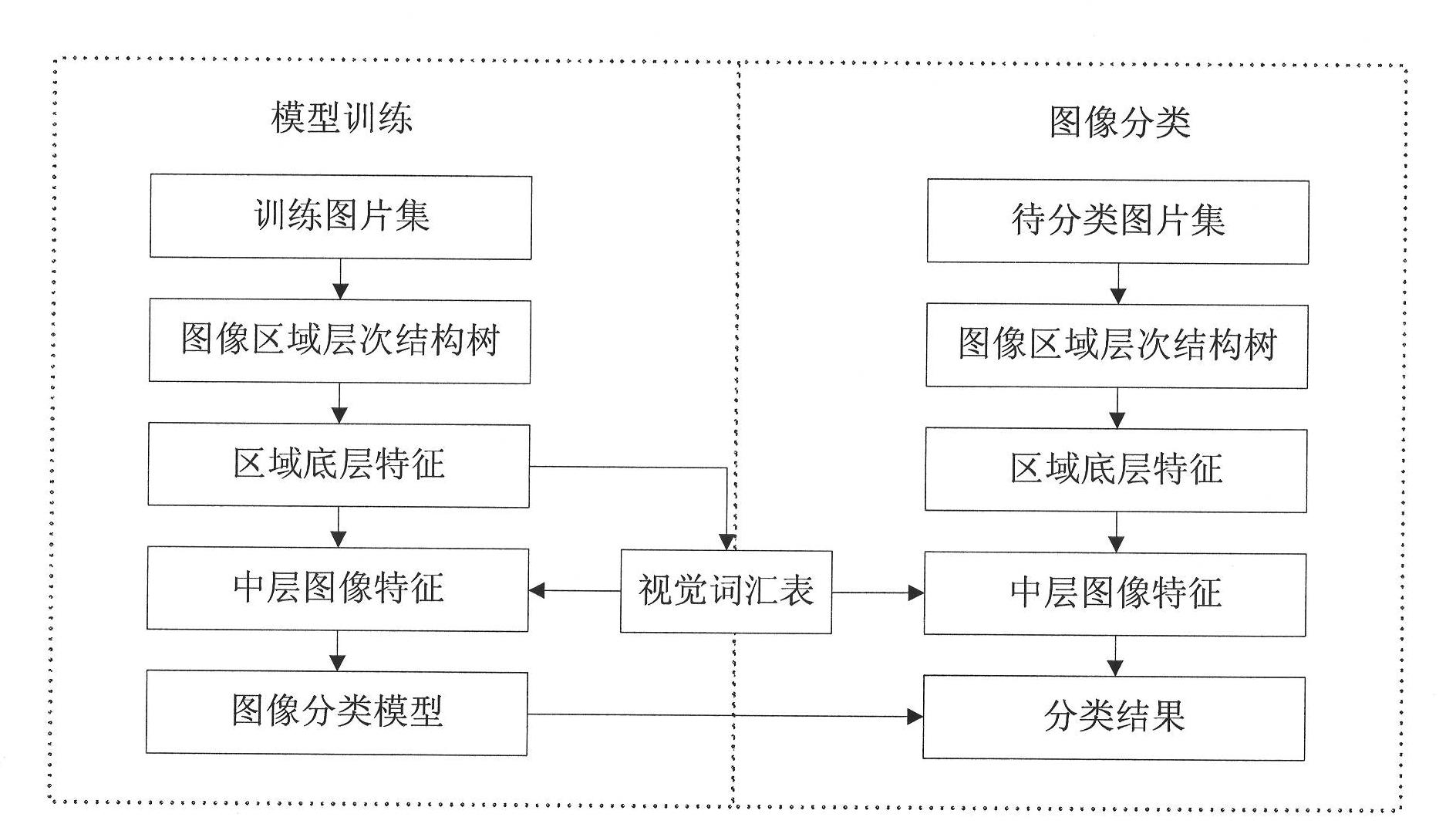

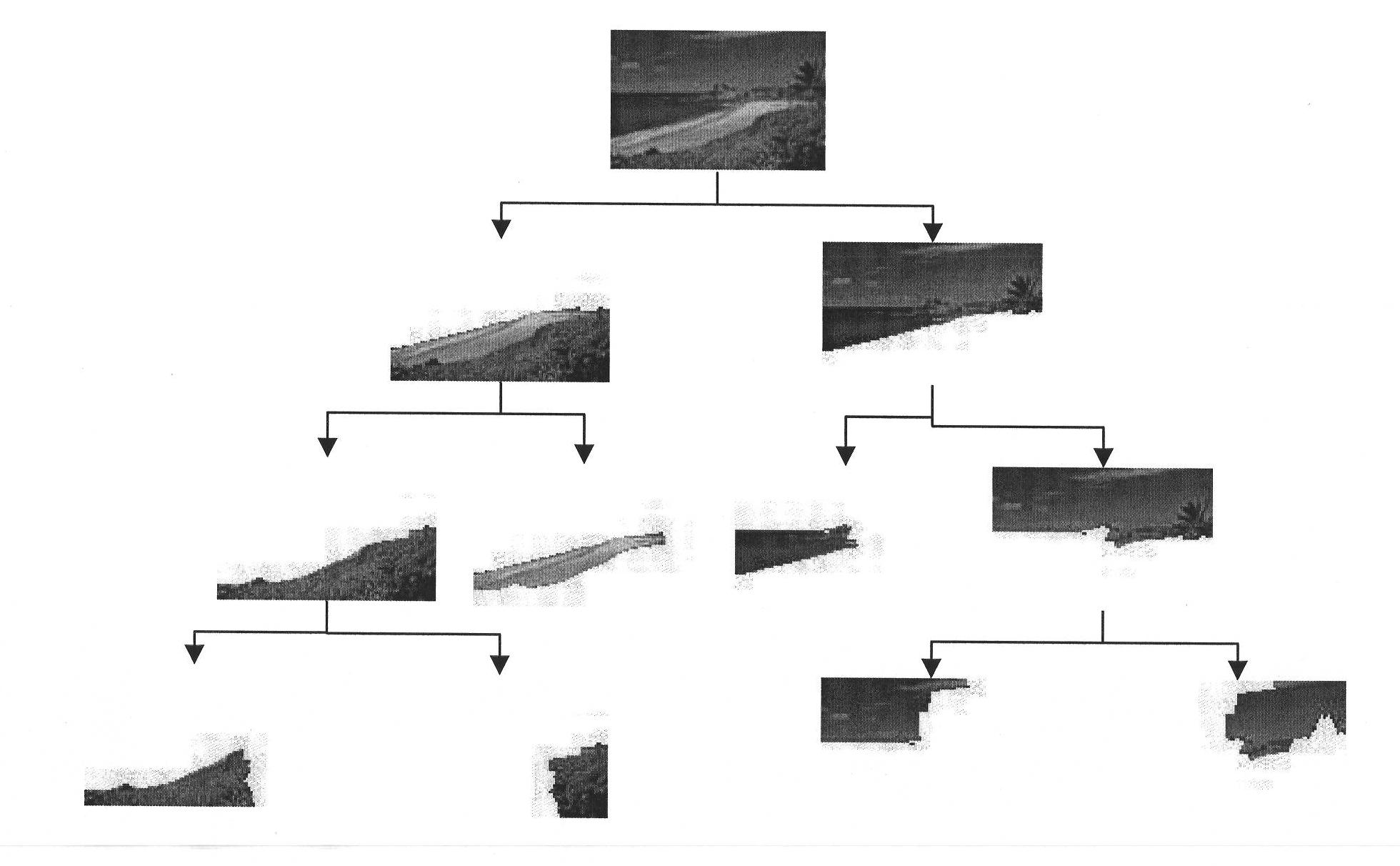

Multilevel content description-based image classification method

ActiveCN101923653AImprove classification accuracyEasy to handleImage enhancementCharacter and pattern recognitionFeature setClassification methods

The invention provides a multilevel content description-based image classification method. The method comprises the following steps of: 1) presetting a training image set; obtaining each image area hiberarchy tree by multilevel image segmentation; and extracting low-level features of each node area in the image area hiberarchy tree; 2) structuring a visual vocabulary by a low-level feature set ofa training image set area; mapping the image area hiberarchy tree to middle-level image features according to the visual vocabulary to obtain multilevel content description of a training image set; and 3) establishing an image classification model based on the multilevel content description of the training image set; and realizing the classification of images to be classified according to the image classification model. In the method, a multilevel segmentation area of the images is adopted; on one hand, the completeness of the image content description is enhanced; and on the other hand, the robustness of the over-segmentation and the under-segmentation of the images are enhanced. Therefore, more effective image description can be obtained to achieve higher image classification accuracy.

Owner:PEKING UNIV

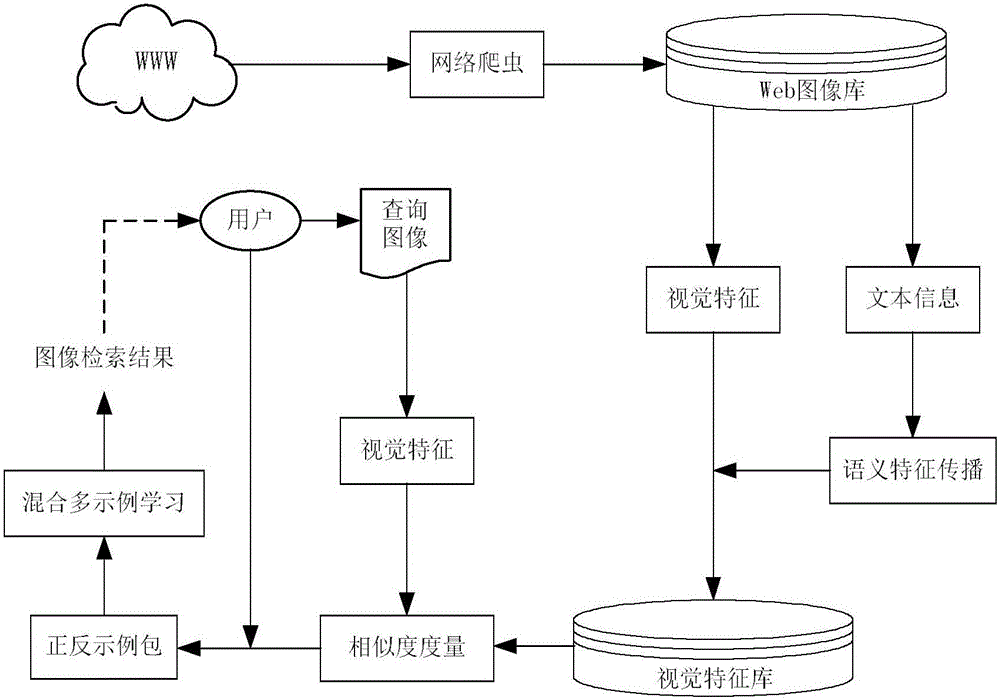

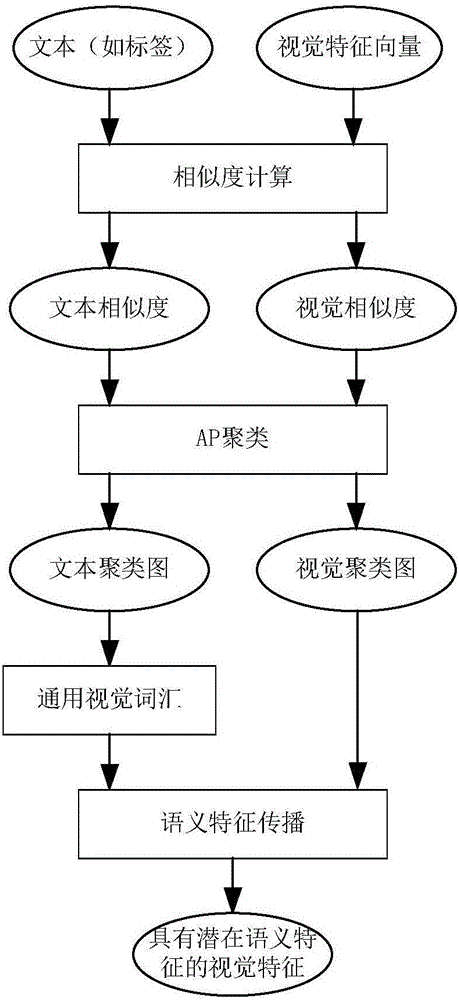

Semantic propagation and mixed multi-instance learning-based Web image retrieval method

ActiveCN106202256AReduce Extraction ComplexityImprove classification performanceCharacter and pattern recognitionSpecial data processing applicationsSmall sampleThe Internet

The invention belongs to the technical field of image processing and particularly provides a semantic propagation and mixed multi-instance learning-based Web image retrieval method. Web image retrieval is performed by combining visual characteristics of images with text information. The method comprises the steps of representing the images as BoW models first, then clustering the images according to visual similarity and text similarity, and propagating semantic characteristics of the images into visual eigenvectors of the images through universal visual vocabularies in a text class; and in a related feedback stage, introducing a mixed multi-instance learning algorithm, thereby solving the small sample problem in an actual retrieval process. Compared with a conventional CBIR (Content Based Image Retrieval) frame, the retrieval method has the advantages that the semantic characteristics of the images are propagated to the visual characteristics by utilizing the text information of the internet images in a cross-modal mode, and semi-supervised learning is introduced in related feedback based on multi-instance learning to cope with the small sample problem, so that a semantic gap can be effectively reduced and the Web image retrieval performance can be improved.

Owner:XIDIAN UNIV

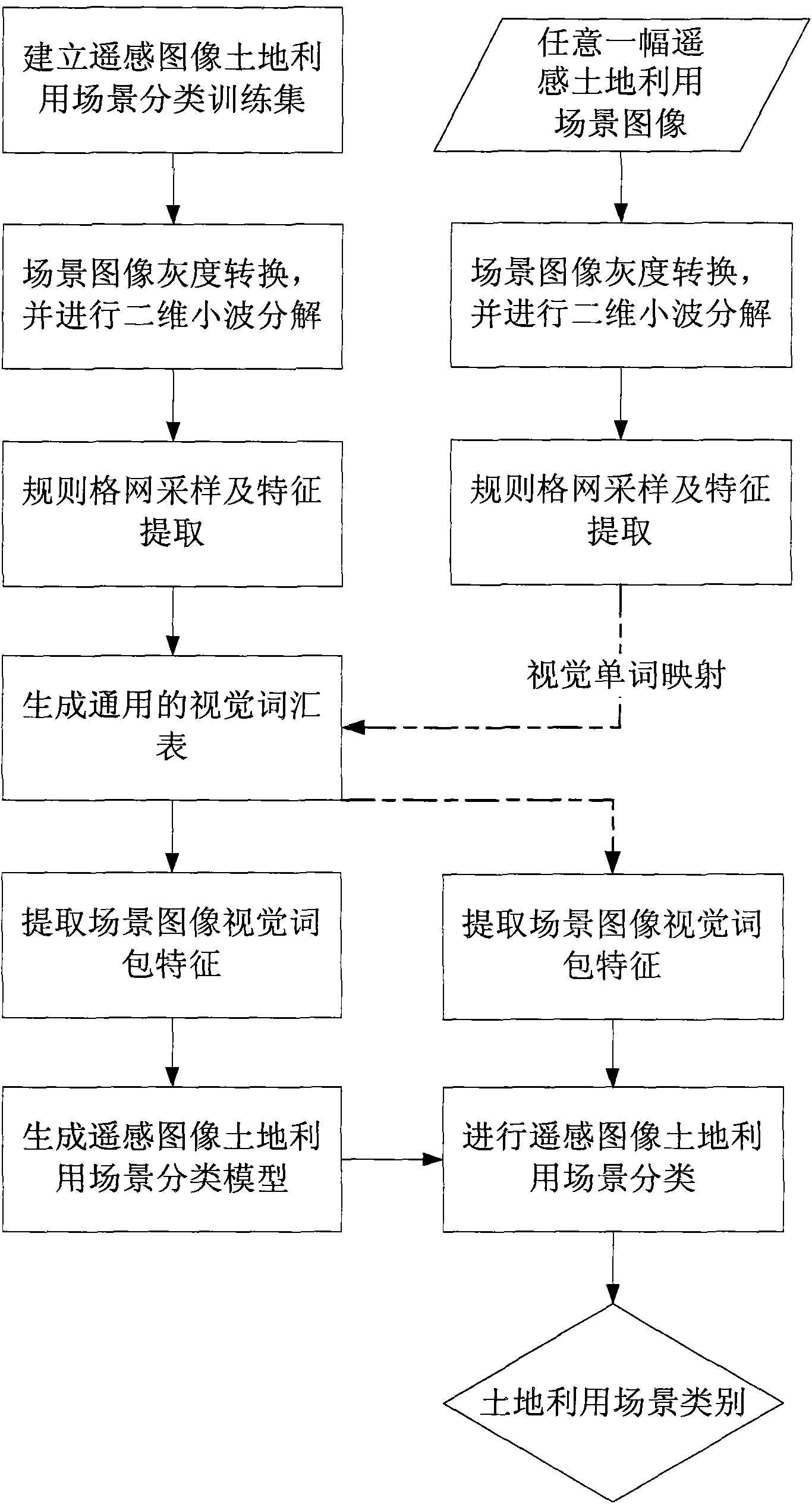

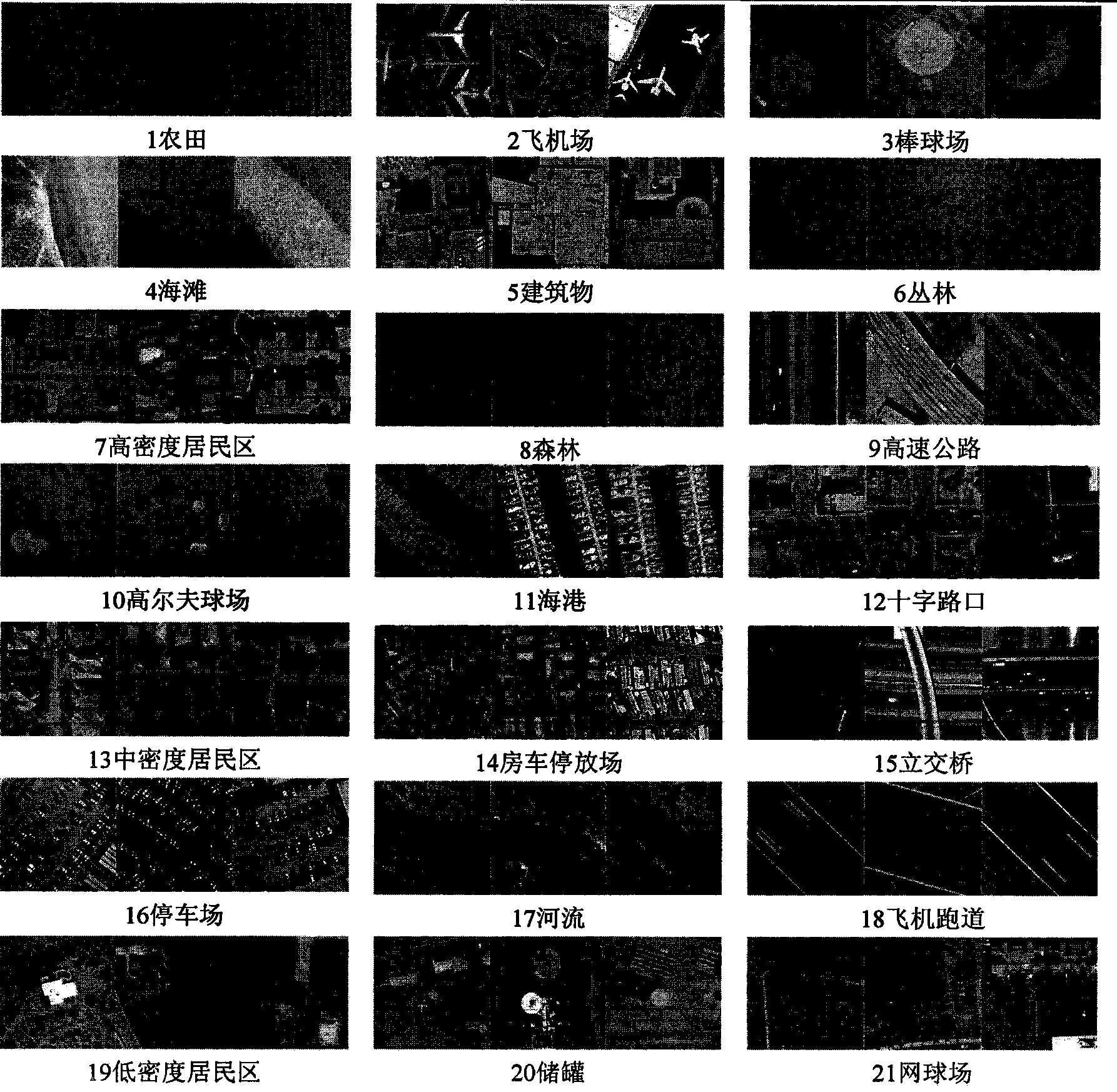

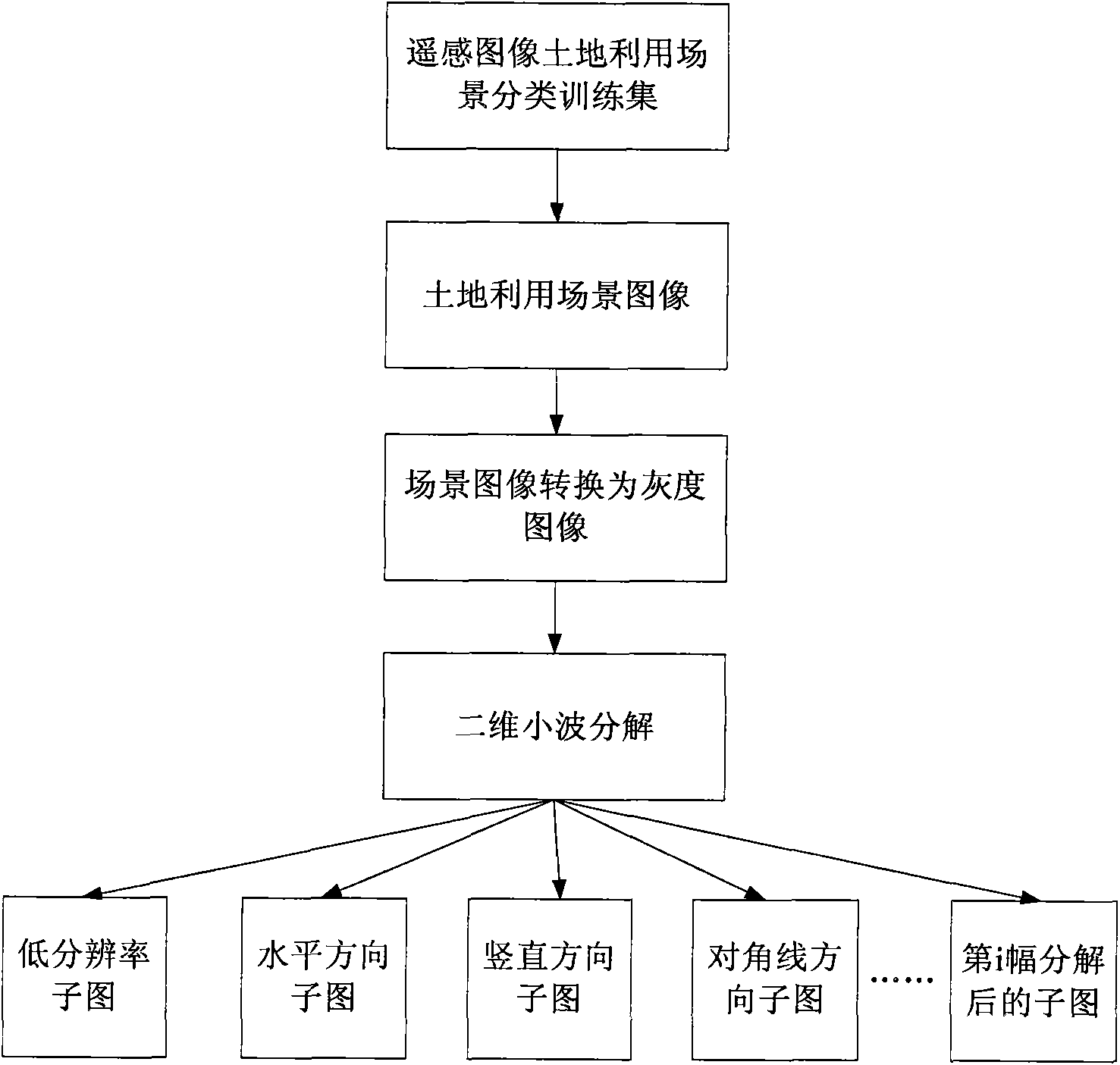

Remote sensing image land utilization scene classification method based on two-dimension wavelet decomposition and visual sense bag-of-word model

ActiveCN103413142ASolve the lack of considerationImprove utilizationCharacter and pattern recognitionDecompositionClassification methods

The invention relates to a remote sensing image land utilization scene classification method based on two-dimension wavelet decomposition and a visual sense bag-of-word model. The method comprises the steps that a remote sensing image land utilization scene classification training set is built; scene images in the training set are converted to grayscale images, and two-dimension decomposition is conducted on the grayscale images; regular-grid sampling and SIFT extracting are conducted on the converted grayscale images and sub-images formed after two-dimension decomposition, and universal visual word lists of the converted grayscale images and the sub-images are independently generated through clustering; visual word mapping is conducted on each image in the training set to obtain bag-of-word characteristics; the bag-of-word characteristics of each image in the training set and corresponding scene category serial numbers serve as training data for generating a classification model through an SVM algorithm; images of each scene are classified according to the classification model. The remote sensing image land utilization scene classification method well solves the problems that remote sensing image texture information is not sufficiently considered through an existing scene classification method based on a visual sense bag-of-word model, and can effectively improve scene classification precision.

Owner:INST OF REMOTE SENSING & DIGITAL EARTH CHINESE ACADEMY OF SCI

Method and system for fast and robust identification of specific product images

ActiveUS9042659B2Quick identificationNoise robustDigital data information retrievalCharacter and pattern recognitionReference imageVisual perception

Owner:CATCHOOM TECH

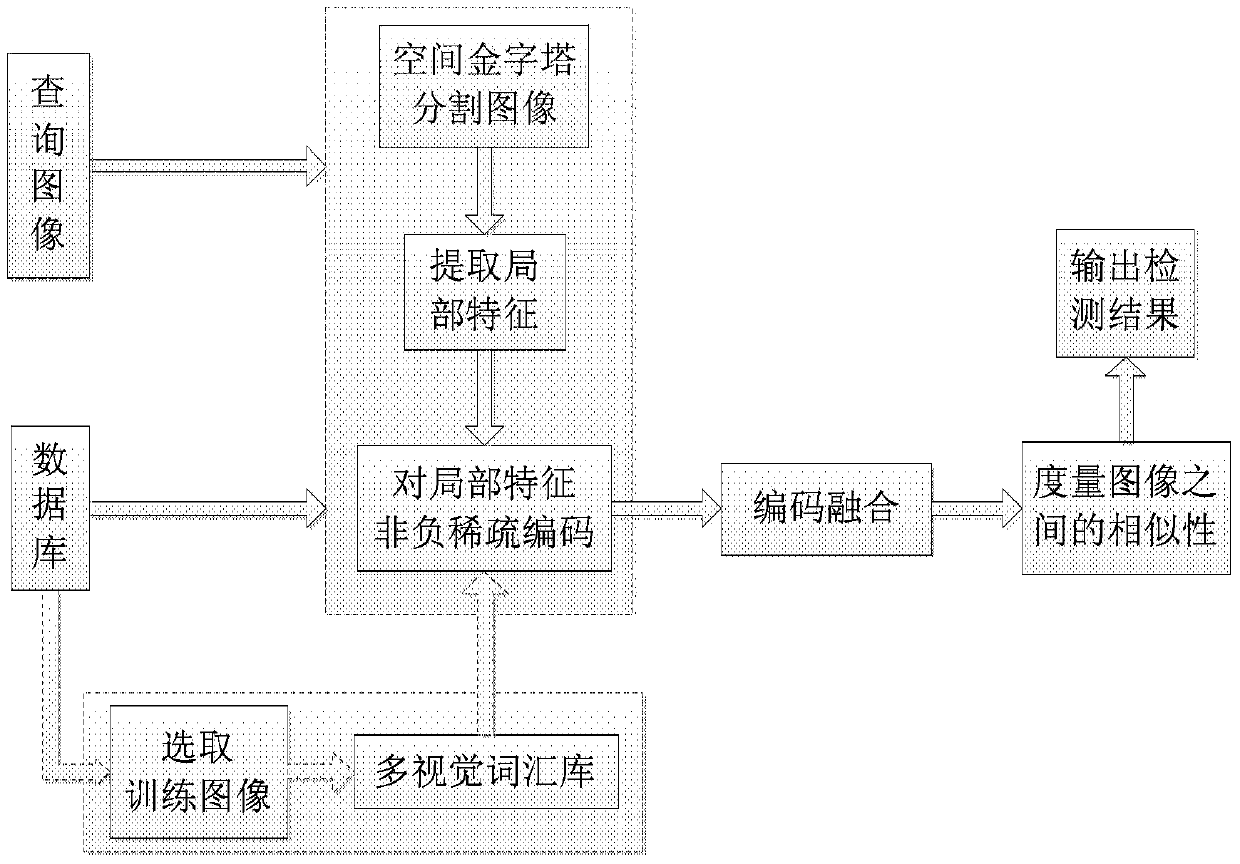

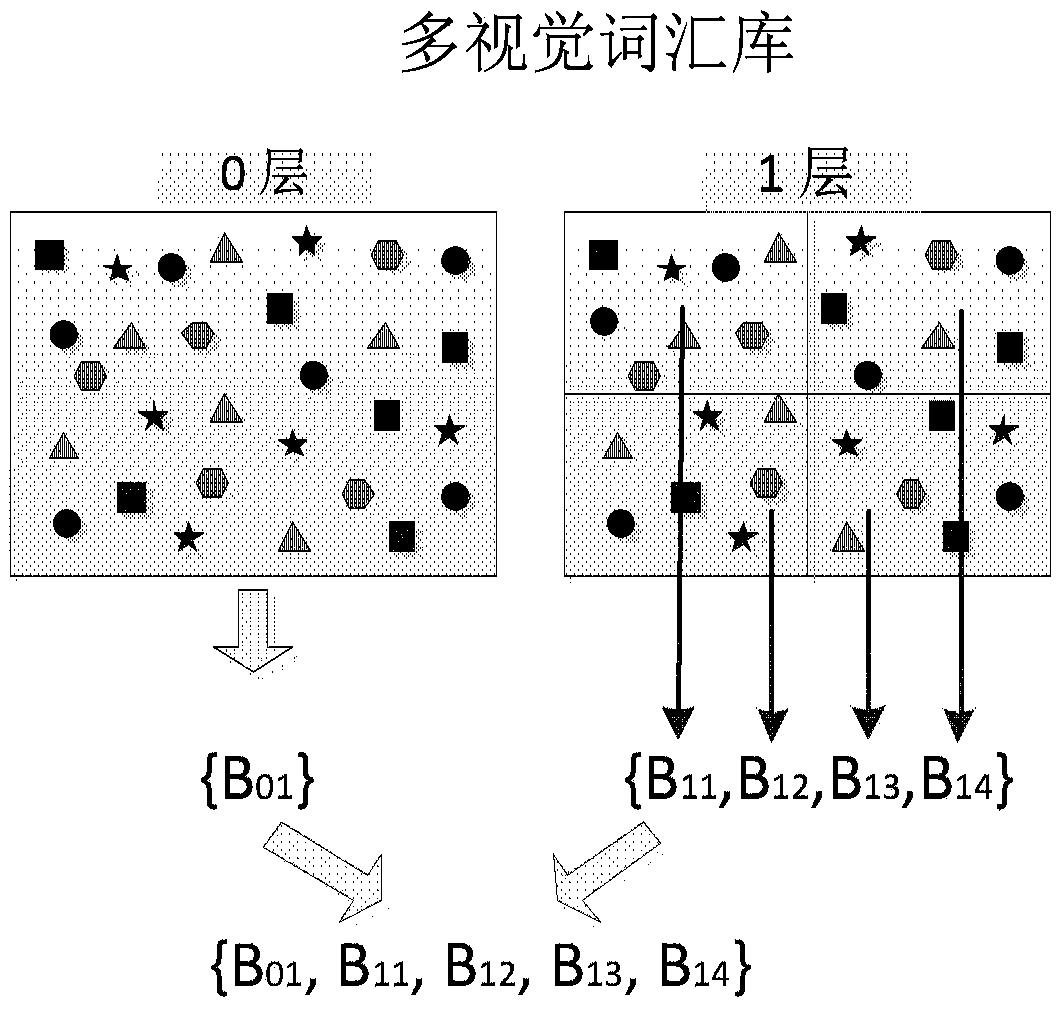

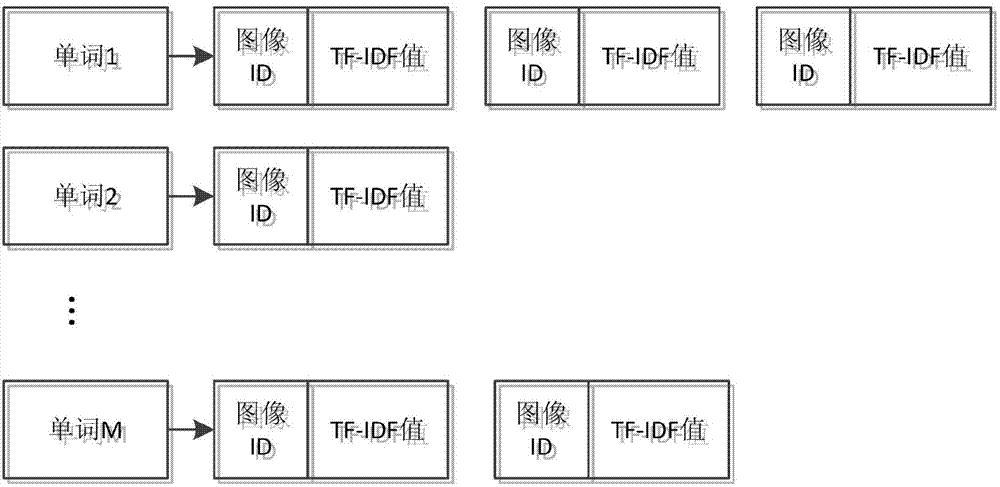

Near-duplicate image detection method

InactiveCN103593677AGood near-duplicate image detectionMeasure similarityCharacter and pattern recognitionImage detectionHistogram

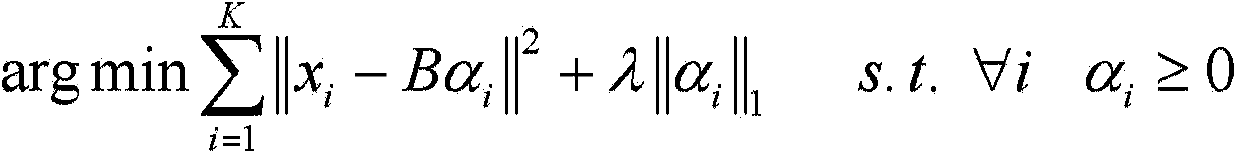

The invention discloses a near-duplicate image detection method. The method comprises the steps that a training image set is obtained, and according to the images in the training image set, a multi-vision word bank is learned and obtained; based on the learned multi-vision work bank, local characteristics of a to-be-detected image and images in an image database are coded into nonnegative sparse vectors in a nonnegative sparse coding method; via regional integration and spatial combination of the nonnegative sparse vectors, histogram vectors of the to-be-detected image and the images in the image database are obtained; and according to the similarity between tolerance images of the histogram vectors of the to-be-detected image and the images in the image database, an image, near-duplicate to the to-be-detected image, in the image database is output.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

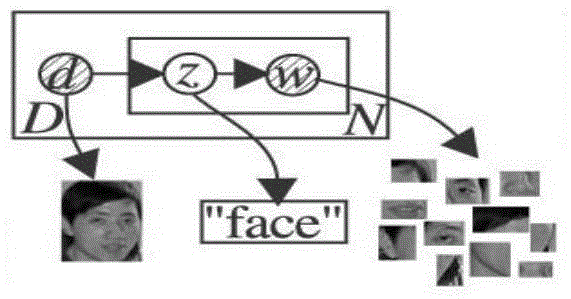

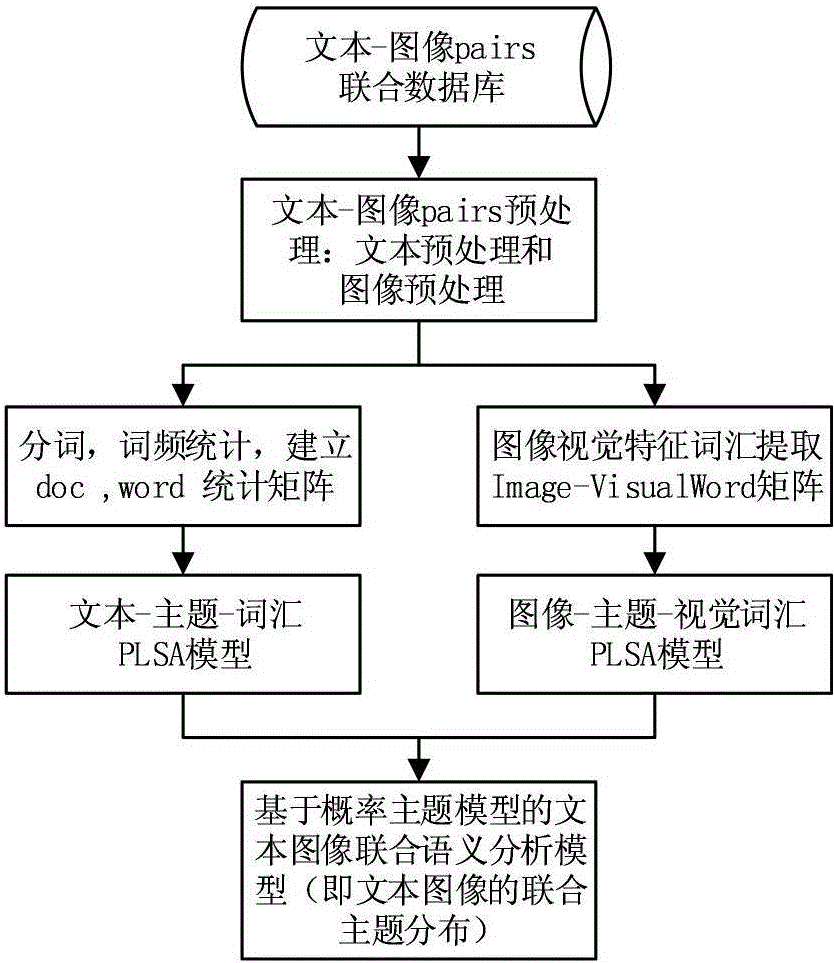

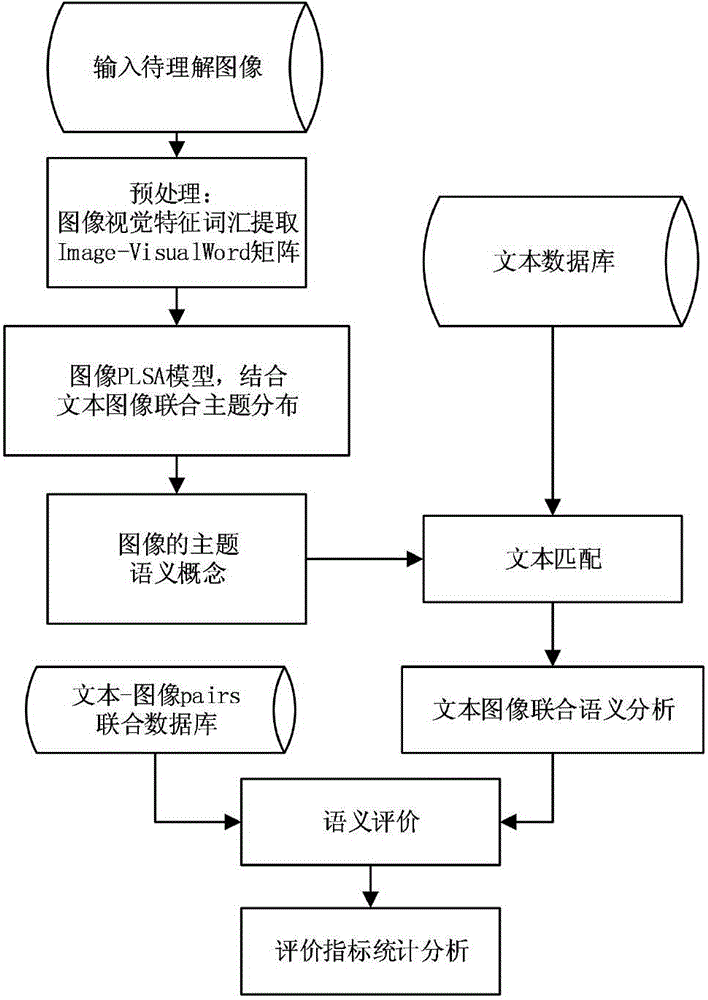

Text image joint semantics analysis method based on probability theme model

InactiveCN104933029ARich semantic informationSpecial data processing applicationsPattern recognitionSemantics

The invention provides a text image joint semantics analysis method based on a probability theme model. The text image joint semantics analysis method comprises the following steps: collecting a great quantity of texts comprising images, carrying out proper processing on the texts and the images, and forming an image-text pairs database in an image and text one-to-one way; utilizing samples to train to obtain a joint theme distribution model used for the text image semantics analysis; for an input image to be analyzed, extracting a visual characteristic vocabulary; applying a PLSA (Probabilistic Latent Semantic Analysis) model to the image and the visual characteristic vocabulary, and combining with text image joint theme distribution to obtain theme semantics of the image to be analyzed; matching the theme semantics obtained tin the previous step with the theme of the text in the image-text pairs database to select an optimal matching text; and for the obtained matching text, combining with an input image to carry out semantics evaluation. The text image joint semantics analysis method can obtain more semantics knowledge in addition to visualized scene object information.

Owner:TIANJIN UNIV

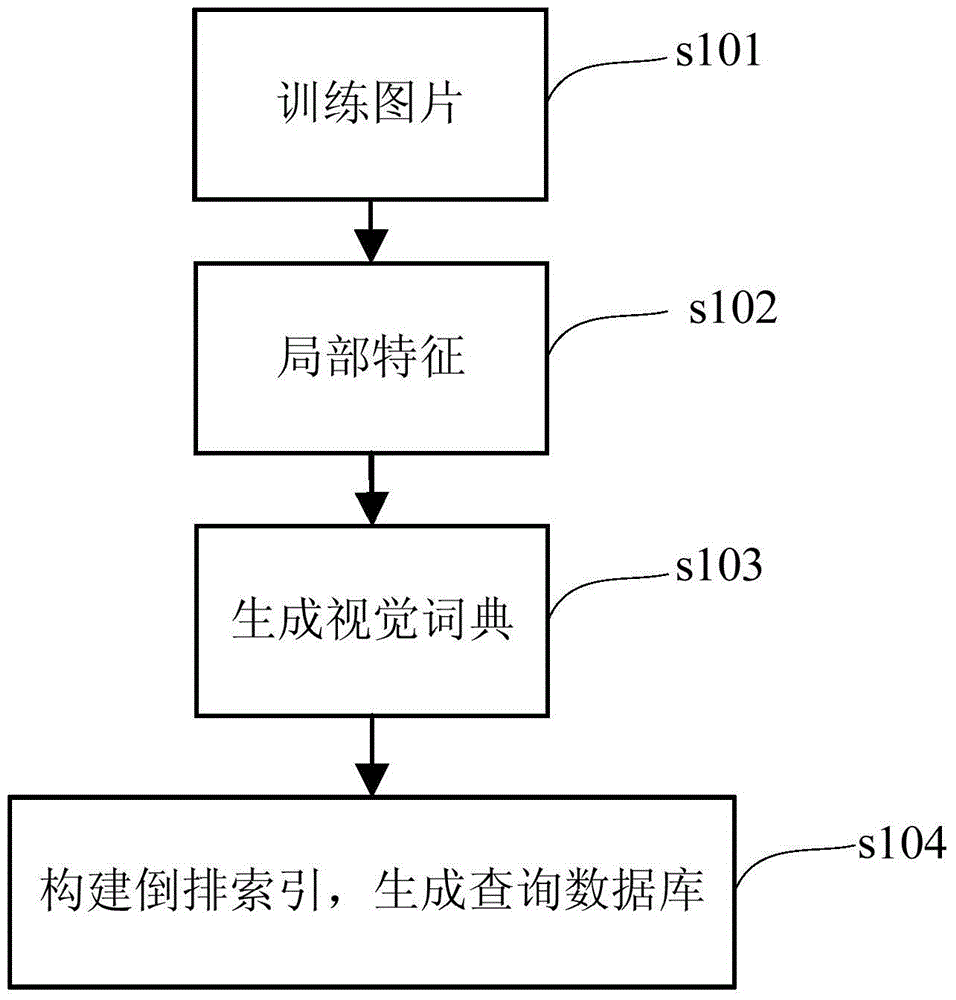

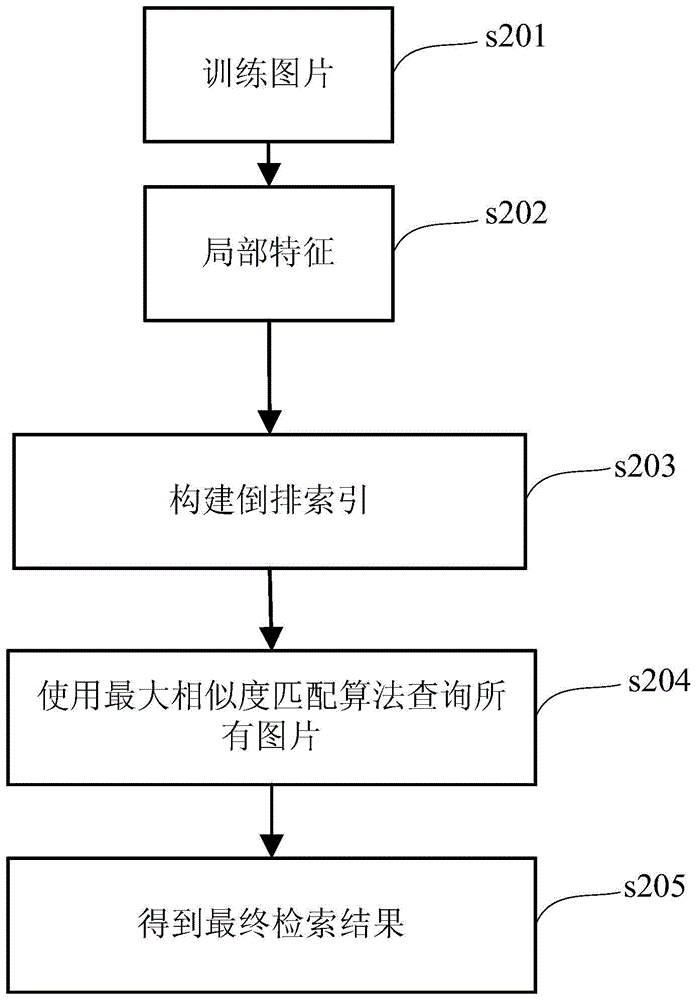

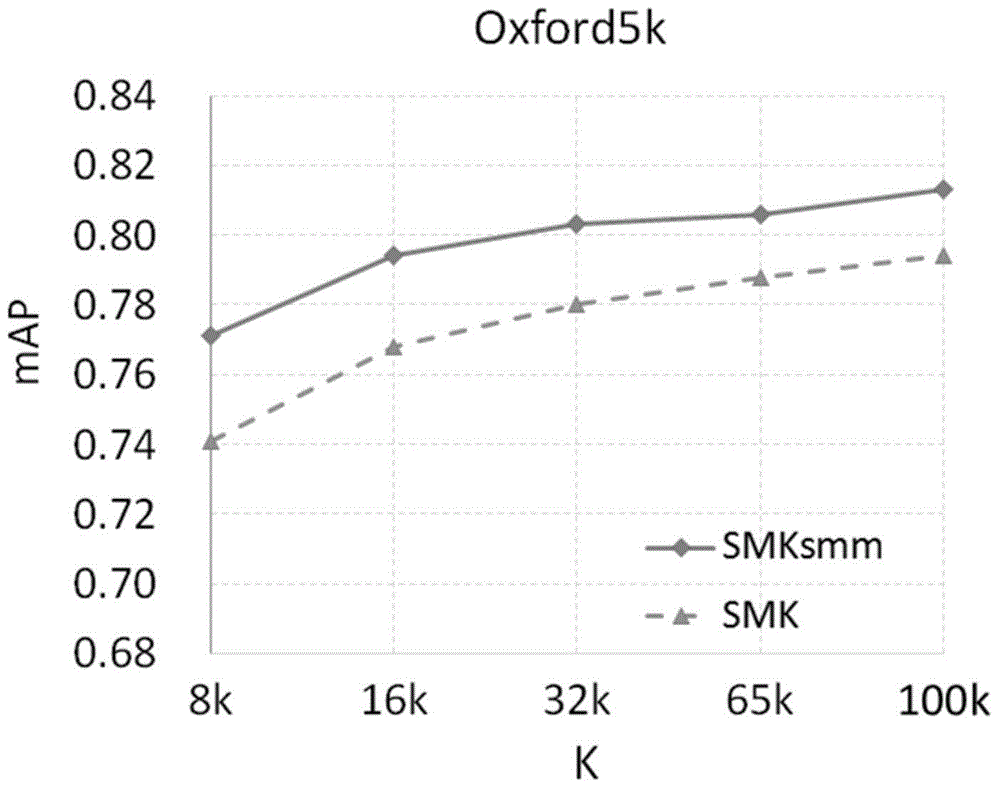

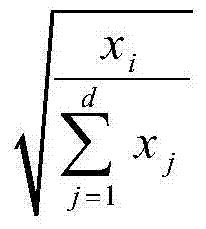

Picture searching method based on maximum similarity matching

ActiveCN104615676AEliminate multiple matchesEnhanced Visual CompatibilityStill image data indexingCharacter and pattern recognitionFeature setReverse index

The invention relates to a picture searching method based on maximum similarity matching. The method includes the following steps that (1) a training picture set is acquired; (2) feature point detection and description are conducted on acquired pictures in a multi-scale space; (3) feature sets extracted in the second step are clustered and generated into a visual dictionary including k visual vocabularies; (4) each feature extracted in the second step is mapped to the visual vocabulary with the distance being smallest to the current feature l2, the current feature and the normalization residual vector of the corresponding visual vocabulary are stored in a reverse index structure, and accordingly a query database is formed; (5) the pictures to be searched for are acquired, the second step and the fourth step are executed again, the reverse index structure of the pictures to be searched for is acquired, the query database is searched for according to the reverse index structure, and the searching results of the pictures to be searched for are acquired based on the maximum similarity matching. Compared with the prior art, the picture searching method has the advantages of being good in robustness, high in computational efficiency and the like.

Owner:TONGJI UNIV

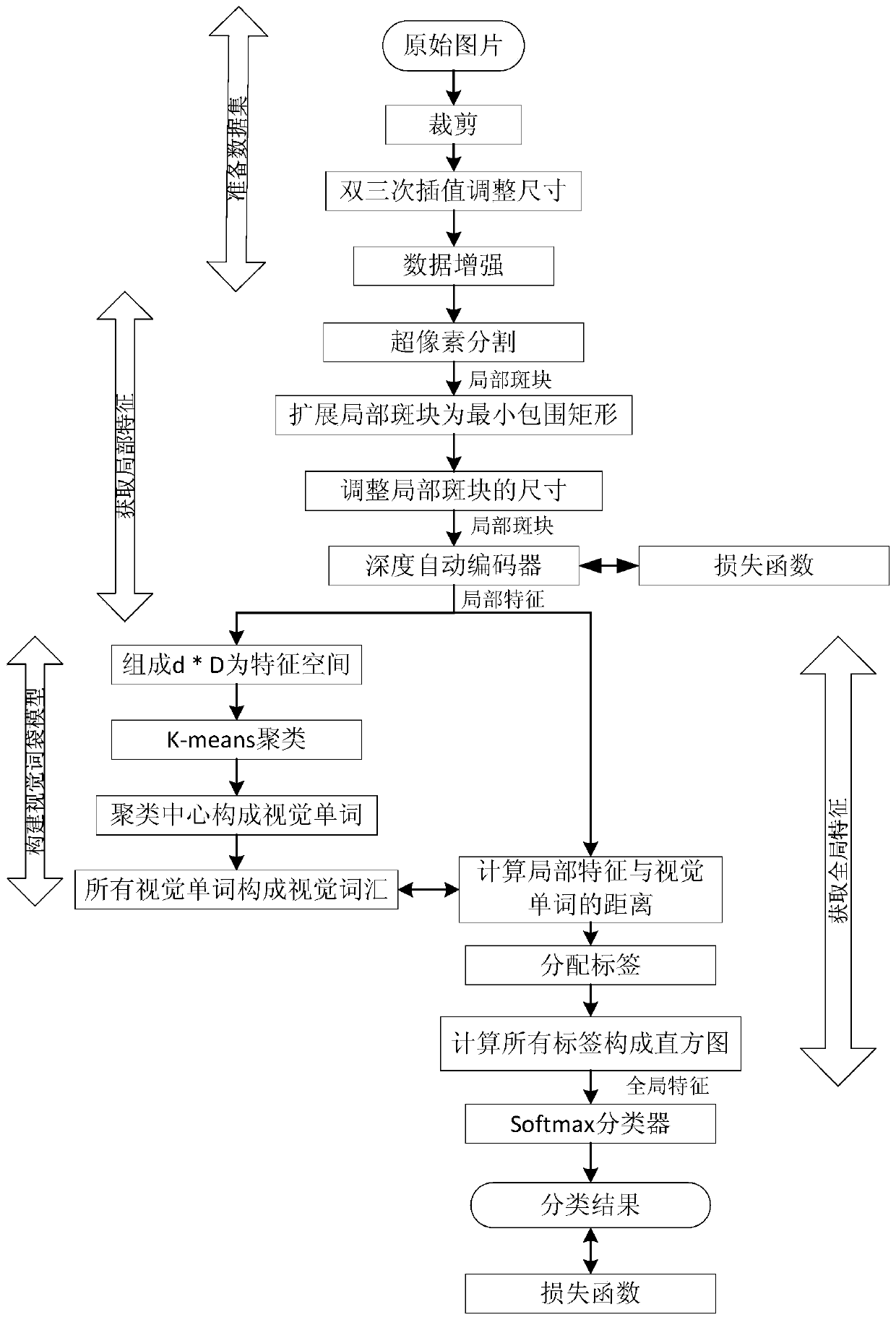

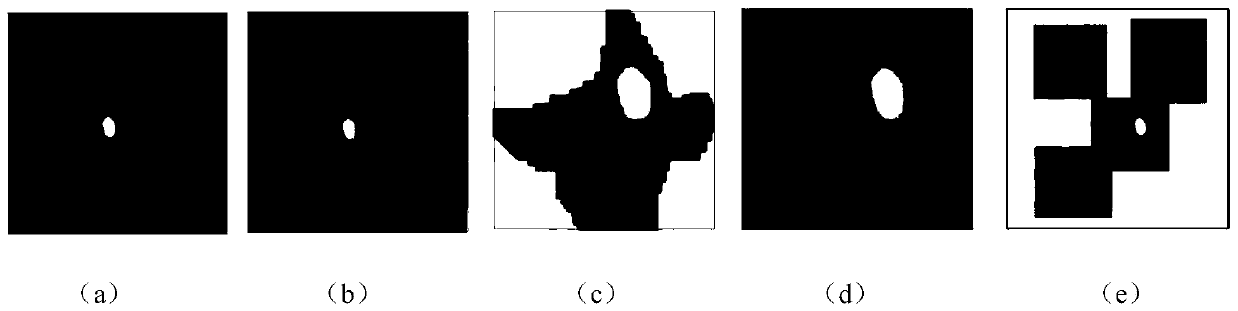

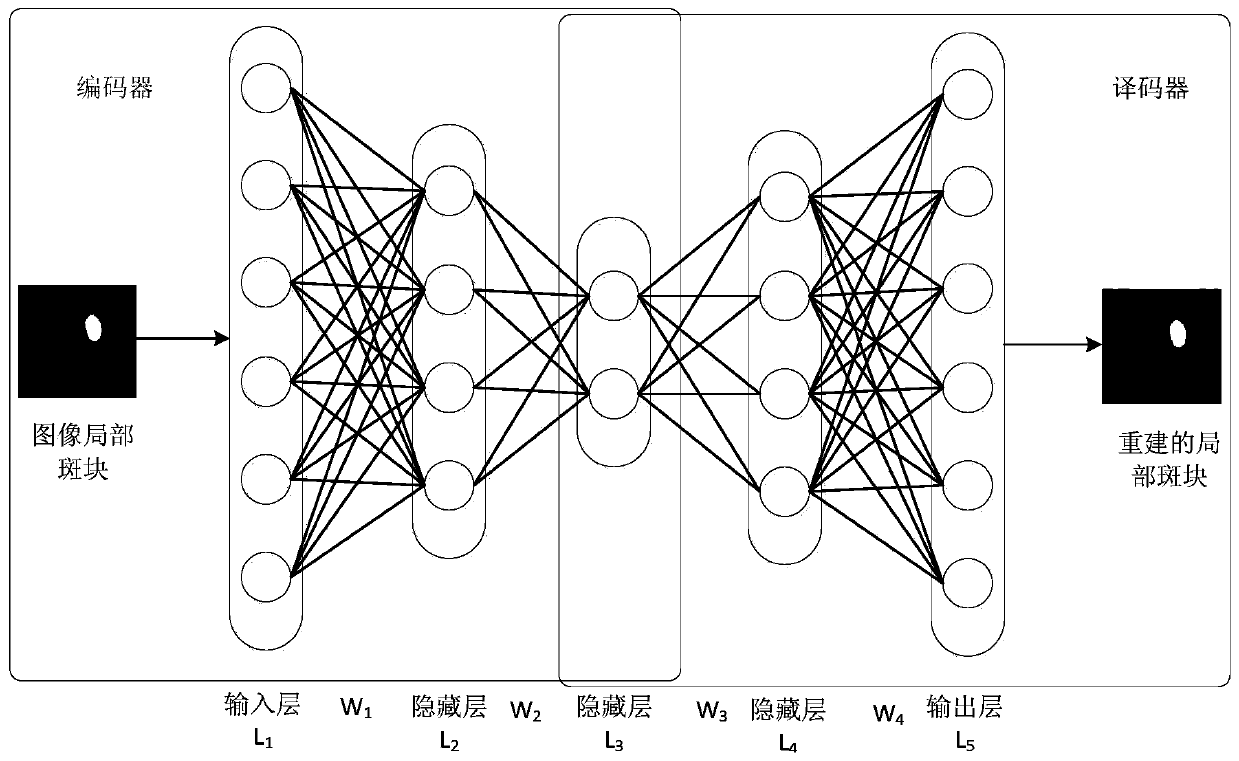

A pulmonary nodule image classification method for constructing feature representation based on an automatic encoder

InactiveCN109902736AImprove accuracyImprove feature extractionImage analysisCharacter and pattern recognitionPulmonary noduleData set

The invention provides a pulmonary nodule image classification method for constructing feature representation based on an automatic encoder, and relates to the technical field of computer vision. Themethod comprises the following steps: firstly, segmenting a pulmonary nodule image into local patches through superpixels; Transforming the patches into local feature vectors with a fixed length by using an unsupervised depth auto-encoder; Constructing visual vocabularies on the basis of the local features, and describing global features of the pulmonary nodule image through a visual word bag; Classifying pulmonary nodule types by using a softmax algorithm to complete the design of a model framework for representing pulmonary nodule image characteristics; Performing training by using the designed model framework and the ELCAP data set to obtain an automatic classification model for the pulmonary nodule images; And finally, carrying out pulmonary nodule image classification by using the obtained pulmonary nodule image classification model. According to the pulmonary nodule image classification method for constructing feature representation based on the automatic encoder provided by theinvention, the feature extraction capability of the pulmonary nodule classification model is improved, and the accuracy of pulmonary nodule automatic classification is improved.

Owner:NORTHEASTERN UNIV

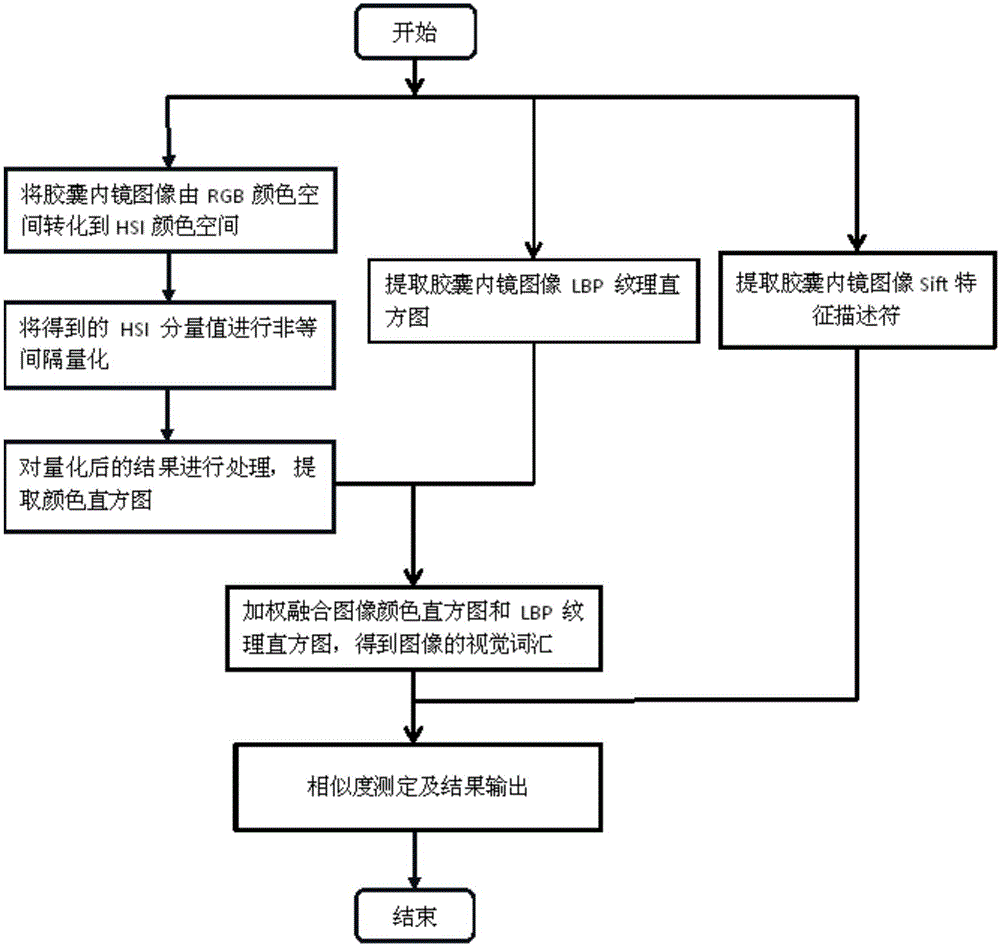

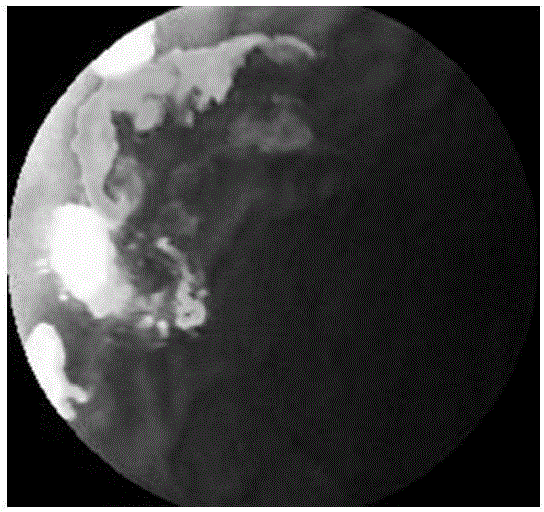

Capsule endoscopy image retrieval method based on visual vocabularies and local descriptors

InactiveCN105069131AAchieve retrievalImprove diagnostic qualitySpecial data processing applicationsCapsule endoscopyImaging processing

The invention provides a capsule endoscopy image retrieval method based on visual vocabularies and local descriptors. The method concretely comprises the following steps of: building an image library, wherein the image library comprises standard case images and images to be retrieved; converting capsule endoscopy images from an RGB (Red, Green and Blue) color space into an HSI (Hue, Saturation and Intensity) color space, performing quantization processing and extracting a color histogram; extracting an LBP (Local Binary Patterns) texture histogram of the capsule endoscopy images; performing weighing blending on color features and texture features to obtain the visual vocabularies of the images; extracting Sift (Scale invariant feature transform) feature descriptors of the capsule endoscopy images; and performing similarity testing to obtain retrieval results. The method provided by the invention is used for capsule endoscopy image processing; the work intensity of film reading doctors is effectively reduced; and the diagnosis efficiency is improved.

Owner:BEIJING UNIV OF TECH

Method for automatically classifying forestry service images

ActiveCN102819747ADescribe wellMeet the needs of managementCharacter and pattern recognitionForest industryFunctional management

The invention relates to a method for automatically classifying forestry service images, comprising the main steps of training and classifying. The step of training is as follows: converting images, calculating the set of key points on a gray-scale image, describing the key points by determining the main direction of the key points and generating eigenvectors, clustering, and producing histograms to express the images. The step of classifying is as follows: expressing the classified images with the histograms, and classifying by a classifier. Therefore, the classification of the forestry service images is finished. The numerous forestry service images collected by the forest rangers are used for constructing a reasonable visual vocabulary book according to the characteristics and the color information of the data of the forestry service images, the forestry service images are divided accurately into seven categories including forest fires, illegal use of forest land, illegal logging, illegal hunting and the like, and the forestry service images of different categories are respectively transferred to the functional management departments to realize the fast, effective and timely management of forest and the information modernization of the management of the forest.

Owner:ZHEJIANG FORESTRY UNIVERSITY

Image retrieval method based on hierarchical convolutional neural network

ActiveCN107908646AEnhanced Representational CapabilitiesAccurate All-Sky Aurora Image RetrievalCharacter and pattern recognitionNeural architecturesFeature extractionReverse index

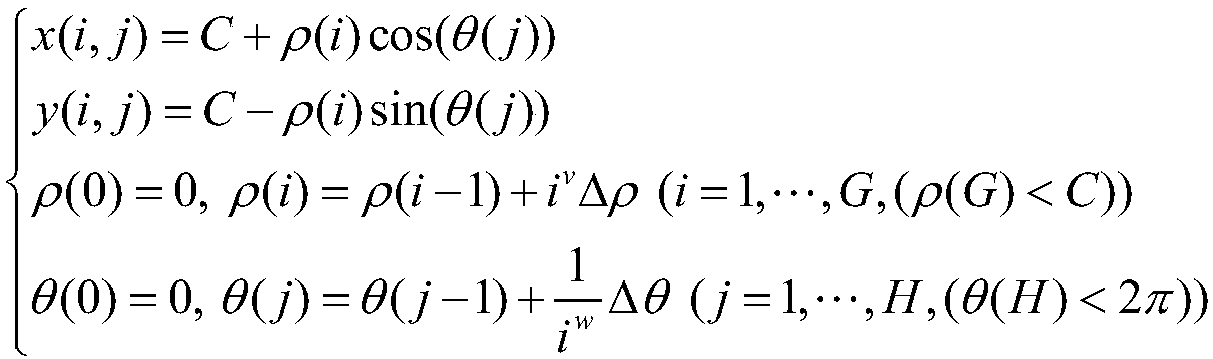

The invention discloses an image retrieval method based on a hierarchical convolutional neural network, and mainly aims at solving the problem that in existing all-sky aurora image retrieval, the accurate rate is low. The method comprises the implementation steps that 1, local key points of all-sky aurora images are determined by adopting an adaptive polar barrier method; 2, local SIFT features ofthe all-sky aurora images are extracted, and a visual vocabulary is constructed; 3, the convolutional neural network is pre-trained and subjected to fine tuning, and a polar region pooling layer is constructed; 4, region CNN features and global CNN features of the all-sky aurora images are extracted; 5, all the features are subjected to binarization processing, and hierarchical features are constructed; 6, a reverse index table is constructed, and the global CNN features are saved separately; and 7, hierarchical features of a queried image are extracted, the similarity between the queried image and the database images is calculated, and a retrieval result is output. According to the method, matching of the local key points is achieved through the hierarchical features, the problem that inan existing image retrieval method, the false alarm rate is high is solved, the advantage of being high in retrieval accuracy rate is achieved, and the method is suitable for real-time image retrieval.

Owner:XIDIAN UNIV

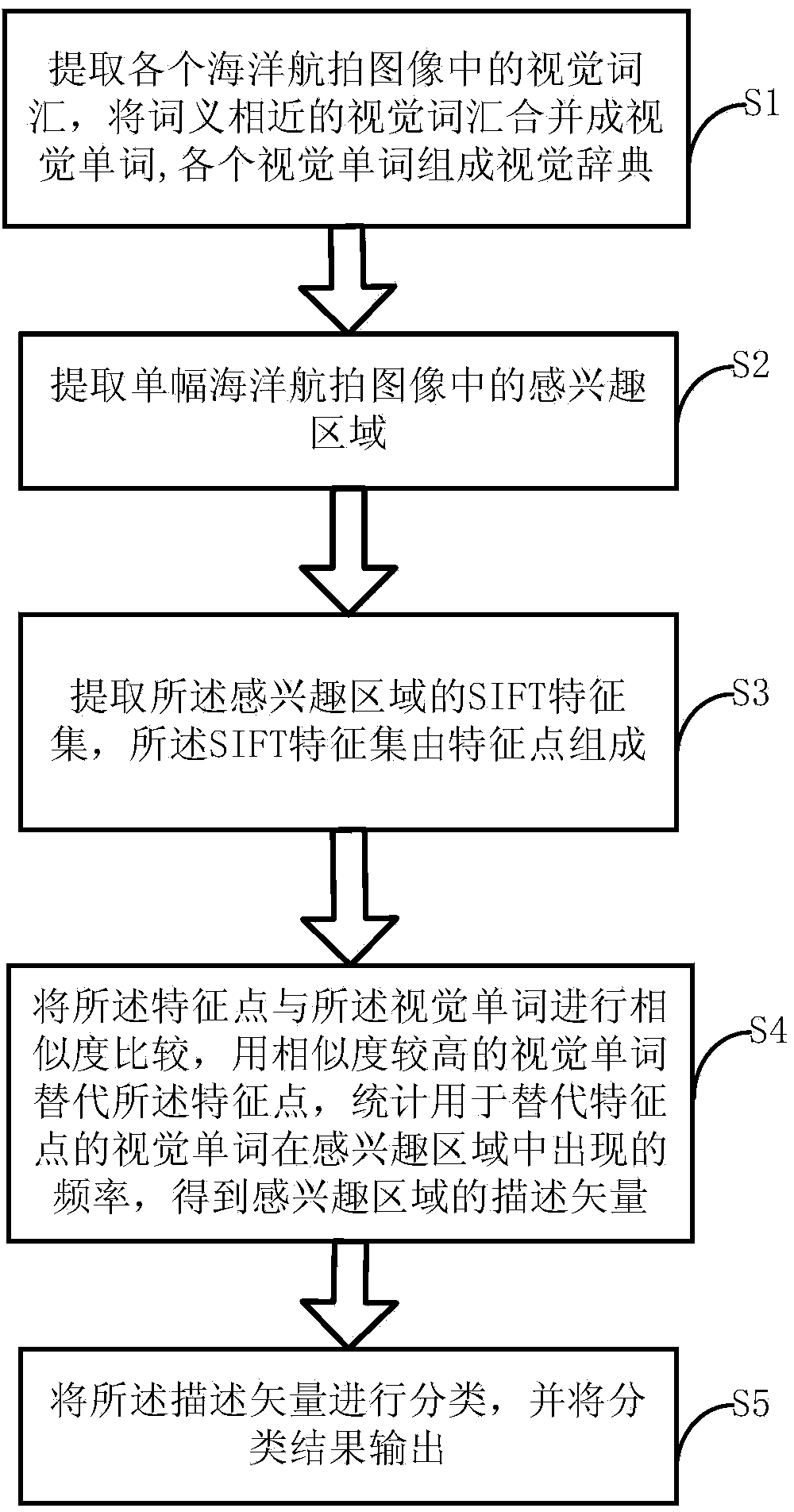

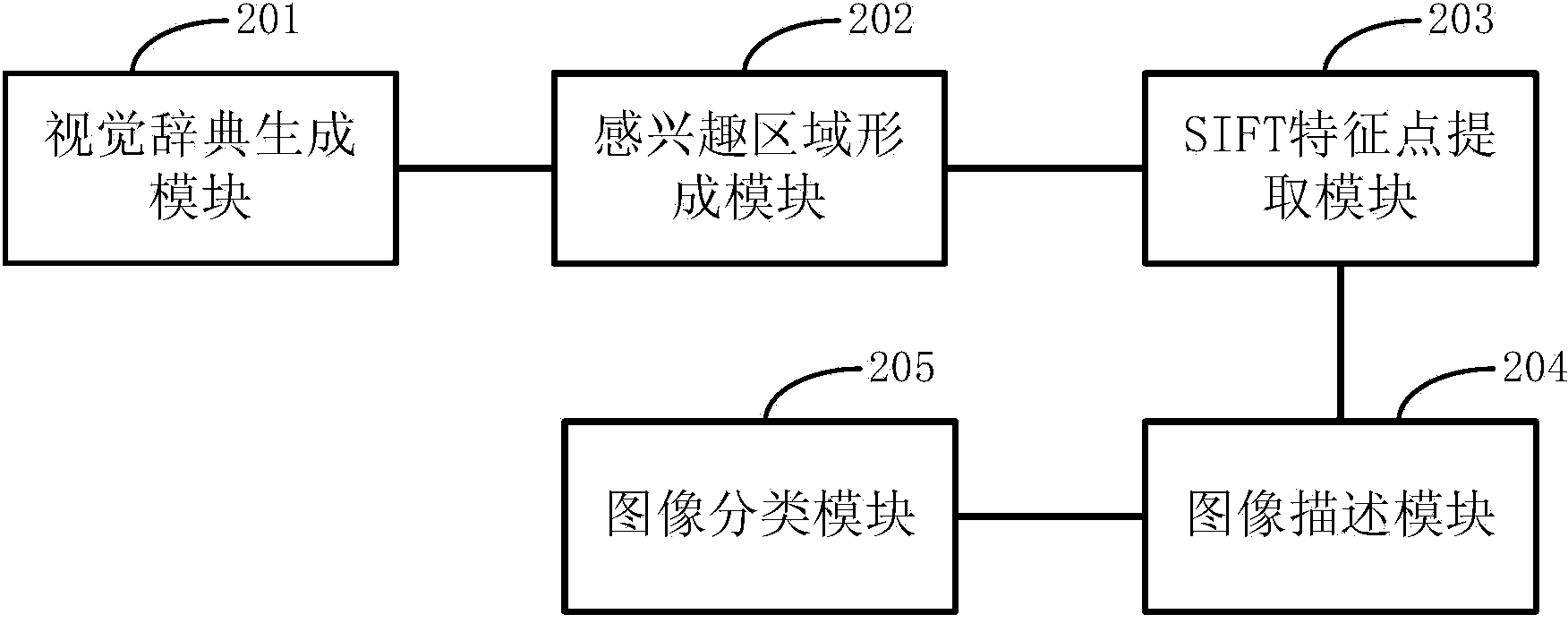

Method and system for target detection and identification of aerial ocean images

InactiveCN103810487AImprove recognition rateEfficient identificationCharacter and pattern recognitionFeature setScale-invariant feature transform

The invention is applicable to the ocean target detection technology and provides a method for target detection and identification of aerial ocean images. The method comprises the steps of 1, extracting visual words of aerial ocean images, uniting the visual words similar in meaning as a visual word and forming a visual dictionary with all the visual words, 2, extracting an area of interest in a single aerial ocean image, 3, extracting an SIFT (Scale Invariant Feature Transform) feature set, composed of feature points, of the area of interest, 4, carrying out similarity comparison of the feature points and the visual words, replacing a feature point with the corresponding visual word high in similarity, counting the frequency of the visual word, taking the place of the feature point, appearing in the area of interest and obtaining a description vector of the area of interest, and 5, classifying all description vectors obtained and outputting a classification result. The method for target detection and identification does not affected by illumination variation, shielding, geometrical transformation, changing in scale and the like, and is capable of accurately detecting and identifying ship objects.

Owner:SHENZHEN UNIV

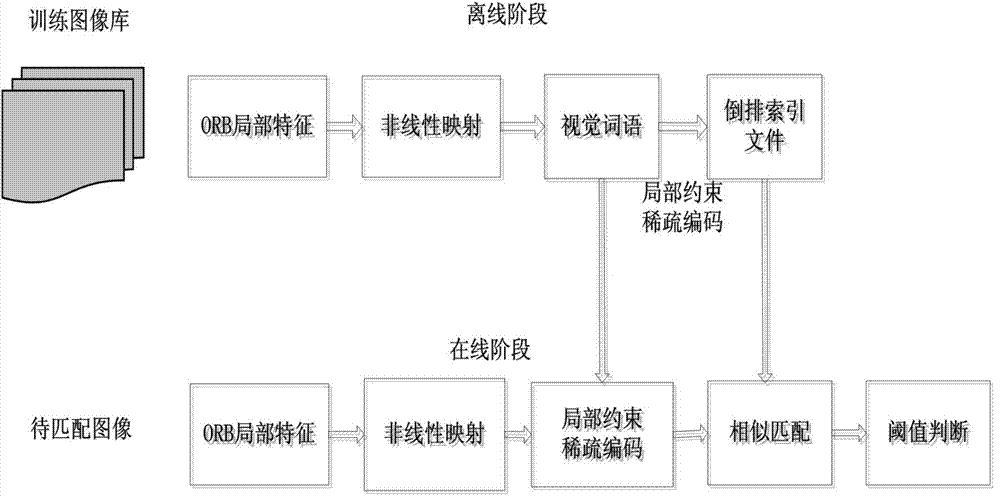

Rapid and high-efficiency near-duplicate image matching method

ActiveCN104504406ACalculation speedNoise insensitiveCharacter and pattern recognitionLinear codingQuantification methods

The invention discloses a rapid and high-efficiency near-duplicate image matching method. The method comprises the steps that 1) the ORB characteristic of each image in a training image library is extracted and nonlinear mapping is performed on the ORB characteristic of each image so that a visual word table of the training image library is constructed; 2) sparse coding is performed on the nonlinear mapping ORB characteristic of each image in the training image library by utilizing locally-constrained linear coding according to the constructed visual word table; 3) the ORB characteristic of the image to be matched is extracted and nonlinear mapping is performed on the ORB characteristic of the image to be matched, and then sparse coding is performed on the nonlinear mapping ORB characteristic of the image to be matched according to the constructed visual word table; and 4) similarity of sparse coding of the image to be matched and sparse coding of the images in the training image library is calculated, and if similarity exceeds the preset threshold value, matching succeeds, or matching fails. Reconstruction error of a hard quantification method is reduced so that matching speed is greatly enhanced and the method can be used for real-time matching.

Owner:长安通信科技有限责任公司

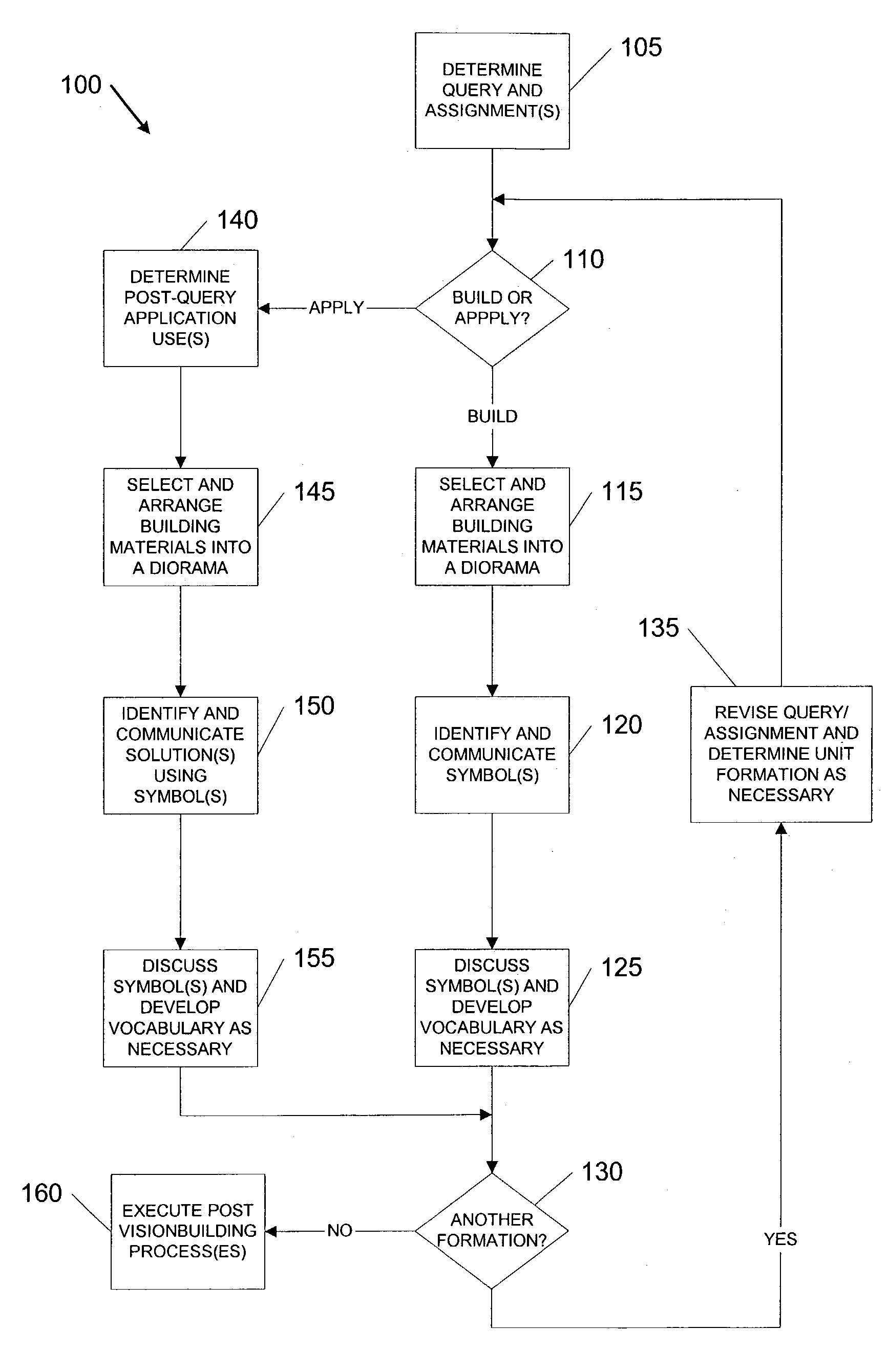

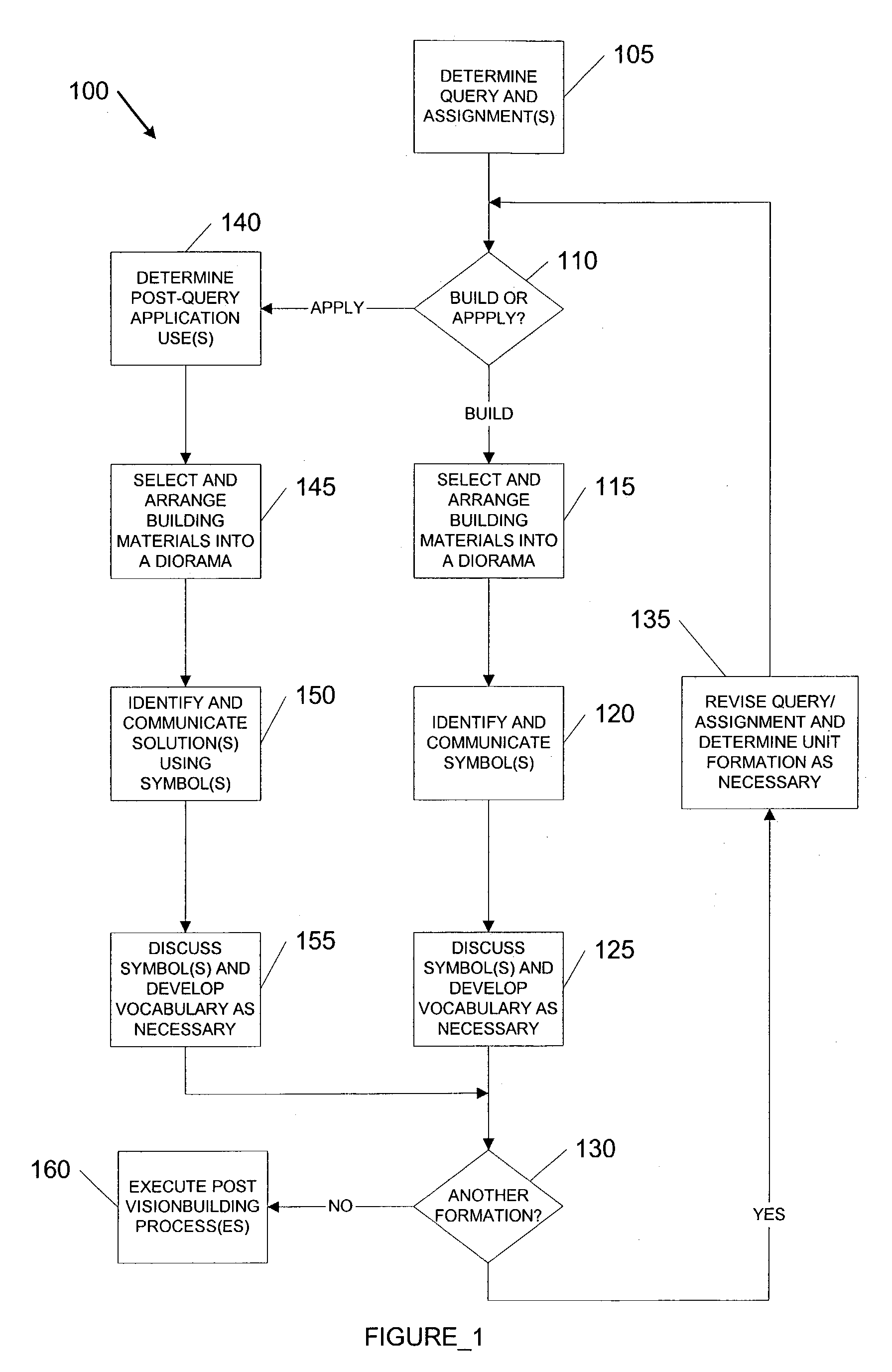

Symbolic vocabulary development and use

ActiveUS7083417B1High bandwidth communicationAccurate and comprehensiveElectrical appliancesTeaching apparatusCollective modelDistillation

A system and method is disclosed for improving intra- and inter-cultural communications by having people of one or more cultures develop and build a symbolic vocabulary of what is salient in their work and their lives. Through successive stages of formations that select and arrange building elements into models having one or more symbols of the visual vocabulary. The symbolic vocabulary is developed by a method that includes the step of creating a collective model by a plurality of participants, the collective model having a collective symbol responsive to an assignment. The model creating step further includes, in the preferred embodiment, the steps of: (a) selecting and arranging a plurality of building elements into a model having a symbol responsive to the assignment; and (b) describing the symbol, discussing symbols and developing a vocabulary. Wherein the steps are performed successively by one or more formations of units of the plurality of participants. Each unit performs the steps and each unit's model symbol using one or more symbols from any preceding formation's symbol, or modifications of them, produces transcendent symbols through successive distillation of resonant symbols from the units of formation.

Owner:WILDFLOWERS INST THE

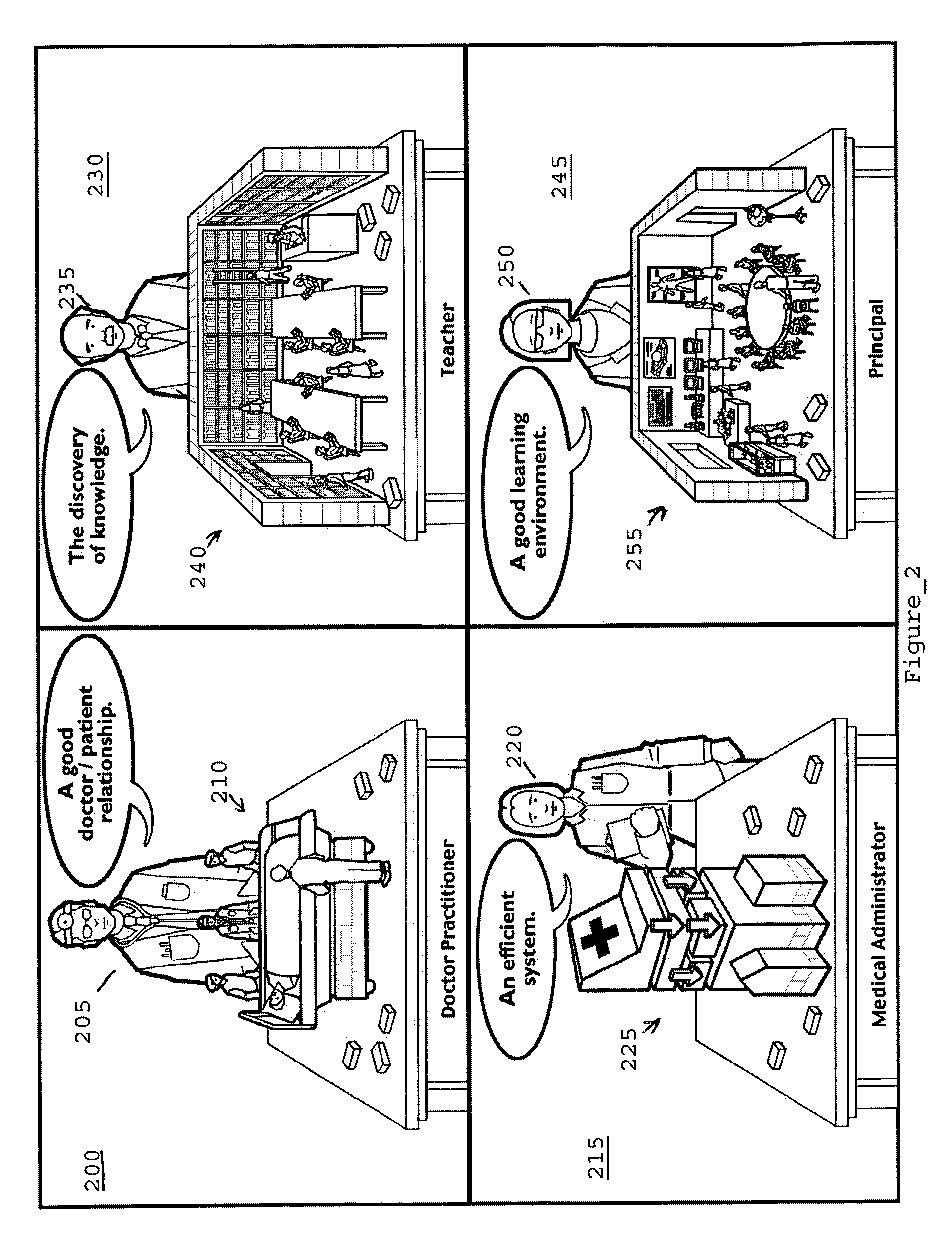

Method for generating context descriptors of visual vocabulary

ActiveCN105678349AImprove retrieval efficiencyImprove retrieval accuracyCharacter and pattern recognitionImage retrievalDocument preparation

The invention relates to a method for generating context descriptors of visual vocabulary. The method comprises following steps: off-line learning, context descriptor generating and context descriptor similarity computing. The off-line learning is used for construction of a visual vocabulary dictionary and evaluation of visual vocabulary. The step of context descriptor generating comprises following sub-steps: 1. extracting local characteristic points and quantifying characteristic descriptors; 2. selecting a context; 3. extracting characteristics of the local characteristic points of the context and generating context descriptors. The context descriptor similarity computing is used for verifying whether local characteristic points of two context descriptors match with each other according to the azimuth and principal direction of the local characteristic points of the context descriptors and consistency of the visual vocabulary, and evaluating the similarity of the two context descriptors through the summation of the inverse document frequency of matched visual vocabulary. The context descriptors established by the invention are adapted to influence brought by conversions such as image clipping, rotation and scale-zooming; the method can be applied in image retrieval and classification, etc.

Owner:杭州远传新业科技股份有限公司

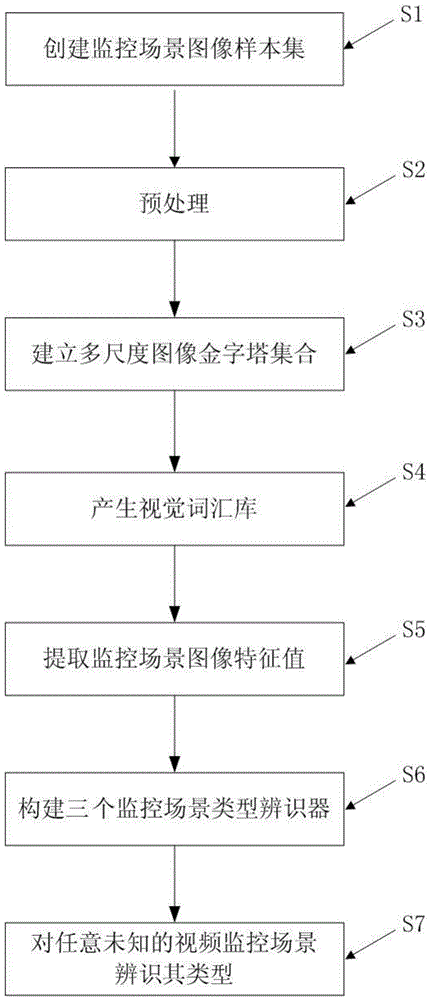

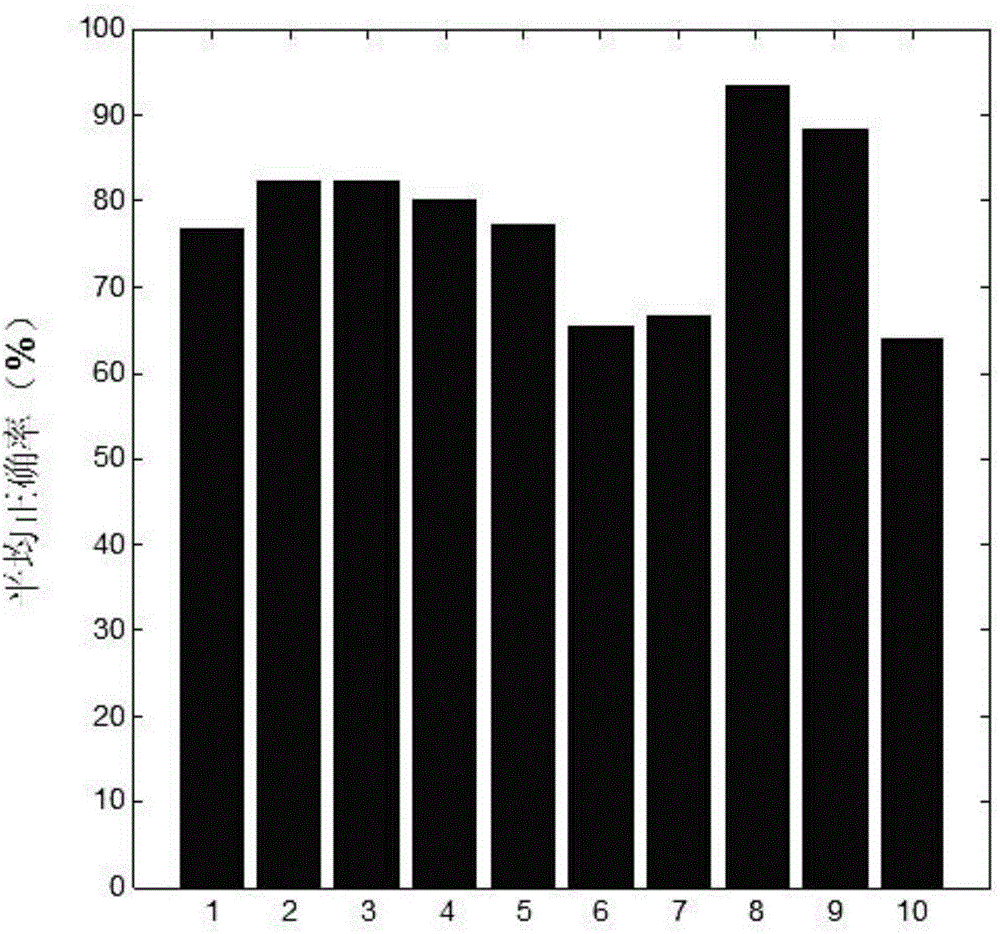

Monitor scene type identification method for intelligent video monitor

ActiveCN104616026AImprove intelligenceImprove accuracyCharacter and pattern recognitionClosed circuit television systemsPattern recognitionVisual perception

Provided is a monitor scene type identification method for intelligent video monitor. The method comprises the following steps: creating a monitor scene image sample set; pre-processing the monitor scene image sample set; establishing a corresponding multi-scale image pyramid set based on the pre-processed monitor scene image sample set; using the multi-scale image pyramid set to generate a visual vocabulary library; using the visual vocabulary library to extract characteristic values of all images in the monitor scene image sample set; constructing three monitor scene type identifiers; identifying the monitor scene type of any monitor scene image with an unknown scene type besides the monitor scene image sample set. The monitor scene type identification method for intelligent video monitor is able to effectively overcome the problems of scale change, visual angle change, object blockage, and the like, which are commonly existed in the video monitor scene images, thereby increasing the accuracy of the identification.

Owner:泰山信息科技有限公司

Global visual vocabulary, systems and methods

Systems and methods of generating a compact visual vocabulary are provided. Descriptor sets related to digital representations of objects are obtained, clustered and partitioned into cells of a descriptor space, and a representative descriptor and index are associated with each cell. Generated visual vocabularies could be stored in client-side devices and used to obtain content information related to objects of interest that are captured.

Owner:NANT HLDG IP LLC

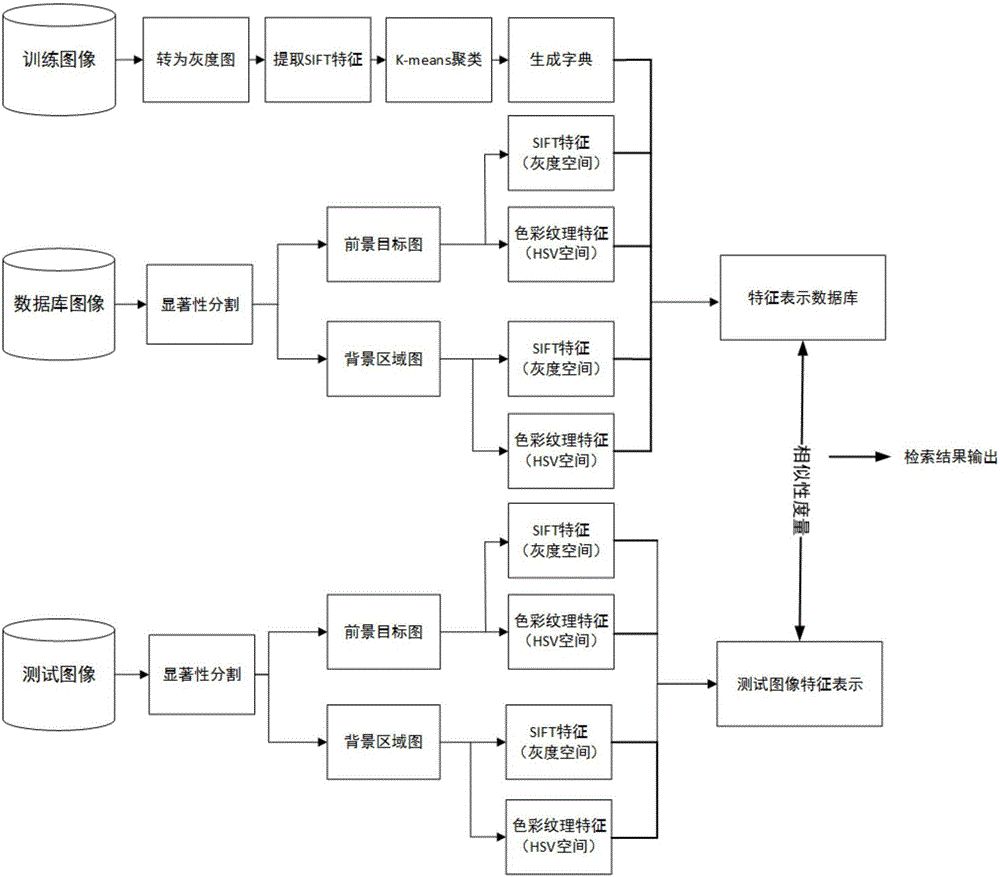

Content-based image retrieval method based on significance segmentation

InactiveCN106844785ABridging the Semantic GapImage enhancementImage analysisStatistical databaseSemantic gap

The invention discloses a content-based image retrieval method based on significance segmentation. The method comprises a training process and a testing process. The training process comprises the following steps that firstly, a visual vocabulary dictionary is established, specifically, all images in a training image set are subjected to analysis and processing sequentially, and the visual vocabulary dictionary is established for subsequent retrieval; secondly, target foreground images and background regional images are obtained through segmentation by means of visual significance features of the images; thirdly, color features and textural features are extracted from the target foreground images and the background regional images; and fourthly, statistics of visual vocabulary distribution histograms of all the images in the database are made on the basis of operation in the first step. The testing process comprises the fifth step that on the basis of operation in the four steps, tested images are retrieved. By adoption of the content-based image retrieval method based on significance segmentation, the number of semantic gaps can be effectively decreased, and the accuracy is high.

Owner:ZHEJIANG UNIV OF TECH

Object recognition method based on semantic feature extraction and matching

InactiveCN104008095ARich relevant informationSpecial data processing applicationsScale-invariant feature transformSupport vector machine classifier

The invention provides an object recognition method based on semantic feature extraction and matching and belongs to the field of information retrieval. The object recognition method based on semantic feature extraction and matching includes semantic feature extraction and semantic feature matching. The semantic feature extraction includes firstly extracting SIFT (Scale Invariant Feature Transform) feature points of training images of a class of objects, then performing spatial clustering on the SIFT feature points through k- means clustering, deciding a plurality of efficient points in every space class through a decision-making mechanism based on kernel function, and finally training the efficient points in every space class through a support vector machine classifier; a visual word with semantic features is trained from every space class, and finally a visual vocabulary describing the semantic features of a class of objects is extracted. The semantic feature matching includes firstly extracting SIFT feature points of an image of an object to be detected as the semantic description of the object to be detected, then using the support vector machine classifier for matching and classifying the semantic description of the object to be detected and visual vocabularies of classes of objects, and finally counting a histogram of the visual vocabulary of the object to be detected for determining the class of the object to be detected.

Owner:武汉三际物联网络科技有限公司

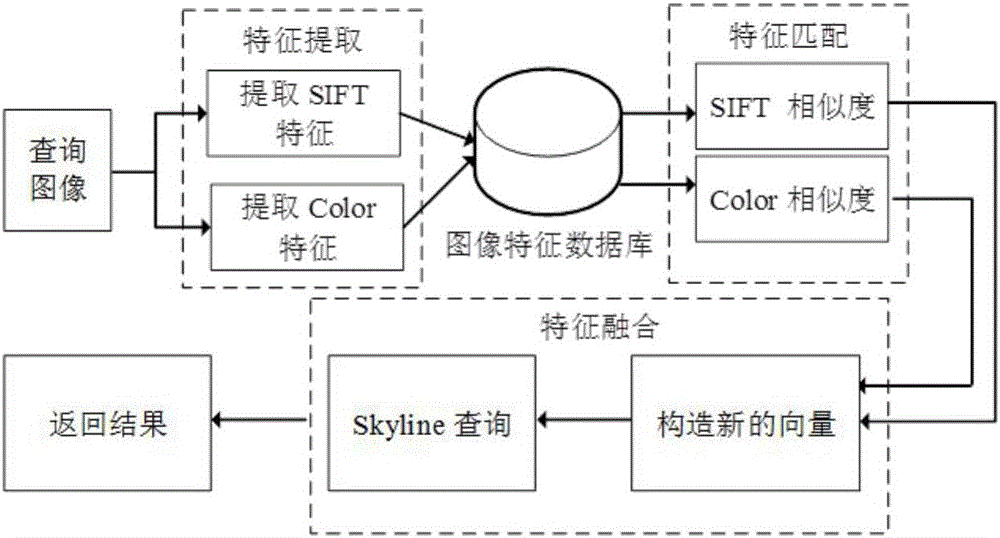

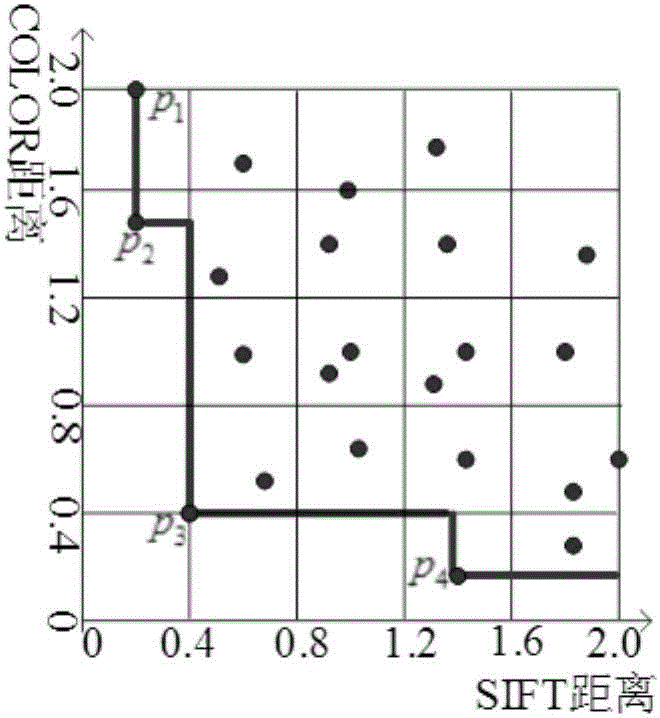

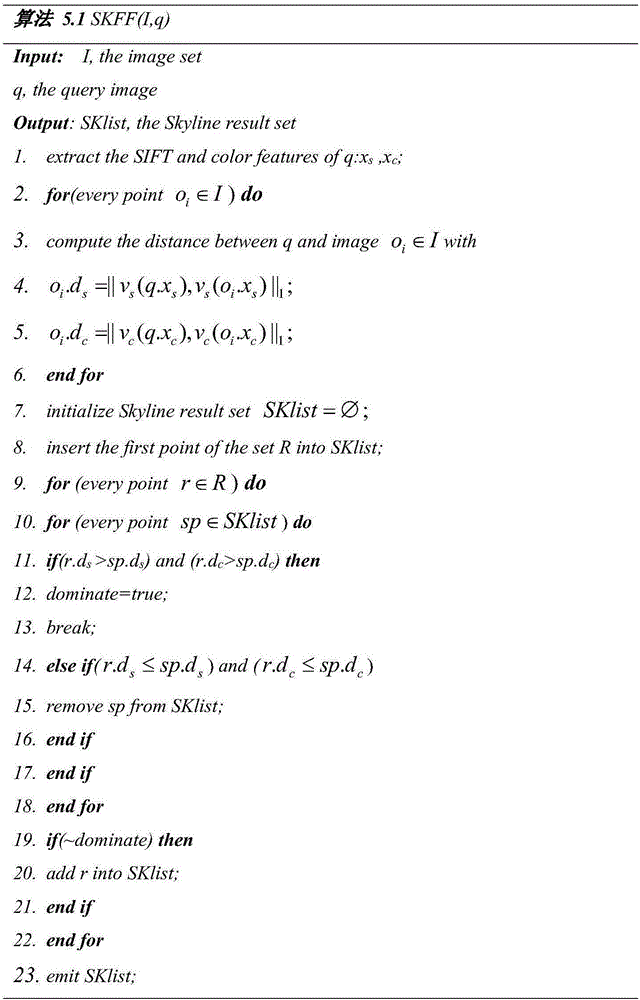

Medical big data retrieval method based on visual vocabulary and multi-feature matching Skyline

A medical big data retrieval method based on visual vocabulary and multi-feature matching Skyline belongs to the crossing field of intelligent medical and big data processing. According to the system, metric space Skyline query is applied to the medical image retrieval technology of basic content. The technical points comprise: feature data such as SIFT and Color are extracted; multiple bottom layer features of an image are fused by means of distributed Skyline operation; each feature similarity is used as an assessment target of the Skyline; return results are candidate images which are similar to the query image in multi-dimensional features or extremely similar to the query image in a certain dimension feature; finally, streaming processing is carried out by means of a cloud calculation Spark system to obtain query or processing results in real time. The method has following beneficial effects: obtaining information corresponding to an image from a user terminal and uploading and saving the information to a cloud server; then the cloud server carries out processing to obtain optimal medical image cluster scheme and feed back to users.

Owner:DALIAN JIAOTONG UNIVERSITY

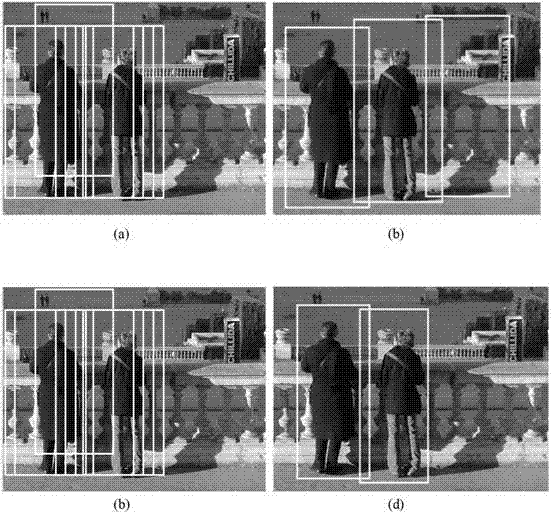

Human body detecting method based on SURF (Speed Up Robust Feature) efficient matching kernel

InactiveCN102810159AOptimize detection resultsAvoid ambiguityCharacter and pattern recognitionHuman bodySingular value decomposition

The invention provides a human body detecting method based on an SURF efficient matching kernel, and mainly solves the problem that image background hybridity can not be better processed in the existing method. The method comprises the steps that a negative sample is obtained through bootstrap in an INRIA (Institute National de Recherce en Informatique et Automatique) database, and a training sample set of the whole human body is formed by the negative sample and a positive sample in the database; SURF descriptor feature points are extracted under different image scales for the training sample; feature points are extracted by random sampling to constitute the initial vector basis of a visual vocabulary; constrained singular value decomposition is utilized for the initial vector basis to obtain the maximum kernel function feature; the maximum kernel function feature in different image scales is weighted to obtain the features under all the image scales; the obtained features are trained in different classes by an SVM (Support Vector Machine) classifier, and a detection classifier is obtained; and the image to be detected is input to the classifier to obtain the final detection result. The method disclosed by the invention can be used for accurately detecting the human body, and can be used for intelligent monitoring, driver auxiliary systems and virtual video.

Owner:XIDIAN UNIV

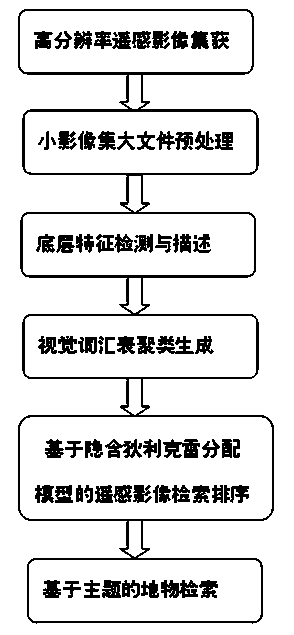

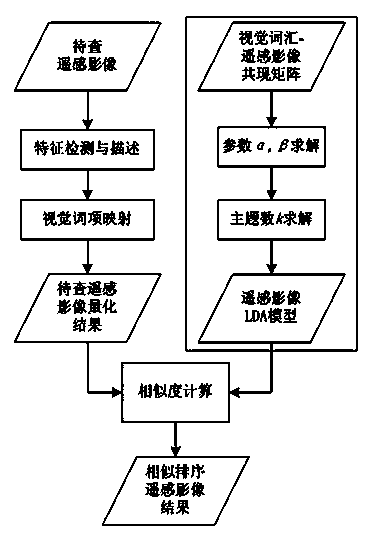

Retrieval method of remote sensing images

InactiveCN104111947AImprove scalabilityGood dimensionality reduction effectCharacter and pattern recognitionSpecial data processing applicationsVisual perceptionComputer science

The invention discloses a retrieval method of remote sensing images. The method includes acquiring and preprocessing the remote sensing images; then detecting and describing bottom visual features, and clustering to generate visual vocabularies; performing remote sensing information retrieval on the basis of a hidden dirichlet distribution model; finally implementing highly-accurate ground feature retrieval. The retrieval method of the remote sensing images has the advantages that fine dimension reduction effect is provided, retrieval accuracy is higher, and a retrieval map can be marked accurately.

Owner:KUNSHAN HONGHU INFORMATION TECH SERVICE

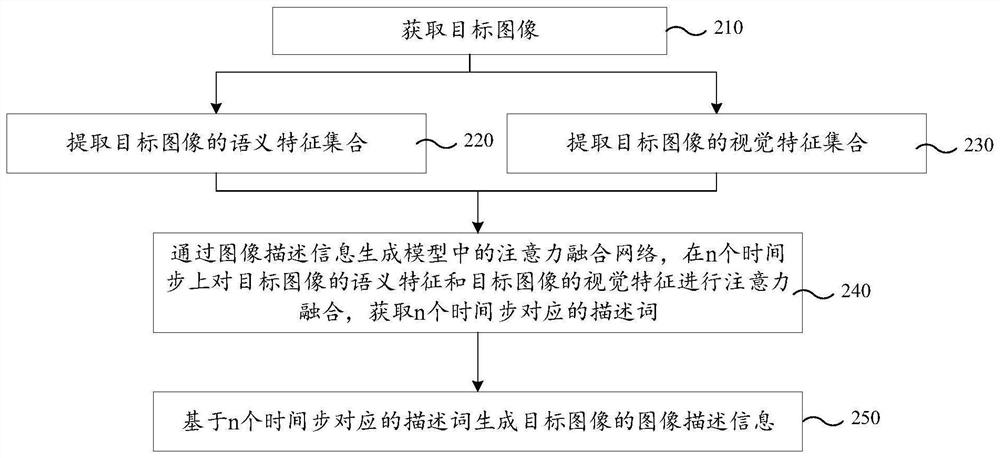

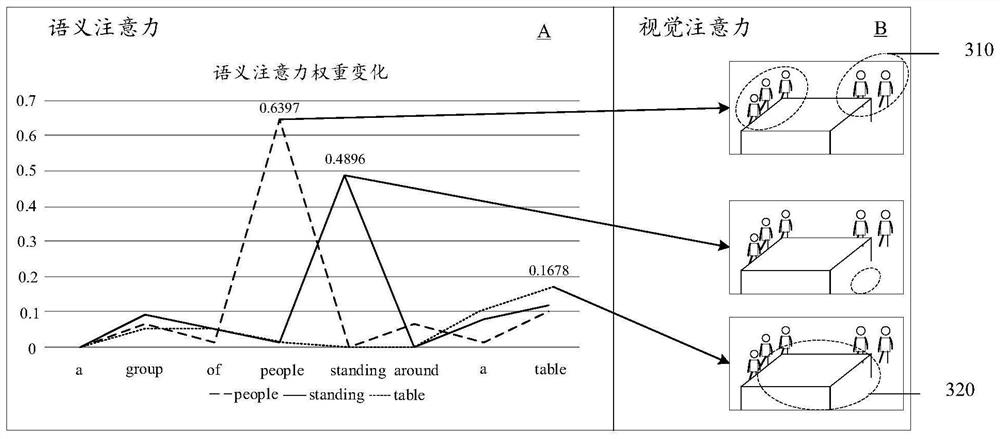

Image description information generation method and device, computer equipment and storage medium

PendingCN113569892AImprove accuracySemantic analysisCharacter and pattern recognitionFeature setImaging processing

The invention relates to an image description information generation method and device, computer equipment and a storage medium, and relates to the technical field of image processing. The method comprises the following steps: acquiring a target image; extracting a semantic feature set and a visual feature set of the target image; generating an attention fusion network in the model through the image description information, carrying out attention fusion on the semantic features of the target image and the visual features of the target image on n time steps, and obtaining descriptors corresponding to the n time steps; and generating image description information of the target image based on the descriptors corresponding to the n time steps. By means of the method, in the process of generating the image description information, the advantage of the visual features in the aspect of generating the visual vocabularies and the advantage of the semantic features in the aspect of generating the non-visual features are complementary, and therefore the accuracy of generating the image description information is improved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

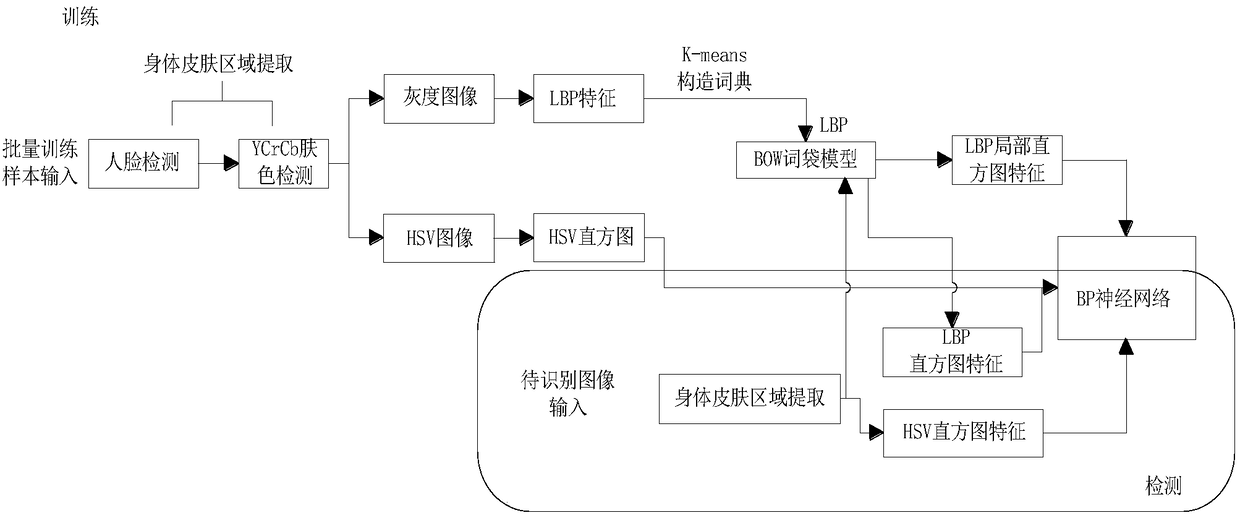

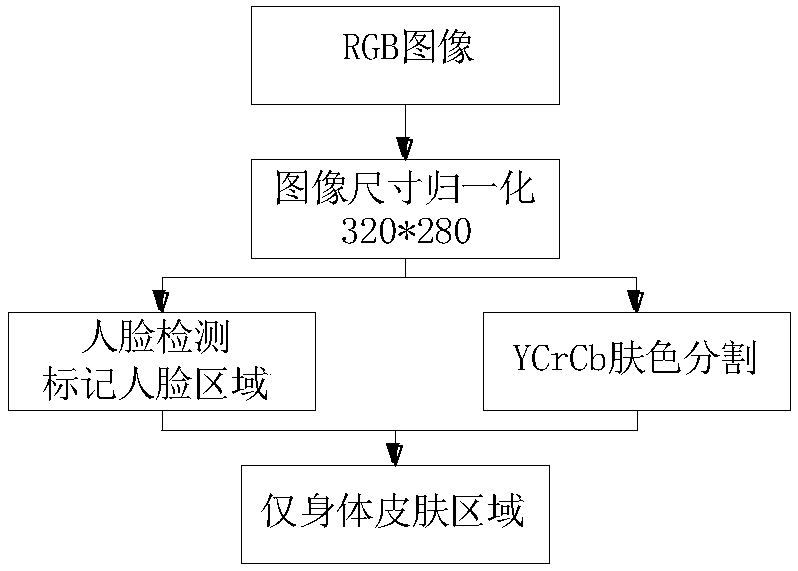

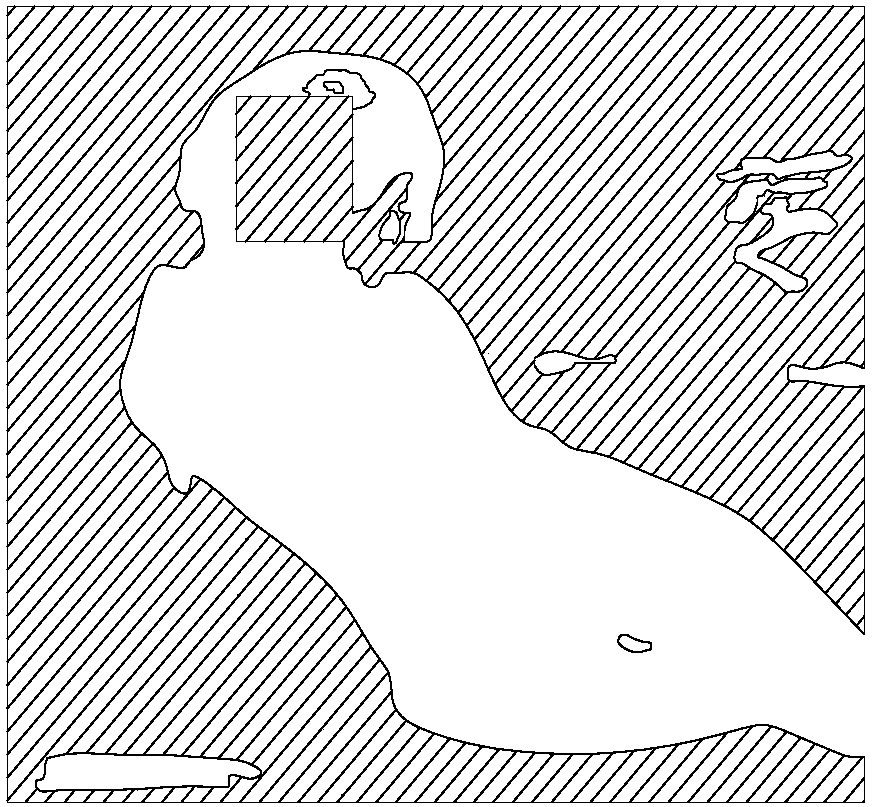

Method for recognizing sensitive images on basis of combinations of HSV (hue, saturation and value) and LBP (local binary pattern) features

PendingCN108280454ANo loss of featuresRotation invariantCharacter and pattern recognitionNeural architecturesPattern recognitionHsv color model

The invention provides a method for recognizing sensitive images on the basis of combinations of HSV (hue, saturation and value) and LBP (local binary pattern) features. The method includes acquiringskin regions of sensitive RGB (red, green and blue) images and normal RGB images; acquiring LBP visual vocabulary expression of the sensitive RGB images and the normal RGB images by the aid of LBP algorithms; acquiring HSV color features of the sensitive RGB images and the normal RGB images by the aid of HSV color models; utilizing the LBP visual vocabulary expression and the HSV color features asinput parameters and training BP (back propagation) neural networks; outputting to-be-detected image recognition results. The method has the advantages that texture information and global color information of images are used as image features, accordingly, picture feature missing can be prevented, and the method is high in accuracy and processing speed.

Owner:天津市国瑞数码安全系统股份有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com