Patents

Literature

129 results about "Semantic gap" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

The semantic gap characterizes the difference between two descriptions of an object by different linguistic representations, for instance languages or symbols. According to Hein, the semantic gap can be defined as "the difference in meaning between constructs formed within different representation systems". In computer science, the concept is relevant whenever ordinary human activities, observations, and tasks are transferred into a computational representation.

Information retrieval from relational databases using semantic queries

ActiveUS20080040308A1Digital data processing detailsRelational databasesSemantic gapRelational database

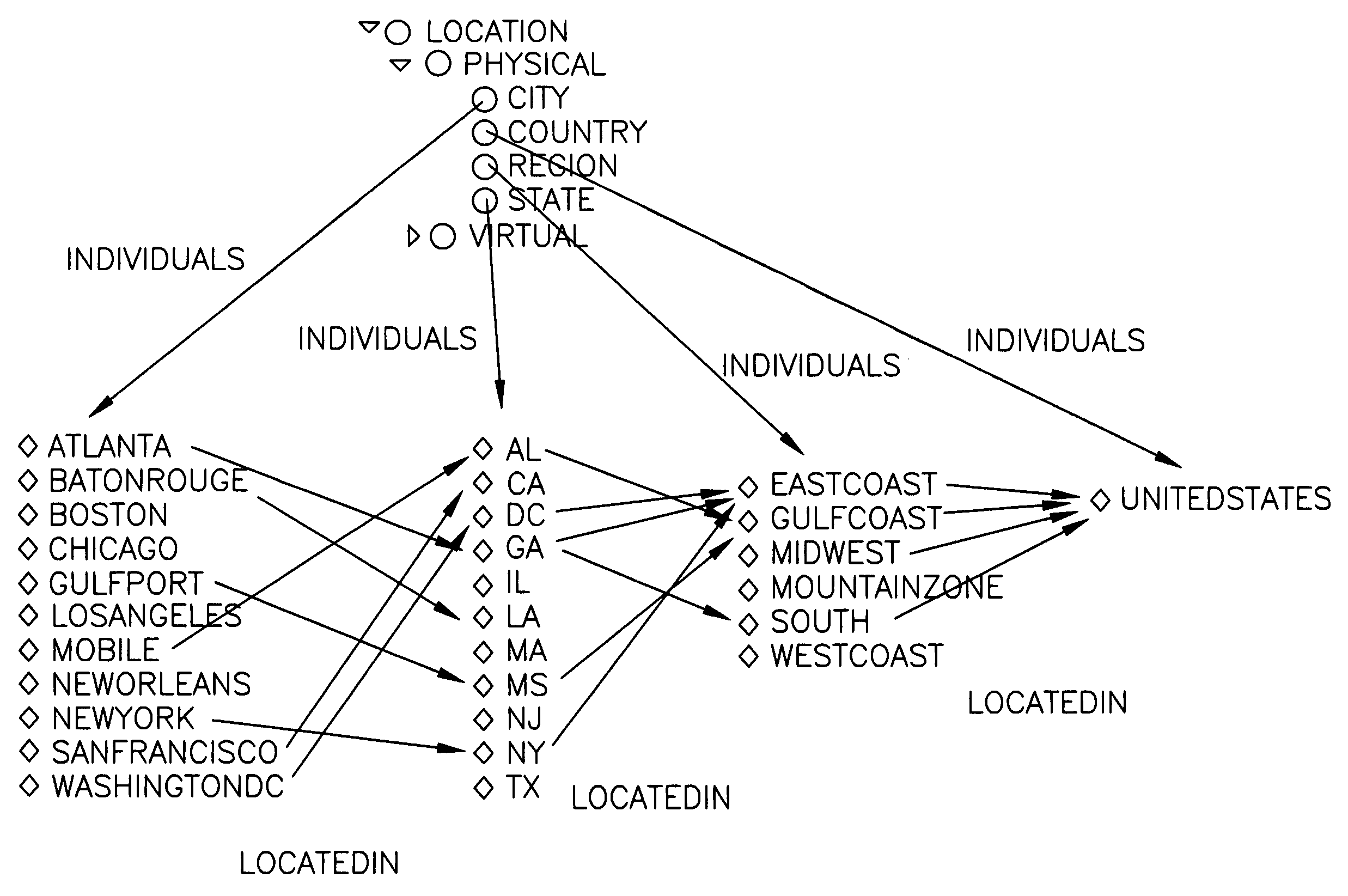

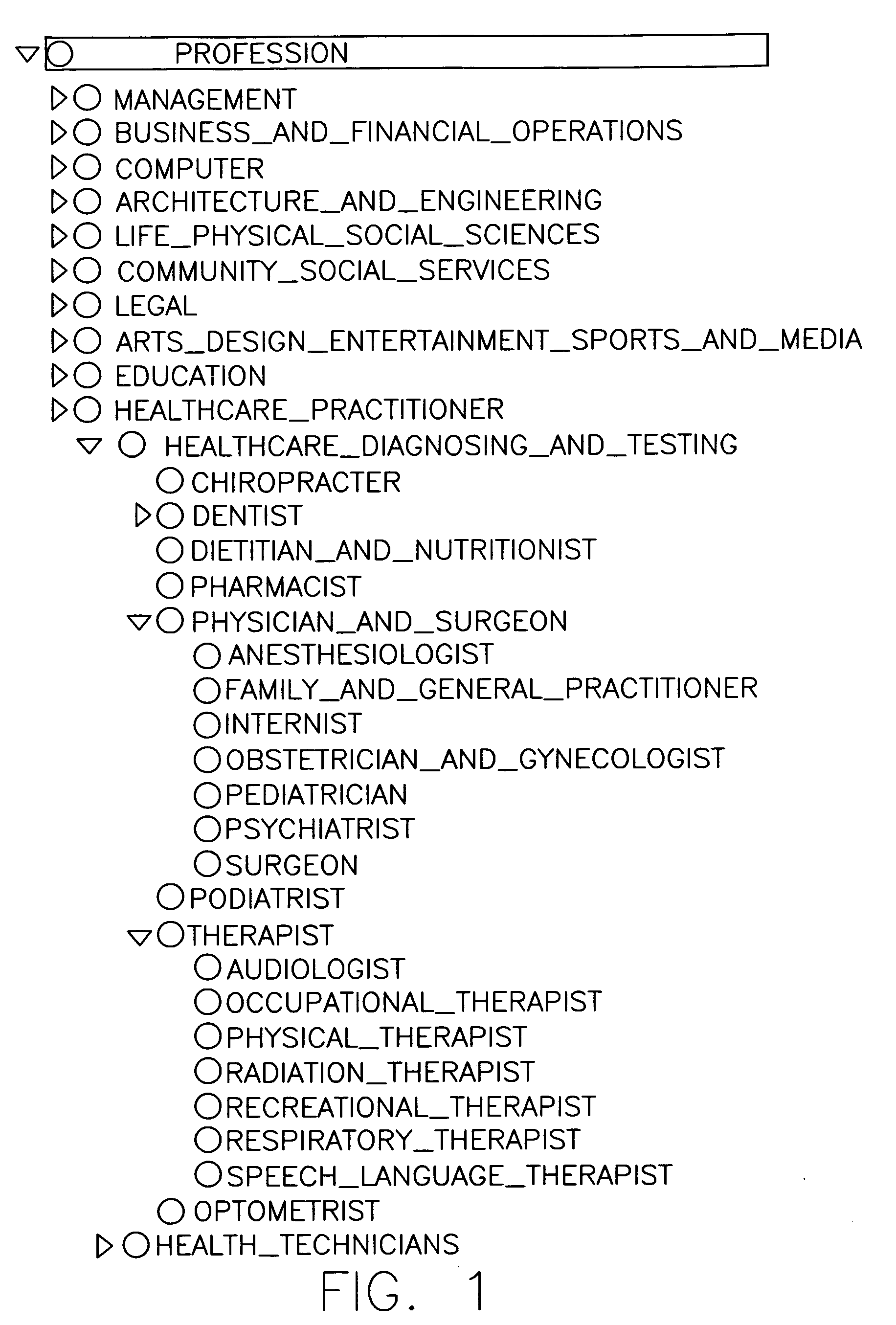

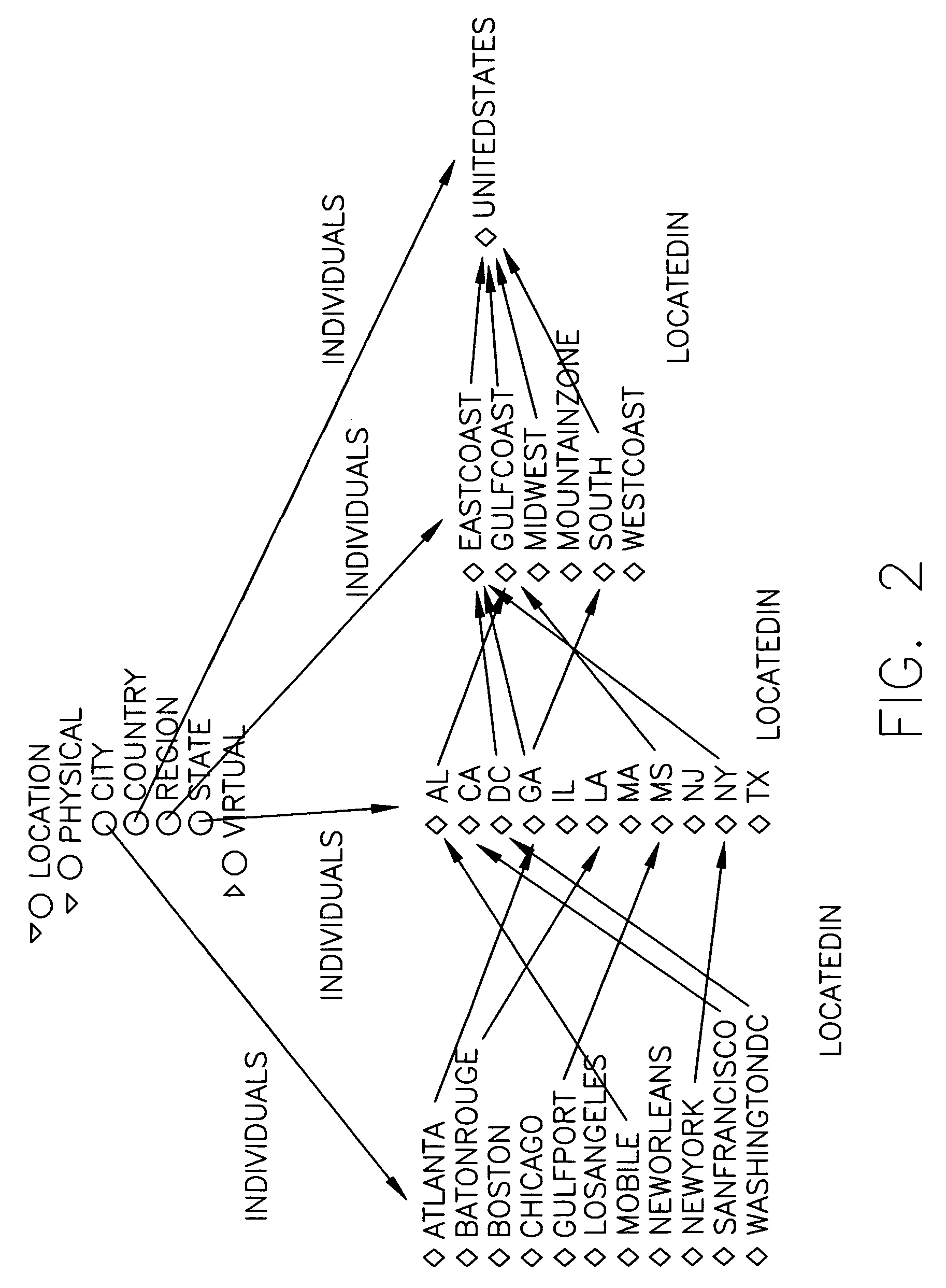

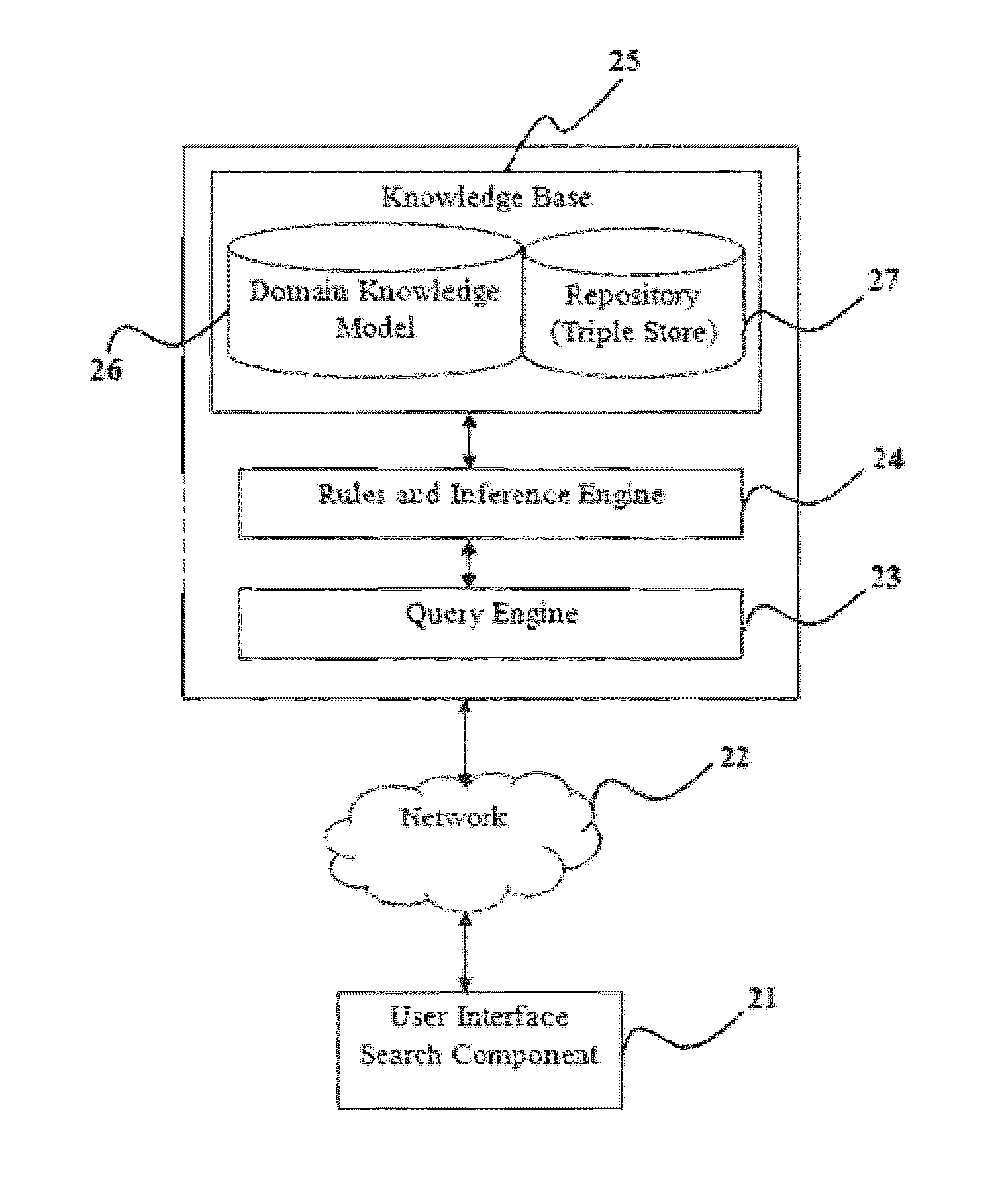

In the realm of managing relational databases, a system that uses both the data in a relational database and domain knowledge in ontologies to return semantically relevant results to a user's query. Broadly contemplated herein, in essence, is a system that bridges a semantic gap between queries users want to express and queries that can be answered by the database using domain knowledge contained in ontologies. In accordance with a preferred embodiment of the present invention, such a system extends relational databases with the ability to answer semantic queries that are represented in SPARQL, an emerging Semantic Web query language. Particularly, users may express their queries in SPARQL, based on a semantic model of the data, and they get back semantically relevant results. Also broadly contemplated herein is the definition of different categories of results that are semantically relevant to a user's query and an effective retrieval of such results.

Owner:IBM CORP

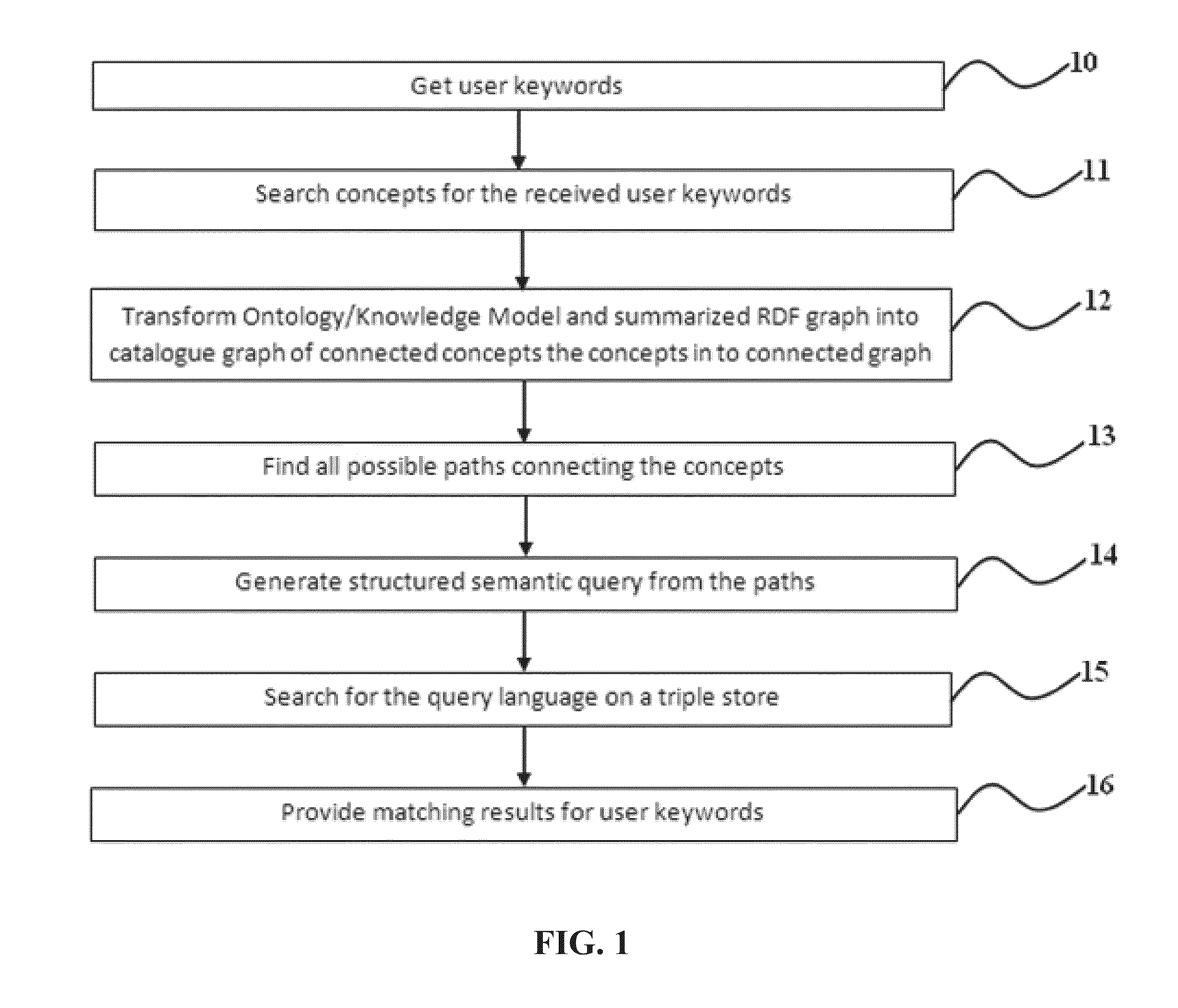

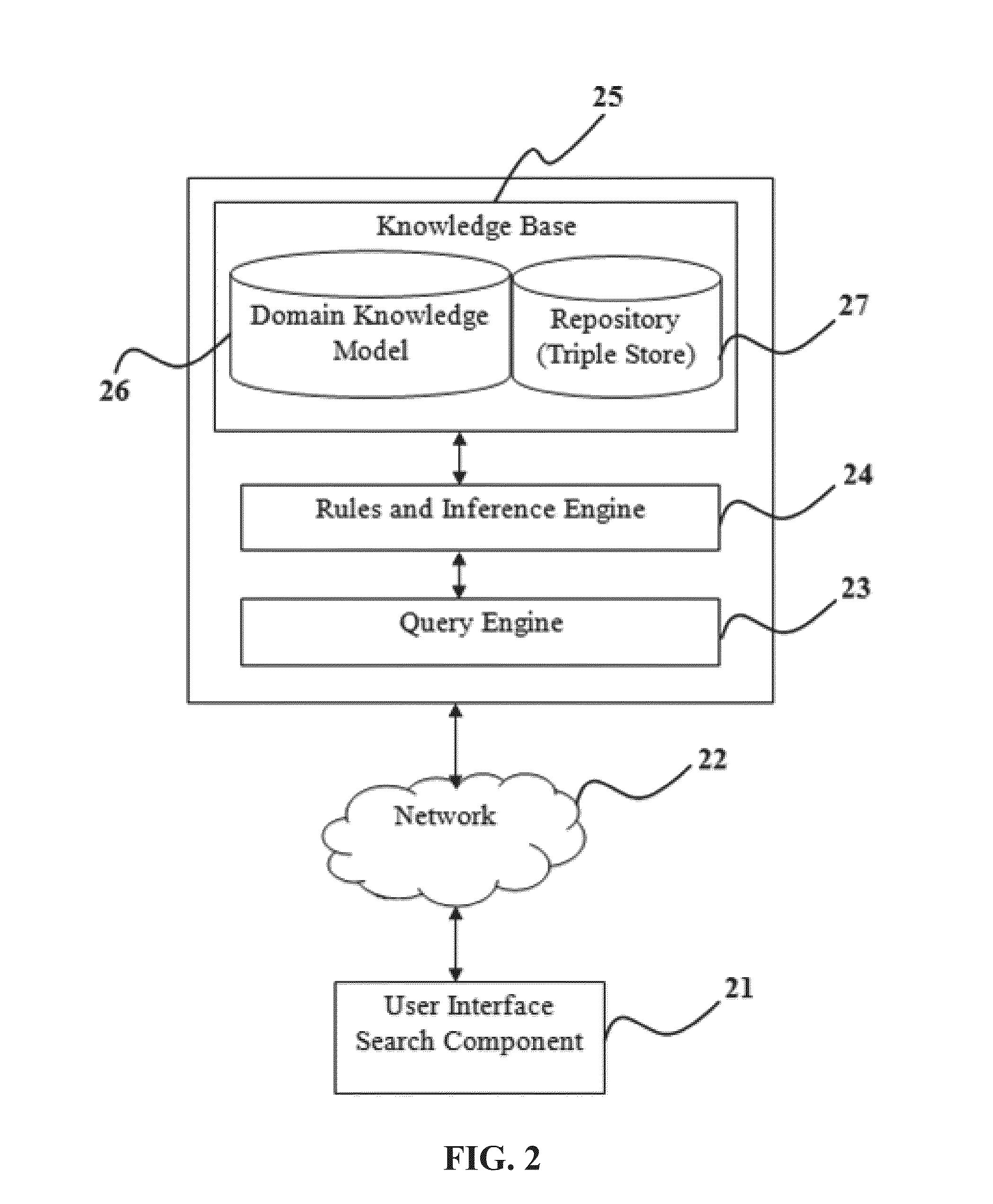

Method and system for translating user keywords into semantic queries based on a domain vocabulary

ActiveUS20140379755A1Improve relevanceDigital data information retrievalDigital data processing detailsSemantic gapWord list

The embodiments of the present invention provide a computer-implemented method and system for translating user keywords into semantic queries based on domain vocabulary. The system receives the user keywords and search for the concepts. The concepts are transformed into a connected graph. The user keywords are translated into precise access paths based on the information relationship described in conceptual entity relationship models and then converts these paths into logic based queries. It bridges the semantic gap between user keywords and logic based structured queries. It enables users to interact with the semantic system by articulating the information in a structured query language. It improves the relevance of search results by incorporating semantic technology to drive the mechanics of the search solution.

Owner:INFOSYS LTD

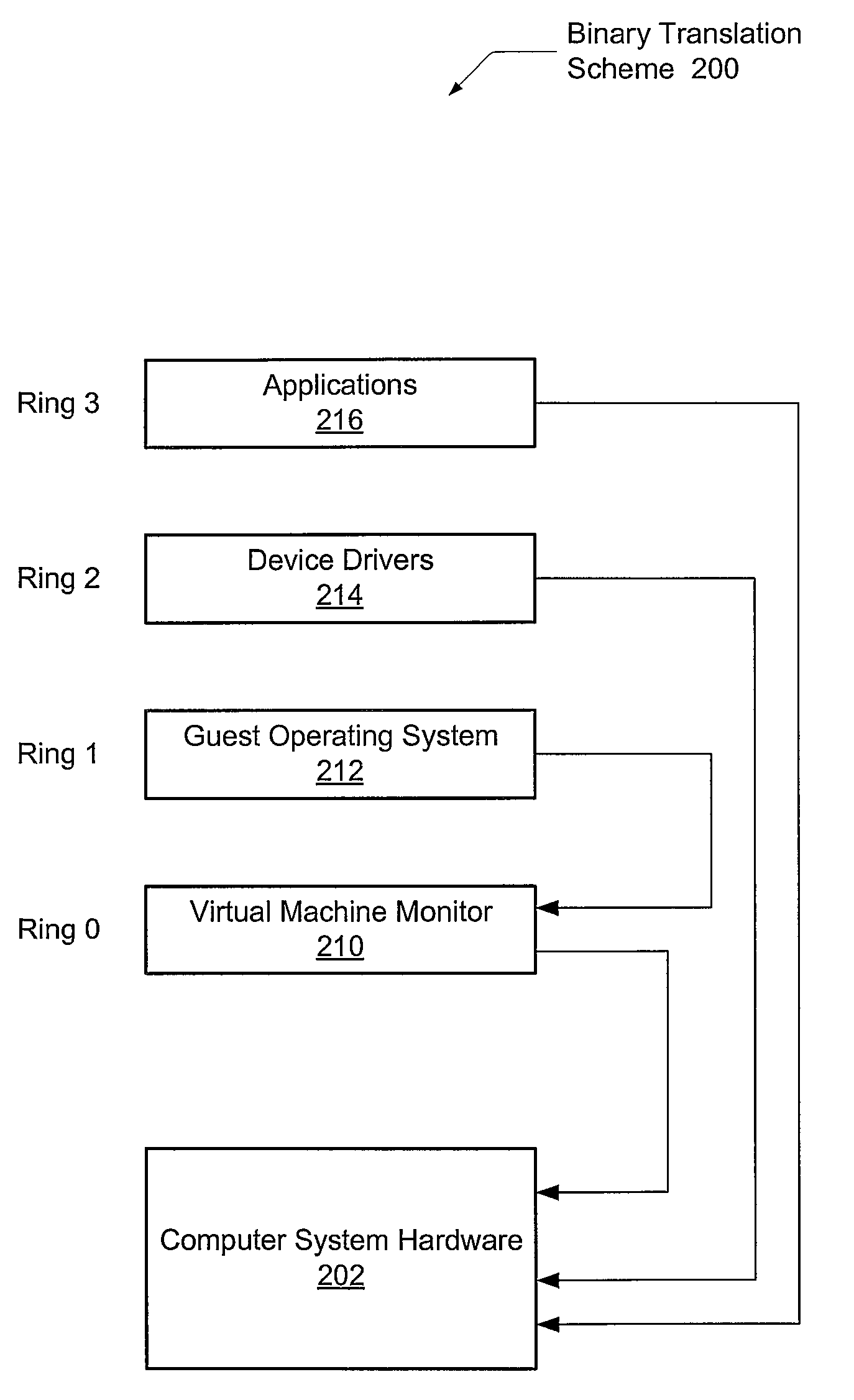

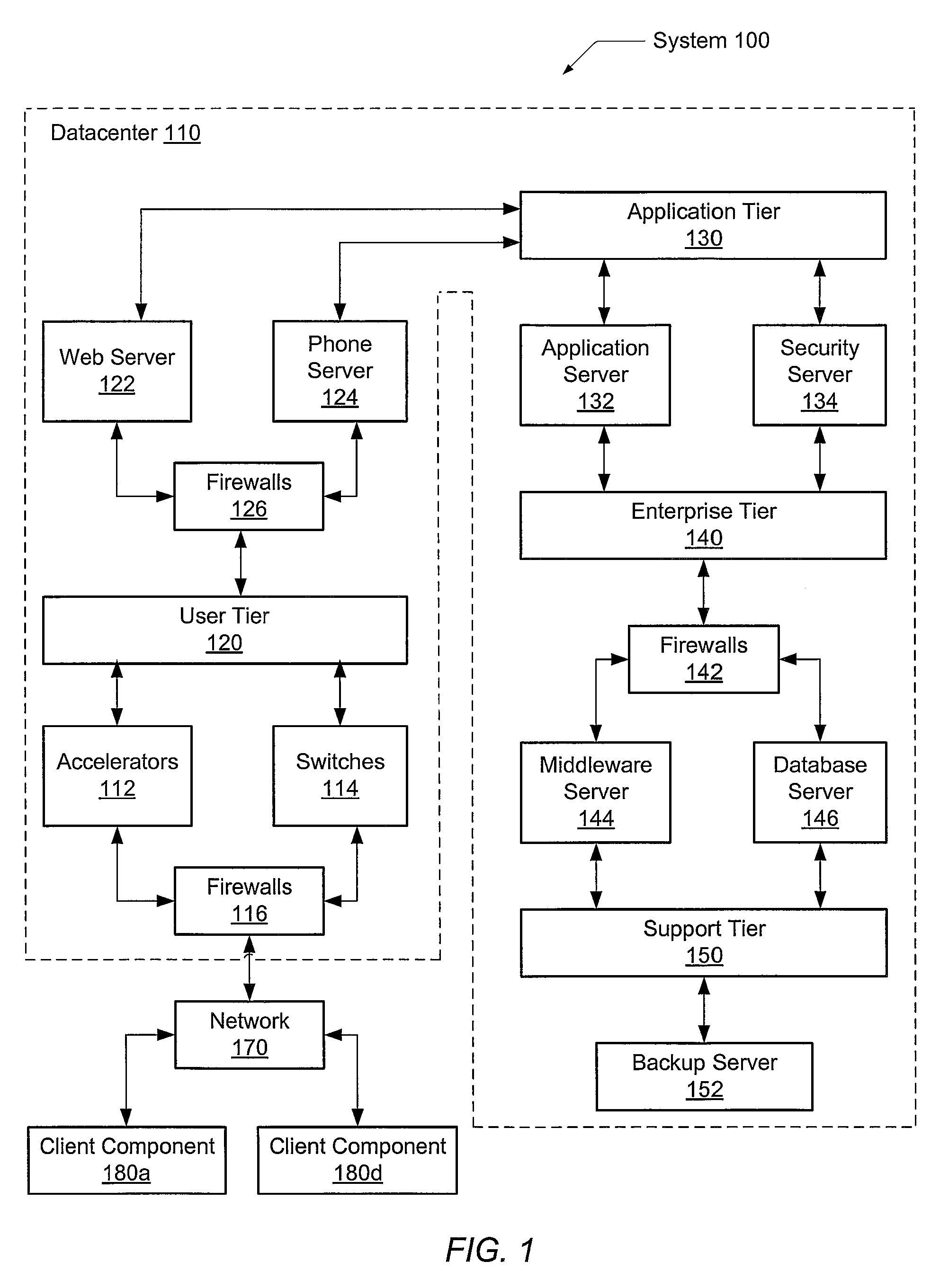

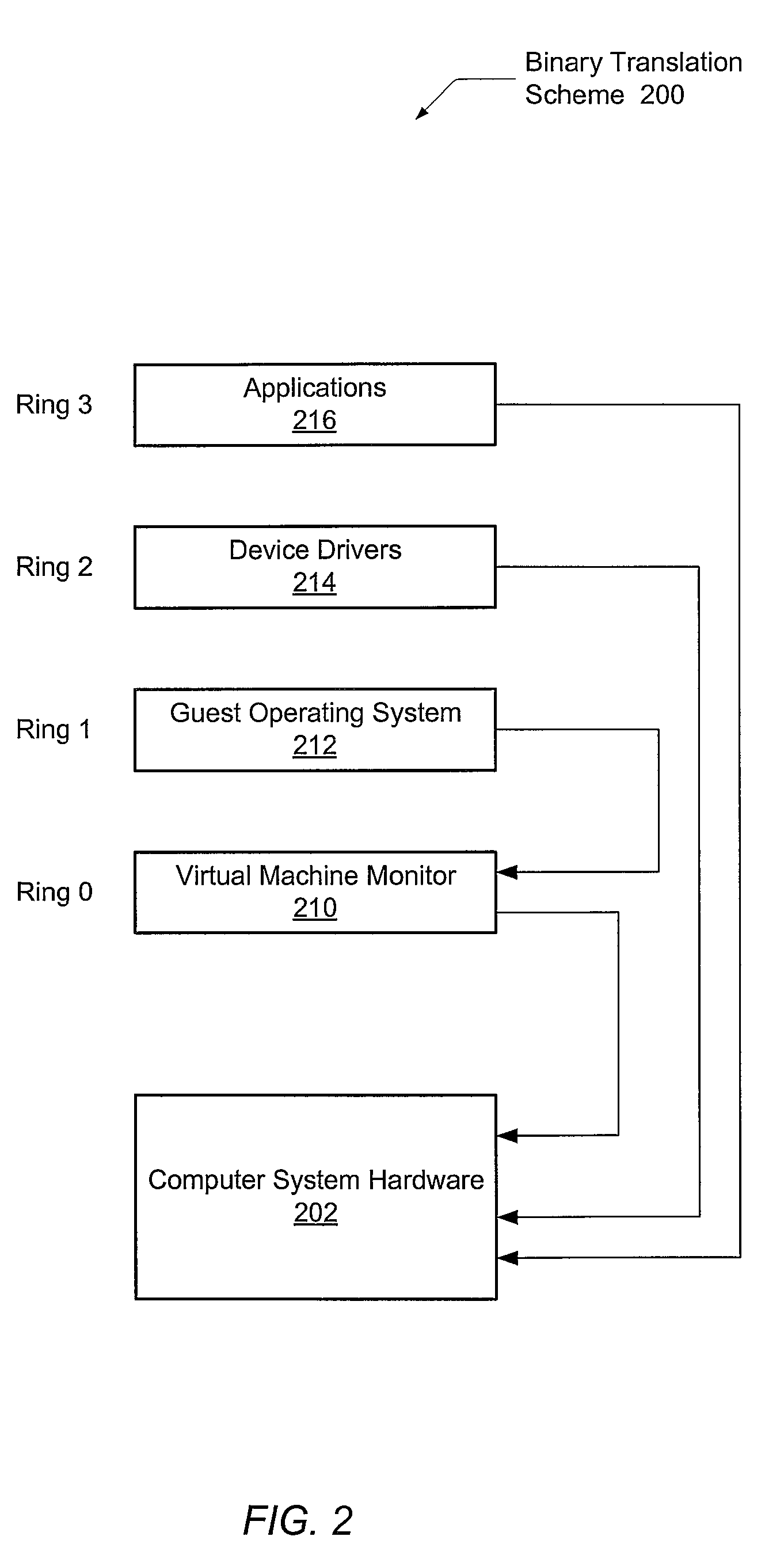

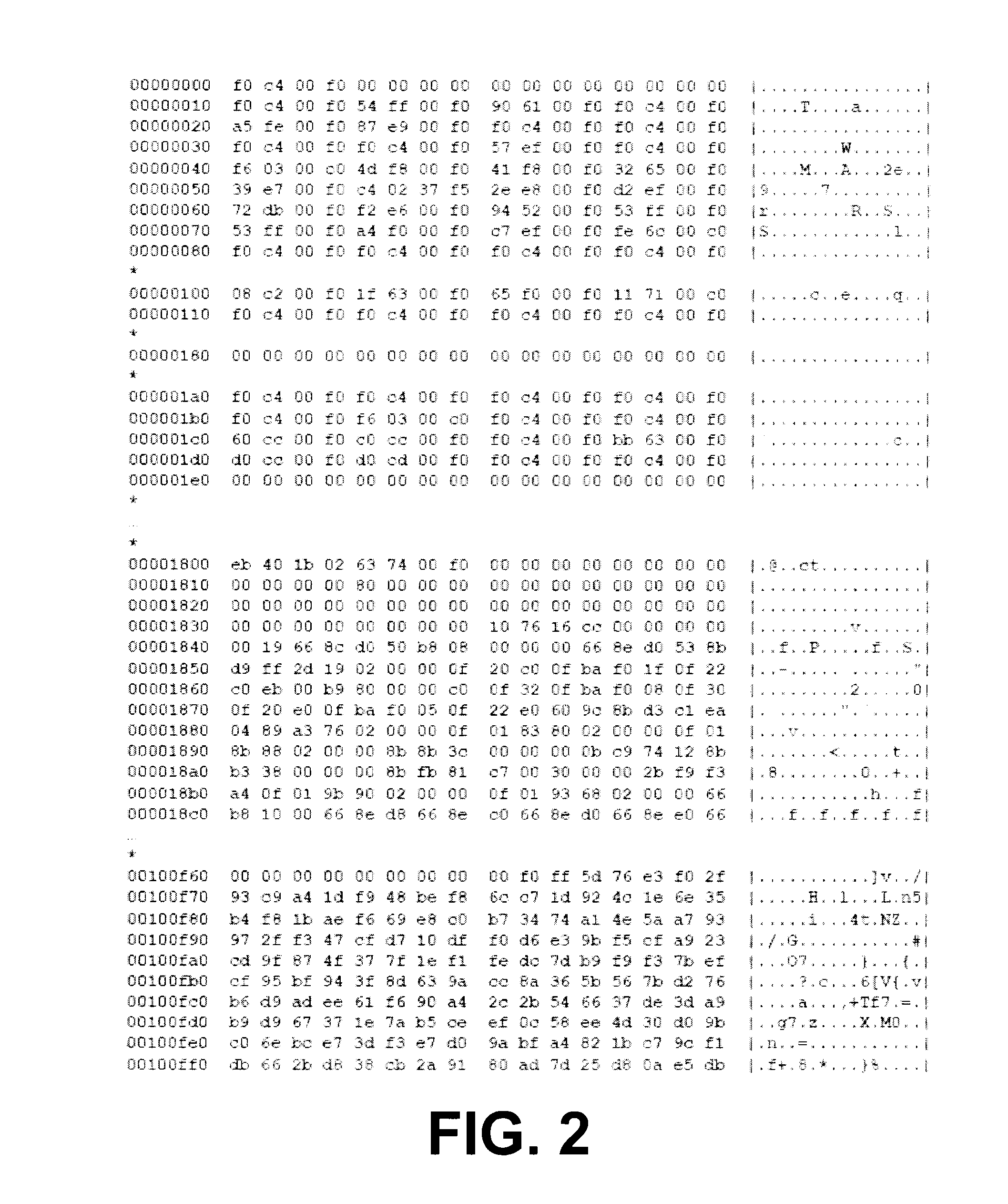

Security driver for hypervisors and operating systems of virtualized datacenters

A system and method for efficient security protocols in a virtualized datacenter environment are contemplated. In one embodiment, a system is provided comprising a hypervisor coupled to one or more protected virtual machines (VMs) and a security VM. Within a private communication channel, a split kernel loader provides an end-to-end communication between a paravirtualized security device driver, or symbiont, and the security VM. The symbiont monitors kernel-level activities of a corresponding guest OS, and conveys kernel-level metadata to the security VM via the private communication channel. Therefore, the well-known semantic gap problem is solved. The security VM is able to read all of the memory of a protected VM, detect locations of memory compromised by a malicious rootkit, and remediate any detected problems.

Owner:CA TECH INC

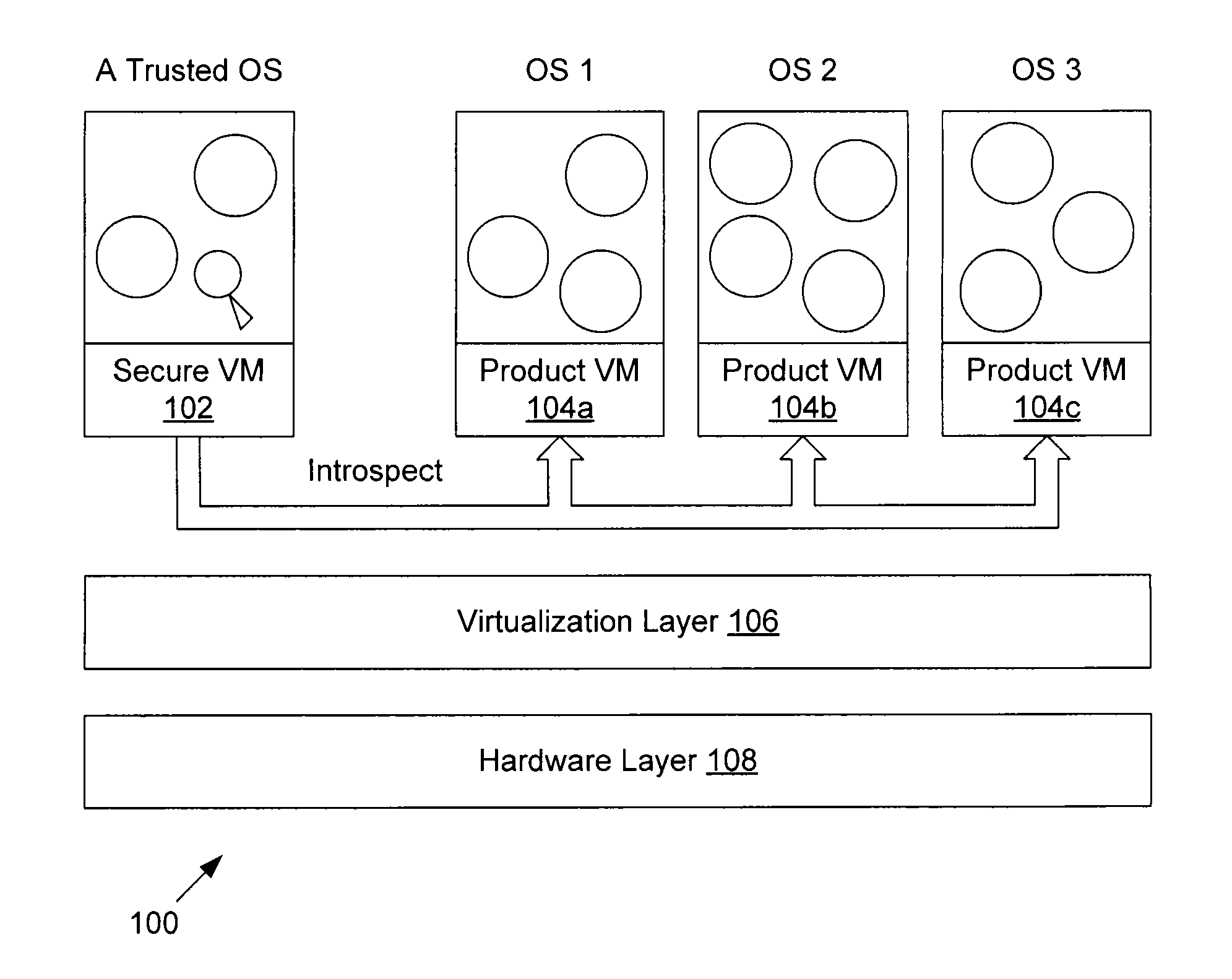

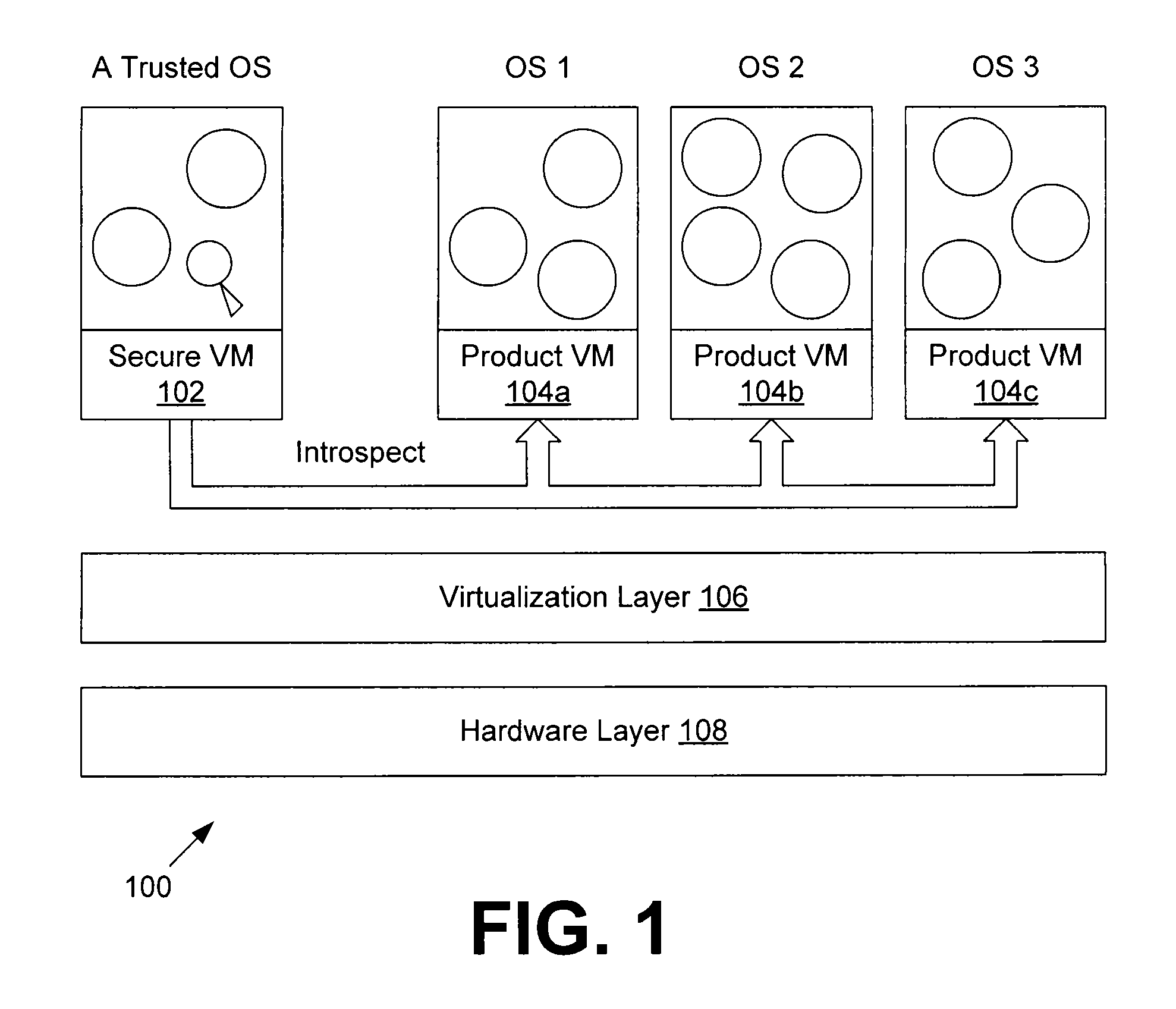

Automatically bridging the semantic gap in machine introspection

ActiveUS20150033227A1Error detection/correctionPlatform integrity maintainanceSemantic gapSoftware engineering

Disclosed are various embodiments that facilitate automatically bridging the semantic gap in machine introspection. It may be determined that a program executed by a first virtual machine is requested to introspect a second virtual machine. A system call execution context of the program may be determined in response to determining that the program is requested to introspect the second virtual machine. Redirectable data in a memory of the second virtual machine may be identified based at least in part on the system call execution context of the program. The program may be configured to access the redirectable data. In various embodiments, the program may be able to modify the redirectable data, thereby facilitating configuration, reconfiguration, and recovery operations to be performed on the second virtual machine from within the first virtual machine.

Owner:BOARD OF RGT THE UNIV OF TEXAS SYST

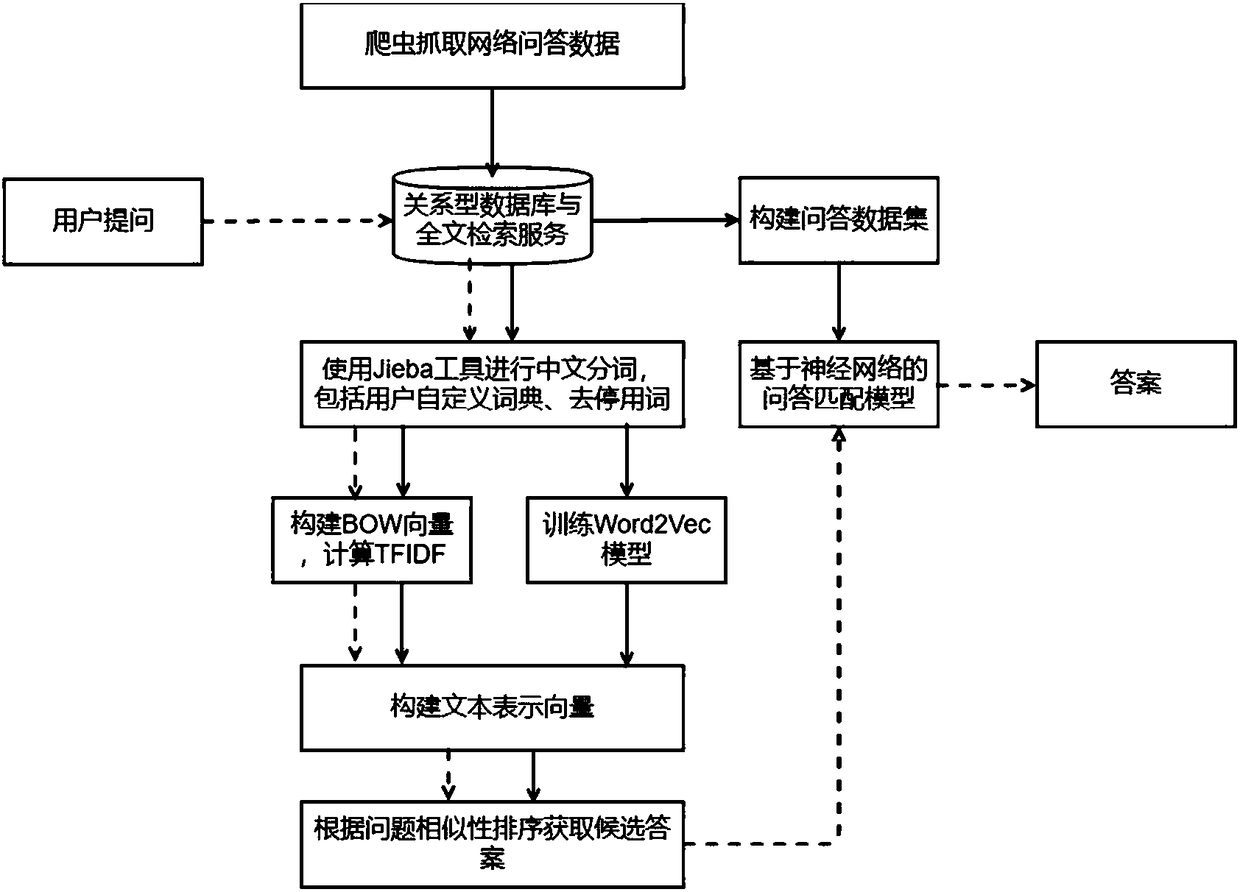

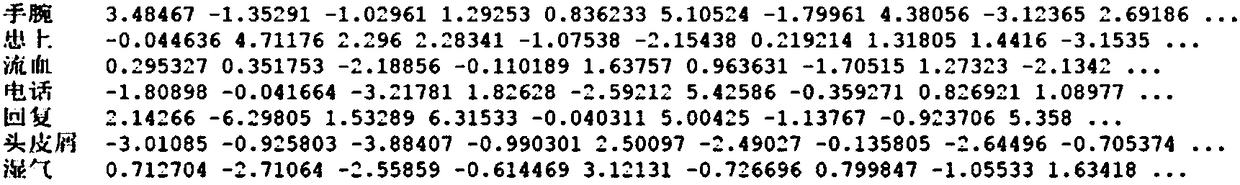

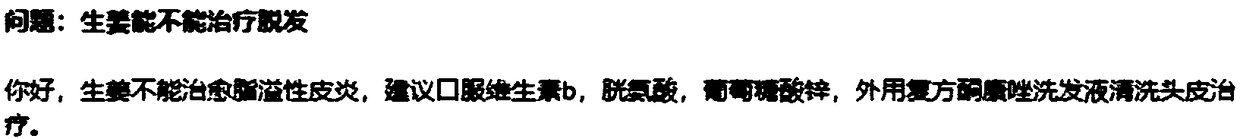

Automatic question-answering method based on deep learning

InactiveCN108345585AAddressing the Semantic GapImprove accuracySemantic analysisNeural learning methodsWeb siteData source

The invention discloses an automatic question-answering method based on deep learning, and aims to provide an algorithm-based fully-automatic question-answering scheme for a user. According to the method, question and answer pairs crawled from websites are used as data sources, and questions with more complicated forms can be answered. According to the method, on the basis of traditional similar-question retrieval, a BOW model, a TFIDF model and a Word2Vec model are utilized to represent text contents of questions as vectors, similar questions are resorted and screened out through calculatingsimilarity between vectors, semantic knowledge can be introduced, the semantic gulf problem in traditional question retrieval processes can be solved, and validity of candidate answers can be improved. In addition, based on deep learning, the method utilizes a neural network model, which is obtained by training, for matching scoring on a question and the candidate answers, and can automatically extract high-layer matching features between the question and the answers, automatically give an answer of the question, improve accuracy of an automatic question-answering system, reduce manual intervention at the same time, and reduce system development costs.

Owner:ZHEJIANG UNIV

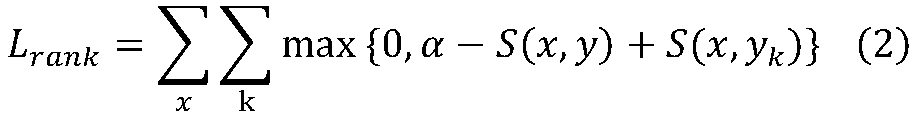

Automatic image annotation method based on deep learning and canonical correlation analysis

The invention discloses an automatic image annotation method based on deep learning and canonical correlation analysis. The method includes: using a depth Boltzmann machine to extract the high-level feature vectors of images and annotation words, selecting multiple Bernoulli distribution to fit annotation word samples, and selecting Gaussian distribution to fit image features; performing canonical correlation analysis on the high-level features of the images and the annotation words; calculating the Mahalanobis distance between to-be-annotated images and training set images in canonical variable space, and performing weighted calculation according to the distance to obtain high-level annotation word features; generating image annotation words through mean field estimation. The depth Boltzmann machine comprises I-DBM and T-DBM which are respectively used for extracting the high-level feature vectors of the images and the annotation words. Each of the I-DBM and the T-DBM sequentially comprises a visible layer, a first hidden unit layer and a second hidden unit layer from bottom to top. By the method, the problem of 'semantic gap' during image semantic annotation can be solved effectively, and annotation accuracy is increased.

Owner:NAVAL AVIATION UNIV

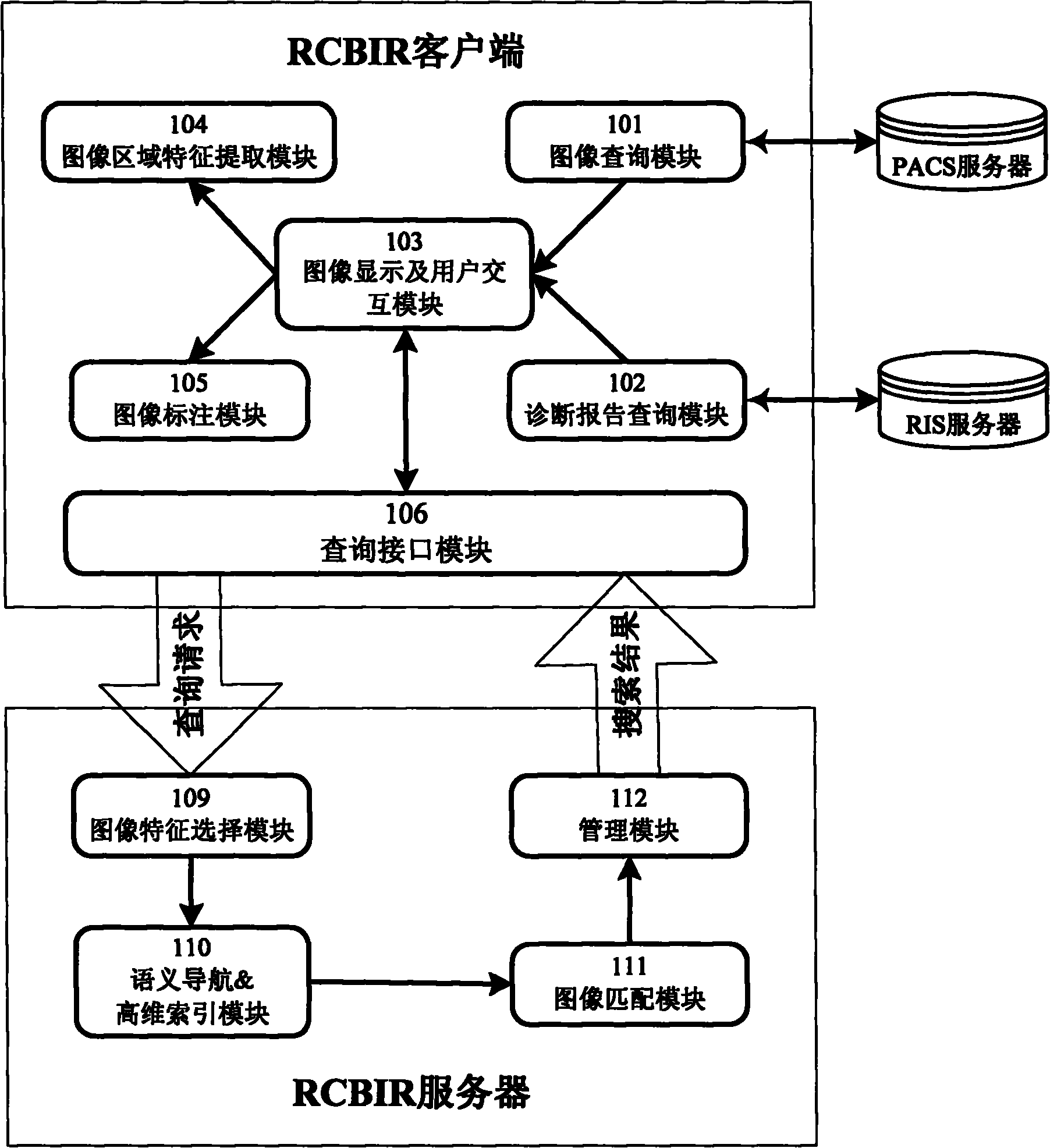

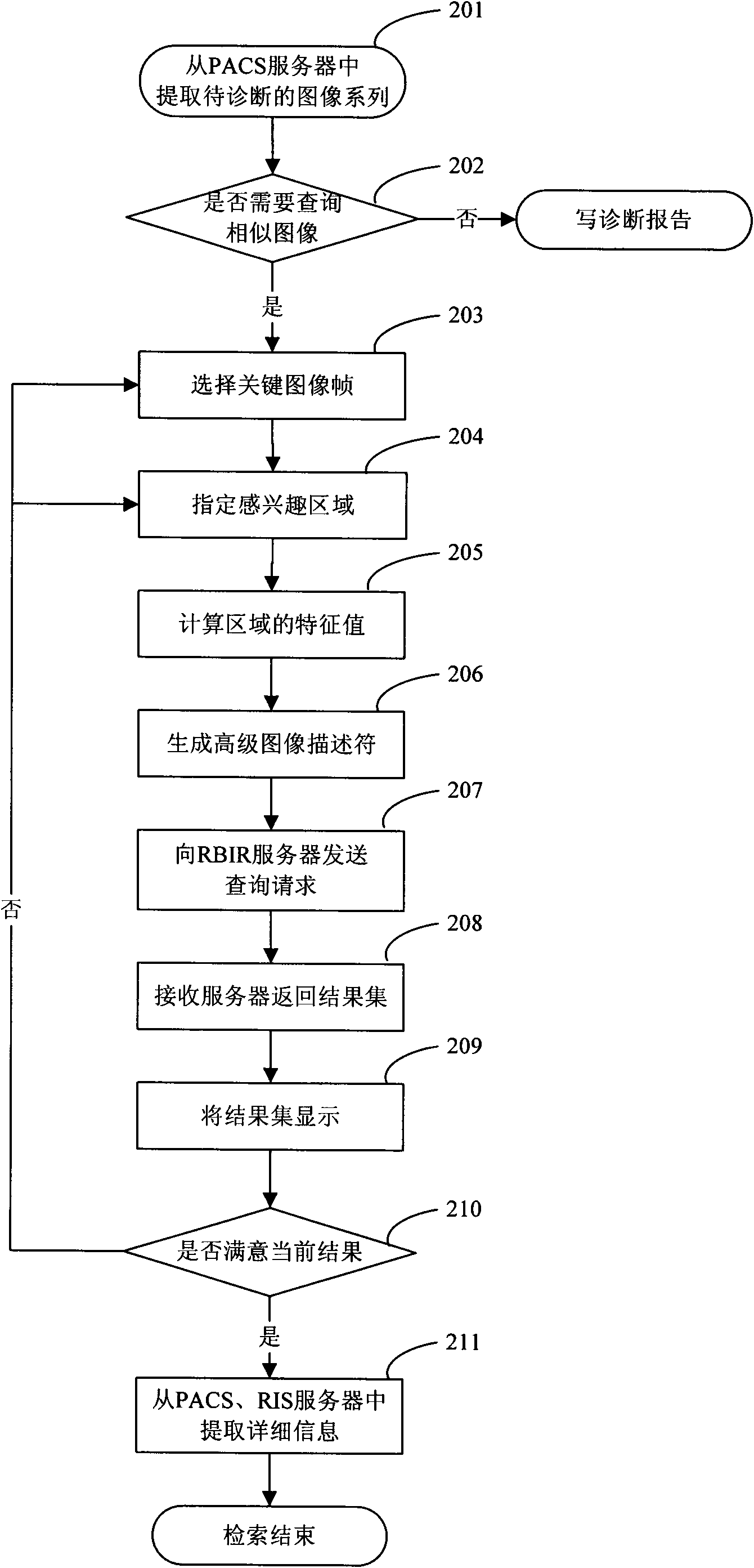

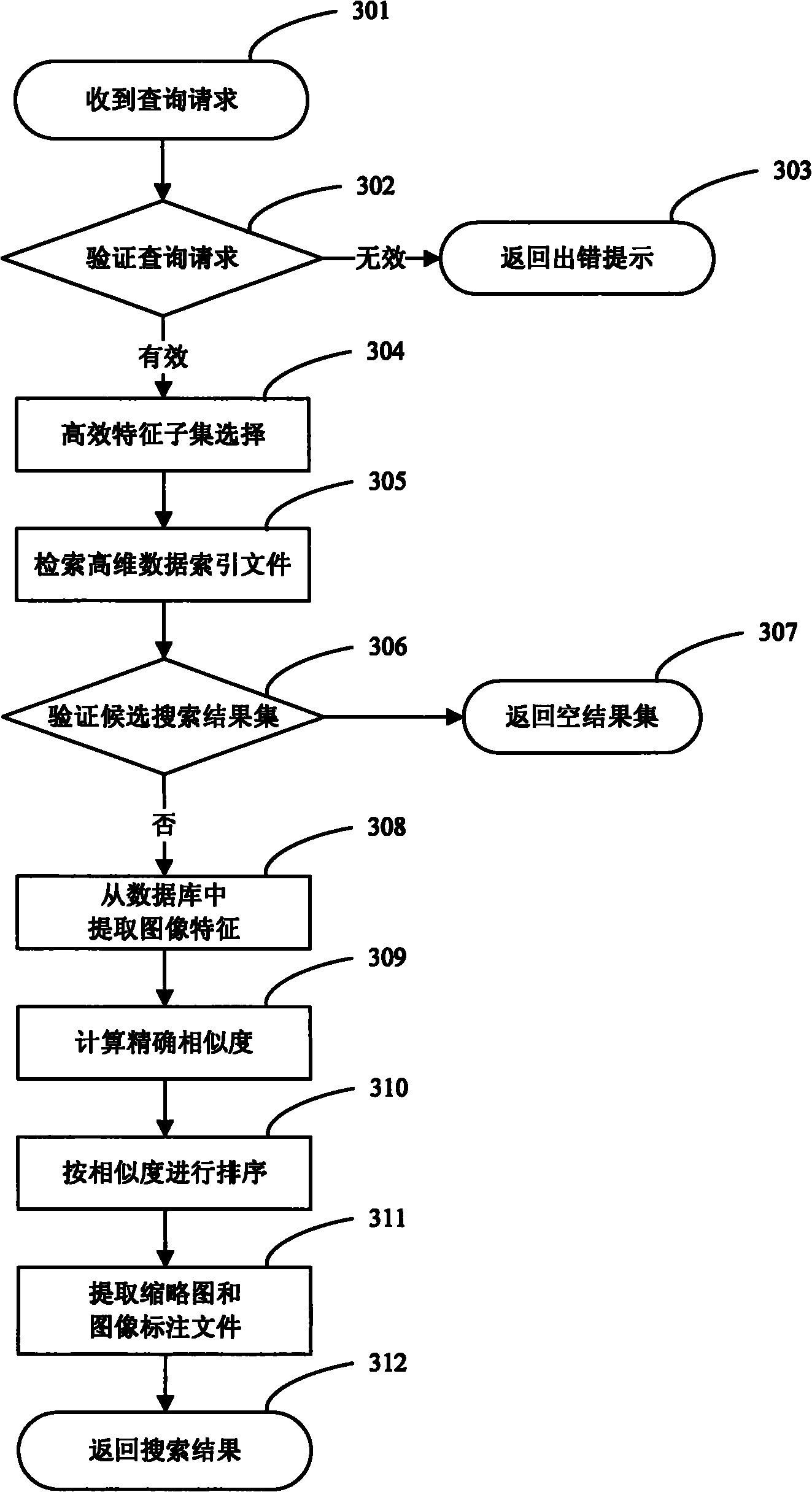

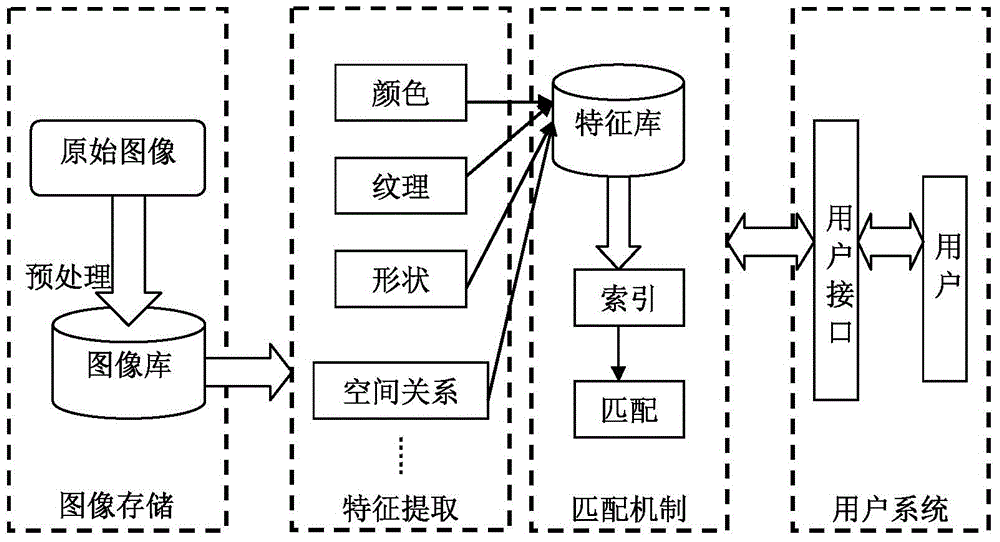

Retrieval system based on multi-lesion region characteristic and oriented to medical image database

InactiveCN102156715APrecise positioningQuick searchSpecial data processing applicationsMedical imaging dataSemantic gap

The invention discloses a retrieval system based on a multi-lesion region characteristic and oriented to a medical image database. In the invention, the image is omni-directionally described by advanced image description symbols, including image region contents, pathological representation and anatomical position information, thus realizing the quick positioning and matching method on a plurality of lesion regions; by adopting the method of combining the semantic navigation and high-dimension data index, the quick retrieval of large-scale image characteristic value can be realized, the retrieval efficiency is improved, and the 'semantic gap' phenomenon existing in the traditional image retrieval technology is relieved to certain degree. The retrieval system sufficiently utilizes the historical image and diagnosis data in a PACS (picture archiving and communication system) database, can be used as an effective measure for computer aided diagnosis, and can be widely applied to the fields such as medical clinics, researches and teaching.

Owner:SHANGHAI INST OF TECHNICAL PHYSICS - CHINESE ACAD OF SCI

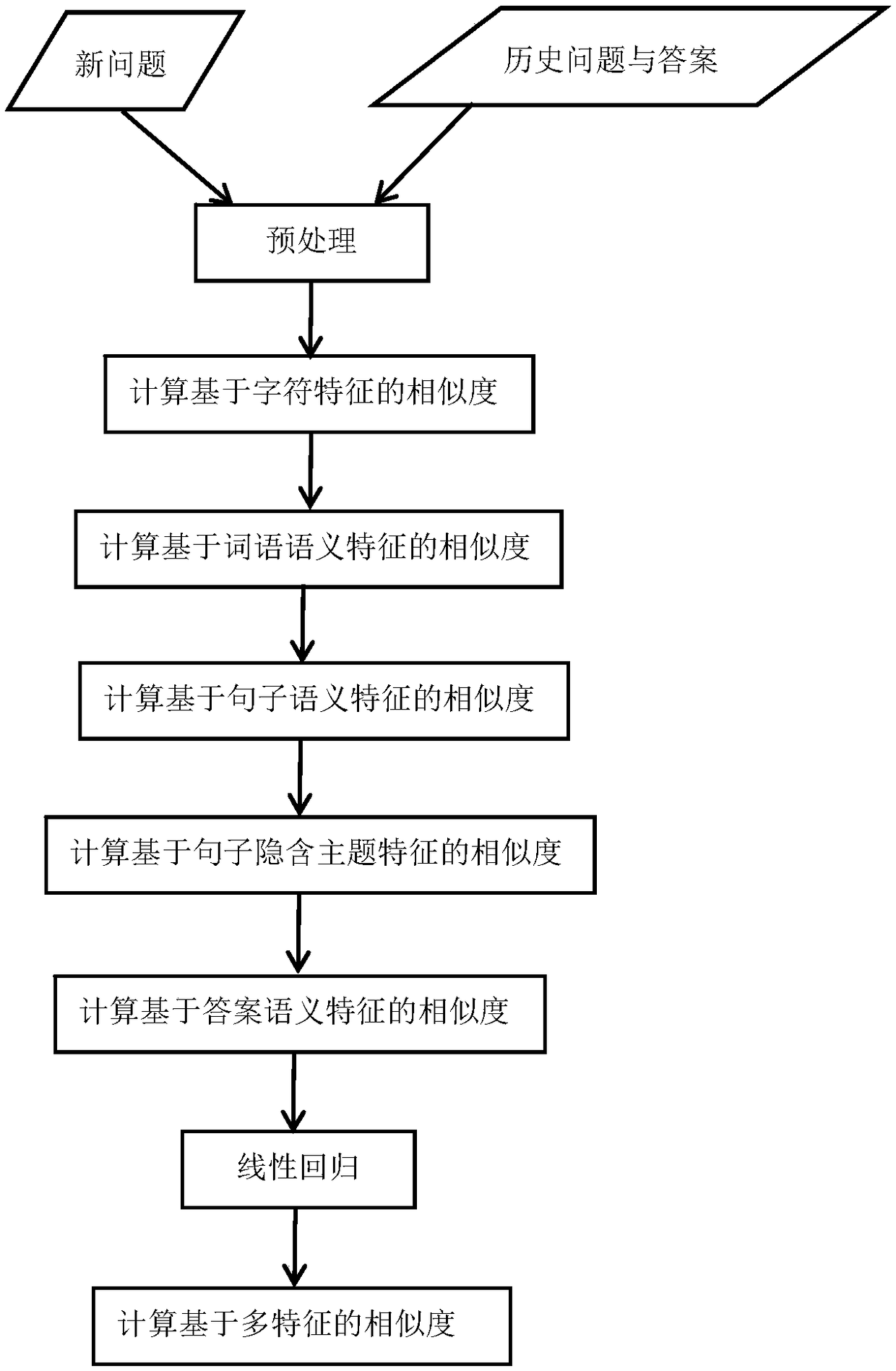

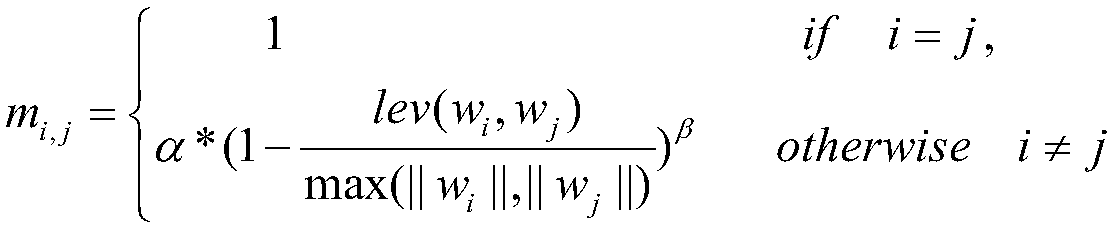

Problem similarity calculation method based on a plurality of features

ActiveCN109344236AIncrease diversityImprove generalization abilityDigital data information retrievalSemantic analysisSemantic gapSemantic feature

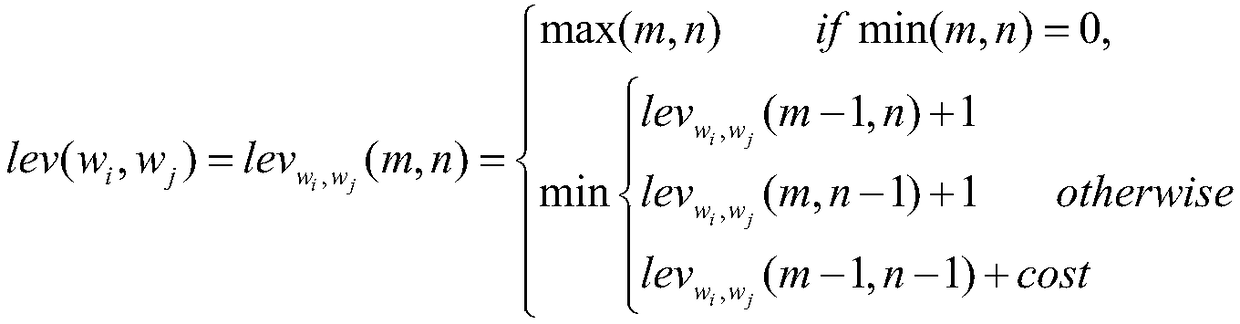

The invention discloses a problem similarity calculation method based on a plurality of features, includes steps: For the input new question sentence, Compared with the stored historical questions andcorresponding answers, the similarities between the new questions and the historical questions are calculated based on character features, semantic features of words, semantic features of sentences,implied topic features of sentences and semantic features of answers. The final similarity is the product of the above five similarities and their corresponding weights, which are trained by linear regression method. The invention adopts a plurality of features to increase the diversity of sample attributes, and improves the generalization ability of the model. At that same time, the soft cosine distance is utilized to convert the TF-IDF is fused with editing distance, word semantics and other information, which overcomes the semantic gap between words and improves the accuracy of similarity calculation.

Owner:JINAN UNIVERSITY

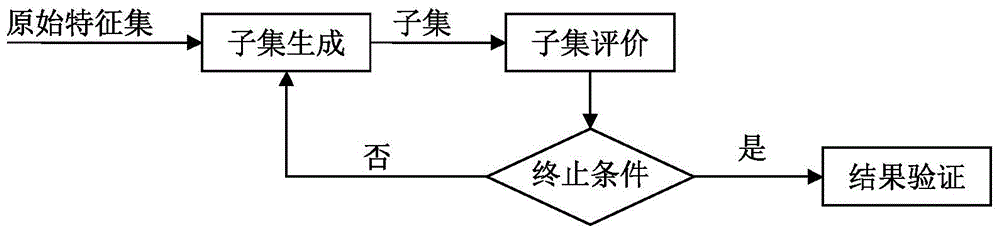

Image retrieval method based on object detection

ActiveCN107256262AReduce distractionsImprove accuracyCharacter and pattern recognitionSpecial data processing applicationsSemantic gapImaging Feature

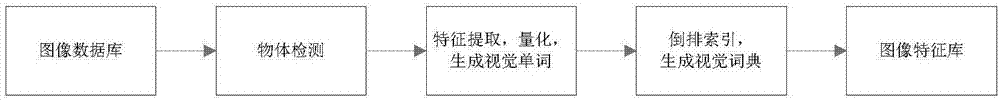

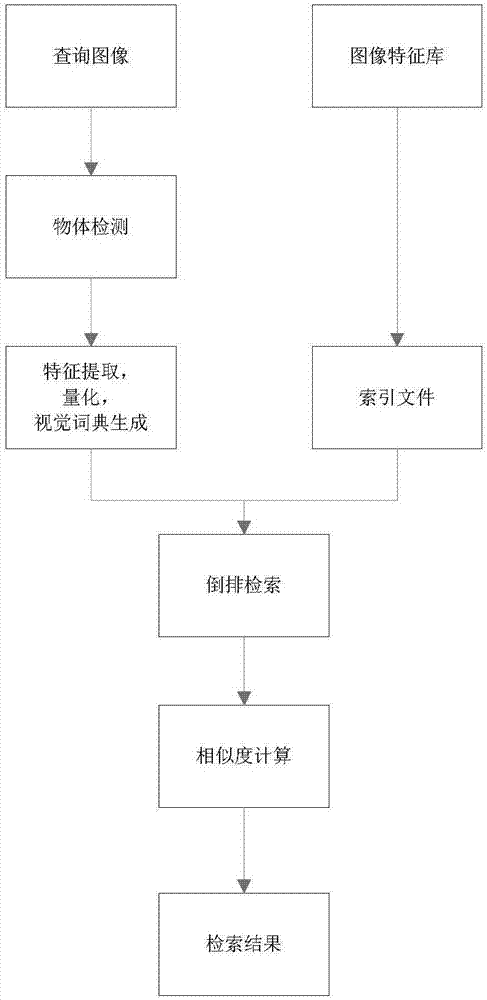

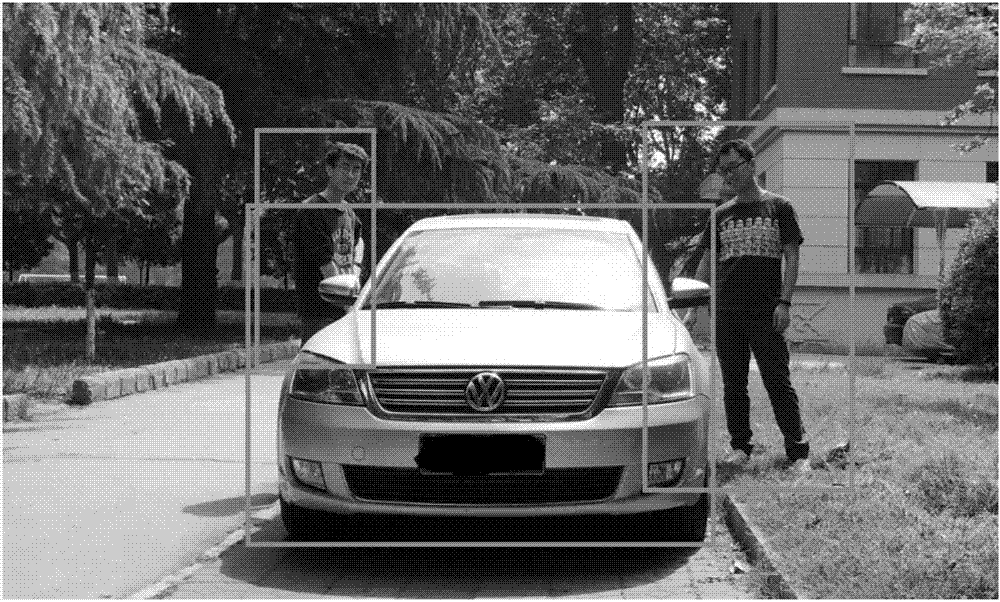

The invention discloses an image retrieval method based on object detection. The method is used for solving the problem that multiple objects in an image are not retrieved respectively during image retrieval. According to the implementation process of the method, object detection is performed on an image in an image database, and one or more objects in the image are detected; SIFT features and MSER features of the detected objects are extracted and combined to generate feature bundles; a K mean value and a k-d tree are adopted to make the feature bundles into visual words; visual word indexes of the objects in the image database are established through reverse indexing, and an image feature library is generated; and an object detection method is used to make objects in a query image into visual words, similarity compassion is performed on the visual words of the query image and the visual words of the image feature library, and the image with the highest score is output to serve as an image retrieval result. Through the method, the objects in the image can be retrieved respectively, background interference and image semantic gaps are reduced, and accuracy, retrieval speed and efficiency are improved; and the method is used for image retrieval on a specific object in the image, including a person.

Owner:XIDIAN UNIV

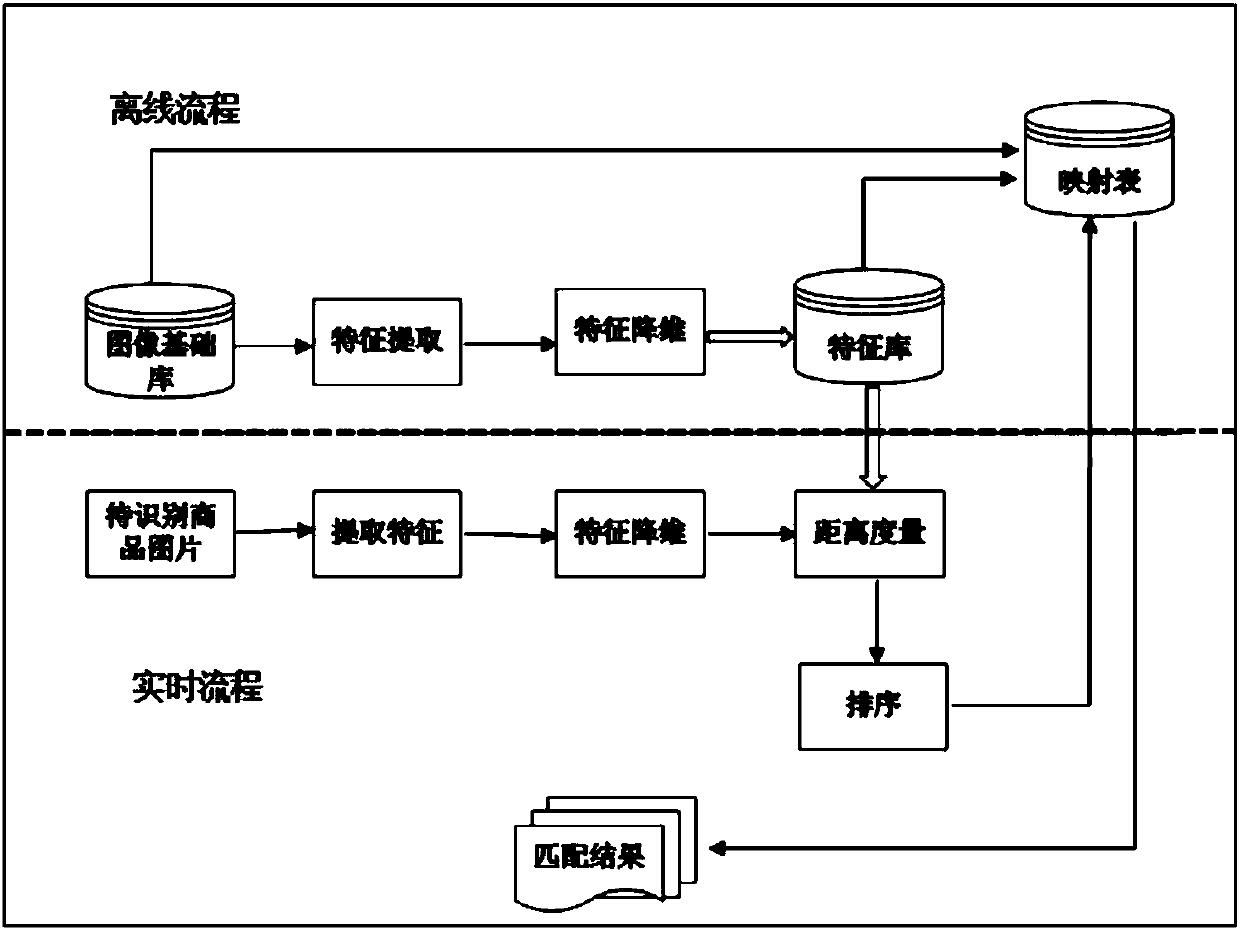

Transfer learning-based multi-view commodity image retrieval and identification method

InactiveCN107908685APerfect "generalizationReduce occupancyCharacter and pattern recognitionBuying/selling/leasing transactionsLearning basedSemantic gap

The invention discloses a transfer learning-based multi-view commodity image retrieval and identification method. The method comprises the steps of 1, establishing a multi-view image basic library according to a commodity list, performing fine adjustment on a pre-trained deep residual error network by using a small amount of commodity images through a transfer learning technology, extracting features of the image basic library by using the network, performing dimension reduction on the features, constructing a feature library, and finally according to corresponding relationships among the feature library, the image basic library and commodity types, establishing a mapping table; 2, after to-be-identified commodity images are obtained, extracting features of the images by using the networkand performing dimension reduction; and 3, performing distance measurement on the features of the to-be-identified commodity images and the features of the images in the basic library, taking the mostsimilar image with the shortest distance as a matching result, and through the mapping table, obtaining commodity type names of the to-be-identified commodity images. The features with strong representation capabilities can be automatically extracted; a semantic gap is further broken through; and the retrieval efficiency and the identification precision are improved by only utilizing a small amount of image basic libraries and low-dimensional features.

Owner:XI AN JIAOTONG UNIV

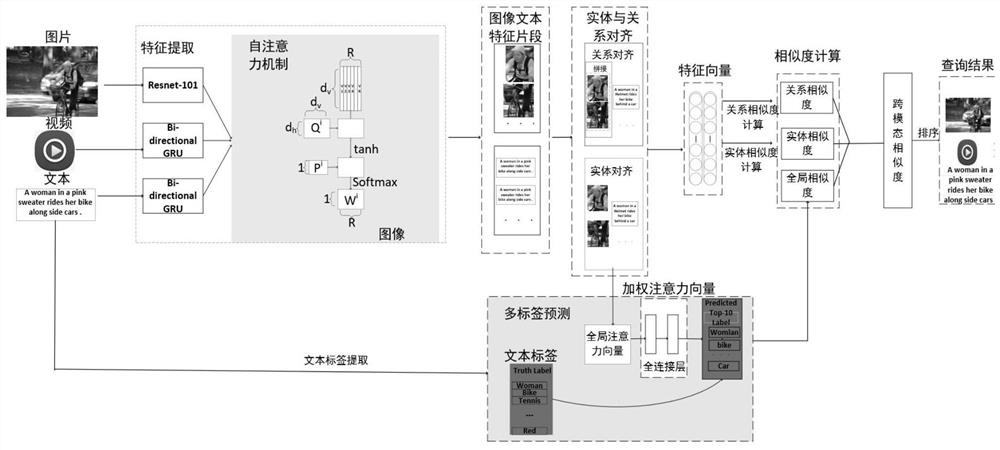

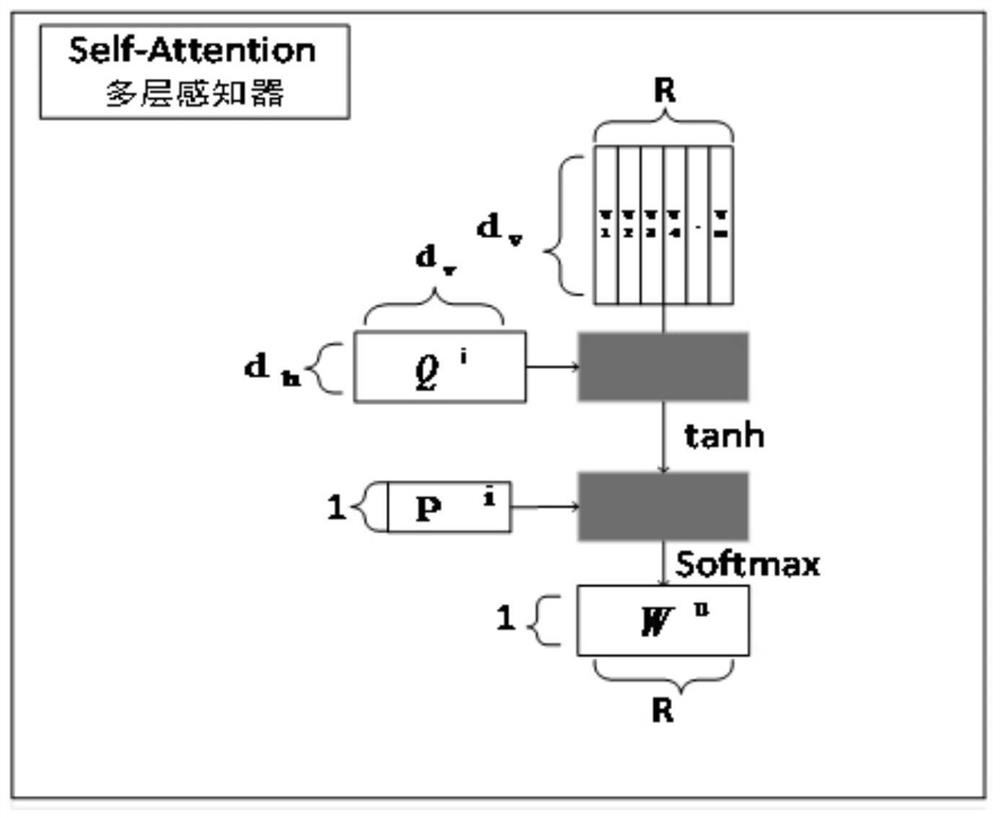

Cross-modal retrieval method based on multilayer semantic alignment

ActiveCN112966127AMake up for inaccurate detectionImprove relationshipMultimedia data queryingEnergy efficient computingSemantic alignmentSemantic gap

The invention discloses a cross-modal retrieval method based on multilayer semantic alignment, which comprises the following steps of: acquiring a remarkable fine-grained region by utilizing a self-attention mechanism, promoting the alignment of entities and relationships among modal data, providing an image text matching strategy based on semantic consistency, extracting semantic tags from a given text data set, and performing global semantic constraint through multi-label prediction, so that more accurate cross-modal association is obtained. Therefore, the problem of cross-modal data semantic gap is solved.

Owner:BEIFANG UNIV OF NATITIES

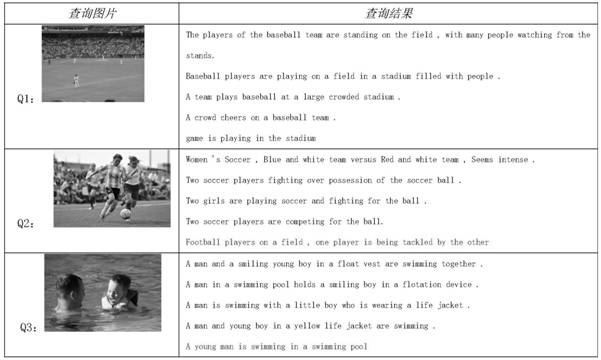

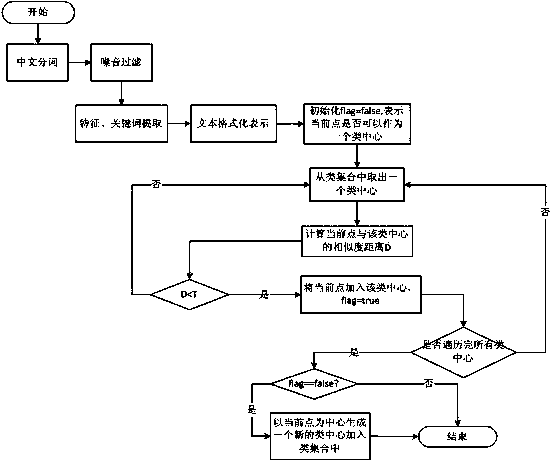

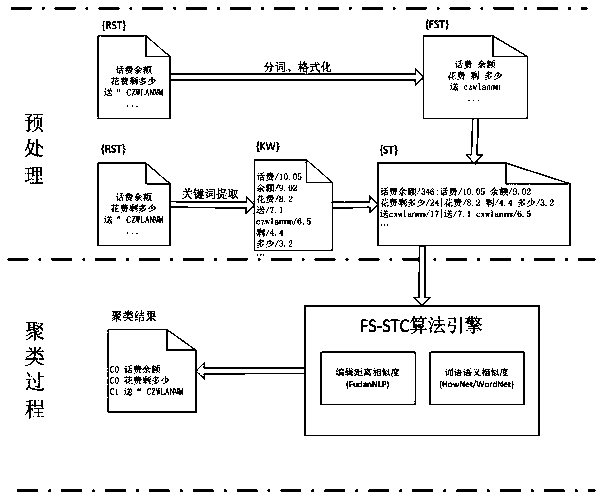

Dialogue short text clustering method based on form and semantic similarity

InactiveCN104008166AImprove clustering effectSpecial data processing applicationsText database clustering/classificationSemantic gapEdit distance

The invention discloses a dialogue short text clustering method based on form and semantic similarity. The form similarity adopts character string editing distance similarity, and the semantic similarity is based on HowNet and WordNet knowledge bases; weight values of the short text and words are introduced during the calculation of the short text similarity. The dialogue short text clustering method based on the form and semantic similarity solves the problems of certain irregular and input wrong noise information, synonyms and semantic gaps included in the dialogue short text to a certain extent, and consequently, relatively great improvement is realized in comparison with a word bag vector based clustering method.

Owner:EAST CHINA NORMAL UNIV

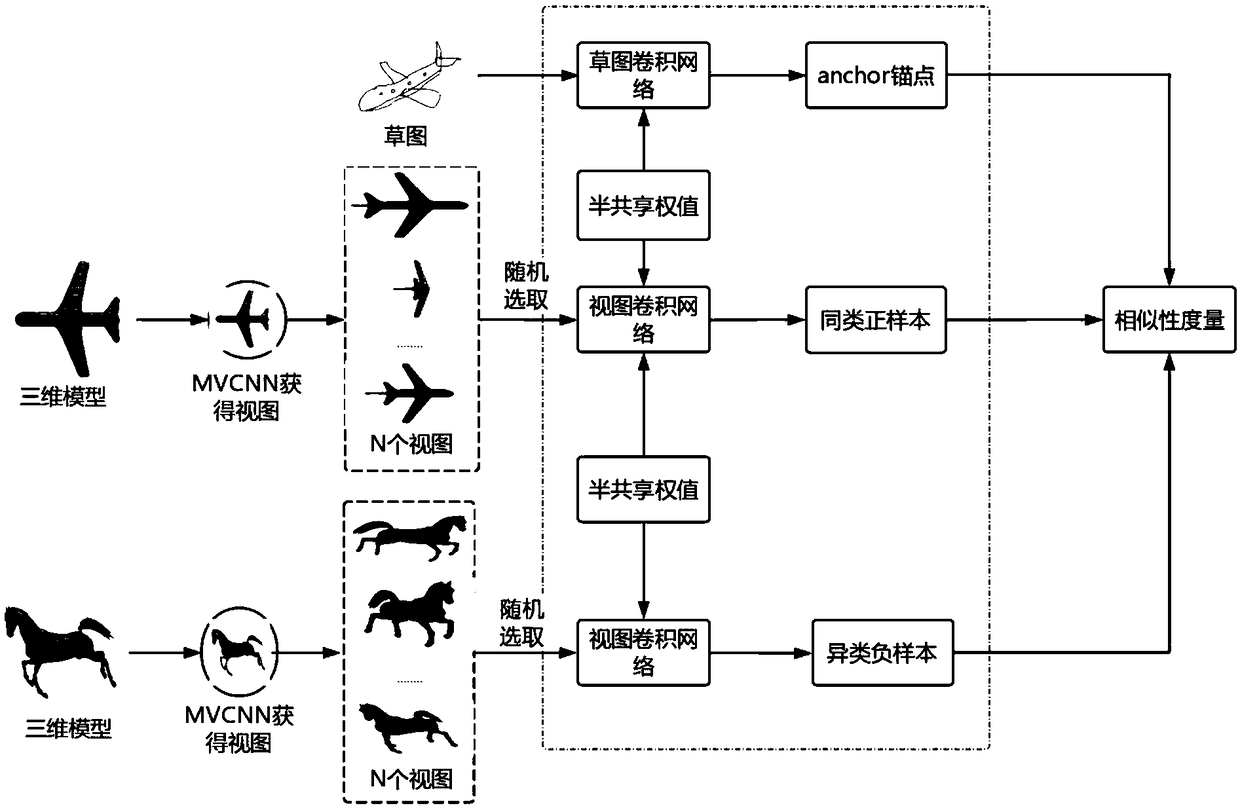

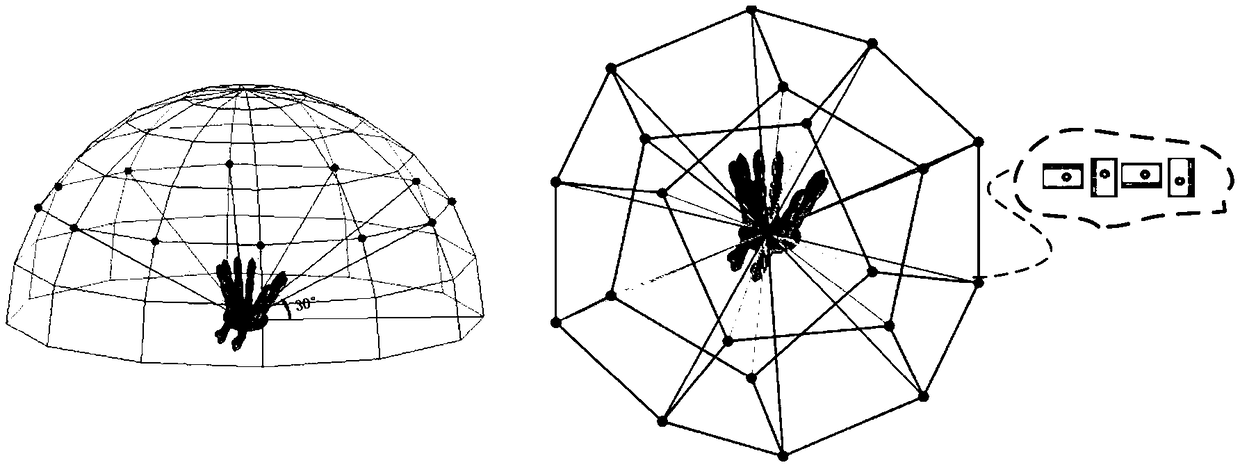

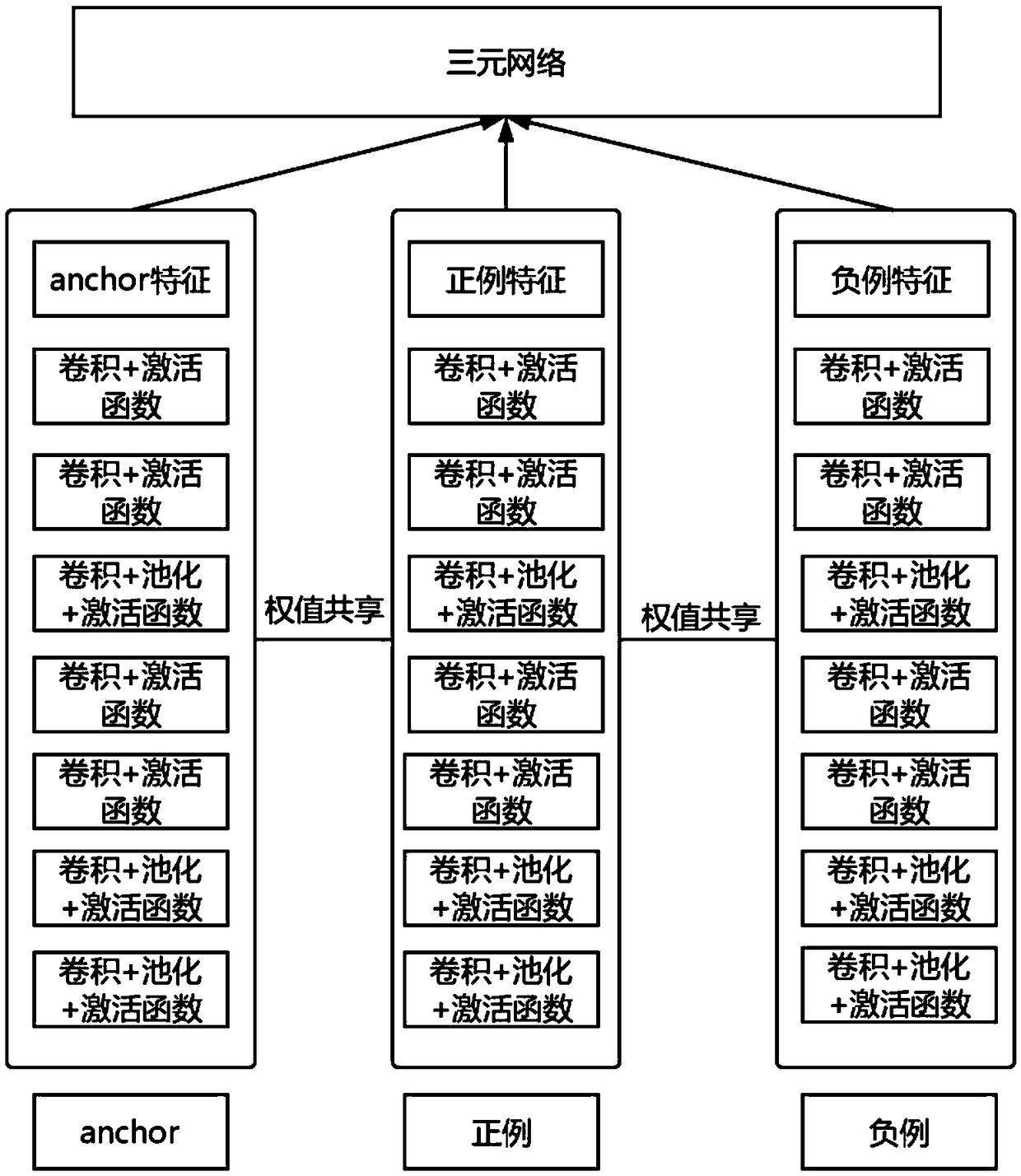

A method for cross-modal retrieval of three-dimensional model based on sketch retrieval

ActiveCN109213884AReduce semantic differencesReduce distanceStill image data indexingCharacter and pattern recognitionPattern recognitionSemantic gap

ion discloses a cross-modal retrieval method based on a sketch retrieval three-dimensional model, comprising the following steps: 1) selecting a data set; 2) rendering the three-dimensional model datain the dataset, and obtaining a plurality of two-dimensional views from one three-dimensional model, wherein the two-dimensional views are used for representing the three-dimensional model, and the sketch data is uniformly sized into 256 plus 256; 3) training a sketch classifier and a view classify; 4) constructing the depth metric learning space: taking the sketch and view of the original dataset as the input of the network, taking the parameters of the sketch and view classifier as the parameters of the network, and achieving the retrieval goal through training. The invention is mediated byviews, minimizing the semantic gap between the 3D model and the sketch. A novel network structure of sketch retrieval 3D model is constructed, which achieves very good classification accuracy on SHREC2013 and SHREC2014. The experimental results fully show that our network framework can retrieve the corresponding 3D model from the sketch.

Owner:BEIFANG UNIV OF NATITIES

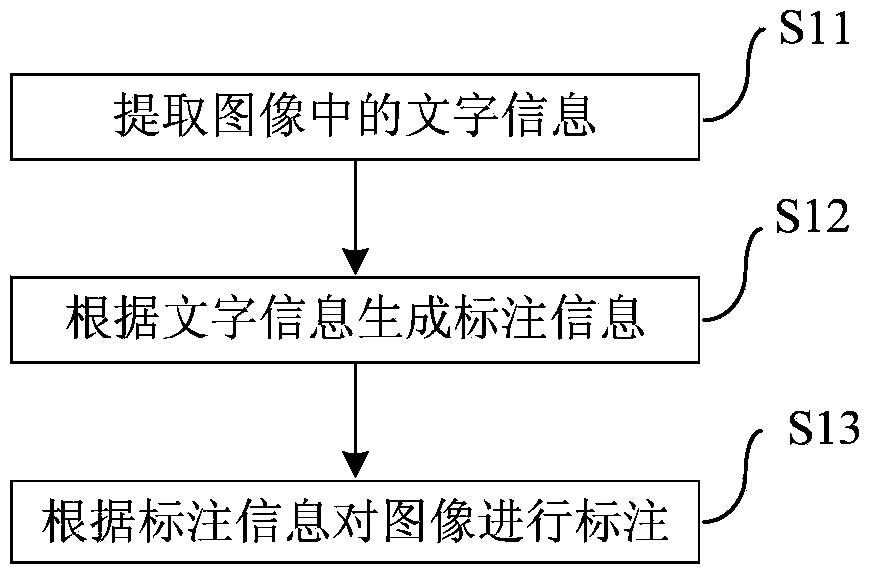

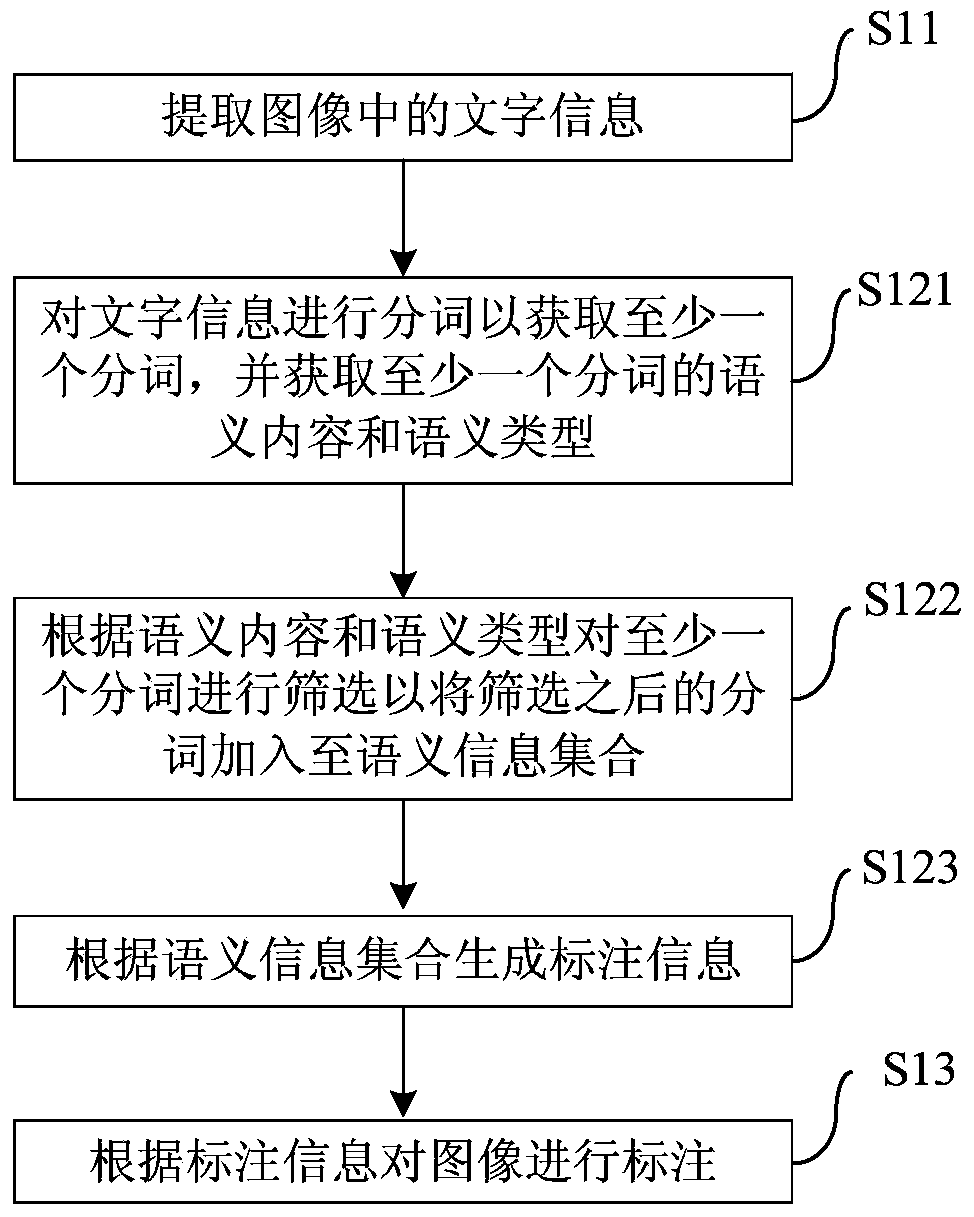

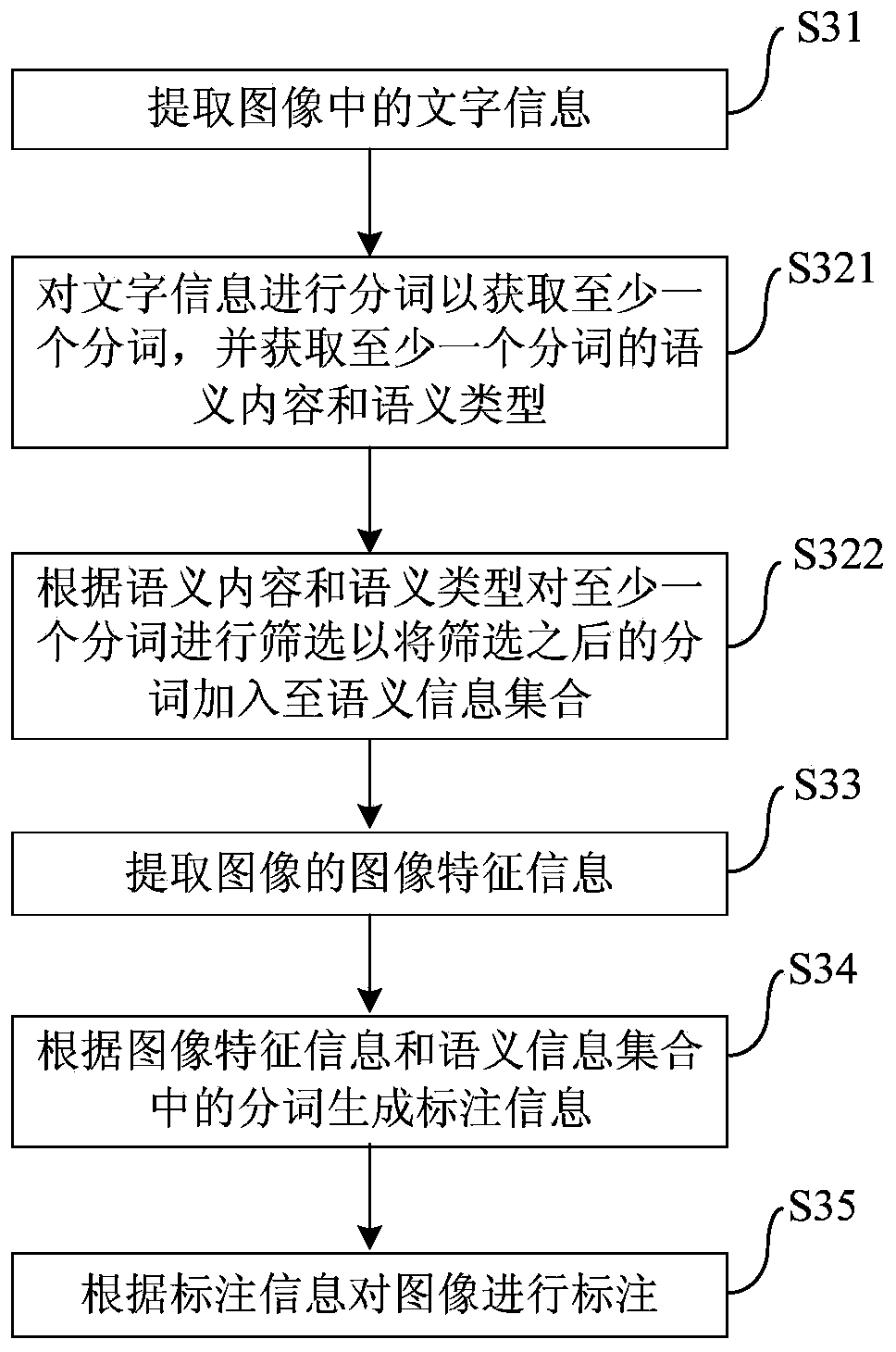

Semantic annotation method, device and client for image

InactiveCN103632388AImprove stabilityImprove consistencyEditing/combining figures or textSemantic gapComputer vision

The invention provides a semantic annotation method, a semantic annotation device and a client for an image. The method comprises the following steps: extracting word information in an image; generating annotation information according to the word information; annotating the image according to the annotation information. According to the method provided according to the embodiment of the invention, on one hand, a process for manually screening image category training classifiers in a traditional semantic annotation method of the image can be omitted, the labor and the time are saved, a semantic gap between the low-level feature and semantic information of the image is avoided, and the stability and coherence of the semantic annotation of the image are improved; on the other hand, an inherent problem that semantic tags are limited can be overcome, the completeness of a semantic annotator of the image is improved, contents in the image can be accurately described by semantic annotation information, and meanwhile, the semantic annotation speed of the image is increased.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

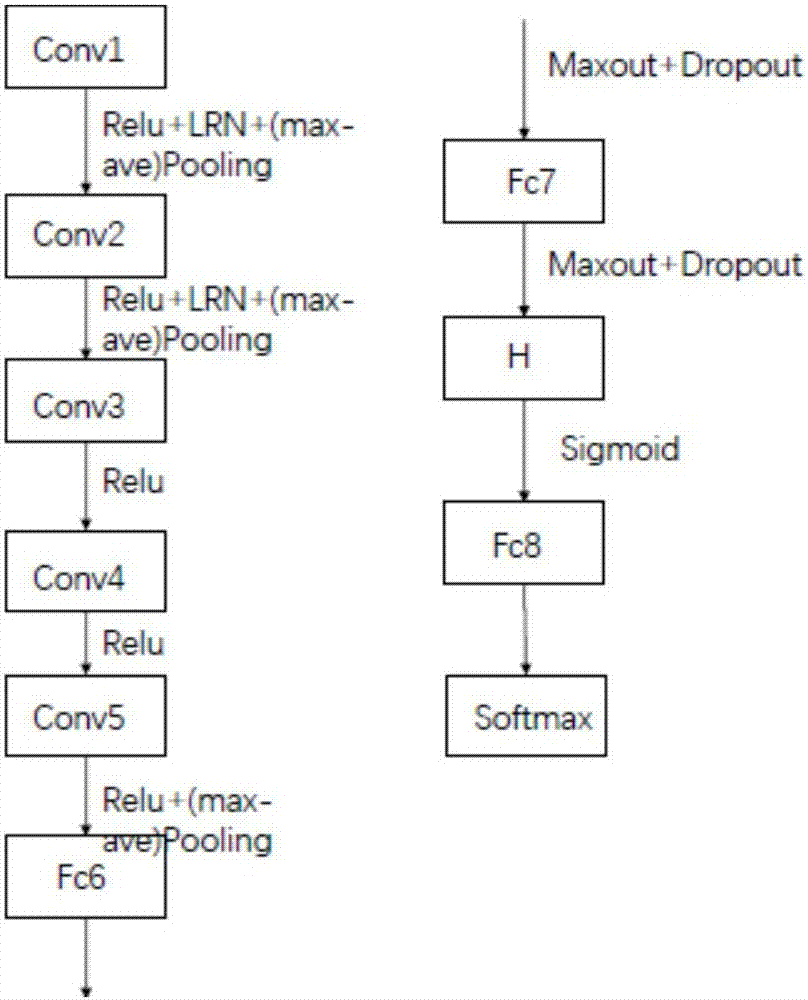

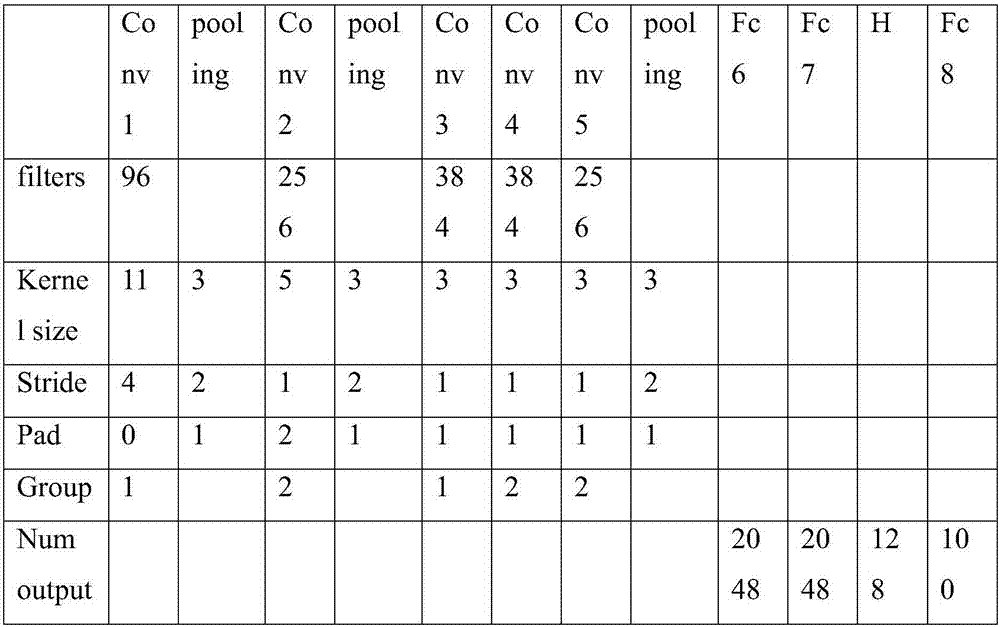

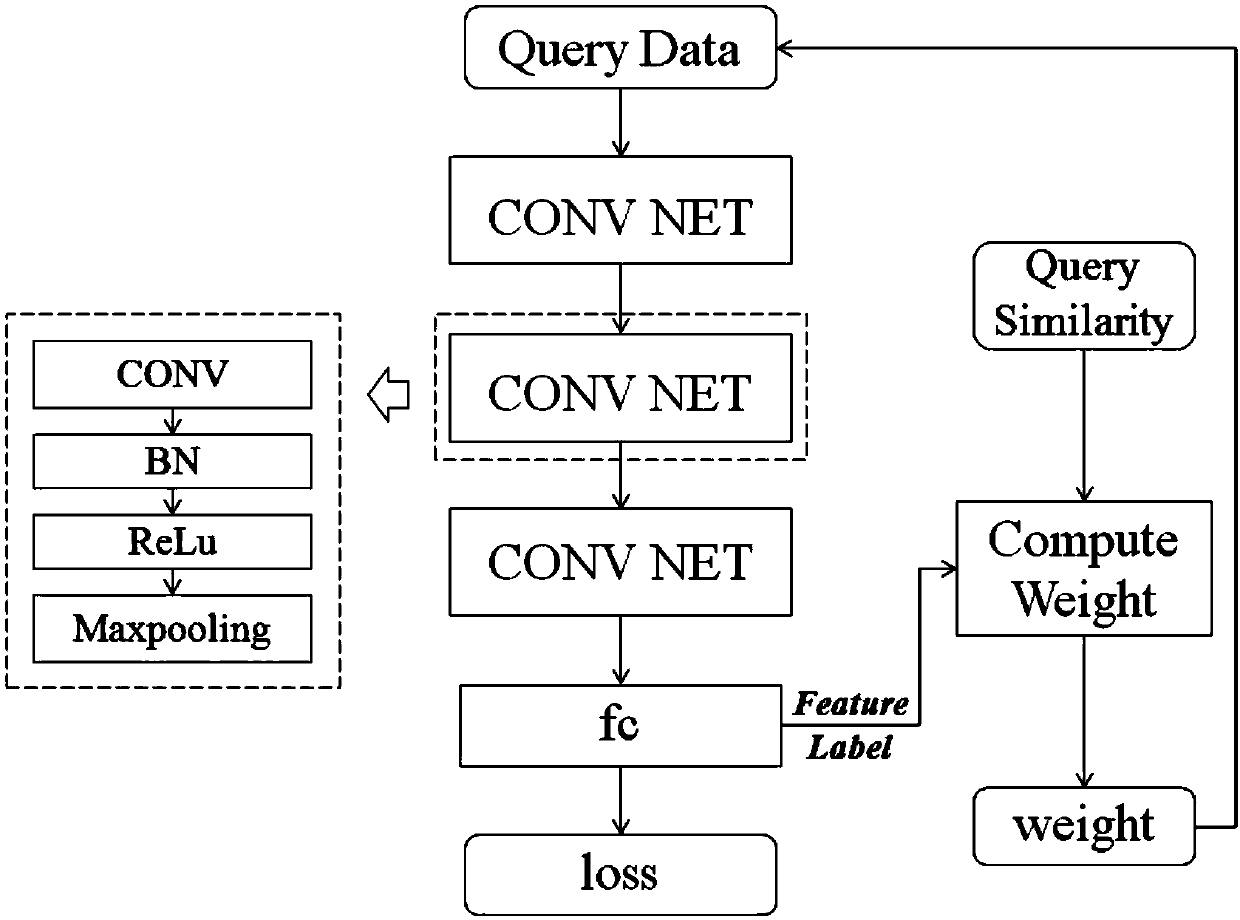

Method for optimizing deep convolutional neural network for image classification

ActiveCN107330446AReduce computational overheadCharacter and pattern recognitionNeural architecturesSemantic gapData set

The invention relates to a method for optimizing a deep convolutional neural network for image classification, which comprises the steps of first, building an image classification convolutional neural network; second, training the image classification convolutional neural network; third, testing the image classification convolutional neural network. The process of testing the image classification convolutional neural network comprises the steps that a preprocessed test data set is fed into a trained network model, and an Accuracy layer of the network outputs an accuracy value according to a probability value outputted by a Softmax layer and a label value of an input layer, wherein the accuracy value is the probability that a test image is correctly classified. According to operations performed in the steps, optimization for the deep convolutional neural network for image classification can be realized. The method provided by the invention for optimizing the deep convolutional neural network for image classification can effectively reduce the semantic gap and is high in classification accuracy.

Owner:ZHEJIANG UNIV OF TECH

Method for automatically annotating remote sensing images on basis of deep learning

ActiveCN103823845AImprove visibilityImprove labeling accuracySpecial data processing applicationsHidden layerFeature vector

The invention discloses a method for automatically annotating remote sensing images on the basis of deep learning. The method for automatically annotating the remote sensing images includes extracting visual feature vectors of the to-be-annotated remote sensing images; inputting the visual feature vectors into a DBM (deep Boltzmann machine) model to automatically annotate the to-be-annotated remote sensing images. The DBM model implemented in the method sequentially comprises a visible layer, a first hidden layer, a second hidden layer and a tag layer from bottom to top, and is acquired by means of training. The method for automatically annotating the remote sensing images has the advantages that the deep Boltzmann machine model implemented in the method comprises the two hidden layers (namely, the first hidden layer and the second hidden layer respectively), accordingly, the problem of 'semantic gaps' in image semantic annotation procedures can be effectively solved by the two hidden layers, and the integral annotation accuracy can be improved.

Owner:ZHEJIANG UNIV

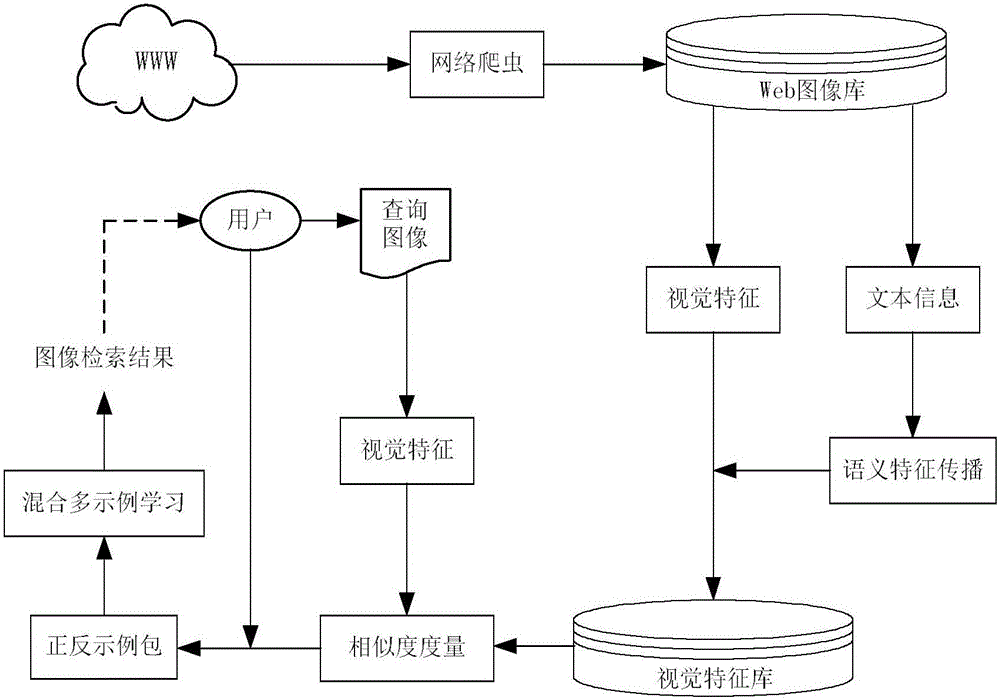

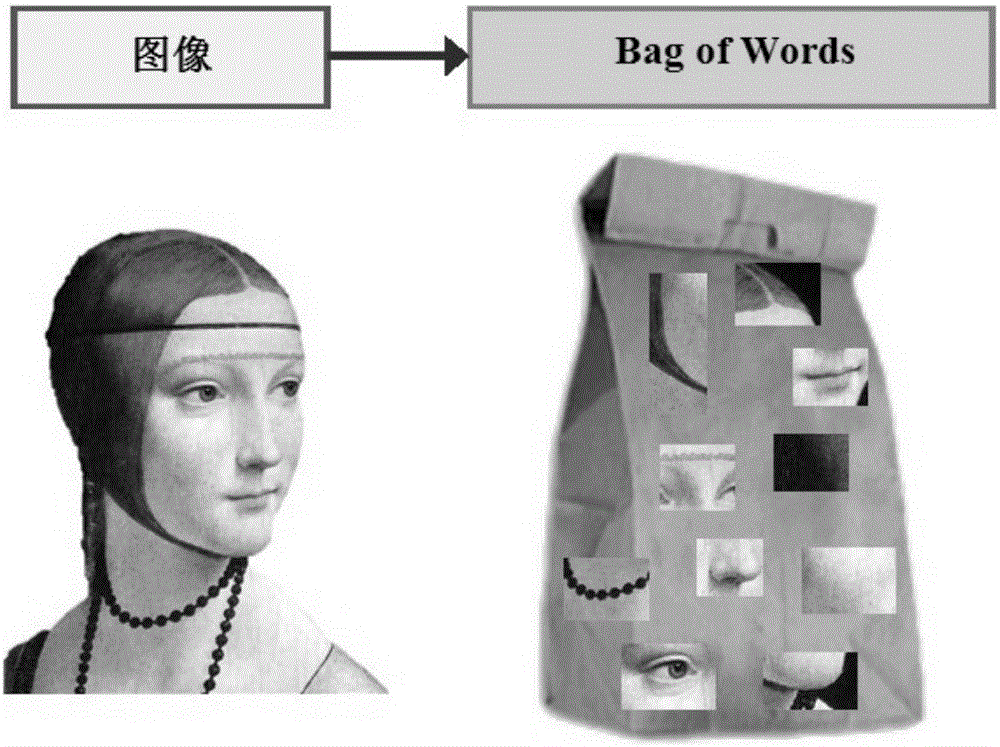

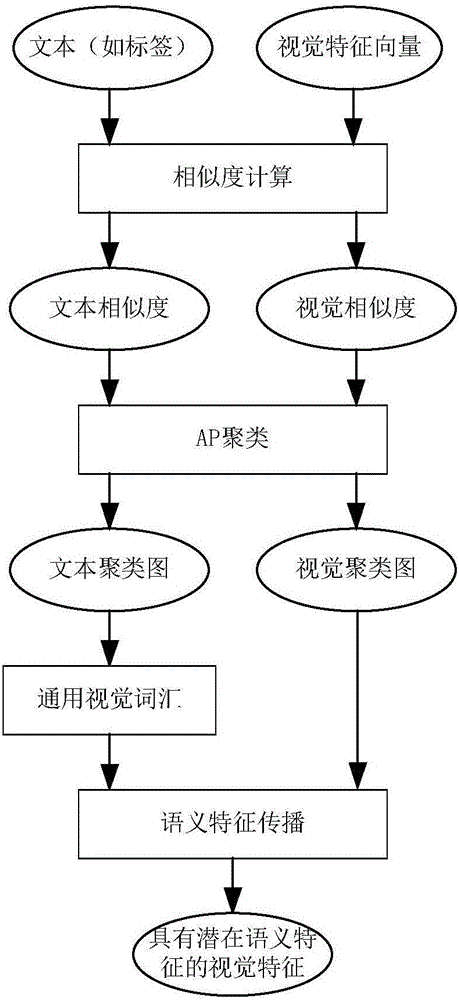

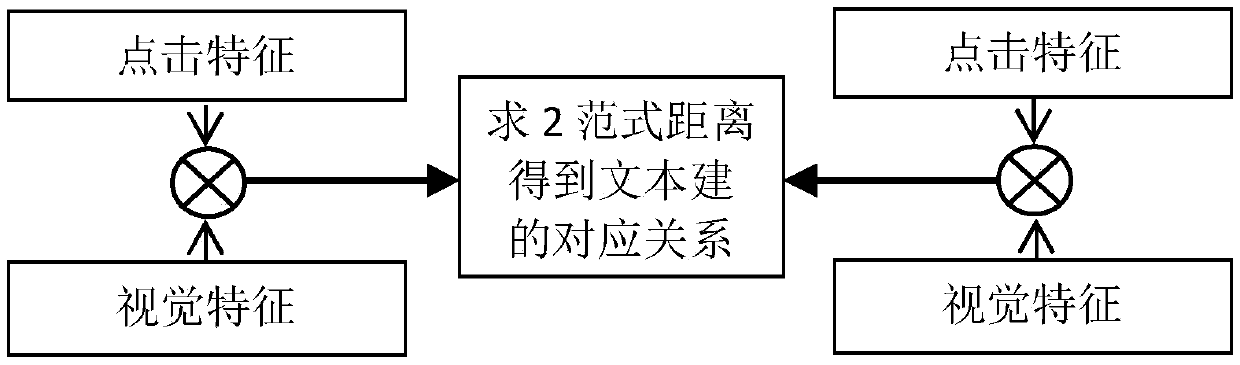

Semantic propagation and mixed multi-instance learning-based Web image retrieval method

ActiveCN106202256AReduce Extraction ComplexityImprove classification performanceCharacter and pattern recognitionSpecial data processing applicationsSmall sampleThe Internet

The invention belongs to the technical field of image processing and particularly provides a semantic propagation and mixed multi-instance learning-based Web image retrieval method. Web image retrieval is performed by combining visual characteristics of images with text information. The method comprises the steps of representing the images as BoW models first, then clustering the images according to visual similarity and text similarity, and propagating semantic characteristics of the images into visual eigenvectors of the images through universal visual vocabularies in a text class; and in a related feedback stage, introducing a mixed multi-instance learning algorithm, thereby solving the small sample problem in an actual retrieval process. Compared with a conventional CBIR (Content Based Image Retrieval) frame, the retrieval method has the advantages that the semantic characteristics of the images are propagated to the visual characteristics by utilizing the text information of the internet images in a cross-modal mode, and semi-supervised learning is introduced in related feedback based on multi-instance learning to cope with the small sample problem, so that a semantic gap can be effectively reduced and the Web image retrieval performance can be improved.

Owner:XIDIAN UNIV

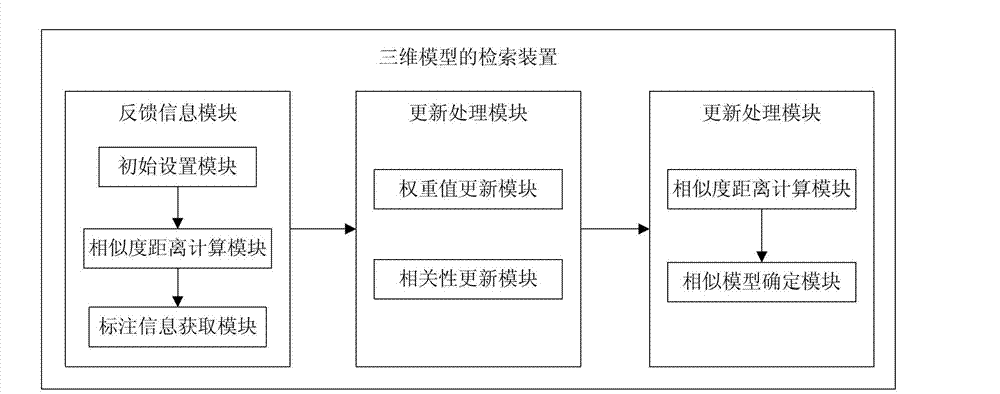

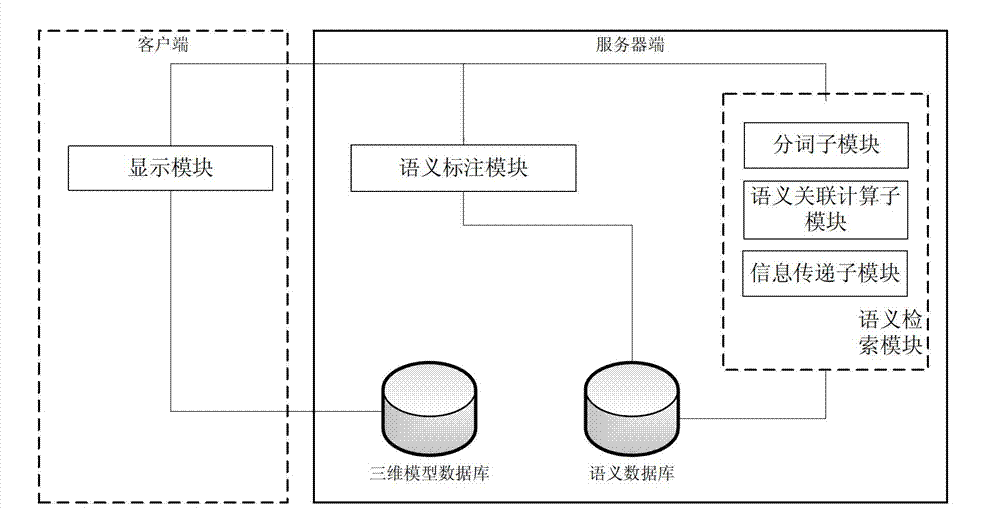

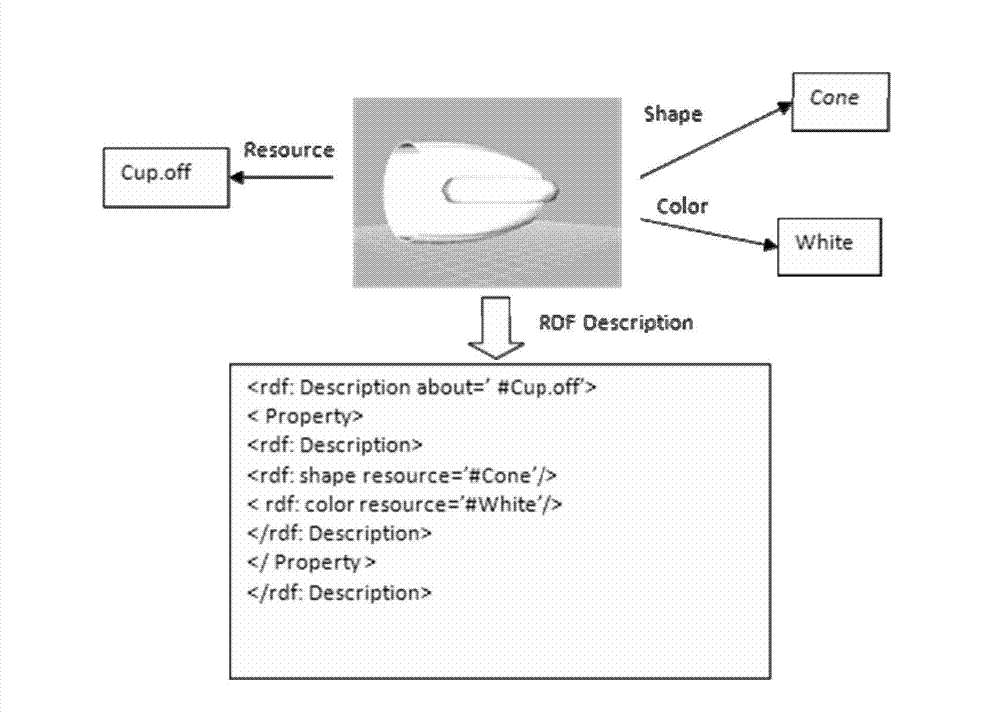

Semantic-based three-dimensional model retrieval system and method

ActiveCN102955848AOvercome limitationsExpand your searchSpecial data processing applicationsSemantic gapSemantic search

The invention provides a semantic-based three-dimensional model retrieval system and method. The system comprises a semantic retrieval module and a semantic database. The semantic retrieval module is used to segment and filter semanteme of a retrieved sentence so as to obtain a semantic concept. The method comprises the steps of inquiring the synonymous semantic concept in the WordNet according to the semantic concept, calculating the similarity between the semantic concept and the inquired semantic concept and screening the semantic concept according to the similarity to obtain other semantic concepts associated with the semantic concept; and combining the semantic concept with other associated semantic concepts to retrieve corresponding three-dimensional information in the semantic database to obtain the retrieval result. According to the three-dimensional model retrieval system and method provided by the invention, the semantic gap of high-level semantic information and low-level semantic information is complemented, the retrieval range of the three-dimensional model is expanded, and the retrieval precision is improved.

Owner:BEIJING TECHNOLOGY AND BUSINESS UNIVERSITY

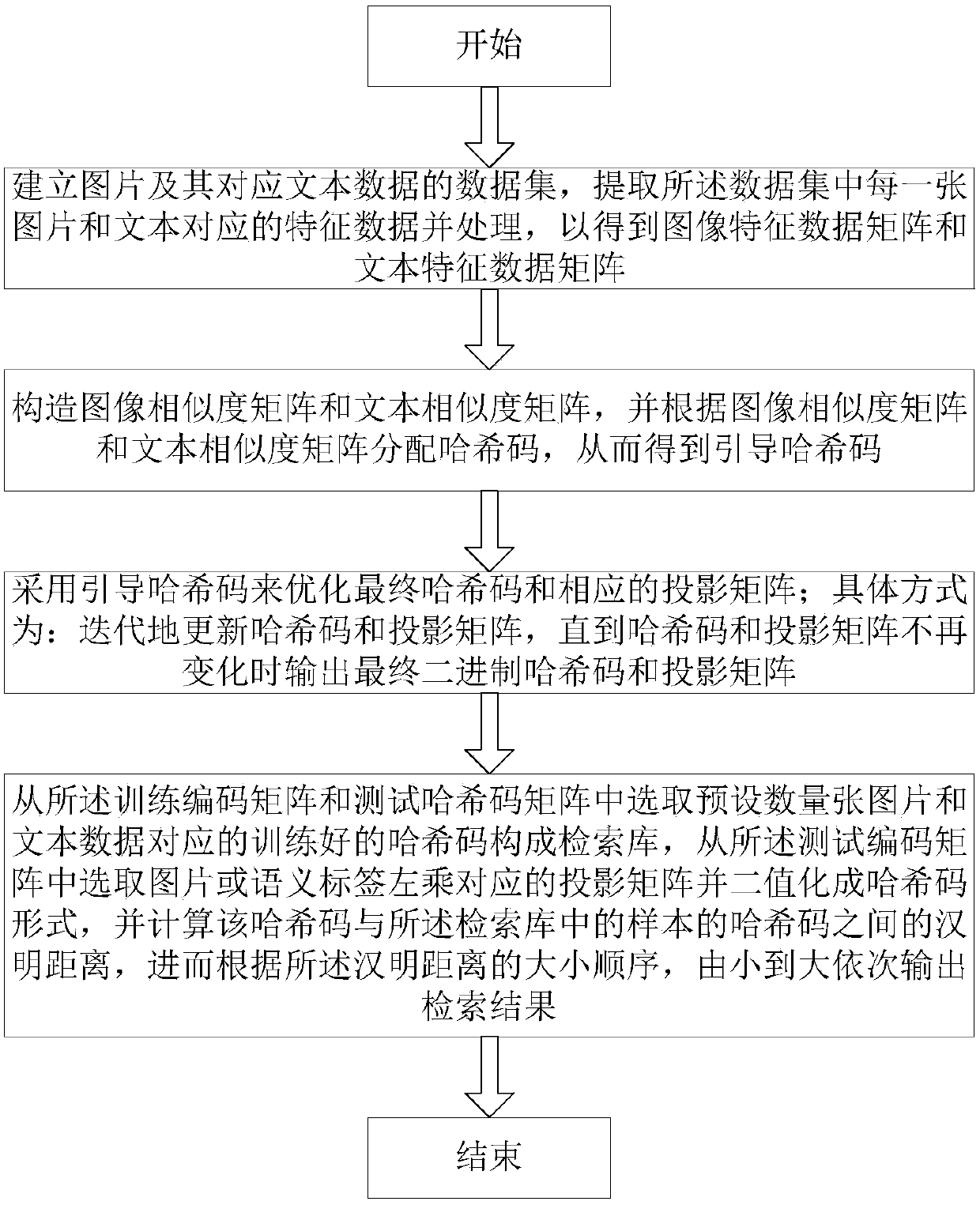

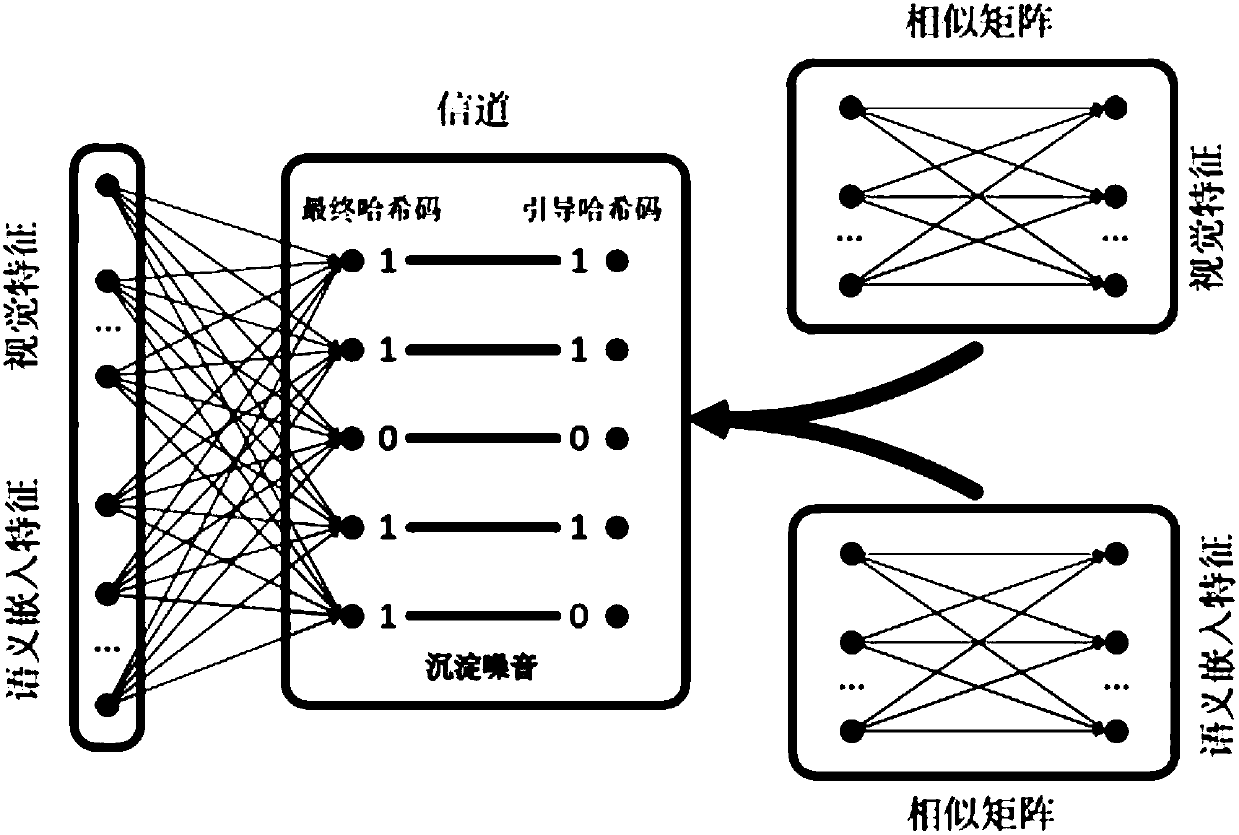

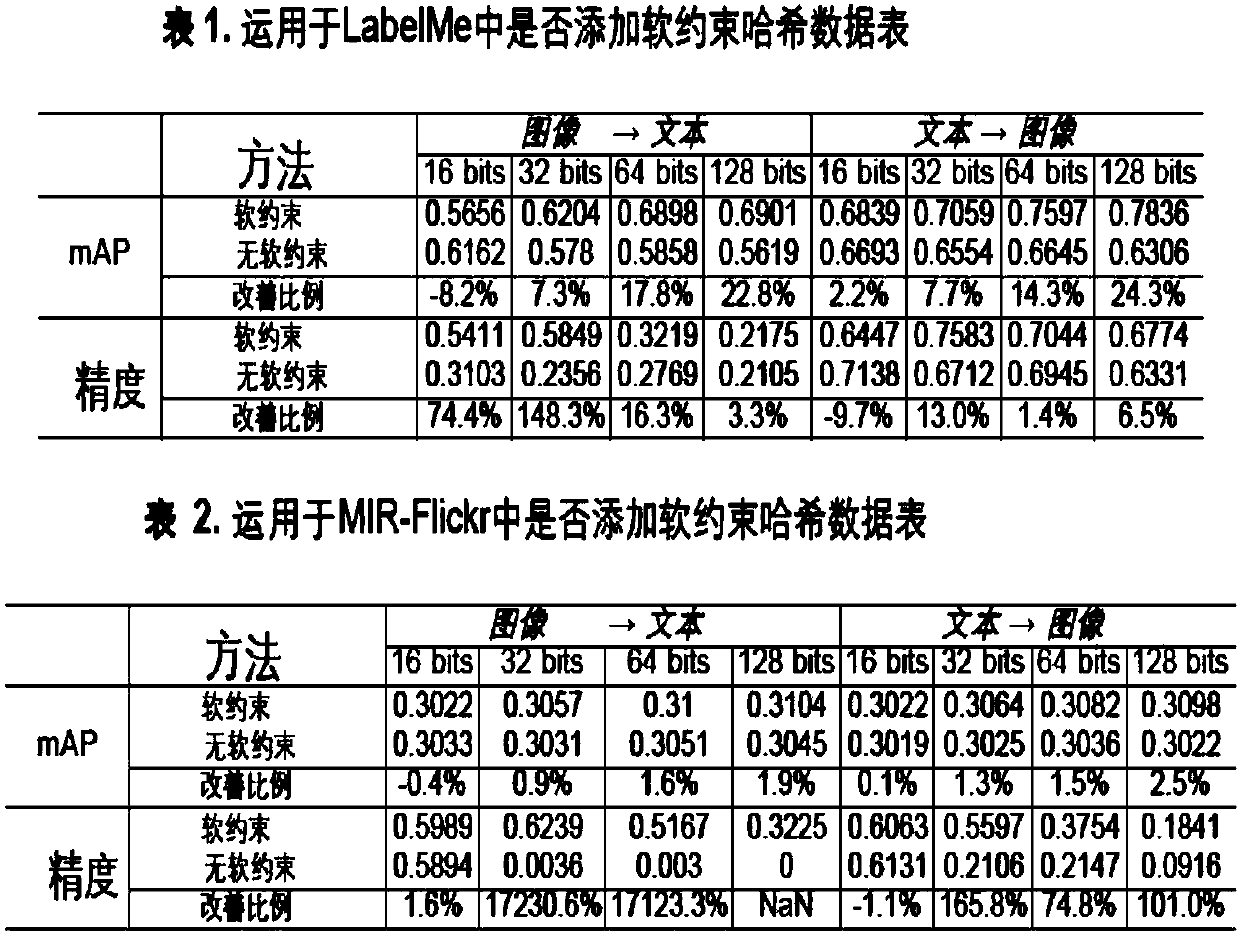

Image search method based on soft constraint non-supervision cross-modality hash

InactiveCN107766555AReduce quantization lossReduce noiseSpecial data processing applicationsCross modalityHat matrix

The invention discloses an image search method based on soft constraint non-supervision cross-modality hash. The method sequentially comprises the following steps of building a picture and a dataset of text data corresponding to the picture to obtain an image feature data matrix and a text feature data matrix; configuring an image similarity matrix and a text similarity matrix, distributing hash codes according to the image similarity matrix and the text similarity matrix, and thus obtaining guiding hash codes; adopting the guiding hash codes to optimize final hash codes and a corresponding projection matrix; calculating a Hamming distance between the hash codes and hash codes of a sample in a search base, and then descendingly outputting search results according to the size order of the Hamming distance. When the image search method is utilized, quantification loss of the hash codes can be lowered, semantic gaps can be shortened, discrete solutions can be obtained, and then the accuracy and efficiency of crossed searching of pictures and texts can be improved.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

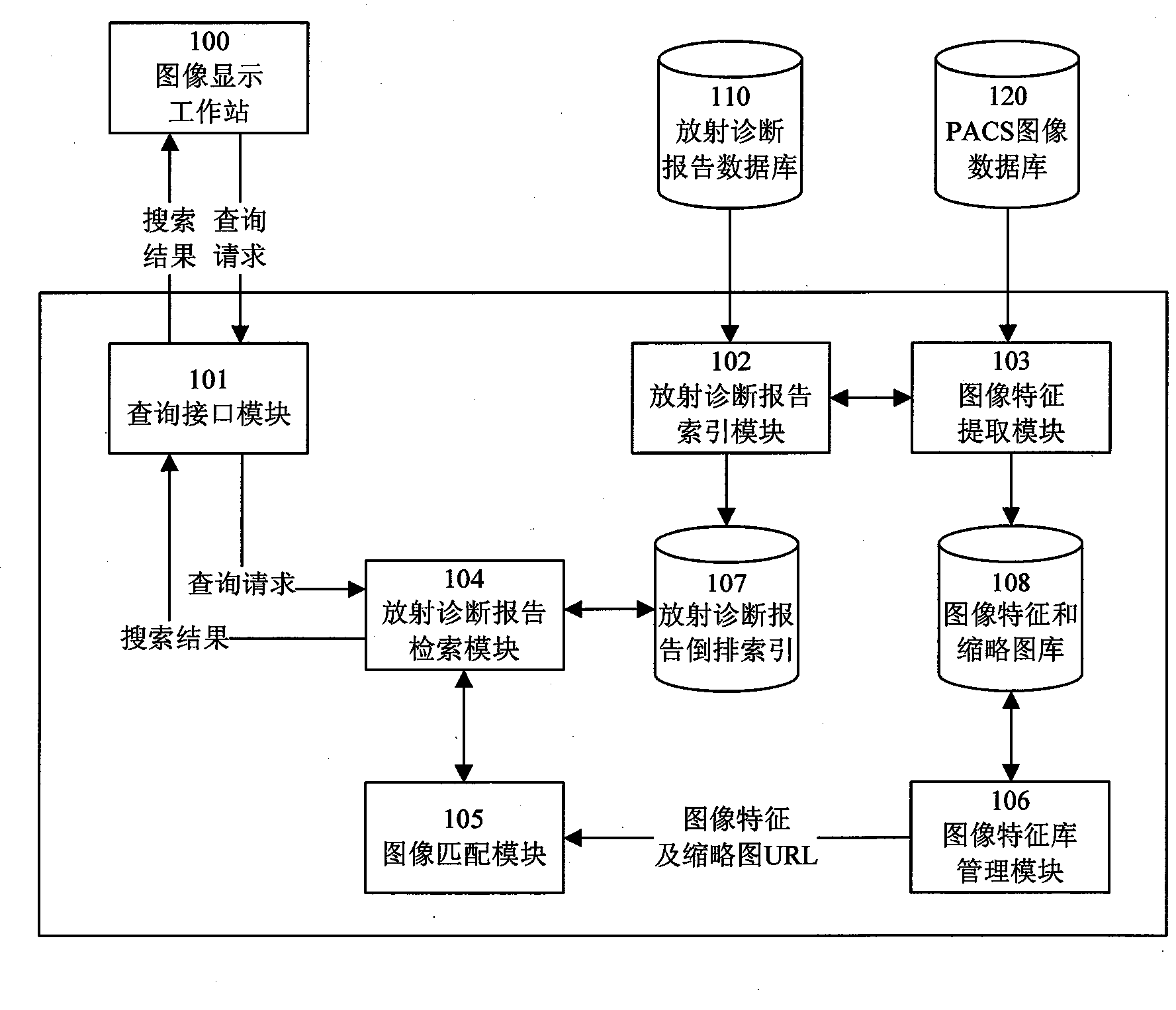

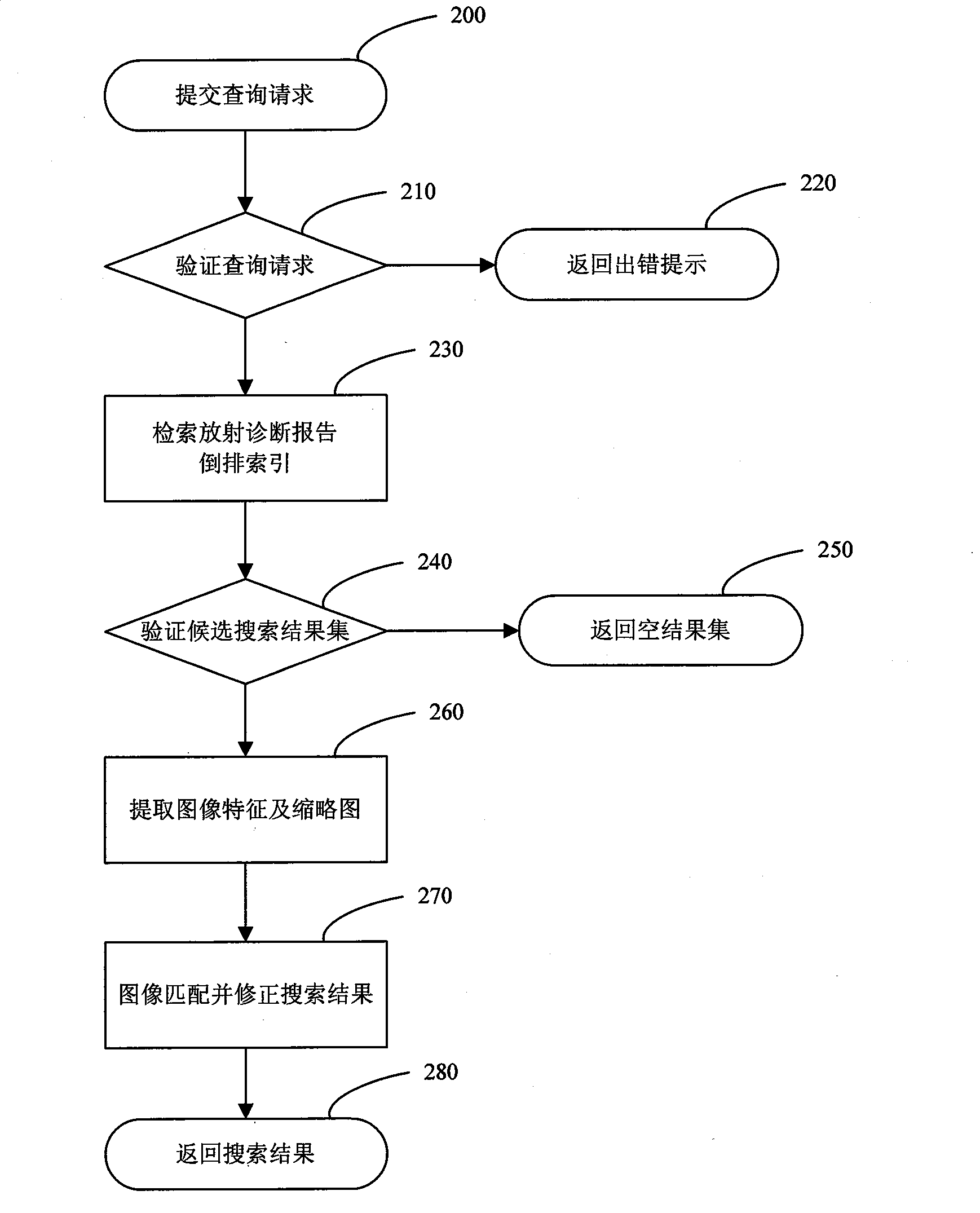

Search method and system facing to radiation image in PACS database based on content

InactiveCN101441658AReduce in quantityImprove performanceSpecial data processing applicationsSemantic gapReverse index

The invention discloses a content-based retrieval method and a content-based retrieval system facing radiographs in a PACS database. The content-based retrieval to the radiographs is realized by associating a radiodiagnosis report in a radio information system with the radiographs in the PACS database, establishing the reverse index of the radiodiagnosis report and a feature index library of the radiographs, and then using a two-phase search algorithm. The retrieval method, by effectively integrating text retrieval technology and content-based image retrieval technology, overcomes the defect that the prior content-based image retrieval technology is not suitable for large-scale image databases, alleviates the semantic gap in the prior content-based image retrieval technology to a certain extent, and has high application value.

Owner:SHANGHAI INST OF TECHNICAL PHYSICS - CHINESE ACAD OF SCI

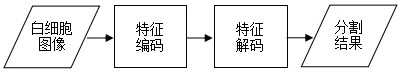

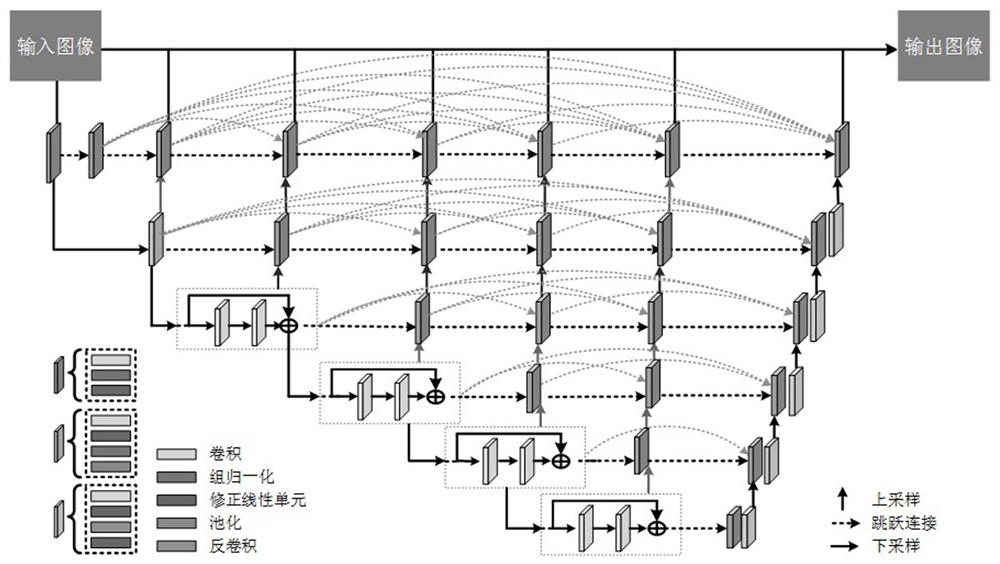

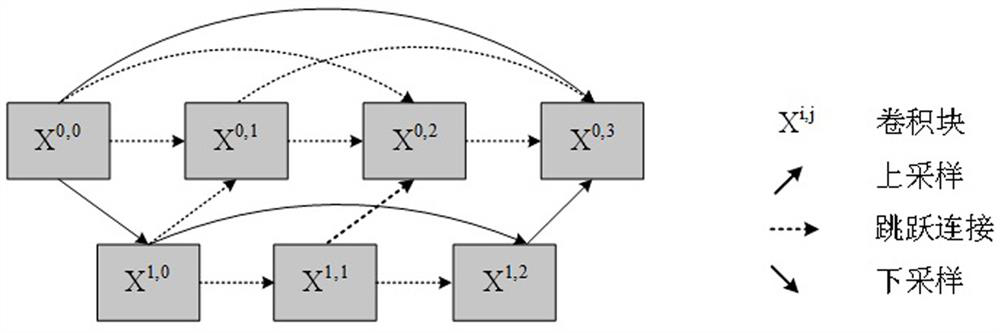

Blood leukocyte image segmentation method based on UNet++ and ResNet

PendingCN112070772ARobustImprove Segmentation AccuracyImage enhancementImage analysisSemantic gapWhite blood cell

The invention relates to a blood leukocyte image segmentation method based on UNet++ and ResNet. The method comprises the following steps: firstly, extracting multi-scale image shallow features by using an encoder with a convolution block and a residual block; extracting deep features of the image by using a decoder with convolution and deconvolution, and fusing shallow features and deep featuresby using mixed jump connection so as to reduce a semantic gap between the shallow features and the deep features; finally, designing a loss function based on the cross entropy and the Tversky index, guiding the model to learn effective image features by calculating a loss function value of each layer, and solving the problem of low training efficiency caused by imbalance of sample categories in aconventional segmentation loss function.

Owner:MINJIANG UNIV

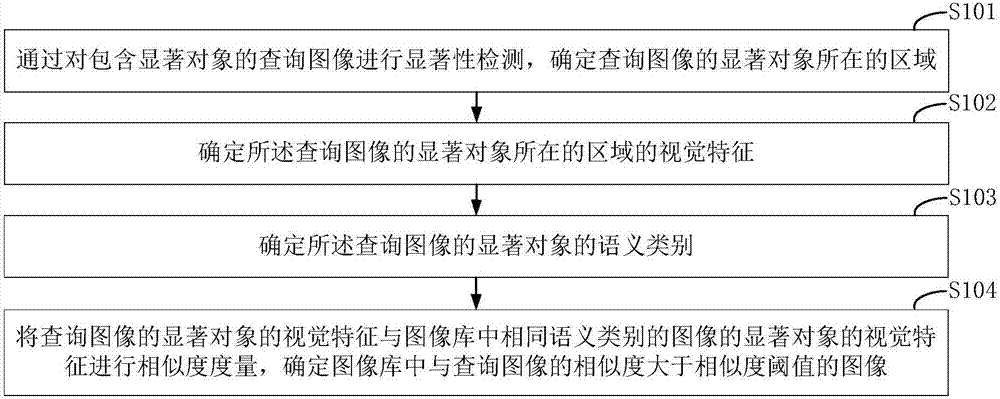

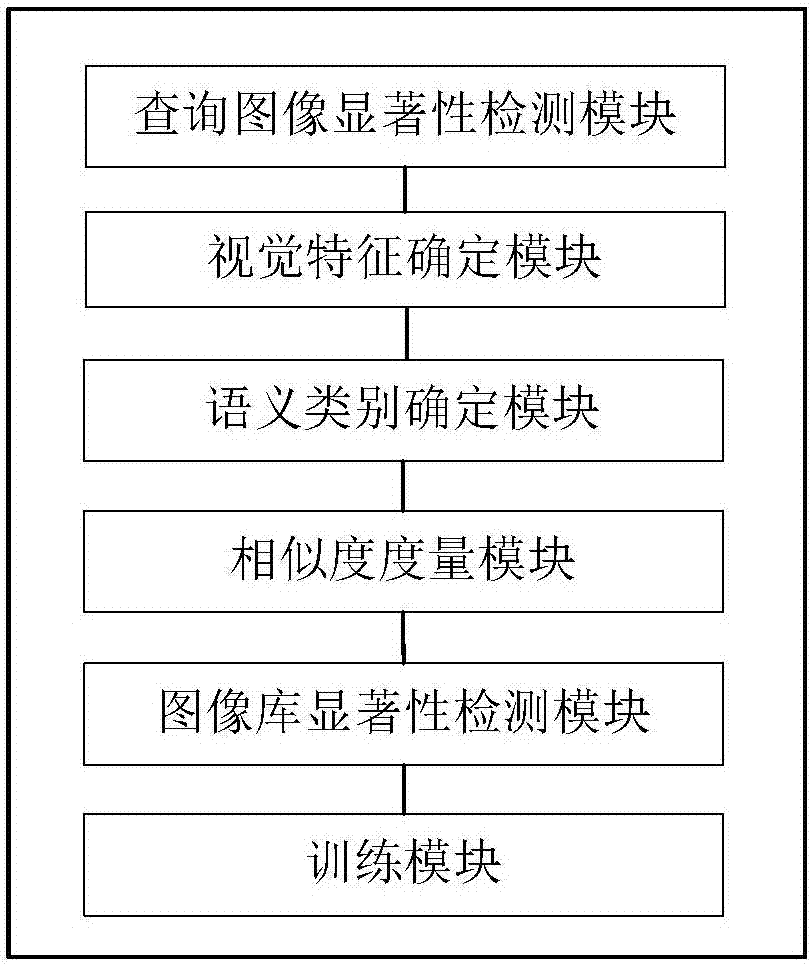

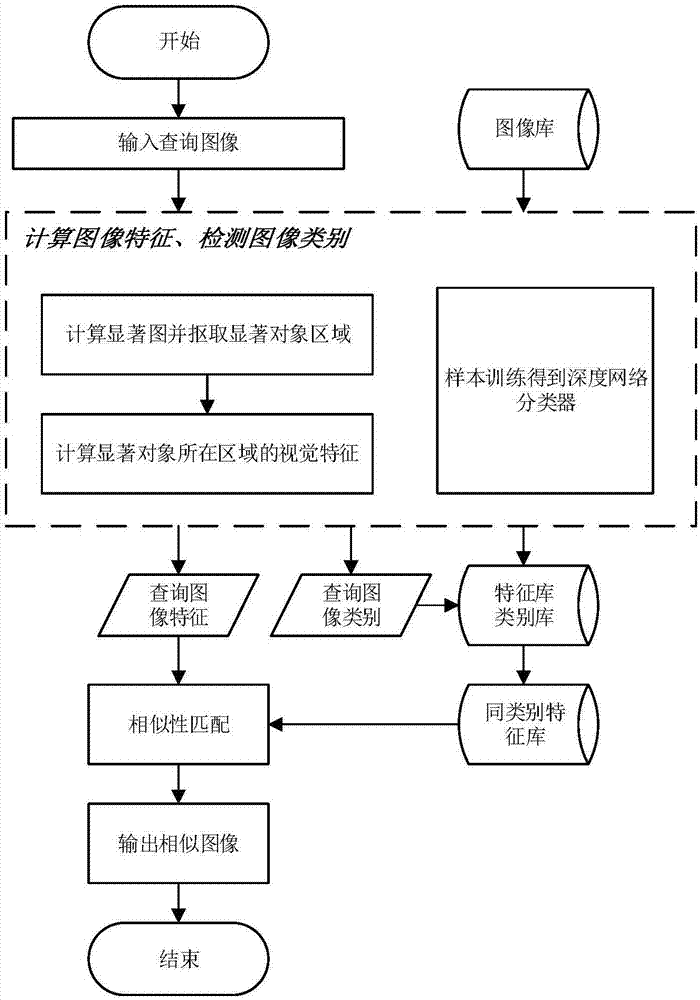

Salient object-based image retrieval method and system

InactiveCN107291855AImprove accuracyReduce complexityImage analysisCharacter and pattern recognitionSalient objectsSemantic gap

The invention discloses a salient object-based image retrieval method and system. The method comprises the steps of performing saliency detection on a query image containing a salient object to determine a region where the salient object of the query image is located; determining visual features of the region where the salient object of the query image is located; determining a semantic type of the salient object of the query image; performing similarity measurement on the visual features of the salient object of the query image and visual features of salient objects of images with the same semantic type in an image library, and determining the images, meeting a condition that the similarity between the images and the query image is greater than a similarity threshold, in the image library. According to the method and the system, the image retrieval is carried out through the visual features of the region where the salient object of the image is located, so that the background interference is avoided; by determining the semantic type of the salient object of the query image, the images with different semantic types in the image library are filtered, so that the semantic gap of the image retrieval is reduced, the image retrieval complexity is lowered, and the image retrieval accuracy is further improved.

Owner:NO 54 INST OF CHINA ELECTRONICS SCI & TECH GRP +1

Cross-modal retrieval method based on graph convolutional neural network

ActiveCN111598214AEfficient extractionEfficient retrievalMultimedia data queryingMultimedia data clustering/classificationSemantic gapSemantic representation

The invention discloses a cross-modal retrieval method based on a graph convolutional neural network. The cross-modal retrieval method comprises four processes of network construction, data set preprocessing, network training and retrieval and precision testing. Semantic representations in an image mode and a text mode are respectively learned by using a graph convolutional neural network; the cross-modal retrieval method can help to process the potential relationship among modal features, introduces the associated data of the third modal into the cross-modal retrieval method to reduce the semantic gap among the modals, and can significantly improve the accuracy and stability of cross-modal retrieval, thereby realizing accurate cross-modal retrieval.

Owner:ZHEJIANG UNIV OF TECH

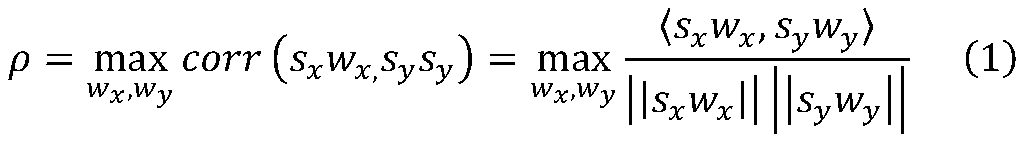

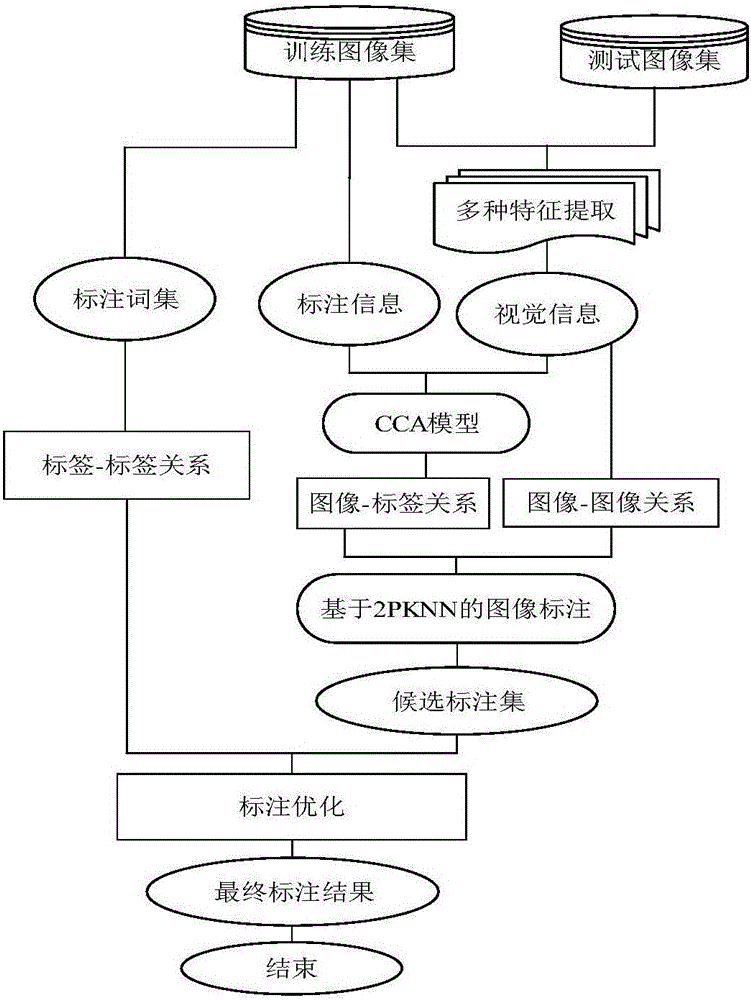

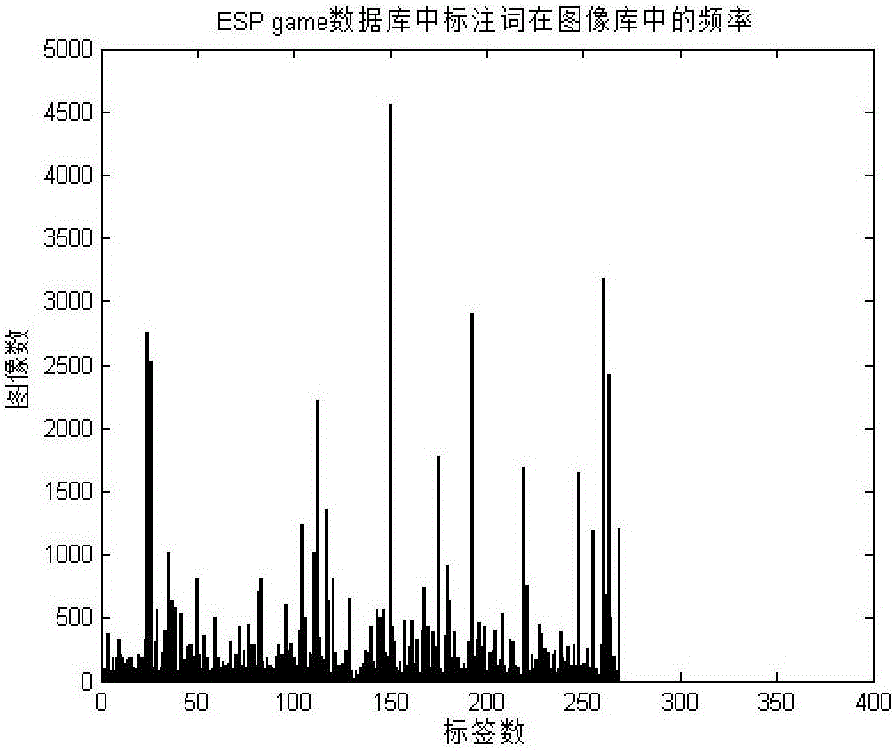

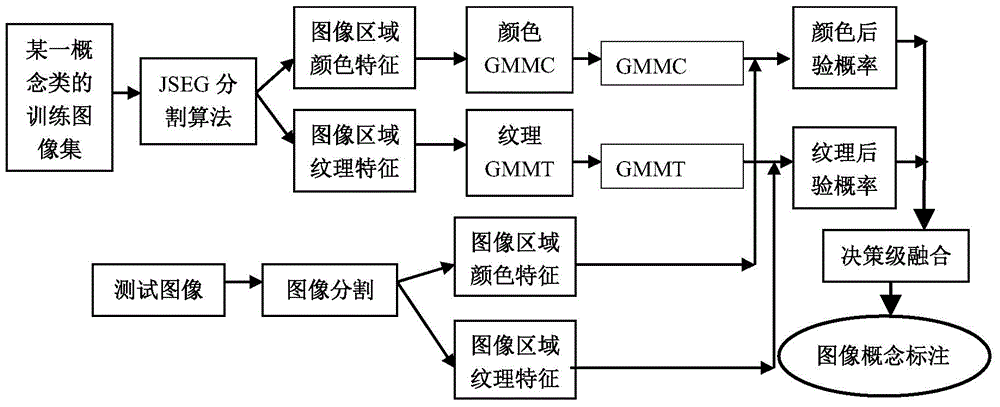

CCA and 2PKNN based automatic image annotation method

ActiveCN105808752ACharacter and pattern recognitionSpecial data processing applicationsPattern recognitionSemantic gap

The invention belongs to the sub-field of the learning theory and application in the technical field of computer application, and relates to a CCA and 2PKNN based automatic image annotation method, in order to solve problems of a semantic gap, a weak annotation, and category imbalance that exist in an automatic image annotation task. The method comprises: firstly, for a semantic gap problem, mapping two features to a CCA sub-space, and solving a distance between the two features in the sub-space; for a weak annotation problem, establishing a semantic space for each annotation; for a category imbalance problem, by combining a KNN algorithm, finding out k nearest neighbors of a test image in the semantic space of each annotation, constituting the k nearest neighbors to an image sub-set, and by using a visual distance between the sub-set and the test image, and by combining a Bayesian formula, assigning a few annotations with the highest score to the test image; and finally, optimizing an image annotation result by using correlation between annotations. The method disclosed by the present invention has a greater degree of improvement for image annotation performance.

Owner:DALIAN UNIV OF TECH

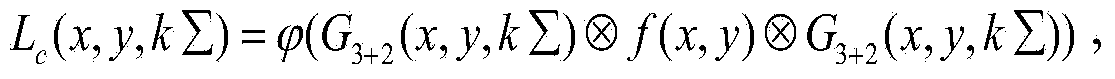

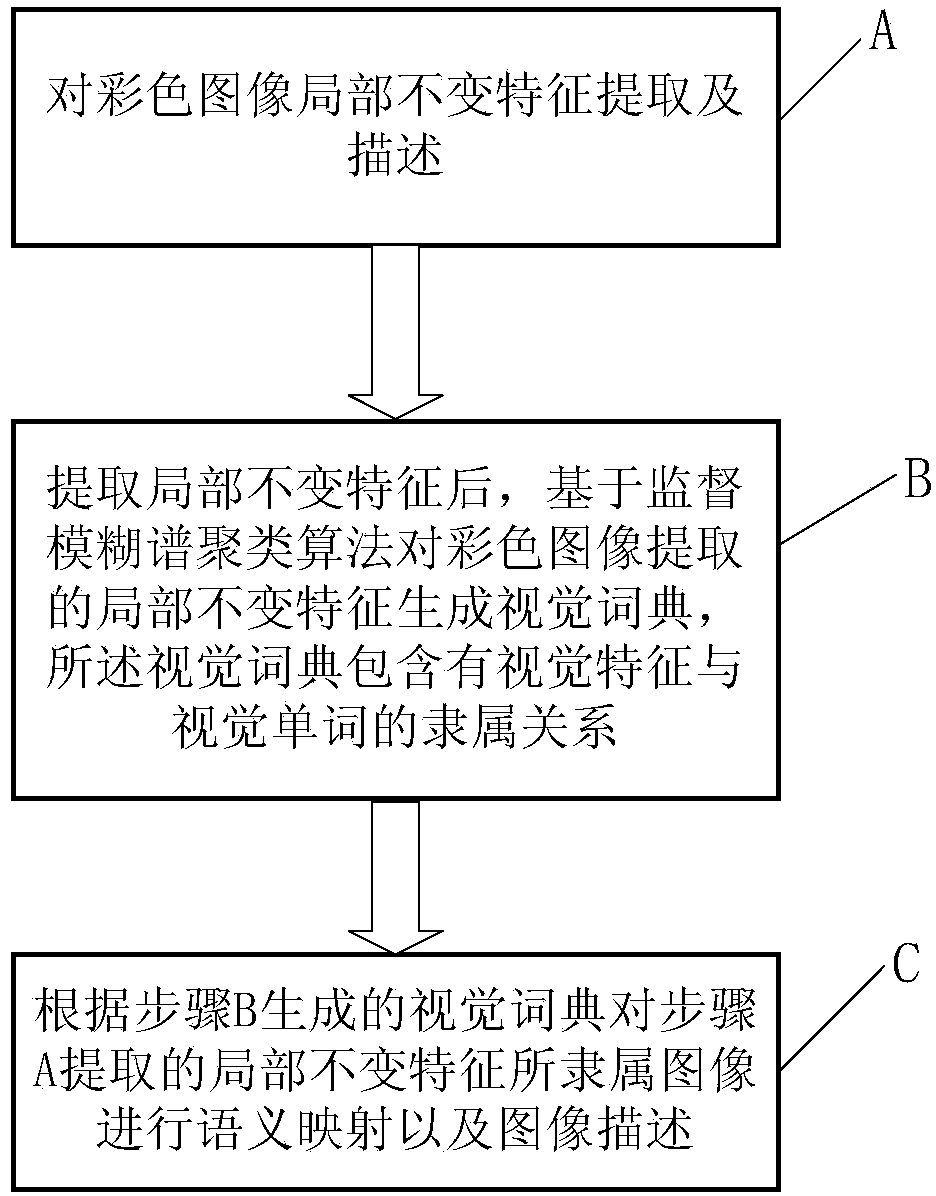

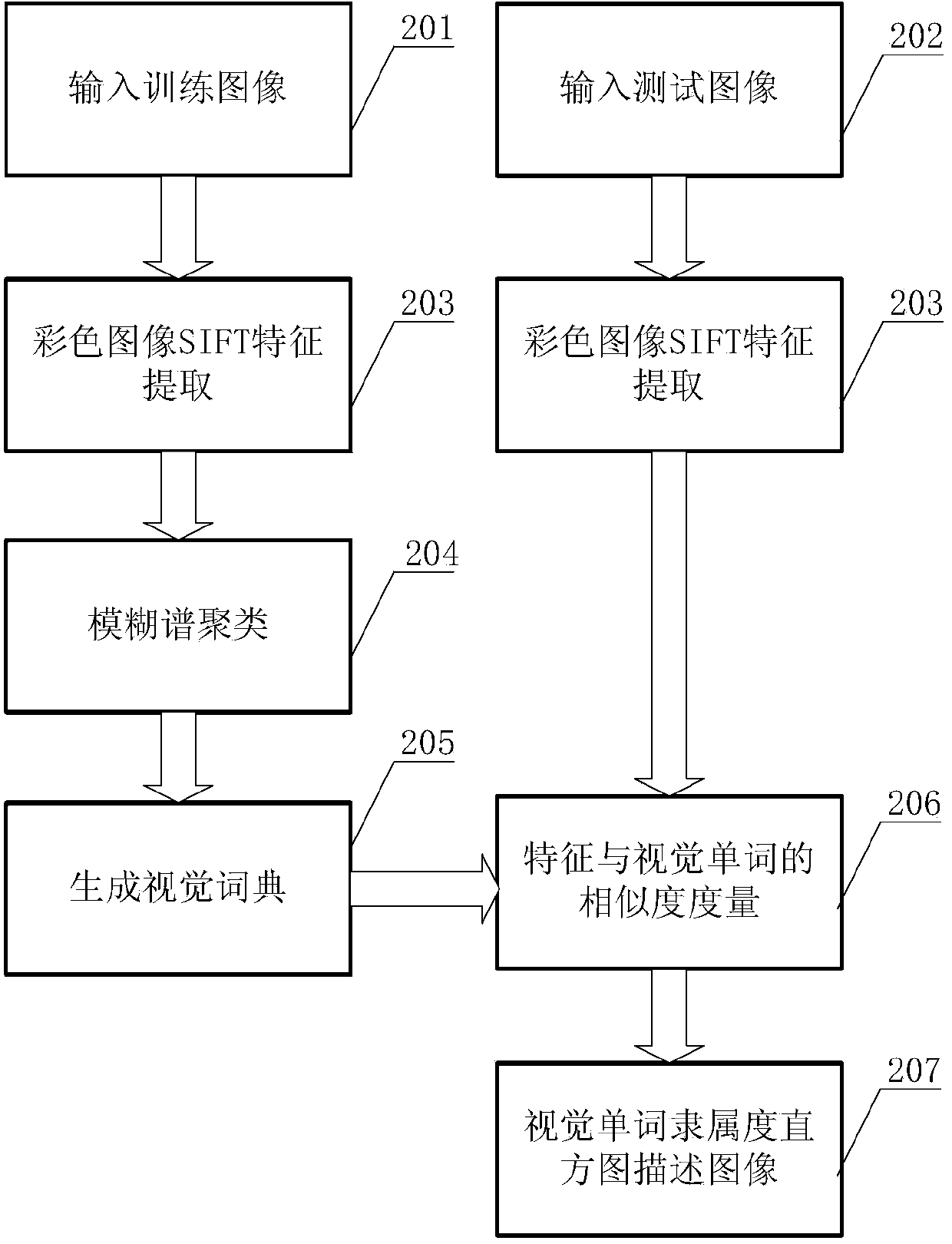

Semantic mapping method of local invariant feature of image and semantic mapping system

ActiveCN103530633AImprove accuracyBridging the Semantic GapCharacter and pattern recognitionSpecial data processing applicationsSpectral clustering algorithmSemantic gap

The invention is applicable to the technical field of image processing and provides a semantic mapping method of the local invariant feature of an image. The semantic mapping method comprises the following steps of step A: extracting and describing the local invariant feature of the colorful image; step B: after extracting the local invariant feature, generating a visual dictionary for the local invariant feature extracted from the colorful image on the basis of an algorithm for supervising fuzzy spectral clustering, wherein the visual dictionary comprises the attached relation of visual features and visual words; step C: carrying out semantic mapping and image description on the attached image with the local invariant feature extracted in the step A according to the visual dictionary generated in the step B. The semantic mapping method provided by the invention has the advantages that the problem of semantic gaps can be eliminated, the accuracy of image classification, image search and target recognition is improved and the development of the theory and the method of machine vision can be promoted.

Owner:湖南植保无人机技术有限公司

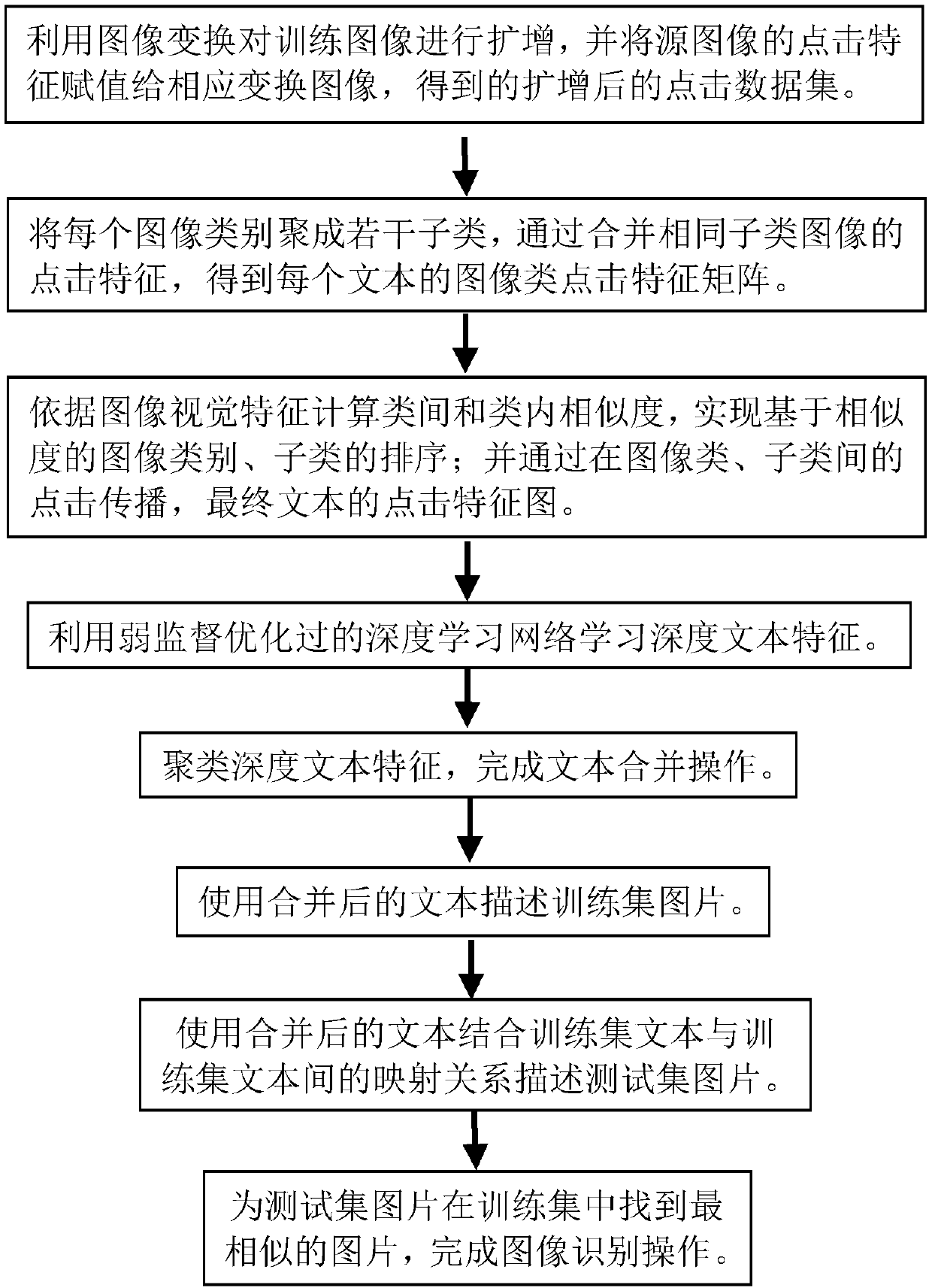

Text clustering method based on weak supervised deep learning

InactiveCN109582782AEmphasize the importanceEnhanced description abilityCharacter and pattern recognitionNeural architecturesData setSemantic gap

The invention discloses a text clustering method based on weak supervised deep learning. The method comprises the following steps: (1) by means of an image data set with text click information, imagevisual information and image category labels are utilized, and adopting image amplification and clustering to construct an image category click characteristic matrix of each text; And (2) obtaining asmooth image click feature map on the initial class click matrix by using a sorting and propagation method. Performing text clustering on the feature map to obtain an initial text category, and initializing text weight by utilizing click priori; (3) under the condition of minimizing an intra-class mean square error, building a deep text clustering model to learn deep text characteristics; (4) performing joint optimization on the depth model and the text weight by using a weak supervised learning method, and iteratively updating the depth model and the text weight; (5) deep text features are extracted through the deep text model, and K-based text feature extraction is achieved. And clustering the means method. The method has very high universality, and the semantic gap in image recognitionis effectively solved.

Owner:HANGZHOU DIANZI UNIV

Method for marking picture semantics based on Gauss mixture model

InactiveCN104820843AImprove labeling abilityAdd steps to remove noisy regionsCharacter and pattern recognitionExpectation–maximization algorithmSemantic gap

The invention discloses a method for marking picture semantics based on a Gauss mixture model, which belongs to the technical field of image retrieval and automatic image marking. The method comprises the following steps: S1, obtaining a relationship between a low-level visual feature of the image and a semantics concept through monitoring Bayesian learning, and obtaining an image feature set; S2, establishing two Gauss mixture models for each semantics concept by means of an expectation-maximization algorithm, and adding a step of eliminating a noise area; and S3. according to the image feature set, calculating the color posterior probability of the pattern posterior probability of an area layer, arranging the calculated posterior probabilities which belong to all concepts of the image according to a descending order, and obtaining the color ordering value of each concept; similarly, arranging the pattern posterior probabilities and obtaining the pattern ordering value of each concept; and selecting a concept class marking image with a least summation of front R ordering values. According to the method of the invention, the difference between the low-level visual feature of the image and the high-level semantics concept expression is remarkably reduced, thereby effectively settling a semantic gap problem.

Owner:常熟苏大低碳应用技术研究院有限公司

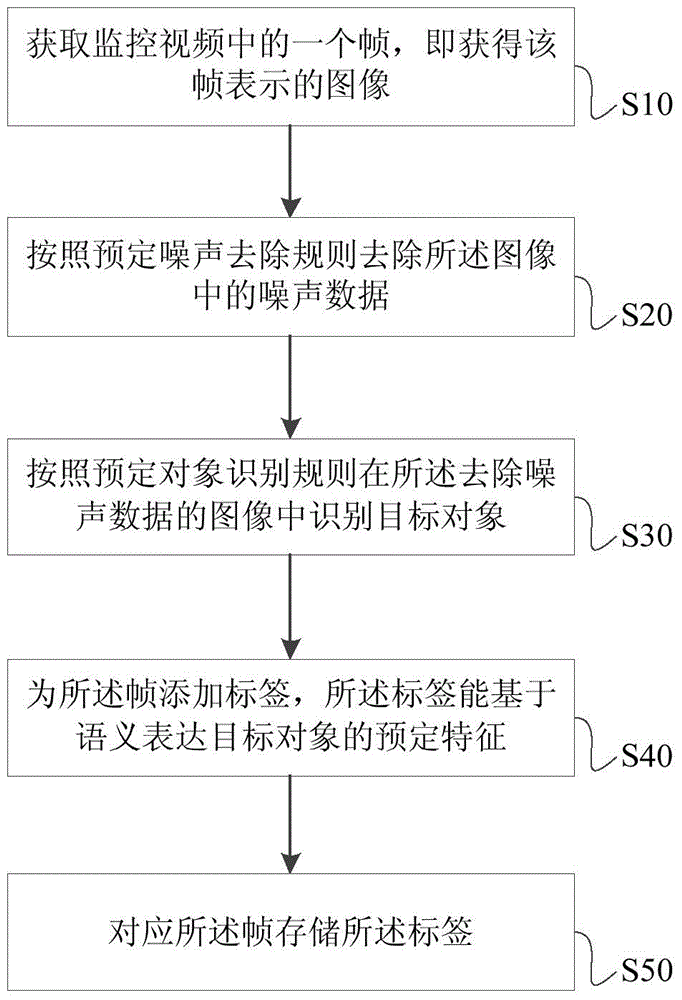

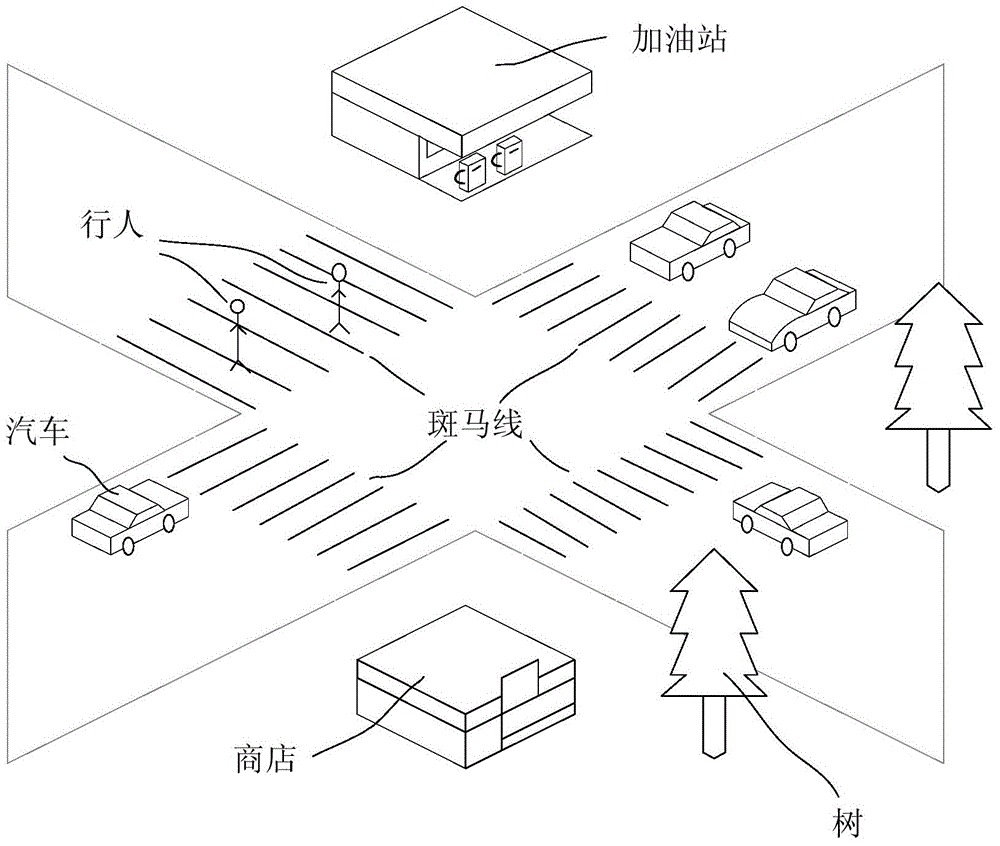

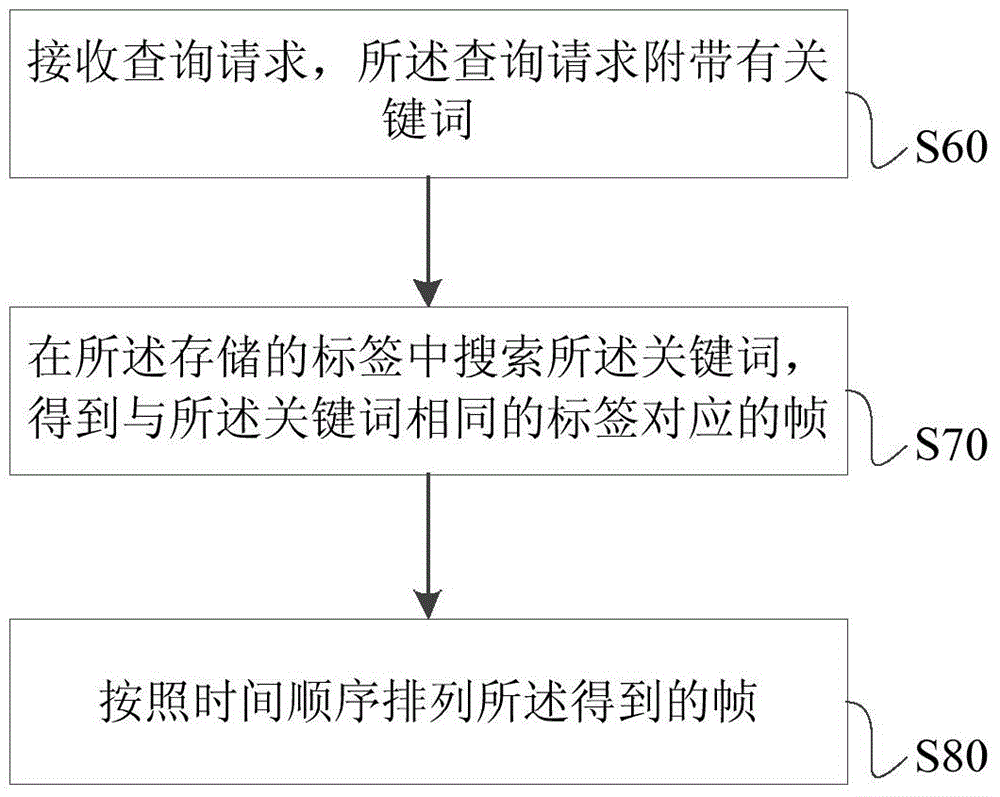

Video monitoring system image acquisition method and apparatus

InactiveCN105049790AEasy accessEfficient acquisitionCharacter and pattern recognitionClosed circuit television systemsVideo monitoringPattern recognition

The invention provides a video monitoring system image acquisition method. The method comprises the following steps of acquiring one frame in a monitoring video, which means that an image expressed by the frame is acquired; according to a preset noise removing rule, removing noise data in the image; according to a preset target identification rule, identifying a target object in the image where the noise data is removed; adding a label to the frame, wherein the label can express a preset characteristic of the target object based on semantics; storing the label corresponding to the frame. Through mapping an extracted visual bottom layer characteristic to high-layer semantic information which is convenient for intuitive understanding of human according to a preset algorithm, based on that, classification and marking of video monitoring image data are realized and a meaning of the video monitoring image data is well expressed. ''A semantic gap'' between an image bottom layer characteristic and rich semantic contents of human is reduced and even eliminated and rapid and high-efficient acquisition of a video monitoring image is realized.

Owner:CHINESE PEOPLE'S PUBLIC SECURITY UNIVERSITY

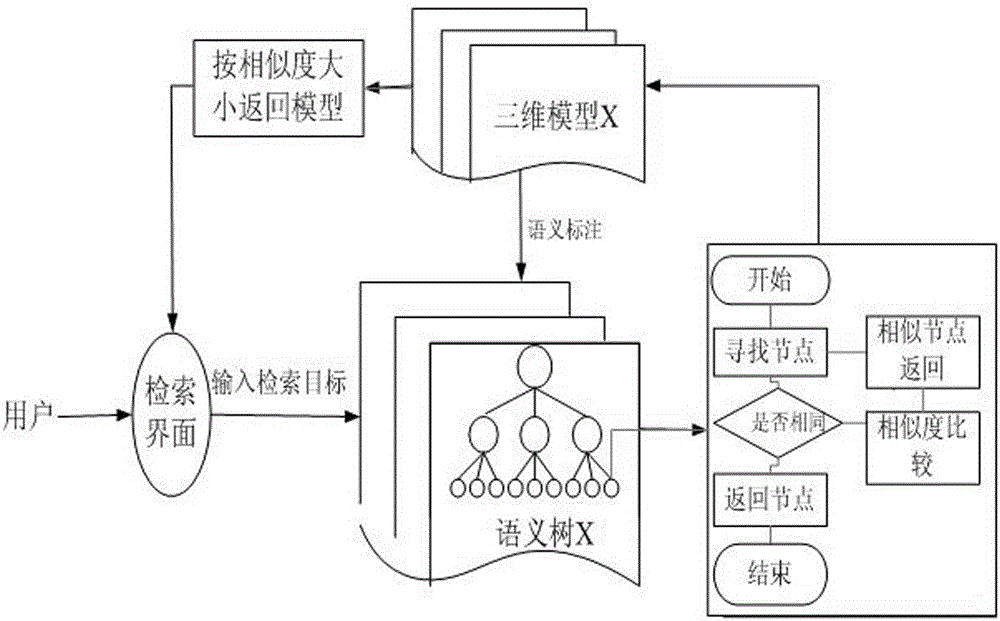

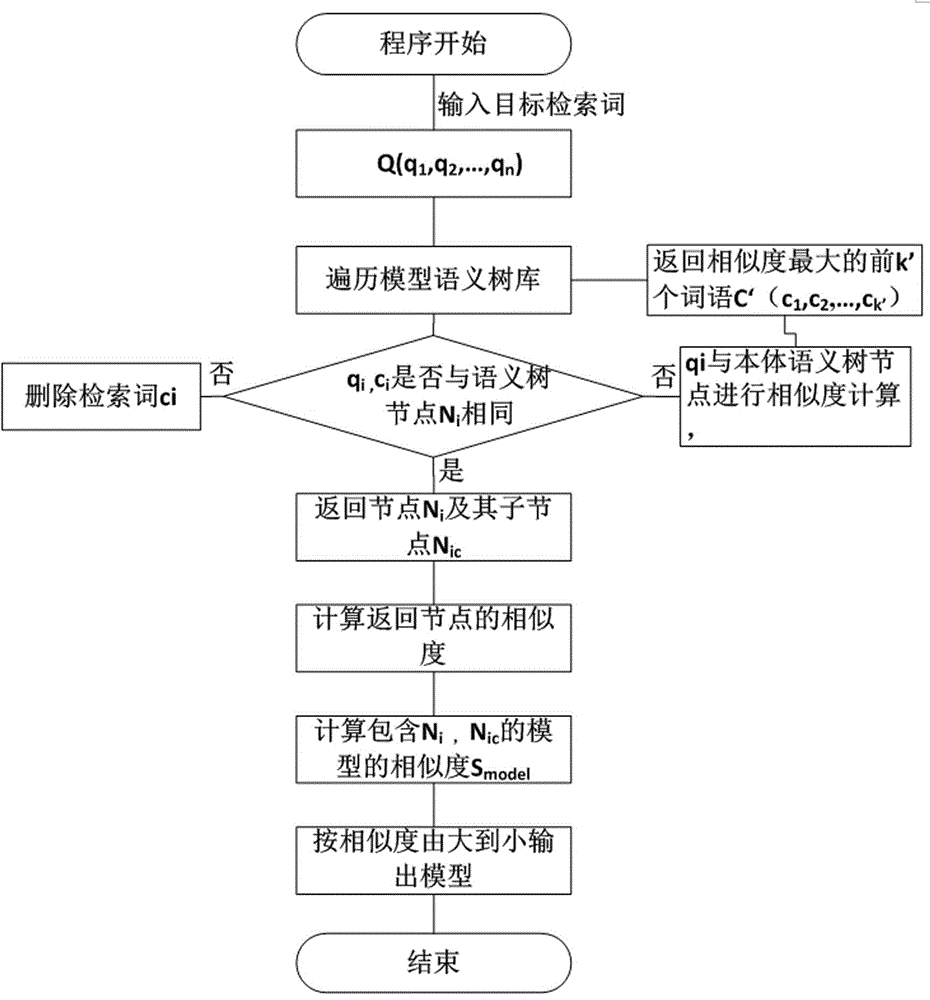

CAD semantic model search method based on design intent

ActiveCN106528770AAddressing the Semantic GapImprove retrieval recallSpecial data processing applicationsSearch wordsNODAL

The invention discloses a CAD semantic model search method based on a design intent. The method comprises the steps of A, establishing a three-dimensional CAD model database and carrying out three-dimensional annotation of the design intent through utilization of a PMI module of UG according to modeling, analyzing and manufacturing features of each model; B, carrying out classification on the annotation information of three-dimensional models according to modeling information, analyzing information and manufacturing information, and establishing a design intent semantic tree of each model; C, establishing a field-based body semantic model tree according to a three-dimensional semantic tree database; D, establishing a search index according to the body semantic tree; E, comparing similarity of a target search word set and semantic tree nodes and returning the same or similar nodes and sub-nodes thereof; and F, calculating corresponding model semantic similarity according to the returned nodes, returning the three-dimensional models with high semantic similarity and feeding back the three-dimensional models to a user. According to the method, the semantic gap problem of the content-based search method is solved, the similarity calculation is carried out by matching target search words and semantic annotation words, and the recall ratio of search is improved.

Owner:DALIAN POLYTECHNIC UNIVERSITY

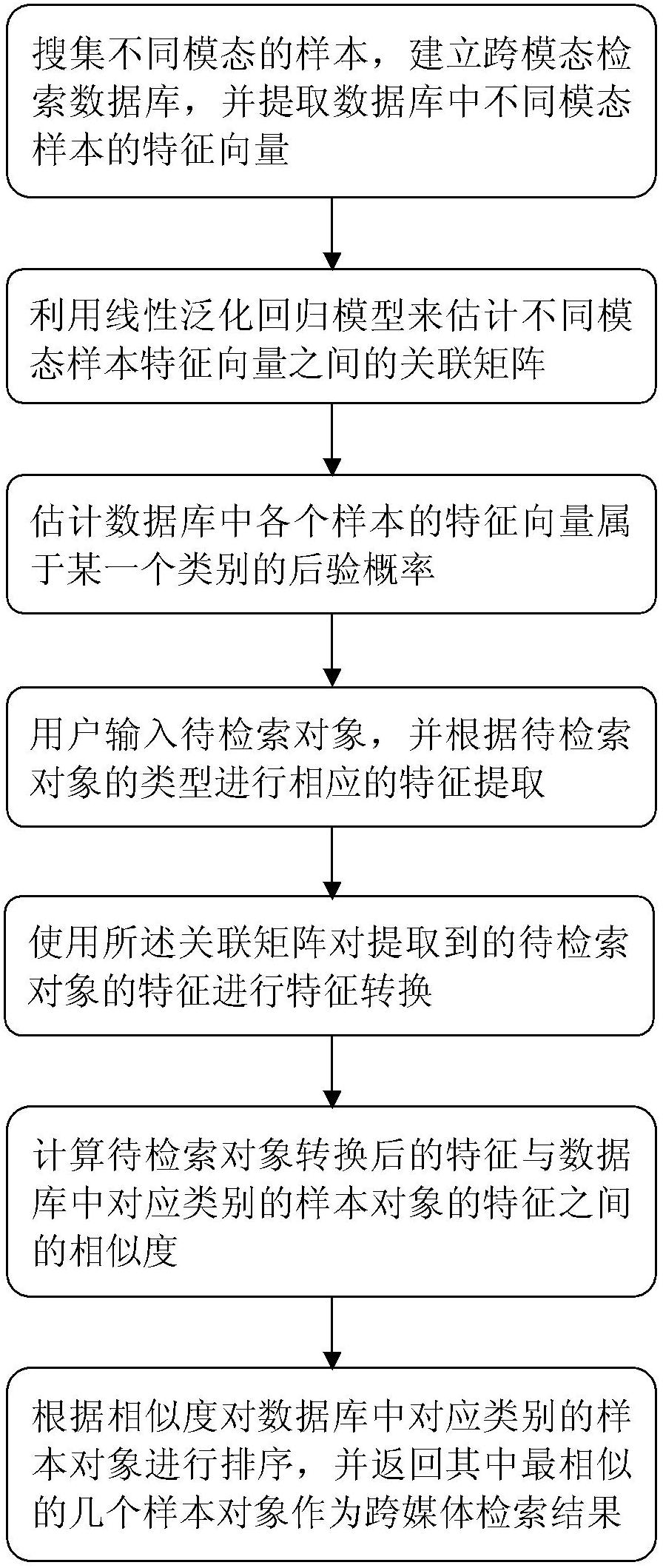

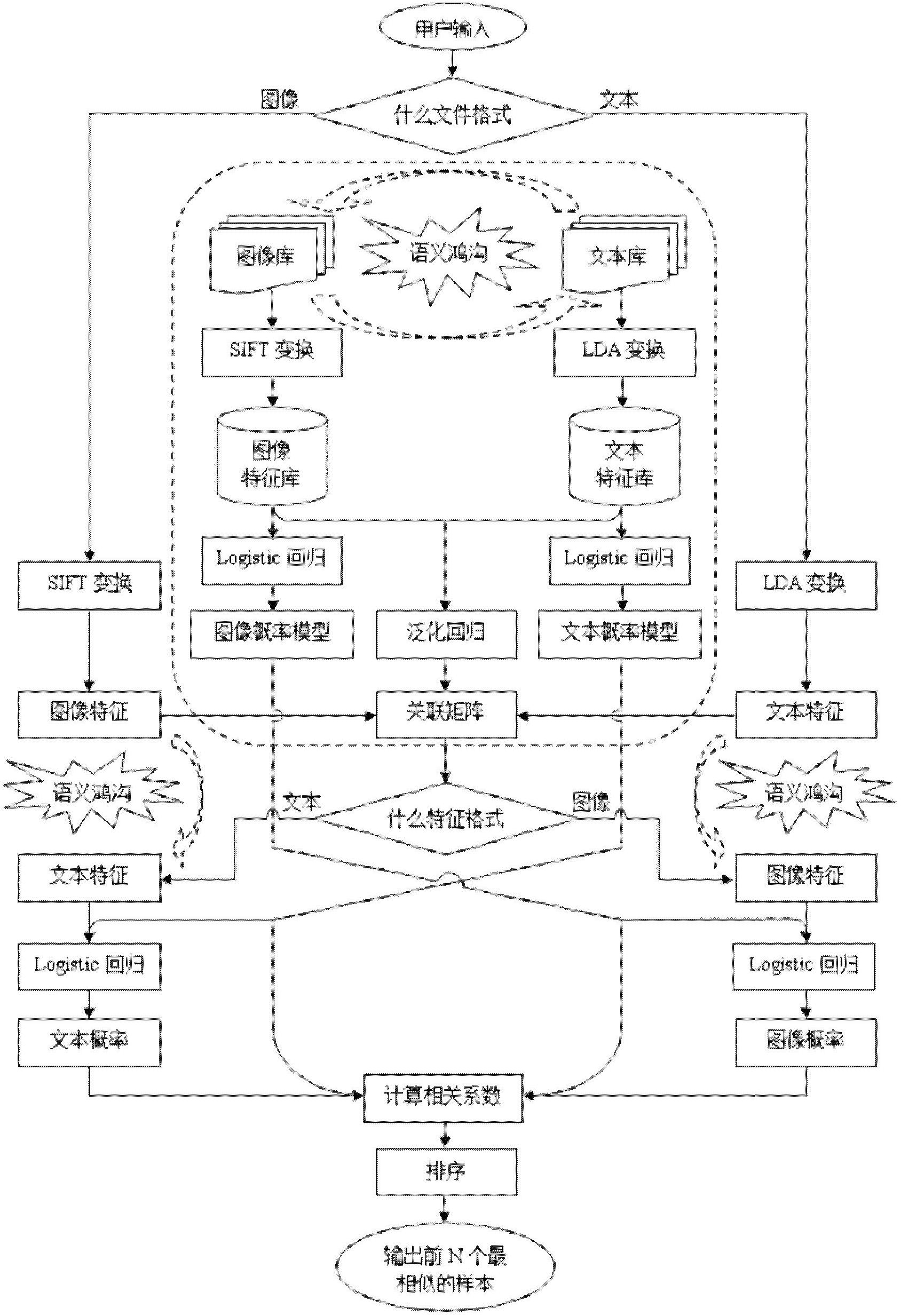

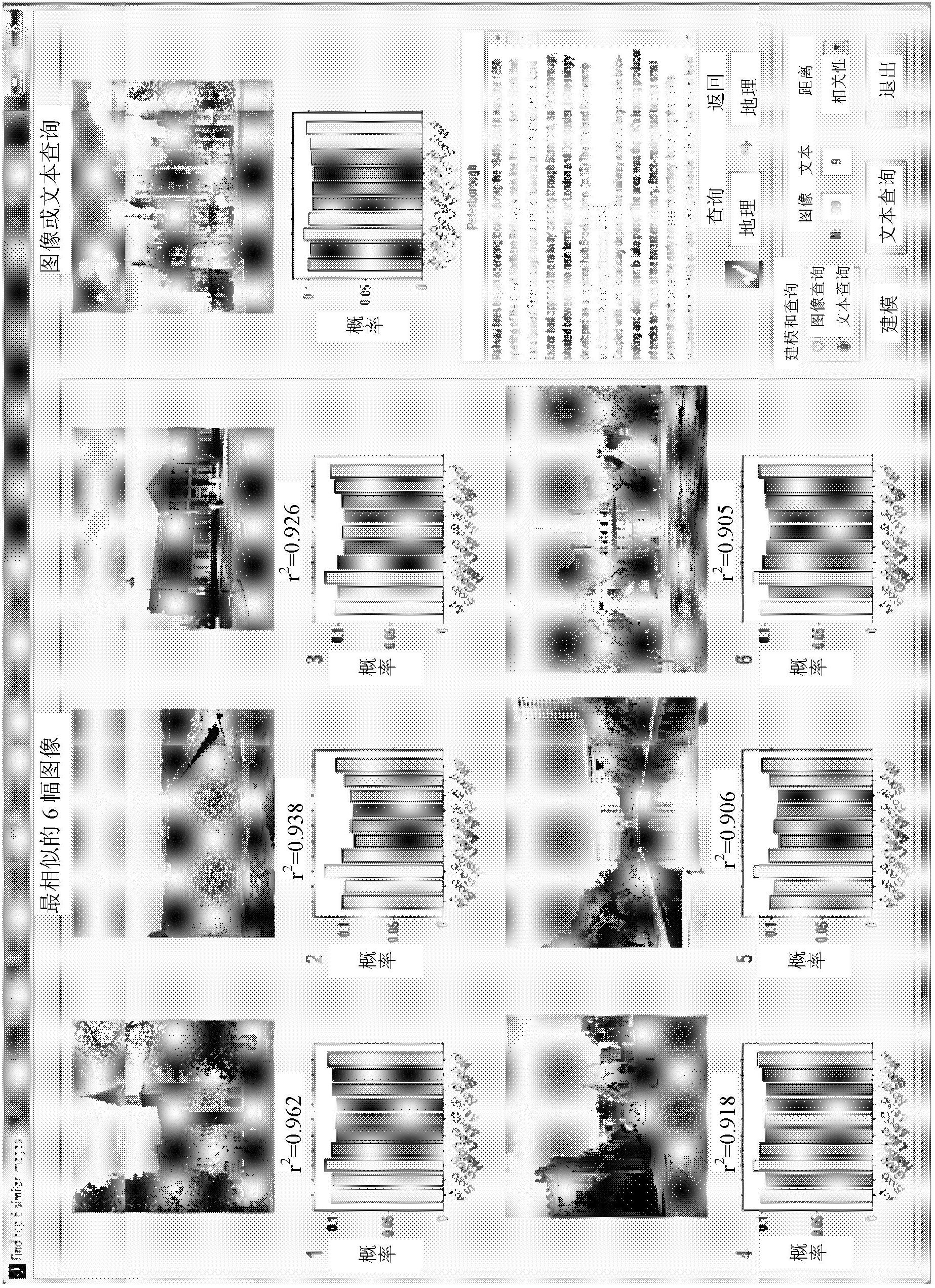

Linear generalization regression model based cross-media retrieval method

ActiveCN102693316AEliminate distractionsImprove effectivenessSpecial data processing applicationsSemantic gapInformation transmission

The invention discloses a linear generalization regression model based cross-media retrieval method which includes: extracting semantic features of different model objects, establishing regression relations among models by the aid of the linear generalization regression model to realize mutual conversions of the features of models; estimating posterior probability distribution of the model objects by multi-class Logistic regression algorithm after features are converted; and measuring distance between a calculation test sample and a database sample by distance measurement, and outputting index to obtain the most similar samples of prior N databases. The linear generalization regression model based cross-media retrieval method can cross semantic gaps of different models, protect different models from revealing effective information during converting process to the greatest extent, guarantee effectiveness of information transmission of different models accordingly, improve robustness and accuracy for cross-media retrieval further, and has good application prospect and considerable marketing value.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com