Patents

Literature

193 results about "Visual dictionary" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A visual dictionary is a dictionary that primarily uses pictures to illustrate the meaning of words. Visual dictionaries are often organized by themes, instead of being an alphabetical list of words. For each theme, an image is labeled with the correct word to identify each component of the item in question. Visual dictionaries can be monolingual or multilingual, providing the names of items in several languages. An index of all defined words is usually included to assist finding the correct illustration that defines the word.

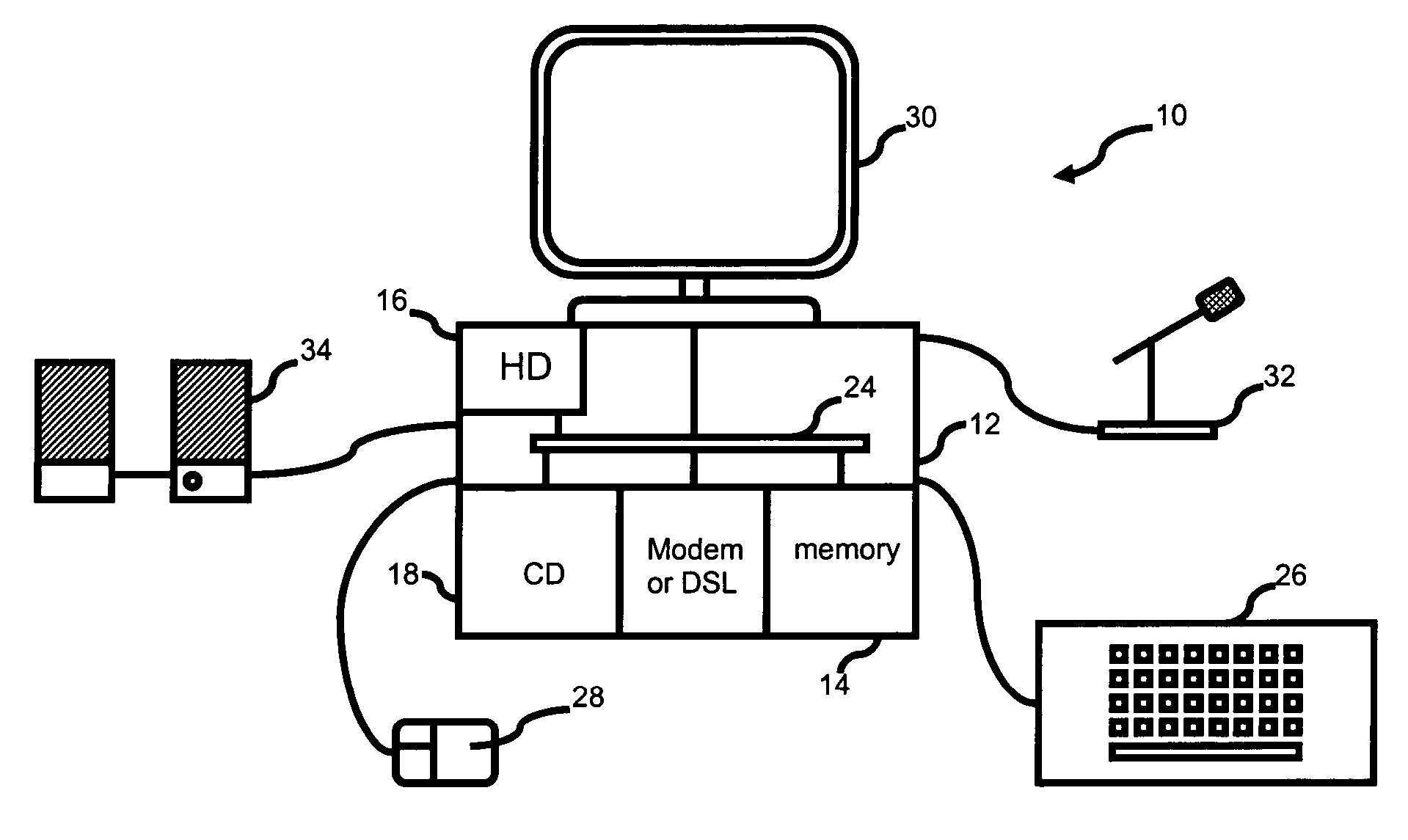

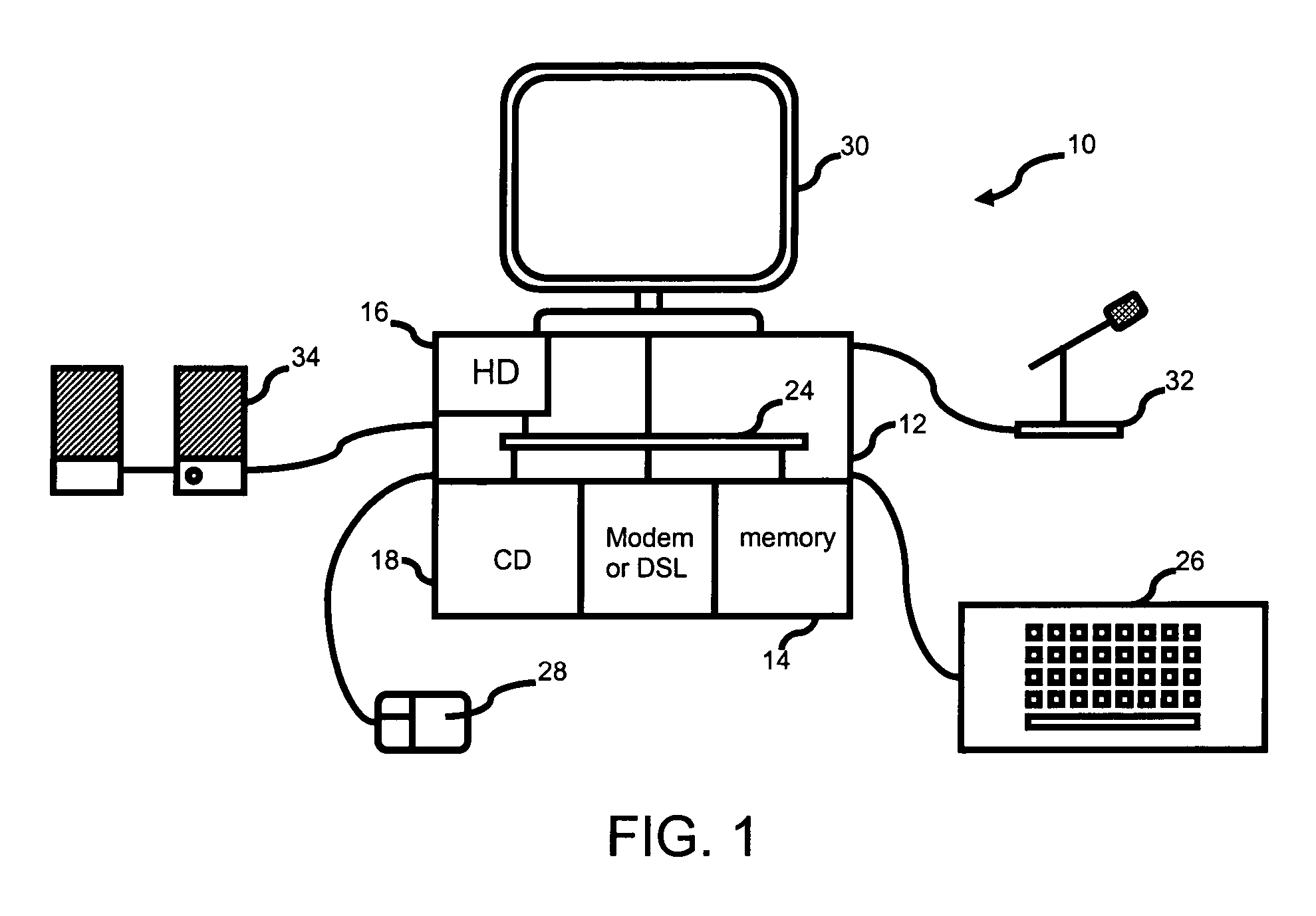

Method and system for interactive teaching and practicing of language listening and speaking skills

An interactive language instruction system employs a setting and characters with which a learner can interact with verbal and text communication. The learner moves through an environment simulating a cultural setting appropriate for the language being learned. Interaction with characters is determined by a skill level matrix to provide varying levels of difficulty. A visual dictionary allows querying of depicted objects in the environment for verbal and text definitions as well as testing for skill determination.

Owner:COCCINELLA DEV

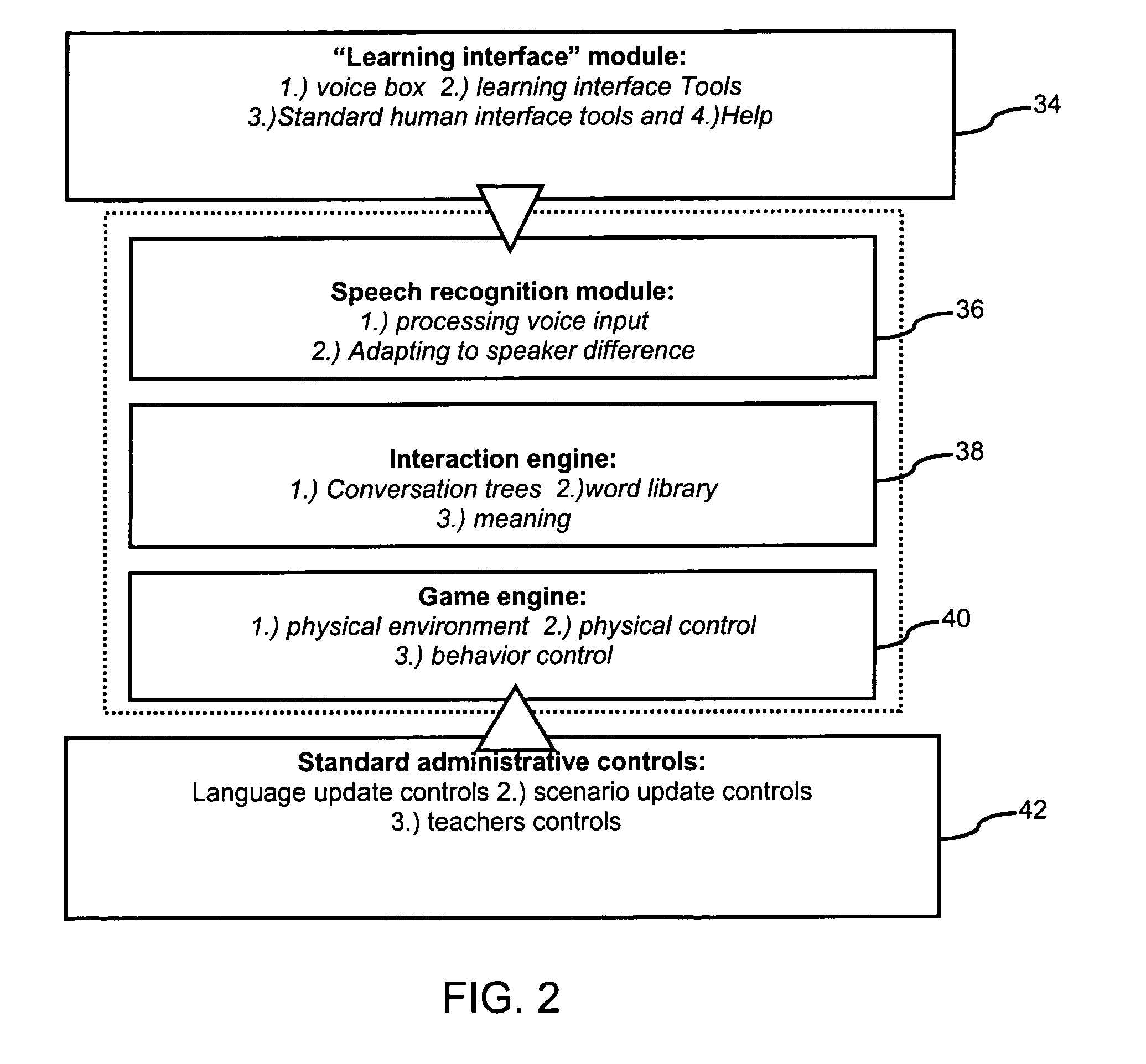

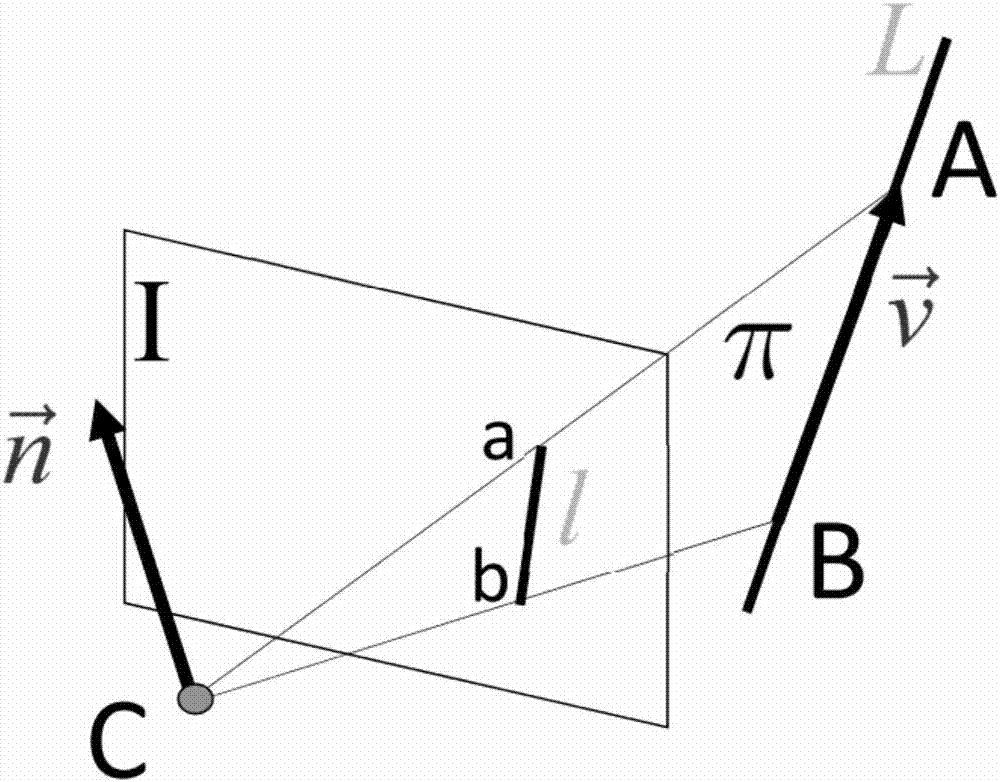

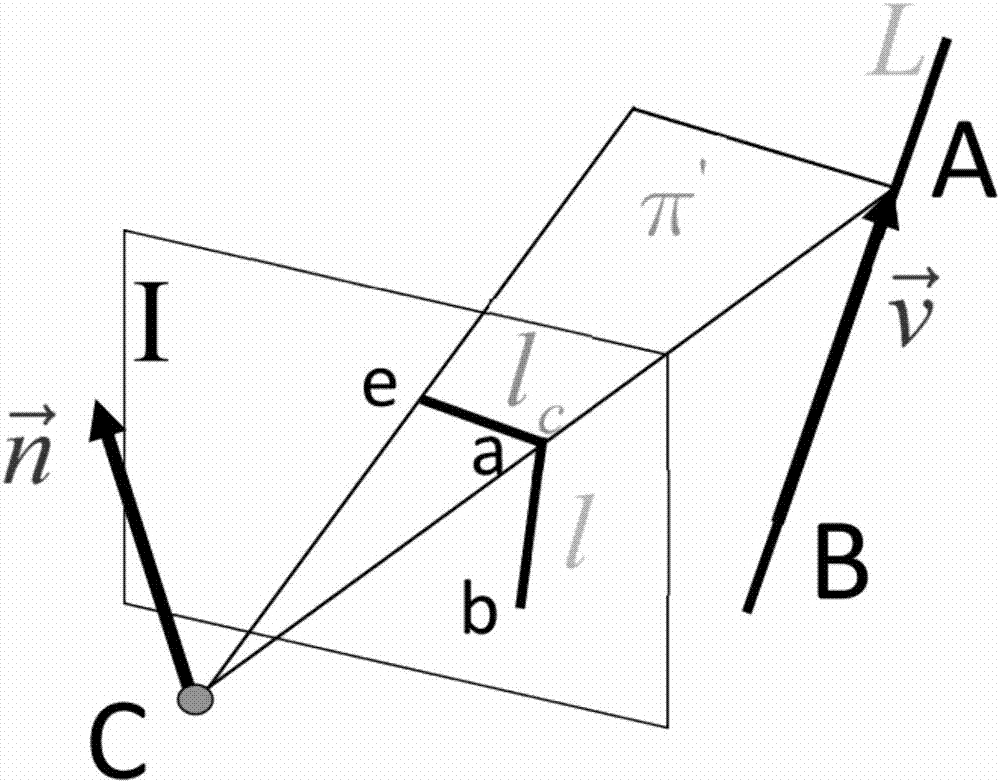

Visual sense simultaneous localization and mapping method based on dot and line integrated features

ActiveCN106909877AImprove clustering effectCharacter and pattern recognitionSimultaneous localization and mappingClosed loop

The invention discloses a visual sense simultaneous localization and mapping method based on dot and line integrated features. The method comprehensively utilizes the line features and the dot features extracted and obtained from a binocular camera image and is able to be used for the positioning and the attitude estimation of a robot in both an external environment and an internal environment. As the dot features and the line features are integrated for use, the system becomes more robust and more accurate. For the parameterization of linear features, the Pluck coordinates are used for straight line calculations, including geometric transformations, 3D reconstructions, and etc. In the back-end optimization, the orthogonal representation of the straight line is used to minimize the number of the parameters of the straight line. The off-line established visual dictionary for the dot and line integrated features is used for closed loop detections; and through the method of adding zone bits, the dot characteristics and the line characteristics are treated differently in the visual dictionary and when an image database is created and image similarity is calculated. The invention can be applied to the construction of a scene image both indoors and outdoors. The constructed map integrates the feature dots and the feature lines, therefore, able to provide even richer information.

Owner:ZHEJIANG UNIV

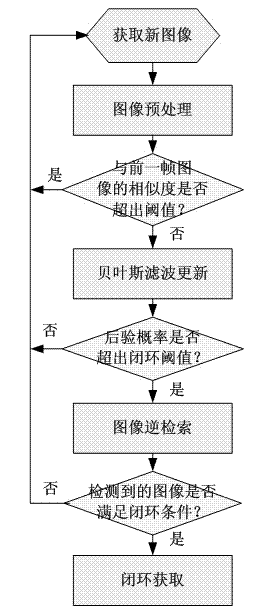

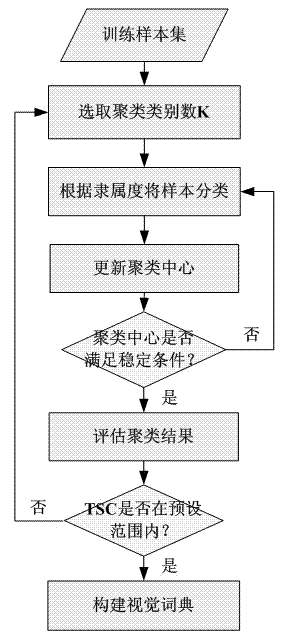

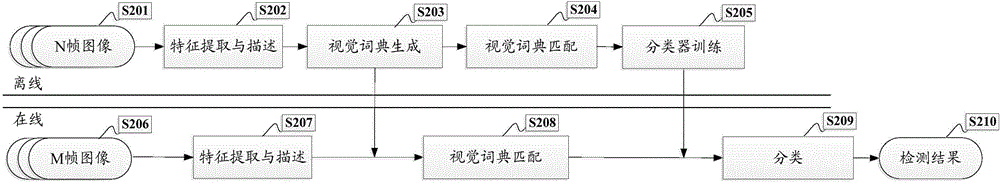

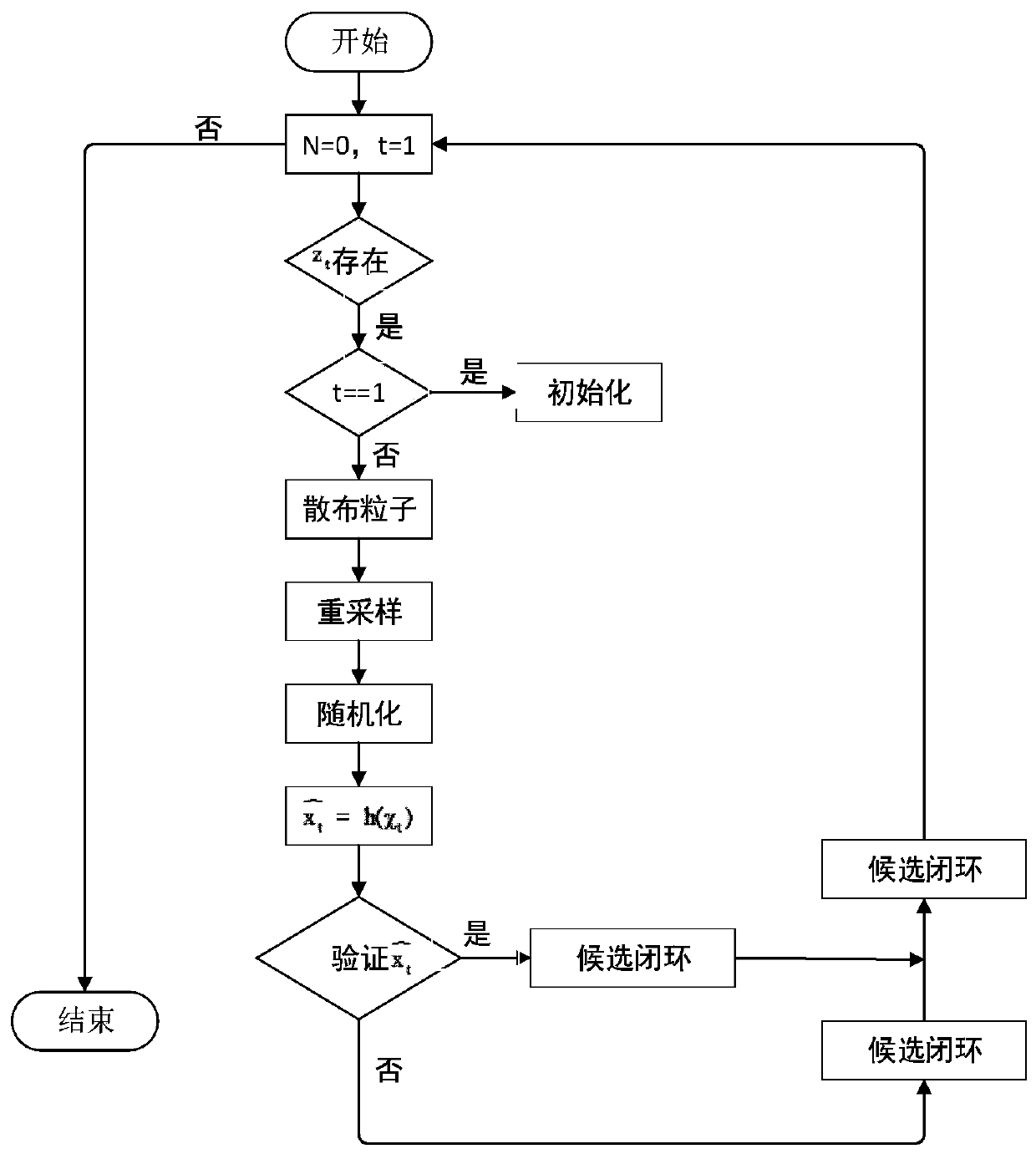

Image appearance based loop closure detecting method in monocular vision SLAM (simultaneous localization and mapping)

InactiveCN102831446AReduce the amount of calculationLower requirementCharacter and pattern recognitionPosition/course control in two dimensionsSimultaneous localization and mappingVisual perception

The invention discloses an image appearance based loop closure detecting method in monocular vision SLAM (simultaneous localization and mapping). The image appearance based loop closure detecting method includes acquiring images of the current scene by a monocular camera carried by a mobile robot during advancing, and extracting characteristics of bag of visual words of the images of the current scene; preprocessing the images by details of measuring similarities of the images according to inner products of image weight vectors and rejecting the current image highly similar to a previous history image; updating posterior probability in a loop closure hypothetical state by a Bayesian filter process to carry out loop closure detection so as to judge whether the current image is subjected to loop closure or not; and verifying loop closure detection results obtained in the previous step by an image reverse retrieval process. Further, in a process of establishing a visual dictionary, the quantity of clustering categories is regulated dynamically according to TSC (tightness and separation criterion) values which serve as an evaluation criterion for clustering results. Compared with the prior art, the loop closure detecting method has the advantages of high instantaneity and detection precision.

Owner:NANJING UNIV OF POSTS & TELECOMM

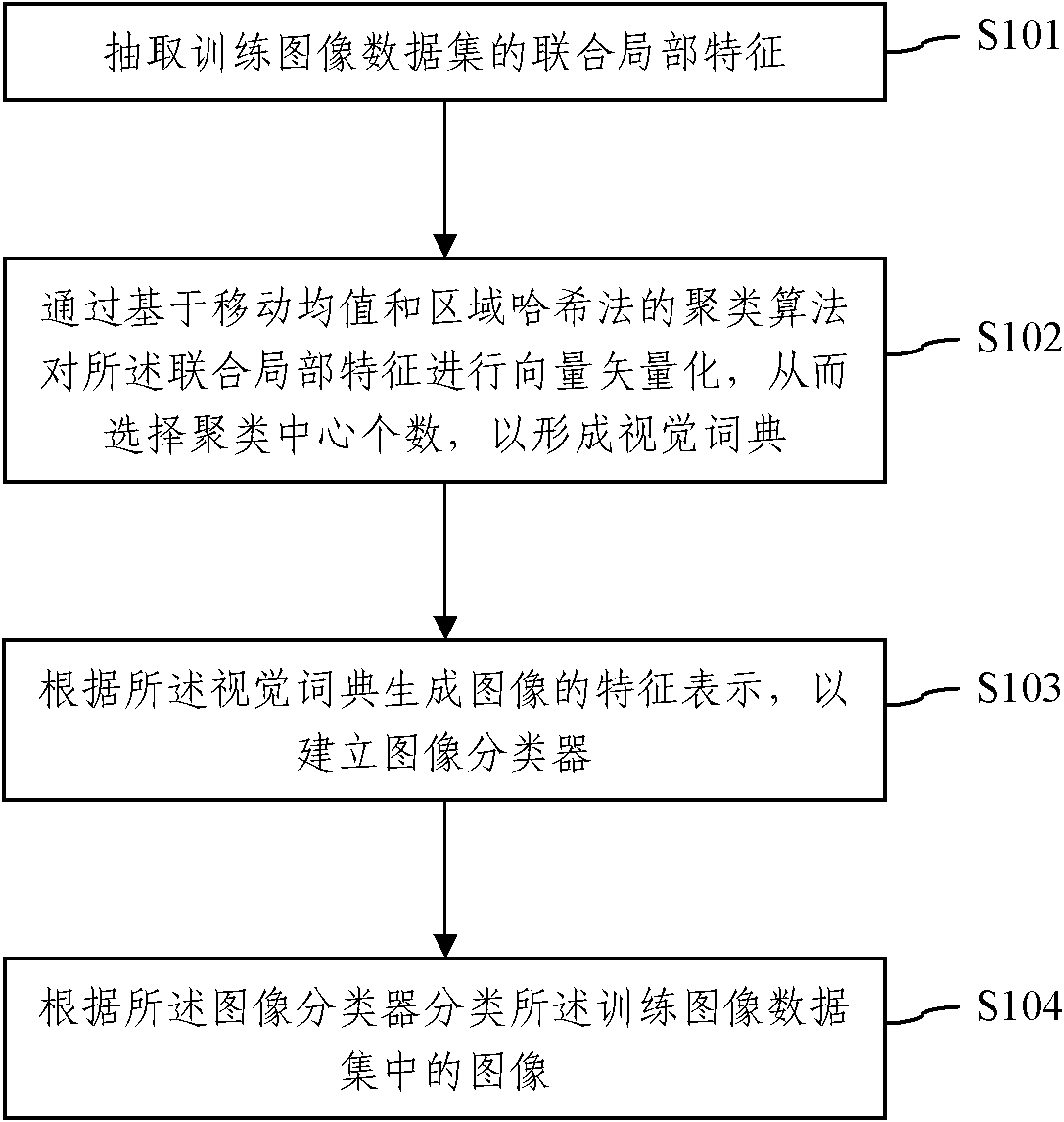

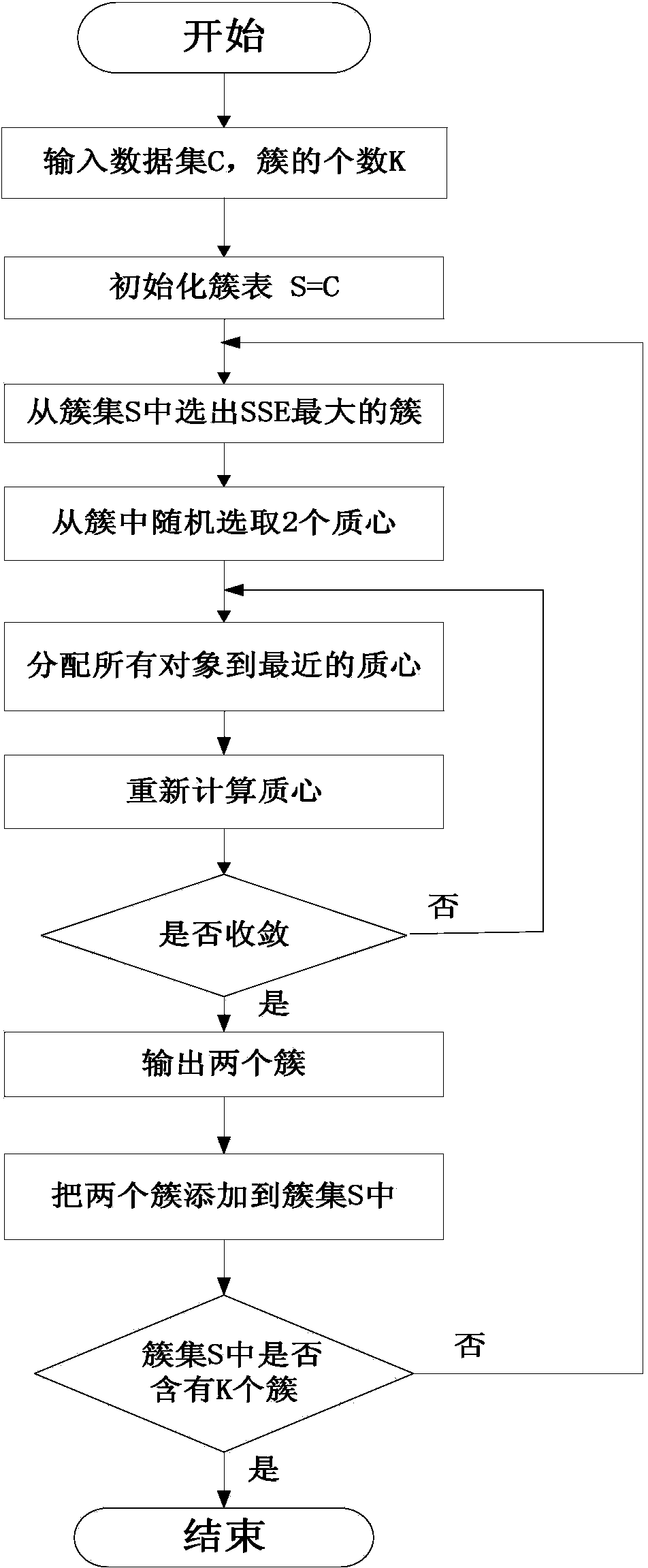

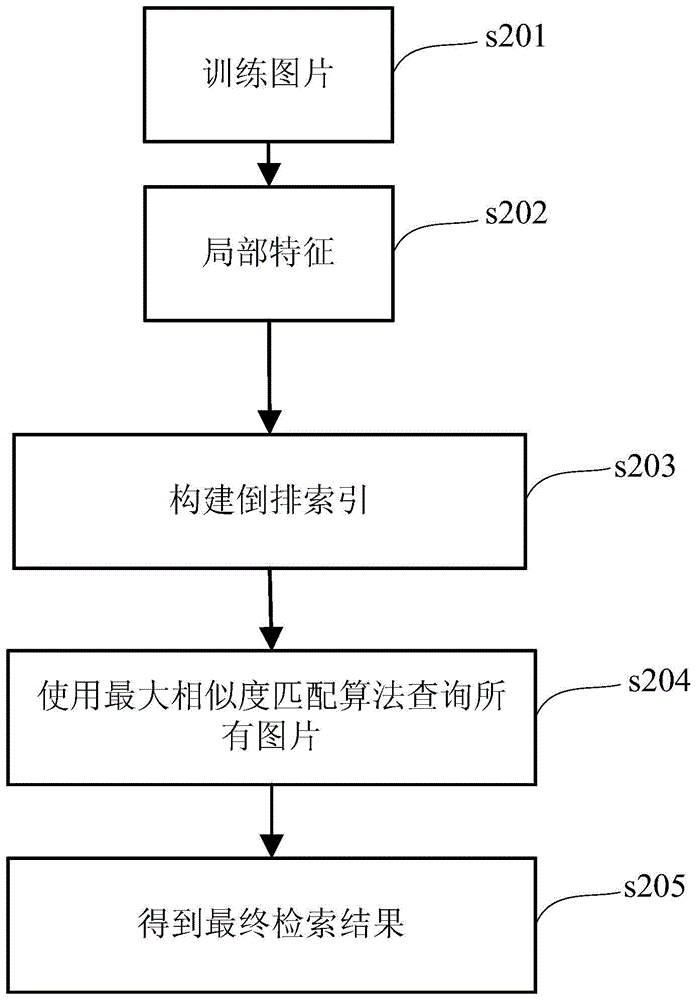

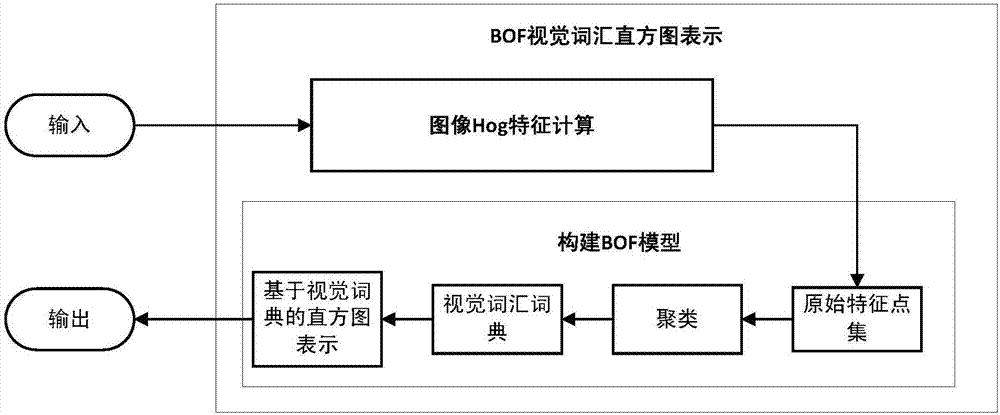

Image classification method based on visual dictionary

ActiveCN102208038AAdaptableImprove robustnessCharacter and pattern recognitionDigital signal processingCluster algorithm

The invention discloses an image classification method based on a visual dictionary and relates to the technical field of digital image processing. The image classification method comprises the following steps of: 1, extracting a union partial characteristic of a training image data set; 2, performing vector vectorization on the union partial characteristic by using a clustering algorithm based on a moving mean value and a regional hash method so as to select the number of clustering centers and form the visual dictionary; 3, generating a characteristic expression of images according to the visual dictionary so as to build an image classifier; and 4, classifying the images in the training image data set according to the image classifier. By the image classification method, the visual dictionary having the discrimination can be obtained, so the classification method is adaptive to the sample space distribution of the image data set, high in resistance of affine transformation and lighting variation, robustness to partial abnormity, noise interference and complicated backgrounds, universality and practical value, and can be applied to classification of various images.

Owner:TSINGHUA UNIV +1

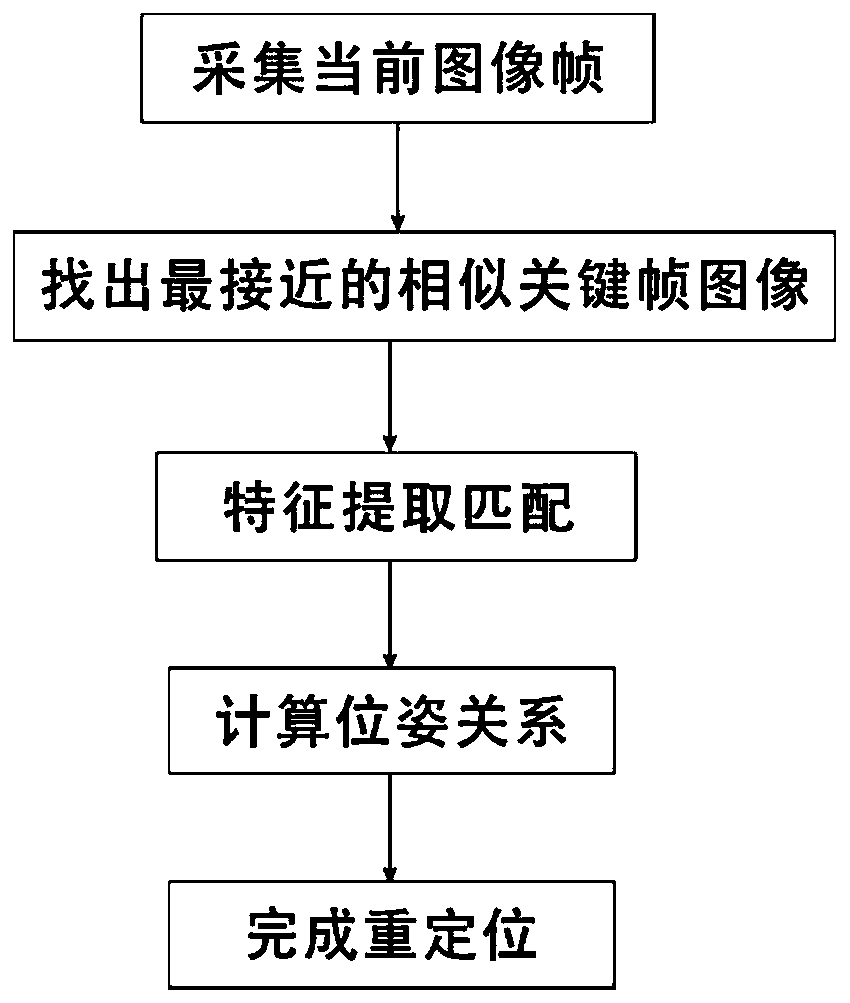

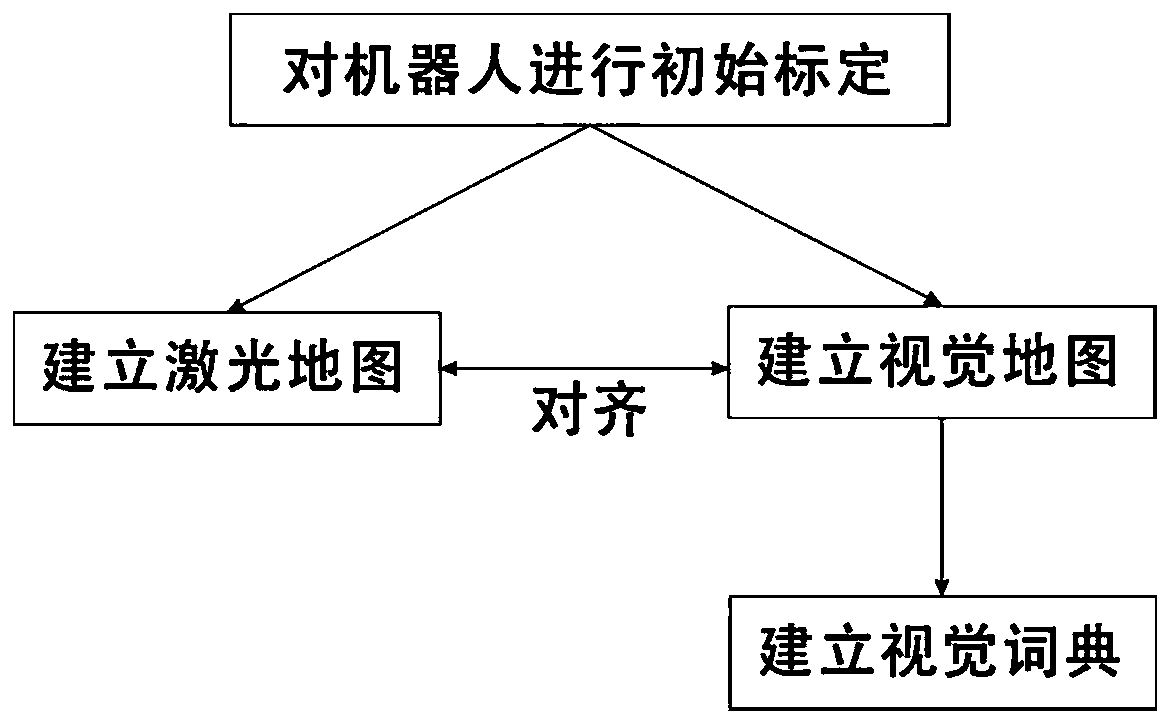

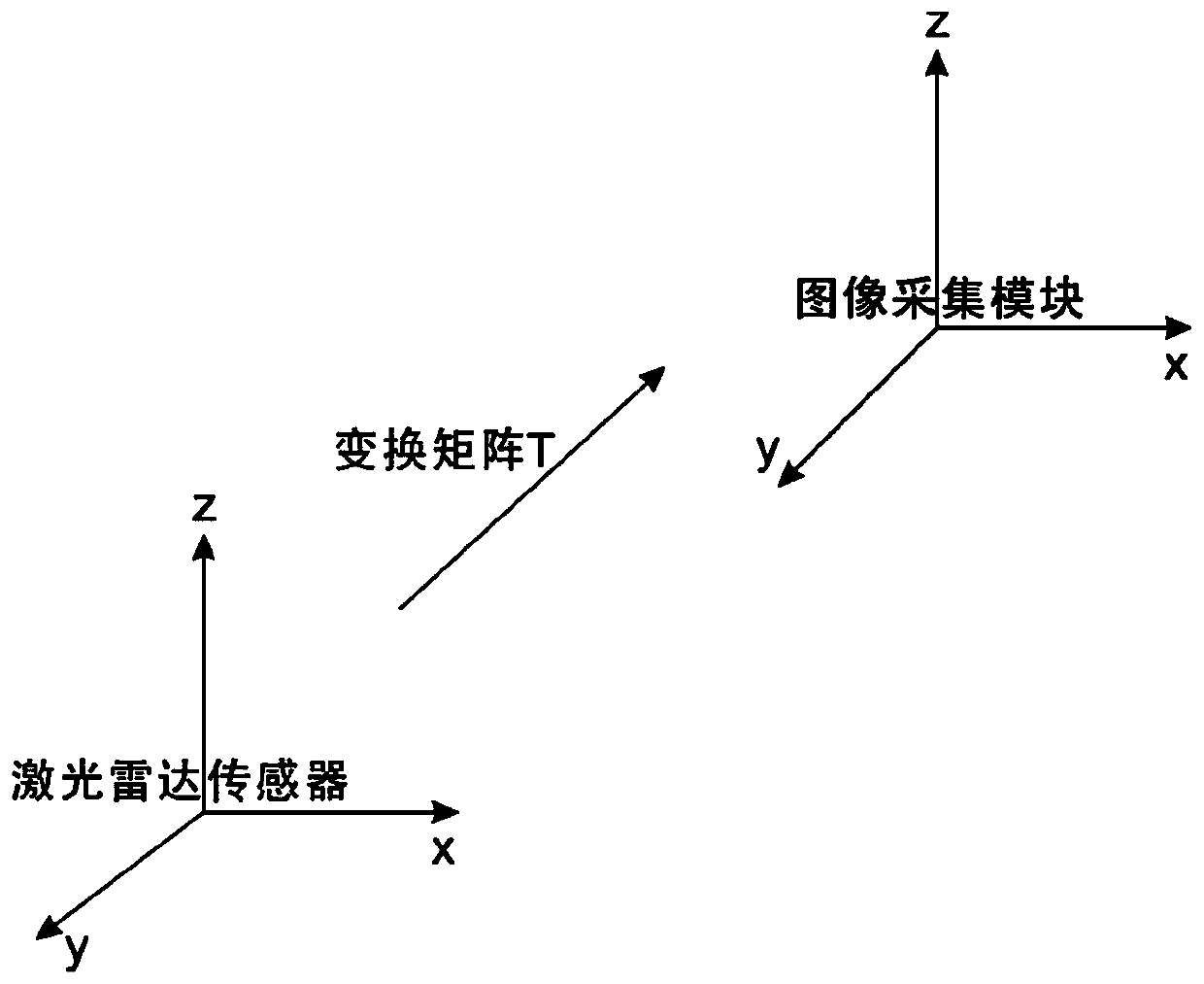

Robot rapid repositioning method and system based on visual dictionary

The invention discloses a robot rapid repositioning method and system based on a visual dictionaryThe method comprises the following steps: obtaining a current image frame through an image collectionmodule, comparing the current image frame with a key frame stored in a visual map, and finding out the closest similar key frame; performing feature matching on the current image frame and the similarkey frame to obtain a pose relationship of the pose of the current image frame relative to the similar key frame; and according to the pose of the similar key frame in the laser map and the pose relationship of the current image frame relative to the similar key frame, pose information of the current robot is obtained, and repositioning is completed. The method has the advantages that the imagesare acquired by the image acquisition module, repositioning is performed when the robot fails to perform positioning by the aid of the laser radar sensor, and the method is simple, high in robustnessand free of manual intervention.

Owner:的卢技术有限公司

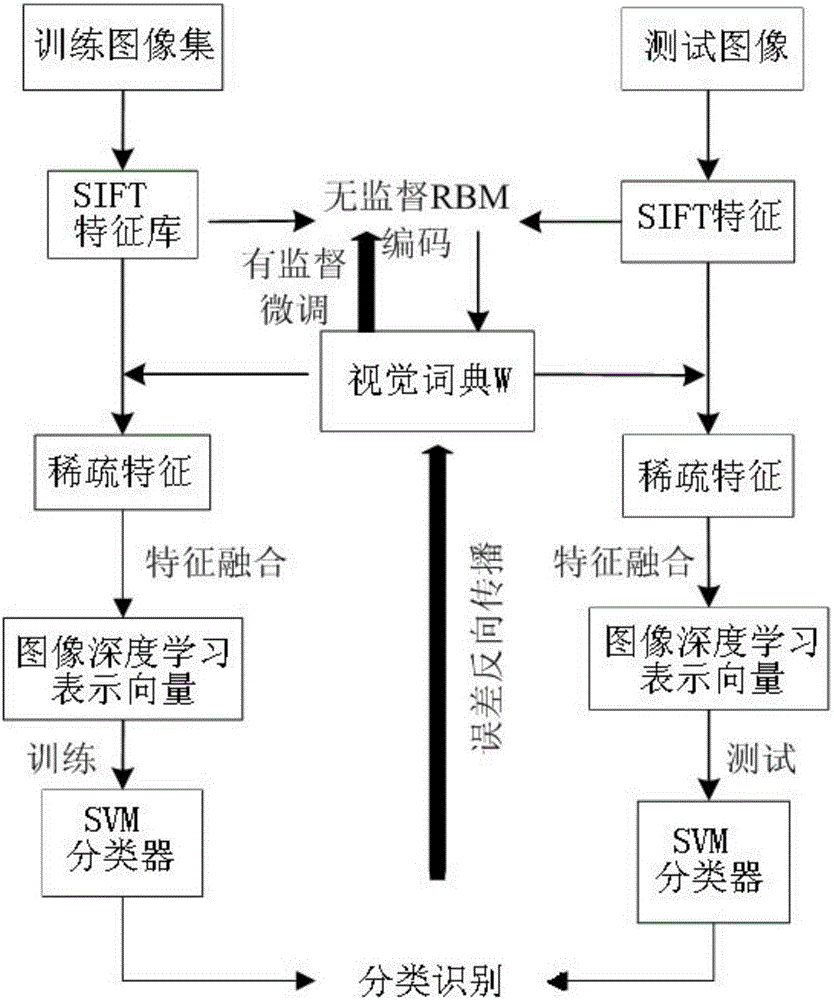

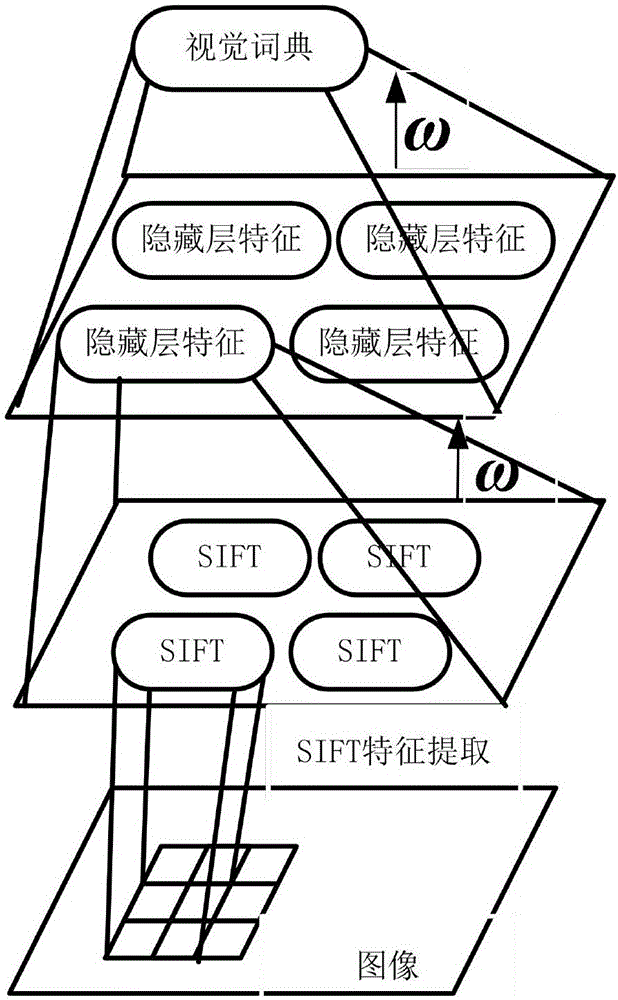

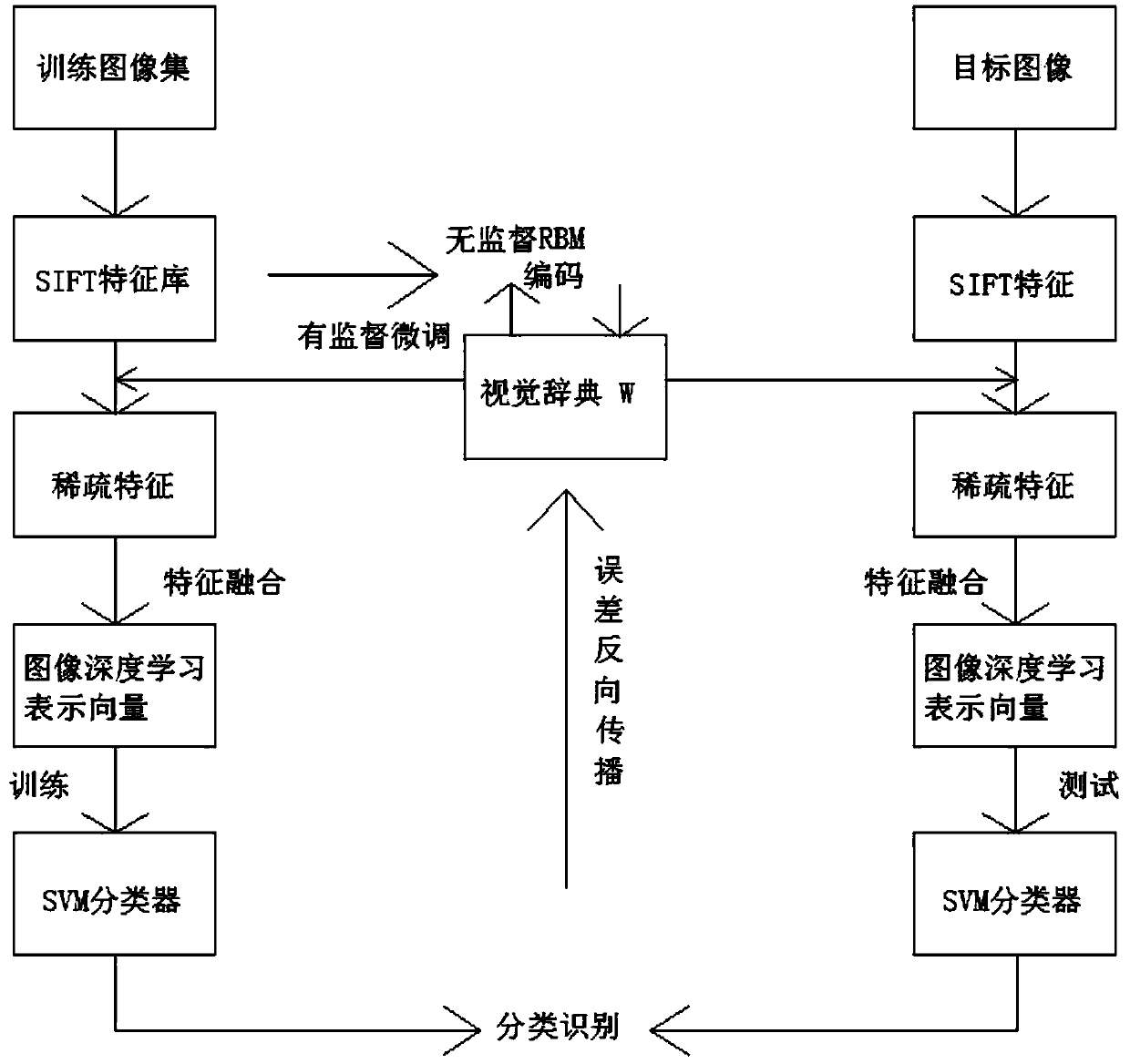

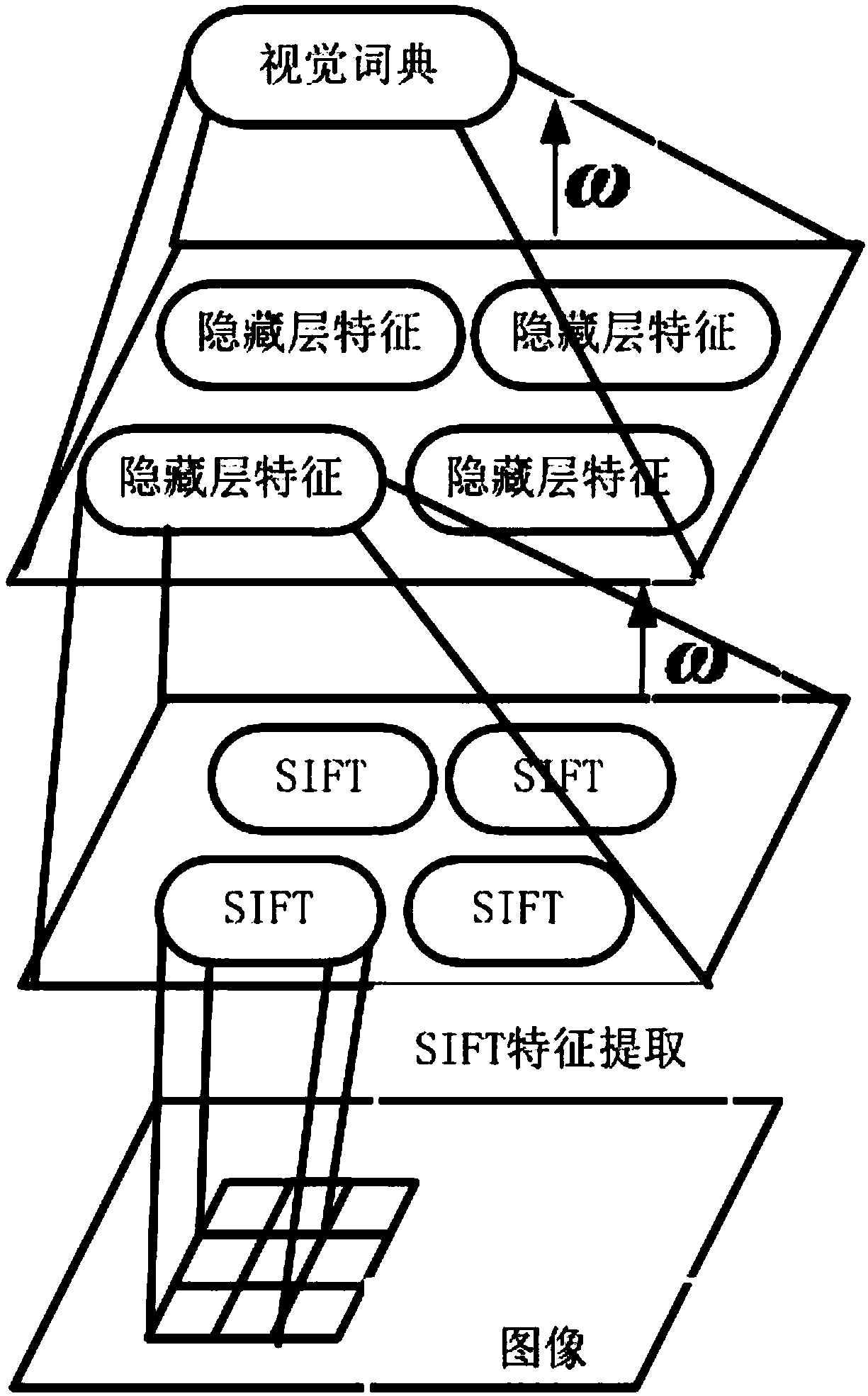

Method for re-identifying persons on basis of deep learning encoding models

InactiveCN106778921AImprove accuracyImprove resolutionCharacter and pattern recognitionNeural learning methodsRestricted Boltzmann machineRestrict boltzmann machine

The invention relates to a method for re-identifying persons on the basis of deep learning encoding models. The method includes steps of firstly, encoding initial SIFT features in bottom-up modes by the aid of unsupervised RBM (restricted Boltzmann machine) networks to obtain visual dictionaries; secondly, carrying out supervised fine adjustment on integral network parameters in top-down modes; thirdly, carrying out supervised fine adjustment on the initial visual dictionaries by the aid of error back propagation and acquiring new image expression modes, namely, image deep learning representation vectors, of video images; fourthly, training linear SVM (support vector machine) classifiers by the aid of the image deep learning representation vectors so as to classify and identify pedestrians. The method has the advantages that the problems of poor effects and low robustness due to poor surveillance video quality and viewing angle and illumination difference of the traditional technologies for extracting features and the problem of high computational complexity of the traditional classifiers can be effectively solved by the aid of the method; the person target detection accuracy and the feature expression performance can be effectively improved, and the pedestrians in surveillance video can be efficiently identified.

Owner:张烜

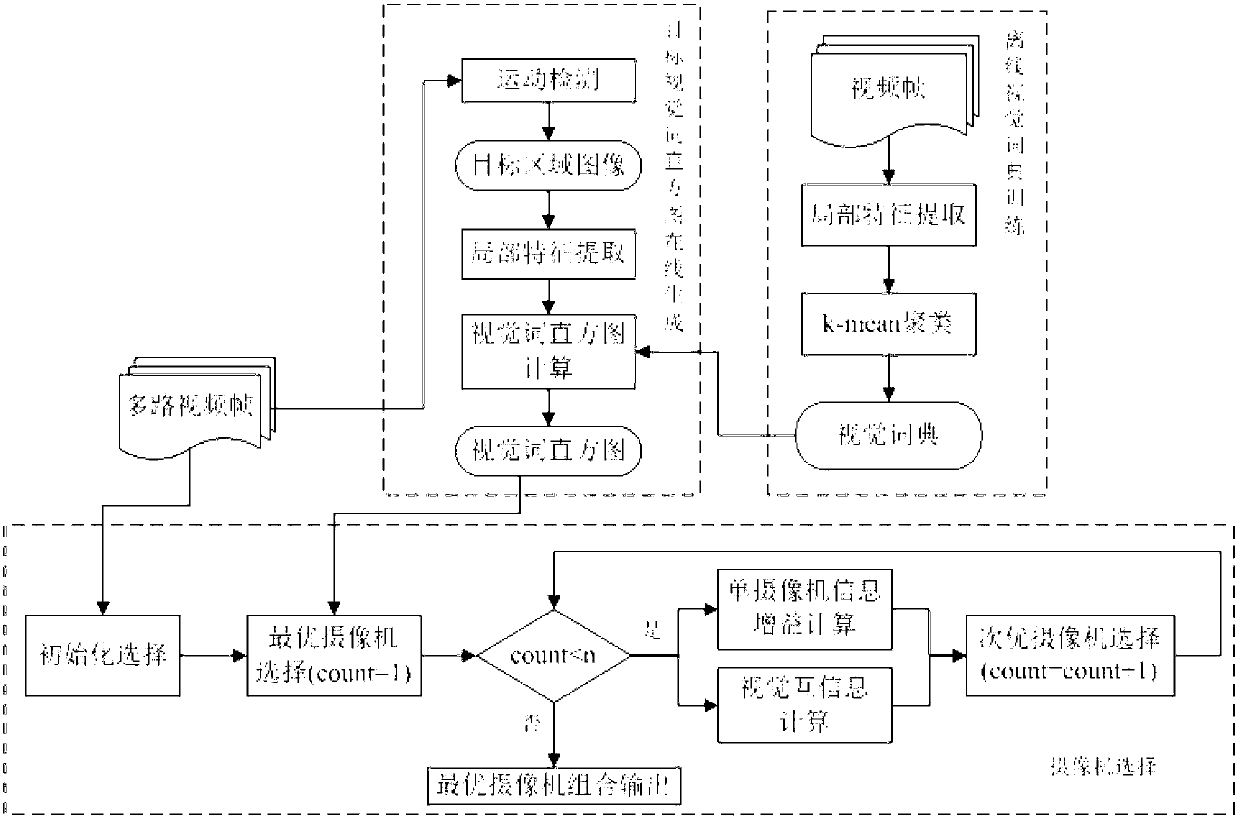

Method for selecting camera combination in visual perception network

InactiveCN102932605AReduce information redundancyHigh level of detailTelevision system detailsImage analysisVisual dictionaryHistogram

The invention discloses a method for selecting camera combination in a visual perception network. The method comprises the following steps: on-line generation of a target image visual histogram: in the case that the vision fields of a plurality of cameras overlap each other, performing motion detection on online obtained video data of multiple cameras observing the same object, determining the subregion of the object in a video frame image space according to the detection result, to obtain a target image region; performing local feature extraction on the target image region, and calculating the visual histogram of the target image region at the visual angle according to a visual dictionary generated by pre-training; and sequential forward camera selection: selecting an optimal visual angle, that is, the optimal camera, in the set of unselected cameras, selecting a secondary optimal camera, adding the secondary optimal camera to the set of selected cameras, removing the secondary optimal camera from the set of candidate cameras, and repeating the steps until the count of the selected cameras reaches the count of needed cameras.

Owner:NANJING UNIV

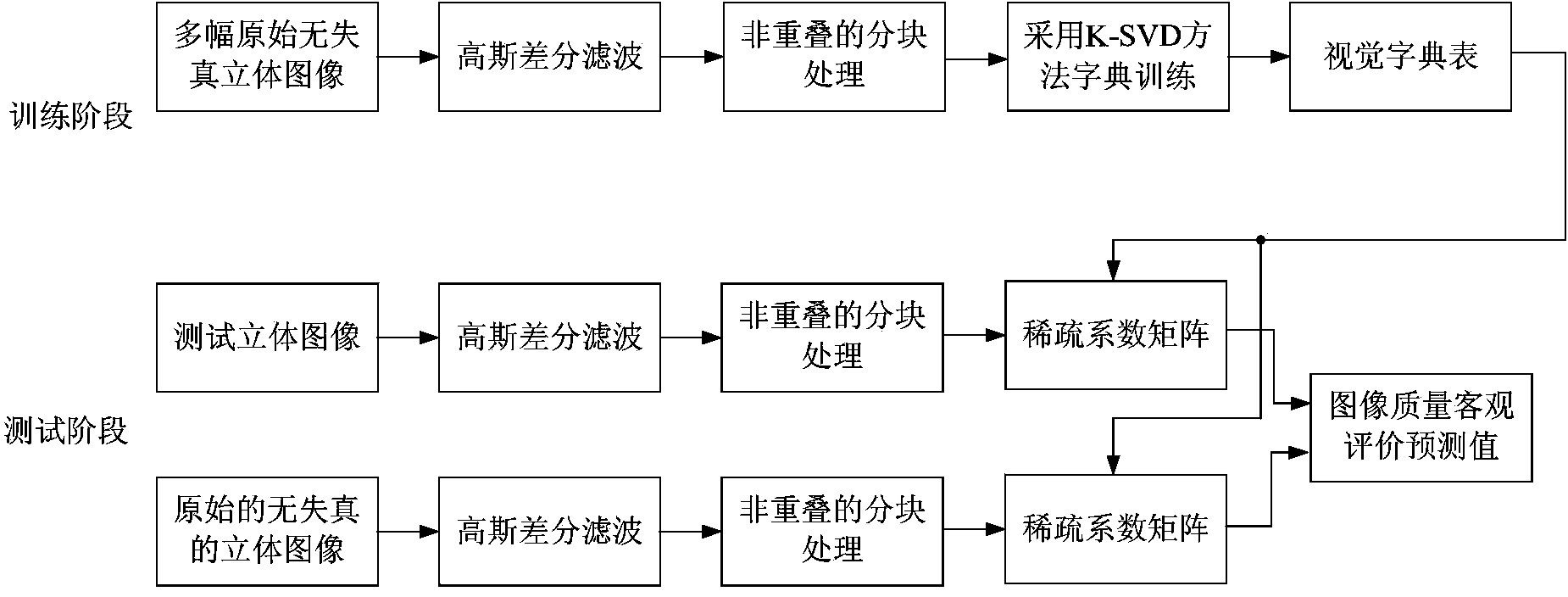

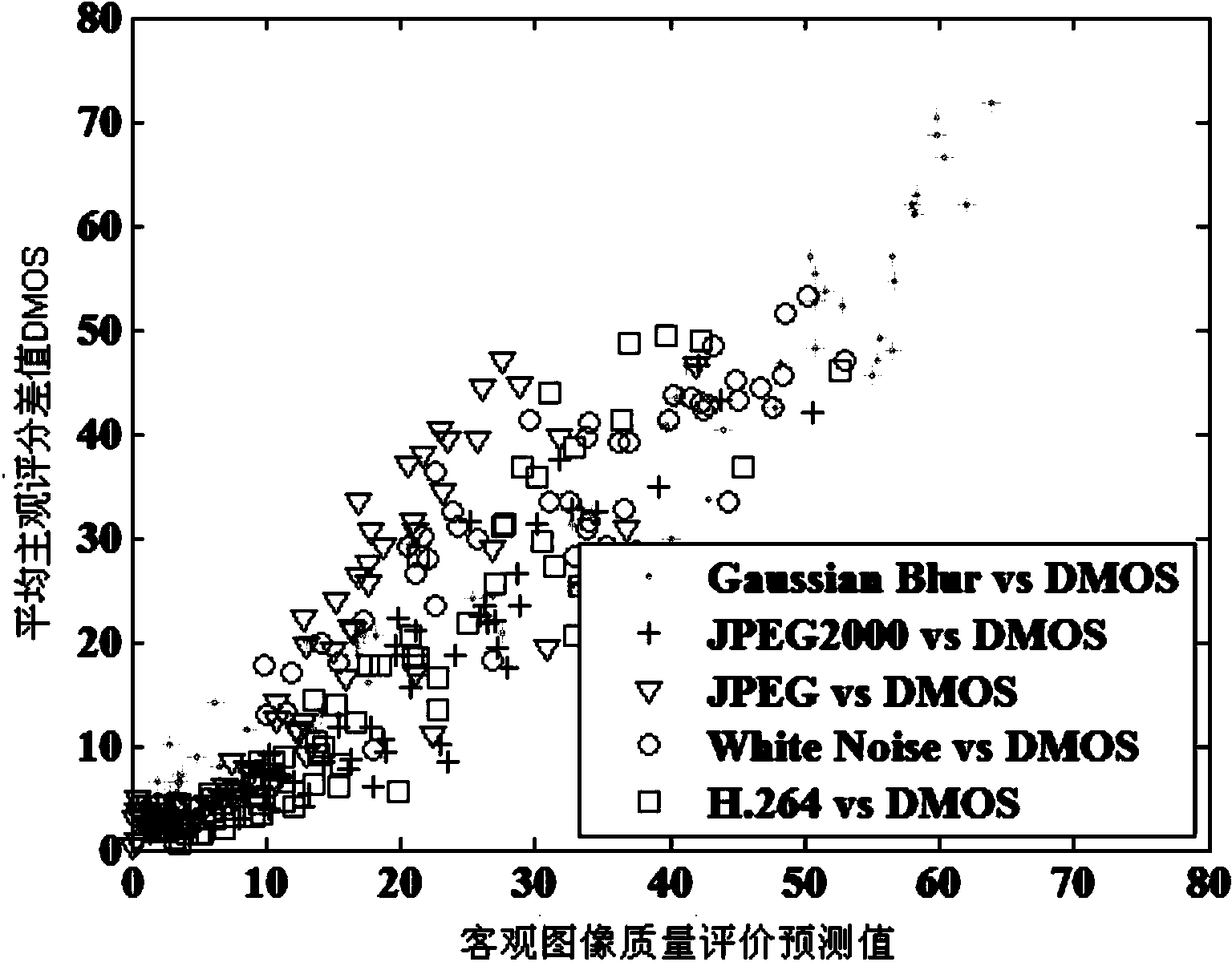

Three-dimensional image quality objective evaluation method based on sparse representation

ActiveCN104036501AAvoid learning the training processReduce computational complexityImage analysisCharacter and pattern recognitionPattern recognitionViewpoints

The invention discloses a three-dimensional image quality objective evaluation method based on sparse representation. According to the method, in a training stage, left viewpoint images of a plurality of original undistorted three-dimensional images are selected for forming a training image set, Gaussian difference filtering is adopted for carrying out filtering on each image in the training image set to obtain filtered images in different scales, and in addition, a K-SVD method is adopted for carrying out dictionary training operation on a set formed by all sub blocks in all of the filtered images in different scales for constructing a visual dictionary table; and in a test stage, the Gaussian difference filtering is performed on any one tested three-dimensional image and the original undistorted three-dimensional image to obtain filtered images in different scales, then, the filtered images in different scales is subjected to non-overlapped partition processing, and an image quality objective evaluation prediction value of the tested images is obtained. The three-dimensional image quality objective evaluation method has the advantages that a complicated machine learning training process is not needed in the training stage; and the in the test stage, the image quality objective evaluation prediction value only needs to be calculated through a sparse coefficient matrix, and in addition, the consistency with the subjective evaluation value is better.

Owner:创客帮(山东)科技服务有限公司

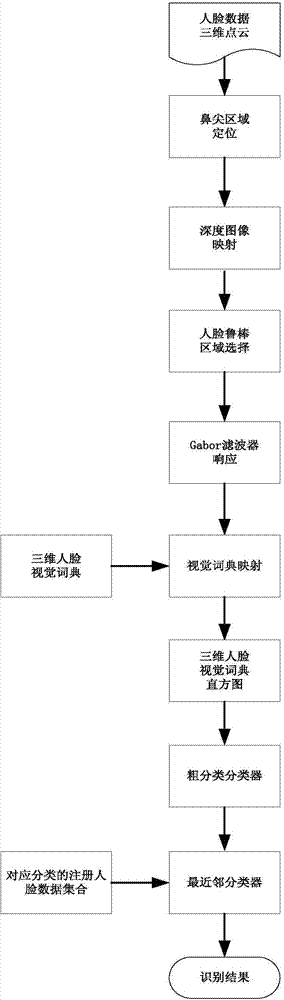

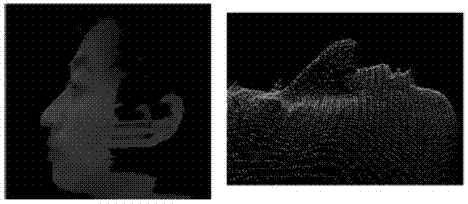

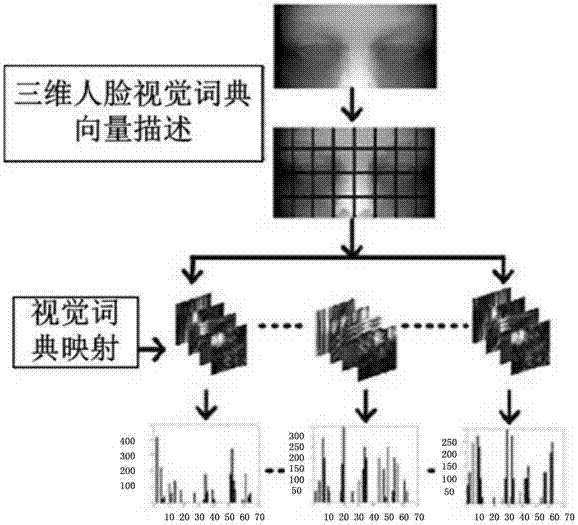

Three-Dimensional Face Recognition Device Based on Three Dimensional Point Cloud and Three-Dimensional Face Recognition Method Based on Three-Dimensional Point Cloud

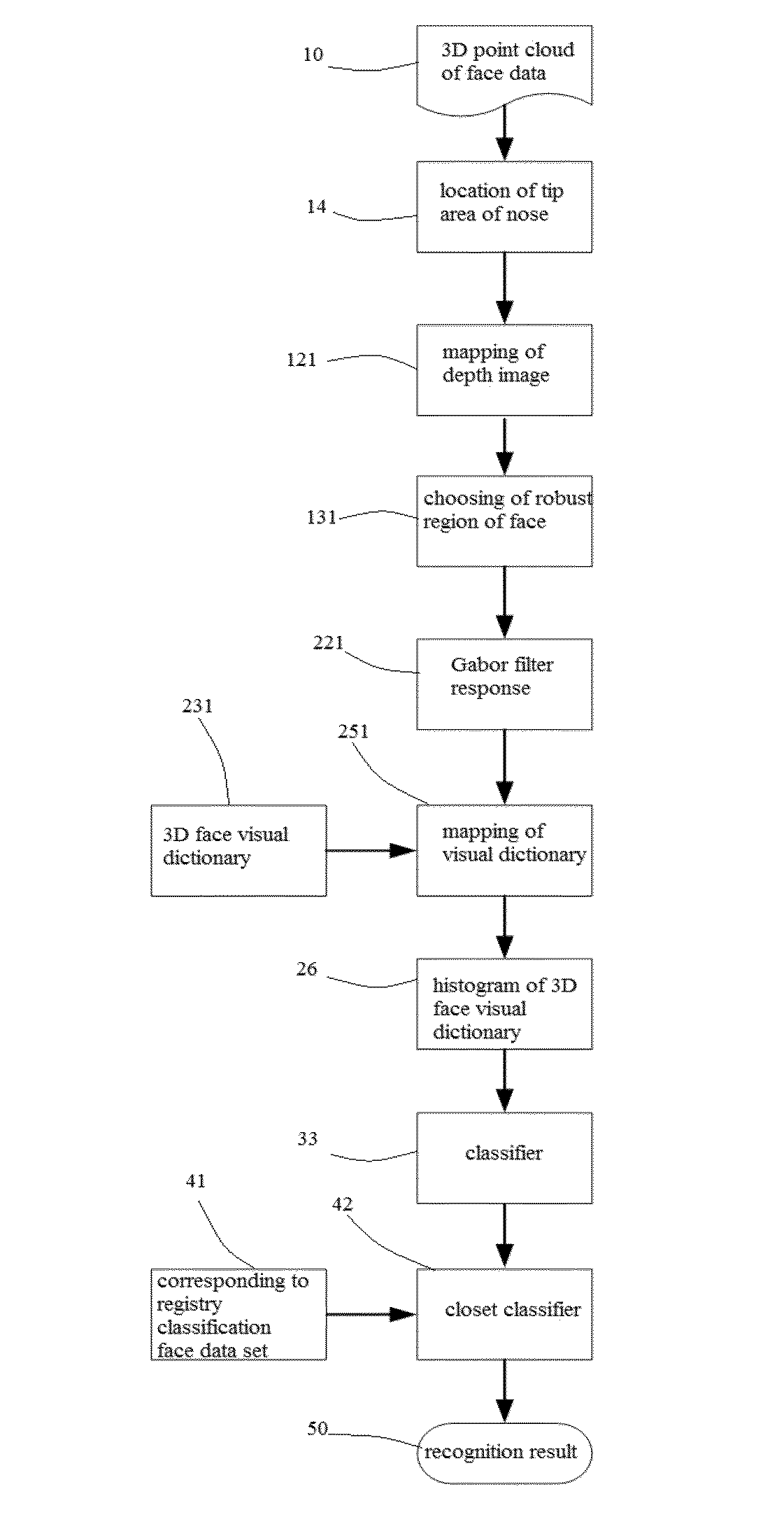

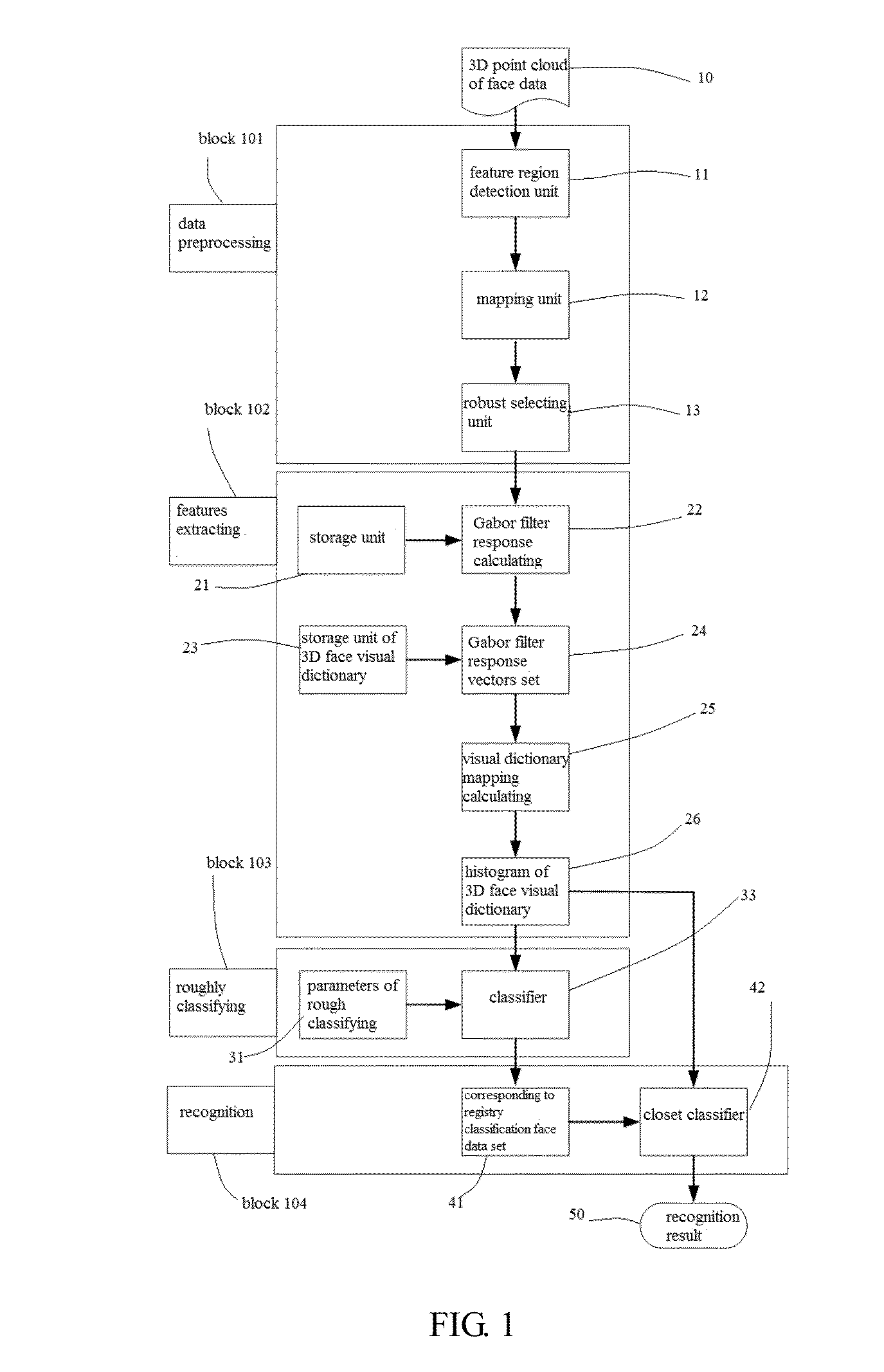

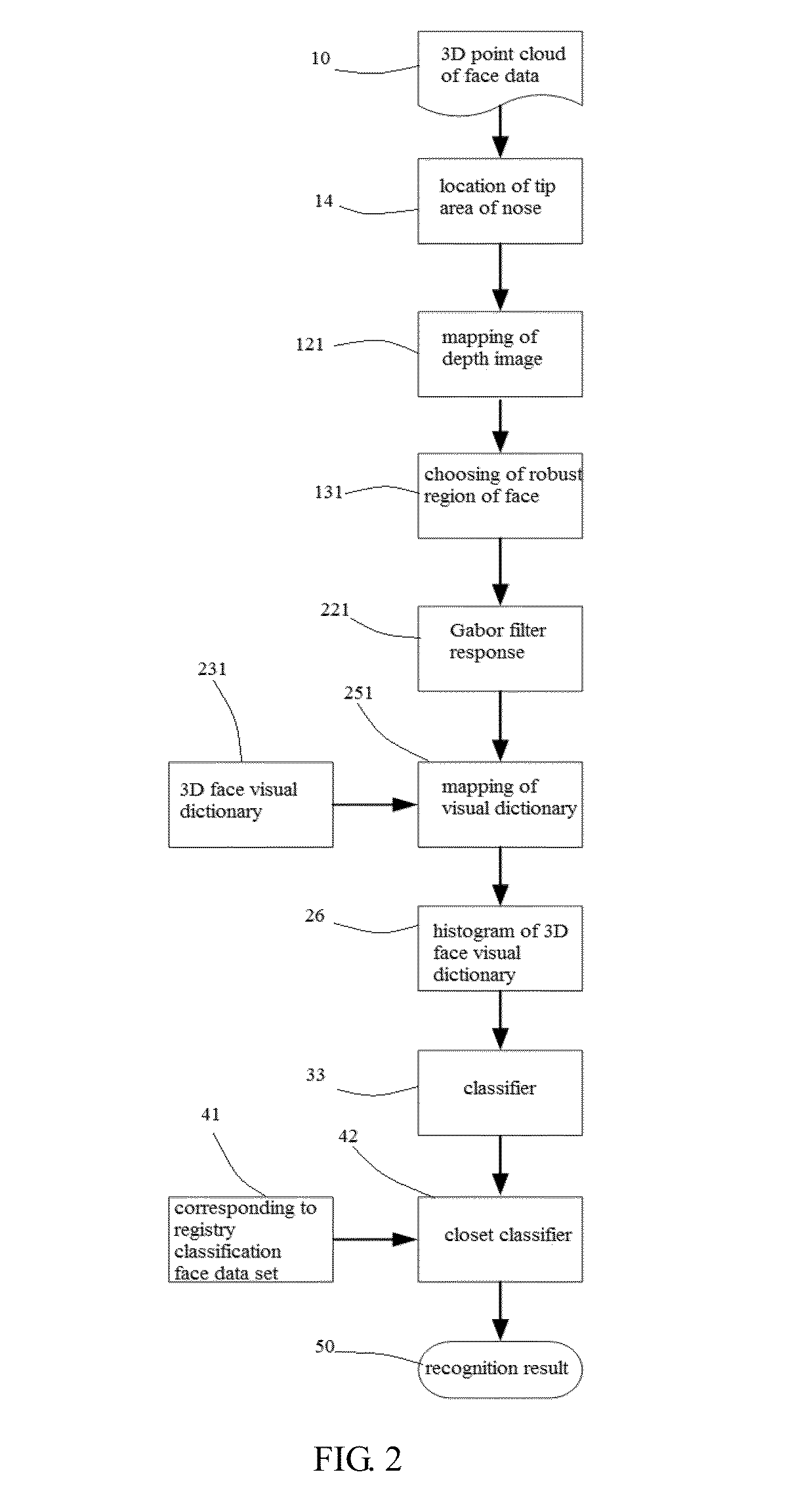

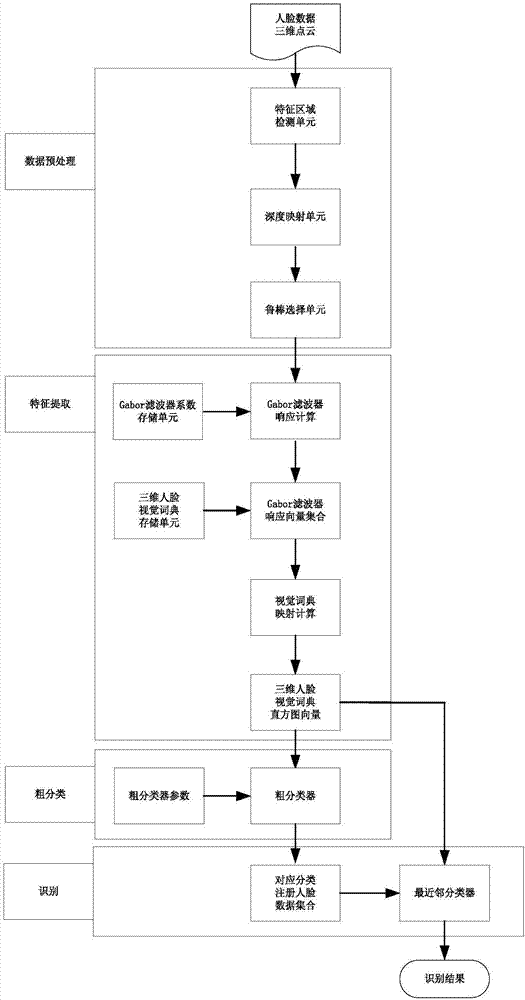

InactiveUS20160196467A1Improve abilitiesImprove adaptabilityImage enhancementImage analysisPoint cloudVisual perception

The invention describes a three-dimensional face recognition device based on three-dimensional point cloud and a three-dimensional face recognition method based on three-dimensional point cloud. The device includes a feature region detection unit used for locating a feature region of the three-dimensional point cloud, a mapping unit used for mapping the three-dimensional point cloud to a depth image space in a normalizing mode, a statistics calculation unit used for conducting response calculating on three-dimensional face data in different scales and directions through Gabor filters having different scales and directions, a storage unit obtained by training used for storing a visual dictionary of the three-dimensional face data, a map calculation unit used for conducting histogram mapping on the visual dictionary and a Gabor response vector of each pixel, a classification calculation unit used for roughly classifying the three-dimensional face data, a recognition calculation unit used for recognizing the three-dimensional face data.

Owner:SHENZHEN WEITESHI TECH

Three-dimensional face recognition device and method based on three-dimensional point cloud

InactiveCN104504410AStrong detail texture description abilityGood quality adaptabilityImage analysisThree-dimensional object recognitionPoint cloudVisual perception

The invention discloses a three-dimensional face recognition device and method based on three-dimensional point cloud; the device comprises a characteristic area detection unit for positioning a three-dimensional point cloud characteristic area; a mapping unit for normalized mapping the three-dimensional point cloud to a depth image space; a data calculating unit using Gabor filters in different dimensions and directions to calculate responses in different dimensions and directions of three-dimensional face data; a storage unit for training a visual dictionary of the obtained three-dimensional face data; a mapping calculating unit for implementing histogram projection to the visual dictionary with respect to the Gabor response vector obtained by each pixel; a classification calculating unit for coarse classification of the three-dimensional face data; and an identification calculating unit for identifying the three-dimensional face data. By employing the disclosed technical scheme, the detail texture description ability of the three-dimensional data is stronger, and the adaptability to the quality of the input three-dimensional point cloud face data is better, thus the application foreground is better.

Owner:SHENZHEN WEITESHI TECH

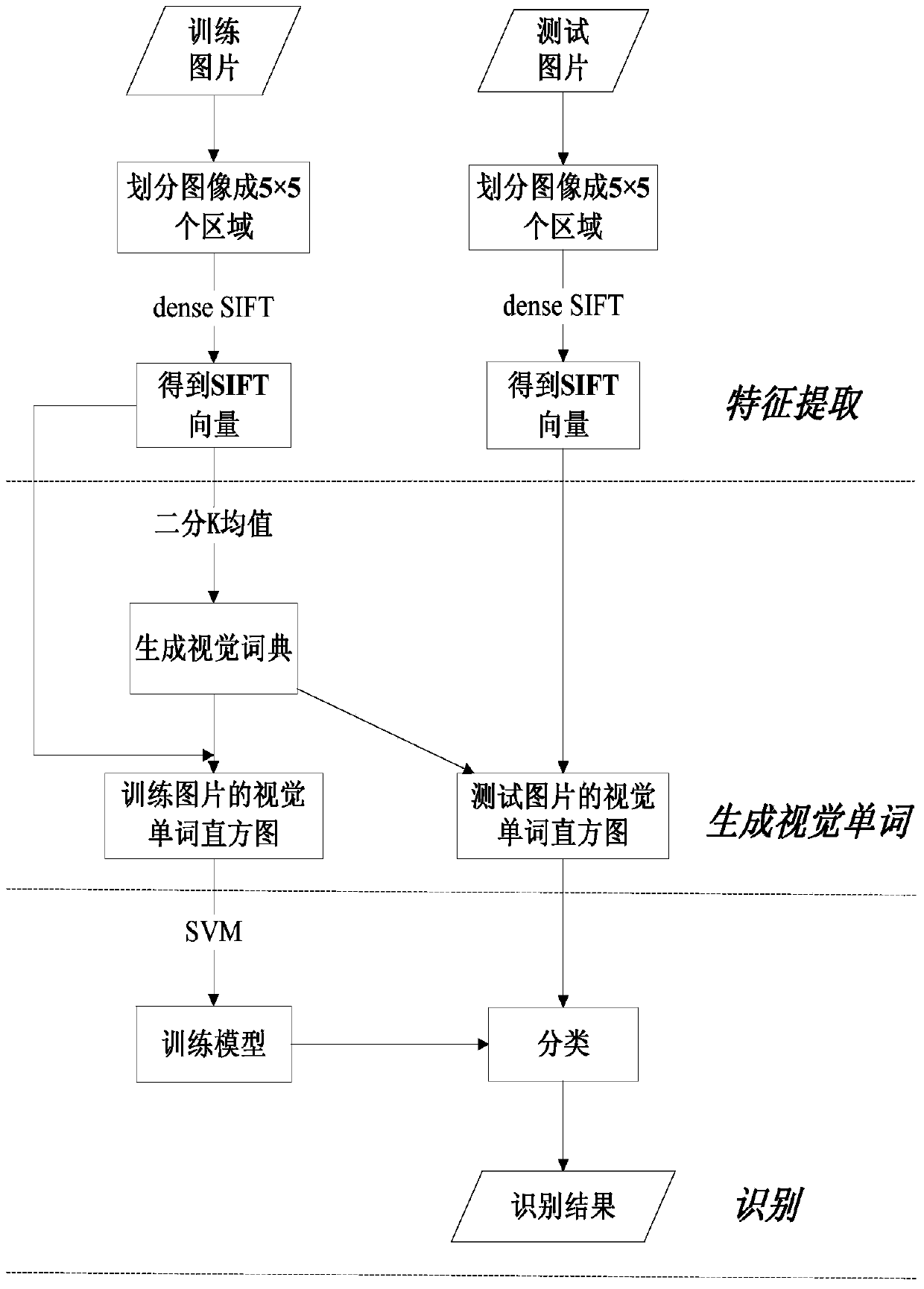

Facial image identification method based on word bag model

ActiveCN103745200AImprove recognition rateReduce running timeCharacter and pattern recognitionCluster algorithmFeature vector

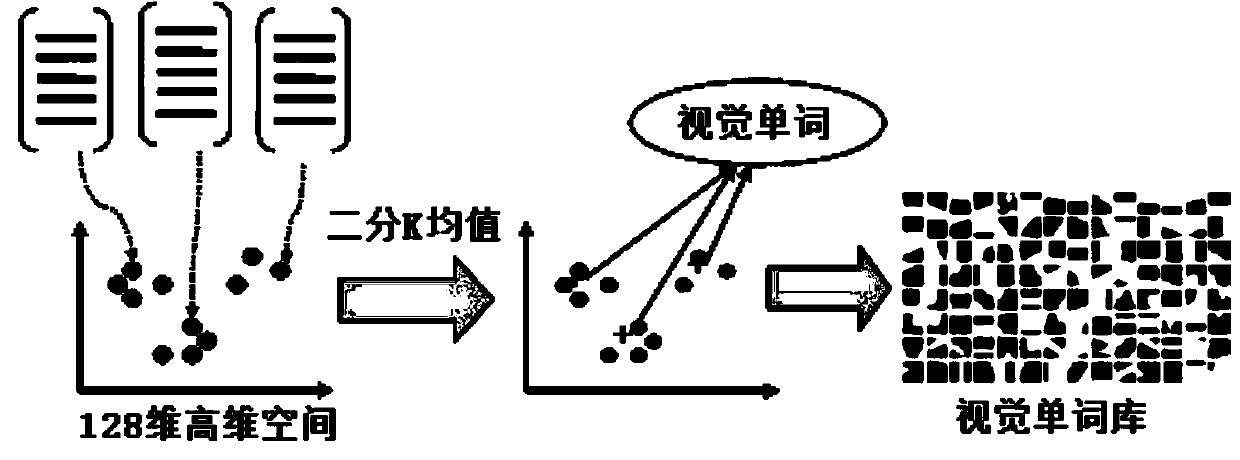

The invention relates to a facial image identification method based on a word bag model. The facial image identification method comprises the following steps: 1, extracting a facial image in a database, partitioning the facial image into 5*5 areas, and performing dense feature extraction on each area to obtain a series of feature vectors; 2, clustering the feature vectors representing each area by using a binary K mean clustering algorithm to generate a visual dictionary, matching the feature vectors with the visual dictionary to generate a histogram of a corresponding area, and expressing the facial image with the visual word histogram; 3, inputting the visual word histogram representing each facial image into a classifier, training and clustering to obtain an identification result.

Owner:HARBIN ENG UNIV

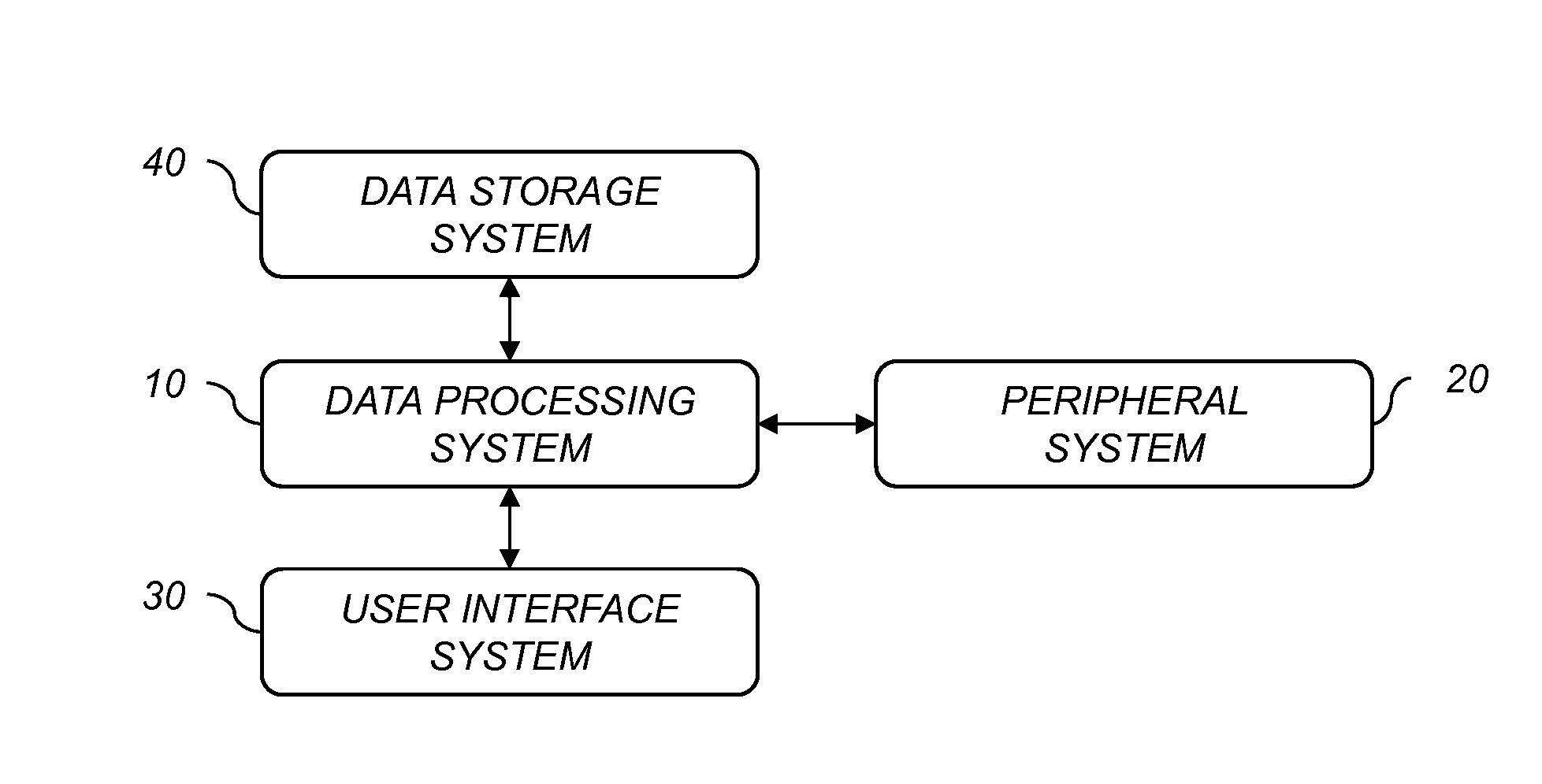

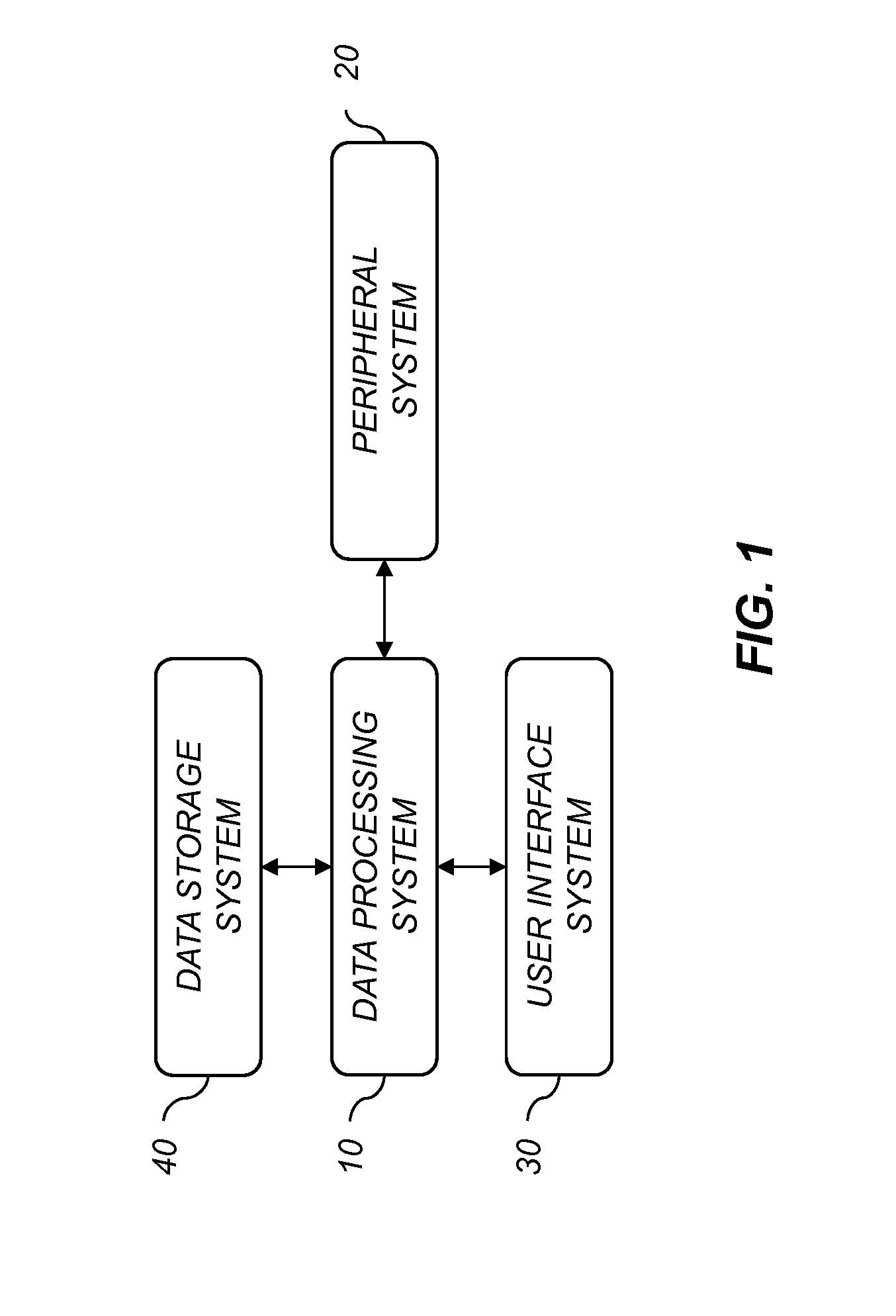

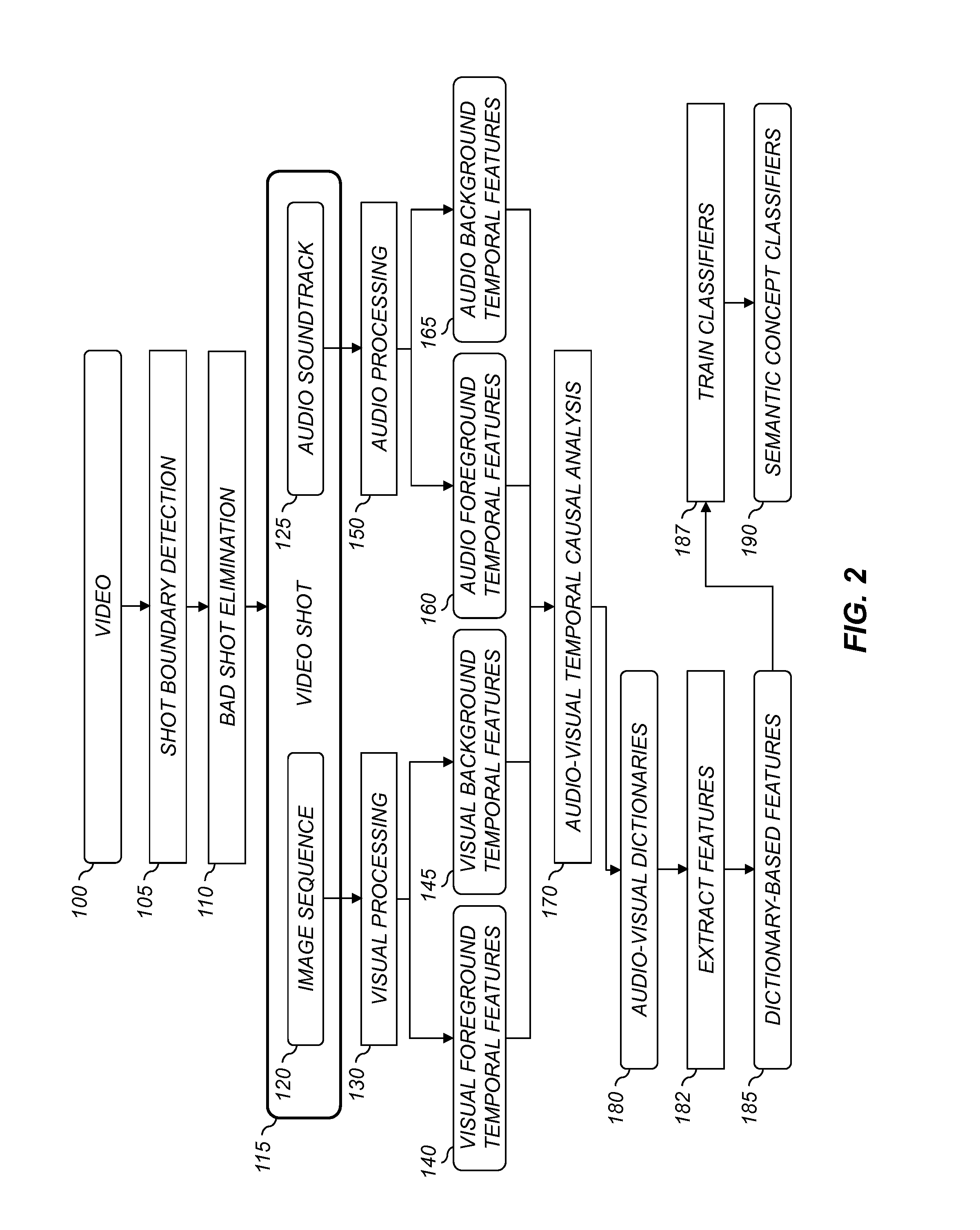

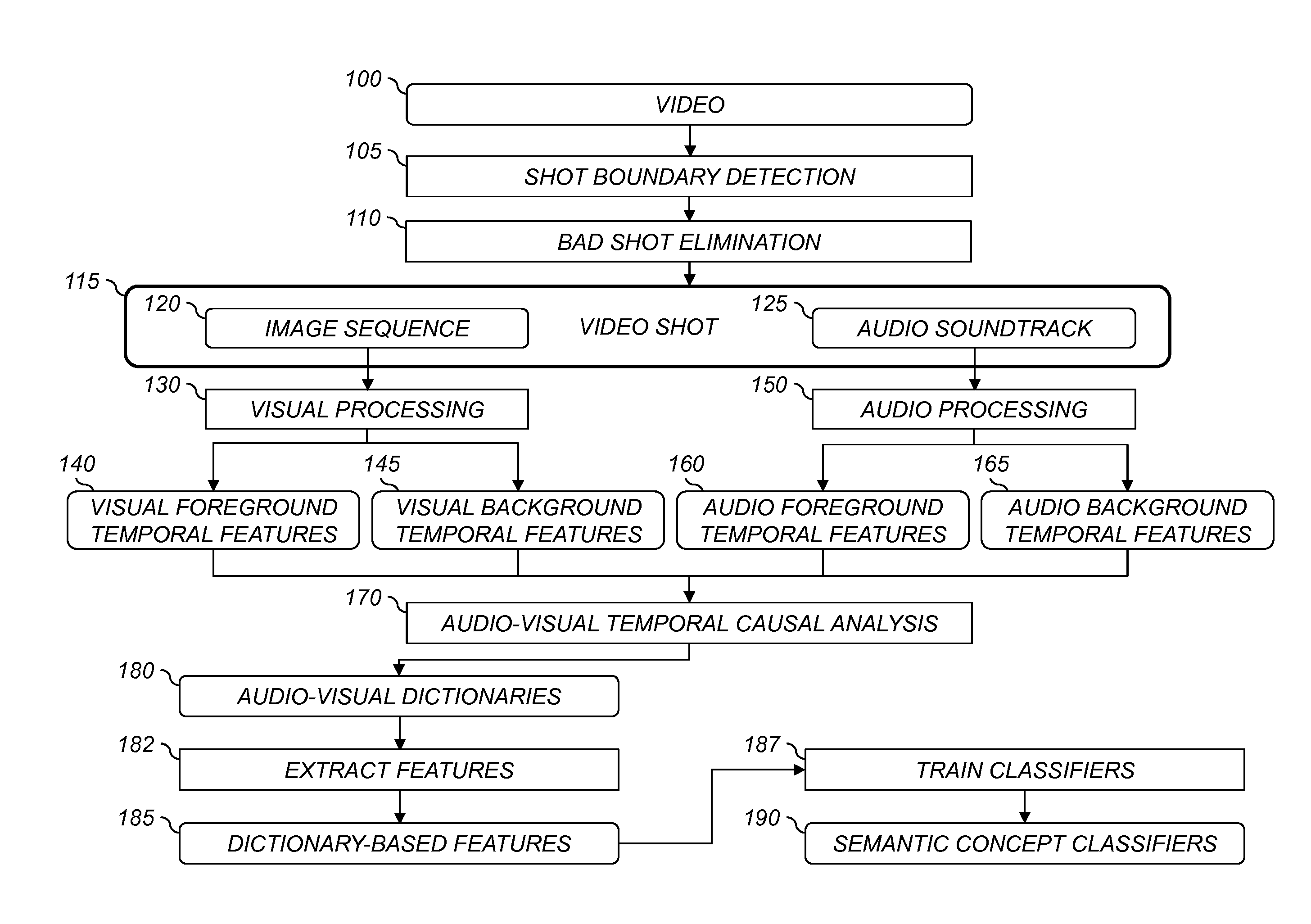

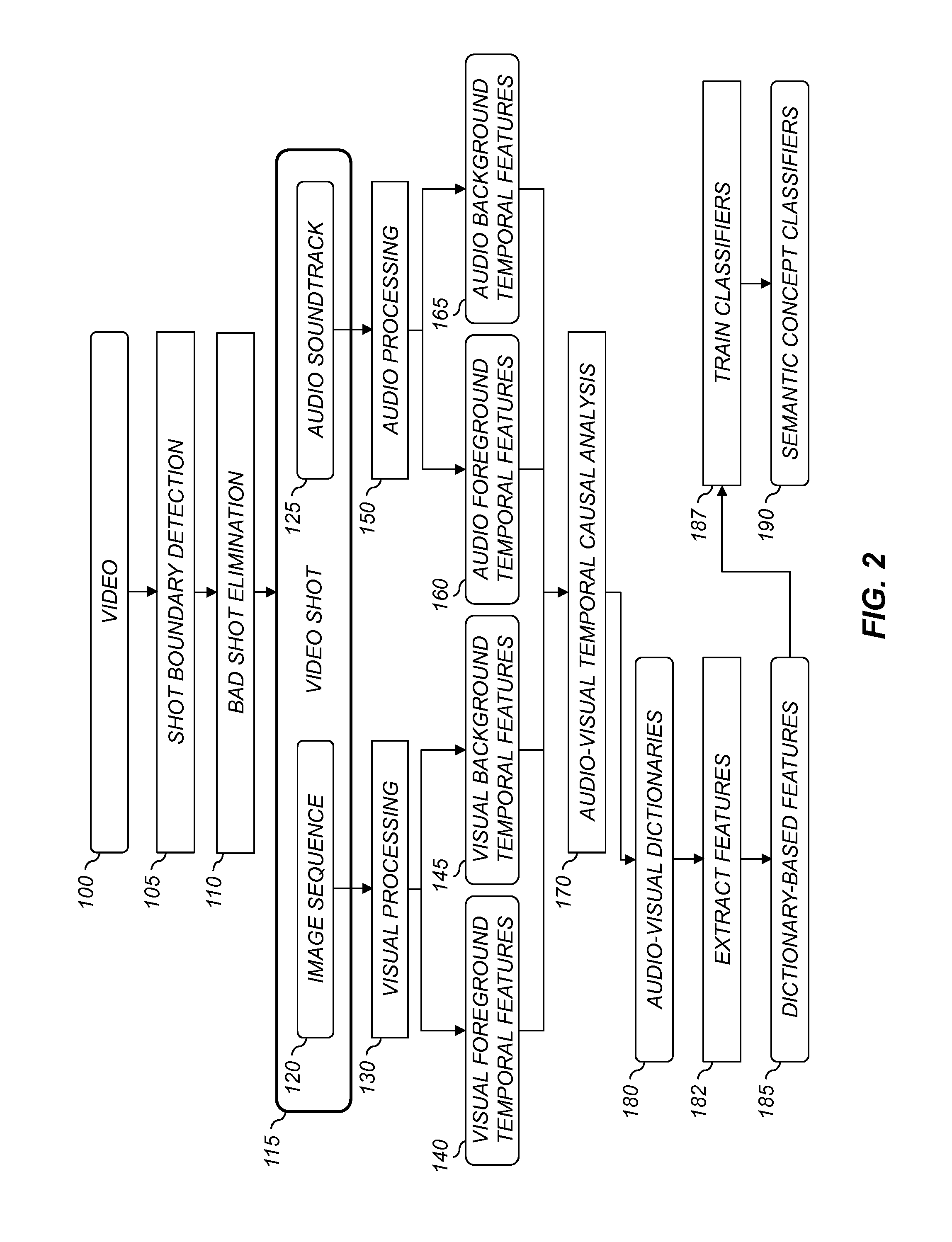

Video concept classification using video similarity scores

InactiveUS20130089304A1Robustly classifiedImprove classification performanceTelevision system detailsRecording carrier detailsPattern recognitionDigital video

A method for determining a semantic concept classification for a digital video clip, comprising: receiving an audio-visual dictionary including a plurality of audio-visual grouplets, the audio-visual grouplets including visual background and foreground codewords, audio background and foreground codewords, wherein the codewords in a particular audio-visual grouplet were determined to be correlated with each other; determining reference video codeword similarity scores for a set of reference video clips; determining codeword similarity scores for the digital video clip; determining a reference video similarity score for each reference video clip representing a similarity between the digital video clip and the reference video clip responsive to the audio-visual grouplets, the codeword similarity scores and the reference video codeword similarity scores; and determining one or more semantic concept classifications using trained semantic classifiers responsive to the determined reference video similarity scores.

Owner:MONUMENT PEAK VENTURES LLC

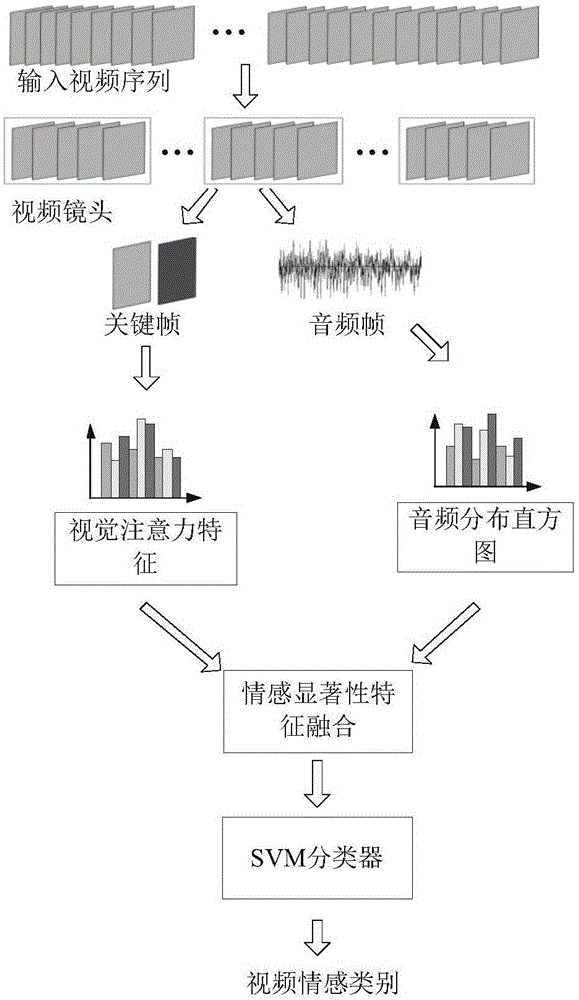

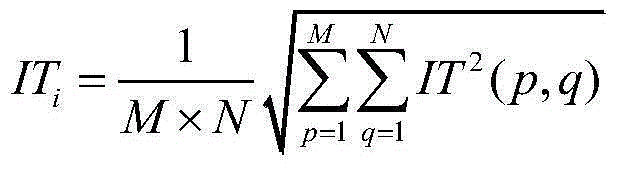

Video emotion identification method based on emotion significant feature integration

ActiveCN105138991ADiscriminatingEasy to implementCharacter and pattern recognitionIntegration algorithmVision based

The invention discloses a video emotion identification method based on emotion significant feature integration. A training video set is acquired, and video cameras are extracted from a video. An emotion key frame is selected for each video camera. The audio feature and the visual emotion feature of each video camera in the training video set are extracted. The audio feature is based on a word package model and forms an emotion distribution histogram feature. The visual emotion feature is based on a visual dictionary and forms an emotion attention feature. The emotion attention feature and the emotion distribution histogram feature are integrated from top to bottom to form a video feature with emotion significance. The video feature with emotion significance is sent into an SVM classifier for training, wherein the video feature is formed in the training video set. Parameters of a training model are acquired. The training model is used for predicting the emotion category of a tested video. An integration algorithm provided by the invention has the advantages of simple realization, mature and reliable trainer and quick prediction, and can efficiently complete a video emotion identification process.

Owner:SHANDONG INST OF BUSINESS & TECH

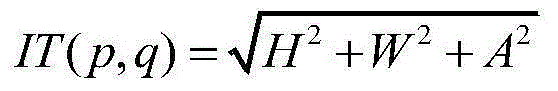

Method for identifying faces in videos based on incremental learning of face partitioning visual representations

InactiveCN103279768AEfficient automatic detectionFacilitates automatic detectionCharacter and pattern recognitionIncremental learningAdaboost algorithm

The invention provides a method for identifying faces in videos based on incremental learning of face partitioning visual representations and belongs to the field of pattern recognition. According to the method, an Adaboost algorithm is used for detecting frontal face images in a first frame of the face videos, a Camshift algorithm is used for tracking, all face images are obtained, in the process of reading the face images in the videos, incremental cluttering is carried out on the face images, and a representative image is selected from each kind of face images; the representative images are processed, and a visual dictionary based on the piece visual representations is learnt; the visual dictionary is used for carrying out the representations on the face images; finally, according to similar matrices, the videos composed of the face images are identified. According to the method, an identification rate and robustness of the video faces can be improved under the state that illumination, postures and tracking results are not ideal. The faces in the videos can be detected, tracked and identified effectively, conveniently and automatically.

Owner:BEIHANG UNIV

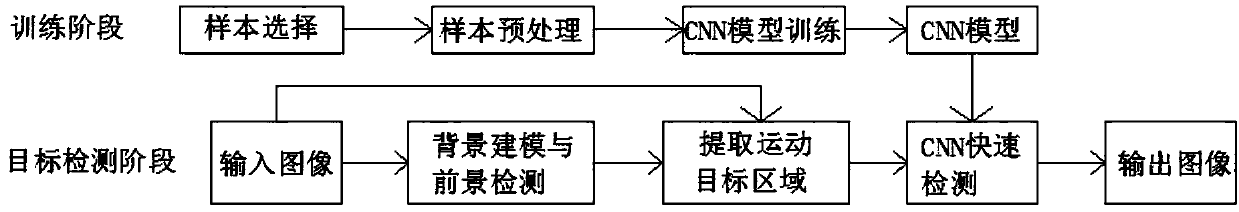

Personnel reidentification method based on deep learning and distance metric learning

PendingCN108345860AHigh selectivityImprove re-identification accuracyCharacter and pattern recognitionNeural learning methodsMethod of characteristicsSemantics

The invention relates to the field of the identification method, and particularly relates to a personnel reidentification method based on deep learning and distance metric learning. The identificationmethod comprises the steps that (1) a pedestrian target detection method based on the convolutional neural network is adopted to process the video data so as to detect the pedestrian target in the video; (2) the initial characteristics of the pedestrian target are coded by using an unsupervised RBM network through the bottom-up mode so as to obtain a visual dictionary having sparsity and selectivity; (3) supervised fine adjustment is performed on the initial visual dictionary by using error back propagation so as to obtain the new image expression mode of the video image, i.e. the image deeplearning representation vector; and (4) the metric space closer to the real semantics is acquired by using the distance metric learning method of characteristic grouping and characteristic value optimization, and the pedestrian target is identified by using a linear SVM classifier. The essential attributes of the image can be more accurately expressed so as to greatly enhance the accuracy of pedestrian reidentification.

Owner:江苏测联空间大数据应用研究中心有限公司

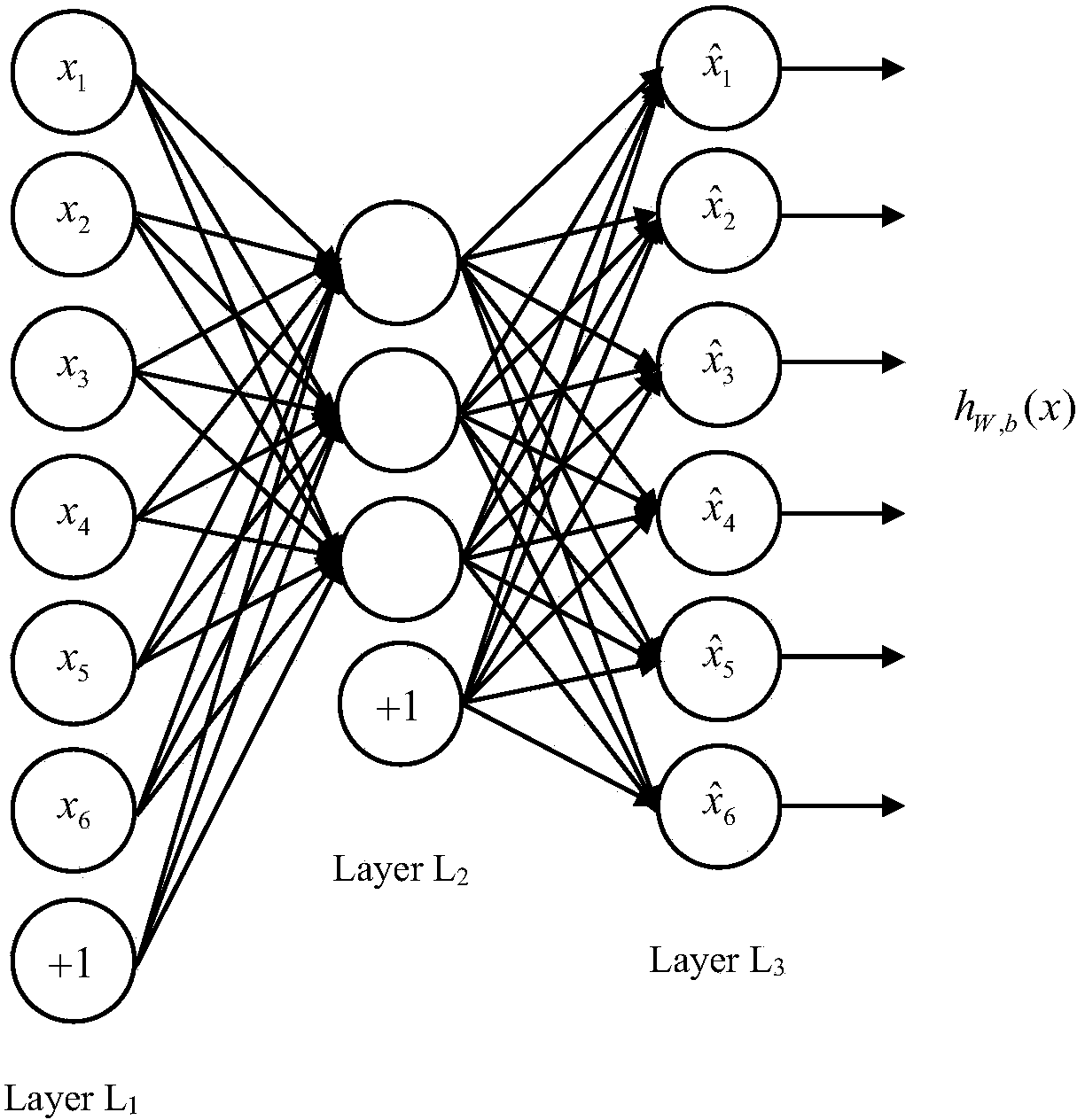

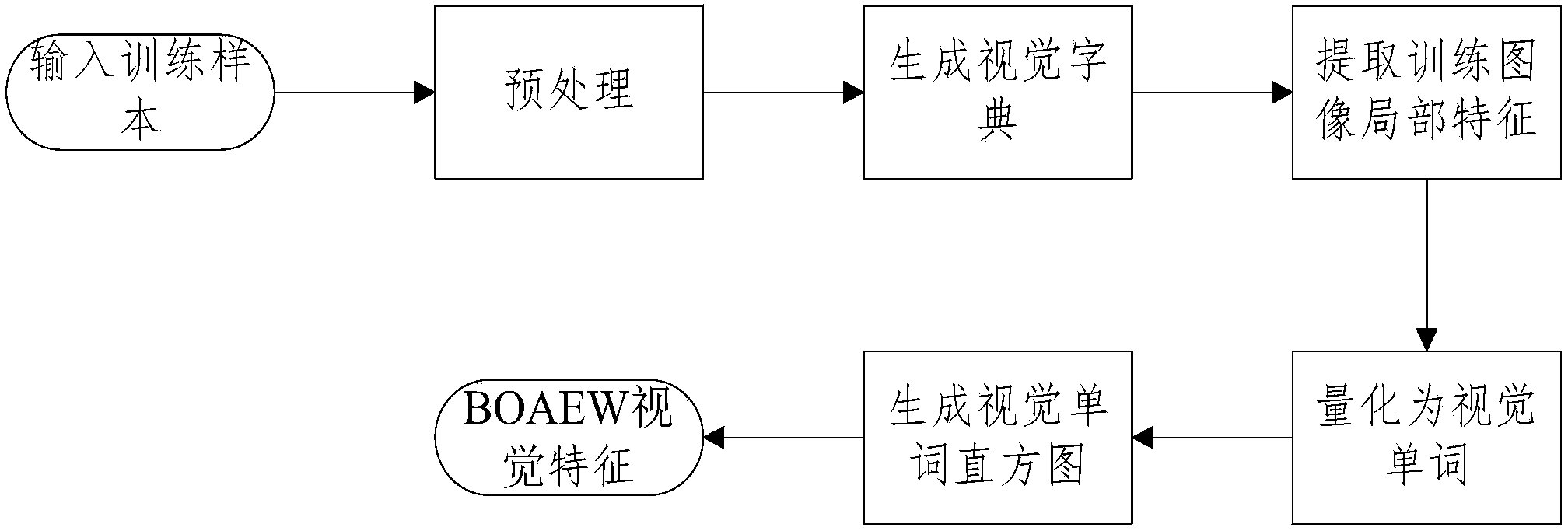

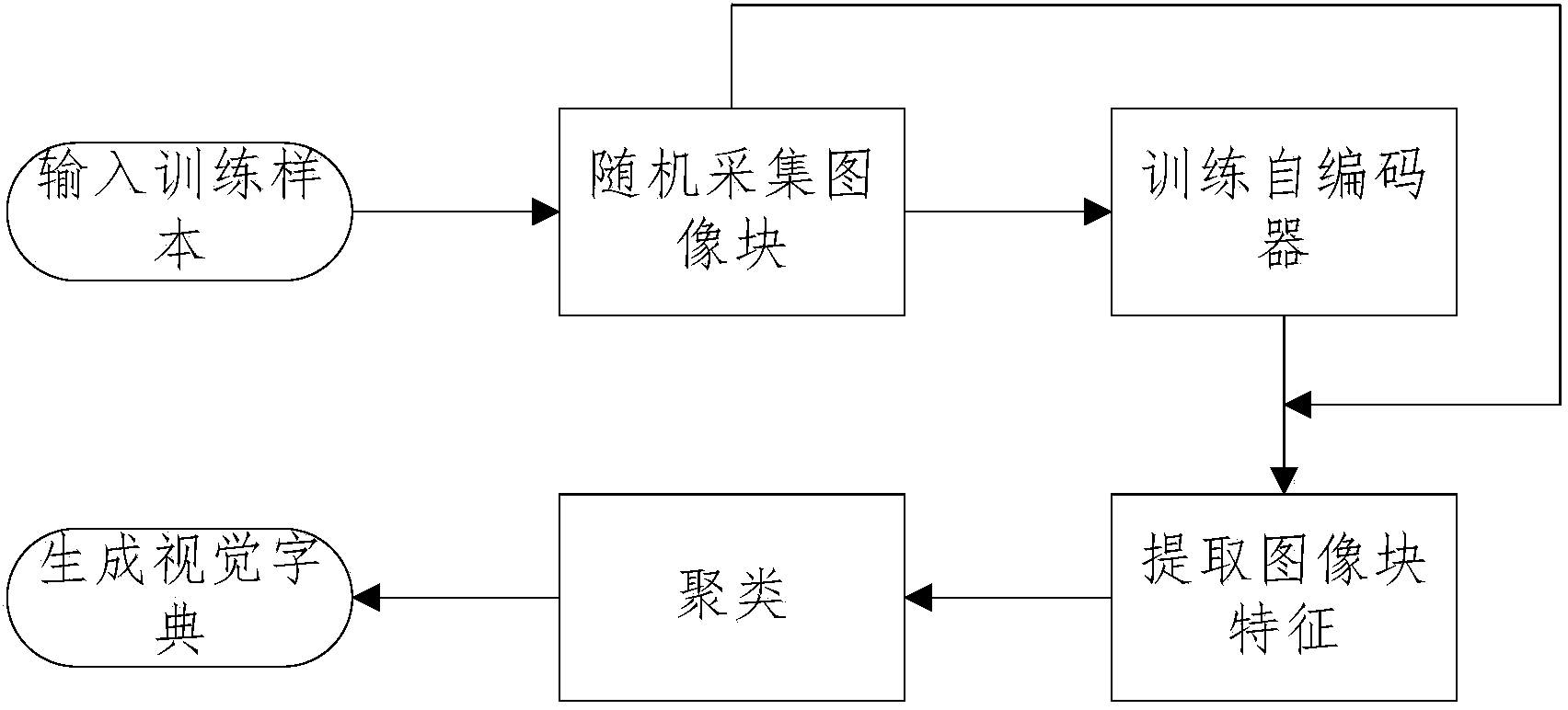

Visual feature representing method based on autoencoder word bag

ActiveCN104239897ARequirements for reducing the number of training samplesProof of validityCharacter and pattern recognitionNeural learning methodsSlide windowVisual perception

The invention relates to a visual feature representing method based on an autoencoder word bag. The method includes the steps that training samples are input to form a training set; the training samples in the training set are preprocessed, and influences of illumination, noise and the like on the image representing accuracy are reduced; a visual dictionary is generated, an autoencoder is used for extracting random image block features, then the clustering method is used for clustering the random image block features into a plurality of visual words, and the visual dictionary is composed of the visual words; a sliding window mode is used for sequentially collecting image blocks of images in the training set, the collected image blocks serve as input of the autoencoder, and output of the autoencoder is local features of the images; the local features of the images are quantized into the visual words according to the visual dictionary; the frequency of the visual words is counted, a visual word column diagram is generated, and the visual word column diagram is overall visual feature representing of the images. By means of the visual feature representing method, feature representing characteristics are independently studied through the adoption of the autoencoder, and requirements for the quantity of the training samples are reduced through a BoVW framework.

Owner:TIANJIN UNIV

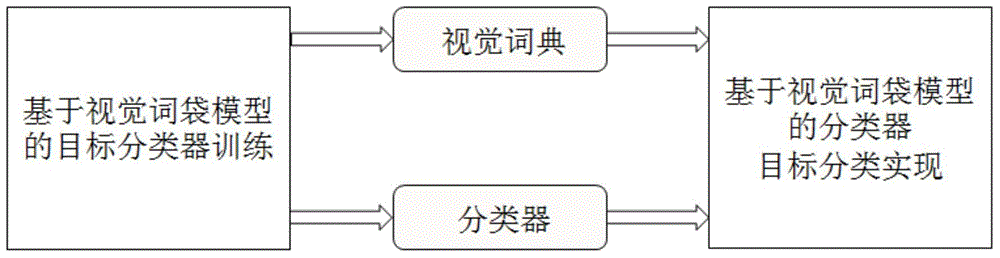

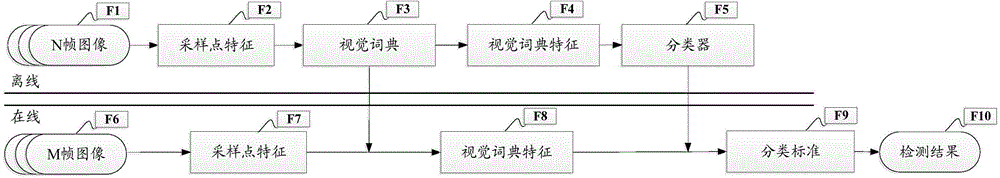

Object classification method and system based on bag of visual word model

ActiveCN104915673AReduce consumptionFlexible and more accurate classification and identification methodsCharacter and pattern recognitionClassification methodsVisual perception

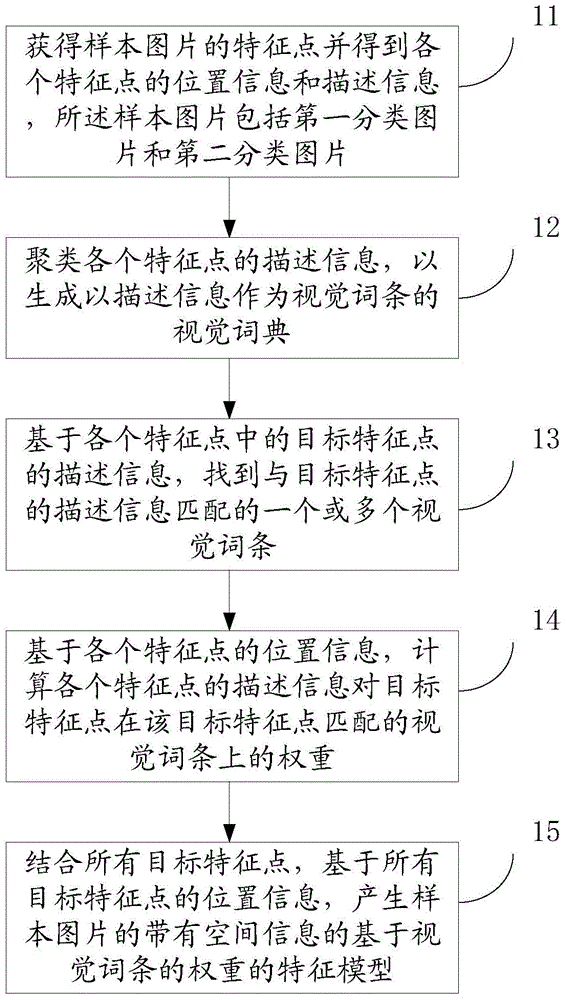

The invention provides an object classification method and system based on a bag of visual word model. The method comprises the following steps: obtaining characteristic points of a sample picture and obtaining position information and description information of each characteristic point, wherein the sample picture comprises a first classification picture and a second classification picture; clustering the description information of each characteristic point so as to generate a visual dictionary taking the description information as visual terms; based on the description information of a target characteristic point in each characteristic point, finding one or more visual terms matching the description information of the target characteristic points; based on the position information of each characteristic point, calculating the weight of the description information of each characteristic point for the target characteristic points on the visual terms matching the target characteristic points; and through combination with all the target characteristic points, based on the position information of all the target characteristic points, generating a characteristic model, which is provided with space information and based on the weights of the visual terms, of the sample picture.

Owner:RICOH KK

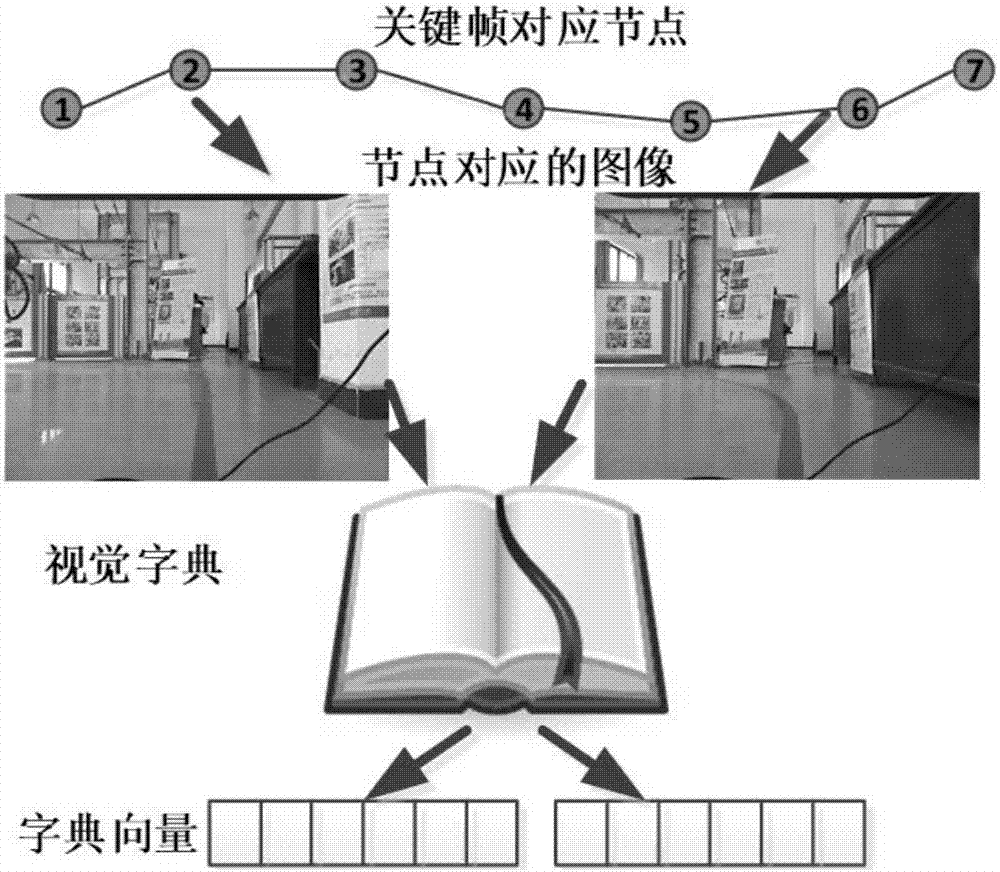

Bag of visual words-based closed-loop detection method for mobile robot maps

ActiveCN107886129AAccurate detectionCalculation speedCharacter and pattern recognitionPattern recognitionClosed loop

The invention discloses a bag of visual words-based closed-loop detection method for mobile robot maps. The method comprises the following steps of: providing a visual dictionary-based image similarity detection algorithm, and taking the algorithm as a front end of closed-loop detection, namely, judging candidate closed-loop nodes through image similarity detection; and further determining the closed-loop nodes by adoption of a time constraint and space position verification method. A large number of experiments prove that the closed-loop detection method is capable of correctly detecting various different closed loops, is high in algorithm calculation speed, and is adaptive to relatively high instantaneity requirements, for the closed-loop detection part, of SLAM.

Owner:HUNAN UNIV +1

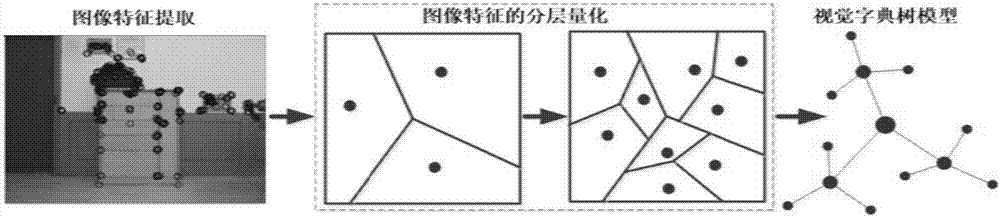

Image characteristic extracting and describing method

InactiveCN102663401AGuaranteed scale invarianceEfficient use ofCharacter and pattern recognitionSpecial data processing applicationsImaging processingScale-invariant feature transform

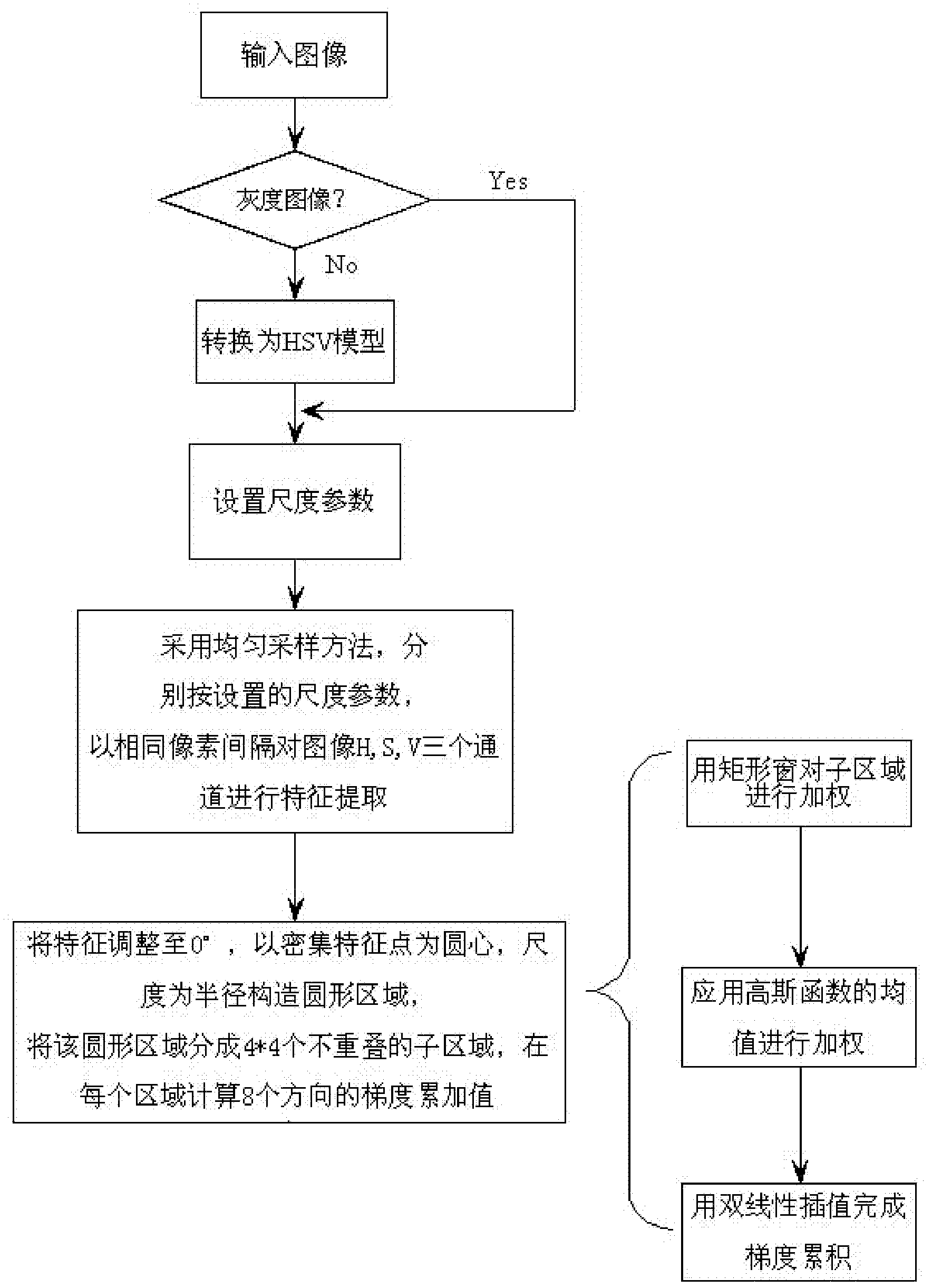

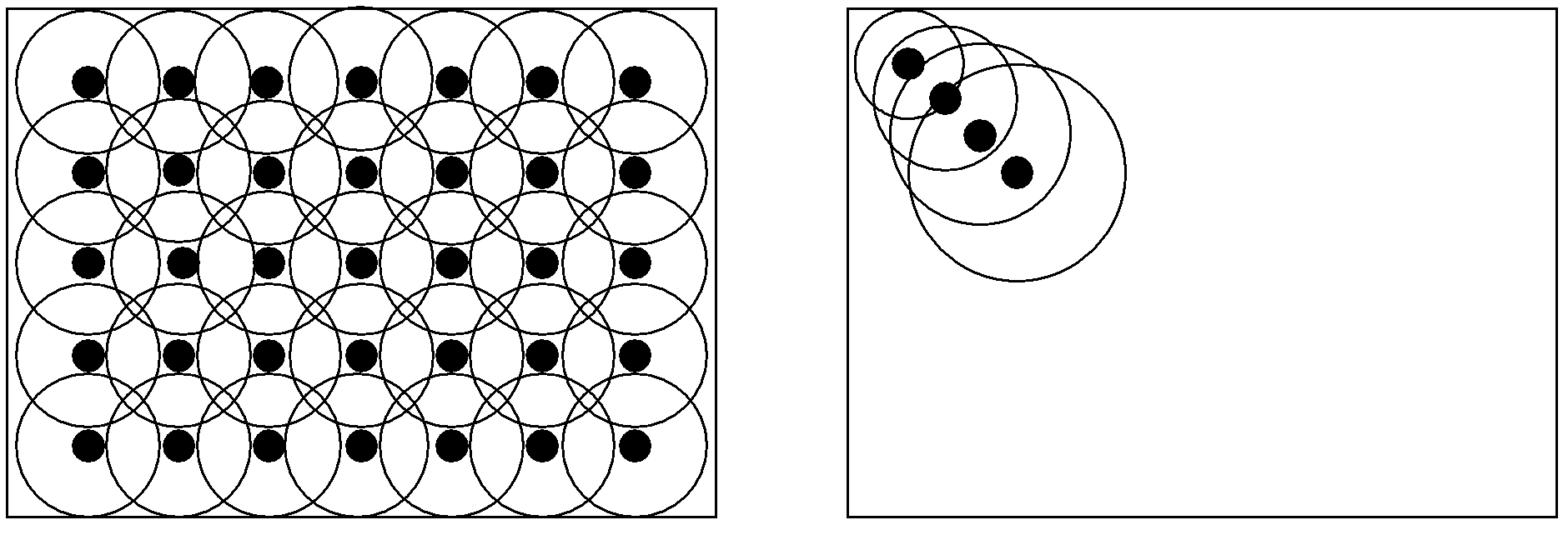

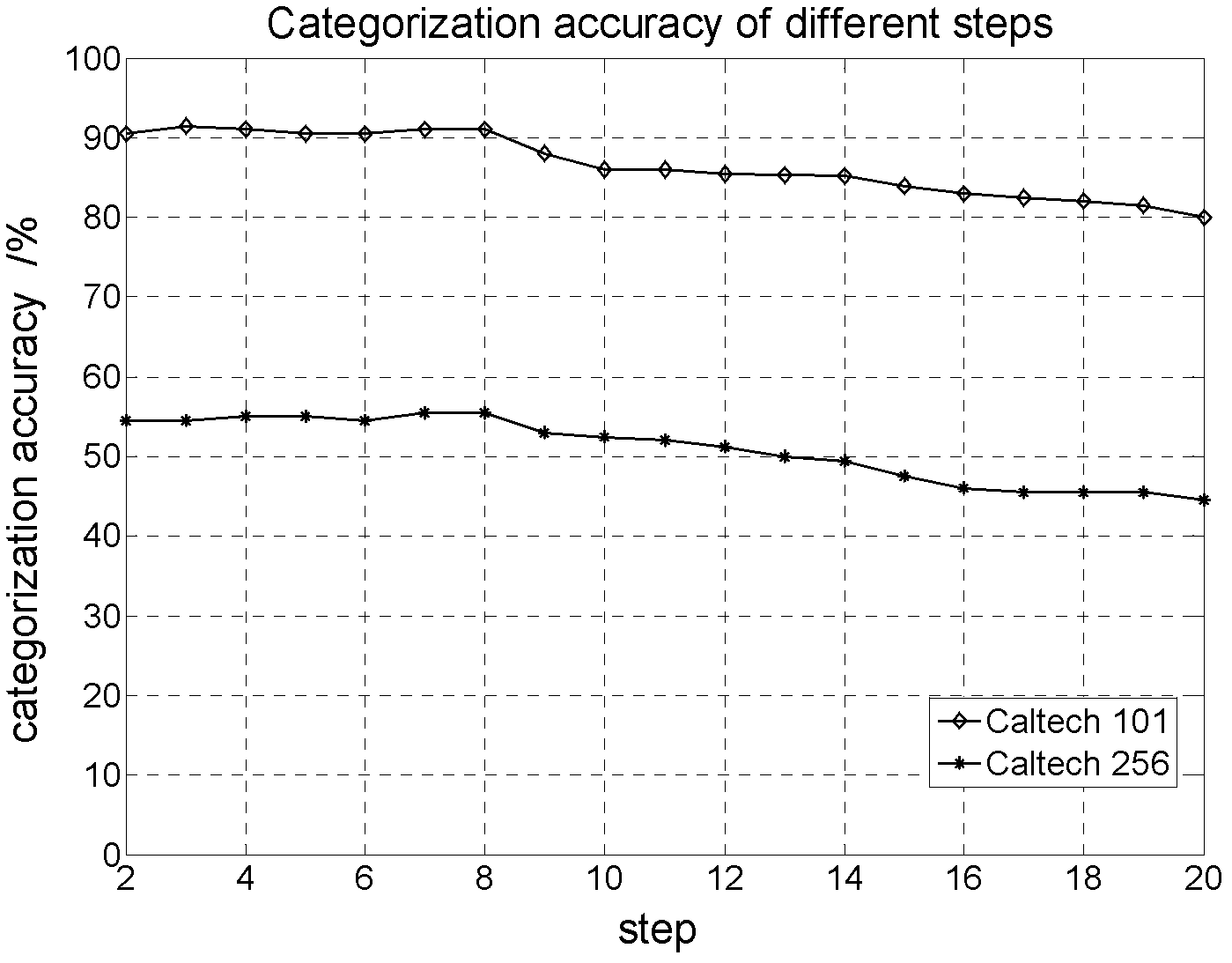

The invention relates to the field of image processing and computer vision and particularly provides an image characteristic extracting and describing method which is suitable for a BoW (Bag of Words) model and is applied to the field of computer vision. The image characteristic extracting and describing method comprises the following steps of: carrying out format judgment on an input image, not processing if the input image is a gray level image and converting the input image into an HSV (Hue, Saturation, Value) model if the input image is not the gray level image; selecting scale parameters; by adopting a uniform sampling method, according to the selected scale parameters, extracting characteristic points of the image at equal pixel intervals, calculating DF-SIFT (Dense Fast-Scale Invariant Feature Transform) descriptors of an H (Hue) channel, an S (Saturation) channel and a V (Value) channel of the image, applying color information into a classification task and controlling the sampling density by a parameter step to obtain the dense characteristic of the image; and carrying out description on the dense characteristic. According to the invention, by densely sampling, a visual dictionary is more accurate and reliable; and the bilinear interpolation replaces the image and Gaussian kernel function convolution process, so that the implementing process is simpler and more efficient.

Owner:HARBIN ENG UNIV

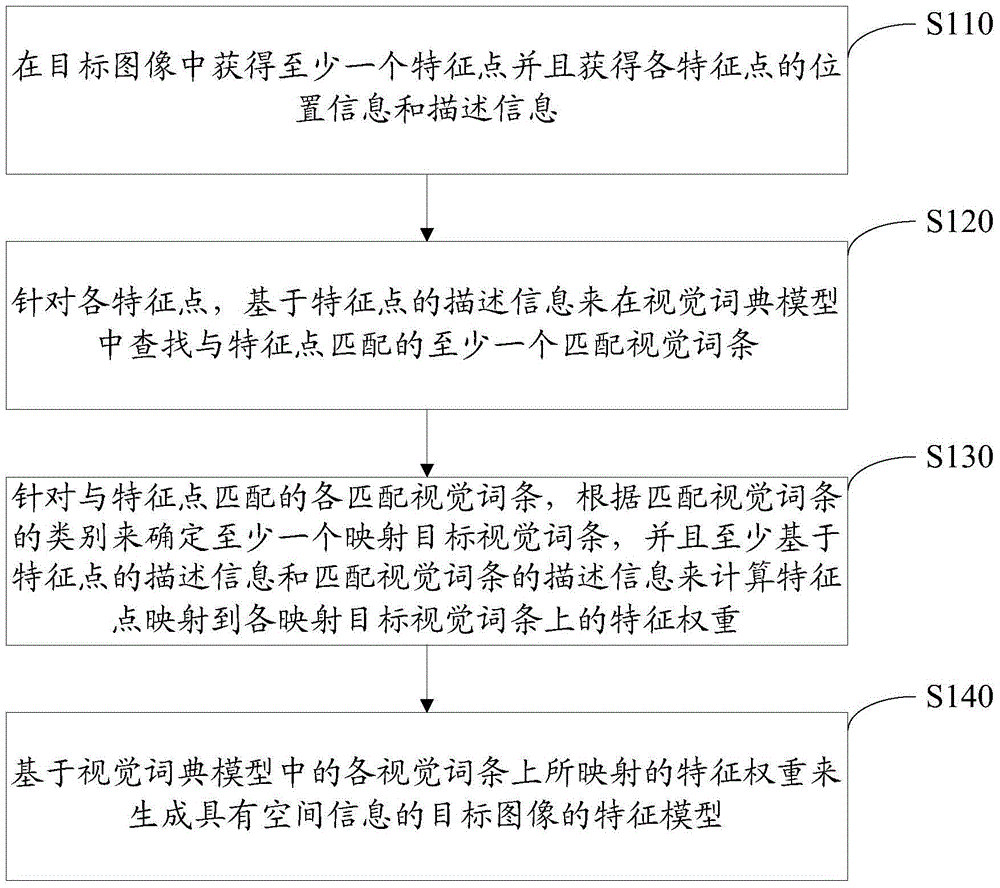

Feature model generating method and feature model generating device

The invention discloses a feature model generating method and device. The method comprises acquiring feature points in a target image and location information and description information of the feature points; searching matching visual entries matching the feature points based on the description information from a visual dictionary model consisting of a second type of visual entries and a first type of visual entries which have relevance with other visual entries in spatial relations; determining mapping target visual entries based on the types of the matching visual entries matching the feature points, and calculating the feature weight that the feature points are mapped onto the mapping target visual entries at least based on the description information of the feature points and the matching visual entries; and generating a feature model of the target image and with spatial information based on the feature weight on the visual entries in the visual dictionary model. Therefore, the method of the invention can be used for generating the feature model of the target image and with spatial information.

Owner:RICOH KK

Three-dimensional face identification device and method based on three-dimensional point cloud

ActiveCN104298995AImprove recognition accuracyAquisition of 3D object measurementsPoint cloudIdentification device

The invention discloses a three-dimensional face identification device and method based on the three-dimensional point cloud. The device comprises a characteristic region detection unit for carrying out positioning on a characteristic region of the three-dimensional point cloud; a depth image mapping unit for carrying out normalization mapping on the three-dimensional point cloud to a depth image space; a Gabor response computation unit for carrying out different-dimension and different-direction response computation on three-dimensional face data by utilizing different-dimension and different-direction Gabor filters; a storage unit for storing visual dictionary of the three-dimensional face data obtained by training; and a histogram mapping computation unit for carrying out histogram mapping with the visual dictionary, for the Gabor response vector obtained by each pixel. To begin with, positioning and registeration are carried out on the characteristic region of a three-dimensional face region; then, point cloud data is mapped to a depth image according to the depth information; next, visual dictionary histogram vector of the three-dimensional data is carried out according to the trained three-dimensional face visual dictionary; and finally, identification can be realized through a classifier, and the identification precision is high.

Owner:SHENZHEN WEITESHI TECH

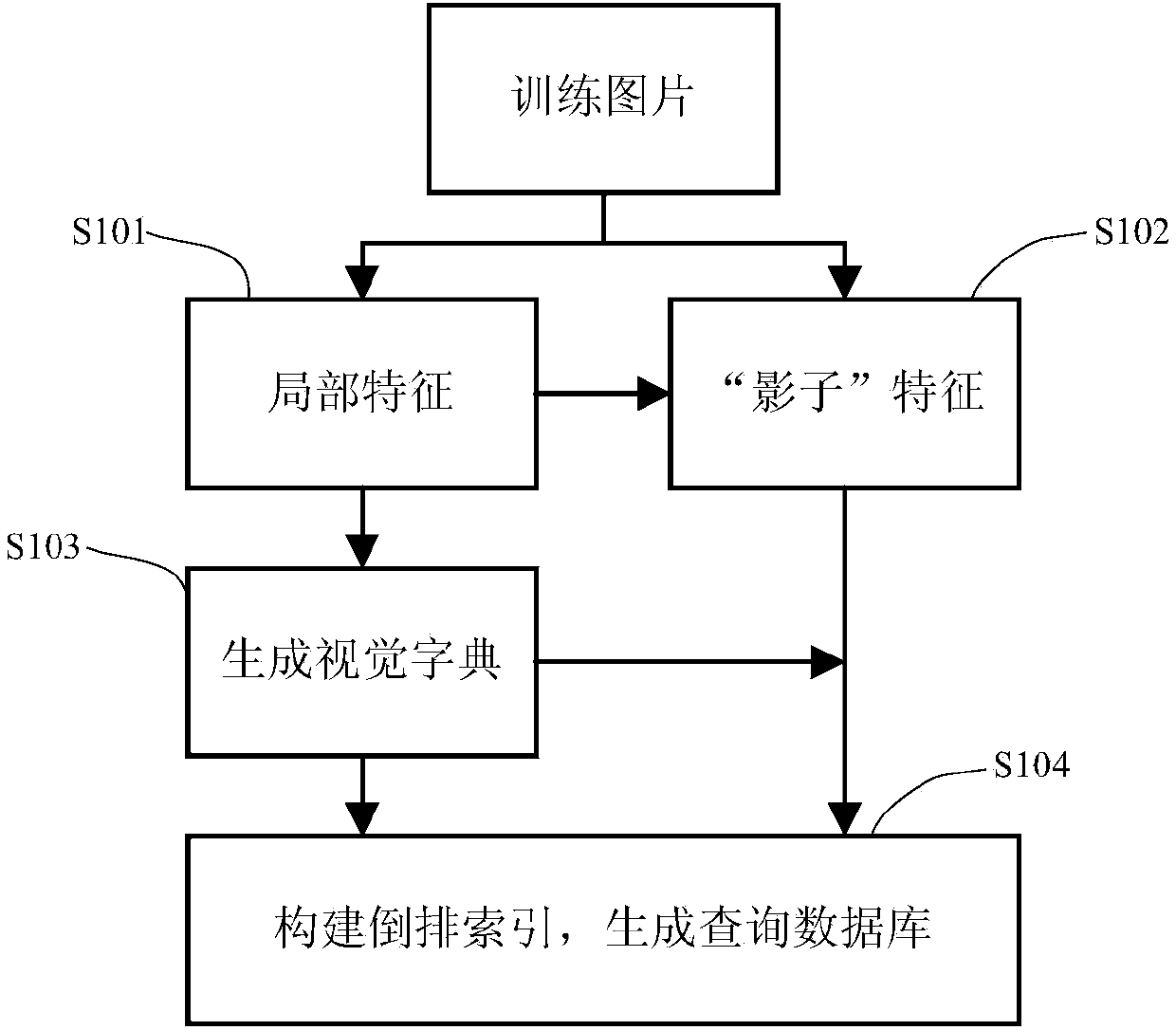

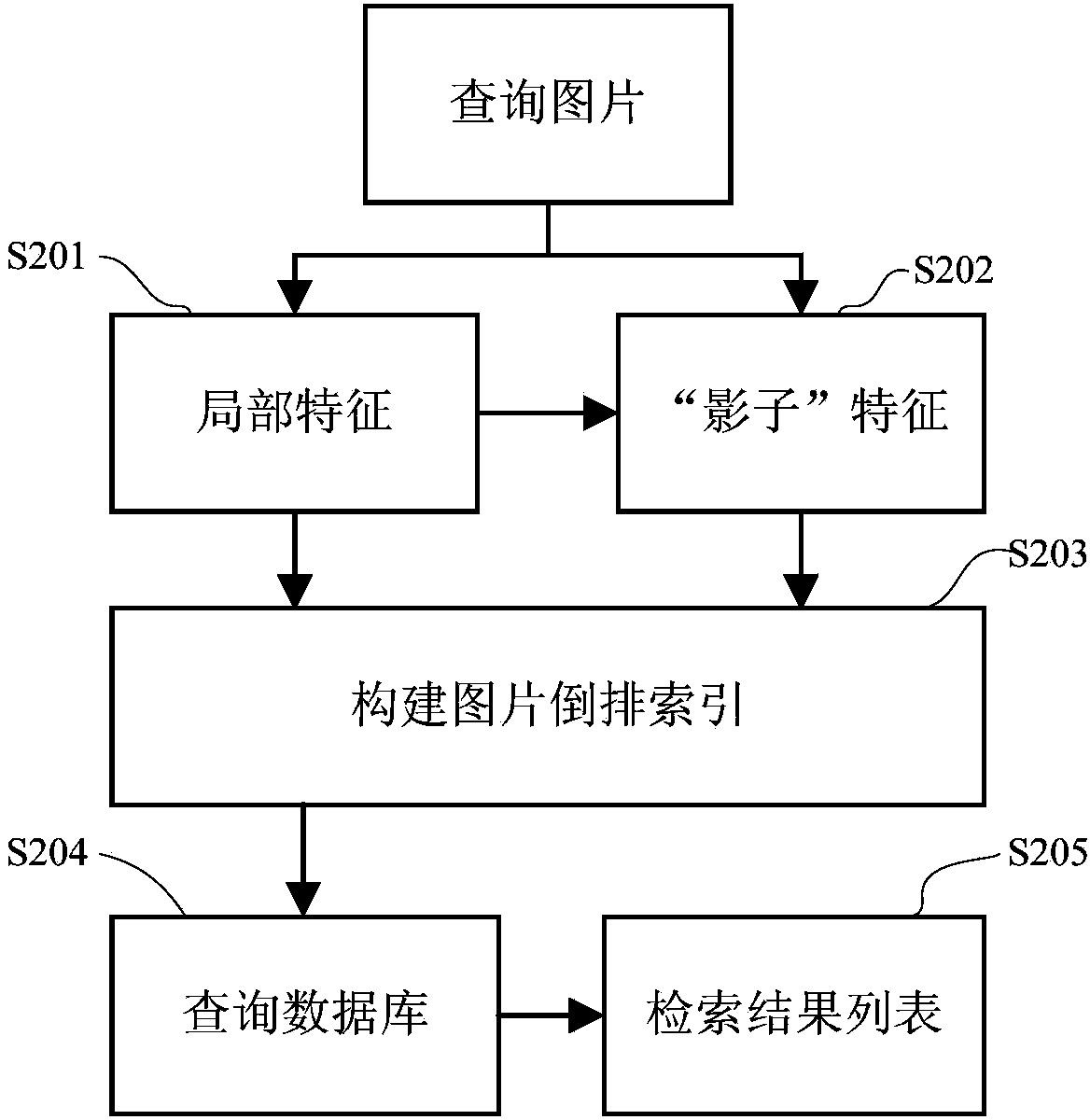

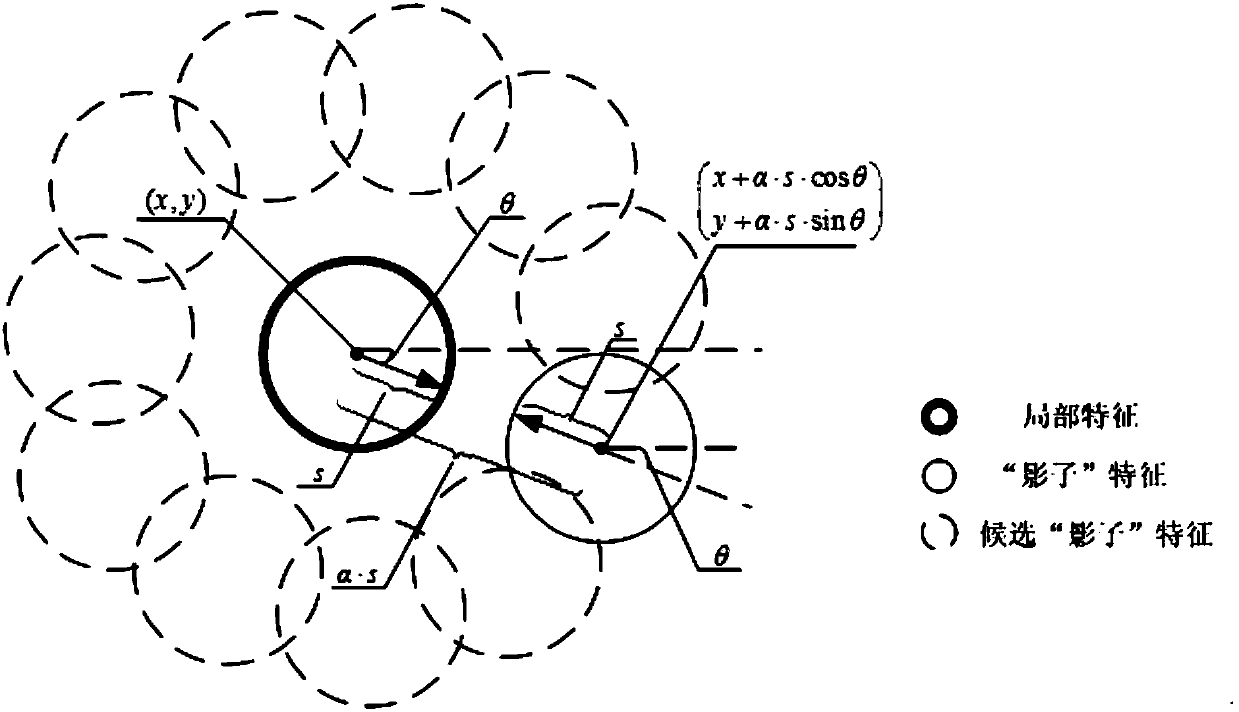

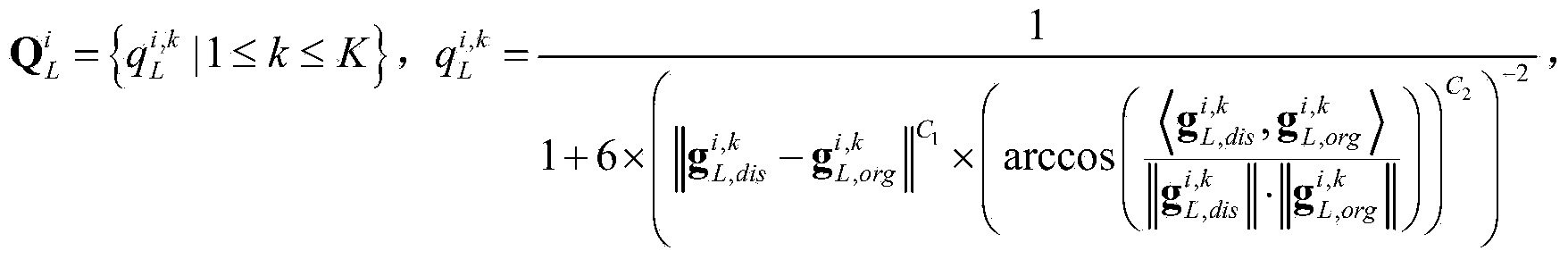

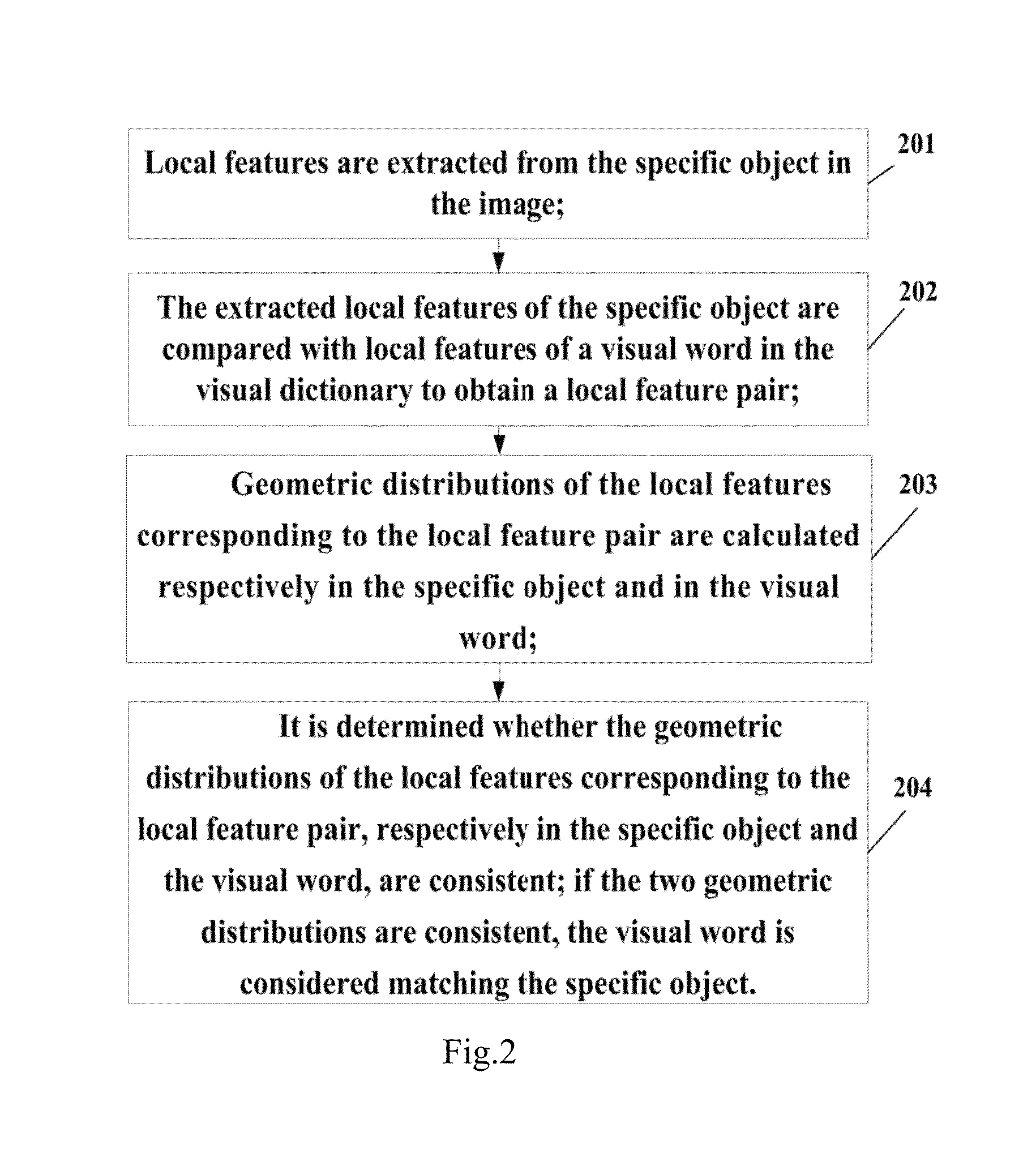

Similar image retrieval method based on local feature neighborhood information

ActiveCN104199842AEnhanced Visual CompatibilityImprove accuracyCharacter and pattern recognitionMetadata still image retrievalInverted indexVisual dictionary

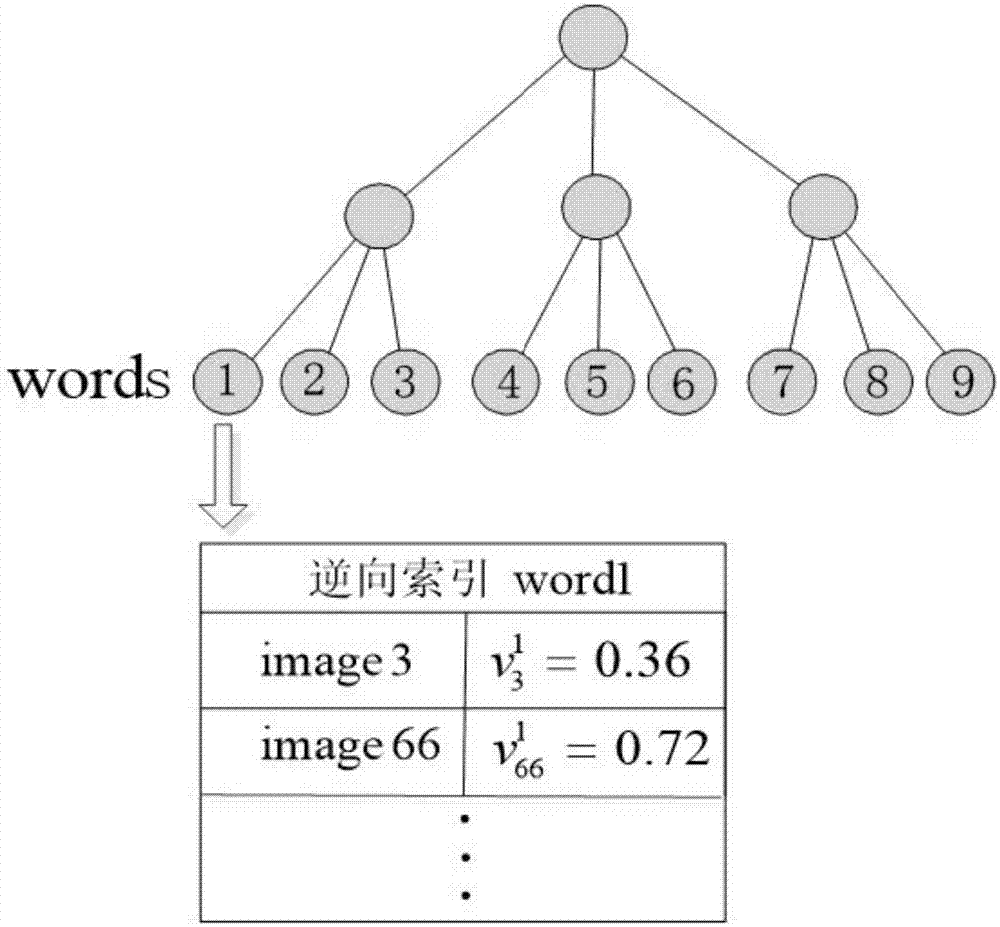

The invention relates to a similar image retrieval method based on local feature neighborhood information. The method includes the steps of (1) obtaining training pictures, (2) conducting feature detection and description on the pictures in a multi-scale space through a Hessian-Affine feature point detection algorithm and an SIFT local feature descriptor, (3) constructing corresponding shadow features according to features extracted in the step (2), (4) conducting clustering on the features extracted in the step (2) through a k mean value clustering algorithm and generating a visual dictionary including k visual words, (5) mapping all the features to the visual word with the smallest distance with L2 of the visual word one by one and storing the features in an inverted index structure, (6) storing the inverted index structure to form a query database and (7) obtaining the corresponding inverted index for querying the pictures, comparing the inverted index with the query database and obtaining a retrieval result list. Compared with the prior art, the method has the advantages of being high in picture retrieval accuracy.

Owner:TONGJI UNIV

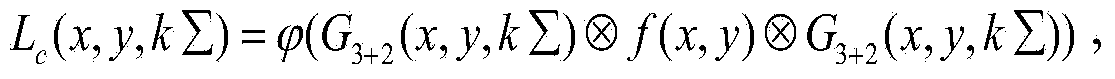

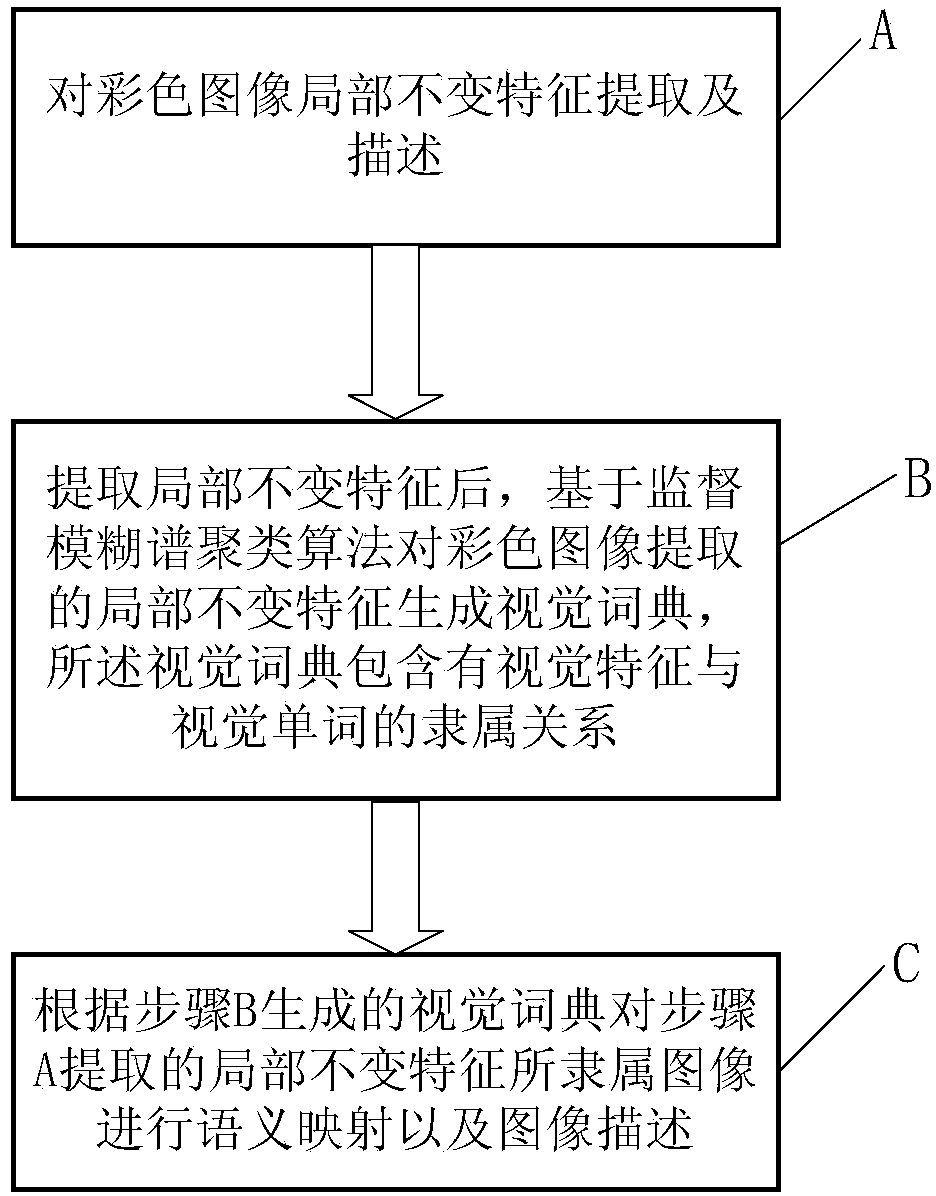

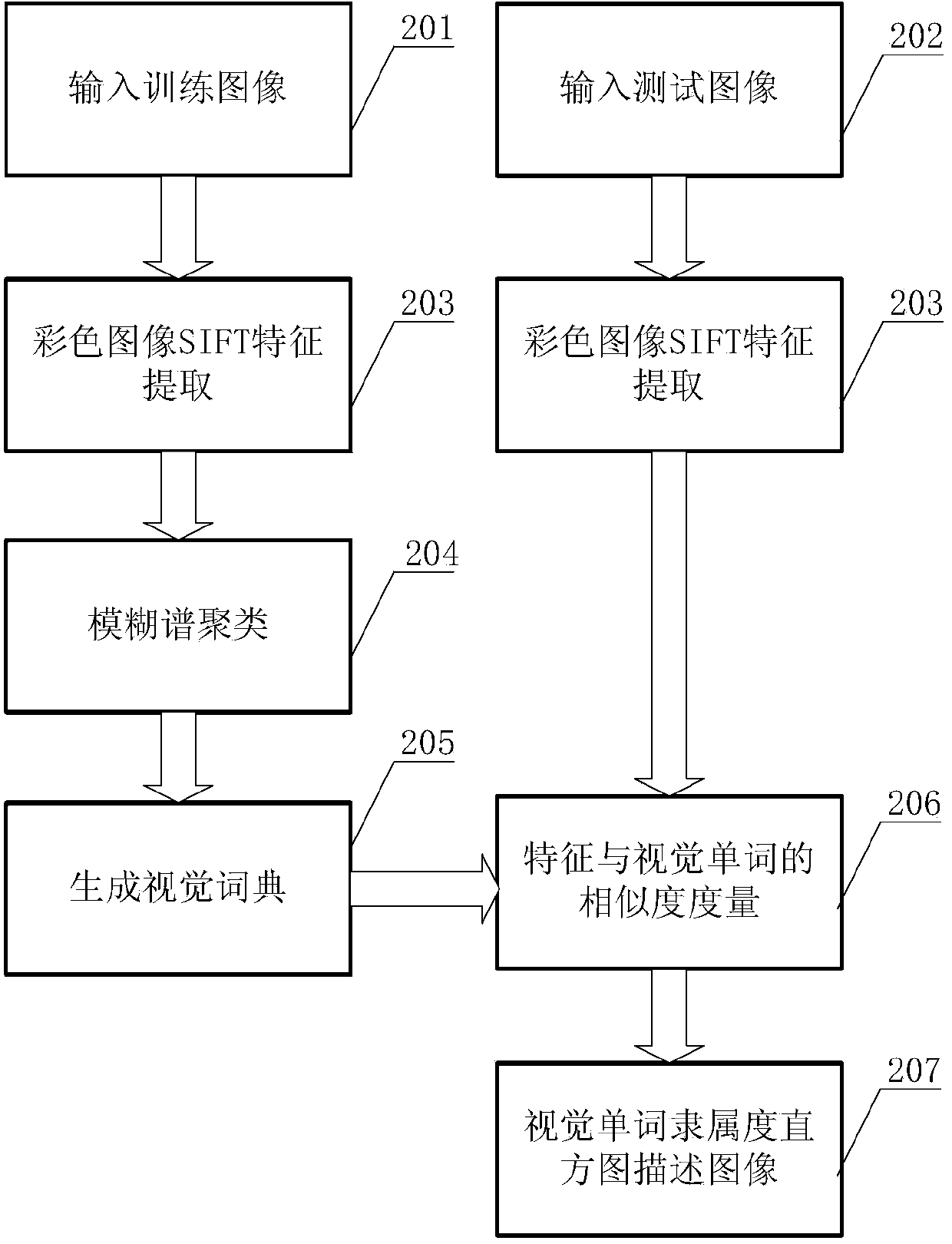

Semantic mapping method of local invariant feature of image and semantic mapping system

ActiveCN103530633AImprove accuracyBridging the Semantic GapCharacter and pattern recognitionSpecial data processing applicationsSpectral clustering algorithmSemantic gap

The invention is applicable to the technical field of image processing and provides a semantic mapping method of the local invariant feature of an image. The semantic mapping method comprises the following steps of step A: extracting and describing the local invariant feature of the colorful image; step B: after extracting the local invariant feature, generating a visual dictionary for the local invariant feature extracted from the colorful image on the basis of an algorithm for supervising fuzzy spectral clustering, wherein the visual dictionary comprises the attached relation of visual features and visual words; step C: carrying out semantic mapping and image description on the attached image with the local invariant feature extracted in the step A according to the visual dictionary generated in the step B. The semantic mapping method provided by the invention has the advantages that the problem of semantic gaps can be eliminated, the accuracy of image classification, image search and target recognition is improved and the development of the theory and the method of machine vision can be promoted.

Owner:湖南植保无人机技术有限公司

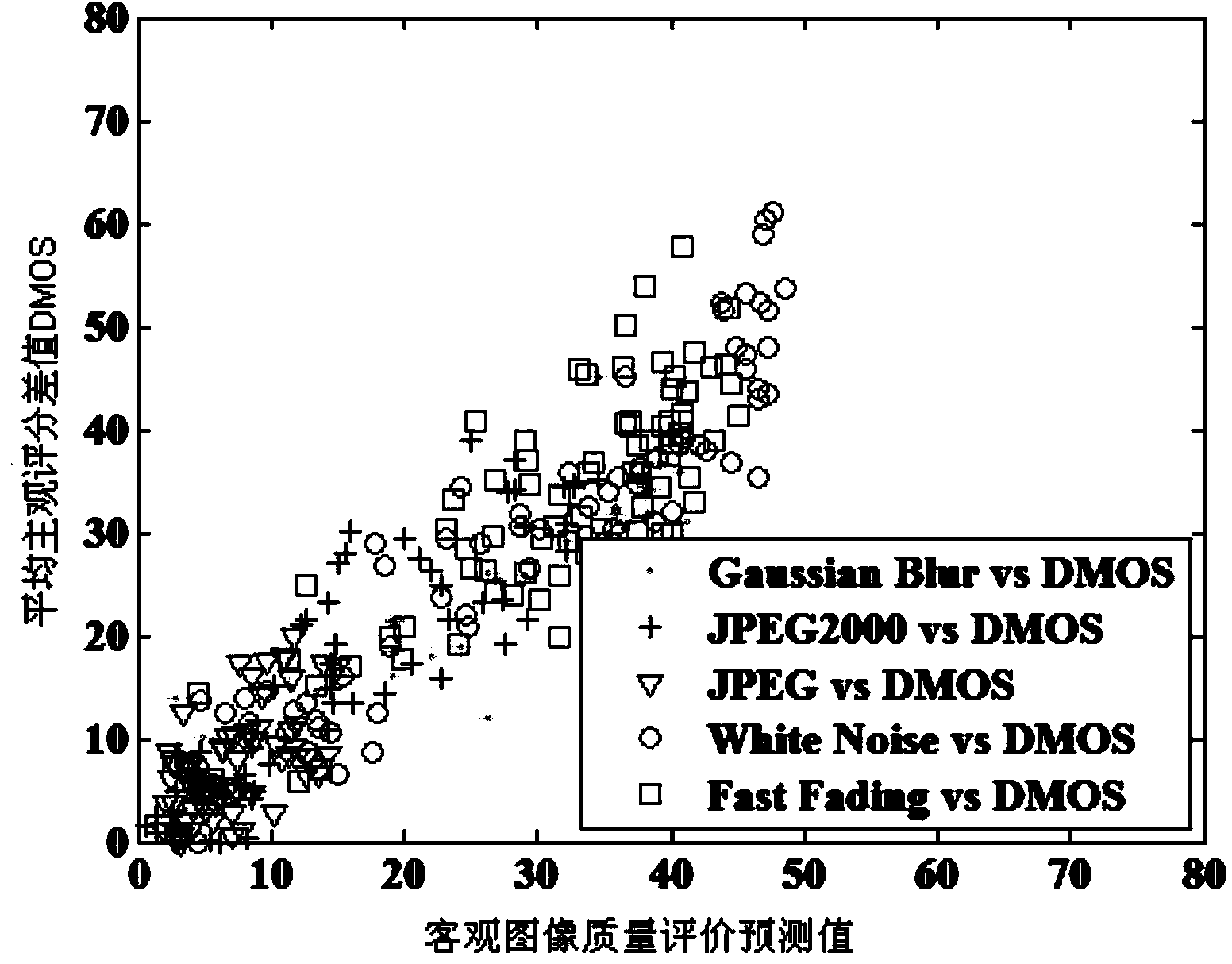

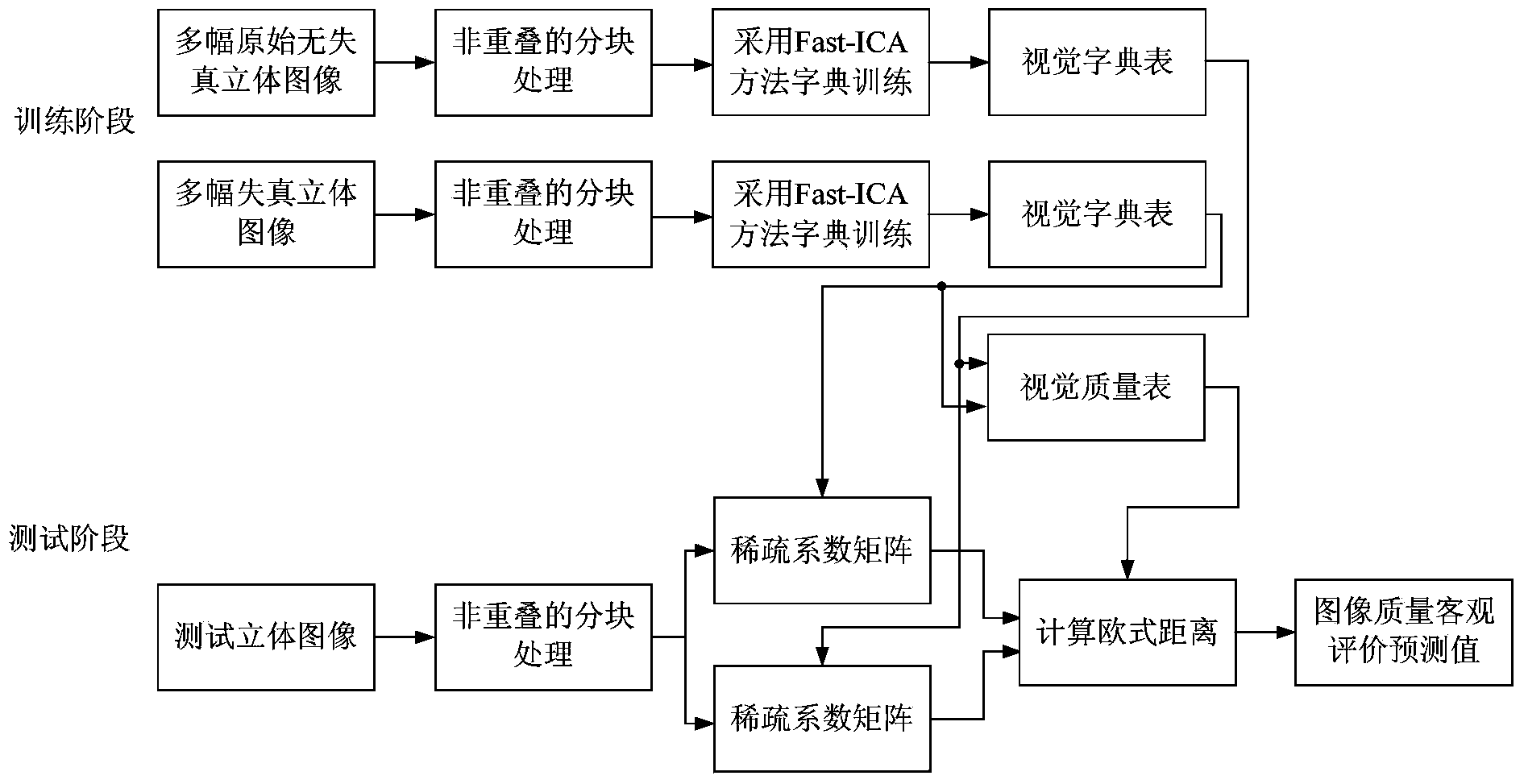

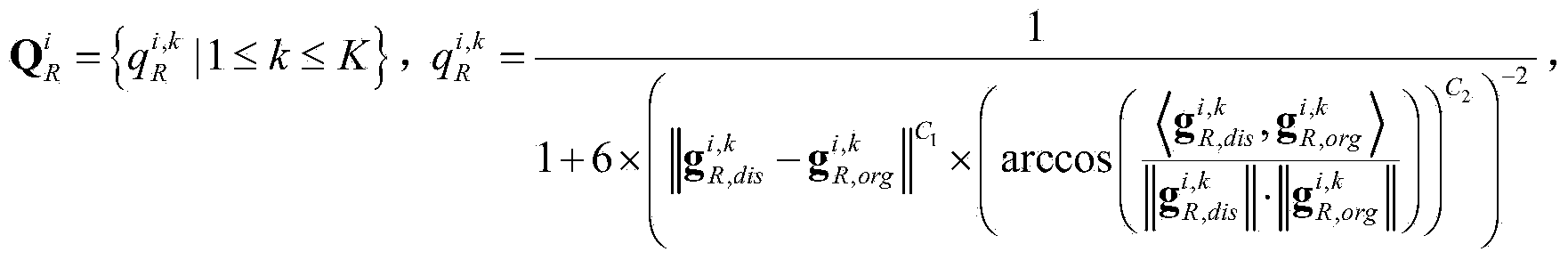

No-reference fuzzy distorted stereo image quality evaluation method

ActiveCN104036502AReduce computational complexityImprove consistencyImage analysisCharacter and pattern recognitionComputation complexityImaging quality

The invention discloses a no-reference fuzzy distorted stereo image quality evaluation method, which comprises the following steps: in a training stage, selecting a plurality of undistorted stereo images and corresponding fuzzy distorted stereo images to form a training image set; then, carrying out a dictionary training operation by a Fast-ICA (independent component analysis) method, and constructing a visual dictionary table of each image in the training image set; constructing the visual quality table of the visual dictionary table of each distorted stereo image by calculating a distance between the visual dictionary table of each undistorted stereo image and the visual dictionary table of each corresponding fuzzy distorted stereo image; in a testing stage, for any one tested stereo image, carrying out non-overlapping partitioning processing to a left sight point image and a right sight point image of the tested stereo image; and according to the constructed visual dictionary table and the constructed visual quality table, obtaining an objective evaluation prediction value of the image quality of the tested stereo image. The no-reference fuzzy distorted stereo image quality evaluation method has the advantages of low computation complexity and good relevance between an objective evaluation result and subjective perception.

Owner:创客帮(山东)科技服务有限公司

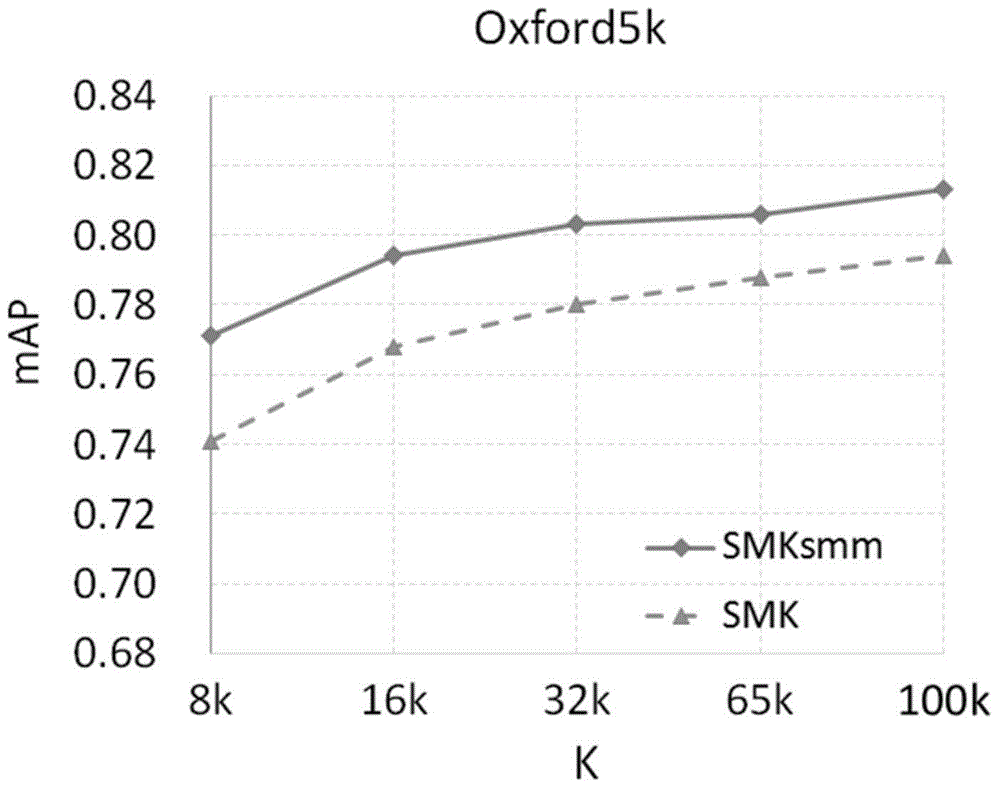

Picture searching method based on maximum similarity matching

ActiveCN104615676AEliminate multiple matchesEnhanced Visual CompatibilityStill image data indexingCharacter and pattern recognitionFeature setReverse index

The invention relates to a picture searching method based on maximum similarity matching. The method includes the following steps that (1) a training picture set is acquired; (2) feature point detection and description are conducted on acquired pictures in a multi-scale space; (3) feature sets extracted in the second step are clustered and generated into a visual dictionary including k visual vocabularies; (4) each feature extracted in the second step is mapped to the visual vocabulary with the distance being smallest to the current feature l2, the current feature and the normalization residual vector of the corresponding visual vocabulary are stored in a reverse index structure, and accordingly a query database is formed; (5) the pictures to be searched for are acquired, the second step and the fourth step are executed again, the reverse index structure of the pictures to be searched for is acquired, the query database is searched for according to the reverse index structure, and the searching results of the pictures to be searched for are acquired based on the maximum similarity matching. Compared with the prior art, the picture searching method has the advantages of being good in robustness, high in computational efficiency and the like.

Owner:TONGJI UNIV

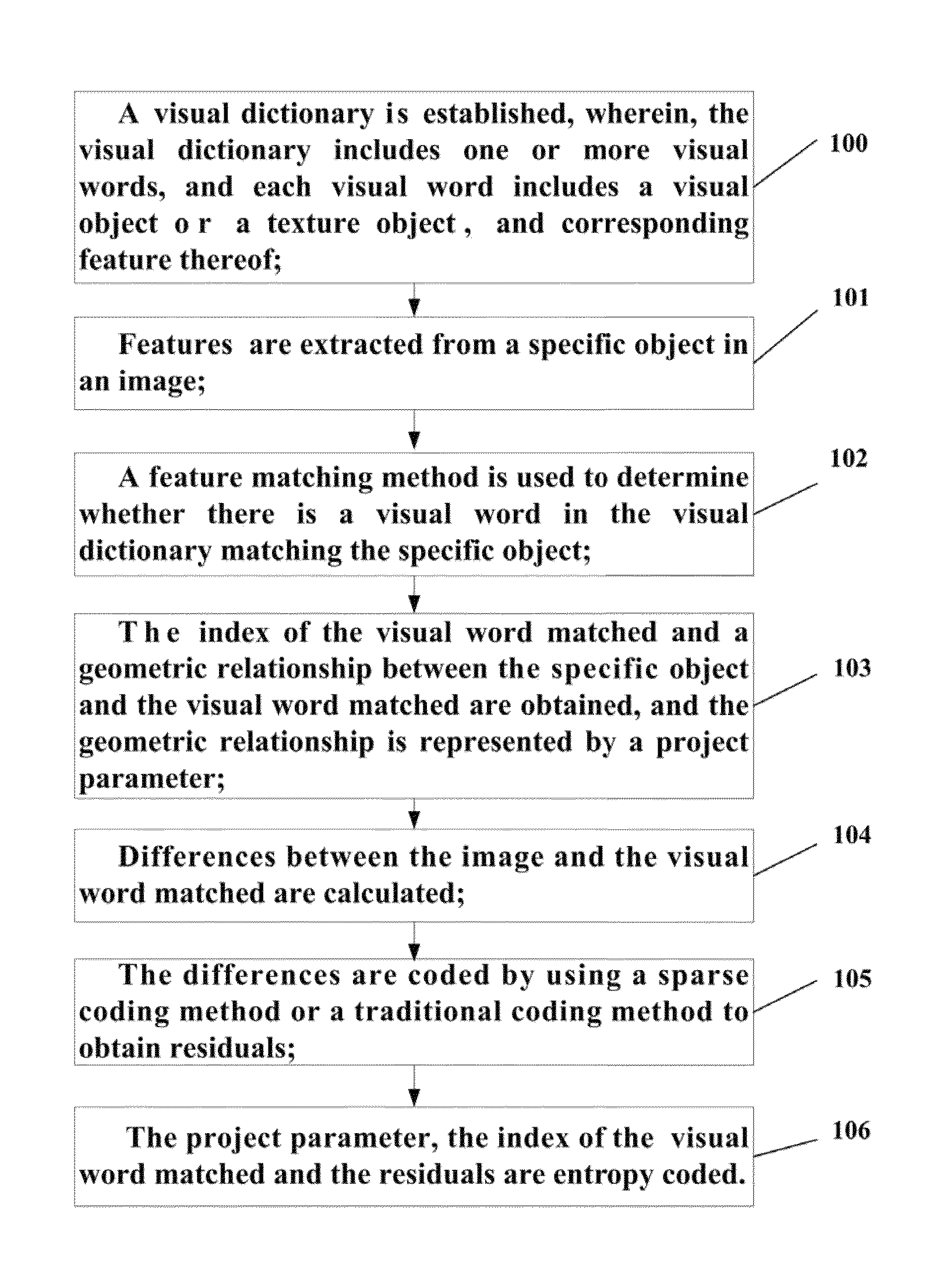

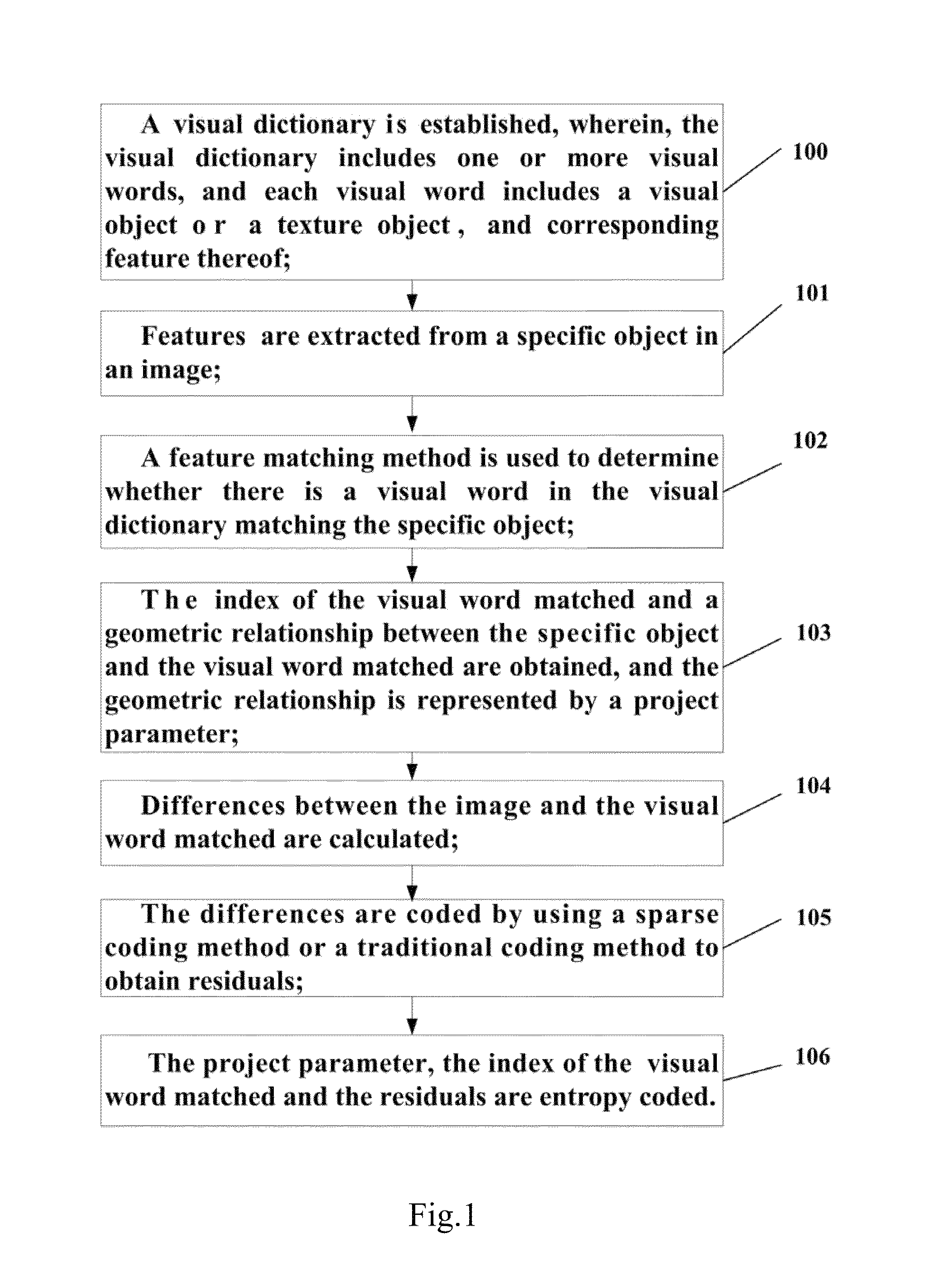

Coding and decoding method for images or videos

ActiveUS9271006B2Improve codec efficiencyReduce data sizeImage codingDigital video signal modificationDecoding methodsVisual perception

Owner:PEKING UNIV

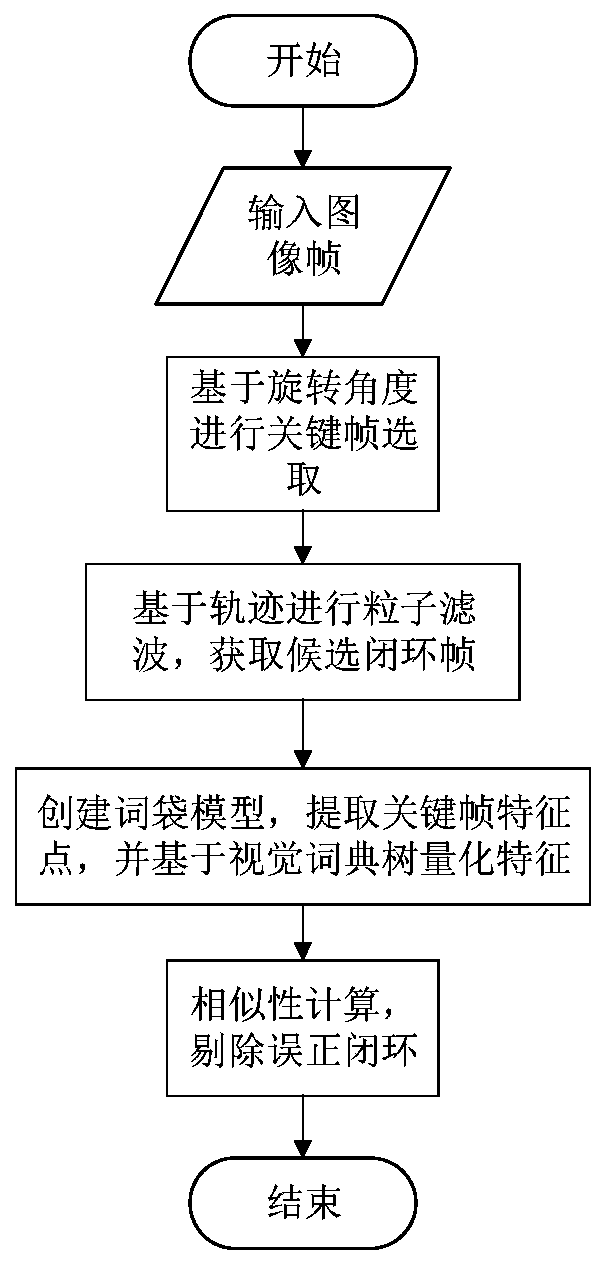

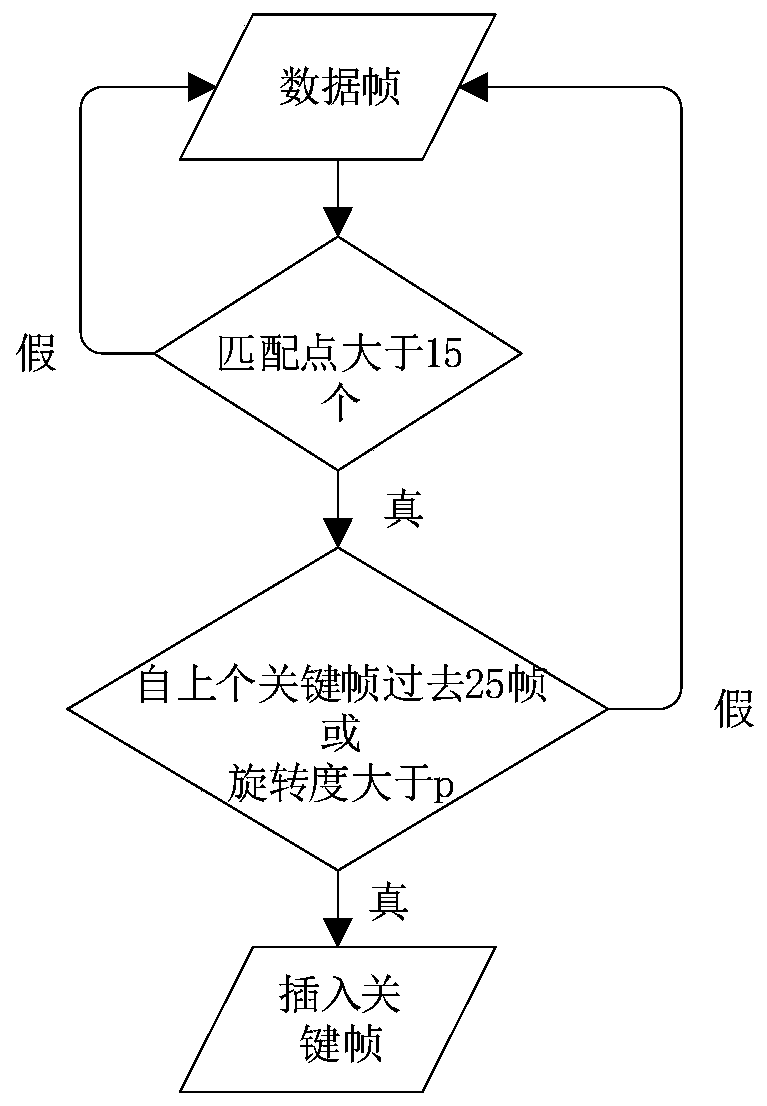

VI-SLAM closed-loop detection method based on inertial navigation attitude track information assistance

The invention provides a VI-SLAM closed-loop detection method based on inertial navigation attitude track information assistance, which comprises the following steps: providing an attitude of a movingobject through inertial navigation, calculating a rotation angle of each frame of image relative to a previous frame of image, and obtaining a key frame based on the rotation angle; then based on theinertial navigation track, carrying out particle filtering on the basis of the key frame, and obtaining candidate closed-loop frames with loose conditions; creating a bag-of-words model, and performing feature quantification on the candidate closed-loop frame and the current frame by using a visual dictionary tree; and finally, performing frame-by-frame matching on the current frame data and thecandidate closed-loop frames by using an improved pyramid TF-IDF score matching method, thereby extracting a closed loop. The method has the advantages that compared with a traditional bag-of-word model method, the accuracy can be effectively improved, and the calculation efficiency can also be improved.

Owner:CENT SOUTH UNIV

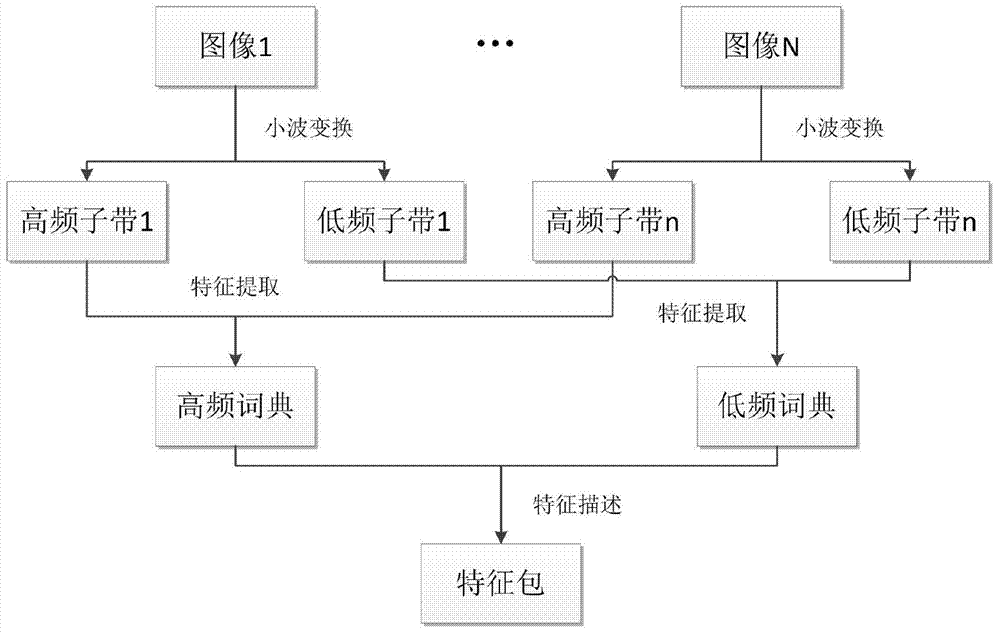

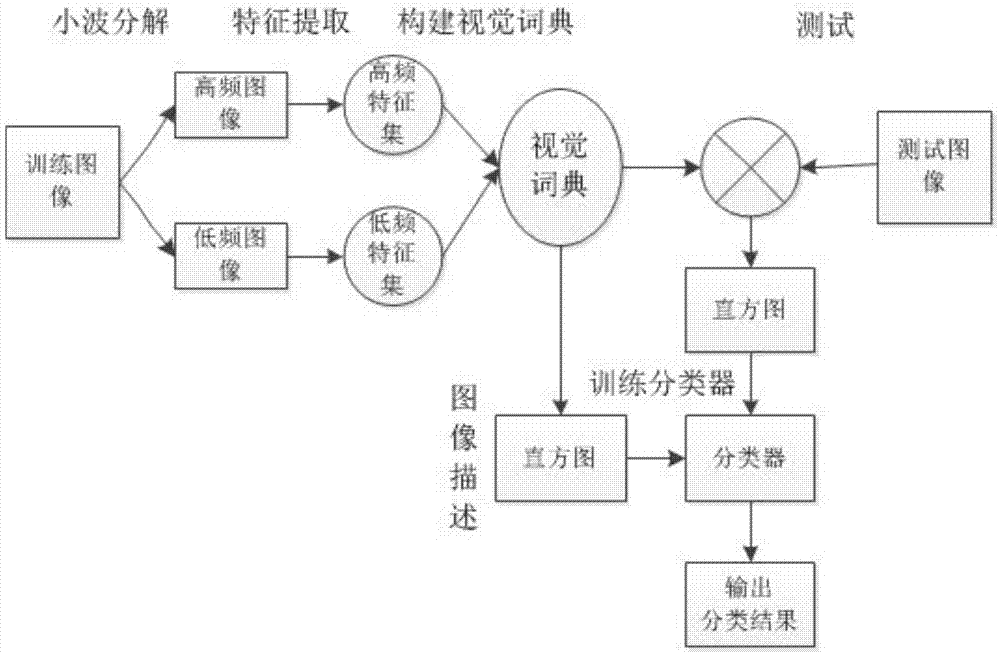

Multi-scale analysis based image feature bag constructing method

The invention provides a multi-scale analysis based image feature bag constructing method through introducing a multi-scale analysis concept of images into a feature bag model. The method comprises the steps of firstly carrying out decomposition on an image by using wavelet transformation, then respectively extracting local area features of a high-frequency sub-band and a low-frequency sub-band of the image, constructing a high-frequency visual dictionary and a low-frequency visual dictionary respectively, then describing the image by using the visual dictionaries, and generating an image feature bag. The method provided by the invention focuses on the level of multi-scale feature extraction and semantic description of the image, detail information in the image can be better captured so as to generate visual feature vocabularies, and the new feature bag model can be specifically applied to classification, retrieval and the like of digital image data such as medical images, remote sensing images, network images and the like.

Owner:UNIV OF ELECTRONIC SCI & TECH OF CHINA

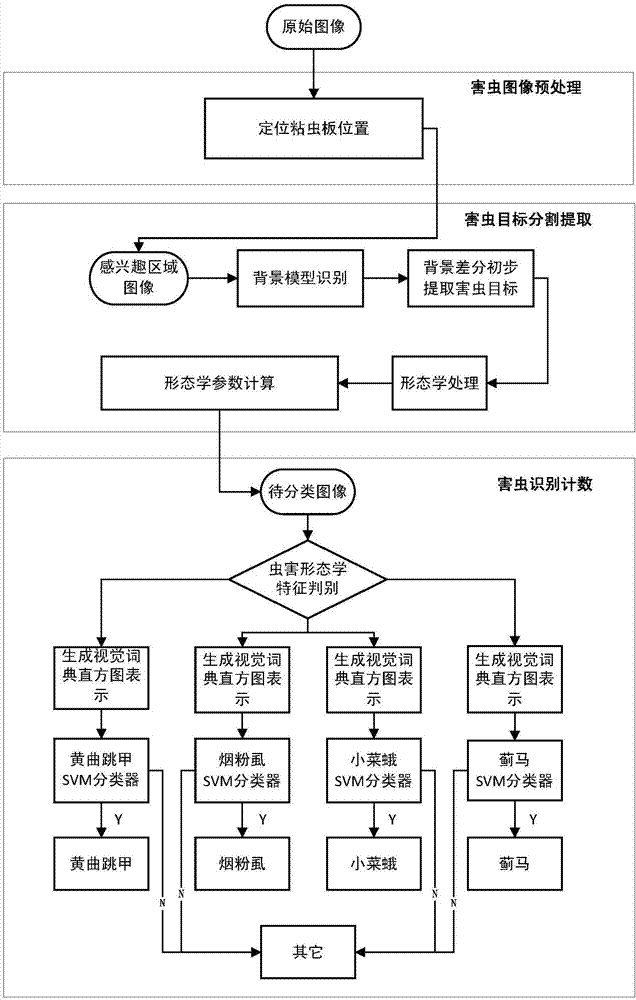

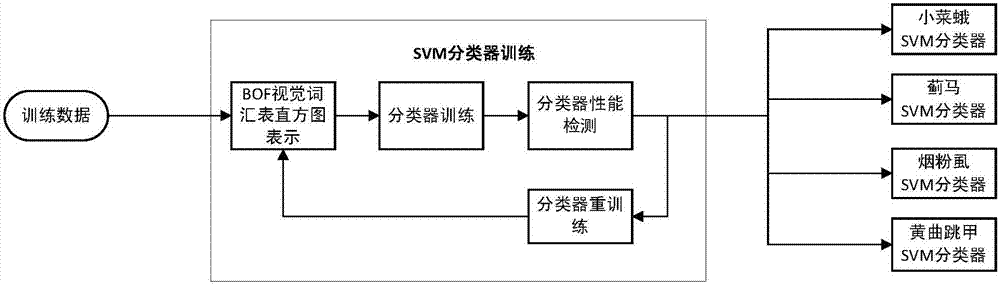

Method for detecting and counting major vegetable pests in South China based on machine vision

InactiveCN107292891AReduce mistakesAccurate countImage enhancementImage analysisMachine visionSvm classifier

The present invention discloses a method for detecting and counting major vegetable pests in South China based on machine vision, comprising the steps of: the pest image preprocessing step; the pest target segmenting and extracting step; and the pest recognizing and counting step. In detail, the method comprises; through the training of SVM classifiers for different pests, optimizing the pest images after standardization; performing HOG characteristic extraction and description; using the K-Means clustering algorithm for clustering the extracted characteristics; constructing a visual dictionary and then using the SPM generated image's histogram representation; and finally, conducting preliminary classification through the morphological data of the target image; selecting different SVM classifiers, outputting the identification results, and identifying and counting the pests. The method of the invention is based on the image's pest automatic identifying and counting technology, which can realize the rapid identifying and counting of the main pest image data of the South China vegetables so as to assist peasants or the grassroots plant protection personnel to carry out the monitoring work of vegetable pests. To a large extent, their labor intensity is reduced.

Owner:SOUTH CHINA AGRI UNIV +1

Video concept classification using audio-visual grouplets

InactiveUS8867891B2Strong temporal correlations in videosRobustly classifiedTelevision system detailsColor television signals processingDigital videoVisual perception

A method for determining a semantic concept classification for a digital video clip, comprising: receiving an audio-visual dictionary including a plurality of audio-visual grouplets, the audio-visual grouplets including visual background and foreground codewords, audio background and foreground codewords, wherein the codewords in a particular audio-visual grouplet were determined to be correlated with each other; analyzing the digital video clip to determine a set of visual features and a set of audio features; determining similarity scores between the digital video clip and each of the audio-visual grouplets by comparing the set of visual features to any visual background and foreground codewords associated with a particular audio-visual grouplet, and comparing the set of audio features to any audio background and foreground codewords associated with the particular audio-visual grouplet; and determining one or more semantic concept classifications using trained semantic classifiers.

Owner:MONUMENT PEAK VENTURES LLC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com