Patents

Literature

10558 results about "Image frame" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Surgical imaging device

A surgical imaging device includes at least one light source for illuminating an object, at least two image sensors configured to generate image data corresponding to the object in the form of an image frame, and a video processor configured to receive from each image sensor the image data corresponding to the image frames and to process the image data so as to generate a composite image. The video processor may be configured to normalize, stabilize, orient and / or stitch the image data received from each image sensor so as to generate the composite image. Preferably, the video processor stitches the image data received from each image sensor by processing a portion of image data received from one image sensor that overlaps with a portion of image data received from another image sensor. Alternatively, the surgical device may be, e.g., a circular stapler, that includes a first part, e.g., a DLU portion, having an image sensor a second part, e.g., an anvil portion, that is moveable relative to the first part. The second part includes an arrangement, e.g., a bore extending therethrough, for conveying the image to the image sensor. The arrangement enables the image to be received by the image sensor without removing the surgical device from the surgical site.

Owner:TYCO HEALTHCARE GRP LP

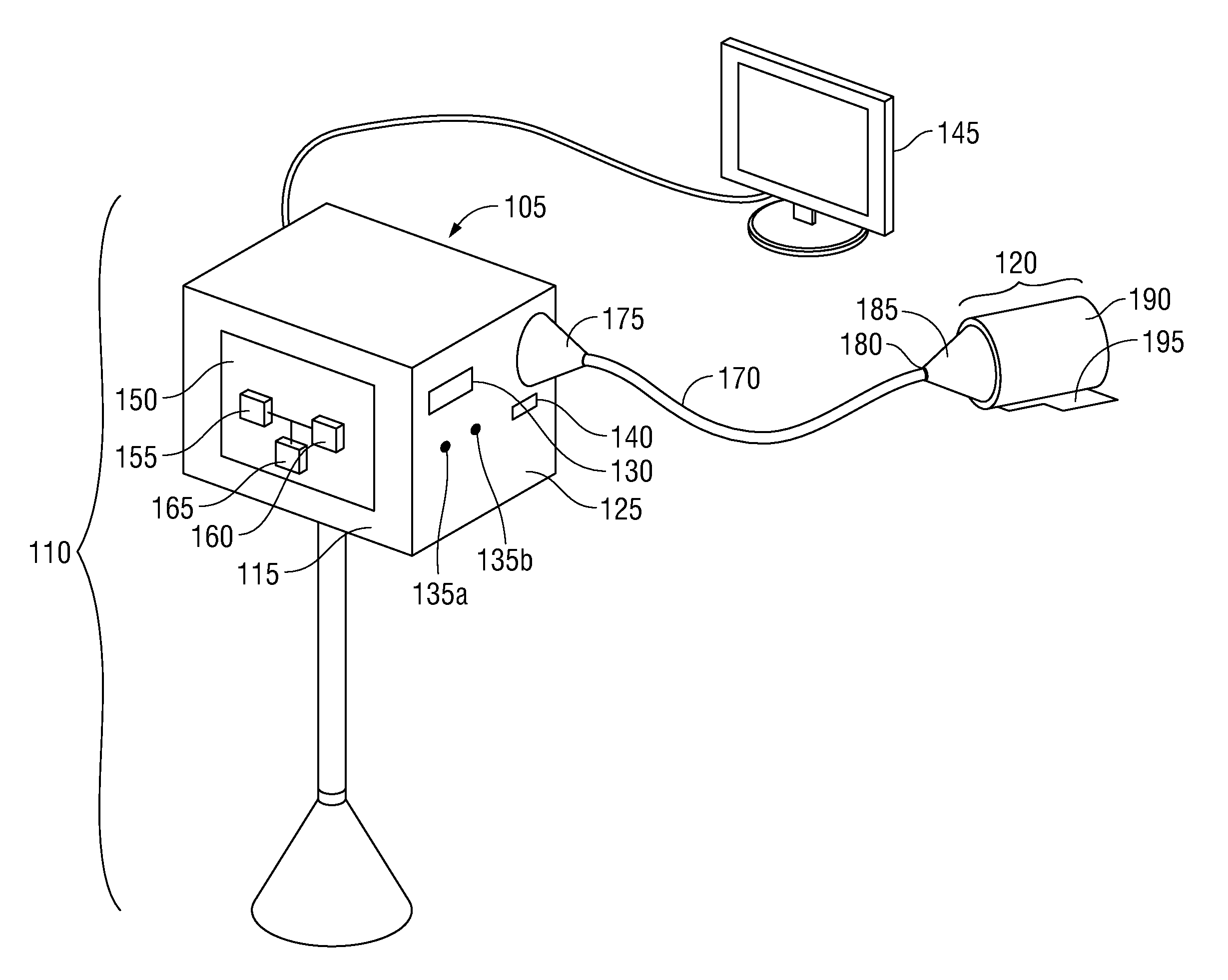

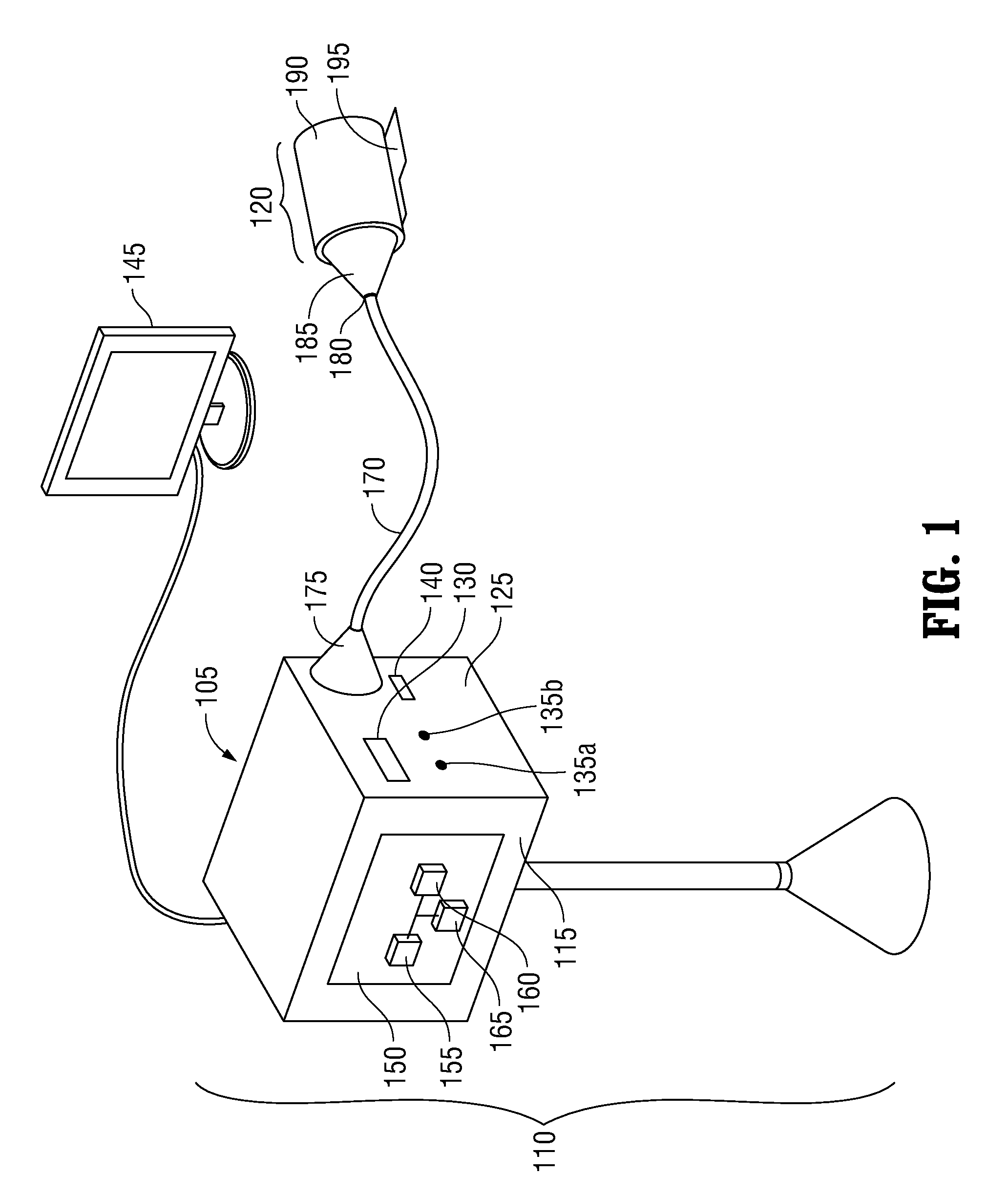

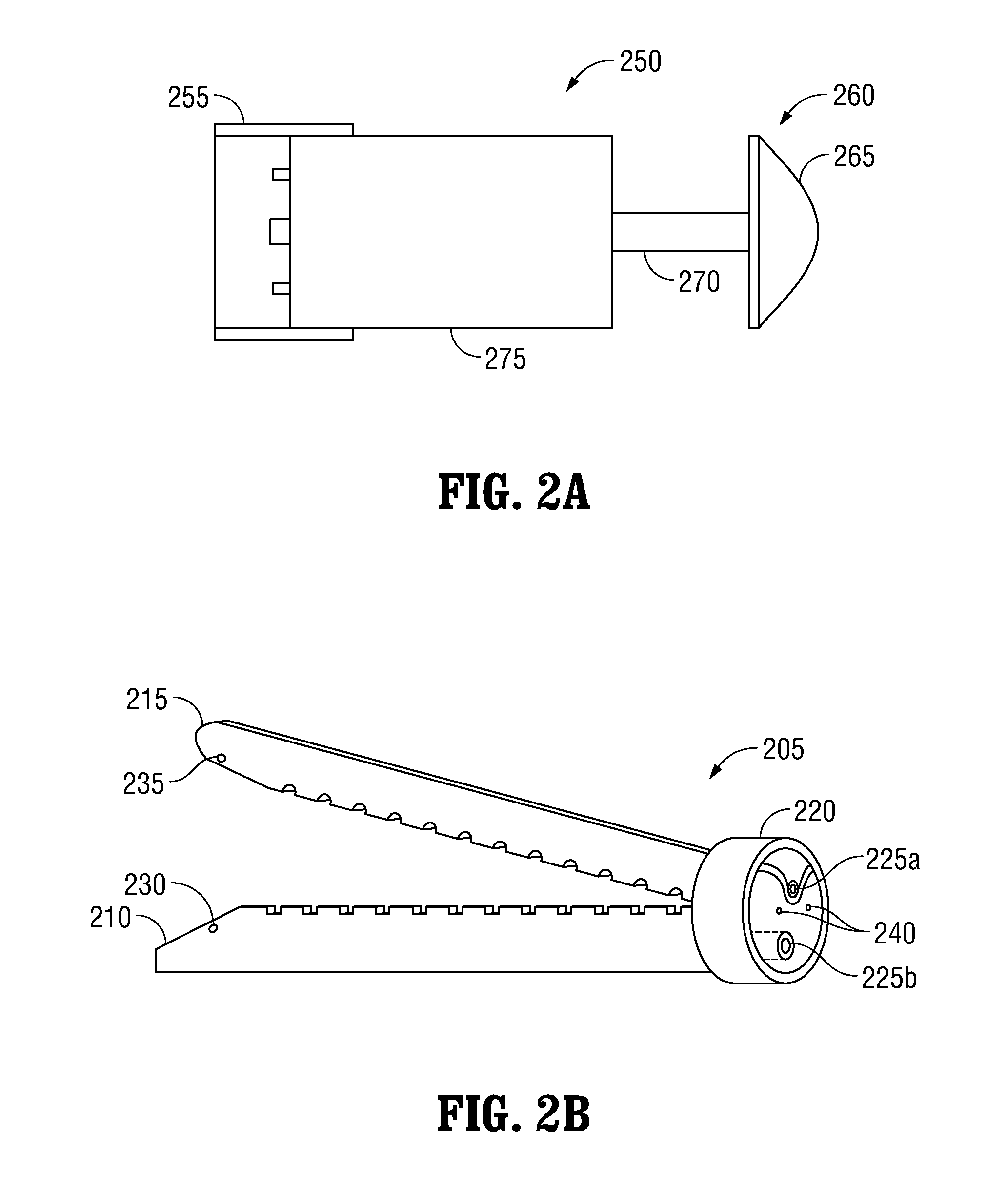

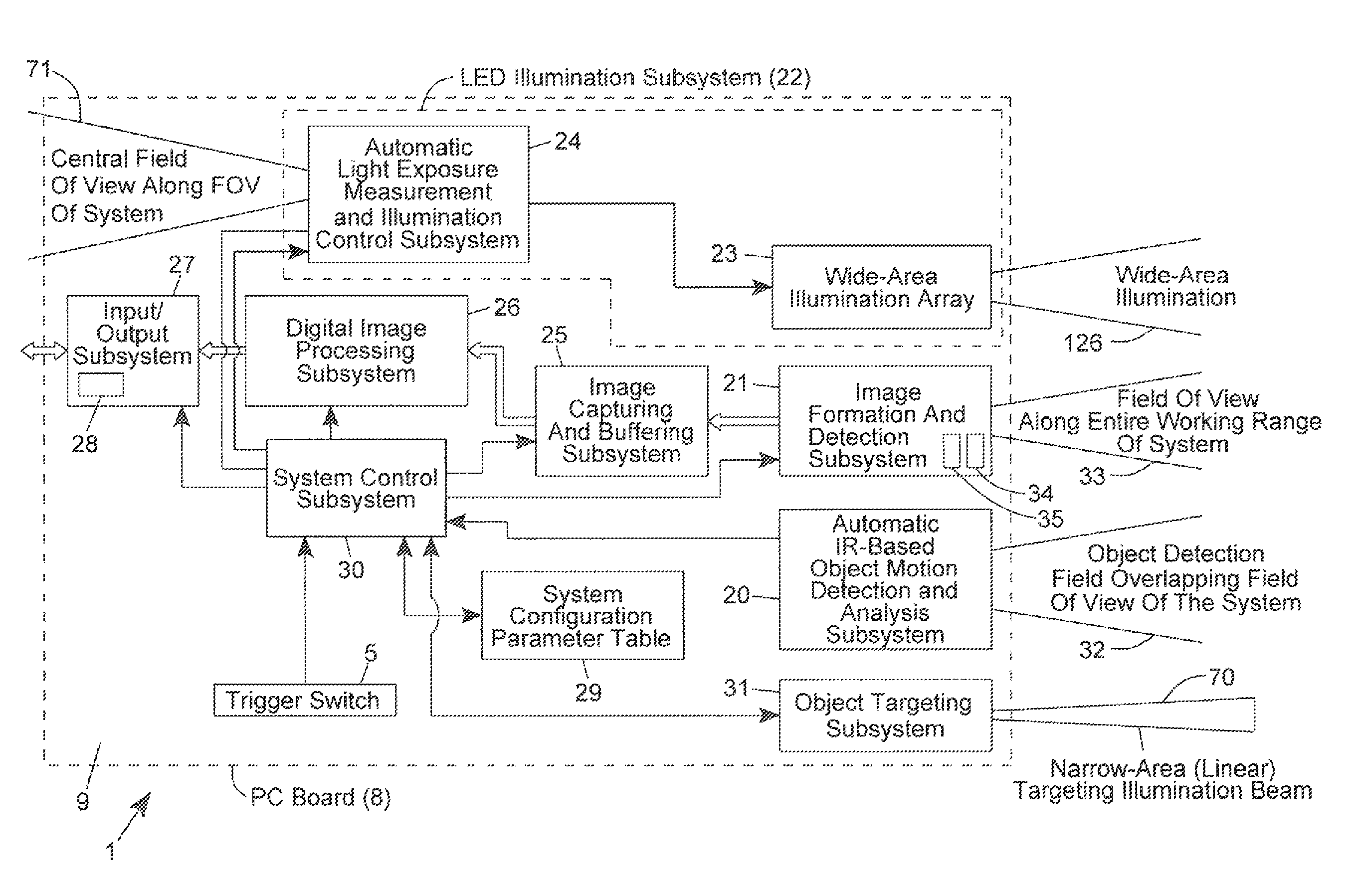

Auto-exposure method using continuous video frames under controlled illumination

ActiveUS8408464B2Improve the level ofCapture performanceTelevision system detailsMechanical apparatusGraphicsReal time analysis

An adaptive strobe illumination control process for use in a digital image capture and processing system. In general, the process involves: (i) illuminating an object in the field of view (FOV) with several different pulses of strobe (i.e. stroboscopic) illumination over a pair of consecutive video image frames; (ii) detecting digital images of the illuminated object over these consecutive image frames; and (iii) decode processing the digital images in an effort to read a code symbol graphically encoded therein. In a first illustrative embodiment, upon failure to read a code symbol graphically encoded in one of the first and second images, these digital images are analyzed in real-time, and based on the results of this real-time image analysis, the exposure time (i.e. photonic integration time interval) is automatically adjusted during subsequent image frames (i.e. image acquisition cycles) according to the principles of the present disclosure. In a second illustrative embodiment, upon failure to read a code symbol graphically encoded in one of the first and second images, these digital images are analyzed in real-time, and based on the results of this real-time image analysis, the energy level of the strobe illumination is automatically adjusted during subsequent image frames (i.e. image acquisition cycles) according to the principles of the present disclosure.

Owner:METROLOGIC INSTR

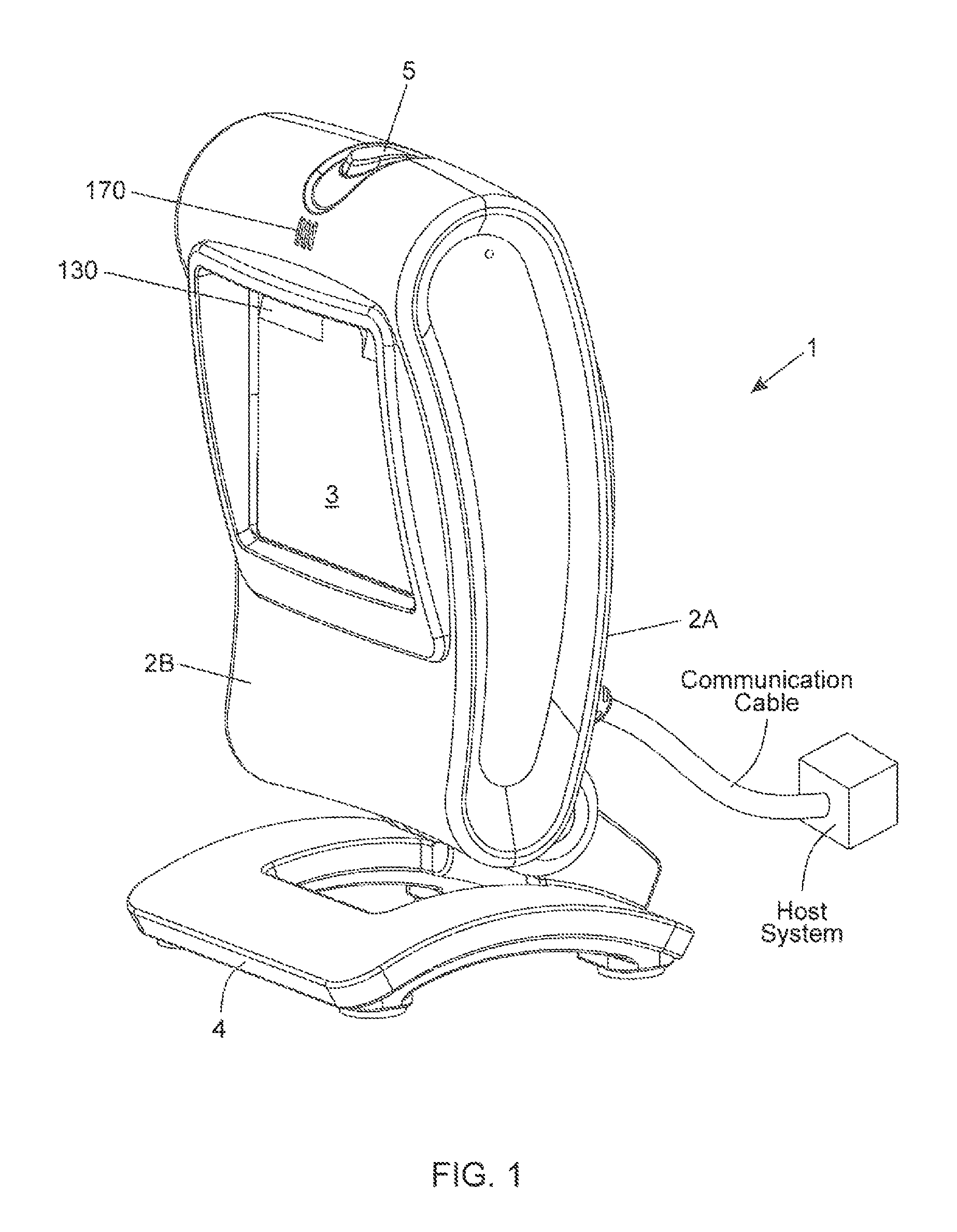

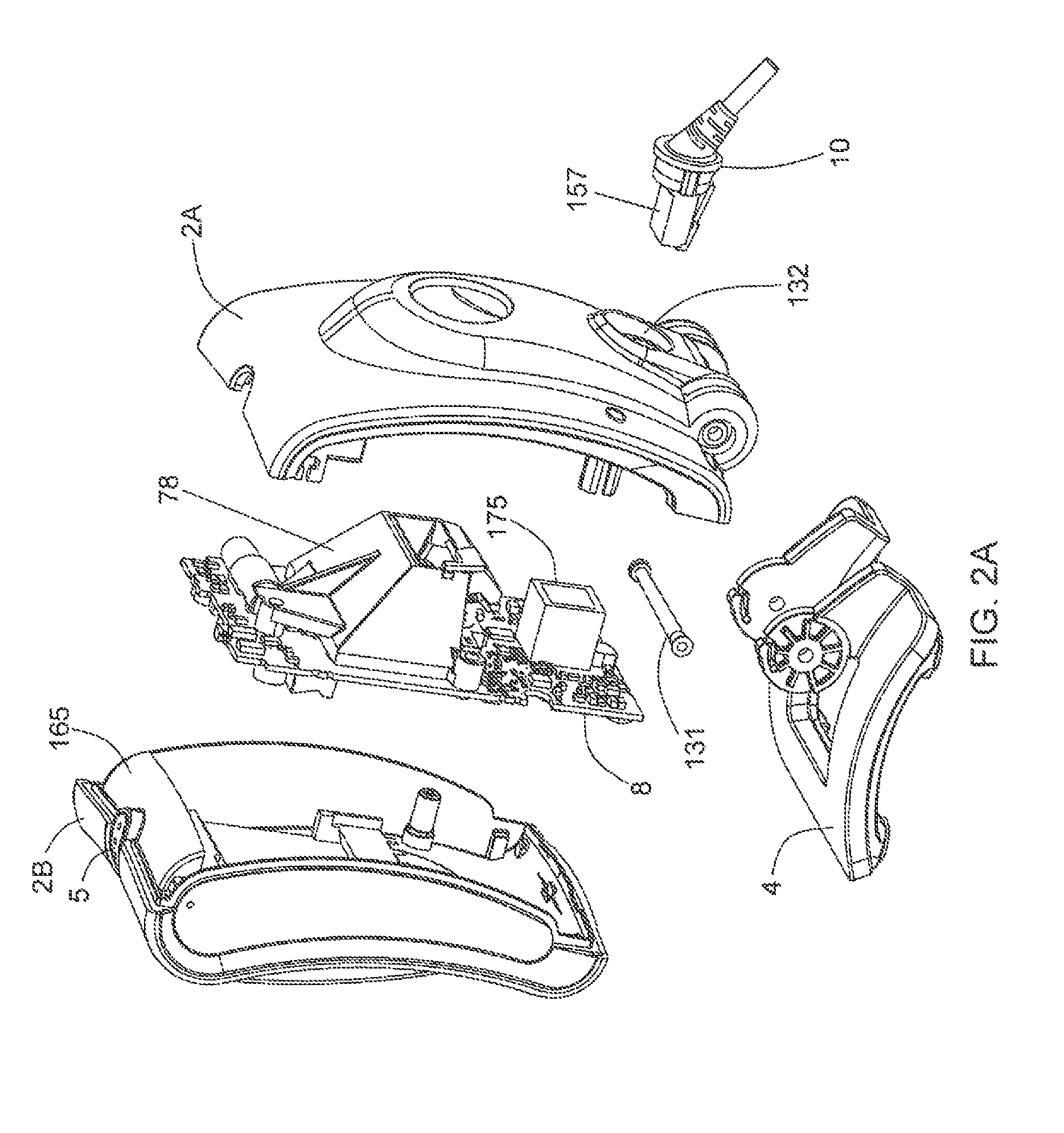

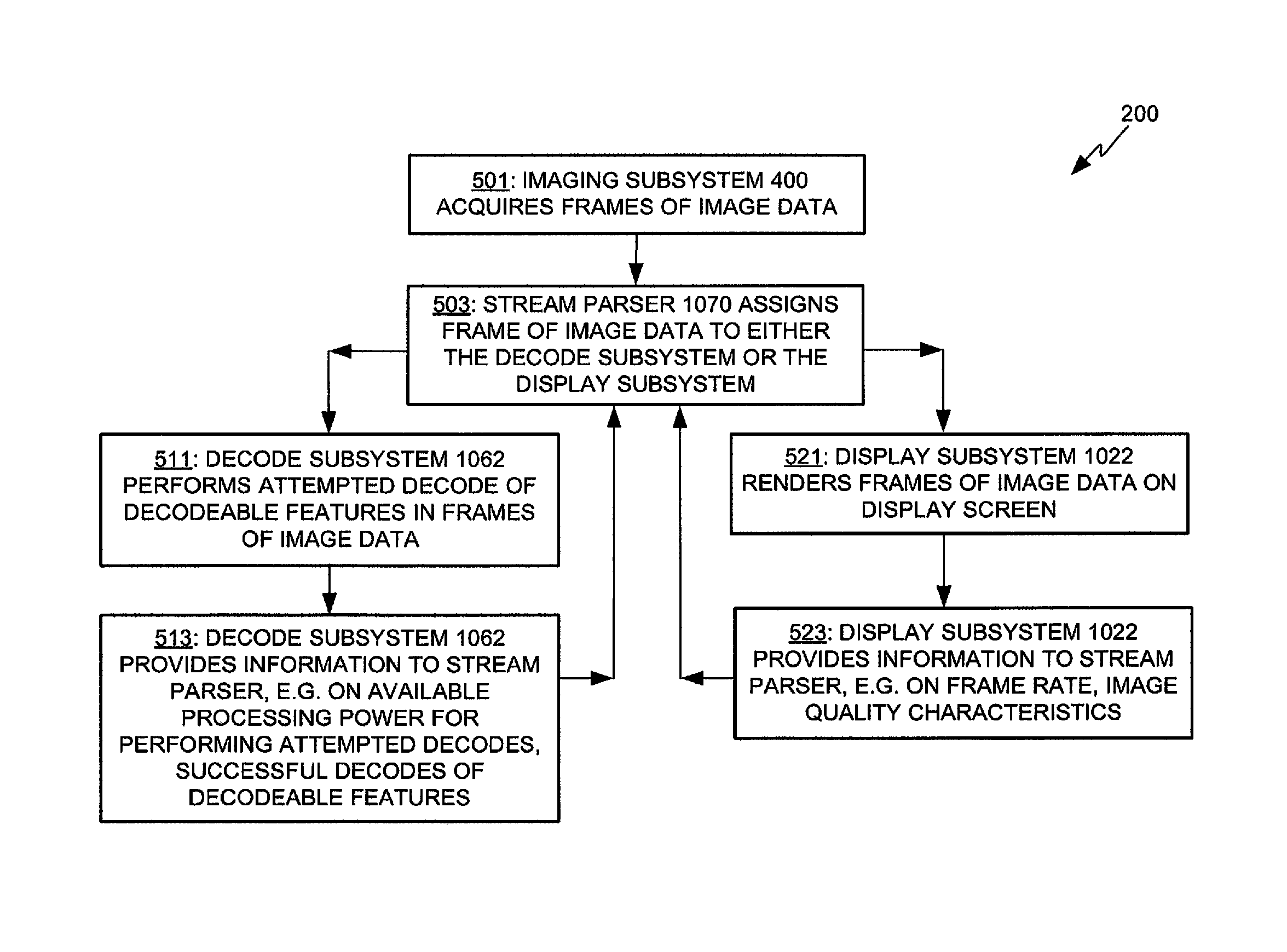

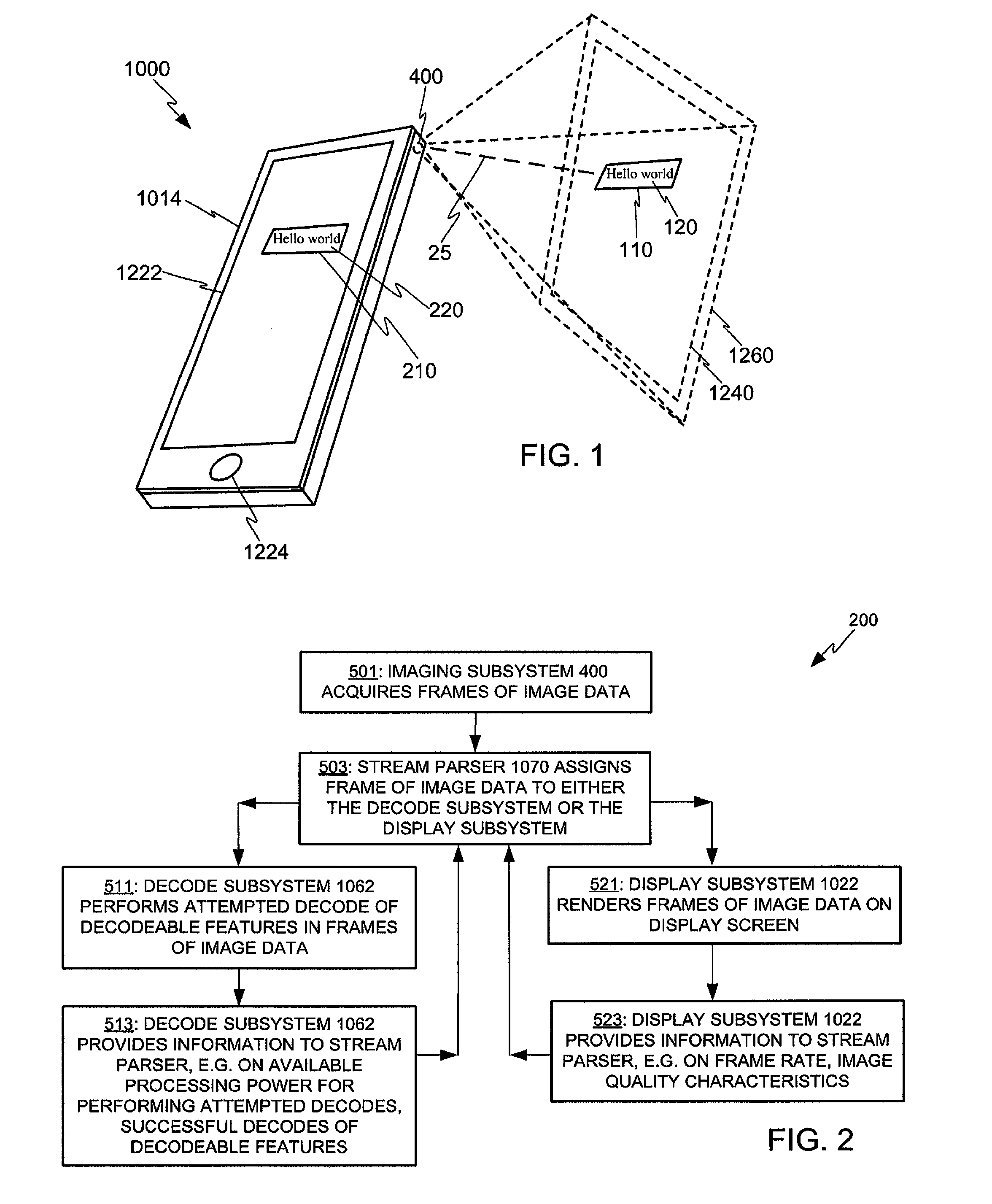

Adaptive video capture decode system

ActiveUS8879639B2Picture reproducers using cathode ray tubesPicture reproducers with optical-mechanical scanningComputer graphics (images)Adaptive video

Devices, methods, and software are disclosed for an adaptive video capture decode system that efficiently manages a stream of image frames between a device display screen and a processor performing decode attempts on decodable features in the image frames. In an illustrative embodiment, a device assigns frames of image data from a stream of frames of image data to either a display subsystem or a decode subsystem. The display subsystem is operative for rendering the frames of image data on a display screen. The decode subsystem is operative for receiving frames of image data and performing an attempted decode of a decodable indicia represented in at least one of the frames of image data. None of the frames of data are assigned to both the display subsystem and the decode subsystem.

Owner:HAND HELD PRODS

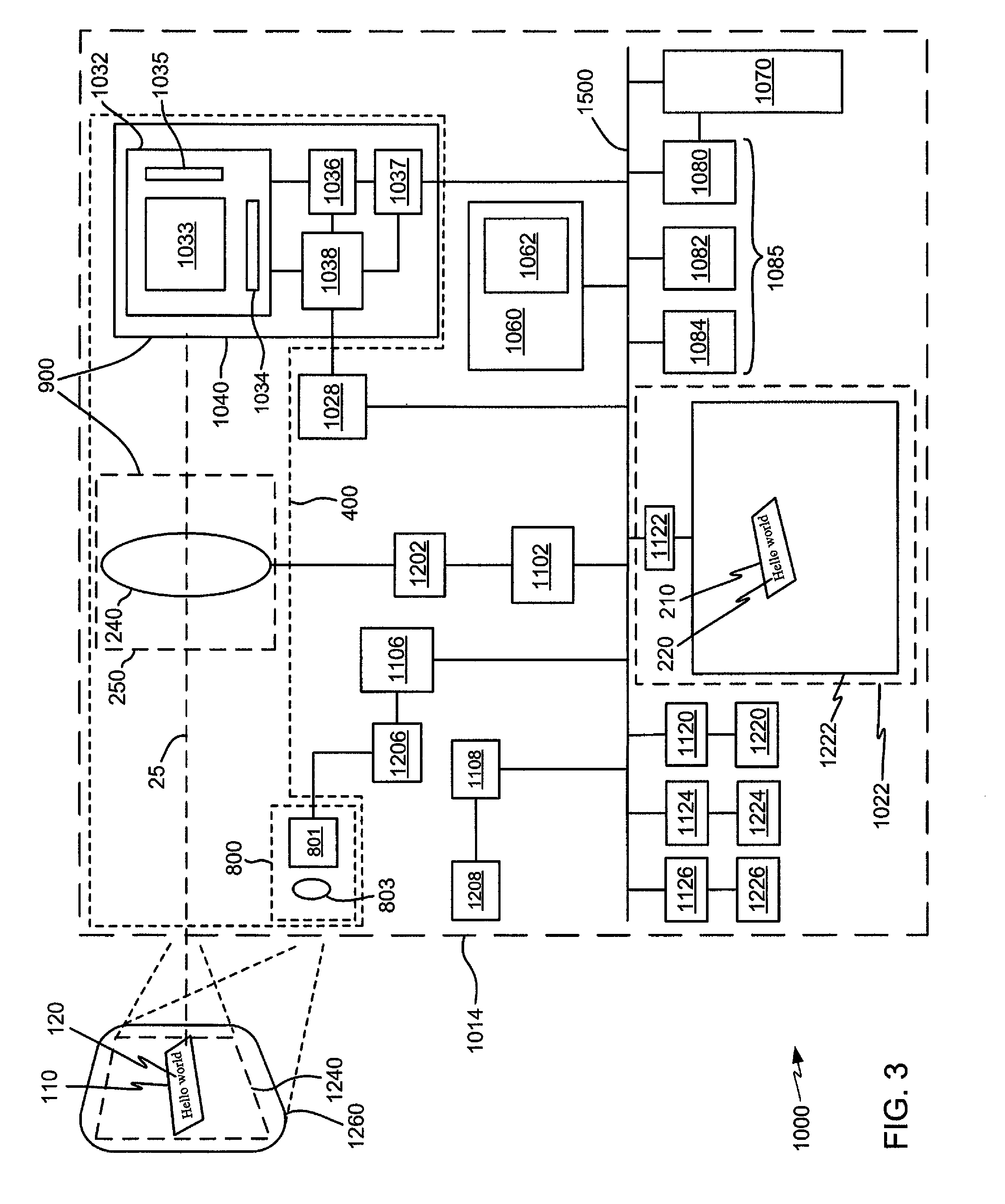

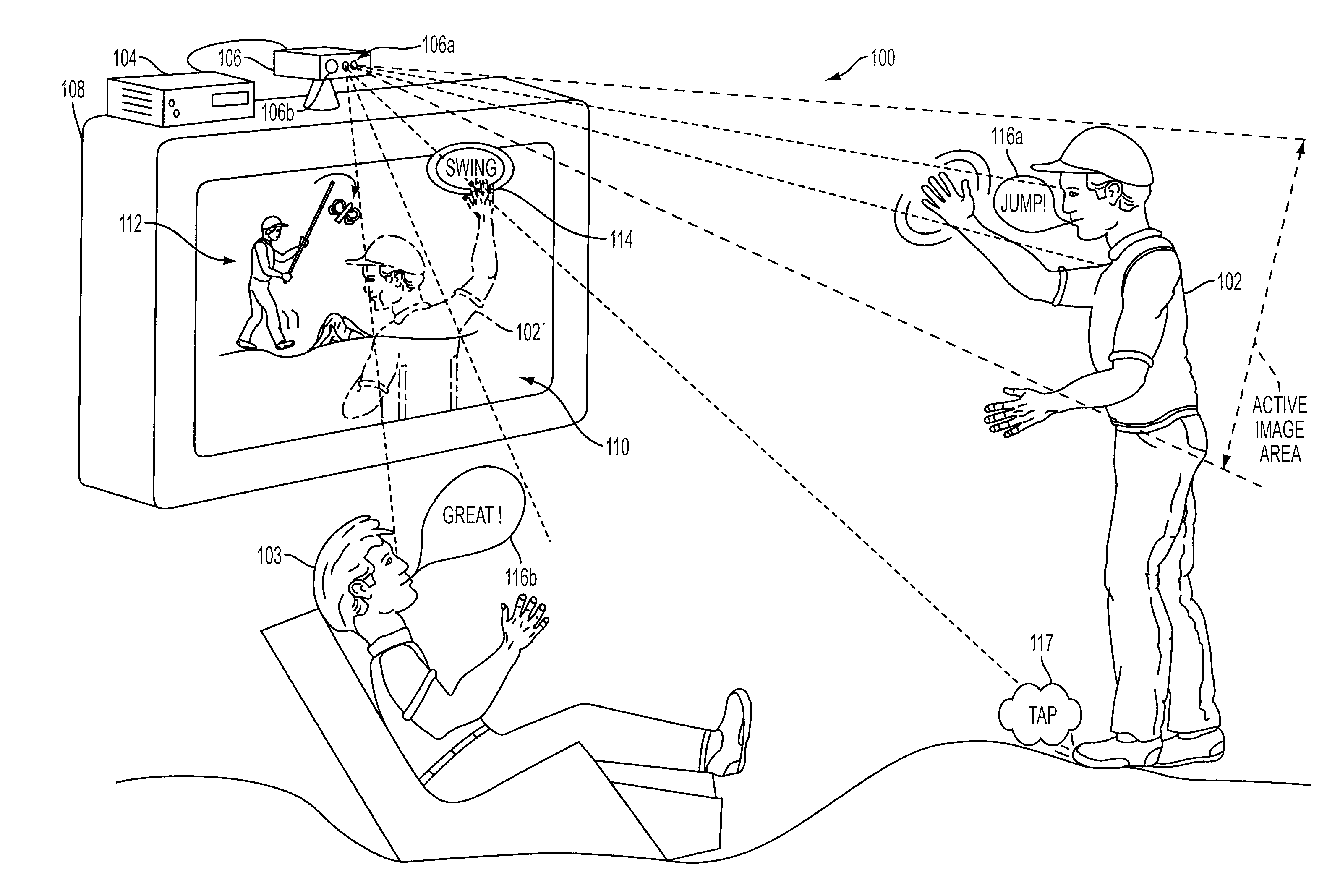

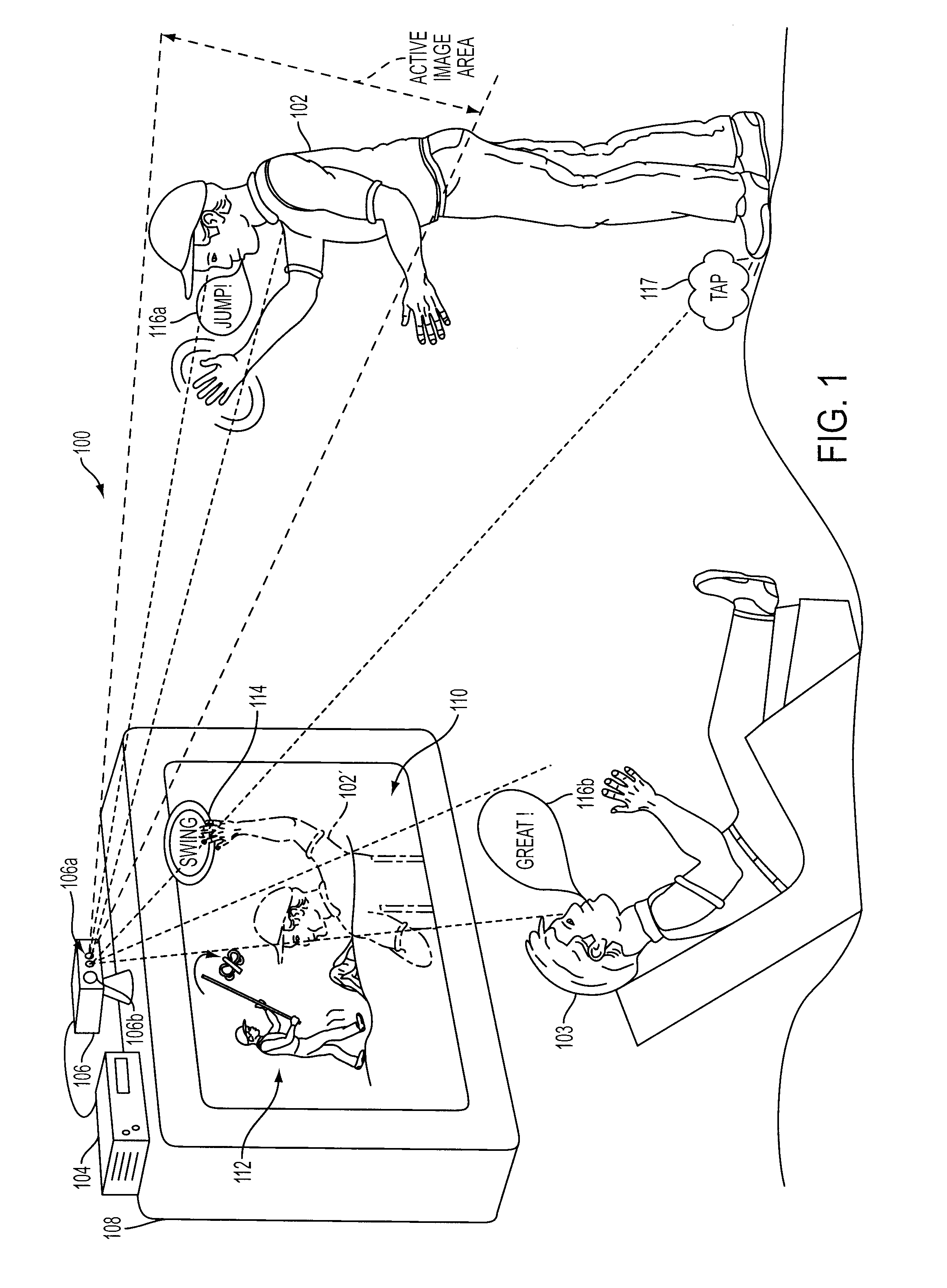

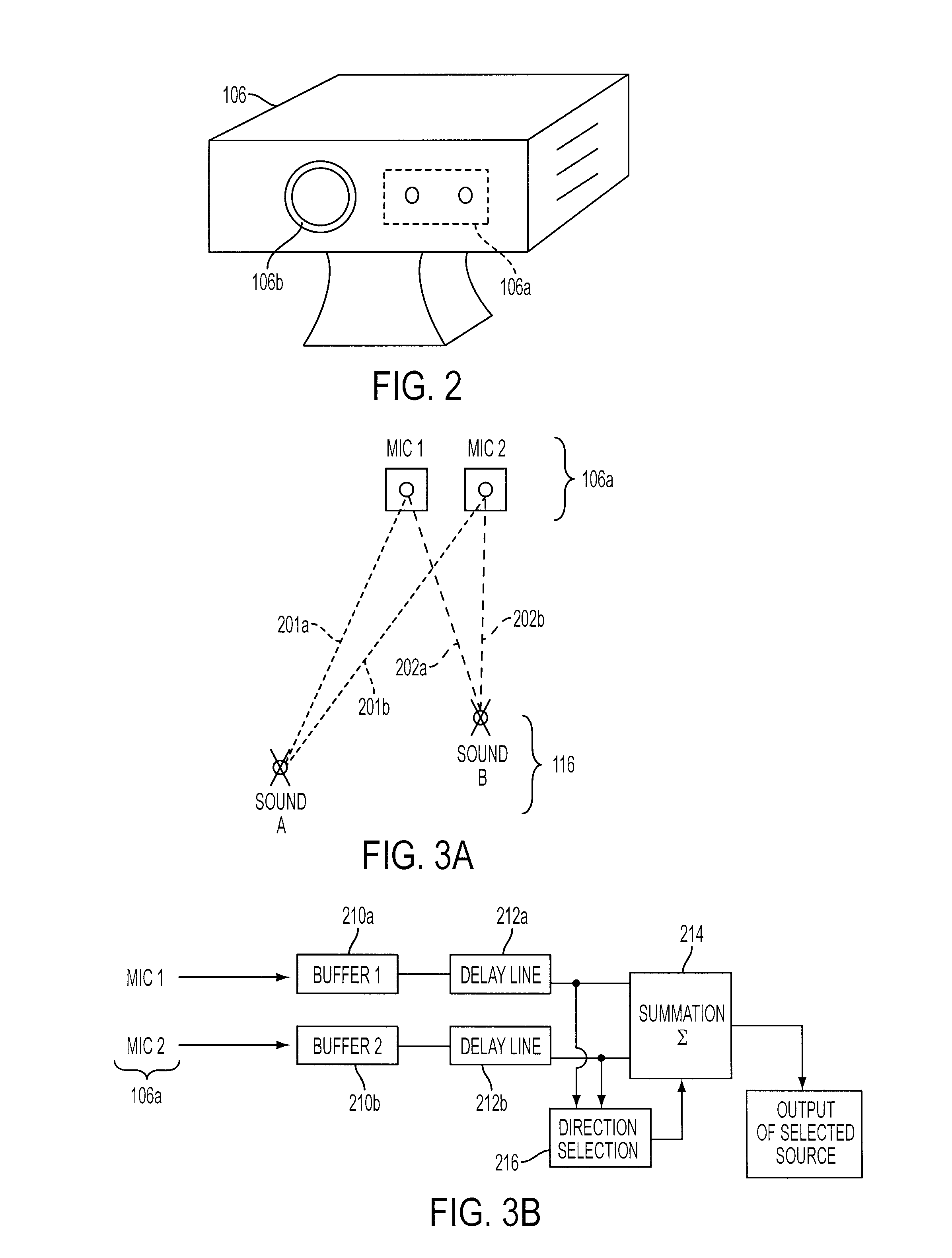

Methods and apparatus for targeted sound detection and characterization

ActiveUS20060239471A1Enhanced interactionRespondMicrophonesSignal processingSound detectionSound sources

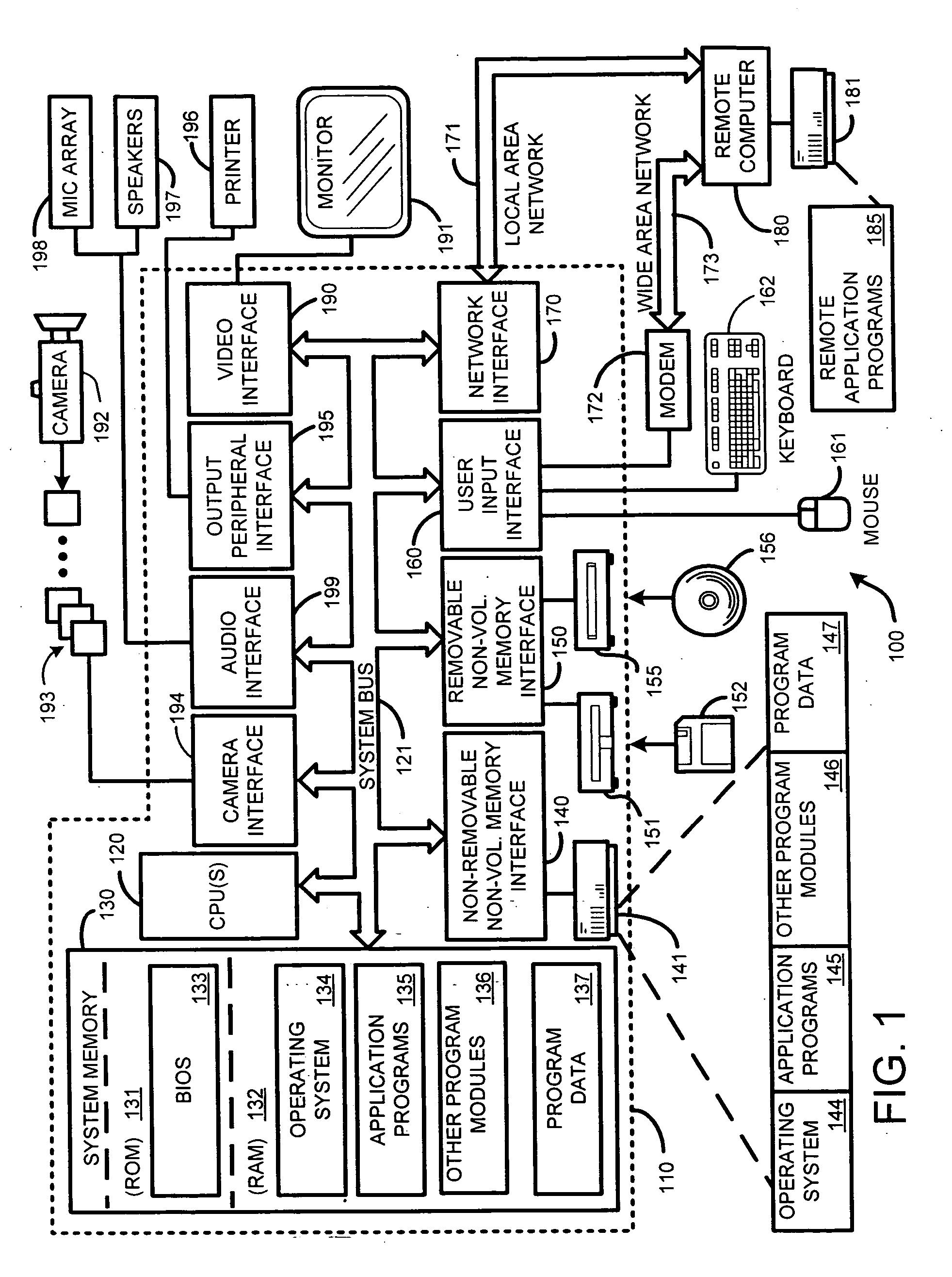

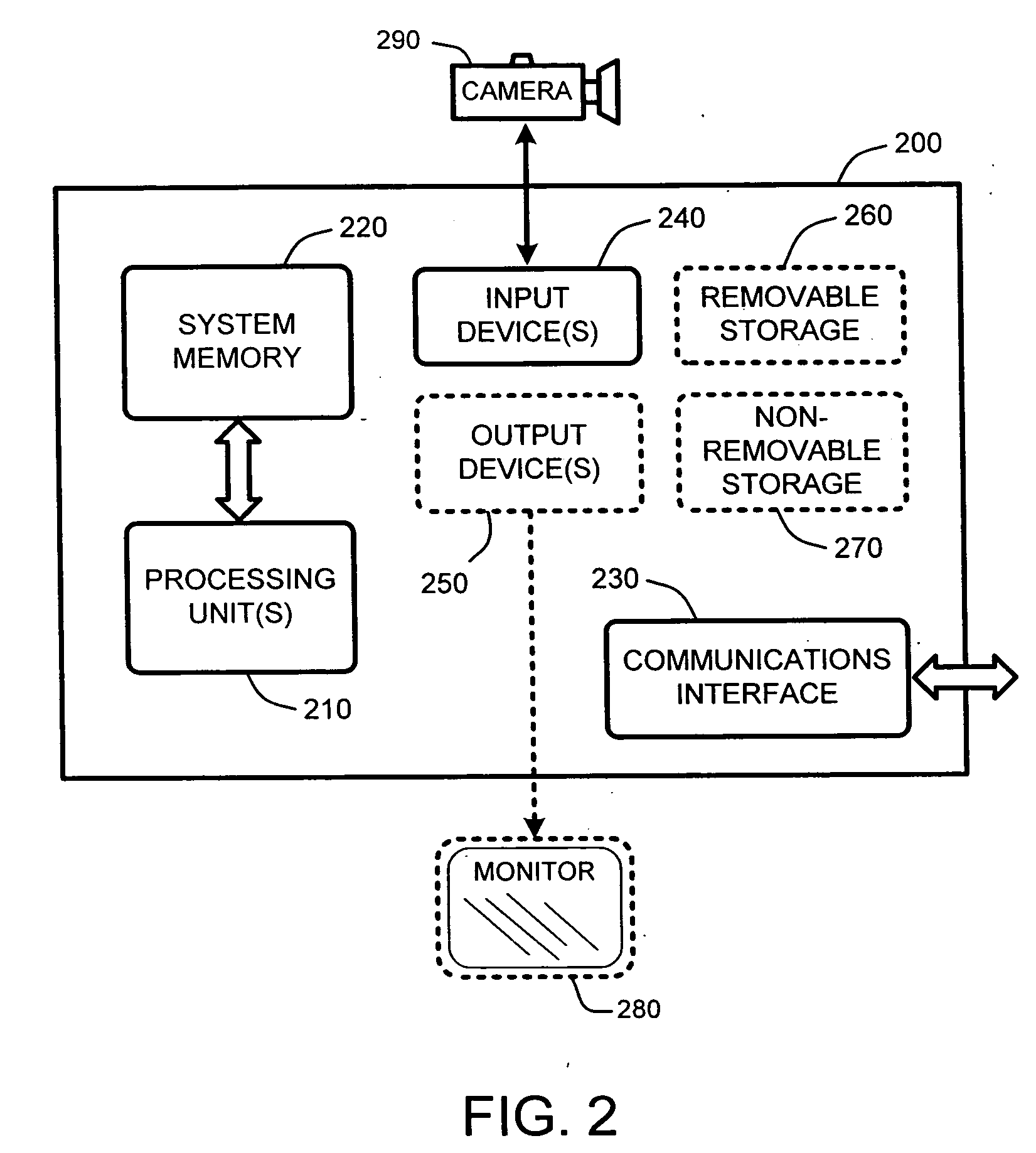

Sound processing methods and apparatus are provided. A sound capture unit is configured to identify one or more sound sources. The sound capture unit generates data capable of being analyzed to determine a listening zone at which to process sound to the substantial exclusion of sounds outside the listening zone. Sound captured and processed for the listening zone may be used for interactivity with the computer program. The listening zone may be adjusted based on the location of a sound source. One or more listening zones may be pre-calibrated. The apparatus may optionally include an image capture unit configured to capture one or more image frames. The listening zone may be adjusted based on the image. A video game unit may be controlled by generating inertial, optical and / or acoustic signals with a controller and tracking a position and / or orientation of the controller using the inertial, acoustic and / or optical signal.

Owner:SONY COMPUTER ENTERTAINMENT INC

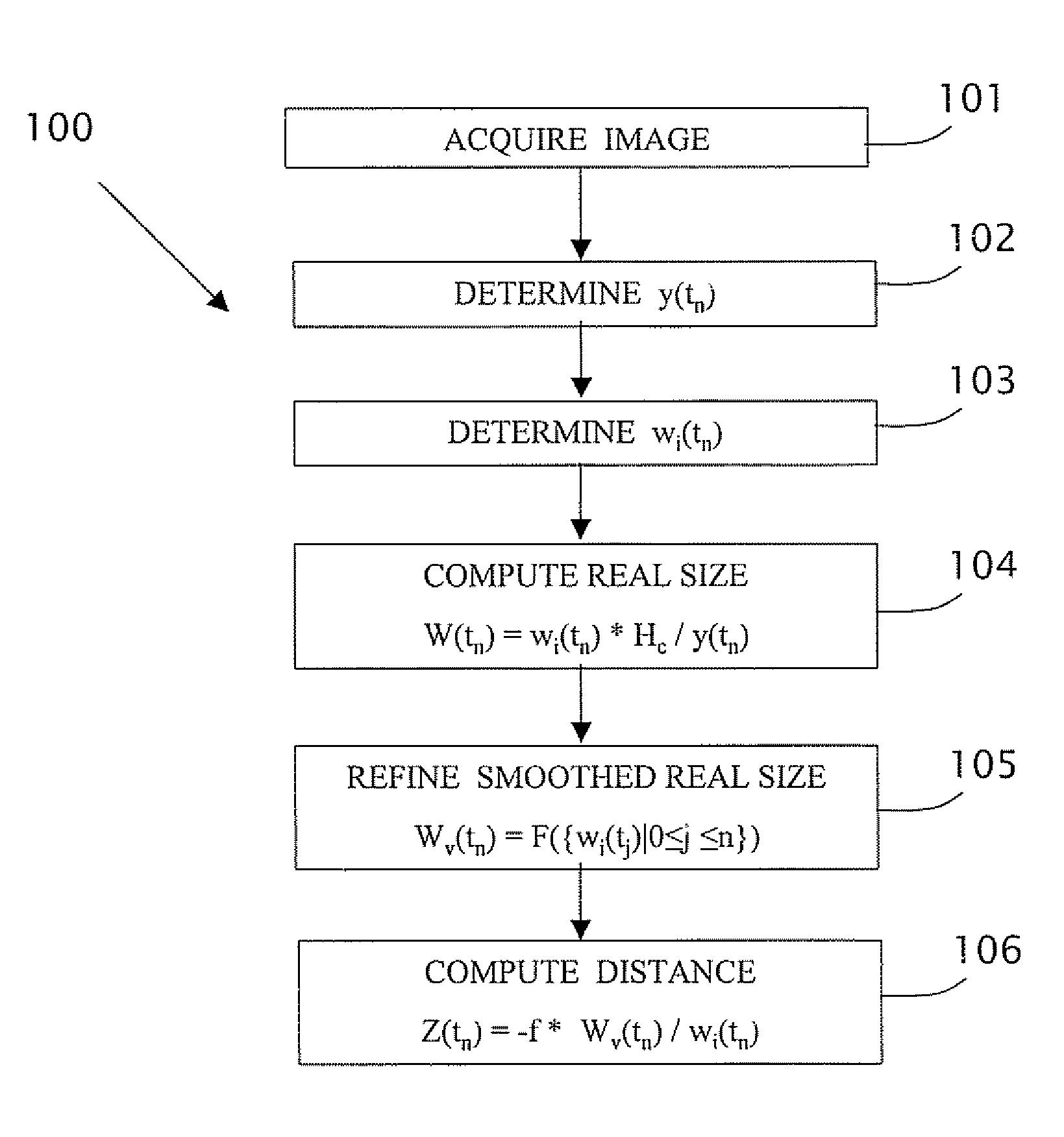

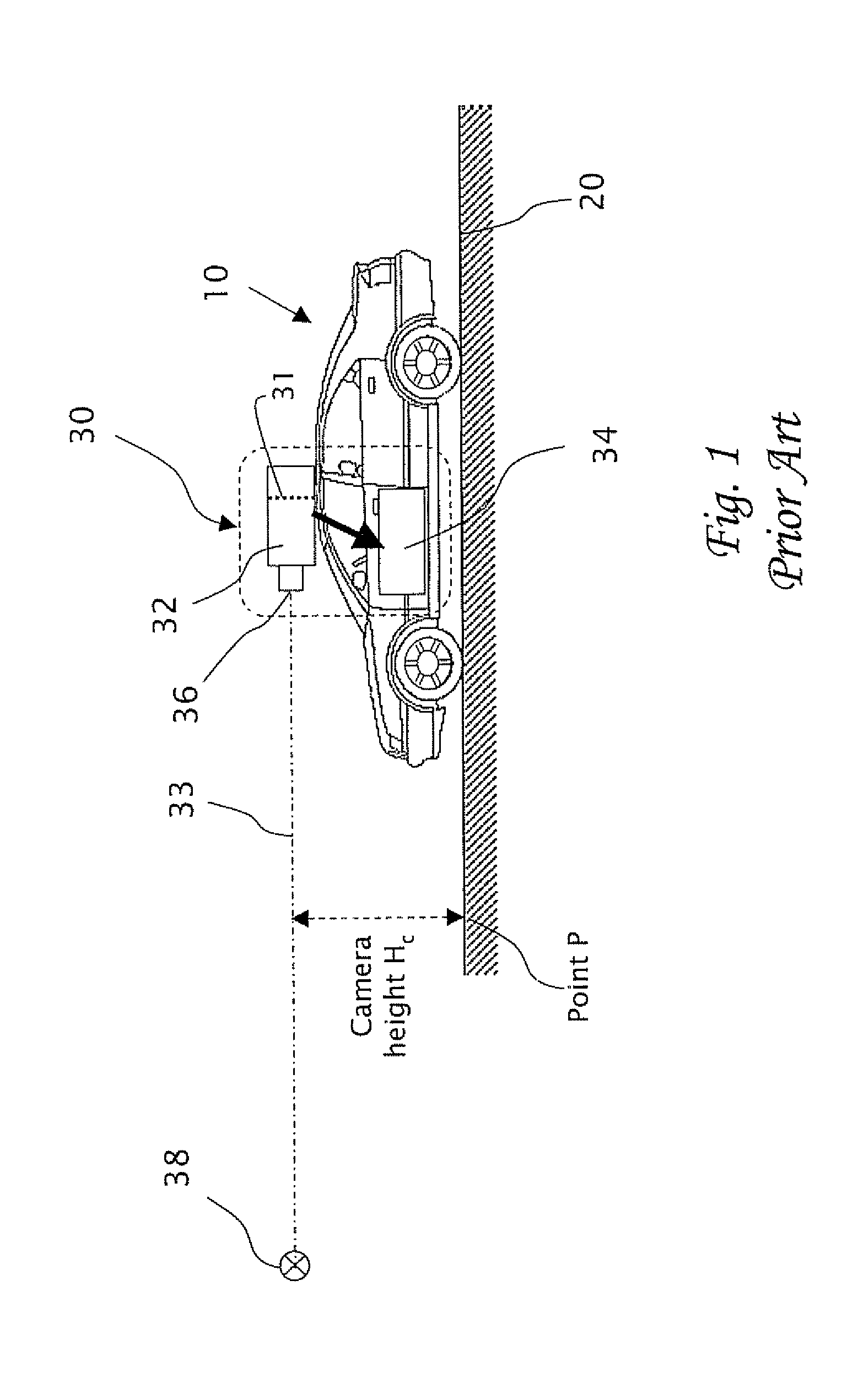

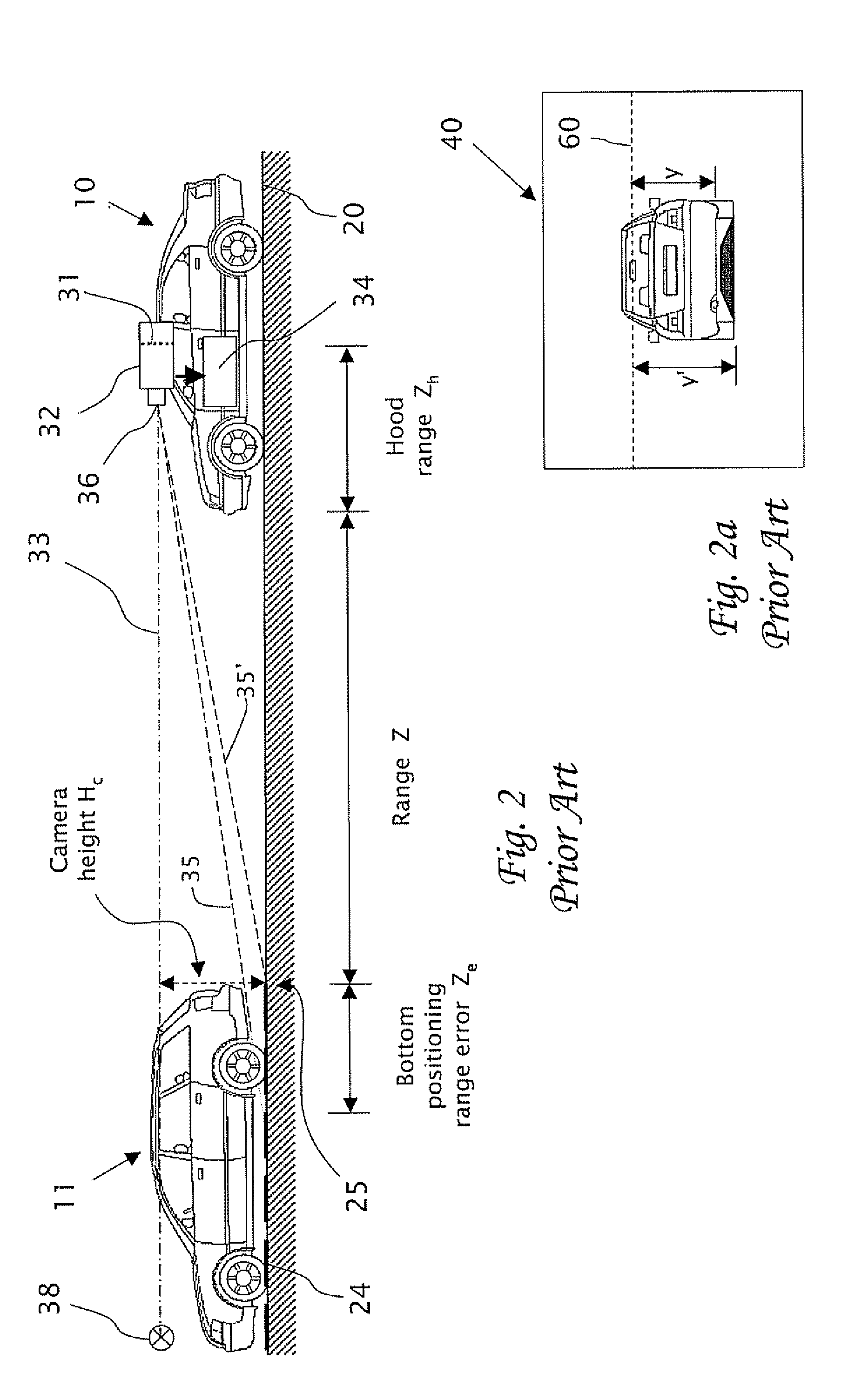

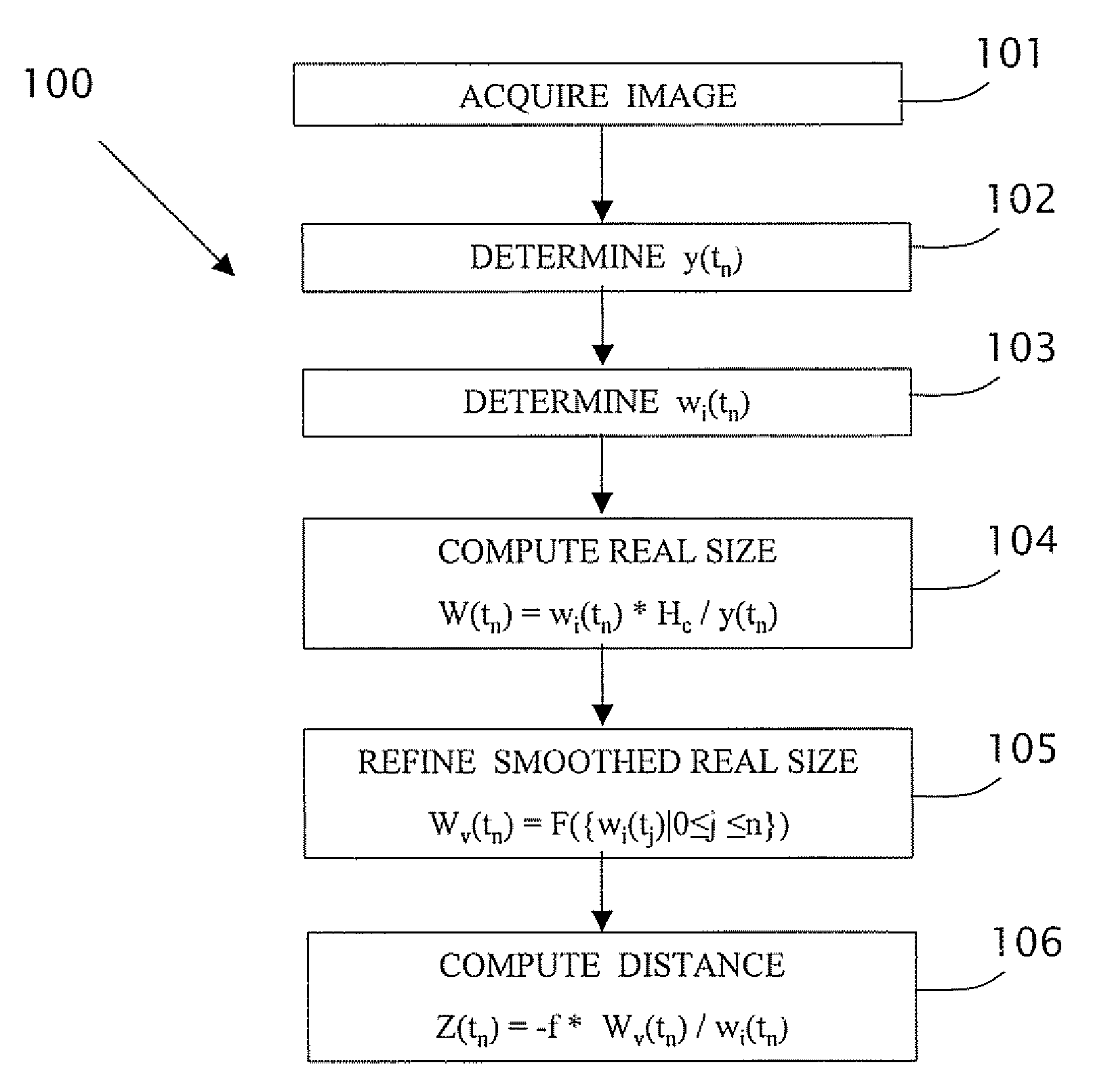

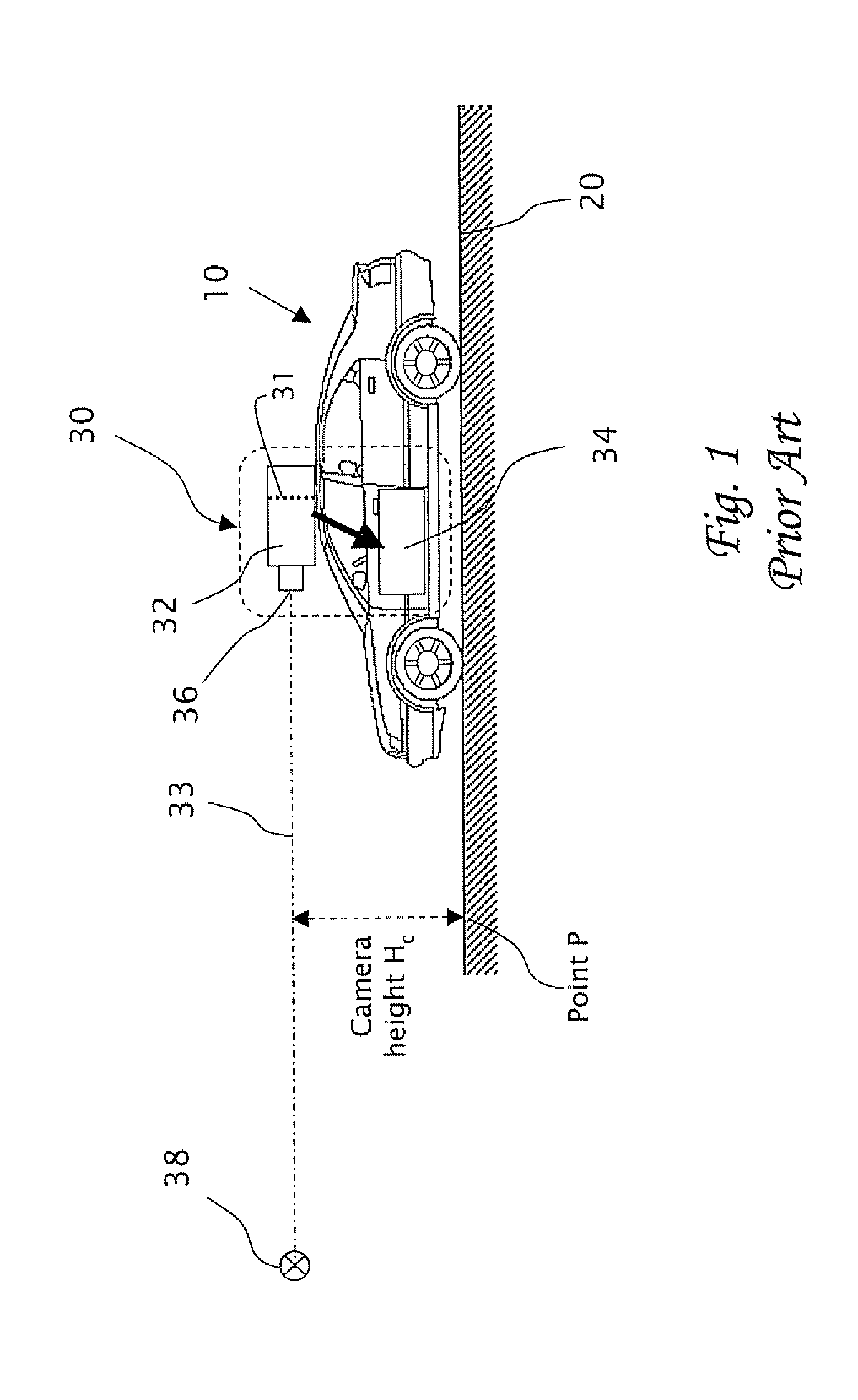

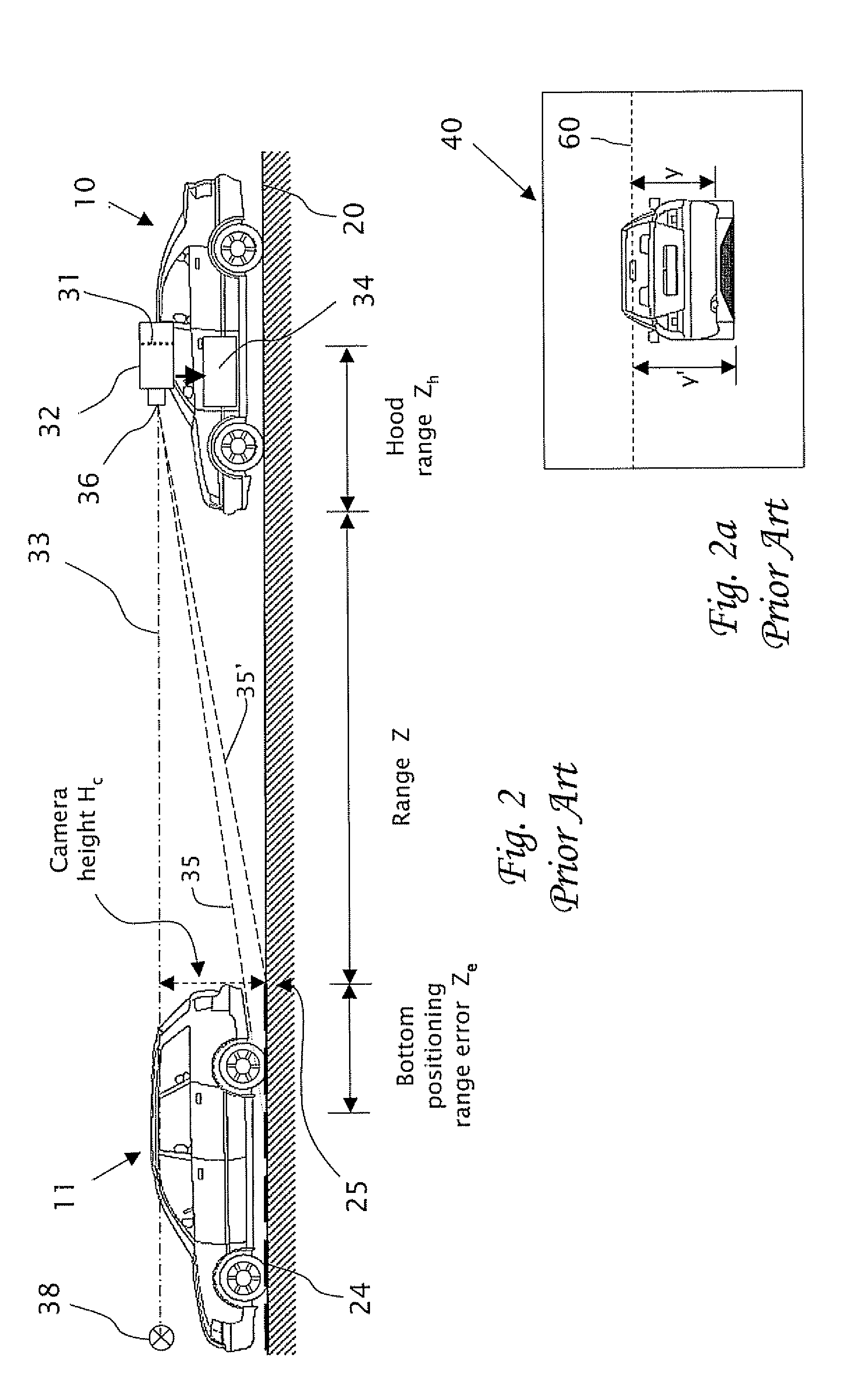

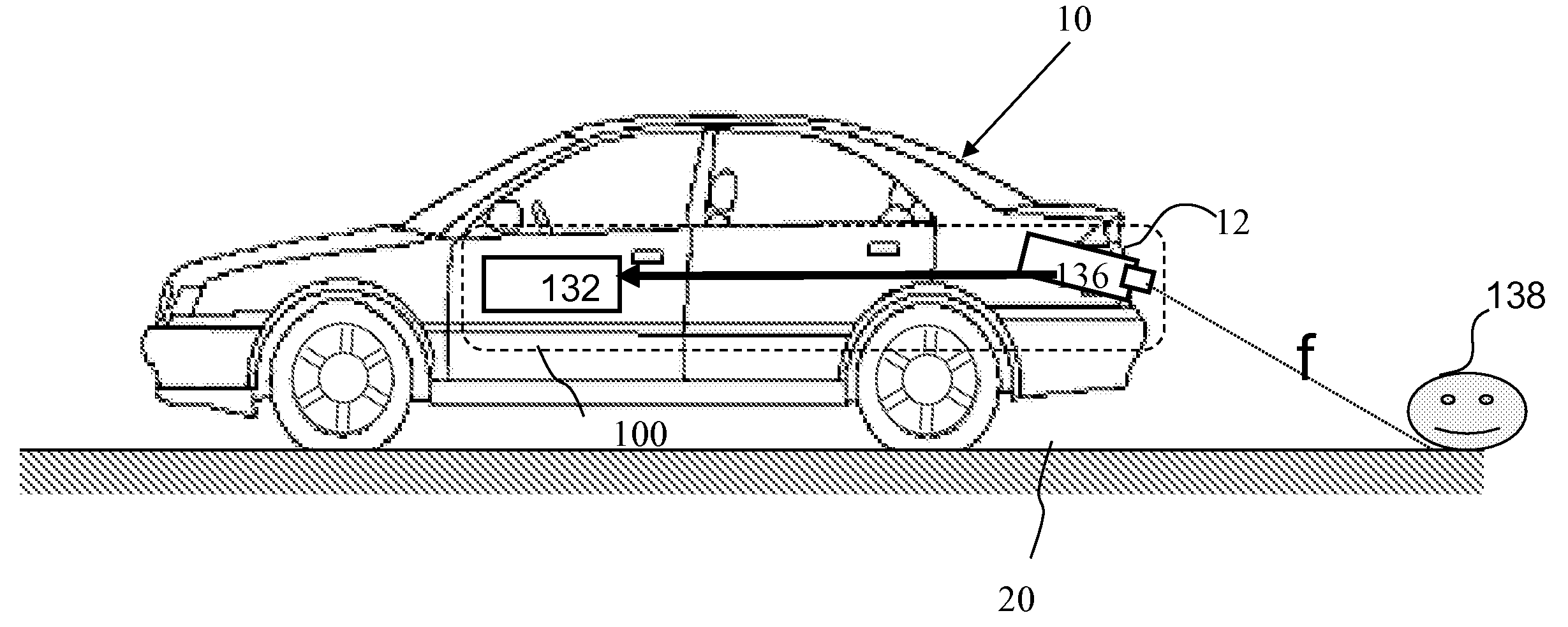

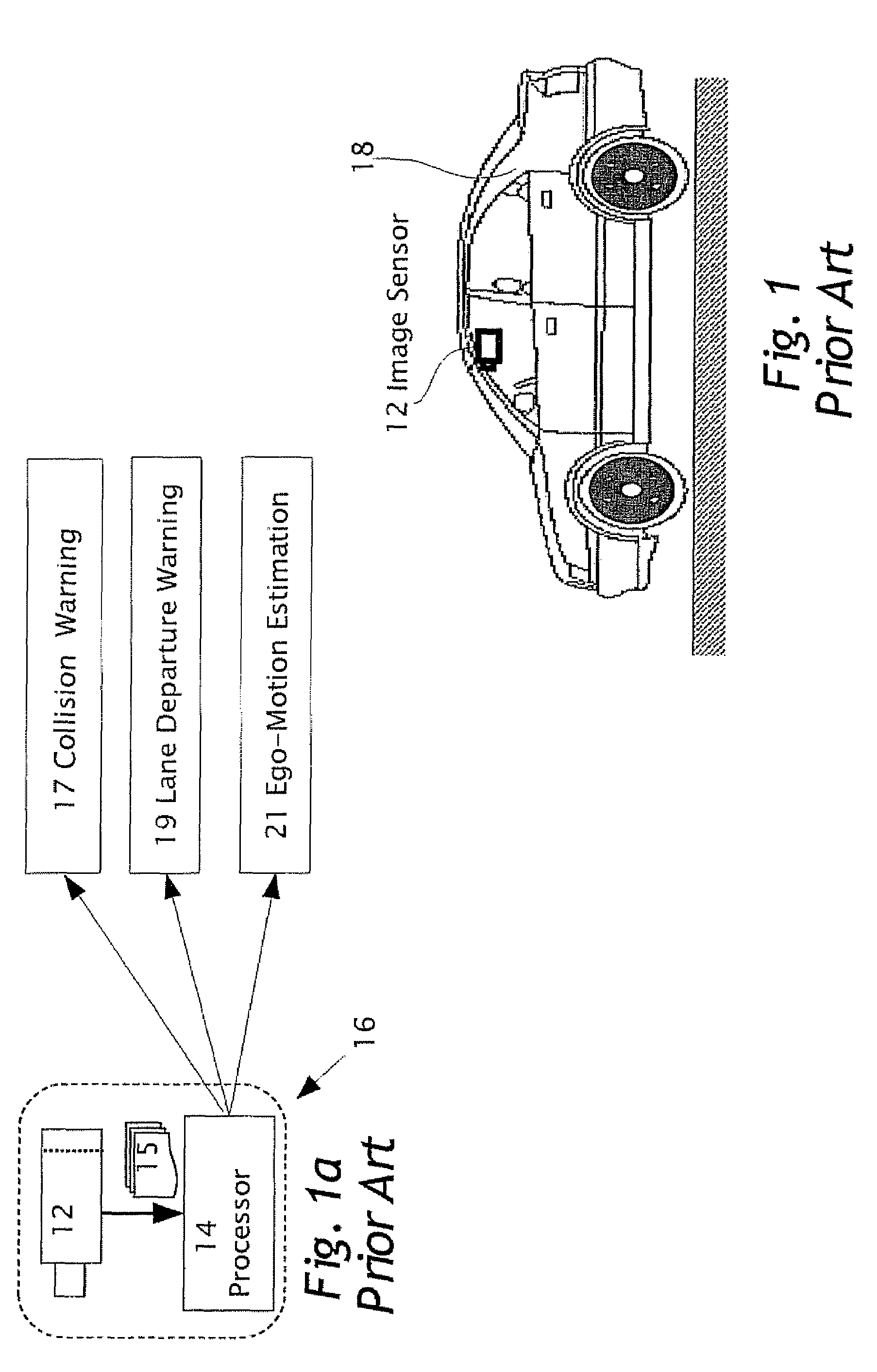

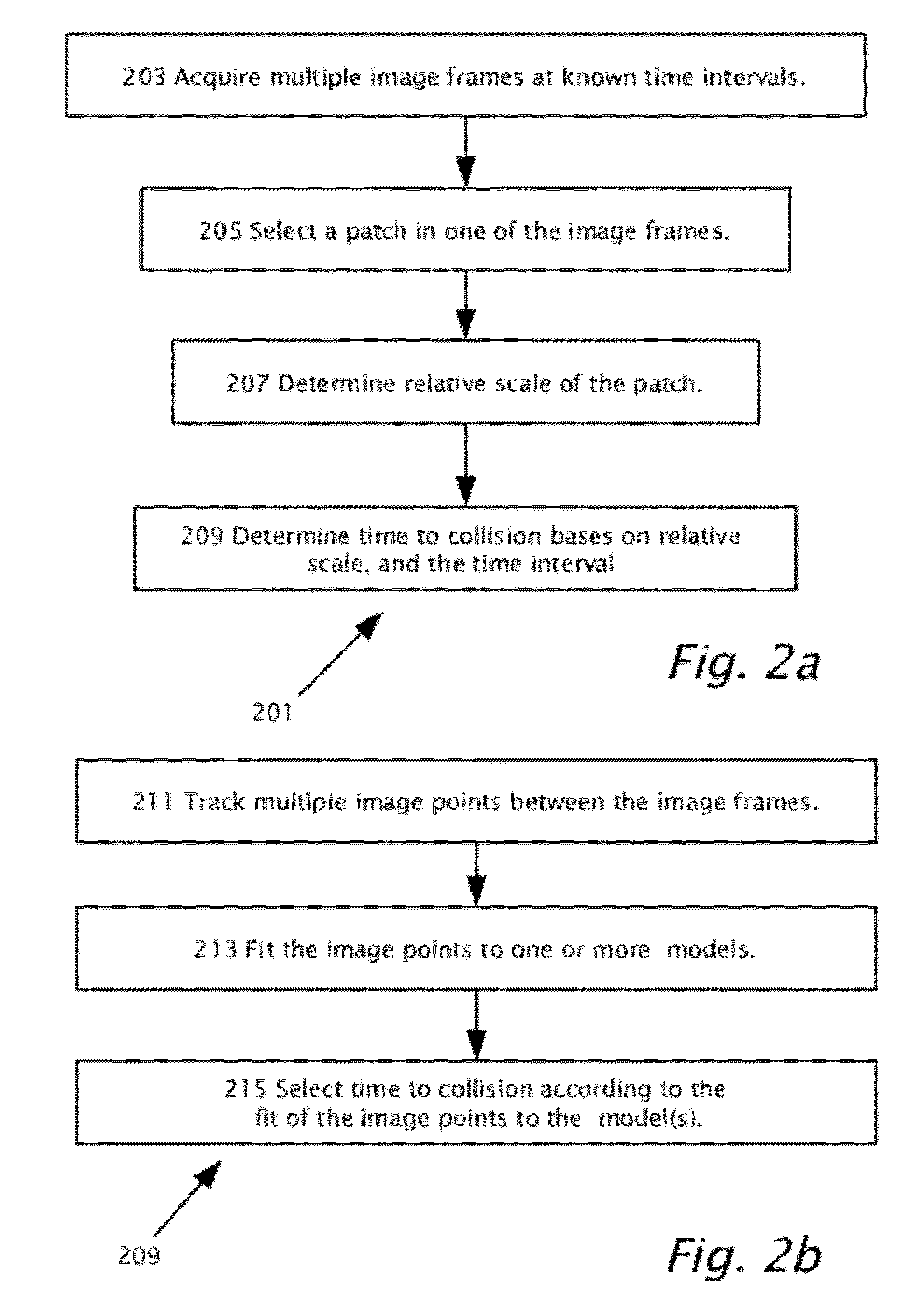

Estimating distance to an object using a sequence of images recorded by a monocular camera

In a computerized system including a camera mounted in a moving vehicle. The camera acquires consecutively in real time image frames including images of an object within the field of view of the camera. Range to the object from the moving vehicle is determined in real time. A dimension, e.g. a width, is measured in the respective images of two or more image frames, thereby producing measurements of the dimension. The measurements are processed to produce a smoothed measurement of the dimension. The dimension is measured subsequently in one or more subsequent frames. The range from the vehicle to the object is calculated in real time based on the smoothed measurement and the subsequent measurements. The processing preferably includes calculating recursively the smoothed dimension using a Kalman filter.

Owner:MOBILEYE VISION TECH LTD

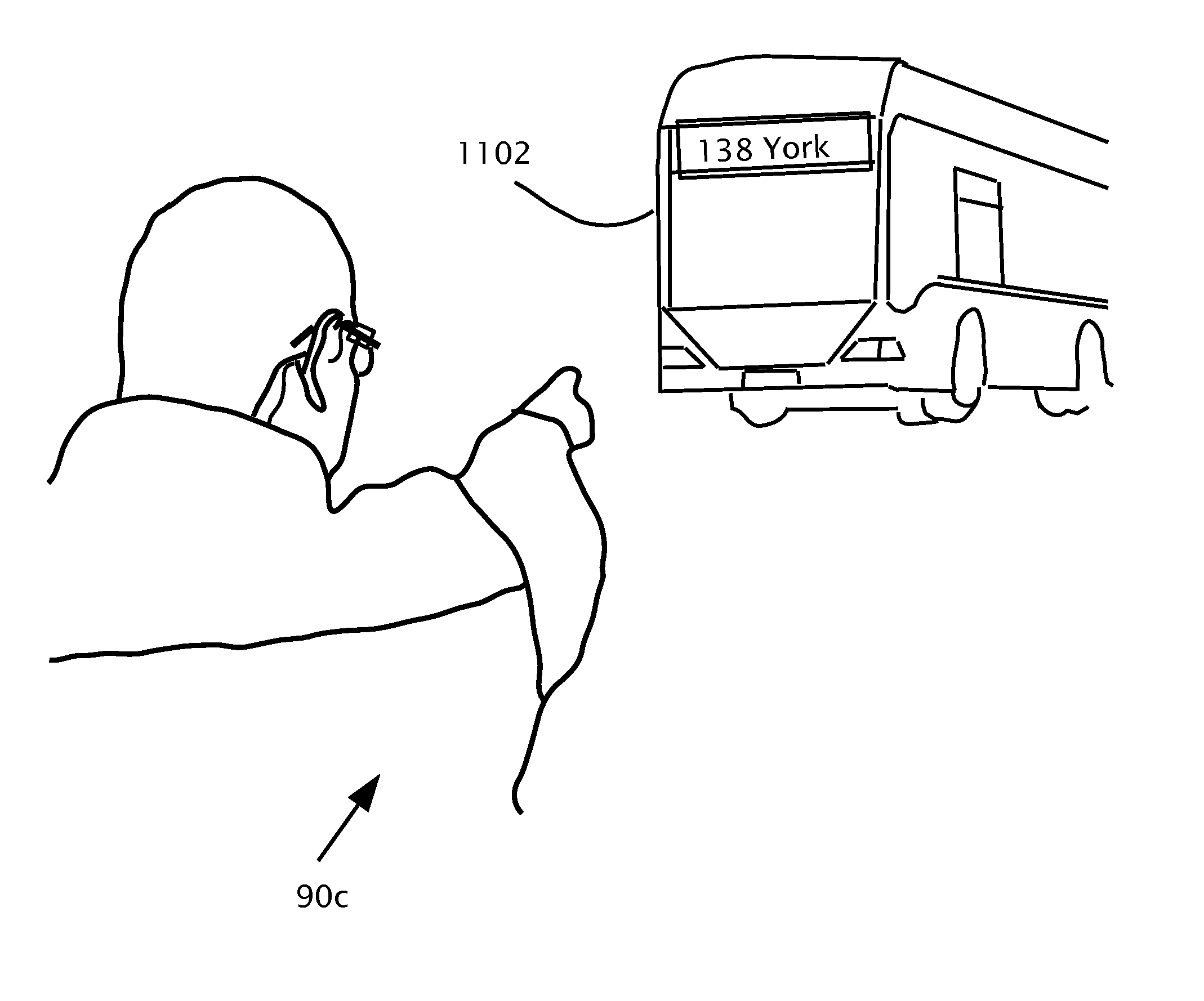

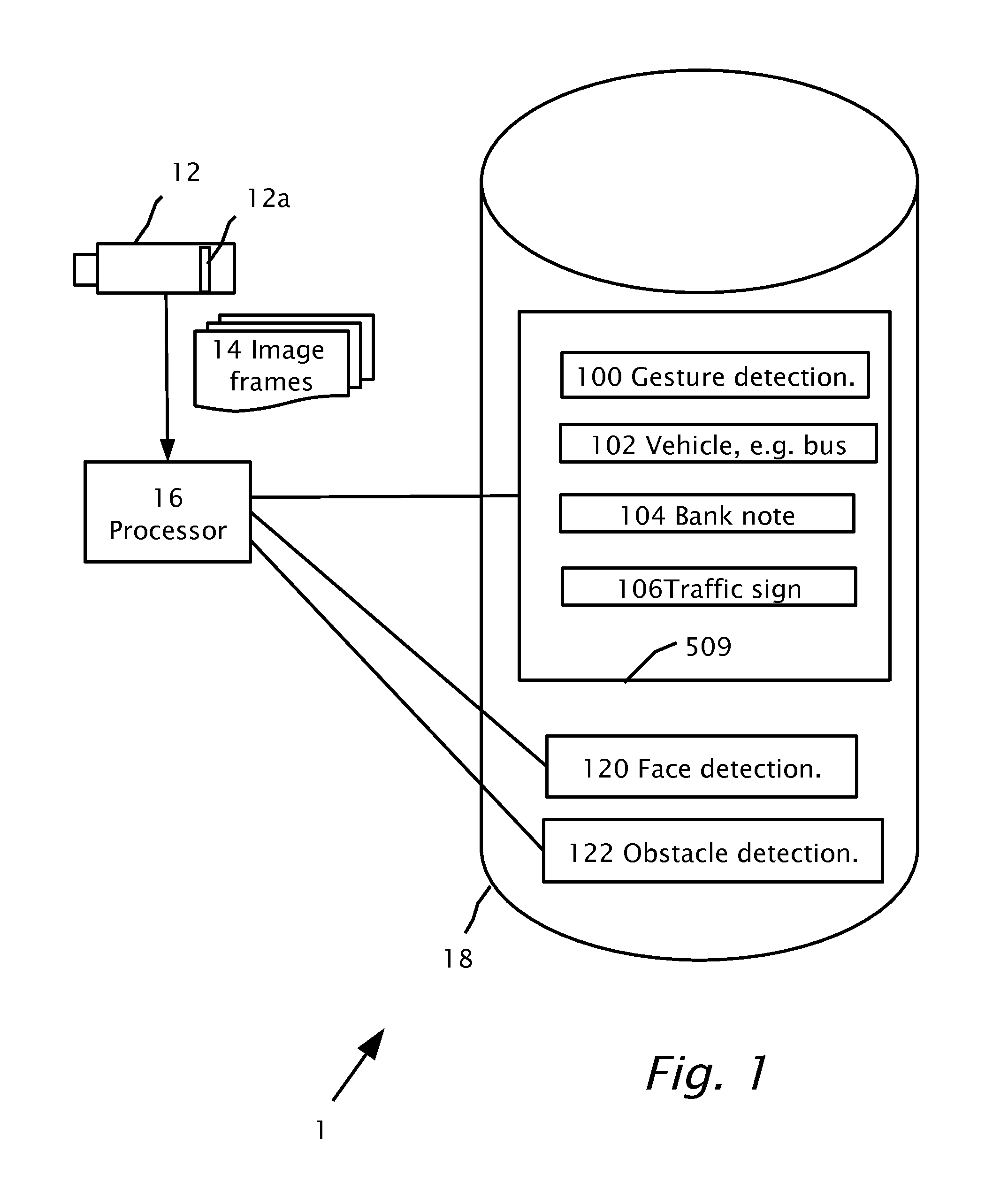

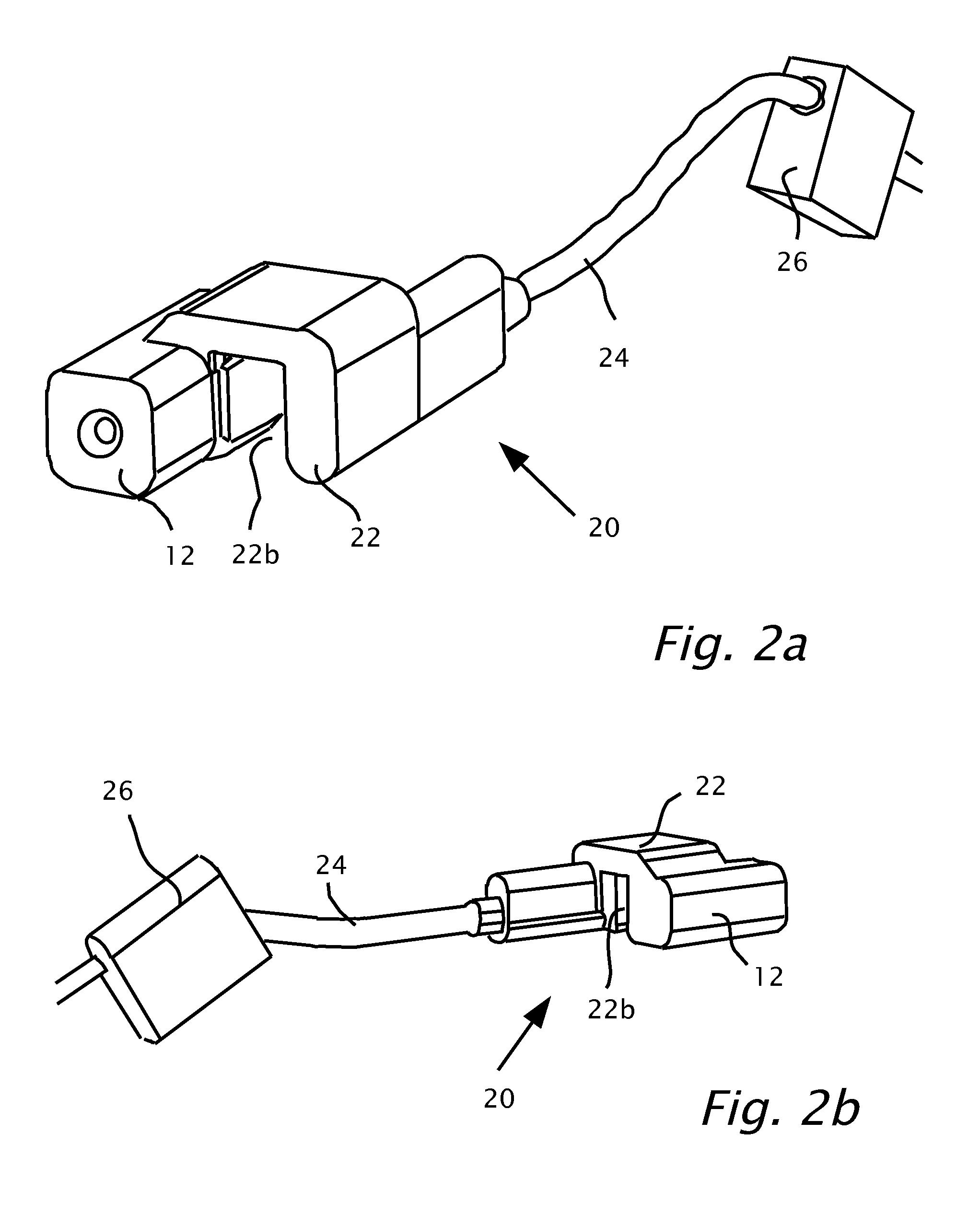

User wearable visual assistance system

InactiveUS20120212593A1High resolutionCharacter and pattern recognitionColor television detailsMultiple imageImage frame

A visual assistance device wearable by a person. The device includes a camera and a processor. The processor captures multiple image frames from the camera. A candidate image of an object is searched in the image frames. The candidate image may be classified as an image of a particular object or in a particular class of objects and is thereby recognized. The person is notified of an attribute related to the object.

Owner:ORCAM TECH

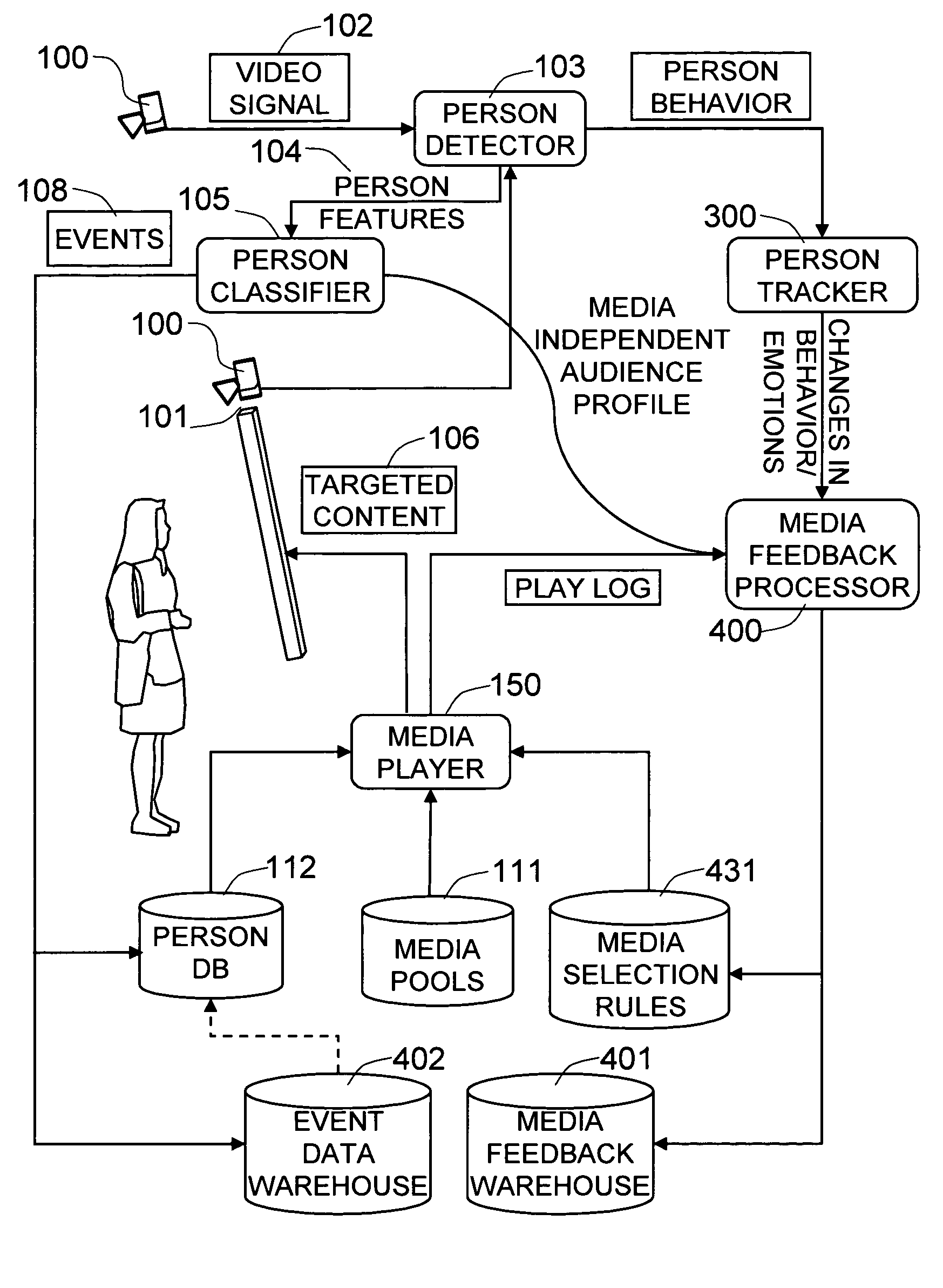

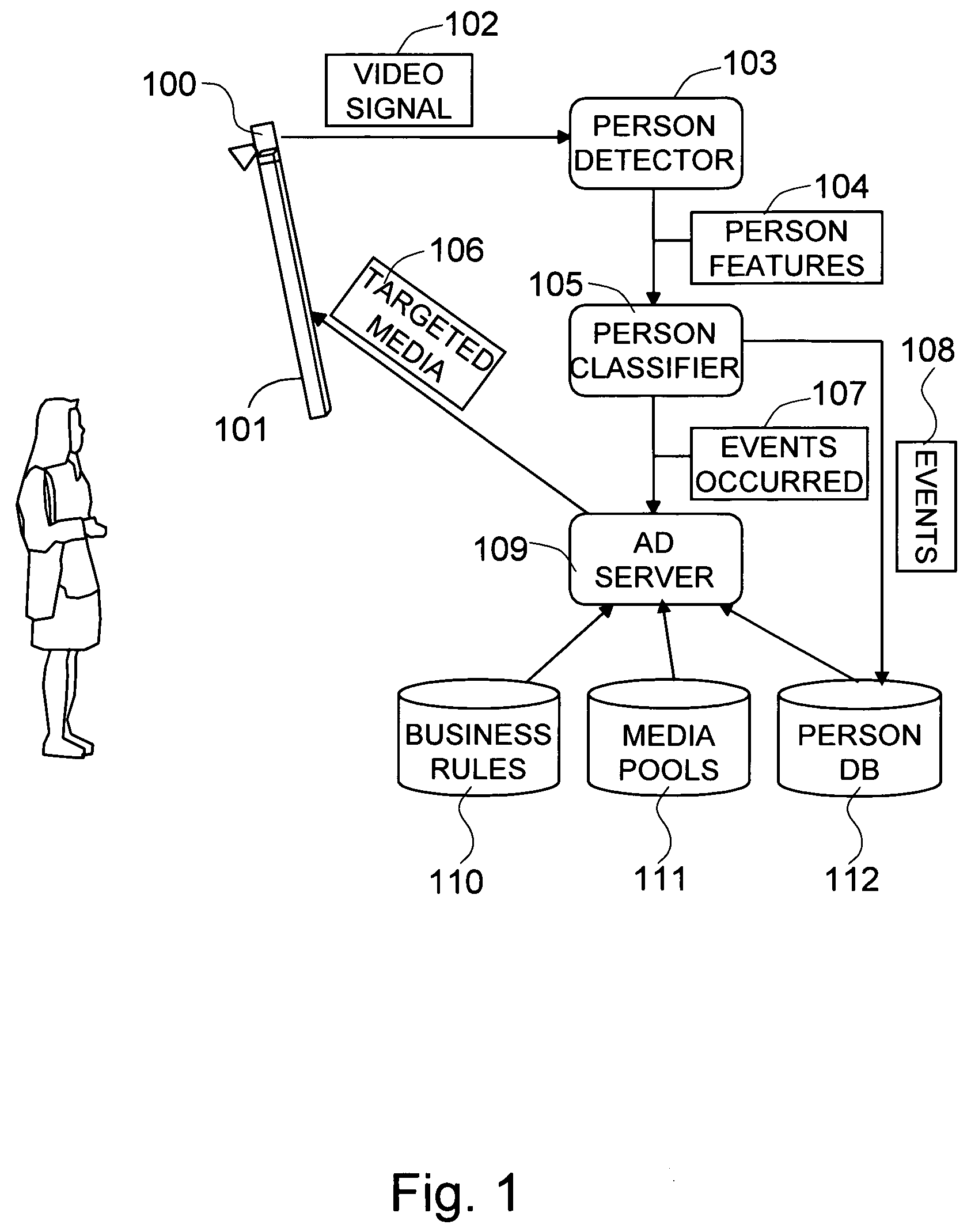

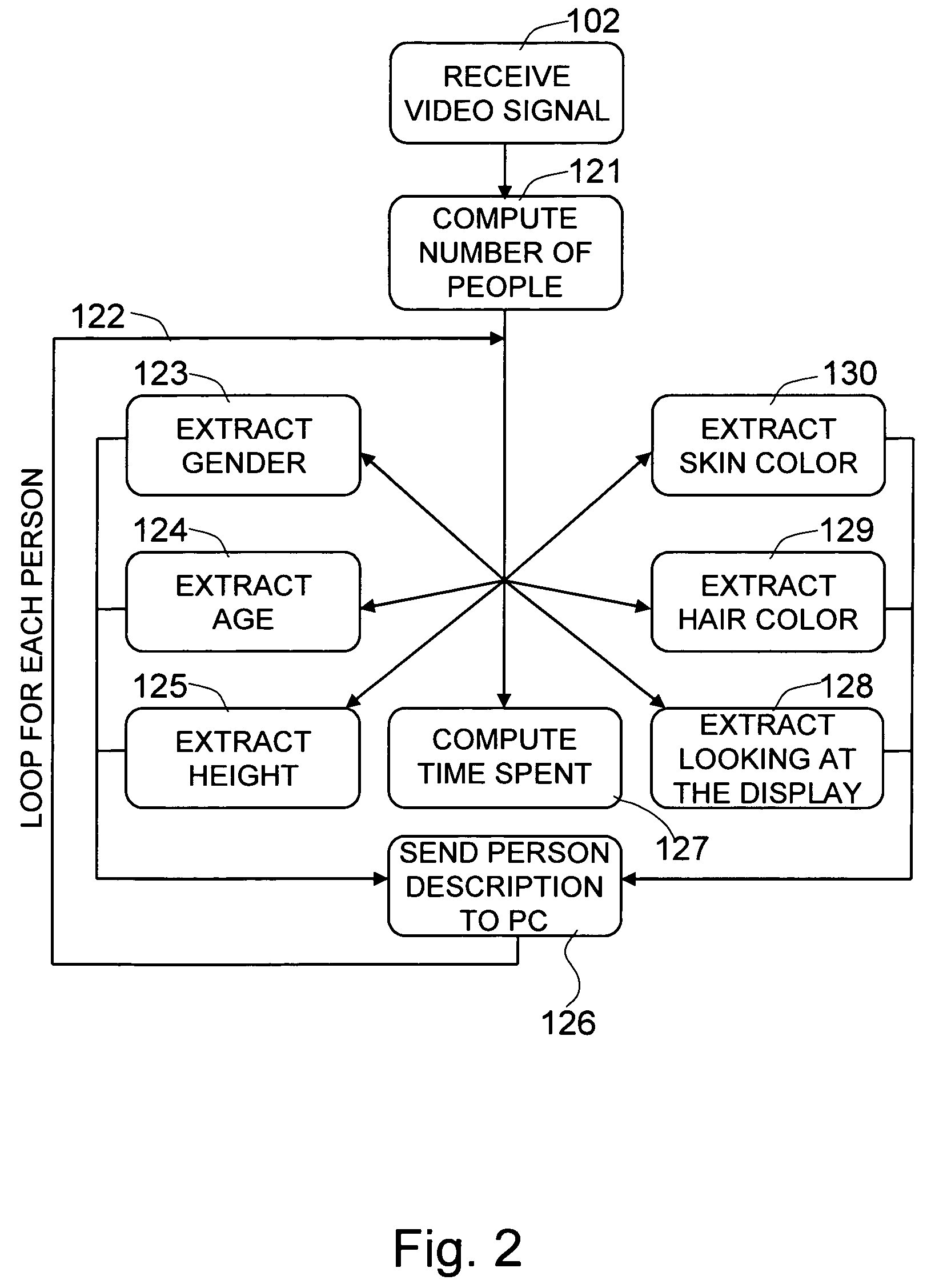

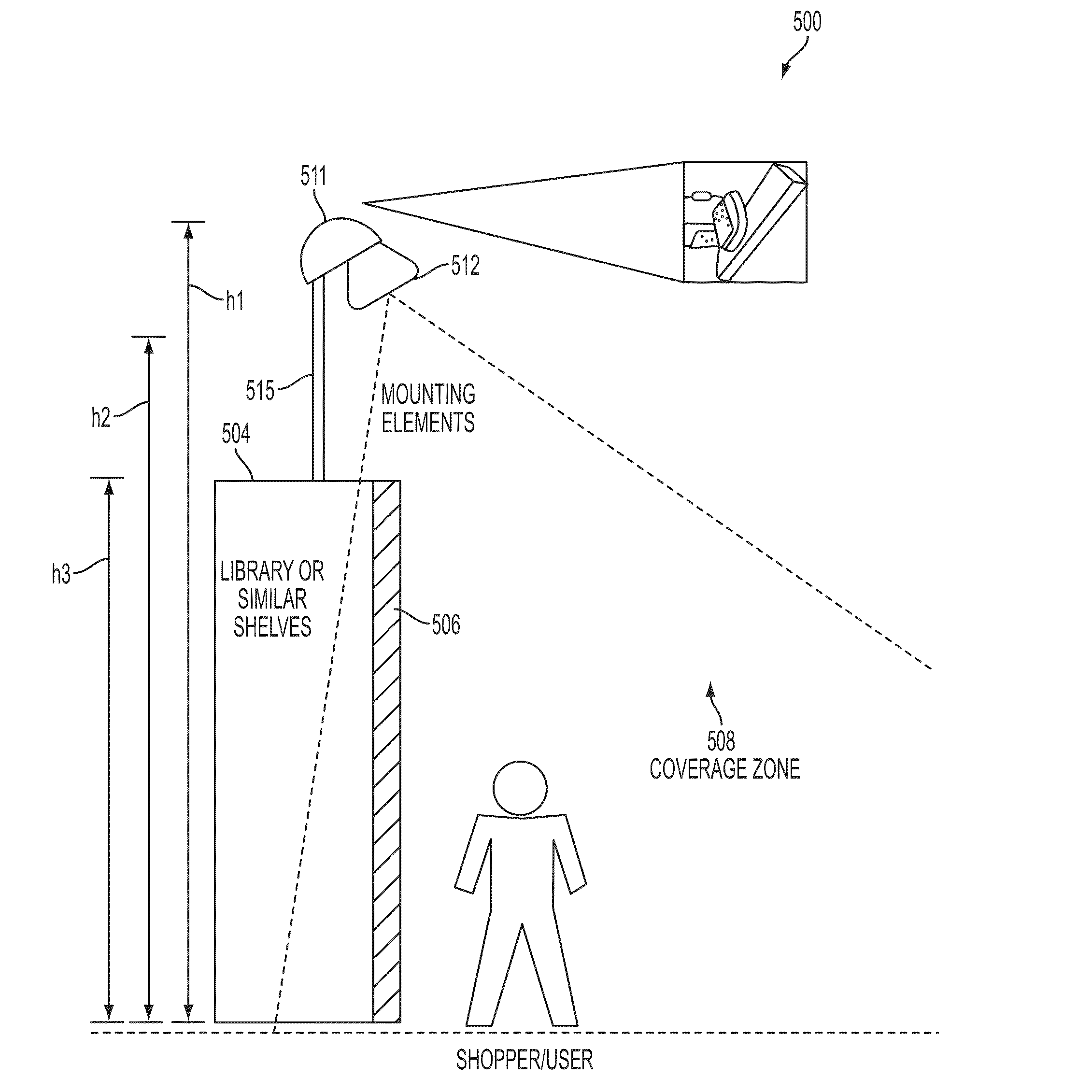

Method and system for dynamically targeting content based on automatic demographics and behavior analysis

InactiveUS7921036B1Computer security arrangementsPayment architectureBehavioral analyticsComputer graphics (images)

The present invention is a method and system for selectively executing content on a display based on the automatic recognition of predefined characteristics, including visually perceptible attributes, such as the demographic profile of people identified automatically using a sequence of image frames from a video stream. The present invention detects the images of the individual or the people from captured images. The present invention automatically extracts visually perceptible attributes, including demographic information, local behavior analysis, and emotional status, of the individual or the people from the images in real time. The visually perceptible attributes further comprise height, skin color, hair color, the number of people in the scene, time spent by the people, and whether a person looked at the display. A targeted media is selected from a set of media pools, according to the automatically-extracted, visually perceptible attributes and the feedback from the people.

Owner:ACCESSIFY LLC

Methods and systems for measuring human interaction

InactiveUS20140132728A1Character and pattern recognitionSteroscopic systemsHuman interactionPoint cloud

A method and system for measuring and reacting to human interaction with elements in a space, such as public places (retail stores, showrooms, etc.) is disclosed which may determine information about an interaction of a three dimensional object of interest within a three dimensional zone of interest with a point cloud 3D scanner having an image frame generator generating a point cloud 3D scanner frame comprising an array of depth coordinates for respective two dimensional coordinates of at least part of a surface of the object of interest, within the three dimensional zone of interest, comprising a three dimensional coverage zone encompassing a three dimensional engagement zone and a computing comparing respective frames to determine the time and location of a collision between the object of interest and a surface of at least one of the three dimensional coverage zone or the three dimensional engagement zone encompassed by the dimensional coverage zone.

Owner:SHOPPERCEPTION

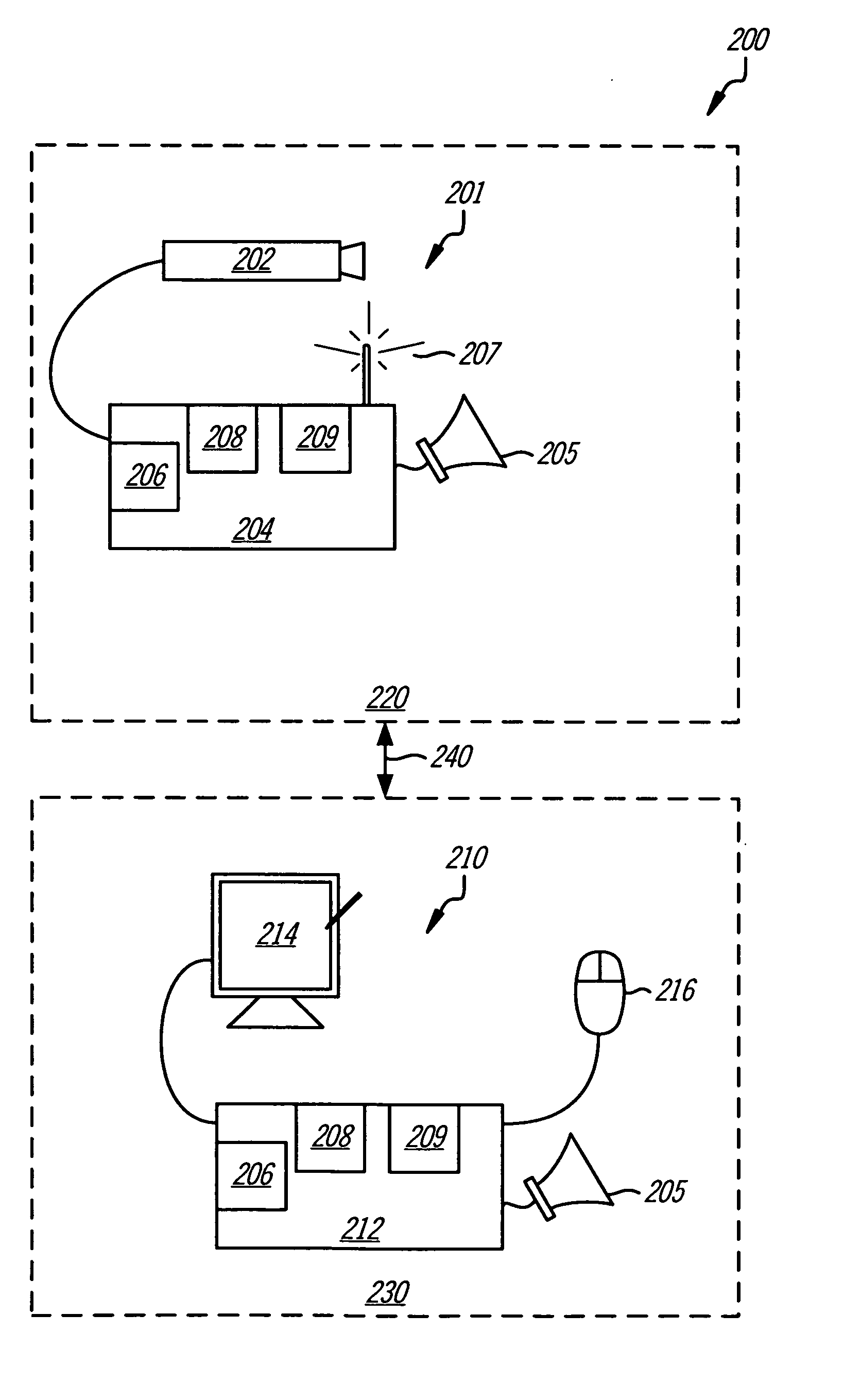

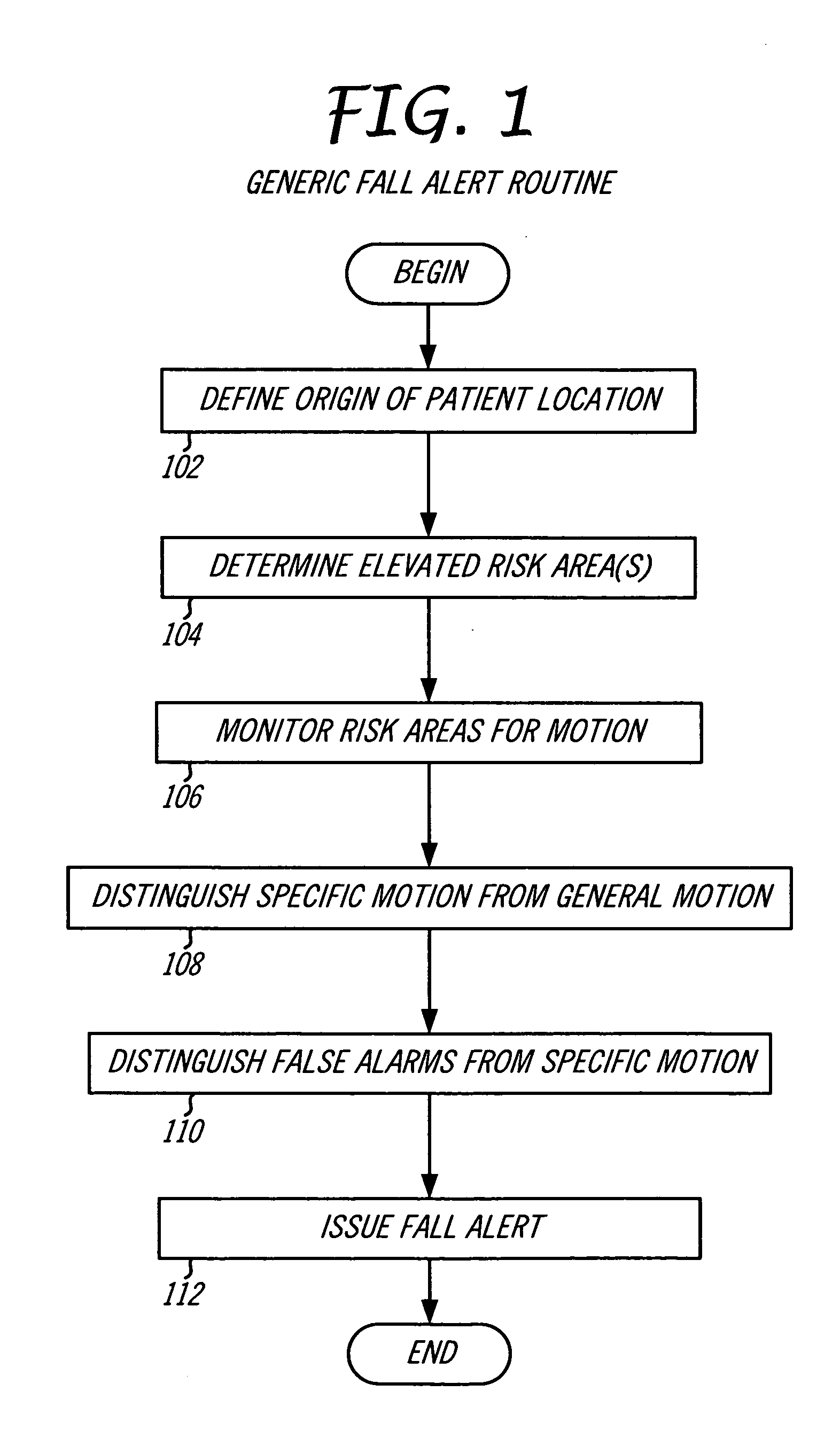

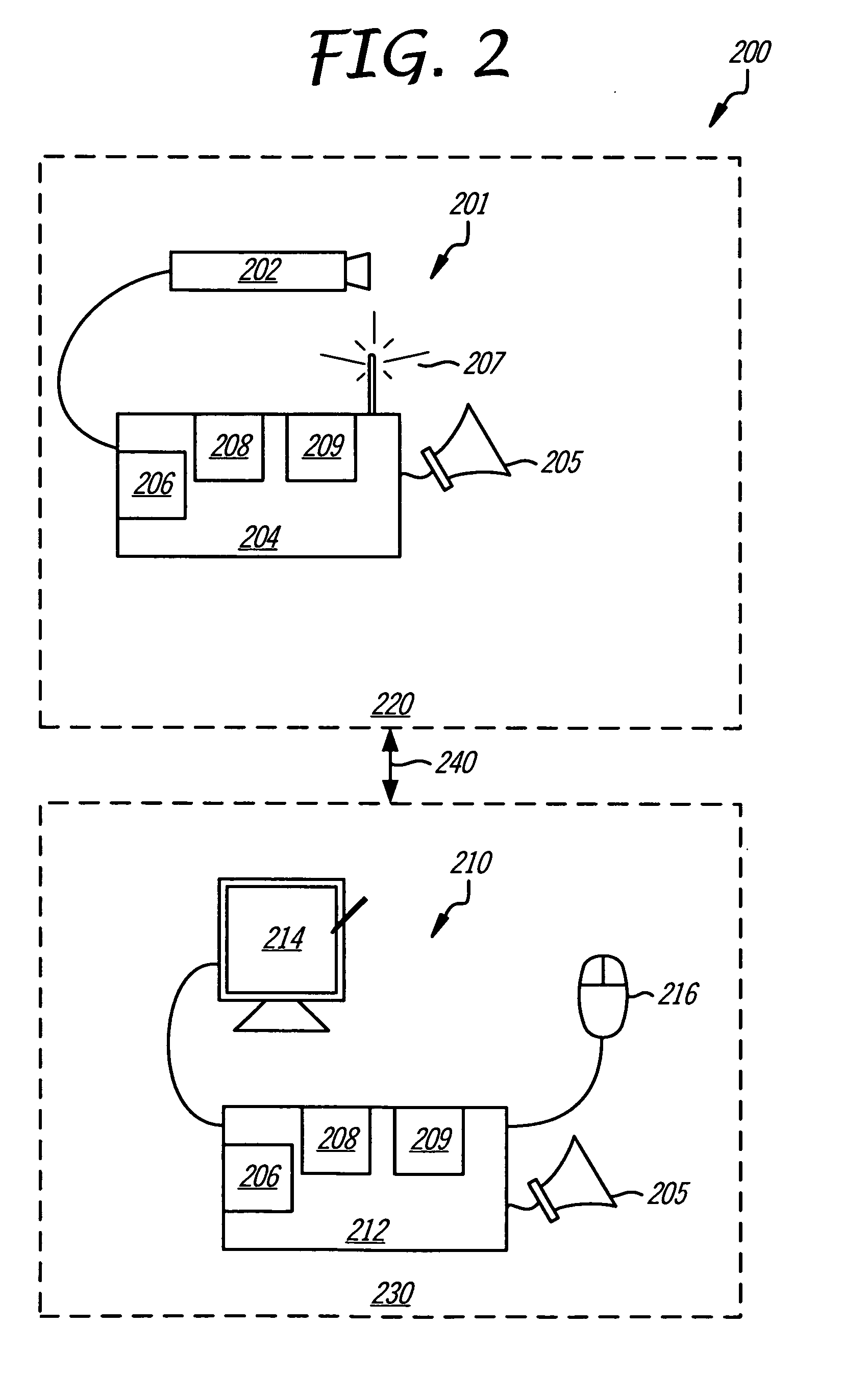

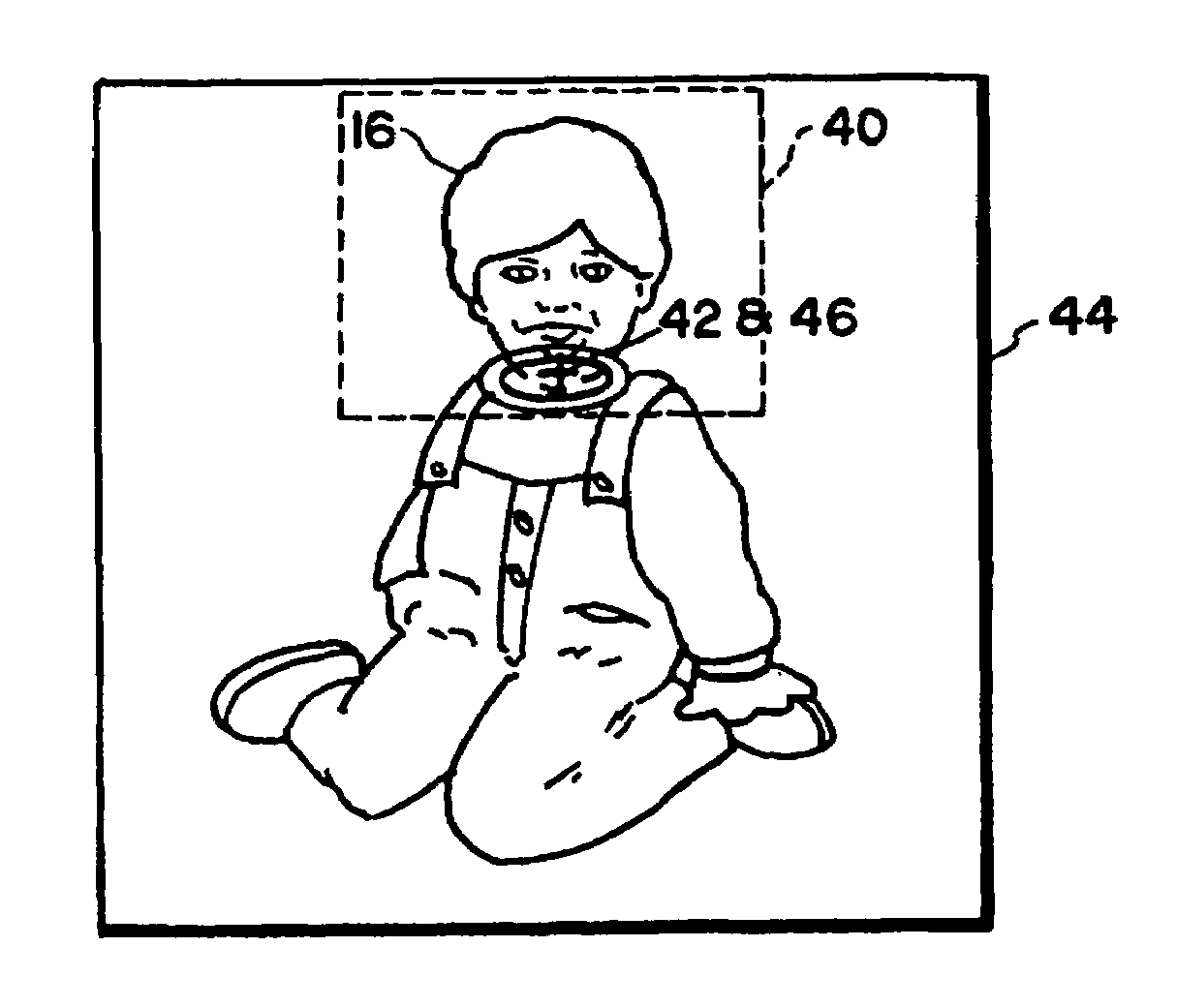

System and method for predicting patient falls

ActiveUS20090278934A1Character and pattern recognitionClosed circuit television systemsGraphicsViewpoints

A patient fall prediction system receives video image frames from a surveillance camera positioned in a patient's room and analyses the video image frames for movement that may be a precursor to a patient fall. In set up phase, the viewpoint of the camera is directed at a risk area associated with patient falls, beds, chairs, wheelchairs, etc. A risk area is defined graphically in the viewport. The patient fall prediction system generates a plurality of concurrent motion detection zones that are situated proximate to the graphic markings of the risk areas. These motion detection zones are monitored for changes between video image frames that indicate a movement. The pattern of detections is recorded and compared to a fall movement detection signature. One fall movement detection signature is a sequential detection order from the motion detection zone closest to the risk area in the frames associated with patient falls, to the motion detection zone farthest away from the risk area. The patient fall prediction system continually monitors the motion detection zones for changes between image frames and compiles detections lists that are compared to known movement detection signatures, such as a fall movement detection signature. Once a match is identified, the patient fall prediction system issues a fall warning to a healthcare provider.

Owner:CAREVIEW COMM INC

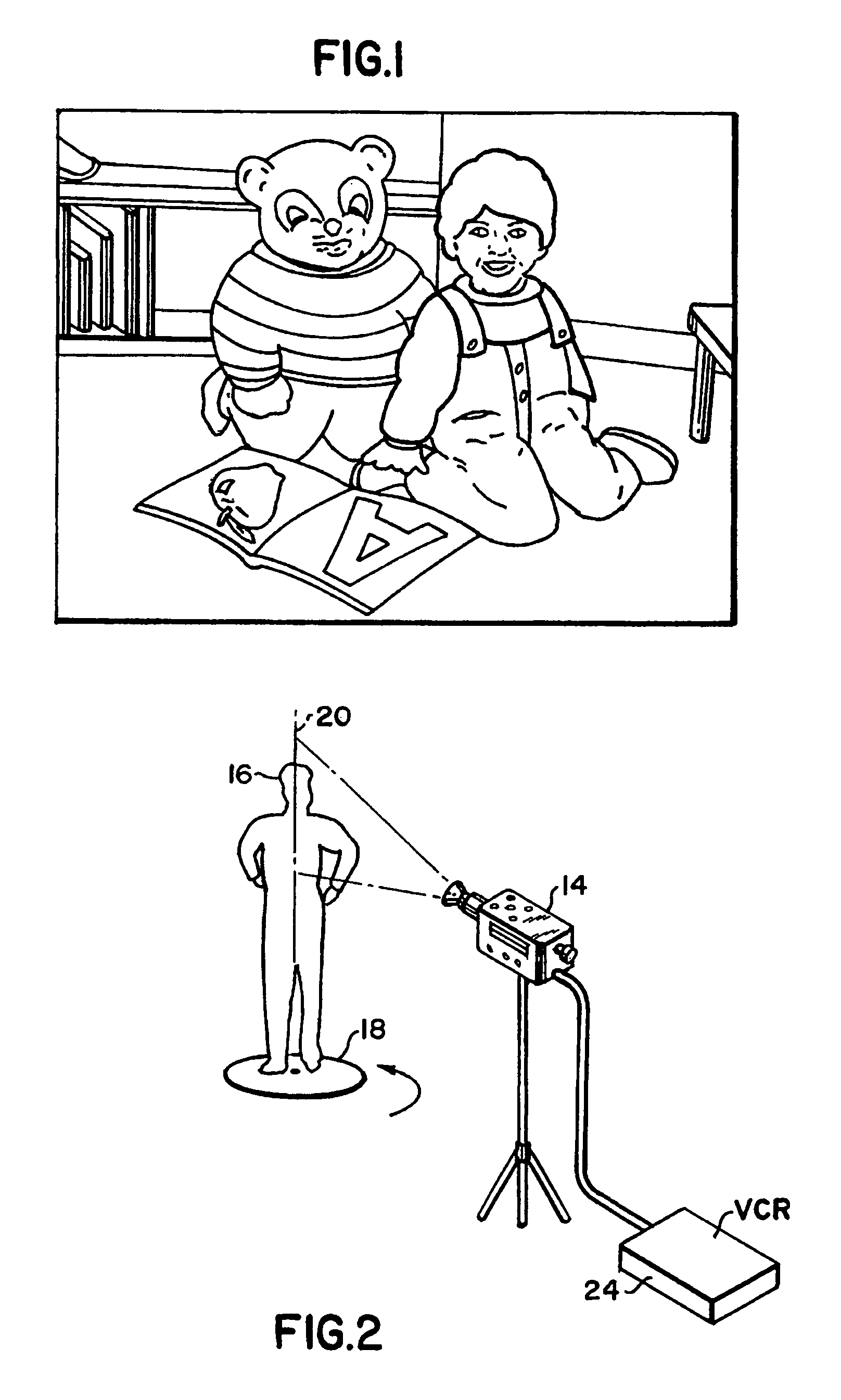

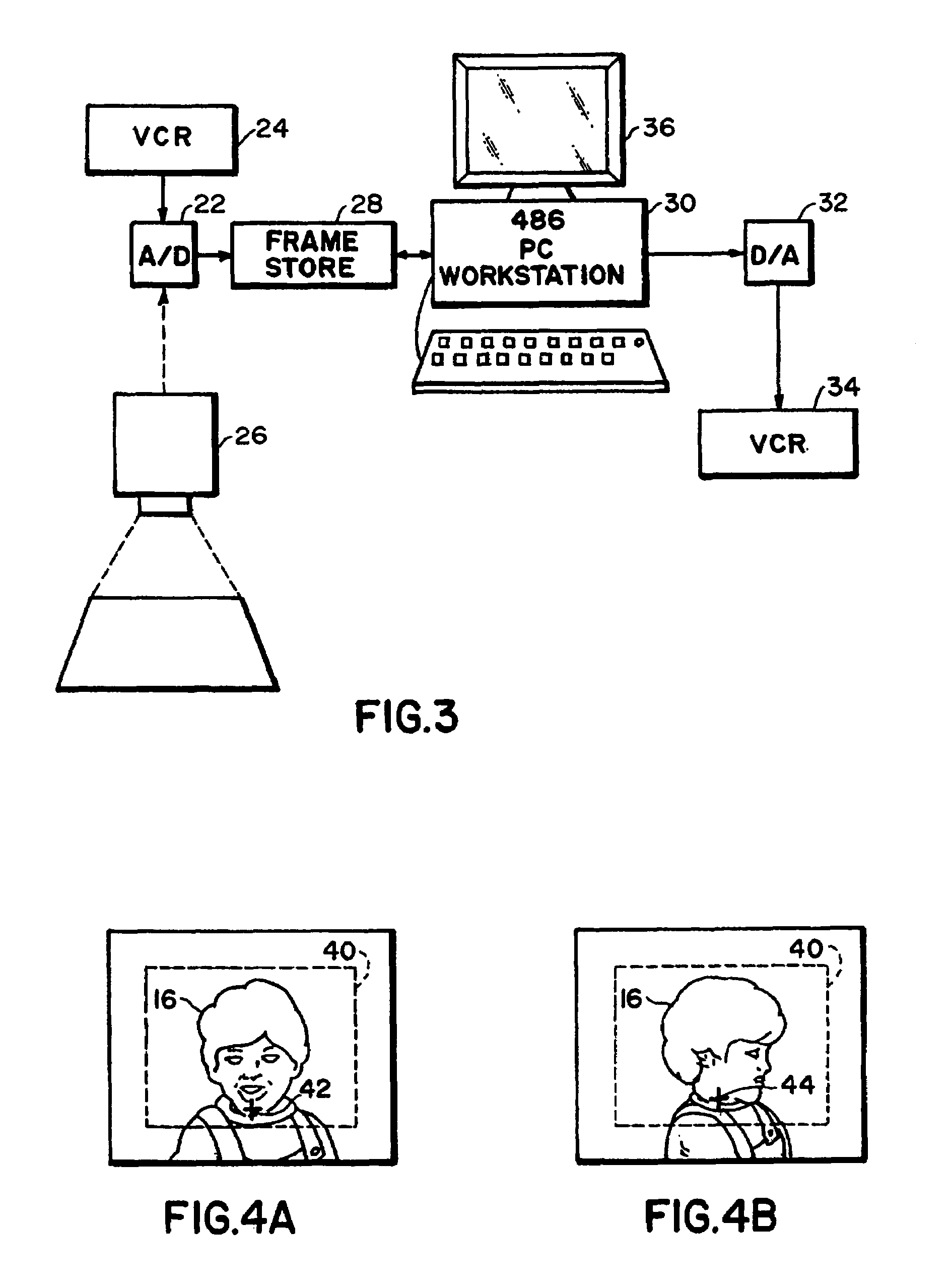

Object customization and presentation system

InactiveUS7859551B2Less time-consumingLess costlyTelevision system detailsPicture framesPersonalizationPrint version

A method for generating a personalized presentation, comprising providing an Internet browser user interface for selecting an image and a surrounding context; receiving the selected image and surrounding context by an Internet web server; accounting for the user activity in a financial accounting system; and delivering the selected image and surrounding context to the user. The surrounding context may comprise a physical frame for a picture, with a printed version of the selected image framed therein. The accounting step may provide consideration to a rights holder of the selected image, or provide for receipt of consideration from a commercial advertiser. A plurality of images may be selected, wherein the context defines a sequence of display of the plurality of images.

Owner:BIG TENT ENTERTAINMENT +1

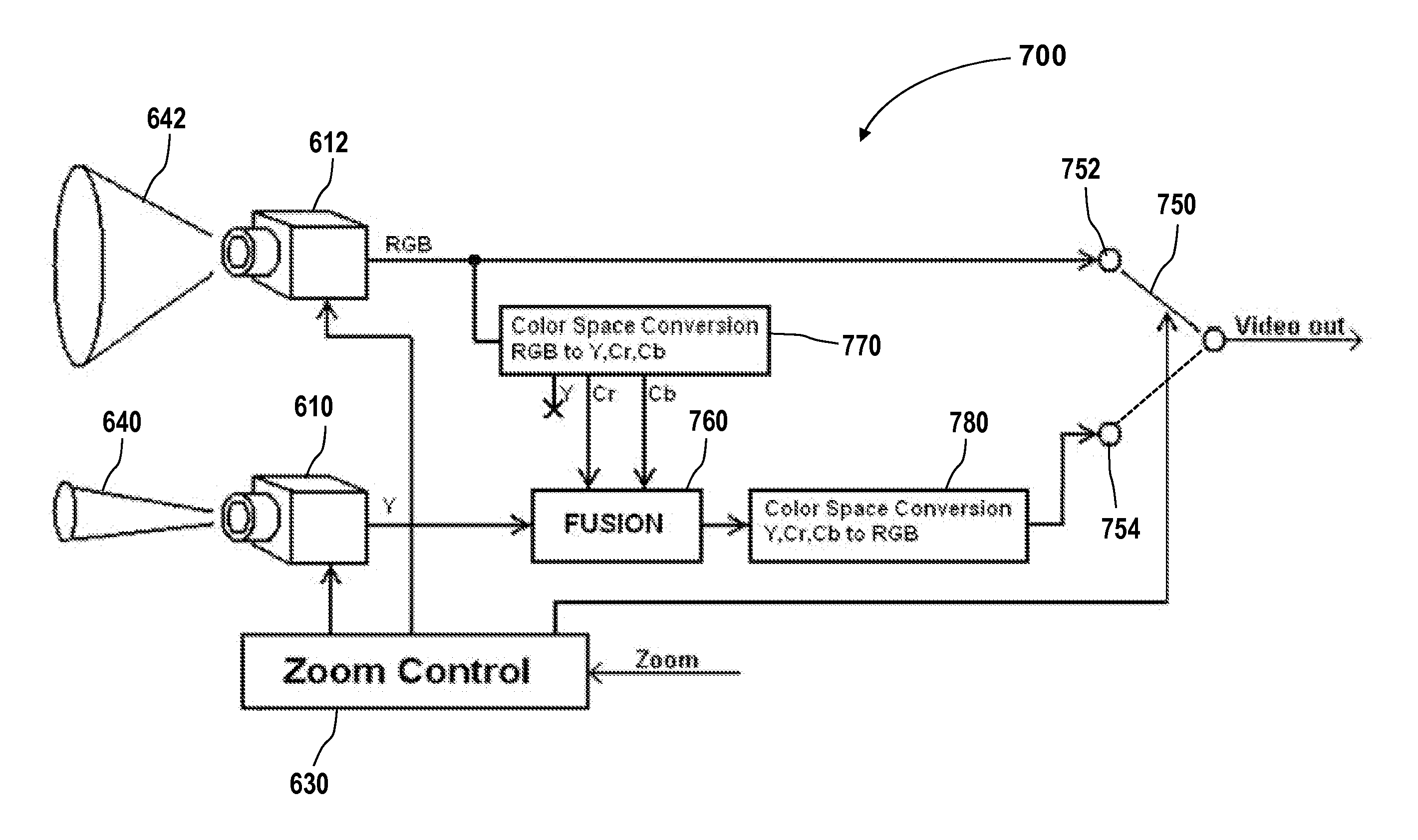

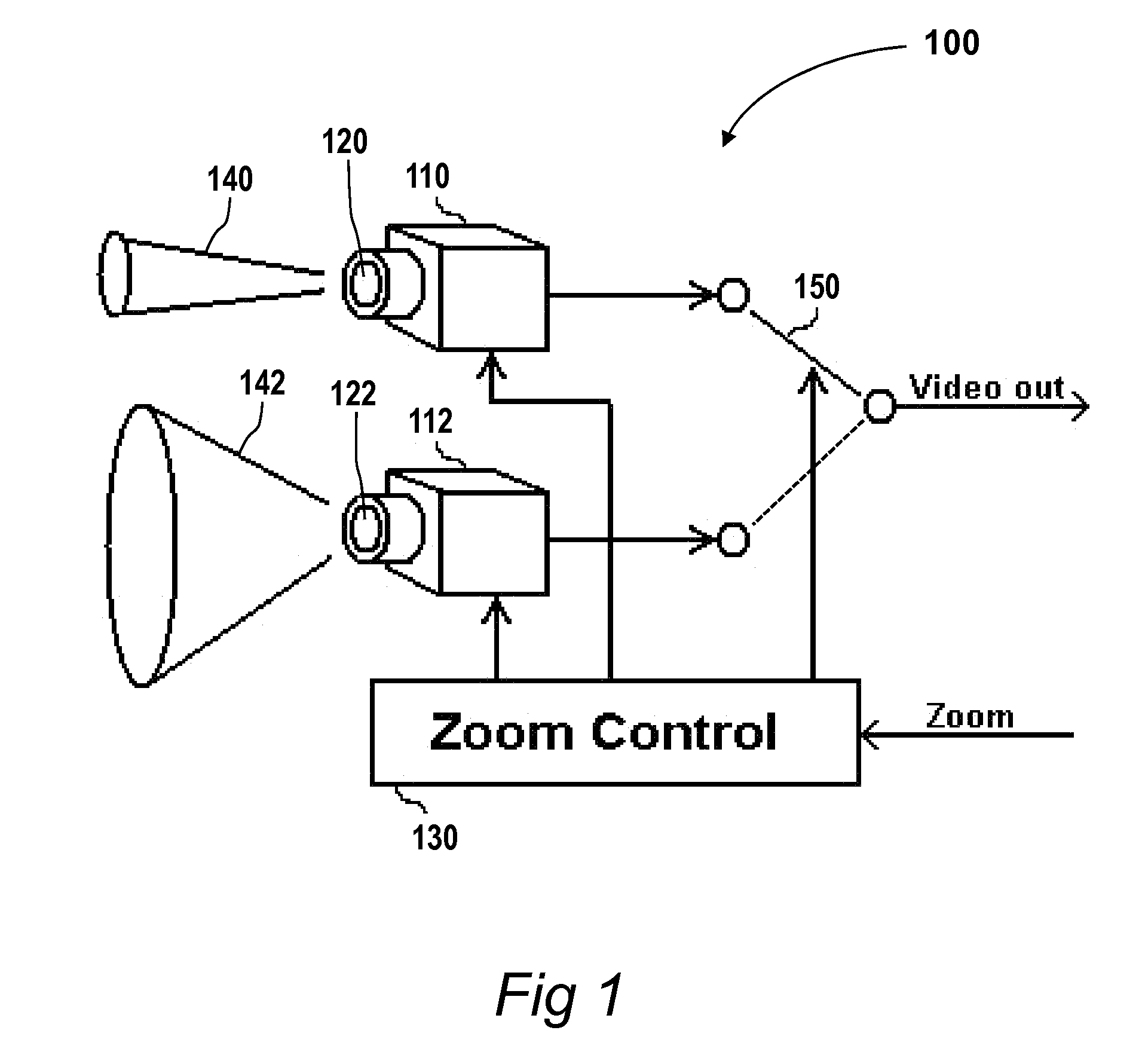

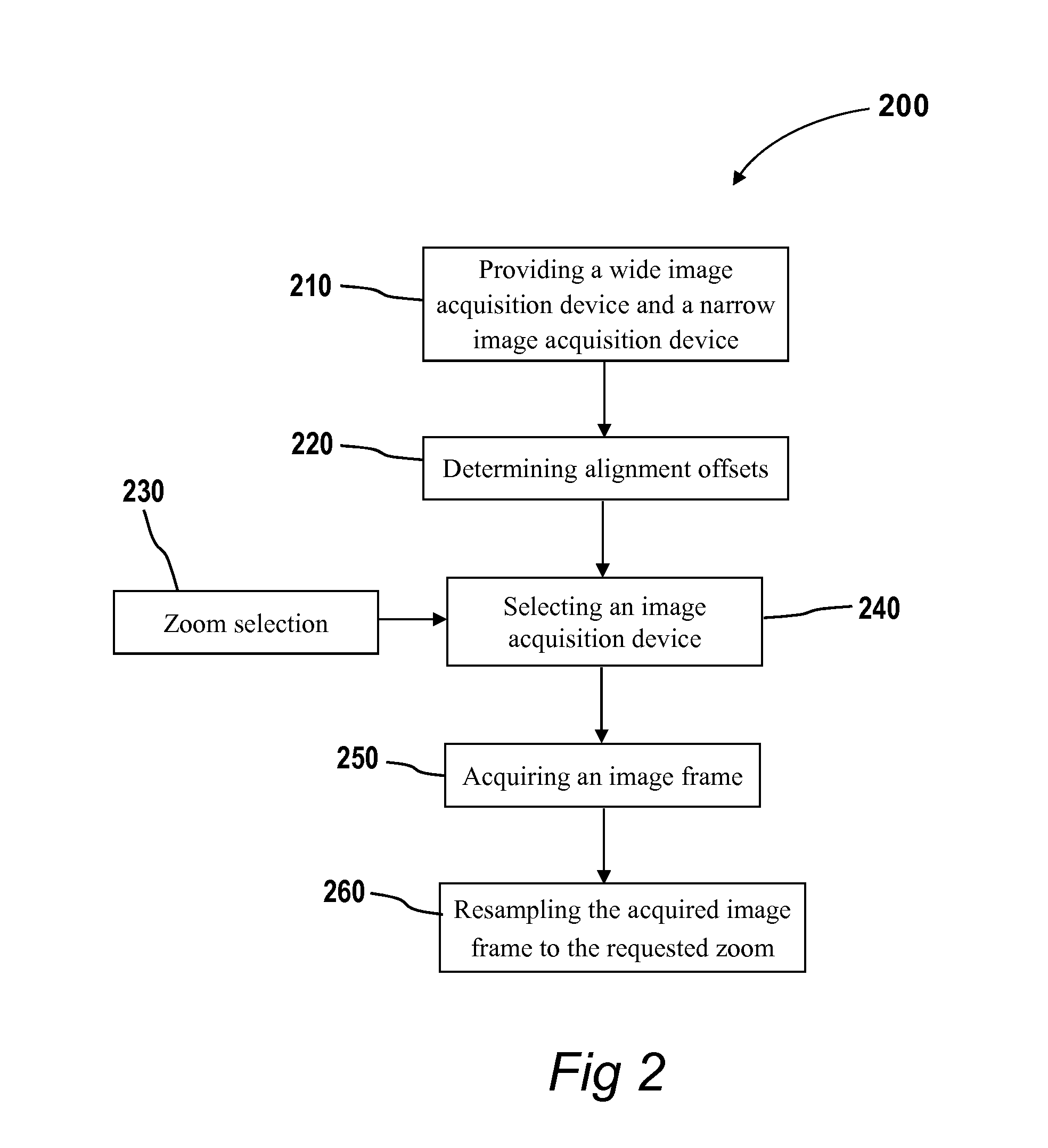

Continuous electronic zoom for an imaging system with multiple imaging devices having different fixed fov

InactiveUS20120026366A1Facilitates a light weight electronic zoomLarge zoom rangeTelevision system detailsColor television detailsSensor arrayCamera lens

A method for continuous electronic zoom in a computerized image acquisition system, the system having a wide image acquisition device and a tele image acquisition device having a tele image sensor array coupled with a tele lens having a narrow FOV, and a tele electronic zoom. The method includes providing a user of the image acquisition device with a zoom selecting control, thereby obtaining a requested zoom, selecting one of the image acquisition devices based on the requested zoom and acquiring an image frame, thereby obtaining an acquired image frame, and performing digitally zoom on the acquired image frame, thereby obtaining an acquired image frame with the requested zoom. The alignment between the wide image sensor array and the tele image sensor array is computed, to facilitate continuous electronic zoom with uninterrupted imaging, when switching back and forth between the wide image sensor array and the tele image sensor array.

Owner:NEXTVISION STABILIZED SYST

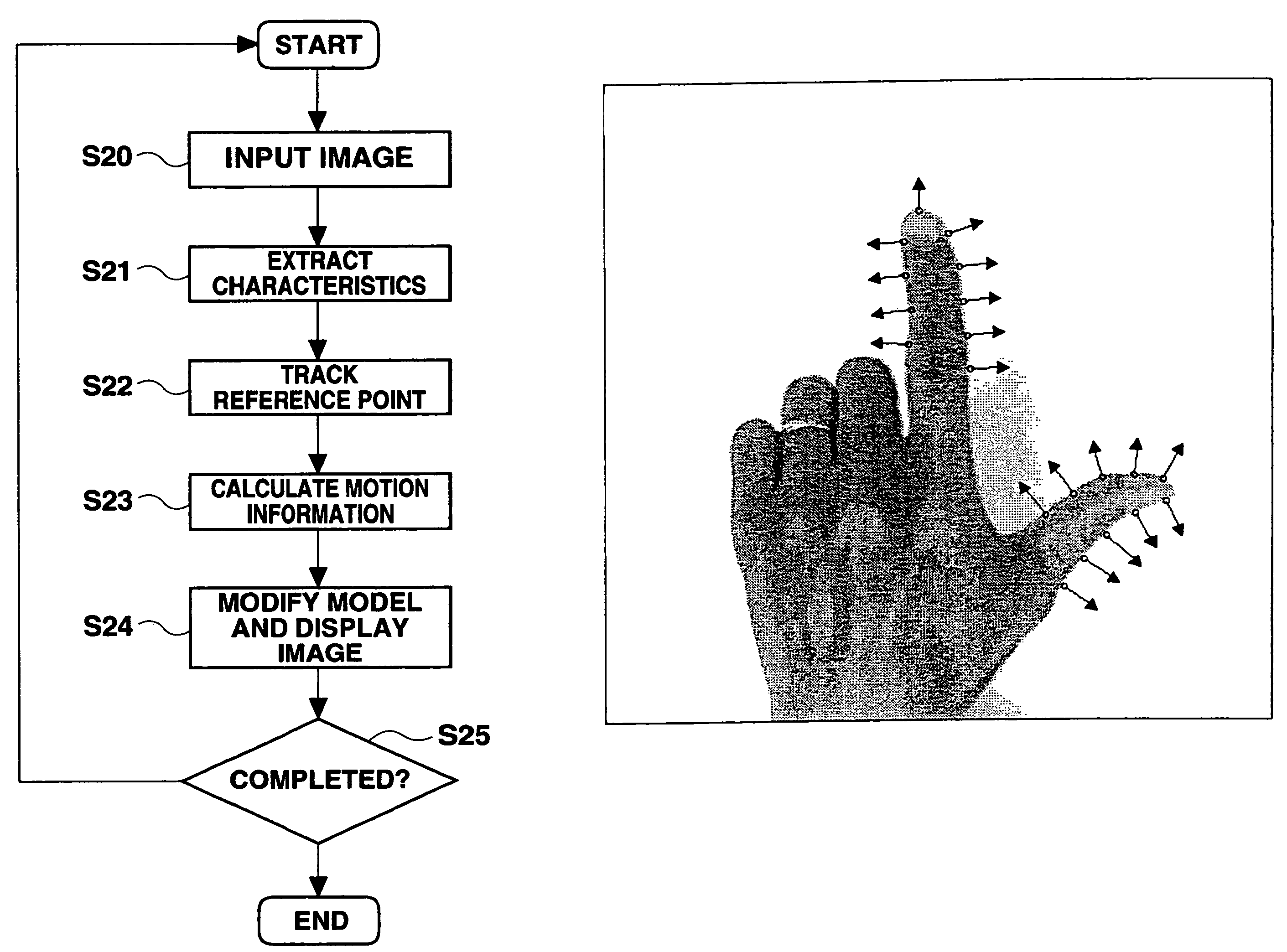

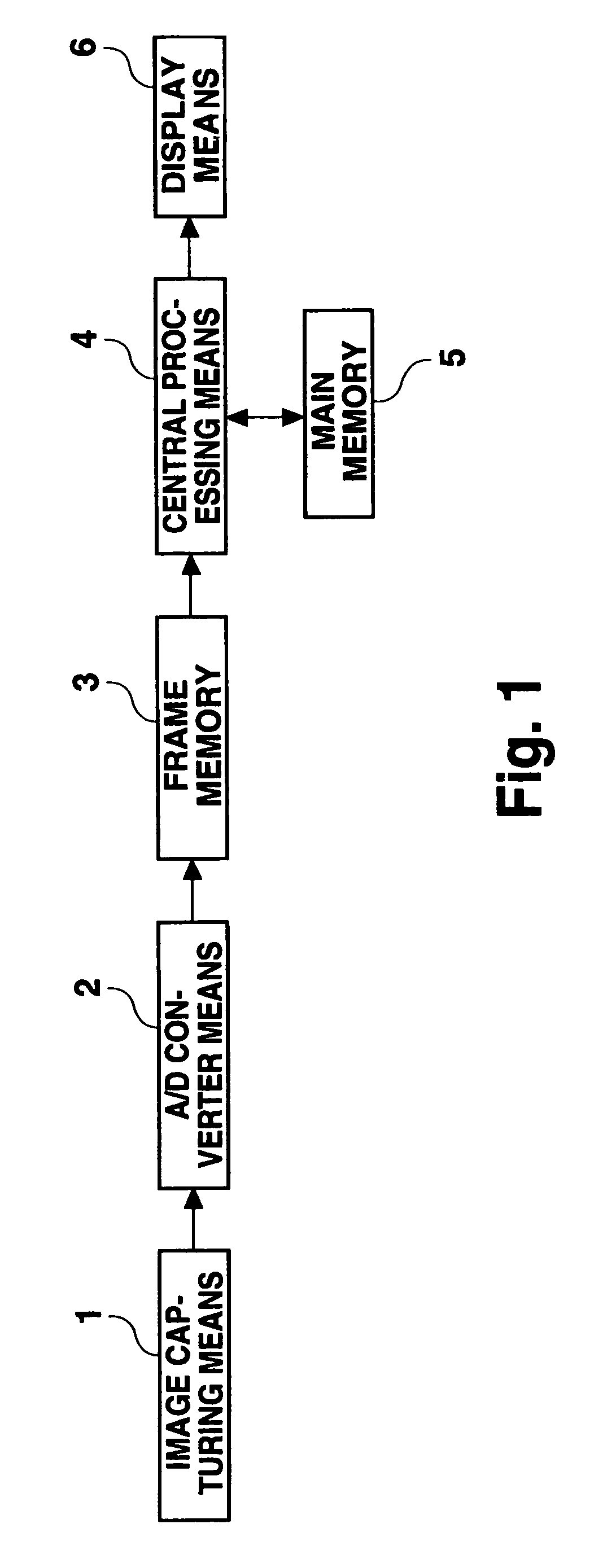

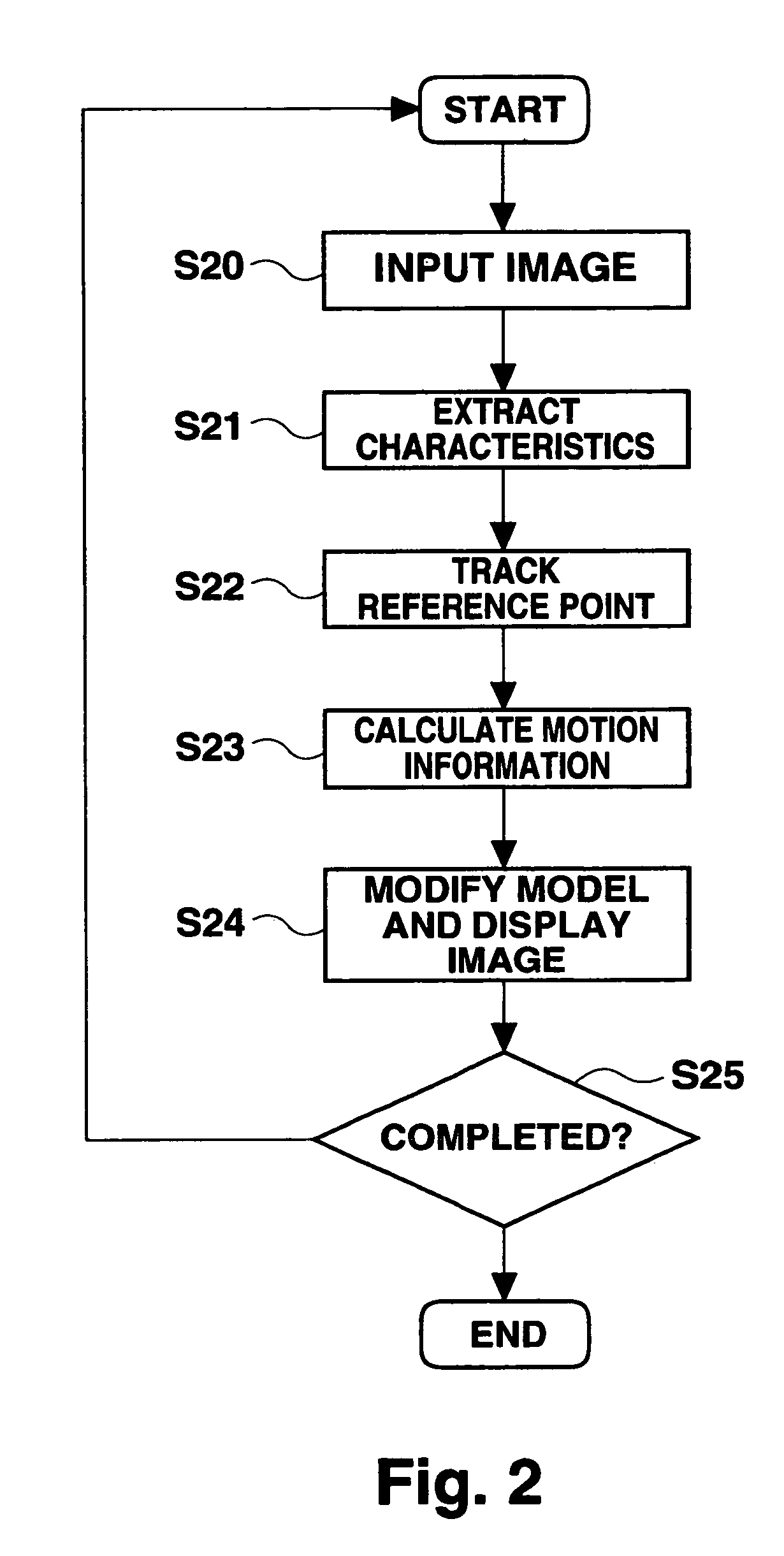

Dynamic image processing method and device and medium

A motion image processing method and device for authenticating a user using a specific device, using motion information of an object. Time series monochrome images, obtained by photographing an object, using a camera, are input. An object is detected from an initial frame of the input time series images, using a basic shape characteristic, and a plurality of reference points to be tracked are automatically determined in the object. Then, corresponding points of the respective reference points are detected in an image frame other than the initial frame among the input time series images. Subsequently, motion information of a finger is calculated, based on the result of tracking the respective reference points and an assumption of limited motion in a 3D plane. Based on the calculated motion parameter, a solid object is subjected to coordinate conversion, and displayed if necessary. As a result of the tracking, a reference point in each frame is updated. Tracking, motion parameter calculation, and displaying are repeated with respect to subsequent frames.

Owner:SANYO ELECTRIC CO LTD

Generating synchronized interactive link maps linking tracked video objects to other multimedia content in real-time

InactiveUS20090083815A1Facilitate product advertisingGreat product awarenessTwo-way working systemsSelective content distributionPattern recognitionComputer graphics (images)

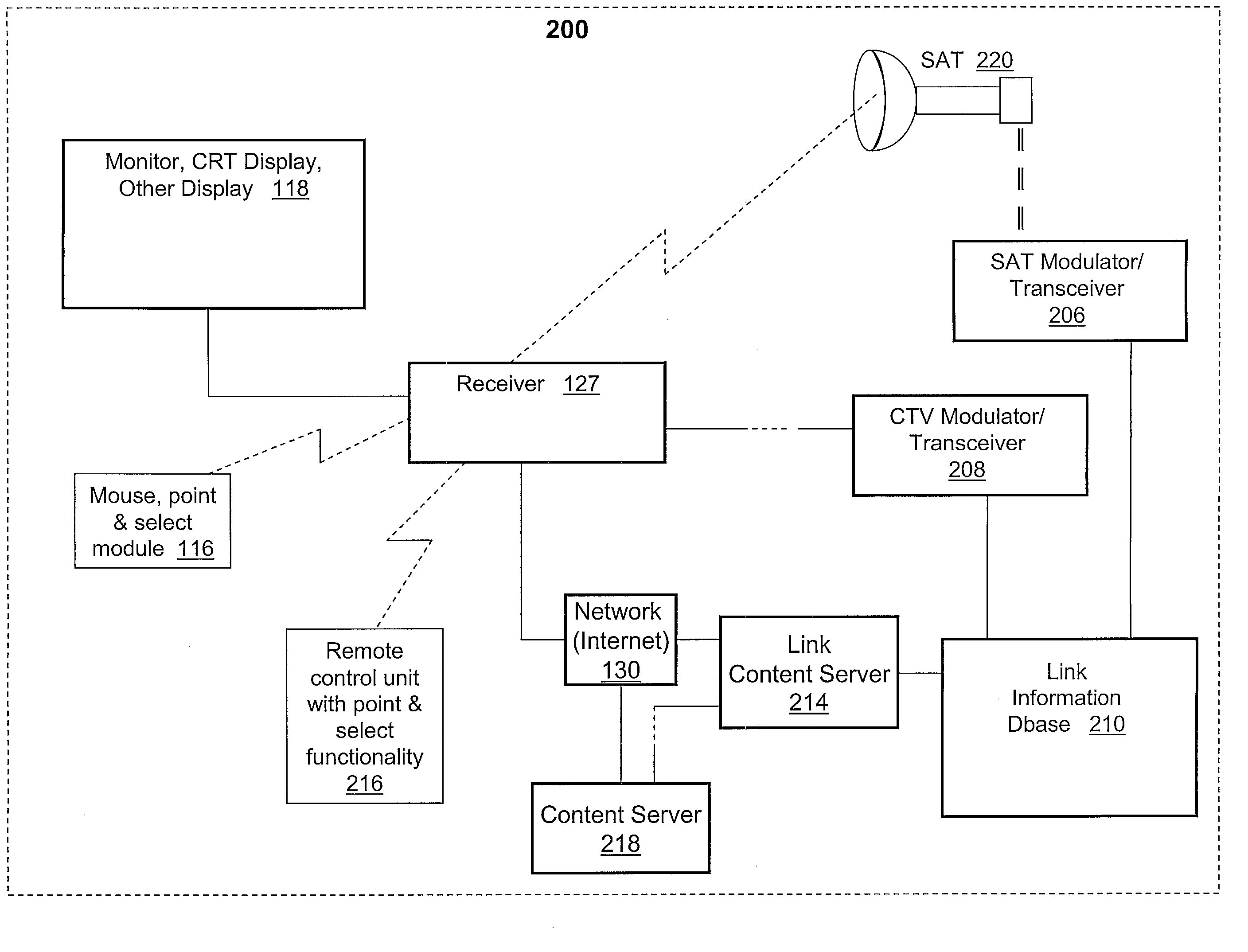

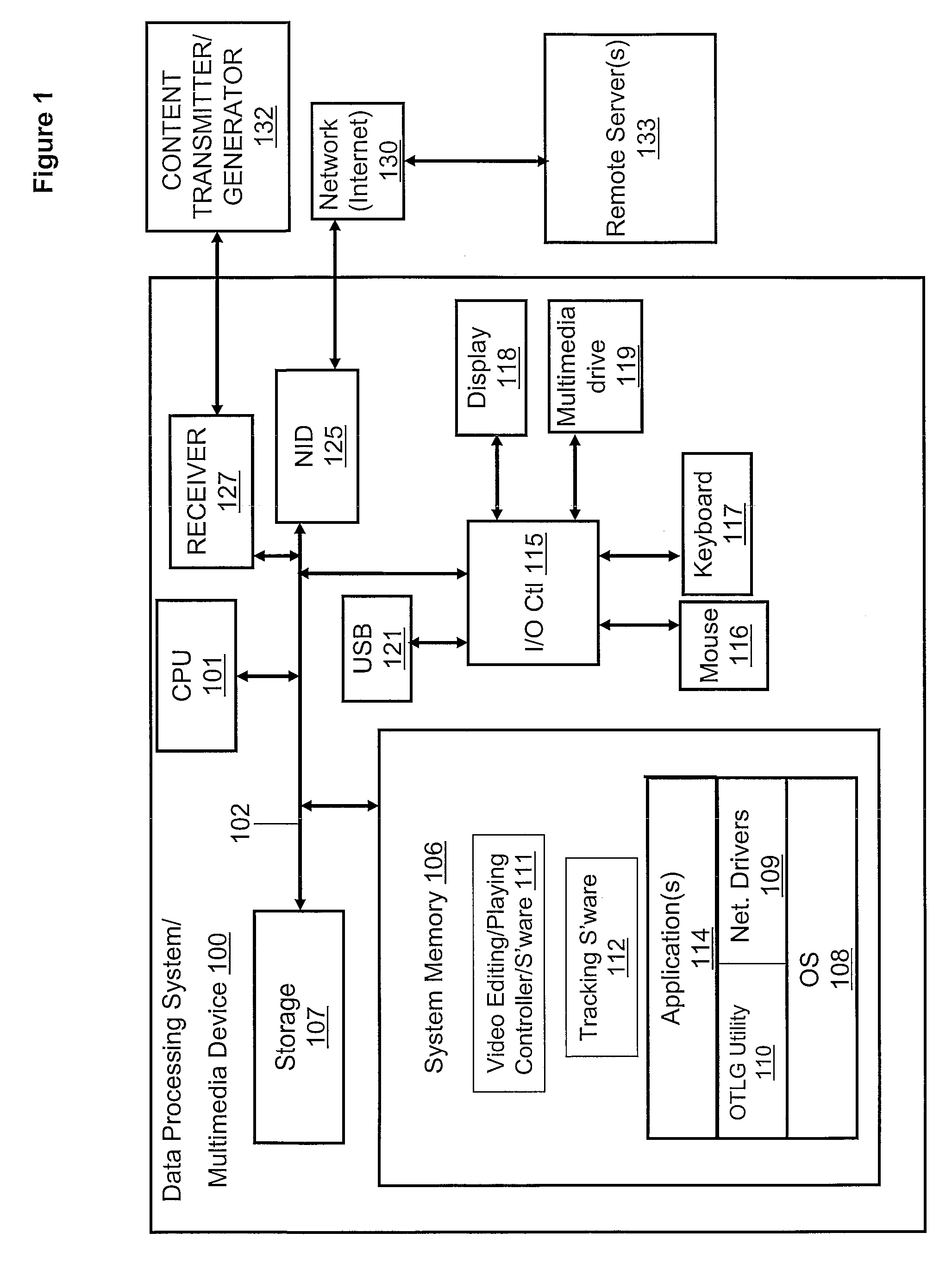

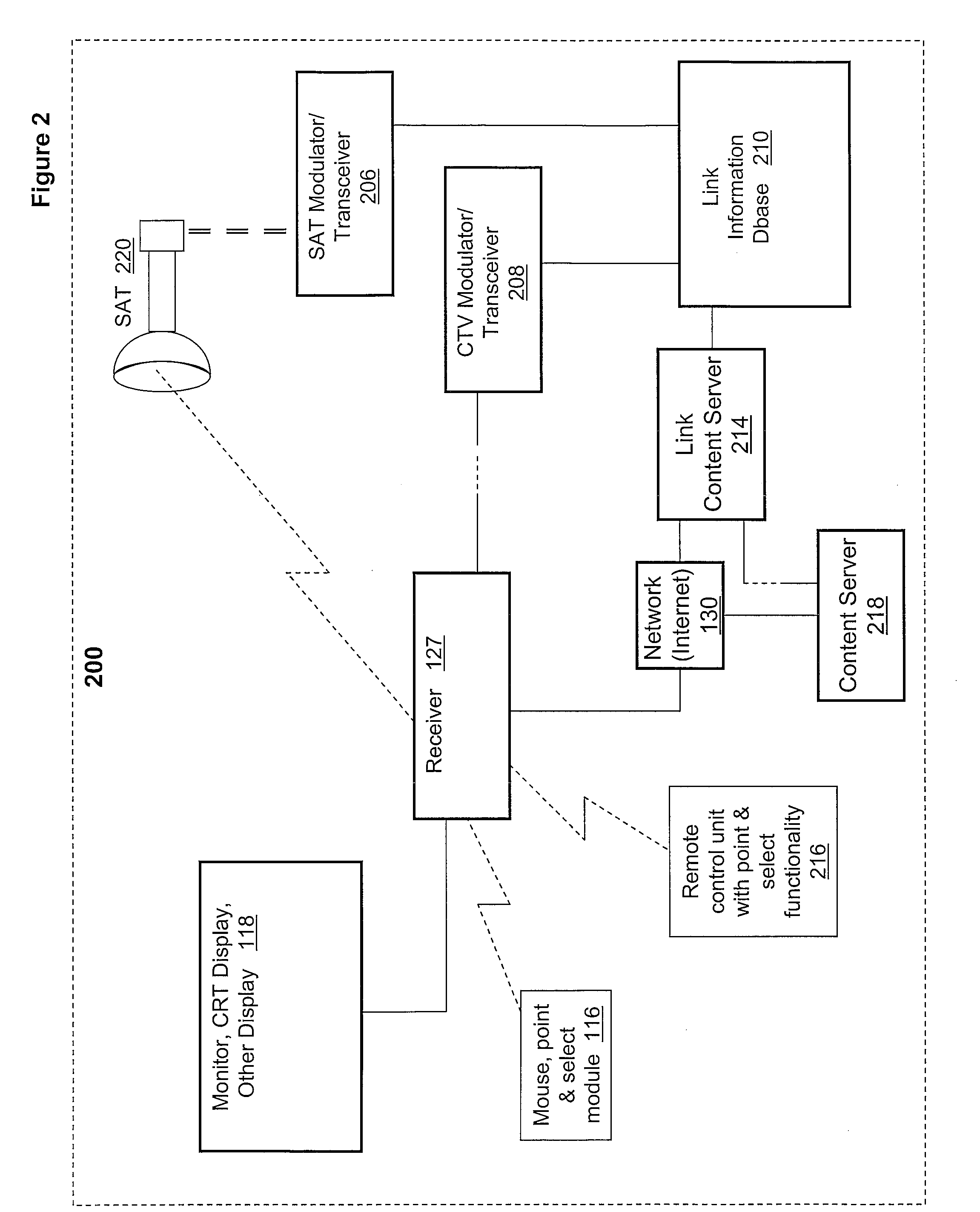

The method, system and computer program product generate online interactive maps linking tracked objects (in a live or pre-recorded video sequence) to multimedia content in real time. Specifically, an object tracking and link generation (OTLG) utility allows a user to access multimedia content by clicking on moving (or still) objects within the frames of a video (or image) sequence. The OTLG utility identifies and stores a clear image(s) of an object or of multiple objects to be tracked and initiates a mechanism to track the identified objects over a sequence of video or image frames. The OTLG utility utilizes the results of the tracking mechanism to generate, for each video frame, an interactive map frame with interactive links placed in the map frame at a location corresponding to the object's tracked location in each video frame.

Owner:MCMASTER ORLANDO +1

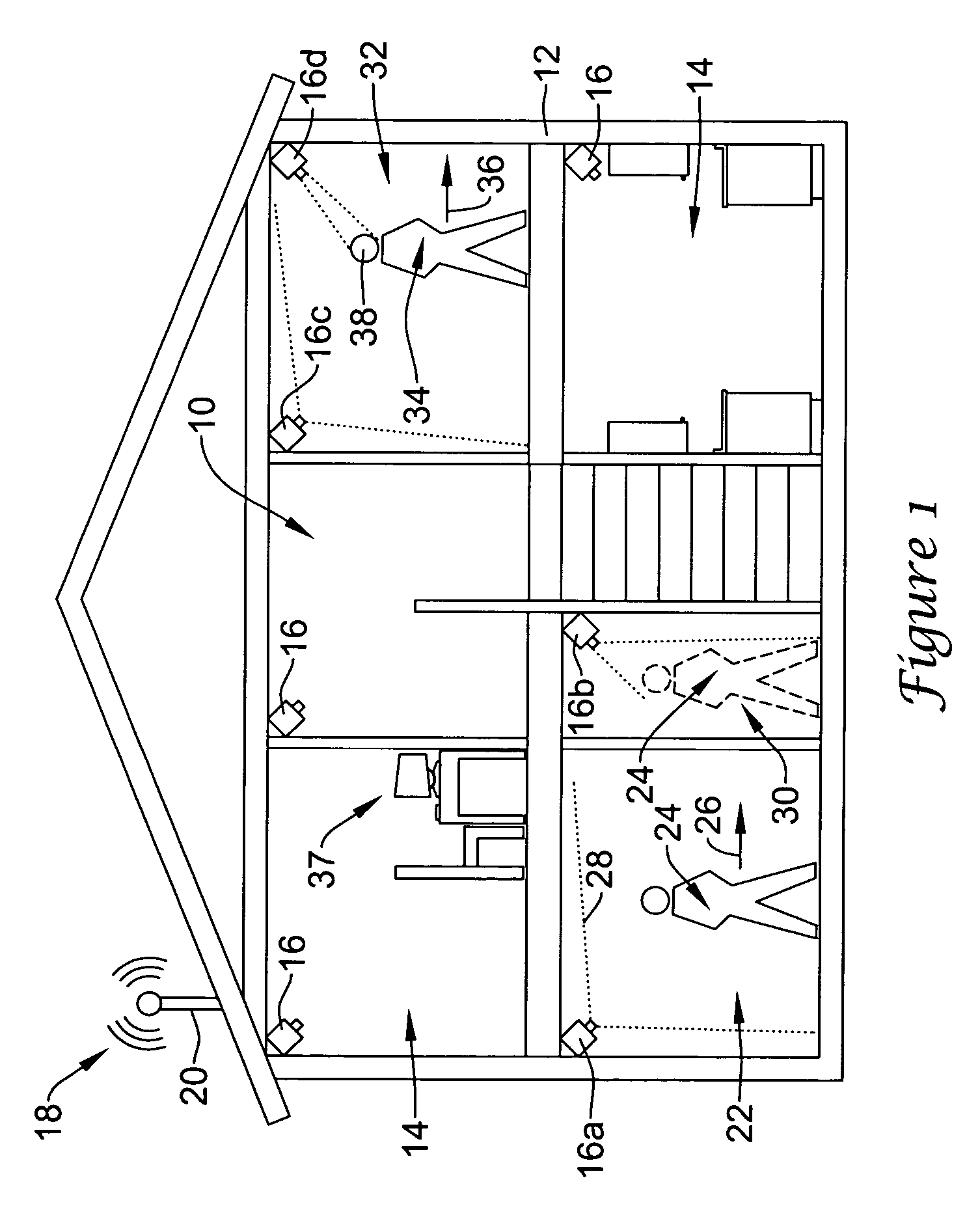

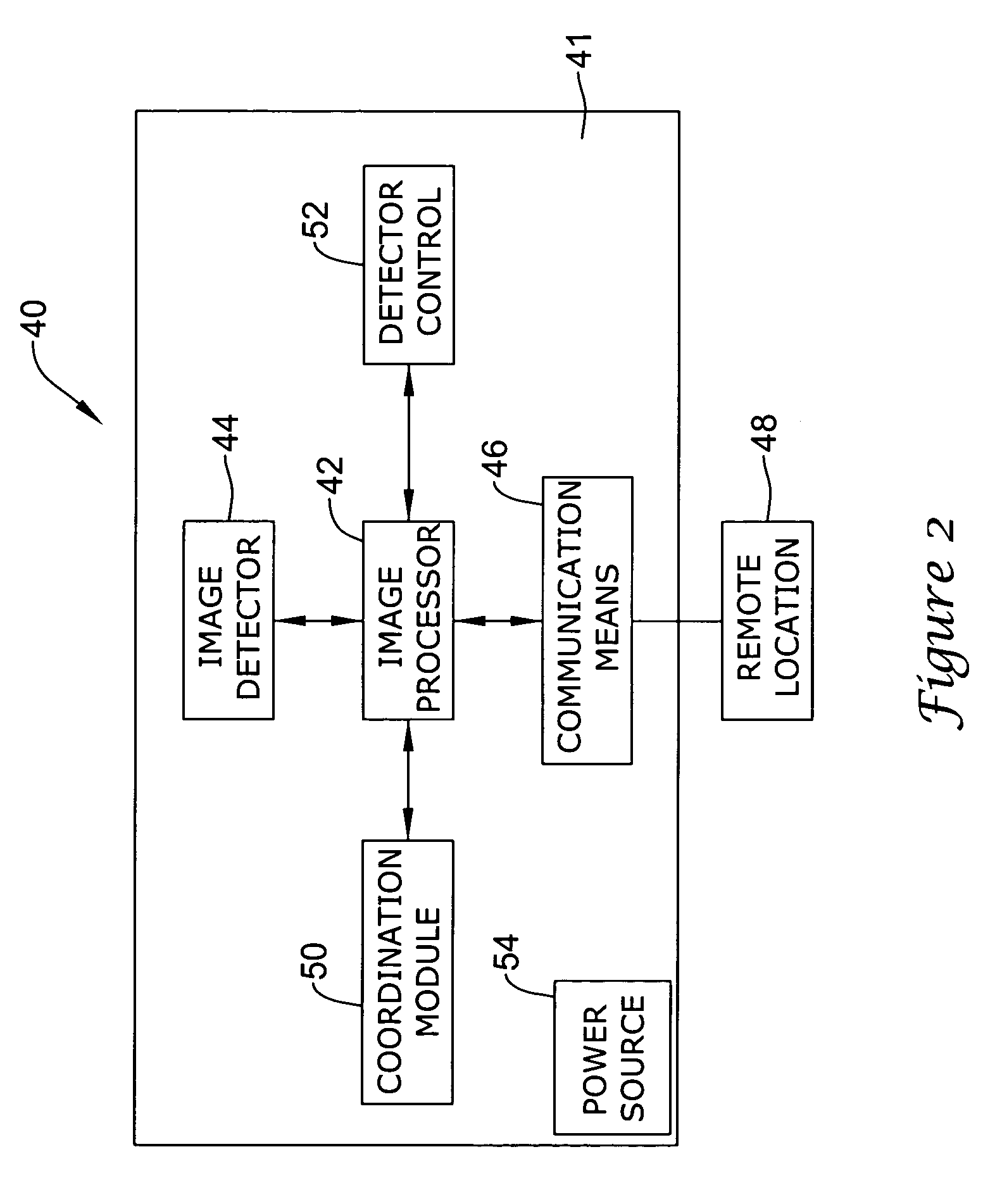

Monitoring devices

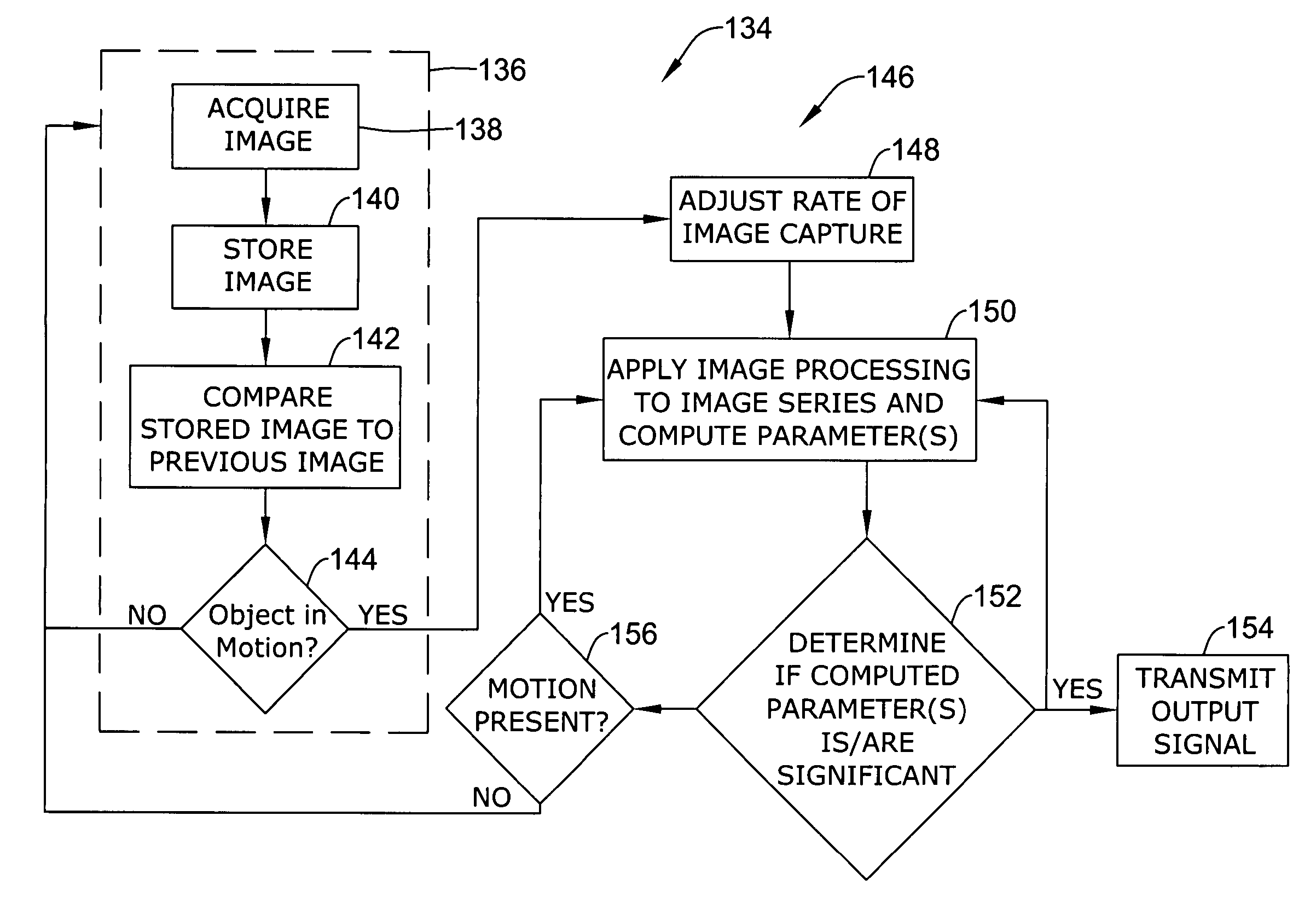

Monitoring systems, devices, and methods for monitoring one or more objects within an environment are disclosed. An illustrative monitoring device in accordance with the present invention can include an image detector, an on-board image processor, and communication means for transmitting an imageless signal to a remote location. The image processor can be configured to run one or more routines that can be used to determine a number of parameters relating to each object detected. In some embodiments, the monitoring device can be configured to run an image differencing routine that can be used to initially detect the presence of motion. Once motion is detected, the monitoring device can be configured to initiate a higher rate mode wherein image frames are processed at a higher frame rate to permit the image processor to compute higher-level information about the moving object.

Owner:HONEYWELL INT INC

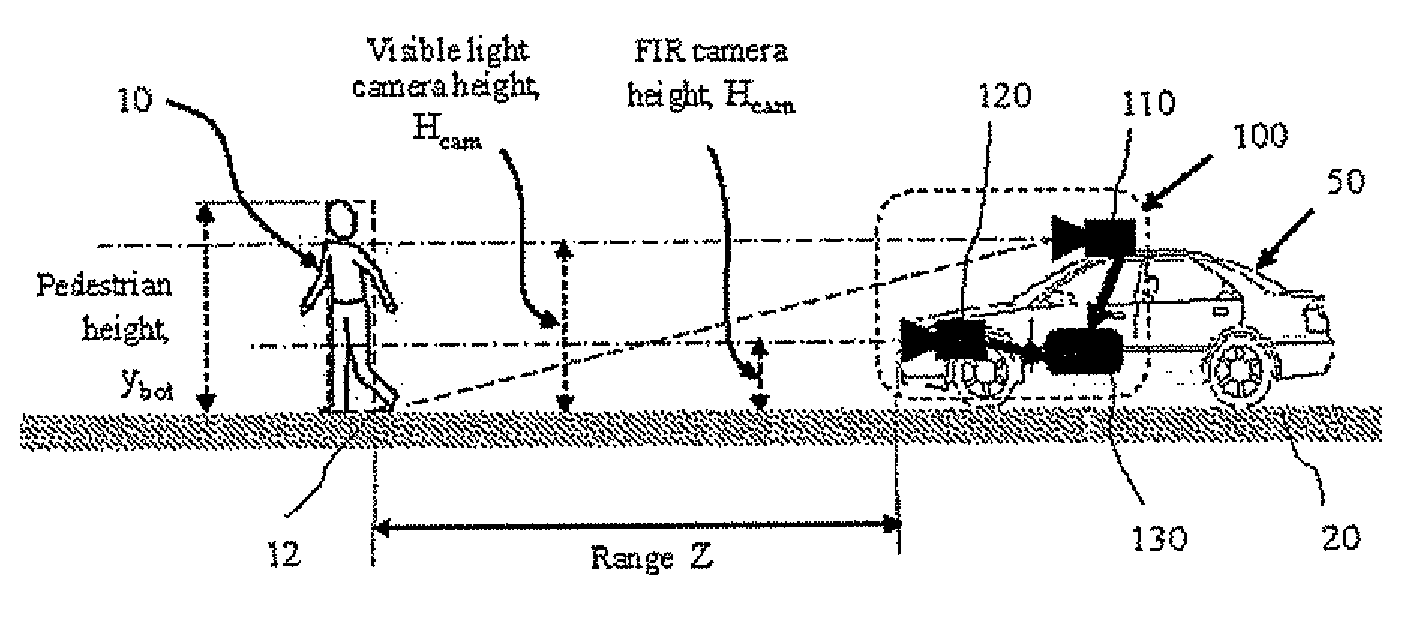

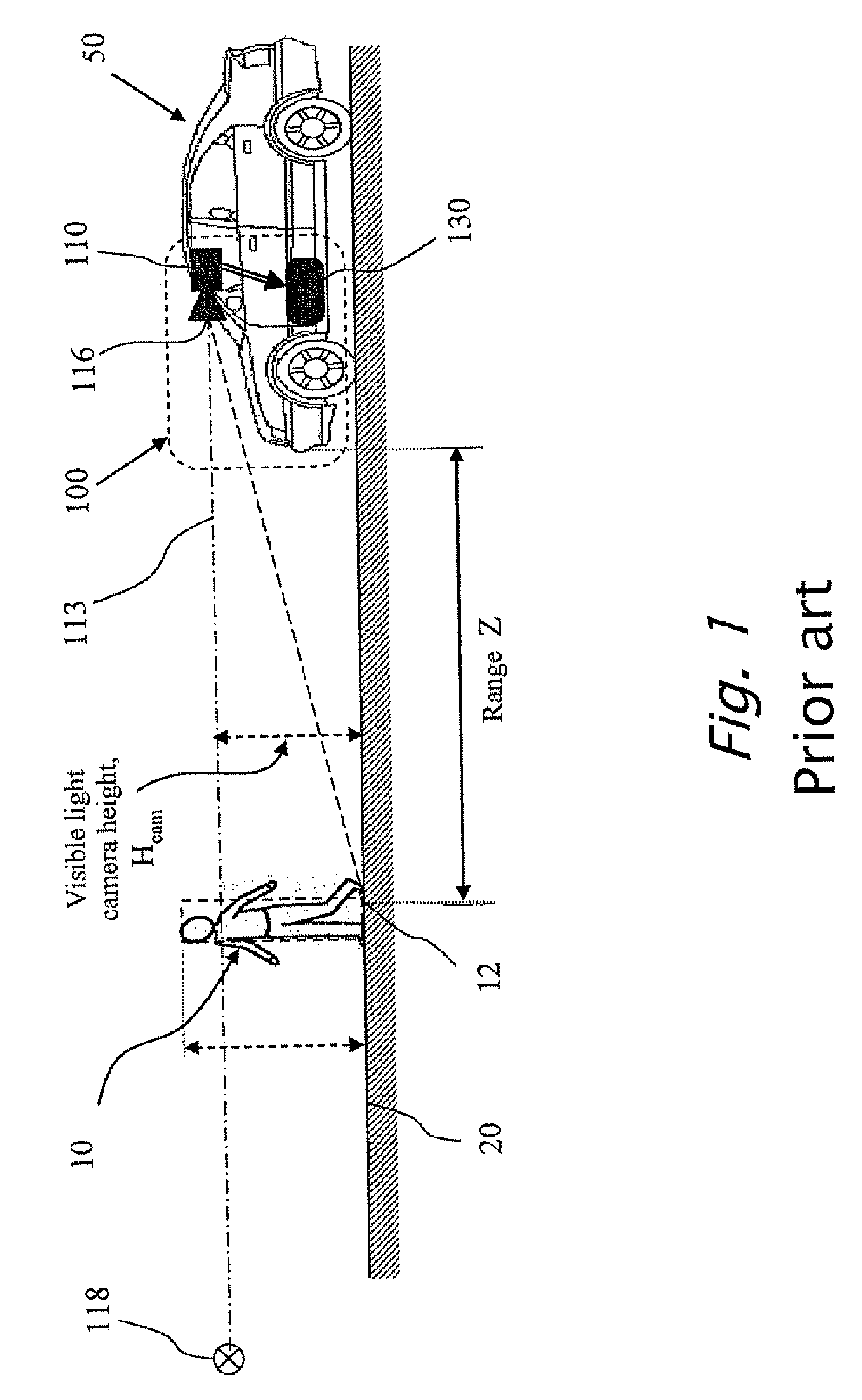

Fusion of far infrared and visible images in enhanced obstacle detection in automotive applications

InactiveUS7786898B2Improve visibilityBetter triangulationImage enhancementImage analysisComputerized systemFar infrared

A method in computerized system mounted on a vehicle including a cabin and an engine. The system including a visible (VIS) camera sensitive to visible light, the VIS camera mounted inside the cabin, wherein the VIS camera acquires consecutively in real time multiple image frames including VIS images of an object within a field of view of the VIS camera and in the environment of the vehicle. The system also including a FIR camera mounted on the vehicle in front of the engine, wherein the FIR camera acquires consecutively in real time multiple FIR image frames including FIR images of the object within a field of view of the FIR camera and in the environment of the vehicle. The FIR images and VIS images are processed simultaneously, thereby producing a detected object when the object is present in the environment.

Owner:MOBILEYE VISION TECH LTD

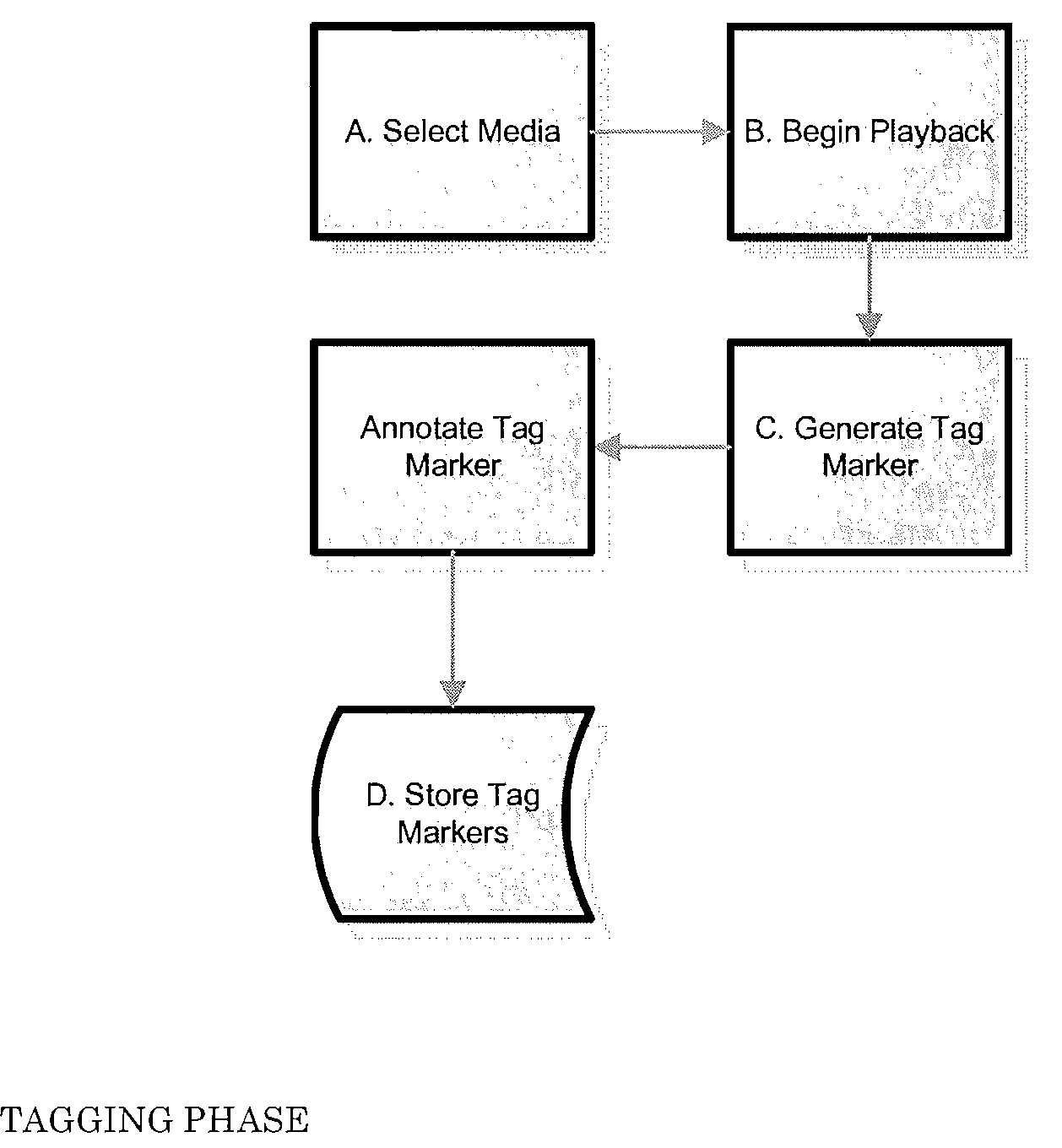

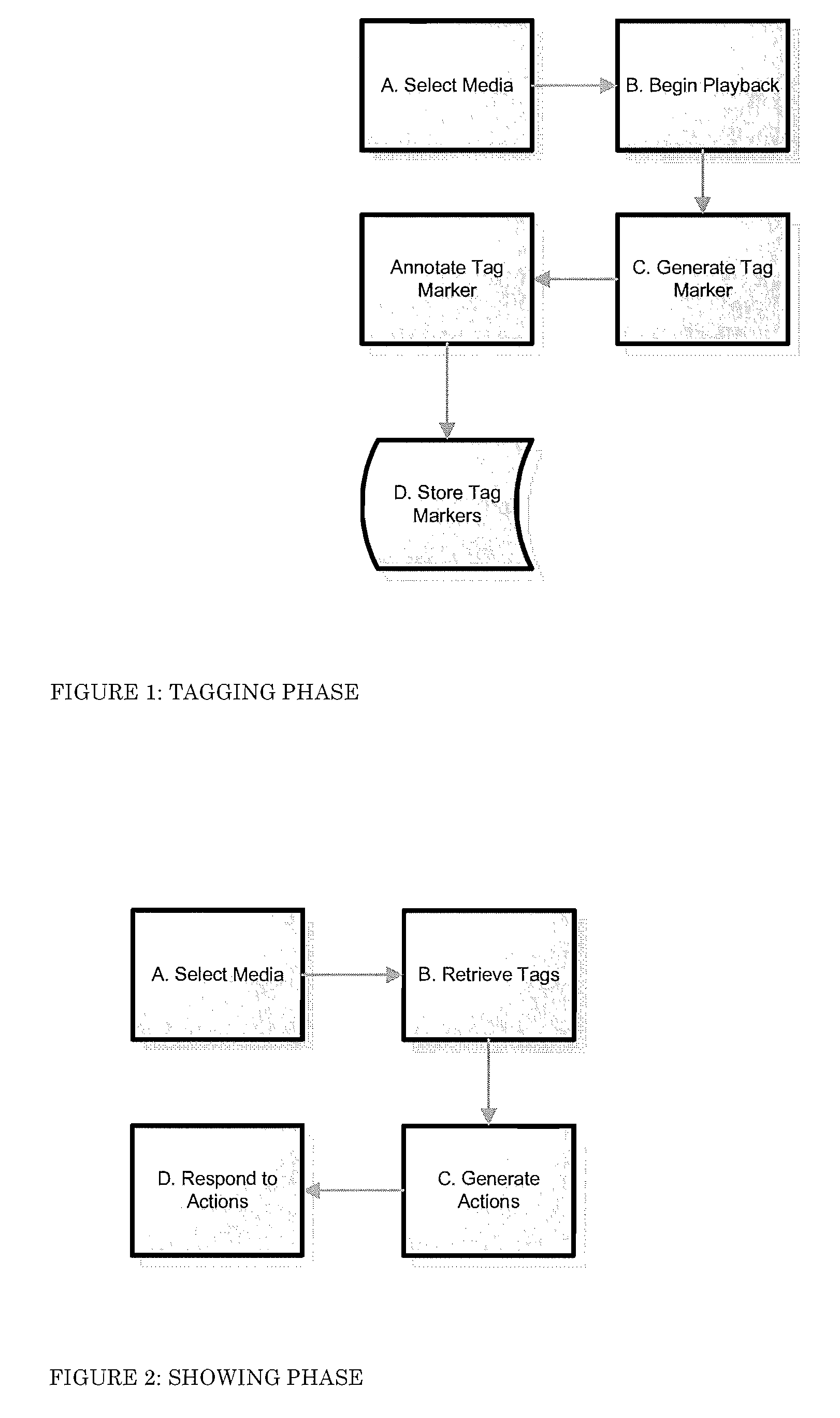

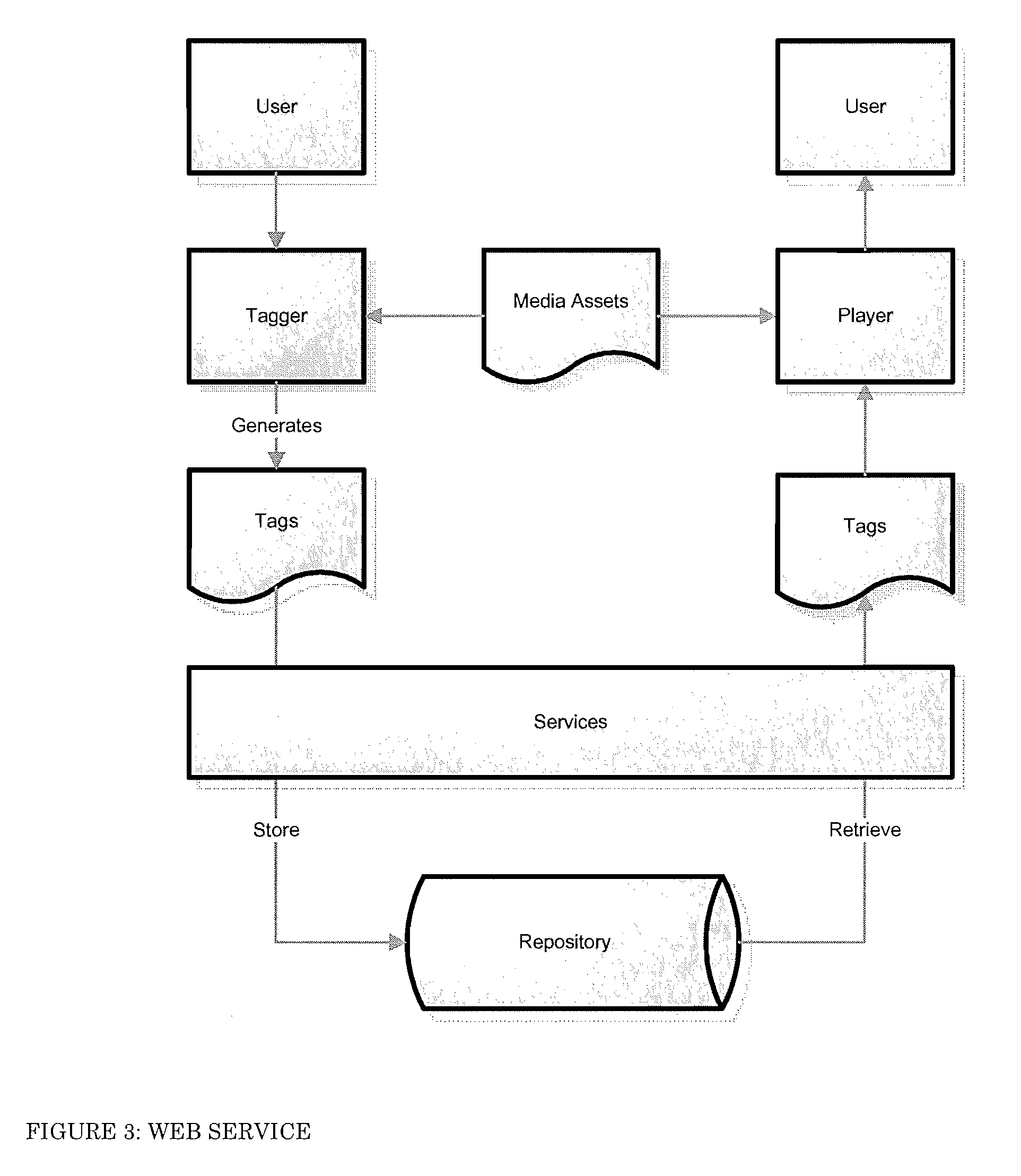

System and method for tagging, searching for, and presenting items contained within video media assets

InactiveUS20080126191A1Simple keyword searchingSpeed transmissionMarketingSpecial data processing applicationsThumbnailResult list

A system and method for computerized searching for items of interest contained in visual media assets, such as a movie or video, stores digital tag information for tagged visual media assets which includes for each tag a time code for a representative image frame, an address code for the storage location of a captured image-still of the frame, and one or more keywords representing the item(s) of interest or their characteristics. When a search request is entered with keywords for items of interest, the search result lists entries from tags containing those keywords, and can also display the captured image-stills (or thumbnail photos thereof) as a visual depiction of the search results. The search service enables advertisers and vendors to bid on rights to link their advertising and other information displays to the search results. The search service can also be used for playback of clips from media assets to viewers on a video viewing website or on a networked playback device.

Owner:SCHIAVI RICHARD

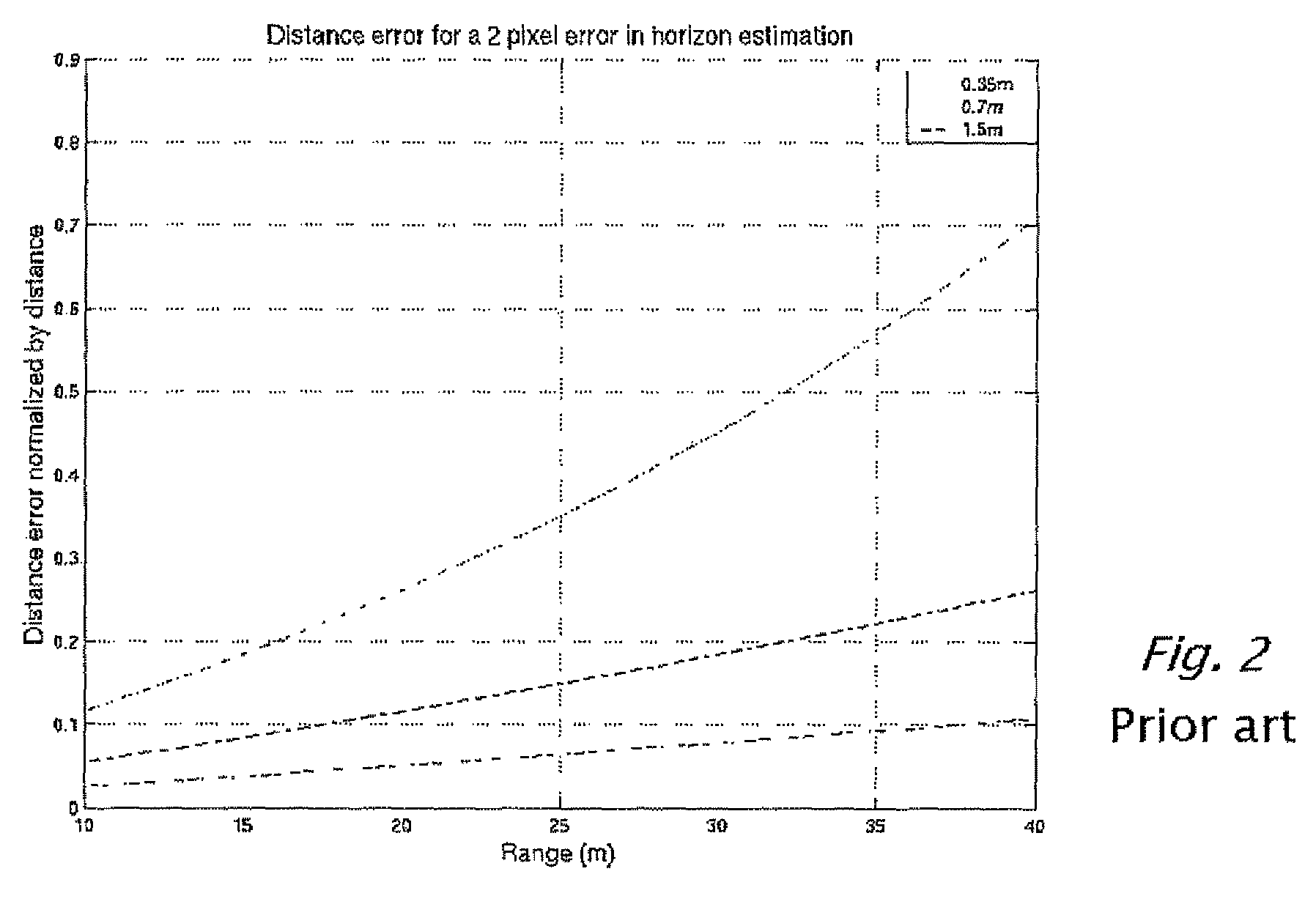

Estimating Distance To An Object Using A Sequence Of Images Recorded By A Monocular Camera

In a computerized system including a camera mounted in a moving vehicle. The camera acquires consecutively in real time image frames including images of an object within the field of view of the camera. Range to the object from the moving vehicle is determined in real time. A dimension, e.g. a width, is measured in the respective images of two or more image frames, thereby producing measurements of the dimension. The measurements are processed to produce a smoothed measurement of the dimension. The dimension is measured subsequently in one or more subsequent frames. The range from the vehicle to the object is calculated in real time based on the smoothed measurement and the subsequent measurements. The processing preferably includes calculating recursively the smoothed dimension using a Kalman filter.

Owner:MOBILEYE VISION TECH LTD

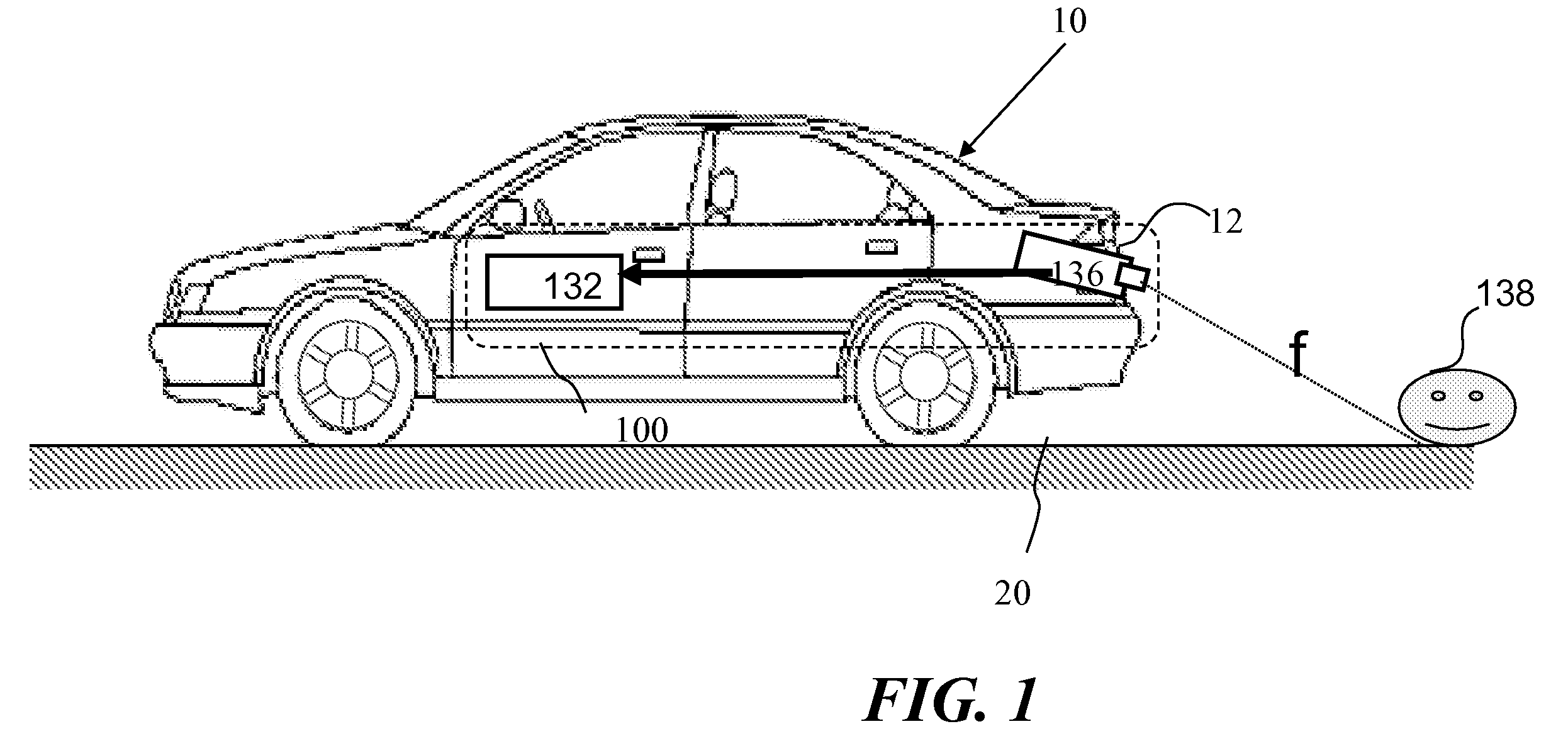

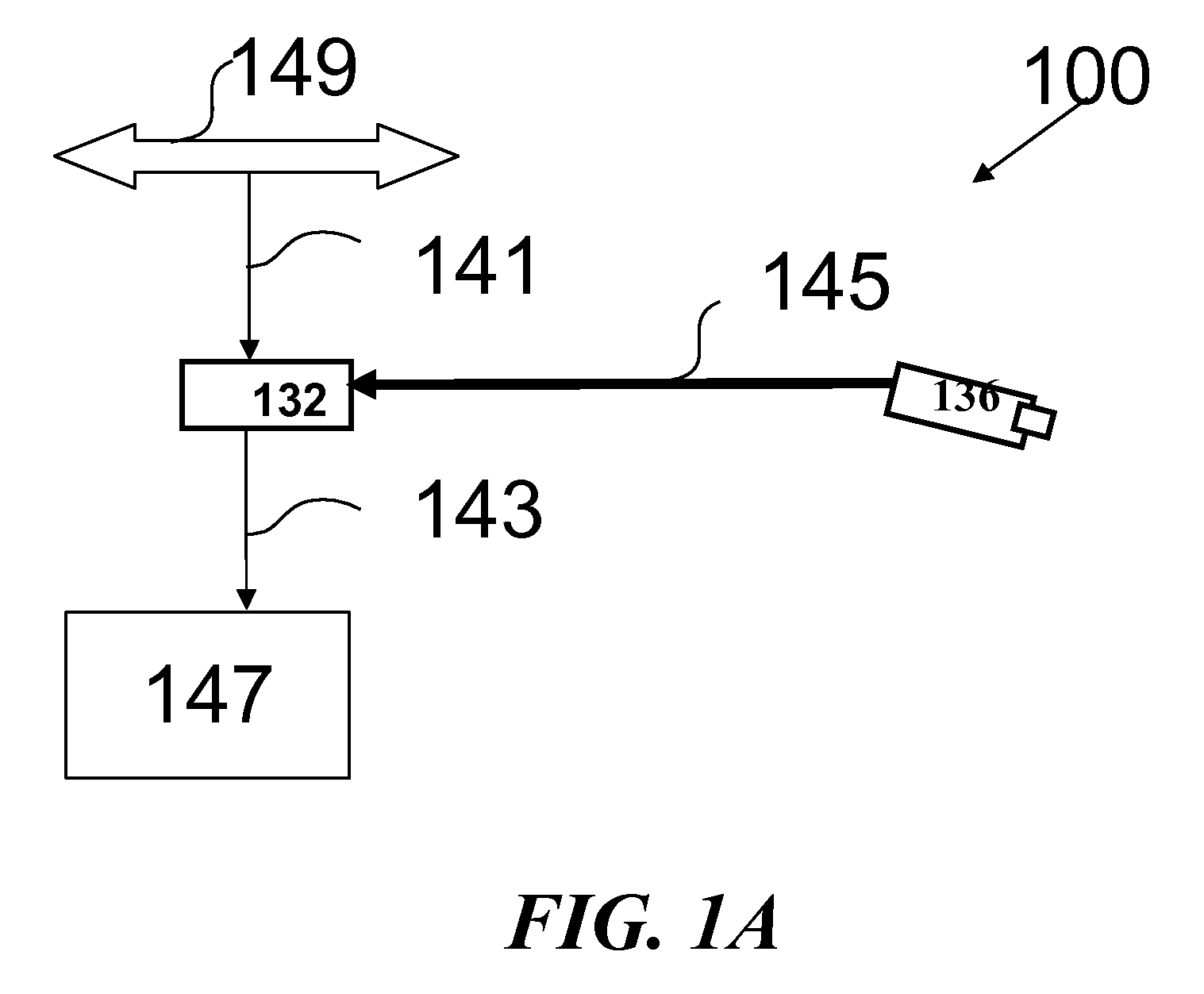

Rear obstruction detection

A method is provided using a system mounted in a vehicle. The system includes a rear-viewing camera and a processor attached to the rear-viewing camera. When the driver shifts the vehicle into reverse gear, and while the vehicle is still stationary, image frames from the immediate vicinity behind the vehicle are captured. The immediate vicinity behind the vehicle is in a field of view of the rear-viewing camera. The image frames are processed and thereby the object is detected which if present in the immediate vicinity behind the vehicle would obstruct the motion of the vehicle. The processing is preferably performed in parallel for a plurality of classes of obstructing objects using a single image frame of the image frames.

Owner:MOBILEYE VISION TECH LTD

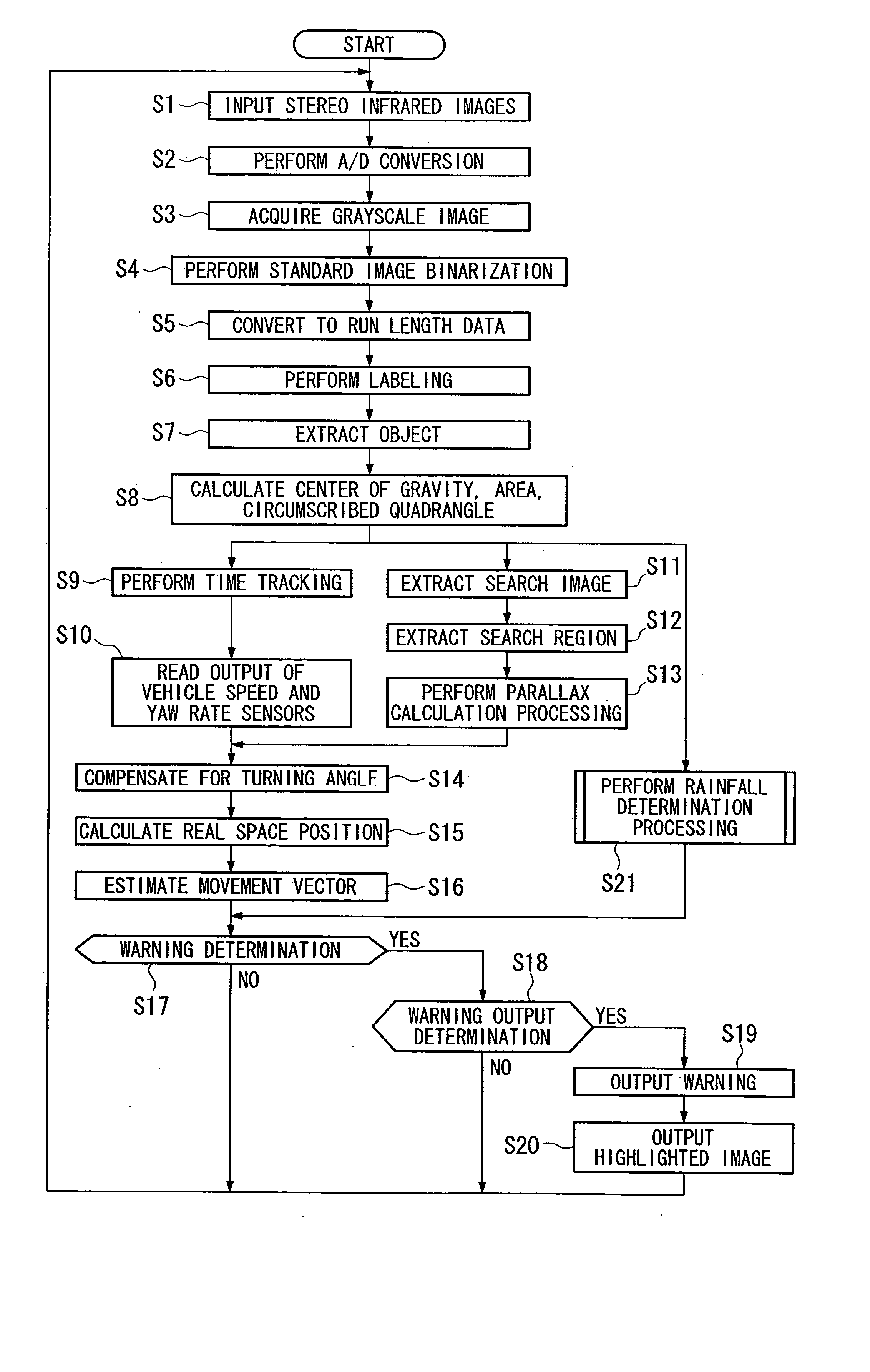

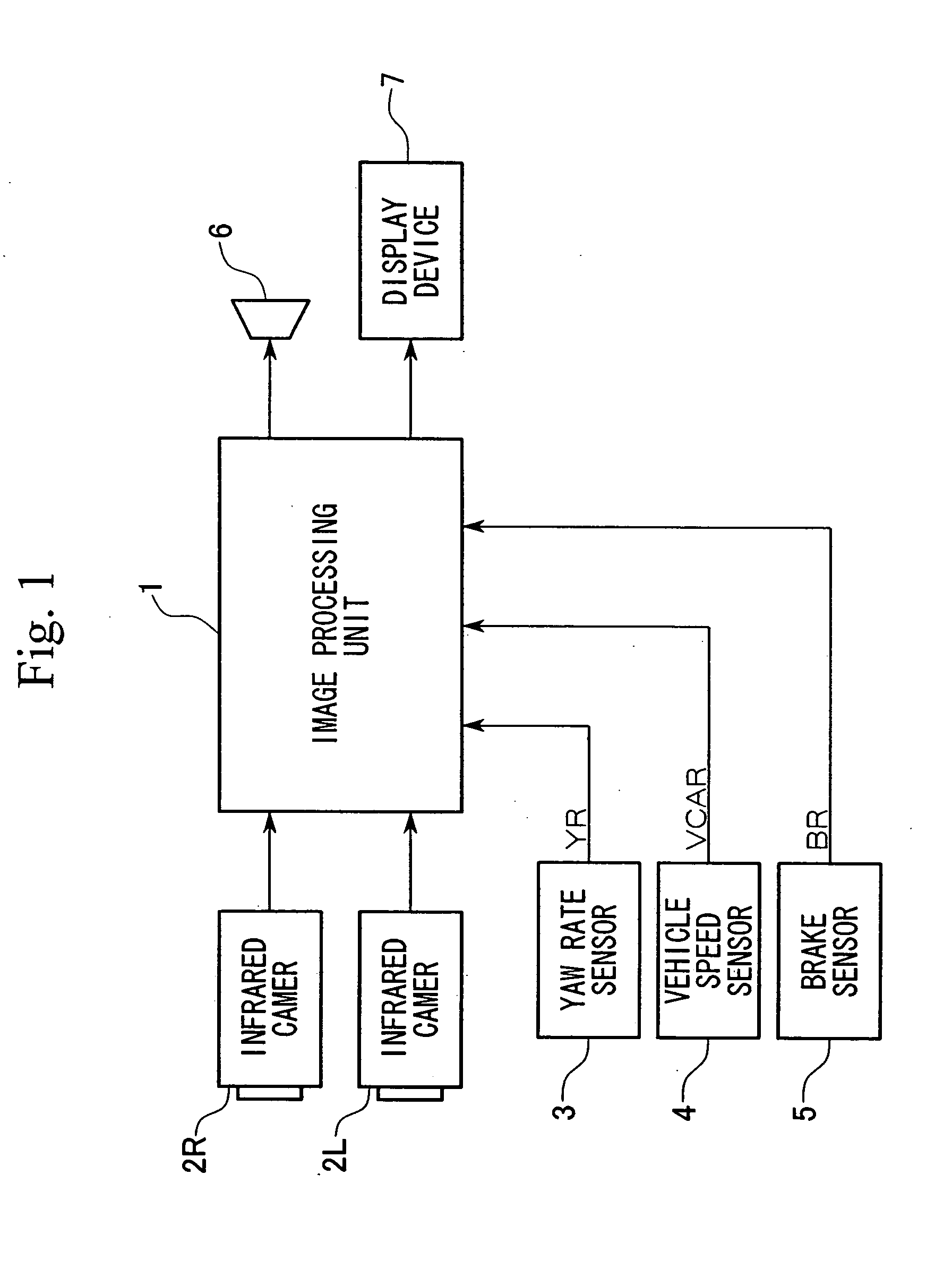

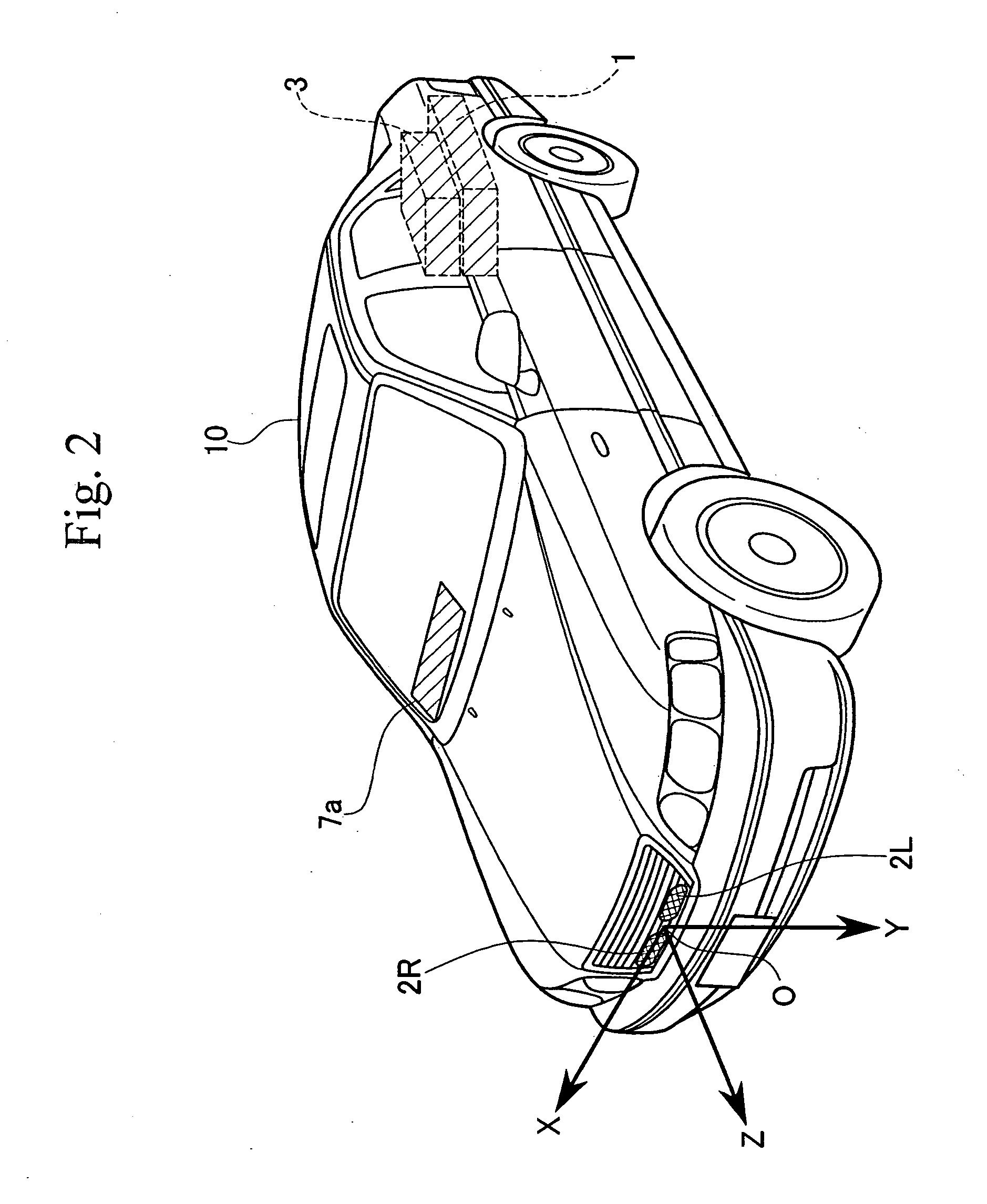

Vehicle environment monitoring device

ActiveUS20050063565A1Improve resource utilizationAccurate judgmentImage enhancementImage analysisHeight differenceState of the Environment

A vehicle environment monitoring device identifies objects from an image taken by an infrared camera. Object extraction from images is performed in accordance with the state of the environment as determined by measurements extracted from the images as follows: N1 binarized objects are extracted from a single frame. Height of a grayscale objects corresponding to one of the binarized objects are calculated. If a ratio of the number of binarized objects C, where the absolute value of the height difference is less than the predetermined value ΔH, to the total number N1 of binarized objects, is greater than a predetermined value X1, the image frame is determined to be rainfall-affected. If the ratio is less than the predetermined value X1, the image frame is determined to be a normal frame. A warning is provided if it is determined that the object is a pedestrian and if a collision is likely.

Owner:ARRIVER SOFTWARE AB

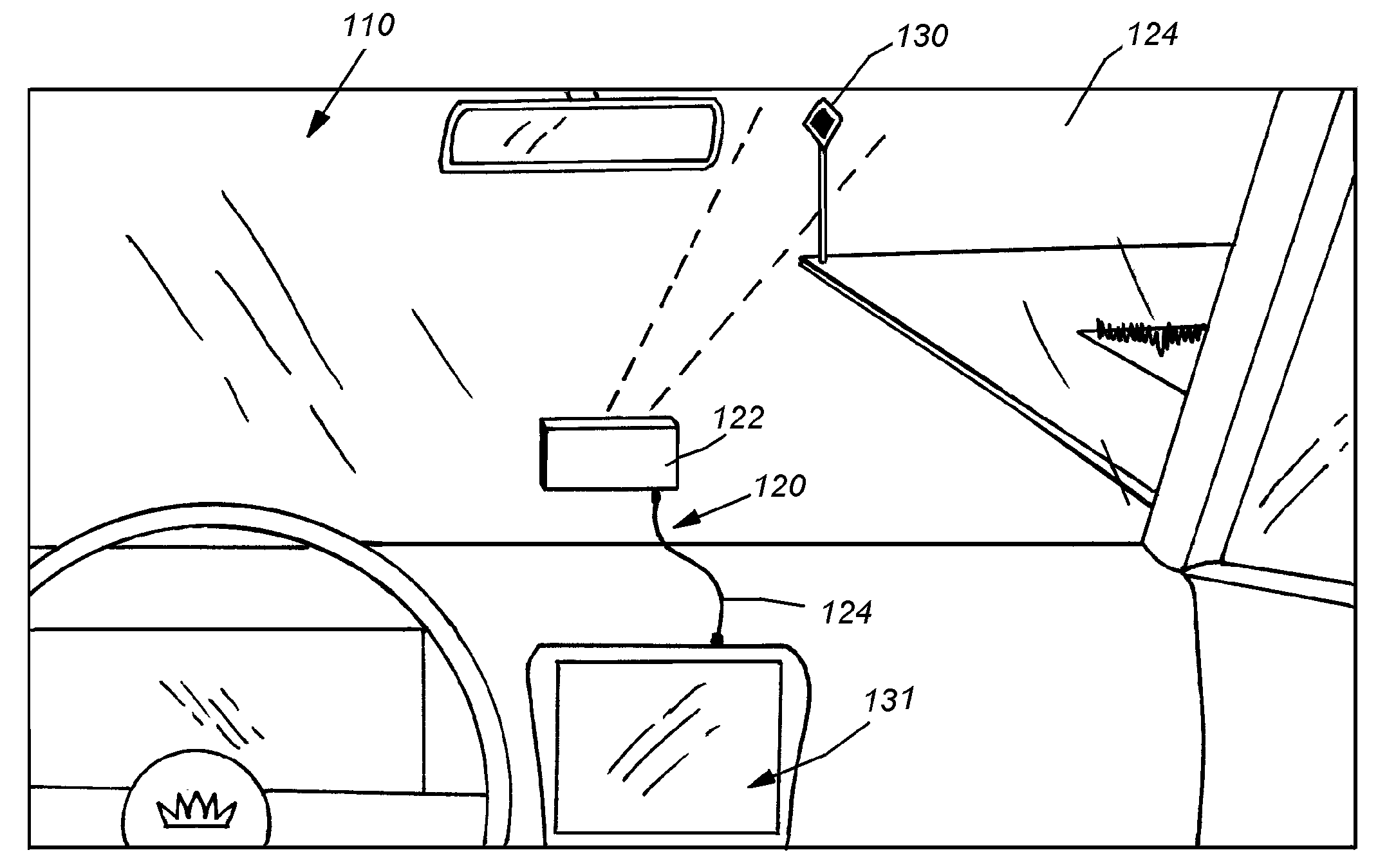

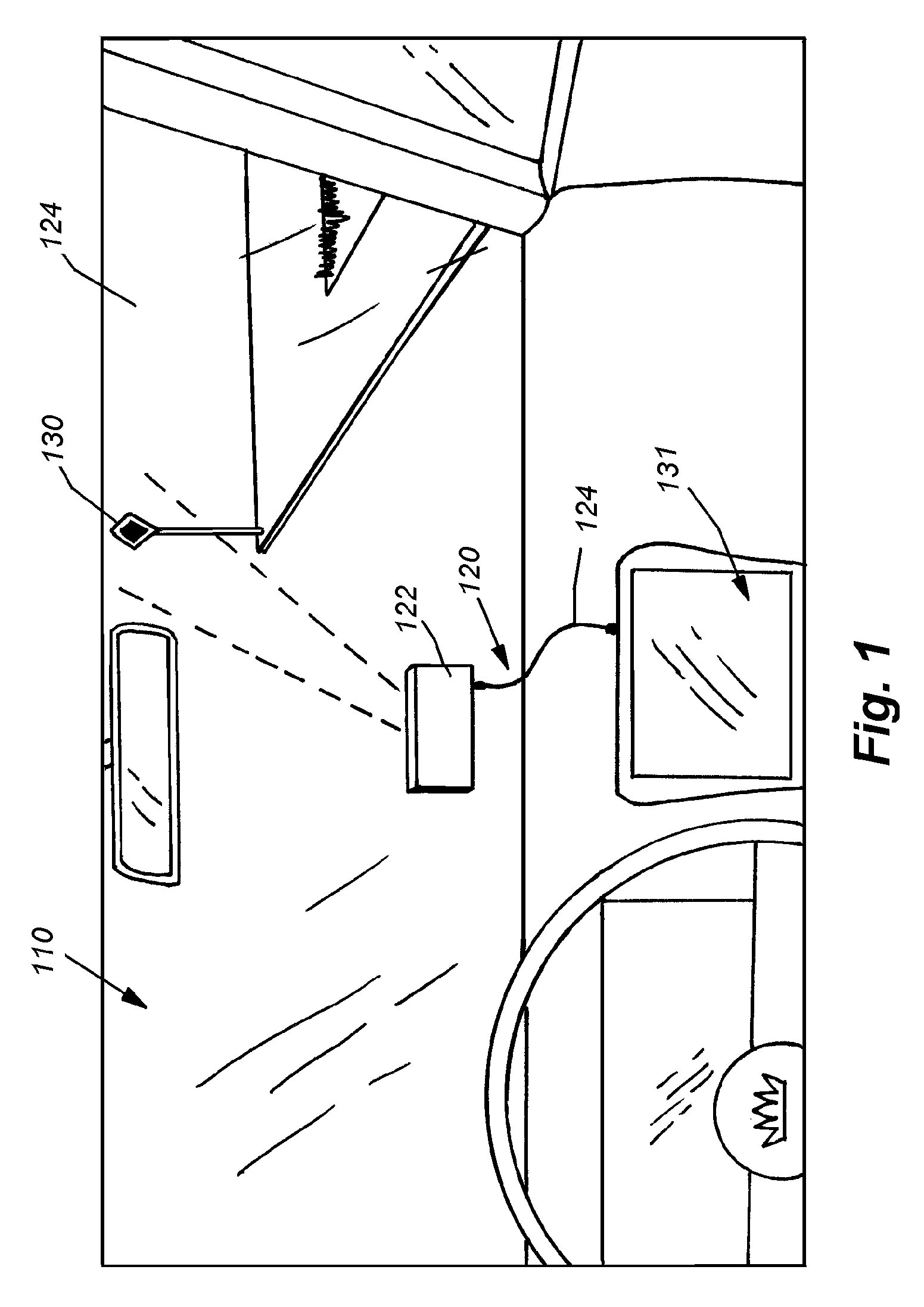

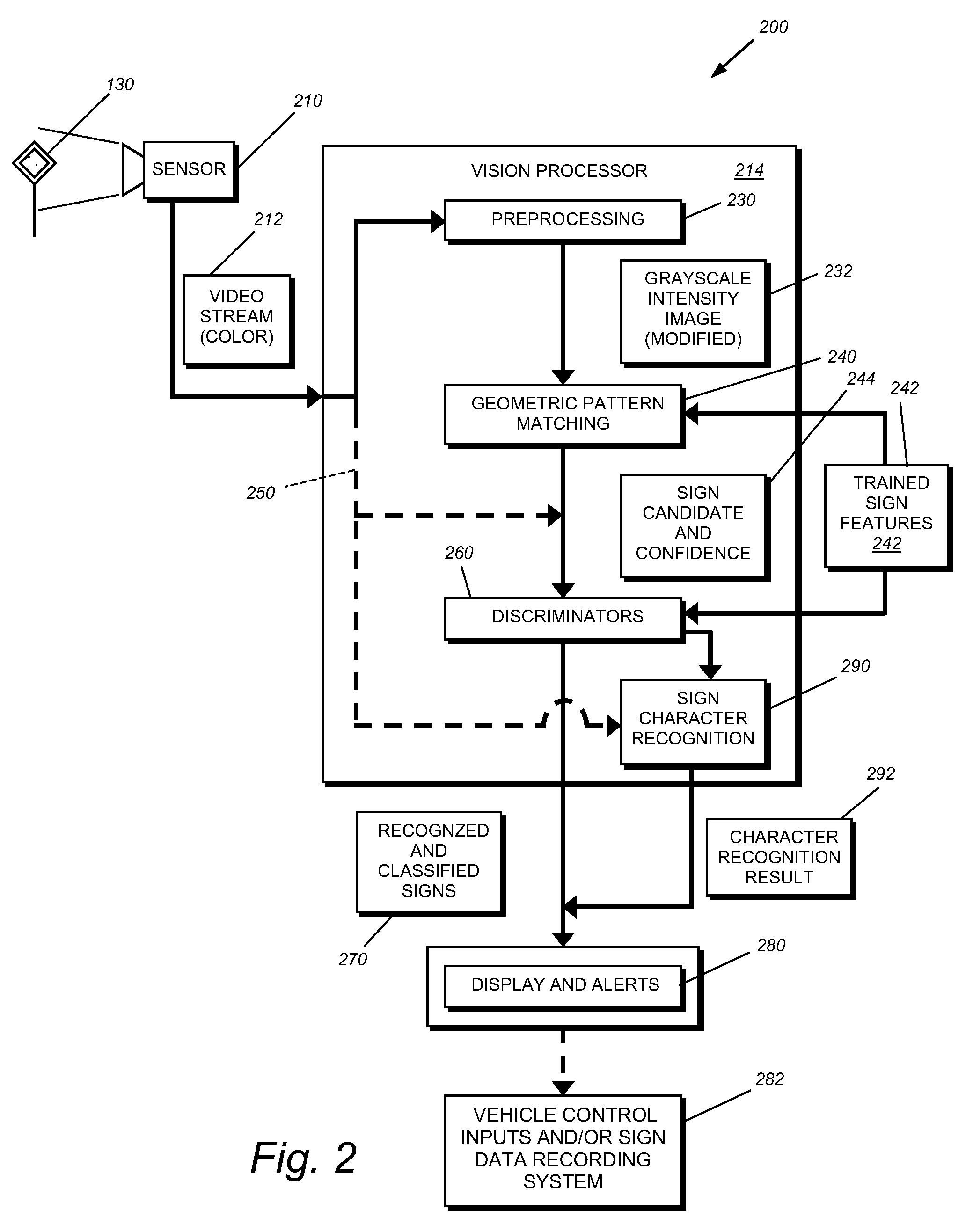

System and method for traffic sign recognition

InactiveUS20090074249A1Improve accuracyImprove efficiencyCharacter and pattern recognitionColor imageTraffic sign recognition

This invention provides a vehicle-borne system and method for traffic sign recognition that provides greater accuracy and efficiency in the location and classification of various types of traffic signs by employing rotation and scale-invariant (RSI)-based geometric pattern-matching on candidate traffic signs acquired by a vehicle-mounted forward-looking camera and applying one or more discrimination processes to the recognized sign candidates from the pattern-matching process to increase or decrease the confidence of the recognition. These discrimination processes include discrimination based upon sign color versus model sign color arrangements, discrimination based upon the pose of the sign candidate versus vehicle location and / or changes in the pose between image frames, and / or discrimination of the sign candidate versus stored models of fascia characteristics. The sign candidates that pass with high confidence are classified based upon the associated model data and the drive / vehicle is informed of their presence. In an illustrative embodiment, a preprocess step converts a color image of the sign candidates into a grayscale image in which the contrast between sign colors is appropriate enhanced to assist the pattern-matching process.

Owner:COGNEX CORP

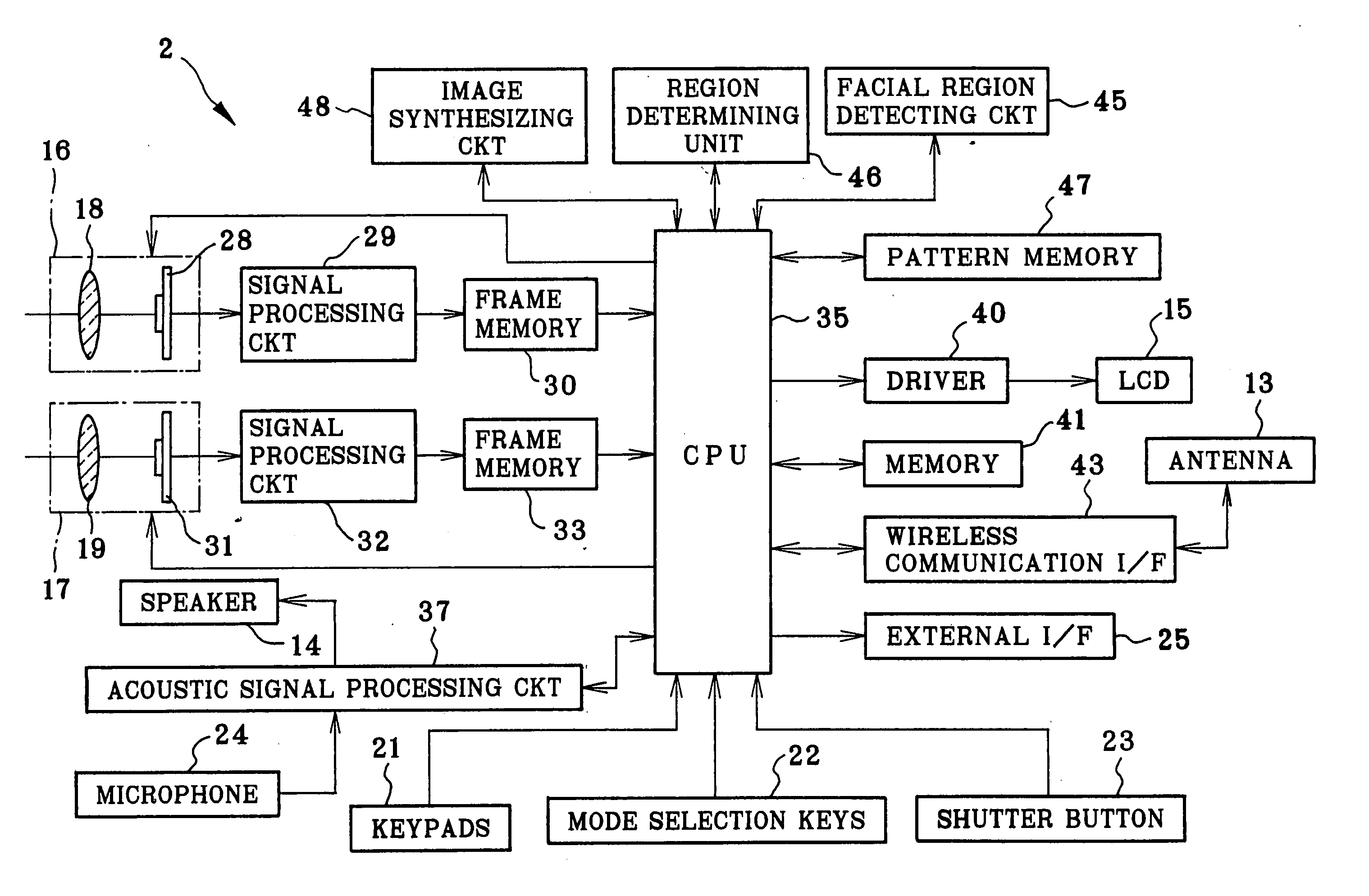

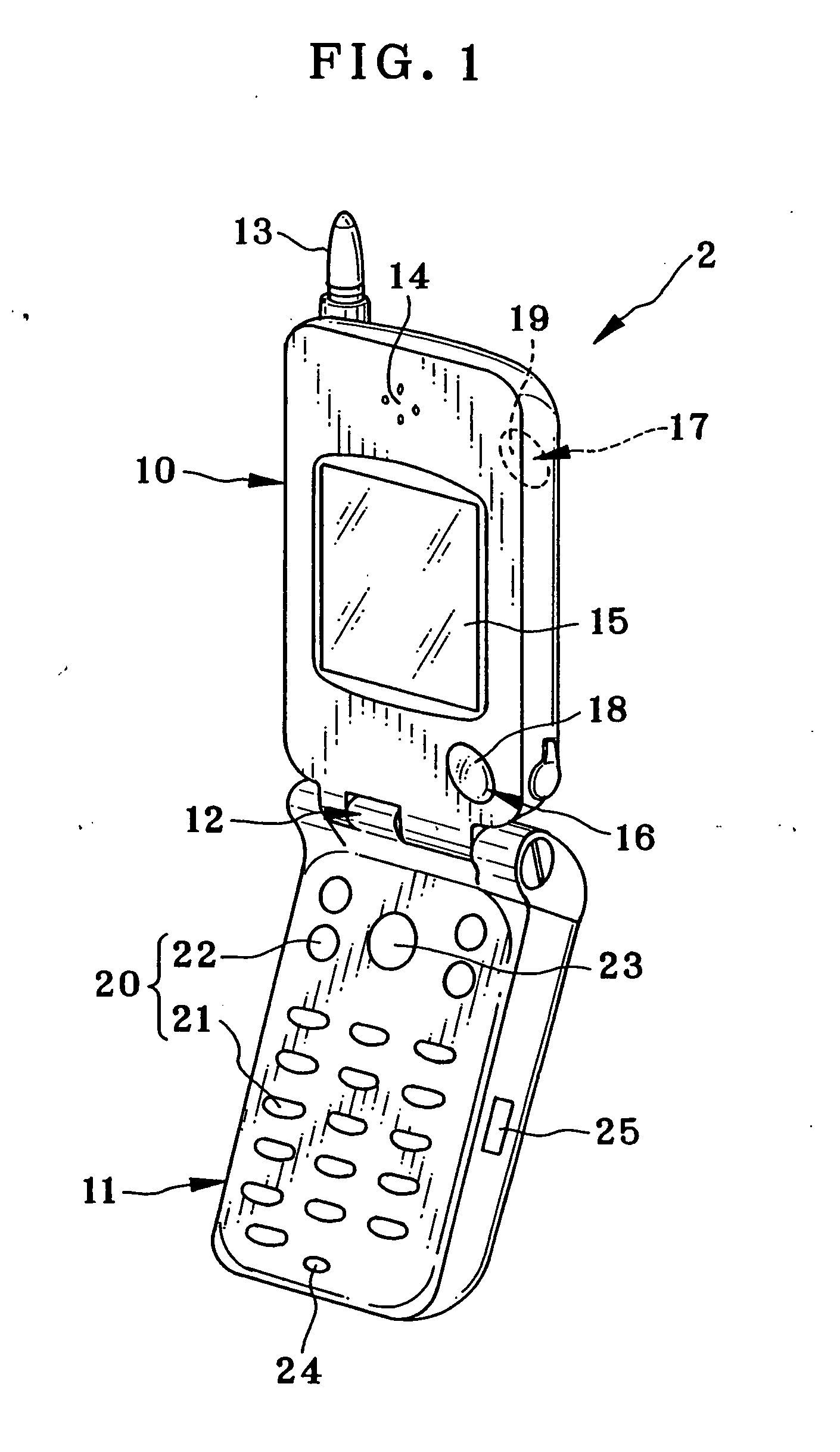

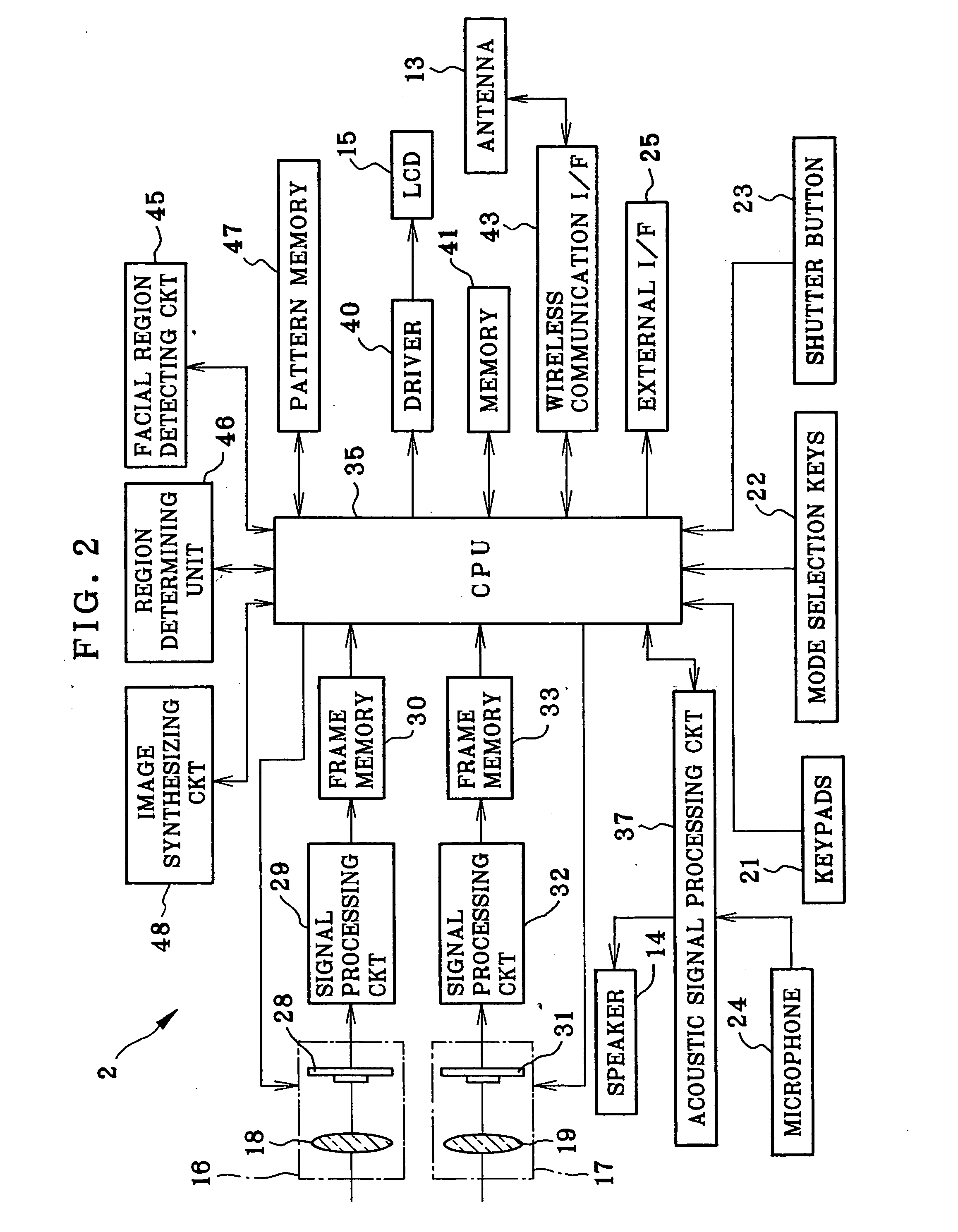

Image pickup device and image synthesizing method

InactiveUS20050036044A1Easy to synthesizeEdited readily and easilyTelevision system detailsImage enhancementFacial regionImaging data

An image pickup device is constituted by a handset of cellular phone, wherein an image pickup unit photographs a user as first object, to output image data of a first image frame. A facial region detecting circuit retrieves a facial image portion of the user as first object according to image data of the first image frame. An image synthesizing circuit is supplied with image data of a second image frame including persons as second object. The facial image portion is synthesized in a background region being defined outside the persons as second object within the second image frame.

Owner:FUJIFILM CORP +1

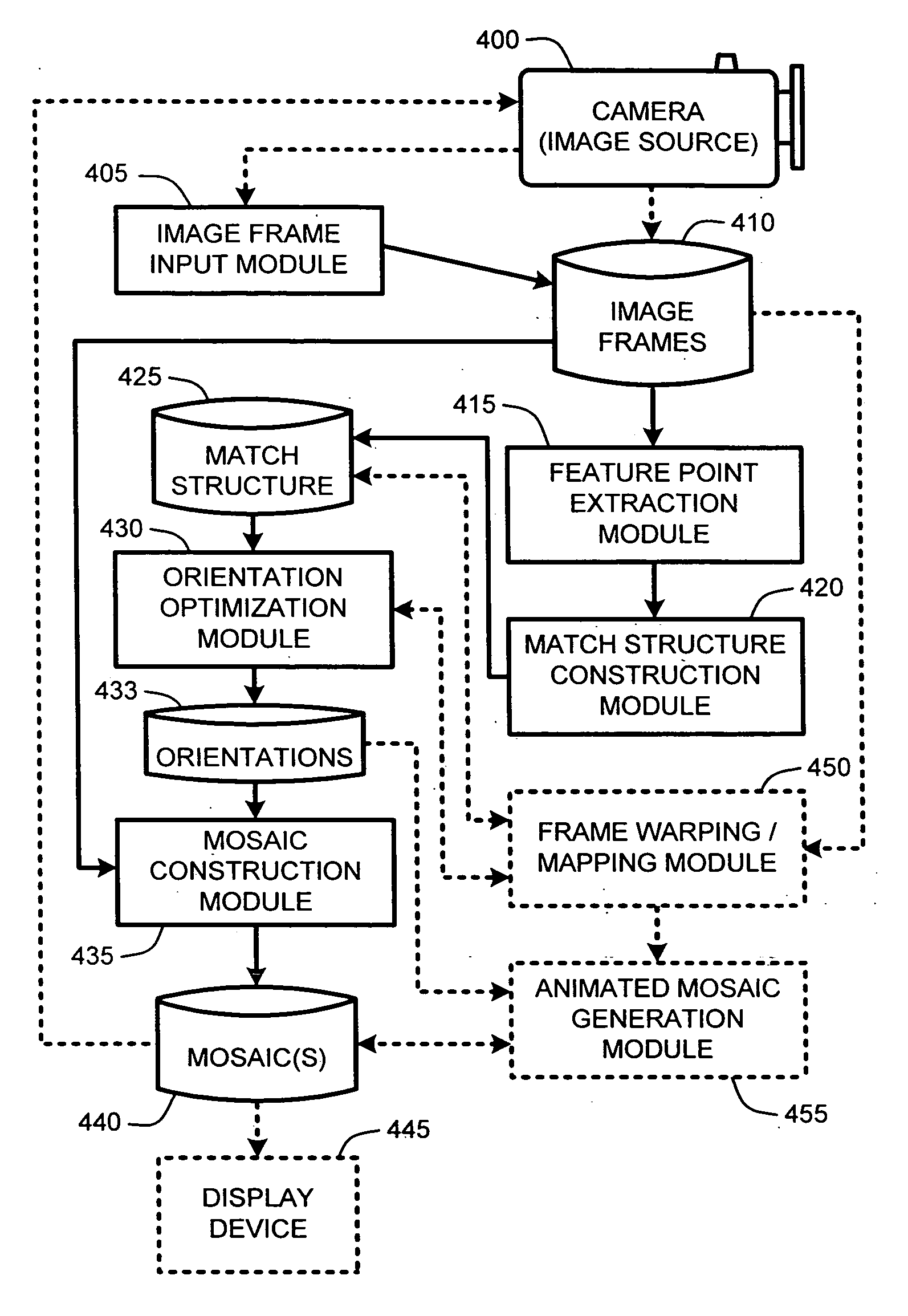

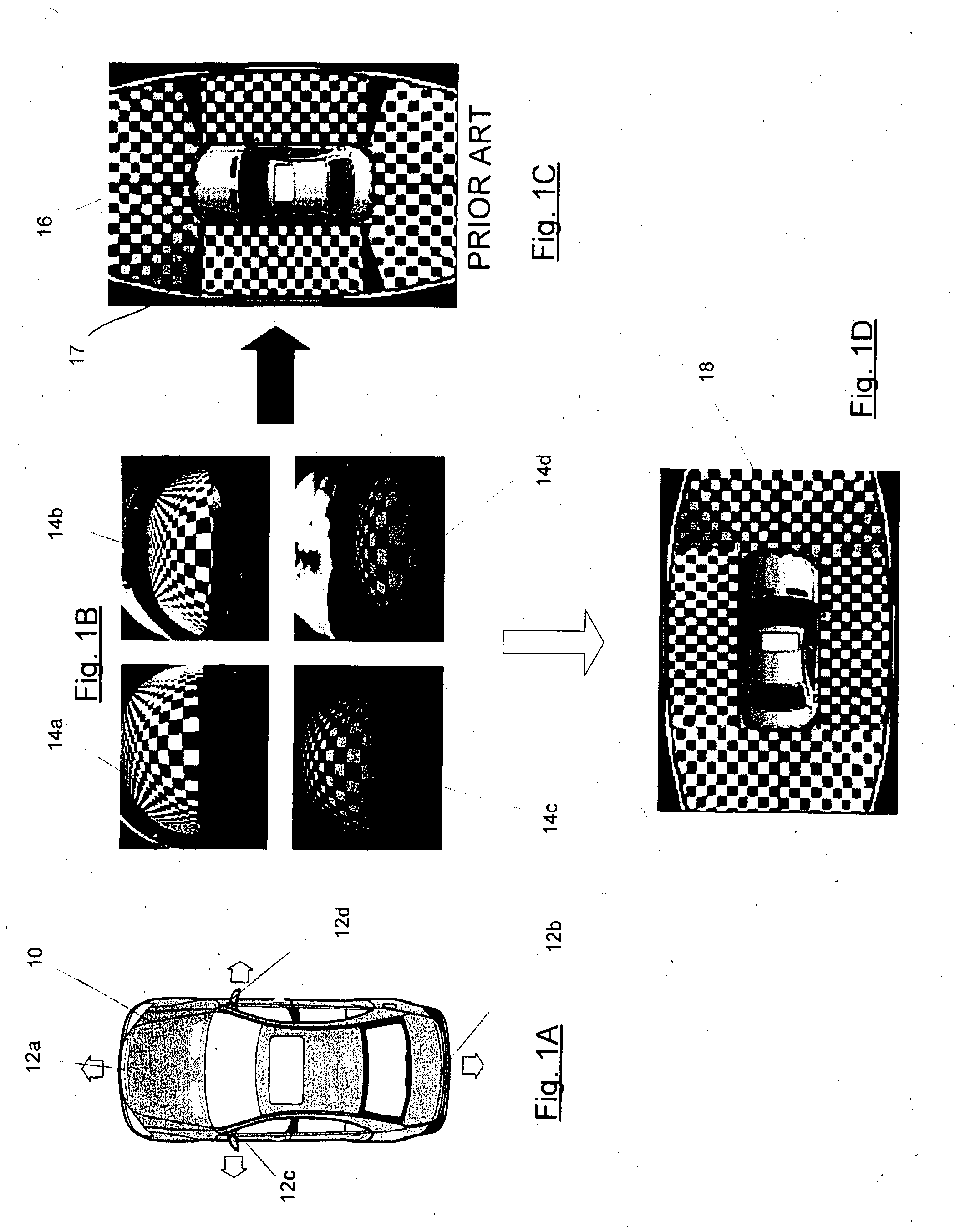

Video registration and image sequence stitching

ActiveUS20070031062A1Reduce computational complexityEasy constructionTelevision system detailsDigital data information retrievalIntermediate imageImage pair

A “Keyframe Stitcher” provides an efficient technique for building mosaic panoramic images by registering or aligning video frames to construct a mosaic panoramic representation. Matching of image pairs is performed by extracting feature points from every image frame and matching those points between image pairs. Further, the Keyframe Stitcher preserves accuracy of image stitching when matching image pairs by utilizing ordering information inherent in the video. The cost of searching for matches between image frames is reduced by identifying “keyframes” based on computed image-to-image overlap. Keyframes are then matched to all other keyframes, but intermediate image frames are only matched to temporally neighboring keyframes and neighboring intermediate frames to construct a “match structure.” Image orientations are then estimated from this match structure and used to construct the mosaic. Matches between image pairs may be compressed to reduce computational overhead by replacing groups of feature points with representative measurements.

Owner:MICROSOFT TECH LICENSING LLC

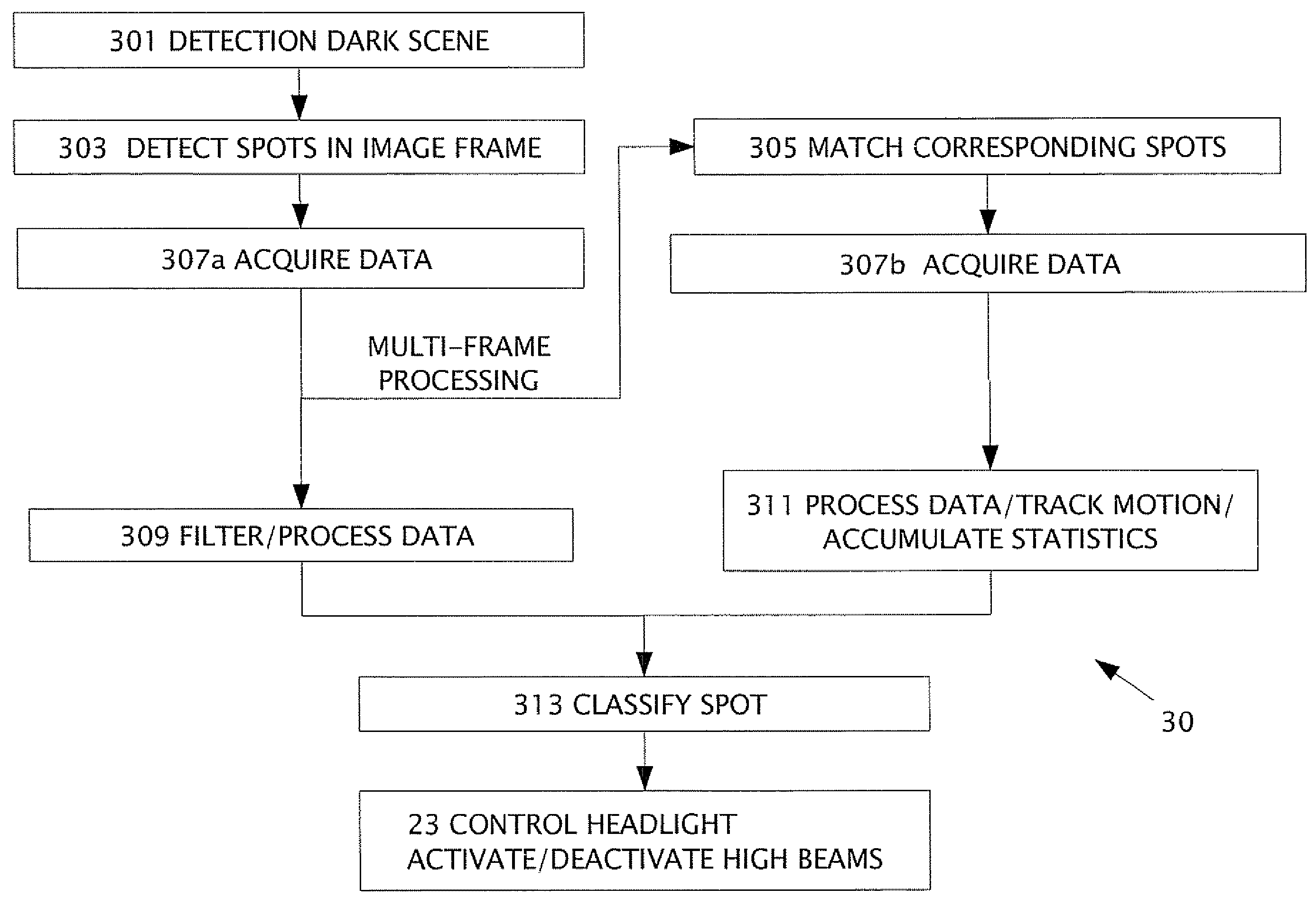

Headlight, taillight and streetlight detection

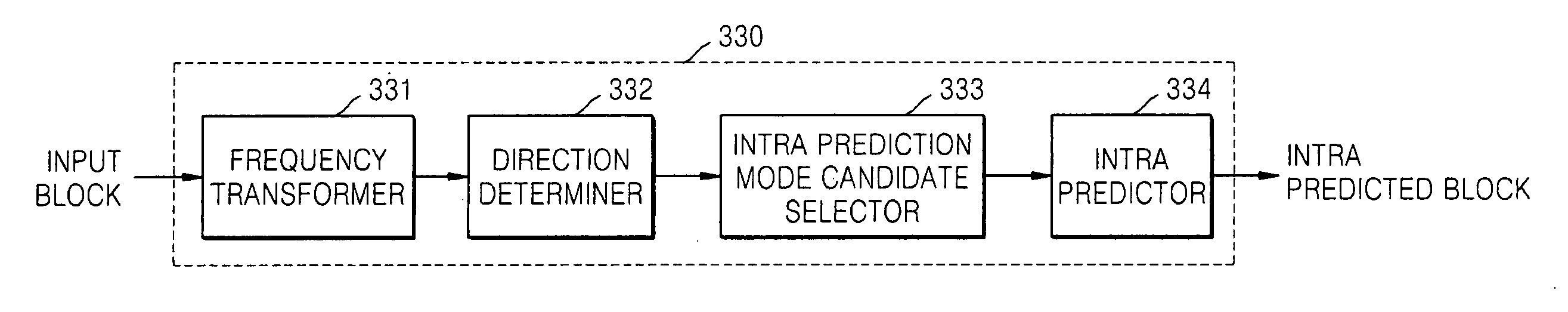

ActiveUS7566851B2Photometry using reference valueVehicle headlampsLane departure warning systemEgo motion estimation

A method in a computerized system including an image sensor mounted in a moving vehicle. The image sensor captures image frames consecutively in real time. In one of the image flames, a spot is detected of measurable brightness; the spot is matched in subsequent image frames. The image frames are available for sharing between the computerized system and another vehicle control system. The spot and the corresponding spot are images of the same object. The object is typically one or more of headlights from an oncoming vehicle, taillights of a leading vehicle, streetlights, street signs and / or traffic signs. Data is acquired from the spot and from the corresponding spot. By processing the data, the object (or spot) is classified. producing an object classification. The vehicle control system controls preferably headlights of the moving vehicle based on the object classification. The other vehicle control system using the image frames is one or more of: lane departure warning system, collision warning system and / or ego-motion estimation system.

Owner:MOBILEYE VISION TECH LTD

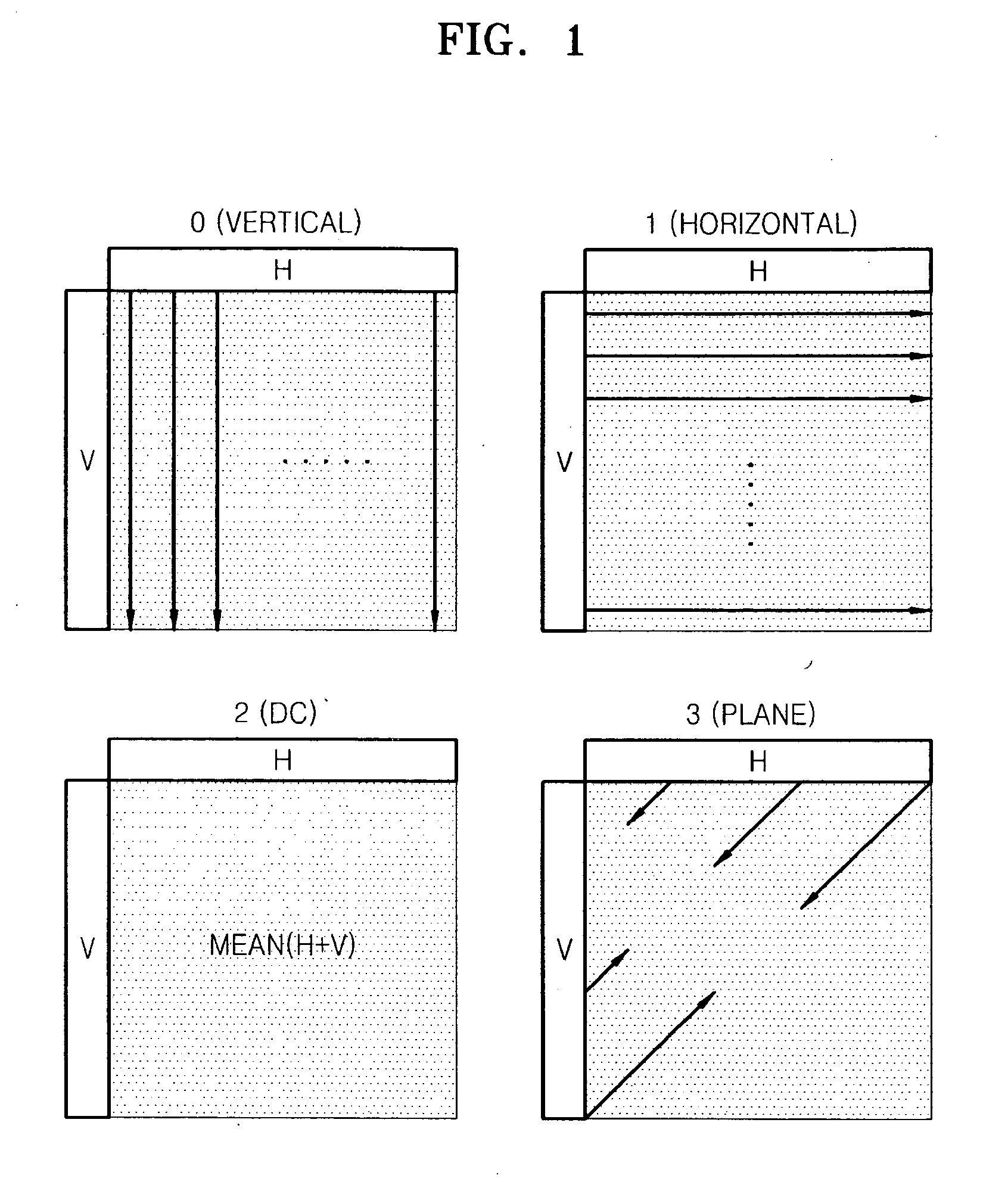

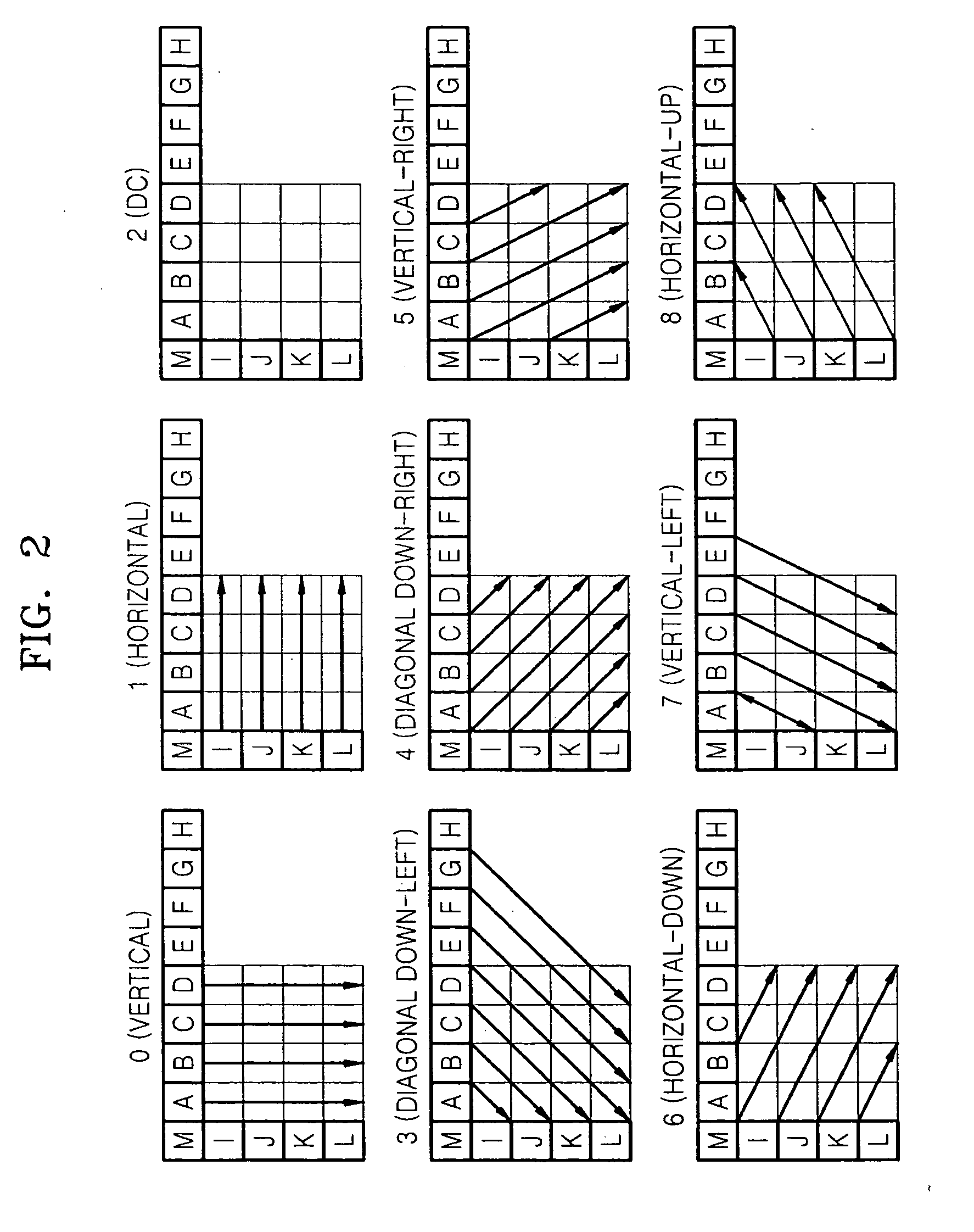

Method and device for intra prediction coding and decoding of image

ActiveUS20070133891A1Reduce complexityReducing complexity and amount of calculationCharacter and pattern recognitionDigital video signal modificationImage frame

Owner:SAMSUNG ELECTRONICS CO LTD

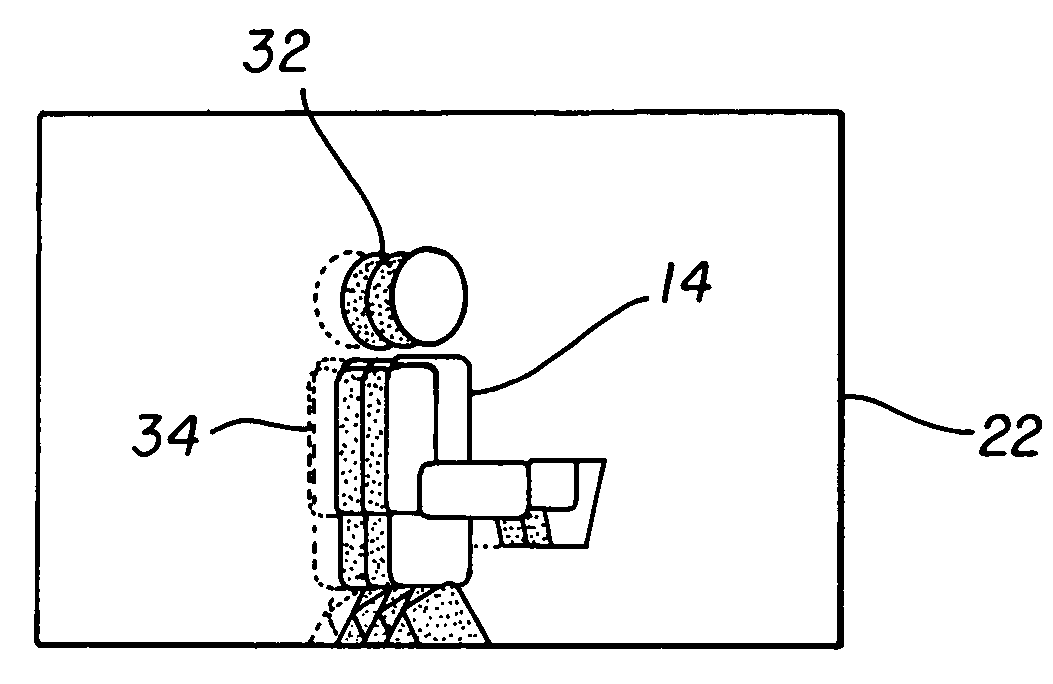

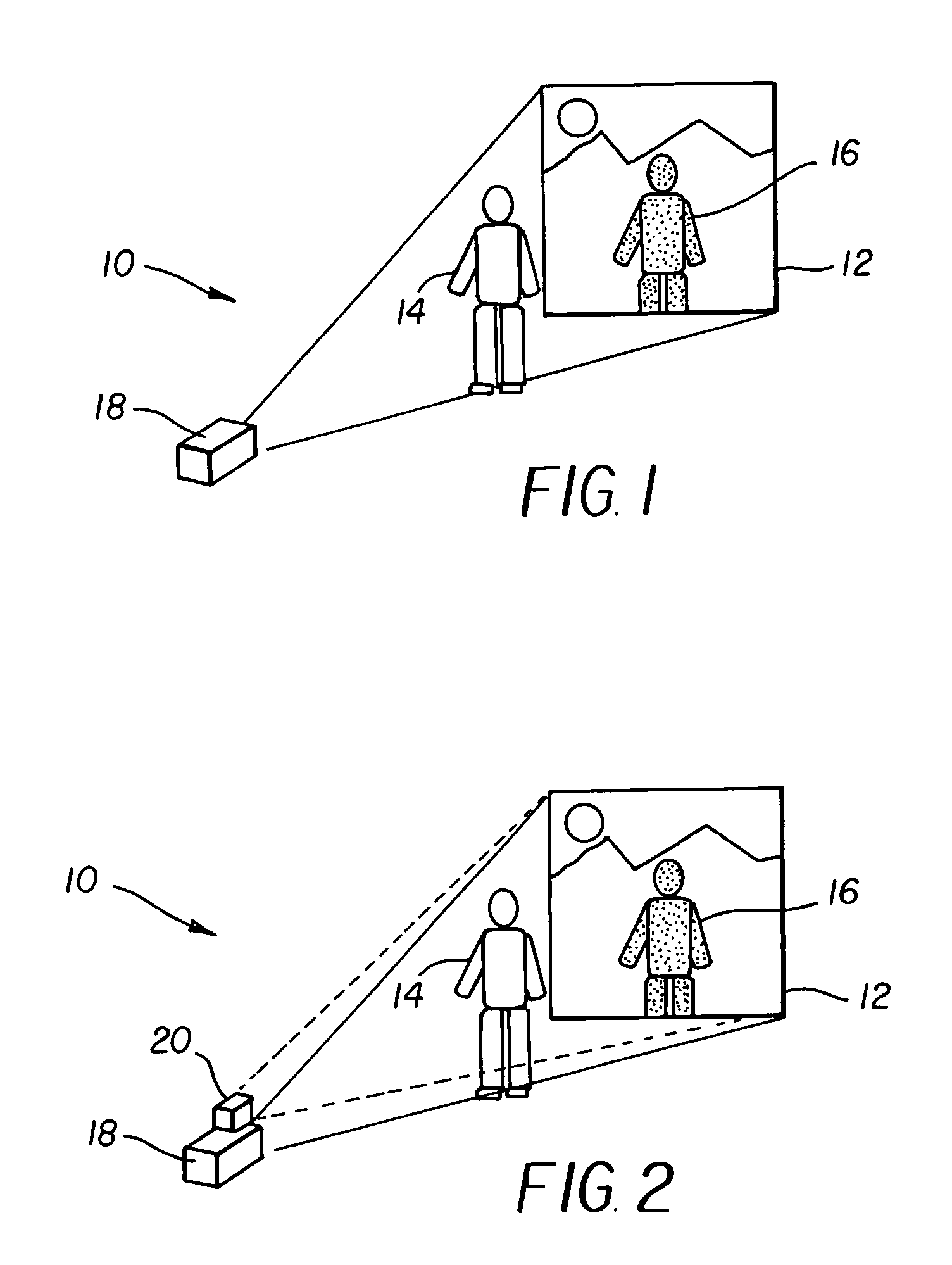

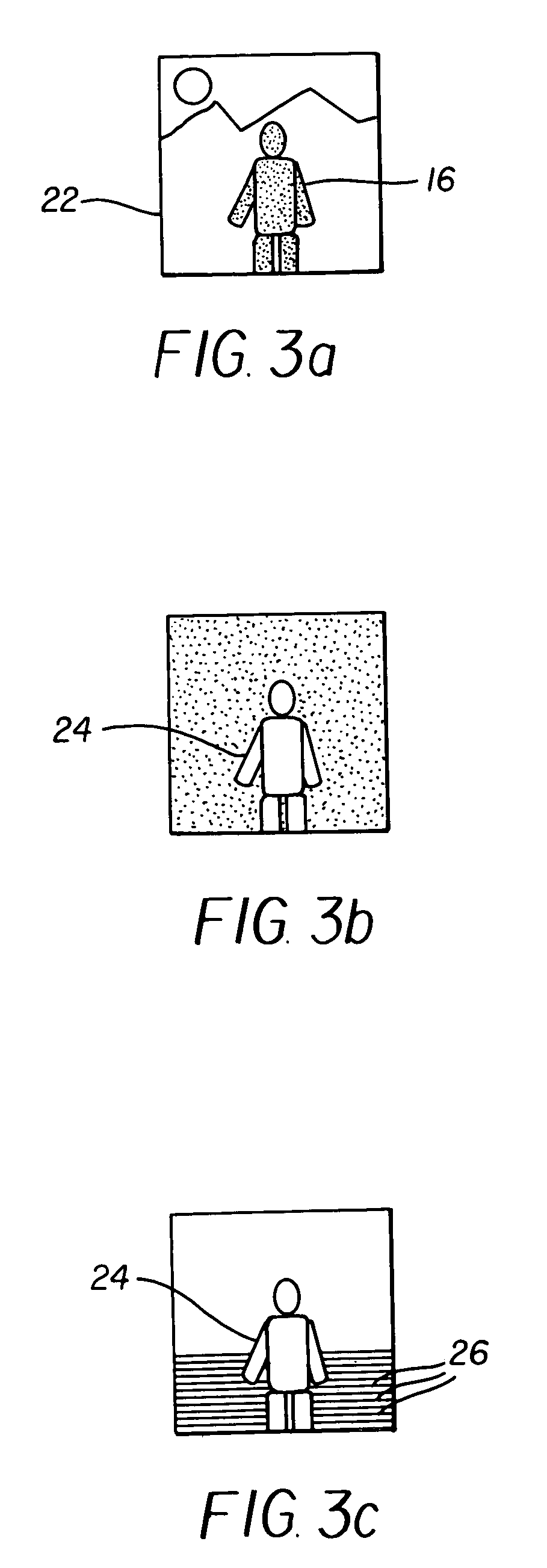

Laser projector having silhouette blanking for objects in the output light path

A projection apparatus (18) forms an image frame (22) on a display surface (12), where the image frame (22) is a two-dimensional array of pixels. The projection apparatus (18) has a laser (40) light source, an image modulator (42) for forming an image-bearing beam according to scanned line data, and projection optics (44) for projecting the image-bearing beam toward the display surface (12). A camera (20) obtains a sensed pixel array by sensing the two-dimensional array of pixels from the display surface (12). A control logic processor (28) compares the sensed pixel array with corresponding image data to identify any portion of the image-bearing beam that is obstructed from the display surface (12) and to disable obstructed portions of the image-bearing beam for at least one subsequent image frame (22).

Owner:IMAX THEATERS INT

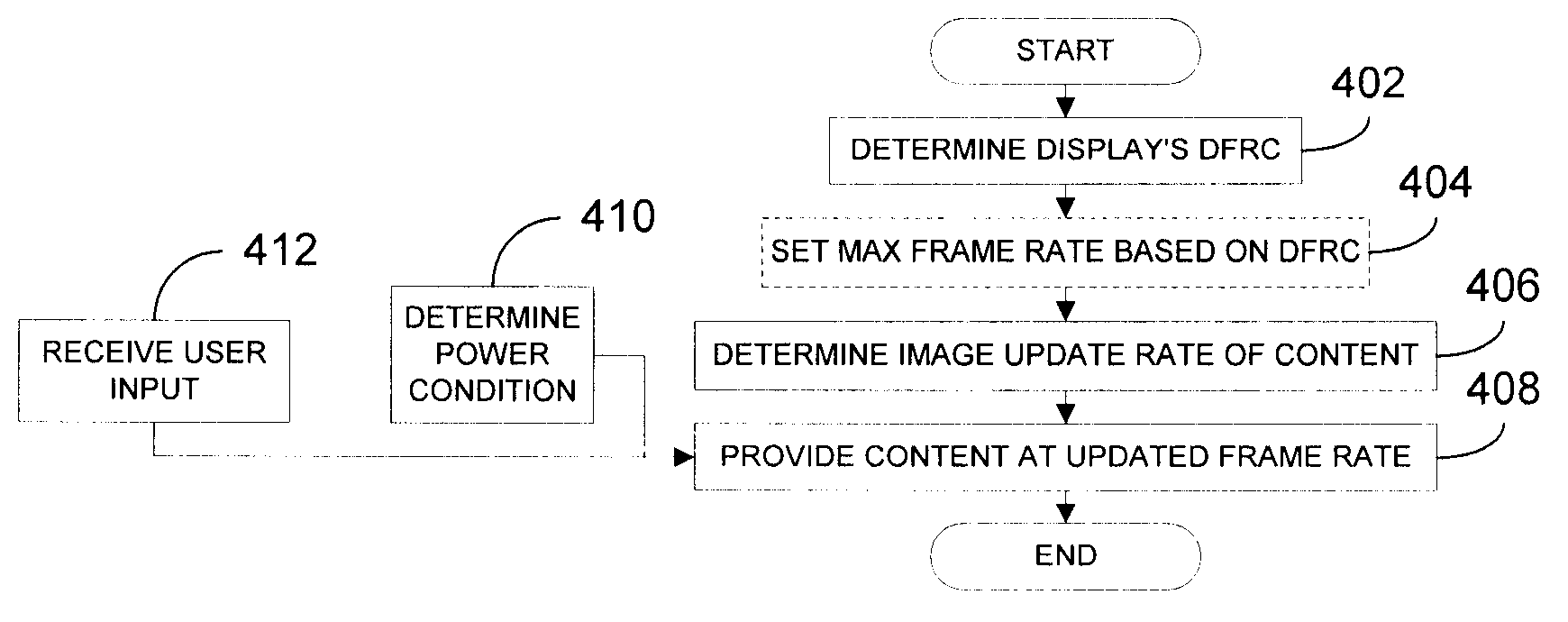

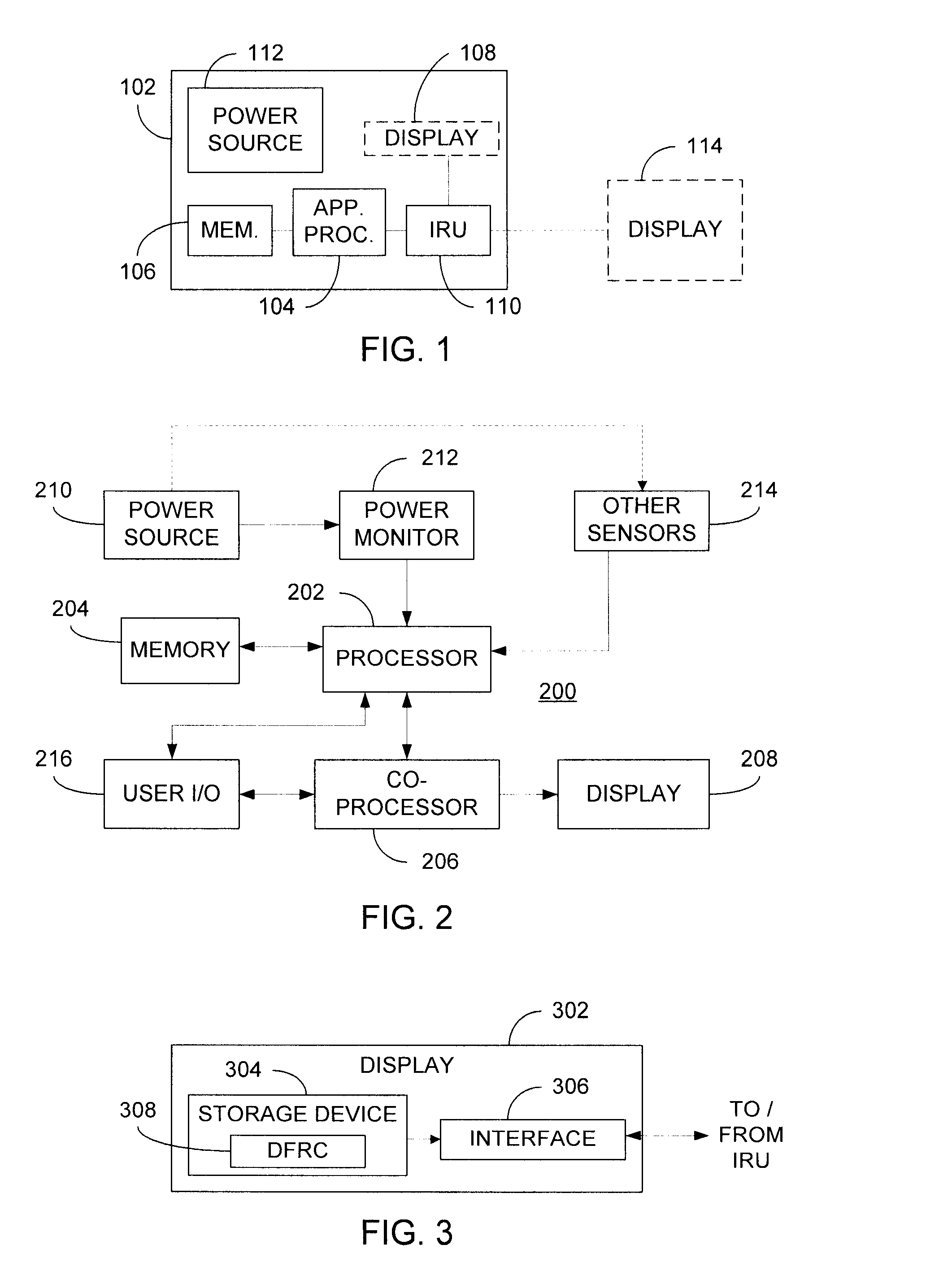

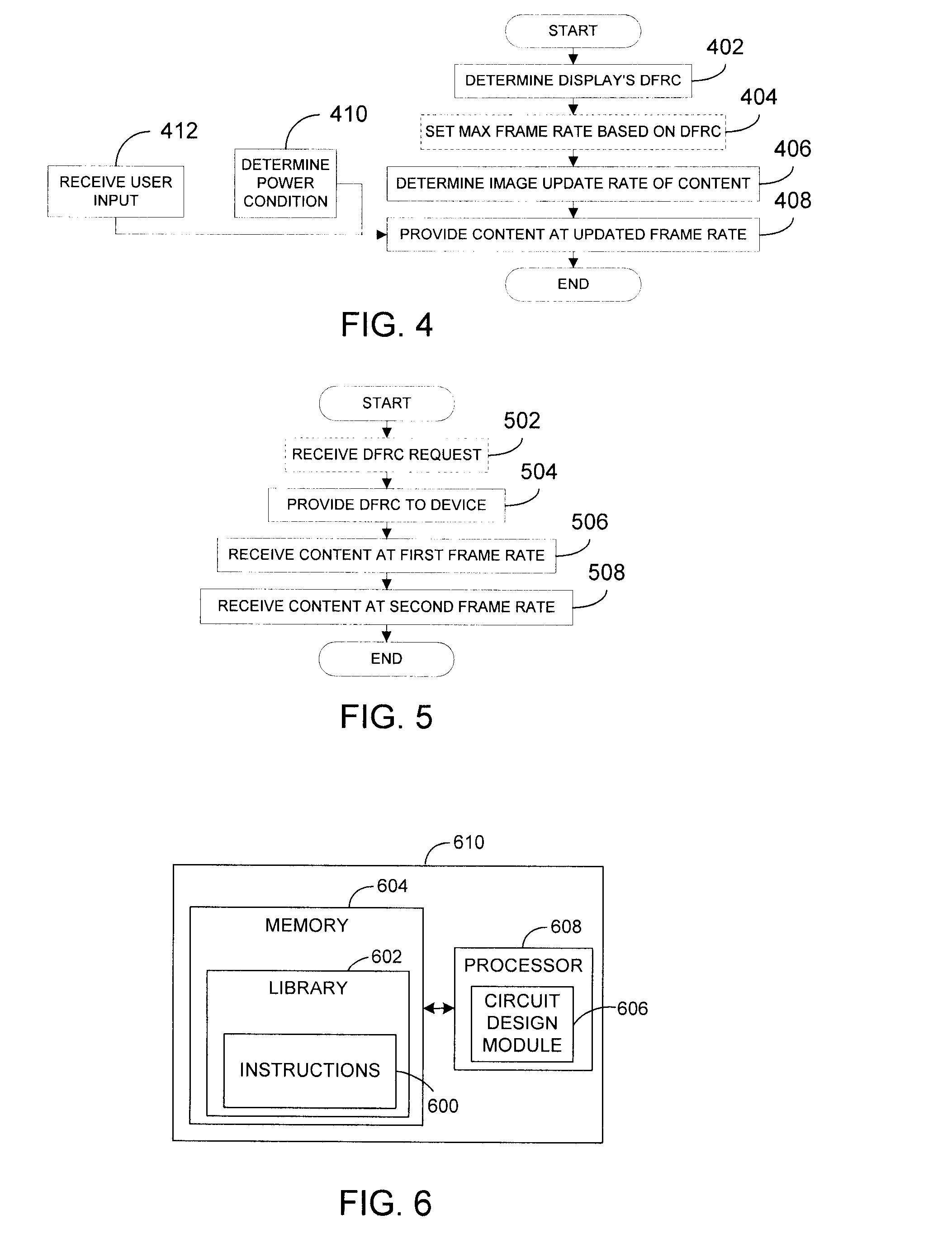

Dynamic frame rate adjustment

InactiveUS20080055318A1Television system detailsStatic indicating devicesComputer graphics (images)Display device

An image rendering unit (IRU) of a device determines the dynamic frame rate capabilities (DFRCs) of a display and an image frame rate of content to be displayed. Preferably, the DFRCs are stored in a storage device deployed within the display itself. Based on the DFRCs and the image frame rate for the content, the IRU determines an updated frame rate and thereafter provides the content to the display at the updated frame rate. Where control of power consumption is desired, selection of a reduced frame rate can effect a power savings. In this manner, the present invention provides flexible control over display frame rates and / or power consumption of the device.

Owner:ATI TECH INC

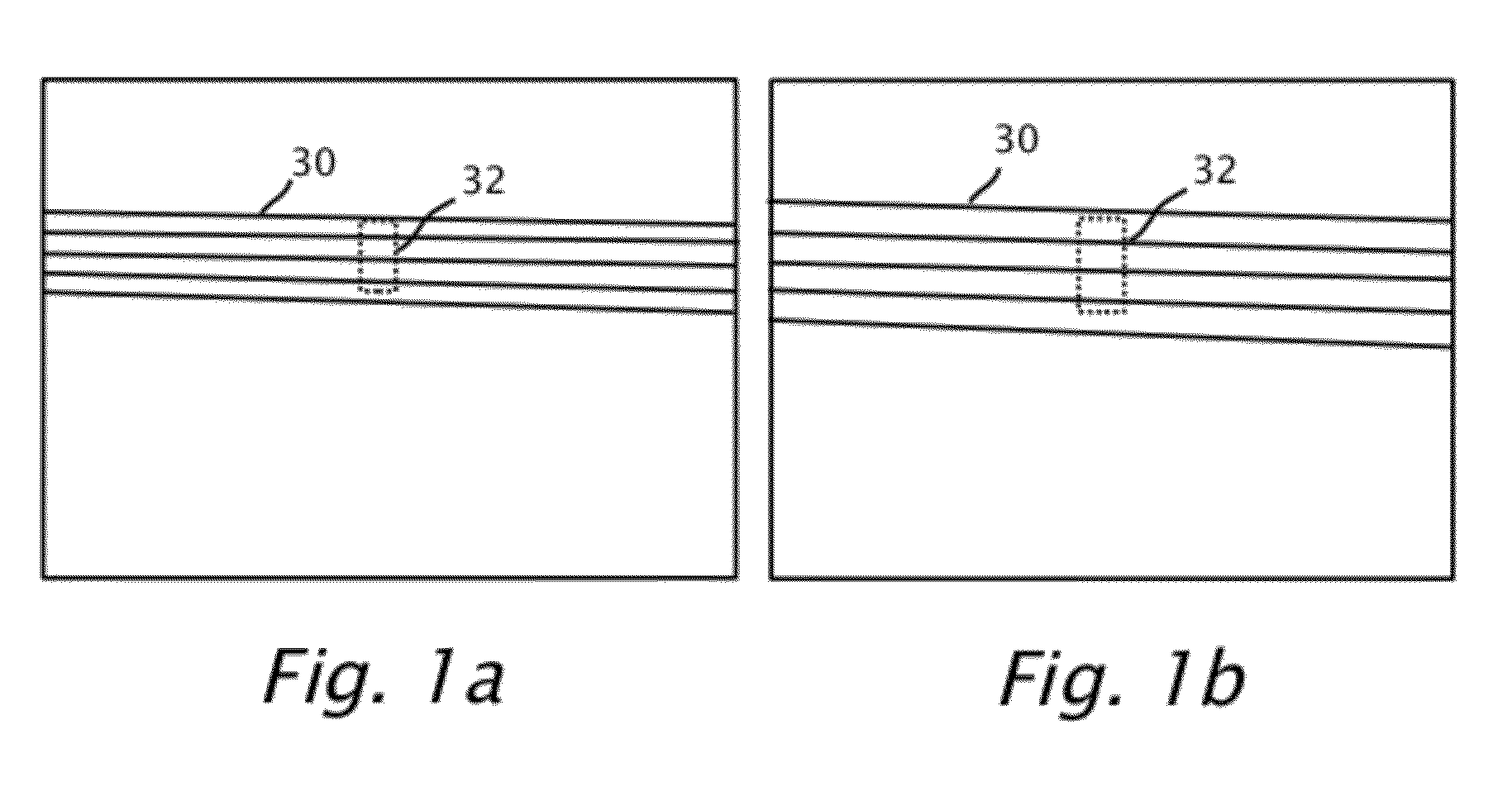

Pedestrian collision warning system

ActiveUS20120314071A1Avoid collisionEliminate and reduce false collision warningImage enhancementImage analysisSimulationOptical flow

A method is provided for preventing a collision between a motor vehicle and a pedestrian. The method uses a camera and a processor mountable in the motor vehicle. A candidate image is detected. Based on a change of scale of the candidate image, it may be determined that the motor vehicle and the pedestrian are expected to collide, thereby producing a potential collision warning. Further information from the image frames may be used to validate the potential collision warning. The validation may include an analysis of the optical flow of the candidate image, that lane markings prediction of a straight road, a calculation of the lateral motion of the pedestrian, if the pedestrian is crossing a lane mark or curb and / or if the vehicle is changing lanes.

Owner:MOBILEYE VISION TECH LTD

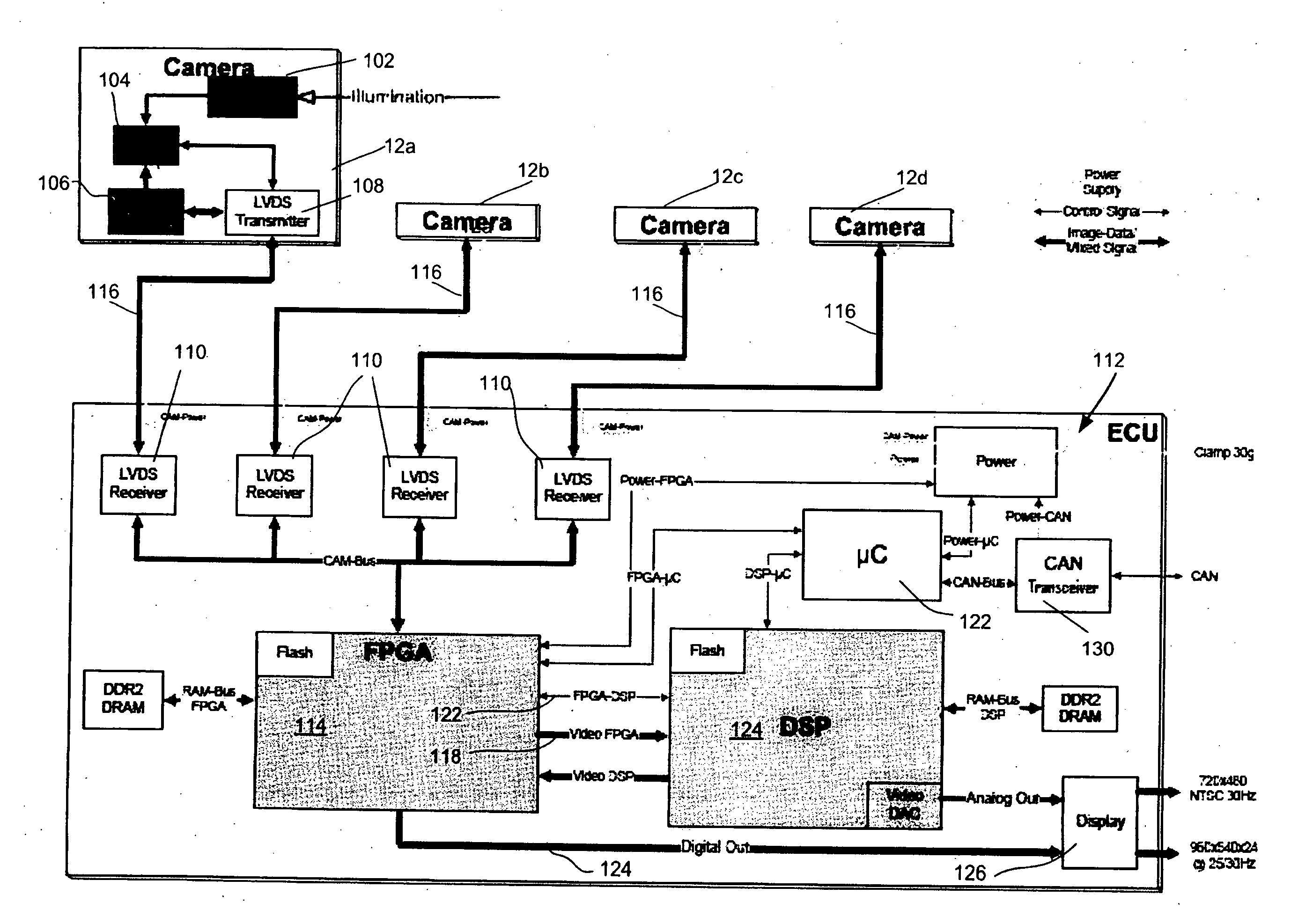

Method and system for dynamically calibrating vehicular cameras

A method of dynamically calibrating a given camera relative to a reference camera of a vehicle includes identifying an overlapping region in an image frame provided by the given camera and an image frame provided by the reference camera and selecting at least a portion of an object in the overlapped region of the reference image frame. Expected pixel positions of the selected object portion in the given image frame is determined based on the location of the selected object portion in the reference image frame, and pixel positions of the selected object portion are located as detected in the given image frame. An alignment of the given camera is determined based on a comparison of the pixel positions of the selected object portion in the given image frame to the expected pixel positions of the selected object portion in the given image frame.

Owner:MAGNA ELECTRONICS

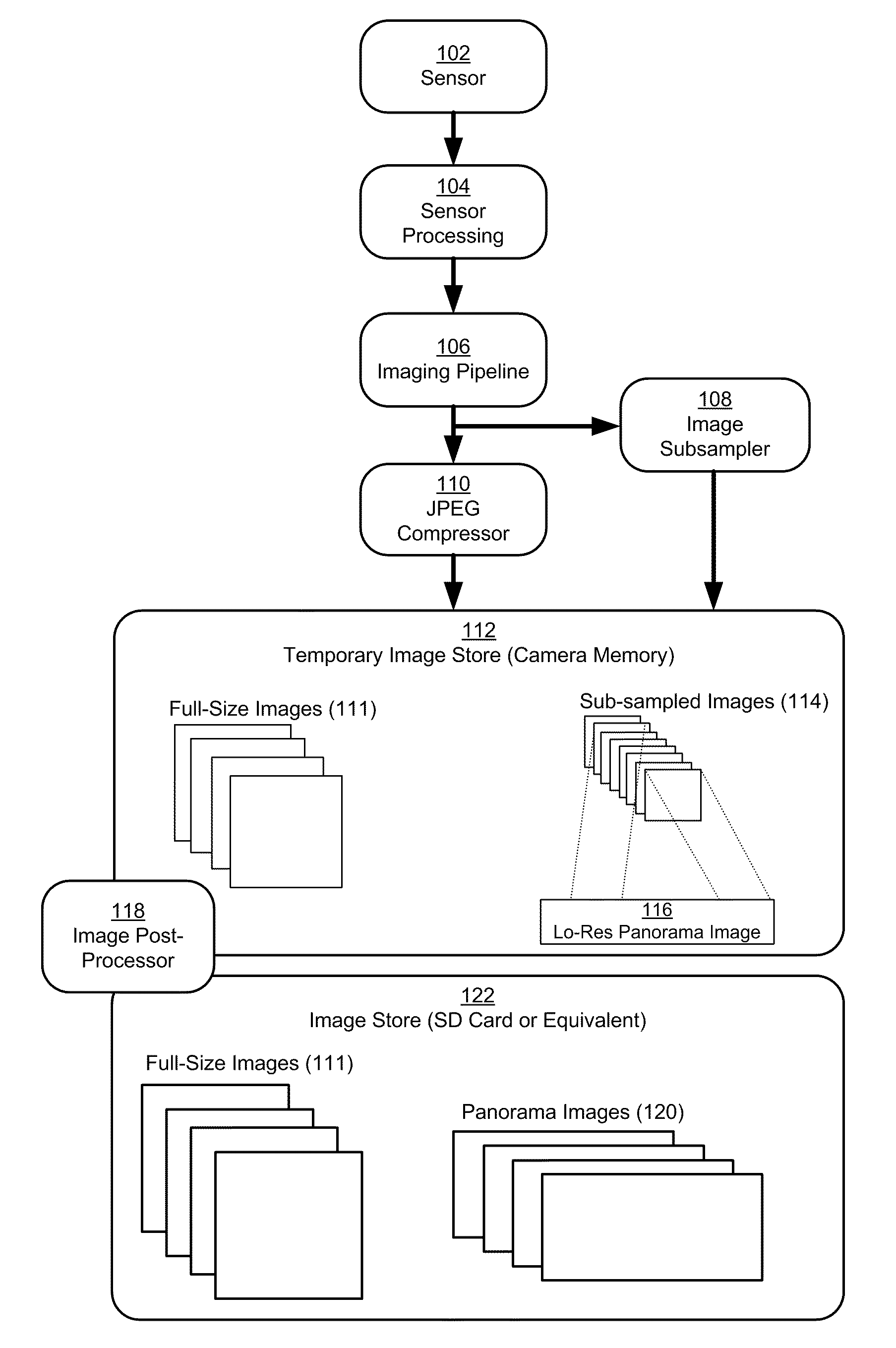

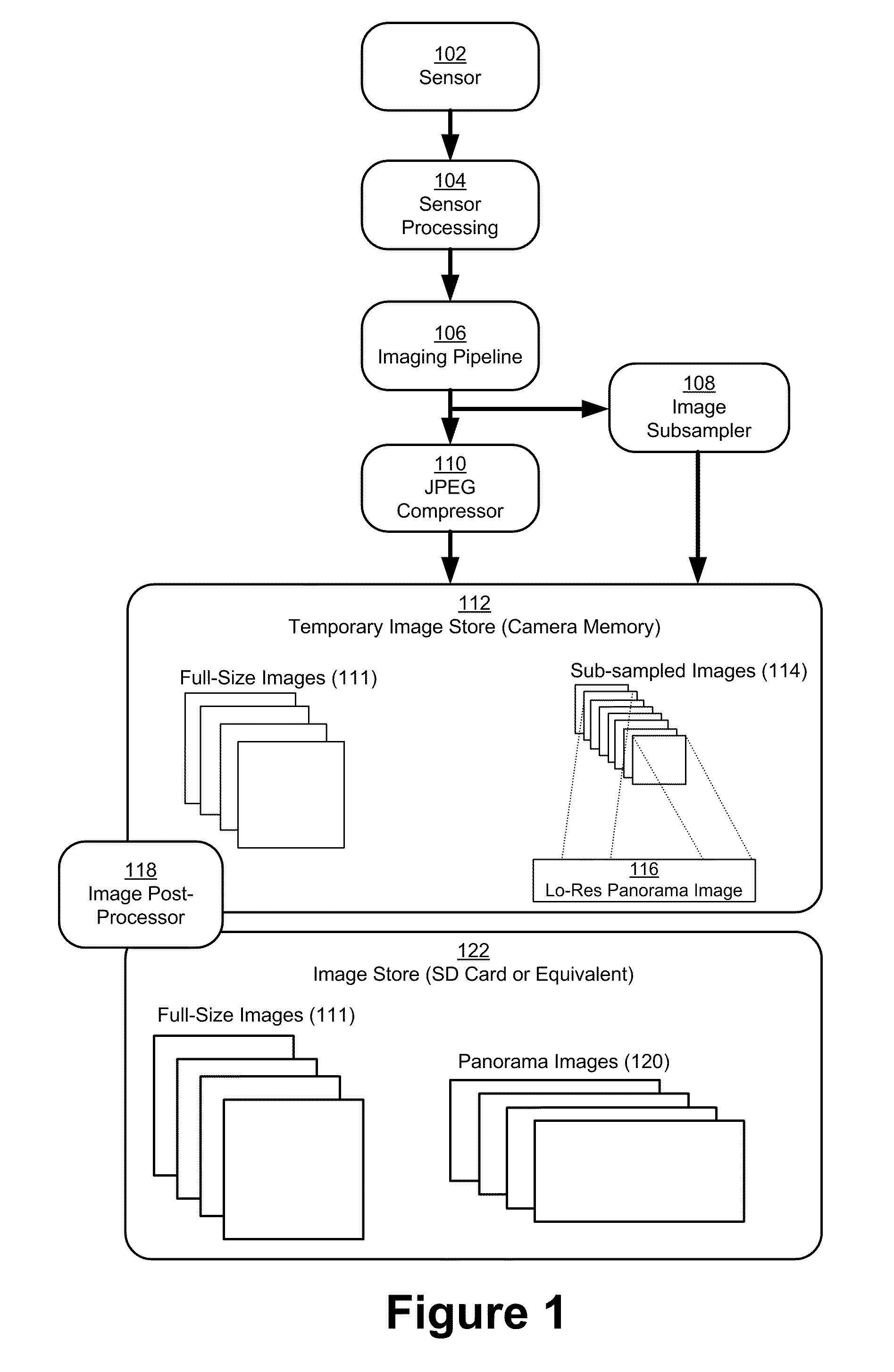

Stereoscopic (3D) panorama creation on handheld device

A technique of generating a stereoscopic panorama image includes panning a portable camera device, and acquiring multiple image frames. Multiple at least partially overlapping image frames are acquired of portions of the scene. The method involves registering the image frames, including determining displacements of the imaging device between acquisitions of image frames. Multiple panorama images are generated including joining image frames of the scene according to spatial relationships and determining stereoscopic counterpart relationships between the multiple panorama images. The multiple panorama images are processed based on the stereoscopic counterpart relationships to form a stereoscopic panorama image.

Owner:FOTONATION LTD

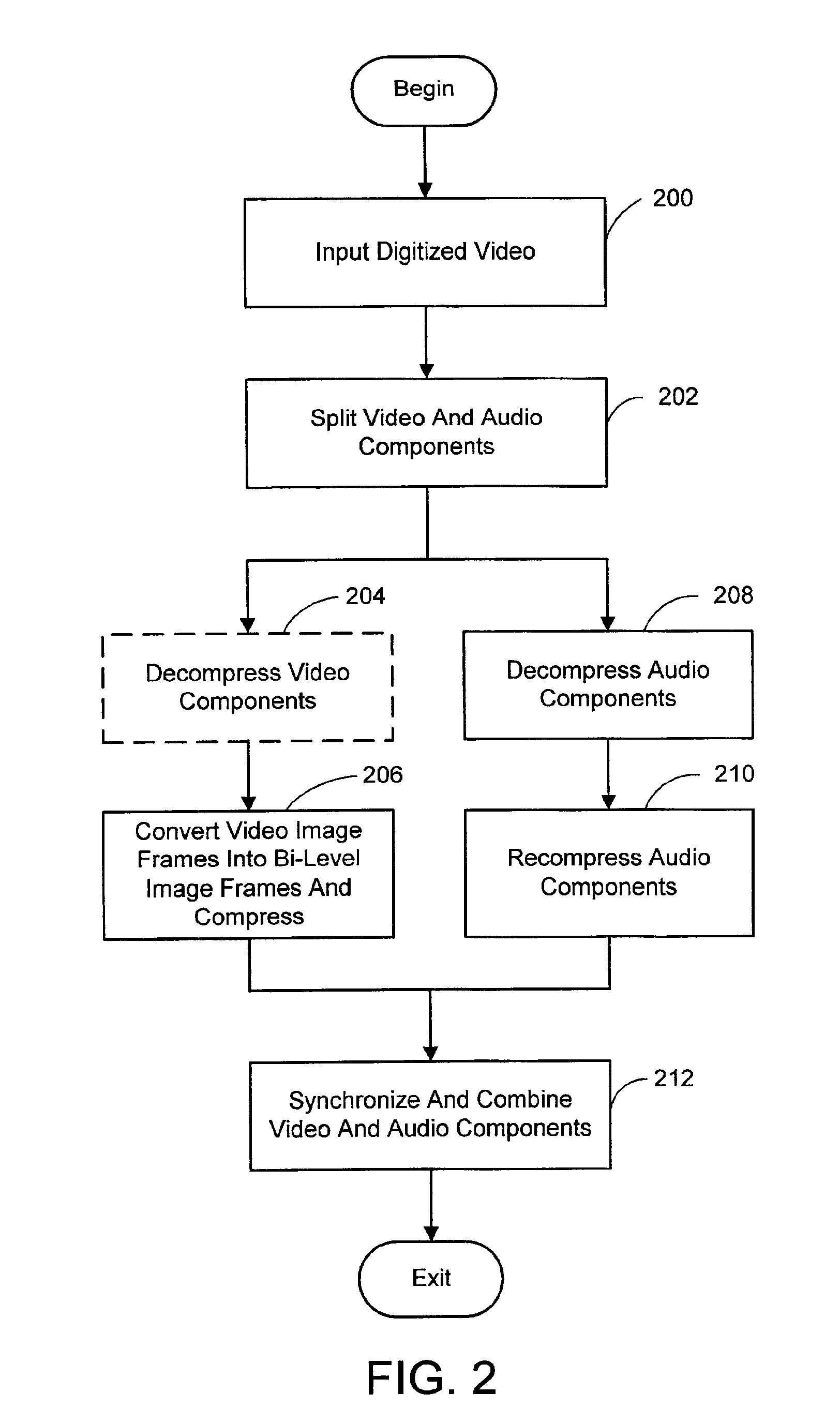

System and process for broadcast and communication with very low bit-rate bi-level or sketch video

InactiveUS6888893B2Clear imagingLow bandwidthPicture reproducers using cathode ray tubesPicture reproducers with optical-mechanical scanningComputer graphics (images)Arithmetic coding

A system and process for broadcast and communication with bi-level or sketch video at extremely low bandwidths is described. Essentially, bi-level and sketch video presents the outlines of the objects in a scene being depicted. Bi-level and sketch video provides a clearer shape, smoother motion, shorter initial latency and cheaper computational cost than do conventional DCT-based video compression methods. This is accomplished by converting each color or gray-scale image frame to bi-level or sketch image frame using adaptive thresholding method, compressing bi-level or sketch image frames into bi-level or sketch video using adaptive context-based arithmetic coding method. Bi-level or sketch video is particularly suitable to such small devices as Pocket PCs and mobile phones that possess small display screen, low bandwidth connection, and light computational power.

Owner:ZHIGU HLDG

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com