Patents

Literature

1014 results about "Candidate image" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

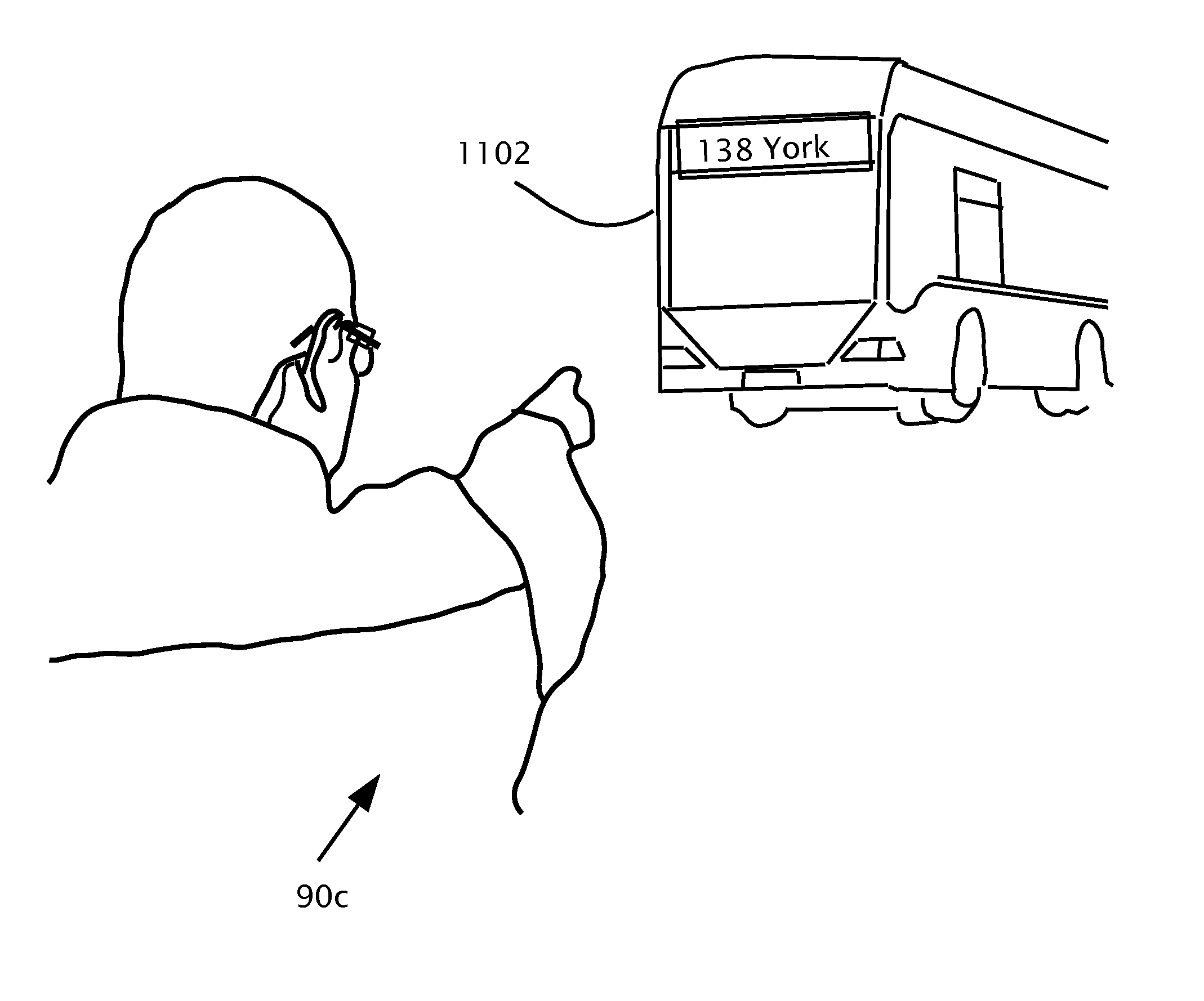

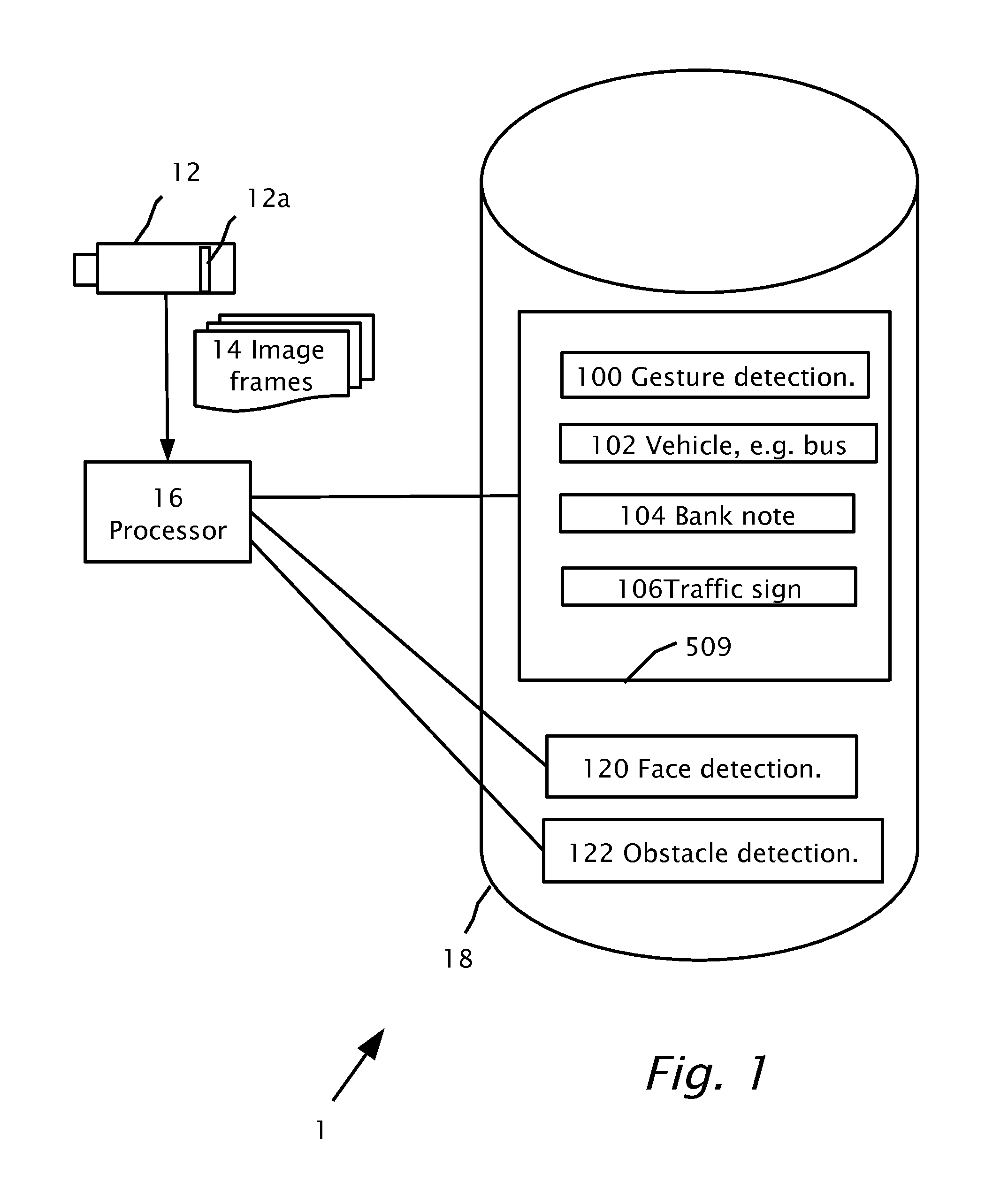

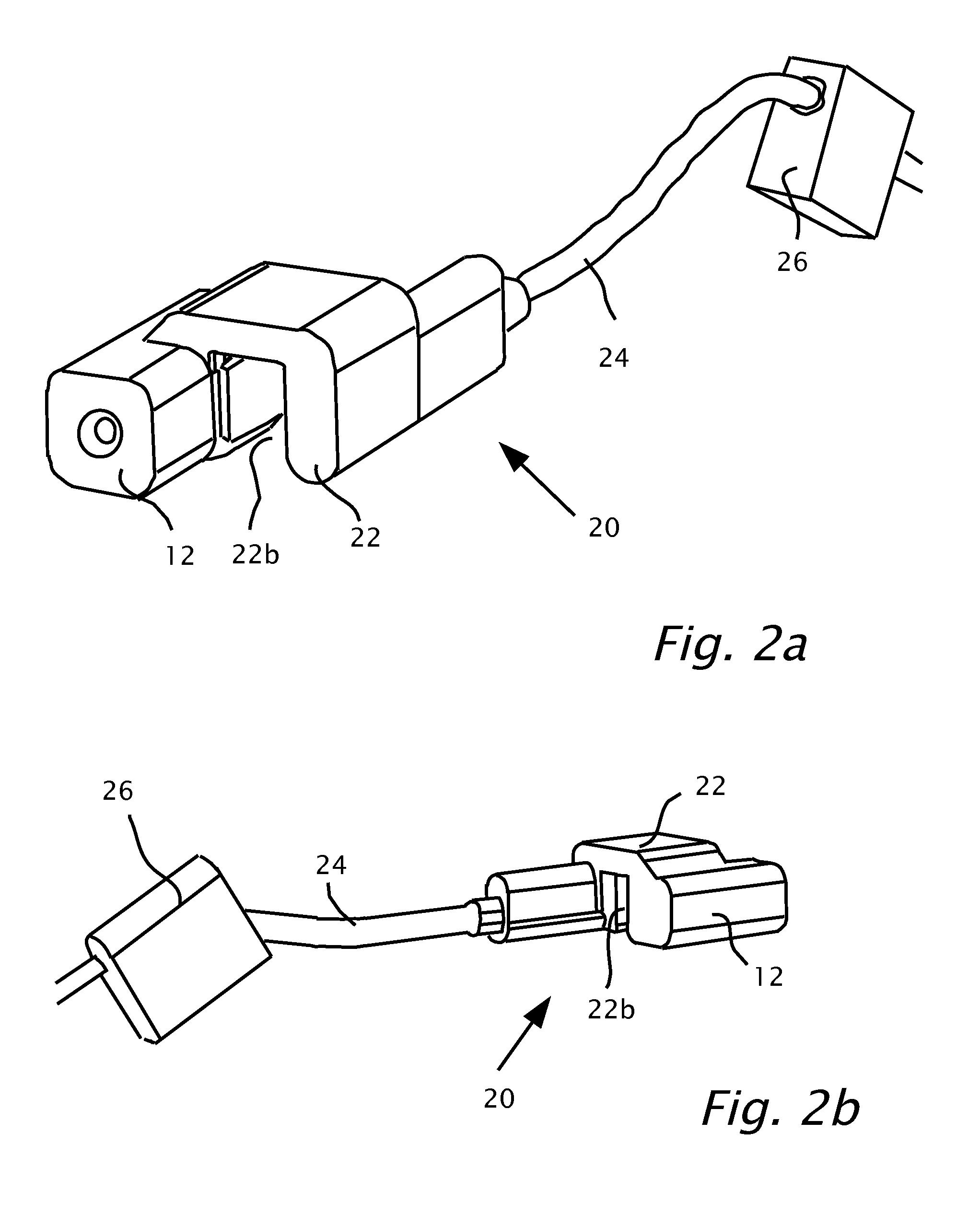

User wearable visual assistance system

InactiveUS20120212593A1High resolutionCharacter and pattern recognitionColor television detailsMultiple imageImage frame

A visual assistance device wearable by a person. The device includes a camera and a processor. The processor captures multiple image frames from the camera. A candidate image of an object is searched in the image frames. The candidate image may be classified as an image of a particular object or in a particular class of objects and is thereby recognized. The person is notified of an attribute related to the object.

Owner:ORCAM TECH

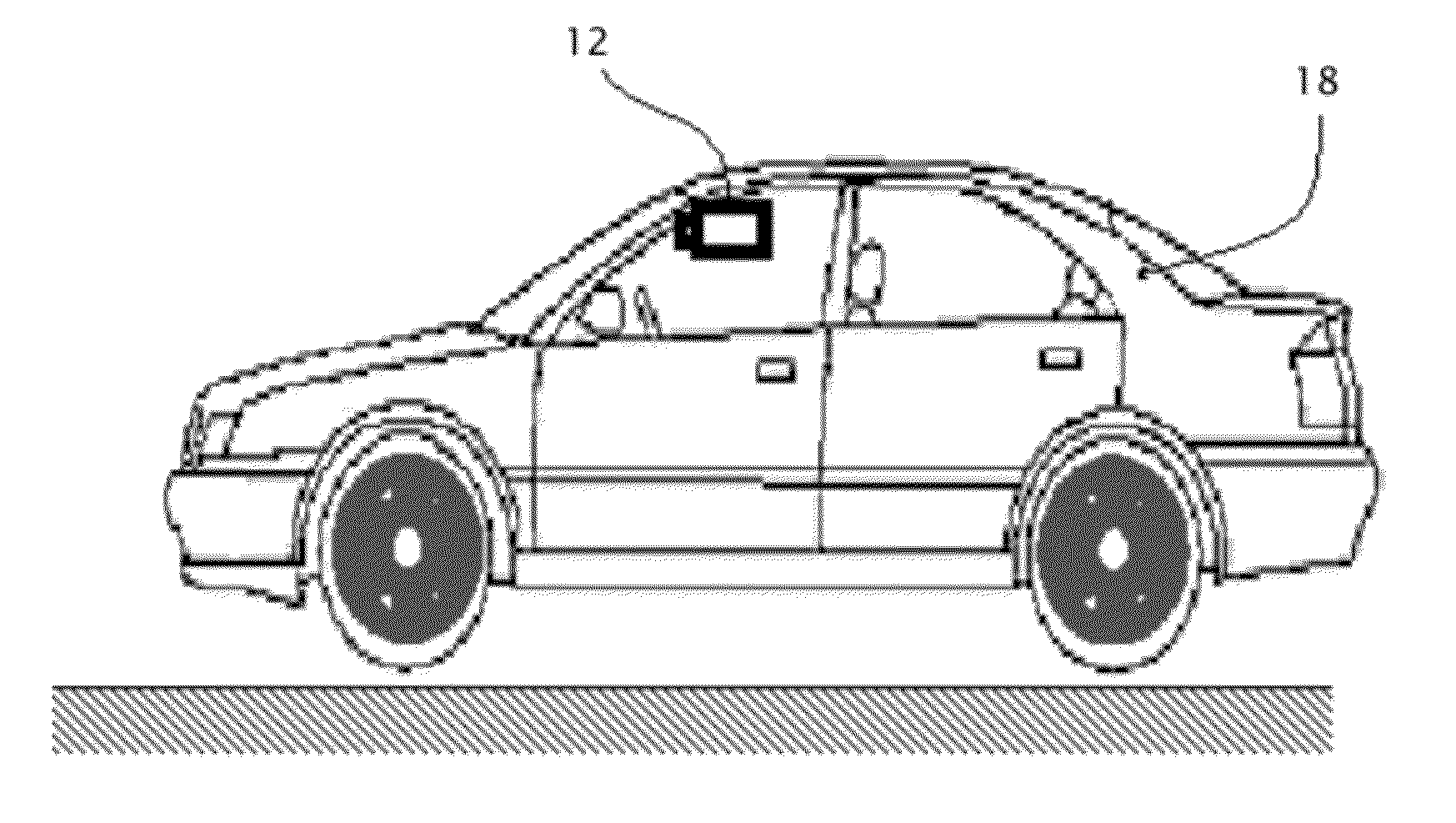

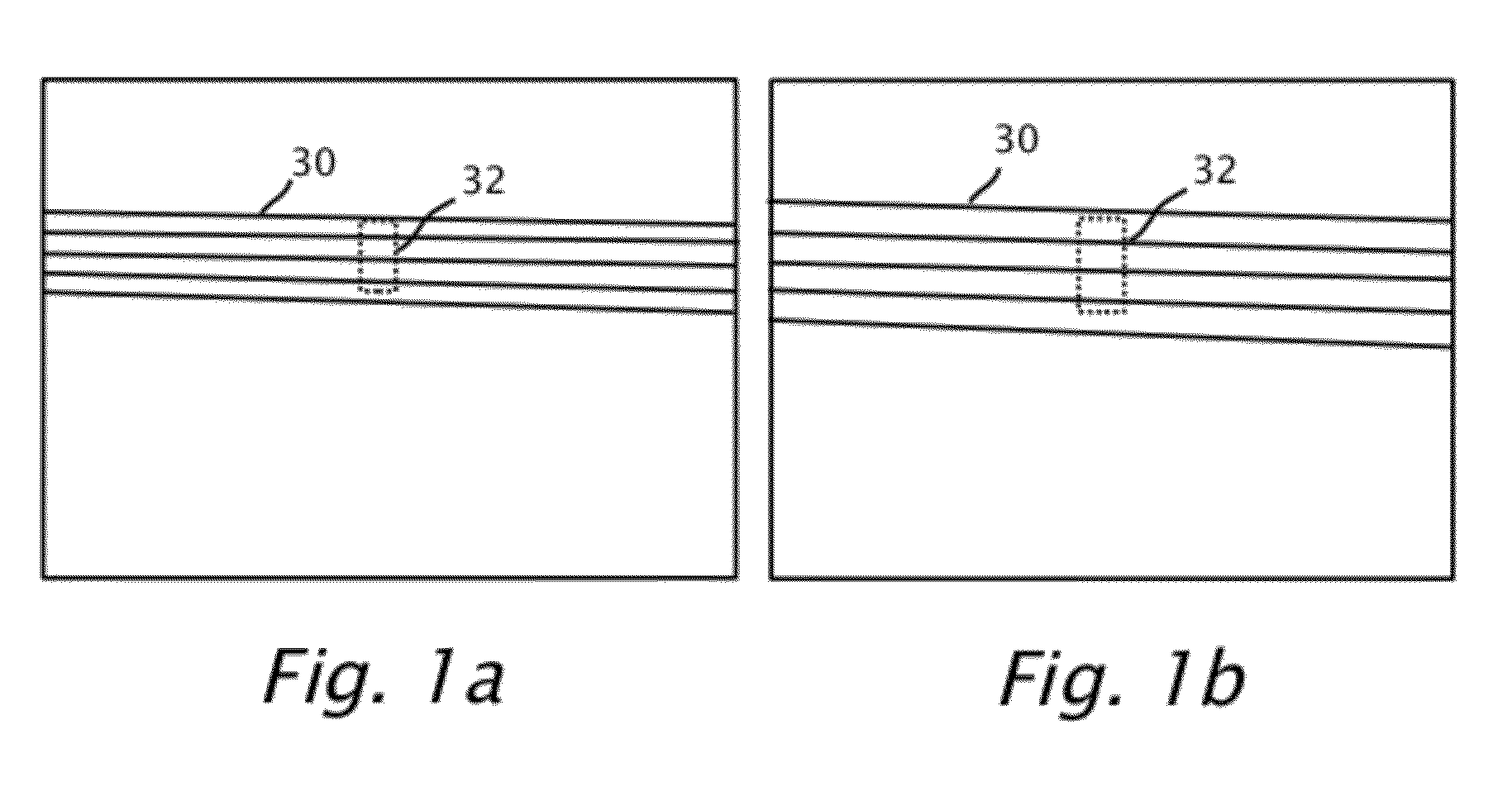

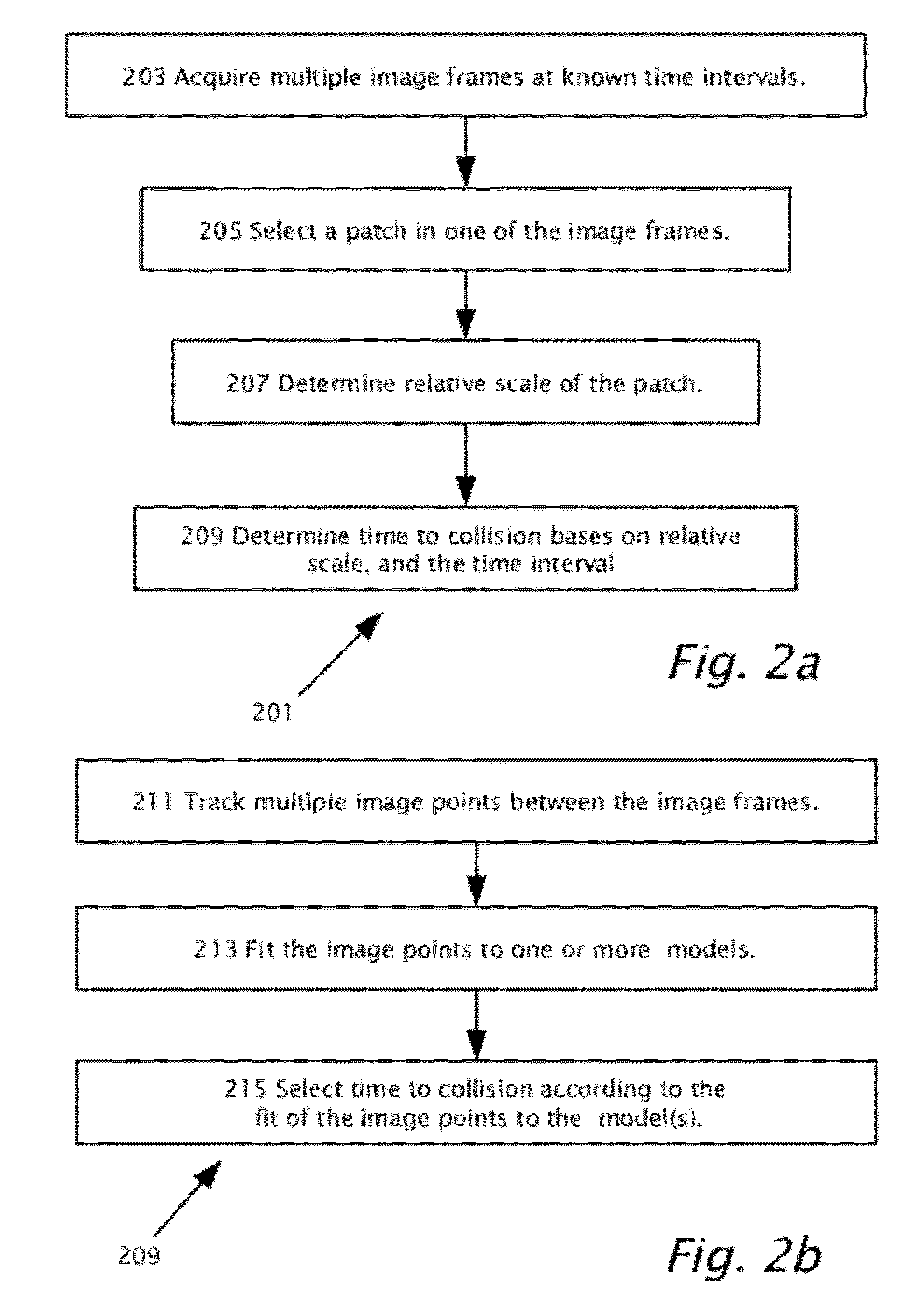

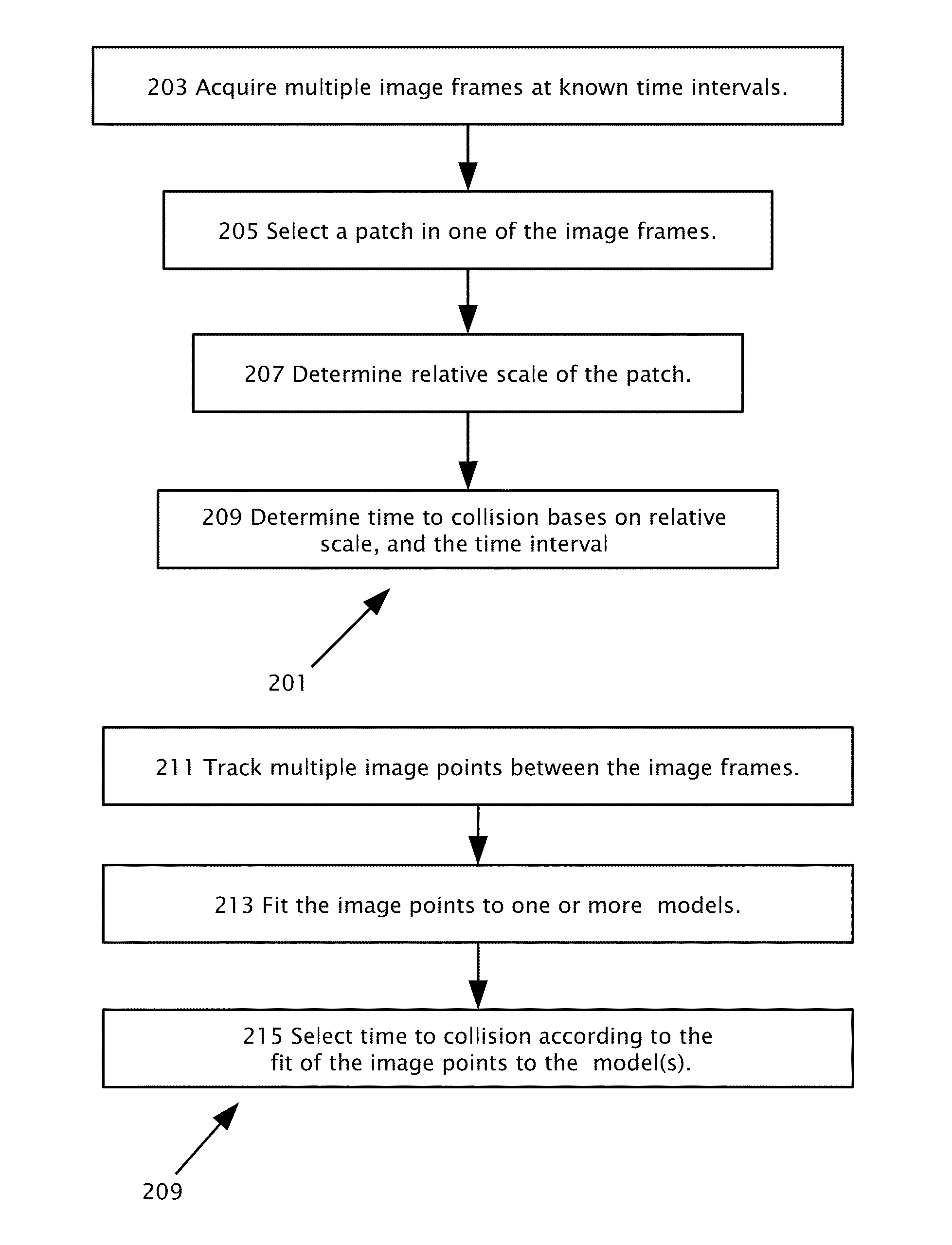

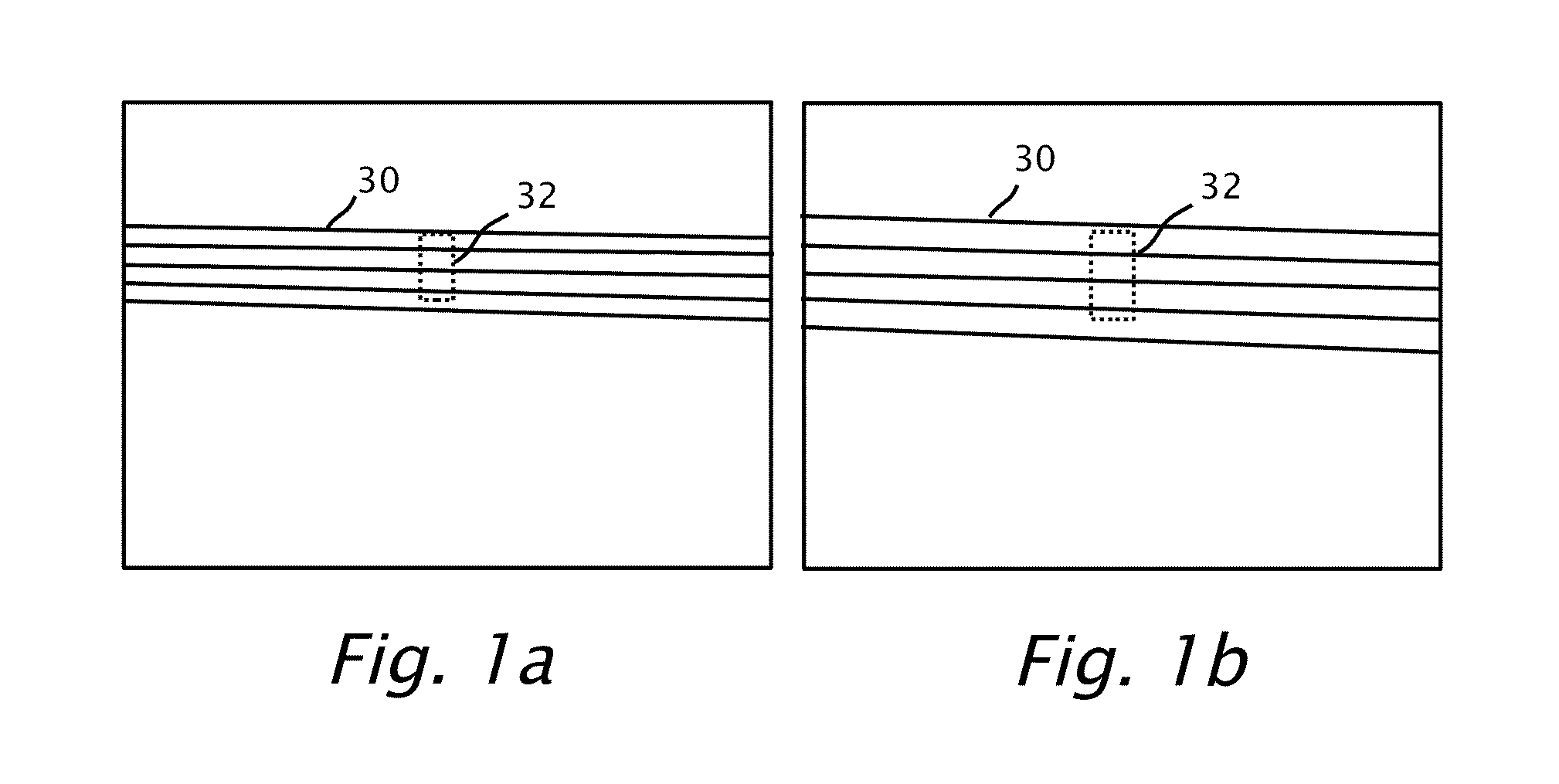

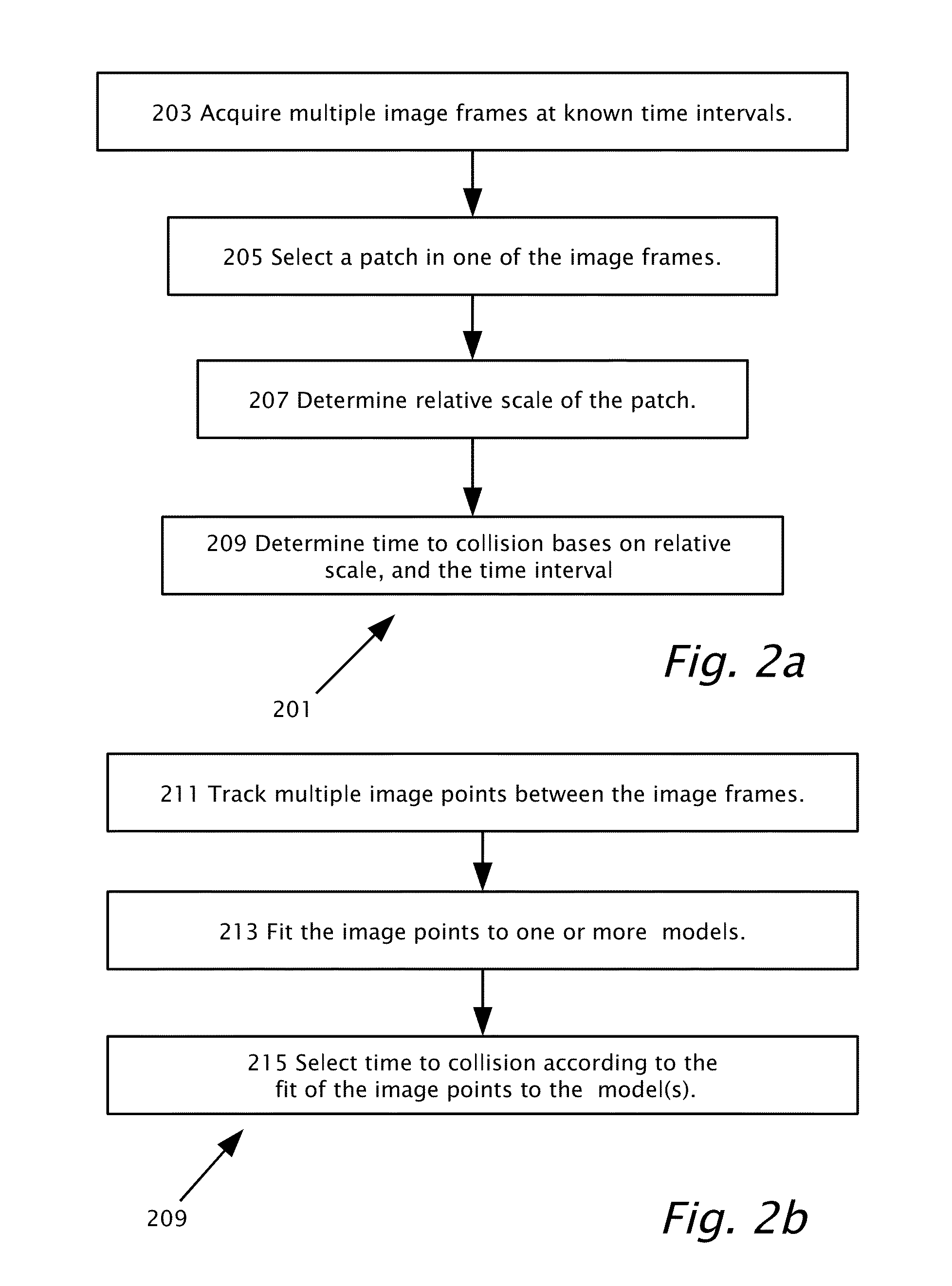

Pedestrian collision warning system

ActiveUS20120314071A1Avoid collisionEliminate and reduce false collision warningImage enhancementImage analysisSimulationOptical flow

A method is provided for preventing a collision between a motor vehicle and a pedestrian. The method uses a camera and a processor mountable in the motor vehicle. A candidate image is detected. Based on a change of scale of the candidate image, it may be determined that the motor vehicle and the pedestrian are expected to collide, thereby producing a potential collision warning. Further information from the image frames may be used to validate the potential collision warning. The validation may include an analysis of the optical flow of the candidate image, that lane markings prediction of a straight road, a calculation of the lateral motion of the pedestrian, if the pedestrian is crossing a lane mark or curb and / or if the vehicle is changing lanes.

Owner:MOBILEYE VISION TECH LTD

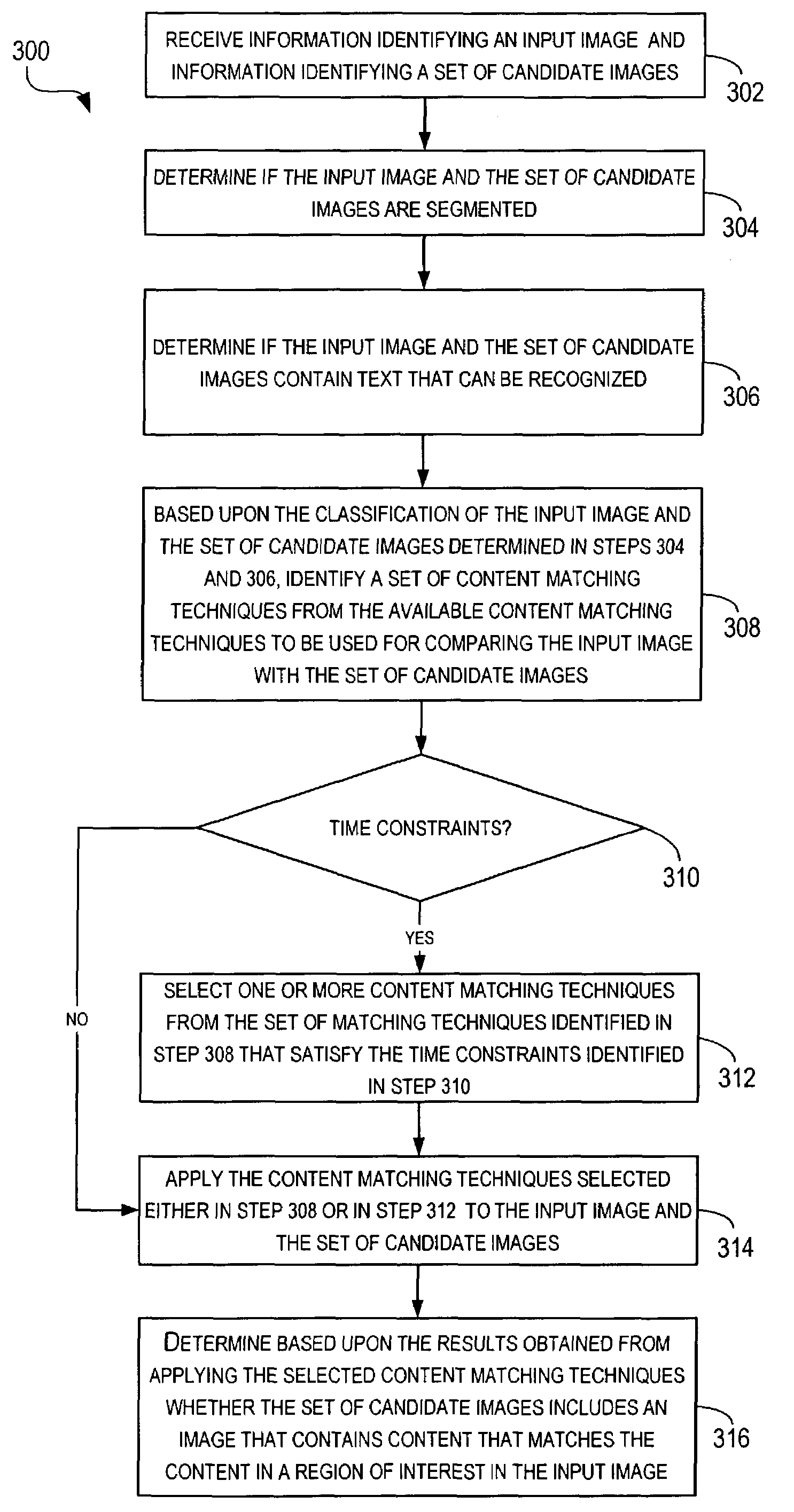

Automated techniques for comparing contents of images

ActiveUS7236632B2High confidence scoreImage analysisDigital data information retrievalRegion of interestCandidate image

Automated techniques for comparing contents of images. For a given image (referred to as an “input image”), a set of images (referred to as “a set of candidate images”) are processed to determine if the set of candidate images comprises an image whose contents or portions thereof match contents included in a region of interest in the input image.

Owner:RICOH KK

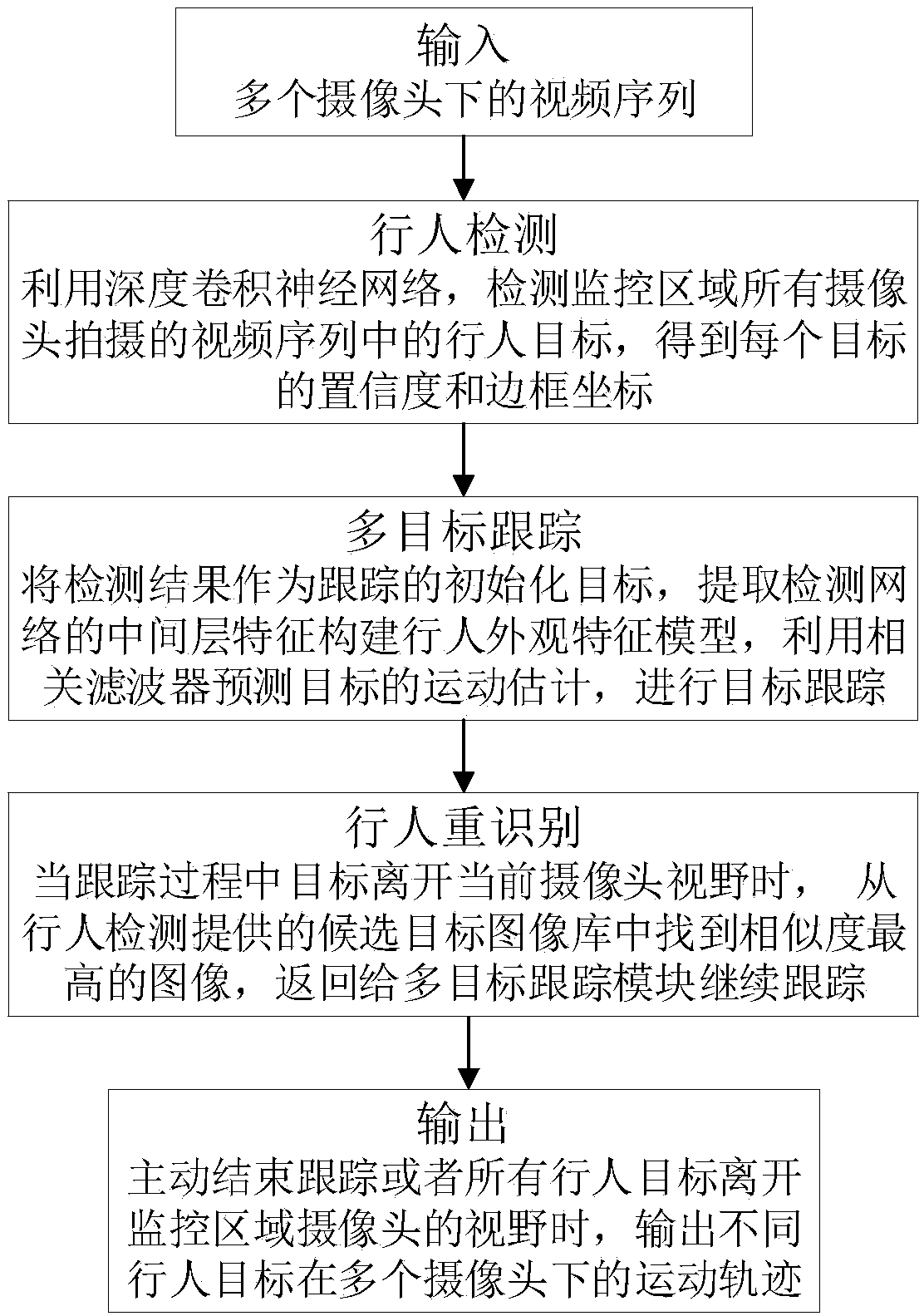

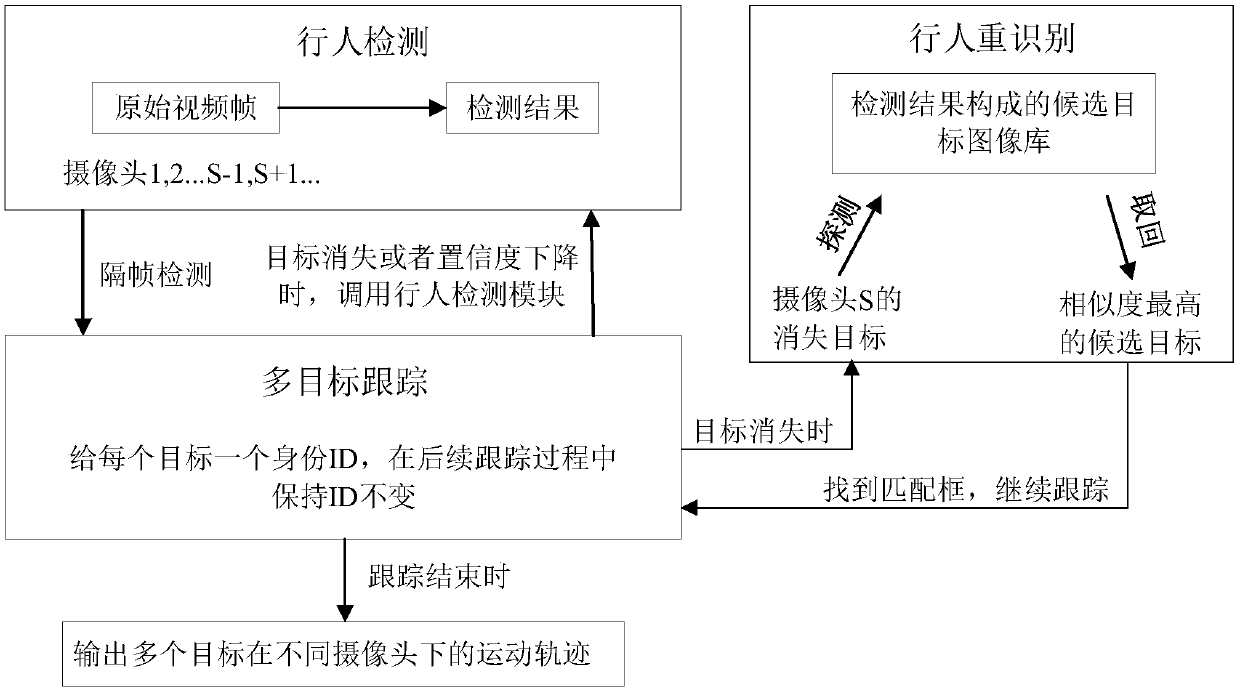

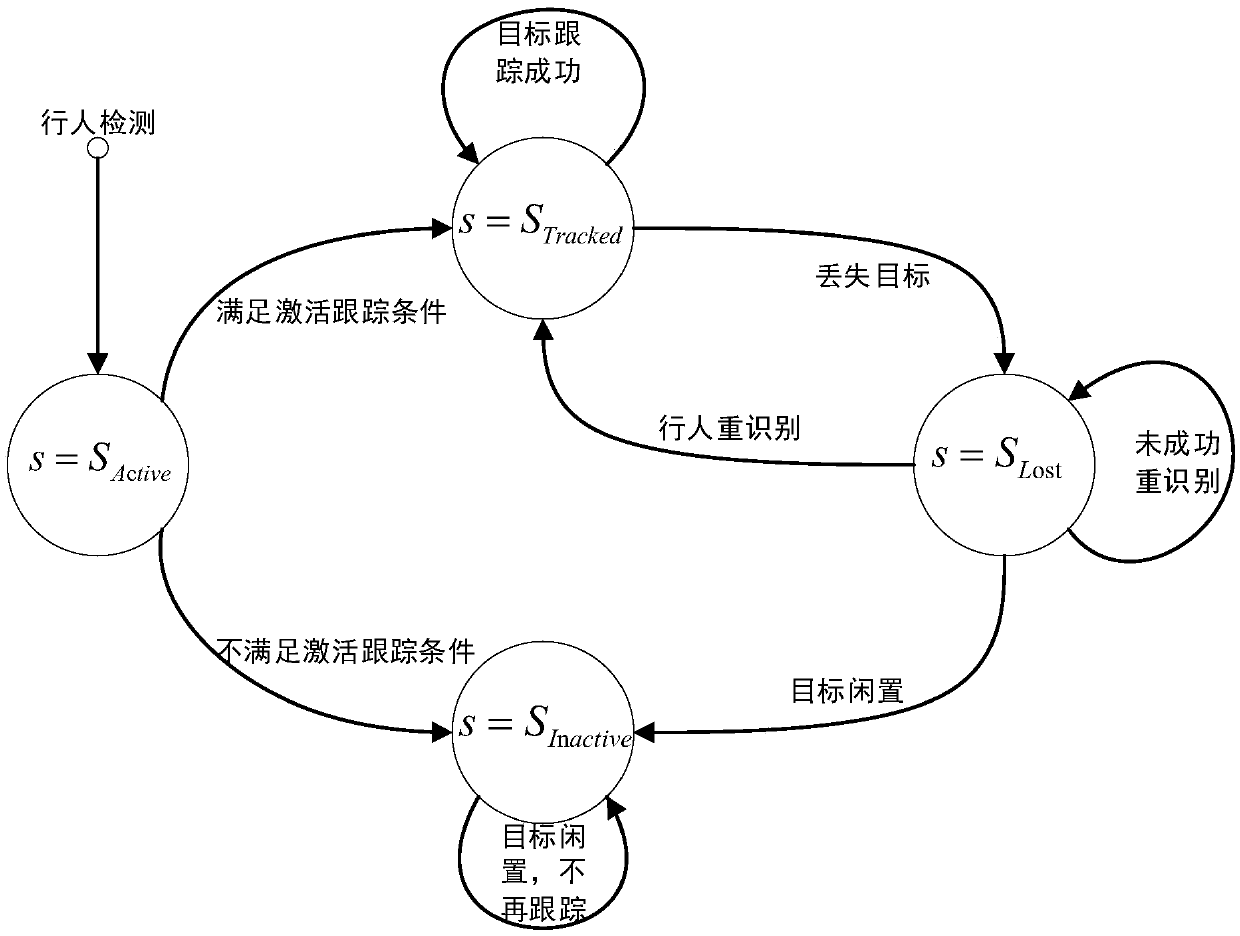

Cross-camera pedestrian detection tracking method based on depth learning

ActiveCN108875588AOvercome occlusionOvercome lighting changesCharacter and pattern recognitionNeural architecturesMulti target trackingRecognition algorithm

The invention discloses a cross-camera pedestrian detection tracking method based on depth learning, which comprises the steps of: by training a pedestrian detection network, carrying out pedestrian detection on an input monitoring video sequence; initializing tracking targets by a target box obtained by pedestrian detection, extracting shallow layer features and deep layer features of a region corresponding to a candidate box in the pedestrian detection network, and implementing tracking; when the targets disappear, carrying out pedestrian re-identification which comprises the process of: after target disappearance information is obtained, finding images with the highest matching degrees with the disappearing targets from candidate images obtained by the pedestrian detection network and continuously tracking; and when tracking is ended, outputting motion tracks of the pedestrian targets under multiple cameras. The features extracted by the method can overcome influence of illuminationvariations and viewing angle variations; moreover, for both the tracking and pedestrian re-identification parts, the features are extracted from the pedestrian detection network; pedestrian detection, multi-target tracking and pedestrian re-identification are organically fused; and accurate cross-camera pedestrian detection and tracking in a large-range scene are implemented.

Owner:WUHAN UNIV

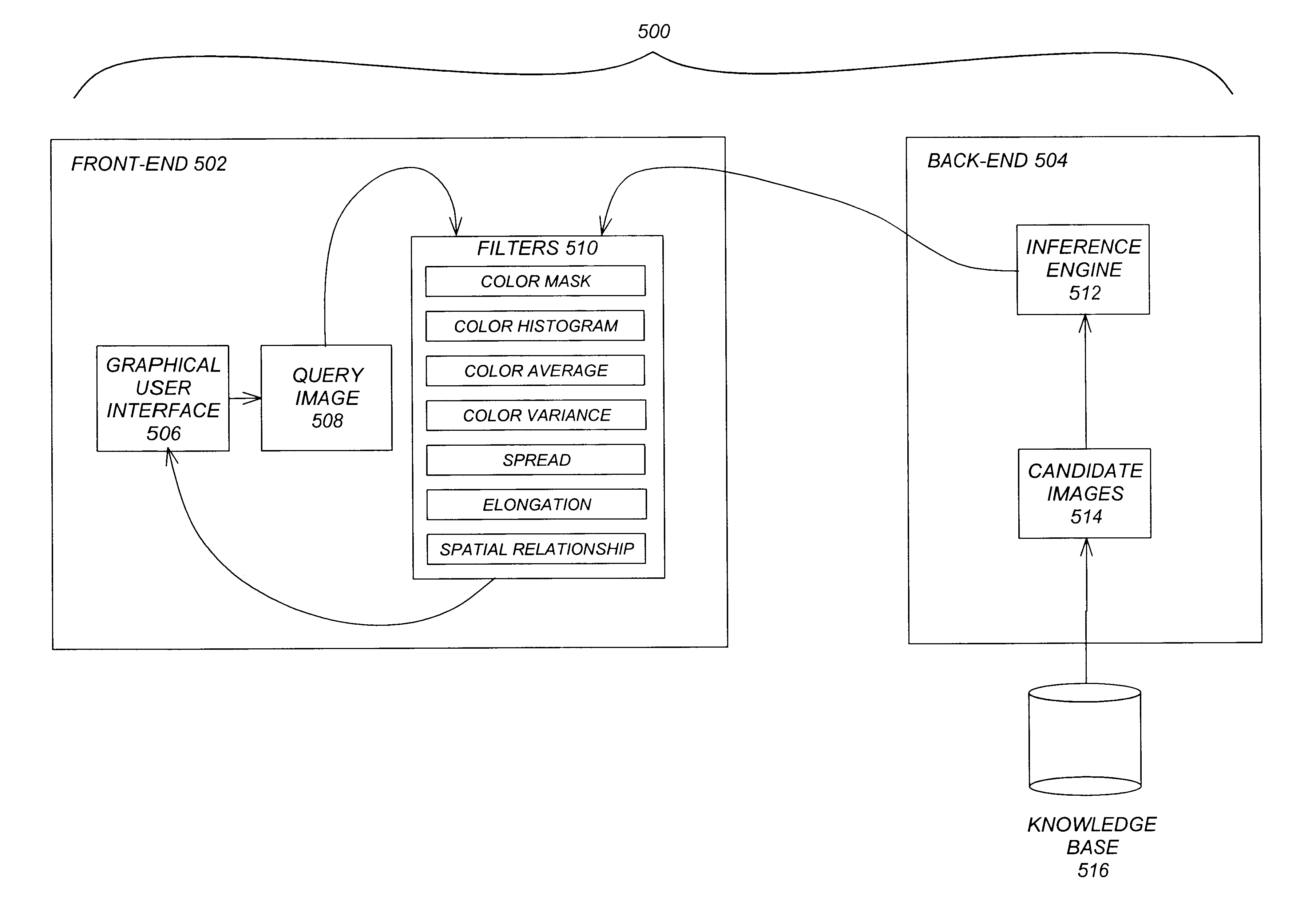

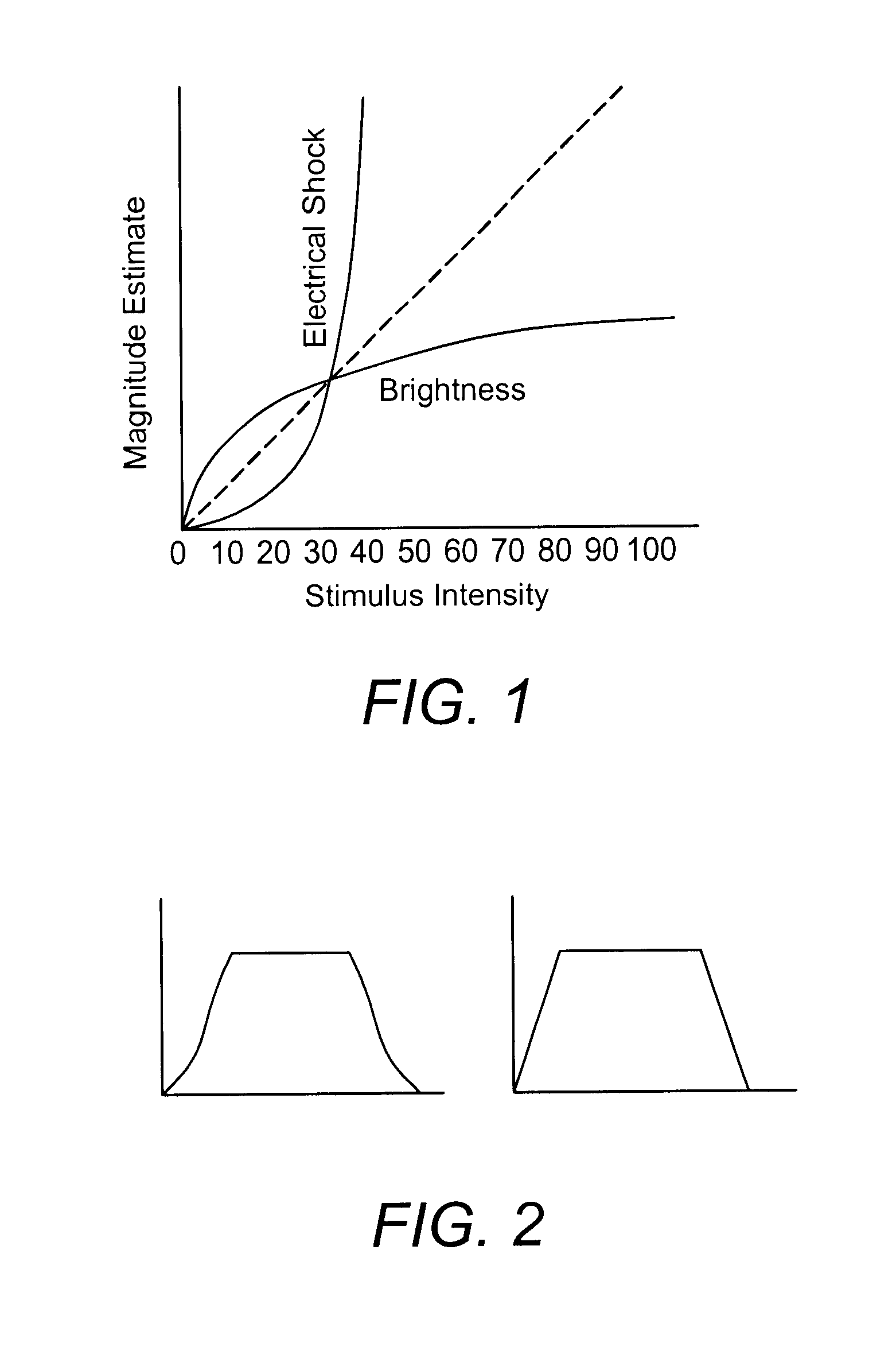

Perception-based image retrieval

InactiveUS6865302B2Data processing applicationsDigital data information retrievalAverage filterPattern perception

A content-based image retrieval (CBIR) system has a front-end that includes a pipeline of one or more dynamically-constructed filters for measuring perceptual similarities between a query image and one or more candidate images retrieved from a back-end comprised of a knowledge base accessed by an inference engine. The images include at least one color set having a set of properties including a number of pixels each having at least one color, a culture color associated with the color set, a mean and variance of the color set, a moment invariant, and a centroid. The filters analyze and compare the set of properties of the query image to the set of properties of the candidate images. Various filters are used, including: a Color Mask filter that identifies identical culture colors in the images, a Color Histogram filter that identifies a distribution of colors in the images, a Color Average filter that performs a similarity comparison on the average of the color sets of the images, a Color Variance filter that performs a similarity comparison on the variances of the color sets of the images, a Spread filter that identifies a spatial concentration of a color in the images, an Elongation filter that identifies a shape of a color in the images, and a Spatial Relationship filter that identifies a spatial relationship between the color sets in the images.

Owner:RGT UNIV OF CALIFORNIA

Pedestrian collision warning system

A method is provided for preventing a collision between a motor vehicle and a pedestrian. The method uses a camera and a processor mountable in the motor vehicle. A candidate image is detected. Based on a change of scale of the candidate image, it may be determined that the motor vehicle and the pedestrian are expected to collide, thereby producing a potential collision warning. Further information from the image frames may be used to validate the potential collision warning. The validation may include an analysis of the optical flow of the candidate image, that lane markings prediction of a straight road, a calculation of the lateral motion of the pedestrian, if the pedestrian is crossing a lane mark or curb and / or if the vehicle is changing lanes.

Owner:MOBILEYE VISION TECH LTD

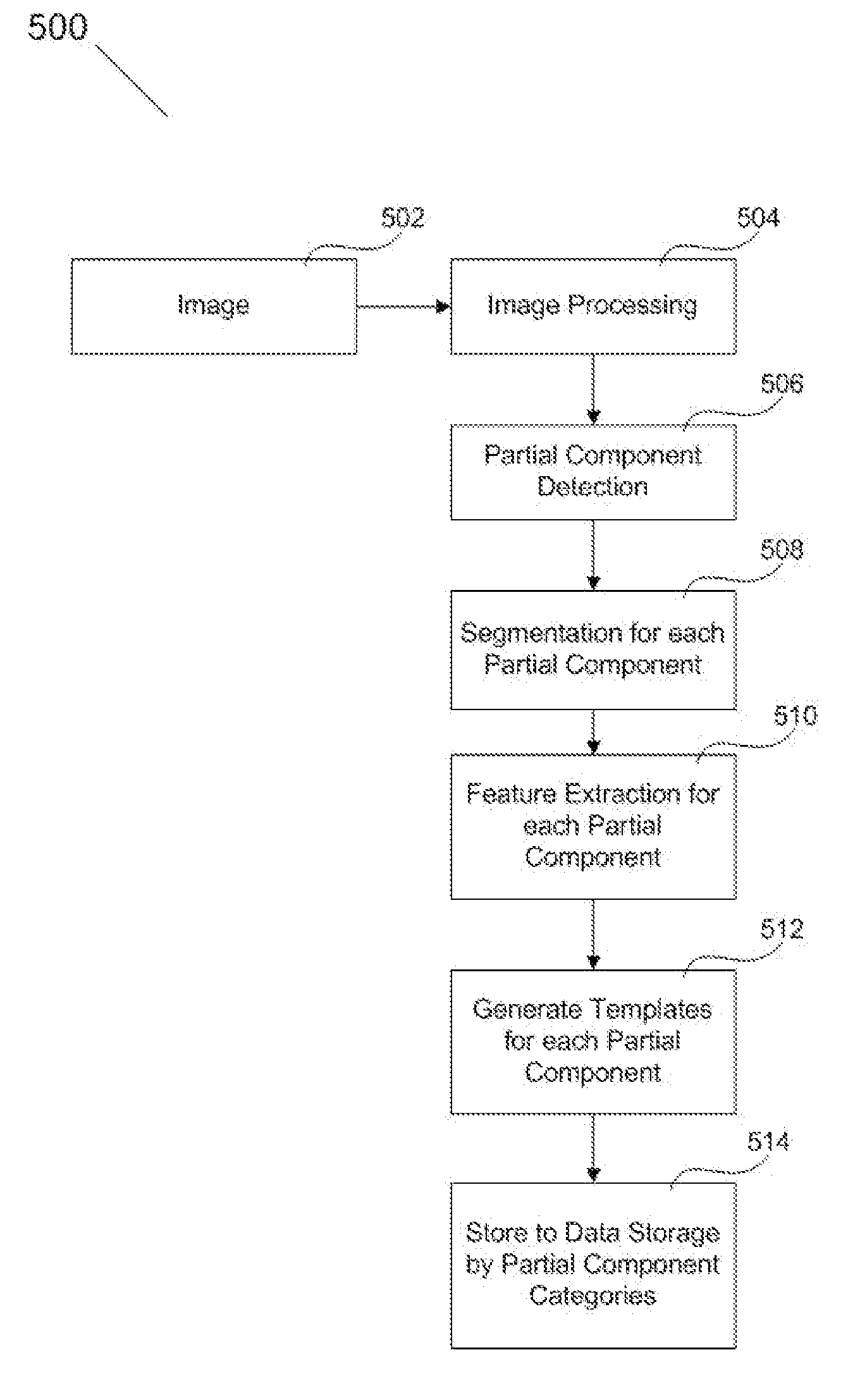

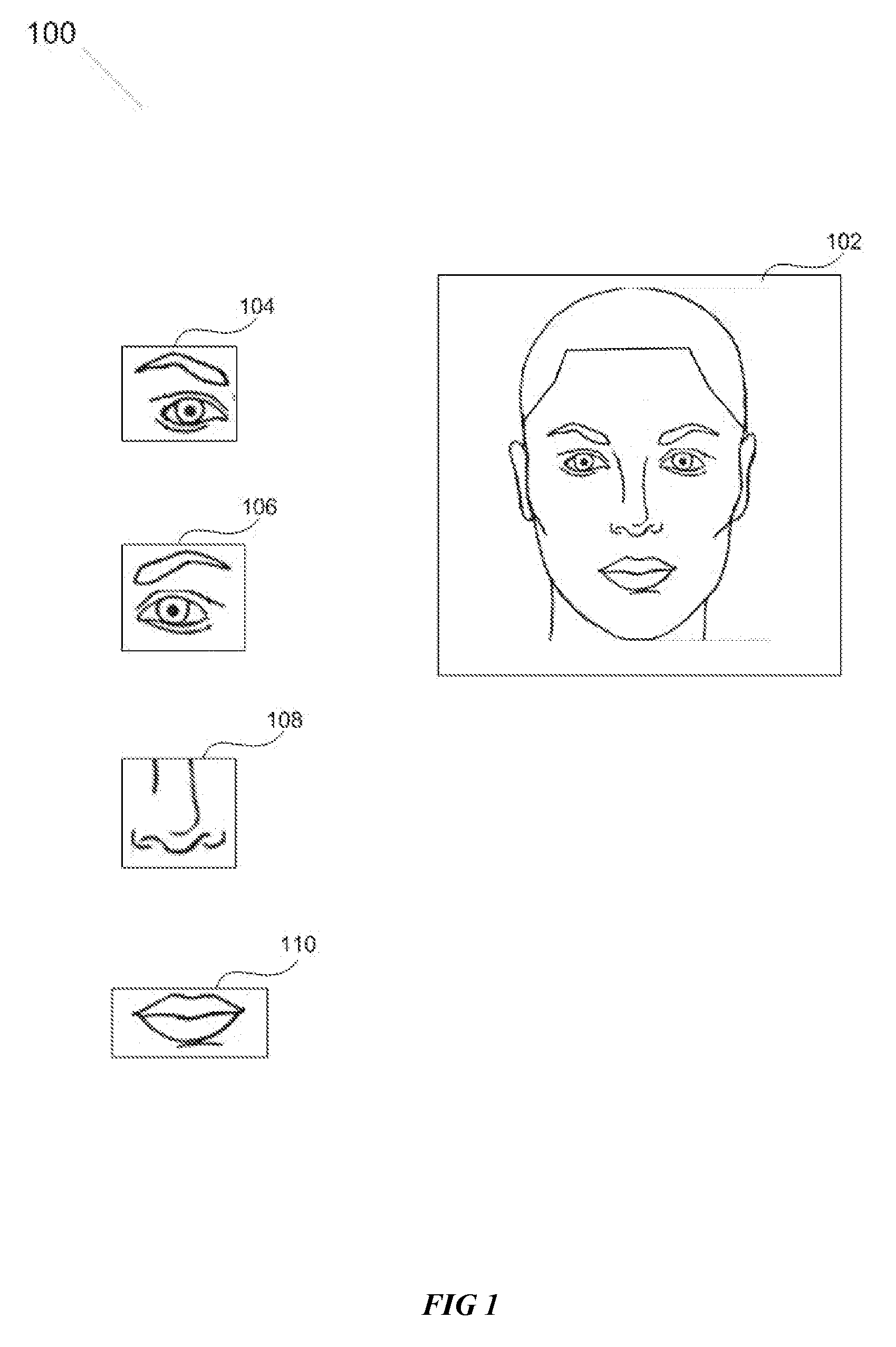

Apparatus and method for partial component facial recognition

A method and system for identifying a human being or verifying two human beings by partial component(s) of their face which may include one or multiple of left eye(s), right eye(s), nose(s), mouth(s), left ear(s), or right ear(s). A gallery database for face recognition is constructed from a plurality of human face images by detecting and segmenting a plurality of partial face components from each of the human face images, creating a template for each of the plurality of partial face components, and storing the templates in the gallery database. Templates from a plurality of partial face components from a same human face image are linked with one ID in the gallery database. A probe human face image is identified from the gallery database by detecting and segmenting a plurality of partial face components from the probe human face image; creating a probe template for each of the partial face components from the probe human face image, comparing each of the probe templates against a category of templates in the gallery database to generate similarity scores between the probe templates and templates in the gallery database; generating a plurality of sub-lists of candidate images having partial face component templates with the highest similarity scores over a first preset threshold; generating for each candidate image from each sub-list a combined similarity score; and generating a final list of candidates from said candidates of combined similarity scores over a second preset threshold.

Owner:INTELITRAC

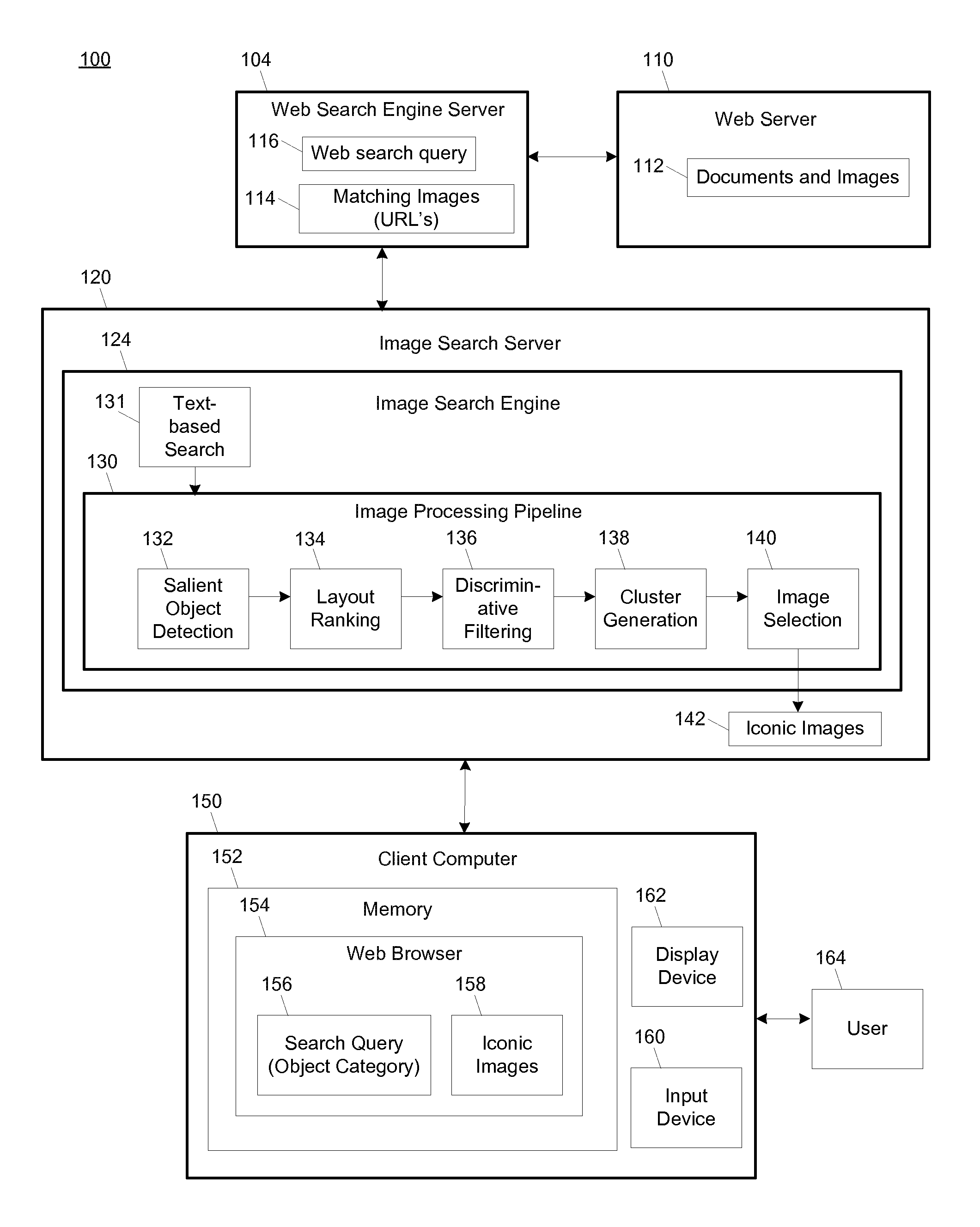

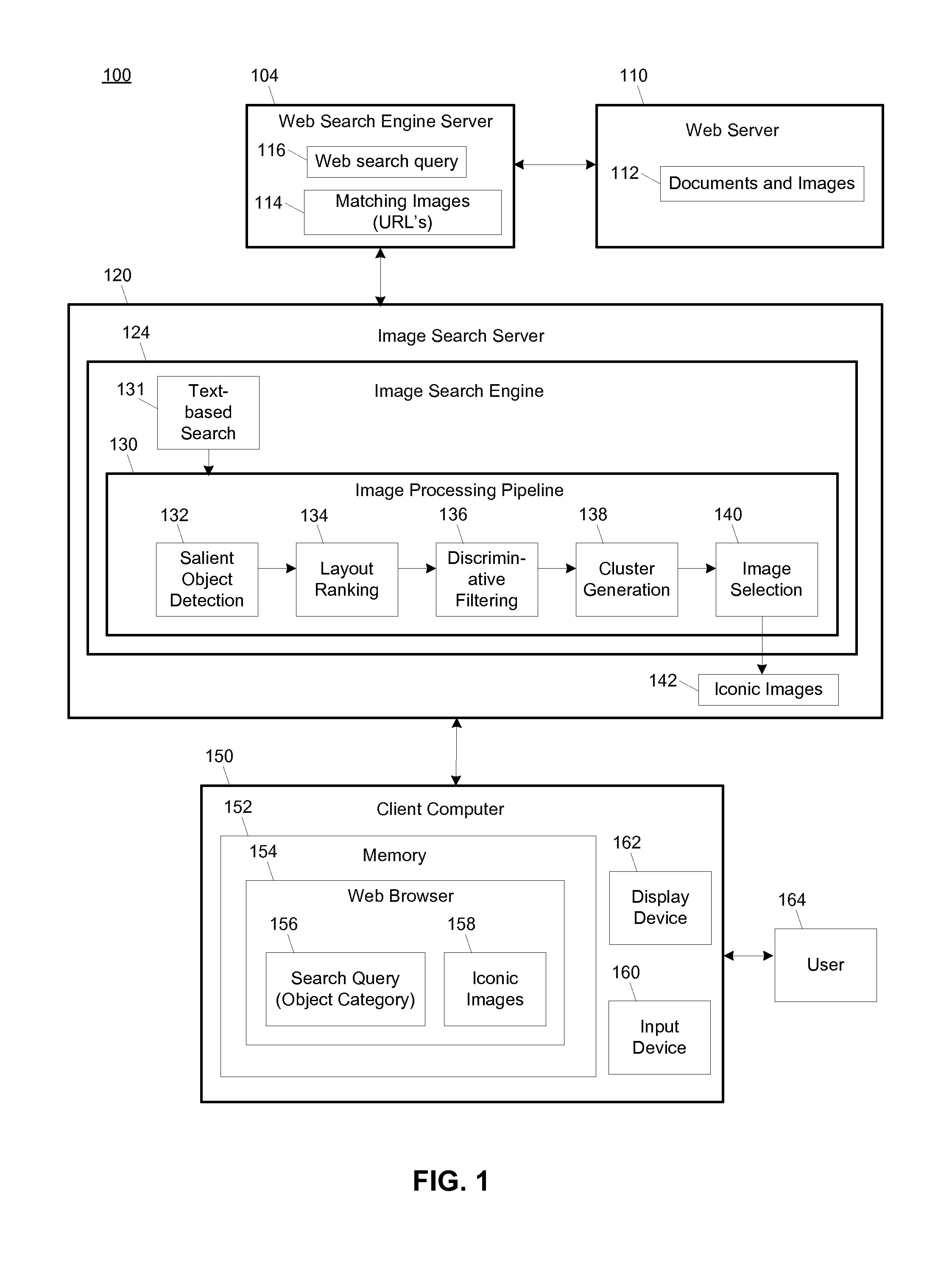

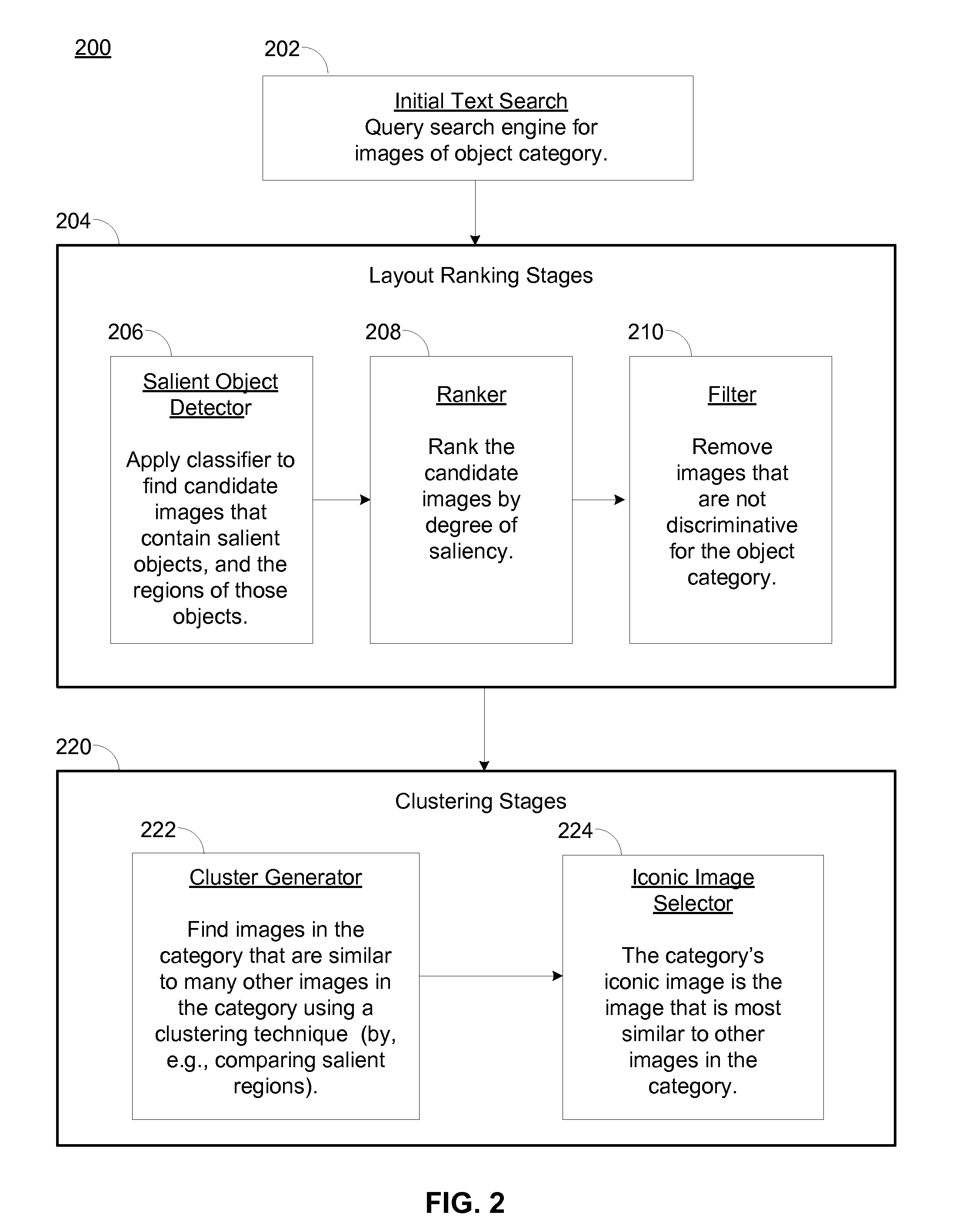

Finding iconic images

ActiveUS20100303342A1High energyReduce decreaseCharacter and pattern recognitionSpecial data processing applicationsHueDiscriminative model

Iconic images for a given object or object category may be identified in a set of candidate images by using a learned probabilistic composition model to divide each candidate image into a most probable rectangular object region and a background region, ranking the candidate images according to the maximal composition score of each image, removing non-discriminative images from the candidate images, clustering highest-ranked candidate images to form clusters, wherein each cluster includes images having similar object regions according to a feature match score, selecting a representative image from each cluster as an iconic image of the object category, and causing display of the iconic image. The composition model may be a Naïve Bayes model that computes composition scores based on appearance cues such as hue, saturation, focus, and texture. Iconic images depict an object or category as a relatively large object centered on a clean or uncluttered contrasting background.

Owner:VERIZON PATENT & LICENSING INC

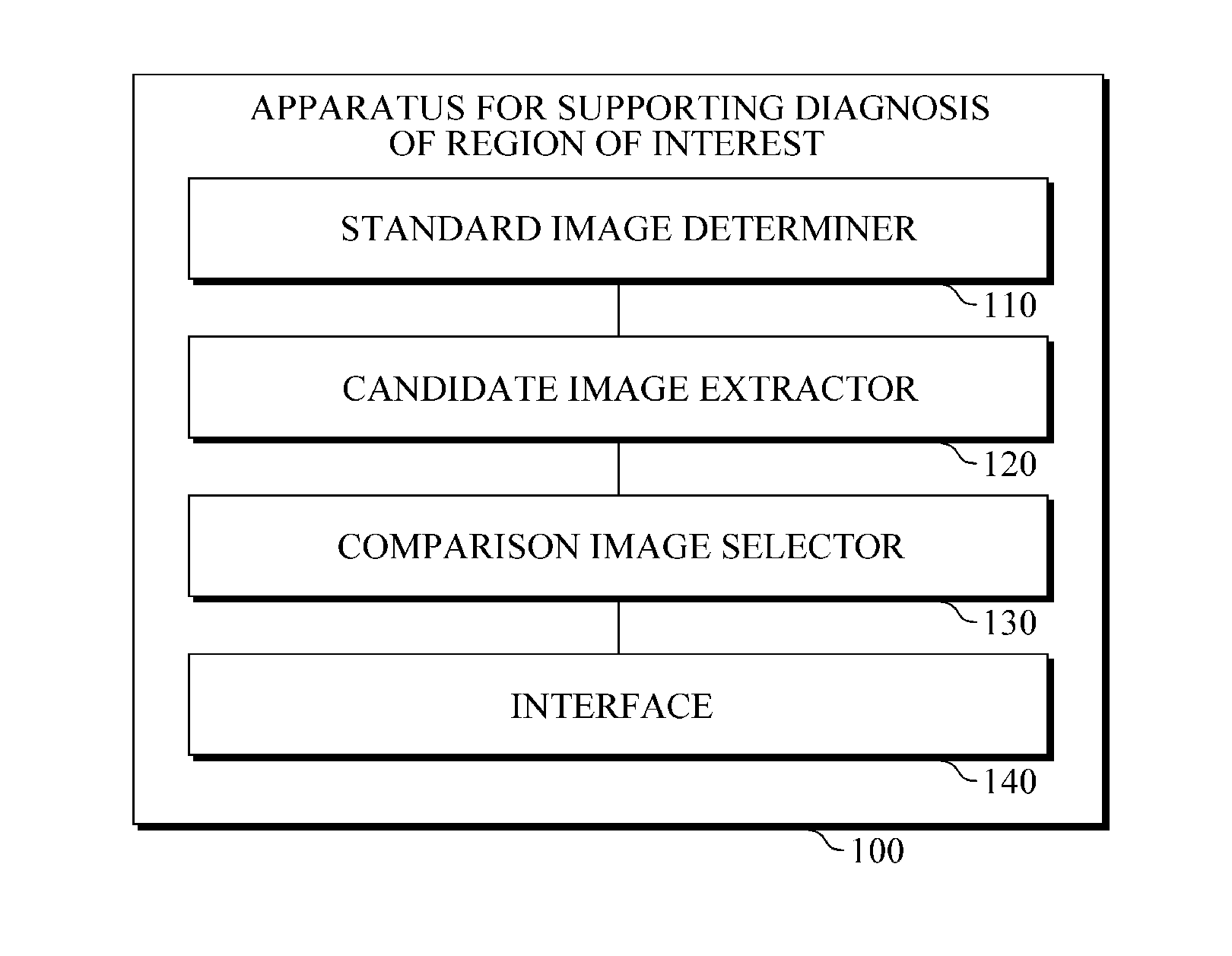

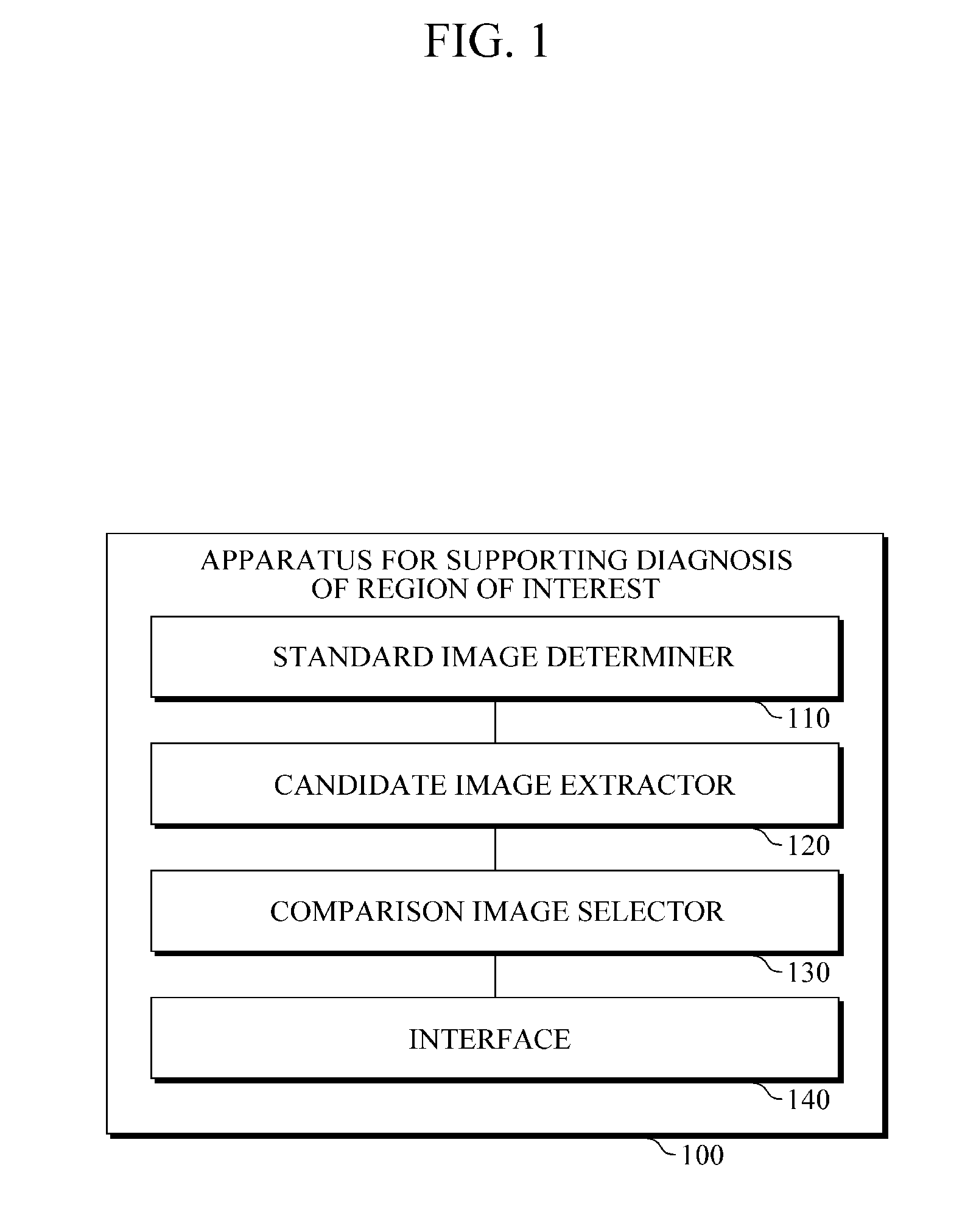

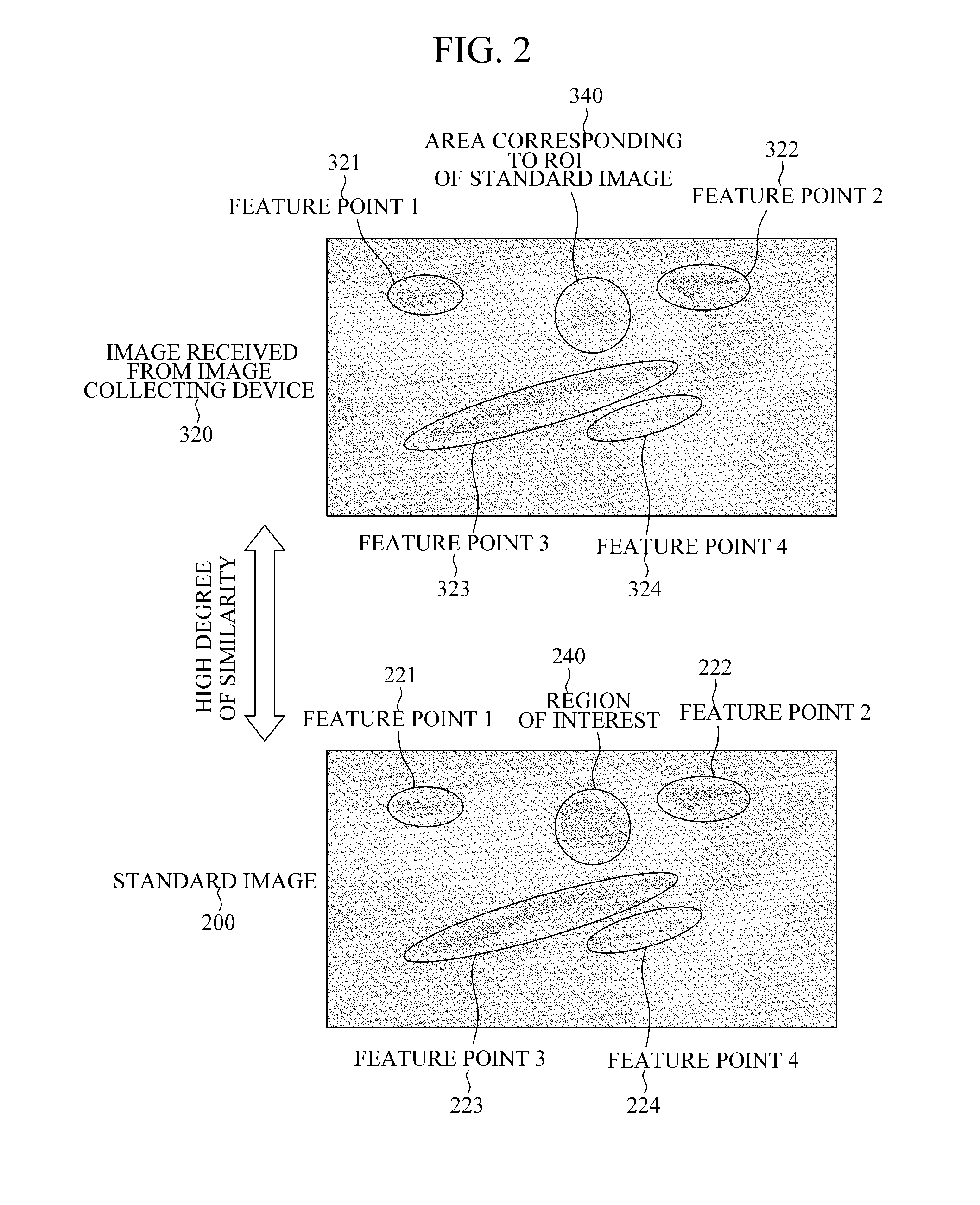

Method and apparatus for supporting diagnosis of region of interest by providing comparison image

Technology related to an apparatus and method for supporting a diagnosis of a lesion is provided. The apparatus includes a standard image determiner to determine, as a standard image, an image where a region of interest (ROI) is detected among a plurality of images received from an image collecting device, a candidate image extractor to extract one or more candidate images with respect to the determined standard image, a comparison image selector to select one or more comparison images for supporting a diagnosis of the ROI from the one or more candidate images, and an interface to provide a user with an output result of the standard image and the one or more selected comparison images.

Owner:SAMSUNG ELECTRONICS CO LTD

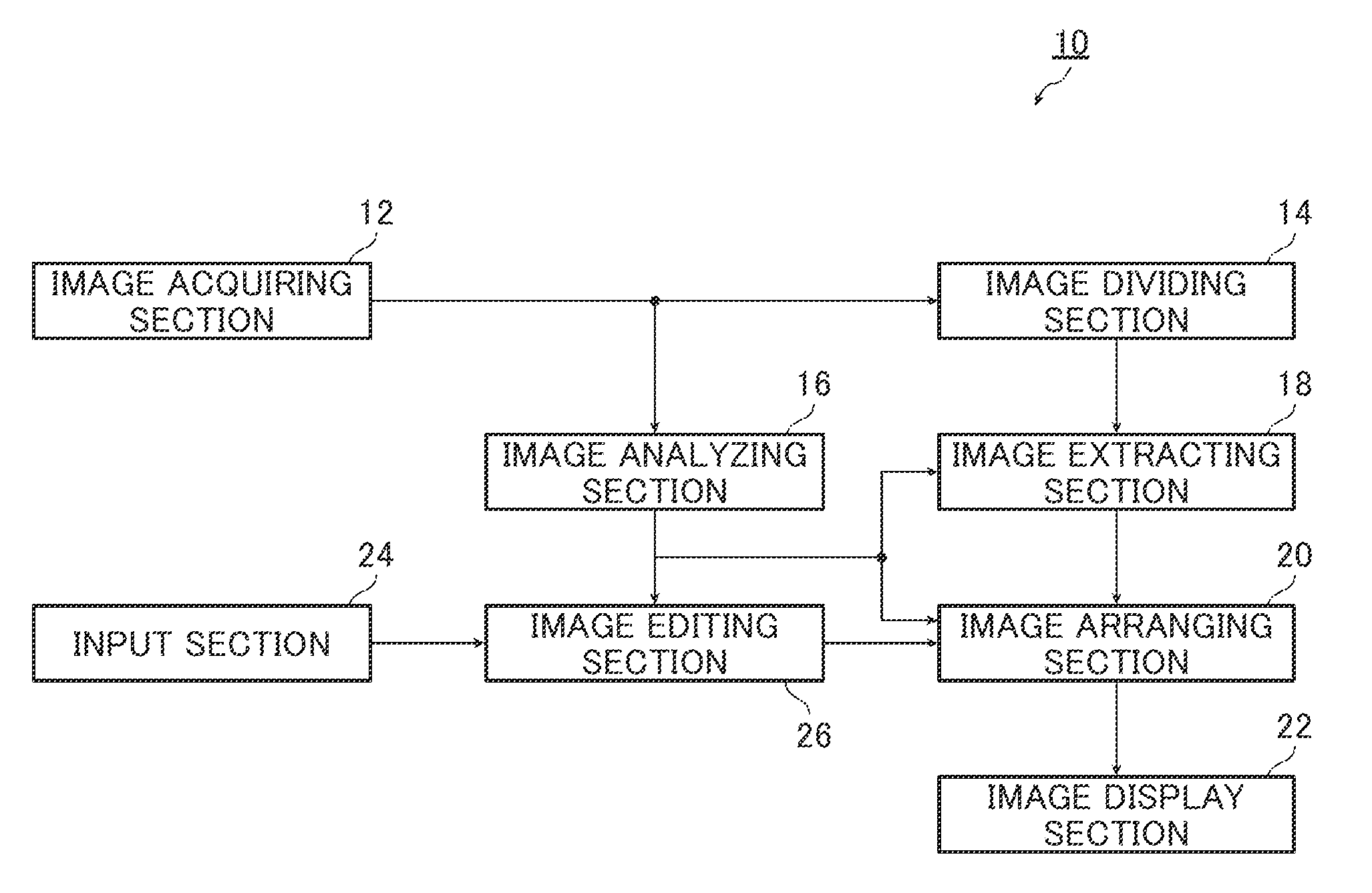

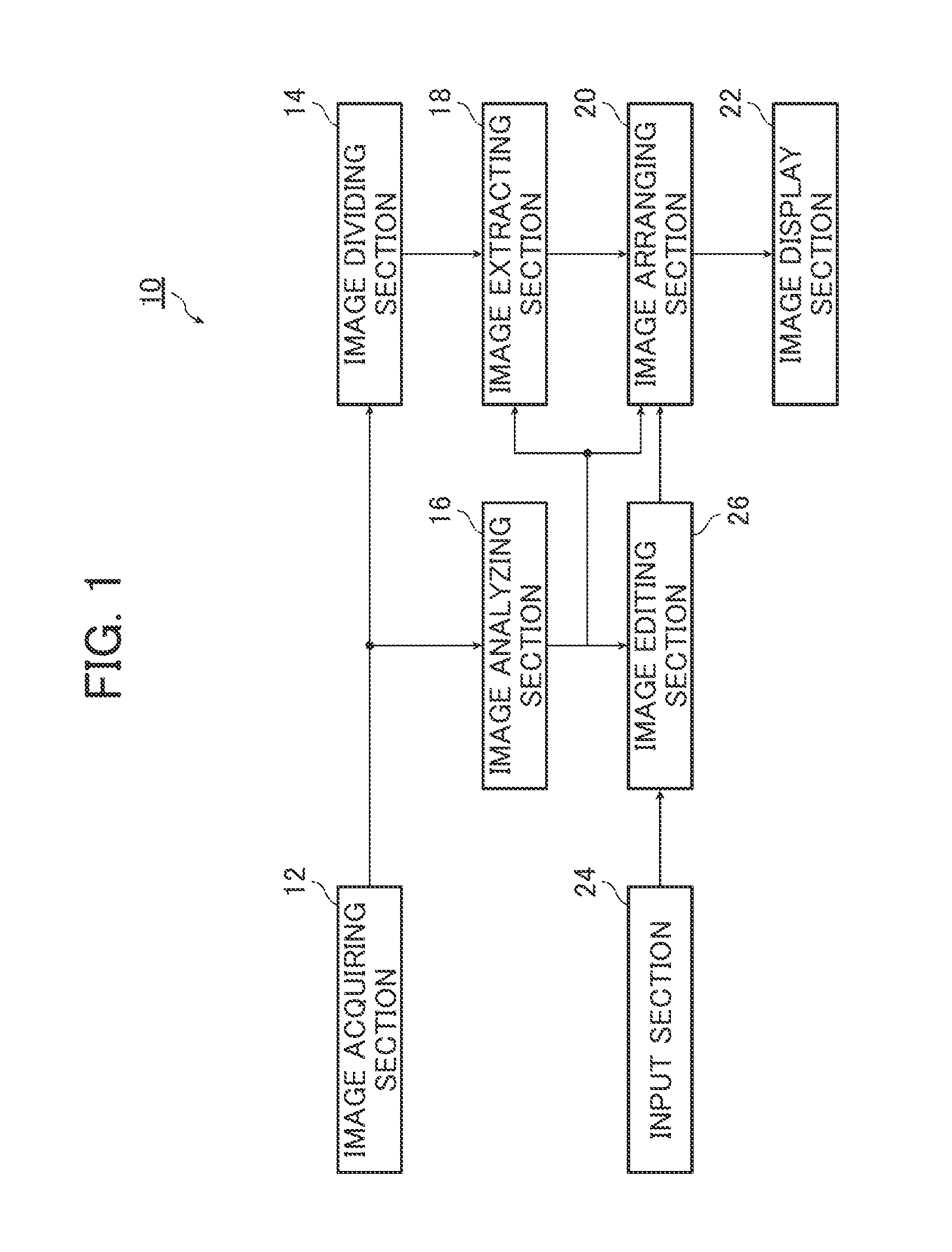

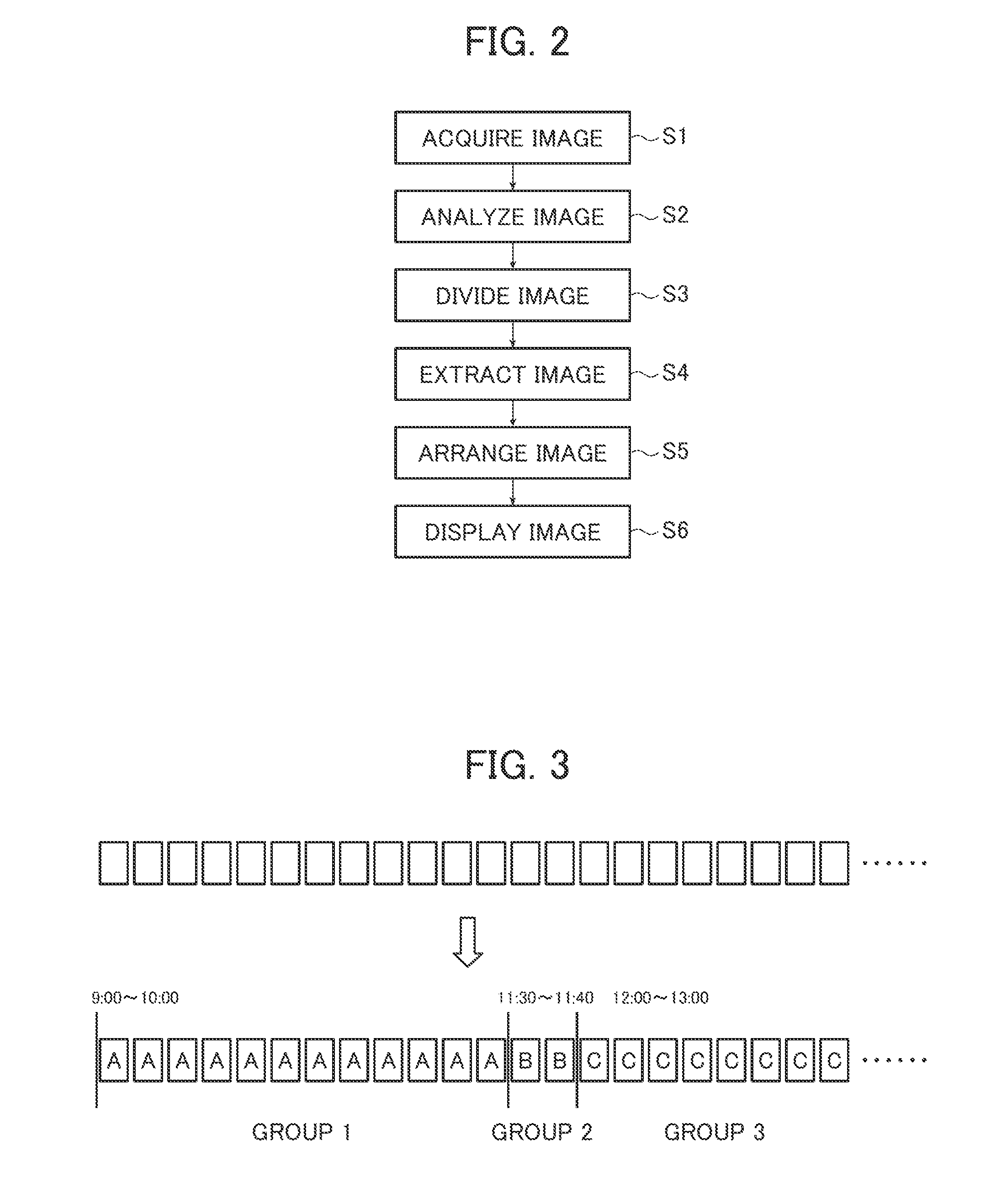

Image processing device, image processing method, and image processing program

ActiveUS20130004073A1Easy to implementCharacter and pattern recognitionPictoral communicationImaging processingImaging analysis

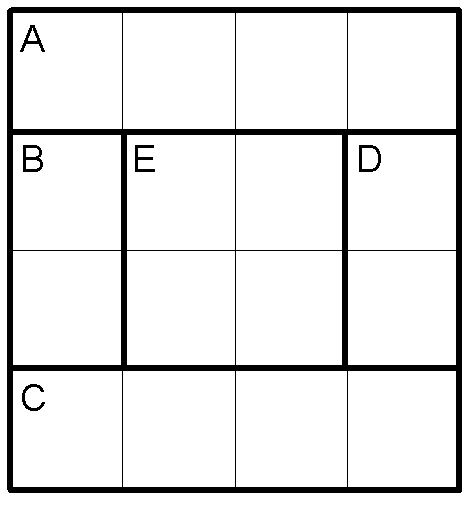

The image processing device divides images into groups, generates image analysis information of the images, extracts a predetermined number of images from each group based on the image analysis information and arranges the images extracted from each group on a corresponding page of a photo book. The image display section displays an image editing area for displaying images arranged on a page to be edited and a candidate image display area for displaying candidate images included in a group corresponding to the page to be edited and being usable for editing the image, and the image editing section uses the candidate images displayed in the candidate image display area to edit the image layout on the page to be edited based on the user's instruction.

Owner:FUJIFILM CORP

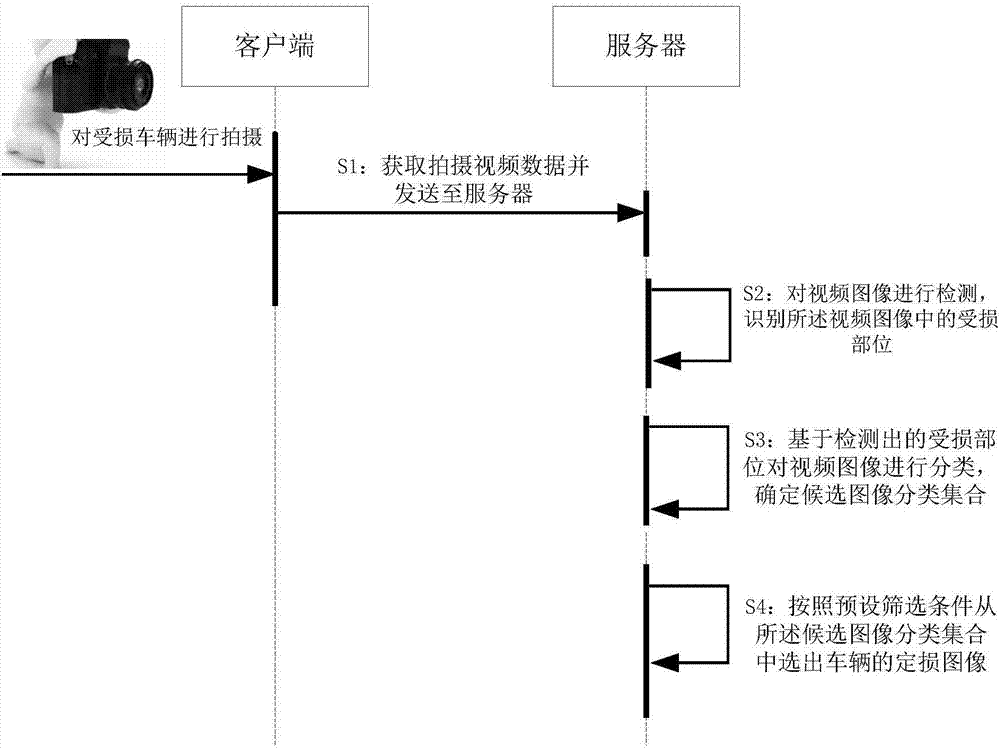

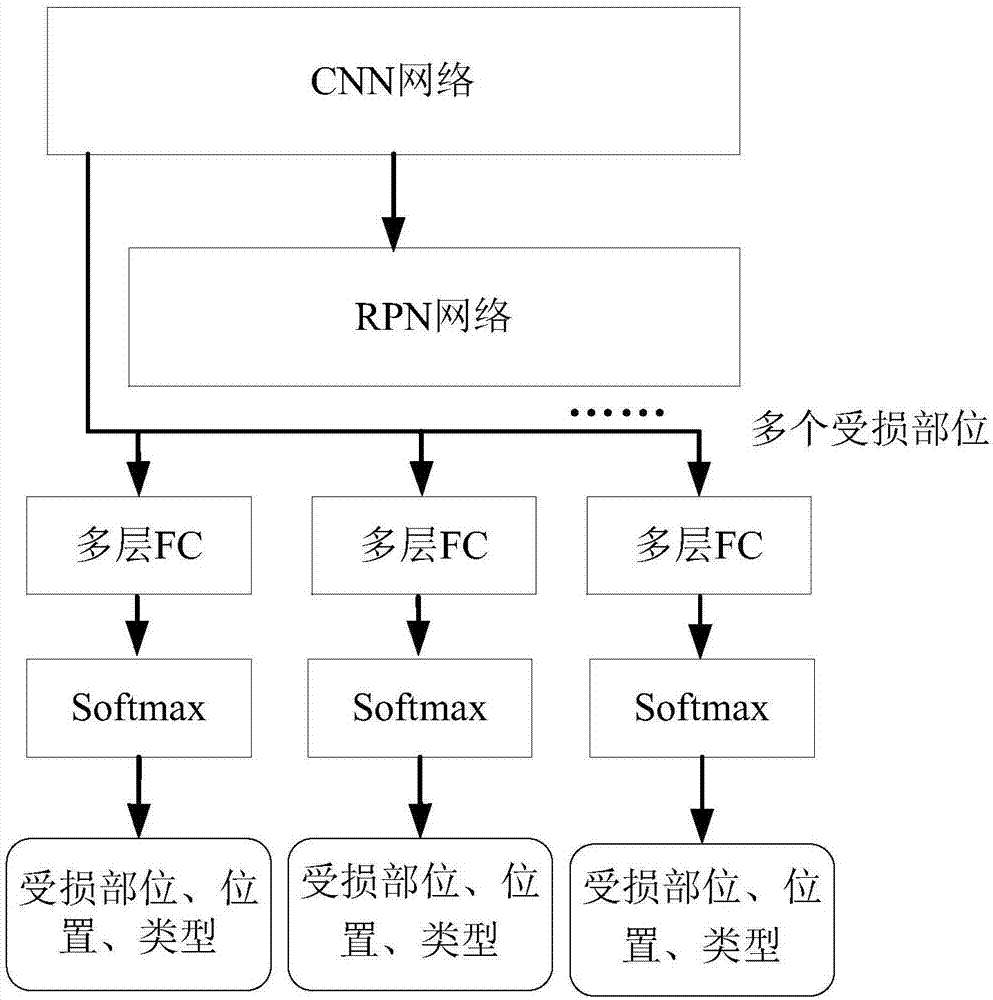

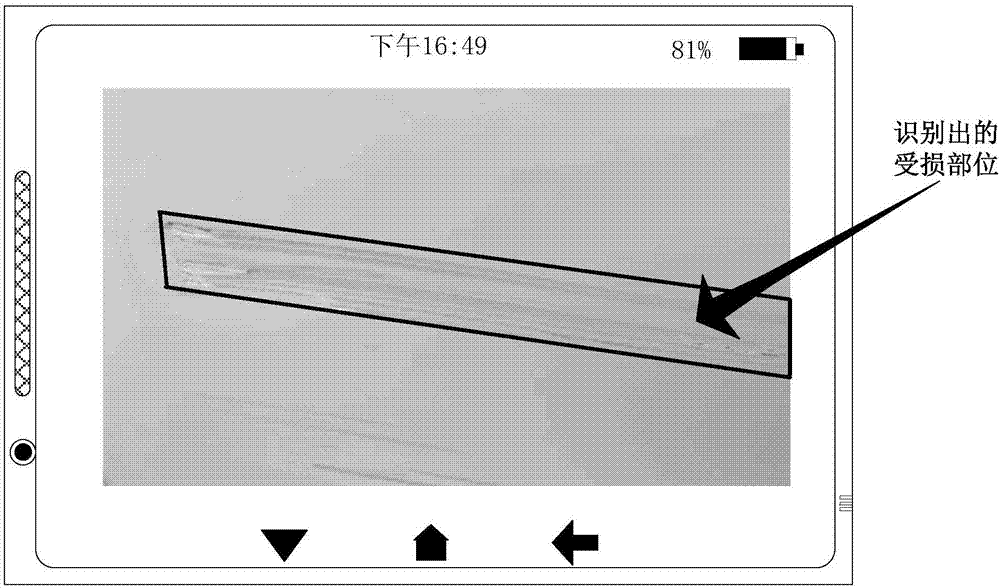

Vehicle loss assessment image obtaining method and apparatus, server and terminal device

ActiveCN107194323AImprove acquisition efficiencyLower acquisition costsImage enhancementImage analysisComputer graphics (images)Terminal equipment

Embodiments of the invention disclose a vehicle loss assessment image obtaining method and apparatus, a server and a terminal device. A client obtains shooting video data and sends the shooting video data to the server; the server detects video images in the shooting video data and identifies damaged parts in the video images; the server classifies the video images based on the detected damaged parts and determines a candidate image classification set of the damaged parts; and vehicle loss assessment images are selected out from the candidate image classification set according to preset screening conditions. By utilizing the method and the apparatus, high-quality loss assessment images meeting loss assessment processing demands can be automatically and quickly generated; the loss assessment processing demands are met; and the loss assessment image obtaining efficiency is improved.

Owner:ADVANCED NEW TECH CO LTD

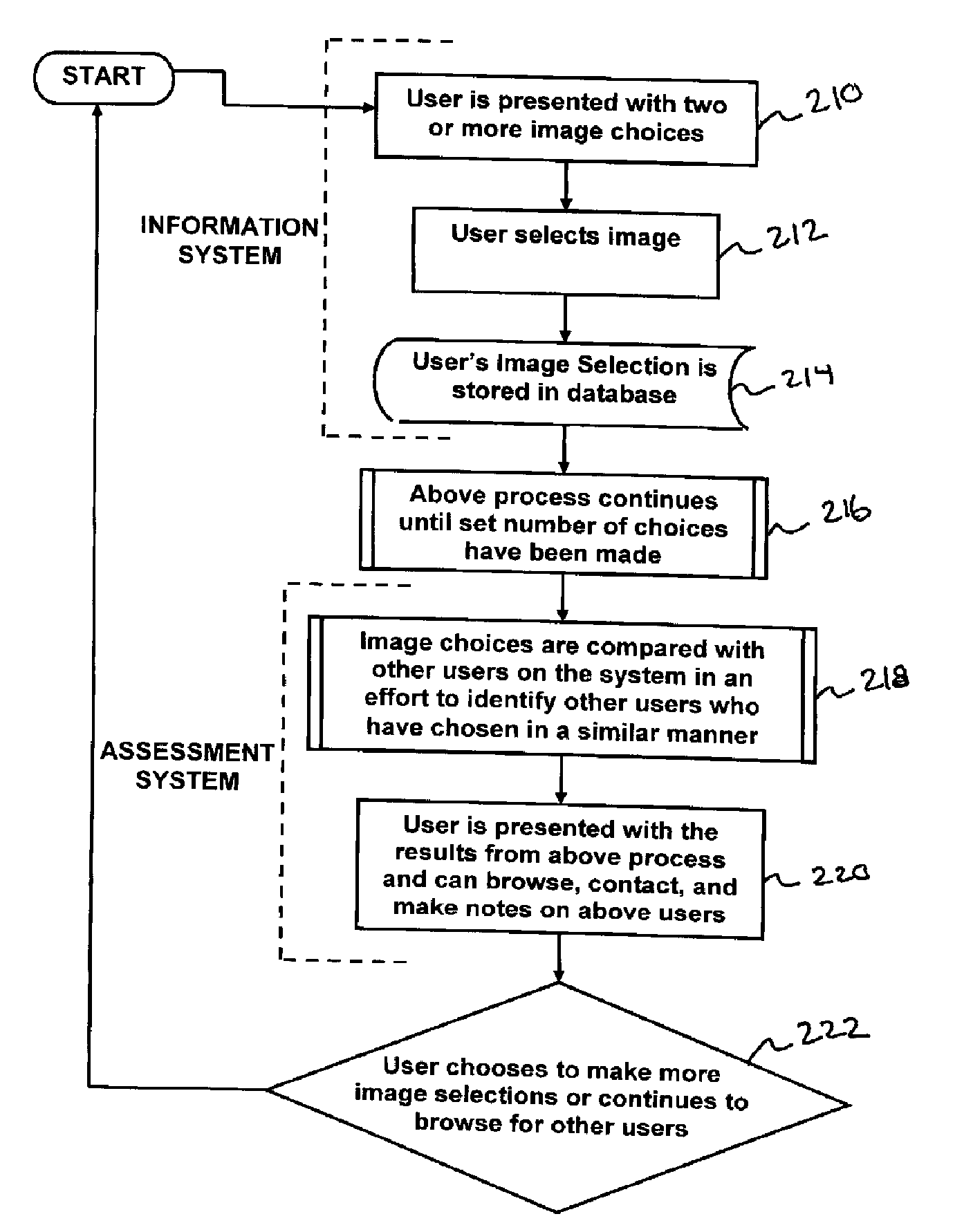

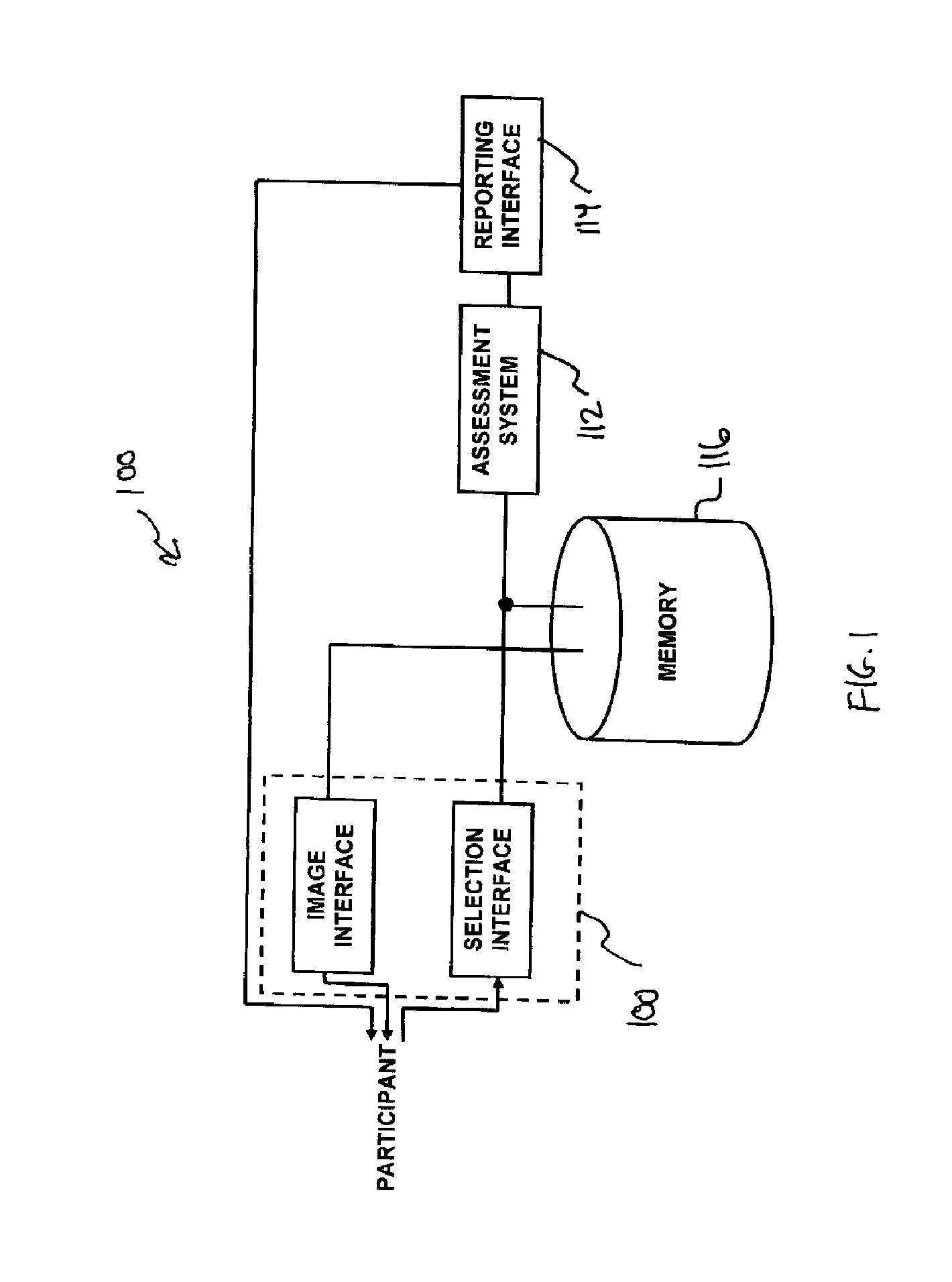

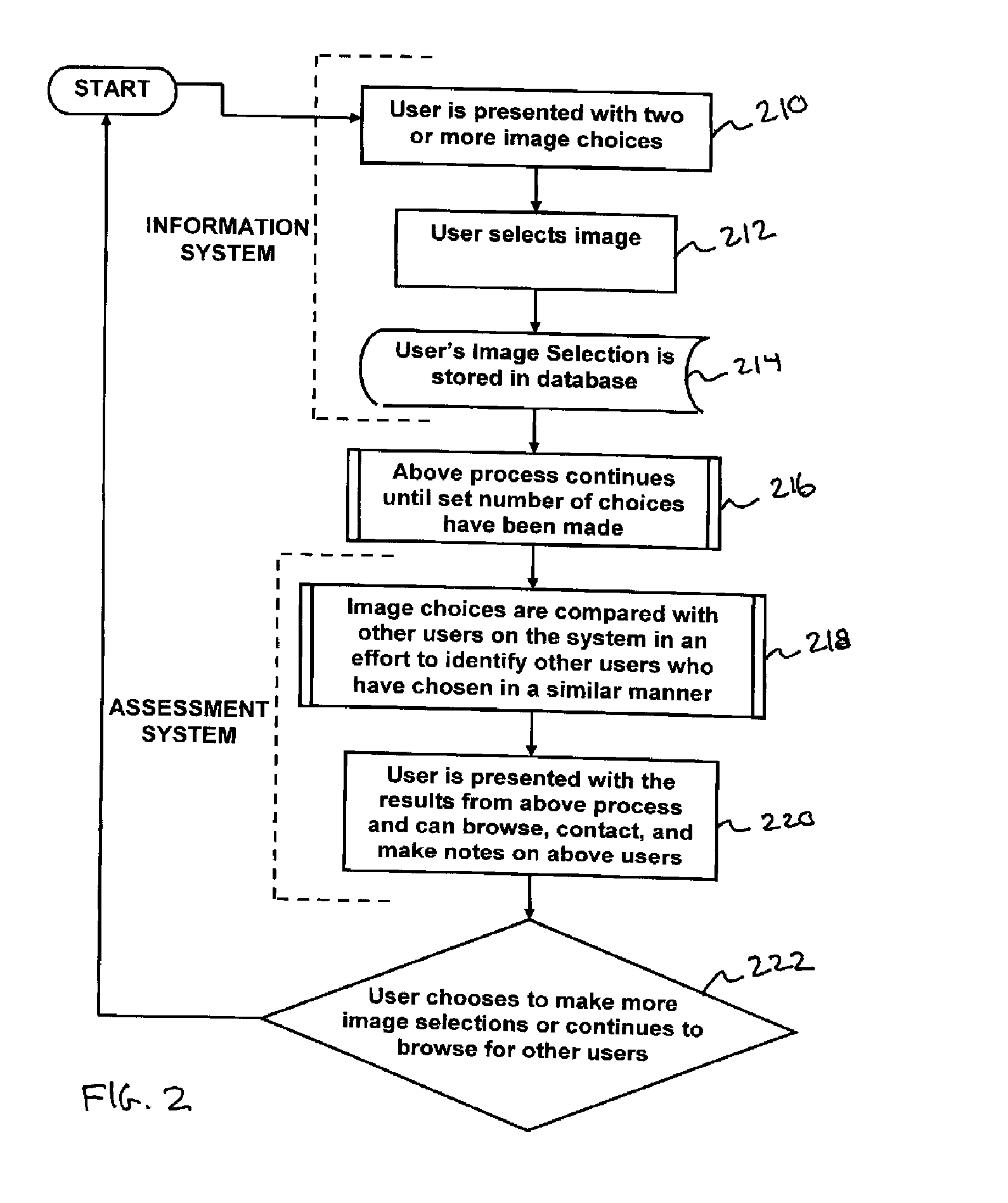

System and method for determining like-mindedness

Methods and apparatus for a participant response system according to various aspects of the present invention operate in conjunction with an information system and an assessment system. The information system may store selection information in response to a first participant's selection of images from a set of candidate images. The assessment system is responsive to the information system, identifies a correlation between the selection information of the first participant and a set of comparison data.

Owner:MIND METRICS

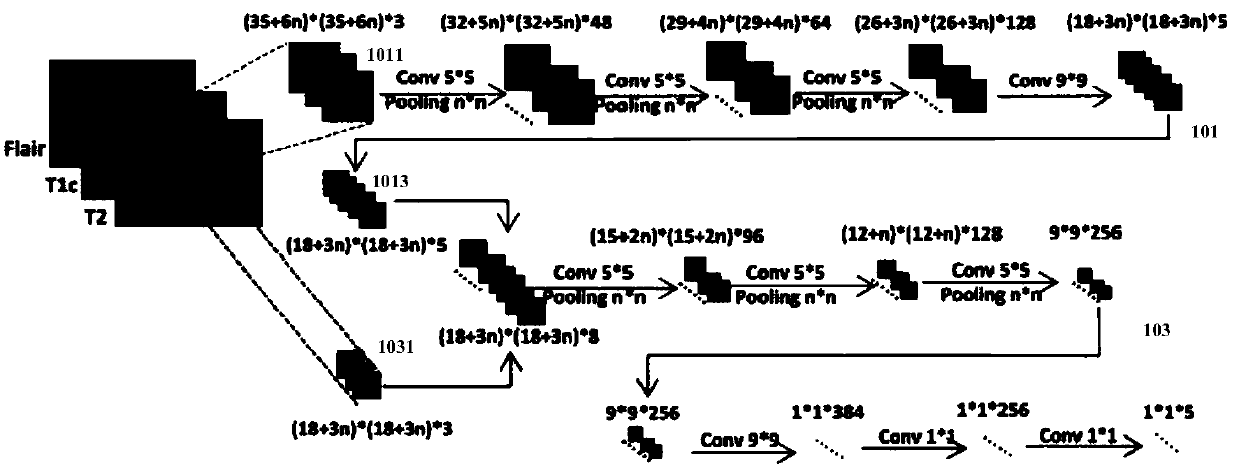

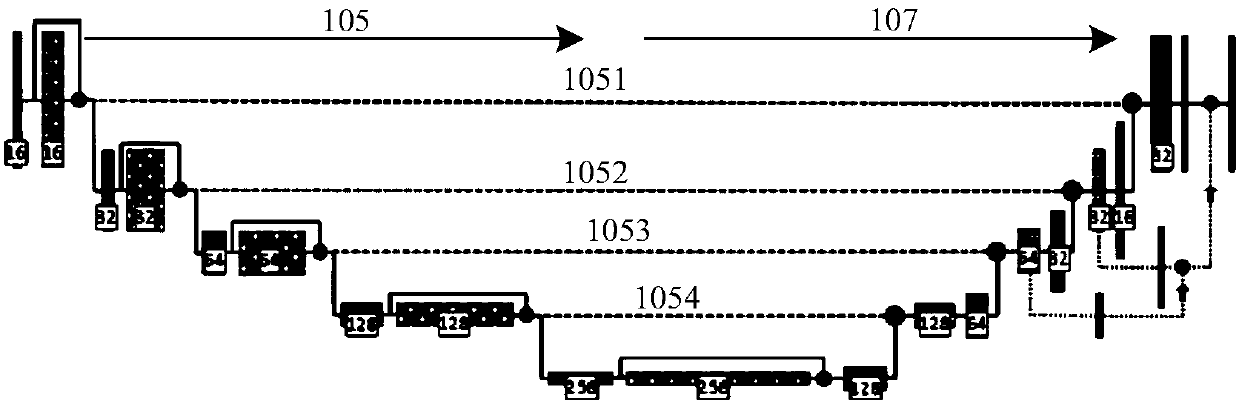

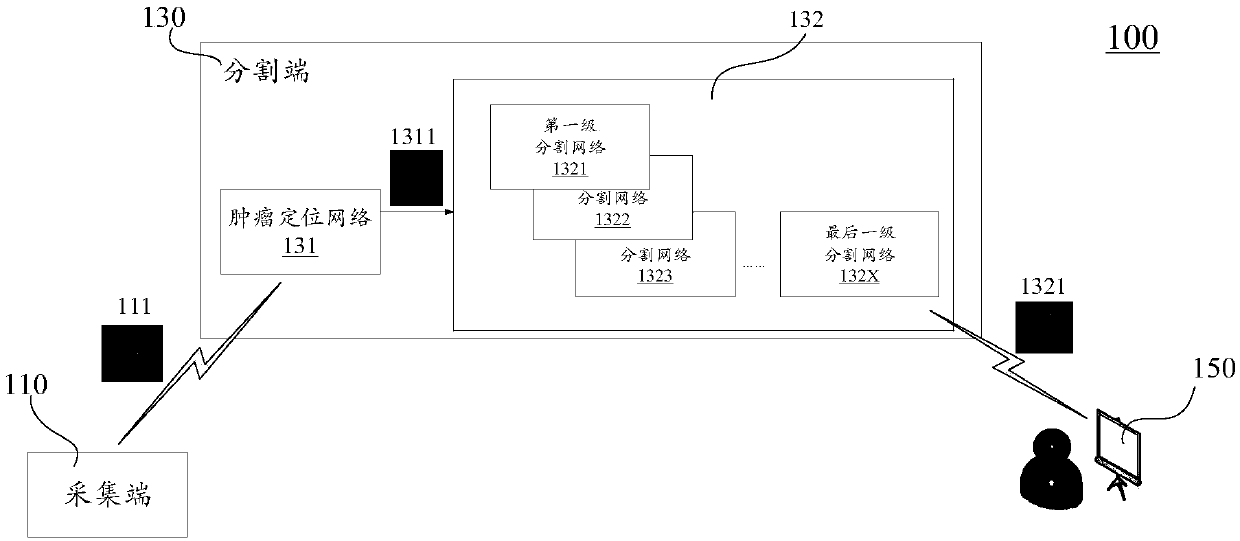

Image segmentation method and device, diagnosis system and storage medium

ActiveCN109598728ATroubleshoot poor segmentationImage enhancementMathematical modelsTumor regionImage segmentation

The invention discloses an image segmentation method and device, a diagnosis system and a storage medium. The image segmentation method comprises the steps of obtaining a tumor image; Carrying out tumor positioning on the obtained tumor image to obtain a candidate image for indicating the position of a full tumor region in the tumor image; Inputting the candidate image into a cascade segmentationnetwork constructed based on a machine learning model; And carrying out image segmentation on the whole tumor region in the candidate image by using a first-stage segmentation network in the cascade segmentation network as a starting point, and stepping to a last-stage segmentation network step by step to carry out image segmentation on an enhanced tumor core region to obtain a segmented image. Byadopting the image segmentation method and device, the diagnosis system and the storage medium provided by the invention, the problem of poor tumor image segmentation effect in the prior art is solved.

Owner:TENCENT TECH (SHENZHEN) CO LTD +1

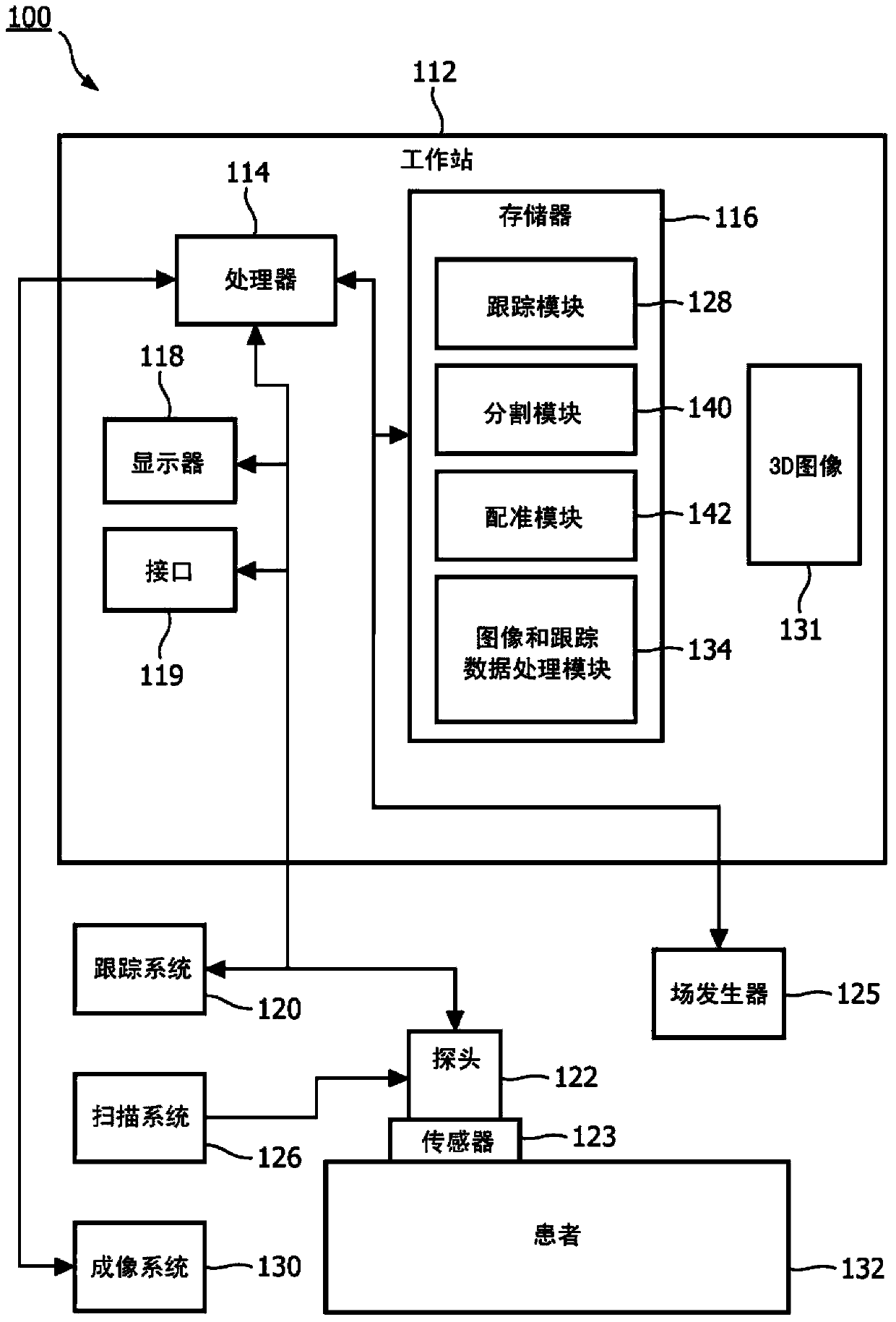

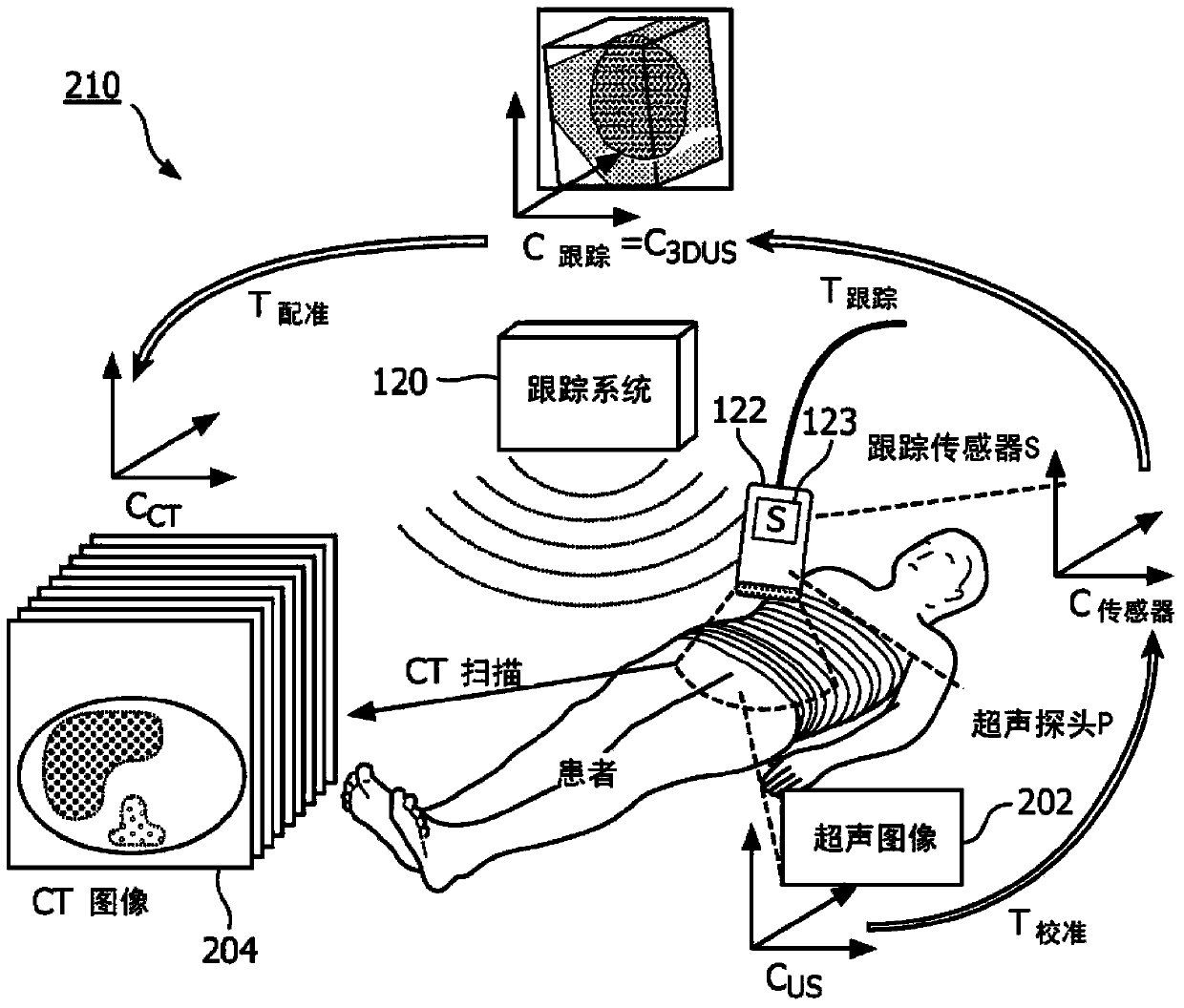

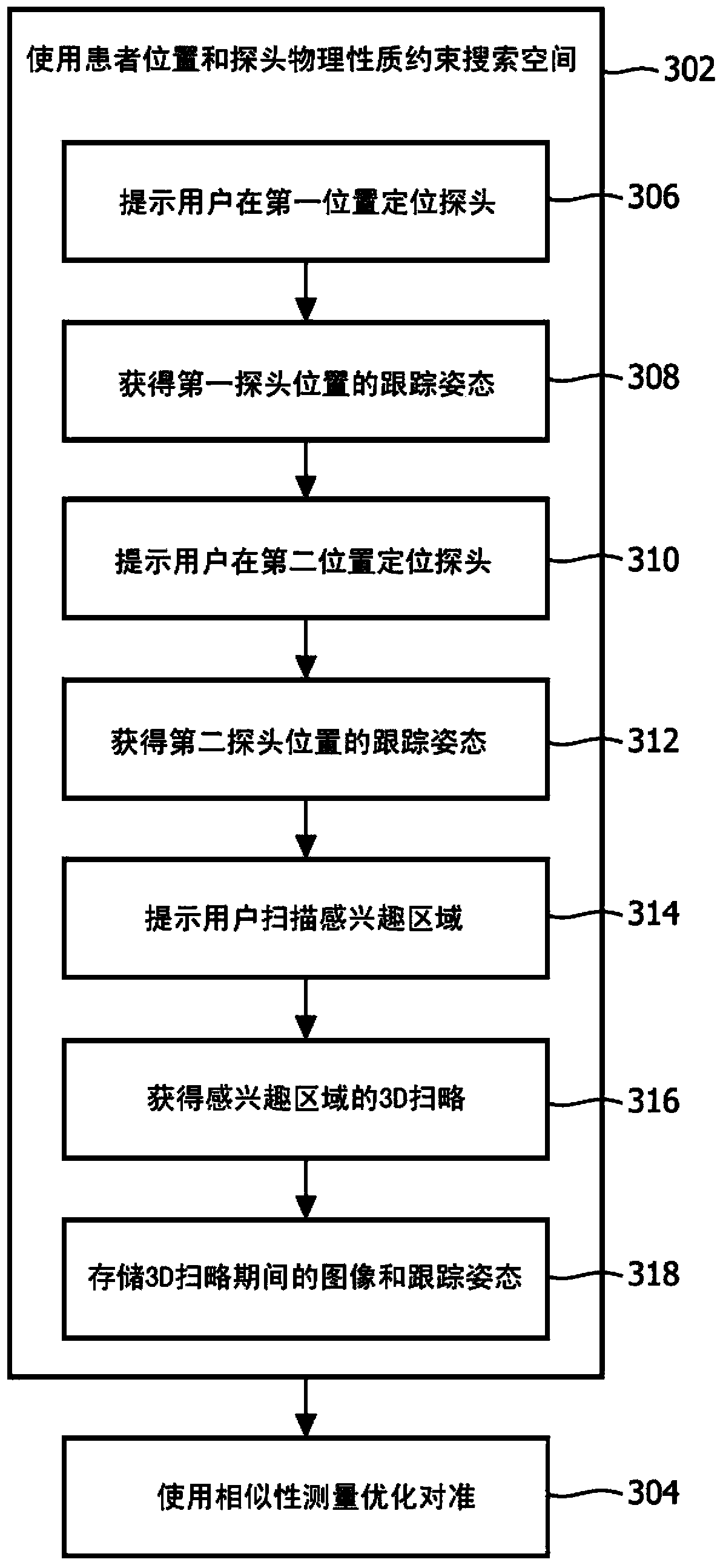

System and method for automated initialization and registration of navigation system

ActiveCN103402453AAccurate initializationGood registration effectUltrasonic/sonic/infrasonic diagnosticsReconstruction from projectionSkin surfaceNavigation system

A system and method for image registration includes tracking (508) a scanner probe in a position along a skin surface of a patient. Image planes corresponding to the position are acquired (510). A three-dimensional volume of a region of interest is reconstructed (512) from the image planes. A search of an image volume is initialized (514) to determine candidate images to register the image volume with the three-dimensional volume by employing pose information of the scanner probe during image plane acquisition, and physical constraints of a pose of the scanner probe. The image volume is registered (522) with the three-dimensional volume.

Owner:KONINKLJIJKE PHILIPS NV

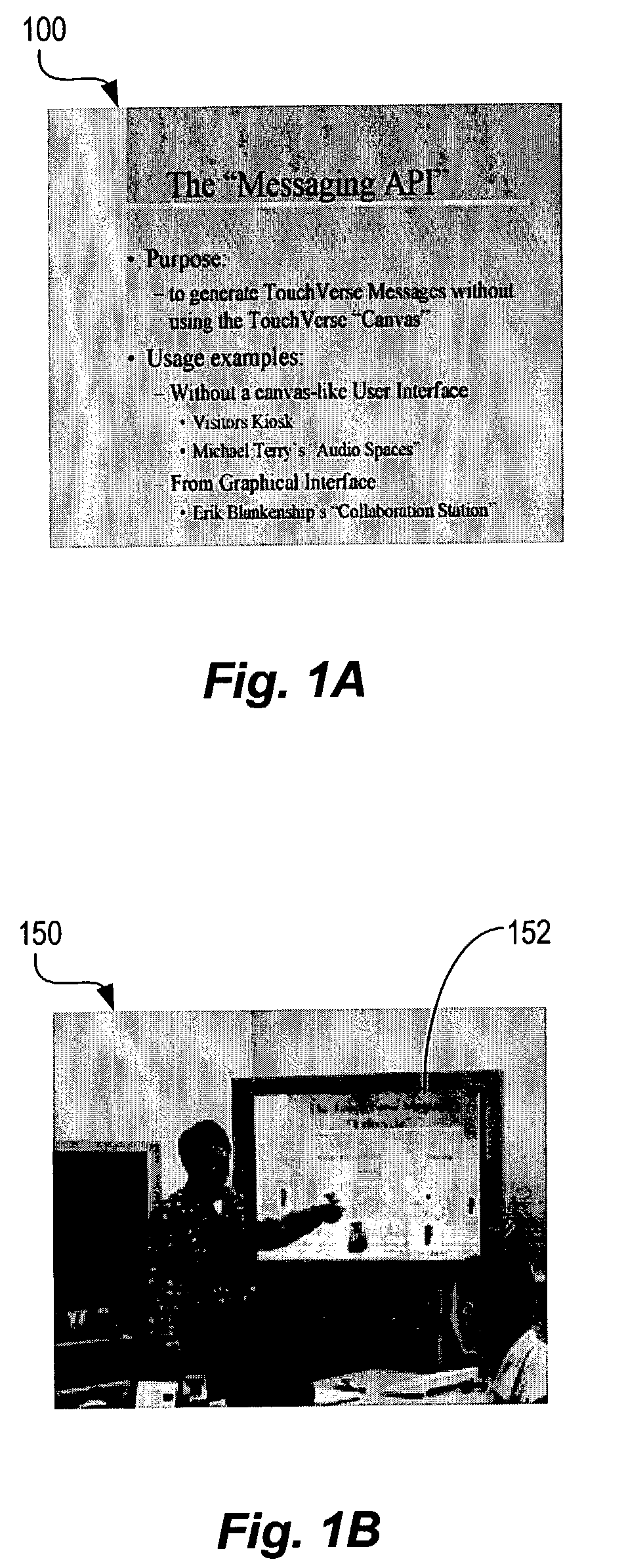

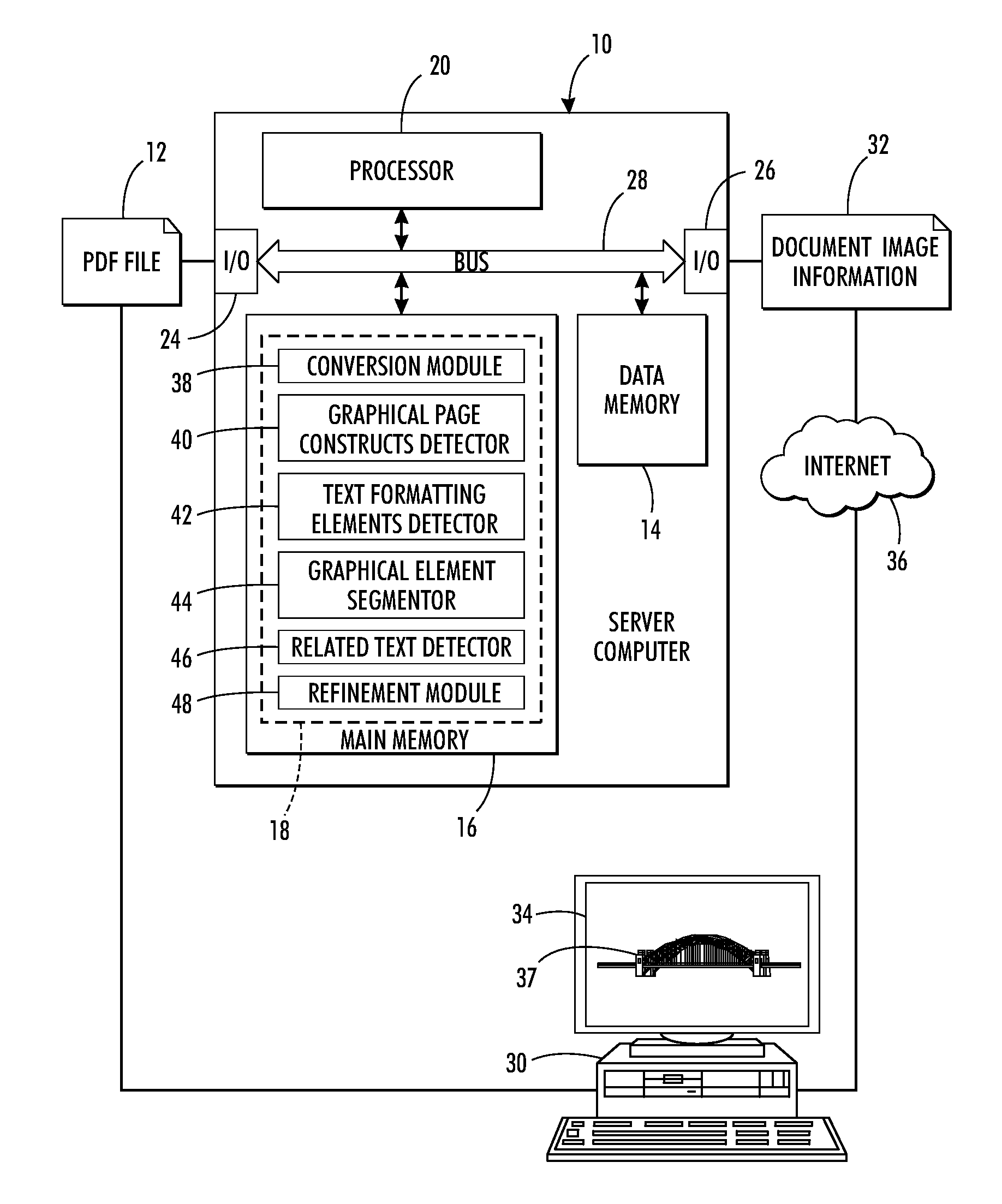

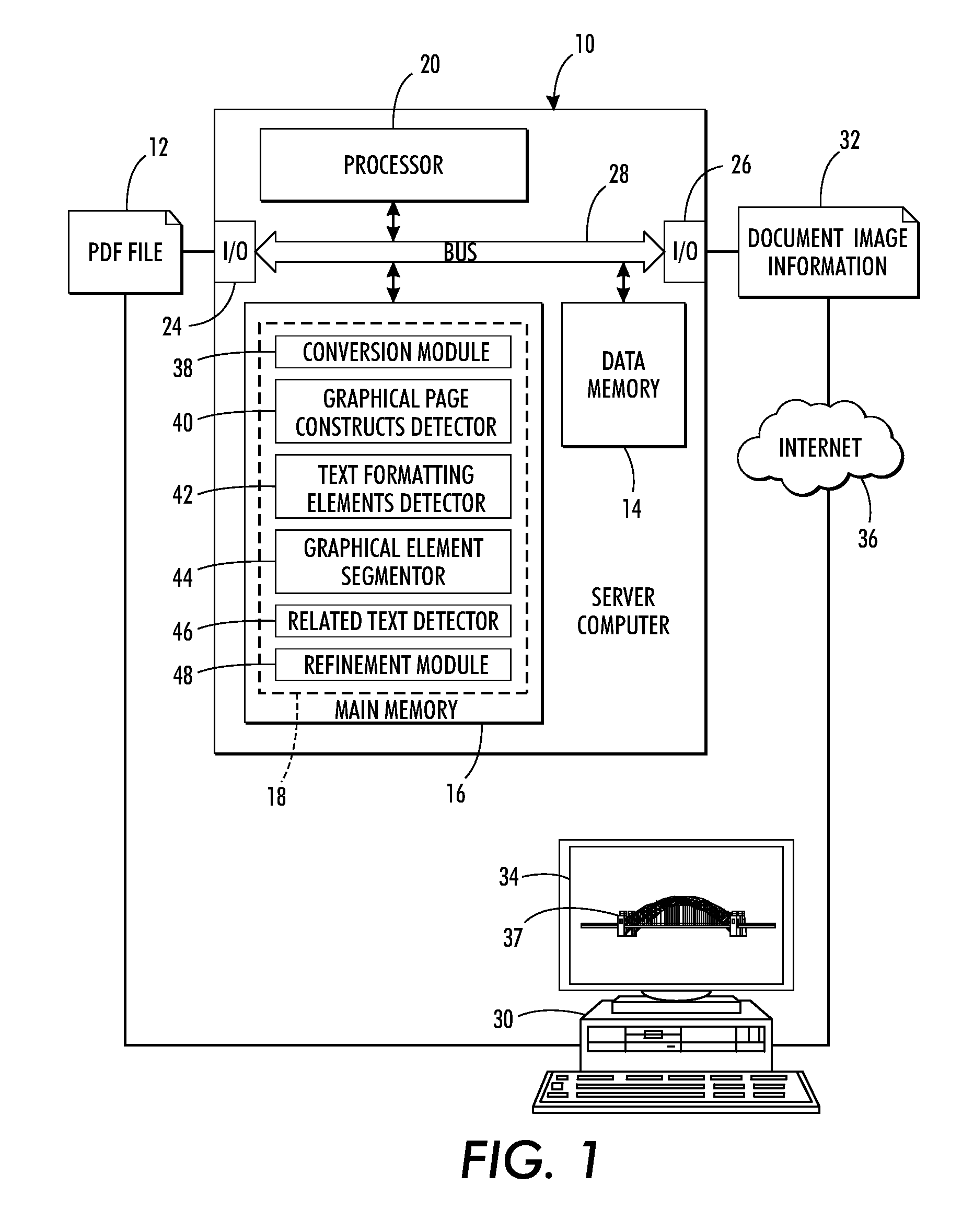

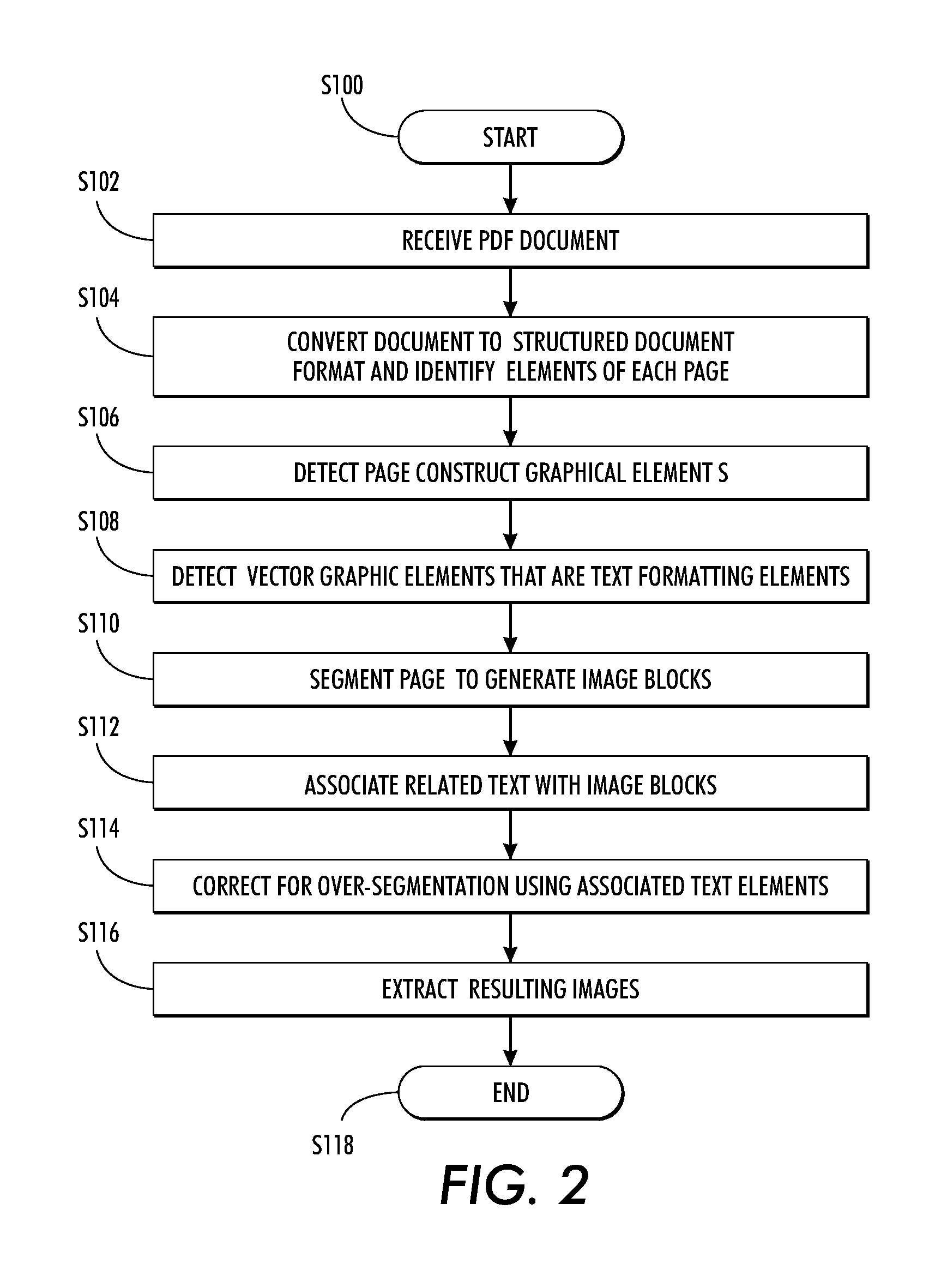

Detection and extraction of elements constituting images in unstructured document files

InactiveUS20120324341A1Natural language data processingSpecial data processing applicationsElectronic documentGraphics

A method and a system for detecting and extracting images in an electronic document are disclosed. The method includes receiving an electronic document comprising a plurality of pages and, for each of at least one of the pages of the document, identifying elements of the page. The identified elements include a set of graphical elements and a set of text elements. The method may include identifying and excluding, from the set of graphical elements, those which serve as graphical page constructs and / or text formatting elements. The page can then be segmented, based on (remaining) graphical elements and identified white spaces, to generate a set of image blocks, each including a respective one or more of the graphical elements. Text elements that are associated with a respective image block are identified as captions. Overlapping candidate images, each including an image block and its caption(s), if any, are then grouped to form a new image. The new image can thus include candidate images which would, without the identification of their caption(s), each be treated as a respective image.

Owner:XEROX CORP

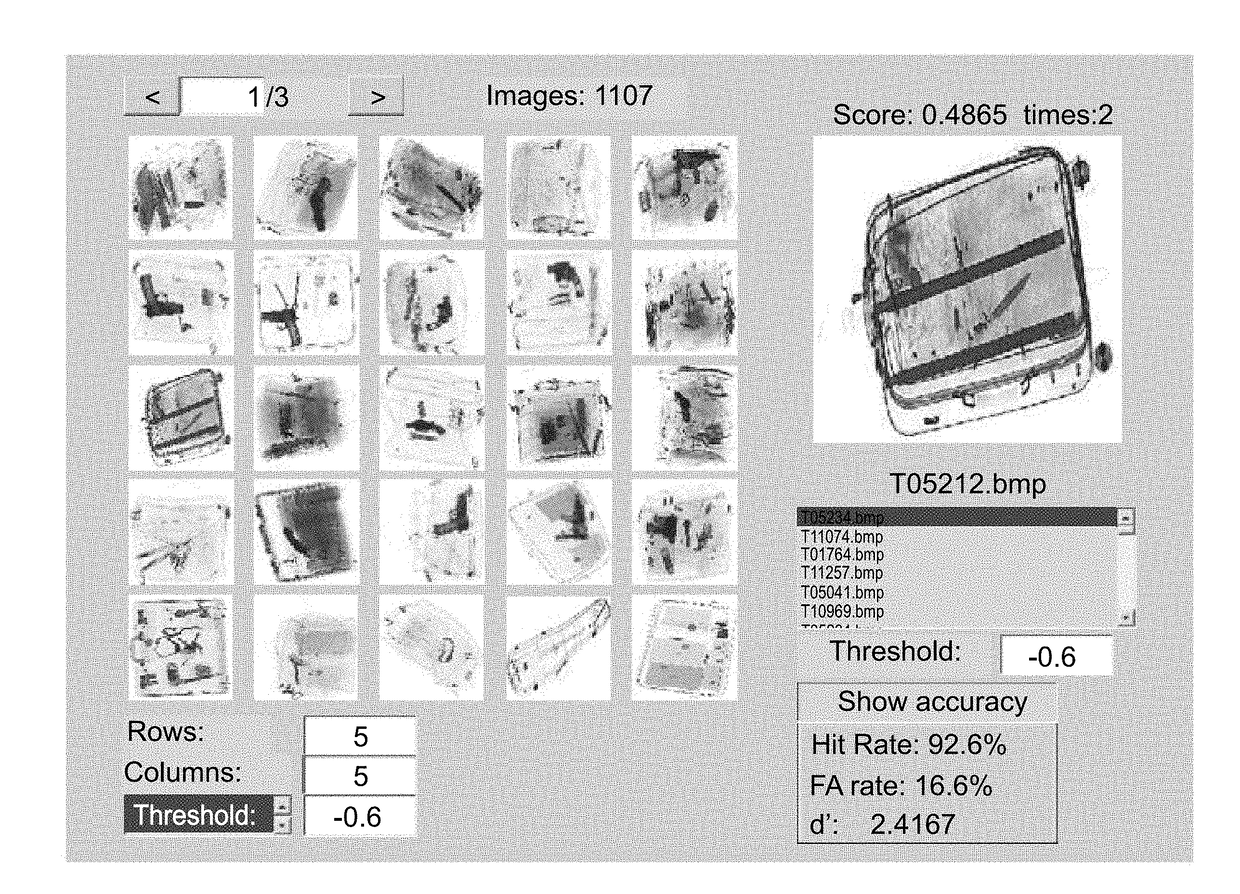

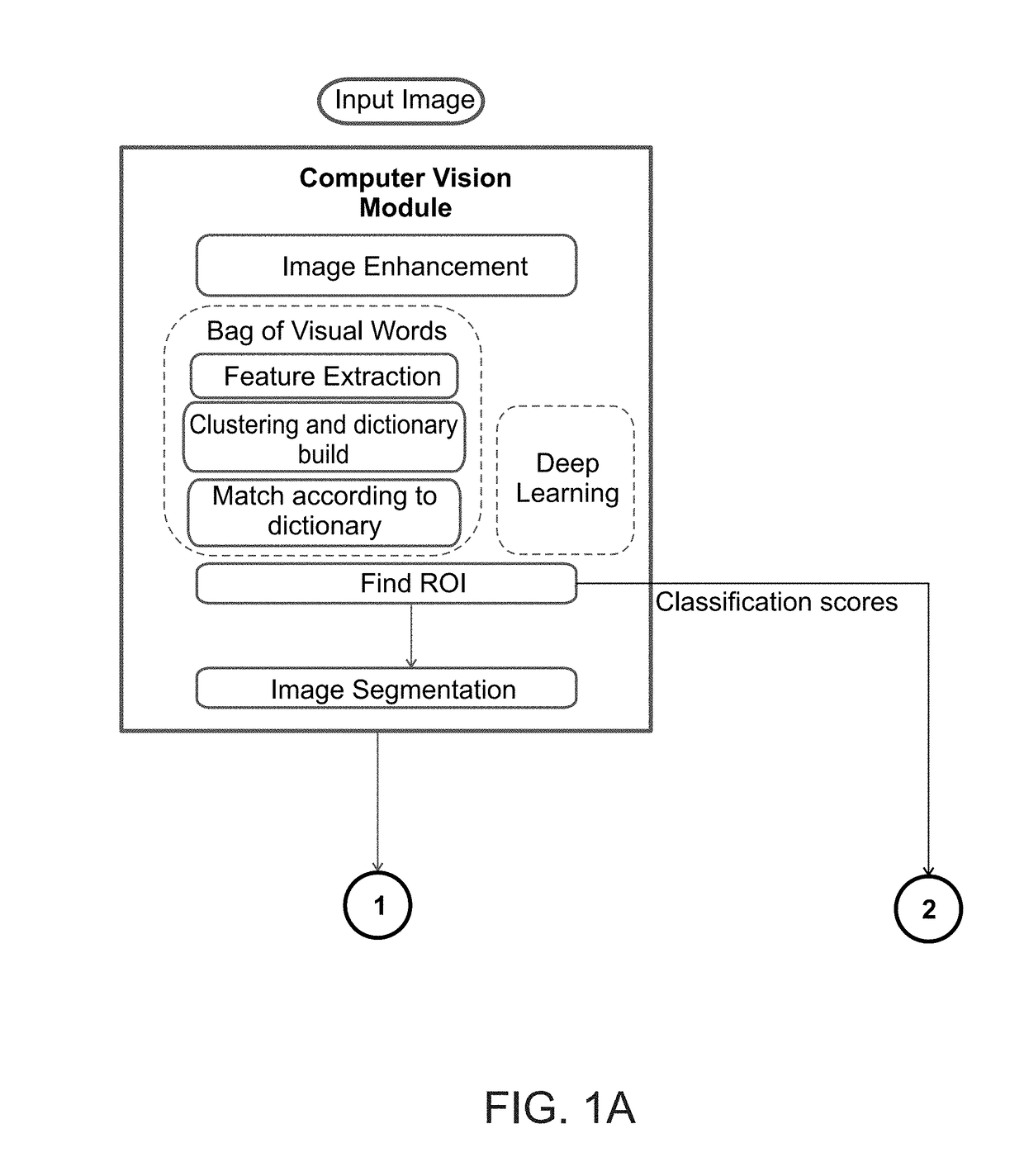

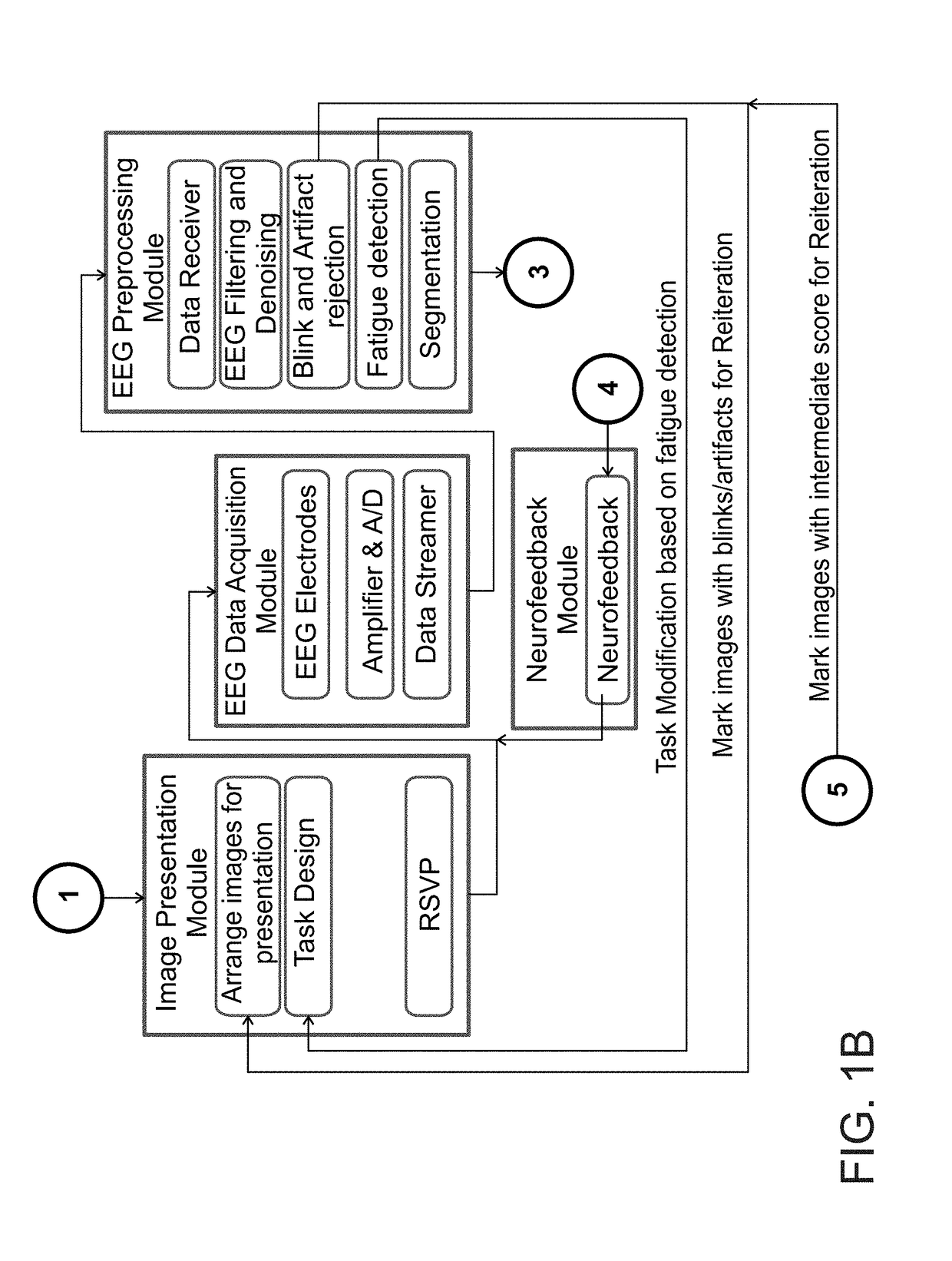

Image classification by brain computer interface

ActiveUS20180089531A1Input/output for user-computer interactionElectroencephalographyBrain computer interfacingApplication computers

A method of classifying an image is disclosed. The method comprises: applying a computer vision procedure to the image to detect therein candidate image regions suspected as being occupied by a target; presenting to an observer each candidate image region as a visual stimulus, while collecting neurophysiological signals from a brain of the observer; processing the neurophysiological signals to identify a neurophysiological event indicative of a detection of the target by the observer; and determining an existence of the target in the image is based, at least in part, on the identification of the neurophysiological event.

Owner:INNEREYE

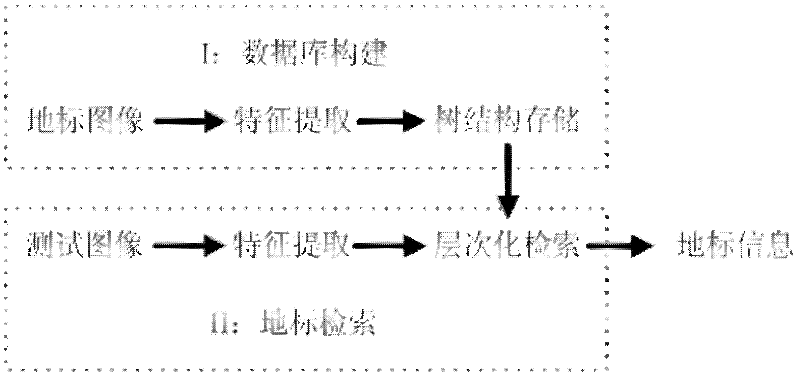

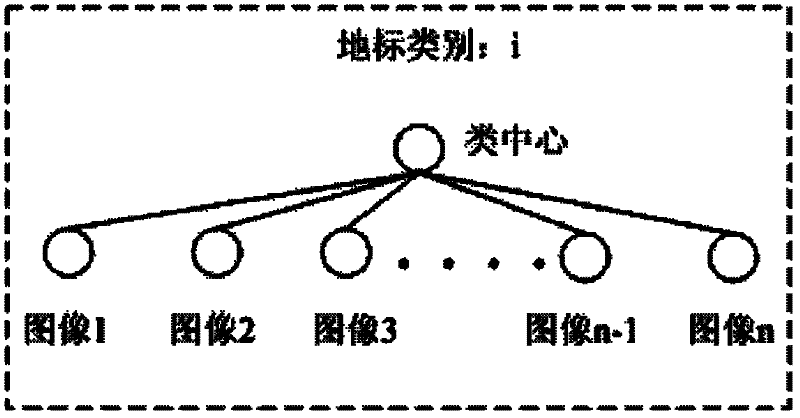

Hierarchical landmark identification method integrating global visual characteristics and local visual characteristics

ActiveCN102542058AImprove accuracyReduce complexitySpecial data processing applicationsTree shapedHigh dimensional

The invention discloses a hierarchical landmark identification method integrating global visual characteristics and local visual characteristics. High-dimensional characteristic vectors of landmark images are obtained and are used as the global visual characteristics of the landmark images; the local visual characteristics of the landmark images are obtained; the global visual characteristics andthe local visual characteristics are stored by adopting a hierarchical tree-shaped structure, and a visual characteristic set is obtained; each image is characterized according to the visual characteristic set; the images are pre-retrieved according to the global visual characteristics xi, and first candidate images are obtained; the first candidate images are further retrieved according to statistical characteristics vi of local outstanding points, and second candidate images are obtained; and the second candidate images are further retrieved according to a characteristic set yi of the localoutstanding points, and final candidate images are obtained and are fed back to a user. By adopting the hierarchical landmark identification method, the images to be identified can be rapidly and accurately retrieved, so the requirement of the user for convenient information acquisition is satisfied; and besides, through removing certain mismatching points, the landmark identification accuracy isimproved and the landmark identification complexity is reduced.

Owner:TIANJIN UNIV

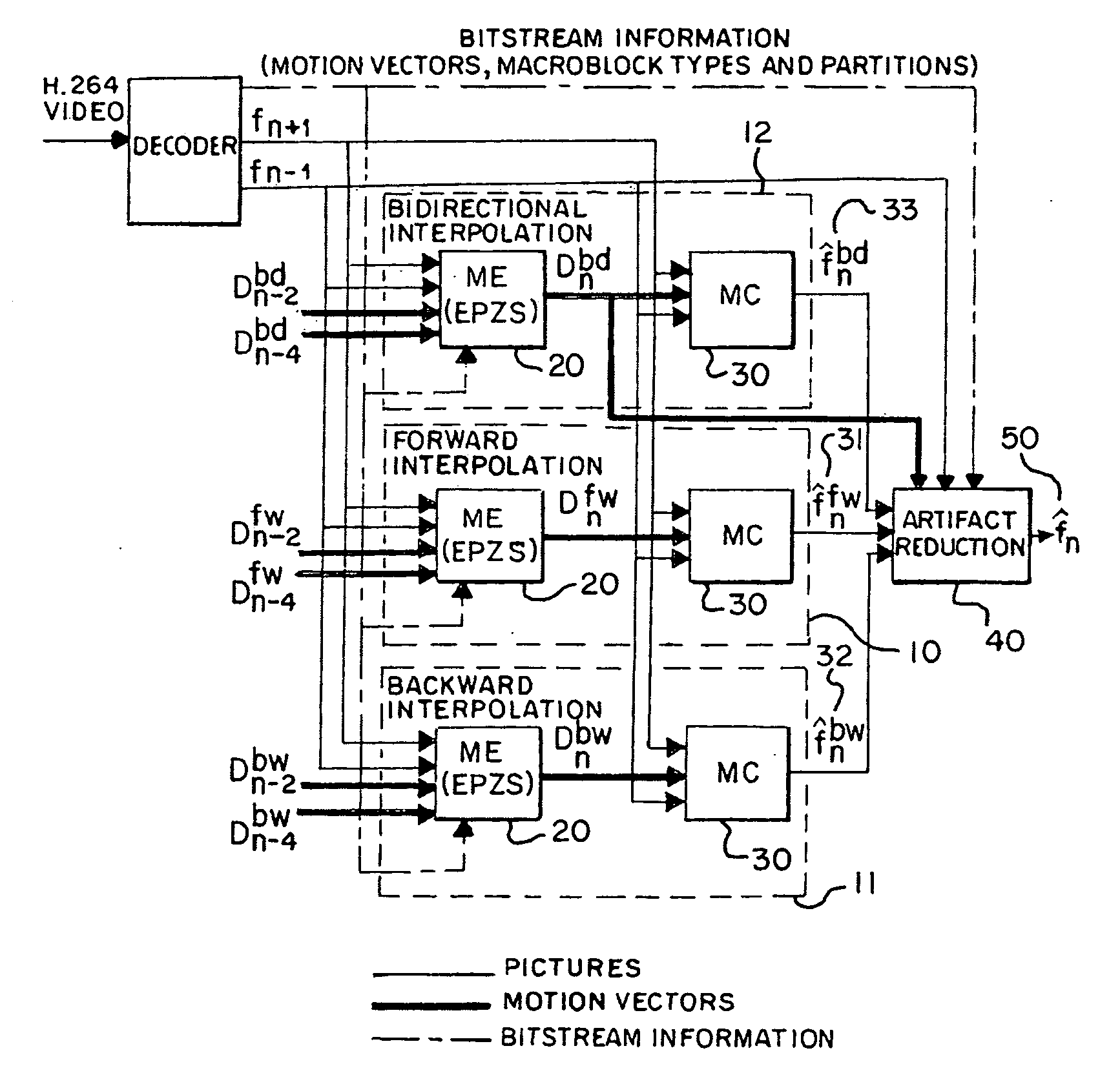

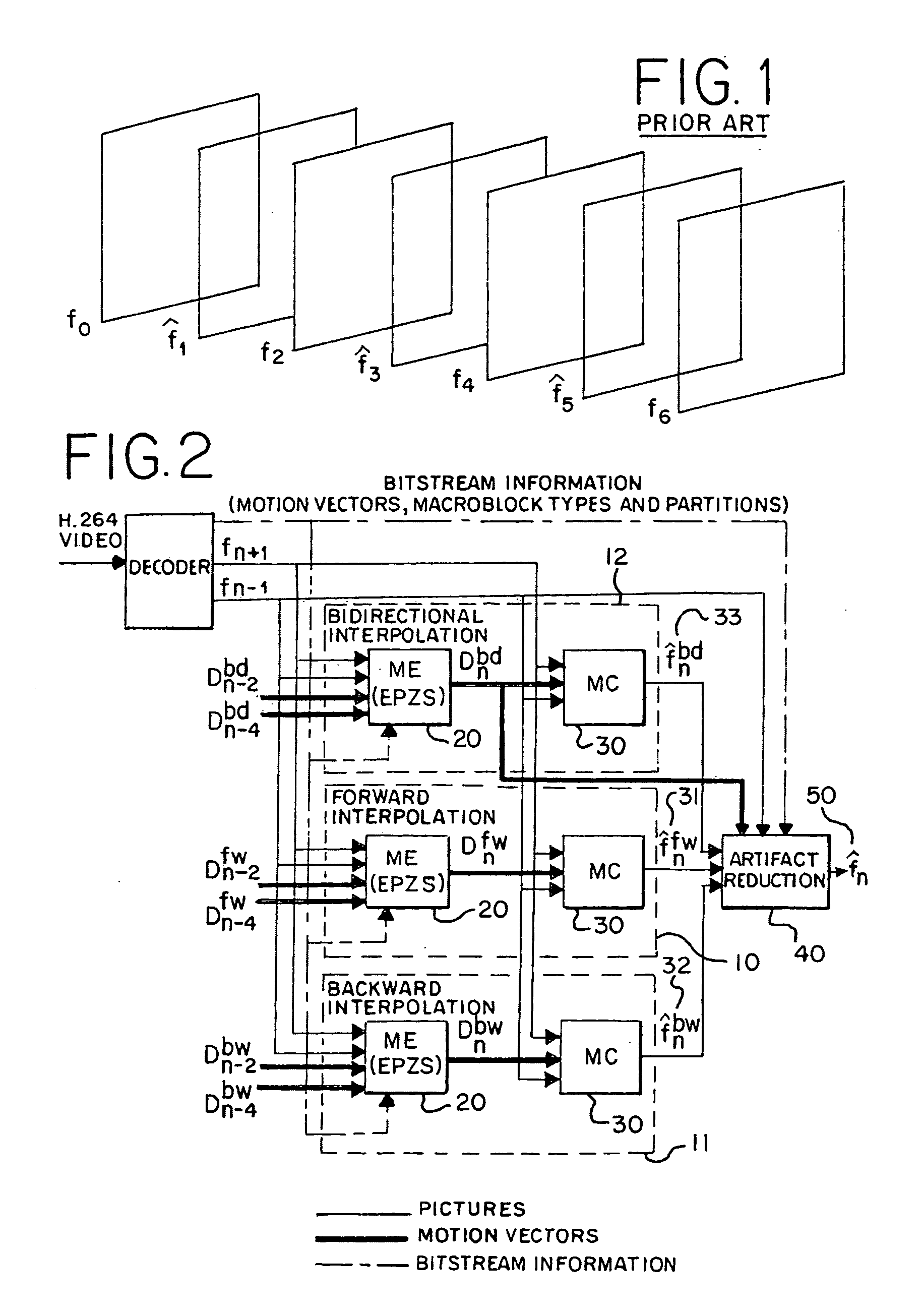

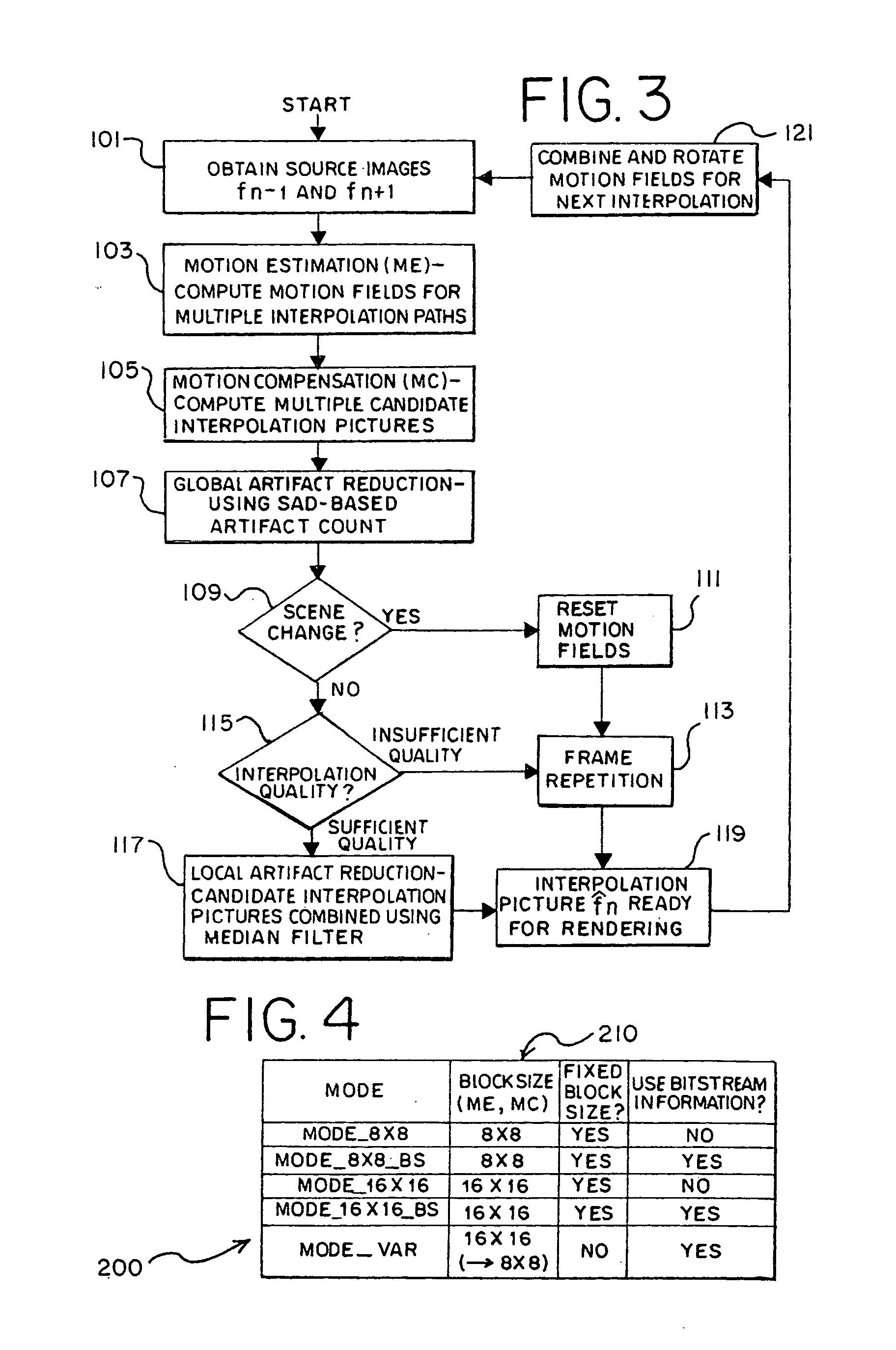

System and method for frame interpolation for a compressed video bitstream

InactiveUS20100201870A1Without usingImprove interpolation qualityPicture reproducers using cathode ray tubesPicture reproducers with optical-mechanical scanningVideo bitstreamMotion field

A system and a method perform frame interpolation for a compressed video bitstream. The system and the method may combine candidate pictures to generate an interpolated video picture inserted between two original video pictures. The system and the method may generate the candidate pictures from different motion fields. The candidate pictures may be generated partially or wholly from motion vectors extracted from the compressed video bitstream. The system and the method may reduce computation required for interpolation of video frames without a negative impact on visual quality of a video sequence.

Owner:LUESSI MARTIN +4

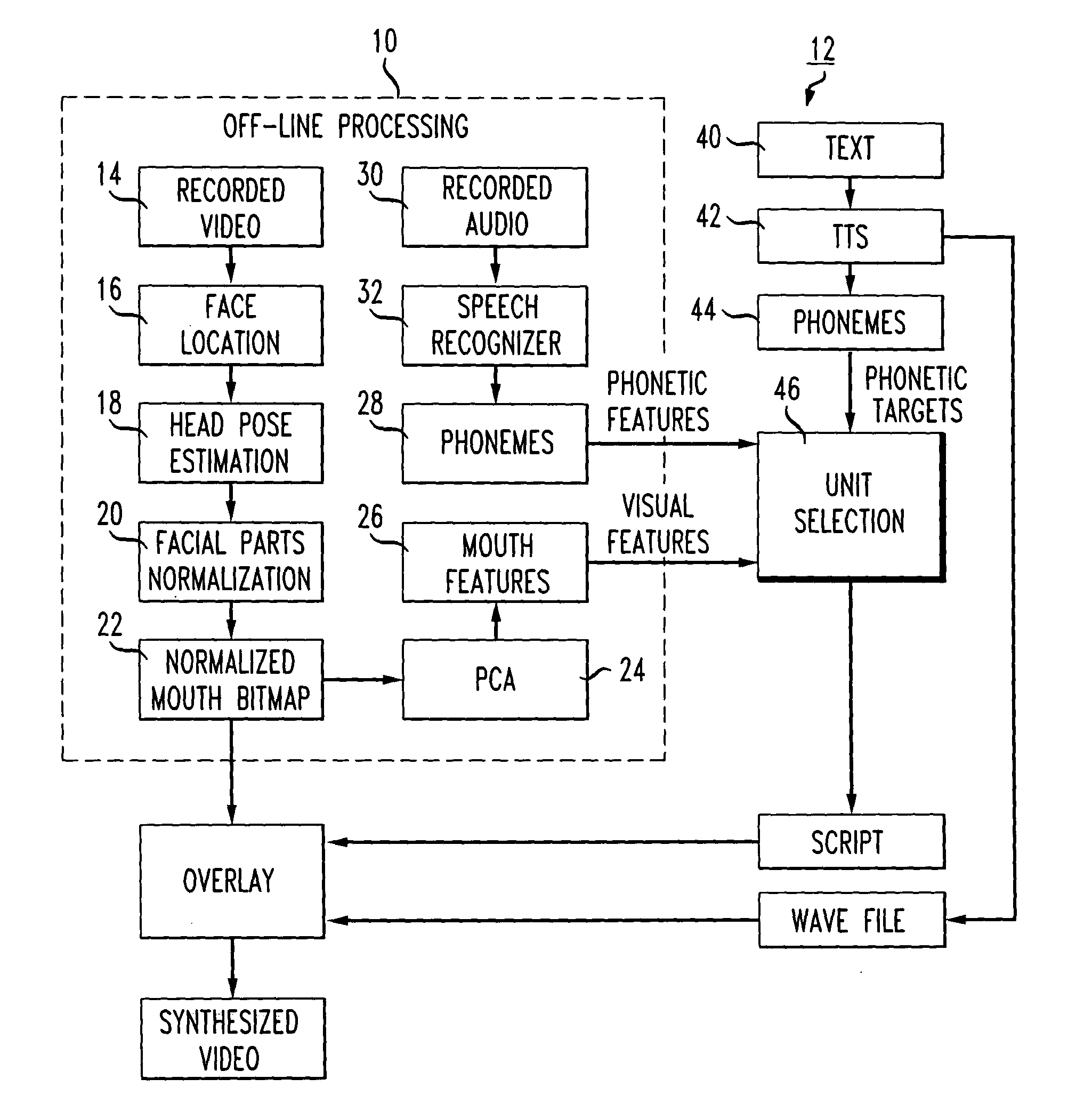

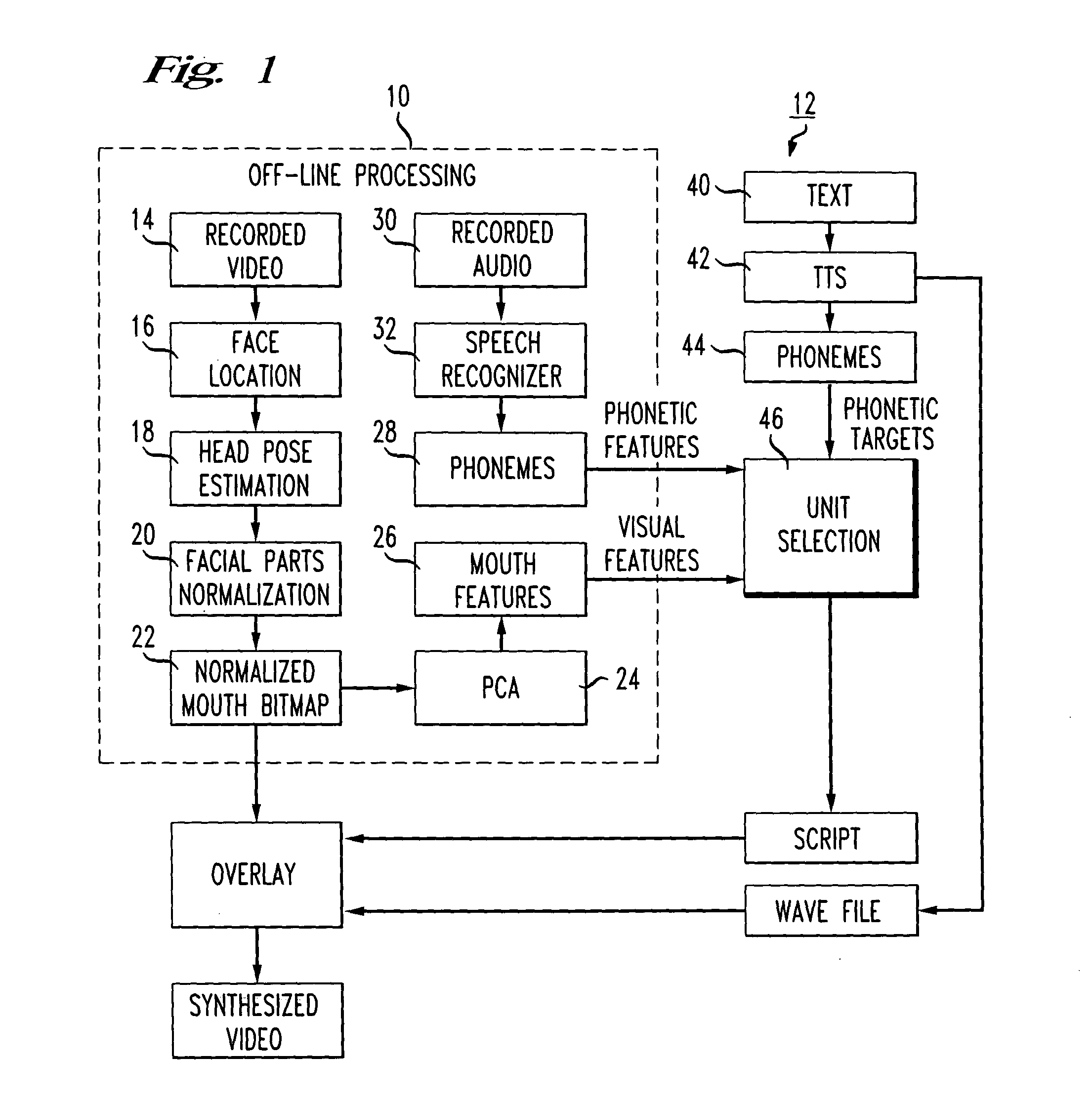

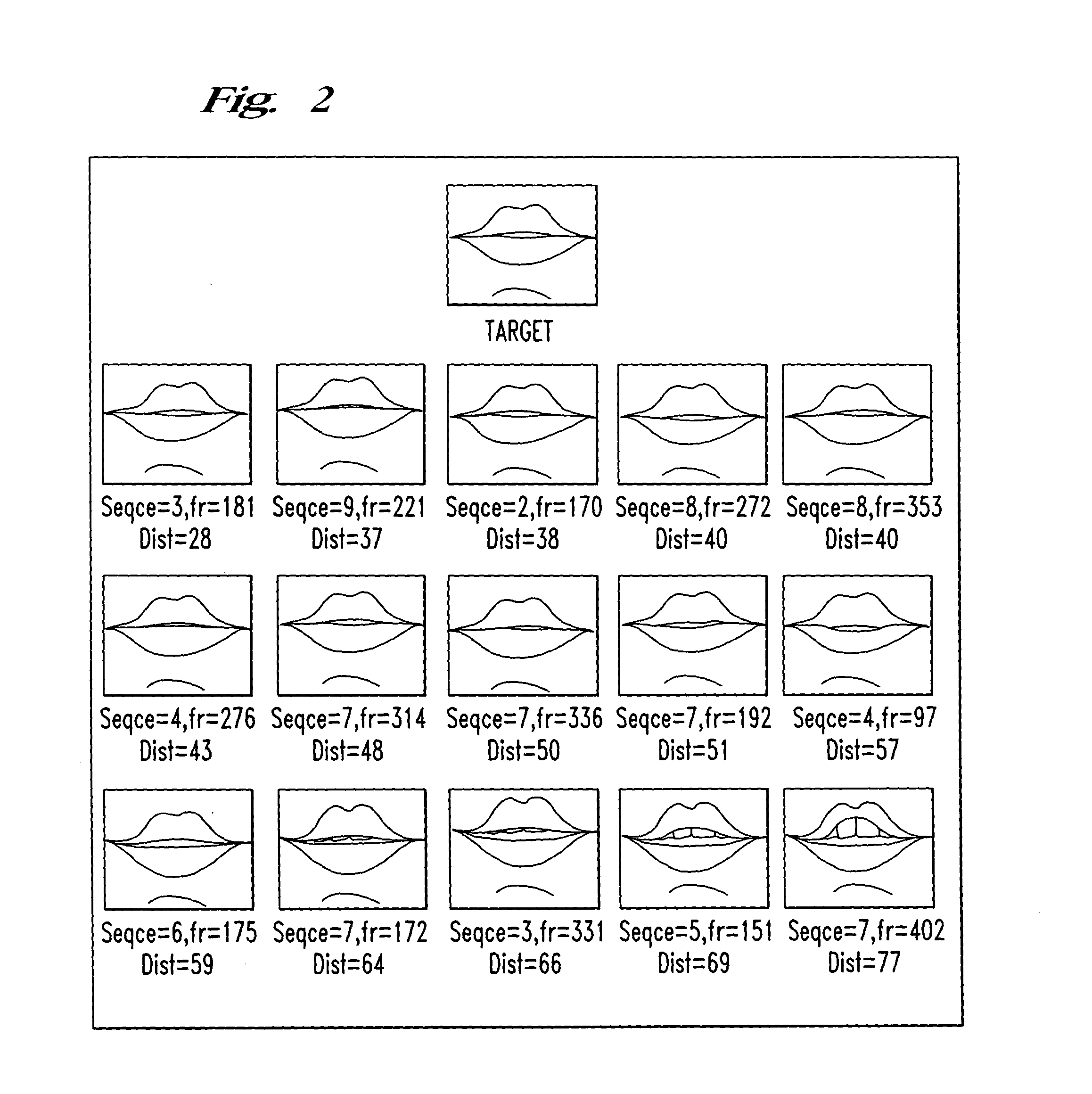

Audio-visual selection process for the synthesis of photo-realistic talking-head animations

A system and method for generating photo-realistic talking-head animation from a text input utilizes an audio-visual unit selection process. The lip-synchronization is obtained by optimally selecting and concatenating variable-length video units of the mouth area. The unit selection process utilizes the acoustic data to determine the target costs for the candidate images and utilizes the visual data to determine the concatenation costs. The image database is prepared in a hierarchical fashion, including high-level features (such as a full 3D modeling of the head, geometric size and position of elements) and pixel-based, low-level features (such as a PCA-based metric for labeling the various feature bitmaps).

Owner:RAKUTEN GRP INC

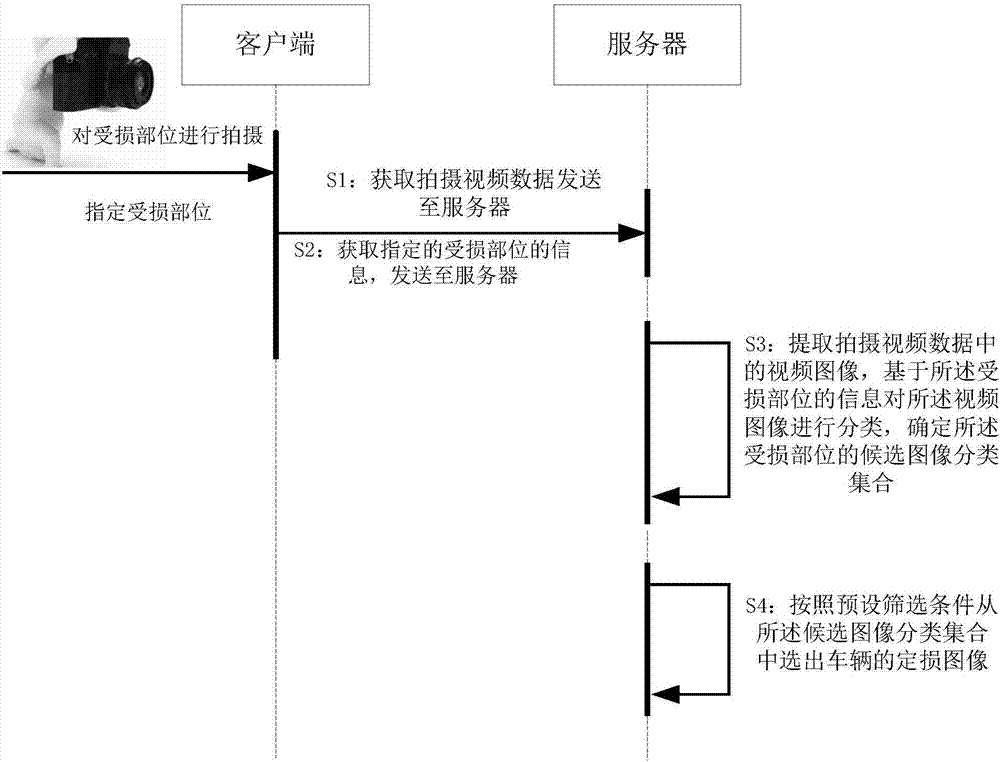

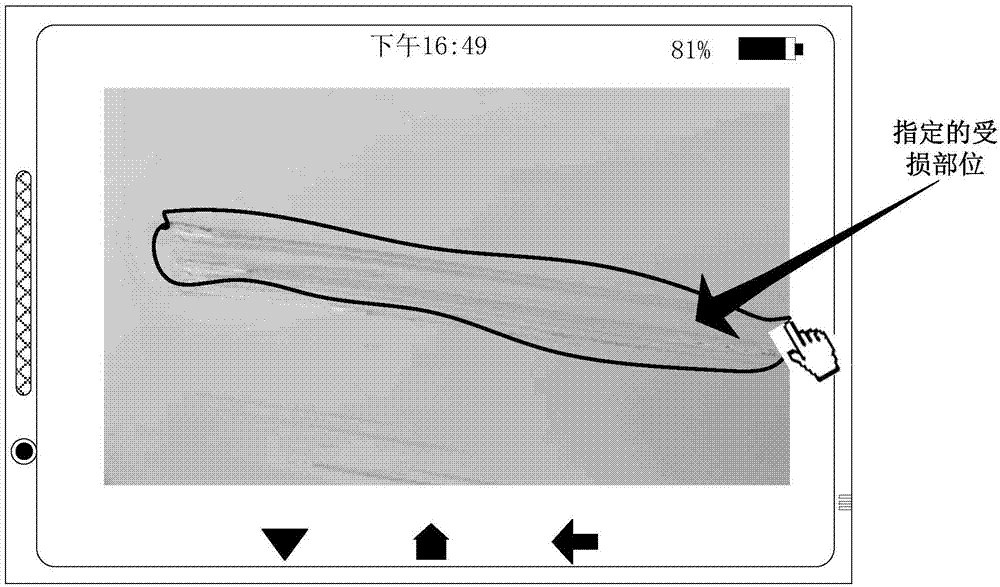

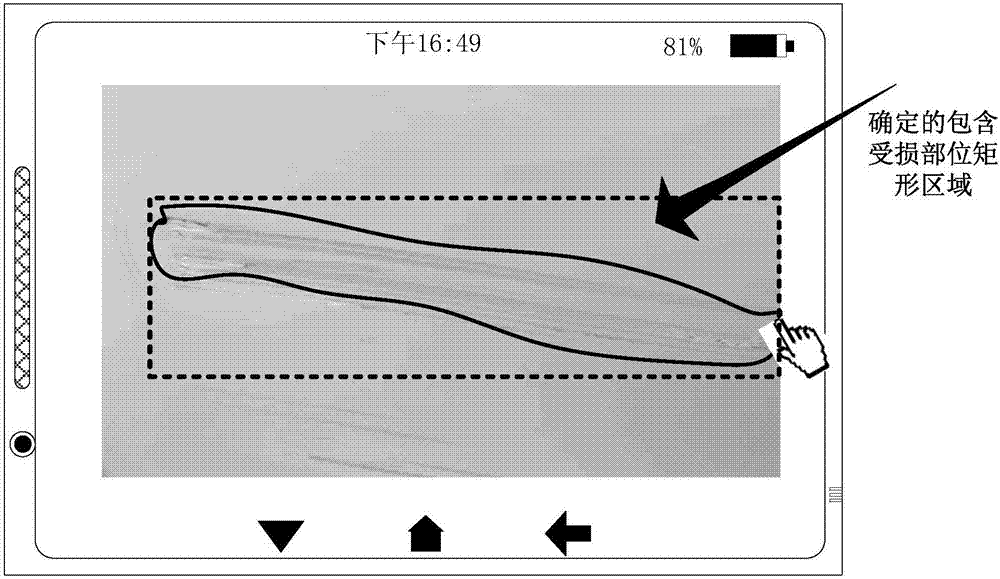

Vehicle loss assessment image obtaining method and device, server, and terminal equipment

ActiveCN107368776AImprove acquisition efficiencyLower acquisition costsFinanceCharacter and pattern recognitionComputer graphics (images)Terminal equipment

The embodiment of the invention discloses a vehicle loss assessment image obtaining method and device, a server, and terminal equipment. A client obtains photographed video data and transmits the photographed video data to the server. The client receives the information of a specified damaged part of a damaged vehicle, and transmits the information of the damaged part to the server. The server receives the photographed video data and the information of the damaged part which are uploaded by the client, and extracts a video image in the photographed video data, and classifies the video image according to the information of the damaged part, and determines a candidate image classification set of the damaged part. The server selects a loss assessment image of the vehicle from the candidate image classification set according to a preset screening condition. According to all embodiment of the invention, the method can achieve the automatic and quick generation of a high-quality loss assessment image meeting the loss assessment processing demands, meets the loss assessment processing demands, and improves the obtaining efficiency of a loss assessment image.

Owner:ADVANCED NEW TECH CO LTD

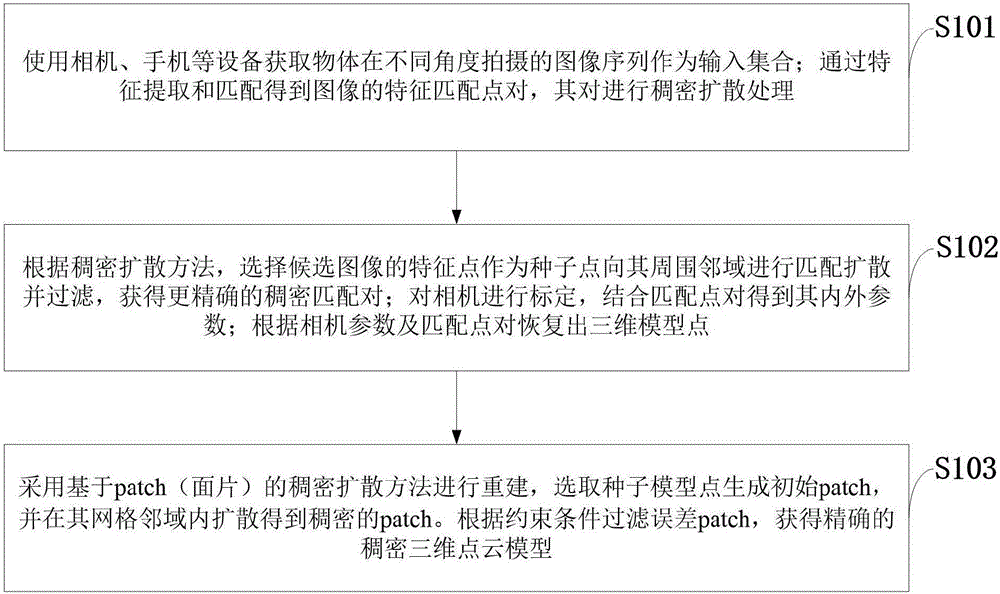

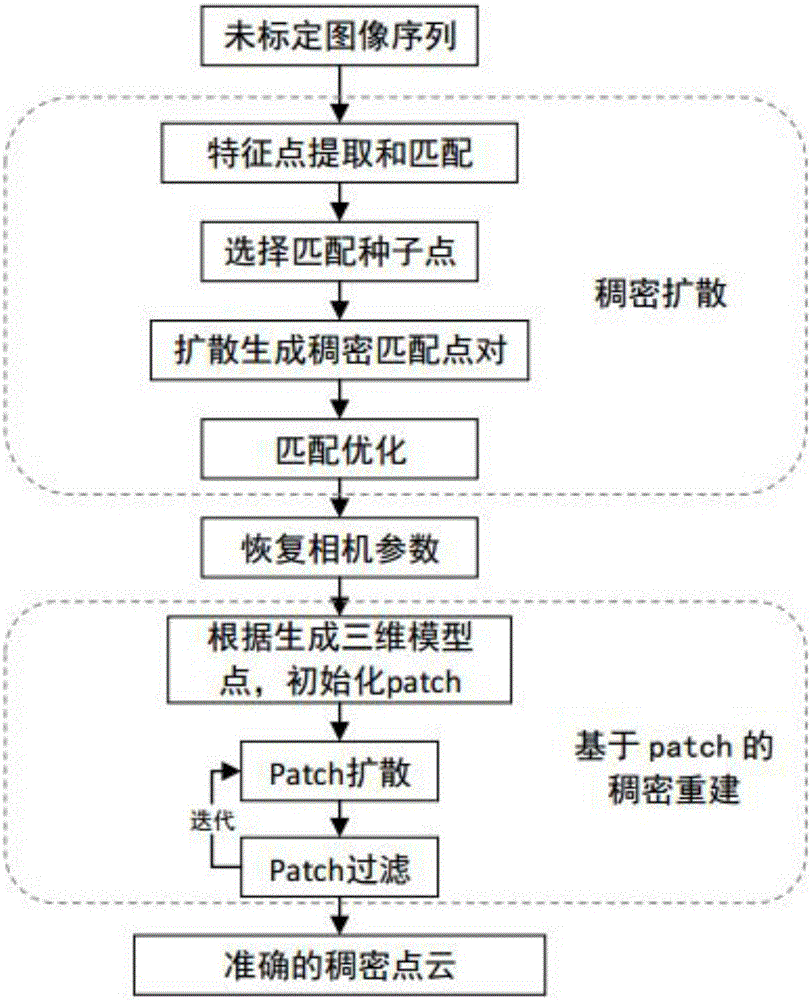

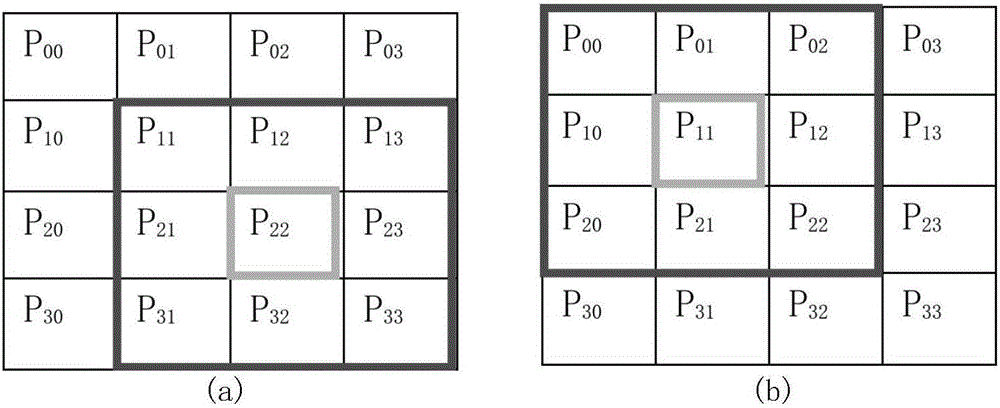

Three-dimensional point cloud reconstruction method based on multiple uncalibrated images

The invention discloses a three-dimensional point cloud reconstruction method based on multiple uncalibrated images. The method comprises the following steps: obtaining the image sequence of objects shot at different angles as an input set; obtaining feature matching point pairs of images through feature extraction and matching, and carrying out dense diffusion treatment; selecting feature points of candidate images as seed points to be matched, diffused and filtered towards the peripheral area of the points, and obtaining the dense matching point pairs; calibrating a camera, and combining the matching point pairs to obtain internal and external parameters; recovering a three-dimensional model point according to camera parameters and the matching point pairs; carrying out reconstruction, selecting seed model points to generate initial patches, and diffusing the patches in the grid area to obtain dense patches; and filtering error patches according to constraint conditions, and obtaining an accurate dense three-dimensional point cloud model. According to the method, the high-precision dense point cloud model can be quickly obtained, the generation speed of the model is quickened, the accuracy of matching density is increased, and the density and accuracy of three-dimensional point cloud are improved.

Owner:XIDIAN UNIV

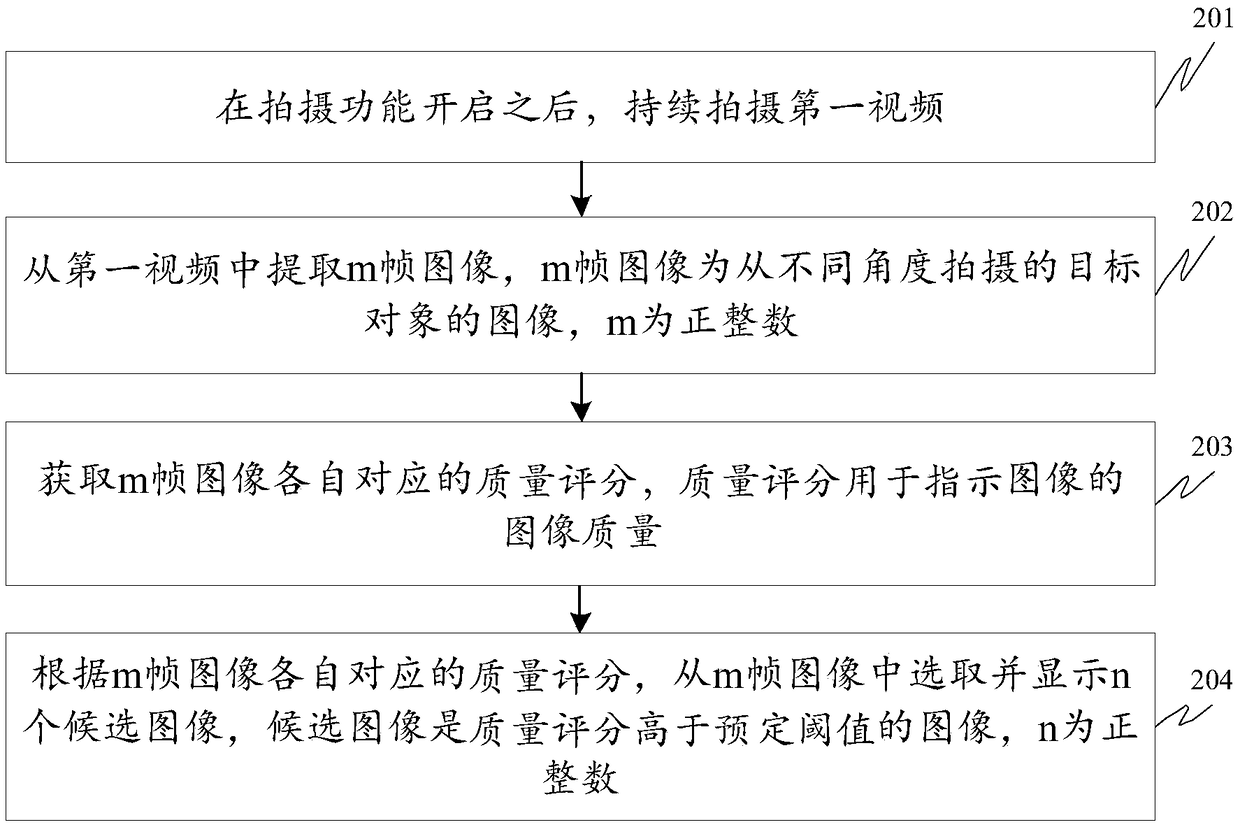

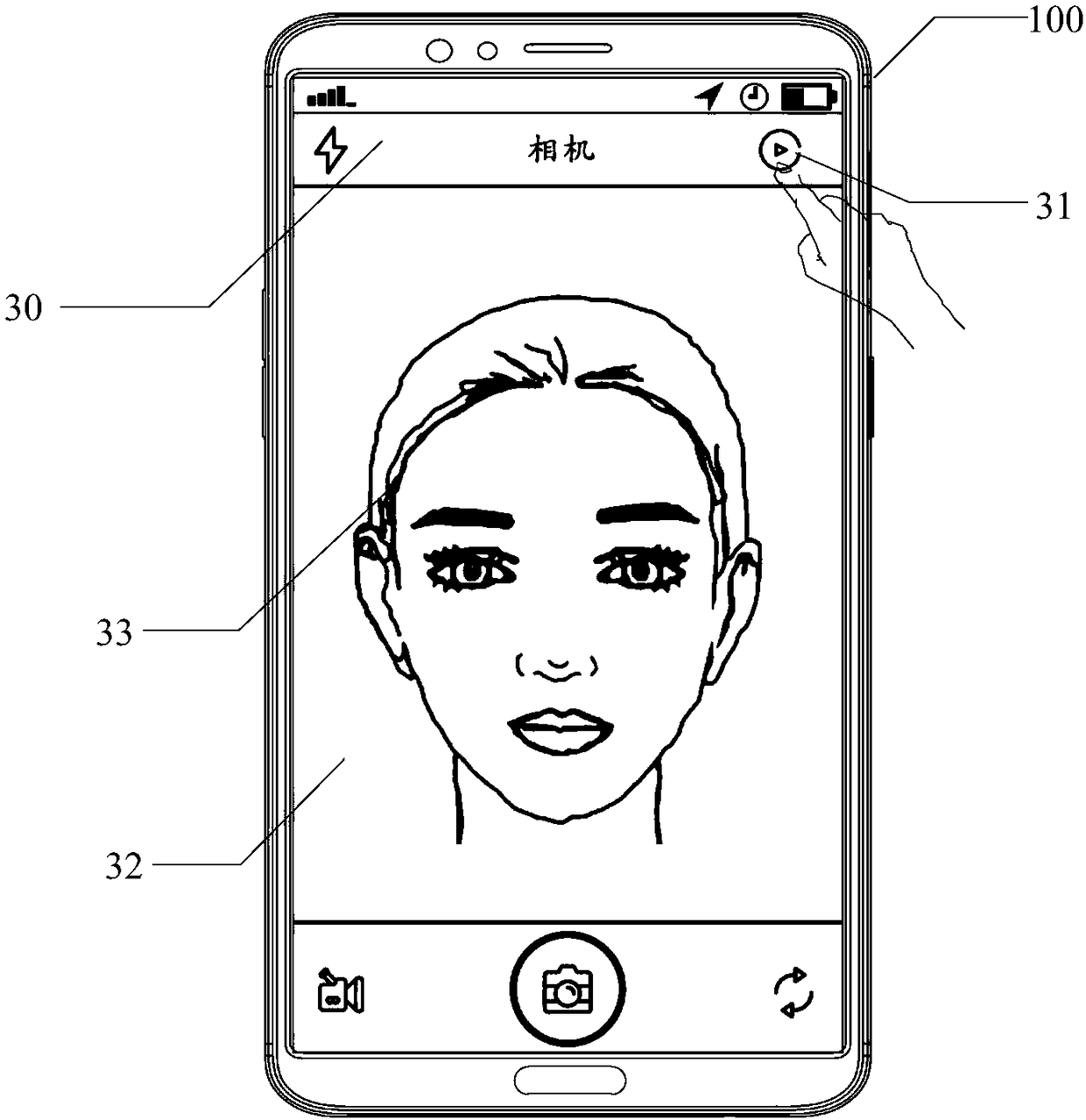

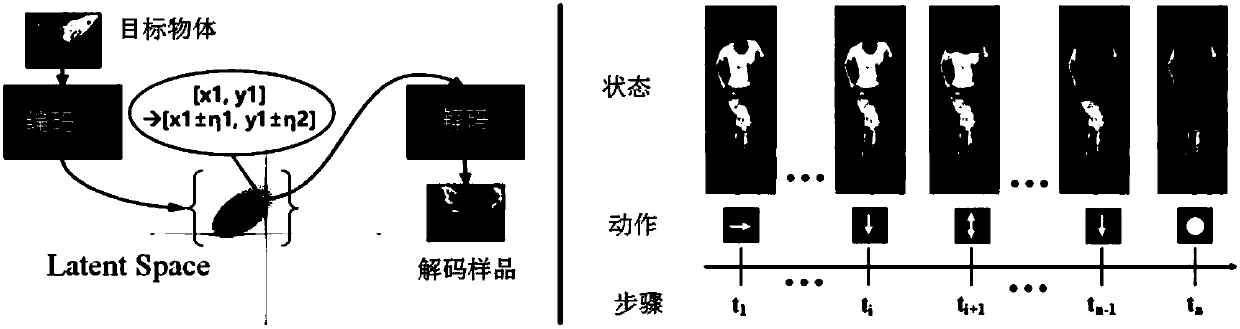

Image processing method and device, terminal and storage medium

ActiveCN108234870AAvoid manual adjustment of shooting parametersReduce the difficulty of operationTelevision system detailsImage enhancementImaging processingImaging quality

The invention discloses an image processing method and device, a terminal and a storage medium, and belongs to the technical field of terminals. The method comprises the steps of keeping on shooting afirst video after a shooting function is enabled; extracting m frames of images from the first video, wherein the m frames of images are images of a target object shot in different angles; obtainingrespective corresponding quality score of the m frames of the images, wherein the quality score is used for indicating image quality of the image; selecting and displaying n candidate images from them frames of images based on respective corresponding quality score of the m frames of the images, wherein the candidate images are images with the quality score higher than a preset threshold. N high-quality candidate images which include the target object are output automatically through the terminal, and the subsequent users can select images satisfies the prospection from the displayed n candidate images, so that users are avoided adjusting shooting parameters every time the users shoot images, and the operational difficulty in shooting high-quality images is reduced greatly.

Owner:GUANGDONG OPPO MOBILE TELECOMM CORP LTD

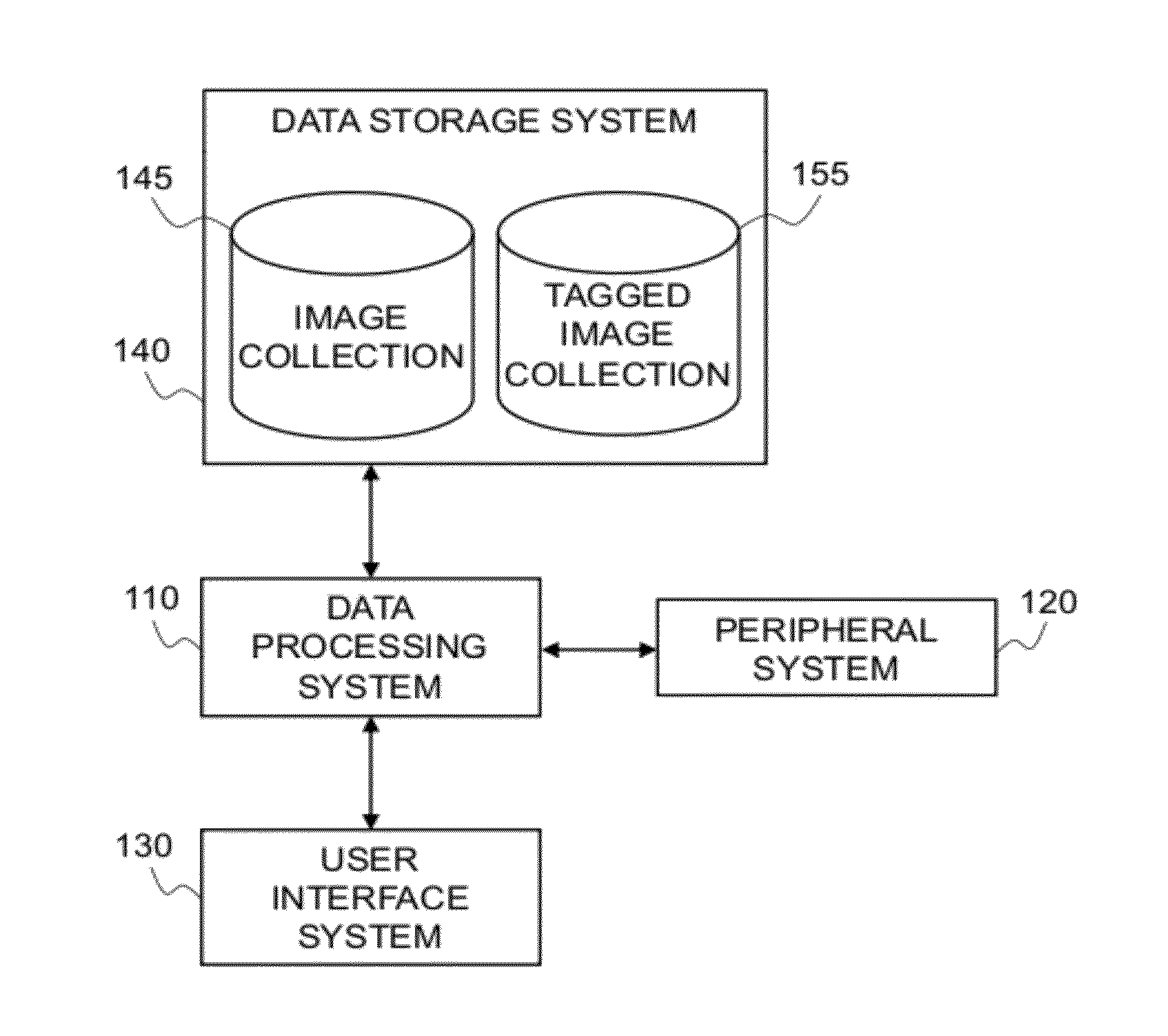

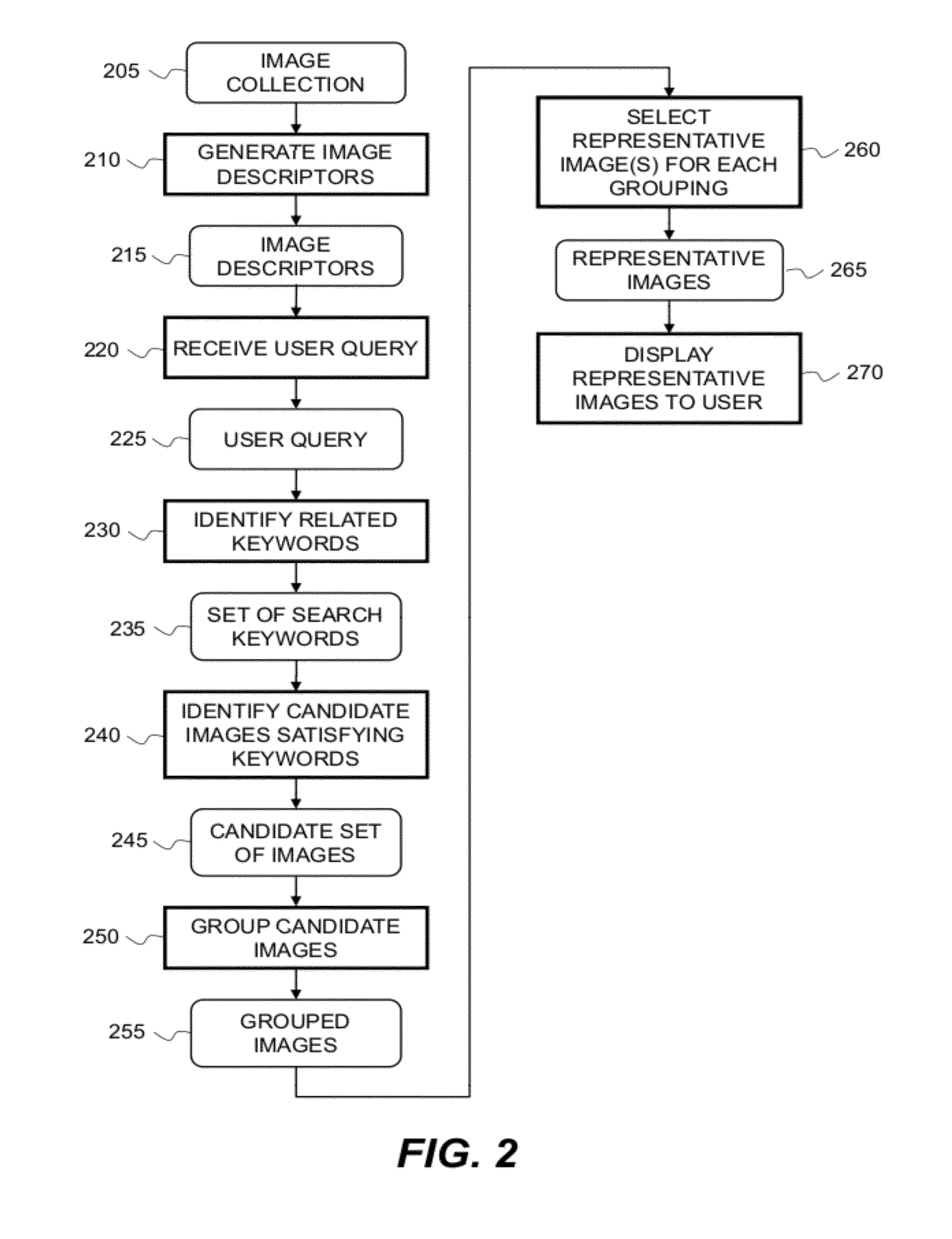

Identifying particular images from a collection

ActiveUS20120203764A1Improve abilitiesEasy to viewStill image data retrievalDigital data processing detailsCo-occurrenceImage display

A method of identifying one or more particular images from an image collection, includes indexing the image collection to provide image descriptors for each image in the image collection such that each image is described by one or more of the image descriptors; receiving a query from a user specifying at least one keyword for an image search; and using the keyword(s) to search a second collection of tagged images to identify co-occurrence keywords. The method further includes using the identified co-occurrence keywords to provide an expanded list of keywords; using the expanded list of keywords to search the image descriptors to identify a set of candidate images satisfying the keywords; grouping the set of candidate images according to at least one of the image descriptors, and selecting one or more representative images from each grouping; and displaying the representative images to the user.

Owner:KODAK ALARIS INC

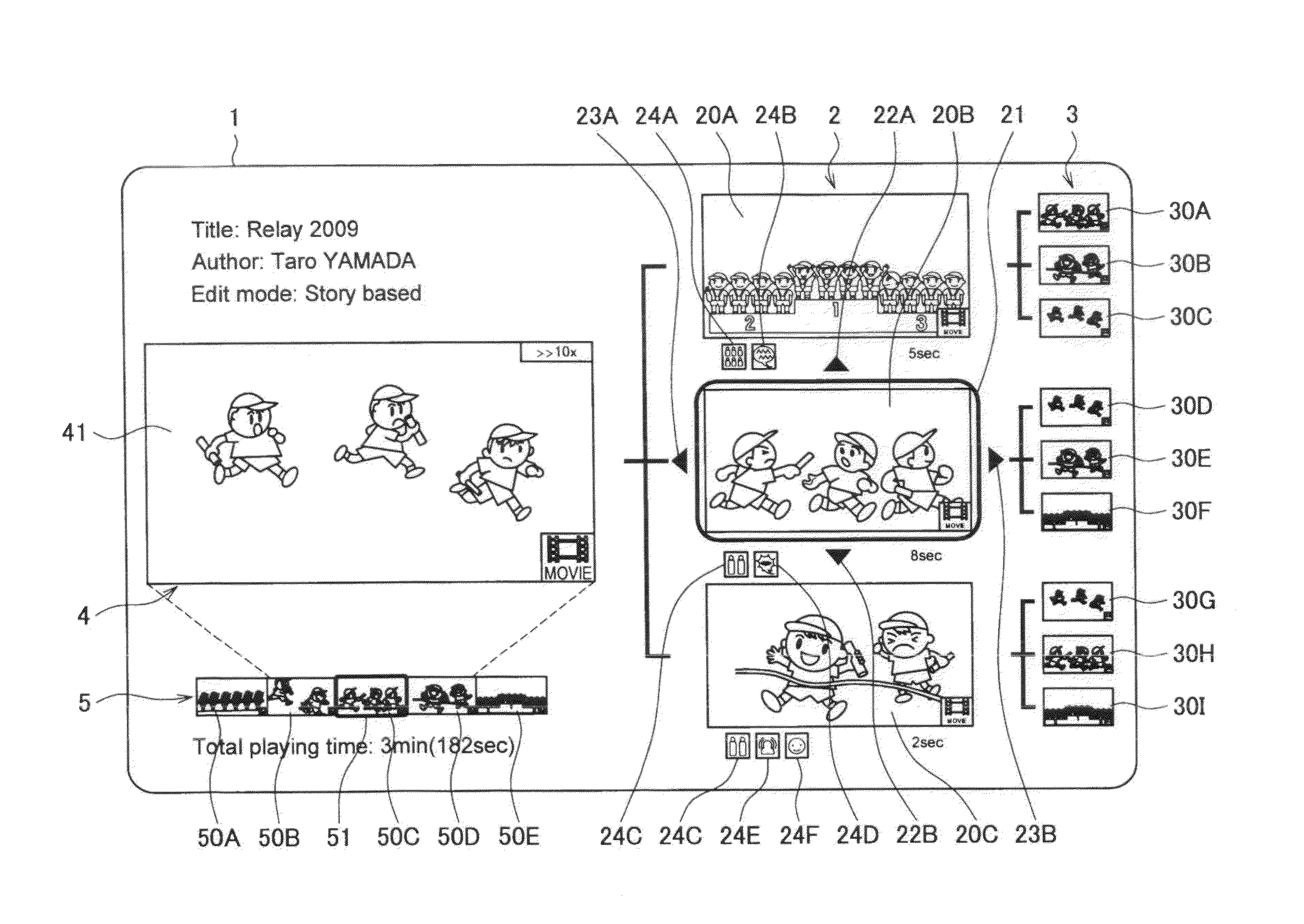

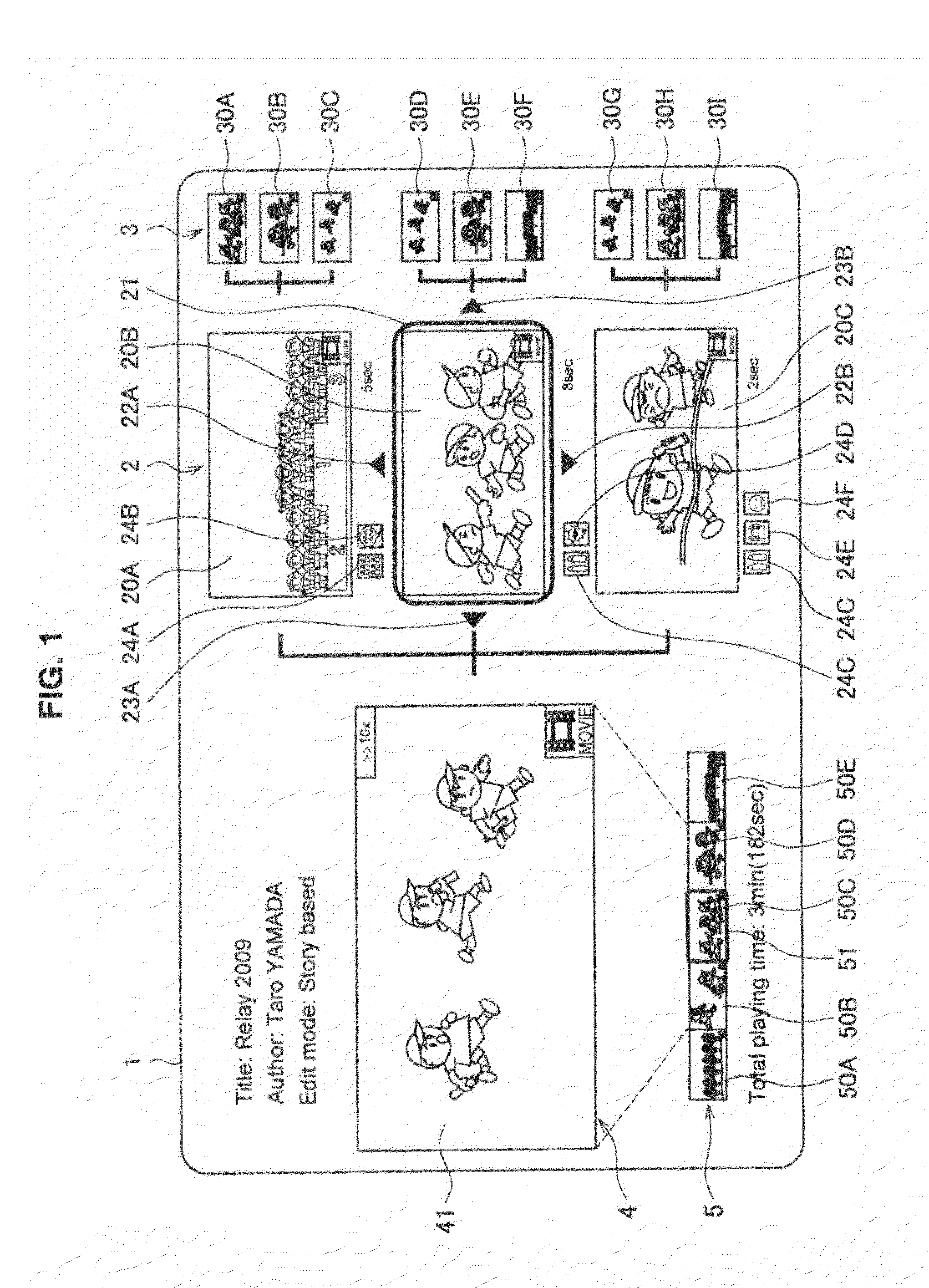

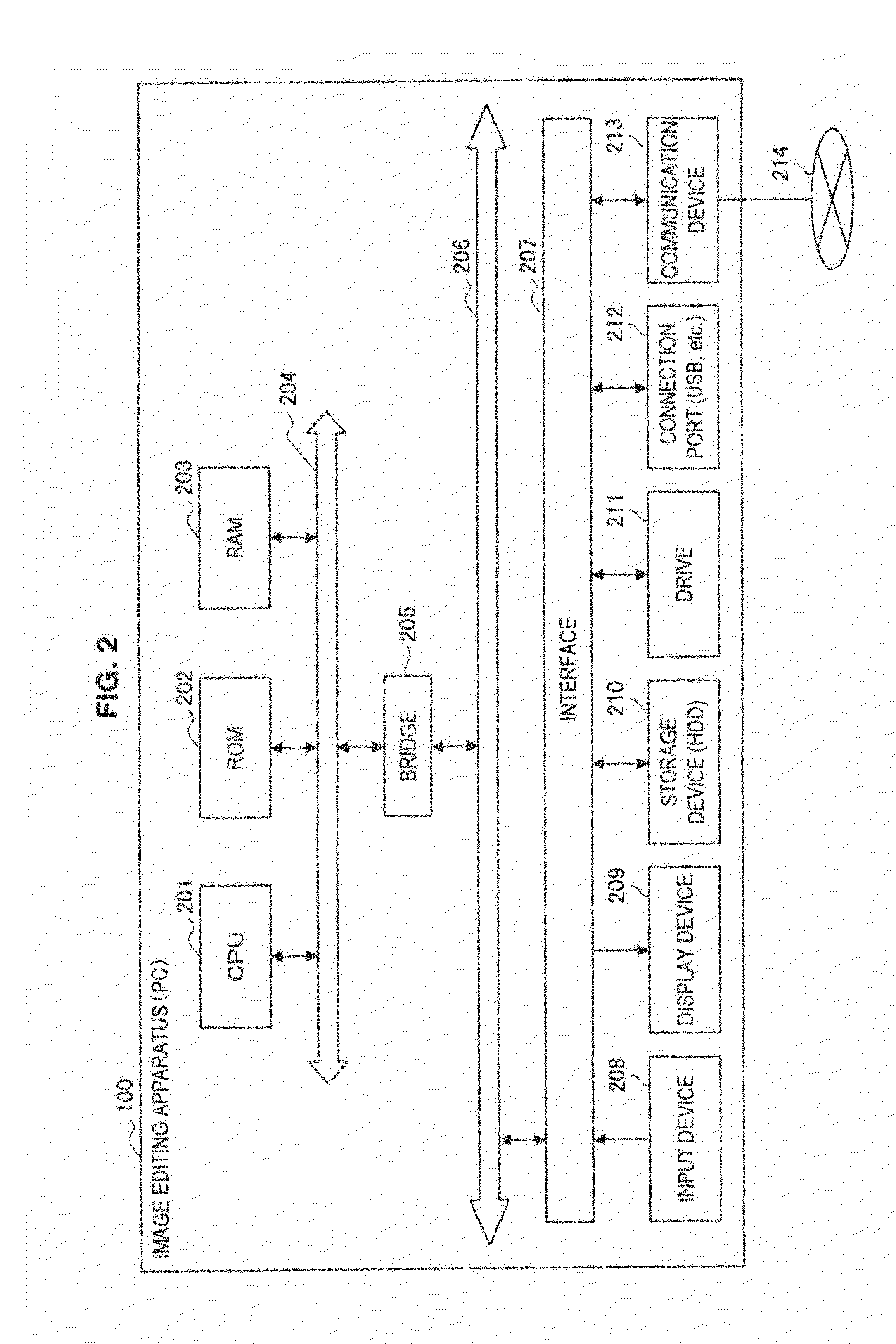

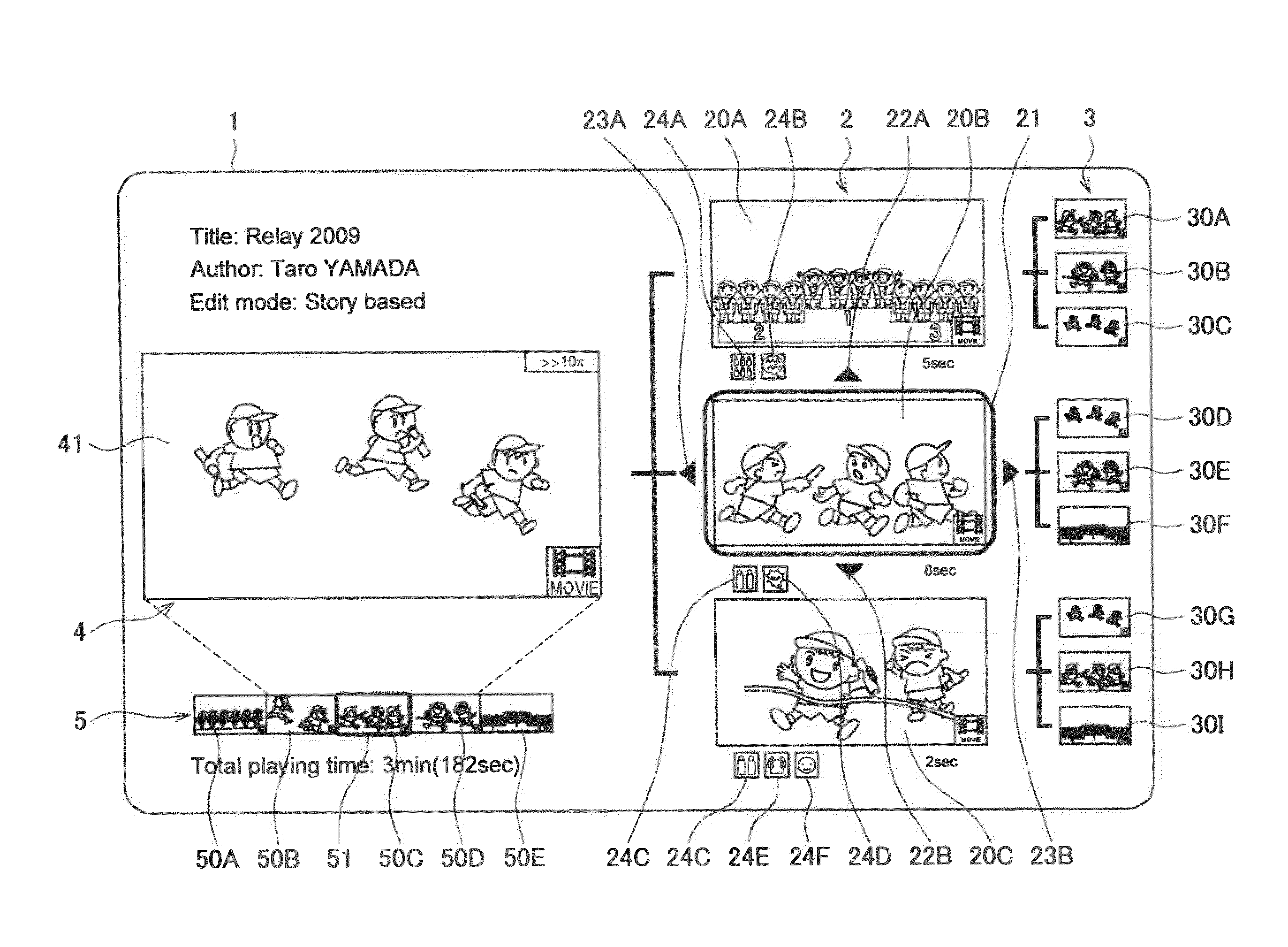

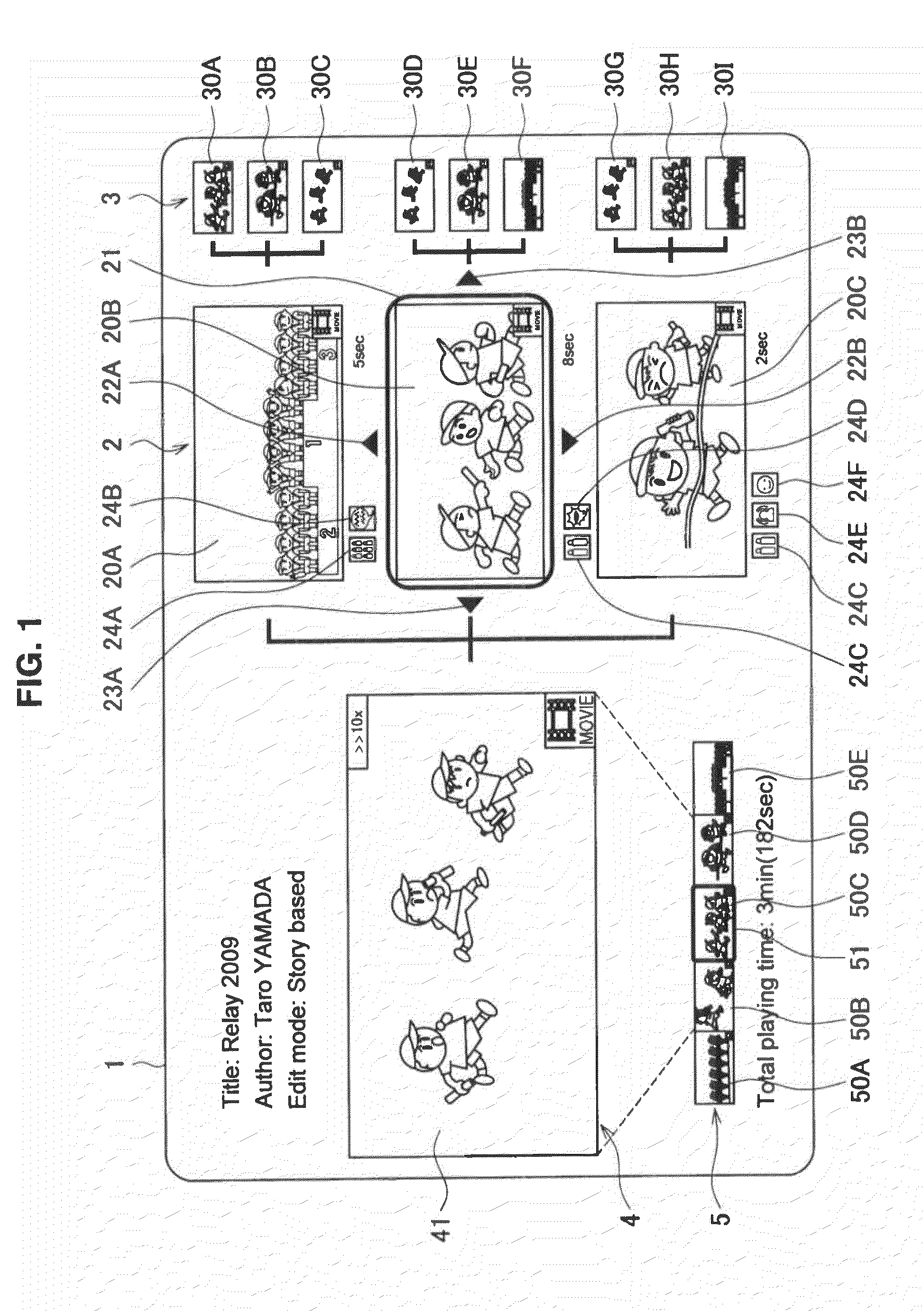

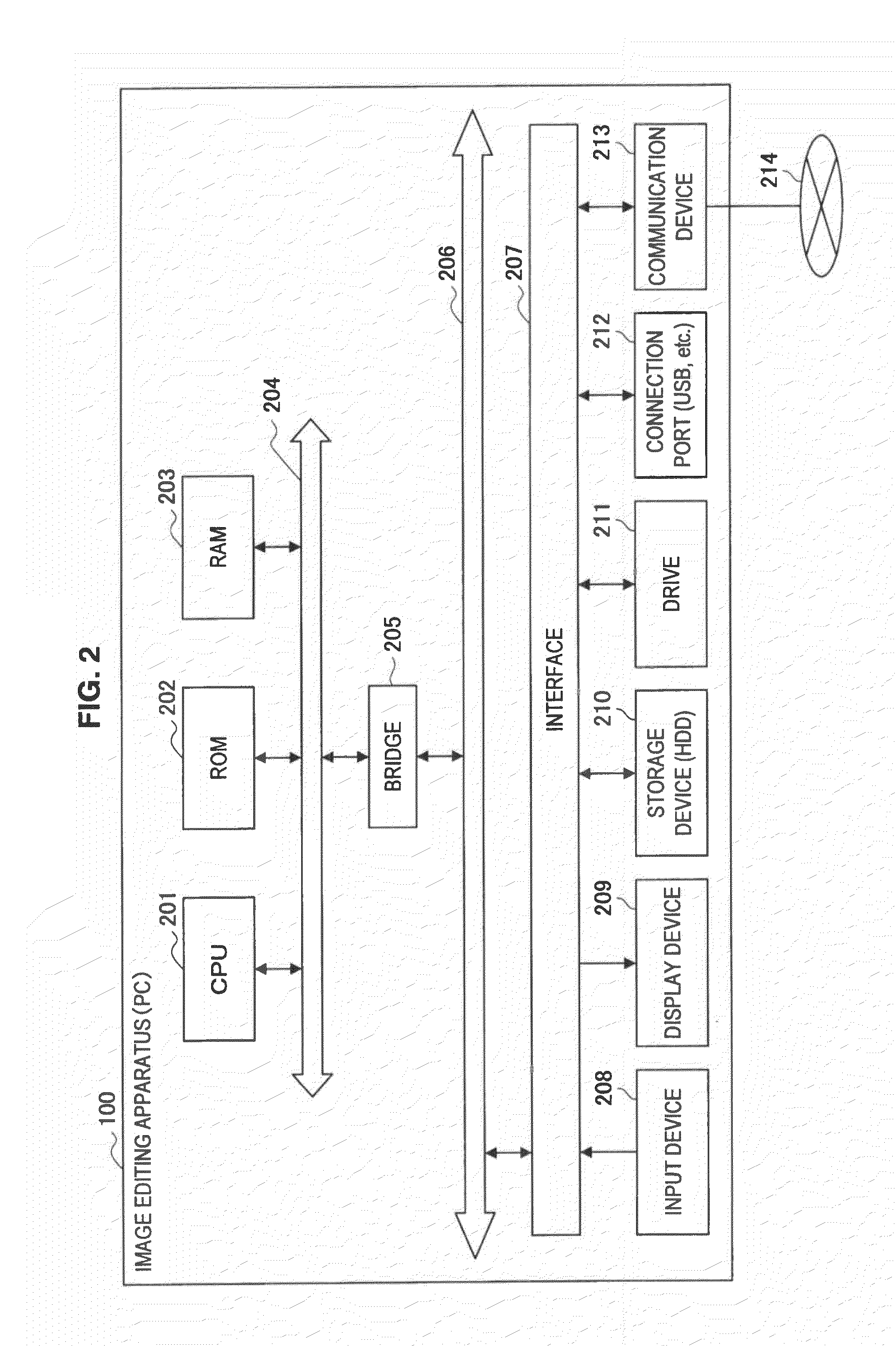

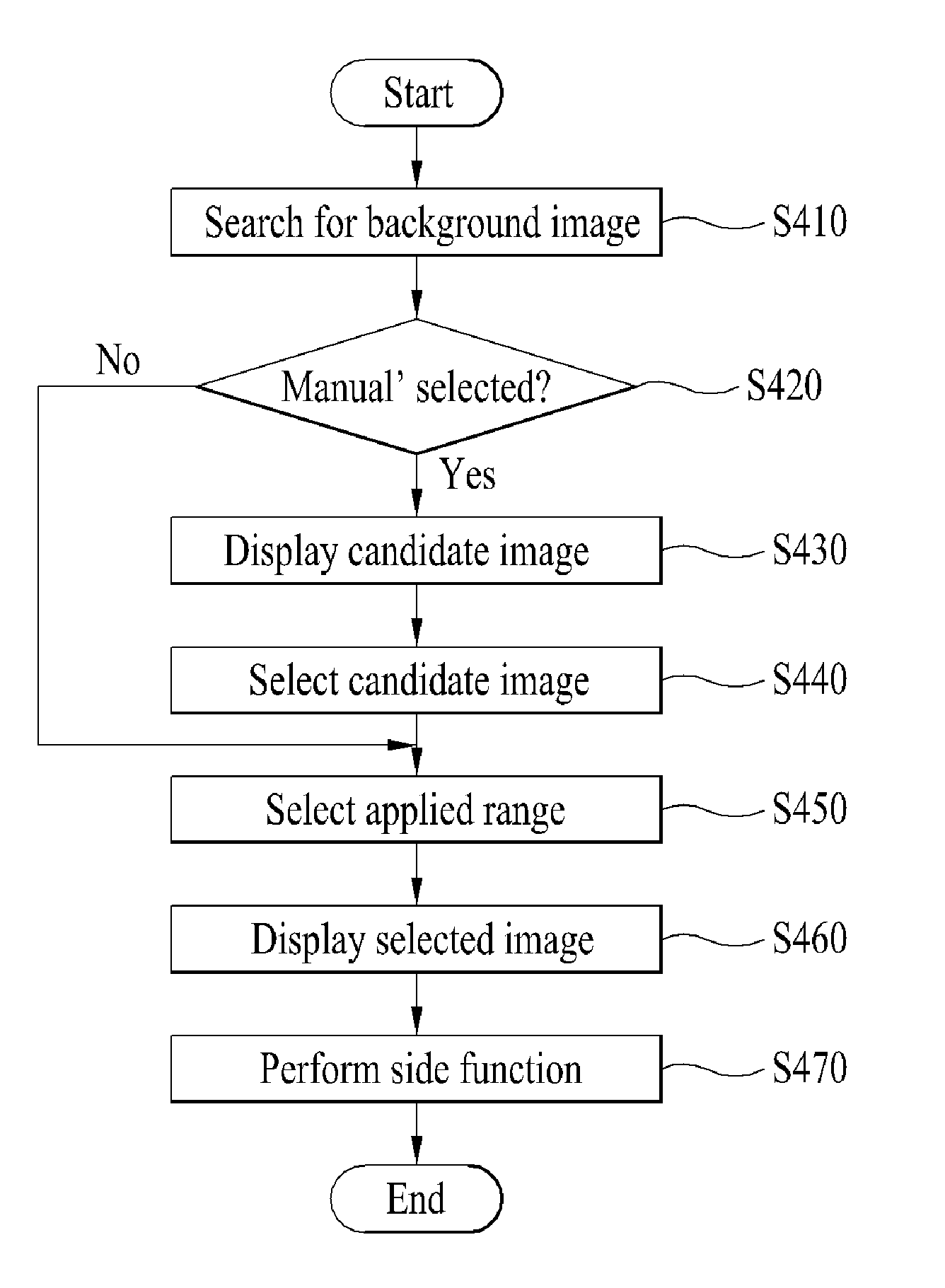

Image editing apparatus, image editing method and program

InactiveUS20140033043A1Easy to produceTelevision system detailsElectronic editing digitised analogue information signalsGraphicsInformation processing

Method and information processing apparatus for generating an edited work including a subset of a plurality of scenes in an image material. The information processing apparatus includes a memory configured to store the image material including the plurality of scenes. The information processing apparatus further includes a processor configured to select, for an n-th scene of the edited work, a plurality of first candidate scenes from the plurality of scenes based on at least one feature of a preceding (n−1-th) scene of the edited work and features of the plurality of scenes. The processor is also configured to generate a graphical user interface including a scene selection area and a preview area. The scene selection area includes one or more first candidate images corresponding to at least one of the plurality of first candidate scenes, and the preview area includes a preview of the preceding (n−1-th) scene.

Owner:SONY CORP

High-resolution remote sensing target extraction method based on multi-scale semantic model

The invention discloses a high-resolution remote sensing target extraction method based on a multi-scale semantic model, and relates to a remote sensing image technology. The high-resolution remote sensing target extraction method comprises the following steps of: establishing a high-resolution remote sensing ground object target image data set; performing multi-scale segmentation on images in a training set, and obtaining a candidate image area block of the target; establishing a semantic model of the target, and calculating the implied category semantic features of the target; performing semantic feature analysis on candidate image blocks on all levels; and finally, calculating a semantic correlation coefficient of the candidate area and the target model, and extracting the target through maximizing semantic correlation coefficient. By the method, the target in the high-resolution remote sensing image is extracted by comprehensively utilizing the multi-scale image segmentation and target category semantic information; the method is accurate in extraction result, high in robustness and applicability, and has a certain practical value in the construction of the geographic information system and digital earth system; and the manual involvement degree is reduced.

Owner:济钢防务技术有限公司

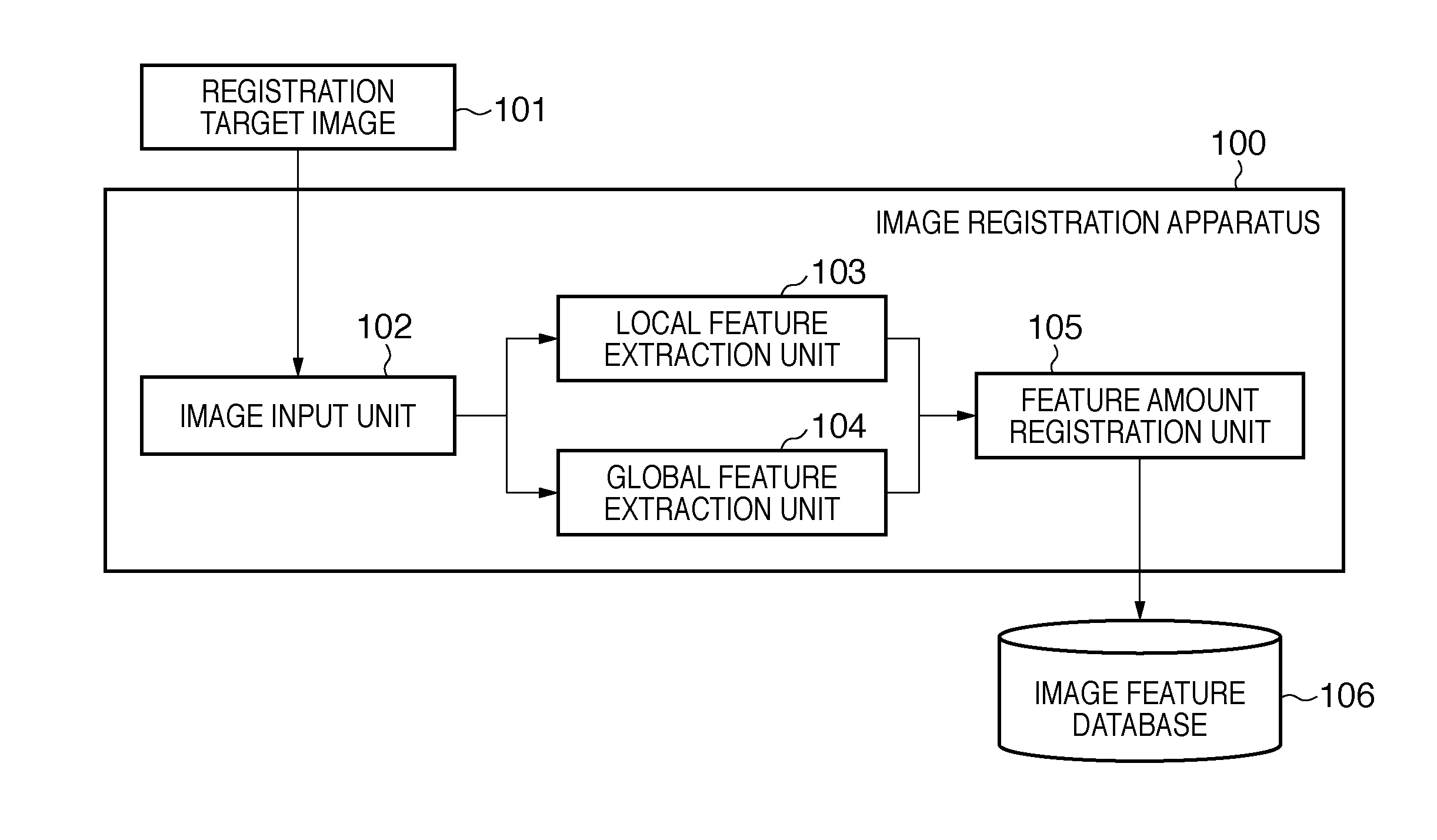

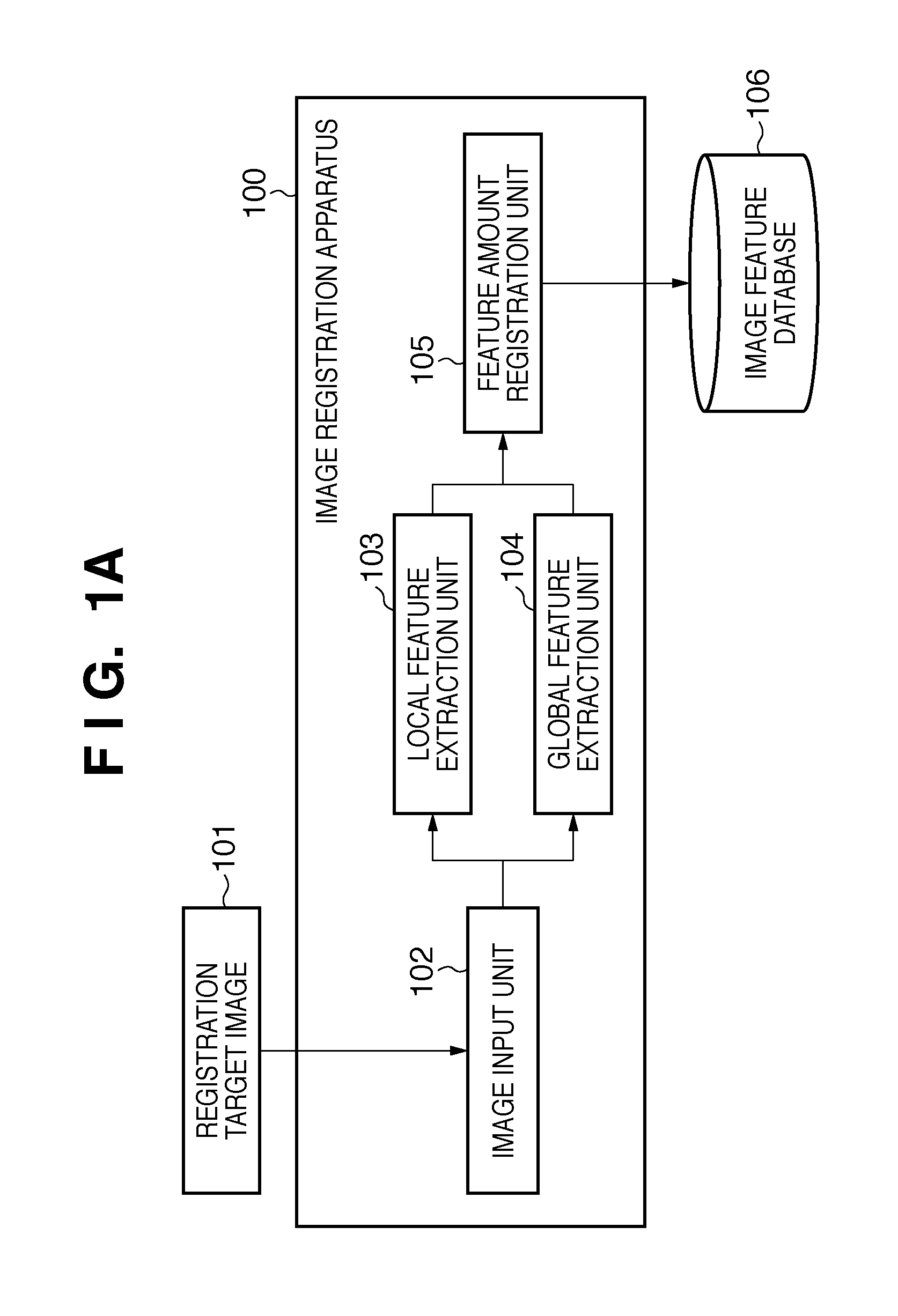

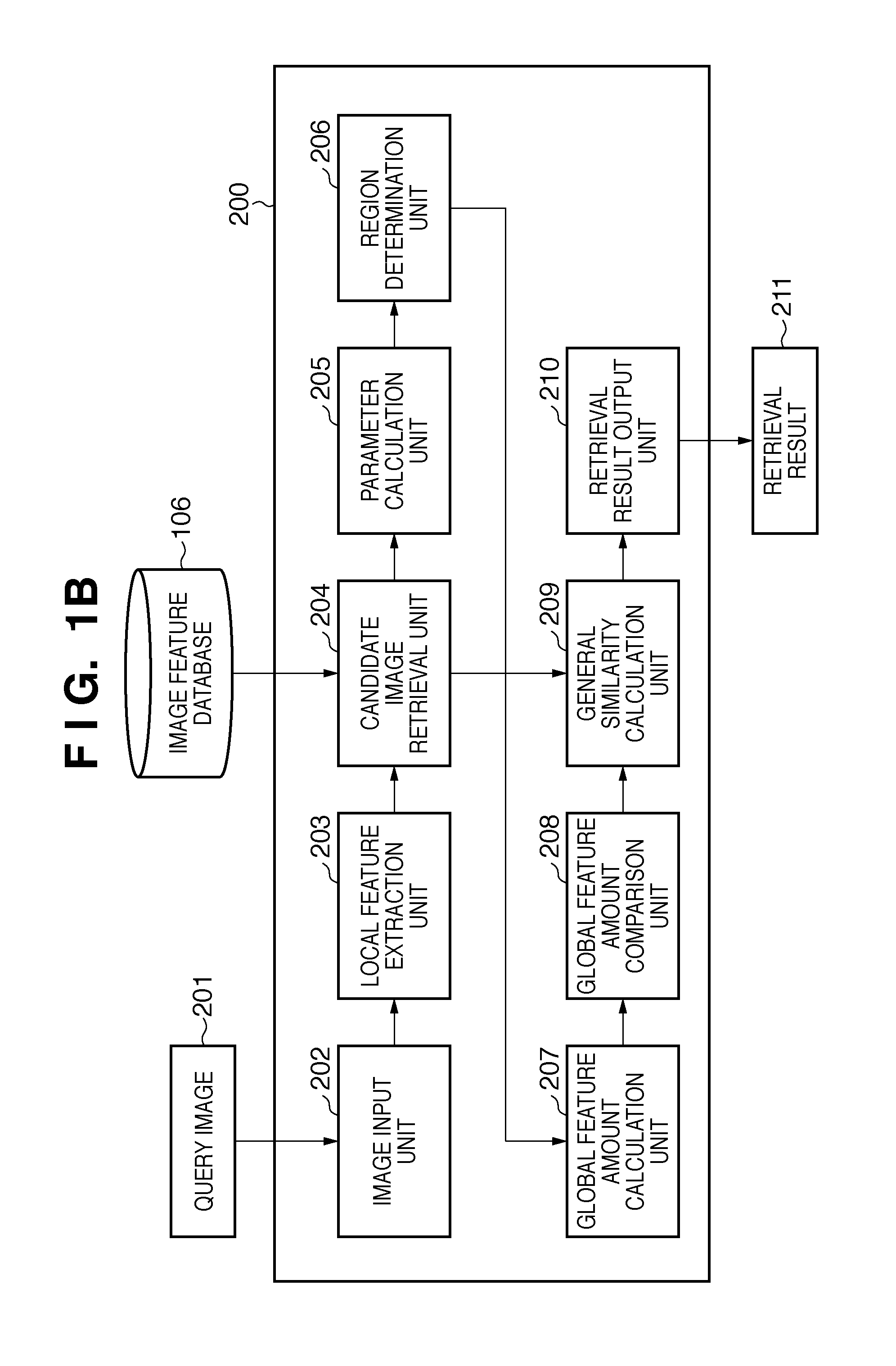

Image retrieval apparatus, control method for the same, and storage medium

ActiveUS20100290708A1Deterioration of retrieval accuracyImprove accuracyCharacter and pattern recognitionImage retrievalRetrieval result

An image retrieval apparatus configured so as to enable a global feature method and a local feature method to complement each other is provided. After obtaining a retrieval result candidate using the local feature method, the image retrieval apparatus further verifies global features already registered in a database, with regard to the retrieval result candidate image. A verification position of the global features is estimated using the local features.

Owner:CANON KK

Image editing apparatus, image editing method and program

InactiveUS20110026901A1Easy to produceEasy to operateTelevision system detailsElectronic editing digitised analogue information signalsInformation processingGraphics

Method and information processing apparatus for generating an edited work including a subset of a plurality of scenes in an image material. The information processing apparatus includes a memory configured to store the image material including the plurality of scenes. The information processing apparatus further includes a processor configured to select, for an n-th scene of the edited work, a plurality of first candidate scenes from the plurality of scenes based on at least one feature of a preceding (n−1-th) scene of the edited work and features of the plurality of scenes. The processor is also configured to generate a graphical user interface including a scene selection area and a preview area. The scene selection area includes one or more first candidate images corresponding to at least one of the plurality of first candidate scenes, and the preview area includes a preview of the preceding (n−1-th) scene.

Owner:SONY CORP

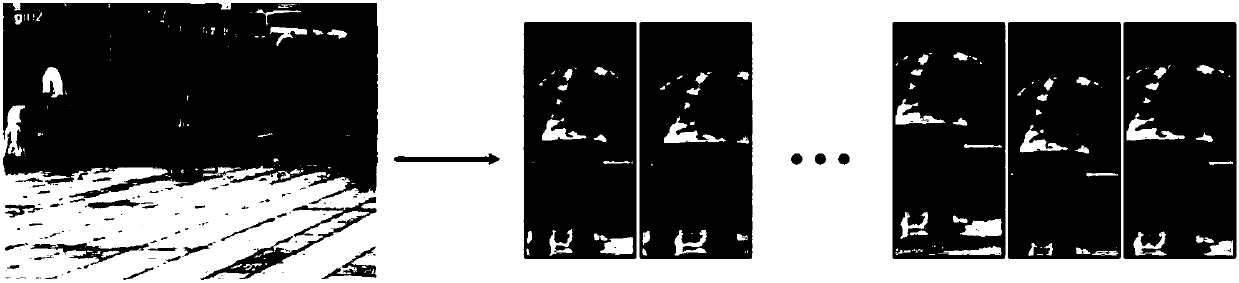

Target tracking method based on difficult positive sample generation

ActiveCN108596958AImprove robustnessImprove tracking accuracyImage enhancementImage analysisPositive sampleVideo processing

The invention discloses a target tracking method based on difficult positive sample generation. According to the method, for each video in training data, a variation auto-encoder is utilized to learna corresponding flow pattern, namely a positive sample generation network, codes are slightly adjusted according to an input image obtained after encoding, and a large quantity of positive samples aregenerated; the positive samples are input into a difficult positive sample conversion network, an intelligent body is trained to learn to shelter a target object through one background image block, the intelligent body performs bounding box adjustment continuously, so that the samples are difficult to recognize, the purpose of difficult positive sample generation is achieved, and sheltered difficult positive samples are output; and based on the generated difficult positive samples, a twin network is trained and used for matching between a target image block and candidate image blocks, and positioning of a target in a current frame is completed till processing of the whole video is completed. According to the target tracking method based on difficult positive sample generation, the flow pattern distribution of the target is learnt directly from the data, and a large quantity of diversified positive samples can be obtained.

Owner:ANHUI UNIVERSITY

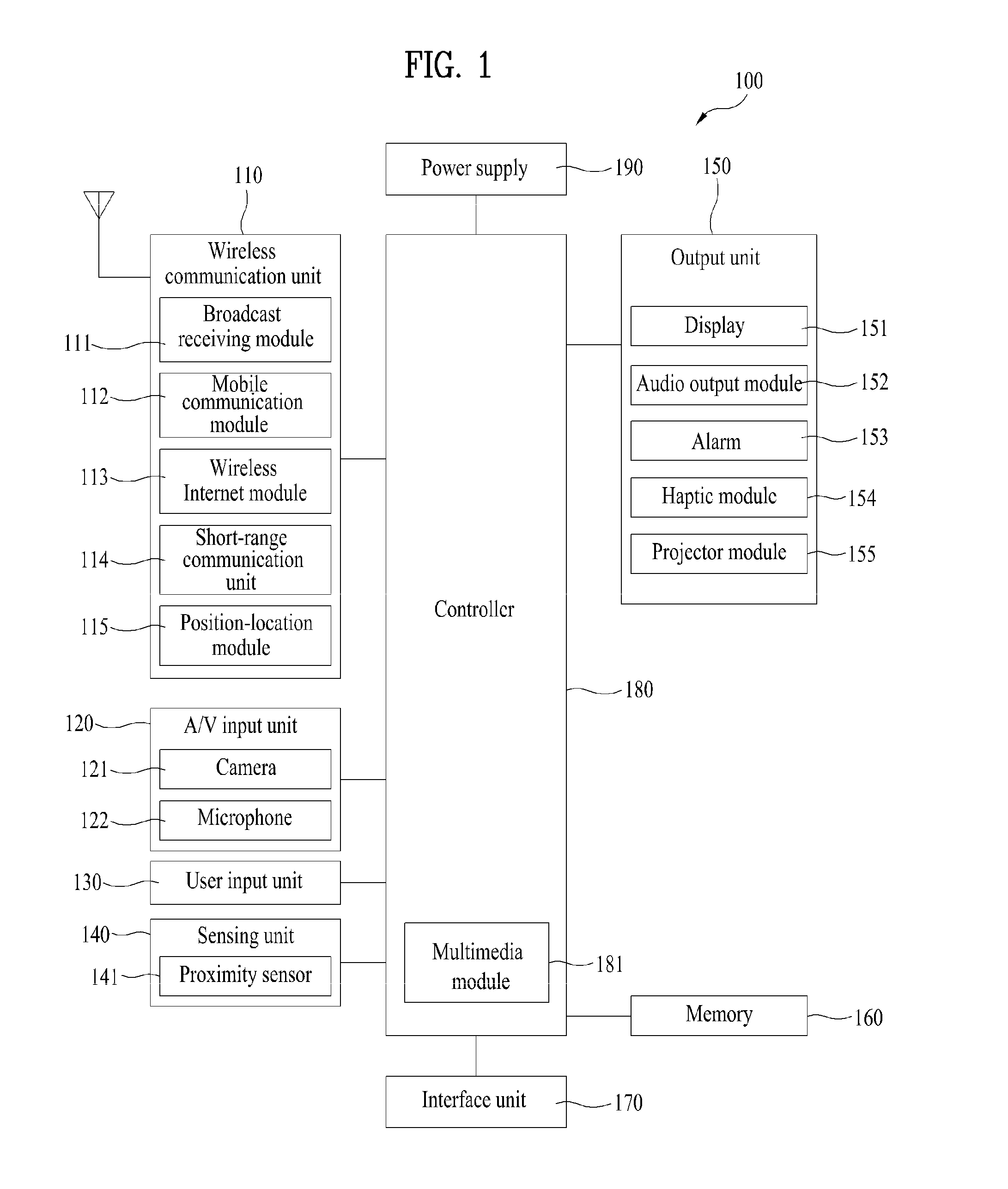

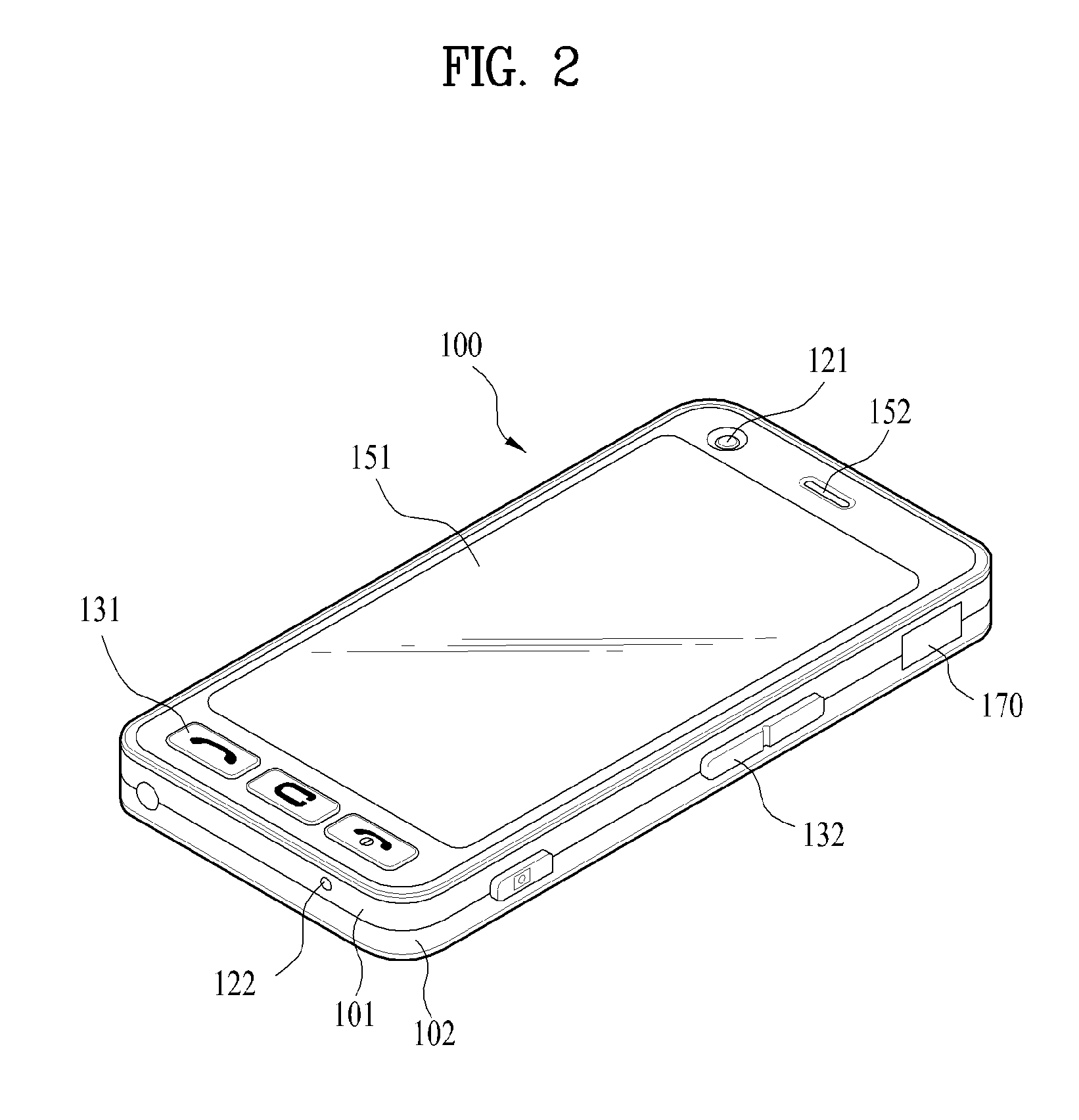

Mobile terminal and controlling method thereof

ActiveUS20120303603A1Easy to readConvenient setInput/output for user-computer interactionDigital data information retrievalComputer hardwareSearch words

A mobile terminal and controlling method thereof are disclosed, by which a more convenient e-book reading environment can be provided through background video and / or audio settings. The present invention includes a touchscreen configured to display a first page of an e-book including at least one or more pages, a memory unit configured to store the e-book and at least one image, a communication unit configured to exchange data with an external device by wire / wireless, and a controller searching at least one of the memory unit and a search server connected via the communication unit for at least one or more candidate images with a search word determined using at least one portion of a content of the first page, the controller controlling a first one of the found at least one or more candidate images to be displayed as a background image of the first page.

Owner:LG ELECTRONICS INC

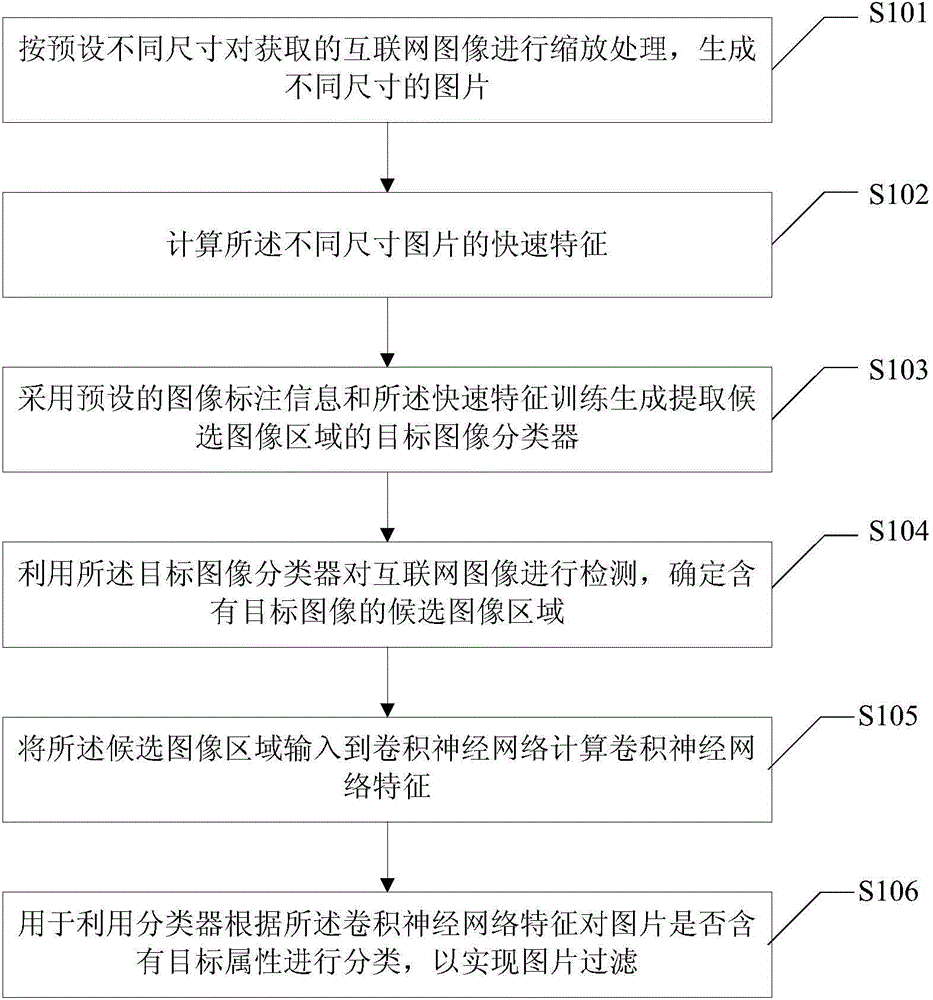

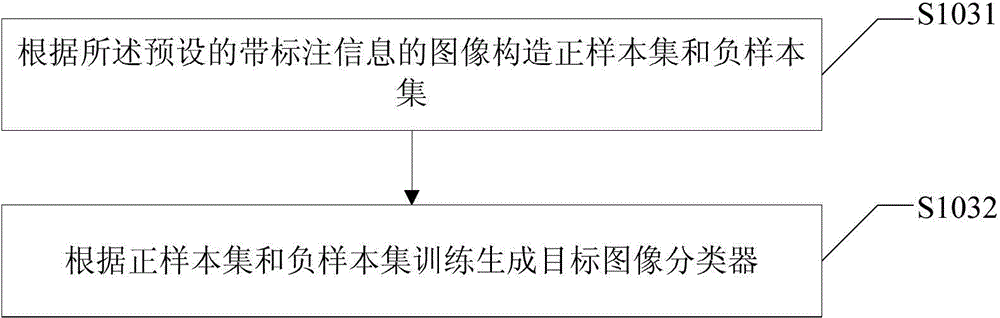

Internet picture filtering method and device

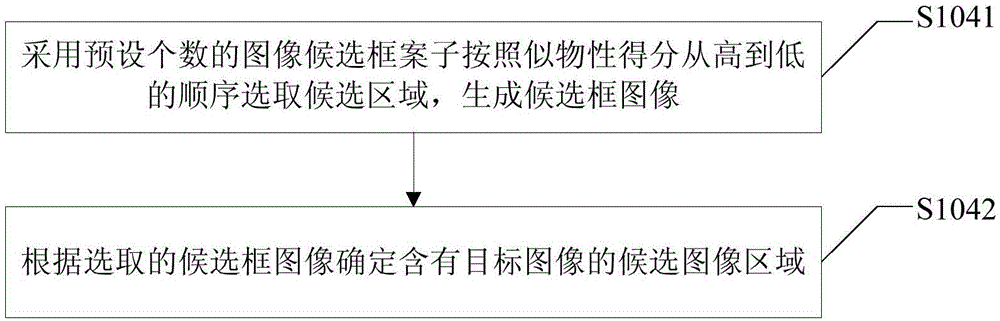

ActiveCN105808610AImplement filteringAchieve fine-tuningBiological neural network modelsCharacter and pattern recognitionNerve networkThe Internet

The invention provides an Internet picture filtering method and device. The Internet picture filtering method comprises the following steps: according to different preset dimensions, zooming an obtained Internet image to generate pictures of different dimensions; calculating the quick characteristics of the pictures of different dimensions; adopting preset image annotation information and the quick characteristics to train to generate a target image classifier; utilizing the target image classifier to detect the Internet image, and determining a candidate image area which contains target attributes; inputting the candidate image area into a convolutional neural network to calculate the characteristics of the convolutional neural network; and utilizing the classifier to classify whether the picture contains a target image or not according to the convolutional neural network to realize picture filtering. Internet pictures can be favorably filtered, calculation efficiency is improved, a user can carry out fine tuning on a deep neural network on line, and therefore, detection performance of the Internet picture filtering method is better than the detection performance of other methods.

Owner:SHENZHEN INST OF ADVANCED TECH CHINESE ACAD OF SCI

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com