Patents

Literature

20941 results about "Video image" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

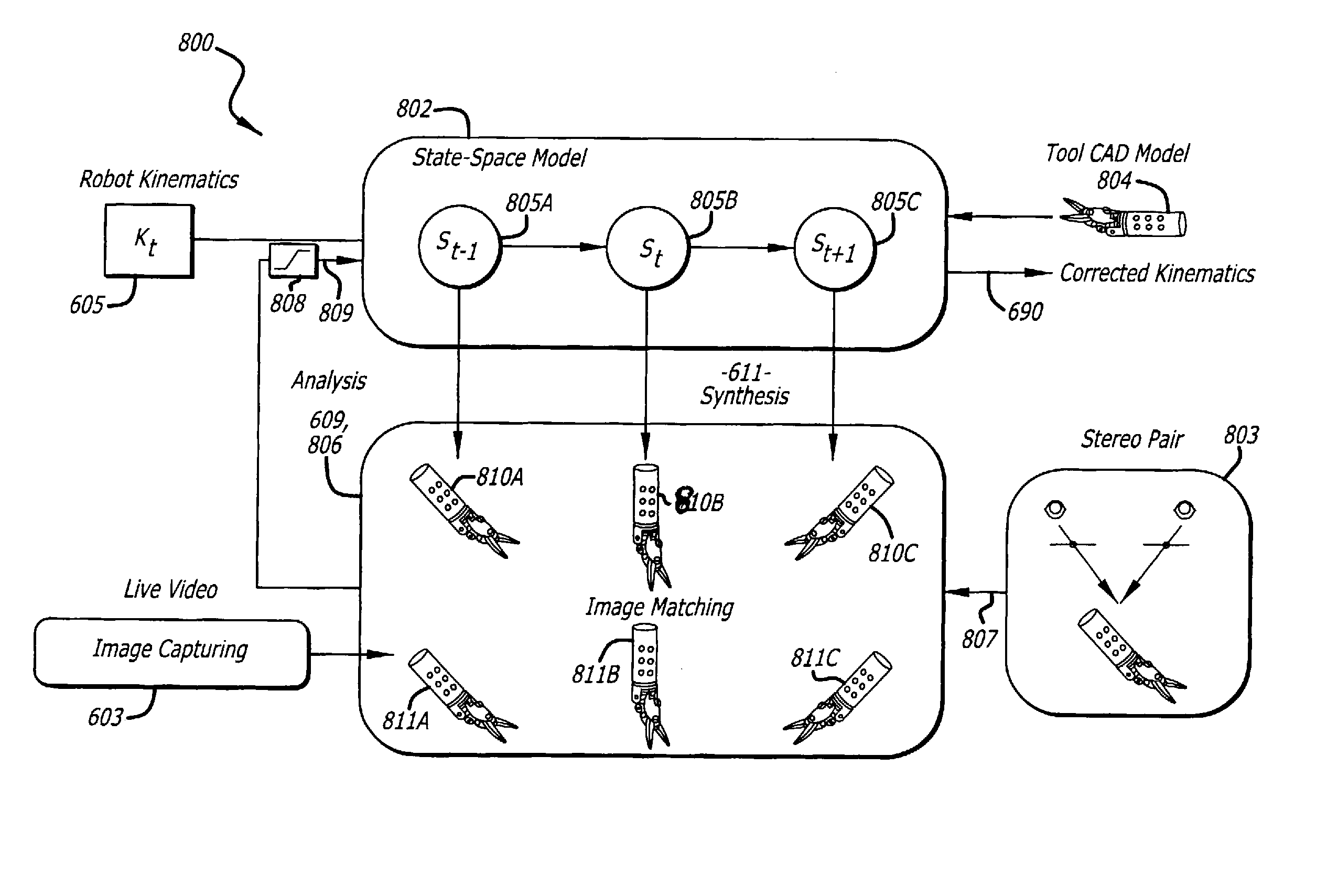

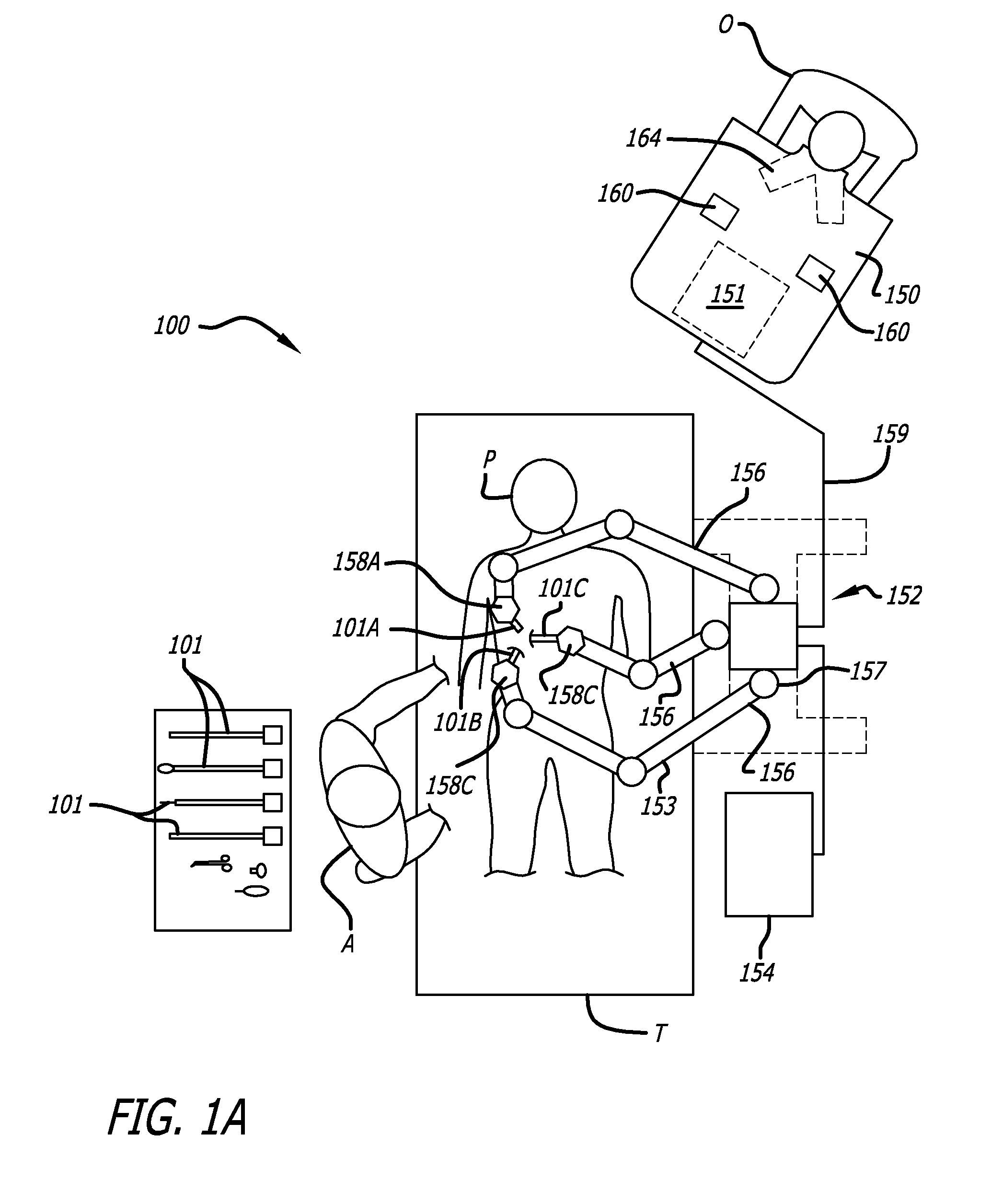

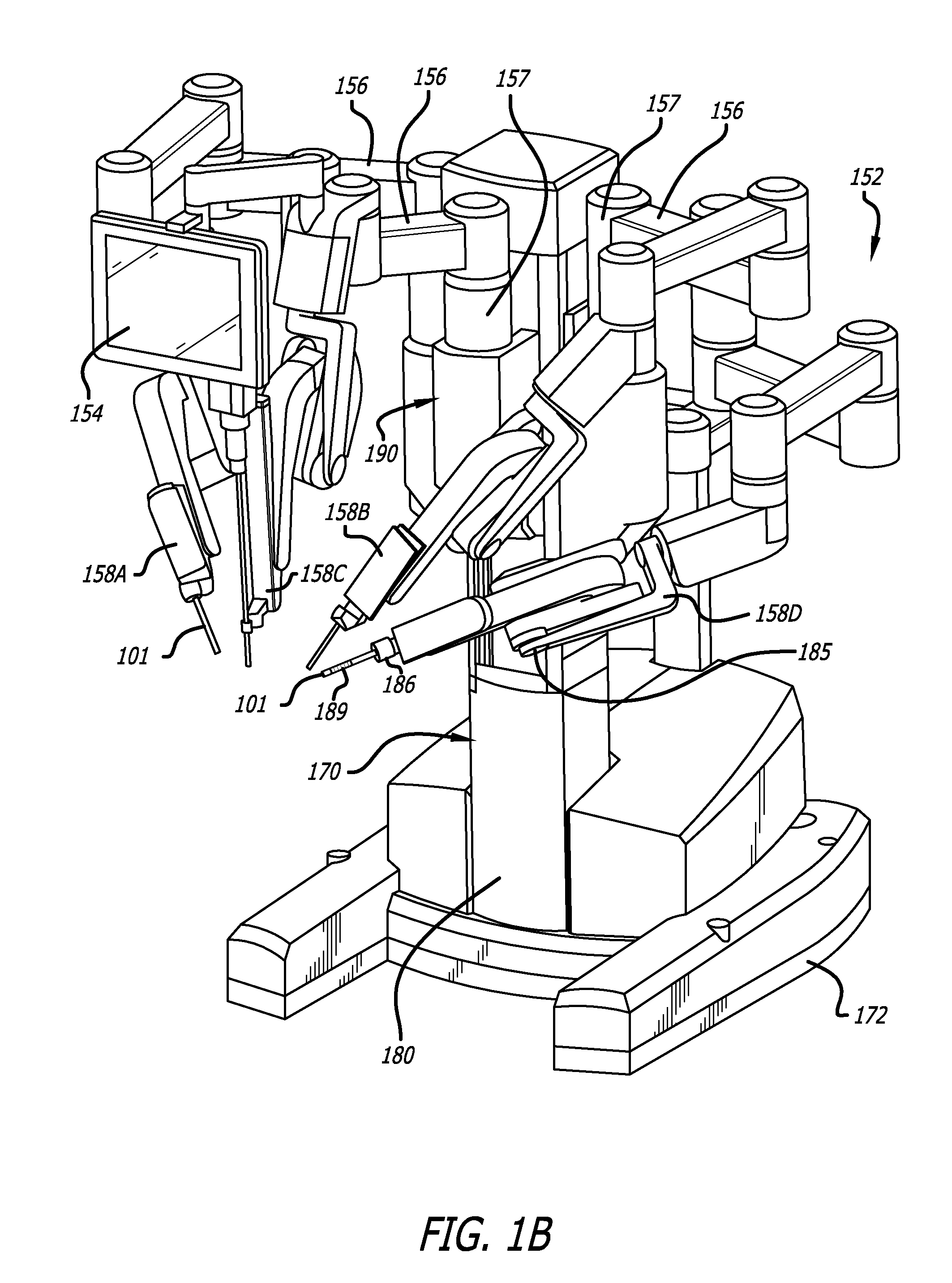

Methods and systems for robotic instrument tool tracking with adaptive fusion of kinematics information and image information

Owner:INTUITIVE SURGICAL OPERATIONS INC

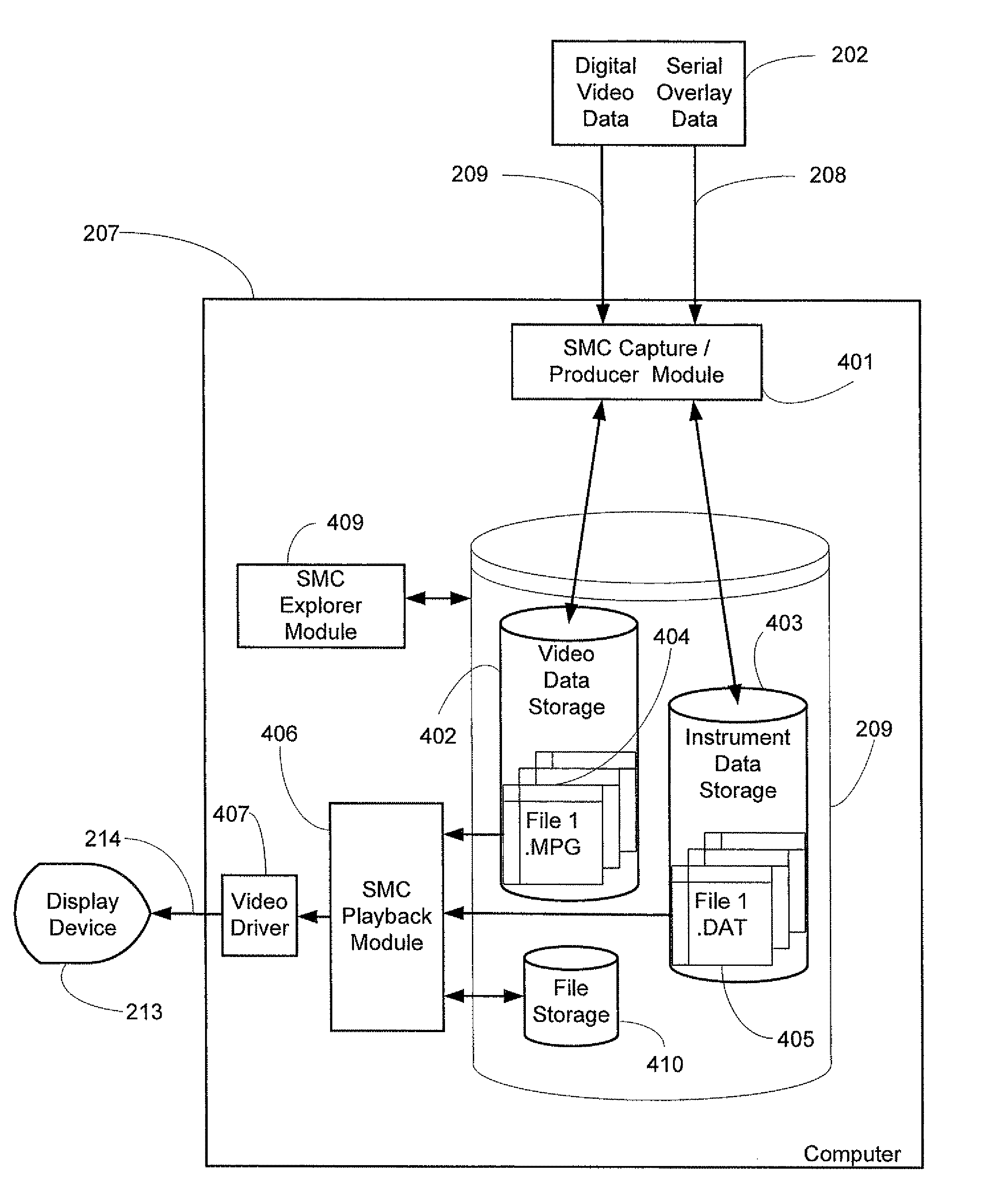

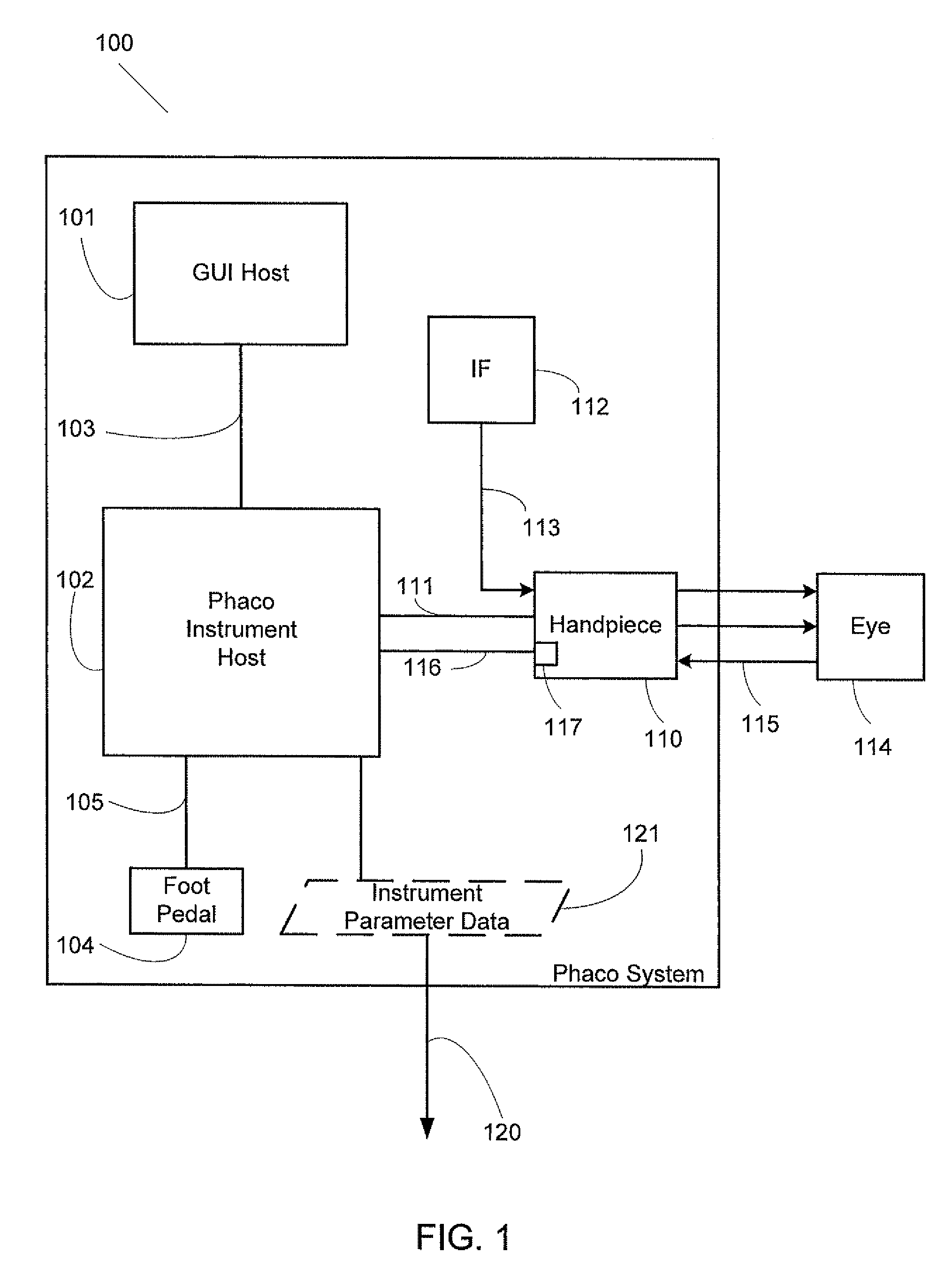

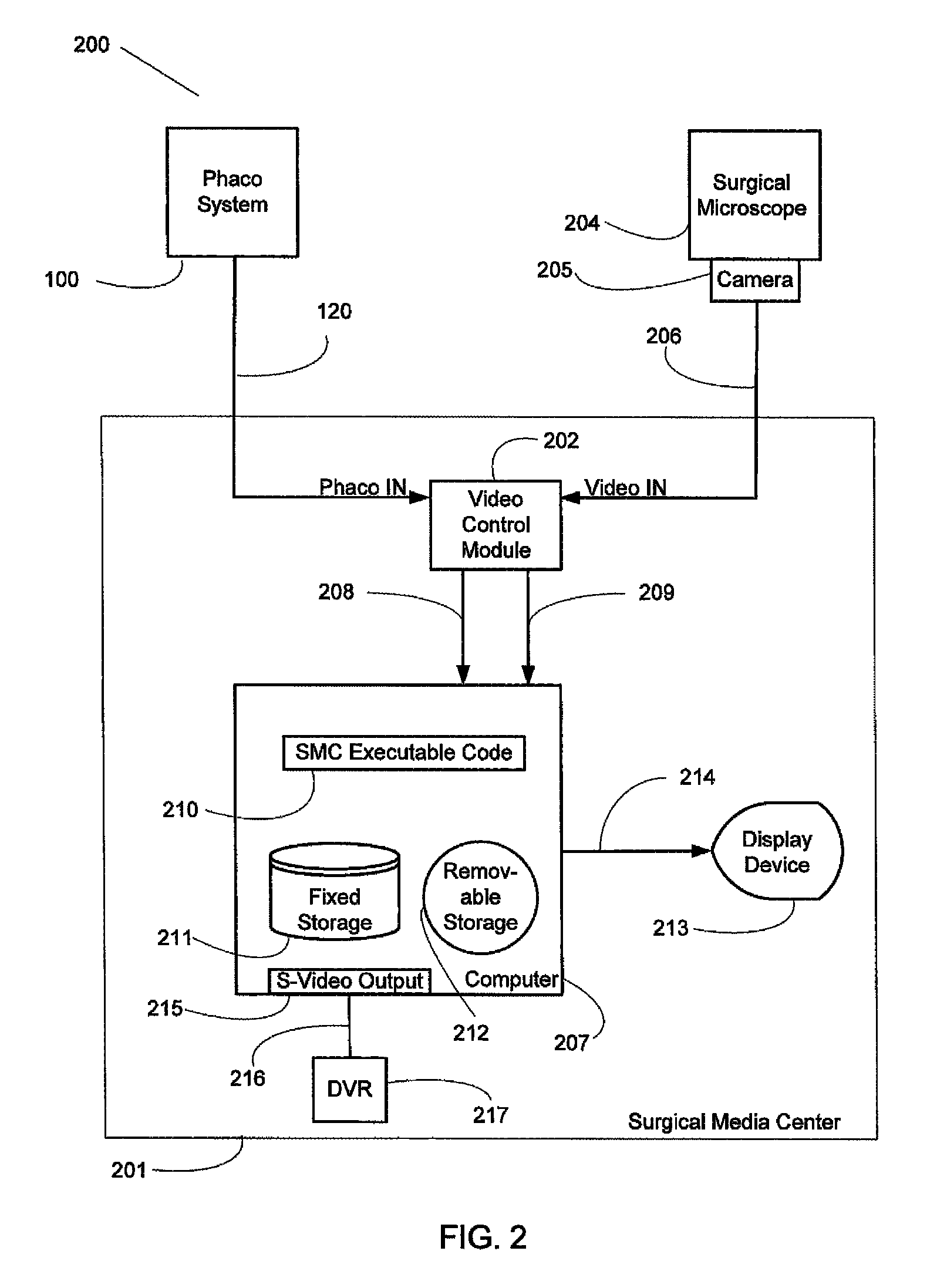

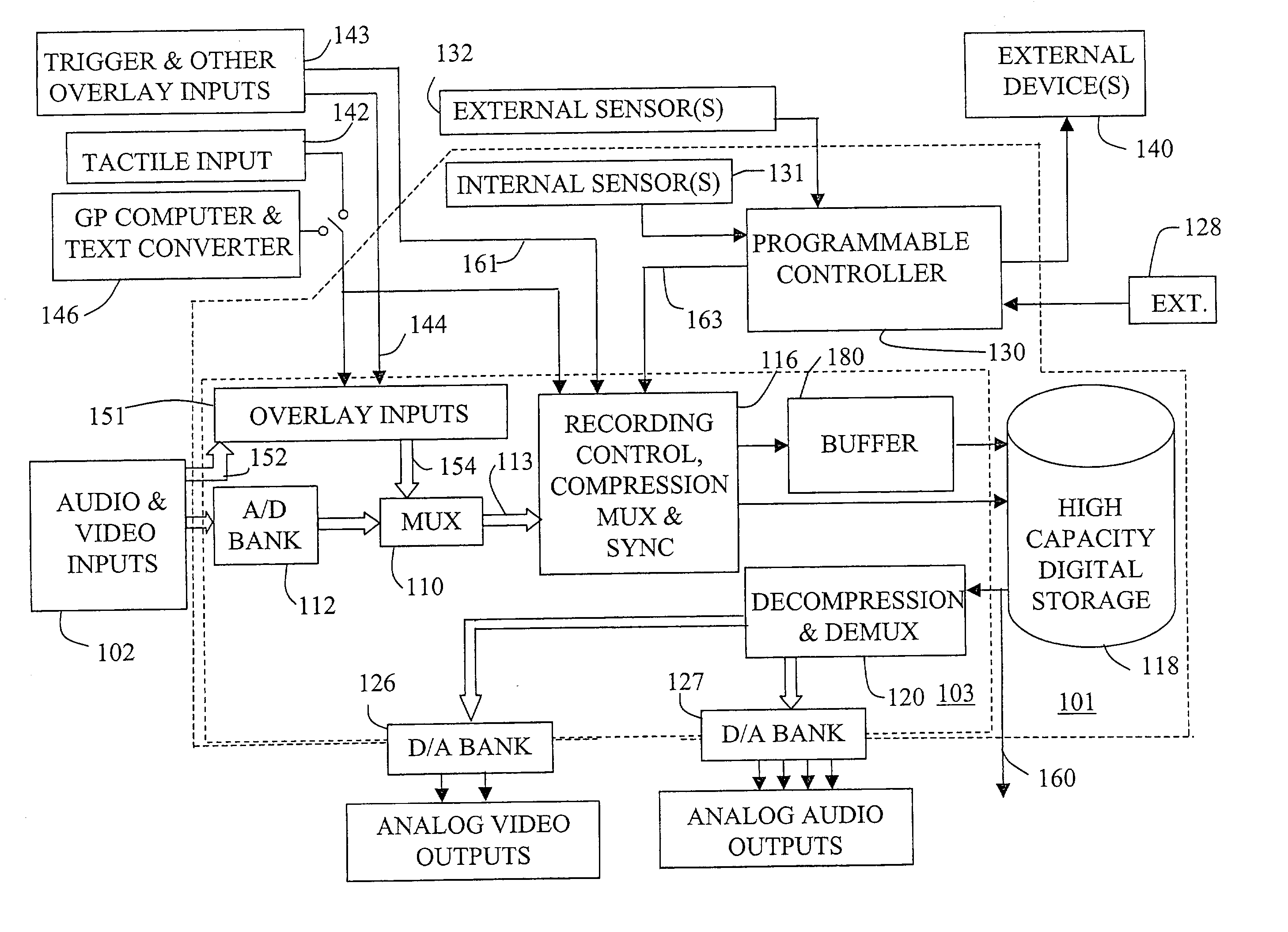

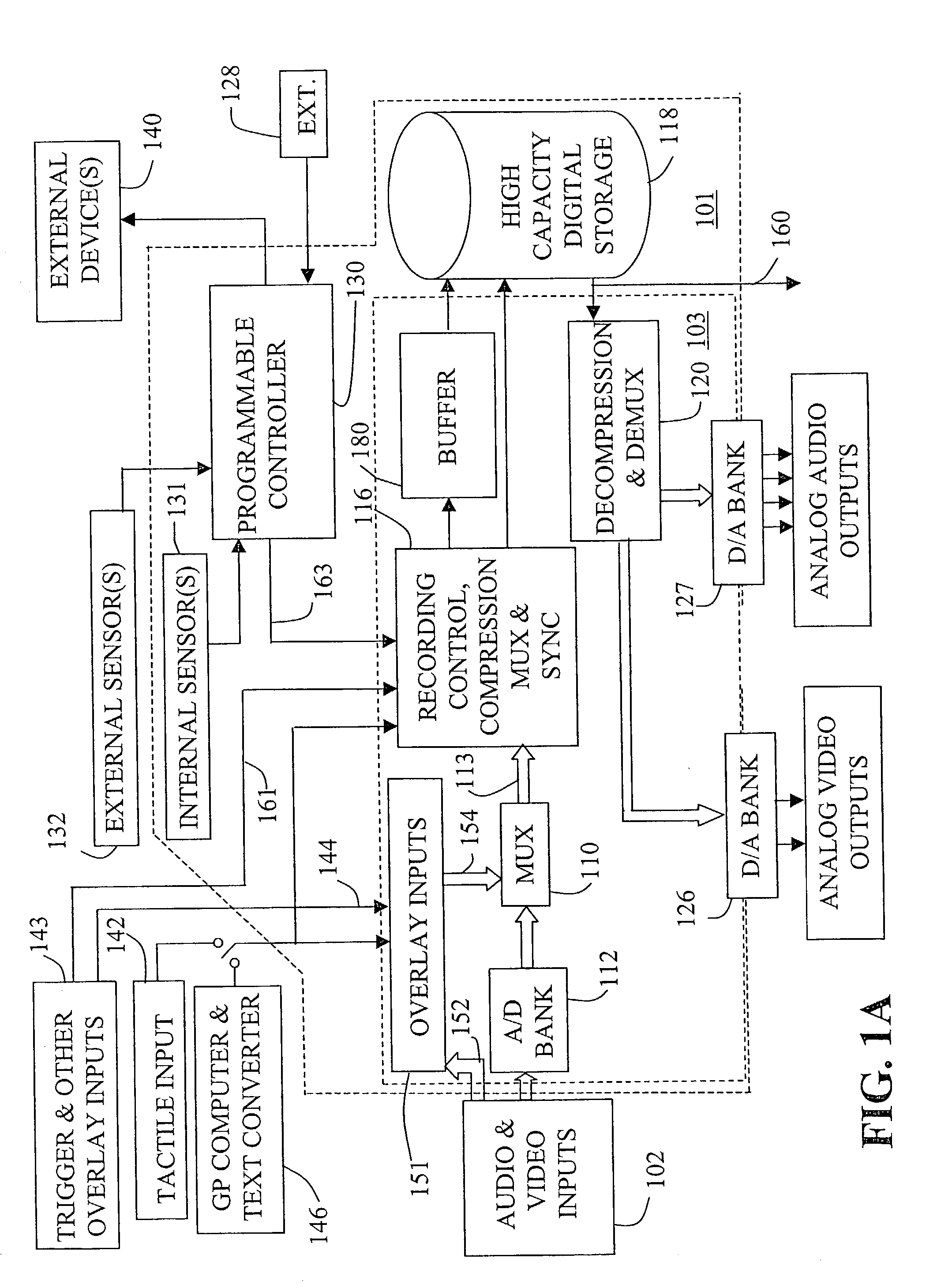

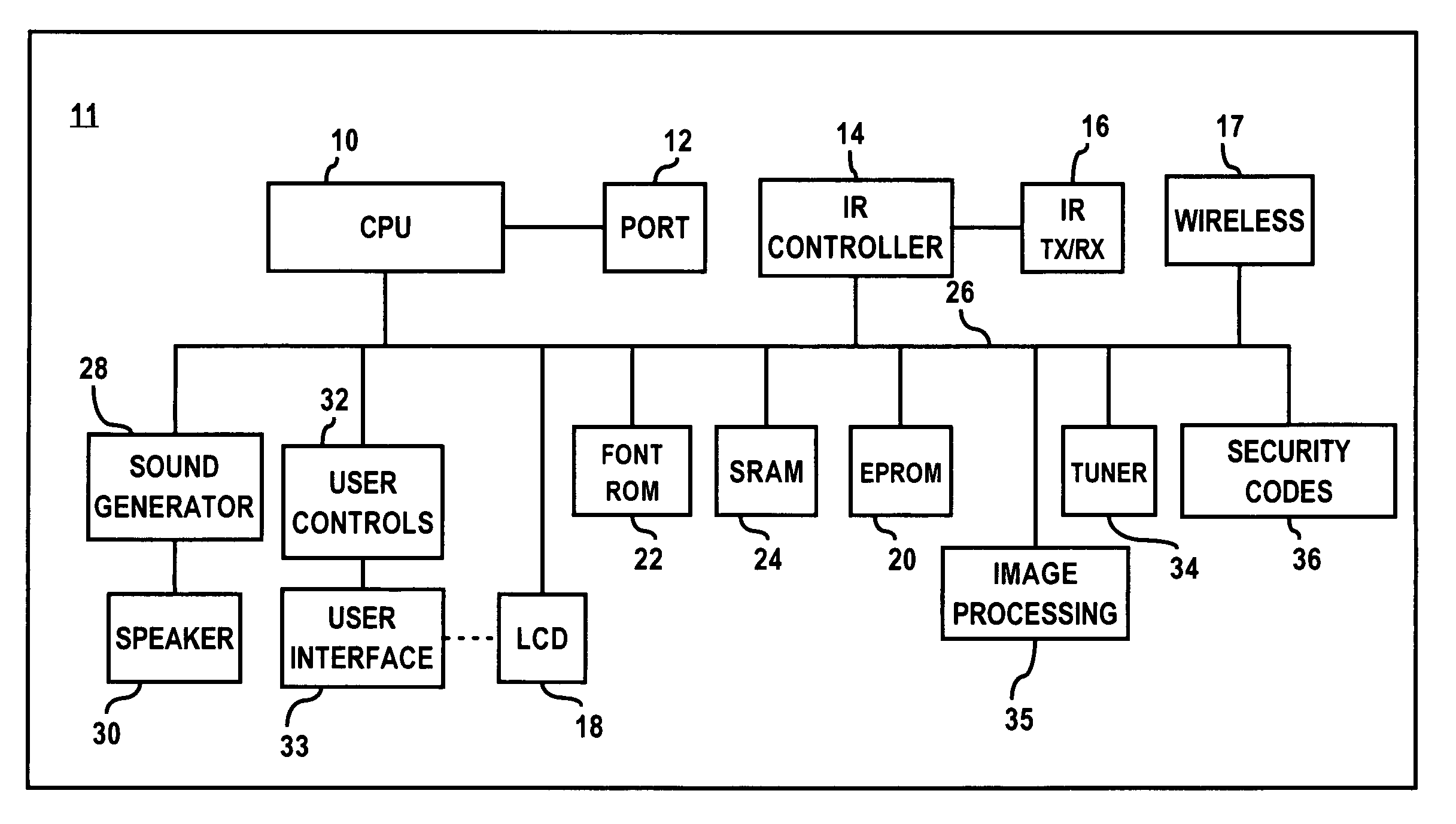

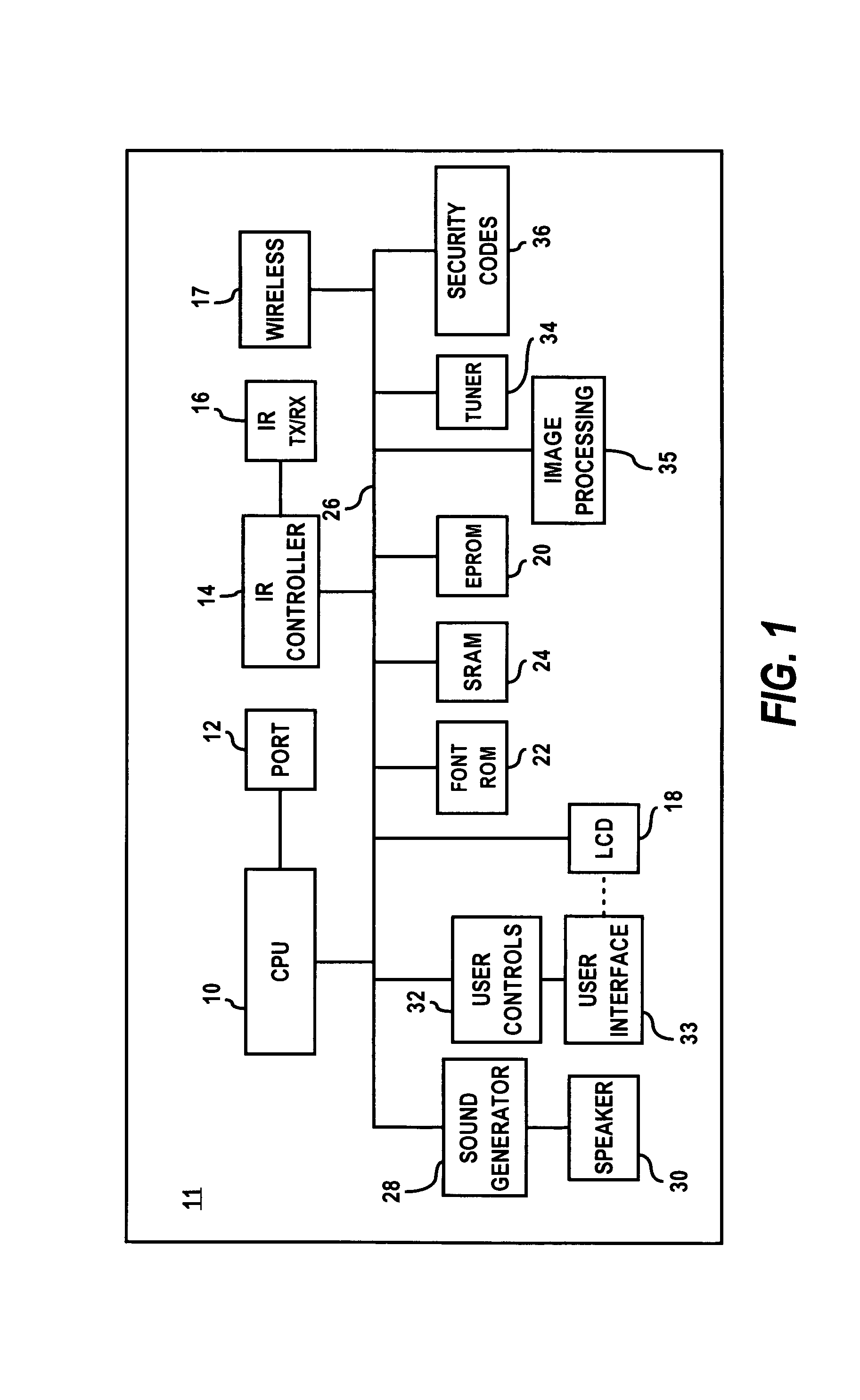

Digital video capture system and method with customizable graphical overlay

Owner:JOHNSON & JOHNSON SURGICAL VISION INC

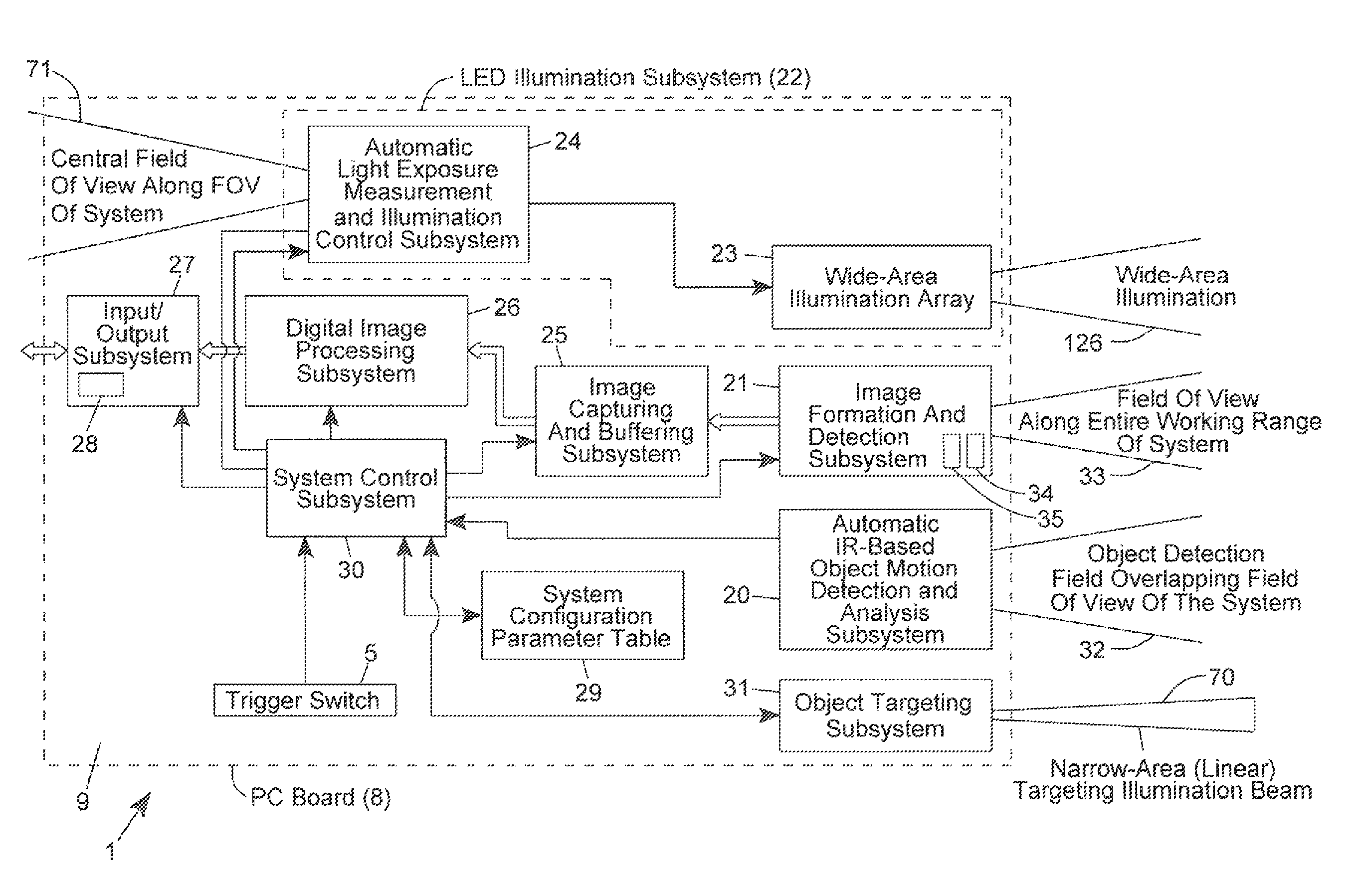

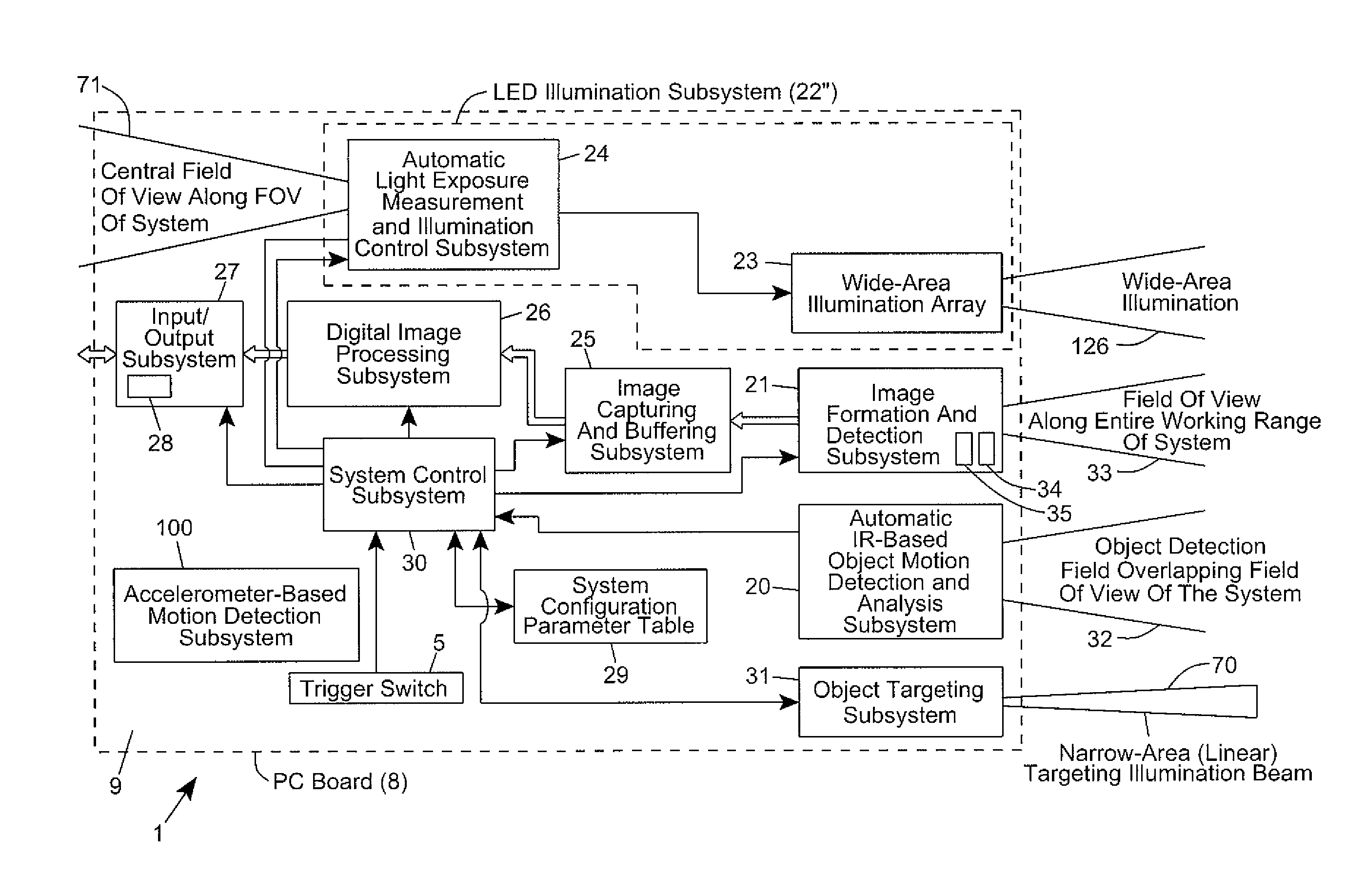

Auto-exposure method using continuous video frames under controlled illumination

ActiveUS8408464B2Improve the level ofCapture performanceTelevision system detailsMechanical apparatusGraphicsReal time analysis

An adaptive strobe illumination control process for use in a digital image capture and processing system. In general, the process involves: (i) illuminating an object in the field of view (FOV) with several different pulses of strobe (i.e. stroboscopic) illumination over a pair of consecutive video image frames; (ii) detecting digital images of the illuminated object over these consecutive image frames; and (iii) decode processing the digital images in an effort to read a code symbol graphically encoded therein. In a first illustrative embodiment, upon failure to read a code symbol graphically encoded in one of the first and second images, these digital images are analyzed in real-time, and based on the results of this real-time image analysis, the exposure time (i.e. photonic integration time interval) is automatically adjusted during subsequent image frames (i.e. image acquisition cycles) according to the principles of the present disclosure. In a second illustrative embodiment, upon failure to read a code symbol graphically encoded in one of the first and second images, these digital images are analyzed in real-time, and based on the results of this real-time image analysis, the energy level of the strobe illumination is automatically adjusted during subsequent image frames (i.e. image acquisition cycles) according to the principles of the present disclosure.

Owner:METROLOGIC INSTR

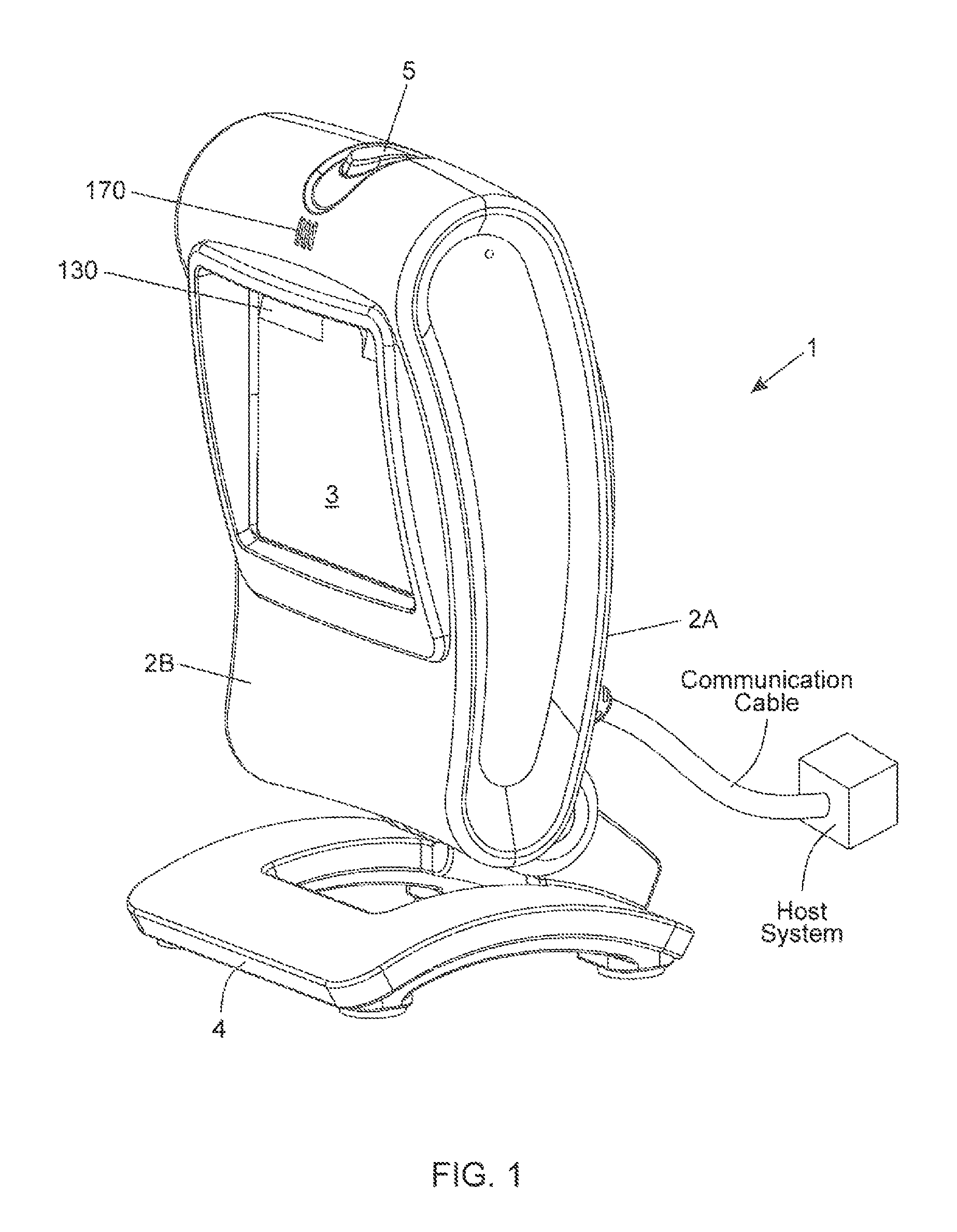

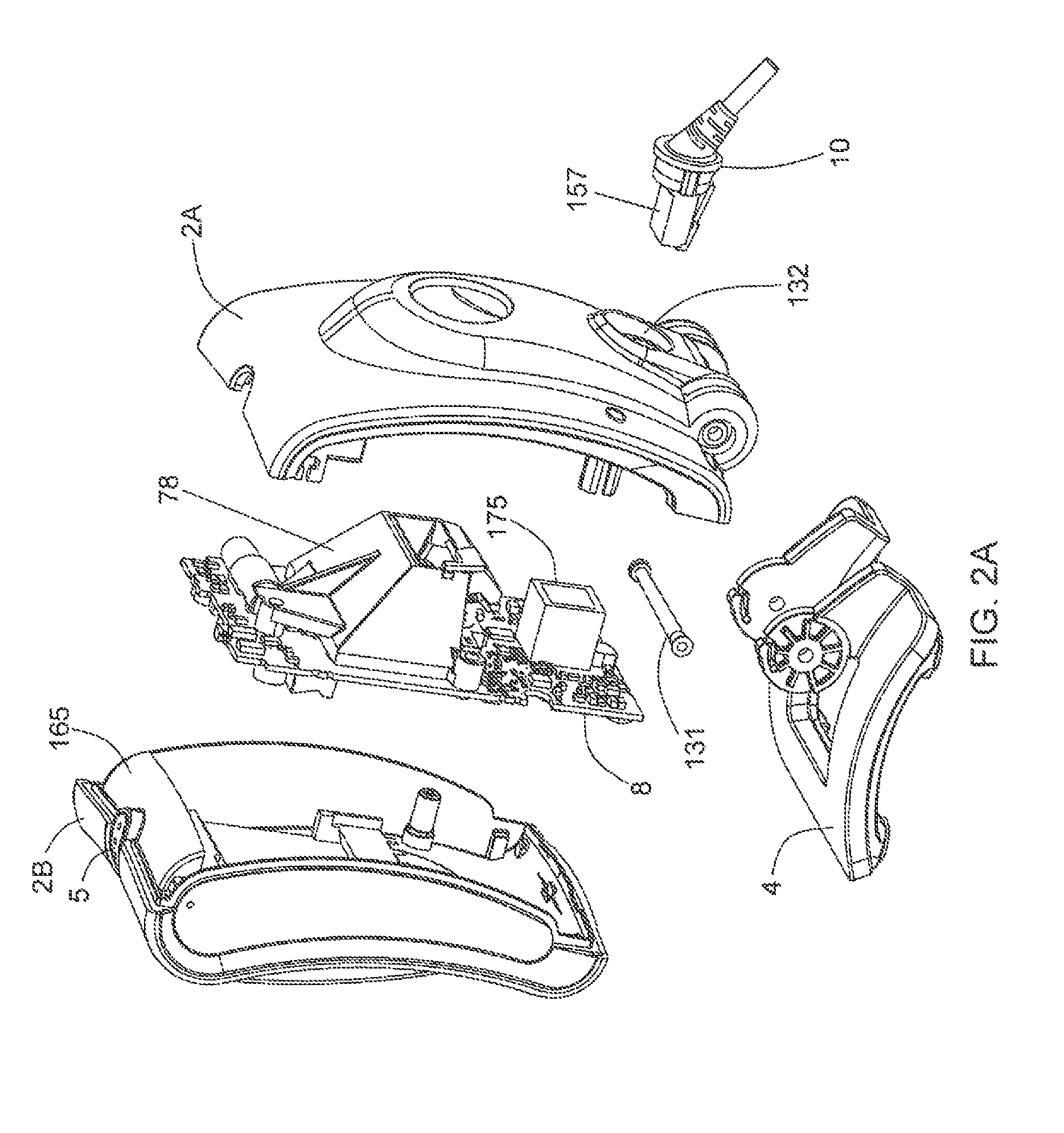

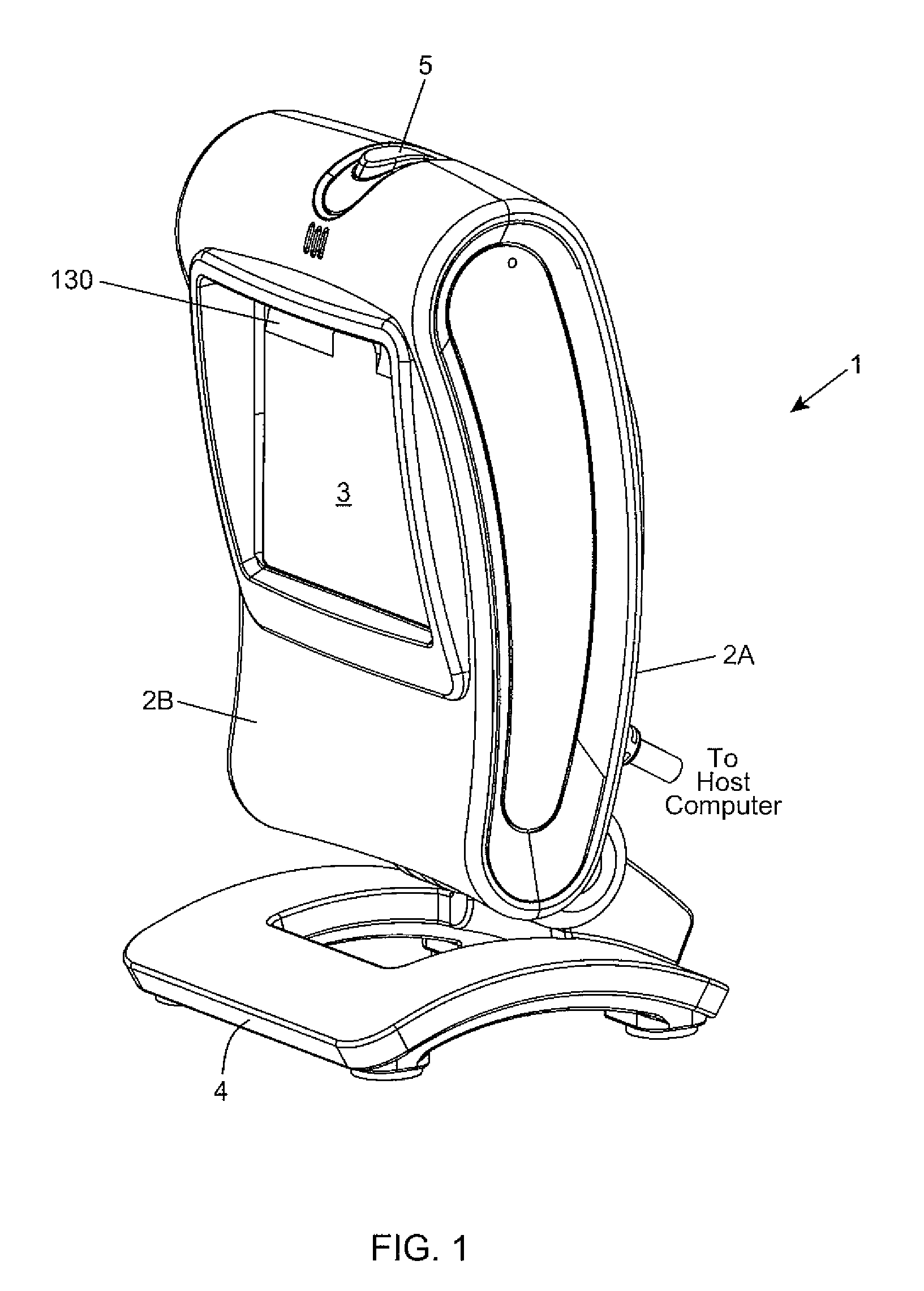

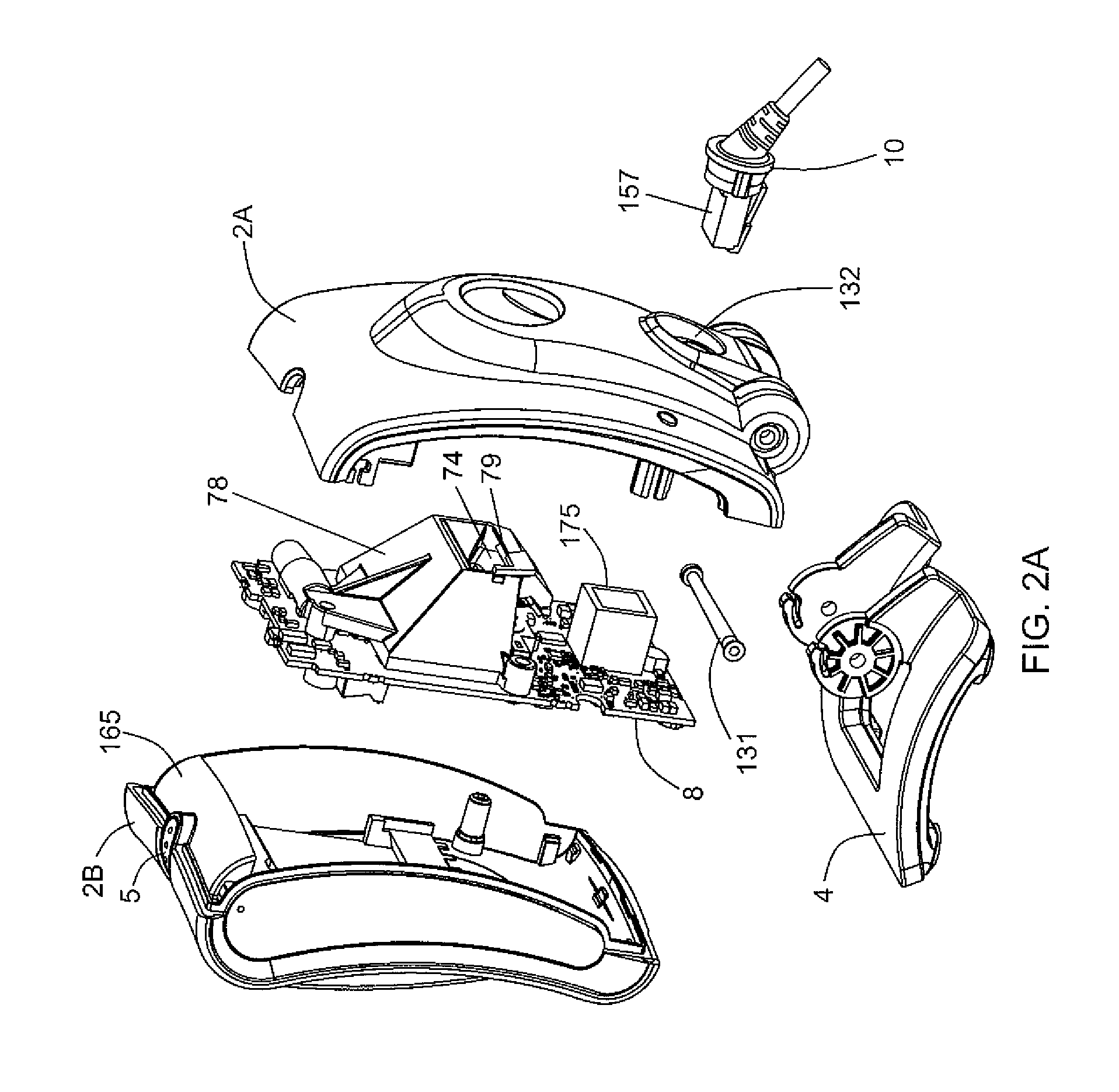

Hand-supportable digital-imaging based code symbol reading system supporting motion blur reduction using an accelerometer sensor

InactiveUS8322622B2Reduce motion blurVerifying markings correctnessSensing by electromagnetic radiationBase codeAccelerometer

A digital-imaging based code symbol reading system which automatically detects hand-induced vibration when the user attempts to read one or more 1D and / or 2D code symbols on an object, and controls system operation in order to reduce motion blur in digital images captured by the hand-supportable system, whether operated in a snap-shot or video image capture mode. An accelerometer sensor is used to automatically detect hand / system acceleration in a vector space during system operation. In a first embodiment, digital image capture is initiated when the user manually depresses a trigger switch, and decode processed only when the measured acceleration of the hand-supportable housing is below predetermined acceleration threshold levels. In another embodiment, digital image capture is initiated when an object is automatically detected in the field of view of the system, and decode processed only when the measured acceleration of the hand-supportable housing is below predetermined acceleration threshold levels.

Owner:METROLOGIC INSTR INC

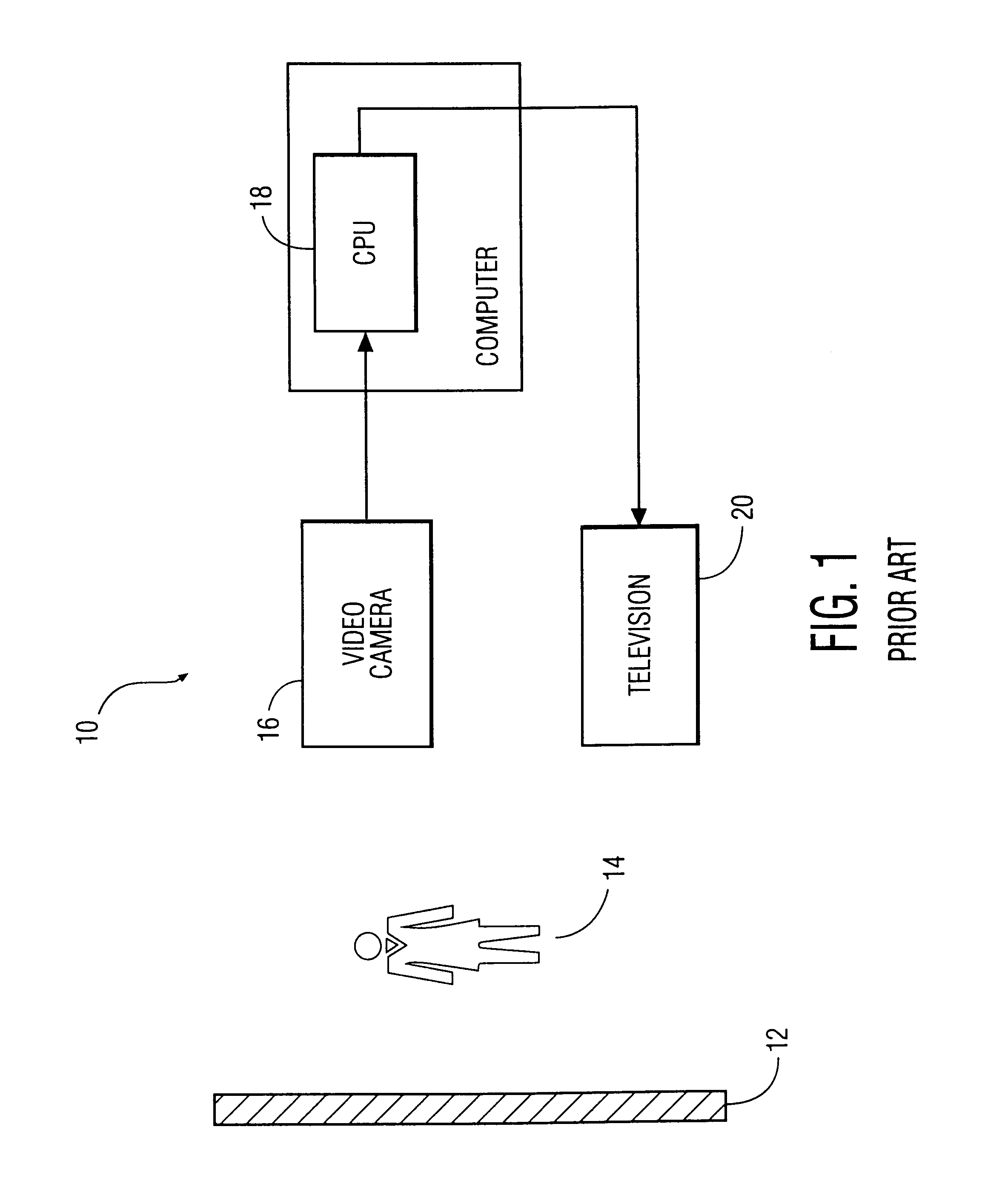

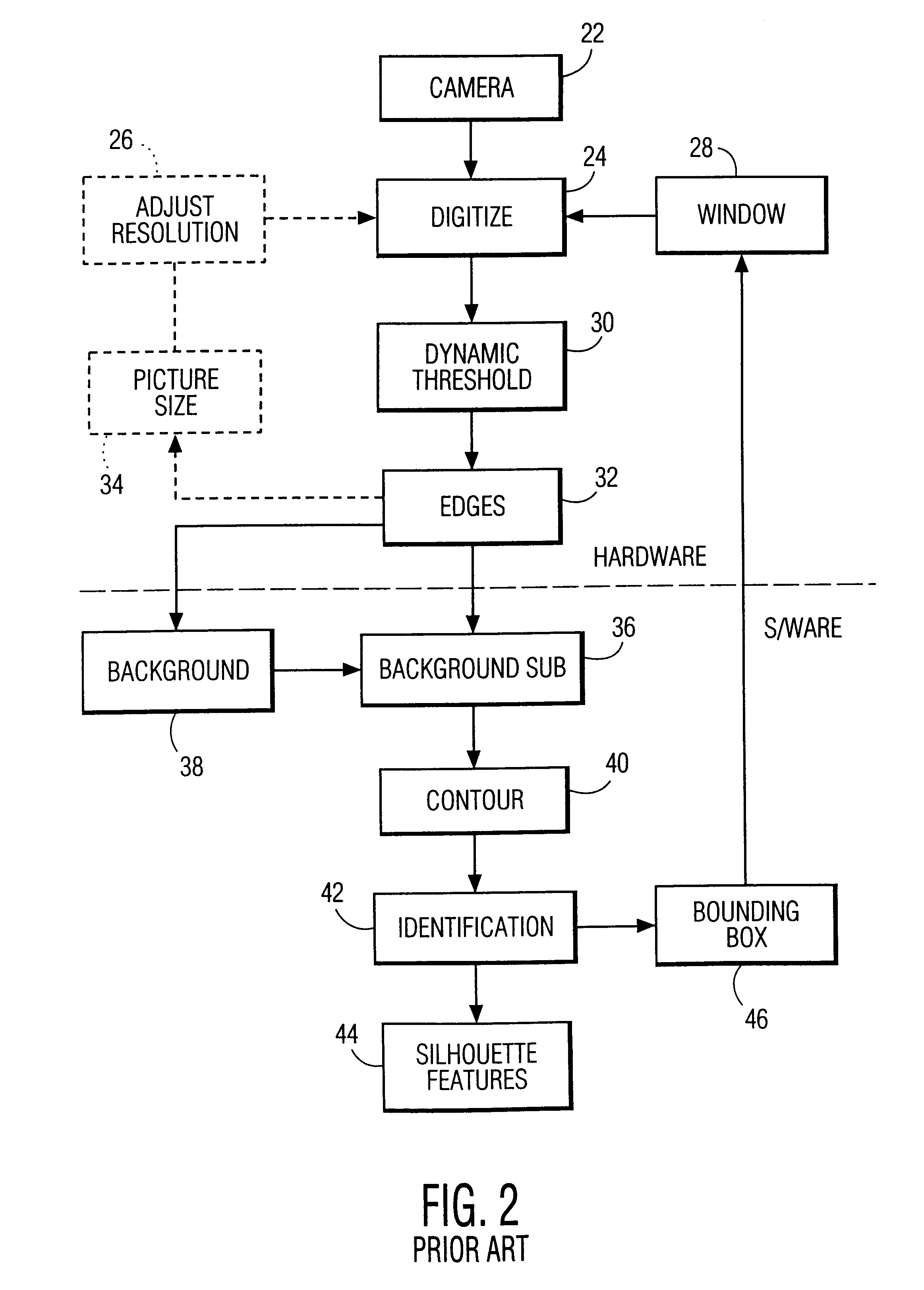

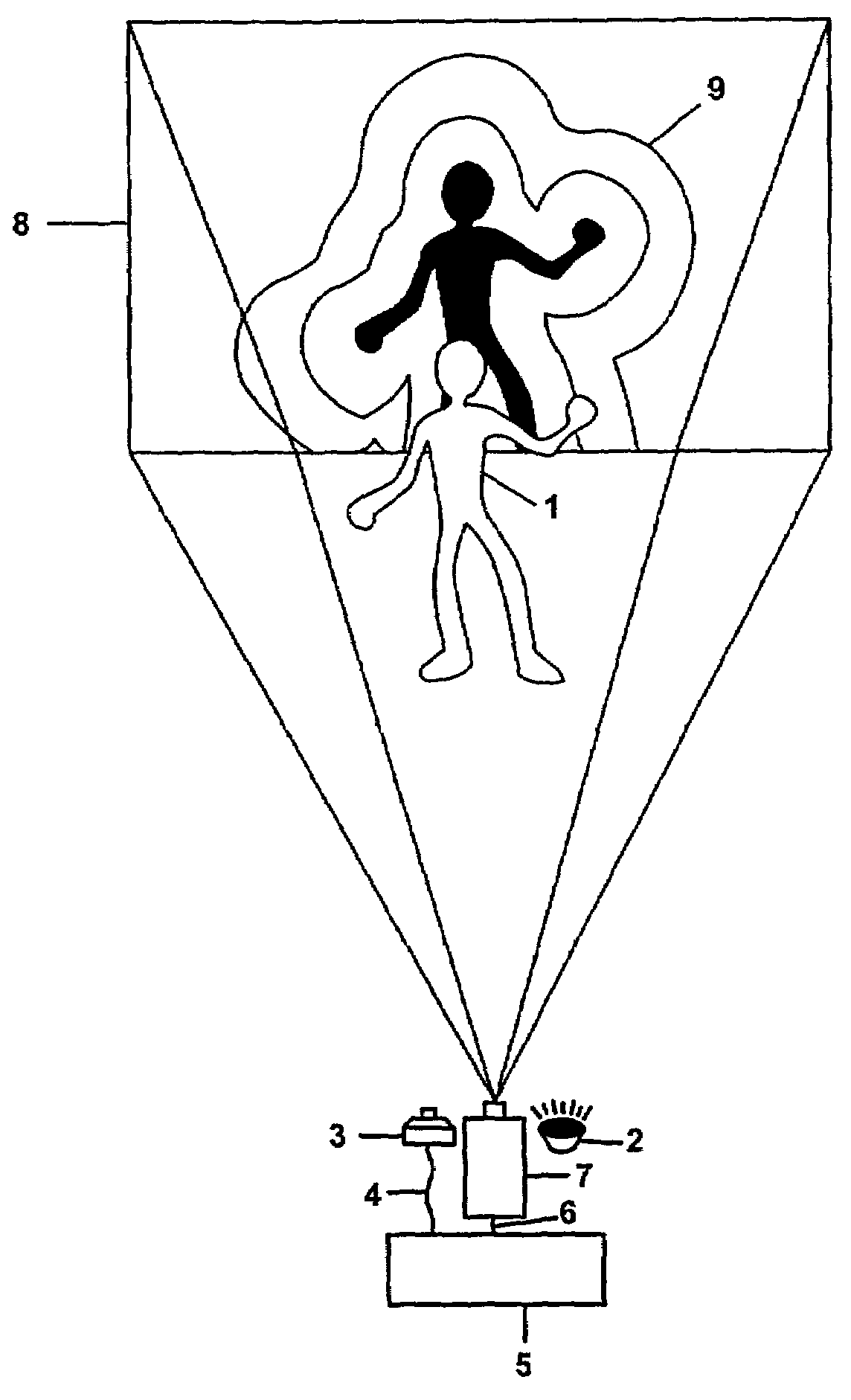

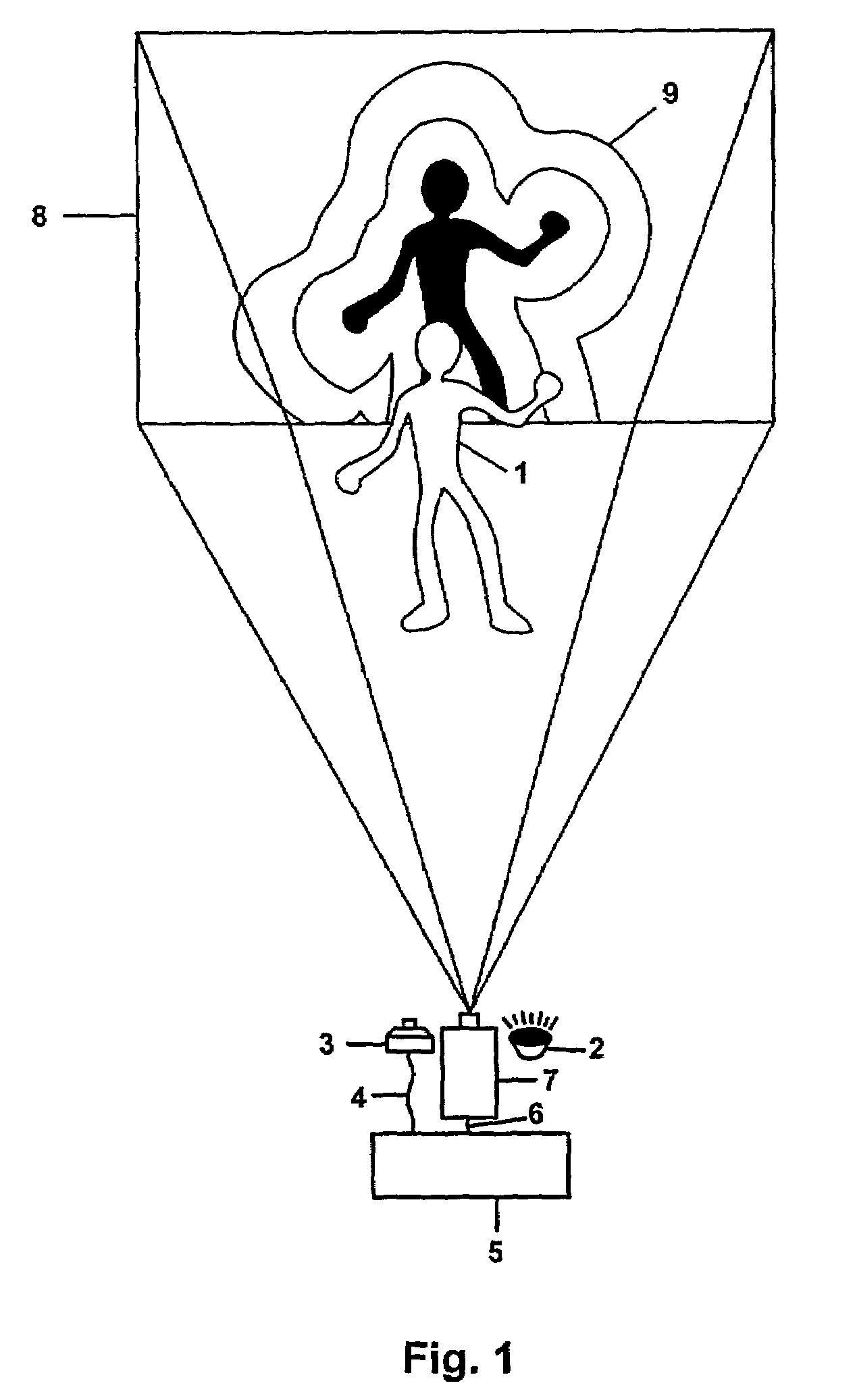

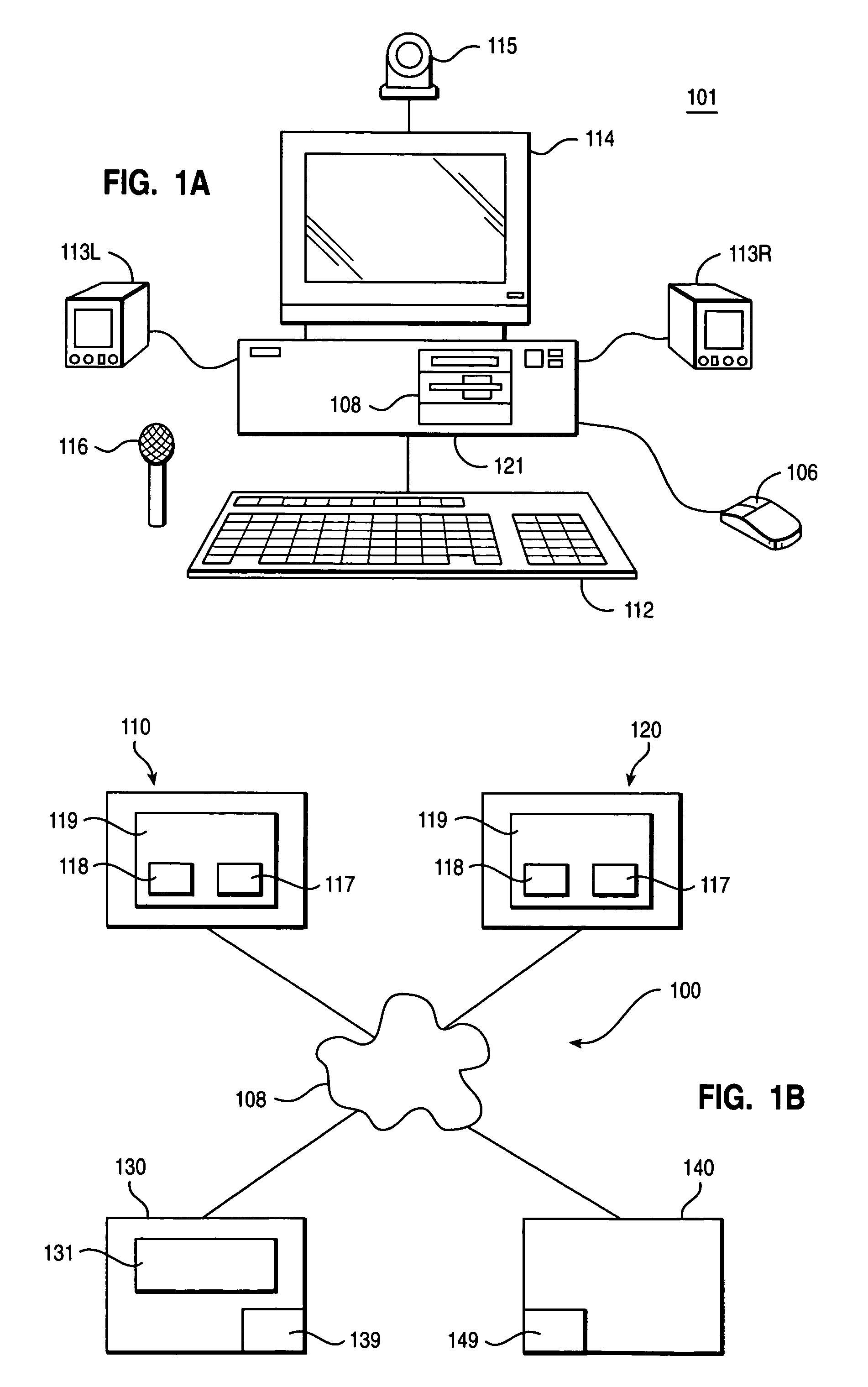

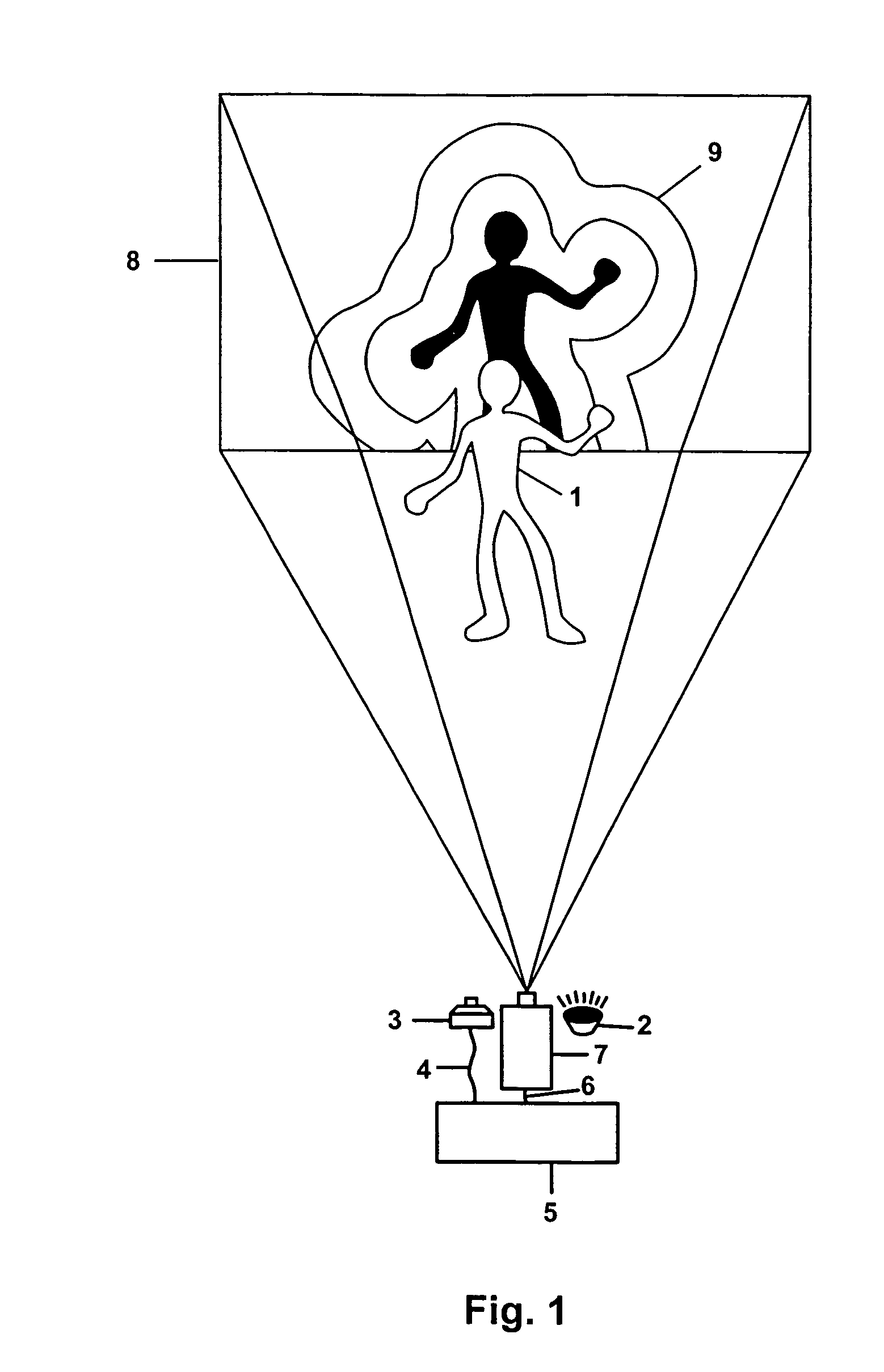

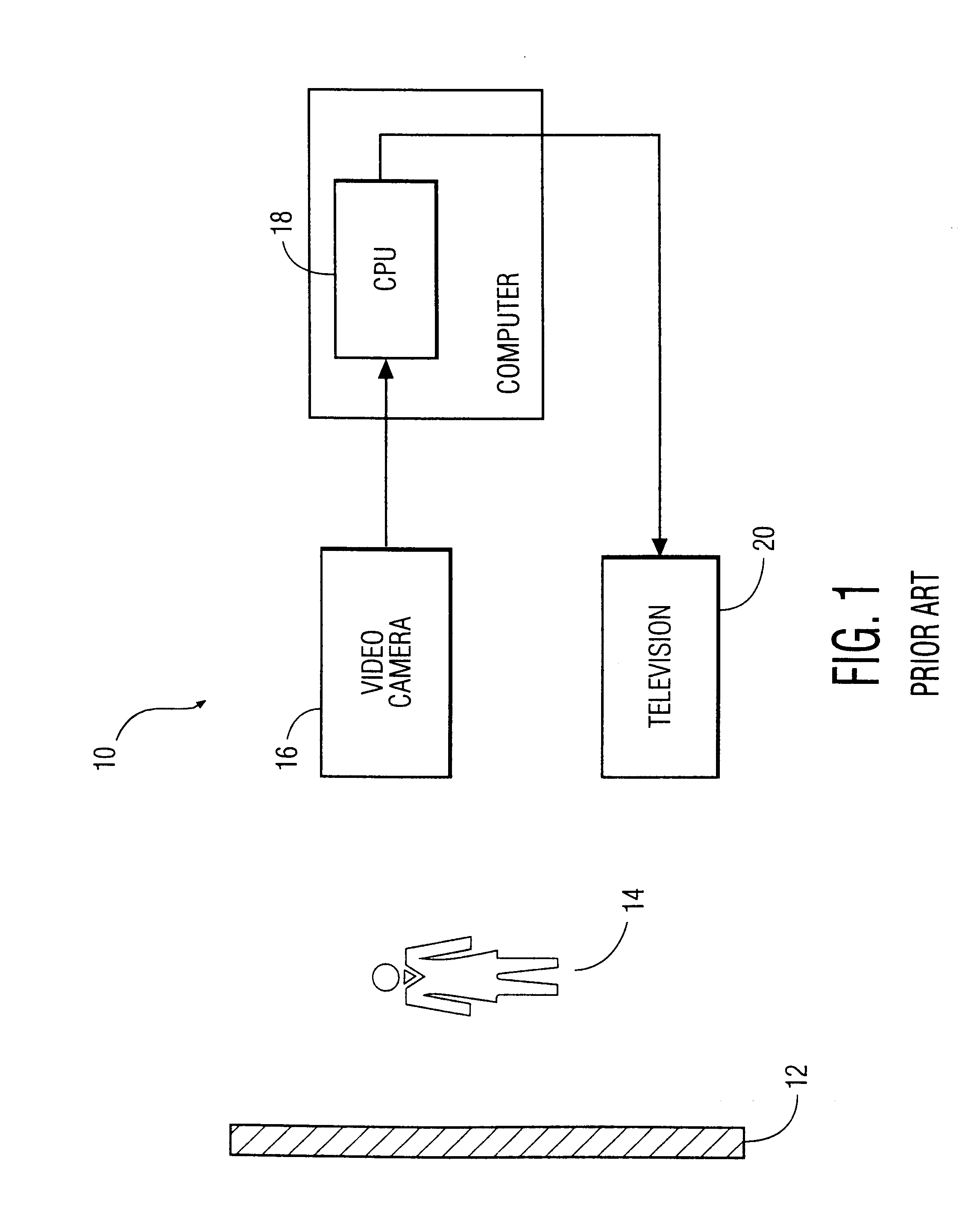

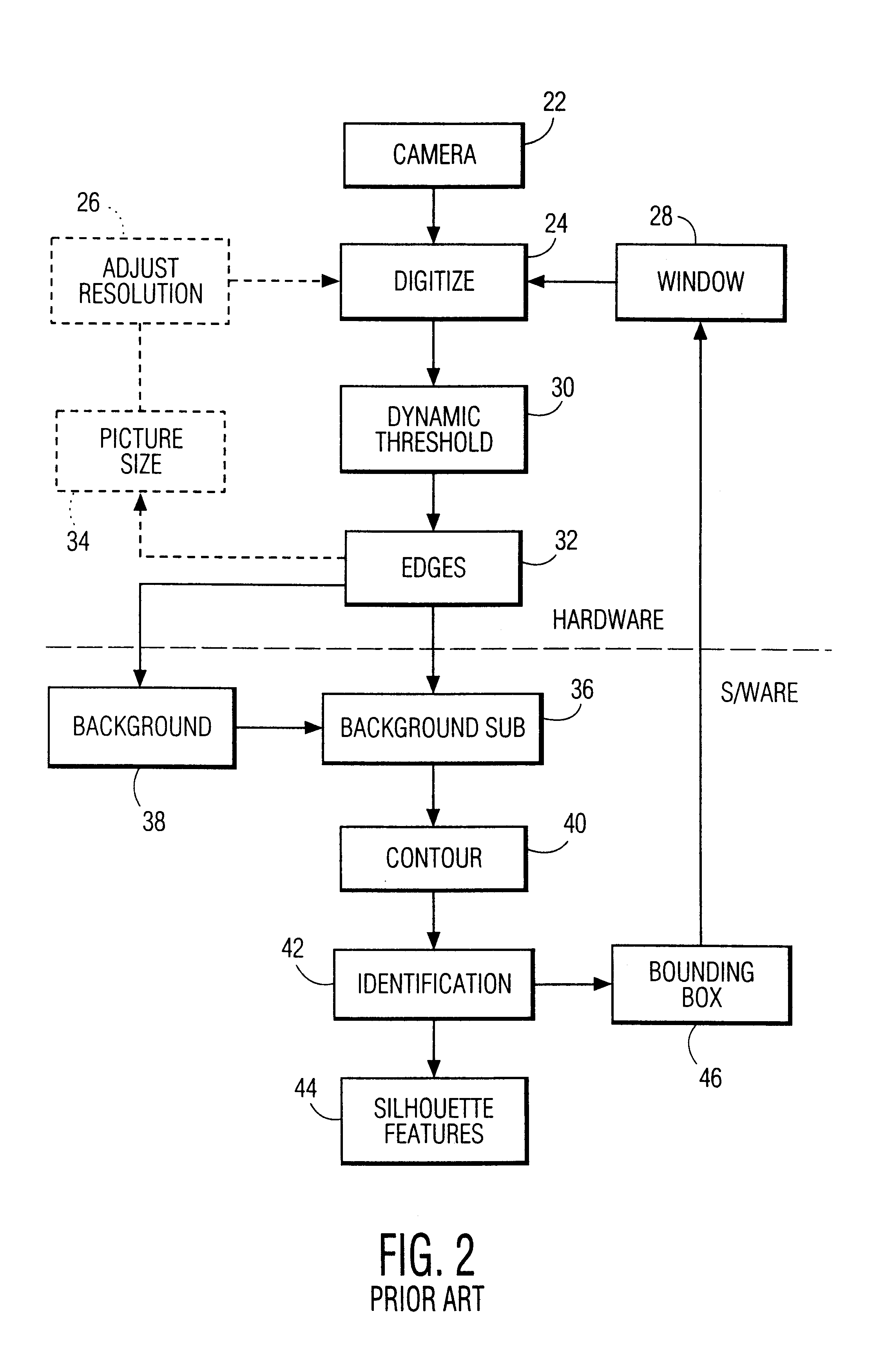

System and method for permitting three-dimensional navigation through a virtual reality environment using camera-based gesture inputs

InactiveUS6181343B1Input/output for user-computer interactionCosmonautic condition simulationsDisplay deviceThree dimensional graphics

A system and method for permitting three-dimensional navigation through a virtual reality environment using camera-based gesture inputs of a system user. The system comprises a computer-readable memory, a video camera for generating video signals indicative of the gestures of the system user and an interaction area surrounding the system user, and a video image display. The video image display is positioned in front of the system user. The system further comprises a microprocessor for processing the video signals, in accordance with a program stored in the computer-readable memory, to determine the three-dimensional positions of the body and principle body parts of the system user. The microprocessor constructs three-dimensional images of the system user and interaction area on the video image display based upon the three-dimensional positions of the body and principle body parts of the system user. The video image display shows three-dimensional graphical objects within the virtual reality environment, and movement by the system user permits apparent movement of the three-dimensional objects displayed on the video image display so that the system user appears to move throughout the virtual reality environment.

Owner:PHILIPS ELECTRONICS NORTH AMERICA

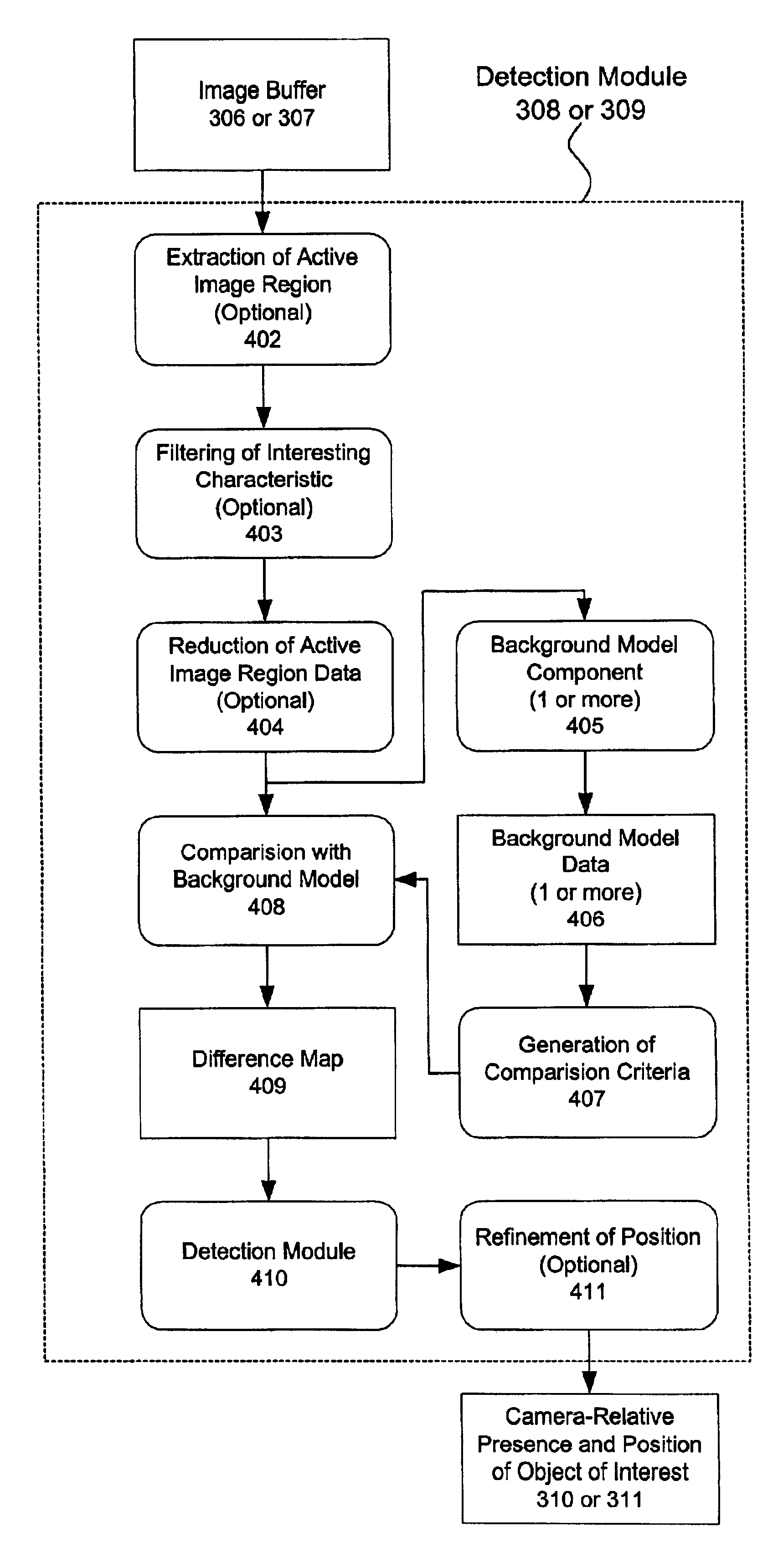

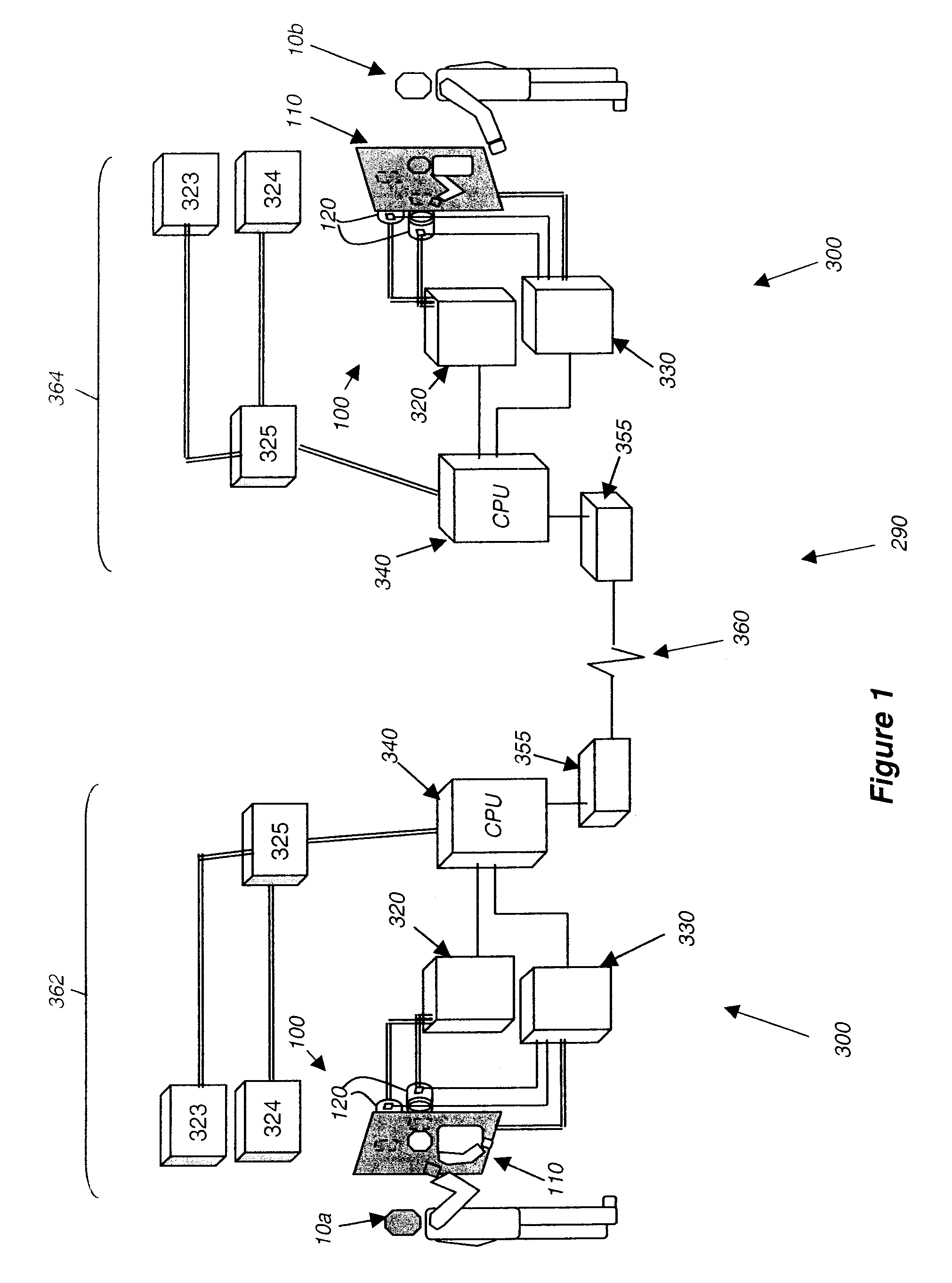

Multiple camera control system

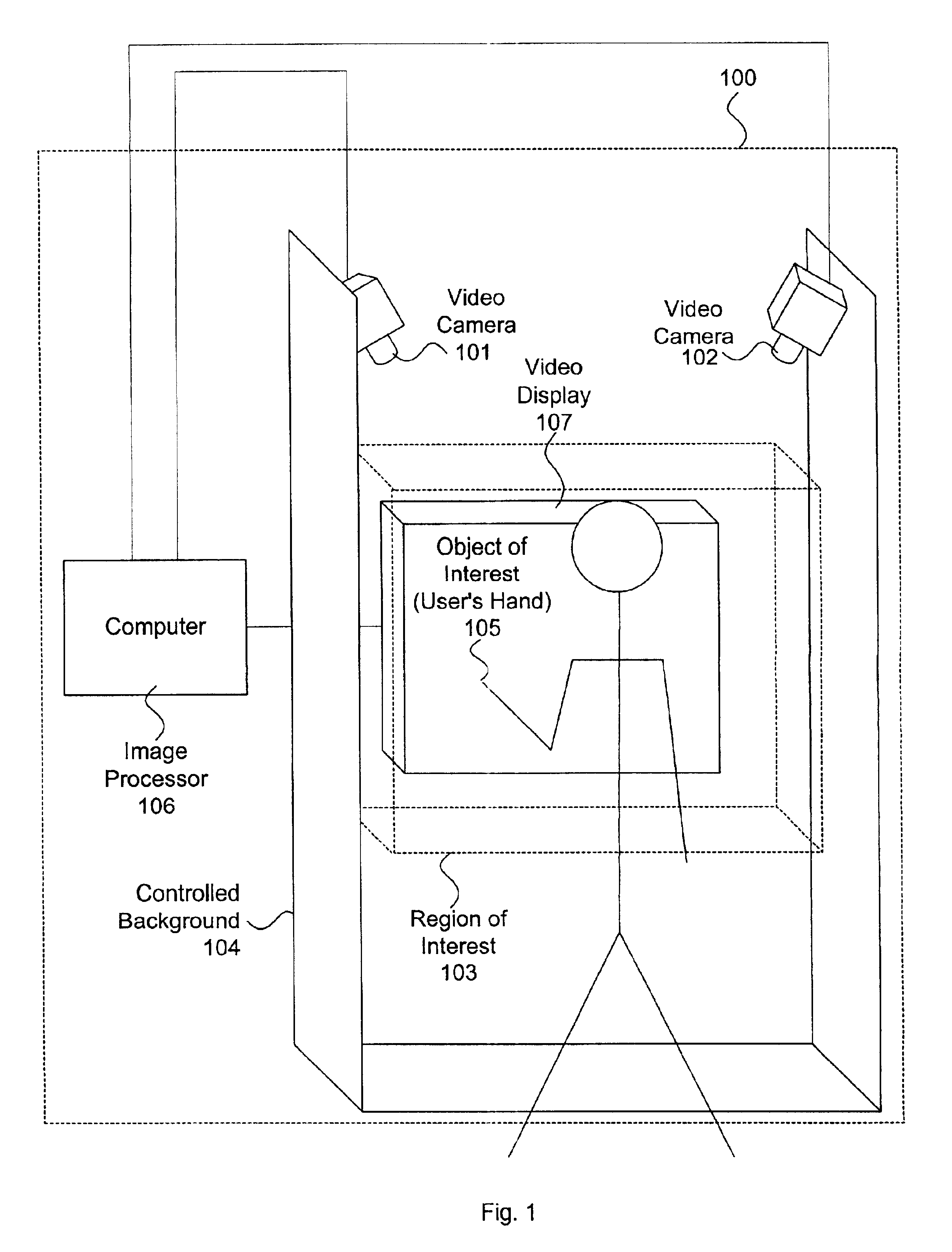

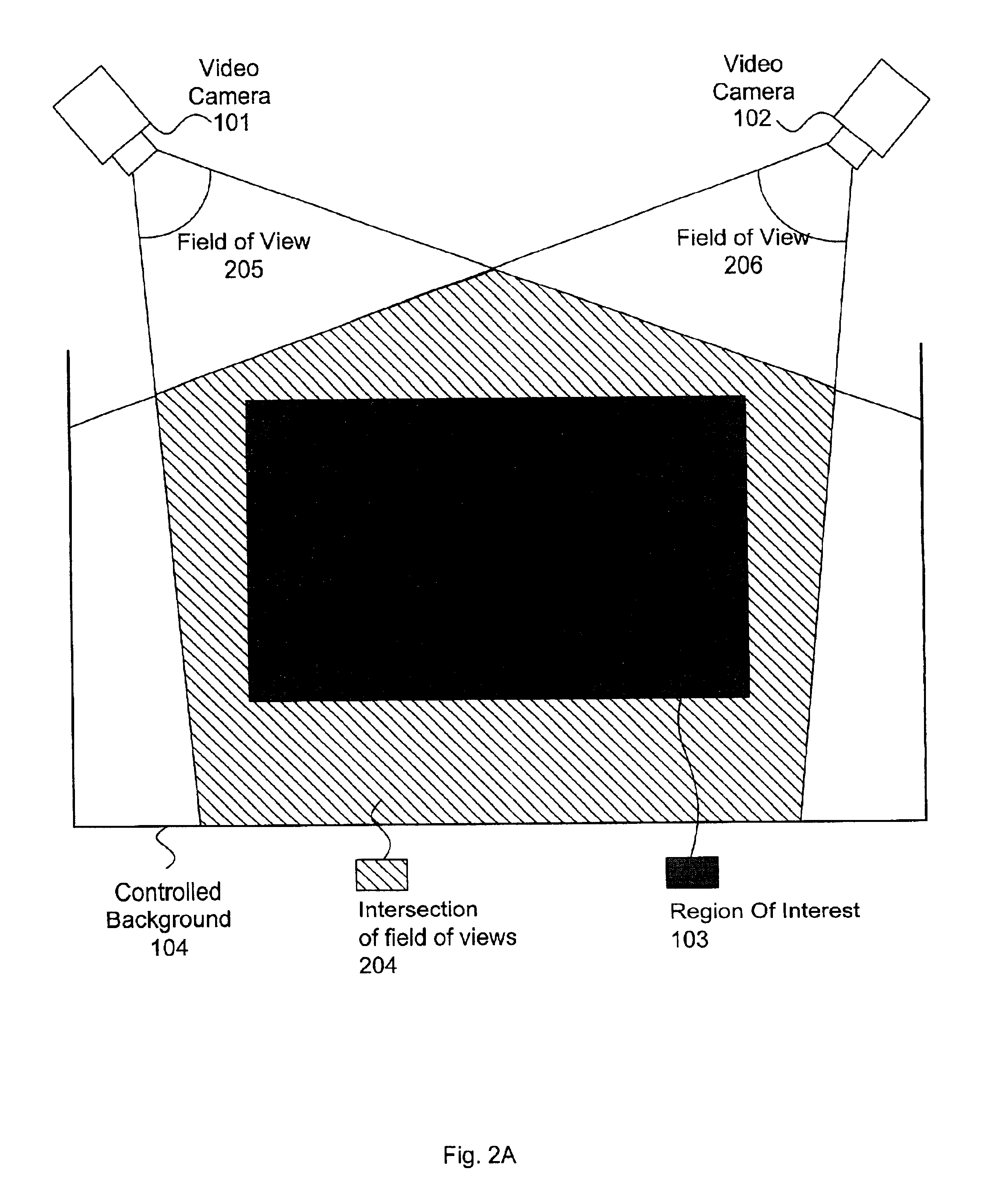

A multiple camera tracking system for interfacing with an application program running on a computer is provided. The tracking system includes two or more video cameras arranged to provide different viewpoints of a region of interest, and are operable to produce a series of video images. A processor is operable to receive the series of video images and detect objects appearing in the region of interest. The processor executes a process to generate a background data set from the video images, generate an image data set for each received video image, compare each image data set to the background data set to produce a difference map for each image data set, detect a relative position of an object of interest within each difference map, and produce an absolute position of the object of interest from the relative positions of the object of interest and map the absolute position to a position indicator associated with the application program.

Owner:QUALCOMM INC

High-density illumination system

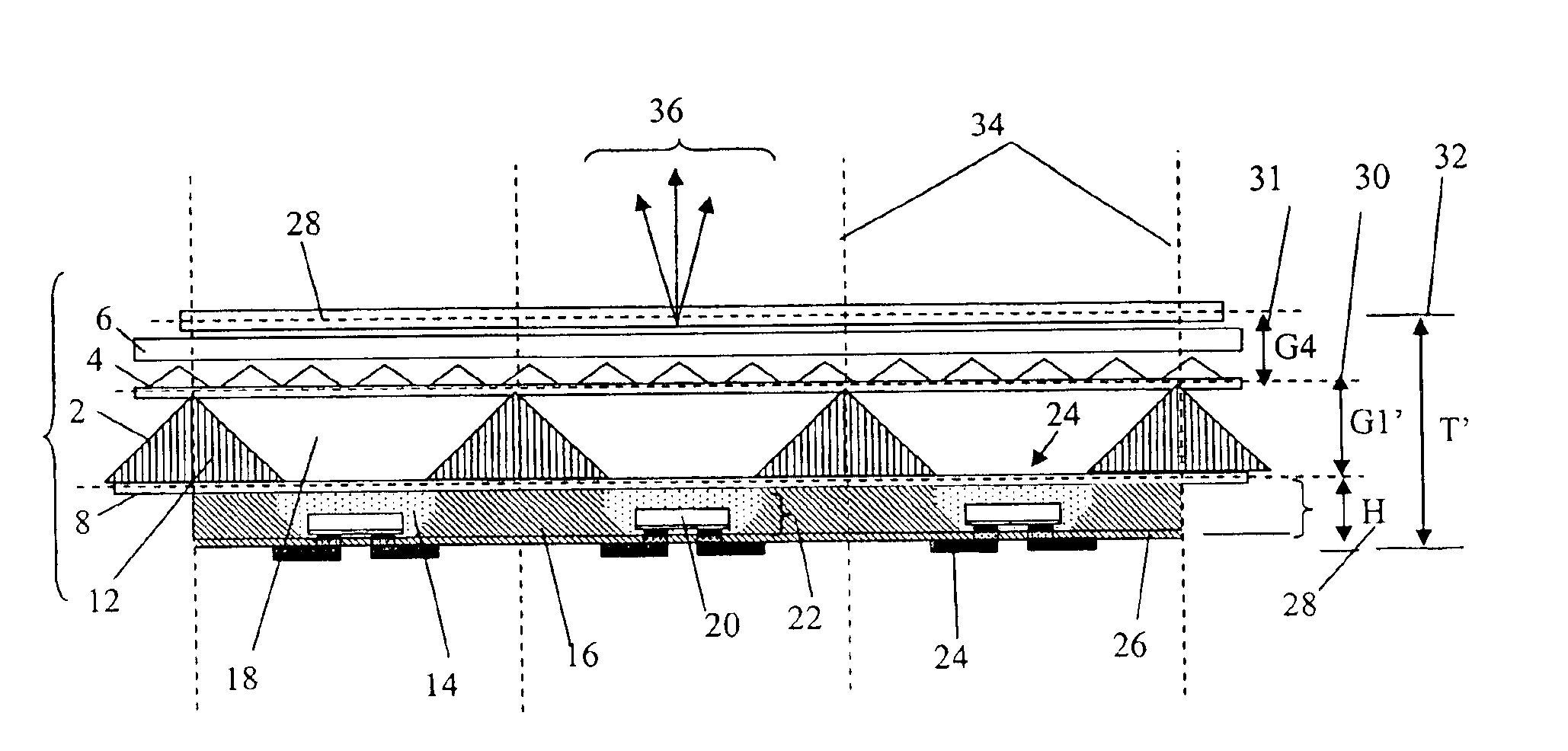

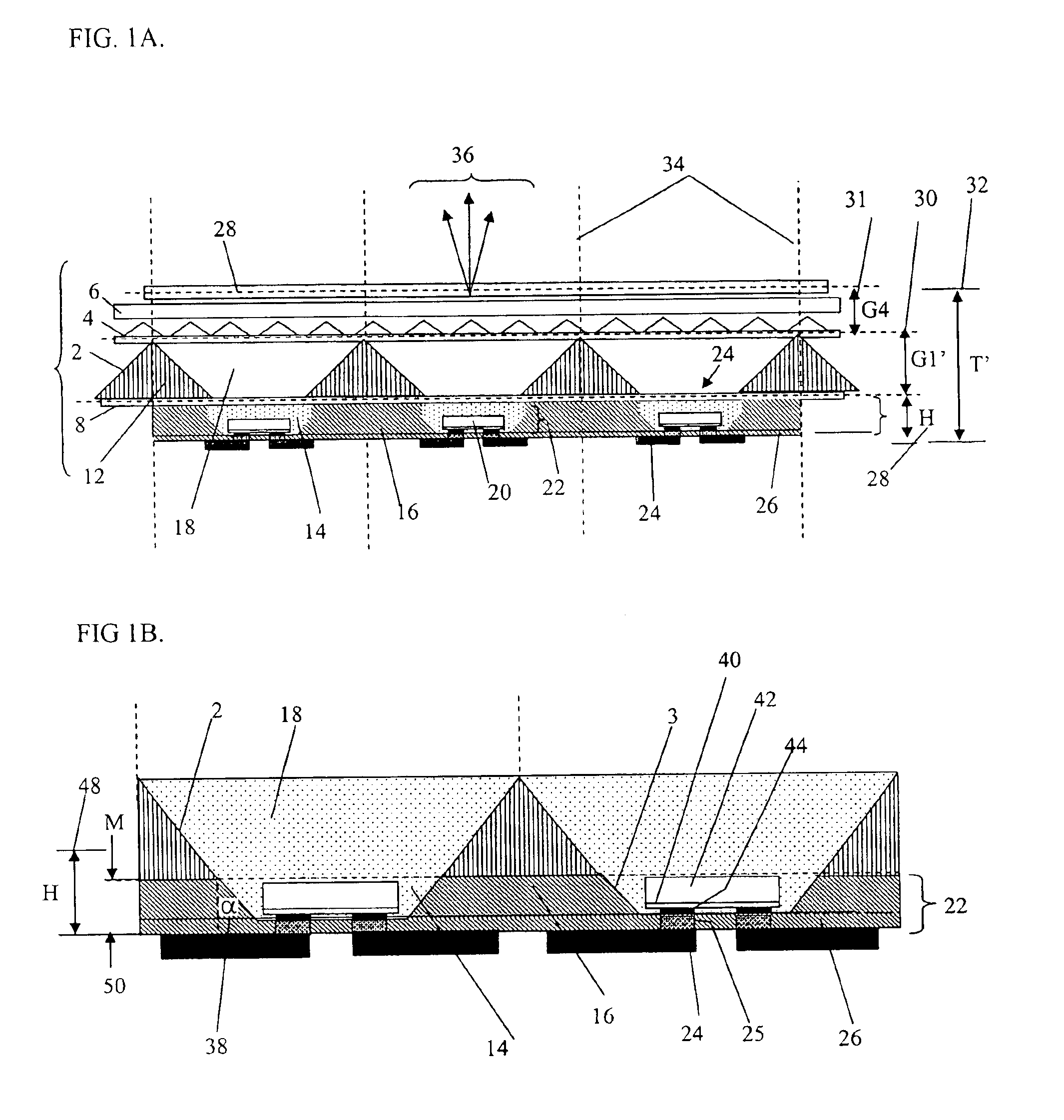

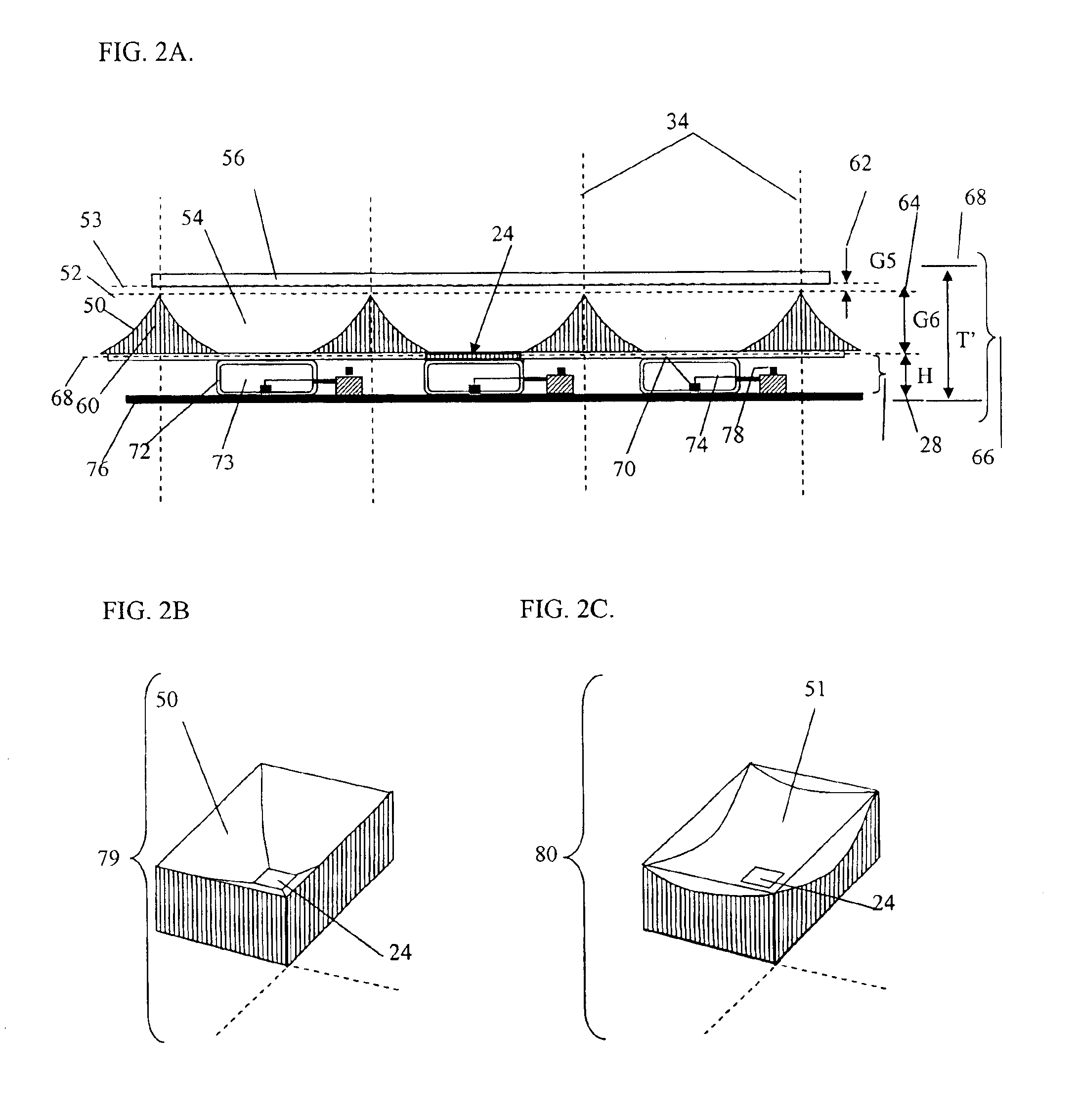

ActiveUS6871982B2Valid conversionIncrease Lumen DensityPrismsMechanical apparatusHigh densityLed array

A compact and efficient optical illumination system featuring planar multi-layered LED light source arrays concentrating their polarized or un-polarized output within a limited angular range. The optical system manipulates light emitted by a planar array of electrically-interconnected LED chips positioned within the input apertures of a corresponding array of shaped metallic reflecting bins using at least one of elevated prismatic films, polarization converting films, micro-lens arrays and external hemispherical or ellipsoidal reflecting elements. Practical applications of the LED array illumination systems include compact LCD or DMD video image projectors, as well as general lighting, automotive lighting, and LCD backlighting.

Owner:SNAPTRACK

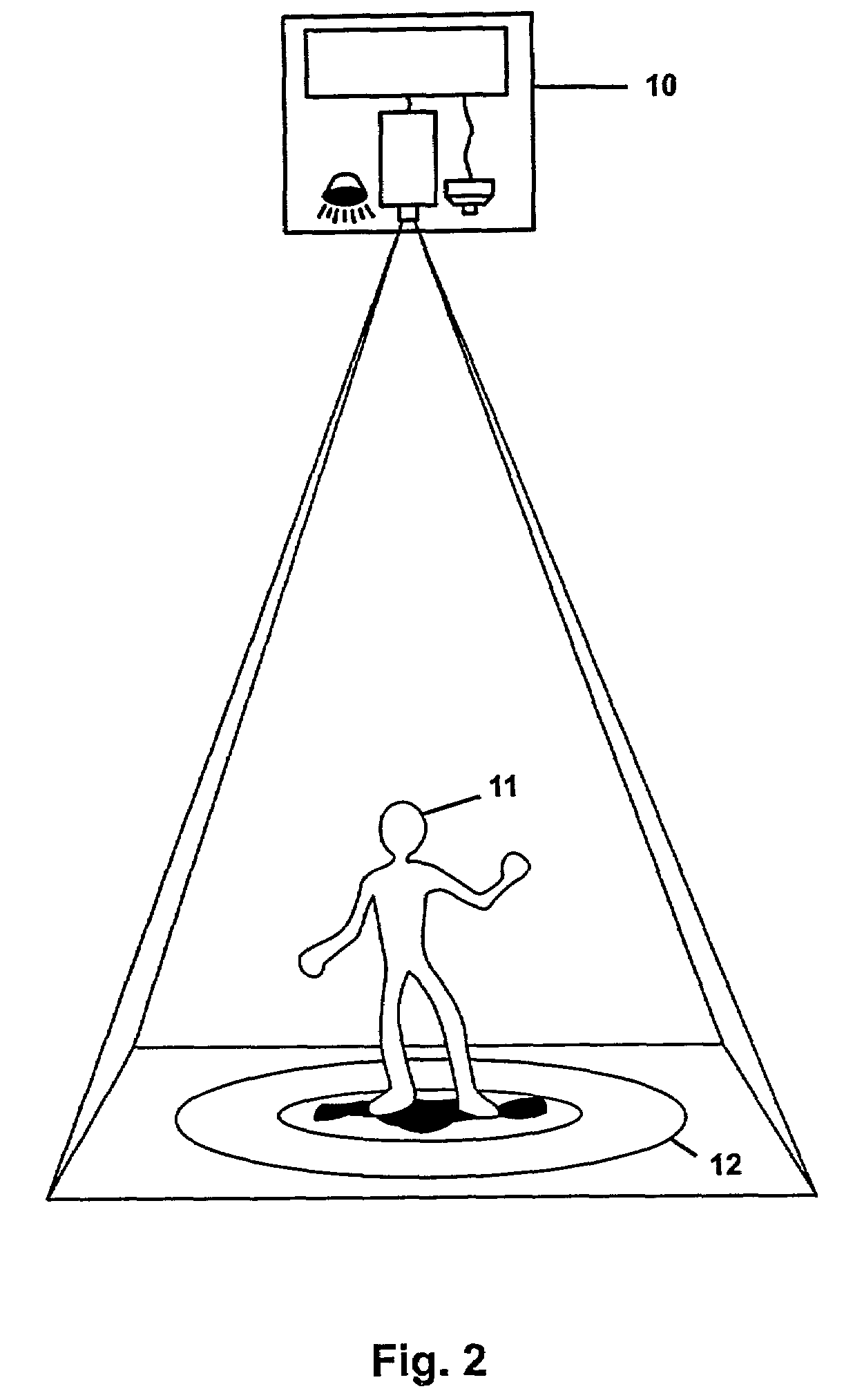

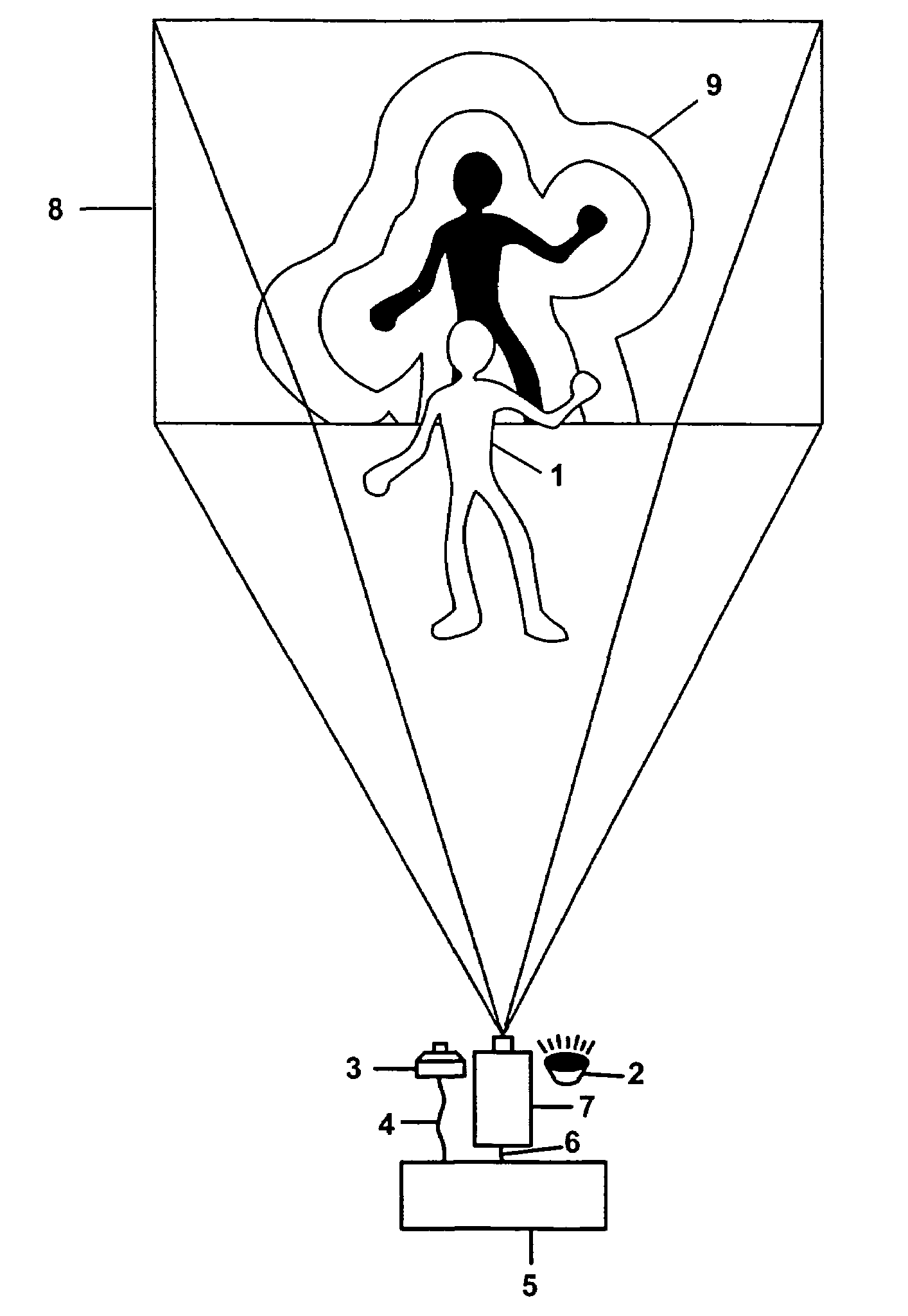

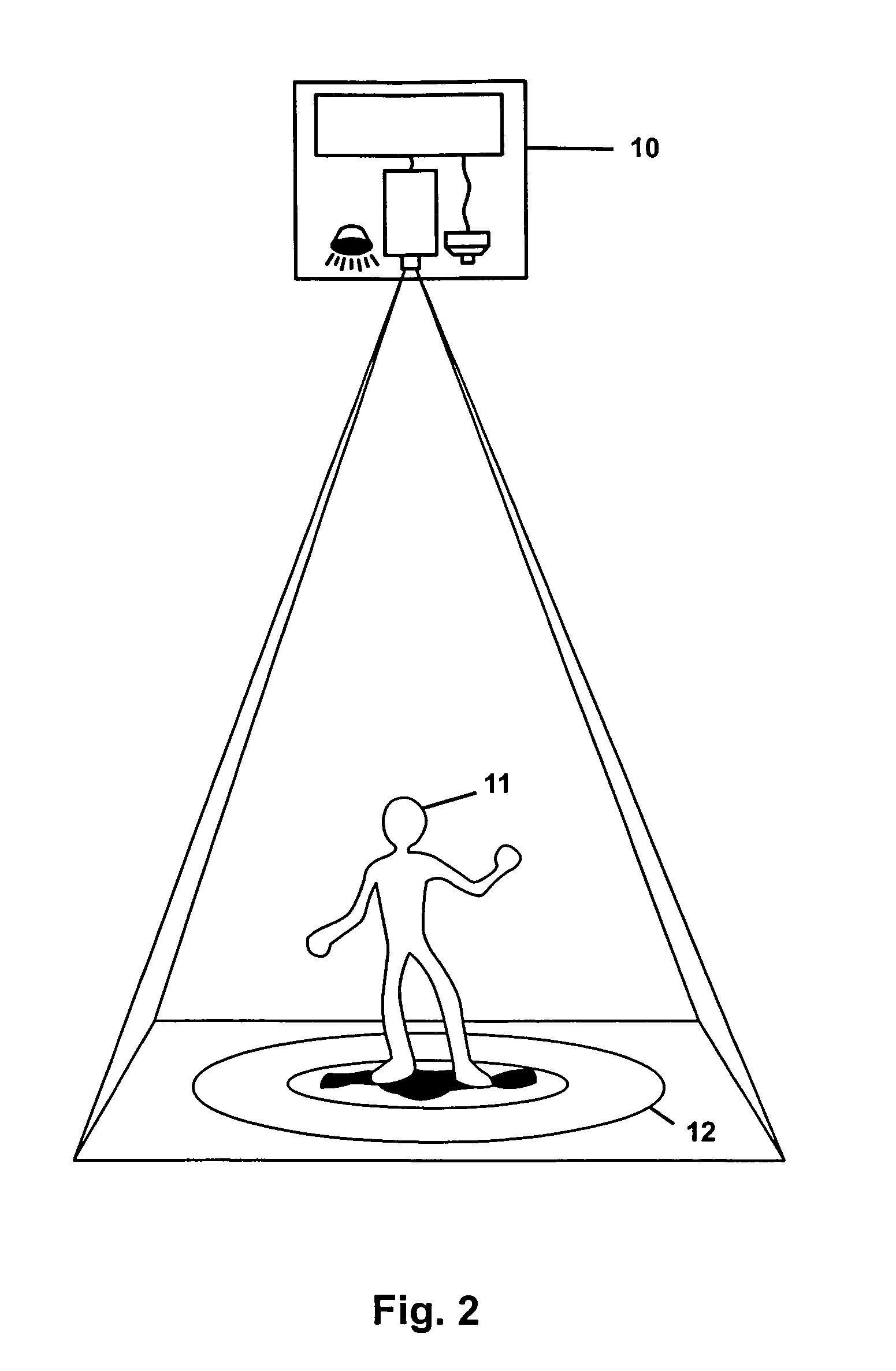

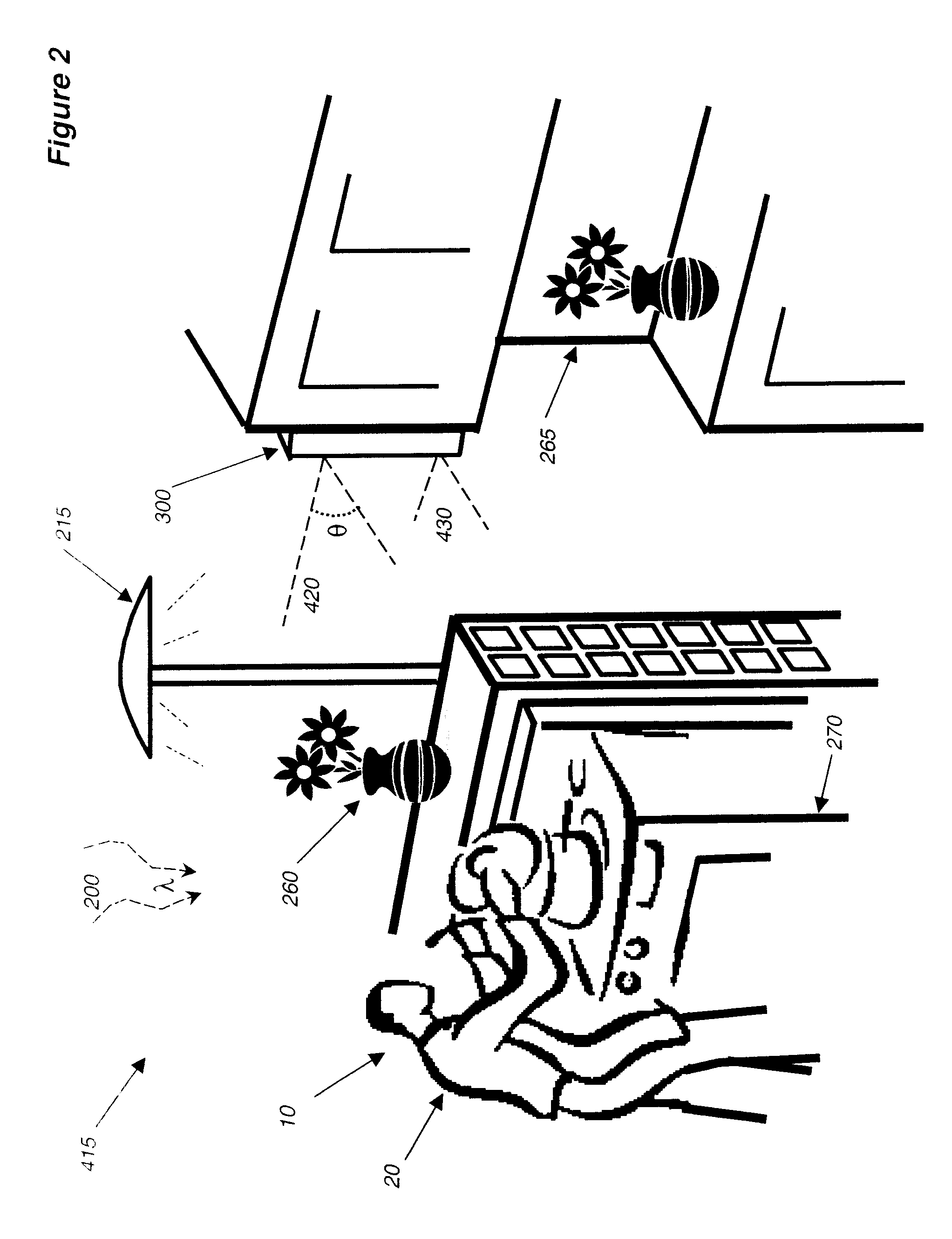

Interactive video display system

ActiveUS7259747B2Improve aestheticsInterferenceInput/output for user-computer interactionTelevision system detailsInformation spaceInteractive video

A device allows easy and unencumbered interaction between a person and a computer display system using the person's (or another object's) movement and position as input to the computer. In some configurations, the display can be projected around the user so that that the person's actions are displayed around them. The video camera and projector operate on different wavelengths so that they do not interfere with each other. Uses for such a device include, but are not limited to, interactive lighting effects for people at clubs or events, interactive advertising displays, etc. Computer-generated characters and virtual objects can be made to react to the movements of passers-by, generate interactive ambient lighting for social spaces such as restaurants, lobbies and parks, video game systems and create interactive information spaces and art installations. Patterned illumination and brightness and gradient processing can be used to improve the ability to detect an object against a background of video images.

Owner:MICROSOFT TECH LICENSING LLC

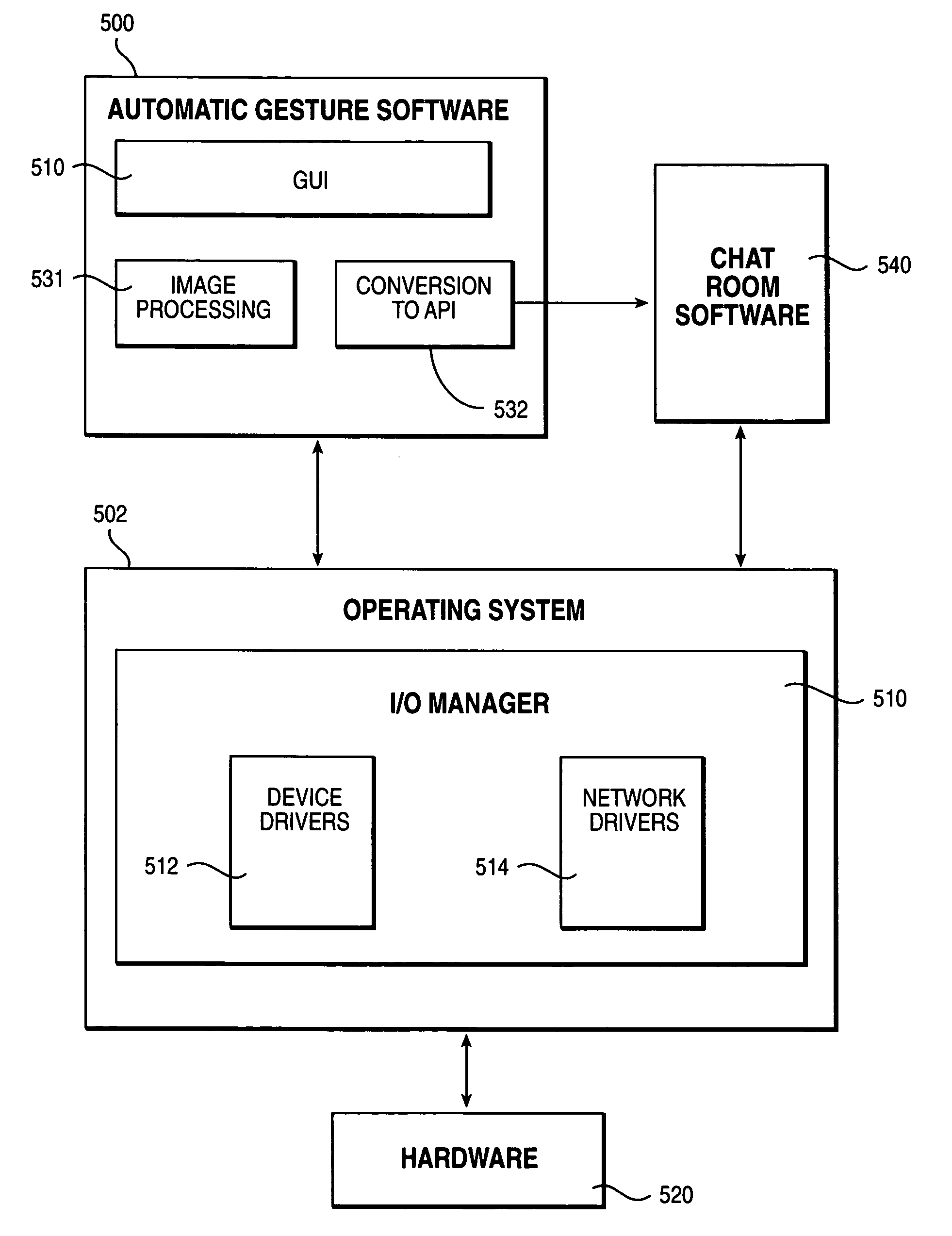

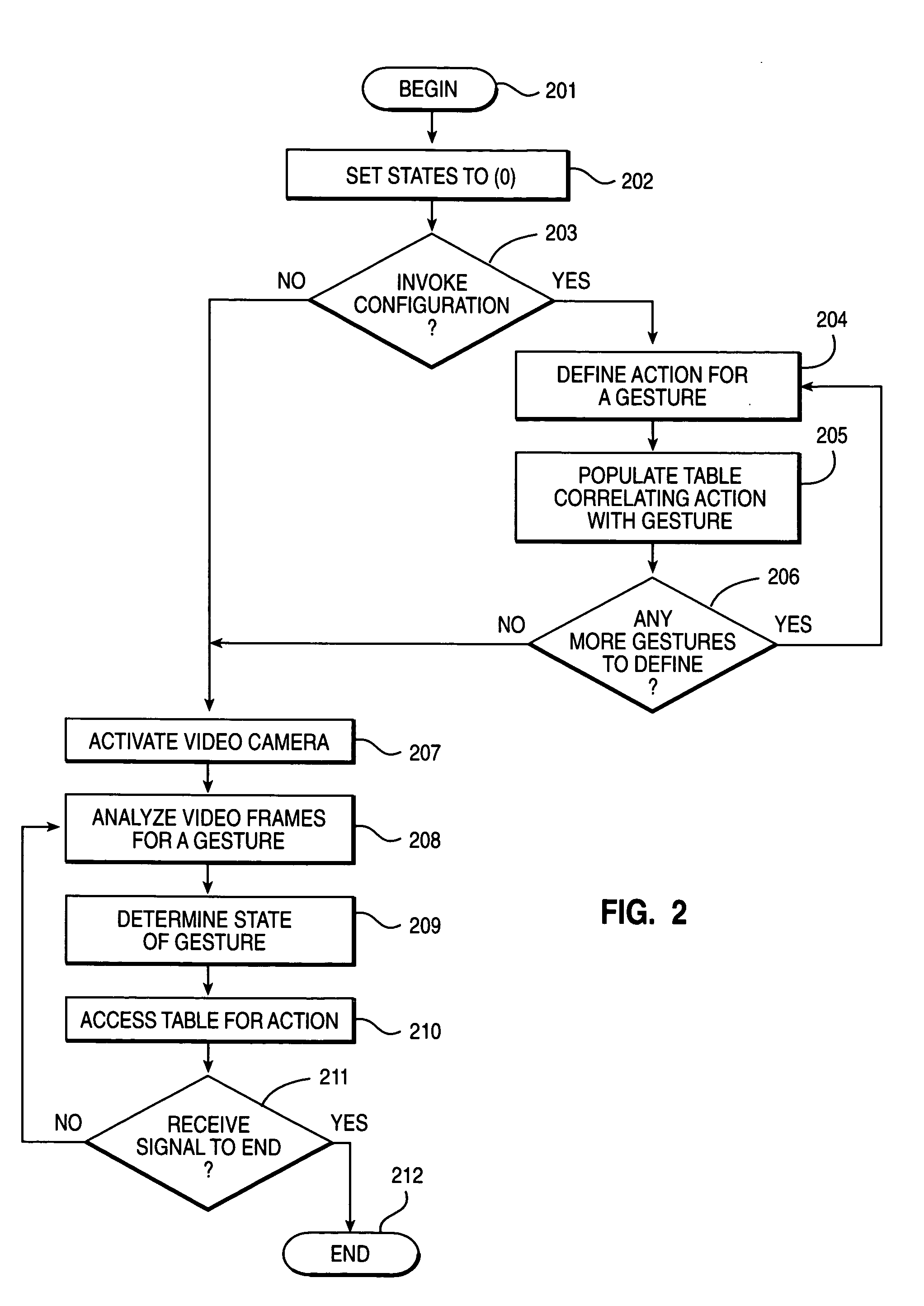

Using video image analysis to automatically transmit gestures over a network in a chat or instant messaging session

InactiveUS7039676B1Minimizing chanceTelevision system detailsCharacter and pattern recognitionChat roomImage processing software

The system, method, and program of the invention captures actual physical gestures made by a participant during a chat room or instant messaging session or other real time communication session between participants over a network and automatically transmits a representation of the gestures to the other participants. Image processing software analyzes successive video images, received as input from a video camera, for an actual physical gesture made by a participant. When a physical gesture is analyzed as being made, the state of the gesture is also determined. The state of the gesture identifies whether it is a first occurrence of the gesture or a subsequent occurrence. An action, and a parameter for the action, is determined for the gesture and the particular state of the gesture. A command to the API of the communication software, such as chat room software, is automatically generated which transmits a representation of the gesture to the participants through the communication software.

Owner:MICROSOFT TECH LICENSING LLC

Interactive video display system

InactiveUS7834846B1InterferenceInterference minimizationInput/output for user-computer interactionTelevision system detailsInformation spaceInteractive video

A device allows easy and unencumbered interaction between a person and a computer display system using the person's (or another object's) movement and position as input to the computer. In some configurations, the display can be projected around the user so that that the person's actions are displayed around them. The video camera and projector operate on different wavelengths so that they do not interfere with each other. Uses for such a device include, but are not limited to, interactive lighting effects for people at clubs or events, interactive advertising displays, etc. Computer-generated characters and virtual objects can be made to react to the movements of passers-by, generate interactive ambient lighting for social spaces such as restaurants, lobbies and parks, video game systems and create interactive information spaces and art installations. Patterned illumination and brightness and gradient processing can be used to improve the ability to detect an object against a background of video images.

Owner:MICROSOFT TECH LICENSING LLC

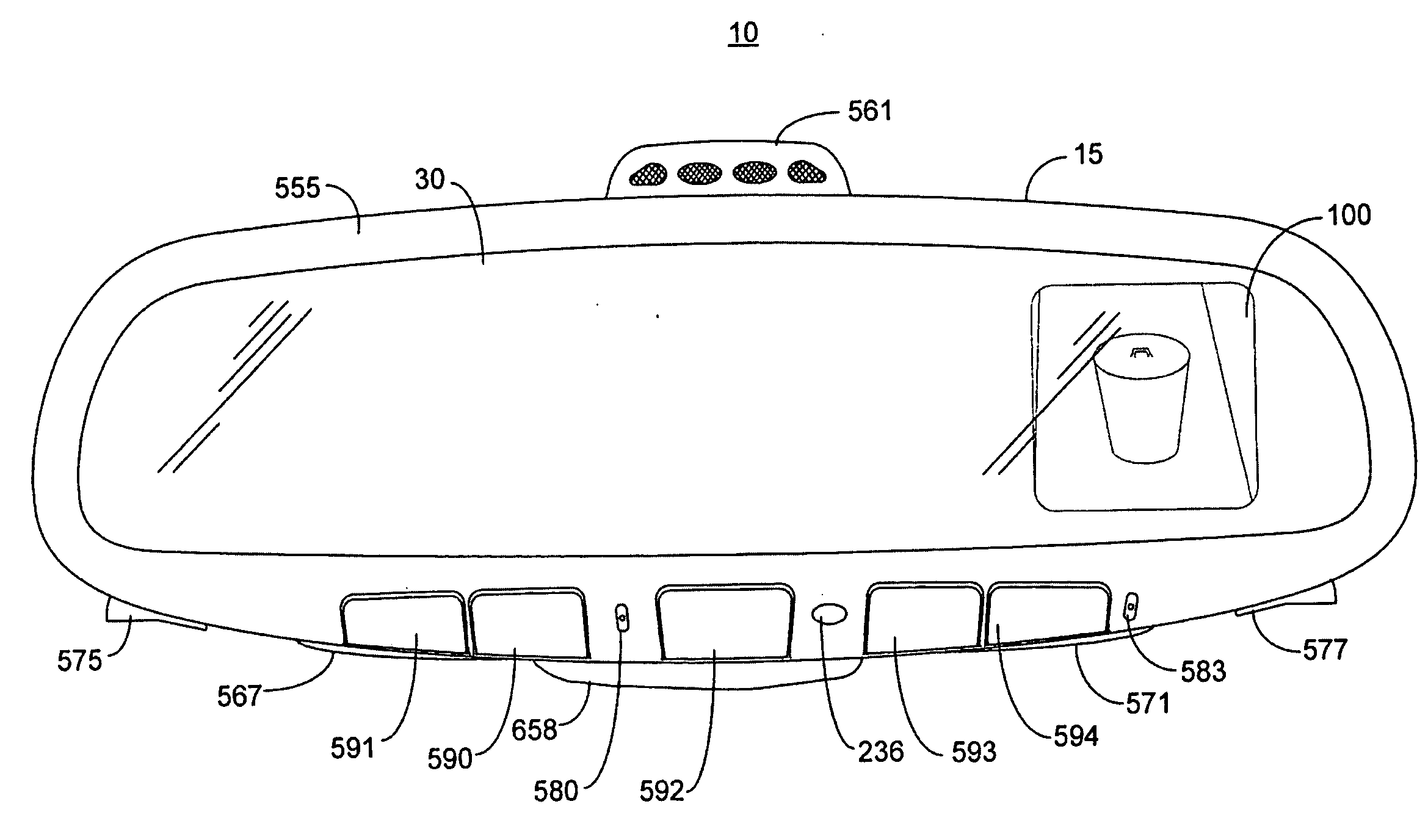

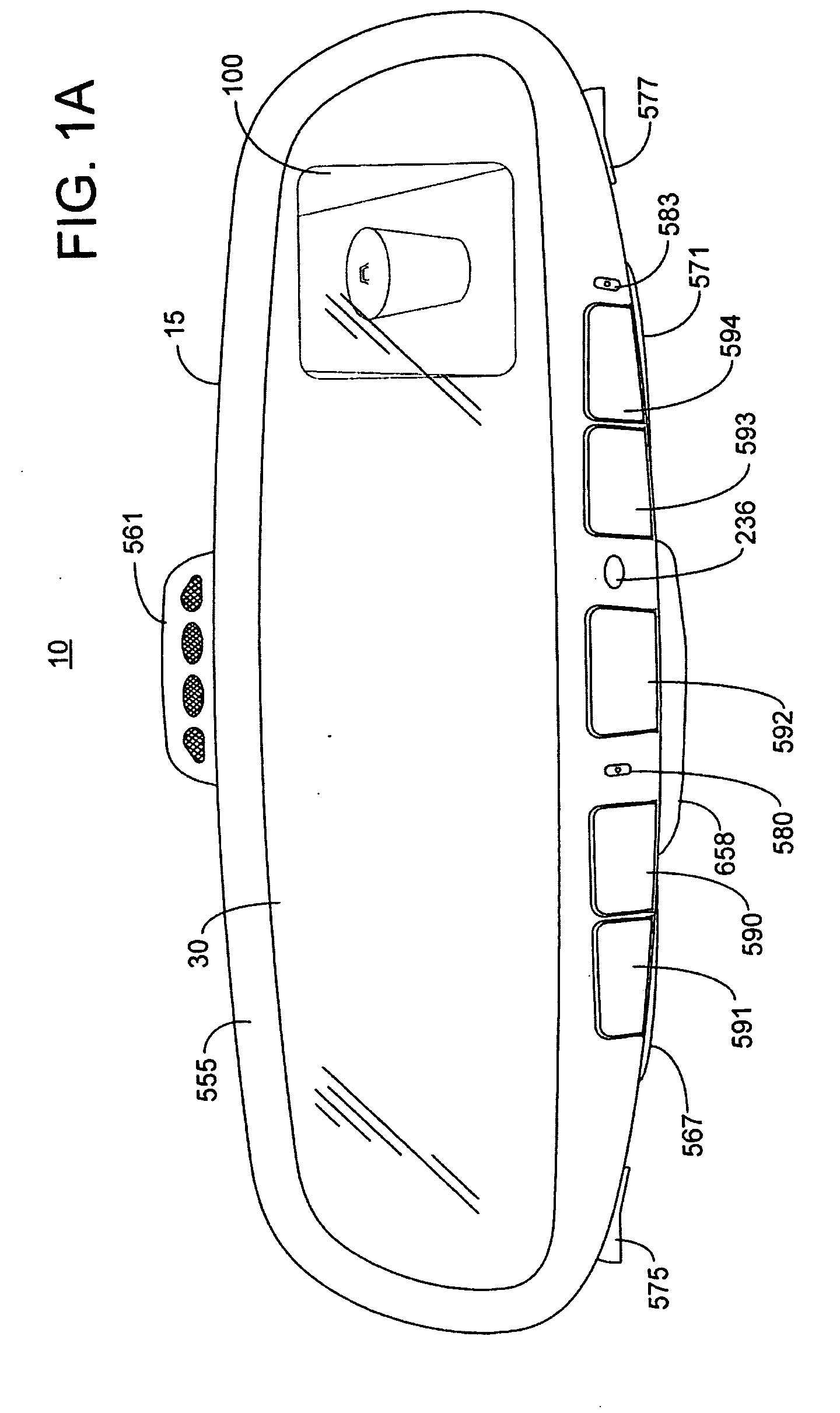

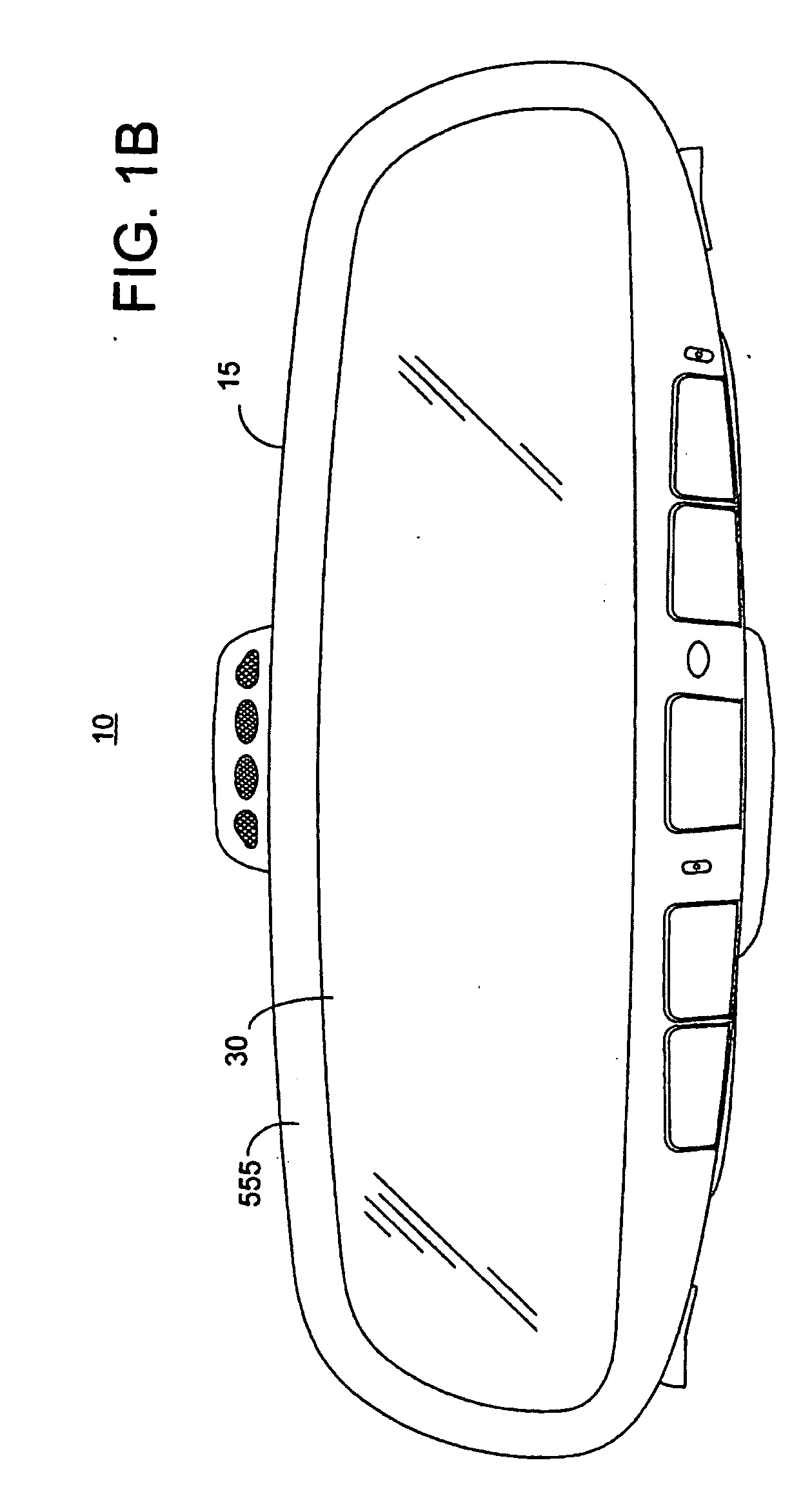

Vehicle Rearview Assembly Including a Display for Displaying Video Captured by a Camera and User Instructions

InactiveUS20090096937A1Television system detailsElectric signal transmission systemsGraphicsGraphical user interface

An inventive rearview assembly (10) for a vehicle may comprise a mirror element (30) and a display (100) including a light management subassembly (101b). The subassembly may comprise an LCD placed behind a transflective layer of the mirror element. Despite a low transmittance through the transflective layer, the inventive display is capable of generating a viewable display image having an intensity of at least 250 cd / m2 and up to 6000 cd / m2. The rearview assembly may further comprise a trainable transmitter (910) and a graphical user interface (920) that includes at least one user actuated switch (902, 904, 906). The graphical user interface generates instructions for operation of the trainable transmitter. The video display selectively displays video images captured by a camera and the instructions supplied from the graphical user interface.

Owner:GENTEX CORP

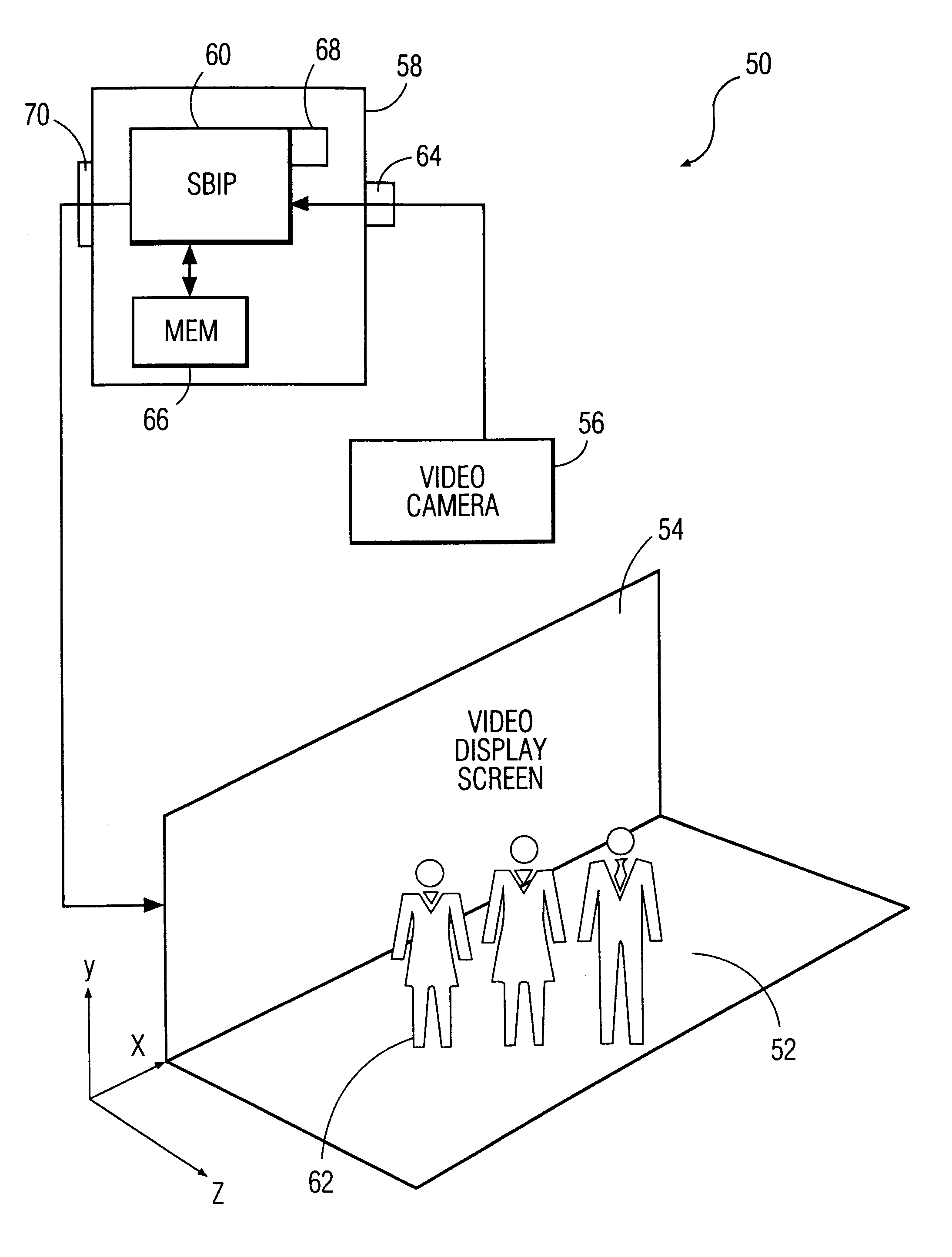

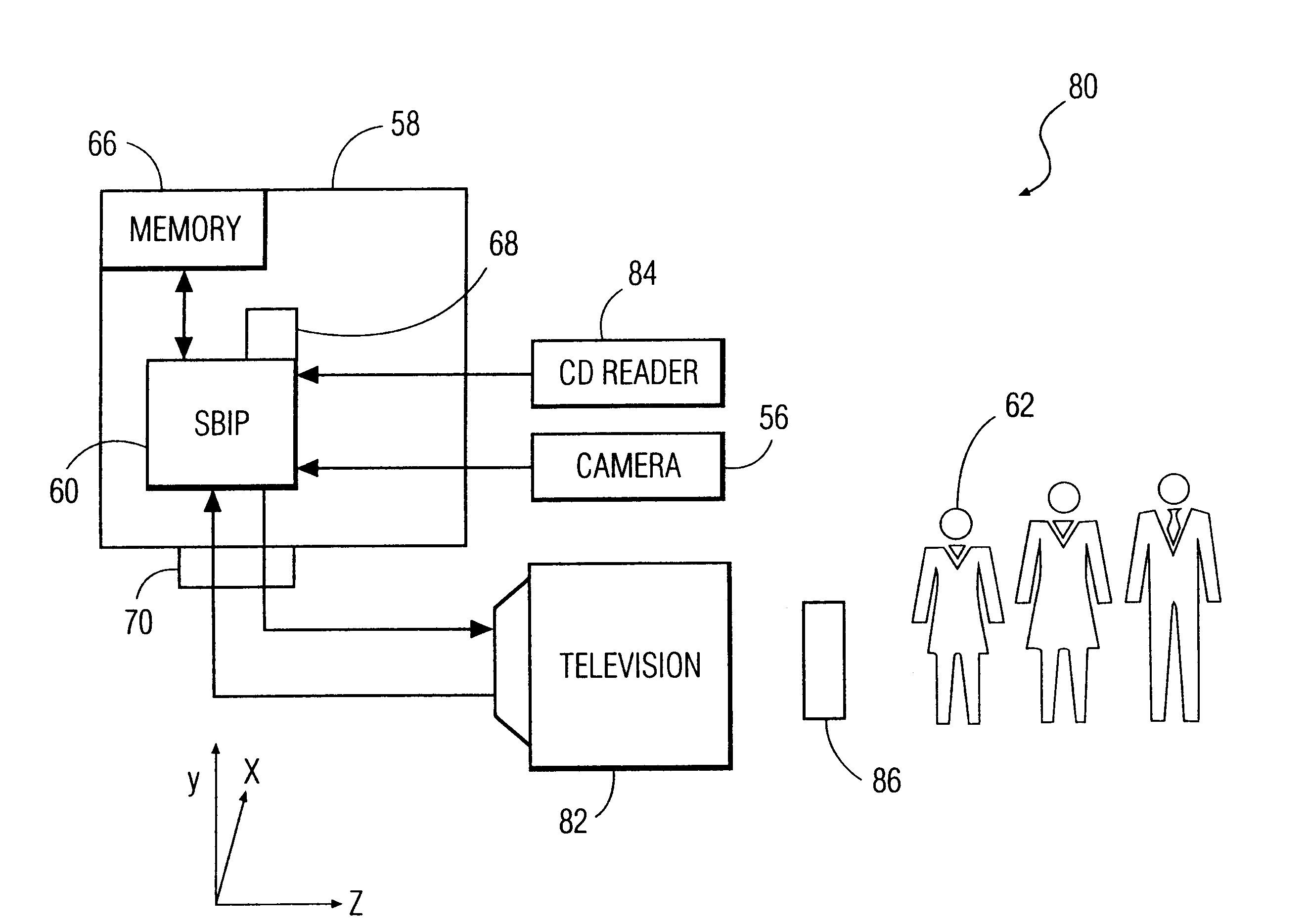

System and method for constructing three-dimensional images using camera-based gesture inputs

InactiveUS6195104B1Input/output for user-computer interactionTelevision system detailsBody areaDisplay device

A system and method for constructing three-dimensional images using camera-based gesture inputs of a system user. The system comprises a computer-readable memory, a video camera for generating video signals indicative of the gestures of the system user and an interaction area surrounding the system user, and a video image display. The video image display is positioned in front of the system users. The system further comprises a microprocessor for processing the video signals, in accordance with a program stored in the computer-readable memory, to determine the three-dimensional positions of the body and principle body parts of the system user. The microprocessor constructs three-dimensional images of the system user and interaction area on the video image display based upon the three-dimensional positions of the body and principle body parts of the system user. The video image display shows three-dimensional graphical objects superimposed to appear as if they occupy the interaction area, and movement by the system user causes apparent movement of the superimposed, three-dimensional objects displayed on the video image display.

Owner:PHILIPS ELECTRONICS NORTH AMERICA

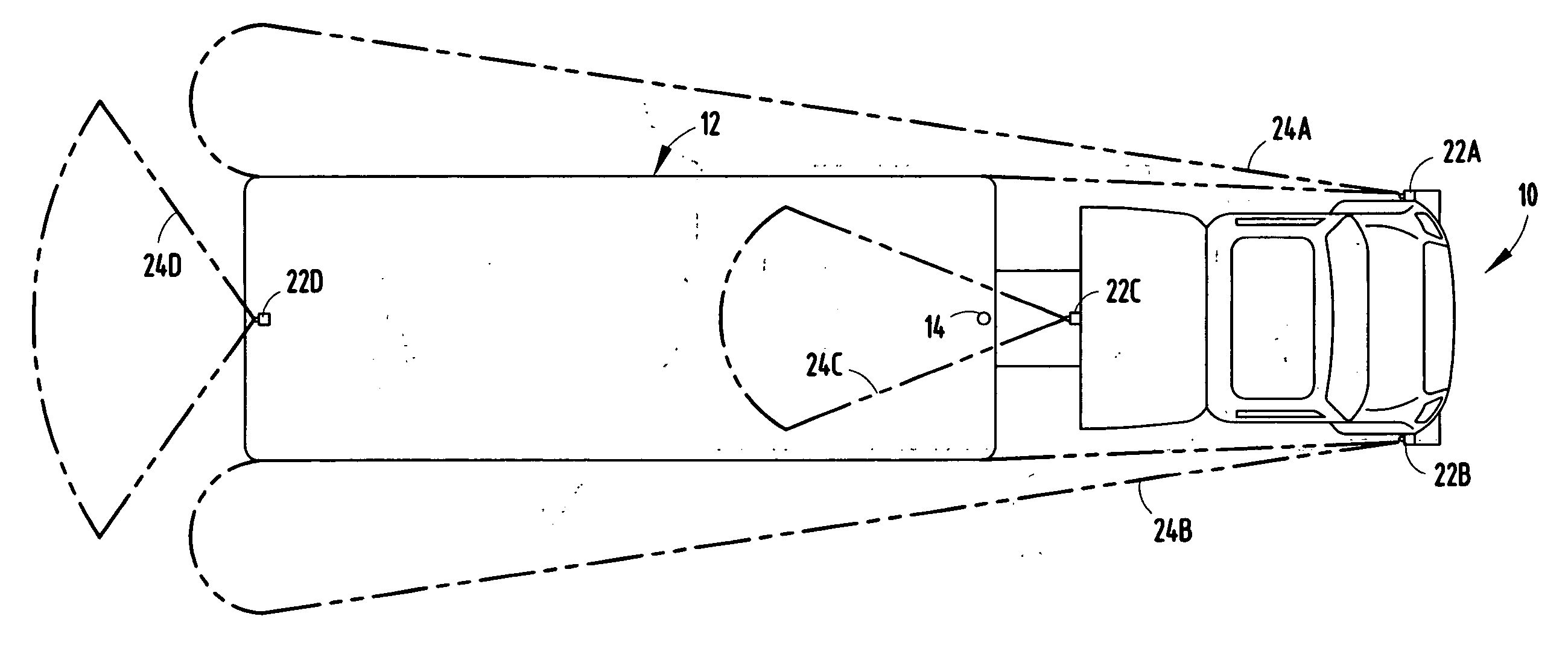

Camera System for Creating an Image From a Plurality of Images

InactiveUS20100097444A1Reduce the misalignment angle to negligibleTelevision system detailsImage analysisComputer graphics (images)Video image

Methods and apparatus to create and display stereoscopic and panoramic images are disclosed. Apparatus is provided to control the position of a lens in relation to a reference lens. Methods and apparatus are provided to generate multiple images that are combined into a stereoscopic or a panoramic image. An image may be a static image. It may also be a video image. A controller provides correct camera settings for different conditions. An image processor creates a stereoscopic or a panoramic image from the correct settings provided by the controller. A panoramic video wall system is also disclosed.

Owner:LABLANS PETER

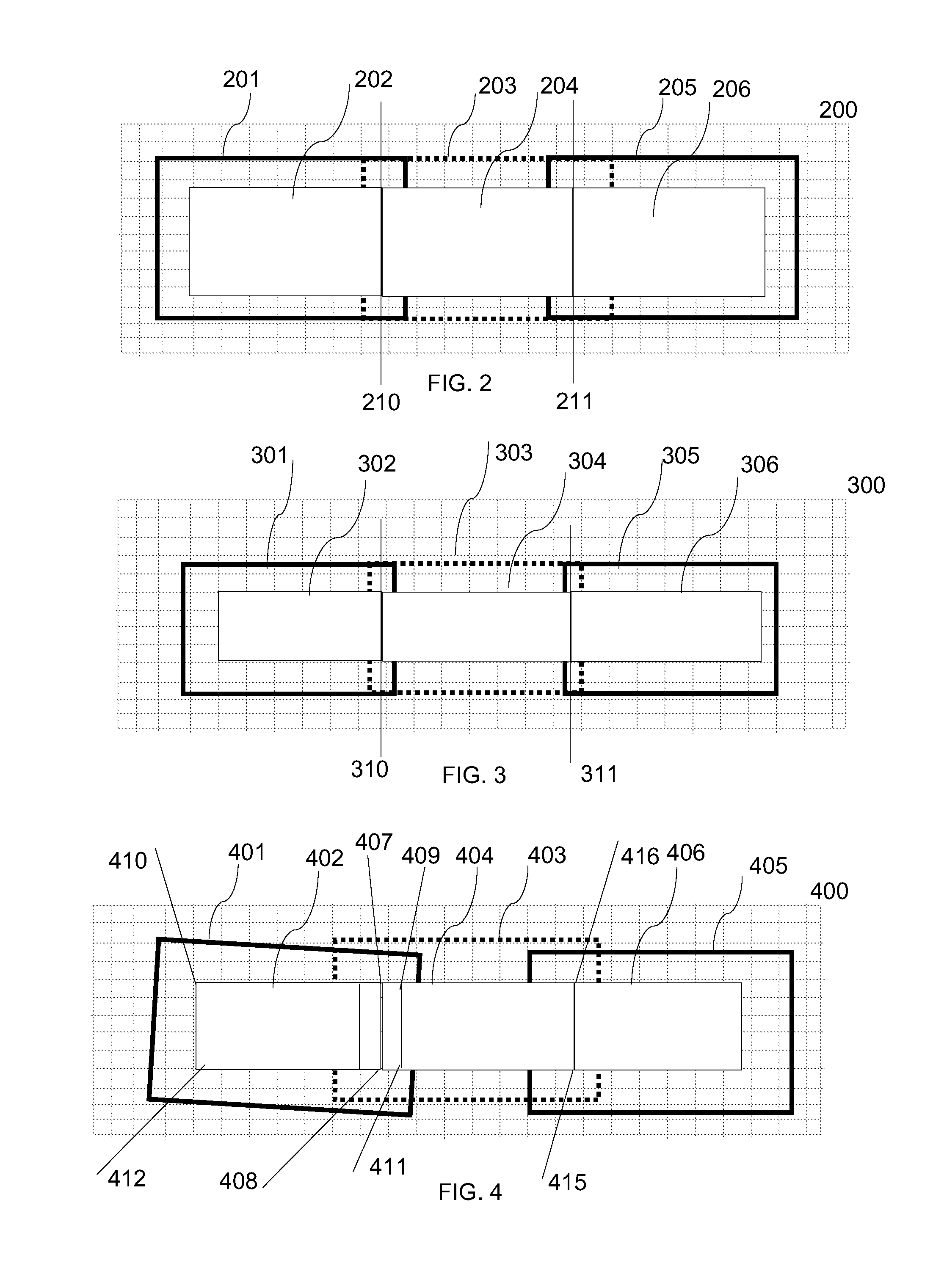

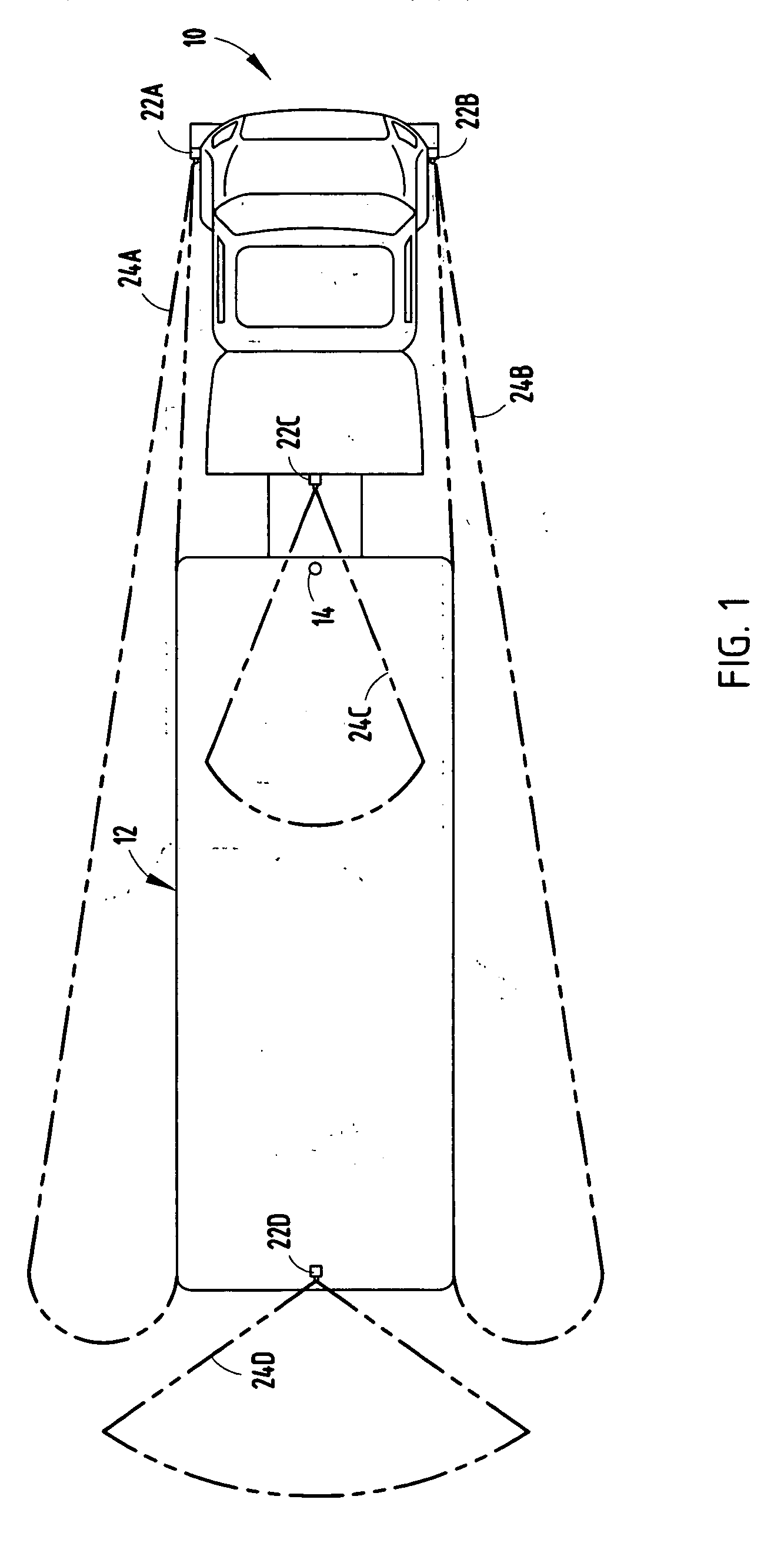

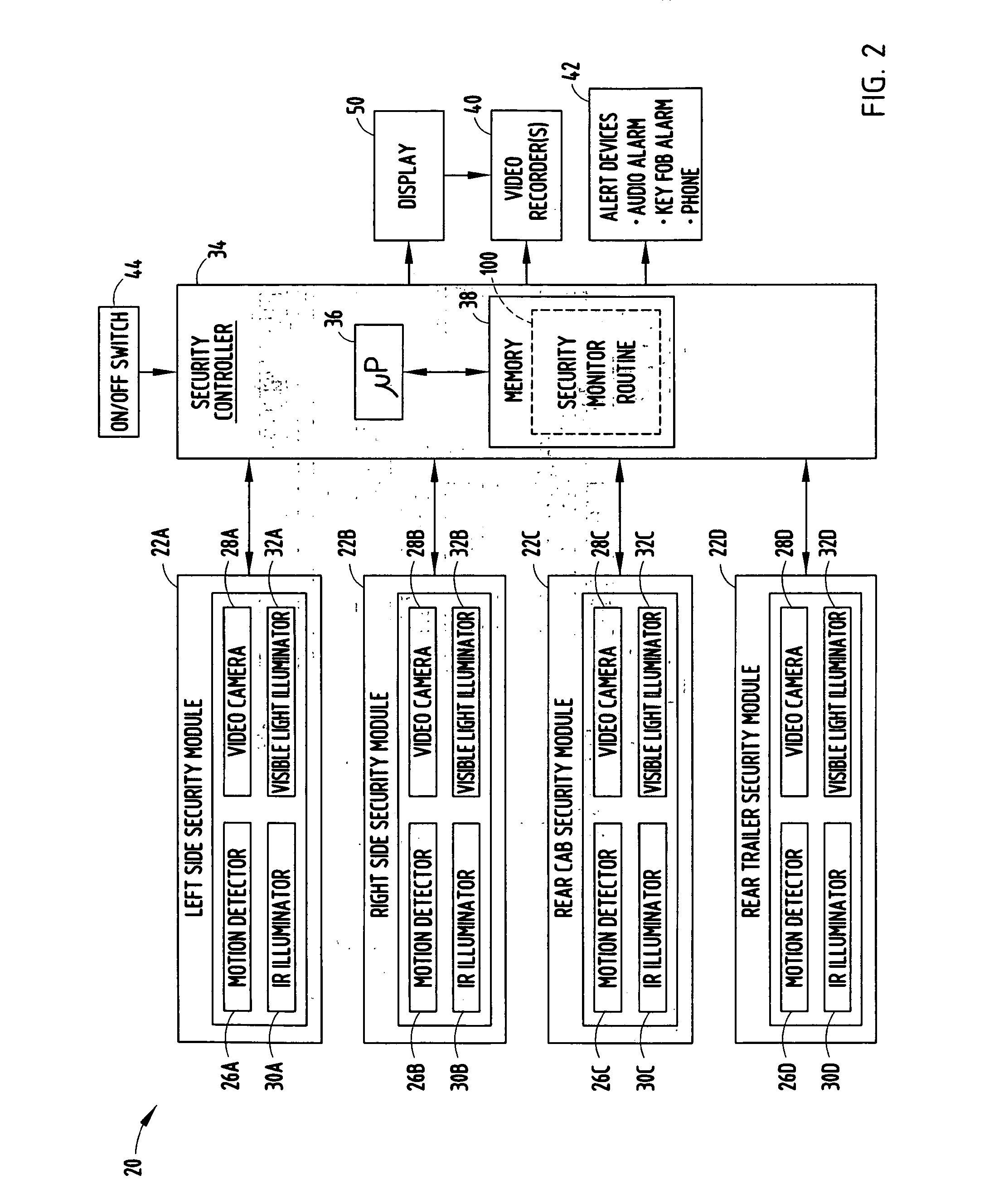

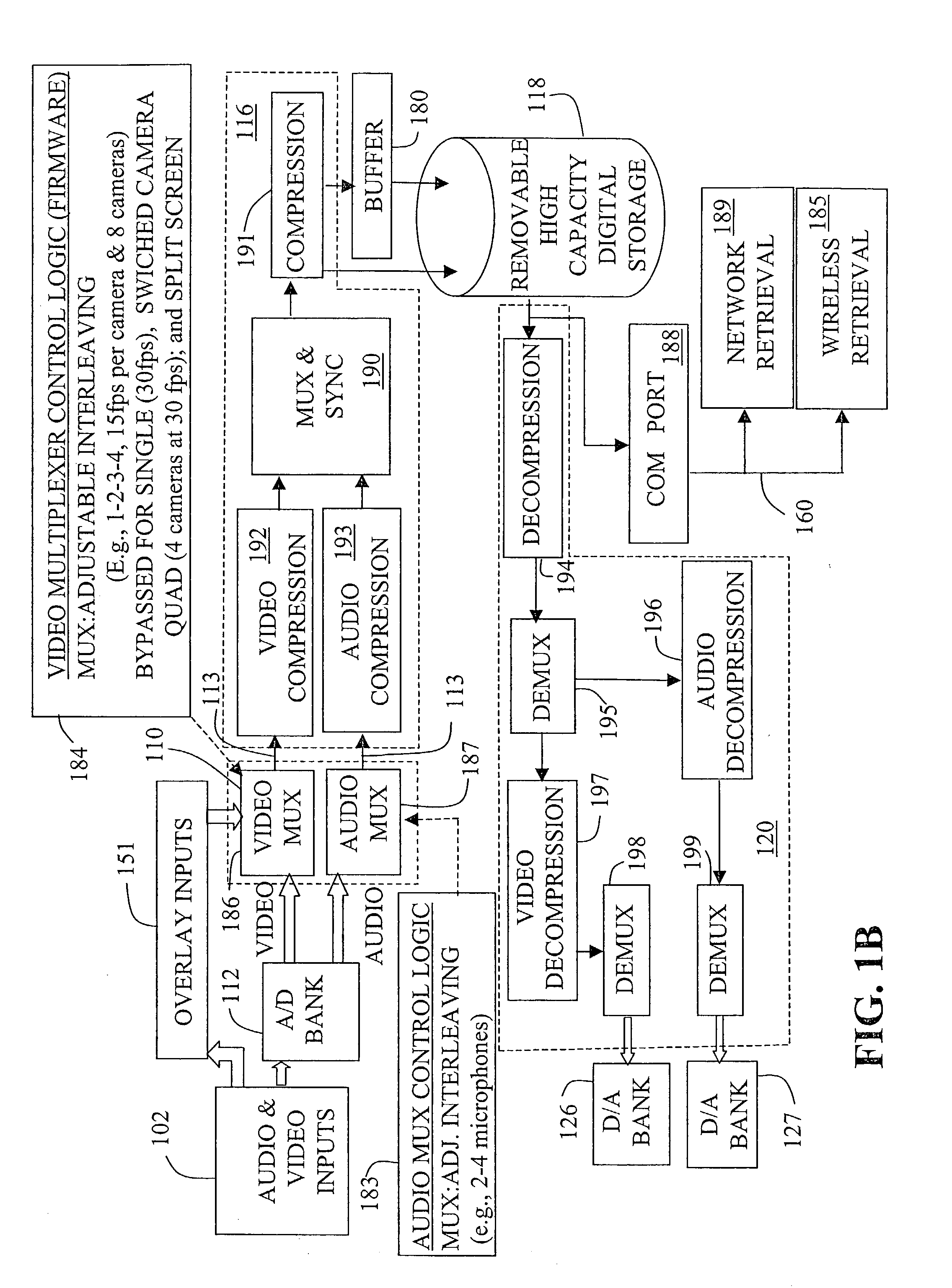

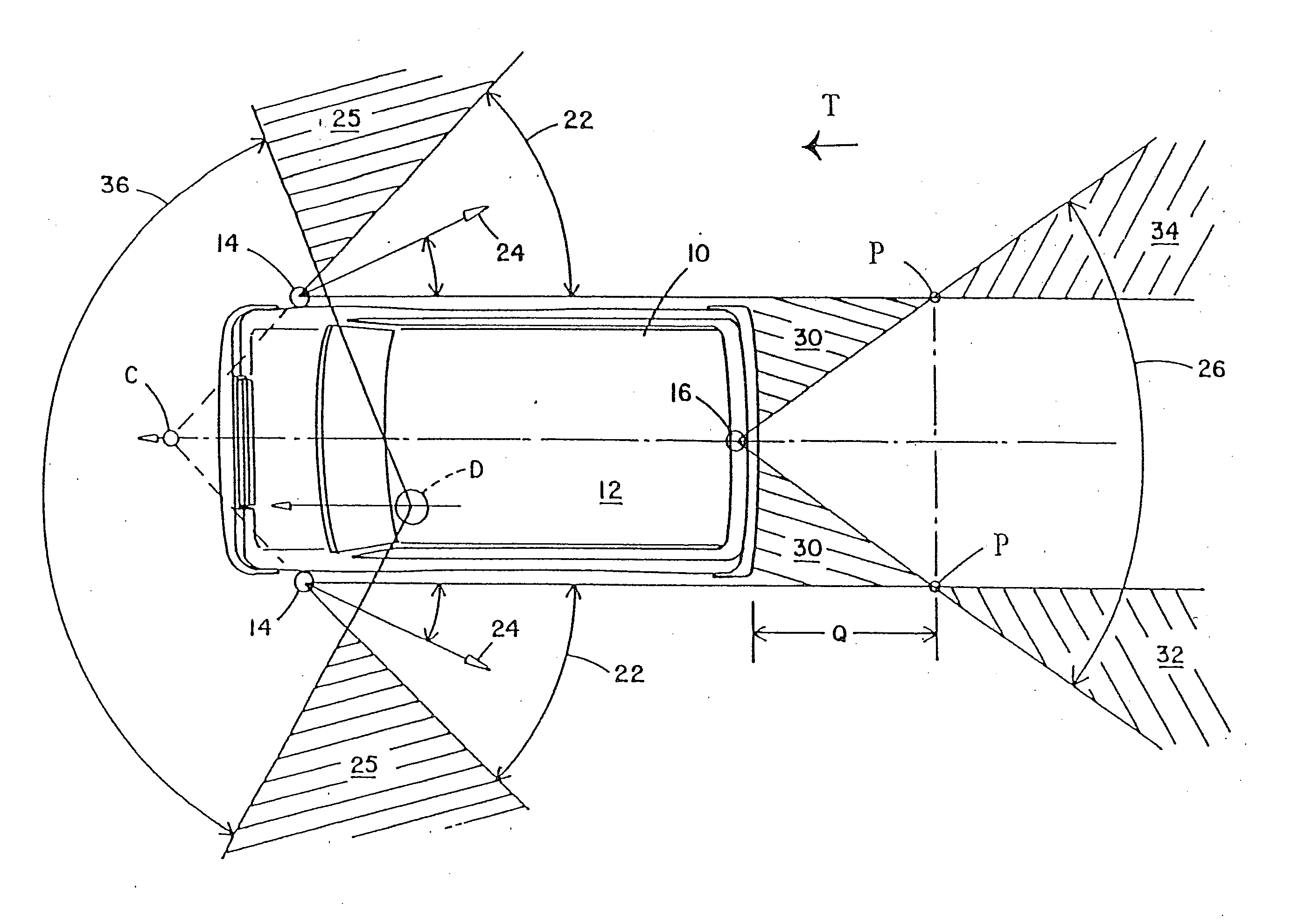

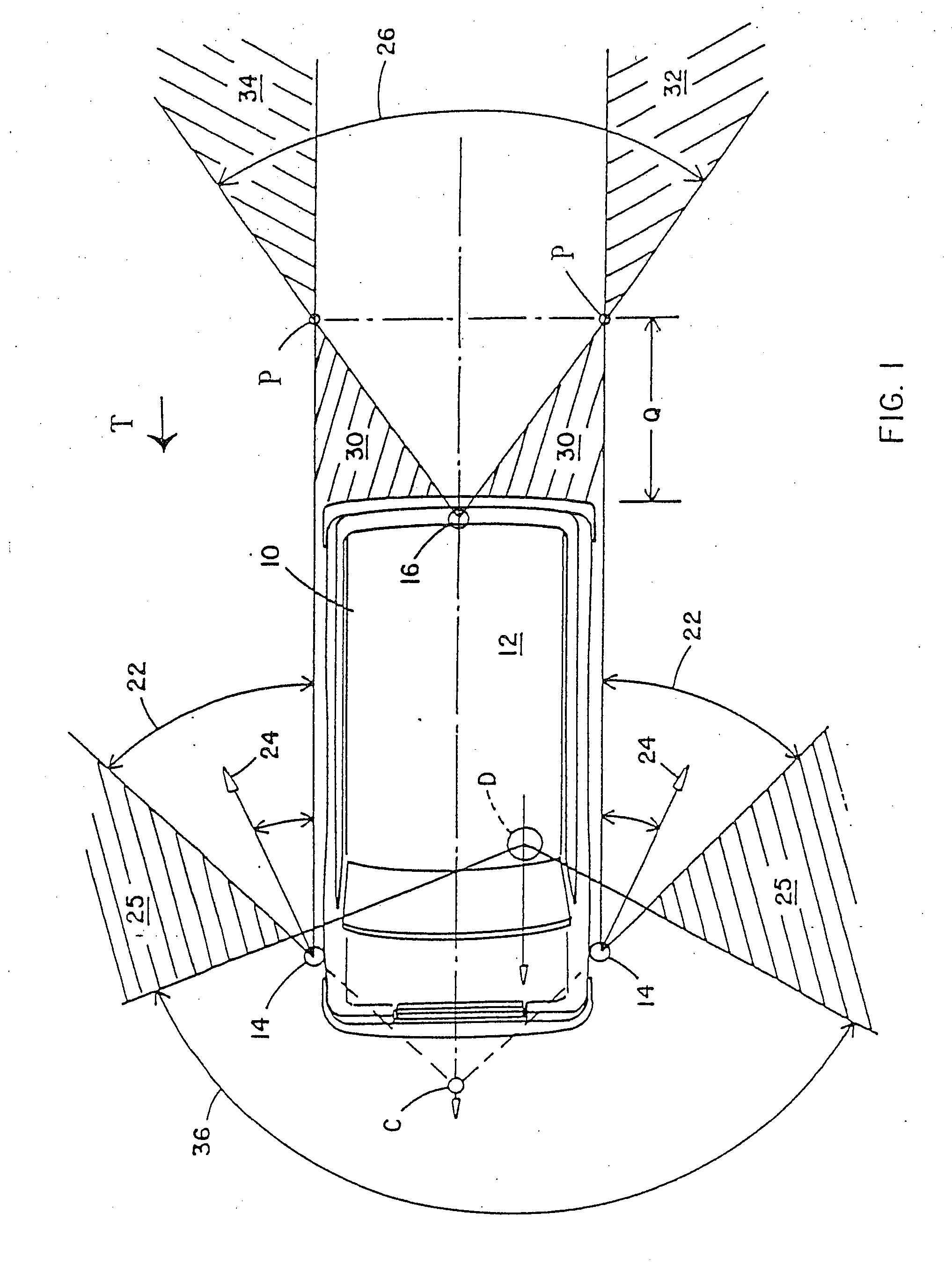

Vehicle security monitor system and method

InactiveUS20060250501A1Prevent and minimize theftImprove securityAnti-theft devicesColor television detailsMotion detectorDisplay device

A vehicle security monitoring system and method is provided for monitoring the security in detection zones near a vehicle. The system includes motion detectors positioned to detect motion of an object within security zones of a vehicle and cameras positioned to generate video images of the security zones. A display located onboard the vehicle displays images generated by a camera when the camera is activated. A controller controls activation of the camera and presentation of images on the display. The controller activates the camera and controls the display to output images captured by the camera when a moving object is detected IN THE security zone.

Owner:DELPHI TECH INC

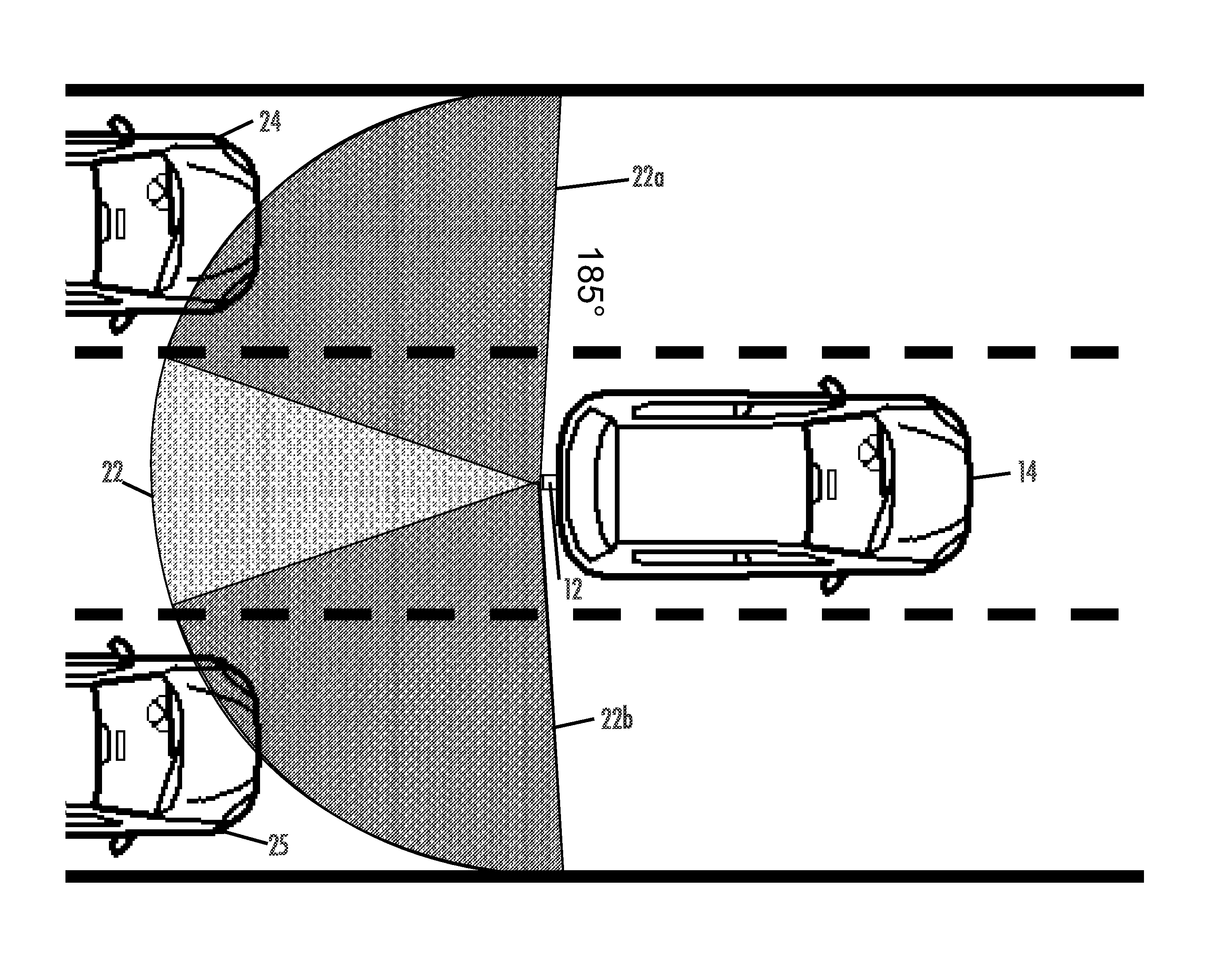

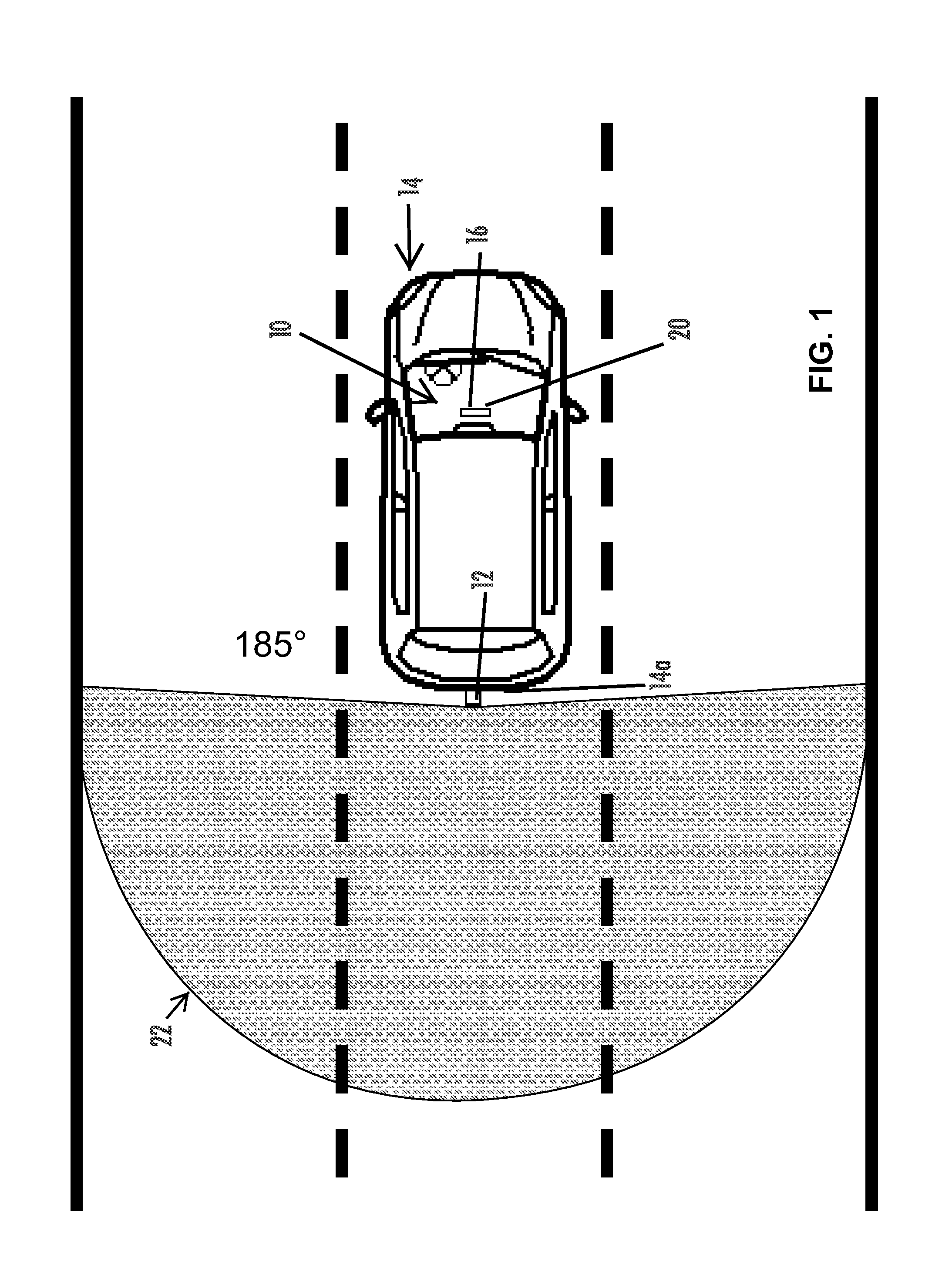

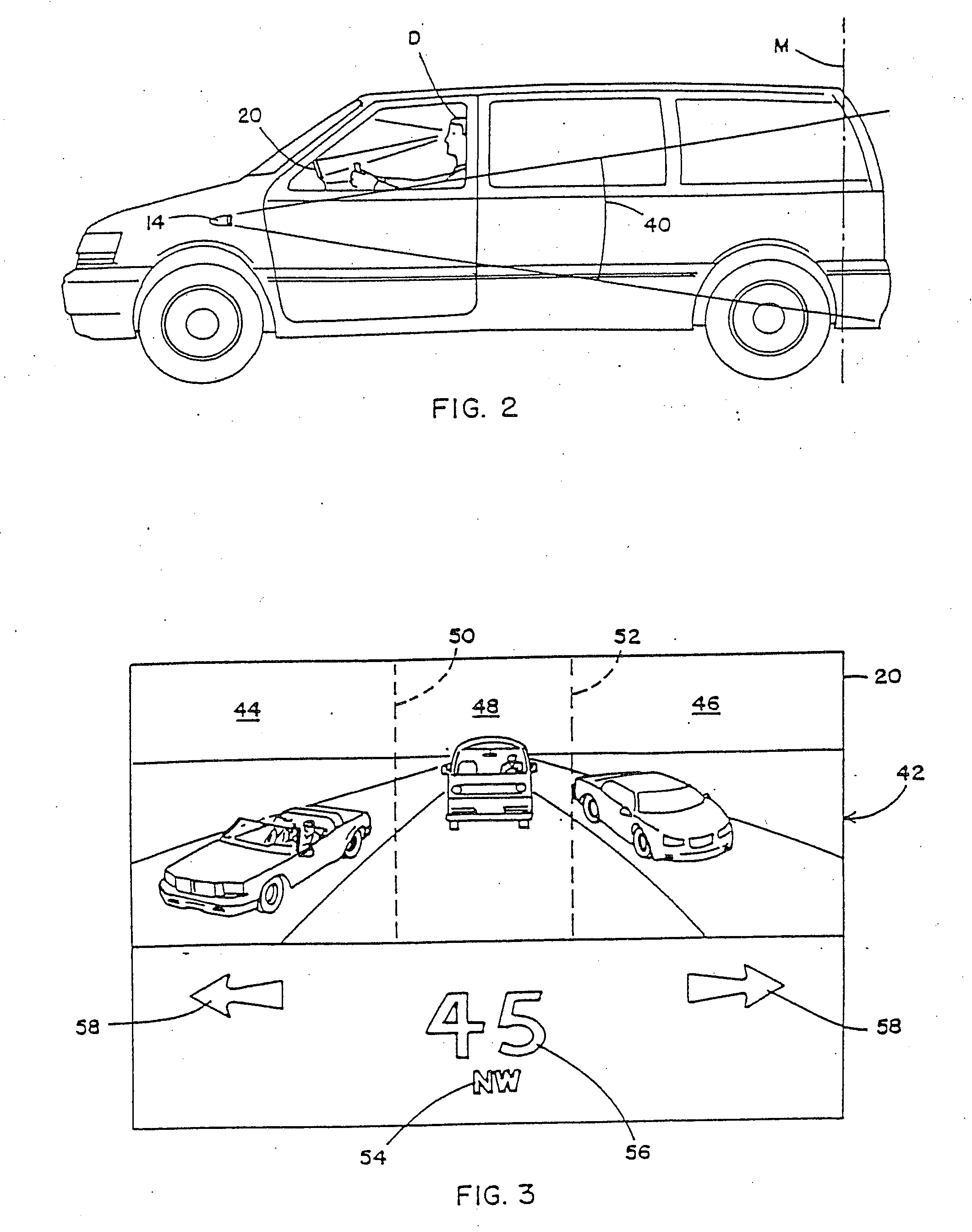

Imaging and display system for vehicle

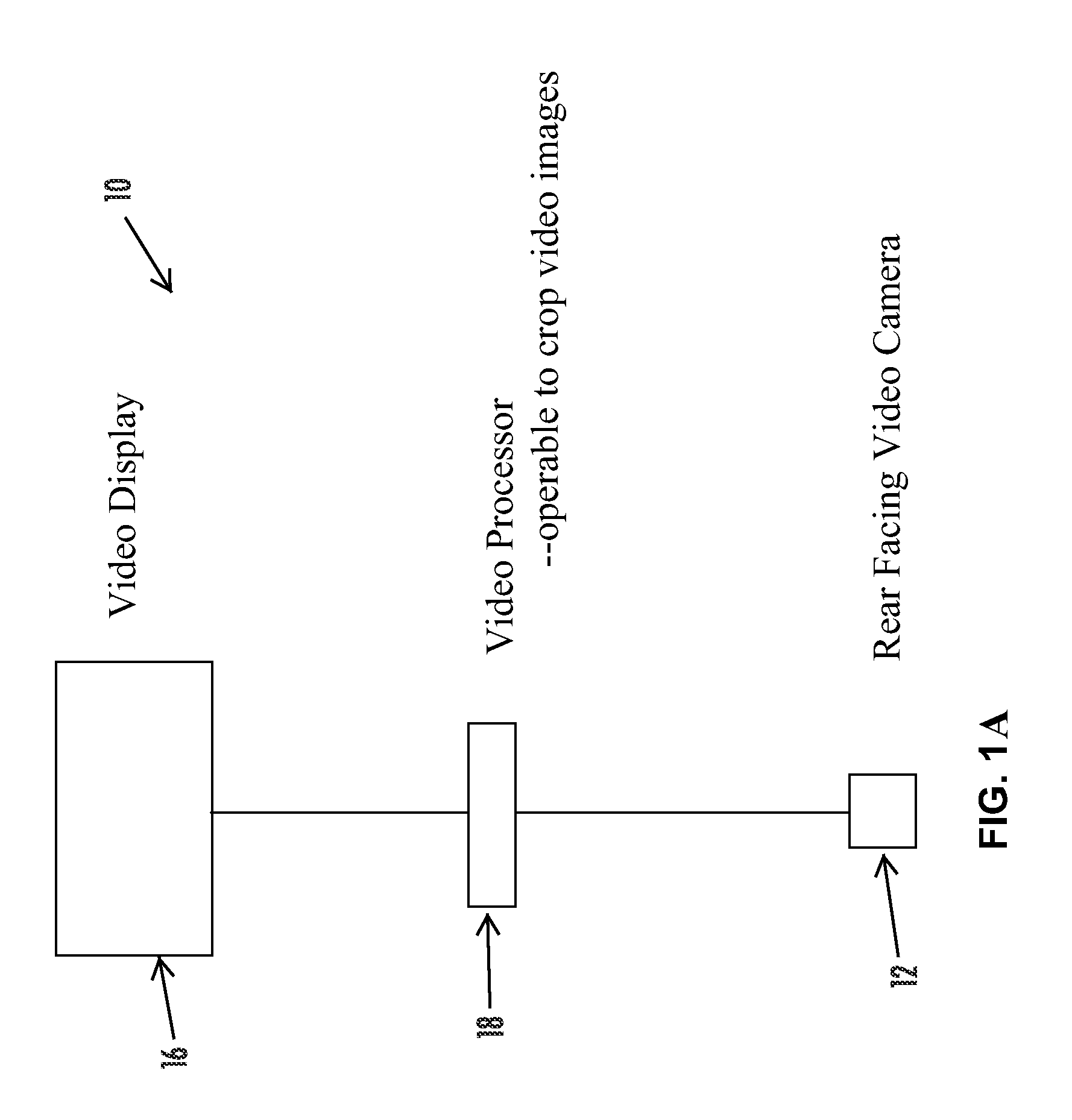

ActiveUS20120154591A1Enhanced Situational AwarenessIncrease awarenessRoad vehicles traffic controlColor television detailsLane departure warning systemVideo processing

A vehicular imaging and display system includes a rear backup camera at a rear portion of a vehicle, a video processor for processing image data captured by the rear camera, and a video display screen responsive to the video processor to display video images. During a reversing maneuver of the equipped vehicle, the video display screen displays video images captured by the rear camera. During forward travel of the equipped vehicle, the video display screen is operable to display images representative of a portion of the field of view of the rear camera to display images representative of an area sideward of the equipped vehicle responsive to at least one of (a) actuation of a turn signal indicator of the vehicle, (b) detection of a vehicle in a side lane adjacent to the equipped vehicle and (c) a lane departure warning system of the vehicle.

Owner:MAGNA ELECTRONICS

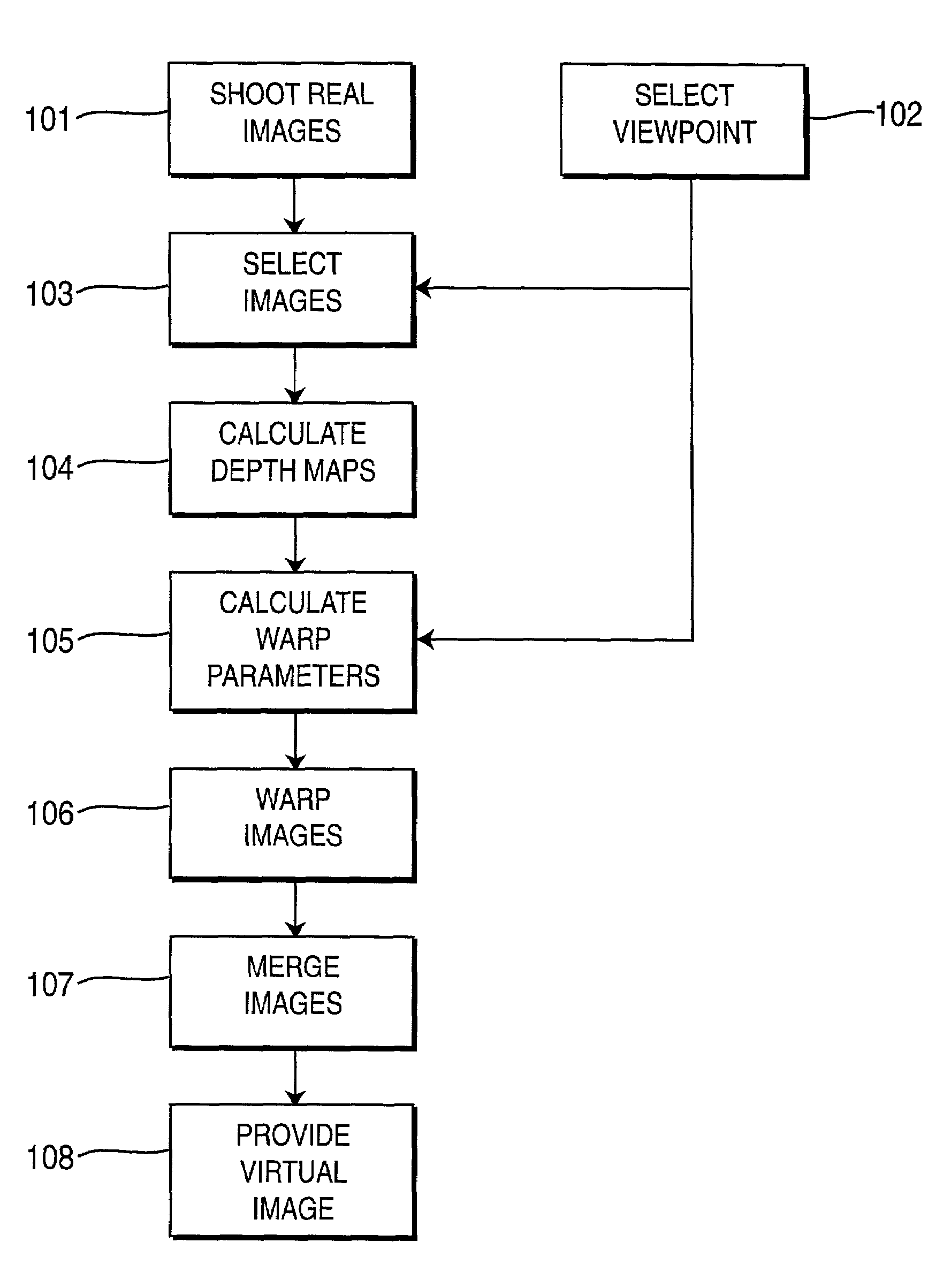

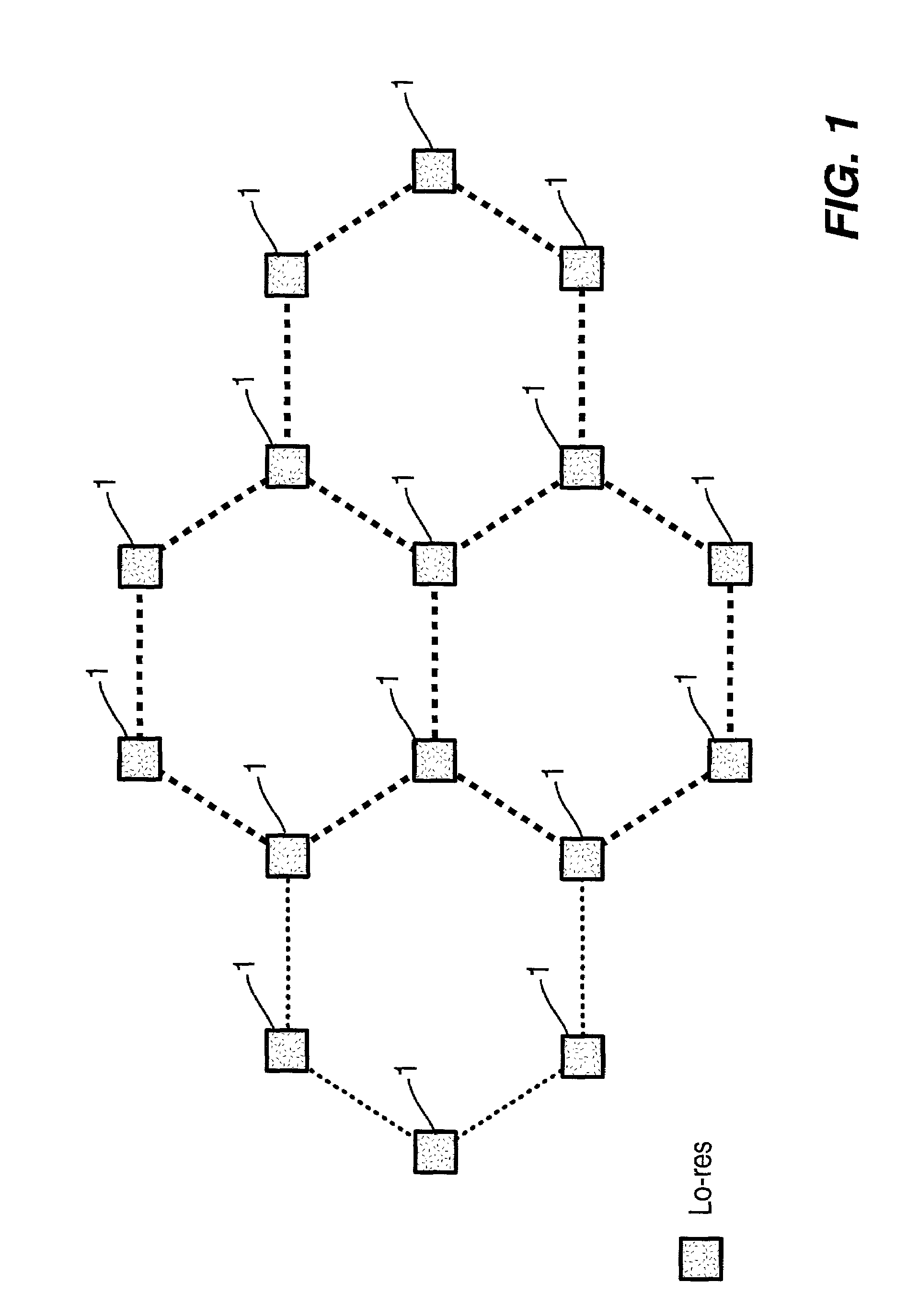

Method and apparatus for synthesizing new video and/or still imagery from a collection of real video and/or still imagery

ActiveUS7085409B2Quality improvementIncrease speedImage enhancementImage analysisViewpointsVirtual position

An image-based tele-presence system forward warps video images selected from a plurality fixed imagers using local depth maps and merges the warped images to form high quality images that appear as seen from a virtual position. At least two images, from the images produced by the imagers, are selected for creating a virtual image. Depth maps are generated corresponding to each of the selected images. Selected images are warped to the virtual viewpoint using warp parameters calculated using corresponding depth maps. Finally the warped images are merged to create the high quality virtual image as seen from the selected viewpoint. The system employs a video blanket of imagers, which helps both optimize the number of imagers and attain higher resolution. In an exemplary video blanket, cameras are deployed in a geometric pattern on a surface.

Owner:SRI INTERNATIONAL

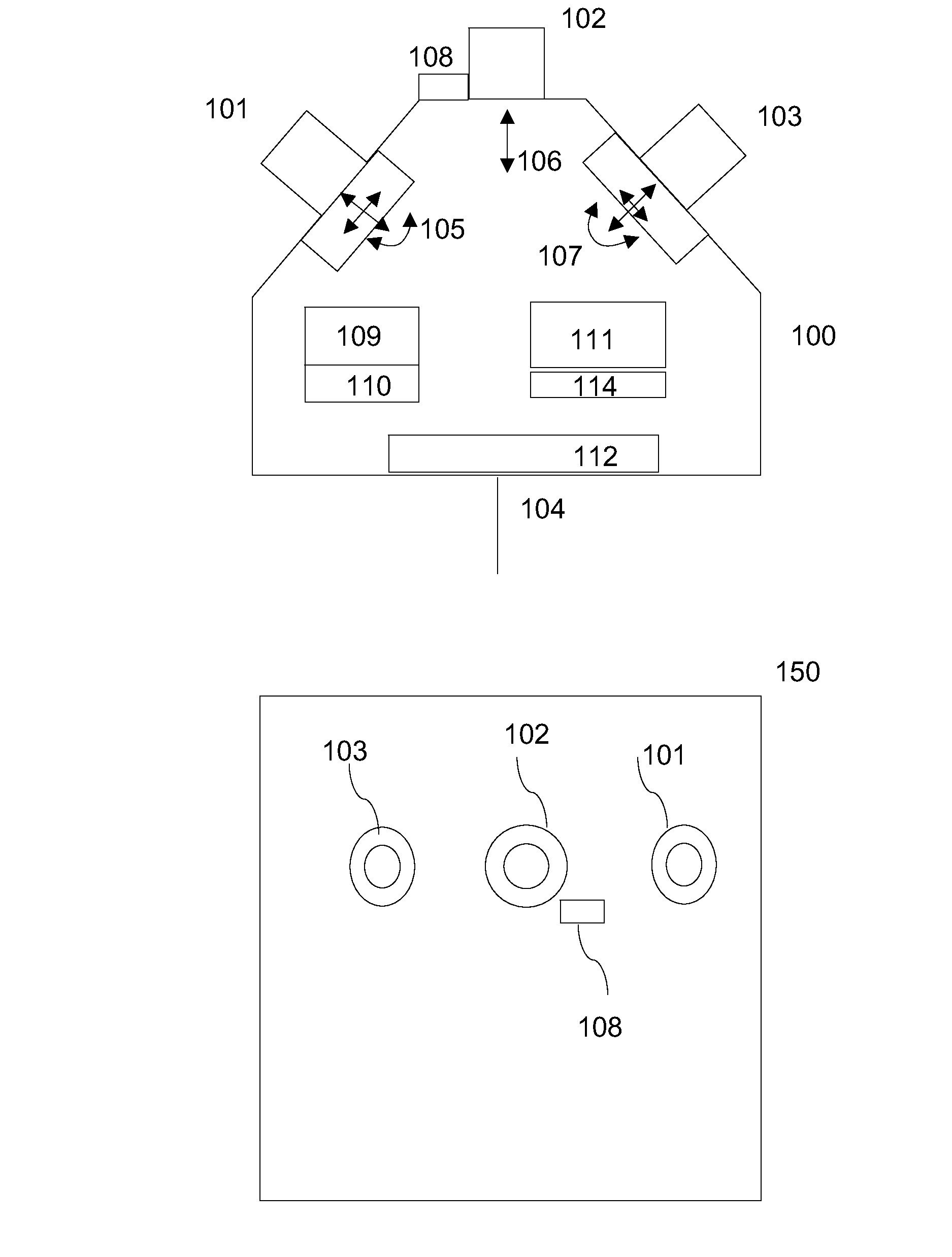

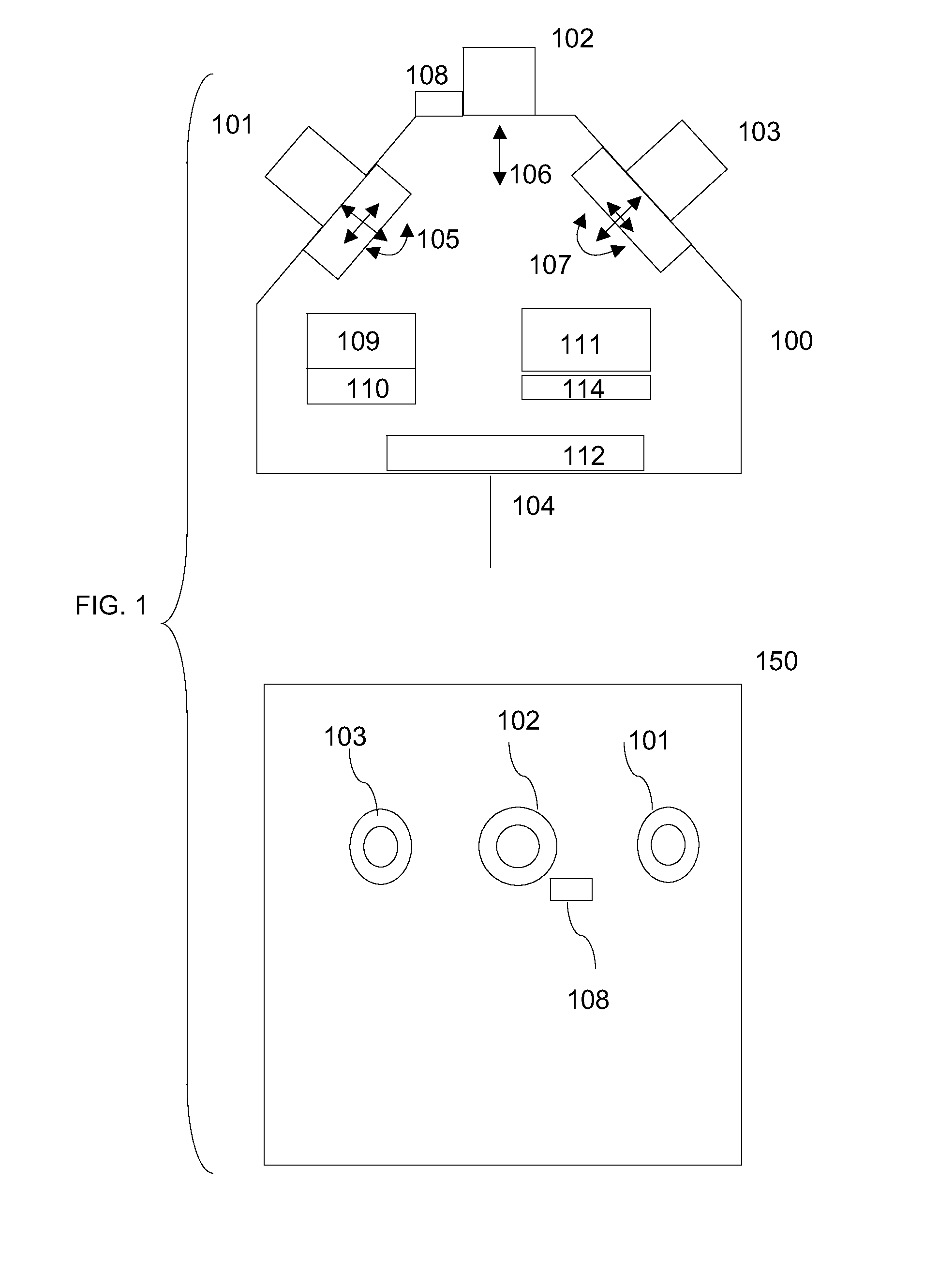

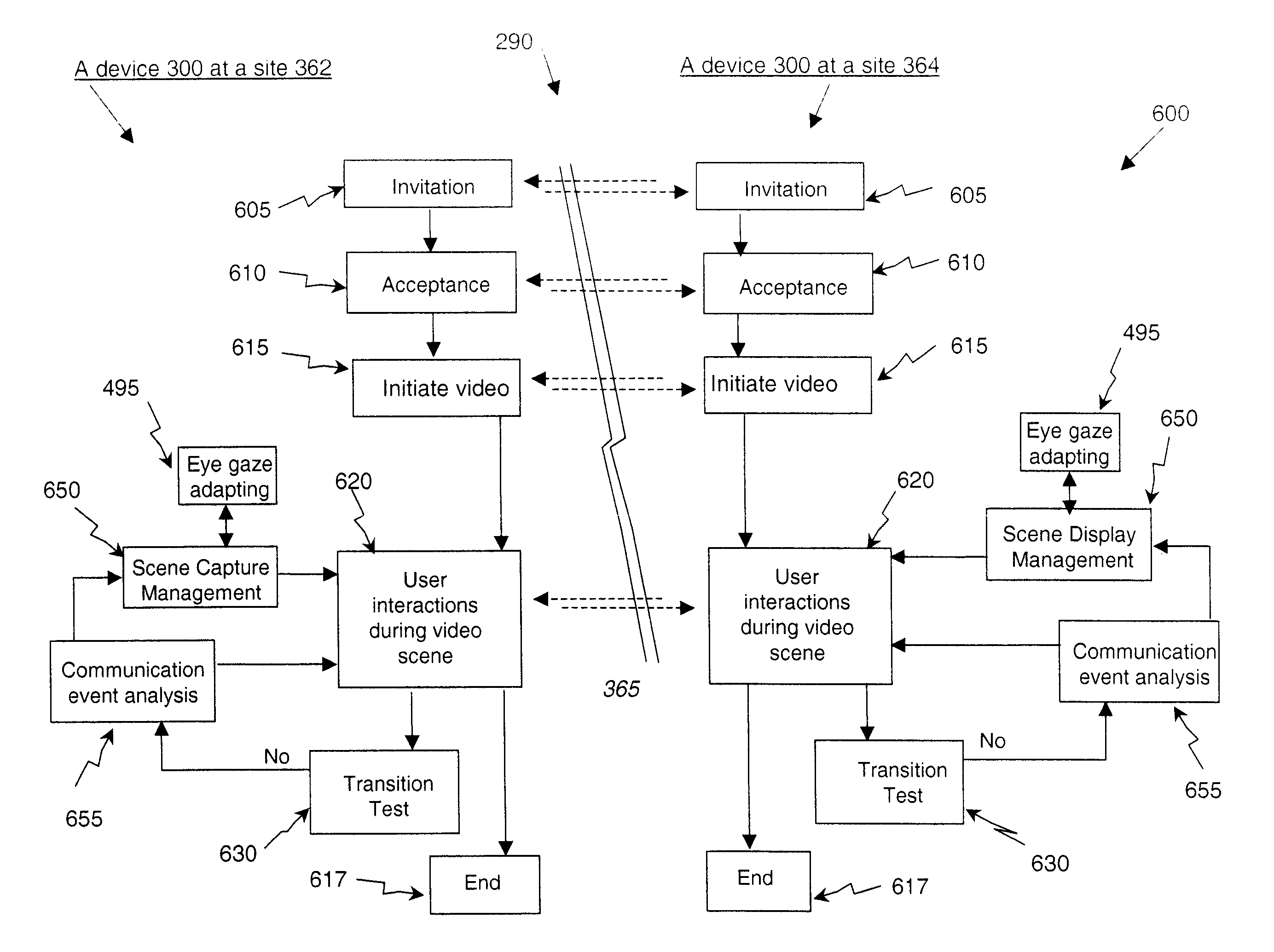

Residential video communication system

InactiveUS20080298571A1Reduce the impactReduce impactTelevision conference systemsSubstation equipmentDisplay deviceLocal environment

A video communication system and method for operating a video communication system are provided. The video communication system has a video communication device, having an image display device and at least one image capture device, wherein the at least one image capture device acquires video images of a local environment and an individual therein, according to defined video capture settings, an audio system having an audio emission device and an audio capture device; and a computer operable to interact with a contextual interface, a privacy interface, an image processor, and a communication controller to enable a communication event including at least one video scene in which outgoing video images are sent to a remote site. Wherein the contextual interface includes scene analysis algorithms for identifying potential scene transitions and capture management algorithms for providing changes in video capture settings appropriate to any identified scene transitions; and wherein the privacy interface provides privacy settings to control the capture, transmission, display, or recording of video image content from the local environment.

Owner:EASTMAN KODAK CO

Event-based vehicle image capture

InactiveUS20030080878A1Quick analysisSimple processDisc-shaped record carriersRegistering/indicating working of vehiclesHard disc driveTraffic signal

Provided is a system for identifying vehicles of traffic violators, the system having elements that include: a video camera for providing, in real-time, a video signal that represents plural sequential video image frames (either perceptually continuous video, such as 30 frames per second, or non-perceptually continuous video, such as 1-2 fps); a traffic violation detector (e.g., a radar gun, an in-ground loop, a pair of self-powered wireless transponders or transmitters, a camera-based speed detection system, or any other speed sensor) that provides a trigger signal (e.g., based on vehicle speed and detection of the state of a traffic signal); a video recorder that receives the video signal provided by the camera and records the video signal in a buffer until receipt of a trigger signal, at which point at least a portion of the video signal stored in the buffer is preserved for recording and direct real-time storage of the video signal to a hard drive, or other high-capacity storage medium, commences. As a result, the video signal is preserved during a pre-programmable sliding (or rolling) time interval prior to provision of the trigger signal.

Owner:HUBB SYST

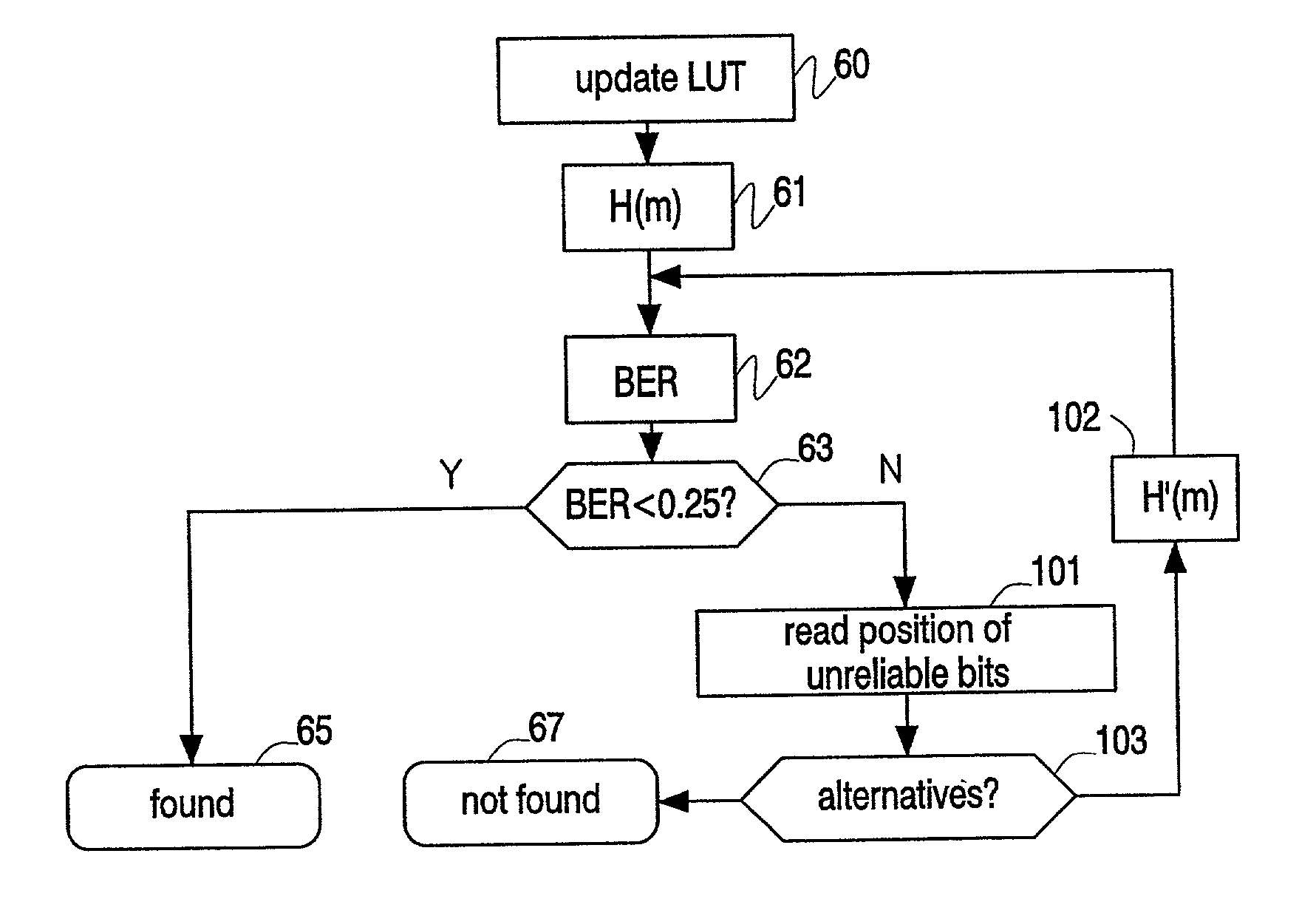

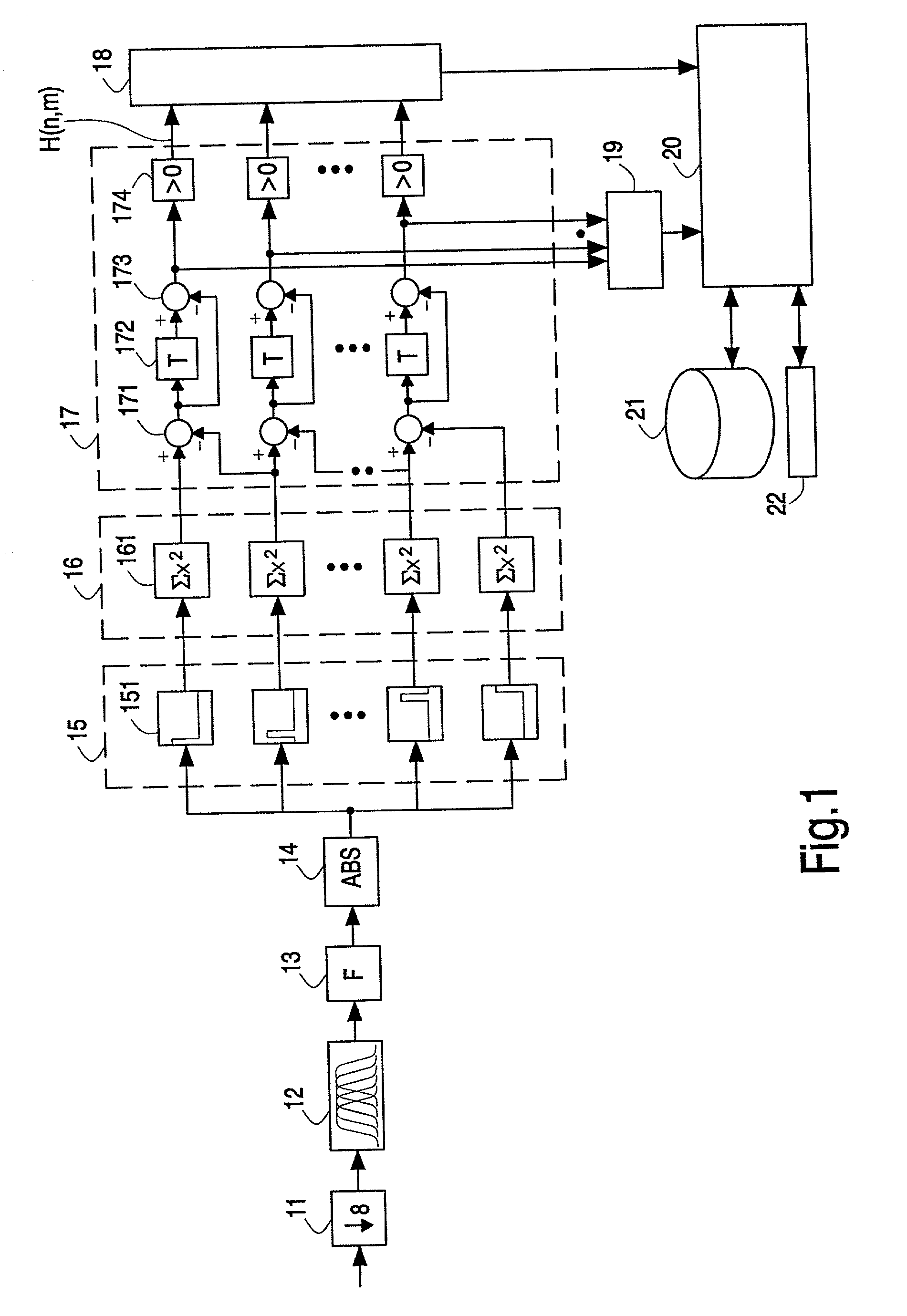

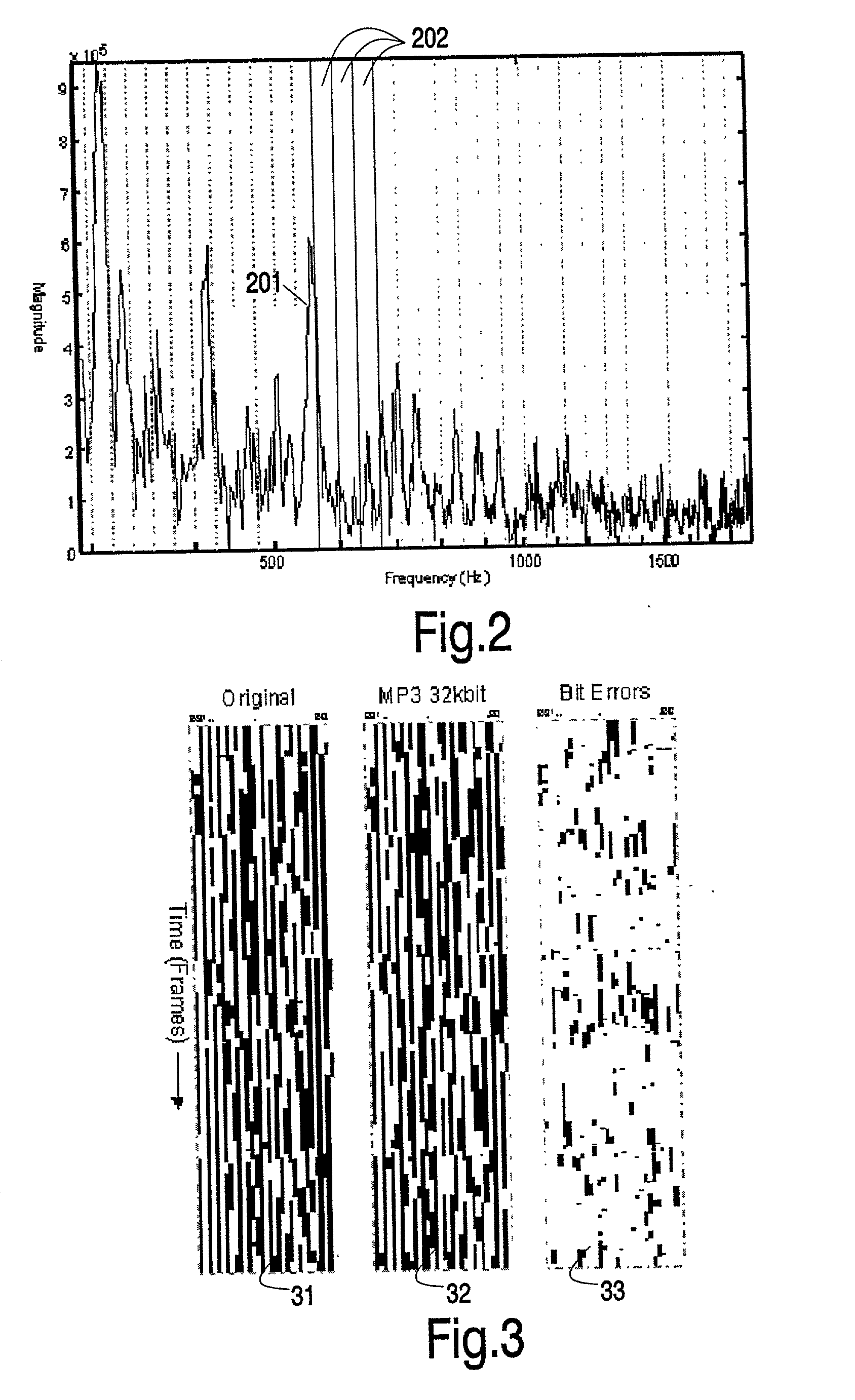

Generating and matching hashes of multimedia content

ActiveUS20020178410A1RobustRobust hashingElectrophonic musical instrumentsCode conversionFrequency spectrumAlgorithm

Hashes are short summaries or signatures of data files which can be used to identify the file. Hashing multimedia content (audio, video, images) is difficult because the hash of original content and processed (e.g. compressed) content may differ significantly. The disclosed method generates robust hashes for multimedia content, for example, audio clips. The audio clip is divided (12) into successive (preferably overlapping) frames. For each frame, the frequency spectrum is divided (15) into bands. A robust property of each band (e.g. energy) is computed (16) and represented (17) by a respective hash bit. An audio clip is thus represented by a concatenation of binary hash words, one for each frame. To identify a possibly compressed audio signal, a block of hash words derived therefrom is matched by a computer (20) with a large database (21). Such matching strategies are also disclosed. In an advantageous embodiment, the extraction process also provides information (19) as to which of the hash bits are the least reliable. Flipping these bits considerably improves the speed and performance of the matching process.

Owner:GRACENOTE

Device for capturing video image data and combining with original image data

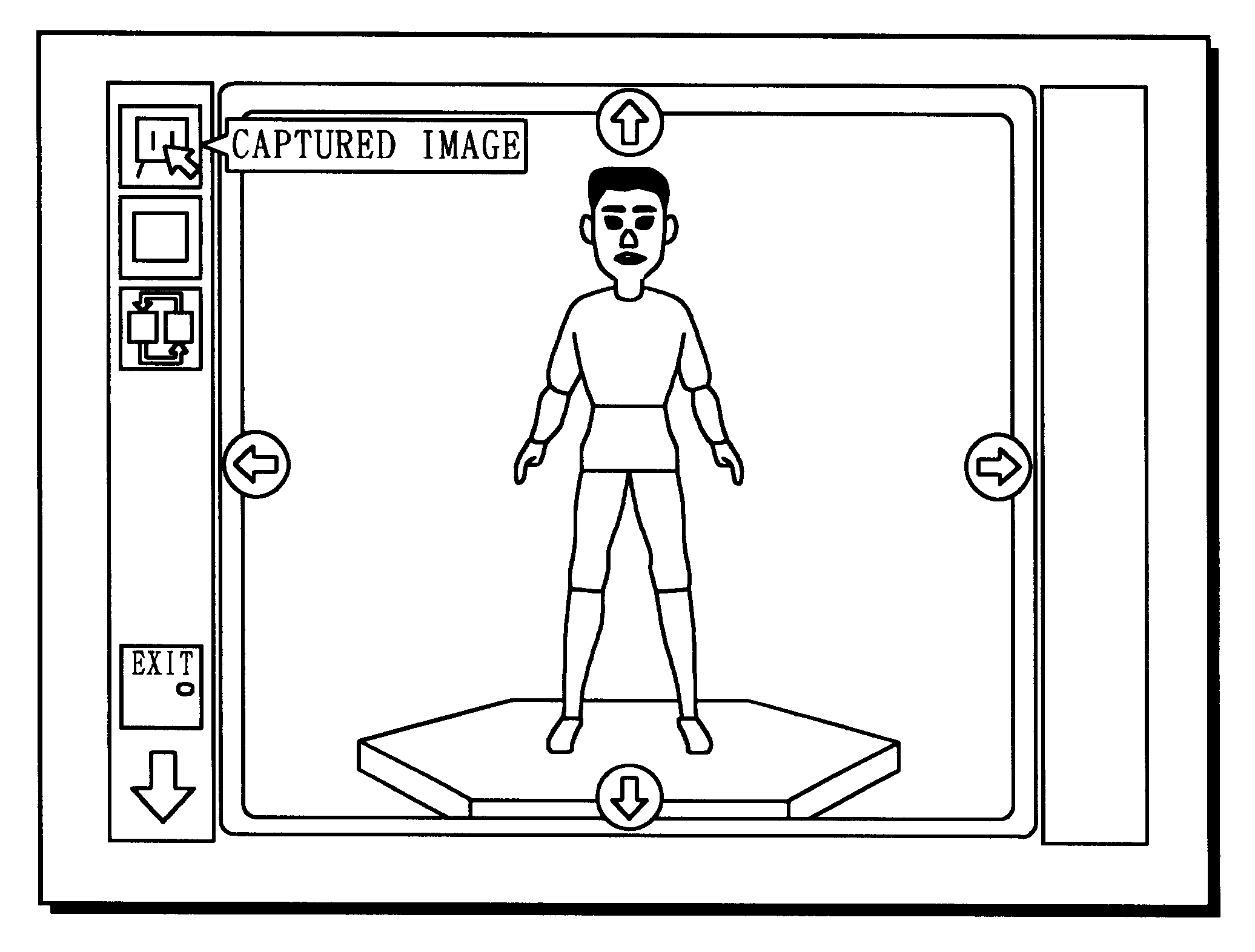

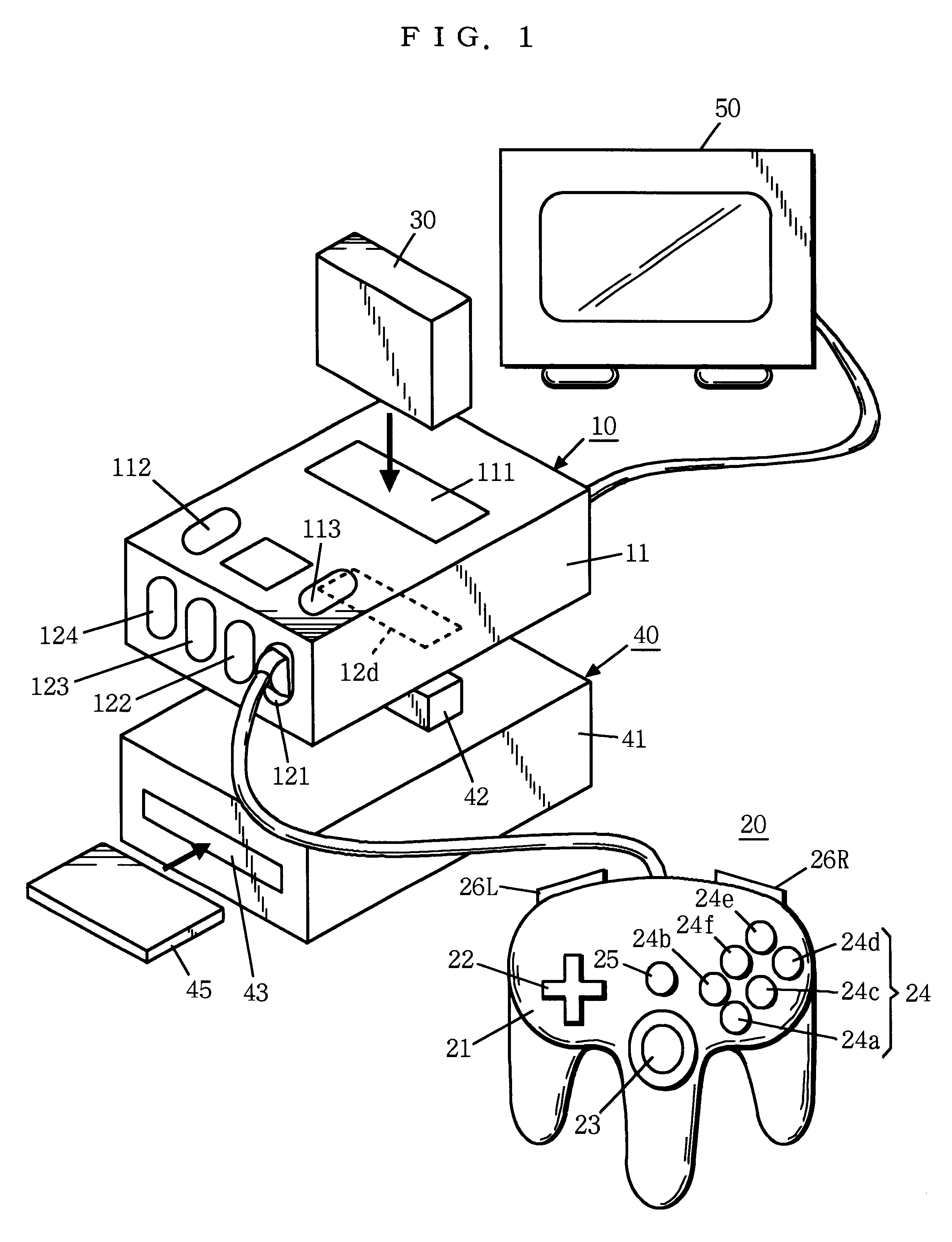

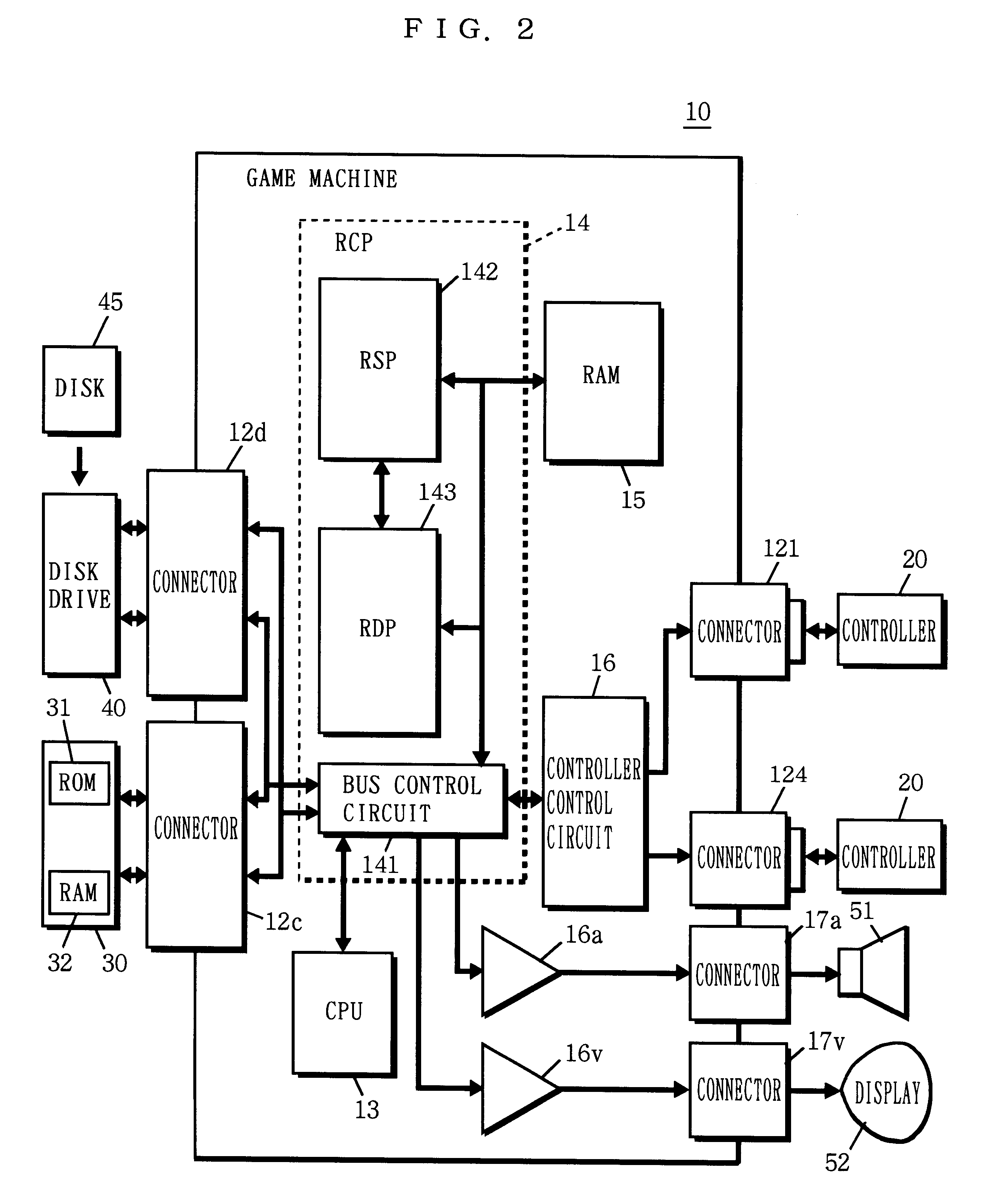

InactiveUS6285381B1Image can be createdAvoid difficult choicesIndoor gamesGeometric image transformationTelevision receiversVideo image

A frame of still picture data is captured at an instant specified by a user from video signals supplied from a given video source, such as a television receiver, a video camera, etc., and the image data is displayed. When the user specifies an area of image to be cut out from the displayed still picture, the image data in the specified area is cut out and recorded as a cutout image. Each cutout image recorded is displayed in the form of an icon. When any of the icons is selected by the user, the corresponding cutout image data is read and pasted in a part to be changed in the original image data. Thus an image can be easily created by user's choice.

Owner:NINTENDO CO LTD

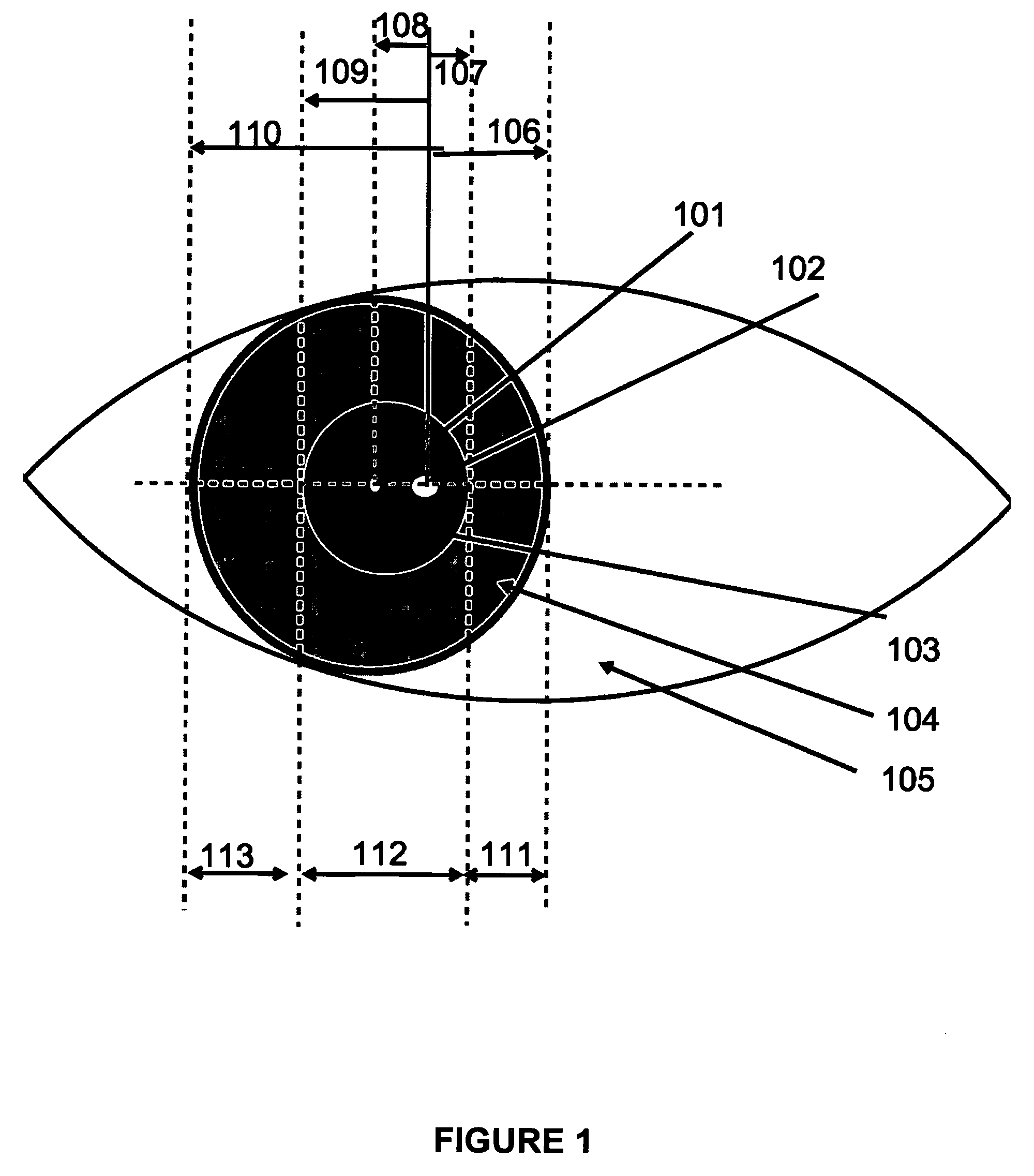

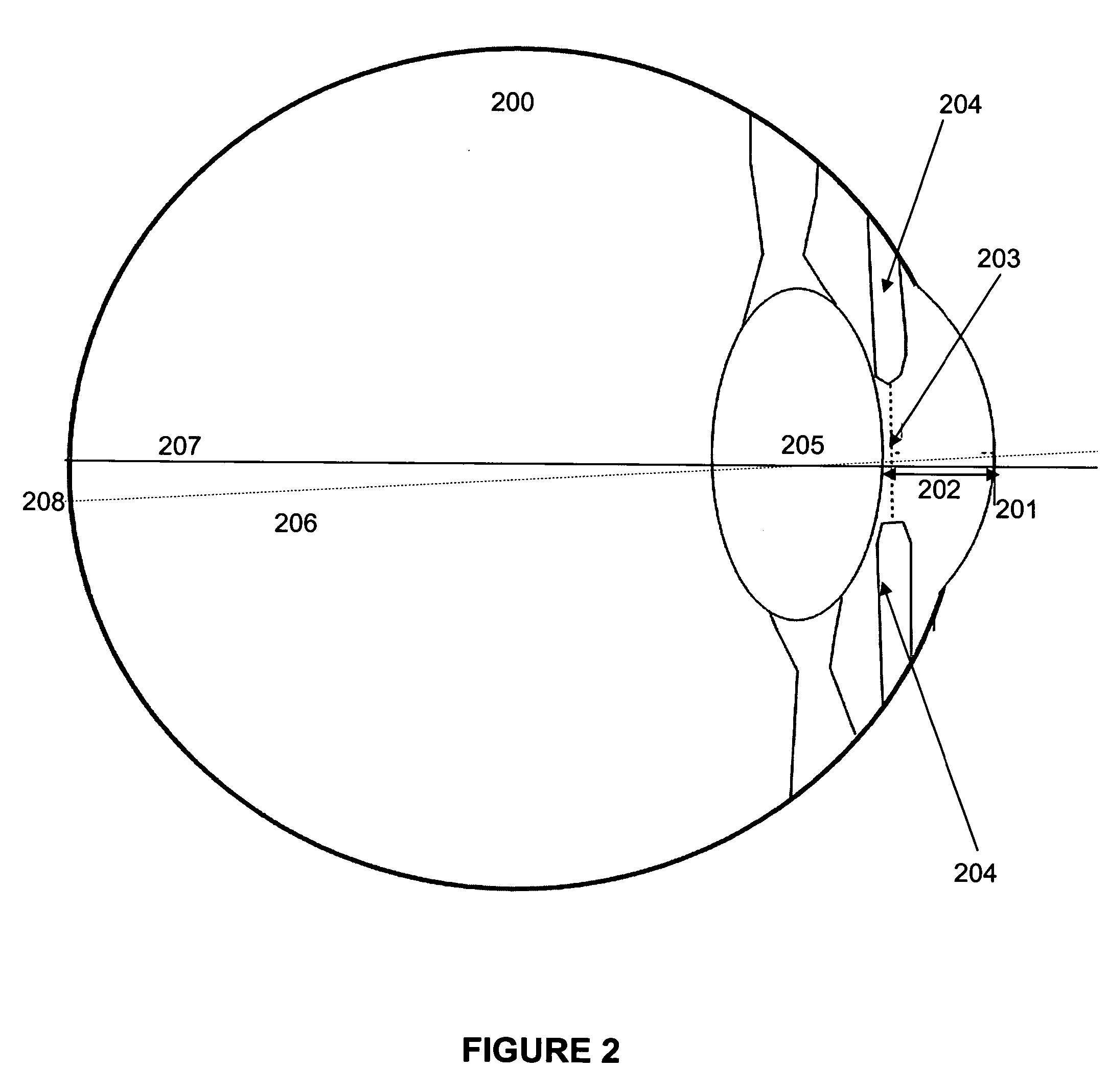

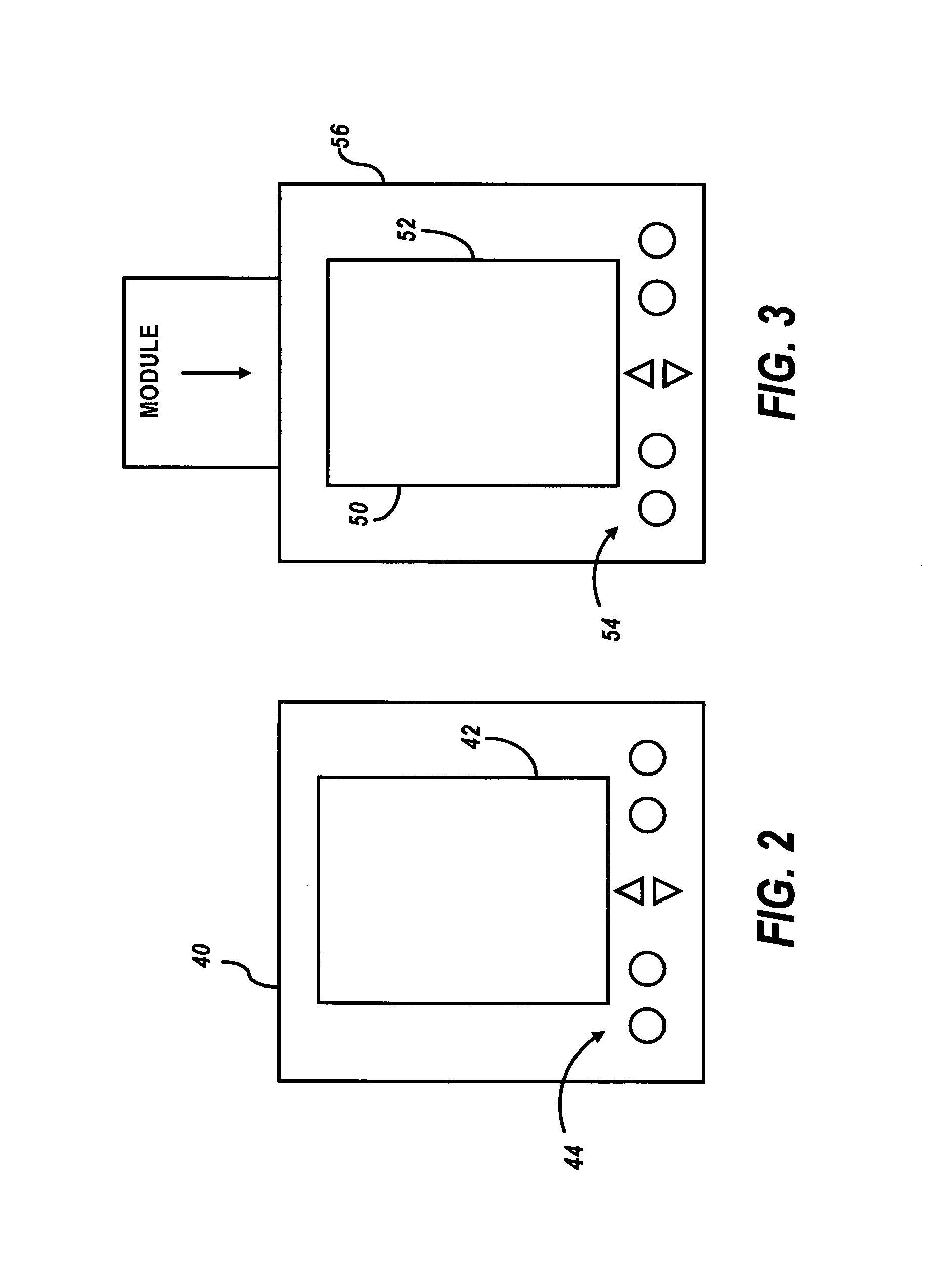

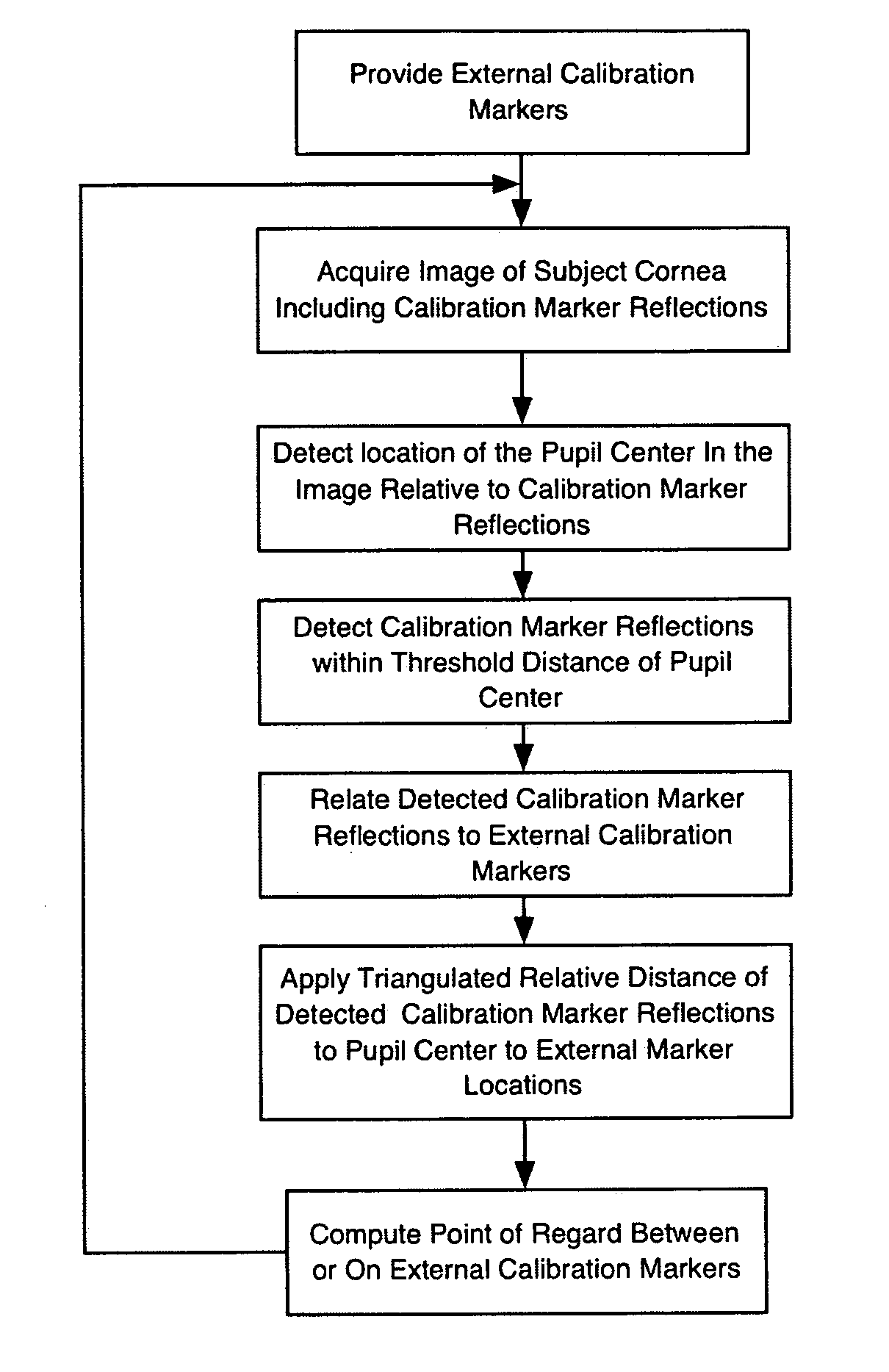

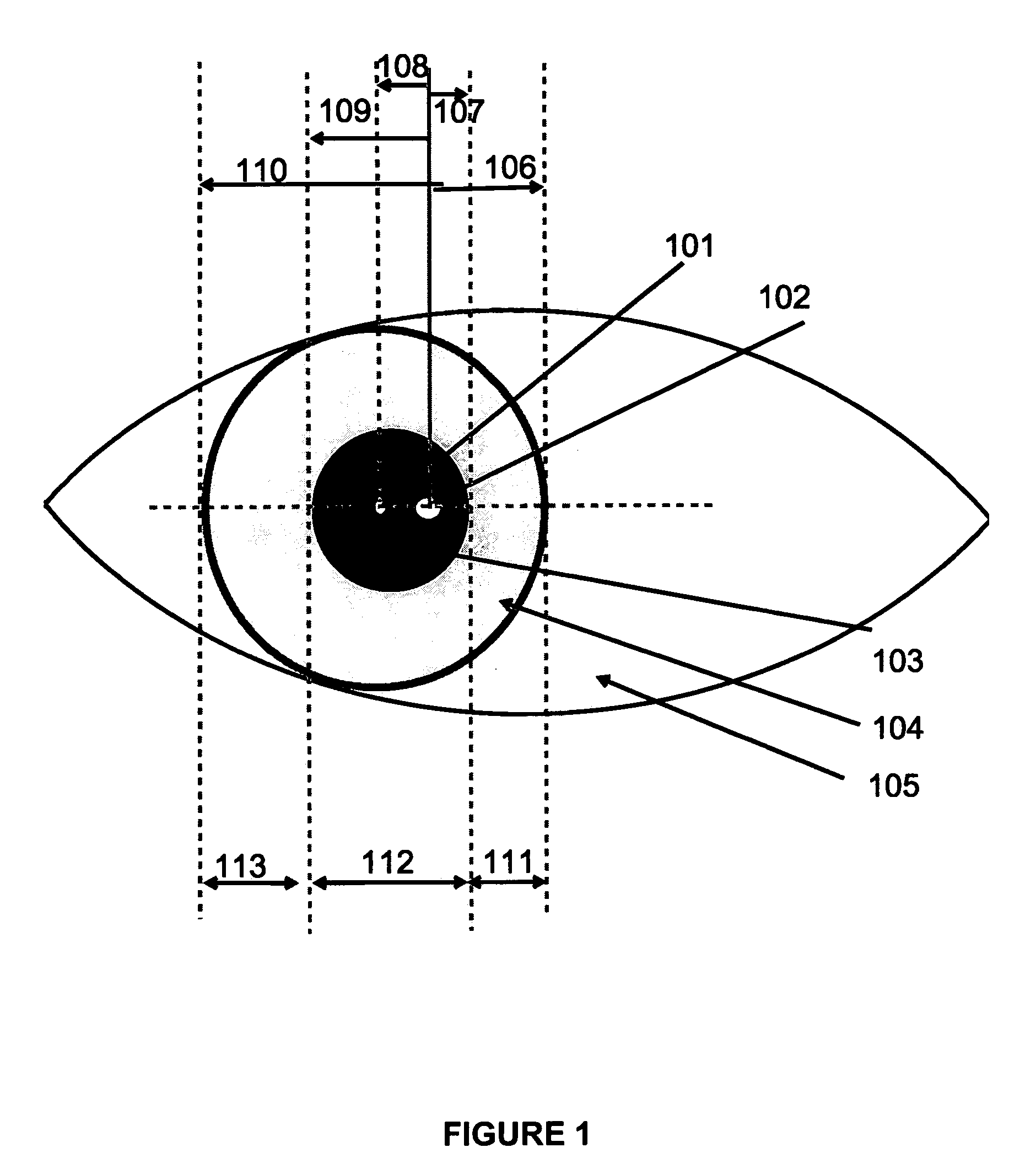

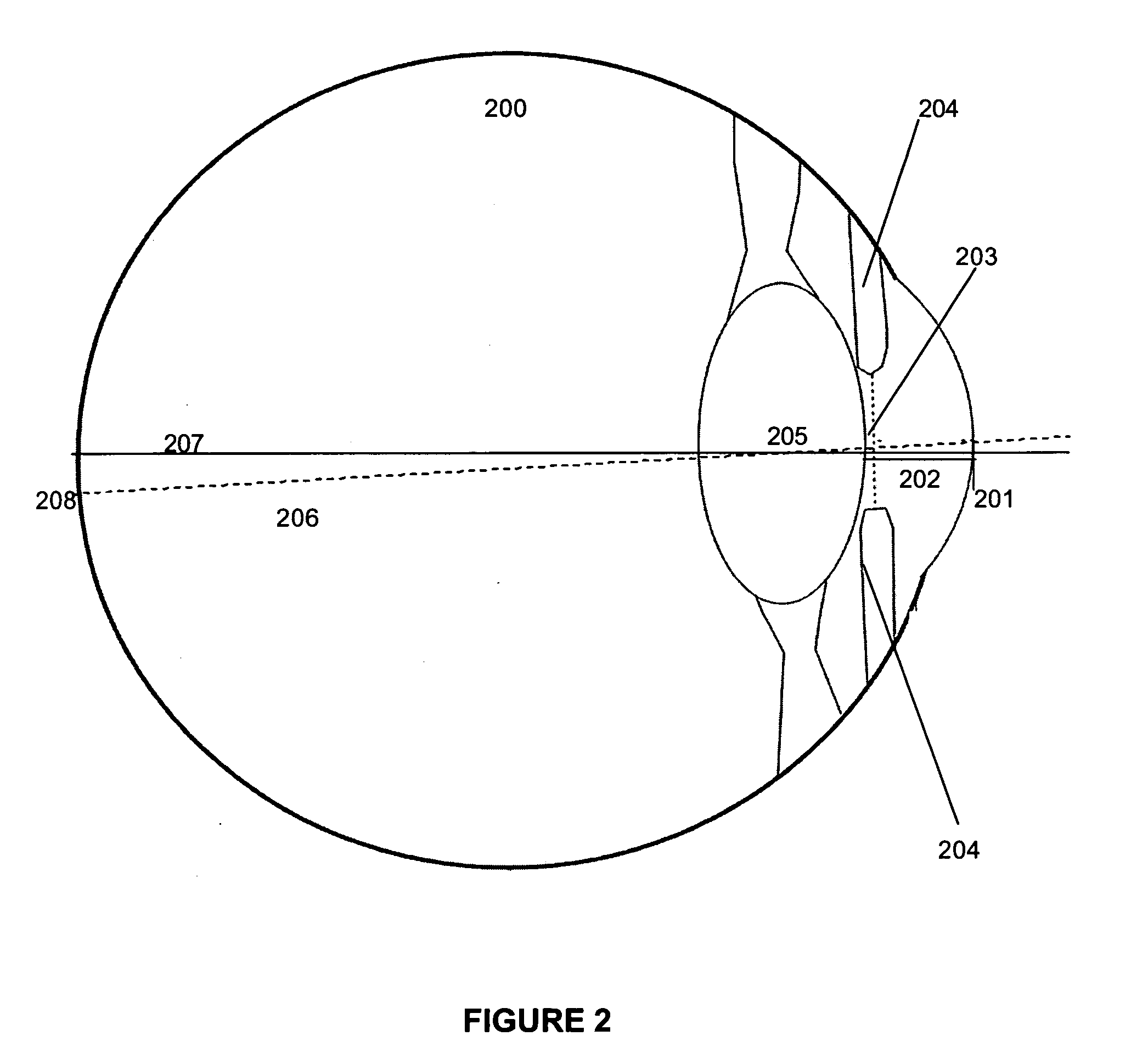

Method and apparatus for calibration-free eye tracking

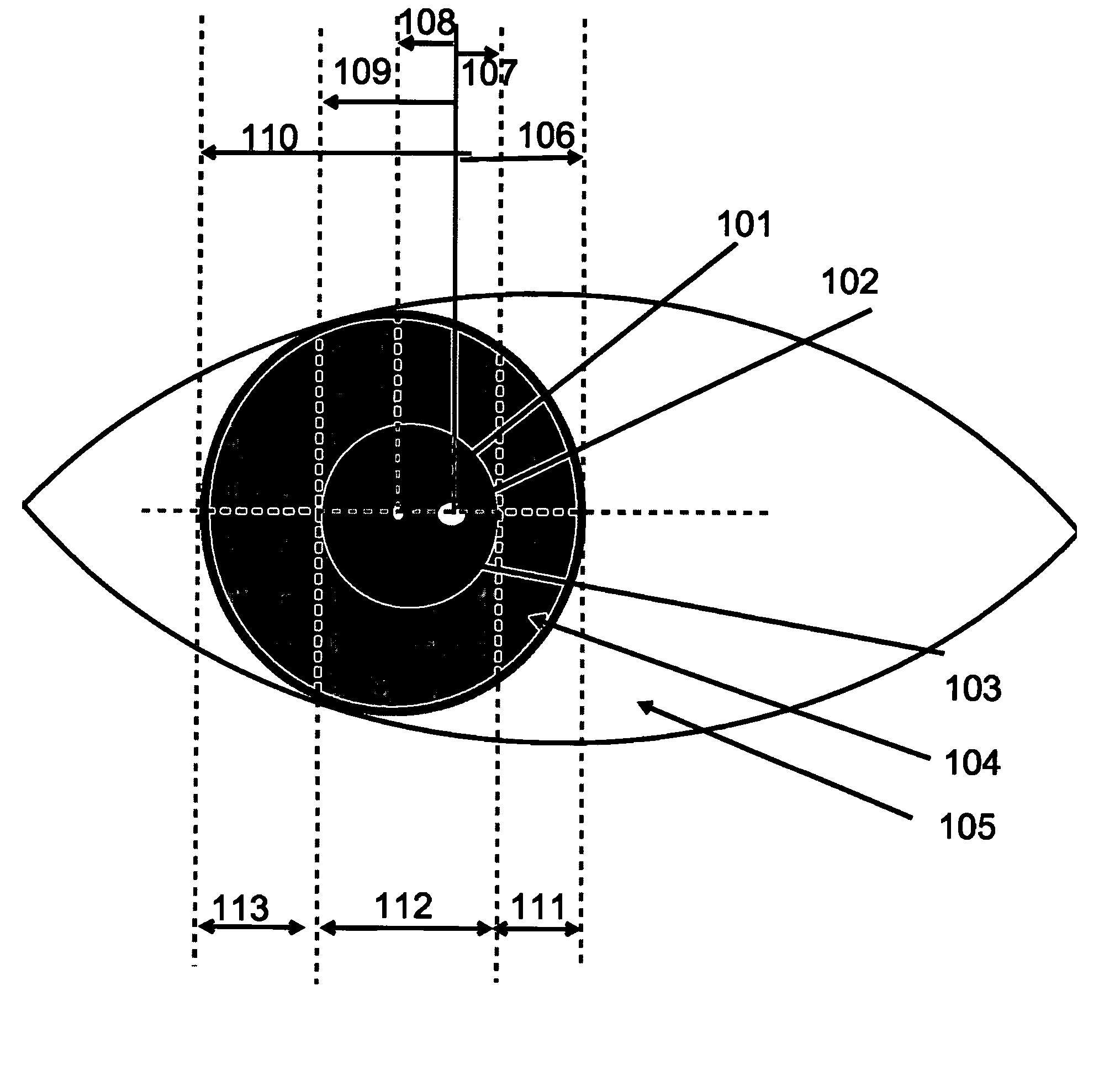

ActiveUS20060110008A1Extended durationImage enhancementImage analysisCorneal surfaceAngular distance

A system and method for eye gaze tracking in human or animal subjects without calibration of cameras, specific measurements of eye geometries or the tracking of a cursor image on a screen by the subject through a known trajectory. The preferred embodiment includes one uncalibrated camera for acquiring video images of the subject's eye(s) and optionally having an on-axis illuminator, and a surface, object, or visual scene with embedded off-axis illuminator markers. The off-axis markers are reflected on the corneal surface of the subject's eyes as glints. The glints indicate the distance between the point of gaze in the surface, object, or visual scene and the corresponding marker on the surface, object, or visual scene. The marker that causes a glint to appear in the center of the subject's pupil is determined to be located on the line of regard of the subject's eye, and to intersect with the point of gaze. Point of gaze on the surface, object, or visual scene is calculated as follows. First, by determining which marker glints, as provided by the corneal reflections of the markers, are closest to the center of the pupil in either or both of the subject's eyes. This subset of glints forms a region of interest (ROI). Second, by determining the gaze vector (relative angular or Cartesian distance to the pupil center) for each of the glints in the ROI. Third, by relating each glint in the ROI to the location or identification (ID) of a corresponding marker on the surface, object, or visual scene observed by the eyes. Fourth, by interpolating the known locations of each these markers on the surface, object, or visual scene, according to the relative angular distance of their corresponding glints to the pupil center.

Owner:CHENG DANIEL +3

Providing multiple perspectives for a venue activity through an electronic hand held device

Methods and systems for receiving and displaying venue-based data at hand held devices are disclosed herein. Data transmitted from one or more venue-based data sources may be received by at least one hand held device present within a venue, such as a sports stadium or concert arena. Such data can be processed for display on a display screen associated with the hand held device. The processed data may be then displayed on the display screen, thereby enabling a user of the hand held device to view venue-based data through the hand held device. Such venue-based data viewable through the hand held device within the venue may include real-time and instant replay video images and clips, advertising and promotional information, scheduling, statistical, historical and other informational data, or a combination thereof.

Owner:FRONT ROW TECH

Method and apparatus for calibration-free eye tracking using multiple glints or surface reflections

InactiveUS20050175218A1Input/output for user-computer interactionImage enhancementCorneal surfaceAngular distance

A system and method for eye gaze tracking in human or animal subjects without calibration of cameras, specific measurements of eye geometries or the tracking of a cursor image on a screen by the subject through a known trajectory. The preferred embodiment includes one uncalibrated camera for acquiring video images of the subject's eye(s) and optionally having an on-axis illuminator, and a surface, object, or visual scene with embedded off-axis illuminator markers. The off-axis markers are reflected on the corneal surface of the subject's eyes as glints. The glints indicate the distance between the point of gaze in the surface, object, or visual scene and the corresponding marker on the surface, object, or visual scene. The marker that causes a glint to appear in the center of the subject's pupil is determined to be located on the line of regard of the subject's eye, and to intersect with the point of gaze. Point of gaze on the surface, object, or visual scene is calculated as follows. First, by determining which marker glints, as provided by the corneal reflections of the markers, are closest to the center of the pupil in either or both of the subject's eyes. This subset of glints forms a region of interest (ROI). Second, by determining the gaze vector (relative angular or cartesian distance to the pupil center) for each of the glints in the ROI. Third, by relating each glint in the ROI to the location or identification (ID) of a corresponding marker on the surface, object, or visual scene observed by the eyes. Fourth, by interpolating the known locations of each these markers on the surface, object, or visual scene, according to the relative angular distance of their corresponding glints to the pupil center.

Owner:CHENG DANIEL +3

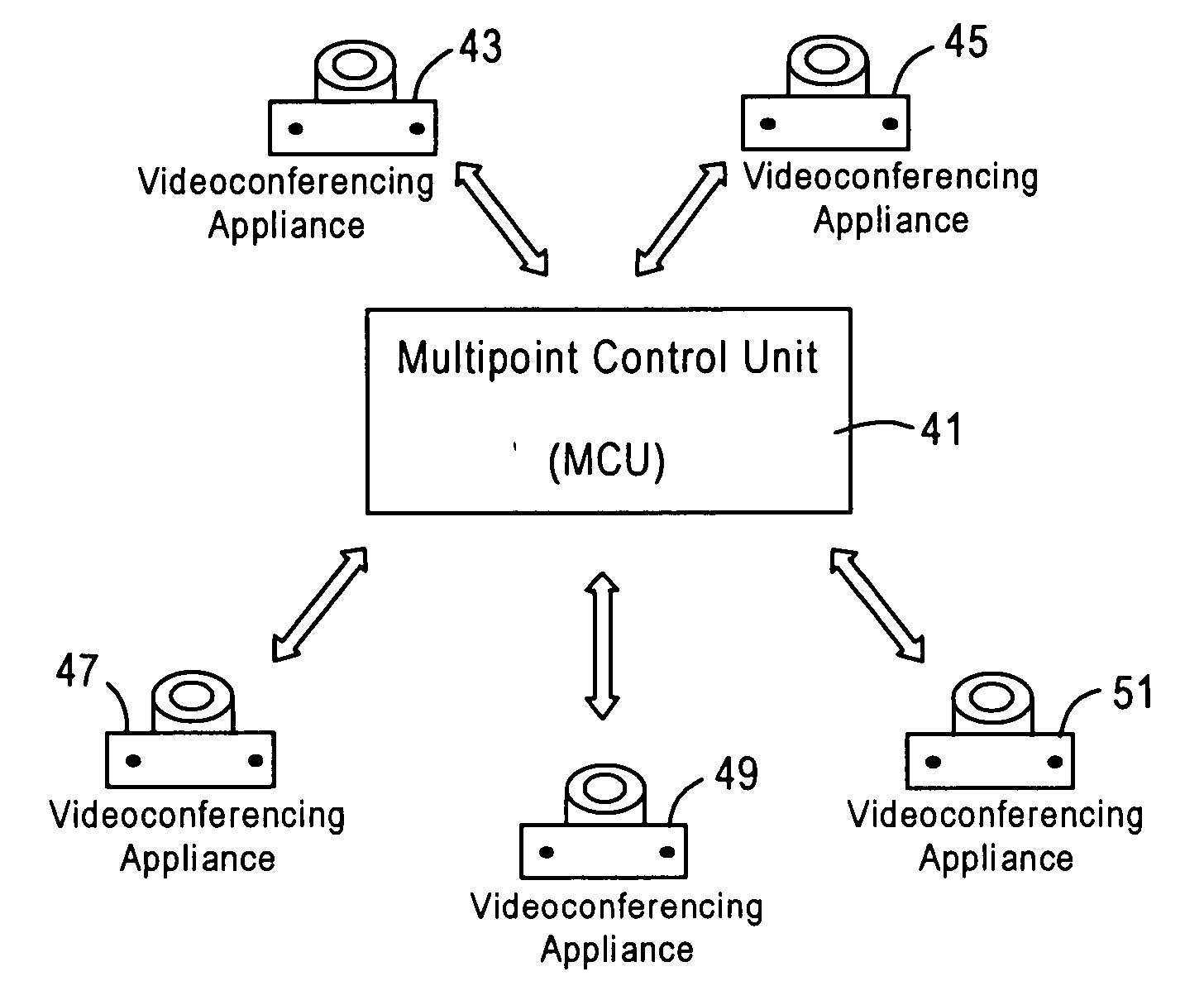

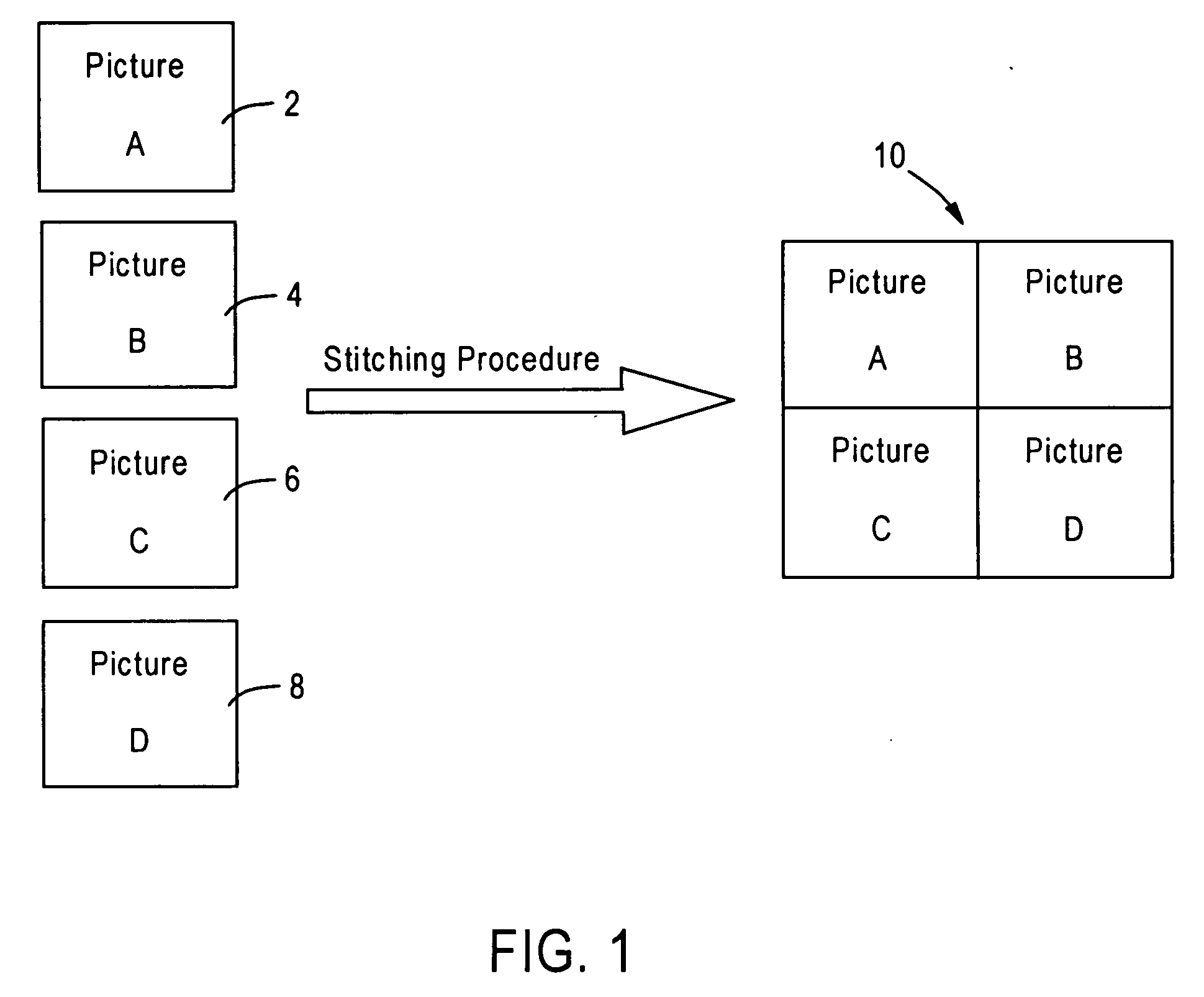

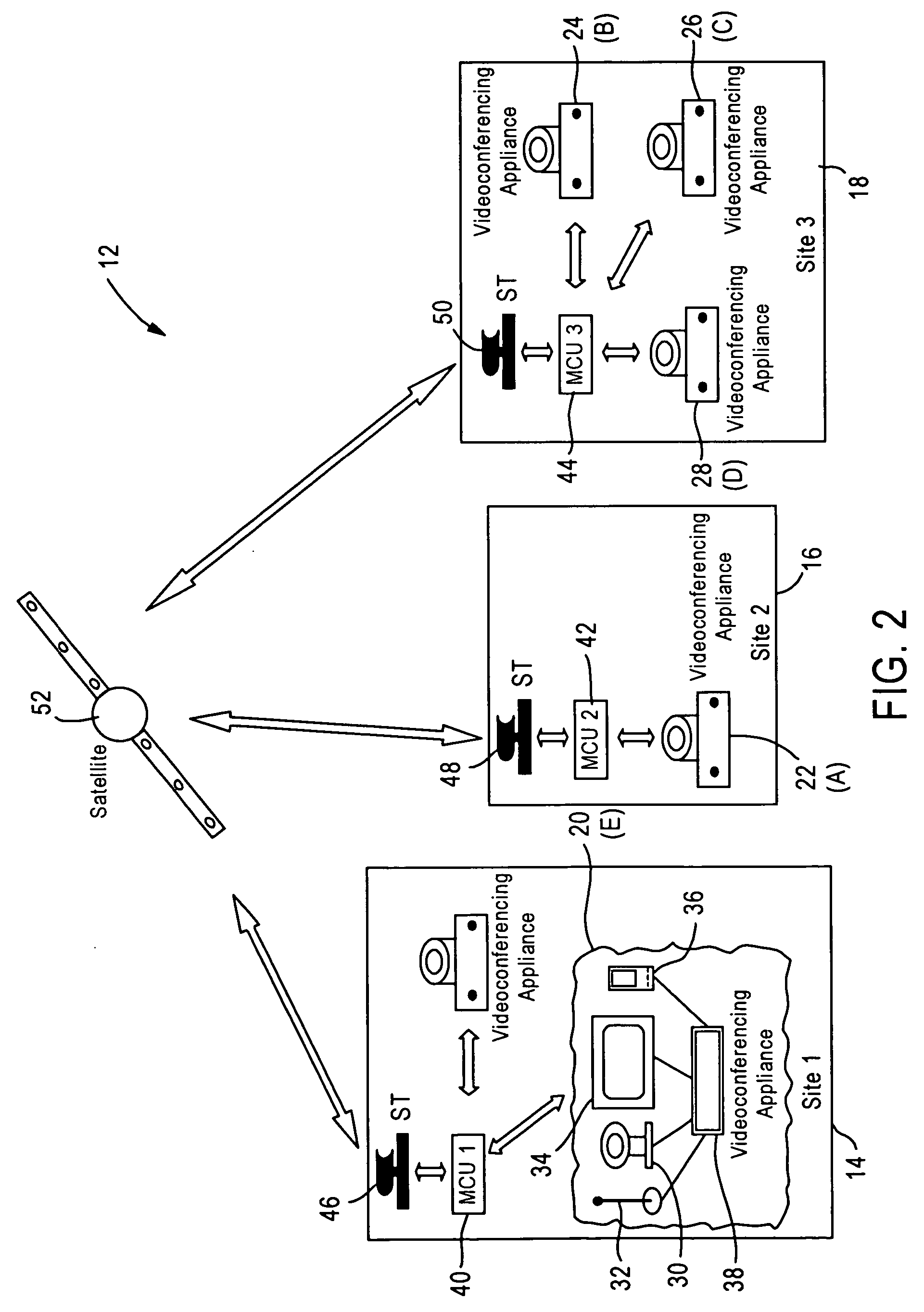

Stitching of video for continuous presence multipoint video conferencing

InactiveUS20050008240A1Avoid mistakesTelevision system detailsTelevision conference systemsVideo bitstreamVideo sequence

A drift-free hybrid method of performing video stitching is provided. The method includes decoding a plurality of video bitstreams and storing prediction information. The decoded bitstreams form video images, spatially composed into a combined image. The image comprises frames of ideal stitched video sequence. The method uses prediction information in conjunction with previously generated frames to predict pixel blocks in the next frame. A stitched predicted block in the next frame is subtracted from a corresponding block in a corresponding frame to create a stitched raw residual block. The raw residual block is forward transformed, quantized, entropy encoded and added to the stitched video bitstream along with the prediction information. Also, the stitched raw residual block is inverse transformed and dequantized to create a stitched decoded residual block. The residual block is added to the predicted block to generate the stitched reconstructed block in the next frame of the sequence.

Owner:HUGHES NETWORK SYST

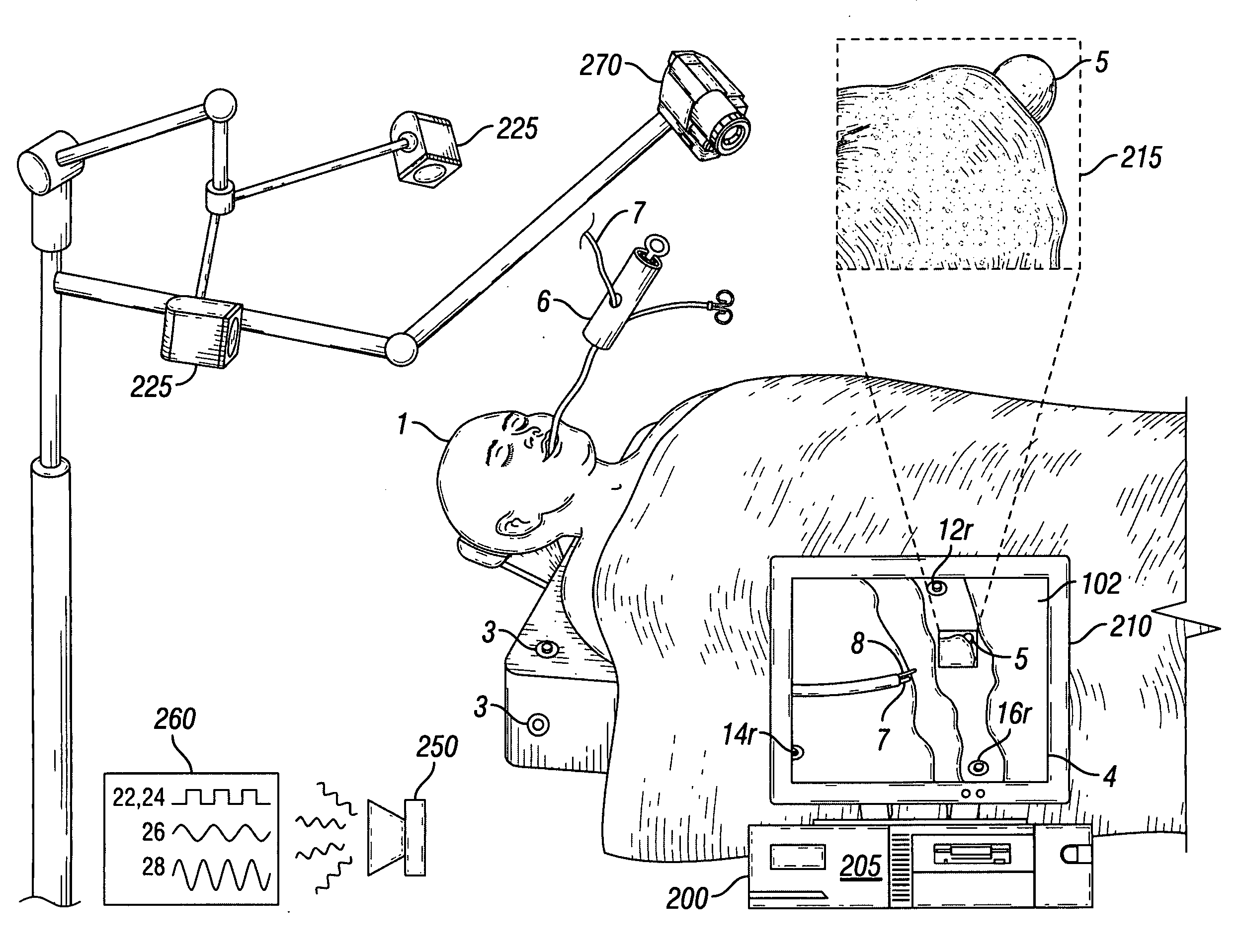

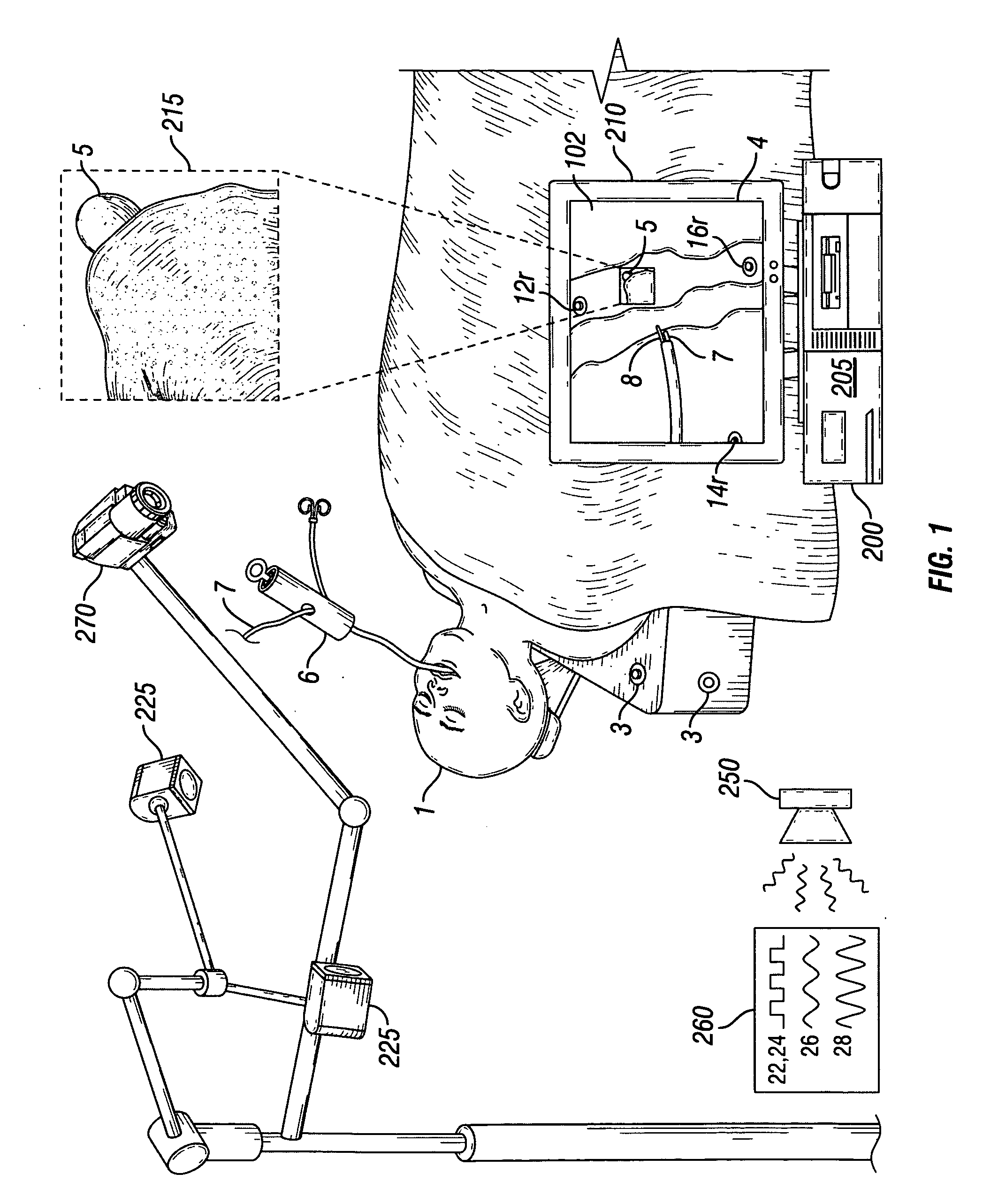

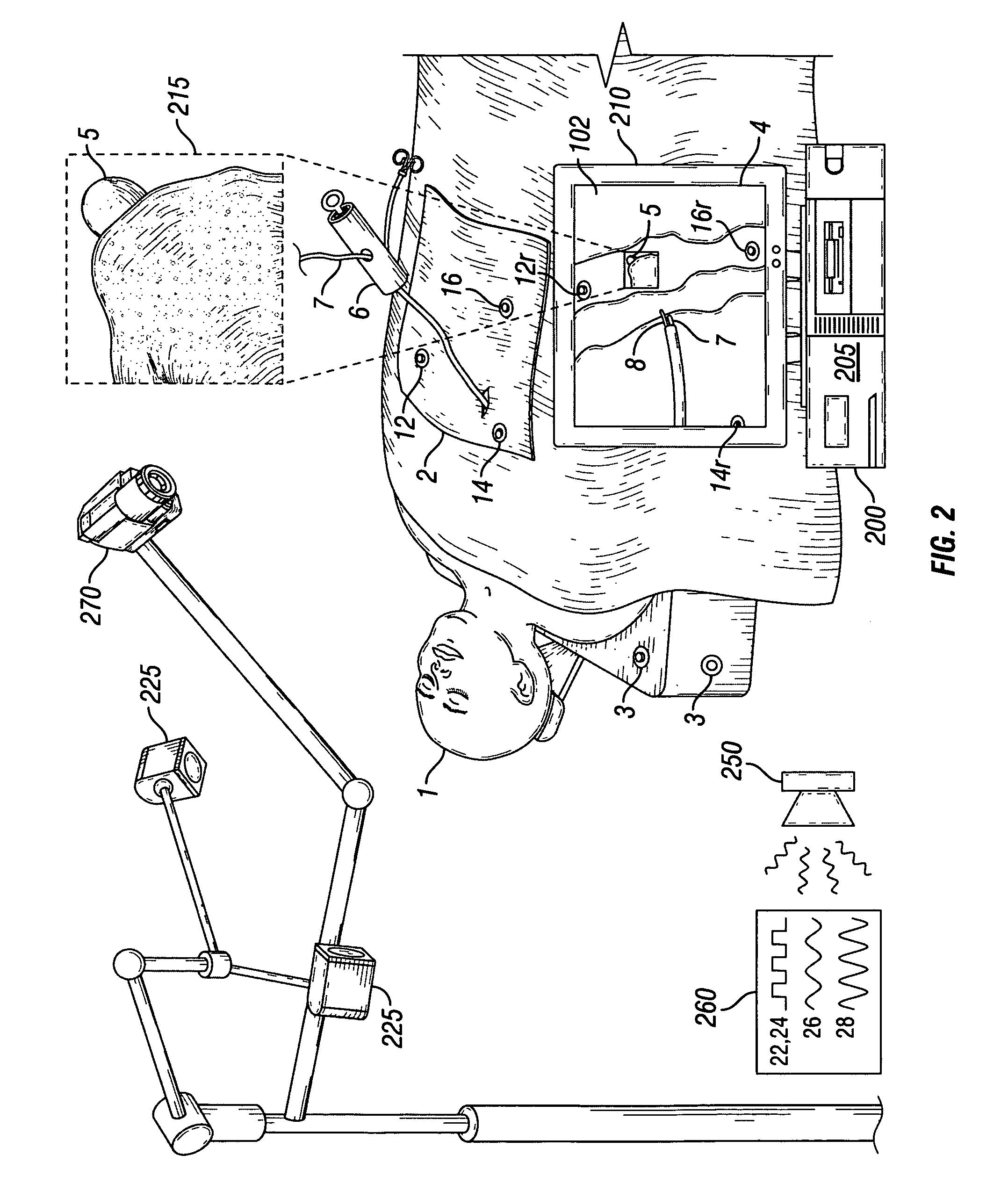

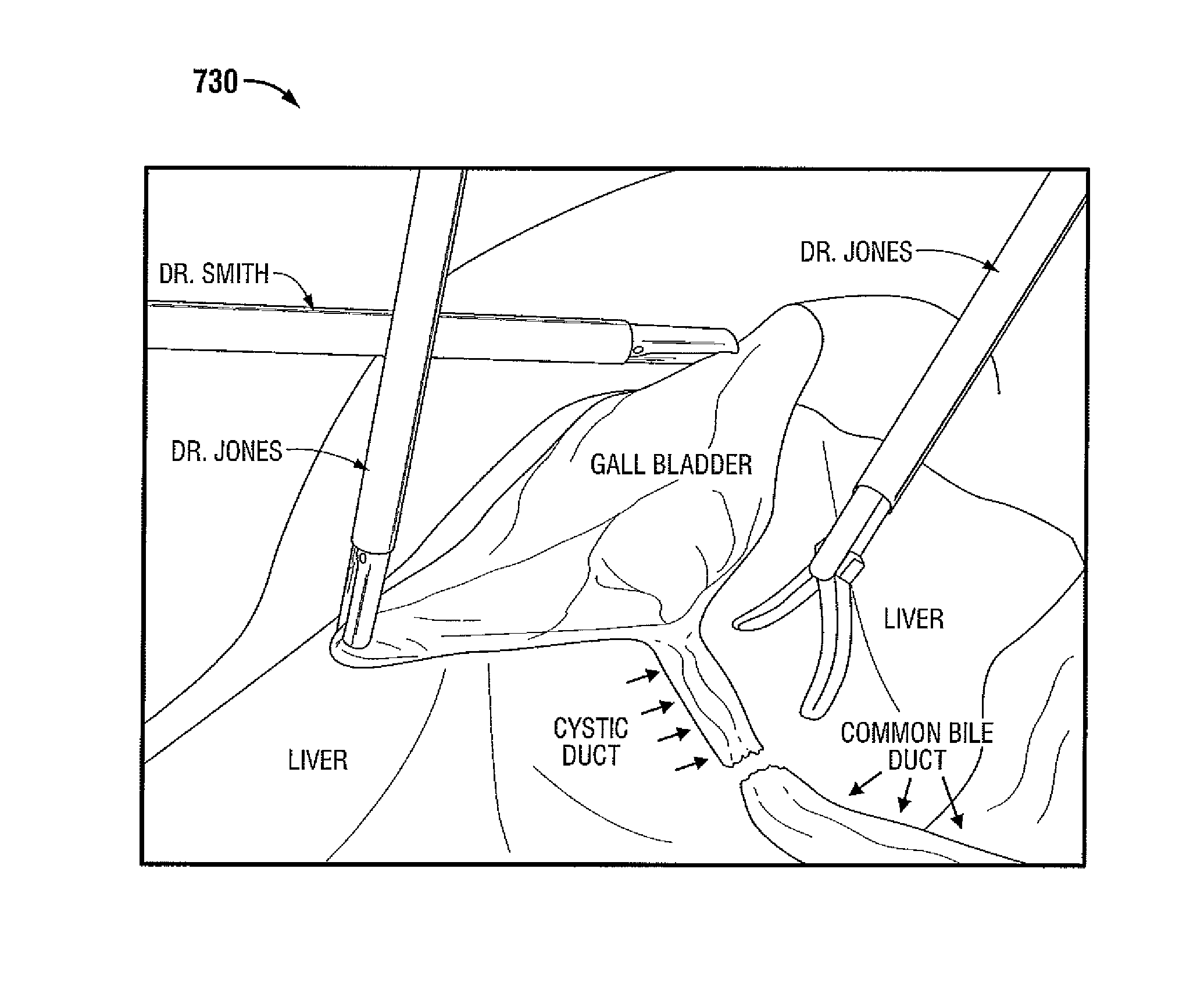

Videotactic and audiotactic assisted surgical methods and procedures

InactiveUS20080243142A1Medical simulationMechanical/radiation/invasive therapiesAnatomical structuresSurgical operation

The present invention provides video and audio assisted surgical techniques and methods. Novel features of the techniques and methods provided by the present invention include presenting a surgeon with a video compilation that displays an endoscopic-camera derived image, a reconstructed view of the surgical field (including fiducial markers indicative of anatomical locations on or in the patient), and / or a real-time video image of the patient. The real-time image can be obtained either with the video camera that is part of the image localized endoscope or with an image localized video camera without an endoscope, or both. In certain other embodiments, the methods of the present invention include the use of anatomical atlases related to pre-operative generated images derived from three-dimensional reconstructed CT, MRI, x-ray, or fluoroscopy. Images can furthermore be obtained from pre-operative imaging and spacial shifting of anatomical structures may be identified by intraoperative imaging and appropriate correction performed.

Owner:GILDENBERG PHILIP L

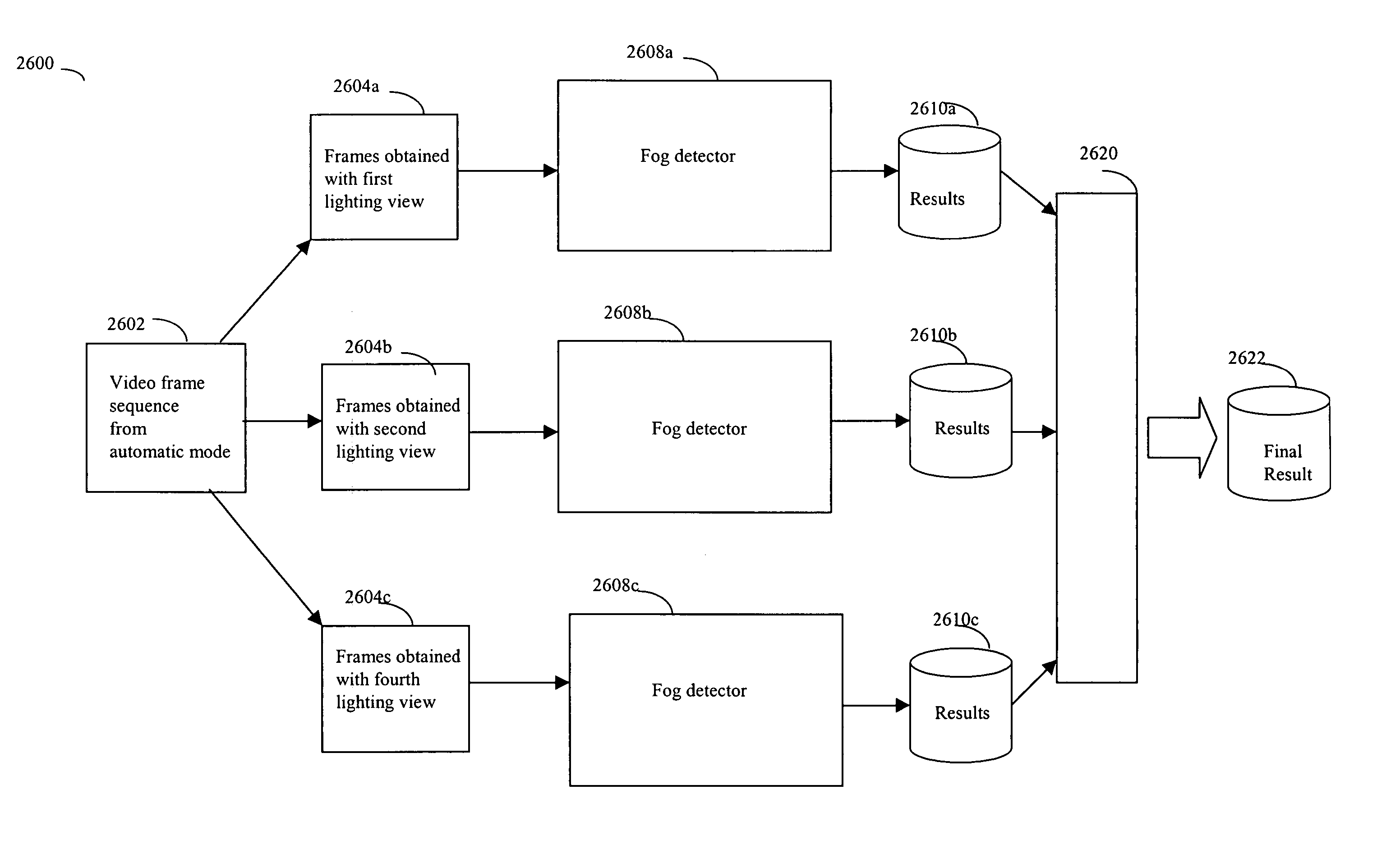

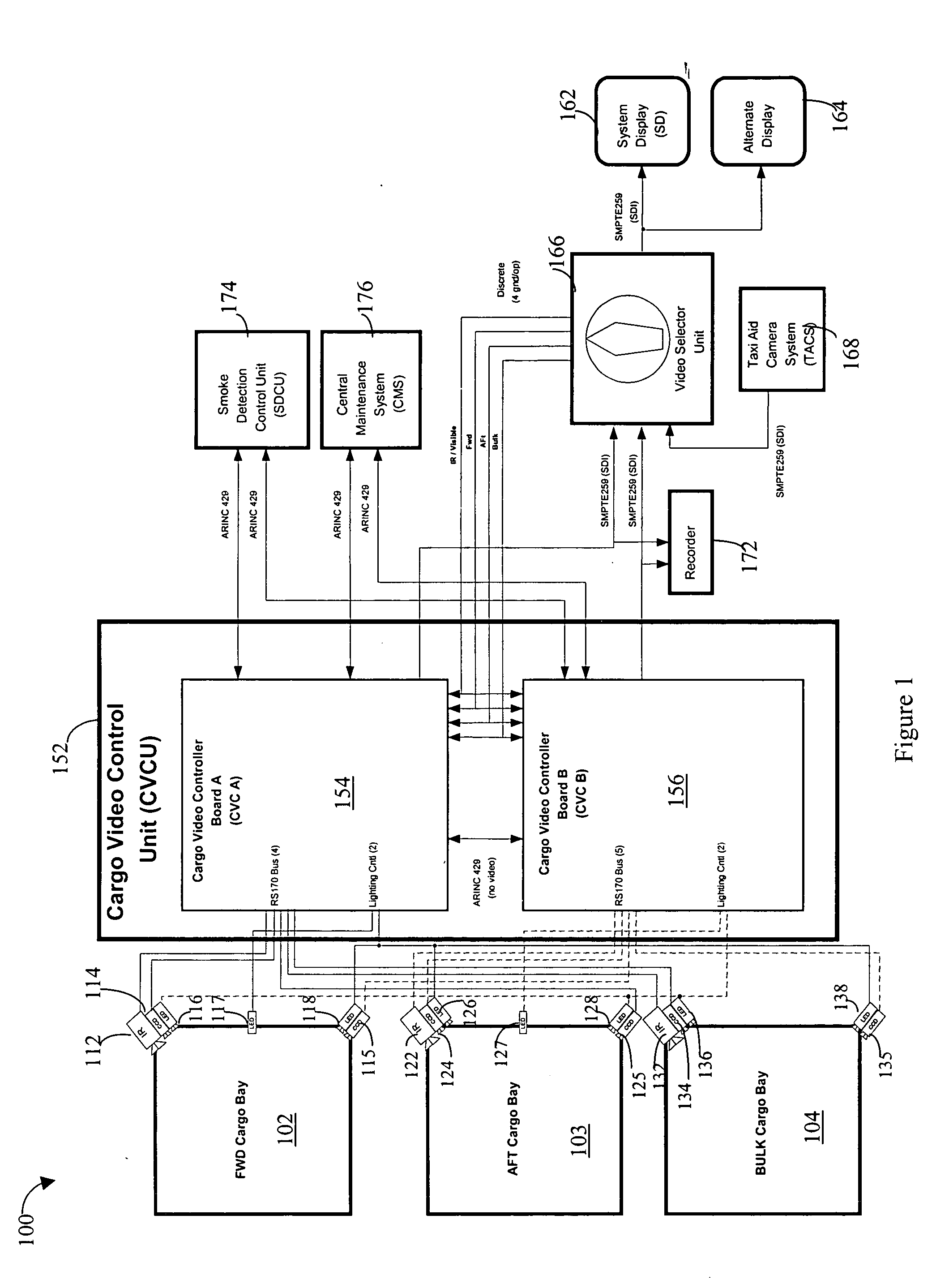

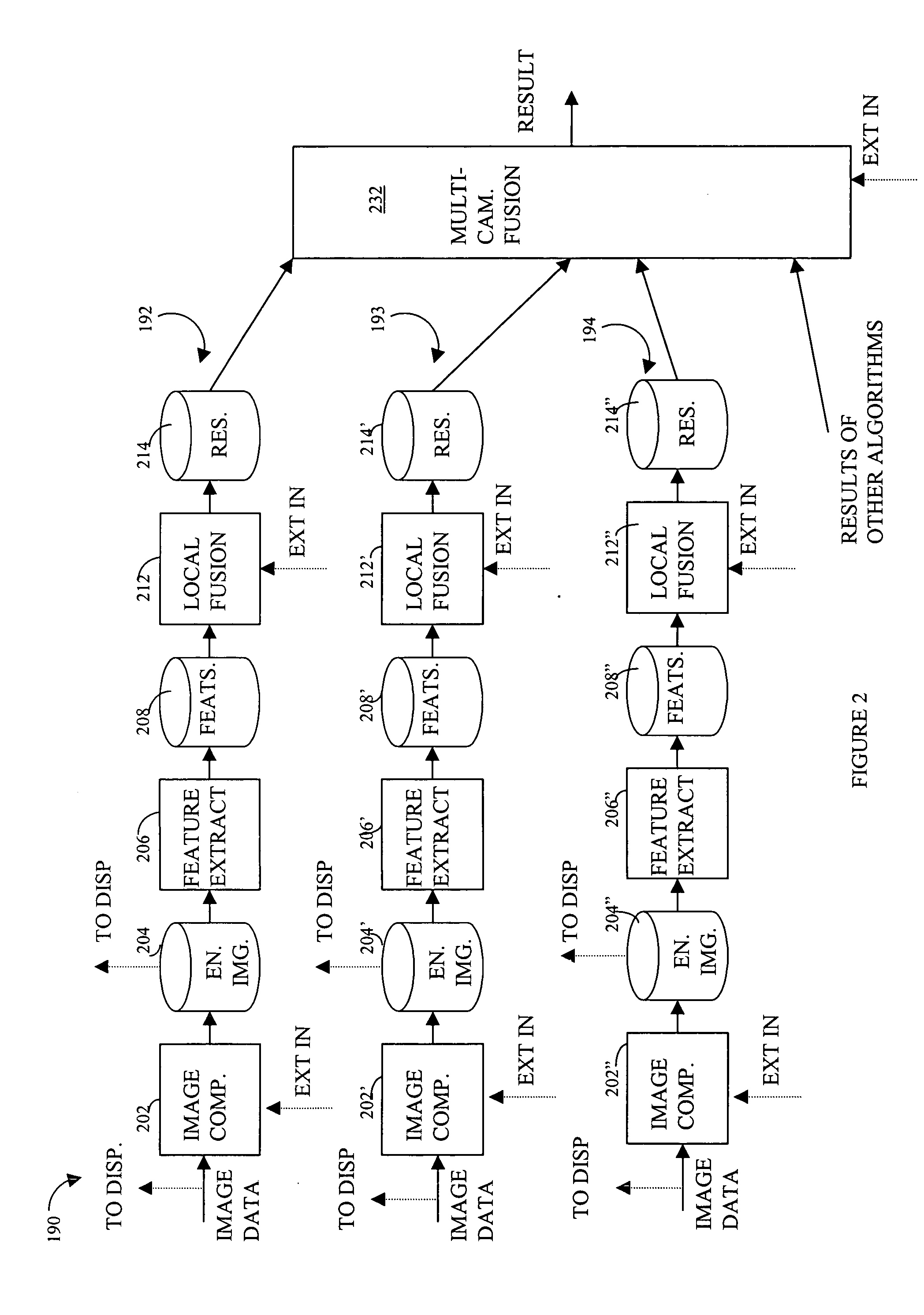

Method for detection and recognition of fog presence within an aircraft compartment using video images

Detecting video phenomena, such as fire in an aircraft cargo bay, includes receiving a plurality of video images from a plurality of sources, compensating the images to provide enhanced images, extracting features from the enhanced images, and combining the features from the plurality of sources to detect the video phenomena. Extracting features may include determining an energy indicator for each of a subset of the plurality of frames. Detecting video phenomena may also include comparing energy indicators for each of the subset of the plurality of frames to a reference frame. The reference frame corresponds to a video frame taken when no fire is present, video frame immediately preceding each of the subset of the plurality of frames, or a video frame immediately preceding a frame that is immediately preceding each of the subset of the plurality of frames. Image-based and non-image based techniques are described herein in connection with fire detection and / or verification and other applications.

Owner:SIMMONDS PRECISION PRODS

Image sensing system for a vehicle

InactiveUS20070120657A1Easy to explainRemove distortionVehicle seatsVehicle headlampsDriver/operatorDisplay device

An image sensing system for a vehicle includes an imaging sensor and a logic and control circuit. The imaging sensor comprises a two-dimensional array of light sensing photosensor elements formed on a semiconductor substrate, and has a field of view exterior of the vehicle. The logic and control circuit comprises an image processor for processing image data derived from the imaging sensor. The image sensing system may generate an indication of the presence of an object within the field of view of the imaging sensor. Preferably, video images may be captured by said imaging sensor and may be displayed by a display device for viewing by the driver when operating the vehicle. The logic and control circuit may generate at least one control output, and the at least control output may control an enhancement of the video images.

Owner:DONNELLY CORP

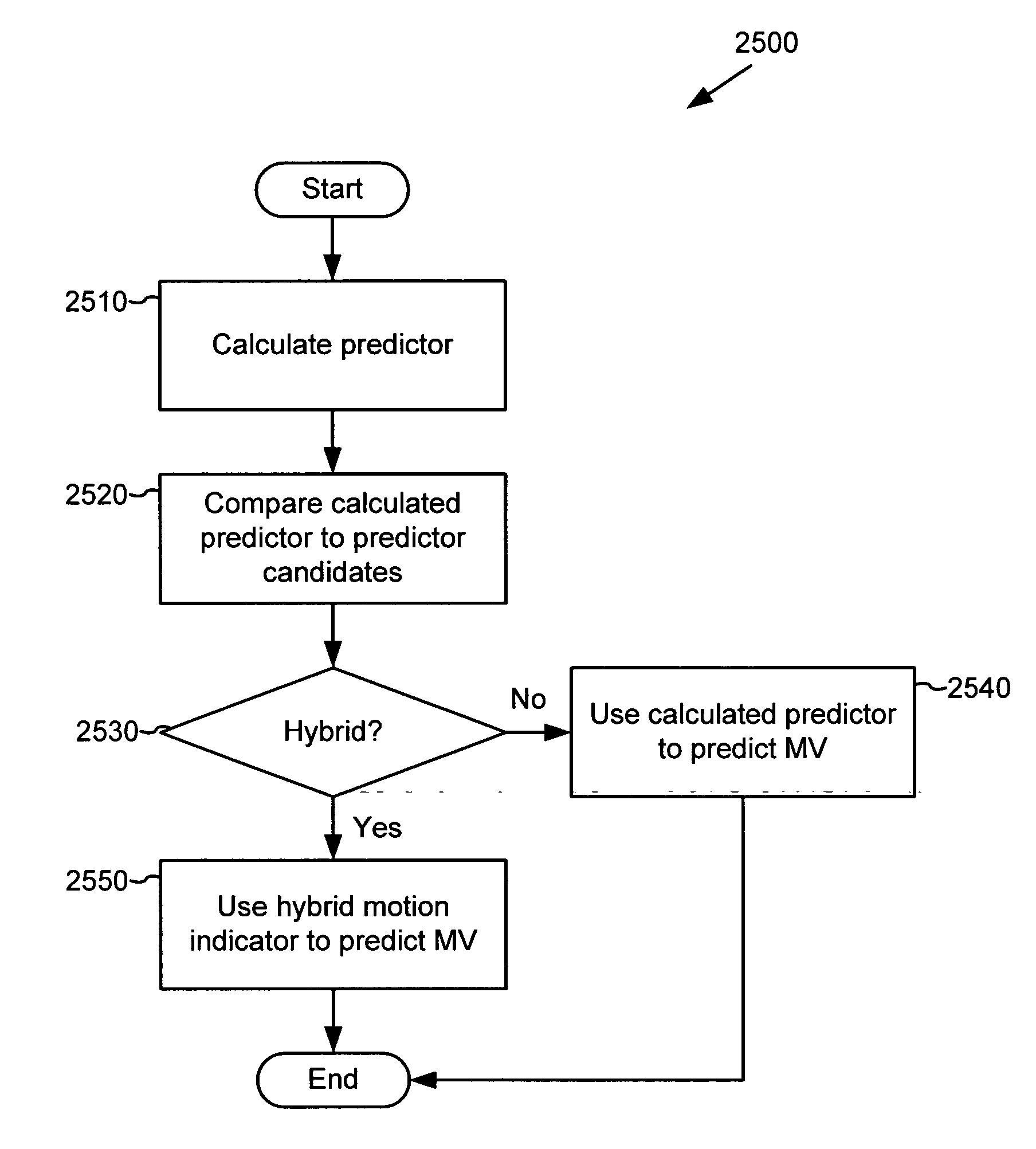

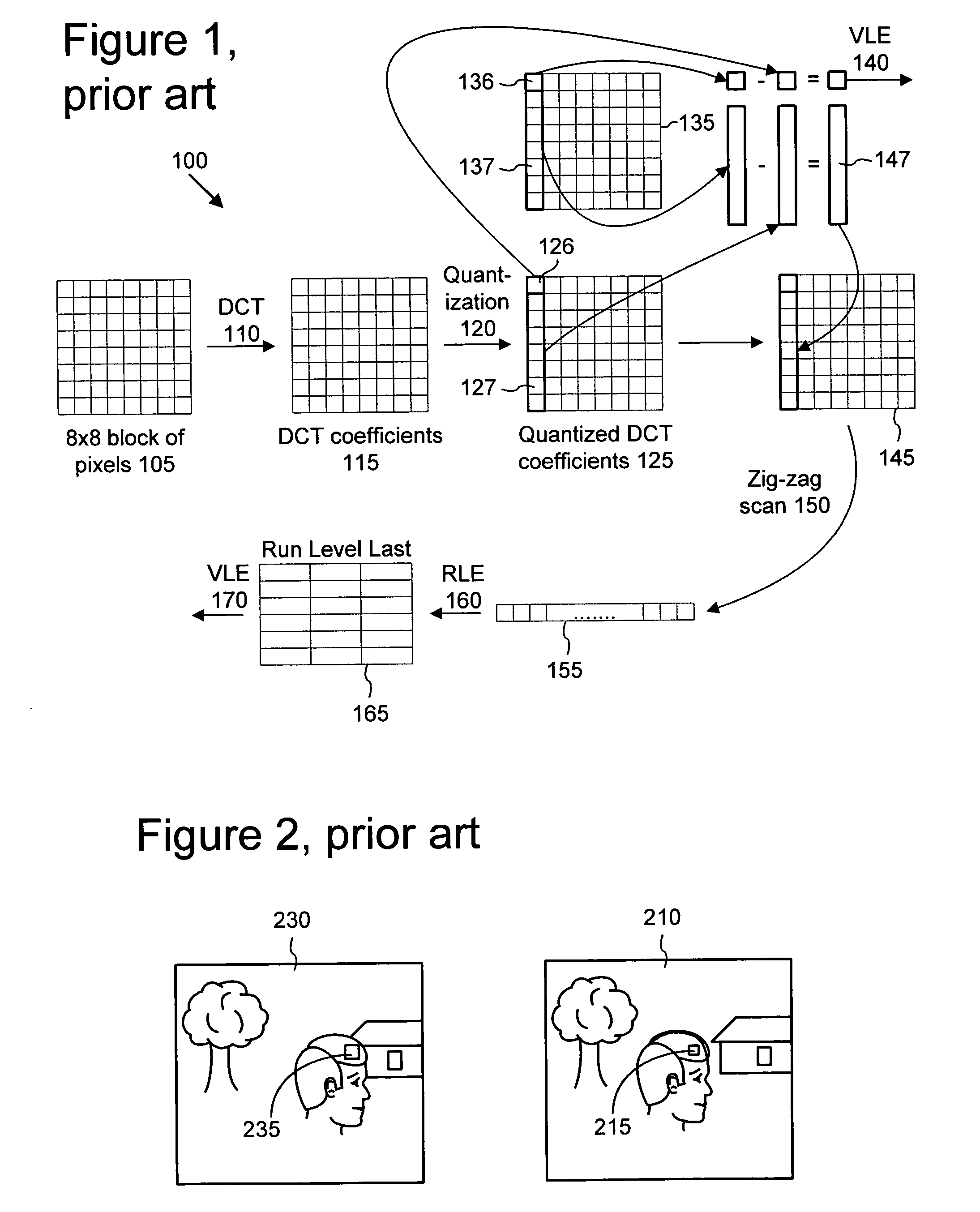

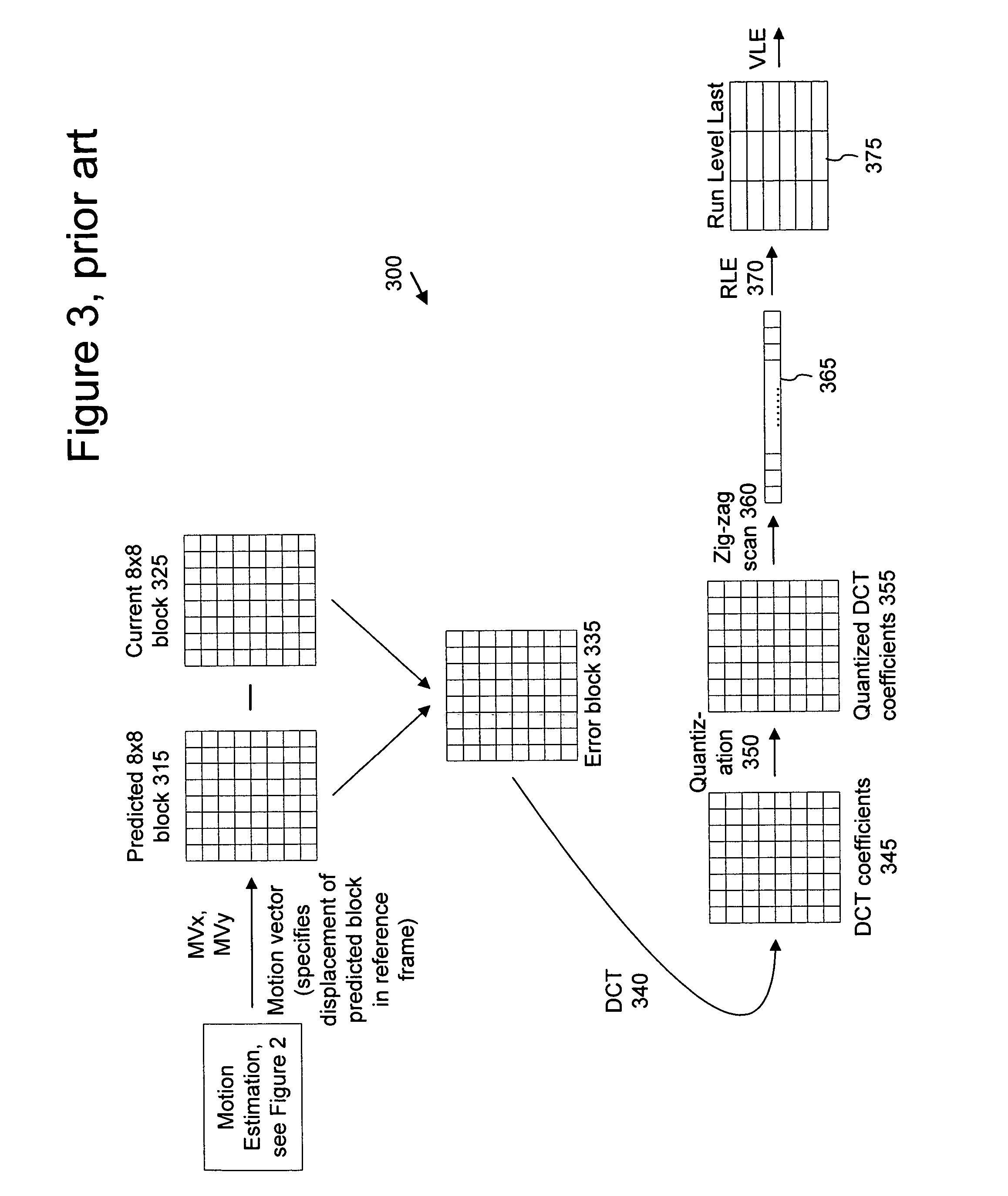

Coding of motion vector information

InactiveUS20050013498A1Pulse modulation television signal transmissionCharacter and pattern recognitionPattern recognitionEncoder decoder

Techniques and tools for encoding and decoding motion vector information for video images are described. For example, a video encoder yields an extended motion vector code by jointly coding, for a set of pixels, a switch code, motion vector information, and a terminal symbol indicating whether subsequent data is encoded for the set of pixels. In another aspect, an encoder / decoder selects motion vector predictors for macroblocks. In another aspect, a video encoder / decoder uses hybrid motion vector prediction. In another aspect, a video encoder / decoder signals a motion vector mode for a predicted image. In another aspect, a video decoder decodes a set of pixels by receiving an extended motion vector code, which reflects joint encoding of motion information together with intra / inter-coding information and a terminal symbol. The decoder determines whether subsequent data exists for the set of pixels based on e.g., the terminal symbol.

Owner:MICROSOFT TECH LICENSING LLC

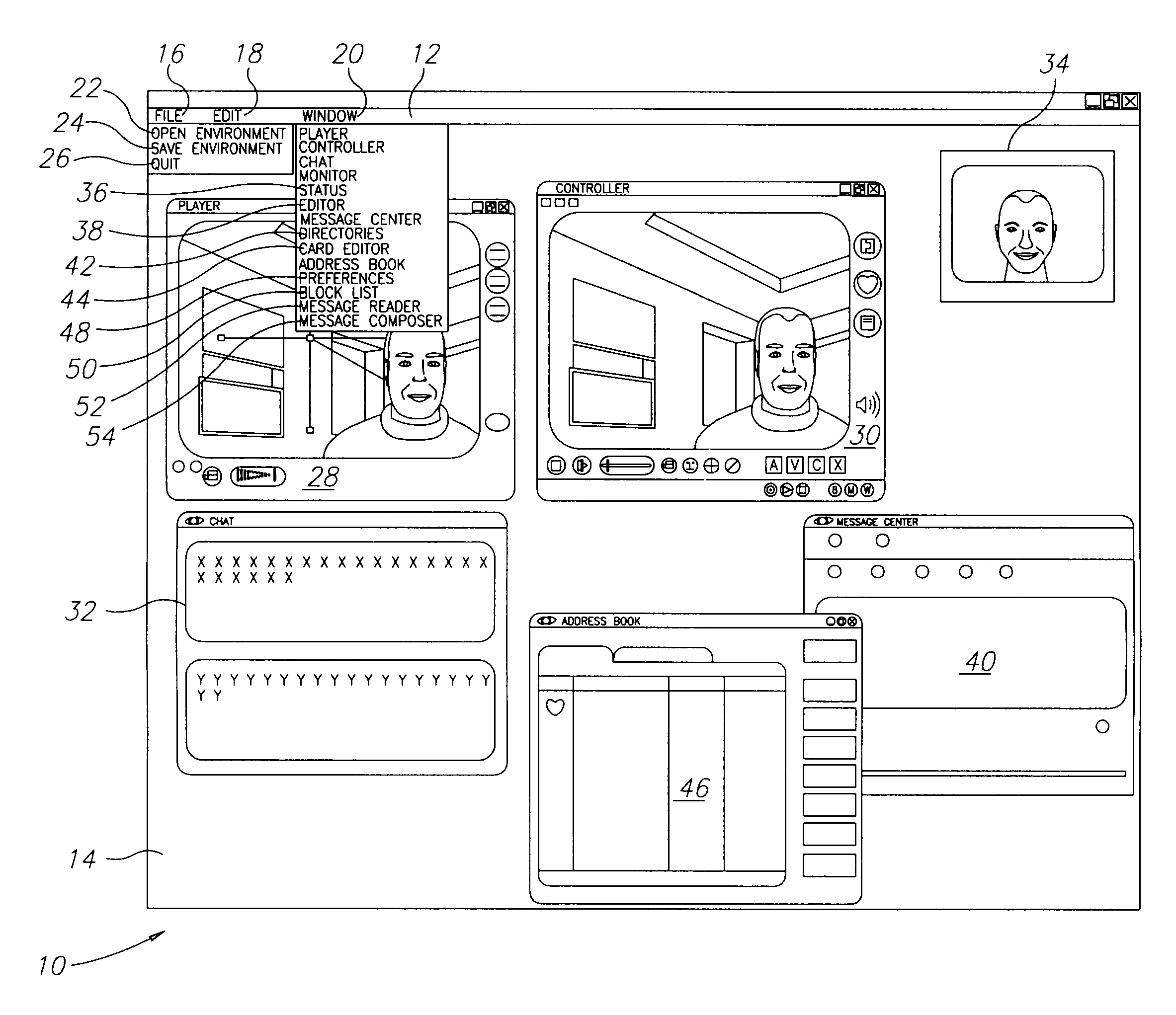

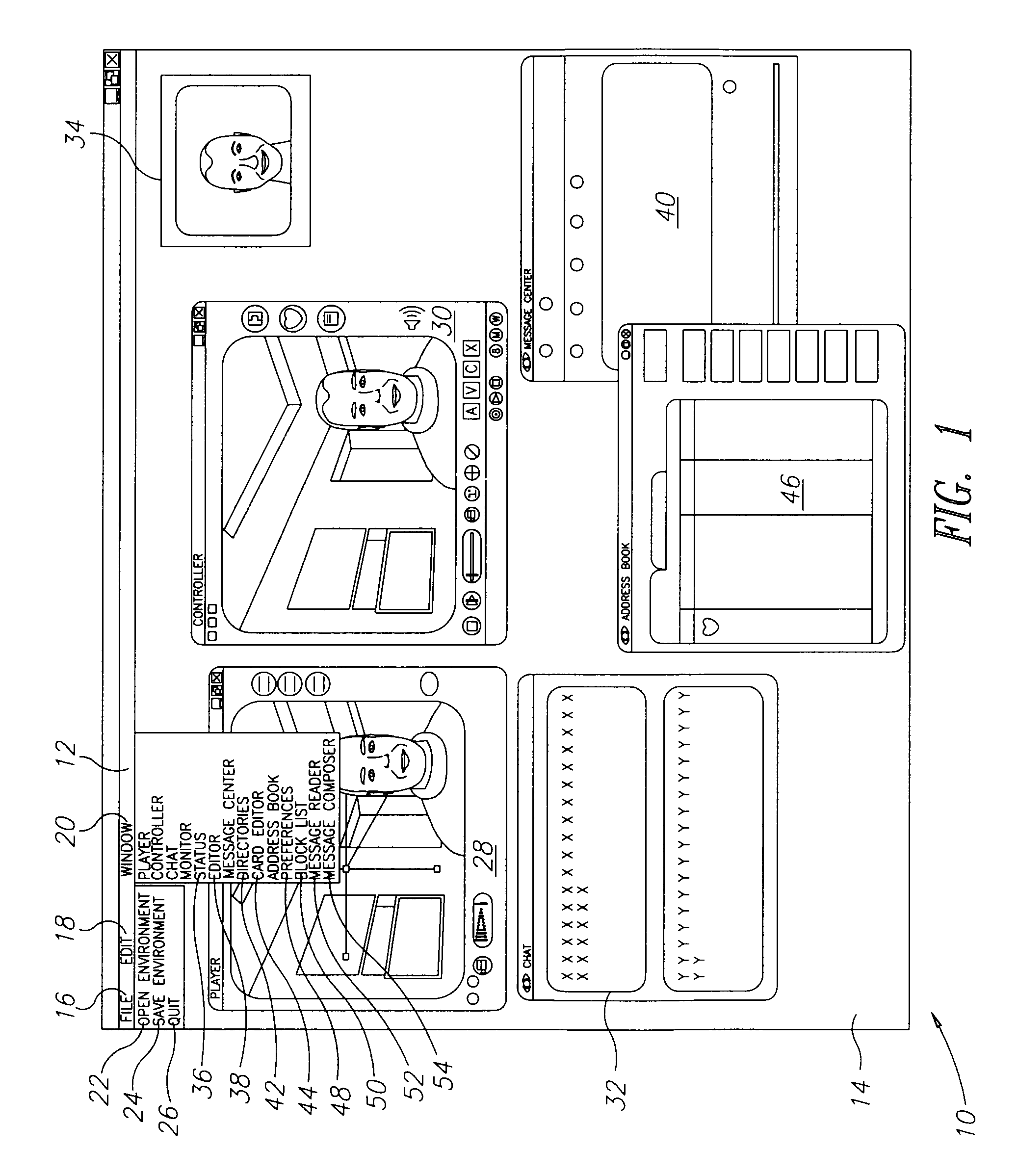

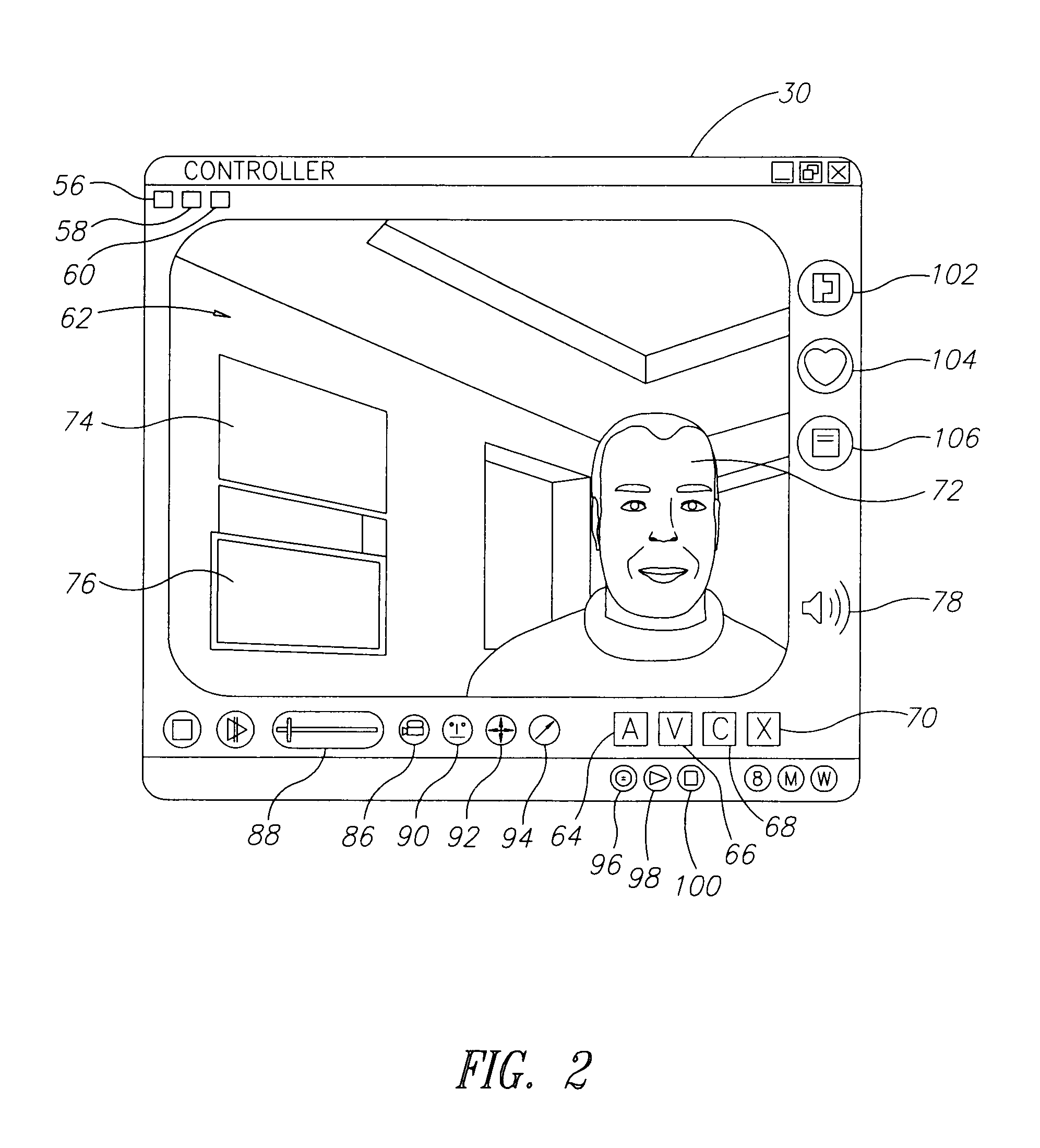

Communication system and method including rich media tools

InactiveUS6948131B1Improved and costRecording carrier detailsData processing applicationsCommunications systemAnimation

The rich media communication system of the present invention provides a user with a three-dimensional communication space or theater having rich media functions. The user may be represented in the theater as a segmented video image or as an avatar. The user is also able to communicate by presenting images, videos, audio files, or text within the theater. The system may include tools for allowing lowered cost of animation, improved collaboration between users, presentation of episodic content, web casts, newscasts, infotainment, advertising, music clips, video conferencing, customer support, distance learning, advertising, social spaces, and interactive game shows and content.

Owner:VIDIATOR ENTERPRISES INC

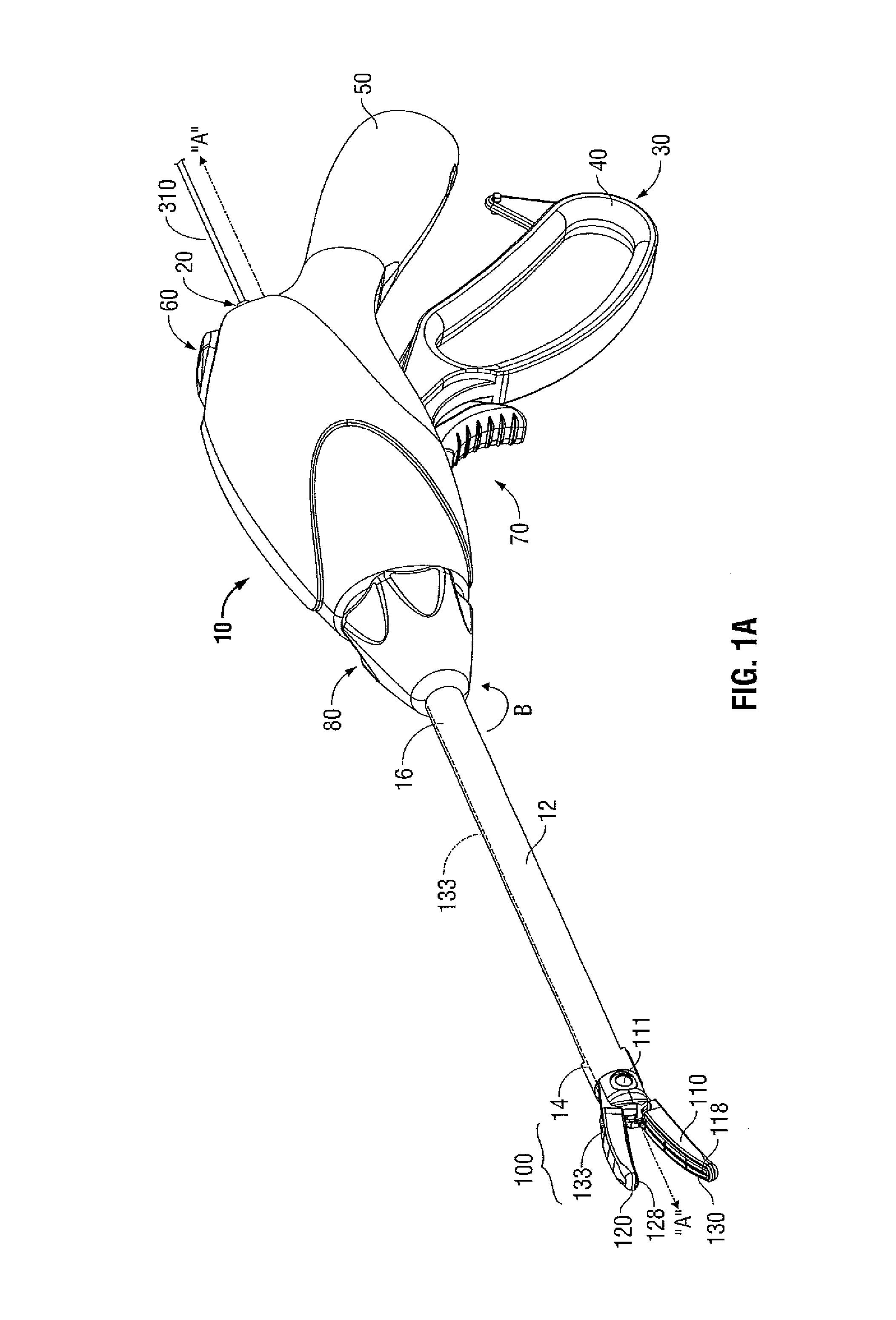

Apparatus and method for using augmented reality vision system in surgical procedures

ActiveUS9123155B2Improve eyesightAvoid enteringMechanical/radiation/invasive therapiesSurgerySurgical siteX-ray

A system and method for improving a surgeon's vision by overlaying augmented reality information onto a video image of the surgical site. A high definition video camera sends a video image in real time. Prior to the surgery, a pre-operative image is created from MRI, x-ray, ultrasound, or other method of diagnosis using imaging technology. The pre-operative image is stored within the computer. The computer processes the pre-operative image to decipher organs, anatomical geometries, vessels, tissue planes, orientation, and other structures. As the surgeon performs the surgery, the AR controller augments the real time video image with the processed pre-operative image and displays the augmented image on an interface to provide further guidance to the surgeon during the surgical procedure.

Owner:TYCO HEALTHCARE GRP LP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com