Patents

Literature

5013 results about "Augmented reality" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

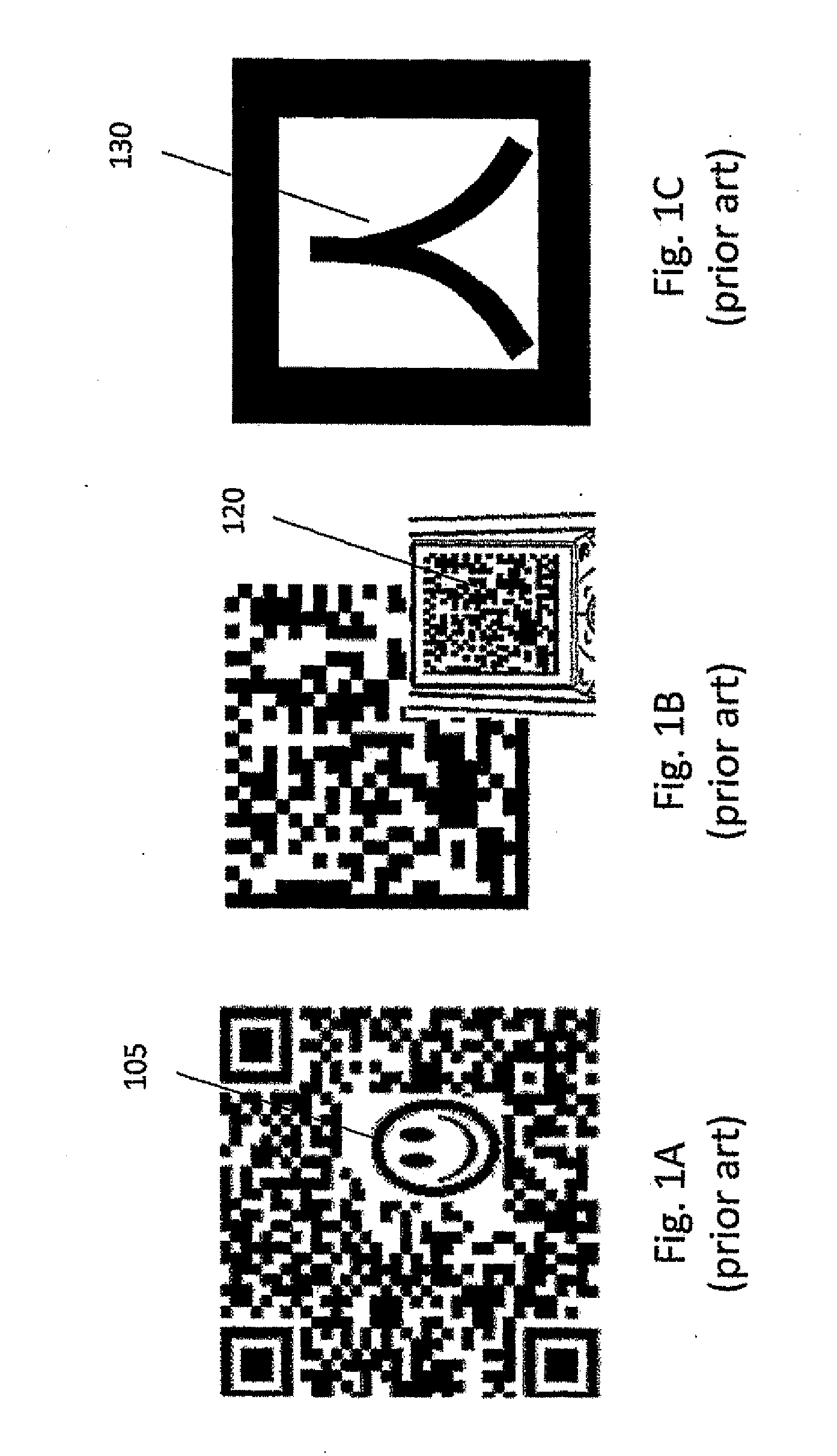

Augmented reality (AR) is an interactive experience of a real-world environment where the objects that reside in the real world are enhanced by computer-generated perceptual information, sometimes across multiple sensory modalities, including visual, auditory, haptic, somatosensory and olfactory. AR can be defined as a system that fulfills three basic features: a combination of real and virtual worlds, real-time interaction, and accurate 3D registration of virtual and real objects. The overlaid sensory information can be constructive (i.e. additive to the natural environment), or destructive (i.e. masking of the natural environment). This experience is seamlessly interwoven with the physical world such that it is perceived as an immersive aspect of the real environment. In this way, augmented reality alters one's ongoing perception of a real-world environment, whereas virtual reality completely replaces the user's real-world environment with a simulated one. Augmented reality is related to two largely synonymous terms: mixed reality and computer-mediated reality.

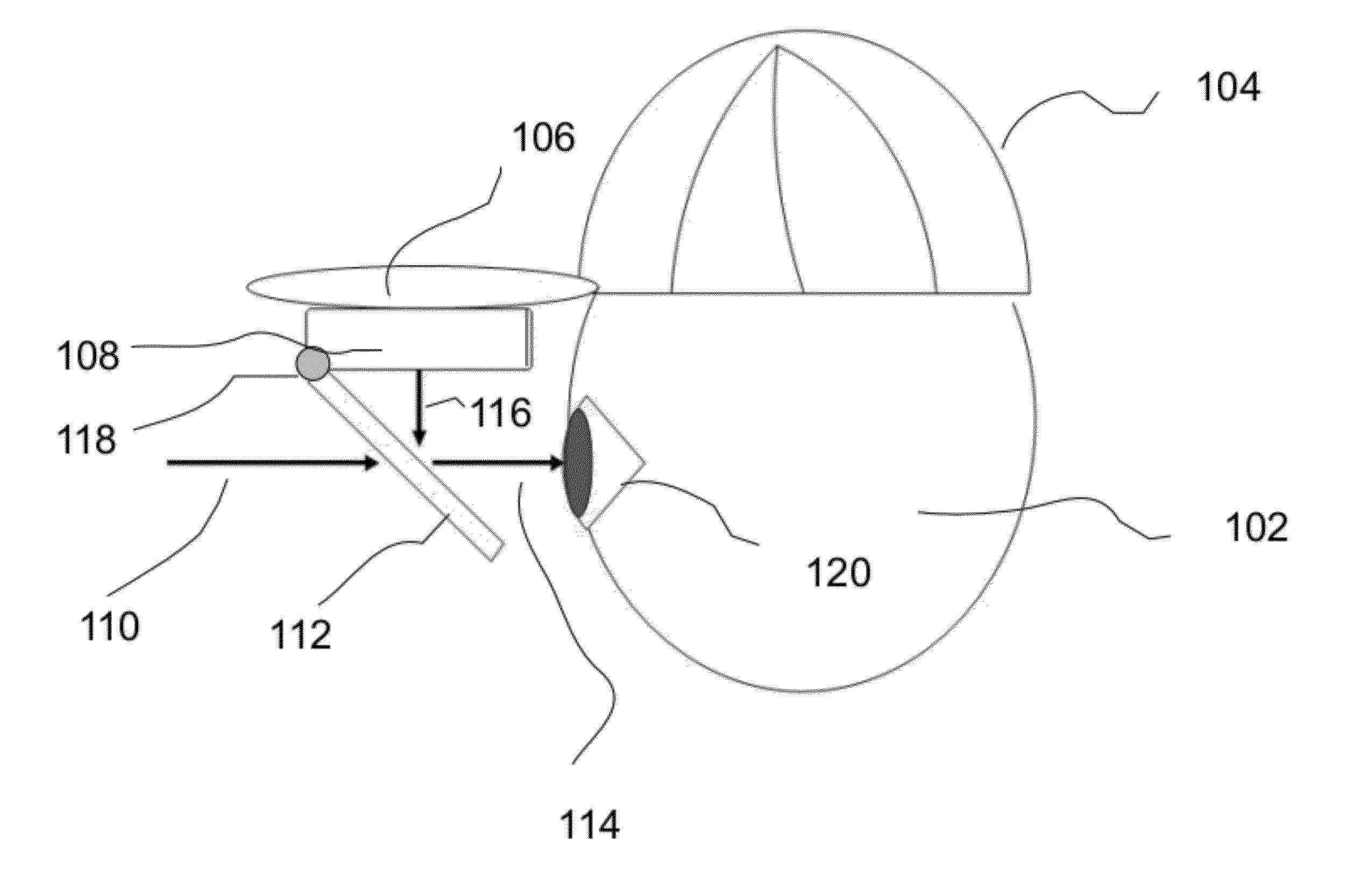

Augmented reality device and method

InactiveUS20060176242A1Precise alignmentUltrasonic/sonic/infrasonic diagnosticsDiagnostics using lightEyepieceDisplay device

An augmented reality device to combine a real world view with an object image. An optical combiner combines the object image with a real world view of the object and conveys the combined image to a user. A tracking system tracks one or more objects. At least a part of the tracking system is at a fixed location with respect to the display. An eyepiece is used to view the combined object and real world images, and fixes the user location with respect to the display and optical combiner location.

Owner:BLUE BELT TECH

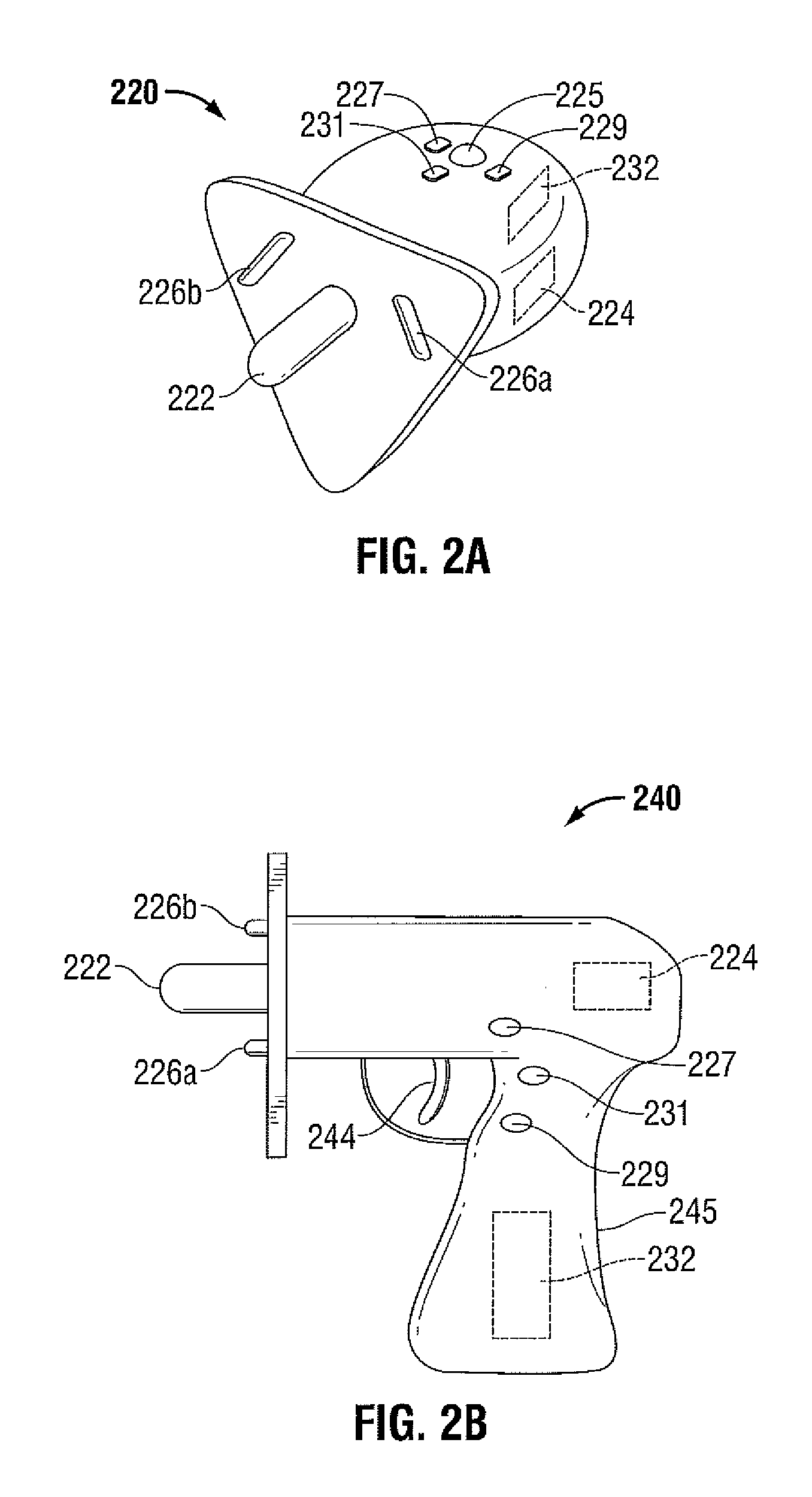

Customizable Haptic Assisted Robot Procedure System with Catalog of Specialized Diagnostic Tips

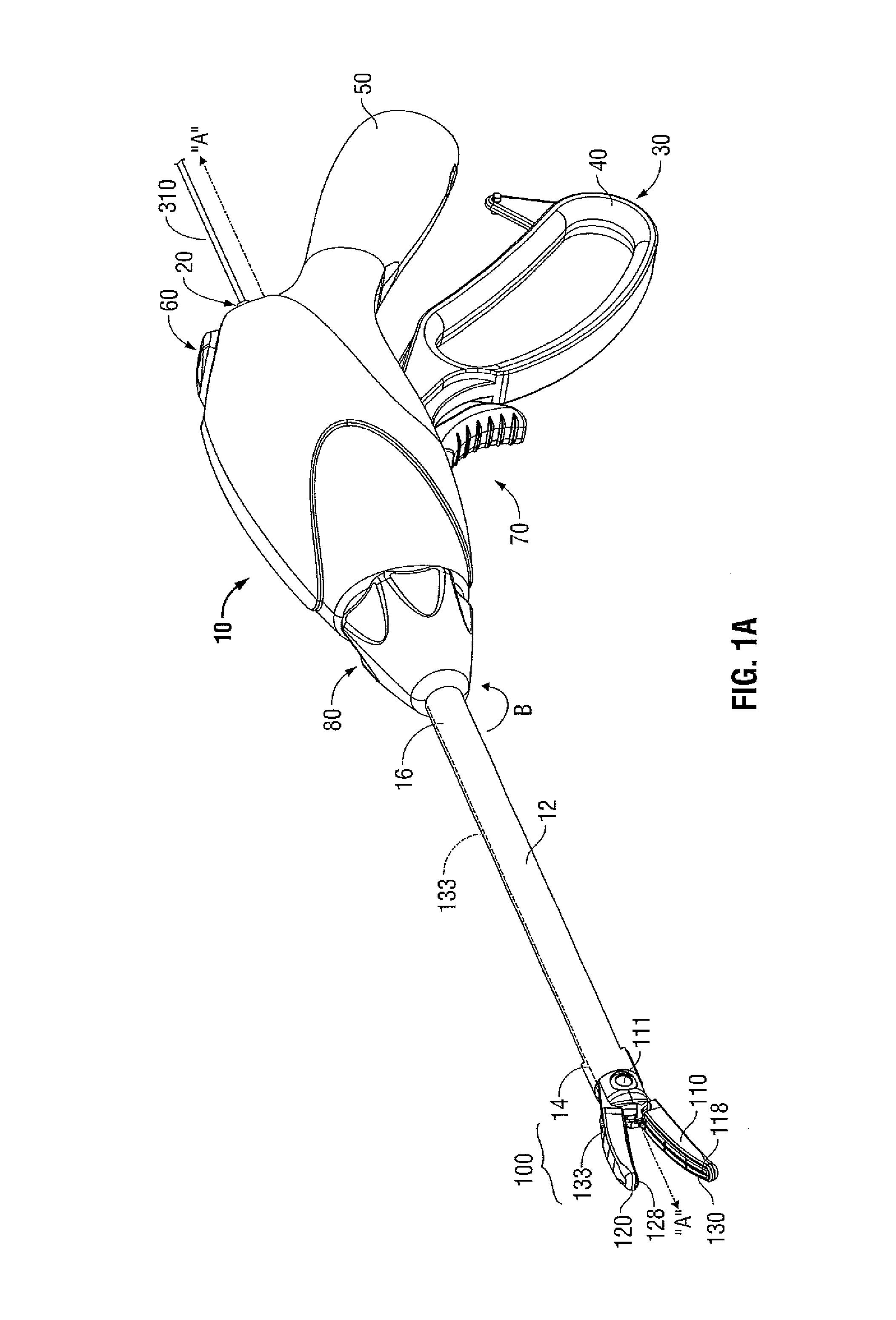

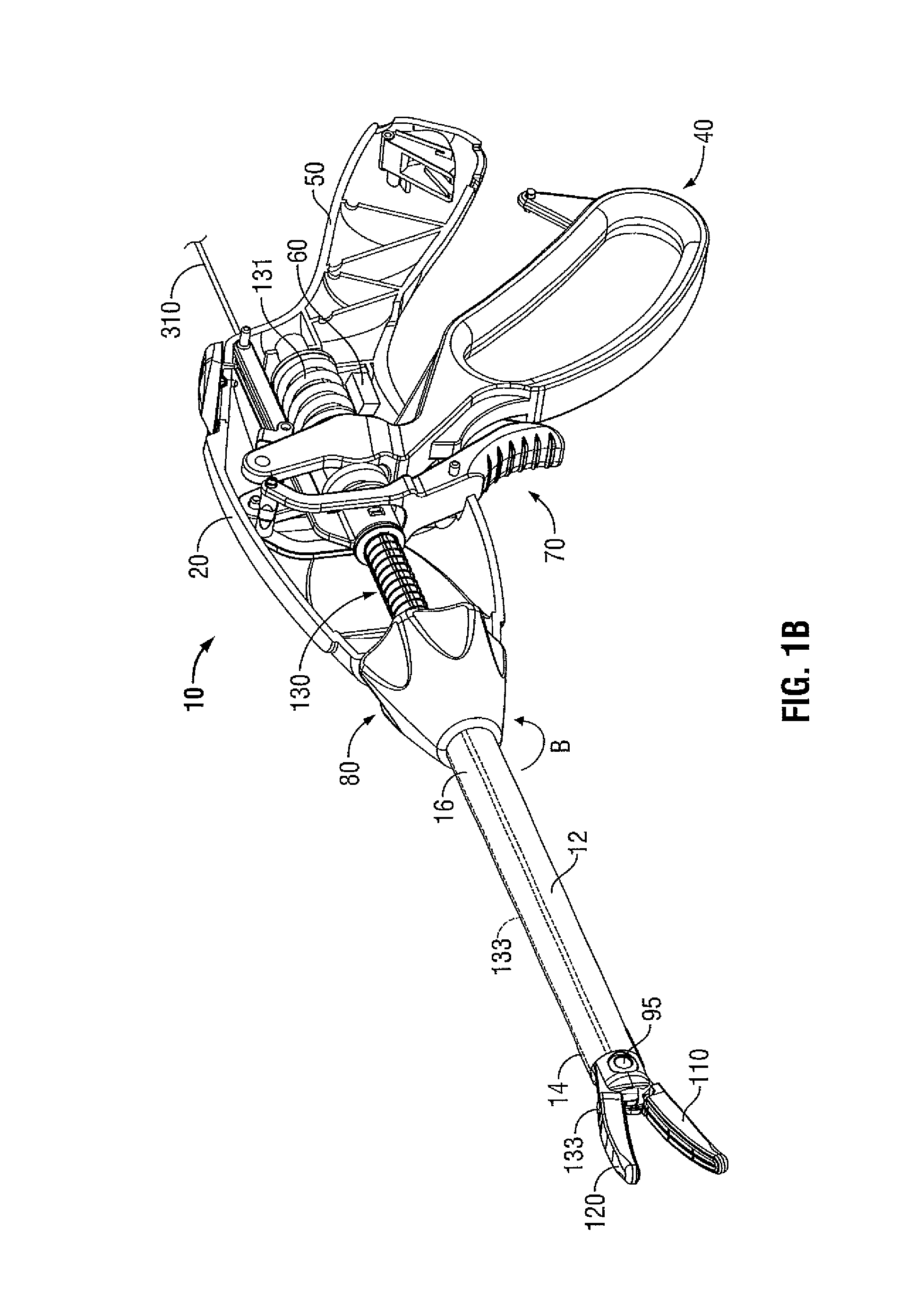

In accordance with the present disclosure, a system and method for using a remote control to control an electrosurgical instrument, where the remote controlled (RC) electrosurgical instrument has a universal coupling mechanism to allow switching between an interchangeable catalog of diagnostic tools. A controller within the base of the RC electrosurgical instrument identifies the type of disposable tip attached to the base. The controller, then, activates necessary features for use with the identified tip and deactivates any unnecessary features. A surgeon uses a remote with at least one momementum sensor to control the RC electrosurgical instrument 10. The surgeon rotates his hand mimicking movements of a handheld electrosurgical instrument, the movements of which are translated and sent to the RC electrosurgical instrument. The surgeon may use an augmented reality (AR) vision system to assist the surgeon in viewing the surgical site.

Owner:TYCO HEALTHCARE GRP LP

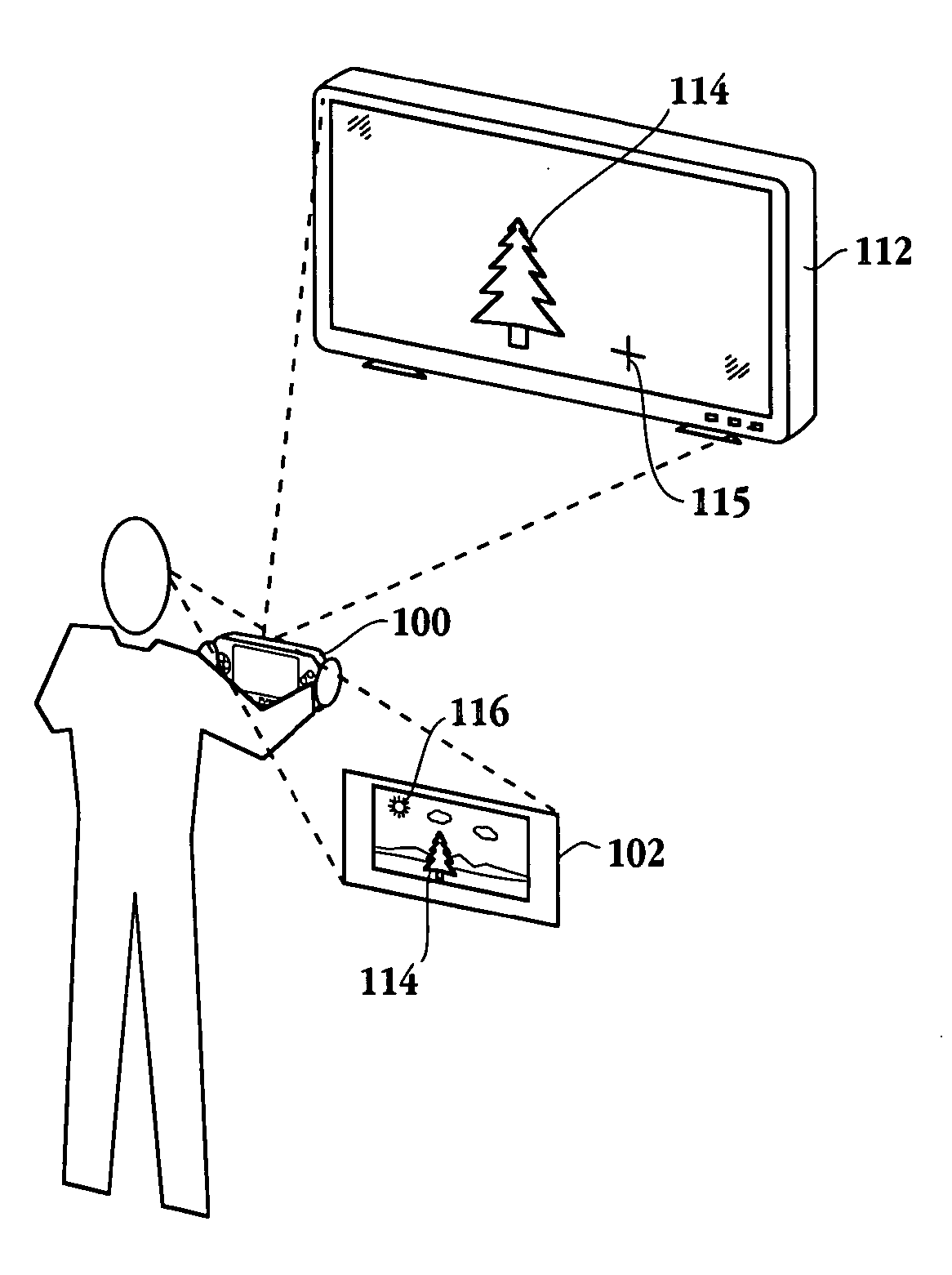

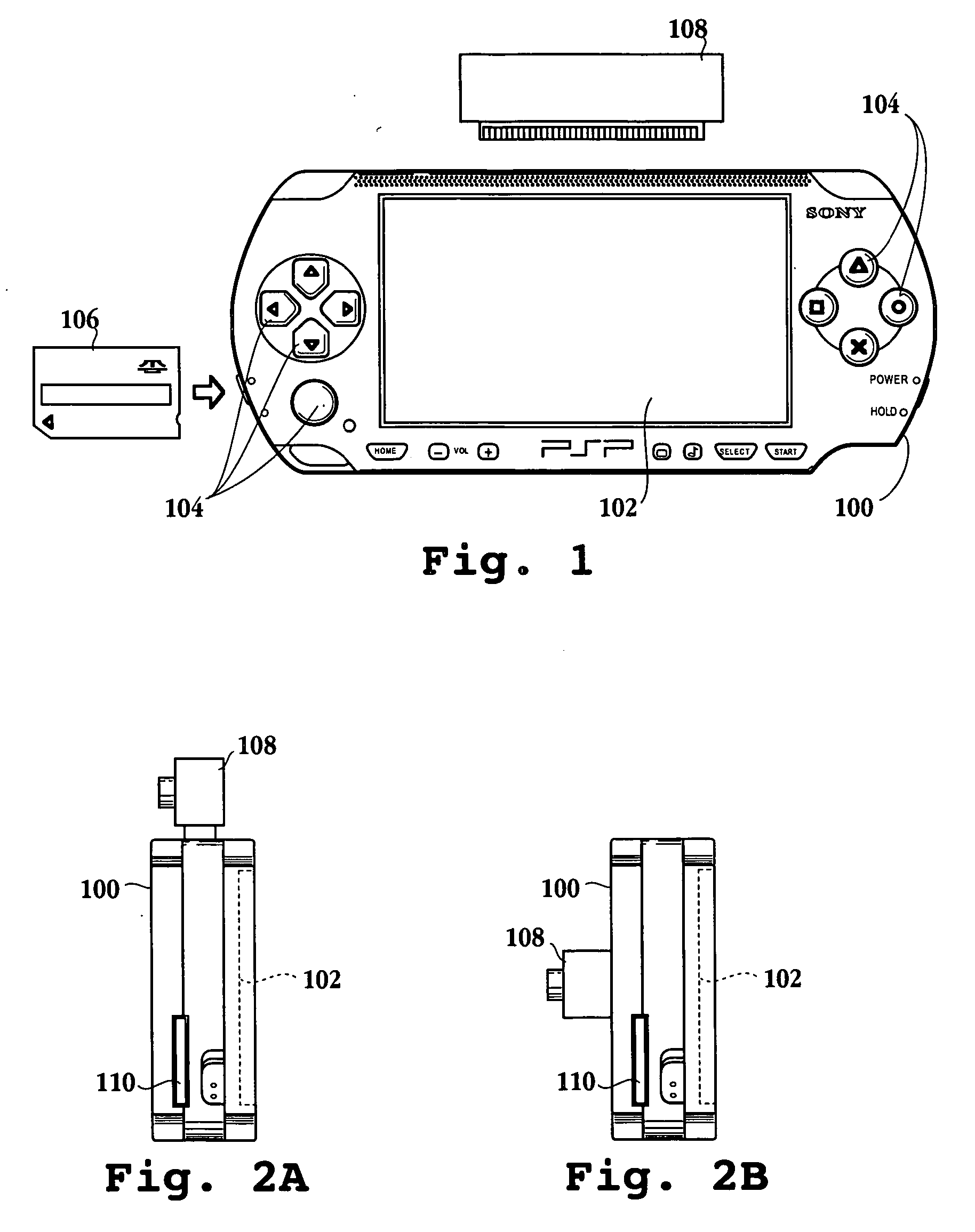

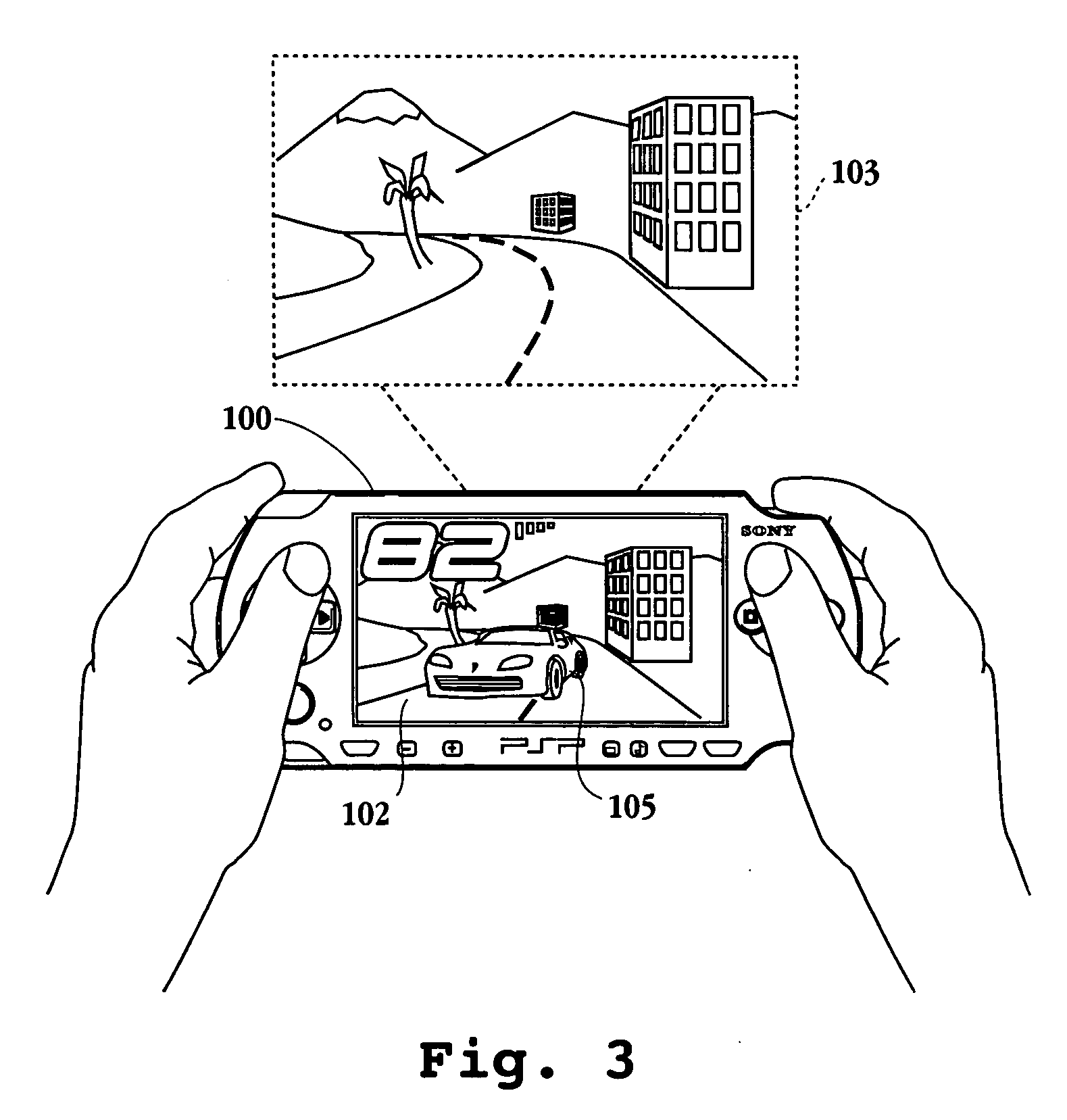

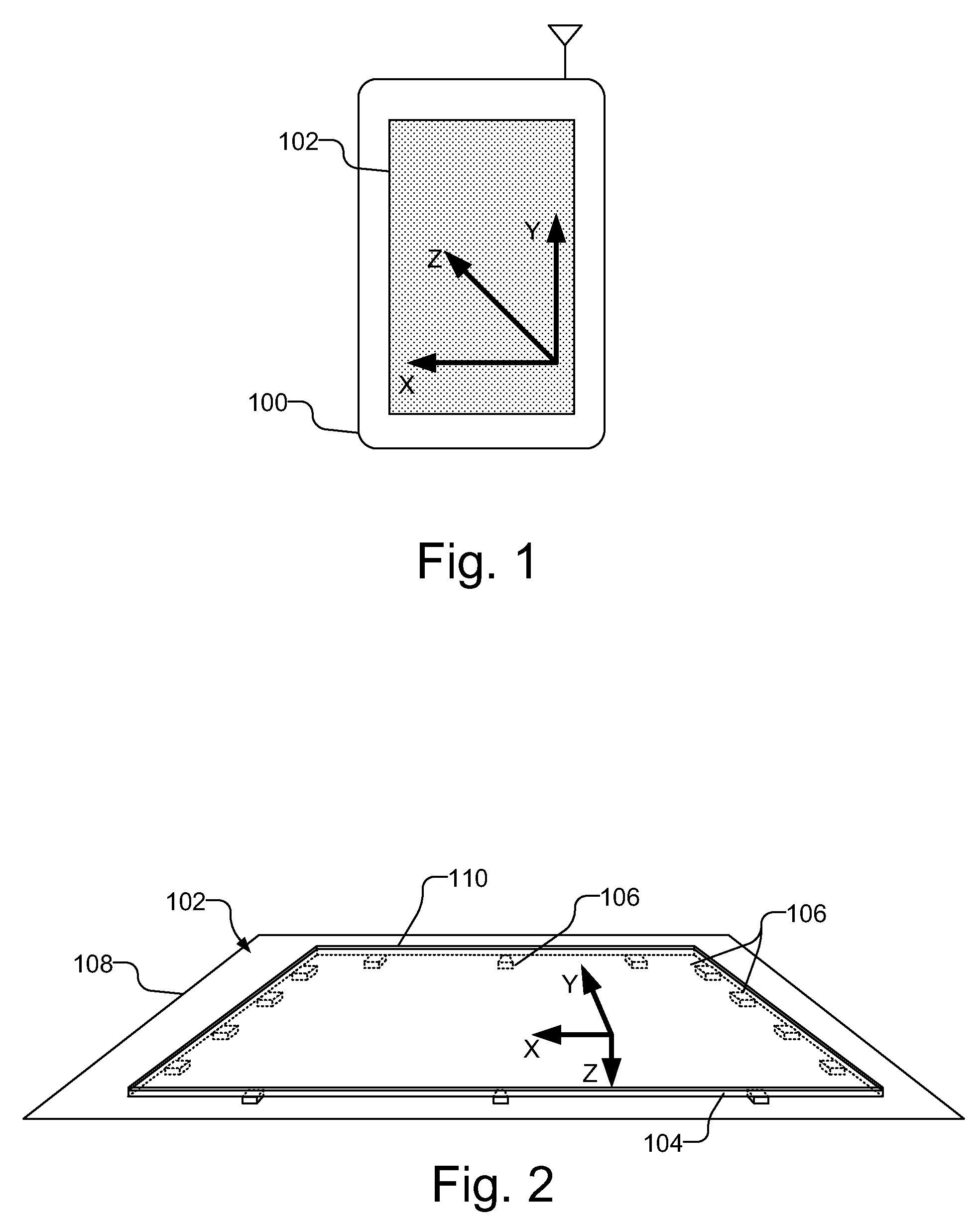

Portable augmented reality device and method

ActiveUS20060038833A1Digital data processing detailsParticular environment based servicesComputer graphics (images)Image capture

A portable device configured to provide an augmented reality experience is provided. The portable device has a display screen configured to display a real world scene. The device includes an image capture device associated with the display screen. The image capture device is configured to capture image data representing the real world scene. The device includes image recognition logic configured to analyze the image data representing the real world scene. Image generation logic responsive to the image recognition logic is included. The image generation logic is configured to incorporate an additional image into the real world scene. A computer readable medium and a system providing an augmented reality environment are also provided.

Owner:SONY COMPUTER ENTERTAINMENT INC

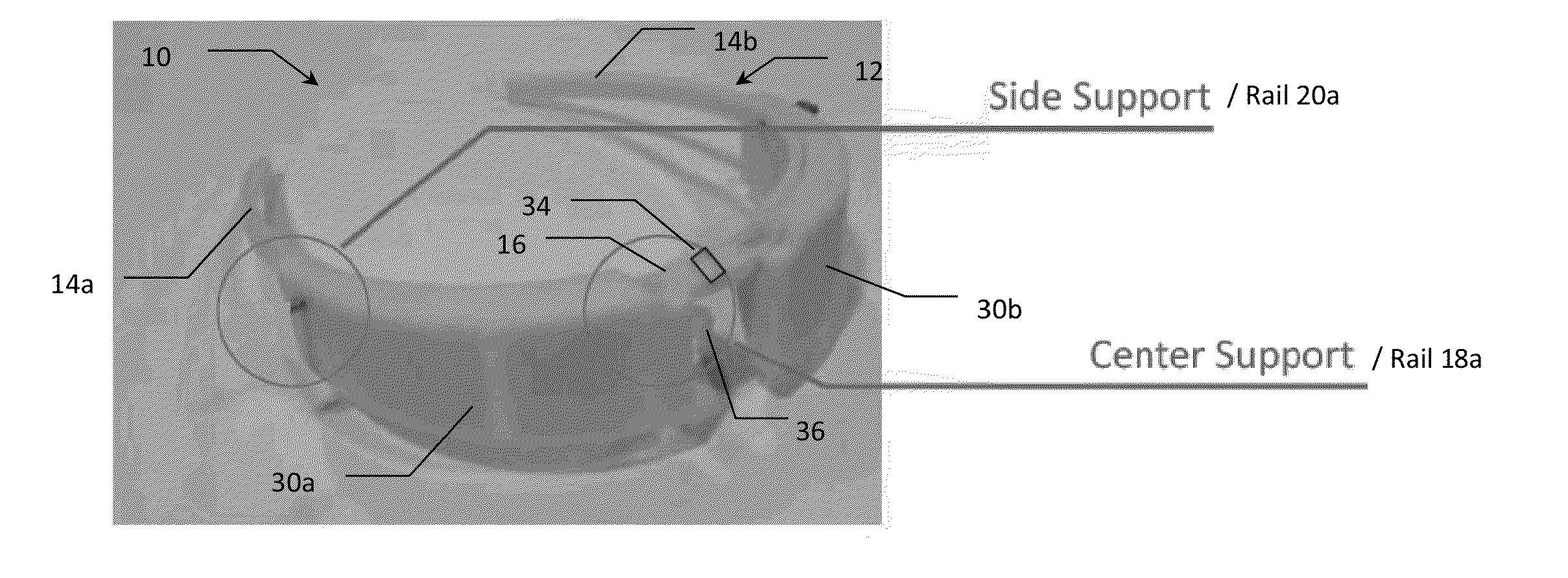

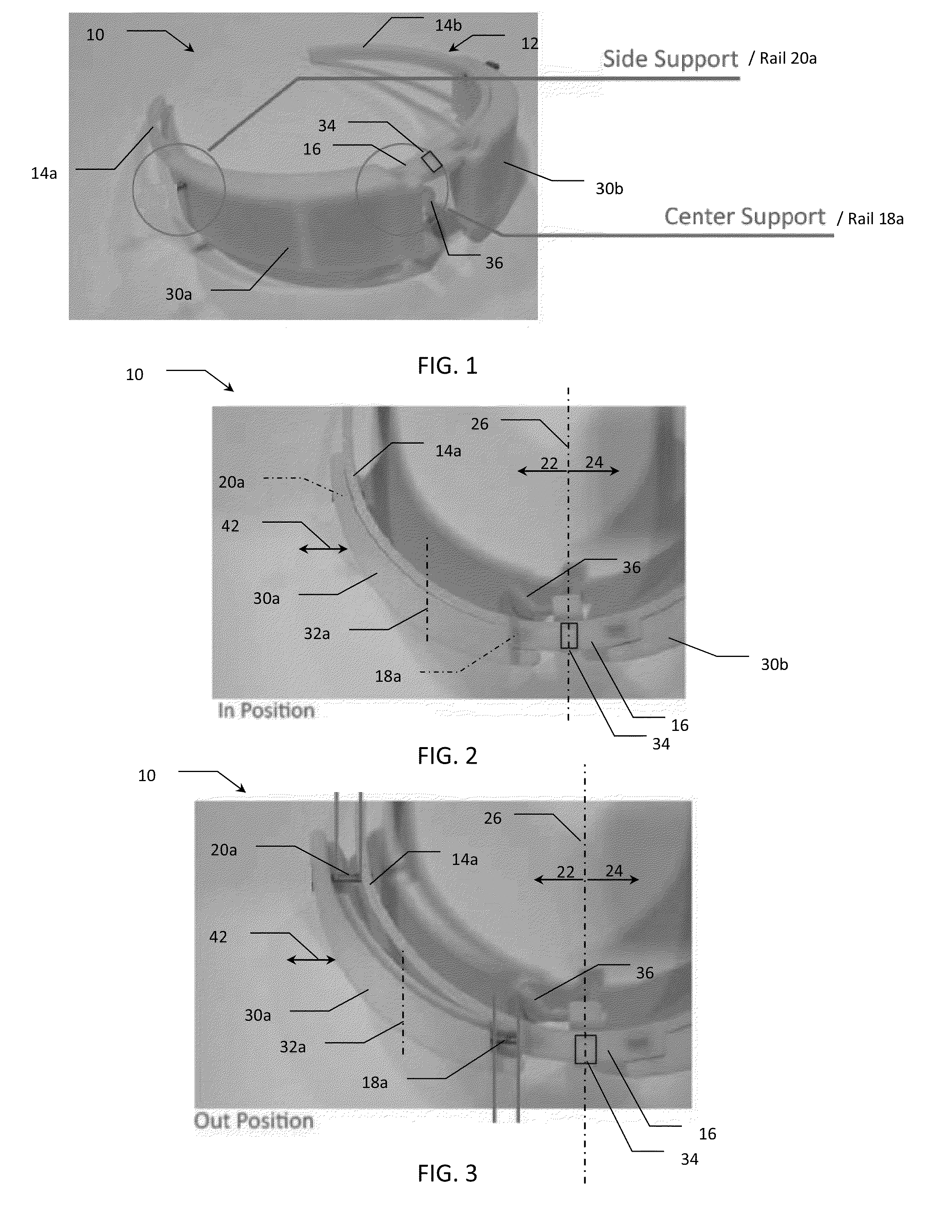

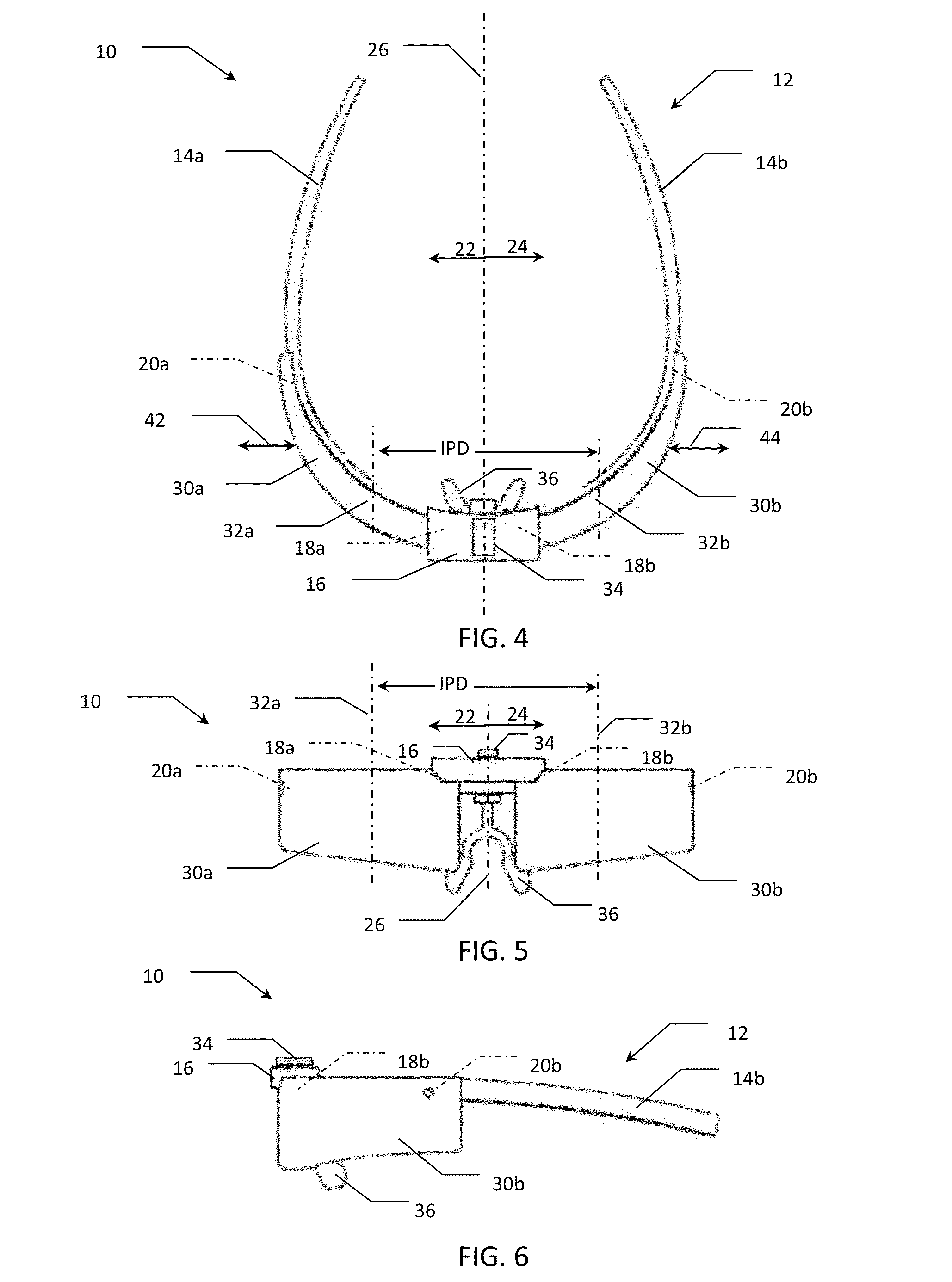

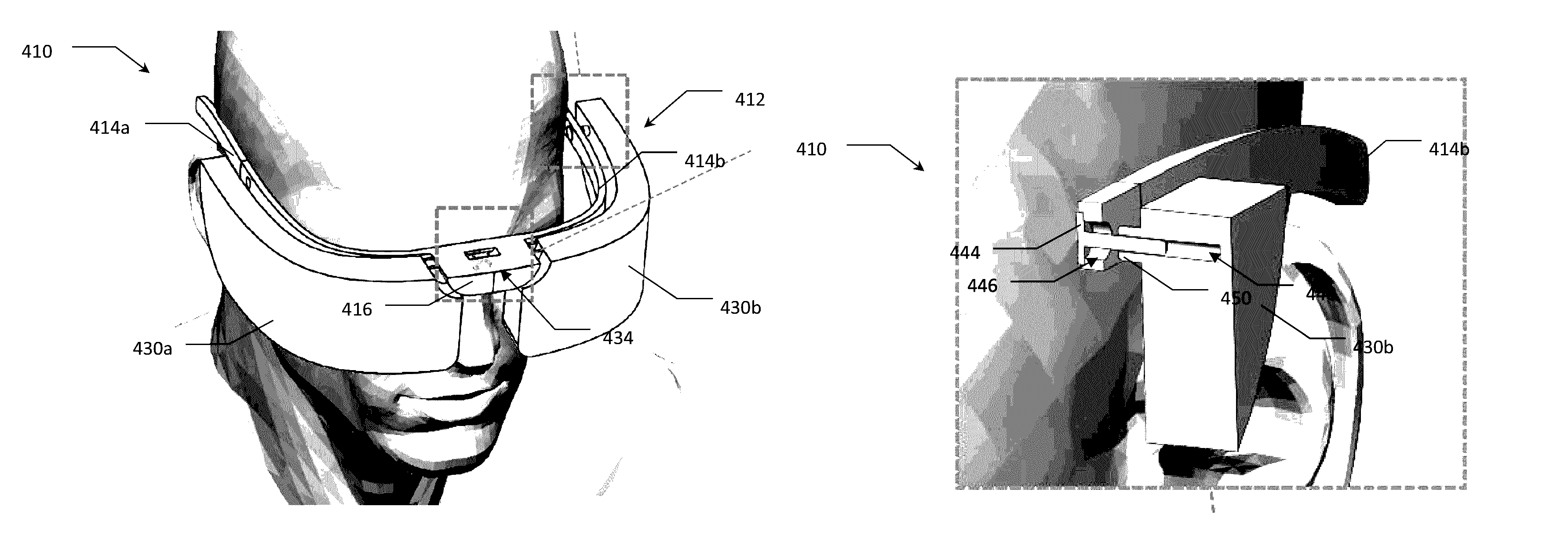

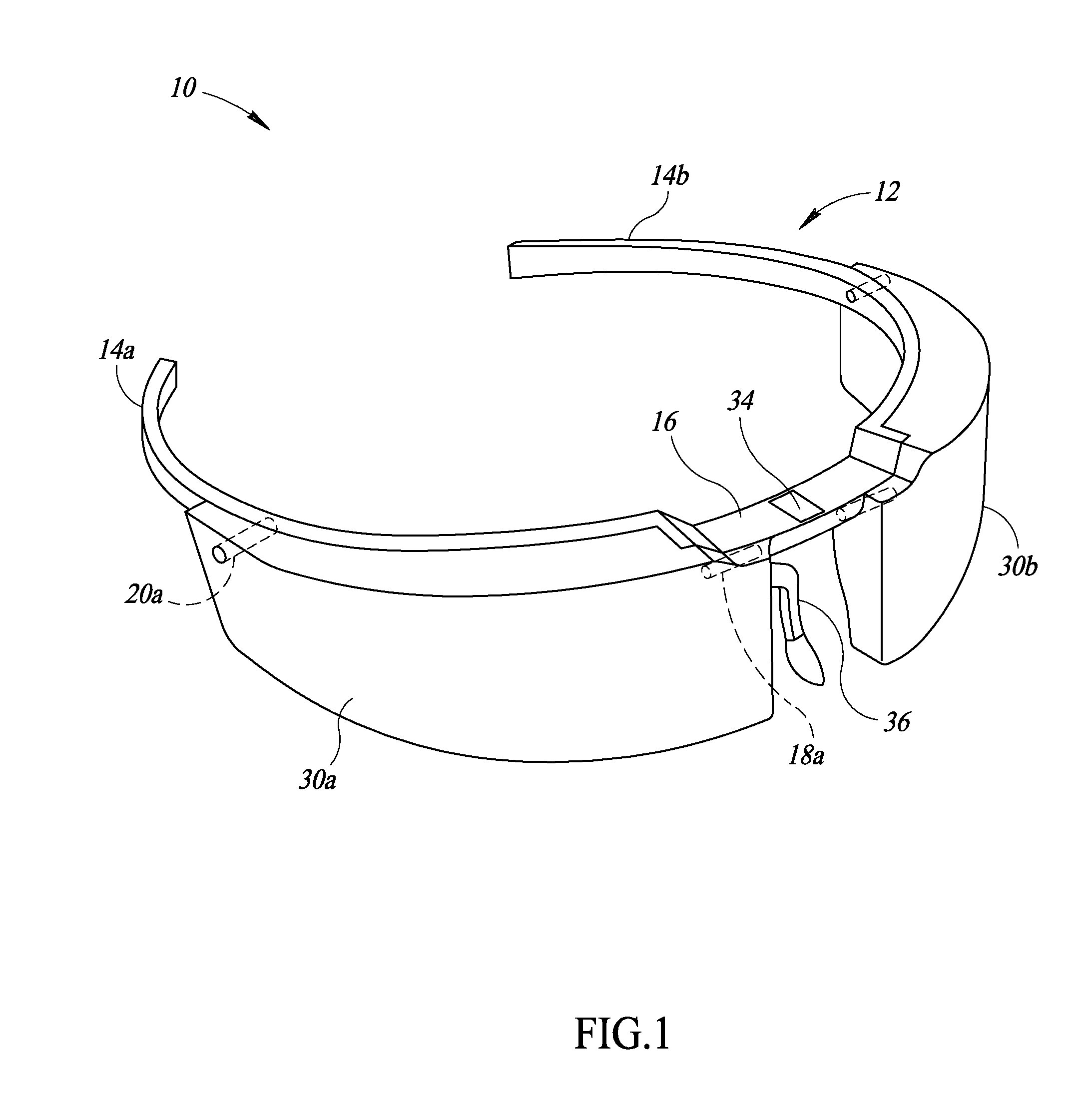

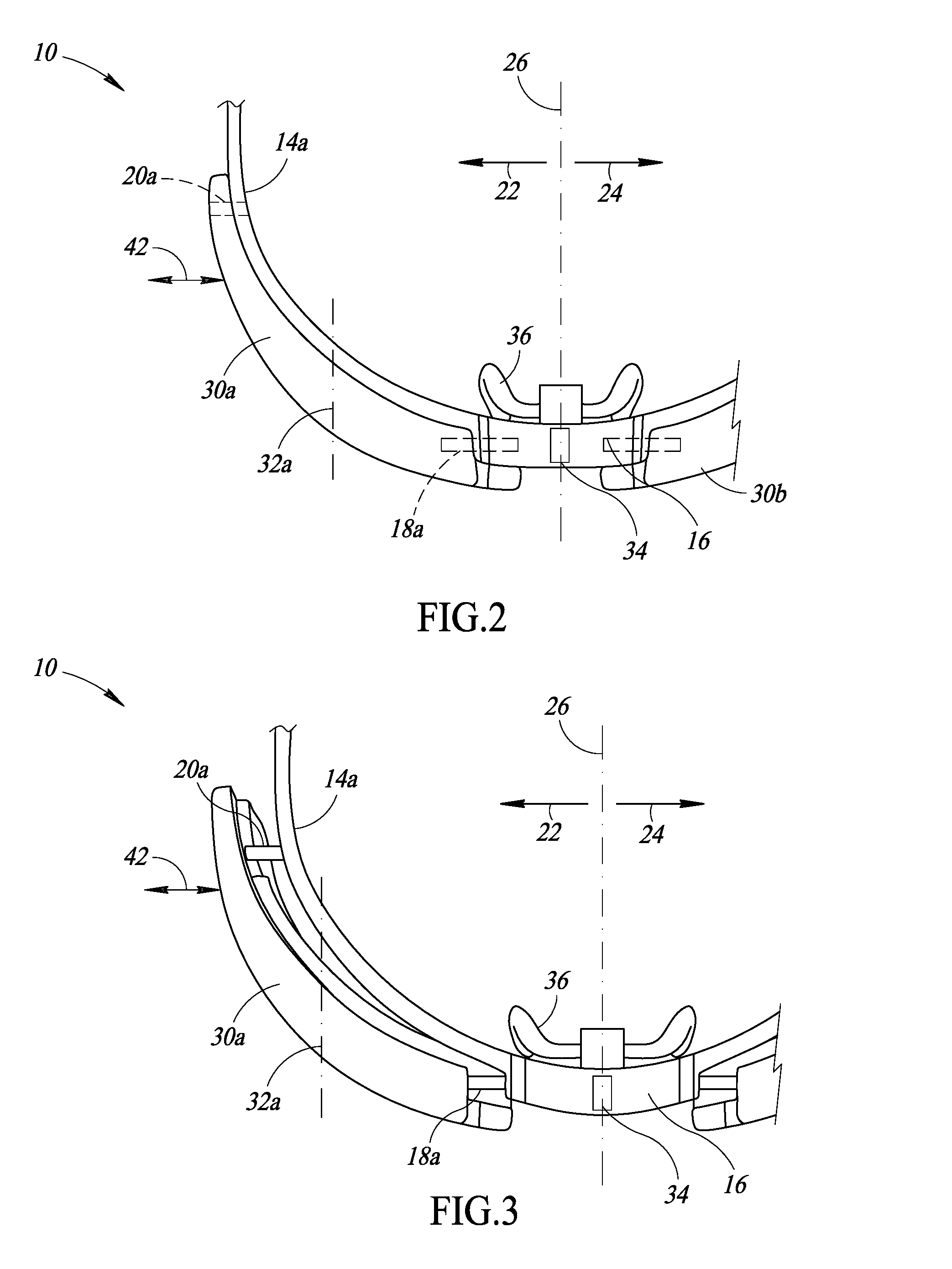

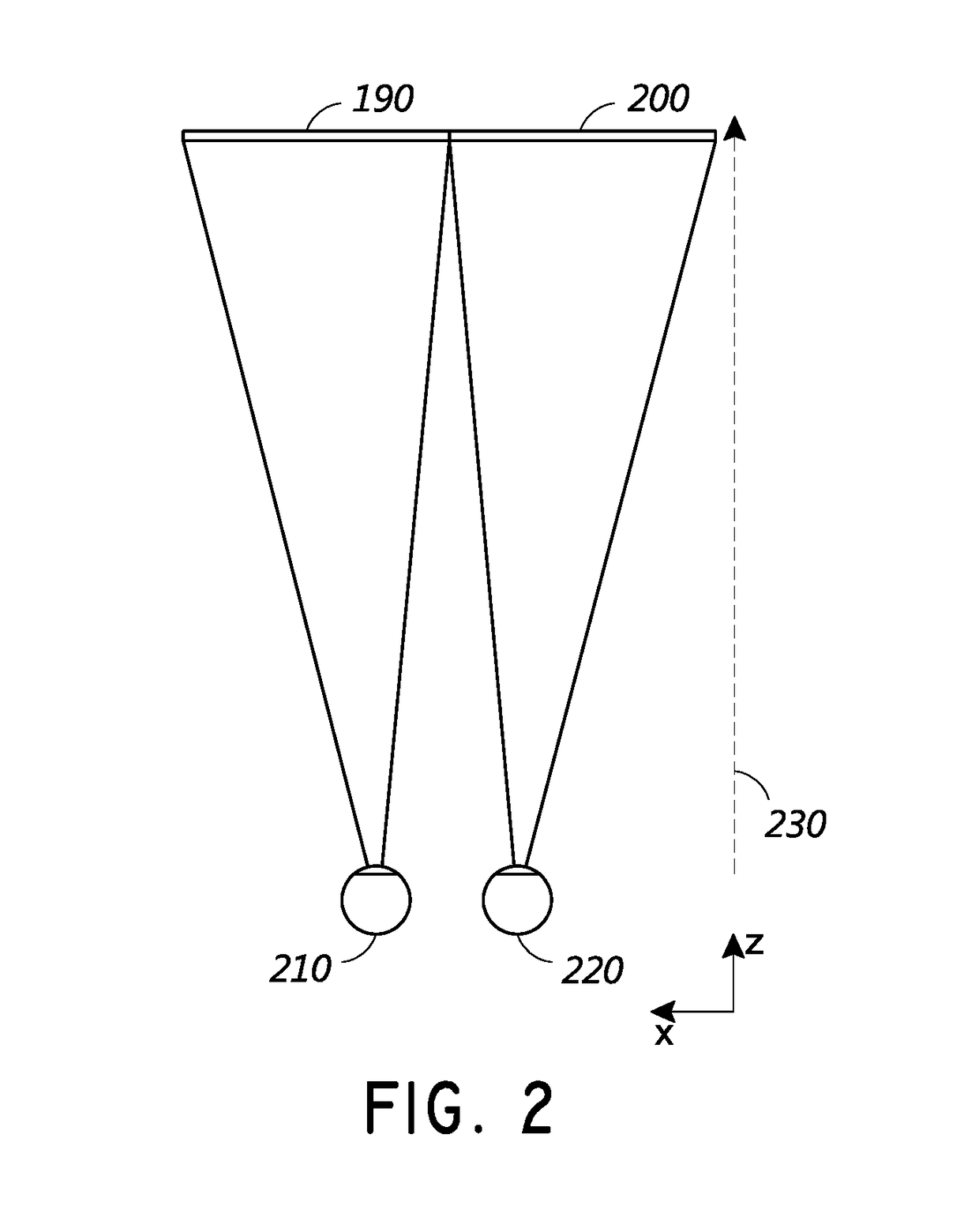

Virtual or augmented reality headsets having adjustable interpupillary distance

A virtual or augmented reality headset is provided having a frame, a pair of virtual or augmented reality eyepieces, and an interpupillary distance adjustment mechanism. The frame includes opposing arm members, a bridge positioned intermediate the opposing arm members, and one or more linear rails. The adjustment mechanism is coupled to the virtual or augmented reality eyepieces and operable to simultaneously move the eyepieces in adjustment directions aligned with the plurality of linear rails to adjust the interpupillary distance of the eyepieces.

Owner:MAGIC LEAP

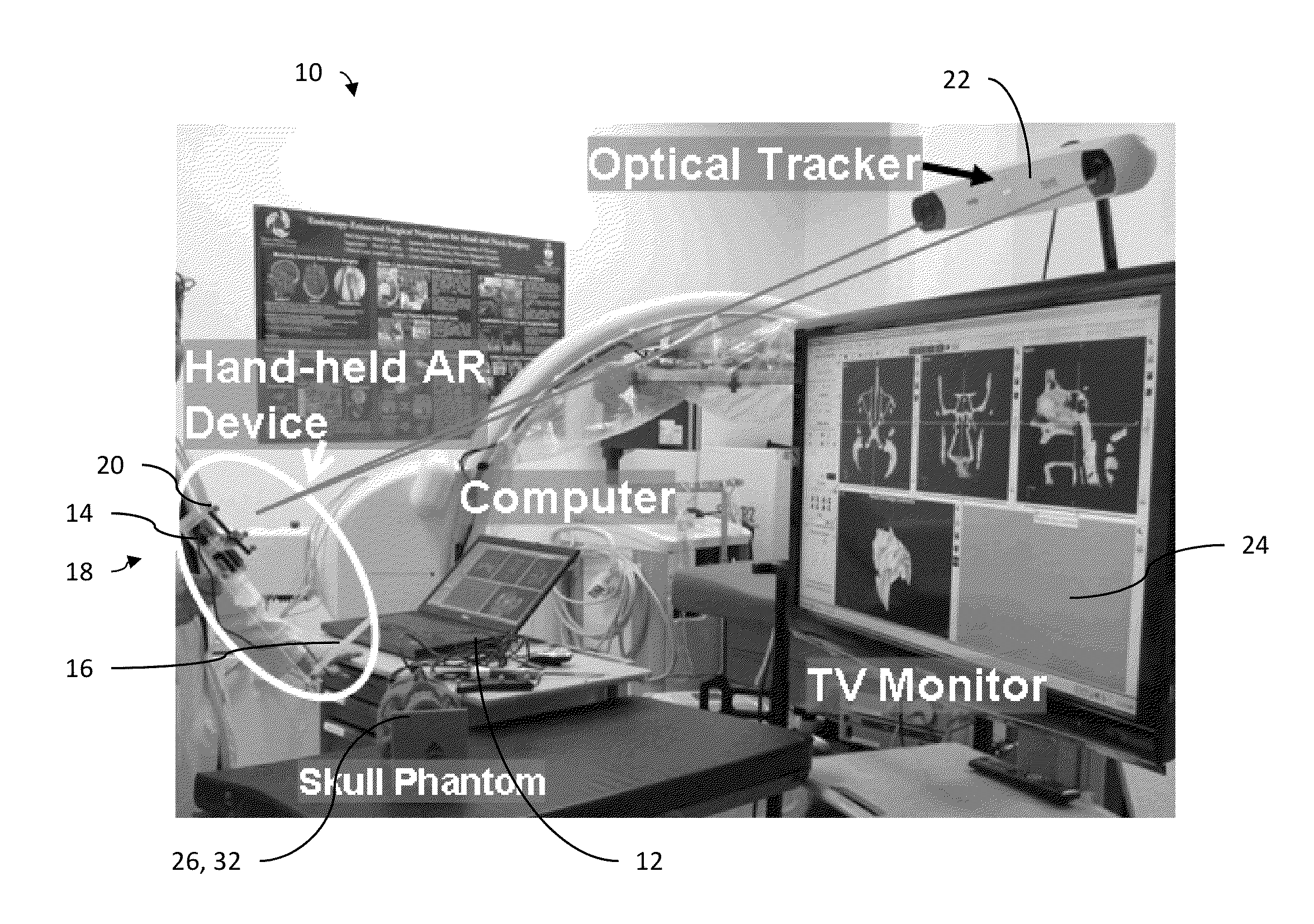

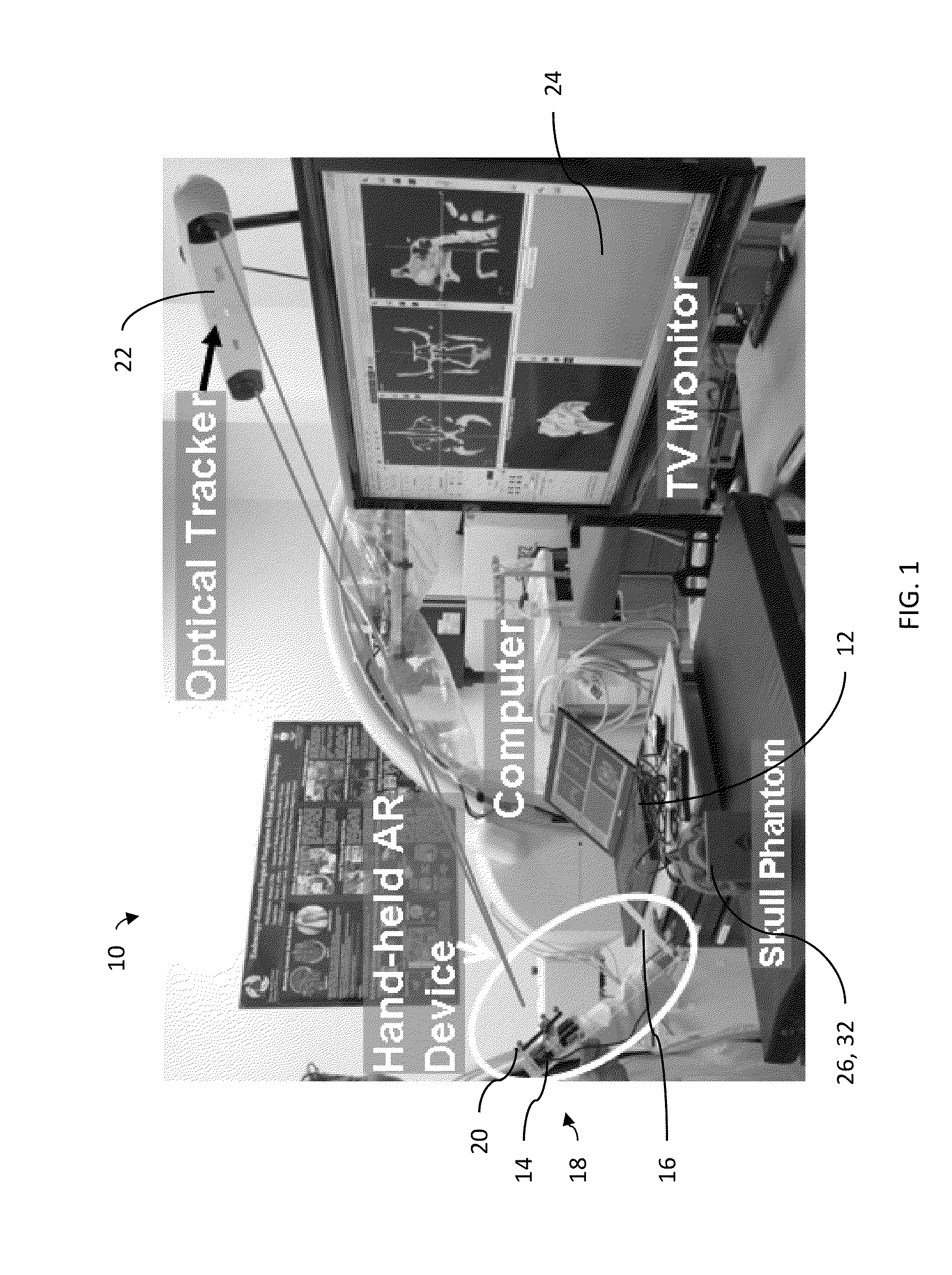

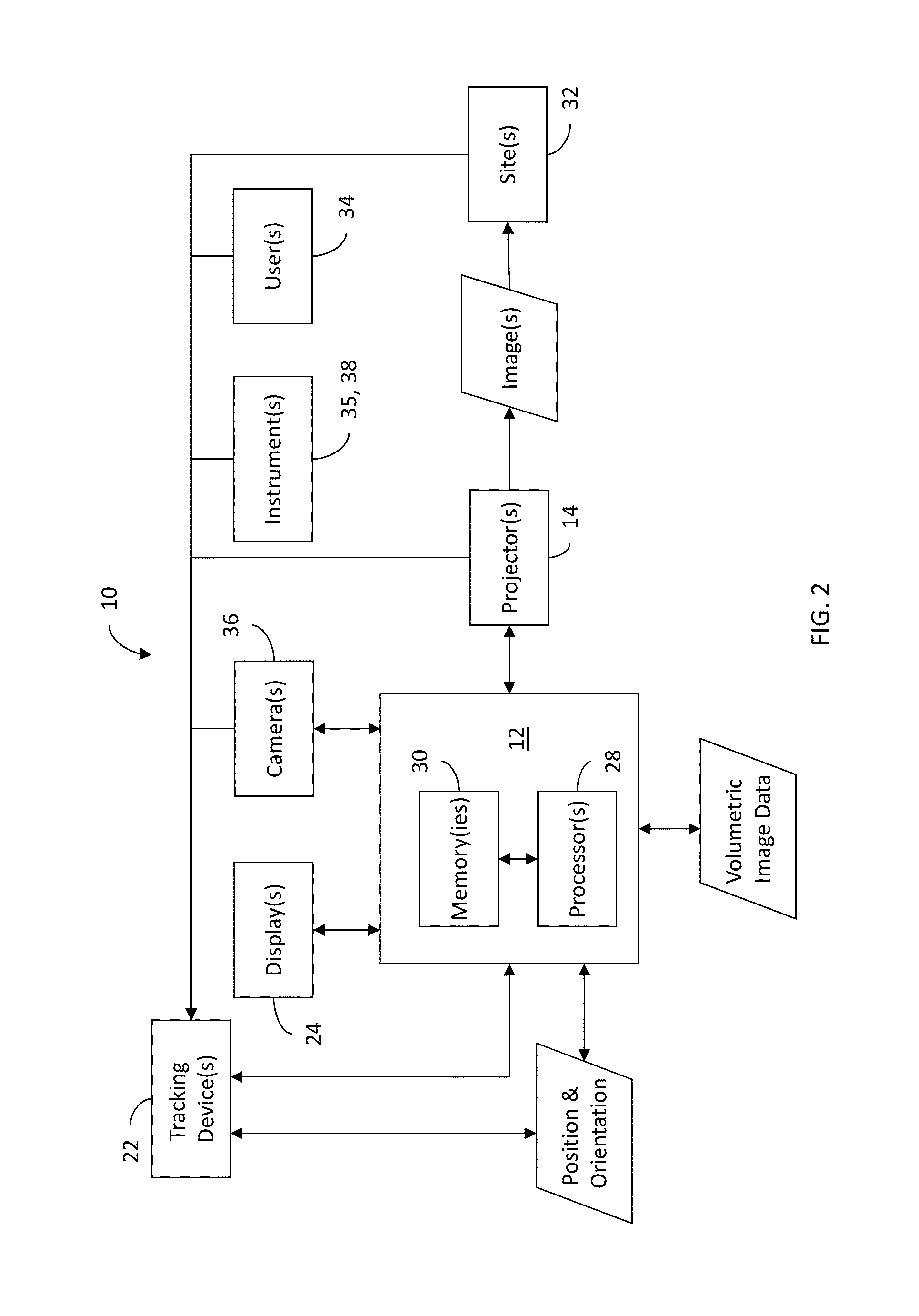

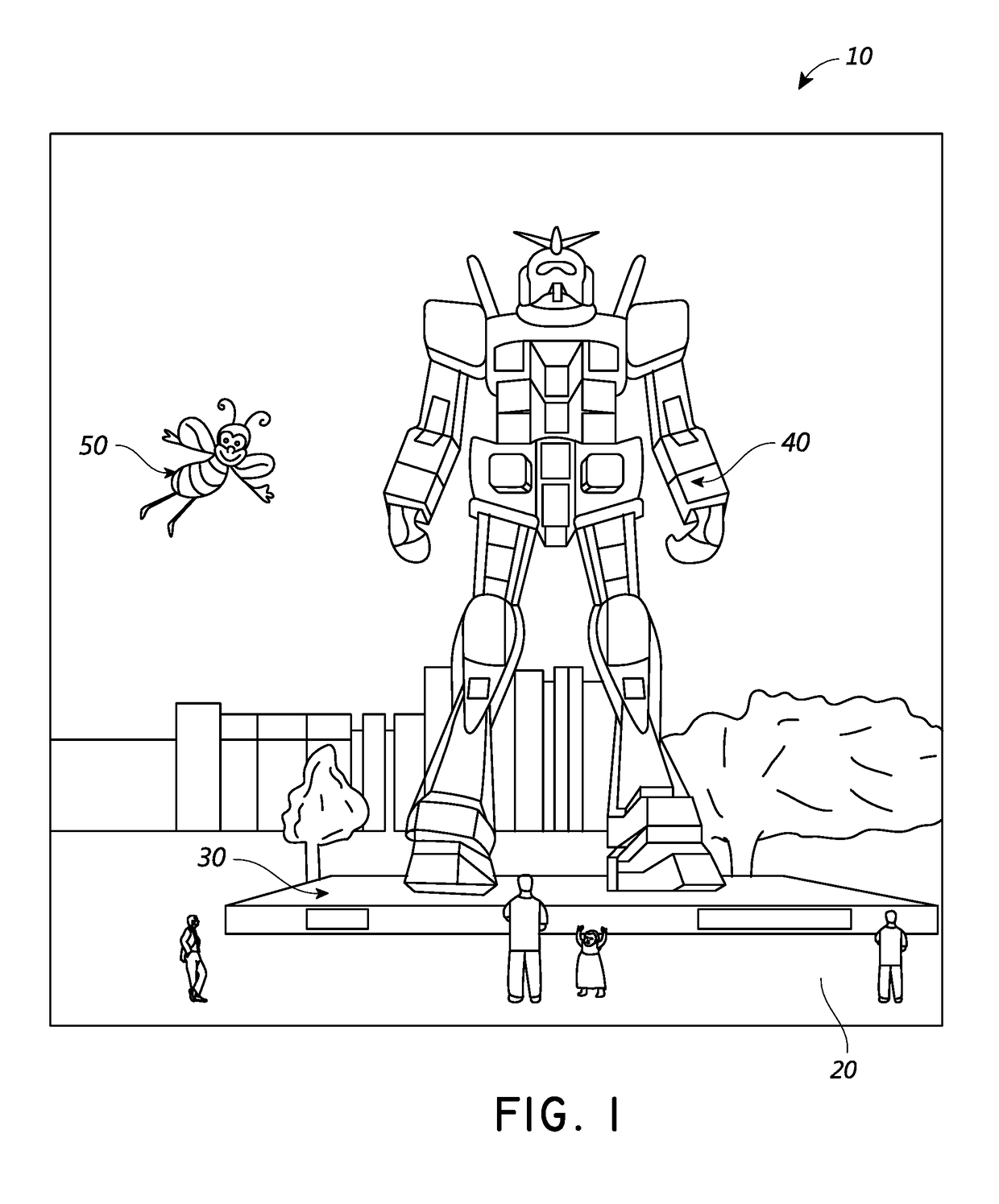

Augmented reality apparatus

Augmented reality apparatus (10) for use during intervention procedures on a trackable intervention site (32) are disclosed herein. The apparatus (10) comprises a data processor (28), a trackable projector (14) and a medium (30) including machine-readable instructions executable by the processor (28). The projector (14) is configured to project an image overlaying the intervention site (32) based on instructions from the data processor (28). The machine readable instructions are configured to cause the processor (28) to determine a spatial relationship between the projector (14) and the intervention site (32) based on a tracked position and orientation of the projector (14) and on a tracked position and orientation of the intervention site (32). The machine readable instructions are also configured to cause the processor to generate data representative of the image projected by the projector based on the determined spatial relationship between the projector and the intervention site.

Owner:UNIV HEALTH NETWORK

Virtual or augmented reality headsets having adjustable interpupillary distance

Owner:MAGIC LEAP INC

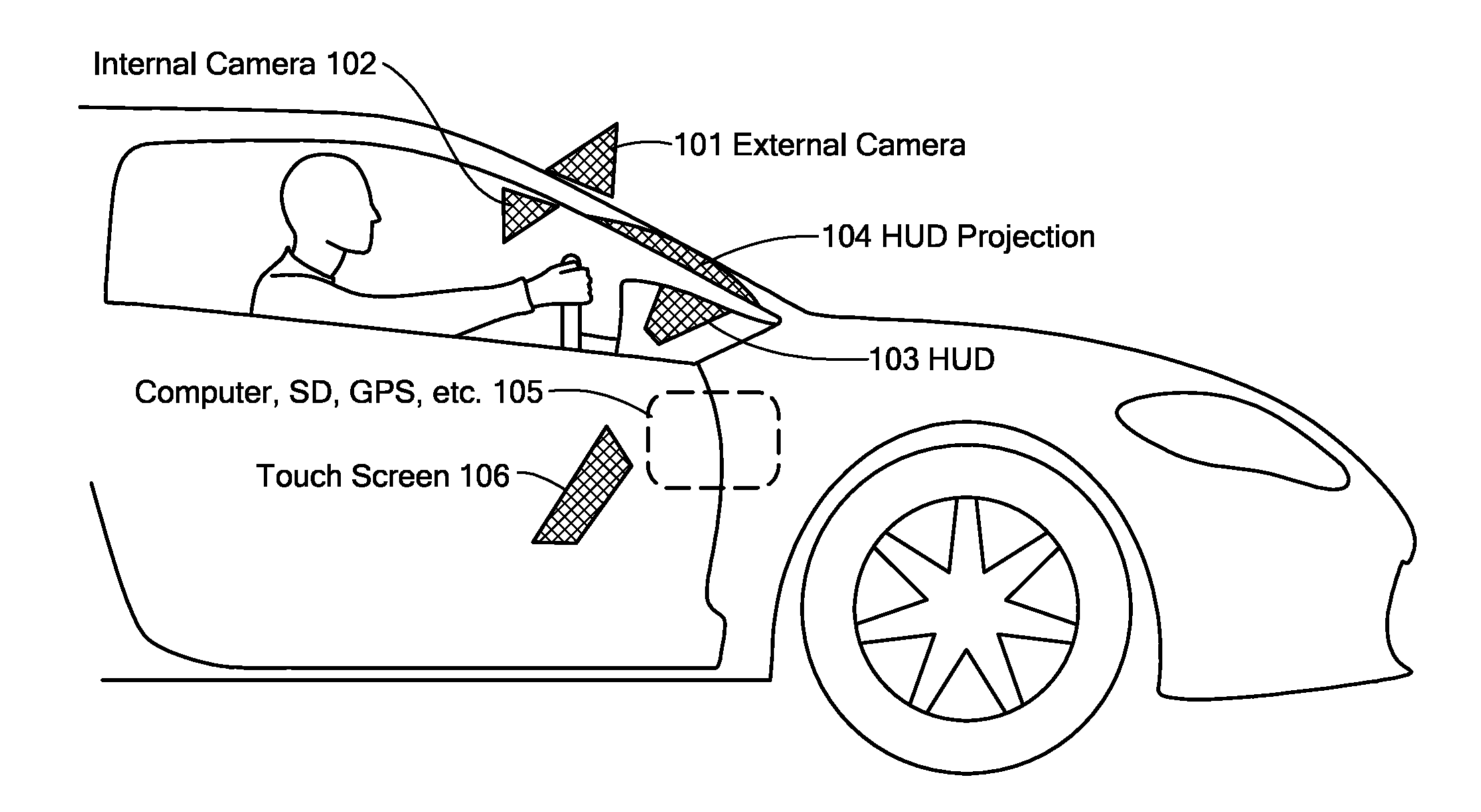

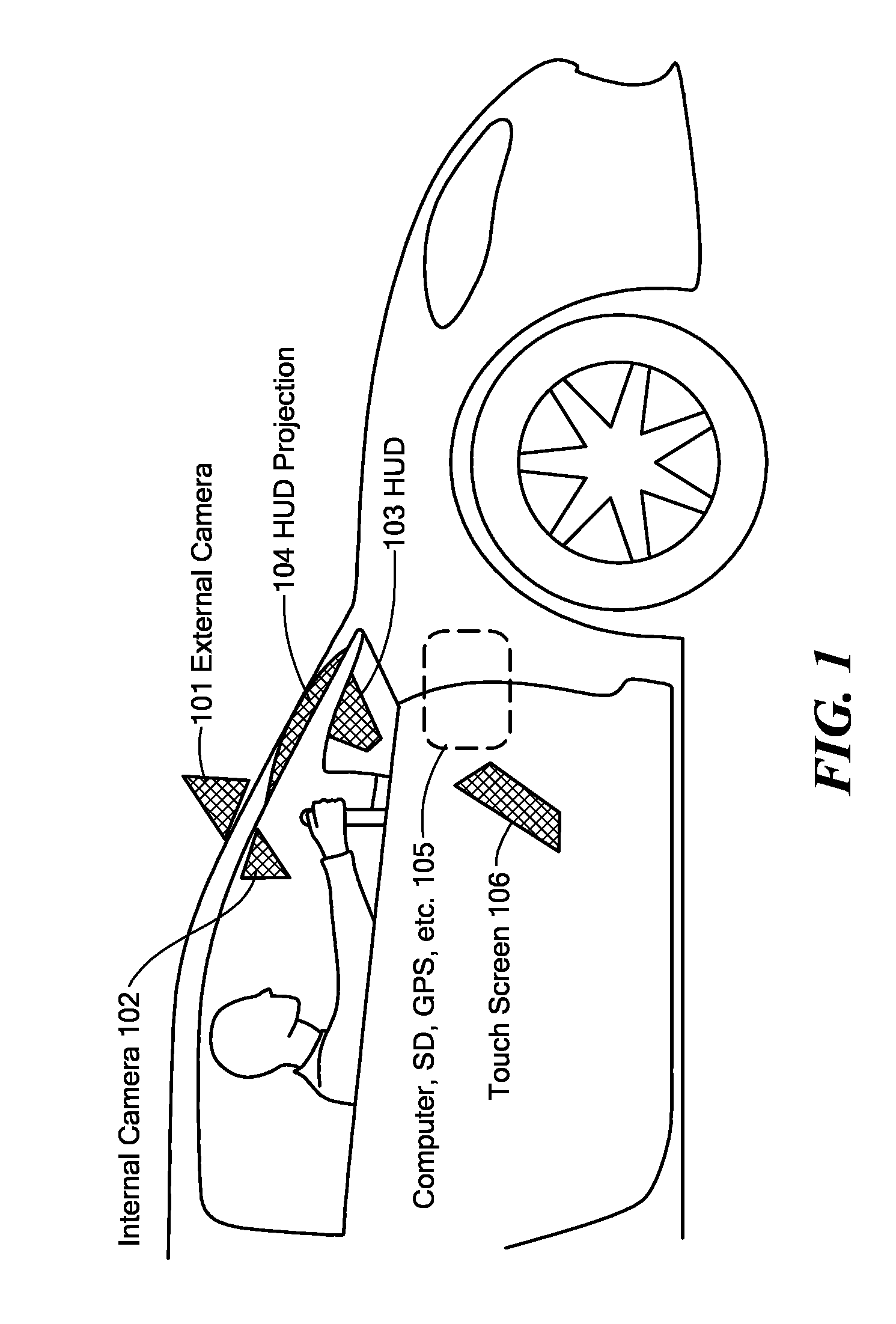

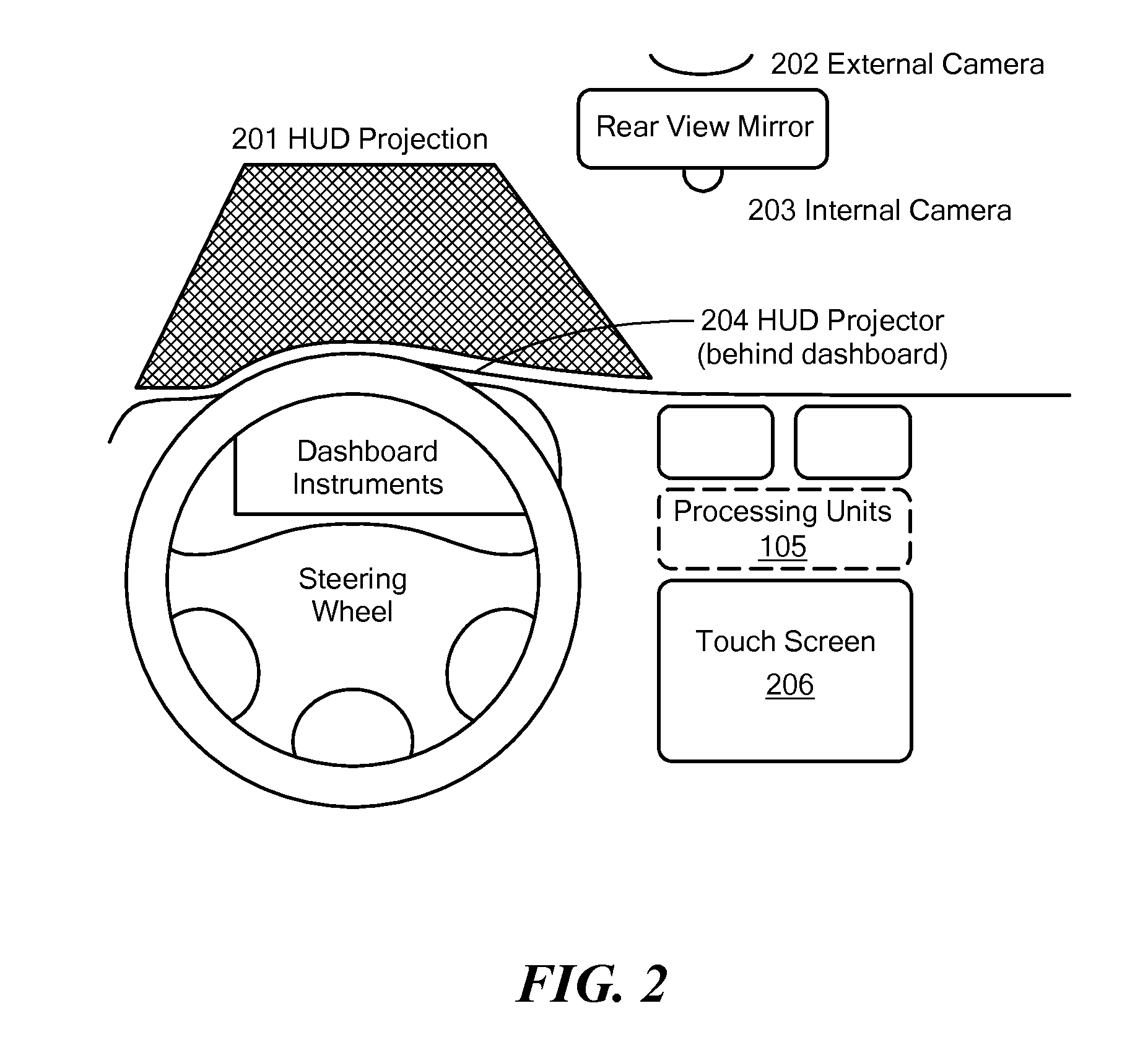

Reducing Driver Distraction Using a Heads-Up Display

InactiveUS20120224060A1Reduce driver distractionReduce distractionsInstrument arrangements/adaptationsColor television detailsHead-up displayCamera image

Driver distraction is reduced by providing information only when necessary to assist the driver, and in a visually pleasing manner. Obstacles such as other vehicles, pedestrians, and road defects are detected based on analysis of image data from a forward-facing camera system. An internal camera images the driver to determine a line of sight. Navigational information, such as a line with an arrow, is displayed on a windshield so that it appears to overlay and follow the road along the line of sight. Brightness of the information may be adjusted to correct for lighting conditions, so that the overlay will appear brighter during daylight hours and dimmer during the night. A full augmented reality is modeled and navigational hints are provided accordingly, so that the navigational information indicates how to avoid obstacles by directing the driver around them. Obstacles also may be visually highlighted.

Owner:INTEGRATED NIGHT VISION SYST

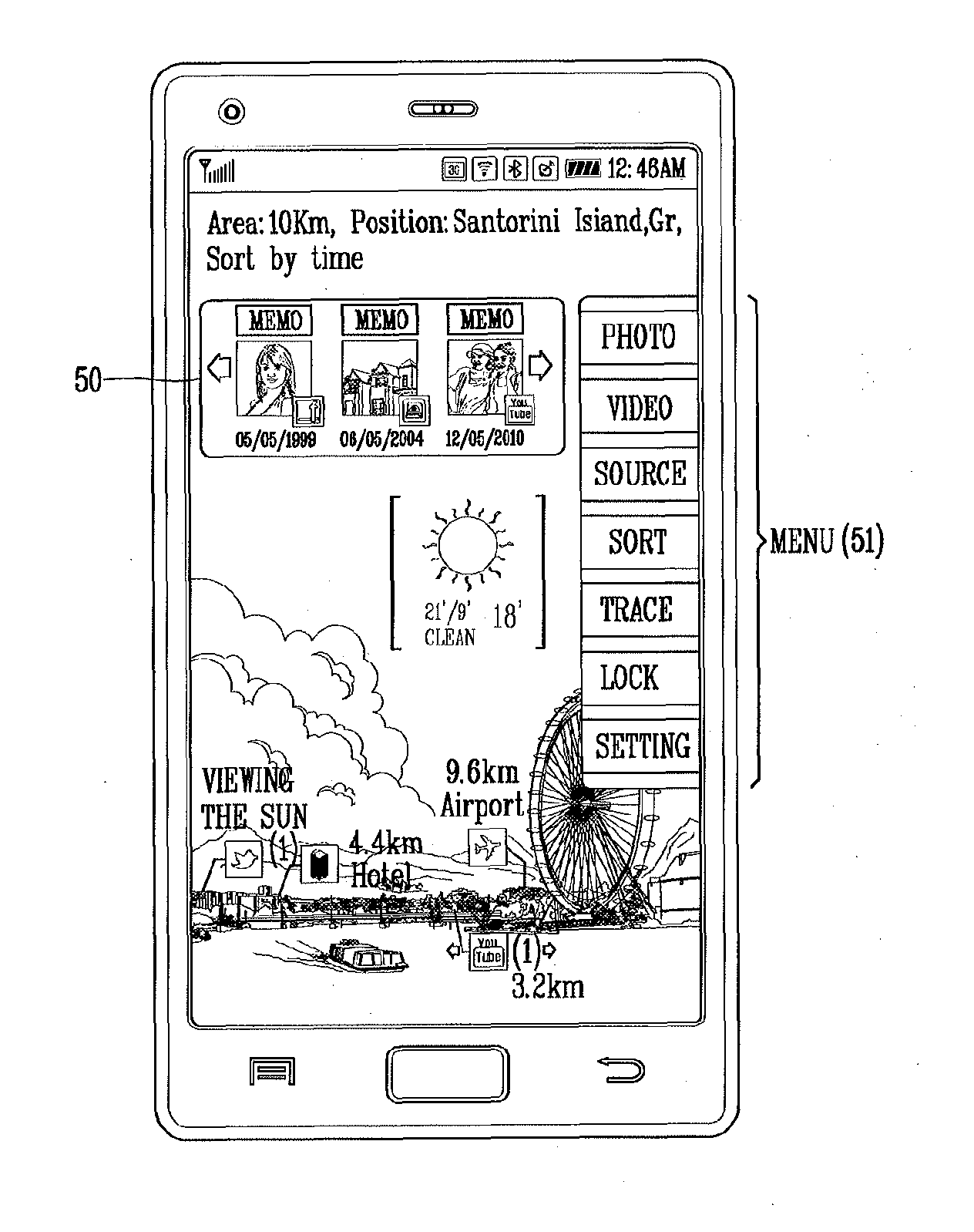

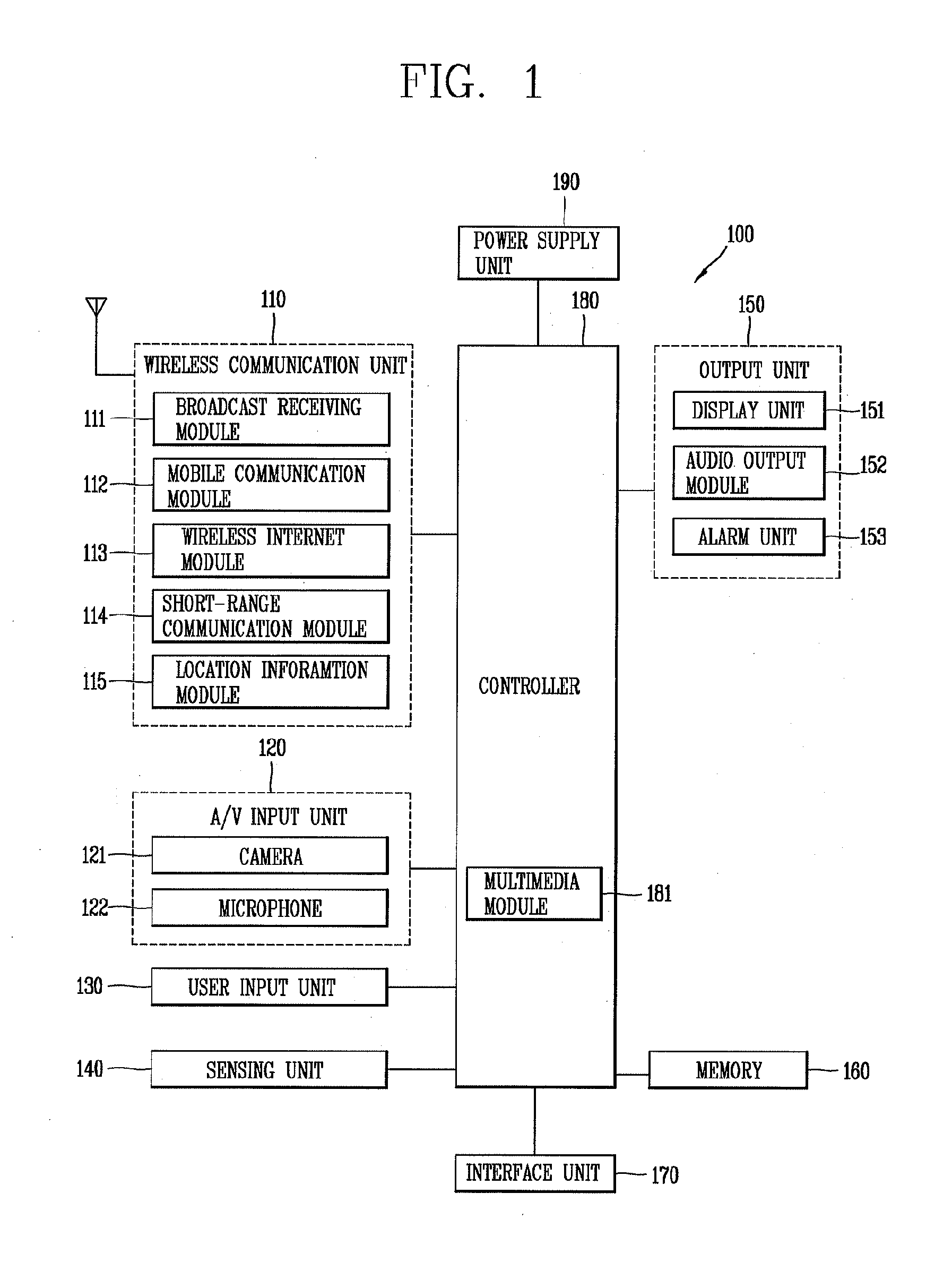

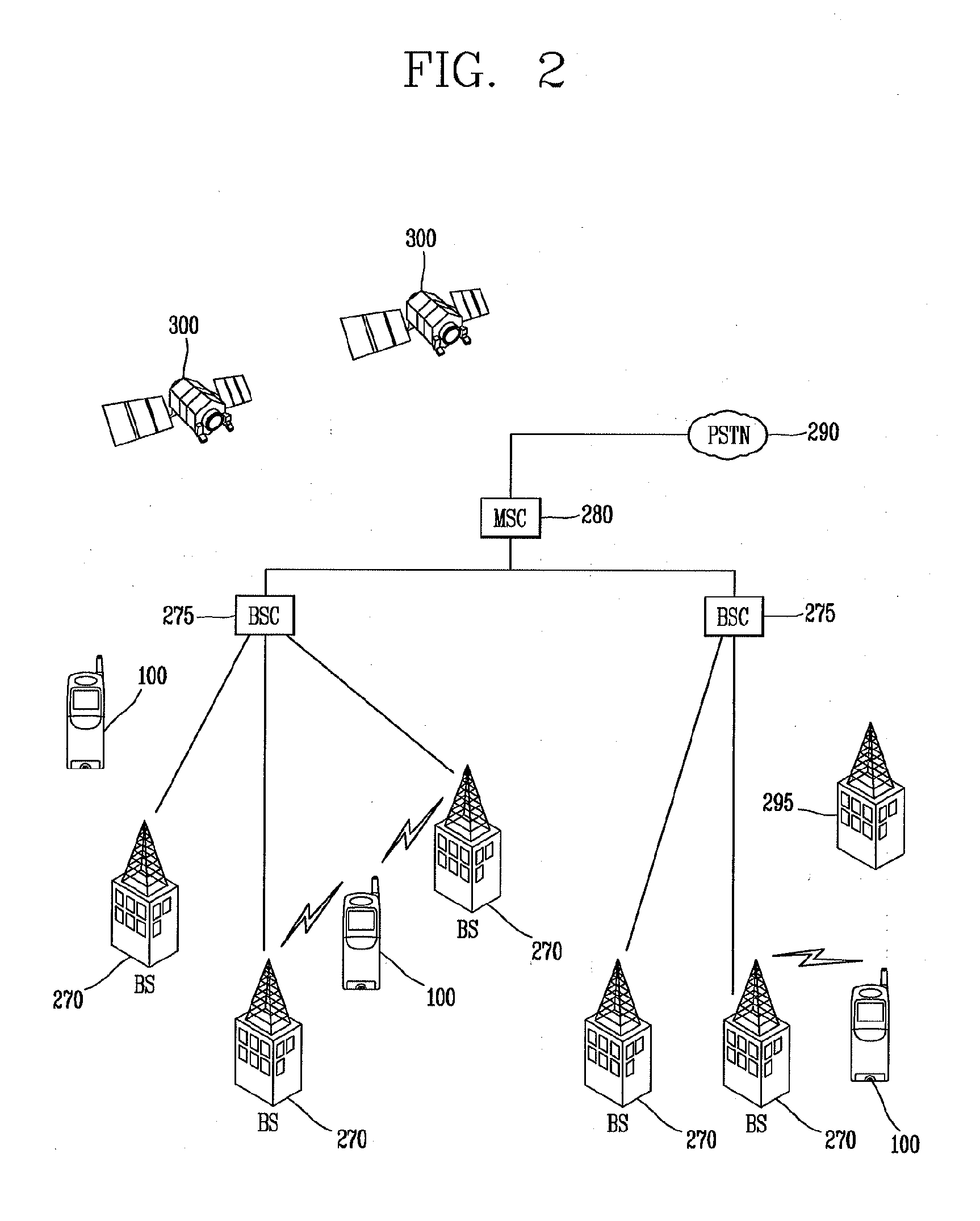

Mobile terminal and photo searching method thereof

InactiveUS20130198176A1Digital data processing detailsMetadata still image retrievalPosition dependentAugmented reality

The present disclosure relates to a mobile terminal and a photo search method thereof capable of searching and displaying photos shared by the cloud and SNS systems in connection with a specific location on a camera view using augmented reality. To this end, according to the present disclosure, when a photo search function is selected on an augmented reality based camera view, photo information associated with at least one location on which the focus of the camera view is placed is searched from a network system to sort and display the searched photo information on the camera view, thereby allowing the user to conveniently search photos associated with old memories.

Owner:LG ELECTRONICS INC

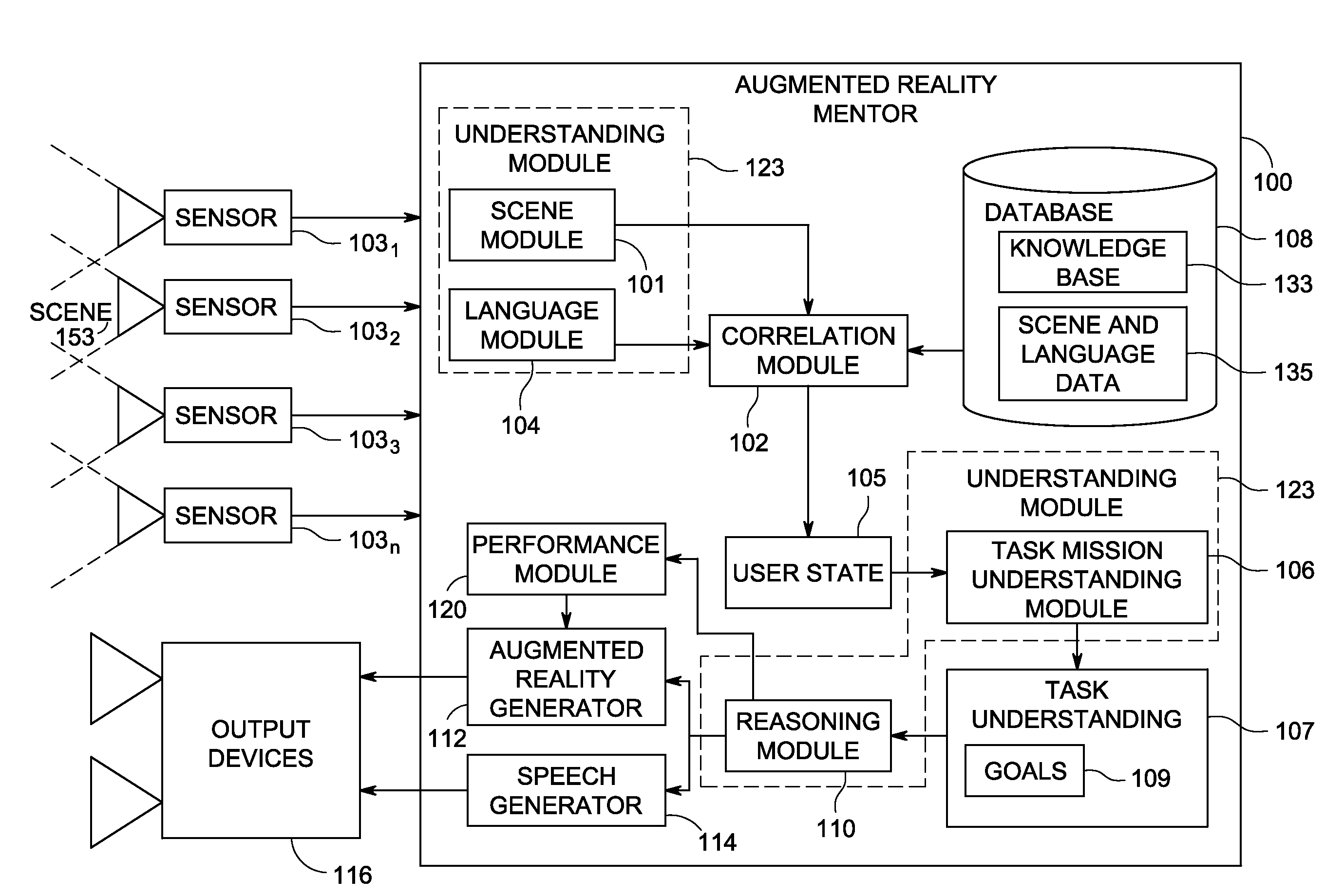

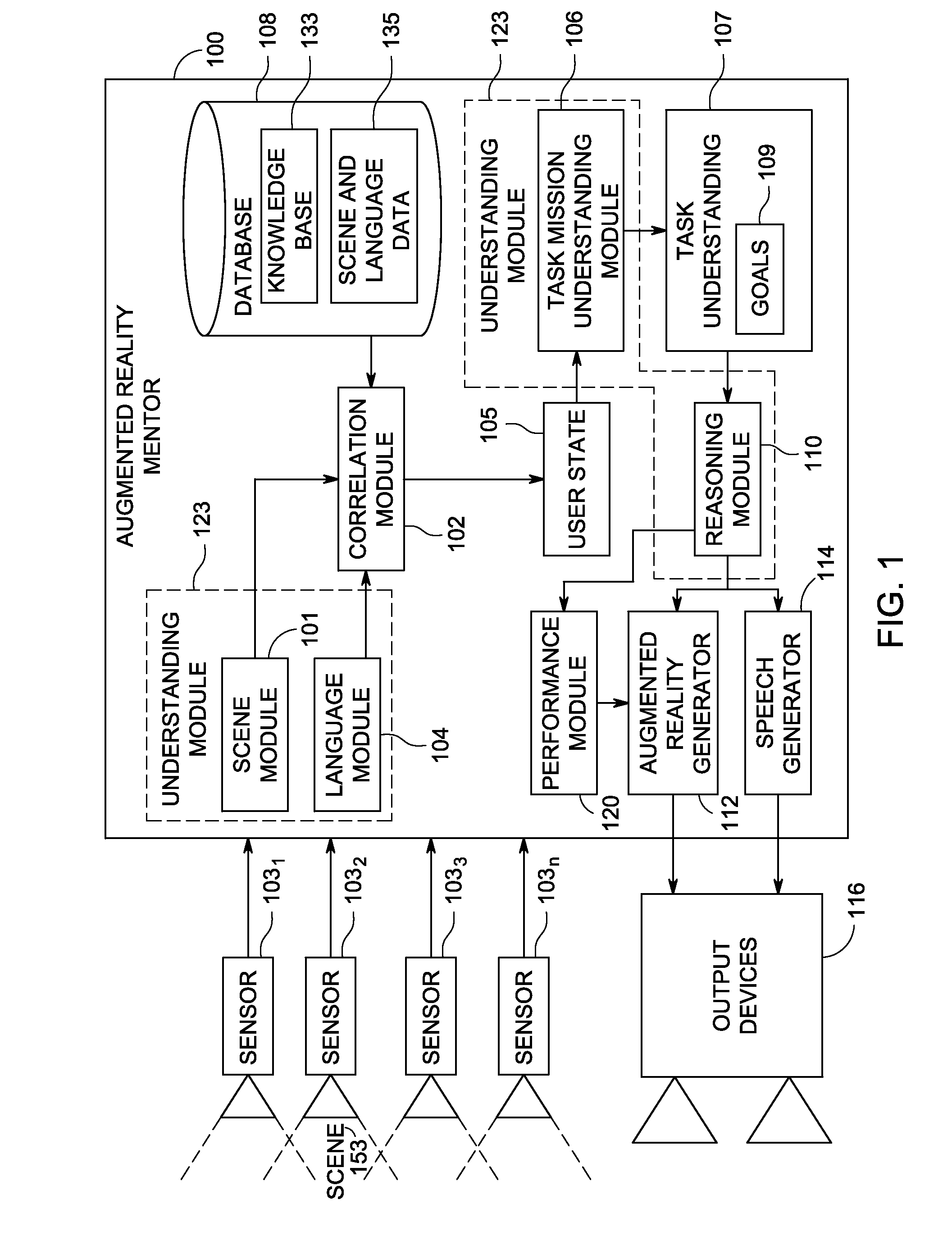

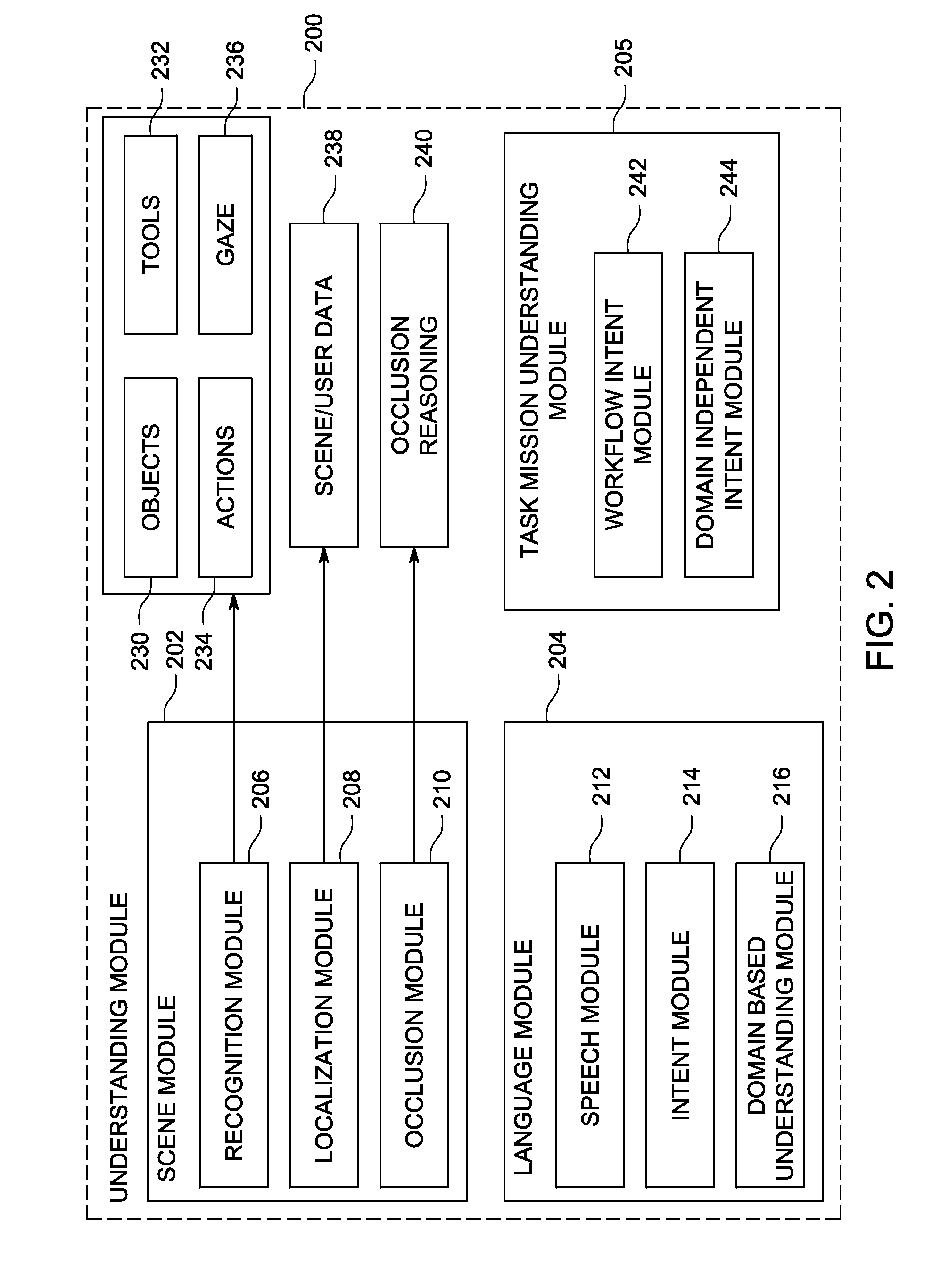

Method and apparatus for mentoring via an augmented reality assistant

ActiveUS20140176603A1Cathode-ray tube indicatorsInput/output processes for data processingVisual perceptionKnowledge base

A method and apparatus for training and guiding users comprising generating a scene understanding based on video and audio input of a scene of a user performing a task in the scene, correlating the scene understanding with a knowledge base to produce a task understanding, comprising one or more goals, of a current activity of the user, reasoning, based on the task understanding and a user's current state, a next step for advancing the user towards completing one of the one or more goals of the task understanding and overlaying the scene with an augmented reality view comprising one or more visual and audio representation of the next step to the user.

Owner:SRI INTERNATIONAL

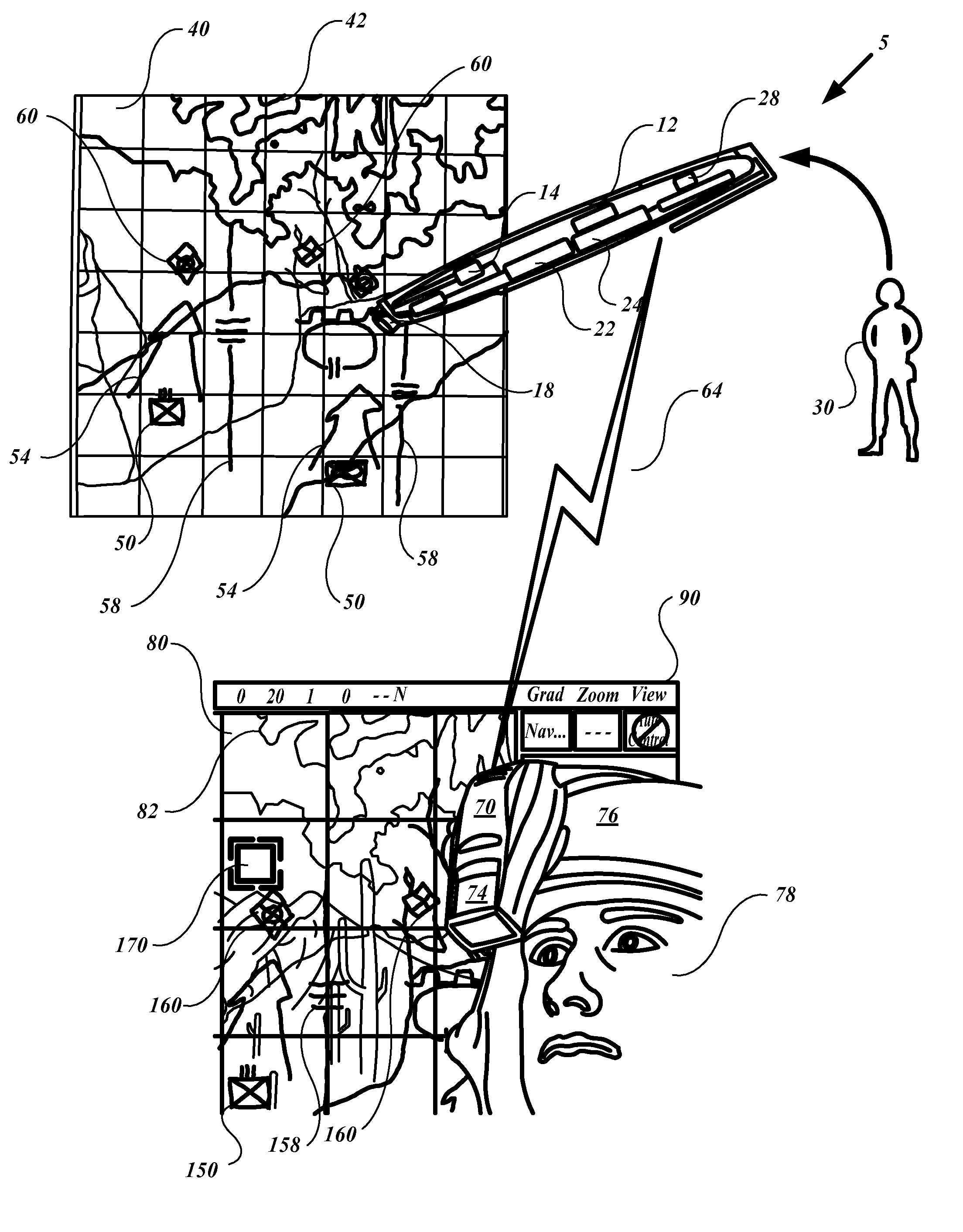

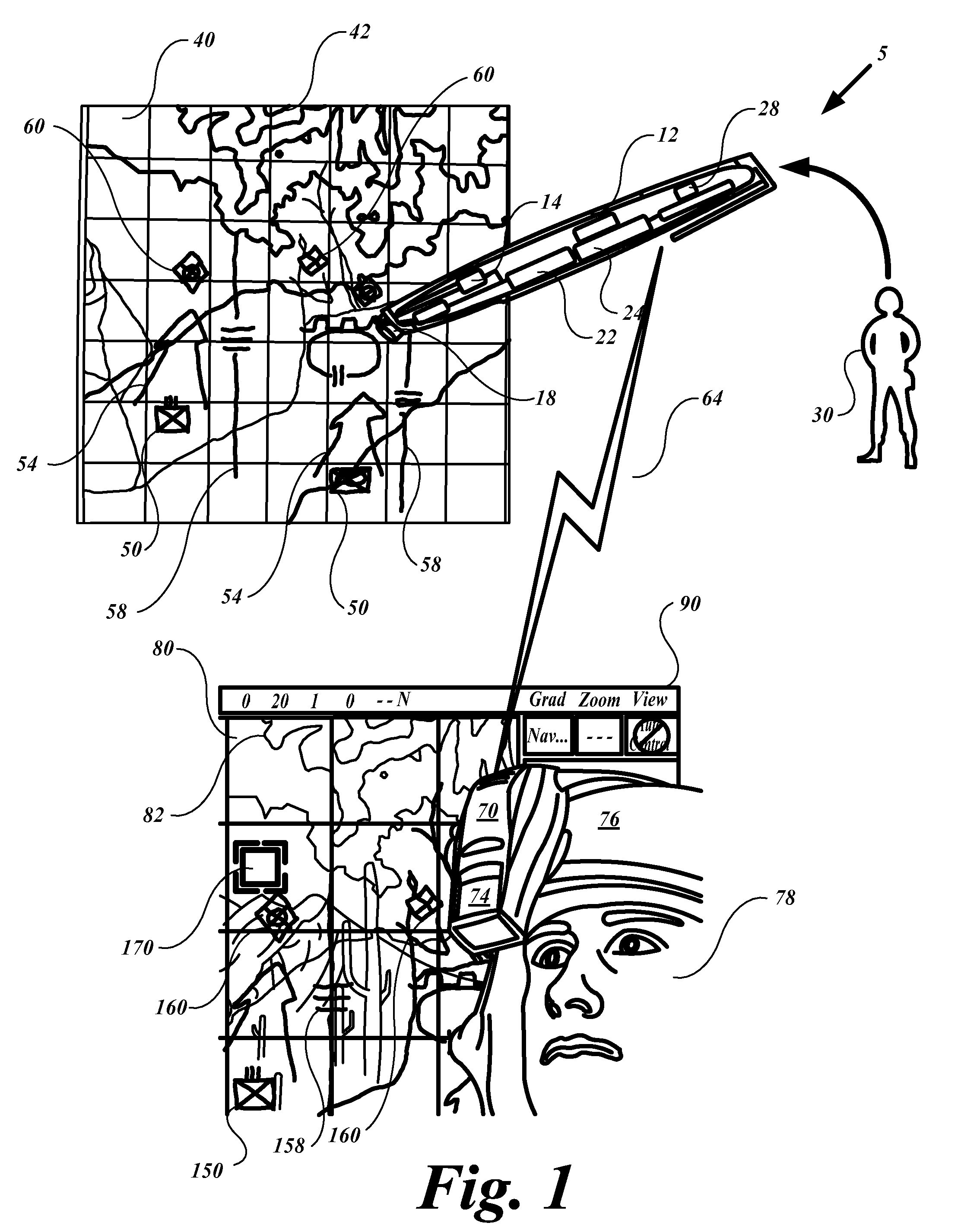

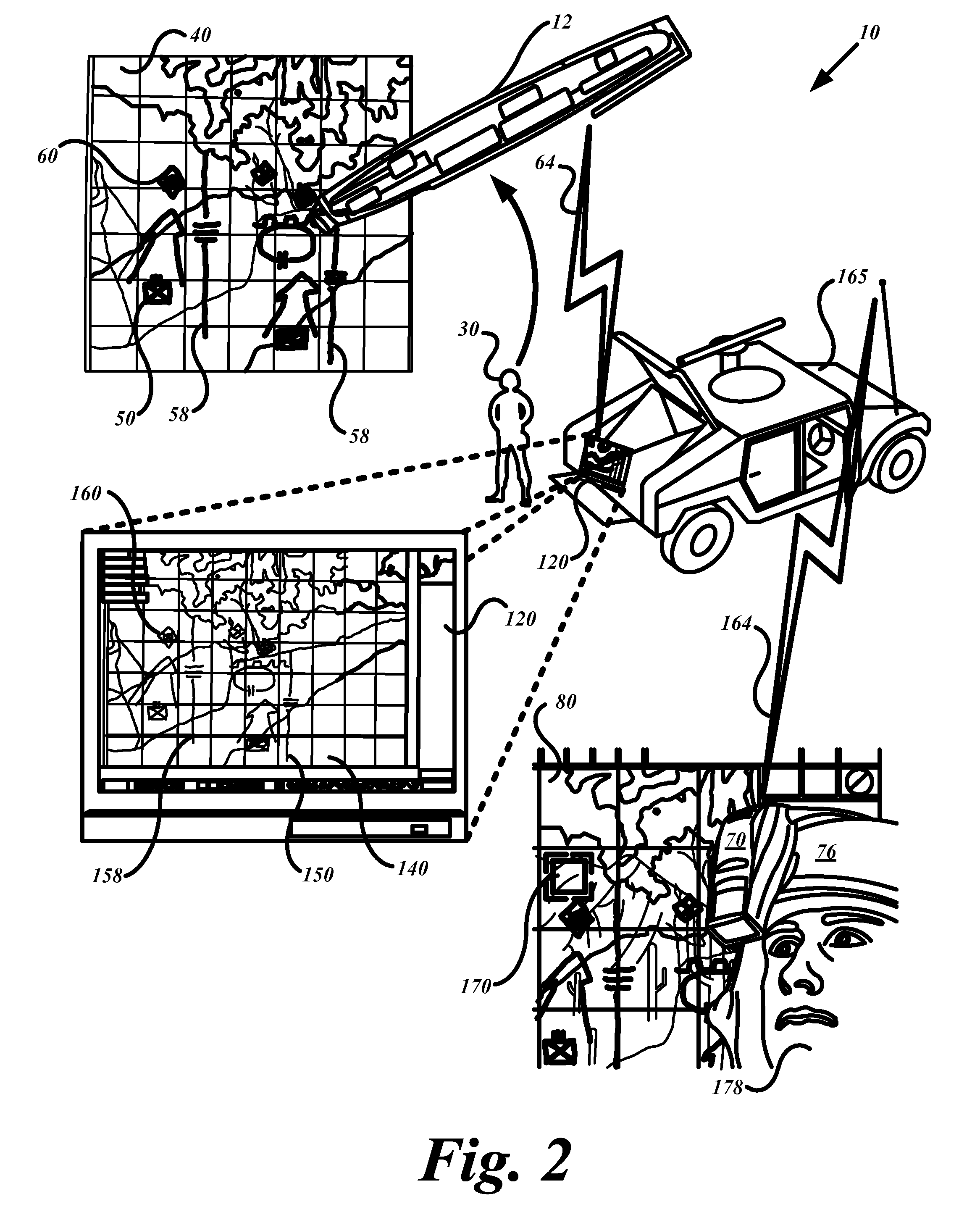

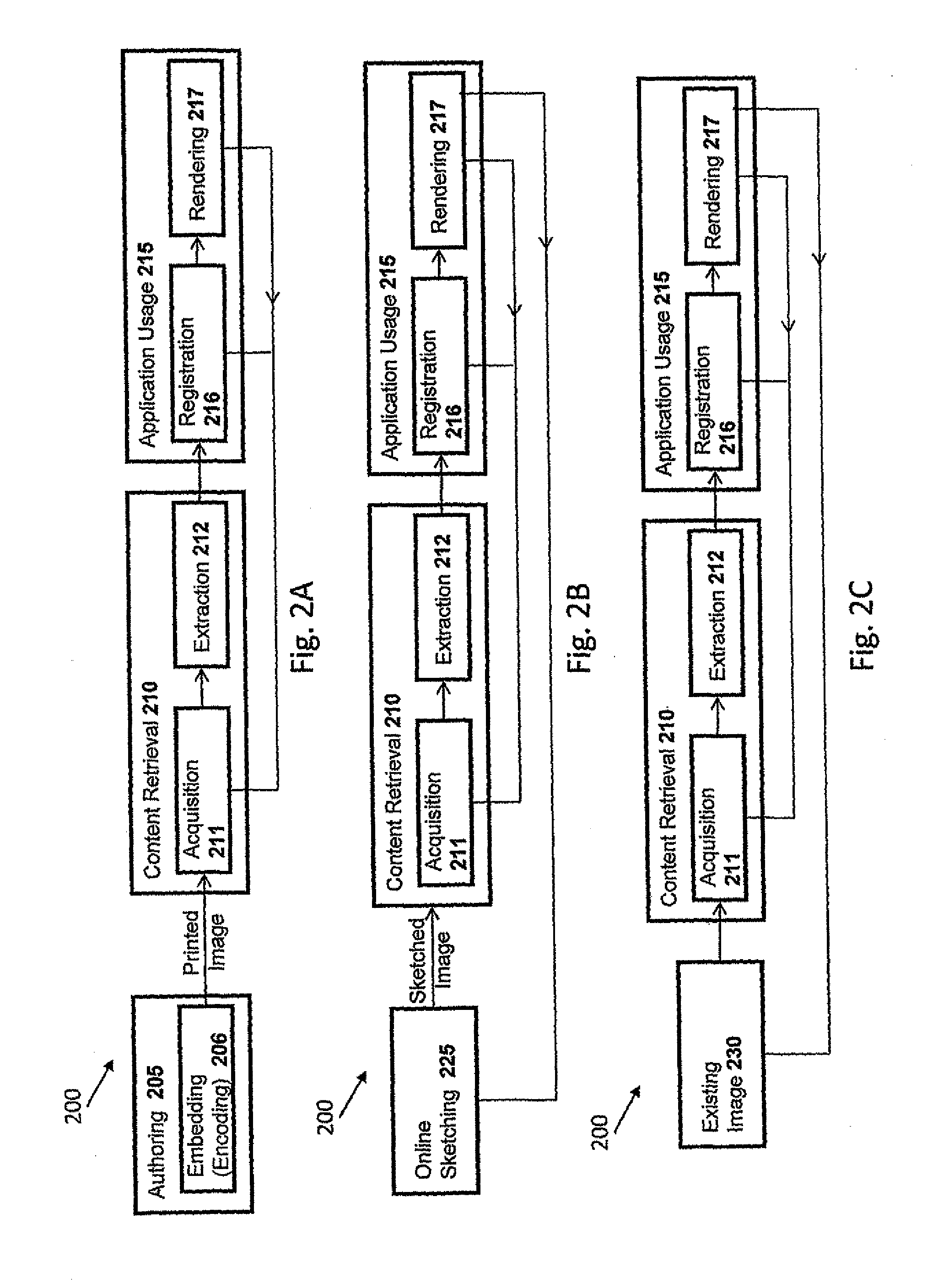

Systems and methods for data annotation, recordation, and communication

InactiveUS20080186255A1Enhance tacticalEnhance strategic situation awarenessCathode-ray tube indicatorsInput/output processes for data processingSensory FeedbacksGraphics

Systems, devices, and methods to provide tools enhance the tactical or strategic situation awareness of on-scene and remotely located personnel involved with the surveillance of a region-of-interest using field-of-view sensory augmentation tools. The sensory augmentation tools provide updated, visual, text, audio, and graphic information associated with the region-of-interest adjusted for the positional frame of reference of the on-scene or remote personnel viewing the region-of-interest, map, document or other surface. Annotations and augmented reality graphics projected onto and positionally registered with objects or regions-of-interest visible within the field of view of a user looking through a see through monitor may select the projected graphics for editing and manipulation by sensory feedback from the viewer.

Owner:ADAPX INC

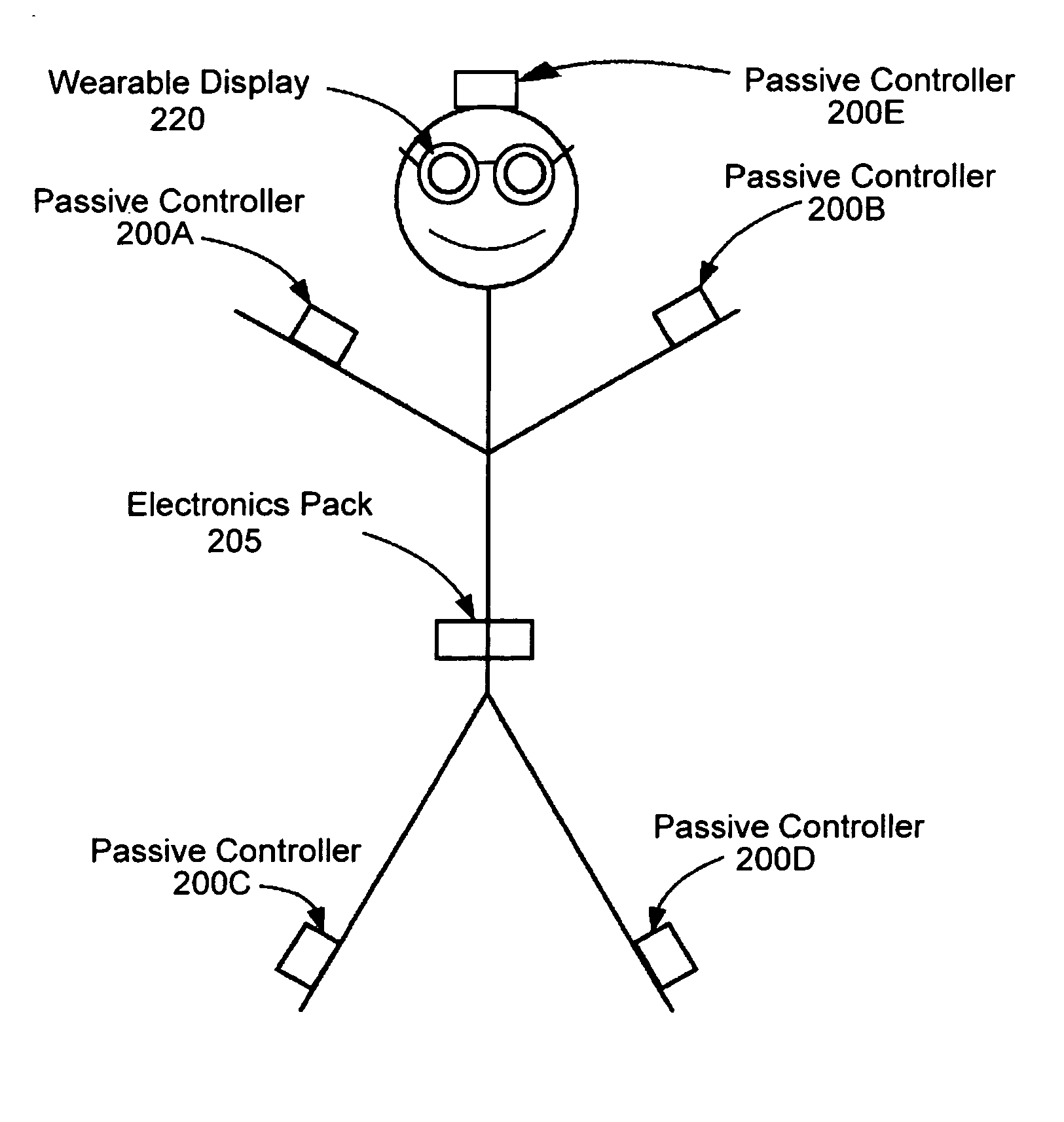

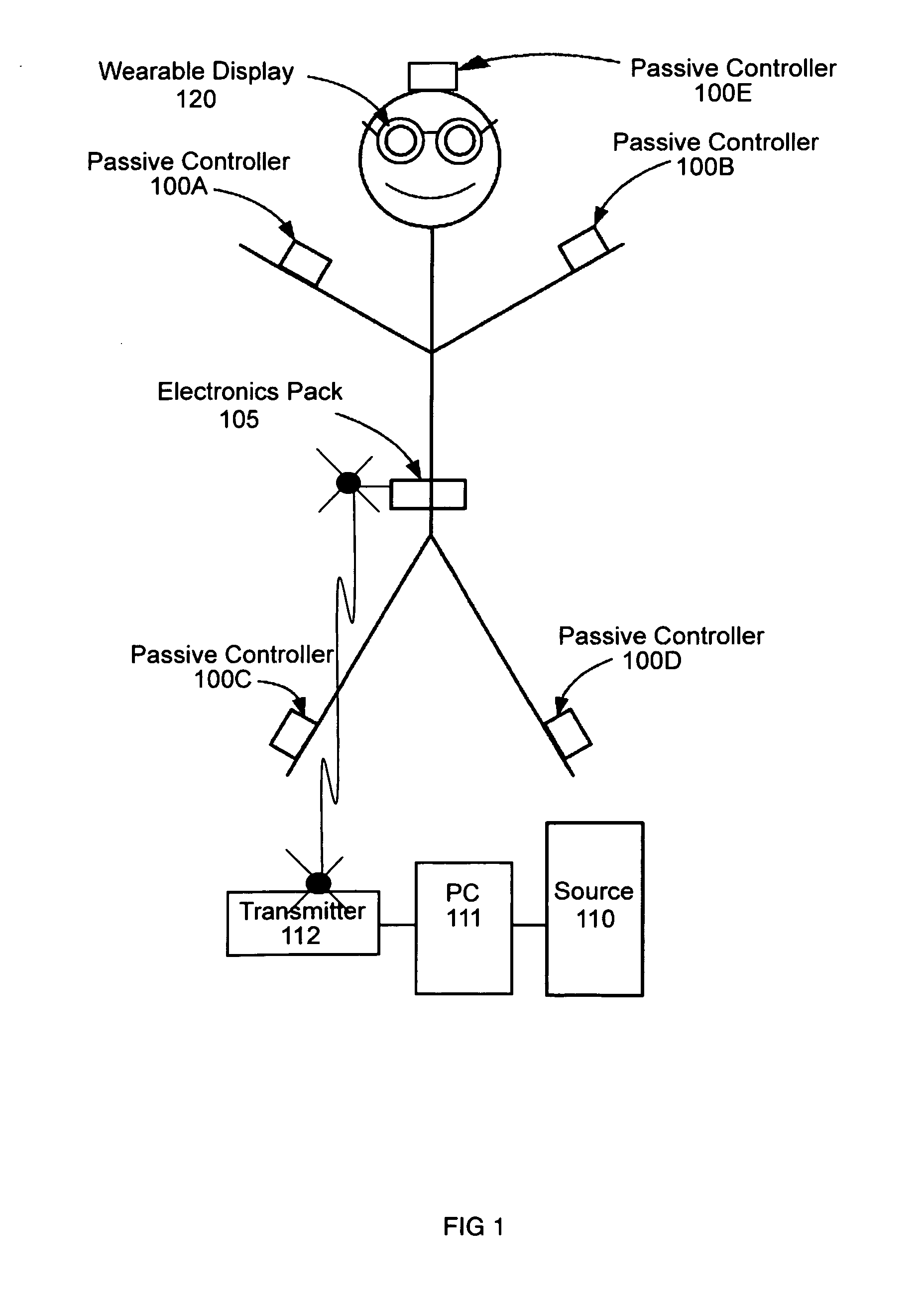

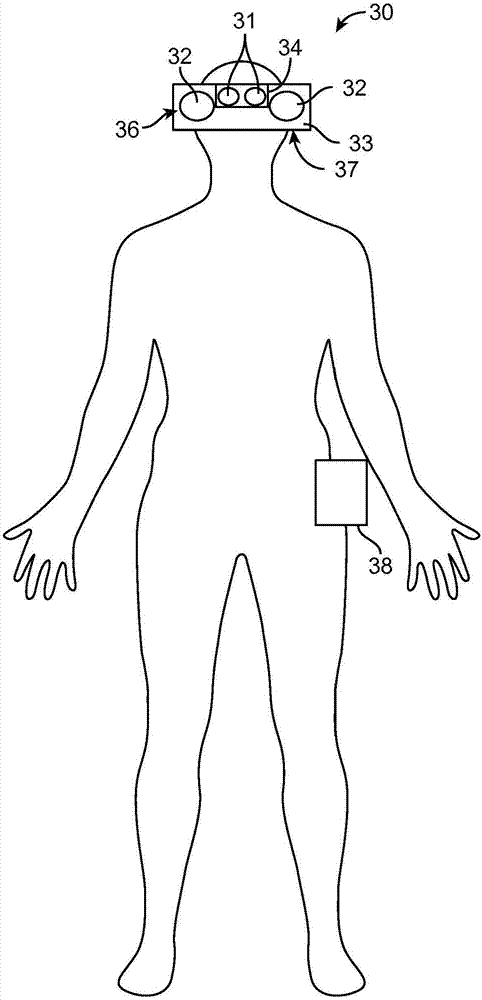

Augmented reality for testing and training of human performance

InactiveUS20110270135A1Facilitate communicationImprove injuryPhysical therapies and activitiesHealth-index calculationEyewearCause injury

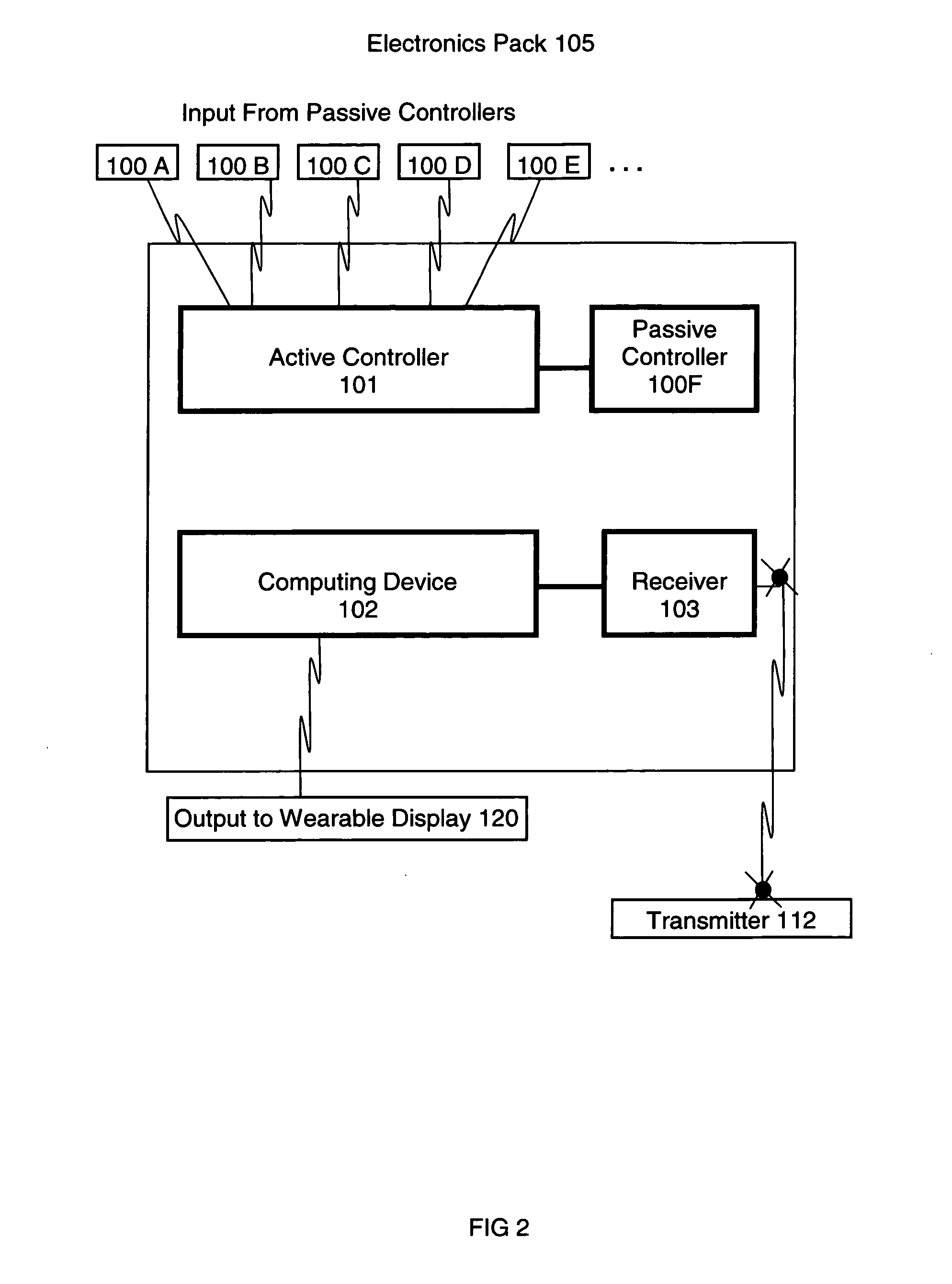

A system for continuously monitoring a user's motion and for continuously providing realtime visual physical performance information to the user while the user is moving to enable the user to detect physical performance constructs that expose the user to increased risk of injury or that reduce the user's physical performance. The system includes multiple passive controllers 100A-F for measuring the user's motion, a computing device 102 for communicating with wearable display glasses 120 and the passive controllers 100A-F to provide realtime physical performance feedback to the user. The computing device 102 also transmits physical performance constructs to the wearable display glasses 120 to enable the user to determine if his or her movement can cause injury or reduce physical performance.

Owner:FRENCH BARRY JAMES

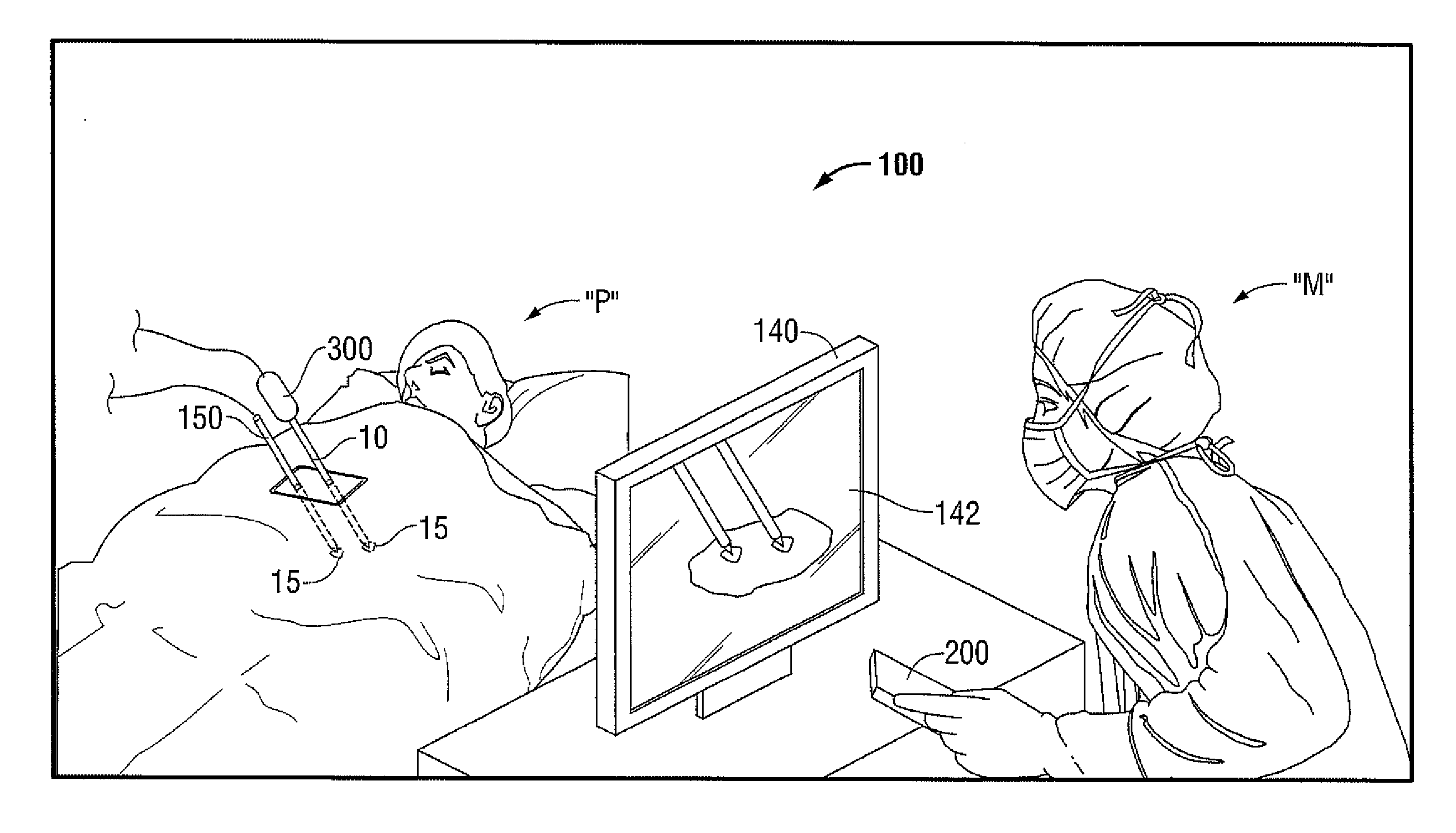

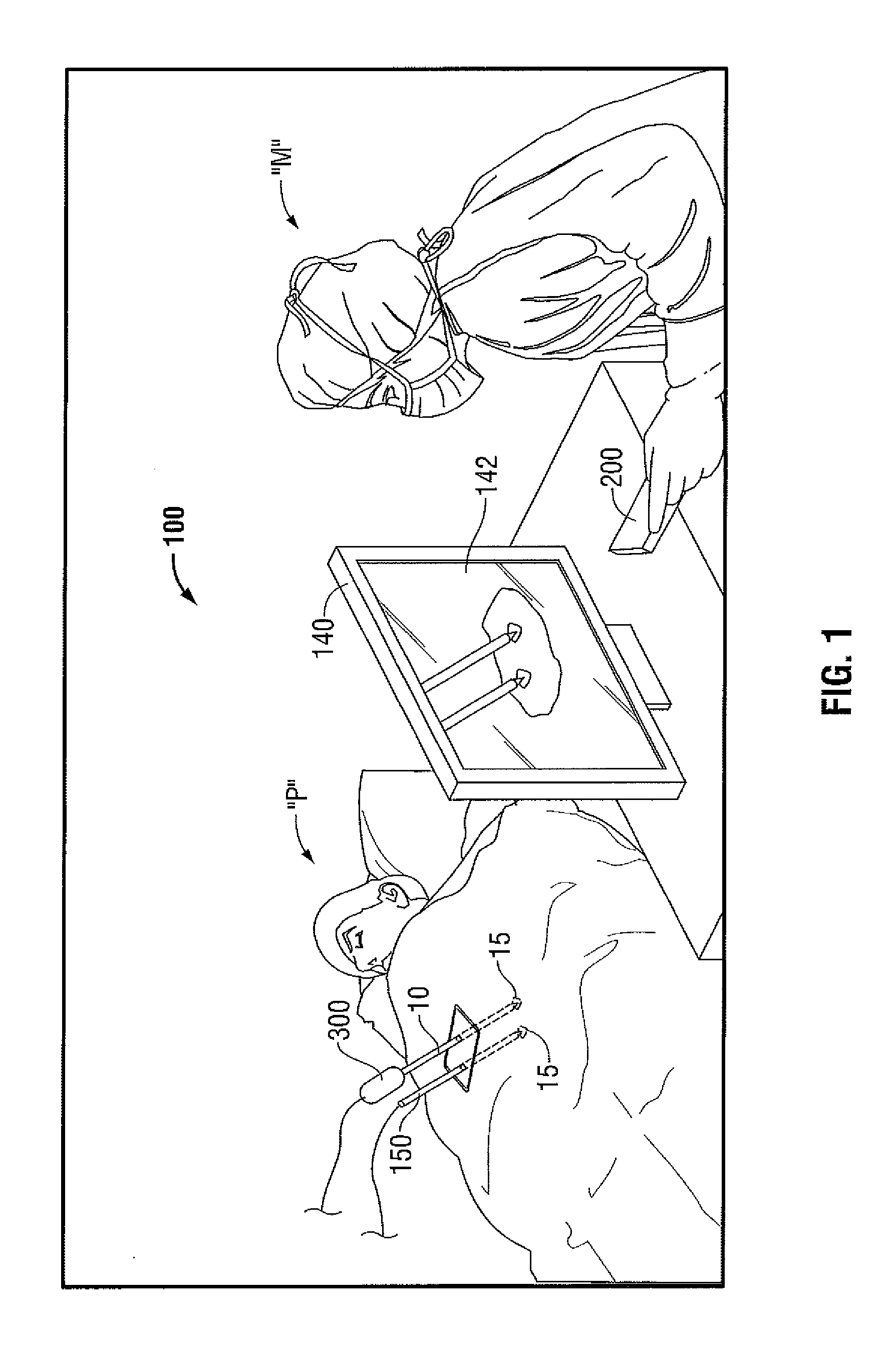

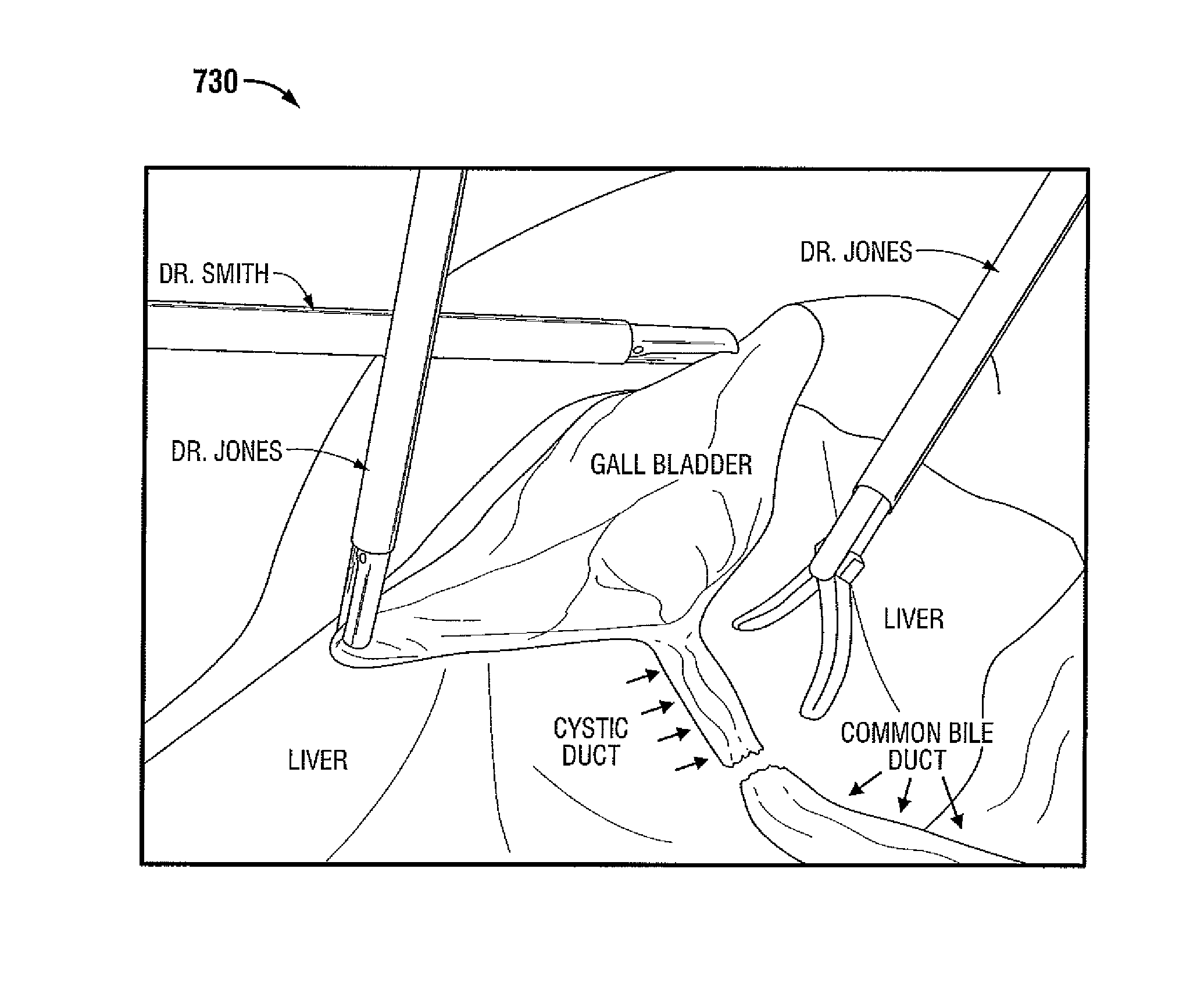

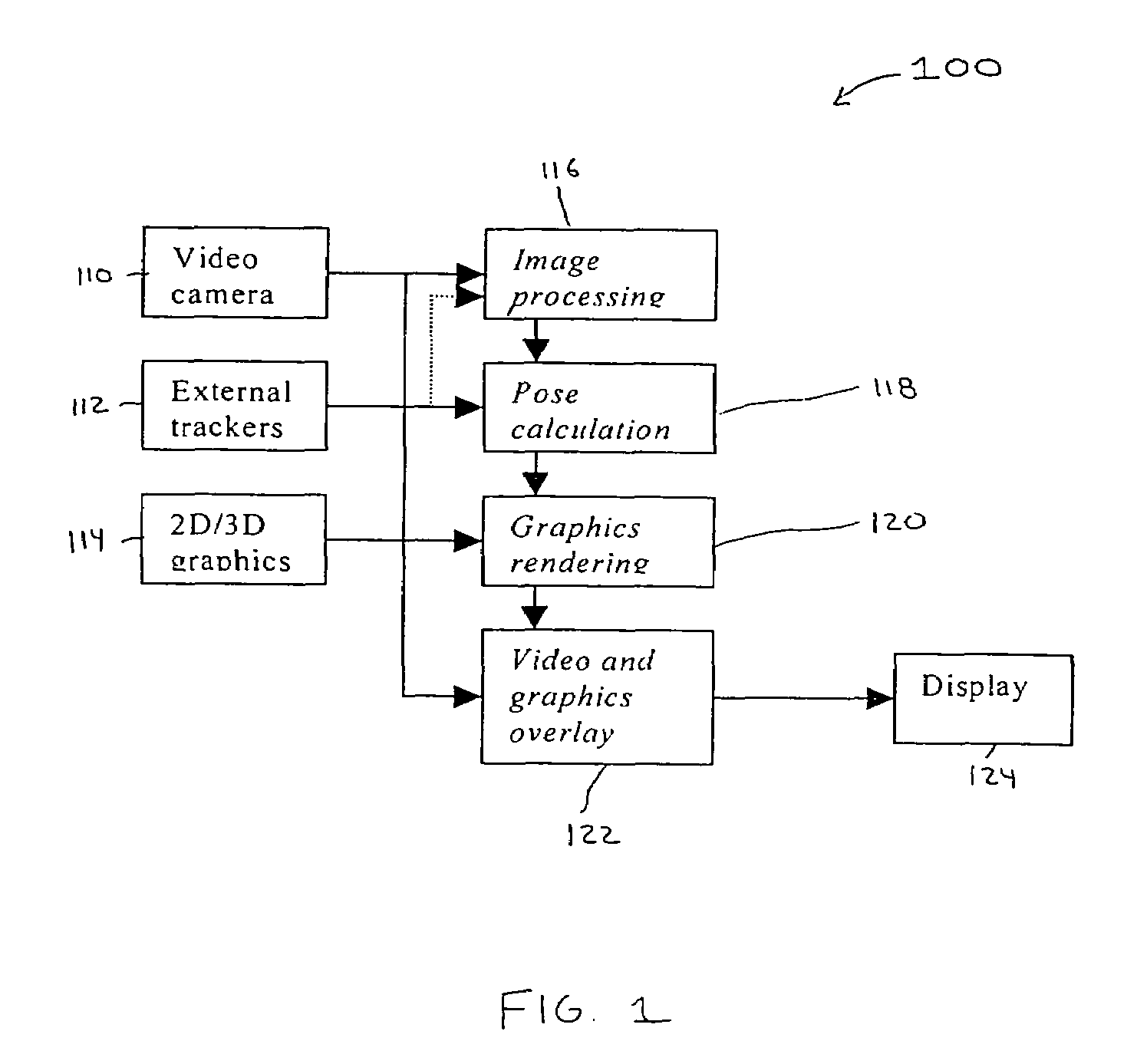

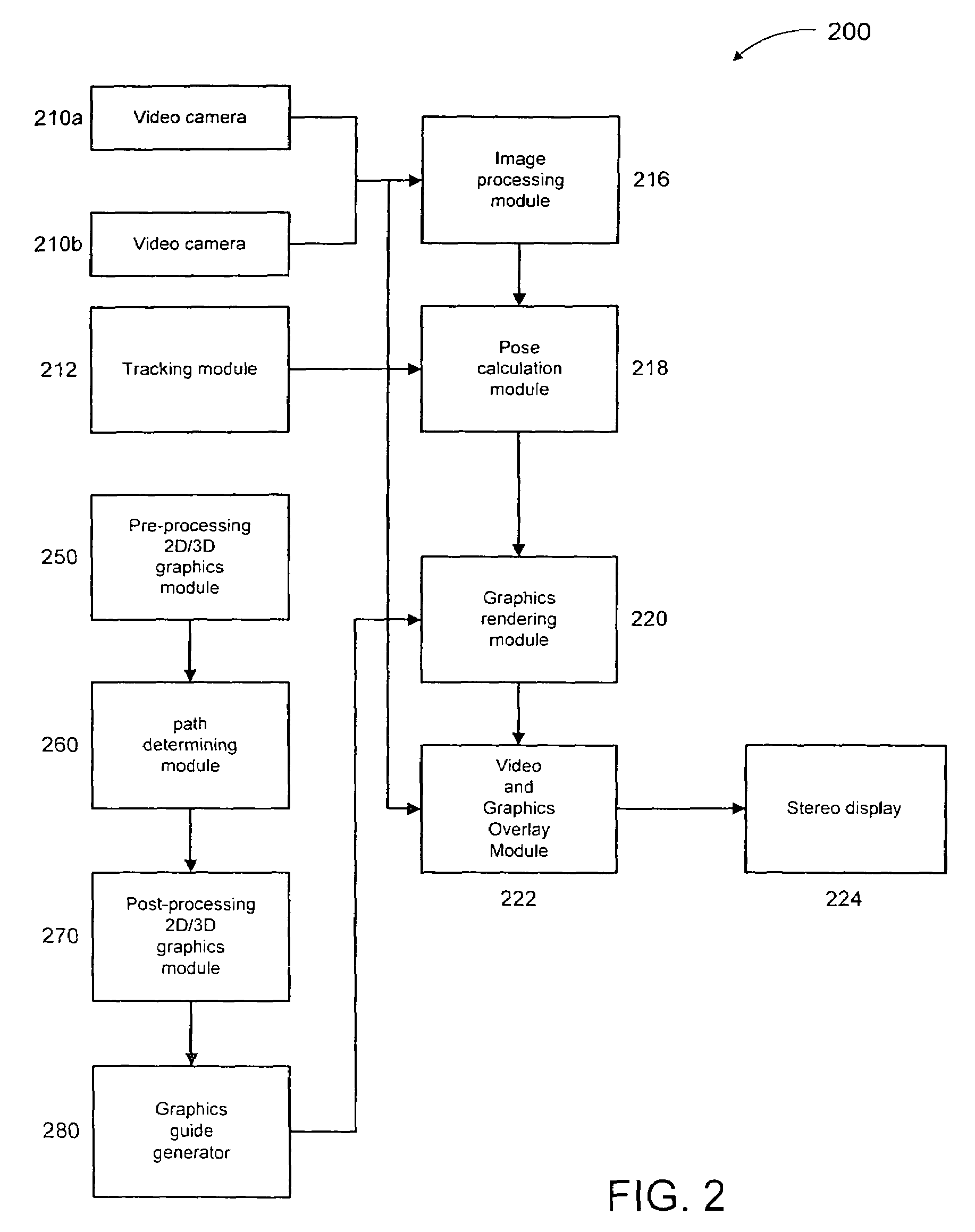

Apparatus and method for using augmented reality vision system in surgical procedures

ActiveUS9123155B2Improve eyesightAvoid enteringMechanical/radiation/invasive therapiesSurgerySurgical siteX-ray

A system and method for improving a surgeon's vision by overlaying augmented reality information onto a video image of the surgical site. A high definition video camera sends a video image in real time. Prior to the surgery, a pre-operative image is created from MRI, x-ray, ultrasound, or other method of diagnosis using imaging technology. The pre-operative image is stored within the computer. The computer processes the pre-operative image to decipher organs, anatomical geometries, vessels, tissue planes, orientation, and other structures. As the surgeon performs the surgery, the AR controller augments the real time video image with the processed pre-operative image and displays the augmented image on an interface to provide further guidance to the surgeon during the surgical procedure.

Owner:TYCO HEALTHCARE GRP LP

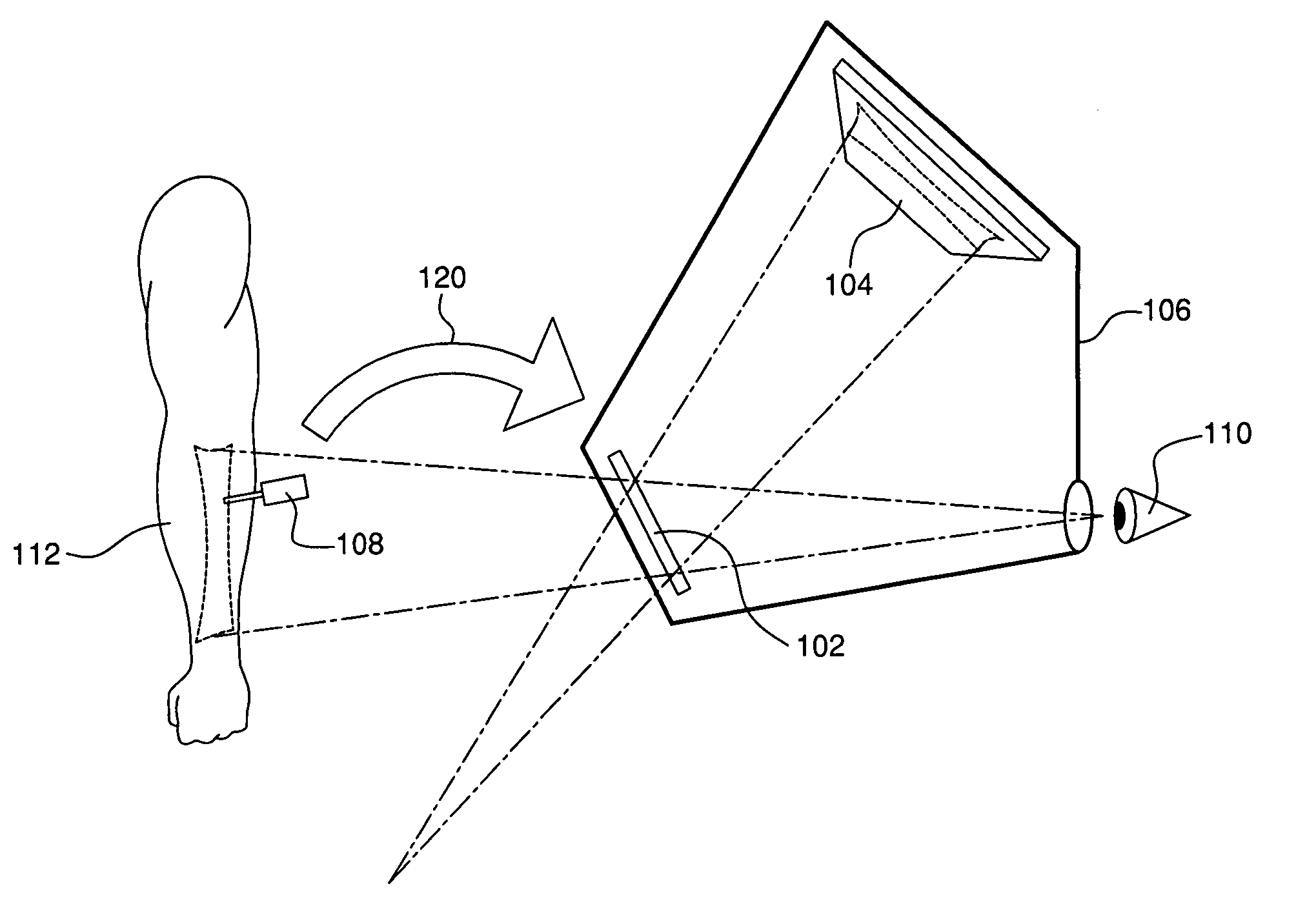

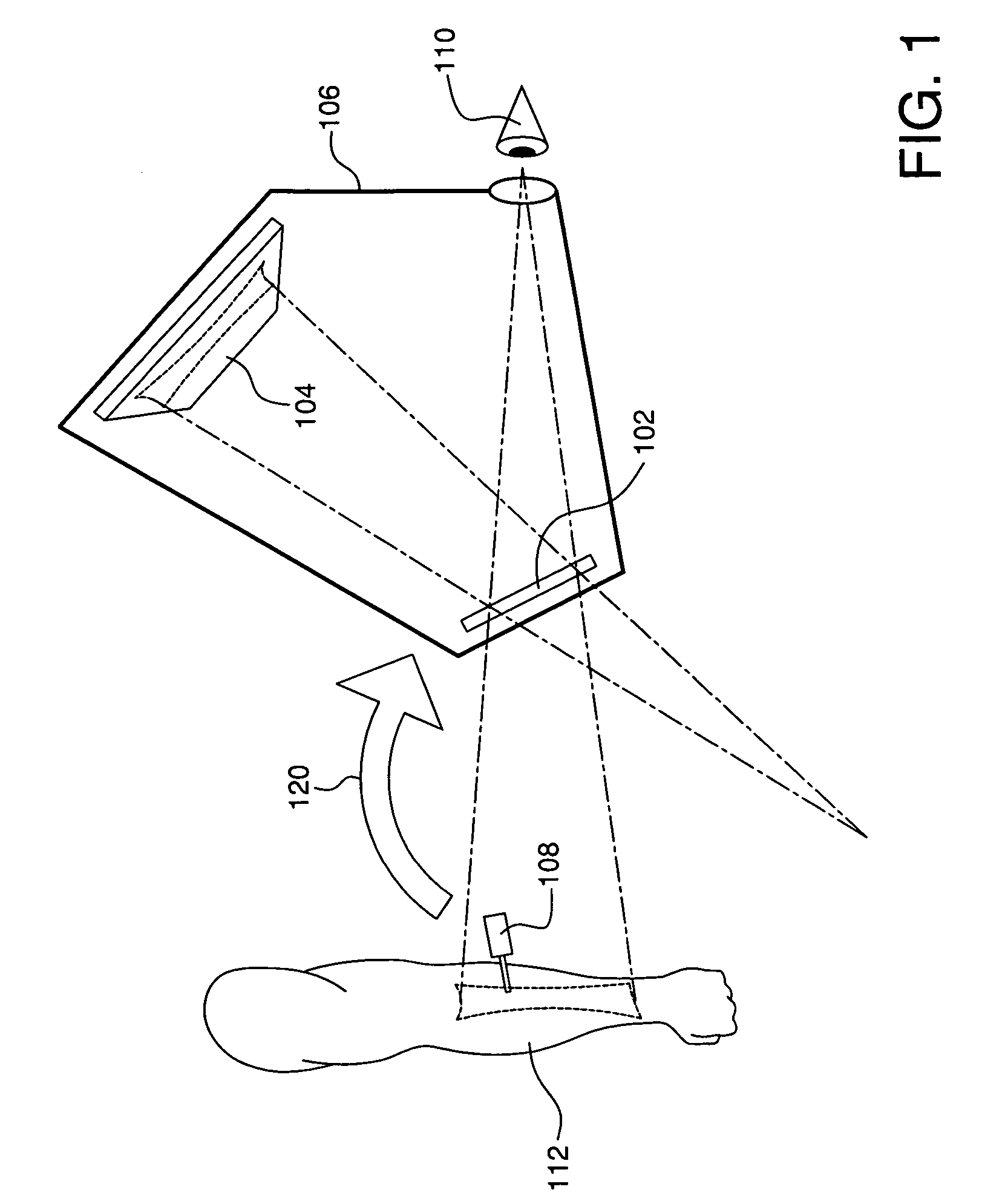

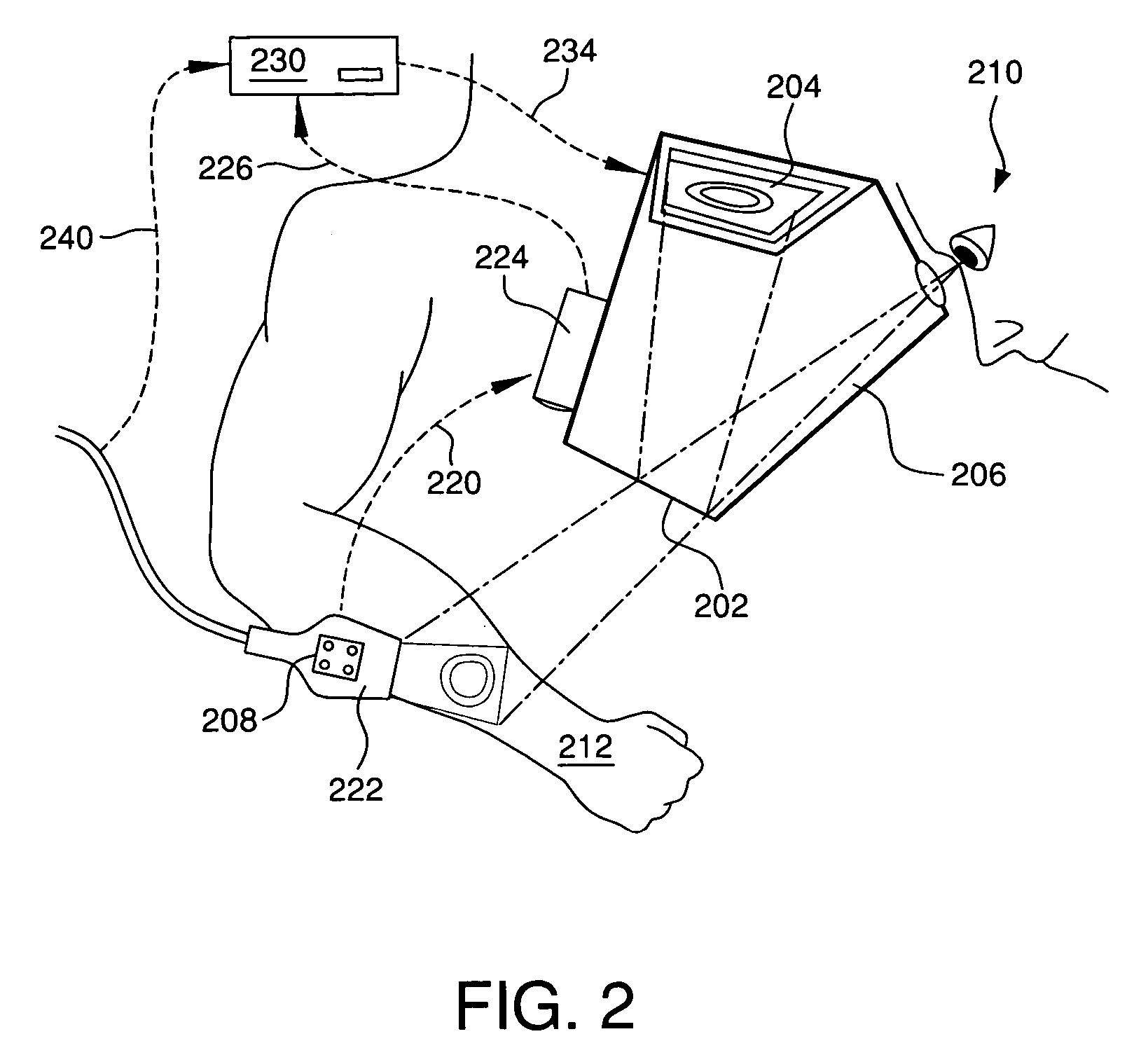

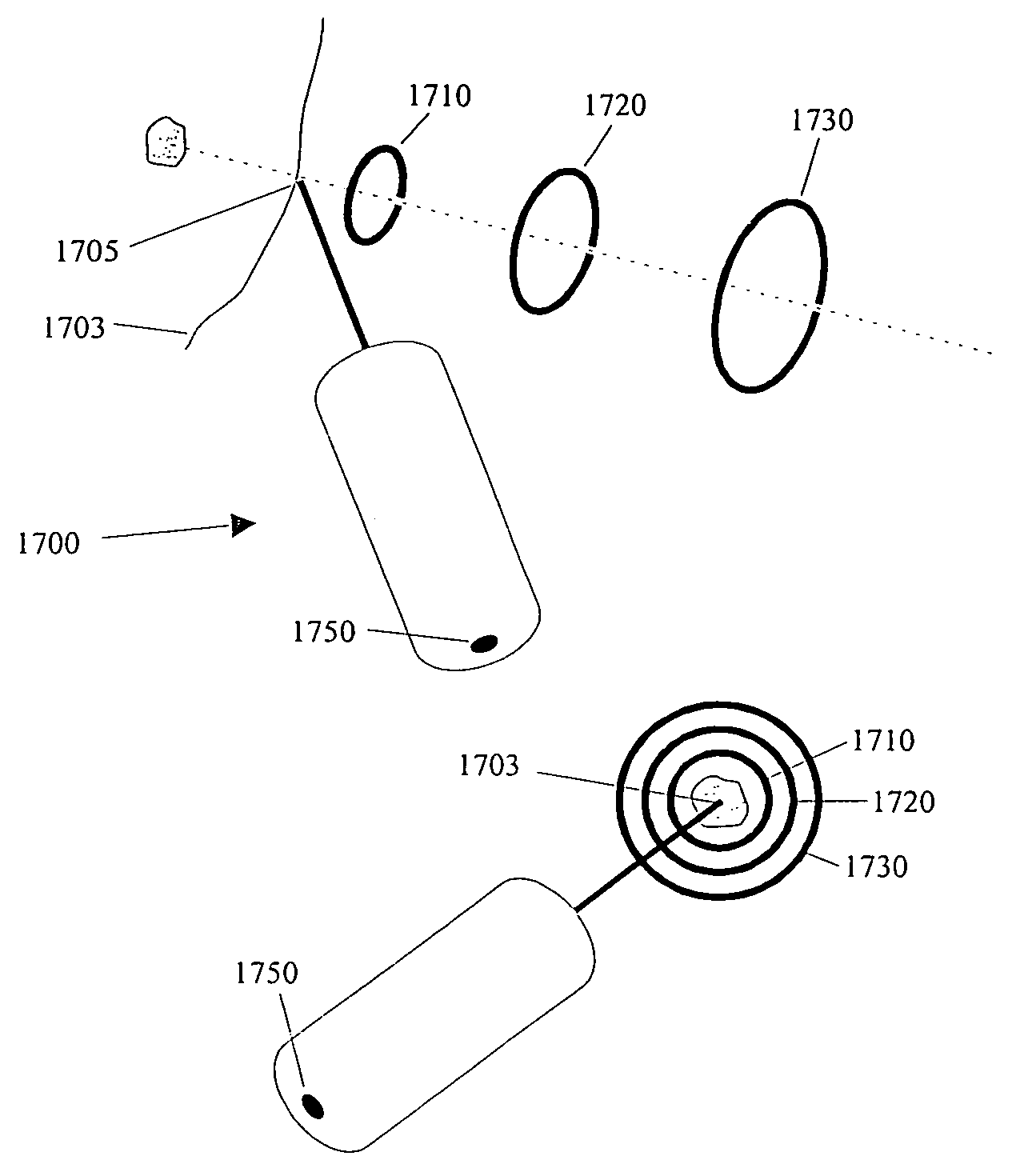

Augmented reality guided instrument positioning with depth determining graphics

InactiveUS7605826B2Input/output for user-computer interactionCathode-ray tube indicatorsGraphicsInstrumentation

There is provided a method for augmented reality guided instrument positioning. At least one graphics proximity marker is determined for indicating a proximity of a predetermined portion of an instrument to a target. The at least one graphics proximity marker is rendered such that the proximity of the predetermined portion of the instrument to the target is ascertainable based on a position of a marker on the instrument with respect to the at least one graphics proximity marker.

Owner:SIEMENS MEDICAL SOLUTIONS USA INC

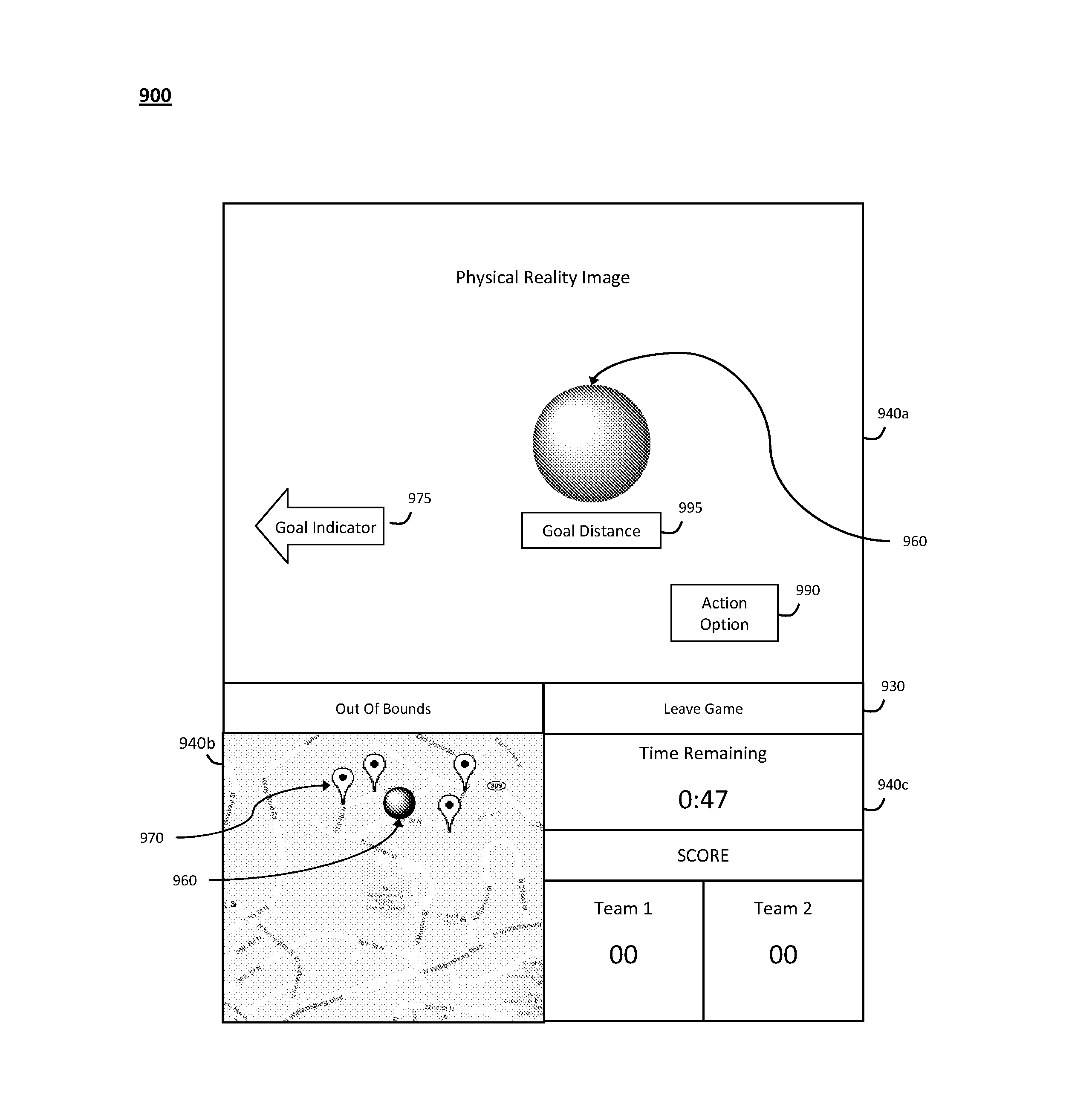

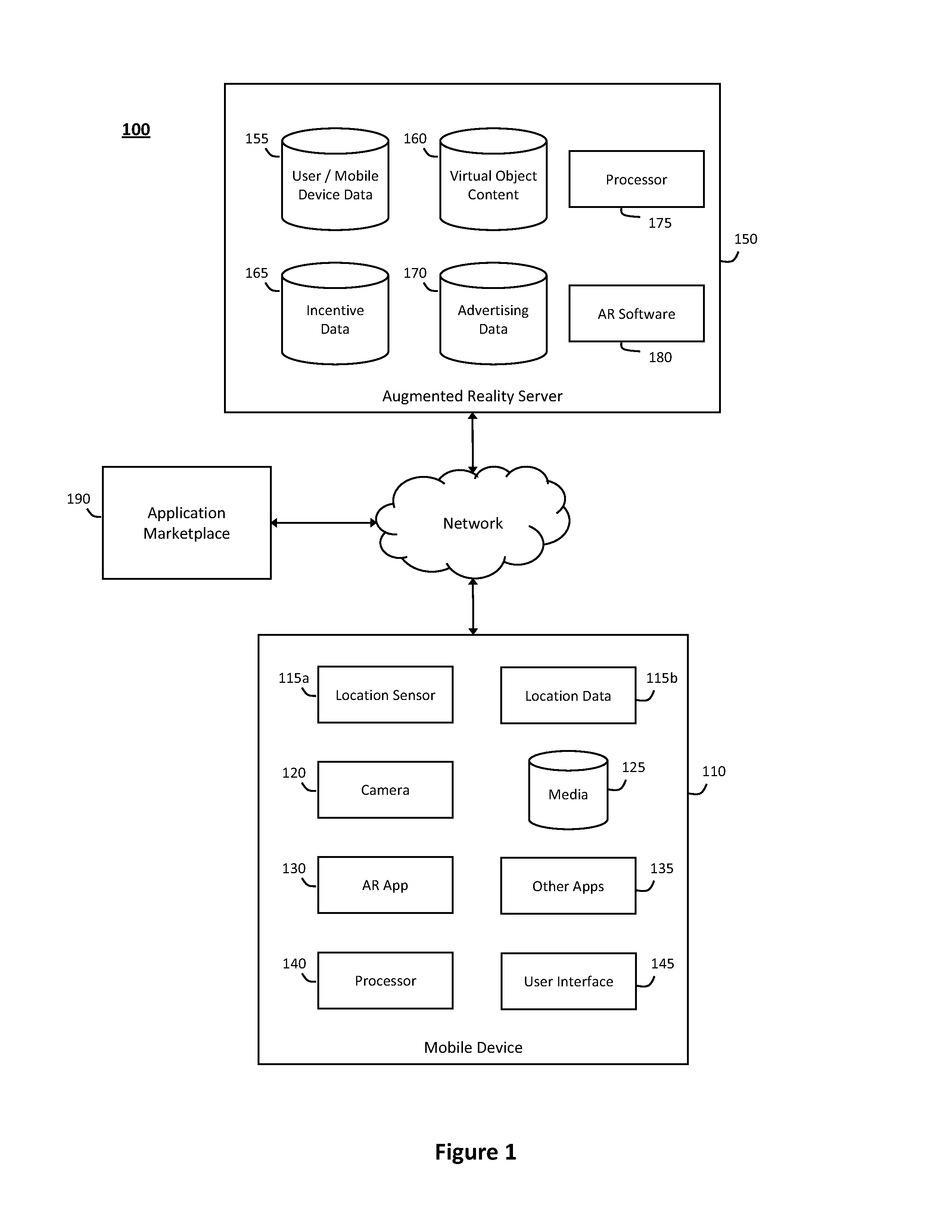

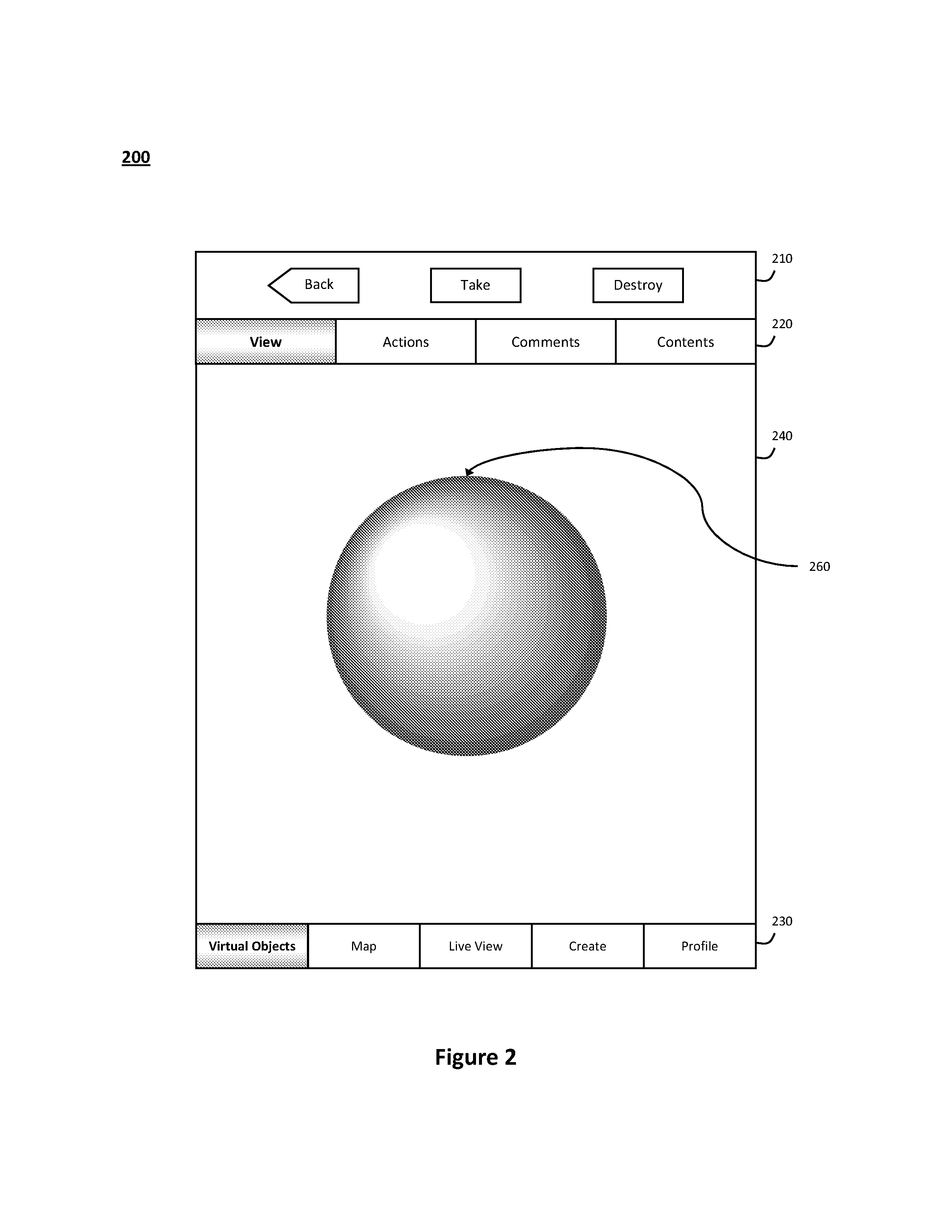

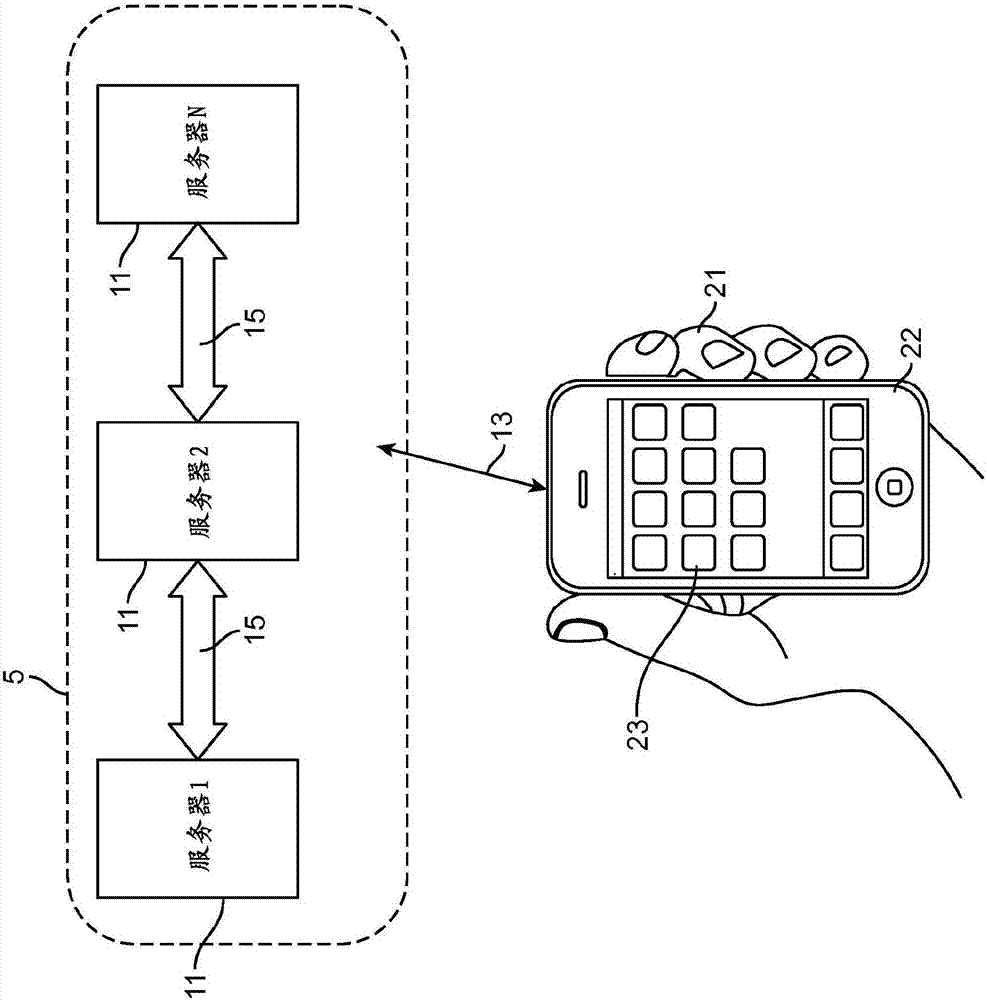

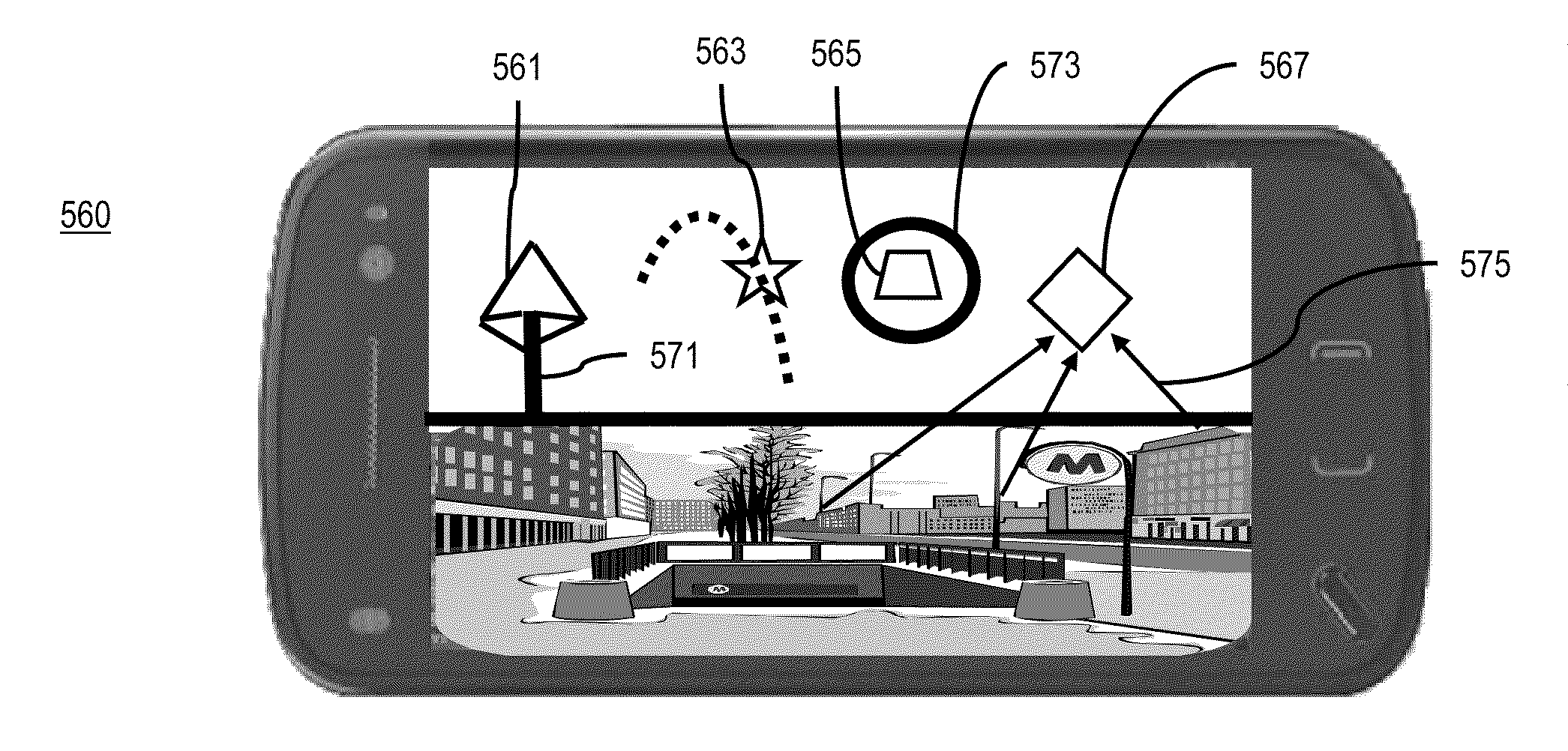

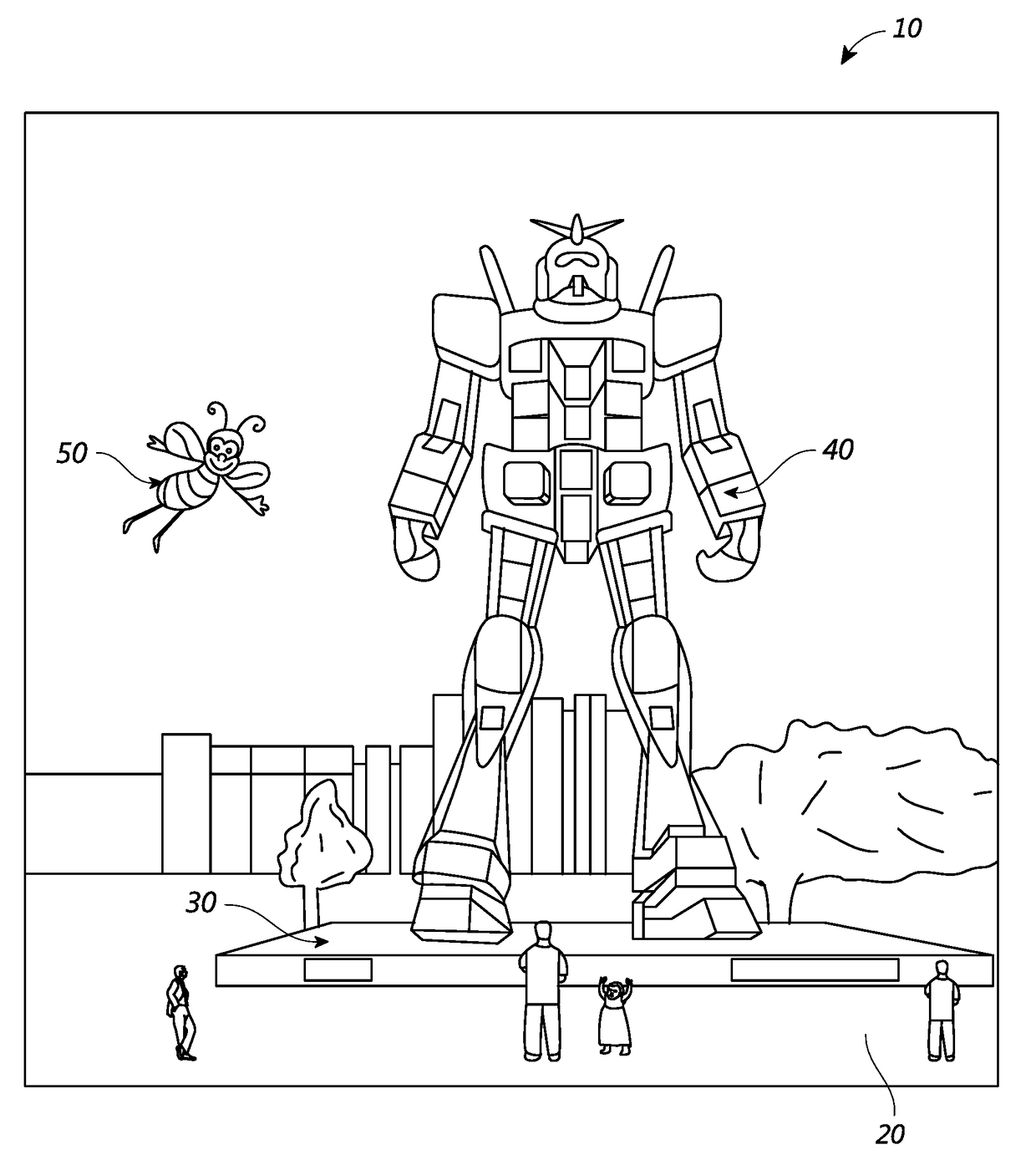

System and method for interacting with virtual objects in augmented realities

InactiveUS20130178257A1Reduce and eliminate feeCathode-ray tube indicatorsVideo gamesMobile deviceSocial web

The system and method described herein may be used to interact with virtual objects in augmented realities. In particular, the system and method described herein may include an augmented reality server to host software that supports interaction with virtual objects in augmented realities on a mobile device through an augmented reality application. For example, the augmented reality application may be used to create and deploy virtual objects having custom designs and embedded content that can be shared with other users to any suitable location, similarly interact with virtual objects and embedded content that other users created and deployed using the augmented reality application, participate in games that involve interacting with the virtual objects, obtain incentives and targeted advertisements associated with the virtual objects, and engage in social networking to stay in touch with friends or meet new people via interaction with the virtual objects, among other things.

Owner:AUGAROO

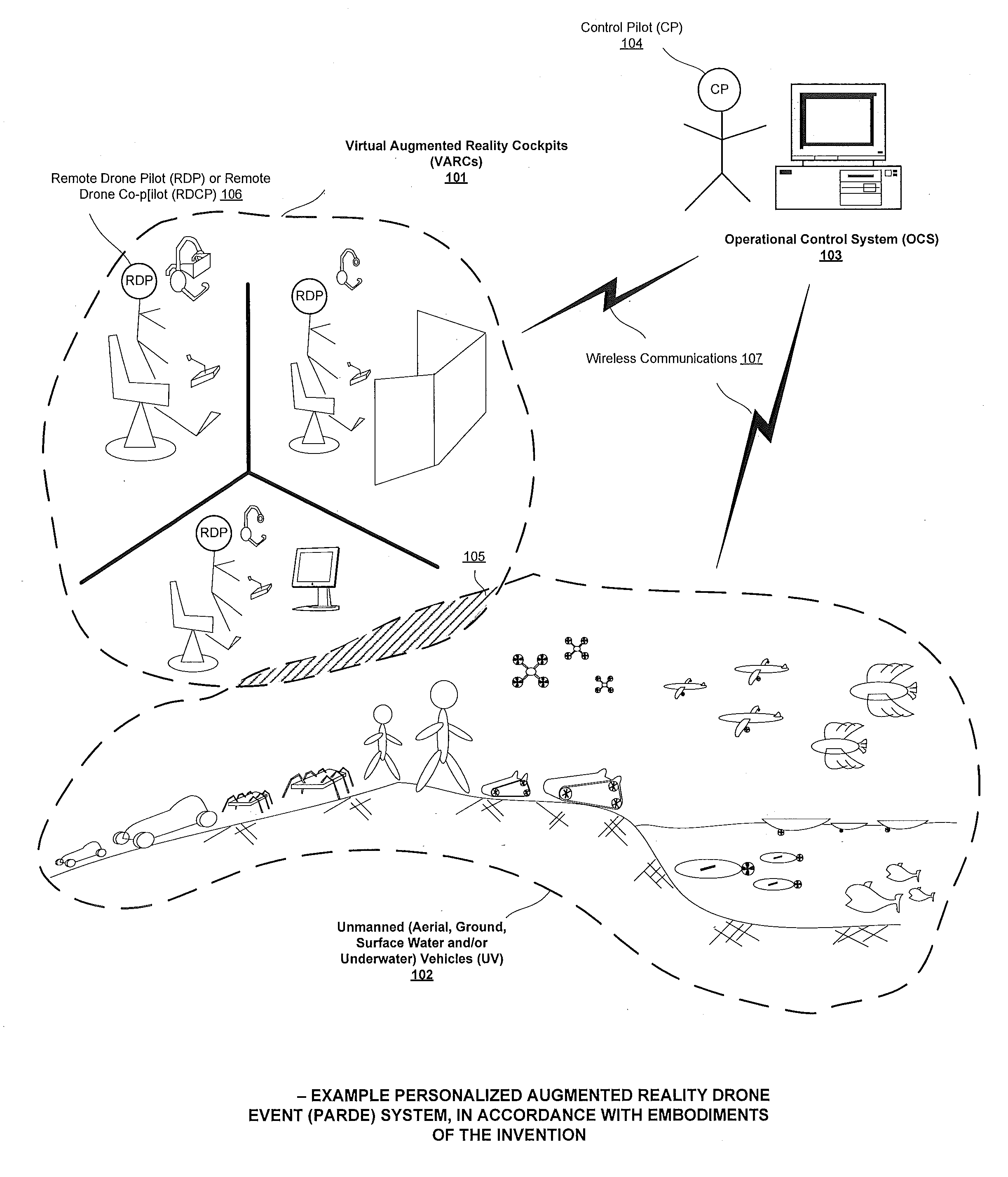

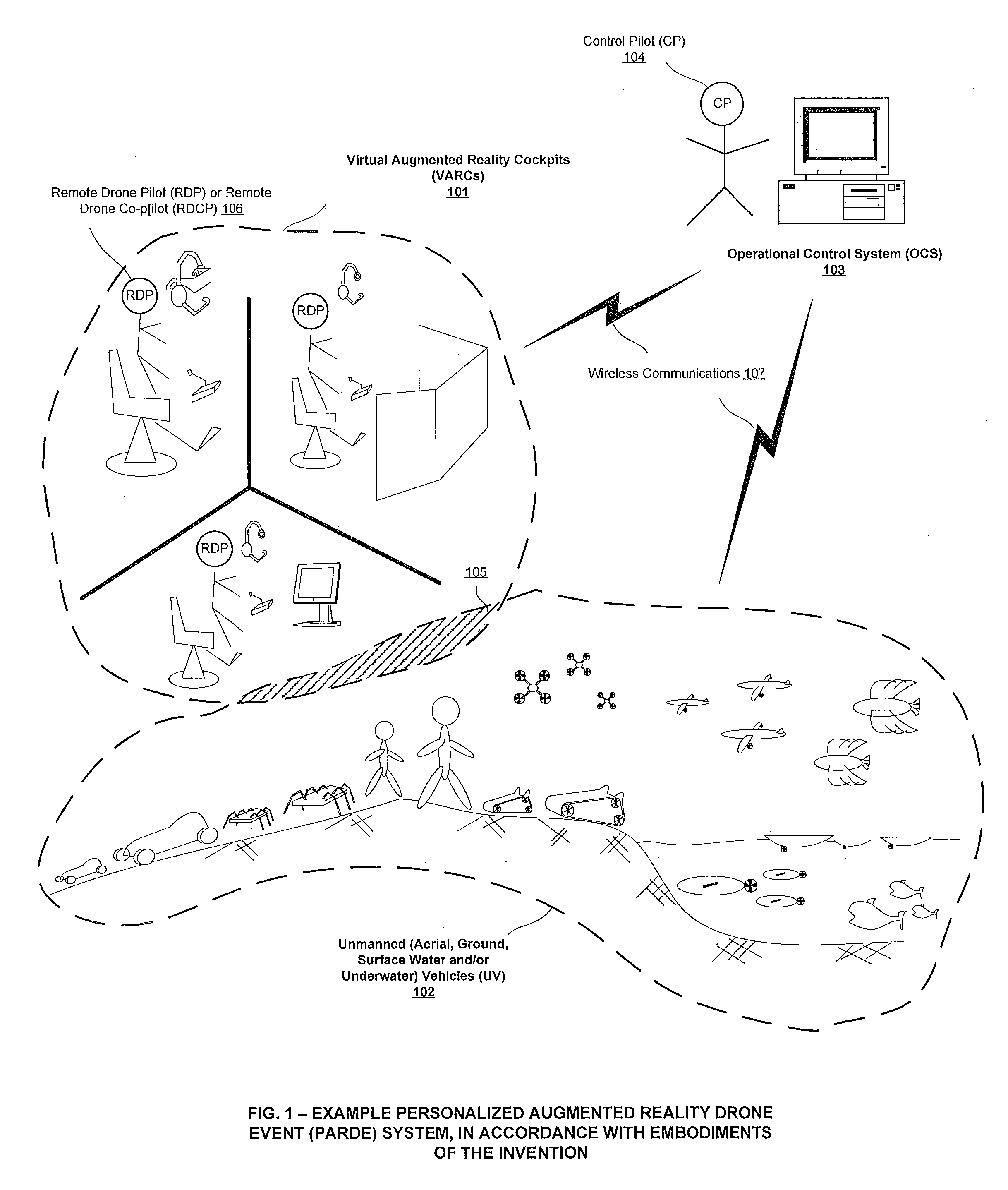

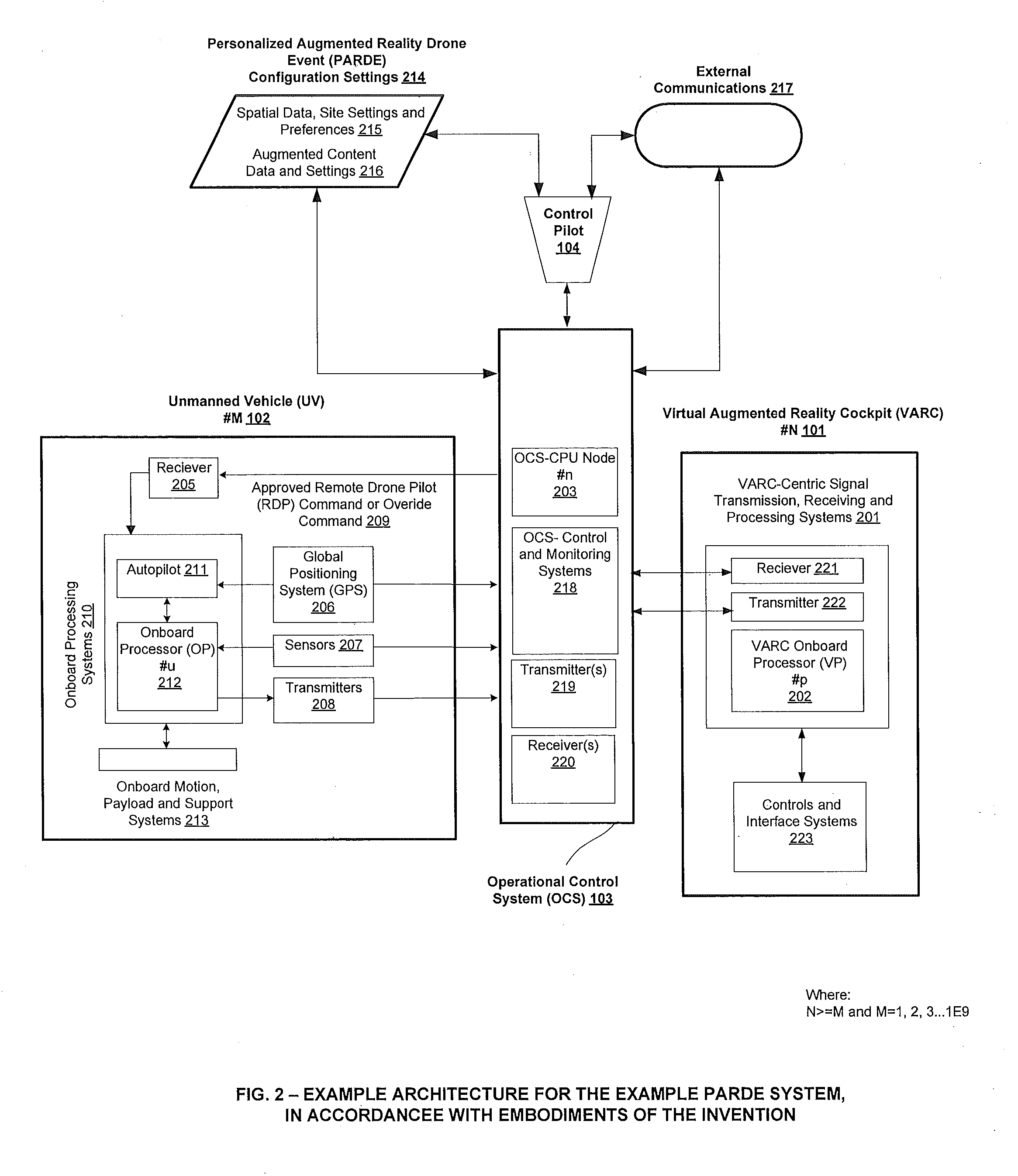

Virtual and Augmented Reality Cockpit and Operational Control Systems

ActiveUS20150346722A1Insufficient in environmentIncrease probabilityDigital data processing detailsSatellite radio beaconingControl systemMultiple sensor

Architecture for a multimodal, multiplatform switching, unmanned vehicle (UV) swarm system which can execute missions in diverse environments. The architecture includes onboard and ground processors to handle and integrate multiple sensor inputs generating a unique UV pilot experience for a remote drone pilot (RDP) via a virtual augmented reality cockpit (VARC). The RDP is monitored by an operational control system and an experienced control pilot. A ground processor handles real-time localization, forwarding of commands, generation and delivery of augmented content to users, along with safety features and overrides. The UVs onboard processors and autopilot execute the commands and provide a redundant source of safety features and override in the case of loss of signal. The UVs perform customizable missions, with adjustable rules for differing skill levels. RDPs experience real-time virtual piloting of the UV with augmented interactive and actionable visual and audio content delivered to them via VARC systems.

Owner:RECREATIONAL DRONE EVENT SYST

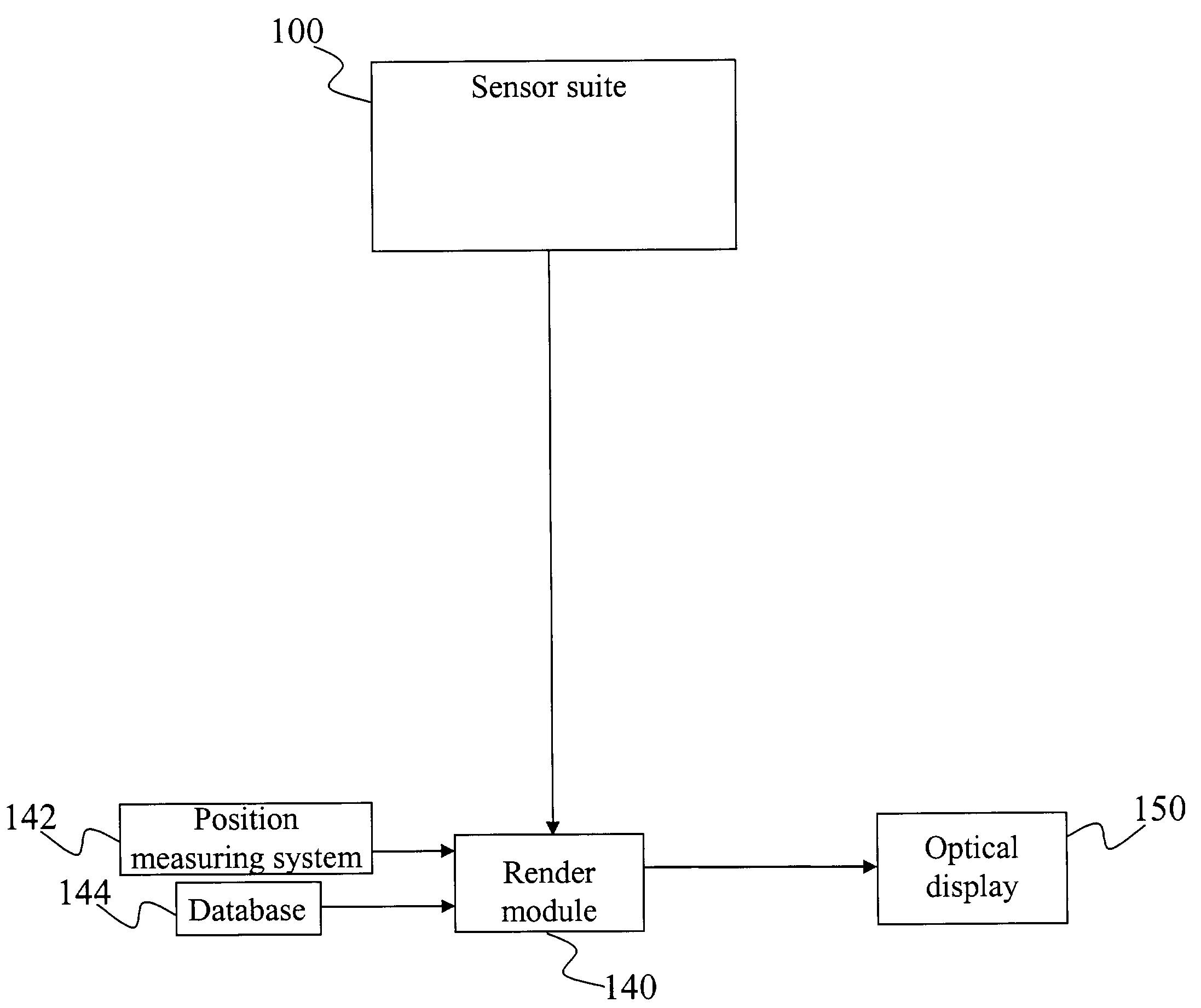

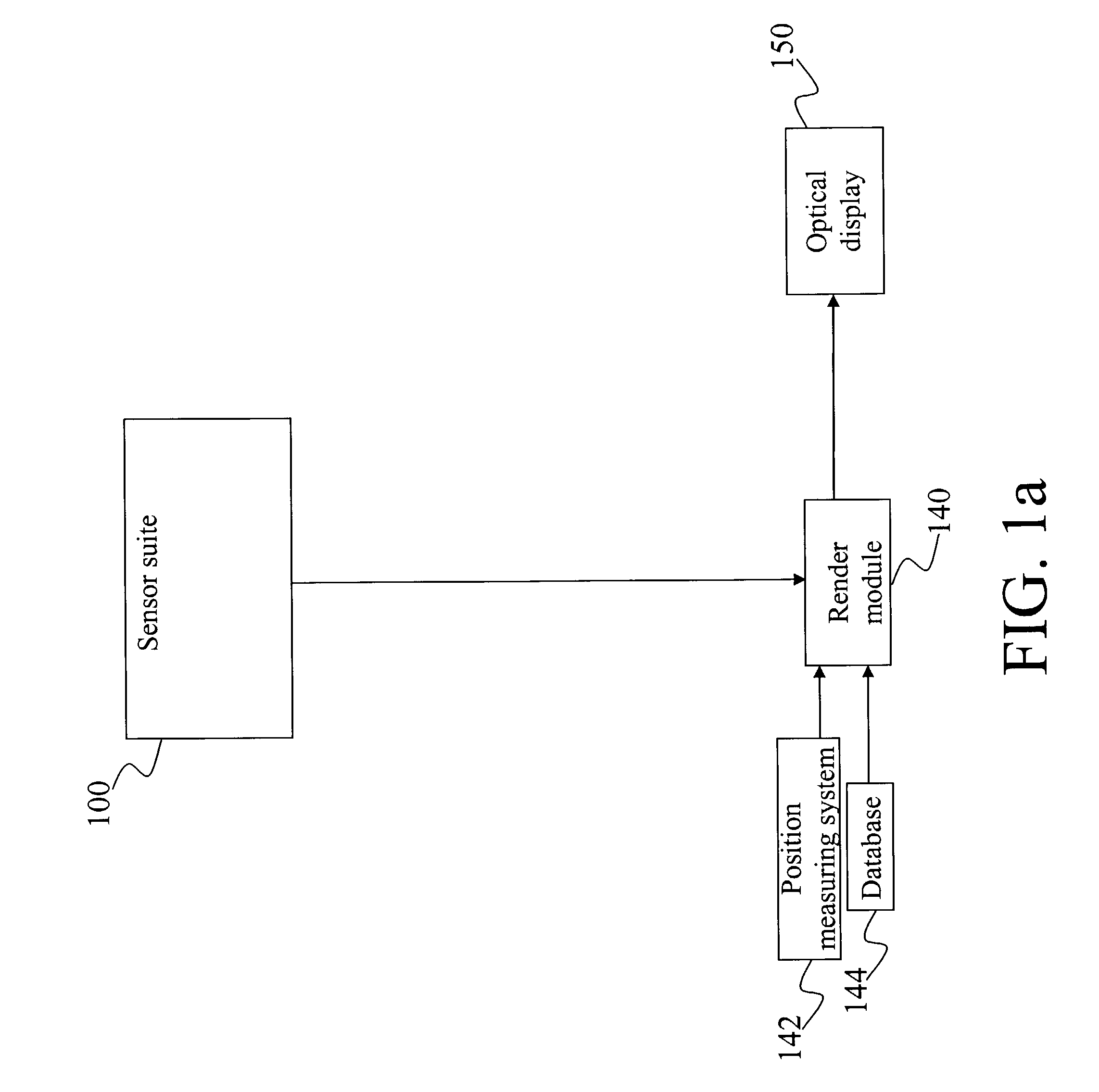

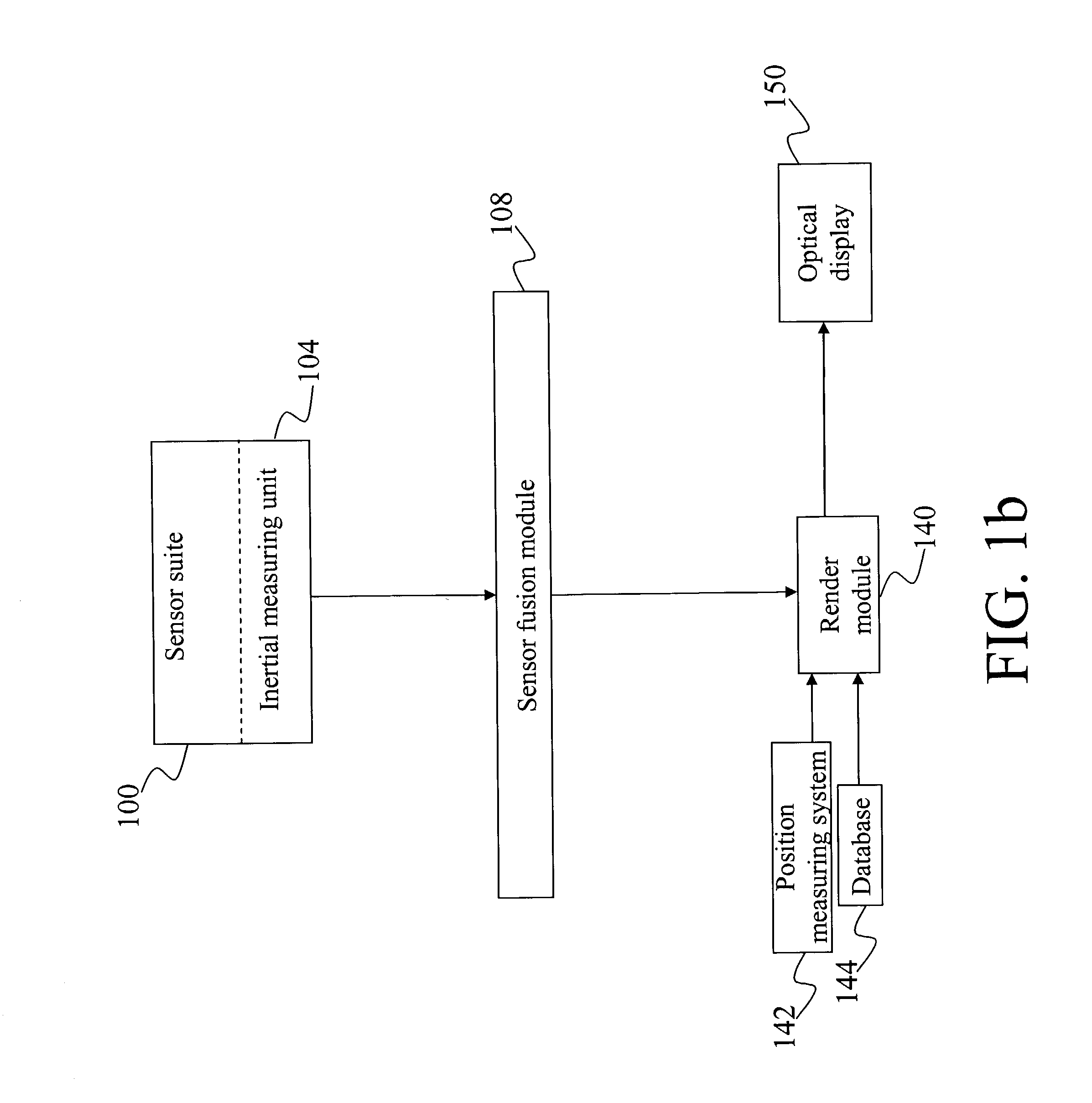

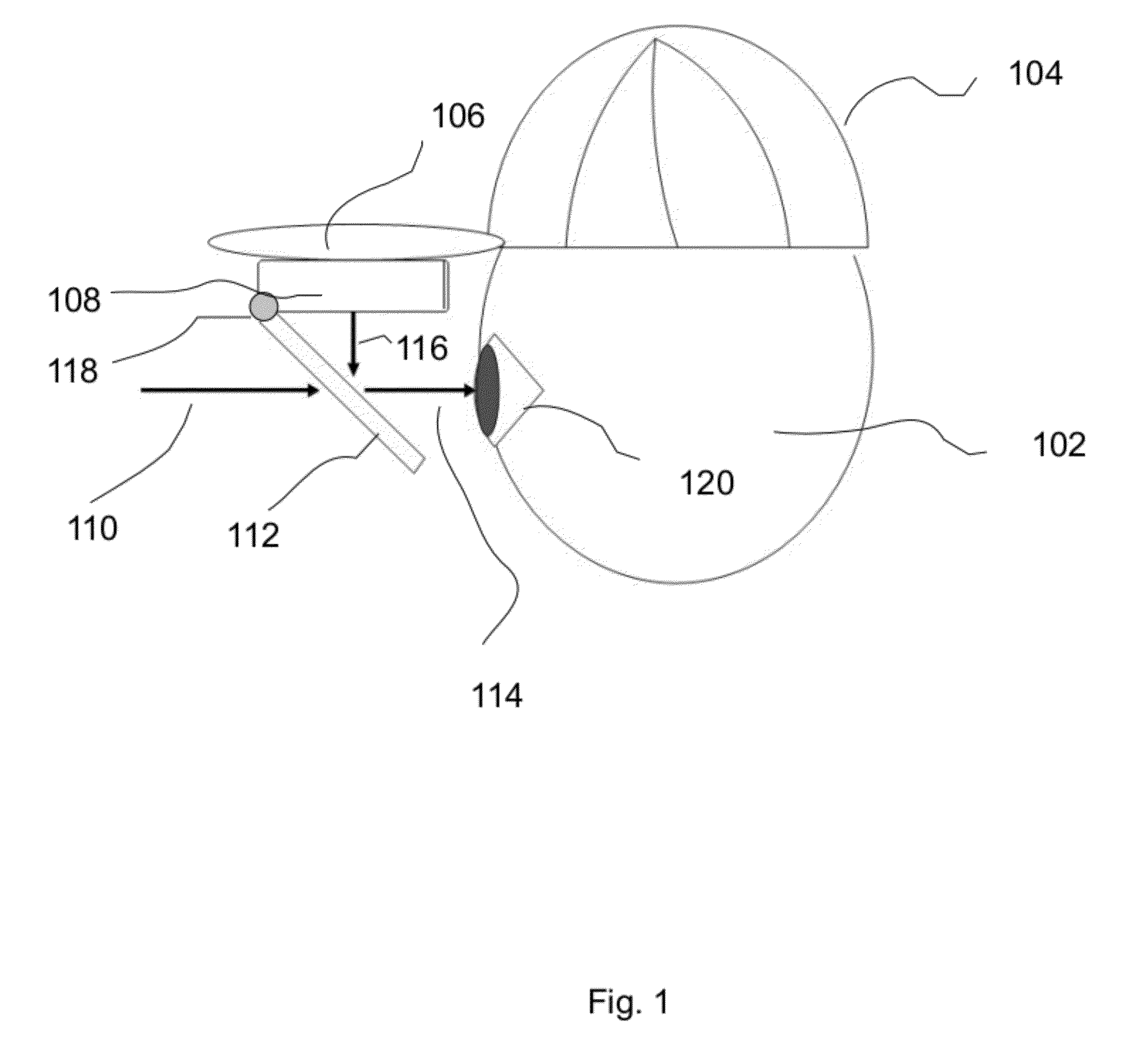

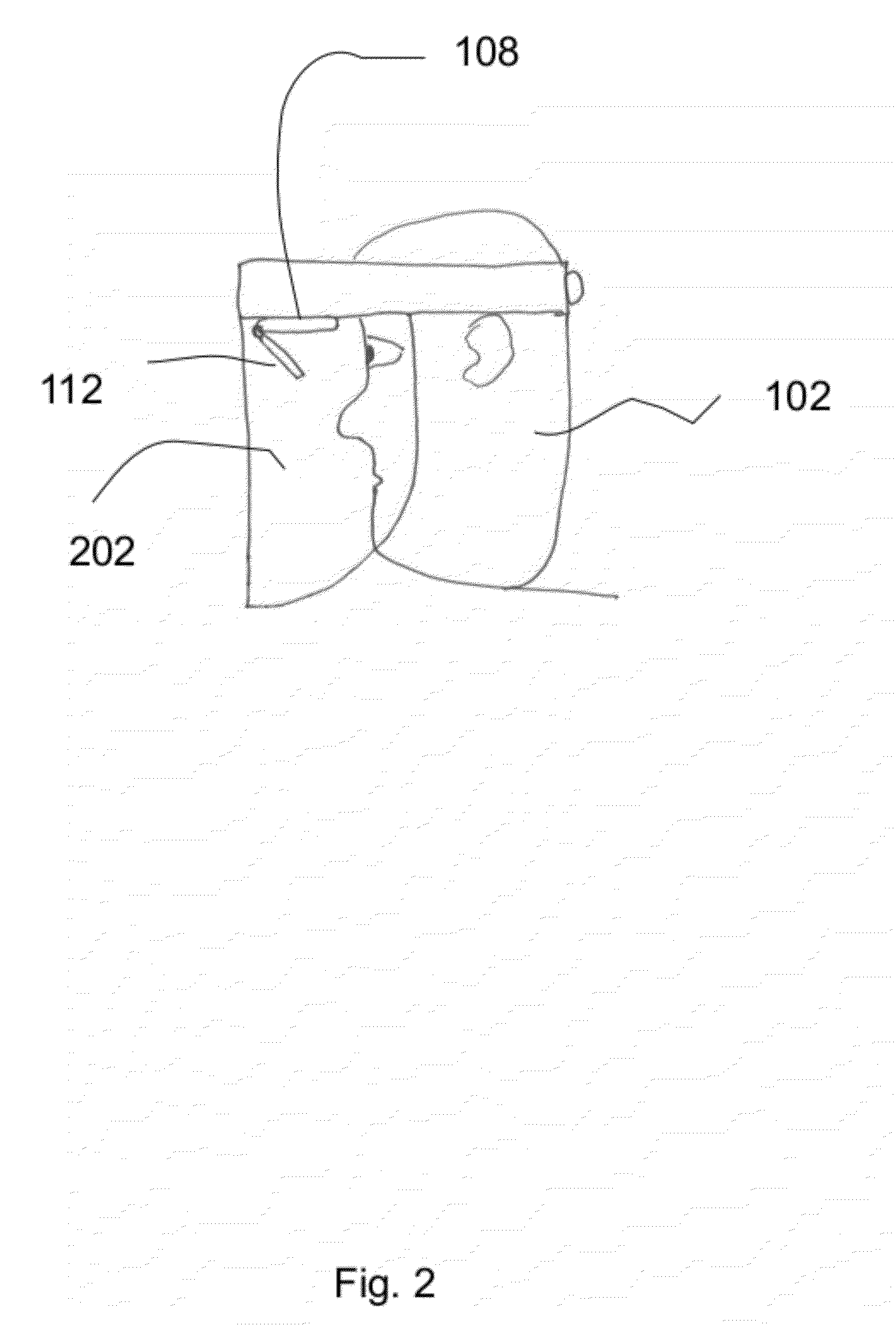

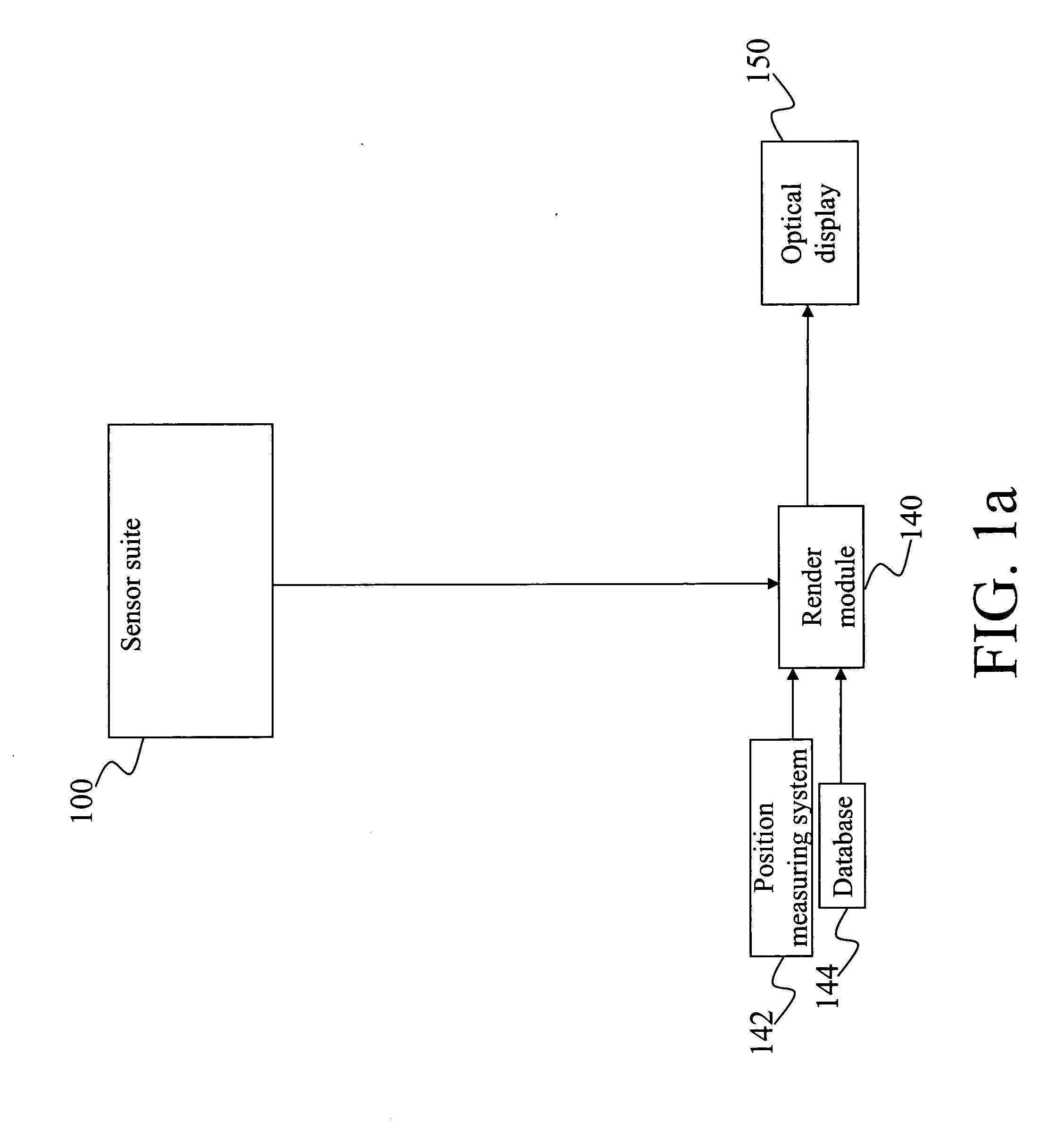

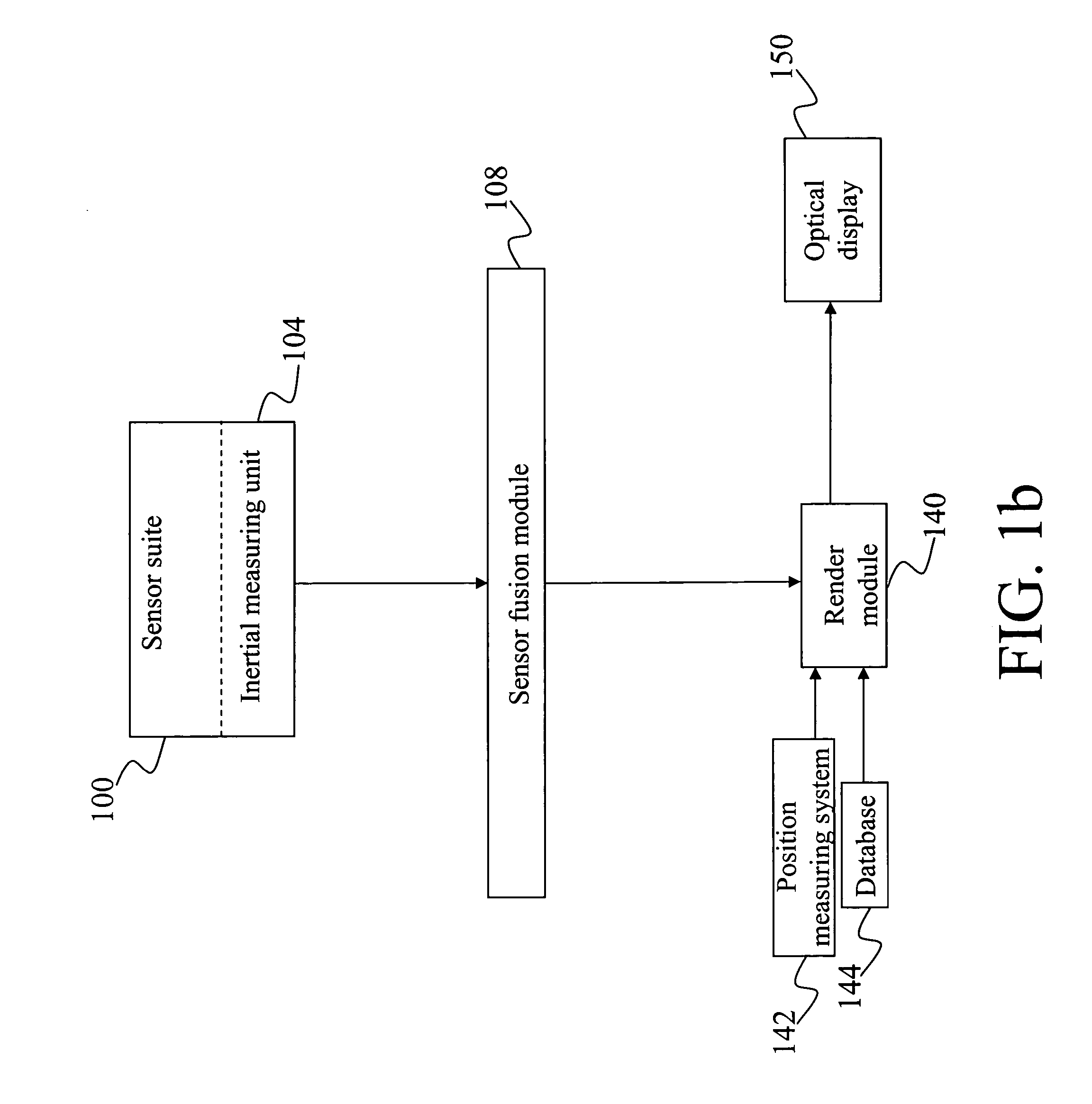

Optical see-through augmented reality modified-scale display

InactiveUS7002551B2Improve accuracyAccurate currentInput/output for user-computer interactionCathode-ray tube indicatorsGraphicsDisplay device

A method and system for providing an optical see-through Augmented Reality modified-scale display. This aspect includes a sensor suite 100 which includes a compass 102, an inertial measuring unit 104, and a video camera 106 for precise measurement of a user's current orientation and angular rotation rate. A sensor fusion module 108 may be included to produce a unified estimate of the user's angular rotation rate and current orientation to be provided to an orientation and rate estimate module 120. The orientation and rate estimate module 120 operates in a static or dynamic (prediction) mode. A render module 140 receives an orientation; and the render module 140 uses the orientation, a position from a position measuring system 142, and data from a database 144 to render graphic images of an object in their correct orientations and positions in an optical display 150.

Owner:HRL LAB

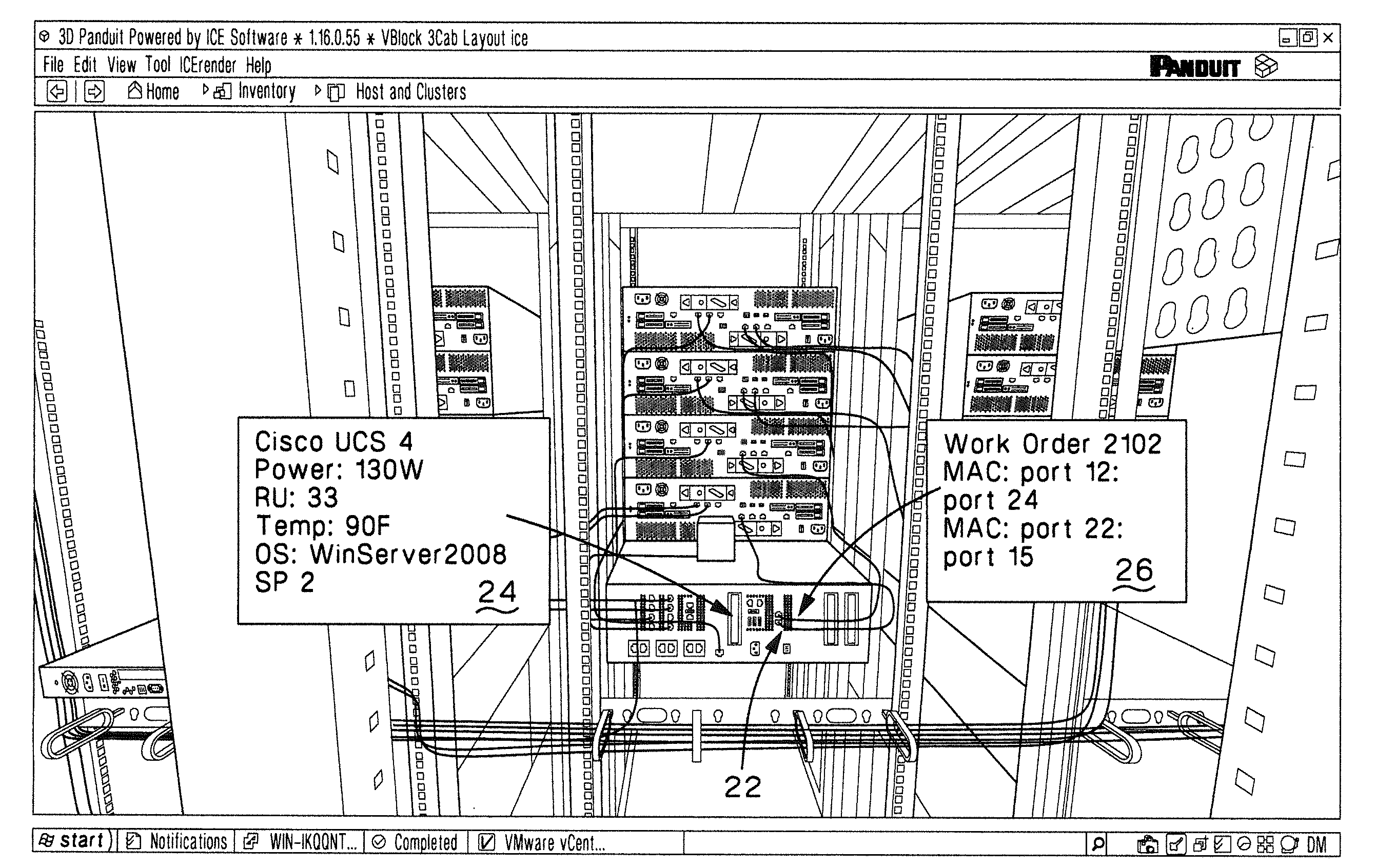

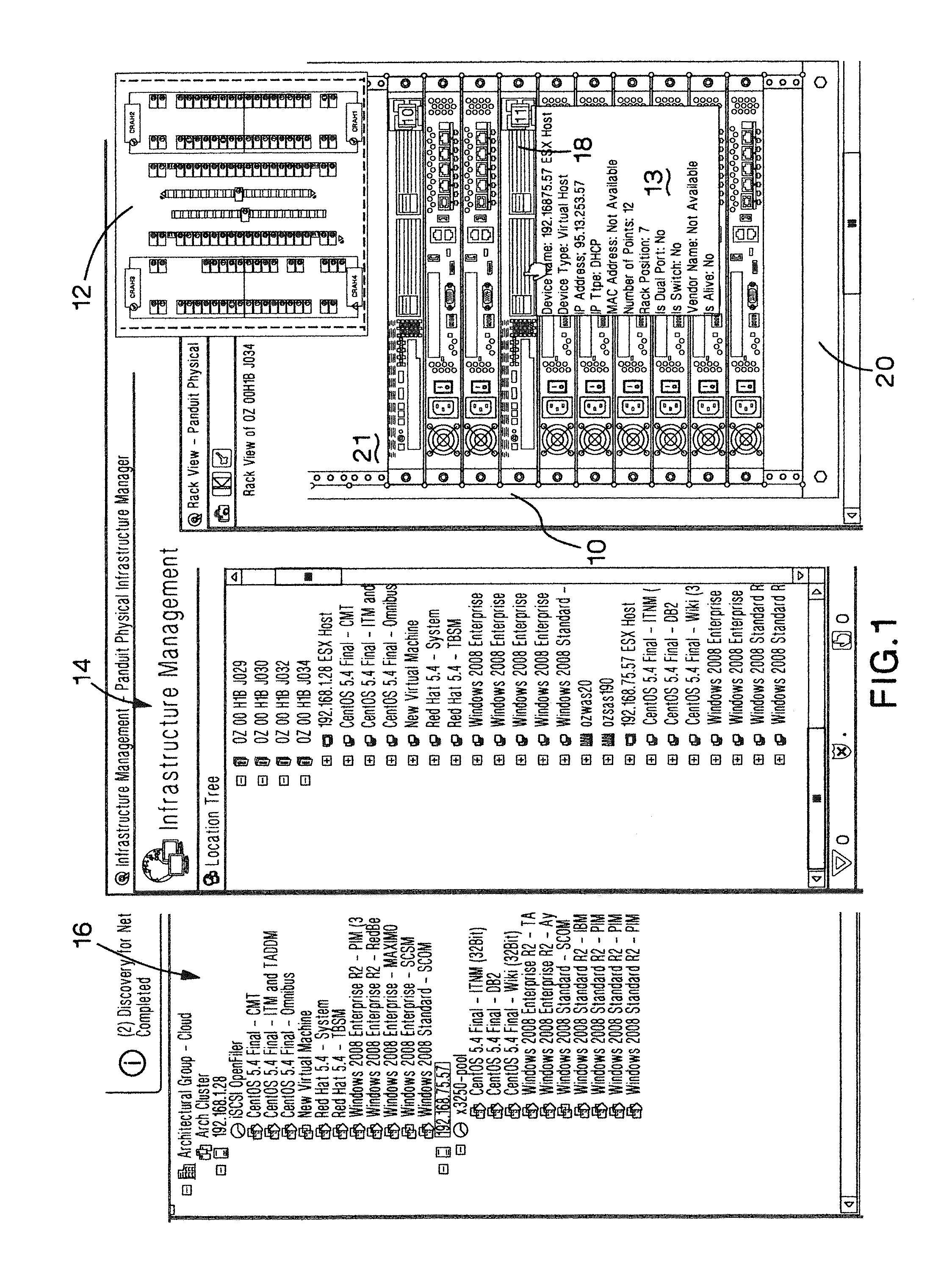

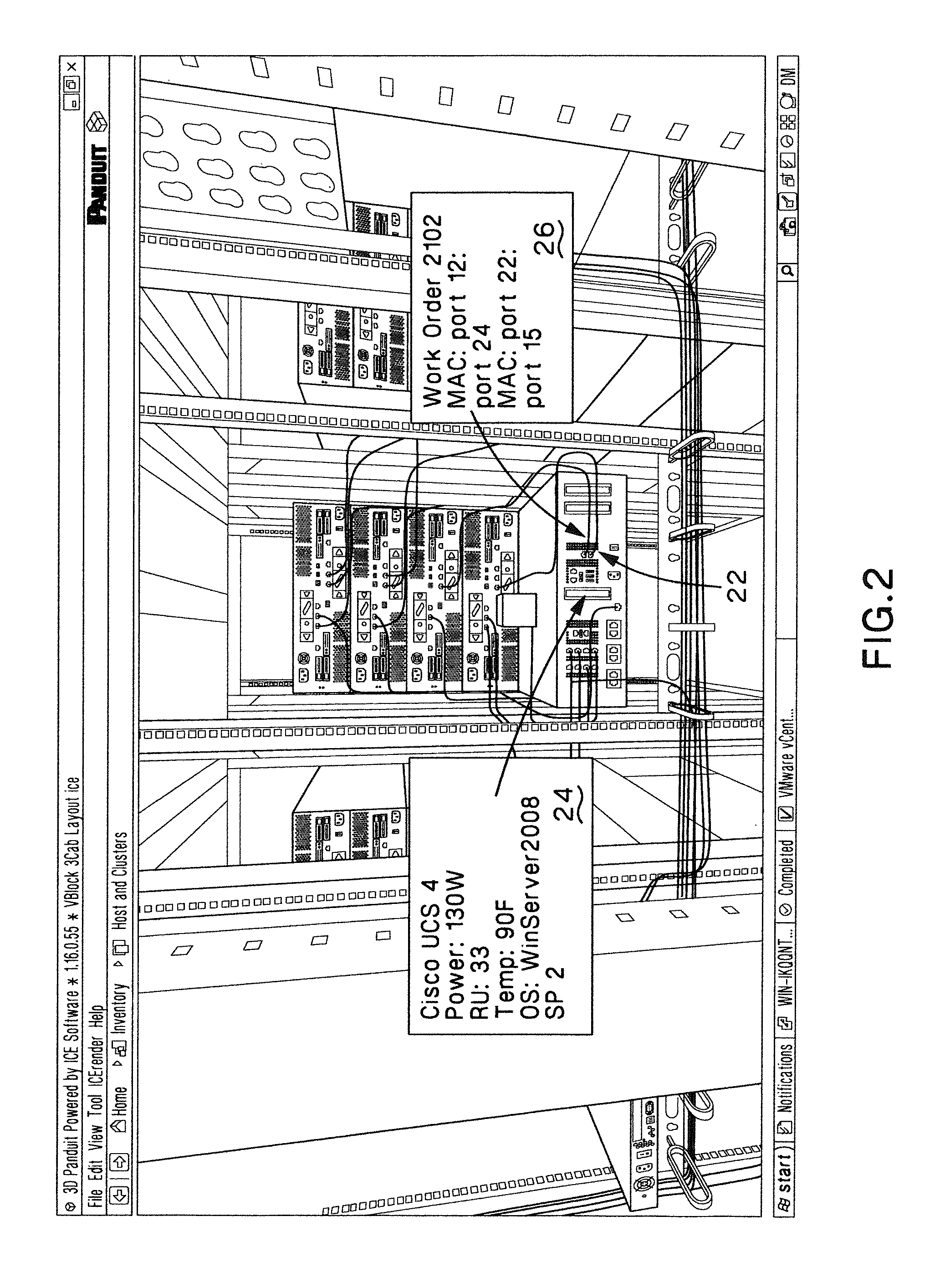

Augmented Reality Data Center Visualization

InactiveUS20120249588A1Digital data information retrievalDigital data processing detailsData setData center

Datacenter datasets and other information are visually displayed in an augmented reality view using a portable device. The visual display of this information is presented along with a visual display of the actual datacenter environment. The combination of these two displays allows installers and technicians to view instructions or other data that are visually correlated to the environment in which they are working.

Owner:PANDUIT

Wearable augmented reality computing apparatus

InactiveUS20120050144A1Improve image qualityDevices with GPS signal receiverDevices with sensorDisplay deviceInput device

A wearable augmented reality computing apparatus with a display screen, a reflective device, a computing device and a head mounted harness to contain these components. The display device and reflective device are configured such that a user can see the reflection from the display device superimposed on the view of reality. An embodiment uses a switchable mirror as the reflective device. One usage of the apparatus is for vehicle or pedestrian navigation. The portable display and general purpose computing device can be combined in a device such as a smartphone. Additional components consist of orientation sensors and non-handheld input devices.

Owner:MORLOCK CLAYTON RICHARD

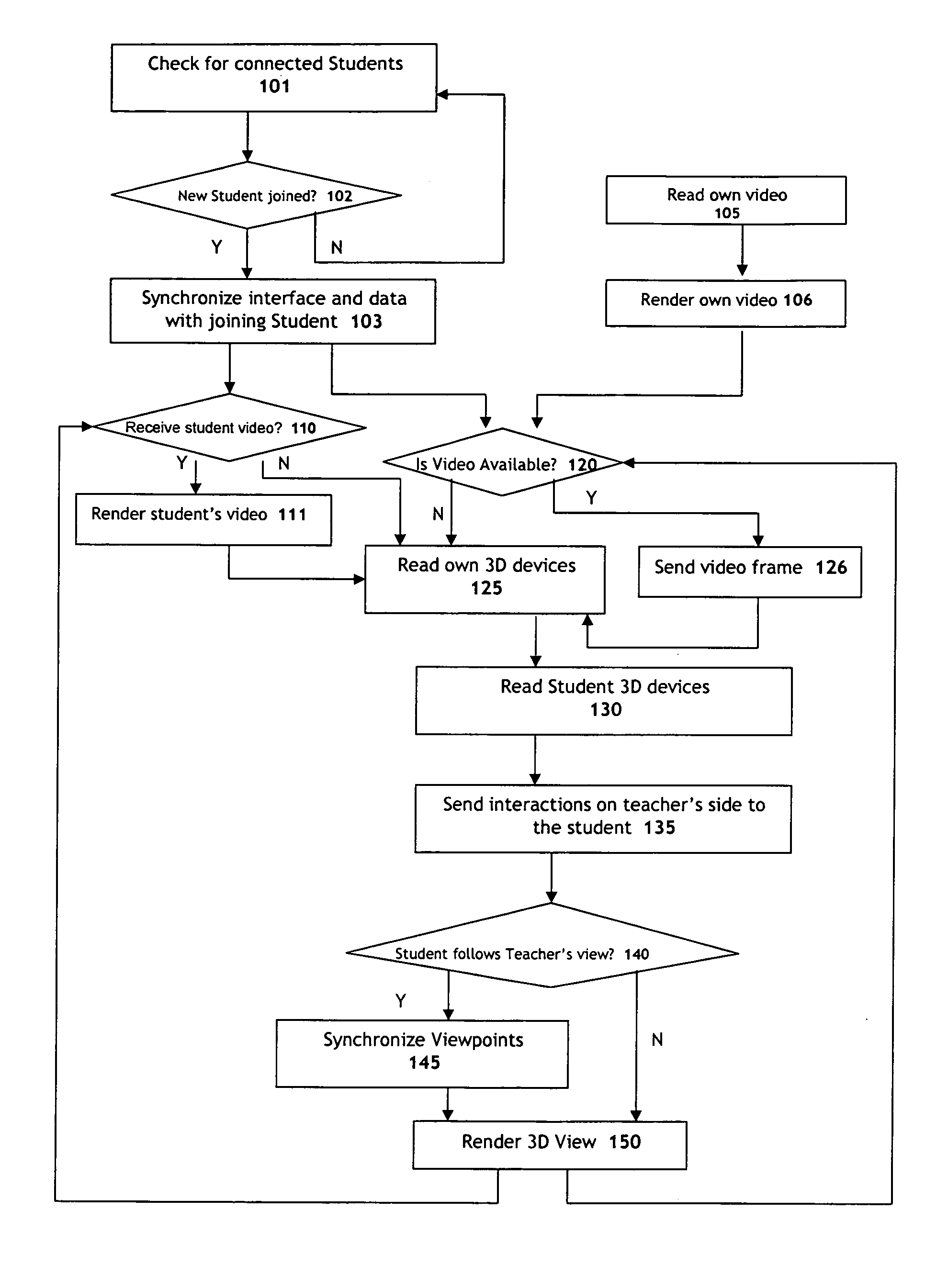

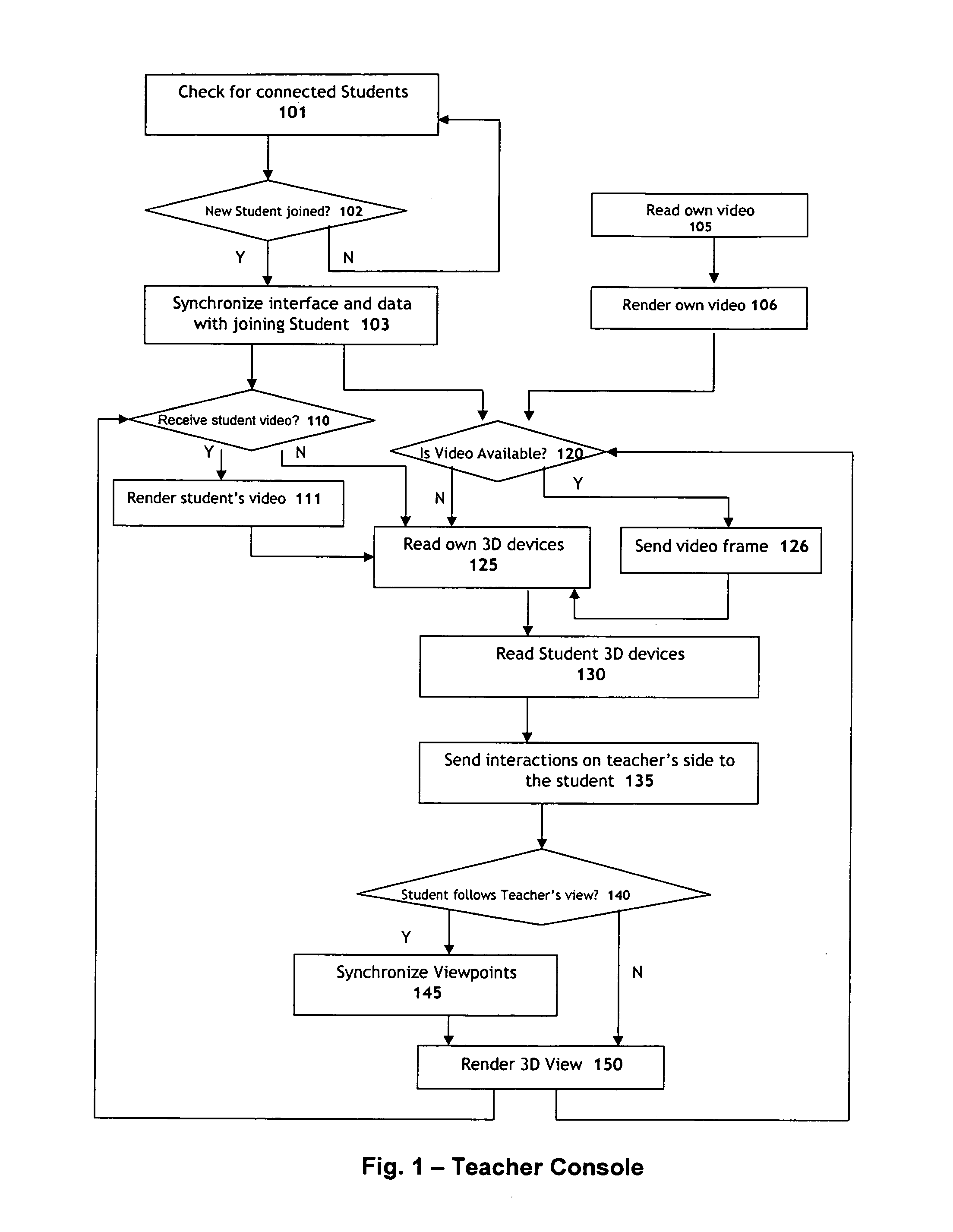

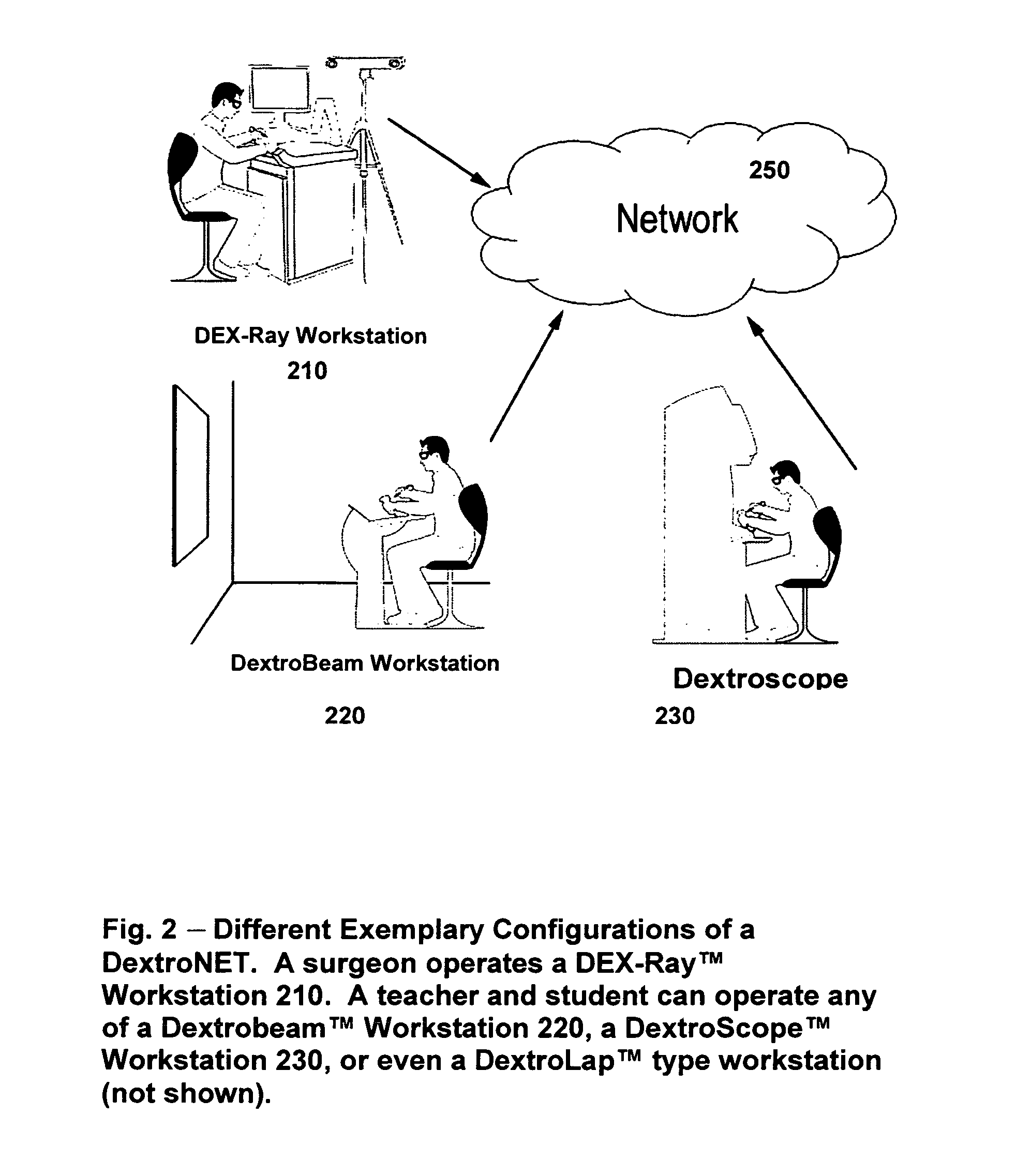

Systems and methods for collaborative interactive visualization of 3D data sets over a network ("DextroNet")

Owner:BRACCO IMAGINIG SPA

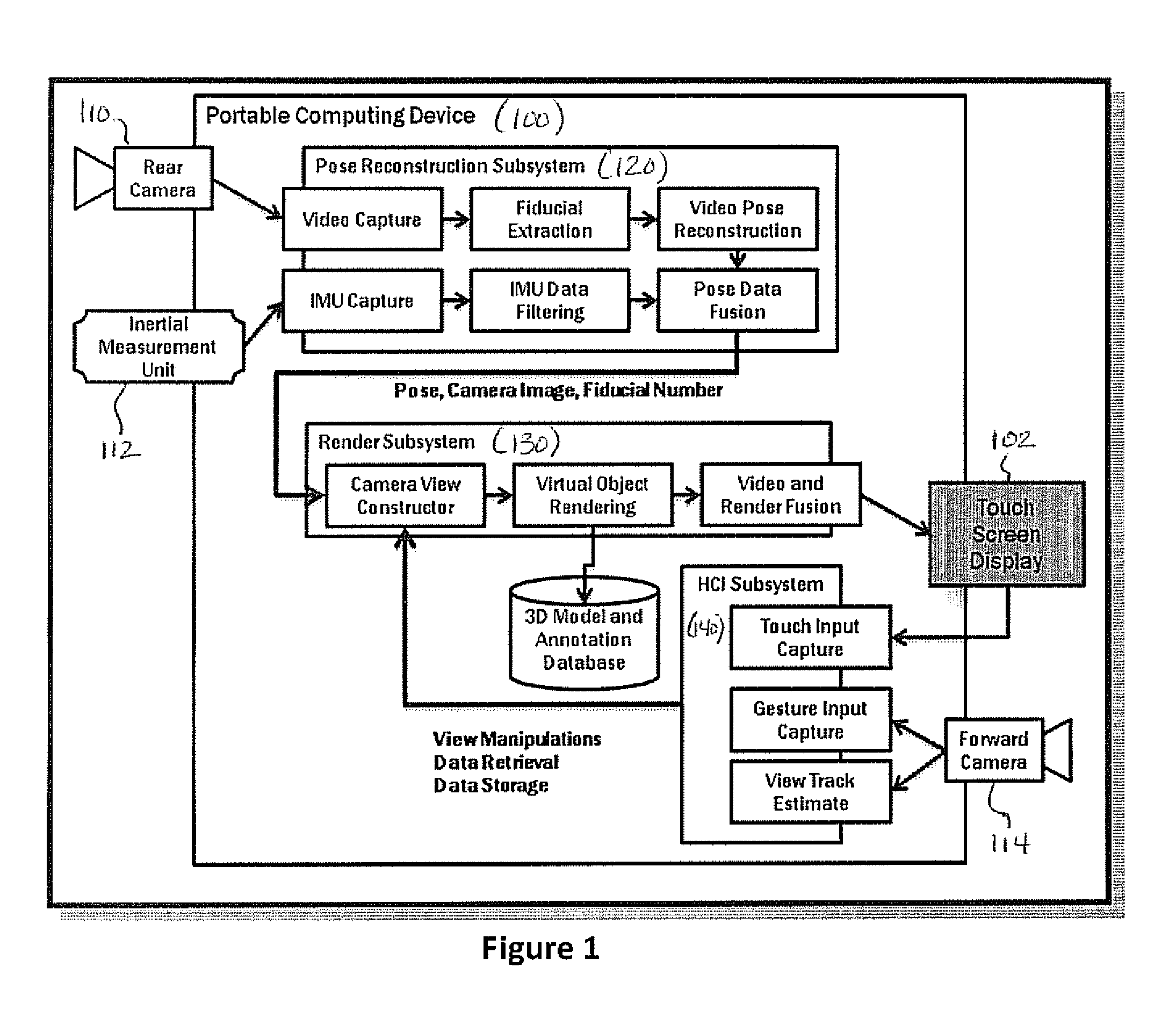

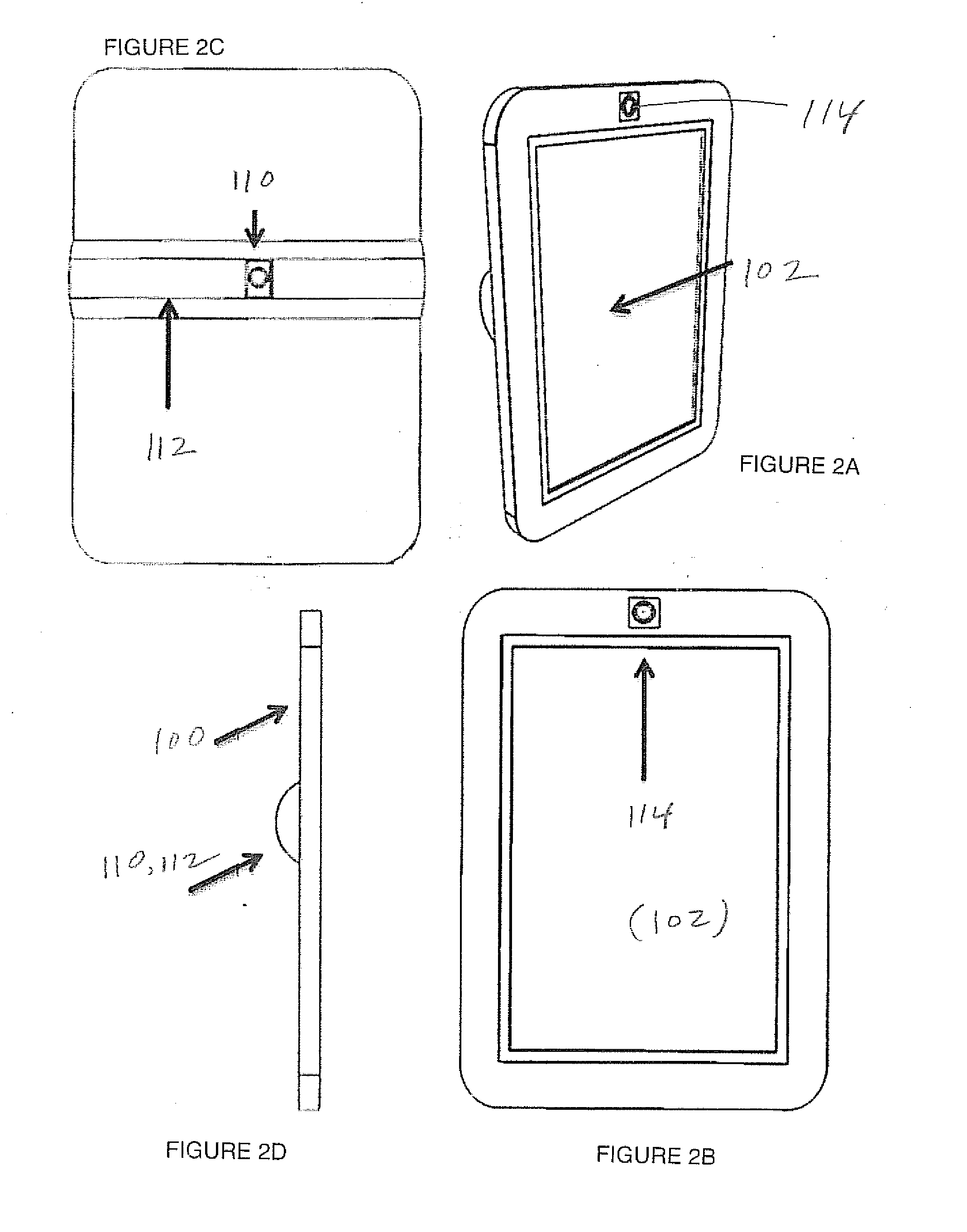

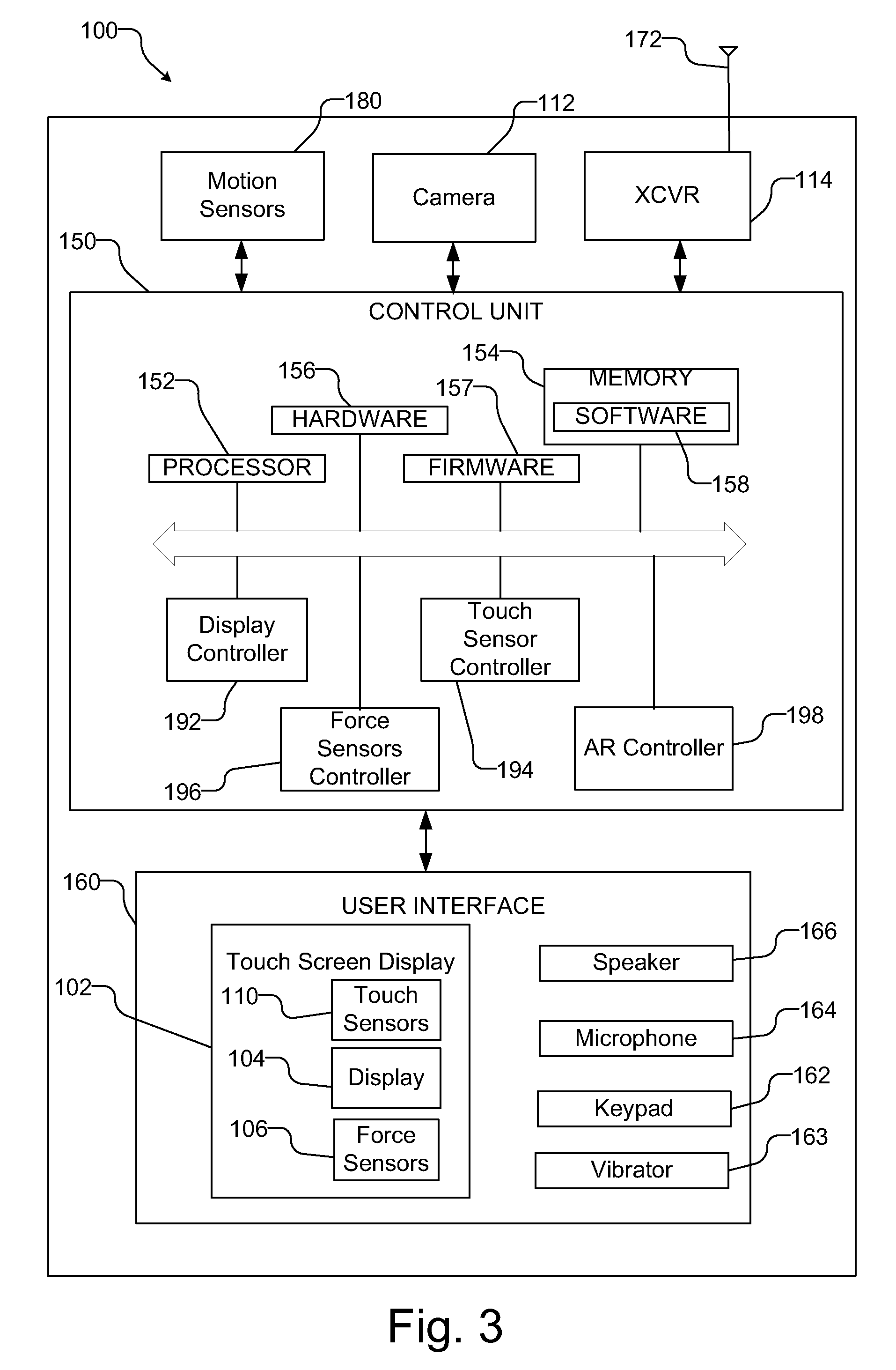

Touch screen augmented reality system and method

InactiveUS20090322671A1Character and pattern recognitionCathode-ray tube indicatorsGraphicsDisplay device

An improved augmented reality (AR) system integrates a human interface and computing system into a single, hand-held device. A touch-screen display and a rear-mounted camera allows a user interact the AR content in a more intuitive way. A database storing graphical images or textual information about objects to be augmented. A processor is operative to analyze the imagery from the camera to locate one or more fiducials associated with a real object, determine the pose of the camera based upon the position or orientation of the fiducials, search the database to find Graphical images or textual information associated with the real object, and display graphical images or textual information in overlying registration with the imagery from the camera.

Owner:JOLLY SEVEN SERIES 70 OF ALLIED SECURITY TRUST I

Methods and systems for determining the pose of a camera with respect to at least one object of a real environment

Owner:APPLE INC

Methods and systems for creating virtual and augmented reality

Configurations are disclosed for presenting virtual reality and augmented reality experiences to users. The system may comprise an image capturing device to capture one or more images, the one or more images corresponding to a field of the view of a user of a head-mounted augmented reality device, and a processor communicatively coupled to the image capturing device to extract a set of map points from the set of images, to identify a set of sparse points and a set of dense points from the extracted set of map points, and to perform a normalization on the set of map points.

Owner:MAGIC LEAP INC

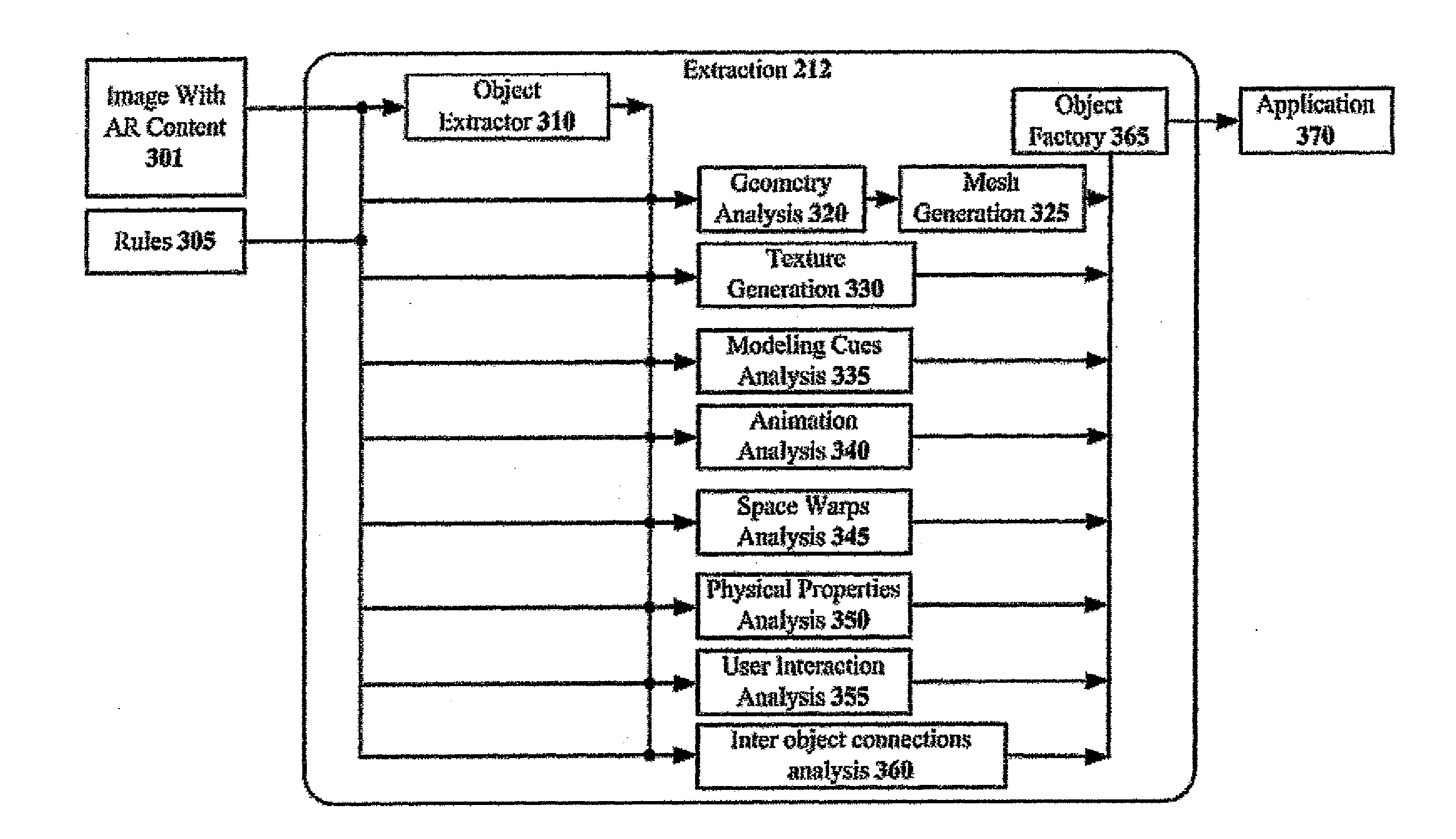

Method and System for Compositing an Augmented Reality Scene

ActiveUS20120069051A1Eliminate needCathode-ray tube indicatorsImage data processingComputer graphics (images)Virtual model

Disclosed are systems and methods for compositing an augmented reality scene, the methods including the steps of extracting, by an extraction component into a memory of a data-processing machine, at least one object from a real-world image detected by a sensing device; geometrically reconstructing at least one virtual model from at least one object; and compositing AR content from at least one virtual model in order to augment the AR content on the real-world image, thereby creating AR scene. Preferably, the method further includes; extracting at least one annotation from the real-world image into the memory of the data-processing machine for modifying at least one virtual model according to at least one annotation. Preferably, the method further includes: interacting with AR scene by modifying AR content based on modification of at least one object and / or at least one annotation in the real-world image.

Owner:APPLE INC

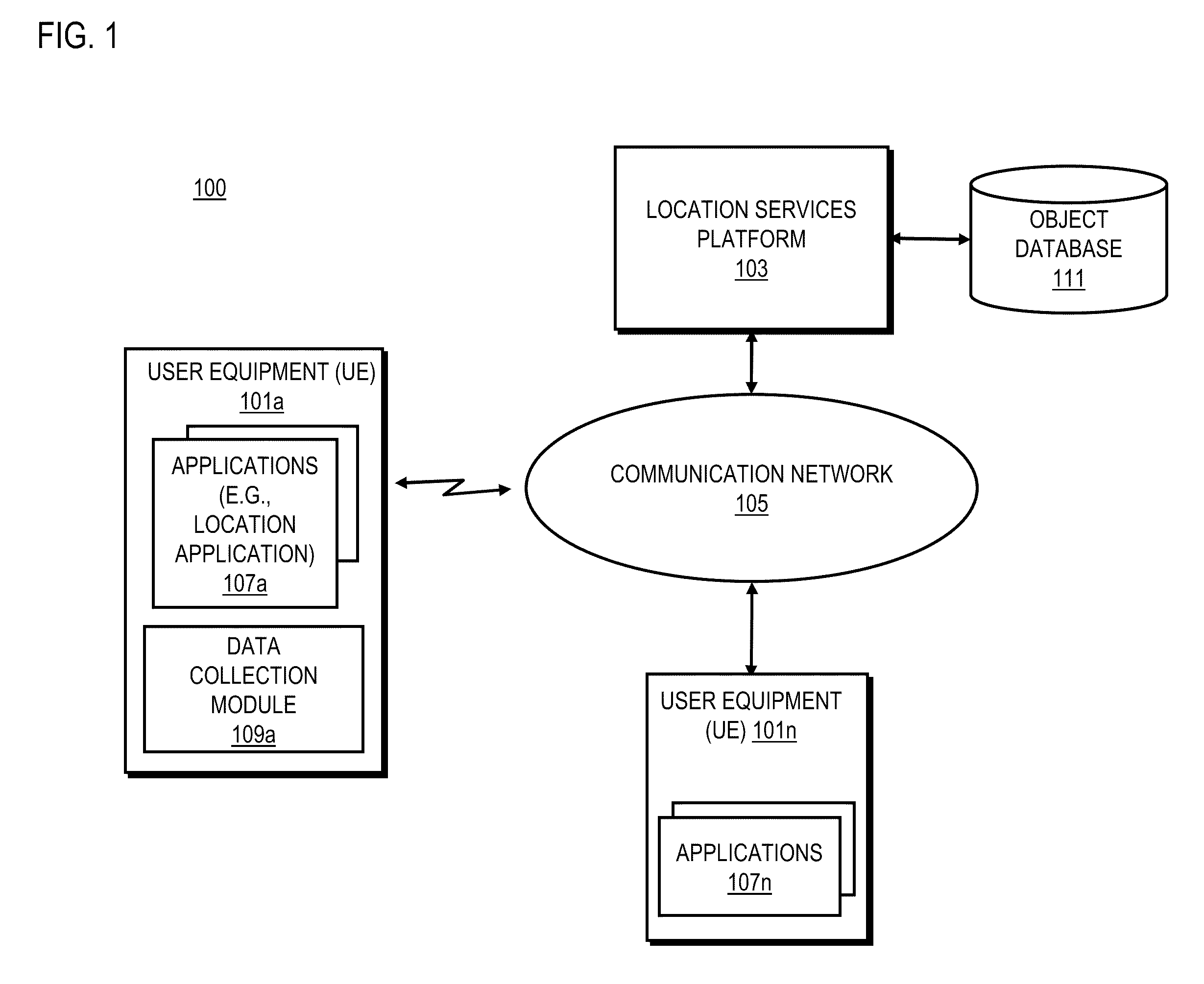

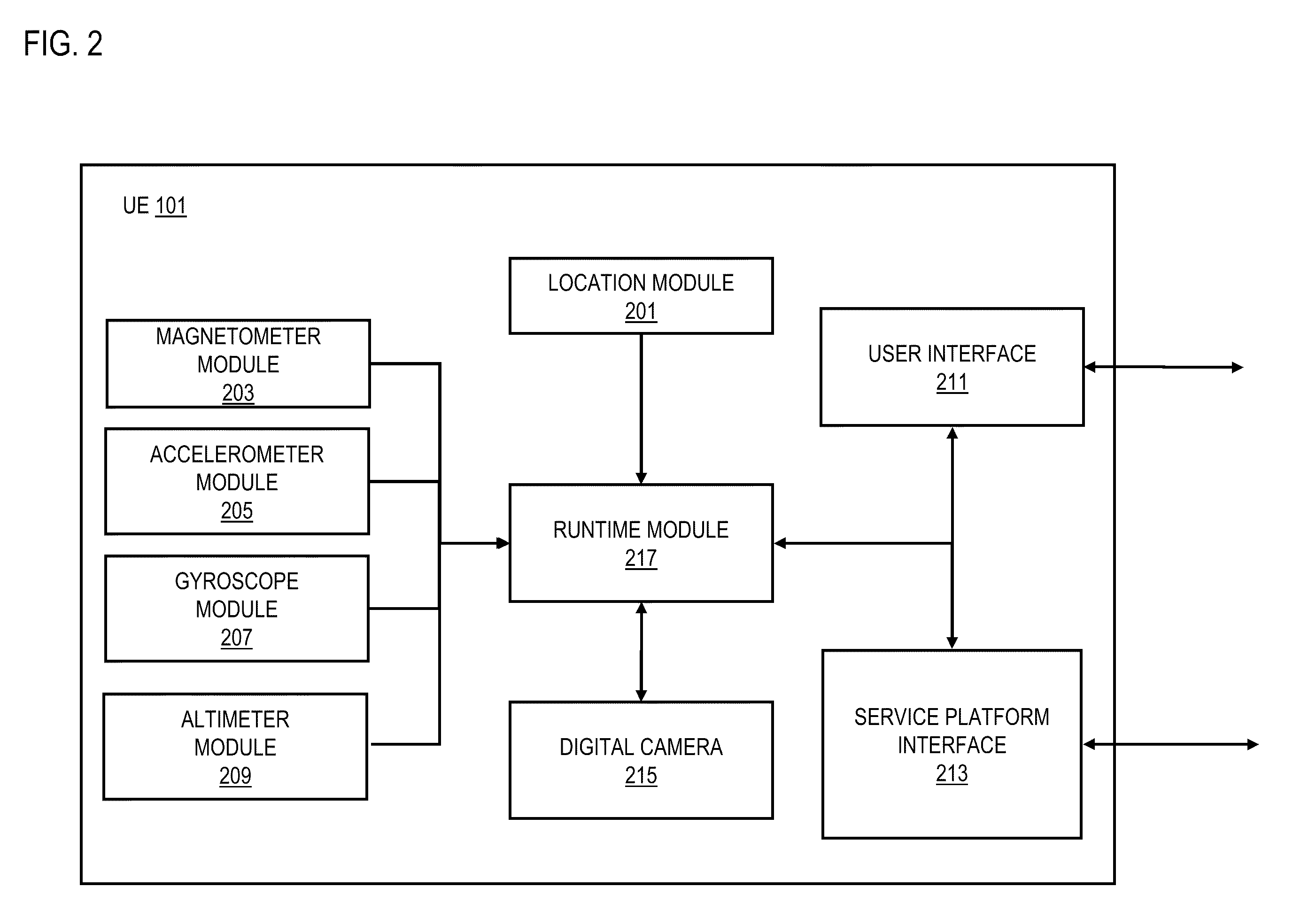

Method and apparatus for an augmented reality user interface

ActiveUS20100328344A1Digital data processing detailsCathode-ray tube indicatorsHorizonUser equipment

An approach is provided for an augmented reality user interface. An image representing a physical environment is received. Data relating to a horizon within the physical environment is retrieved. A section of the image to overlay location information based on the horizon data is determined. Presenting of the location information within the determined section to a user equipment is initiated.

Owner:NOKIA TECHNOLOGLES OY

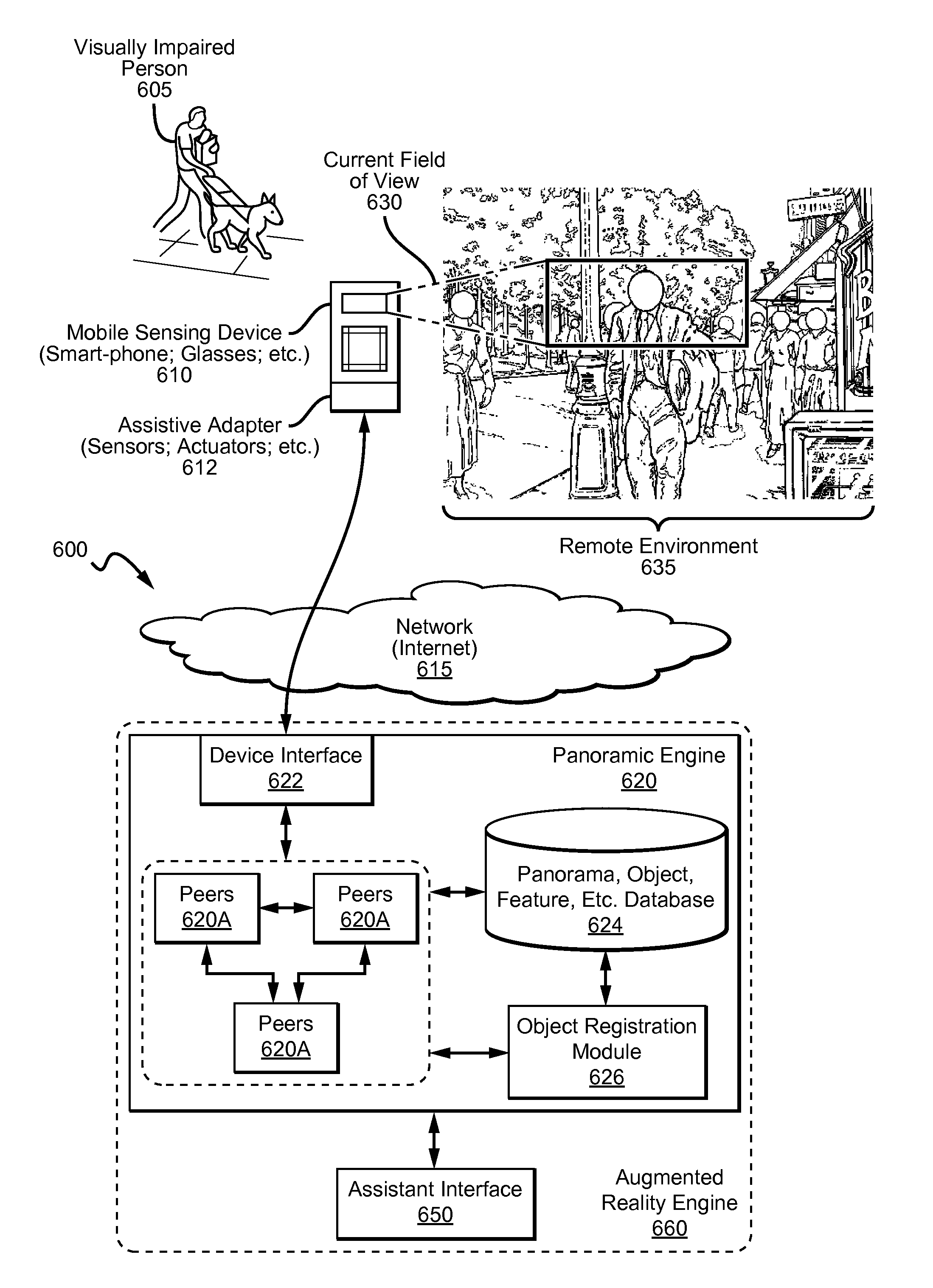

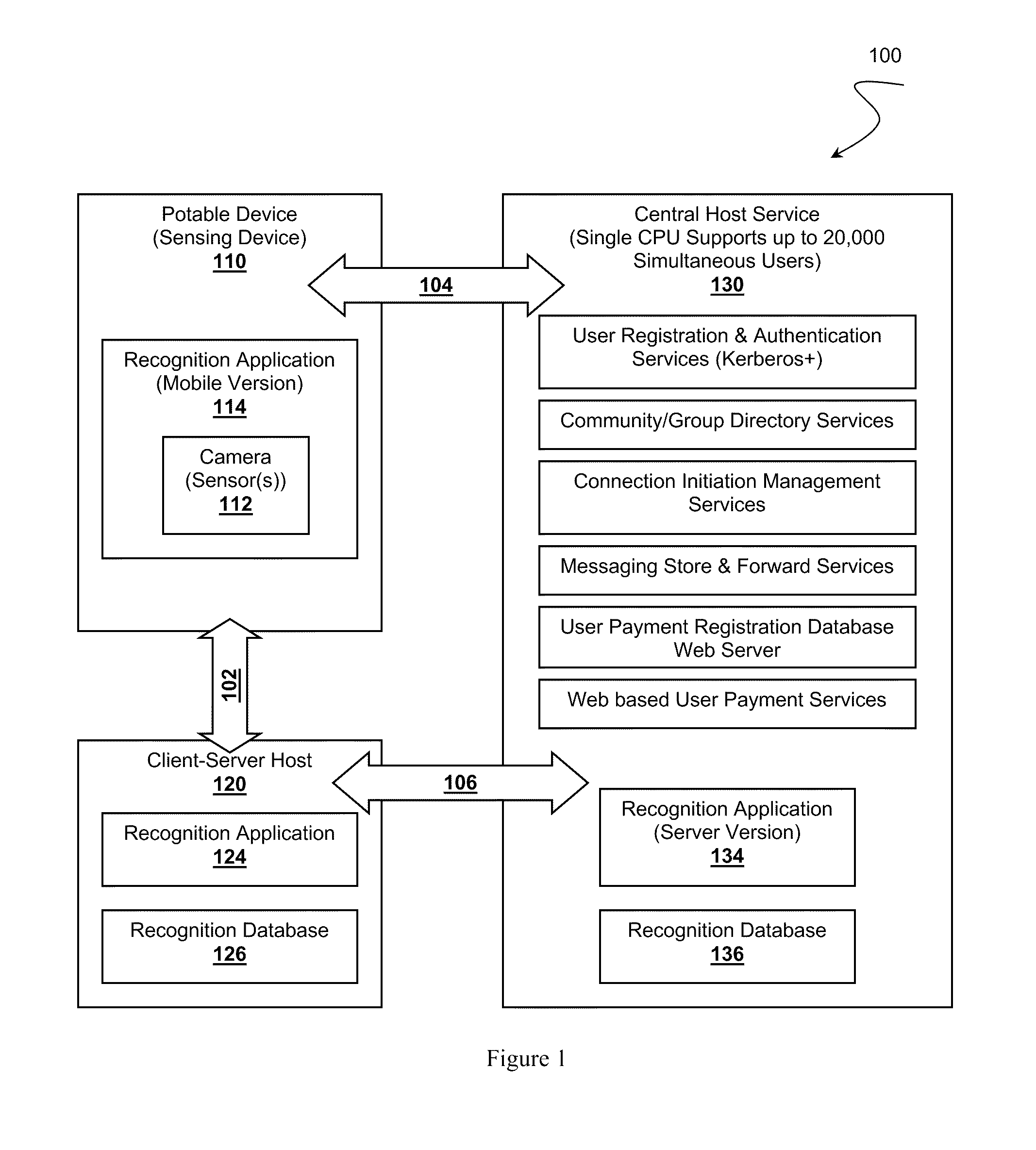

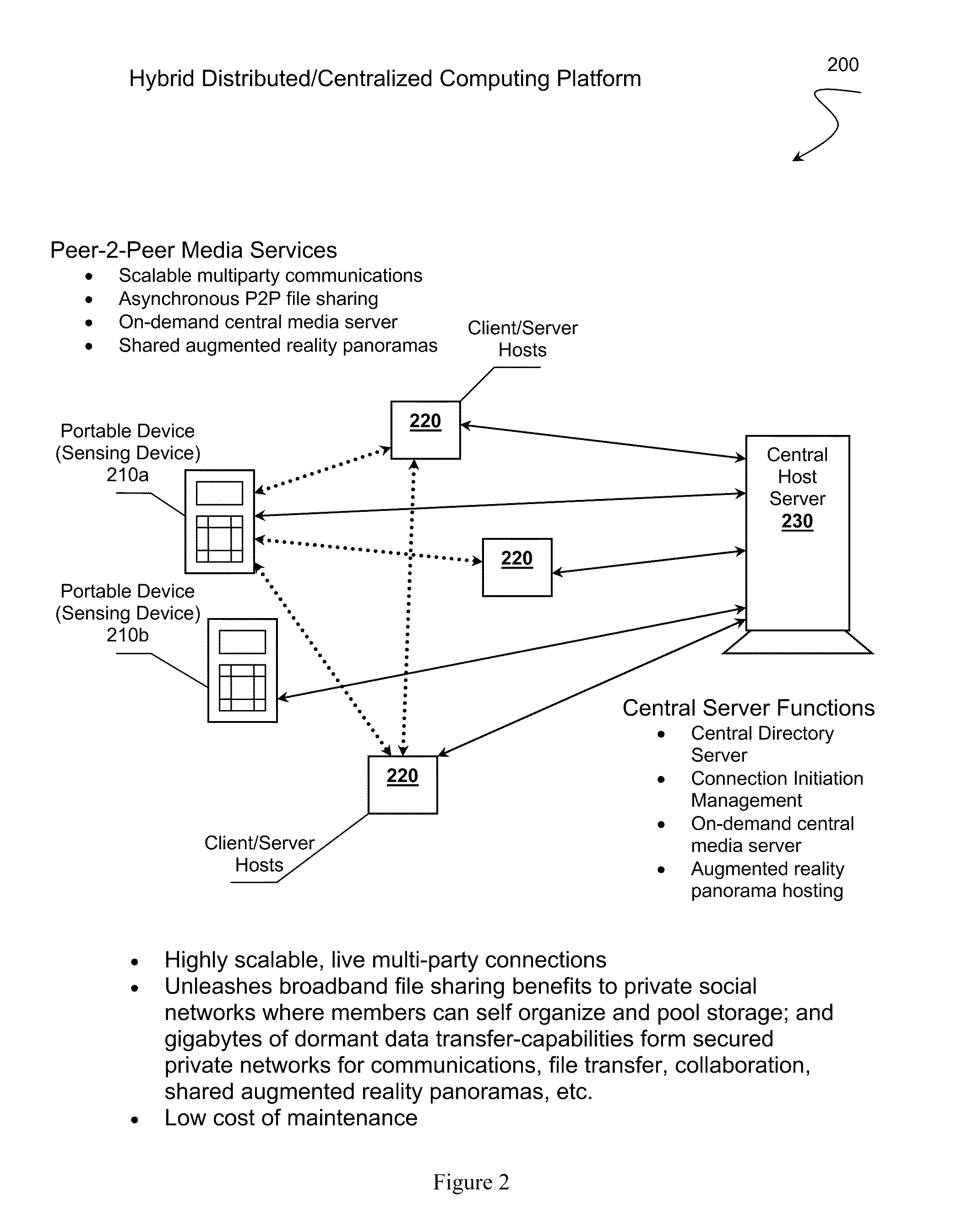

Augmented reality panorama supporting visually impaired individuals

There is presented a system and method for providing real-time object recognition to a remote user. The system comprises a portable communication device including a camera, at least one client-server host device remote from and accessible by the portable communication device over a network, and a recognition database accessible by the client-server host device or devices. A recognition application residing on the client-server host device or devices is capable of utilizing the recognition database to provide real-time object recognition of visual imagery captured using the portable communication device to the remote user of the portable communication device. In one embodiment, a sighted assistant shares an augmented reality panorama with a visually impaired user of the portable communication device where the panorama is constructed from sensor data from the device.

Owner:NANT HLDG IP LLC

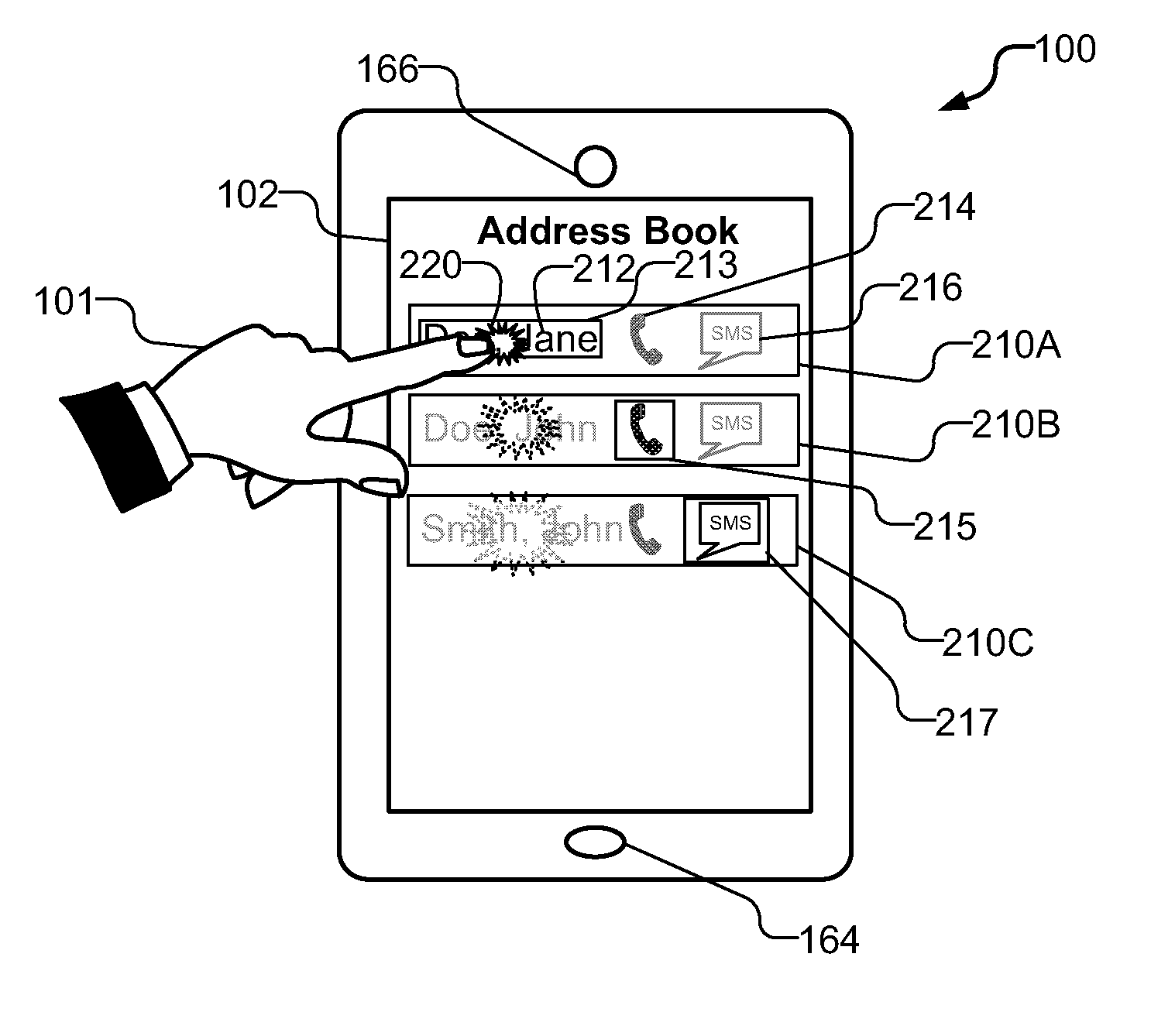

User interface elements augmented with force detection

ActiveUS8952987B2Inhibition of activationProgram control using stored programsCathode-ray tube indicatorsDisplay deviceBiological activation

A computing device includes a touch screen display with at least one force sensor, each of which provides a signal in response to contact with the touch screen display. Using force signals from the at least one force sensor that result from contact with the touch screen, the operation of the computing device may be controlled, e.g. to select one of a plurality of overlaying interface elements, to prevent the unintended activation of suspect commands that require secondary confirmation, and to mimic the force requirements of real-world objects in augmented reality applications.

Owner:QUALCOMM INC

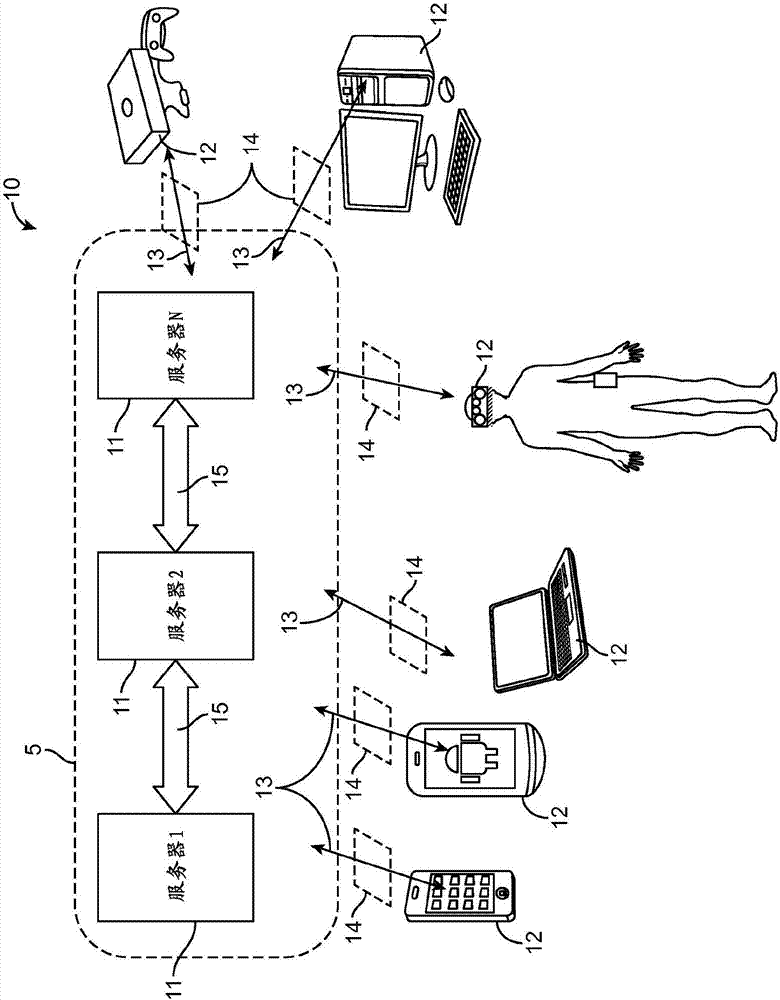

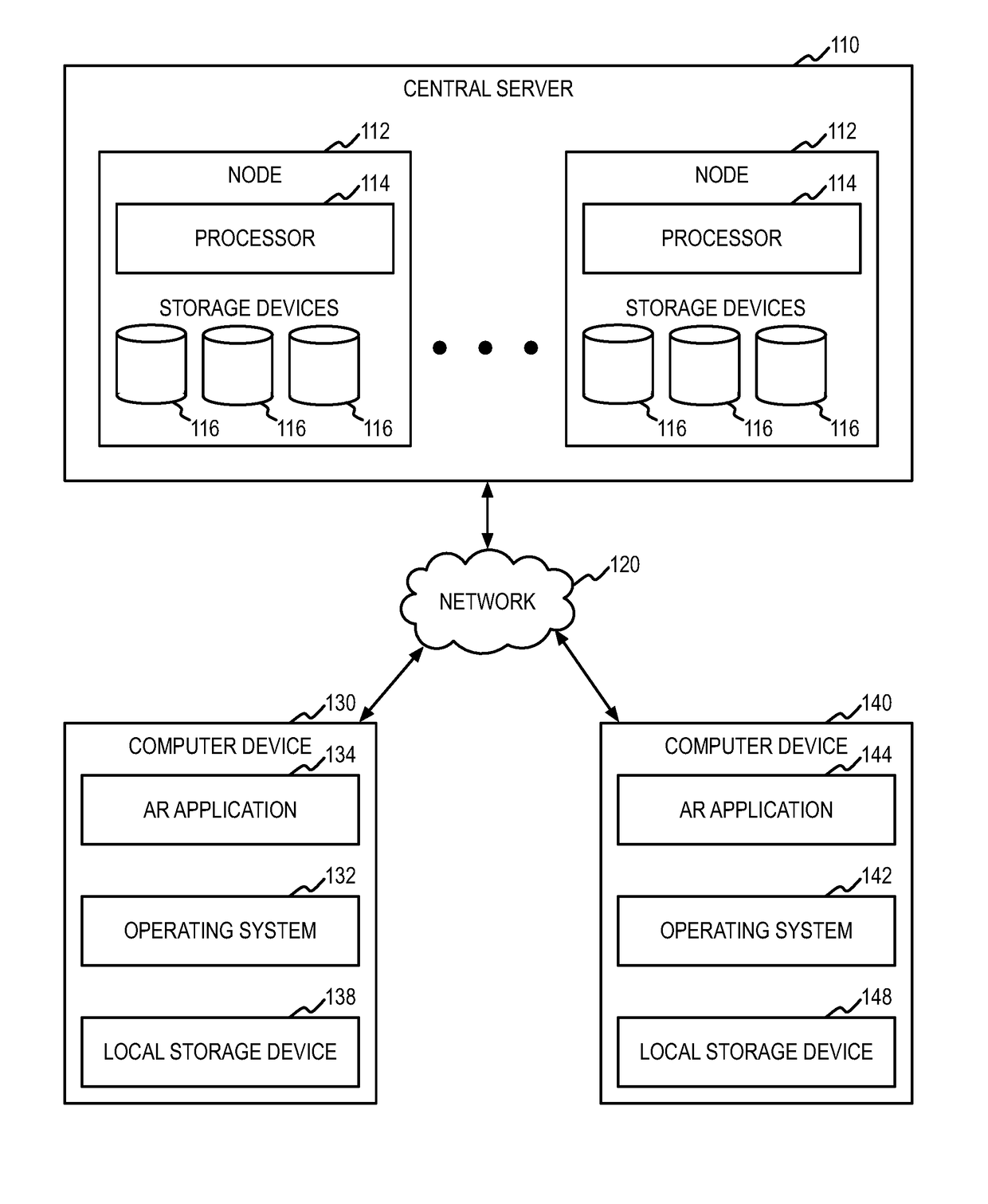

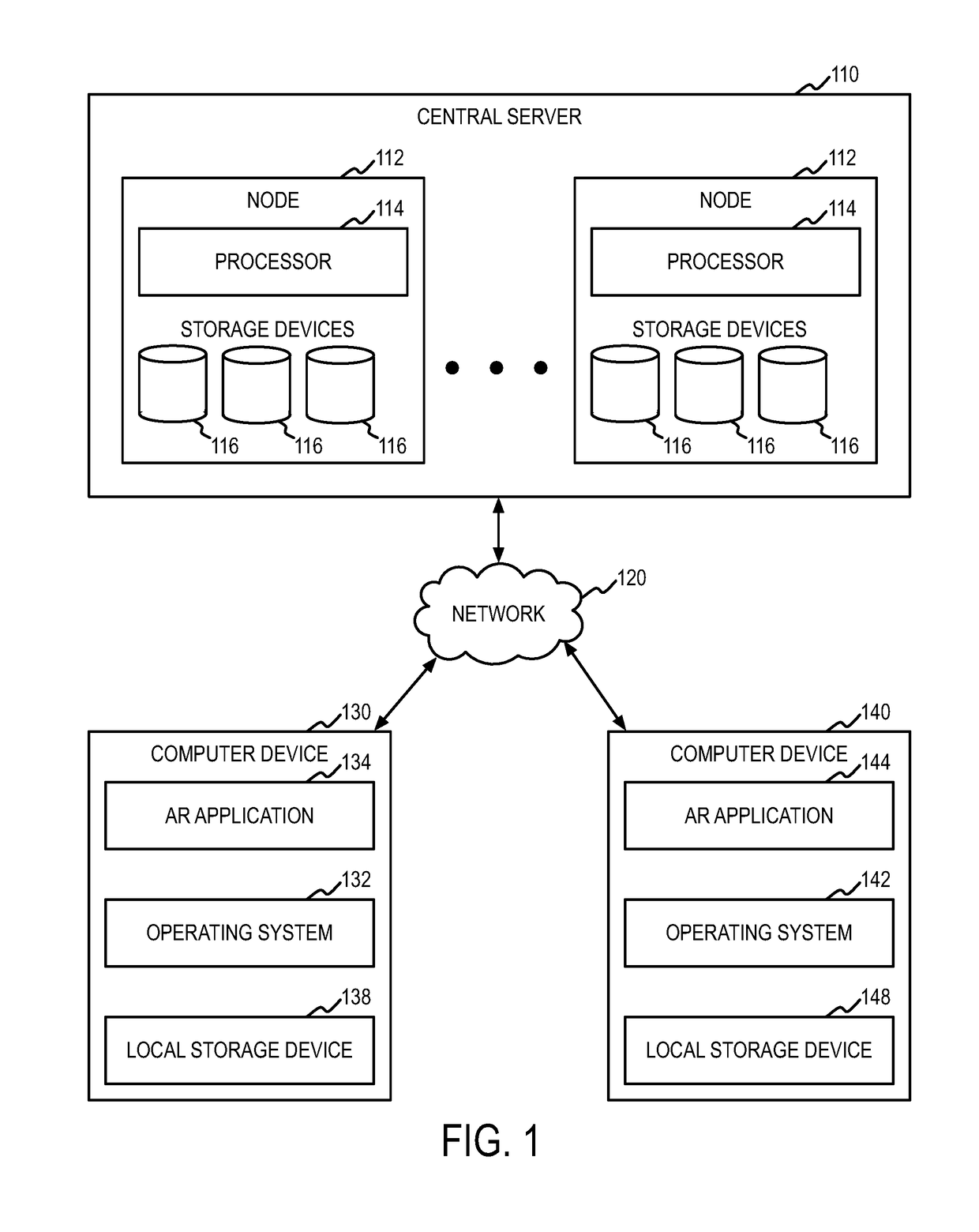

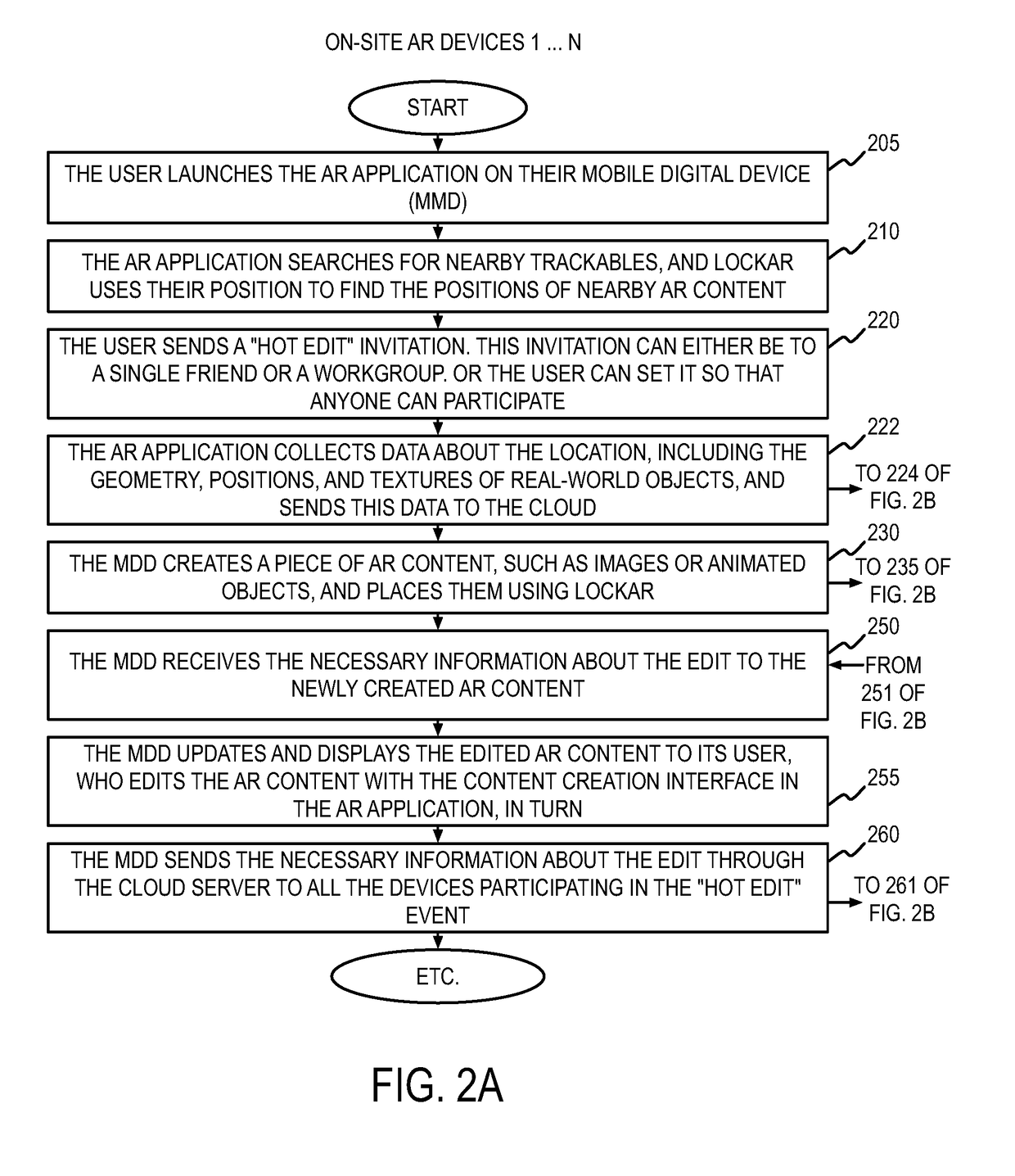

Real-time shared augmented reality experience

A method and system are provided for enabling a shared augmented reality experience. The system may include one or more onsite devices for generating AR representations of a real-world location, and one or more offsite devices for generating virtual AR representations of the real-world location. The AR representations may include data and / or content incorporated into live views of a real-world location. The virtual AR representations of the AR scene may incorporate images and data from a real-world location and include additional AR content. The onsite devices may synchronize content used to create the AR experience with the offsite devices in real time, such that the onsite AR representations and the offsite virtual AR representations are consistent with each other.

Owner:YOUAR INC

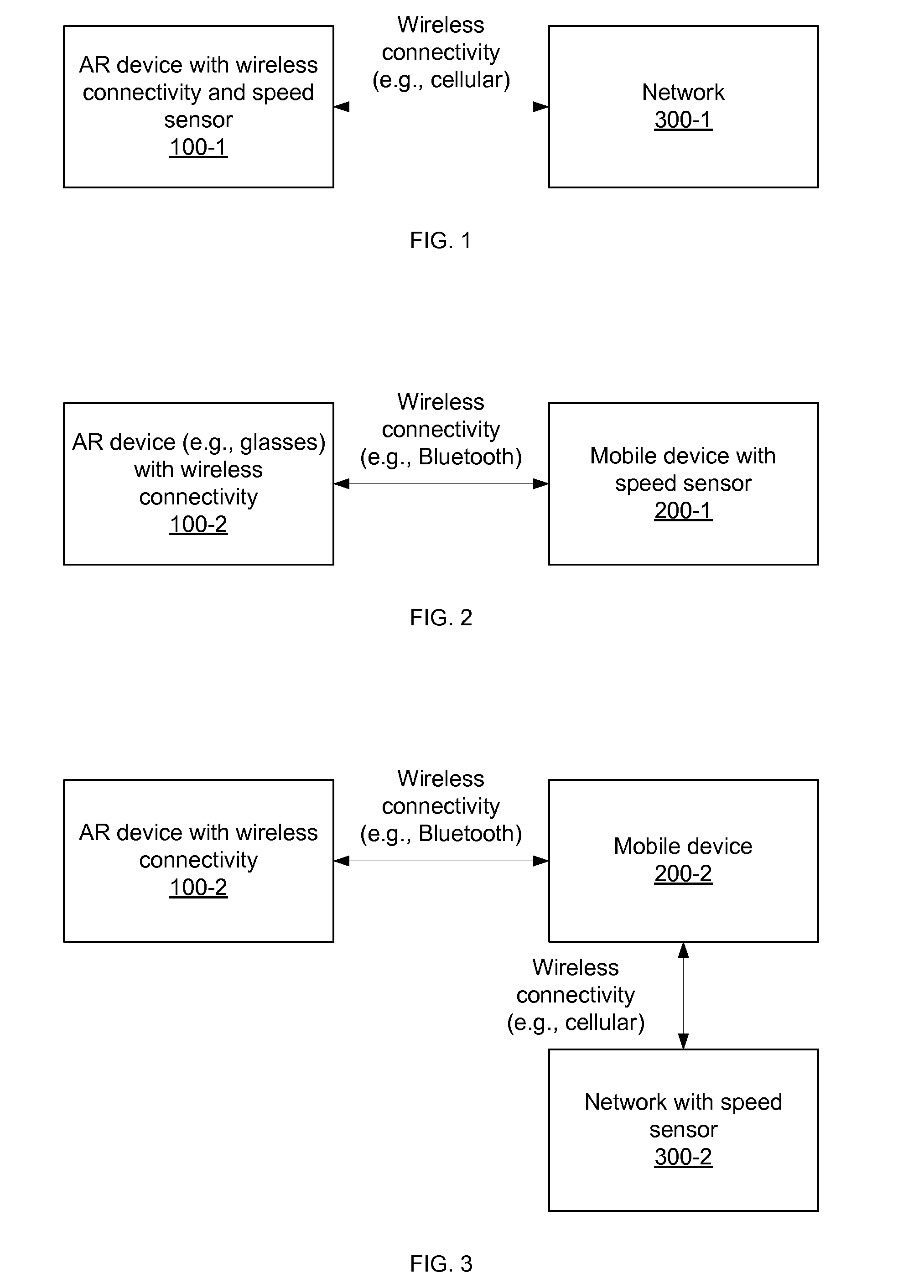

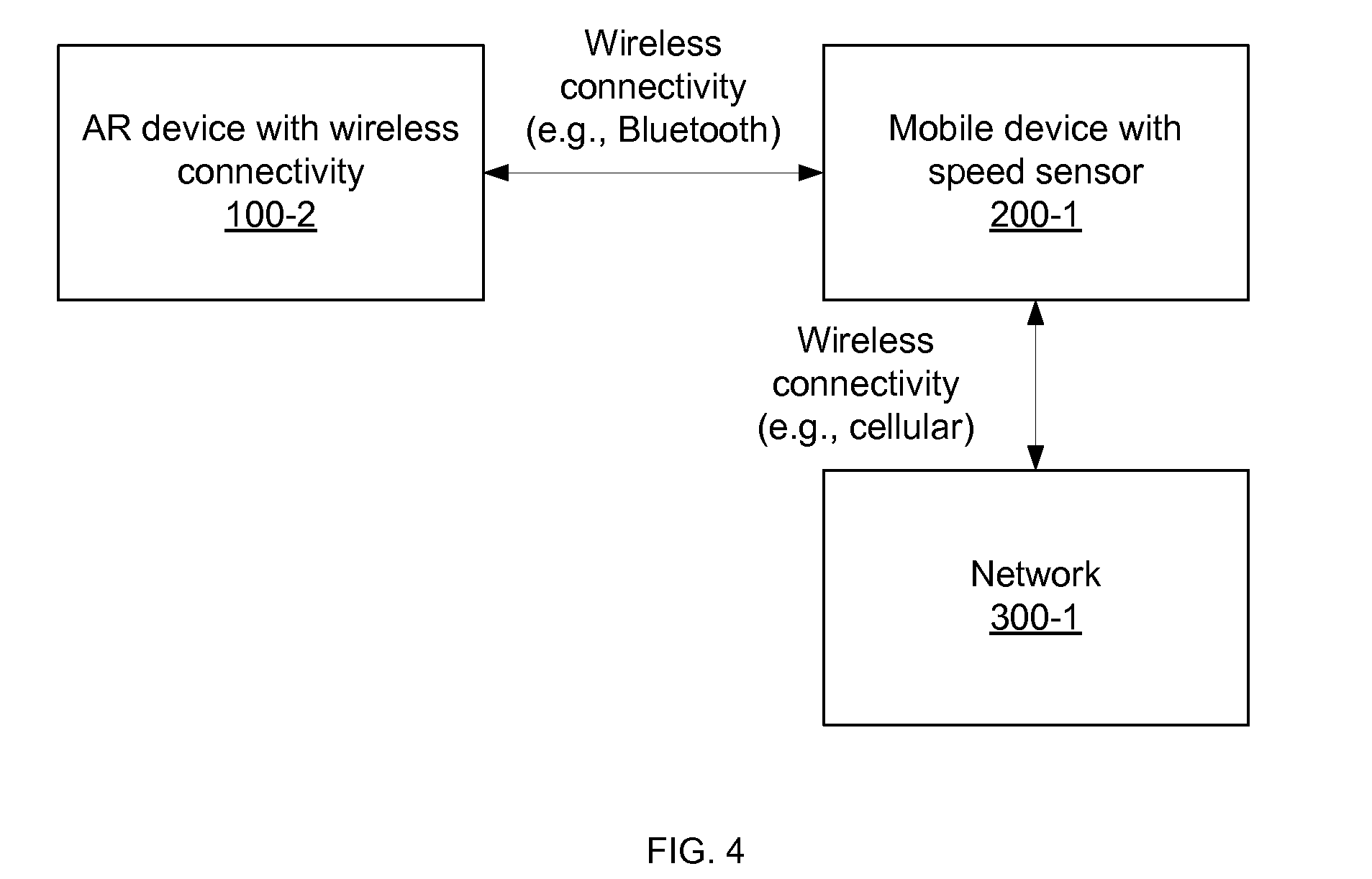

Disabling augmented reality (AR) devices at speed

ActiveUS20140253588A1Reduce distractionsCathode-ray tube indicatorsVehicle componentsDistractionDisplay device

Systems, apparatus and methods for limiting information on an augmented reality (AR) display based on various speeds of an AR device are presented. Often information forms a distraction when the wearer is driving, running or even walking Therefore, the described systems, devices and methods aim to limit information displayed on an AR display based on three or more levels movement (e.g., stationary, walking, driving) such that the wearer is less distracted when higher levels of concentration are needed for real world activities.

Owner:QUALCOMM INC

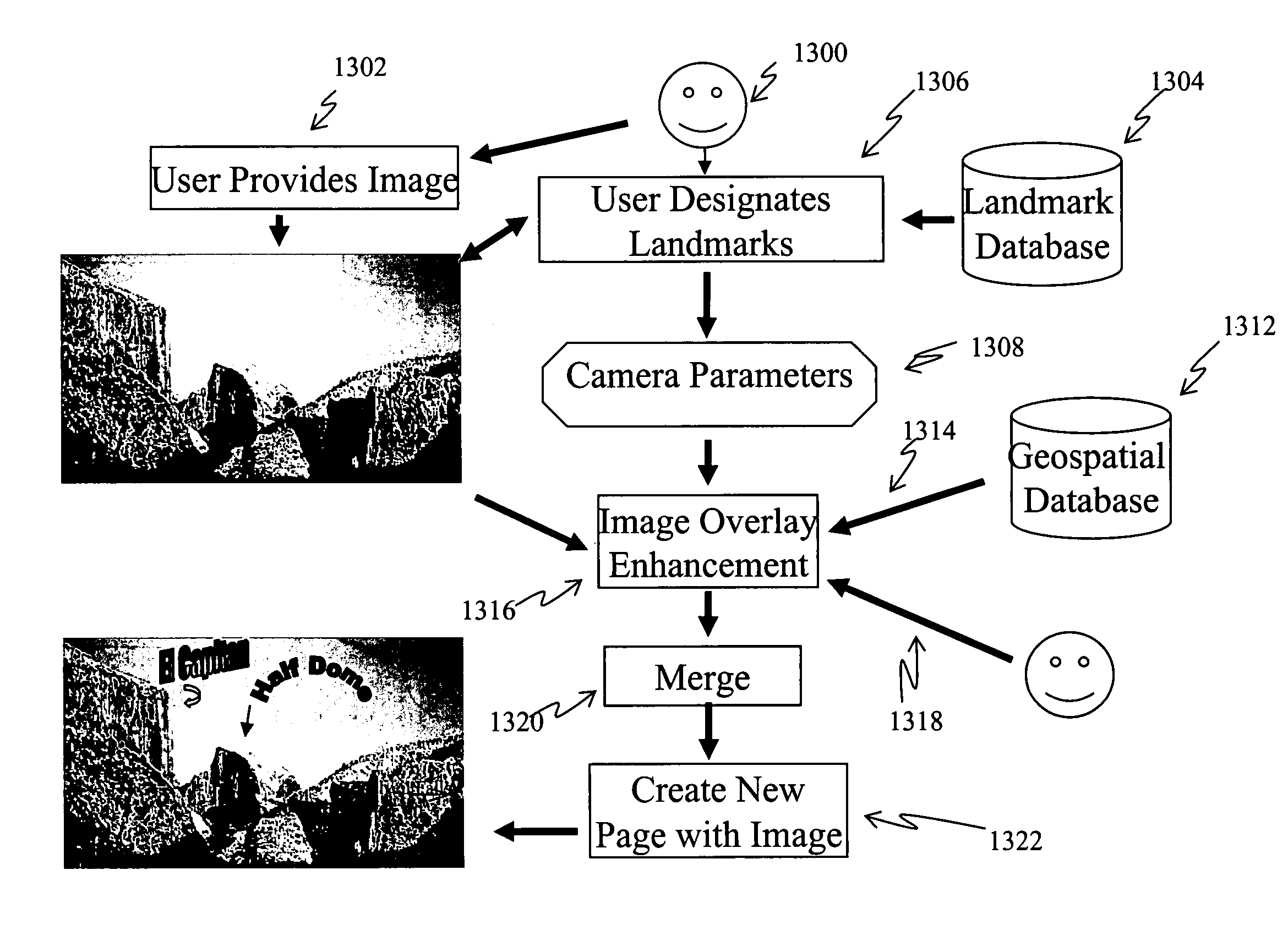

Method and apparatus for image enhancement

InactiveUS20070035562A1Improve accuracyAccurate currentInput/output for user-computer interactionCathode-ray tube indicatorsGraphicsImage enhancement

The present invention is generally related to image enhancement and augmented reality (“AR”). More specifically, this invention presents a method and an apparatus for static image enhancement and the use of an optical display and sensing technologies to superimpose, in real time, graphical information upon a user's magnified view of the real world.

Owner:HRL LAB

Augmented reality display system for evaluation and modification of neurological conditions, including visual processing and perception conditions

ActiveUS20170365101A1Increase alertnessImprove plasticityMedical automated diagnosisMental therapiesWavefrontPattern perception

In some embodiments, a display system comprising a head-mountable, augmented reality display is configured to perform a neurological analysis and to provide a perception aid based on an environmental trigger associated with the neurological condition. Performing the neurological analysis may include determining a reaction to a stimulus by receiving data from the one or more inwardly-directed sensors; and identifying a neurological condition associated with the reaction. In some embodiments, the perception aid may include a reminder, an alert, or virtual content that changes a property, e.g. a color, of a real object. The augmented reality display may be configured to display virtual content by outputting light with variable wavefront divergence, and to provide an accommodation-vergence mismatch of less than 0.5 diopters, including less than 0.25 diopters.

Owner:MAGIC LEAP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com