Patents

Literature

181 results about "Handle" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computer programming, a handle is an abstract reference to a resource. Handles are used when application software references blocks of memory or objects managed by another system, such as a database or an operating system. A resource handle can be an opaque identifier, in which case it is often an integer number (often an array index in an array or "table" that is used to manage that type of resource), or it can be a pointer that allows access to further information.

Extensible information system

InactiveUS20020184401A1Determining correlationEasy to determineDatabase management systemsInterprogram communicationInformation systemApplication software

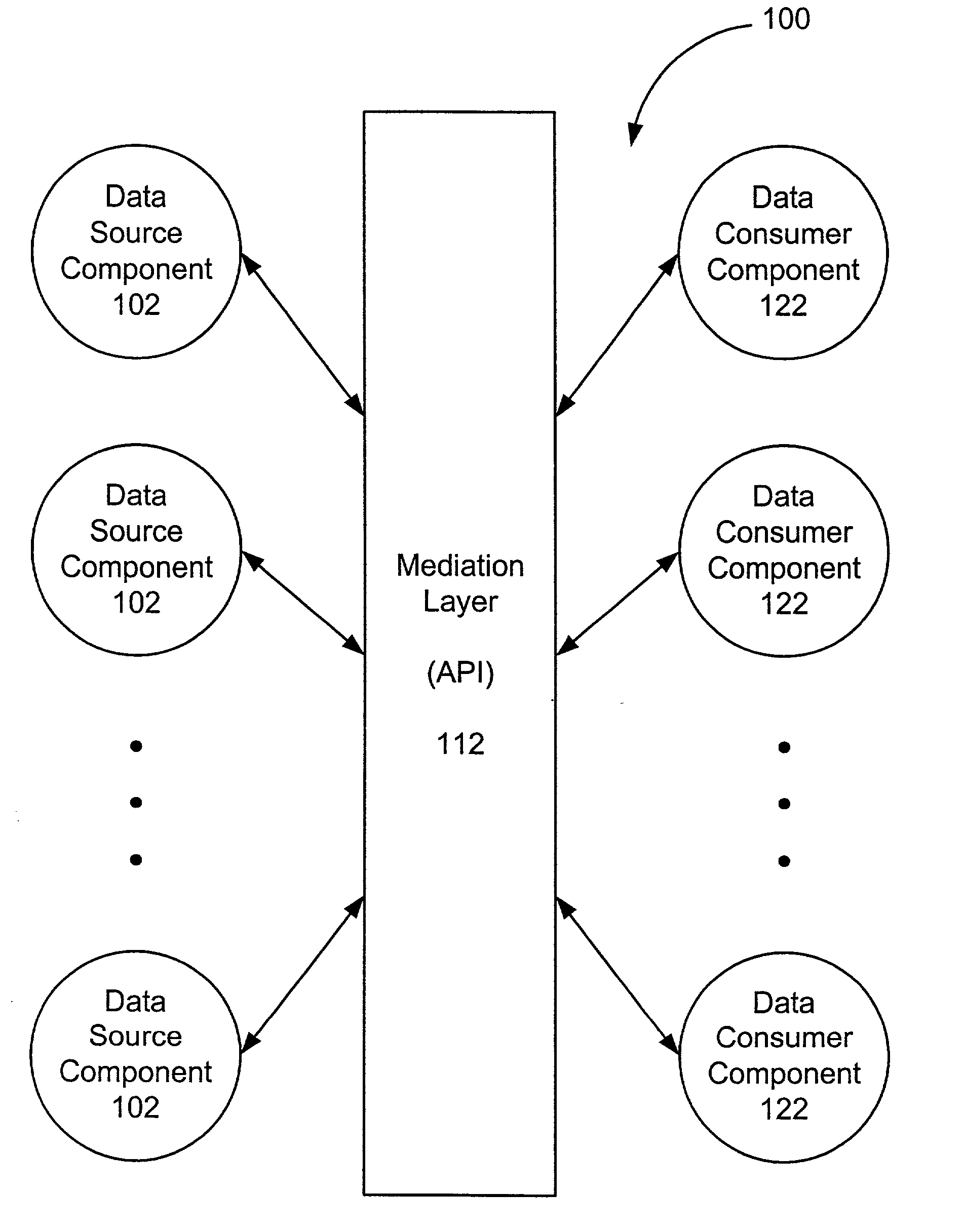

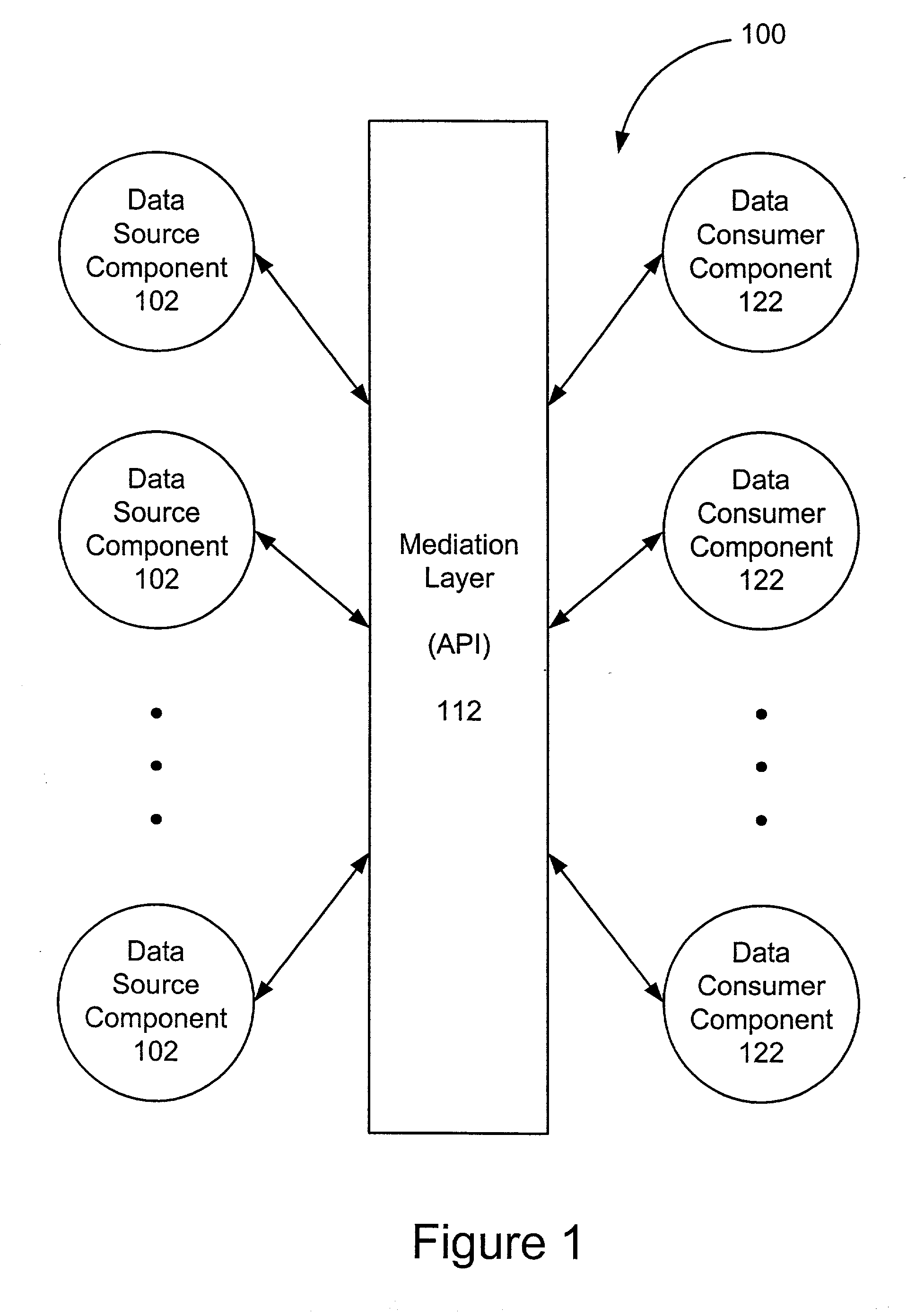

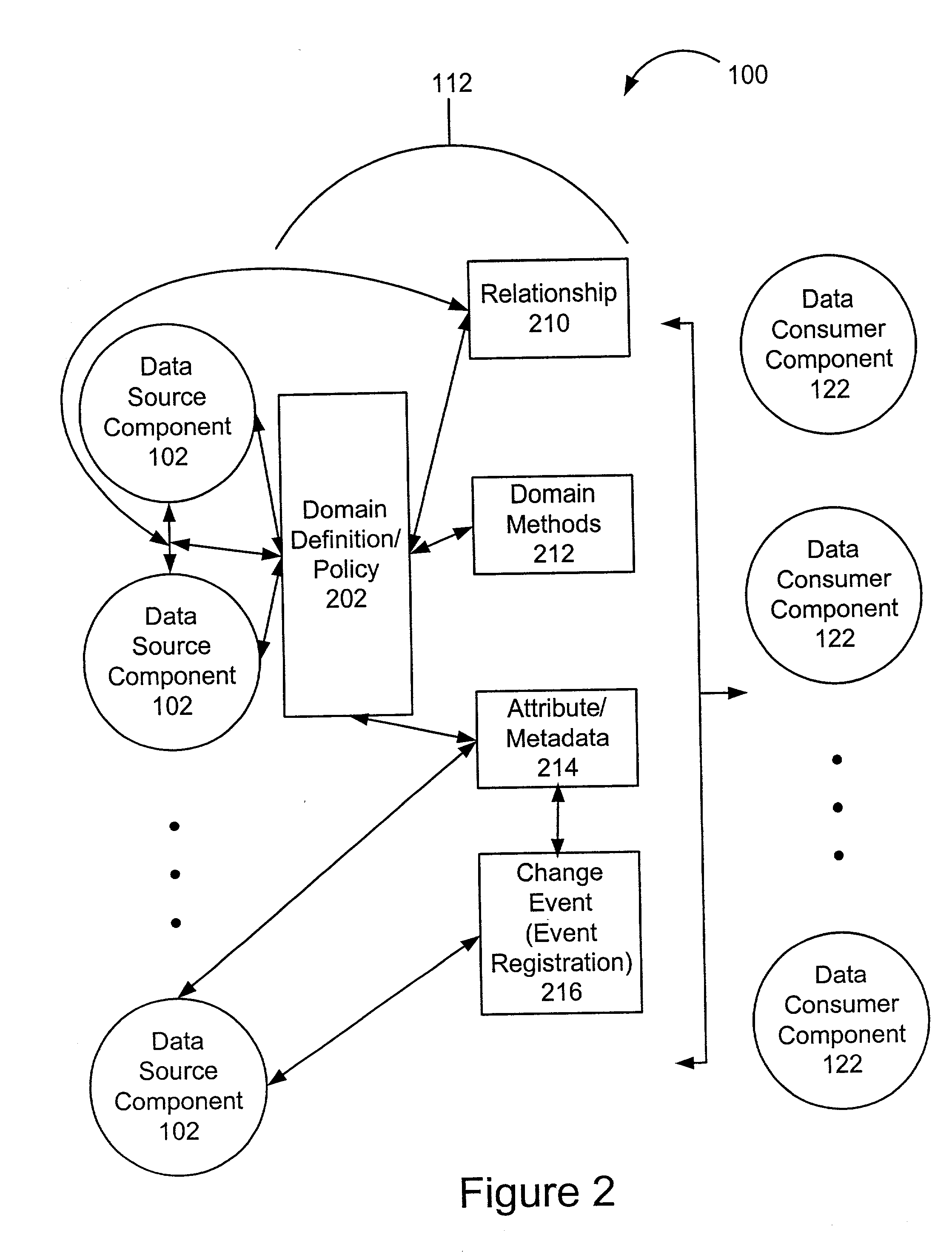

A framework enables data source components to be developed independently of data consumer components. A mediation layer, typically implemented as a group of APIs (application programming interface), handles and defines the mediation and interface between the source and data components. The framework, called XIS (extensible information system), is especially suited for development of information-handling systems and applications. Data source components and data consumer components are typically designed to communicate with each other via several interfaces. Domain, relationship, attribute / metadata, and change event interfaces are defined within the mediation layer. Other interfaces may also be defined. Data source components that are written for non-XIS aware environments or frameworks may still be used with XIS by "wrapping" such source components with code to conform to the interface requirements. Java objects are examples of data source components. Data consumer components thus are able to use or consume various source components regardless of the data types and the data source. Thus, once a data consumer component is developed within the XIS framework, any data source components within the XIS framework may be consumed by a data consumer component.

Owner:POLEXIS

Representation of data records

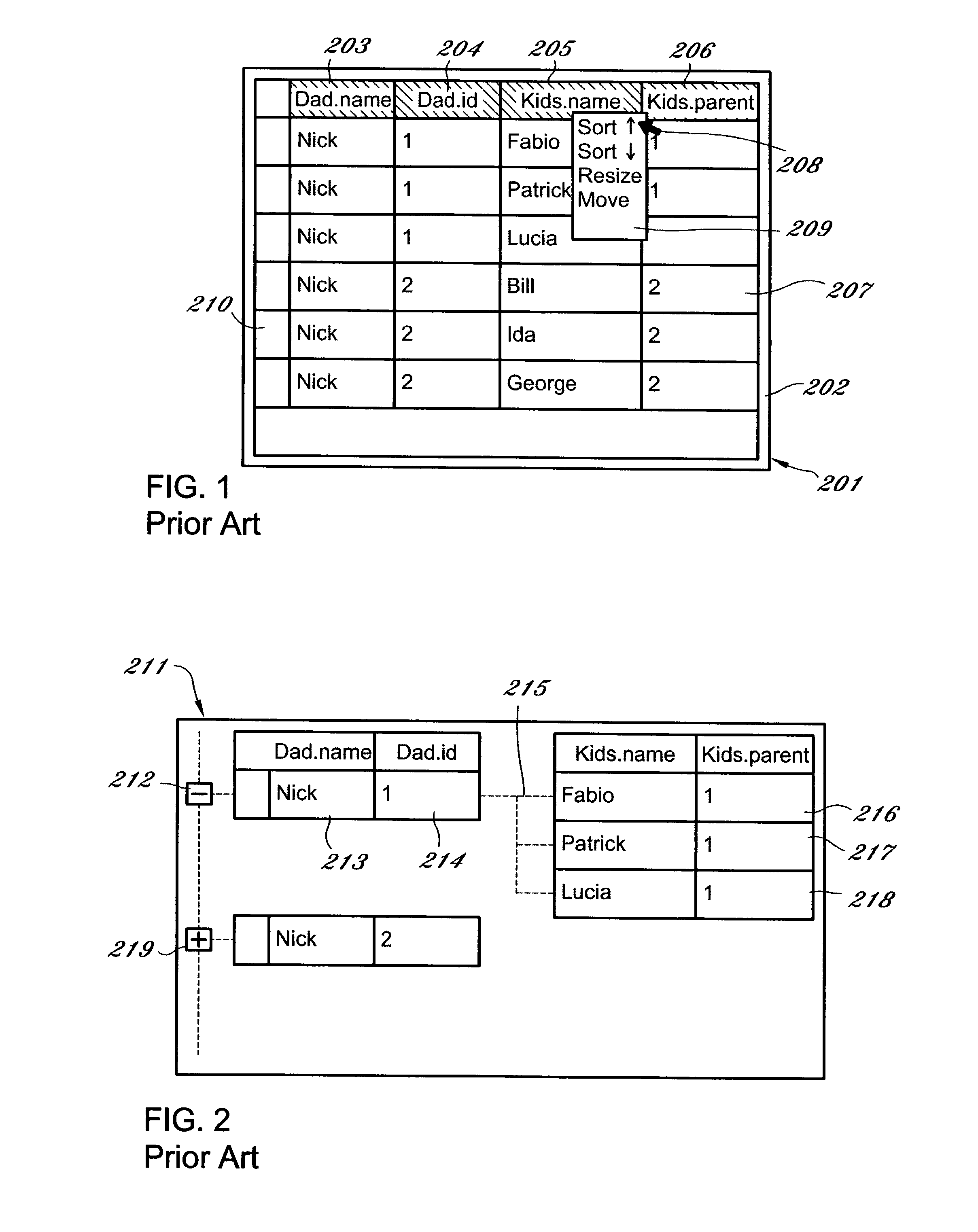

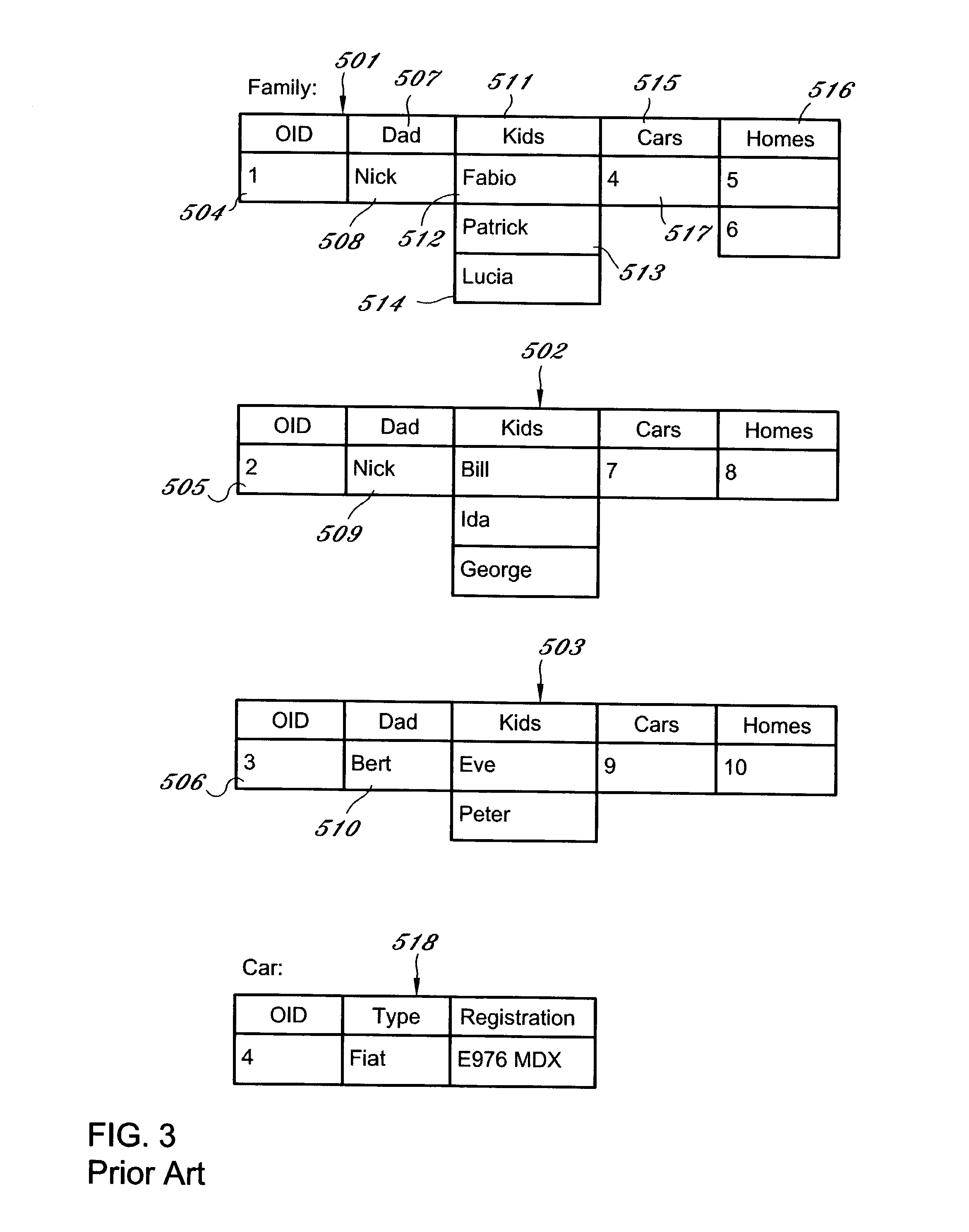

ActiveUS7461077B1Easy to browseConsistent interfaceDigital data information retrievalSpecial data processing applicationsData sourceDisplay device

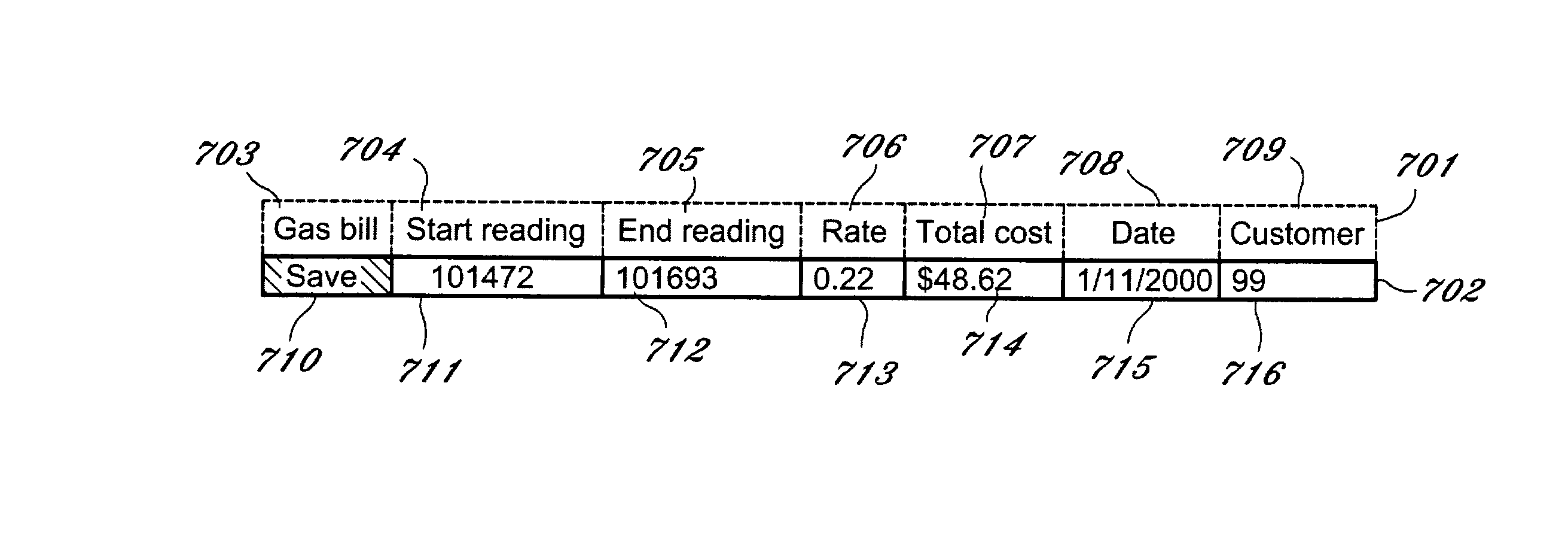

A computerized method for representing a data record comprising: querying a data source to obtain data selected from the group consisting of a data element in a record, and metadata concerning the record; presenting in a display a record handle for manipulation of the record; presenting in the display a data item wherein the data item is a list of data items or a reference to another record; and, optionally, presenting on the display the metadata above the data item. In some embodiments, the method includes the step of retrieving one or more heterogeneous records from a plurality of databases for display and manipulation. The invention is also a grid control programmed to implement a disclosed method and is a computer-readable medium having computer-executable instructions for performing a disclosed method. The invention links the grid control of the invention with automatic query generation using hierarchical data schema trees. Both the trees and the grid records represent relational foreign keys as extra reference columns. In the grid control, these reference columns are additional embedded record handles.

Owner:MUSICQUBED INNOVATIONS LLC

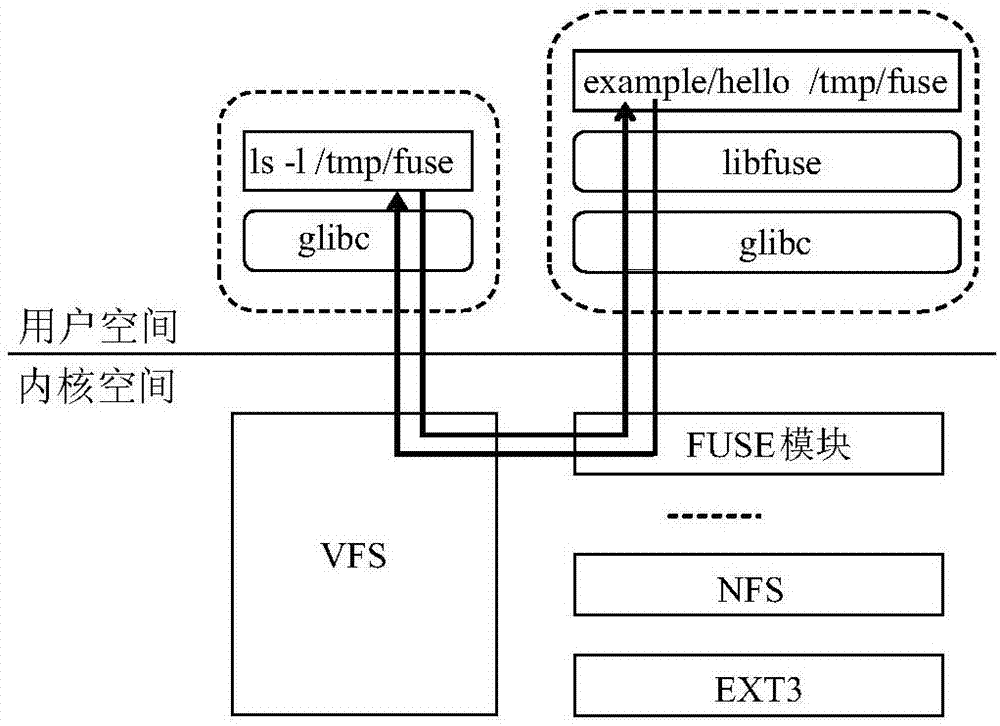

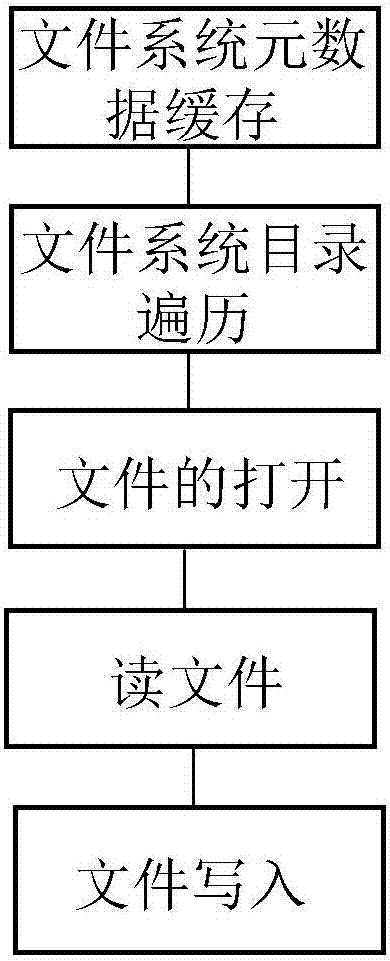

Method for realizing local file system through object storage system

InactiveCN107045530AReduce the number of interactionsImprove the performance of accessing swift storage systemSpecial data processing applicationsApplication serverFile system

The invention discloses a method for realizing a local file system through an object storage system. A metadata cache algorithm of the file system and a memory description structure are adopted, so that the interactive frequency of an application and a background of a swift storage system is reduced and the performance of accessing the swift storage system by the application is improved; a policy of pre-allocating a memory pool and recovering idle memory blocks in batches in a delayed manner is adopted, so that the efficiency of traversing a directory comprising a large amount of subdirectories and files is improved; a memory description structure of an open file handle is adopted, so that the application can efficiently perform file reading-writing operation; a pre-reading policy is adopted, so that the frequency of network interaction between an application server and a swift storage back end is effectively reduced and the reading performance of the file system is improved; and a zero copying and block writing policy is adopted, so that no any data copying and caching exist in a file writing process, system call during each write is a complete block writing operation, and the file writing efficiency is improved.

Owner:HUAZHONG UNIV OF SCI & TECH

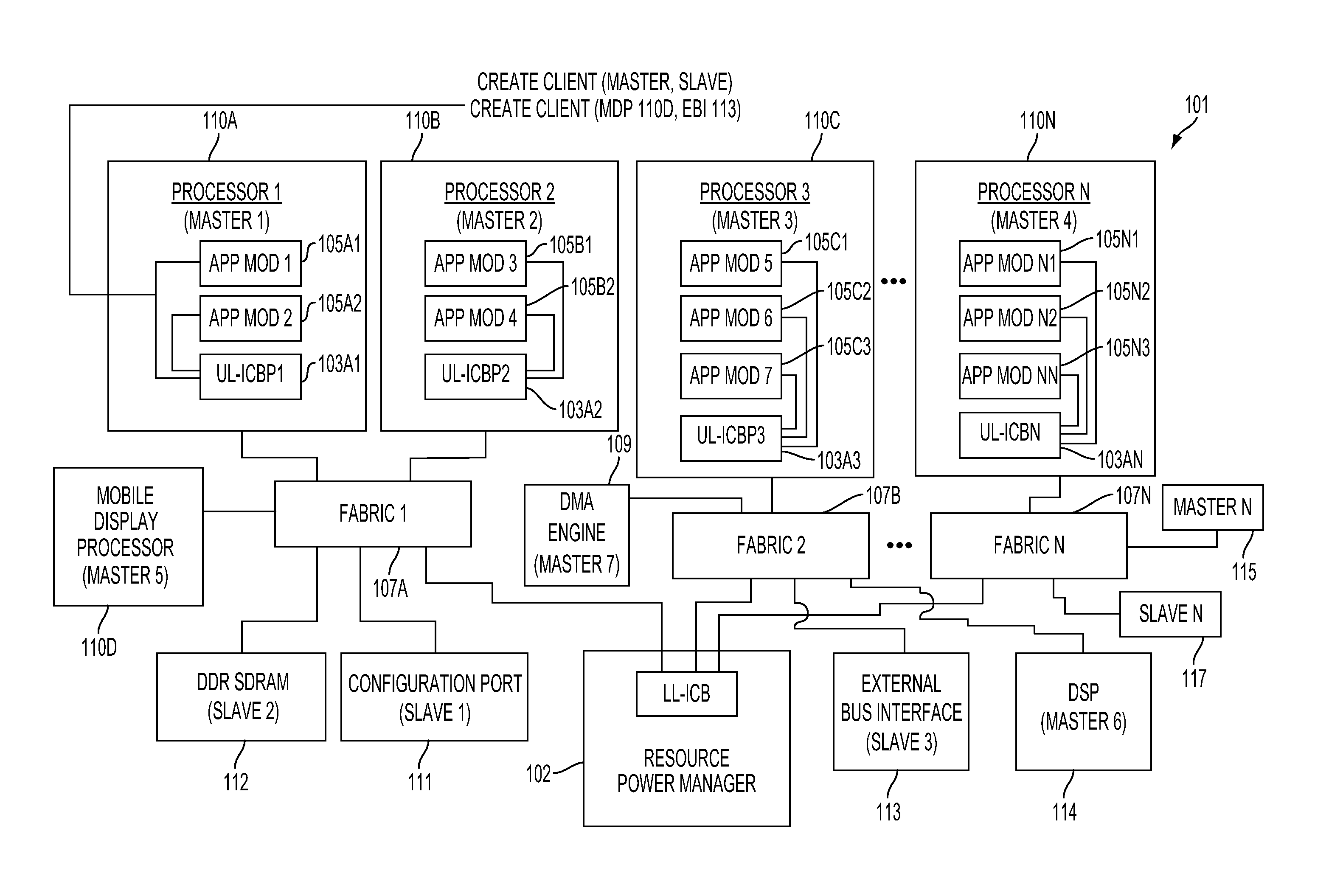

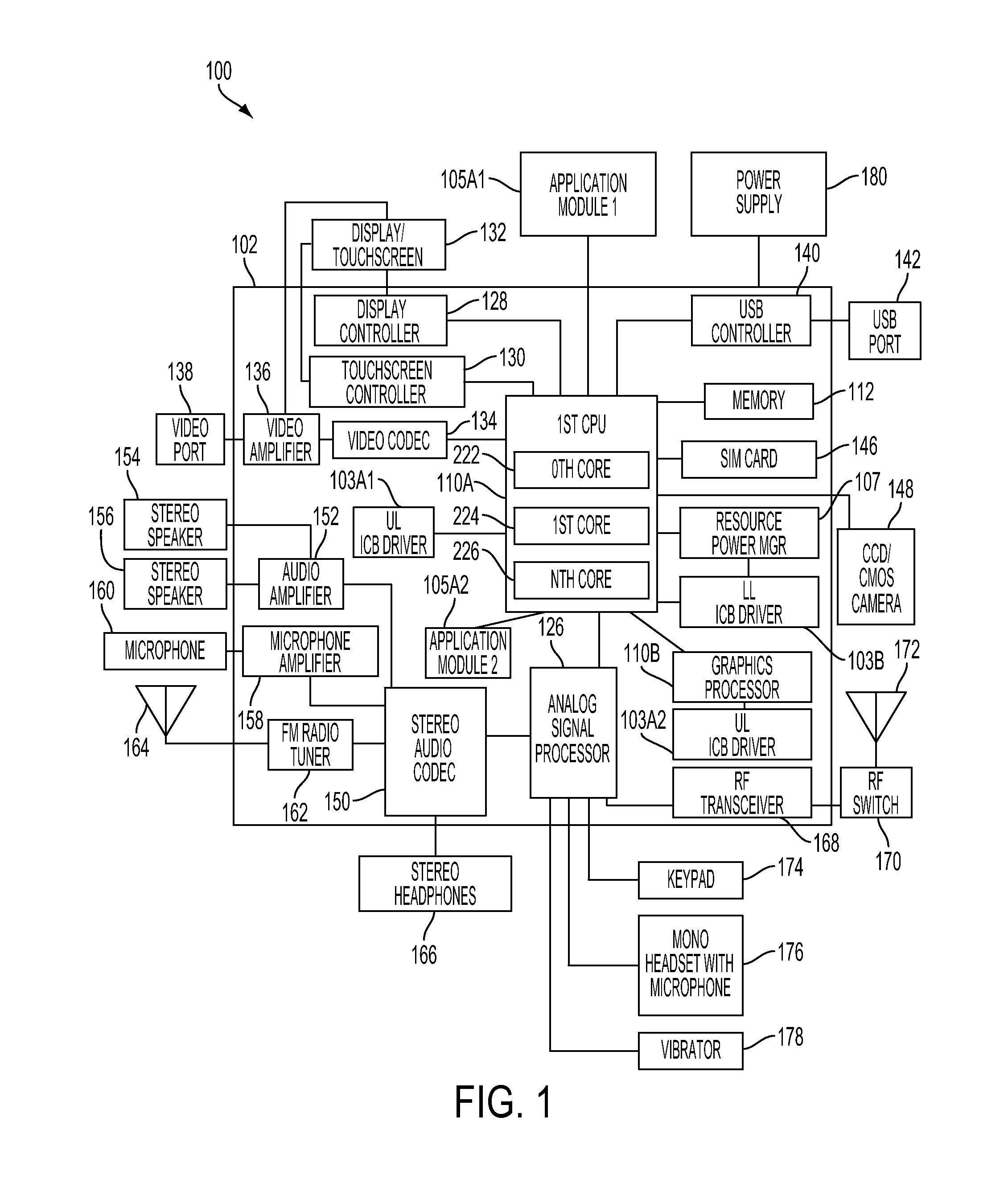

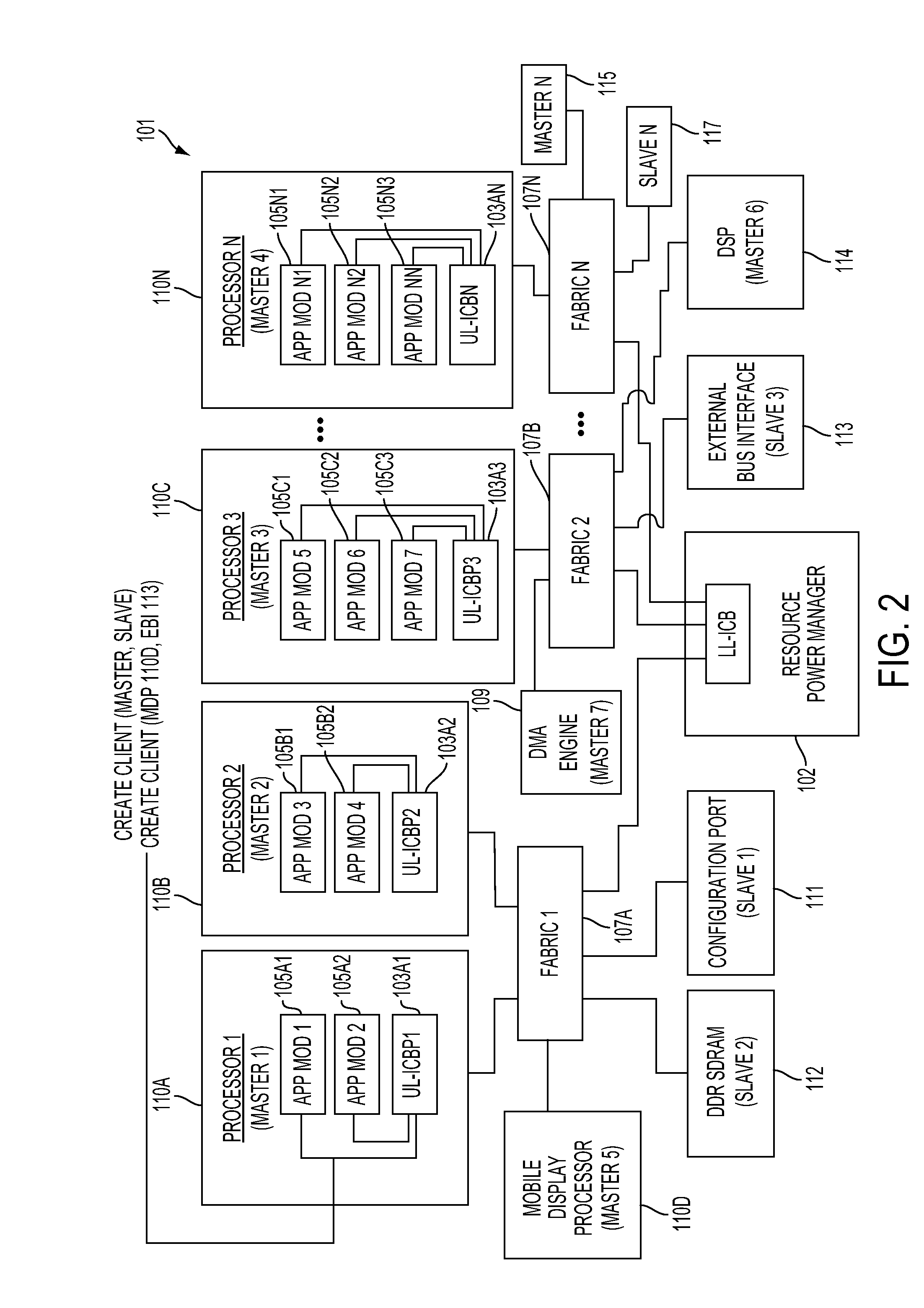

Method and system for dynamically creating and servicing master-slave pairs within and across switch fabrics of a portable computing device

ActiveUS20120284354A1Multiple digital computer combinationsTransmissionLayered structureUnique identifier

A method and system for dynamically creating and servicing master-slave pairs within and across switch fabrics of a portable computing device (“PCD”) are described. The system and method includes receiving a client request comprising a master-slave pair and conducting a search for a slave corresponding to the master-slave pair. A route for communications within and across switch fabrics is created and that corresponds to the master-slave pair. One or more handles or arrays may be stored in a memory device that correspond to the created route. Next, bandwidth across the route may be set. After the bandwidth across the newly created route is set, the client request originating the master-slave pair may be serviced using the created route. Conducting the search for the slave may include comparing unique identifiers assigned to each slave in a master-slave hierarchy. The search within and across switch fabrics may also include reviewing a fabric route check table for slaves that can be interrogated within a switch fabric.

Owner:QUALCOMM INC

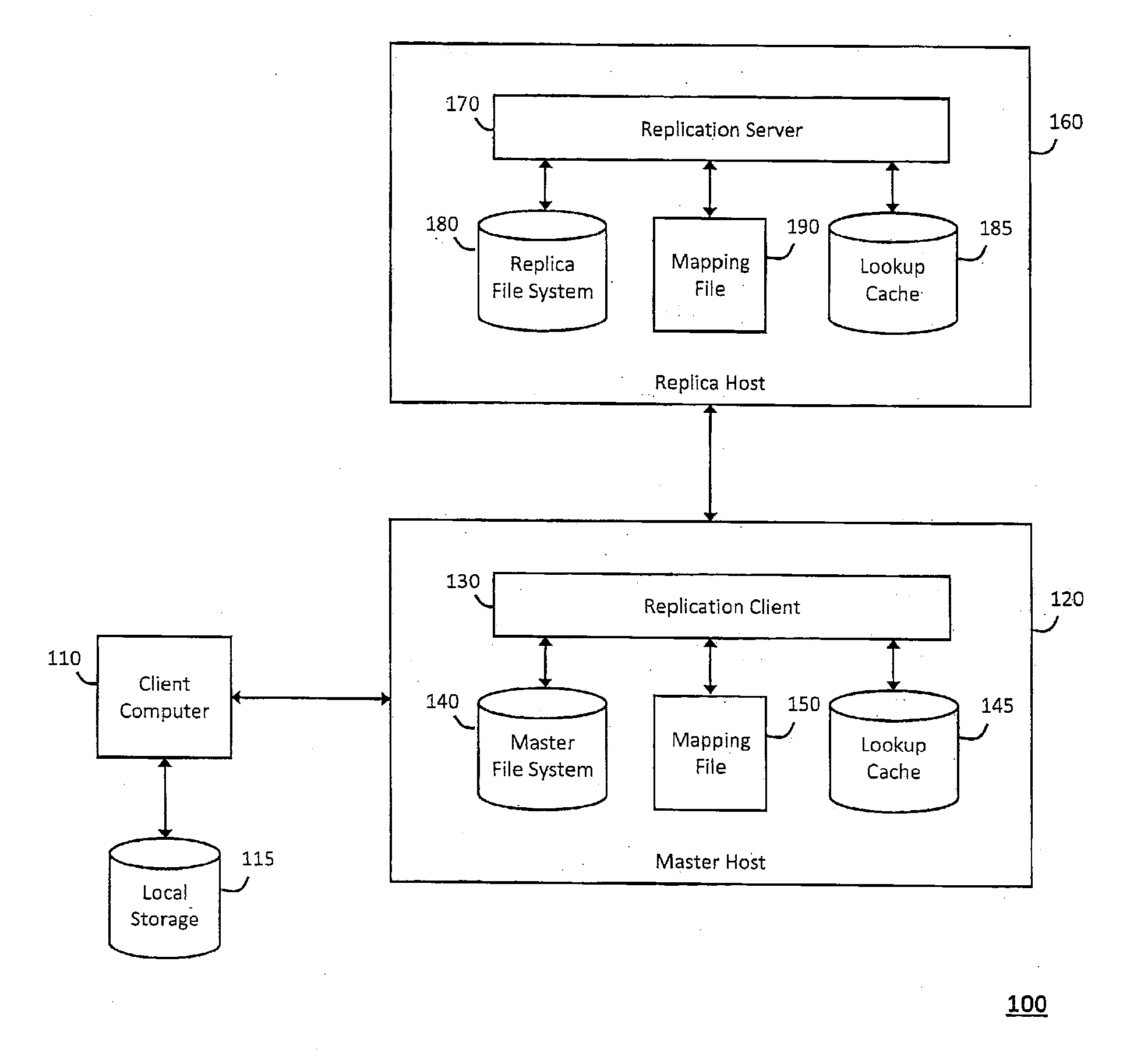

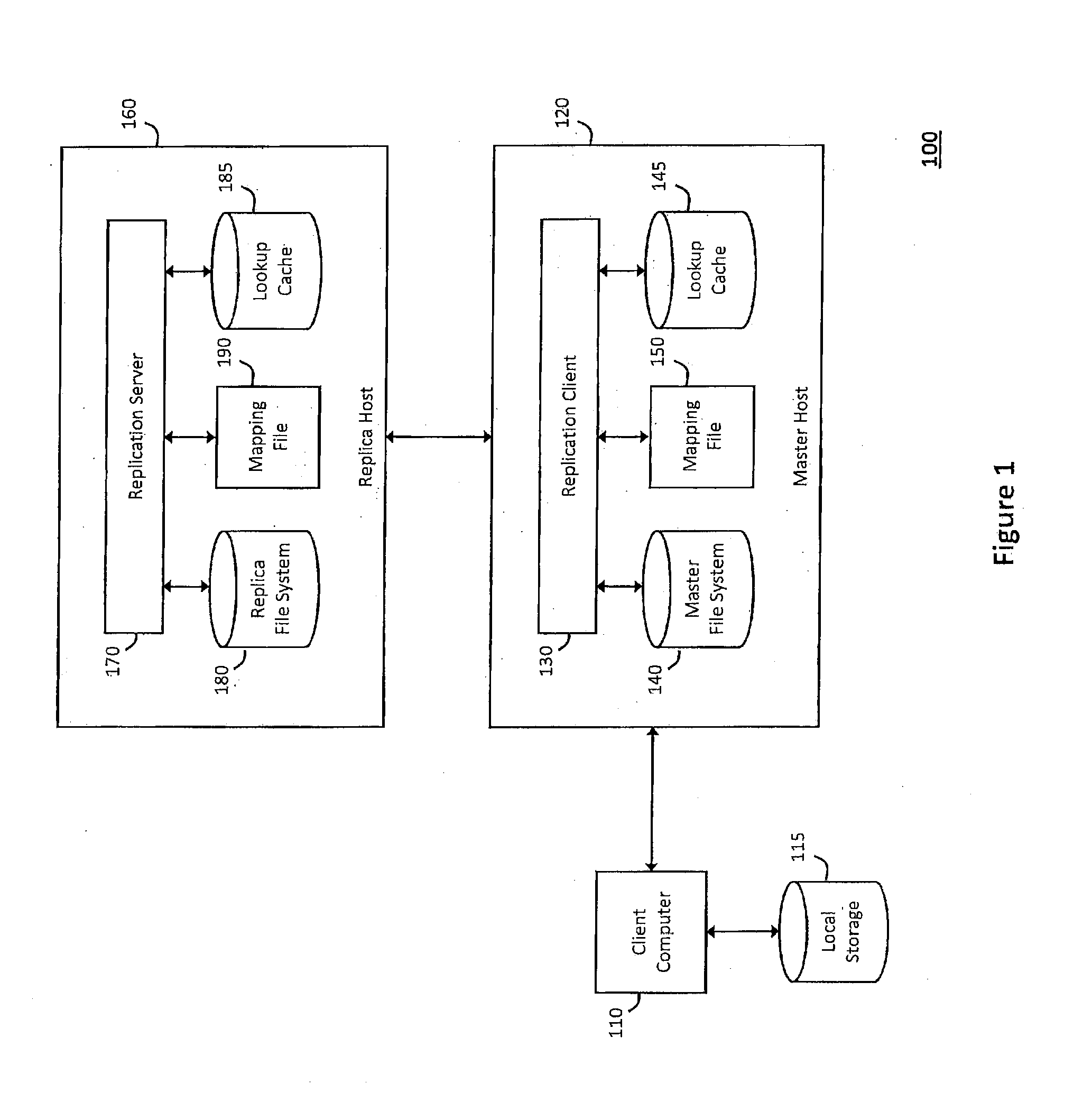

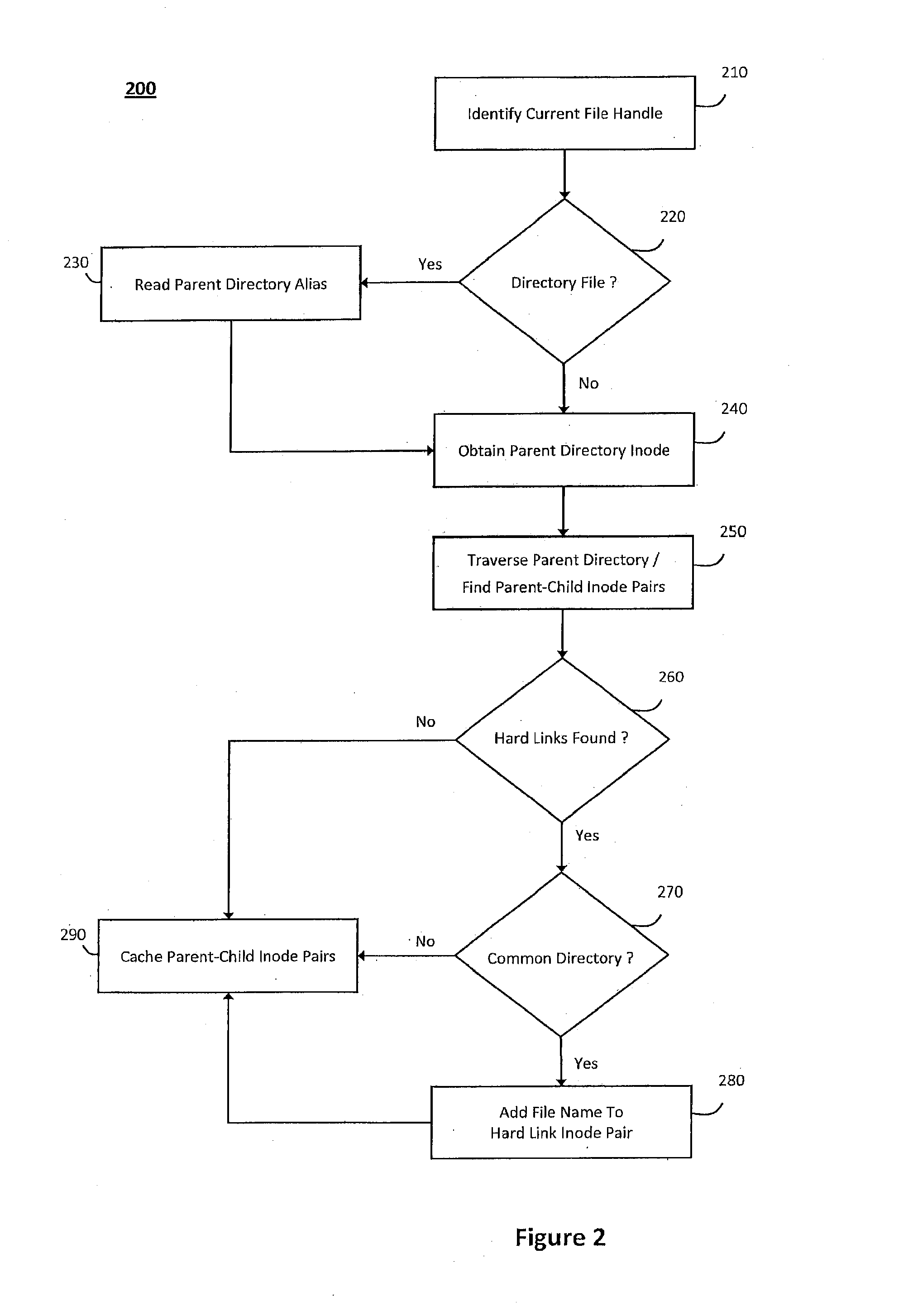

System and method for network file system server replication using reverse path lookup

ActiveUS20140122428A1Easy to copyReduce and eliminate needDigital data information retrievalError detection/correctionServer replicationFile system

The system and method described herein may use reverse path lookup to build mappings between file handles that represent network file system objects and full path names associated therewith and distinguish hard links between different file system objects having the same identifier with different parents or file names. The mappings and information distinguishing the hard links may then be cached to enable replicating changes to the file system. For example, a server may search the cached information using a file handle associated with a changed file system object to obtain the file name and full path name associated therewith. The server may then send the file name and full path name and metadata describing how the file system object was changed to a replica host, which may then replicate the change to the file system object.

Owner:CA TECH INC

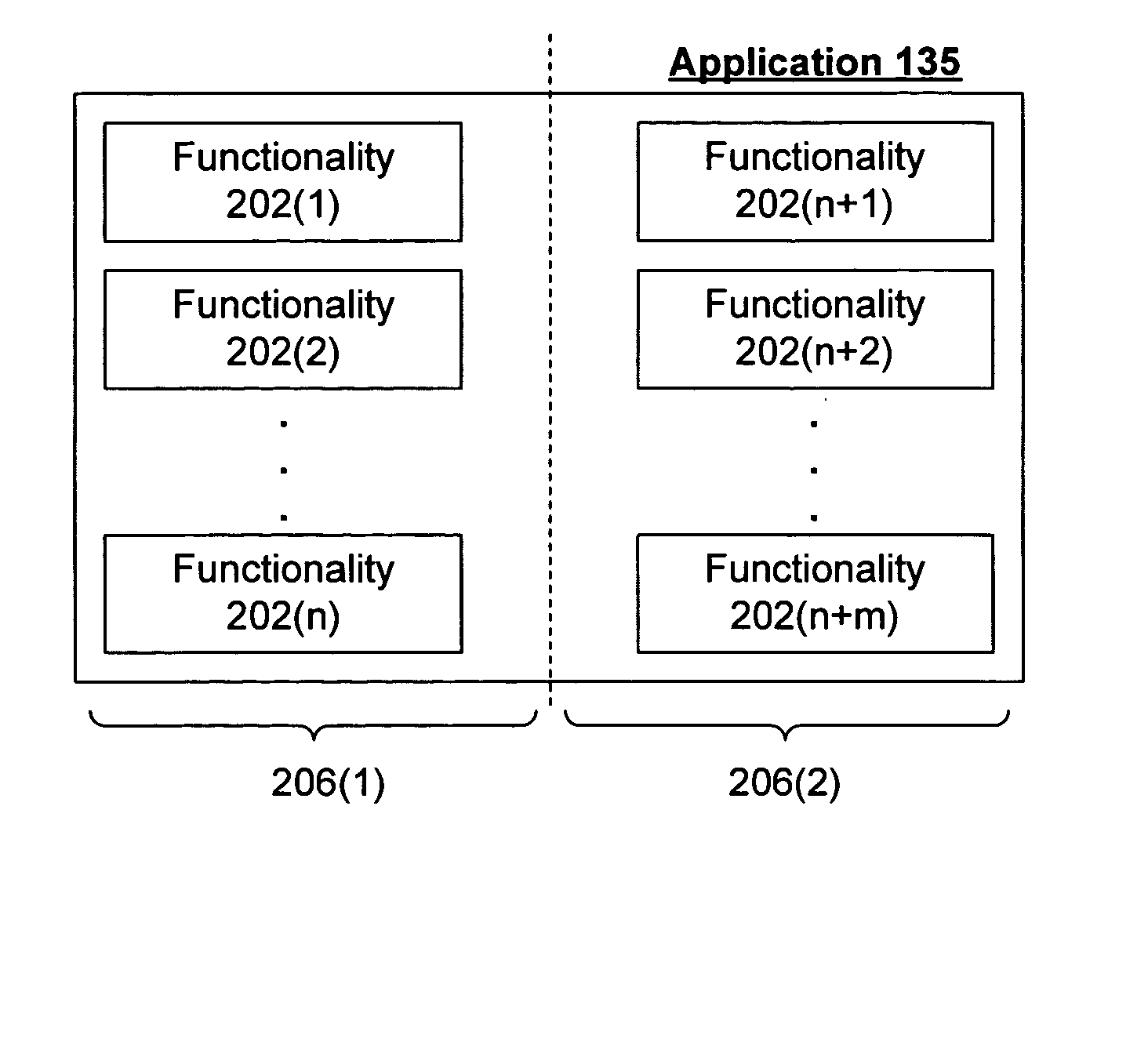

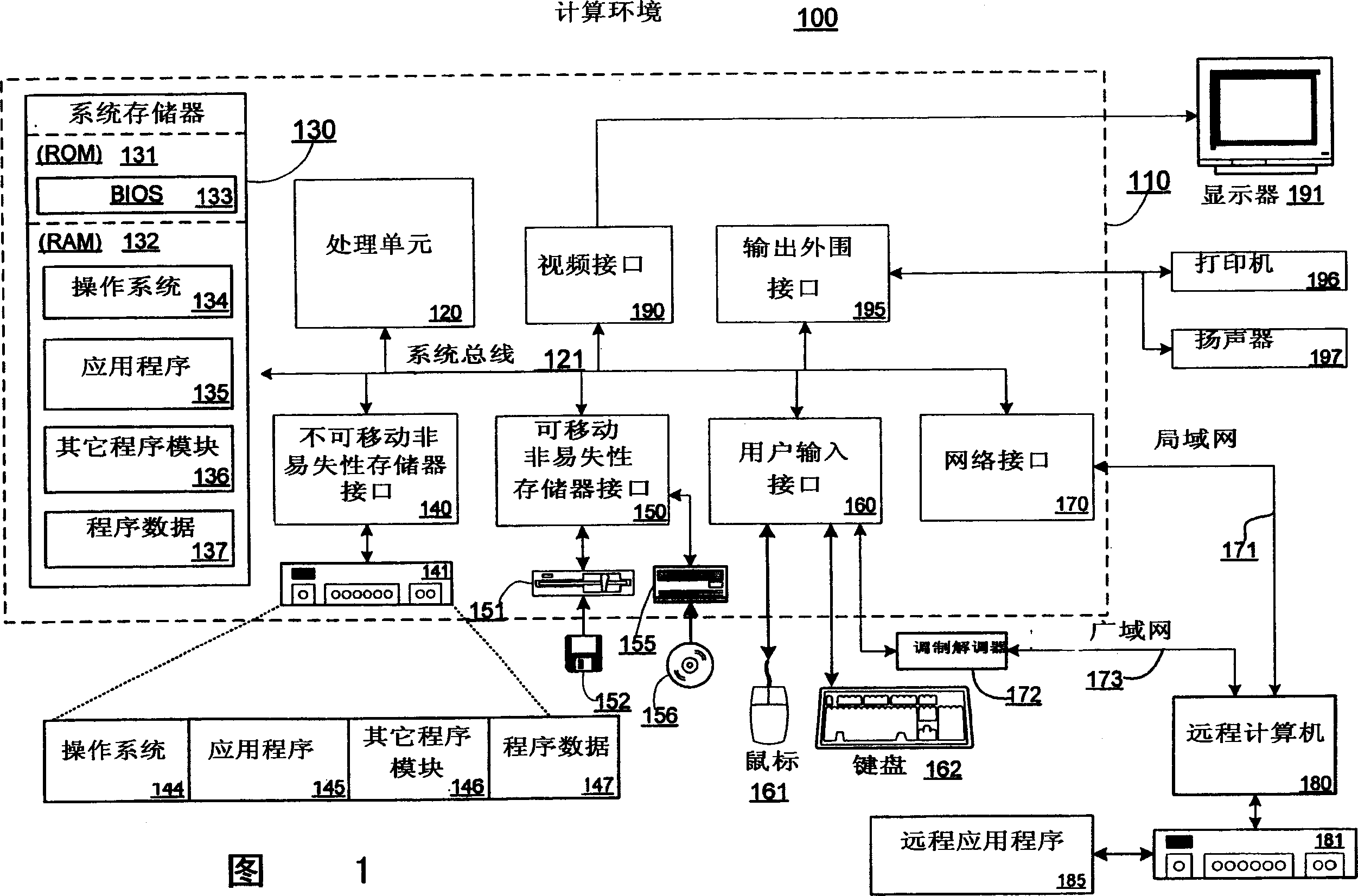

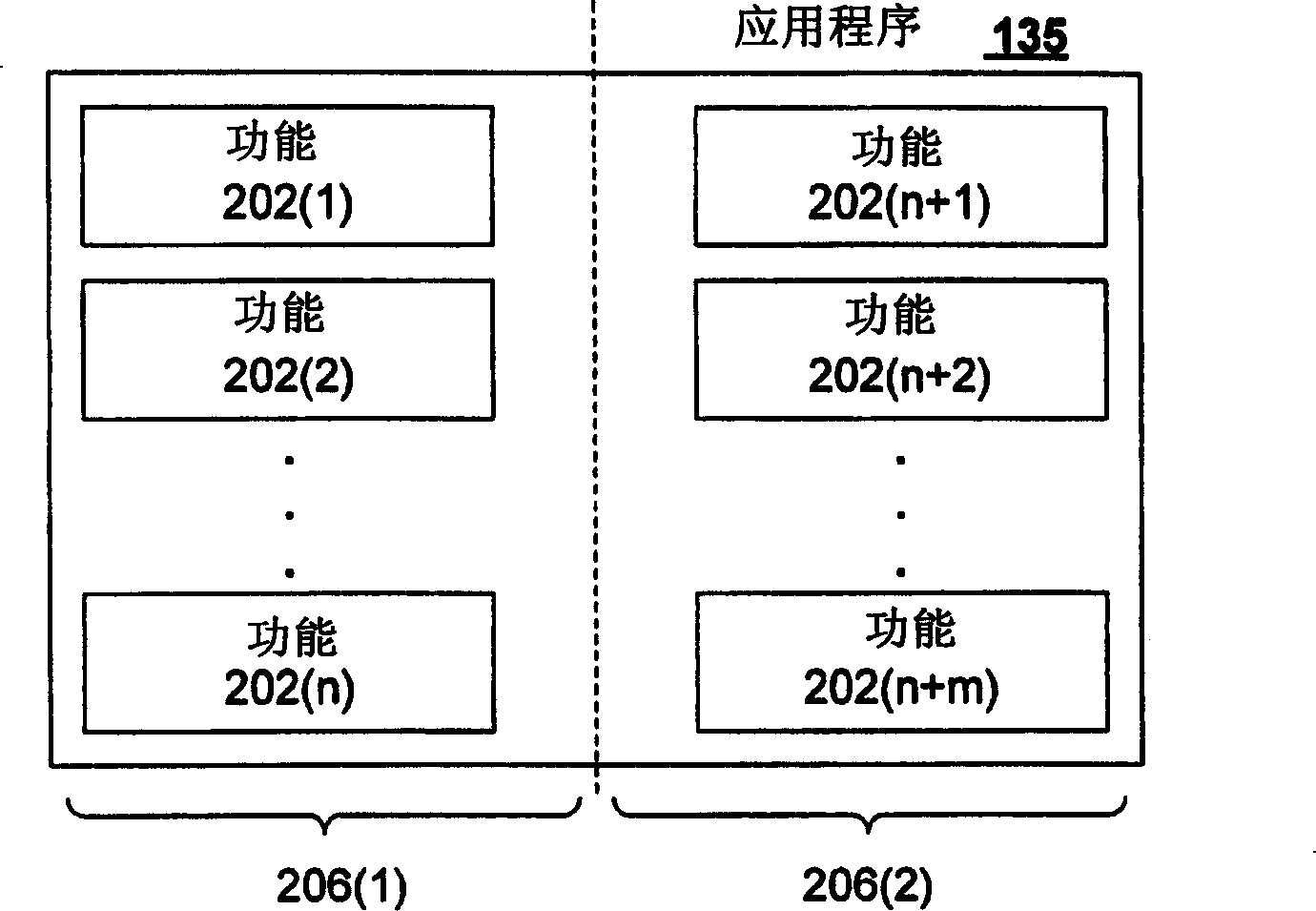

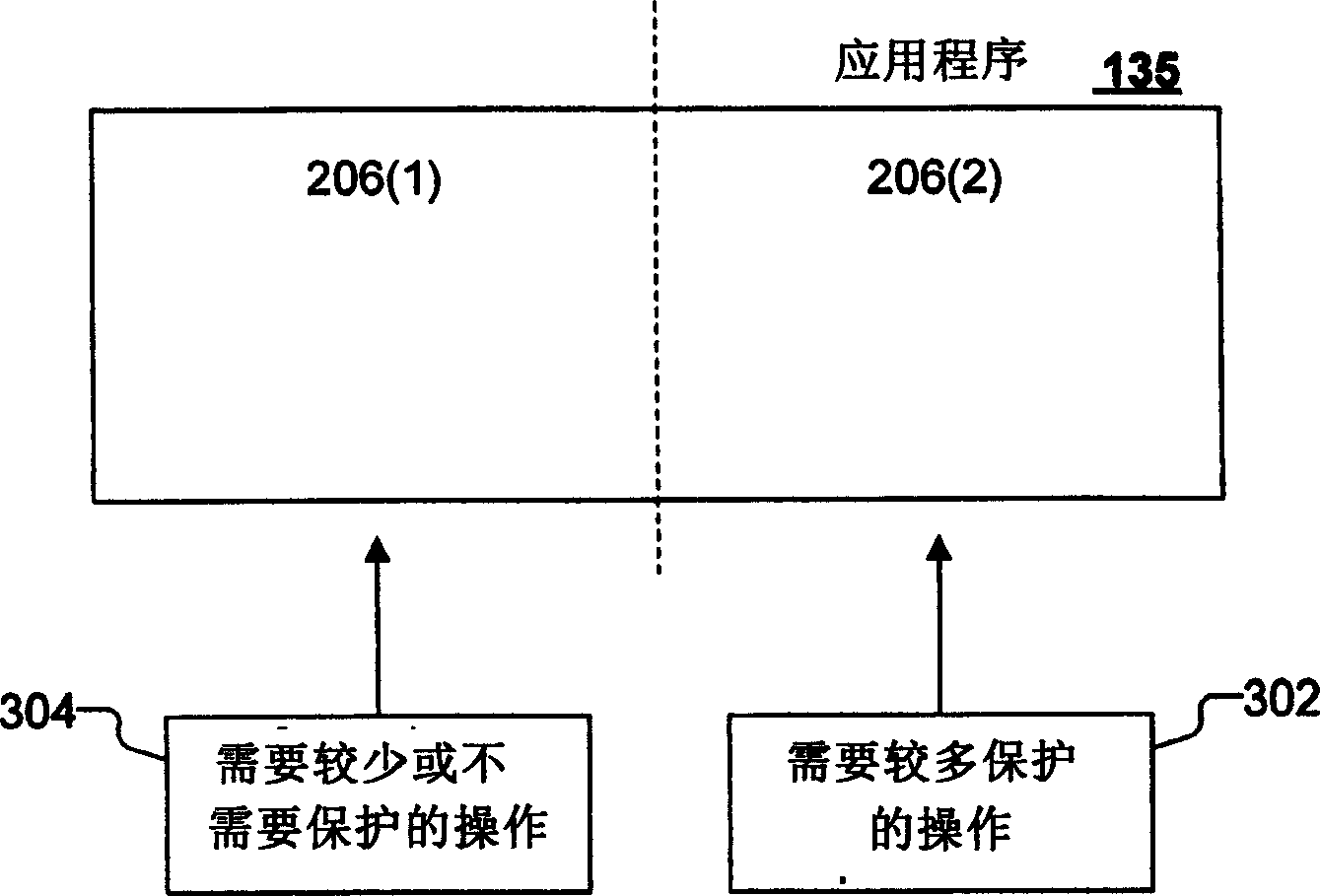

Integration of high-assurance features into an application through application factoring

ActiveUS20050091661A1Better at ensuring correct behavior of their hosted environmentsTransformation of program codeDigital data processing detailsChain of trustApplication software

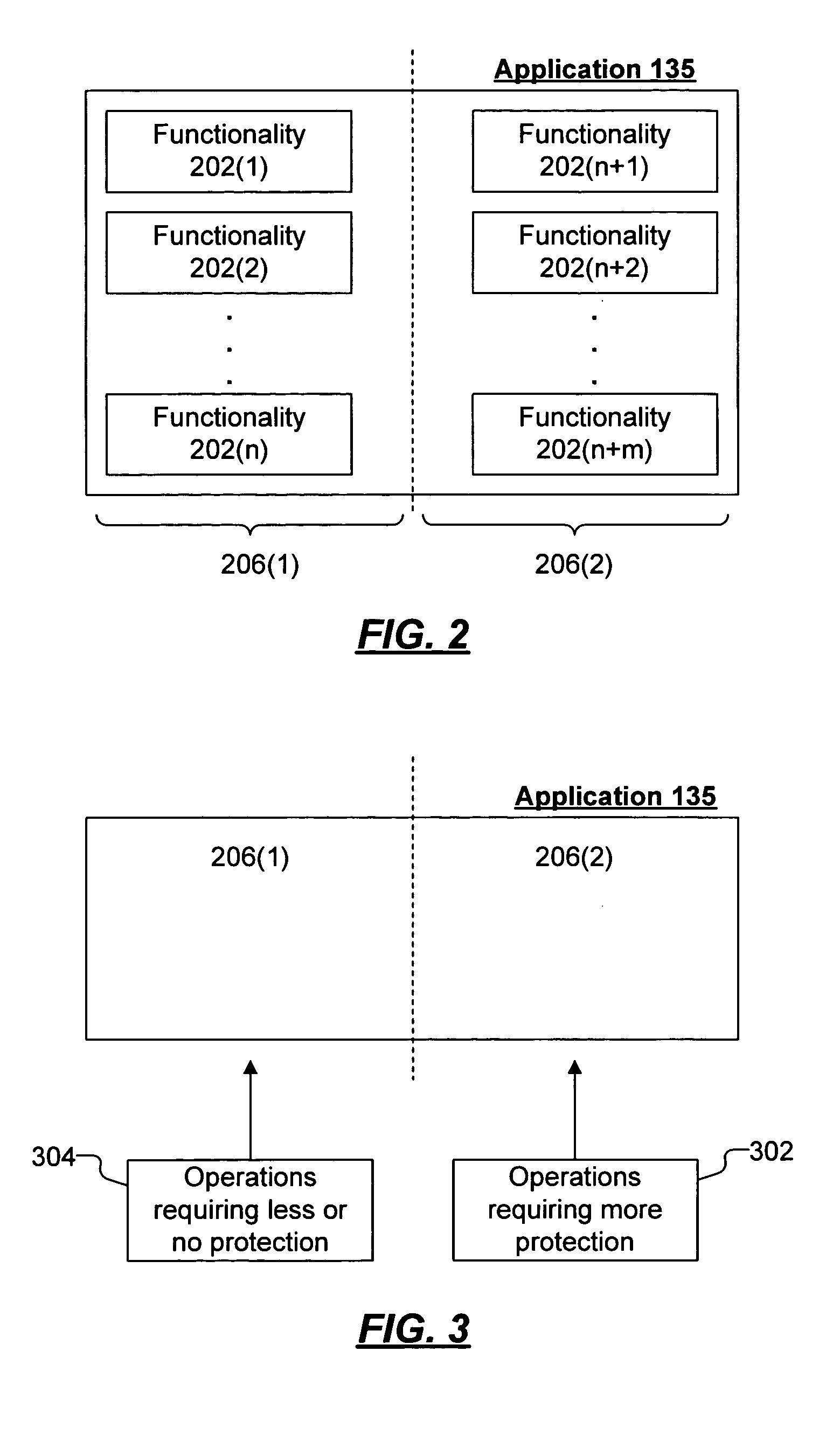

Application factoring or partitioning is used to integrate secure features into a conventional application. An application's functionality is partitioned into two sets according to whether a given action does, or does not, involve the handling of sensitive data. Separate software objects (processors) are created to perform these two sets of actions. A trusted processor handles secure data and runs in a high-assurance environment. When another processor encounters secure data, that data is sent to the trusted processor. The data is wrapped in such a way that allows it to be routed to the trusted processor, and prevents the data from being deciphered by any entity other than the trusted processor. An infrastructure is provided that wraps objects, routes them to the correct processor, and allows their integrity to be attested through a chain of trust leading back to base component that is known to be trustworthy.

Owner:MICROSOFT TECH LICENSING LLC

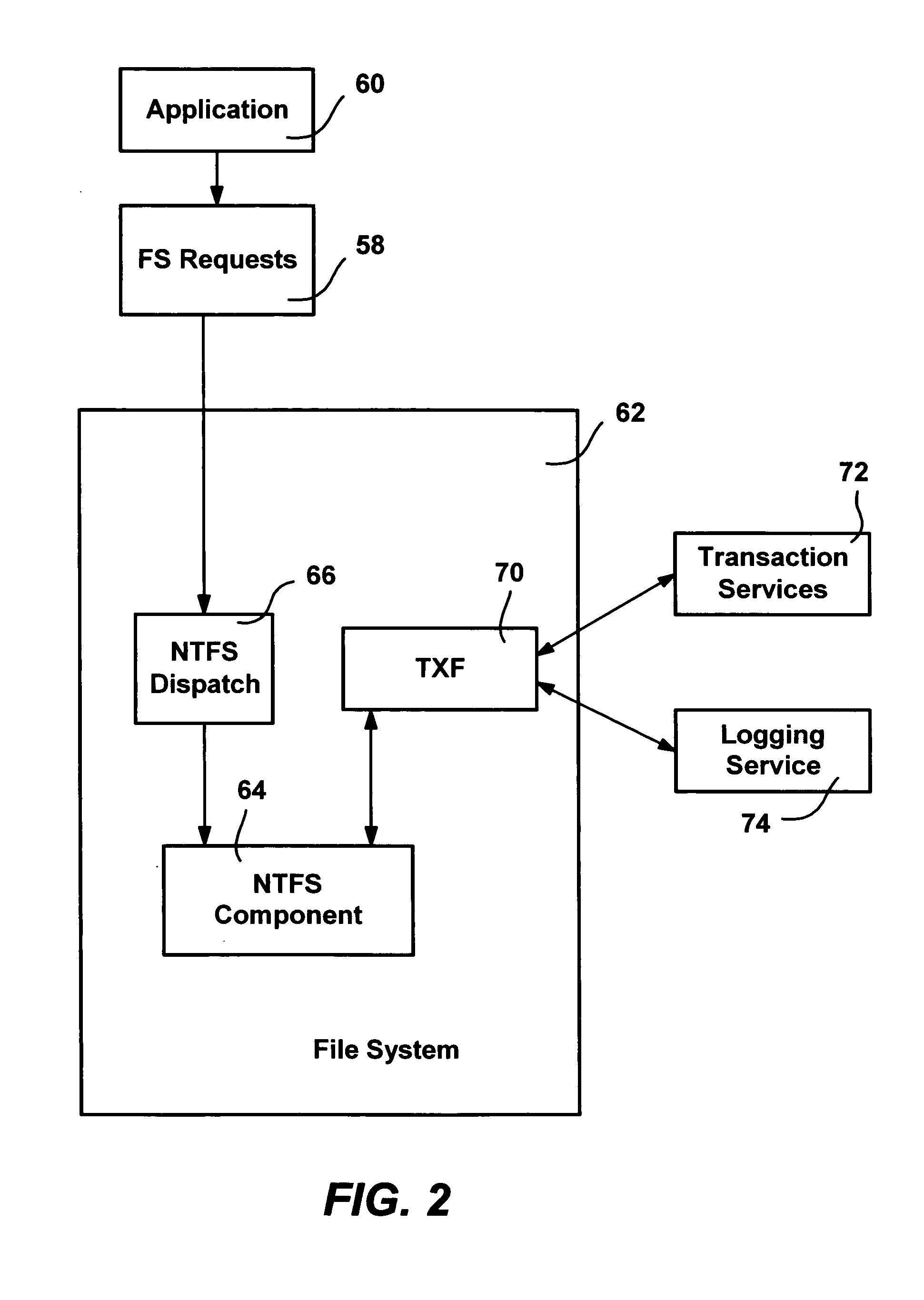

Transactional file system

InactiveUS20050120059A1Easy to implementMultiple operationData processing applicationsDigital data information retrievalFile systemResource management

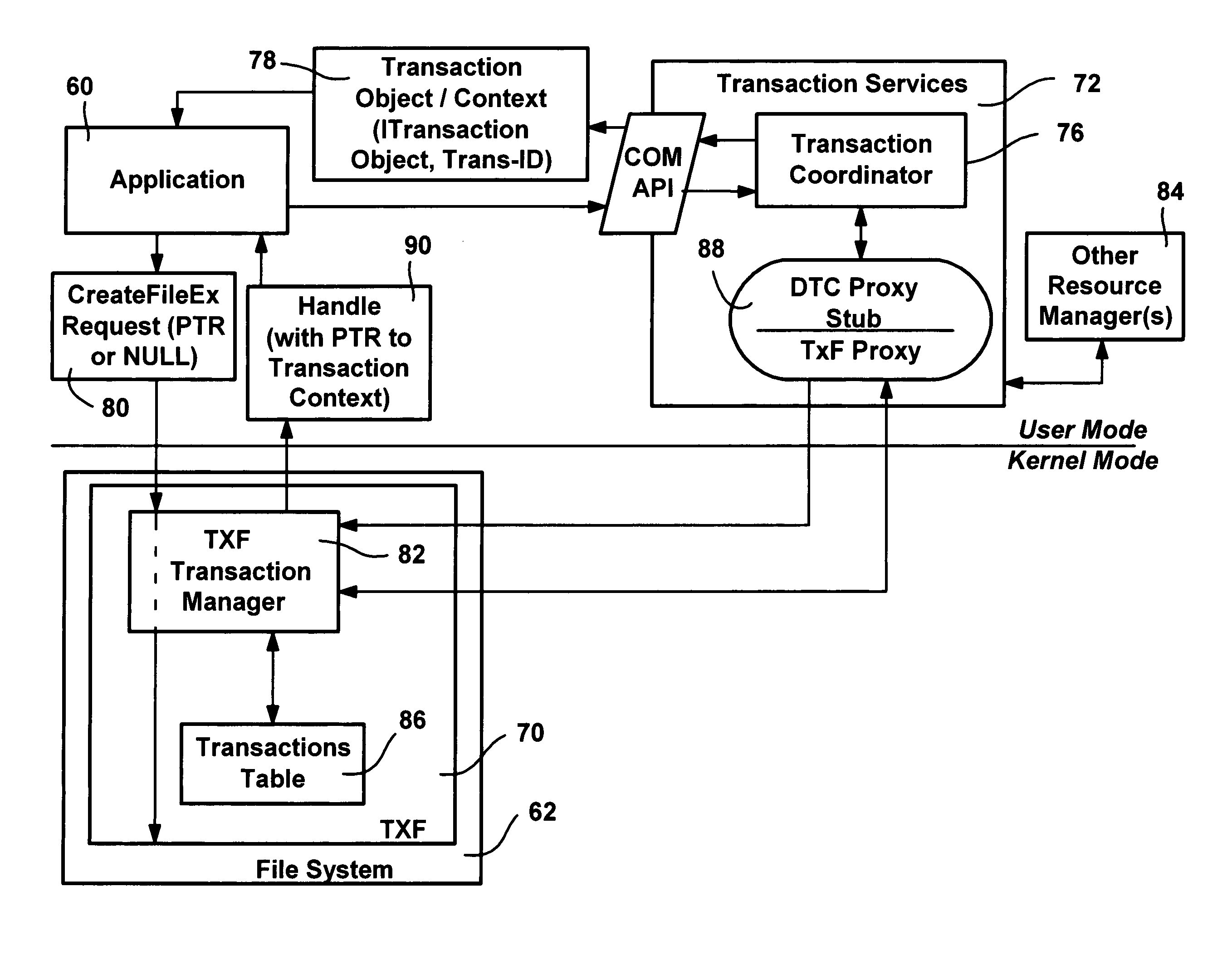

A transactional file system wherein multiple file system operations may be performed as part of a user-level transaction. An application specifies that the operations on a file, or the file system operations of a thread, should be handled as part of a transaction, and the application is given a file handle associated with a transaction context. For file system requests associated with a transaction context, a component within the file system manages the operations consistent with transactional behavior. The component, which may be a resource manager for distributed transactions, provides data isolation by providing multiple versions of a file by tracking copies of pages that have changed, such that transactional readers do not receive changes to a file made by transactional writers, until the transactional writer commits the transaction and the reader reopens the file. The component also handles namespace logging operations in a multiple-level log that facilitates logging and recovery. Page data is also logged separate from the main log, with a unique signature that enables the log to determine whether a page was fully flushed to disk prior to a system crash. Namespace isolation is provided until a transaction commits via isolation directories, whereby until committed, a transaction sees the effects of its own operations not the operations of other transactions. Transactions over a network are also facilitated via a redirector protocol.

Owner:MICROSOFT TECH LICENSING LLC

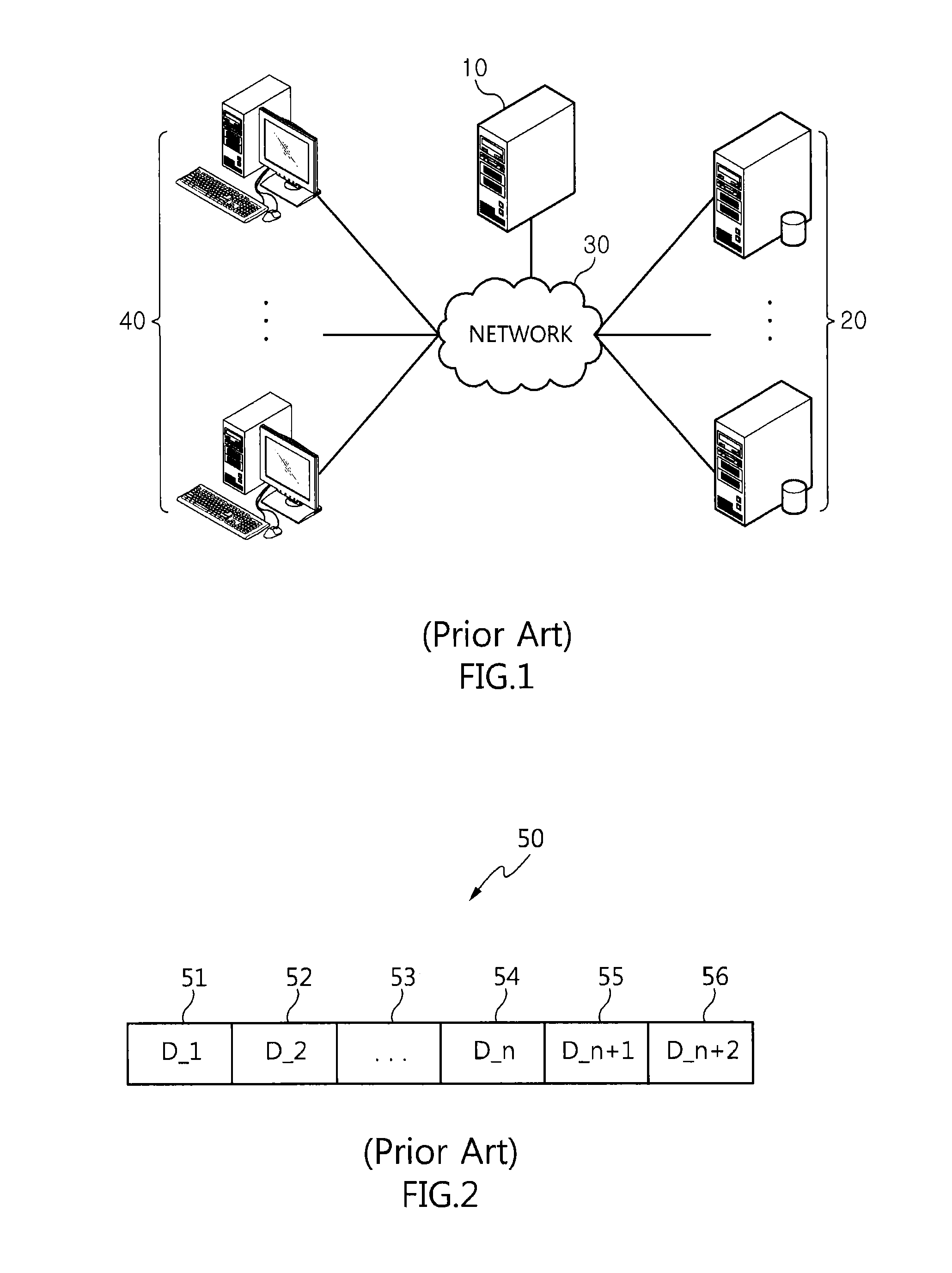

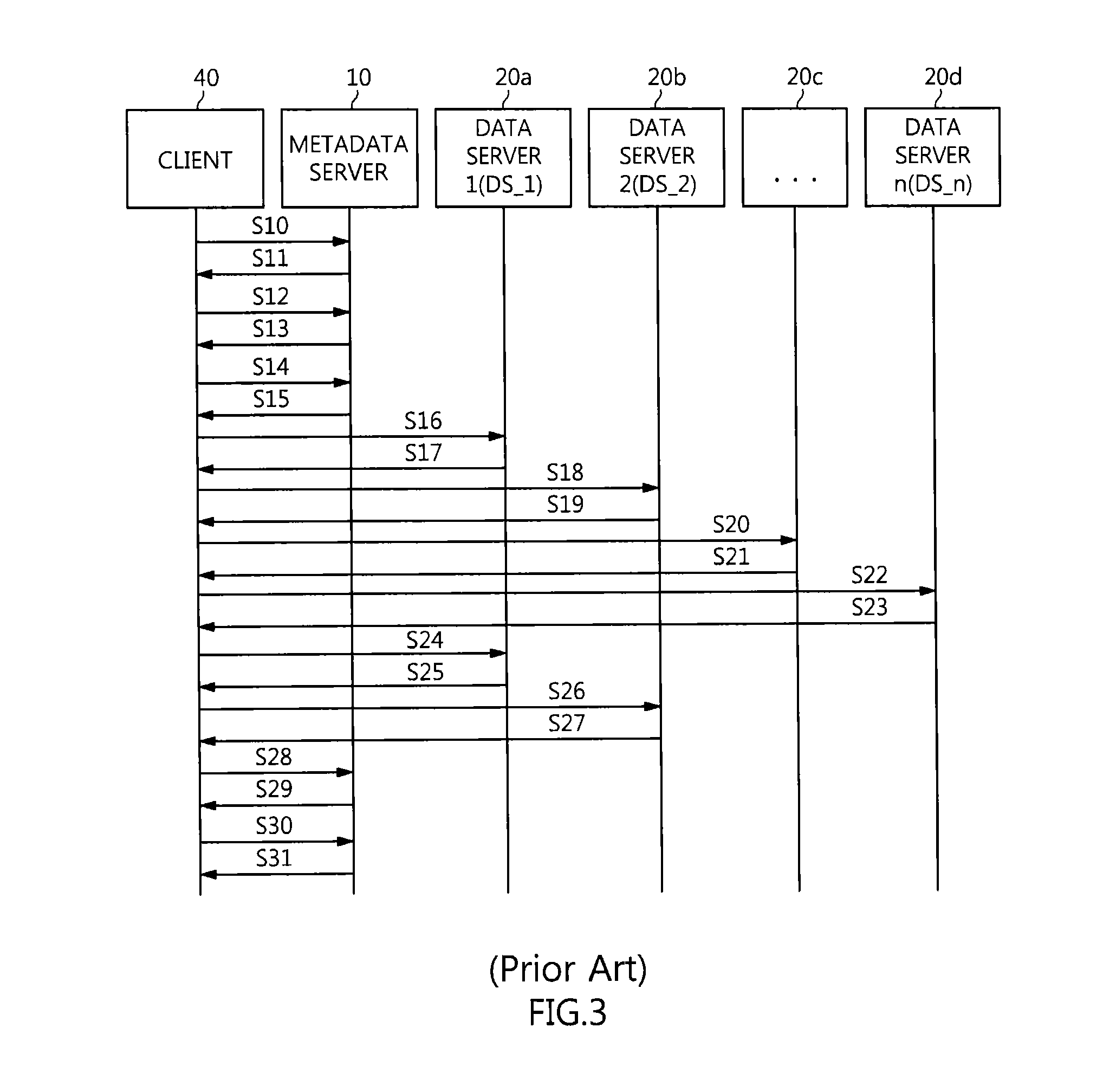

Method of managing data in asymmetric cluster file system

ActiveUS20130332418A1Effective controlMeet level requirementsDigital data processing detailsDatabase distribution/replicationFile replicationClustered file system

Disclosed herein is a method of managing data in an asymmetric cluster file system. In this method, if an OPEN request for the opening of an absent file has been received, a metadata server assigns a file handle value and a file name and then generates a file in a data server. Thereafter, the metadata server copies a file stored in the data server or the generated file to one or more data servers based on a preset copying level. Thereafter, a client performs a file operation on the files stored in the data servers.

Owner:ELECTRONICS & TELECOMM RES INST

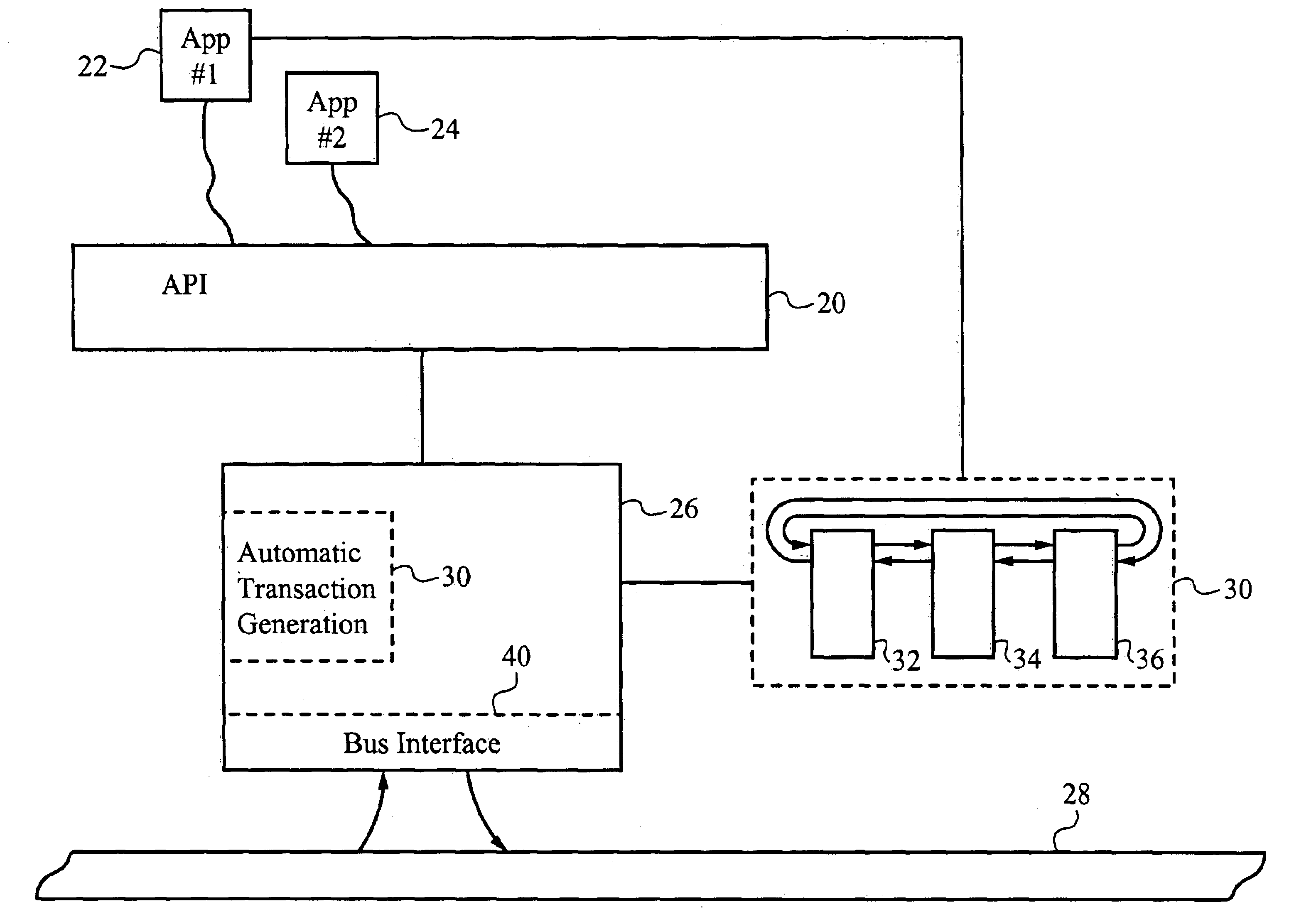

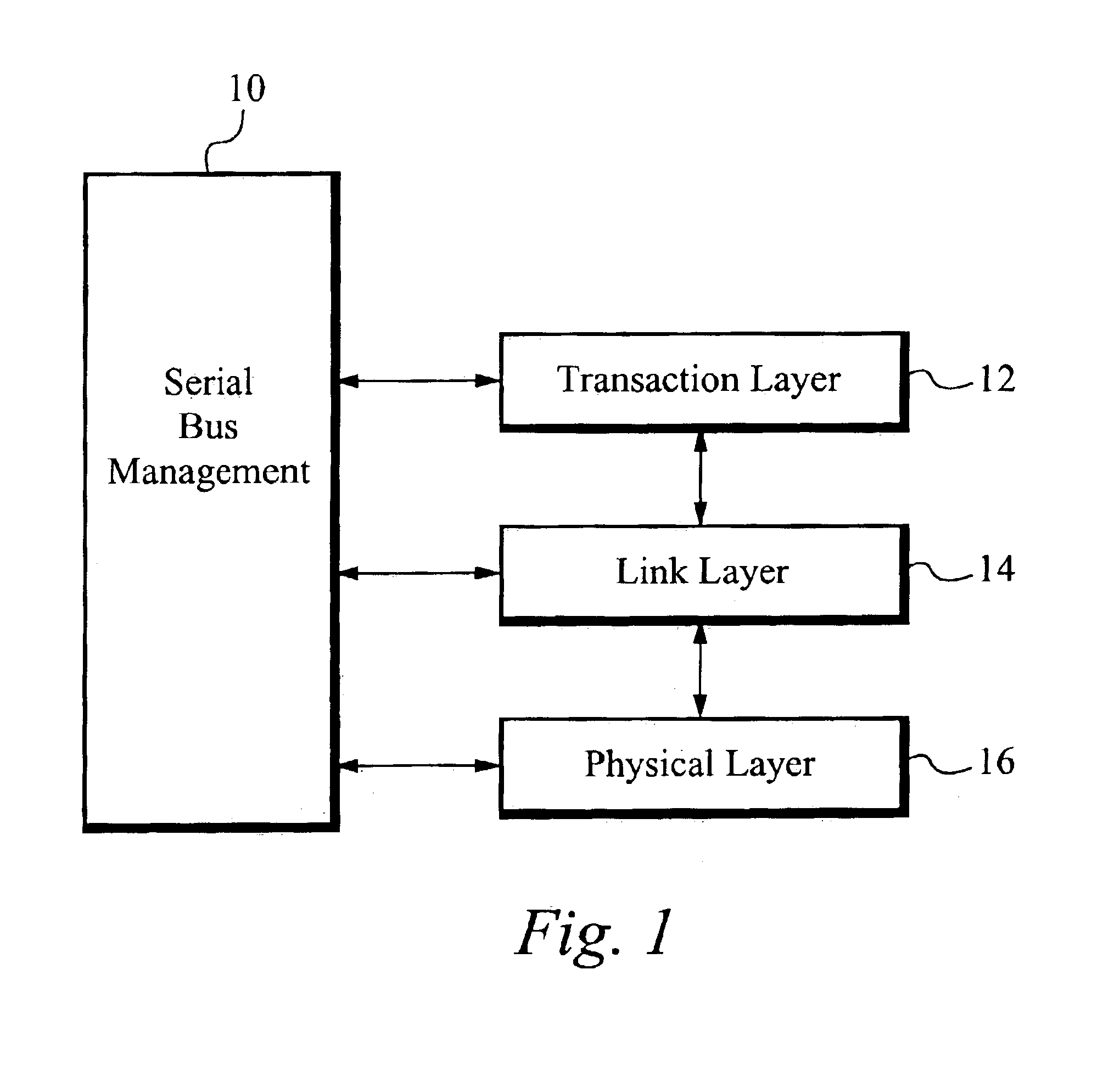

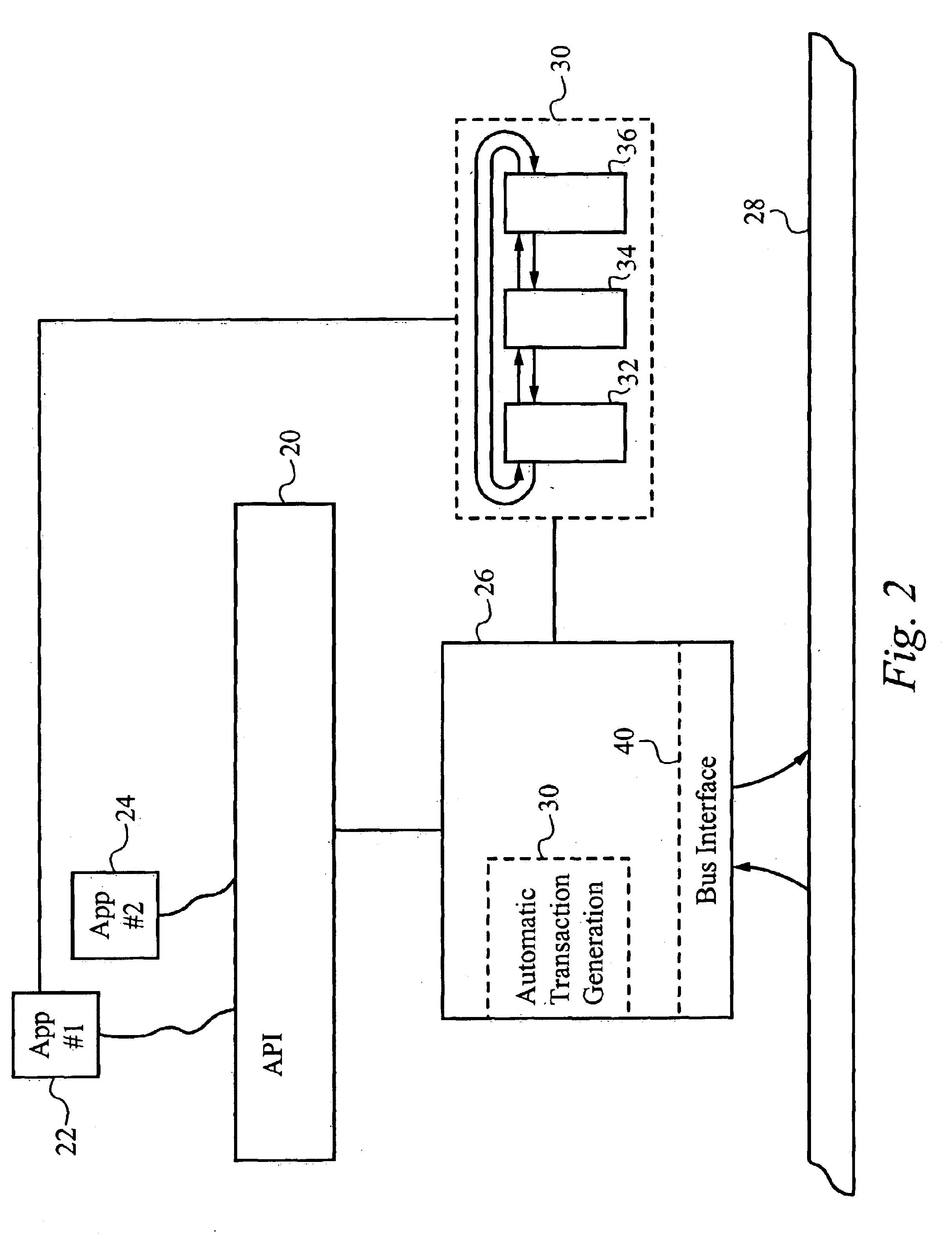

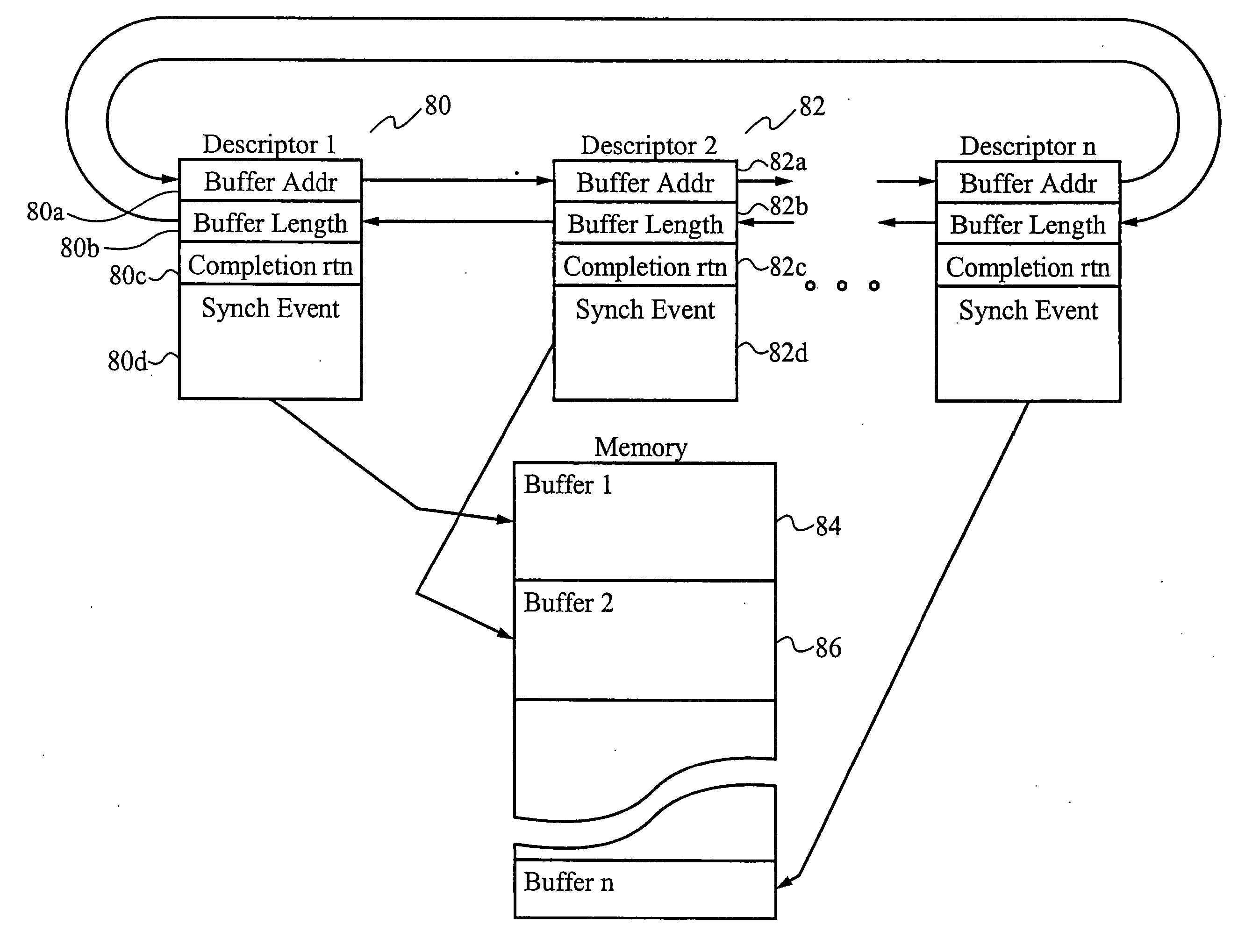

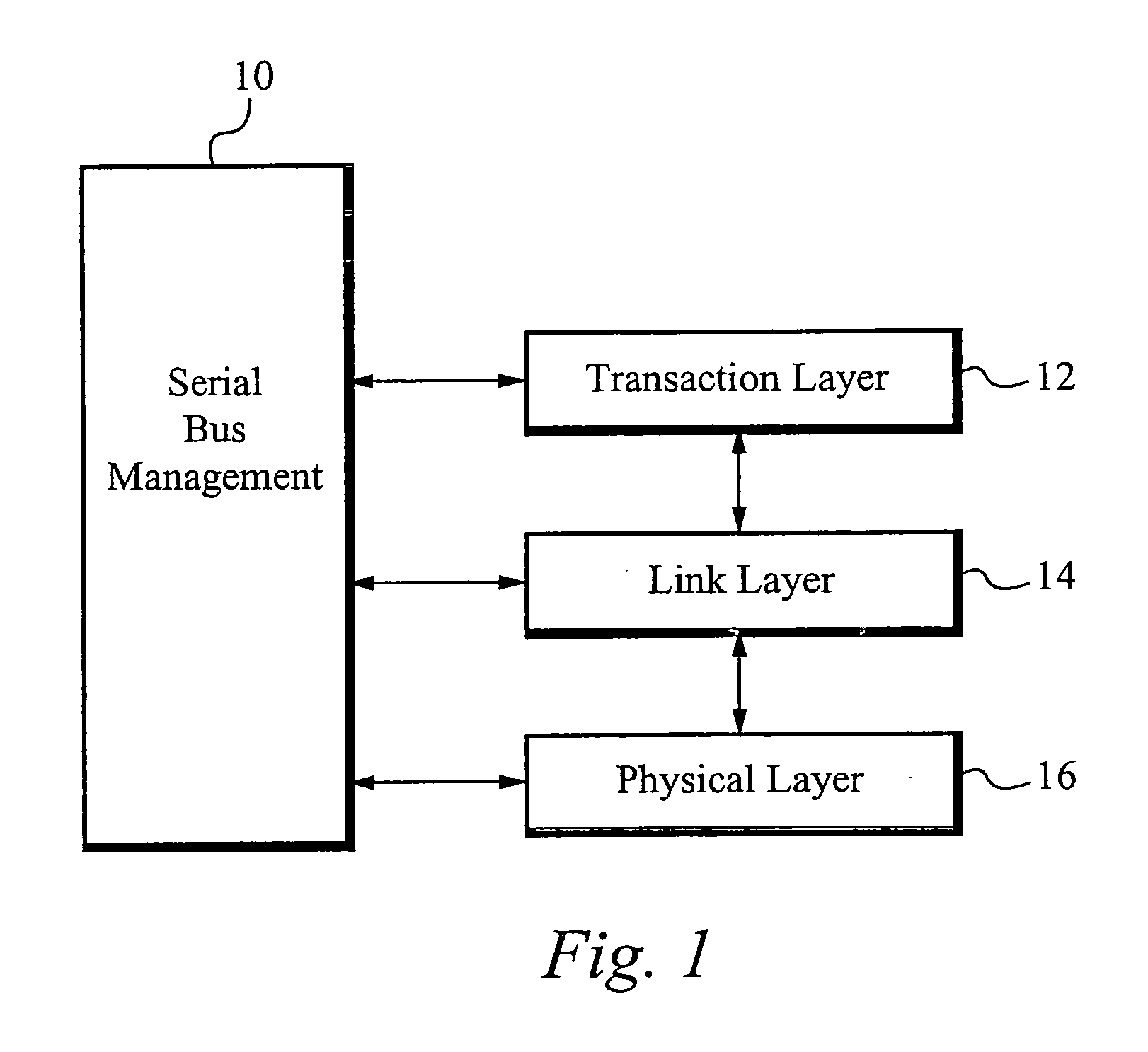

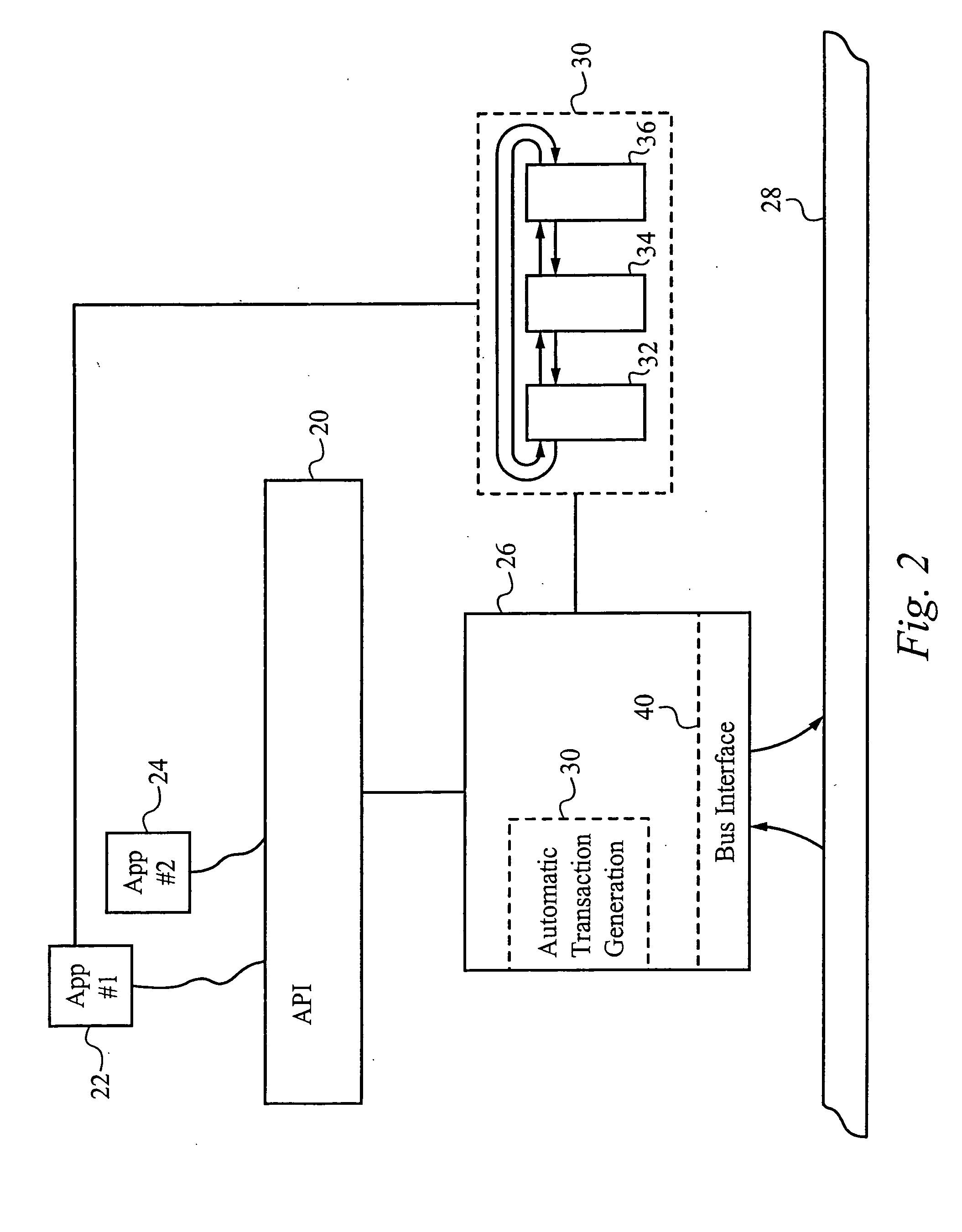

Application programming interface for data transfer and bus management over a bus structure

InactiveUS6901474B2Hybrid switching systemsInput/output processes for data processingData streamApplication programming interface

In a first embodiment, an applications programming interface (API) implements and manages isochronous and asychronous data transfer operations between an application and a bus structure. During an asynchronous transfer the API includes the ability to transfer any amount of data between one or more local data buffers within the application and a range of addresses over the bus structure using one or more asynchronous transactions. An automatic transaction generator may be used to automatically generate the transactions necessary to complete the data transfer. The API also includes the ability to transfer data between the application and another node on the bus structure isochronously over a dedicated channel. During an isochronous data transfer, a buffer management scheme is used to manage a linked list of data buffer descriptors. During isochronous transfer of data, the API provides implementation of a resynchronization event in the stream of data allowing for resynchronization by the application to a specific point within the data. Implementation is also provided for a callback routine for each buffer in the list which calls the application at a predetermined point during the transfer of data. An isochronous API of the preferred embodiment presents a virtual representation of a plug, using a plug handle, to the application. The isochronous API notifies a client application of any state changes on a connected plug through the event handle. The isochronous API also manages buffers utilized during a data operation by attaching and detaching the buffers to the connected plug, as appropriate, to mange the data flow.

Owner:SONY CORP +1

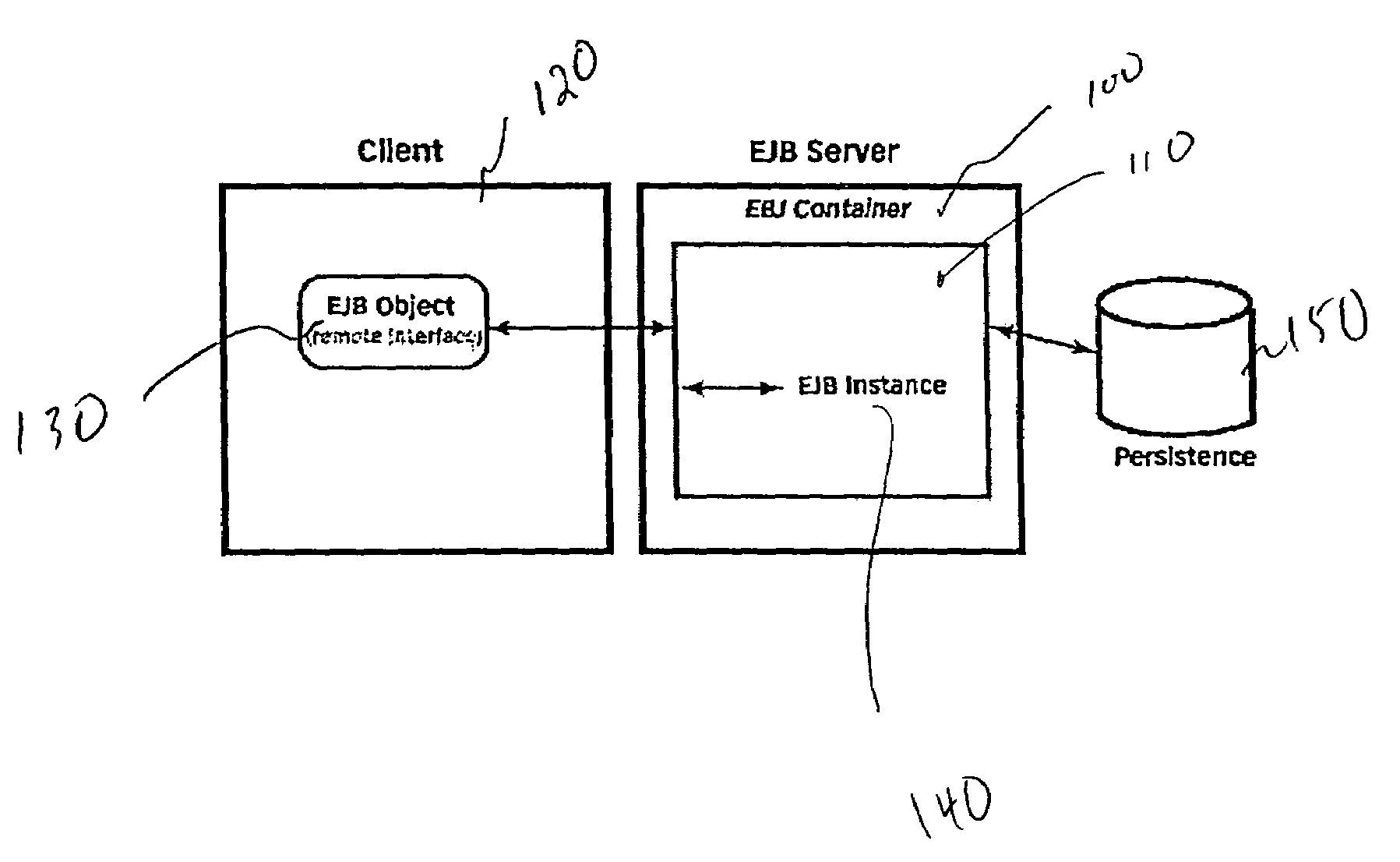

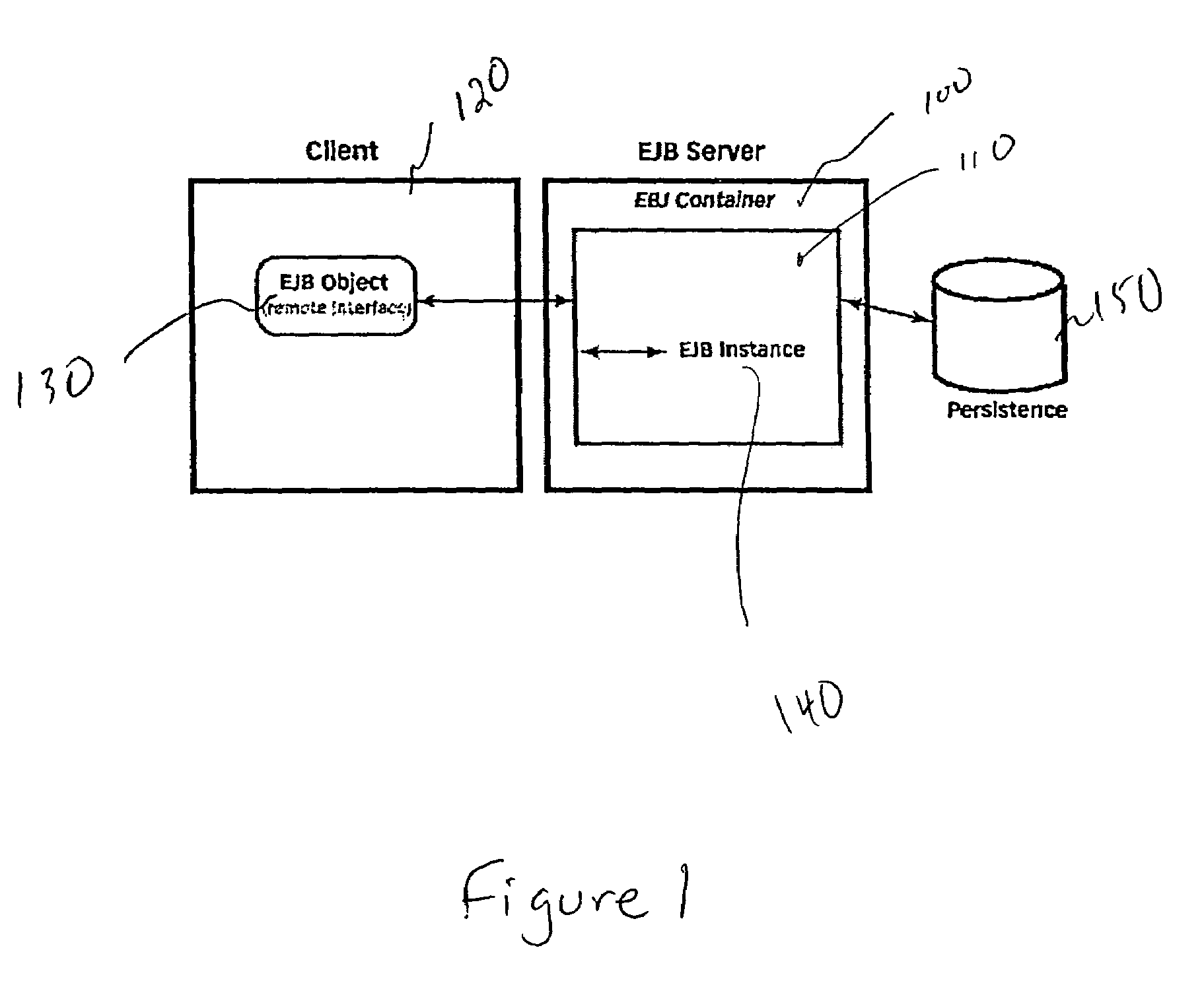

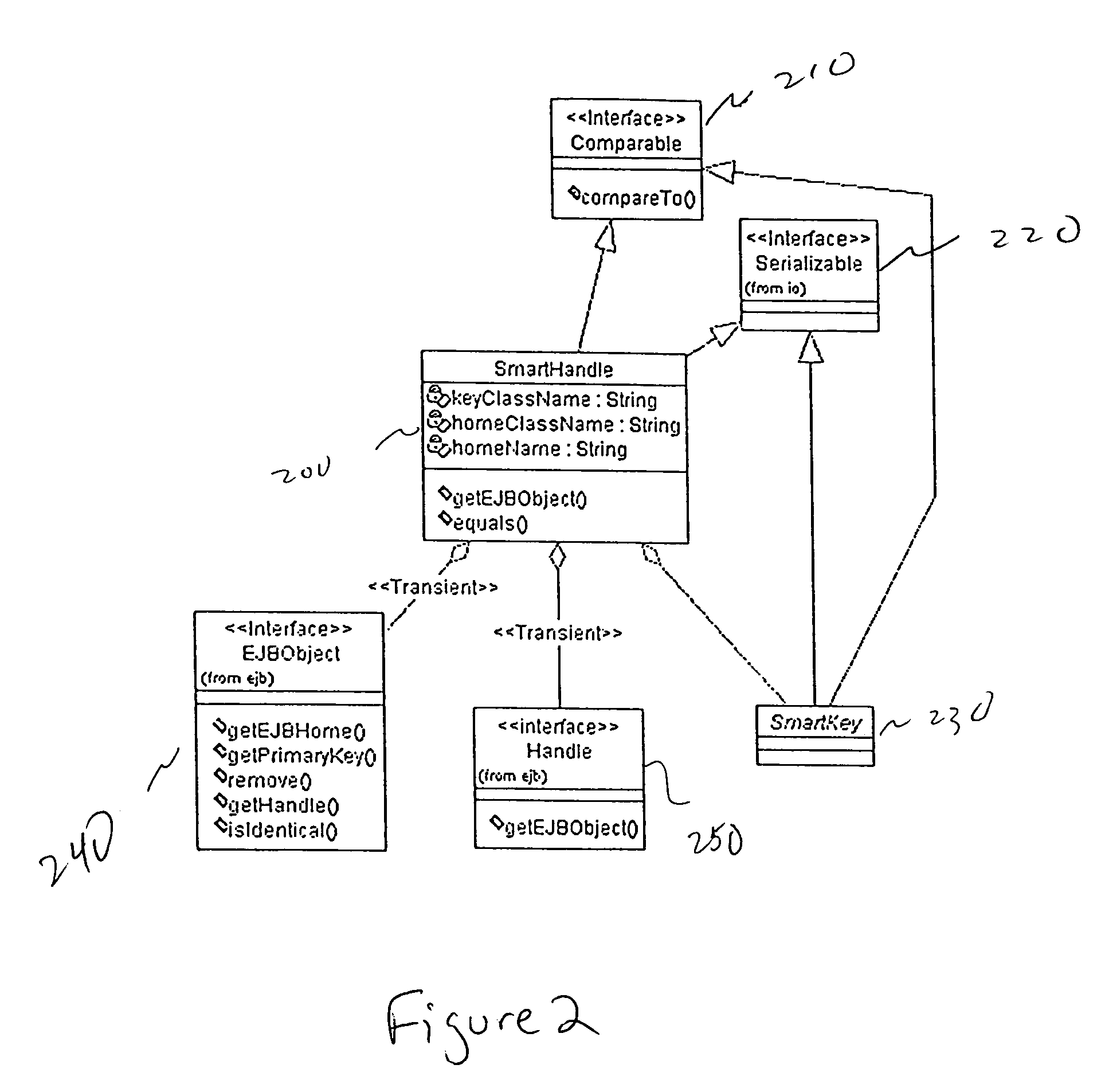

Smart handle

InactiveUS6944680B1Increase capacityMultiple digital computer combinationsWebsite content managementRelational databaseEngineering

A SmartHandle and method is provided which can extend capabilities of the EJB Handle. The SmartHandle can be mapped to a multi-column relational database. Additionally, the SmartHandle enables two EJB Handles to be compared without instantiating the actual EJB objects.

Owner:ORACLE INT CORP

System and methods for caching in connection with authorization in a computer system

InactiveUS7096367B2Easy to operateMore efficientData processing applicationsDigital data processing detailsComputer resourcesComputerized system

An authorization handle is supported for each access policy determination that is likely to be repeated. In particular, an authorization handle may be assigned to access check results associated with the same discretionary access control list and the same client context. This likelihood may be determined based upon pre-set criteria for the application or service, based on usage history and the like. Once an access policy determination is assigned an authorization handle, the static maximum allowed access is cached for that policy determination. From access check to access check, the set of permissions desired by the client may change, and dynamic factors that might affect the overall privilege grant may also change; however, generally there is still a set of policies that is unaffected by the changes and common across access requests. The cached static maximum allowed access data is thus used to provide efficient operations for the evaluation of common policy sets. In systems having access policy evaluations that are repeated, authorization policy evaluations are more efficient, computer resources are free for other tasks, and performance improvements are observed.

Owner:MICROSOFT TECH LICENSING LLC

Plug-in management method, plug-in manager and set top box

InactiveCN101950255AShorten the development cycleAchieve modularityTelevision system detailsMultiprogramming arrangementsCouplingComputer module

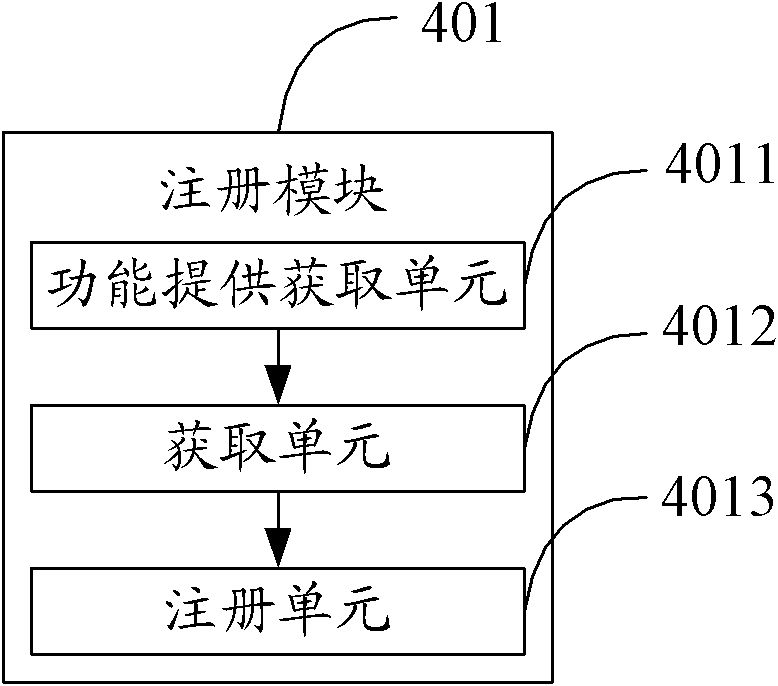

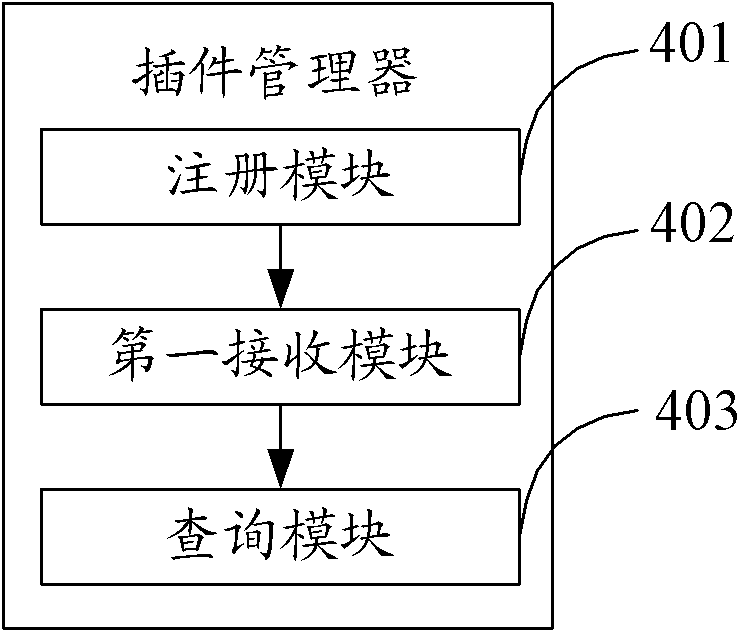

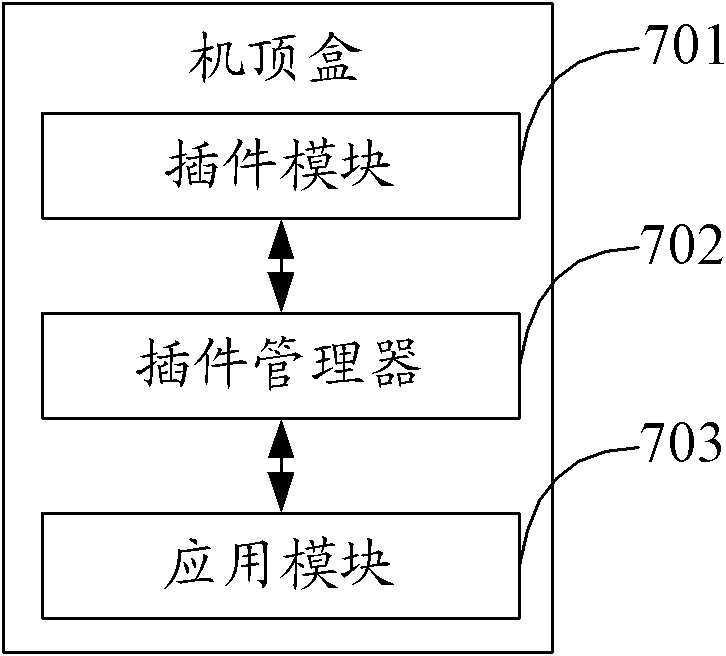

The invention relates to a plug-in management method, a plug-in manager and a set top box. The method comprises the following steps of: registering a plug-in module through a plug-in manager when the plug-in module is connected with the plug-in module, and acquiring the description information of the plug-in module; receiving keyword information, sent by an application module, on querying whether a corresponding plug-in module is registered; and returning a handle of the corresponding plug-in module to the application module when the keyword information is matched with a keyword information part in the description information so that the application module revokes the functions of the corresponding plug-in module according to the handle. In the invention, by arranging a plug-in and the plug-in manager in the set top box, the modularization of set top box software is realized, the coupling of all functional modules of the set top box is reduced, the transportability of the set top box software on different products is enhanced, and the development cycle of the software is shortened; and by dynamically loading the plug-ins, the system functions are expanded and the flexibility of the product is enhanced by dynamically selecting different plug-ins in plug-ins of the same kind.

Owner:山东浪潮数字媒体科技有限公司

Generation and validation of reference handles

InactiveUS6105039AEfficient releaseGuaranteed to workData processing applicationsResource allocationHysteresisComputerized system

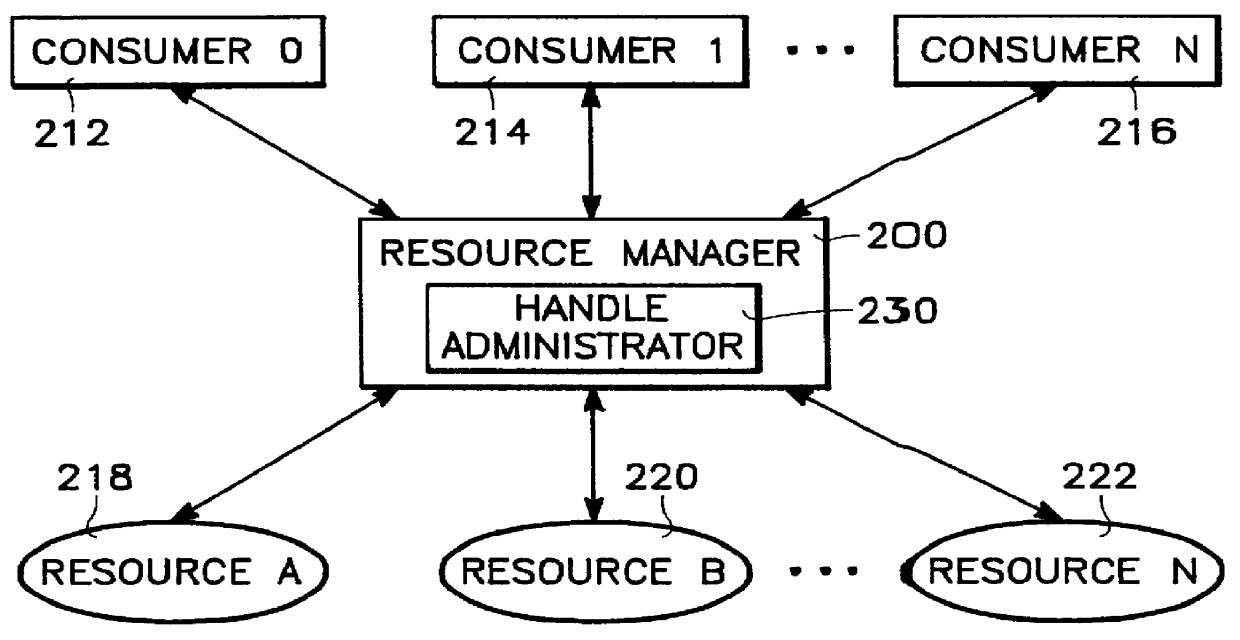

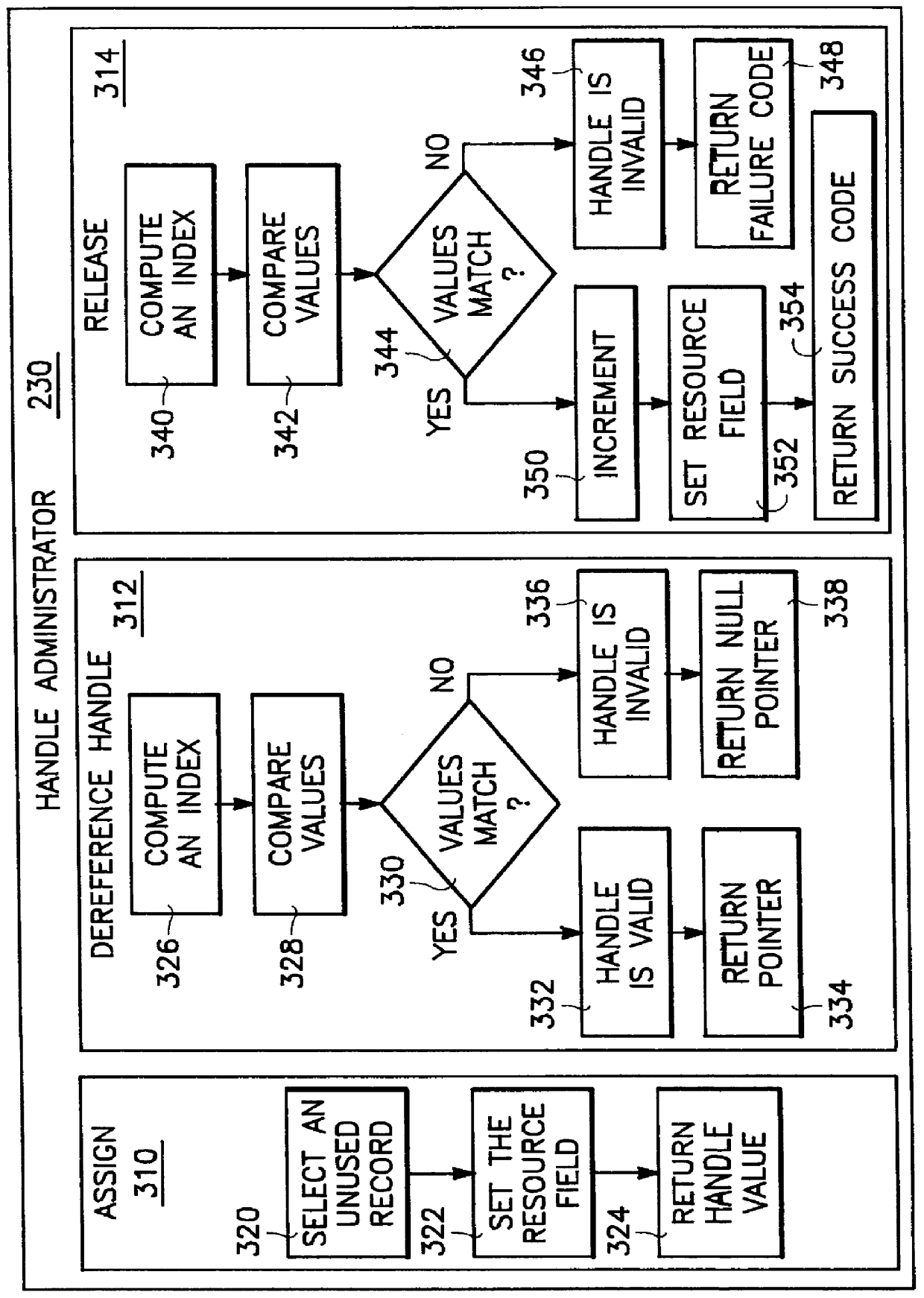

The present invention is embodied in a system and method for generating and validating reference handles for consumers requiring access to resources in a computer system. The system of the present invention includes a resource manager having a handle administrator, a plurality of consumers, and a plurality of resources. The handle administrator includes an assignment routine, a release routine, and a dereference routine. The assignment routine issues new handles, the release routine releases handles that are no longer required (thus rendering the handle invalid), and the dereference routine dereferences handles into a pointer to a resource, which entails verifying that the handle is valid. Also included is an auxiliary sub-routine for managing used and unused records, an expansion sub-routine for efficiently expanding the handle database, a handle recycling sub-routine for recycling handles, a contraction sub-routine for efficiently contracting the handle database, a hysteresis sub-routine for probabilistically contracting the handle database, and a memory allocation failure sub-routine to improve functionality in the event of memory allocation failure.

Owner:MICROSOFT TECH LICENSING LLC

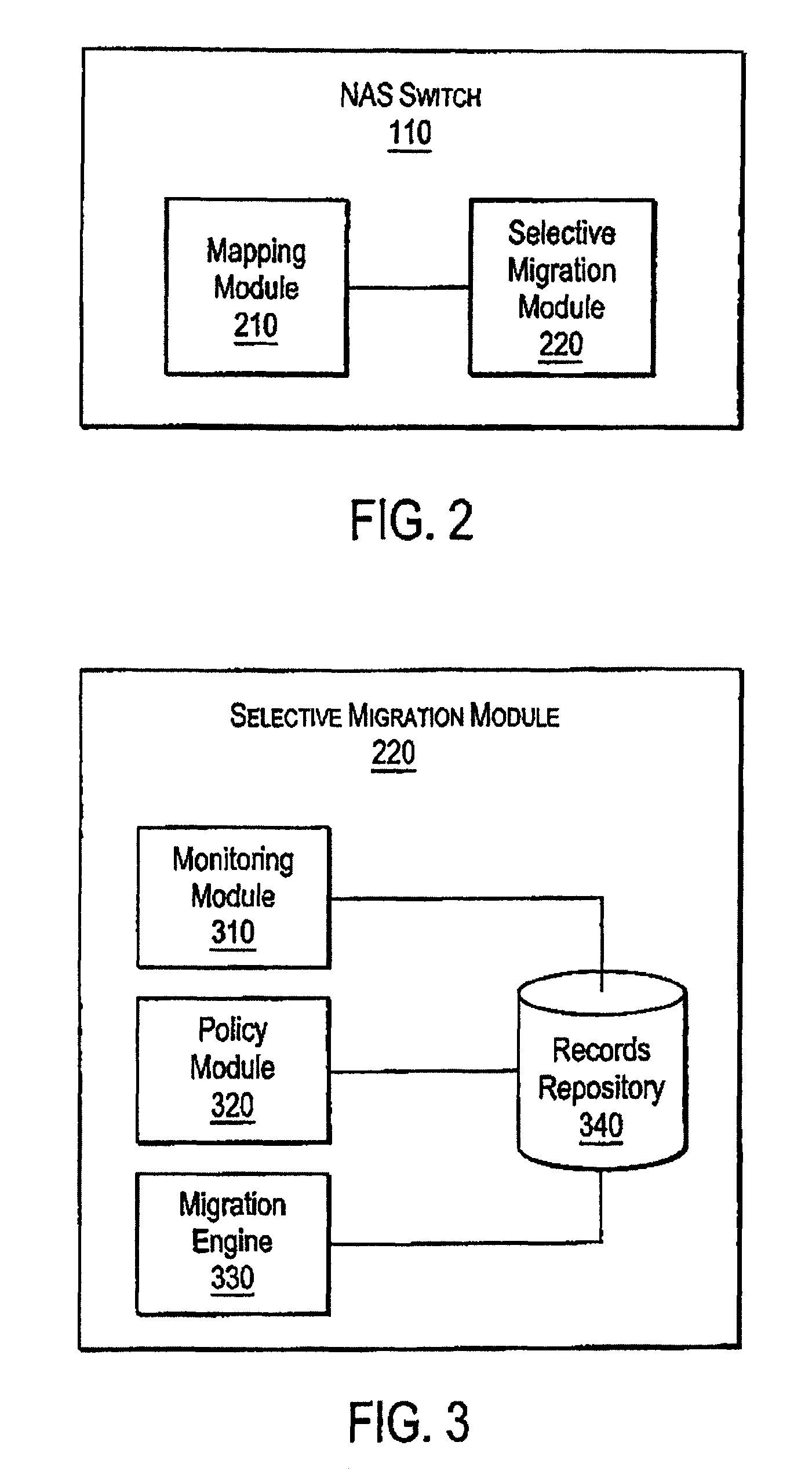

Accumulating access frequency and file attributes for supporting policy based storage management

ActiveUS8131689B2Digital data information retrievalDigital data processing detailsComputer moduleAccess frequency

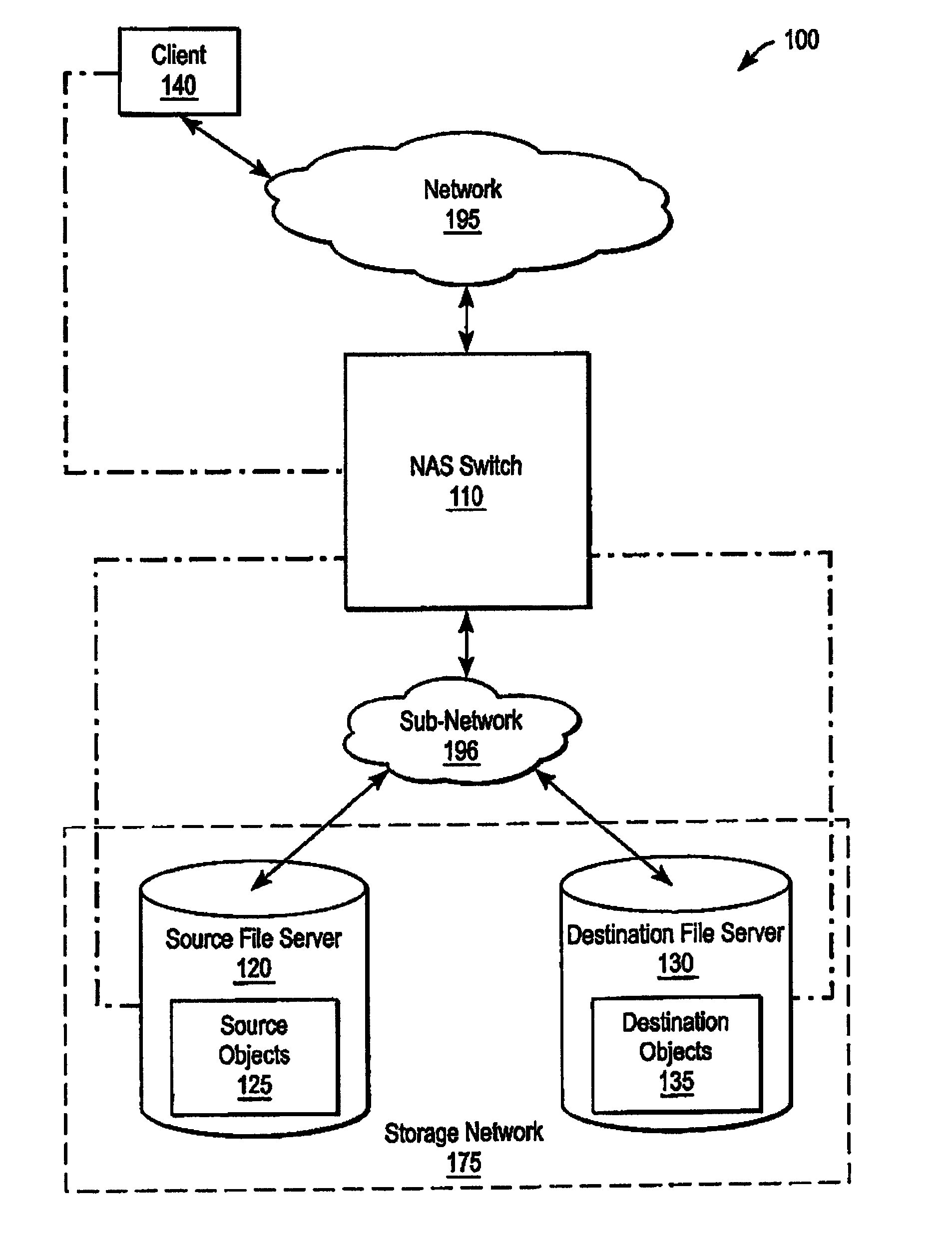

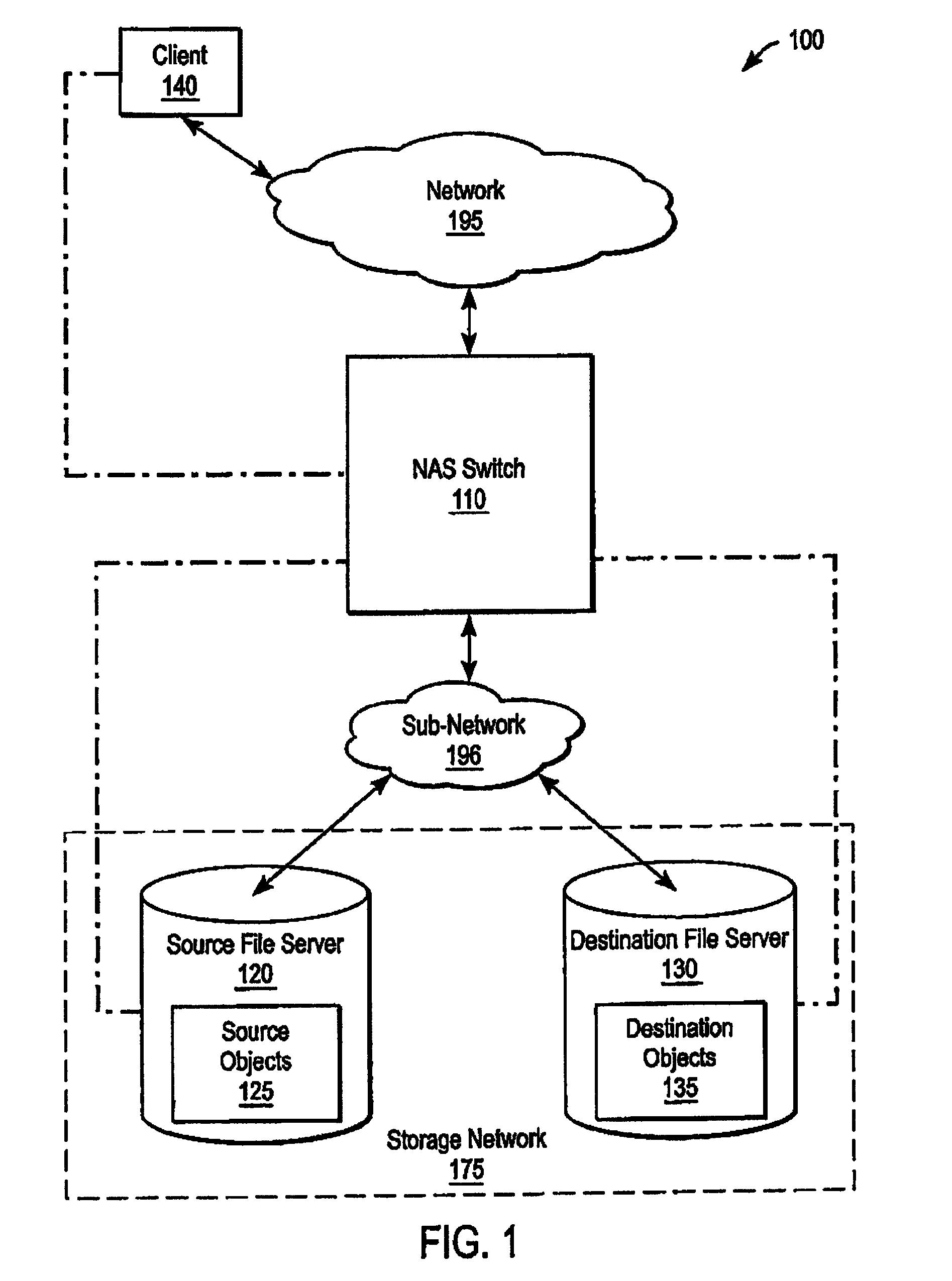

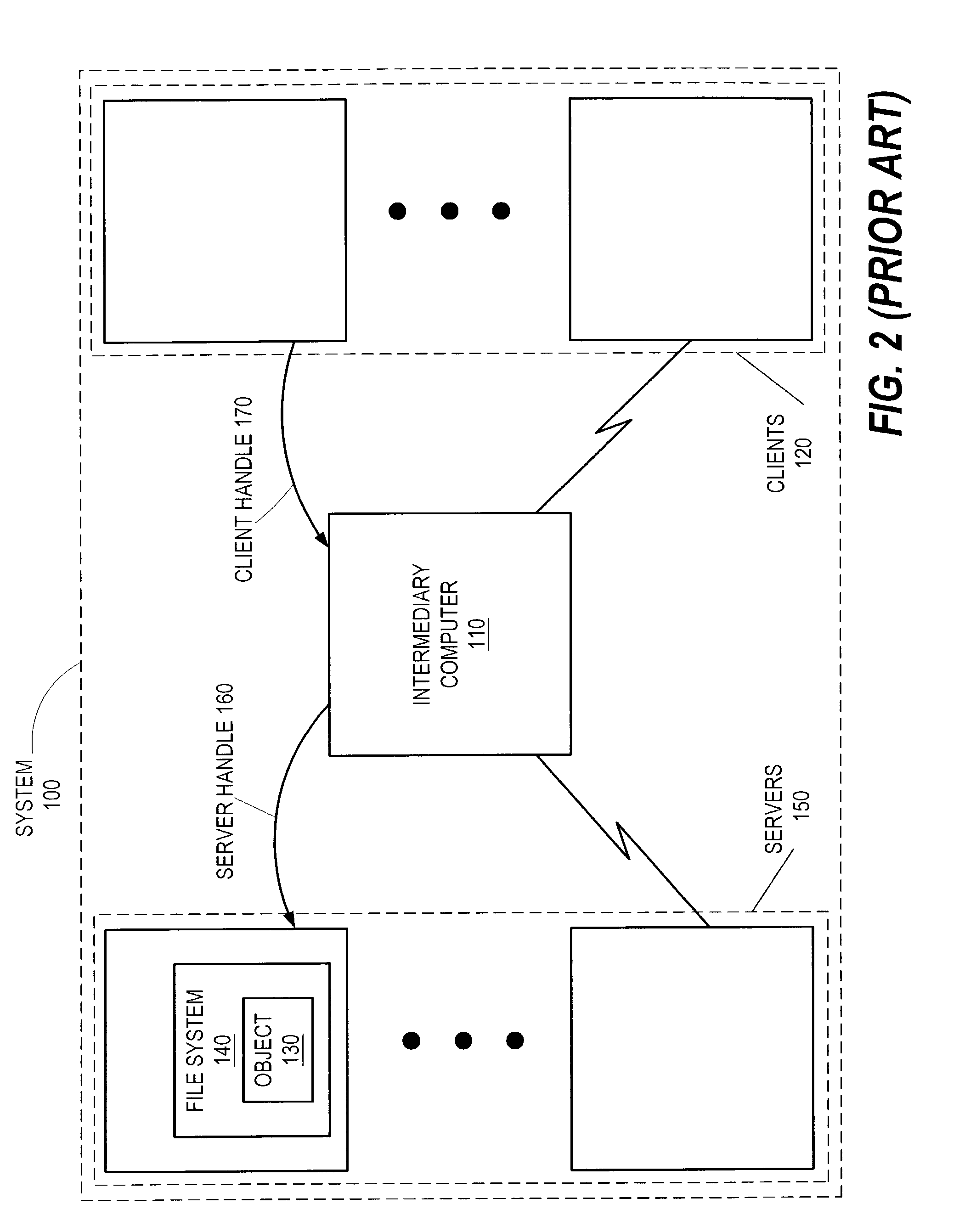

A system and method for performing policy-based storage management using data related to access frequency and file attribute accumulation. A switch device provides transparency for transactions between a client and a storage network. The transparency allows objects (e.g., files or directories) to be moved (e.g., migrated) on the storage network without affecting a reference to the object used by the client (e.g., a file handle). A monitoring module generates accumulation data associated with the transactions for use in policy-based management. The accumulation data can describe uses of the file such as how often certain files are accessed, modifications to files such as creations of new directories or files, and other uses.

Owner:CISCO TECH INC

Application programming interface for data transfer and bus management over a bus structure

InactiveUS20050097245A1Hybrid switching systemsInput/output processes for data processingData streamApplication programming interface

In a first embodiment, an applications programming interface (API) implements and manages isochronous and asynchronous data transfer operations between an application and a bus structure. During an synchronous transfer the API includes the ability to transfer any amount of data between one or more local data buffers within the application and a range of addresses over the bus structure using one or more asynchronous transactions. An automatic transaction generator may be used to automatically generate the transactions necessary to complete the data transfer. The API also includes the ability to transfer data between the application and another node on the bus structure isochronously over a dedicated channel. During an isochronous data transfer, a buffer management scheme is used to manage a linked list of data buffer descriptors. During isochronous transfers of data, the API provides implementation of a resynchronization event in the stream of data allowing for resynchronization by the application to a specific point within the data. Implementation is also provided for a callback routine for each buffer in the list which calls the application at a predetermined point during the transfer of data. An isochronous API of the preferred embodiment presents a virtual representation of a plug, using a plug handle, to the application. The isochronous API notifies a client application of any state changes on a connected plug through the event handle. The isochronous API also manages buffers utilized during a data operation by attaching and detaching the buffers to the connected plug, as appropriate, to manage the data flow.

Owner:SONY CORP +1

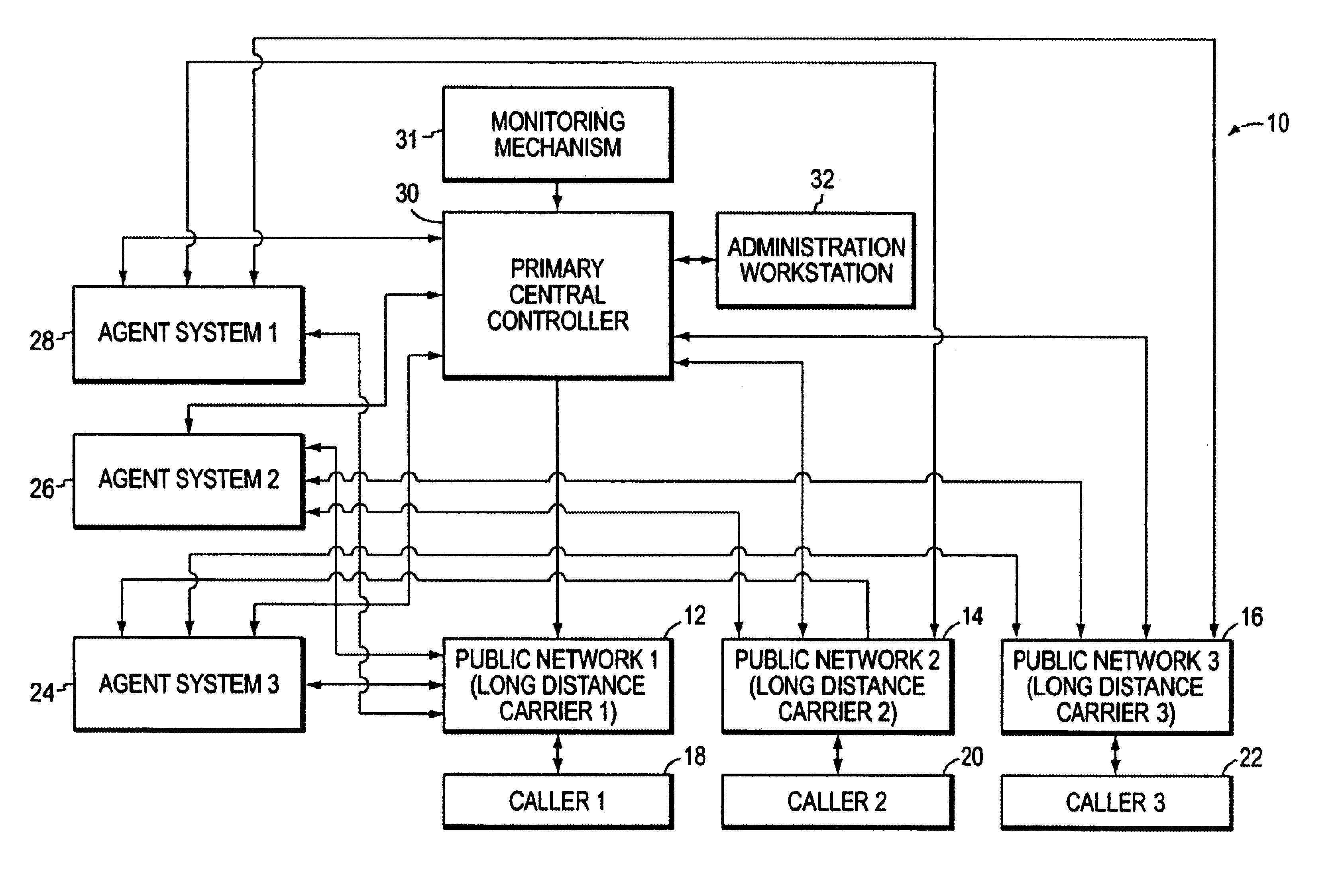

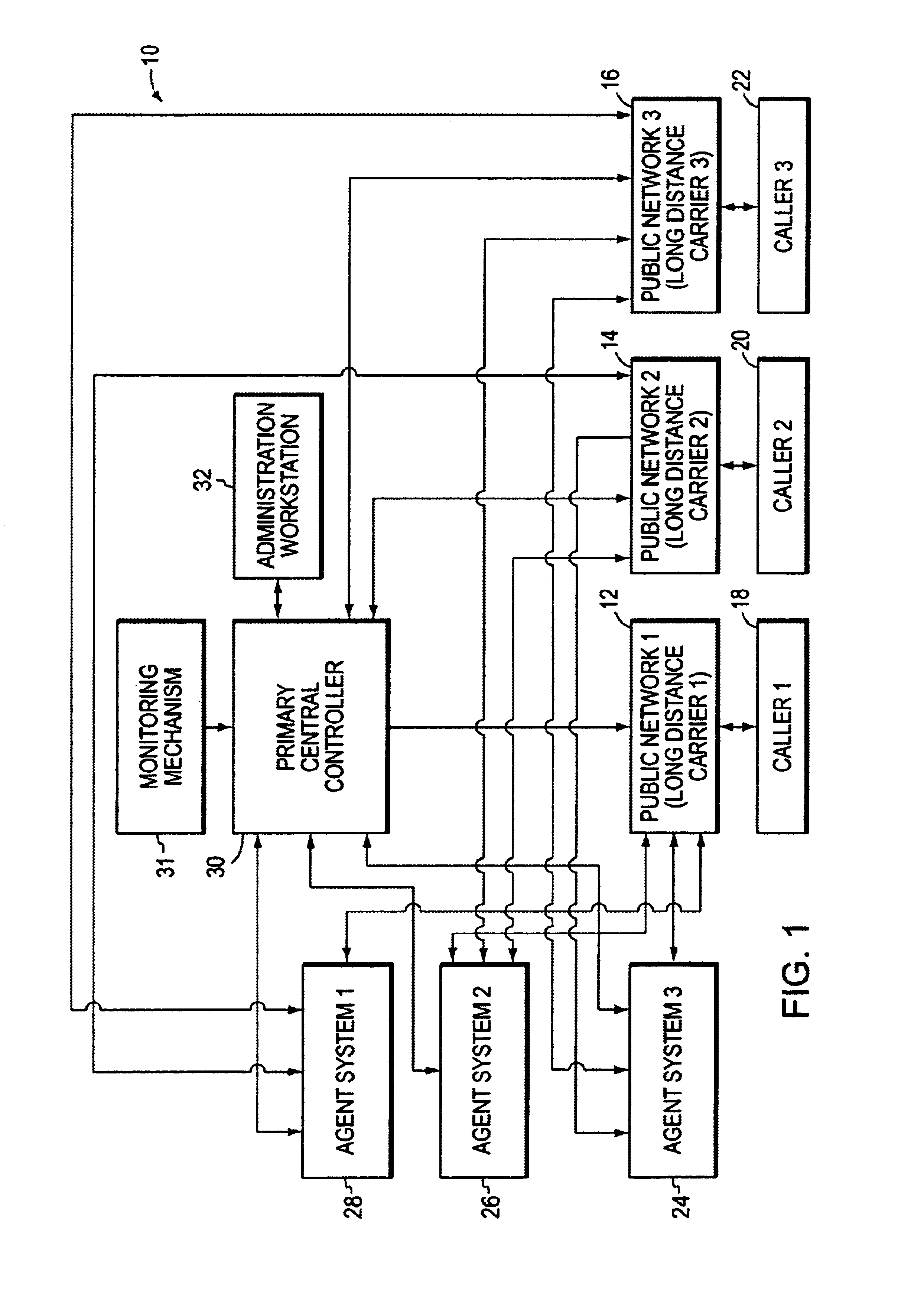

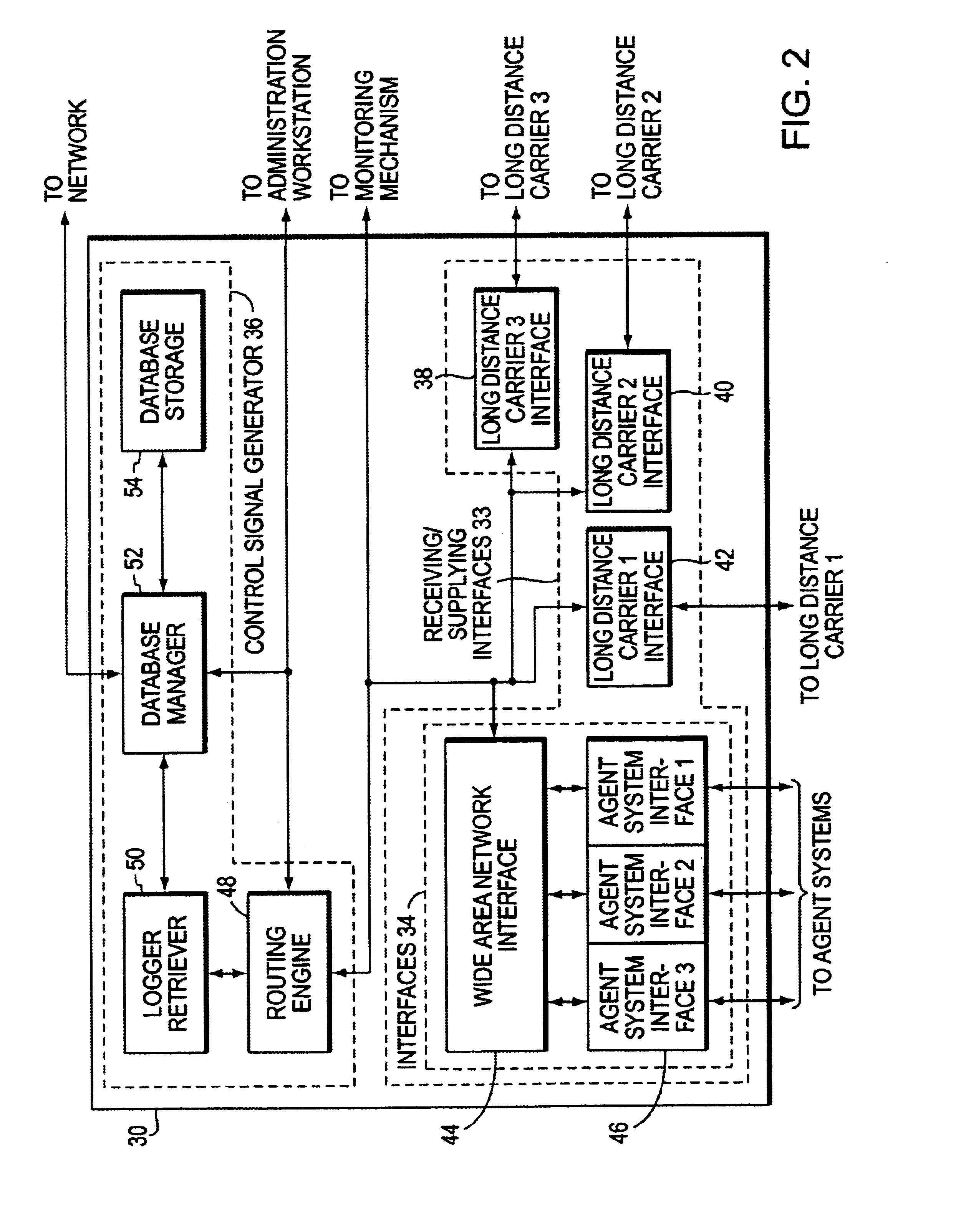

Generation of communication system control scripts

InactiveUS6819754B1Easy to adaptEasy to determineMultiplex system selection arrangementsError detection/correctionProgramming languageCommunications system

Techniques are provided for use in generating call routing control scripts. The techniques permit call routing control scripts, initially generated for use in controlling a first communication system, to be adapted for use in controlling a second, different communication system. More specifically, in these techniques, values (e.g., numerical values) used to identify script-controlled objects (i.e., physical or logical entities in the first system) in the initially-generated script, are replaced with values identifying equivalent objects in the second system, to thereby facilitate creation of another script that is executable by the second system. The initially-generated script contains a section in which the object identification values used in the initially-generated script are grouped together, in association with respective logical handles / names and respective object classifications of the objects that they identify, in order to facilitate determination of the equivalent objects.

Owner:CISCO TECH INC

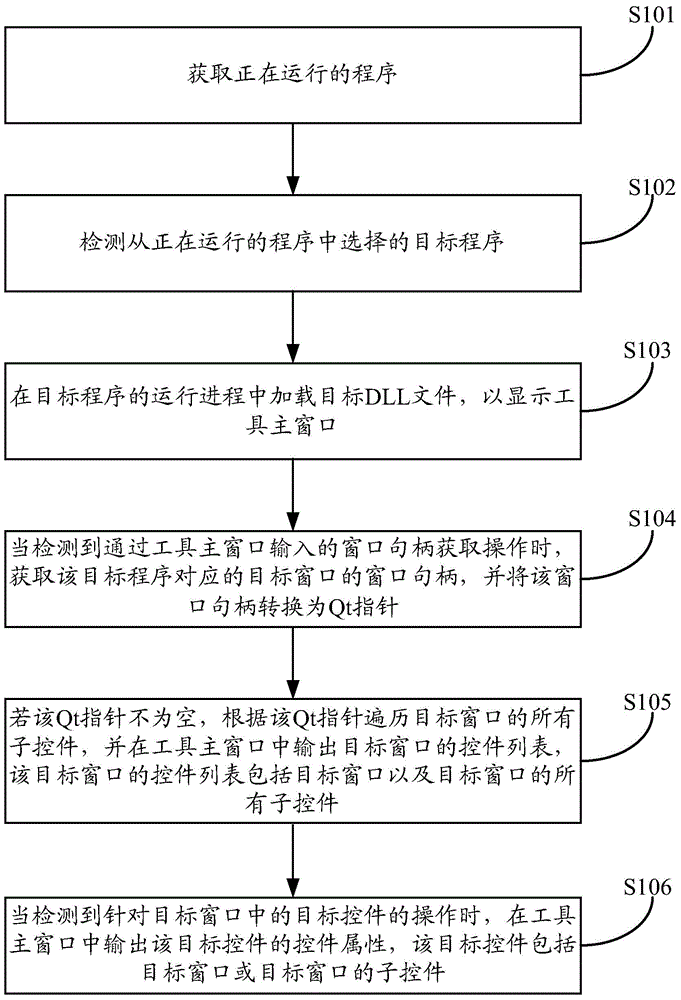

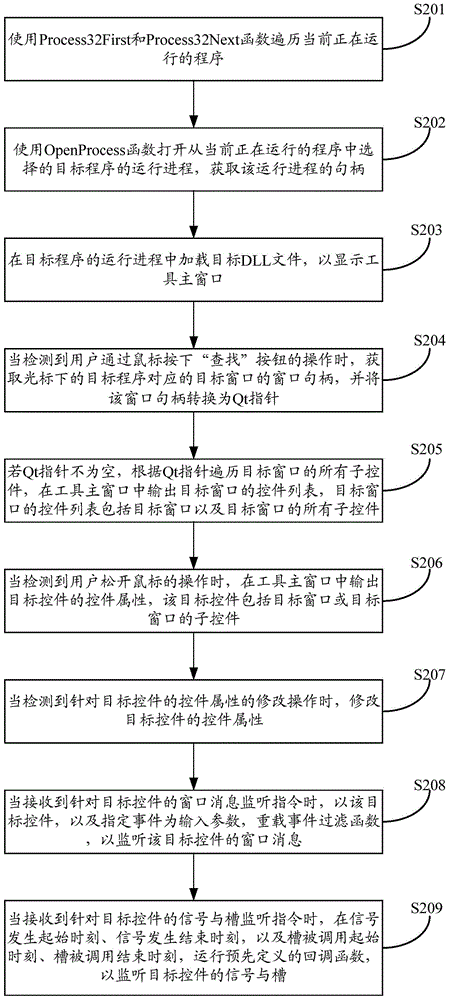

Control capture method and equipment

ActiveCN104598282ARealize identificationProgram loading/initiatingGraphical user interfaceTarget control

The embodiment of the invention discloses a control capture method. The control capture method comprises the steps of acquiring programs in running; detecting a target program selected from the programs in running; loading a target DLL file in a running process of the target program to display a tool main window; when a window handle acquisition operation input through the tool main window is detected, acquiring a window handle of a target window corresponding to the target program and converting the window handle into a Qt pointer; if the Qt pointer is not empty, traversing all sub-controls of the target window according to the Qt pointer and outputting a control list of the target window in the tool main window; when an operation aiming at the target control in the target window is detected, outputting a control attribute of the target control in the tool main window. The embodiment of the invention further discloses control capture equipment. By adopting the control capture method and the control capture equipment, recognition aiming at controls of a graphic user interface program based on Qt can be realized.

Owner:GUANGZHOU HUADUO NETWORK TECH

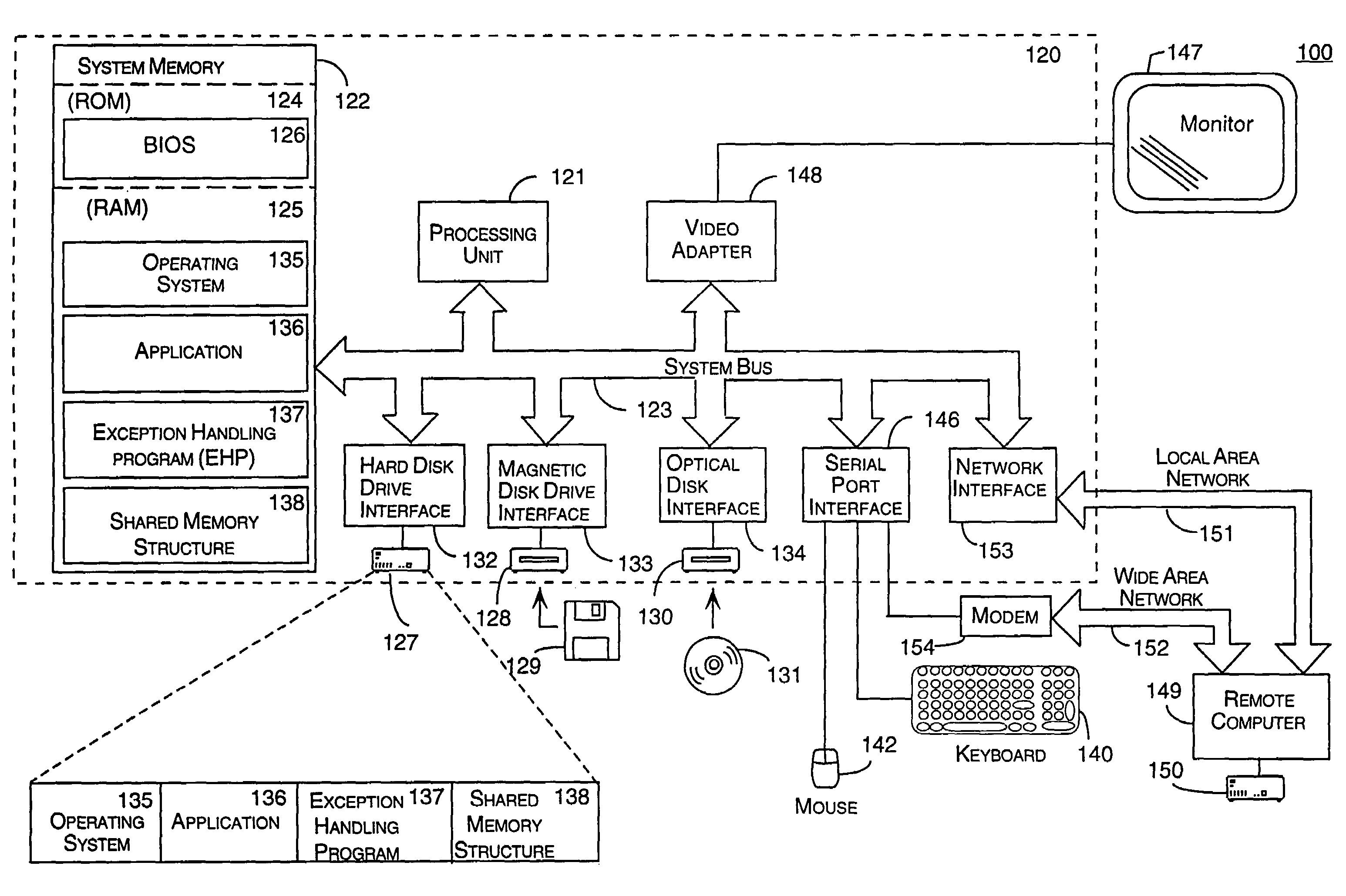

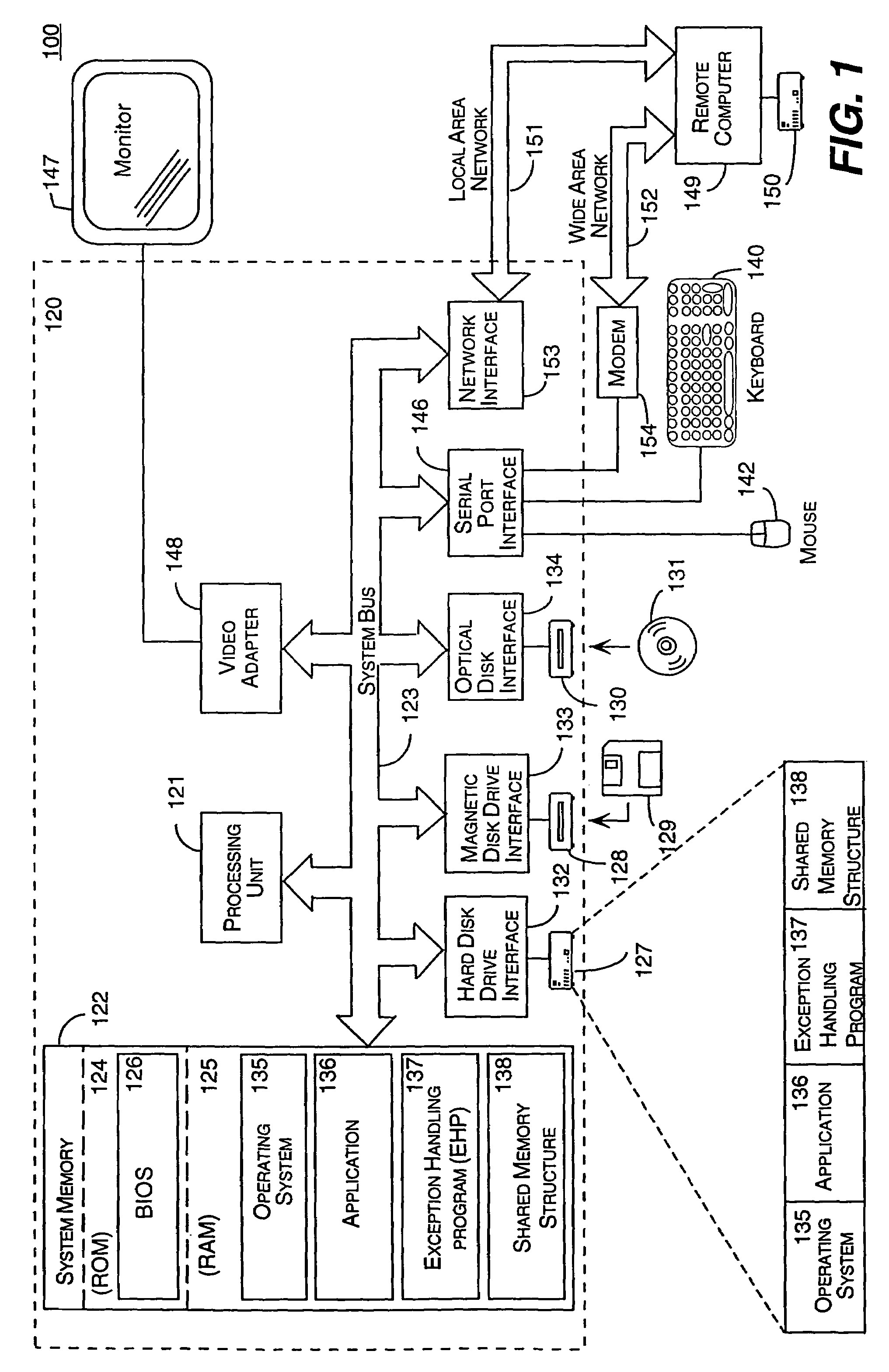

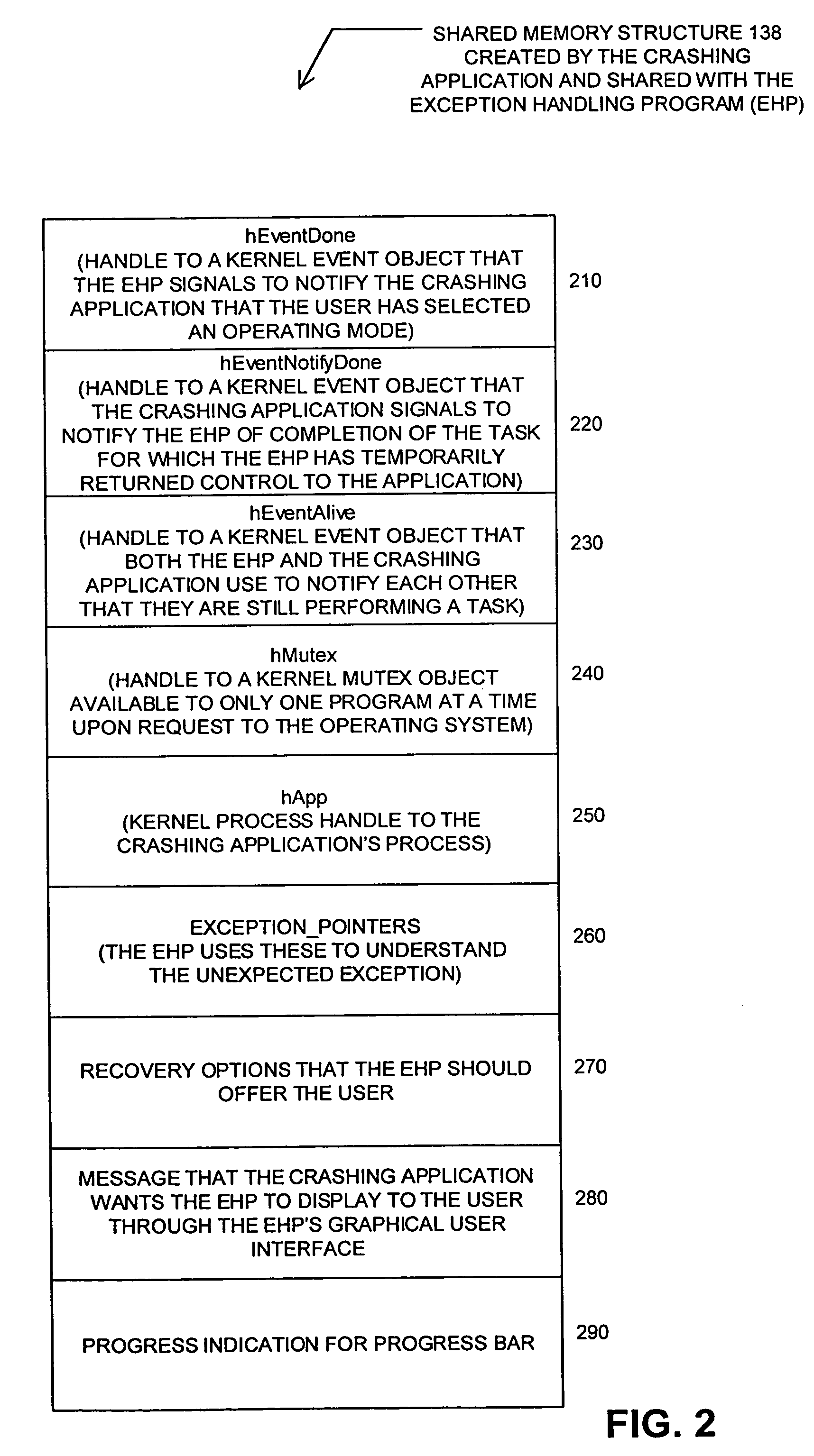

Method and system for handling an unexpected exception generated by an application

InactiveUS7089455B2Improve securitySimple methodProgram initiation/switchingError detection/correctionGraphicsGraphical user interface

A system and method for handling the generation of an unexpected exception by an application. When the application generates an unexpected exception (i.e., crashes), the application's exception filter launches an outside exception handling program (EHP) that is separate and distinct from the application. Through a special protocol, the application and the EHP collaborate in responding to the unexpected exception. In this protocol, the application and the EHP communicate through kernel objects accessible by handles in a shared memory structure that the application creates before launching of the exception handling program and then shares with the EHP. Through this shared memory, the application also provides the EHP with information about the types of recovery options to offer the user. Through a graphical user interface, the EHP is then responsible for notifying the user of the application that an unexpected exception has occurred. Through the graphical user interface, the EHP also provides the user with the ability to specify various courses of action in response to the unexpected exception.

Owner:ZHIGU HLDG

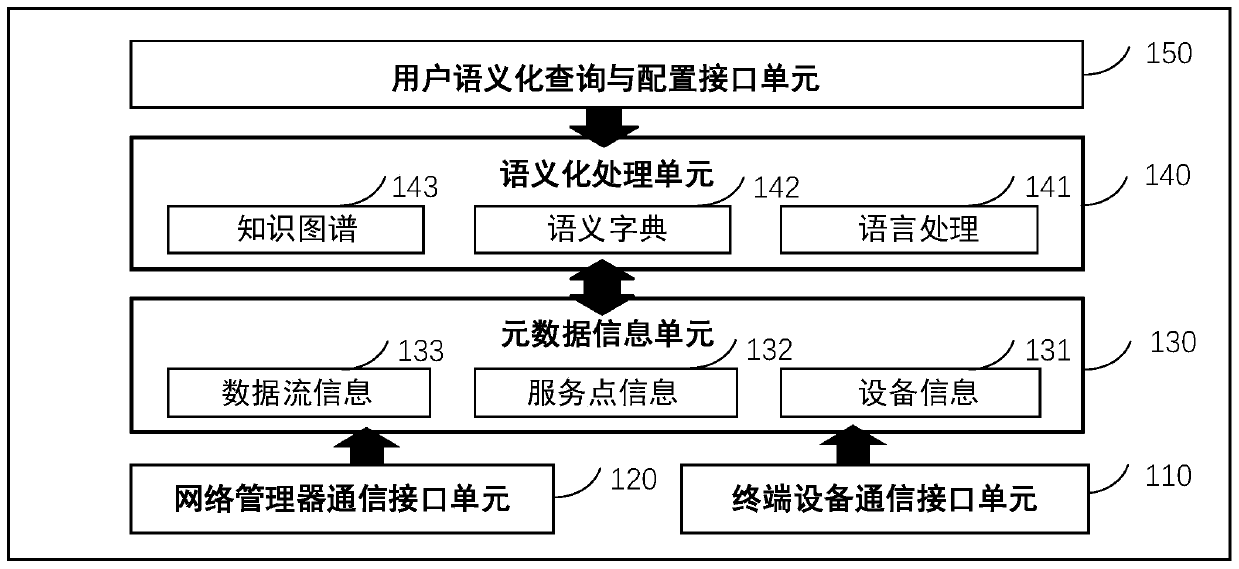

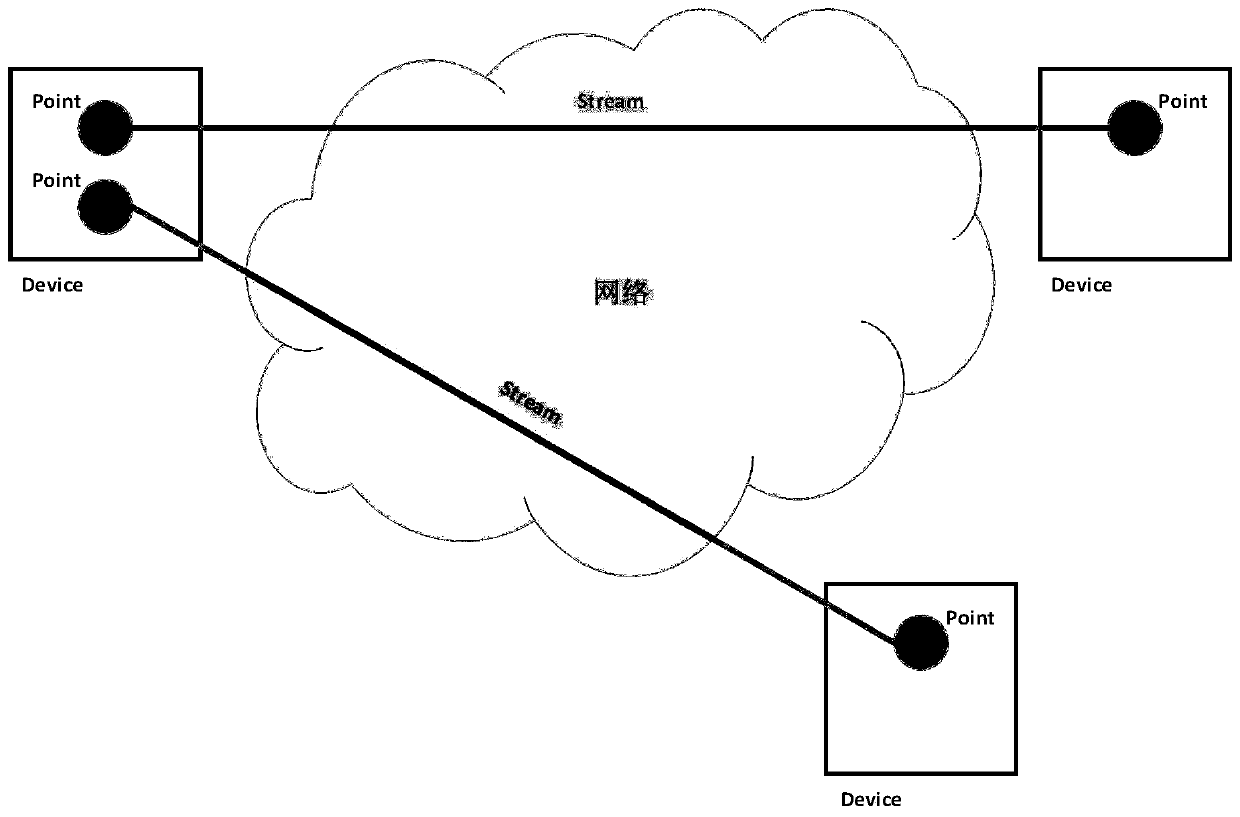

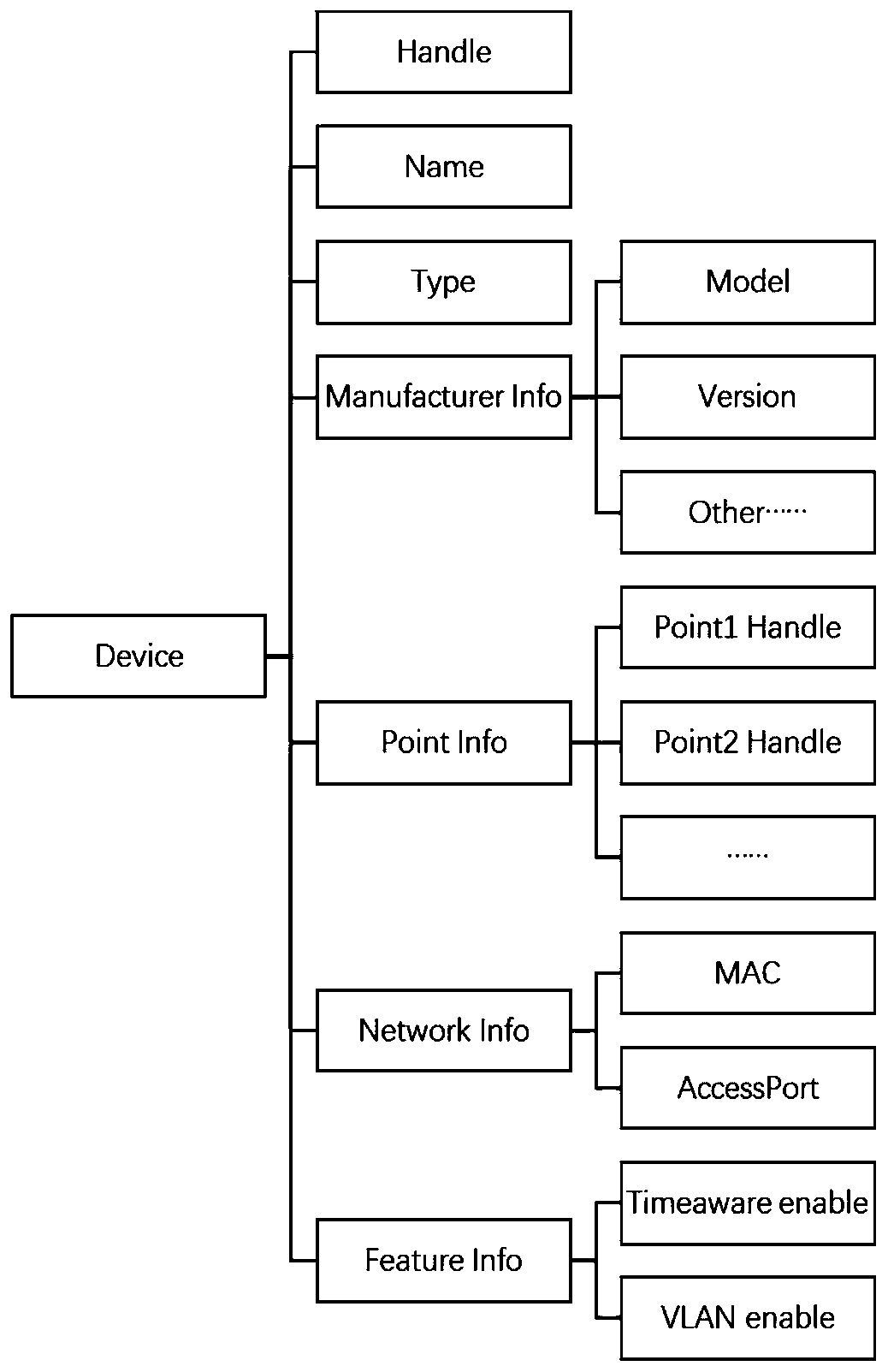

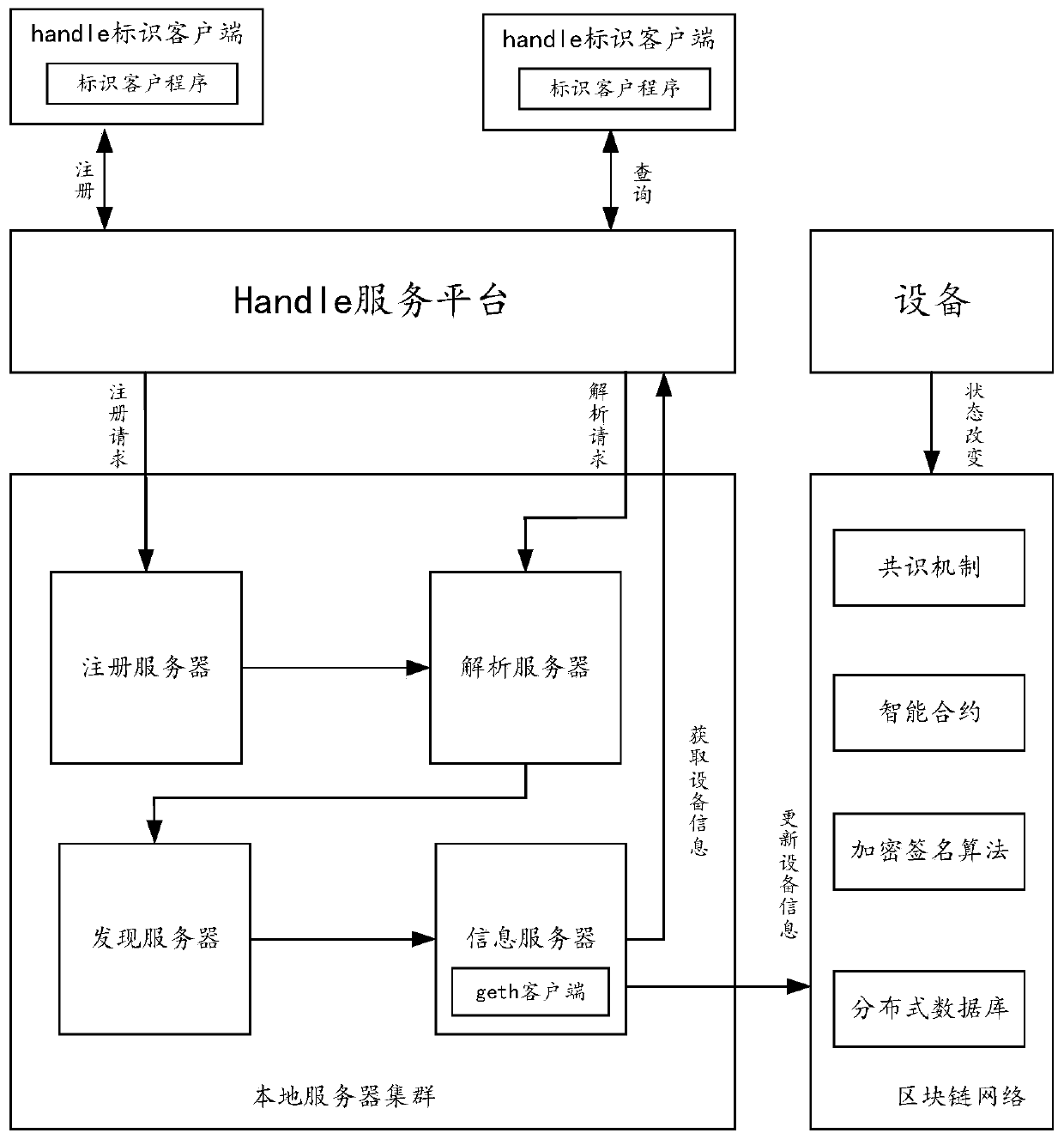

A semantic industrial network service interface system based on Handle identification

ActiveCN109714408AUniqueness guaranteedEasy to identifyMetadata text retrievalTransmissionCommunication interfaceSERCOS interface

The invention discloses a semantic industrial network service interface system based on Handle identification. The service interface comprises a user semantic query and configuration interface unit, asemantic processing unit, a metadata information unit, a network manager communication interface unit and a terminal device communication interface unit. Through the system, the equipment and the service of the invention, a Handle identification analysis technology is adopted to carry out unified information identification, the uniqueness of information in a global range is ensured, and the identification and understanding of extranet users are facilitated; Intention-oriented semantic analysis and processing are supported, and the method is more convenient for a user to use; Network transmission quality assurance related service description is supported, and the method can be suitable for industrial network transmission.

Owner:SHENYANG INST OF AUTOMATION - CHINESE ACAD OF SCI

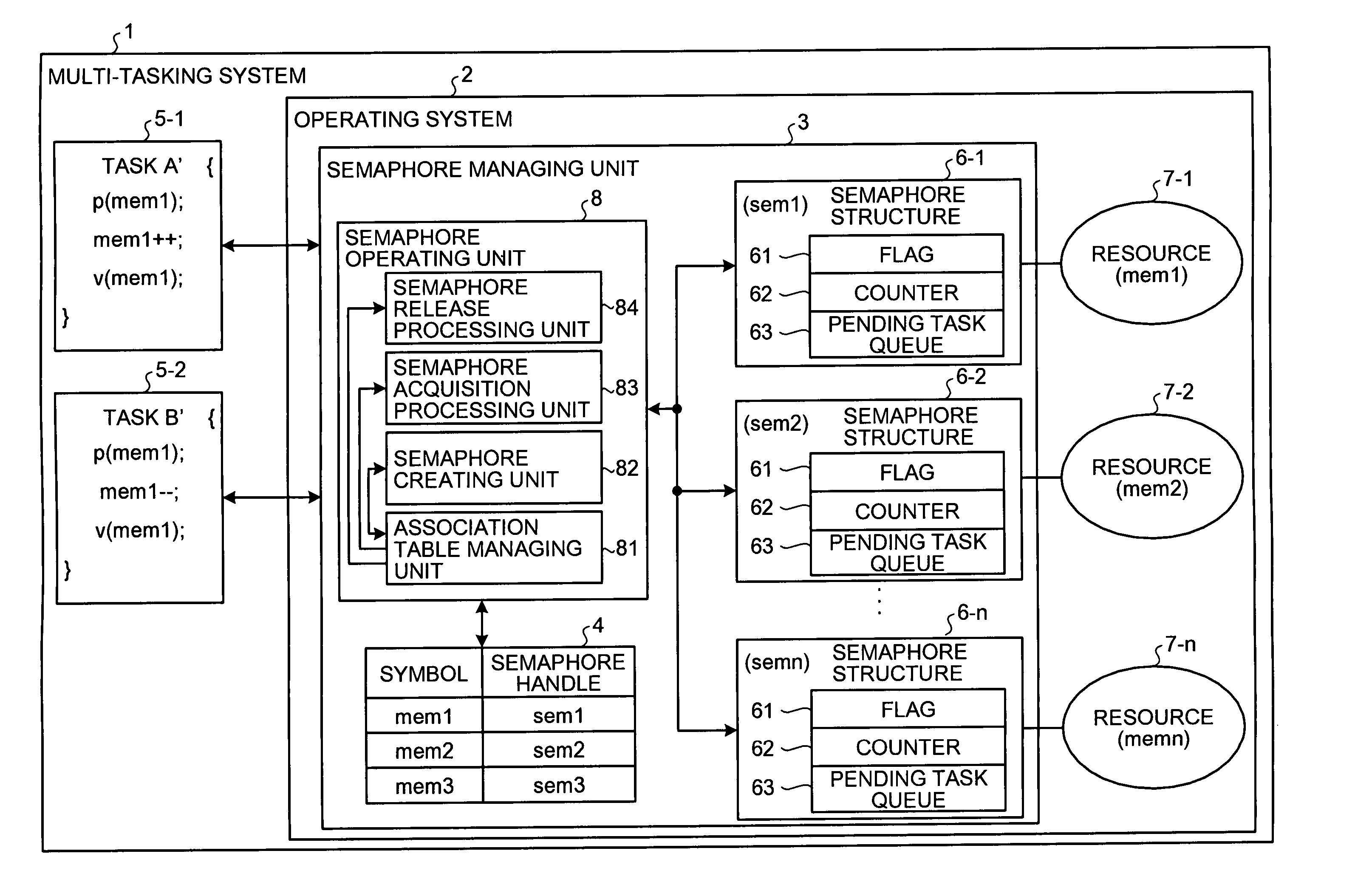

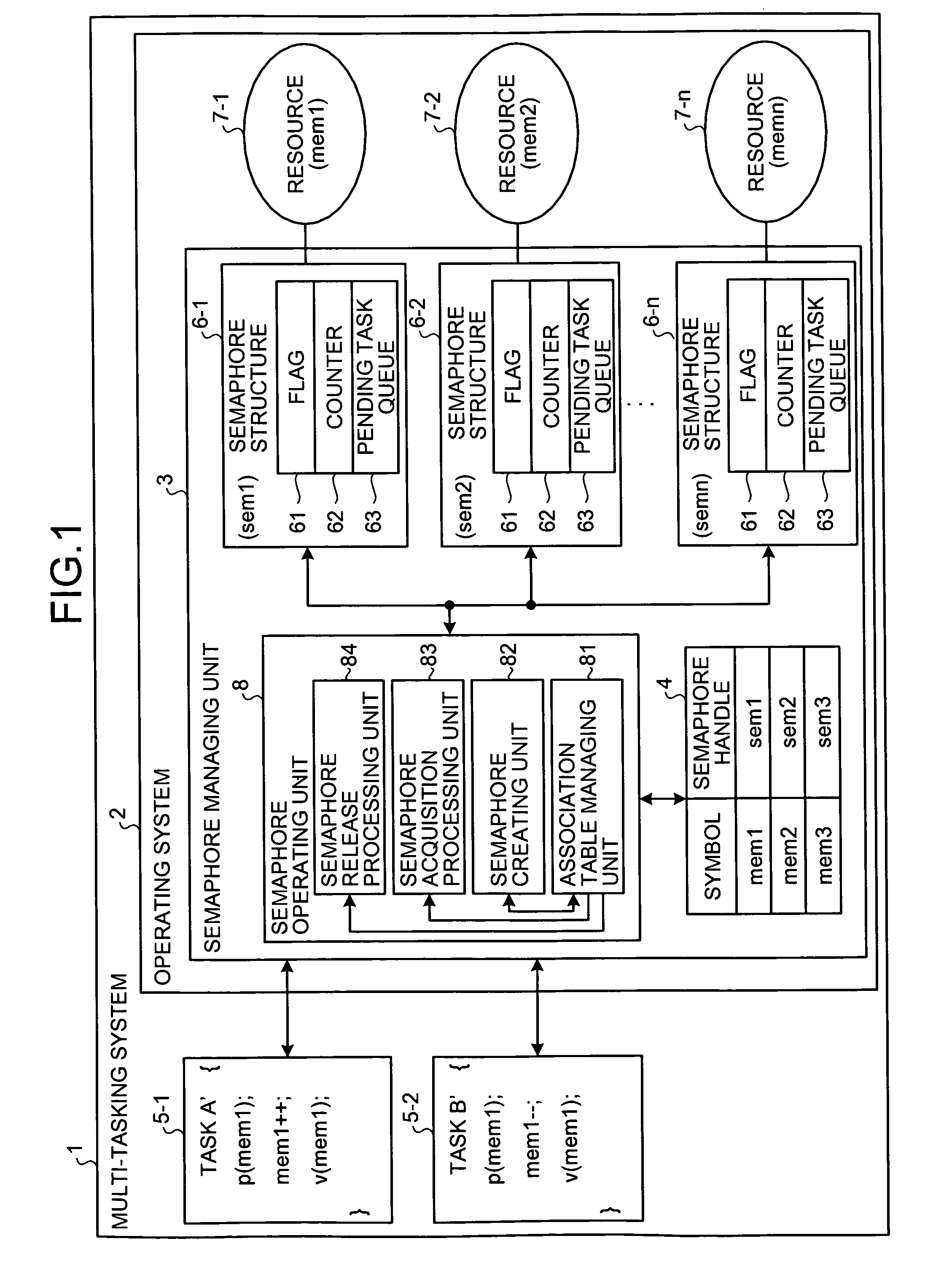

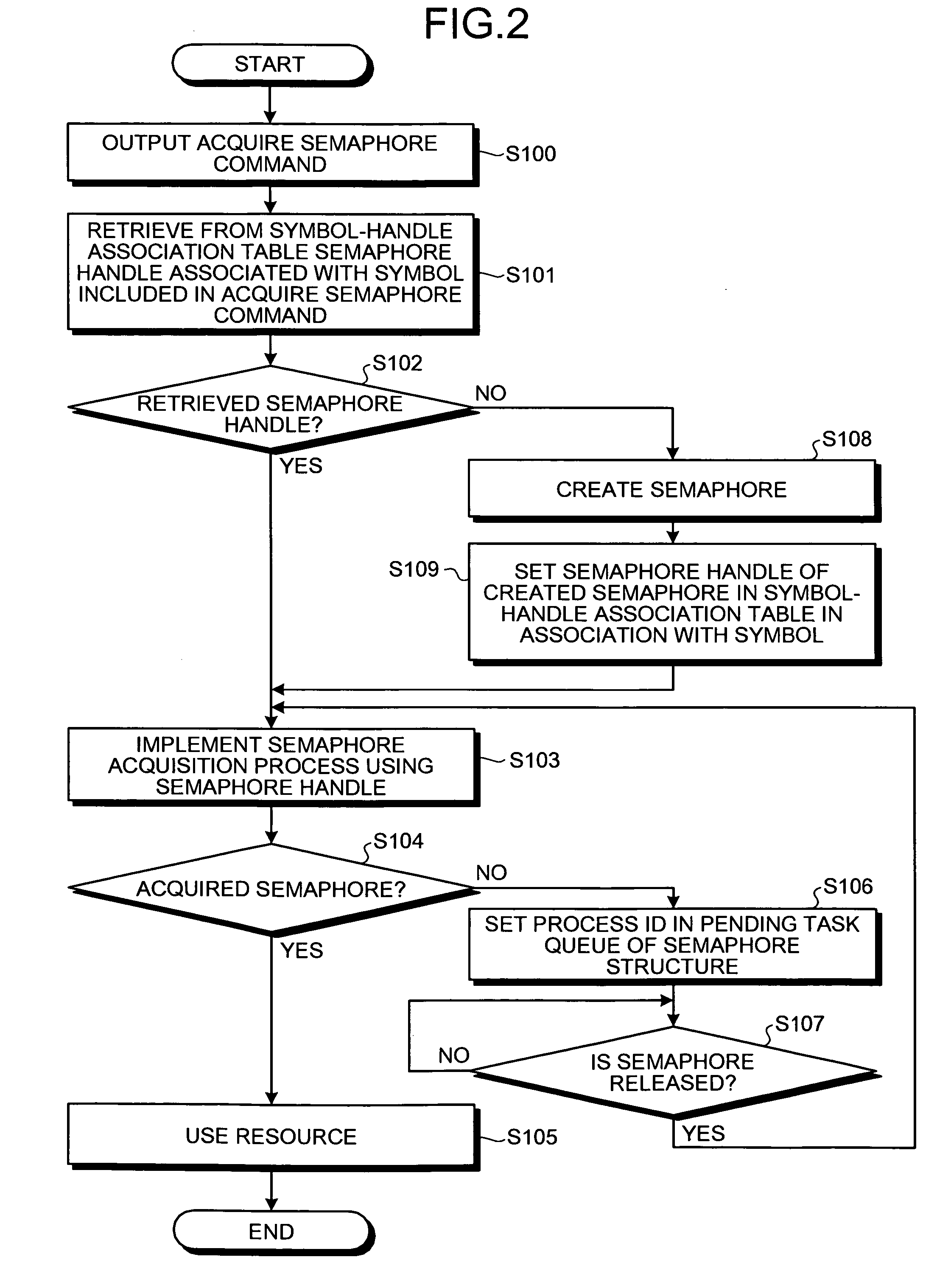

Semaphore management method and computer product

InactiveUS20070150899A1Solve problemsMultiprogramming arrangementsMemory systemsResource allocationDistributed computing

A semaphore handle is retrieved from a symbol-handle table, which stores a semaphore handle of a semaphore controlling a resource in association with a resource symbol included in a semaphore operation command issued by a task. In case of an “Acquire semaphore” command, the semaphore indicated by the retrieved semaphore handle is acquired, and the resource managed by the acquired semaphore is assigned to the task that issued the “Acquire semaphore ” command. In case of a “Release semaphore” command, the semaphore indicated by the retrieved semaphore handle is released, and the resource managed by the semaphore is released from the task that issued the “Release semaphore” command.

Owner:MITSUBISHI ELECTRIC CORP

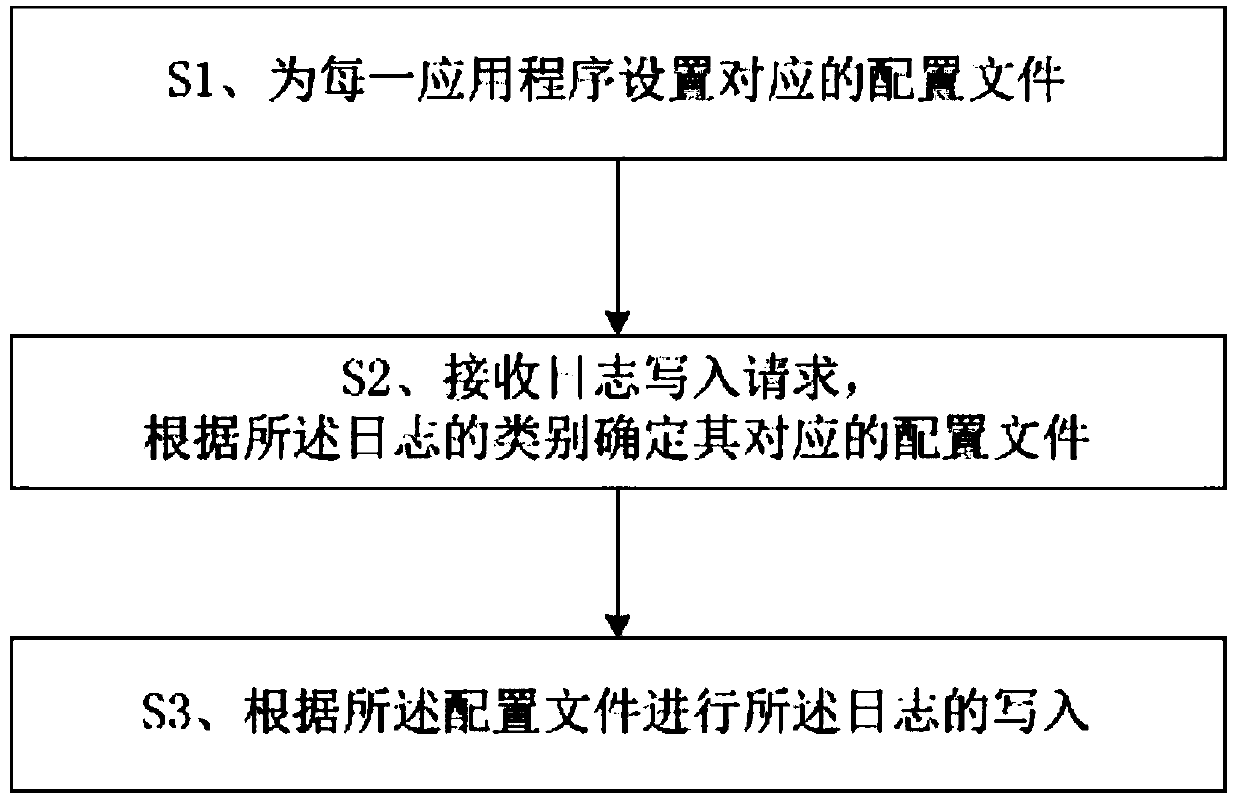

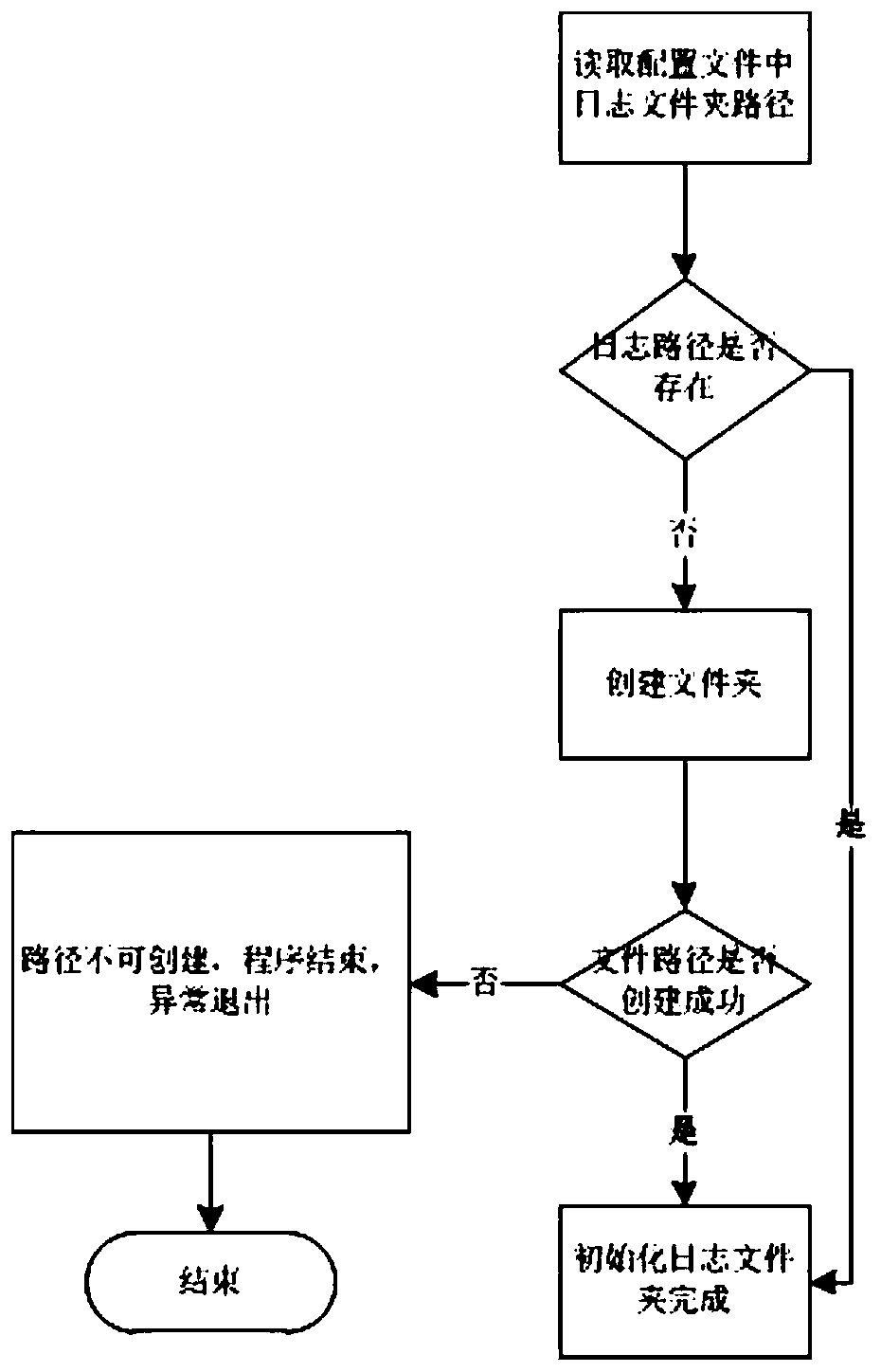

Log management method and terminal

PendingCN111459764AEasy to operate and manageEasy to expand and developHardware monitoringLog managementHandle

The invention discloses a log management method and a terminal. The method includes setting a corresponding configuration file for each application program; receiving a log writing request, and determining a configuration file corresponding to the log according to the category of the log; writing the log according to the configuration file; setting the configuration file to restrain log file attributes and log writing; enabling logs generated by different application programs in the embedded system to be managed in a unified manner; meanwhile, determining a corresponding configuration file according to the category of the log, enabling the setting of the configuration file to be more flexible; meanwhile, arranging a unified write-in interface for log write-in operation, guaranteeing that the formats of logs are unified, facilitating searching and reading of log files in the later period, positioning the files by updating log file handles. When new log files need to be written in, the interface does not need to be reset, only the corresponding handles need to be updated, and system resources are saved.

Owner:江苏盛海智能科技有限公司

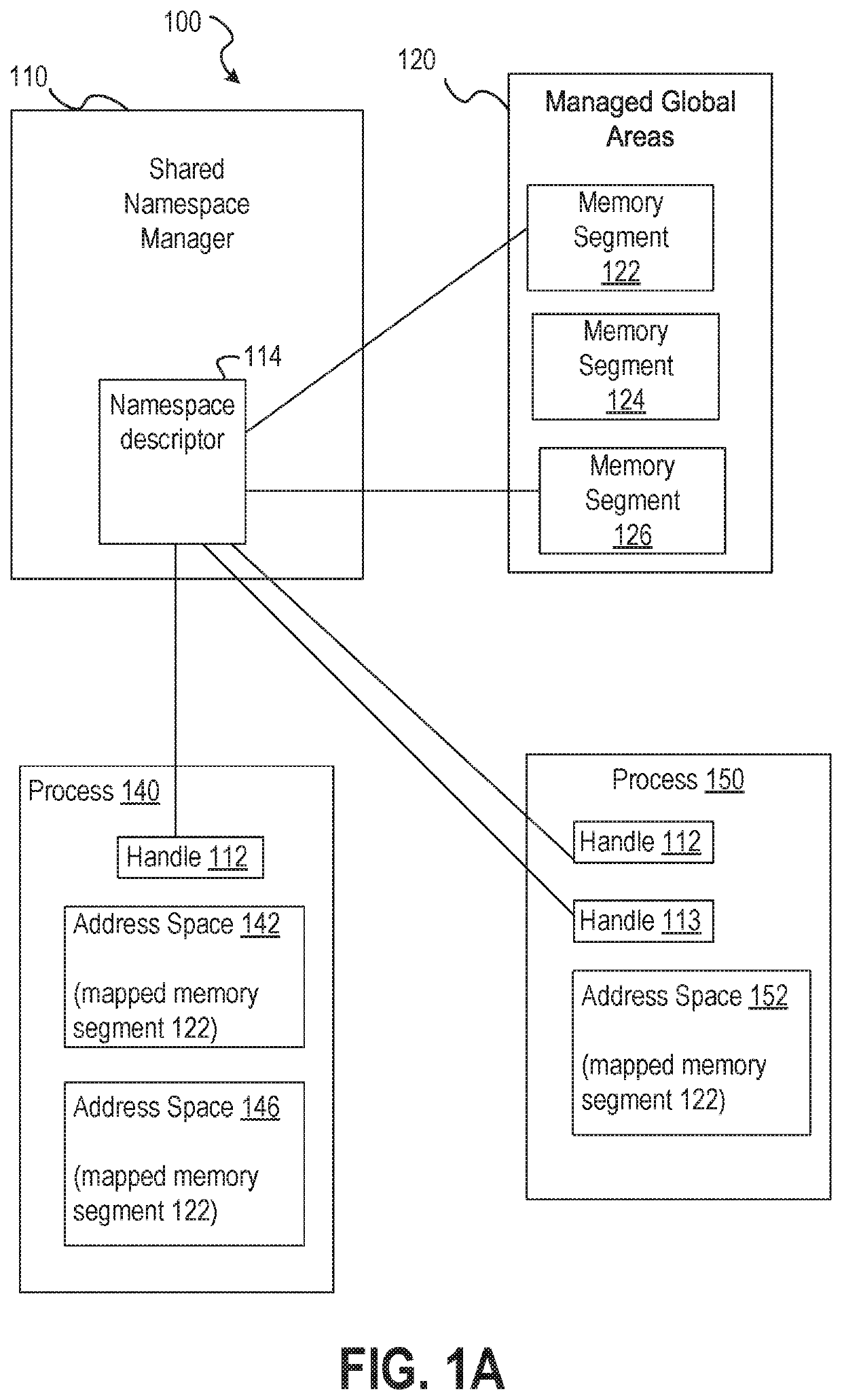

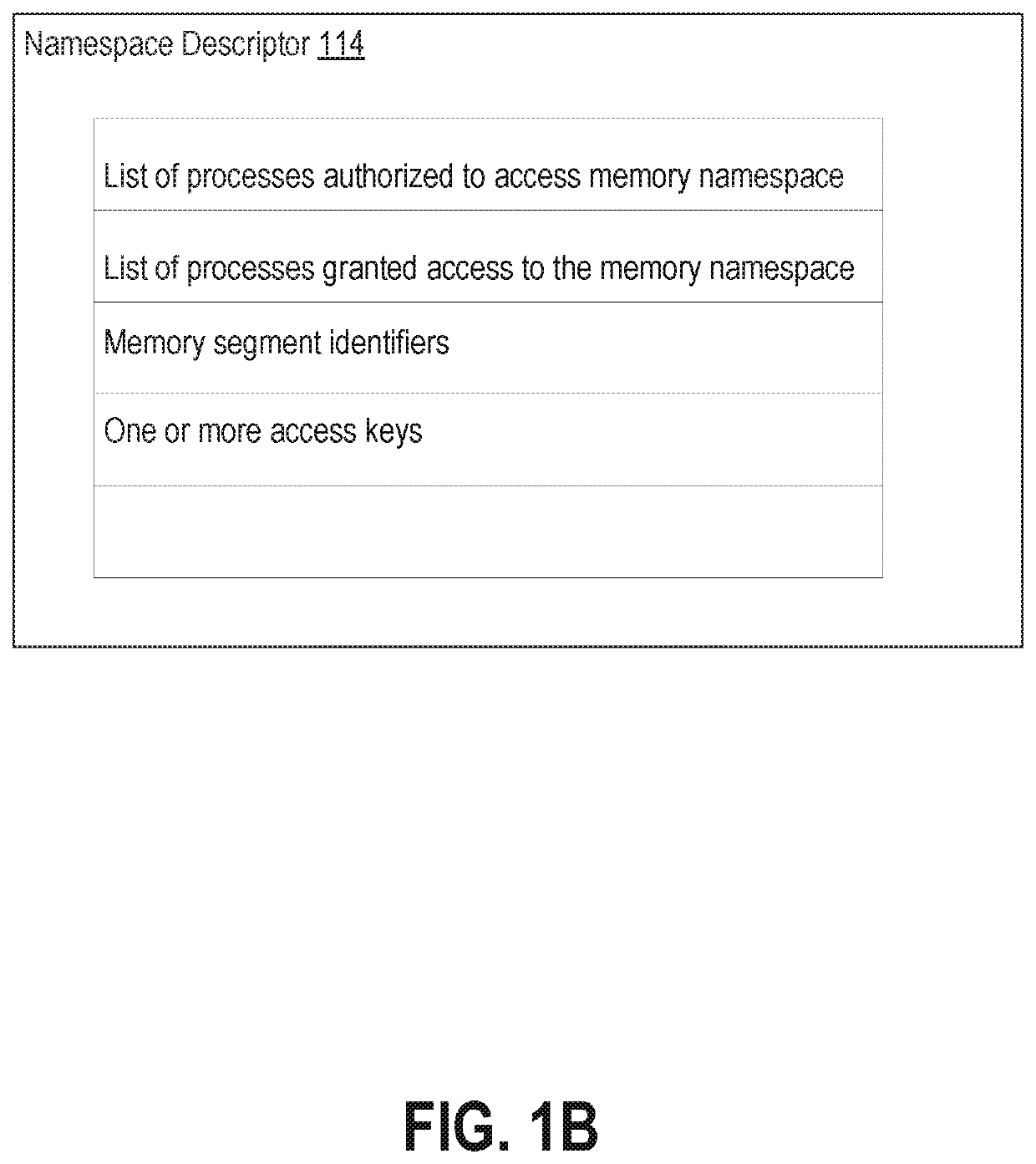

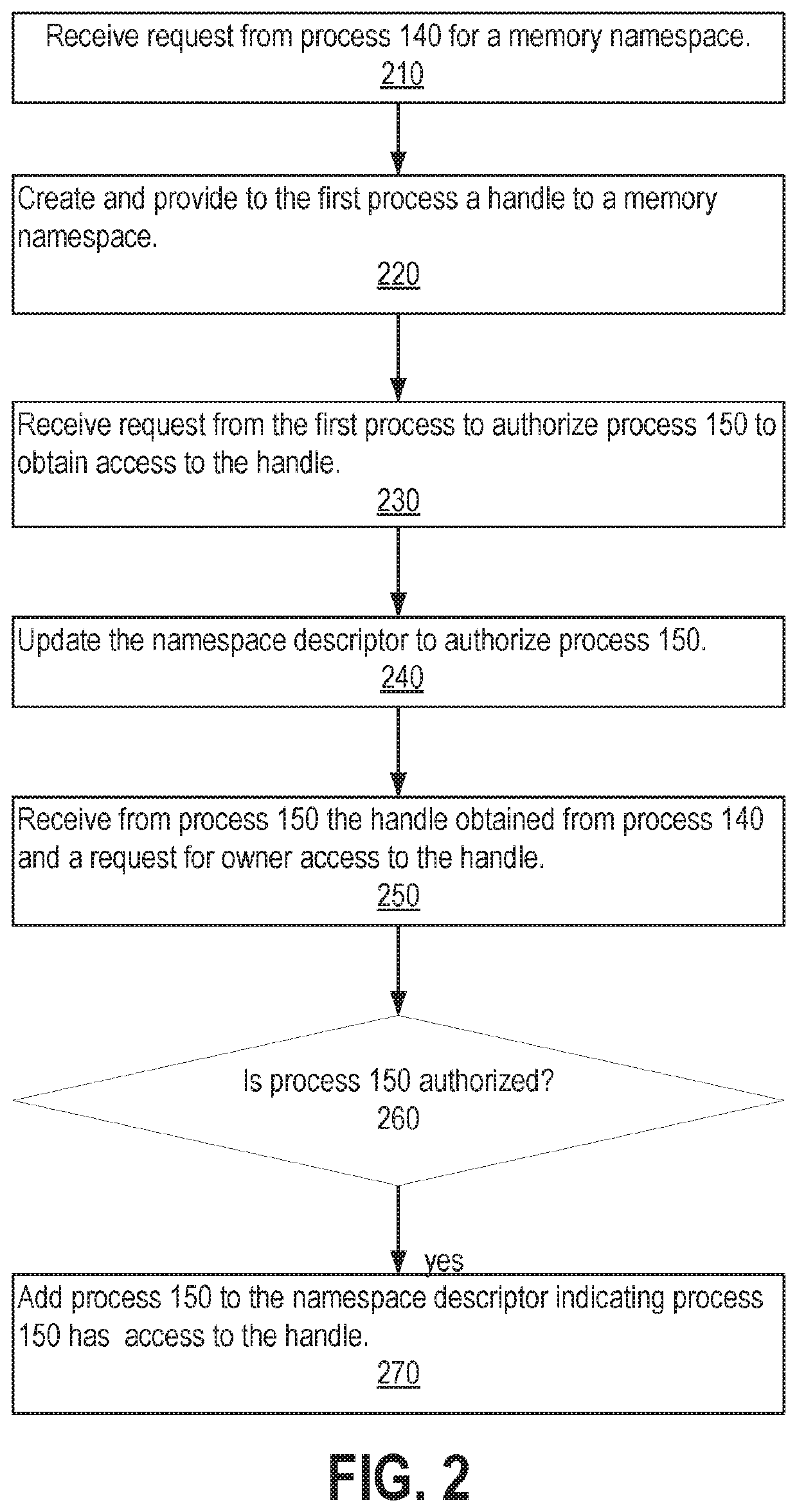

Fine grained memory and heap management for sharable entities across coordinating participants in database environment

ActiveUS20190377694A1Memory architecture accessing/allocationProgram initiation/switchingClient-sideApplication software

Many computer applications comprise multiple threads of executions. Some client application requests are fulfilled by multiple cooperating processes. Techniques are disclosed for creating and managing memory namespaces that may be shared among a group of cooperating processes in which the memory namespaces are not accessible to processes outside of the group. The processes sharing the memory each have a handle that references the namespace. A process having the handle may invite another process to share the memory by providing the handle. A process sharing the private memory may change the private memory or the processes sharing the private memory according to a set of access rights assigned to the process. The private shared memory may be further protected from non-sharing processes by tagging memory segments allocated to the shared memory with protection key and / or an encryption key used to encrypt / decrypt data stored in the memory segments.

Owner:ORACLE INT CORP

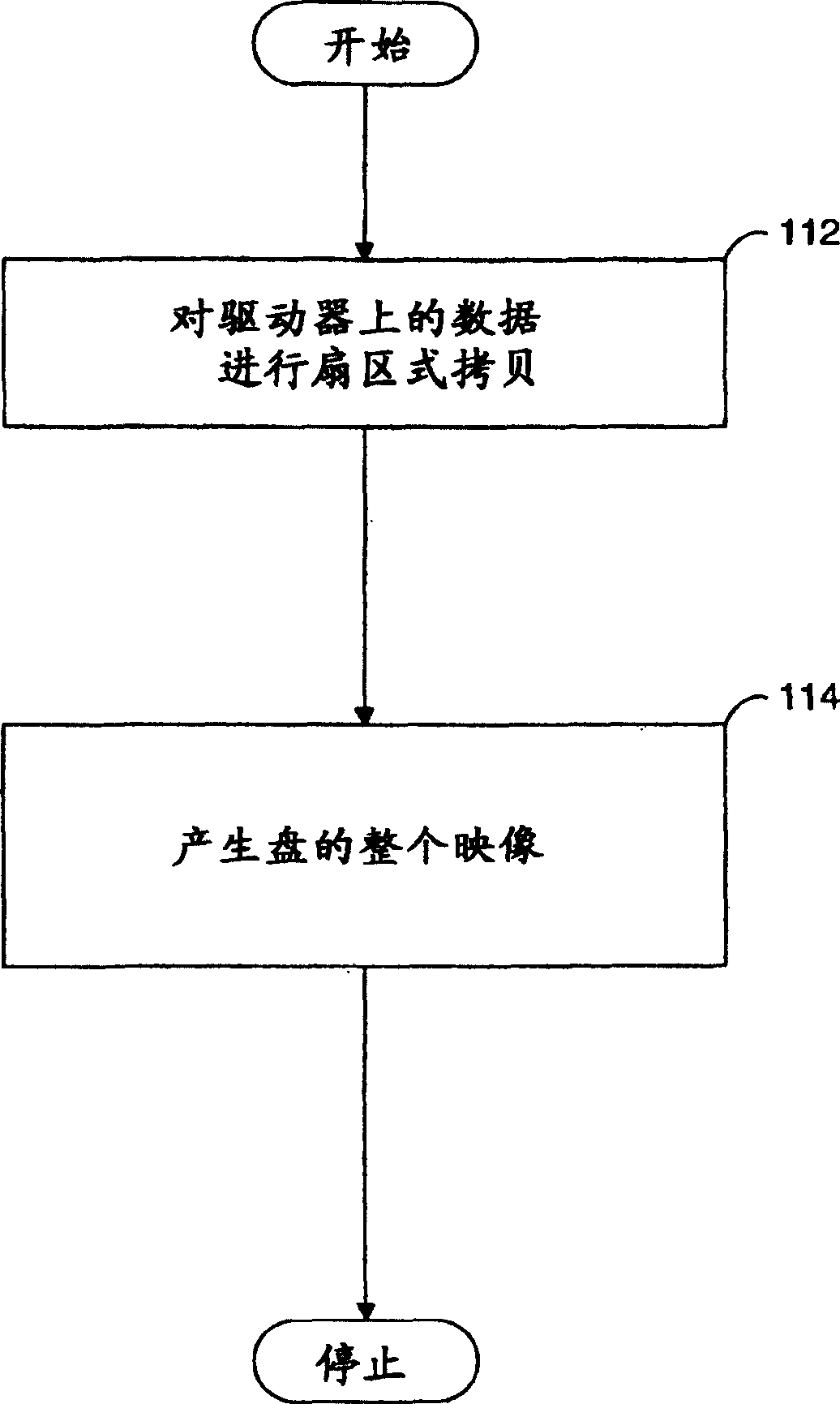

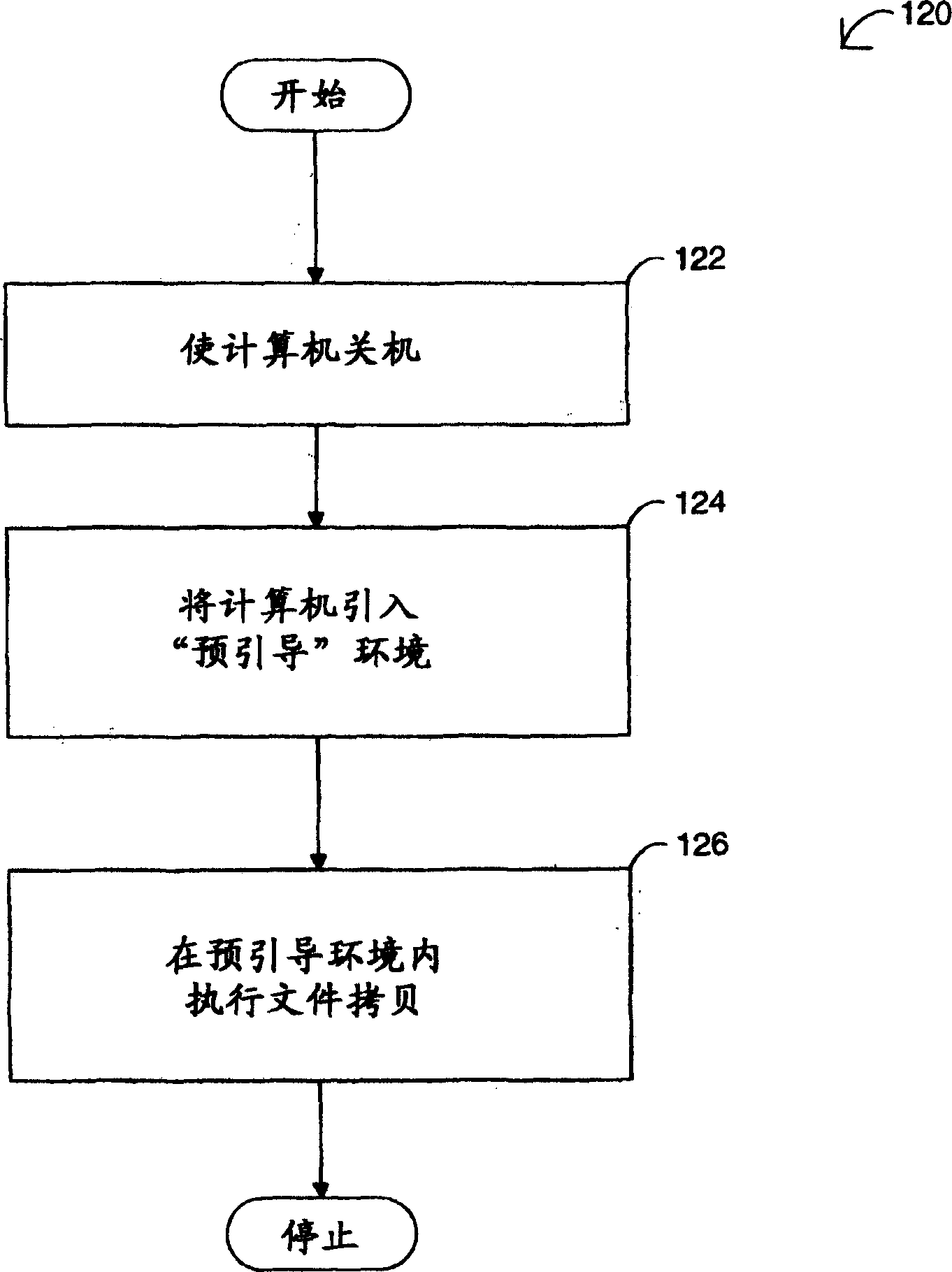

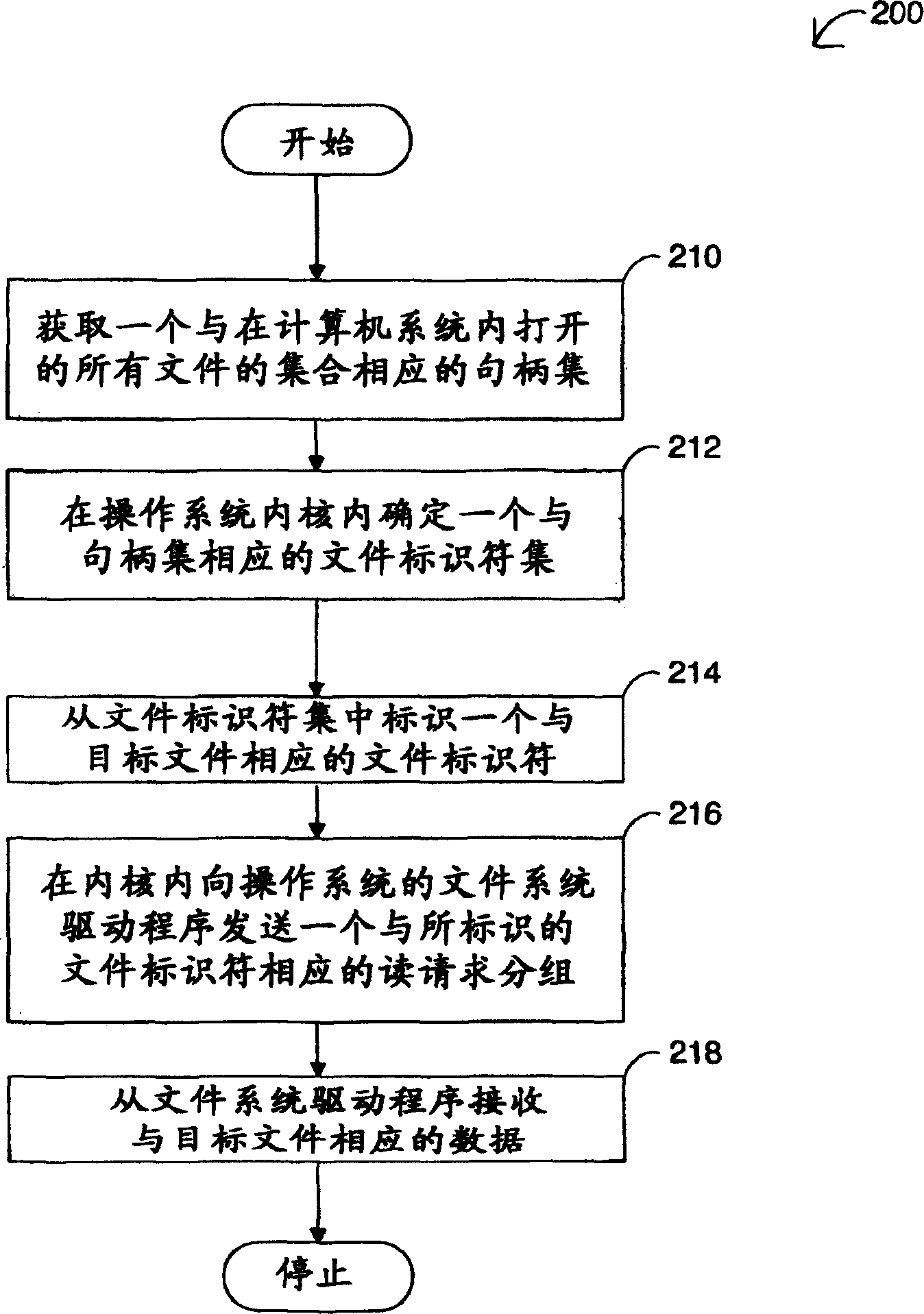

Method and system of accessing at least one target file in a computer system

InactiveCN1667608ADigital data information retrievalProgram control using stored programsOperational systemFile system

The present invention provides a method and system for accessing at least one target file in a computer system having an operating system that implements file locking while the file is open. In a typical embodiment, this method and system include: (1) obtaining a set of handles corresponding to the set of all files opened in the computer system; (2) determining a set of handles corresponding to the set of handles in the kernel of the operating system (3) identify a file identifier corresponding to the target file from the file identifier set; (4) send a read corresponding to the identified file identifier to the file system driver of the operating system in the kernel requesting a packet; and (5) receiving data corresponding to the target file from the file system driver.

Owner:LENOVO (SINGAPORE) PTE LTD

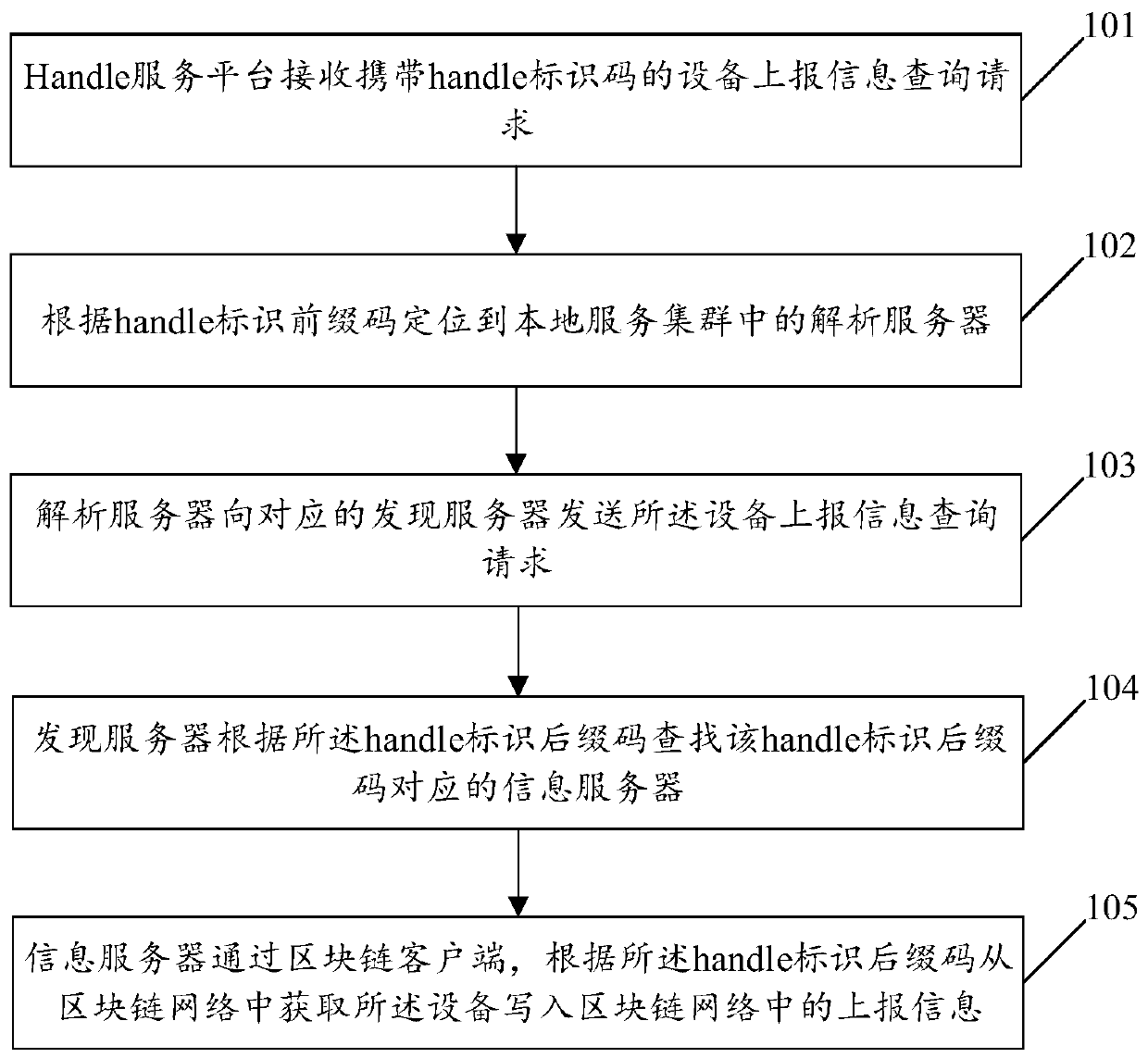

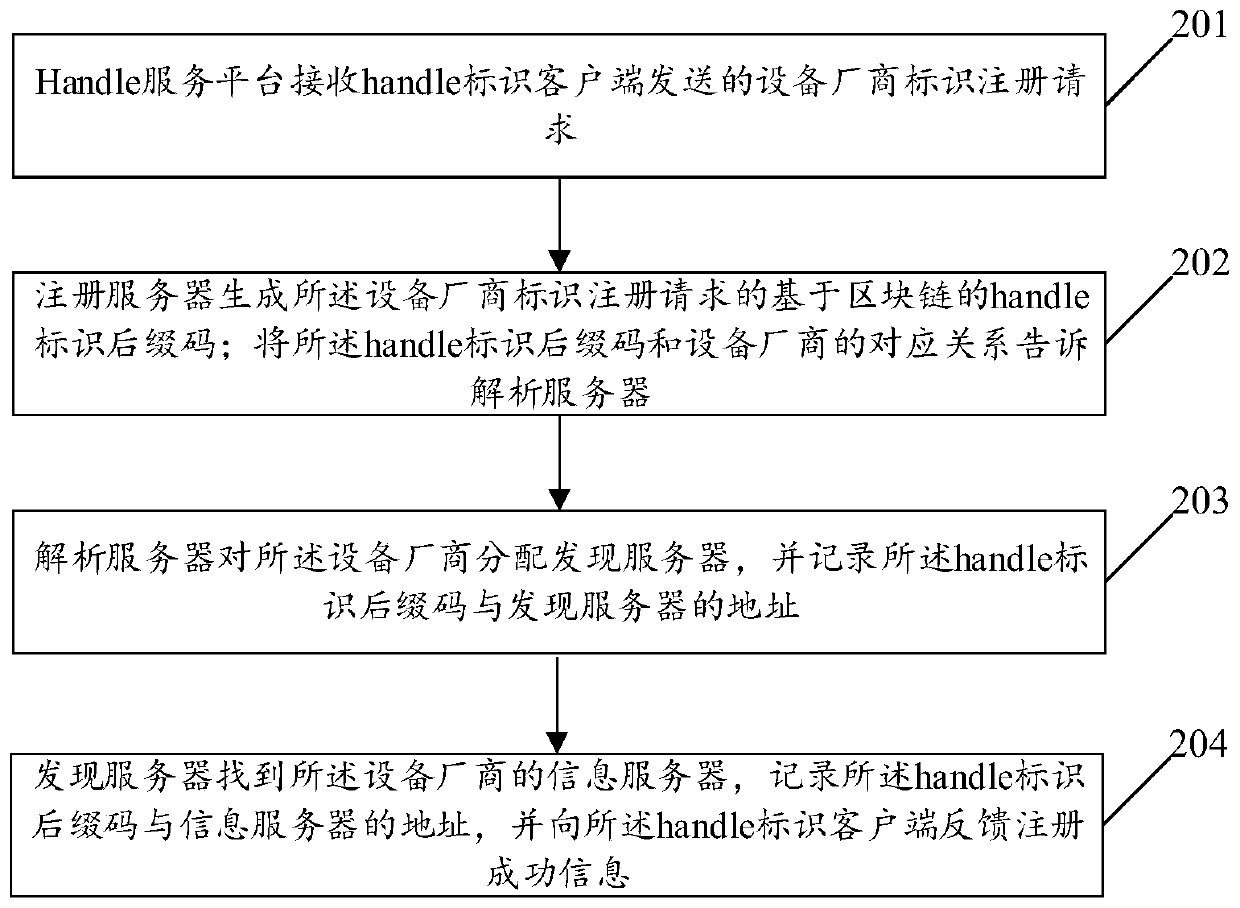

Handle recognition system based on block chain and data processing method

ActiveCN111524005AGuarantee data securityFinanceDigital data protectionSoftware engineeringNetwork data

The invention discloses a handle recognition system based on a block chain and a data processing method. The method comprises the following steps that: a Handle service platform receives an information query request reported by equipment carrying a Handle recognition suffix code; the discovery server searches an information server corresponding to the handle recognition suffix code according to the handle recognition suffix code; and the block chain client of the information server acquires report information written into the block chain network by the equipment from the block chain network according to the handle recognition suffix code. According to the technical scheme, the handle recognition system is combined with the blockchain technology, and the blockchain technology is utilized toensure the network data safety.

Owner:四川赛康智能科技股份有限公司 +1

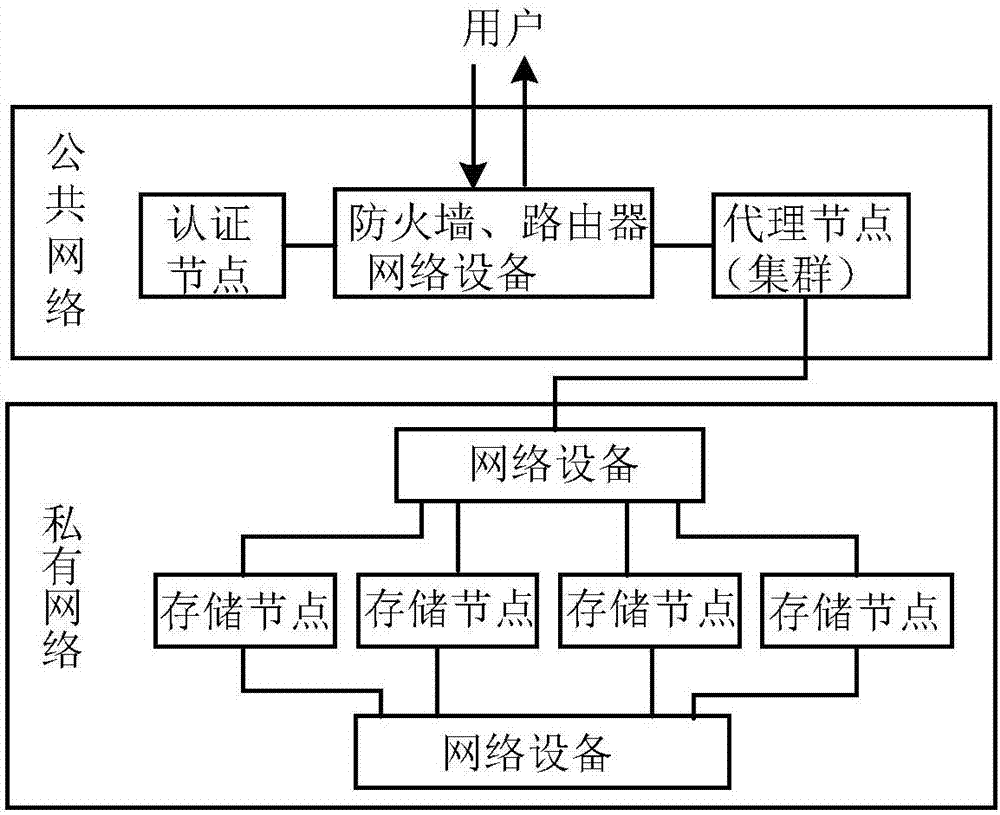

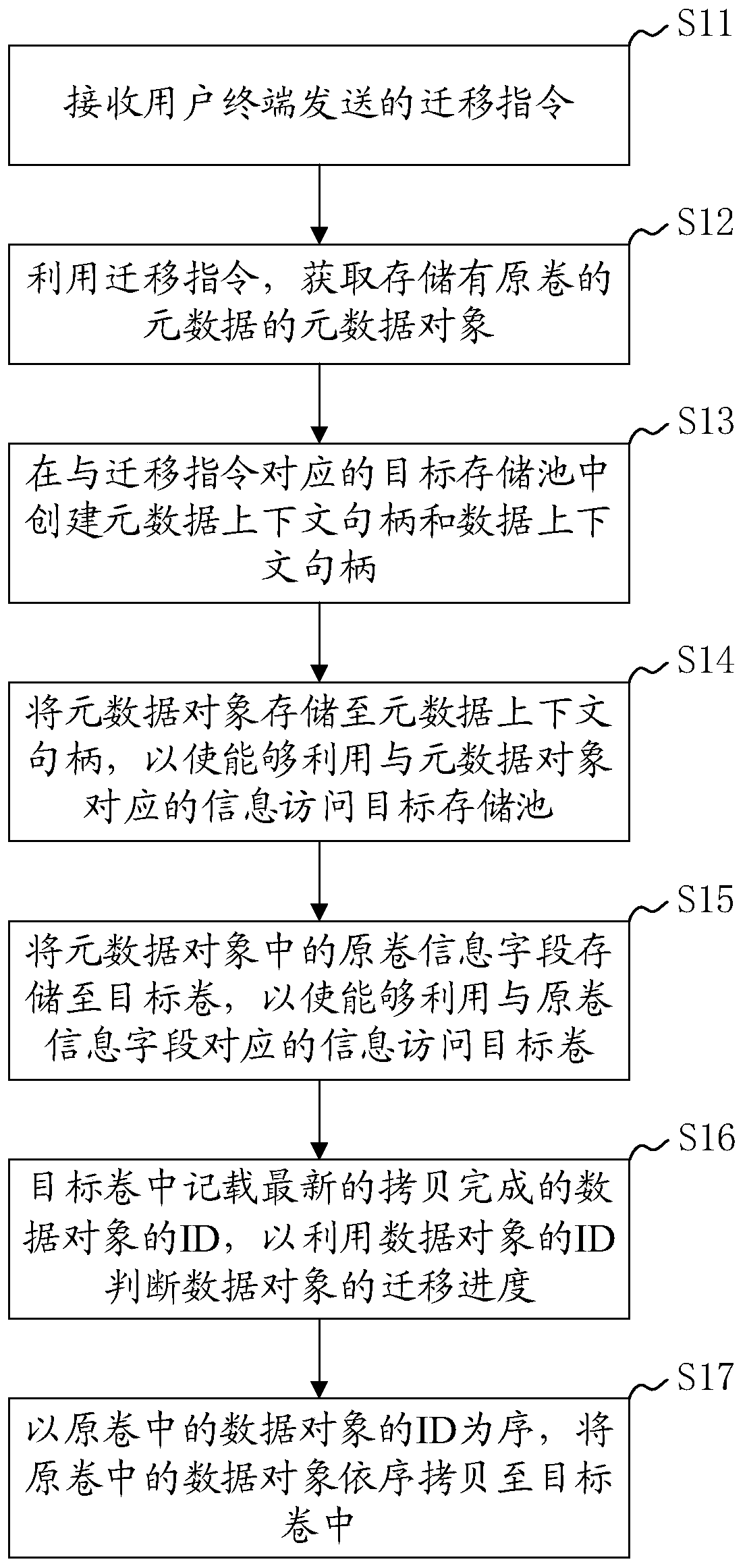

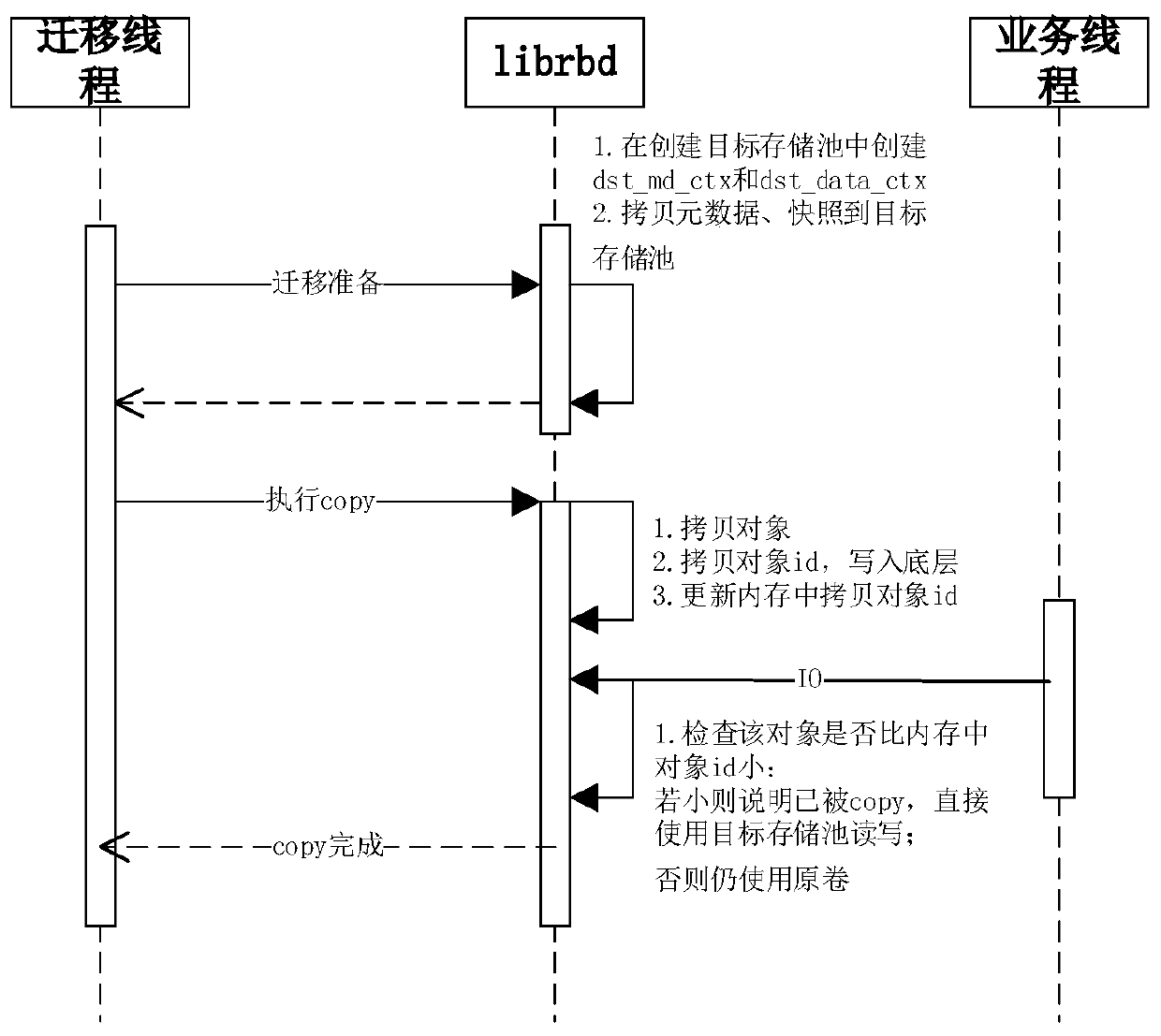

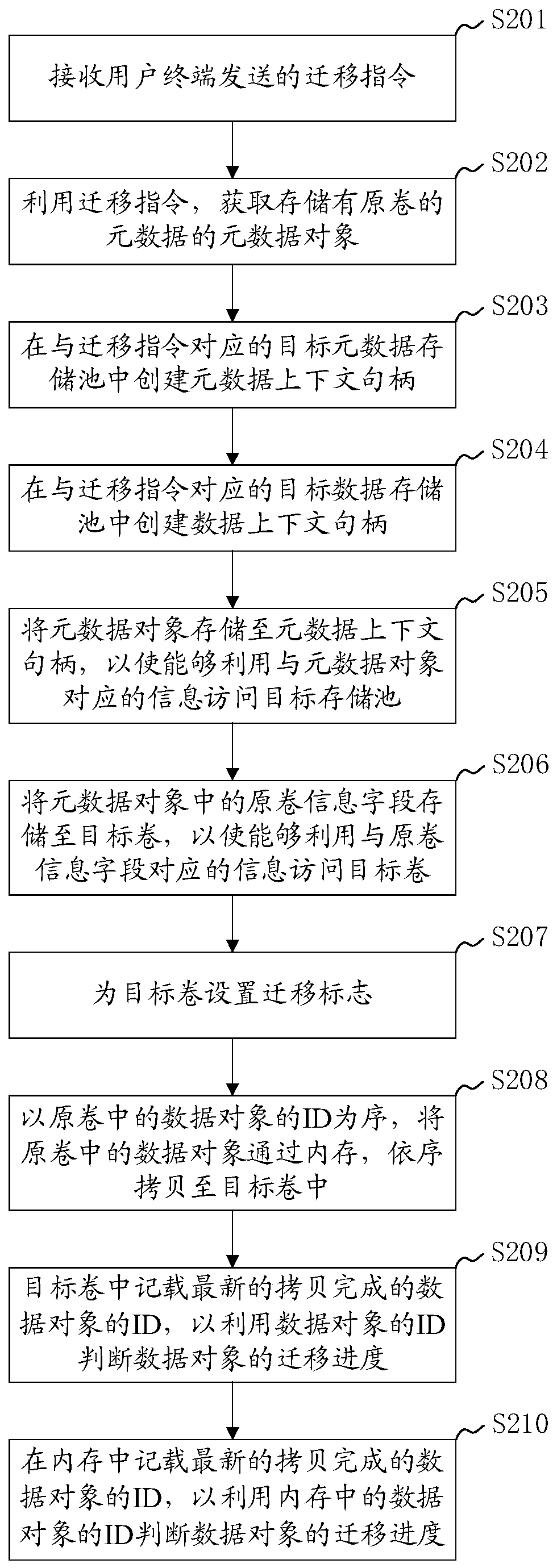

Distributed storage volume online migration method, system and device and readable storage medium

ActiveCN111367470ADoes not destroy index relationsRealize online migrationInput/output to record carriersInformation accessObject store

The invention discloses a distributed storage volume online migration method, system and device and a computer readable storage medium. The method comprises the steps of obtaining a metadata object storing metadata of an original volume; creating a metadata context handle and a data context handle in a target storage pool corresponding to the migration instruction; storing the metadata object to ametadata context handle; storing an original volume information field in the metadata object to the target volume; copying the data objects in the original volume into a target volume in sequence; wherein the ID of the copied data object is recorded in the target volume; according to the method, the metadata object of the original volume is stored in the target storage pool and the target volume;according to the method, the original index relationship of data in the original volume is not damaged, the target volume can be accessed by utilizing the information of the original volume, and meanwhile, the ID of the data object is utilized to ensure that the data of the original volume can still be accessed in the migration process and the data migrated to the target volume is preferentiallyaccessed, so that non-perception online migration of the storage volume is realized.

Owner:SUZHOU LANGCHAO INTELLIGENT TECH CO LTD

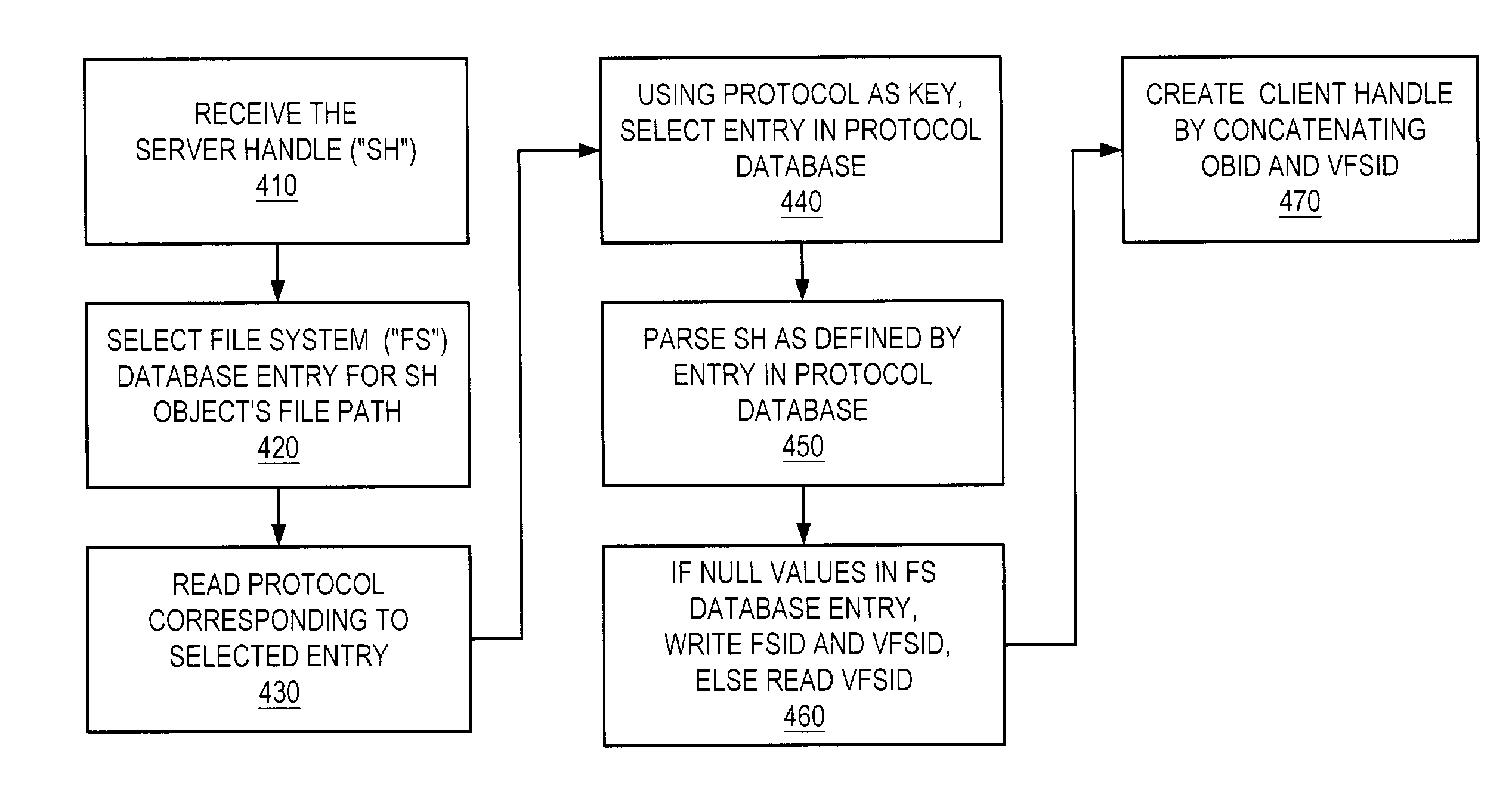

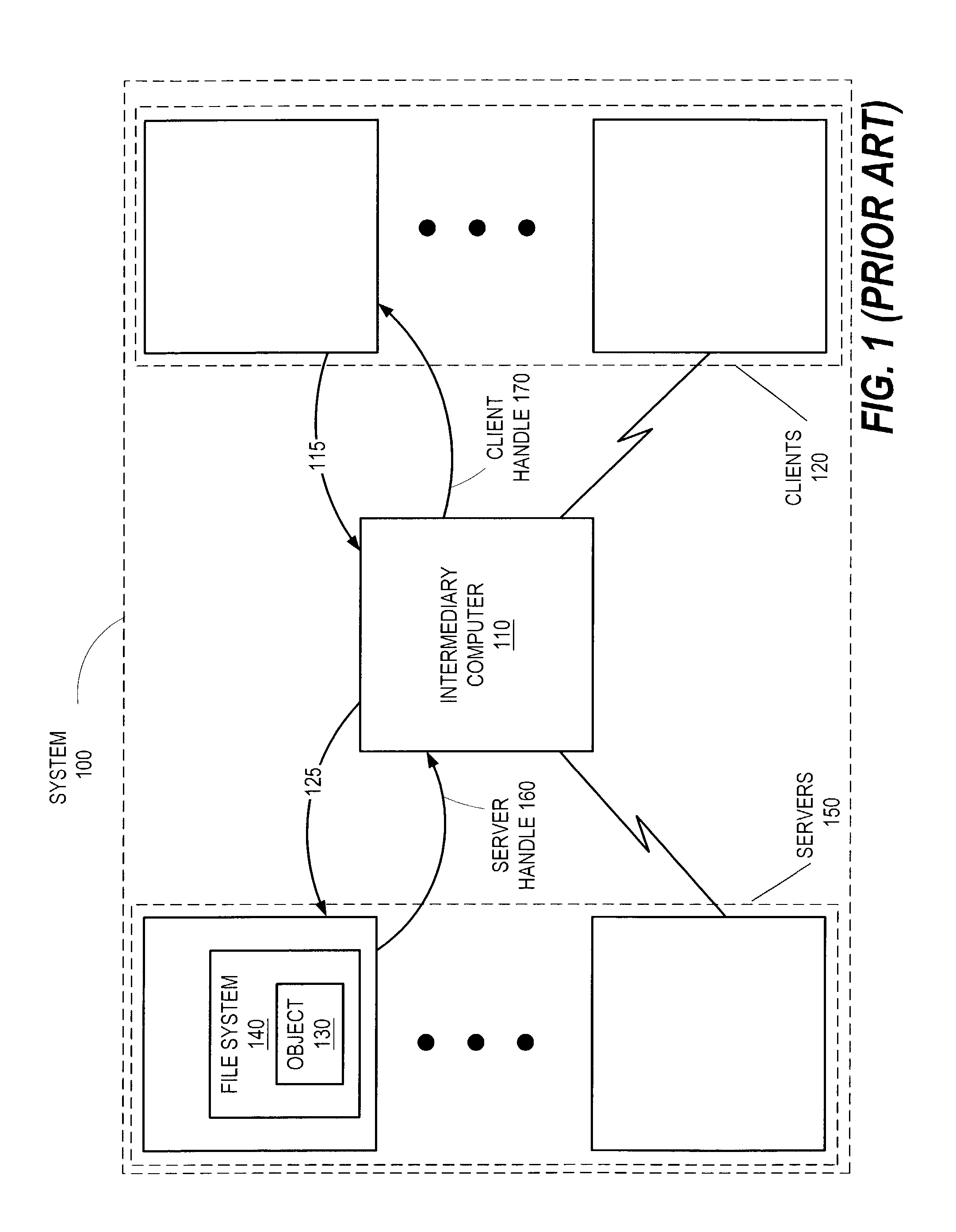

Method, apparatus and computer program product for mapping file handles

InactiveUS6980994B2Data processing applicationsDigital data processing detailsFile systemData element

In one form, in a method for mapping file handles, protocol data elements are created for respective file system protocols. Such a protocol data element identifies a structure of server handles for the data element's corresponding protocol. File system data elements are created for server file systems. Such a file system data element includes a file system identification (FSID) attribute. Responsive to accessing an object of one of the server file systems, a value for the FSID attribute of the corresponding file system data element is created for reconstructing the object's server handle. Creating the value includes parsing, responsive to one of the protocol data elements, an FSID of a server handle for the object.

Owner:IBM CORP

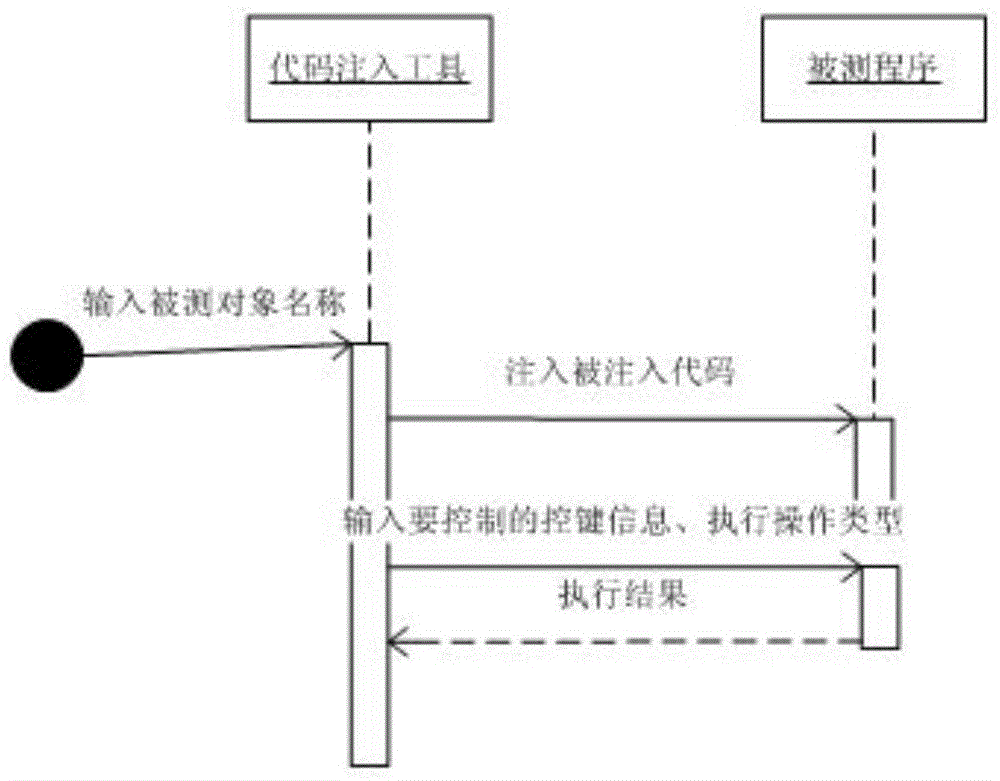

Automatic testing auxiliary recognition method based on code injection

ActiveCN104636248ASolve the problem of unrecognizable and untestable functionsSolve the problem of unrecognizable and untestableSoftware testing/debuggingMemory addressControl data

The invention discloses an automatic testing auxiliary recognition method based on code injection. The automatic testing auxiliary recognition method comprises the following steps that a progress handle of a tested procedure is obtained; an injected code is injected in a progress address of the tested procedure, a related memory address is developed for subsequent operation; control information to be tested and an operation type are transmitted to the injected code; the injected code searches for corresponding control in the memory address of the tested procedure according to the control information, and corresponding operation is executed or corresponding control data are obtained according to the operation type; the operation result, returned by the injected code, of the corresponding operation or the corresponding control data returned by the injected code are received.

Owner:AEROSPACE INFORMATION

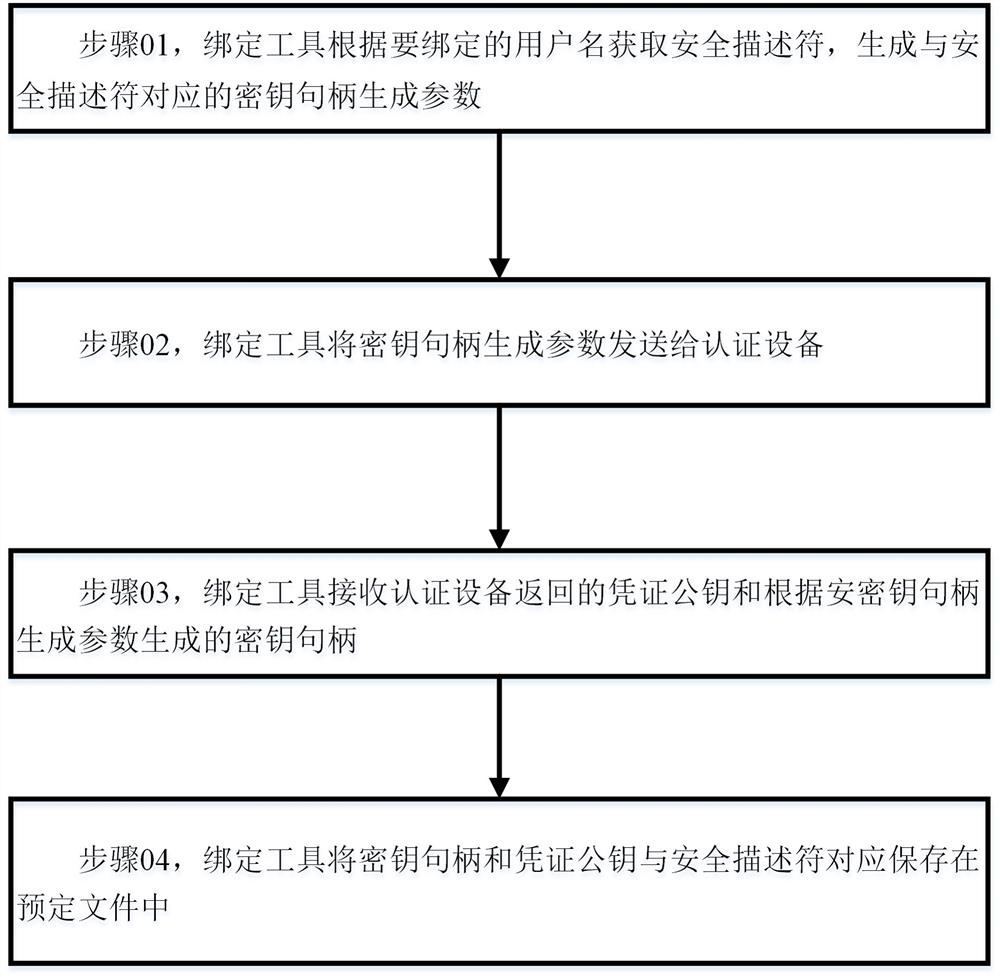

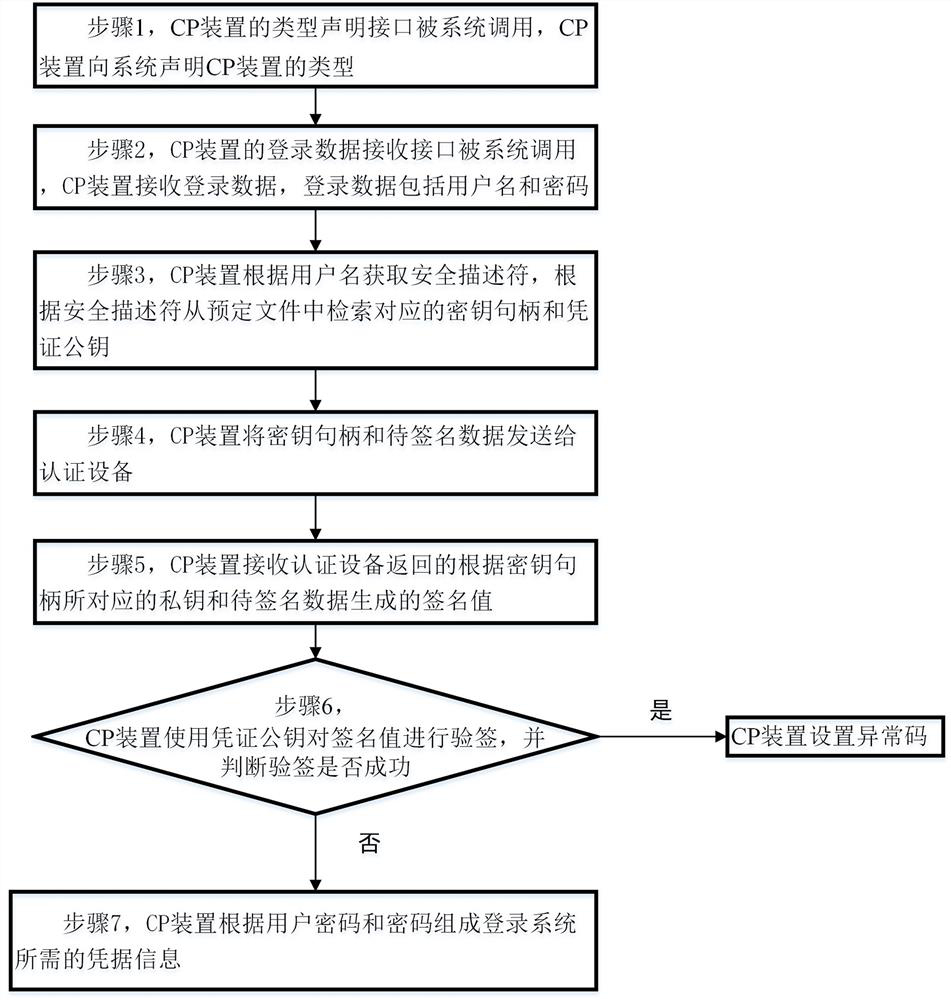

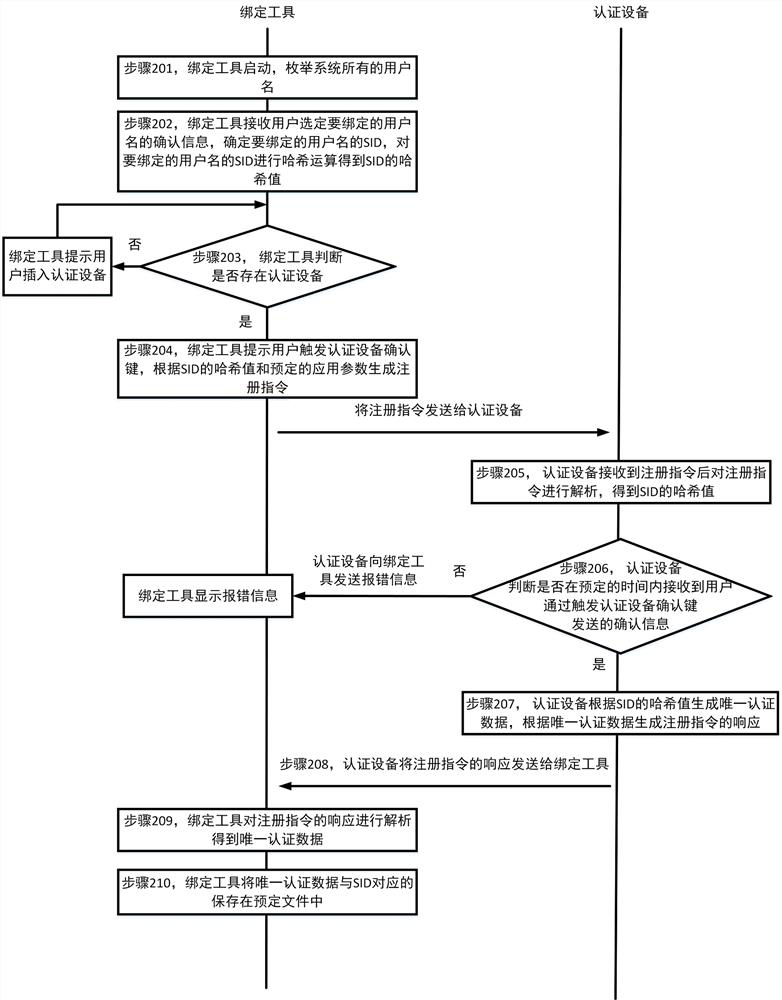

Method and system for logging in Windows operating system

ActiveCN112287312AOvercome the limitation of not being able to provide a login method in a non-domain environmentDigital data authenticationOperational systemPassword

The invention discloses a method and a system for logging in a Windows operating system. The method comprises a binding process and a login process. The binding process comprises the steps: enabling the binding tool to send key handle generation parameters obtained according to a user name to be bound to the authentication device; and correspondingly storing a certificate public key returned by the authentication device, a key handle generated according to the key handle generation parameter and the security descriptor in a predetermined file. The login process comprises the following steps: enabling a credential providing device to receive login data, acquiring a security descriptor according to a user name, and retrieving a corresponding key handle and a credential public key from a predetermined file according to the security descriptor; sending the key handle and the to-be-signed data to an authentication device; receiving a signature value returned by the authentication device andgenerated according to the private key corresponding to the key handle and the to-be-signed data; performing signature verification on the signature value by using the certificate public key, and when the signature verification succeeds, forming credential information required for logging in the system according to the user name and the password.

Owner:FEITIAN TECHNOLOGIES

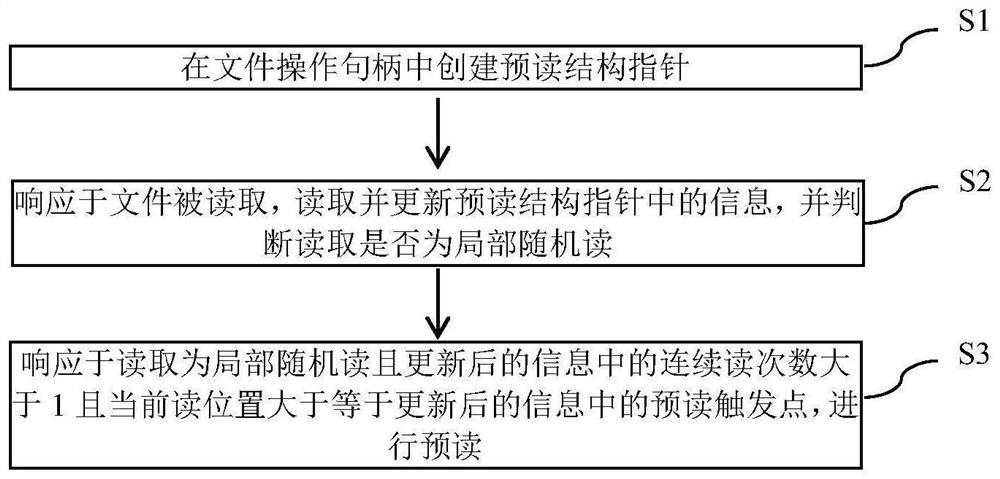

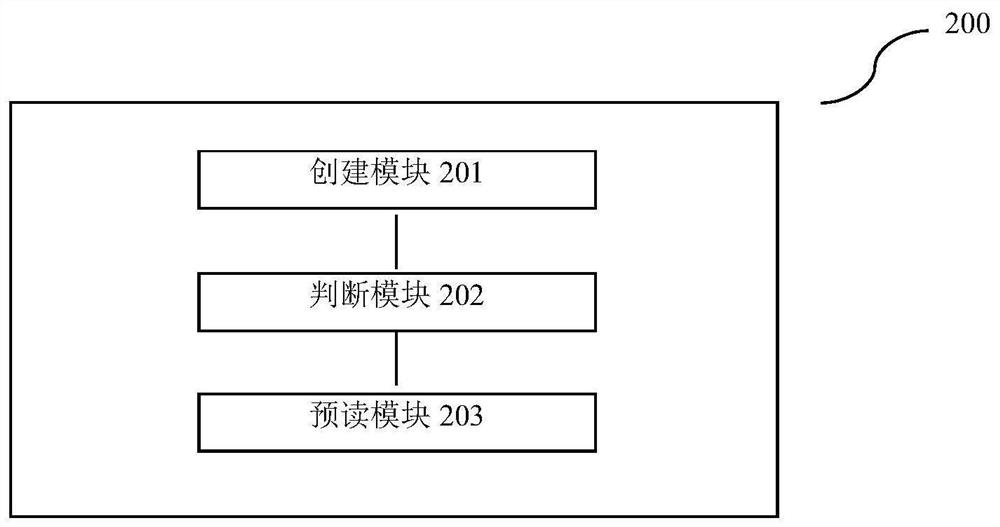

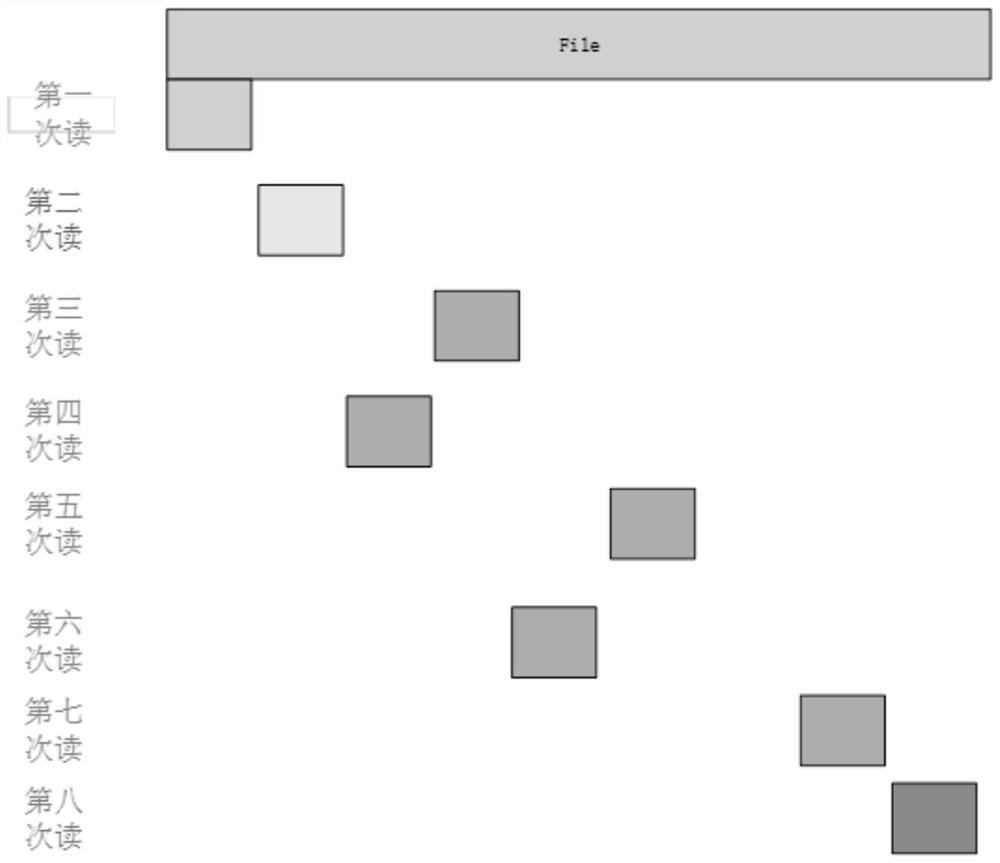

Method and equipment for locally and randomly pre-reading distributed file system file

ActiveCN111625503AAvoid disk stressDisk pressure dropFile access structuresSpecial data processing applicationsComputer hardwareComputer network

The invention provides a method and equipment for locally and randomly pre-reading a distributed file system file. The method comprises the following steps of: creating a pre-reading structure pointerin a file operation handle; in response to the fact that the file is read, reading and updating information in the pre-reading structure pointer, and judging whether reading is local random reading or not; and in response to the fact that the reading is local random reading and the continuous reading frequency in the updated information is greater than 1 and the current reading position is greater than or equal to the pre-reading trigger point in the updated information, carrying out pre-reading. By using the scheme of the invention, the pre-reading performance of the local random reading scene of the large file can be improved, the pressure of small io blocks on a network disk can be avoided, and the network and disk pressure of the local random reading scene of the large file can be reduced.

Owner:SUZHOU LANGCHAO INTELLIGENT TECH CO LTD

Integration of high-assurance features into an application through application factoring

Application factoring or partitioning is used to integrate secure features into a conventional application. An application's functionality is partitioned into two sets according to whether a given action does, or does not, involve the handling of sensitive data. Separate software objects (processors) are created to perform these two sets of actions. A trusted processor handles secure data and runs in a high-assurance environment. When another processor encounters secure data, that data is sent to the trusted processor. The data is wrapped in such a way that allows it to be routed to the trusted processor, and prevents the data from being deciphered by any entity other than the trusted processor. An infrastructure is provided that wraps objects, routes them to the correct processor, and allows their integrity to be attested through a chain of trust leading back to base component that is known to be trustworthy.

Owner:MICROSOFT TECH LICENSING LLC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com