Patents

Literature

18010results about "Memory architecture accessing/allocation" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Garbage collection in a storage system

ActiveUS8527544B1Memory architecture accessing/allocationDigital data information retrievalRefuse collectionControl store

A system and method for performing garbage collection. A system includes a storage medium, a first table including entries which map a virtual address to locations in the storage medium, and a second table with entries which include a reverse mapping of a physical address in a data storage medium to one or more virtual addresses. A storage controller is configured to perform garbage collection. During garbage collection, the controller is configured to identify one or more entries in the second table which correspond to a segment to be garbage collected. In response to determining the first table includes a valid mapping for a virtual address included in an entry of the one of the one or more entries, the controller is configured to copy data from a first location identified in the entry to a second location in the data storage medium, and reclaim the first storage location.

Owner:PURE STORAGE

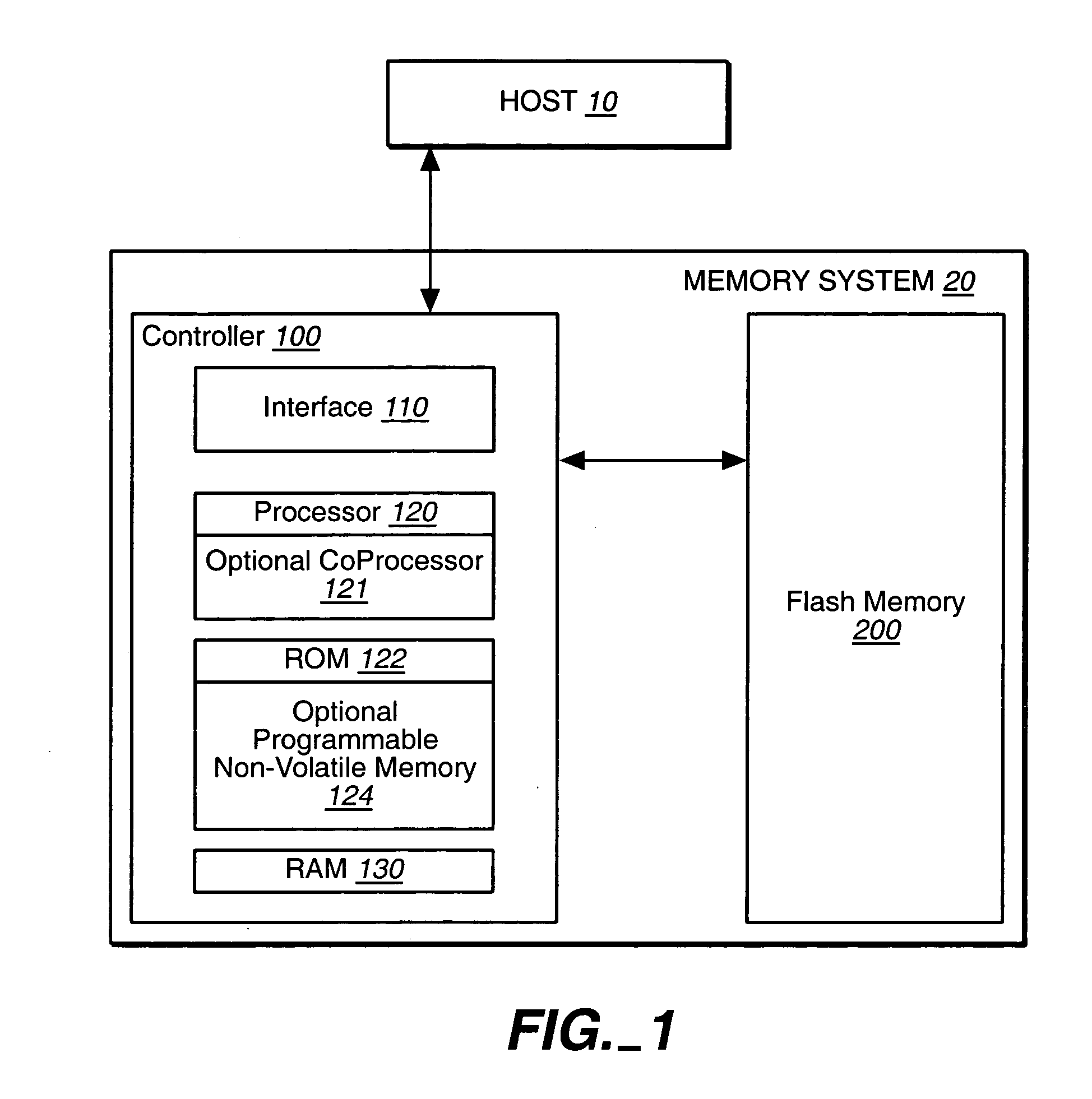

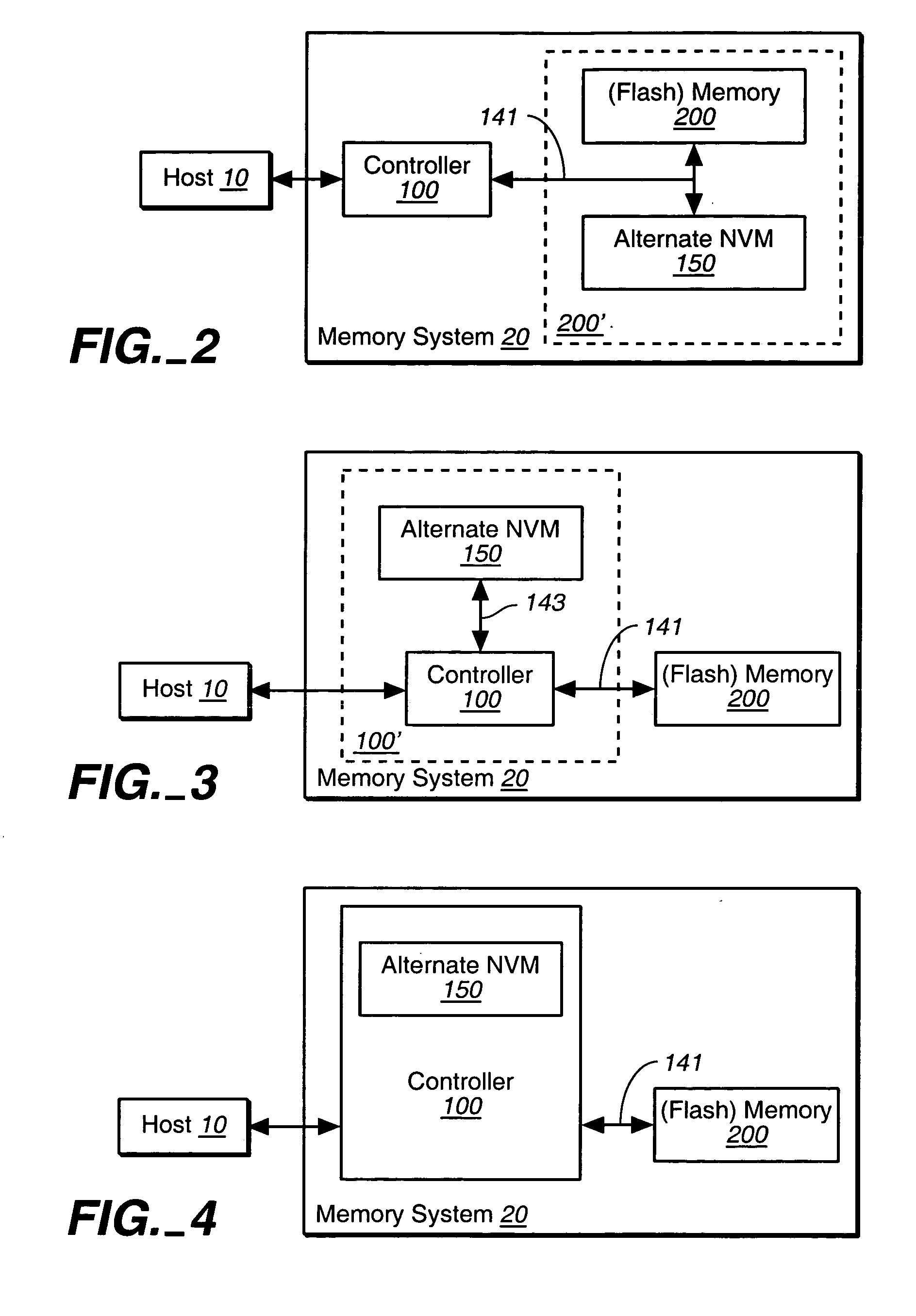

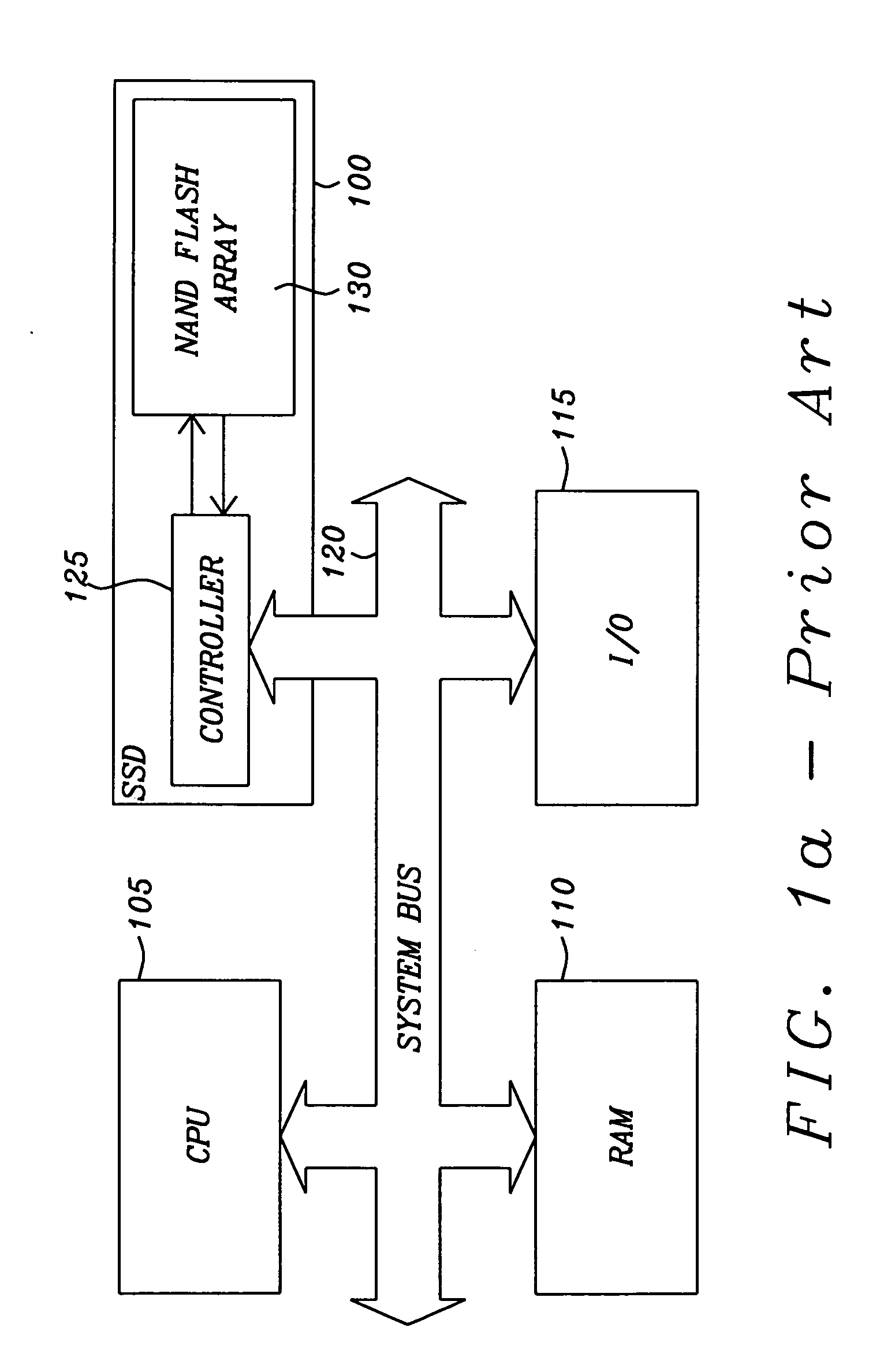

Hybrid non-volatile memory system

InactiveUS20050251617A1Easy accessMemory architecture accessing/allocationInput/output to record carriersData controlControl data

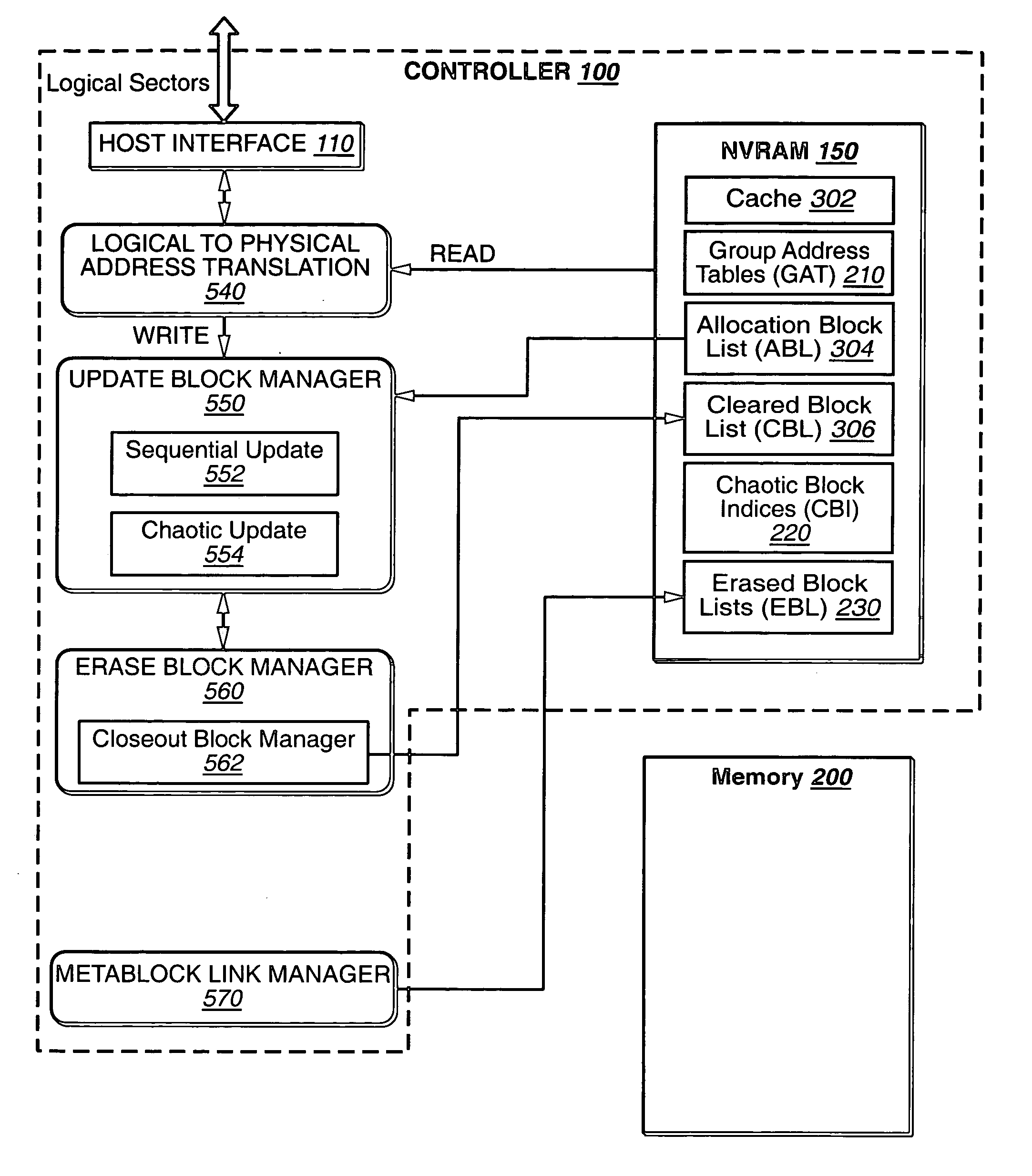

The present invention presents a hybrid non-volatile system that uses non-volatile memories based on two or more different non-volatile memory technologies in order to exploit the relative advantages of each these technology with respect to the others. In an exemplary embodiment, the memory system includes a controller and a flash memory, where the controller has a non-volatile RAM based on an alternate technology such as FeRAM. The flash memory is used for the storage of user data and the non-volatile RAM in the controller is used for system control data used by the control to manage the storage of host data in the flash memory. The use of an alternate non-volatile memory technology in the controller allows for a non-volatile copy of the most recent control data to be accessed more quickly as it can be updated on a bit by bit basis. In another exemplary embodiment, the alternate non-volatile memory is used as a cache where data can safely be staged prior to its being written to the to the memory or read back to the host.

Owner:SANDISK TECH LLC

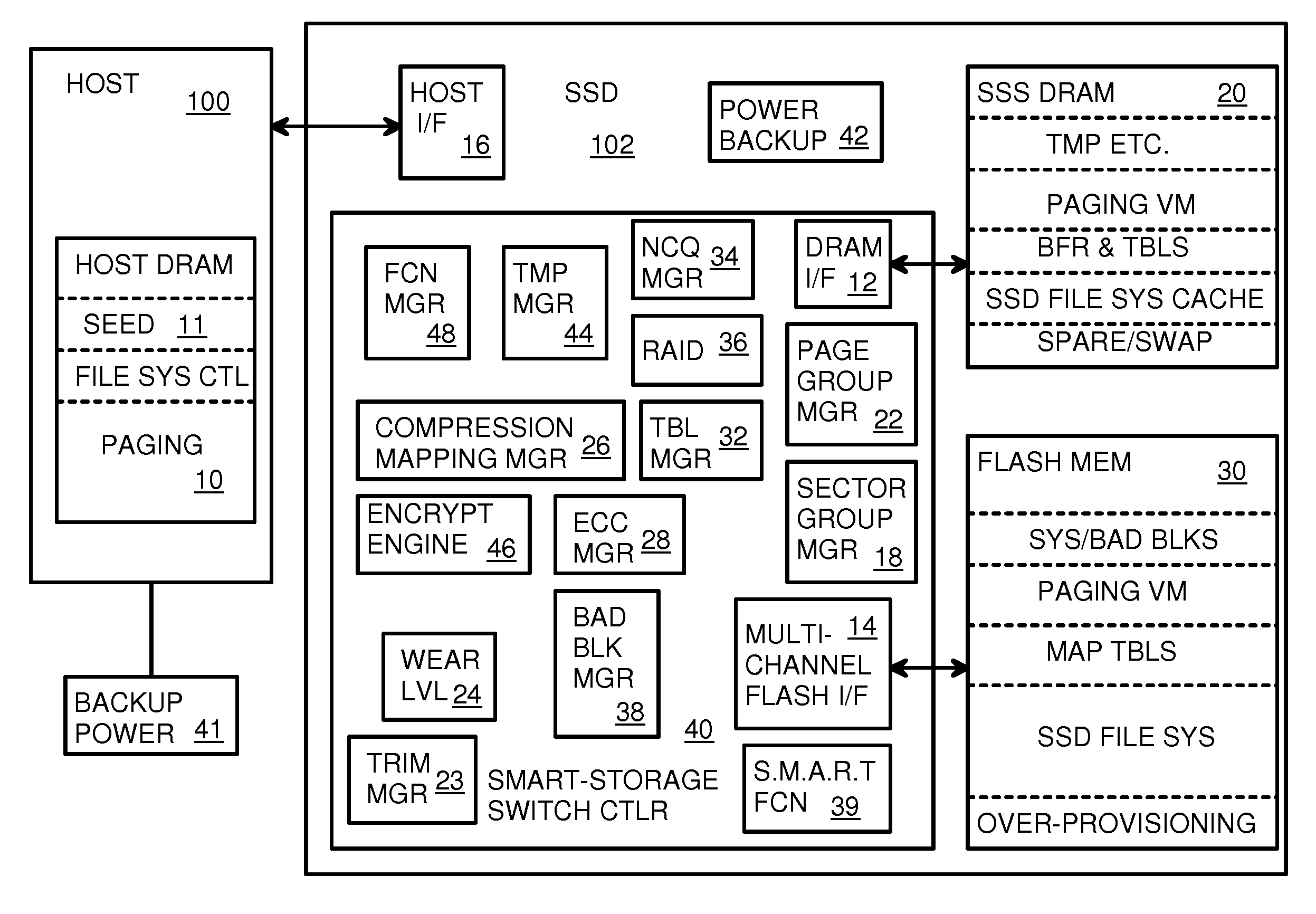

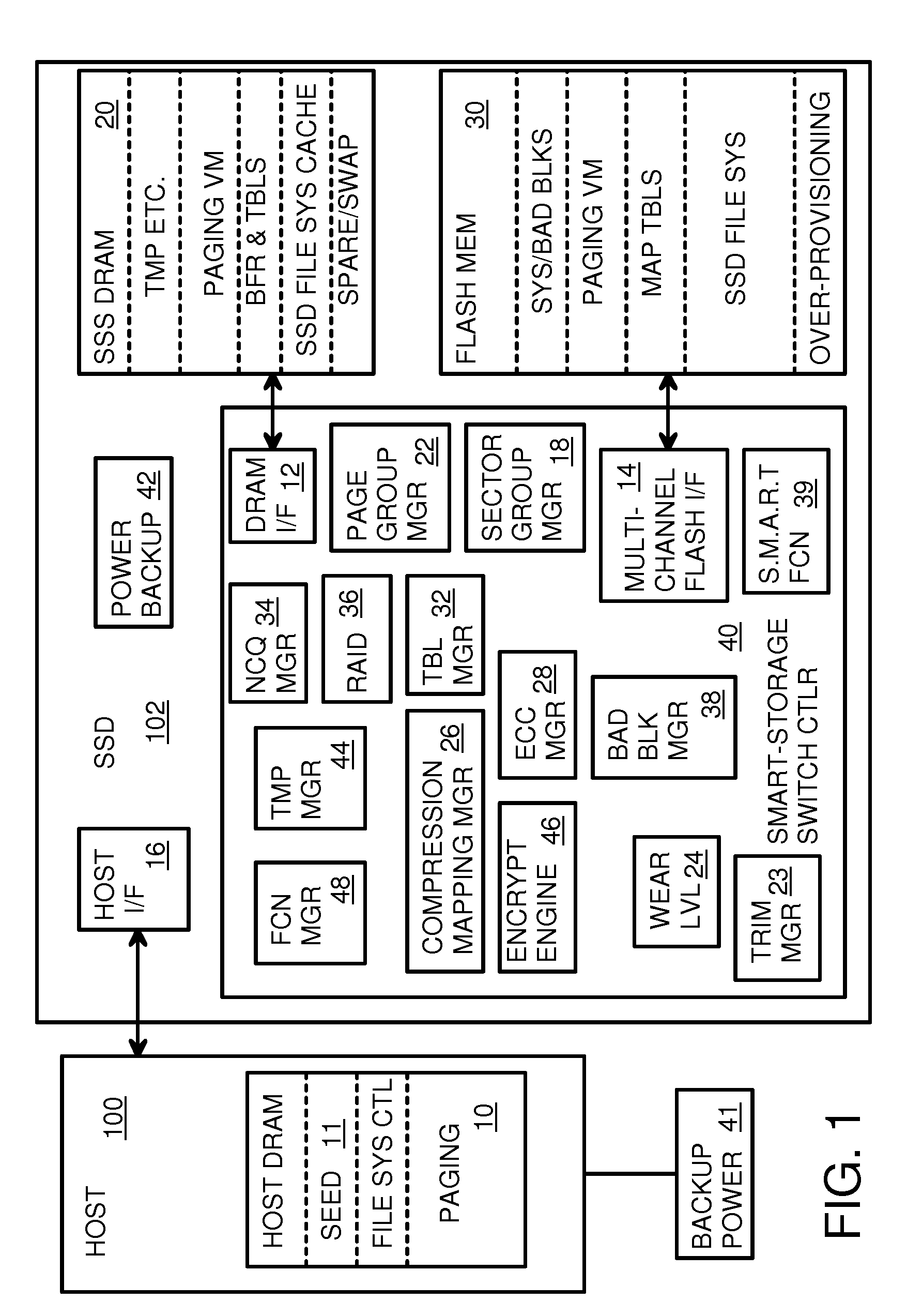

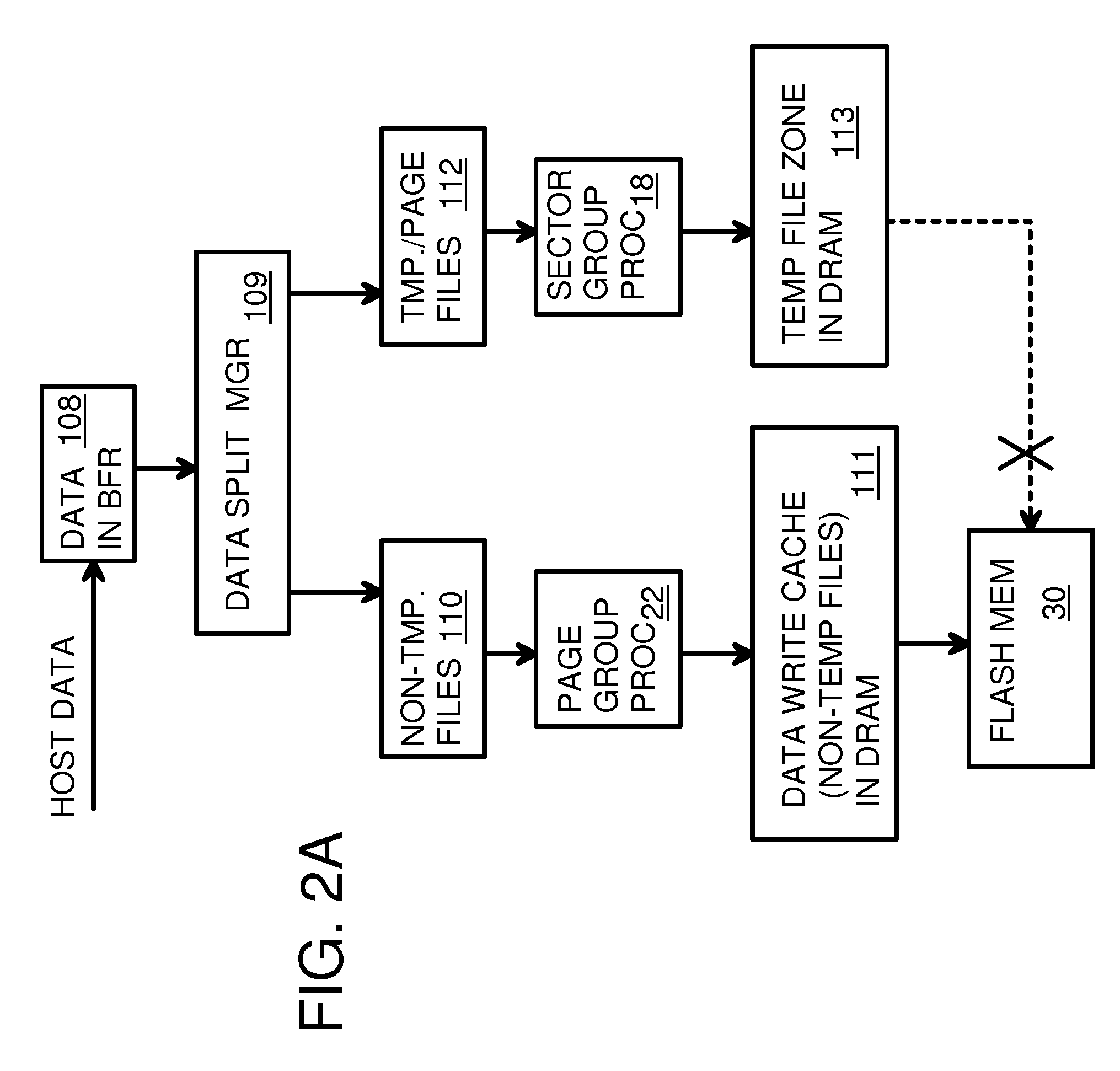

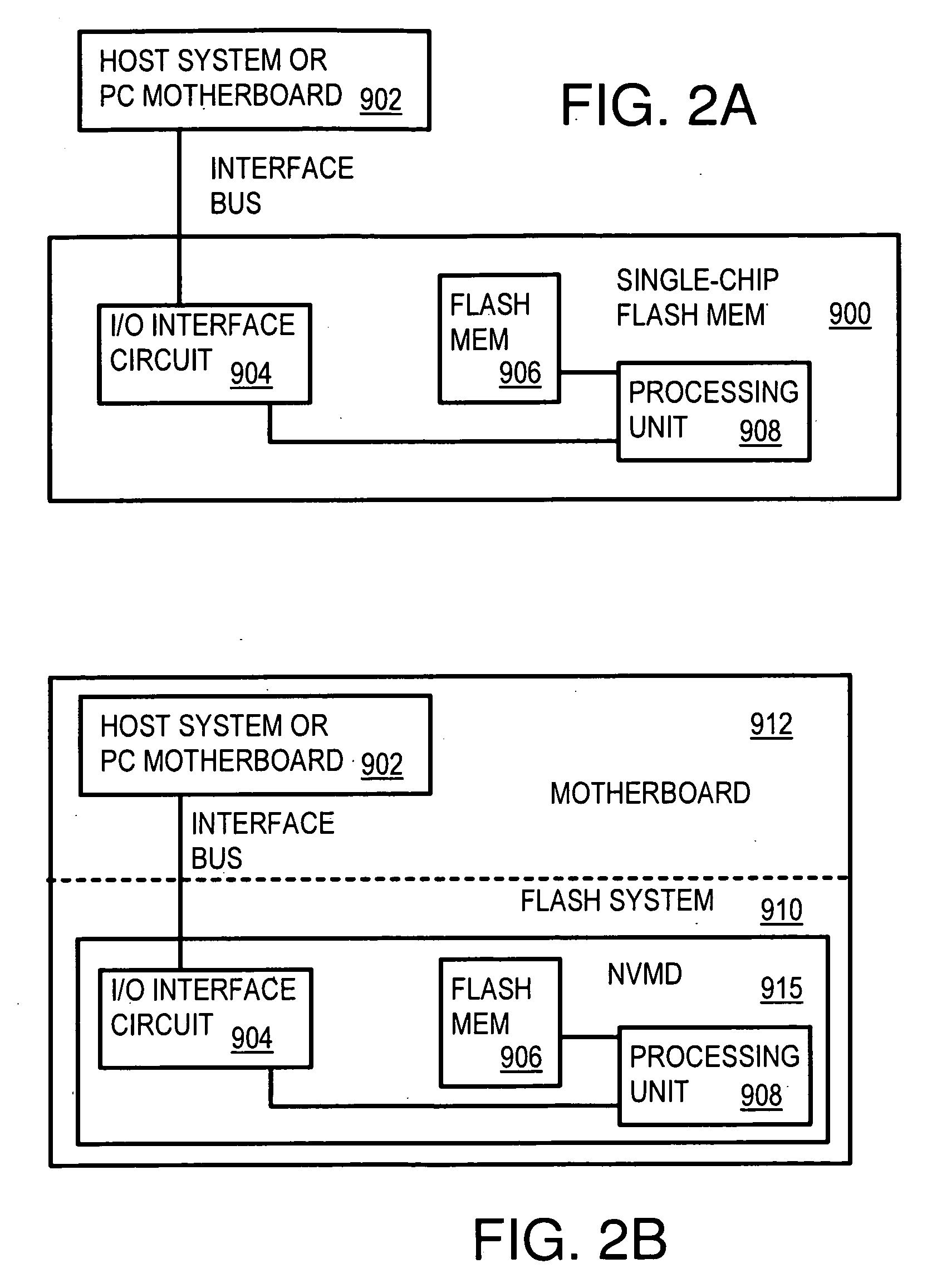

Super-Endurance Solid-State Drive with Endurance Translation Layer (ETL) and Diversion of Temp Files for Reduced Flash Wear

ActiveUS20120284587A1Memory architecture accessing/allocationDigital storageFilename extensionData file

A flash drive has increased endurance and longevity by reducing writes to flash. An Endurance Translation Layer (ETL) is created in a DRAM buffer and provides temporary storage to reduce flash wear. A Smart Storage Switch (SSS) controller assigns data-type bits when categorizing host accesses as paging files used by memory management, temporary files, File Allocation Table (FAT) and File Descriptor Block (FDB) entries, and user data files, using address ranges and file extensions read from FAT. Paging files and temporary files are never written to flash. Partial-page data is packed and sector mapped by sub-sector mapping tables that are pointed to by a unified mapping table that stores the data-type bits and pointers to data or tables in DRAM. Partial sectors are packed together to reduce DRAM usage and flash wear. A spare / swap area in DRAM reduces flash wear. Reference voltages are adjusted when error correction fails.

Owner:SUPER TALENT TECH CORP

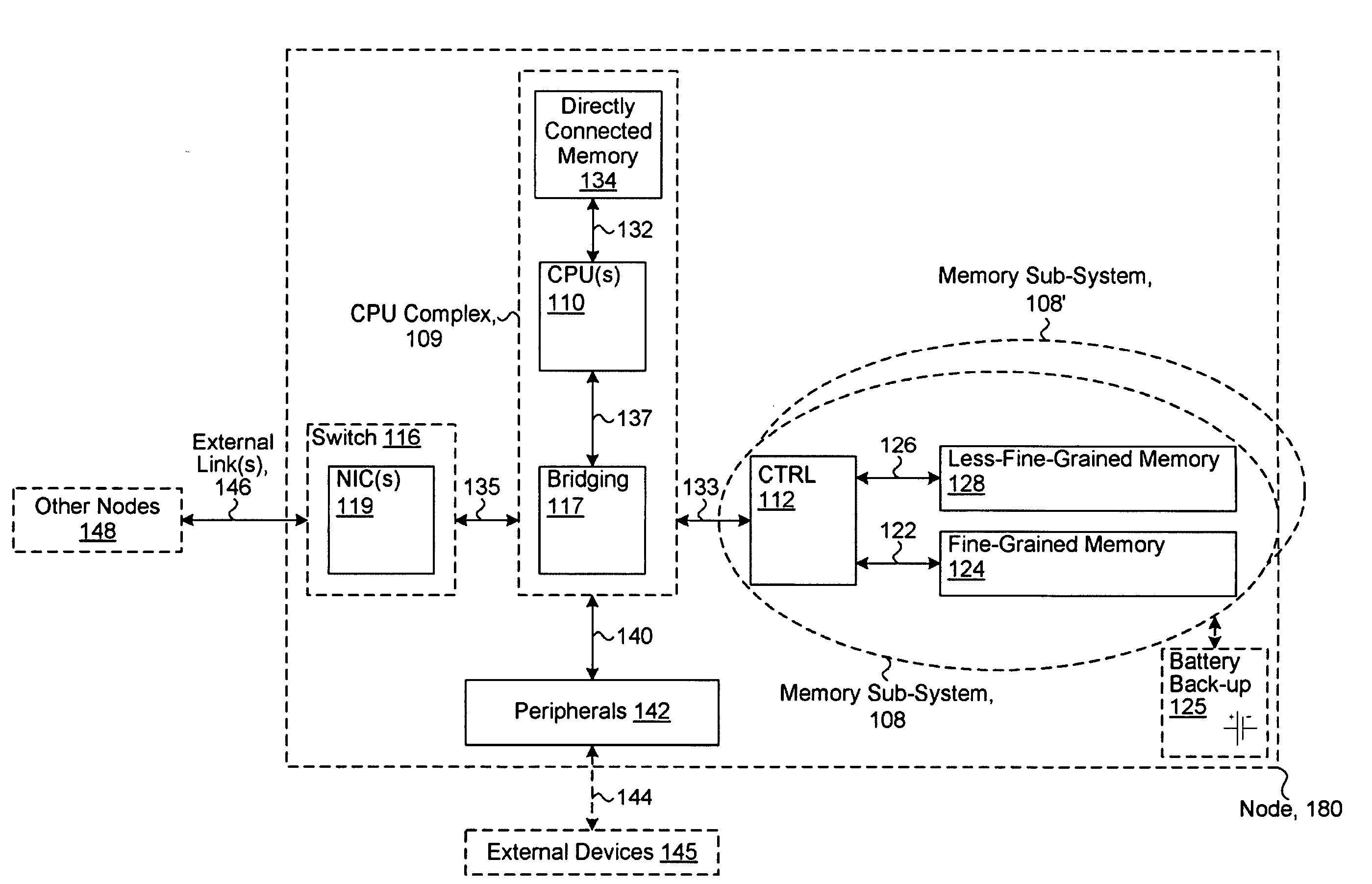

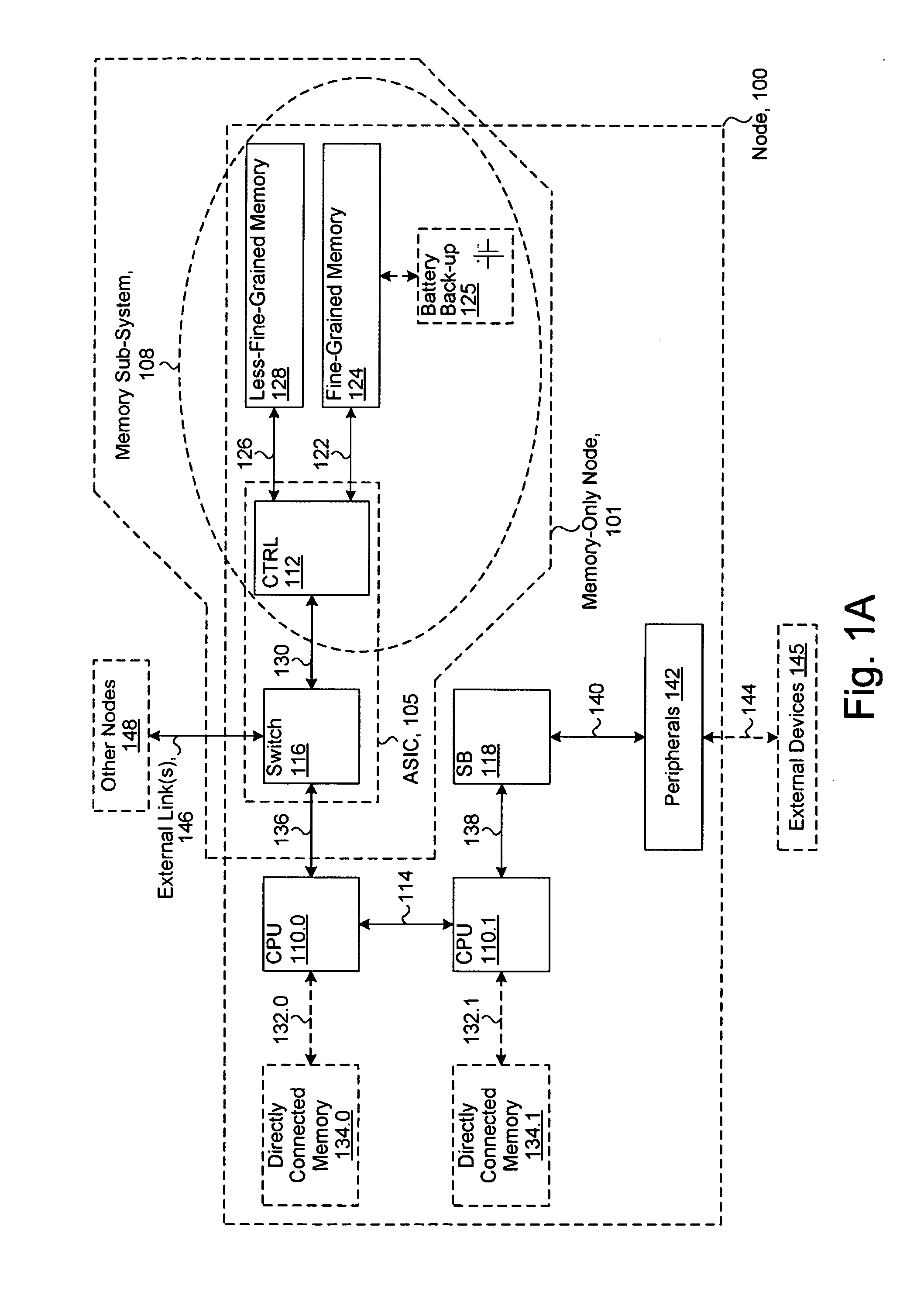

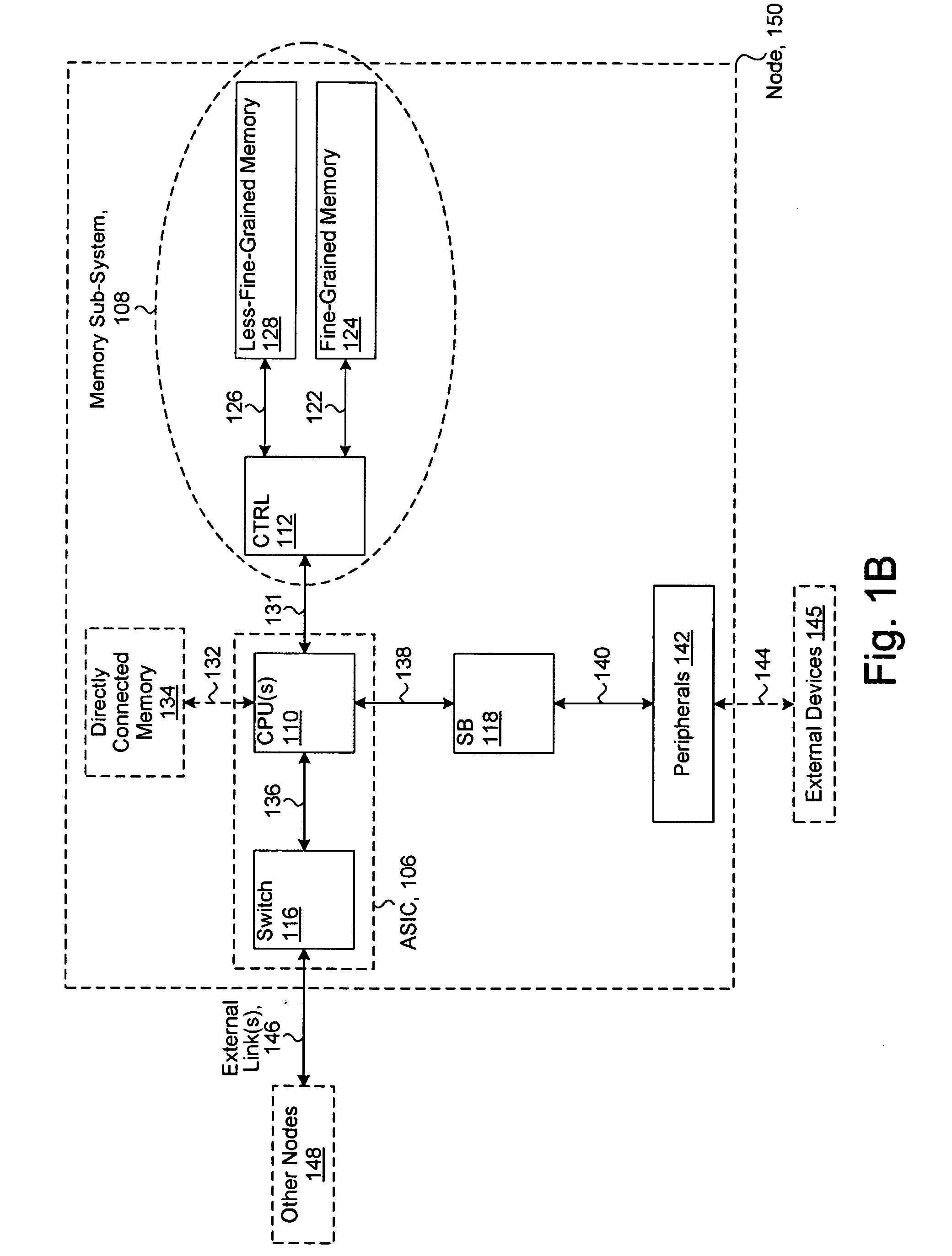

System including a fine-grained memory and a less-fine-grained memory

ActiveUS20080301256A1Memory architecture accessing/allocationEnergy efficient ICTData processing systemWrite buffer

A data processing system includes one or more nodes, each node including a memory sub-system. The sub-system includes a fine-grained, memory, and a less-fine-grained (e.g., page-based) memory. The fine-grained memory optionally serves as a cache and / or as a write buffer for the page-based memory. Software executing on the system uses n node address space which enables access to the page-based memories of all nodes. Each node optionally provides ACID memory properties for at least a portion of the space. In at least a portion of the space, memory elements are mapped to locations in the page-based memory. In various embodiments, some of the elements are compressed, the compressed elements are packed into pages, the pages are written into available locations in the page-based memory, and a map maintains an association between the some of the elements and the locations.

Owner:SANDISK TECH LLC

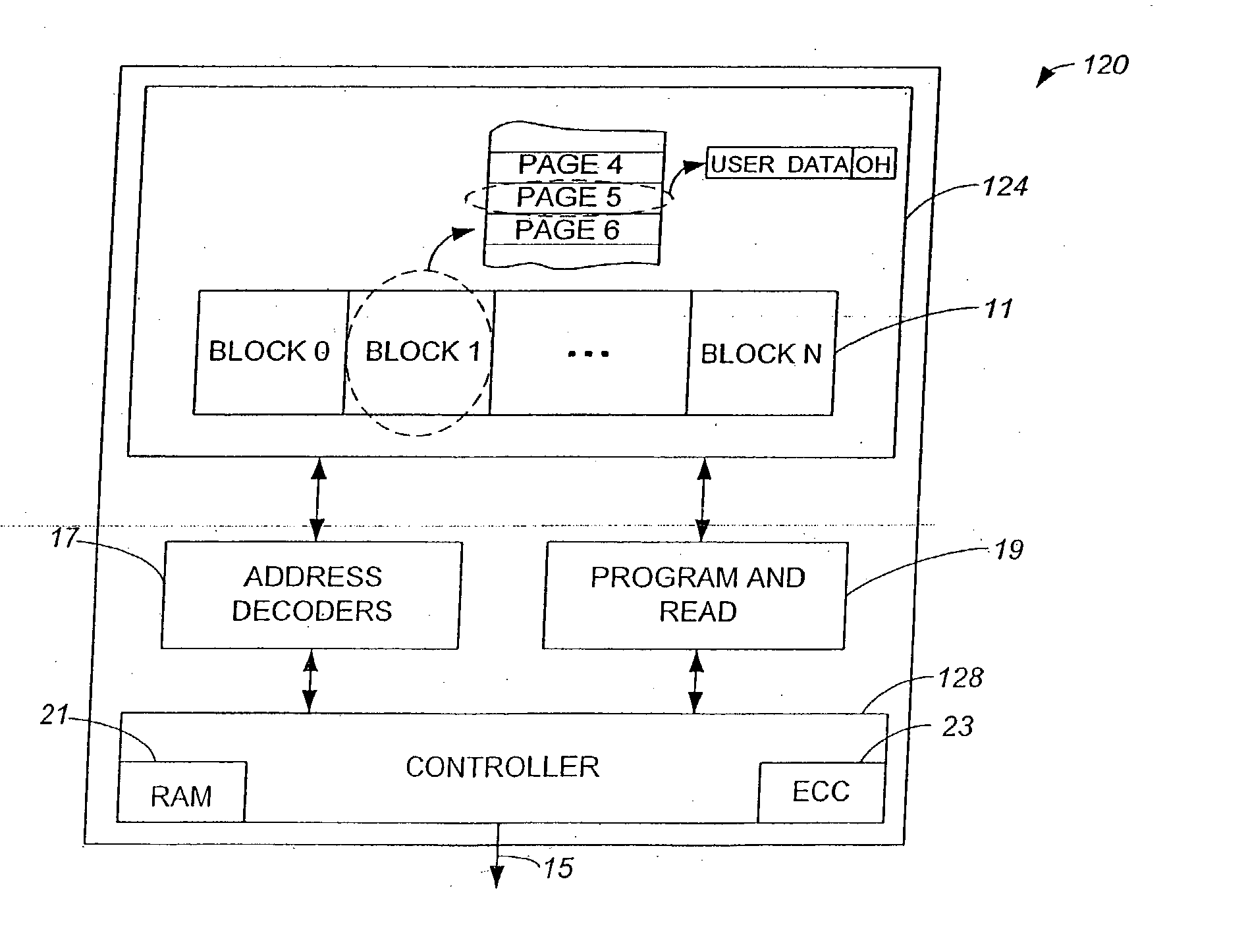

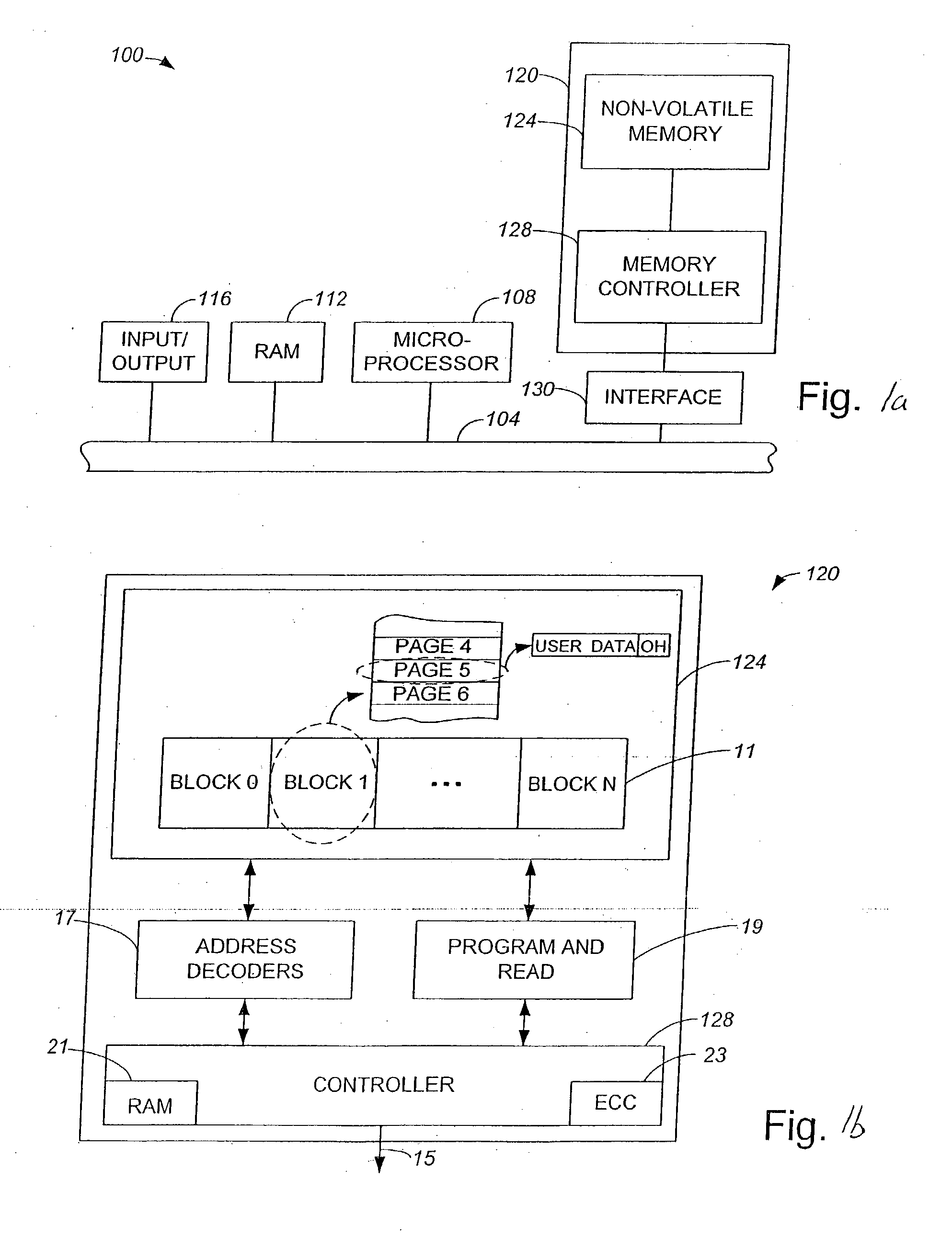

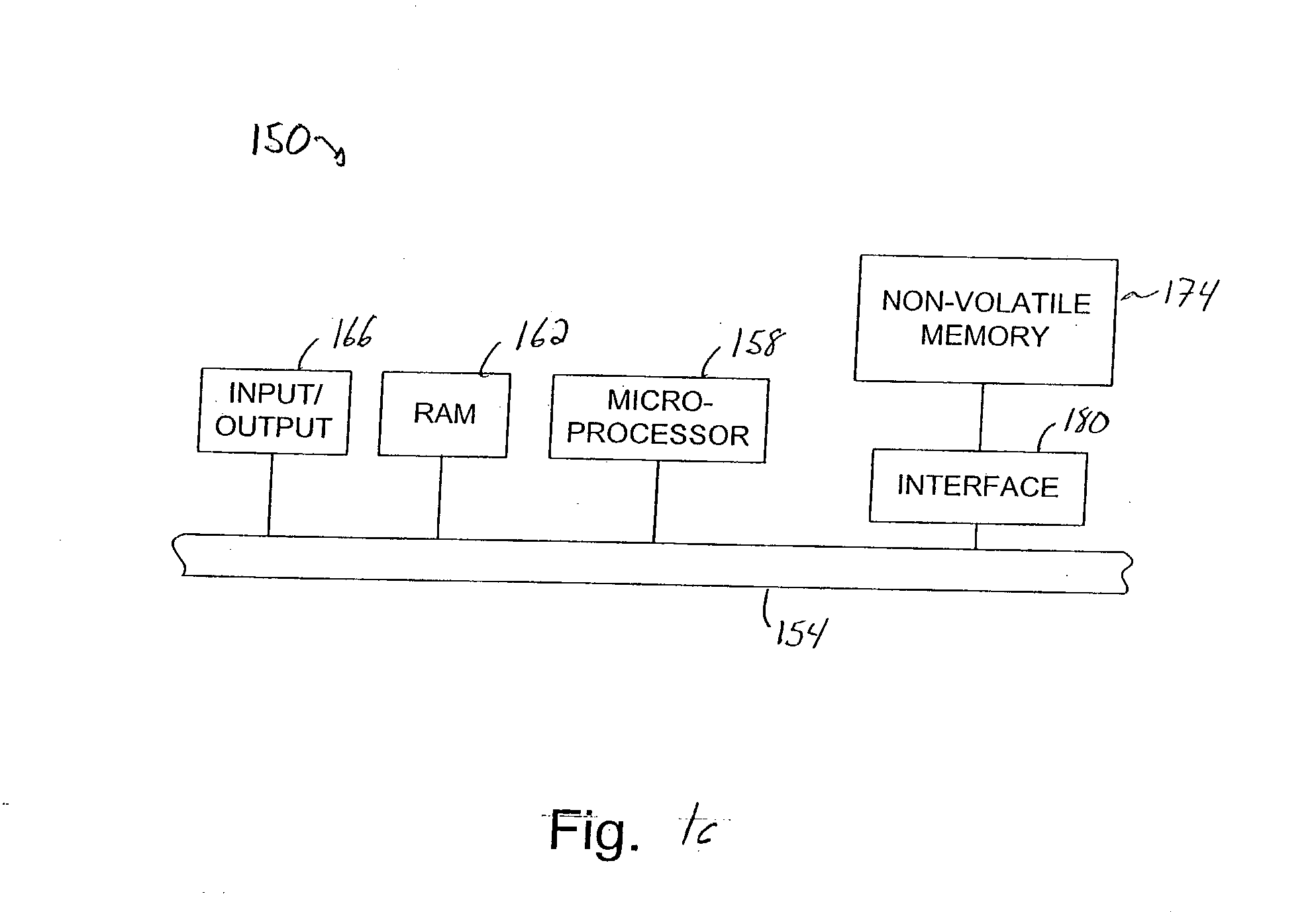

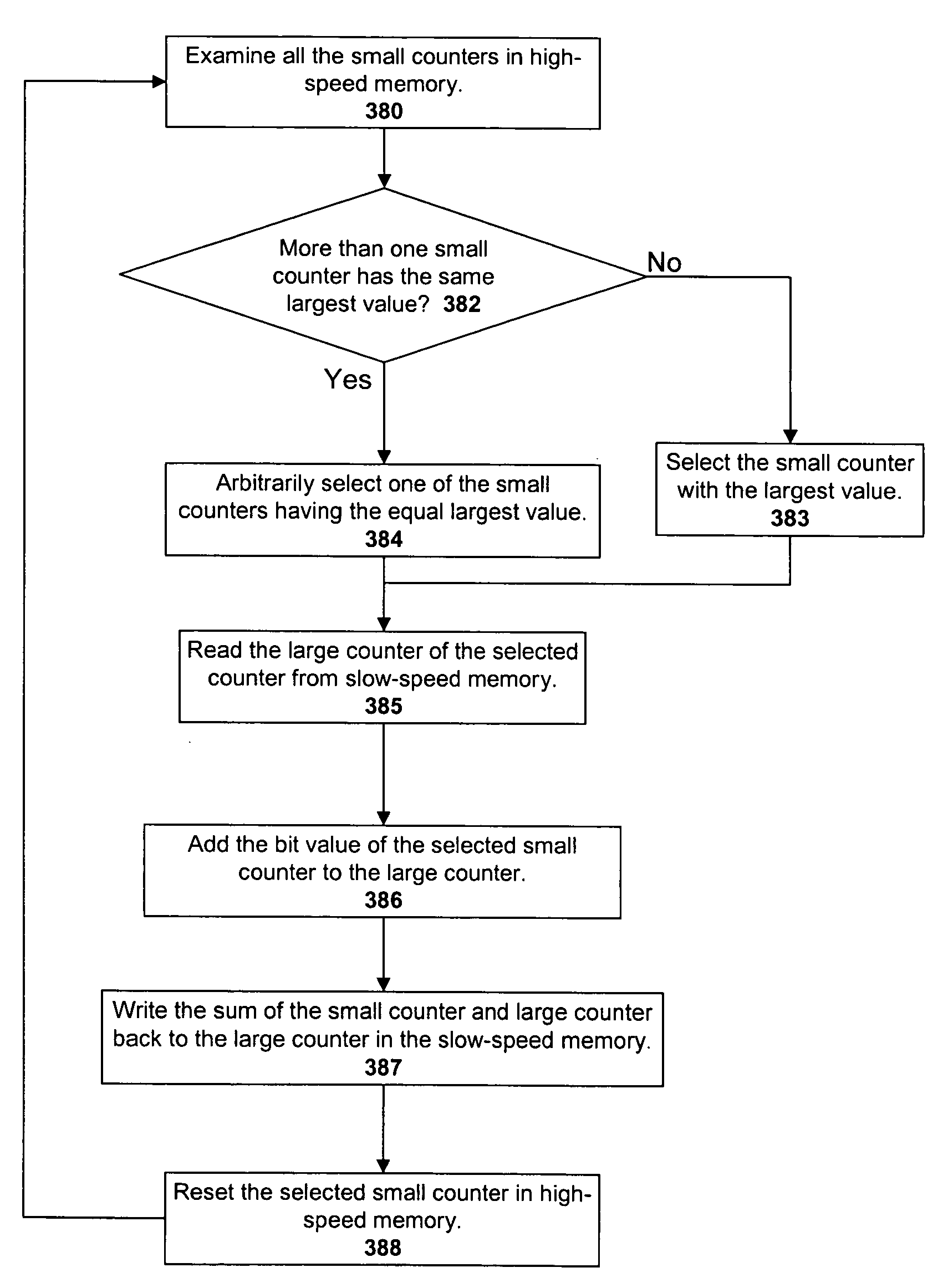

Maintaining erase counts in non-volatile storage systems

InactiveUS20040080985A1Memory architecture accessing/allocationMemory adressing/allocation/relocationData structureVolatile memory

Methods and apparatus for storing erase counts in a non-volatile memory of a non-volatile memory system are disclosed. According to one aspect of the present invention, a data structure in a non-volatile memory includes a first indicator that provides an indication of a number of times a first block of a plurality of blocks in a non-volatile memory has been erased. The data structure also includes a header that is arranged to contain information relating to the blocks in the non-volatile memory.

Owner:SANDISK TECH LLC

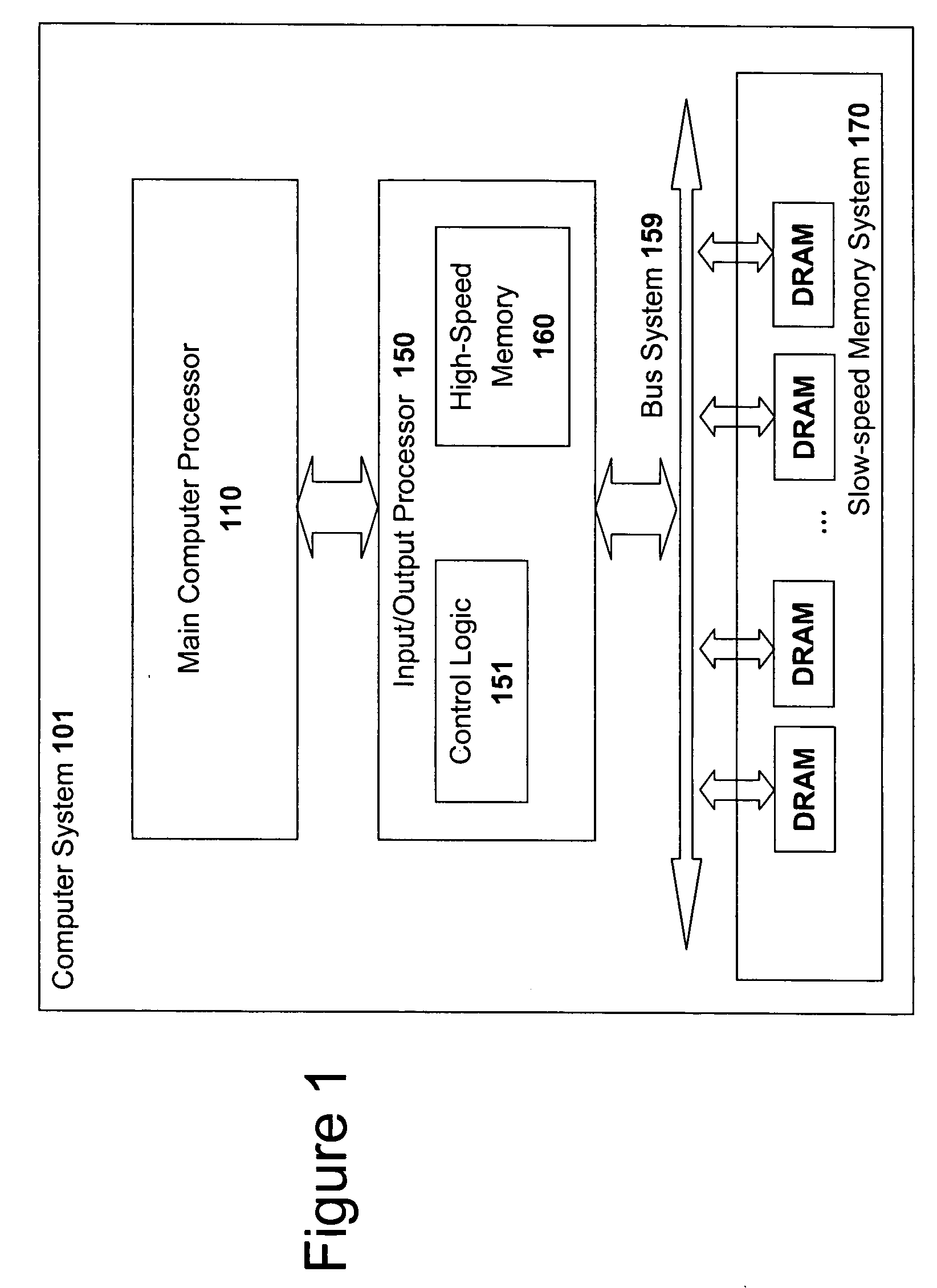

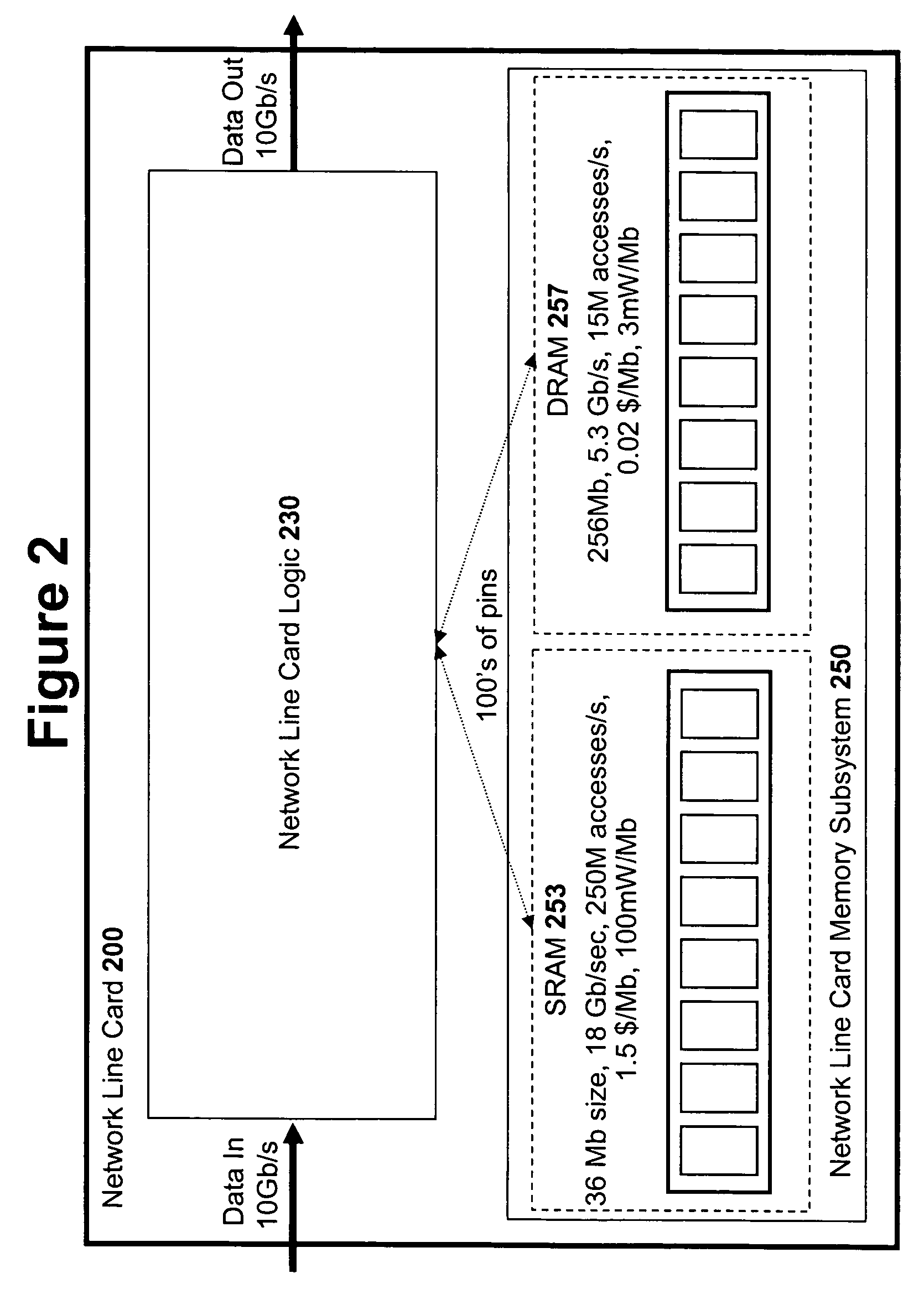

High speed memory control and I/O processor system

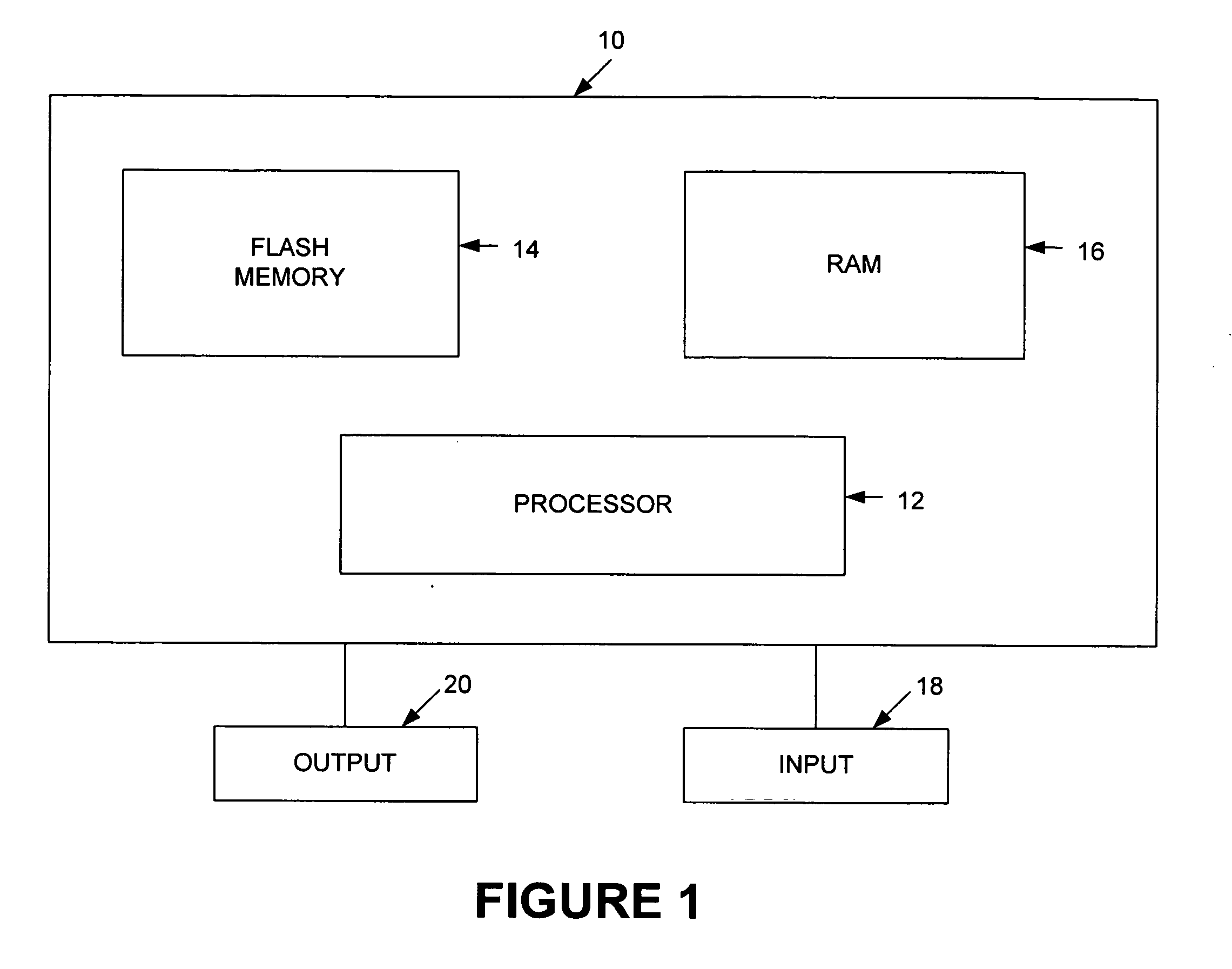

ActiveUS20050240745A1Easy to handleSimplify memory access taskMemory architecture accessing/allocationMemory adressing/allocation/relocationHigh speed memoryTailored approach

An input / output processor for speeding the input / output and memory access operations for a processor is presented. The key idea of an input / output processor is to functionally divide input / output and memory access operations tasks into a compute intensive part that is handled by the processor and an I / O or memory intensive part that is then handled by the input / output processor. An input / output processor is designed by analyzing common input / output and memory access patterns and implementing methods tailored to efficiently handle those commonly occurring patterns. One technique that an input / output processor may use is to divide memory tasks into high frequency or high-availability components and low frequency or low-availability components. After dividing a memory task in such a manner, the input / output processor then uses high-speed memory (such as SRAM) to store the high frequency and high-availability components and a slower-speed memory (such as commodity DRAM) to store the low frequency and low-availability components. Another technique used by the input / output processor is to allocate memory in such a manner that all memory bank conflicts are eliminated. By eliminating any possible memory bank conflicts, the maximum random access performance of DRAM memory technology can be achieved.

Owner:CISCO TECH INC

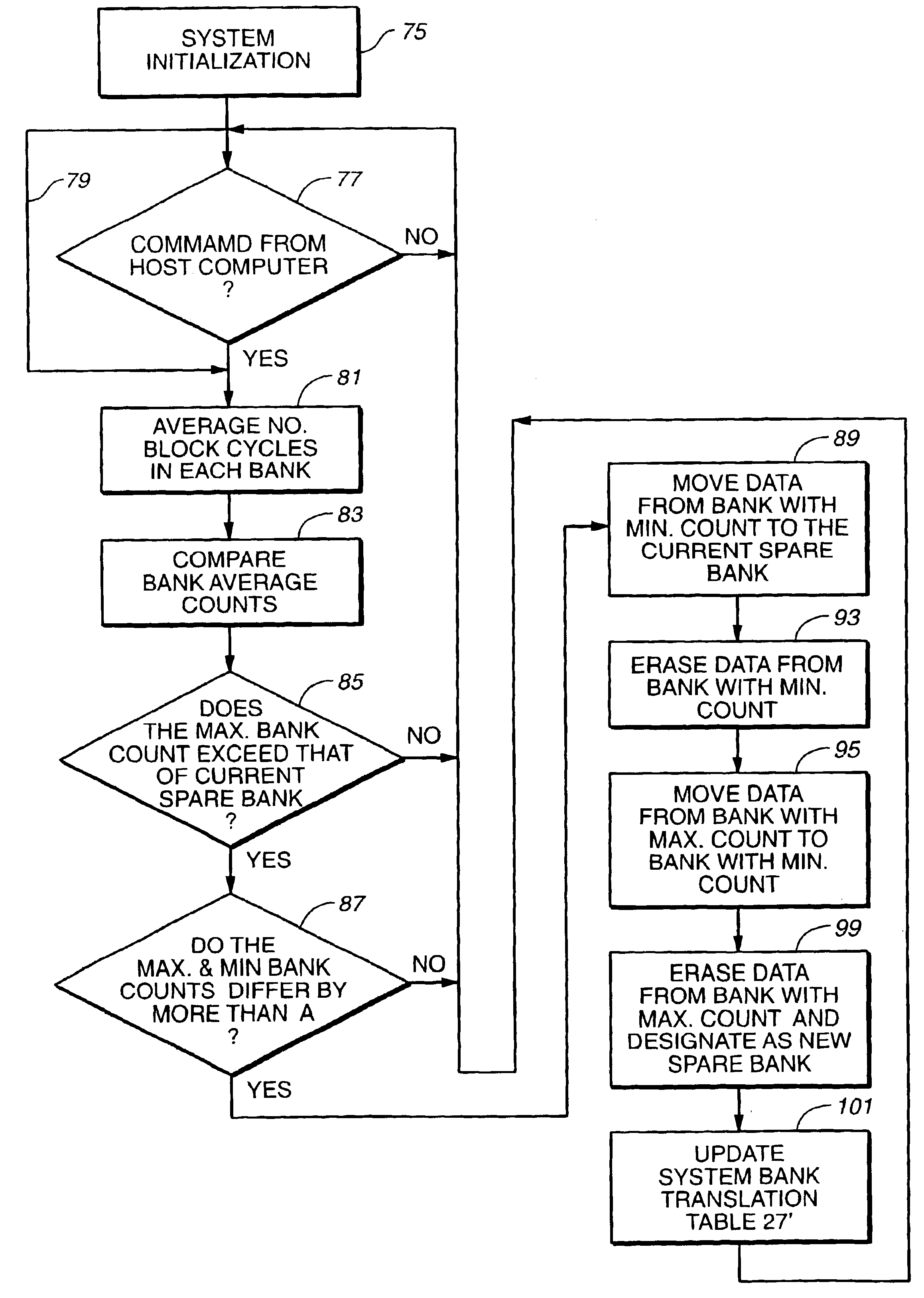

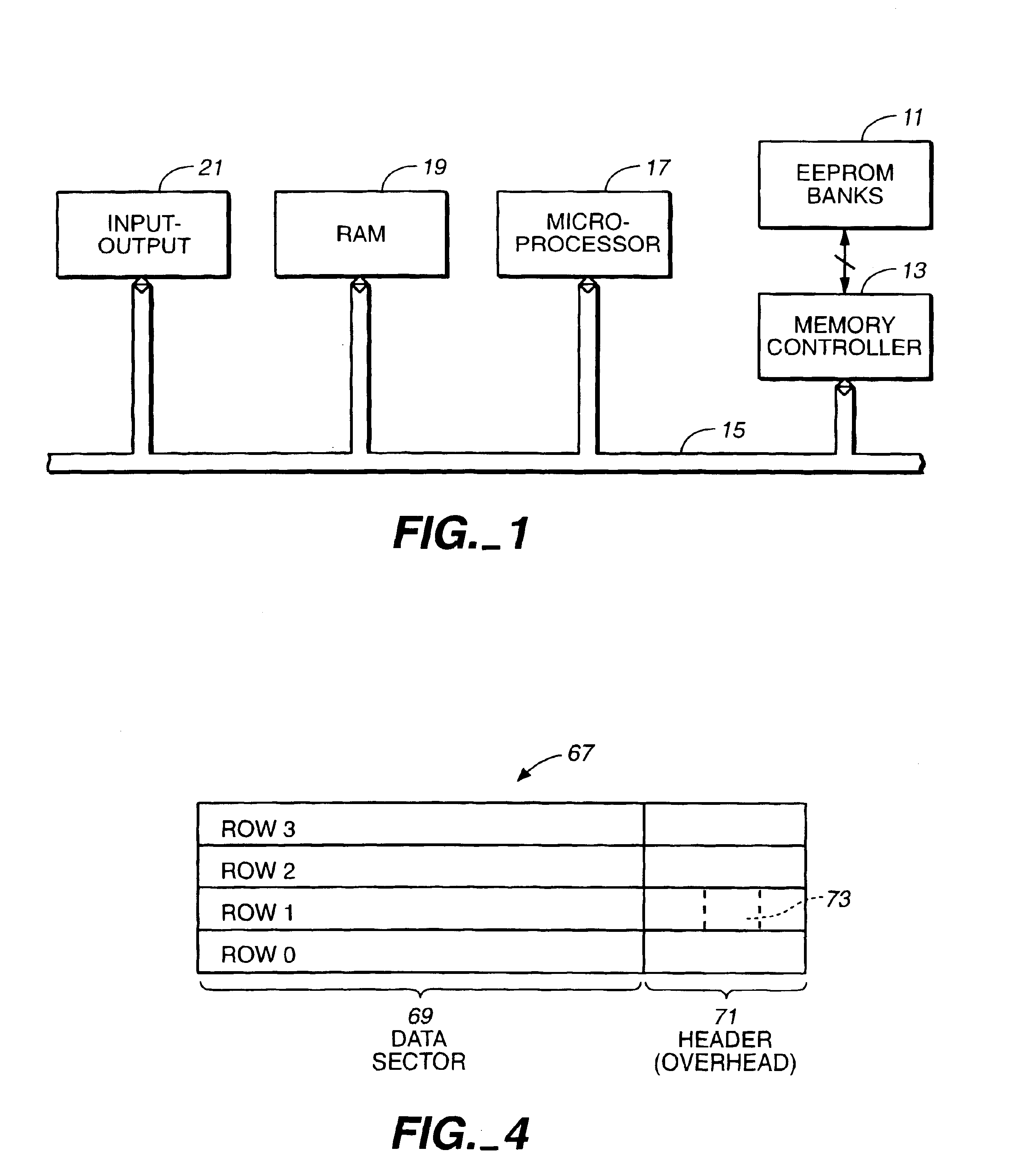

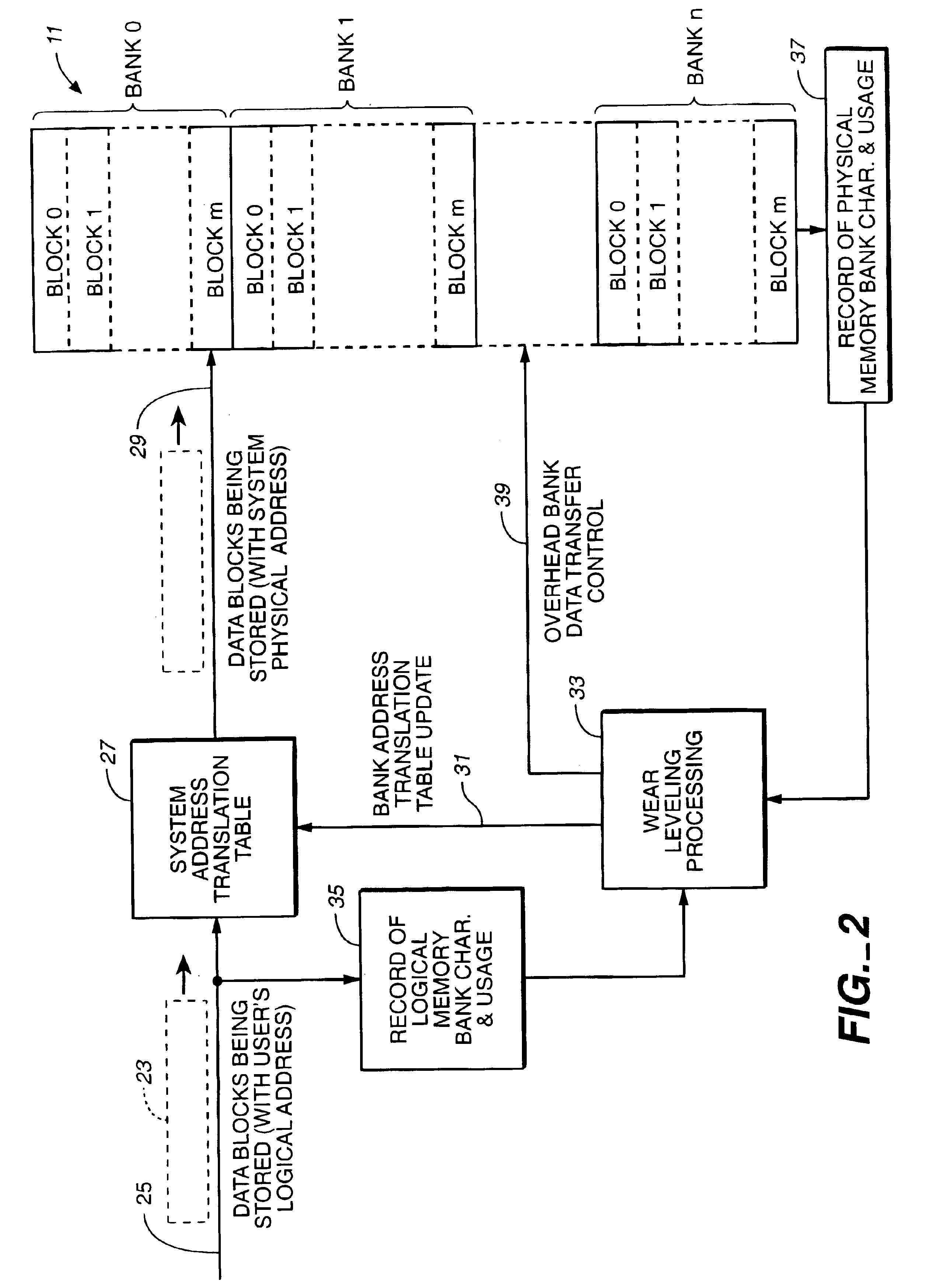

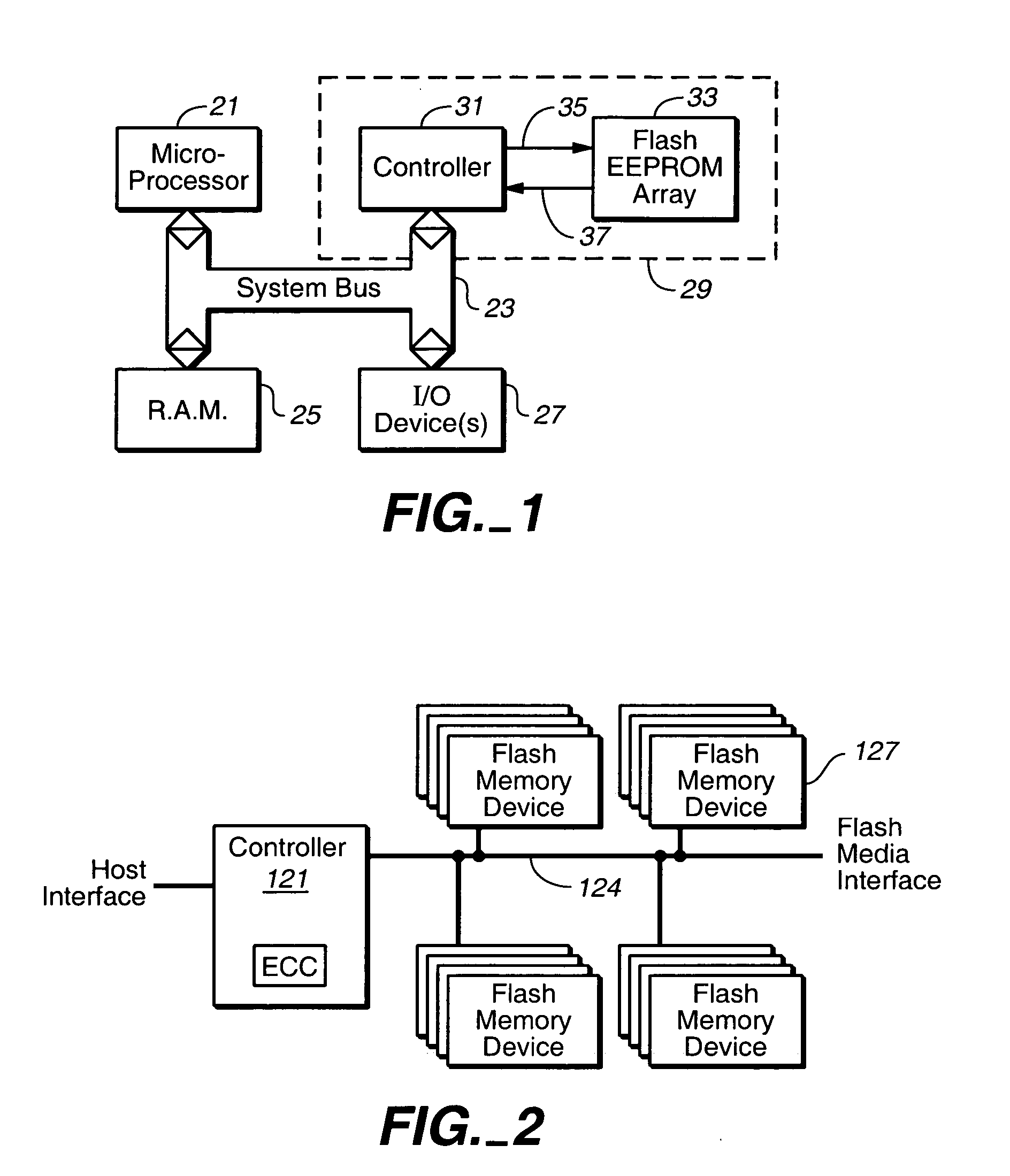

Wear leveling techniques for flash EEPROM systems

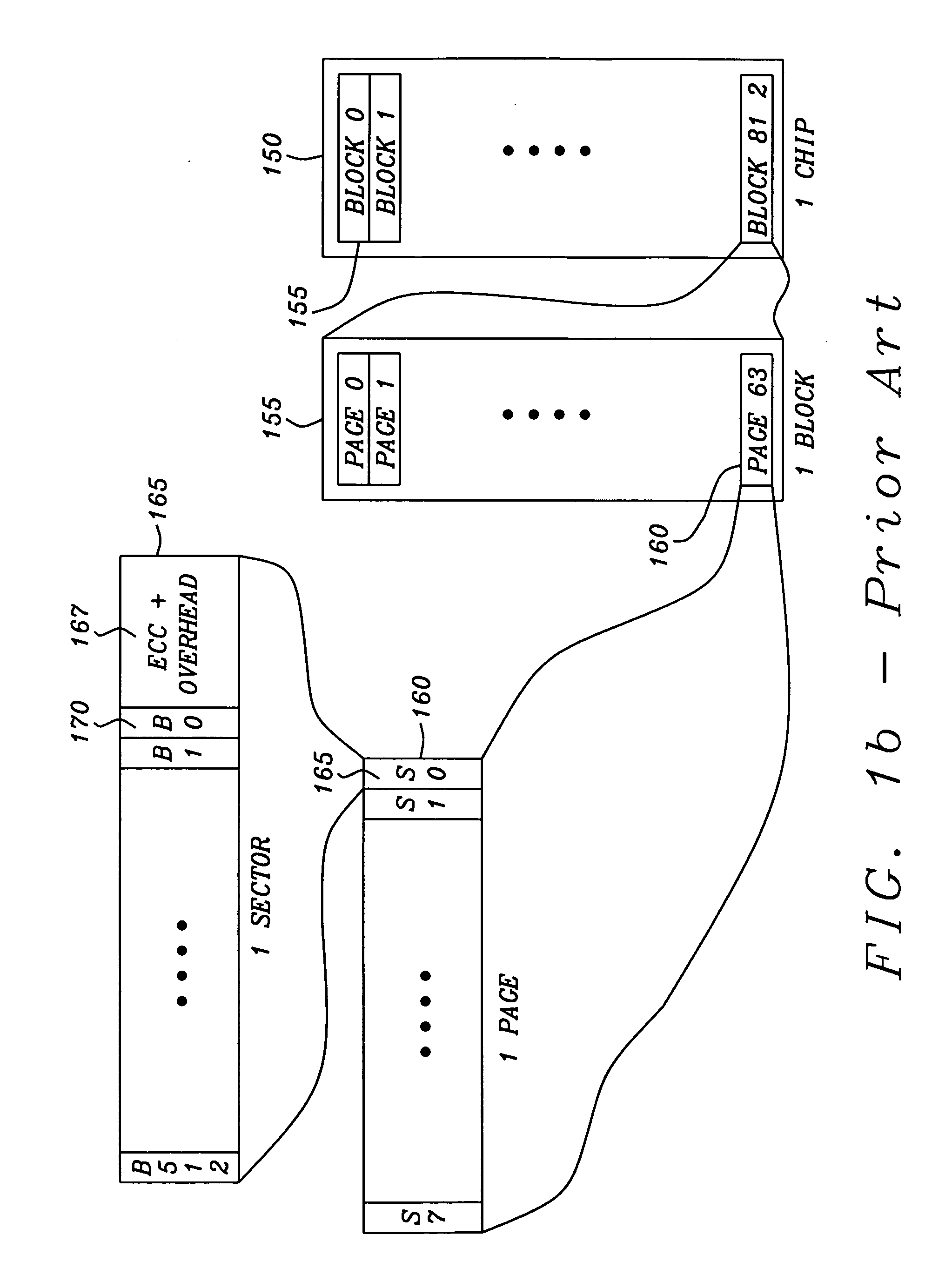

InactiveUS6850443B2Avoid uneven wearExtended service lifeMemory architecture accessing/allocationRead-only memoriesElectricityProgrammable read-only memory

A mass storage system made of flash electrically erasable and programmable read only memory (“EEPROM”) cells organized into blocks, the blocks in turn being grouped into memory banks, is managed to even out the numbers of erase and rewrite cycles experienced by the memory banks in order to extend the service lifetime of the memory system. Since this type of memory cell becomes unusable after a finite number of erase and rewrite cycles, although in the tens of thousands of cycles, uneven use of the memory banks is avoided so that the entire memory does not become inoperative because one of its banks has reached its end of life while others of the banks are little used. Relative use of the memory banks is monitored and, in response to detection of uneven use, have their physical addresses periodically swapped for each other in order to even out their use over the lifetime of the memory.

Owner:SANDISK TECH LLC

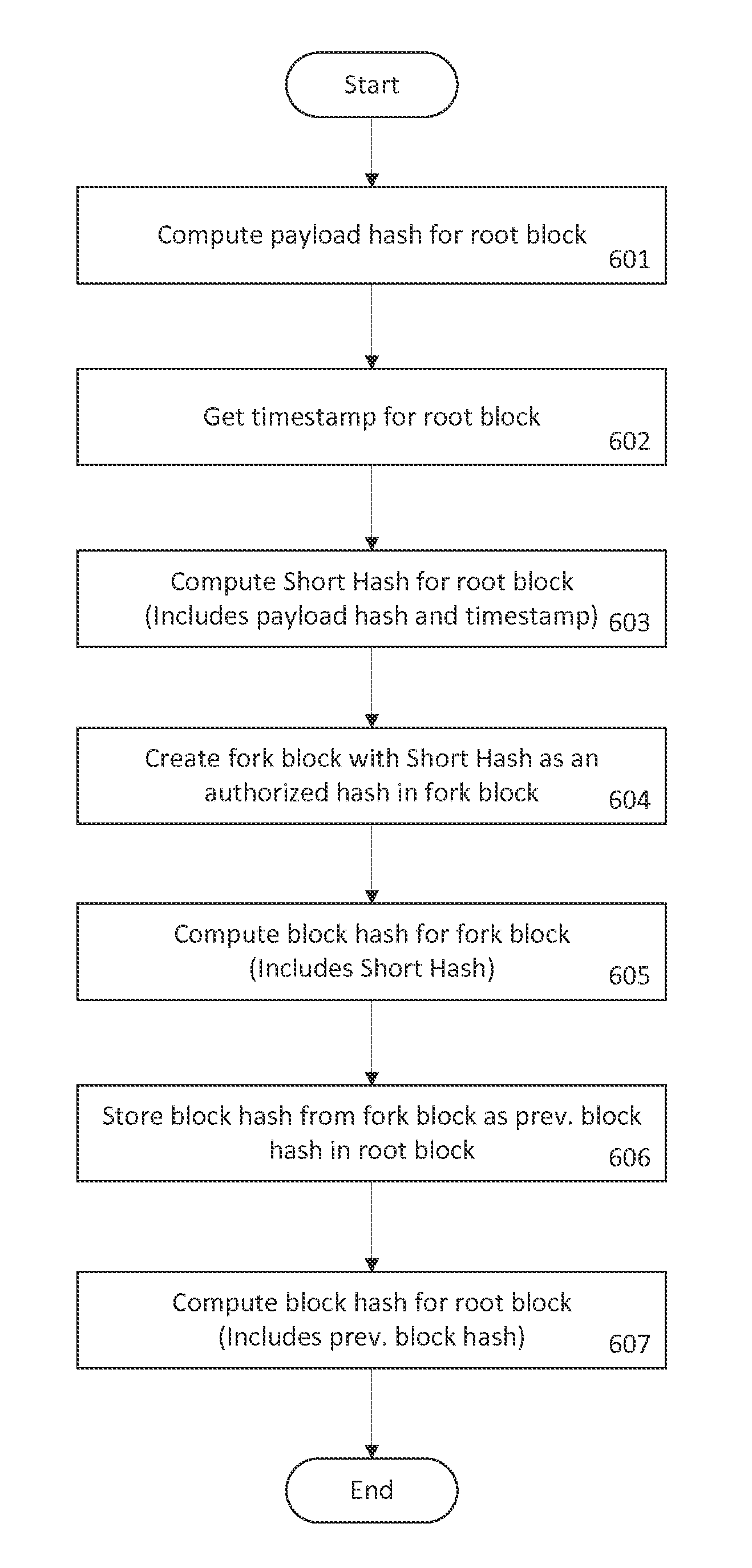

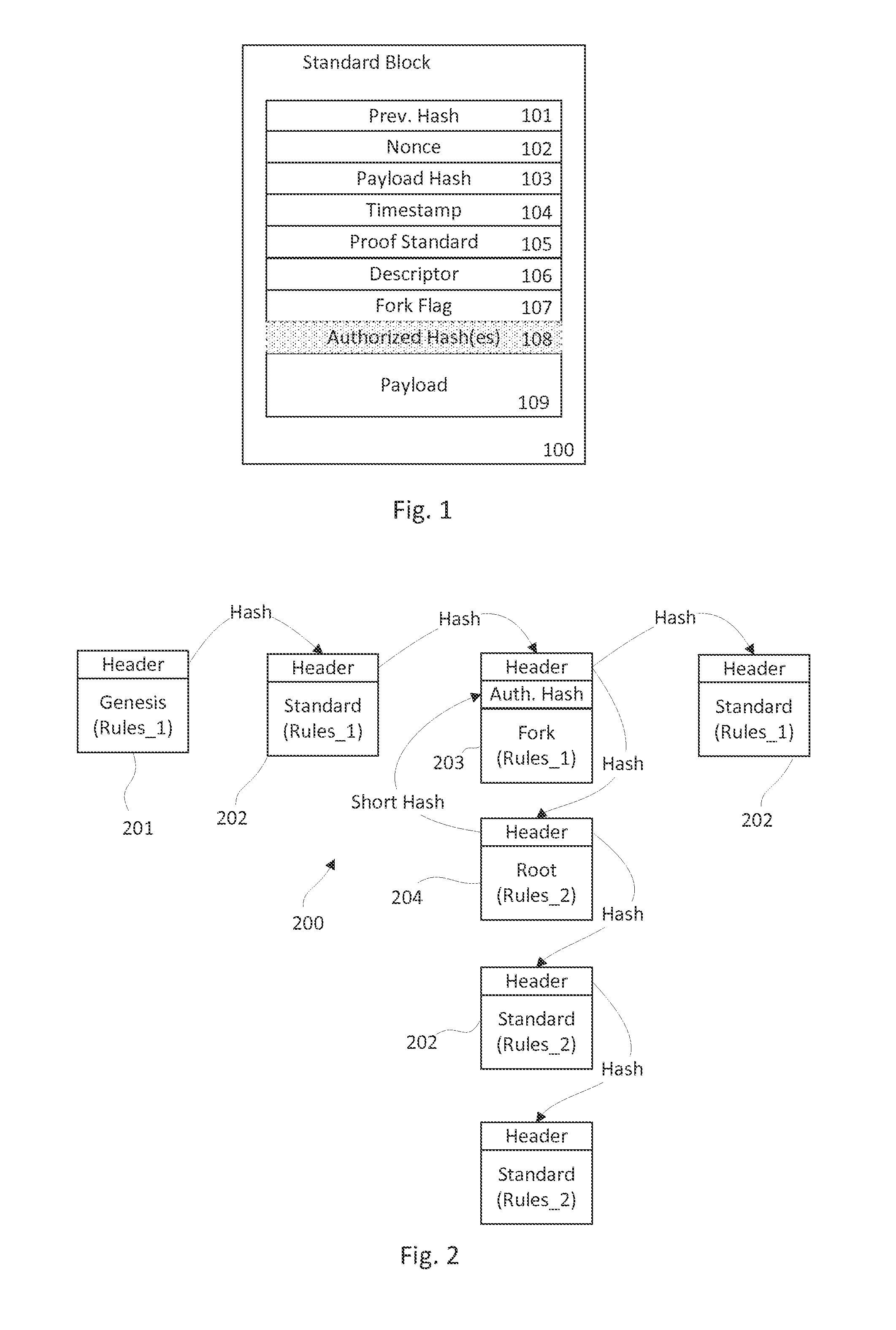

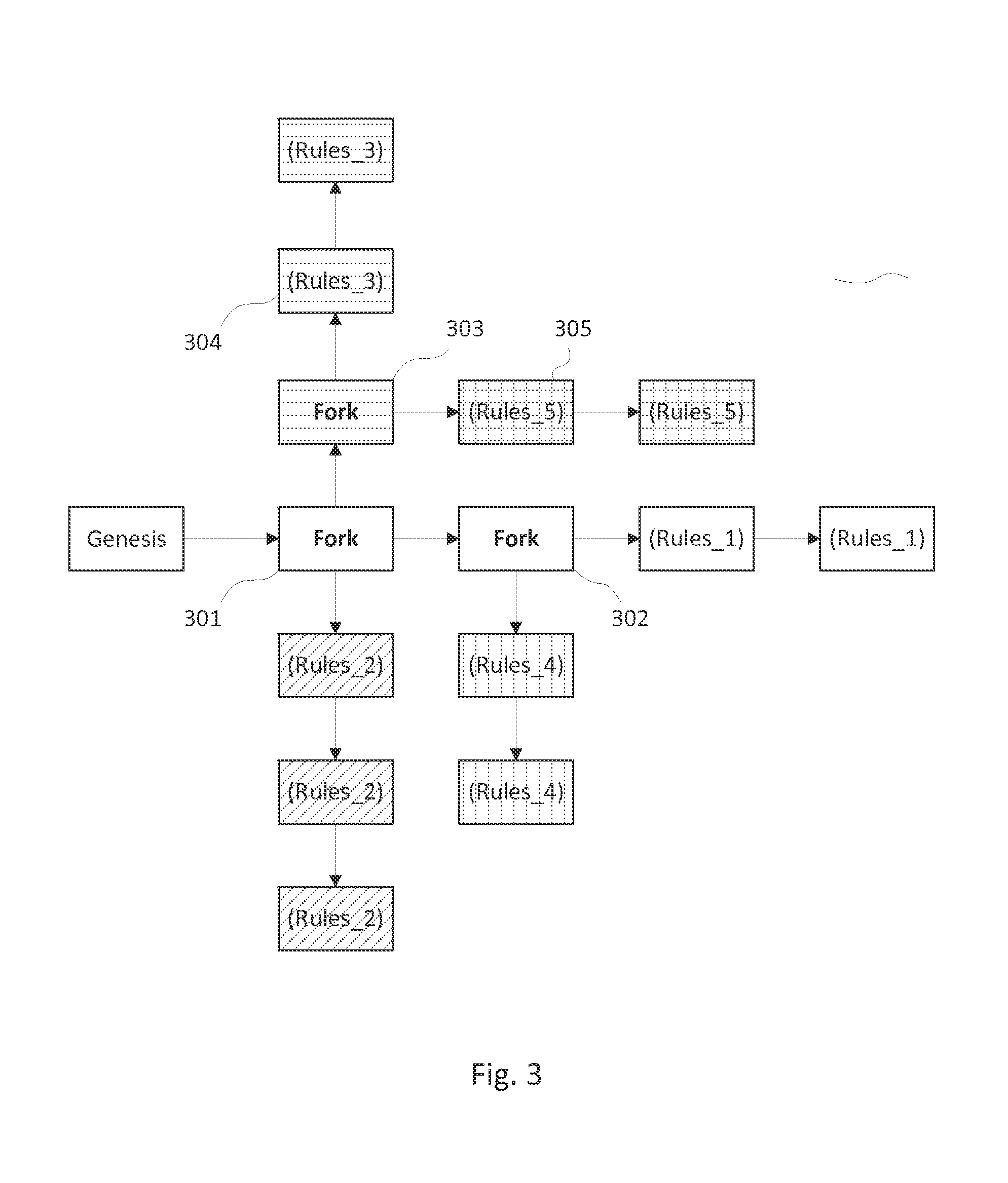

System and method for creating a multi-branched blockchain with configurable protocol rules

ActiveUS20160028552A1Improve securityQuickly become obsoleteMemory architecture accessing/allocationUser identity/authority verificationPrimitive stateEmerging technologies

The present invention generally relates to blockchain technology. Specifically, this invention relates to creating a blockchain called a slidechain that allows for multiple valid branches or forks to propagate simultaneously with a customized set of protocol rules embedded in and applied to each fork chain that branches from another chain. The invention generally provides a computer-implemented method for accessing, developing and maintaining a decentralized database through a peer-to-peer network, to preserve the original state of data inputs while adapting to changing circumstances, user preferences, and emerging technological capabilities.

Owner:BLOCKTECH LLC

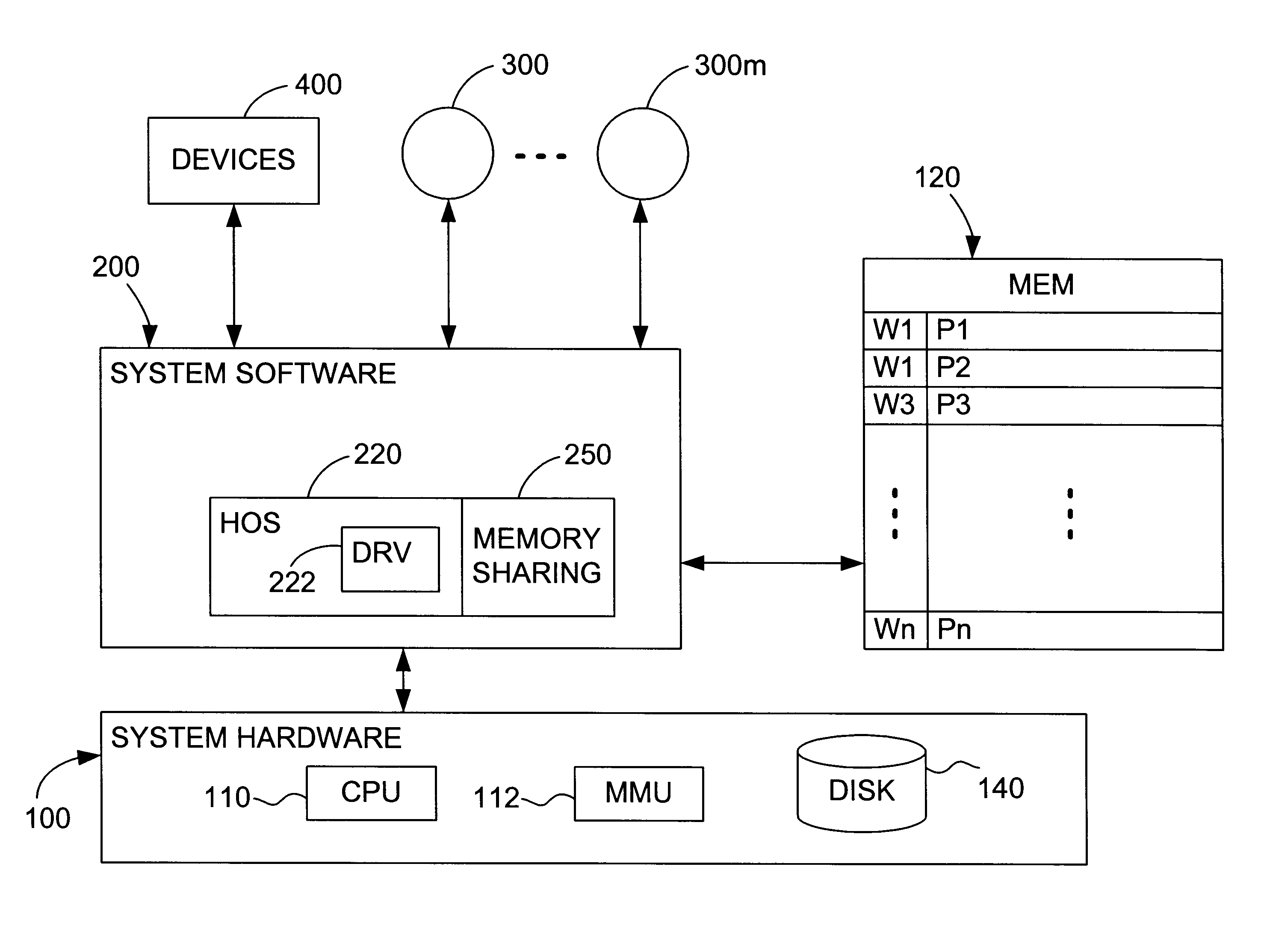

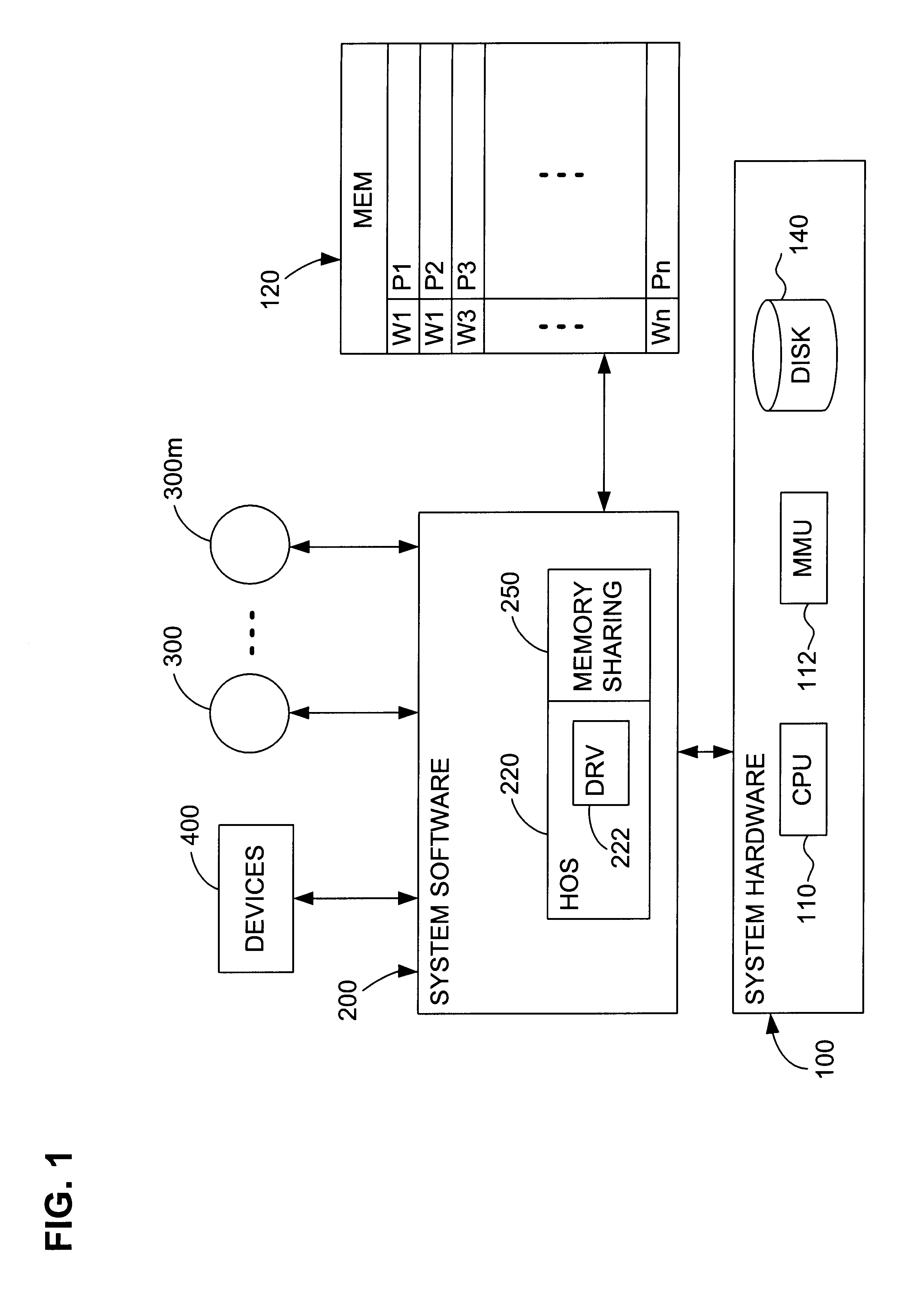

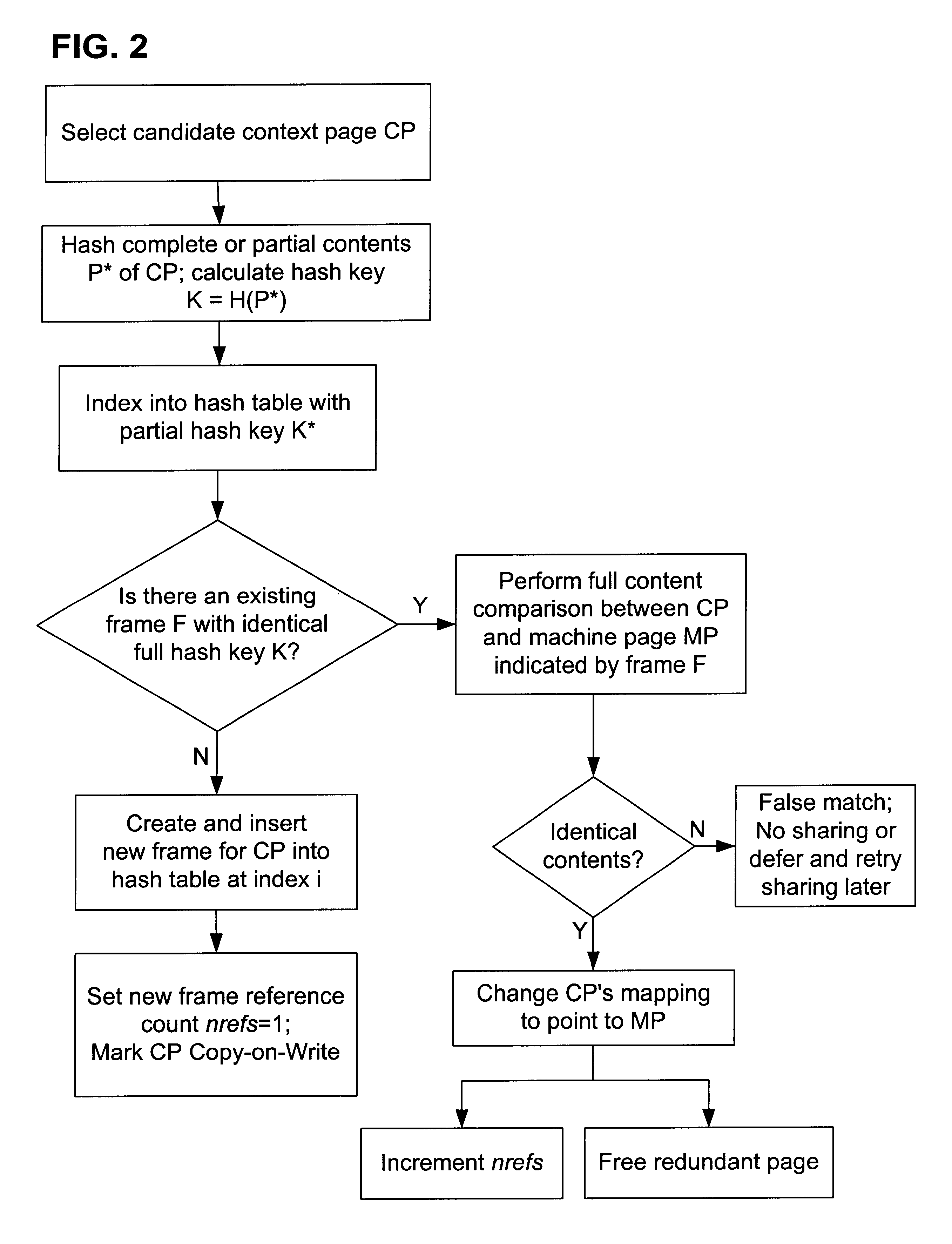

Content-based, transparent sharing of memory units

InactiveUS6789156B1Memory architecture accessing/allocationMemory adressing/allocation/relocationComputer hardwareMultiple context

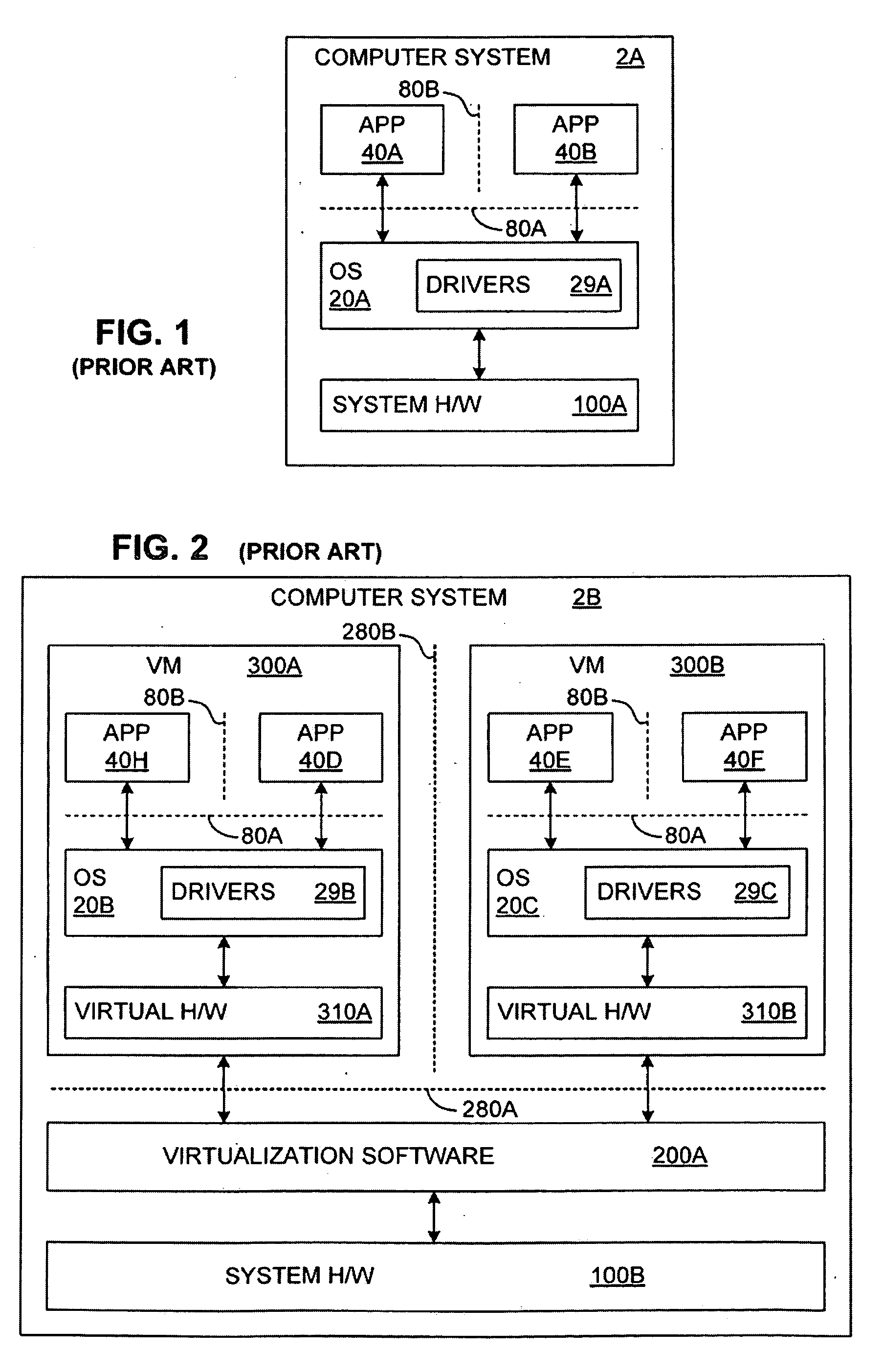

A computer system has one or more software context that share use of a memory that is divided into units such as pages. In the preferred embodiment of the invention, the context are, or include, virtual machines running on a common hardware platform. The context, as opposed to merely the addresses or page numbers, of virtual memory pages that accessible to one or more contexts are examined. If two or more context pages are identical, then their memory mappings are changed to point to a single, shared copy of the page in the hardware memory, thereby freeing the memory space taken up by the redundant copies. The shared copy is ten preferable marked copy-on-write. Sharing is preferably dynamic, whereby the presence of redundant copies of pages is preferably determined by hashing page contents and performing full content comparisons only when two or more pages hash to the same key.

Owner:VMWARE INC

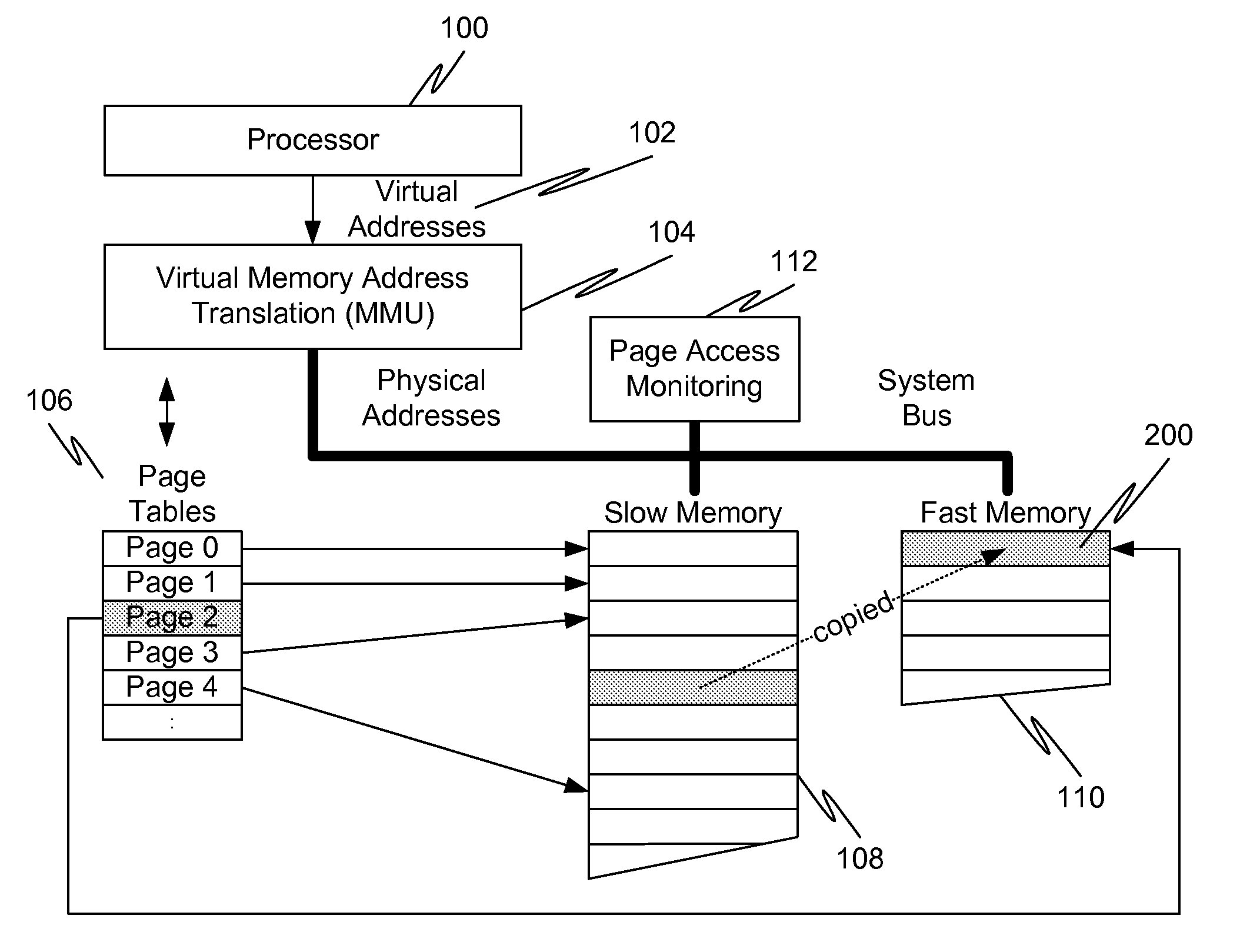

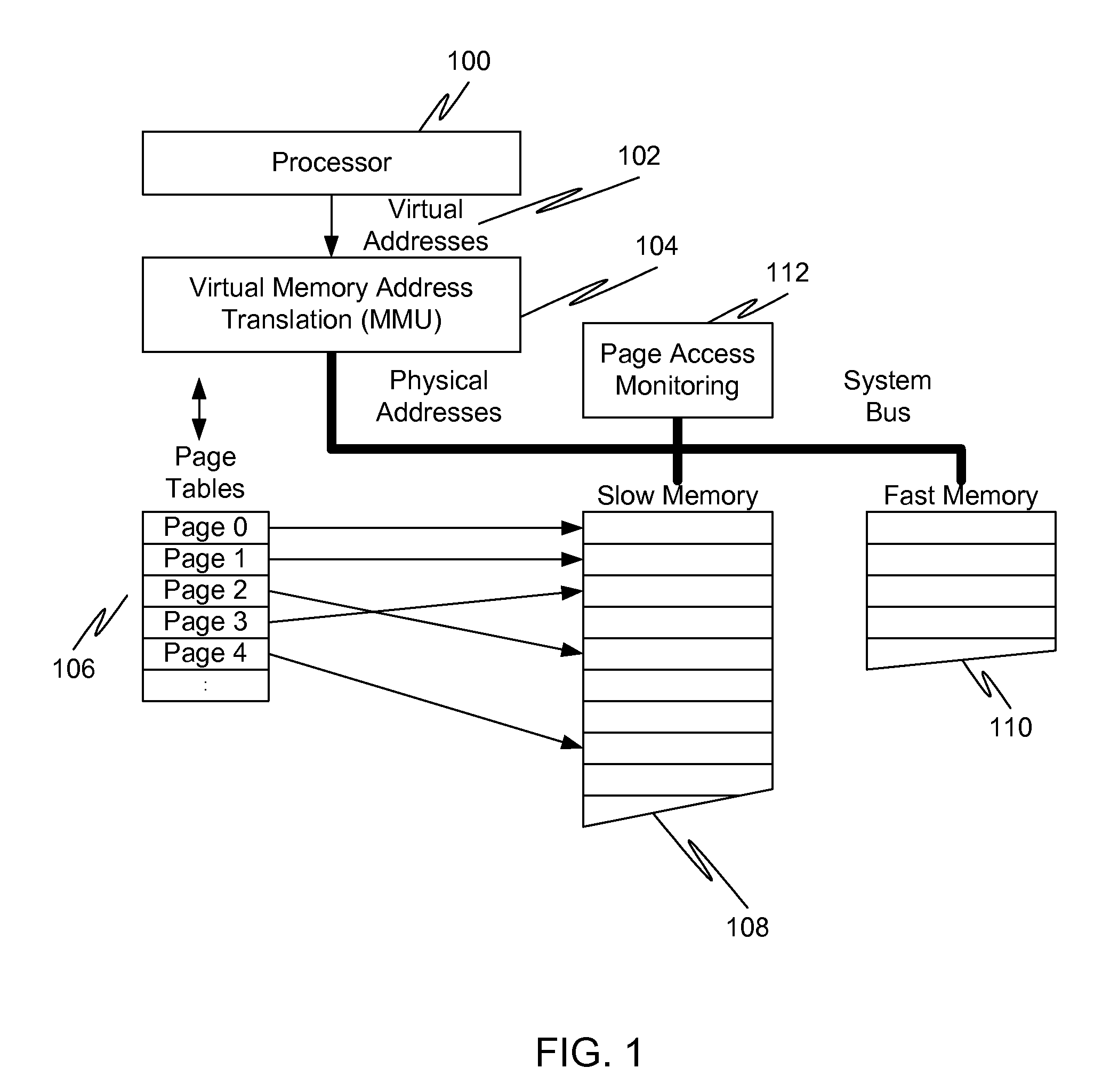

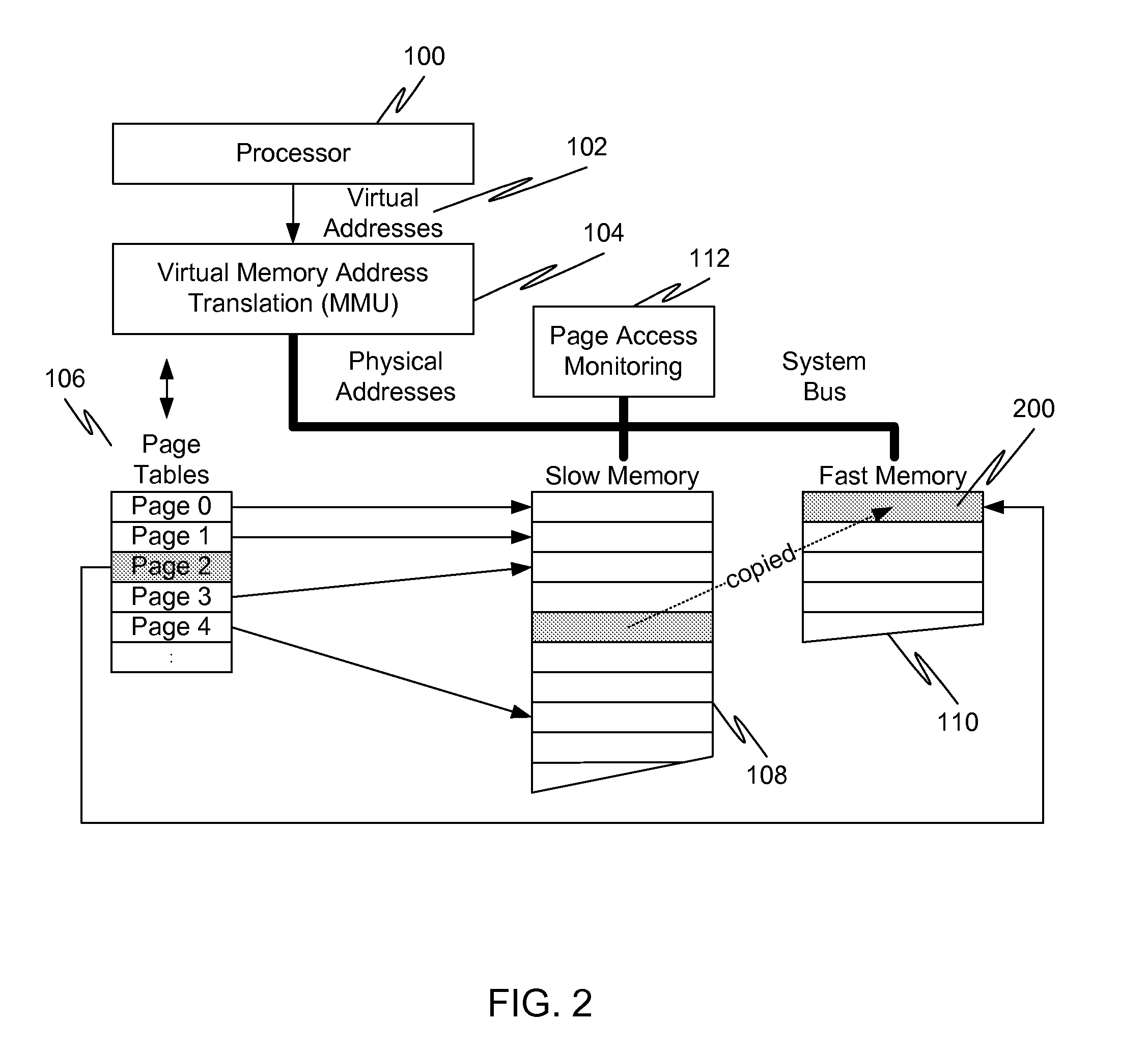

Caching using virtual memory

InactiveUS20120017039A1Memory architecture accessing/allocationMemory adressing/allocation/relocationVirtual memoryPage table

In a first embodiment of the present invention, a method for caching in a processor system having virtual memory is provided, the method comprising: monitoring slow memory in the processor system to determine frequently accessed pages; for a frequently accessed page in slow memory: copy the frequently accessed page from slow memory to a location in fast memory; and update virtual address page tables to reflect the location of the frequently accessed page in fast memory.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

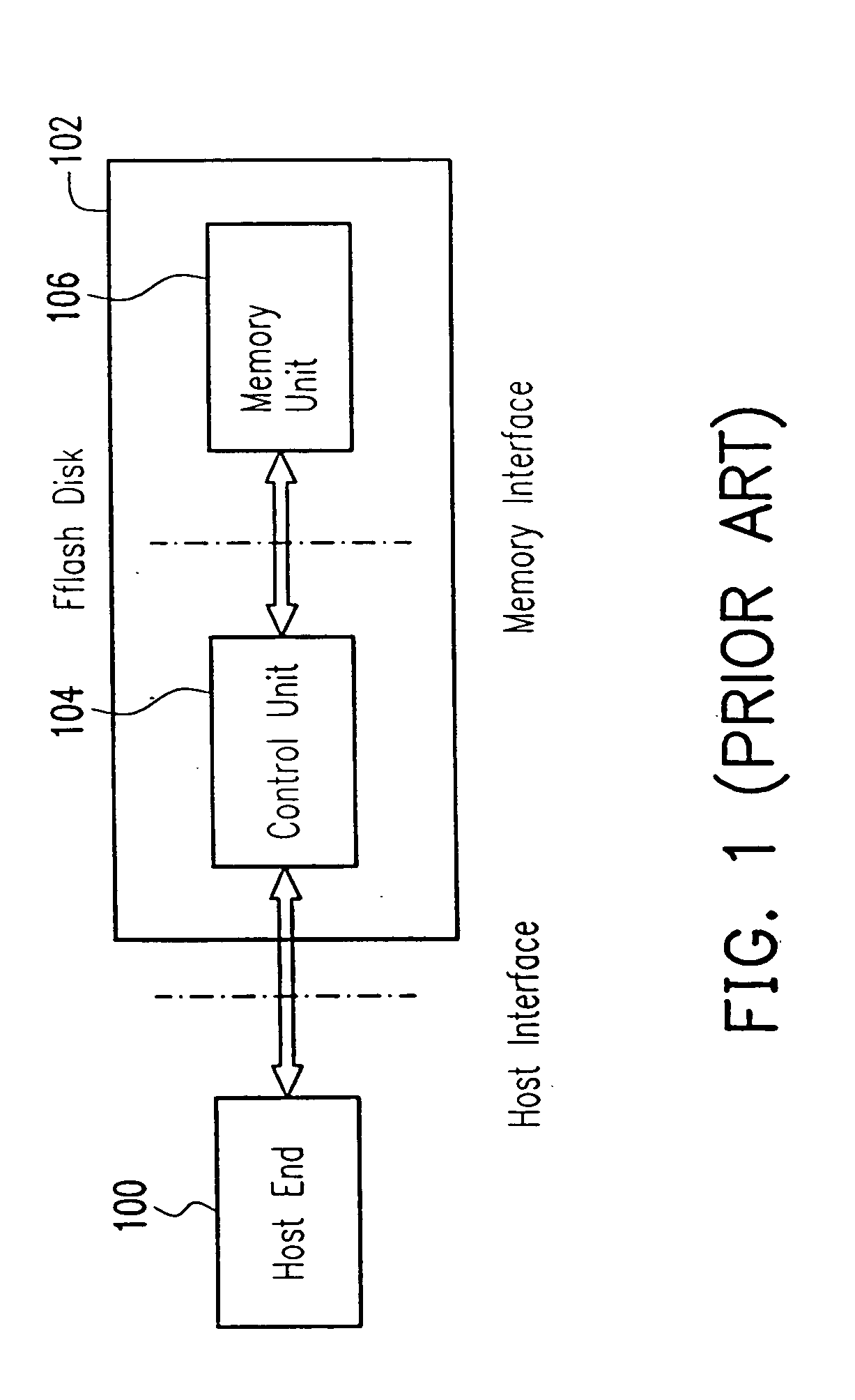

Non-volatile memory storage system

InactiveUS20100125695A1Speed up data transferCost-effective structureMemory architecture accessing/allocationMemory adressing/allocation/relocationRAIDComputer science

The present invention discloses a flash memory storage system, comprising at least one RAID controller; a plurality of flash memory cards electrically connected with the RAID controller; and a cache memory electrically connected with the RAID controller and shared by the RAID controller and the flash memory cards. The cache memory efficiently enhances the system performance. The storage system may comprise more RAID controllers to construct a nested RAID architecture.

Owner:NANOSTAR CORP

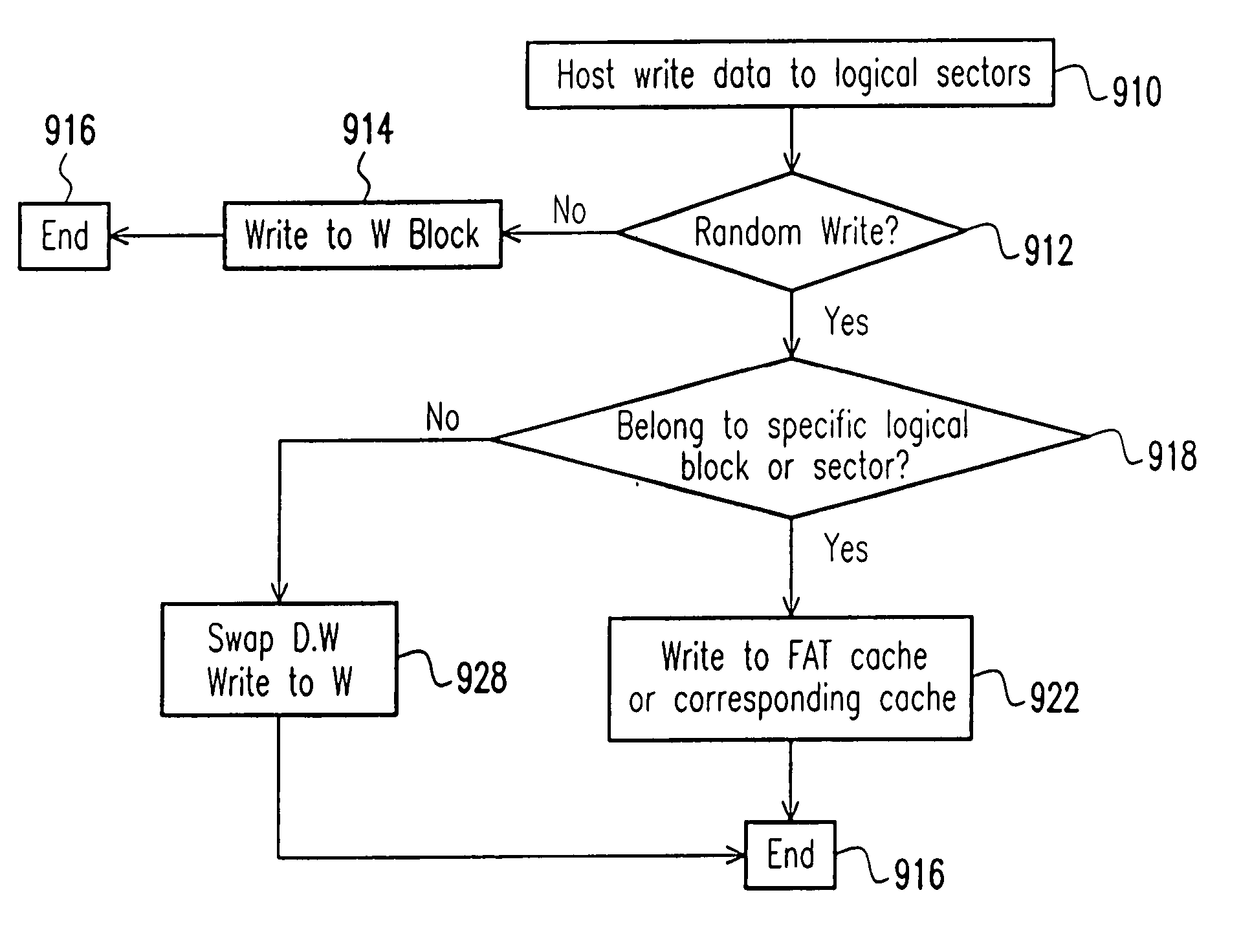

Nonvolatile memory unit with specific cache

InactiveUS20050015557A1Reduce frequencyAvoid actionMemory architecture accessing/allocationInput/output to record carriersOperating systemVolatile memory

The invention provides a method for organizing a writing operation to a nonvolatile memory. The method comprises setting a specific cache area, into which a specific data belonging to a specific group of logical blocks is to be written. It is determined whether or not the writing operation is a random write. If the writing operation is the random write, then the following steps are performed: determining whether or not the writing operation is to write a data that is belonging to the specific group of logical blocks; and writing the data into the specific cache area if the data is belonging to the specific group of logical blocks. As a result, a swap action between a data block and a writing block can be avoided during a random write operation. A storage structure in a nonvolatile memory device are organized to perform the forgoing writing operation.

Owner:SOLID STATE SYST

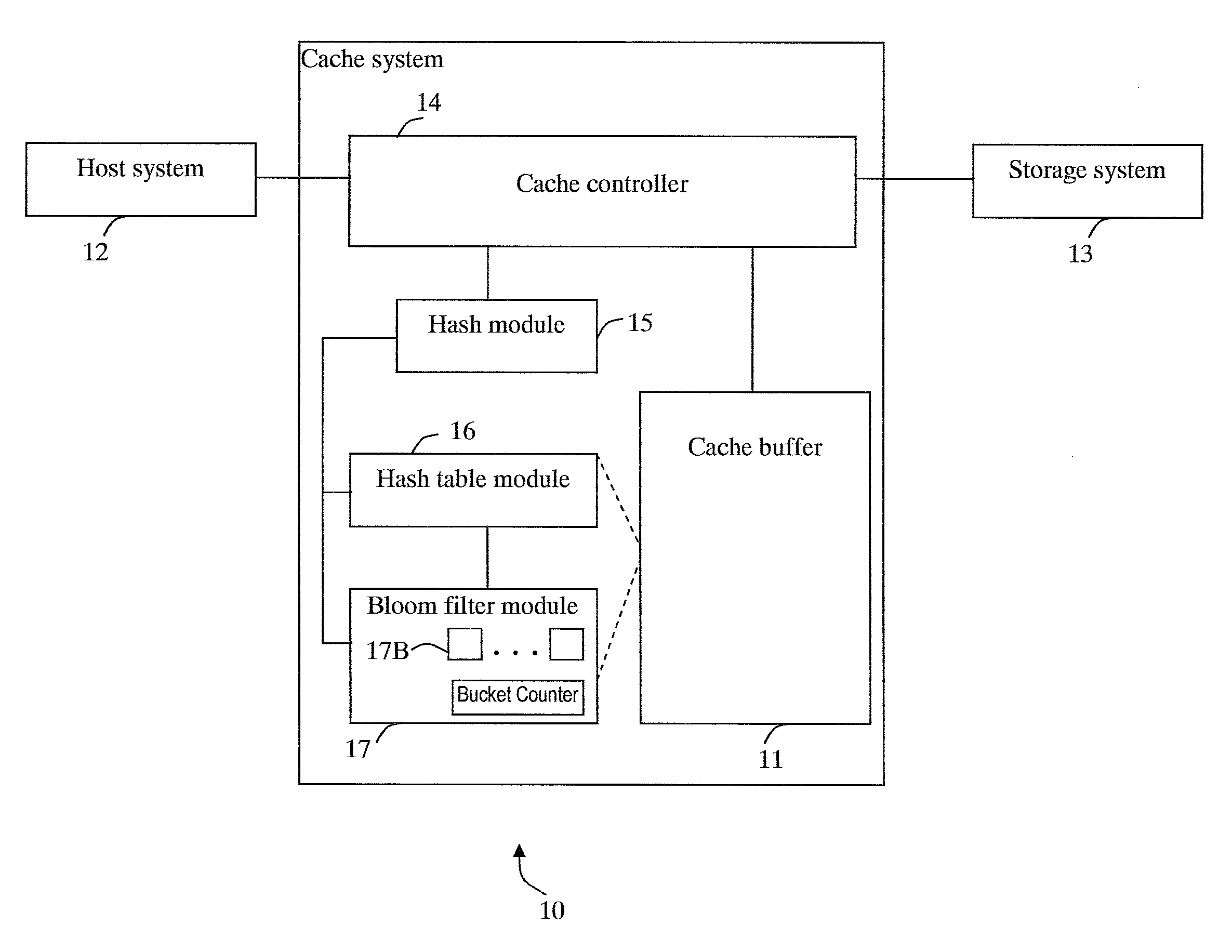

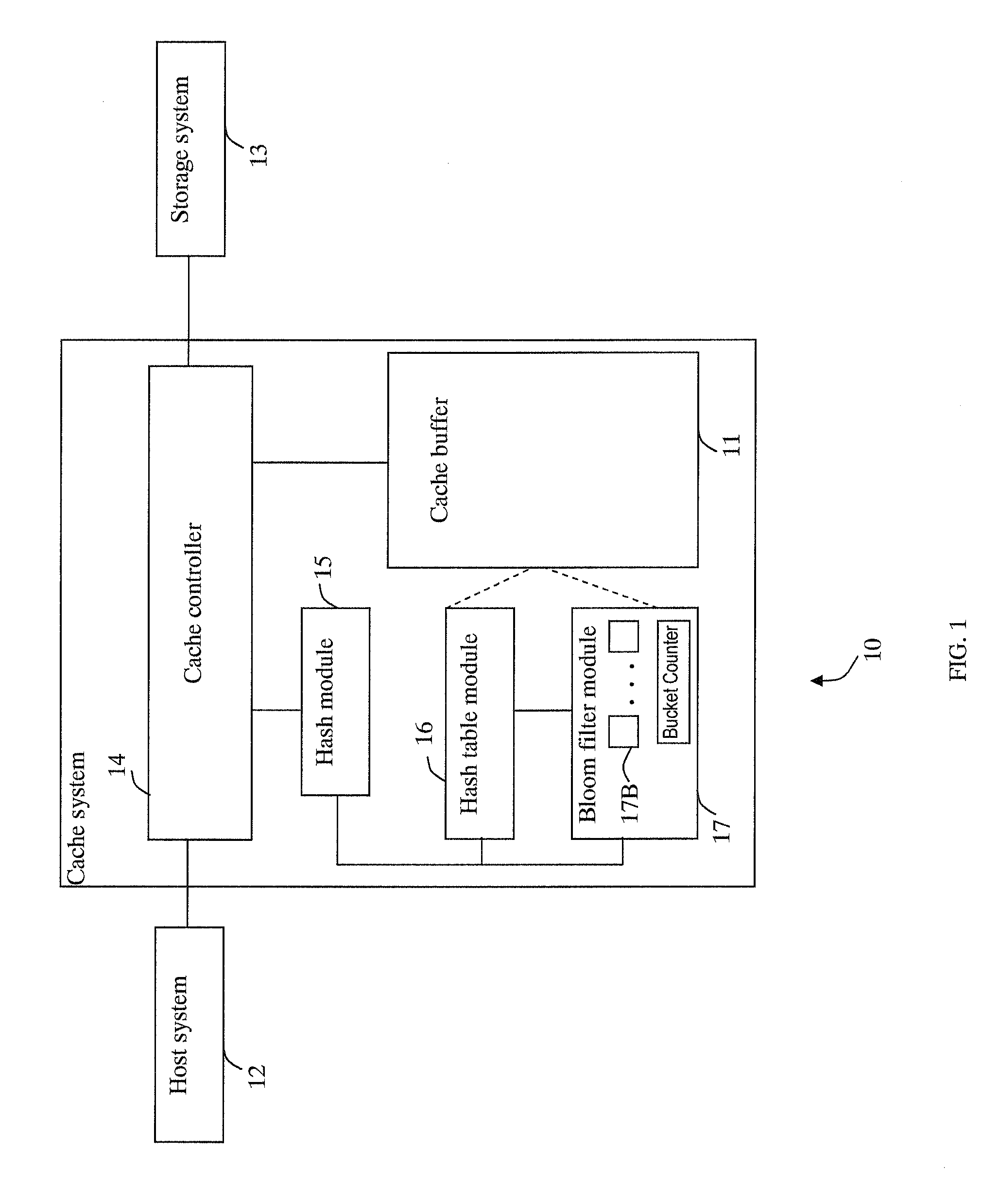

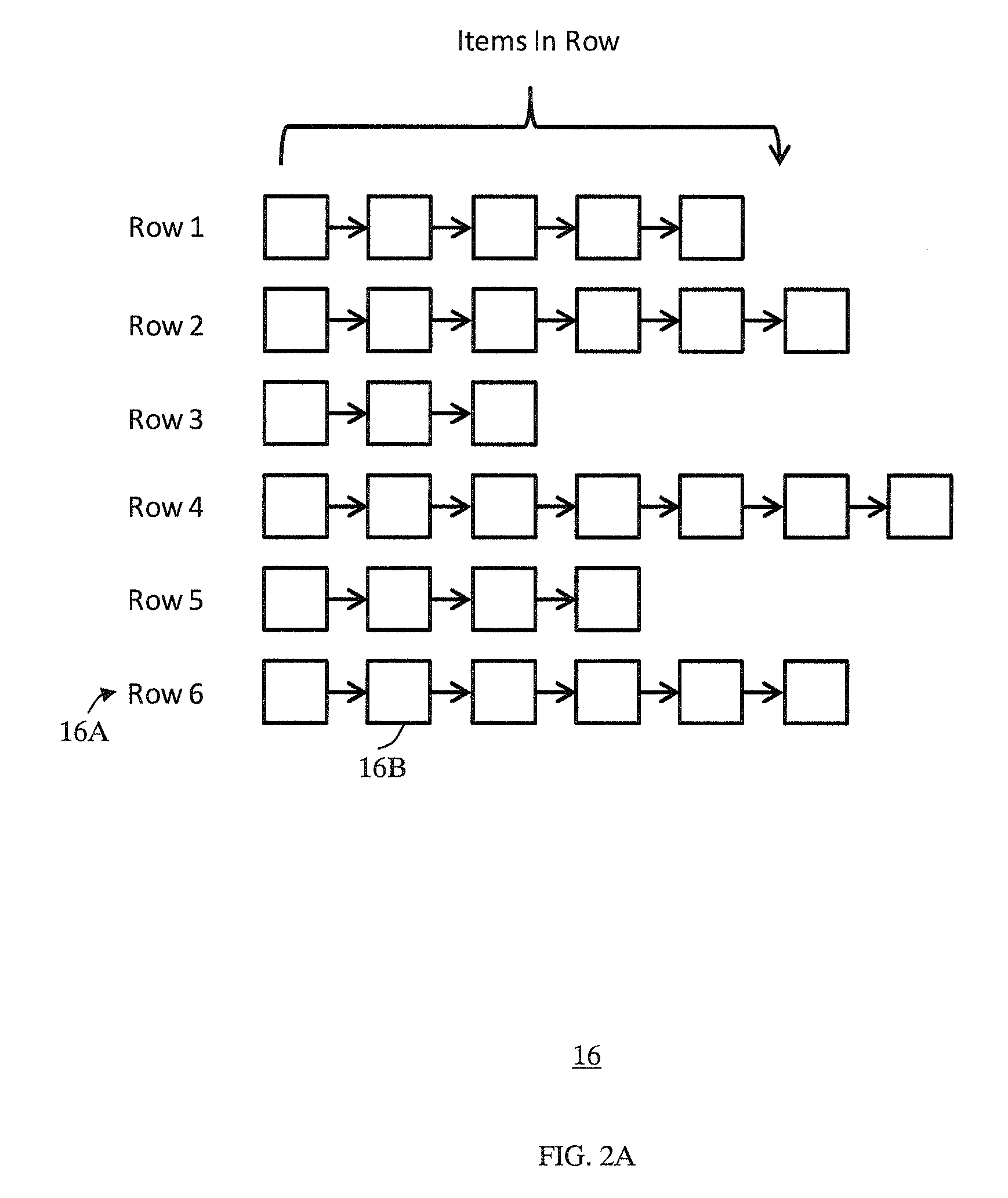

Cache system optimized for cache miss detection

ActiveUS20130326154A1Memory architecture accessing/allocationMemory adressing/allocation/relocationLogical block addressingBloom filter

According to an embodiment of the invention, cache management comprises maintaining a cache comprising a hash table including rows of data items in the cache, wherein each row in the hash table is associated with a hash value representing a logical block address (LBA) of each data item in that row. Searching for a target data item in the cache includes calculating a hash value representing a LBA of the target data item, and using the hash value to index into a counting Bloom filter that indicates that the target data item is either not in the cache, indicating a cache miss, or that the target data item may be in the cache. If a cache miss is not indicated, using the hash value to select a row in the hash table, and indicating a cache miss if the target data item is not found in the selected row.

Owner:SAMSUNG ELECTRONICS CO LTD

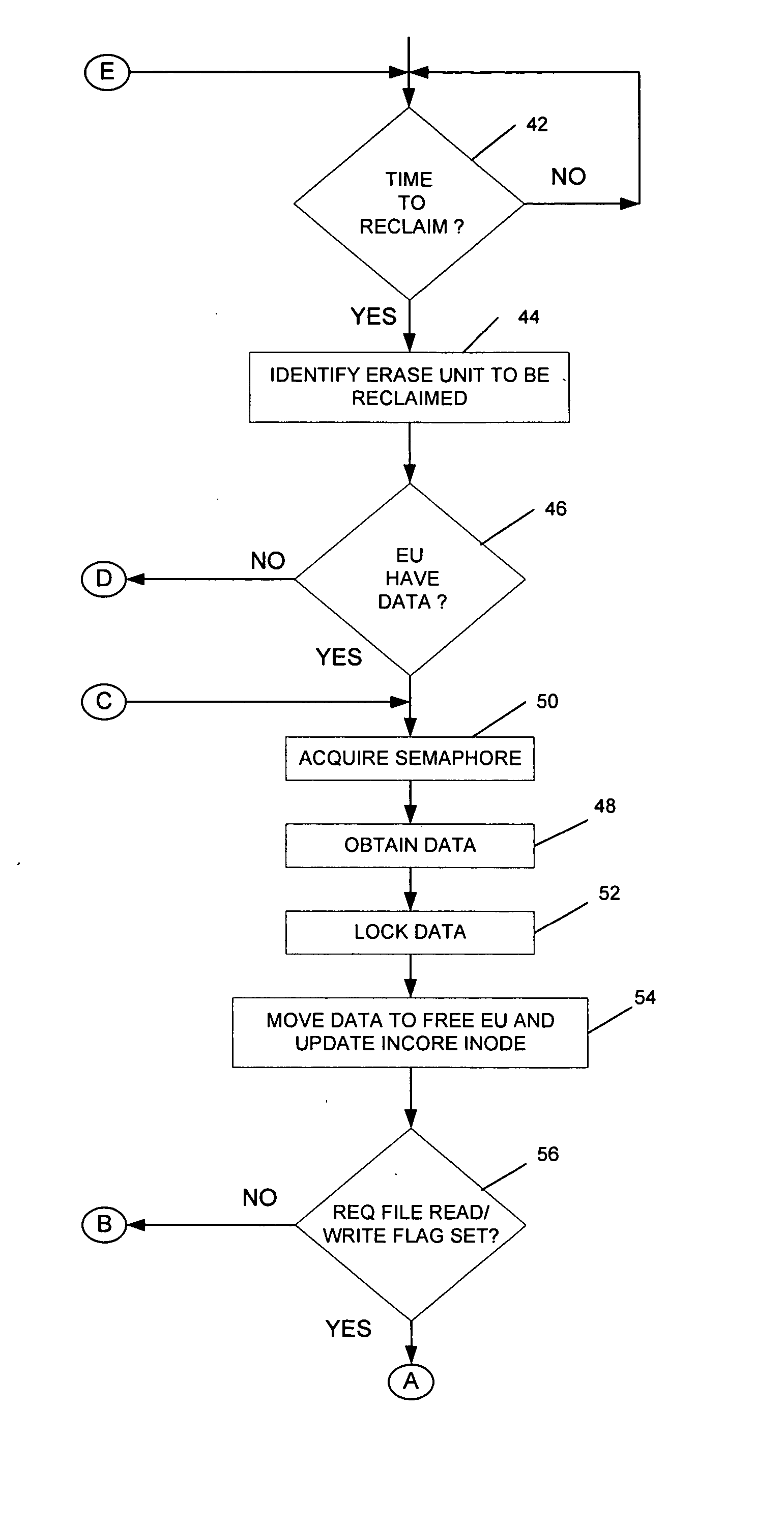

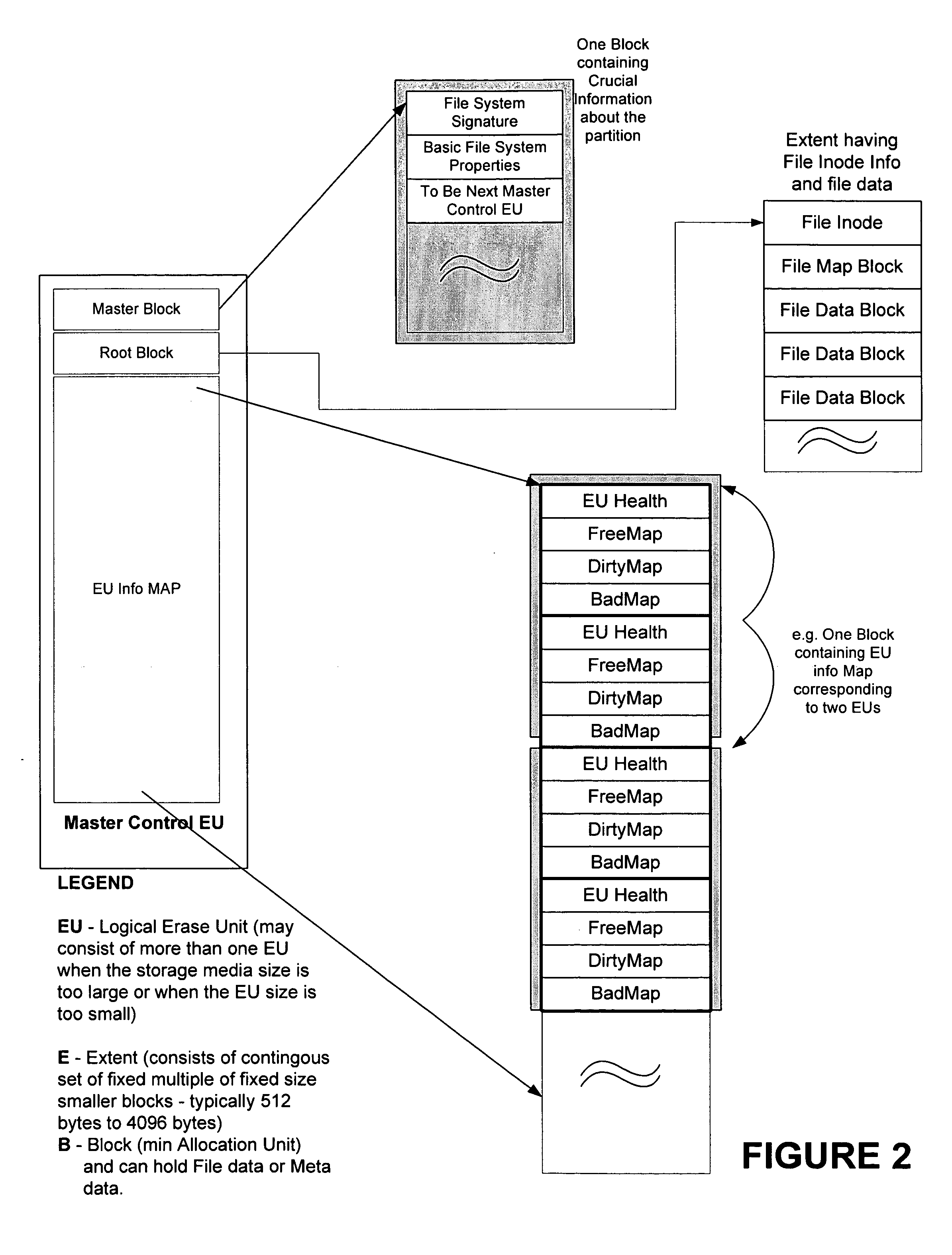

Effective wear-leveling and concurrent reclamation method for embedded linear flash file systems

InactiveUS20070150689A1Memory architecture accessing/allocationComputer security arrangementsRecovery methodFlash file system

Reclamation of an Erase Unit of a flash memory is performed concurrently with a file operation on the flash memory by initiating a reclamation operation on the individually erasable portion of the memory, by suspending the reclamation operation for the file operation, by performing the file operation, and by resuming the reclamation operation.

Owner:HONEYWELL INT INC

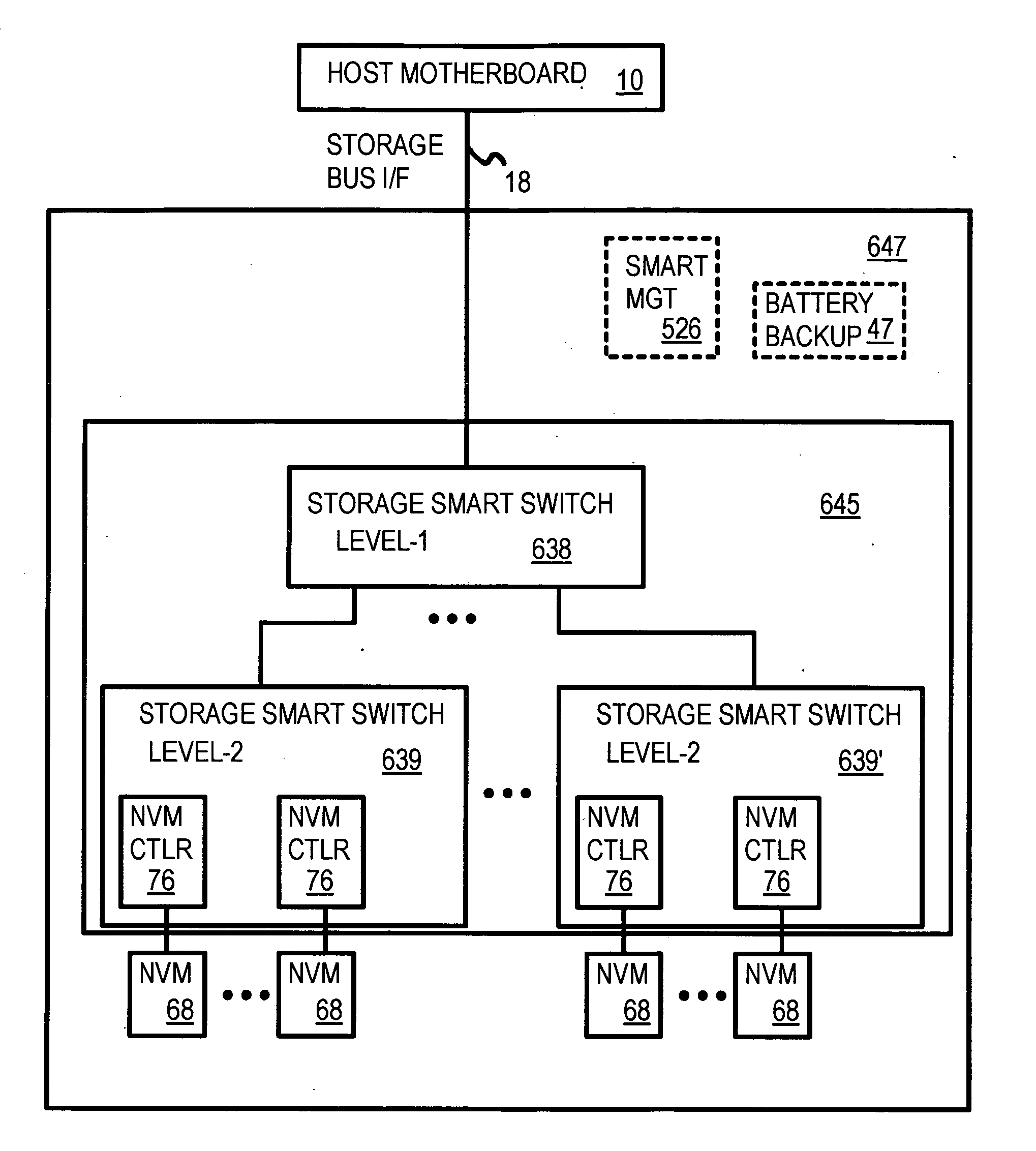

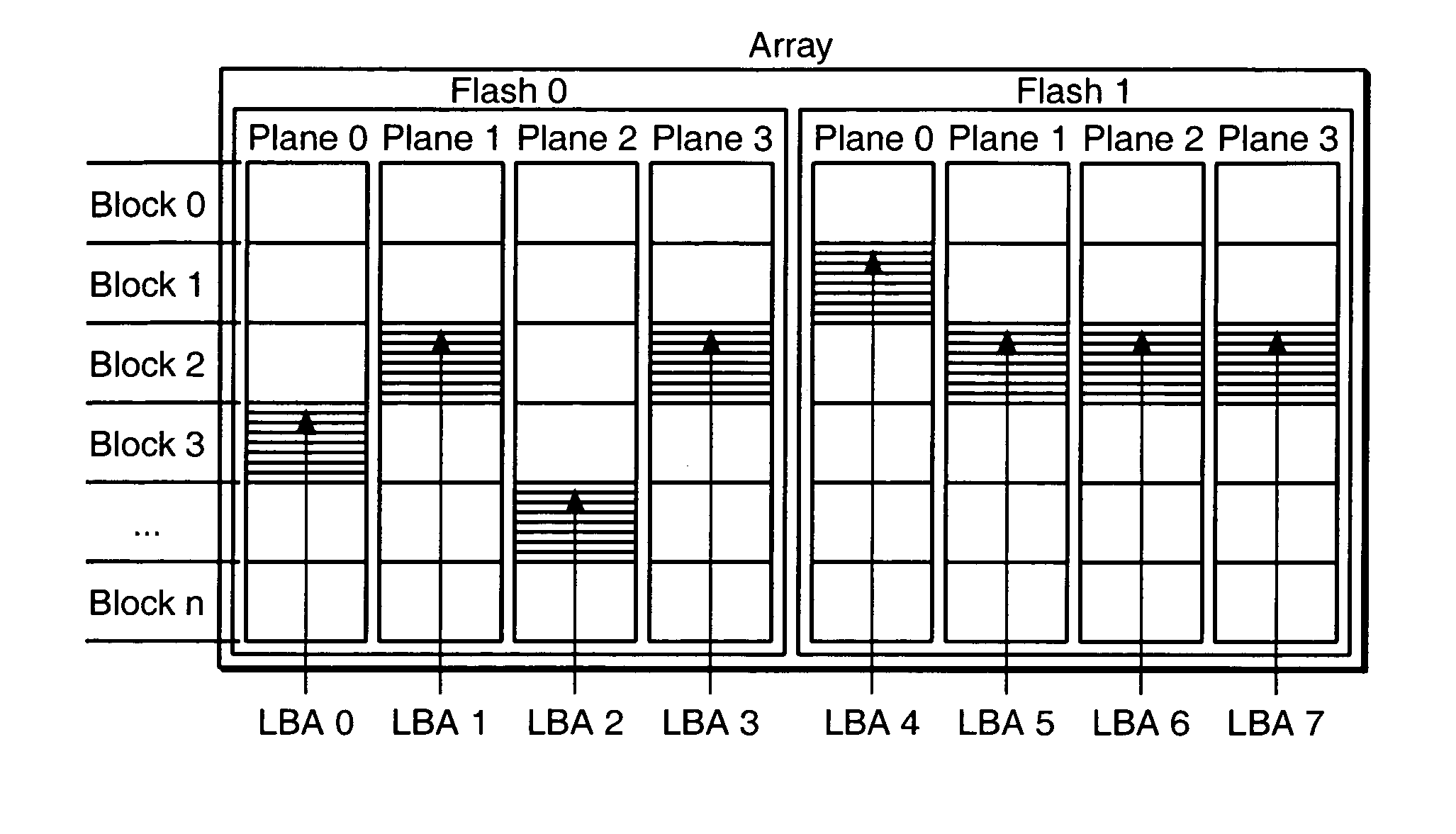

Multi-Level Striping and Truncation Channel-Equalization for Flash-Memory System

InactiveUS20090240873A1Memory architecture accessing/allocationError preventionLogical block addressingData access

Truncation reduces the available striped data capacity of all flash channels to the capacity of the smallest flash channel. A solid-state disk (SSD) has a smart storage switch salvages flash storage removed from the striped data capacity by truncation. Extra storage beyond the striped data capacity is accessed as scattered data that is not striped. The size of the striped data capacity is reduced over time as more bad blocks appear. A first-level striping map stores striped and scattered capacities of all flash channels and maps scattered and striped data. Each flash channel has a Non-Volatile Memory Device (NVMD) with a lower-level controller that converts logical block addresses (LBA) to physical block addresses (PBA) that access flash memory in the NVMD. Wear-leveling and bad block remapping are preformed by each NVMD. Source and shadow flash blocks are recycled by the NVMD. Two levels of smart storage switches enable three-level controllers.

Owner:SUPER TALENT TECH CORP

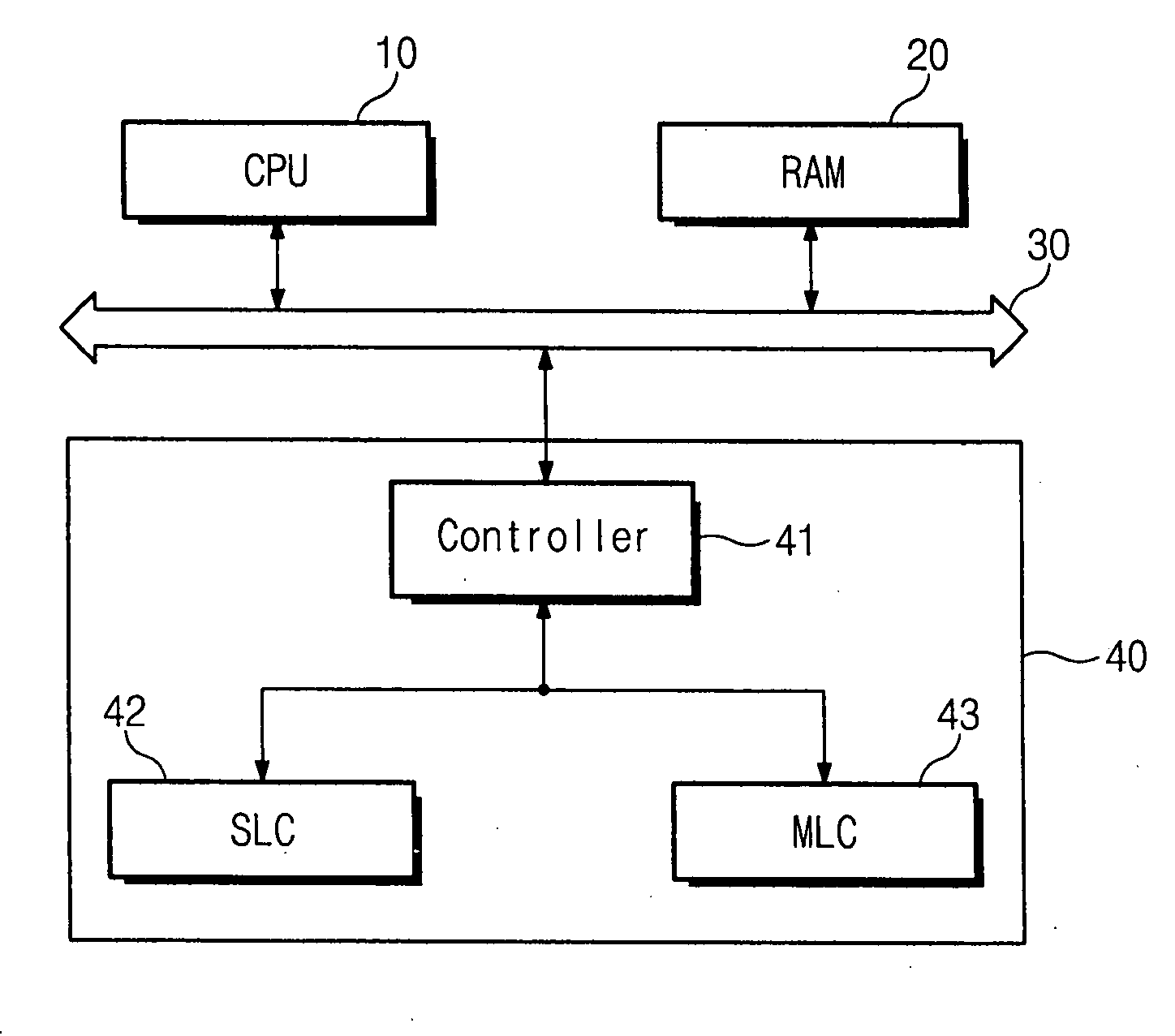

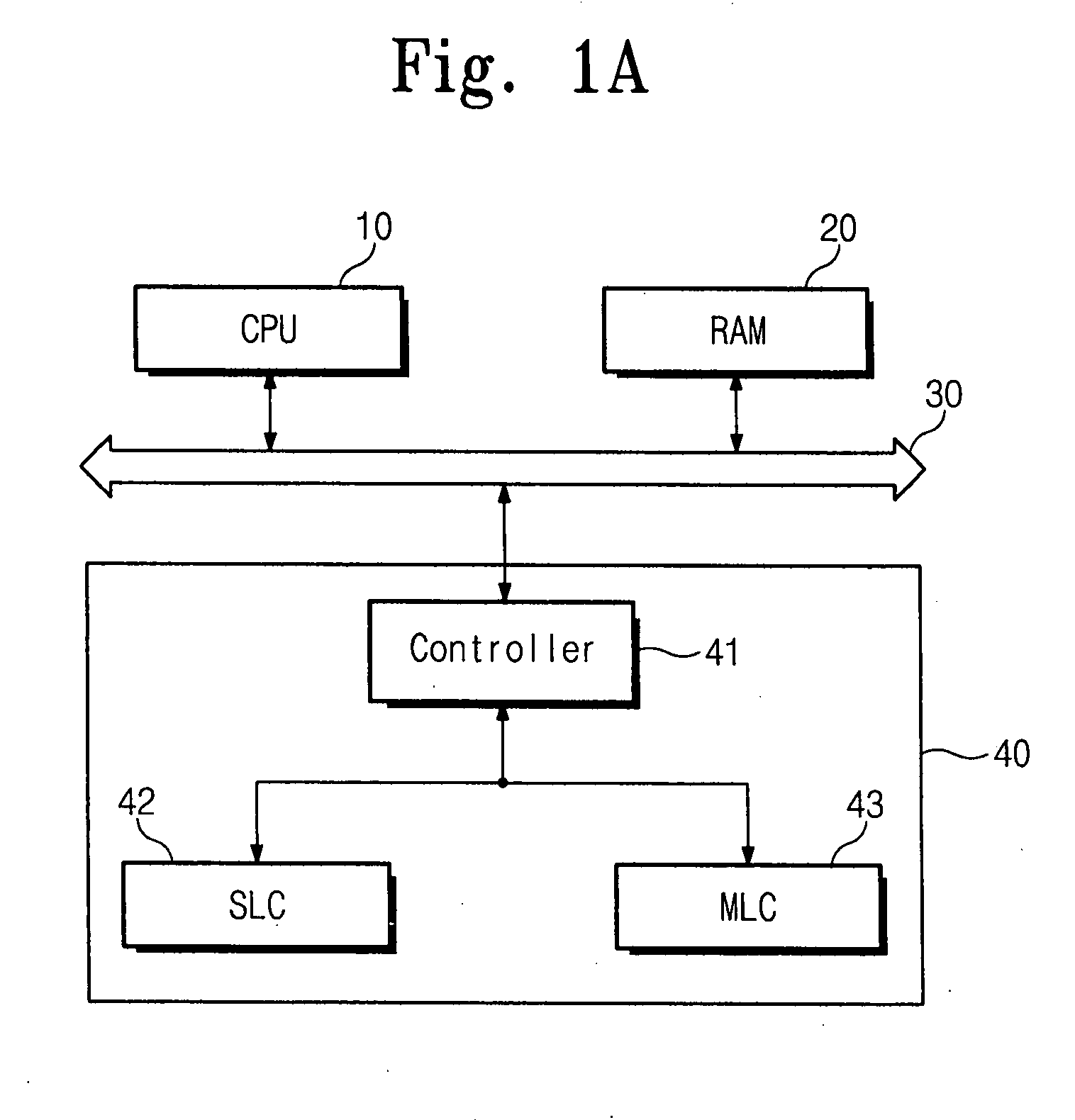

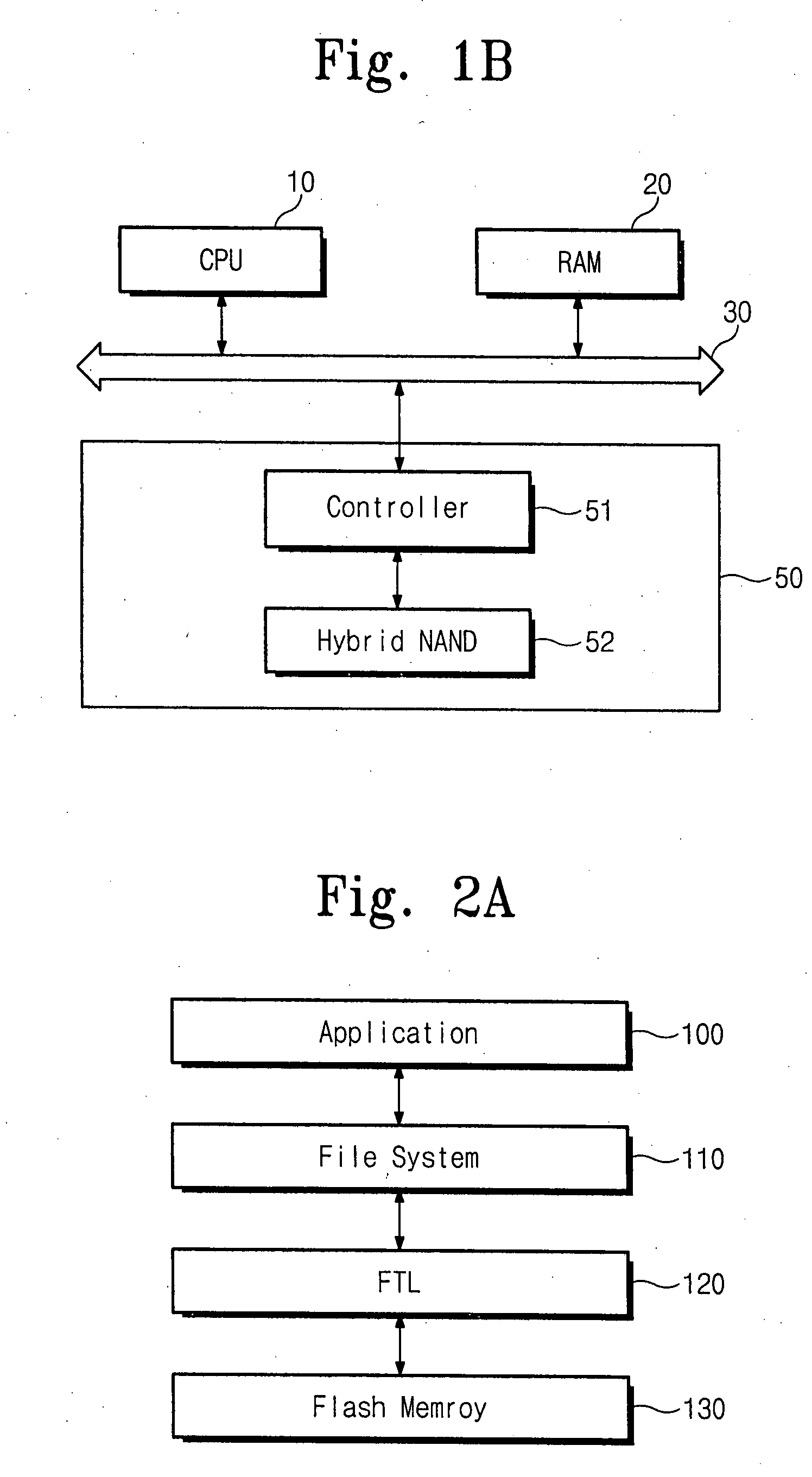

Flash memory device with multi-level cells and method of writing data therein

ActiveUS20080104309A1Memory architecture accessing/allocationMemory adressing/allocation/relocationMulti-level cellAddress mapping

In one aspect, a method of writing data in a flash memory system is provided. The flash memory system forms an address mapping pattern according to a log block mapping scheme. The method includes determining a writing pattern of data to be written in a log block, and allocating one of SLC and MLC blocks to the log block in accordance with the writing pattern of the data.

Owner:SAMSUNG ELECTRONICS CO LTD

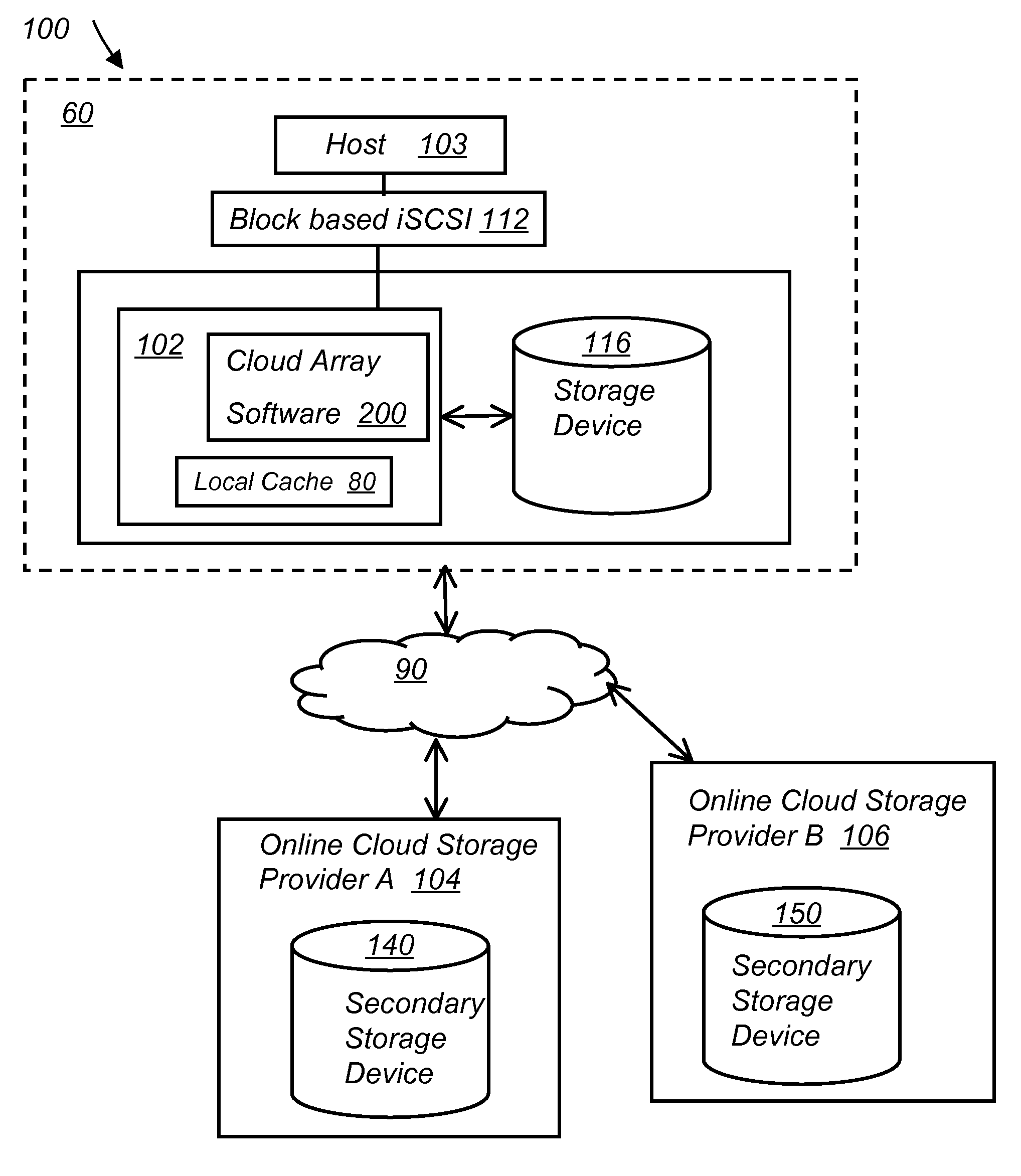

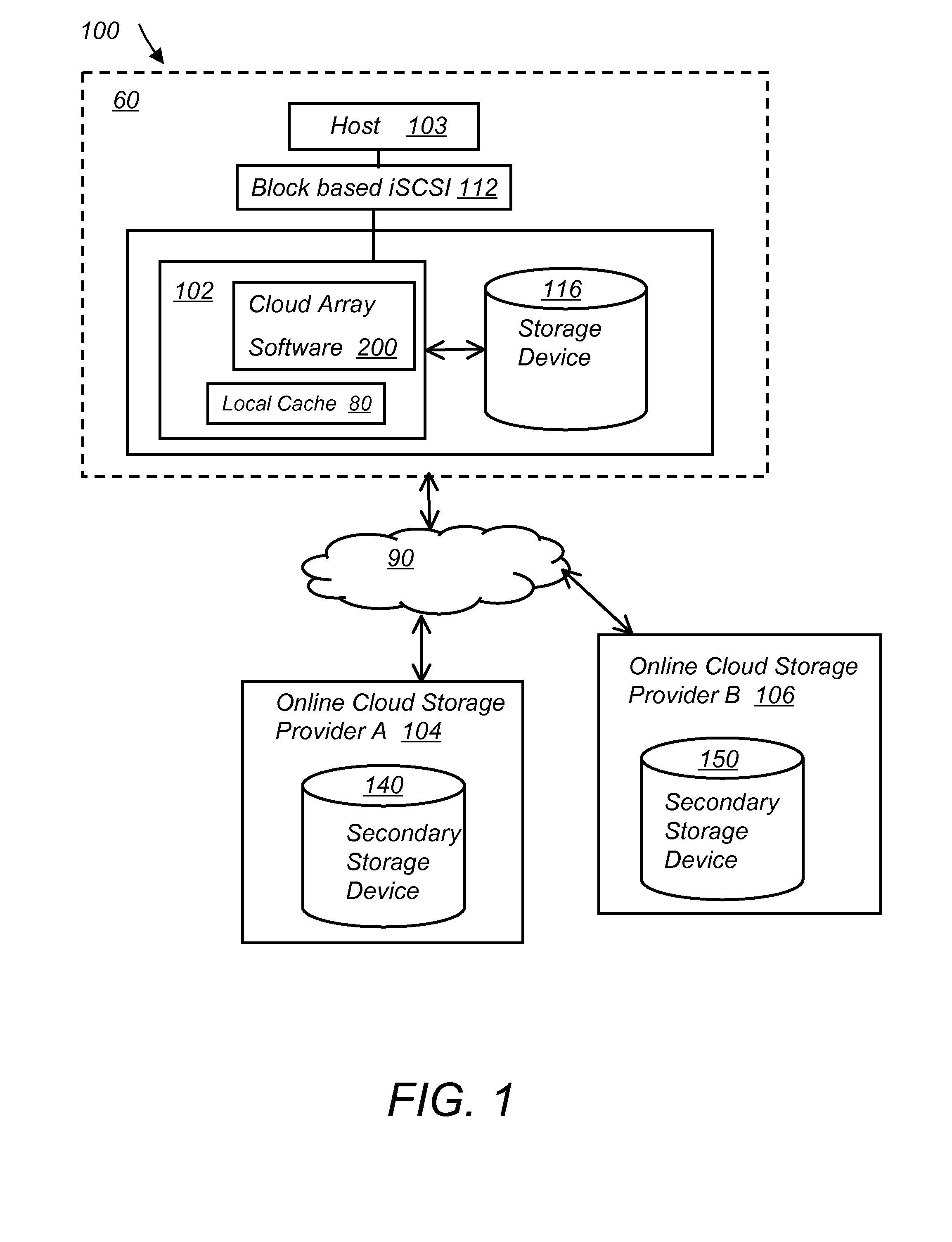

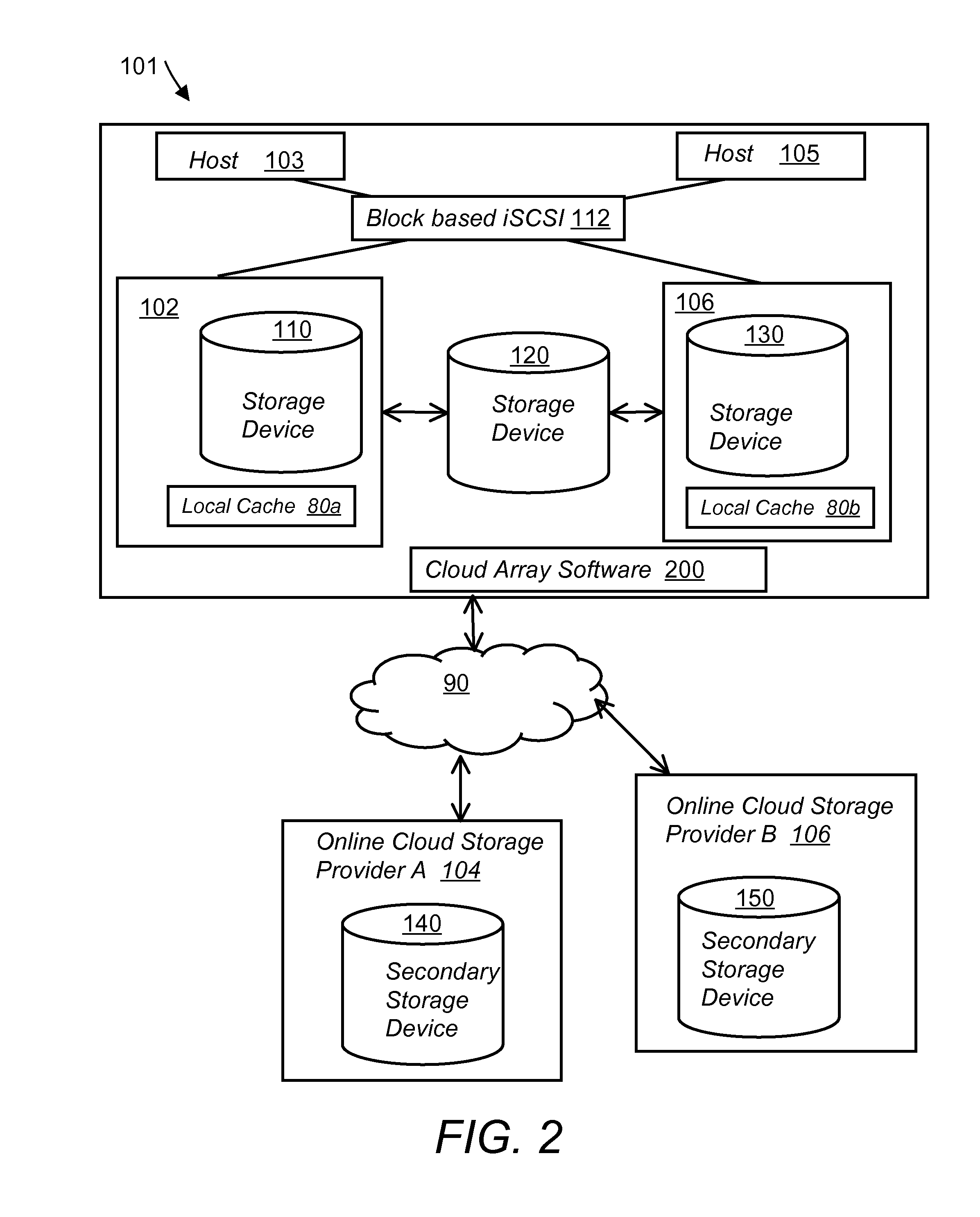

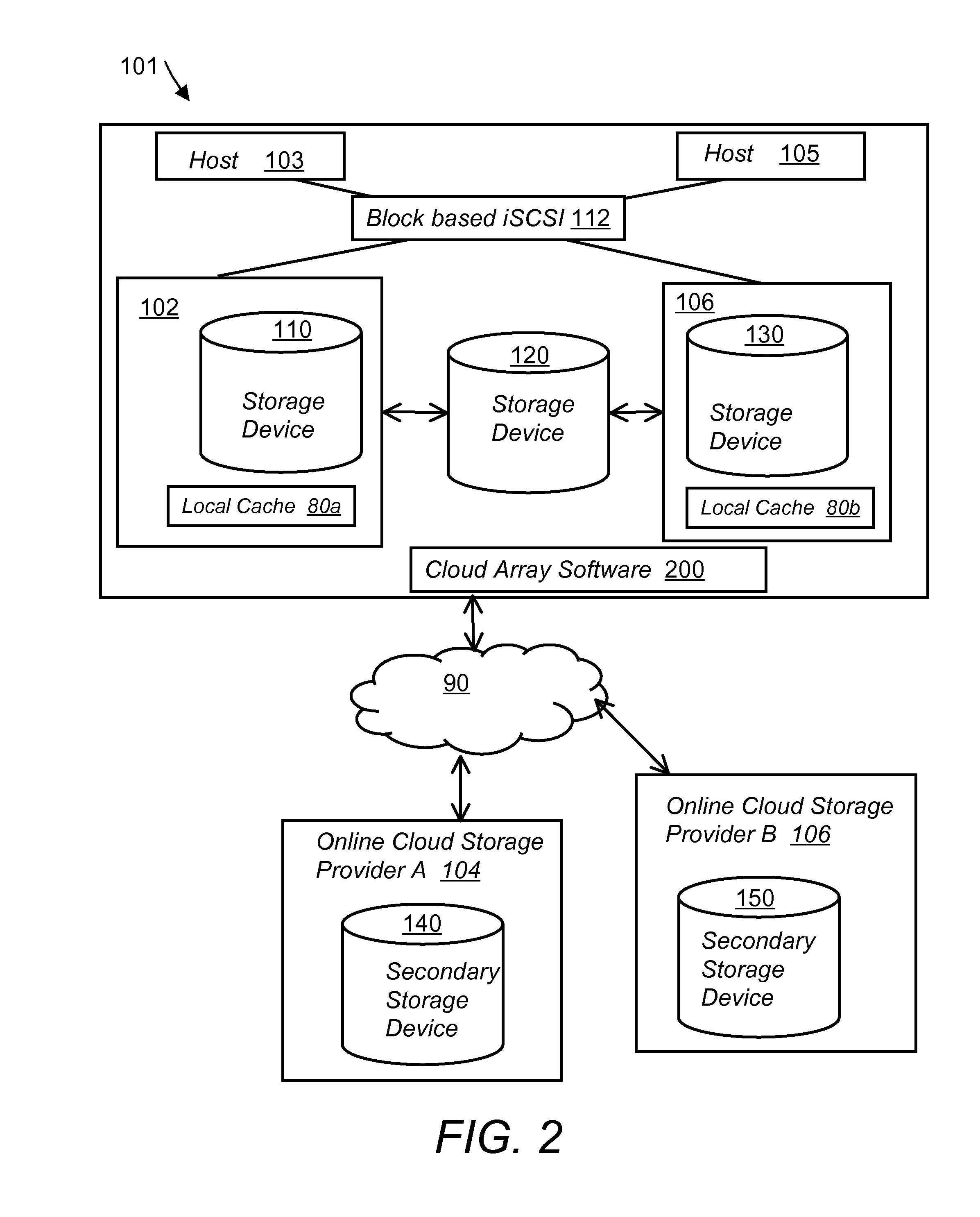

System and method for secure and reliable multi-cloud data replication

ActiveUS8762642B2Reduce the amount requiredReduce storage costsMemory architecture accessing/allocationUnauthorized memory use protectionReliable computingNetwork connection

Owner:EMC IP HLDG CO LLC

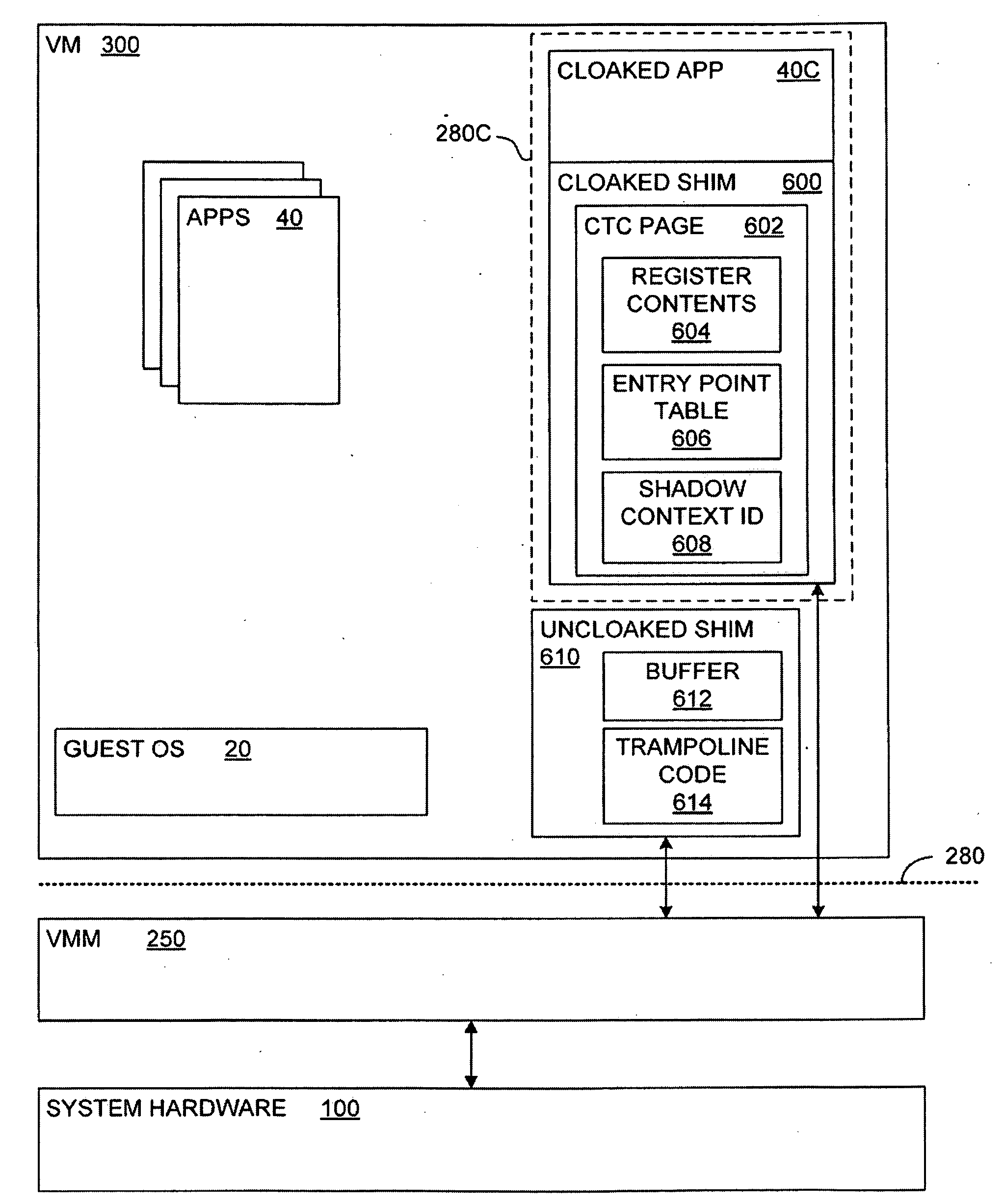

Transparent Memory-Mapped Emulation of I/O Calls

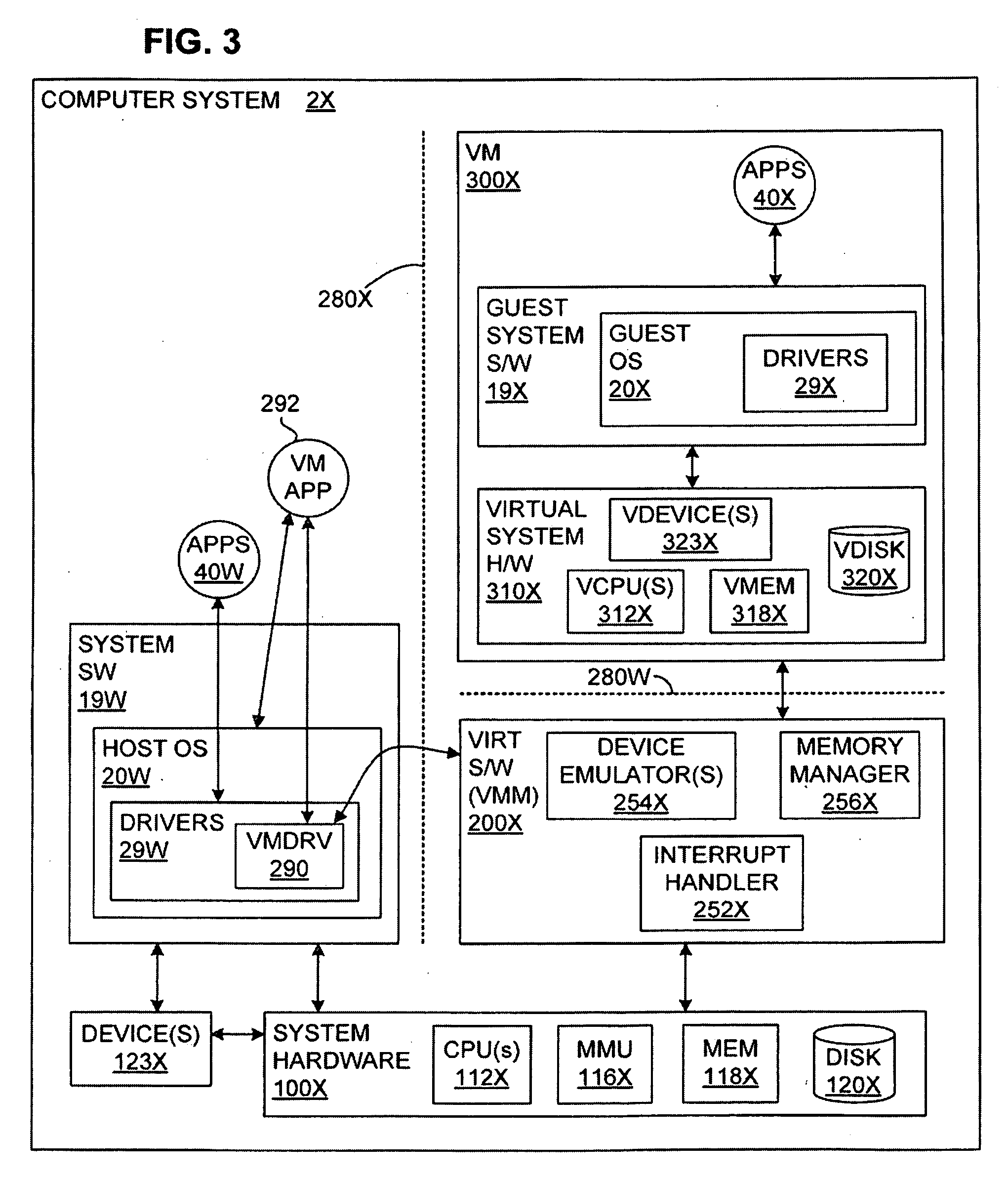

ActiveUS20090113425A1Memory architecture accessing/allocationError detection/correctionSemanticsMemory map

A virtual-machine-based system provides a mechanism to implement application file I / O operations of protected data by implementing the I / O operations semantics in a shim layer with memory-mapped regions. The semantics of these I / O operations are emulated in a shim layer with memory-mapped regions by using a mapping between a process' address space and a file or shared memory object. Data that is protected from viewing by a guest OS running in a virtual machine may nonetheless be accessed by the process.

Owner:VMWARE INC

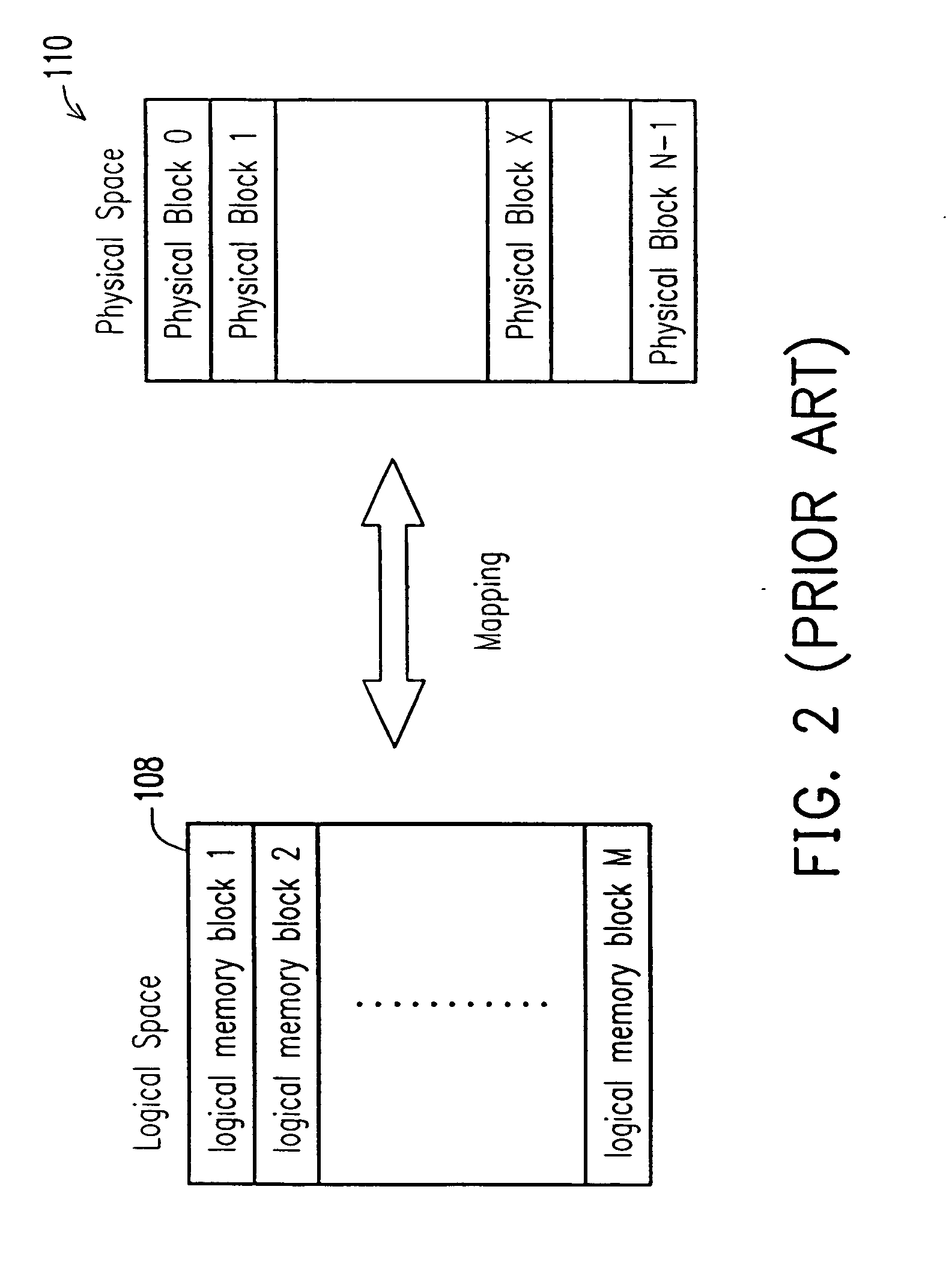

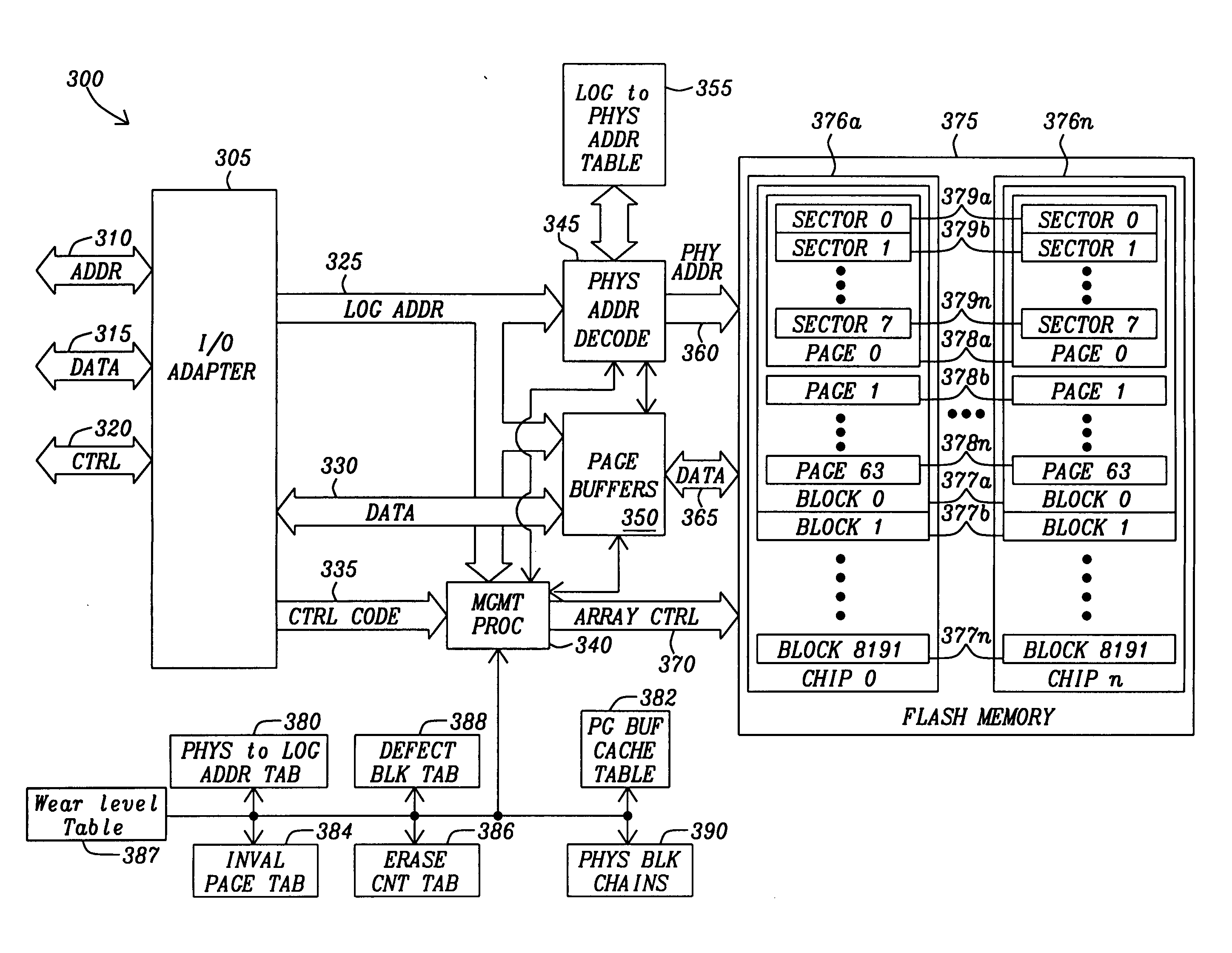

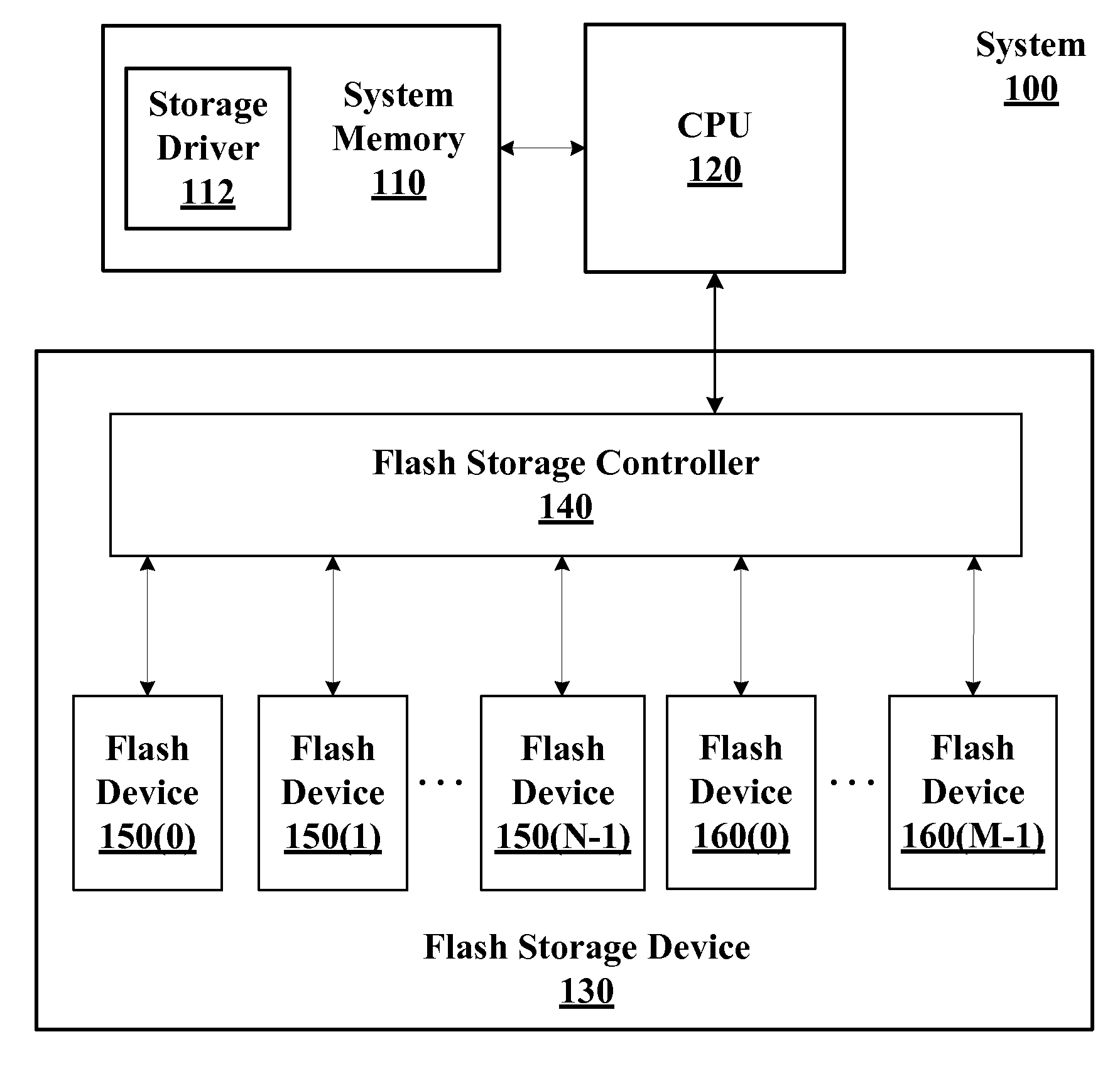

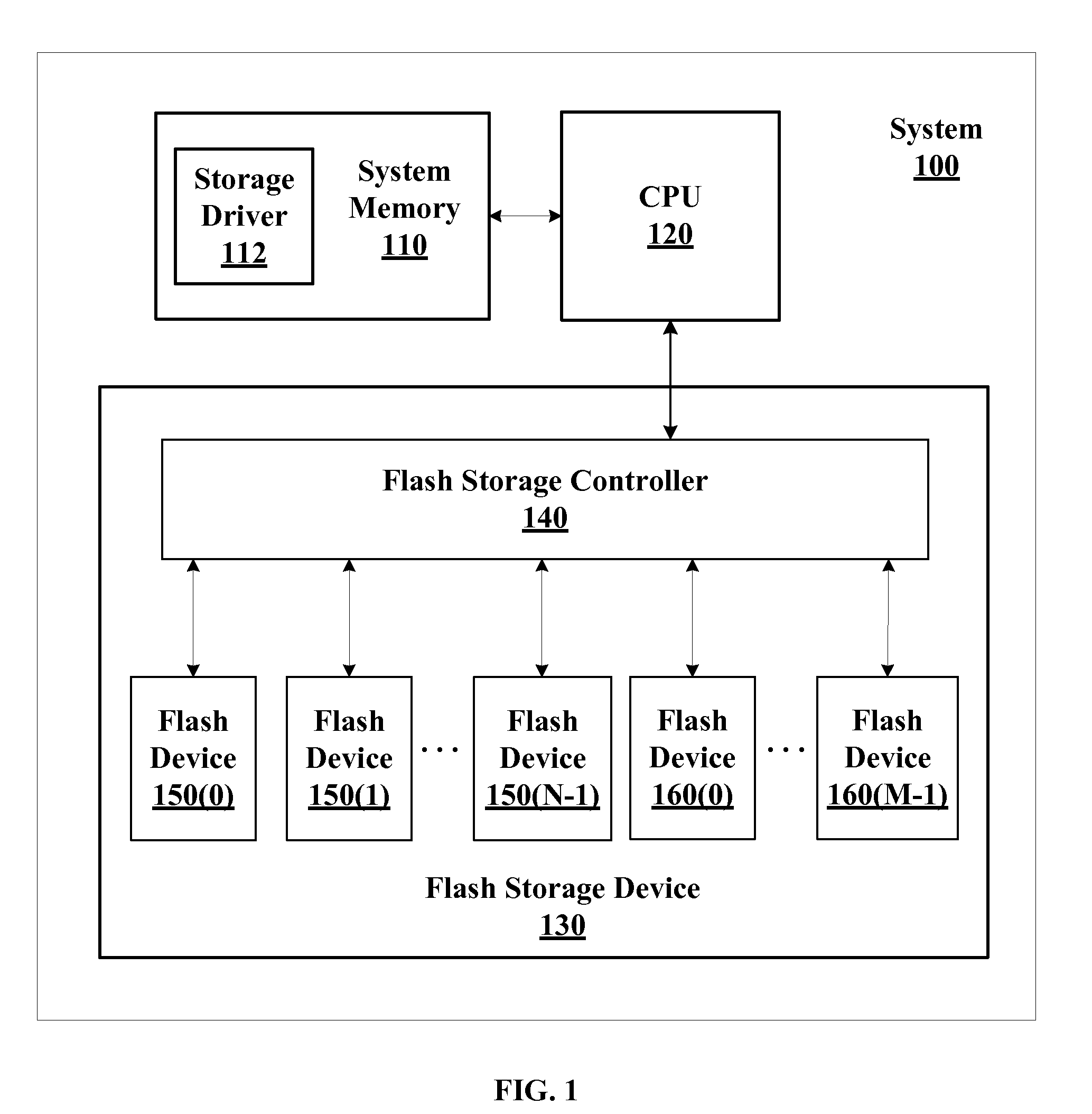

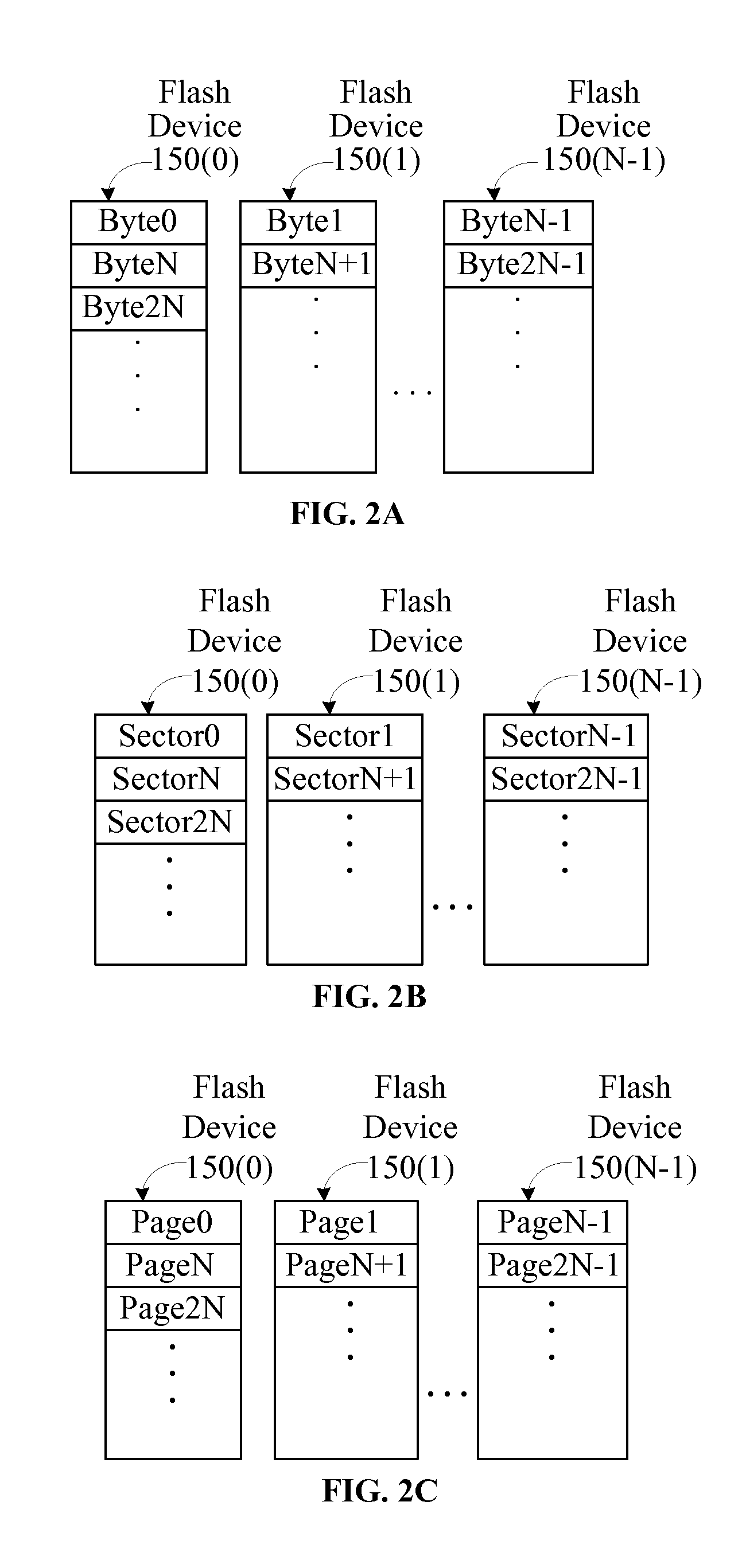

Page based management of flash storage

InactiveUS20110055458A1Memory architecture accessing/allocationMemory adressing/allocation/relocationControl signalPaging

Methods and circuits for page based management of an array of Flash RAM nonvolatile memory devices provide paged base reading and writing and block erasure of a flash storage system. The memory management system includes a management processor, a page buffer, and a logical-to-physical translation table. The management processor is in communication with an array of nonvolatile memory devices within the flash storage system to provide control signals for the programming of selected pages, erasing selected blocks, and reading selected pages of the array of nonvolatile memory devices.

Owner:PIONEER CHIP TECH

Flash memory storage system and method

ActiveUS20070061502A1Overcome disadvantagesEqually distributedMemory architecture accessing/allocationRead-only memoriesParallel computingStorage cell

A flash memory storage system includes a memory array containing a plurality of memory cells and a controller for controlling the flash memory array. The controller dedicates a first group of memory cells to operate with a first number of bits per cell and a second, separate group of memory cells to operate with a second number of bits per cell. A mechanism is provided to apply wear leveling techniques separately to the two groups of cells to evenly wear out the memory cells.

Owner:WESTERN DIGITAL ISRAEL LTD

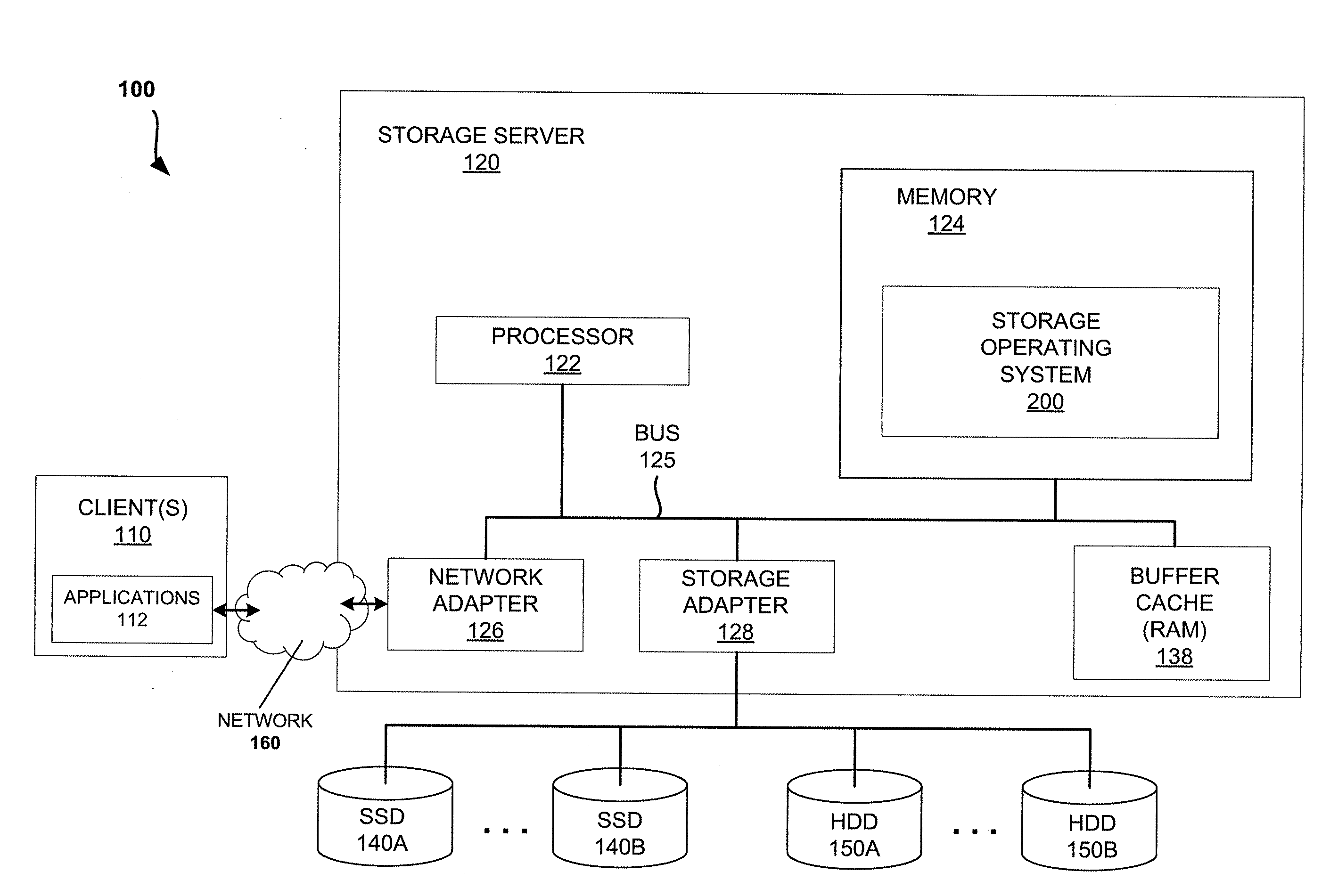

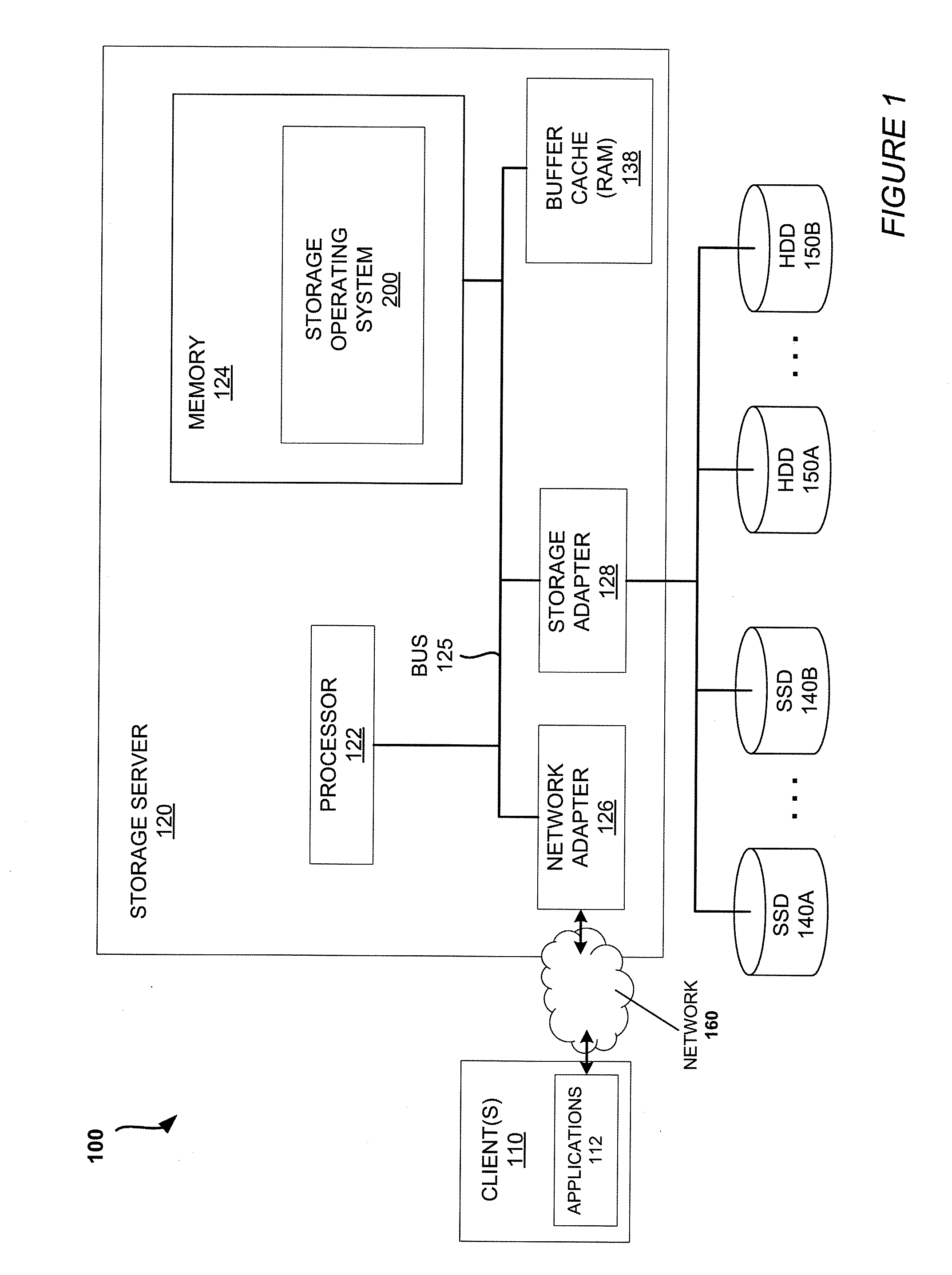

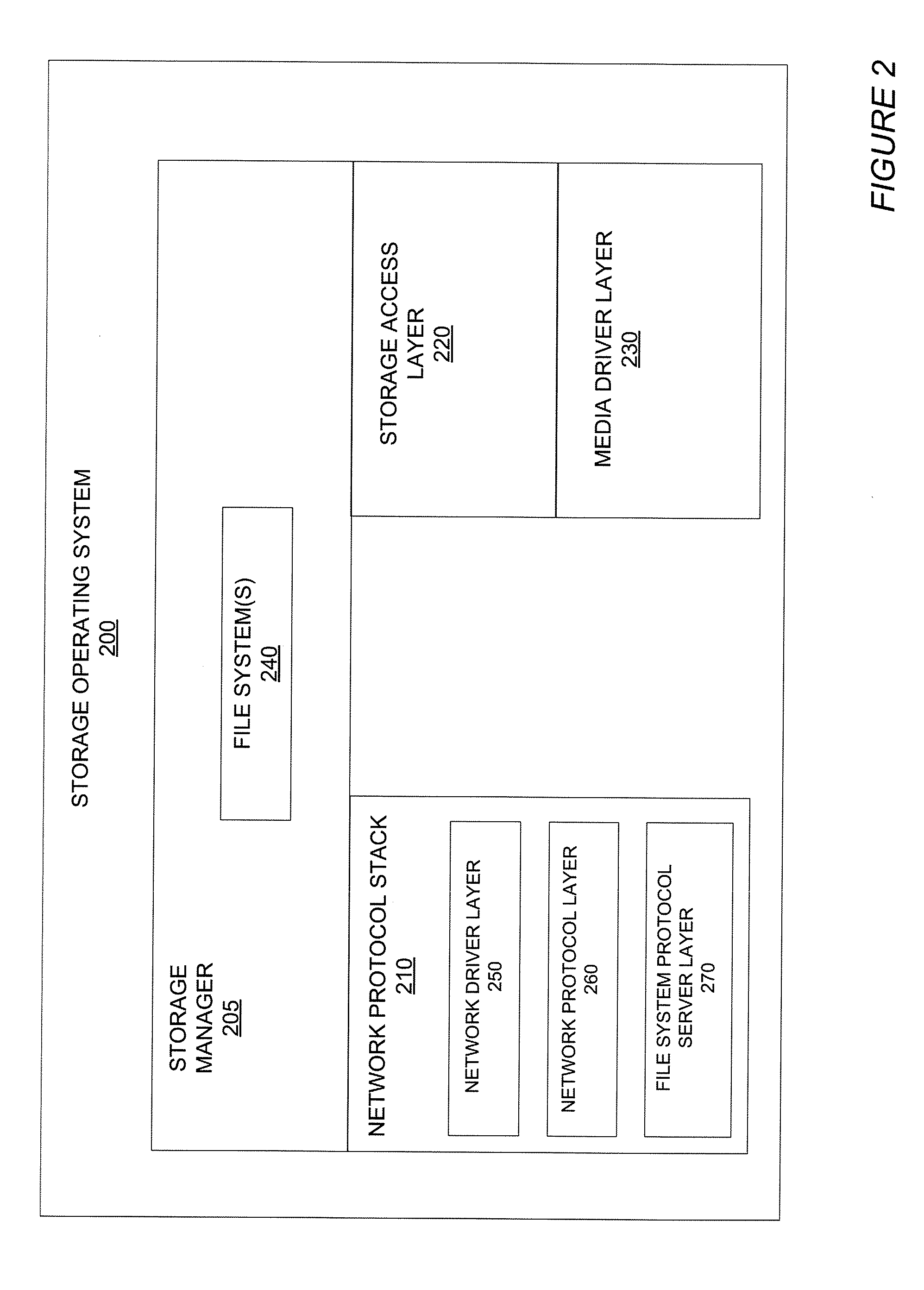

Mechanisms for moving data in a hybrid aggregate

ActiveUS20100281230A1Improved performance characteristicsMemory architecture accessing/allocationMemory adressing/allocation/relocationPolicy decisionFile system

At least certain embodiments disclose a method, system and apparatus for relocating data between tiers of storage media in a hybrid storage aggregate encompassing multiple tiers of heterogeneous physical storage media including a file system to automatically relocate the data between tiers. The hybrid storage aggregate includes one or more volumes, each volume including a volume block number space spanning at least a first-tier of storage media and a second tier of storage media of the multiple tiers of heterogeneous physical storage media and the hybrid storage aggregate further includes a control module to cooperatively manage the tiers of the multiple tiers of heterogeneous physical storage media and a file system coupled with the control module, the file system including a policy module configured to make policy decisions based on a set of one or more policies and configured to automatically relocate data between different tiers of the multiple tiers of heterogeneous physical storage media based on the set of policies.

Owner:NETWORK APPLIANCE INC

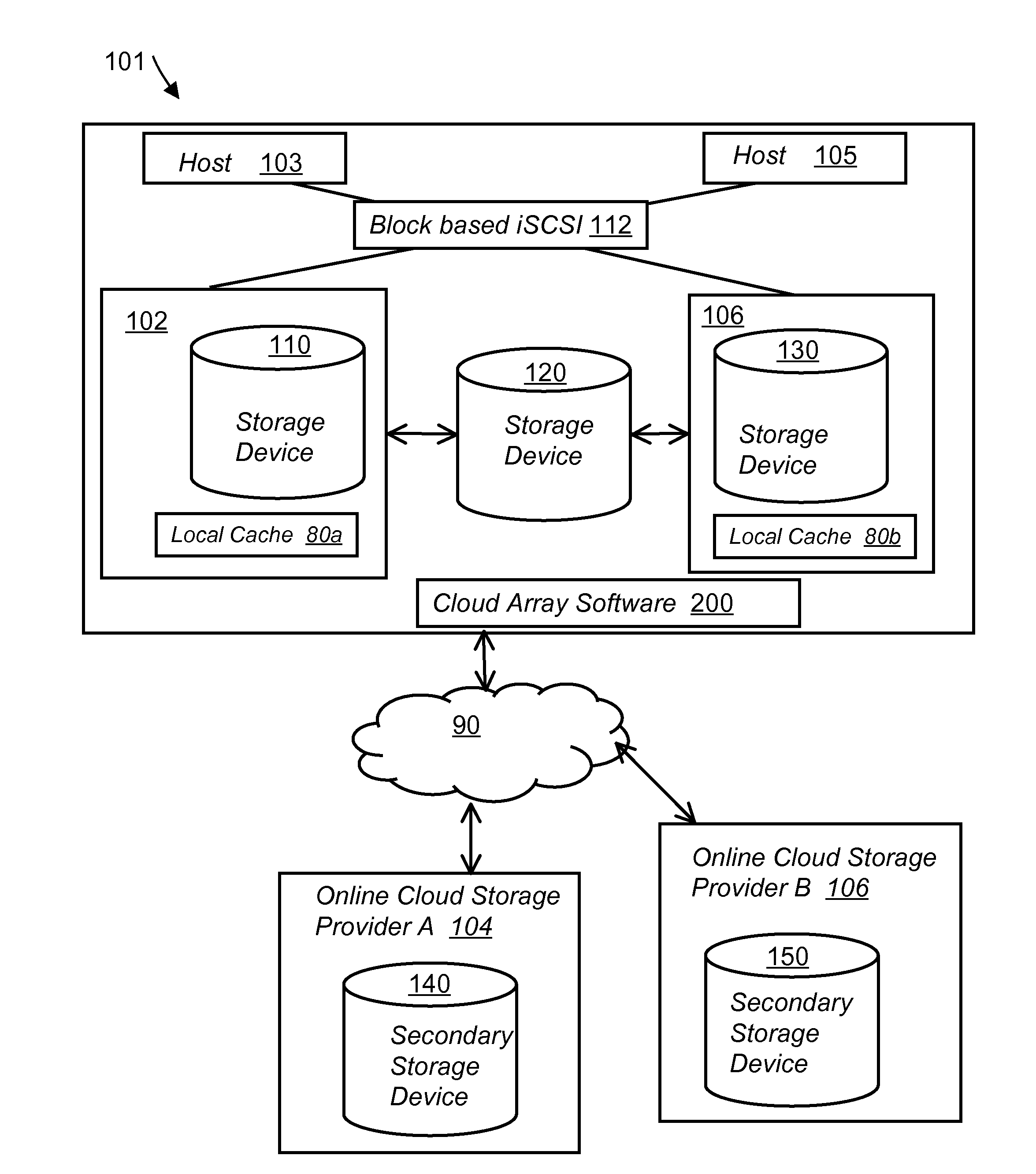

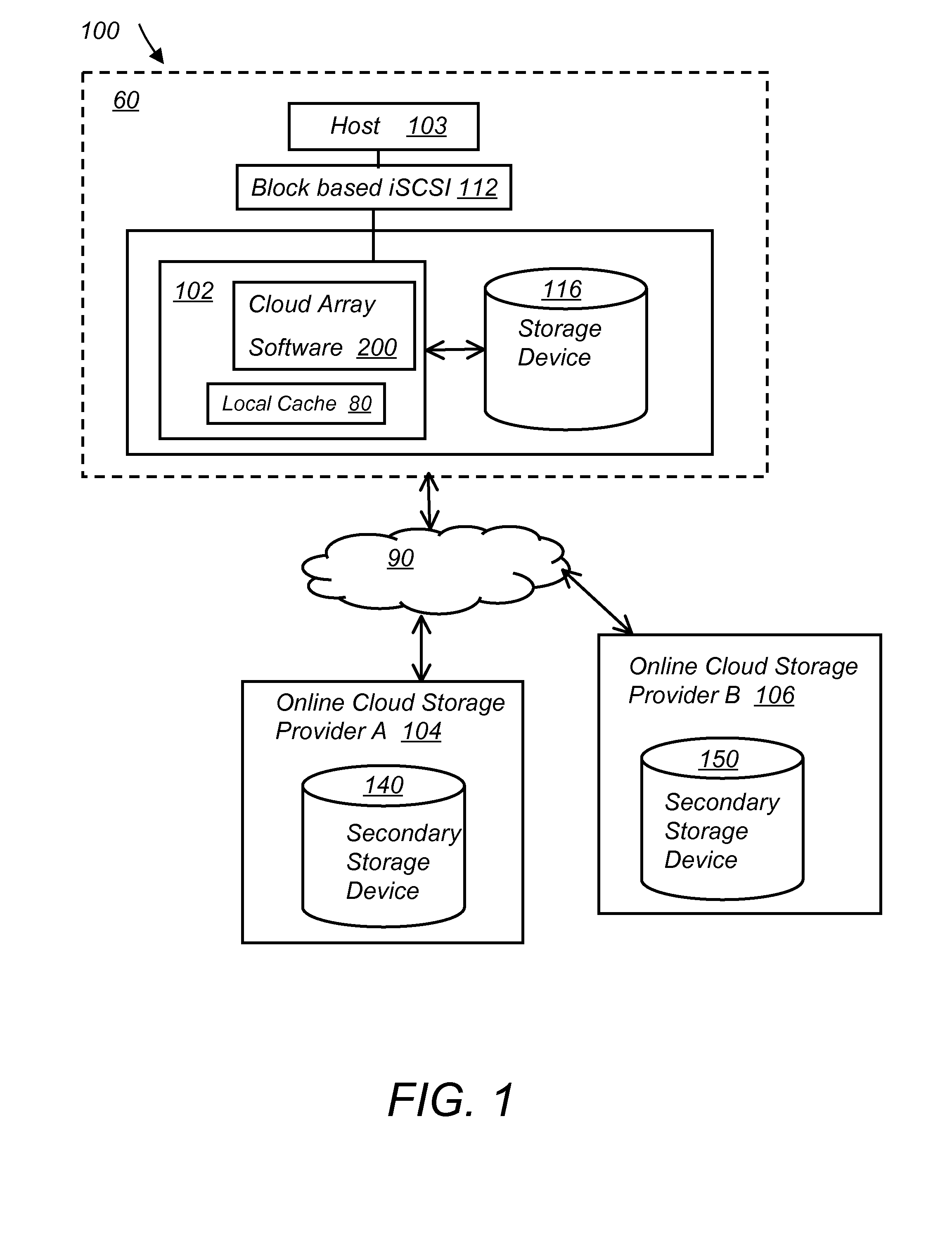

System and method for secure and reliable multi-cloud data replication

ActiveUS20100199042A1Reduce the amount requiredReduce storage costsMemory architecture accessing/allocationMemory loss protectionReliable computingReplication method

A multi-cloud data replication method includes providing a data replication cluster comprising at least a first host node and at least a first online storage cloud. The first host node is connected to the first online storage cloud via a network and comprises a server, a cloud array application and a local cache. The local cache comprises a buffer and a first storage volume comprising data cached in one or more buffer blocks of the local cache's buffer. Next, requesting authorization to perform cache flush of the cached first storage volume data to the first online storage cloud. Upon receiving approval of the authorization, encrypting the cached first storage volume data in each of the one or more buffer blocks with a data private key. Next, assigning metadata comprising at lest a unique identifier to each of the one or more buffer blocks and then encrypting the metadata with a metadata private key. Next, transmitting the one or more buffer blocks with the encrypted first storage volume data to the first online cloud storage. Next, creating a sequence of updates of the metadata, encrypting the sequence with the metadata private key and then transmitting the sequence of metadata updates to the first online storage cloud.

Owner:EMC IP HLDG CO LLC

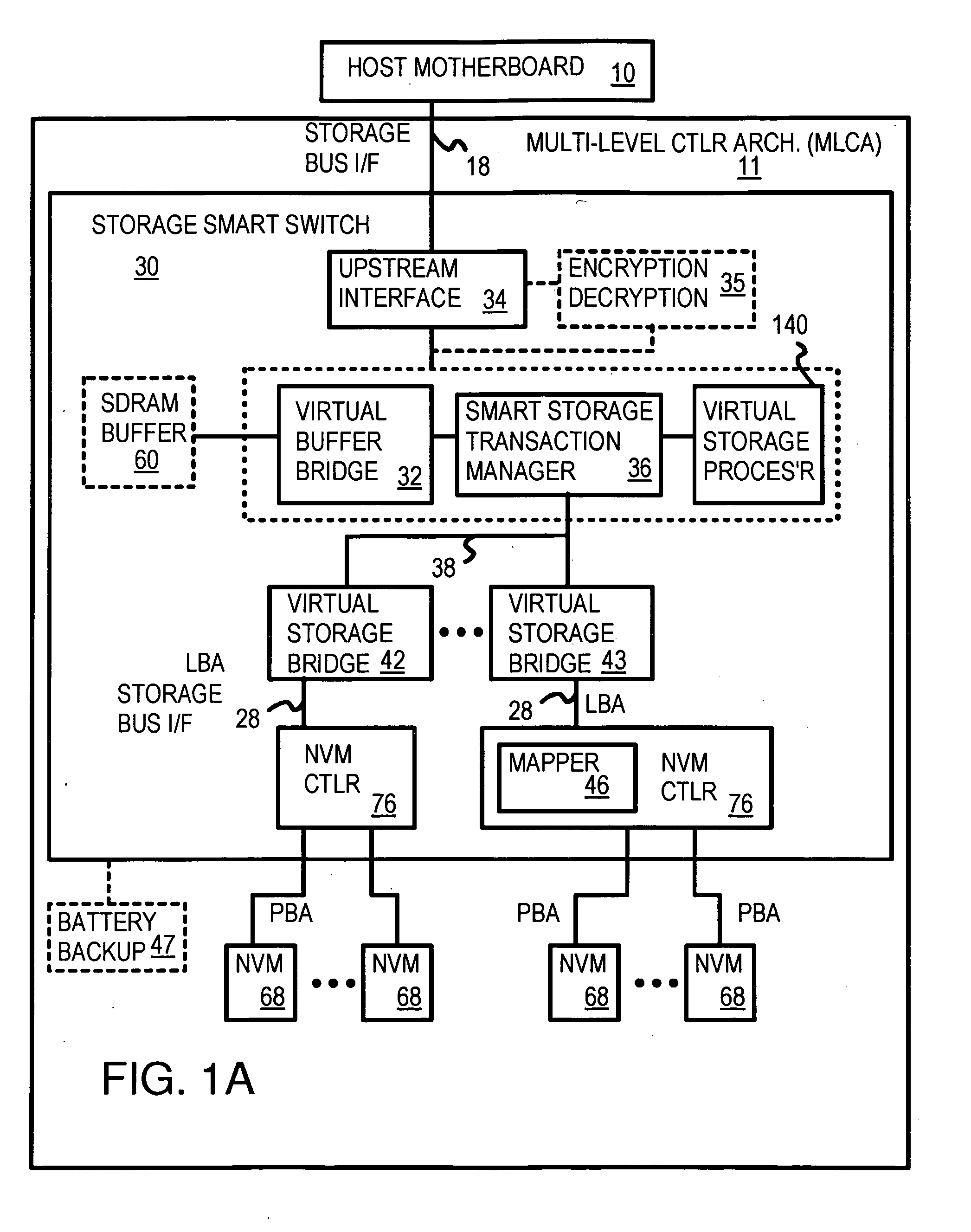

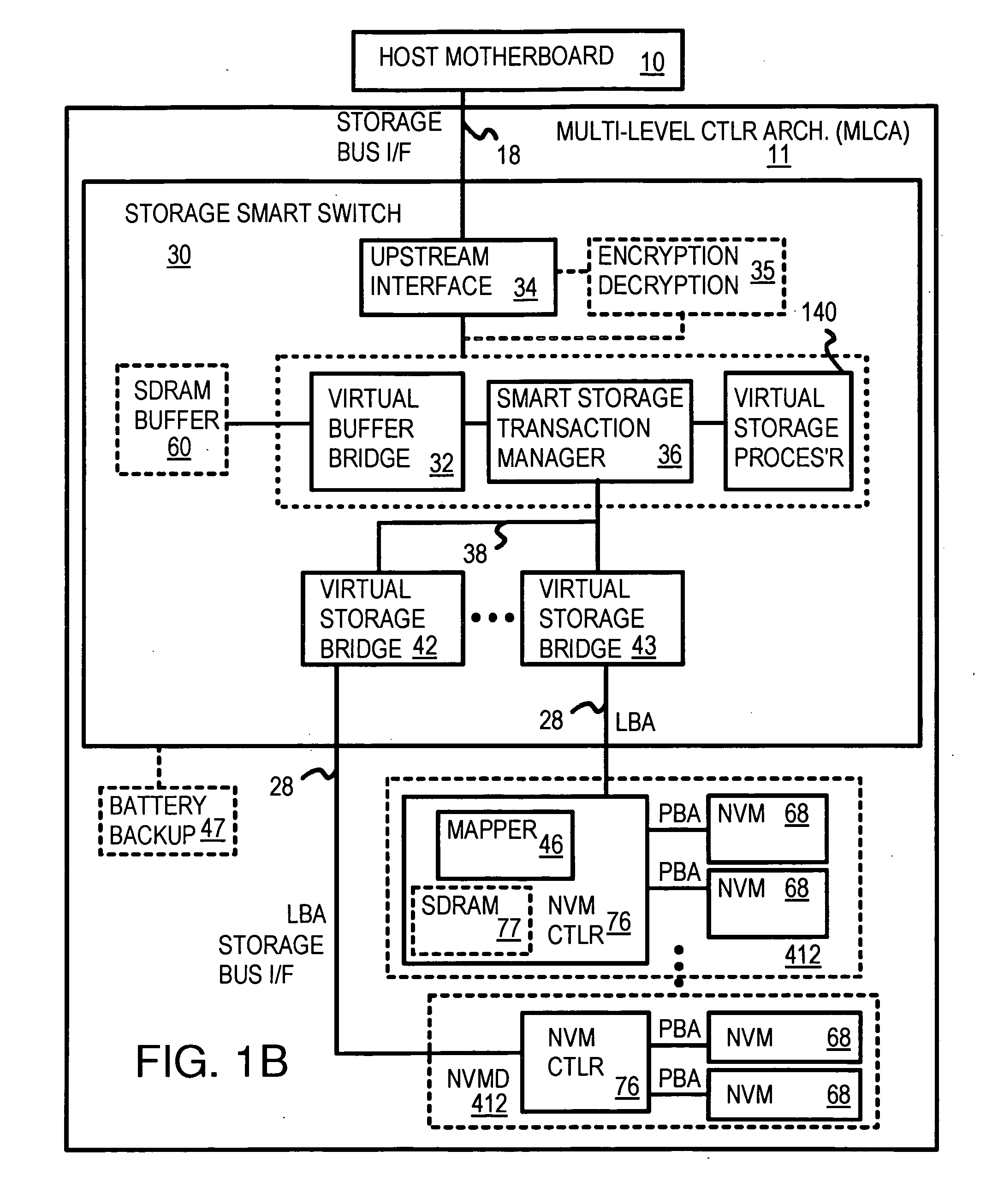

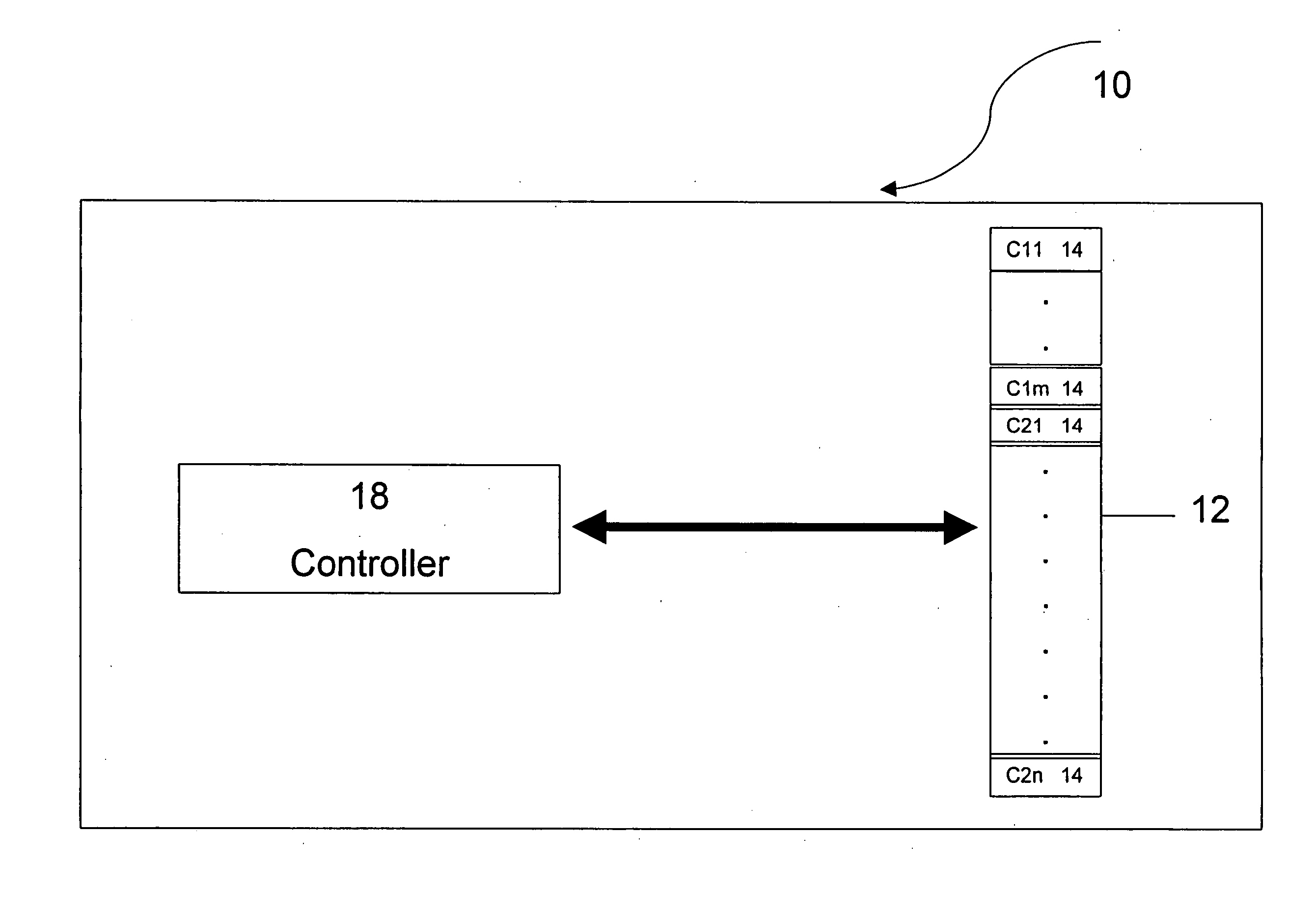

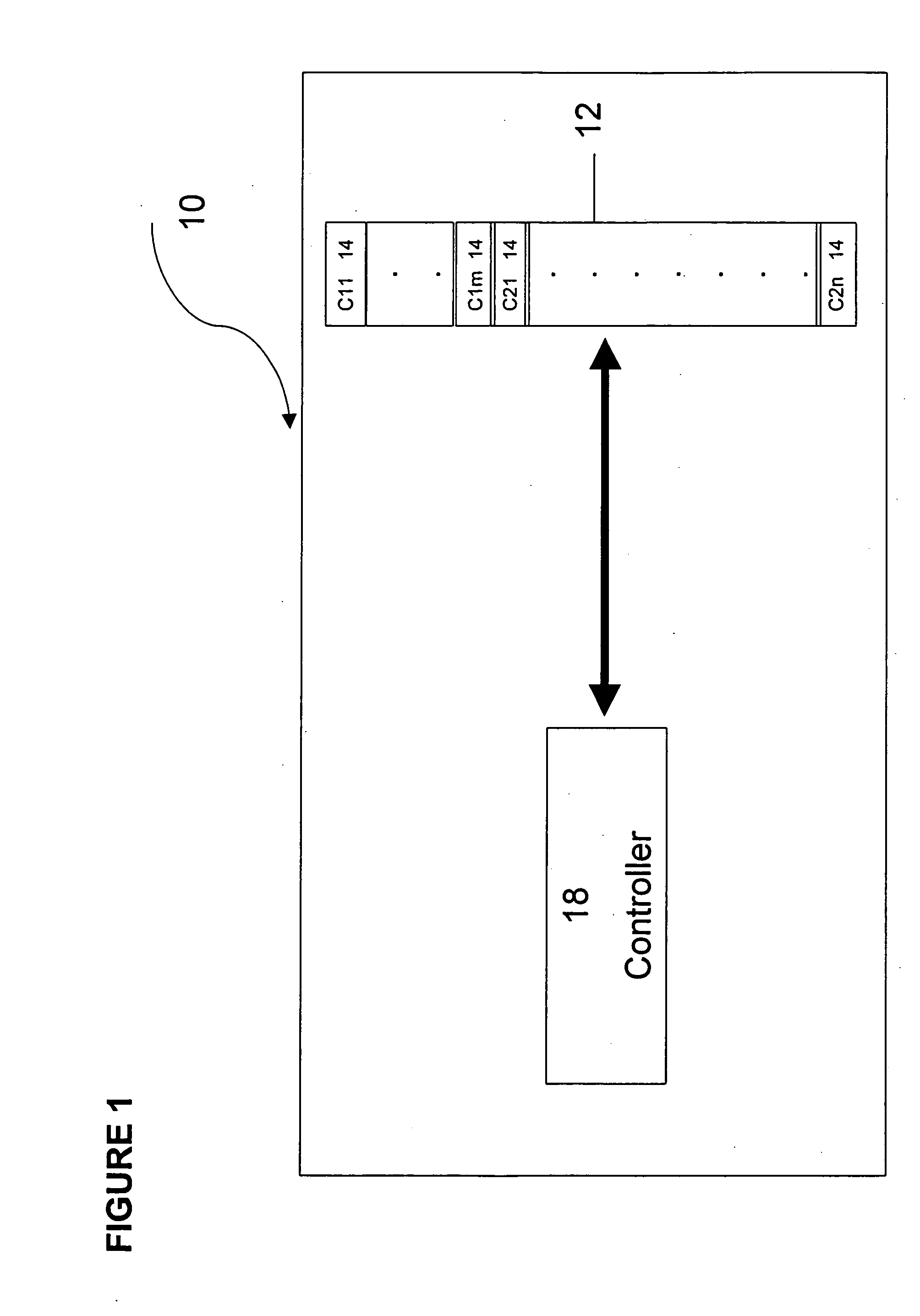

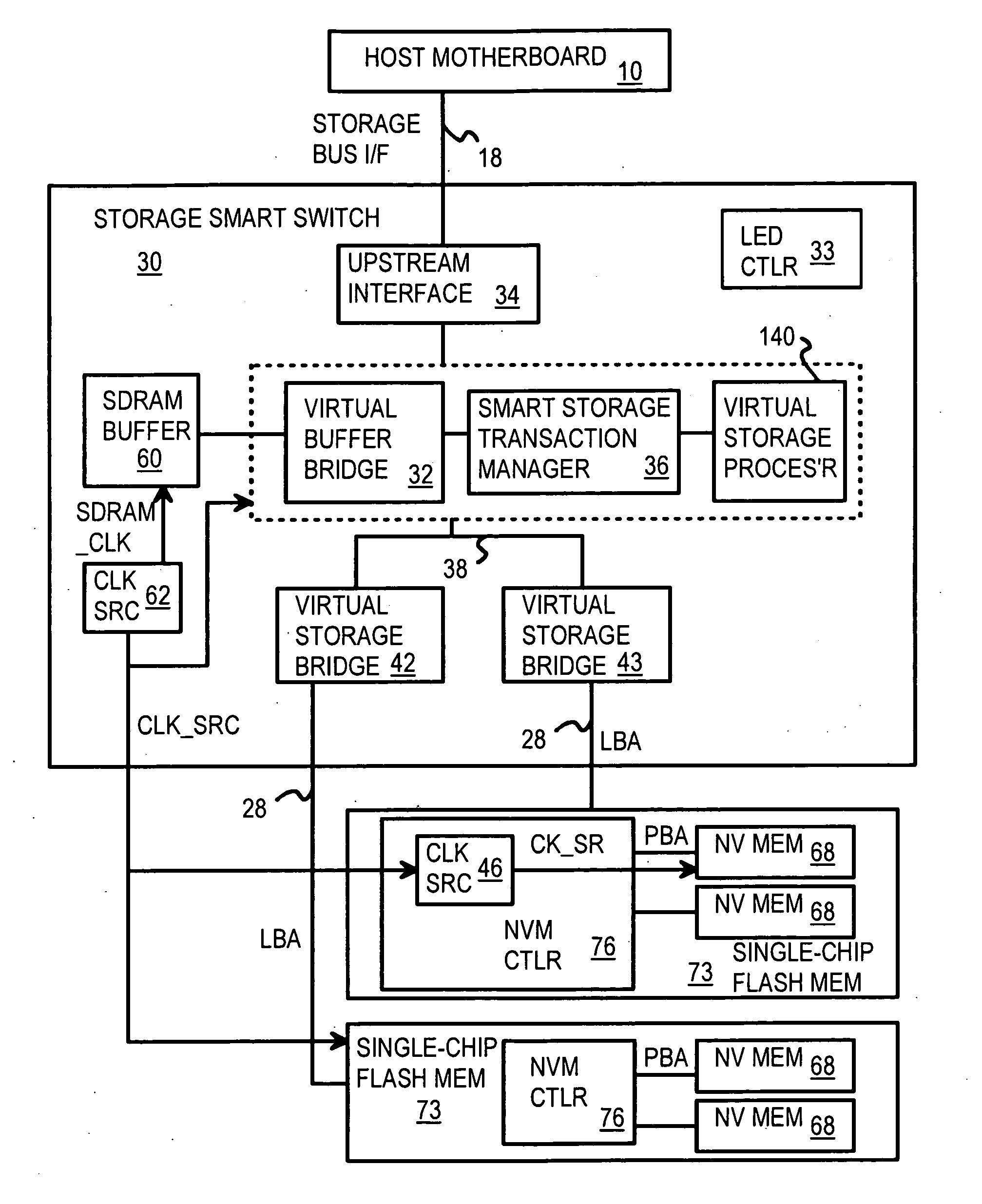

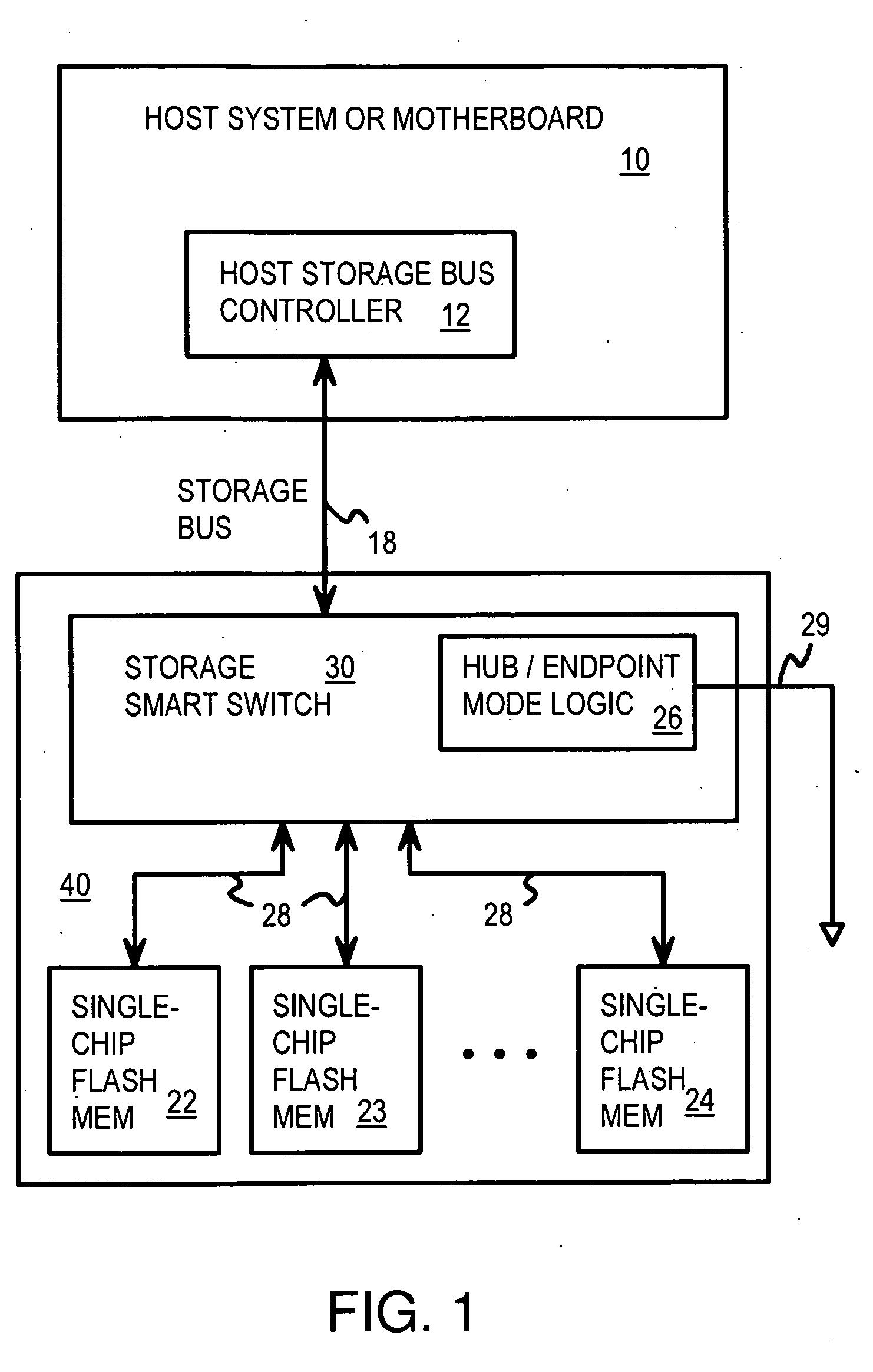

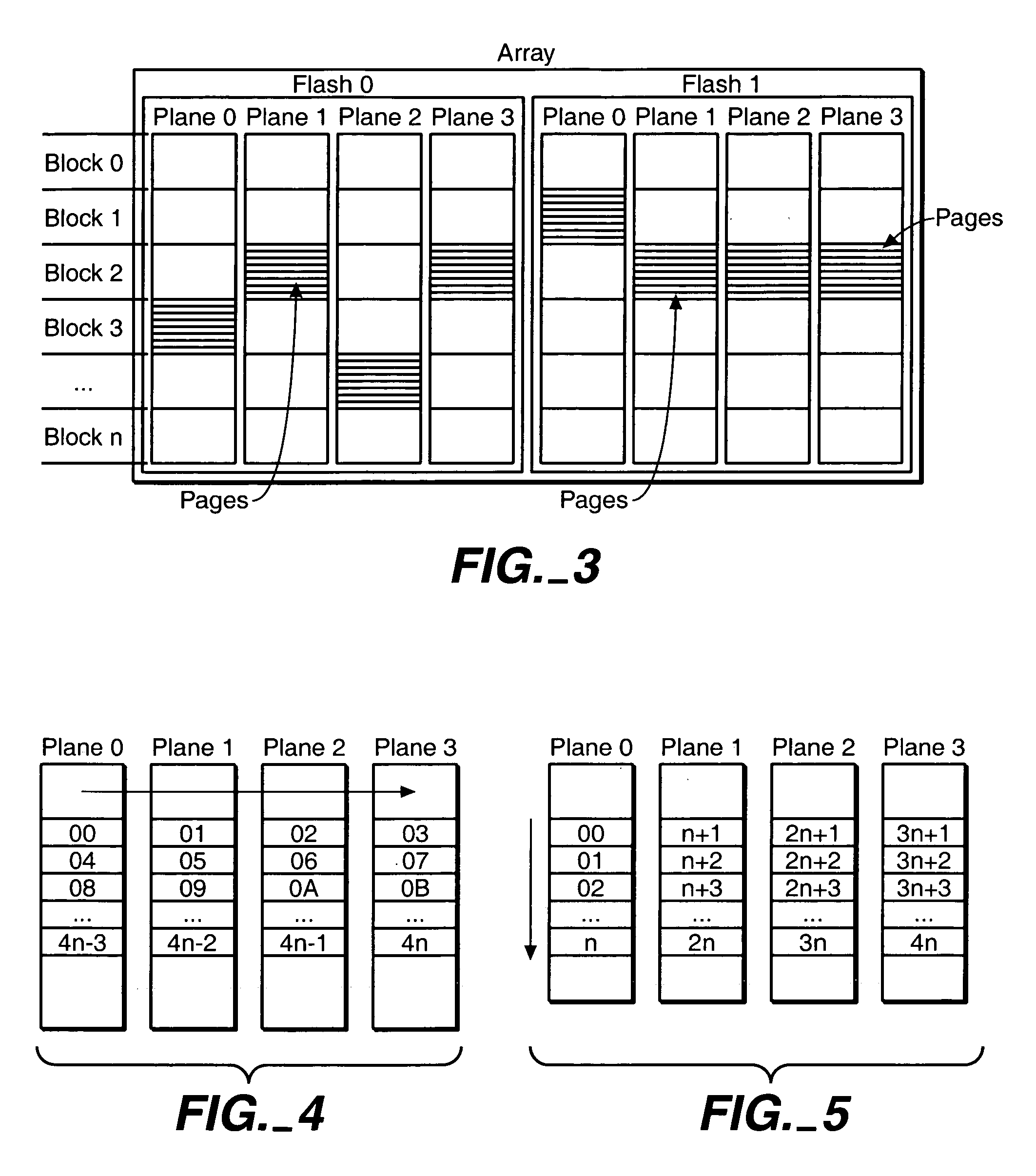

Multi-Level Controller with Smart Storage Transfer Manager for Interleaving Multiple Single-Chip Flash Memory Devices

InactiveUS20080320214A1Memory architecture accessing/allocationMemory adressing/allocation/relocationLogical block addressingSolid-state drive

A solid-state disk (SSD) has a smart storage switch with a smart storage transaction manager that re-orders host commands for accessing downstream single-chip flash-memory devices. Each single-chip flash-memory device has a lower-level controller that converts logical block addresses (LBA) to physical block addresses (PBA) that access flash memory blocks in the single-chip flash-memory device. Wear-leveling and bad block remapping are preformed by each single-chip flash-memory device, and at a higher level by a virtual storage processor in the smart storage switch. Virtual storage bridges between the smart storage transaction manager and the single-chip flash-memory devices bridge LBA transactions over LBA buses to the single-chip flash-memory devices. Data striping and interleaving among multiple channels of the single-chip flash-memory device is controlled at a high level by the smart storage transaction manager, while further interleaving and remapping may be performed within each single-chip flash-memory device.

Owner:SUPER TALENT TECH CORP

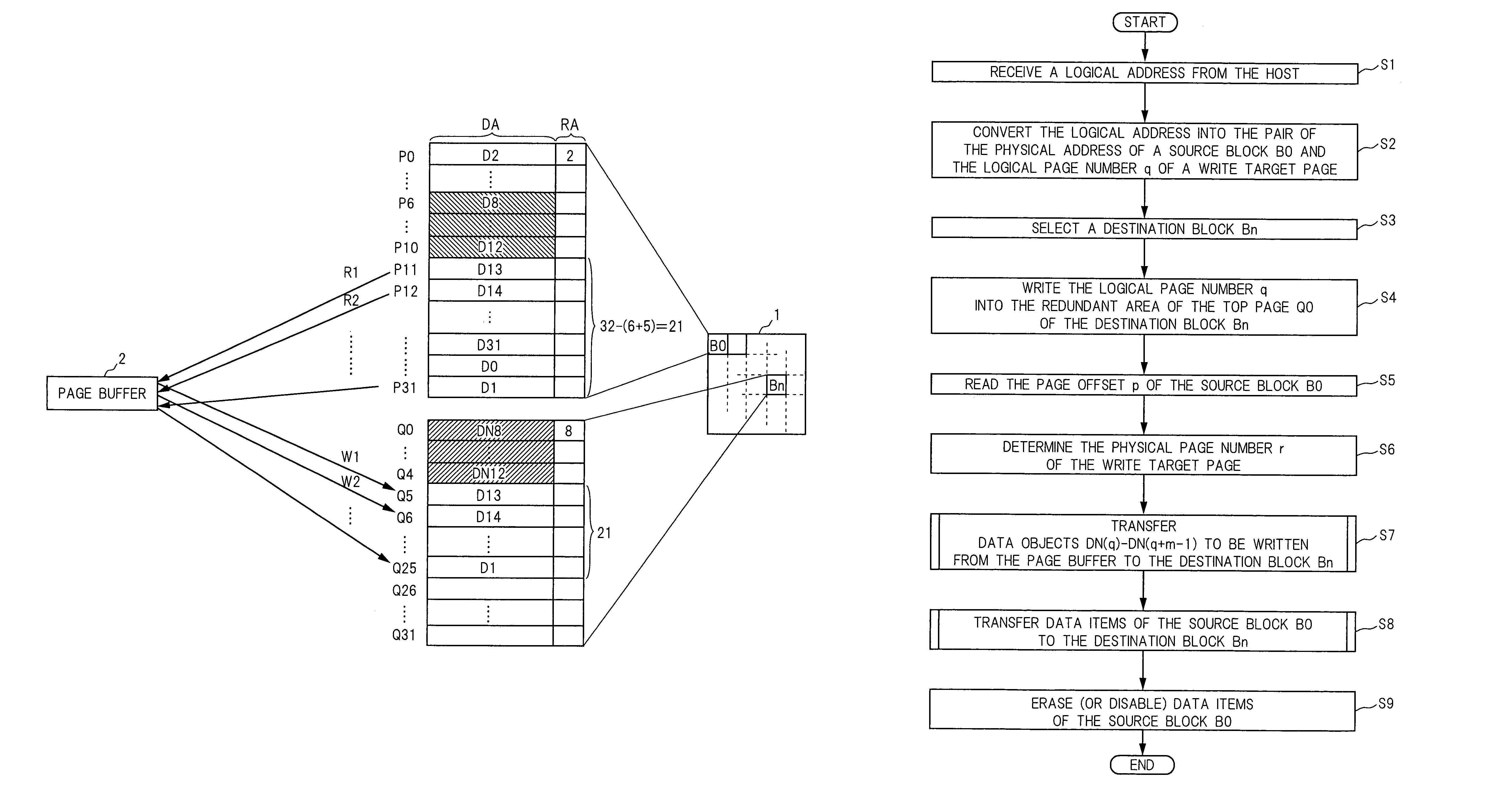

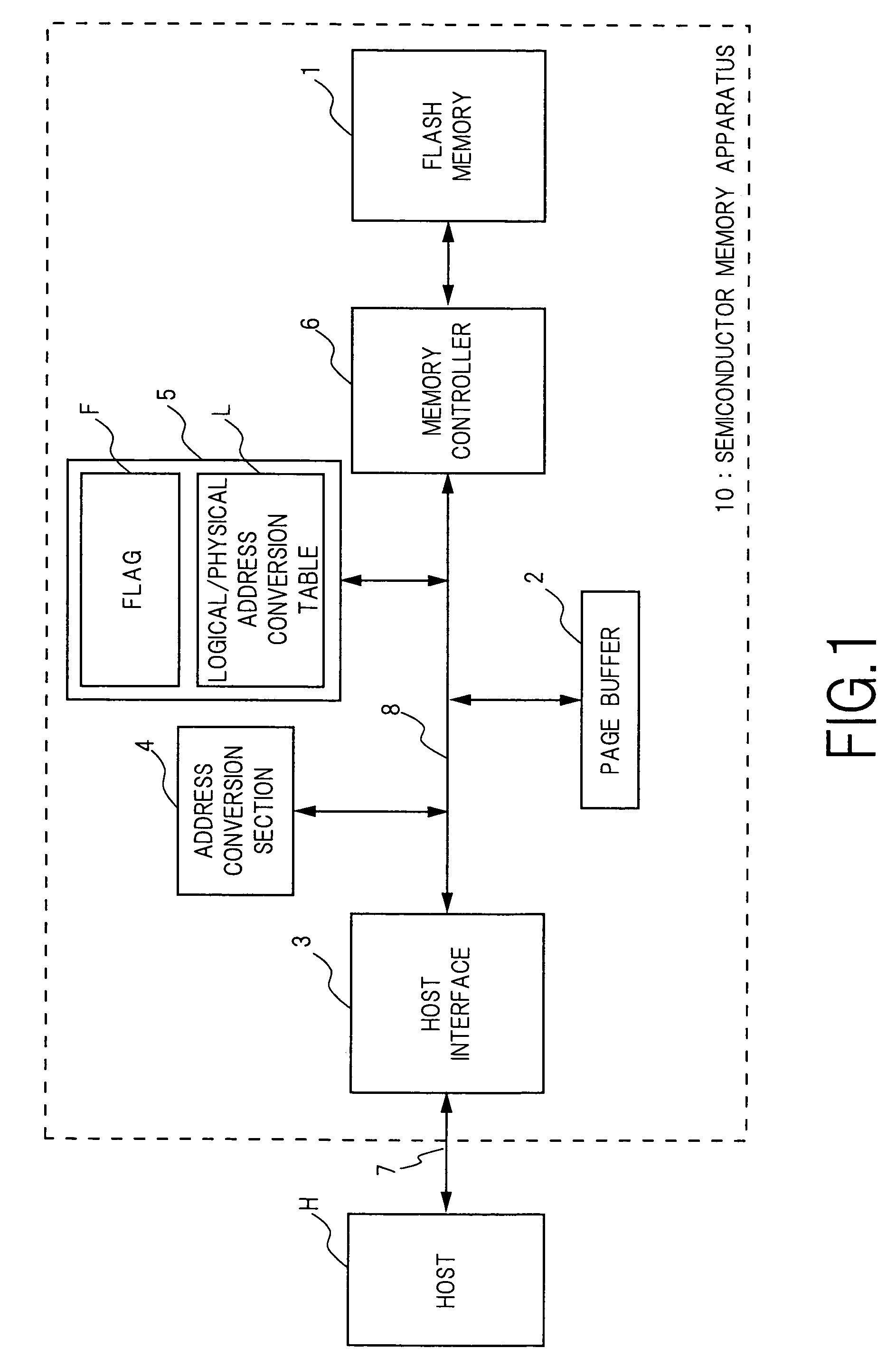

Semiconductor memory device and method for writing data into flash memory

ActiveUS7107389B2Small sizeReduce waiting timeMemory architecture accessing/allocationMemory adressing/allocation/relocationHome pageDatabase

A source block (B0) and the logical page number (“8”) of a write target page are identified from the logical address of the write target page. Data objects (DN8, DN9, . . . , DN12) to be written, which a host stores in a page buffer (2), are written into the data areas (DA) of the pages (Q0, Q1, . . . , Q4) of a destination block (Bn), starting from the top page (Q0) in sequence. The logical page number (“8”) of the write target page is written into the redundant area (RA) of the top page (Q0). The physical page number (“6=8−2”) of the write target page is identified, based on the logical page number (“8”) of the write target page and the page offset (“2”) of the source block (B0). When notified by the host of the end of the sending of the data objects (DN8, . . . , DN12), the data items (D13, . . . , D31, D0, D1, . . . , D7) in the source block (B0) are transferred to the pages (Q5, Q6, . . . , Q31) in the destination block (Bn) via the page buffer (2) sequentially and cyclically, starting from the page (P11) situated cyclically behind the write target page (P6) by the number (“5”) of pages of the data objects (DN8, . . . , DN12).

Owner:PANASONIC CORP

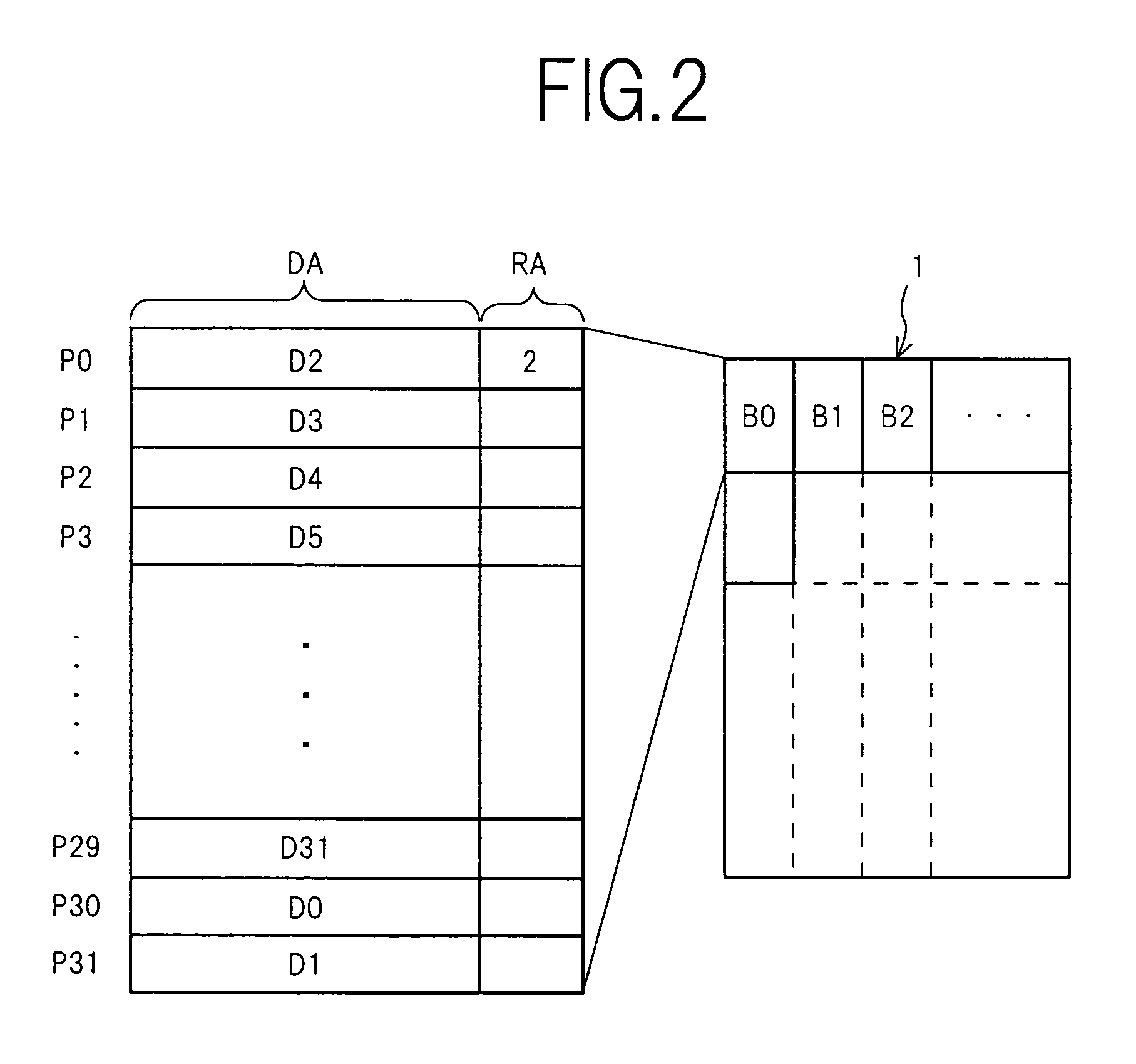

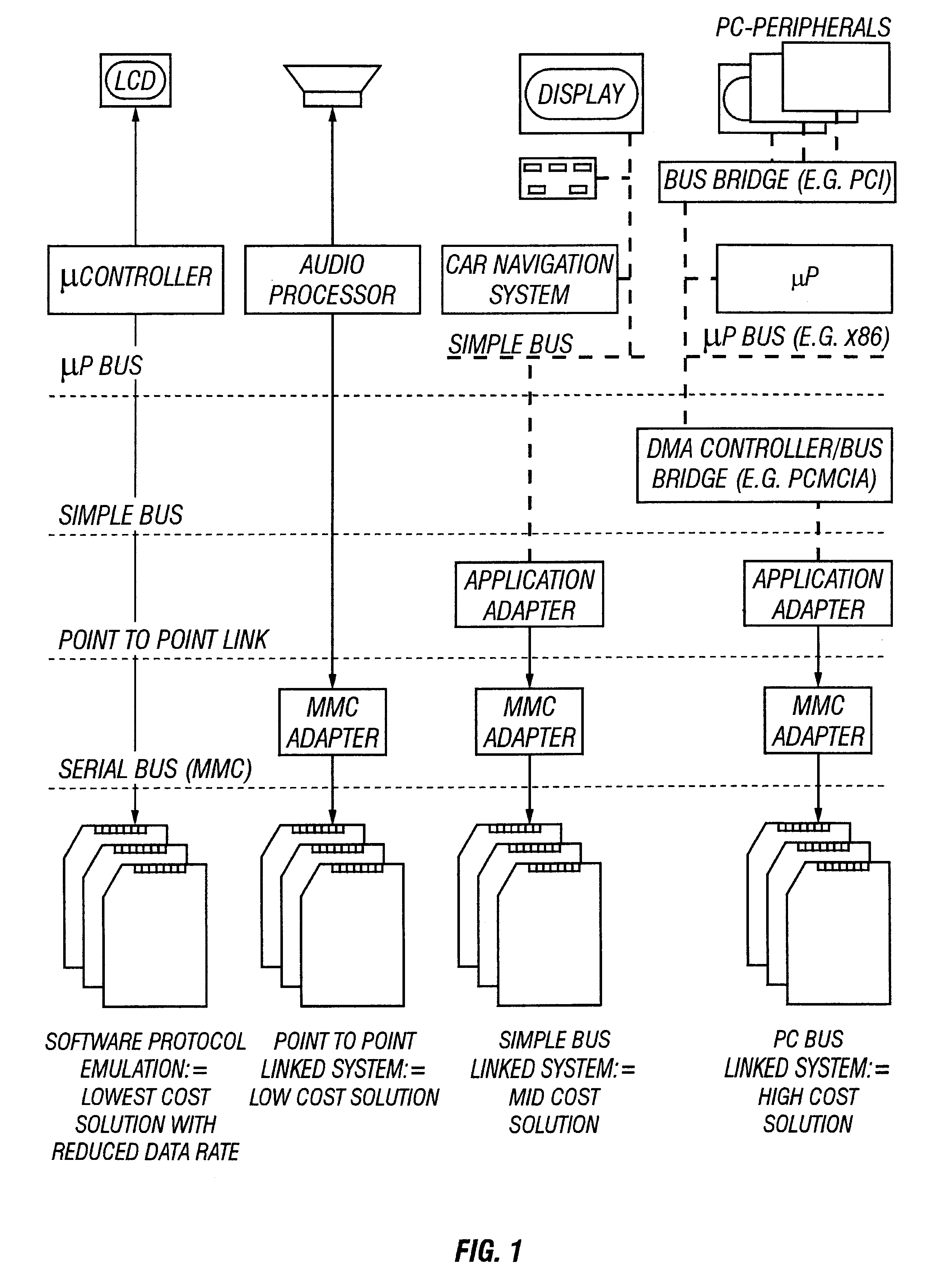

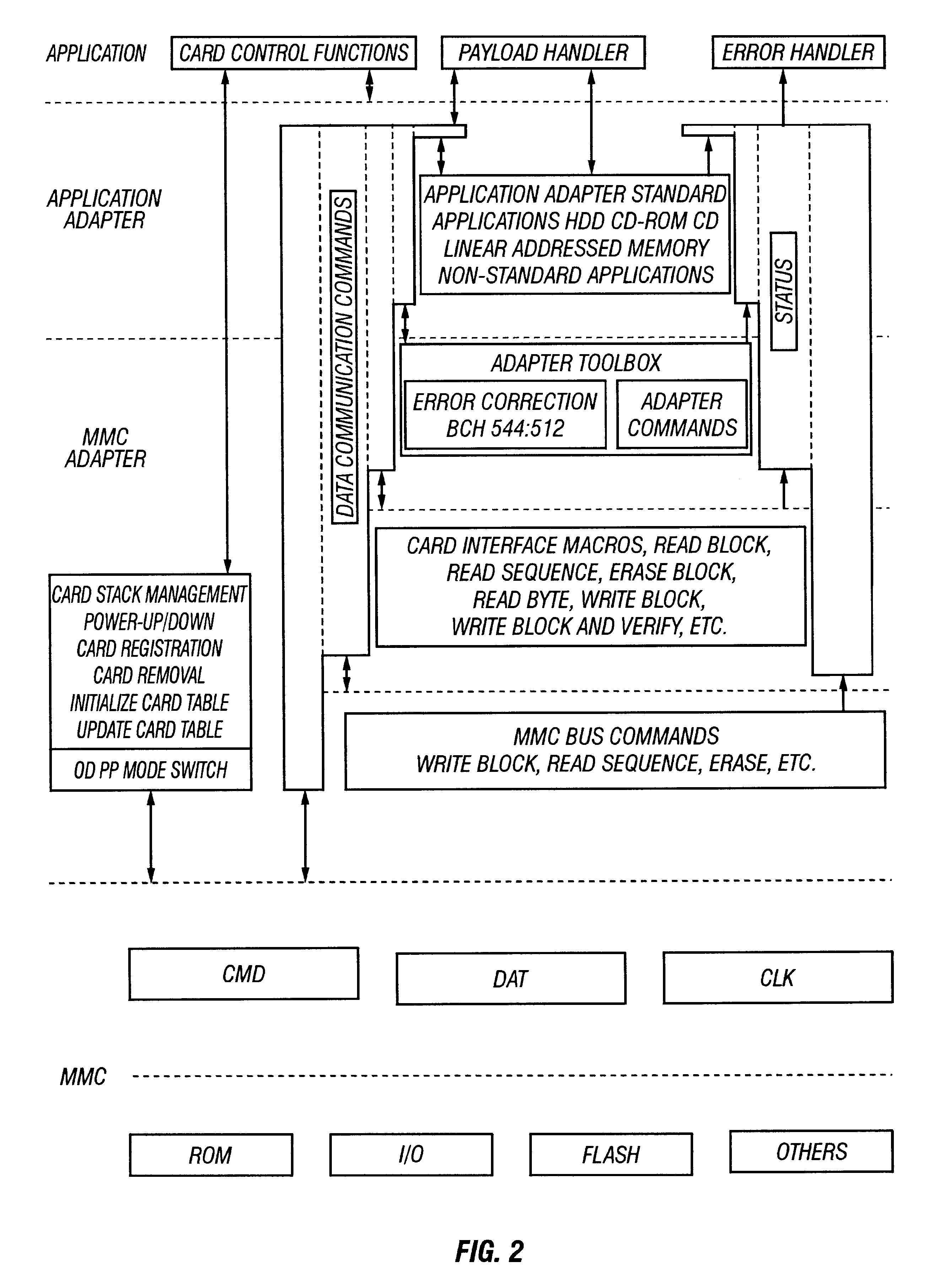

Voltage negotiation in a single host multiple cards system

InactiveUS6279114B1Avoid writingMemory architecture accessing/allocationVolume/mass flow measurementComputer hardwareCommunications system

A low cost data storage and communication system. The low cost data storage and communication system has a host and at least one card connected to the host. A voltage negotiator located in the system for determining a common operating voltage range that is a common demonminator of all independent operating voltage ranges of all of the cards connected to the system. In addition, there is a novel feature of partitioning the memory storages of the card. This feature provides the host the ability to simultaneously erase any combination of sectors in a single erase group, or any combination of the entire erase groups. Another feature feature provided by this novel method of partitioning the memory storages is the ability to write protect any combination of memory groups in the card.

Owner:SANDISK TECH LLC

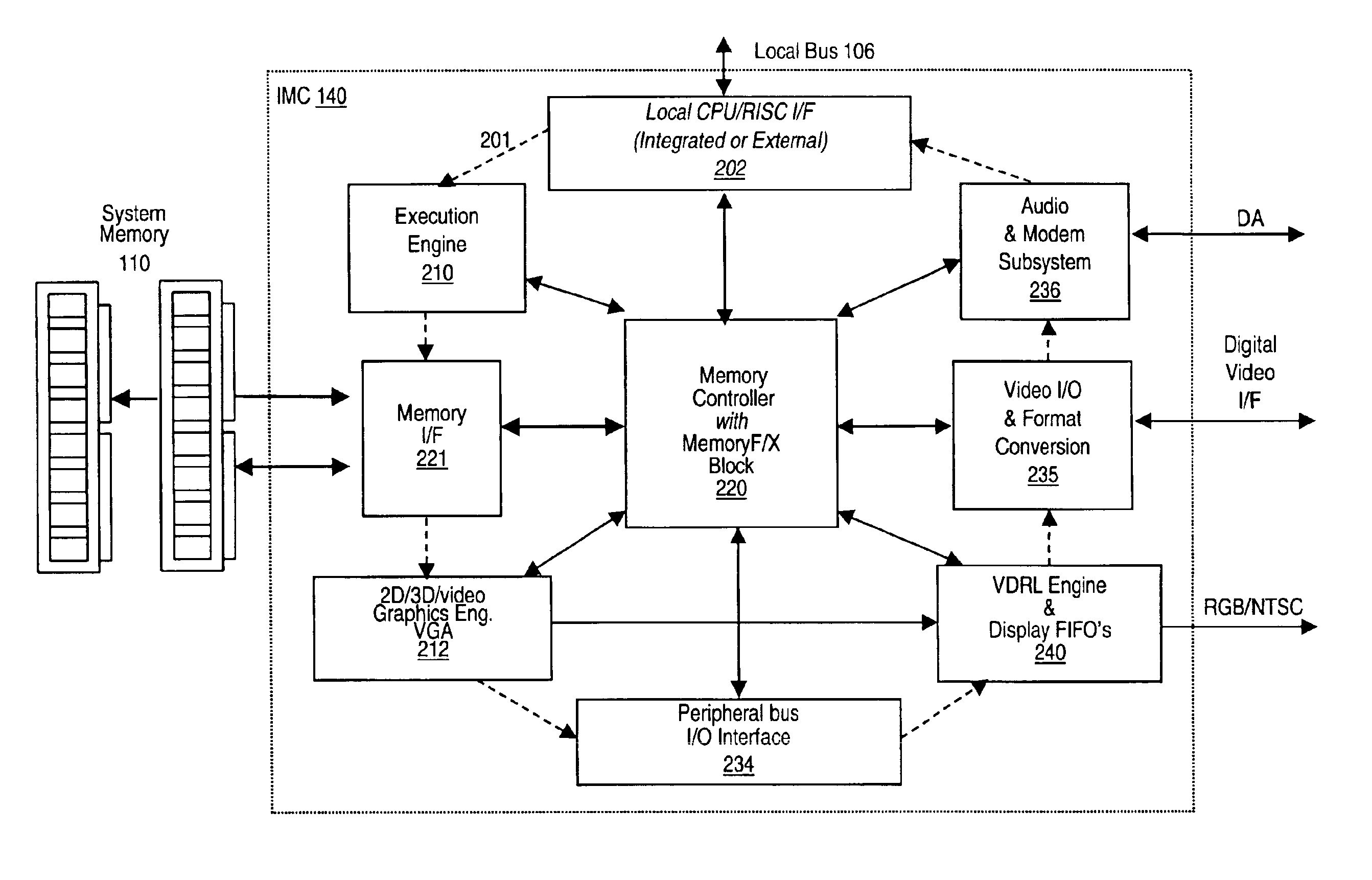

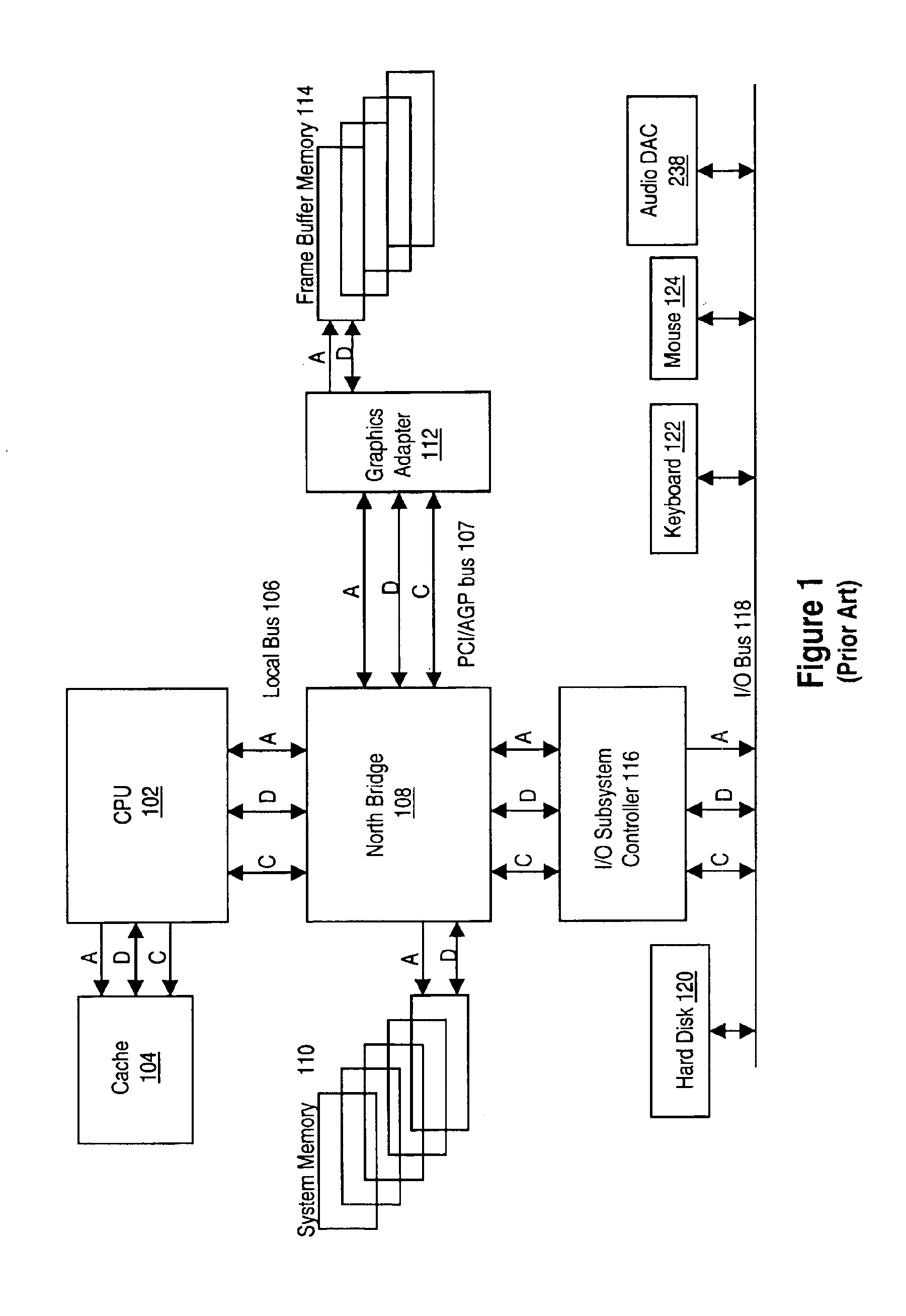

Memory module including scalable embedded parallel data compression and decompression engines

InactiveUS6879266B1Low costSmall data storage requirementMemory architecture accessing/allocationEnergy efficient ICTParallel compressionParallel computing

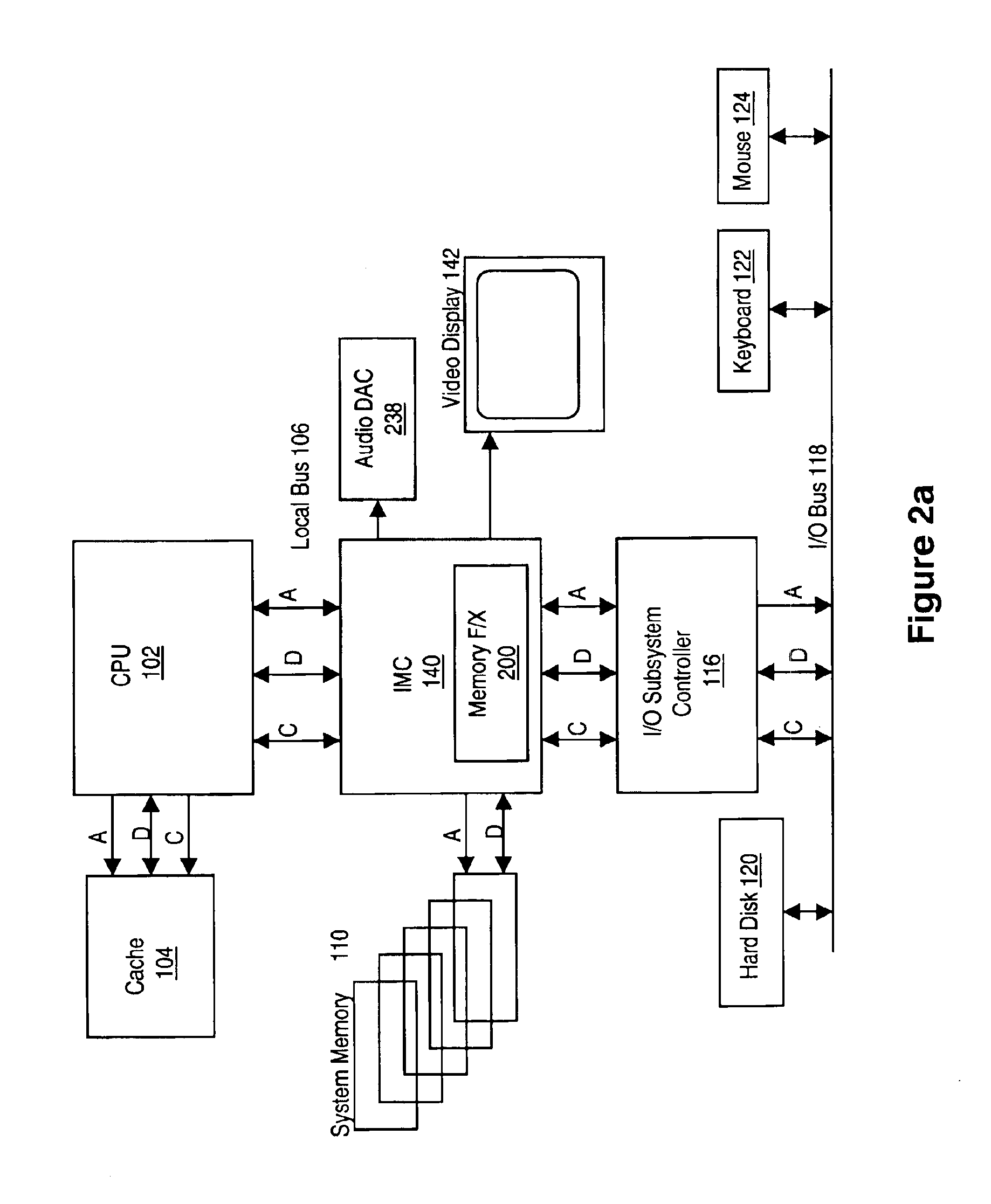

An memory module including parallel data compression and decompression engines for improved performance. The memory module includes MemoryF / X Technology. To improve latency and reduce performance degradations normally associated with compression and decompression techniques, the MemoryF / X Technology encompasses multiple novel techniques such as: 1) parallel lossless compression / decompression; 2) selectable compression modes such as lossless, lossy or no compression; 3) priority compression mode; 4) data cache techniques; 5) variable compression block sizes; 6) compression reordering; and 7) unique address translation, attribute, and address caches. The parallel compression and decompression algorithm allows high-speed parallel compression and high-speed parallel decompression operation. The memory module-integrated data compression and decompression capabilities remove system bottlenecks and increase performance. This allows lower cost systems due to smaller data storage, reduced bandwidth requirements, reduced power and noise.

Owner:INTELLECTUAL VENTURES I LLC

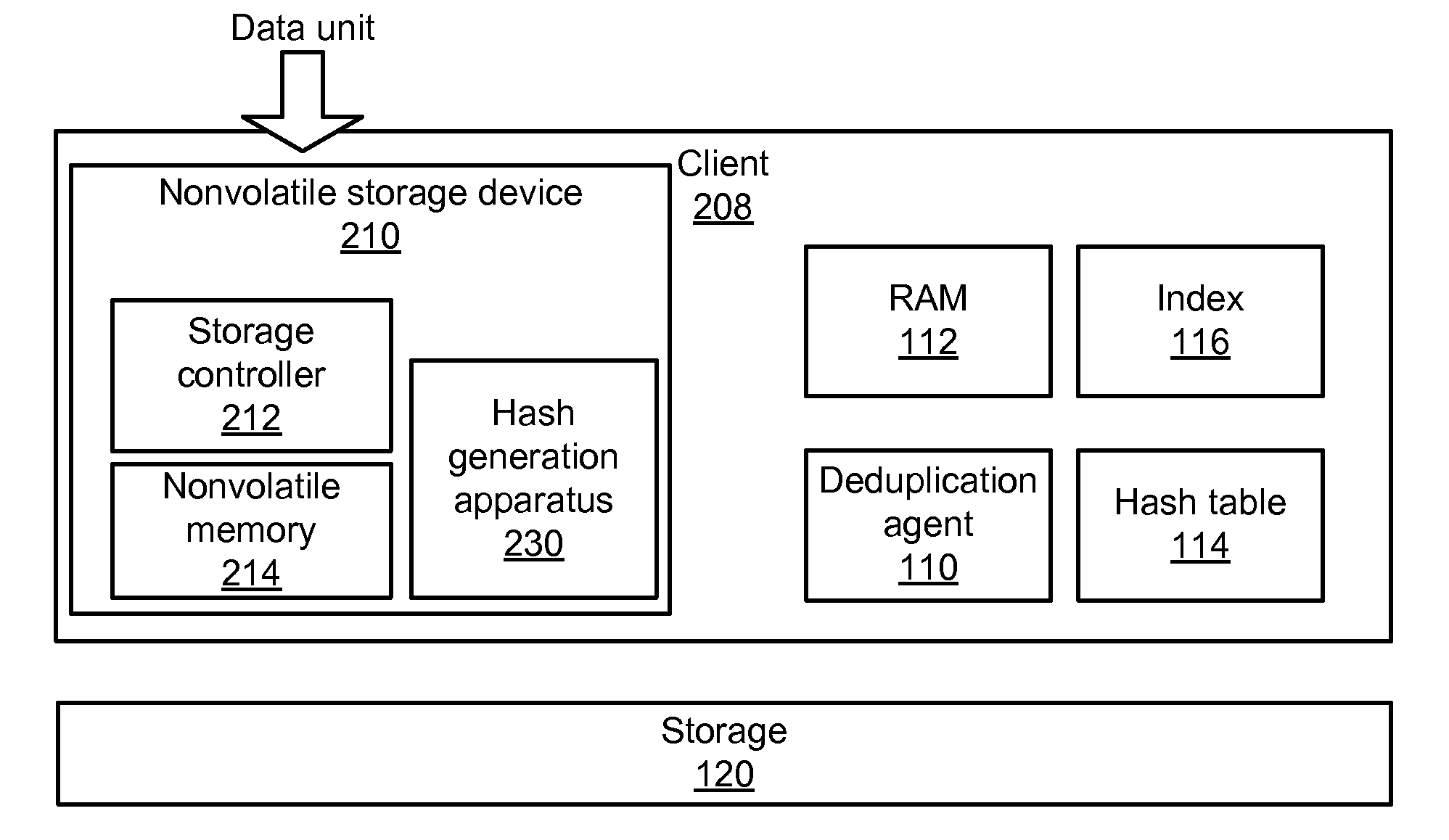

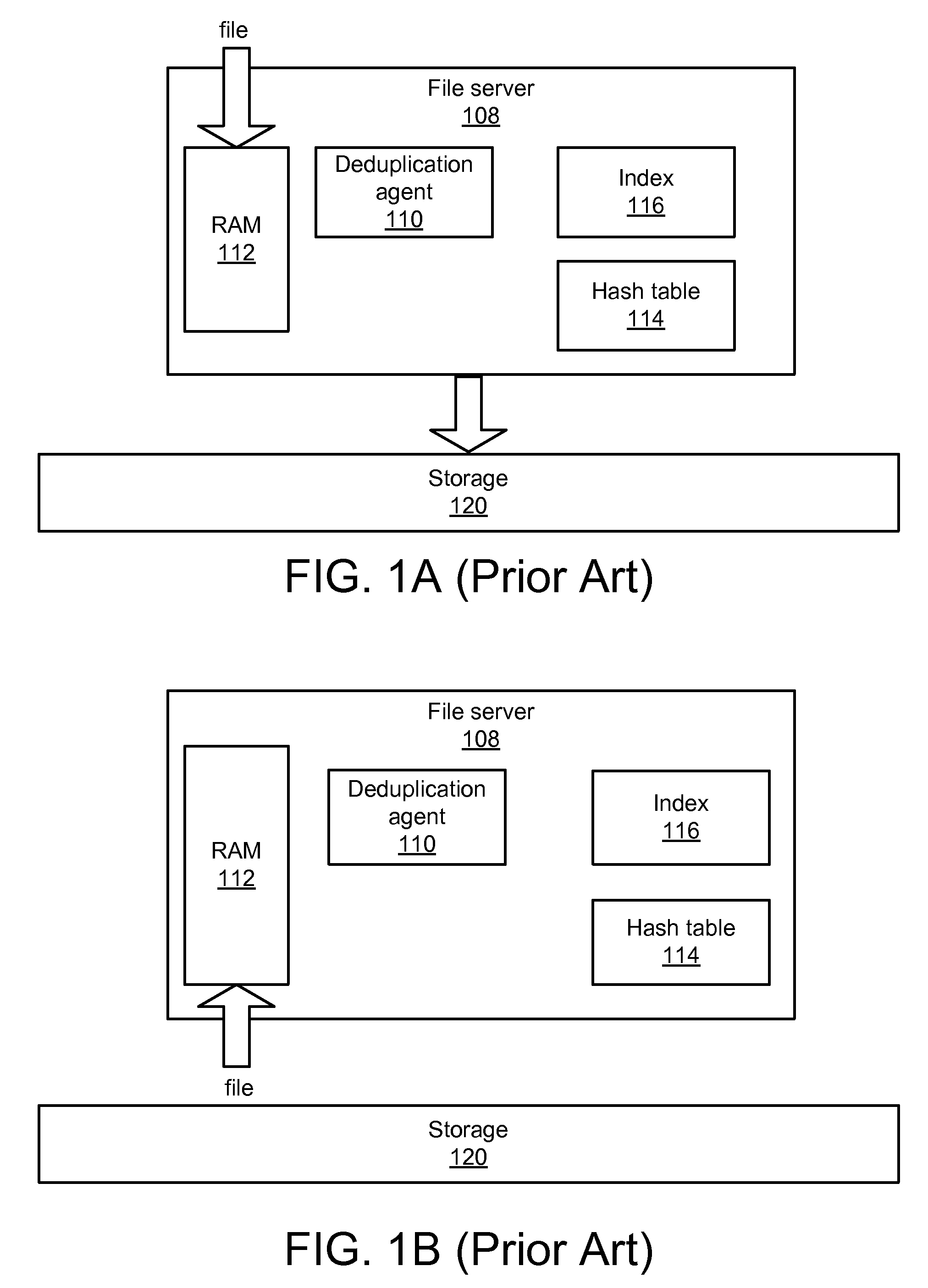

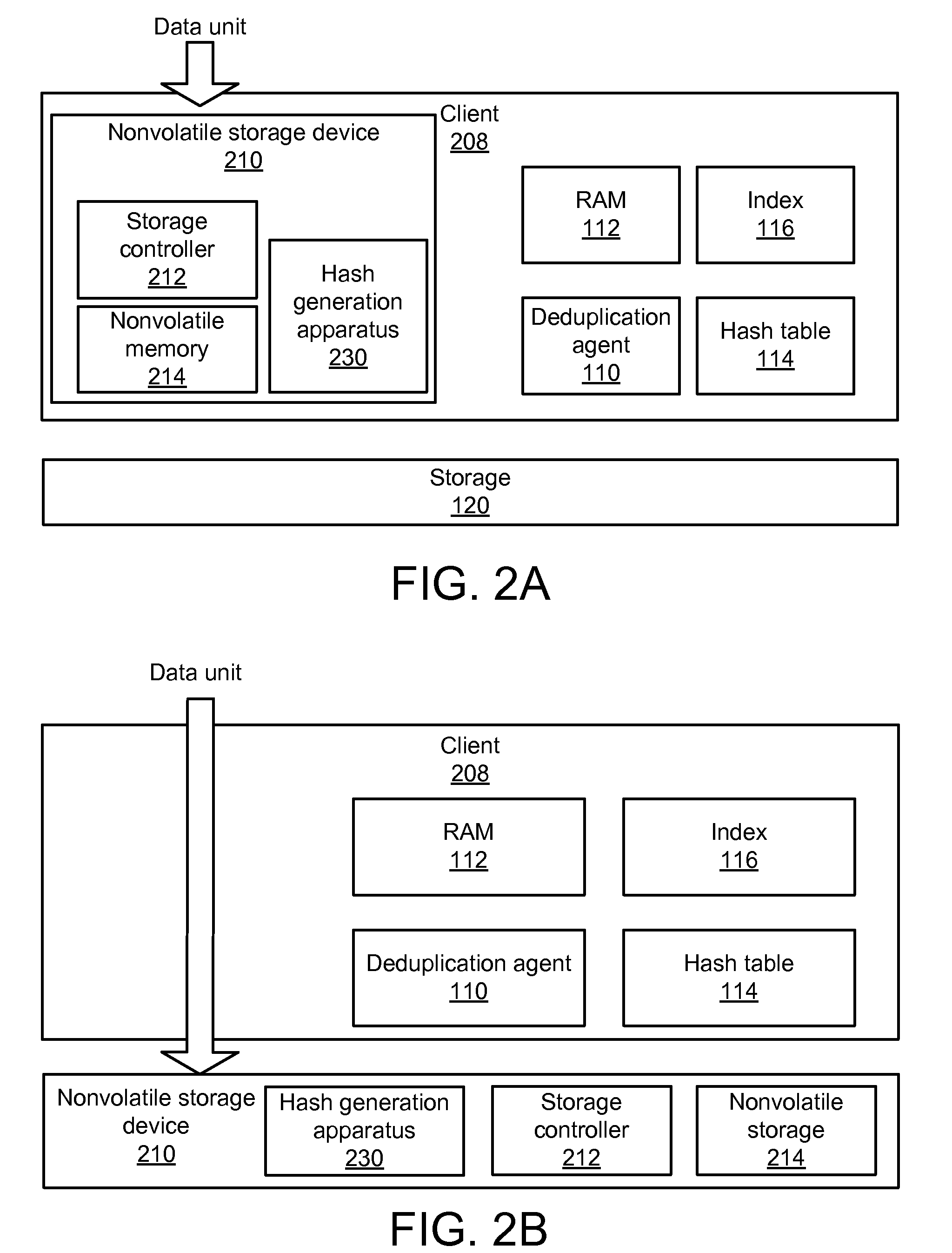

Apparatus, system, and method for improved data deduplication

InactiveUS20110055471A1Memory architecture accessing/allocationMemory systemsComputer hardwareHash function

An apparatus, system, and method are disclosed for improved deduplication. The apparatus includes an input module, a hash module, and a transmission module that are implemented in a nonvolatile storage device. The input module receives hash requests from requesting entities that may be internal or external to the nonvolatile storage device; the hash requests include a data unit identifier that identifies the data unit for which the hash is requested. The hash module generates a hash for the data unit using a hash function. The hash is generated using the computing resources of the nonvolatile storage device. The transmission module sends the hash to a receiving entity when the input module receives the hash request. A deduplication agent uses the hash to determine whether or not the data unit is a duplicate of a data unit already stored in the storage system that includes the nonvolatile storage device.

Owner:SANDISK TECH LLC

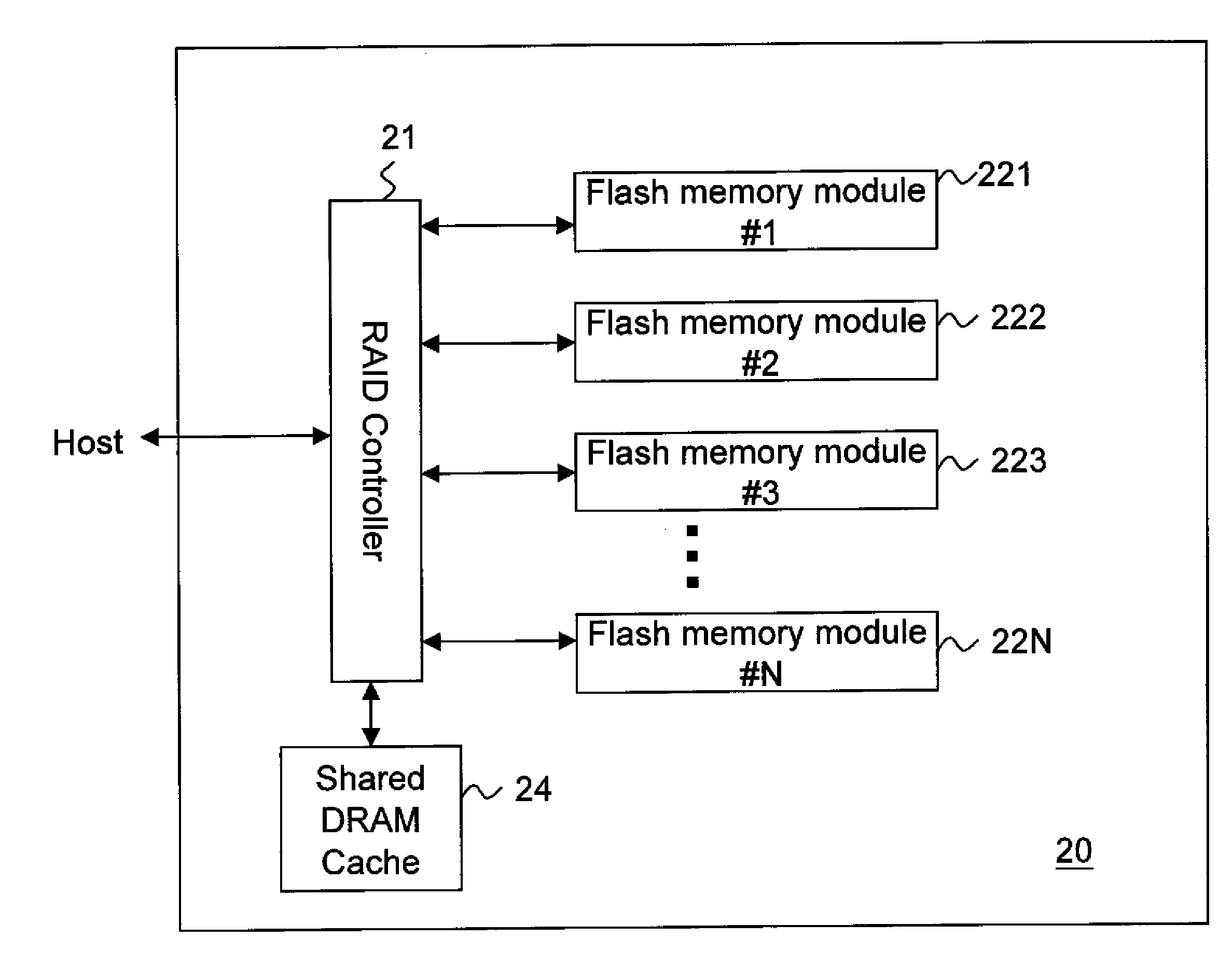

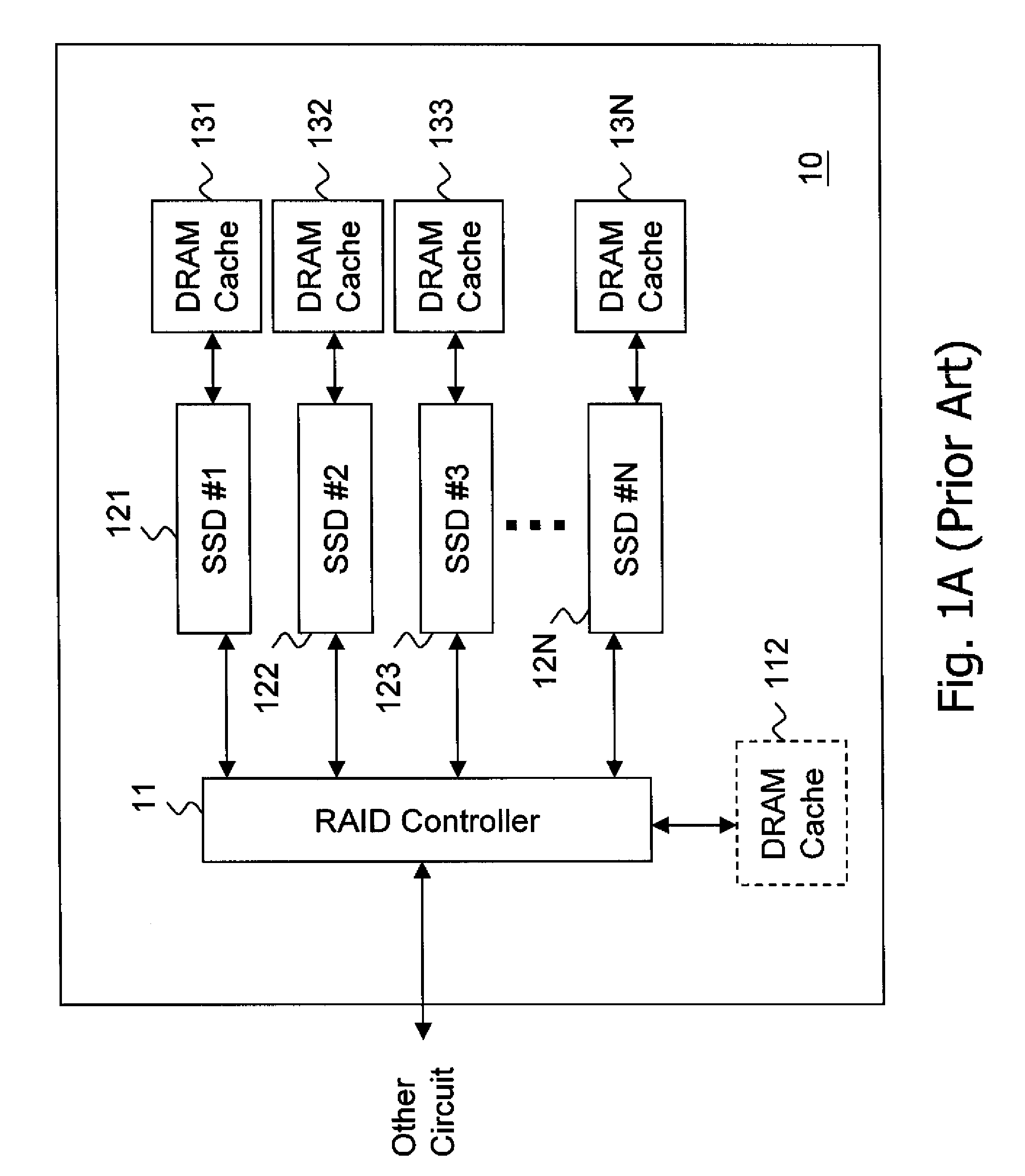

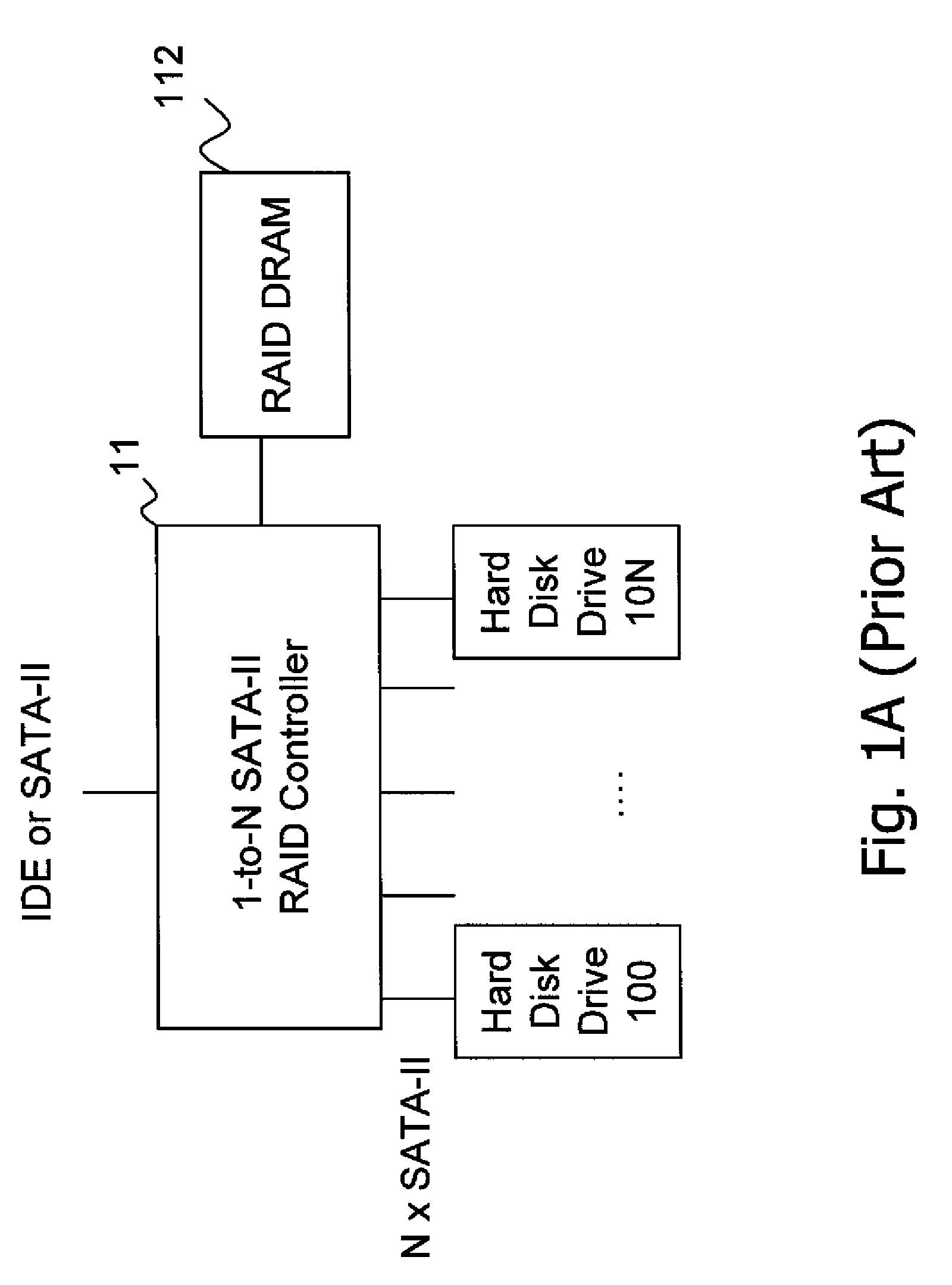

Flash devices with raid

InactiveUS20090172335A1Improve reliabilityImprove performanceMemory architecture accessing/allocationEnergy efficient ICTRAIDHard disc drive

Methods and apparatus of the present invention include multiple flash storage devices that are configured to form a single storage device that is flexible and scalable. Reliability and performance are improved while keeping the power consumption benefits compared to conventional hard disk drives.

Owner:HITACHI GLOBAL STORAGE TECH NETHERLANDS BV

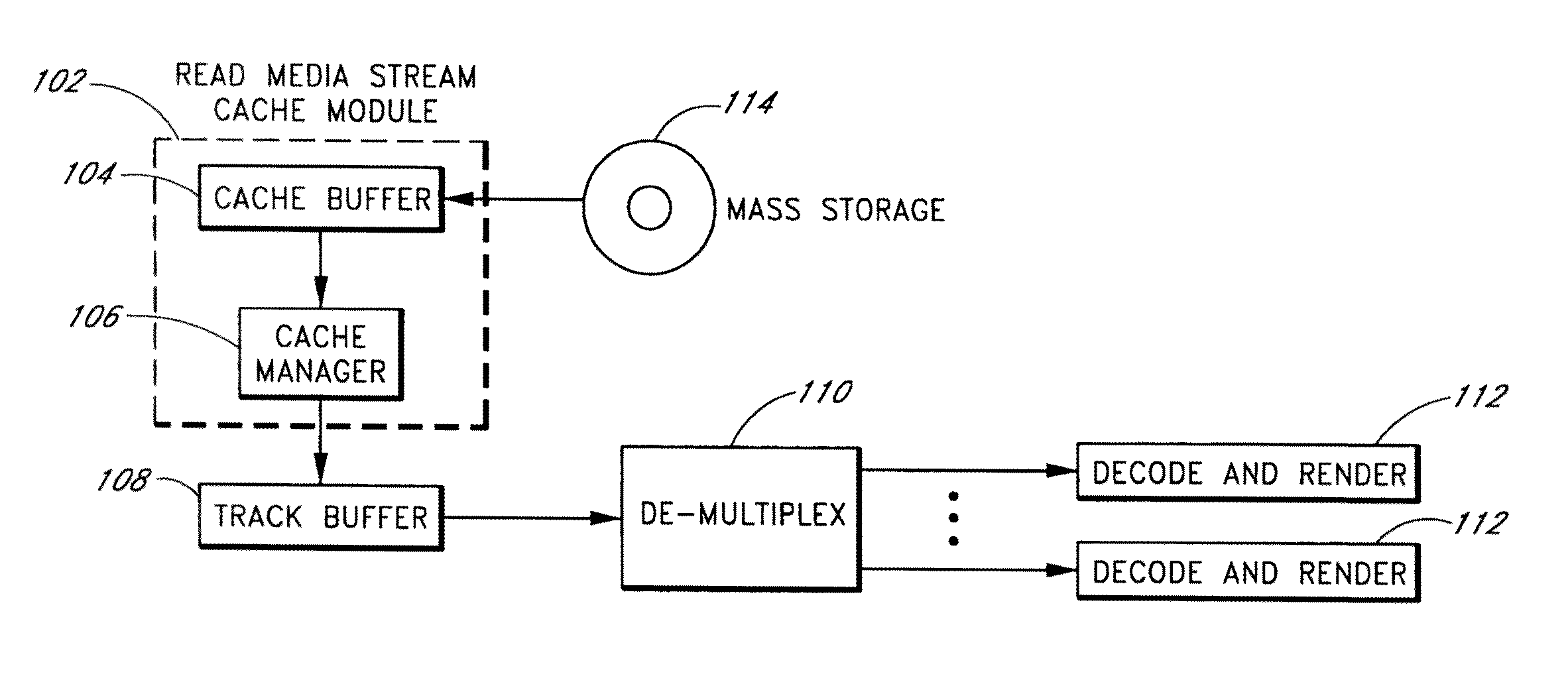

Adaptive mode switching of flash memory address mapping based on host usage characteristics

ActiveUS20050144361A1Reduced useful lifeOptimal performance characteristicMemory architecture accessing/allocationMemory adressing/allocation/relocationDegree of parallelismSelf adaptive

In a non-volatile memory storage system such as a flash EEPROM system, a controller switches the manner in which data sectors are mapped into blocks and metablocks of the memory in response to host programming and controller data consolidation patterns, in order to improve performance and reduce wear. Data are programmed into the memory with different degrees of parallelism.

Owner:SANDISK TECH LLC

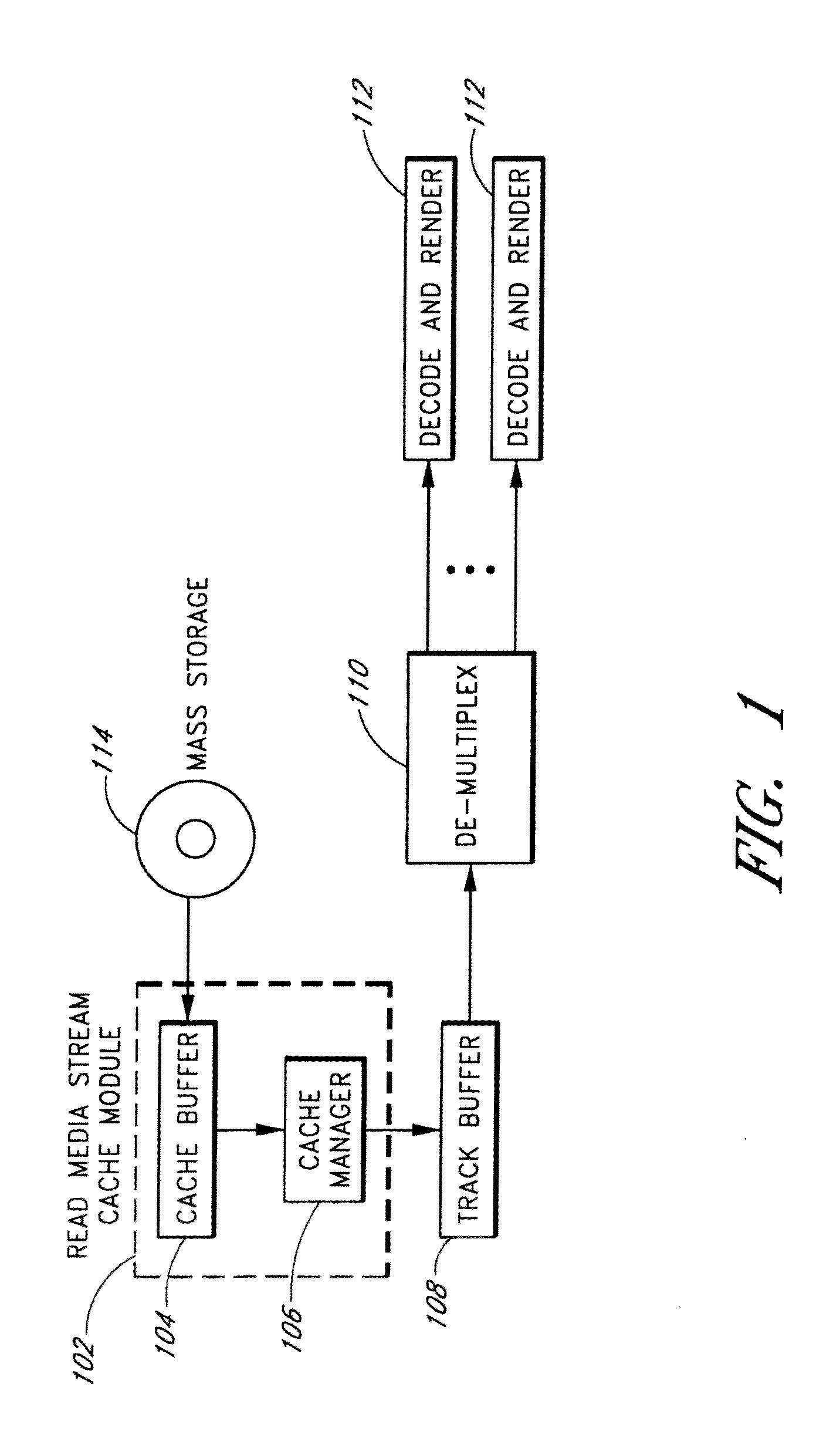

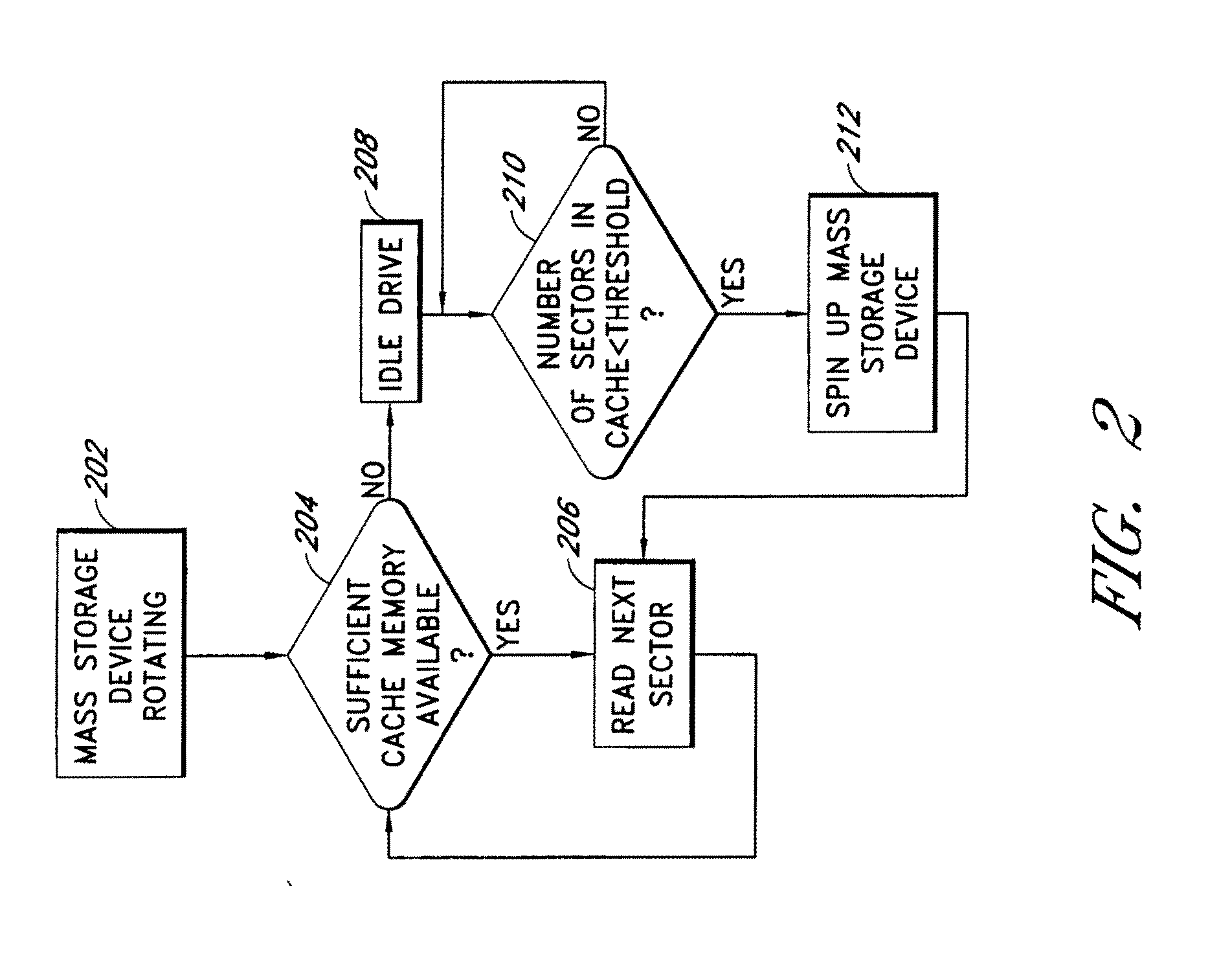

System and Method for Caching Multimedia Data

InactiveUS20100332754A1Data augmentationImprove functionalityMemory architecture accessing/allocationEnergy efficient ICTParallel computingData system

Systems and methods are provided for caching media data to thereby enhance media data read and / or write functionality and performance. A multimedia apparatus, comprises a cache buffer configured to be coupled to a storage device, wherein the cache buffer stores multimedia data, including video and audio data, read from the storage device. A cache manager coupled to the cache buffer, wherein the cache buffer is configured to cause the storage device to enter into a reduced power consumption mode when the amount of data stored in the cache buffer reaches a first level.

Owner:COREL CORP

Popular searches

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com