Patents

Literature

1189 results about "Cache management" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Cache Management. Magento’s cache management system is an easy way to improve the performance of your site. Whenever a cache needs to be refreshed, a notice appears at the top of the workspace to guide you through the process. Follow the link to Cache Management, and refresh the invalid caches.

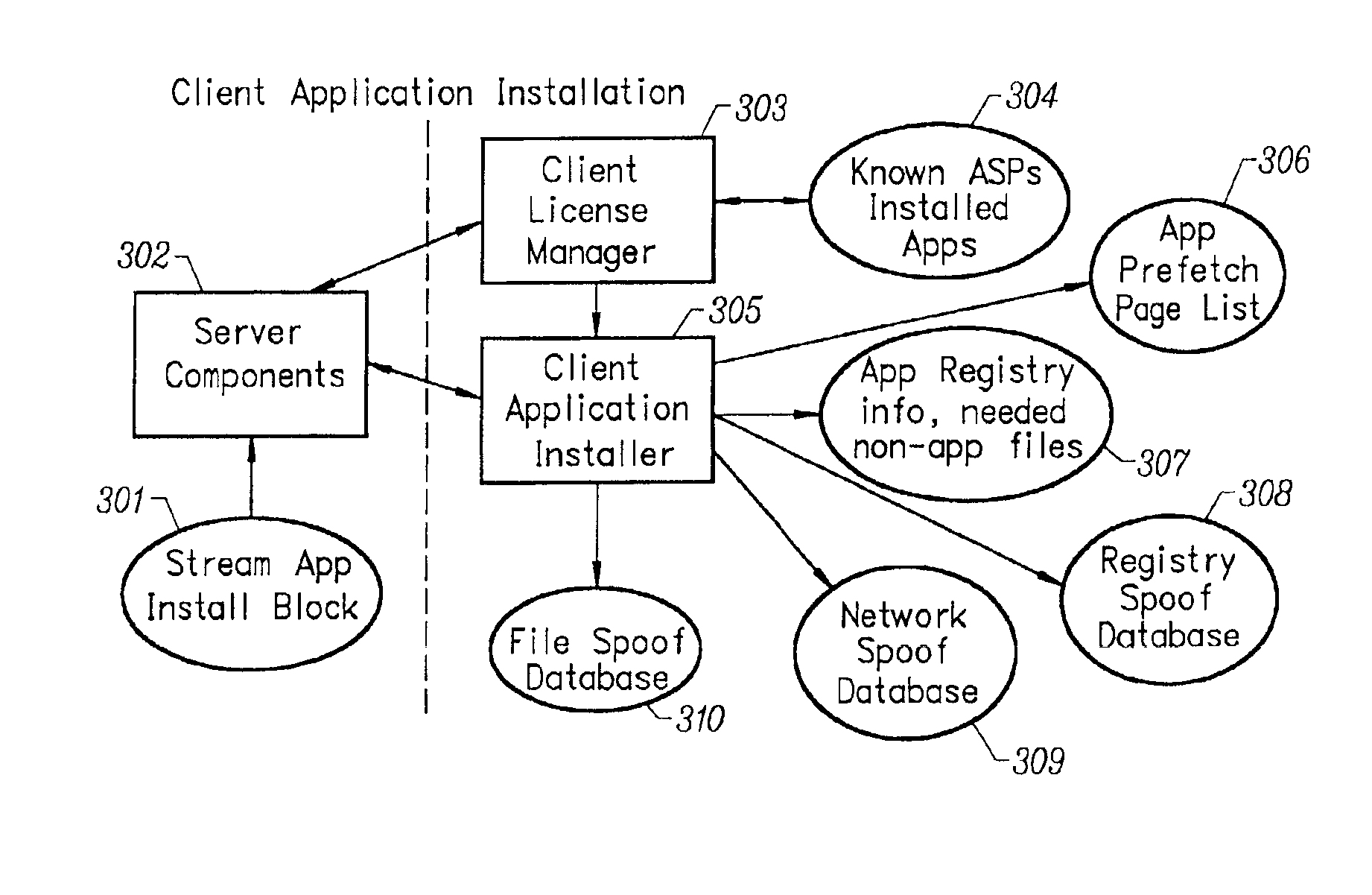

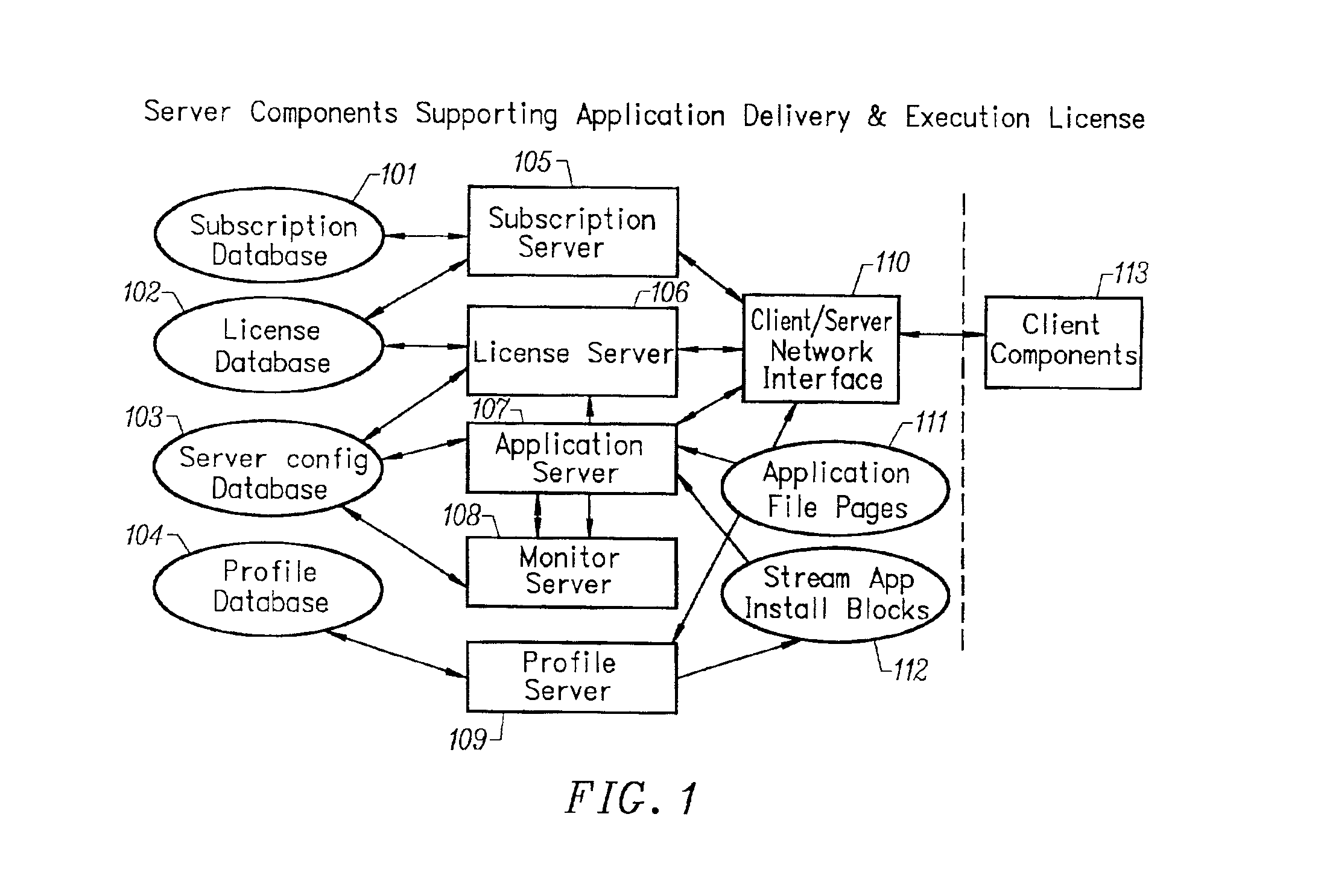

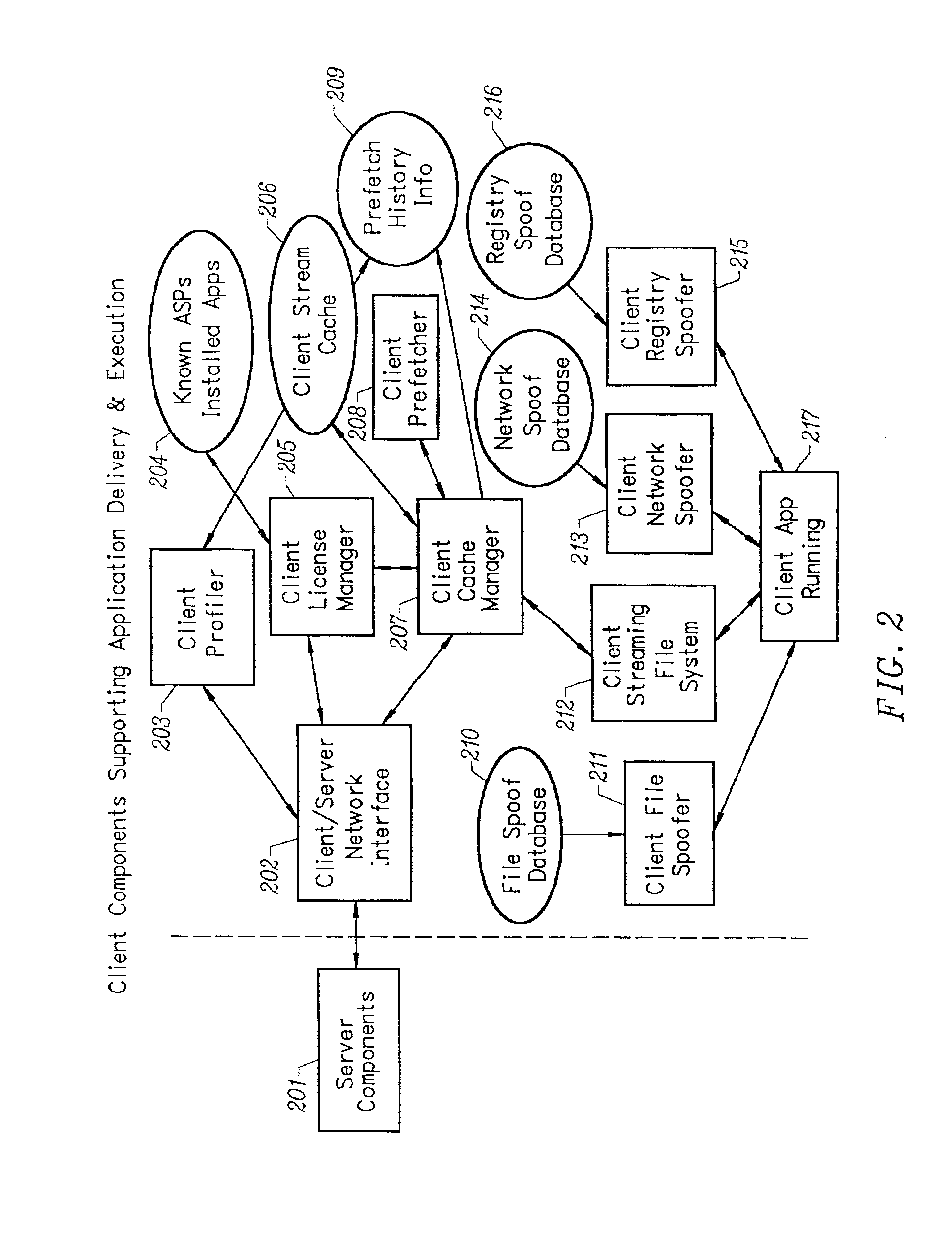

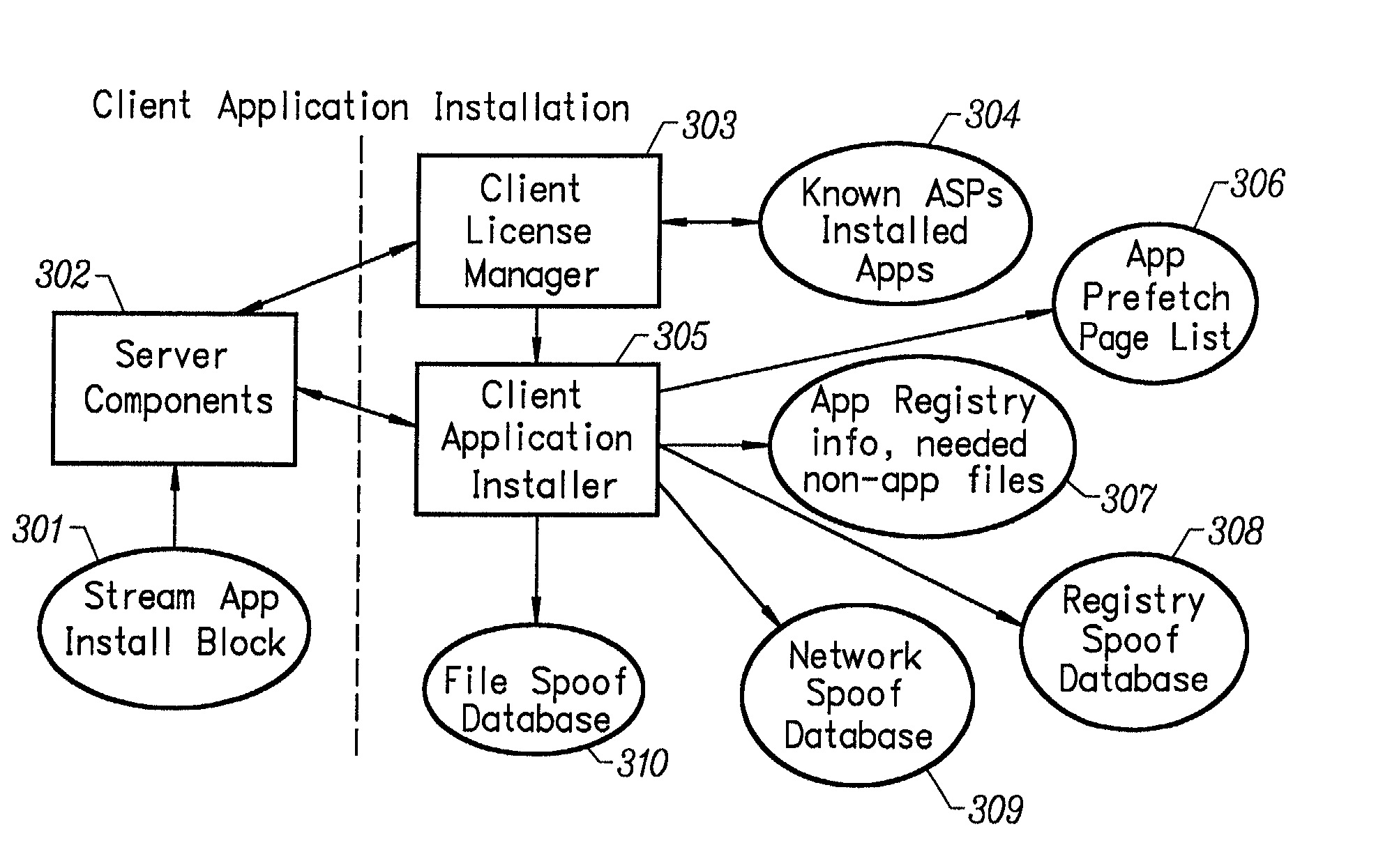

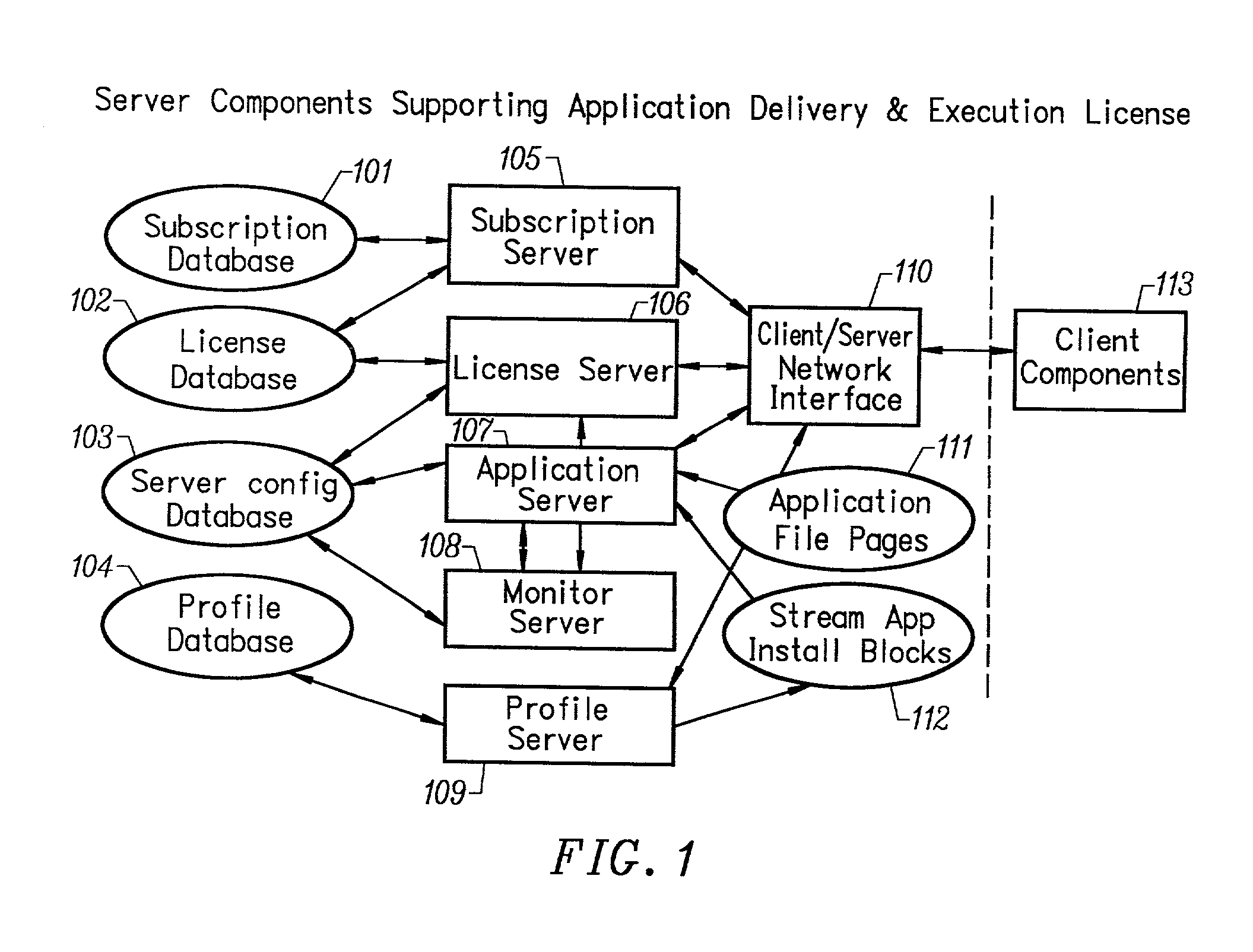

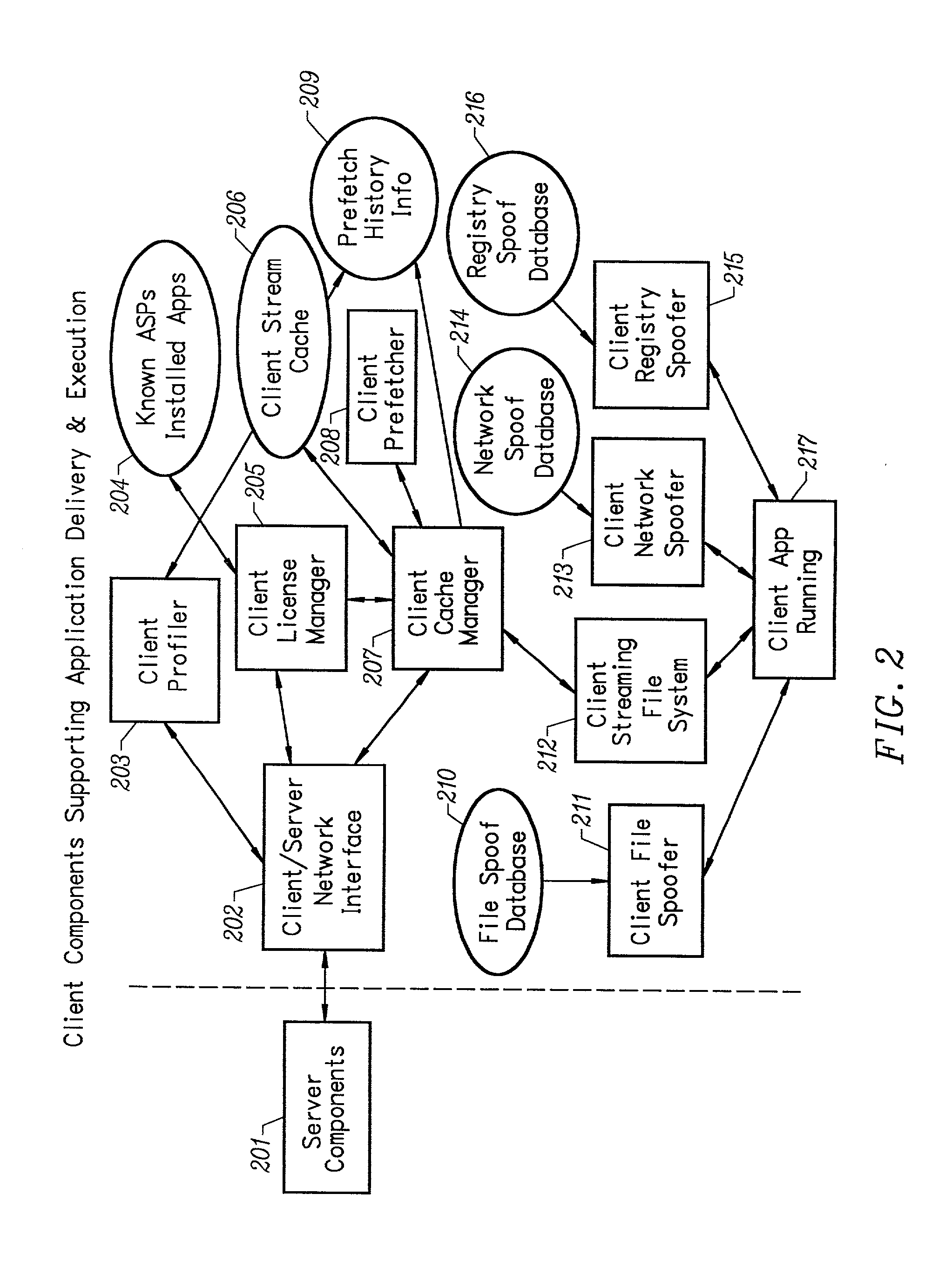

Client installation and execution system for streamed applications

InactiveUS6918113B2Multiple digital computer combinationsProgram loading/initiatingRegistry dataFile system

Owner:NUMECENT HLDG +1

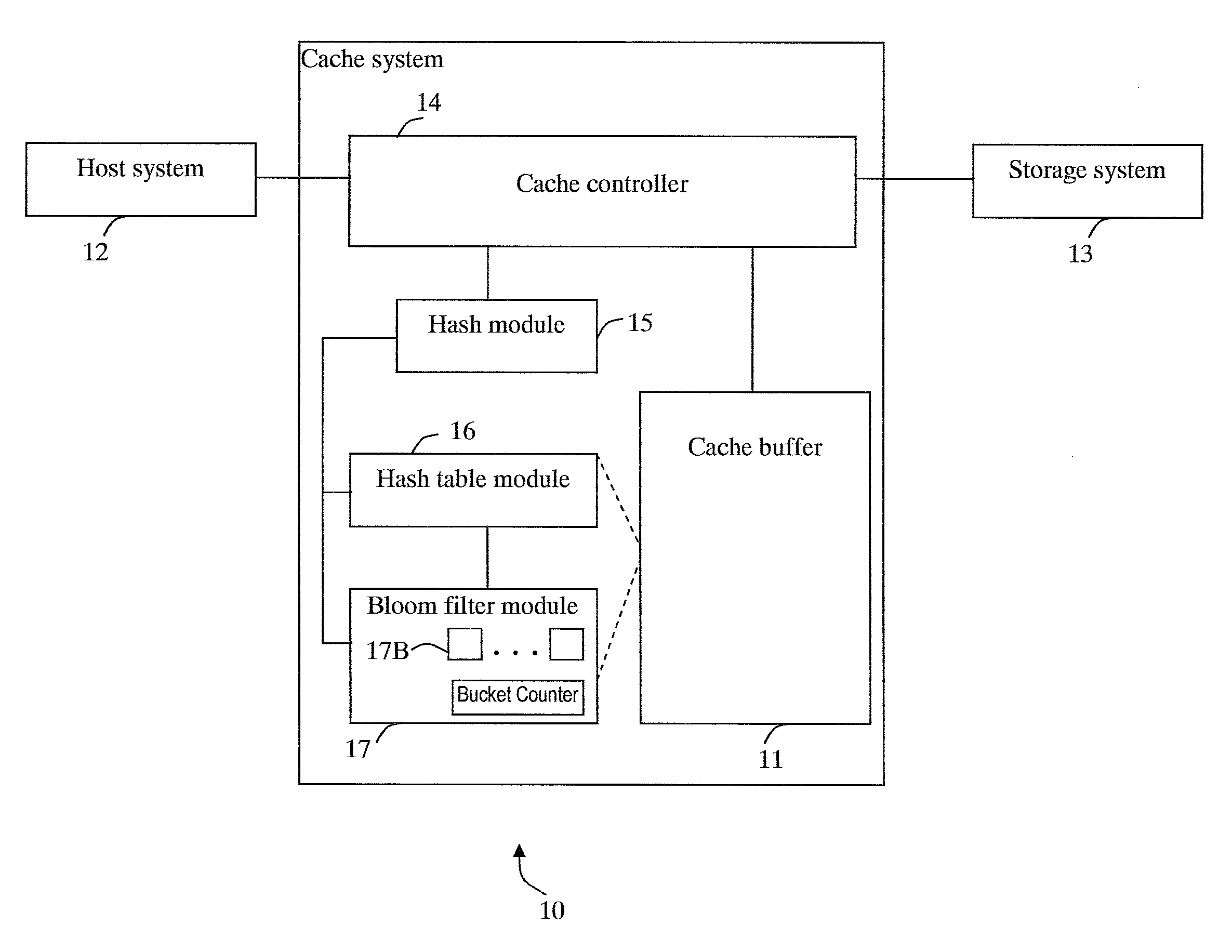

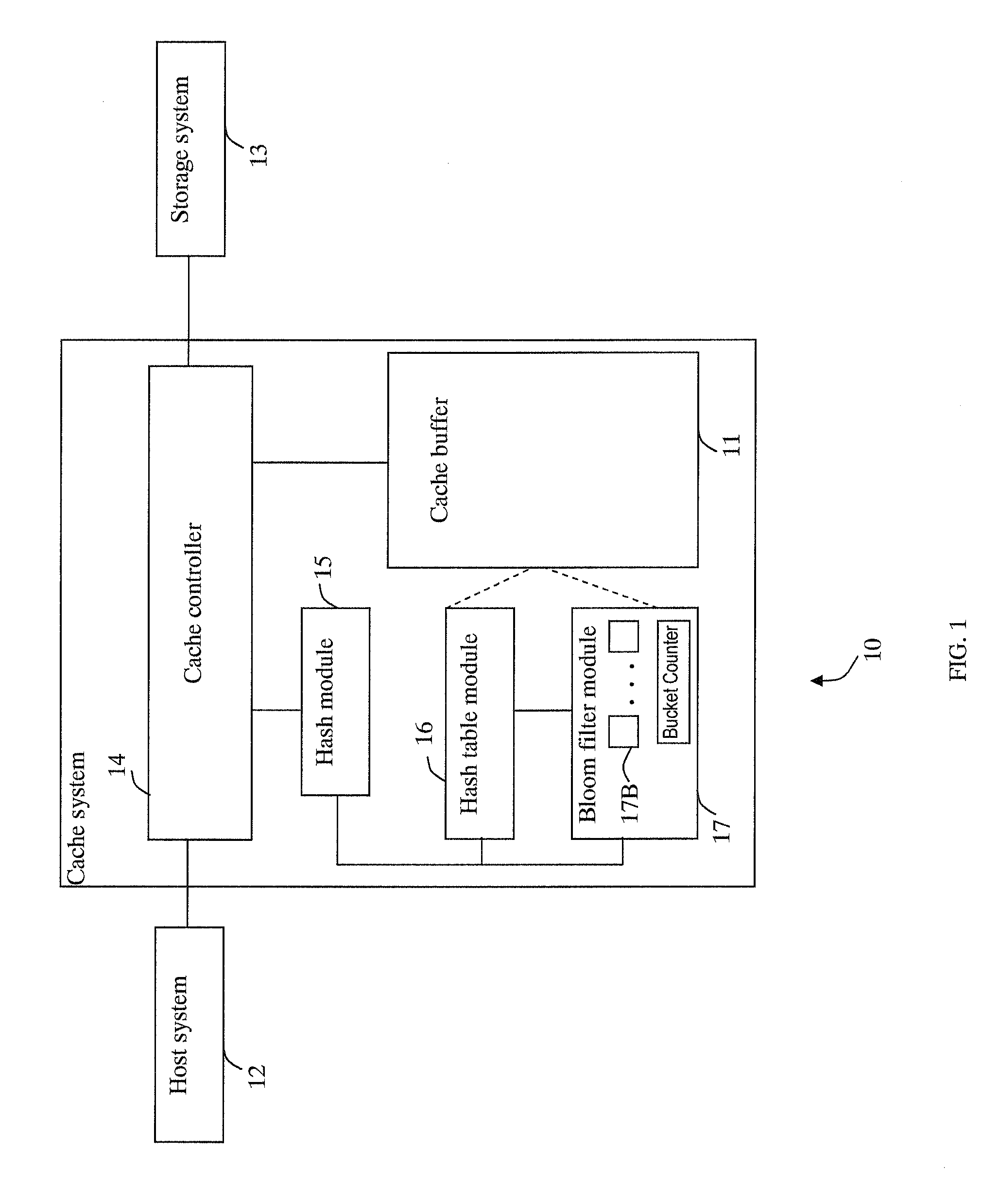

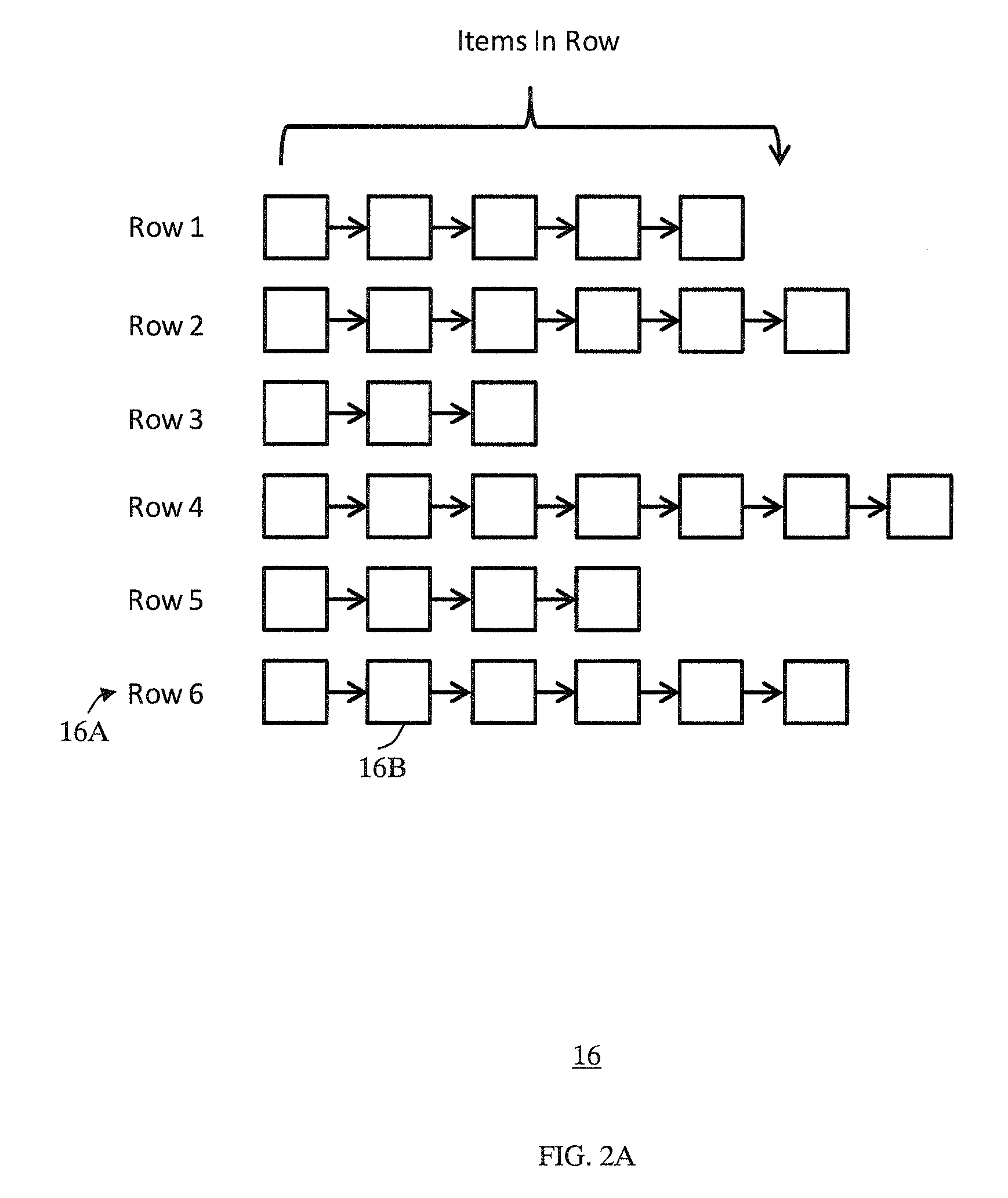

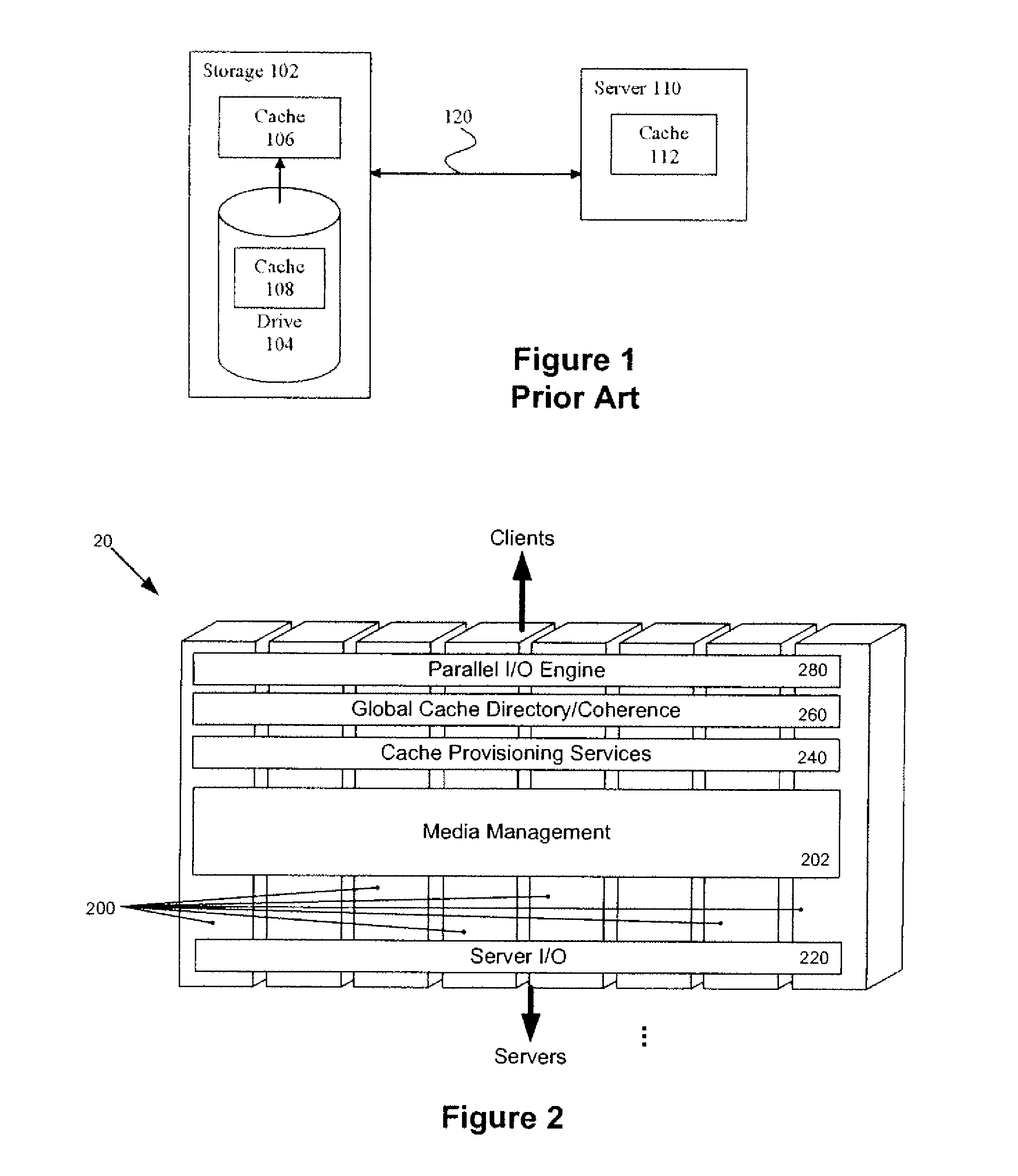

Cache system optimized for cache miss detection

ActiveUS20130326154A1Memory architecture accessing/allocationMemory adressing/allocation/relocationLogical block addressingBloom filter

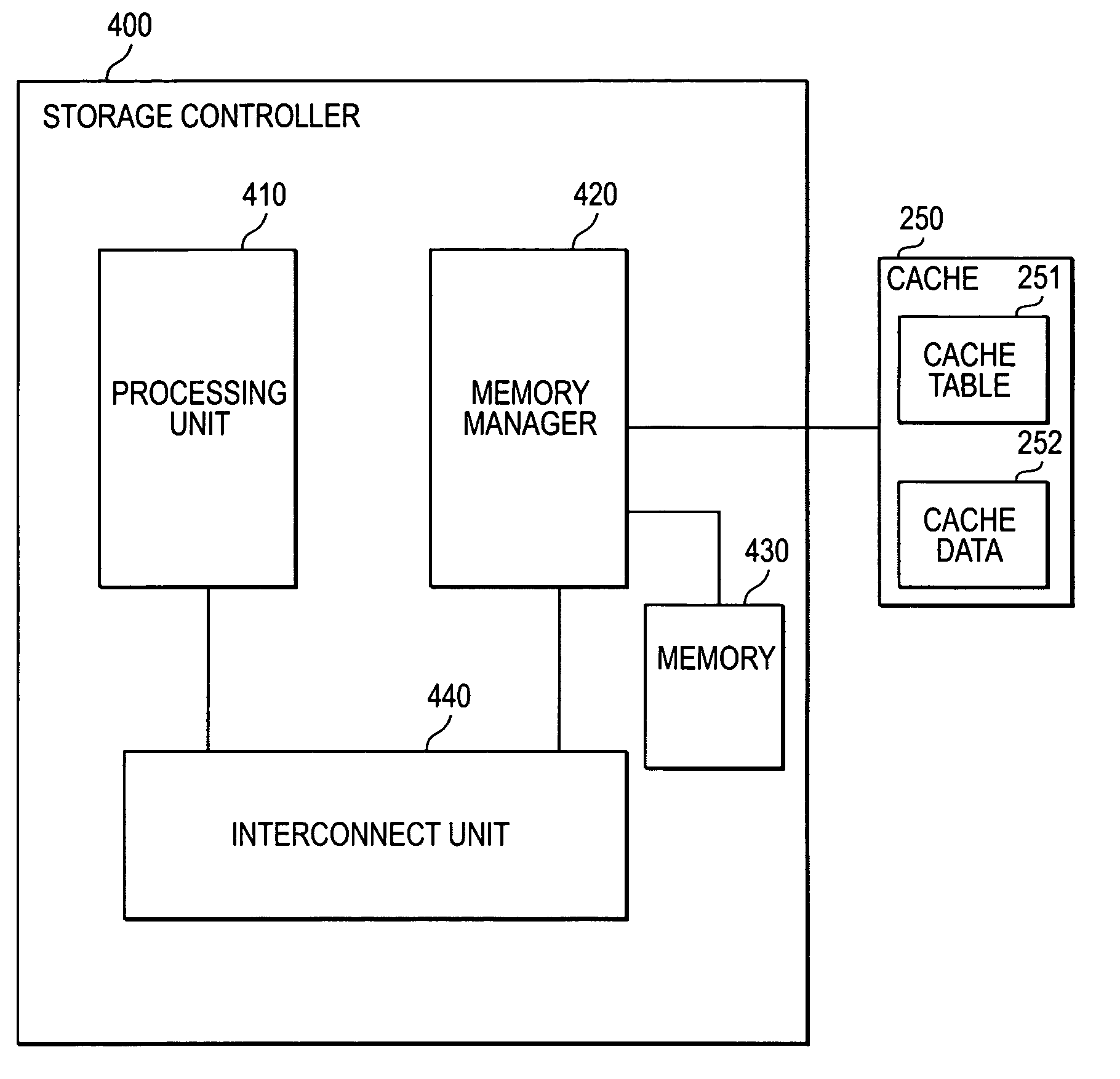

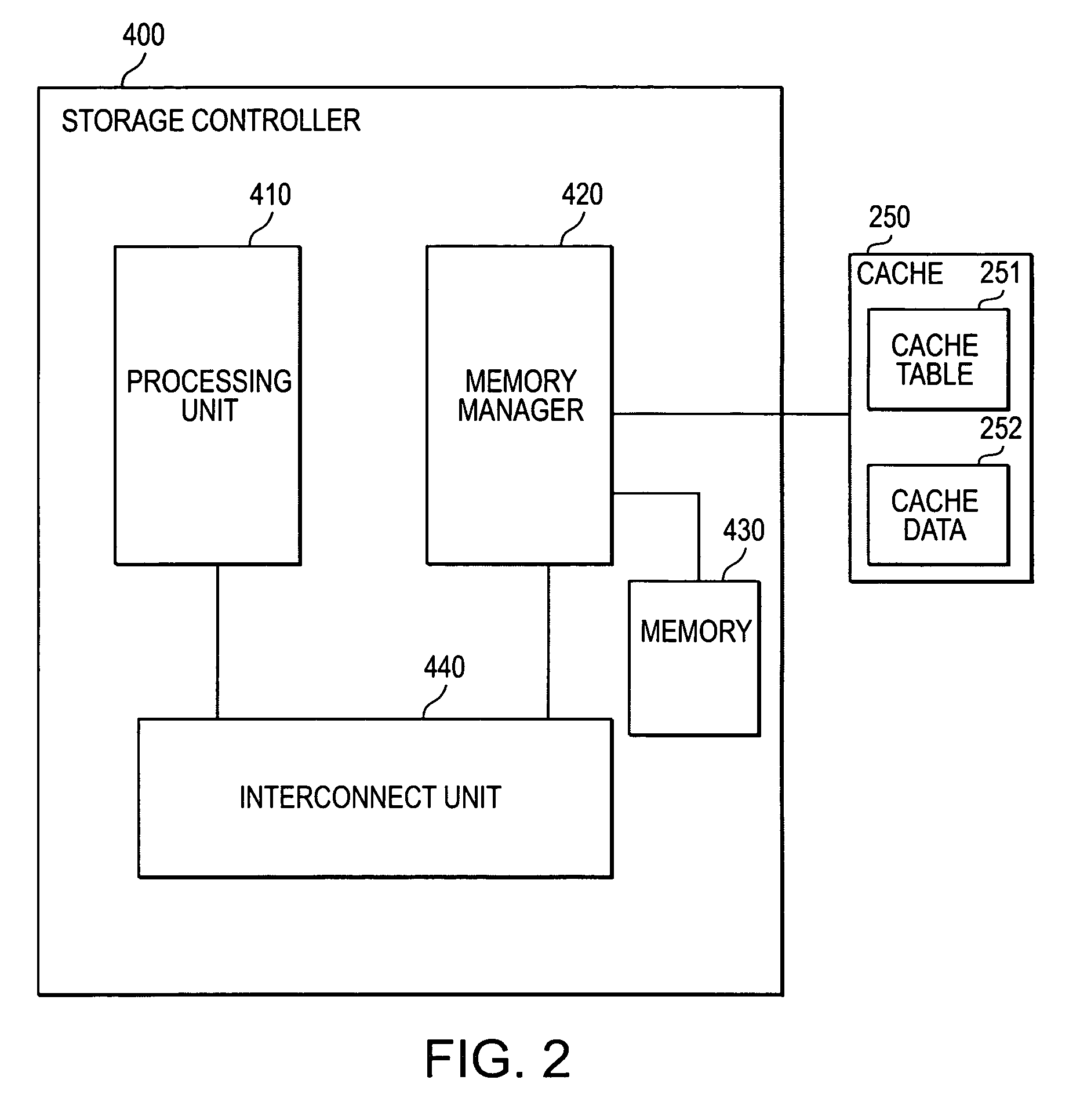

According to an embodiment of the invention, cache management comprises maintaining a cache comprising a hash table including rows of data items in the cache, wherein each row in the hash table is associated with a hash value representing a logical block address (LBA) of each data item in that row. Searching for a target data item in the cache includes calculating a hash value representing a LBA of the target data item, and using the hash value to index into a counting Bloom filter that indicates that the target data item is either not in the cache, indicating a cache miss, or that the target data item may be in the cache. If a cache miss is not indicated, using the hash value to select a row in the hash table, and indicating a cache miss if the target data item is not found in the selected row.

Owner:SAMSUNG ELECTRONICS CO LTD

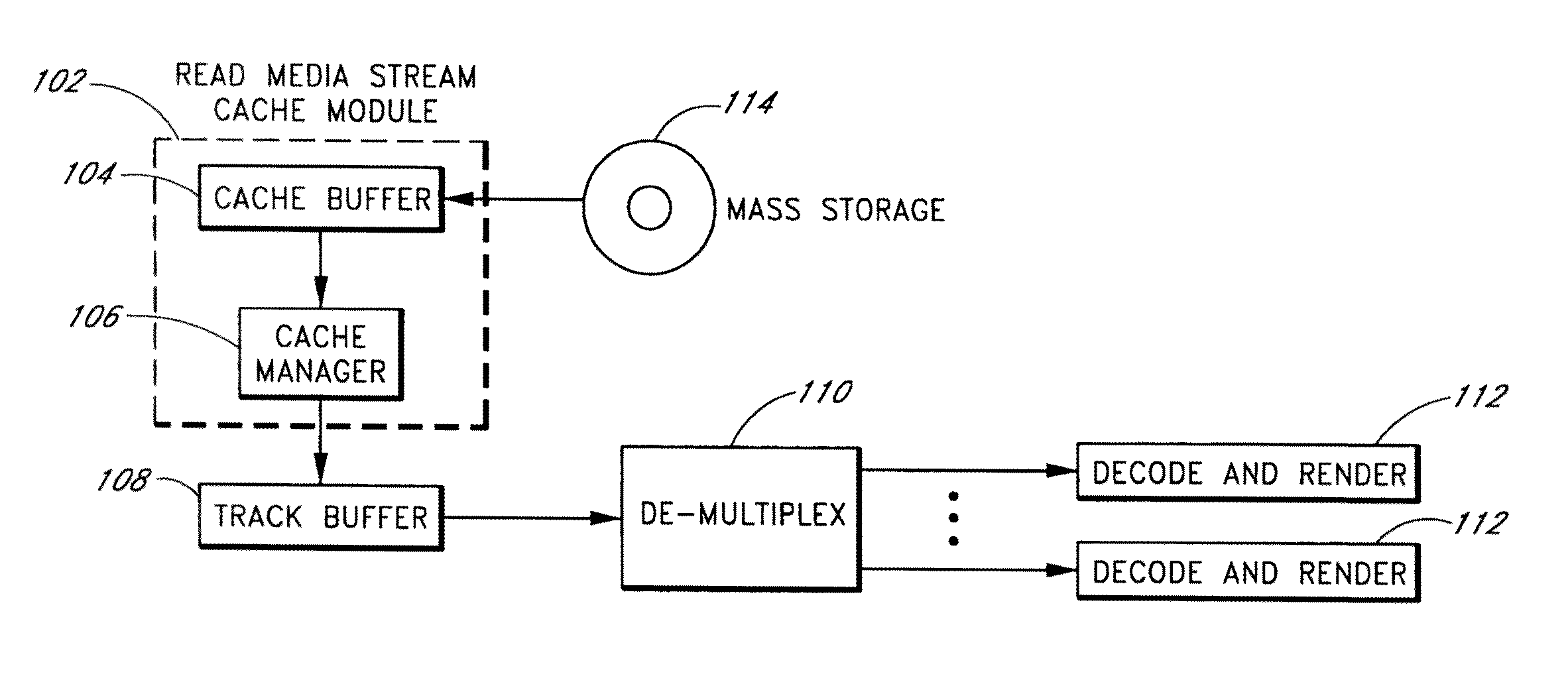

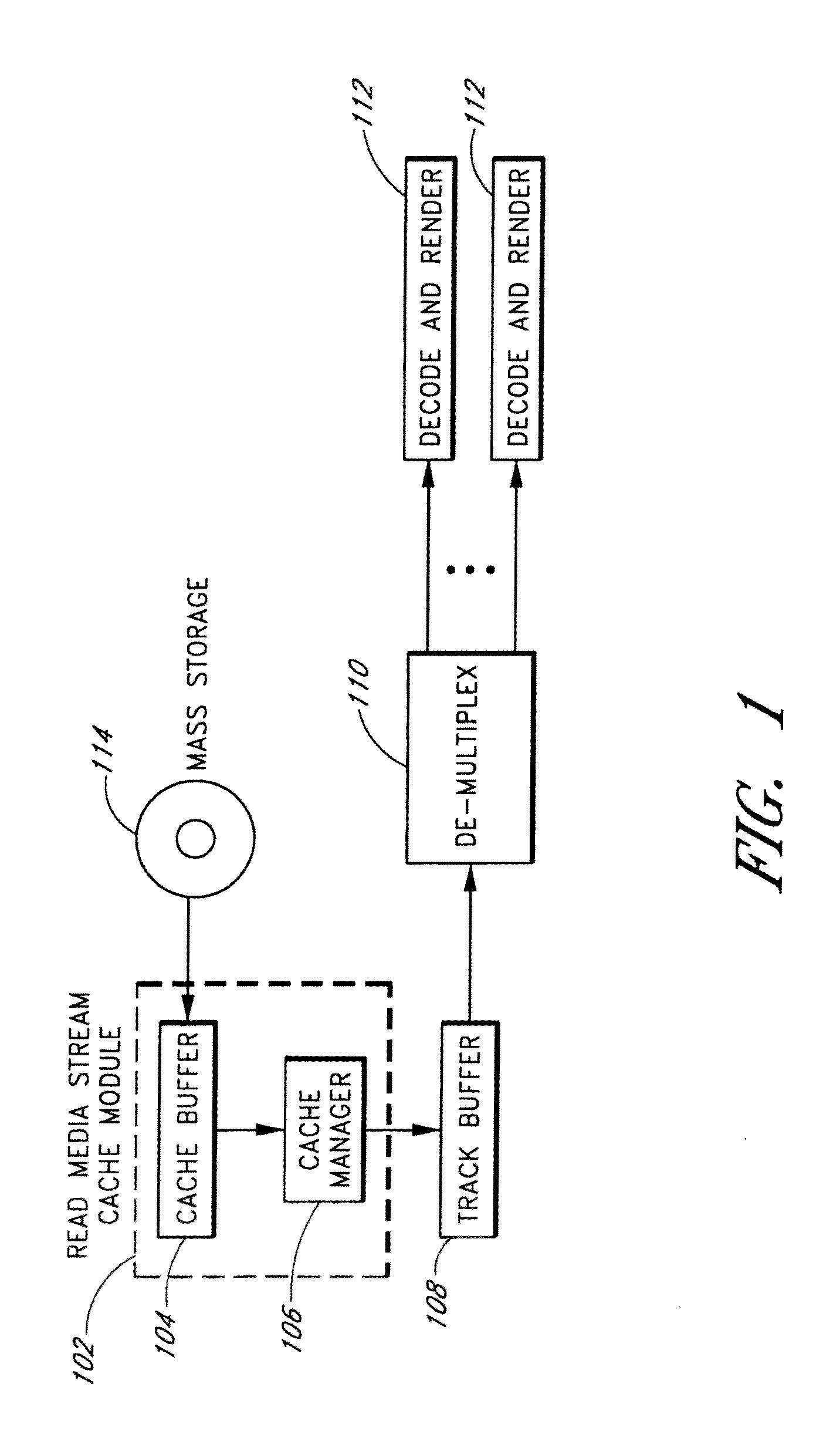

System and Method for Caching Multimedia Data

InactiveUS20100332754A1Data augmentationImprove functionalityMemory architecture accessing/allocationEnergy efficient ICTParallel computingData system

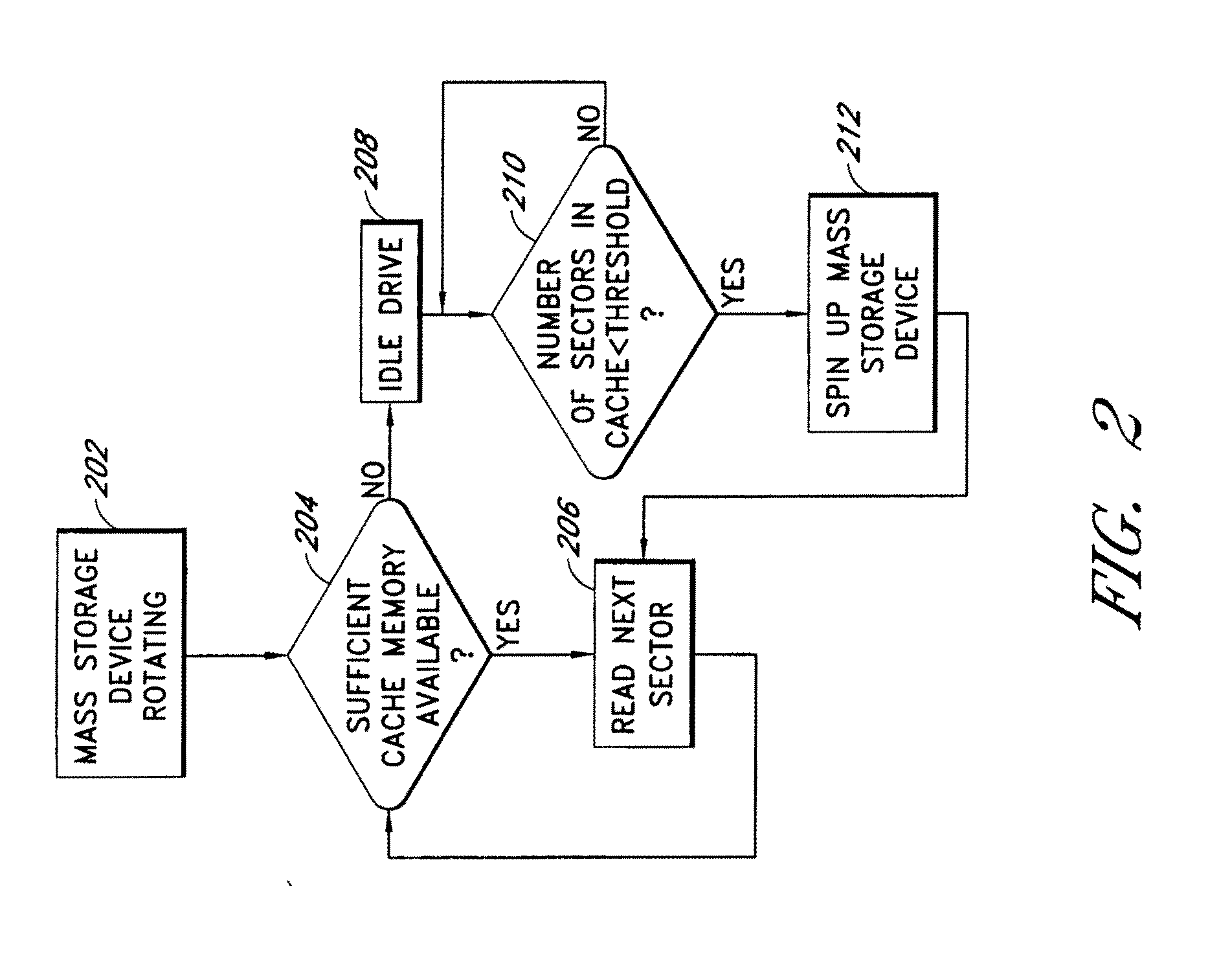

Systems and methods are provided for caching media data to thereby enhance media data read and / or write functionality and performance. A multimedia apparatus, comprises a cache buffer configured to be coupled to a storage device, wherein the cache buffer stores multimedia data, including video and audio data, read from the storage device. A cache manager coupled to the cache buffer, wherein the cache buffer is configured to cause the storage device to enter into a reduced power consumption mode when the amount of data stored in the cache buffer reaches a first level.

Owner:COREL CORP

Client installation and execution system for streamed applications

InactiveUS20020157089A1Multiple digital computer combinationsProgram loading/initiatingRegistry dataFile system

Owner:NUMECENT HLDG +1

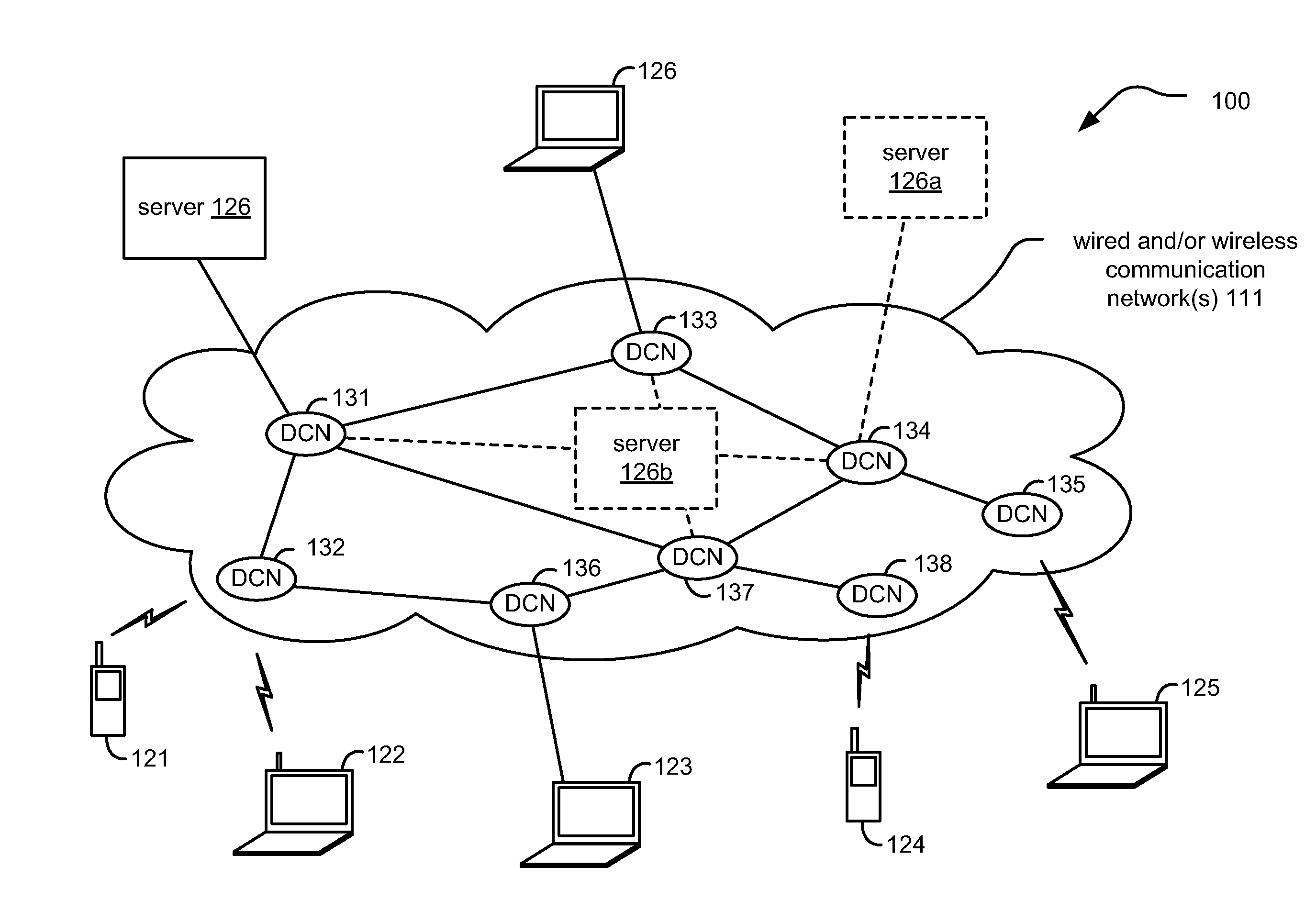

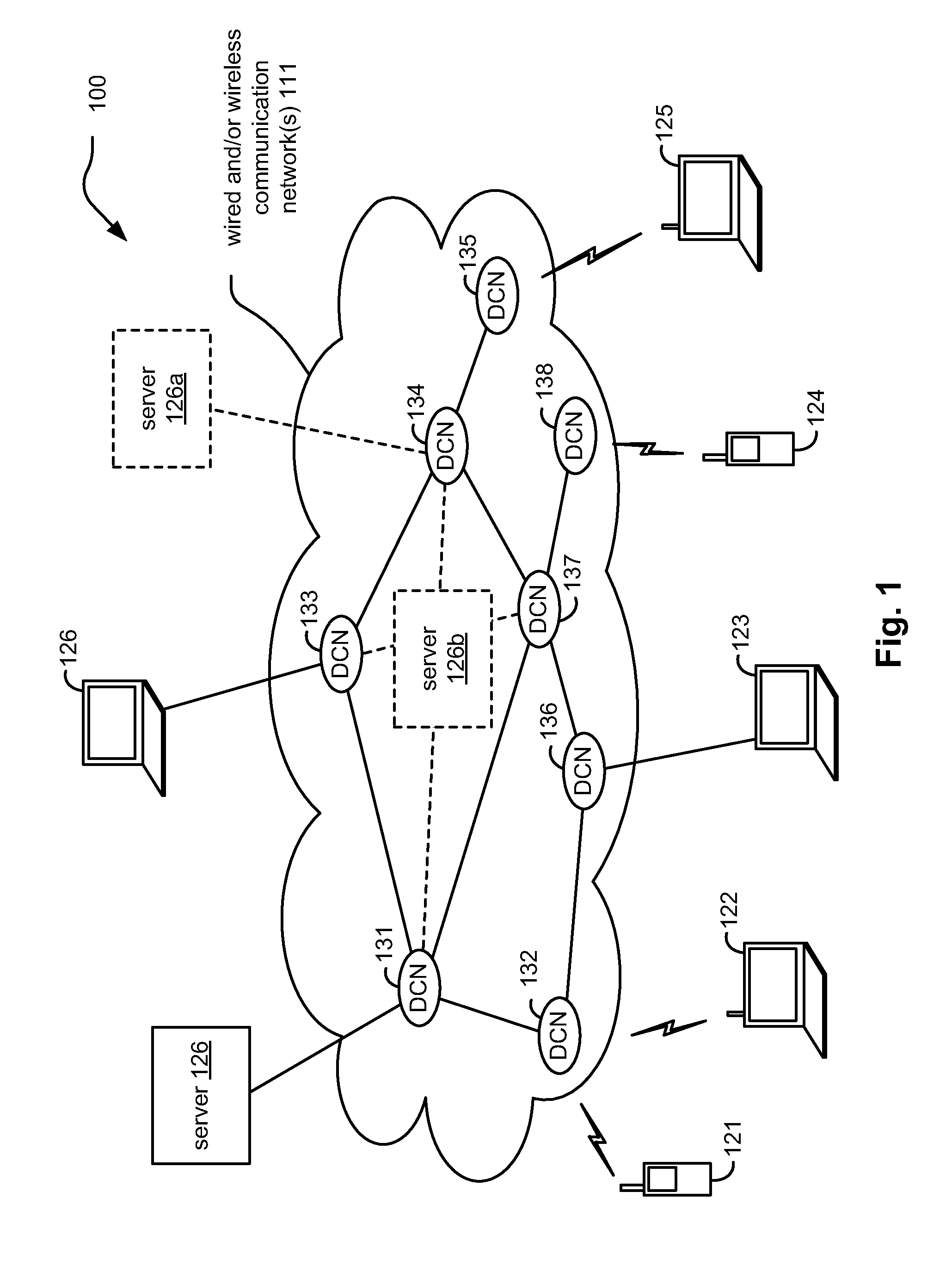

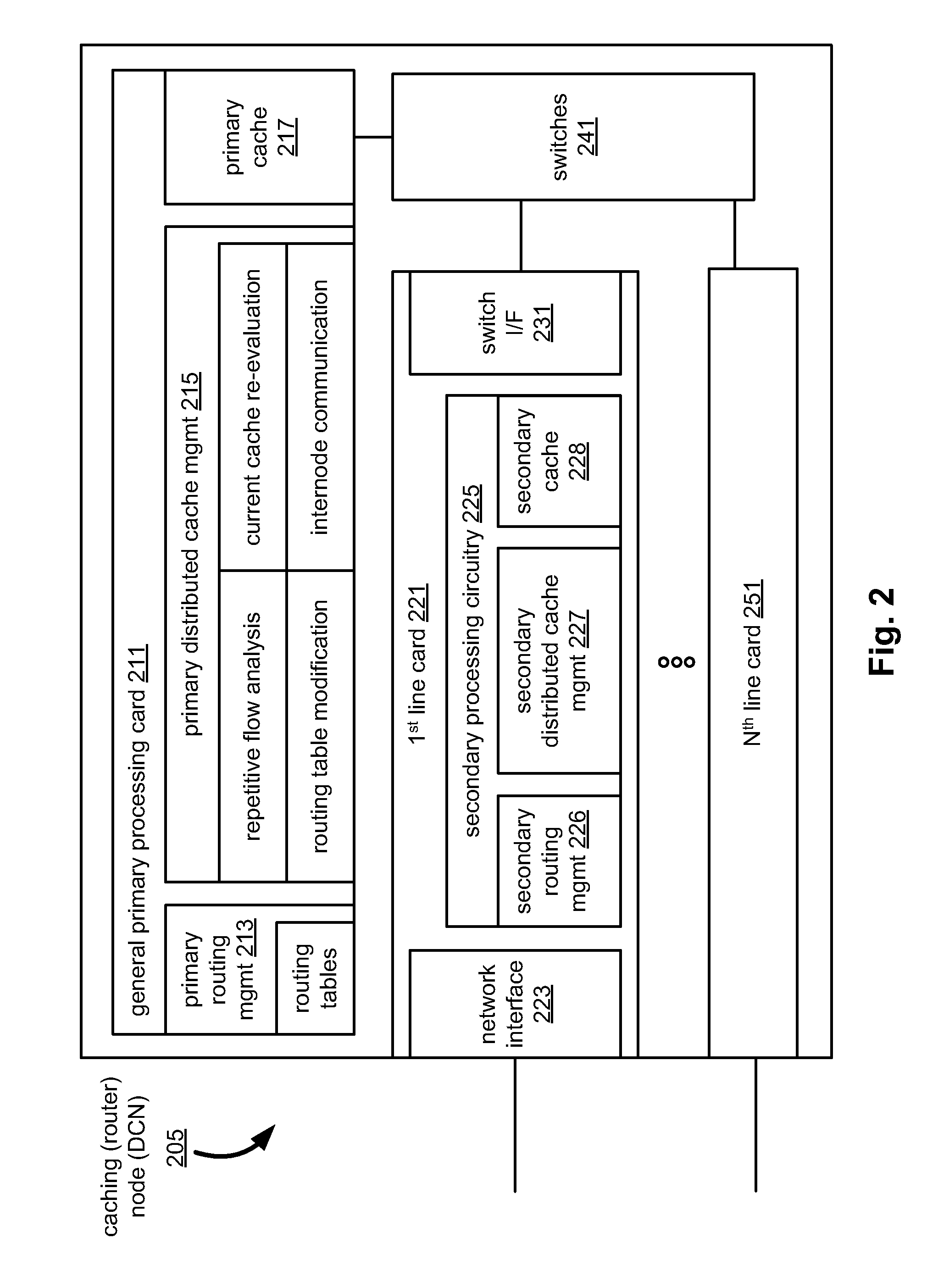

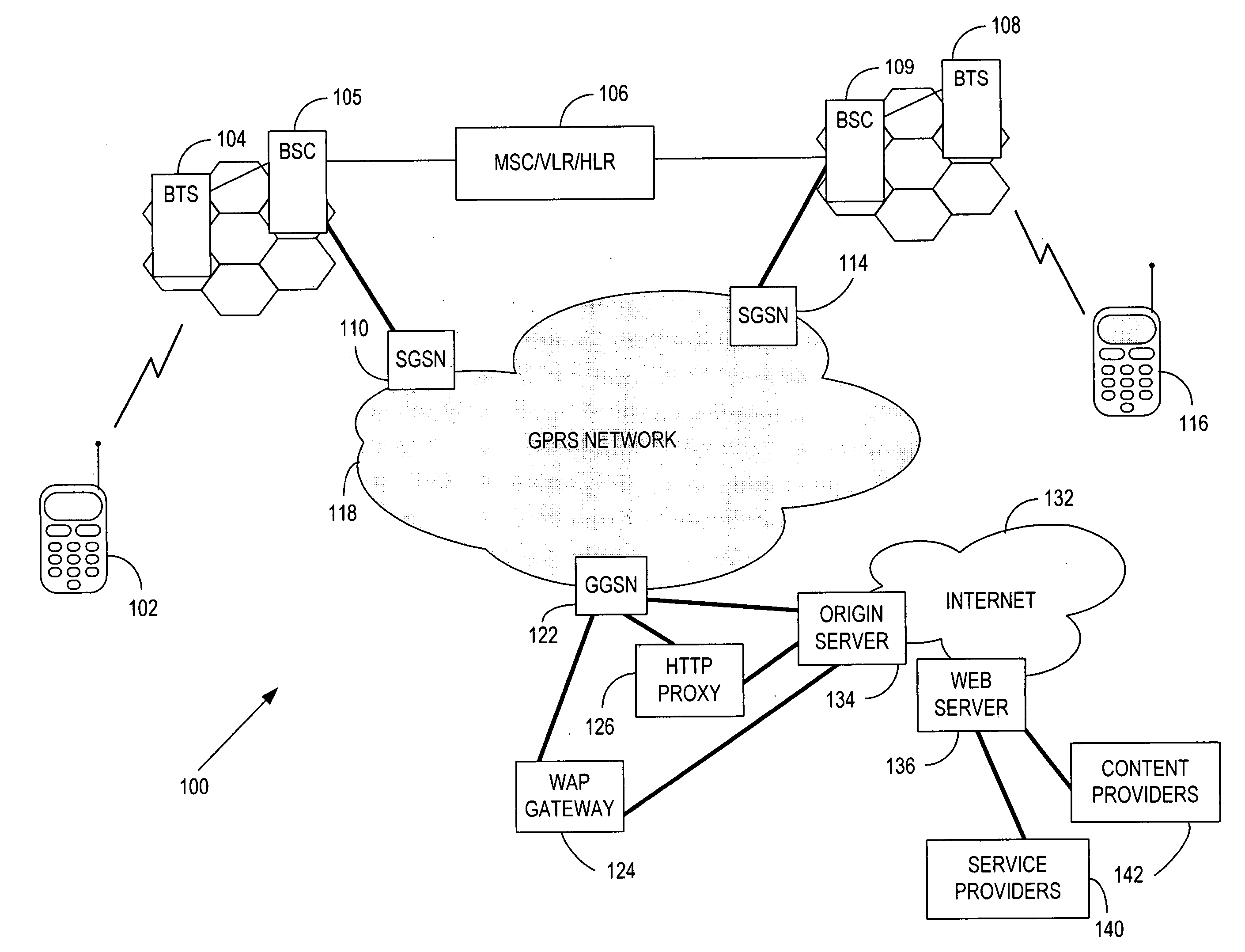

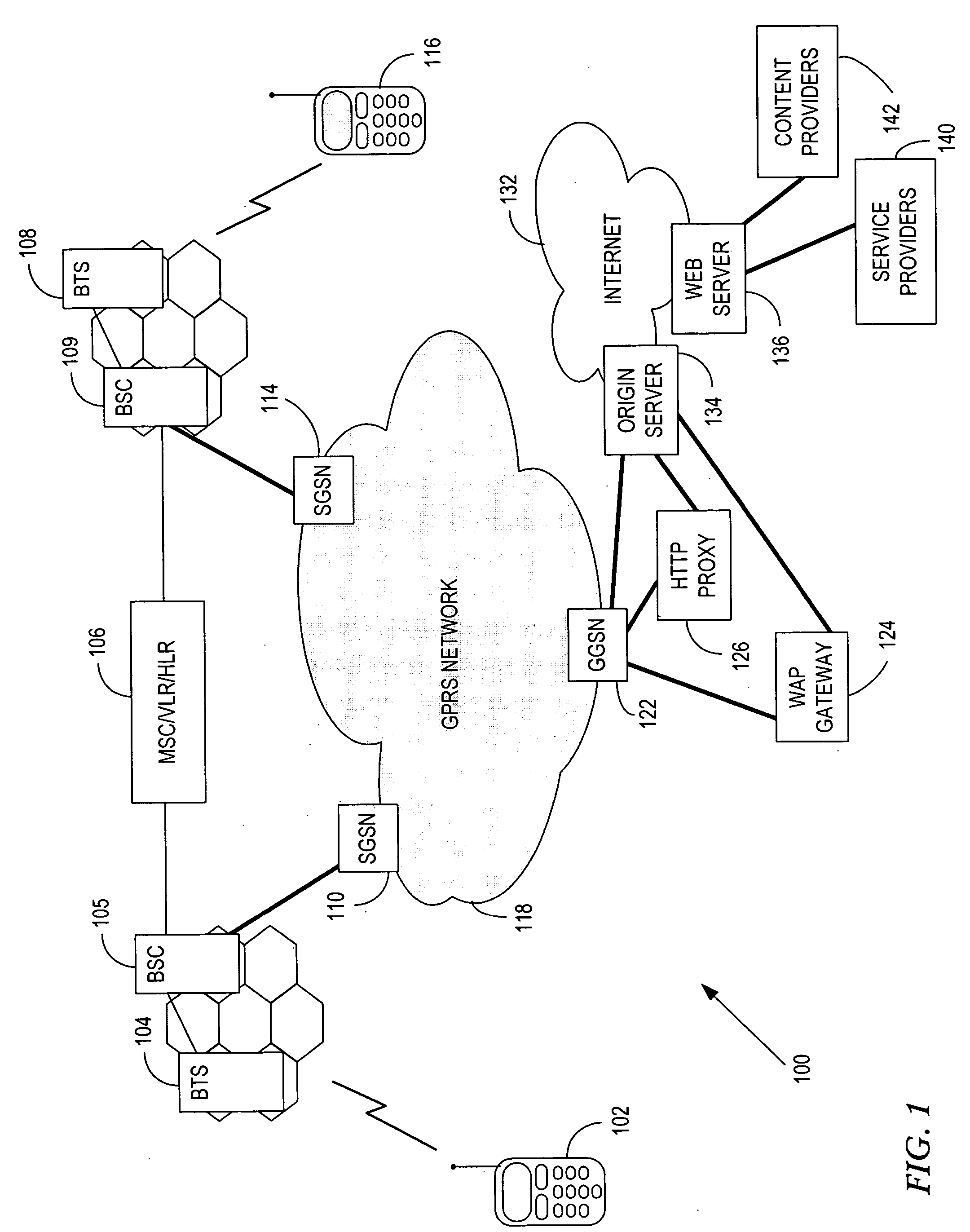

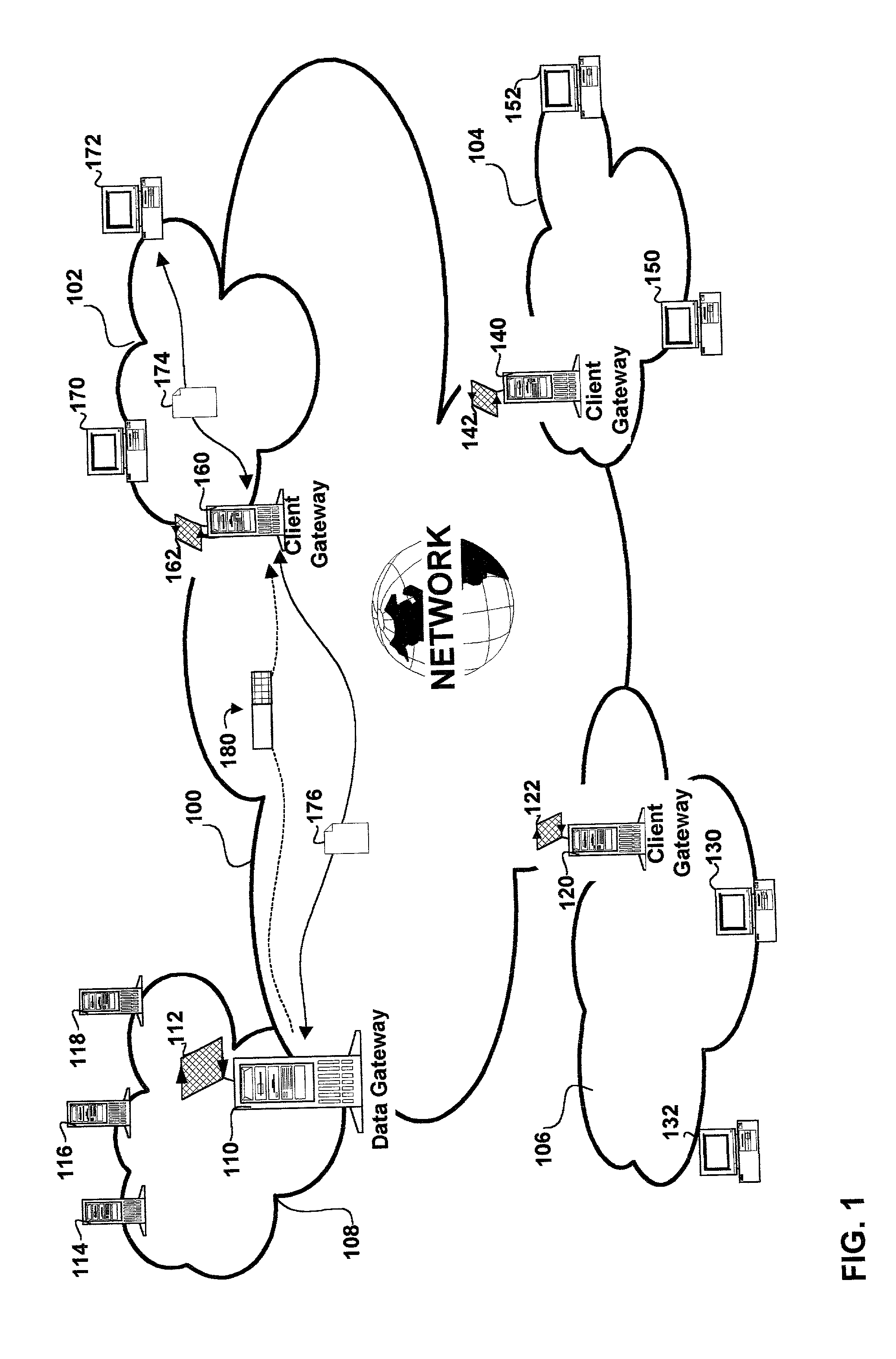

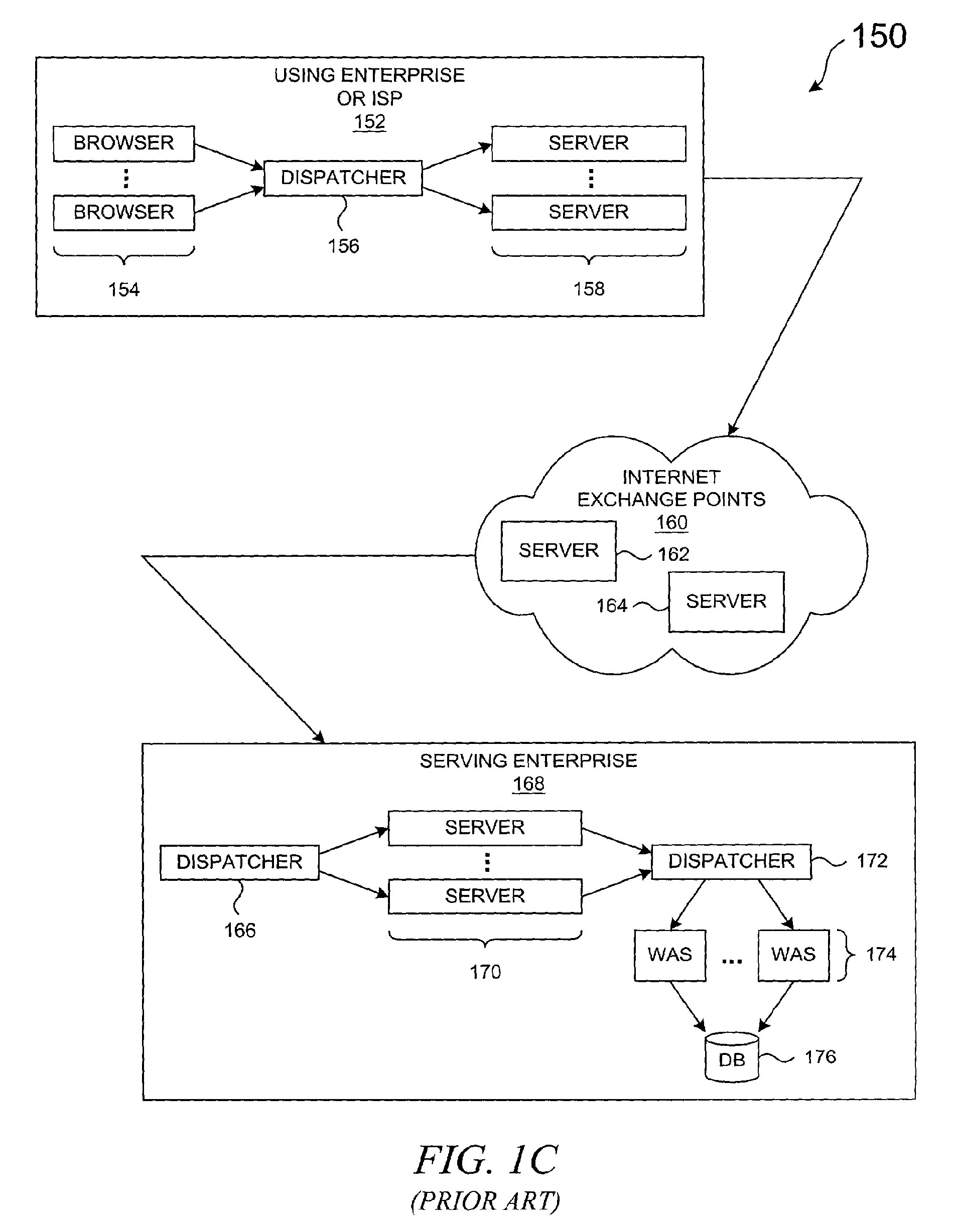

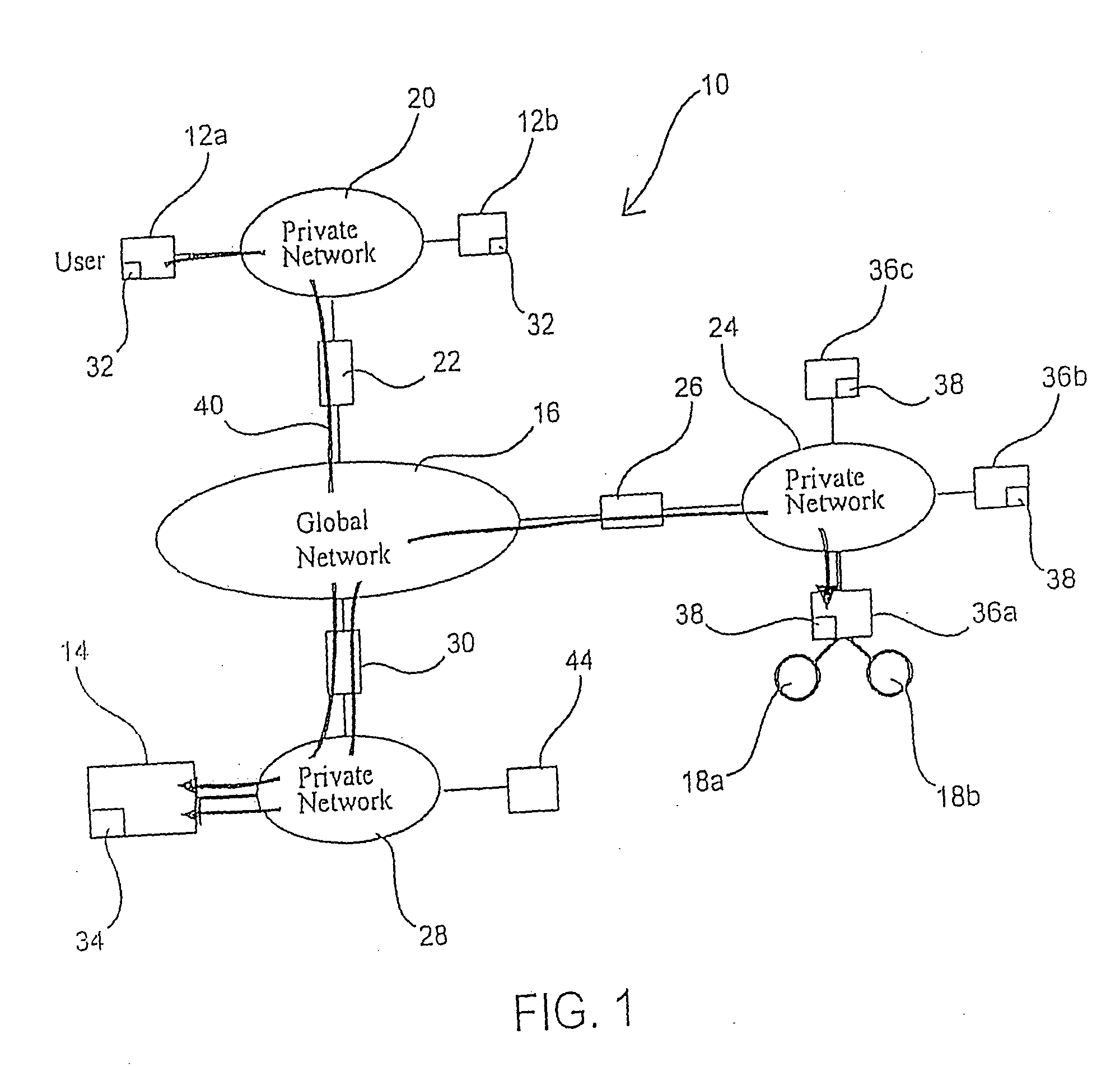

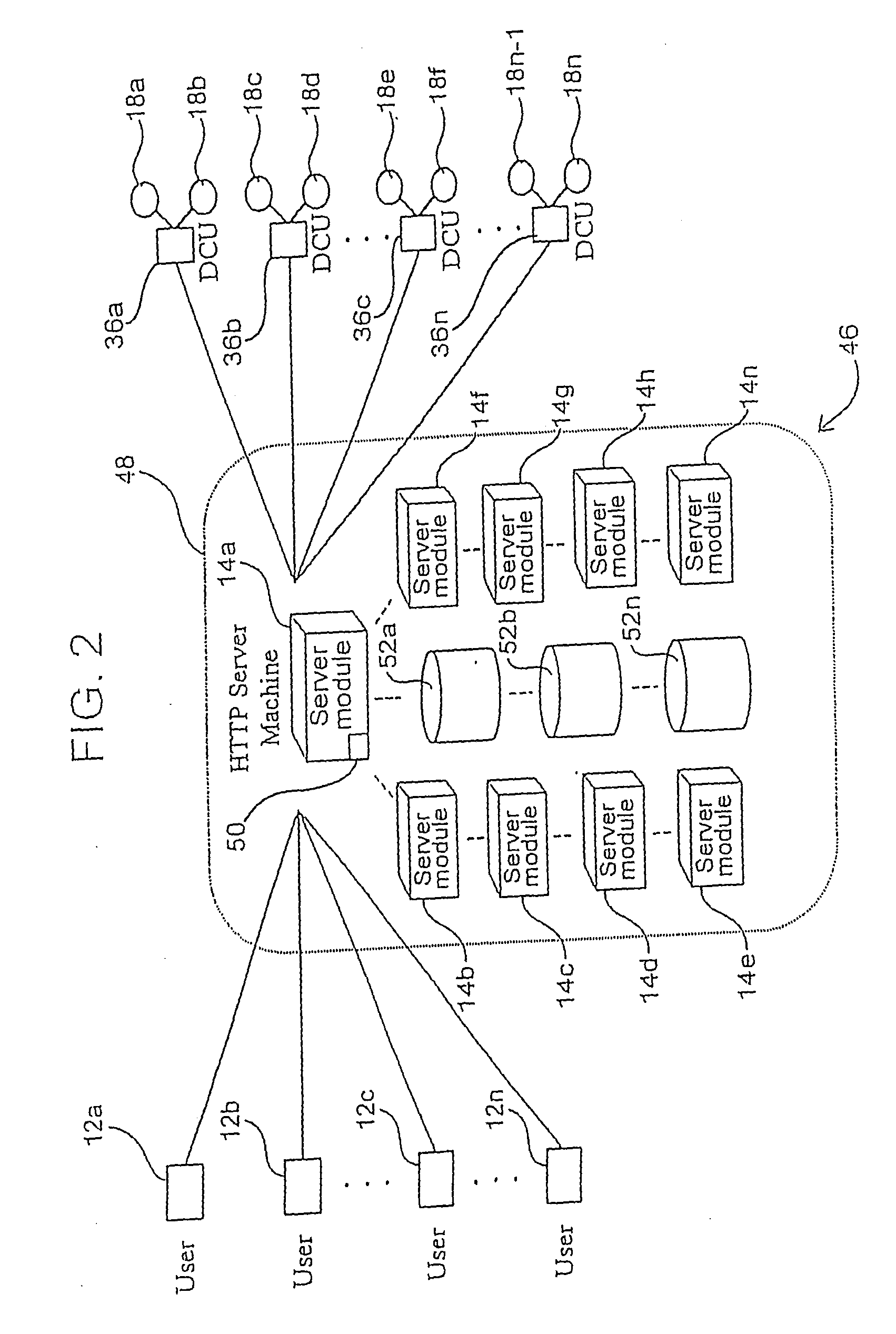

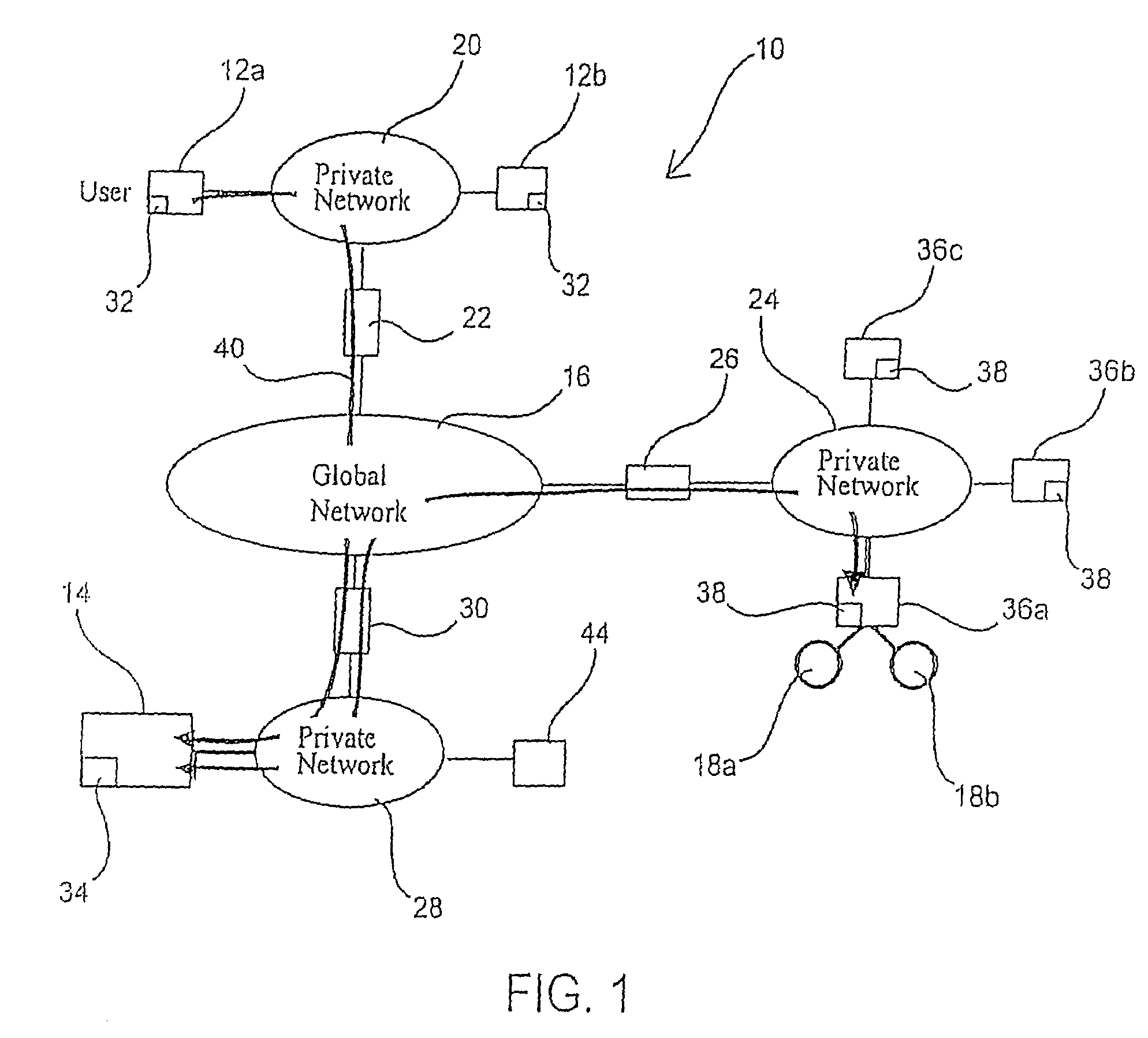

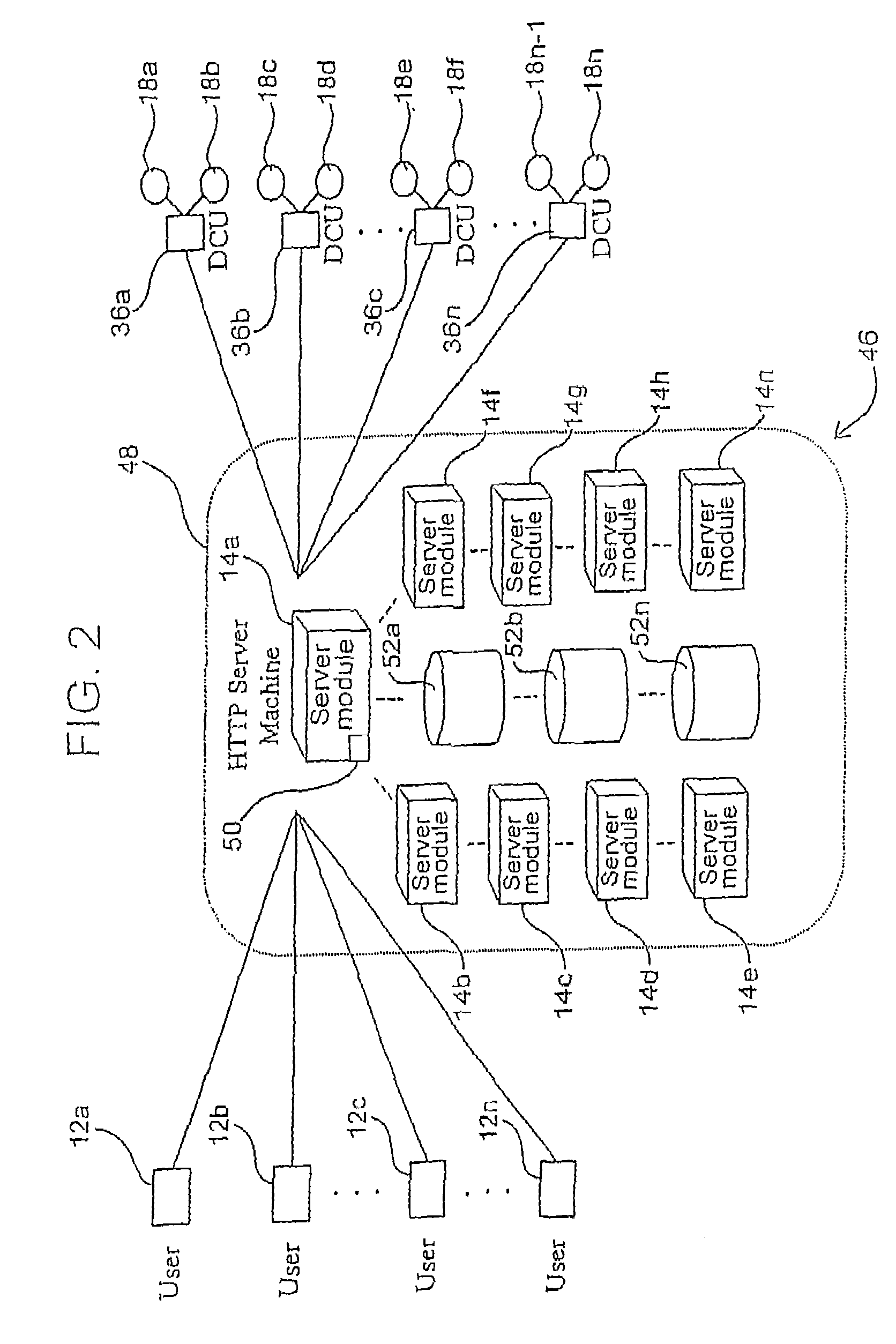

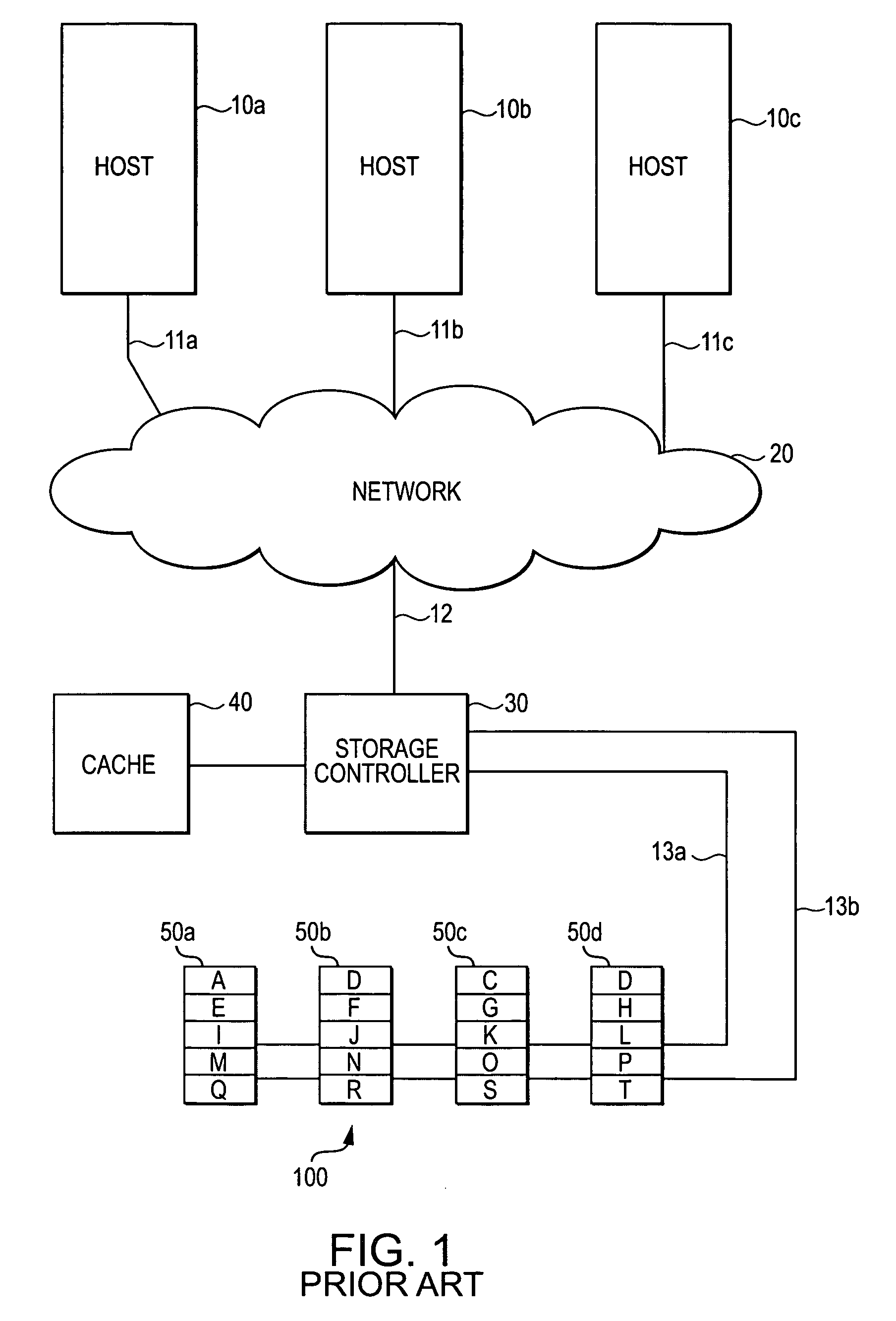

Distributed Internet caching via multiple node caching management

InactiveUS20110040893A1Digital computer detailsTransmissionCommunications systemTelecommunications link

Distributed Internet caching via multiple node caching management. Caching decisions and management are performed based on information corresponding to more than one caching node device (sometimes referred to as a distributed caching node device, distributed Internet caching node device, and / or DCN) within a communication system. The communication system may be composed of one type or multiple types of communication networks that are communicatively coupled to communicate there between, and they may be composed of any one or combination types of communication links therein [wired, wireless, optical, satellite, etc.]). In some instances, more than one of these DCNs operate cooperatively to make caching decisions and direct management of content to be stored among the more than one DCNs. In an alternative embodiment, a managing DCN is operative to make caching decisions and direct management of content within more than one DCNs of a communication system.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

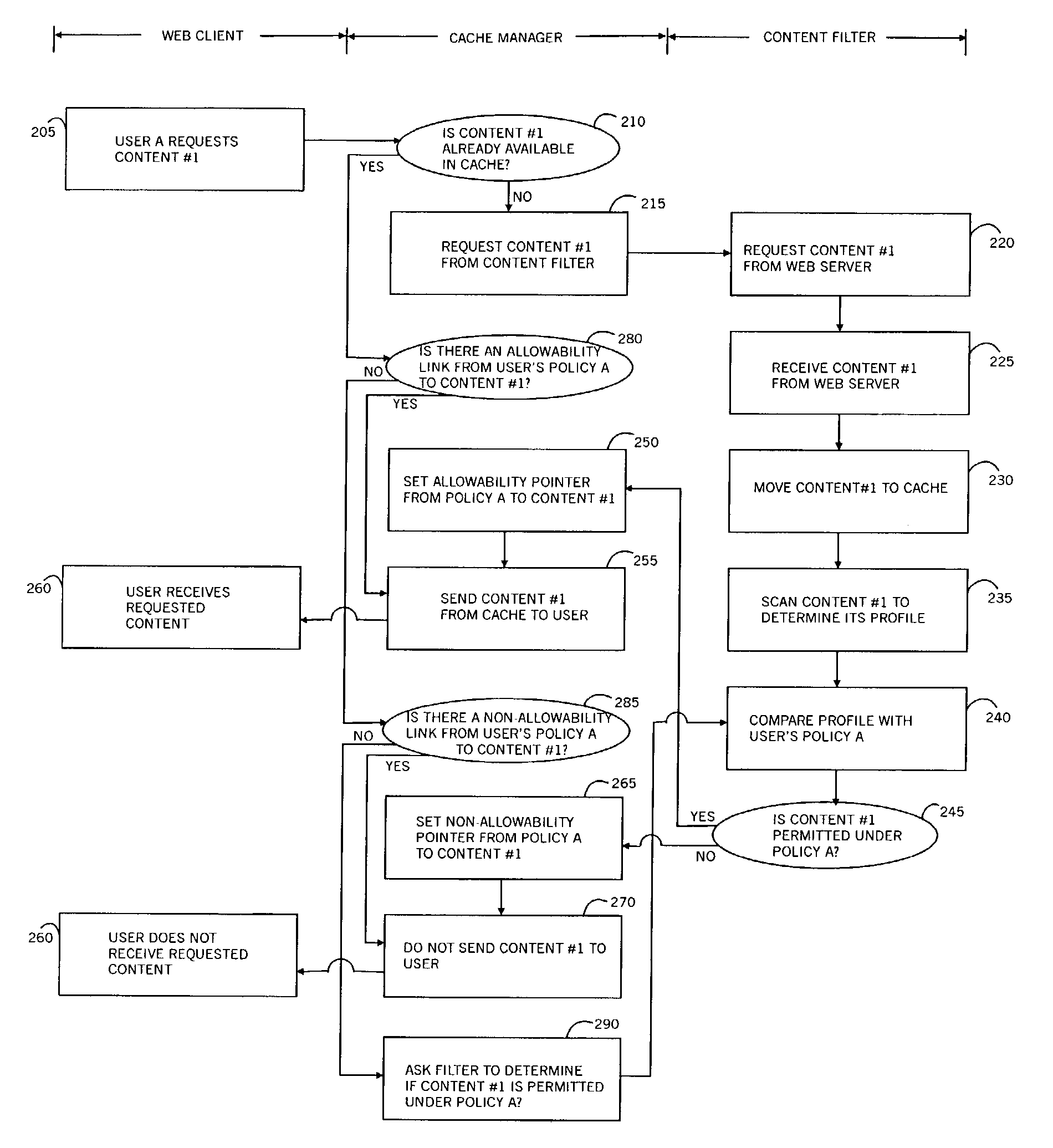

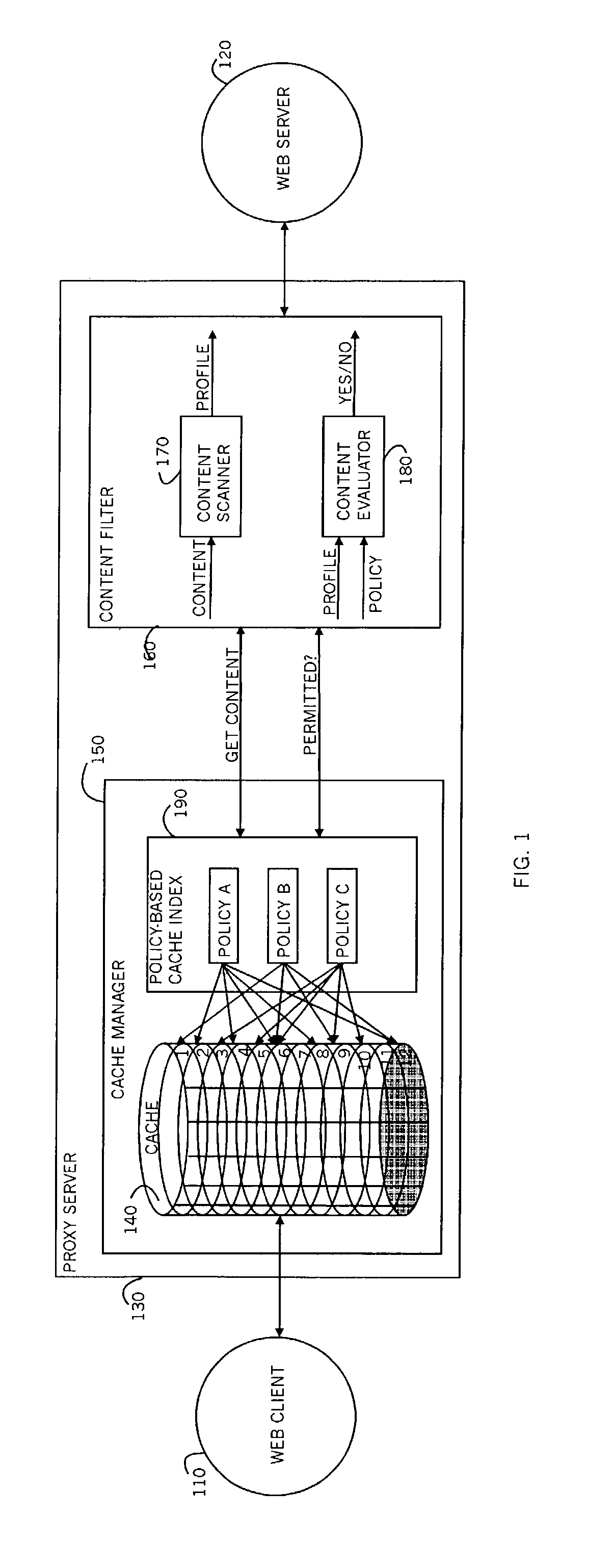

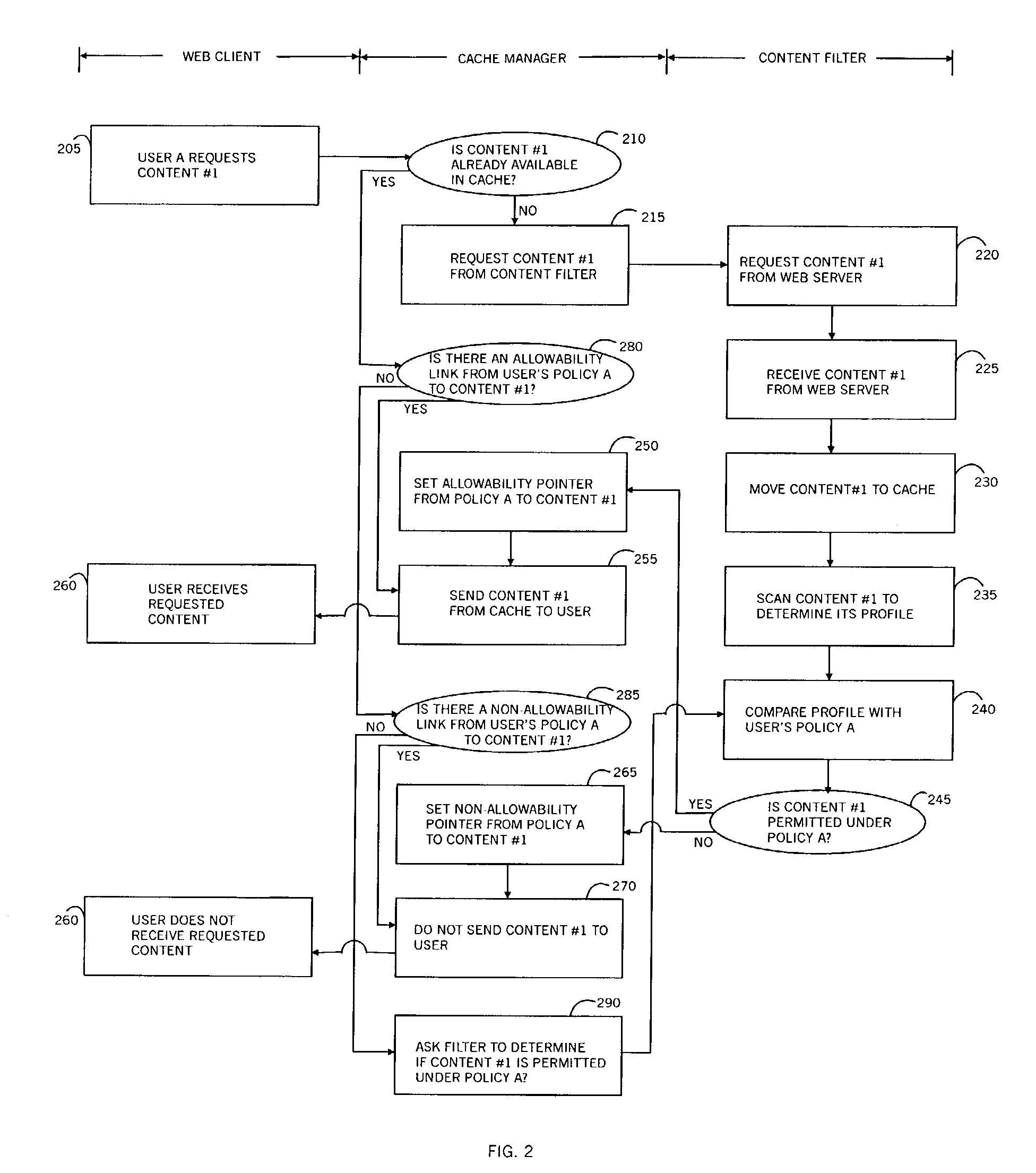

Policy-based caching

InactiveUS6965968B1Enhances conventional cachingBlock deliveryDigital data information retrievalComputer security arrangementsDigital contentNumber content

A policy-based cache manager, including a memory storing a cache of digital content, a plurality of policies, and a policy index to the cache contents, the policy index indicating allowable cache content for each of a plurality of policies, a content scanner for scanning a digital content received, to derive a corresponding content profile, and a content evaluator for determining whether a given digital content is allowable relative to a given policy, based on the content profile. A method is also described and claimed.

Owner:FINJAN LLC

System and method for smart persistent cache

InactiveUS20060069746A1Inhibitory contentMultiple digital computer combinationsTransmissionParallel computingCache management

A system and method for smart, persistent cache management of received content within a terminal. Received content is tagged with cache directive allowing cache control to determine which of cache storage locations to use for storage of content. Cache control detects the number of instances that received content correlates to a newer version of purged content and provides the ability to re-classify cache persistence directive based upon the number of instances.

Owner:NOKIA CORP

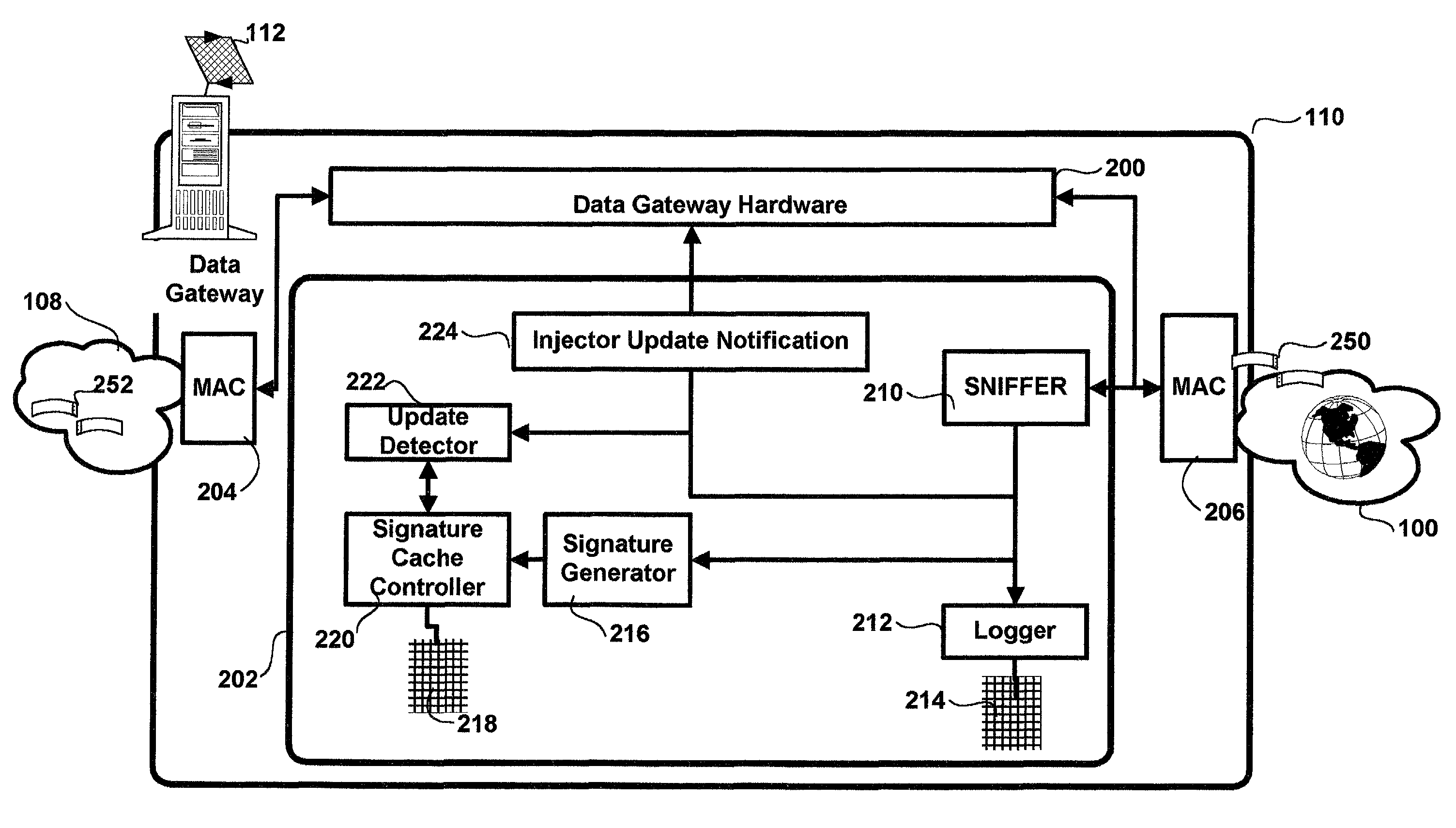

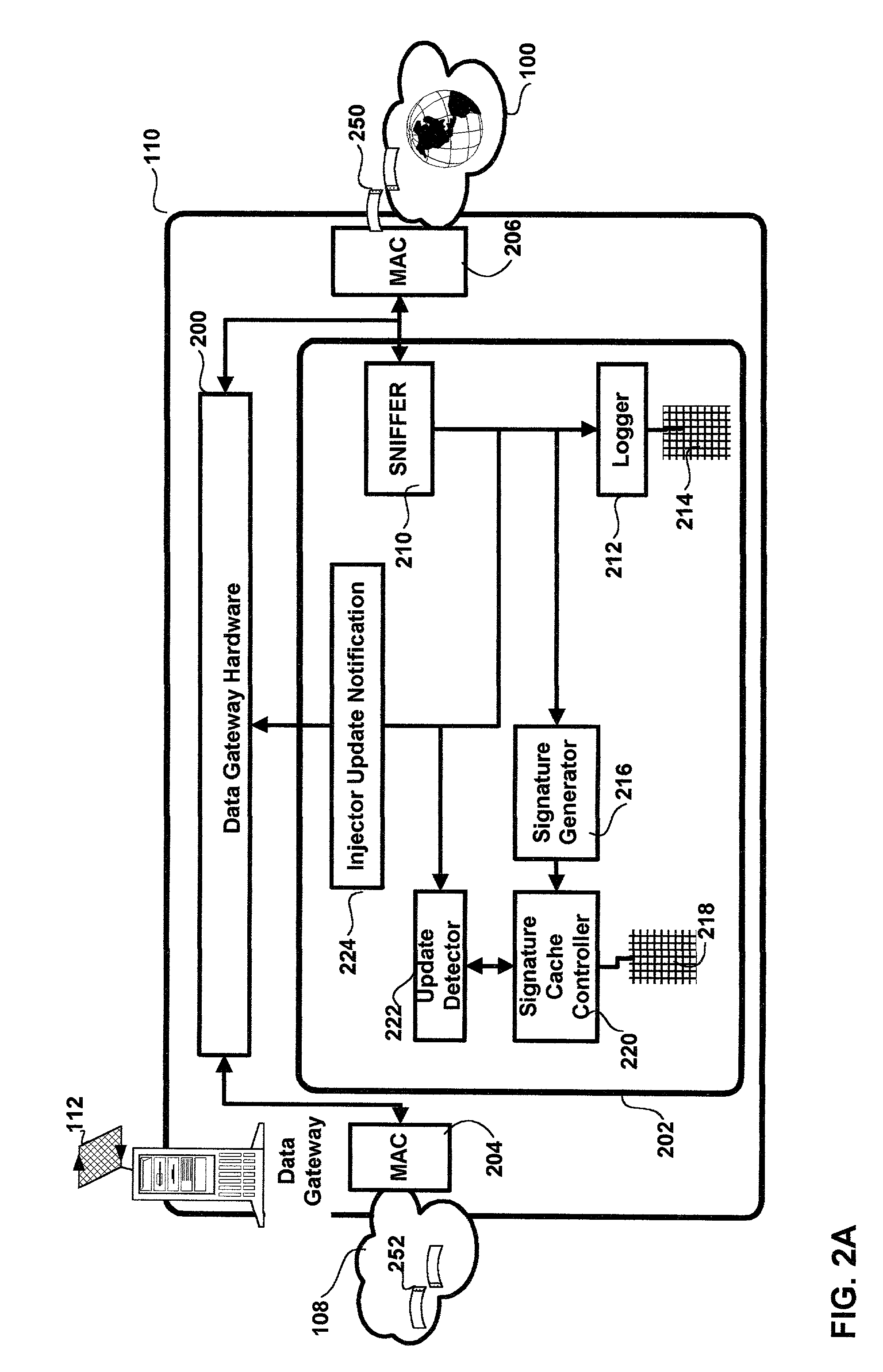

Method and apparatus for web caching

InactiveUS6990526B1Reduced activityAvoid delayDigital data information retrievalMultiple digital computer combinationsHash functionData access

A method and apparatus for web caching is disclosed. The method and apparatus may be implemented in hardware, software or firmware. Complementary cache management modules, a coherency module and a cache module(s) are installed complementary gateways for data and for clients respectively. The coherency management module monitors data access requests and or response and determines for each: the uniform resource locator (URL) of the requested web page, the URL of the requestor and a signature. The signature is computed using cryptographic techniques and in particular a hash function for which the input is the corresponding web page for which a signature is to be generated. The coherency management module caches these signatures and the corresponding URL and uses the signatures to determine when a page has been updated. When, on the basis of signature comparisons it is determined that a page has been updated the coherency management module sends a notification to all complementary cache modules. Each cache module caches web pages requested by the associated client(s) to which it is coupled. The notification from the cache management module results in the cache module(s) which are the recipient of a given notice updating their tag table with a stale bit for the associated web page. The cache module(s) use this information in the associated tag tables to determine which pages they need to update. The cache modules initiate this update during intervals of reduced activity in the servers, gateways, routers, or switches of which they are a part. All clients requesting data through the system of which each cache module is a part are provided by the associated cache module with cached copies of requested web pages.

Owner:POINTRED TECH

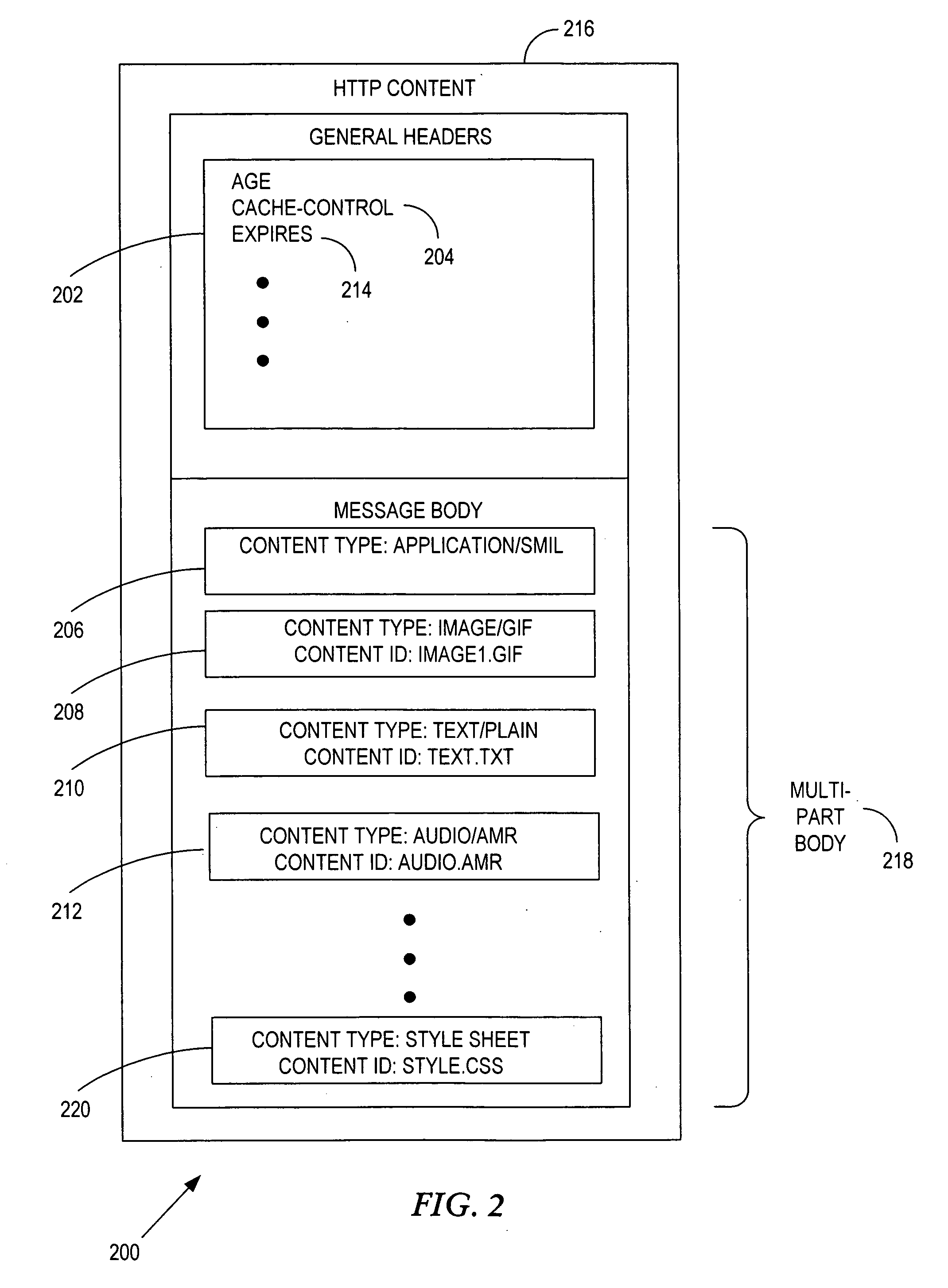

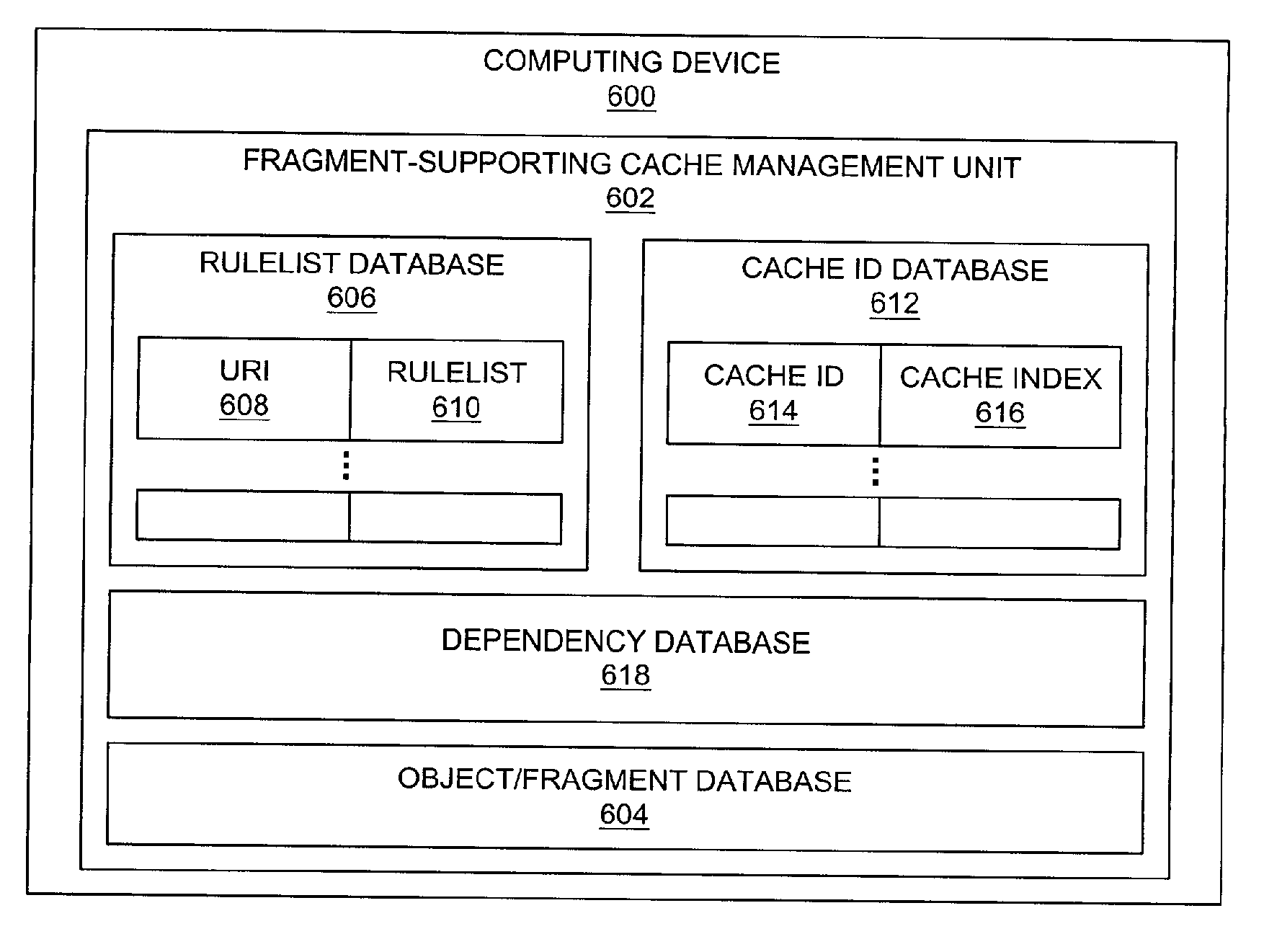

Method and system for caching message fragments using an expansion attribute in a fragment link tag

ActiveUS20070244964A1Digital data information retrievalMemory adressing/allocation/relocationCache managementWorld Wide Web

A method, a system, an apparatus, and a computer program product are presented for a fragment caching methodology. After a message is received at a computing device that contains a cache management unit, a fragment in the message body of the message is cached. Subsequent requests for the fragment at the cache management unit result in a cache hit. A FRAGMENTLINK tag is used to specify the location in a fragment for an included or linked fragment which is to be inserted into the fragment during fragment or page assembly or page rendering. A FRAGMENTLINK tag may include a FOREACH attribute that is interpreted as indicating that the FRAGMENTLINK tag should be replaced with multiple FRAGMENTLINK tags. The FOREACH attribute has an associated parameter that has multiple values that are used in identifying multiple fragments for the multiple FRAGMENTLINK tags.

Owner:SNAP INC

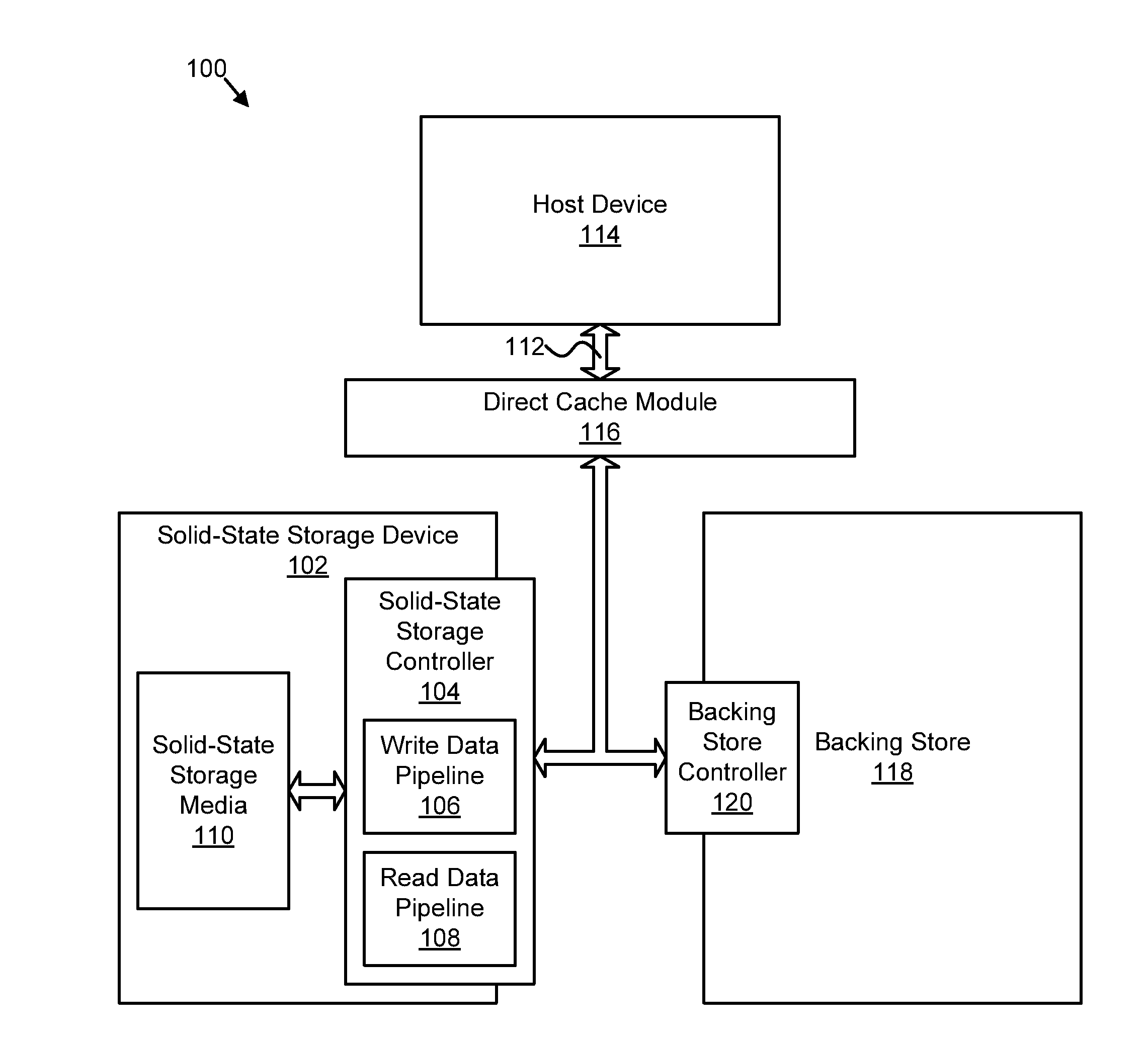

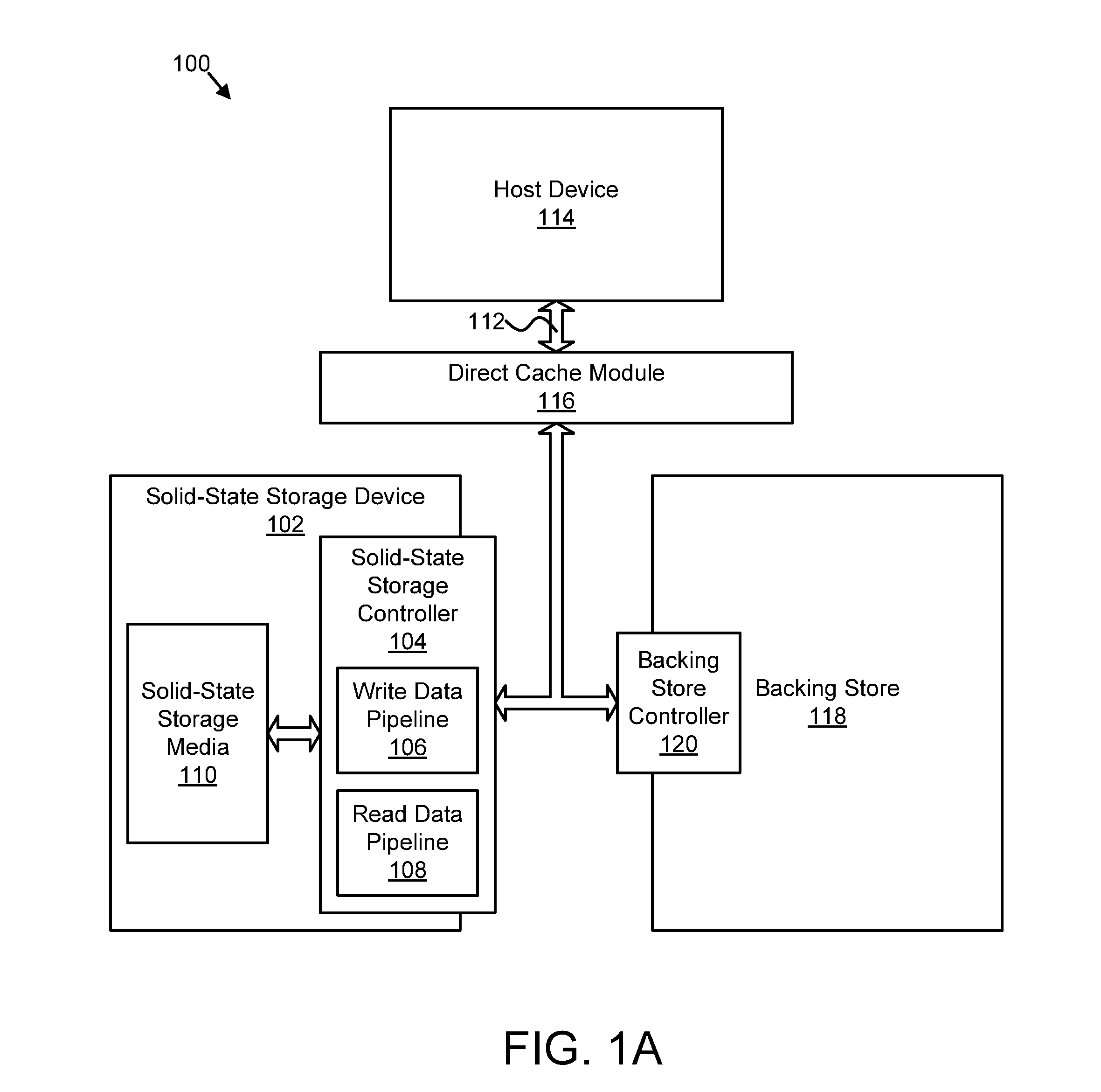

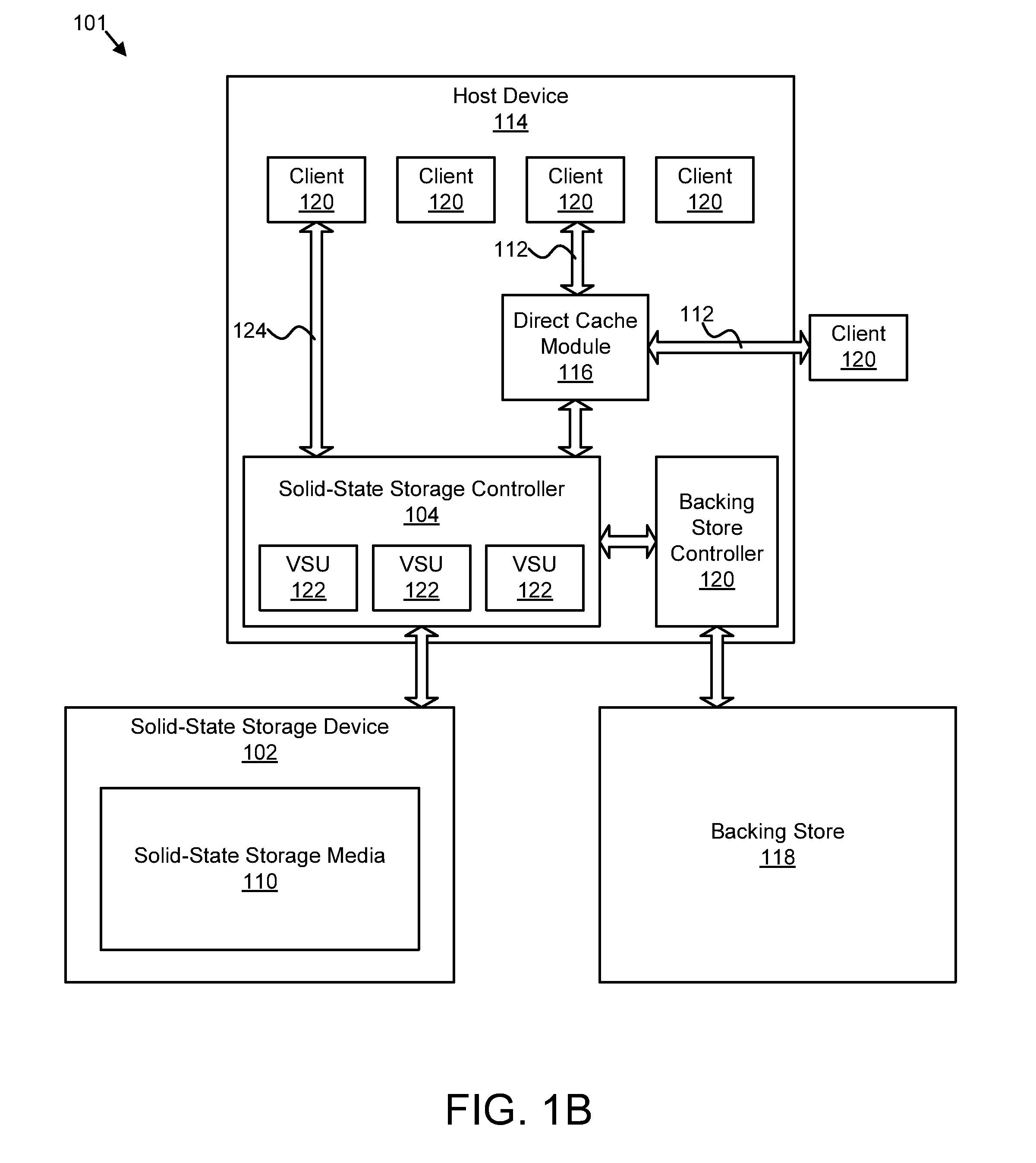

Apparatus, system, and method for managing a cache

ActiveUS20130191601A1Effective cachingMemory adressing/allocation/relocationSolid-state storageParallel computing

An apparatus, system, and method are disclosed for managing a cache. A cache interface module provides access to a plurality of virtual storage units of a solid-state storage device over a cache interface. At least one of the virtual storage units comprises a cache unit. A cache command module exchanges cache management information for the at least one cache unit with one or more cache clients over the cache interface. A cache management module manages the at least one cache unit based on the cache management information exchanged with the one or more cache clients.

Owner:SANDISK TECH LLC

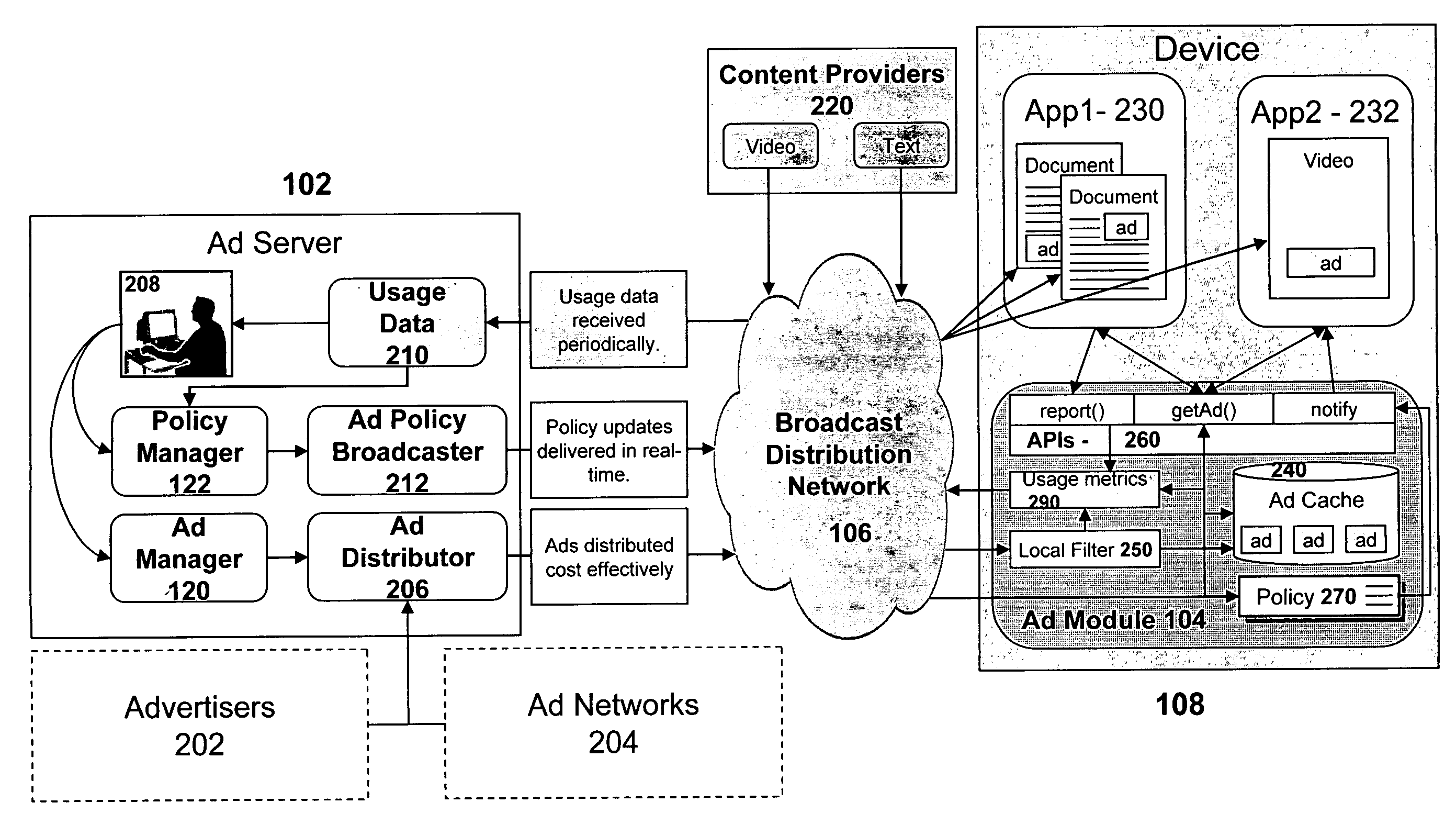

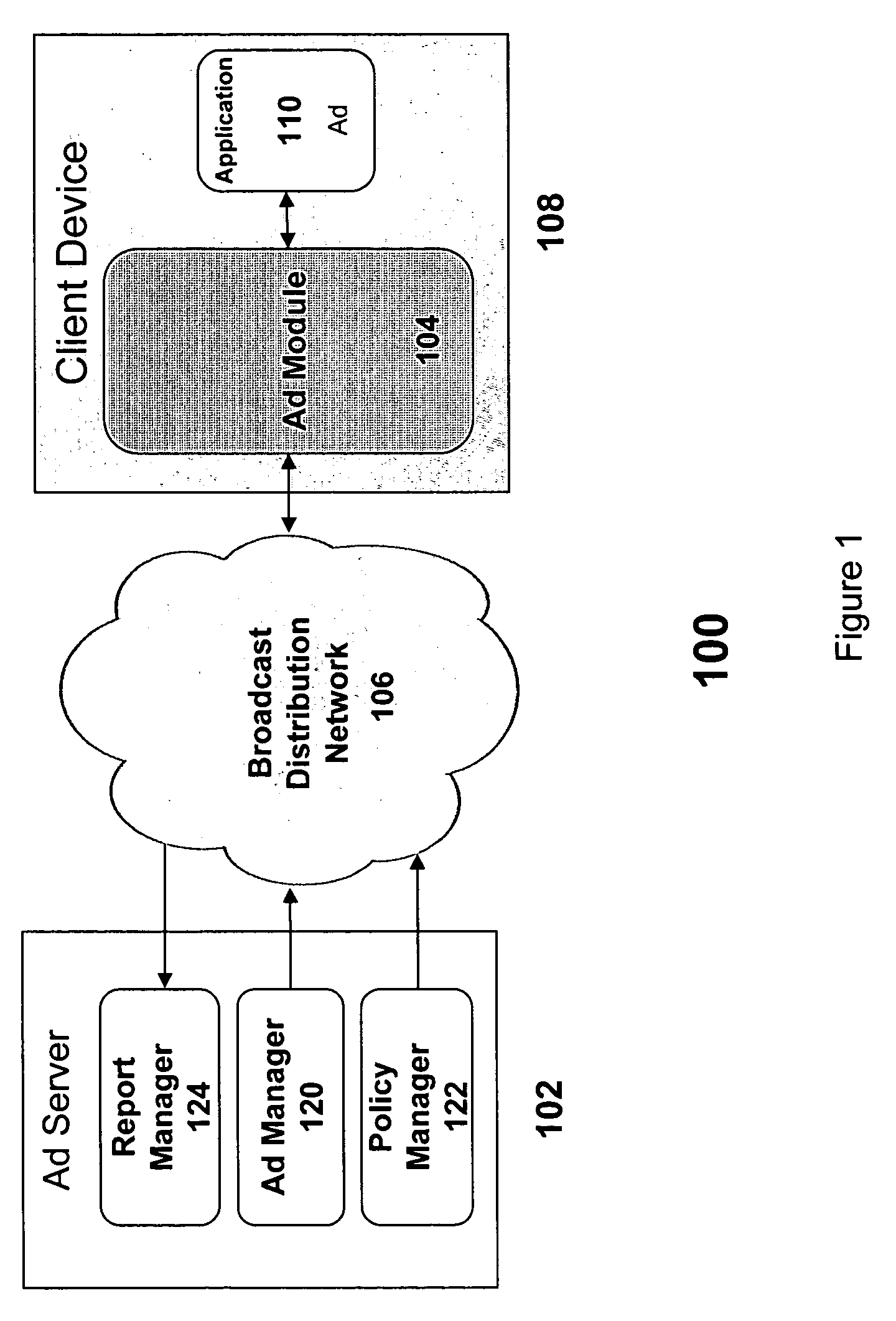

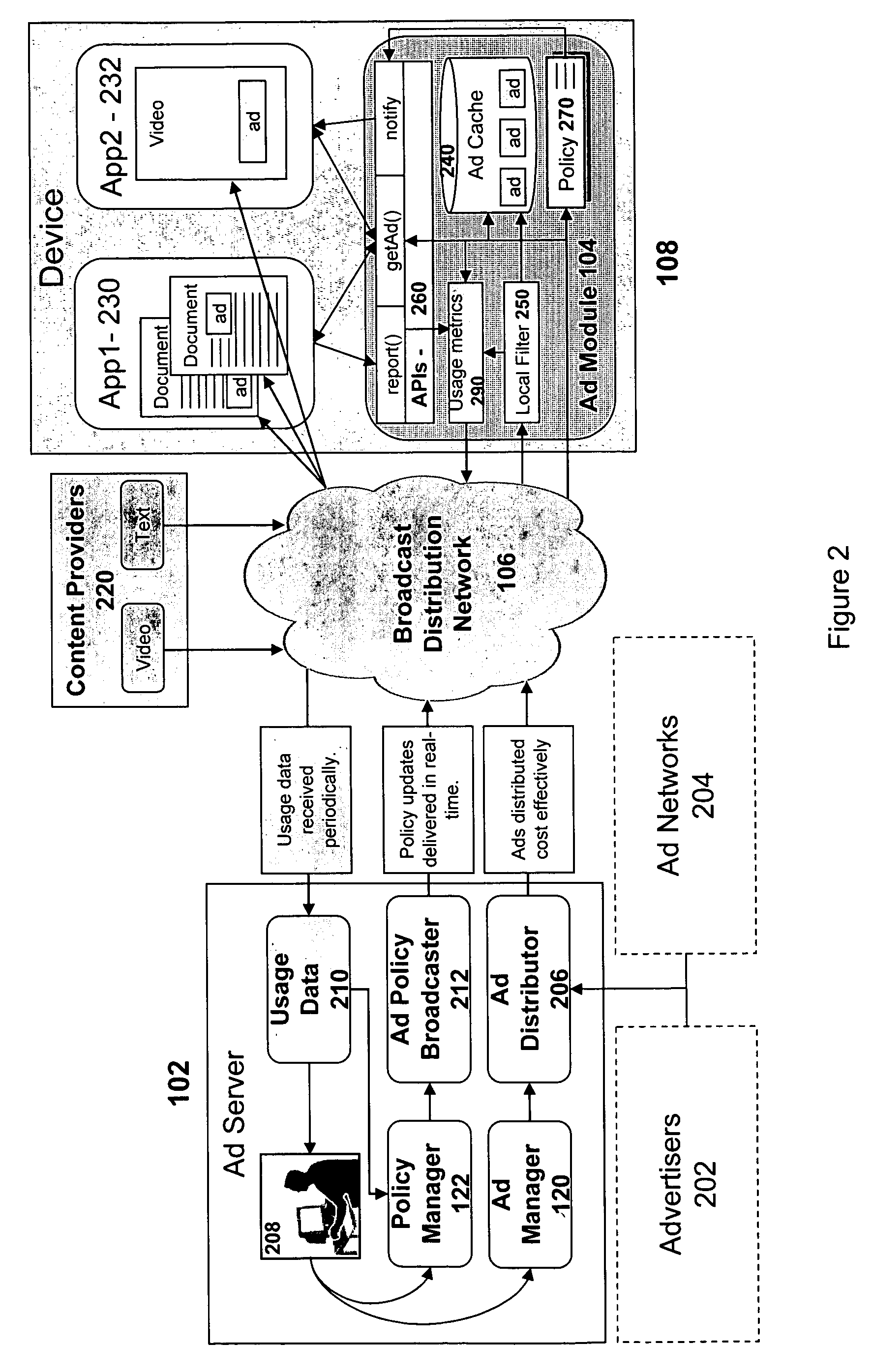

Distribution and display of advertising for devices in a network

InactiveUS20080098420A1Good choiceElectrical cable transmission adaptationMarketingCyber operationsClient-side

A system and associated apparatus and methods for the distribution, selection and display of advertising content for devices operating in a network. The devices need not initiate a point-to-point communication with an ad server to provide the ability to filter received advertising content and enable the display of advertisements that are targeted to a device's user. The invention enables a service provider or network operator to control the policy used to specify the selection, timing, and display of an advertisement stored in a cache of a client device. A modification to the policy can be broadcast and implemented in real-time by the device. Advertisements stored in the cache may be filtered both by the service provider or network operator and by the device itself so as to provide the best selection of ads tailored to the user of the device. The device implements cache management processes to determine how best to maintain the advertisements of greatest relevance to the user of the device. The invention also provides mechanisms for the reporting of statistics that can be used for billing purposes and to better filter the selection of advertisements cached at each device.

Owner:ROUNDBOX

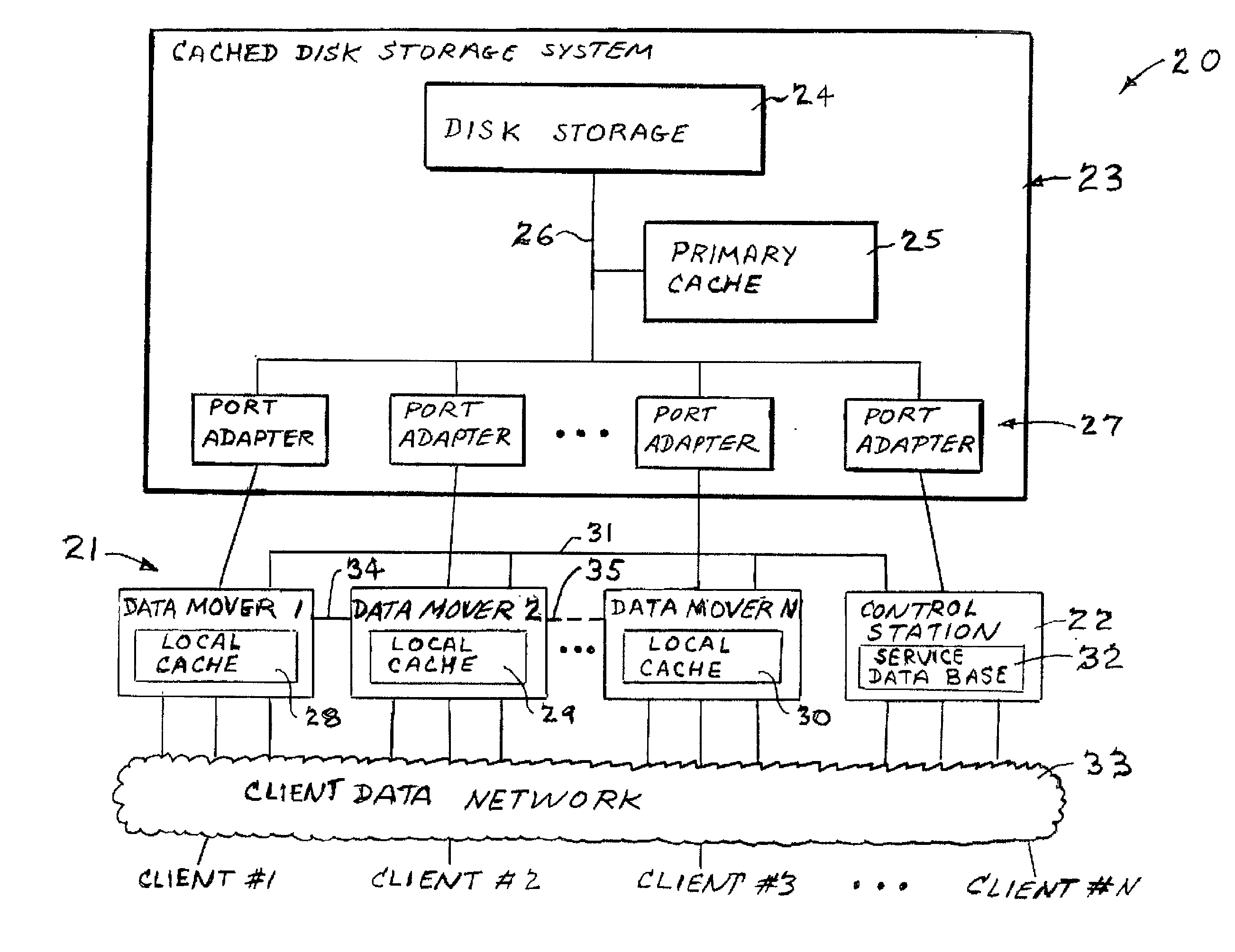

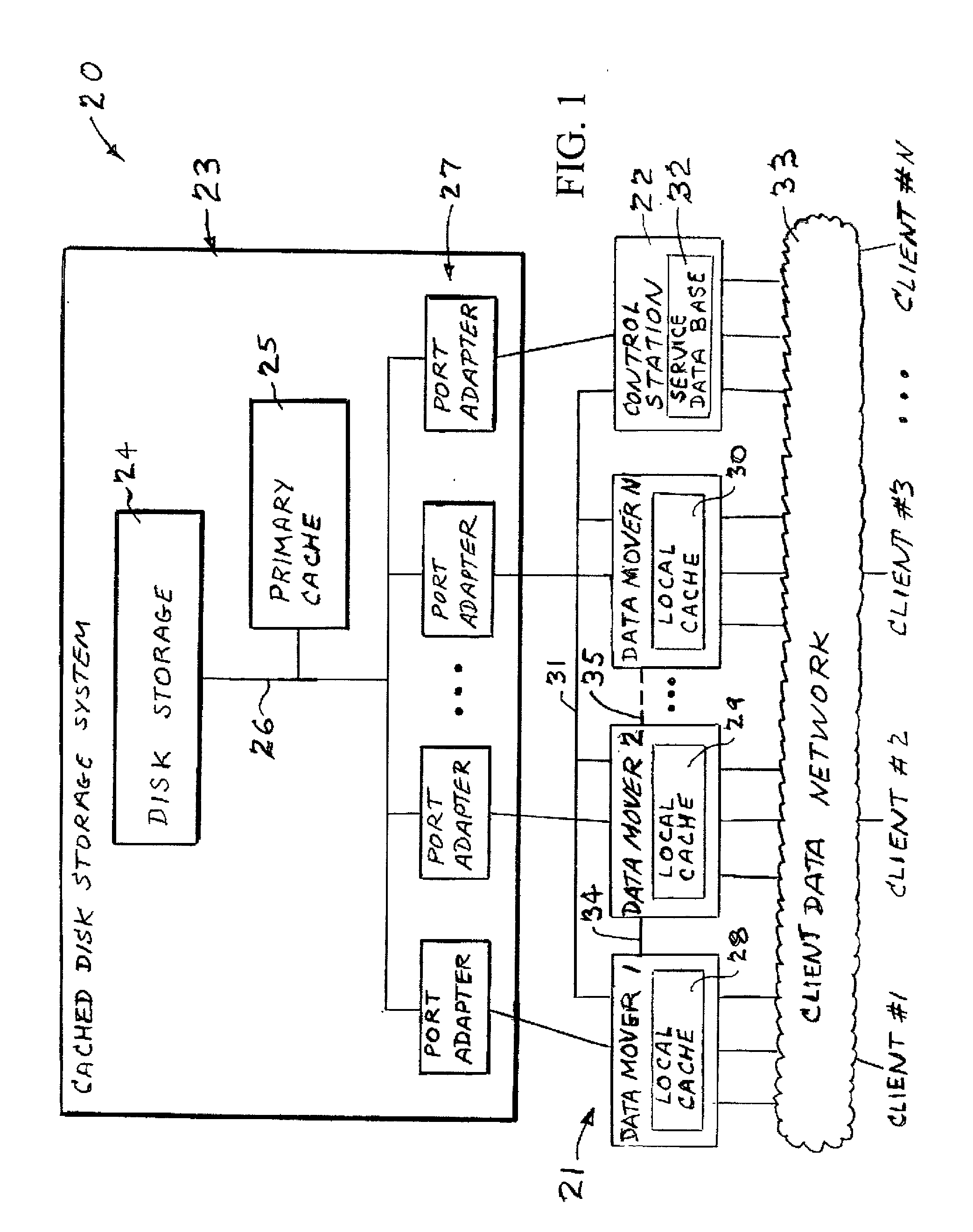

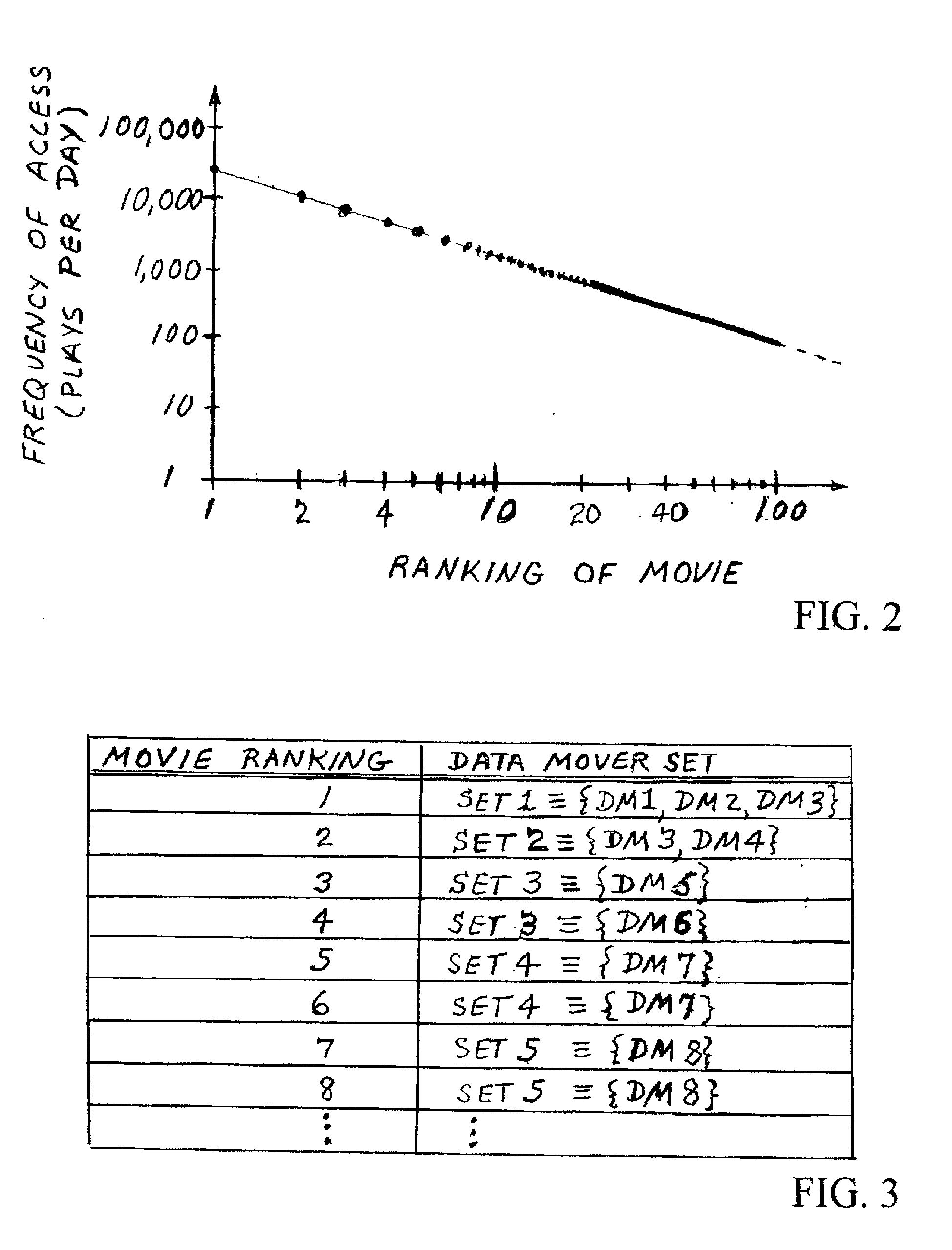

Video file server cache management using movie ratings for reservation of memory and bandwidth resources

ActiveUS20030005457A1Memory adressing/allocation/relocationMultiple digital computer combinationsMagnetic tapeRanking

Access to movies ranging from very popular movies to unpopular movies is managed by configuring sets of data movers for associated movie rankings, reserving data mover local cache resources for the most popular movies, reserving a certain number of streams for popular movies, negotiating with a client for selection of available movie titles during peak demand when resources are not available to start any freely-selected movie in disk storage, and managing disk bandwidth and primary and local cache memory and bandwidth resources for popular and unpopular movies. The assignment of resources to movie rankings may remain the same while the rankings of the movies are adjusted, for example, during off-peak hours. A movie locked in primary cache and providing a source for servicing a number of video streams may be demoted from primary cache to disk in favor of servicing one or more streams of a higher-ranking movie.

Owner:EMC IP HLDG CO LLC

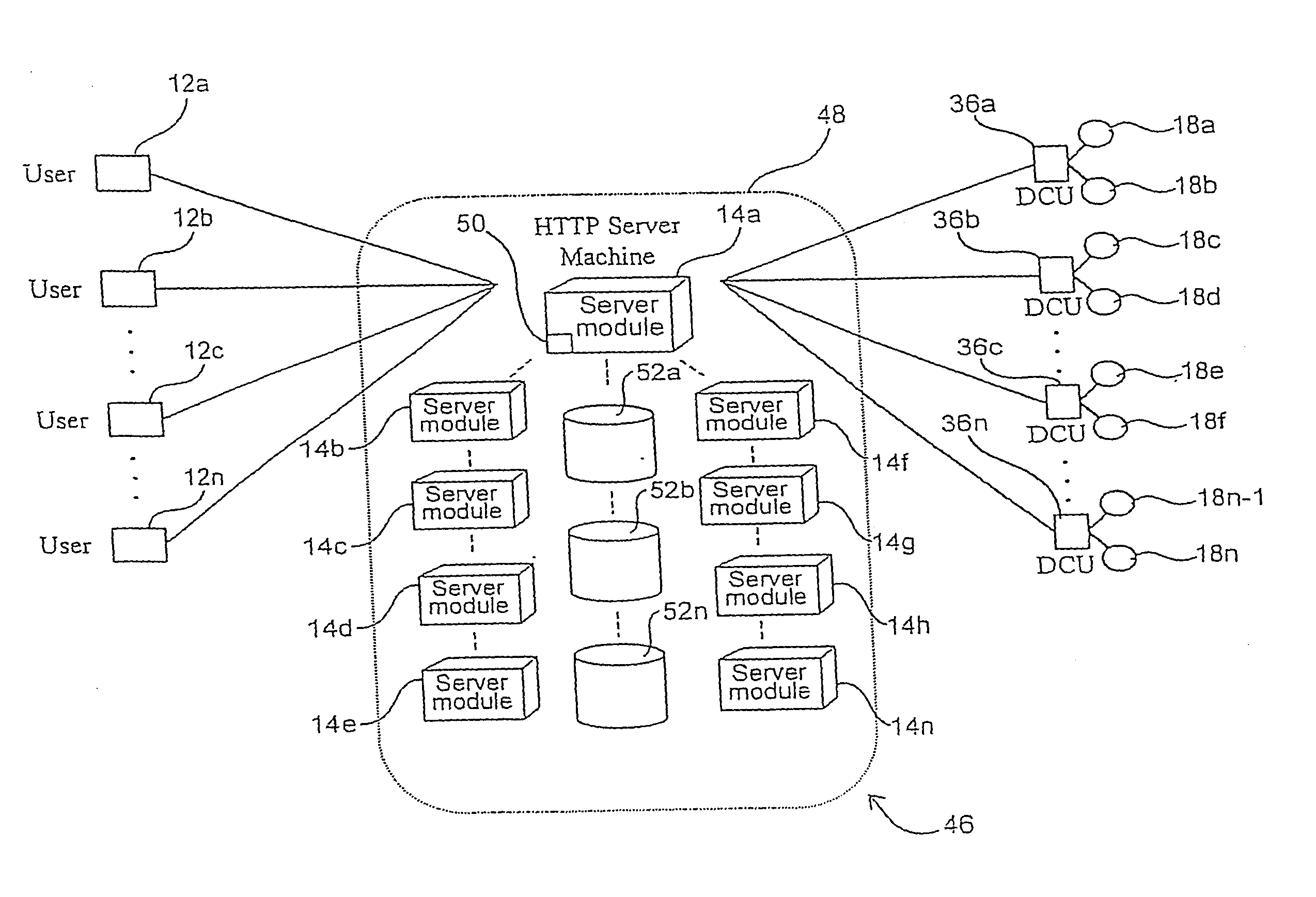

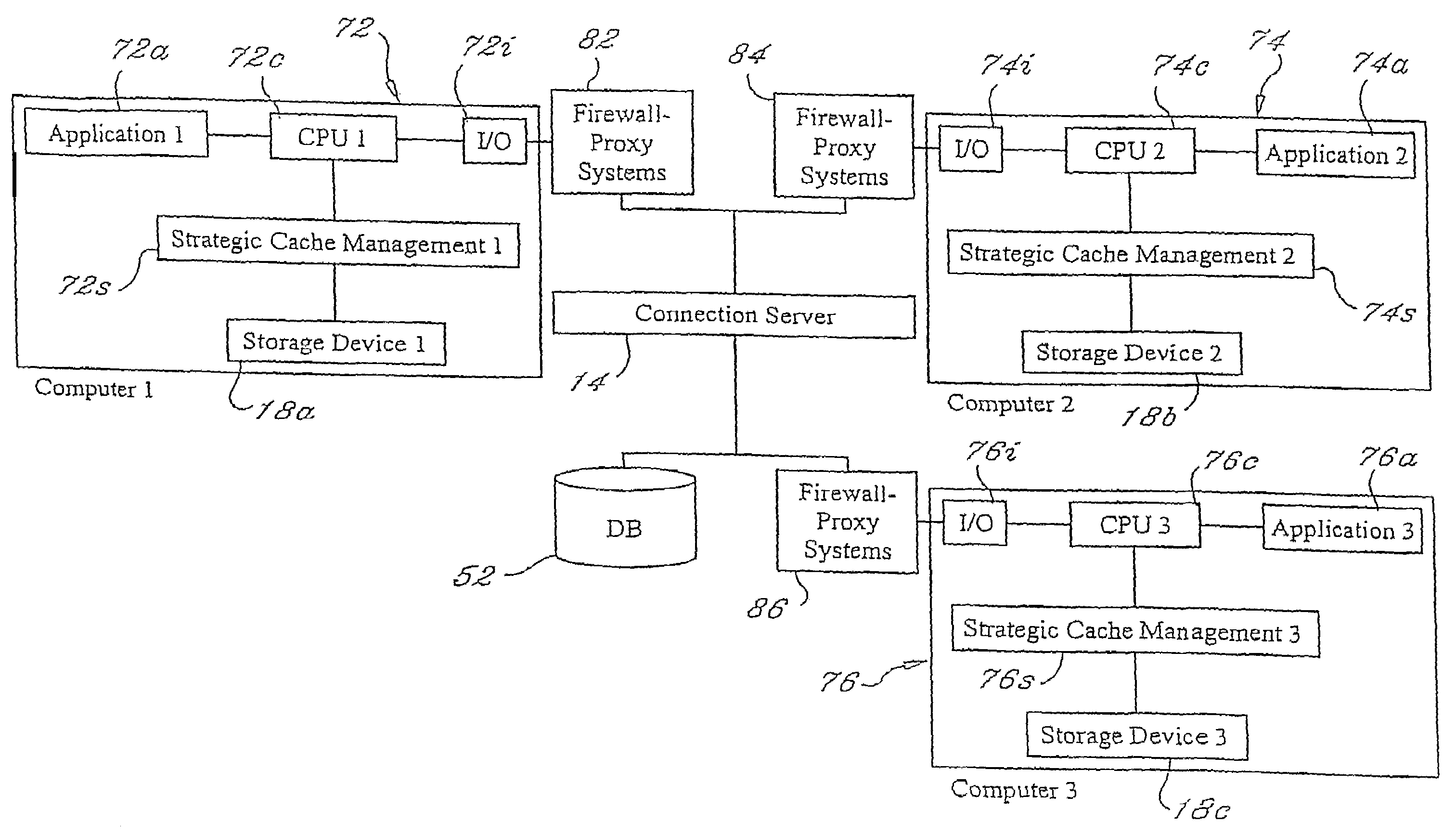

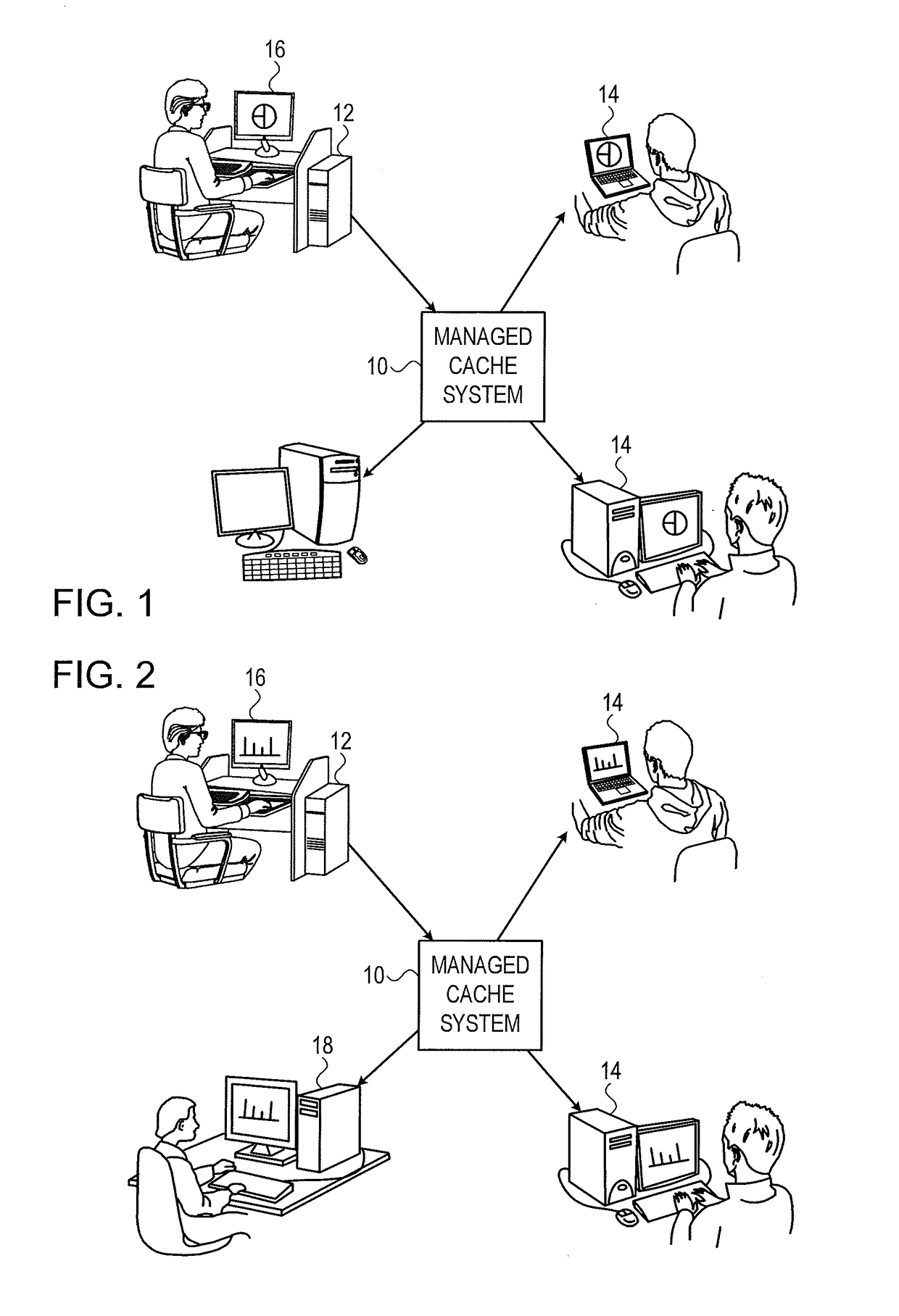

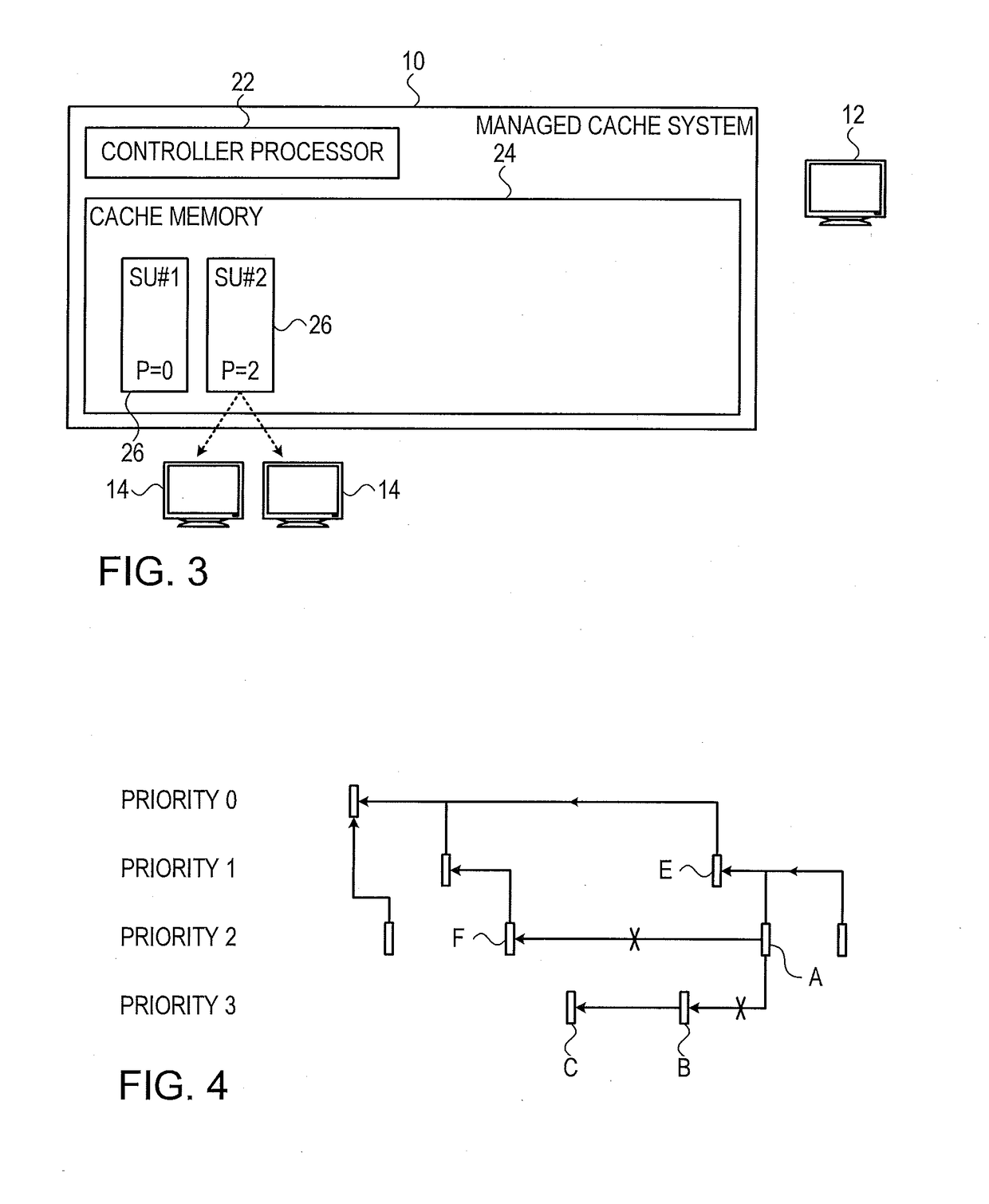

Managed peer-to-peer applications, systems and methods for distributed data access and storage

InactiveUS20050144186A1Efficiently accessing and controllingMinimizes bandwidth requirementDigital data processing detailsMultiple digital computer combinationsData accessTerm memory

Applications, systems and methods for efficiently accessing and controlling data of devices among multiple computers over a network. Strategic cache management processes are provided to manage the data in cache memory of the storage devices involved. Communication of data over the network may be managed by means of one or more connection servers which may also manage any or all of authentication, authorization, security, encryption and point-to-multipoint communications functionalities. Alternatively, computers may be connected over a wide area network without a connection server, and with or without a VPN. Data transmissions may be managed to minimize bandwidth and may be temporally and / or spatially compressed.

Owner:WESTERN DIGITAL TECH INC

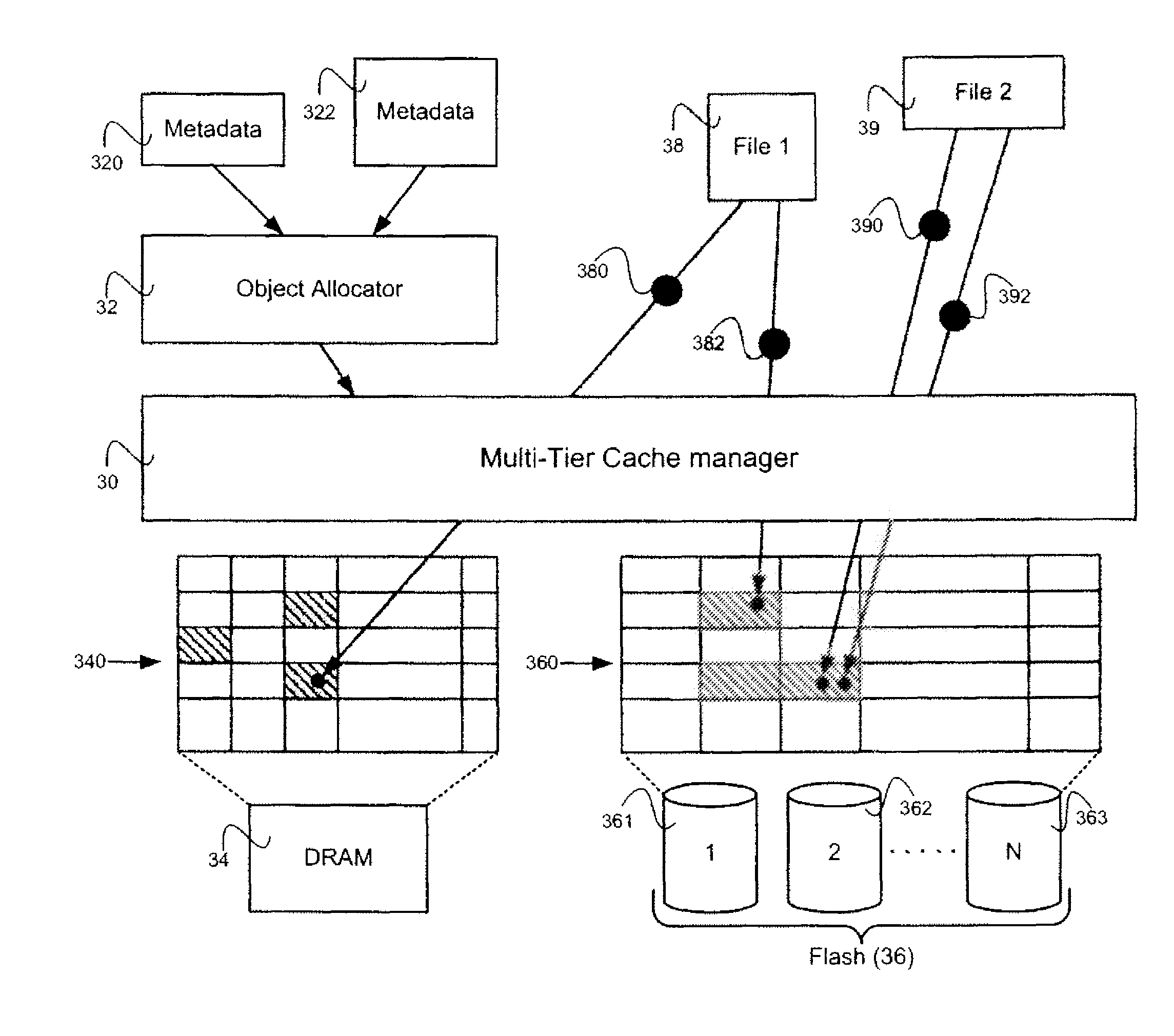

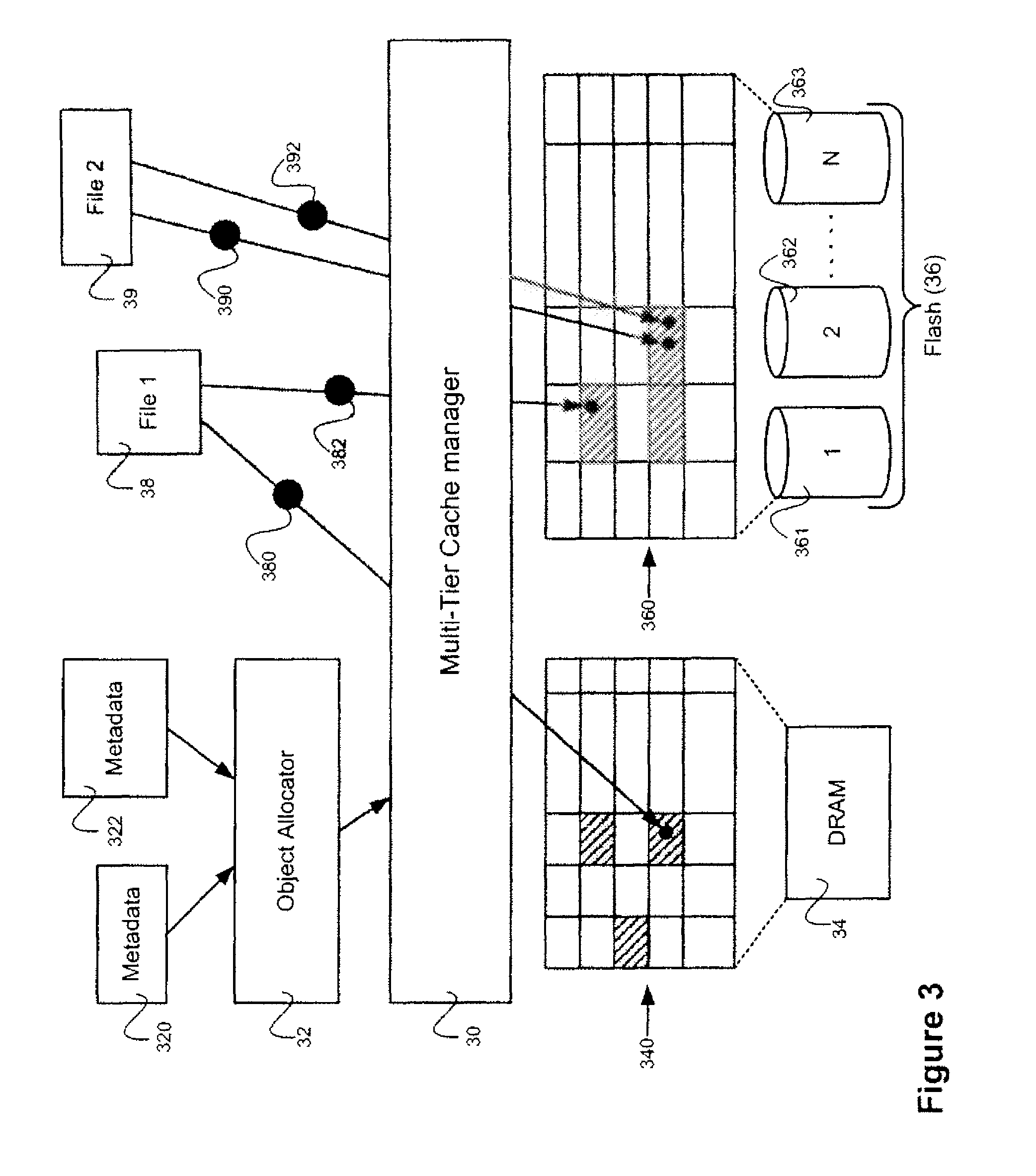

Efficient use of hybrid media in cache architectures

ActiveUS8397016B2Memory architecture accessing/allocationProgram control using stored programsParallel computingCache management

A multi-tiered cache manager and methods for managing multi-tiered cache are described. Multi-tiered cache manager causes cached data to be initially stored in the RAM elements and selects portions of the cached data stored in the RAM elements to be moved to the flash elements. Each flash element is organized as a plurality of write blocks having a block size and wherein a predefined maximum number of writes is permitted to each write block. The portions of the cached data may be selected based on a maximum write rate calculated from the maximum number of writes allowed for the flash device and a specified lifetime of the cache system.

Owner:INNOVATIONS IN MEMORY LLC

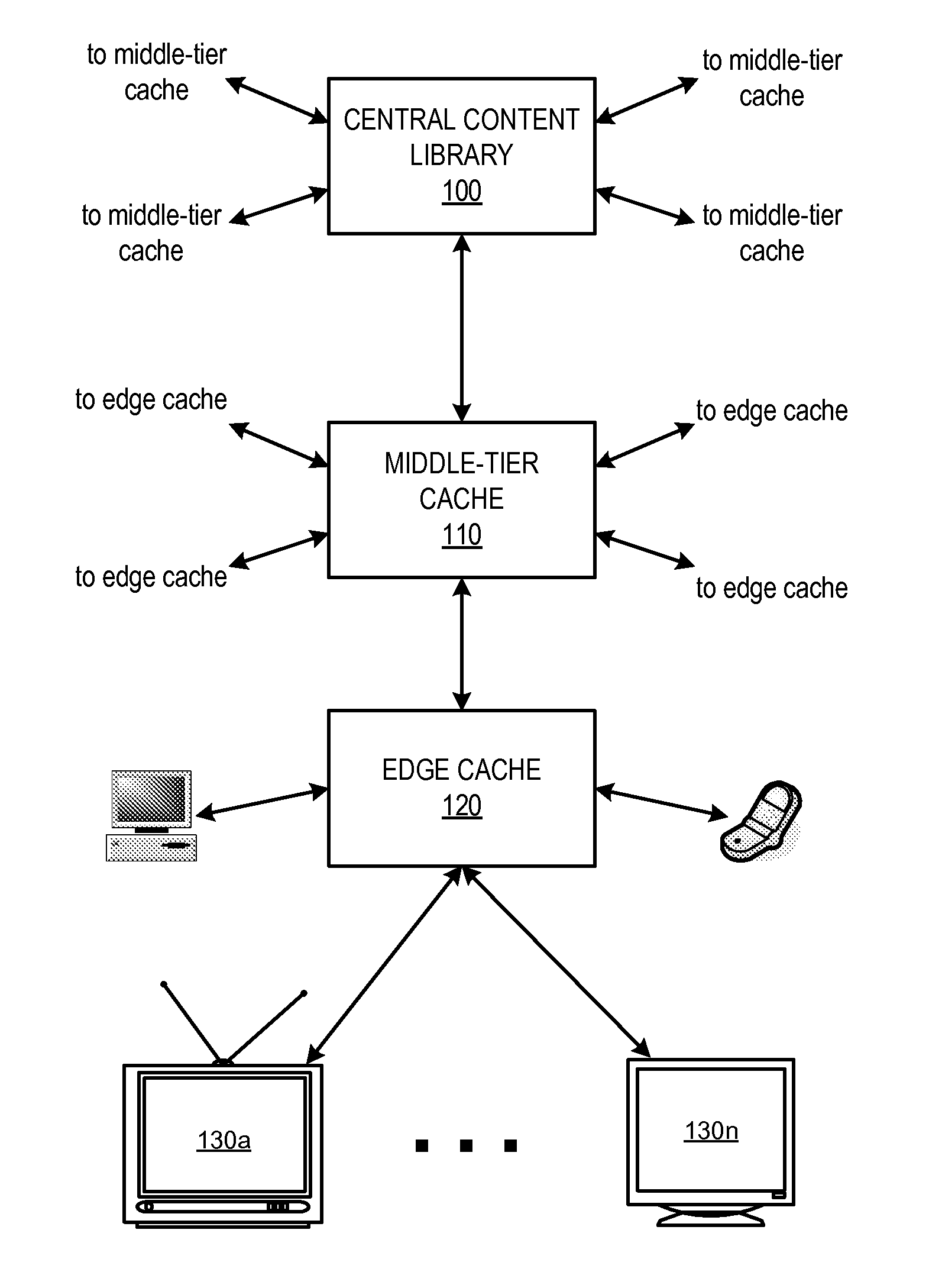

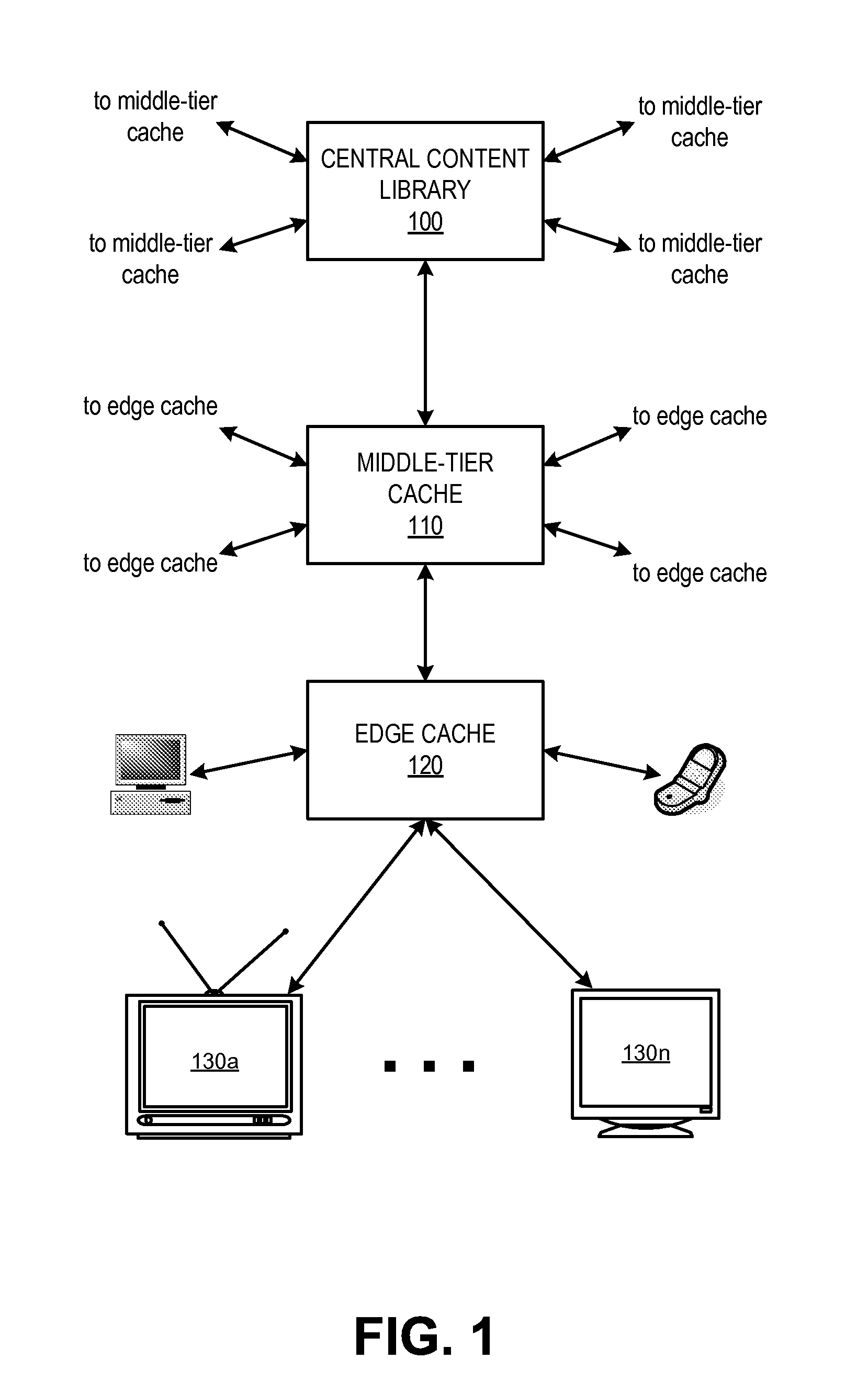

Cache Management In A Video Content Distribution Network

ActiveUS20120159558A1Memory architecture accessing/allocationTwo-way working systemsContent distributionPage replacement algorithm

Cache management techniques are described for a content distribution network (CDN), for example, a video on demand (VOD) system supporting user requests and delivery of video content. A preferred cache size may be calculated for one or more cache devices in the CDN, for example, based on a maximum cache memory size, a bandwidth availability associated with the CDN, and a title dispersion calculation determined by the user requests within the CDN. After establishing the cache with a set of assets (e.g., video content), an asset replacement algorithm may be executed at one or more cache devices in the CDN. When a determination is made that a new asset should be added to a full cache, a multi-factor comparative analysis may be performed on the assets currently residing in the cache, comparing the popularity and size of assets and combinations of assets, along with other factors to determine which assets should be replaced in the cache device.

Owner:COMCAST CABLE COMM LLC

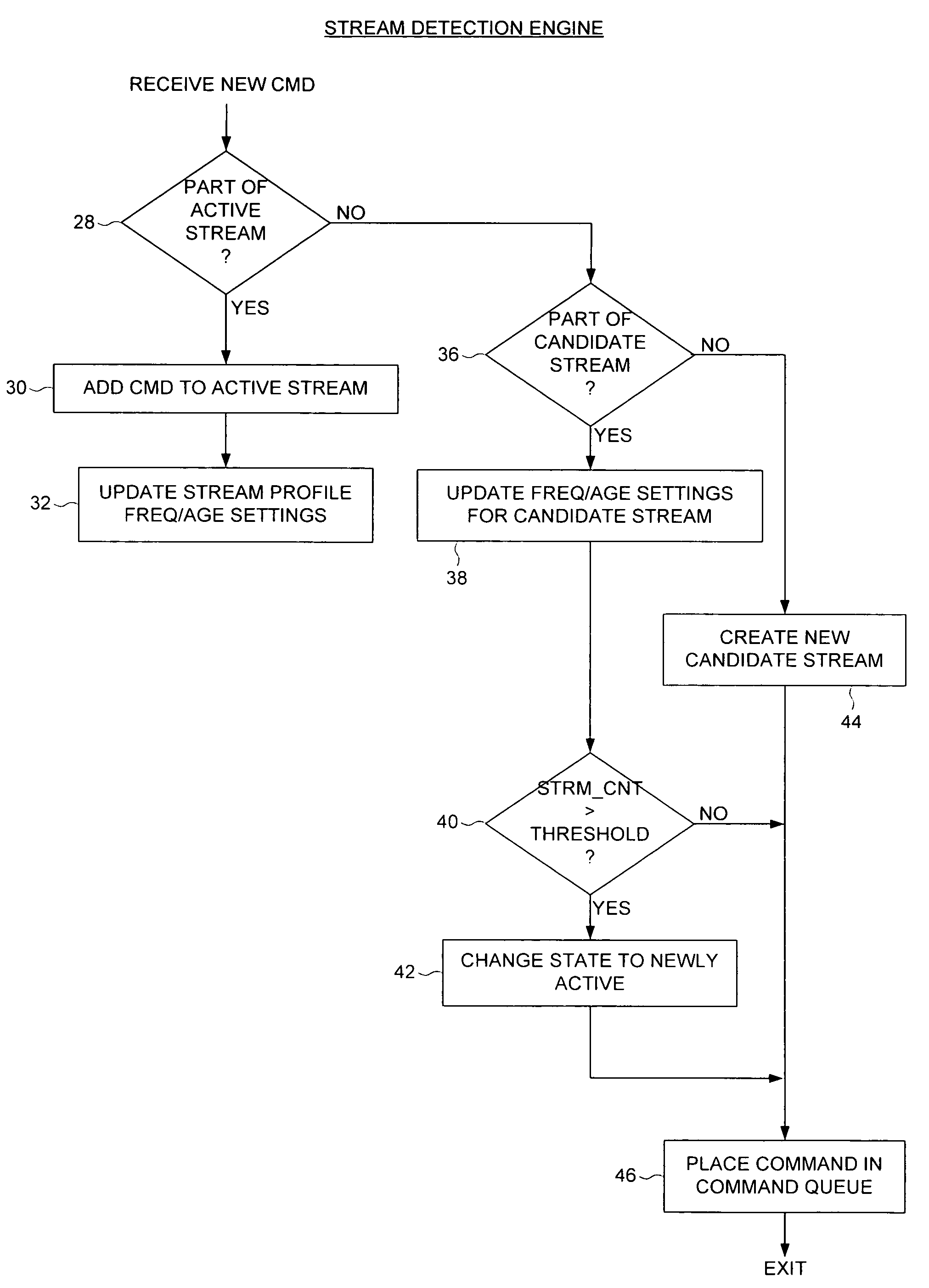

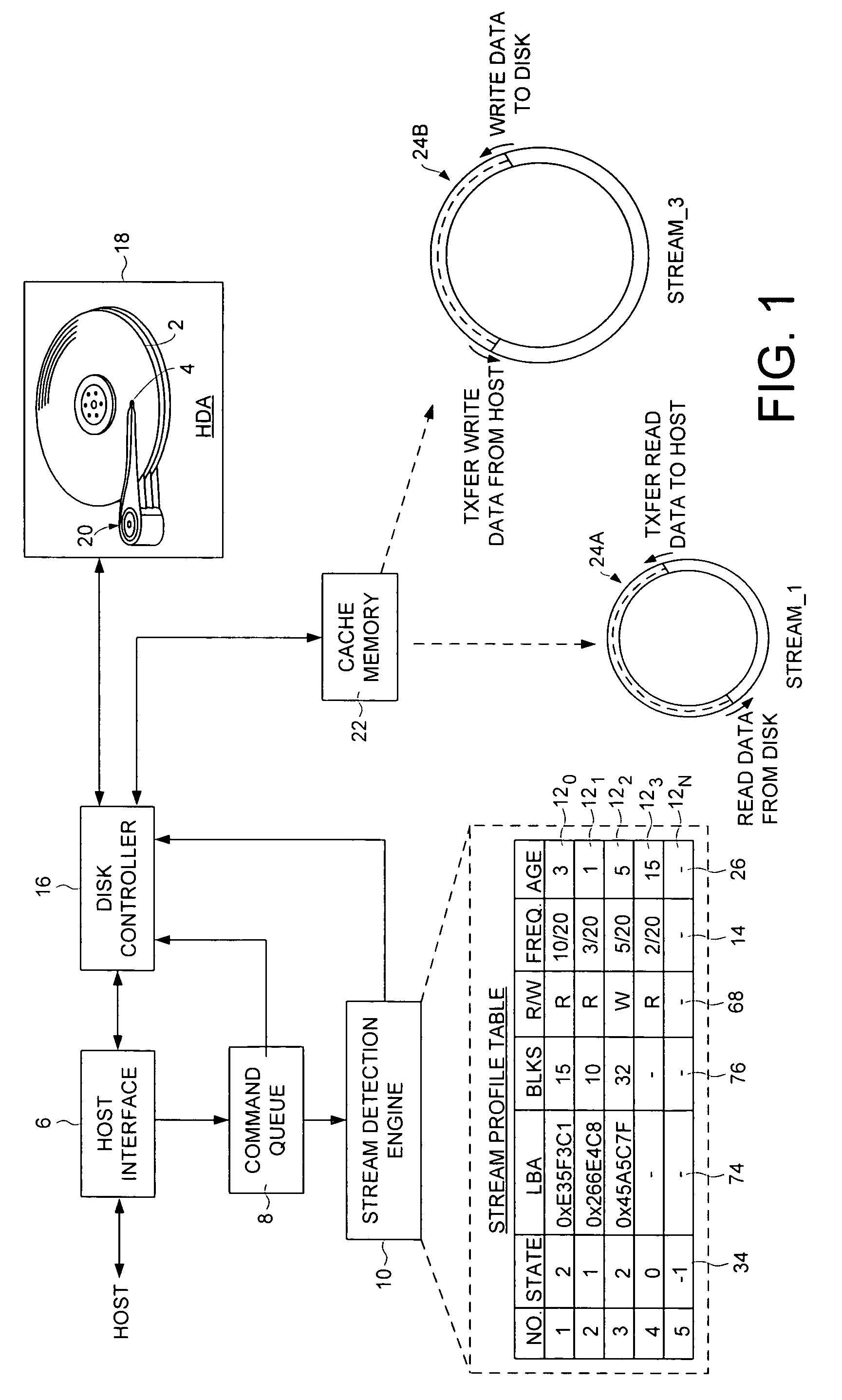

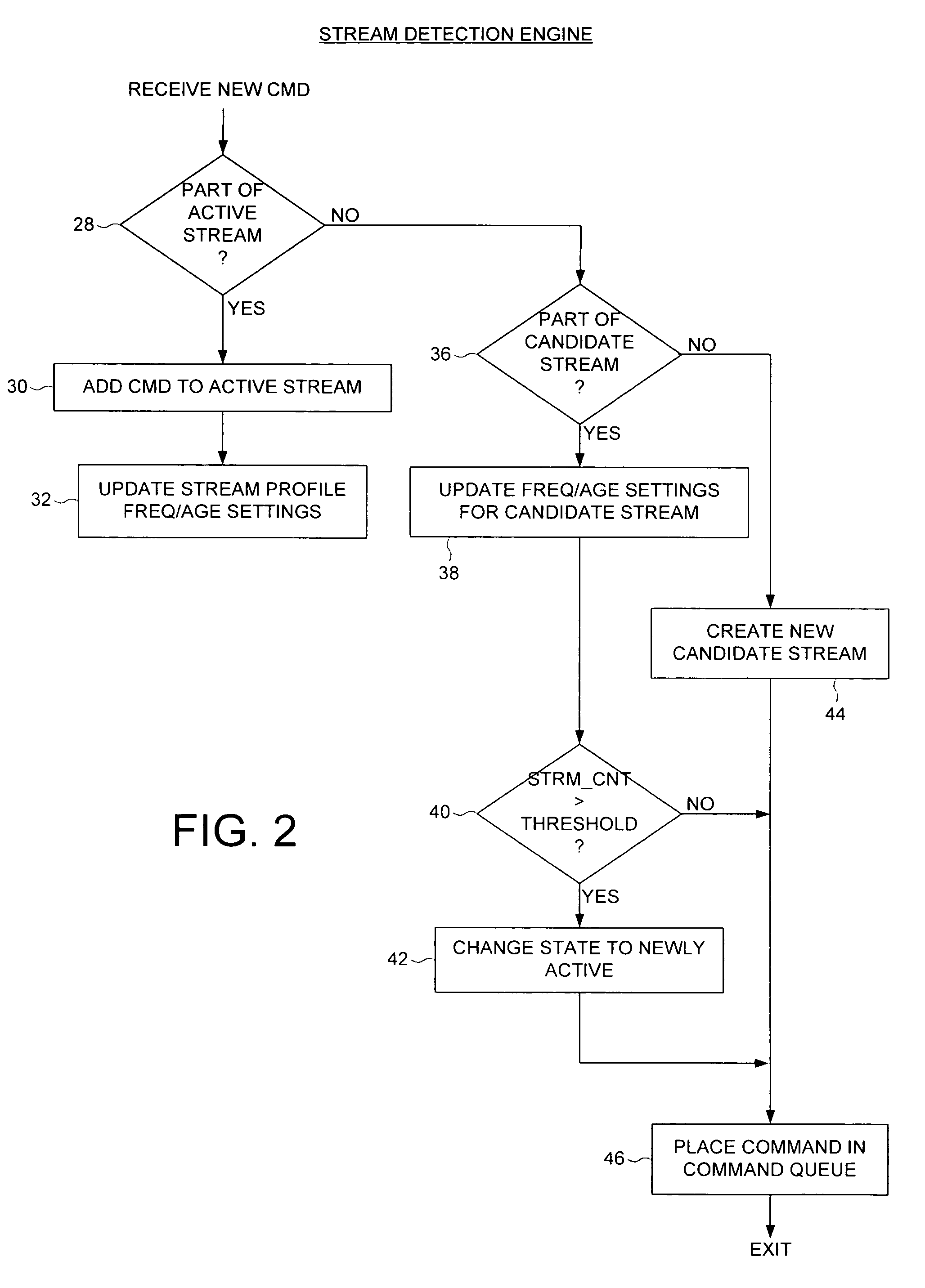

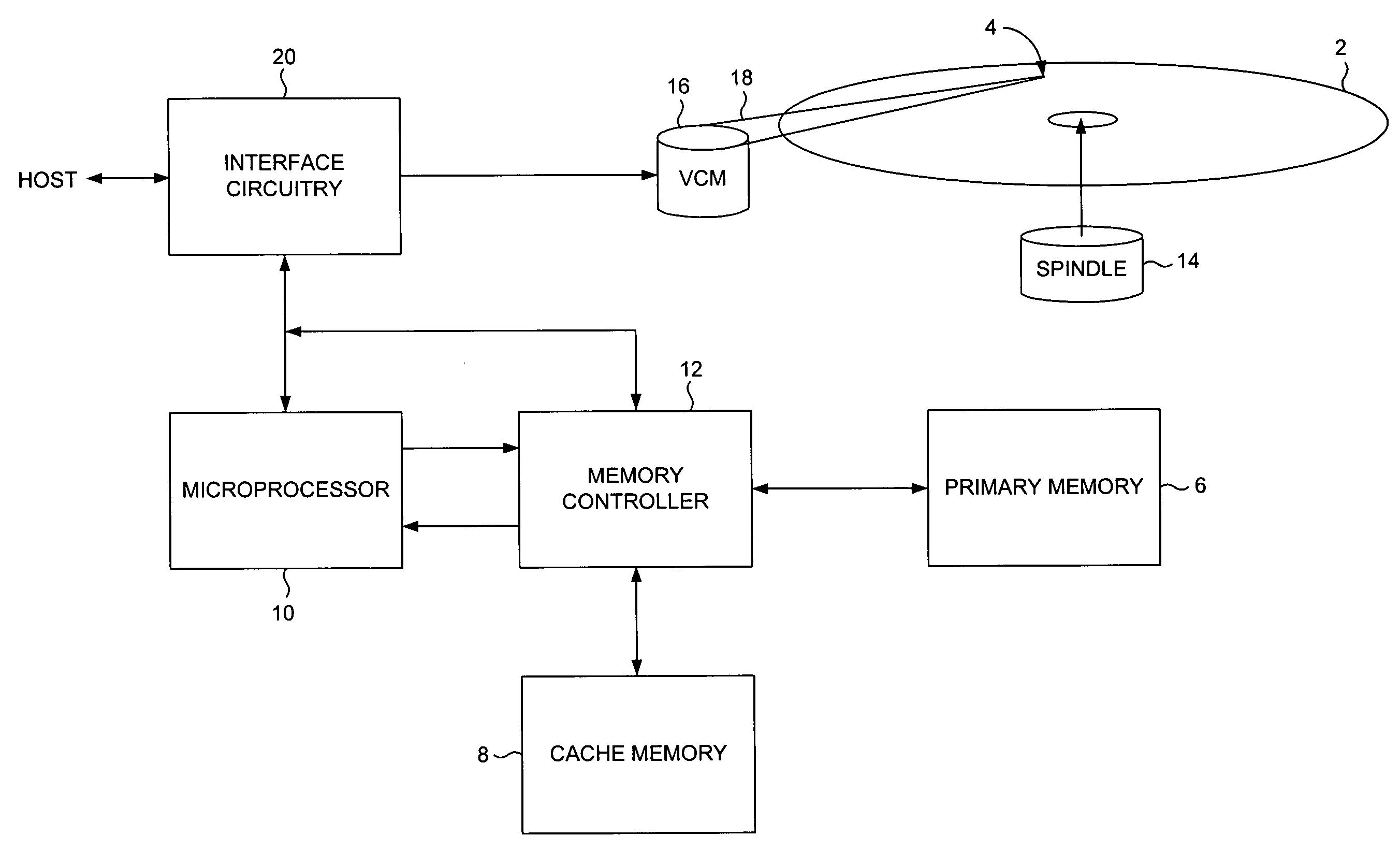

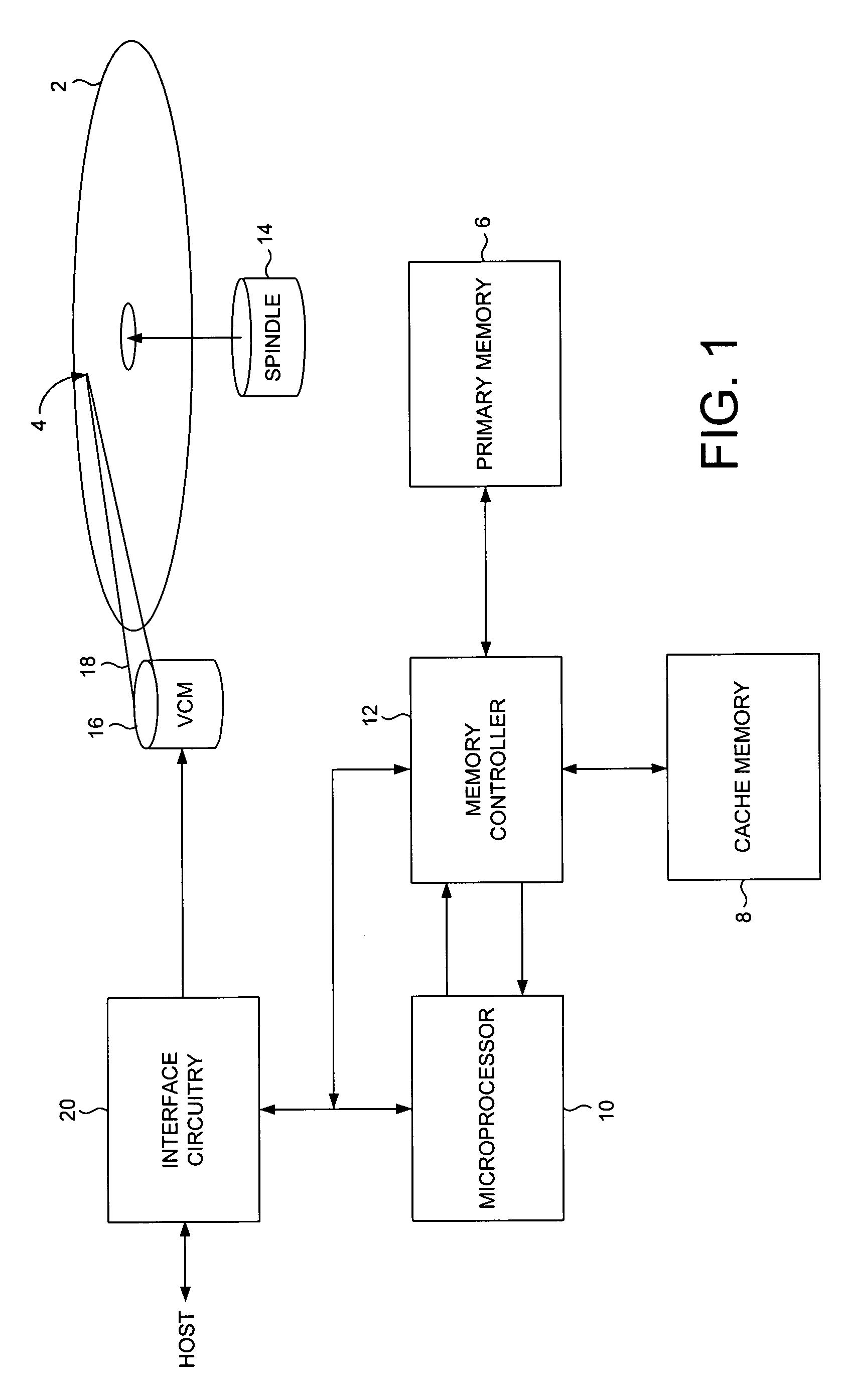

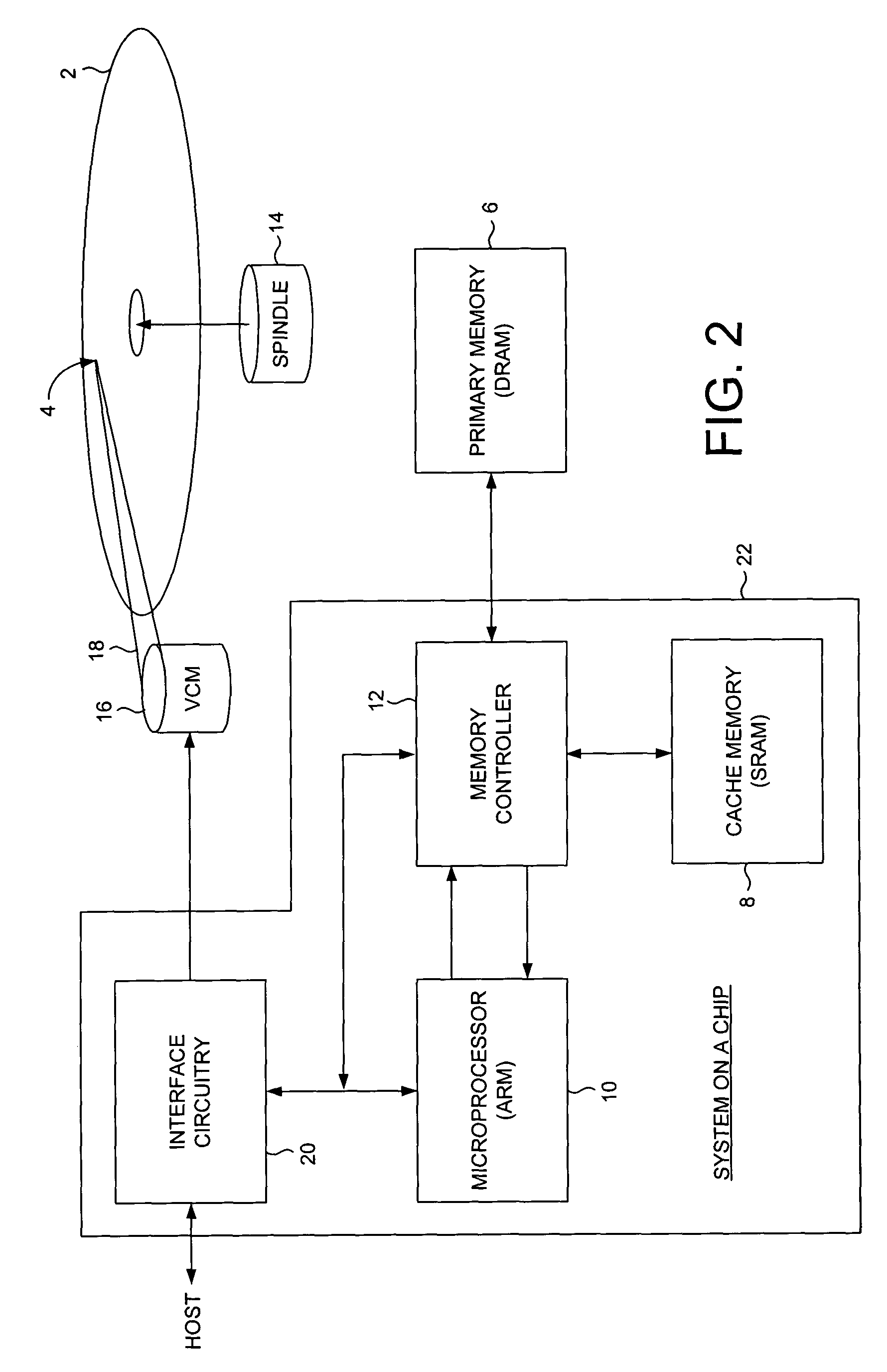

Disk drive employing stream detection engine to enhance cache management policy

A disk drive is disclosed comprising a disk, a head actuated over the disk, a host interface for receiving disk access commands from a host, a command queue for queuing the disk access commands, and a stream detection engine for evaluating the disk access commands to detect a plurality of streams accessed by the host. The stream detection engine maintains a stream data structure for each detected stream, wherein the stream data structure comprises a frequency counter for tracking a number of disk access commands associated with the stream out of a predetermined number of consecutive disk access commands received from the host. A disk controller selects one of the streams for servicing in response to the frequency counters.

Owner:WESTERN DIGITAL TECH INC

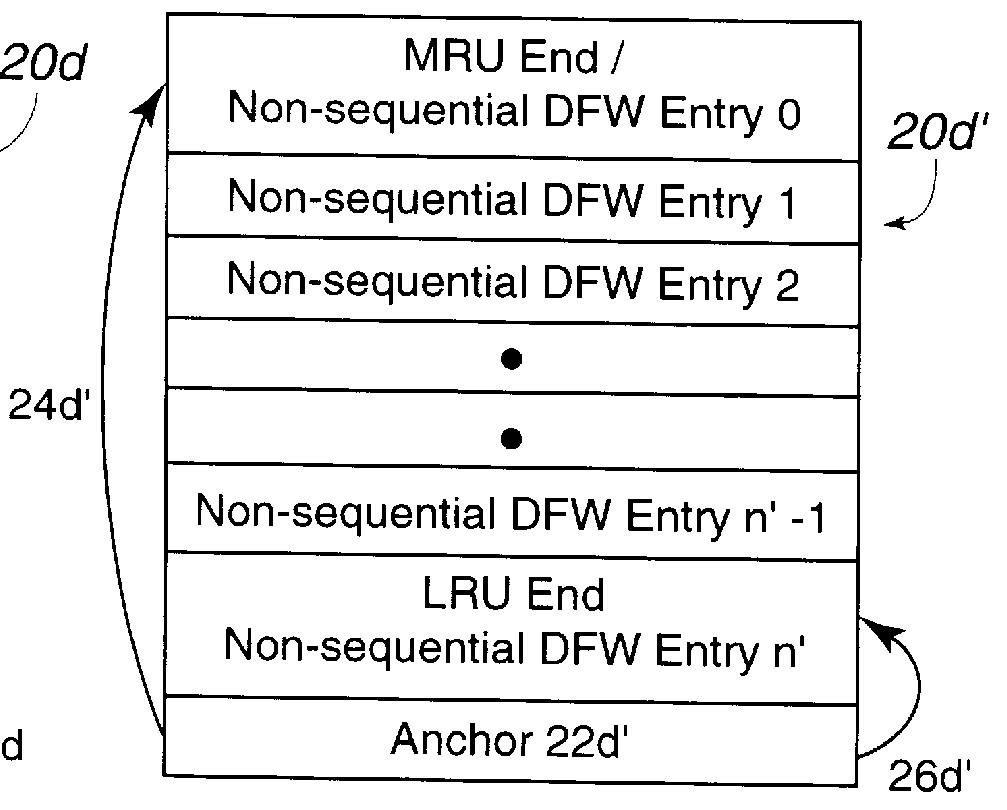

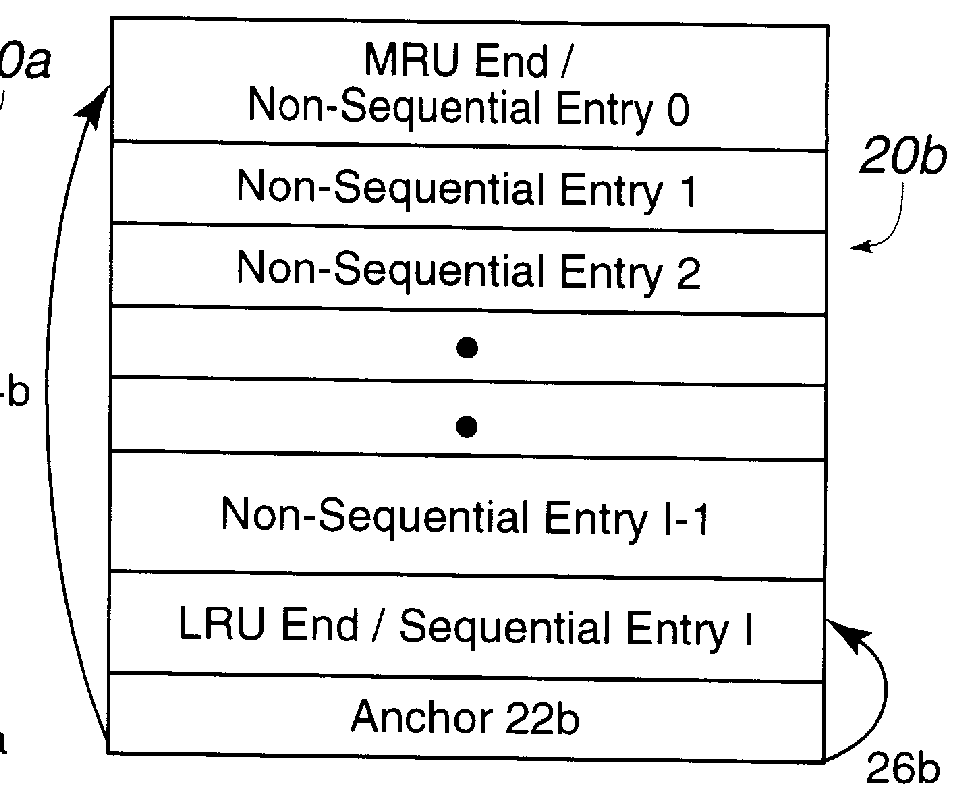

Method and system for managing data in cache using multiple data structures

InactiveUS6141731AEfficient managementChoose accuratelyMemory adressing/allocation/relocationLeast recently frequently usedCache management

Disclosed is a cache management scheme using multiple data structure. A first and second data structures, such as linked lists, indicate data entries in a cache. Each data structure has a most recently used (MRU) entry, a least recently used (LRU) entry, and a time value associated with each data entry indicating a time the data entry was indicated as added to the MRU entry of the data structure. A processing unit receives a new data entry. In response, the processing unit processes the first and second data structures to determine a LRU data entry in each data structure and selects from the determined LRU data entries the LRU data entry that is the least recently used. The processing unit then demotes the selected LRU data entry from the cache and data structure including the selected data entry. The processing unit adds the new data entry to the cache and indicates the new data entry as located at the MRU entry of one of the first and second data structures.

Owner:IBM CORP

Managed peer-to-peer applications, systems and methods for distributed data access and storage

InactiveUS7587467B2Efficiently accessing and controllingMinimizes bandwidth requirementDigital data processing detailsMultiple digital computer combinationsData accessApplication software

Applications, systems and methods for efficiently accessing and controlling data of devices among multiple computers over a network. Strategic cache management processes are provided to manage the data in cache memory of the storage devices involved. Communication of data over the network may be managed by means of one or more connection servers which may also manage any or all of authentication, authorization, security, encryption and point-to-multipoint communications functionalities. Alternatively, computers may be connected over a wide area network without a connection server, and with or without a VPN. Data transmissions may be managed to minimize bandwidth and may be temporally and / or spatially compressed.

Owner:WESTERN DIGITAL TECH INC

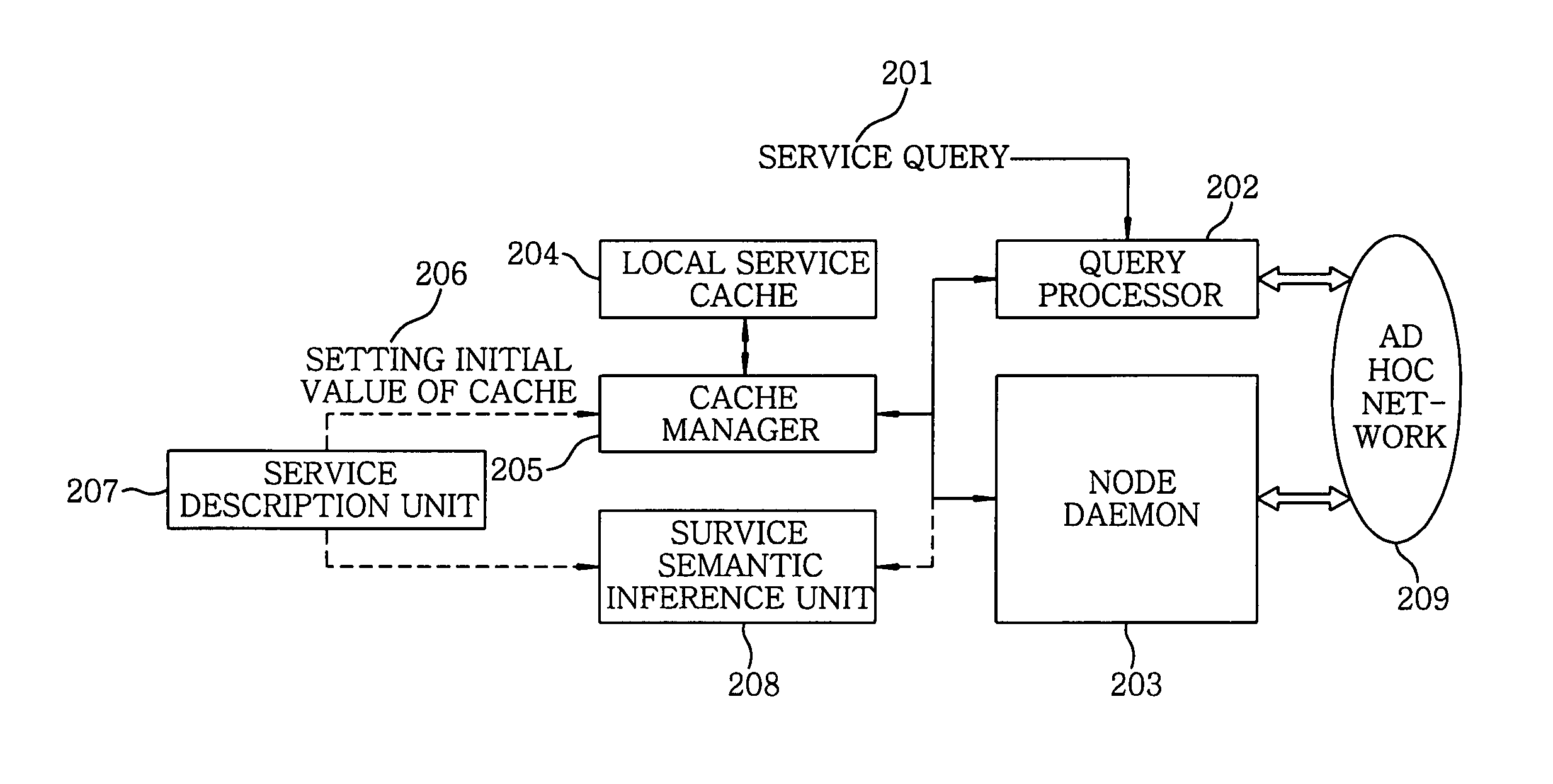

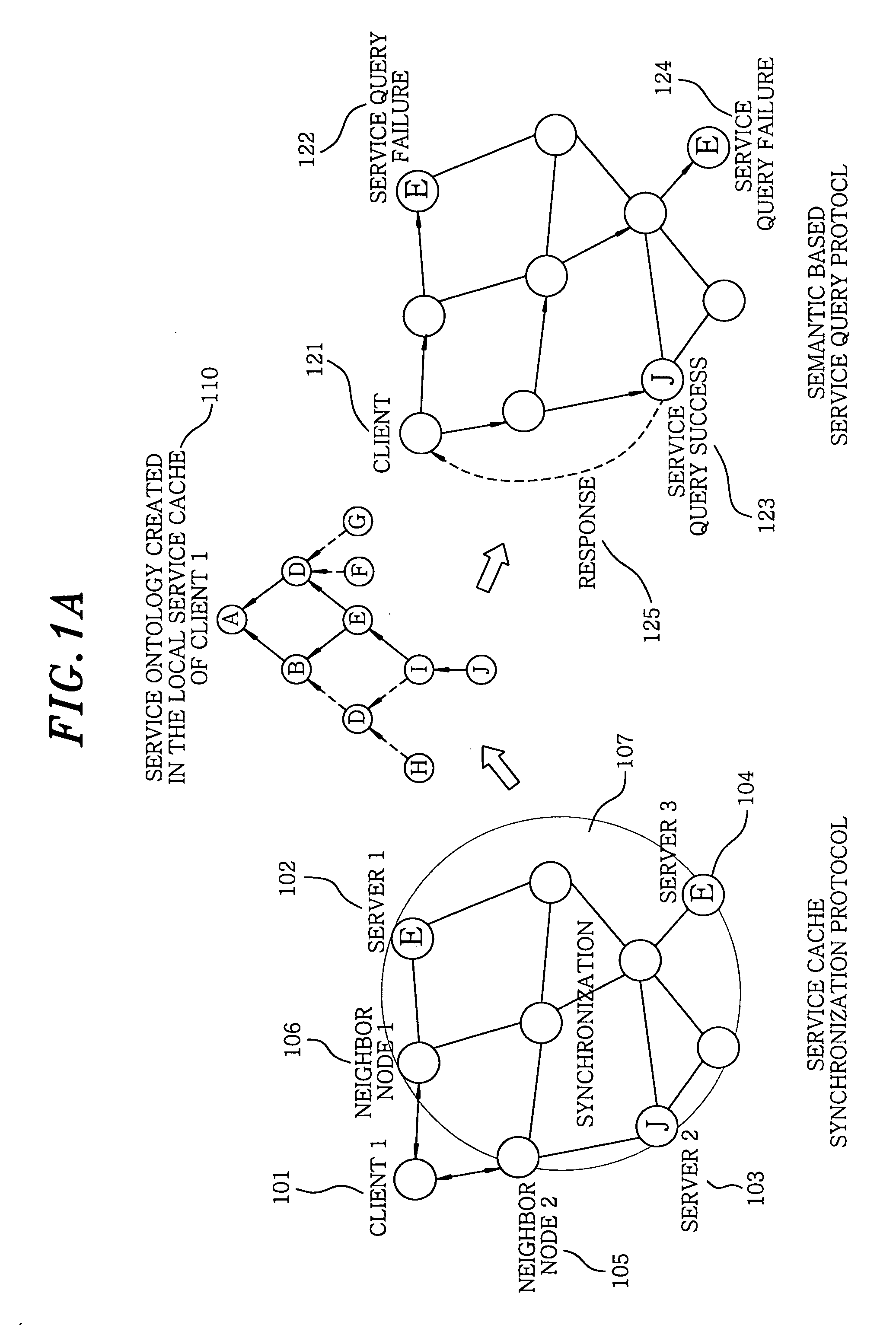

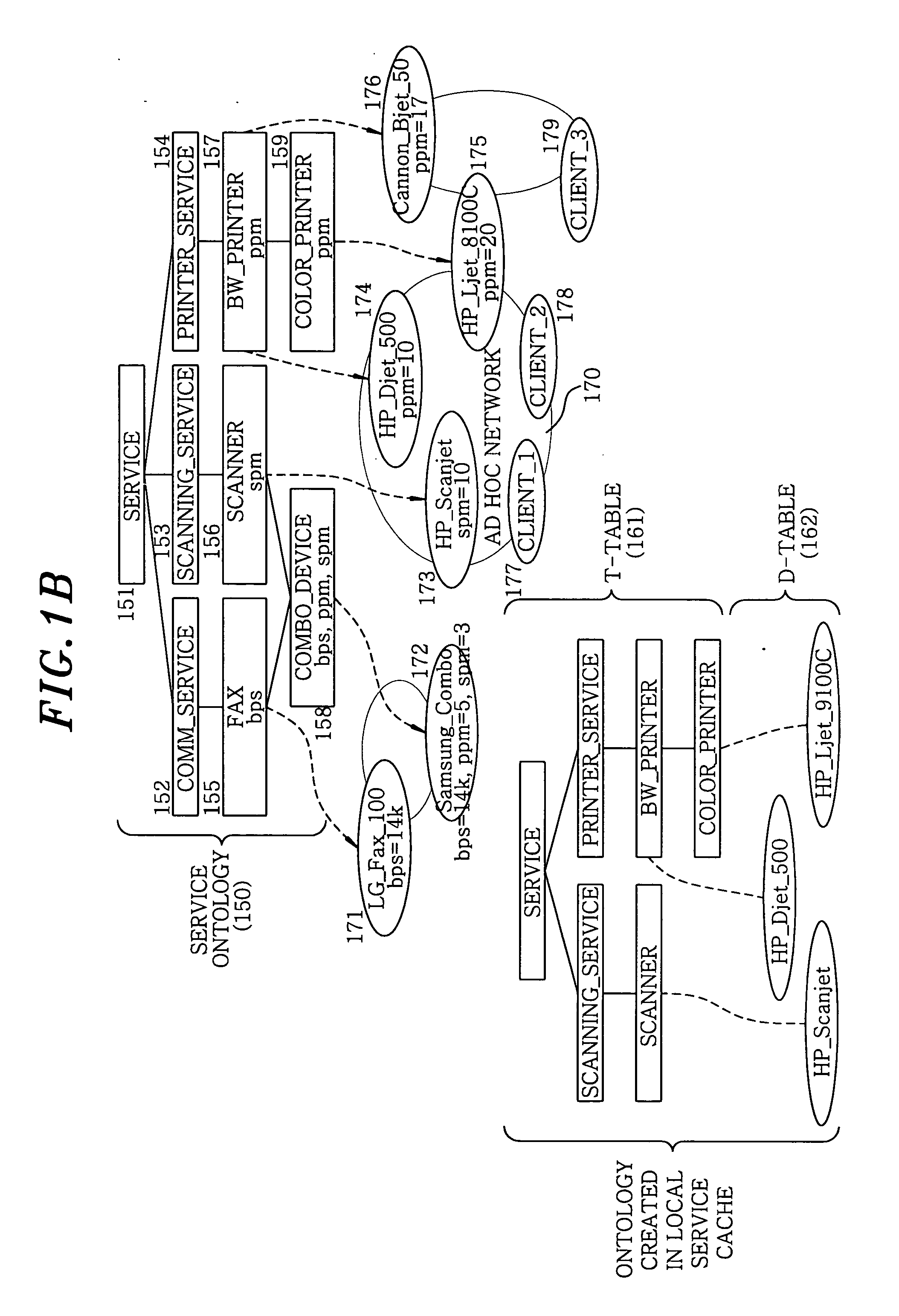

Ontology-based service discovery system and method for ad hoc networks

An ontology-based ad hoc service discovery system includes a local service cache, a cache manager, a service description unit, a query processor, a service semantic inference unit and a node daemon. The local service cache restores a service ontology by collecting class information of all services advertised on an ad hoc network and stores the service ontology. The cache manager manages the local service cache and performs various preset operations on the cache. The service description unit stores a description of a corresponding service for use in initializing the local service cache. The query processor starts performing a semantic based service query protocol by receiving a service query from a user or an application program. The service semantic inference unit inspects whether the service query transmitted from a client is coincident with the content of the service. The node daemon performs a service cache synchronization protocol with neighboring nodes.

Owner:ELECTRONICS & TELECOMM RES INST

Disk drive employing enhanced instruction cache management to facilitate non-sequential immediate operands

InactiveUS7055000B1Instruction analysisMemory adressing/allocation/relocationOperandCache management

A disk drive is disclosed for executing a program comprising a plurality of instructions. The disk drive comprises a primary memory for storing the instructions, and a cache memory for caching the instructions. The cache management is enhanced by not re-filling the cache due to accessing a non-sequential immediate operand.

Owner:WESTERN DIGITAL TECH INC

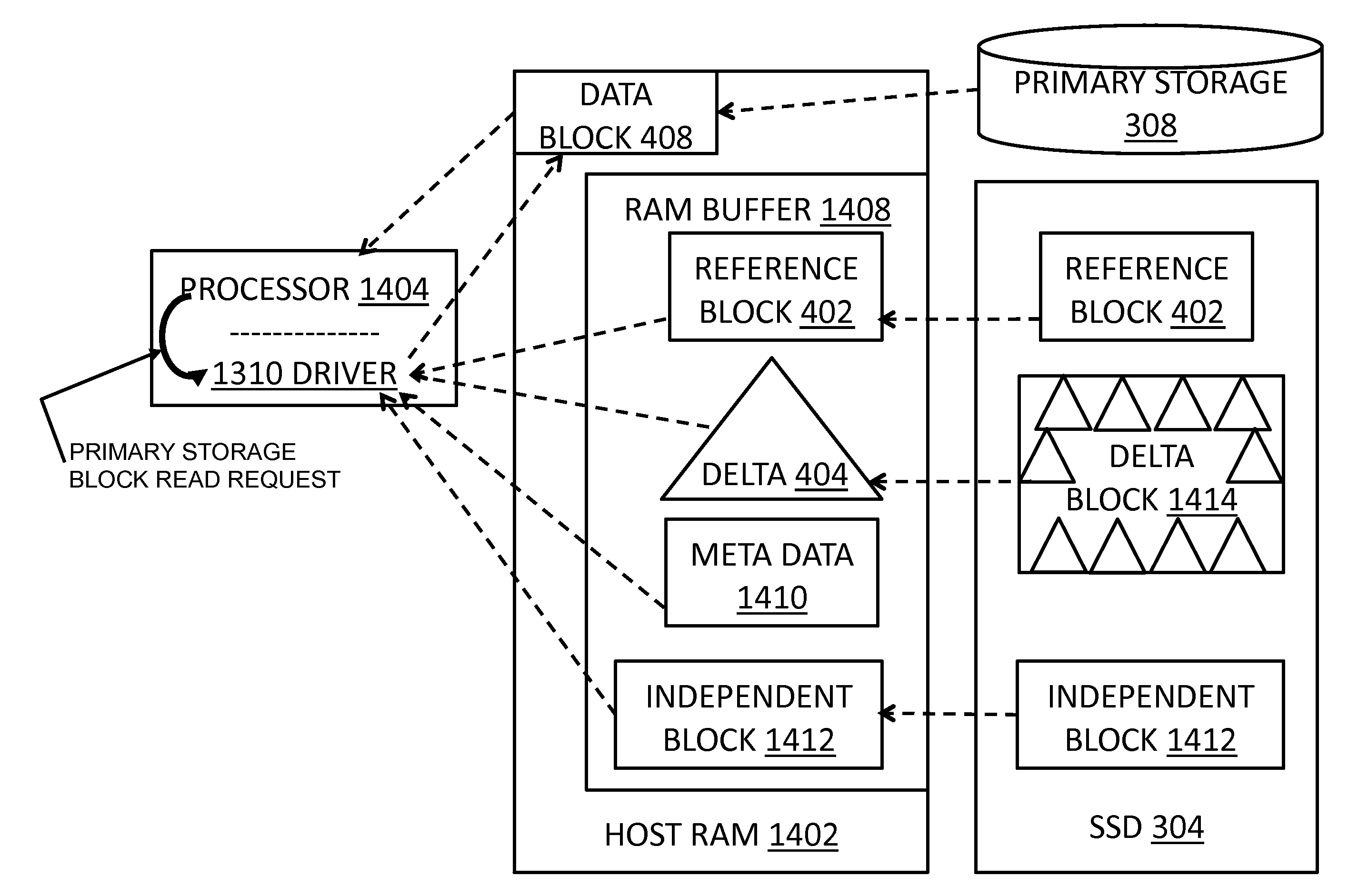

Content locality-based caching in a data storage system

InactiveUS20120137059A1Quick upgradeLow costMemory architecture accessing/allocationEnergy efficient ICTData accessLeast frequently used

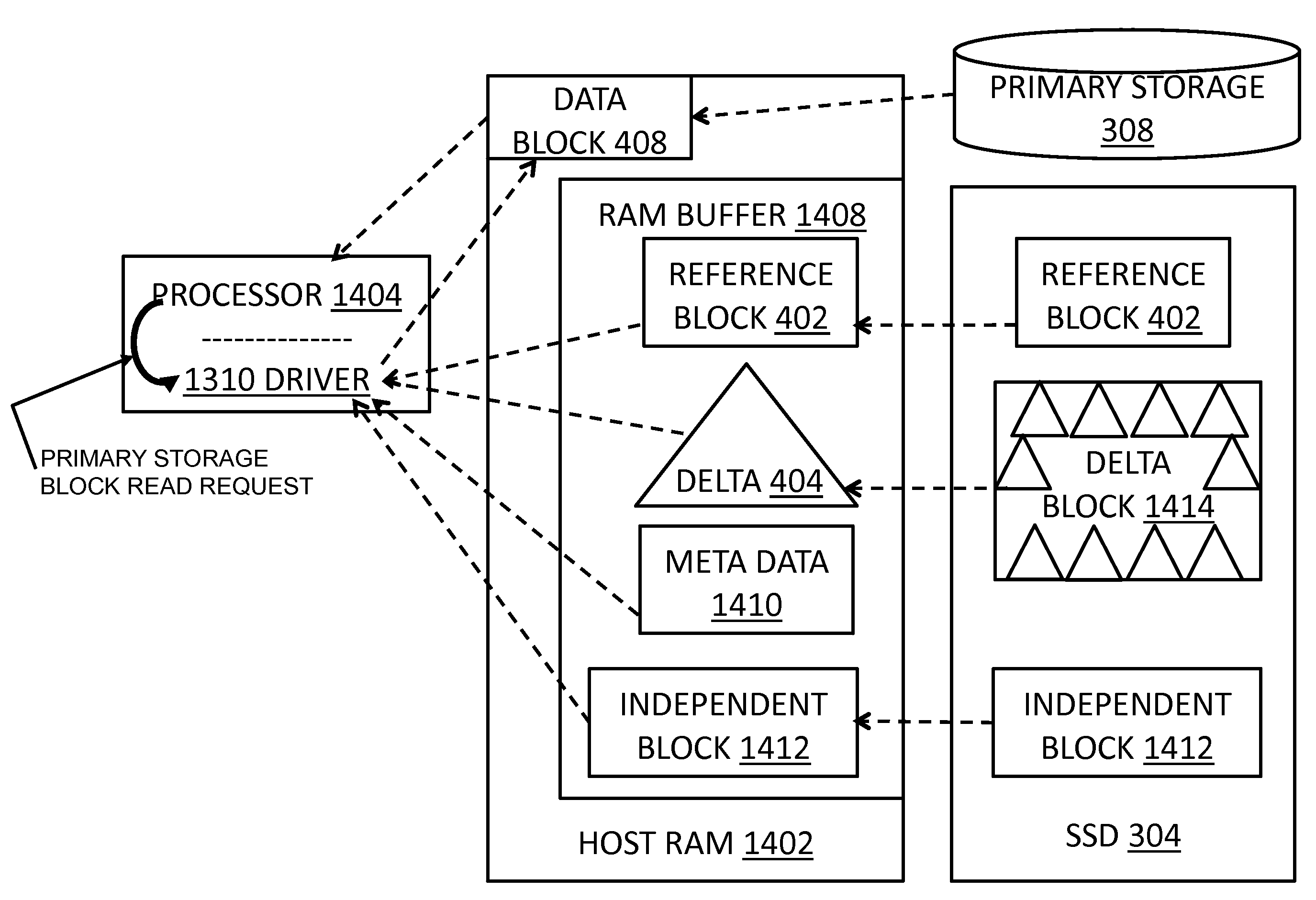

A data storage caching architecture supports using native local memory such as host-based RAM, and if available, Solid State Disk (SSD) memory for storing pre-cache delta-compression based delta, reference, and independent data by exploiting content locality, temporal locality, and spatial locality of data accesses to primary (e.g. disk-based) storage. The architecture makes excellent use of the physical properties of the different types of memory available (fast r / w RAM, low cost fast read SSD, etc) by applying algorithms to determine what types of data to store in each type of memory. Algorithms include similarity detection, delta compression, least popularly used cache management, conservative insertion and promotion cache replacement, and the like.

Owner:VELOBIT

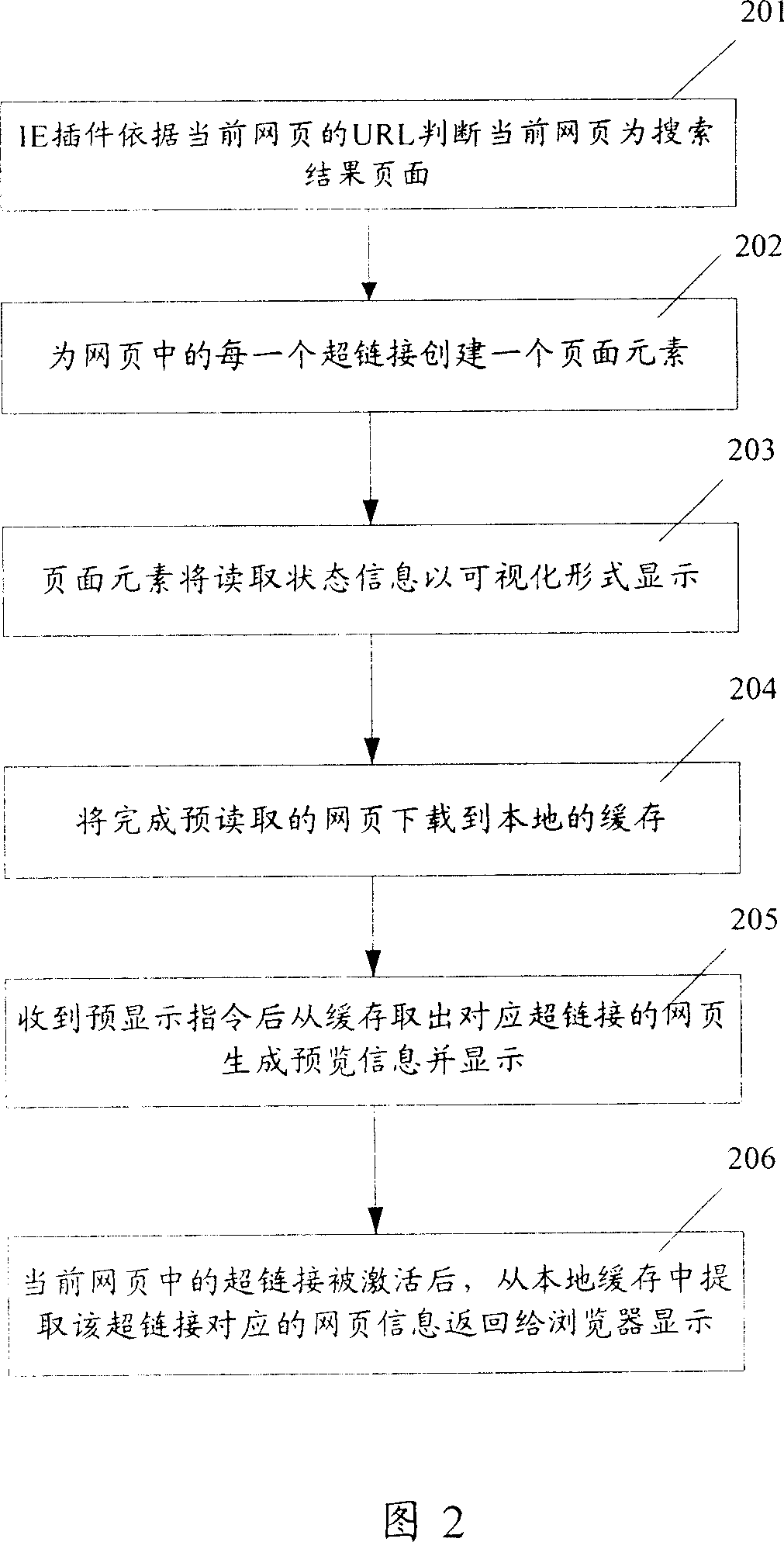

Apparatus and method for accelerating browser webpage display

InactiveCN101075236AImprove display speedReduce the time waiting for the page to displaySpecial data processing applicationsHyperlinkCache management

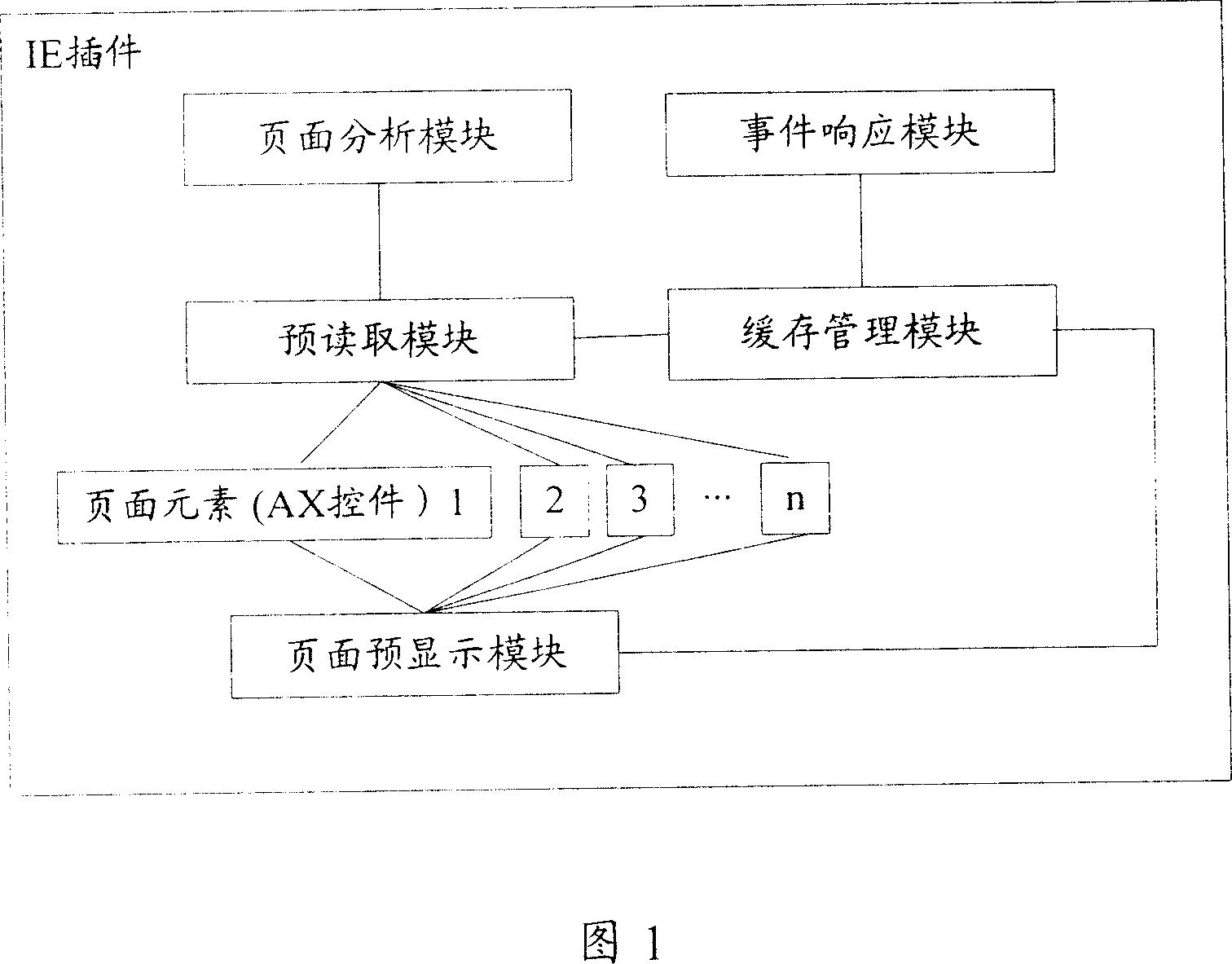

A method for speeding up display of web page on browser includes pre-fetching web page information corresponding to super-chaining in current web page, downloading fetched web page onto local buffer storage, picking up corresponding web page information from local buffer storage and displaying picked up information out when super-chaining in current web page is activated. The device used for realizing said method is also disclosed.

Owner:TENCENT TECH (SHENZHEN) CO LTD

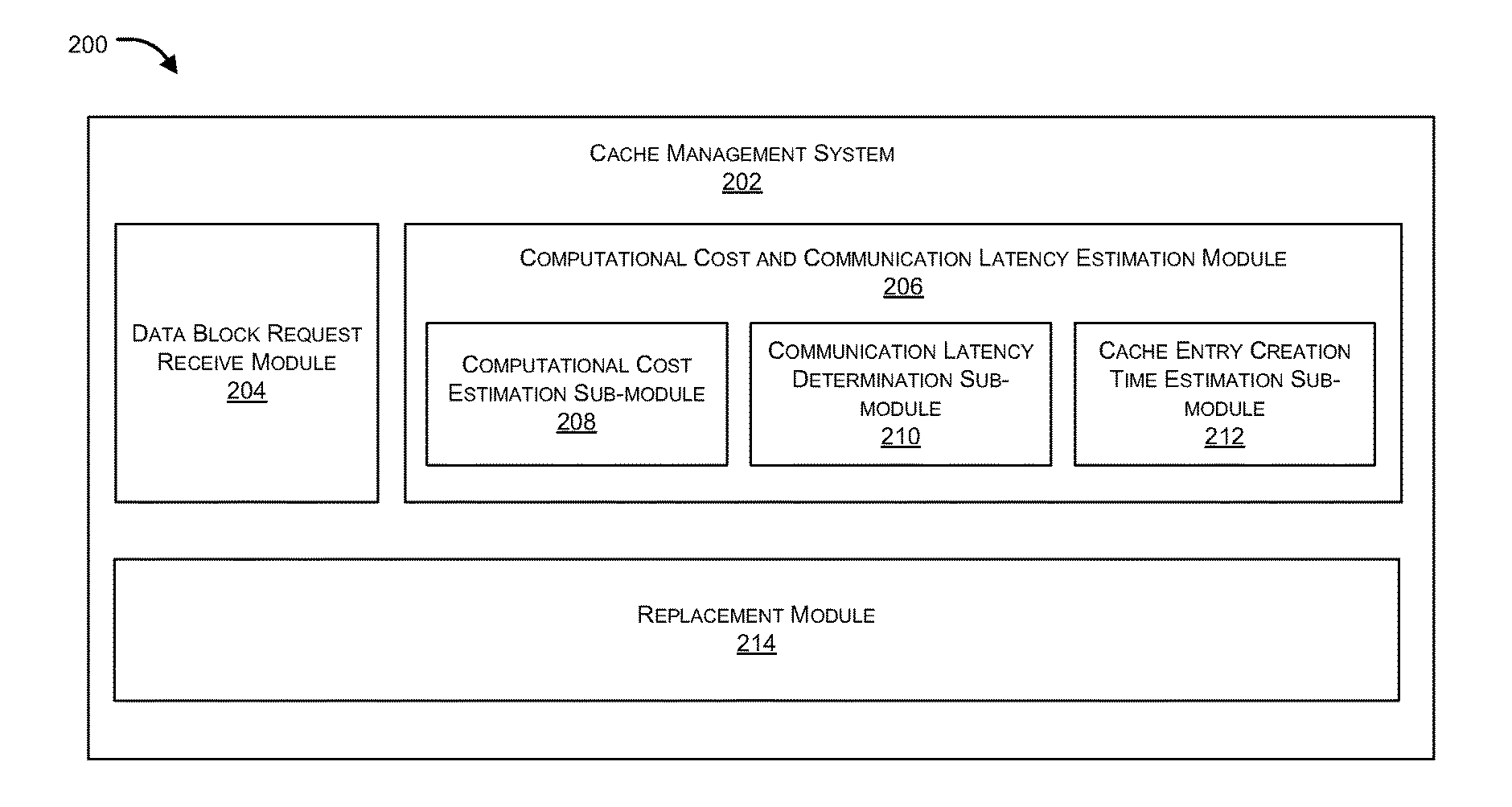

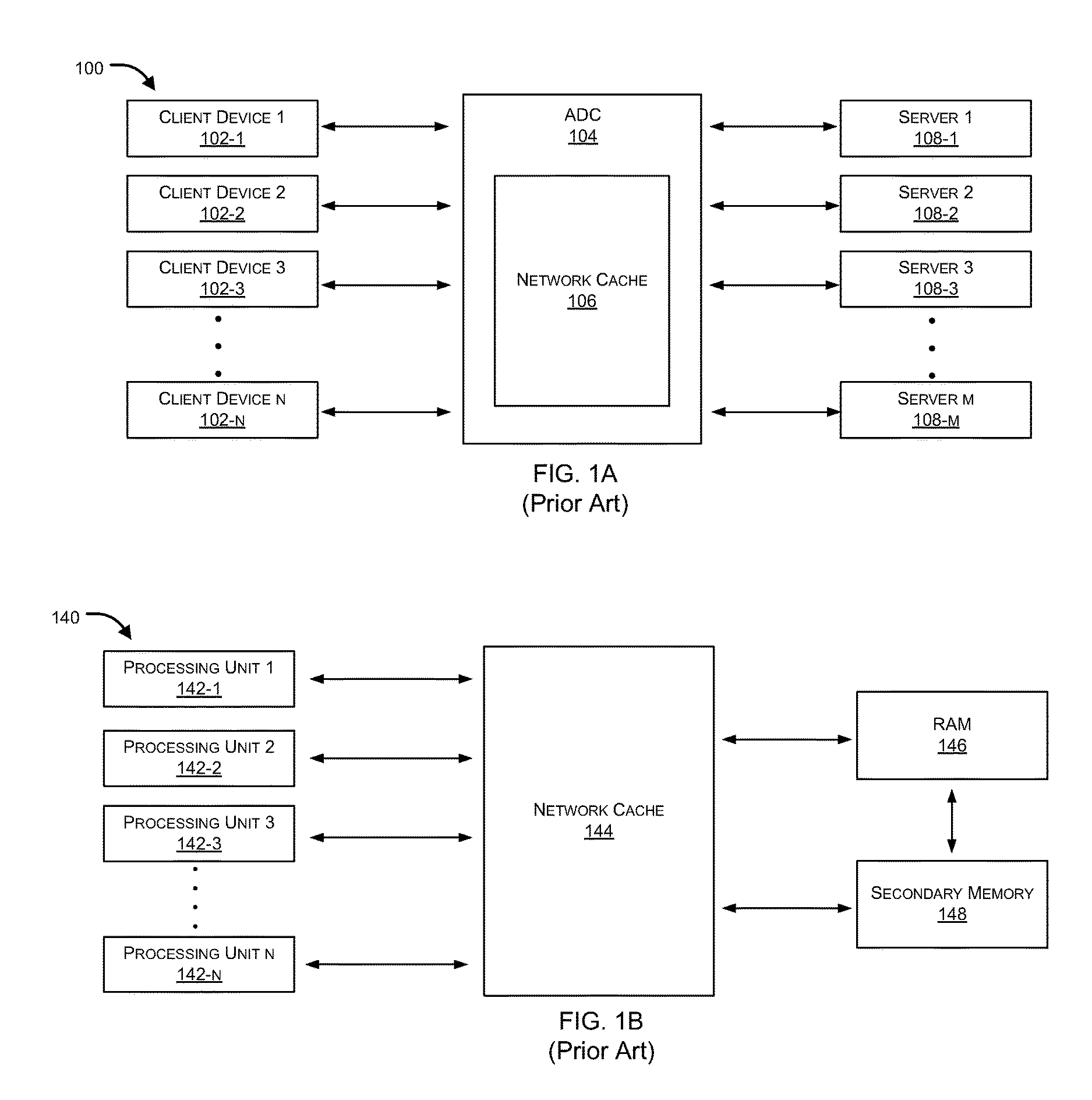

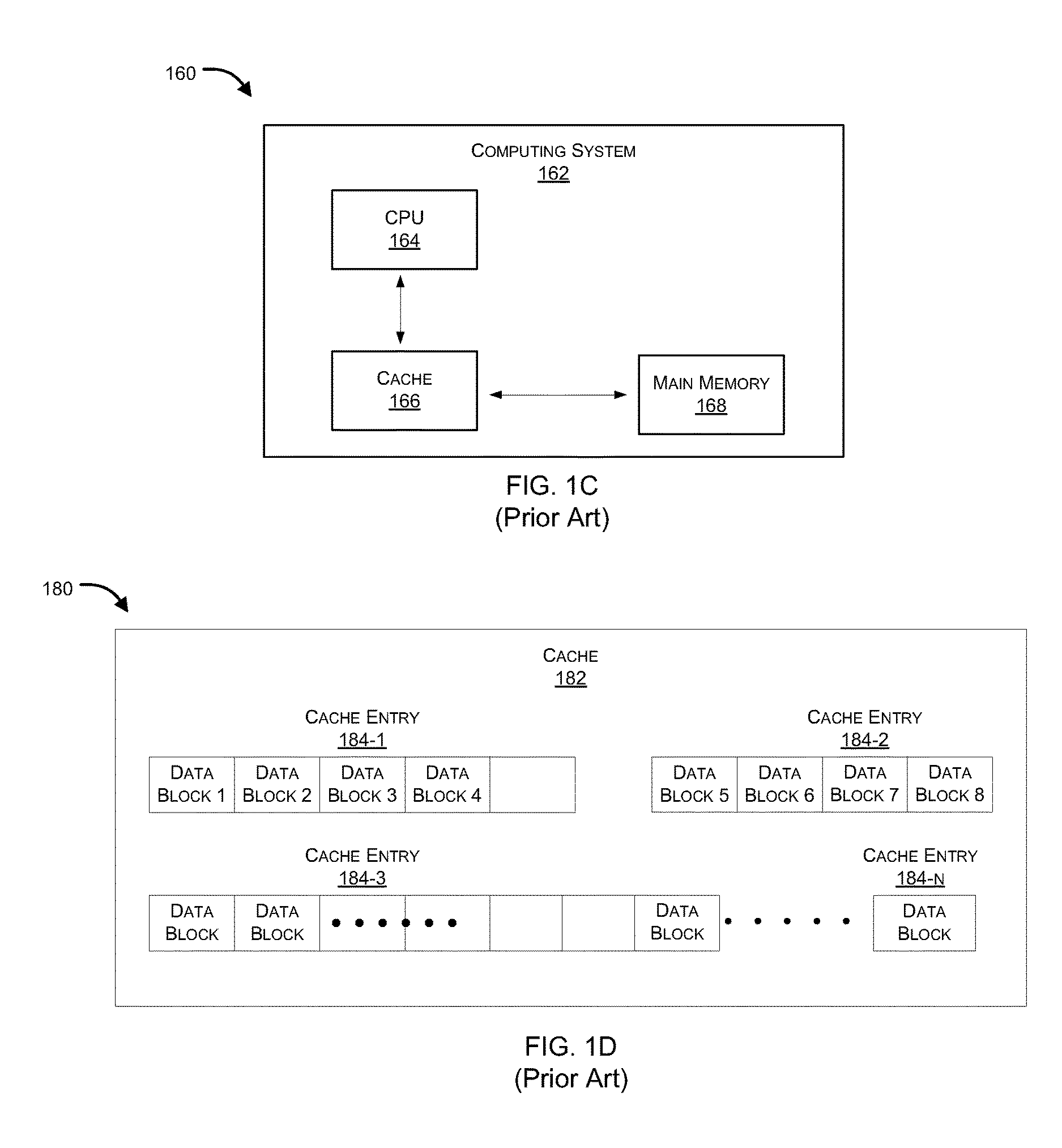

Cache management based on factors relating to replacement cost of data

ActiveUS20170041428A1Memory architecture accessing/allocationMemory adressing/allocation/relocationSystems managementData system

Systems and methods for a cache replacement policy that takes into consideration factors relating to the replacement cost of currently cached data and / or the replacement cost of requested data. According to one embodiment, a request for data is received by a network device. A cache management system running on the network device estimates, for each of multiple cache entries of a cache managed by the cache management system, a computational cost of reproducing data cached within each of the cache entries by respective origin storage devices from which the respective cached data originated. The cache management system estimates a communication latency between the cache and the respective origin storage devices. The cache management system enables the cache to replace data cached within a selected cache entry with the requested data based on the estimated computational costs and the estimated communication latencies.

Owner:FORTINET

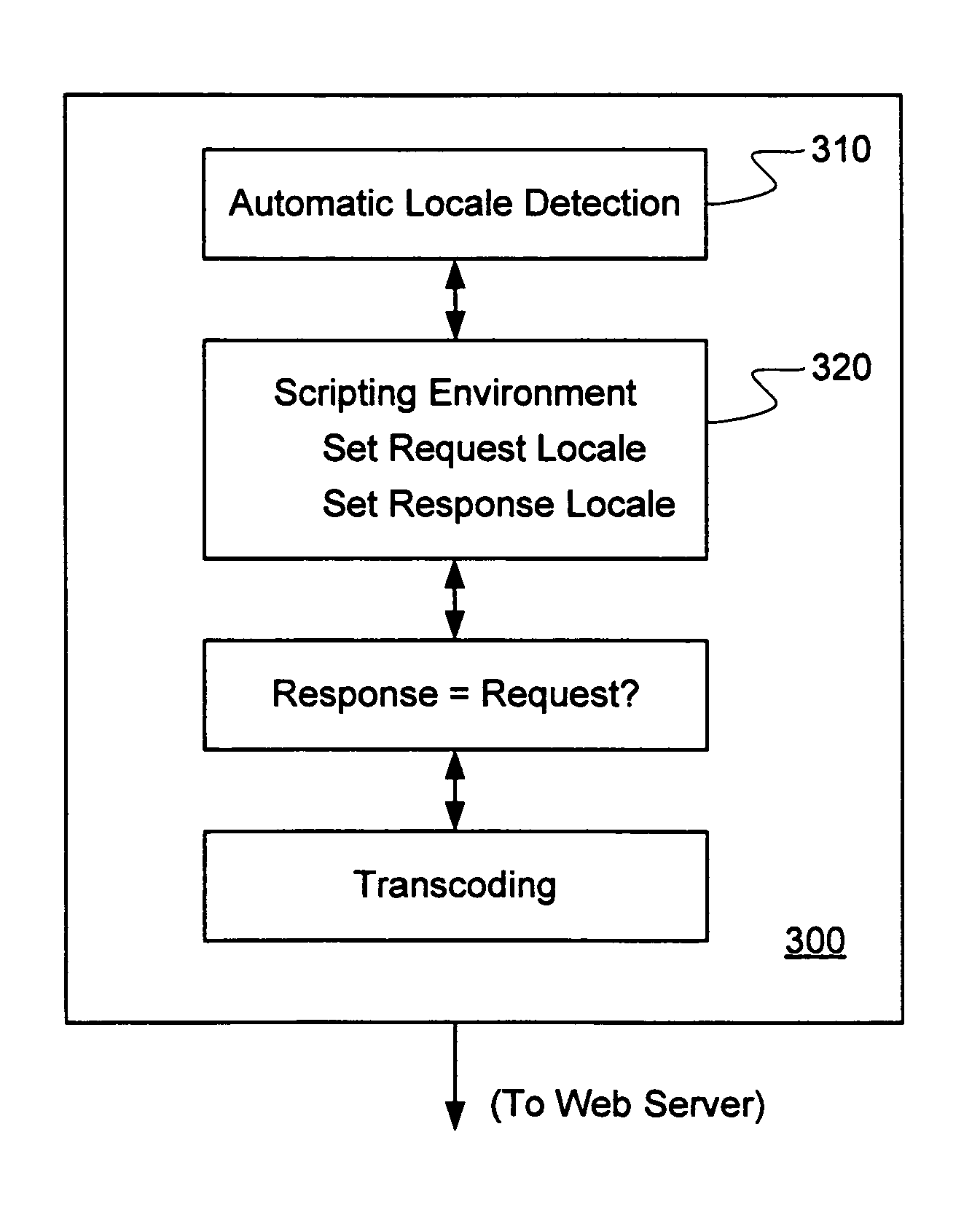

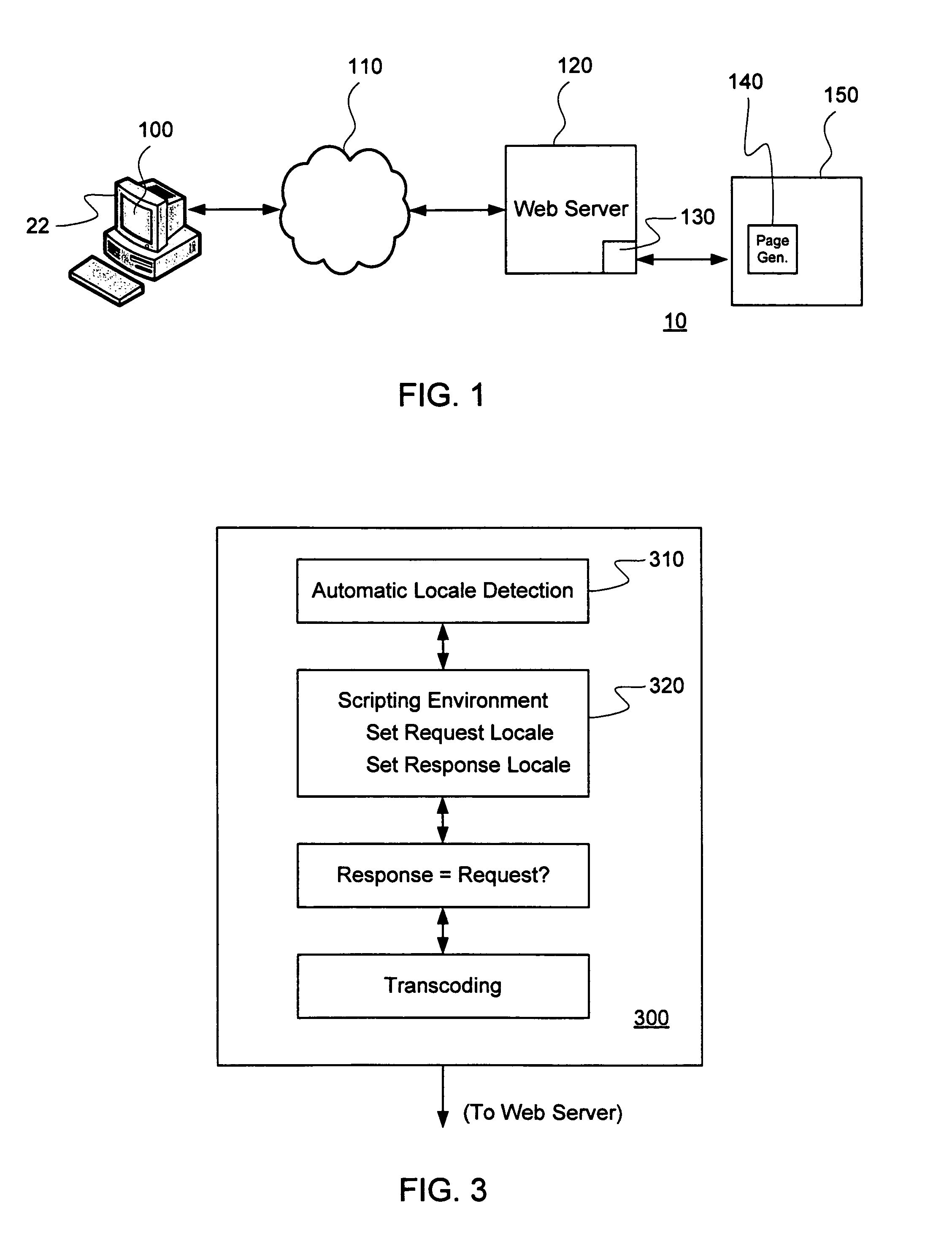

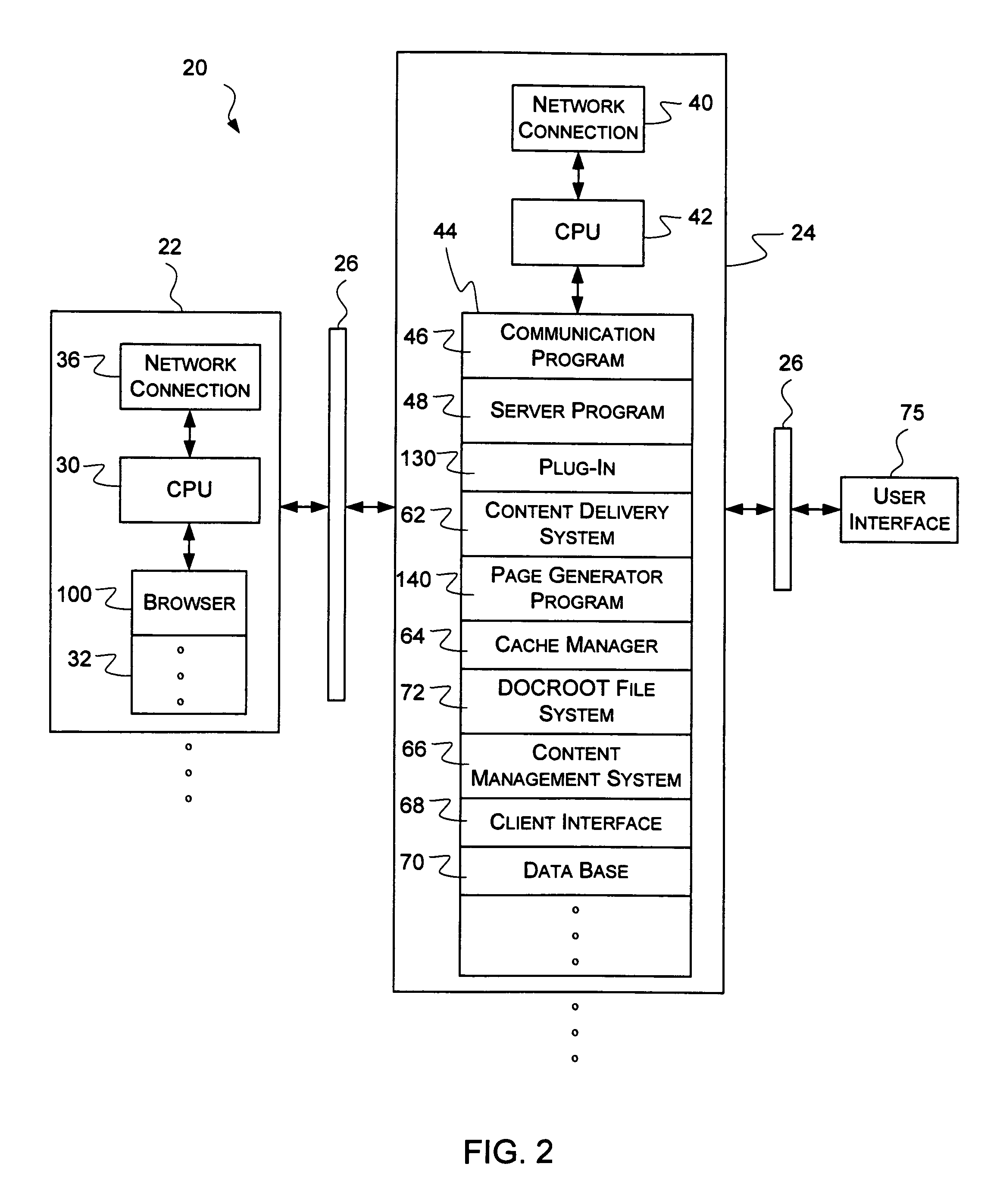

Method and system for cache management of locale-sensitive content

ActiveUS7194506B1Computationally efficientMultiple digital computer combinationsWebsite content managementClient-sideCache management

A method and system are disclosed for cache management and regeneration of dynamically-generated locale-sensitive content (DGLSC) in one or more server computers within a client-server computer network. One embodiment of the method of this invention can comprise receiving a request for content from a user at a client computer and determining the user's locale preference with, for example, an automatic locale detection algorithm. The requested content can be dynamically generated from a template as DGLSC based on the user locale preference. If the template is a cacheable template, a locale-sensitive filename can be generated for the DGLSC based on the user locale preference. The locale-sensitive filename can be associated with the DGLSC. The DGLSC can be cached in a locale-sensitive directory, such that it can be served (and thus avoid duplicative generation of the same content) in response to subsequent requests from users having the same locale preference The DGLSC is then served to the requesting user at his or her client computer.

Owner:OPEN TEXT SA ULC

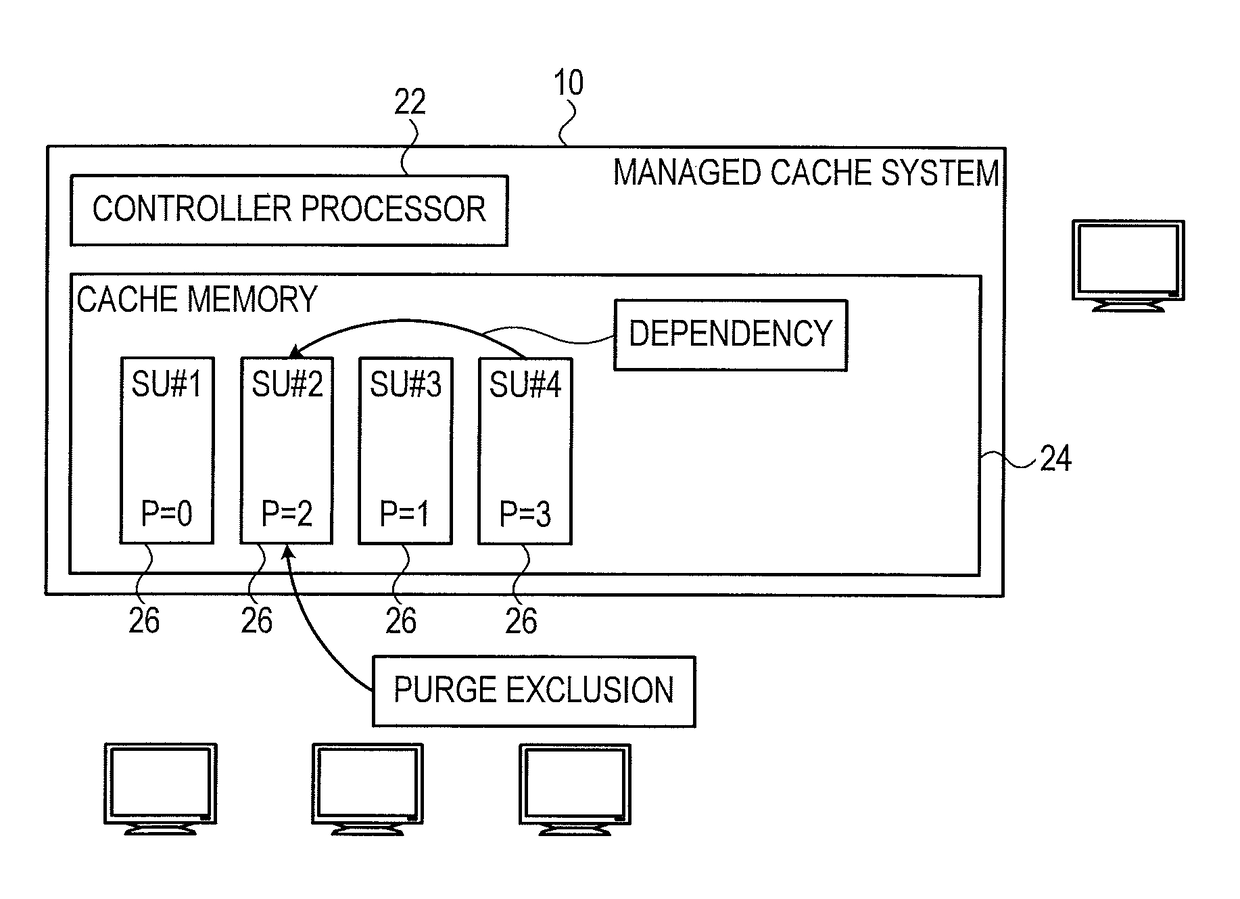

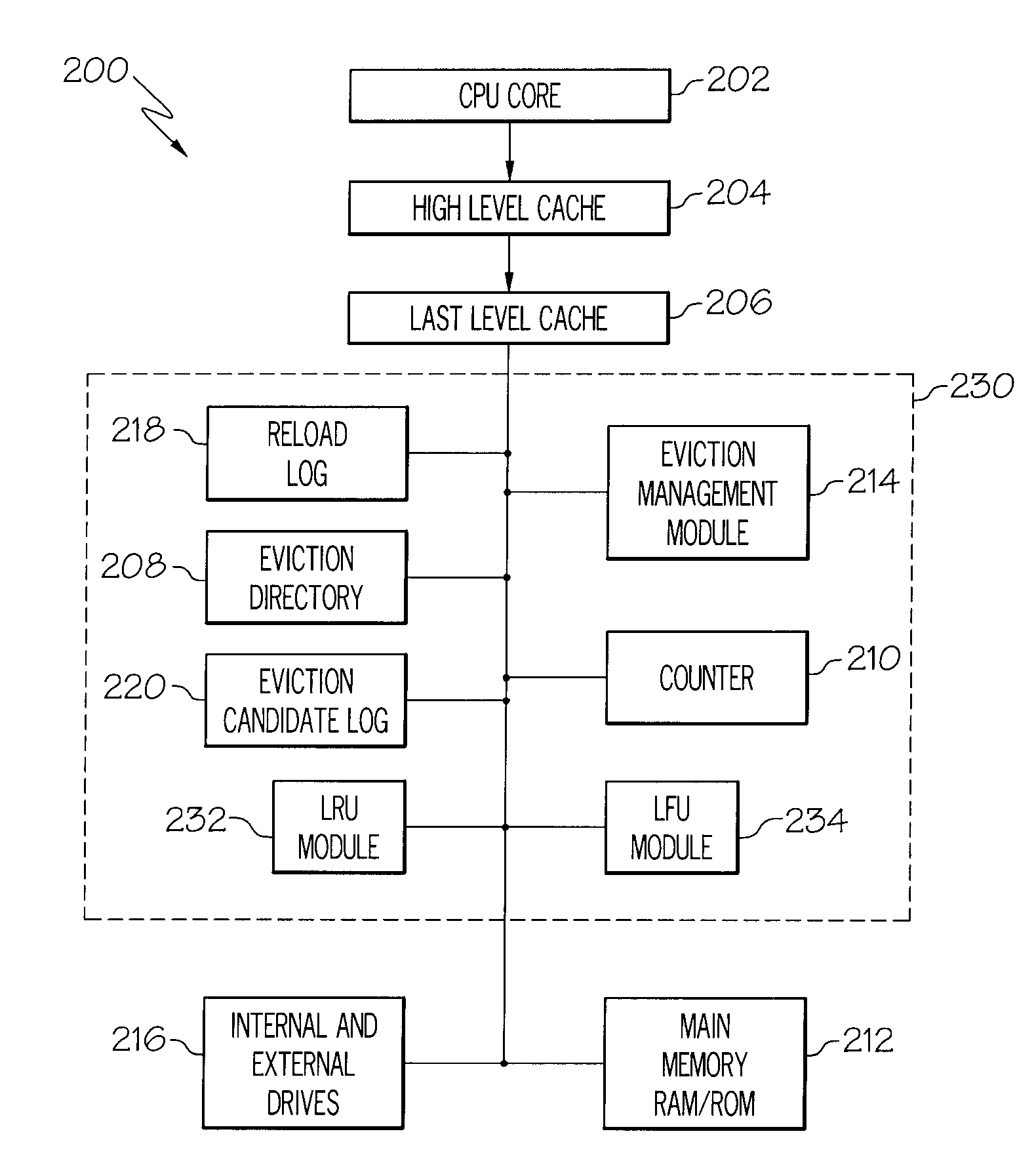

Screen sharing cache management

In one embodiment, a managed cache system, includes a cache memory to receive storage units via an uplink from a transmitting client, each storage unit including a decodable video unit, each storage unit having a priority, and enable downloading of the storage units via a plurality of downlinks to receiving clients, and a controller processor to purge the cache memory of one of the storage units when all of the following conditions are satisfied: the one storage unit is not being downloaded to any of the receiving clients, the one storage unit is not currently subject to a purging exclusion, and another one of the storage units now residing in the cache, having a higher priority than the priority of the one storage unit, arrived in the cache after the one storage unit. Related apparatus and methods are also described.

Owner:CISCO TECH INC

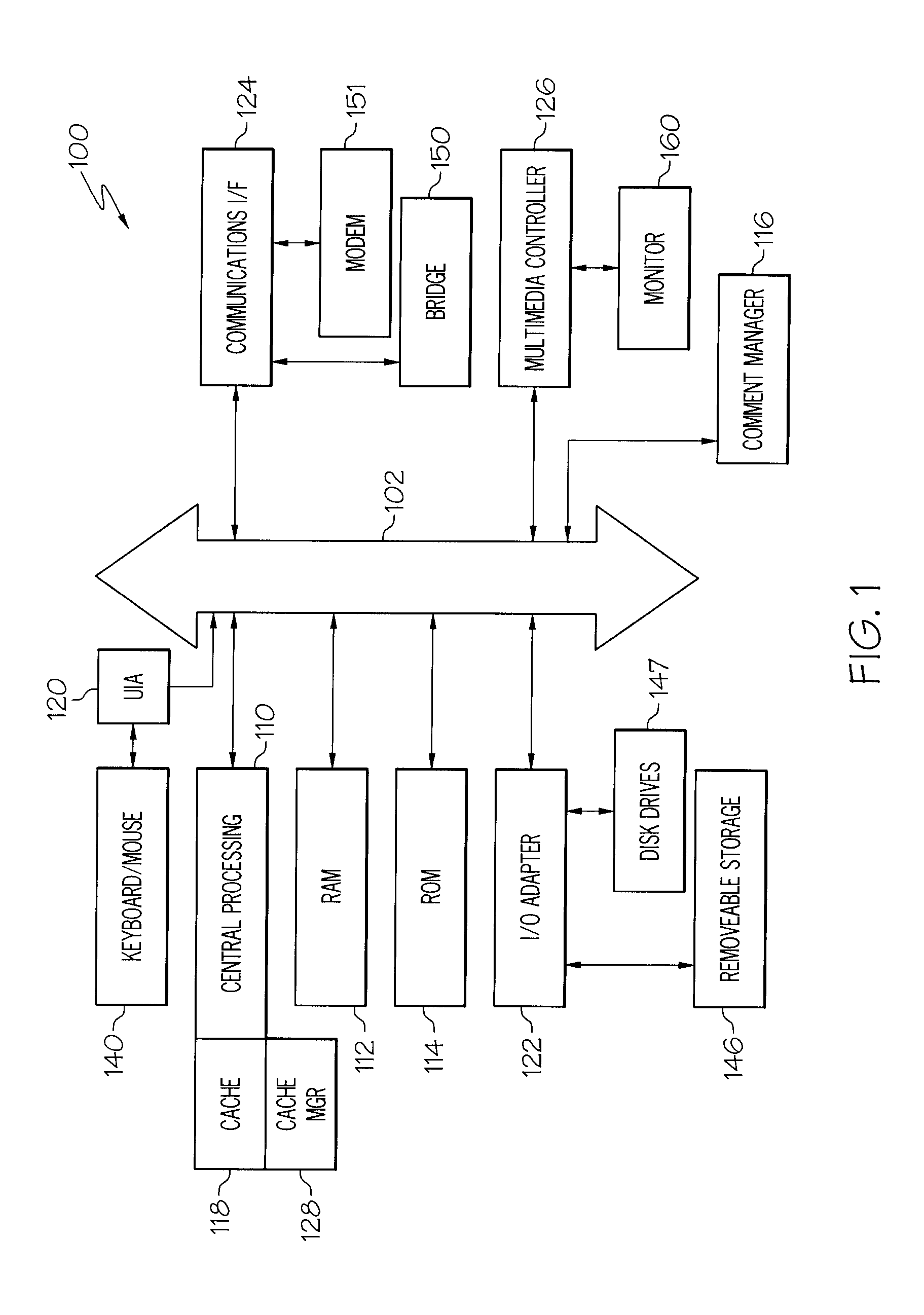

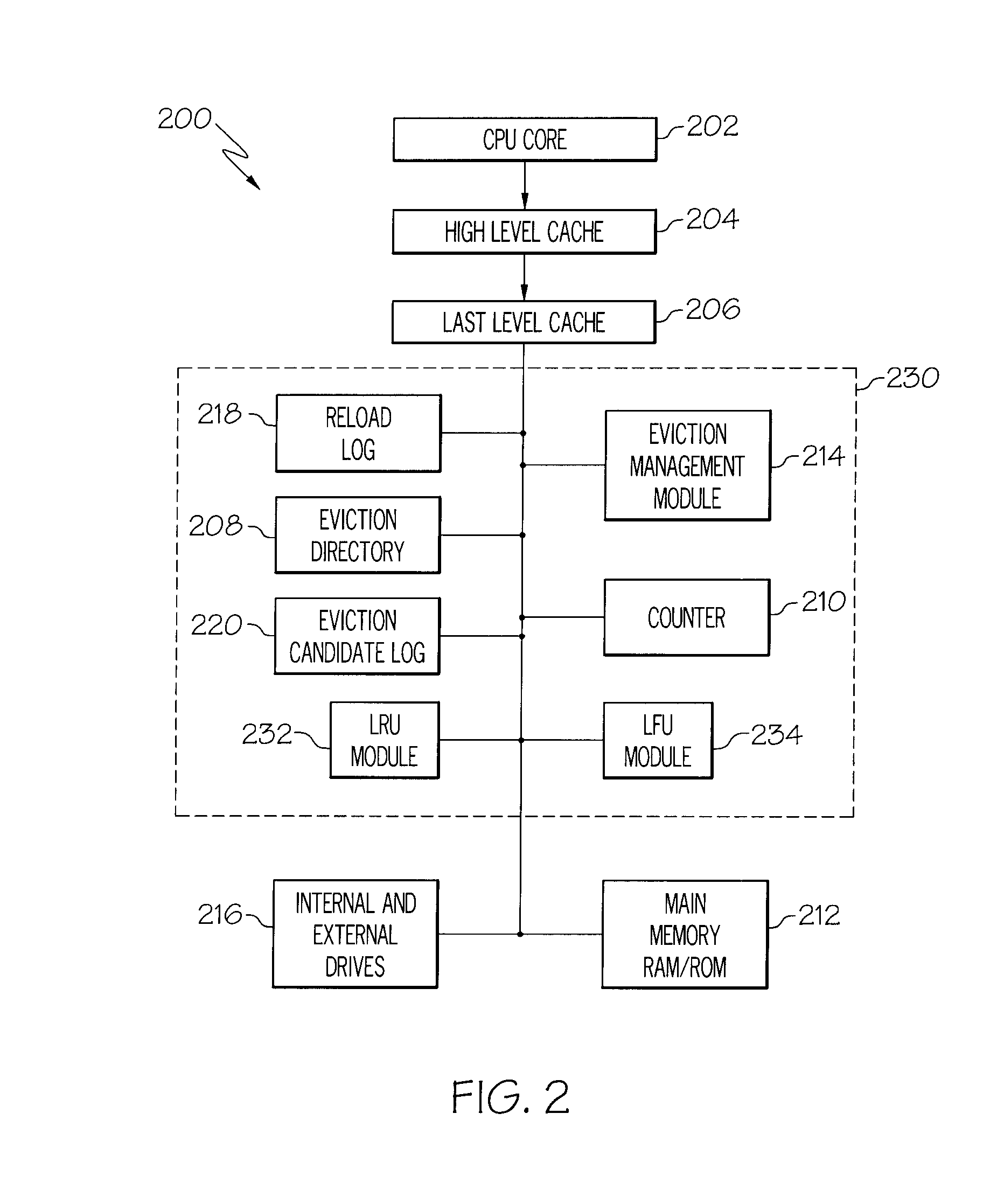

Systems and Arrangements for Cache Management

InactiveUS20080120469A1Reduce frequencyImprove system performanceMemory adressing/allocation/relocationParallel computingCache management

A method for cache management is disclosed. The method can assign or determined identifiers for lines of binary code that are, or will be stored in cache. The method can create a cache directory that utilizes the identifier to keep an eviction count and / or a reload count for cached lines. Thus, each time a line is entered into, or evicted from cache, the cache eviction log can be amended accordingly. When a processor receives or creates an instruction that requests that a line be evicted from cache, a cache manager log can identify a line, or lines of binary code to be evicted based on data by accessing the cache directory and then the line(s) can be evicted.

Owner:IBM CORP

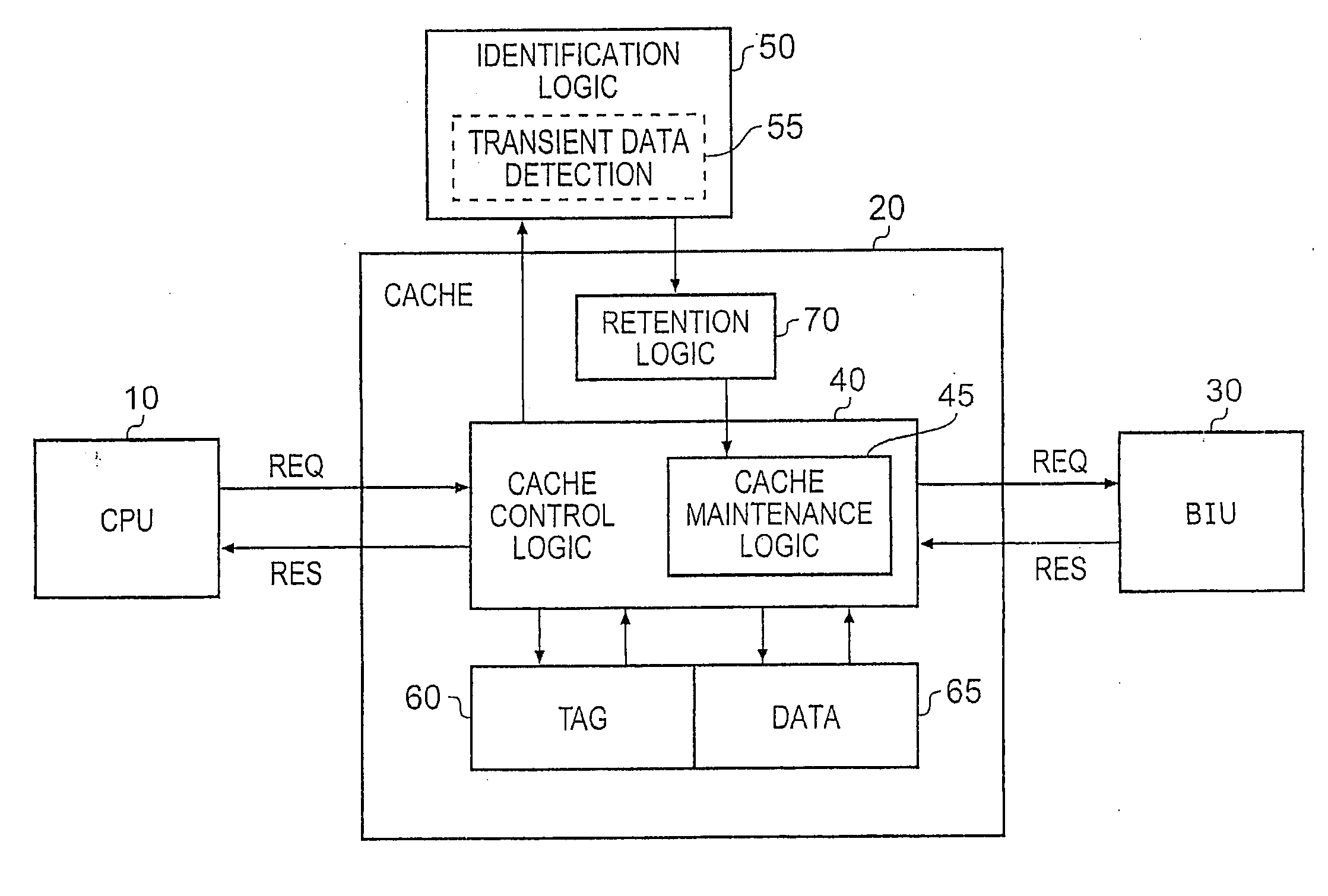

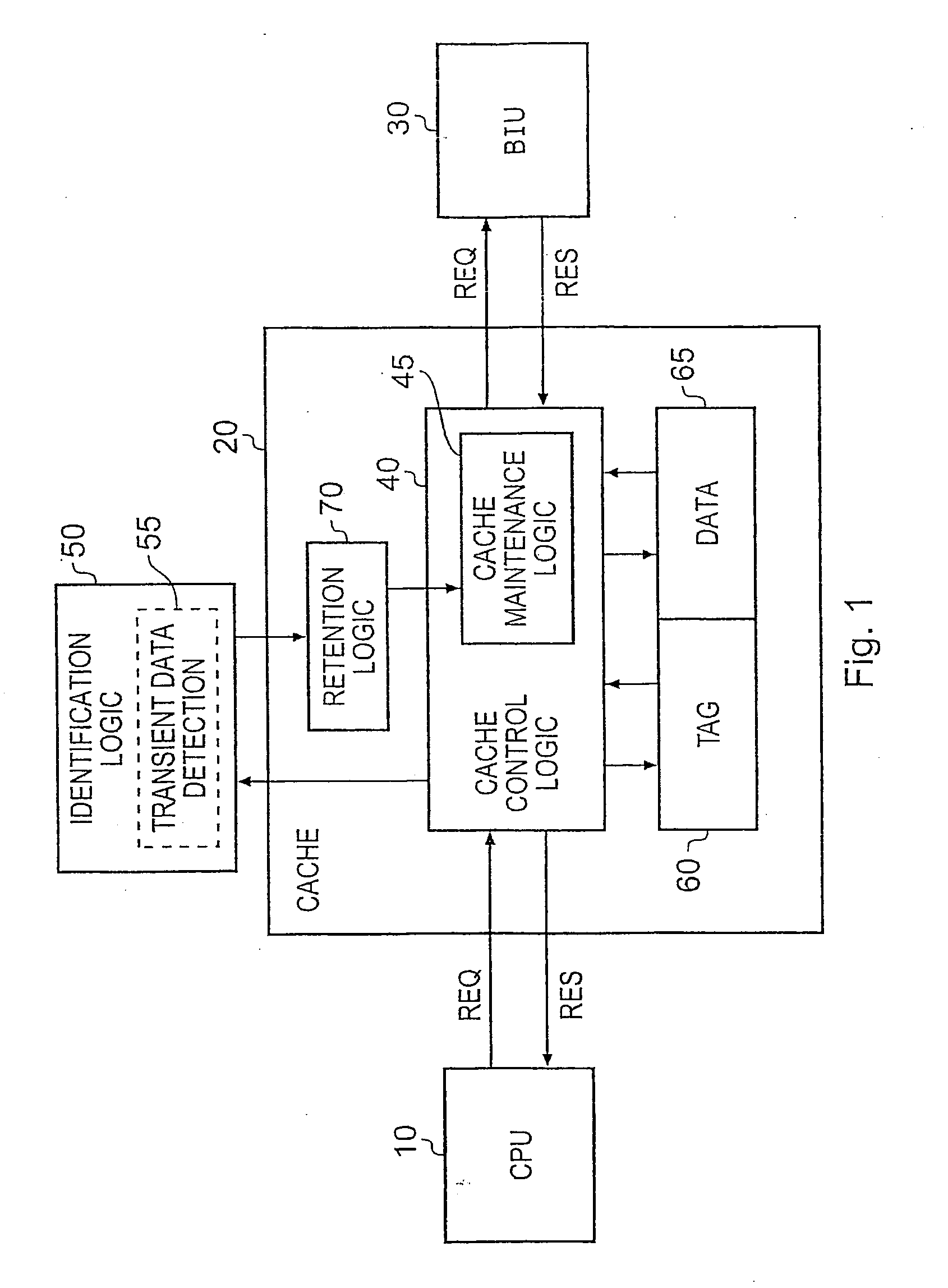

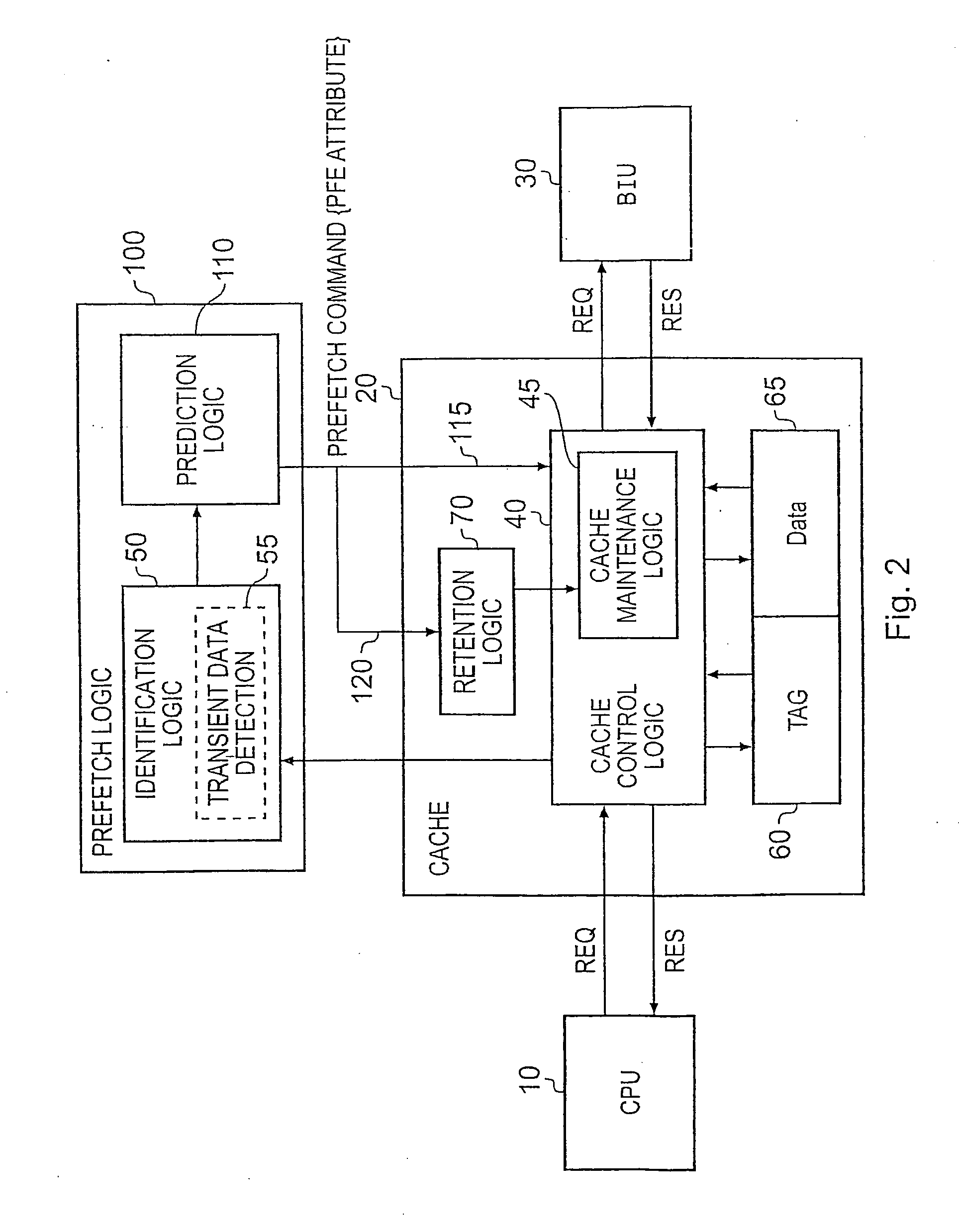

Cache Management Within A Data Processing Apparatus

ActiveUS20100235579A1Optimal utilisationProvide flexibilityMemory adressing/allocation/relocationTraffic capacityParallel computing

A data processing apparatus, and method of managing at least one cache within such an apparatus, are provided. The data processing apparatus has at least one processing unit for executing a sequence of instructions, with each such processing unit having a cache associated therewith, each cache having a plurality of cache lines for storing data values for access by the associated processing unit when executing the sequence of instructions. Identification logic is provided which, for each cache, monitors data traffic within the data processing apparatus and based thereon generates a preferred for eviction identification identifying one or more of the data values as preferred for eviction. Cache maintenance logic is then arranged, for each cache, to implement a cache maintenance operation during which selection of one or more data values for eviction from that cache is performed having regard to any preferred for eviction identification generated by the identification logic for data values stored in that cache. It has been found that such an approach provides a very flexible technique for seeking to improve cache storage utilisation.

Owner:ARM LTD

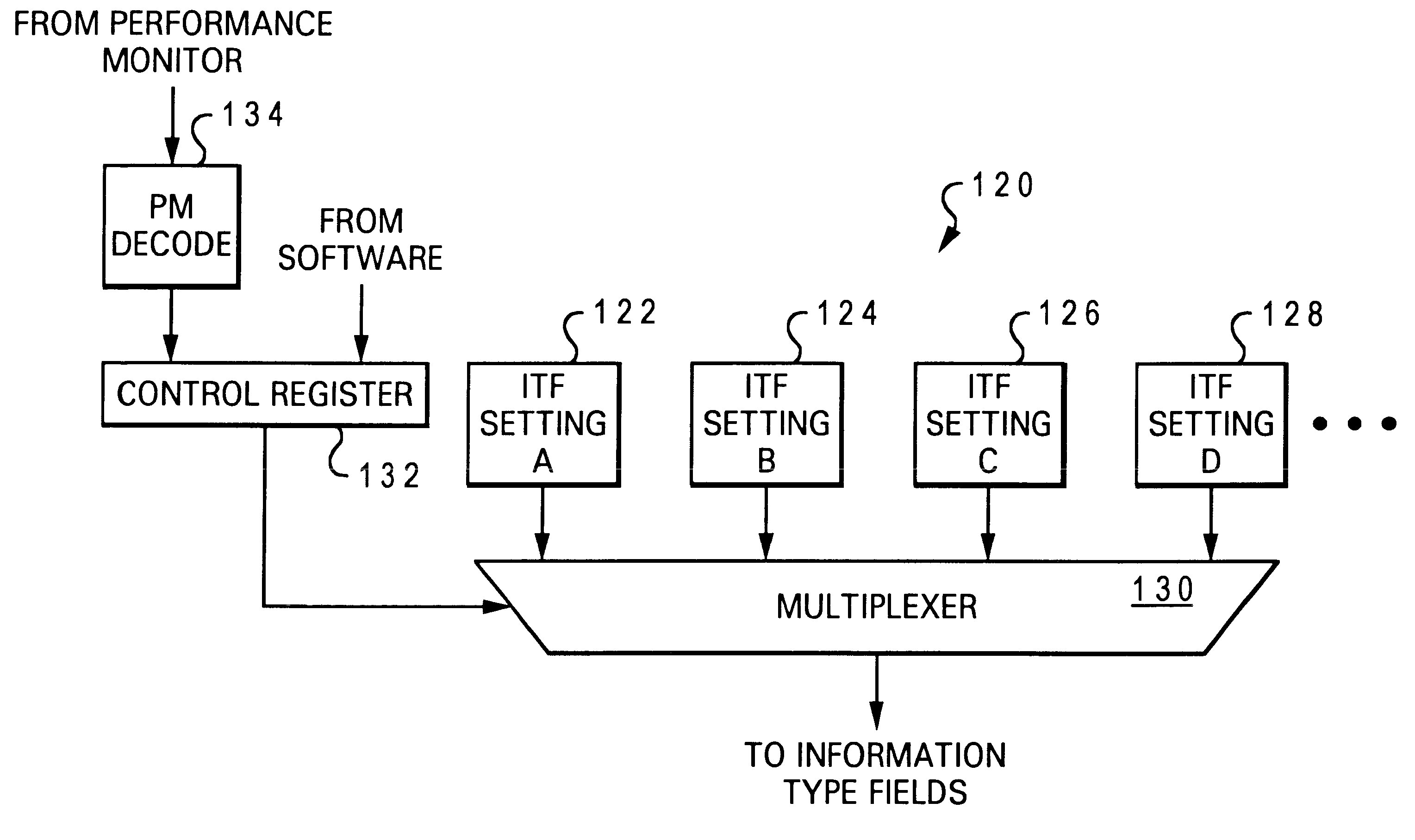

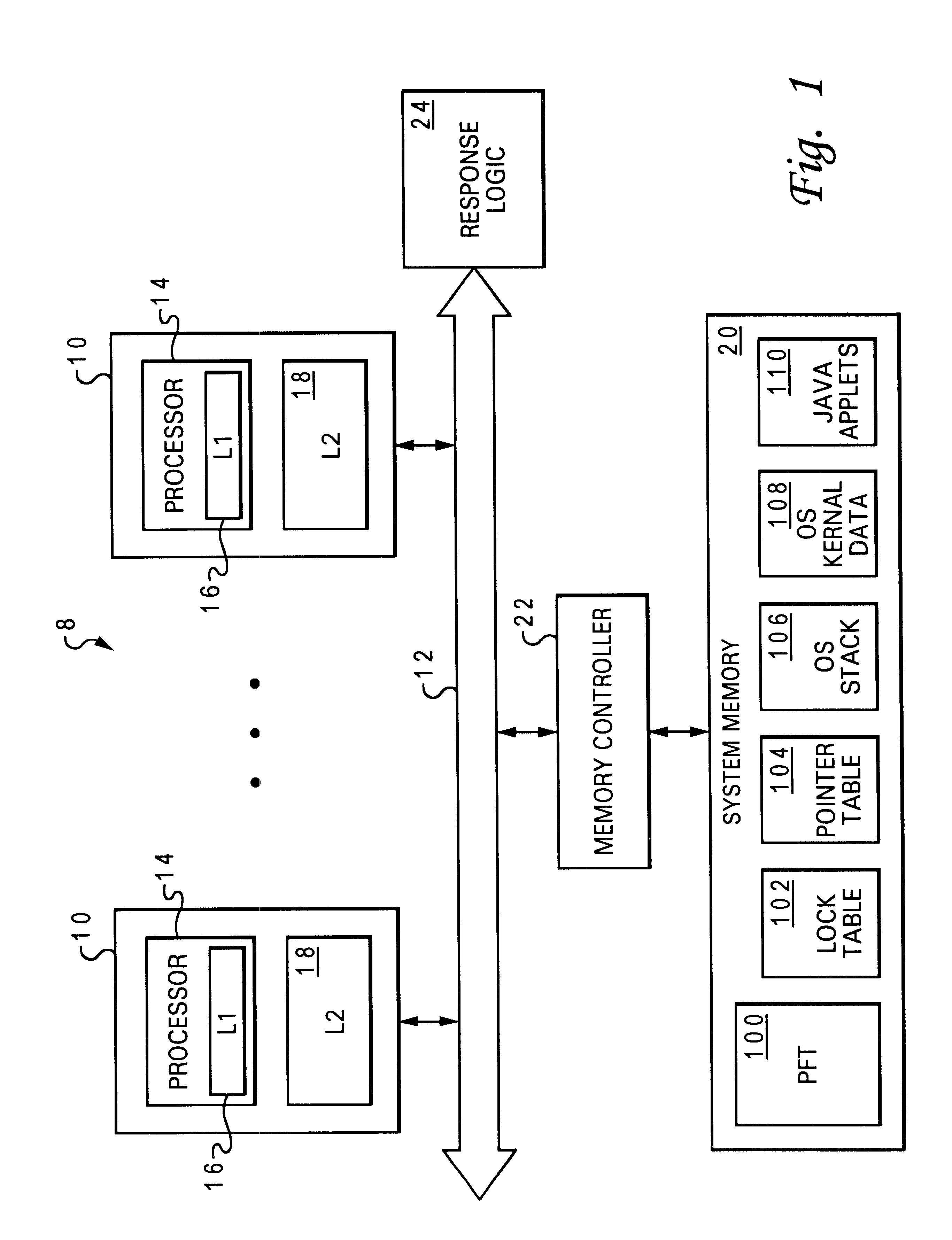

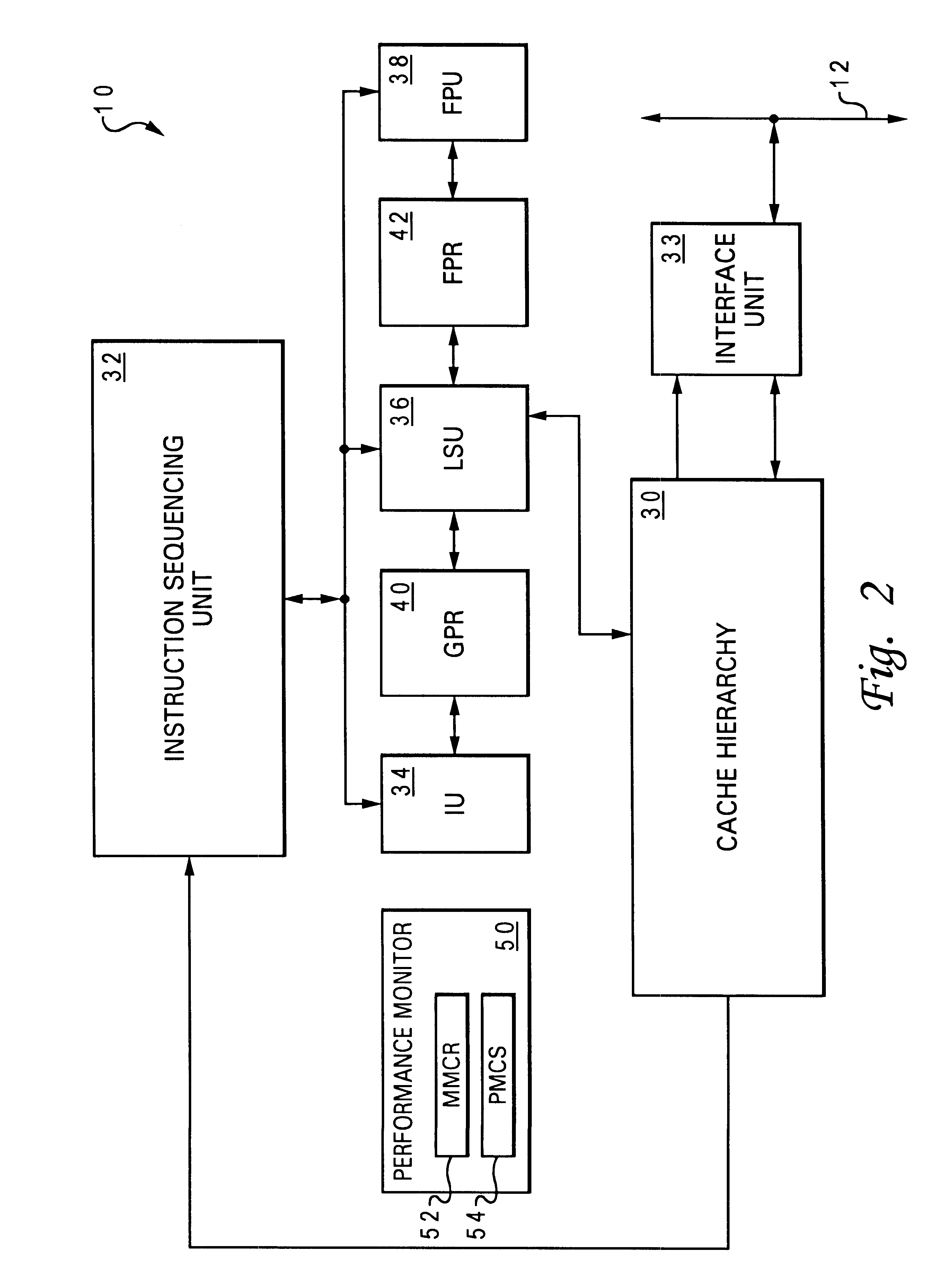

Method of cache management to dynamically update information-type dependent cache policies

A set associative cache includes a cache controller, a directory, and an array including at least one congruence class containing a plurality of sets. The plurality of sets are partitioned into multiple groups according to which of a plurality of information types each set can store. The sets are partitioned so that at least two of the groups include the same set and at least one of the sets can store fewer than all of the information types. To optimize cache operation, the cache controller dynamically modifies a cache policy of a first group while retaining a cache policy of a second group, thus permitting the operation of the cache to be individually optimized for different information types. The dynamic modification of cache policy can be performed in response to either a hardware-generated or software-generated input.

Owner:IBM CORP

Pre-cache similarity-based delta compression for use in a data storage system

ActiveUS20120137061A1Quick upgradeHigh-speed random readMemory architecture accessing/allocationEnergy efficient ICTIndex compressionLeast frequently used

A data storage caching architecture supports using native local memory such as host-based RAM, and if available, Solid State Disk (SSD) memory for storing pre-cache delta-compression based delta, reference, and independent data by exploiting content locality, temporal locality, and spatial locality of data accesses to primary (e.g. disk-based) storage. The architecture makes excellent use of the physical properties of the different types of memory available (fast r / w RAM, low cost fast read SSD, etc) by applying algorithms to determine what types of data to store in each type of memory. Algorithms include similarity detection, delta compression, least popularly used cache management, conservative insertion and promotion cache replacement, and the like.

Owner:WESTERN DIGITAL TECH INC

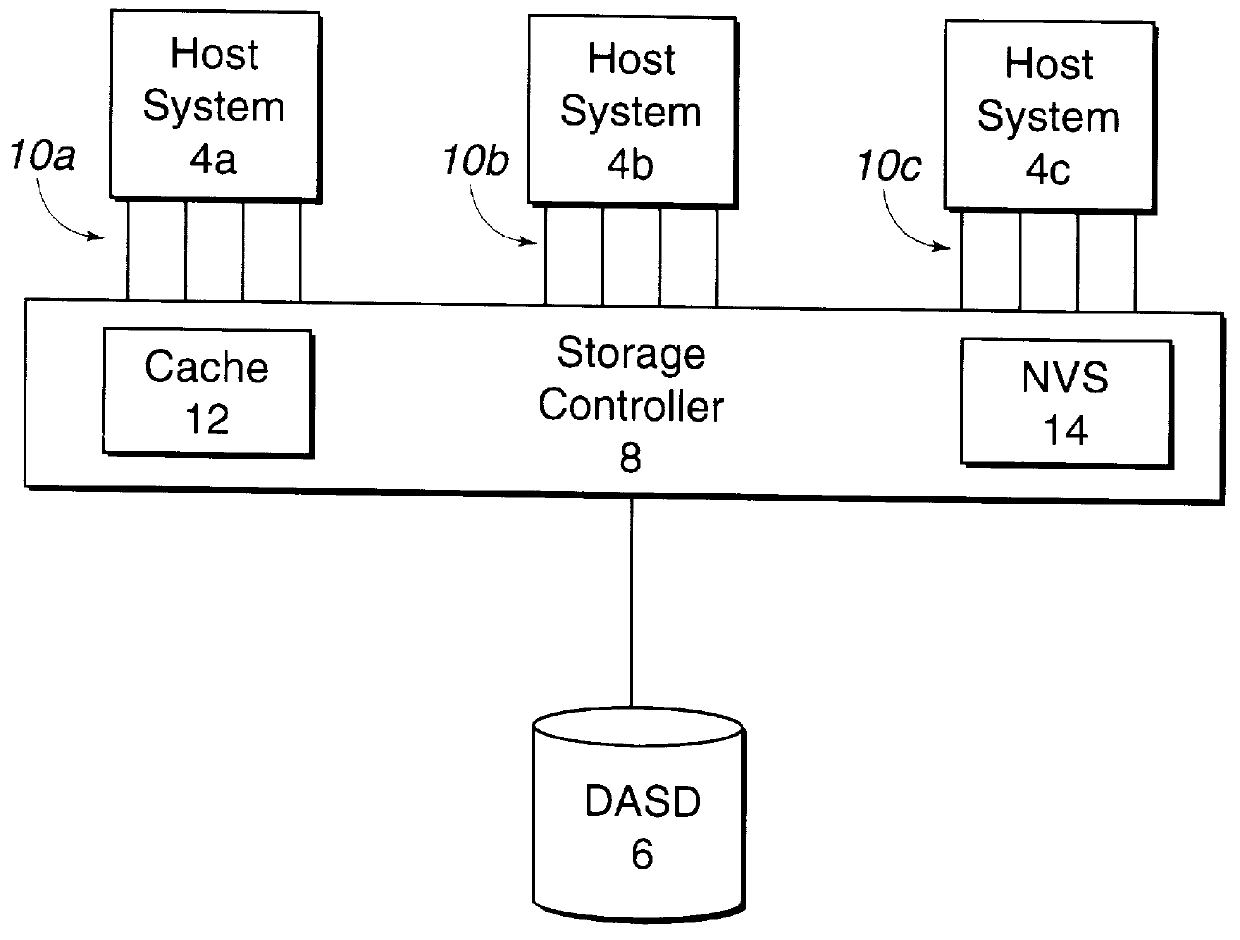

System and method for maintaining cache coherency without external controller intervention

InactiveUS7043610B2Efficiently detectsEfficiently resolveMemory adressing/allocation/relocationSegment descriptorControl store

A disk array includes a system and method for cache management and conflict detection. Incoming host commands are processed by a storage controller, which identifies a set of at least one cache segment descriptor (CSD) associated with the requested address range. Command conflict detection can be quickly performed by examining the state information of each CSD associated with the command. The use of CSDs therefore permits the present invention to rapidly and efficiently perform read and write commands and detect conflicts.

Owner:ADAPTEC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

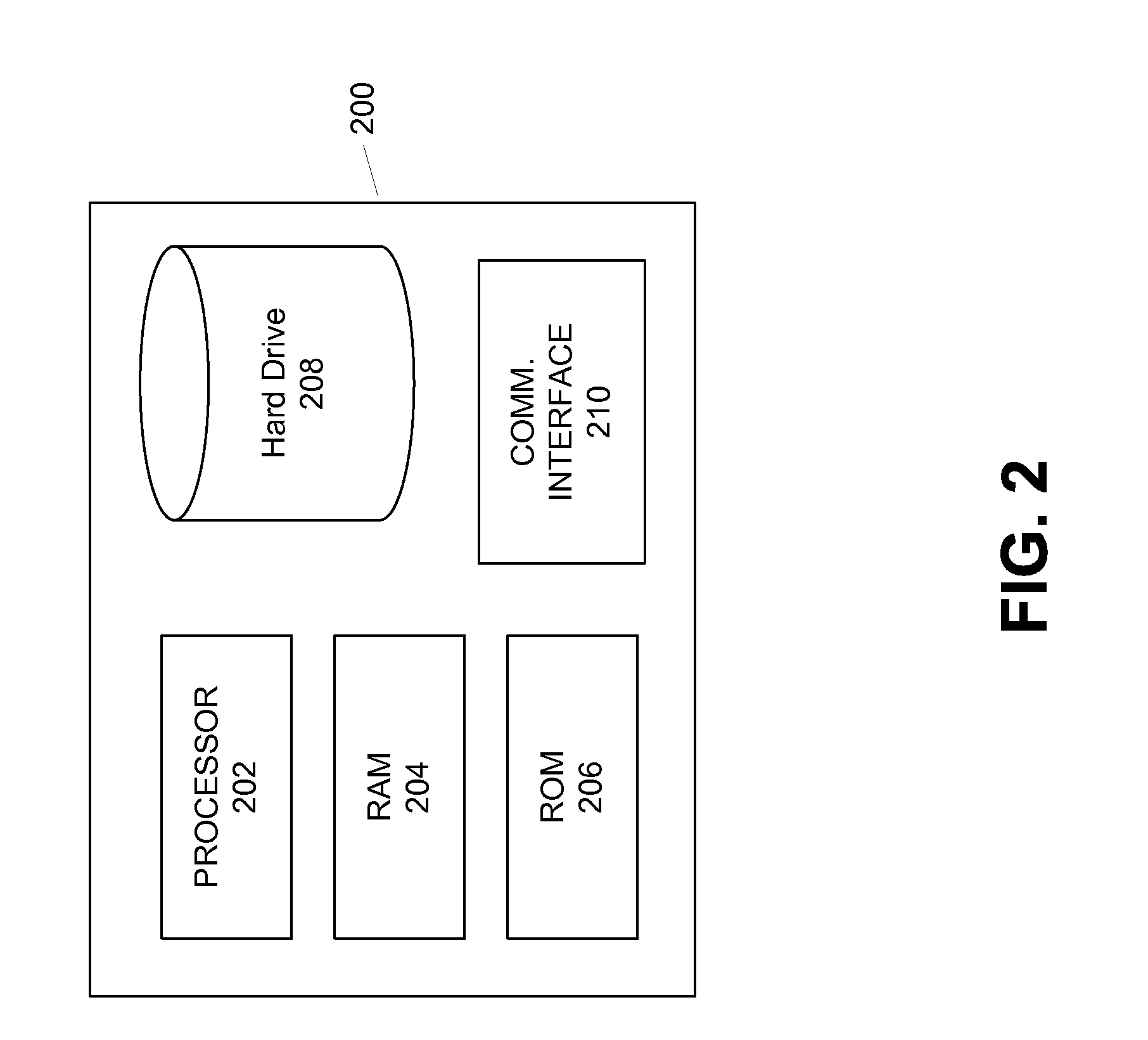

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com