Patents

Literature

163 results about "Associative cache" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

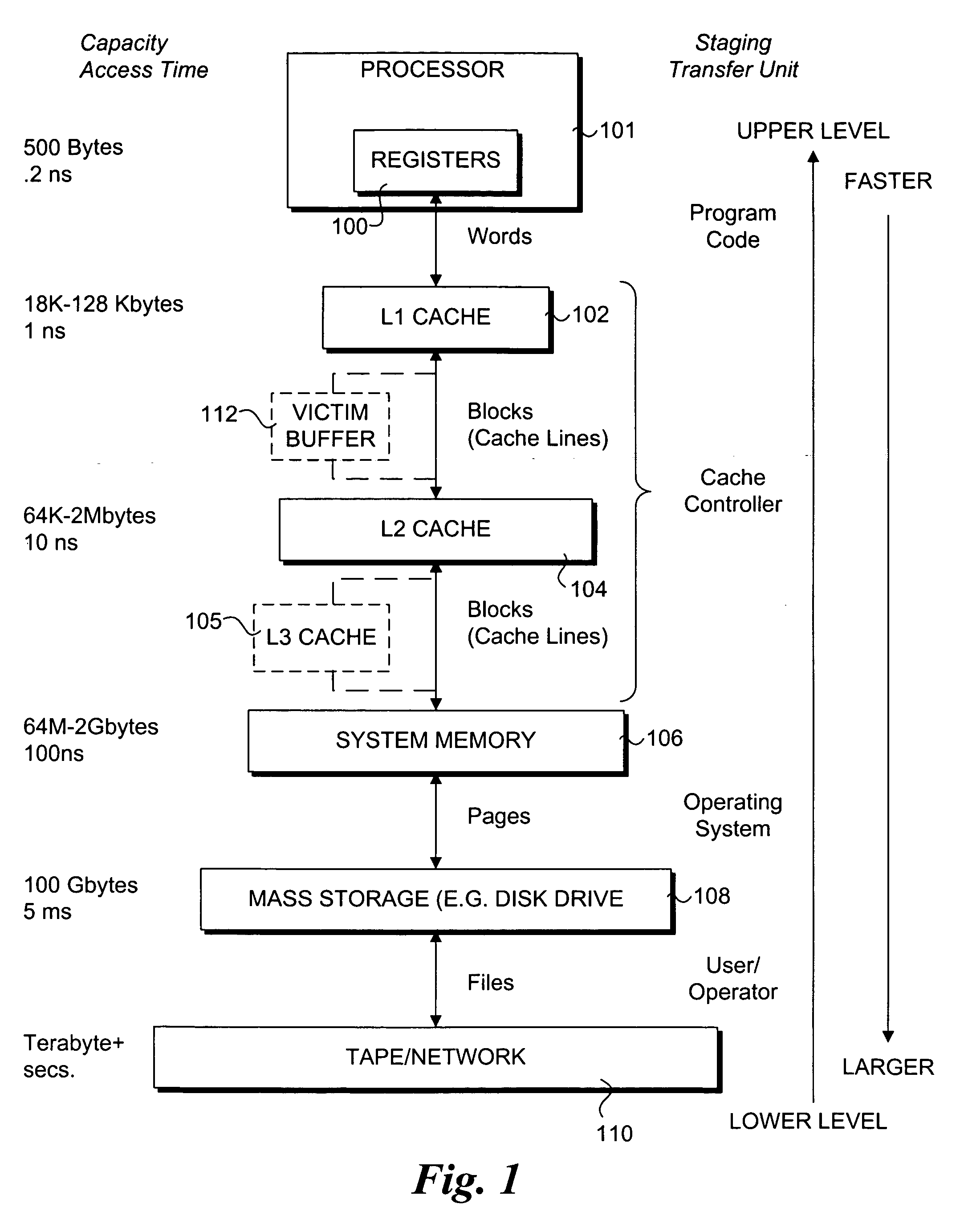

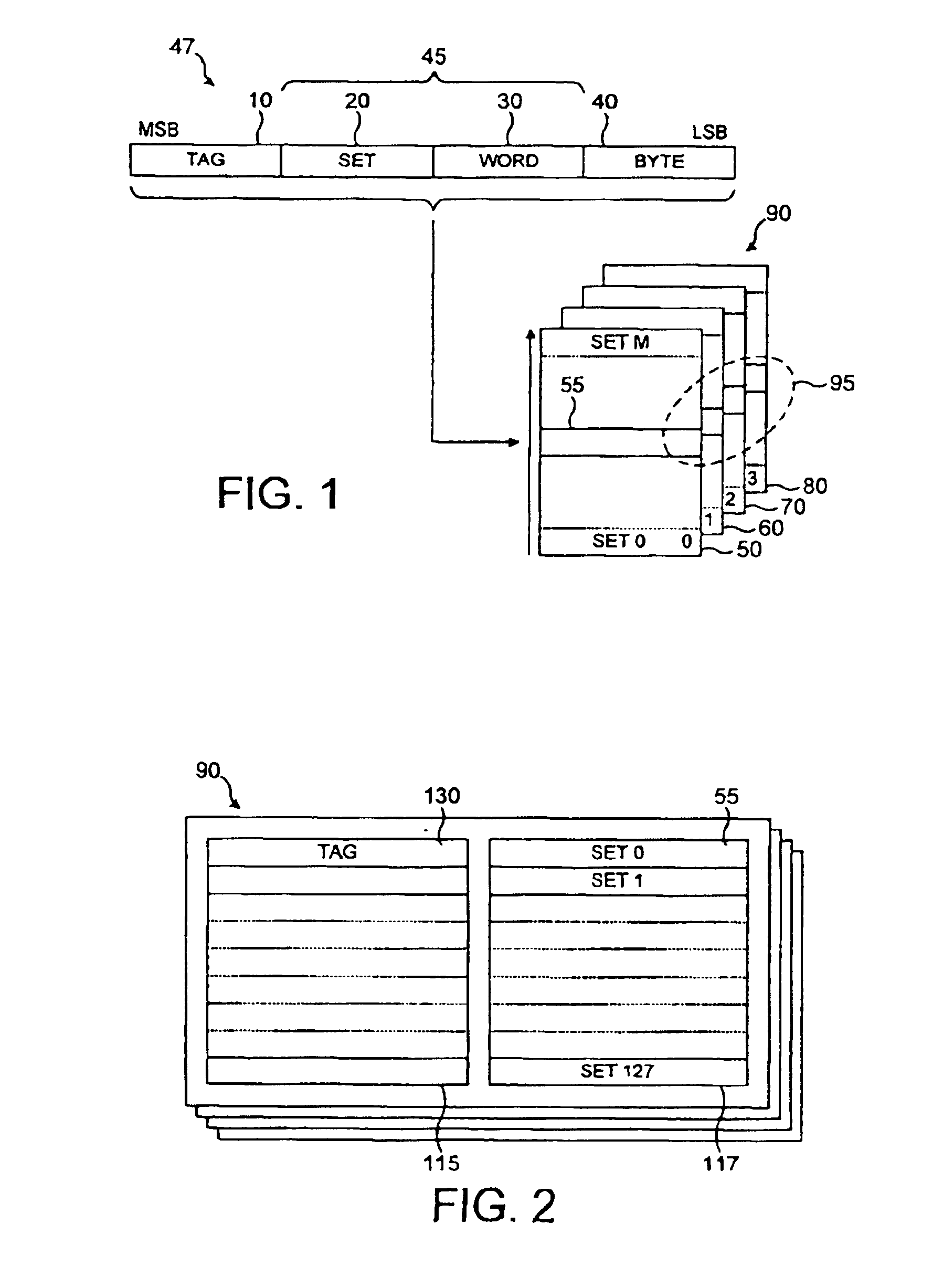

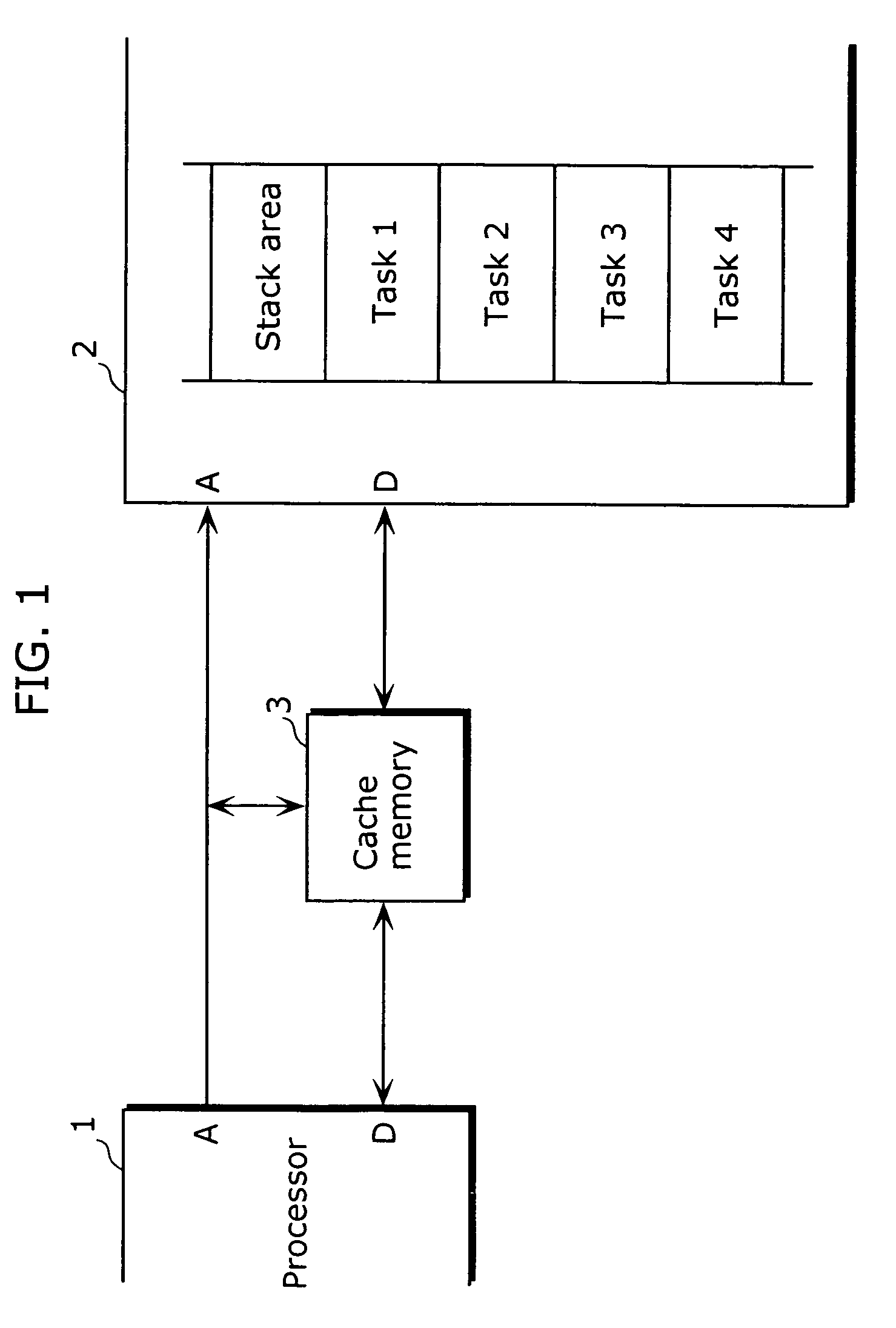

Set associative cache is a trade-off between Direct mapped cache and Fully associative cache. The Set associative cache can be imagined as a (n*m) matrix. The cache is divided into ‘n’ sets and each set contains ‘m’ cache lines. A memory block is first mapped onto a set and then placed into any cache line of the set.

Method for programmer-controlled cache line eviction policy

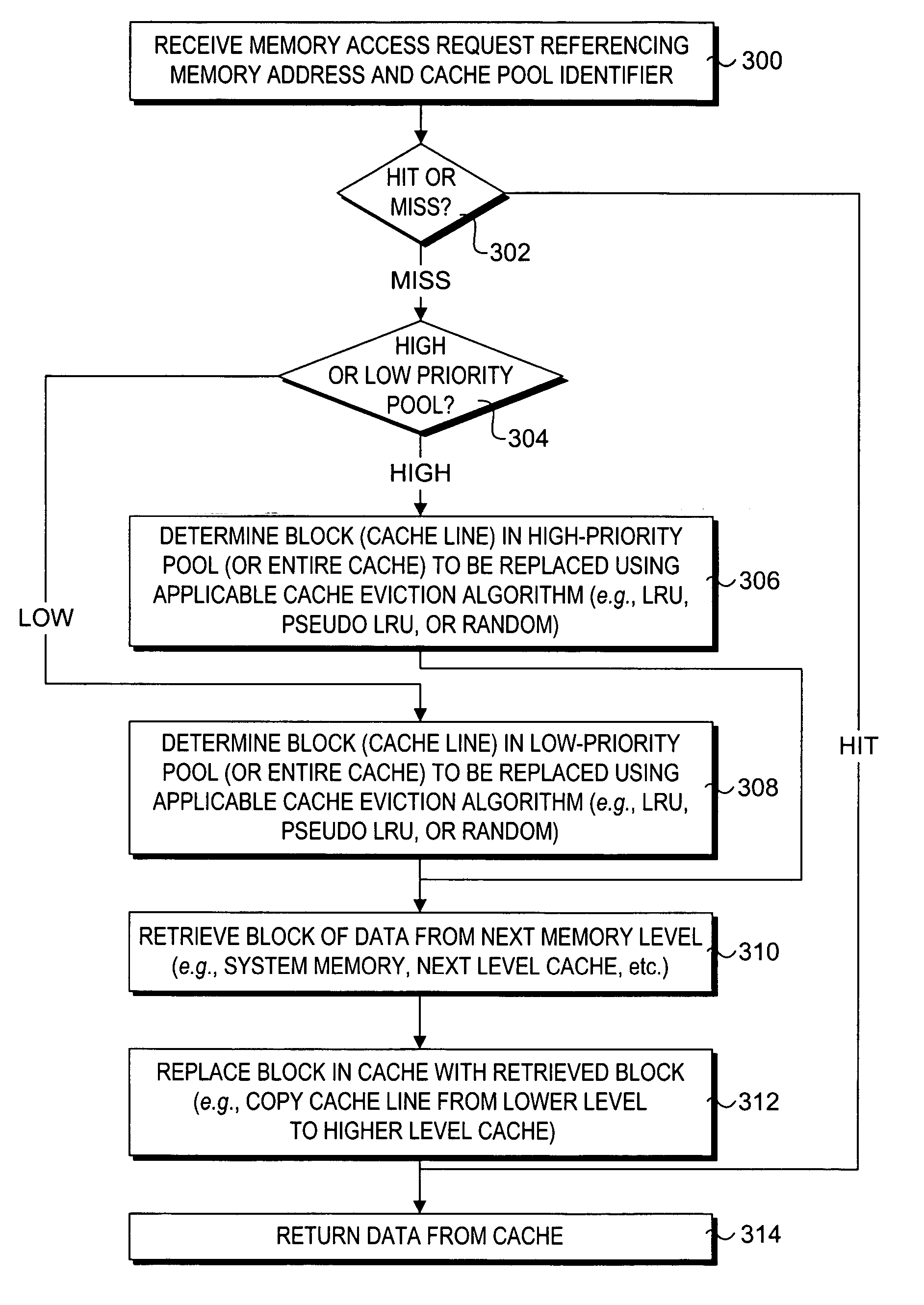

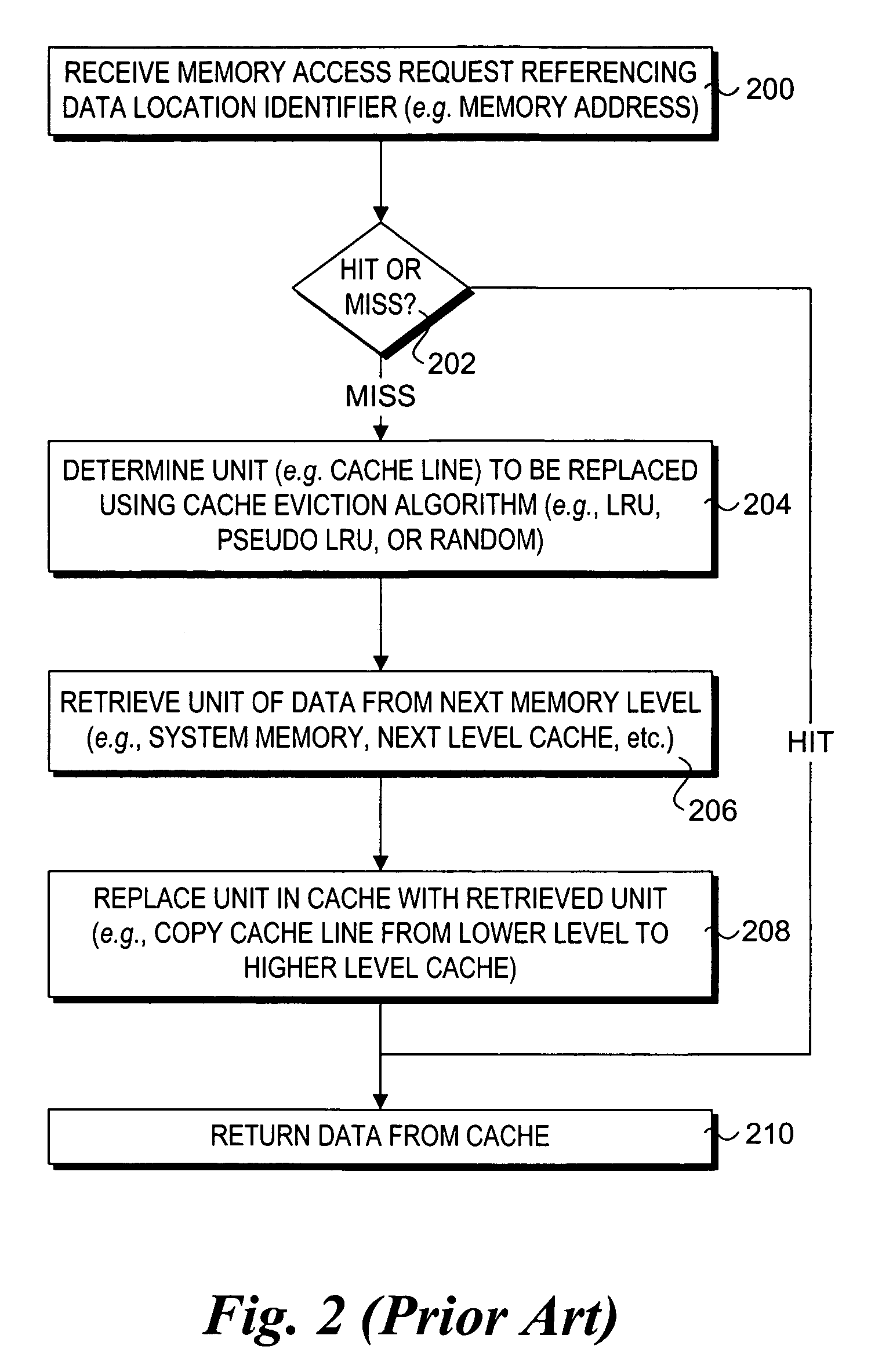

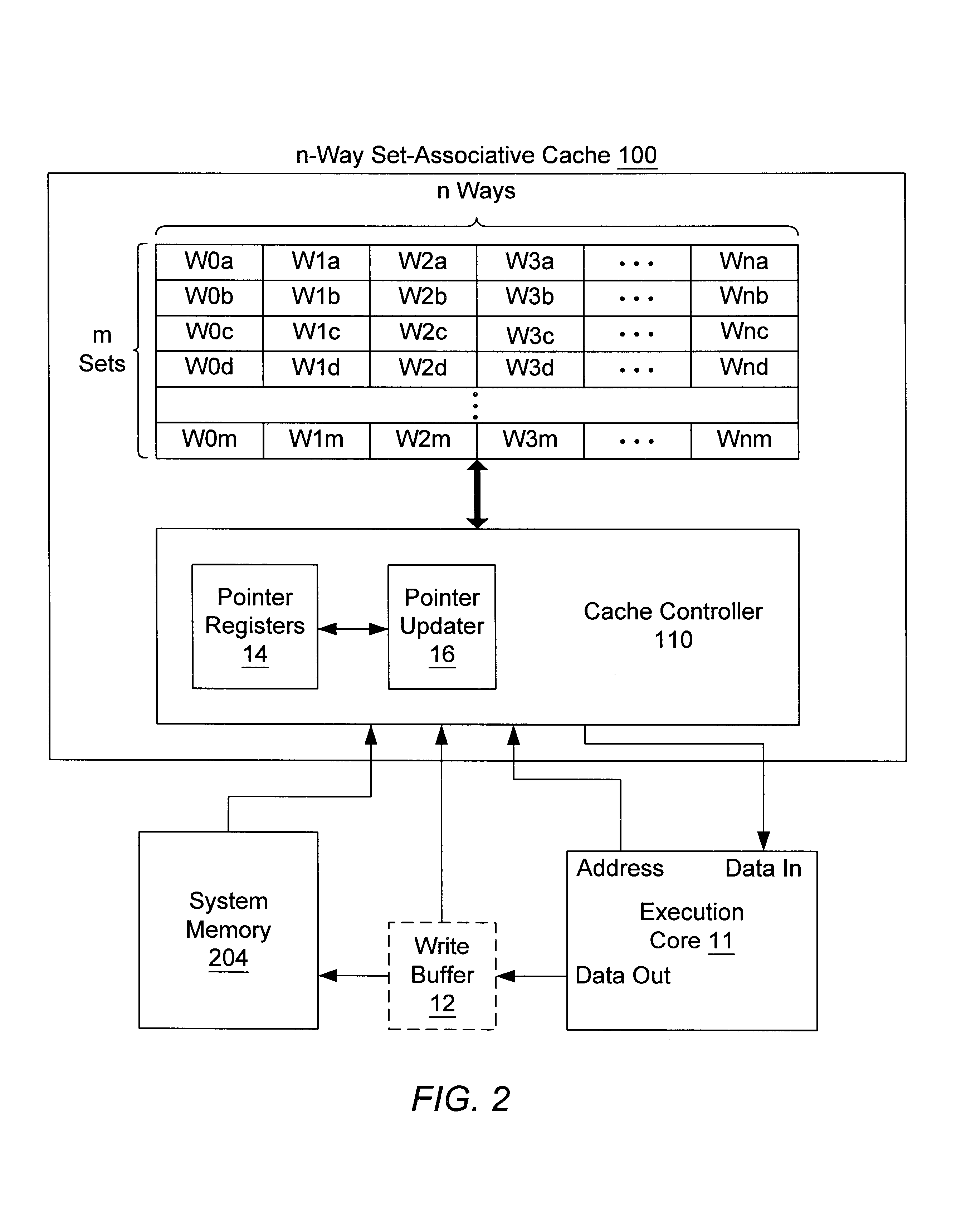

A method and apparatus to enable programmatic control of cache line eviction policies. A mechanism is provided that enables programmers to mark portions of code with different cache priority levels based on anticipated or measured access patterns for those code portions. Corresponding cues to assist in effecting the cache eviction policies associated with given priority levels are embedded in machine code generated from source- and / or assembly-level code. Cache architectures are provided that partition cache space into multiple pools, each pool being assigned a different priority. In response to execution of a memory access instruction, an appropriate cache pool is selected and searched based on information contained in the instruction's cue. On a cache miss, a cache line is selected from that pool to be evicted using a cache eviction policy associated with the pool. Implementations of the mechanism or described for both n-way set associative caches and fully-associative caches.

Owner:INTEL CORP

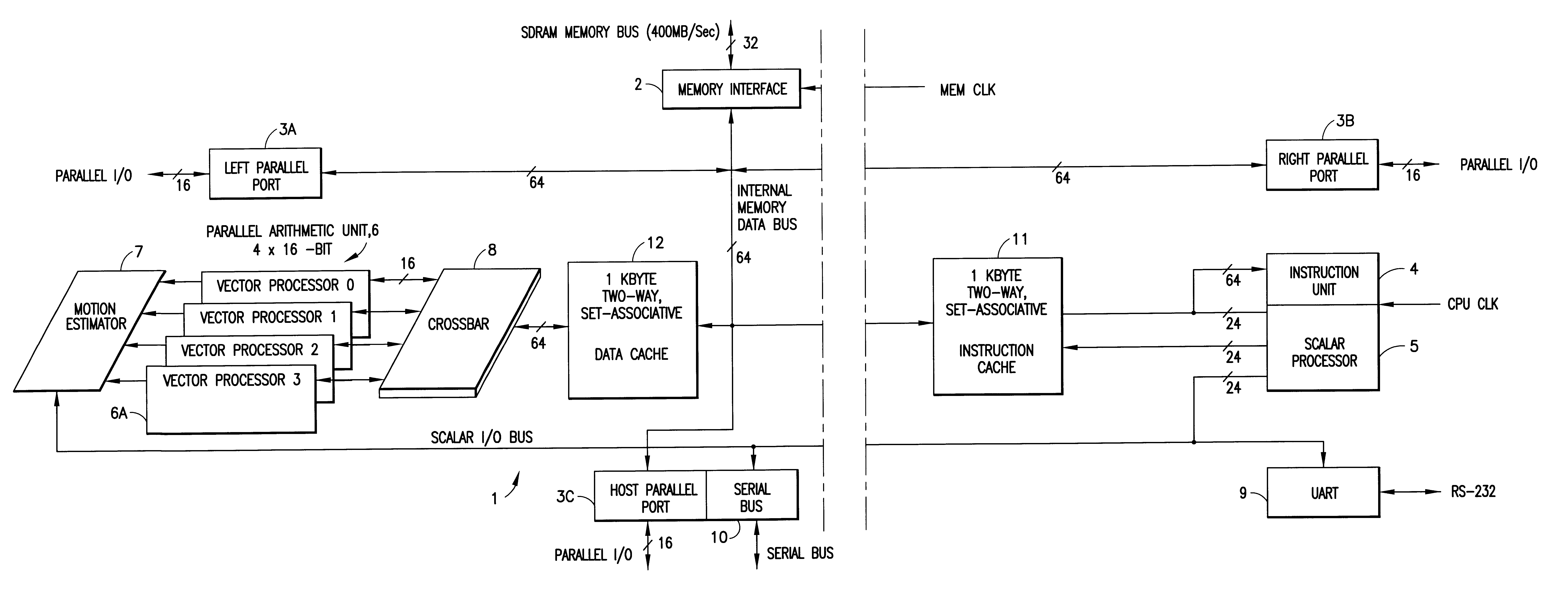

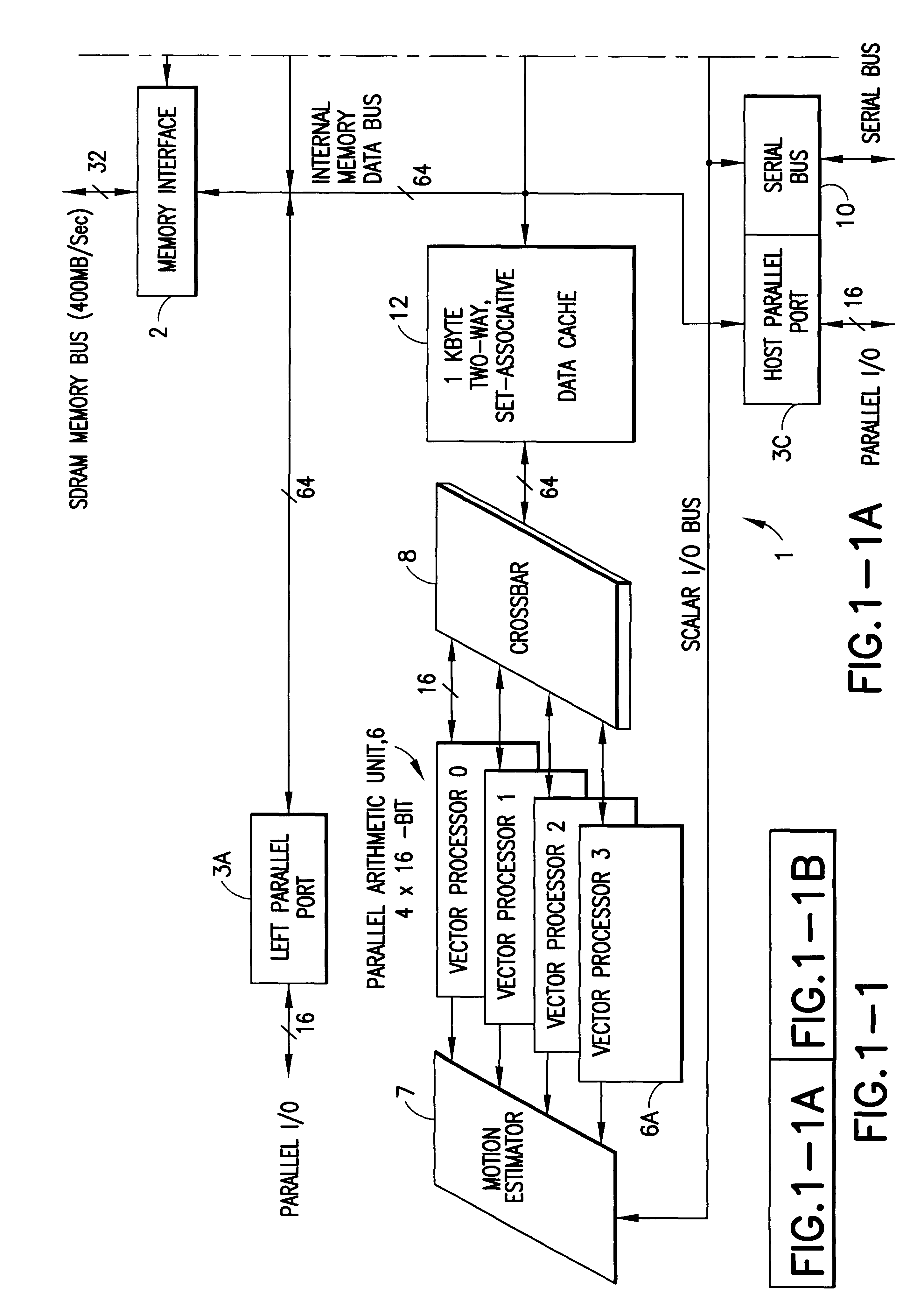

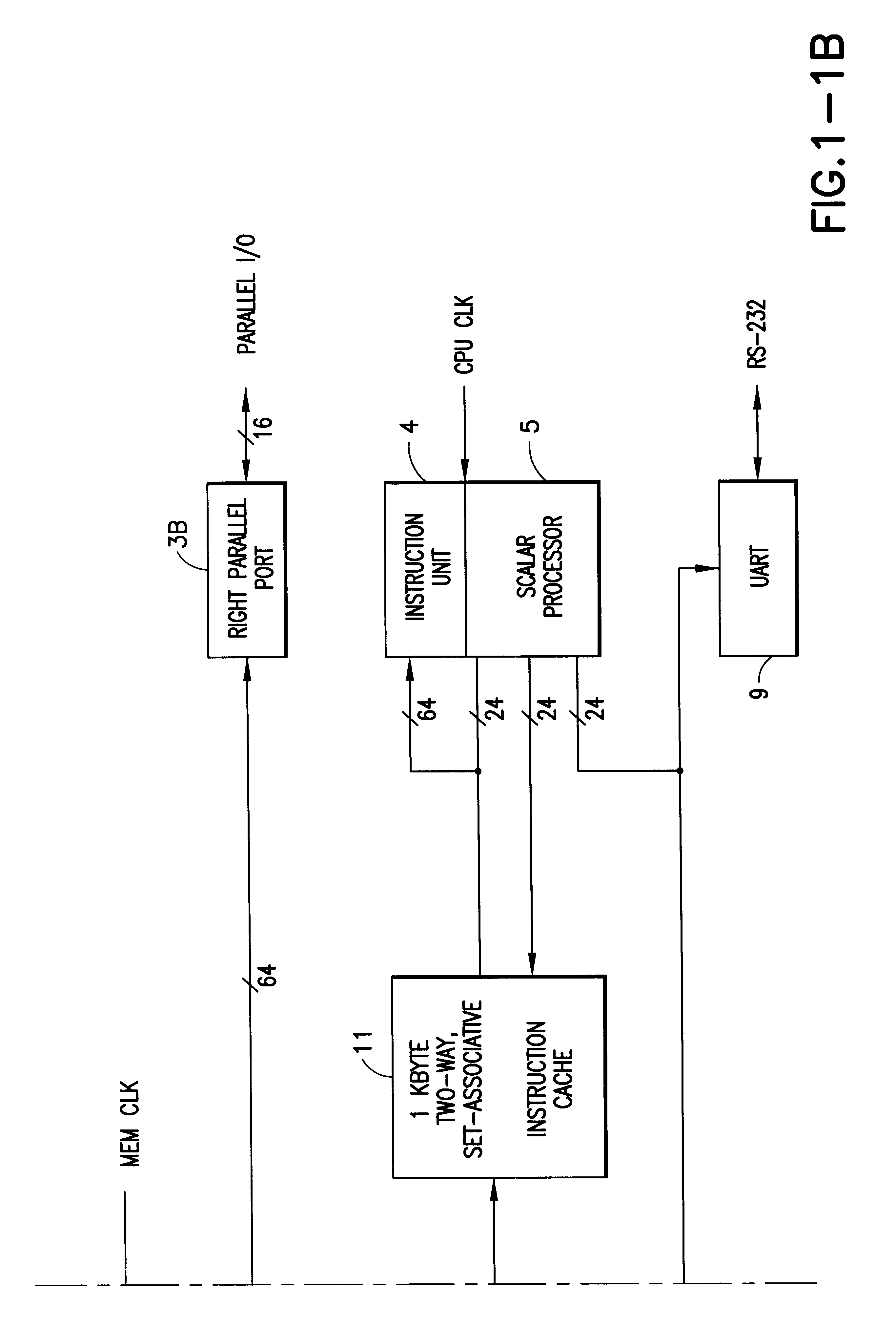

Digital signal processor containing scalar processor and a plurality of vector processors operating from a single instruction

InactiveUS6317819B1Register arrangementsMemory adressing/allocation/relocationCrossbar switchDigital data

A digital data processor integrated circuit (1) includes a plurality of functionally identical first processor elements (6A) and a second processor element (5). The first processor elements are bidirectionally coupled to a first cache (12) via a crossbar switch matrix (8). The second processor element is coupled to a second cache (11). Each of the first cache and the second cache contain a two-way, set-associative cache memory that uses a least-recently-used (LRU) replacement algorithm and that operates with a use-as-fill mode to minimize a number of wait states said processor elements need experience before continuing execution after a cache-miss. An operation of each of the first processor elements and an operation of the second processor element are locked together during an execution of a single instruction read from the second cache. The instruction specifies, in a first portion that is coupled in common to each of the plurality of first processor elements, the operation of each of the plurality of first processor elements in parallel. A second portion of the instruction specifies the operation of the second processor element. Also included is a motion estimator (7) and an internal data bus coupling together a first parallel port (3A), a second parallel port (3B), a third parallel port (3C), an external memory interface (2), and a data input / output of the first cache and the second cache.

Owner:CUFER ASSET LTD LLC

Weighted cache line replacement

InactiveUS20040083341A1Avoid replacementMemory adressing/allocation/relocationCache hierarchyParallel computing

A method for selecting a line to replace in an inclusive set-associative cache memory system which is based on a least recently used replacement policy but is enhanced to detect and give special treatment to the reloading of a line that has been recently cast out. A line which has been reloaded after having been recently cast out is assigned a special encoding which temporarily gives priority to the line in the cache so that it will not be selected for replacement in the usual least recently used replacement process. This method of line selection for replacement improves system performance by providing better hit rates in the cache hierarchy levels above, by ensuring that heavily used lines in the levels above are not aged out of the levels below due to lack of use.

Owner:IBM CORP

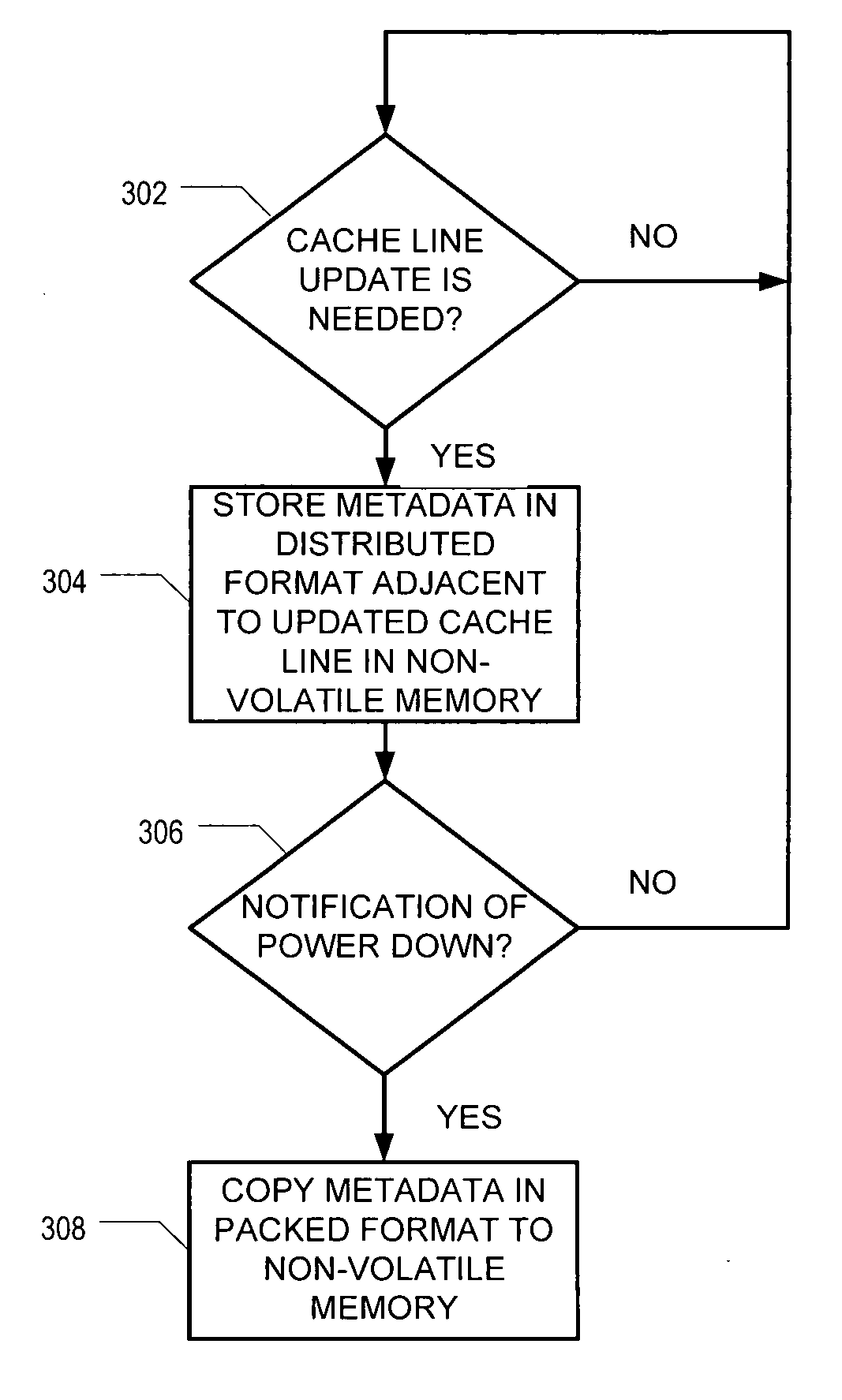

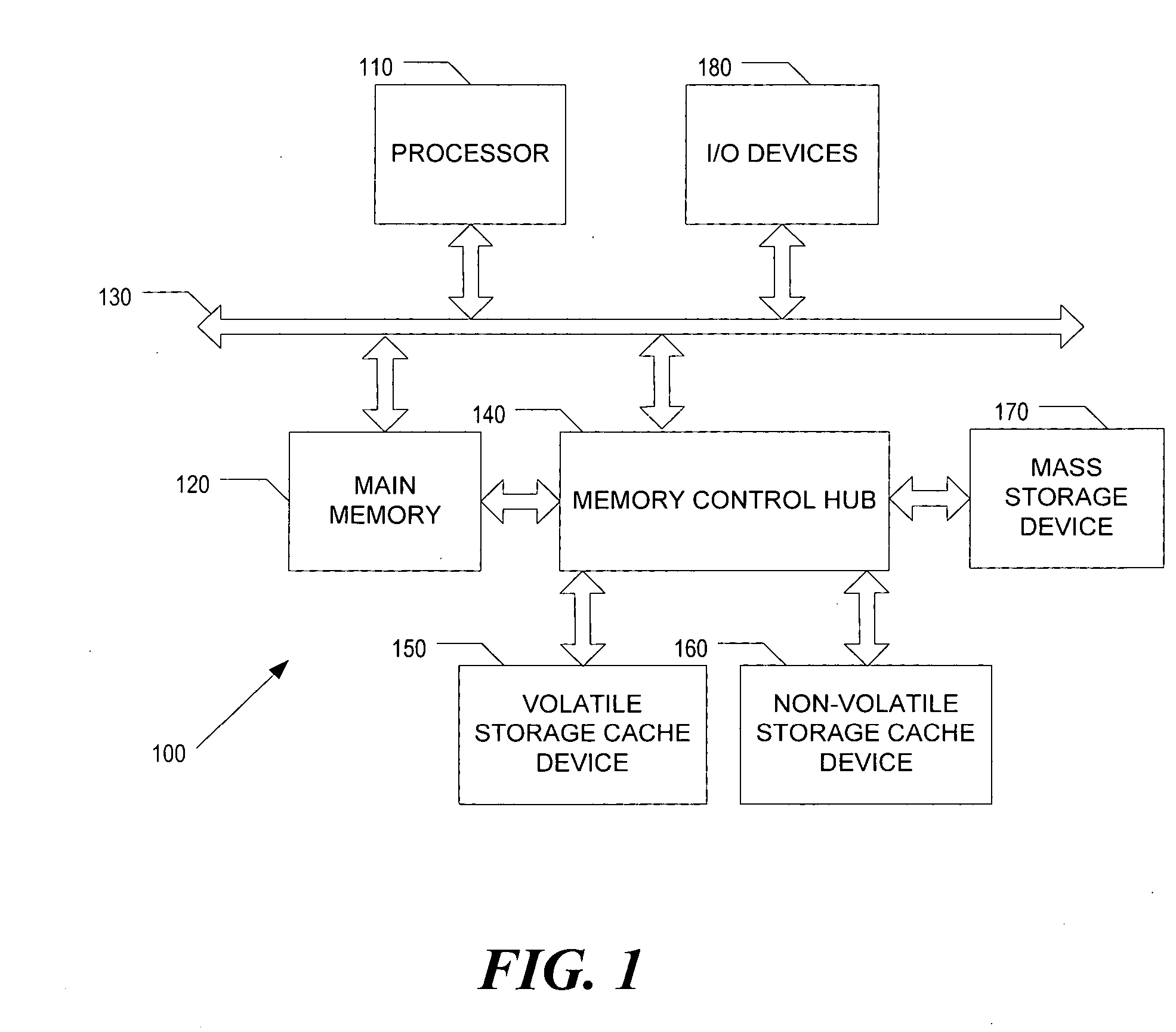

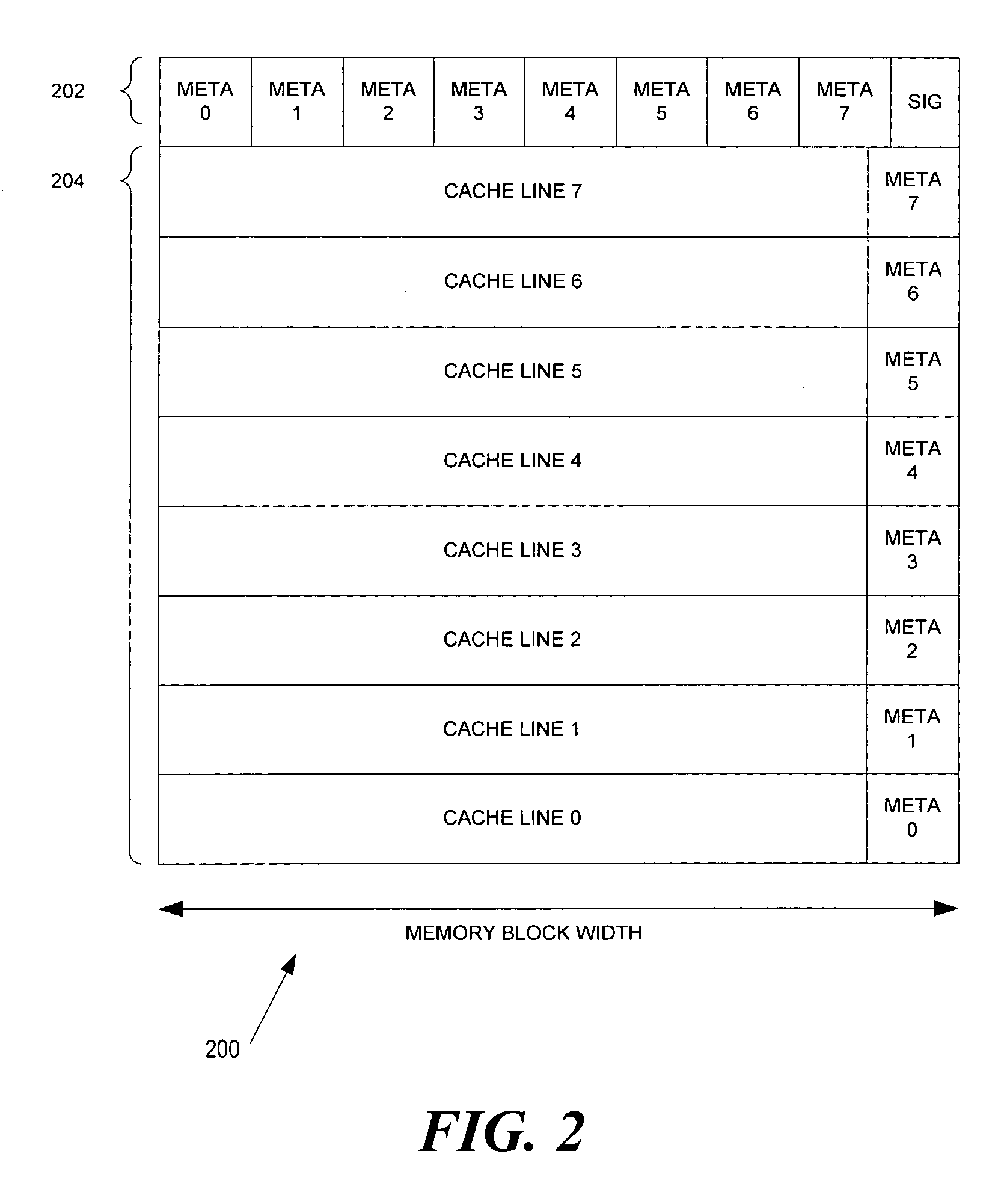

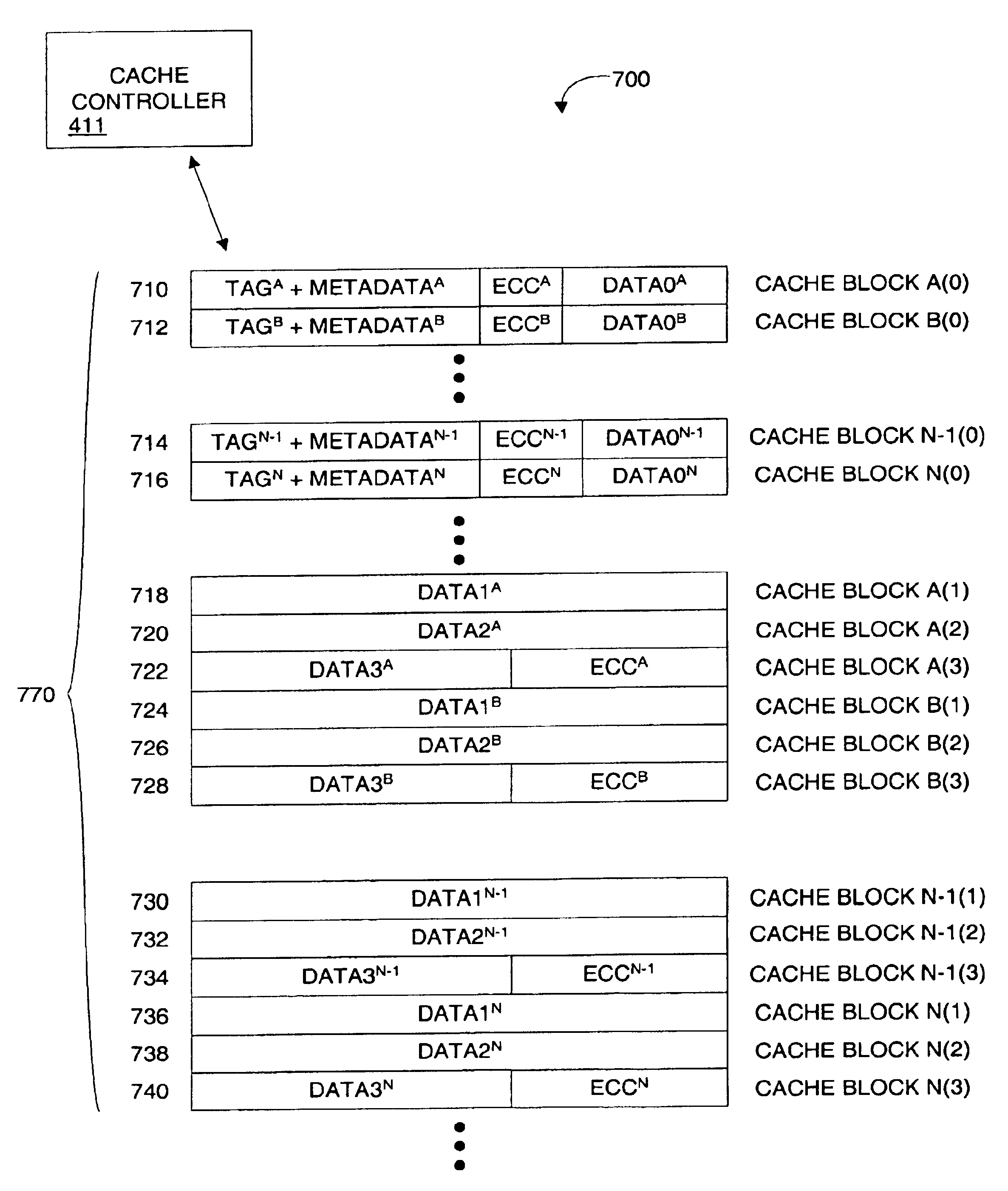

Distributed and packed metadata structure for disk cache

An apparatus and method to reduce the initialization time of a system is disclosed. In one embodiment, upon a cache line update, metadata associated with the cache line is stored in a distributed format in non-volatile memory with its associated cache line. Upon indication of an expected shut down, metadata is copied from volatile memory and stored in non-volatile memory in a packed format. In the packed format, multiple metadata associated with multiple cache lines are stored together in, for example, a single memory block. Thus, upon system power up, if the system was shut down in an expected manner, metadata may be restored in volatile memory from the metadata stored in the packed format, with a significantly reduced boot time over restoring metadata from the metadata stored in the distributed format.

Owner:INTEL NDTM US LLC

Method for way allocation and way locking in a cache

ActiveUS20100250856A1Small sizeEnergy efficient ICTMemory adressing/allocation/relocationGraphicsGraphics processing unit

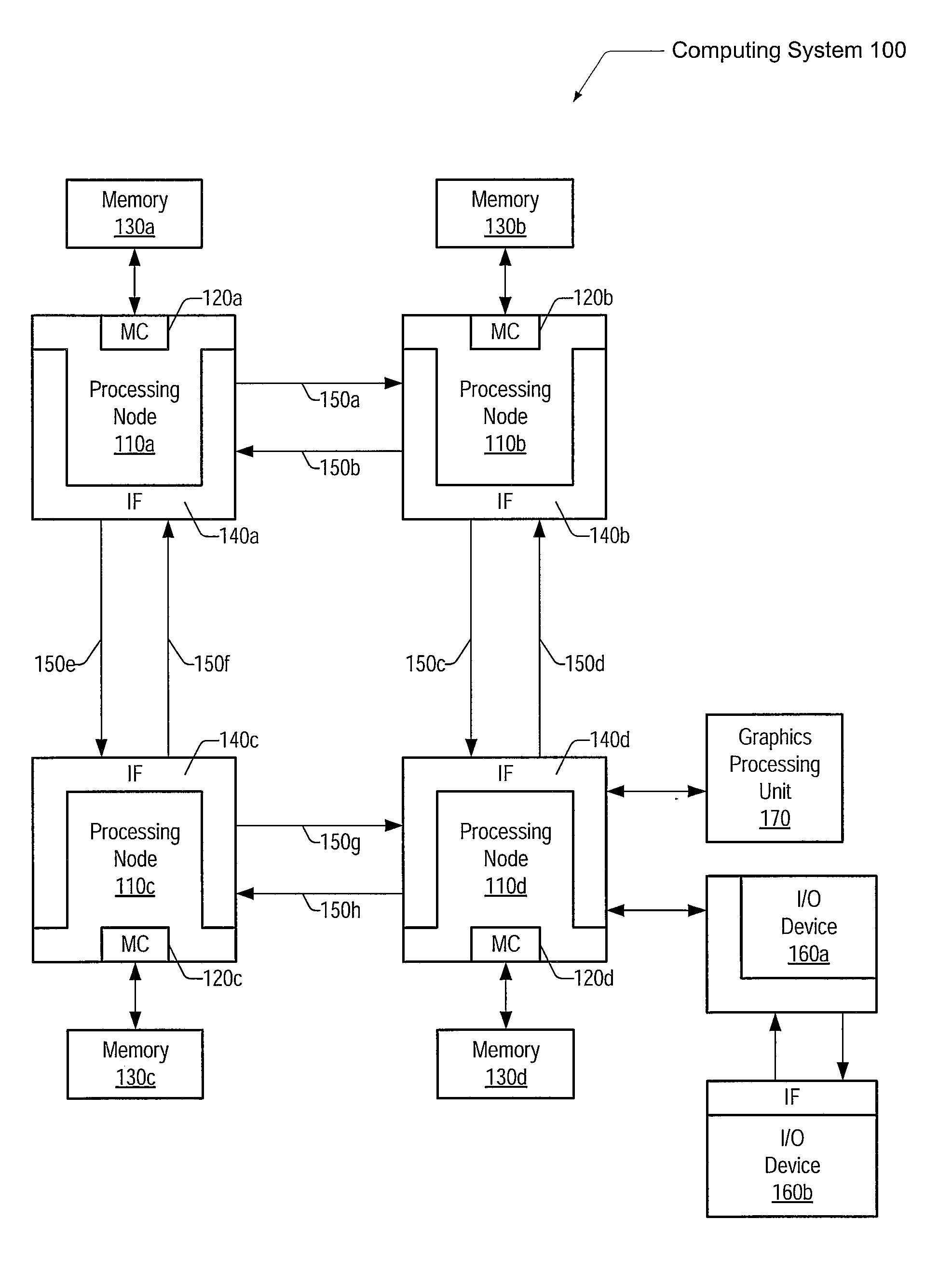

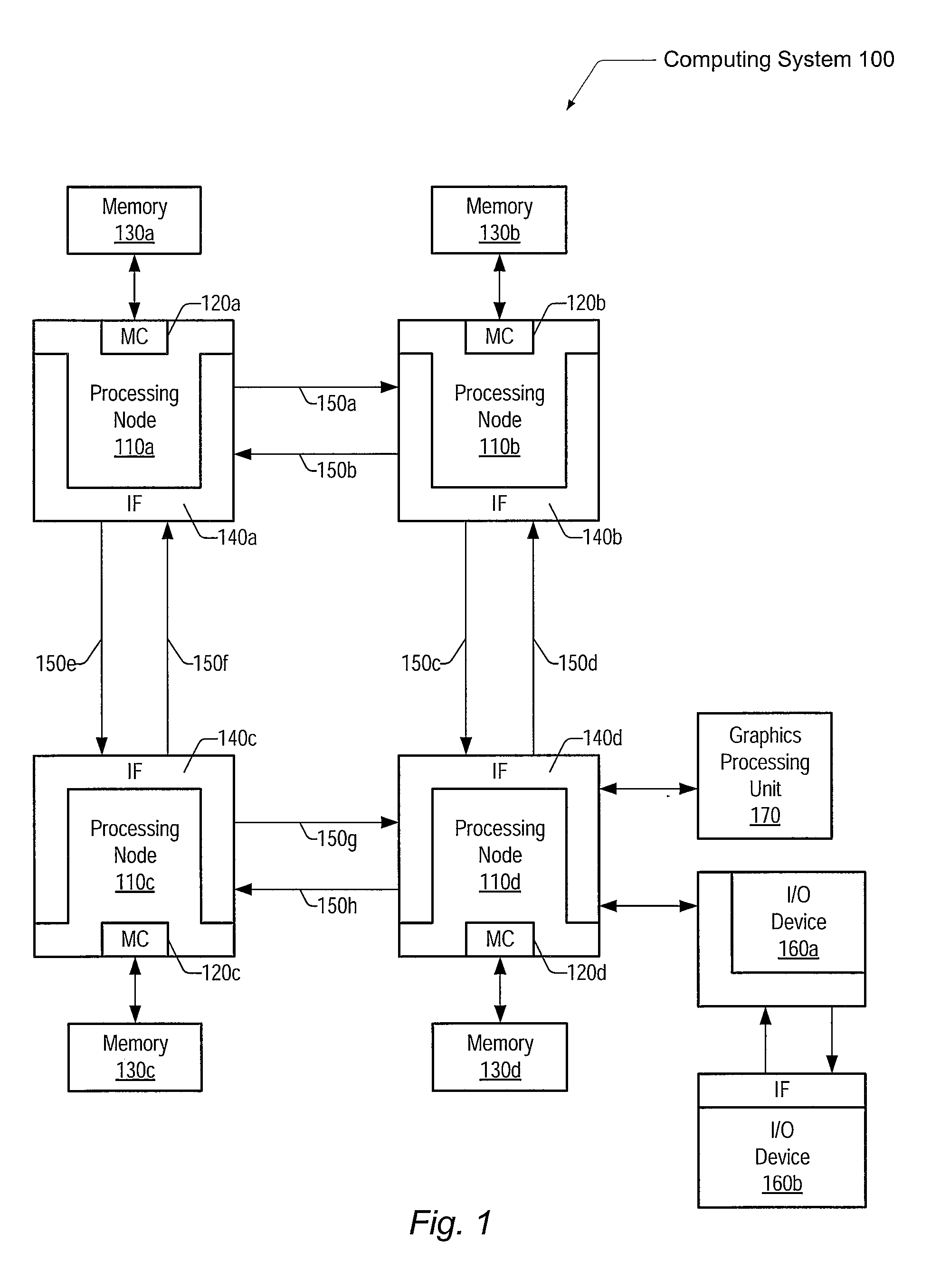

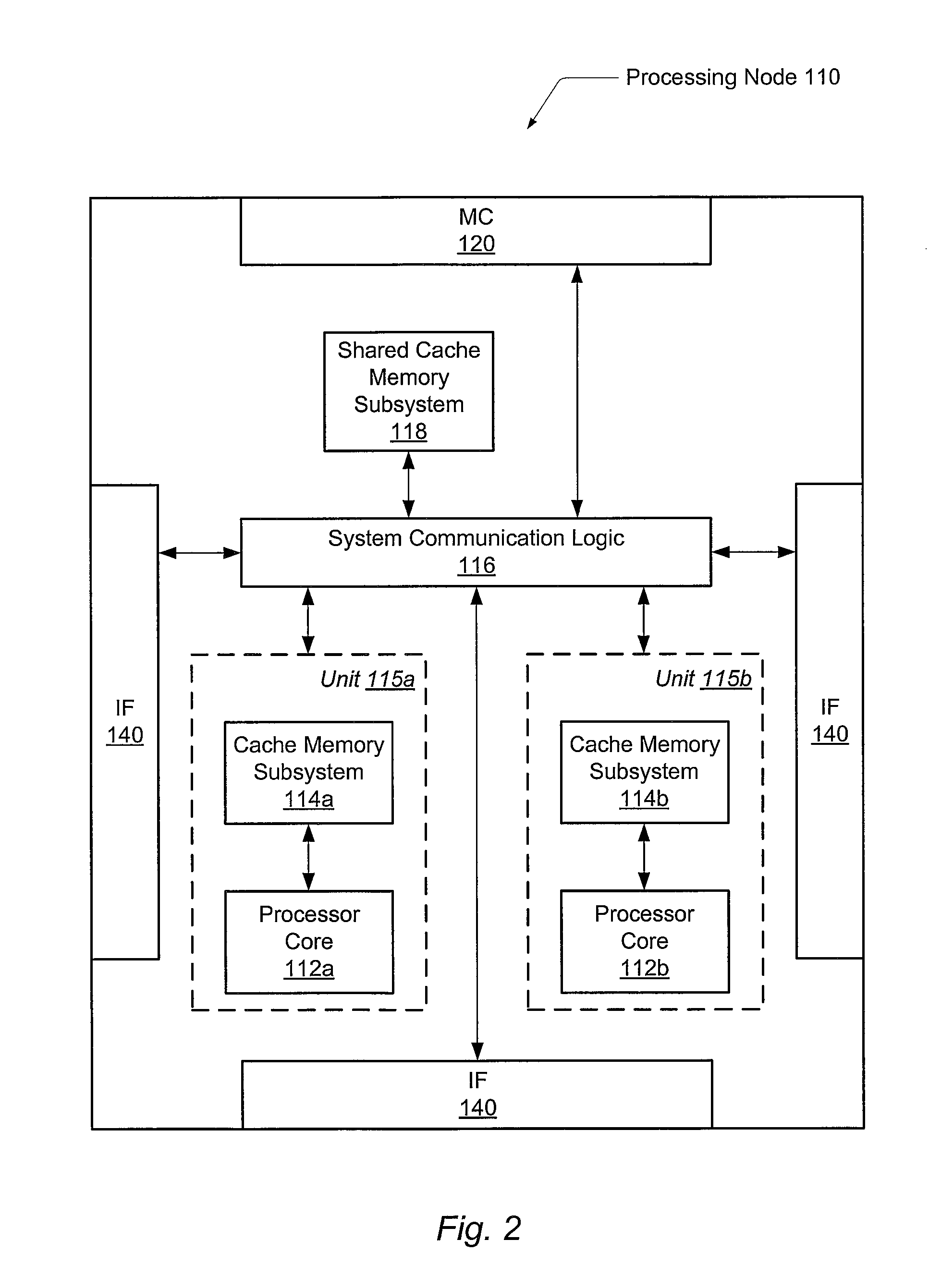

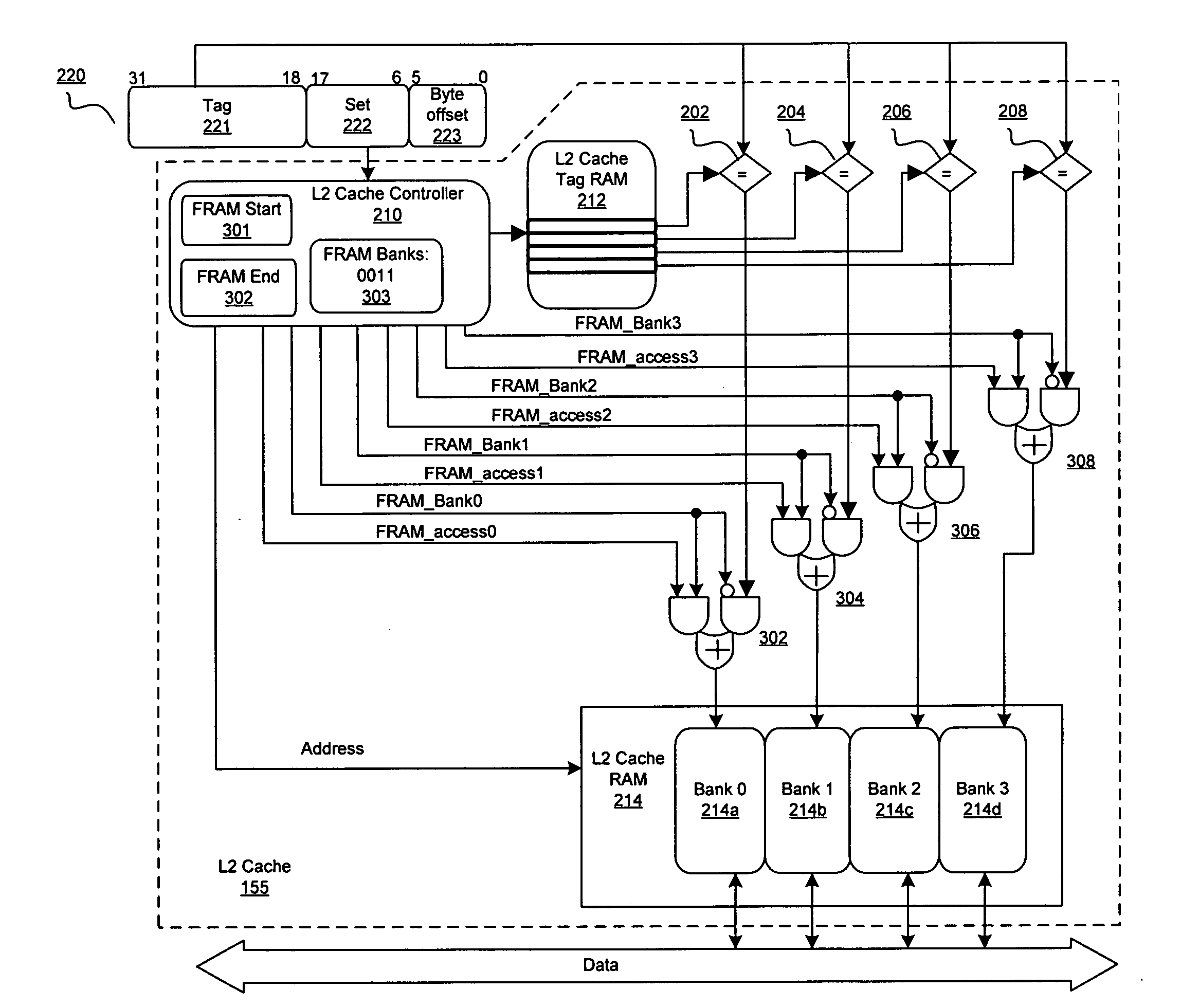

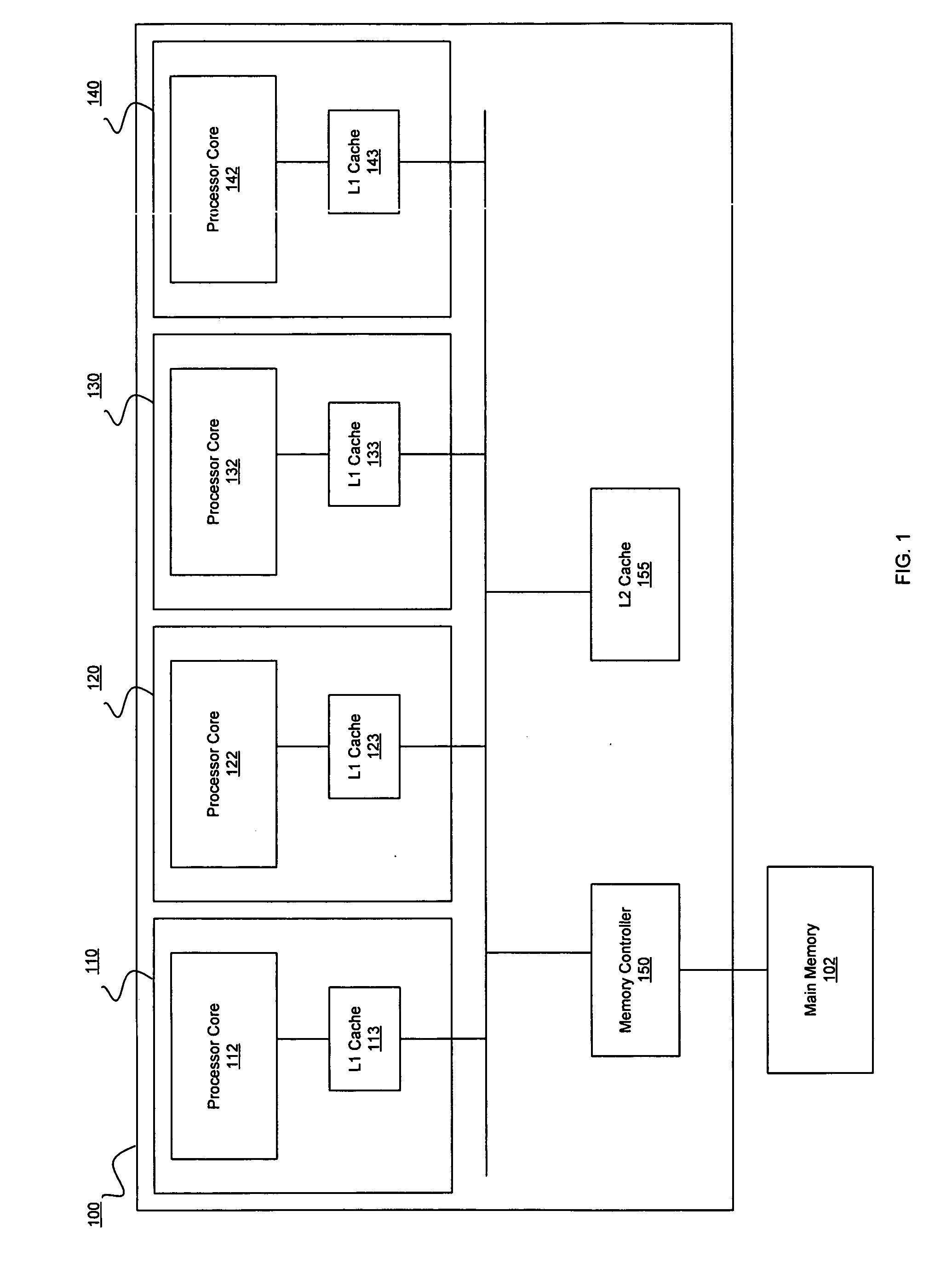

A system and method for data allocation in a shared cache memory of a computing system are contemplated. Each cache way of a shared set-associative cache is accessible to multiple sources, such as one or more processor cores, a graphics processing unit (GPU), an input / output (I / O) device, or multiple different software threads. A shared cache controller enables or disables access separately to each of the cache ways based upon the corresponding source of a received memory request. One or more configuration and status registers (CSRs) store encoded values used to alter accessibility to each of the shared cache ways. The control of the accessibility of the shared cache ways via altering stored values in the CSRs may be used to create a pseudo-RAM structure within the shared cache and to progressively reduce the size of the shared cache during a power-down sequence while the shared cache continues operation.

Owner:ADVANCED MICRO DEVICES INC

Method and system for on-chip configurable data ram for fast memory and pseudo associative caches

InactiveUS20060277365A1Memory architecture accessing/allocationEnergy efficient ICTMemory addressMemory bank

Aspects of a method and system for an on-chip configurable data RAM for fast memory and pseudo associative caches are provided. Memory banks of configurable data RAM integrated within a chip may be configured to operate as fast on-chip memory or on-chip level 2 cache memory. A set associativity of the on-chip level 2 cache memory may be same after configuring the memory banks as prior to the configuring. The configuring may occur during initialization of the memory banks, and may adjusted the amount of the on-chip level 2 cache. The memory banks configured to operate as on-chip level 2 cache memory or as fast on-chip memory may be dynamically enabled by a memory address.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

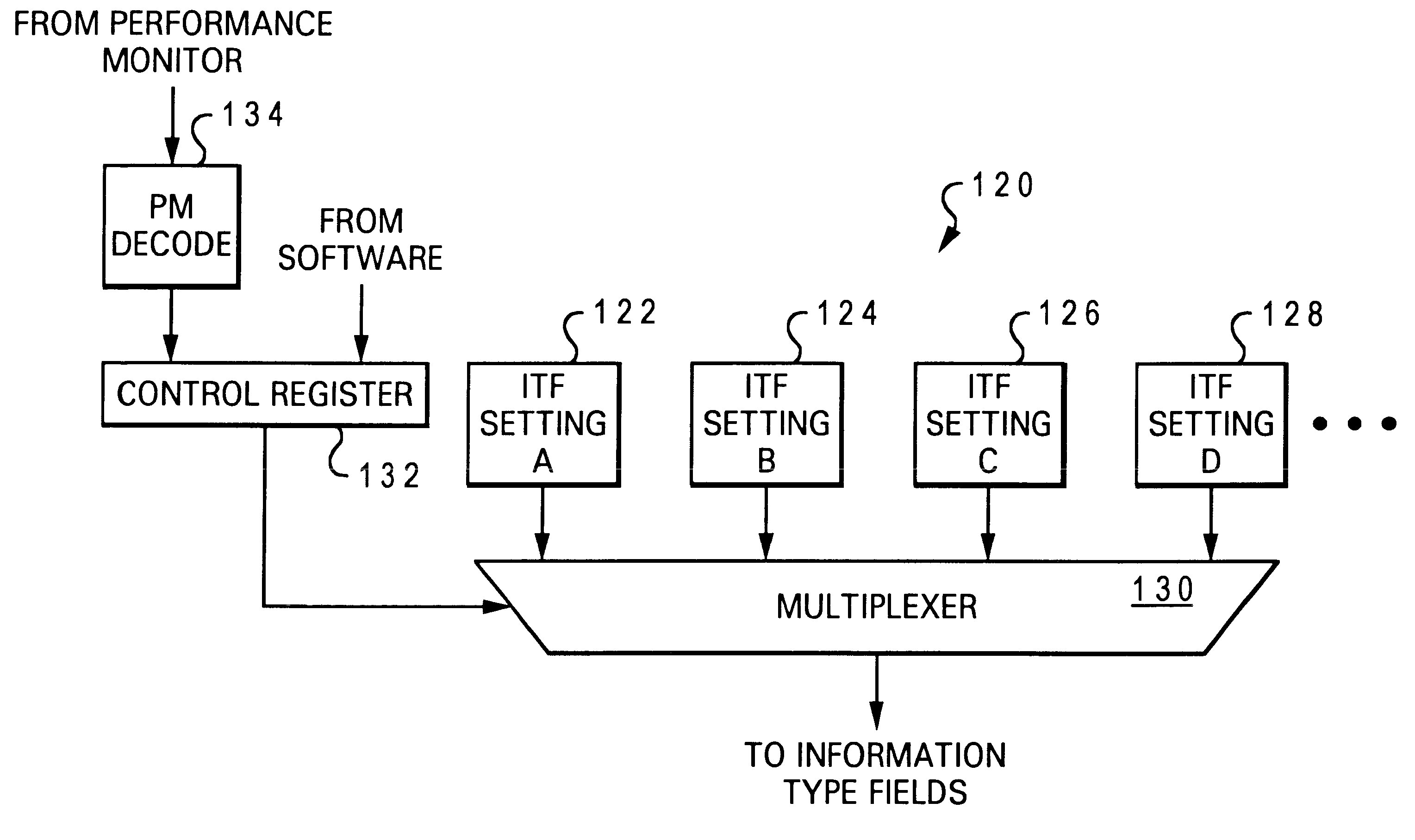

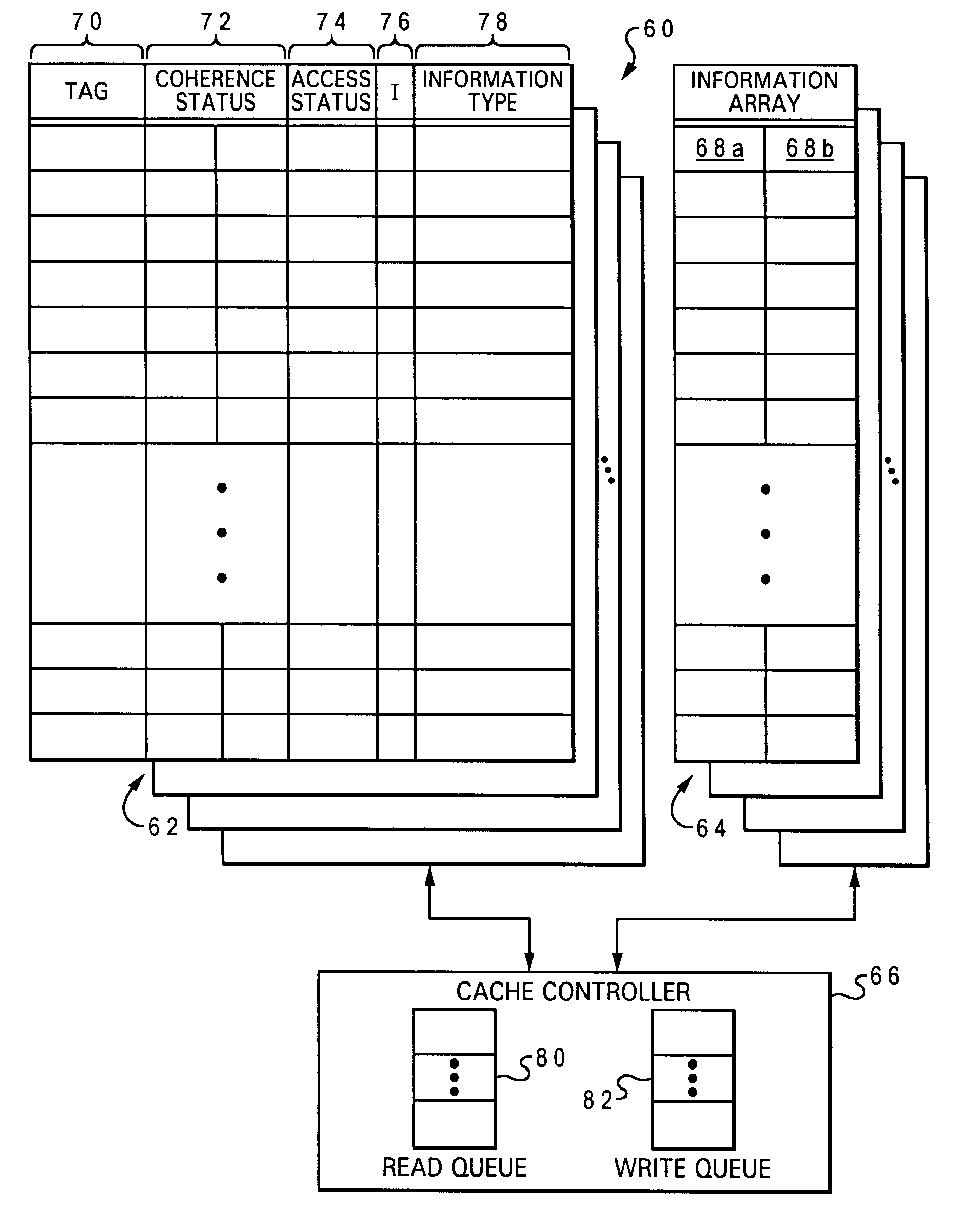

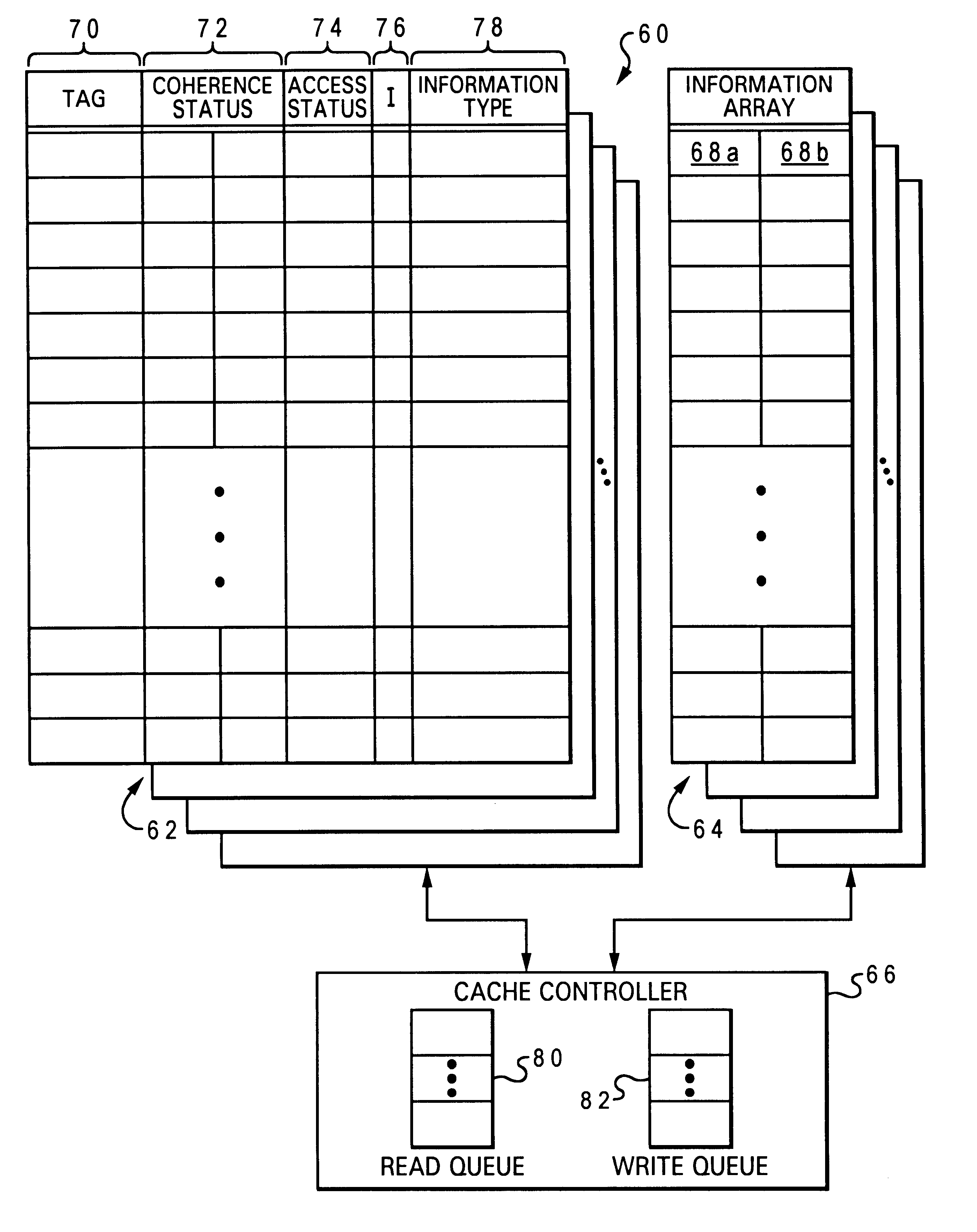

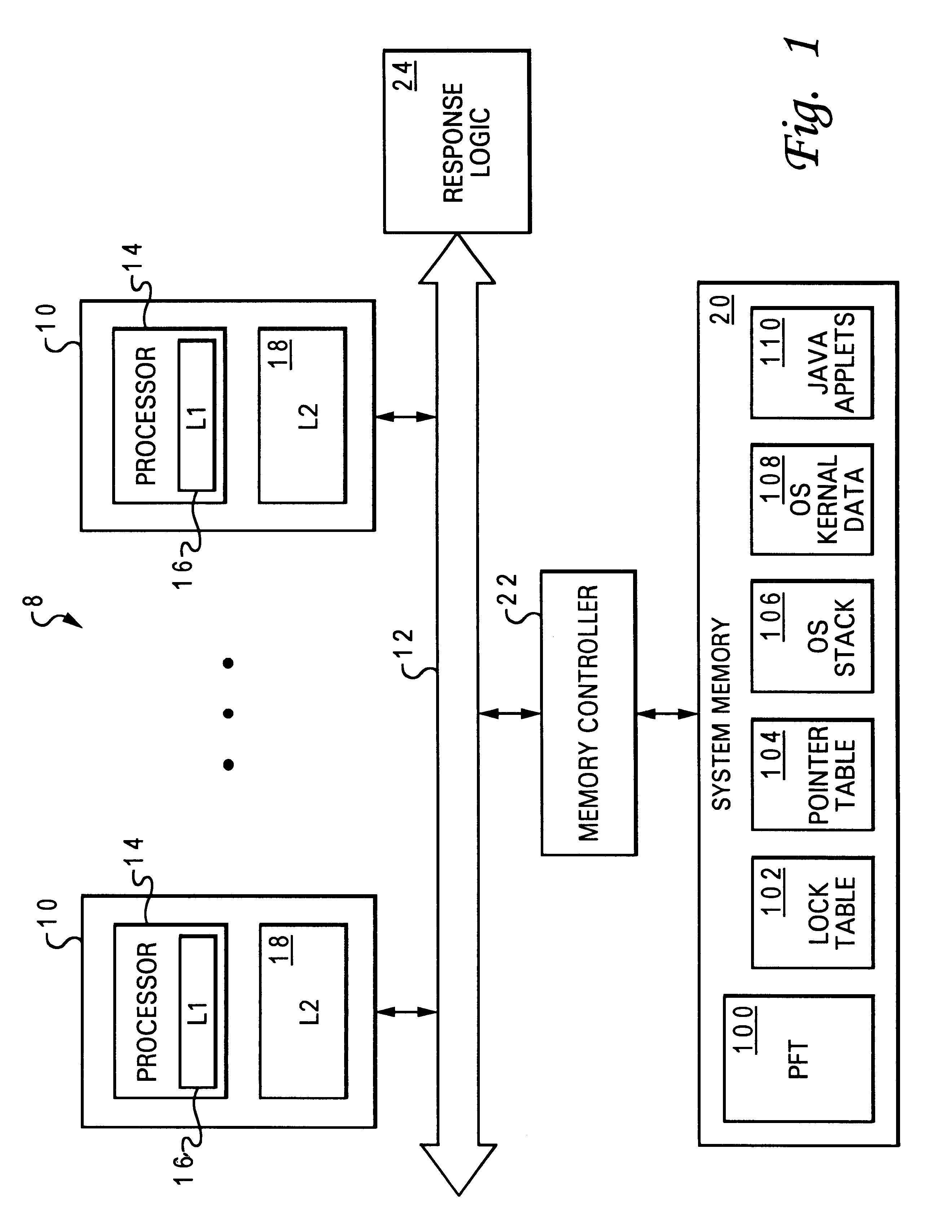

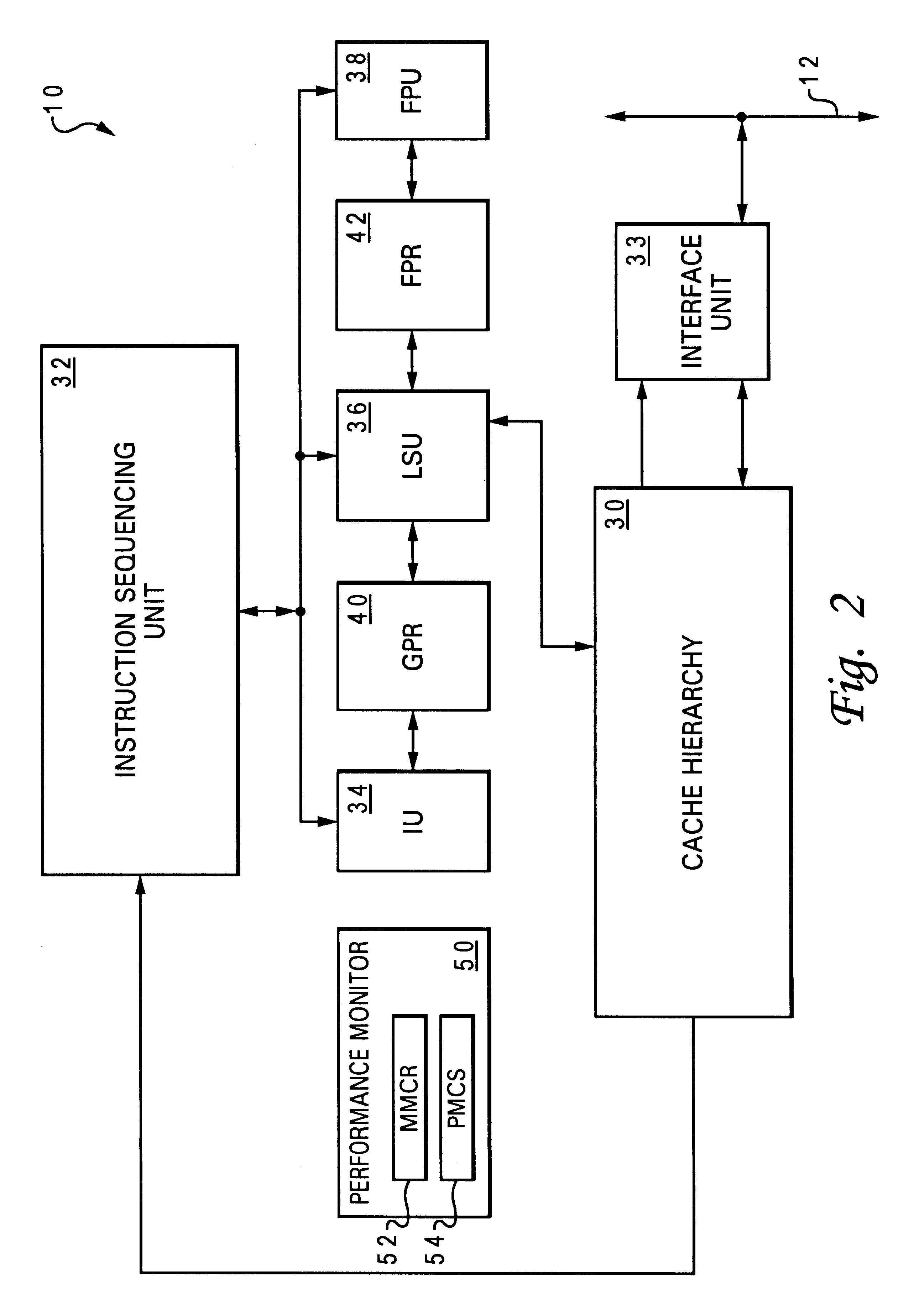

Method of cache management to dynamically update information-type dependent cache policies

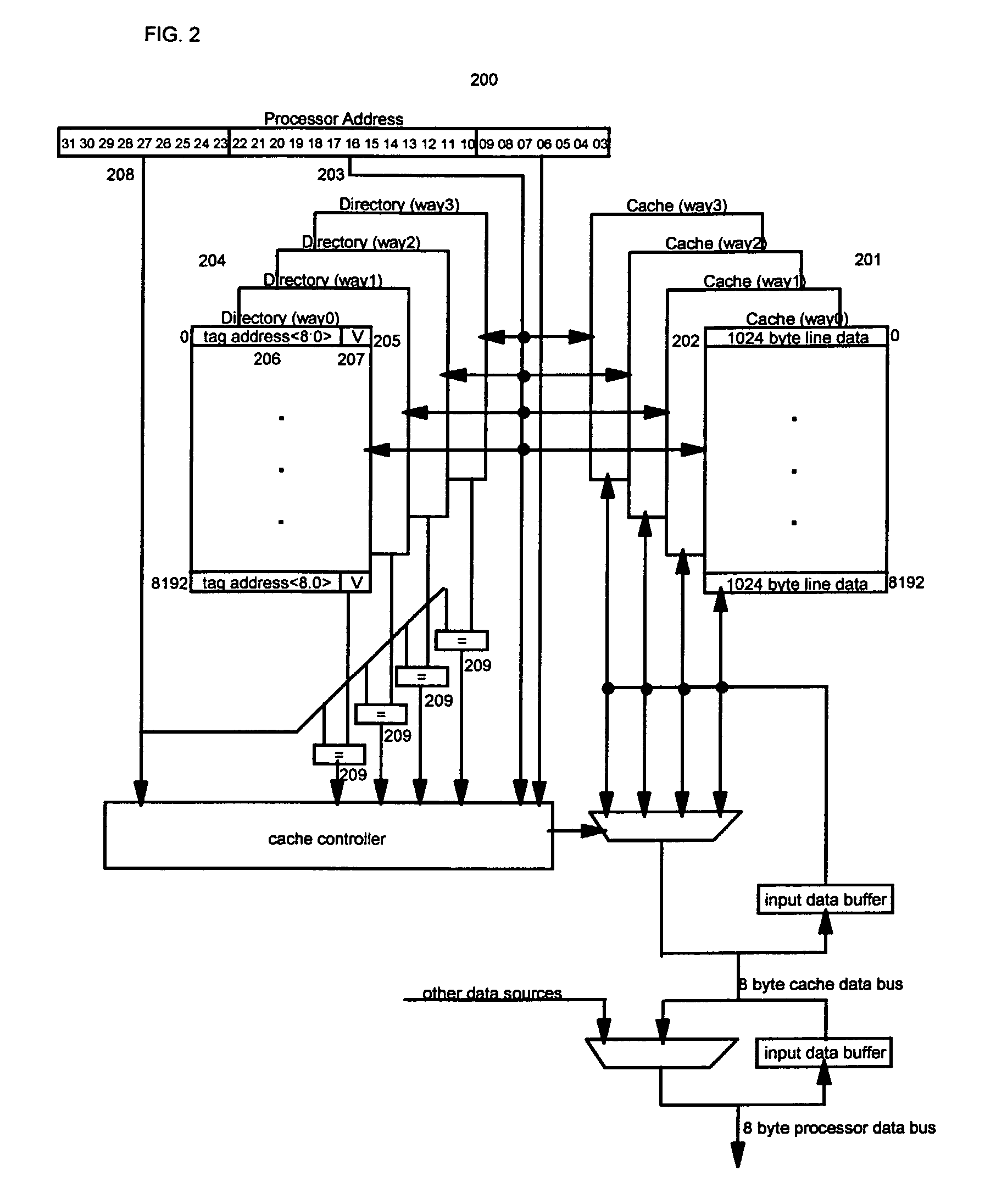

A set associative cache includes a cache controller, a directory, and an array including at least one congruence class containing a plurality of sets. The plurality of sets are partitioned into multiple groups according to which of a plurality of information types each set can store. The sets are partitioned so that at least two of the groups include the same set and at least one of the sets can store fewer than all of the information types. To optimize cache operation, the cache controller dynamically modifies a cache policy of a first group while retaining a cache policy of a second group, thus permitting the operation of the cache to be individually optimized for different information types. The dynamic modification of cache policy can be performed in response to either a hardware-generated or software-generated input.

Owner:IBM CORP

Fabric router with flit caching

InactiveUS20050018609A1Overcome limitationsError preventionTransmission systemsArray data structureParallel computing

In a fabric router, flits are stored on chip in a first set of rapidly accessible flit buffers, and overflow from the first set of flit buffers is stored in a second set of off-chip flit buffers that are accessed more slowly than the first set. The flit buffers may include a buffer pool accessed through a pointer array or a set associative cache. Flow control between network nodes stops the arrival of new flits while transferring flits between the first set of buffers and the second set of buffers.

Owner:SOAPSTONE NETWORKS

Scalable indexing

ActiveUS20100332846A1Memory architecture accessing/allocationMemory adressing/allocation/relocationTraffic capacityTranslation table

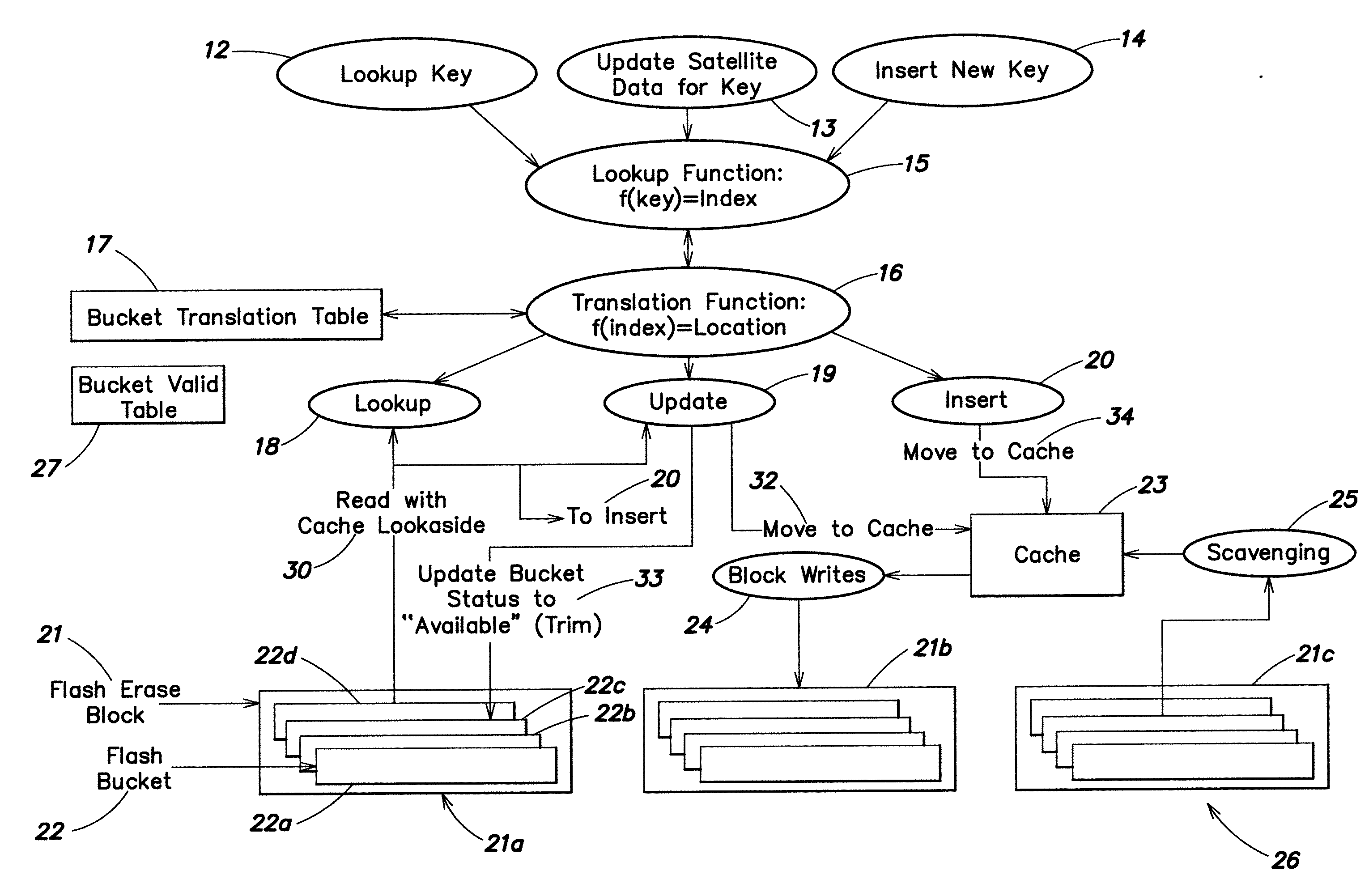

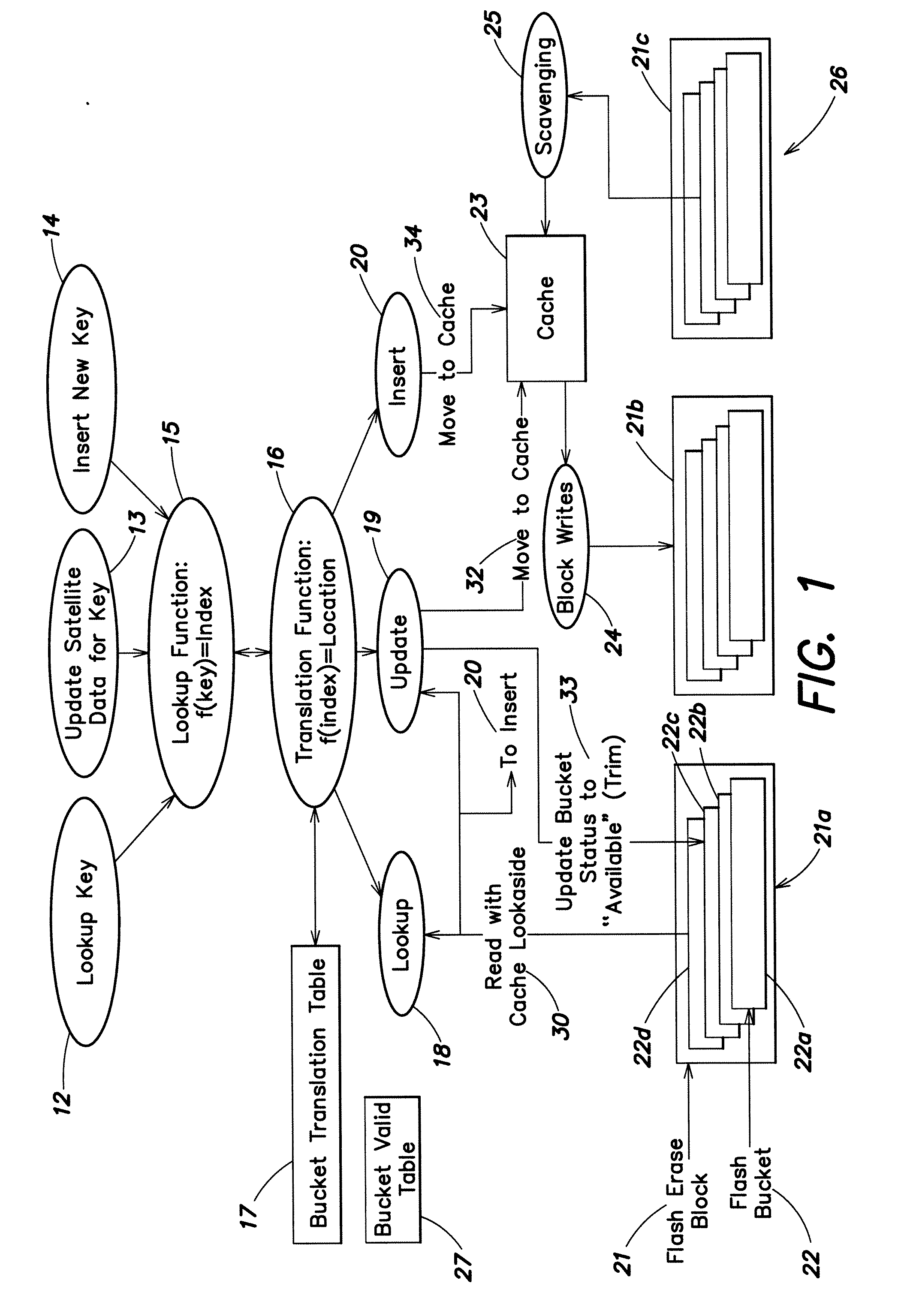

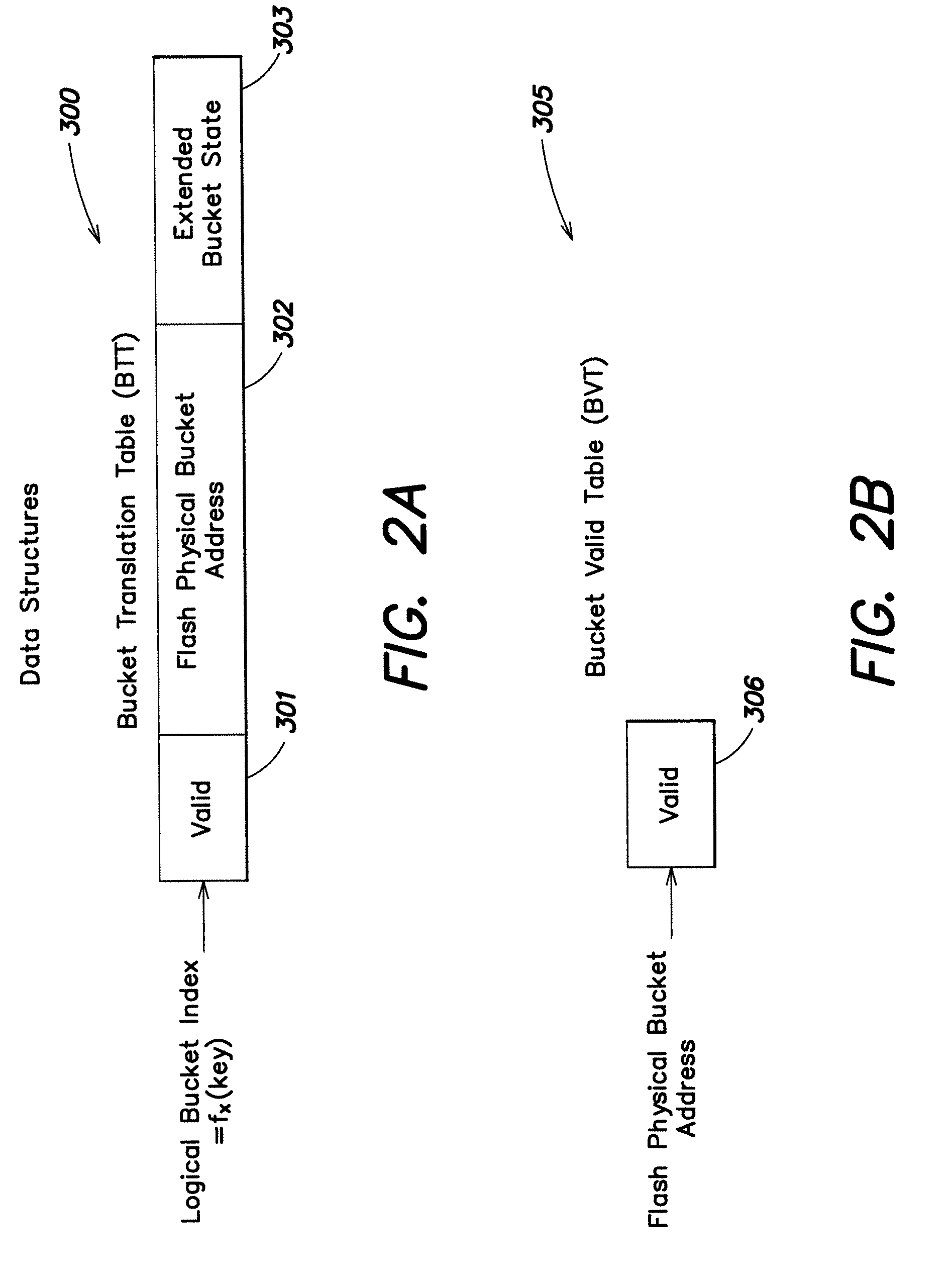

Method and apparatus for constructing an index that scales to a large number of records and provides a high transaction rate. New data structures and methods are provided to ensure that an indexing algorithm performs in a way that is natural (efficient) to the algorithm, while a non-uniform access memory device sees IO (input / output) traffic that is efficient for the memory device. One data structure, a translation table, is created that maps logical buckets as viewed by the indexing algorithm to physical buckets on the memory device. This mapping is such that write performance to non-uniform access SSD and flash devices is enhanced. Another data structure, an associative cache is used to collect buckets and write them out sequentially to the memory device as large sequential writes. Methods are used to populate the cache with buckets (of records) that are required by the indexing algorithm. Additional buckets may be read from the memory device to cache during a demand read, or by a scavenging process, to facilitate the generation of free erase blocks.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

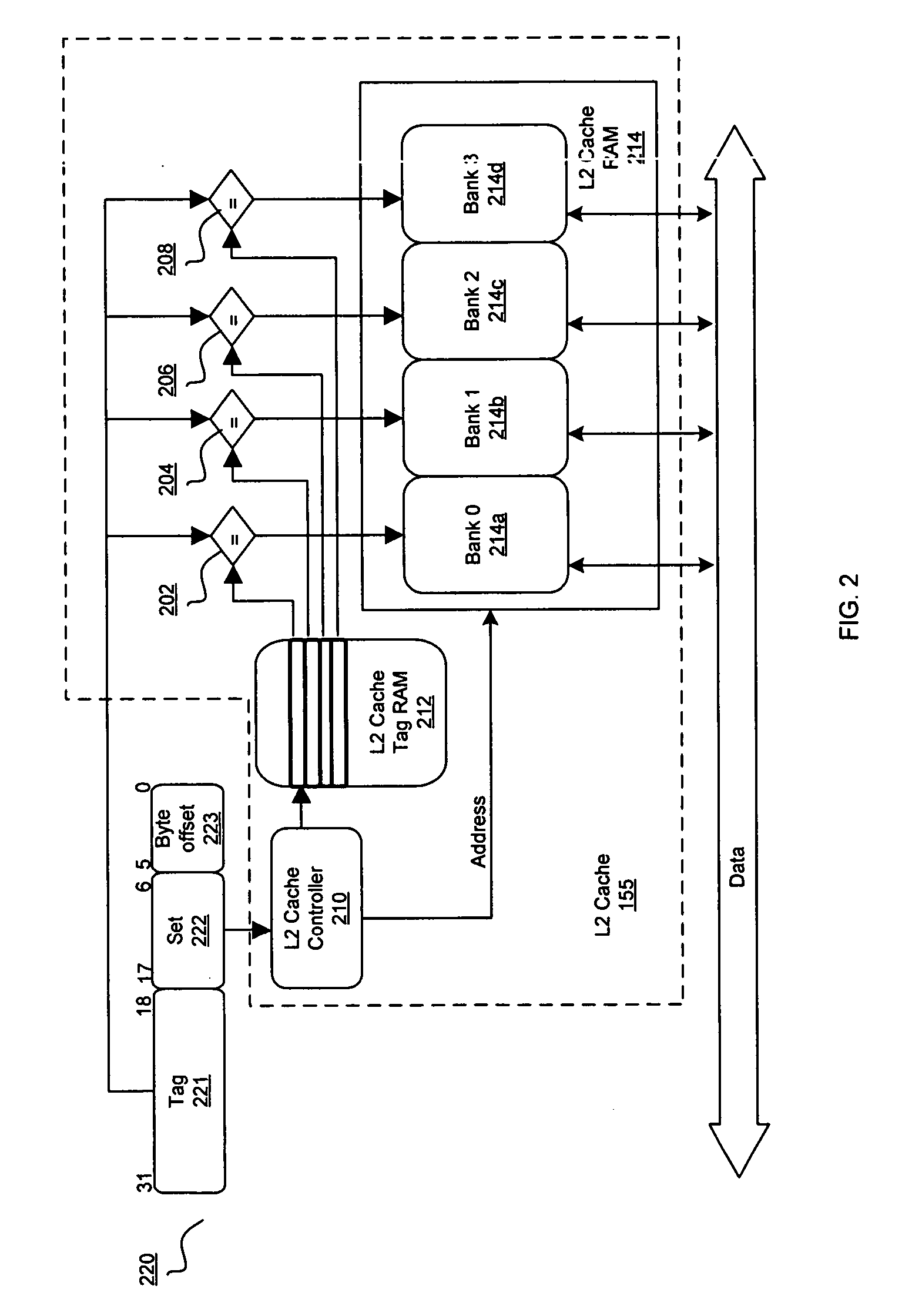

N-way set-associative external cache with standard DDR memory devices

InactiveUS6912628B2Increasing snoop bandwidthHigh bandwidthMemory architecture accessing/allocationMemory adressing/allocation/relocationDouble data rateBurst transmission

A method, cache system, and cache controller are provided. A two-way and n-way cache organization scheme are presented as at least two embodiments of a set-associative external cache that utilizes standard burst memory devices such as DDR (double data rate) memory devices. The set-associative cache organization scheme is designed to fully utilize burst efficiencies during snoop and invalidation operations. Cache lines are interleaved in such a way that a first burst transfer from the cache to the cache controller brings in a plurality of tags.

Owner:ORACLE INT CORP

Sectored least-recently-used cache replacement

InactiveUS6823427B1Memory adressing/allocation/relocationLeast recently frequently usedOperating system

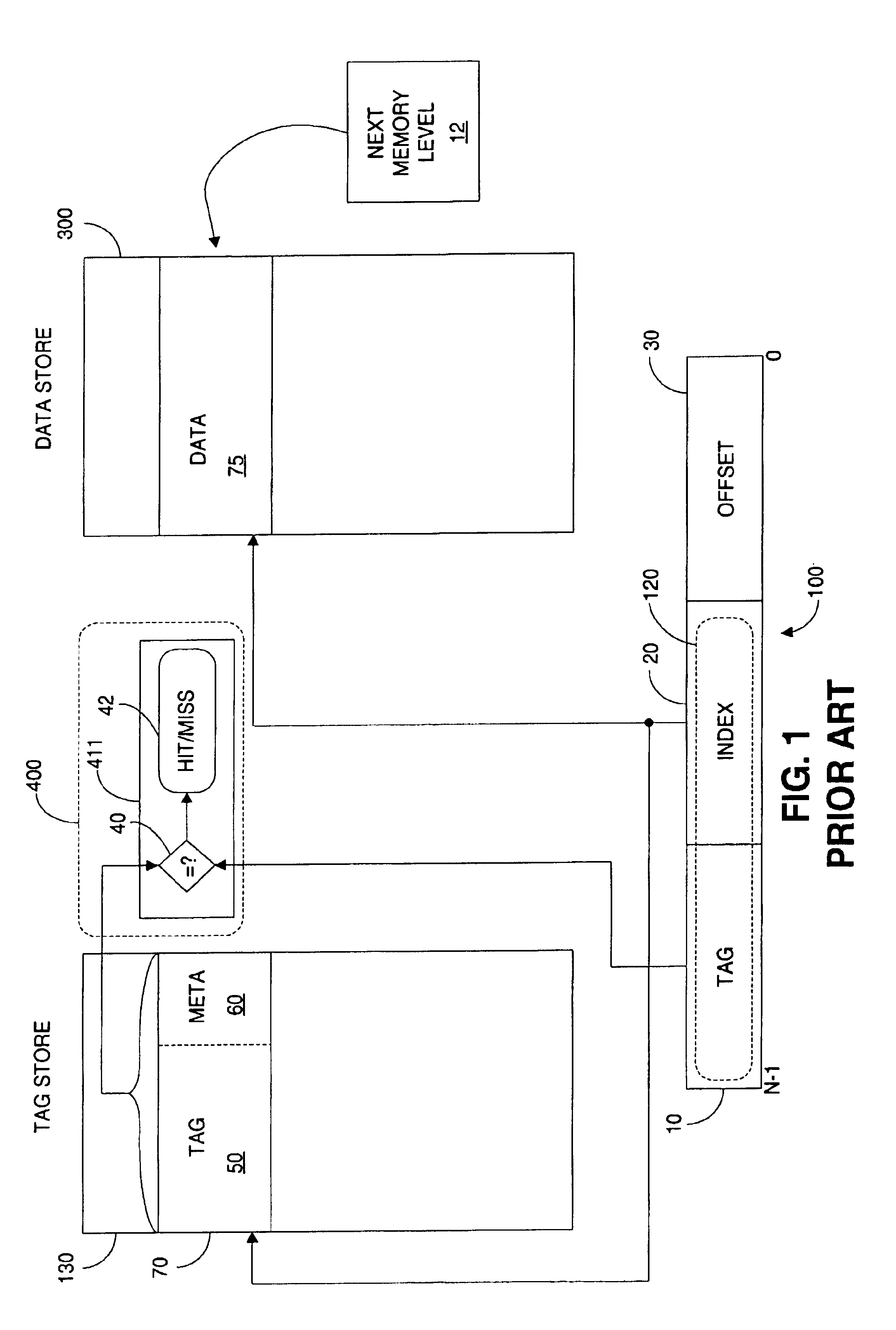

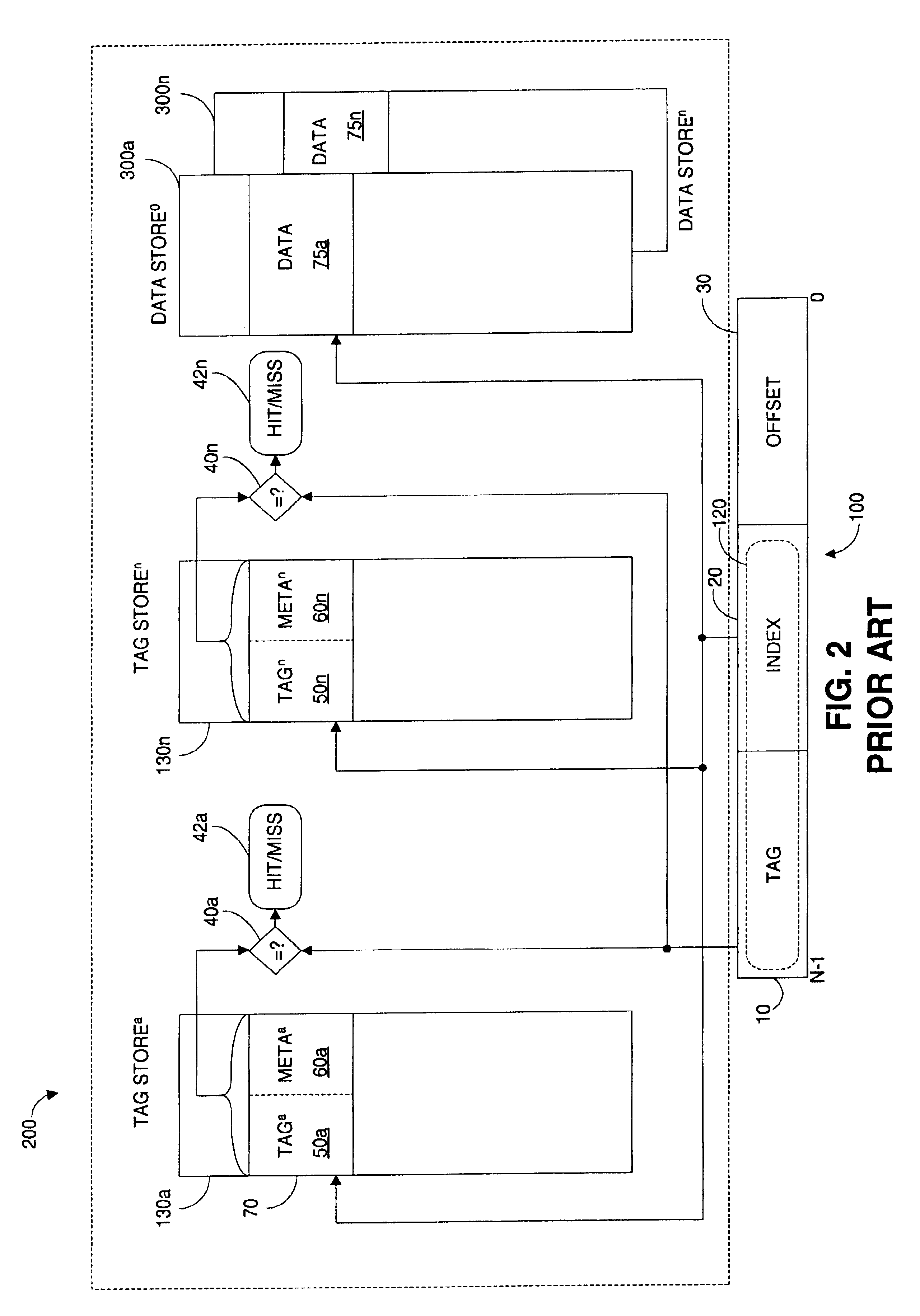

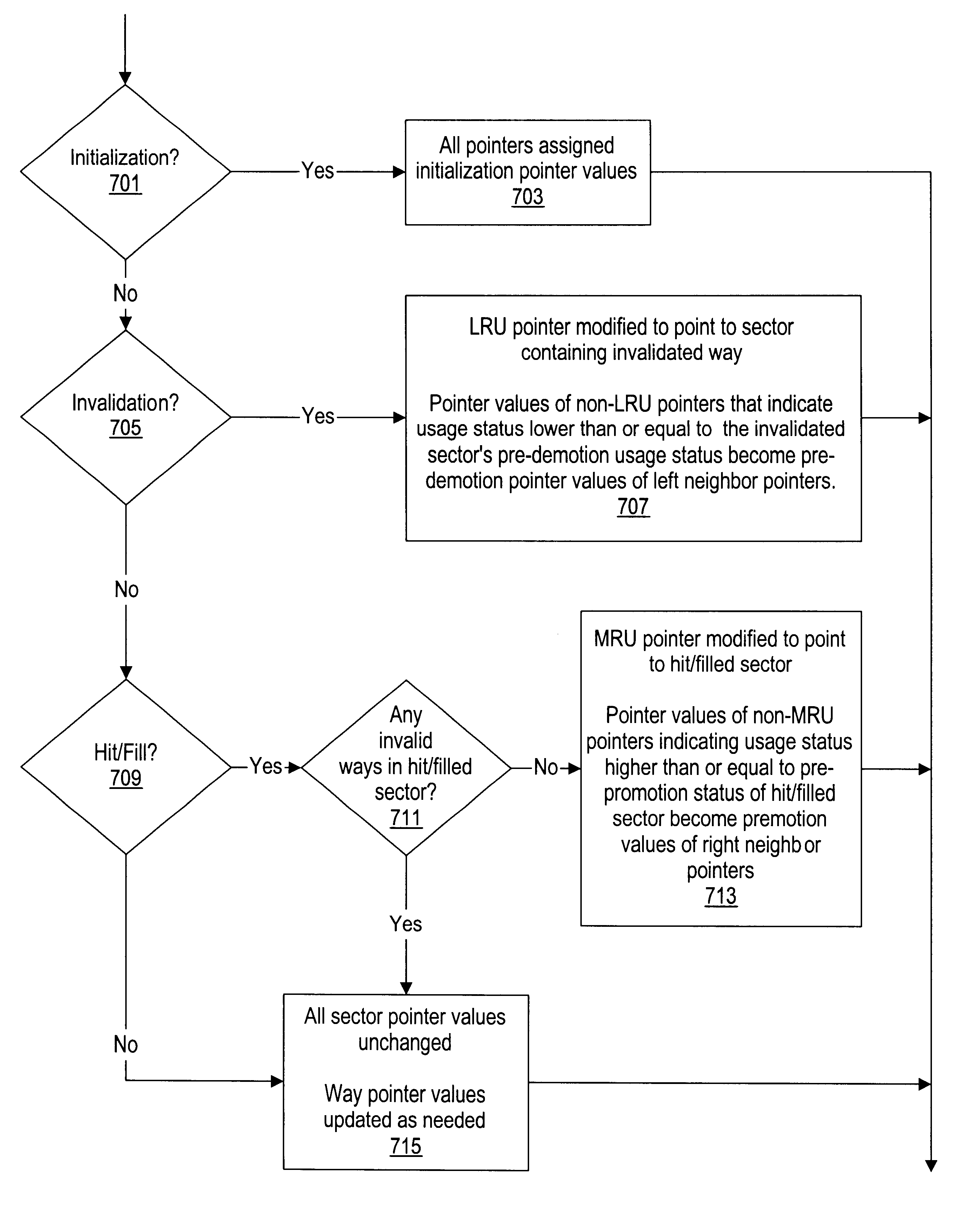

Various methods and systems for implementing a sectored least recently used (LRU) cache replacement algorithm are disclosed. Each set in an N-way set-associative cache is partitioned into several sectors that each include two or more of the N ways. Usage status indicators such as pointers show the relative usage status of the sectors in an associated set. For example, an LRU pointer may point to the LRU sector, an MRU pointer may point to the MRU sector, and so on. When a replacement is performed, a way within the LRU sector identified by the LRU pointer is filled.

Owner:ADVANCED MICRO DEVICES INC

Cache management mechanism to enable information-type dependent cache policies

A set associative cache includes a cache controller, a directory, and an array including at least one congruence class containing a plurality of sets. The plurality of sets are partitioned into multiple groups according to which of a plurality of information types each set can store. The sets are partitioned so that at least two of the groups include the same set and at least one of the sets can store fewer than all of the information types. The cache controller then implements different cache policies for at least two of the plurality of groups, thus permitting the operation of the cache to be individually optimized for different information types.

Owner:IBM CORP

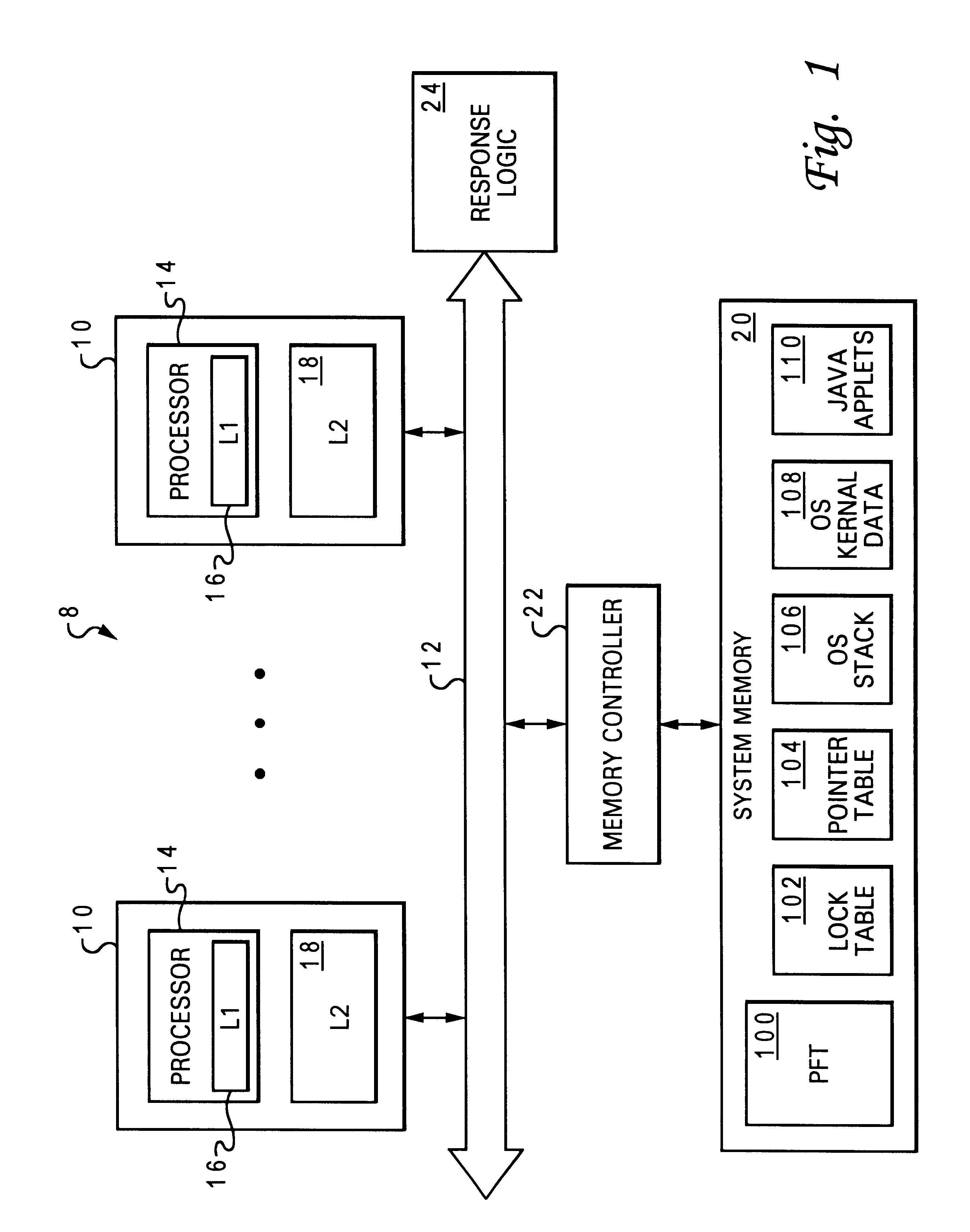

Method of cache management to store information in particular regions of the cache according to information-type

A set associative cache includes a number of congruence classes that each contain a plurality of sets, a directory, and a cache controller. The directory indicates, for each congruence class, which of a plurality of information types each of the plurality of sets can store. At least one set in at least one of the congruence classes is restricted to storing fewer than all of the information types and at least one set can store multiple information types. When the cache receives information to be stored of a particular information type, the cache controller stores the information into one of the plurality of sets indicated by the directory as capable of storing that particular information type. By managing the sets in which sets information is stored according to information type, an awareness of the characteristics of the various information types can easily be incorporated into the cache's allocation and victim selection policies.

Owner:IBM CORP

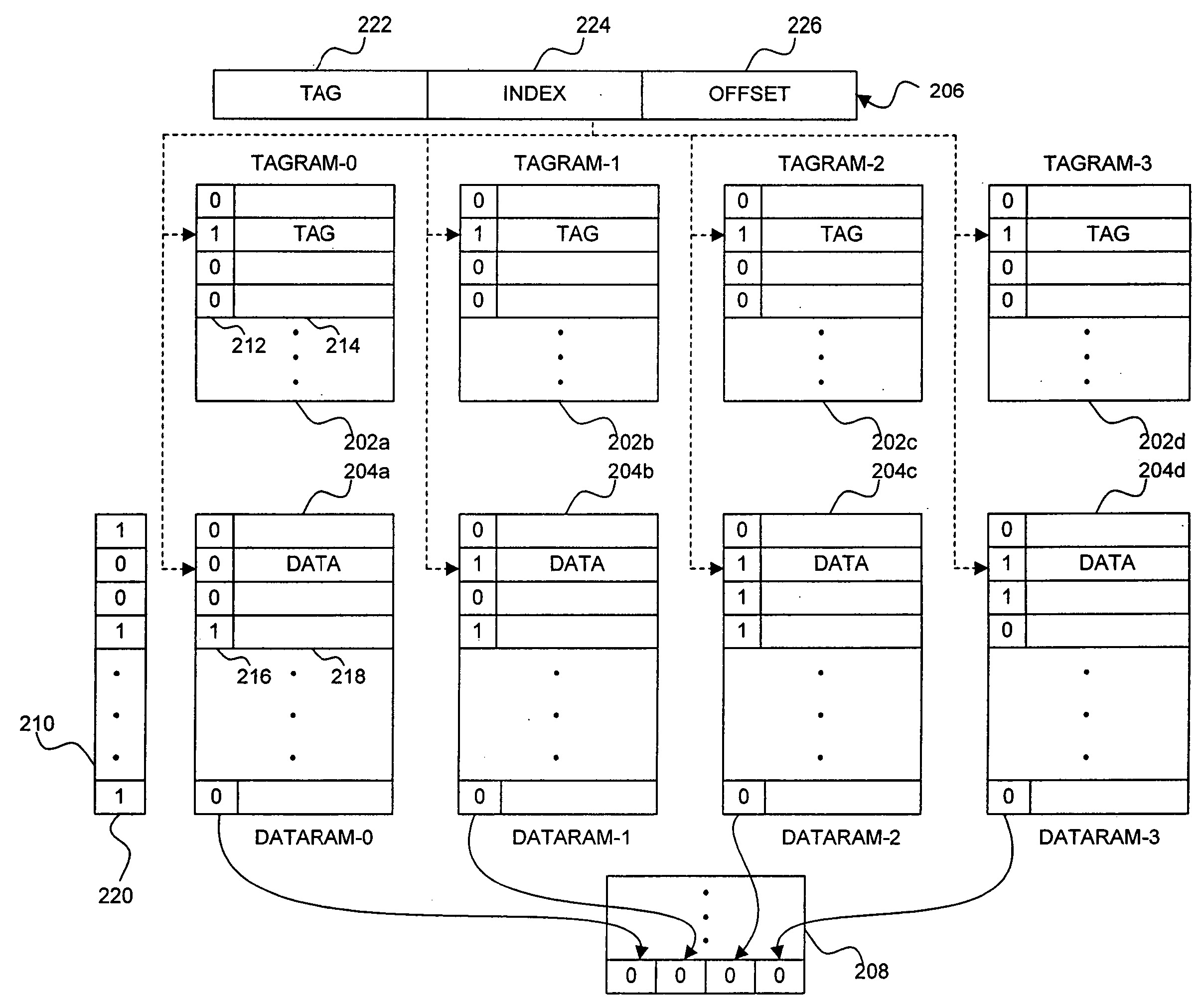

Microprocessor having a power-saving instruction cache way predictor and instruction replacement scheme

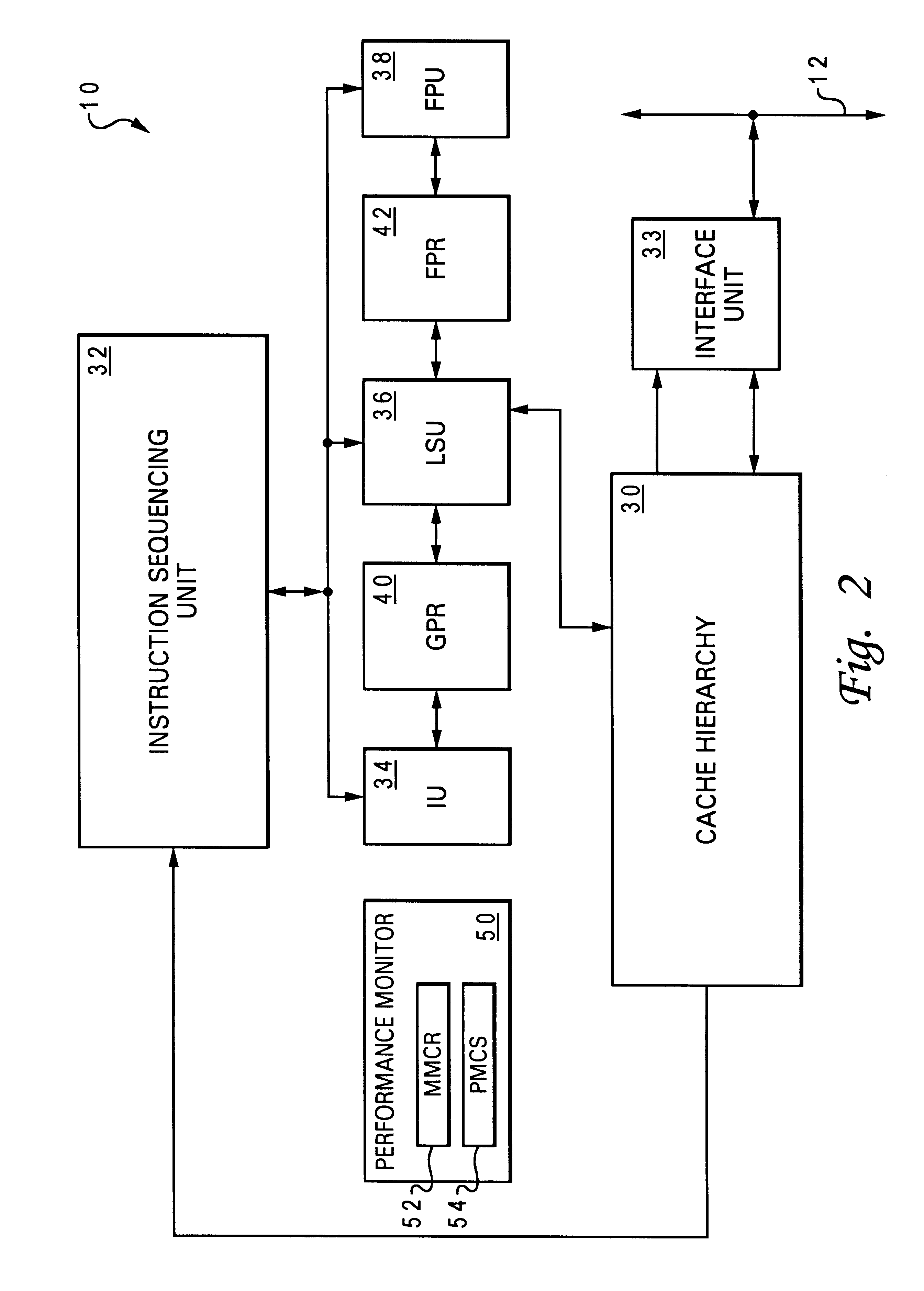

ActiveUS20070113013A1Improve performanceReduce power consumptionMemory architecture accessing/allocationEnergy efficient ICTParallel computingData field

Microprocessor having a power-saving instruction cache way predictor and instruction replacement scheme. In one embodiment, the processor includes a multi-way set associative cache, a way predictor, a policy counter, and a cache refill circuit. The policy counter provides a signal to the way predictor that determines whether the way predictor operates in a first mode or a second mode. Following a cache miss, the cache refill circuit selects a way of the cache and compares a layer number associated with a dataram field of the way to a way set layer number. The cache refill circuit writes a block of data to the field if the layer number is not equal to the way set layer number. If the layer number is equal to the way set layer number, the cache refill circuit repeats the above steps for additional ways until the block of memory is written to the cache.

Owner:ARM FINANCE OVERSEAS LTD

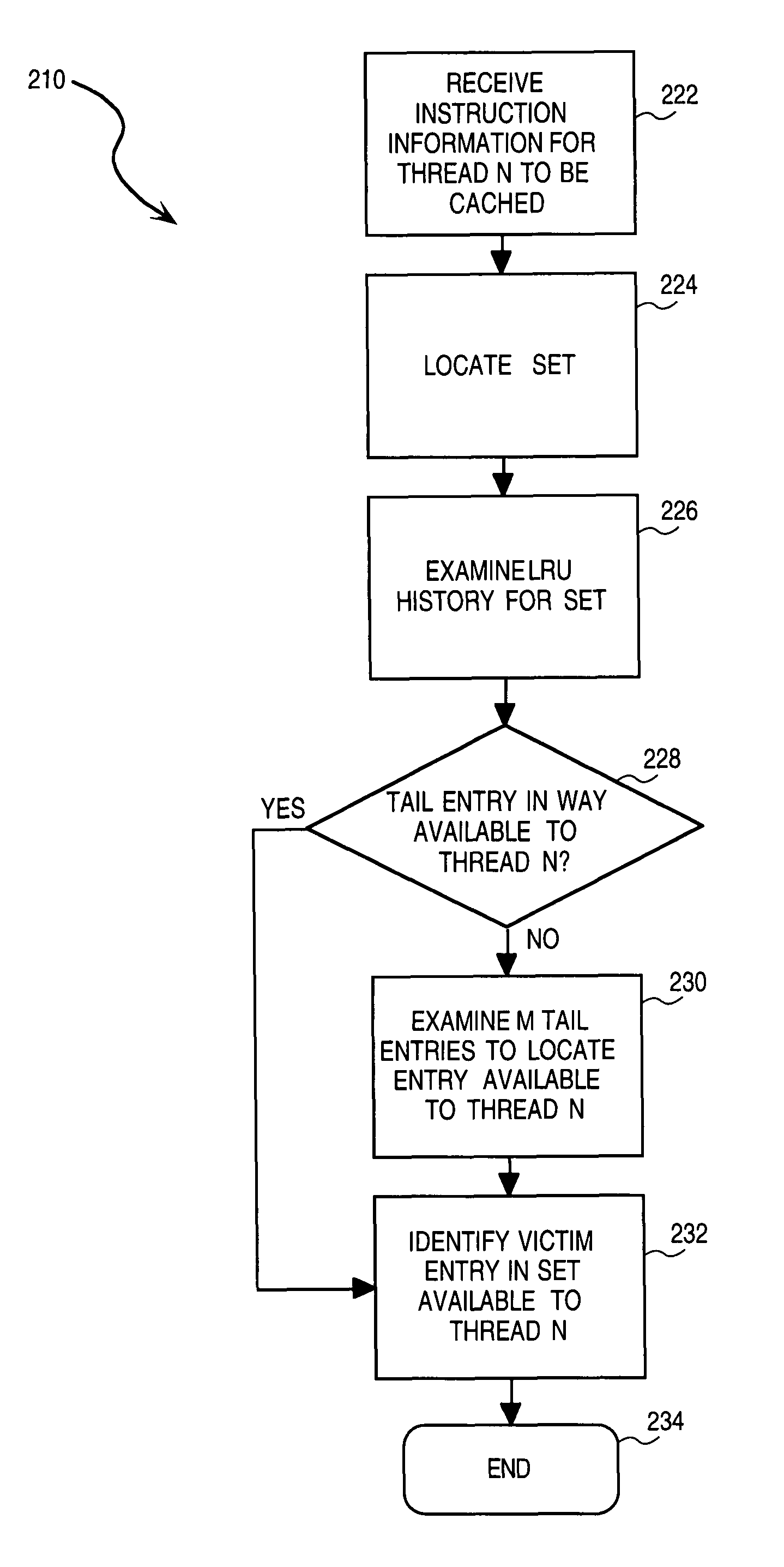

LRU cache replacement for a partitioned set associative cache

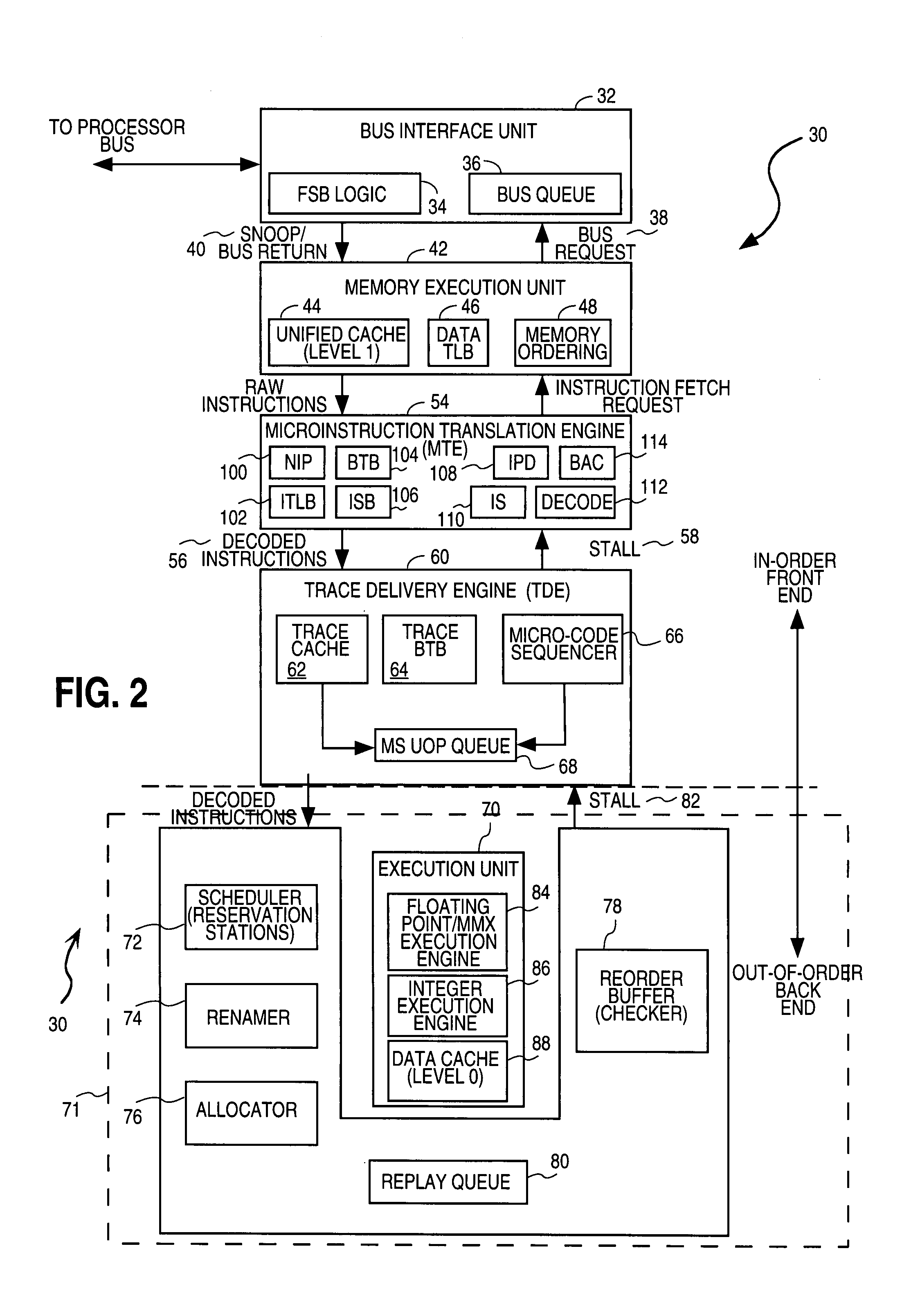

InactiveUS7856633B1Efficiently share resourceResource allocationRuntime instruction translationLeast recently frequently usedOperating system

A method of partitioning a memory resource, associated with a multi-threaded processor, includes defining the memory resource to include first and second portions that are dedicated to the first and second threads respectively. A third portion of the memory resource is then designated as being shared between the first and second threads. Upon receipt of an information item, (e.g., a microinstruction associated with the first thread and to be stored in the memory resource), a history of Least Recently Used (LRU) portions is examined to identify a location in either the first or the third portion, but not the second portion, as being a least recently used portion. The second portion is excluded from this examination on account of being dedicated to the second thread. The information item is then stored within a location, within either the first or the third portion, identified as having been least recently used.

Owner:INTEL CORP

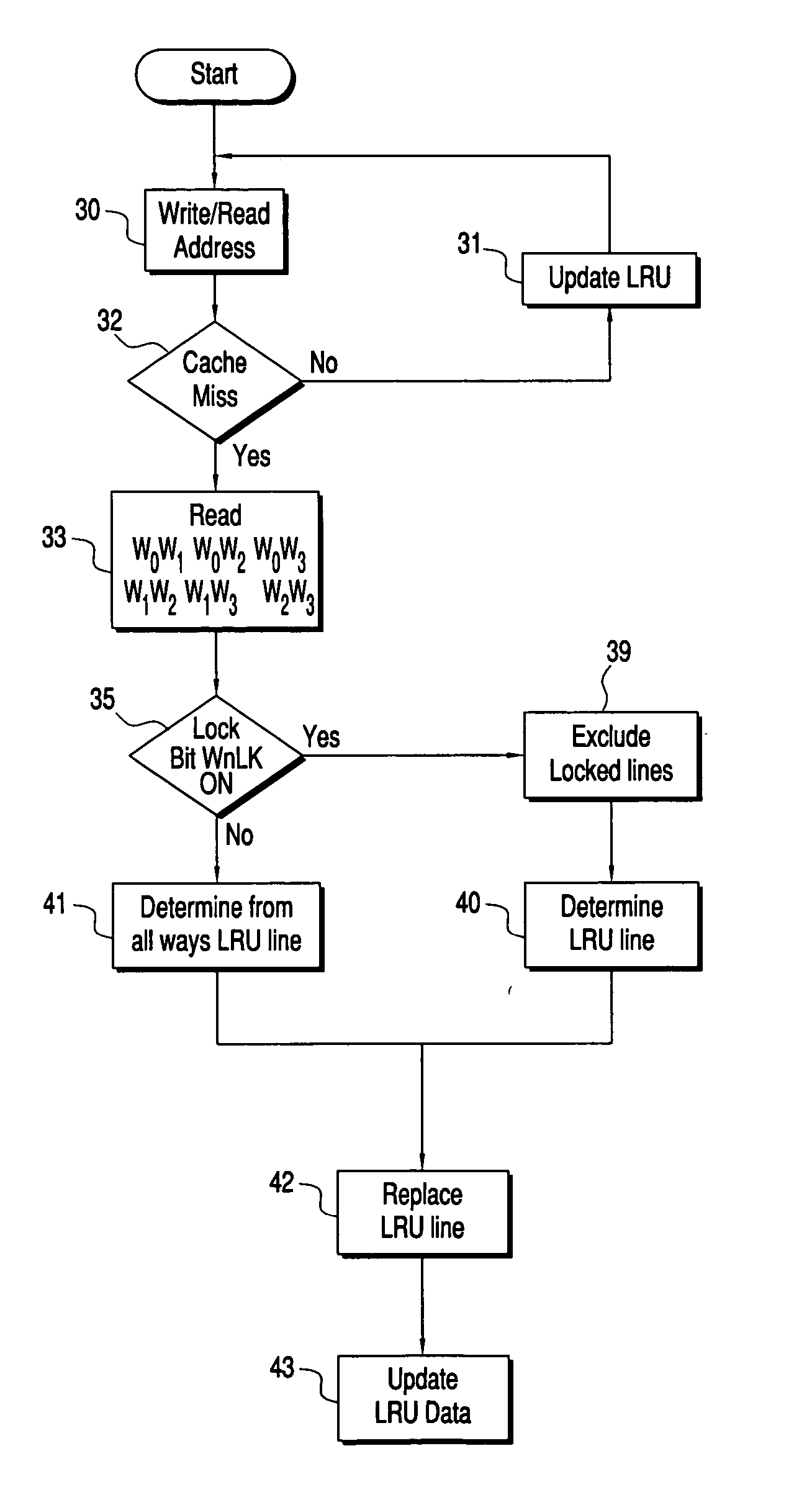

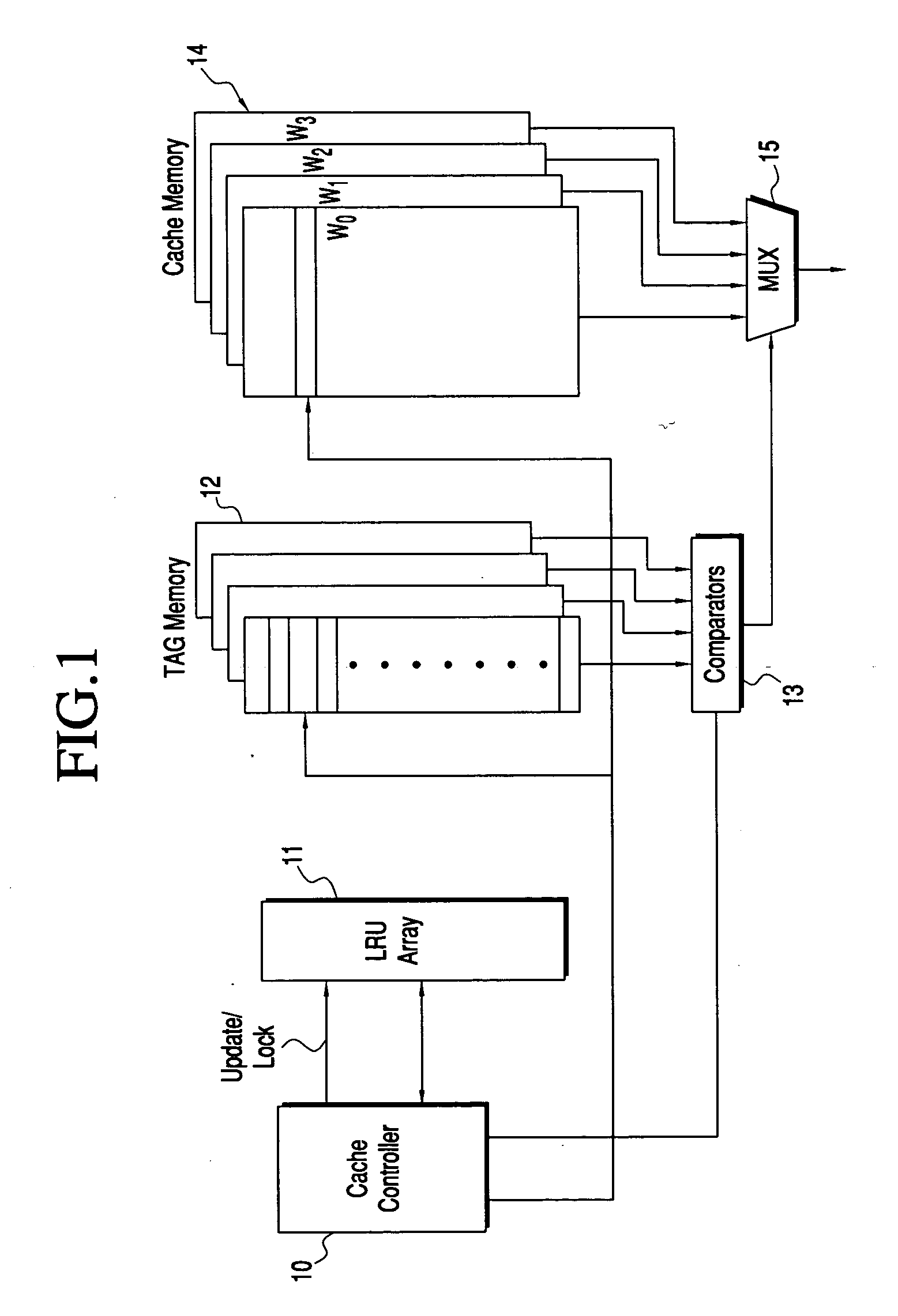

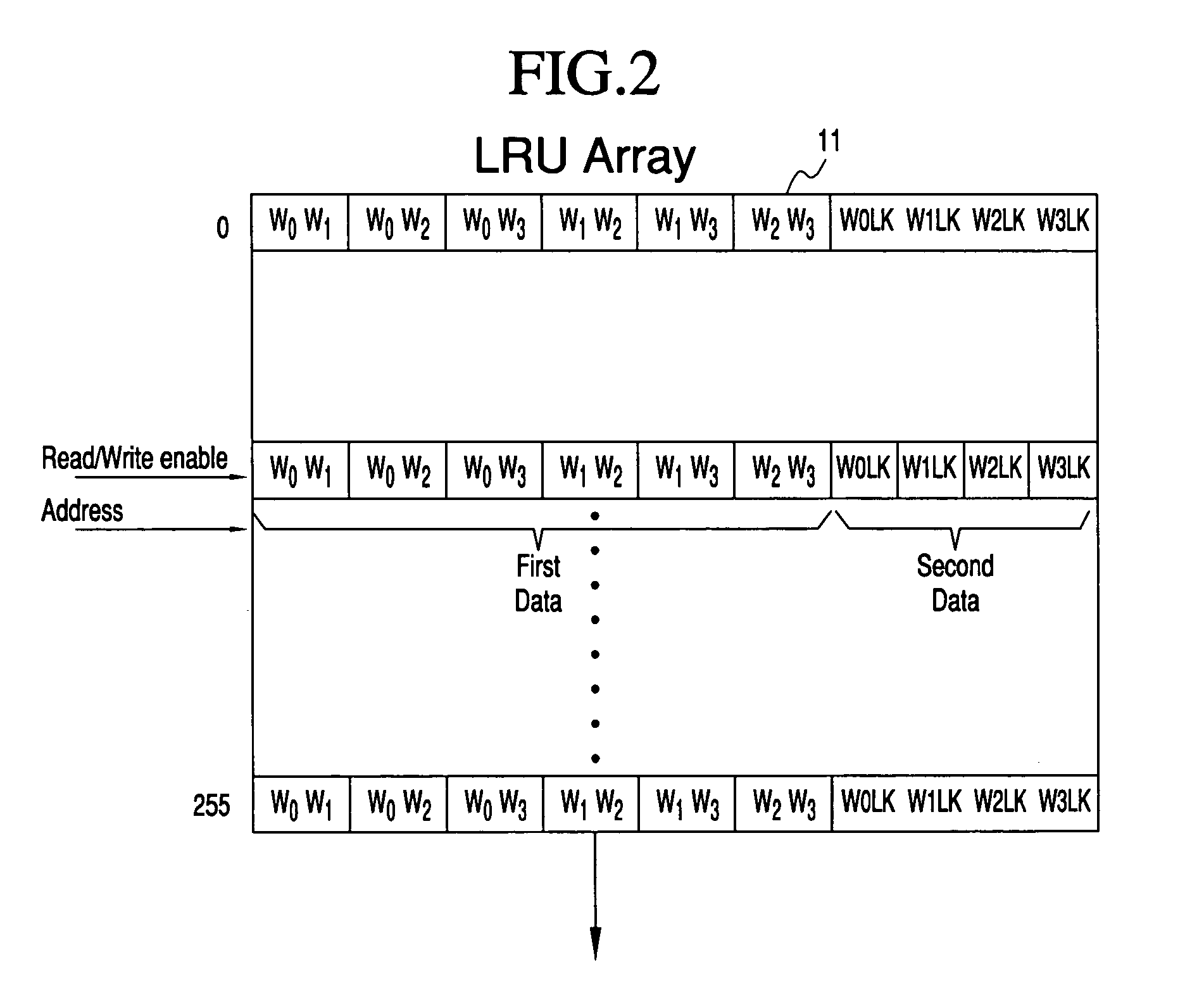

Method for software controllable dynamically lockable cache line replacement system

InactiveUS20060036811A1Shorten access timeSpeed up access timeMemory systemsParallel computingAccess line

An LRU array and method for tracking the accessing of lines of an associative cache. The most recently accessed lines of the cache are identified in the table, and cache lines can be blocked from being replaced. The LRU array contains a data array having a row of data representing each line of the associative cache, having a common address portion. A first set of data for the cache line identifies the relative age of the cache line for each way with respect to every other way. A second set of data identifies whether a line of one of the ways is not to be replaced. For cache line replacement, the cache controller will select the least recently accessed line using contents of the LRU array, considering the value of the first set of data, as well as the value of the second set of data indicating whether or not a way is locked. Updates to the LRU occur after each pre-fetch or fetch of a line or when it replaces another line in the cache memory.

Owner:GOOGLE LLC

Set-associative cache memory having variable time decay rewriting algorithm

InactiveUS6732238B1Memory adressing/allocation/relocationDigital storageParallel computingVariable time

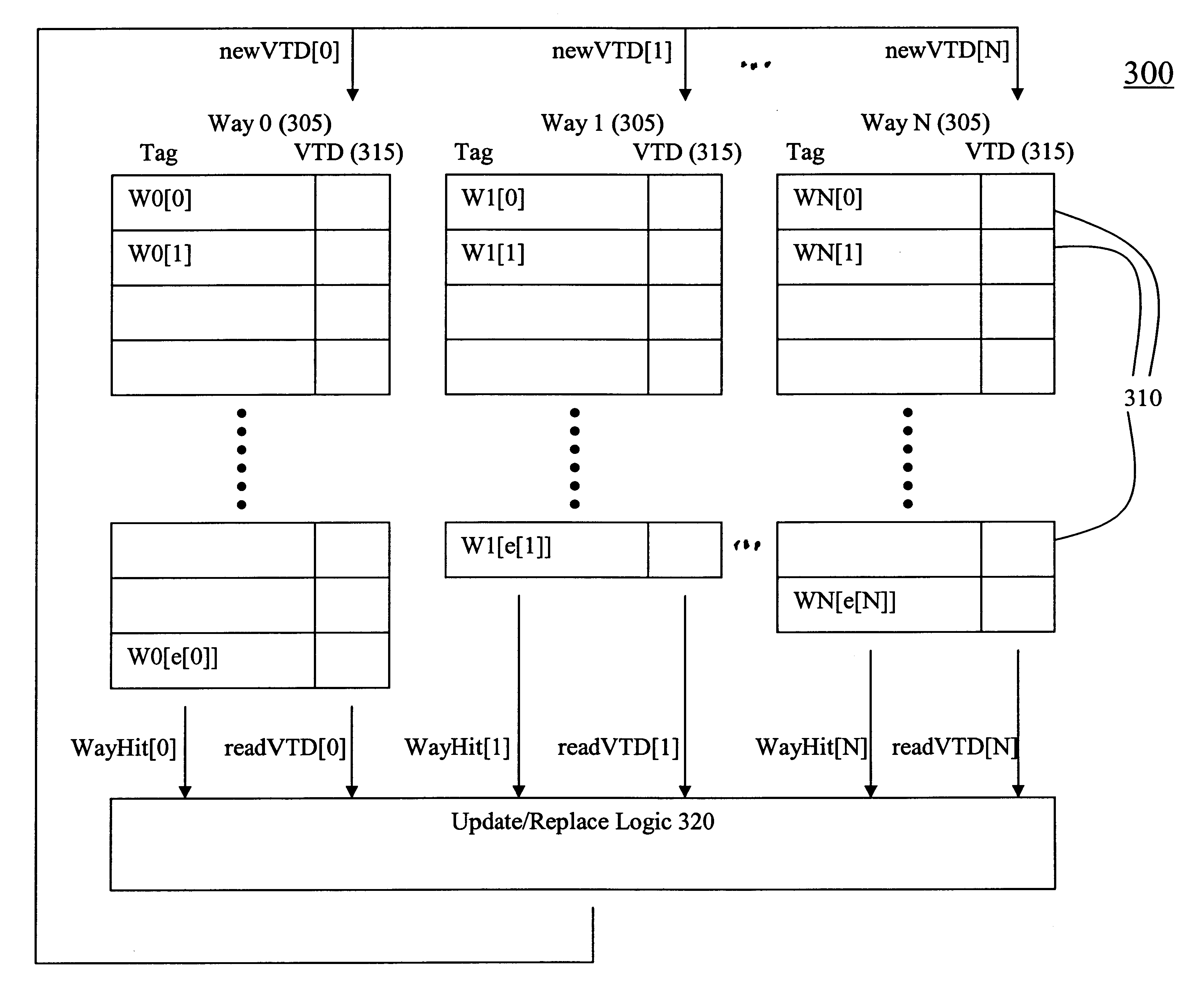

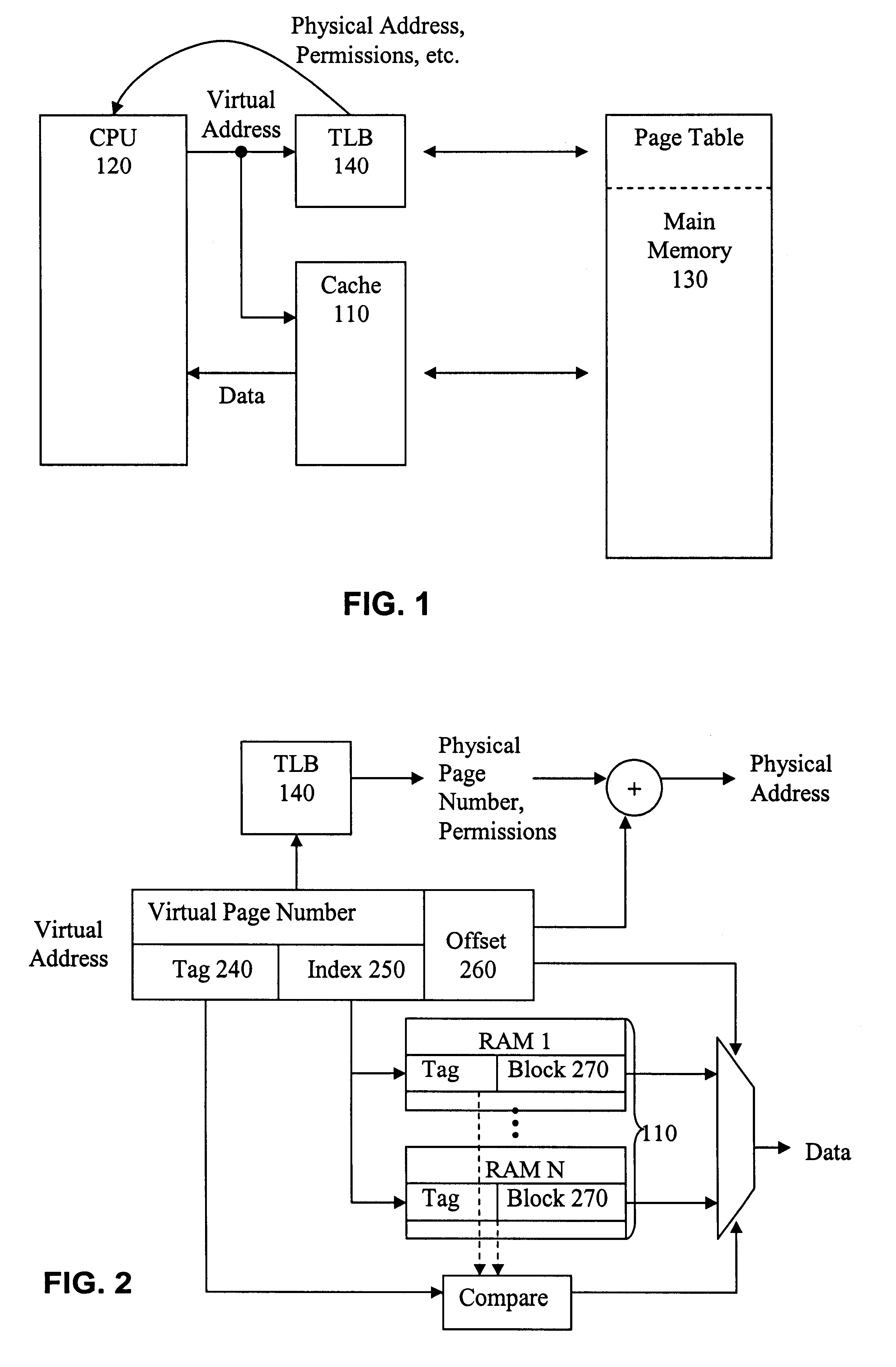

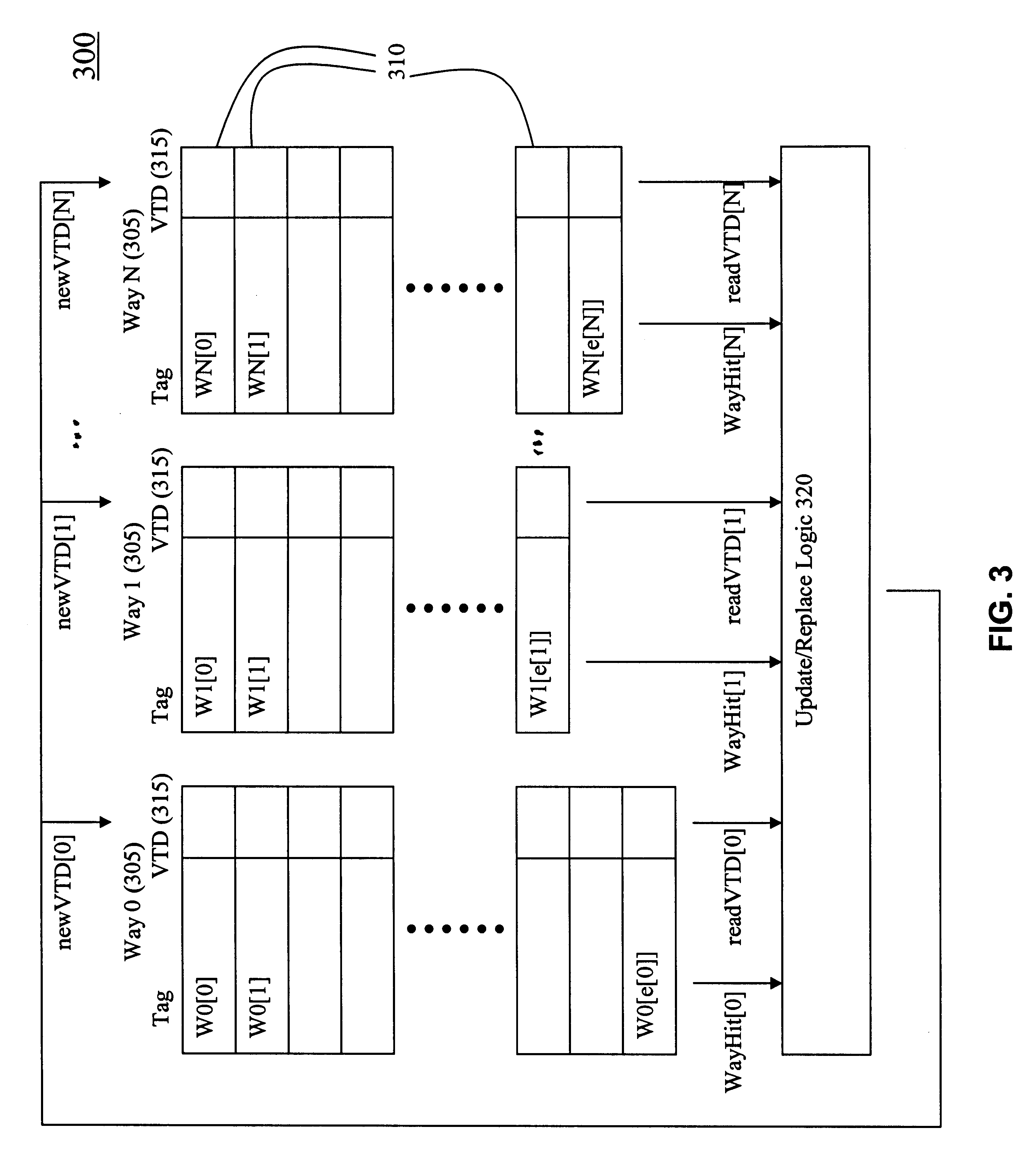

A set-associative structure replacement algorithm is particularly beneficial for irregular set-associative structures which may be affected by different access patterns, and different associativities available to be replaced on any given access. According to certain aspects, methods and apparatuses implement a novel decay replacement algorithm that is particularly beneficial for irregular set-associative structures. An embodiment apparatus includes set-associative structures having decay information stored therein, as well as update / replacement logic to implement replacement algorithms for translation lookup buffers (TLBS) and caches that vary in the number of associativities; have unbalanced associativity sizes, e.g., associativities can have different numbers of indices; and can have varying replacement criteria. The implementation apparatuses and methods provide good performance, on the level of LRU, random and clock algorithms; and is efficient and scalable.

Owner:TENSILICA

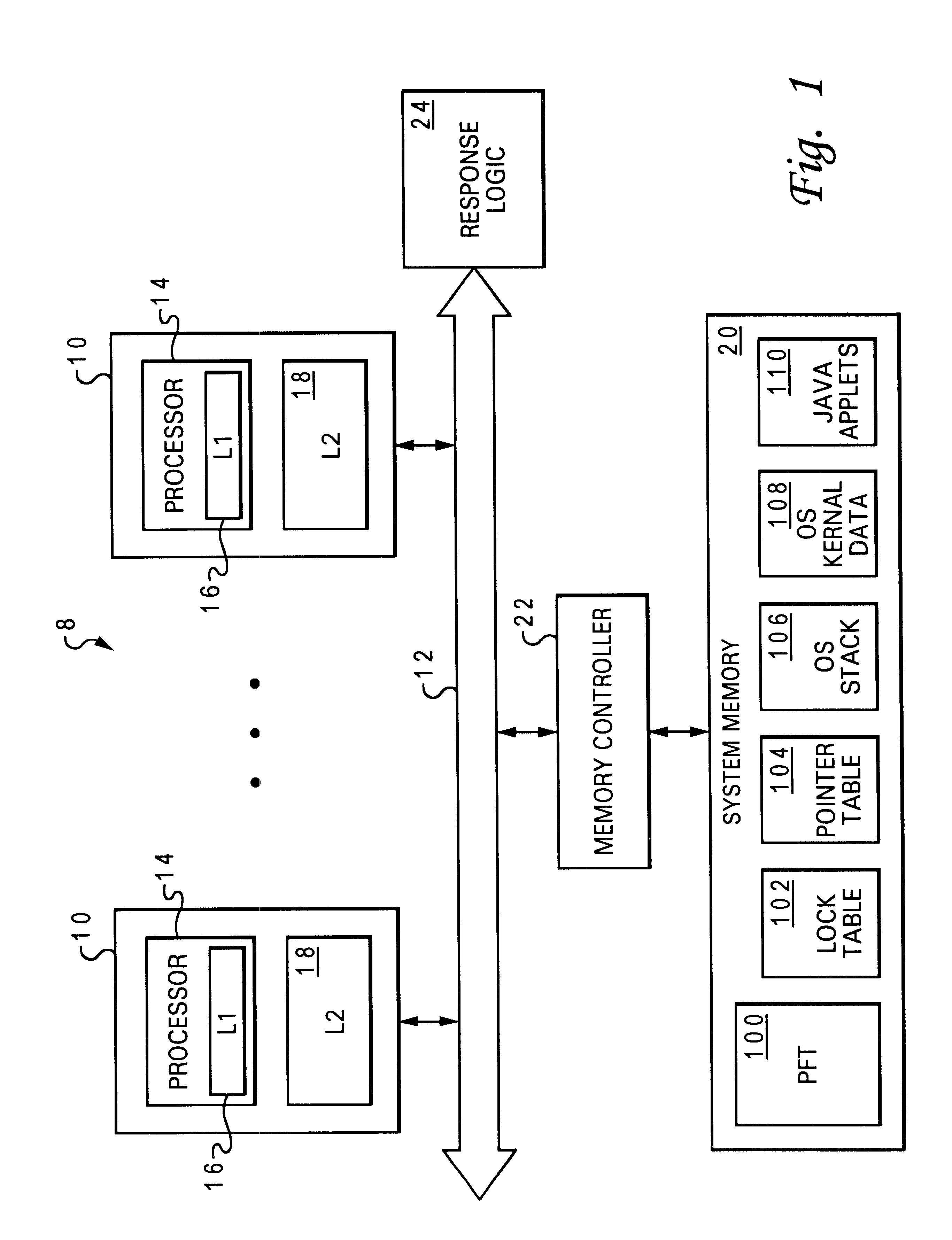

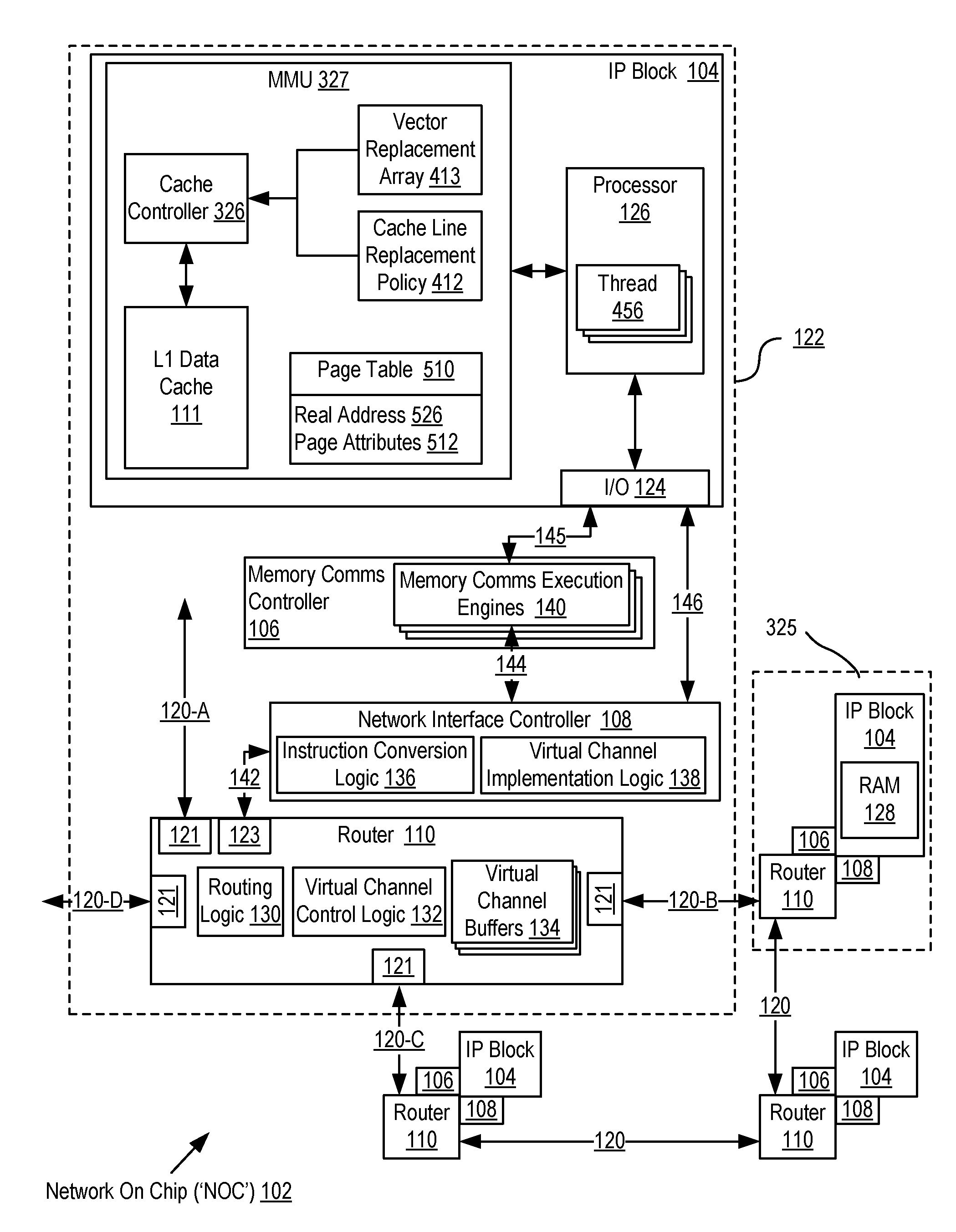

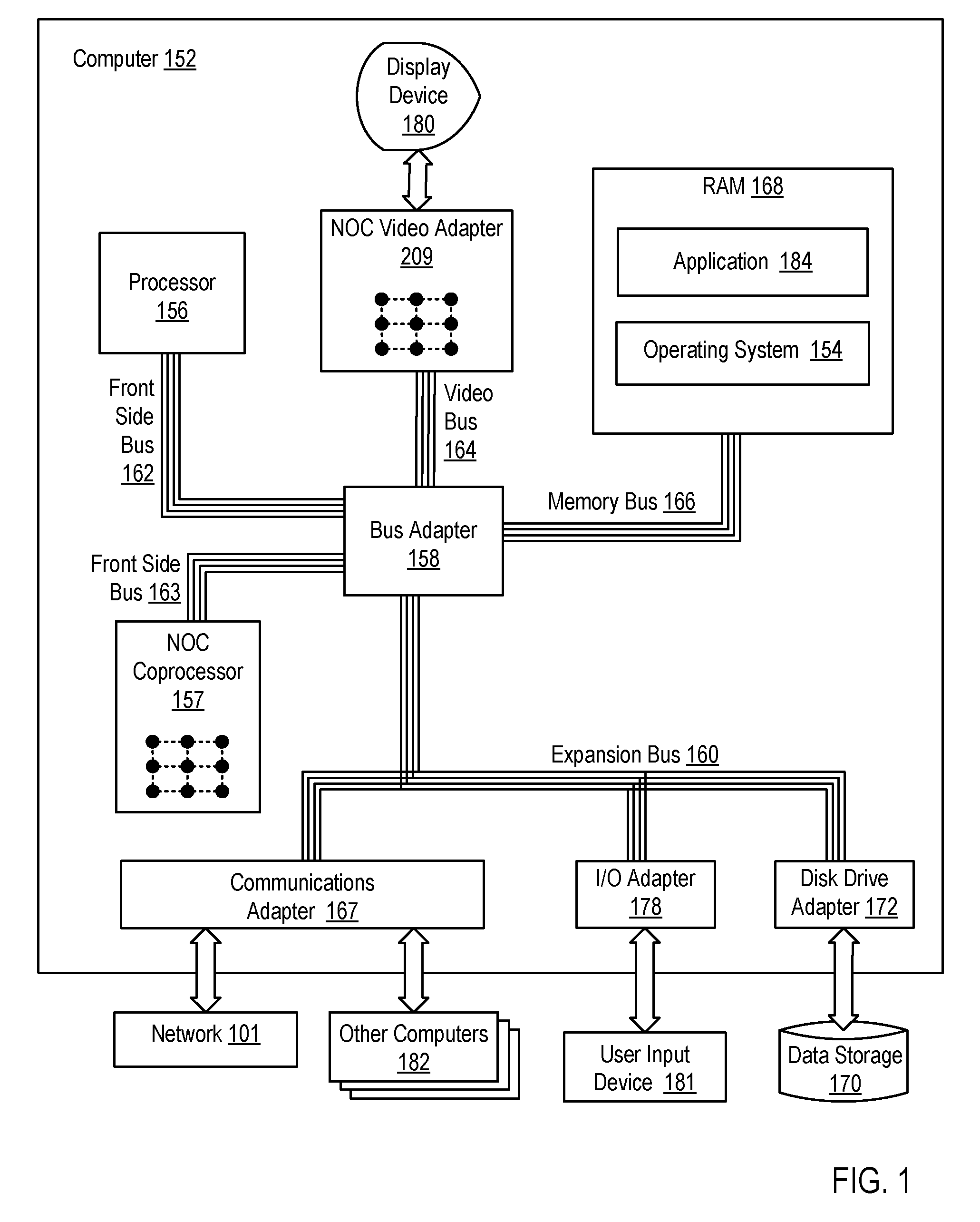

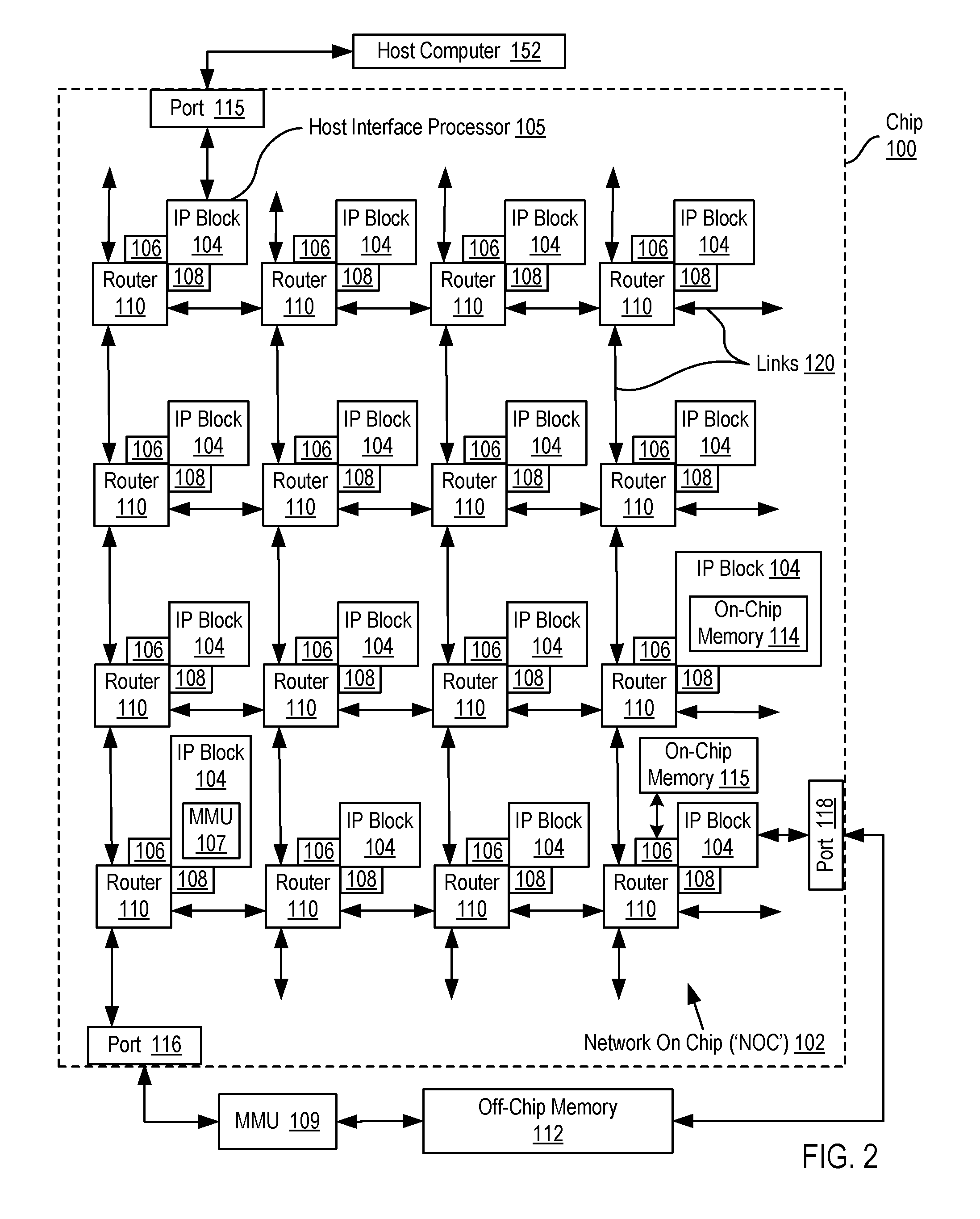

Network On Chip With Caching Restrictions For Pages Of Computer Memory

InactiveUS20100070714A1Memory adressing/allocation/relocationDigital computer detailsHardware threadParallel computing

A network on chip (‘NOC’) that includes integrated processor (‘IP’) blocks, routers, memory communications controllers, and network interface controllers, each IP block adapted to a router through a memory communications controller and a network interface controller, a multiplicity of computer processors, each computer processor implementing a plurality of hardware threads of execution; and computer memory, the computer memory organized in pages and operatively coupled to one or more of the computer processors, the computer memory including a set associative cache, the cache comprising cache ways organized in sets, the cache being shared among the hardware threads of execution, each page of computer memory restricted for caching by one replacement vector of a class of replacement vectors to particular ways of the cache, each page of memory further restricted for caching by one or more bits of a replacement vector classification to particular sets of ways of the cache.

Owner:IBM CORP

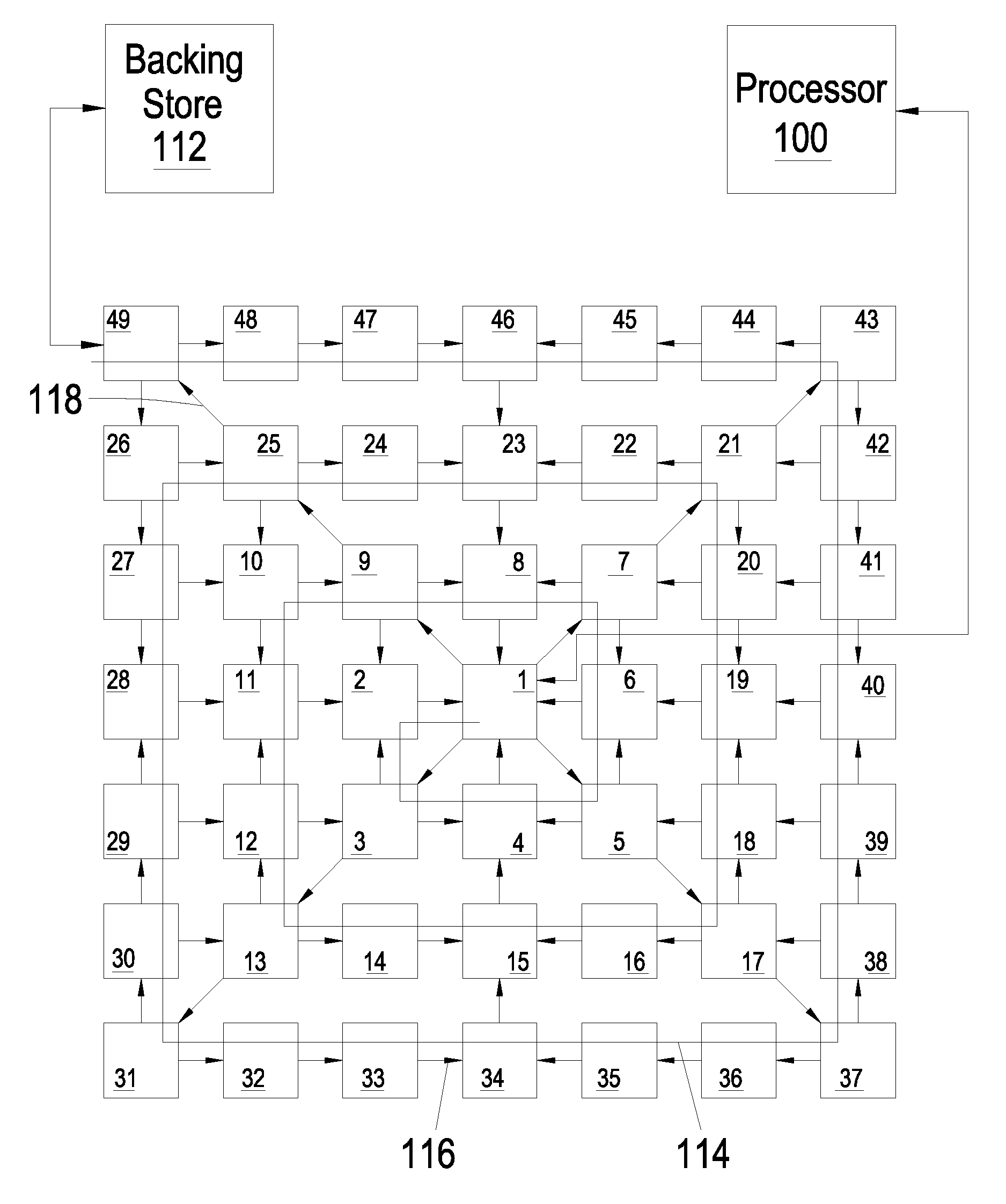

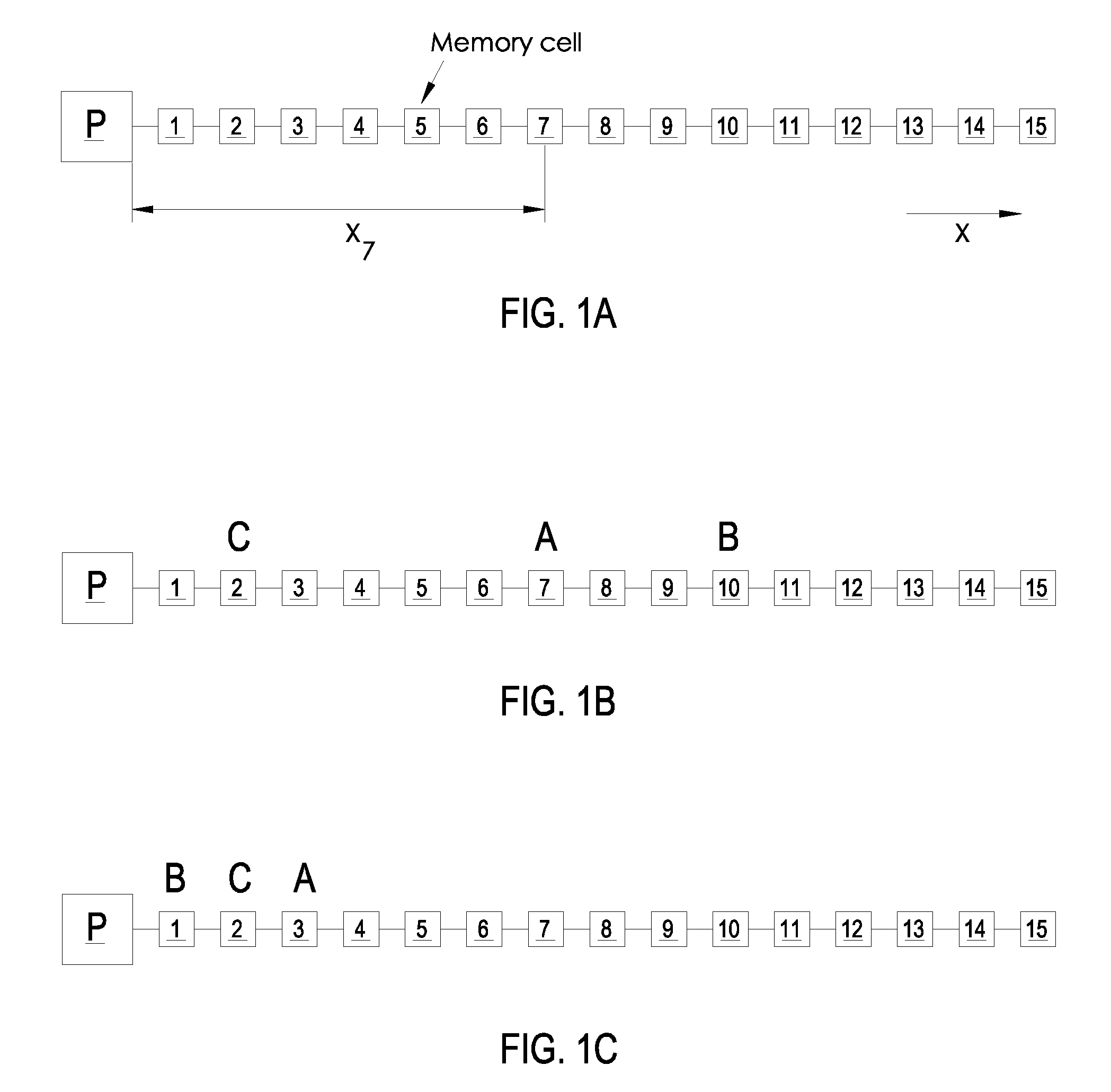

Spiral cache power management, adaptive sizing and interface operations

InactiveUS20100122031A1Memory architecture accessing/allocationEnergy efficient ICTAs DirectedParallel computing

A spiral cache memory provides low access latency for frequently-accessed values by self-organizing to always move a requested value to a front-most storage tile of the spiral. If the spiral cache needs to eject a value to make space for a value moved to the front-most tile, space is made by ejecting a value from the cache to a backing store. A buffer along with flow control logic is used to prevent overflow of writes of ejected values to the generally slow backing store. The tiles in the spiral cache may be single storage locations or be organized as some form of cache memory such as direct-mapped or set-associative caches. Power consumption of the spiral cache can be reduced by dividing the cache into an active and inactive partition, which can be adjusted on a per-tile basis. Tile-generated or global power-down decisions can set the size of the partitions.

Owner:IBM CORP

Latency-aware replacement system and method for cache memories

ActiveUS20060041720A1Low latency accessMemory architecture accessing/allocationMemory systemsGuidelineParallel computing

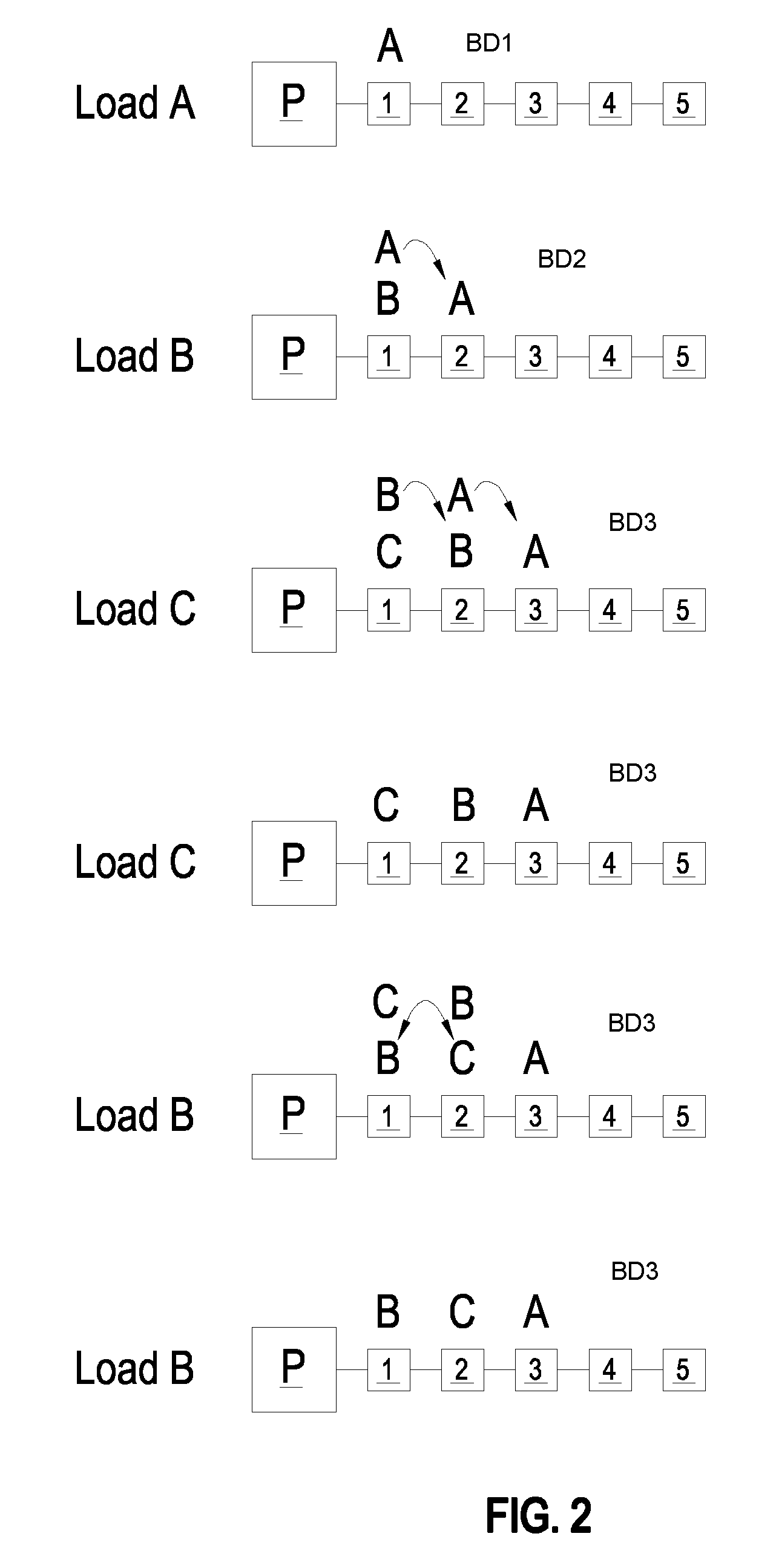

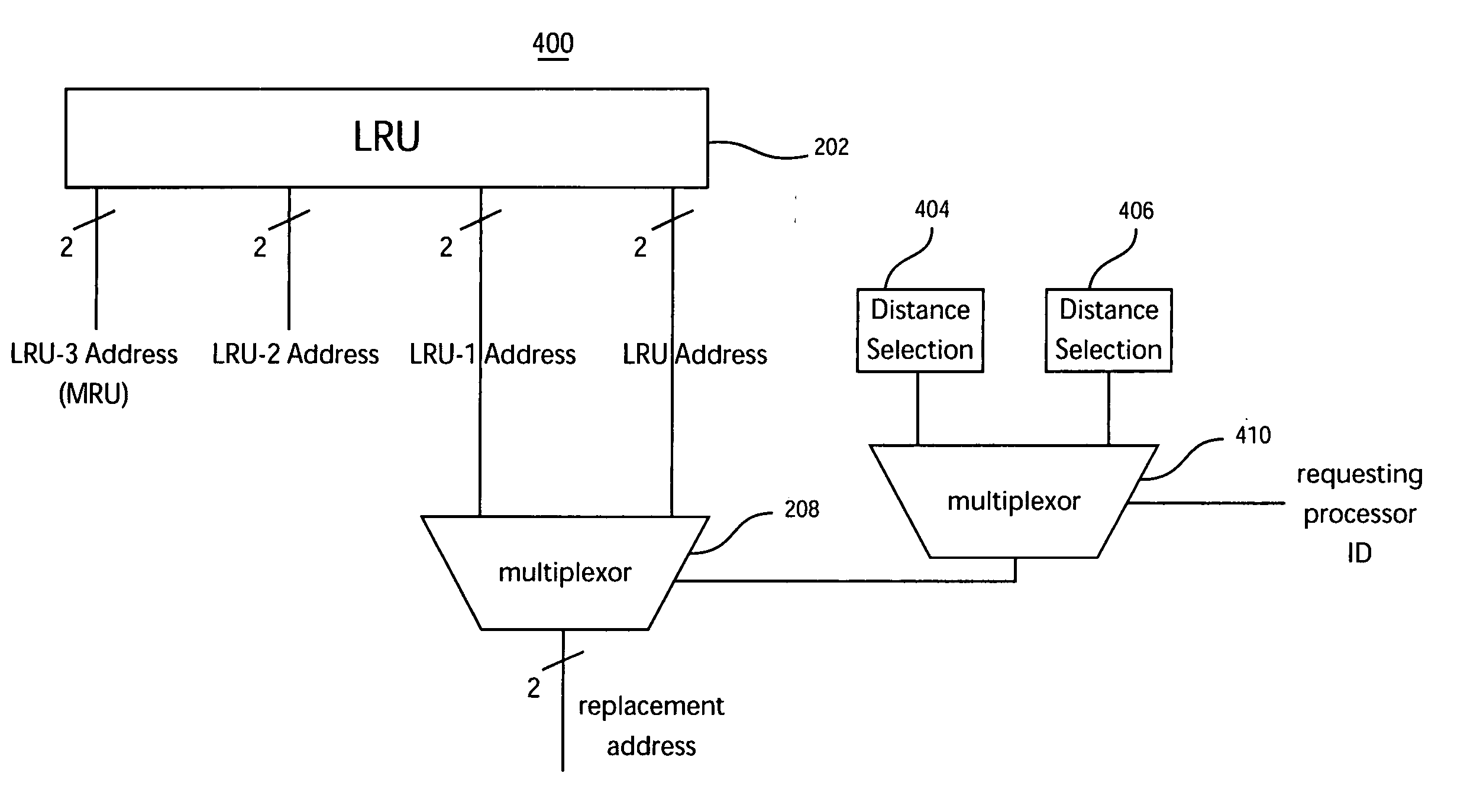

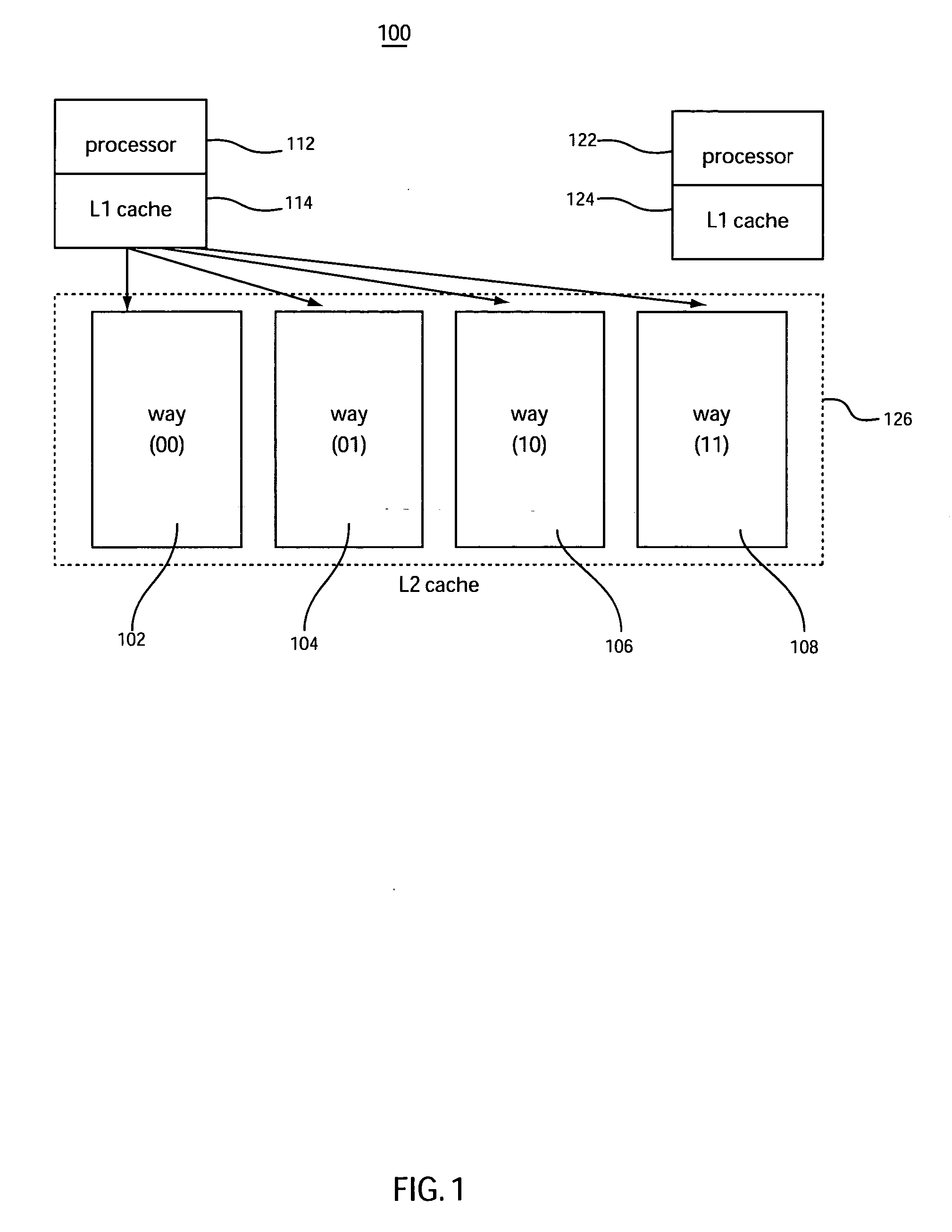

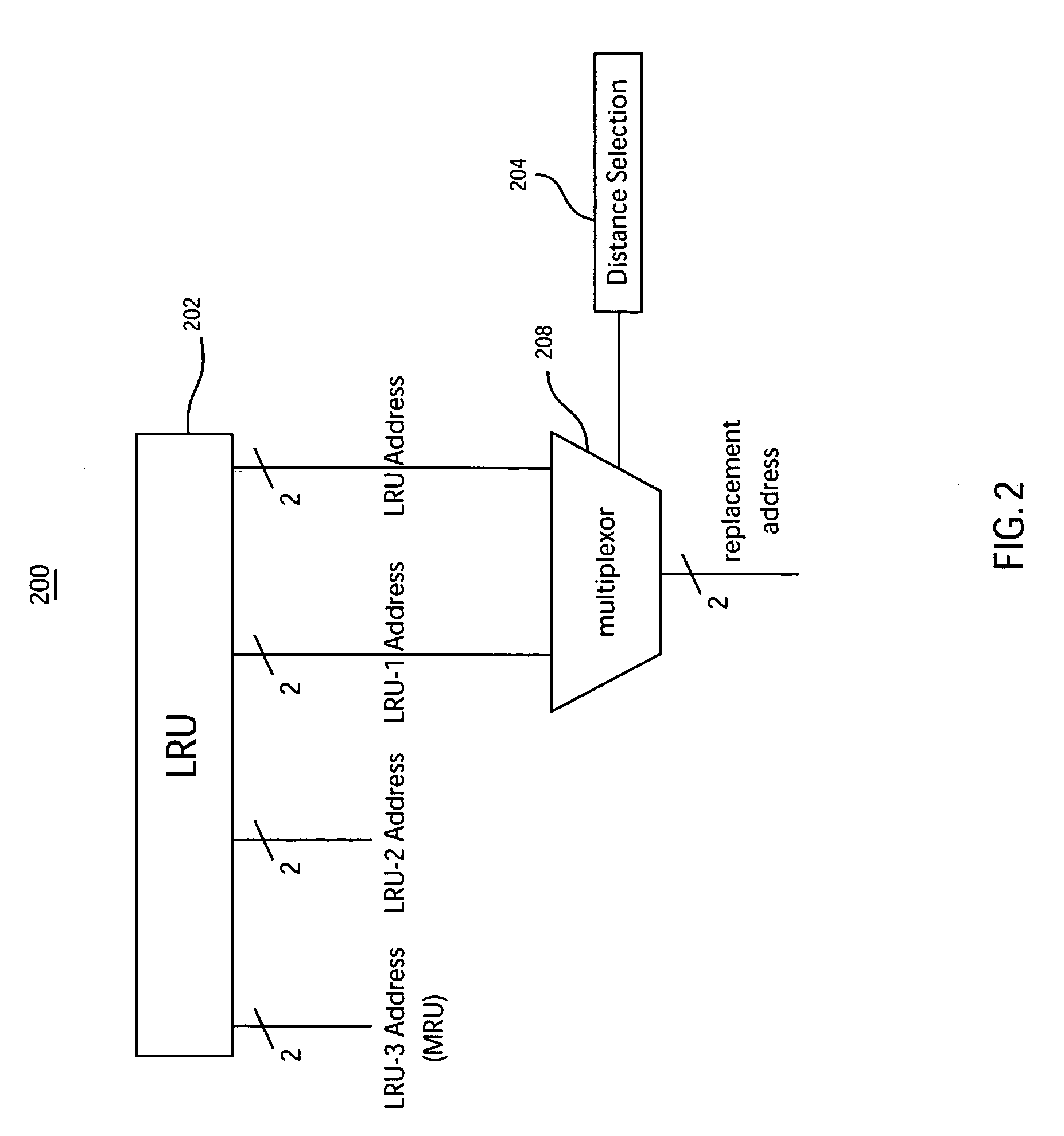

A method for replacing cache lines in a computer system having a non-uniform set associative cache memory is disclosed. The method incorporates access latency as an additional factor into the existing ranking guidelines for replacement of a line, the higher the rank of the line the sooner that it is likely to be evicted from the cache. Among a group of highest ranking cache lines in a cache set, the cache line chosen to be replaced is one that provides the lowest latency access to a requesting entity, such as a processor. The distance separating the requesting entity from the memory partition where the cache line is stored most affects access latency.

Owner:GOOGLE LLC

Method and architecture for data coherency in set-associative caches including heterogeneous cache sets having different characteristics

A processor architecture and method are shown which involve a cache having heterogeneous cache sets. An address value of a data access request from a CPU is compared to all cache sets within the cache regardless of the type of data and the type of data access indicated by the CPU to create a unitary interface to the memory hierarchy of the architecture. Data is returned to the CPU from the cache set having the shortest line length of the cache sets containing the data corresponding to the address value of the data request. Modified data replaced in a cache set having a line length that is shorter than other cache sets is checked for matching data resident in the cache sets having longer lines and the matching data is replaced with the modified data. All the cache sets at the cache level of the memory hierarchy are accessed in parallel resulting in data being retrieved from the fastest memory source available, thereby improving memory performance. The unitary interface to a memory hierarchy having multiple cache sets maintains data coherency, simplifies code design and increases resilience to coding errors.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

Accessing data values in a cache

ActiveUS6976126B2Operation can be suppressedReduce power consumptionEnergy efficient ICTMemory adressing/allocation/relocationParallel computingData value

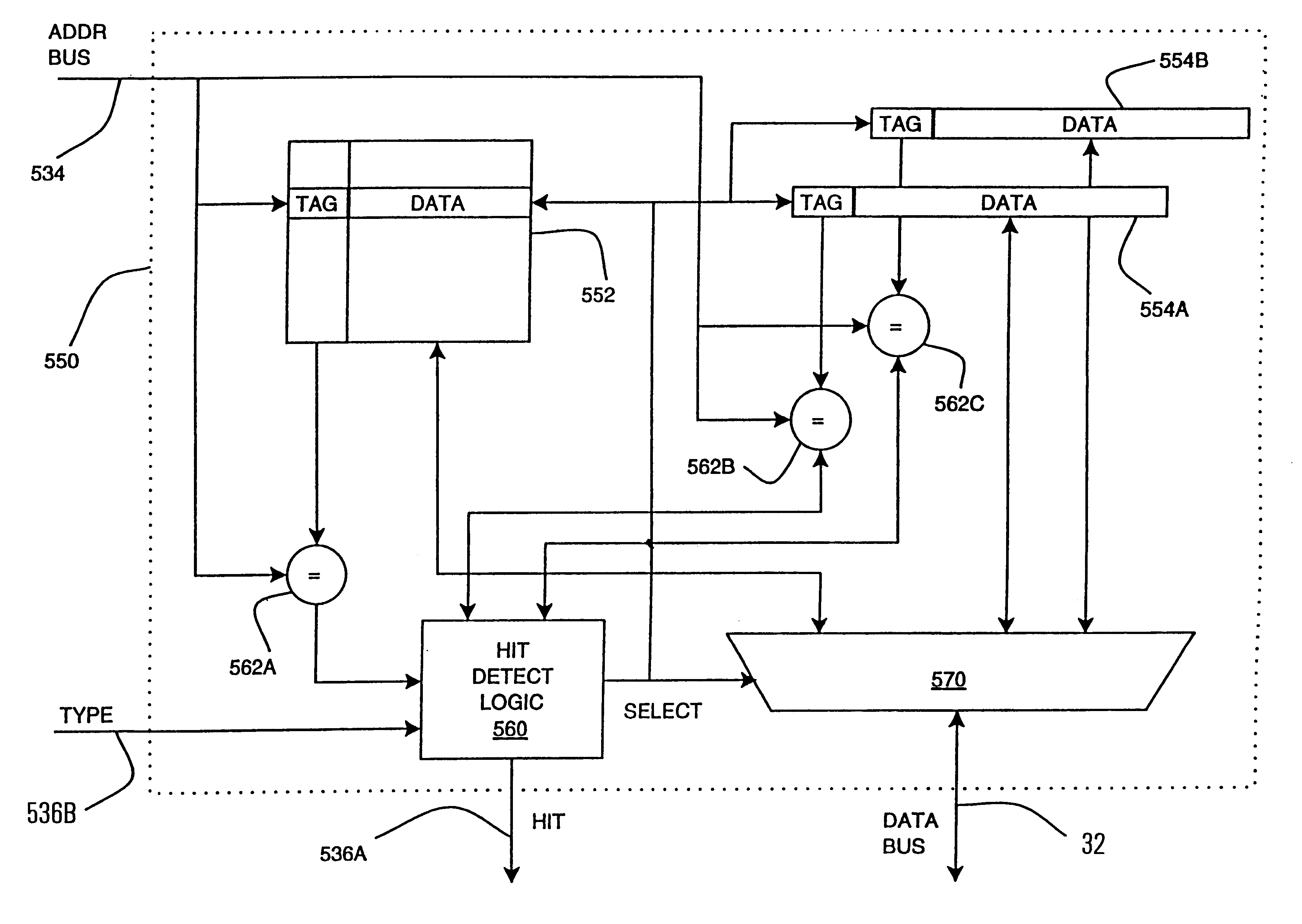

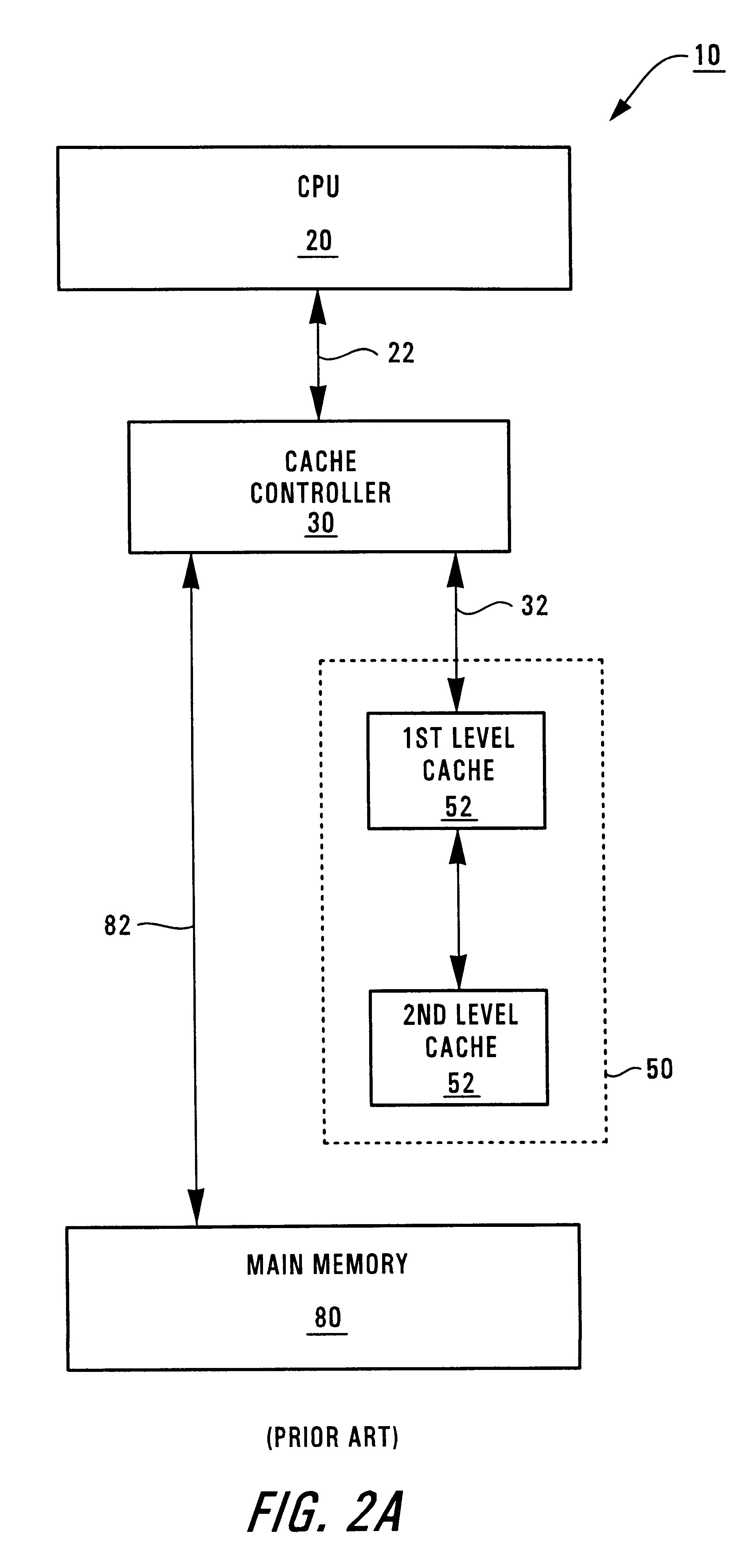

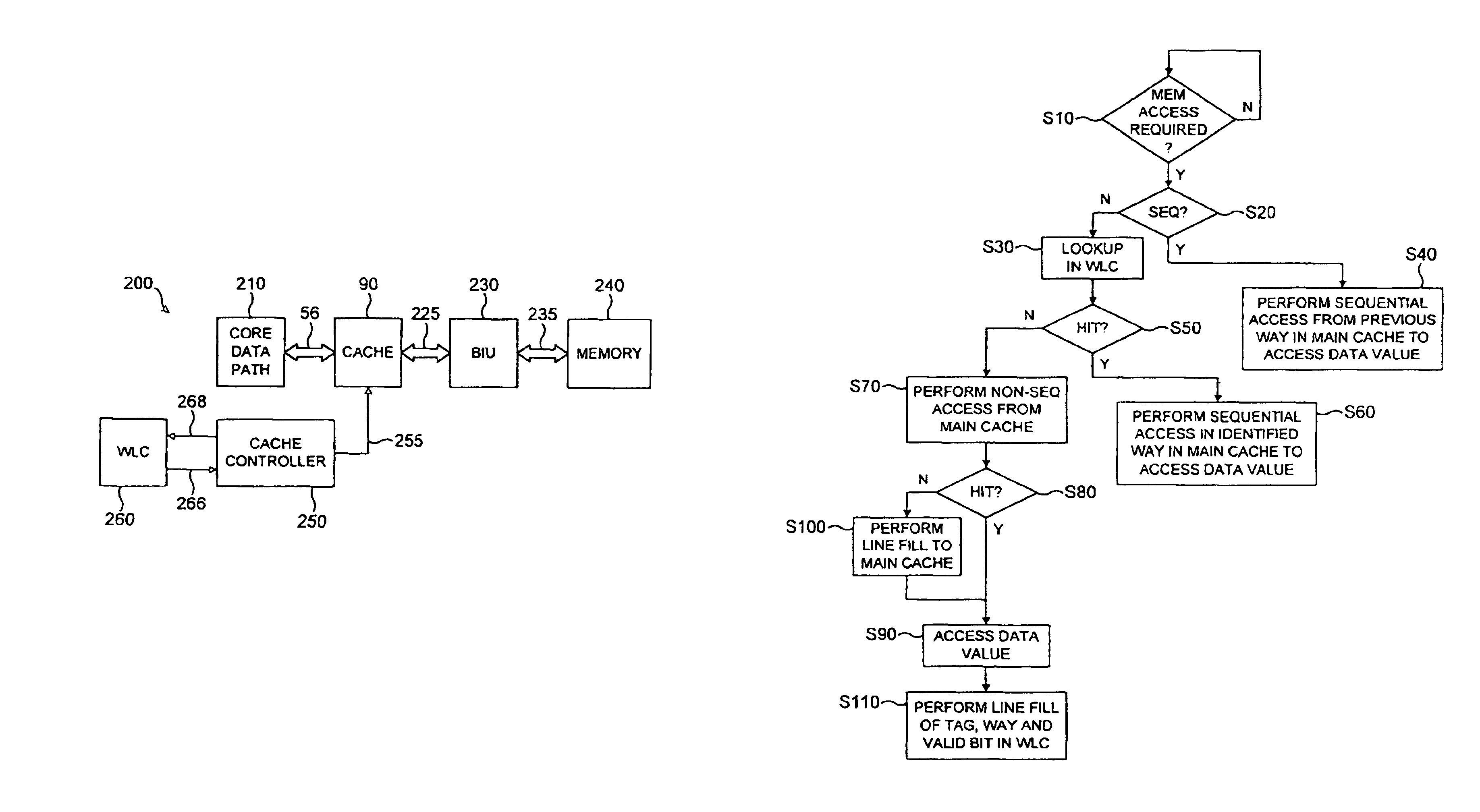

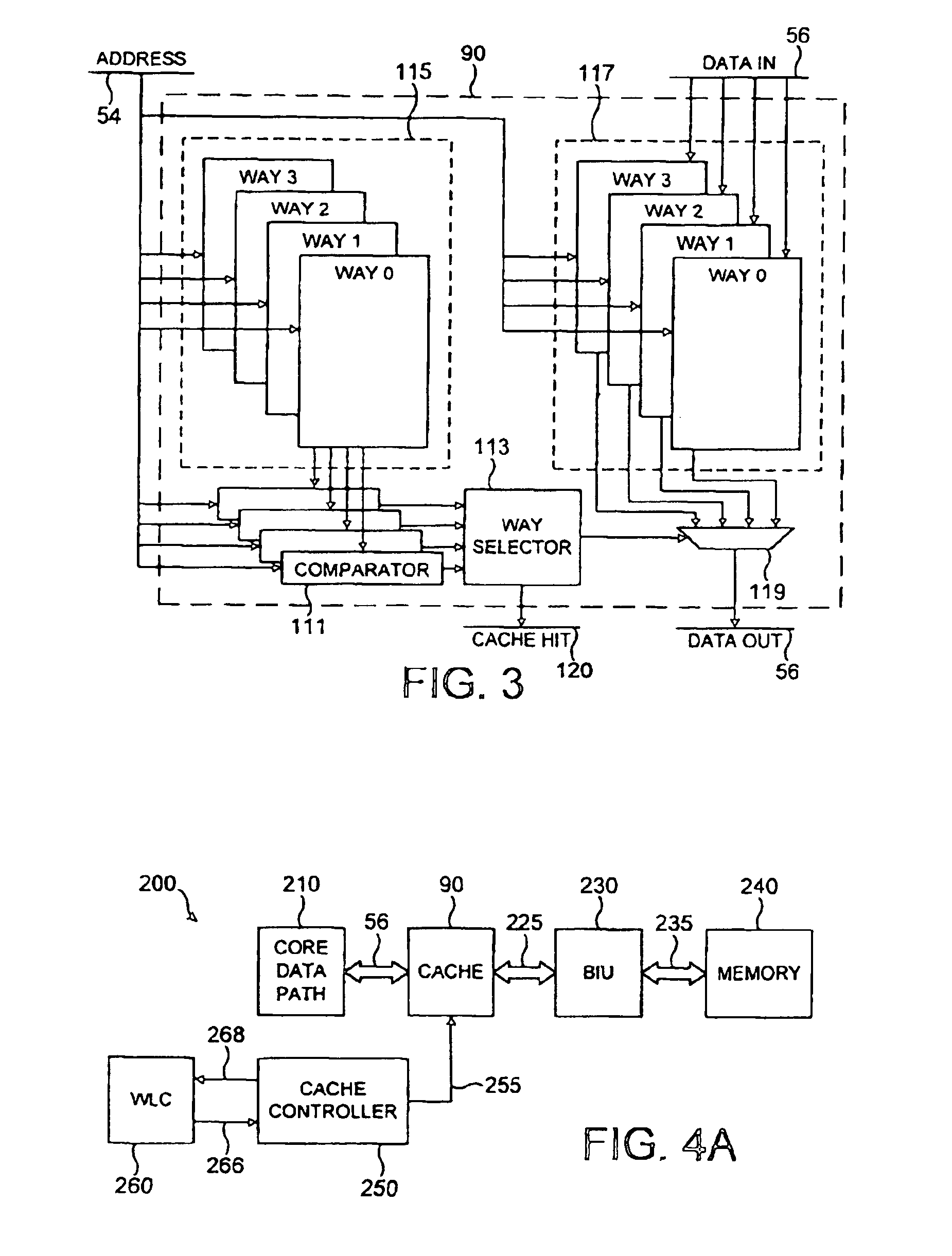

The present invention provides an apparatus and method for accessing data values in a cache and in particular accessing data values in an ‘n’ way set associative cache. A data processing apparatus is provided comprising an ‘n’ way set-associative cache, each cache way having a plurality of entries for storing a corresponding plurality of data values. A cache controller is provided which is operable on receipt of an access request for a data value to determine whether that data value is accessible within the cache, the cache comprising cache access logic operable under the control of the cache controller to determine whether a data value the subject of an access request is accessible in one of the cache ways. Also provided is a way lookup cache arranged to store an indication of the cache way in which a number of the plurality of data values stored in the cache are accessible. The cache controller is operable, when an access request for a data value specifies a non-sequential access, to reference the way lookup cache to determine whether that data value is identified in the way lookup cache and, if so, the cache controller being further operable to suppress the operation of the cache access logic and to cause that data value to be accessed. The provision of a way lookup cache enables the power consumption of the cache to be reduced by enabling the operation of the cache access logic to be suppressed.

Owner:ARM LTD

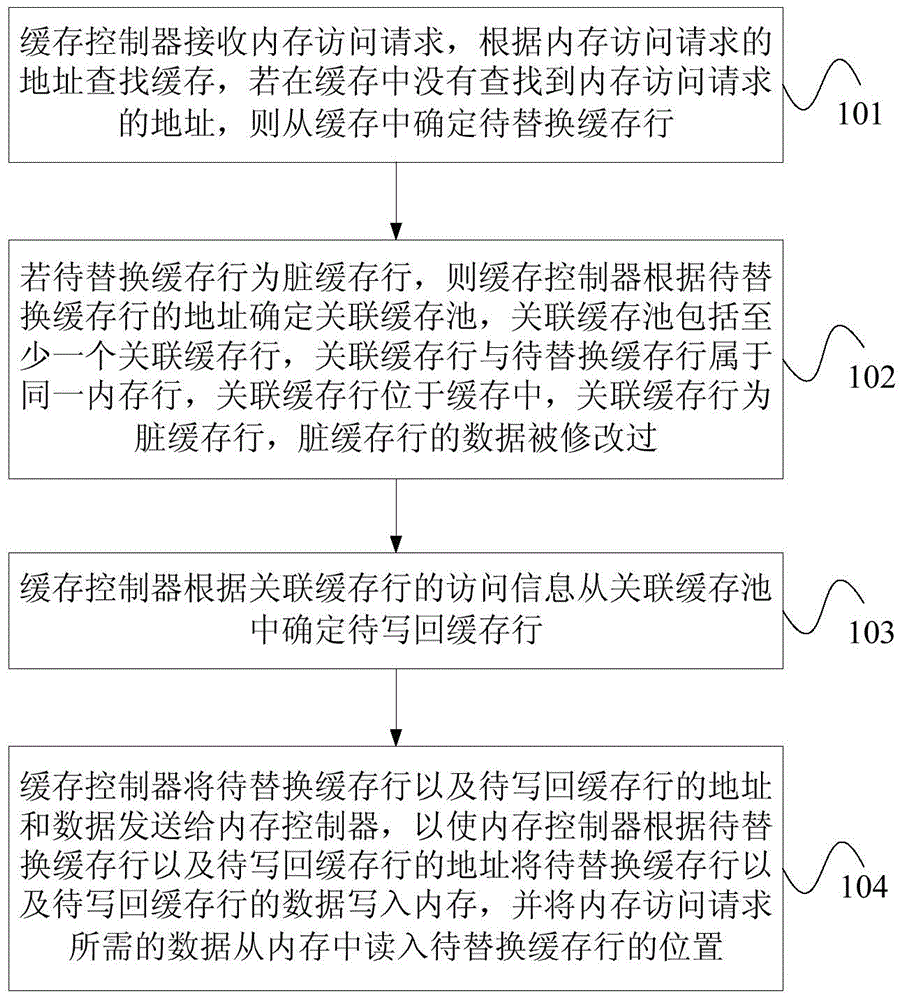

Cache replacing method, cache controller and processor

ActiveCN105095116AImprove access performanceReduce the number of writesMemory adressing/allocation/relocationParallel computingHit ratio

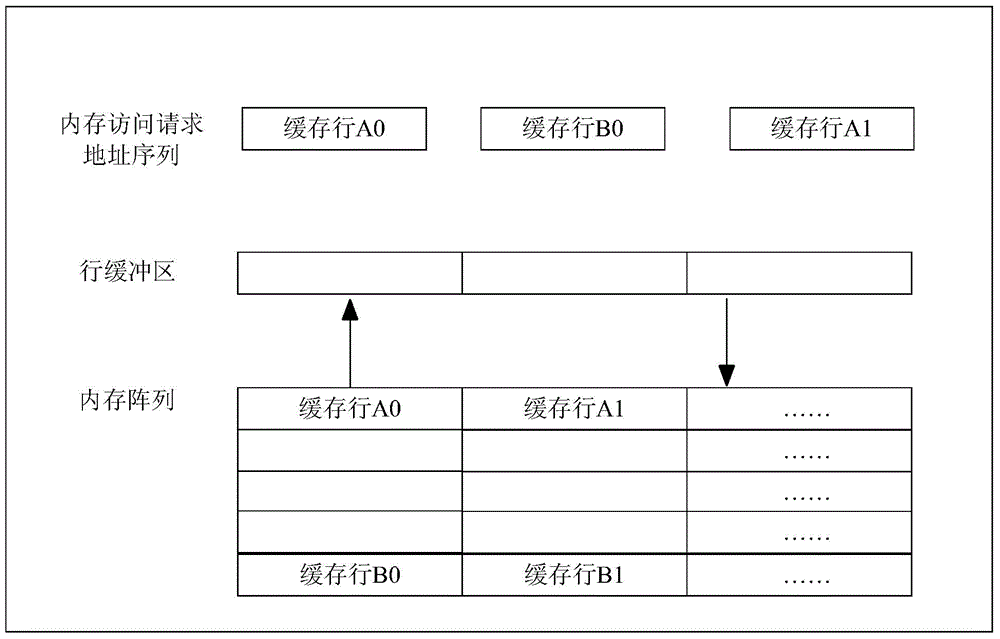

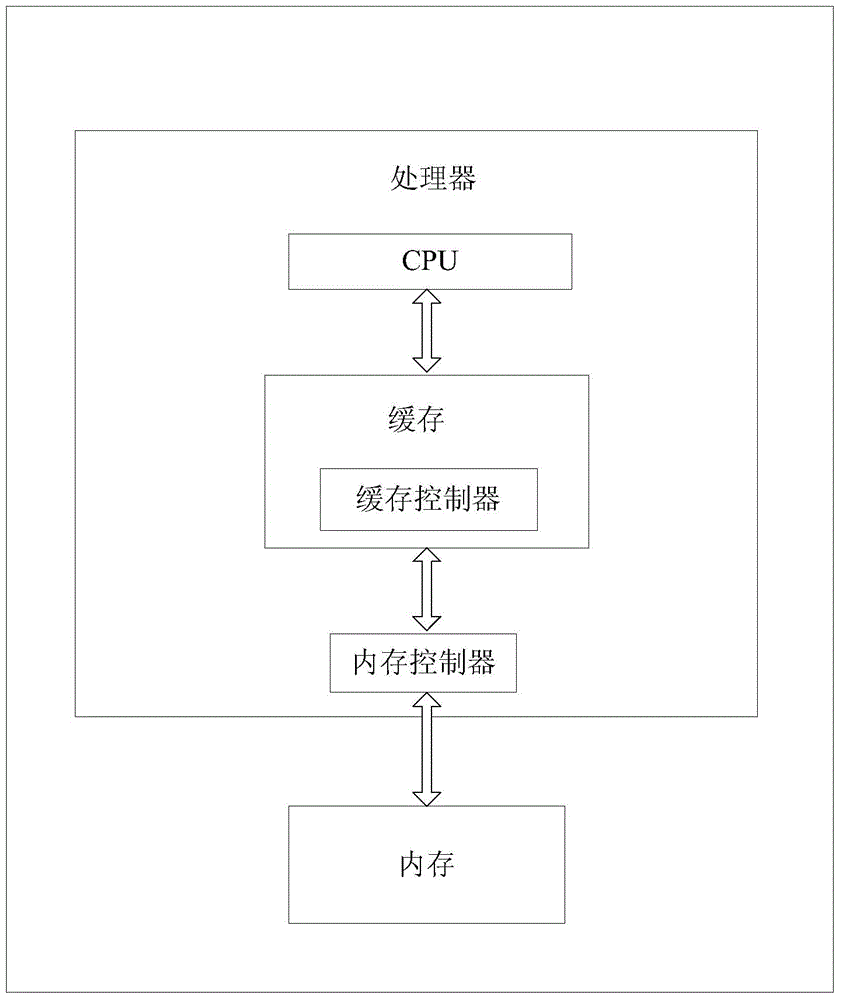

The embodiment of the invention provides a cache replacing method, a cache controller and a processor. The method comprises the following steps that: the cache controller determines an associated cache pool of a cache line to be replaced, wherein each associated cache row in the associated cache pool and the cache row to be replaced belong to the same memory row; a cache row to be written back is further determined from the associated cache pool according to the access information of the associated cache row; and data in the cache row to be replaced and the cache row to be written back are simultaneously written into a memory. The cache row to be replaced and the cache row to be written back belong to the same memory row, so that the hit rate of the cache region can be improved; and the memory access performance is improved. The cache controller further determines the cache row to be written back from the associated cache pool according to the access information of the associated cache row, and only the cache row to be written back in the associated cache pool is written into the memory, so that the number of the memory writing times can be reduced; and the service life of the memory is prolonged.

Owner:HUAWEI TECH CO LTD +1

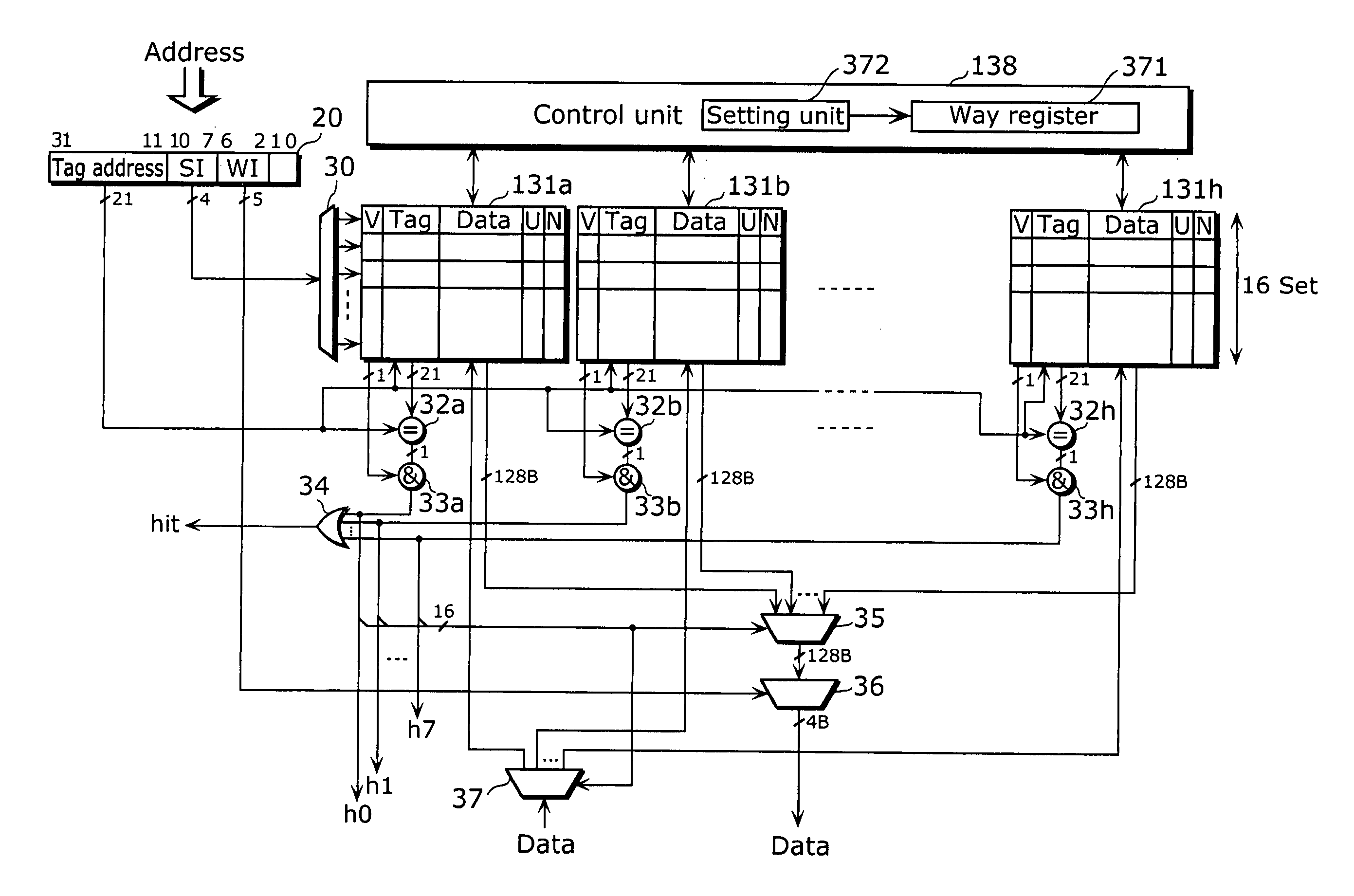

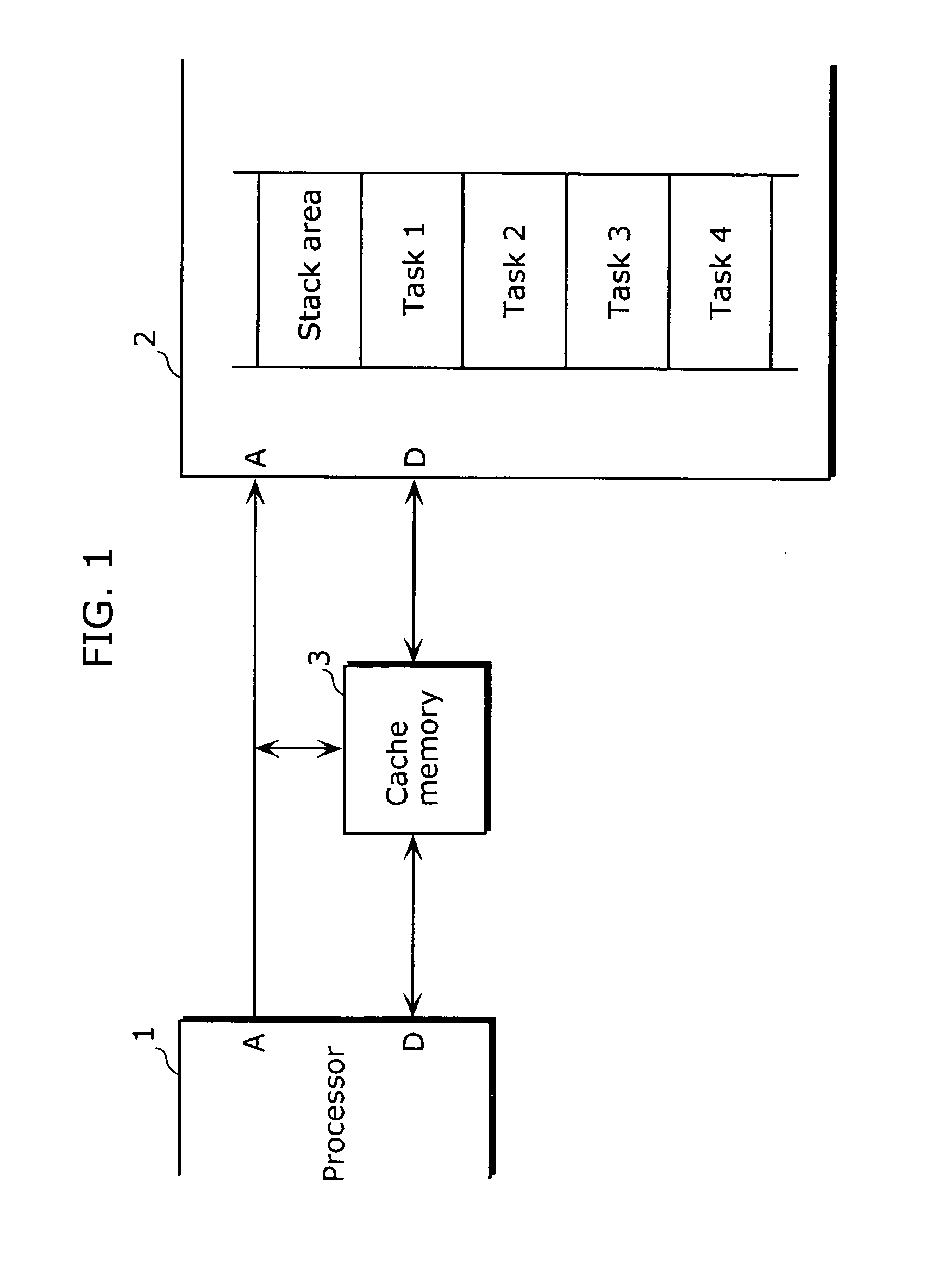

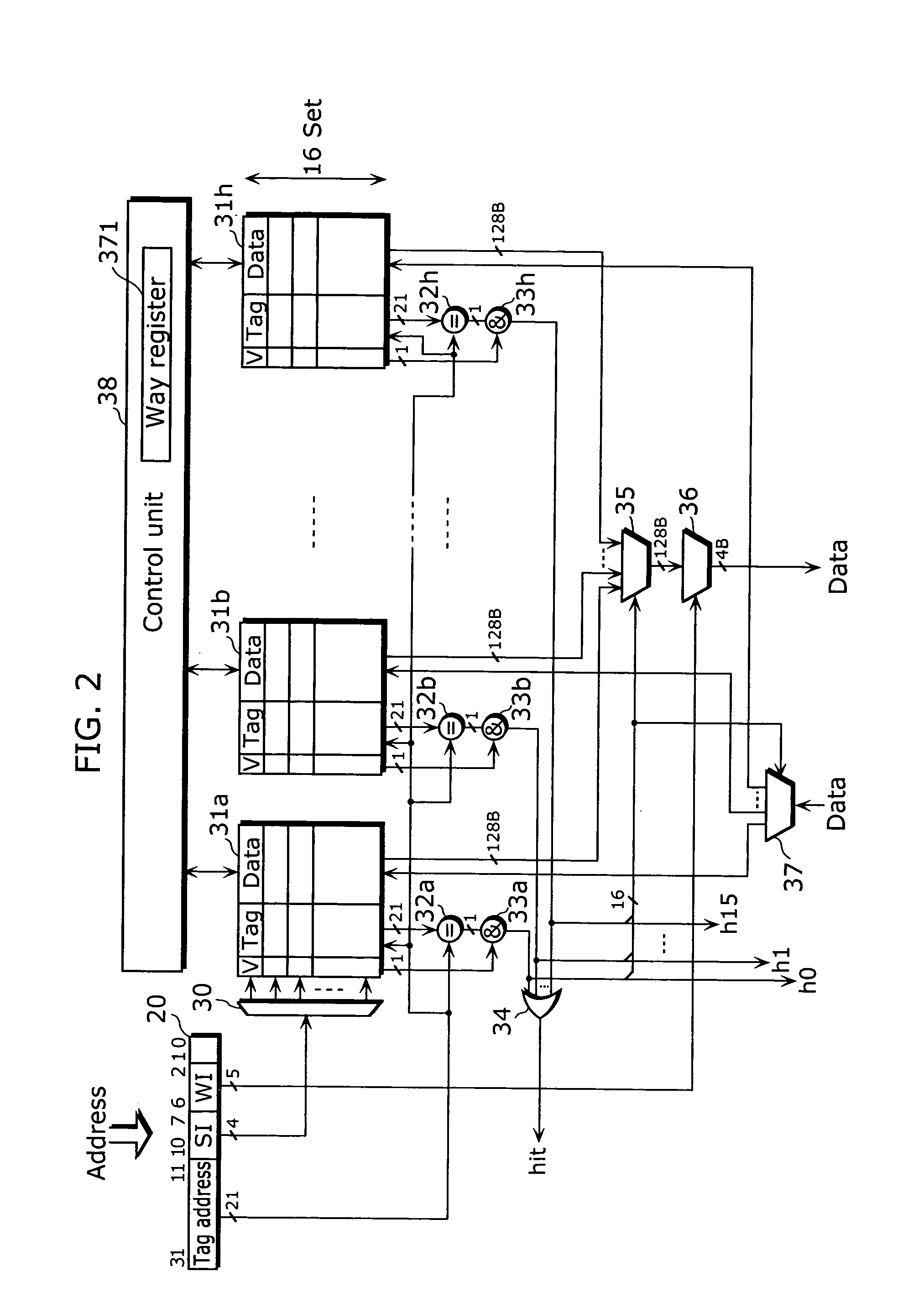

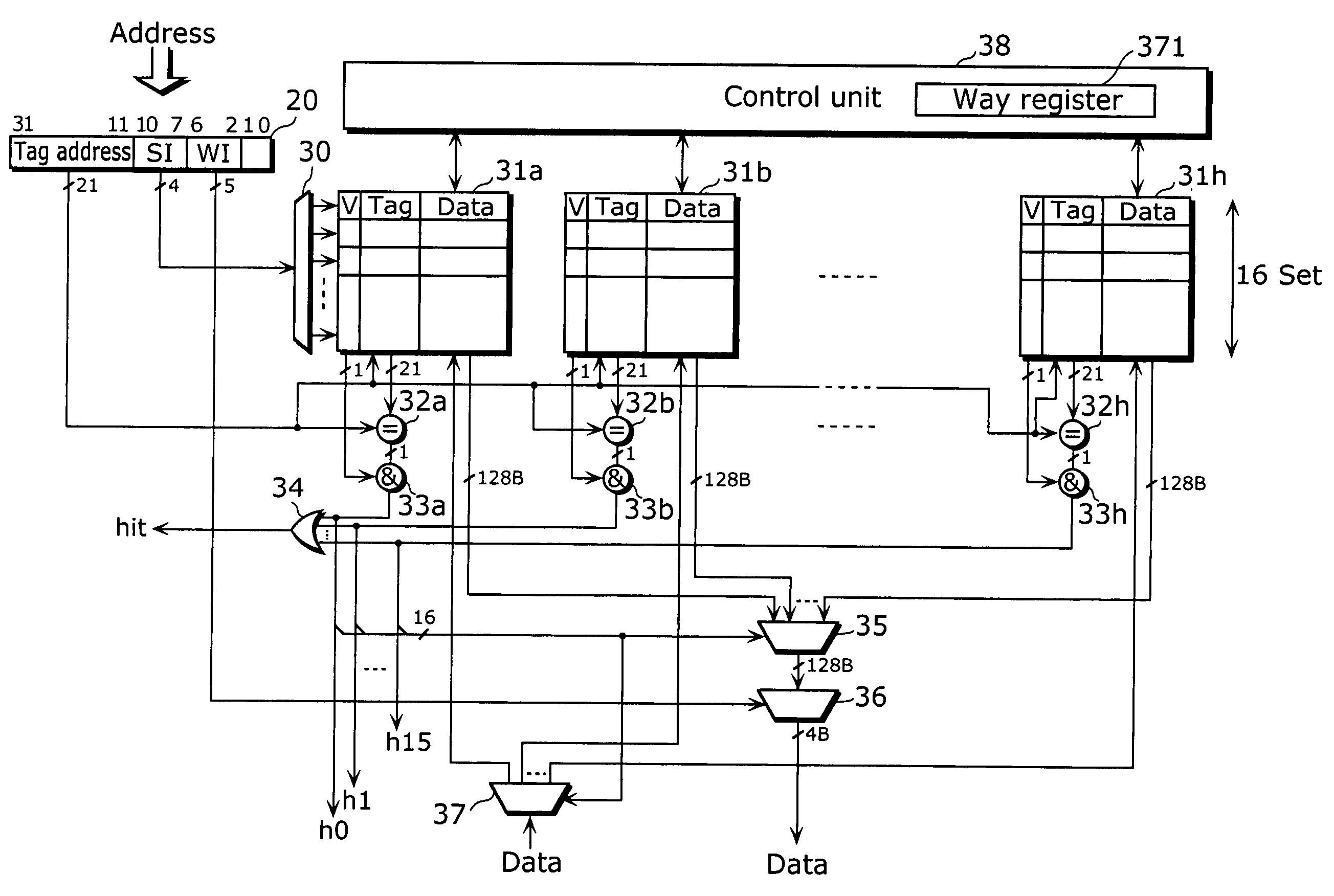

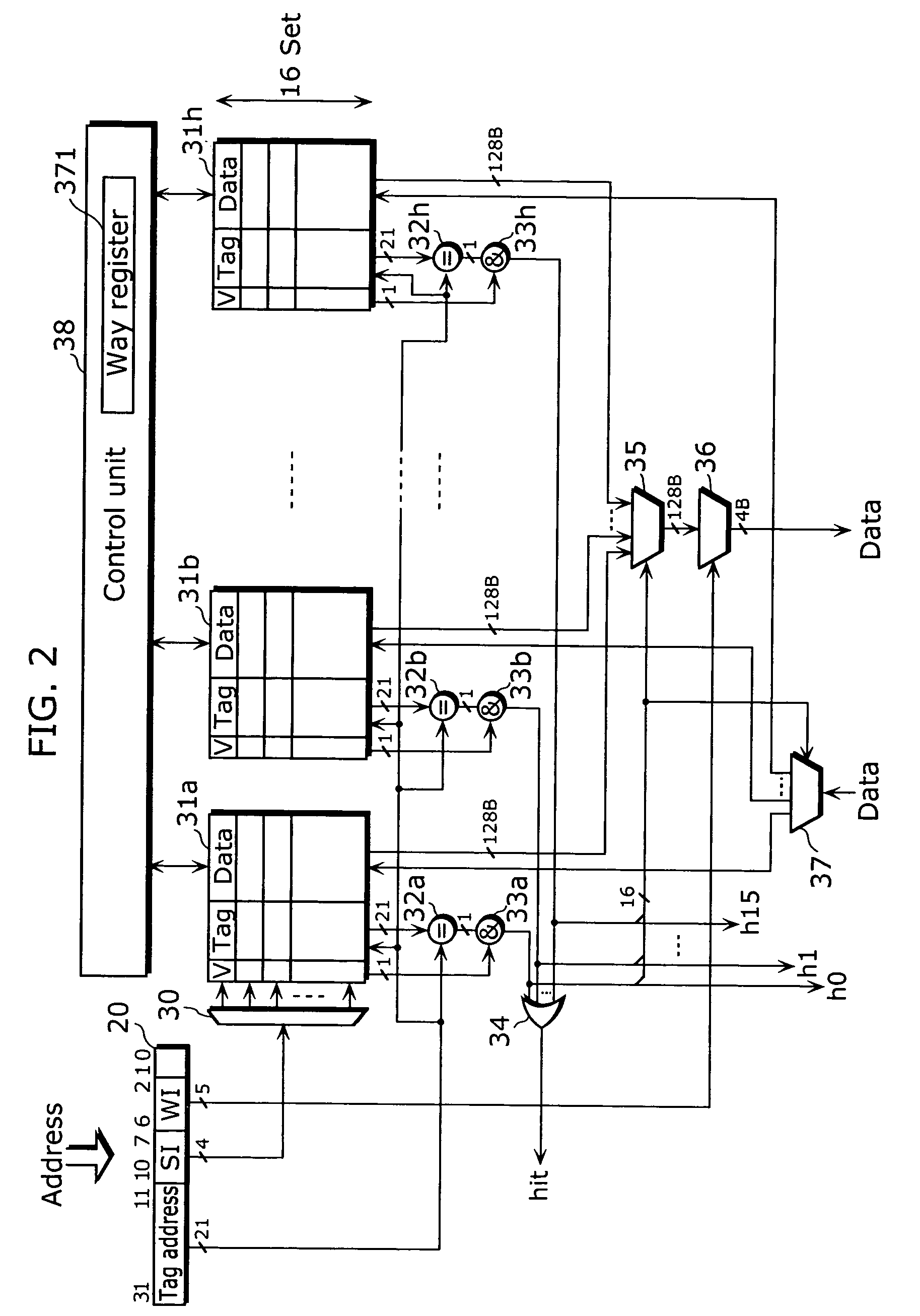

Cache memory and control method thereof

ActiveUS20070136530A1Easy to processEasily secures real processing time for a taskMemory adressing/allocation/relocationProcessor registerControl register

The cache memory in the present invention is an N-way set-associative cache memory including a control register which indicates one or more ways among N ways, a control unit which activates the way indicated by said control register, and an updating unit which updates contents of said control register. The control unit restricts at least replacement, for a way other than the active way indicated by the control register.

Owner:SOCIONEXT INC

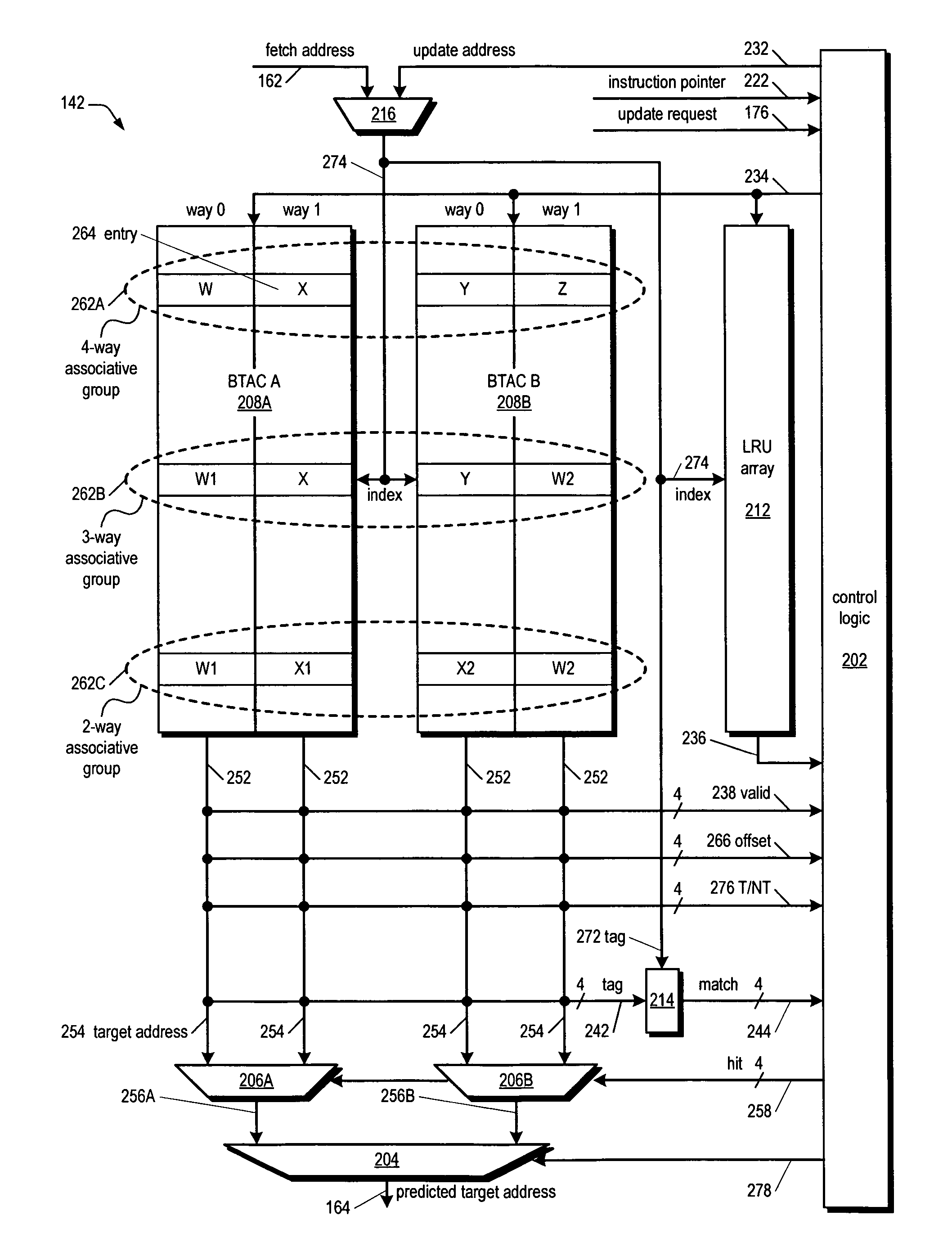

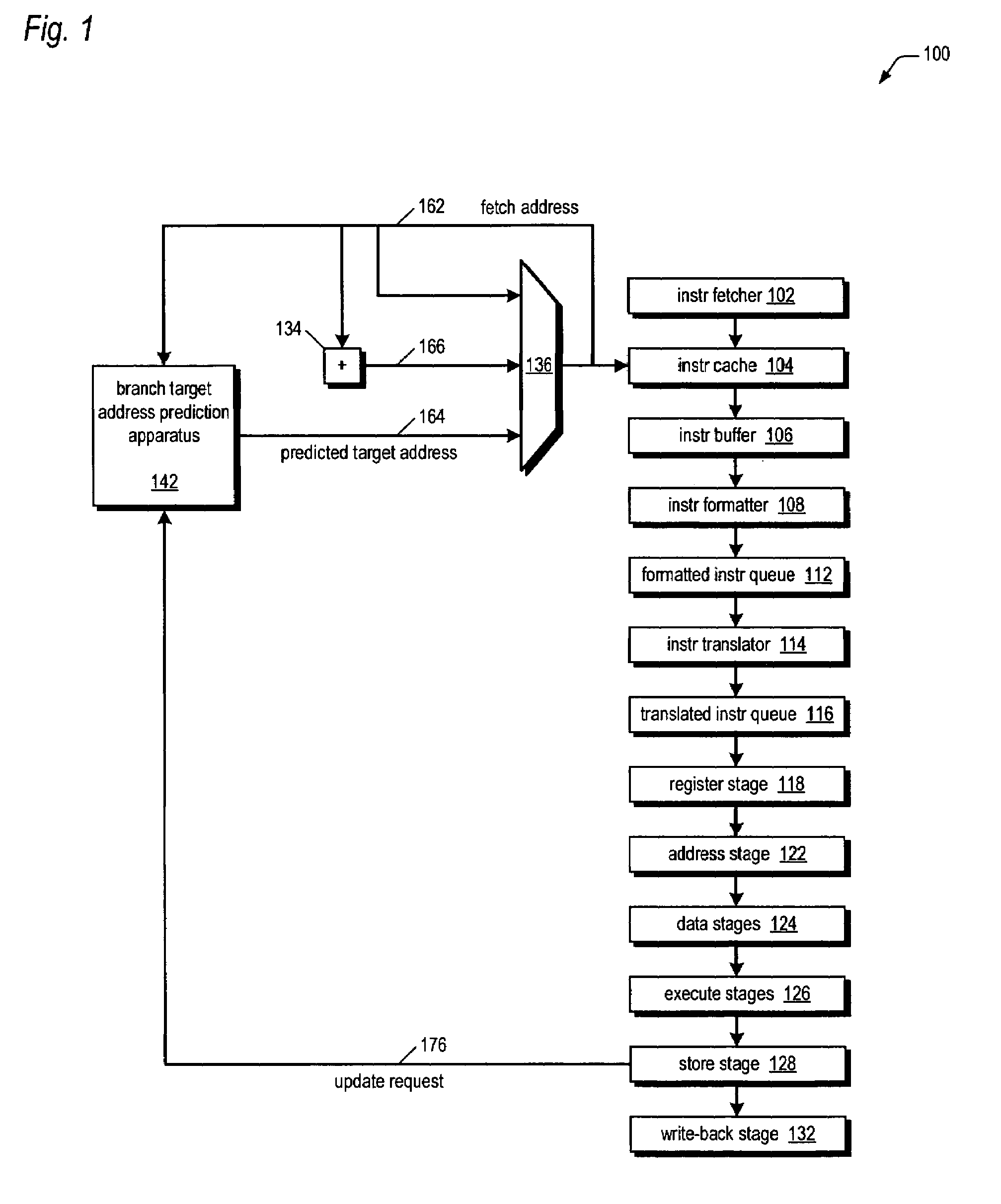

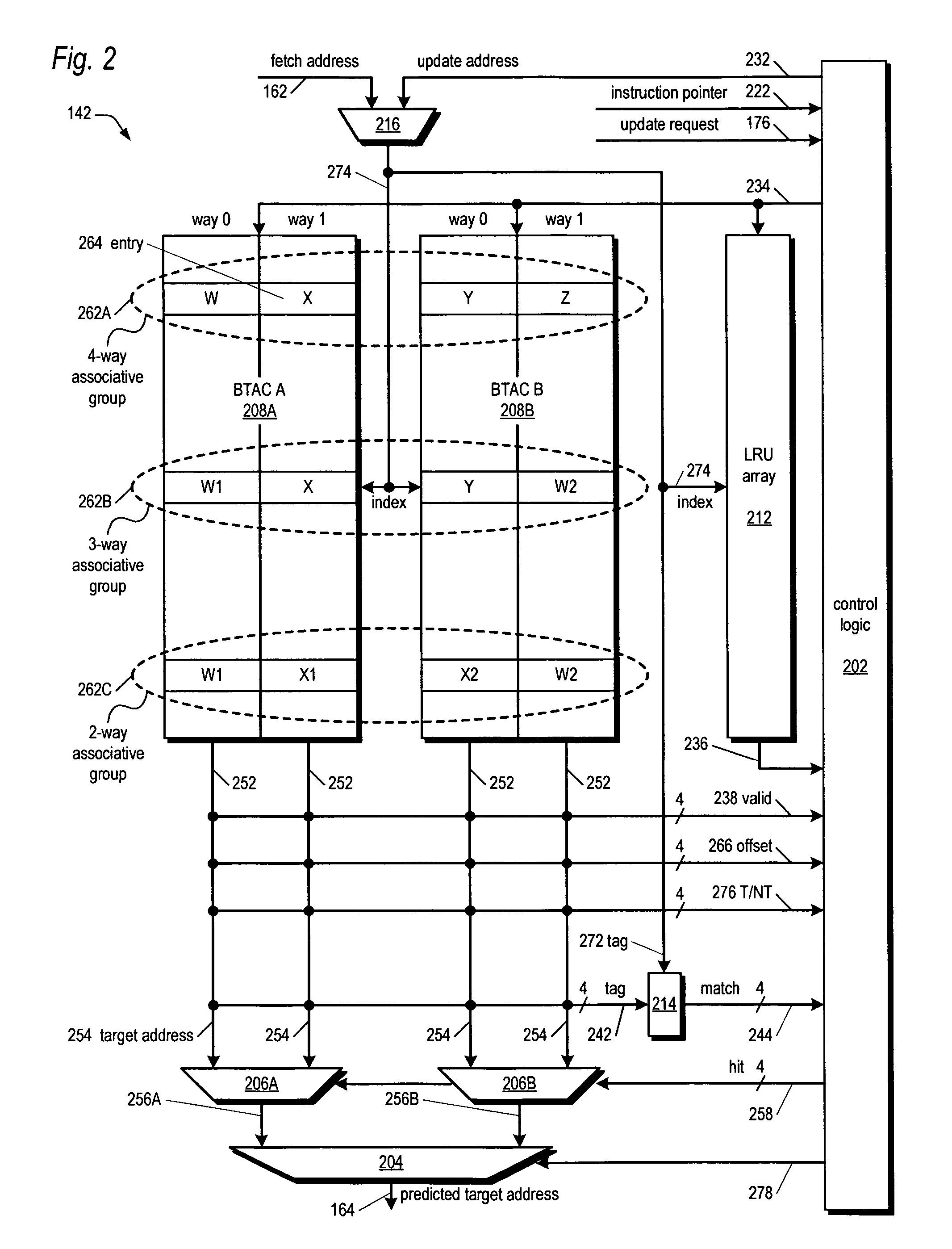

Variable group associativity branch target address cache delivering multiple target addresses per cache line

ActiveUS7707397B2Easy to useAchieving associativityComputation using non-contact making devicesDigital computer detailsBranch target address cacheParallel computing

A branch prediction apparatus having two two-way set associative cache memories each indexed by a lower portion of an instruction cache fetch address is disclosed. The index selects a group of four entries, one from each way of each cache. Each entry stores a single target address of a different previously executed branch instruction. For some groups, the four entries cache target addresses for one branch instruction in each of four different cache lines, to obtain four-way group associativity; for other groups, the four entries cache target addresses for one branch instruction in each of two different cache lines and two branch instructions in a third different cache line, to effectively obtain three-way group associativity, depending on the distribution of the branch instructions in the program. The apparatus trades off associativity for number of predictable branches per cache line on an index-by-index basis to efficiently use storage space.

Owner:VIA TECH INC

Prioritizing and locking removed and subsequently reloaded cache lines

InactiveUS6901483B2Low cache hit rateInefficiency described above will be avoidedMemory adressing/allocation/relocationCache hierarchyParallel computing

Owner:INT BUSINESS MASCH CORP

Set-associative cache memory having a built-in set prediction array

InactiveUS6356990B1Memory architecture accessing/allocationEnergy efficient ICTCache accessParallel computing

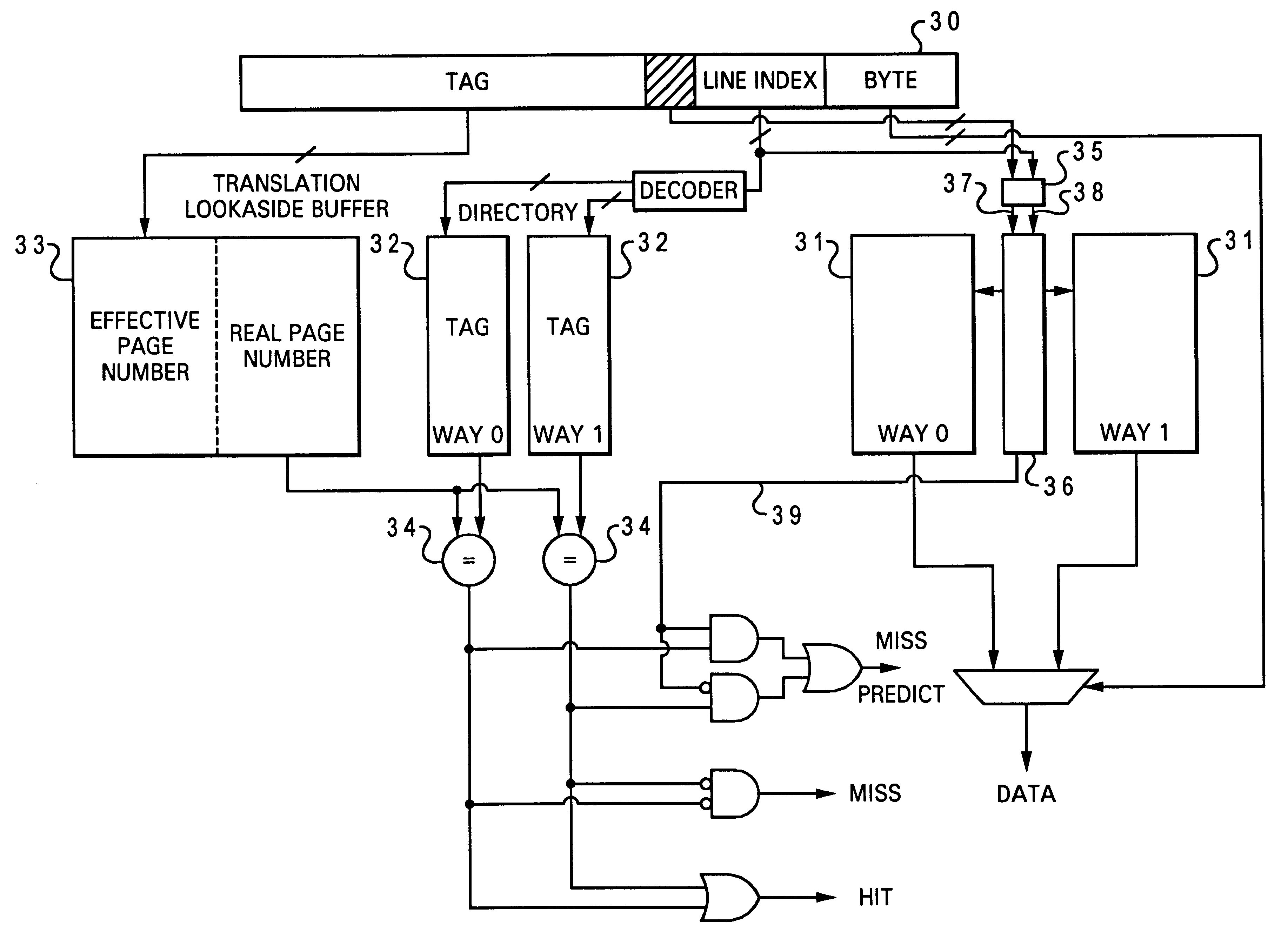

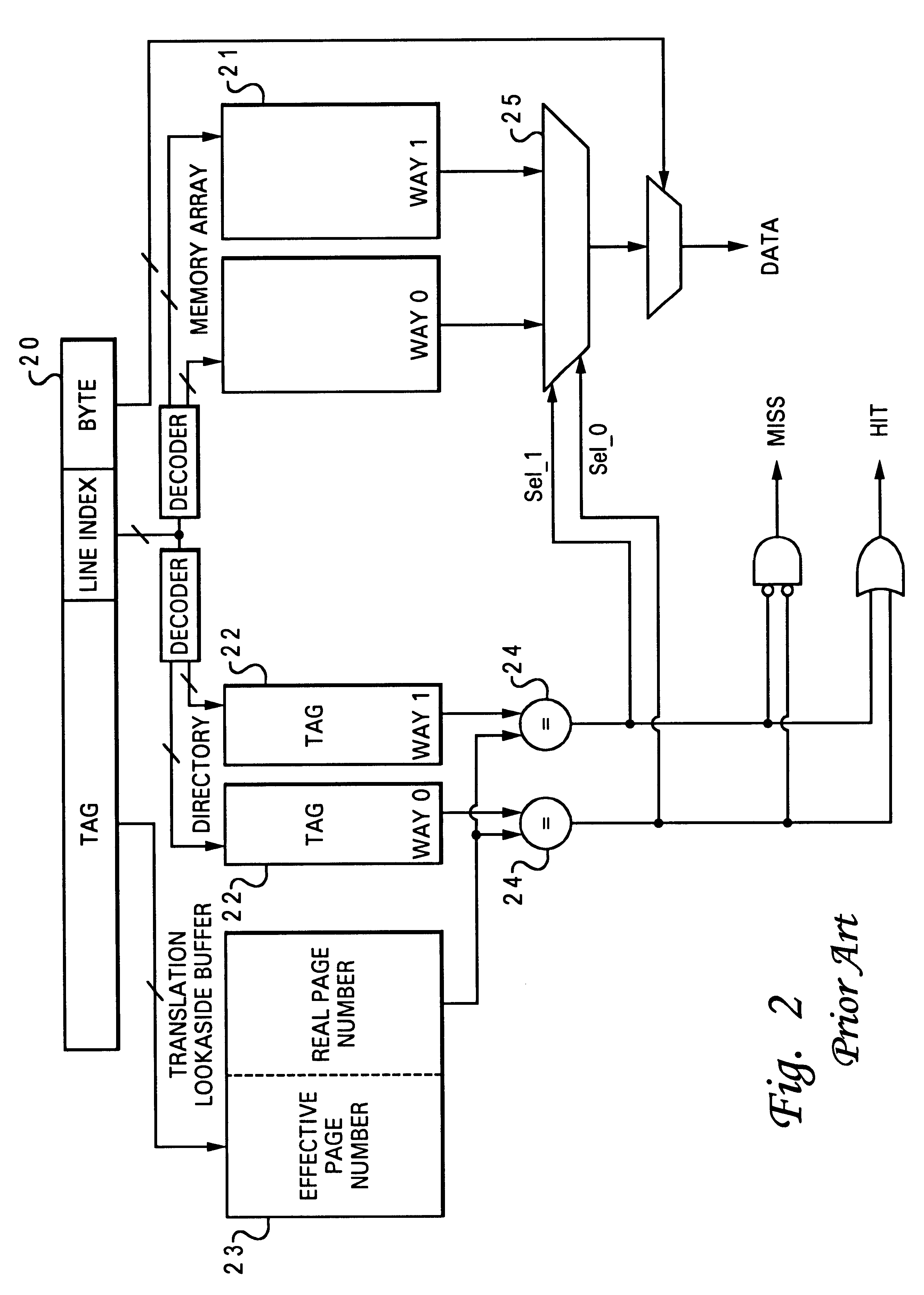

A set-associative cache memory having a built-in set prediction array is disclosed. The cache memory can be accessed via an effective address having a tag field, a line index field, and a byte field. The cache memory includes a directory, a memory array, a translation lookaside buffer, and a set prediction array. The memory array is associated with the directory such that each tag entry within the directory corresponds to a cache line within the memory array. In response to a cache access by an effective address, the translation lookaside buffer determines whether or not the data associated with the effective address is stored within the memory array. The set prediction array is built-in within the memory array such that an access to a line entry within the set prediction array can be performed in a same access cycle as an access to a cache line within the memory array.

Owner:TWITTER INC

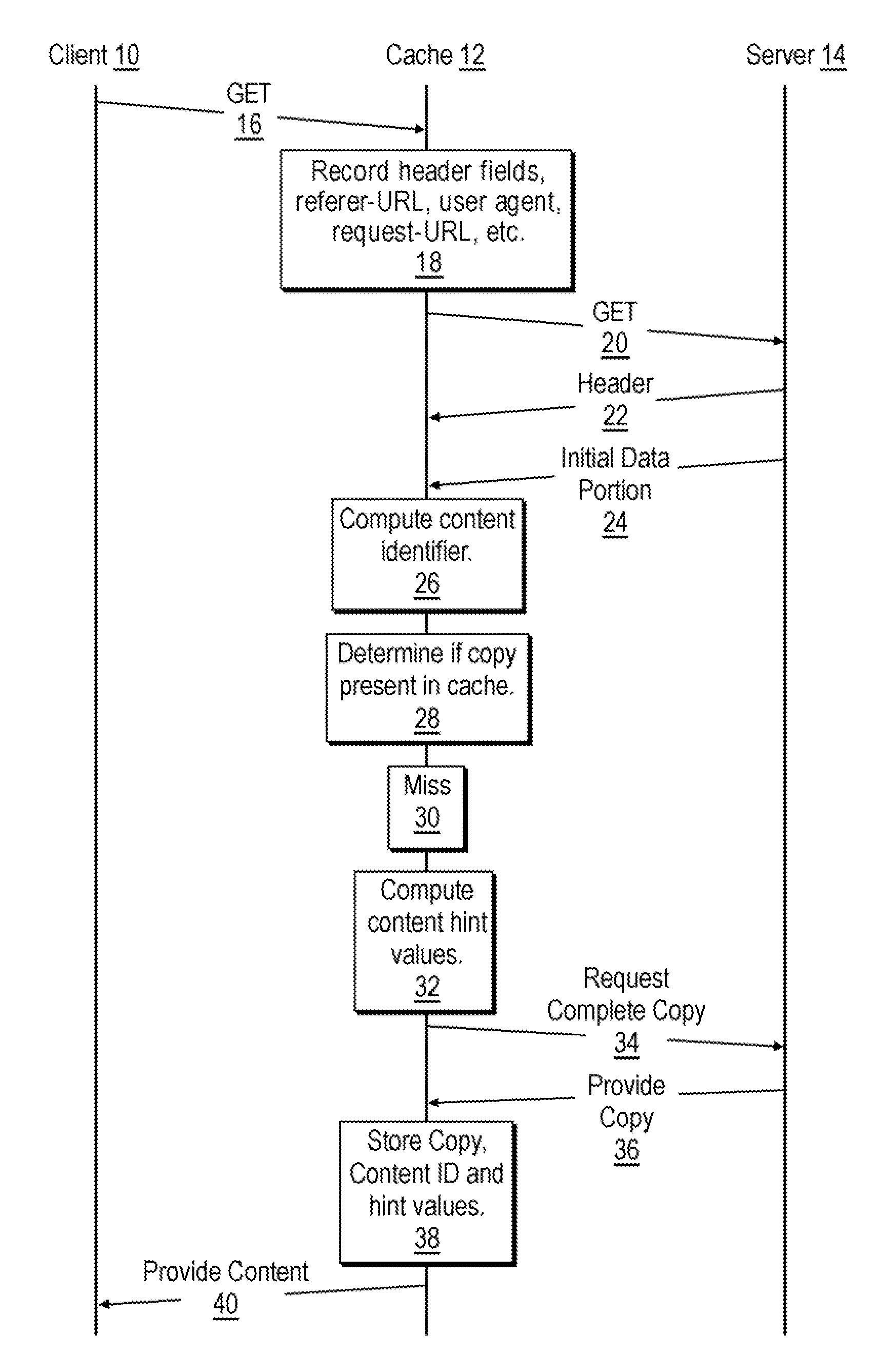

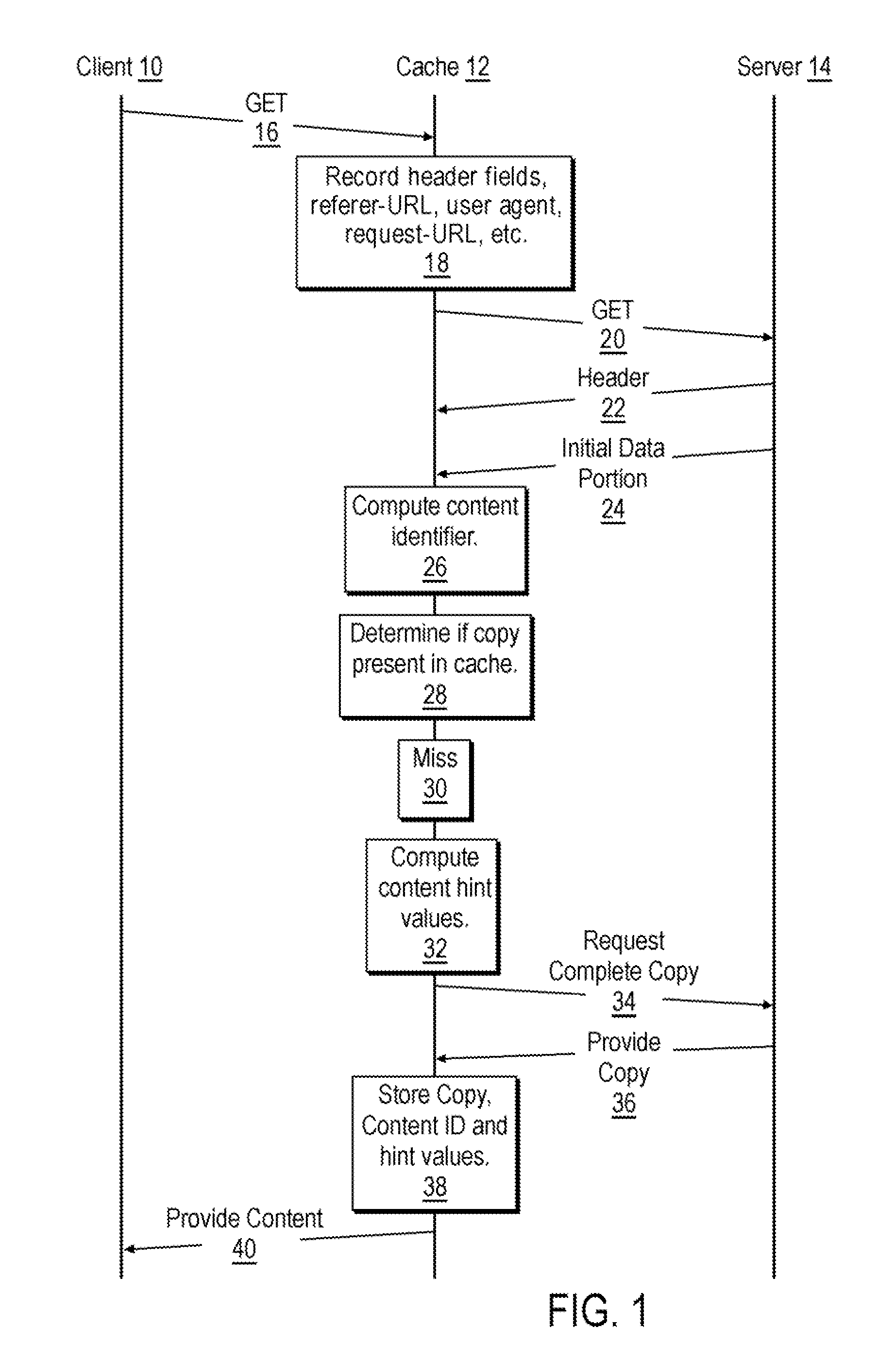

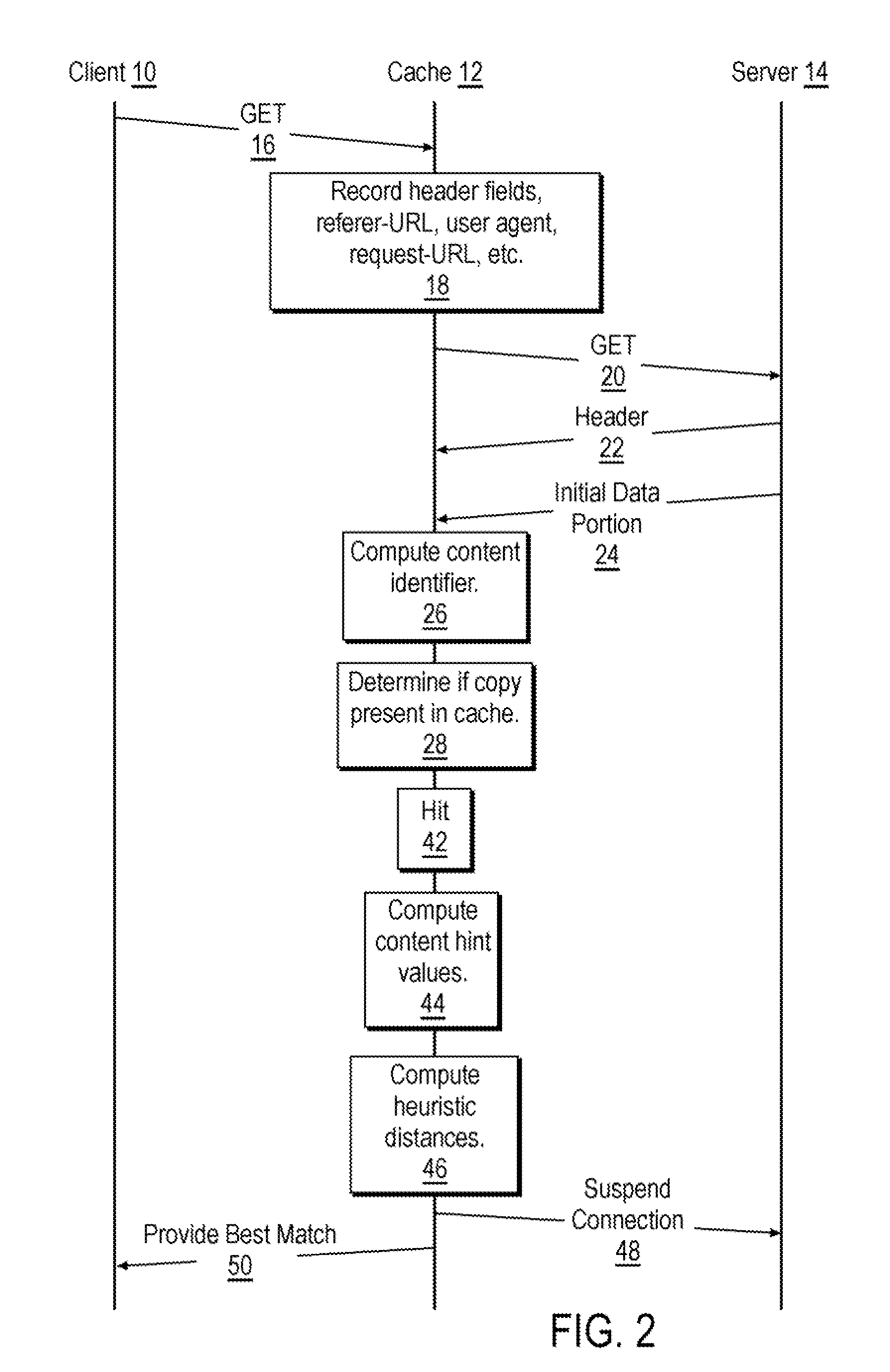

Content associative caching method for web applications

InactiveUS20100191805A1Memory adressing/allocation/relocationMultiple digital computer combinationsContent IdentifierWeb application

A cache logically disposed in a communication path between a client and a server receives a request for a content item and, in response thereto, requests from the server header information concerning the content item and an initial portion of data that makes up the content item. The cache then computes a first hashing value from the header information and a second hashing value from the initial portion of data. A content identifier is created by combining the first hashing value and the second hashing value. Using the content identifier, the cache determines whether a copy of the content item is stored by the cache; and, if so provides same to the client. Otherwise, the requests the content item from the server and, upon receipt thereof, provides it to the client.

Owner:CA TECH INC

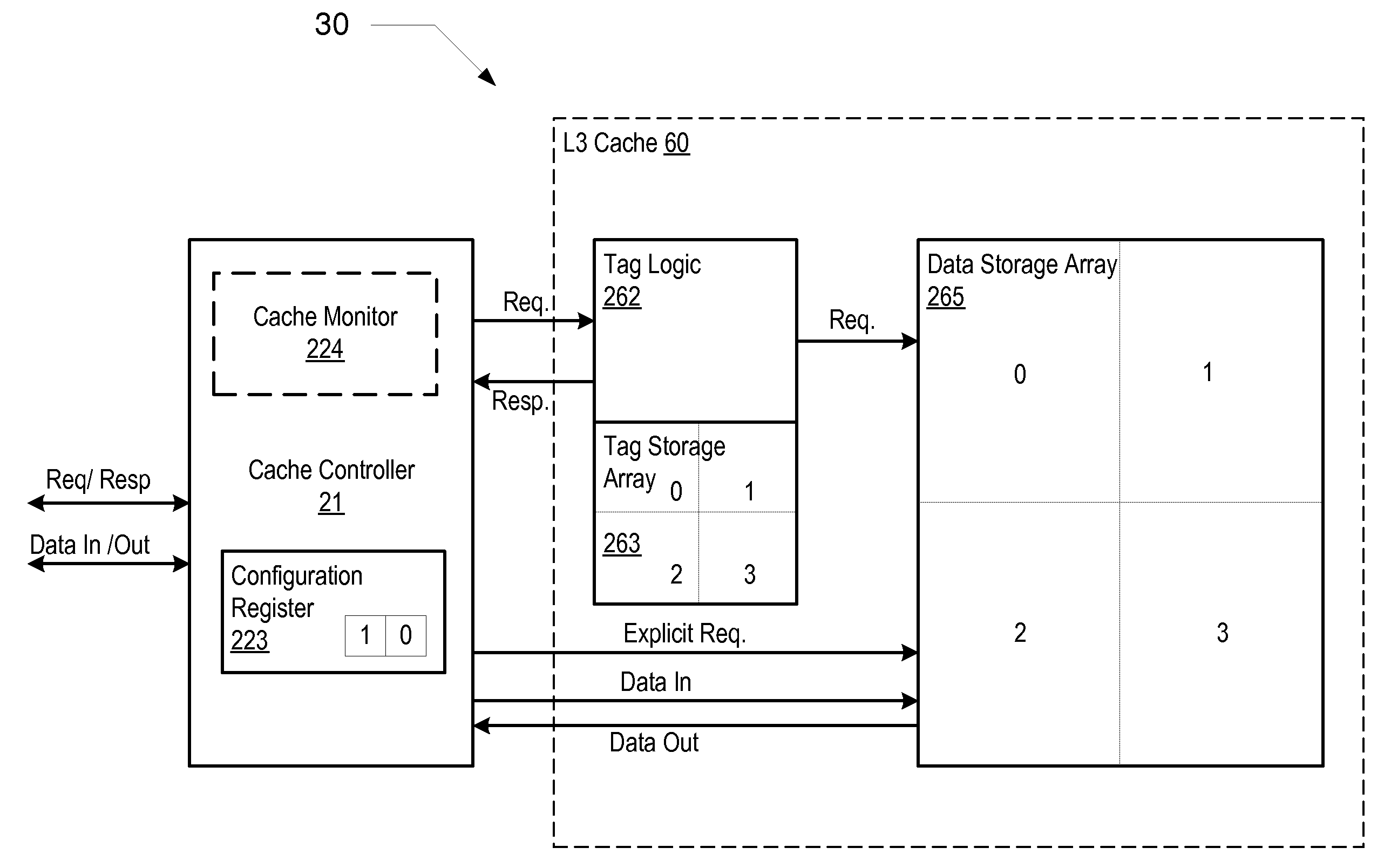

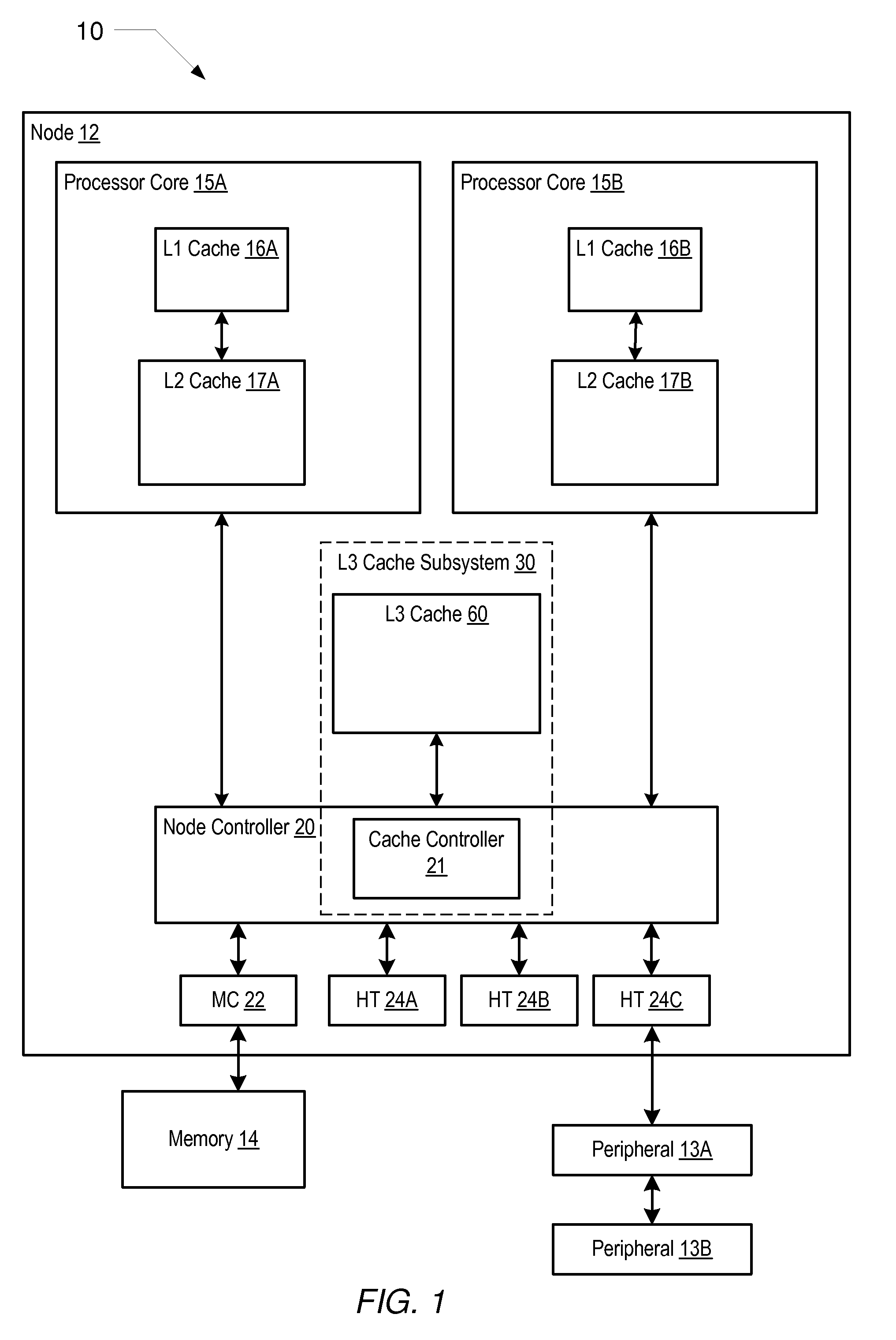

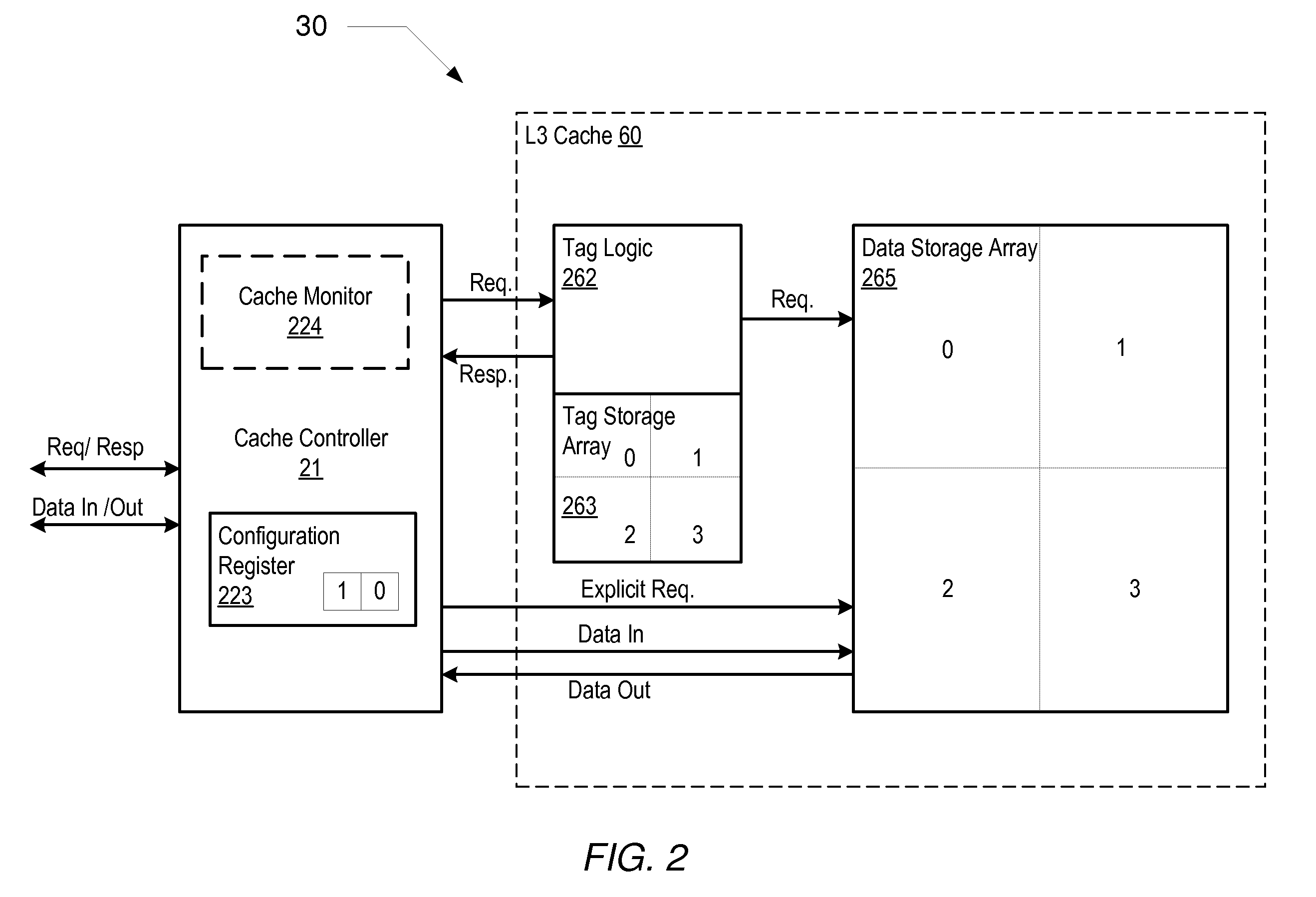

Cache memory having configurable associativity

InactiveUS20090006756A1Access be disabledMemory architecture accessing/allocationEnergy efficient ICTParallel computingData store

A processor cache memory subsystem includes a cache memory having a configurable associativity. The cache memory may operate in a fully associative addressing mode and a direct addressing mode with reduced associativity. The cache memory includes a data storage array including a plurality of independently accessible sub-blocks for storing blocks of data. For example each of the sub-blocks implements an n-way set associative cache. The cache memory subsystem also includes a cache controller that may programmably select a number of ways of associativity of the cache memory. When programmed to operate in the fully associative addressing mode, the cache controller may disable independent access to each of the independently accessible sub-blocks and enable concurrent tag lookup of all independently accessible sub-blocks, and when programmed to operate in the direct addressing mode, the cache controller may enable independent access to one or more subsets of the independently accessible sub-blocks.

Owner:GLOBALFOUNDRIES INC

N-way set associative cache memory and control method thereof

ActiveUS7502887B2Easily secures real processing time for a taskMemory adressing/allocation/relocationProcessor registerControl register

Owner:SOCIONEXT INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com