Patents

Literature

37results about How to "Low-latency access" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

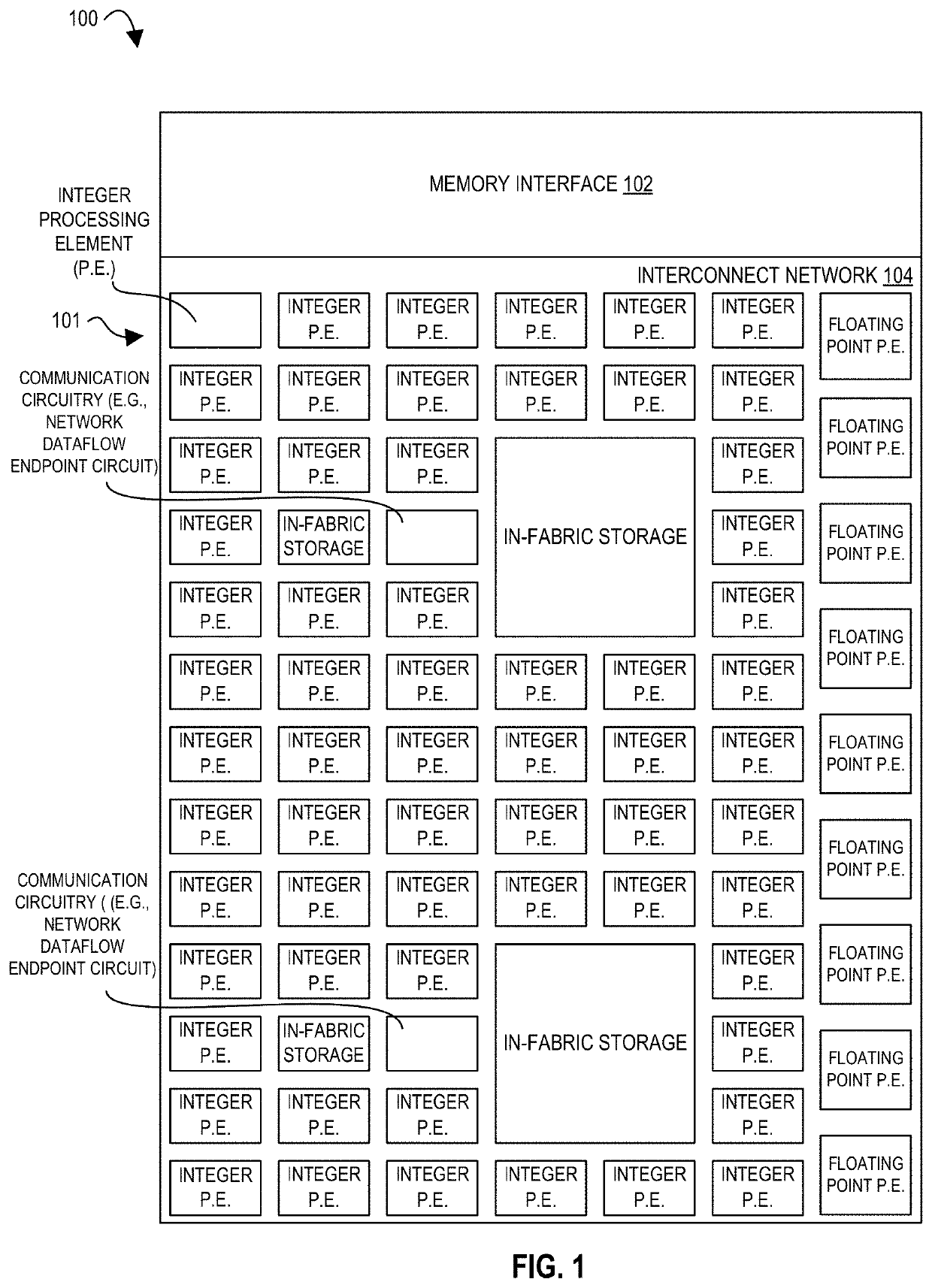

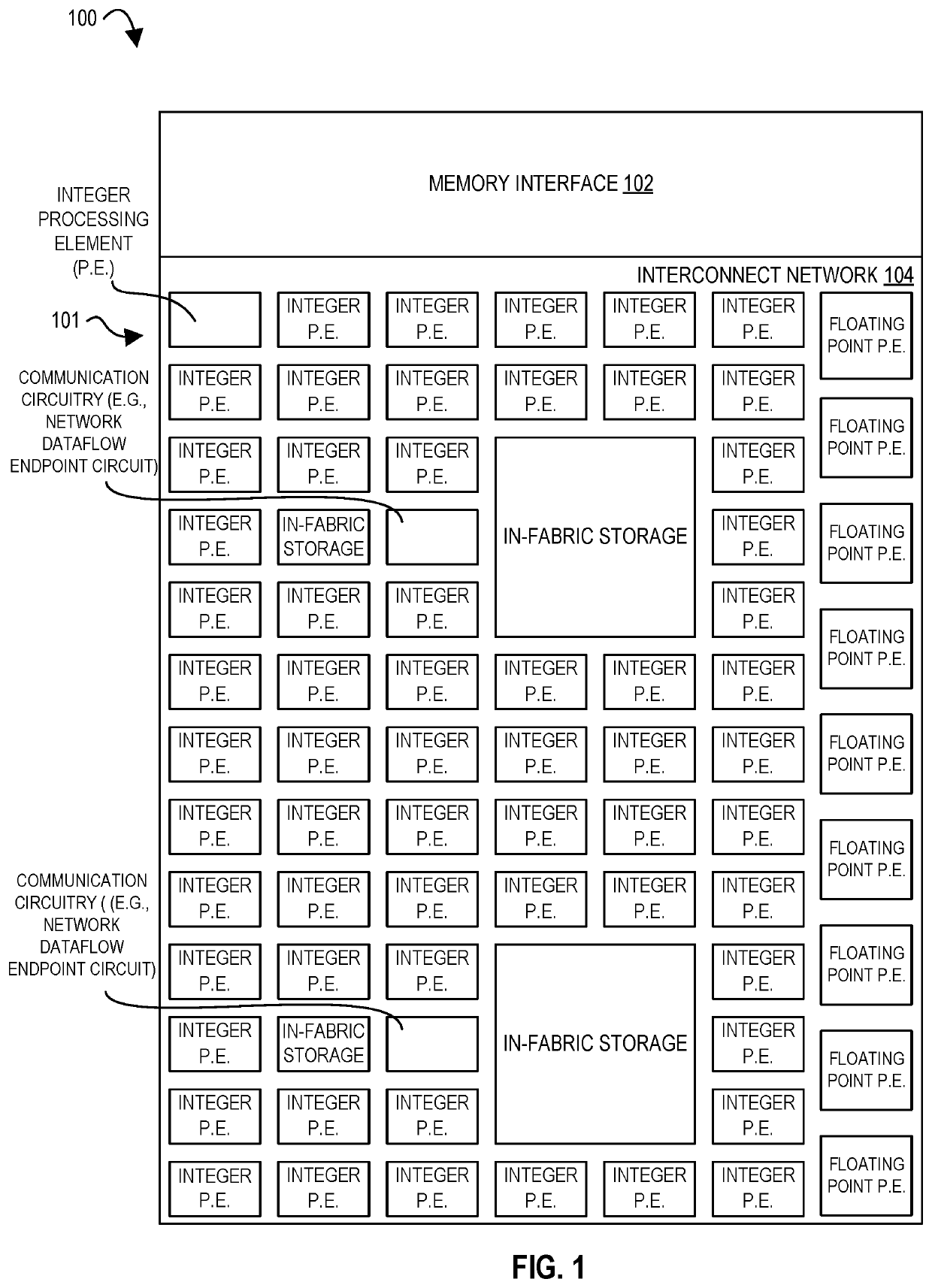

Processors, methods, and systems with a configurable spatial accelerator

ActiveUS20190018815A1Easy to adaptImprove performanceDataflow computersResource allocationComputer scienceVoltage

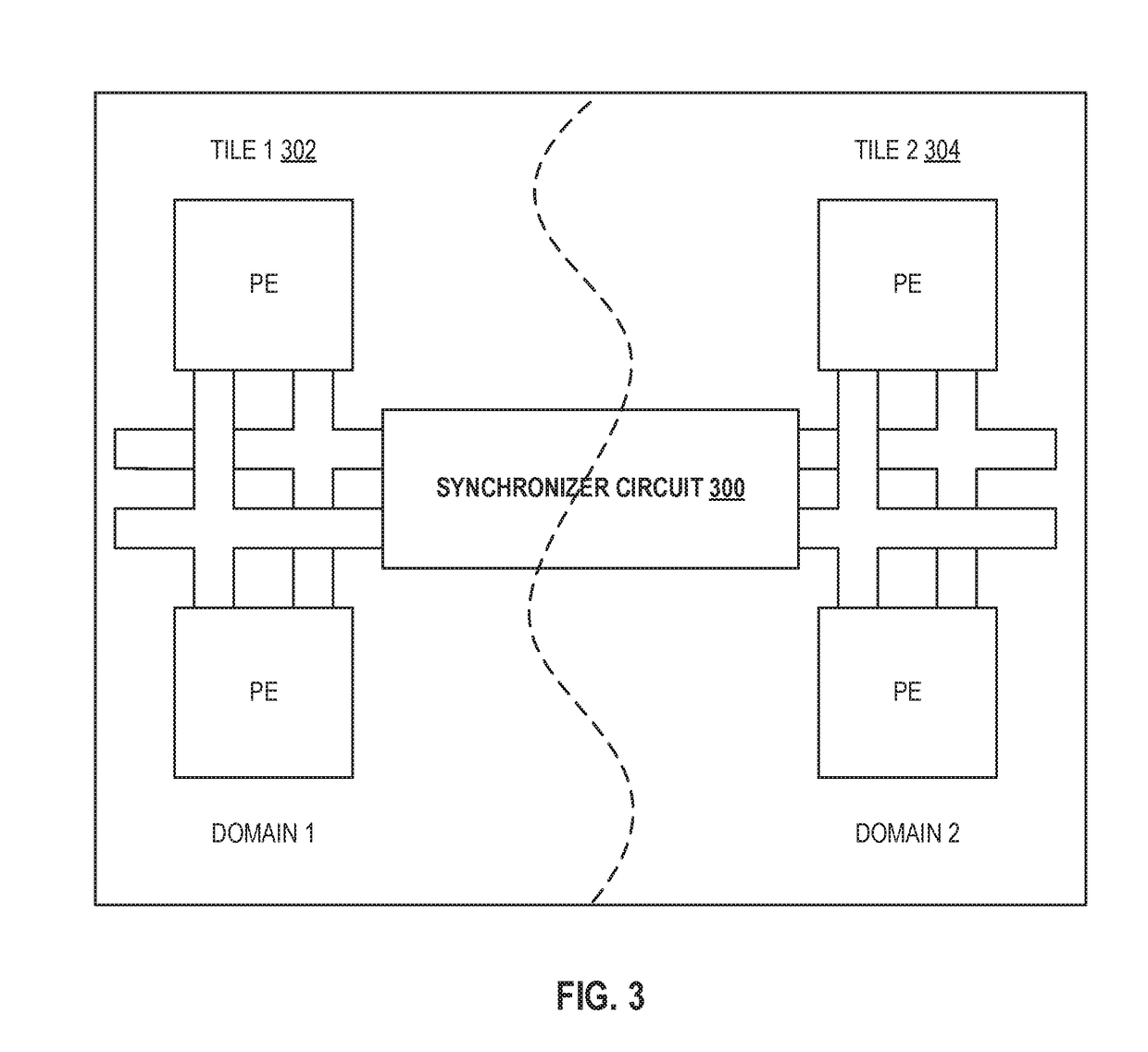

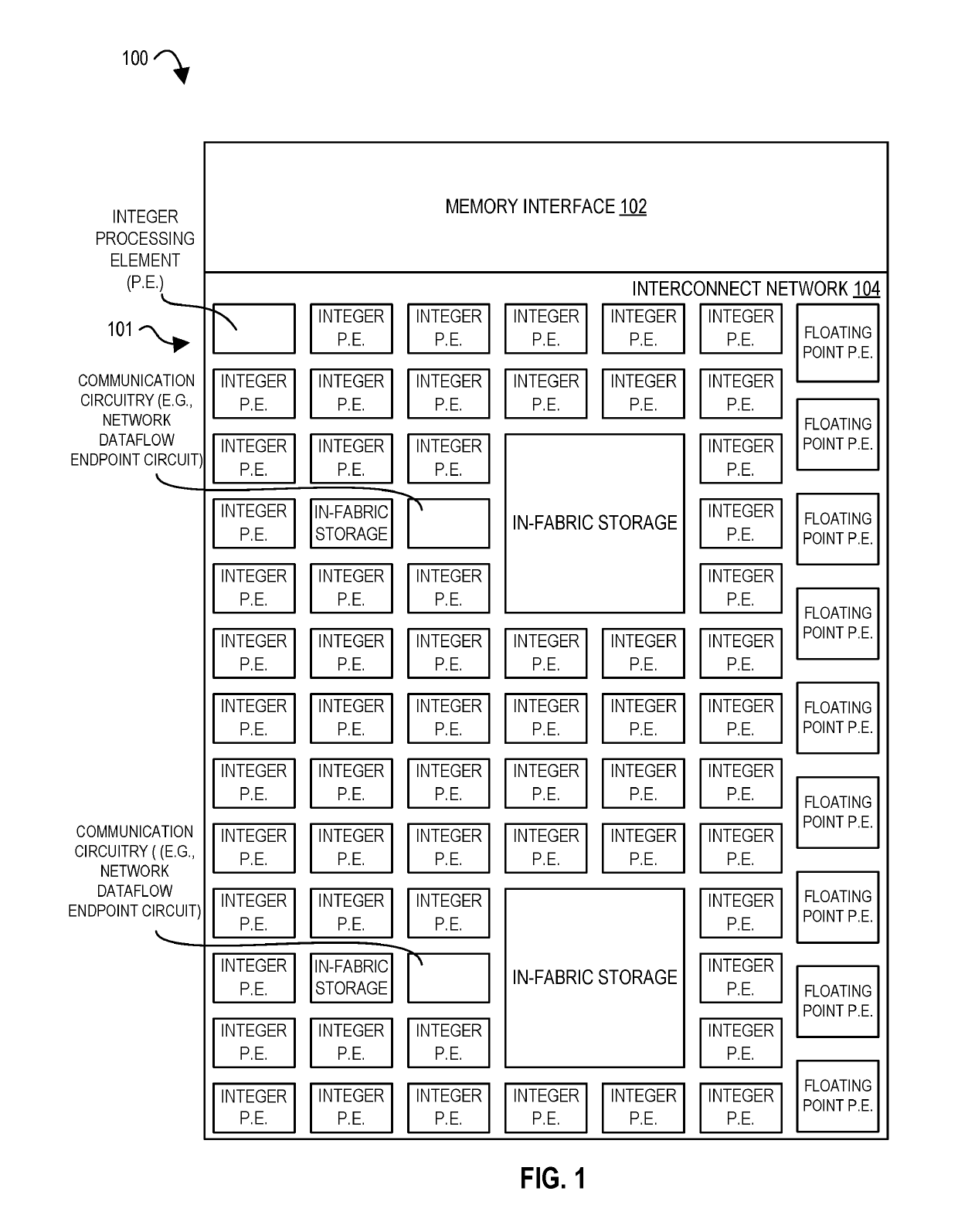

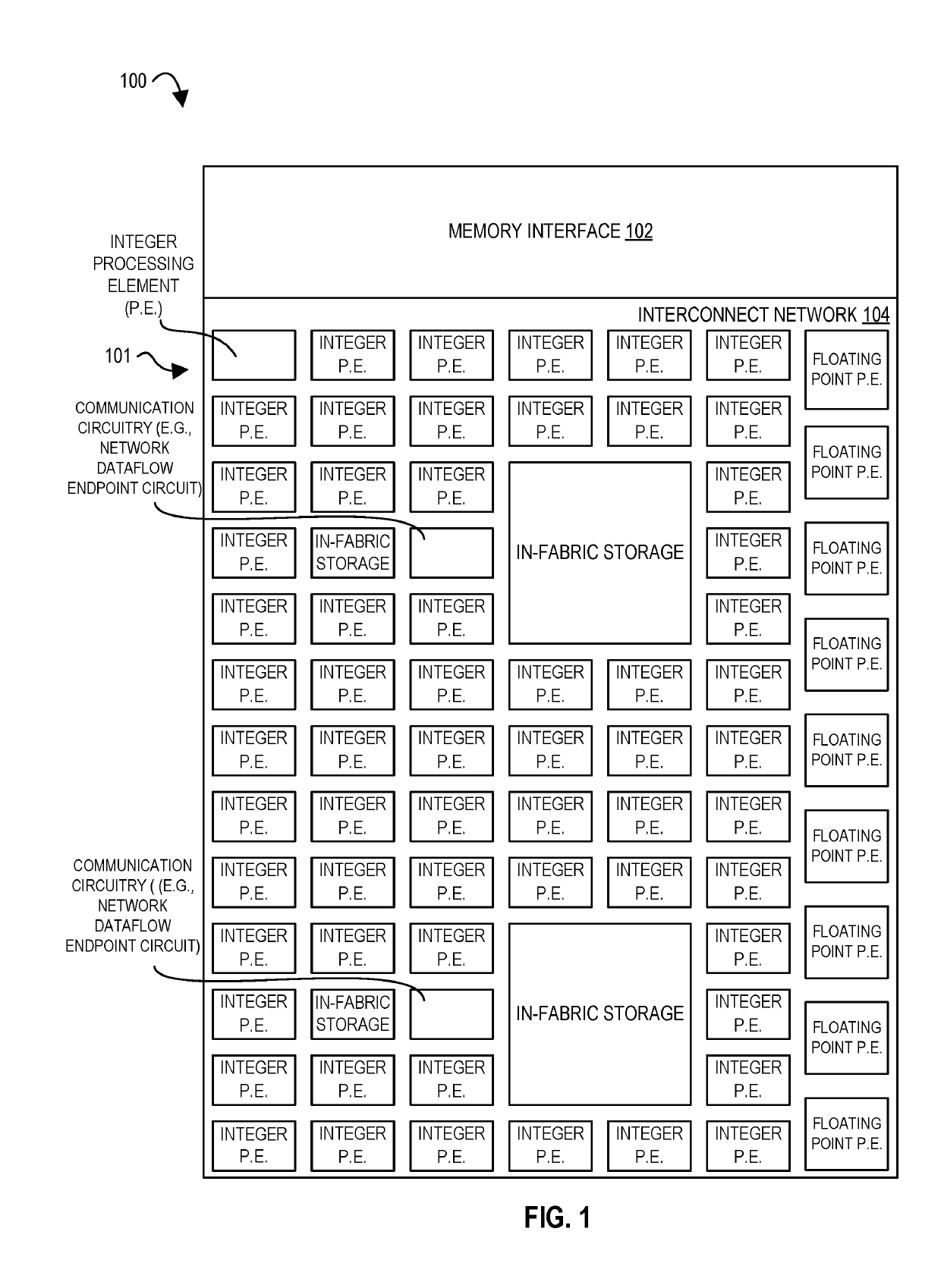

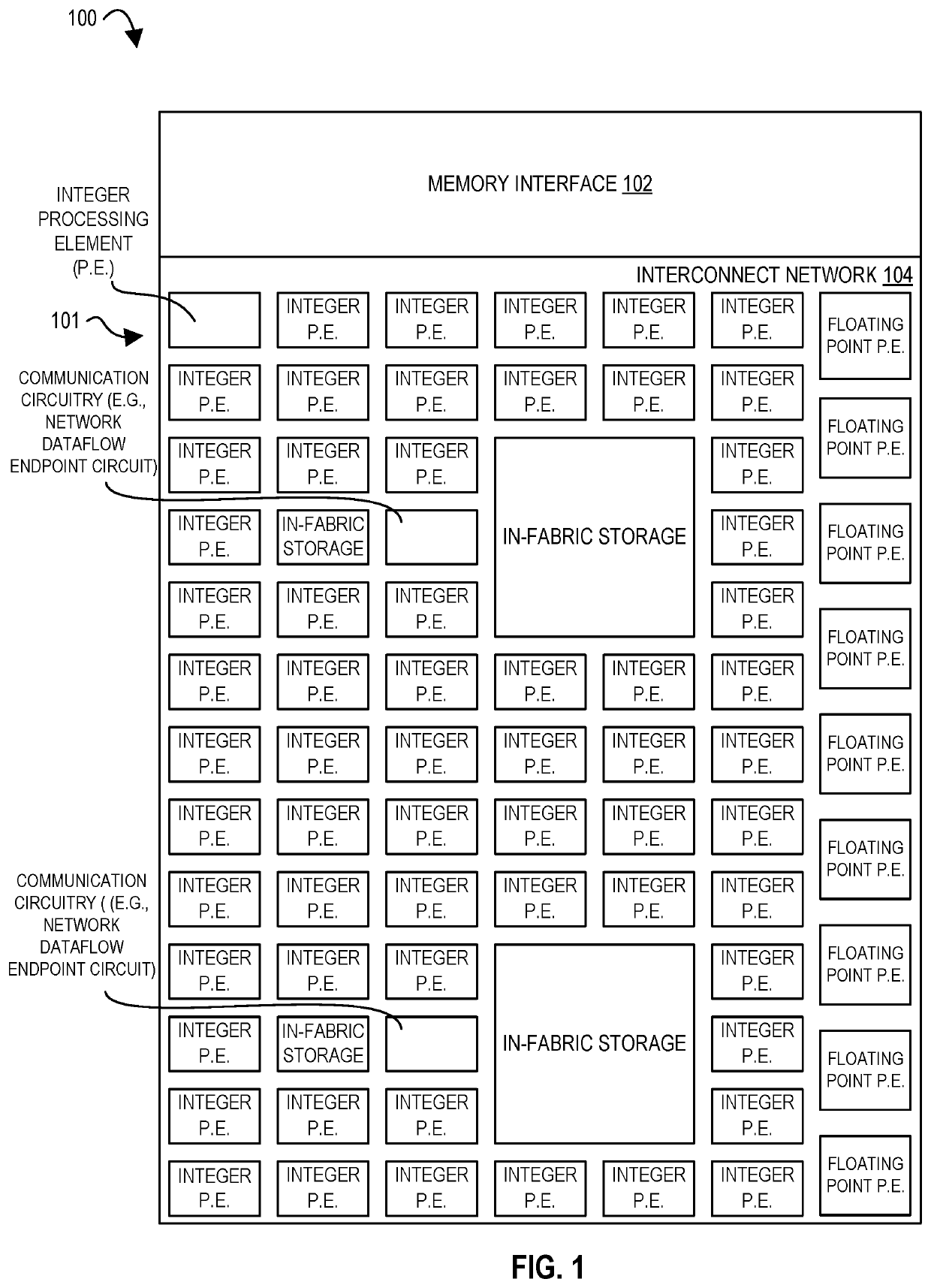

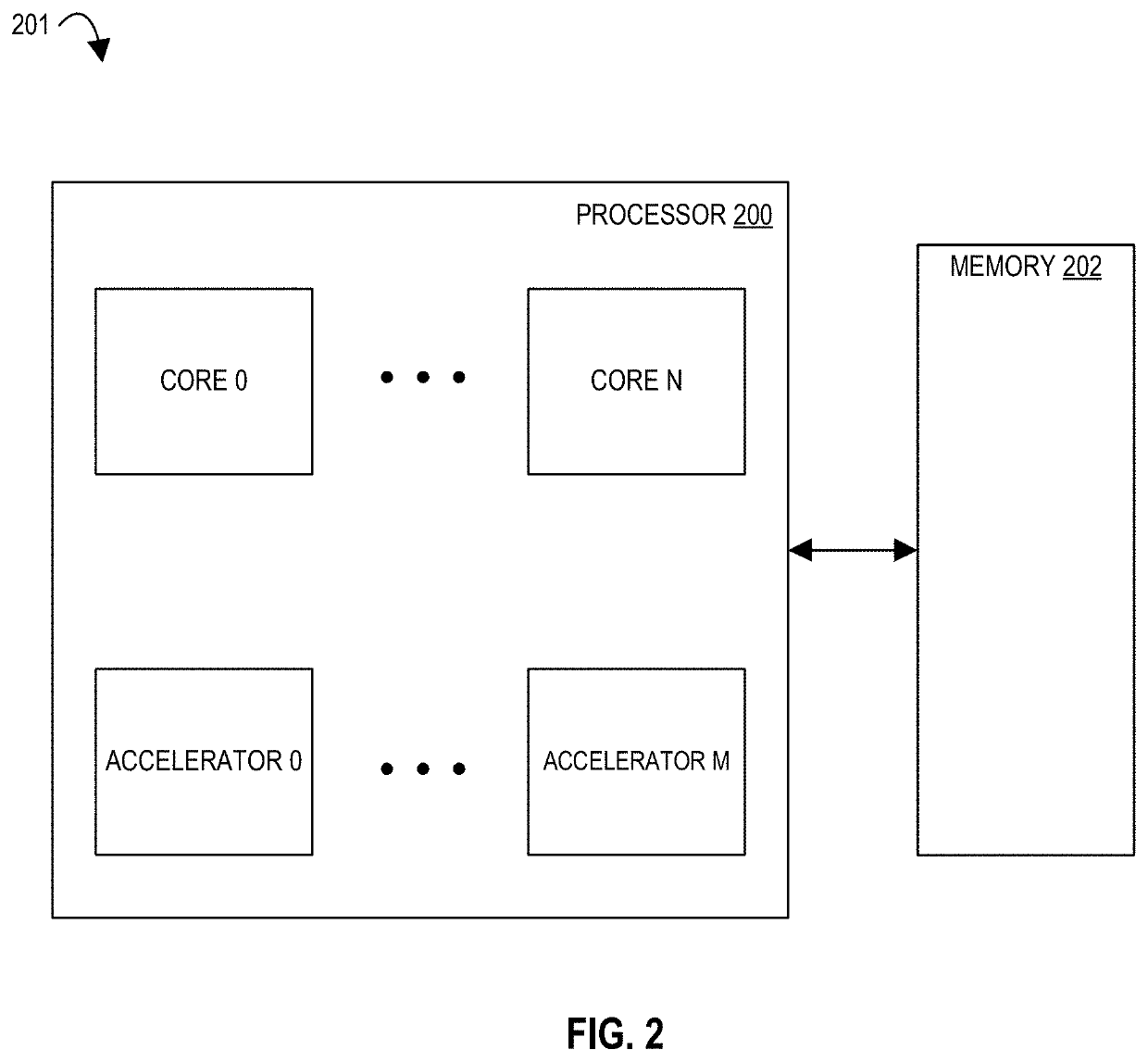

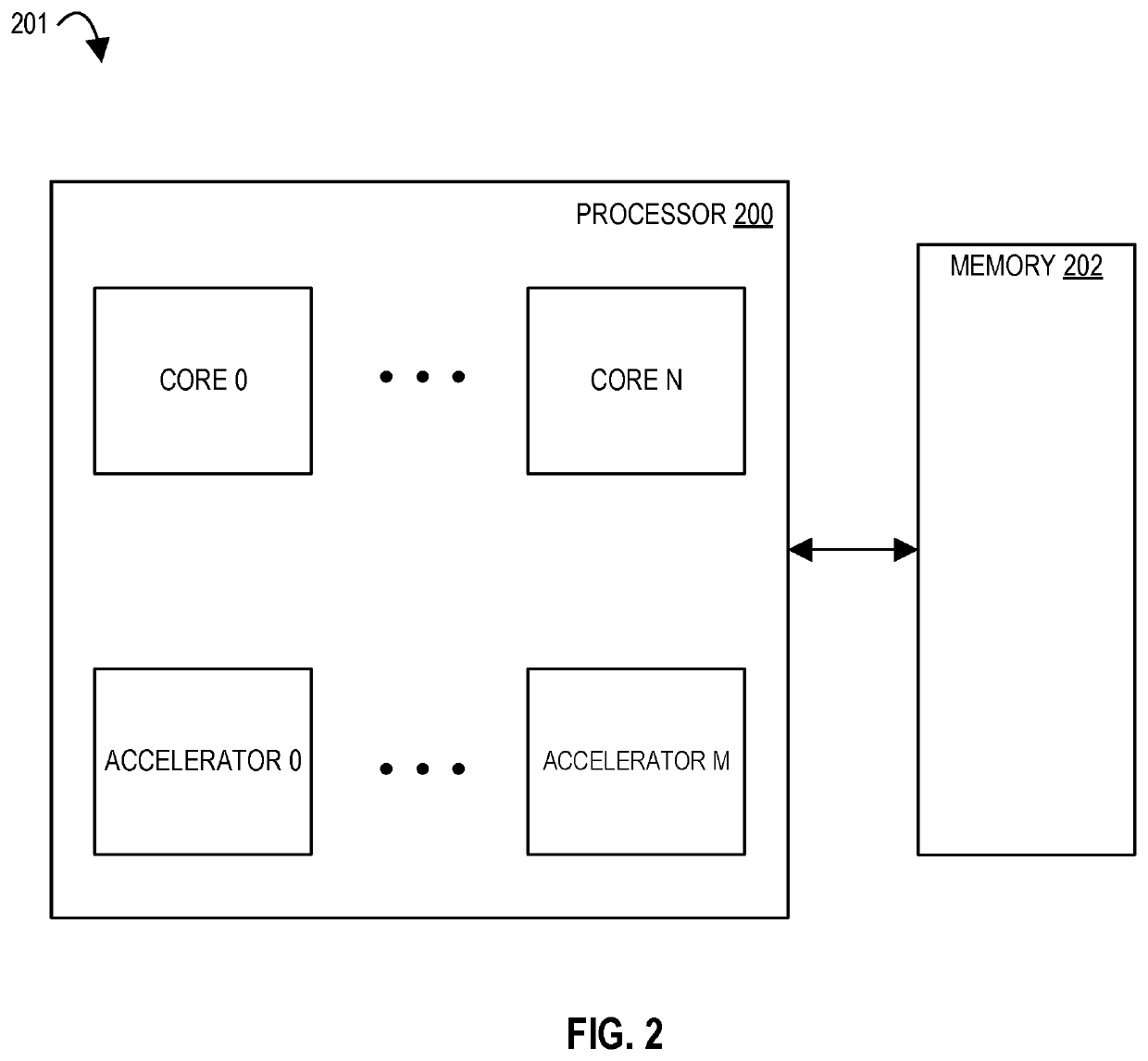

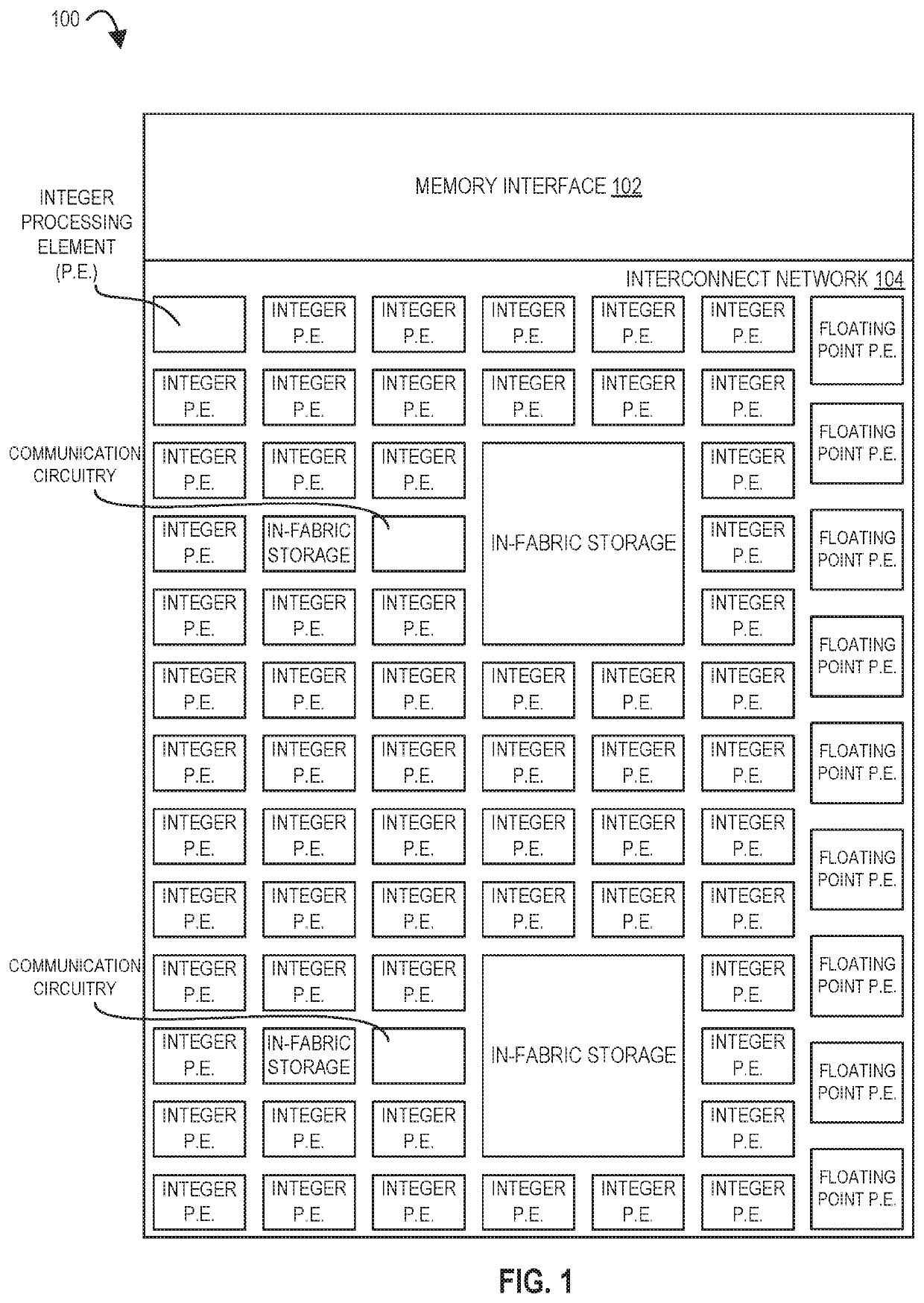

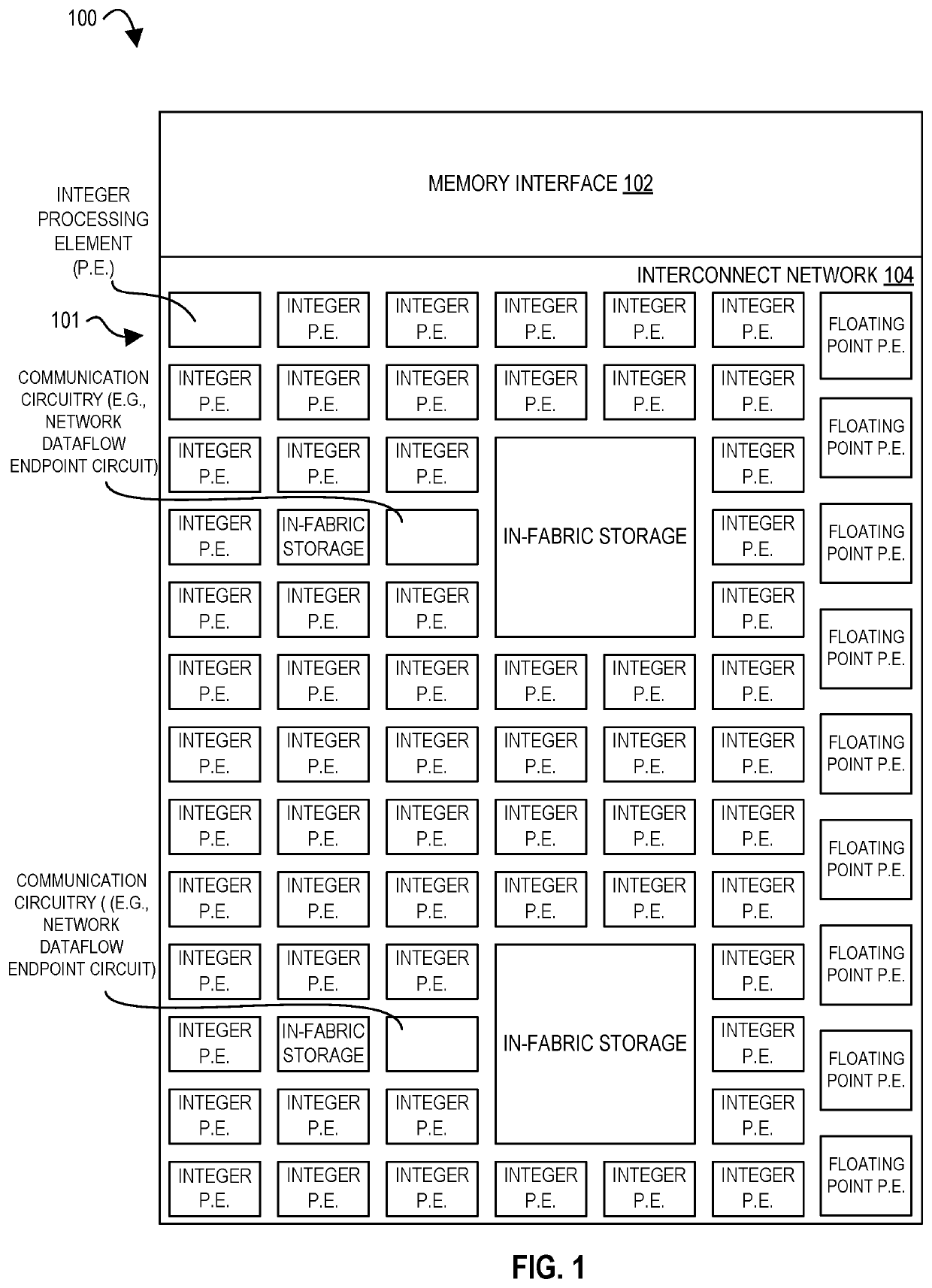

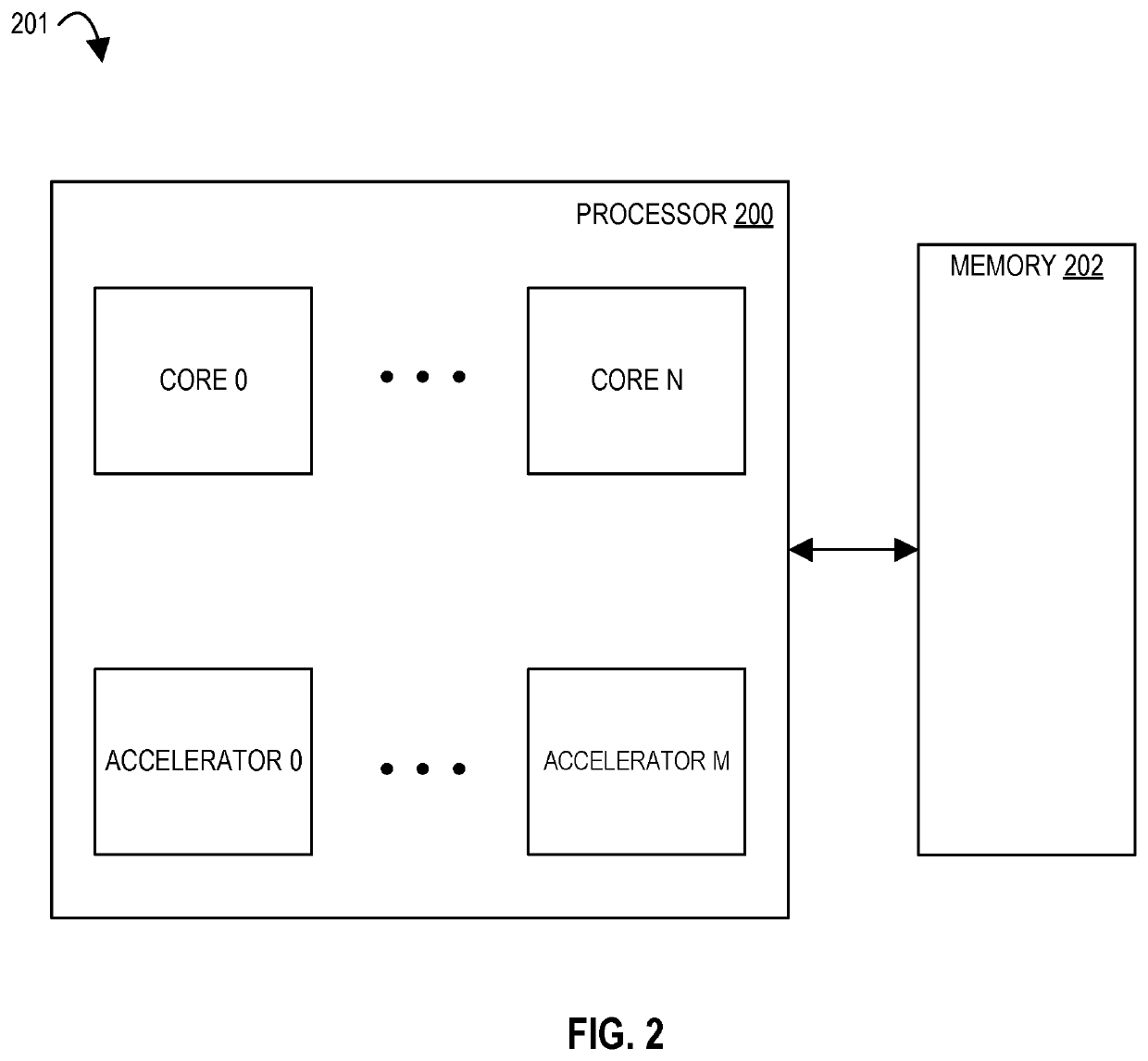

Systems, methods, and apparatuses relating to a configurable spatial accelerator are described. In one embodiment, a processor includes a synchronizer circuit coupled between an interconnect network of a first tile and an interconnect network of a second tile and comprising storage to store data to be sent between the interconnect network of the first tile and the interconnect network of the second tile, the synchronizer circuit to convert the data from the storage between a first voltage or a first frequency of the first tile and a second voltage or a second frequency of the second tile to generate converted data, and send the converted data between the interconnect network of the first tile and the interconnect network of the second tile

Owner:INTEL CORP

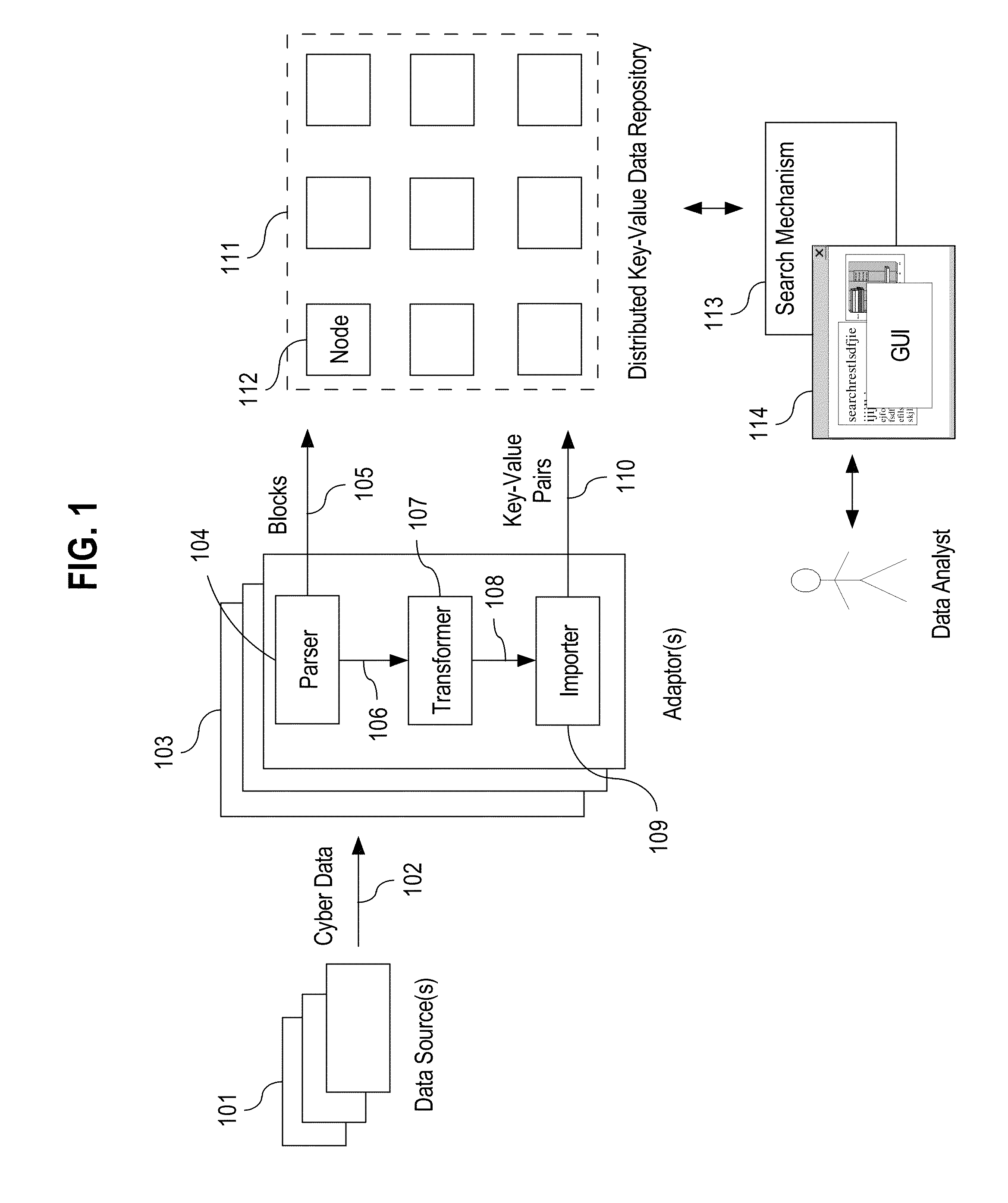

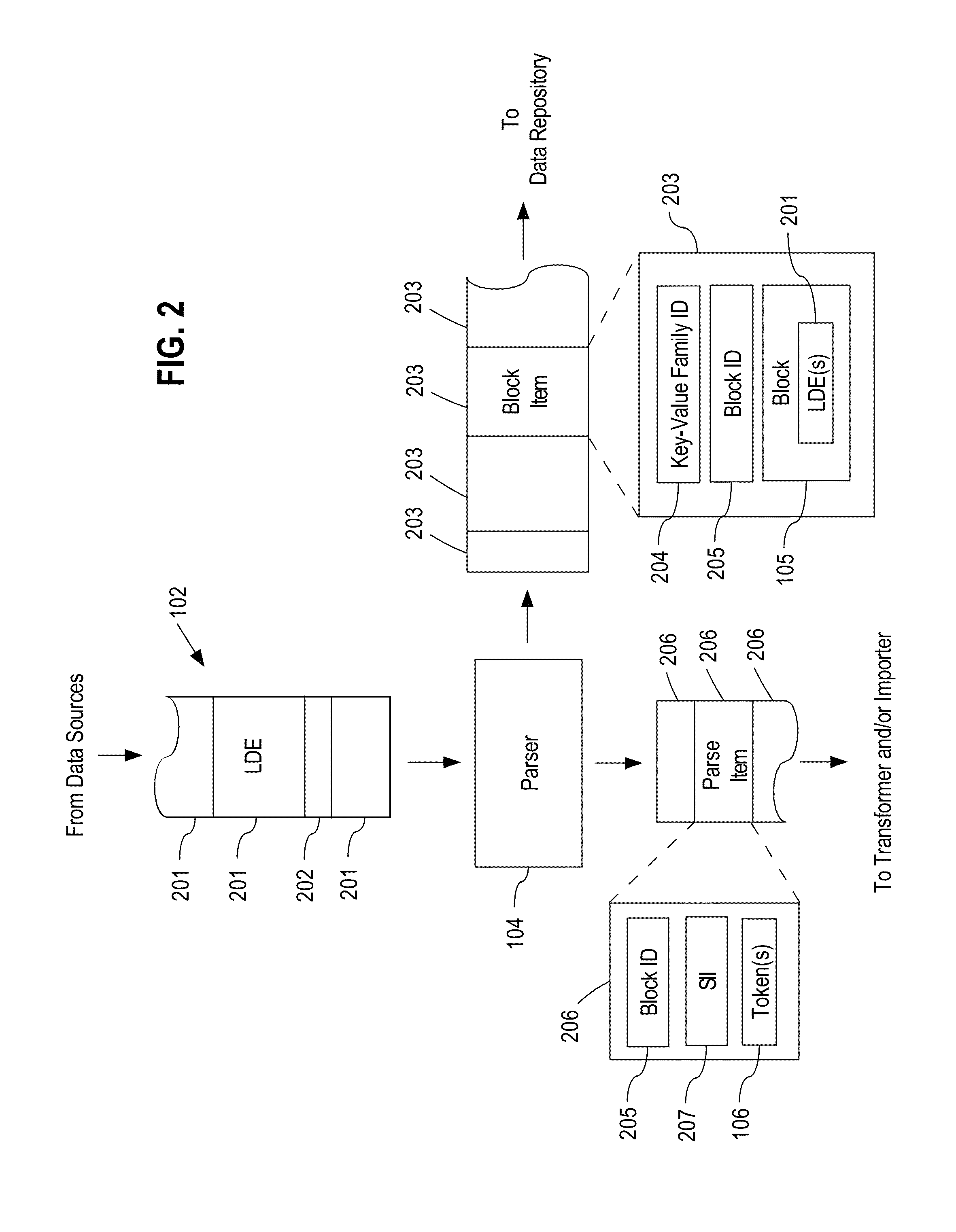

System and method for investigating large amounts of data

ActiveUS8799240B2Storage requirement be minimizeEfficient identificationError detection/correctionDigital data processing detailsData analystData science

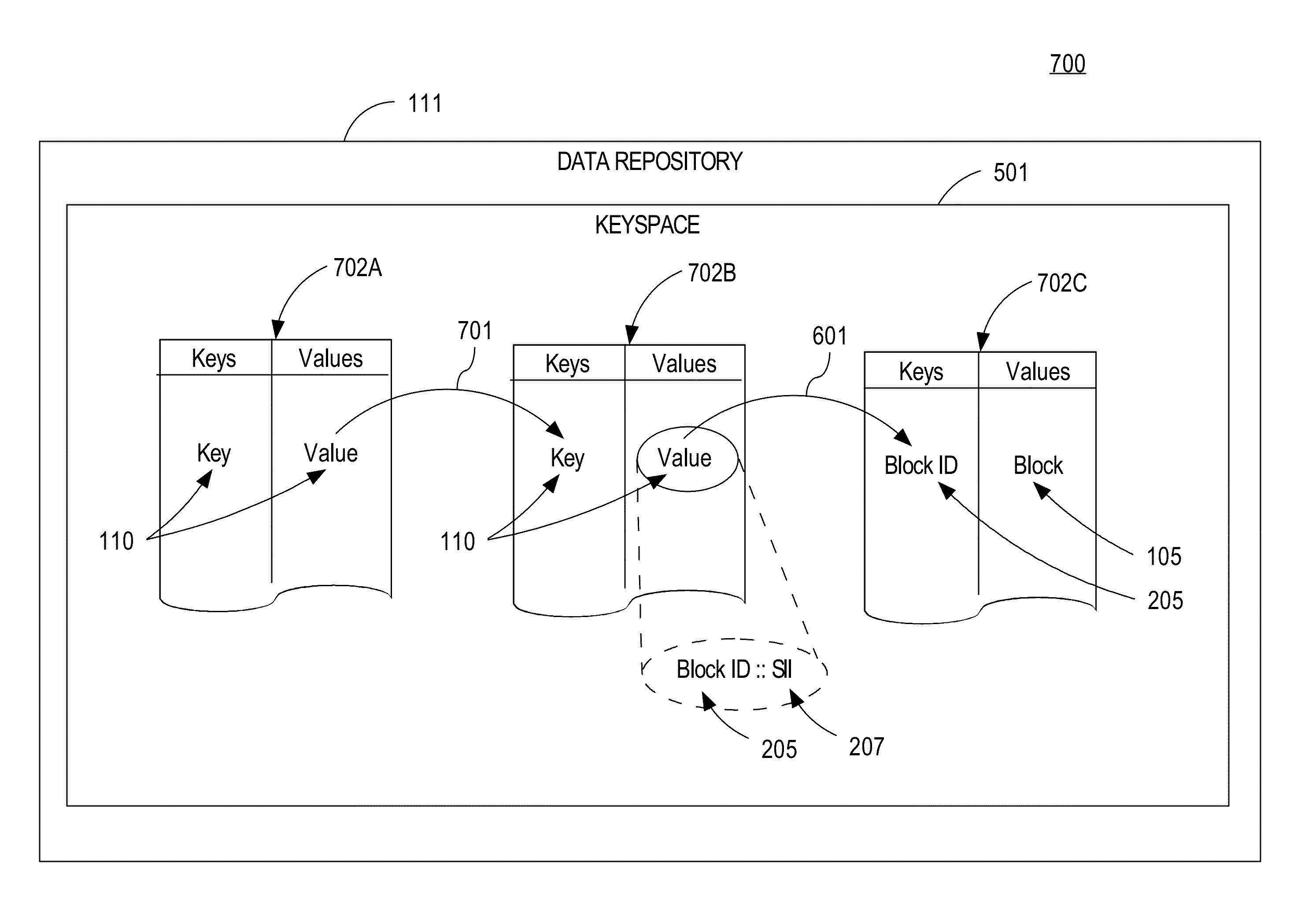

A data analysis system is proposed for providing fine-grained low latency access to high volume input data from possibly multiple heterogeneous input data sources. The input data is parsed, optionally transformed, indexed, and stored in a horizontally-scalable key-value data repository where it may be accessed using low latency searches. The input data may be compressed into blocks before being stored to minimize storage requirements. The results of searches present input data in its original form. The input data may include access logs, call data records (CDRs), e-mail messages, etc. The system allows a data analyst to efficiently identify information of interest in a very large dynamic data set up to multiple petabytes in size. Once information of interest has been identified, that subset of the large data set can be imported into a dedicated or specialized data analysis system for an additional in-depth investigation and contextual analysis.

Owner:PALANTIR TECHNOLOGIES

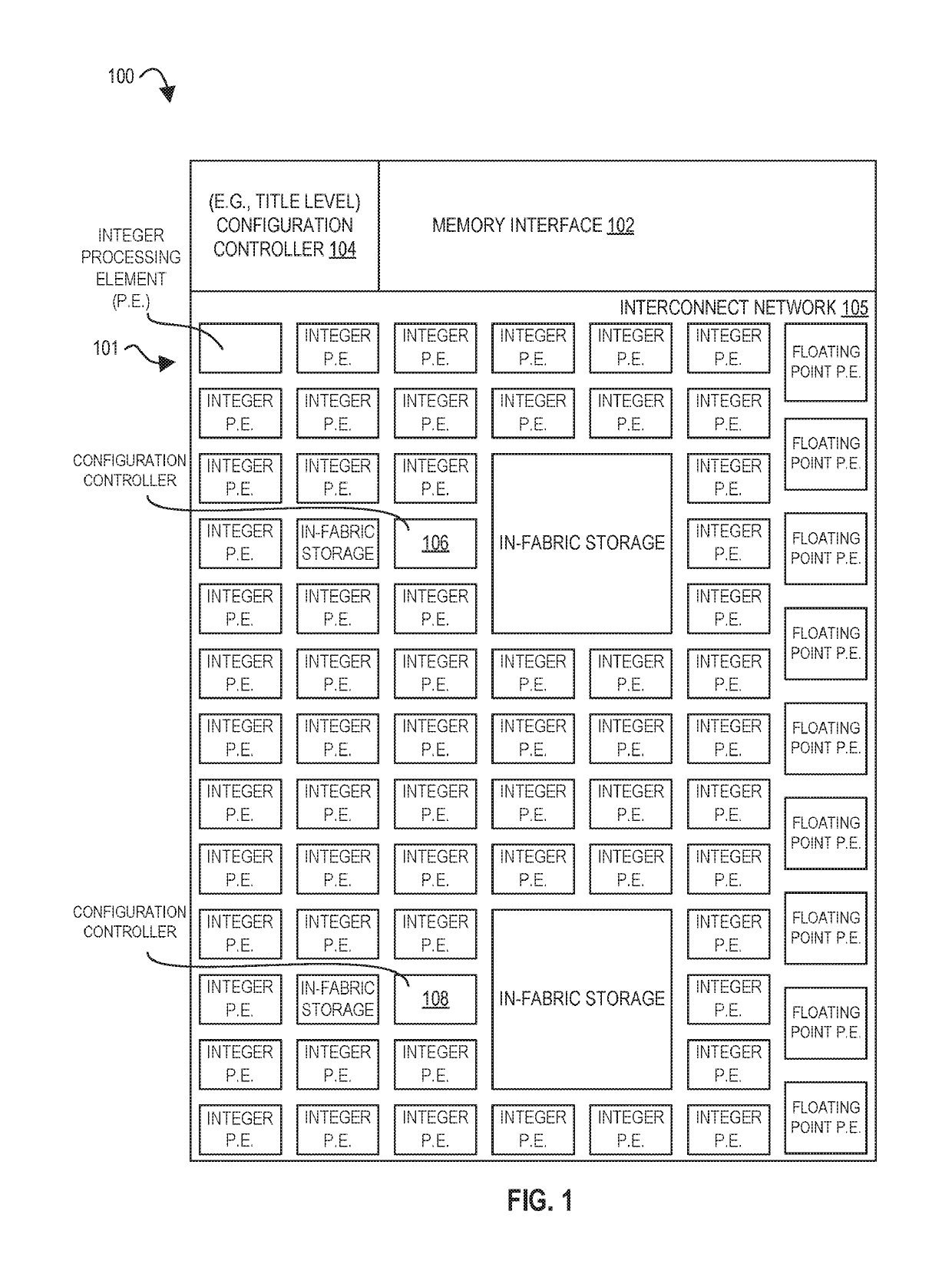

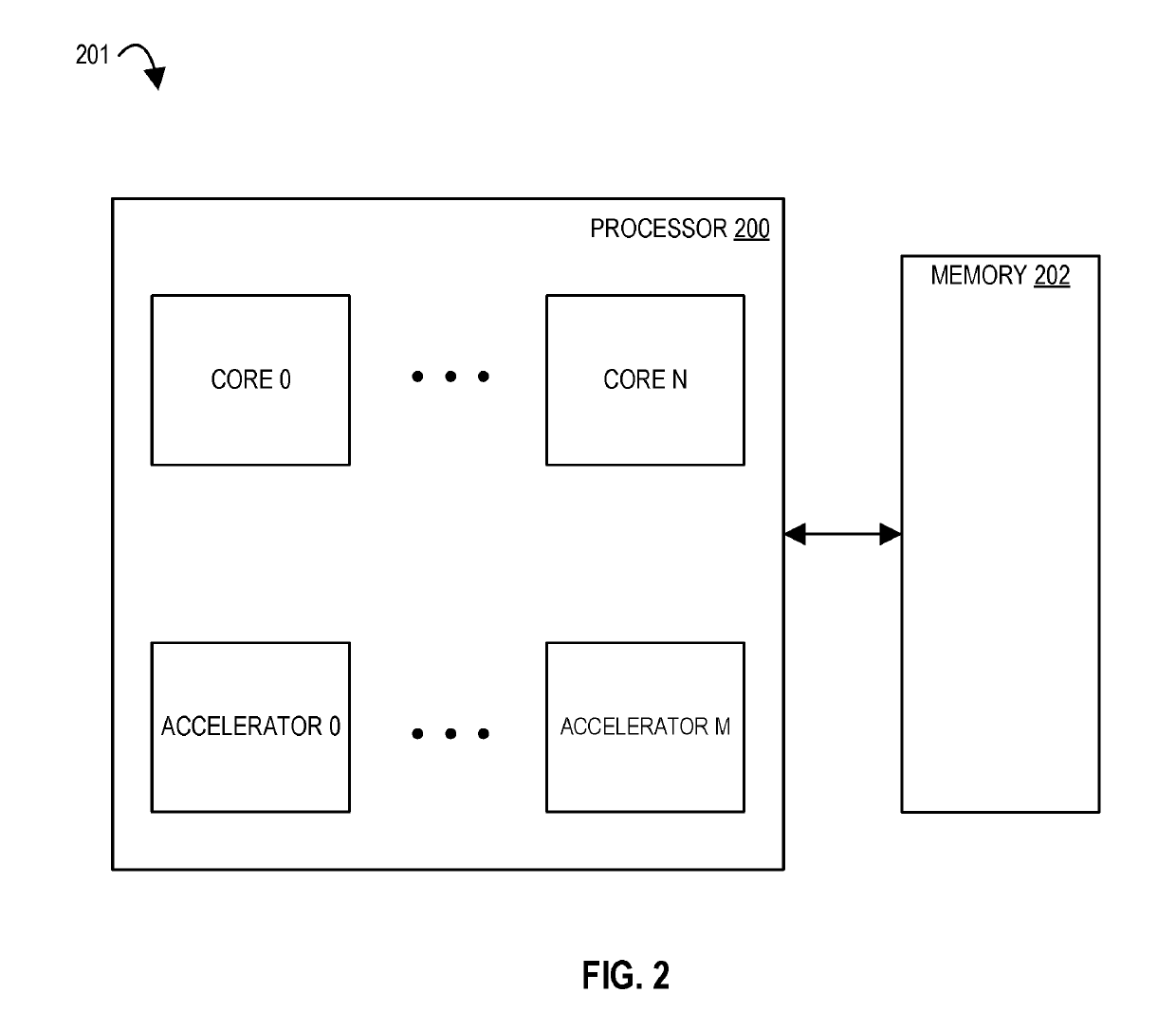

Processors and methods for privileged configuration in a spatial array

ActiveUS20190102179A1Easy to adaptImprove performanceResource allocationRuntime instruction translationArray data structureProcessing element

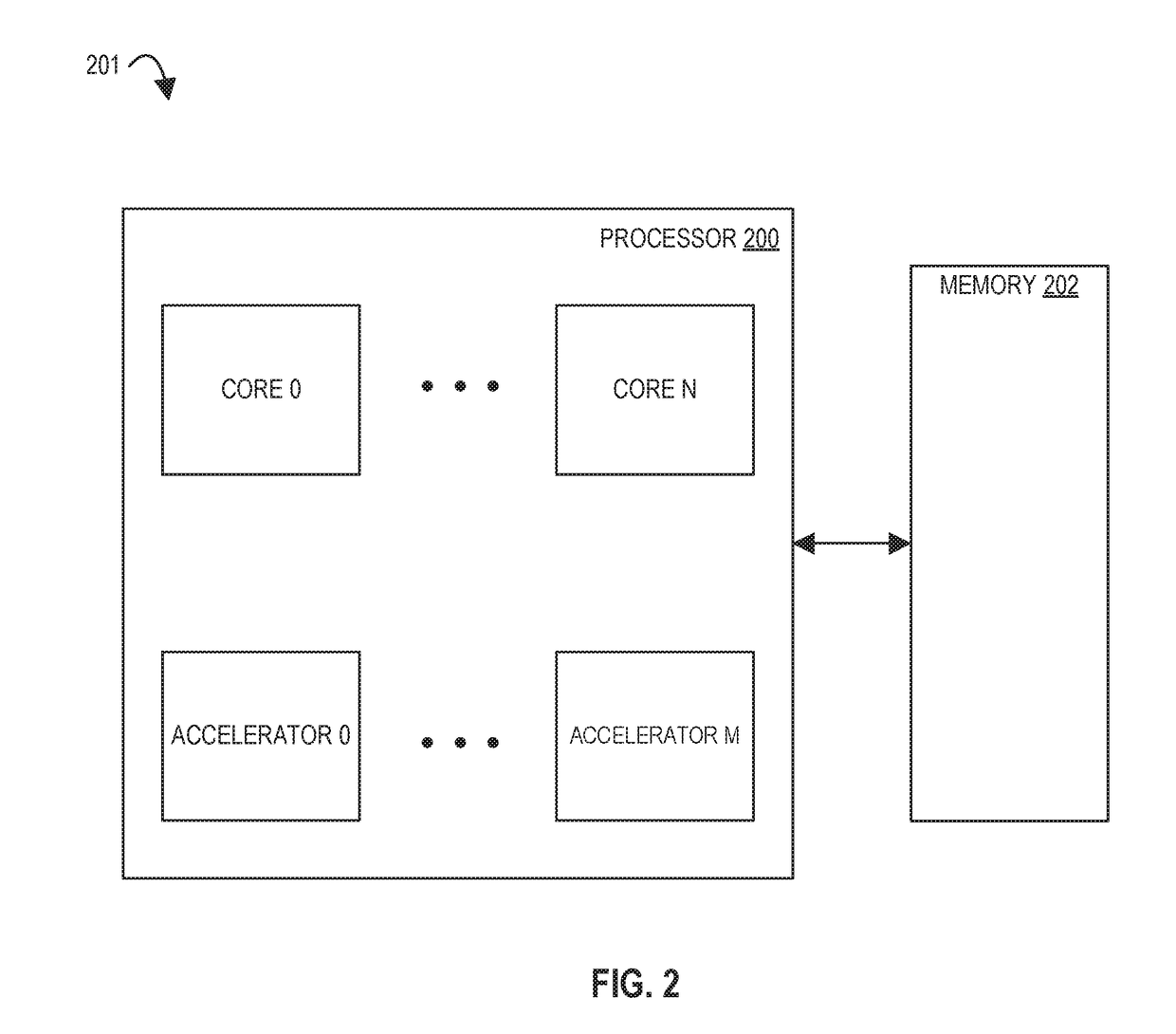

Methods and apparatuses relating to privileged configuration in spatial arrays are described. In one embodiment, a processor includes processing elements; an interconnect network between the processing elements; and a configuration controller coupled to a first subset and a second, different subset of the plurality of processing elements, the first subset having an output coupled to an input of the second, different subset, wherein the configuration controller is to configure the interconnect network between the first subset and the second, different subset of the plurality of processing elements to not allow communication on the interconnect network between the first subset and the second, different subset when a privilege bit is set to a first value and to allow communication on the interconnect network between the first subset and the second, different subset of the plurality of processing elements when the privilege bit is set to a second value.

Owner:INTEL CORP

Apparatus, methods, and systems for remote memory access in a configurable spatial accelerator

InactiveUS20190303297A1Easy to adaptImprove performanceMemory architecture accessing/allocationDigital computer detailsDirect memory accessRemote memory access

Systems, methods, and apparatuses relating to remote memory access in a configurable spatial accelerator are described. In one embodiment, a configurable spatial accelerator includes a first memory interface circuit coupled to a first processing element and a cache, the first memory interface circuit to issue a memory request to the cache, the memory request comprising a field to identify a second memory interface circuit as a receiver of data for the memory request; and the second memory interface circuit coupled to a second processing element and the cache, the second memory interface circuit to send a credit return value to the first memory interface circuit, to cause the first memory interface circuit to mark the memory request as complete, when the data for the memory request arrives at the second memory interface circuit and a completion configuration register of the second memory interface circuit is set to a remote response value.

Owner:INTEL CORP

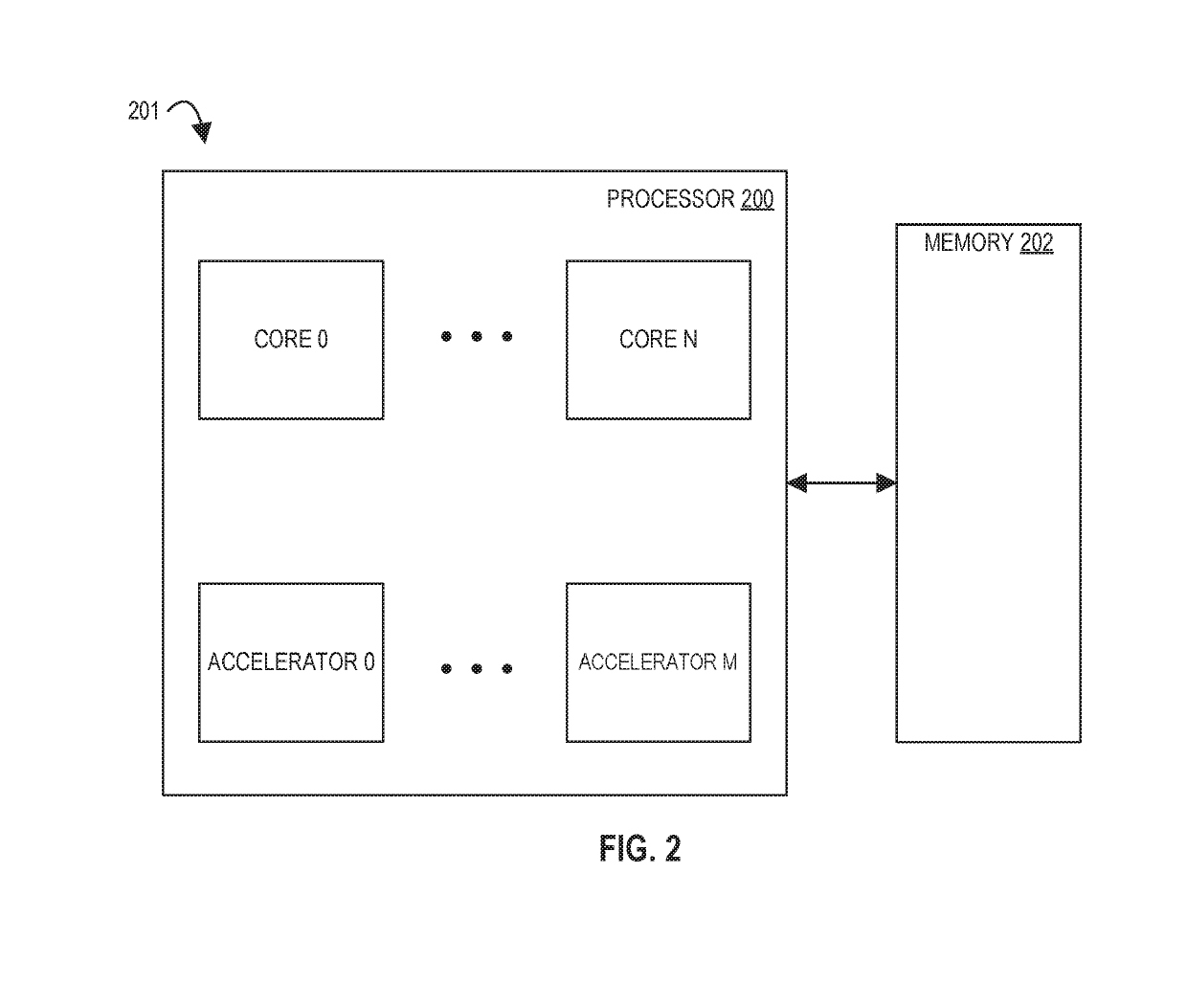

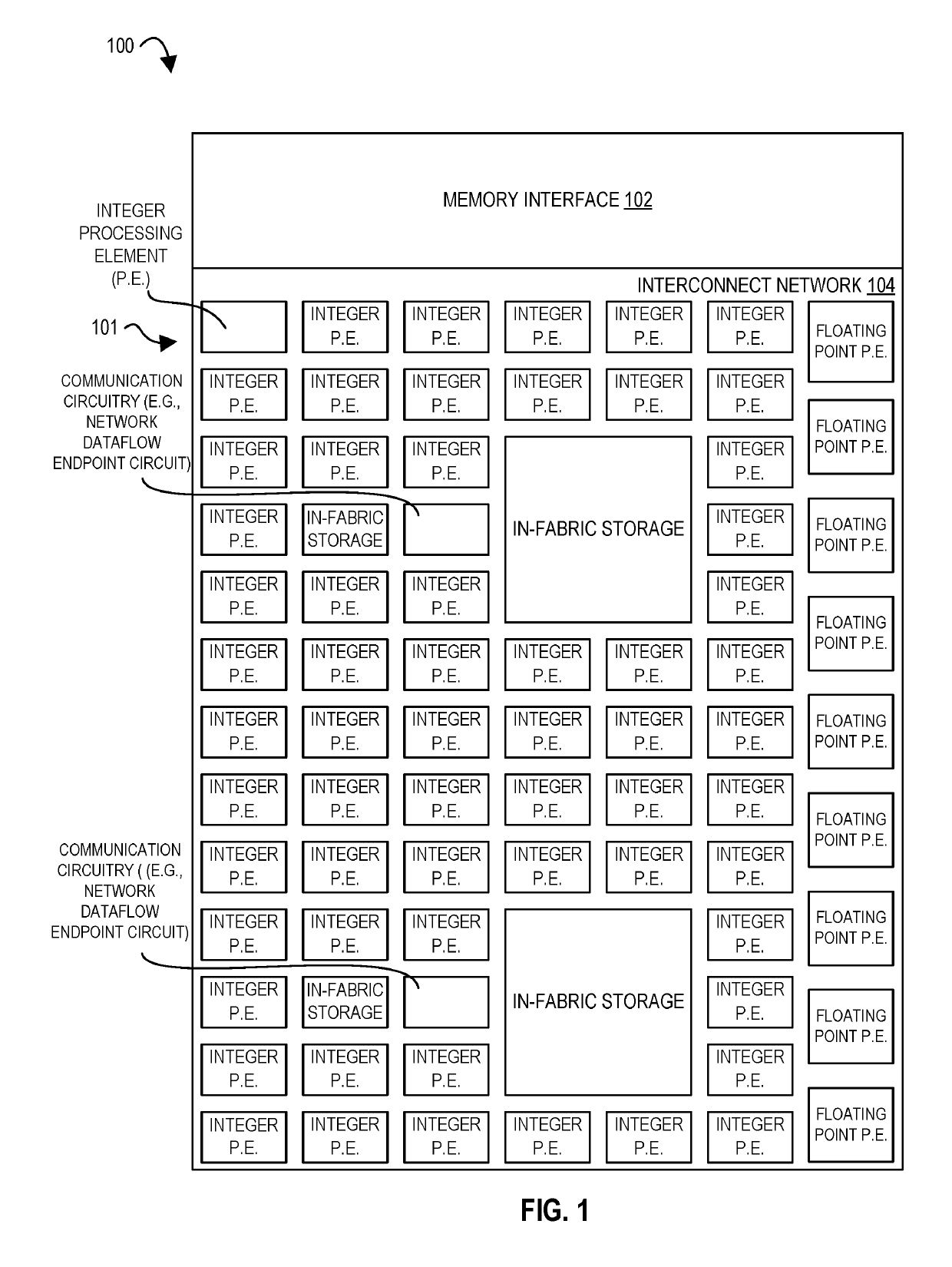

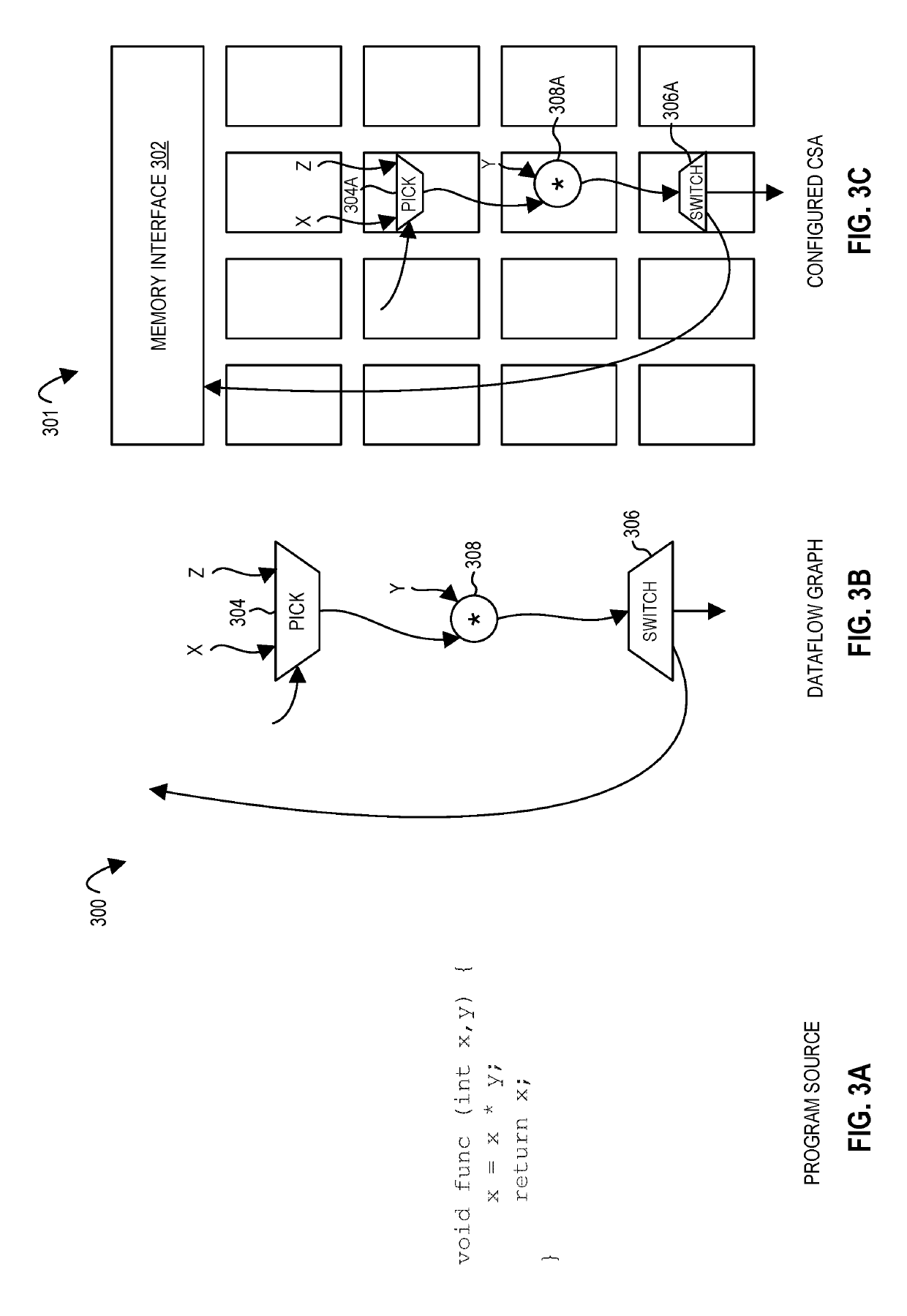

Apparatus, methods, and systems with a configurable spatial accelerator

ActiveUS20190205263A1Easy to adaptImprove performanceMemory architecture accessing/allocationEnergy efficient computingSystems approachesDataflow

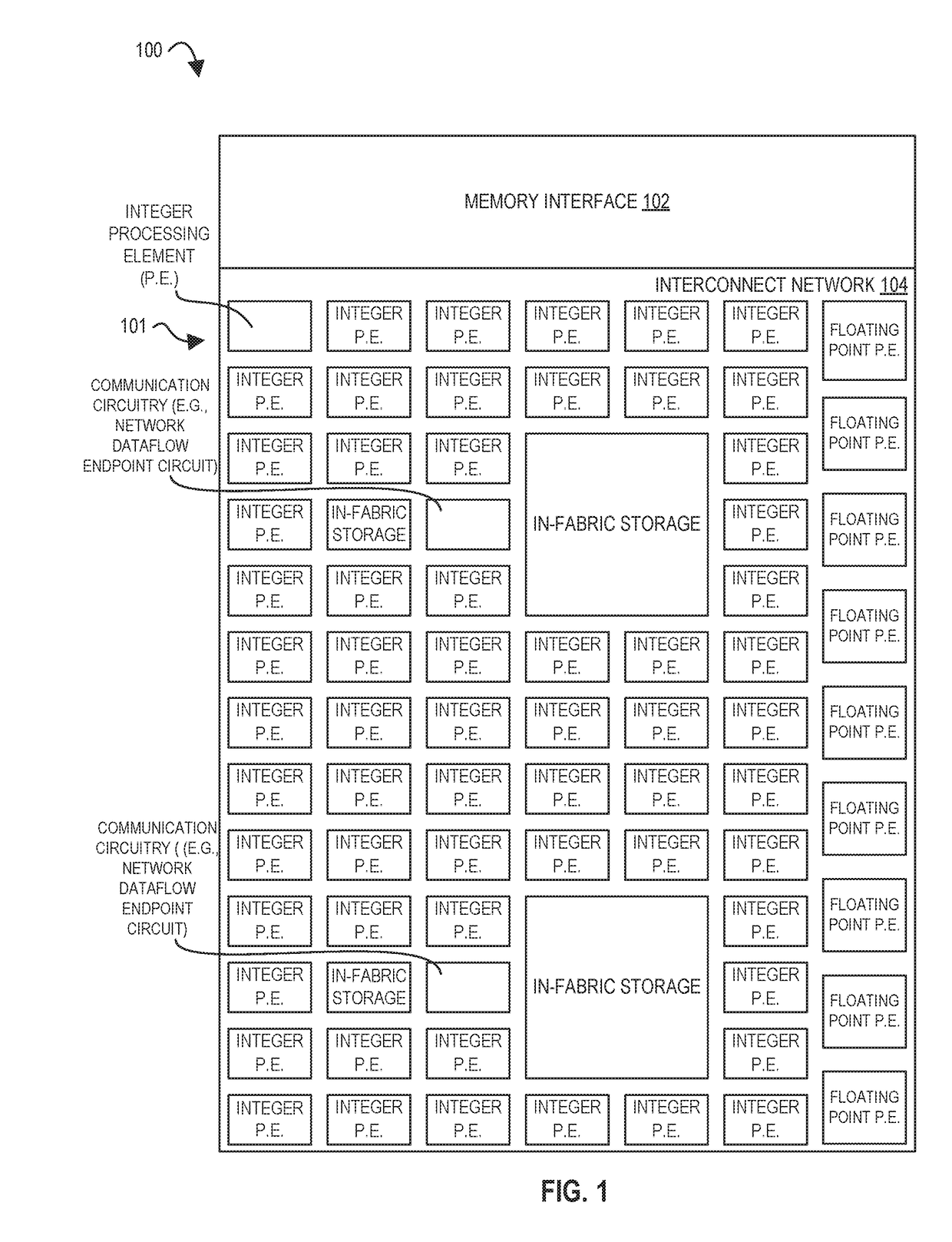

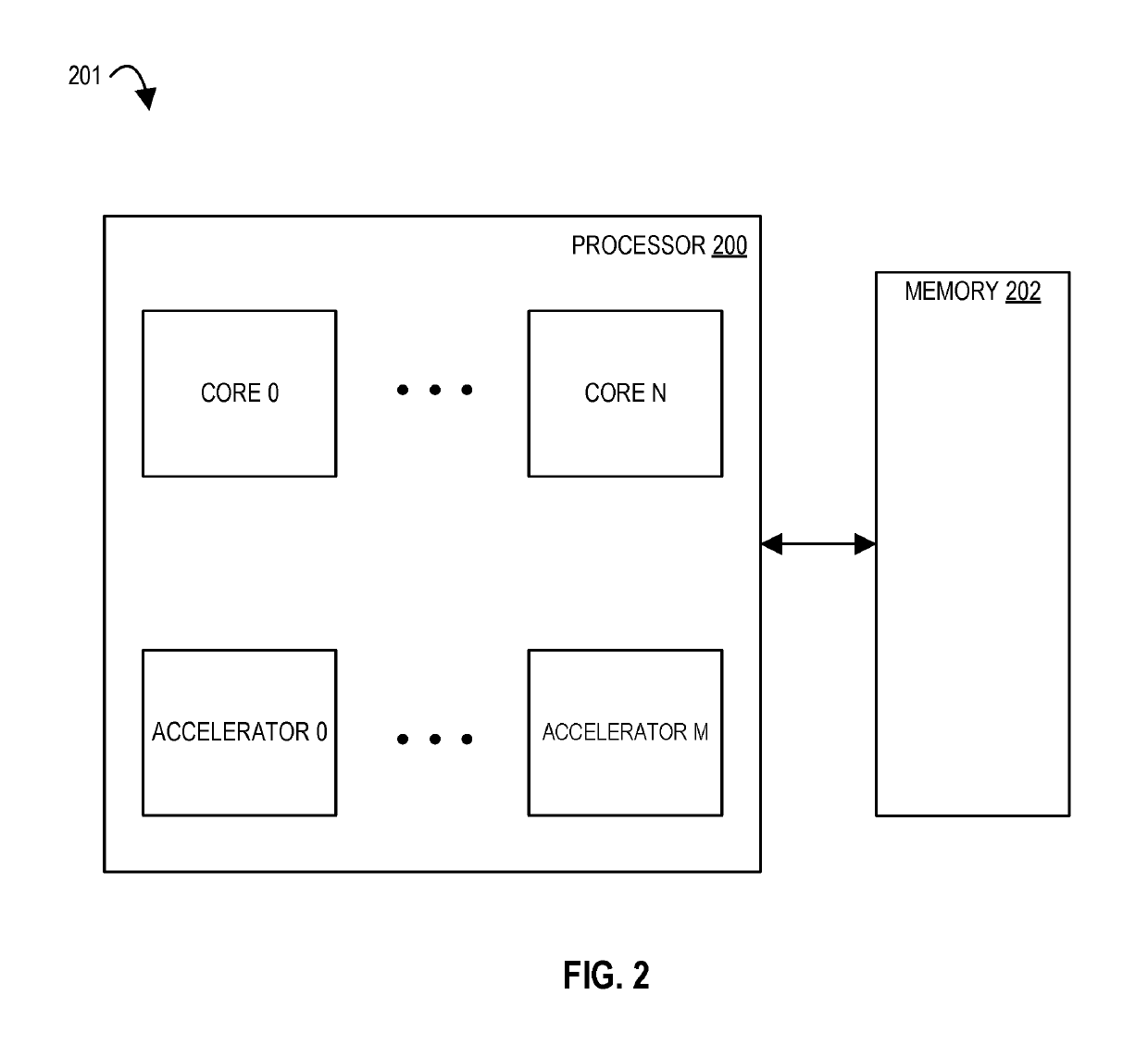

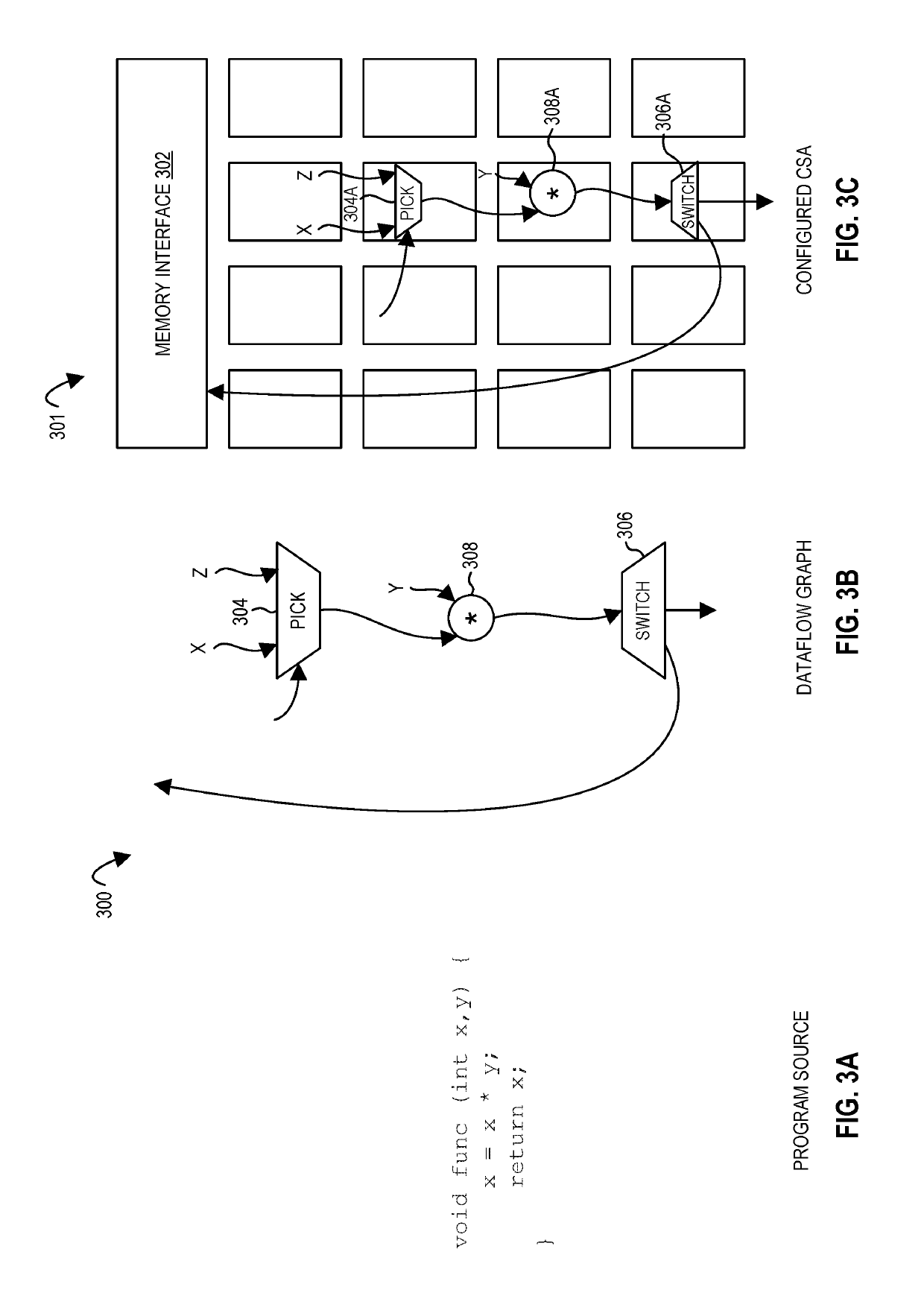

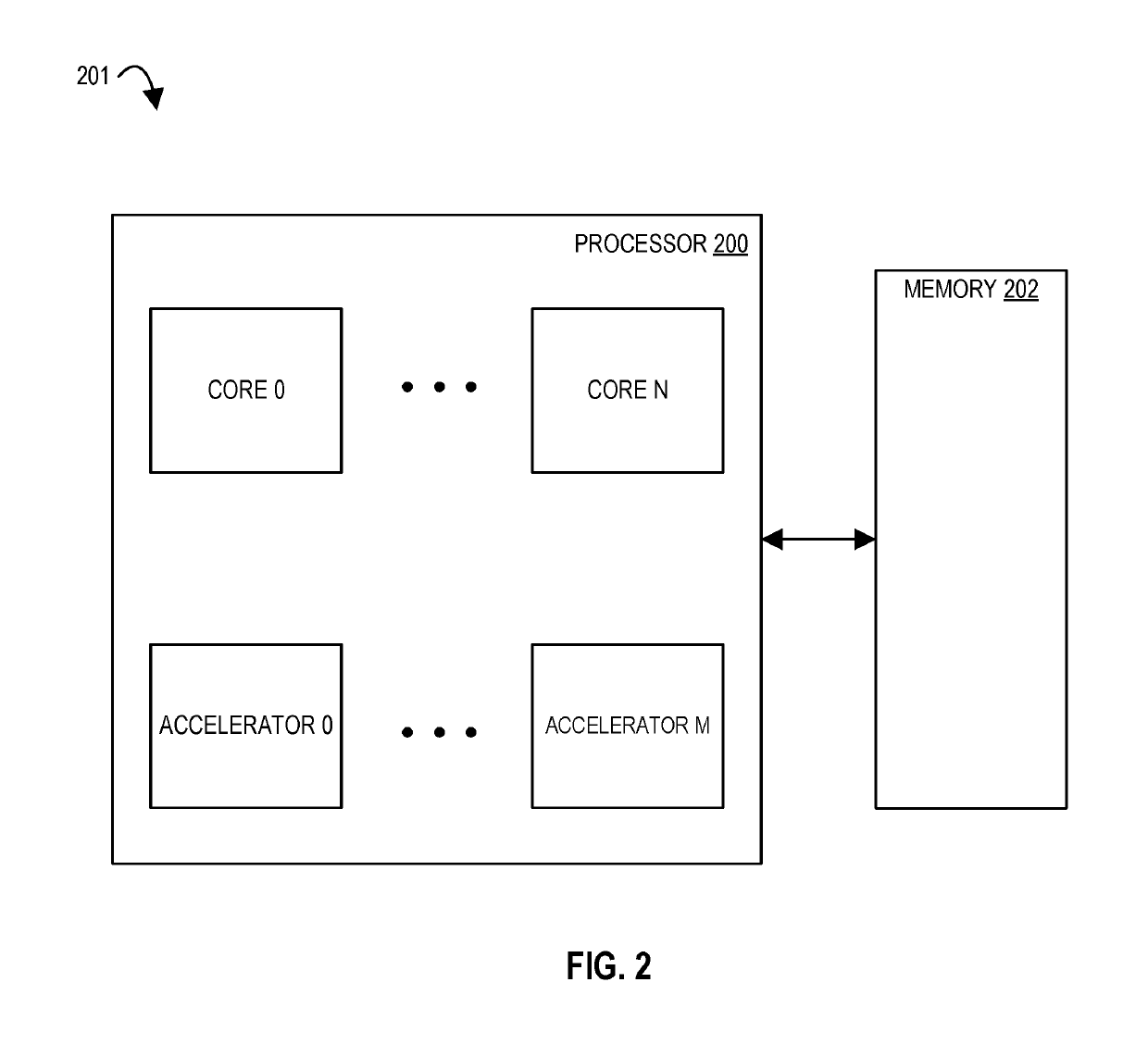

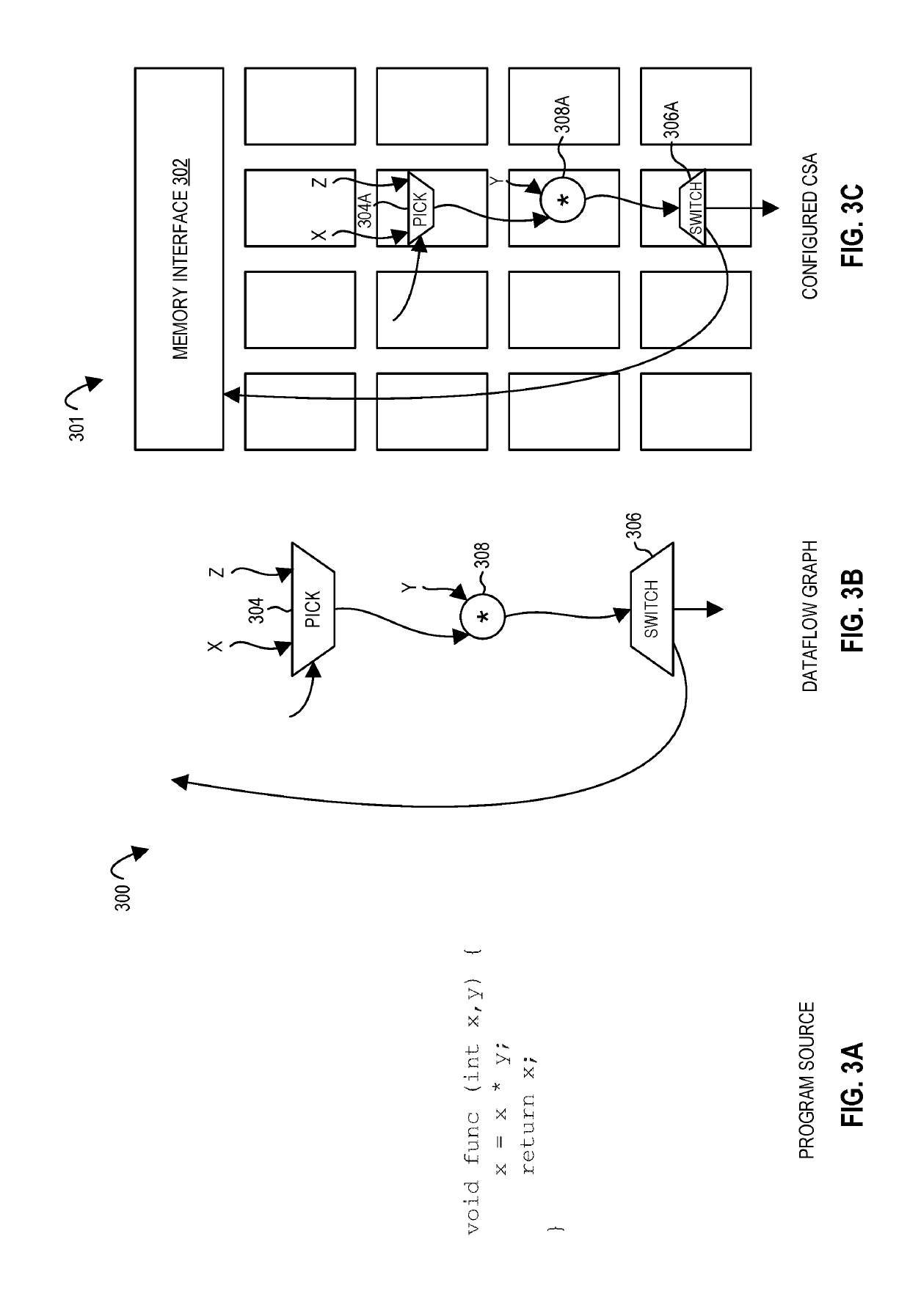

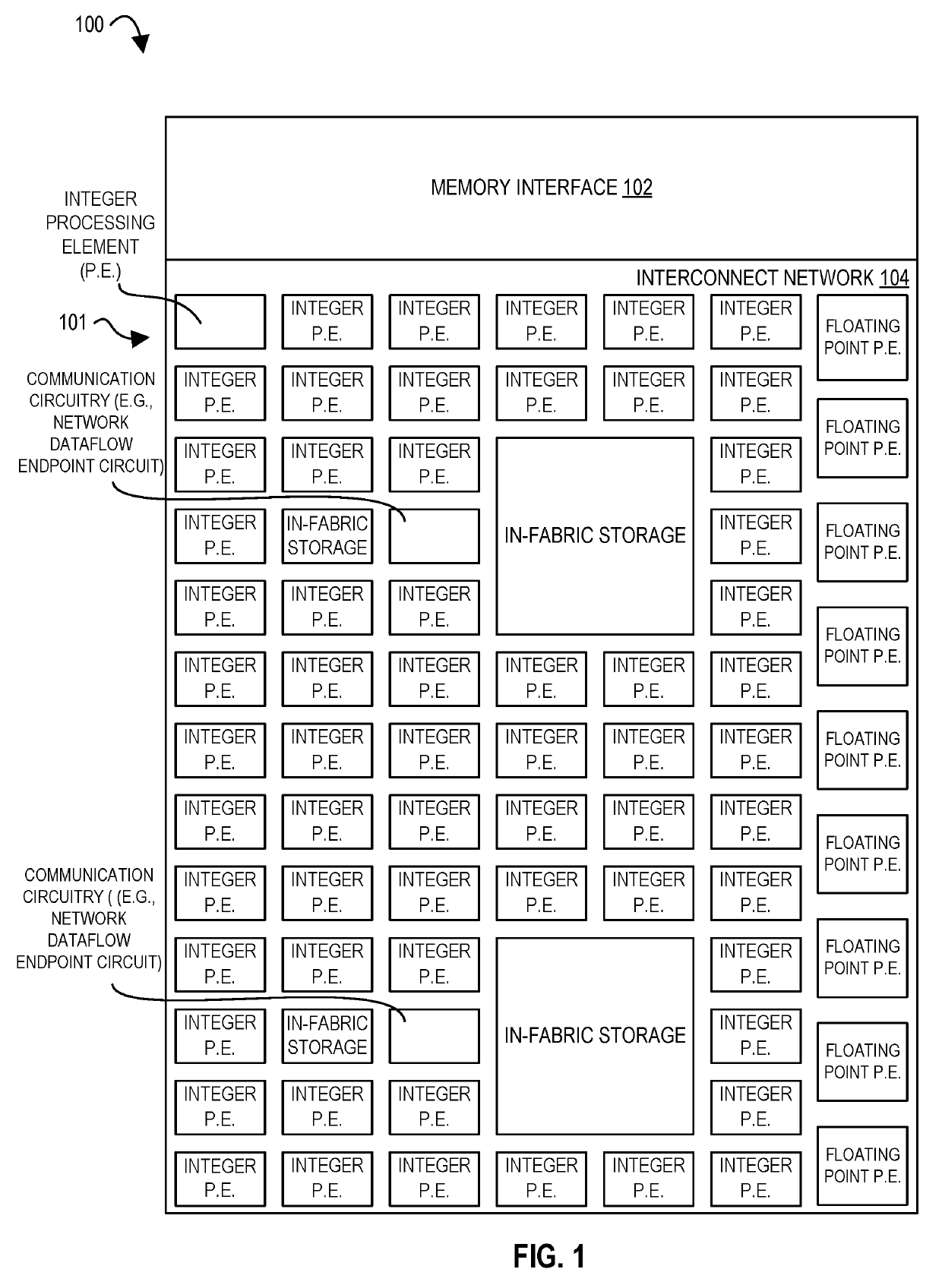

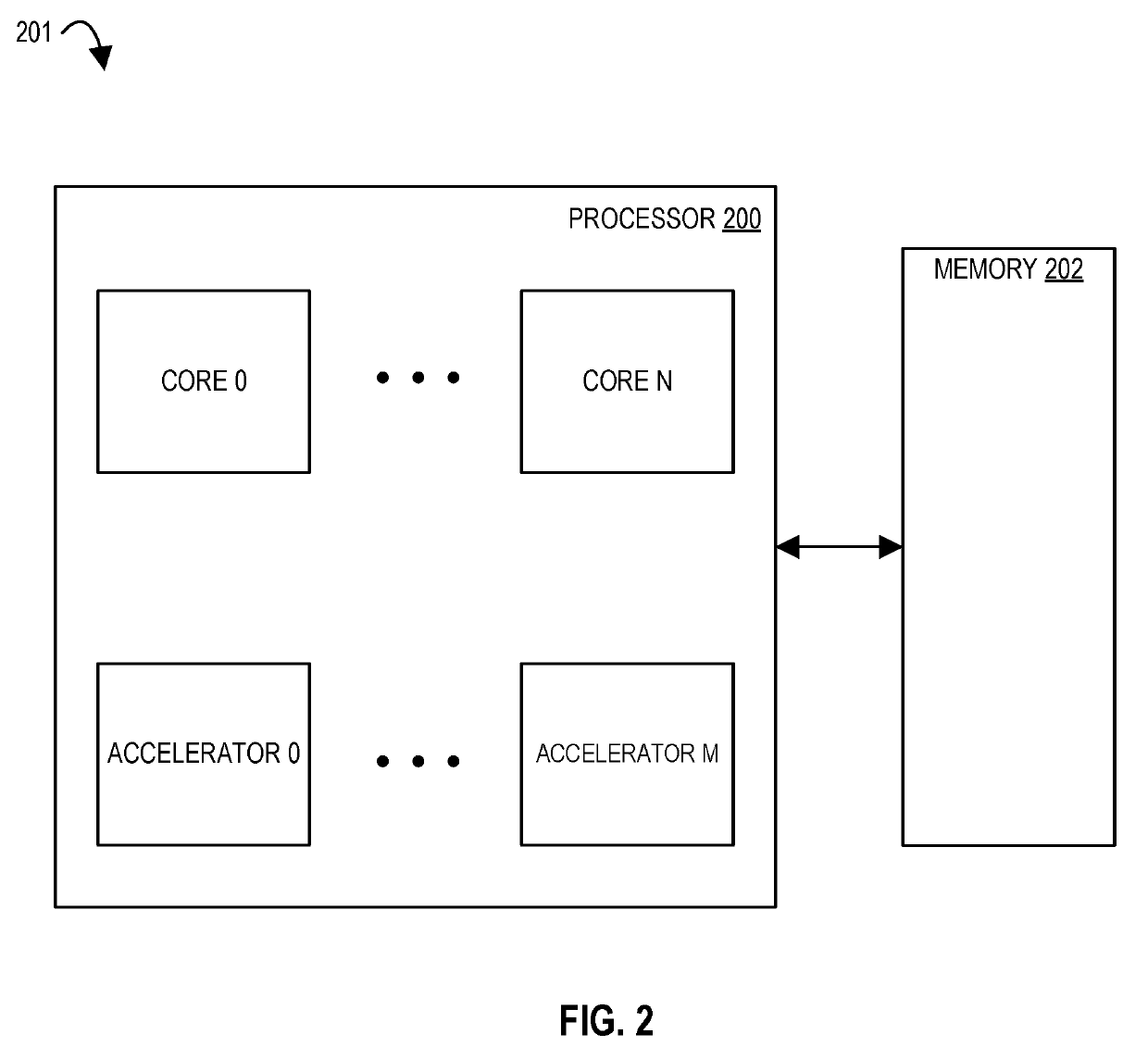

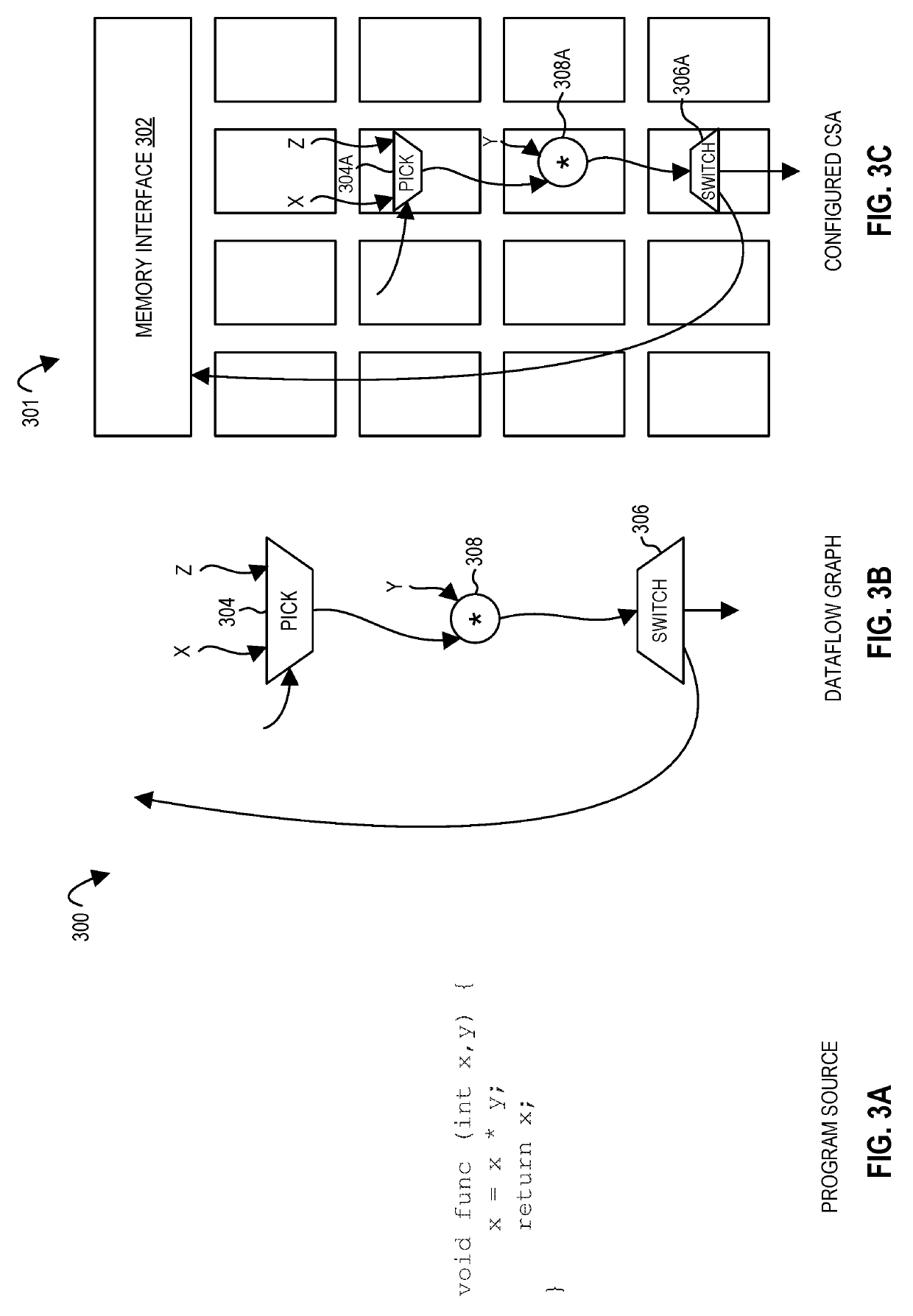

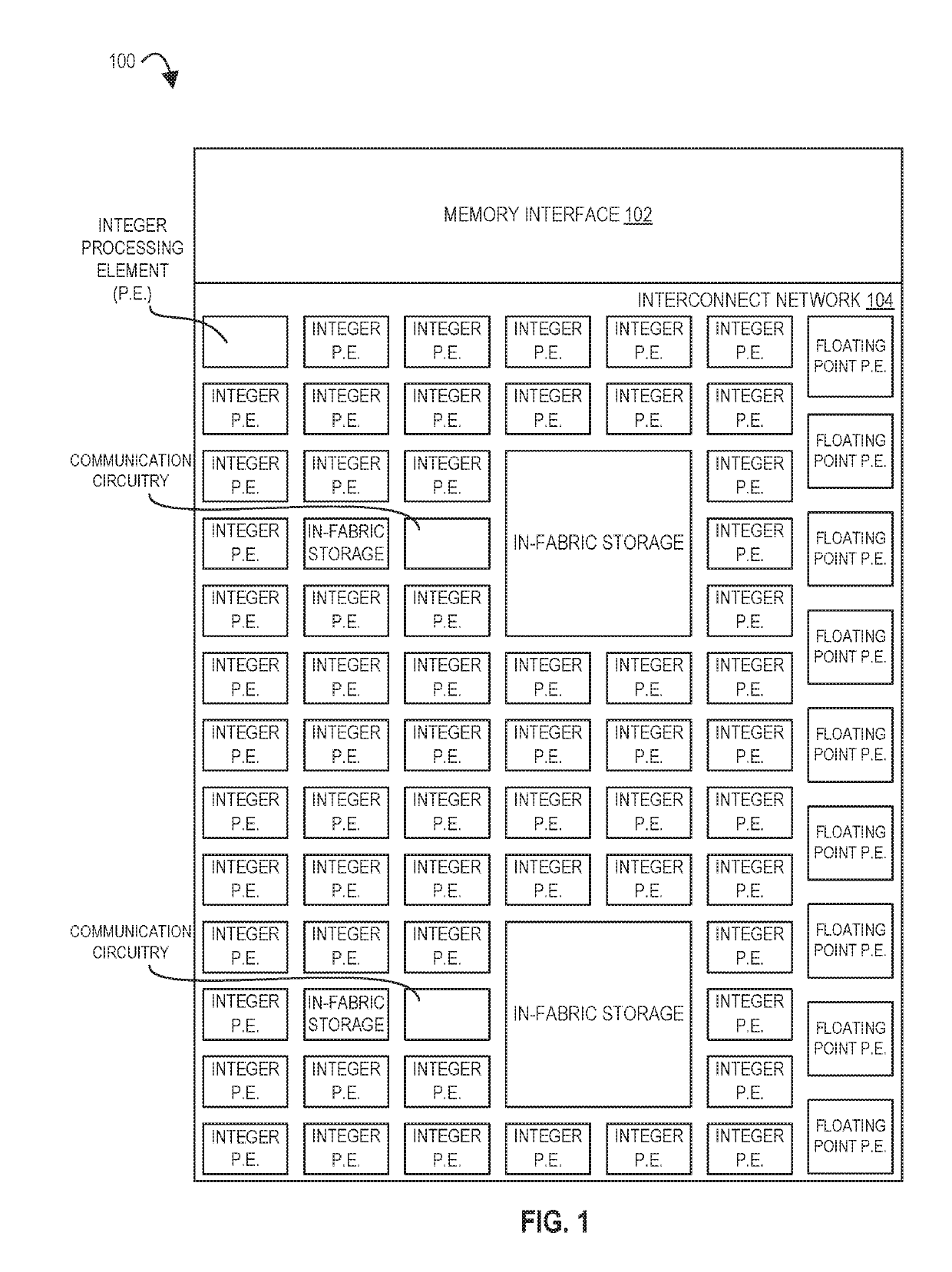

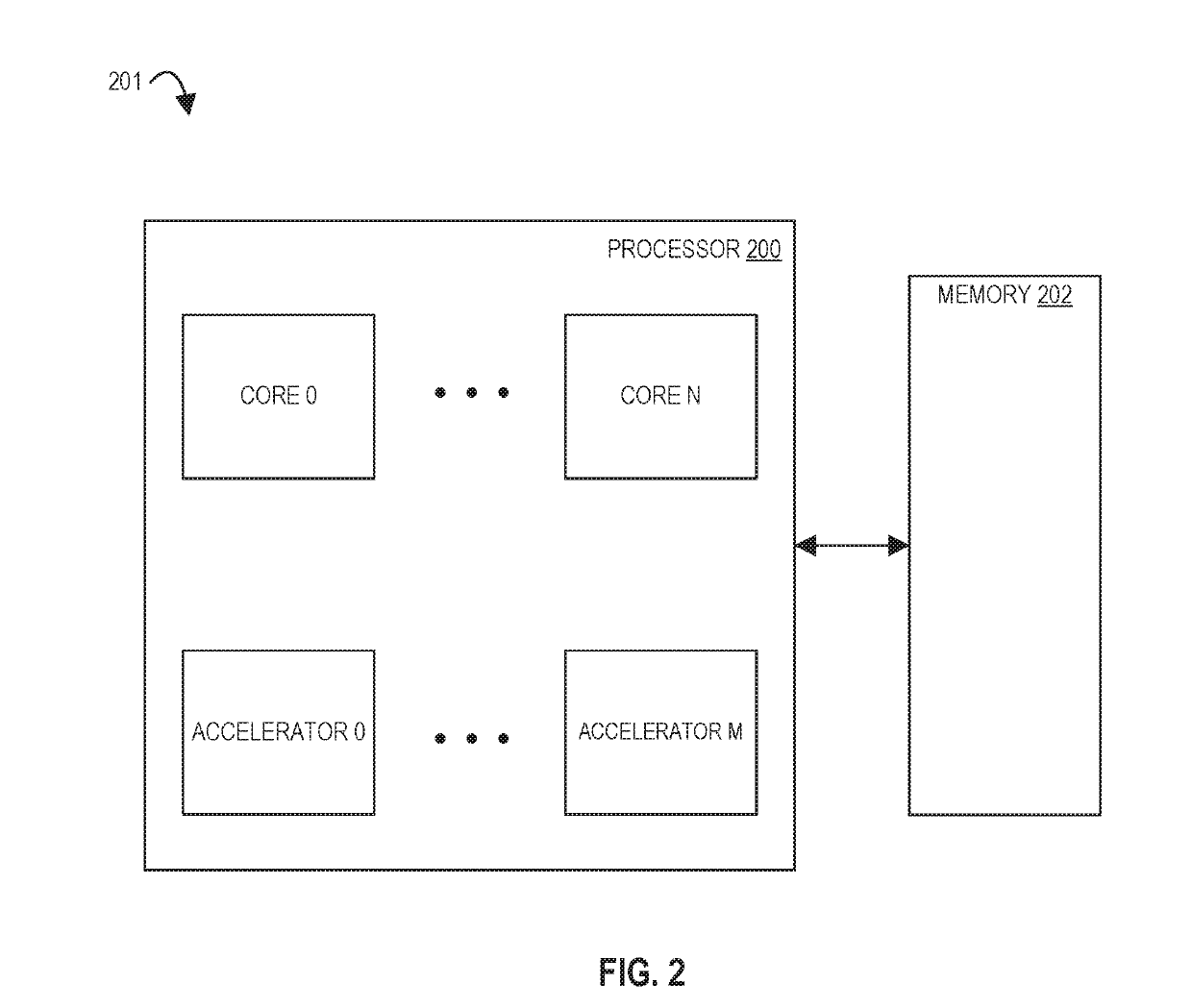

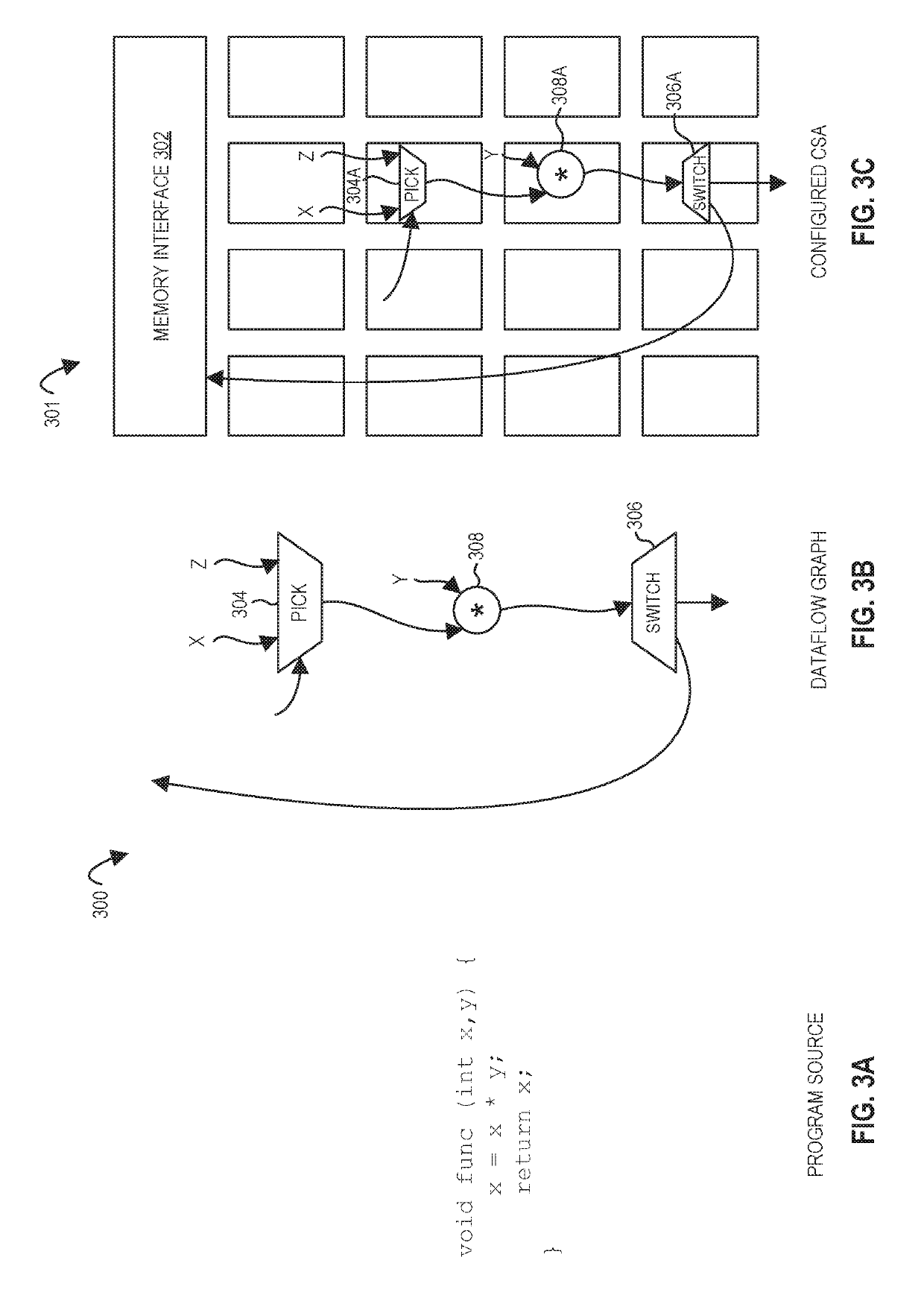

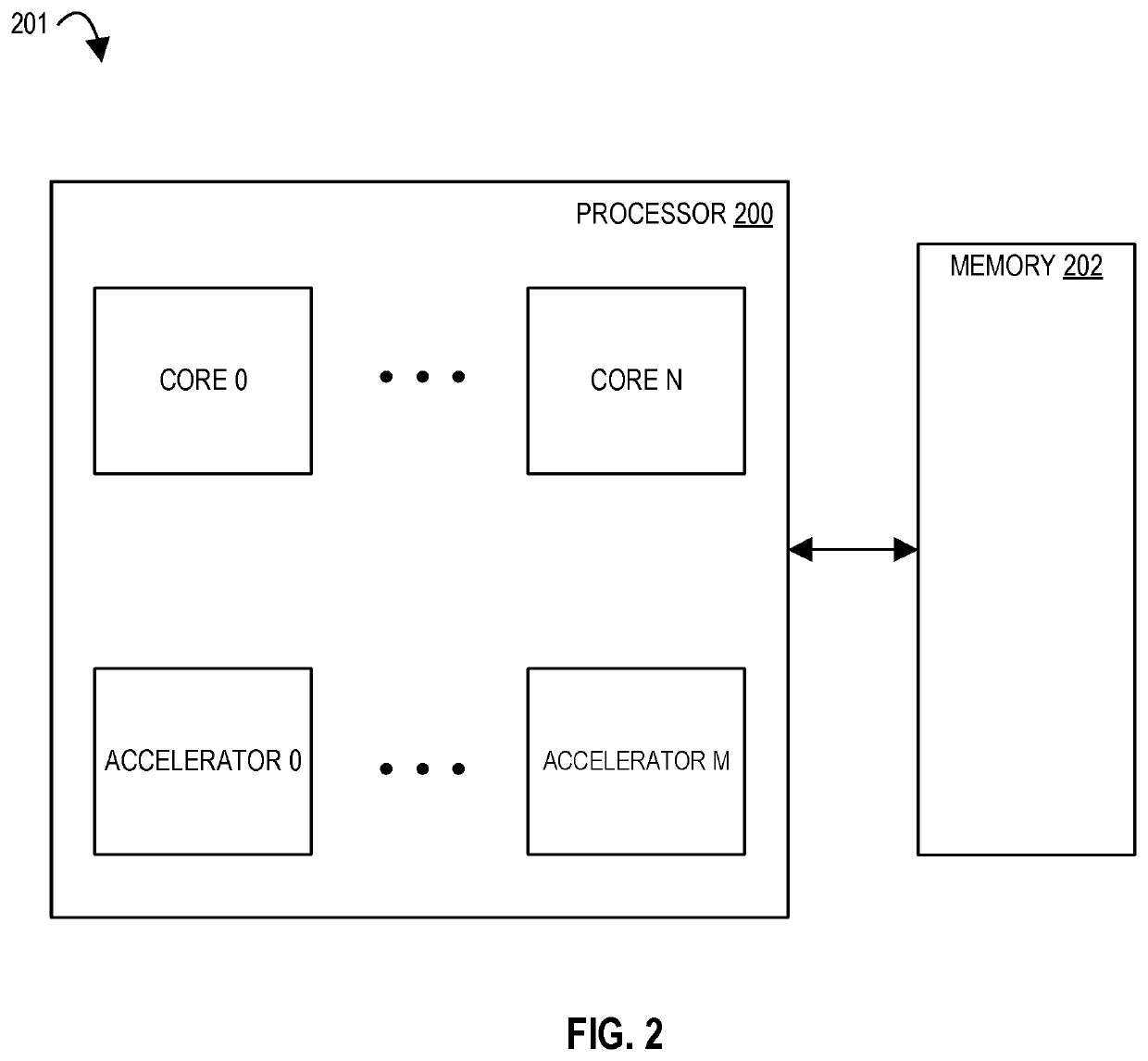

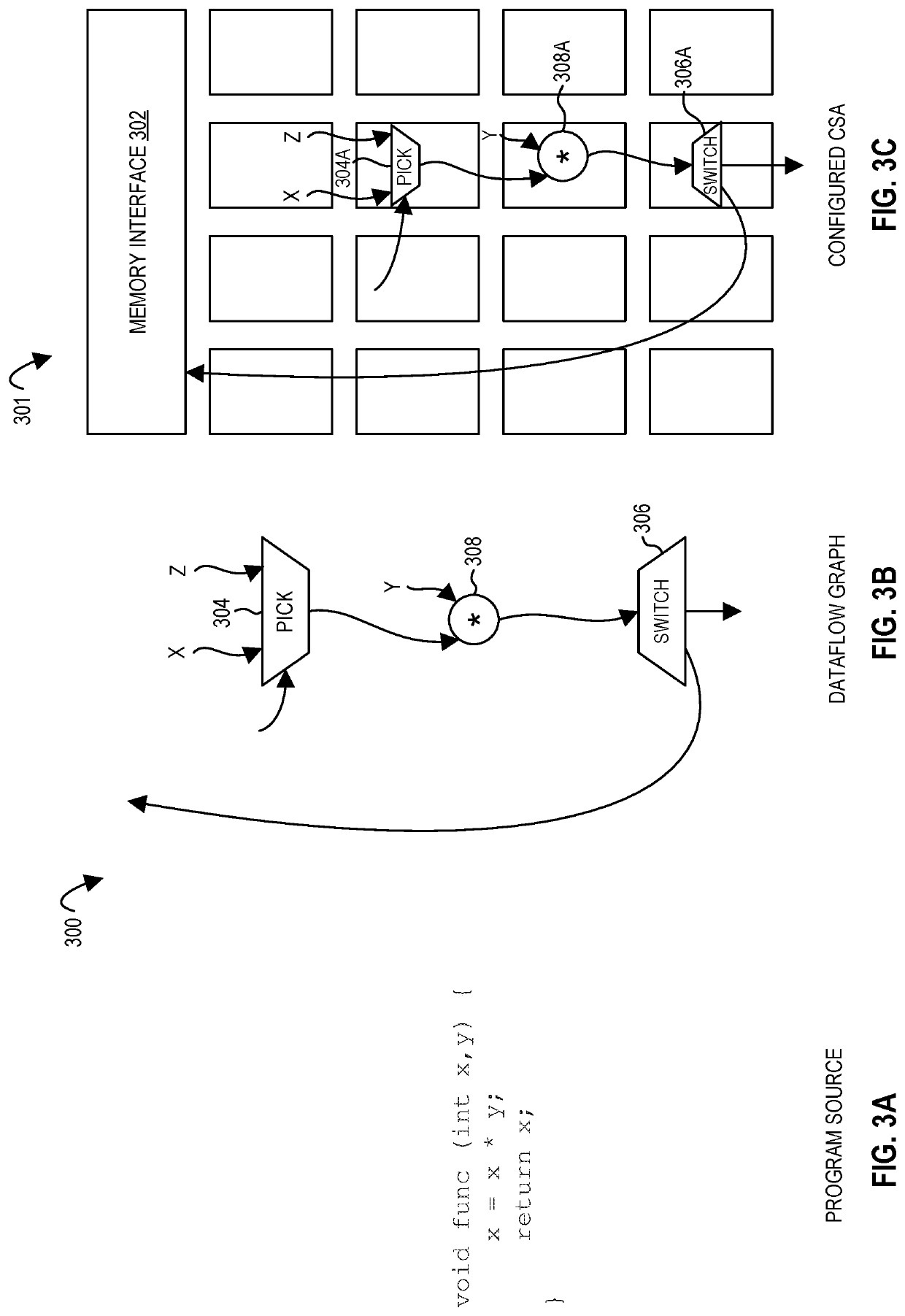

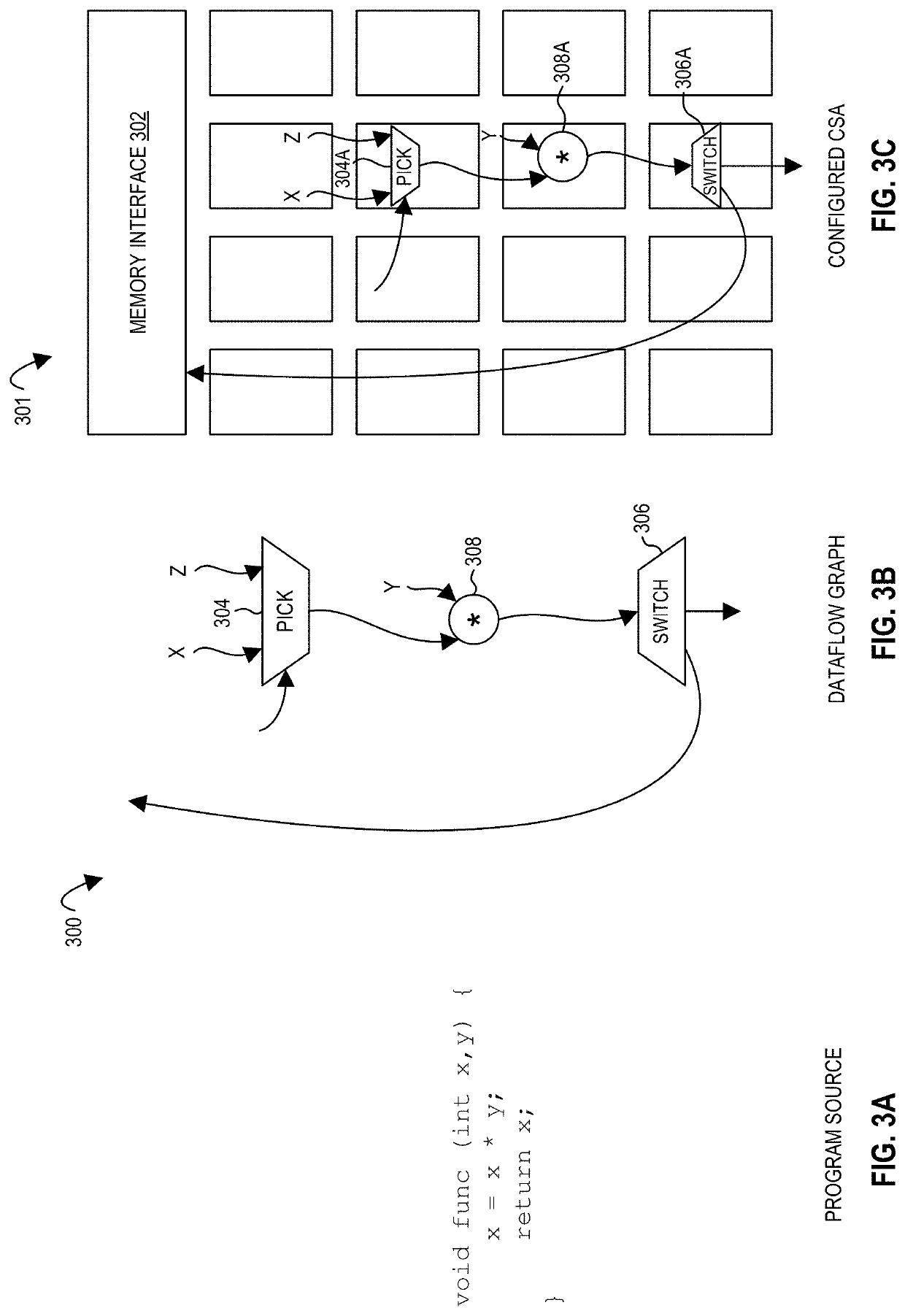

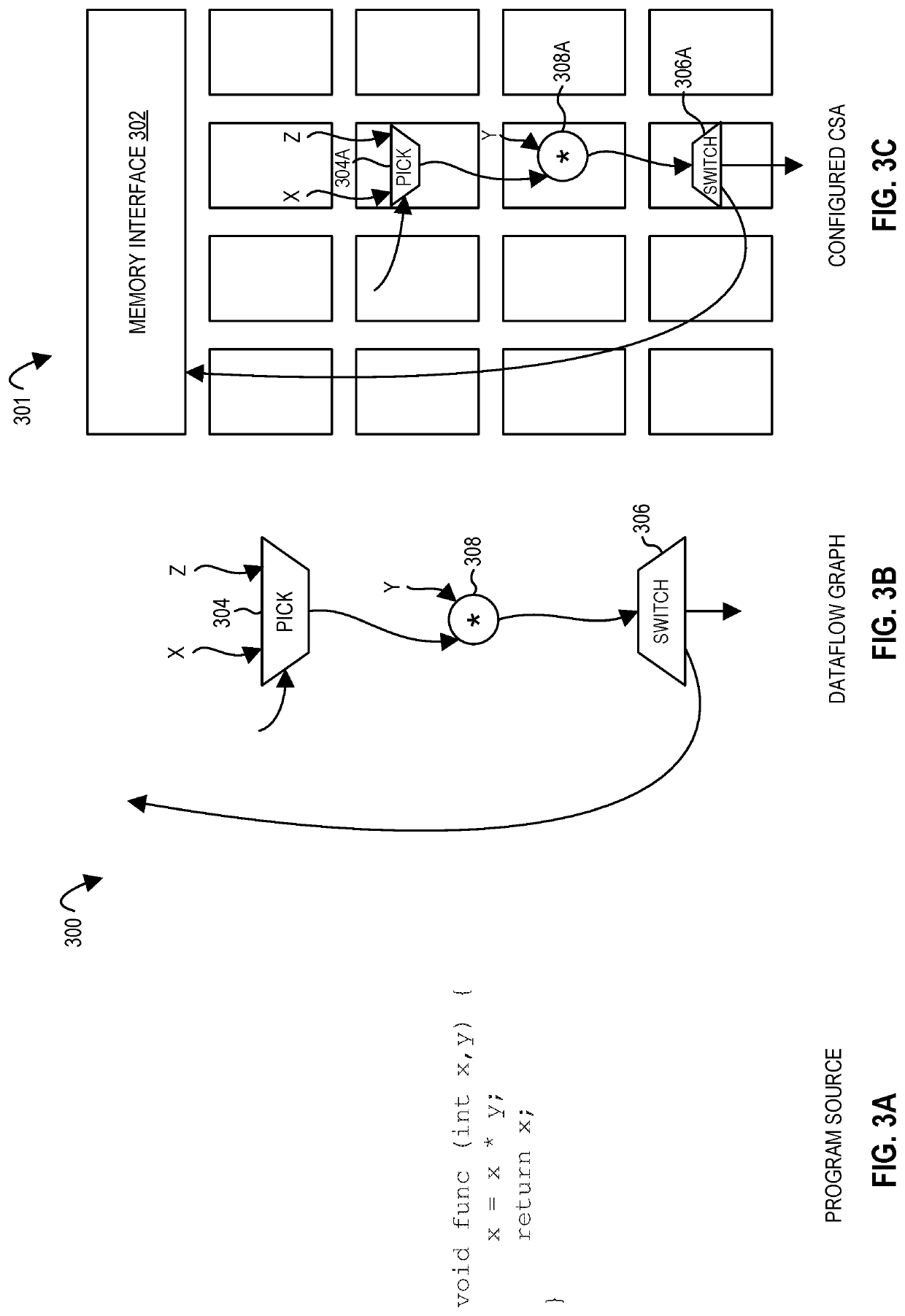

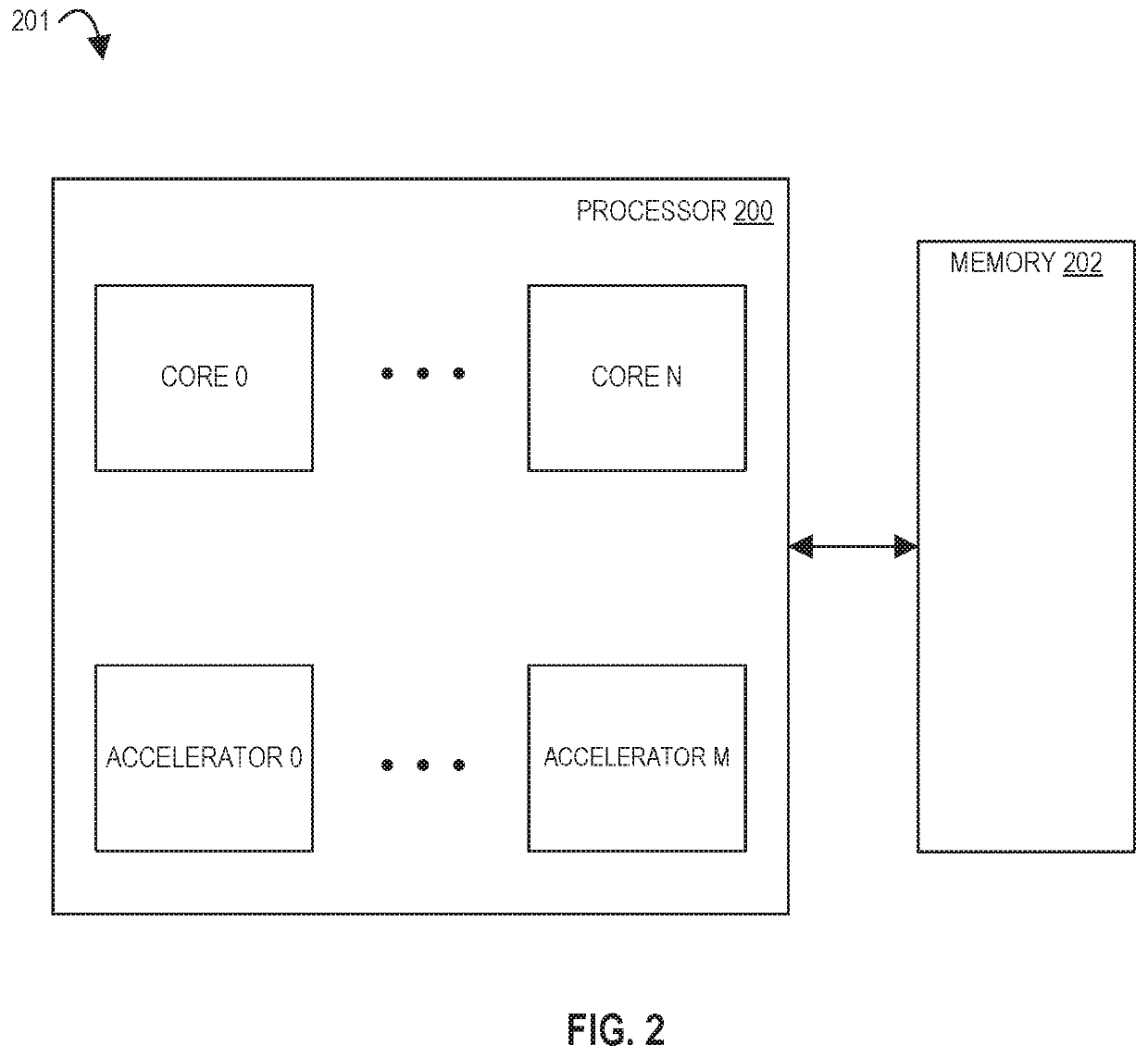

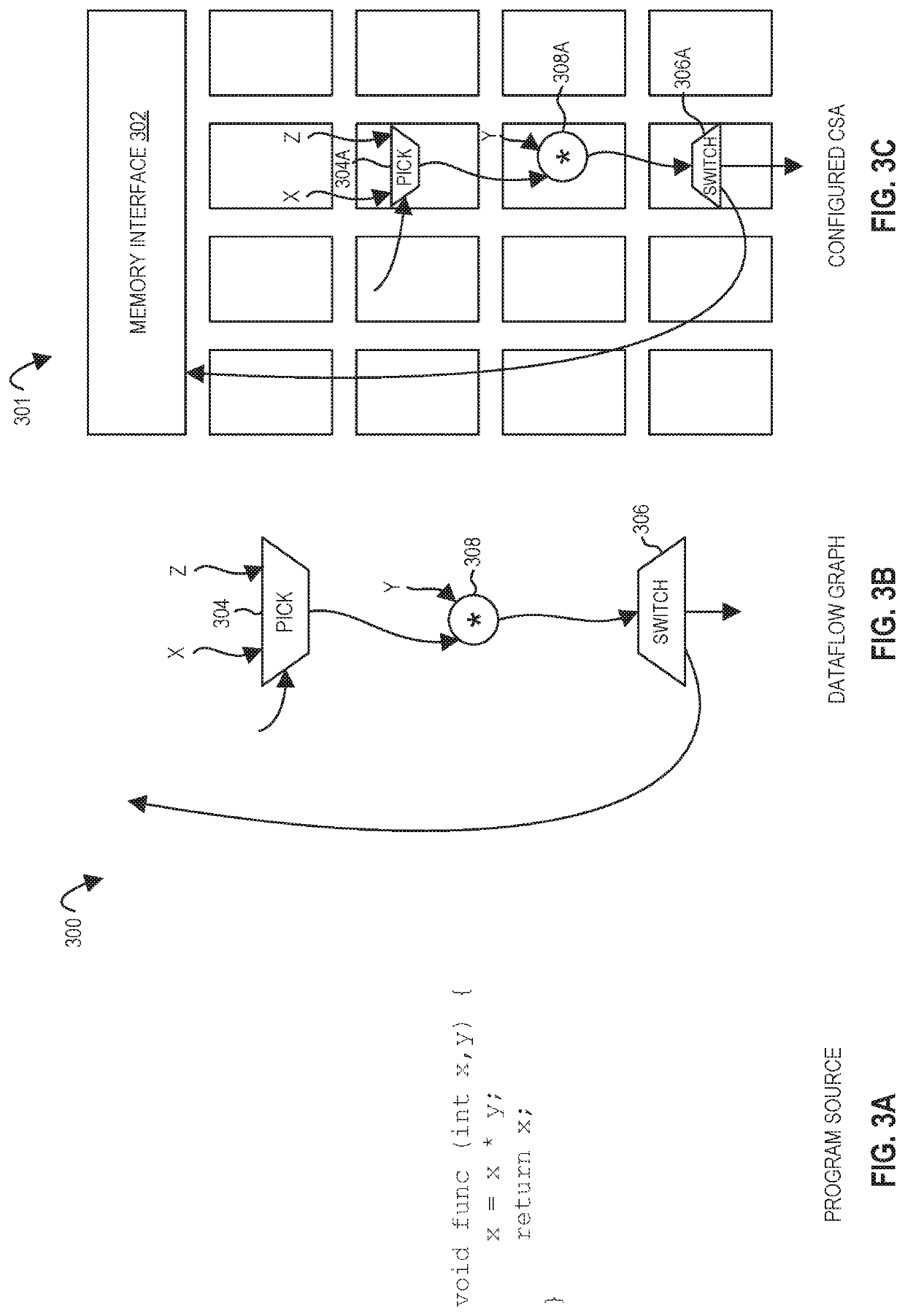

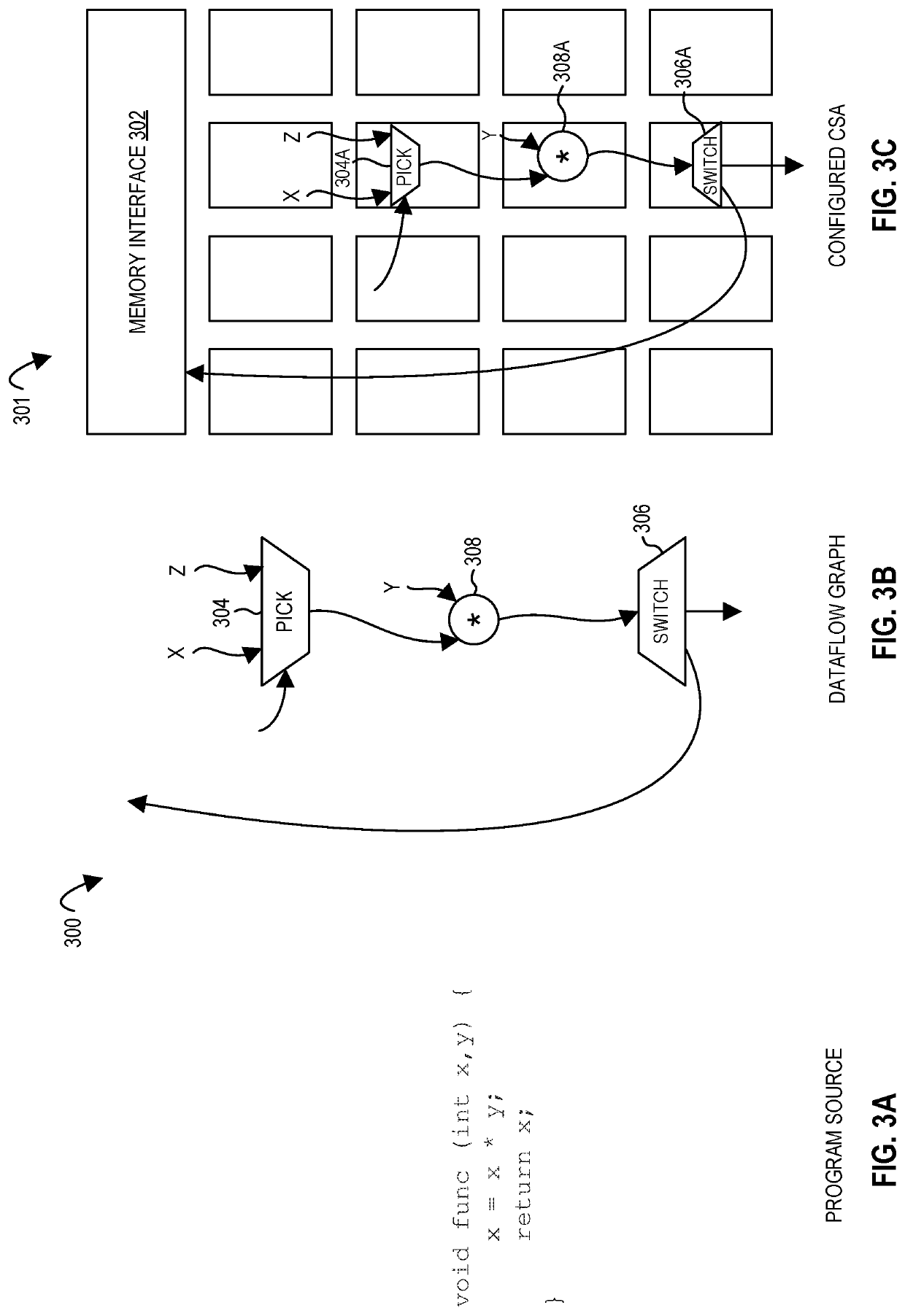

Systems, methods, and apparatuses relating to a configurable spatial accelerator are described. In one embodiment, a processor includes a core with a decoder to decode an instruction into a decoded instruction and an execution unit to execute the decoded instruction to perform a first operation; a plurality of processing elements; and an interconnect network between the plurality of processing elements to receive an input of a dataflow graph comprising a plurality of nodes, wherein the dataflow graph is to be overlaid into the interconnect network and the plurality of processing elements with each node represented as a dataflow operator in the plurality of processing elements, and the plurality of processing elements are to perform a second operation by a respective, incoming operand set arriving at each of the dataflow operators of the plurality of processing elements.

Owner:INTEL CORP

Apparatus, methods, and systems for conditional queues in a configurable spatial accelerator

ActiveUS20190303168A1Easy to adaptImprove performanceSingle instruction multiple data multiprocessorsConcurrent instruction executionDataflowDatapath

Systems, methods, and apparatuses relating to conditional queues in a configurable spatial accelerator are described. In one embodiment, a configurable spatial accelerator includes a first output buffer of a first processing element coupled to a first input buffer of a second processing element and a second input buffer of a third processing element via a data path that is to send a dataflow token to the first input buffer of the second processing element and the second input buffer of the third processing element when the dataflow token is received in the first output buffer of the first processing element; a first backpressure path from the first input buffer of the second processing element to the first processing element to indicate to the first processing element when storage is not available in the first input buffer of the second processing element; a second backpressure path from the second input buffer of the third processing element to the first processing element to indicate to the first processing element when storage is not available in the second input buffer of the third processing element; and a scheduler of the second processing element to cause storage of the dataflow token from the data path into the first input buffer of the second processing element when both the first backpressure path indicates storage is available in the first input buffer of the second processing element and a conditional token received in a conditional queue of the second processing element from another processing element is a true conditional token.

Owner:INTEL CORP

Apparatus, methods, and systems for memory consistency in a configurable spatial accelerator

ActiveUS20190205284A1Easy to adaptImprove performanceInterprogram communicationDigital computer detailsComputer scienceProcedure sequence

Owner:INTEL CORP

Processors, methods, and systems for debugging a configurable spatial accelerator

ActiveUS20190095383A1Easy to adaptImprove performanceAssociative processorsDataflow computersProcessing elementOperand

Systems, methods, and apparatuses relating to debugging a configurable spatial accelerator are described. In one embodiment, a processor includes a plurality of processing elements and an interconnect network between the plurality of processing elements to receive an input of a dataflow graph comprising a plurality of nodes, wherein the dataflow graph is to be overlaid into the interconnect network and the plurality of processing elements with each node represented as a dataflow operator in the plurality of processing elements, and the plurality of processing elements are to perform an operation by a respective, incoming operand set arriving at each of the dataflow operators of the plurality of processing elements. At least a first of the plurality of processing elements is to enter a halted state in response to being represented as a first of the plurality of dataflow operators.

Owner:INTEL CORP

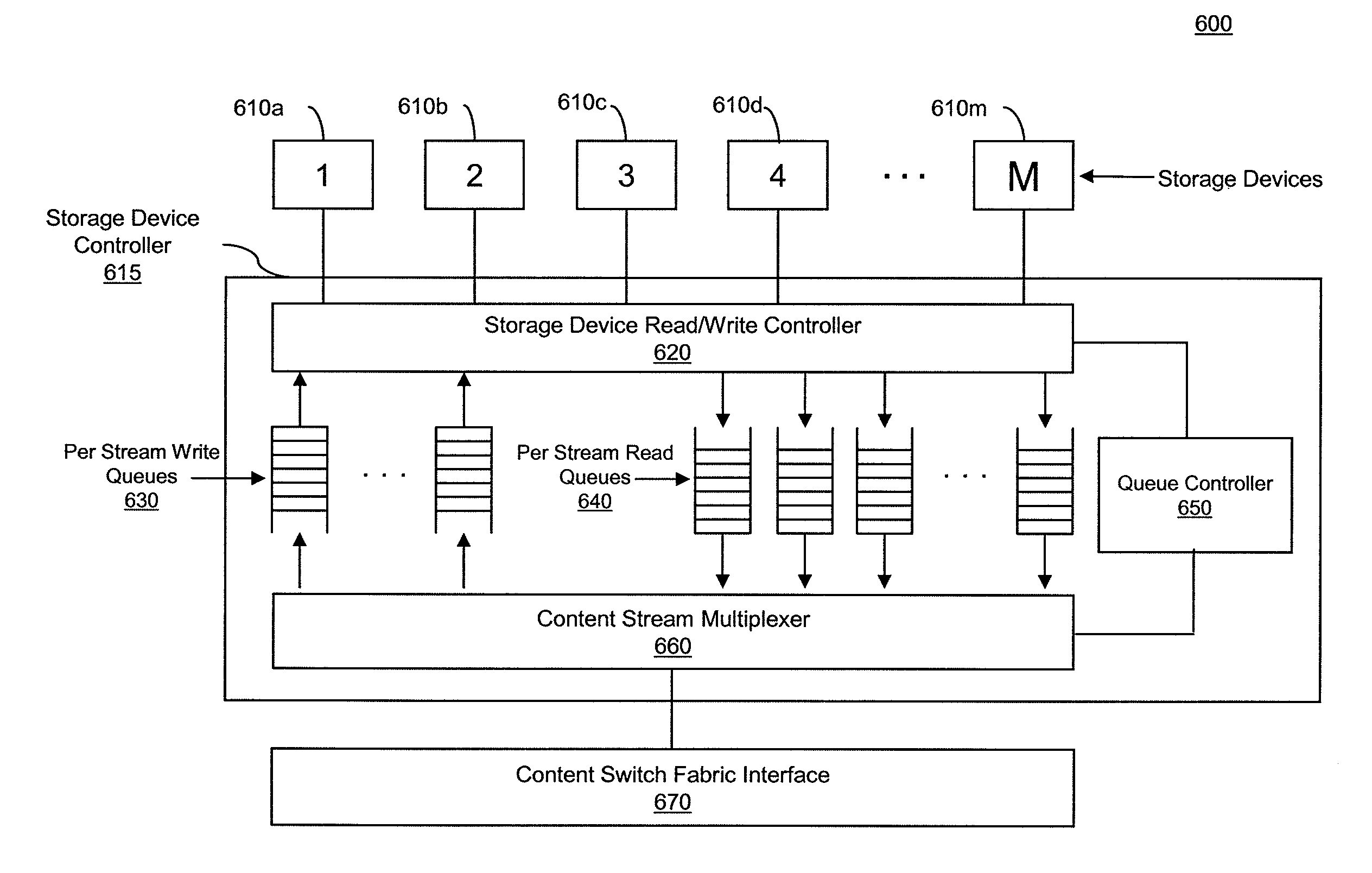

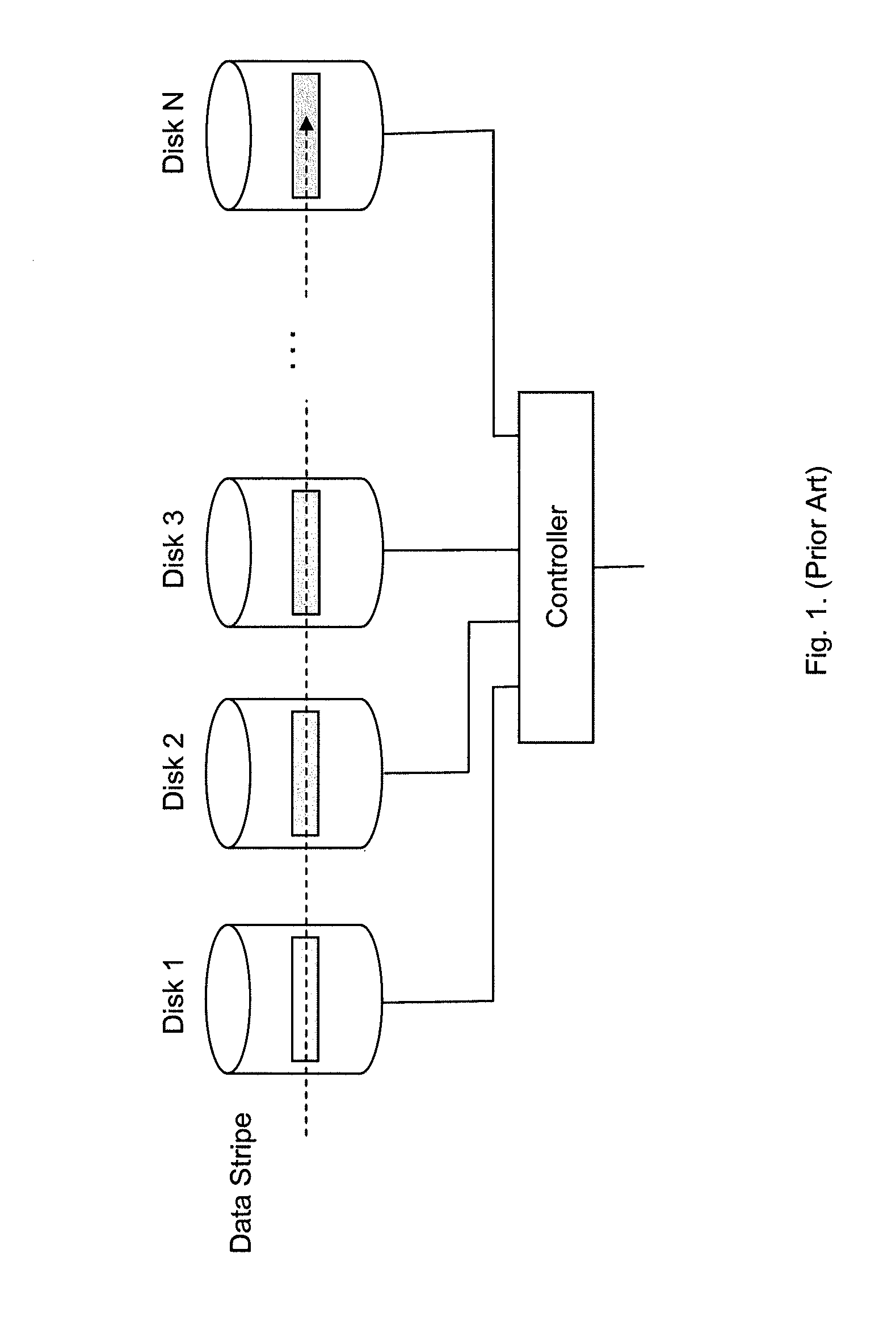

Storage of Data

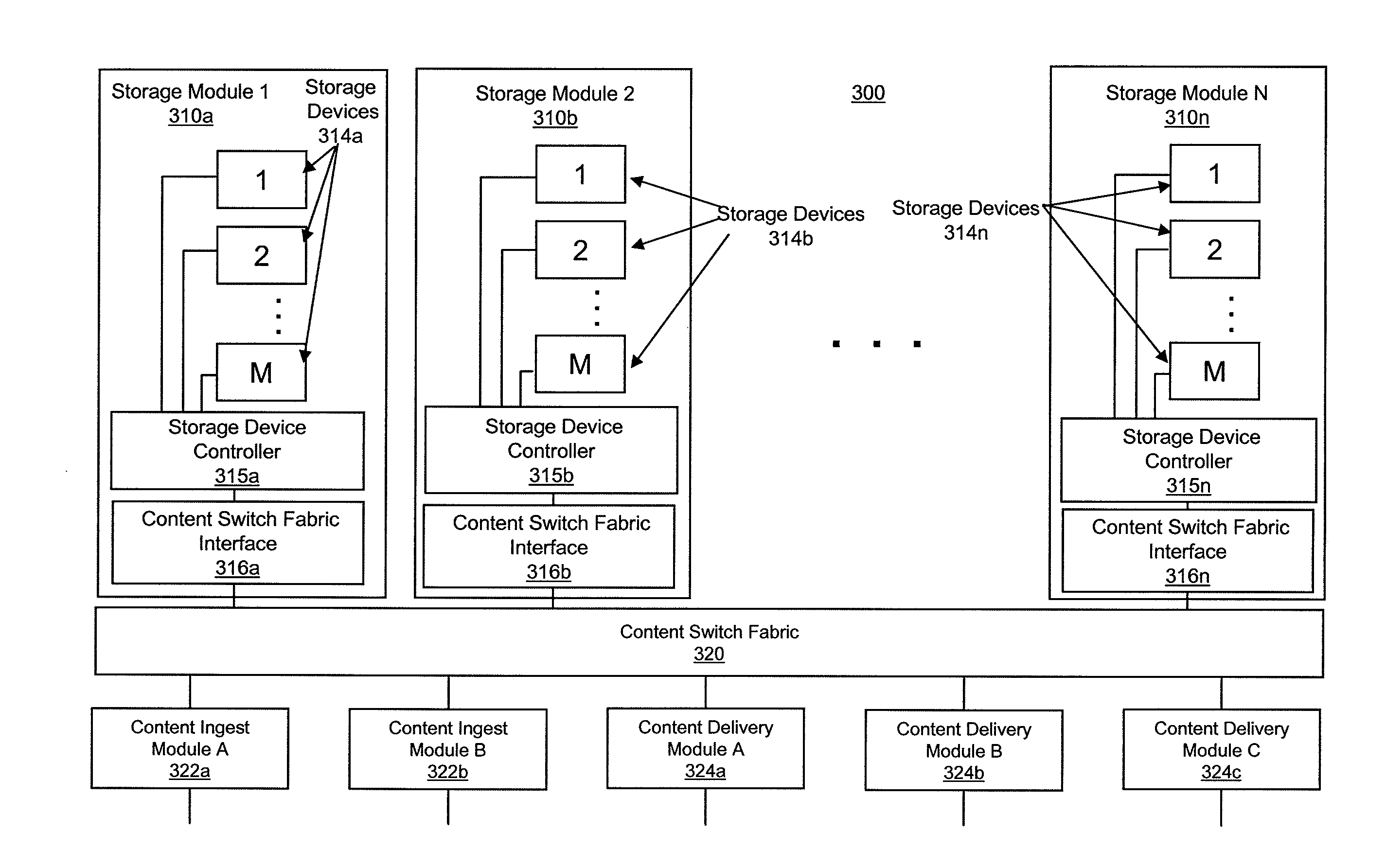

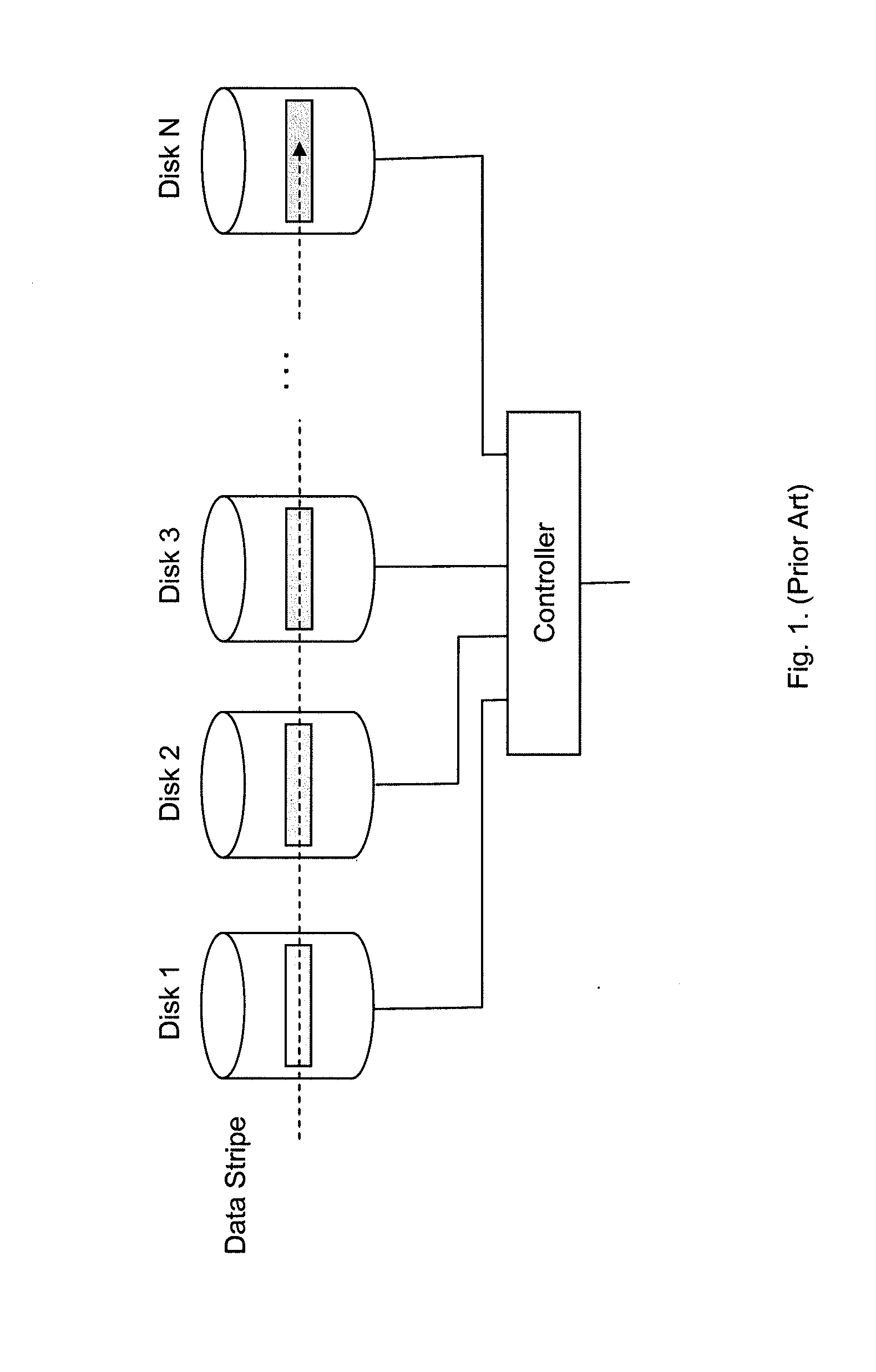

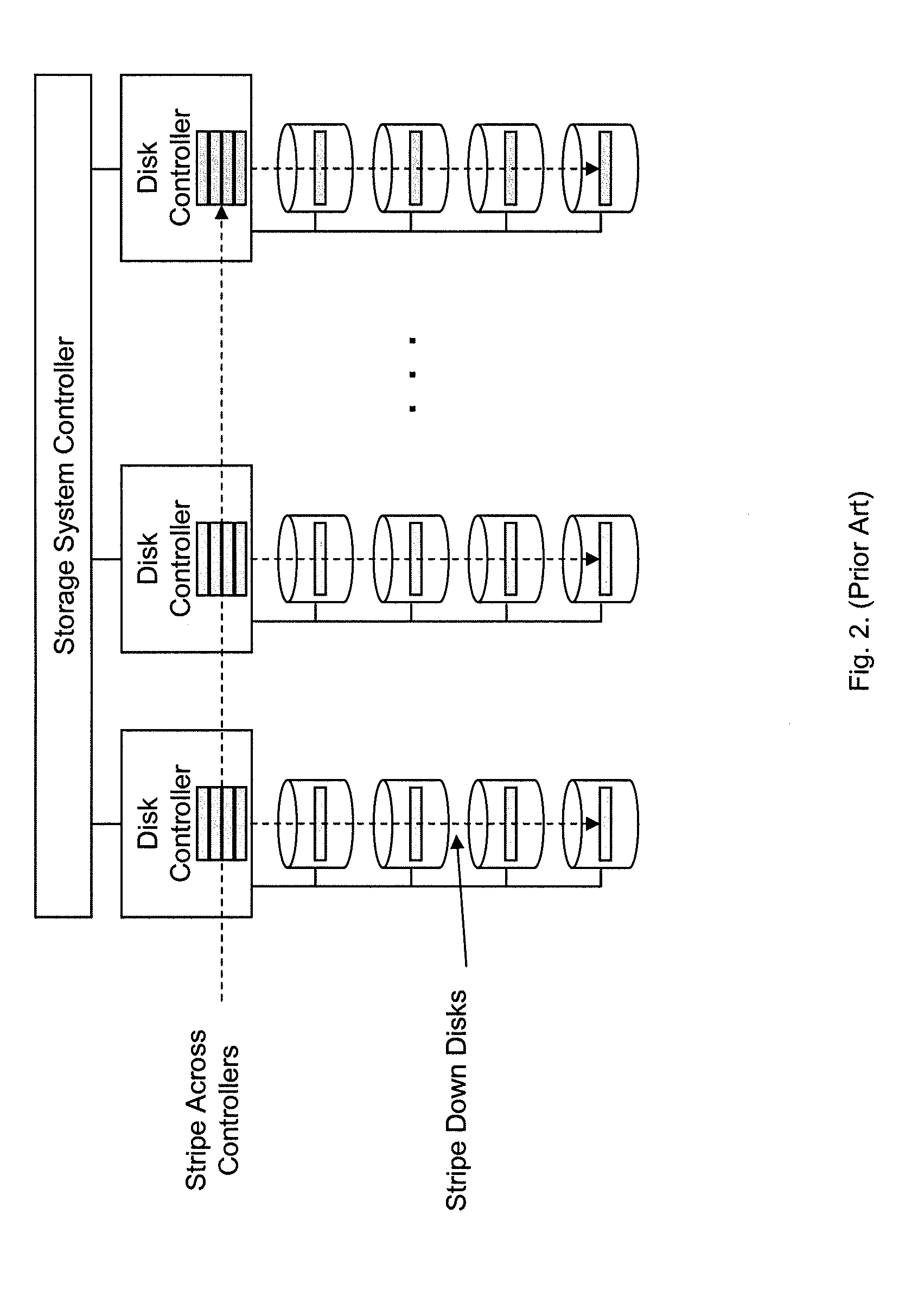

ActiveUS20090182790A1Reduces large streaming bufferImprove delivery efficiencyError detection/correctionMemory adressing/allocation/relocationData segmentData rate

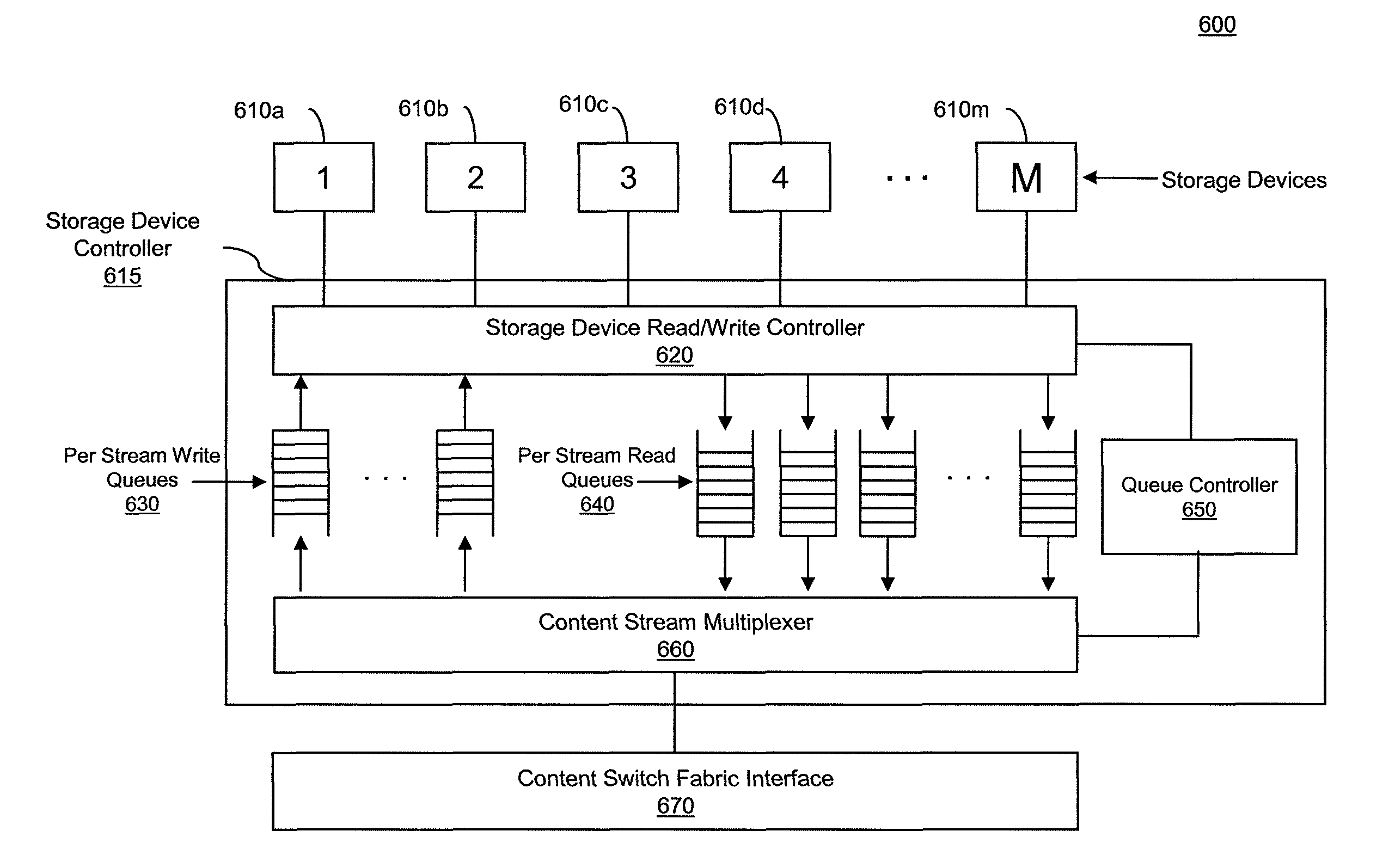

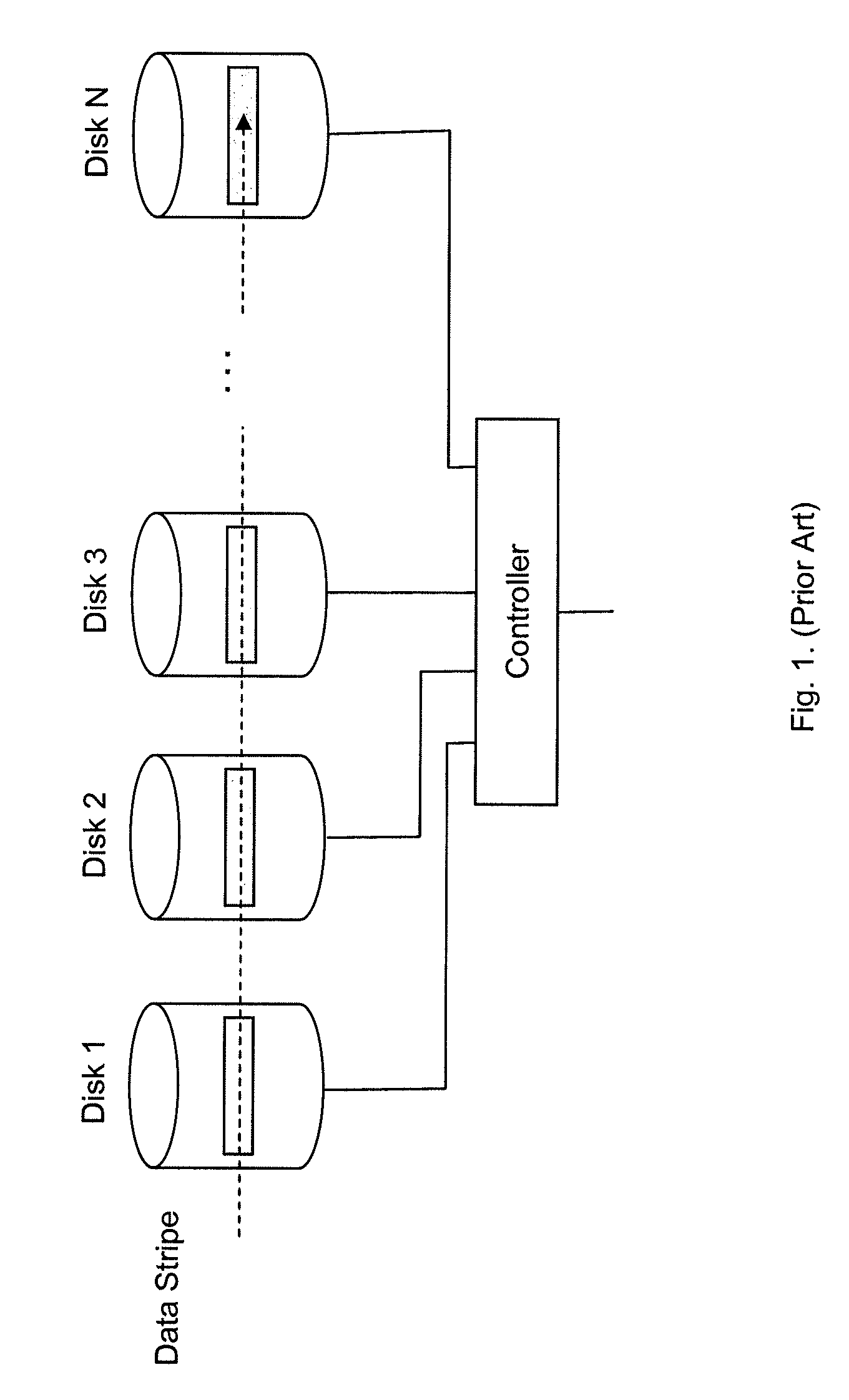

In one example, multimedia content is requested from a plurality of storage modules. Each storage module retrieves the requested parts, which are typically stored on a plurality of storage devices at each storage module. Each storage module determines independently when to retrieve the requested parts of the data file from storage and transmits those parts from storage to a data queue. Based on a capacity of a delivery module and / or the data rate associated with the request, each storage module transmits the parts of the data file to the delivery module. The delivery module generates a sequenced data segment from the parts of the data file received from the plurality of storage modules and transmits the sequenced data segment to the requester.

Owner:AKAMAI TECH INC

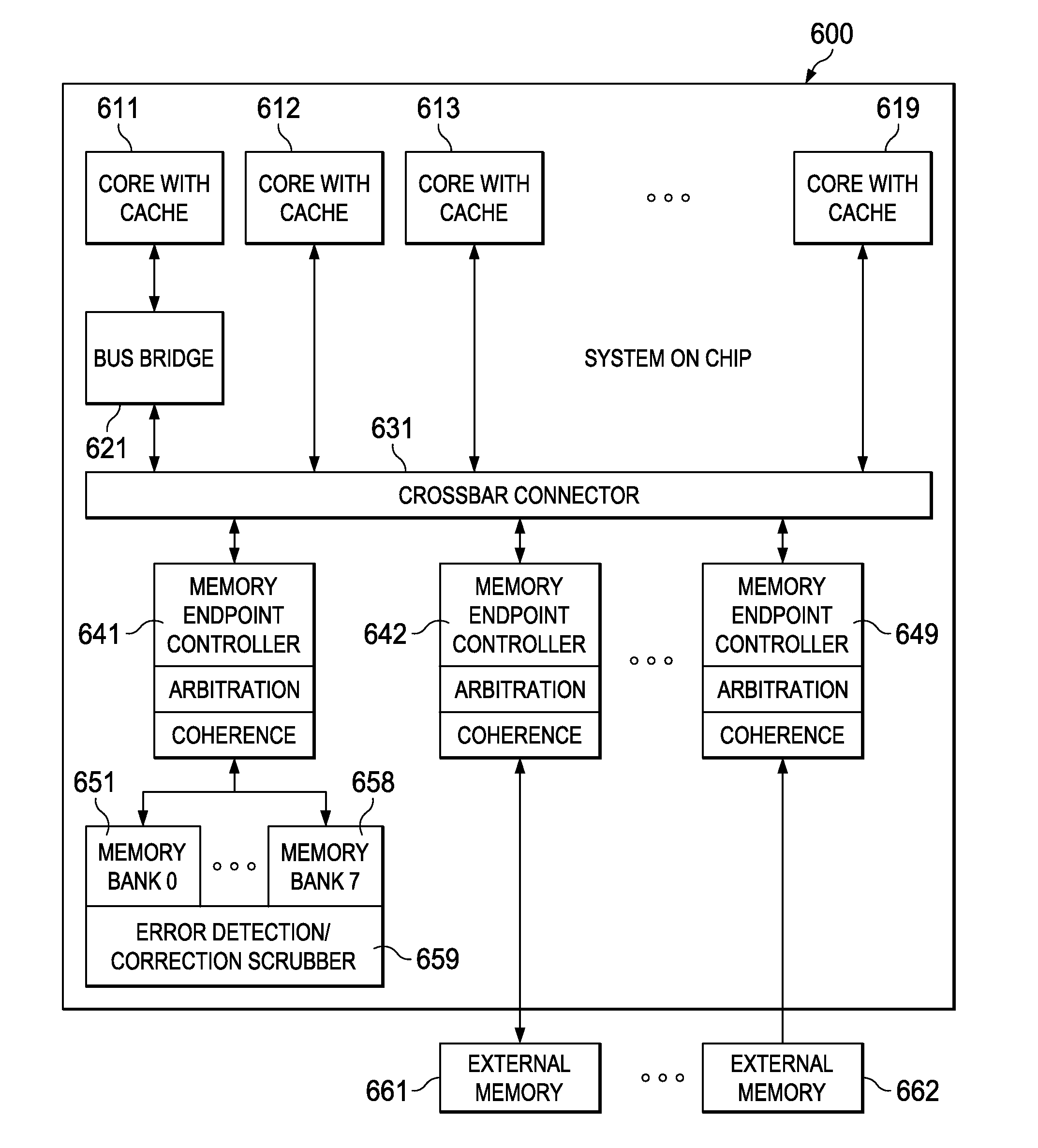

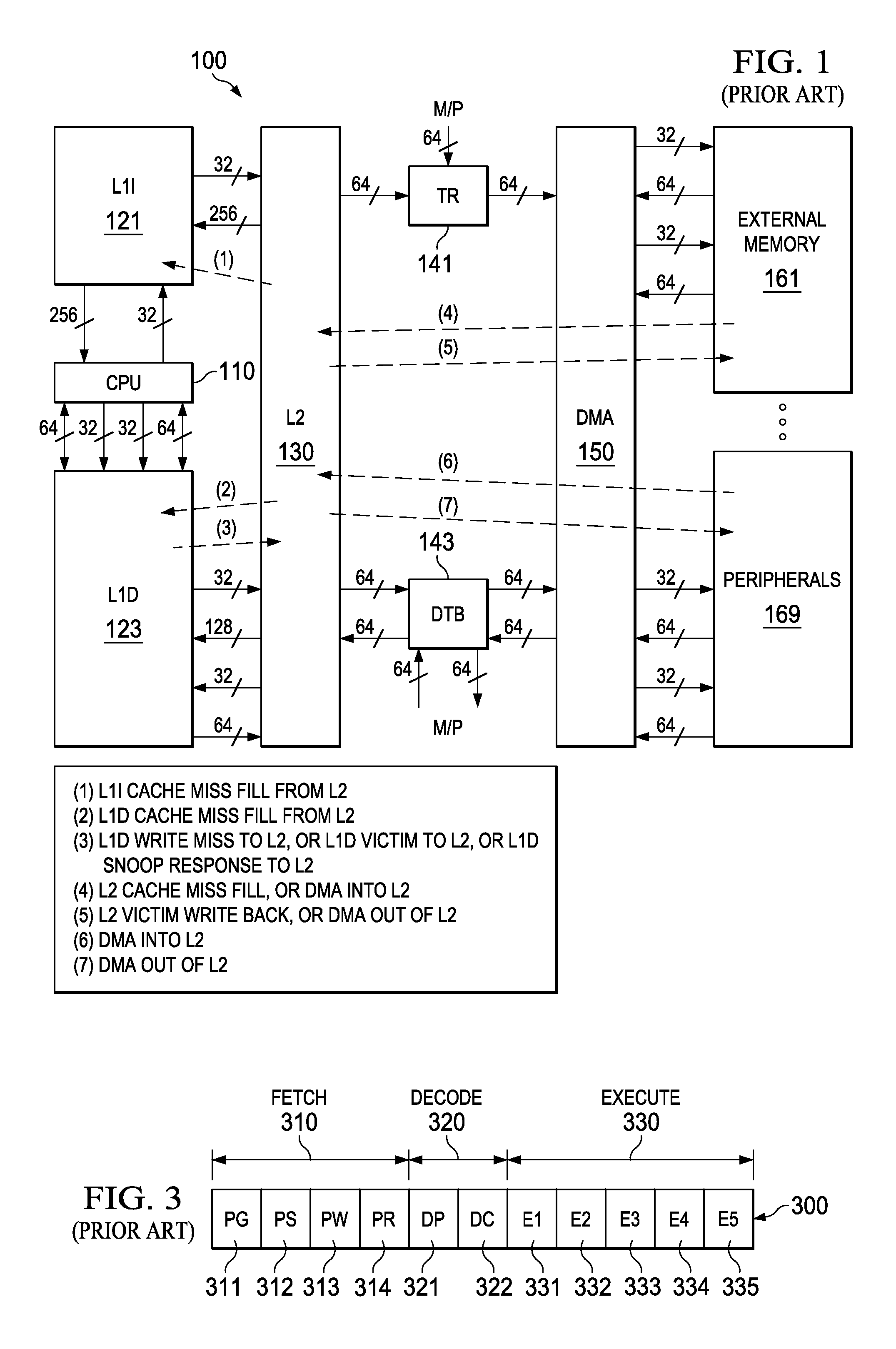

Multi-Master Cache Coherent Speculation Aware Memory Controller with Advanced Arbitration, Virtualization and EDC

ActiveUS20140115279A1Minimizes latency/bandwidth penaltyLow-latency accessMemory architecture accessing/allocationInternal/peripheral component protectionVirtualizationComputer hardware

This invention is an integrated memory controller / interconnect that provides very high bandwidth access to both on-chip memory and externally connected off-chip memory. This invention includes an arbitration for all memory endpoints including priority, fairness, and starvation bounds; virtualization; and error detection and correction hardware to protect the on-chip SRAM banks including automated scrubbing.

Owner:TEXAS INSTR INC

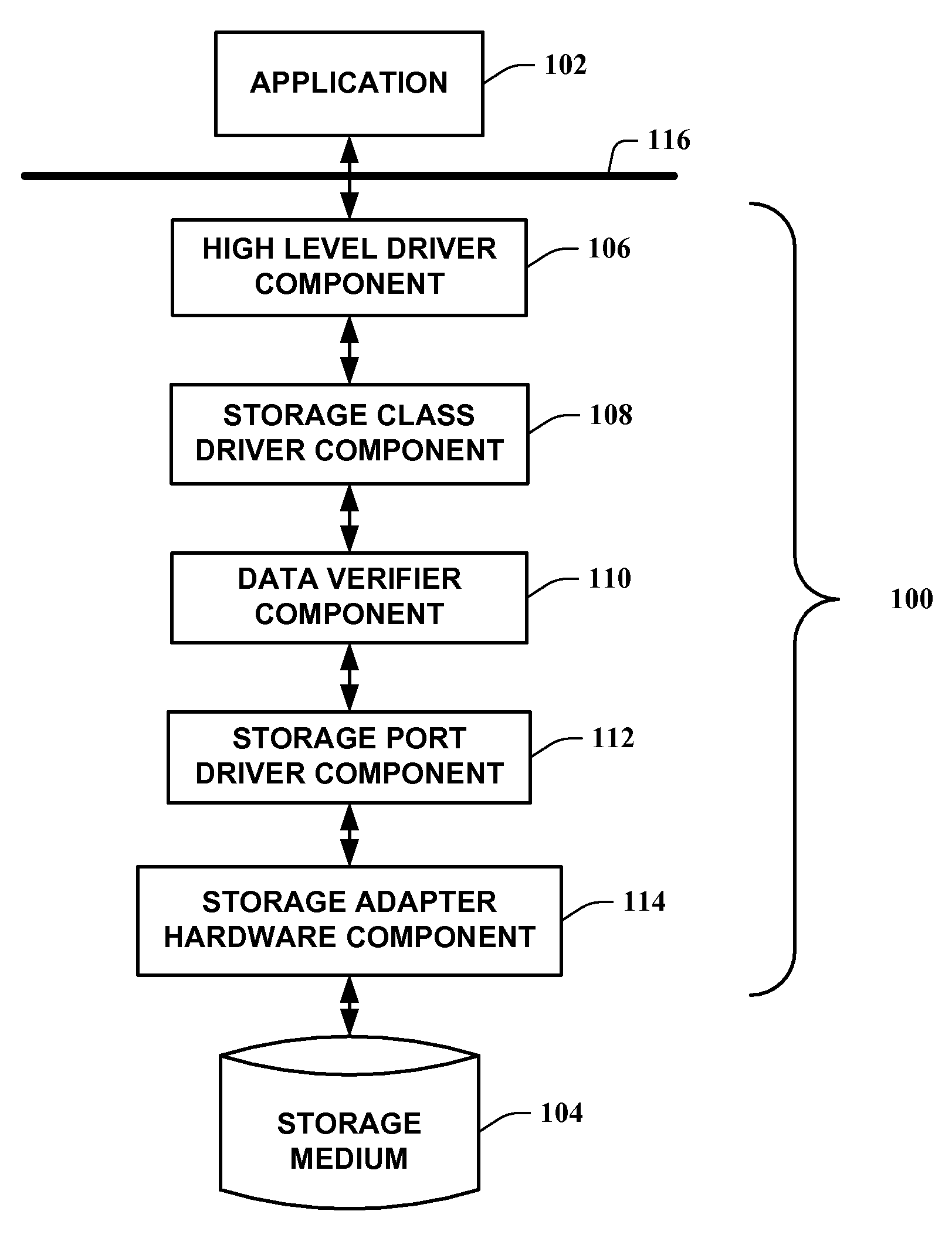

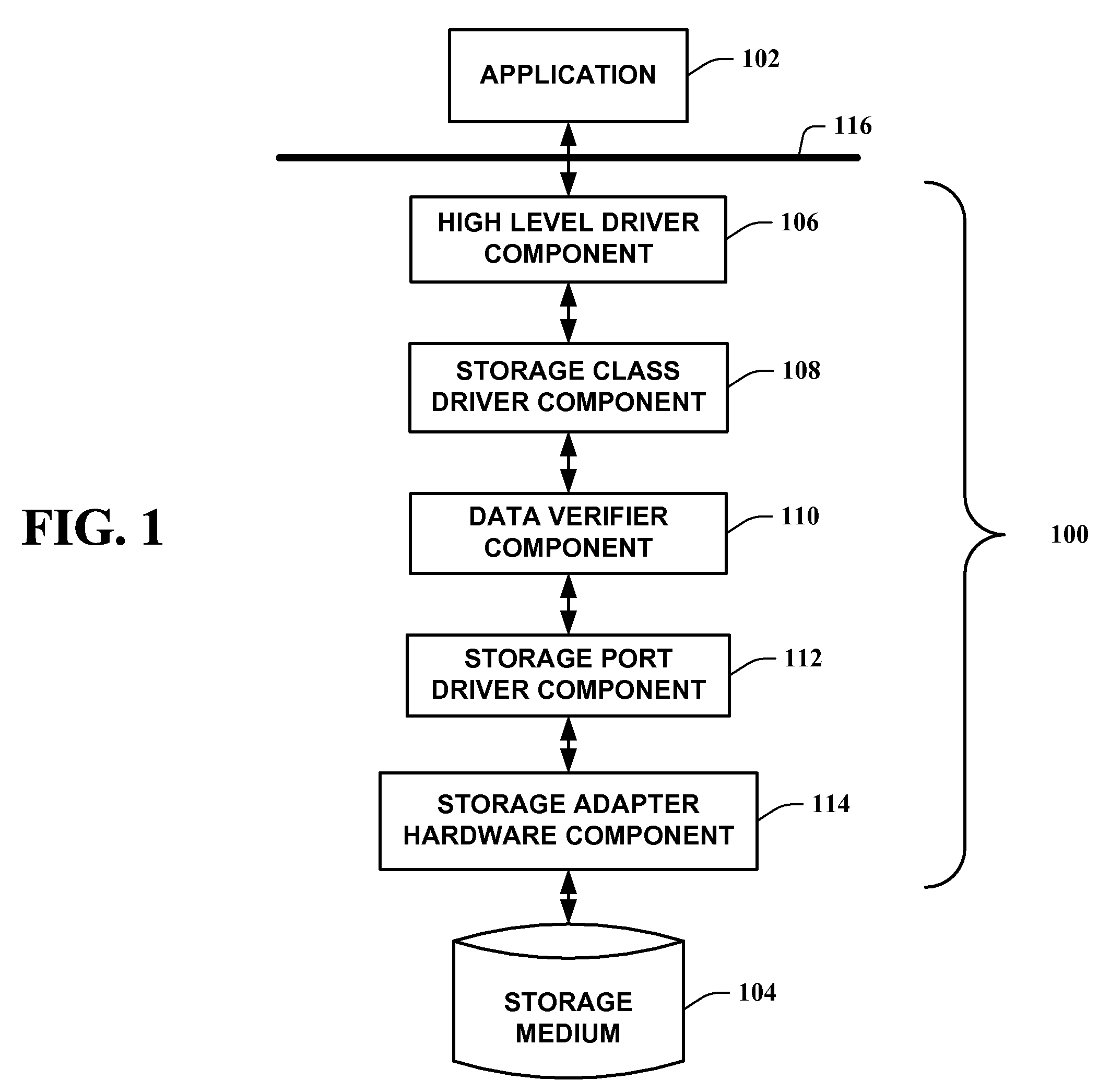

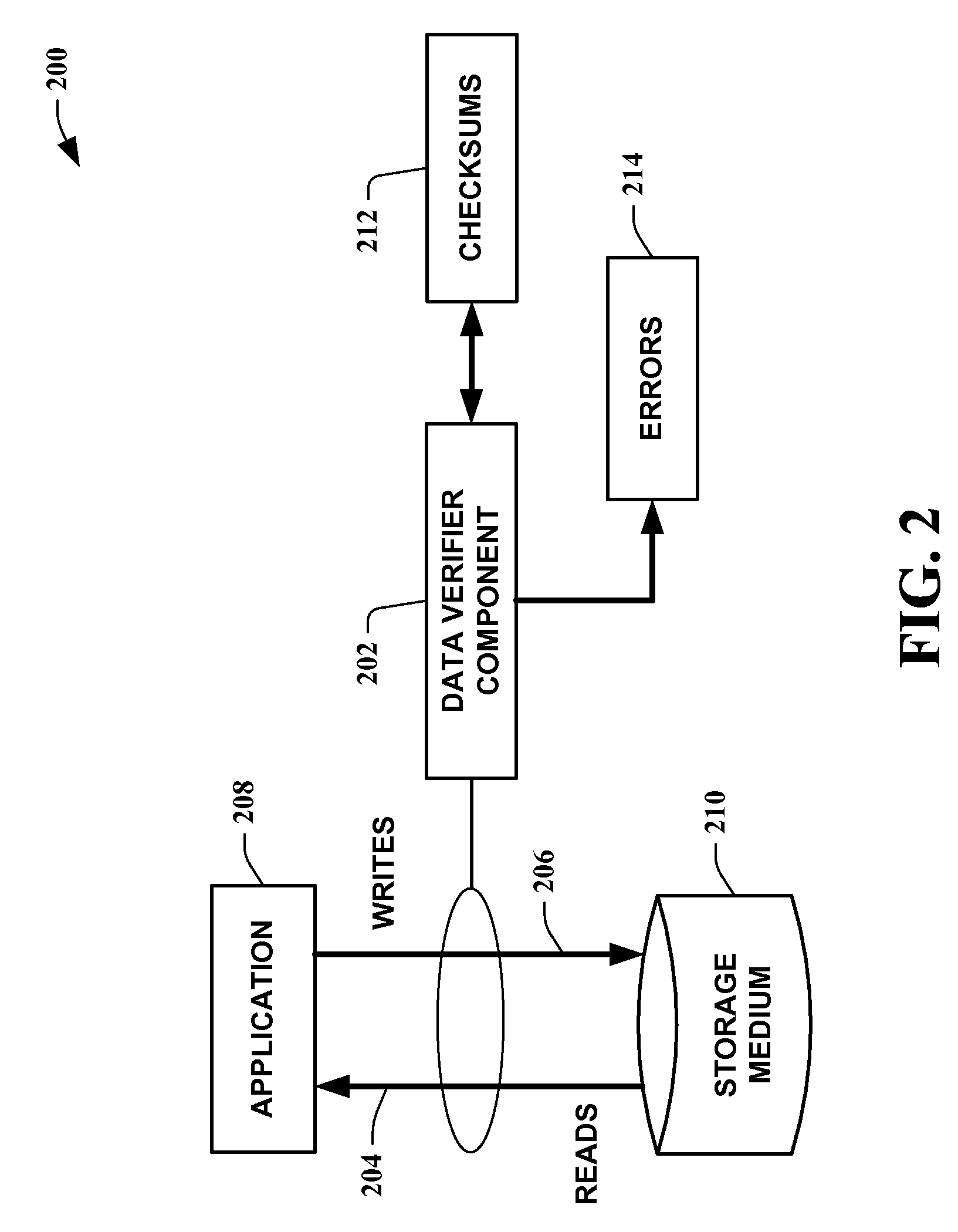

Systems and methods for enhanced stored data verification utilizing pageable pool memory

InactiveUS20070011180A1Improve data storage capacityData augmentationCode conversionCoding detailsVirtual memorySource Data Verification

The present invention utilizes pageable pool memory to provide, via a data verifier component, data verification information for storage mediums. By allowing the utilization of pageable pool memory, overflow from the pageable pool memory is paged and stored in a virtual memory space on a storage medium. Recently accessed verification information is stored in non-pageable memory, permitting low latency access. One instance of the present invention synchronously verifies data when verification information is accessible in physical system memory while deferring processing of data verification when verification information is stored in paged memory. Another instance of the present invention allows access to paged verification information in order to permit synchronous verification of data.

Owner:MICROSOFT TECH LICENSING LLC

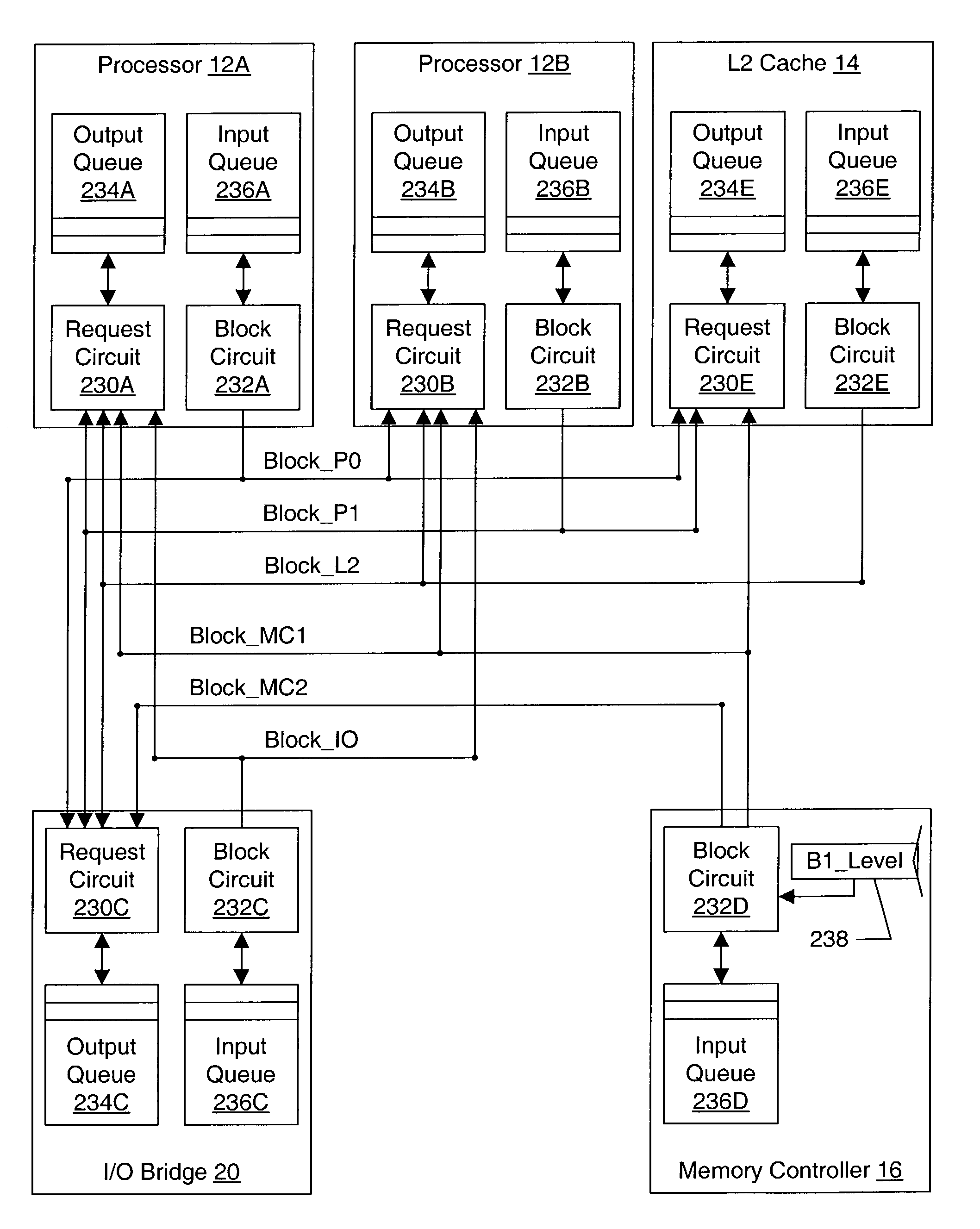

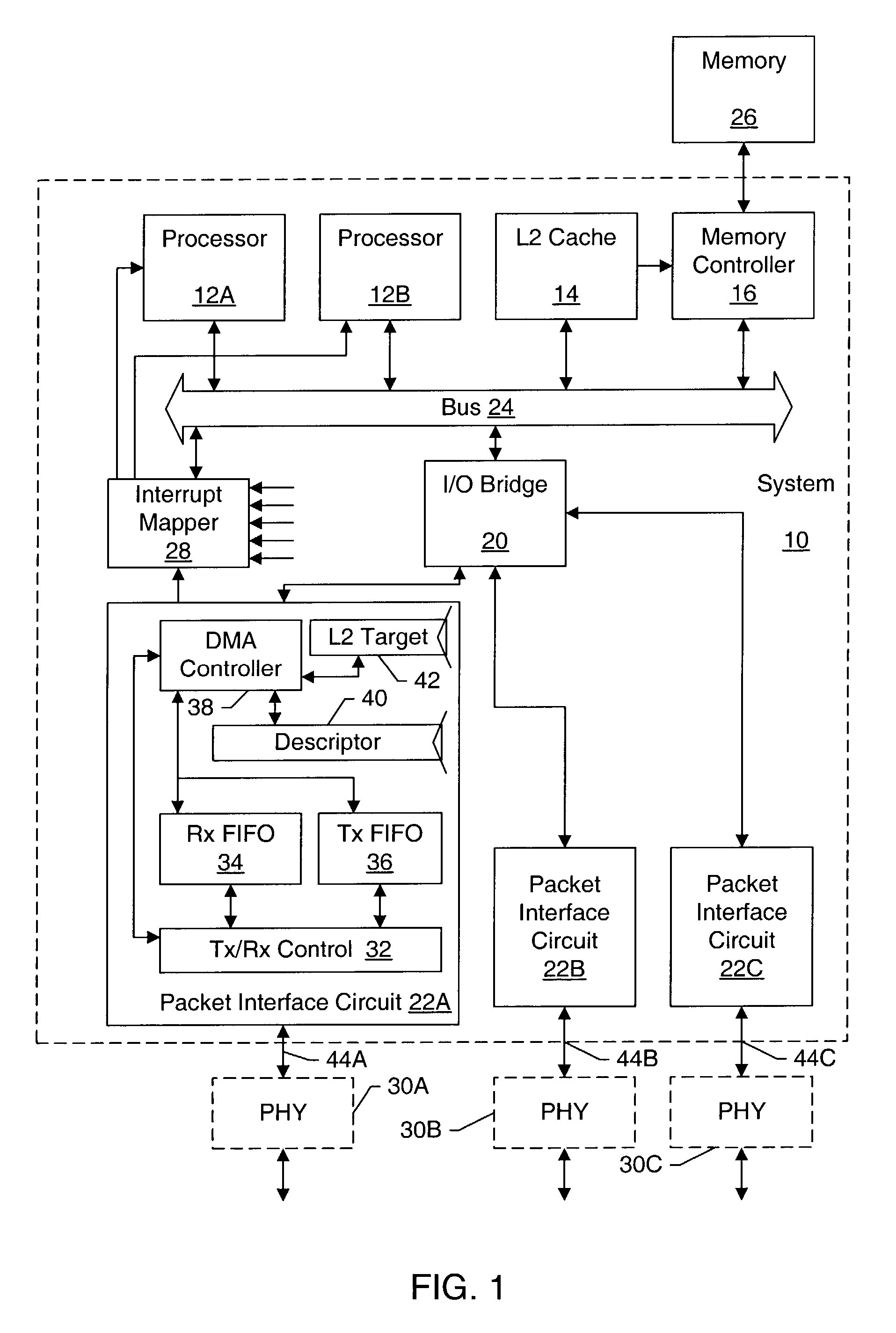

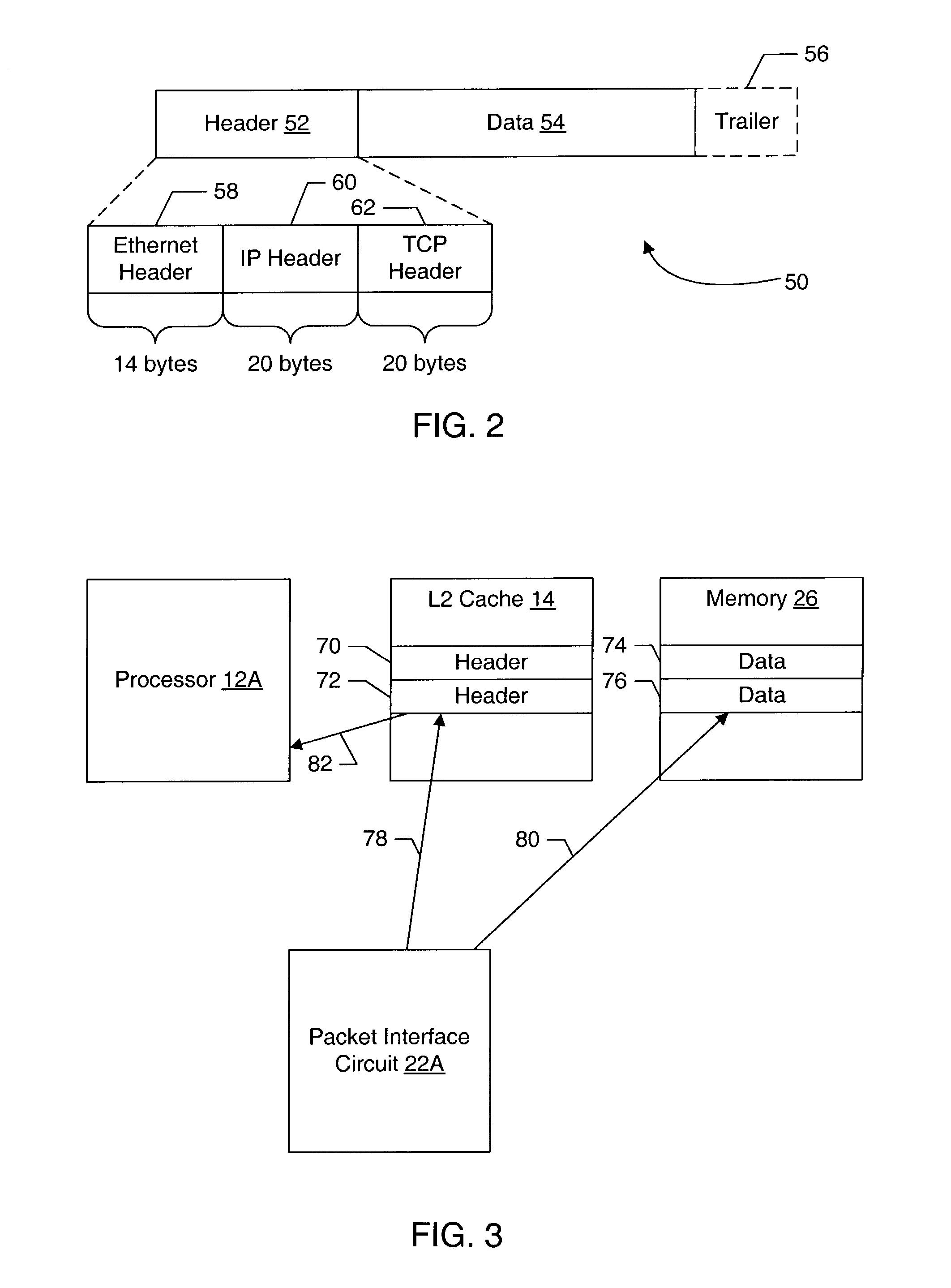

System on a chip for caching of data packets based on a cache miss/hit and a state of a control signal

InactiveUS7320022B2Low latency accessLower latencyDigital computer detailsData switching networksControl signalParallel computing

Owner:AVAGO TECH INT SALES PTE LTD

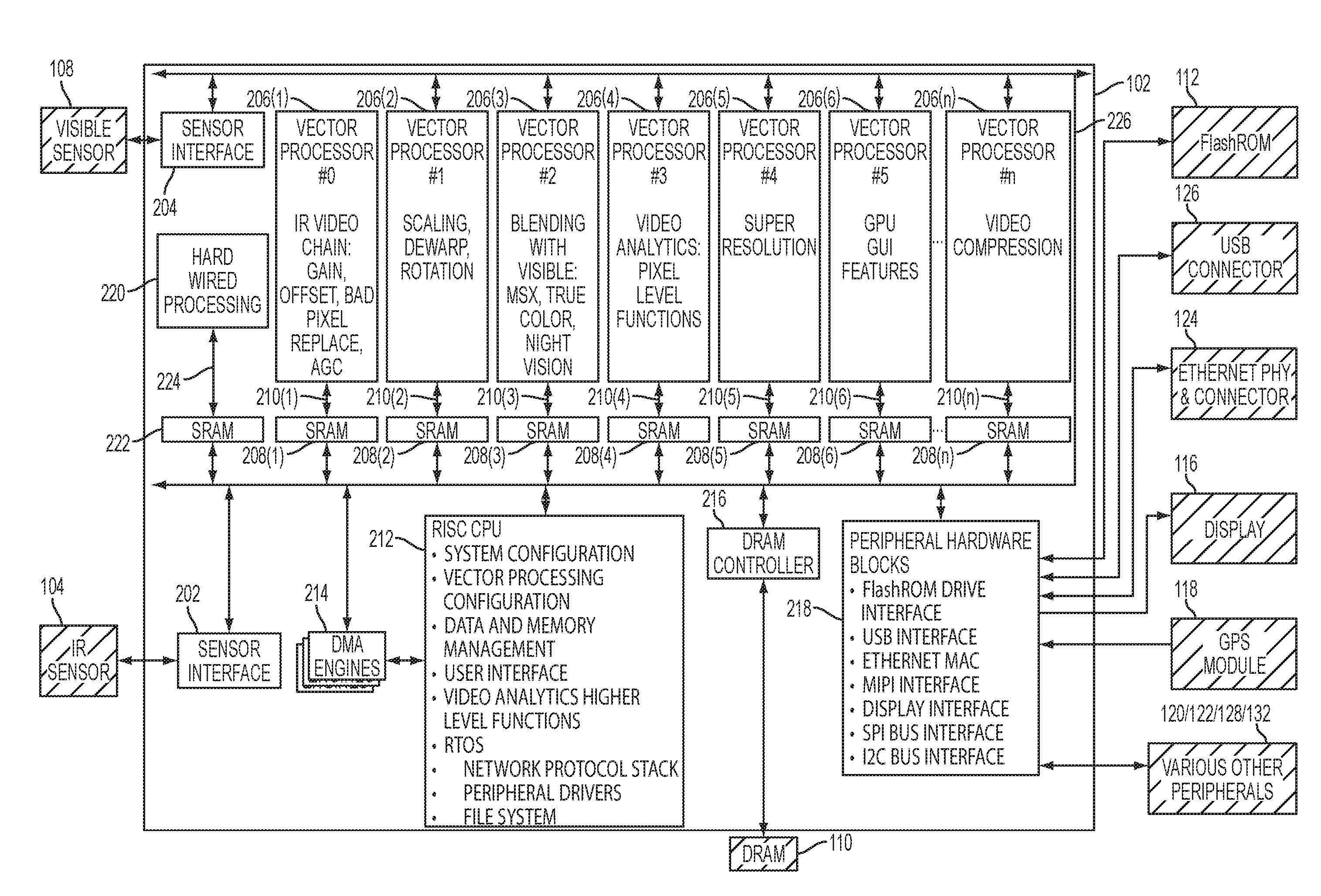

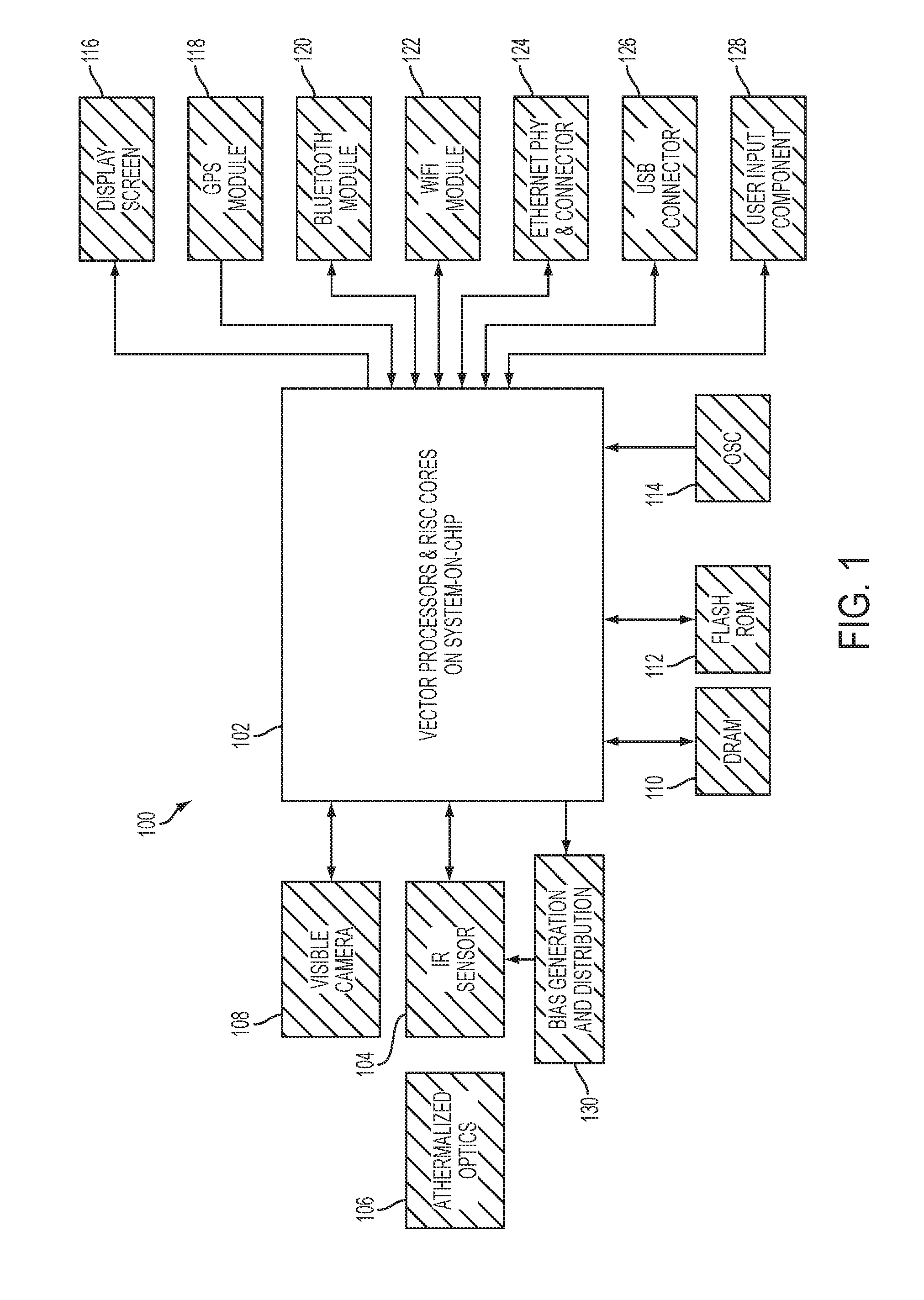

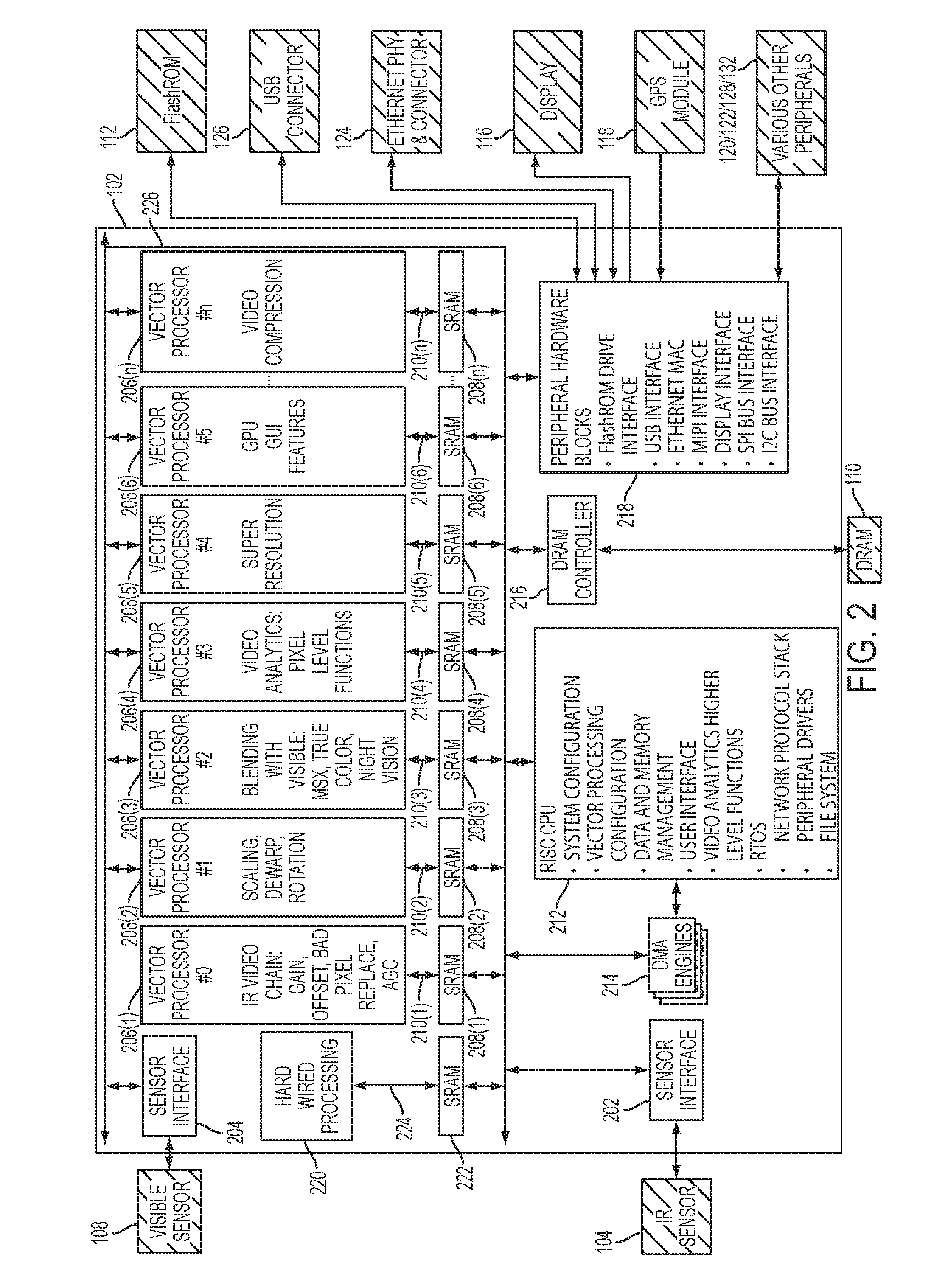

Vector processing architectures for infrared camera electronics

ActiveUS20160156855A1Improved electronics architectureEfficient processingTelevision system detailsColor television detailsData streamImaging data

Systems and methods are disclosed herein to provide infrared imaging systems with improved electronics architectures. In one embodiment, an infrared imaging system is provided that includes an infrared imaging sensor for capturing infrared image data and a main electronics block for efficiently processing the captured infrared image data. The main electronics block may include a plurality of vector processors each configured to operate on multiple pixels of the infrared image data in parallel to efficiently exploit pixel-level parallelism. Each vector processor may be communicatively coupled to a local memory that provides high bandwidth, low latency access to a portion of the infrared image data for the vector processor to operate on. The main electronics block may also include a general-purpose processor configured to manage data flow to / from the local memories and other system functionalities. The main electronics block may be implemented as a system-on-a-chip.

Owner:TELEDYNE FLIR LLC

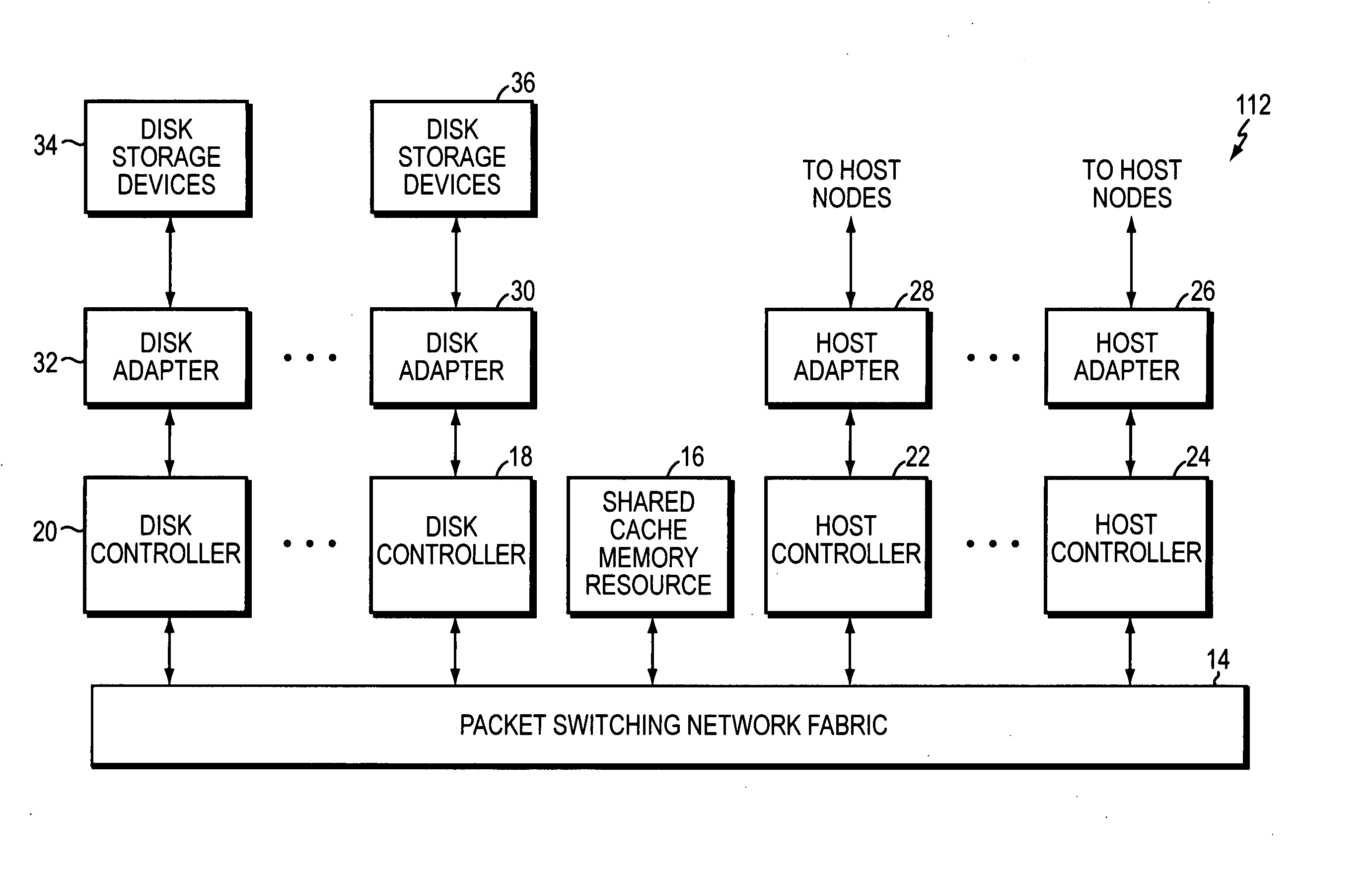

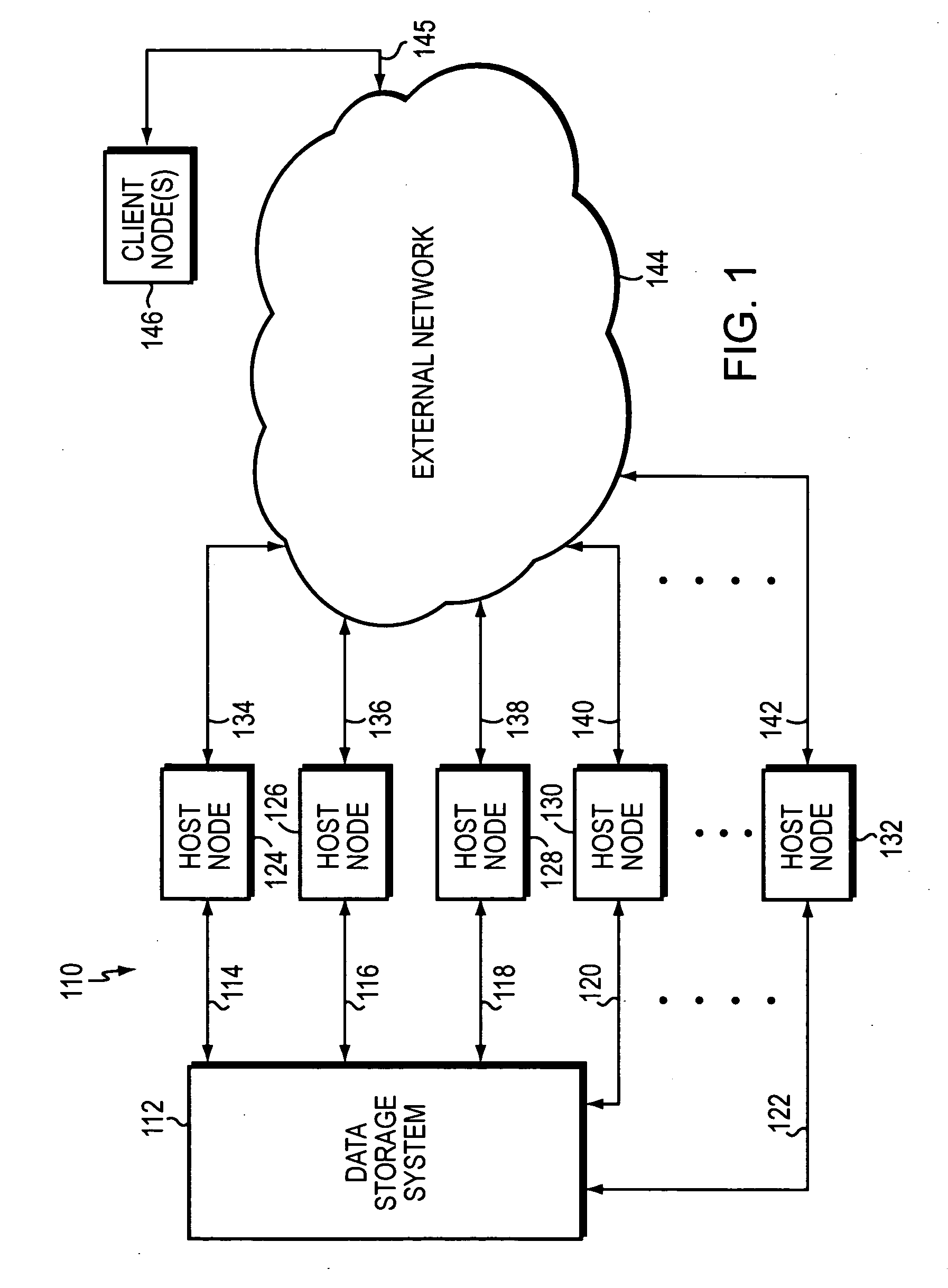

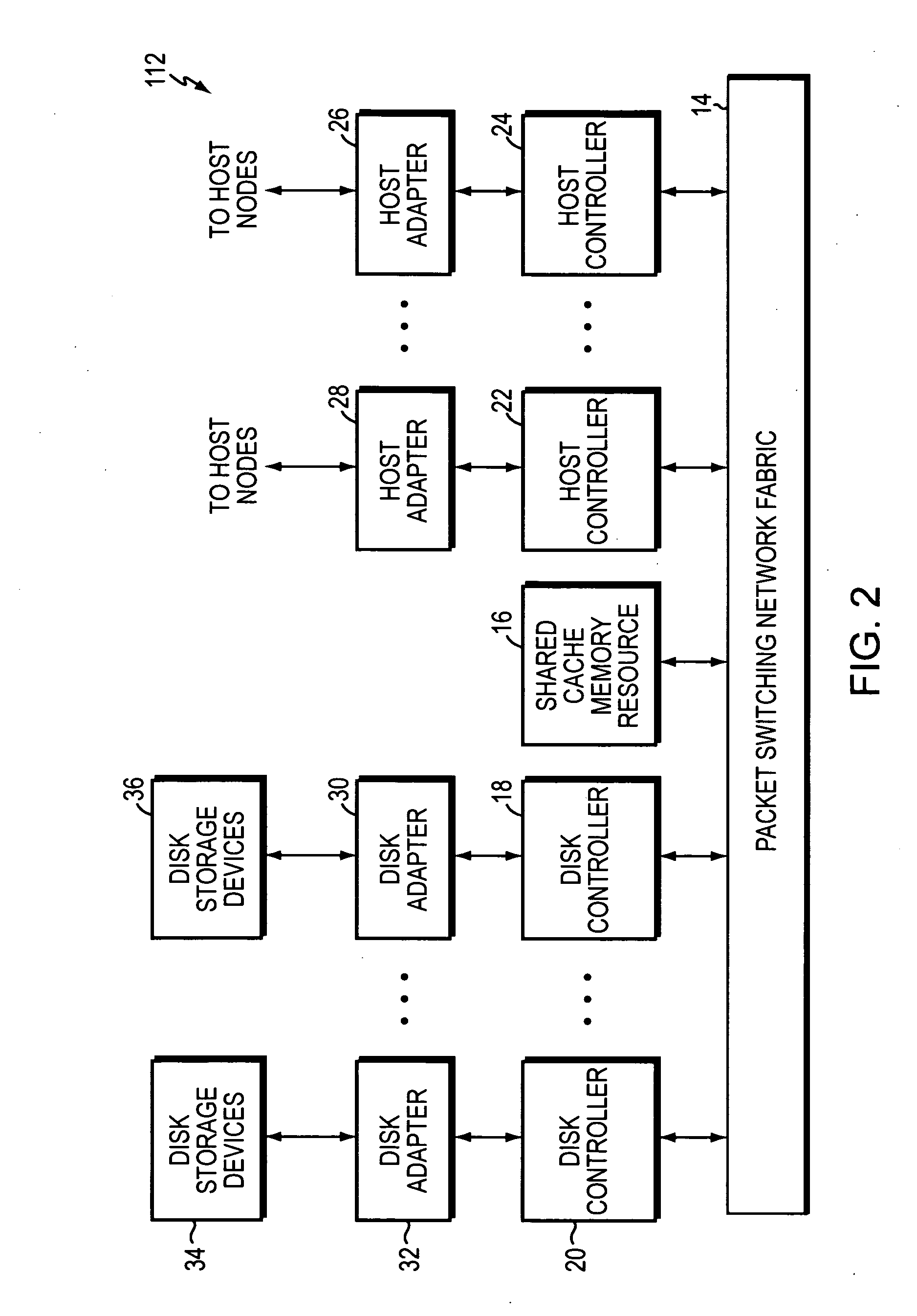

Data storage system having memory controller with embedded CPU

InactiveUS20060236032A1Low latency accessLess timeMemory architecture accessing/allocationMemory systemsMemory controllerData store

A memory system includes a bank of memory, an interface to a packet switching network, and a memory controller. The memory system is adapted to receive by the interface a packet based command to access the bank of memory. The memory controller is adapted to execute initialization and configuration cycles for the bank of memory. An embedded central processing unit (CPU) is included in the memory controller and is adapted to execute computer executable instructions. The memory controller is adapted to process the packet based command.

Owner:EMC CORP

Storage of data utilizing scheduling queue locations associated with different data rates

ActiveUS8799535B2Reduces large streaming bufferImprove delivery efficiencyError detection/correctionData switching by path configurationData segmentData file

In one example, multimedia content is requested from a plurality of storage modules. Each storage module retrieves the requested parts, which are typically stored on a plurality of storage devices at each storage module. Each storage module determines independently when to retrieve the requested parts of the data file from storage and transmits those parts from storage to a data queue. Based on a capacity of a delivery module and / or the data rate associated with the request, each storage module transmits the parts of the data file to the delivery module. The delivery module generates a sequenced data segment from the parts of the data file received from the plurality of storage modules and transmits the sequenced data segment to the requester.

Owner:AKAMAI TECH INC

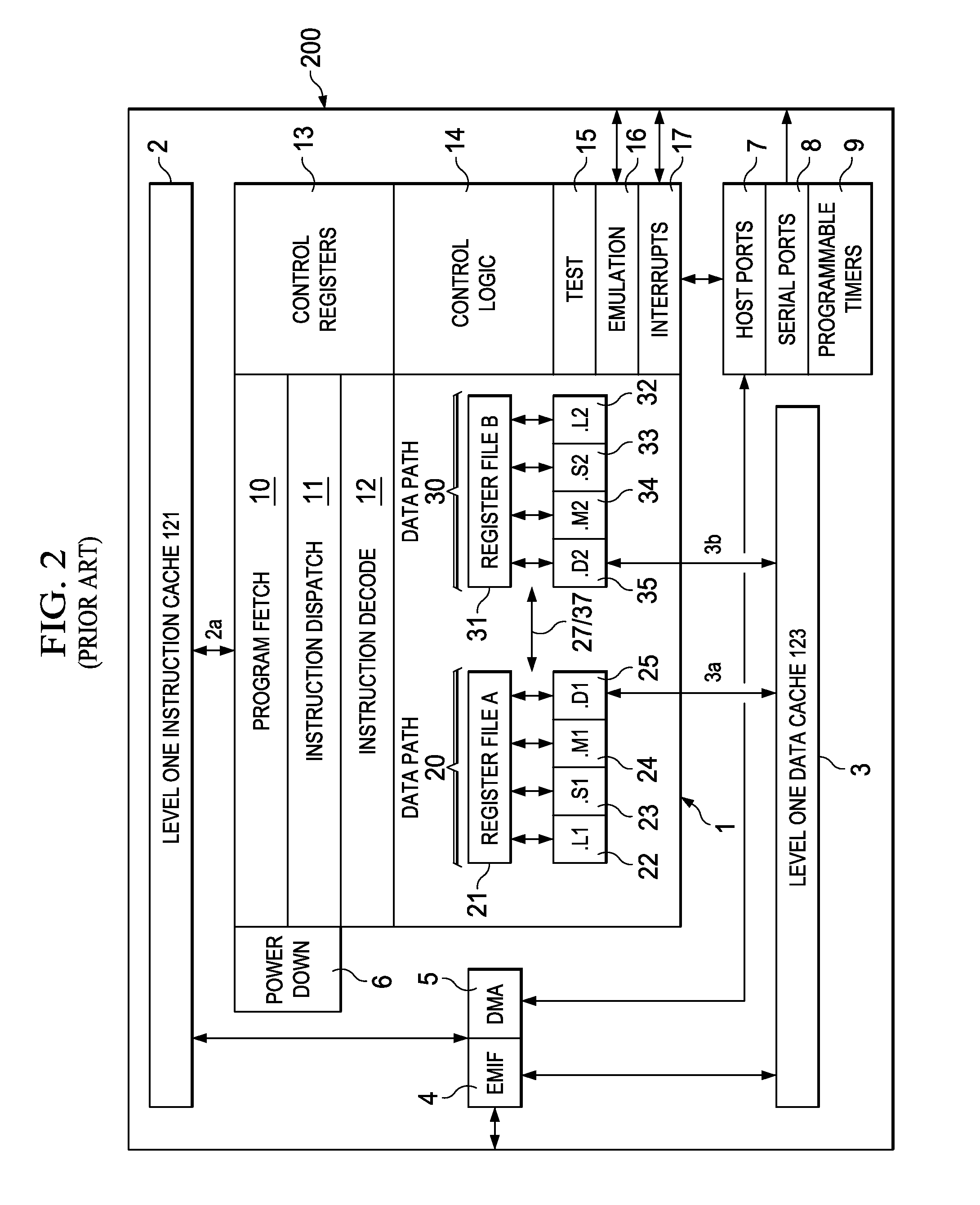

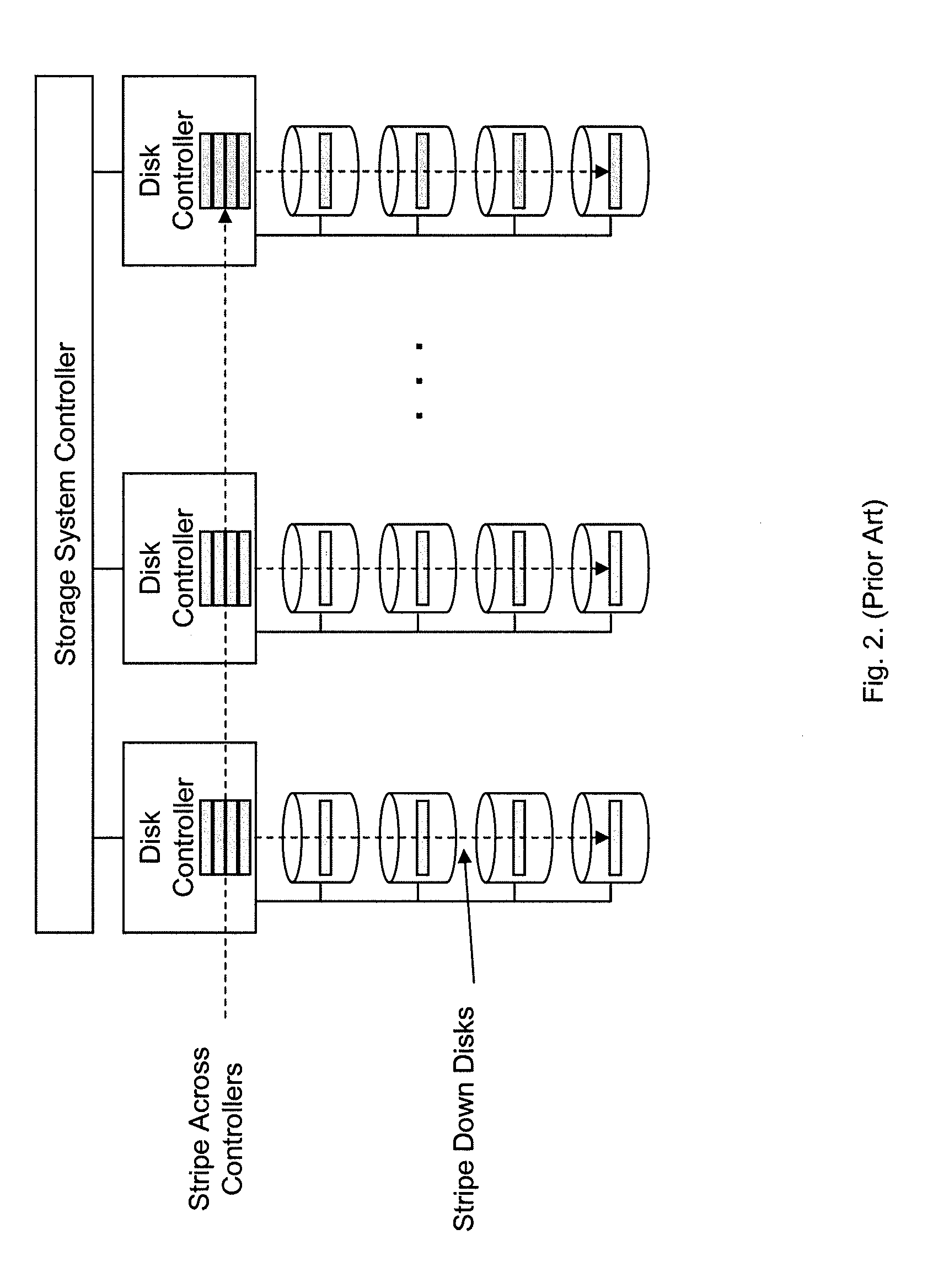

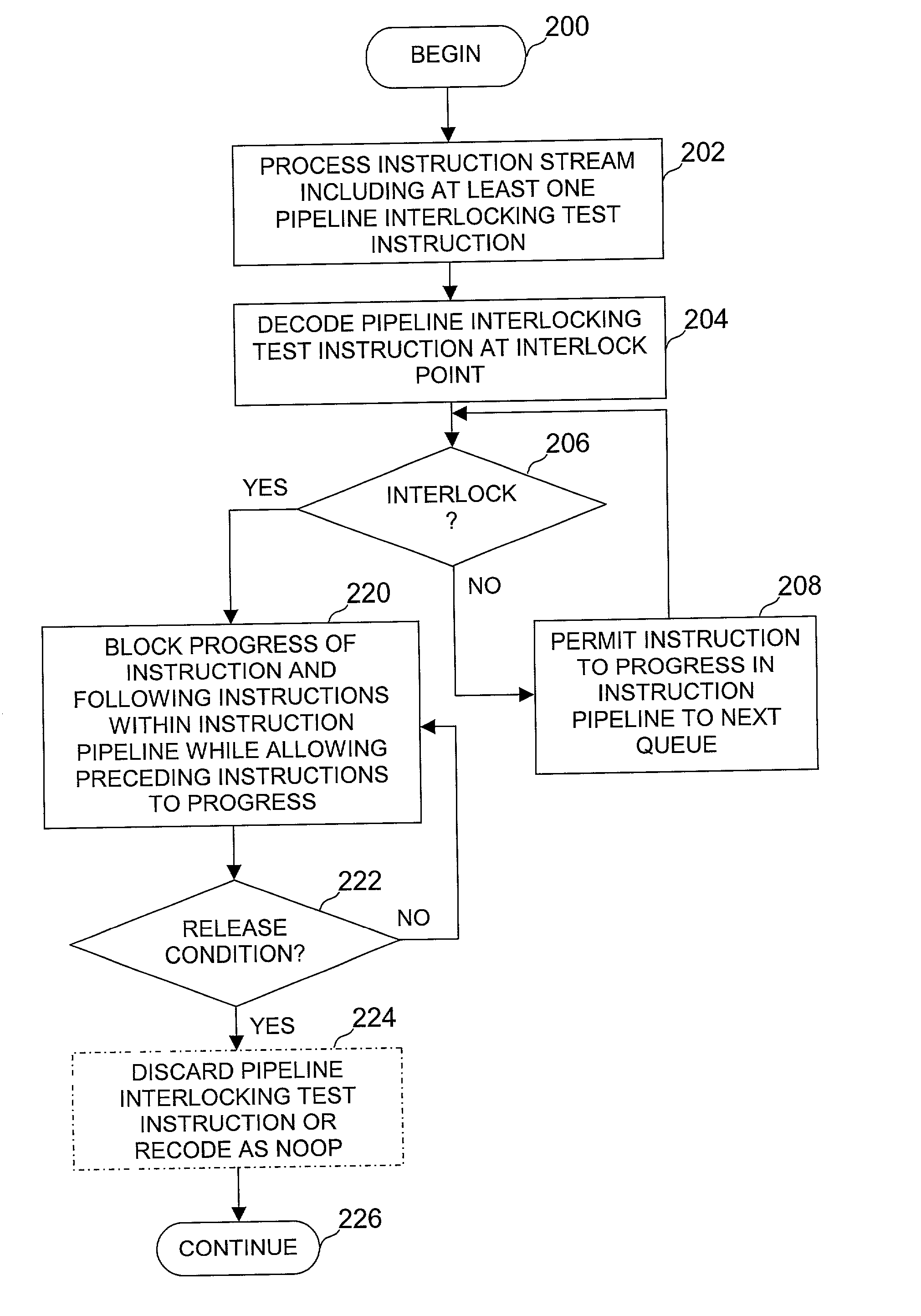

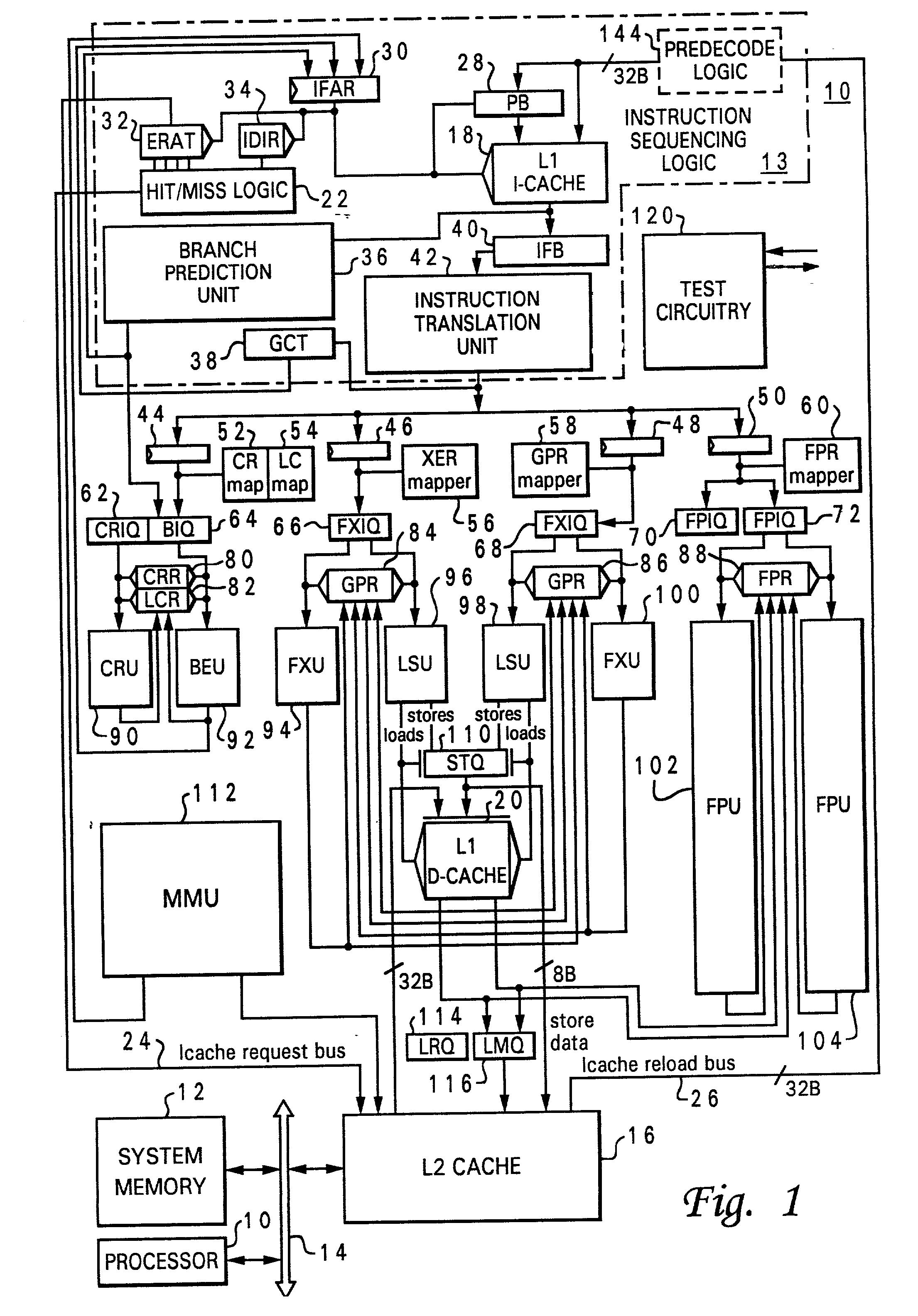

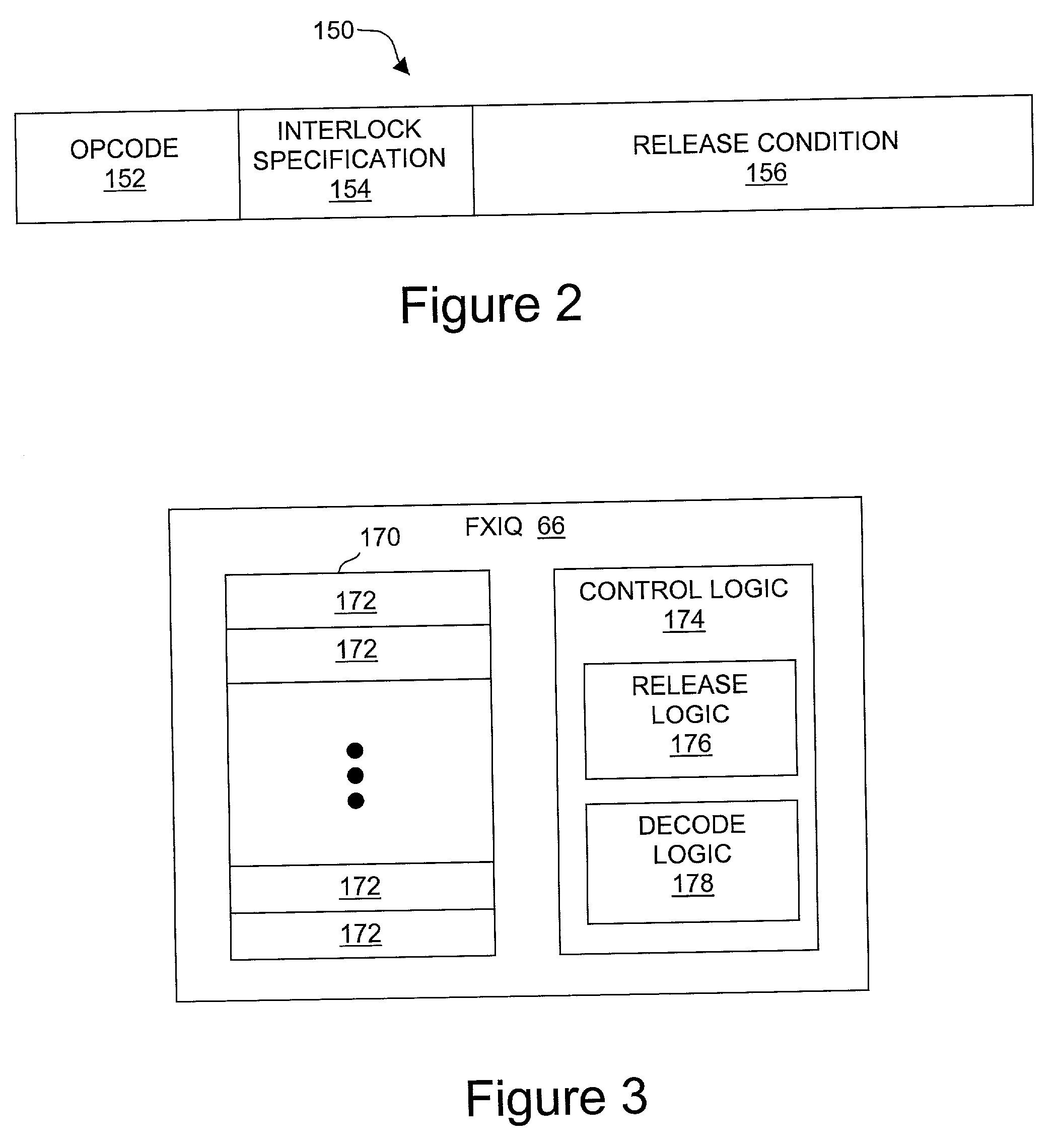

Processor and method of testing a processor for hardware faults utilizing a pipeline interlocking test instruction

InactiveUS20030084273A1Low latency accessGeneral purpose stored program computerConcurrent instruction executionInstruction pipelineControl logic

The instruction pipeline of a processor, which includes execution circuitry and instruction sequencing logic, receives a stream of instructions including a pipeline interlocking test instruction. The processor includes pipeline control logic that, responsive to receipt of the test instruction, interlocks the instruction pipeline as specified in the test instruction to prevent advancement of at least one first instruction in the instruction pipeline while permitting advancement of at least one second instruction in the instruction pipeline until occurrence of a release condition also specified by the test instruction. In response to the release condition, the pipeline control logic releases the interlock to enable advancement of said at least one instruction in the instruction pipeline. In this manner, selected instruction pipeline conditions, such a full queue or synchronization of instructions at certain pipeline stages, which are difficult to induce in a test environment through the execution of conventional instruction sets, can be tested.

Owner:IBM CORP

Apparatuses, methods, and systems for configurable operand size operations in an operation configurable spatial accelerator

ActiveUS11029958B1Easy to adaptImprove performanceInstruction analysisHardware accelerationElectrical and Electronics engineering

Systems, methods, and apparatuses relating to configurable operand size operation circuitry in an operation configurable spatial accelerator are described. In one embodiment, a hardware accelerator includes a plurality of processing elements, a network between the plurality of processing elements to transfer values between the plurality of processing elements, and a first processing element of the plurality of processing elements including a first plurality of input queues having a multiple bit width coupled to the network, at least one first output queue having the multiple bit width coupled to the network, configurable operand size operation circuitry coupled to the first plurality of input queues, and a configuration register within the first processing element to store a configuration value that causes the configurable operand size operation circuitry to switch to a first mode for a first multiple bit width from a plurality of selectable multiple bit widths of the configurable operand size operation circuitry, perform a selected operation on a plurality of first multiple bit width values from the first plurality of input queues in series to create a resultant value, and store the resultant value in the at least one first output queue.

Owner:INTEL CORP

Systems and methods for hosting an in-memory database

InactiveCN106164897AReduce the burden onImprove recallSpecial data processing applicationsIn-memory databaseData mining

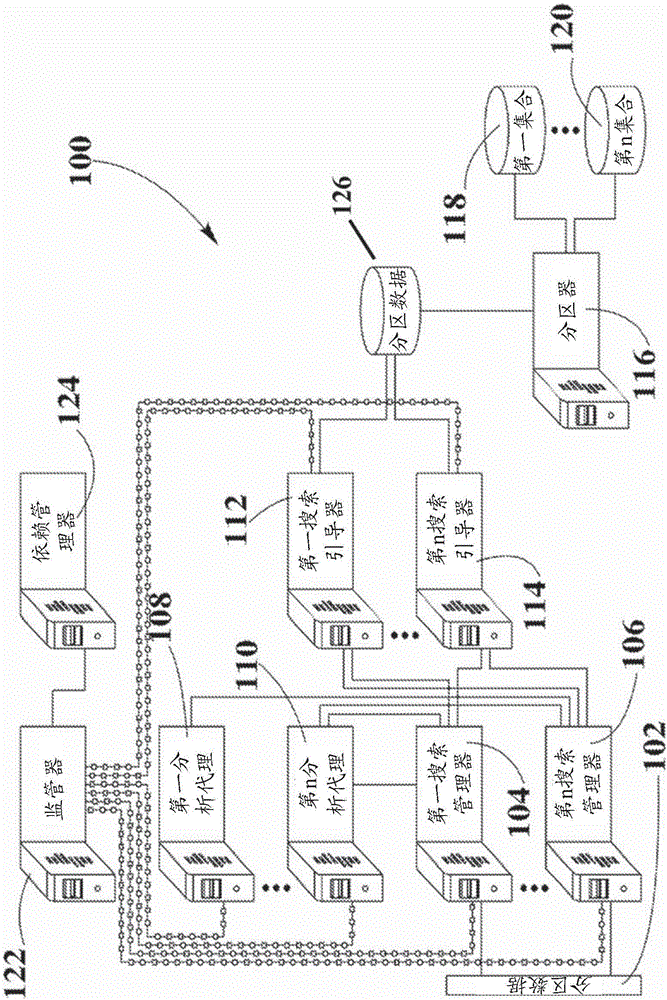

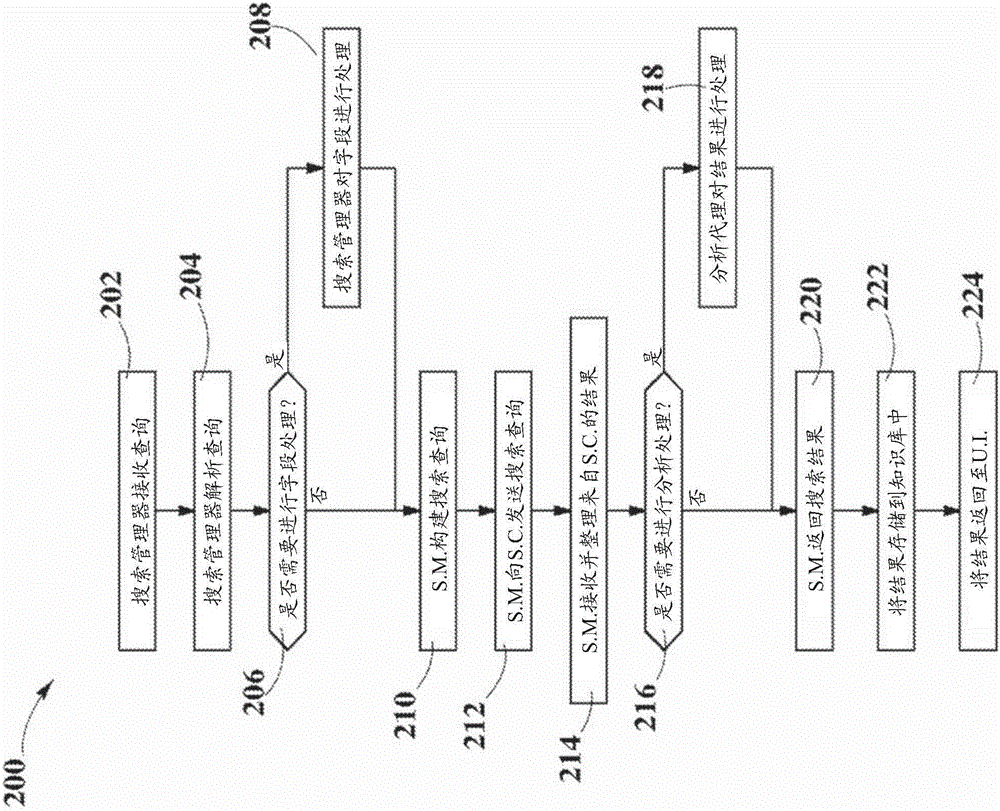

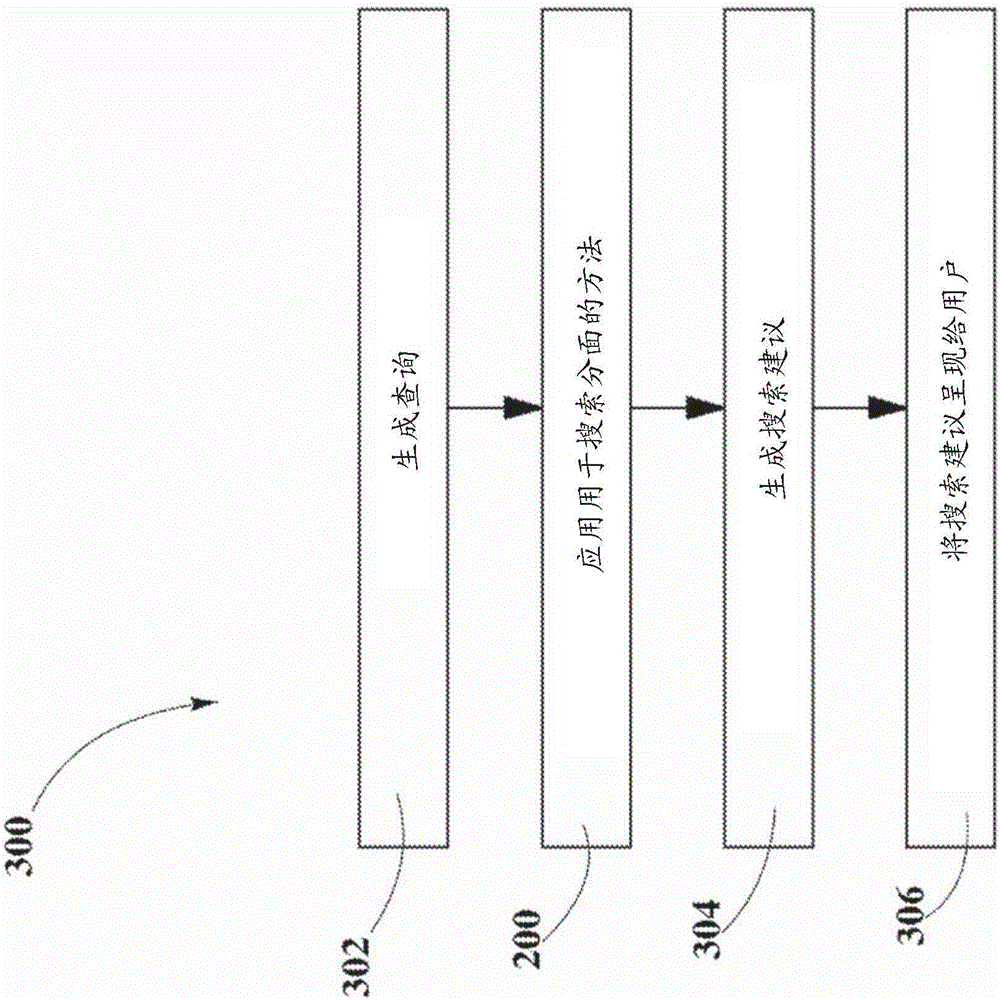

An in-memory database system and method for administrating a distributed in-memory database, comprising one or more nodes having modules configured to store and distribute database partitions of collections partitioned by a partitioner associated with a search conductor is disclosed. Database collections are partitioned according to a schema. Partitions, collections, and records, are updated and removed when requested by a system interface, according to the schema. Supervisors determine a node status based on a heartbeat signal received from each node. Users can send queries through a system interface to search managers. Search managers apply a field processing technique, forward the search query to search conductors, and return a set of result records to the analytics agents. Analytics agents perform analytics processing on a candidate results records from a search manager. The search conductors comprising partitioners associated with a collection, search and score the records in a partition, then return candidate result records.

Owner:QBASE LLC

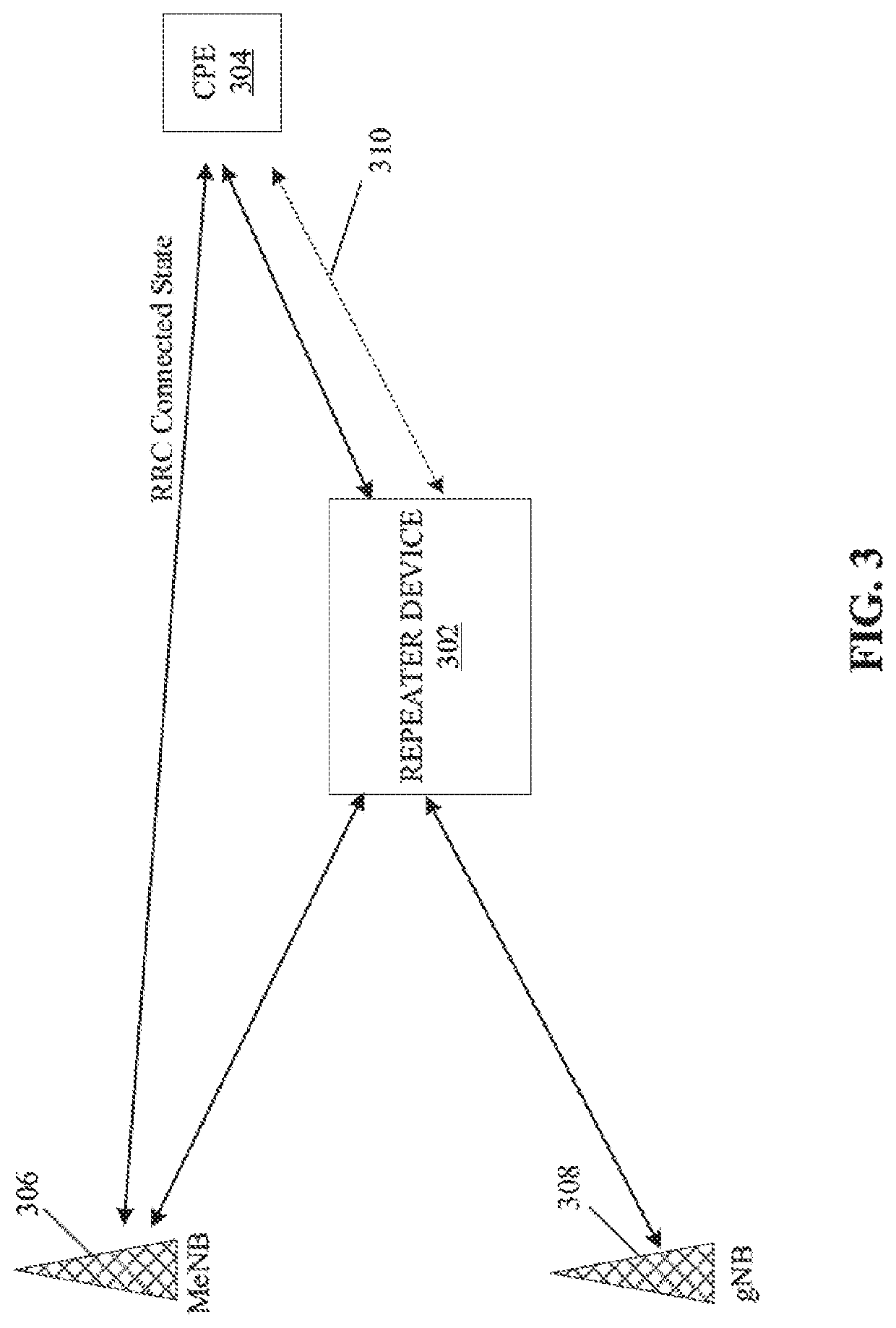

Communication device and method for low-latency initial access to non-standalone 5g new radio network

ActiveUS20200344739A1Low-latency accessSynchronisation arrangementTransmission path divisionSignal waveRadio networks

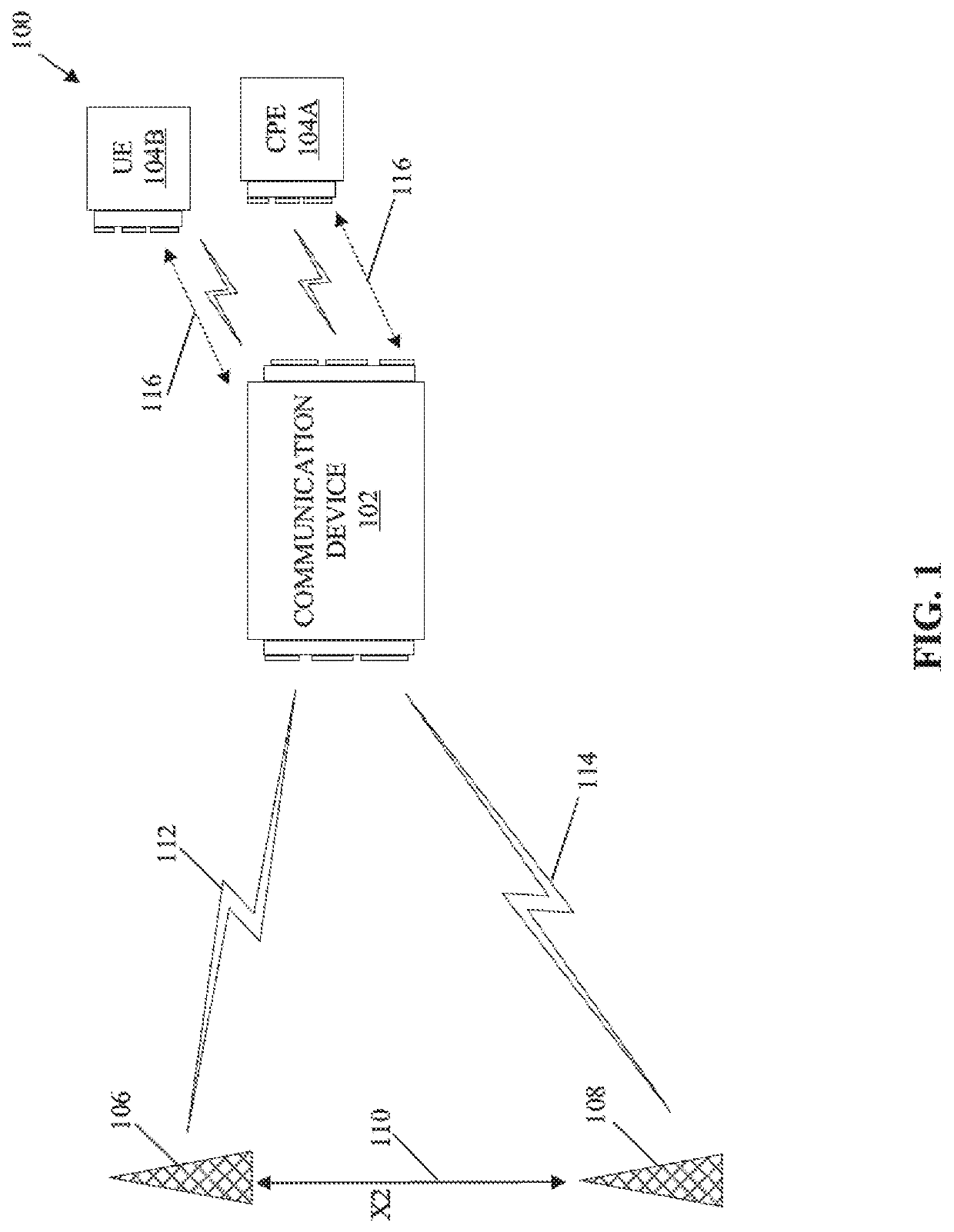

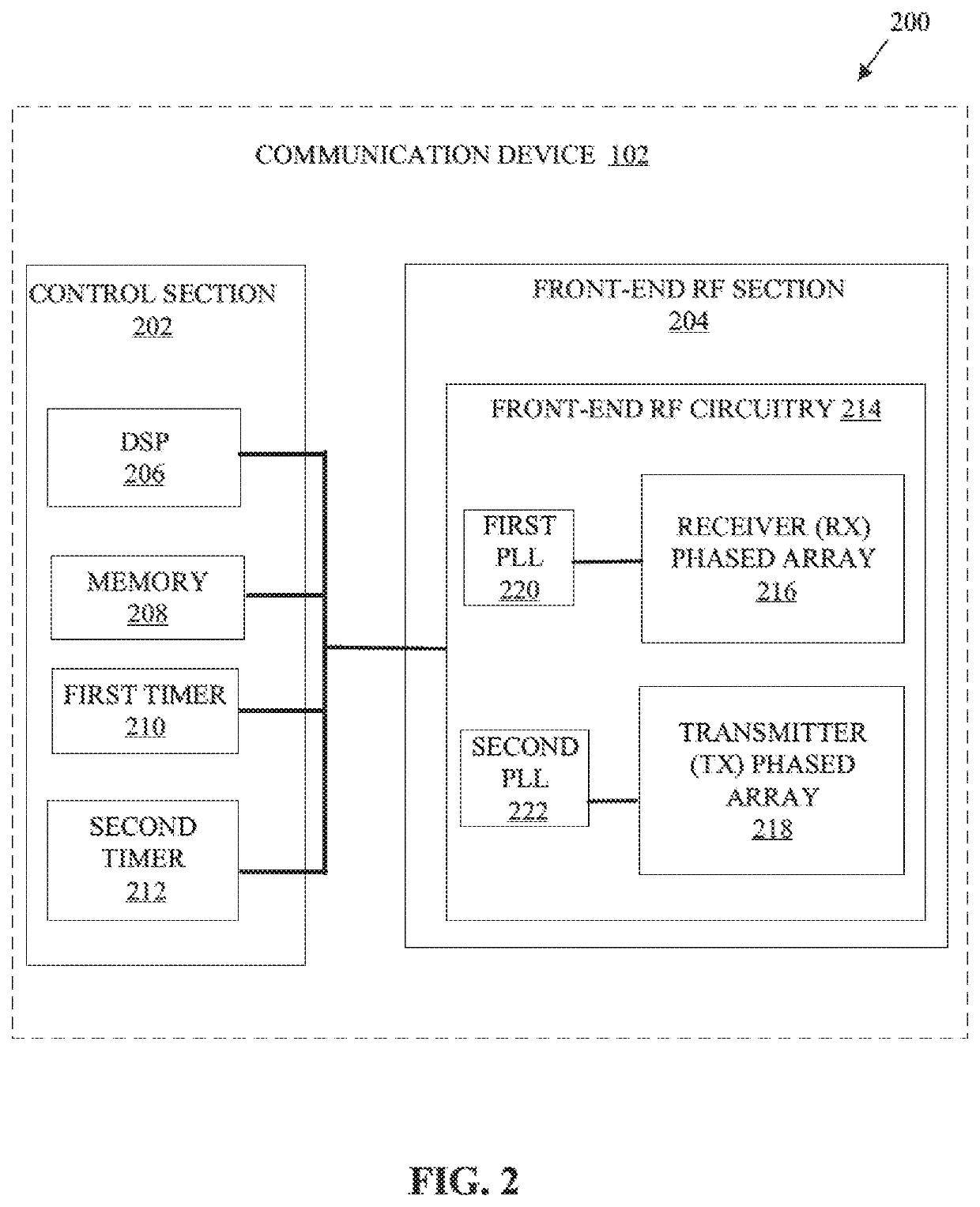

A communication device initiates beam acquisition in a receive-only mode. Beam reception is set to an omni mode in which different beams of RF signals are receivable at the communication device from different directions. A primary signal synchronization (PSS) search is executed from each signal synchronization block location based on control information acquired directly from a first base station over a long-term evolution (LTE) control plane link or from a customer premise equipment (CPE) or user equipment (UE) that is in a radio resource control (RRC) connected state. The communication of the beam of RF signals that has highest received signal strength is activated in a new radio (NR) frequency to the UE or the CPE based on at least a PSS detected in the PSS search, for low-latency non-standalone access to the beam of RF signals in NR frequency at the UE or the CPE.

Owner:MOVANDI CORP

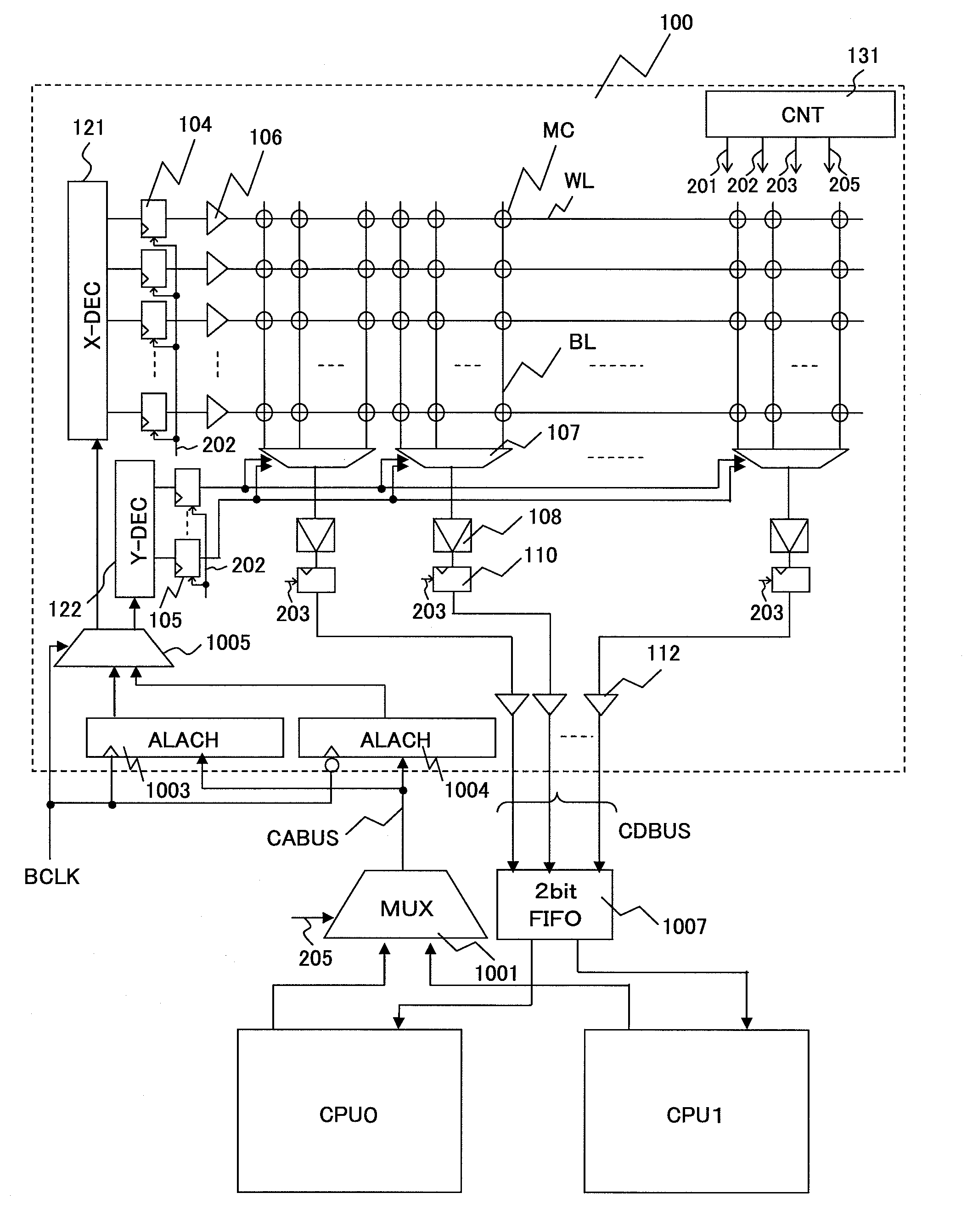

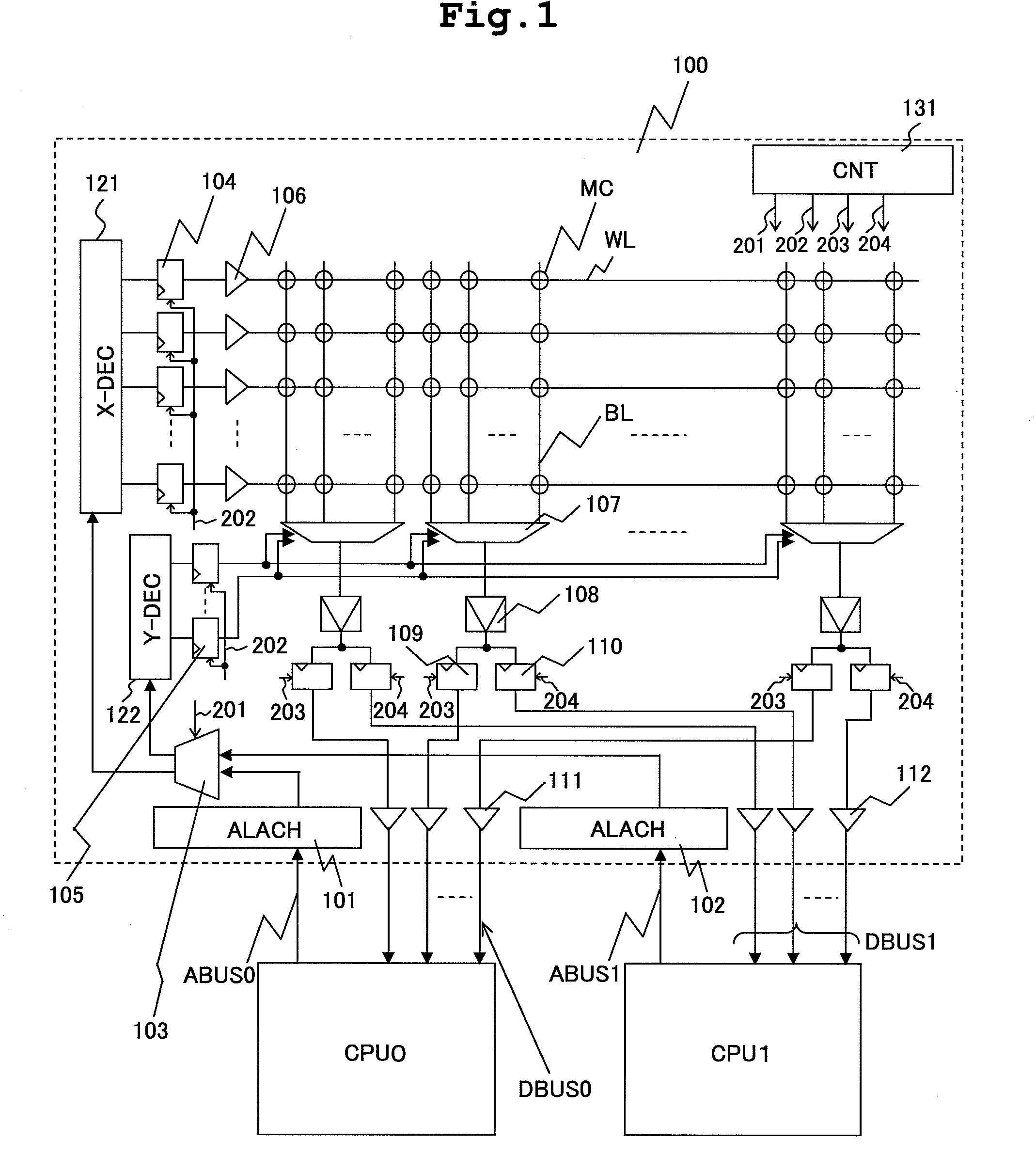

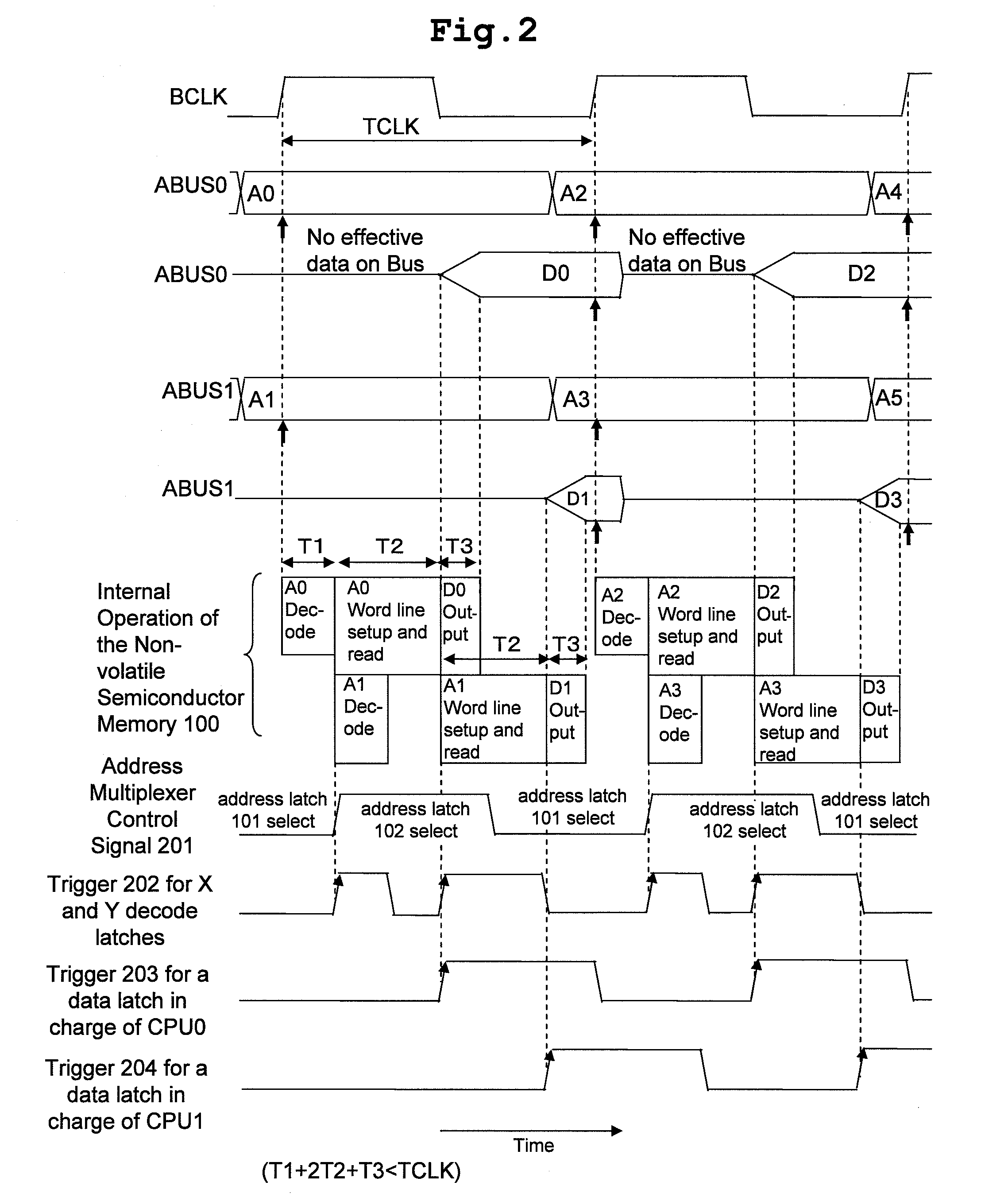

Semiconductor integrated circuit device

InactiveUS20090129173A1Low-latency accessRead-only memoriesDigital storageSense amplifierIntegrated circuit

The semiconductor integrated circuit device includes: a first latch which can hold an output signal of the X decoder and transfer the signal to the word driver in a post stage subsequent to the X decoder; a second latch which can hold an output signal of the Y decoder and transfer the signal to the column multiplexer in the post stage subsequent to the Y decoder; and a third latch which can hold an output signal of the sense amplifier and transfer the signal to the output buffer in the post stage subsequent to the sense amplifier. The structure makes it possible to pipeline-control a series of processes for reading data stored in the non-volatile semiconductor memory, and enables low-latency access even with access requests from CPUs conflicting.

Owner:RENESAS ELECTRONICS CORP

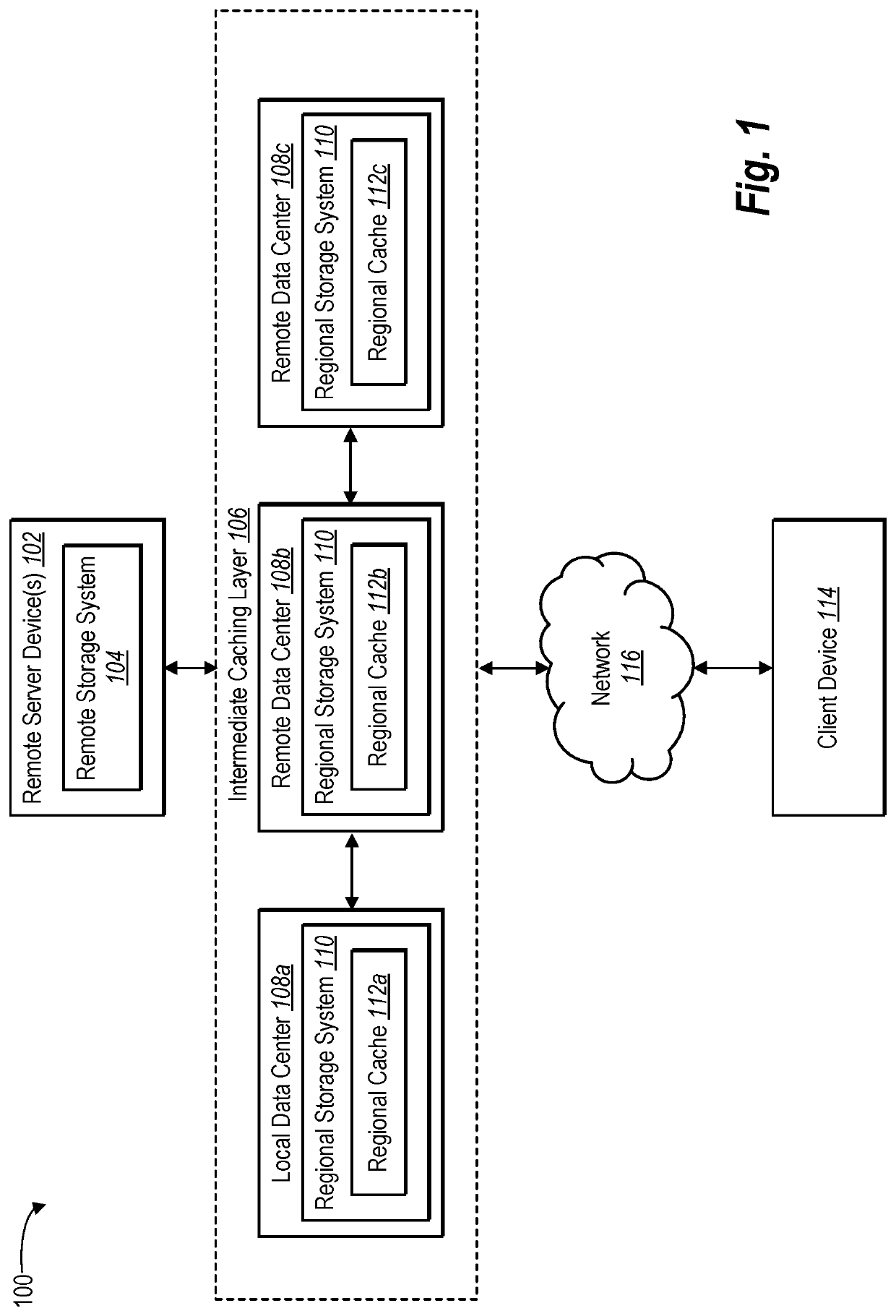

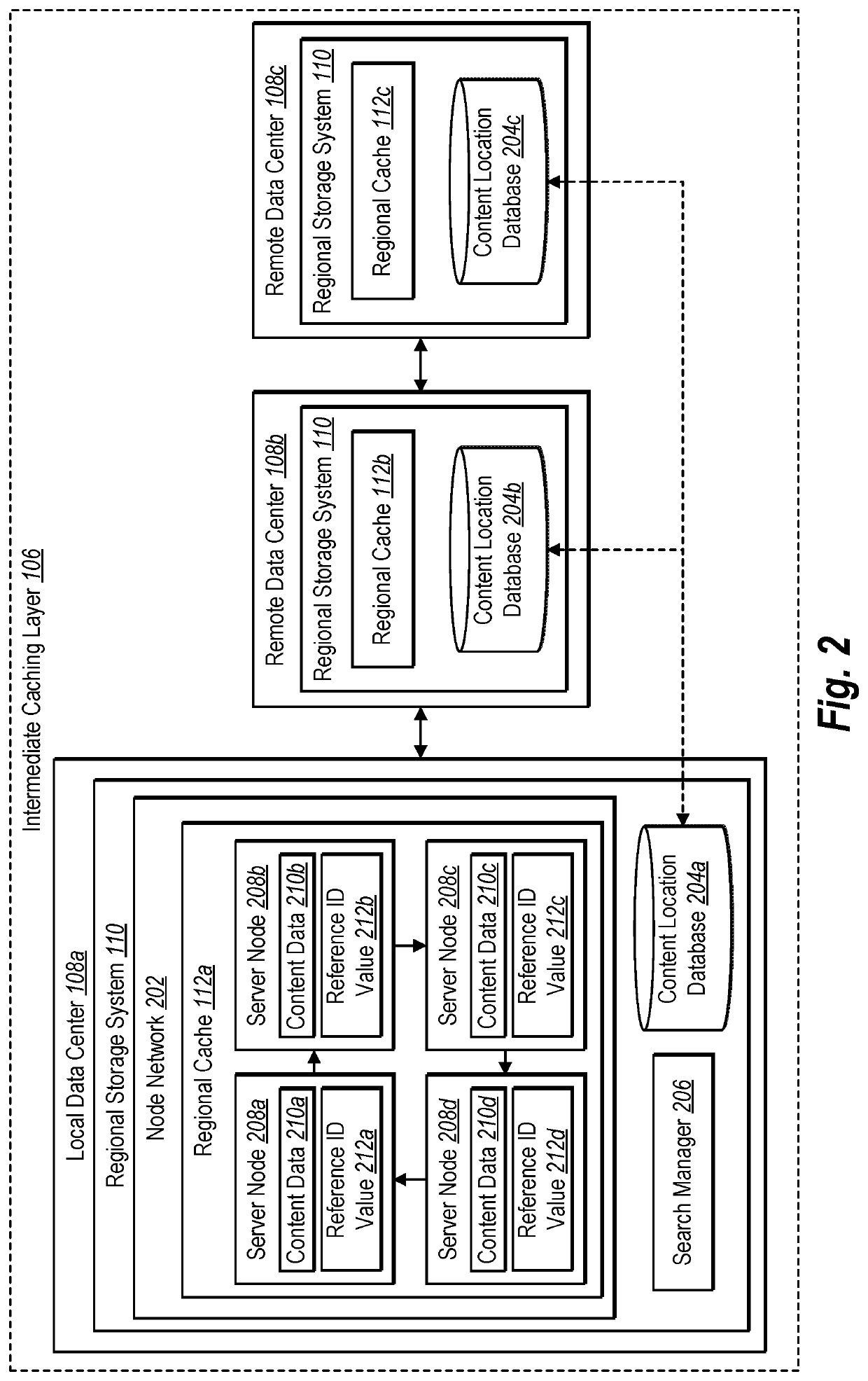

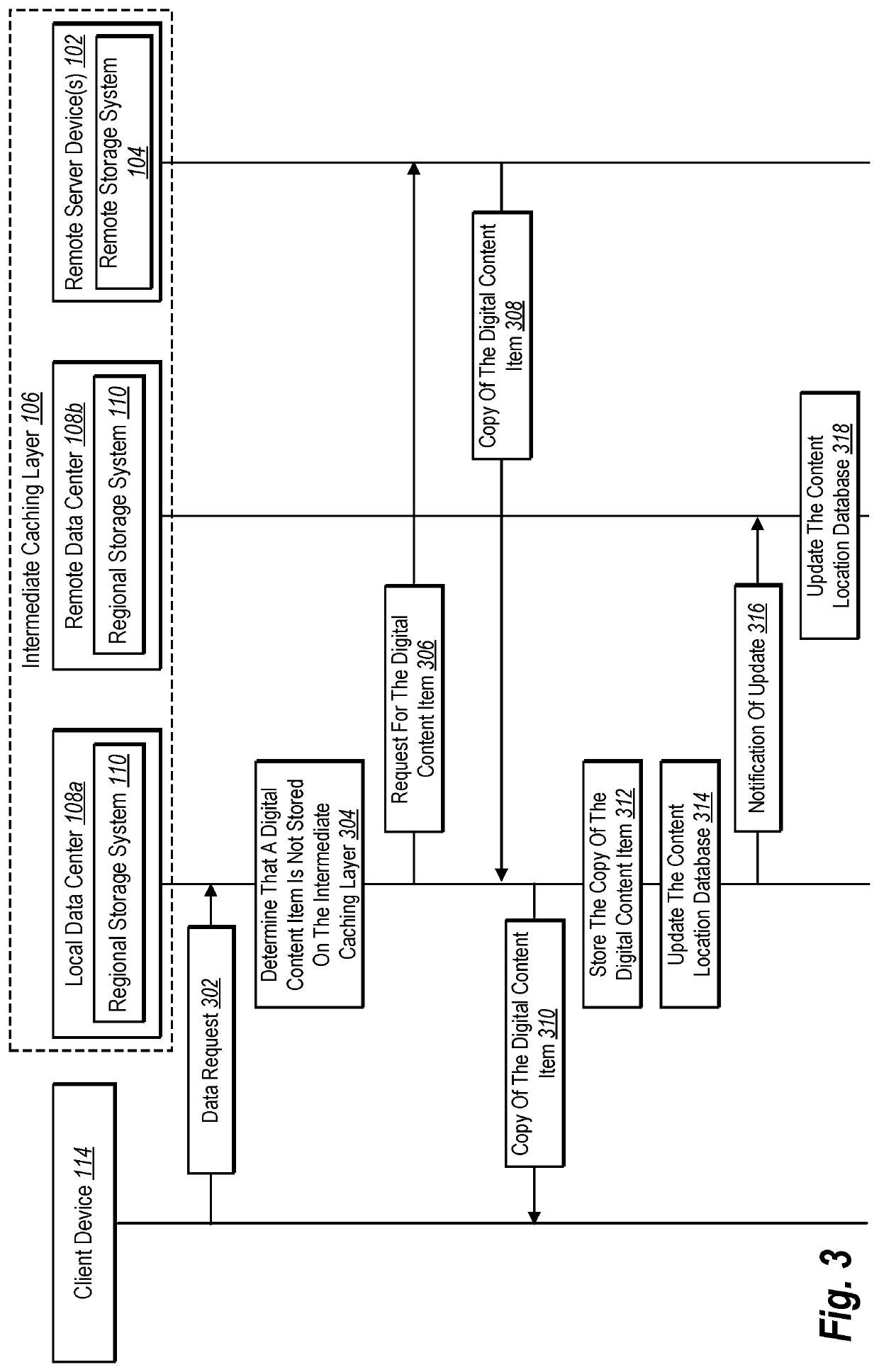

Generating and providing low-latency cached content

ActiveUS20200034050A1Facilitate accessEasy accessMemory architecture accessing/allocationInput/output to record carriersClientData request

Embodiments of the present disclosure relate to facilitating efficient access to electronic content via an intermediate caching layer between a client device and remote storage system. In particular, systems and methods disclosed herein generate and maintain a regional cache on a data center that includes a subset of digital content items from a collection of digital content items stored on the remote storage system. For example, in response to receiving a data request, the systems and methods disclosed herein determine whether a digital content item corresponding to the data request exists on an intermediate caching layer including both a local data center and one or more remote data centers. The systems and methods facilitate obtaining copies of requested digital content items from the regional caches when available, resulting in faster responses to data requests while decreasing a number of times a client directly accesses the remote storage system.

Owner:QUALTRICS

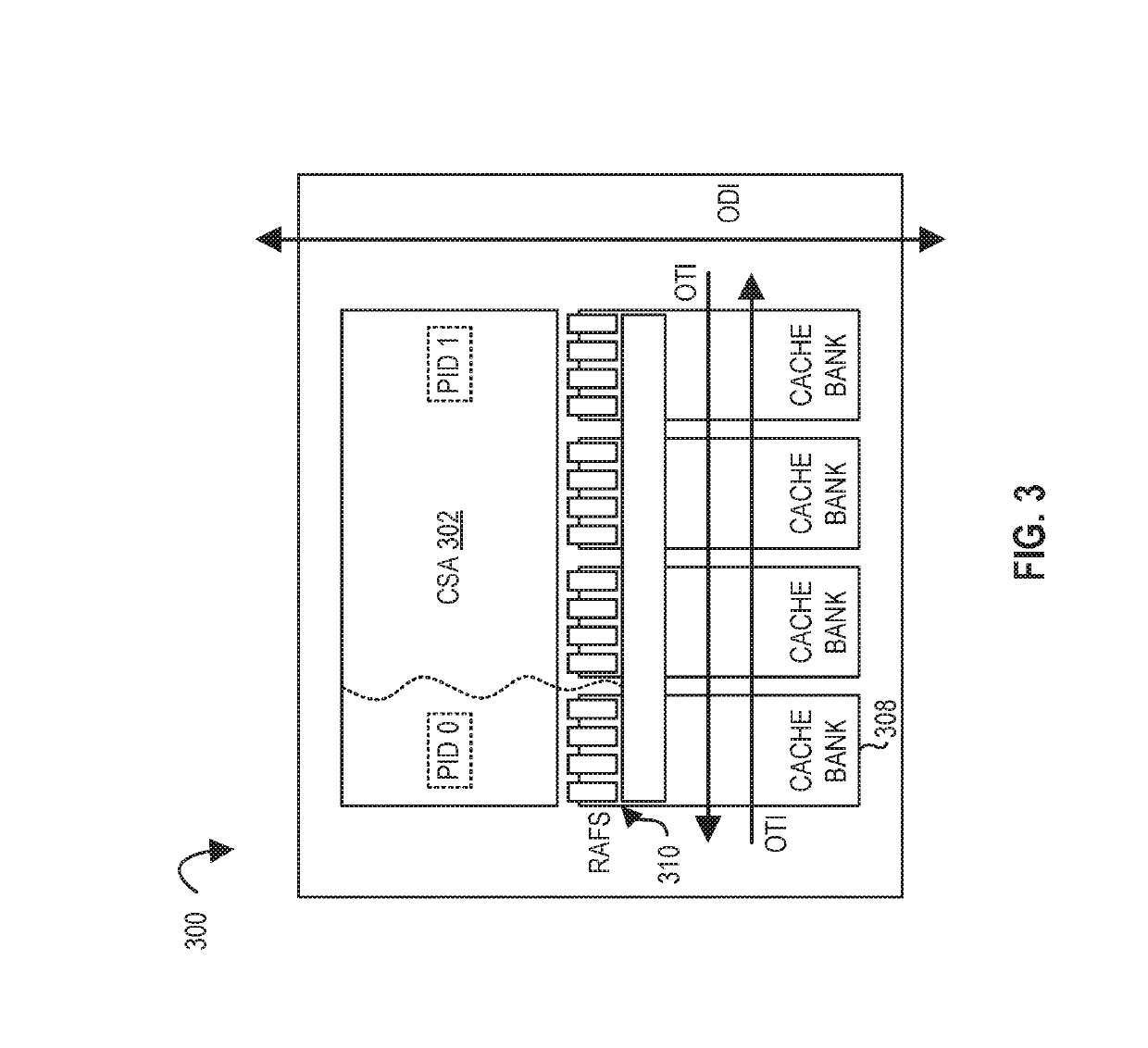

Apparatuses, methods, and systems for a configurable accelerator having dataflow execution circuits

PendingUS20220100680A1Easy to adaptImprove performanceEnergy efficient computingElectric digital data processingData streamData memory

Systems, methods, and apparatuses relating to a configurable accelerator having dataflow execution circuits are described. In one embodiment, a hardware accelerator includes a plurality of dataflow execution circuits that each comprise a register file, a plurality of execution circuits, and a graph station circuit comprising a plurality of dataflow operation entries that each include a respective ready field that indicates when an input operand for a dataflow operation is available in the register file, and the graph station circuit is to select for execution a first dataflow operation entry when its input operands are available, and clear ready fields of the input operands in the first dataflow operation entry when a result of the execution is stored in the register file; a cross dependence network coupled between the plurality of dataflow execution circuits to send data between the plurality of dataflow execution circuits according to a second dataflow operation entry; and a memory execution interface coupled between the plurality of dataflow execution circuits and a cache bank to send data between the plurality of dataflow execution circuits and the cache bank according to a third dataflow operation entry.

Owner:INTEL CORP

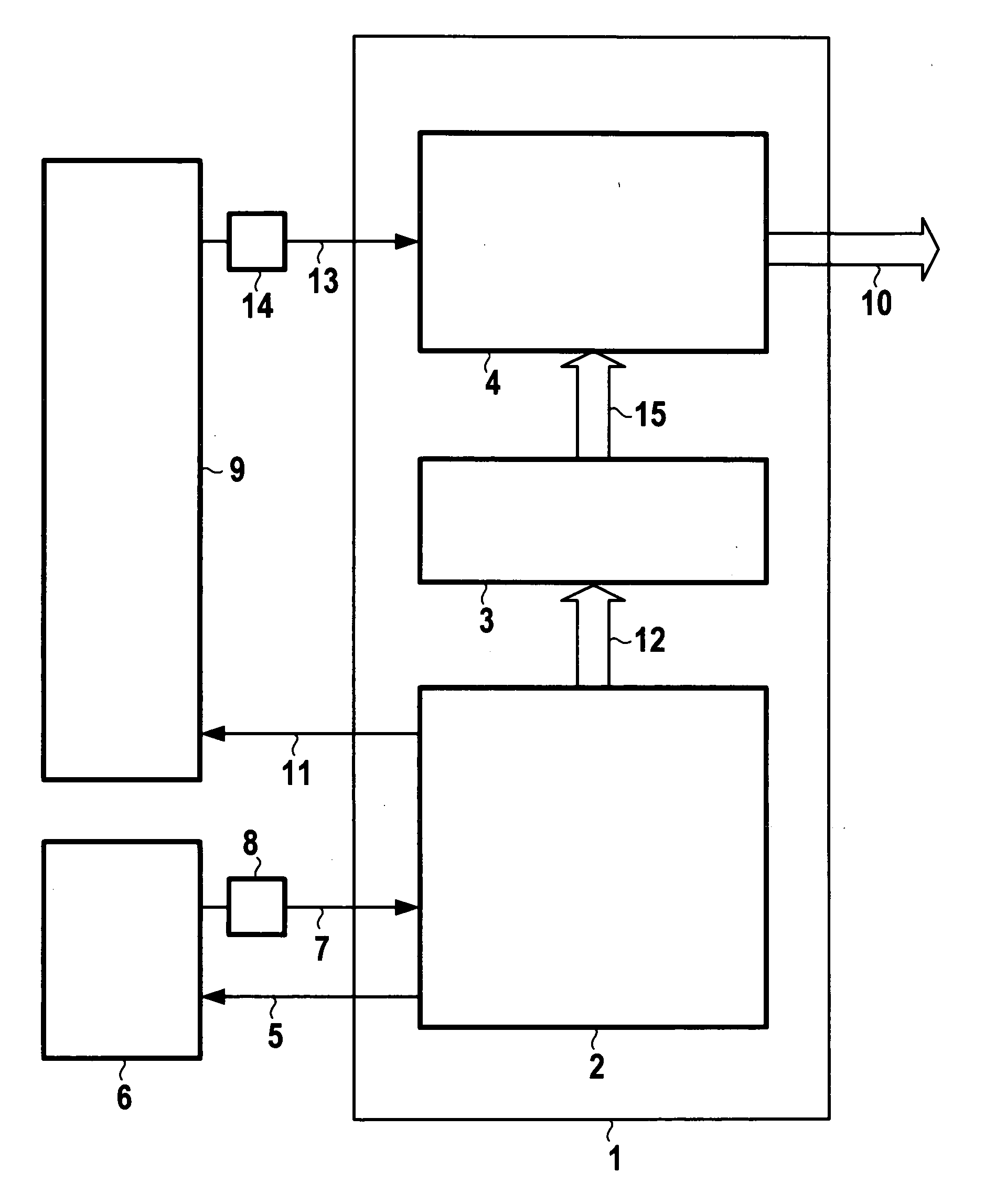

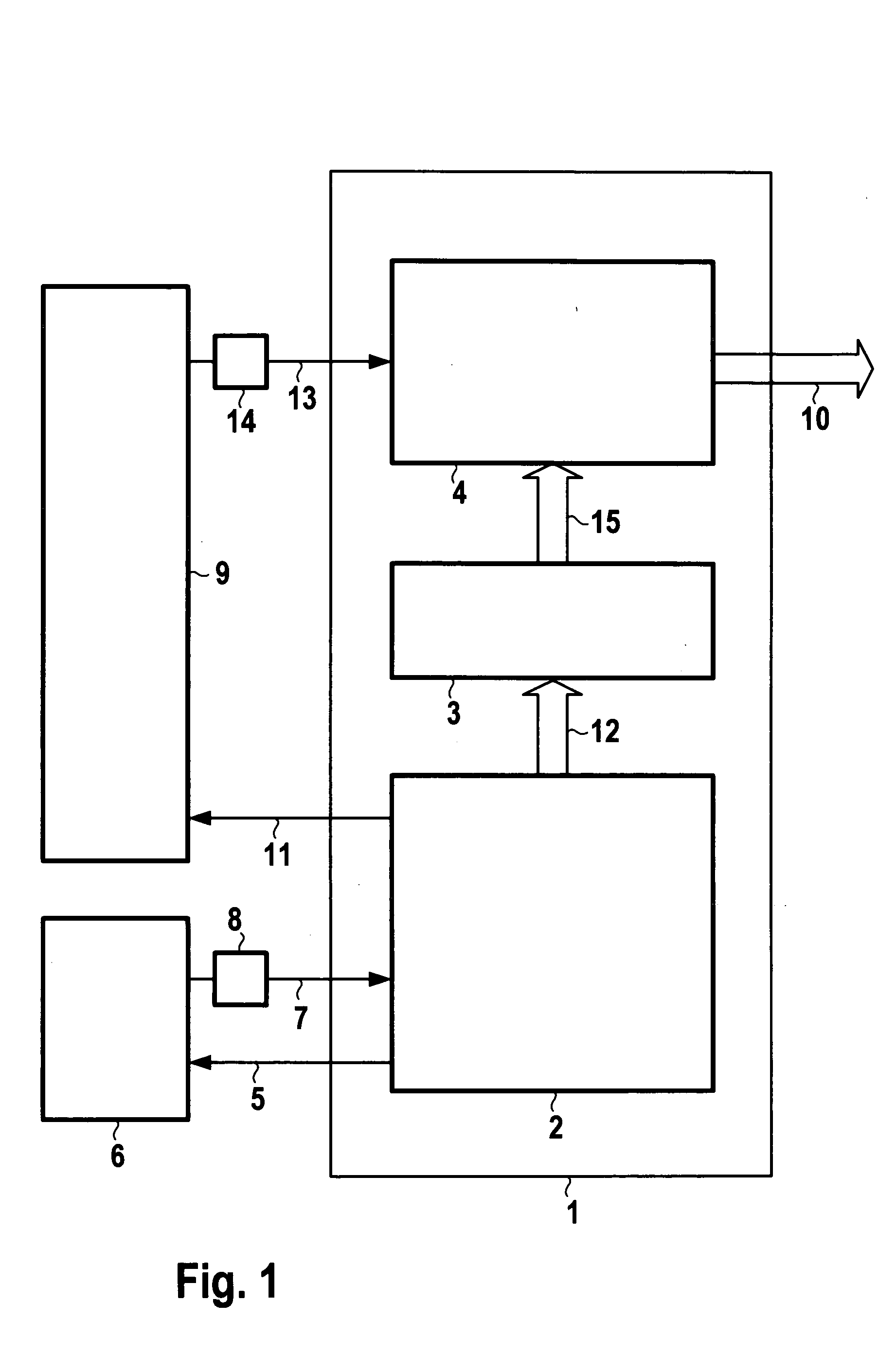

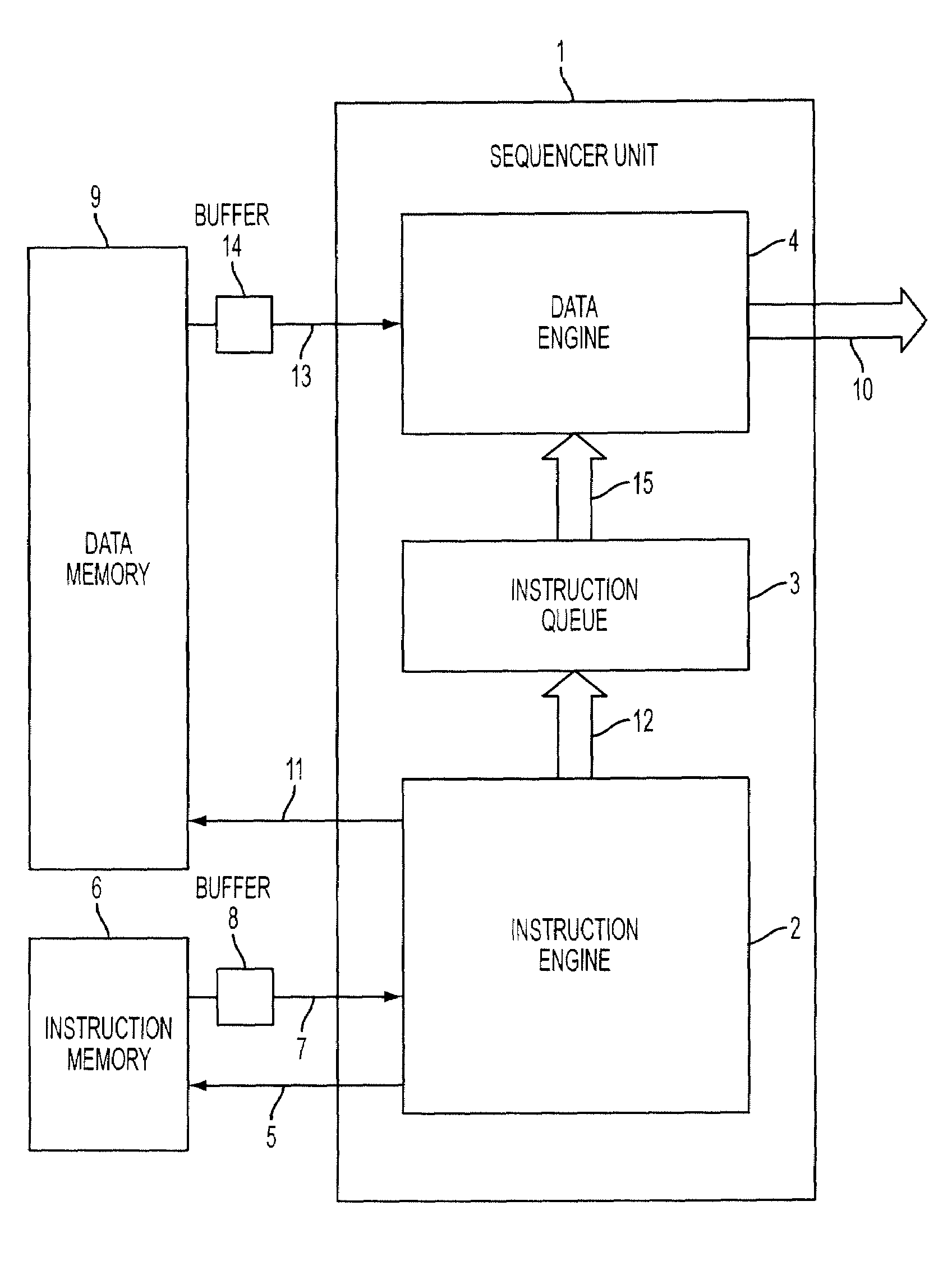

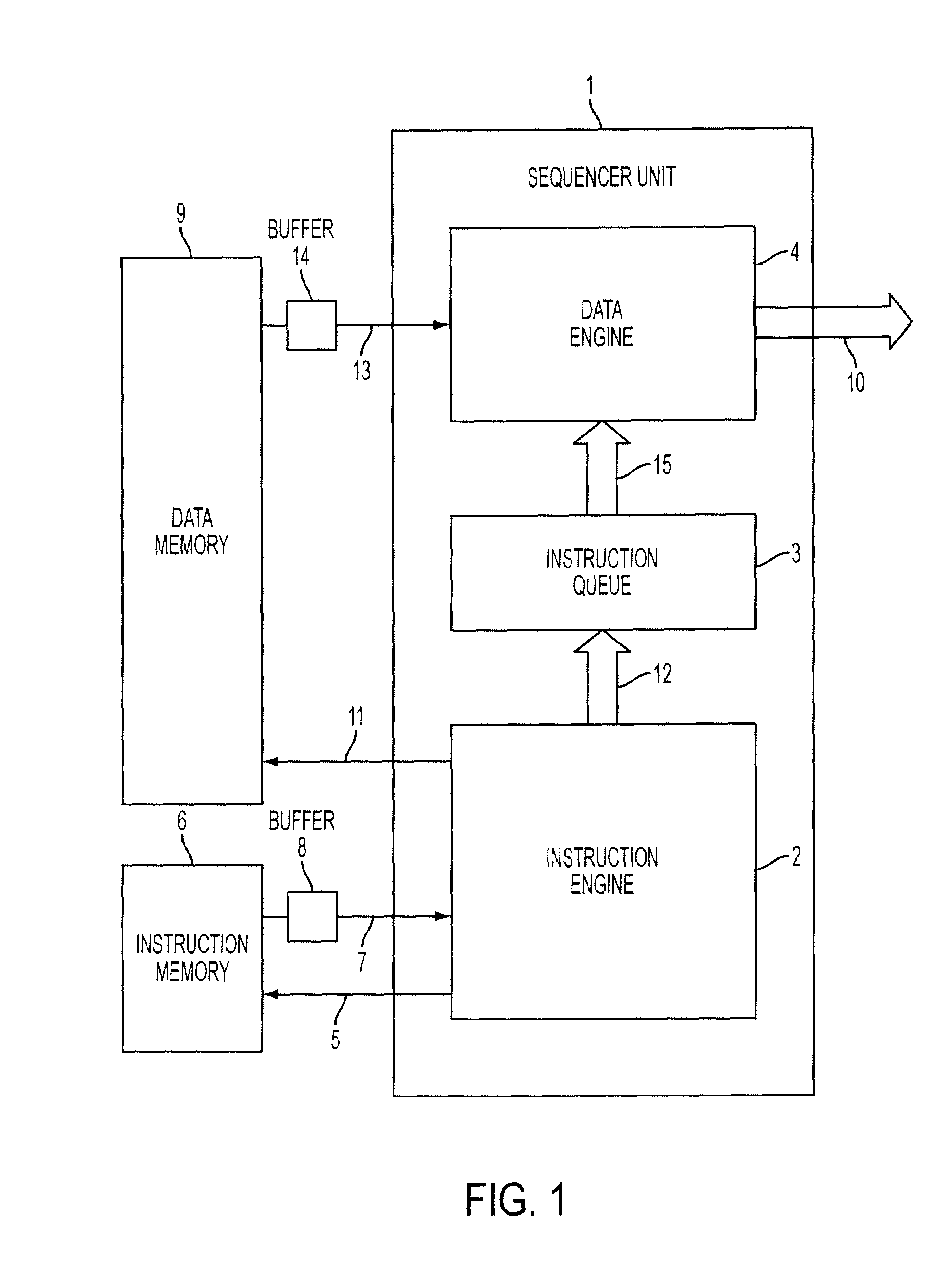

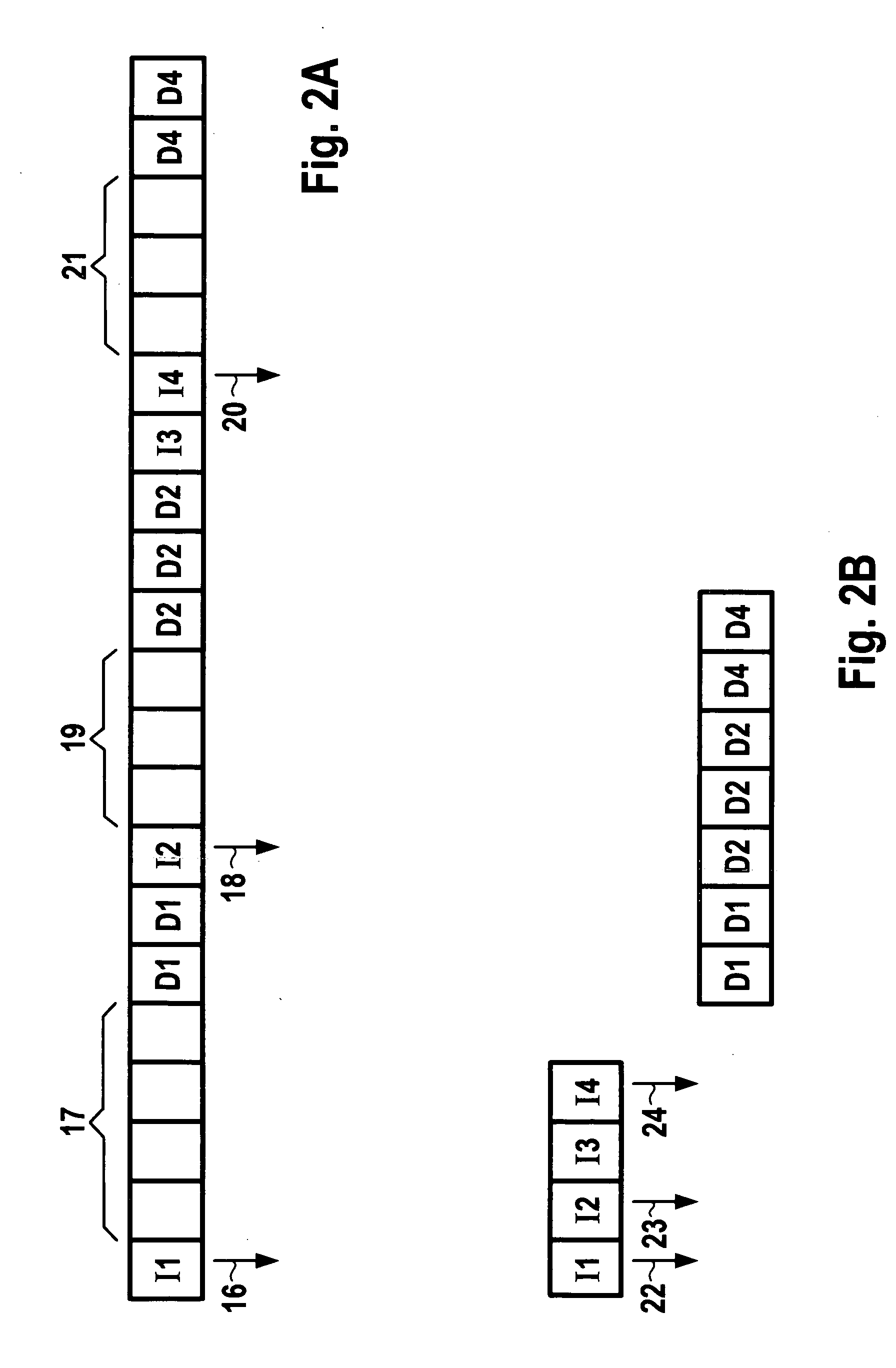

Sequencer unit with instruction buffering

ActiveUS20050050302A1Reduce idle timeGuaranteed stable operationDigital computer detailsConcurrent instruction executionProcessing InstructionData stream

A sequencer unit according to embodiments of the present invention comprises a first instruction processing unit, an instruction buffer and a second instruction processing unit. The first instruction processing unit is adapted for receiving and processing a stream of instructions, and for issuing, in case data is required by a certain instruction, a corresponding data read request for fetching said data. Instructions that wait for requested data are buffered in the instruction buffer. The second instruction processing unit is adapted for receiving requested data that corresponds to one of the issued data read requests, for assigning the requested data to the corresponding instructions buffered in the instruction buffer, and for processing said instructions in order to generate an output data stream.

Owner:ADVANTEST CORP

Scale Out Storage Architecture for In-Memory Computing and Related Method for Storing Multiple Petabytes of Data Entirely in System RAM Memory

InactiveUS20170131899A1Reduction of latency accessRemove constraintsMemory architecture accessing/allocationInput/output to record carriersComputer architectureHigh bandwidth

A high performance, linearly scalable, software-defined, RAM-based storage architecture designed for in-memory RAM based petascale systems, including a method to aggregate system RAM memory across multiple clustered nodes. The architecture realizes a parallel storage system where multiple petabytes of data can be hosted entirely in RAM memory. The resulting system eliminates the scalability limitation of any traditional in-memory approach using a file system based scale-out approach with low latency, high bandwidth, and scalable IOPS running entirely in RAM.

Owner:A3CUBE INC

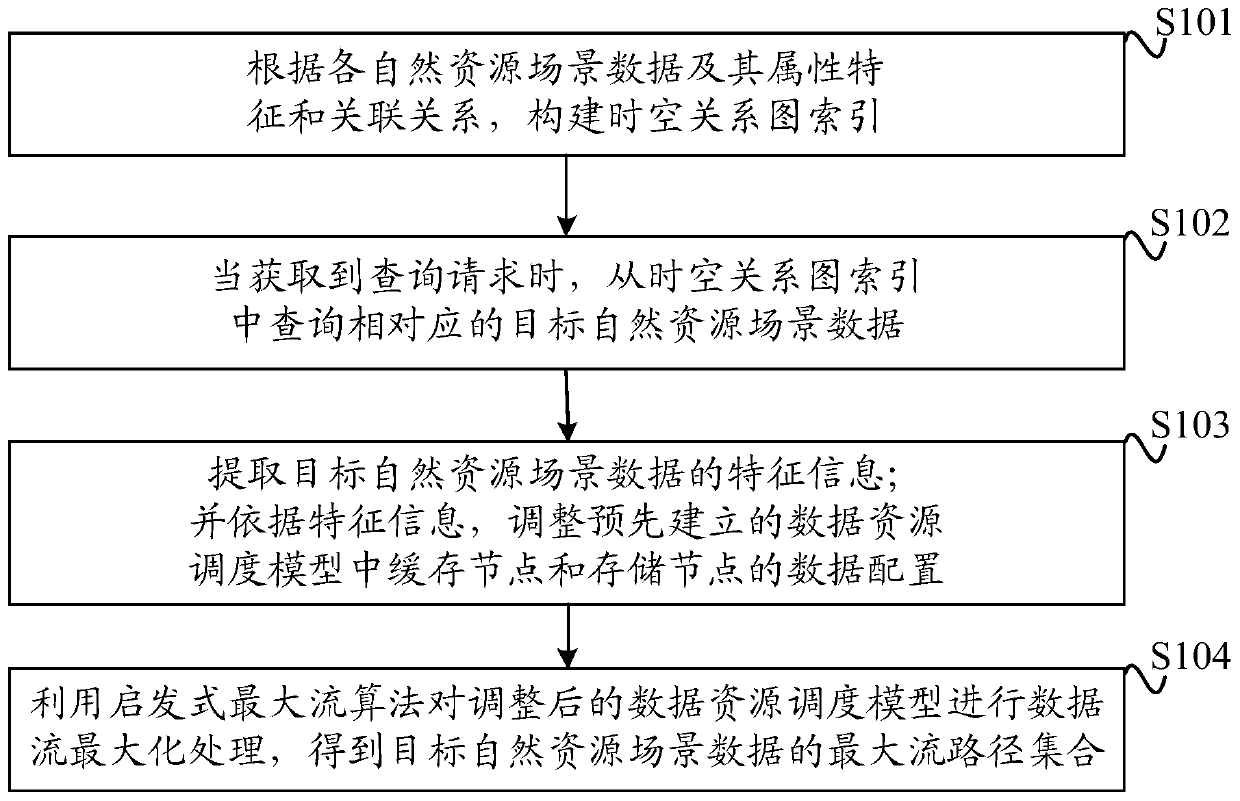

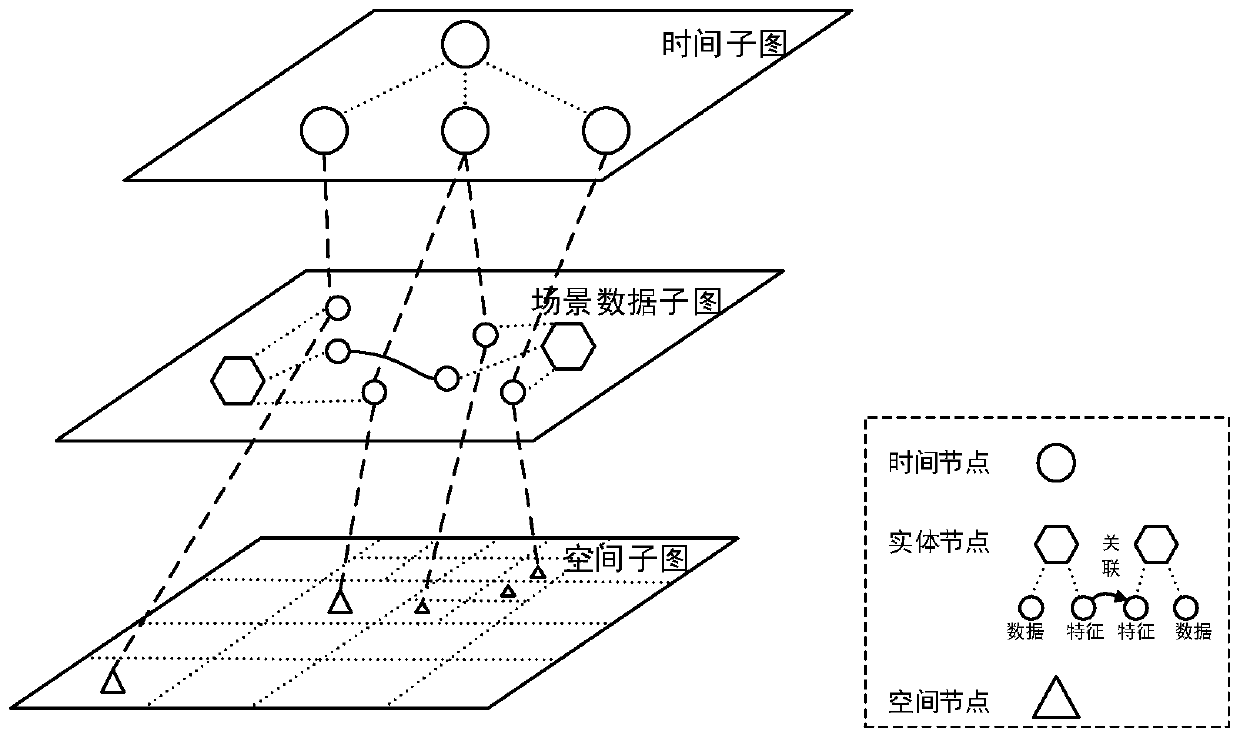

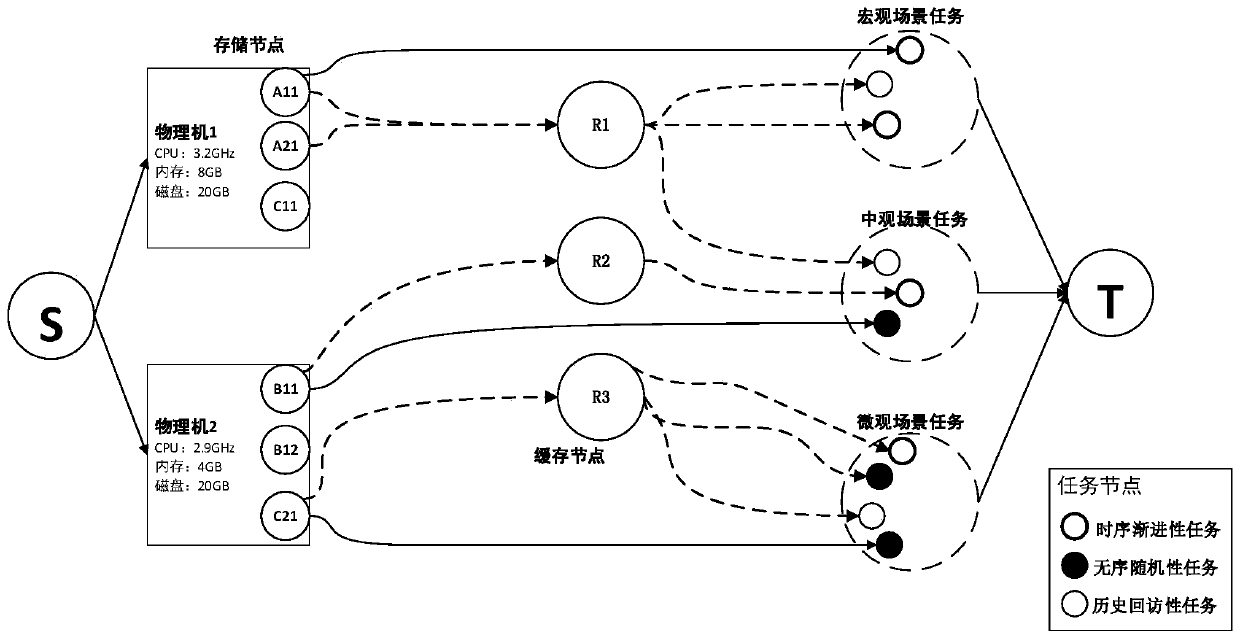

Organization scheduling method and device for natural resource scene data and storage medium

InactiveCN110516119AEasy to understandHigh performance processingOther databases indexingOther databases browsing/visualisationNatural resourceTraffic capacity

The embodiment of the invention discloses an organization scheduling method and device for natural resource scene data, and a medium, and the method comprises the steps: constructing a space-time relation graph index according to the natural resource scene data and the attribute features and association relation thereof, wherein high-performance processing and low-latency access of natural resource scene data are achieved through the space-time relation graph index; when a query request is obtained, querying corresponding target natural resource scene data from the space-time relationship graph index; adjusting data configuration of a cache node and a storage node in a pre-established data resource scheduling model according to the extracted feature information of the target natural resource scene data; and performing data flow maximization processing on the adjusted data resource scheduling model by using a heuristic maximum flow algorithm to obtain a maximum flow path set of the target natural resource scene data. The data resource scheduling model can adaptively adjust the flow of various types of data, improves the accuracy of data services, and achieves the efficient scheduling of large-scale high-concurrency task data.

Owner:SOUTHWEST JIAOTONG UNIV +1

Apparatuses, methods, and systems for swizzle operations in a configurable spatial accelerator

ActiveUS20200310797A1Easy to adaptImprove performanceSingle instruction multiple data multiprocessorsInstruction analysisSoftware engineeringProcessing element

Systems, methods, and apparatuses relating to swizzle operations and disable operations in a configurable spatial accelerator (CSA) are described. Certain embodiments herein provide for an encoding system for a specific set of swizzle primitives across a plurality of packed data elements in a CSA. In one embodiment, a CSA includes a plurality of processing elements, a circuit switched interconnect network between the plurality of processing elements, and a configuration register within each processing element to store a configuration value having a first portion that, when set to a first value that indicates a first mode, causes the processing element to pass an input value to operation circuitry of the processing element without modifying the input value, and, when set to a second value that indicates a second mode, causes the processing element to perform a swizzle operation on the input value to form a swizzled input value before sending the swizzled input value to the operation circuitry of the processing element, and a second portion that causes the processing element to perform an operation indicated by the second portion the configuration value on the input value in the first mode and the swizzled input value in the second mode with the operation circuitry.

Owner:INTEL CORP

Asynchronous and distributed storage of data

InactiveUS8364892B2Reduces large streaming bufferImprove delivery efficiencySelective content distributionMemory systemsData segmentData rate

In one example, multimedia content is requested from a plurality of storage modules. Each storage module retrieves the requested parts, which are typically stored on a plurality of storage devices at each storage module. Each storage module determines independently when to retrieve the requested parts of the data file from storage and transmits those parts from storage to a data queue. Based on a capacity of a delivery module and / or the data rate associated with the request, each storage module transmits the parts of the data file to the delivery module. The delivery module generates a sequenced data segment from the parts of the data file received from the plurality of storage modules and transmits the sequenced data segment to the requester.

Owner:AKAMAI TECH INC

Sequencer unit with instruction buffering

ActiveUS7263601B2Reduce idle timeGuaranteed stable operationError detection/correctionDigital computer detailsProcessing InstructionInstruction processing unit

A sequencer unit includes a first instruction processing unit, an instruction buffer and a second instruction processing unit. The first instruction processing unit is adapted for receiving and processing a stream of instructions, and for issuing, in case data is required by a certain instruction, a corresponding data read request for fetching said data. Instructions that wait for requested data are buffered in the instruction buffer. The second instruction processing unit is adapted for receiving requested data that corresponds to one of the issued data read requests, for assigning the requested data to the corresponding instructions buffered in the instruction buffer, and for processing said instructions in order to generate an output data stream.

Owner:ADVANTEST CORP

Processors, methods, and systems for debugging a configurable spatial accelerator

ActiveUS11086816B2Easy to adaptImprove performanceAssociative processorsDataflow computersComputer architectureData stream

Systems, methods, and apparatuses relating to debugging a configurable spatial accelerator are described. In one embodiment, a processor includes a plurality of processing elements and an interconnect network between the plurality of processing elements to receive an input of a dataflow graph comprising a plurality of nodes, wherein the dataflow graph is to be overlaid into the interconnect network and the plurality of processing elements with each node represented as a dataflow operator in the plurality of processing elements, and the plurality of processing elements are to perform an operation by a respective, incoming operand set arriving at each of the dataflow operators of the plurality of processing elements. At least a first of the plurality of processing elements is to enter a halted state in response to being represented as a first of the plurality of dataflow operators.

Owner:INTEL CORP

Apparatuses, methods, and systems for time-multiplexing in a configurable spatial accelerator

InactiveUS20200409709A1Easy to adaptImprove performanceDataflow computersConcurrent instruction executionHemt circuitsProcessing element

Systems, methods, and apparatuses relating to time-multiplexing circuitry in a configurable spatial accelerator are described. In one embodiment, a configurable spatial accelerator (CSA) includes a plurality of processing elements; and a time-multiplexed, circuit switched interconnect network between the plurality of processing elements. In another embodiment, a configurable spatial accelerator (CSA) includes a plurality of time-multiplexed processing elements; and a time-multiplexed, circuit switched interconnect network between the plurality of time-multiplexed processing elements.

Owner:INTEL CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com