Patents

Literature

2235 results about "Direct memory access" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Direct memory access (DMA) is a feature of computer systems that allows certain hardware subsystems to access main system memory (random-access memory), independent of the central processing unit (CPU).

Logical address direct memory access with multiple concurrent physical ports and internal switching

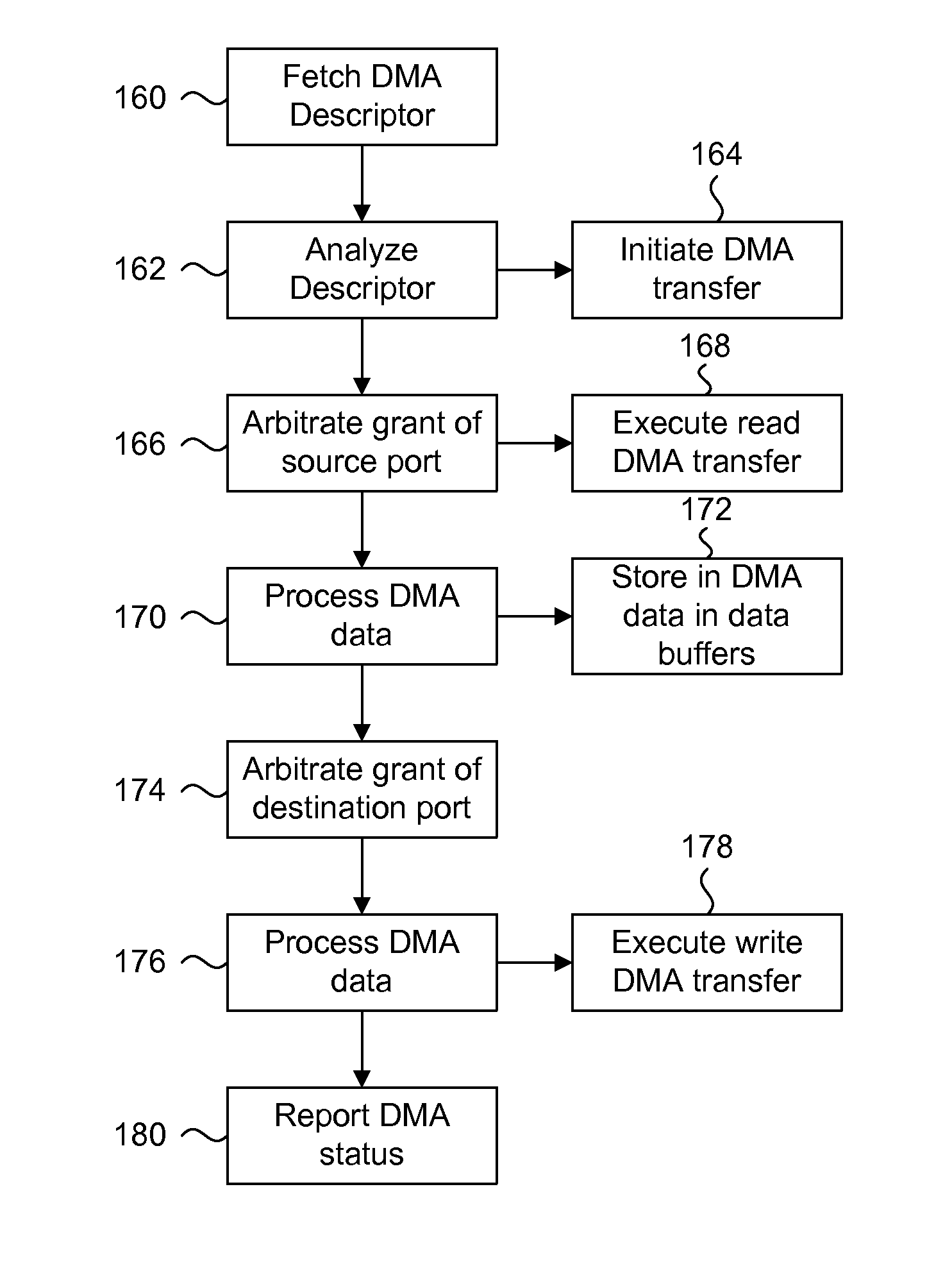

A DMA engine is provided that is suitable for higher performance System On a Chip (SOC) devices that have multiple concurrent on-chip / off-chip memory spaces. The DMA engine operates either on logical addressing method or physical addressing method and provides random and sequential mapping function from logical address to physical address while supporting frequent context switching among a large number of logical address spaces. Embodiments of the present invention utilize per direction (source-destination) queuing and an internal switch to support non-blocking concurrent transfer of data on multiple directions. A caching technique can be incorporated to reduce the overhead of address translation.

Owner:MICROSEMI SOLUTIONS (US) INC

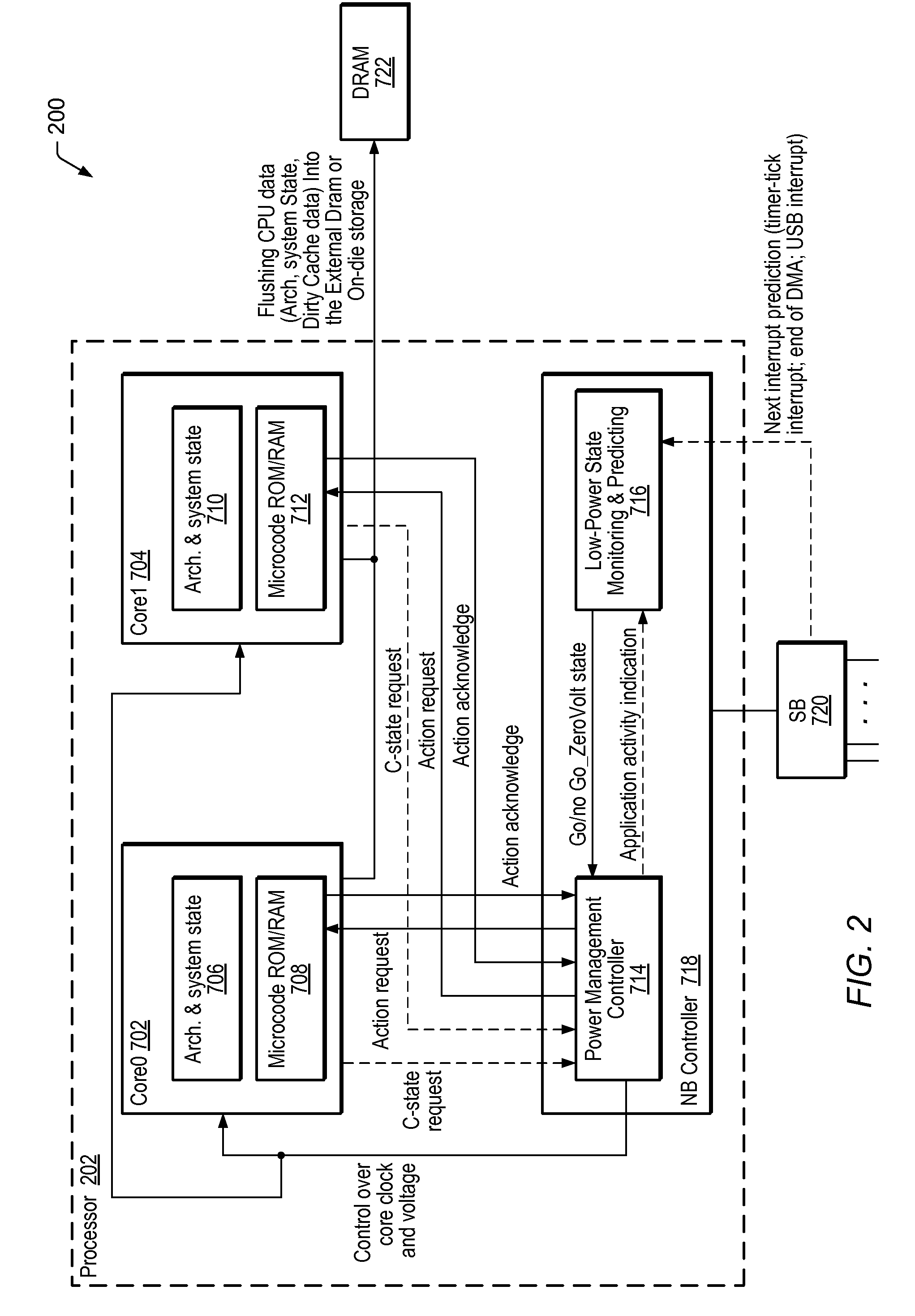

Hardware Monitoring and Decision Making for Transitioning In and Out of Low-Power State

ActiveUS20090235105A1Improve balanceMore efficiencyEnergy efficient ICTVolume/mass flow measurementDirect memory accessTimer

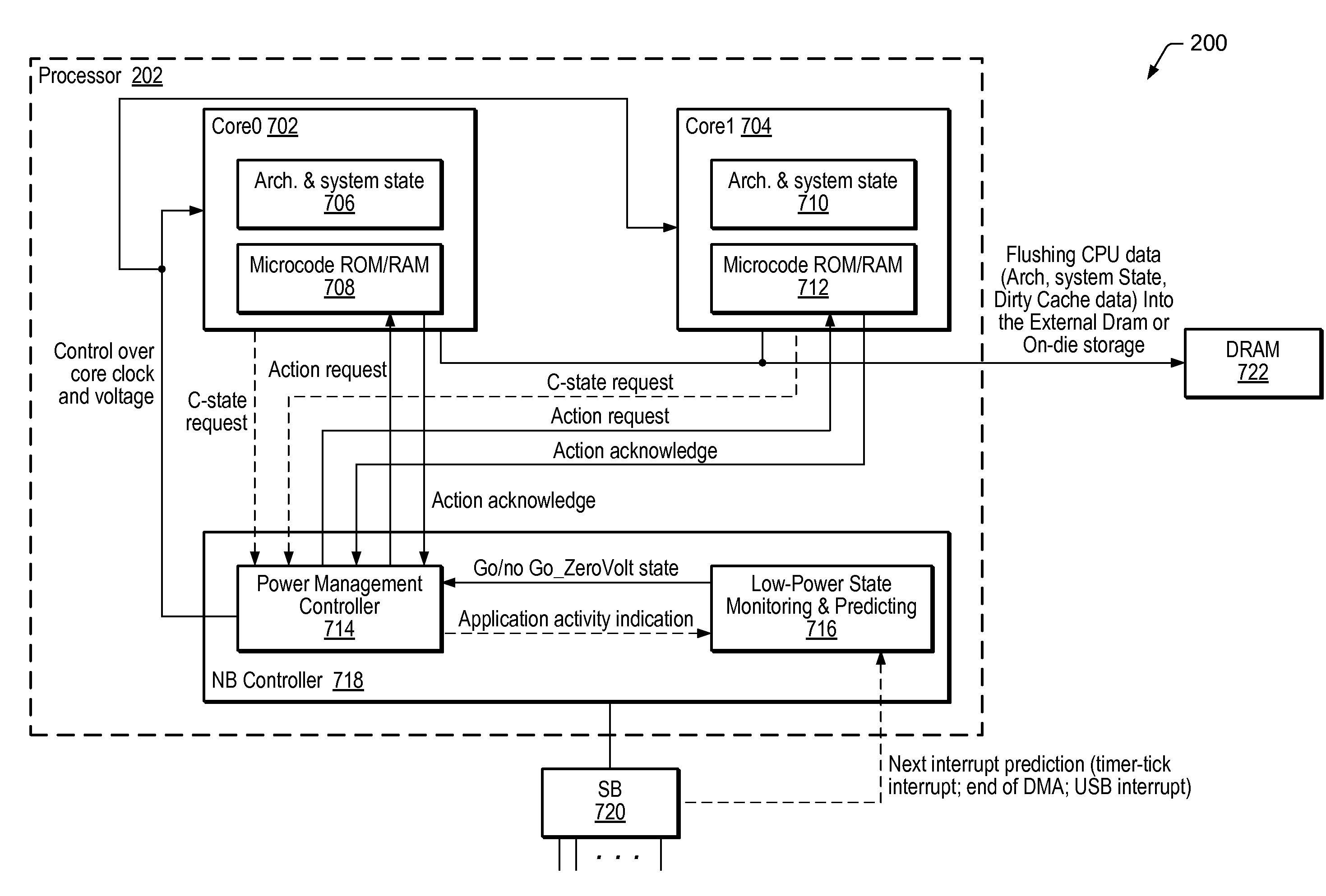

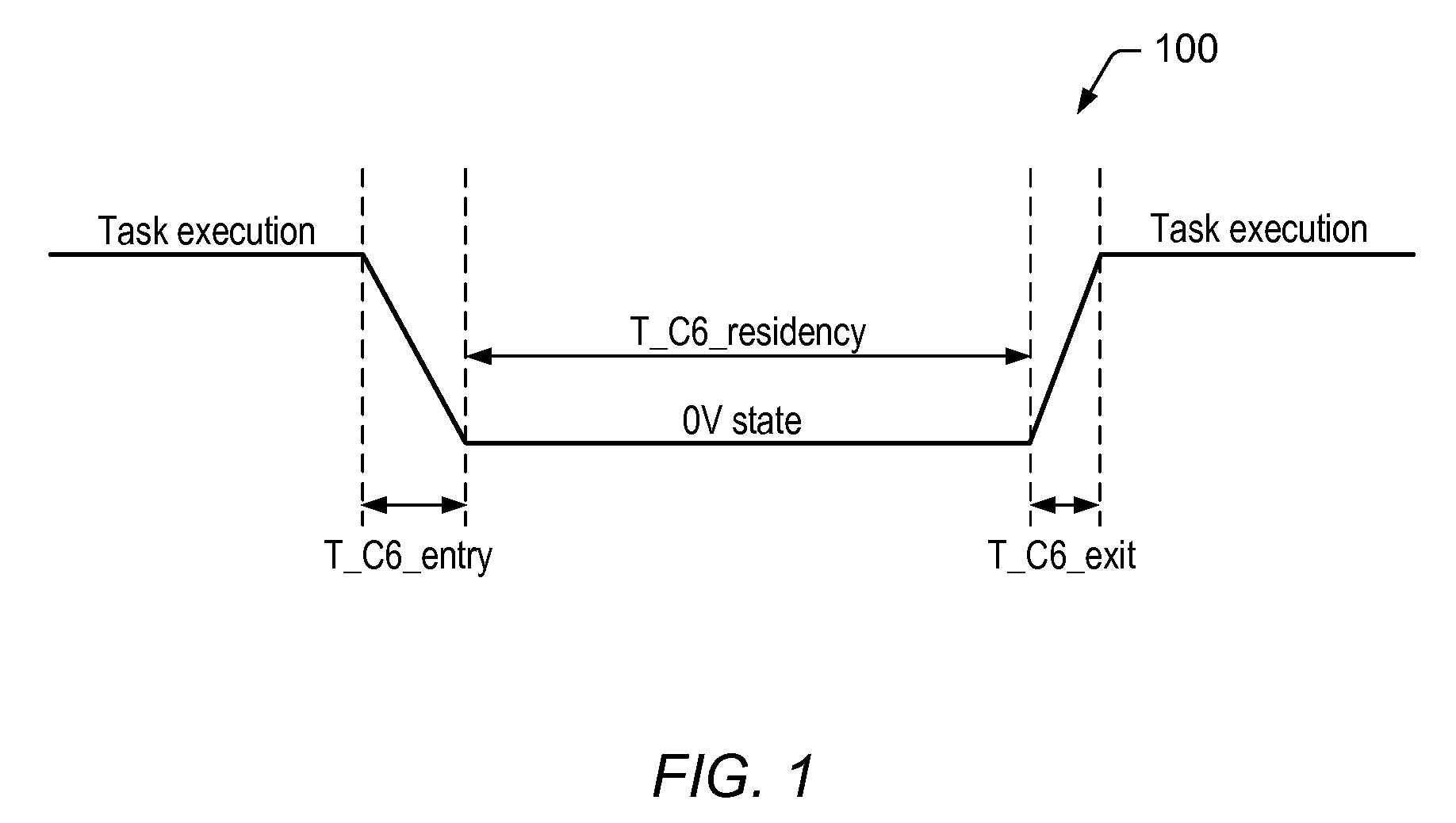

A power management controller (PMC) that interfaces with a processor comprising one or more cores. The PMC may be configured to communicate with each respective core, such that microcode executed by the respective processor core may recognize when a request is made to transition the respective core to a target power-state. For each respective core, the state monitor may monitor active-state residency, non-active-state residency, Direct Memory Access (DMA) transfer activity associated with the respective core, Input / Output (I / O) processes associated with the respective core, and the value of a timer-tick (TT) interval associated with the respective core. The status monitor may derive respective status information for the respective core based on the monitoring and indicate whether the respective core should be allowed to transition to the corresponding target power-state. The PMC may transition the respective processor core to the corresponding target power-state accordingly.

Owner:MEDIATEK INC

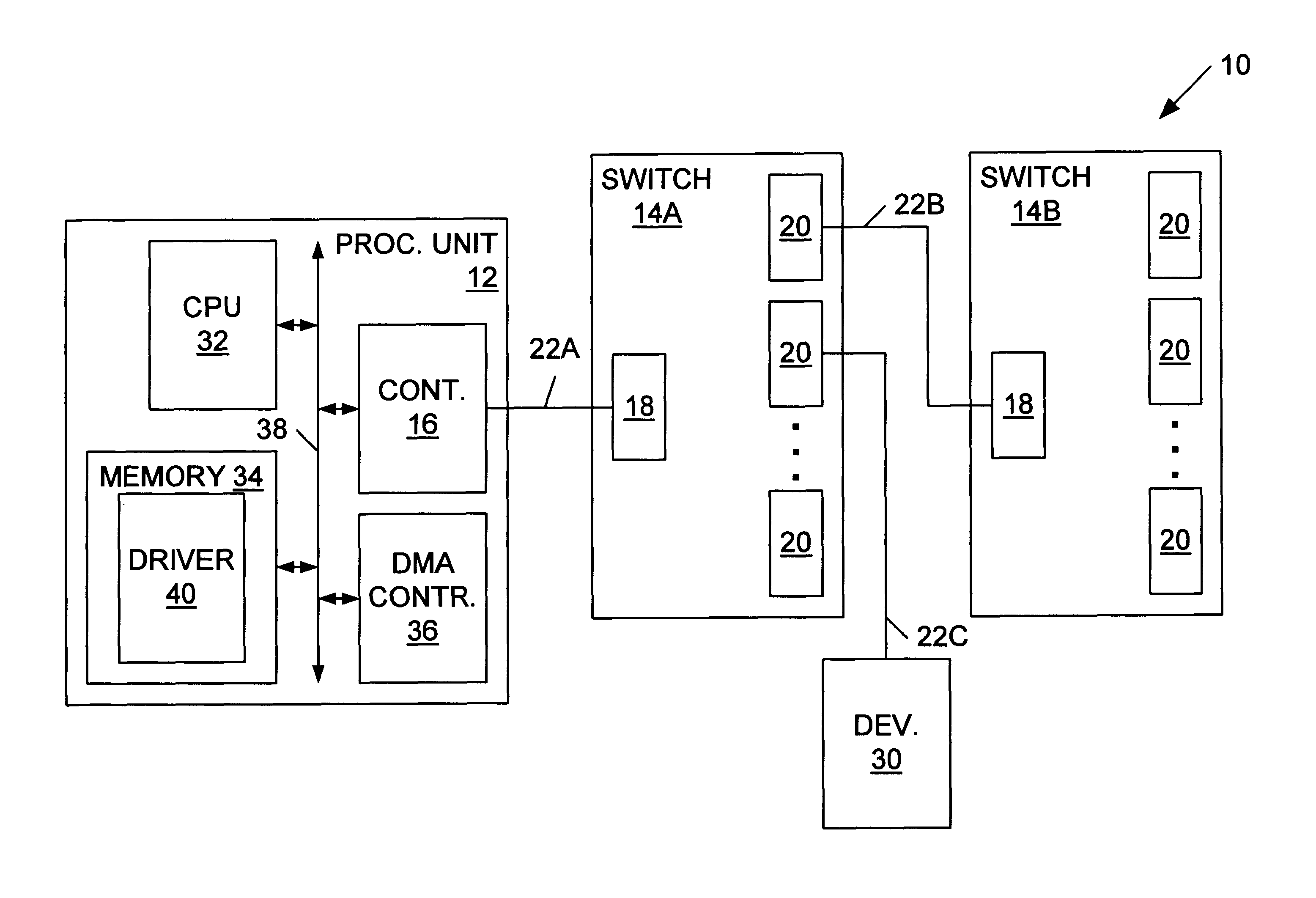

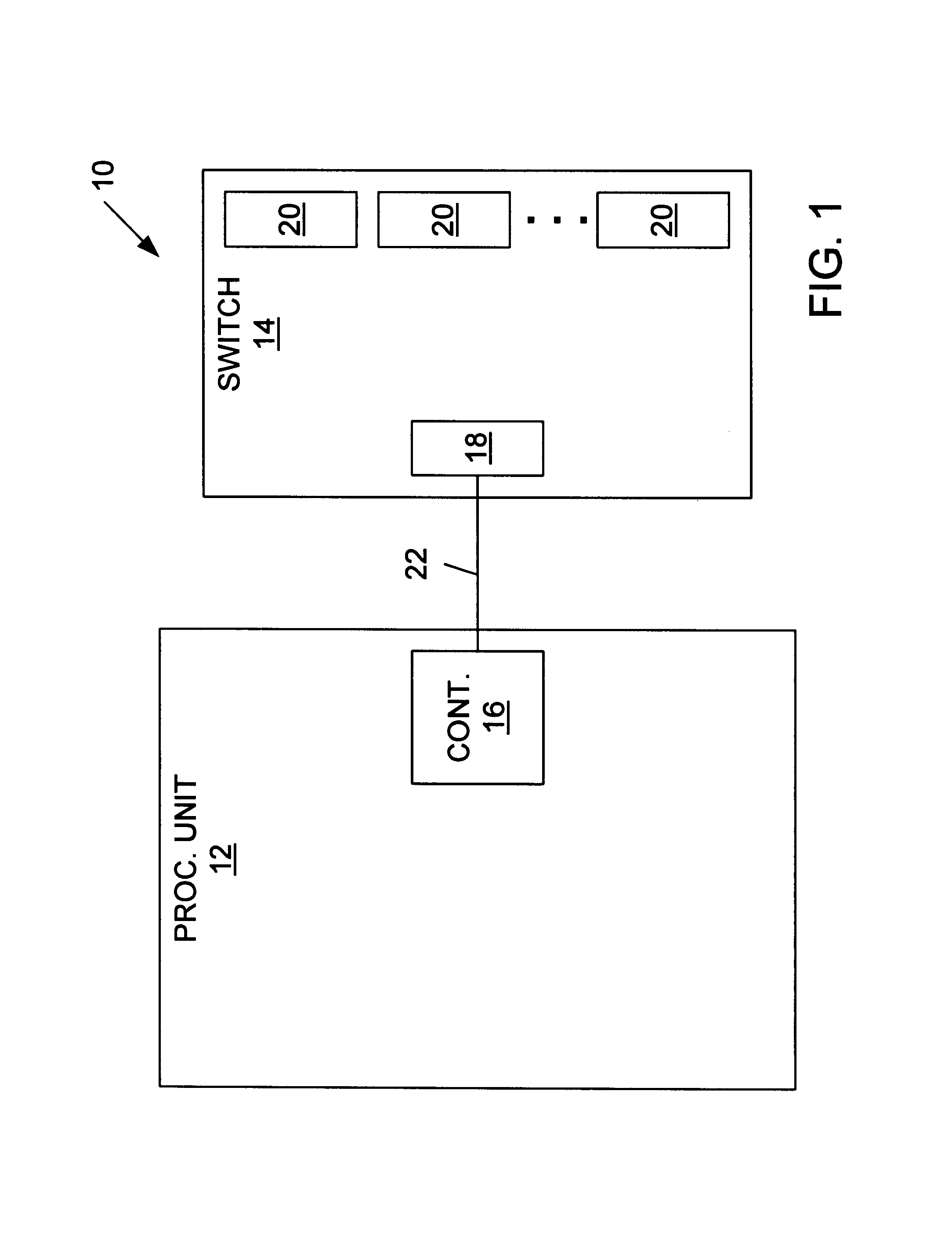

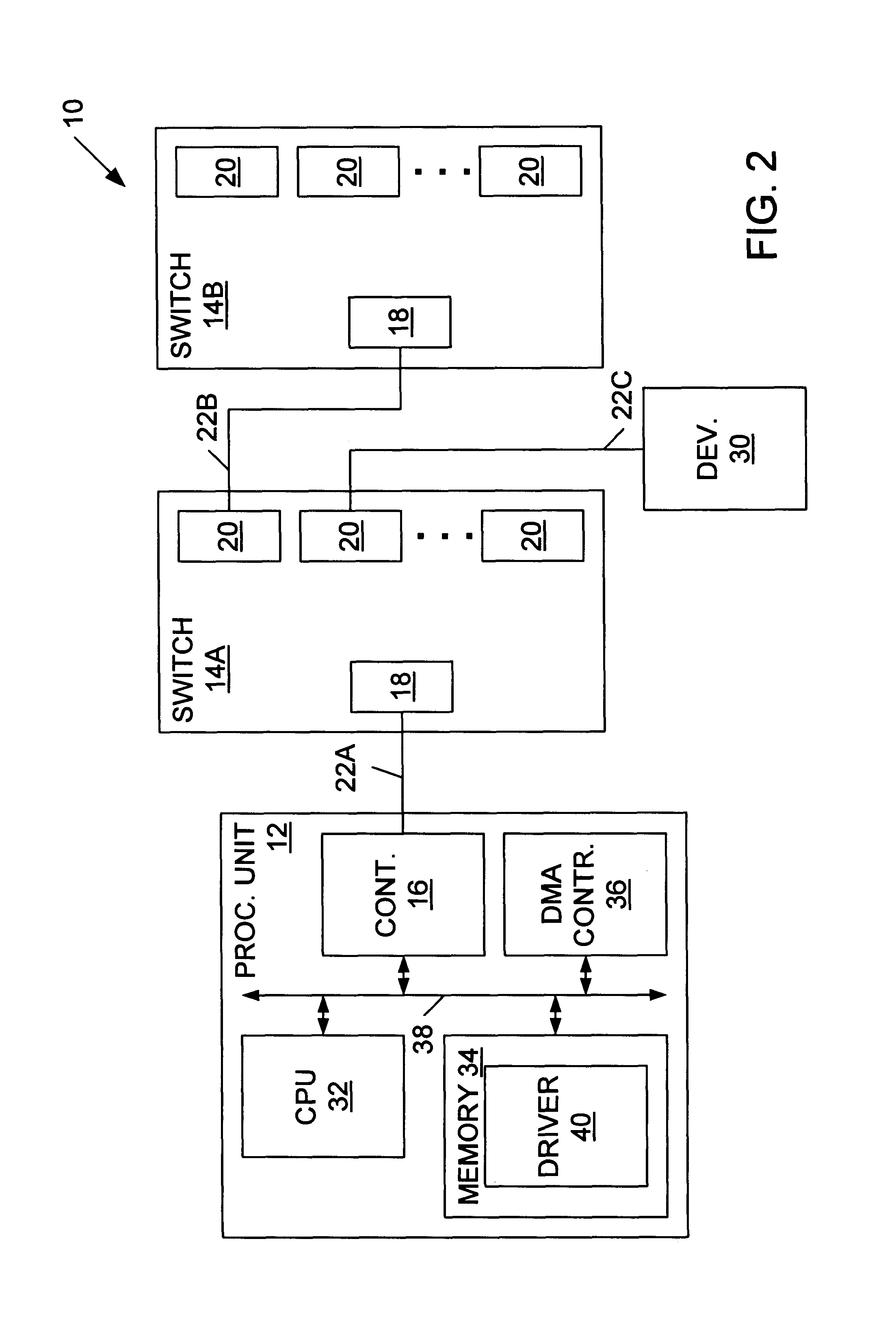

Data exchange methods for a switch which selectively forms a communication channel between a processing unit and multiple devices

InactiveUS6845409B1Reduce processing overheadReduce overheadMultiplex system selection arrangementsInput/output to record carriersMain processing unitDirect memory access

A switch is presented including a host input / output (I / O) port adapted for coupling to a controller, multiple device I / O ports each adapted for coupling to at least one device, and logic coupled between the host I / O port and the device I / O ports configured to selectively form a communication channel between the host I / O port and one of the device I / O ports. The switch may operate in a connected mode and a disconnected mode. When in the switch is in the disconnected mode, the logic may not form a communication channel between the host I / O port and any of the device I / O ports. In an ATA embodiment, the switch may comply with an AT attachment (ATA) standard, and thus be an ATA switch. The host I / O port may be adapted for coupling to an ATA controller, the device I / O ports may be adapted for coupling to at least one ATA device, and the logic may selectively form an ATA communication channel between the host I / O port and one of the device I / O ports. Several methods for exchanging data between a processing unit coupled to the host I / O port of the switch and one or more devices coupled to device I / O ports of the switch are described. Several methods for performing direct memory access (DMA) transfers to move data between a memory of the processing unit and one or more of the devices are also described.

Owner:ORACLE INT CORP

Inverted default semantics for in-speculative-region memory accesses

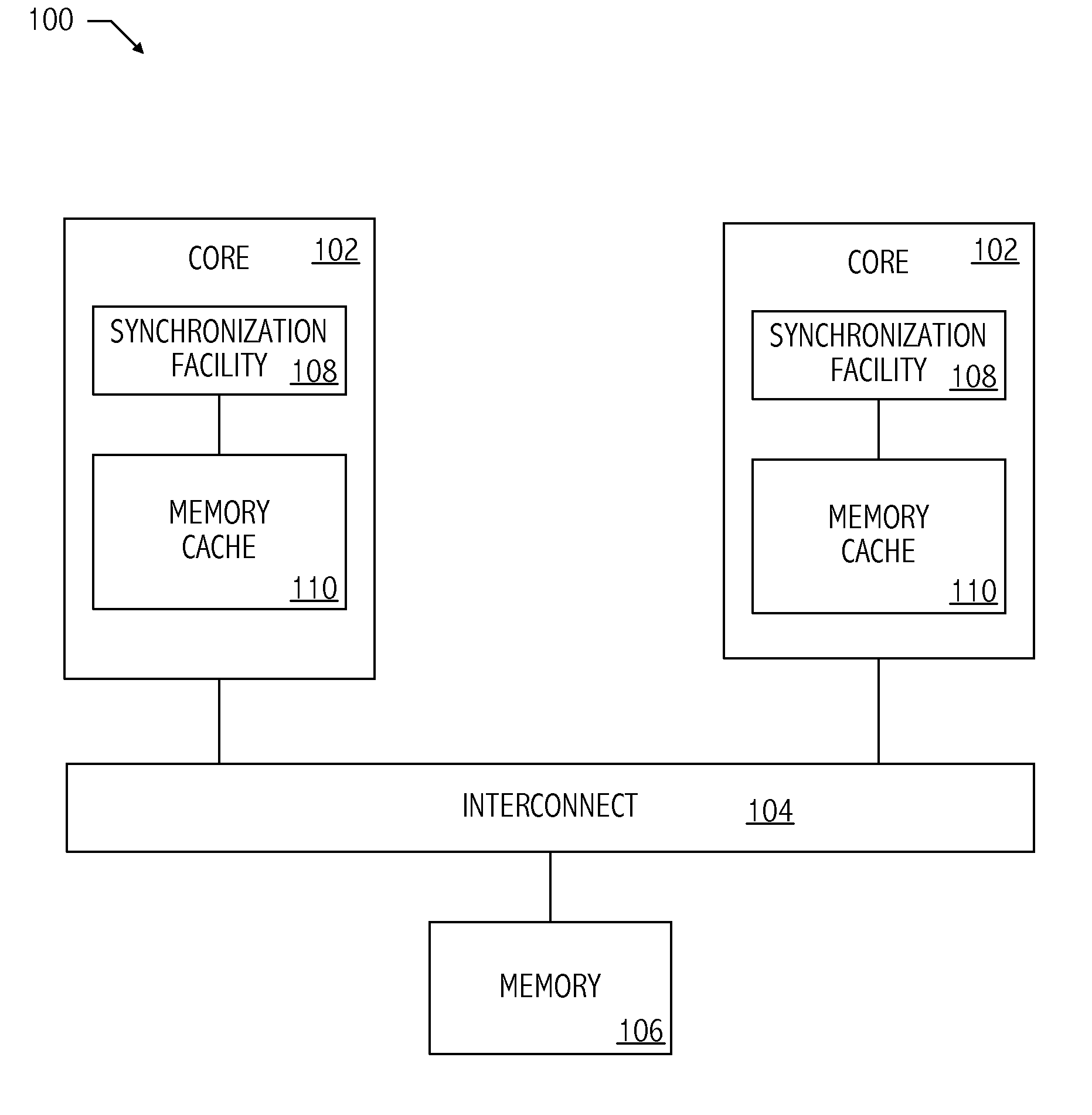

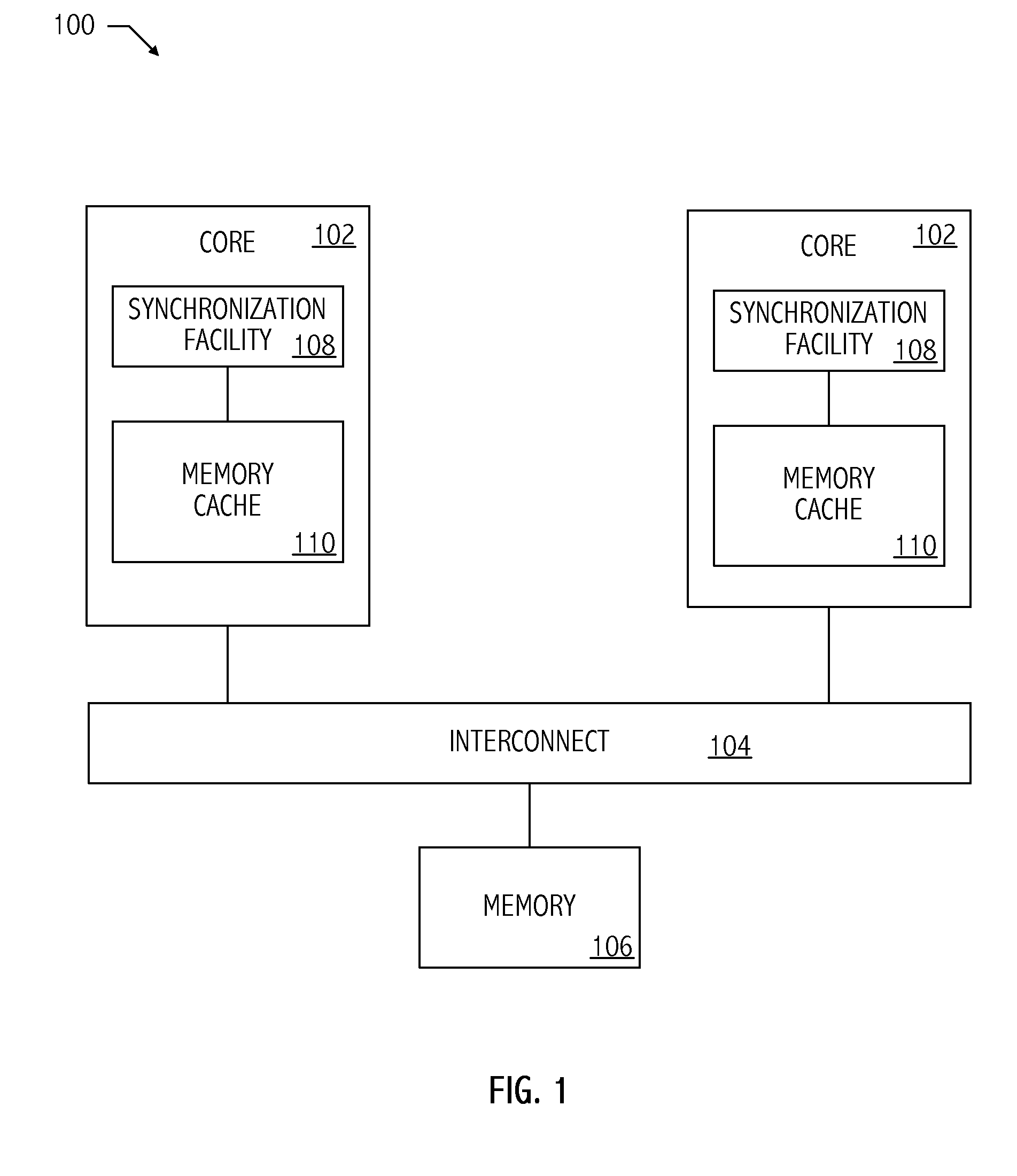

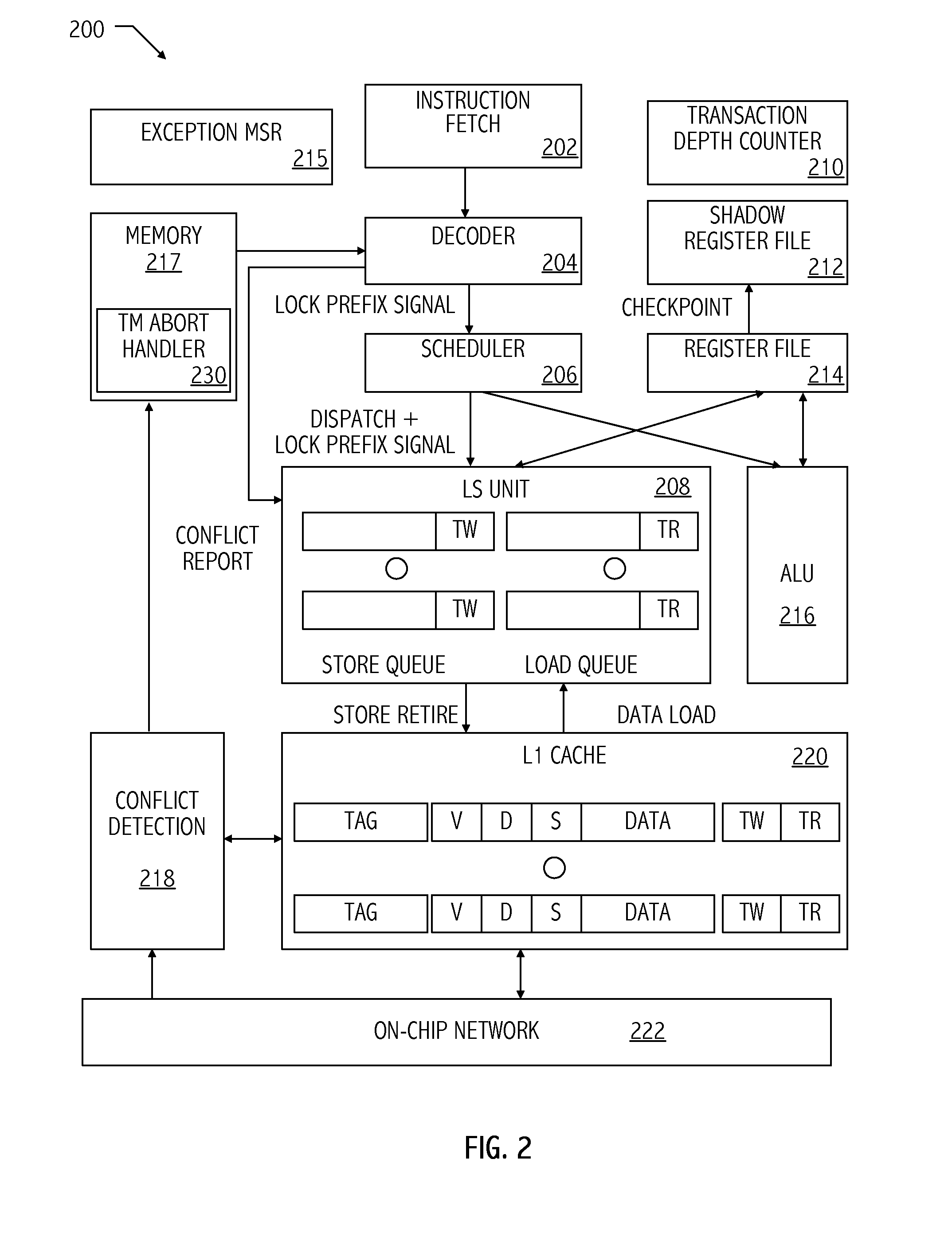

InactiveUS20110208921A1Unauthorized memory use protectionTransaction processingDirect memory accessMulti processor

A method for accessing memory by a first processor of a plurality of processors in a multi-processor system includes, responsive to a memory access instruction within a speculative region of a program, accessing contents of a memory location using a transactional memory access to the memory access instruction unless the memory access instruction indicates a non-transactional memory access. The method may include accessing contents of the memory location using a non-transactional memory access by the first processor according to the memory access instruction responsive to the instruction not being in the speculative region of the program. The method may include updating contents of the memory location responsive to the speculative region of the program executing successfully and the memory access instruction not being annotated to be a non-transactional memory access.

Owner:ADVANCED MICRO DEVICES INC

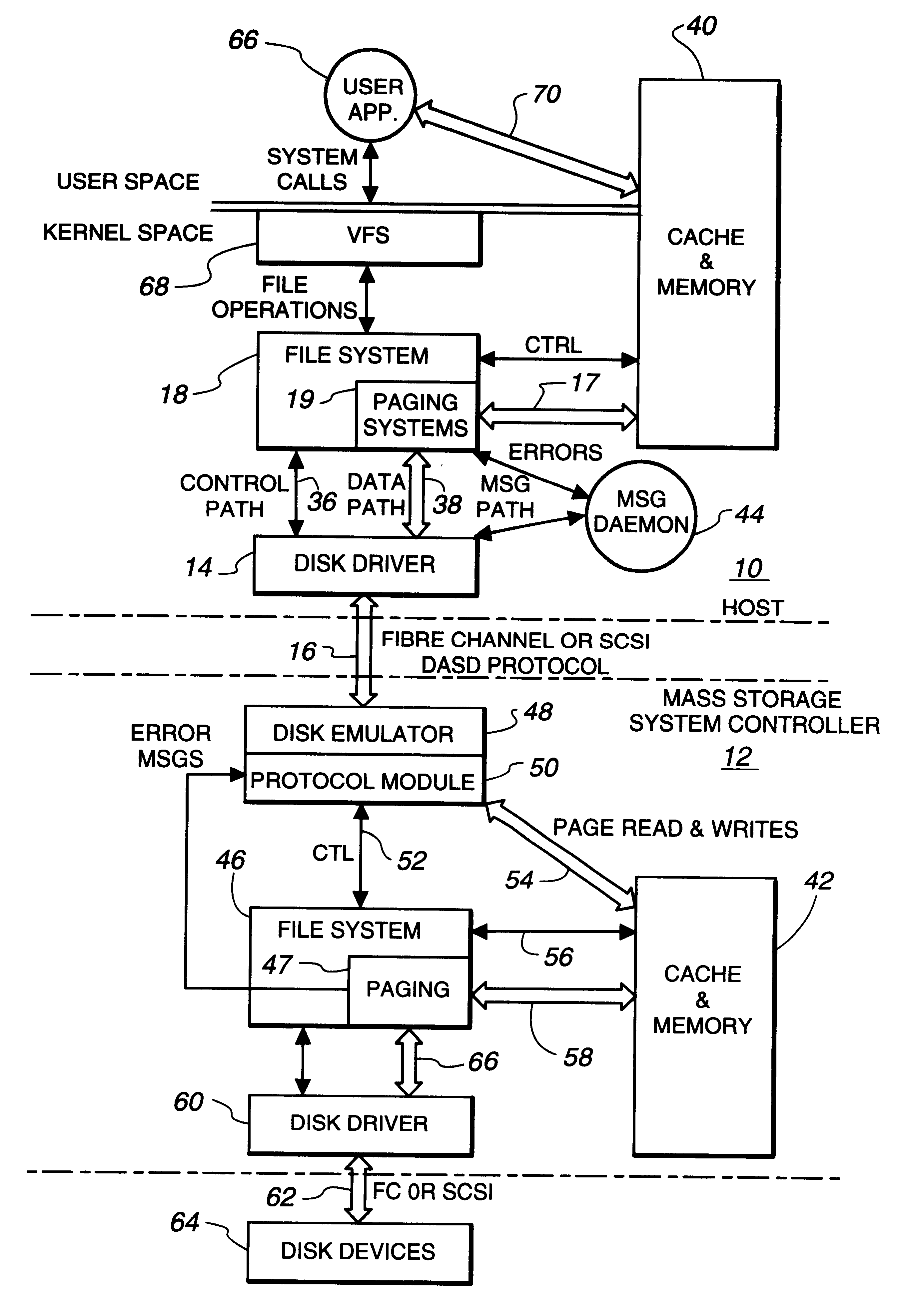

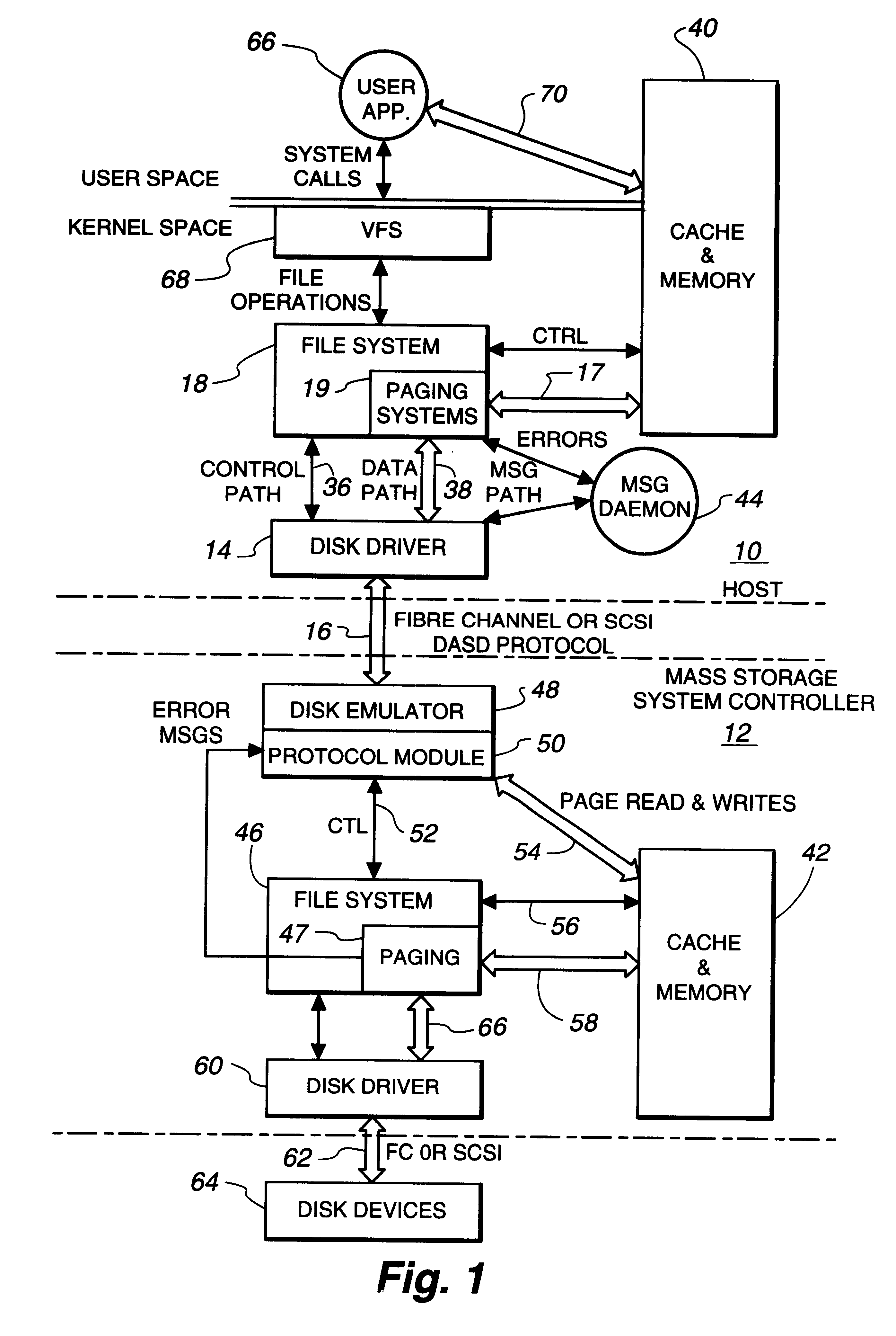

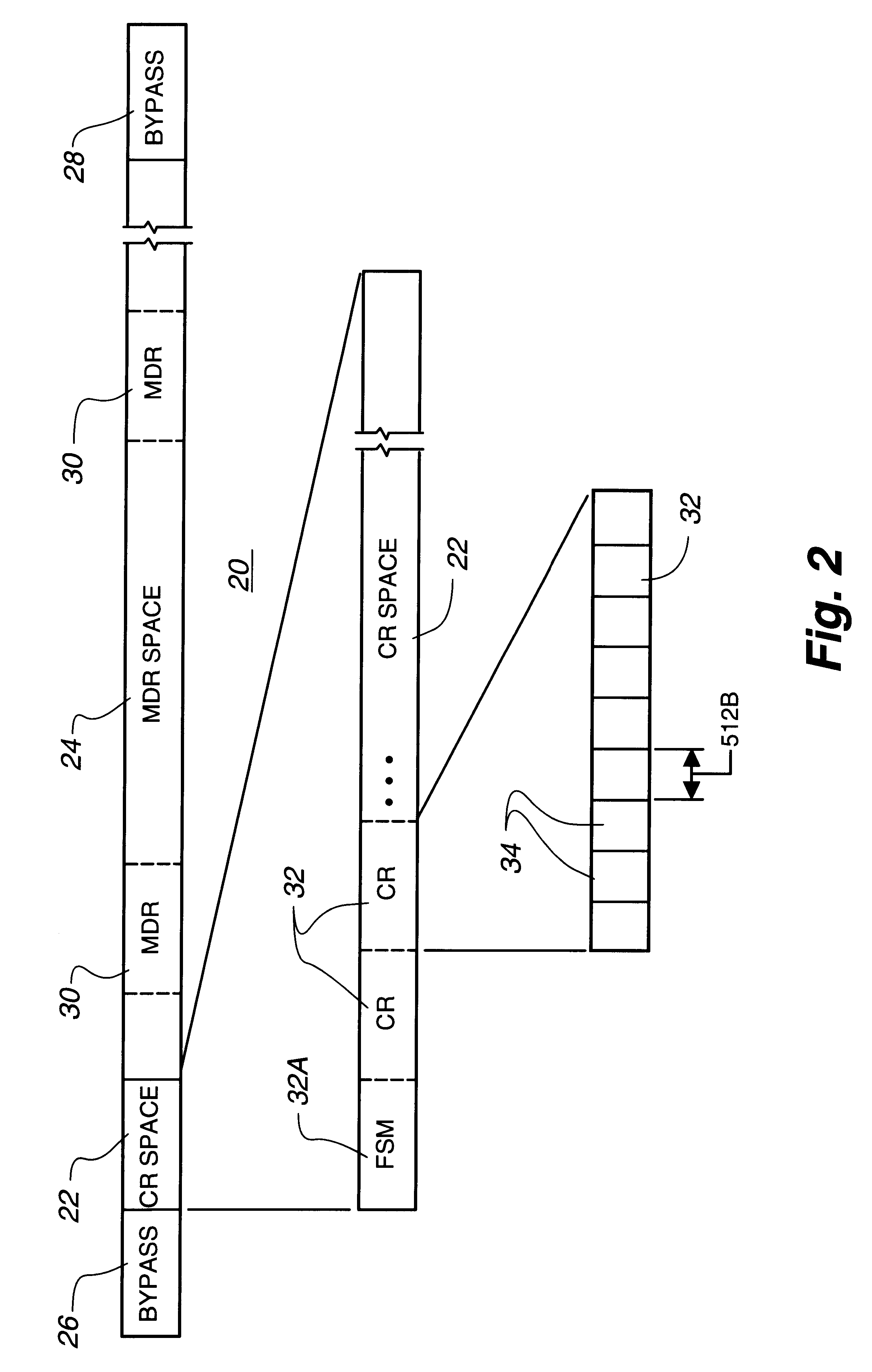

Intelligent controller accessed through addressable virtual space

InactiveUS6493811B1Remove restrictionsInput/output to record carriersMemory adressing/allocation/relocationDirect memory accessVirtual space

File operations on files in a peripheral system are controlled by an intelligent controller with a file processor. The files are accessed as if the intelligent controller were an addressable virtual storage space. This is accomplished first by communicating controller commands for the intelligent controller through read / write commands addressed to a Command Region of a virtual storage device. The controller commands set up a Mapped Data Region in the virtual storage device for use in controlling data transfer operations to and from the peripheral system. With the Mapped Data Regions set up, blocks of data are transferred between the host and the intelligent controller in response to read / write commands addressed to the Mapped Data Region of the virtual storage device.In an additional feature of the invention file operations are communicated between host and controller through a device driver at the host and a device emulator at the intelligent controller. If the address in the device write / read command is pointing to the Command Region of the virtual storage device, a Command Region process interprets and implements the controller operation required by the controller command embedded in the device write / read command. One of these controller commands causes the Command Region processor to map a requested file to a Mapped Data Region in the virtual storage device. If the address detected by the detecting operation is in a Mapped Data Region, a Mapped Data Region process is called. The Mapped Data Region process reads or writes requested data of a file mapped to the Mapped Data Region addressed by the read / write command. This mapped file read or write is accomplished as a transfer of data over a data path separate from a control path. In an additional feature of the invention, the data transfer between host system and intelligent controller is accomplished by performing a direct memory access transfer of data.

Owner:CA TECH INC

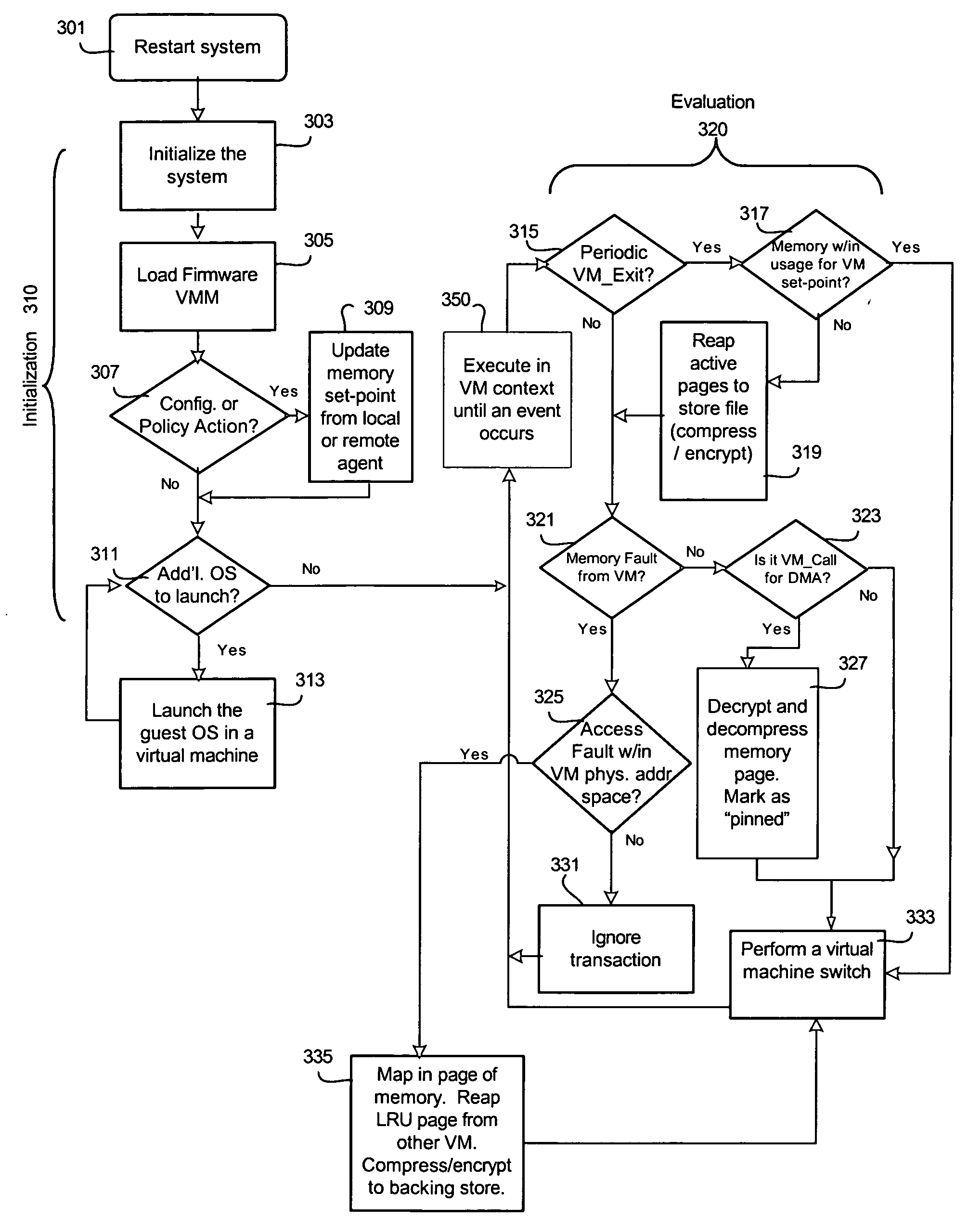

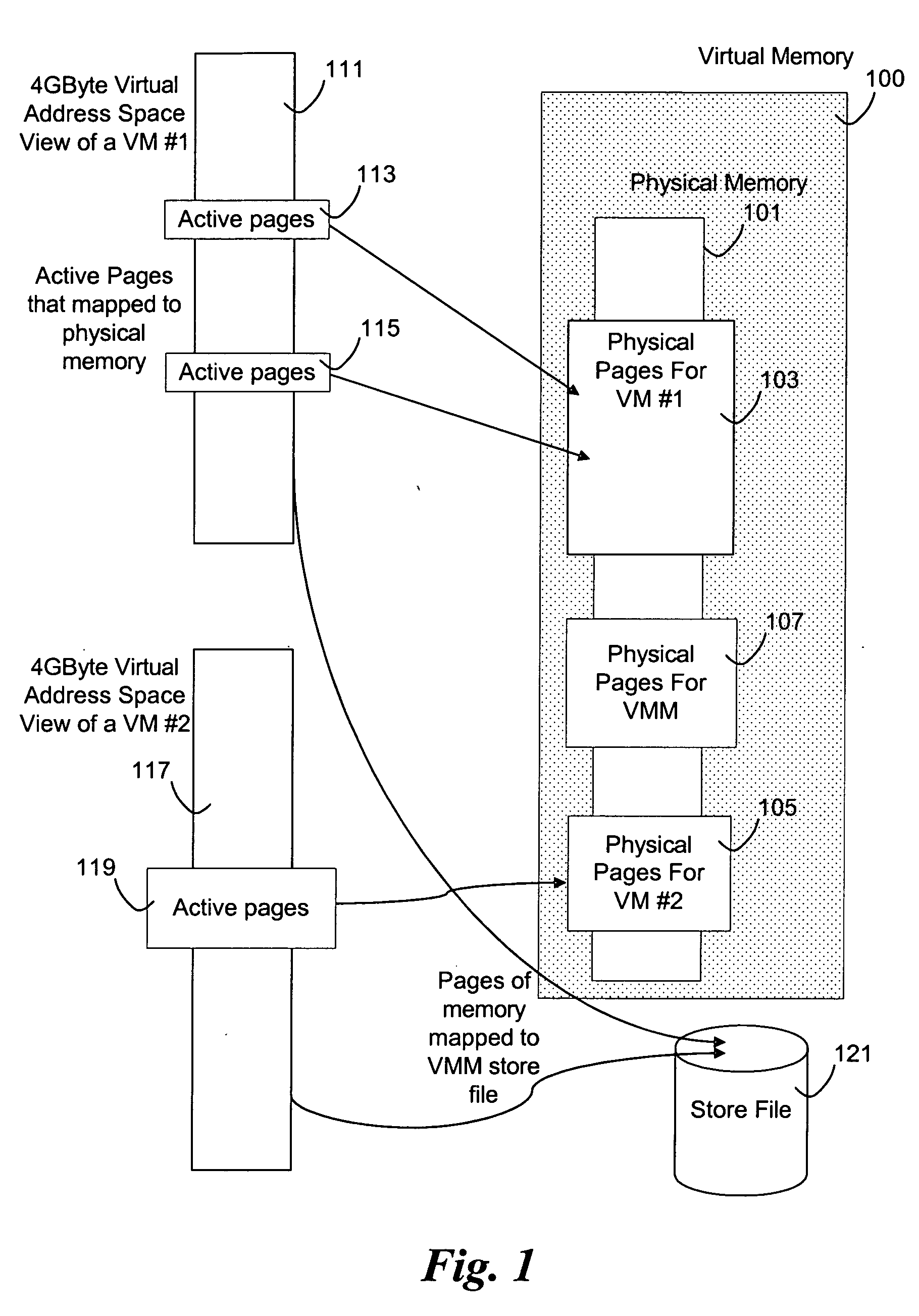

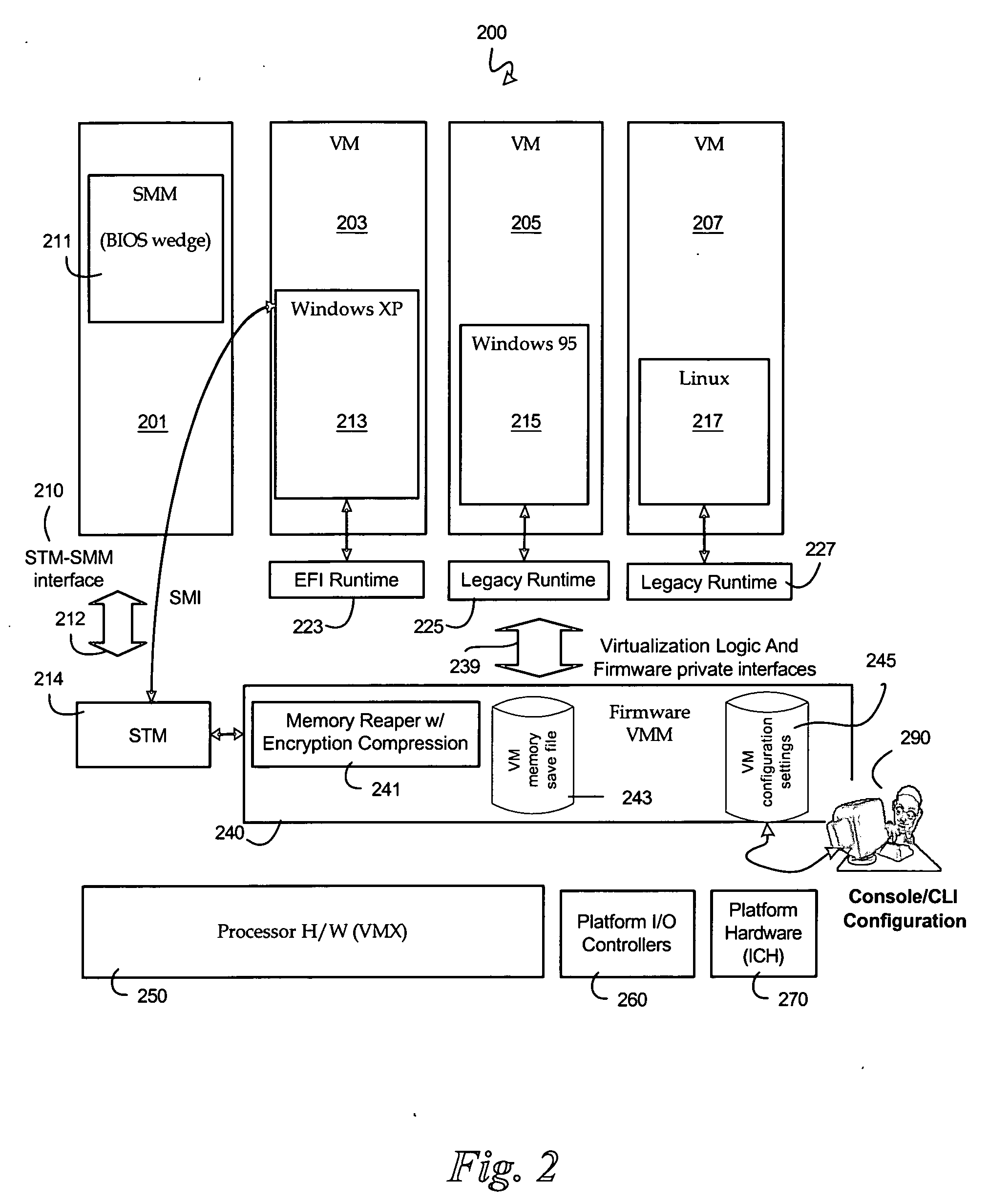

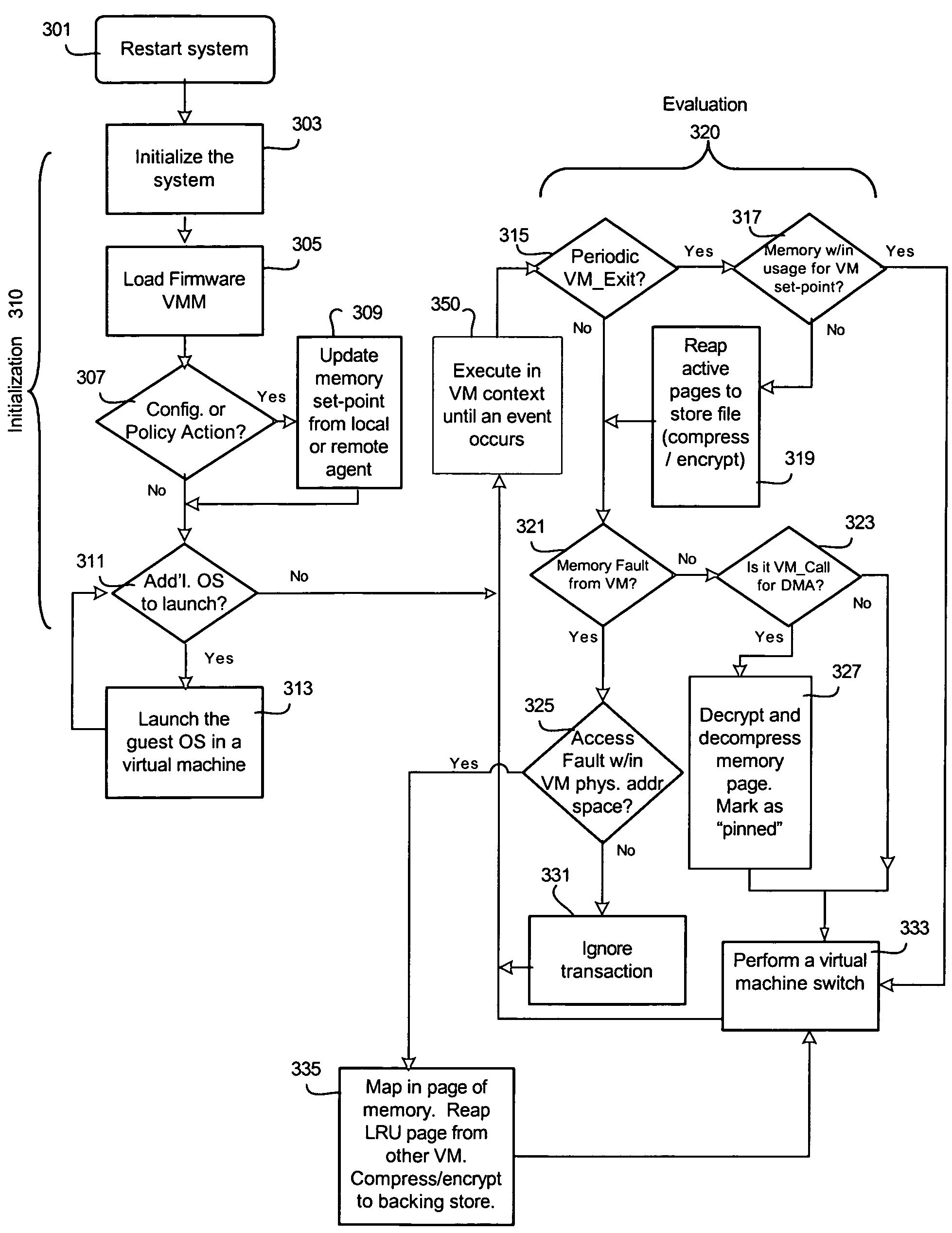

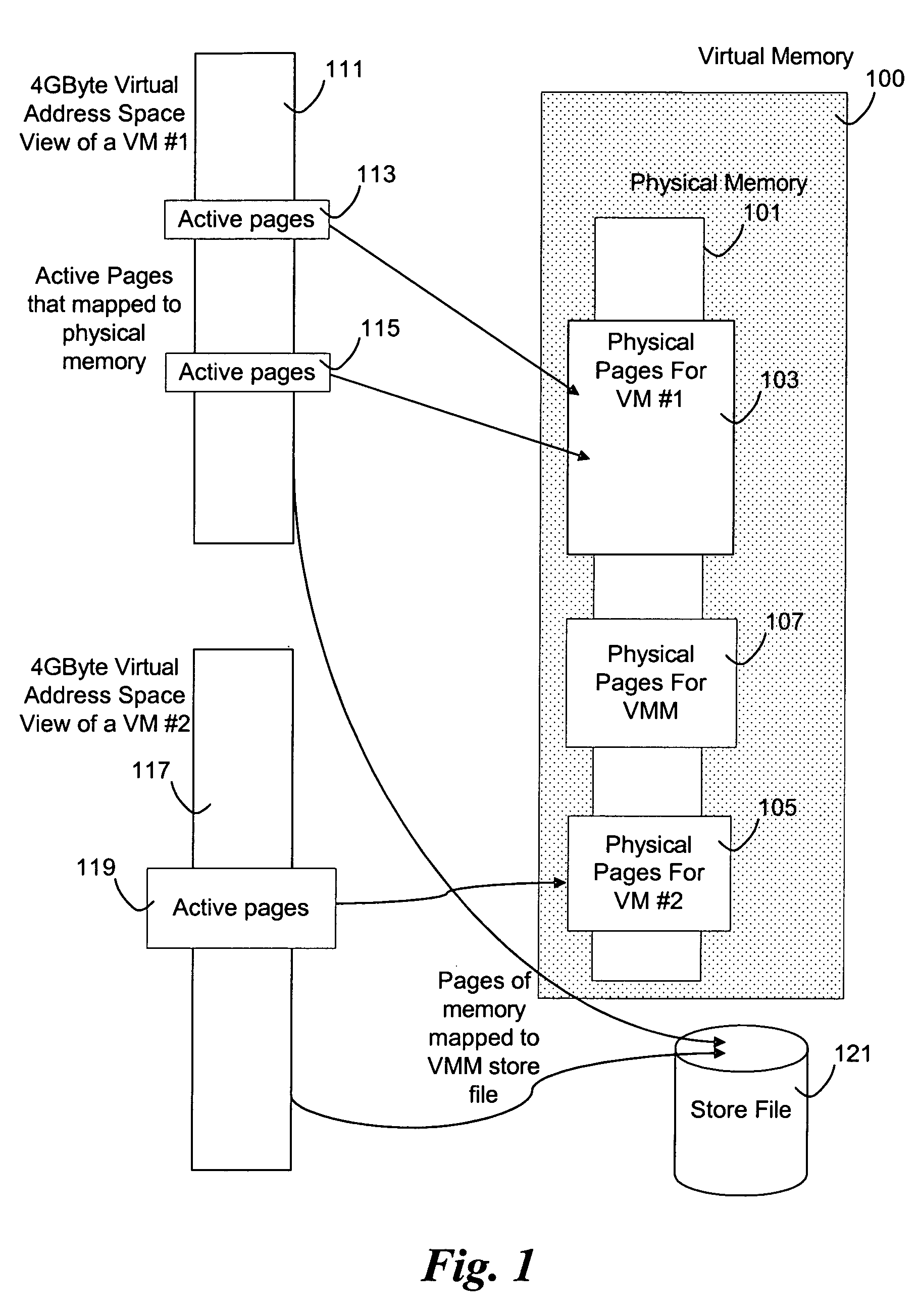

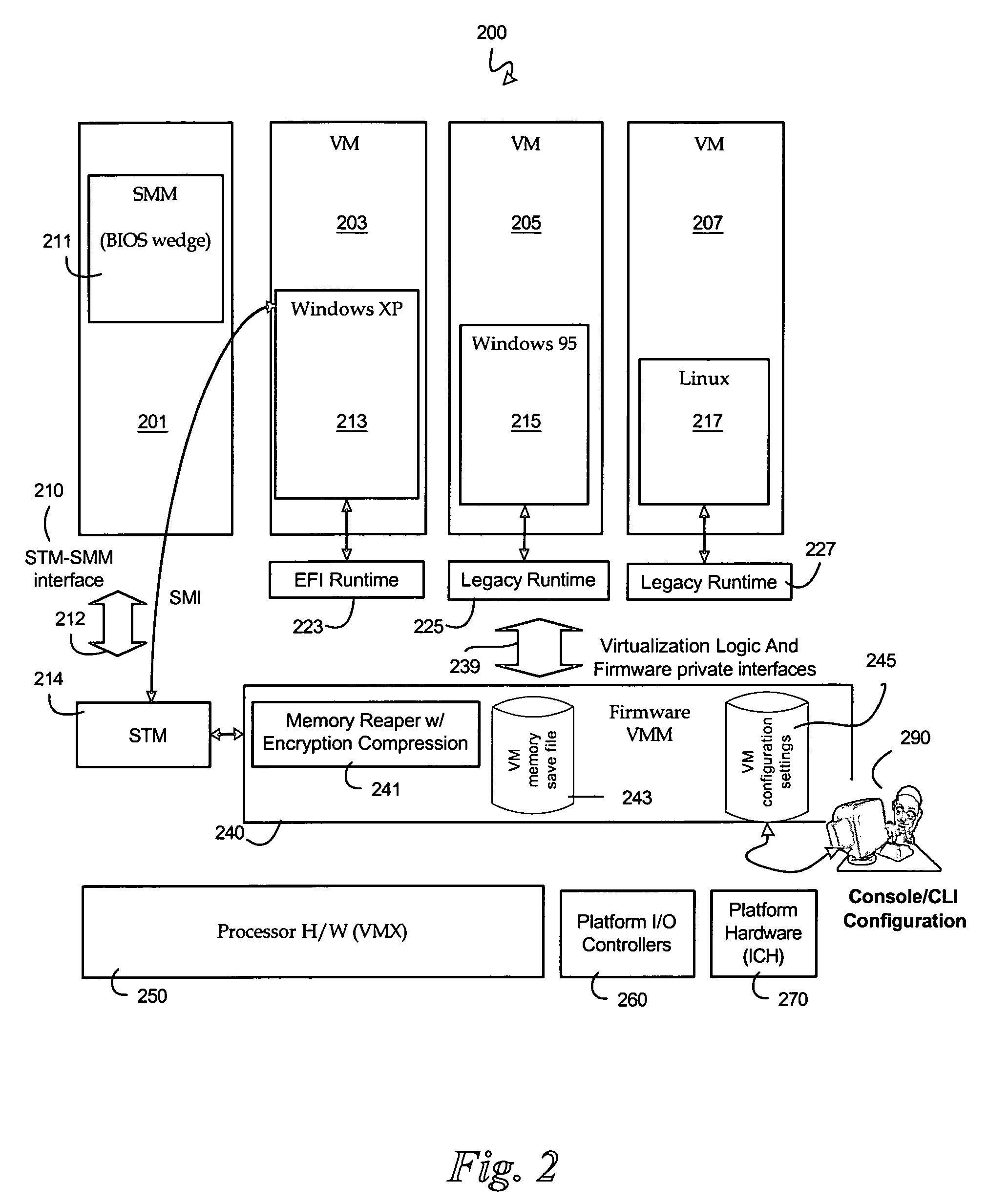

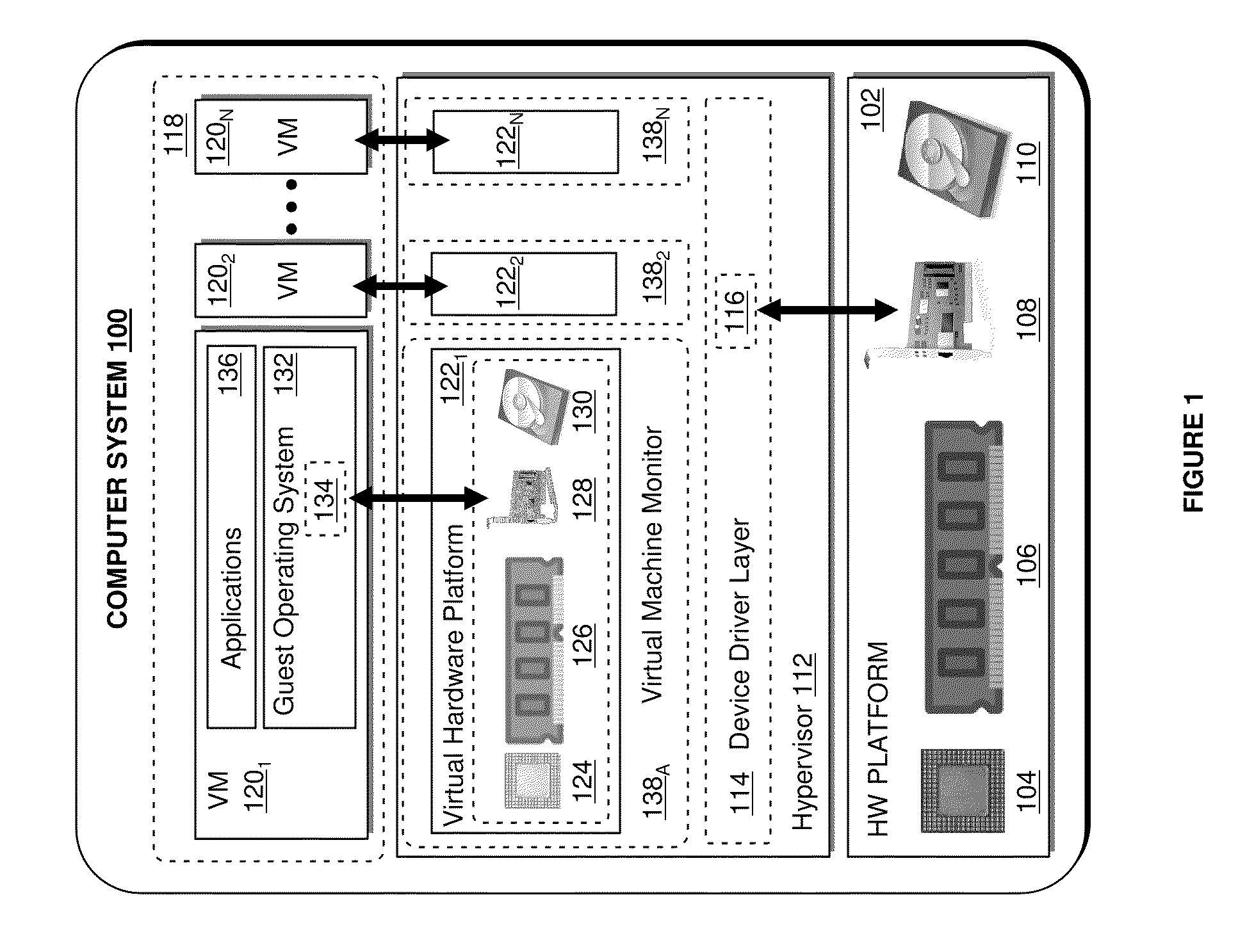

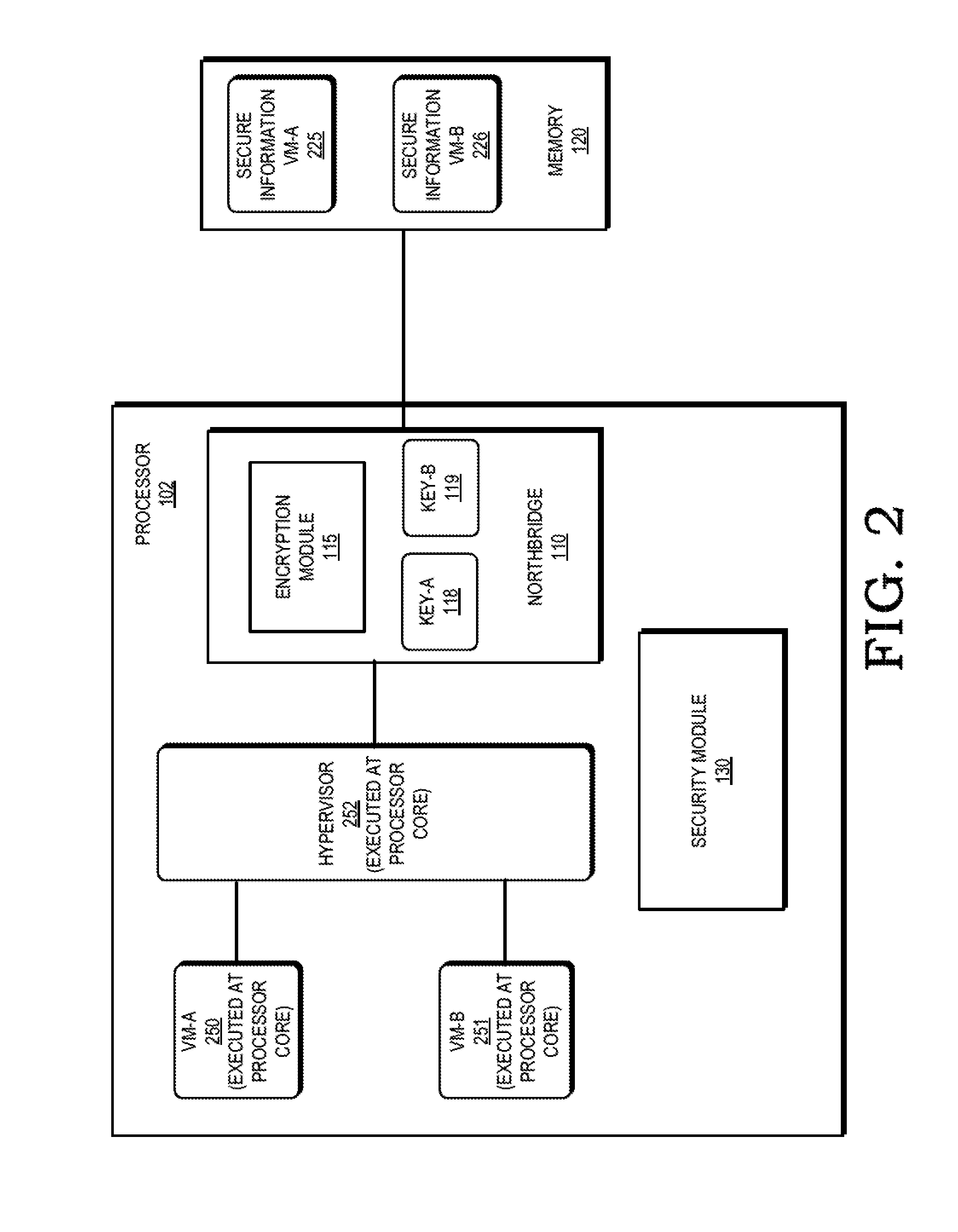

Method to manage memory in a platform with virtual machines

An embodiment of the present invention enables the virtualizing of virtual memory in a virtual machine environment within a virtual machine monitor (VMM). Memory required for direct memory access (DMA) for device drivers, for example, is pinned by the VMM and prevented from being swapped out. The VMM may dynamically allocated memory resources to various virtual machines running in the platform. Other embodiments may be described and claimed.

Owner:TAHOE RES LTD

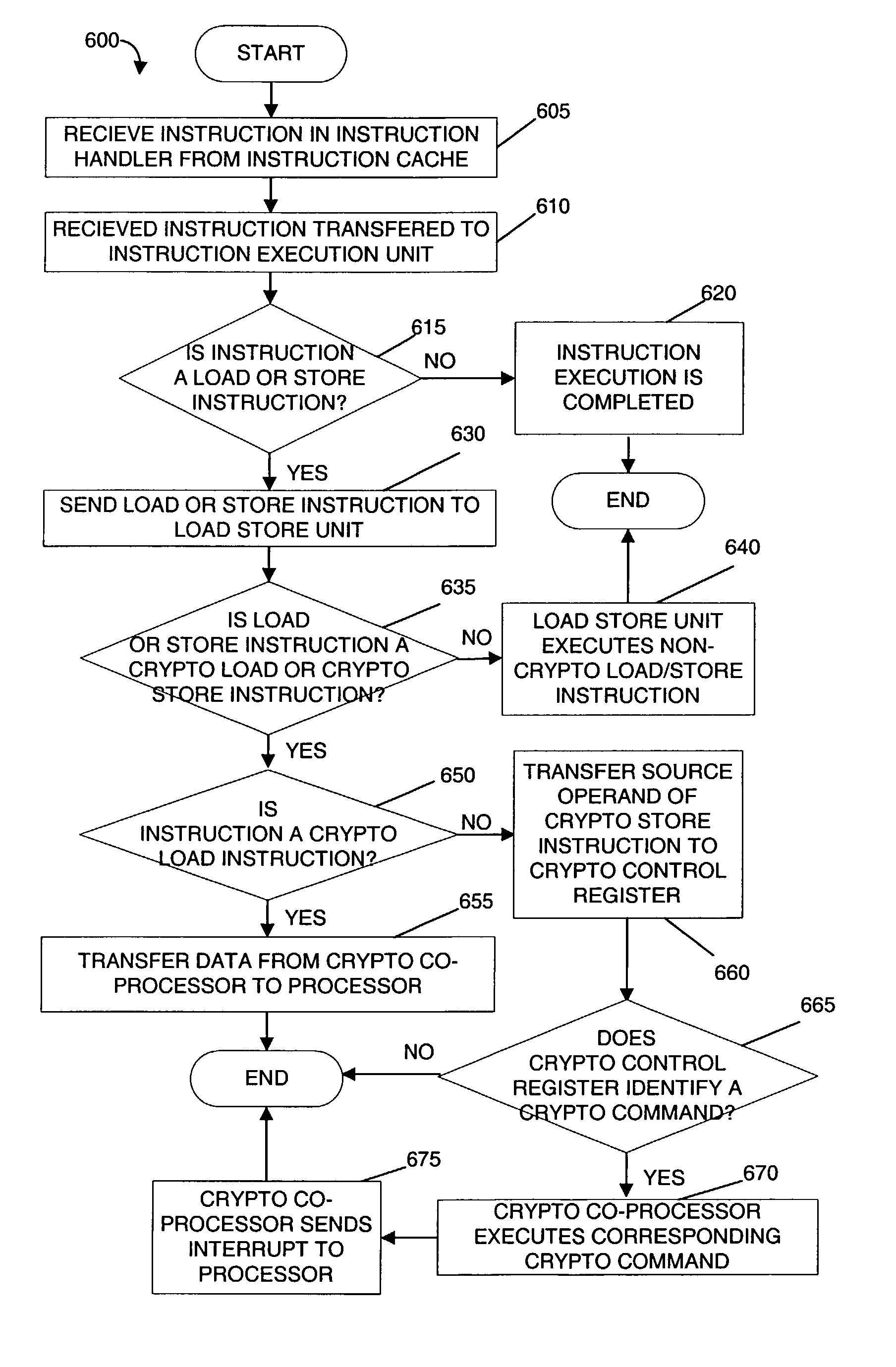

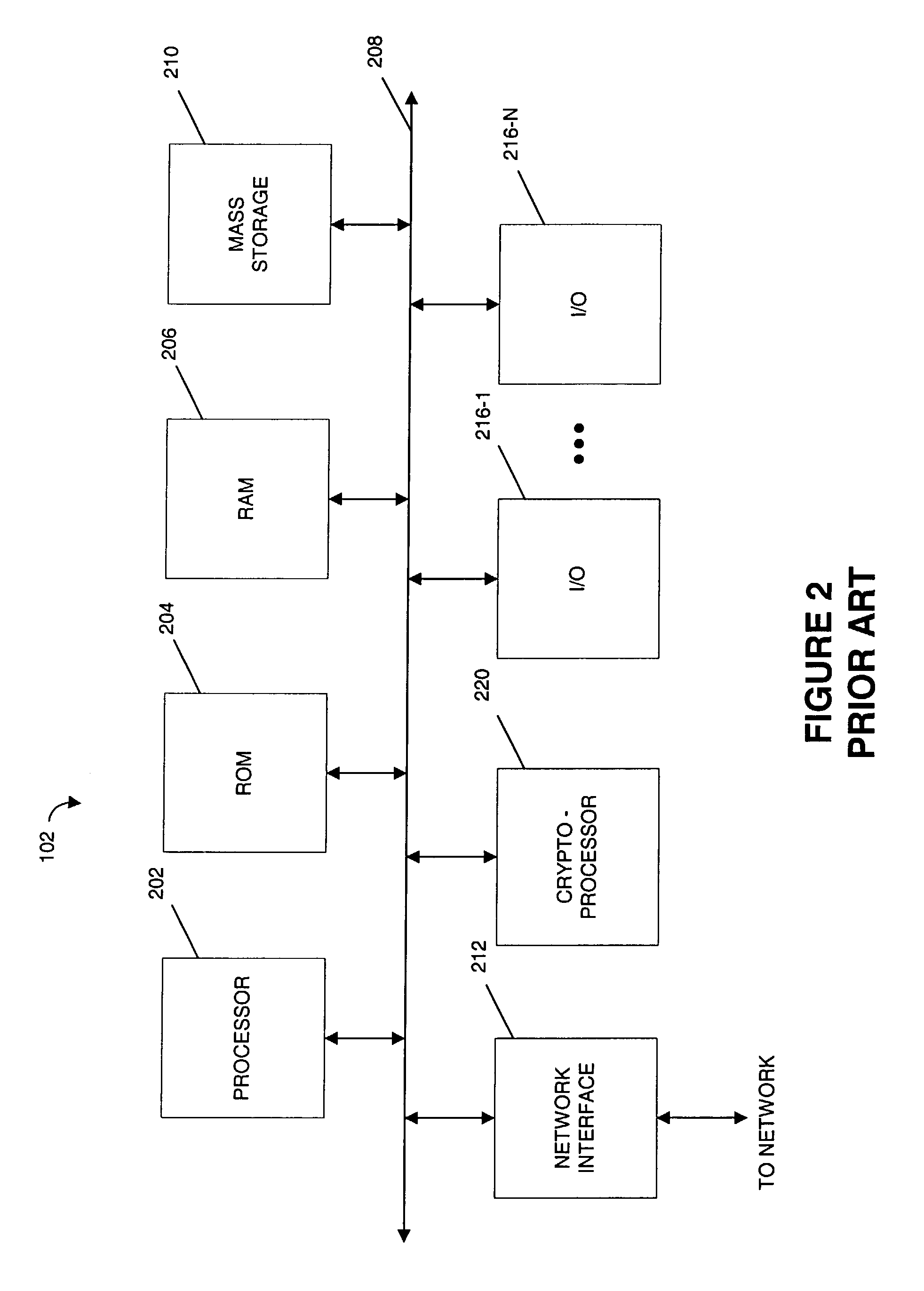

Stream processor with cryptographic co-processor

InactiveUS20030084309A1Energy efficient ICTStatic indicating devicesProcessing coreDirect memory access

A microprocessor includes a first processing core, a first cryptographic coprocessor and an integer multiplier unit that is coupled to the first processing core and the first cryptographic co-processor. The first processing core includes an instruction decode unit, an instruction execution unit, a load / store unit. The first cryptographic coprocessor is located on a first die with the first processing core. The first cryptographic co-processor includes a cryptographic control register, a direct memory access engine that is coupled to the load / store unit in the first processing core and a cryptographic memory.

Owner:SUN MICROSYSTEMS INC

Method to manage memory in a platform with virtual machines

ActiveUS7421533B2Resource allocationComputer security arrangementsVirtual memoryDirect memory access

An embodiment of the present invention enables the virtualizing of virtual memory in a virtual machine environment within a virtual machine monitor (VMM). Memory required for direct memory access (DMA) for device drivers, for example, is pinned by the VMM and prevented from being swapped out. The VMM may dynamically allocated memory resources to various virtual machines running in the platform. Other embodiments may be described and claimed.

Owner:TAHOE RES LTD

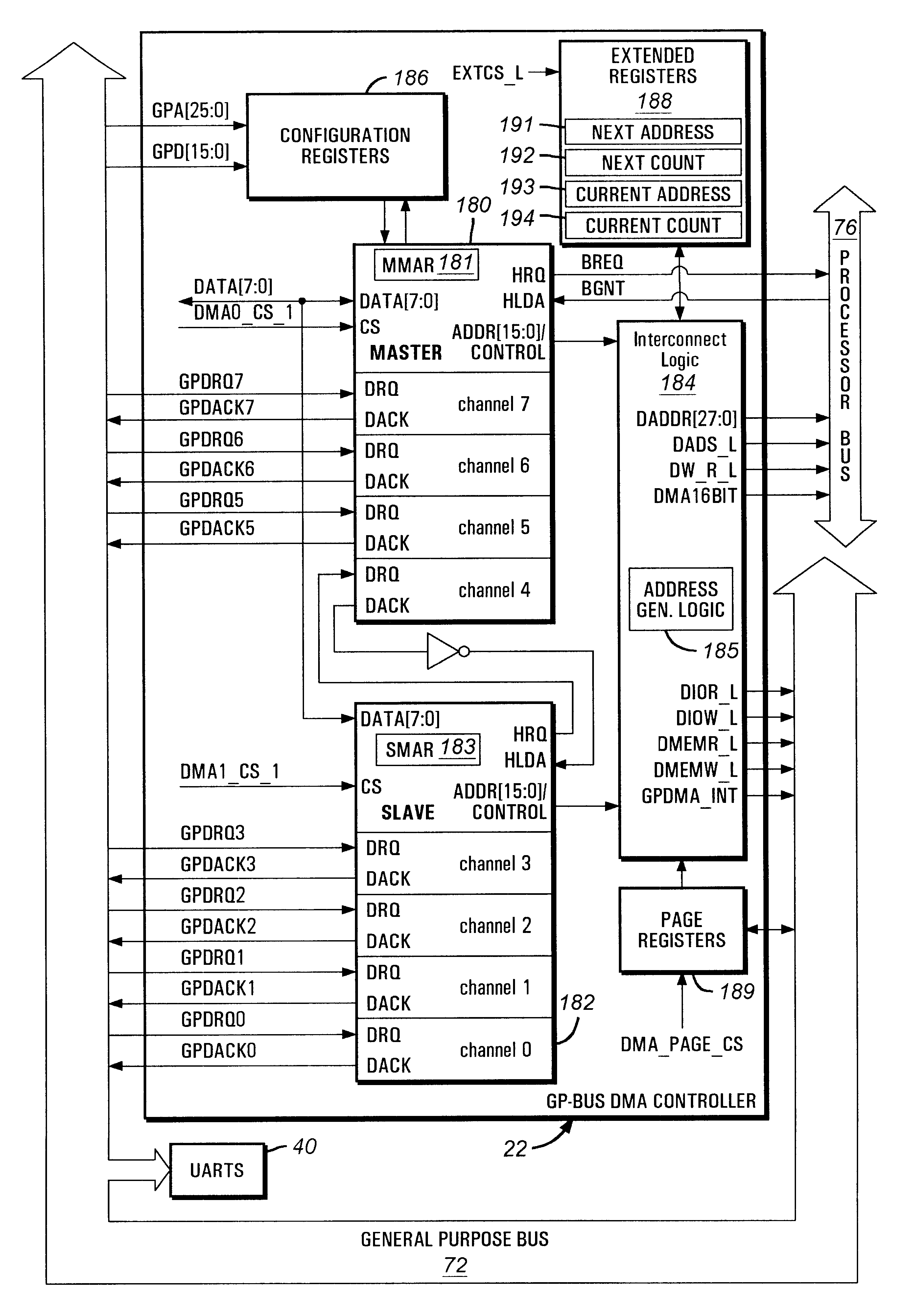

Direct memory access controller with channel width configurability support

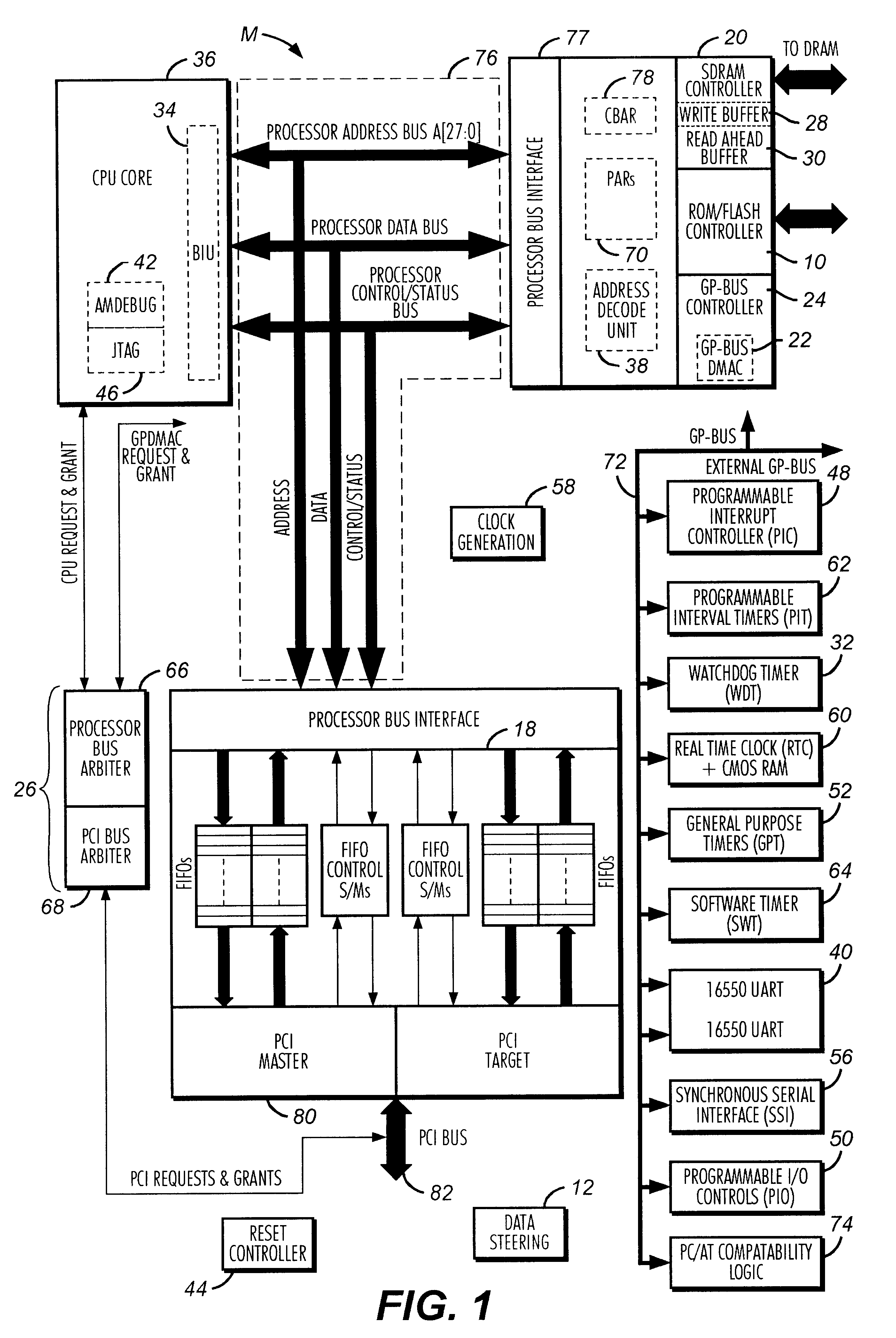

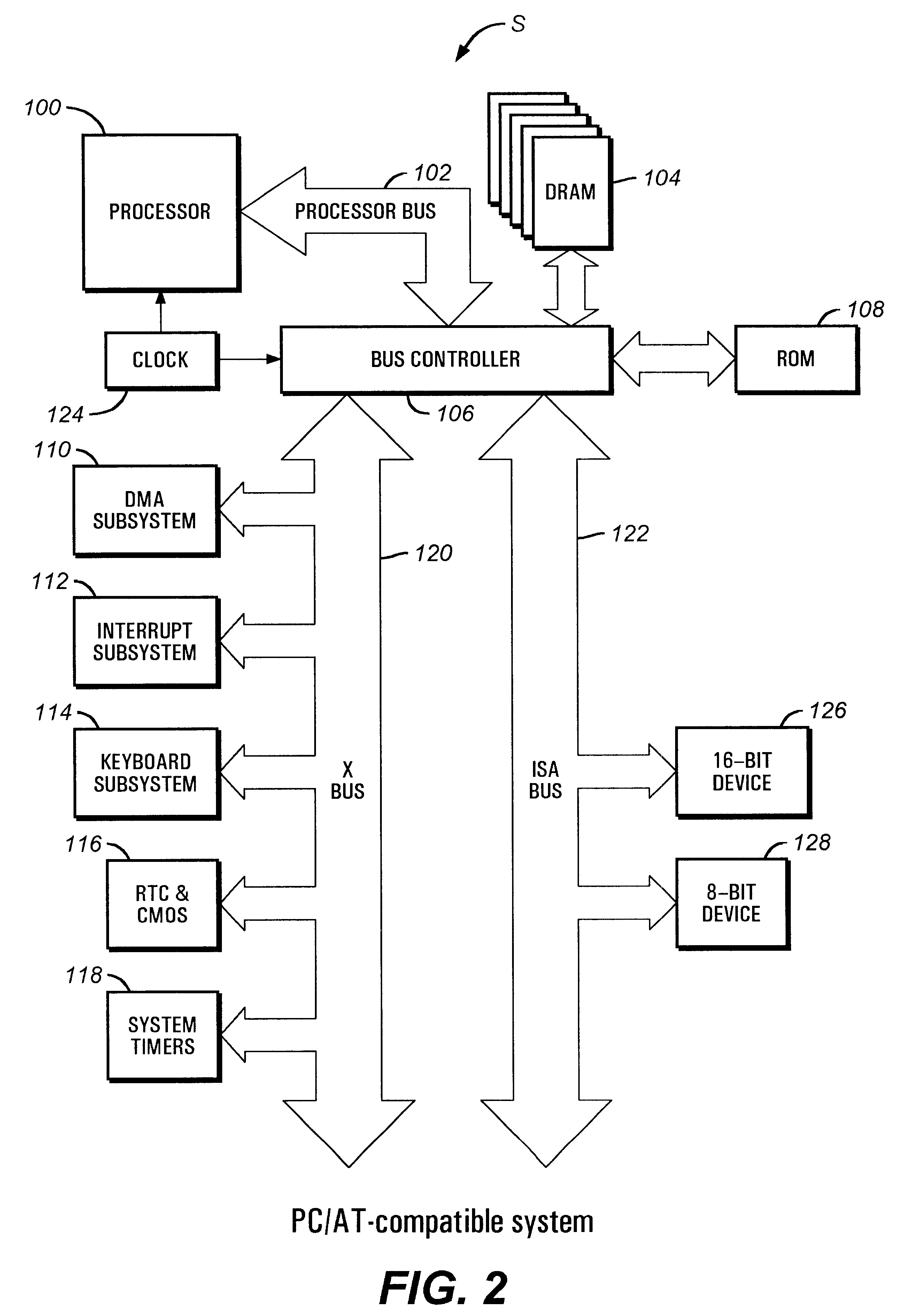

A direct memory access (DMA) controller provides seven DMA channels configurable for a PC / AT compatible mode or an enhanced mode. In an enhanced mode of the DMA controller, three DMA master channels on a master DMA controller and a DMA channel on a slave DMA controller are individually configurable to be either 8-bit or 16-bit DMA channels. In addition, in the enhanced mode, a memory address can increment or decrement across a memory page boundary. The DMA controller includes a transfer count register selectively configured for 16-bit operation or 24-bit operation. The DMA controller also includes address generation logic selectively configured for 24-bit operation or 28-bit operation. In the PC / AT compatible mode, the DMA controller supports three 16-bit channels and four 8-bit channels. The DMA controller thus provides DMA channel width configurability.

Owner:GLOBALFOUNDRIES INC

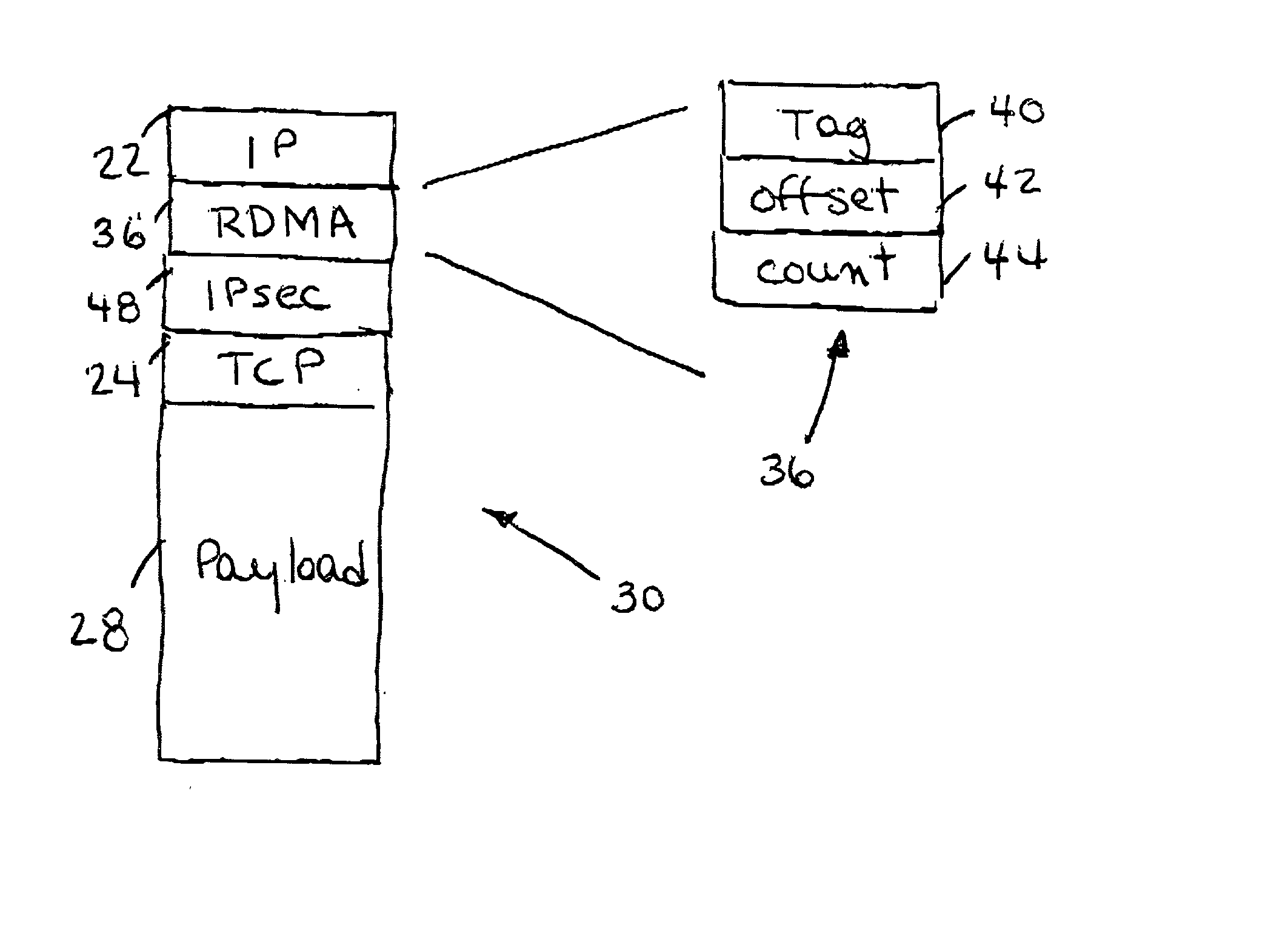

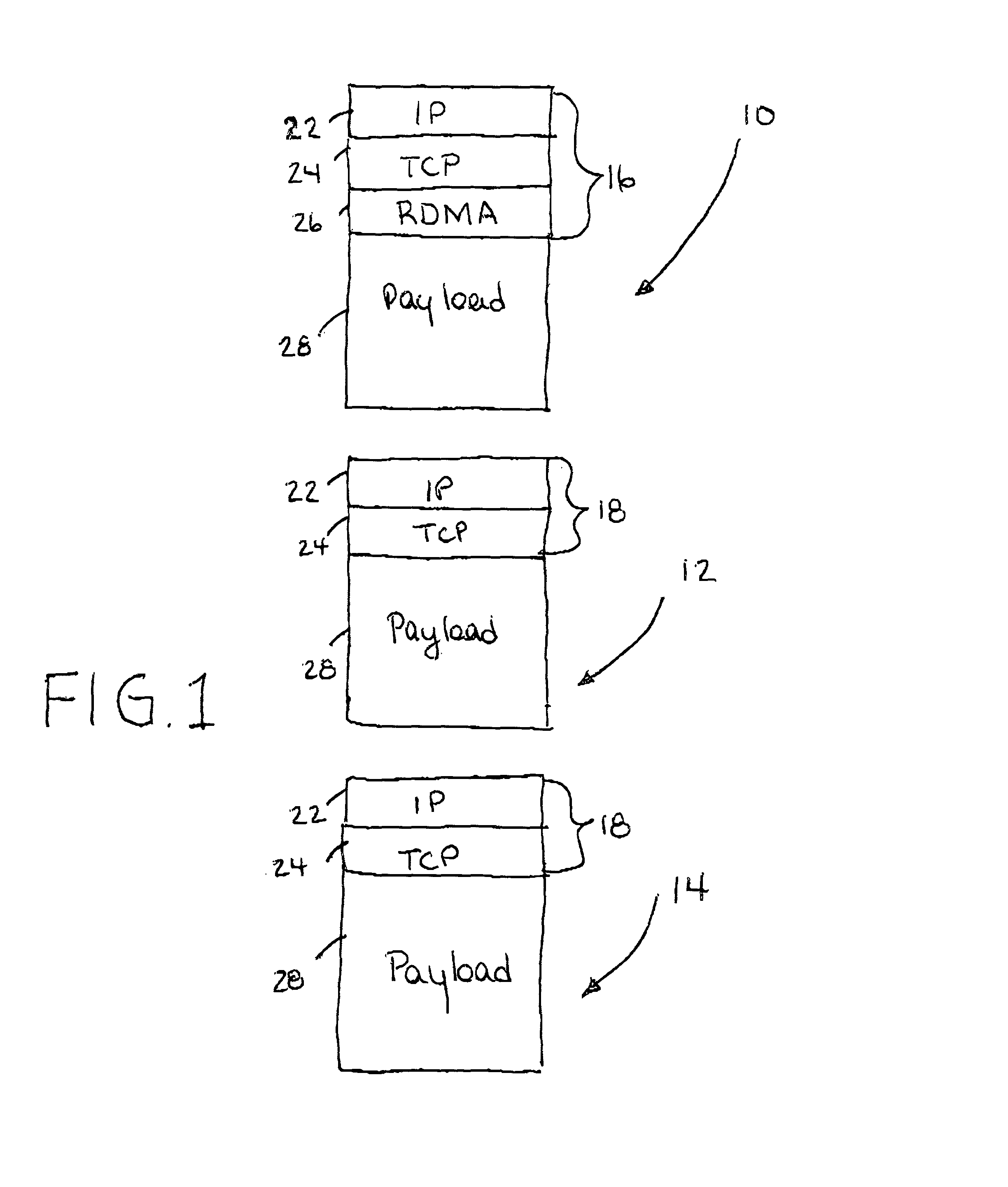

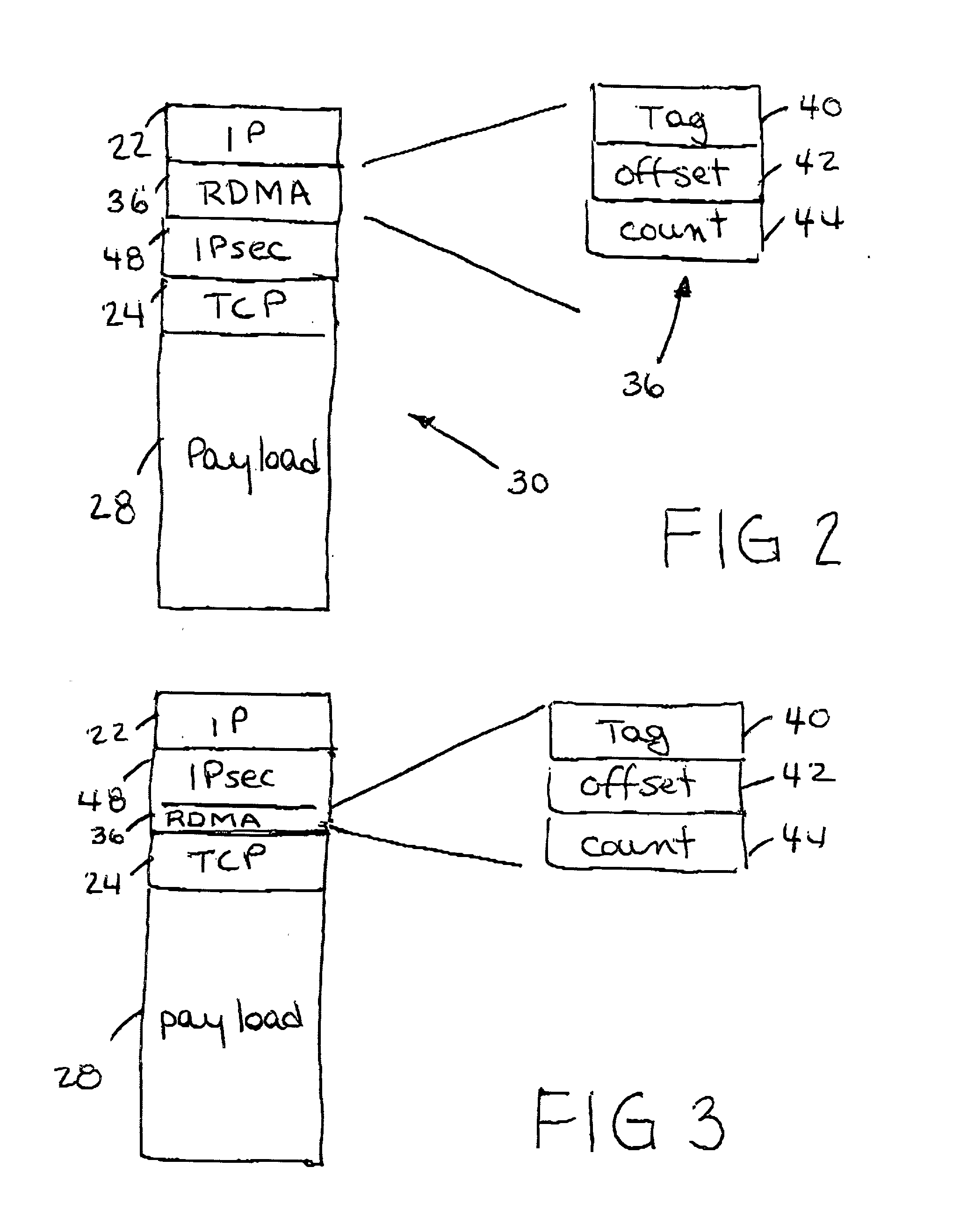

IP headers for remote direct memory access and upper level protocol framing

InactiveUS20020085562A1Time-division multiplexData switching by path configurationDirect memory accessRemote direct memory access

A data packet header including an internet protocol (IP) header, a remote direct memory access (RDMA) header, and a transmission control protocol (TCP) header, wherein the RDMA header is between the IP header and the TCP header.

Owner:IBM CORP

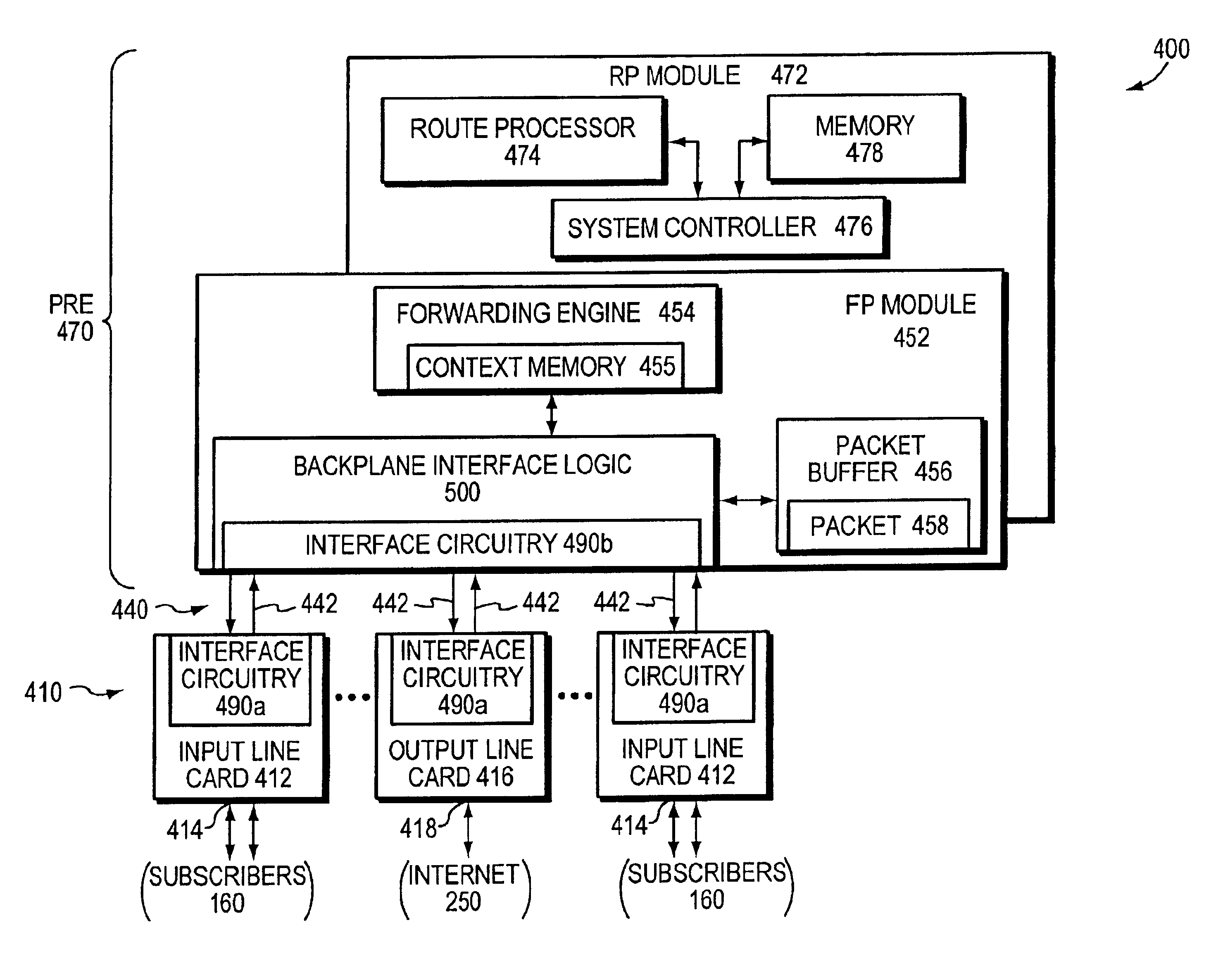

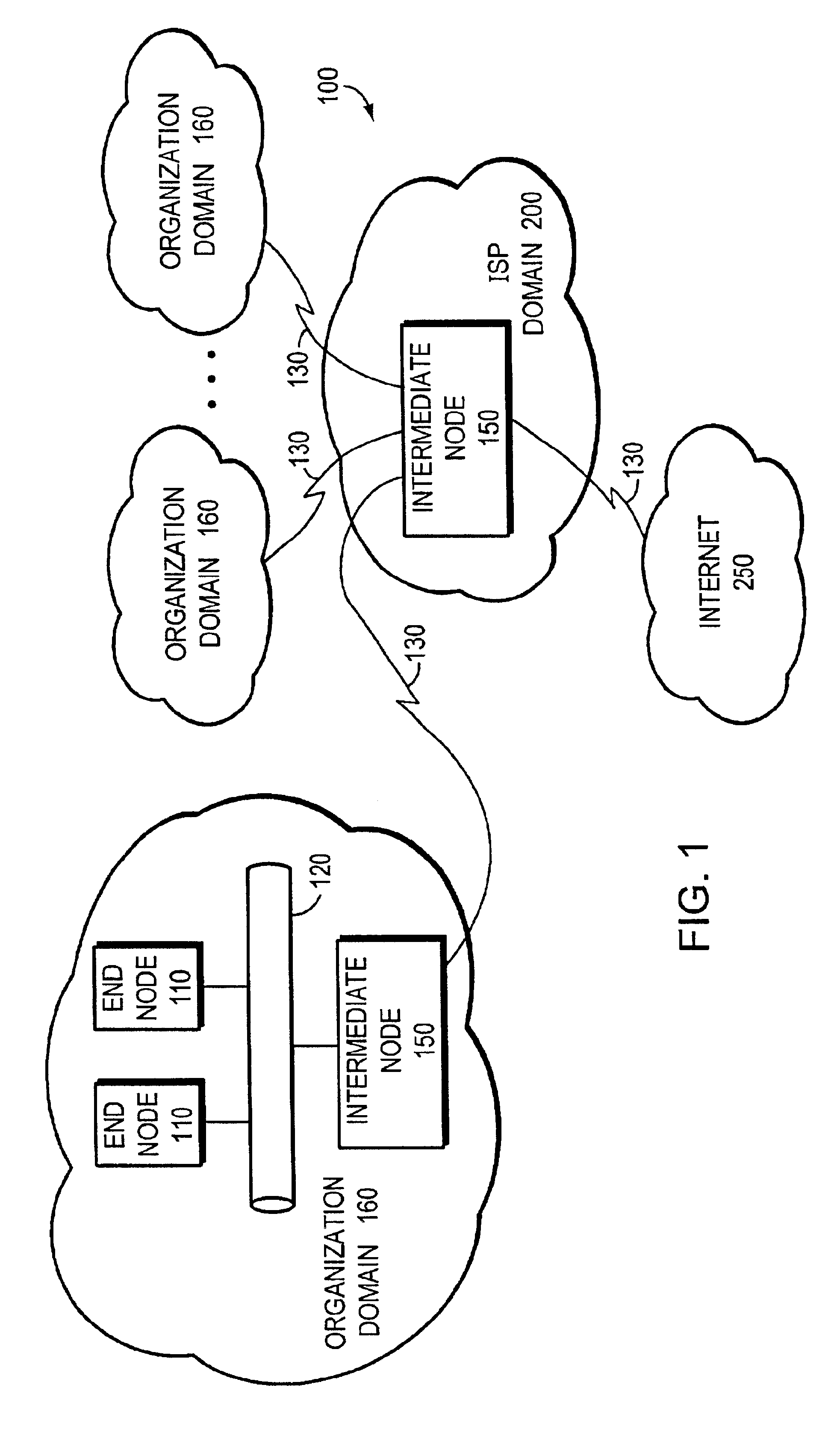

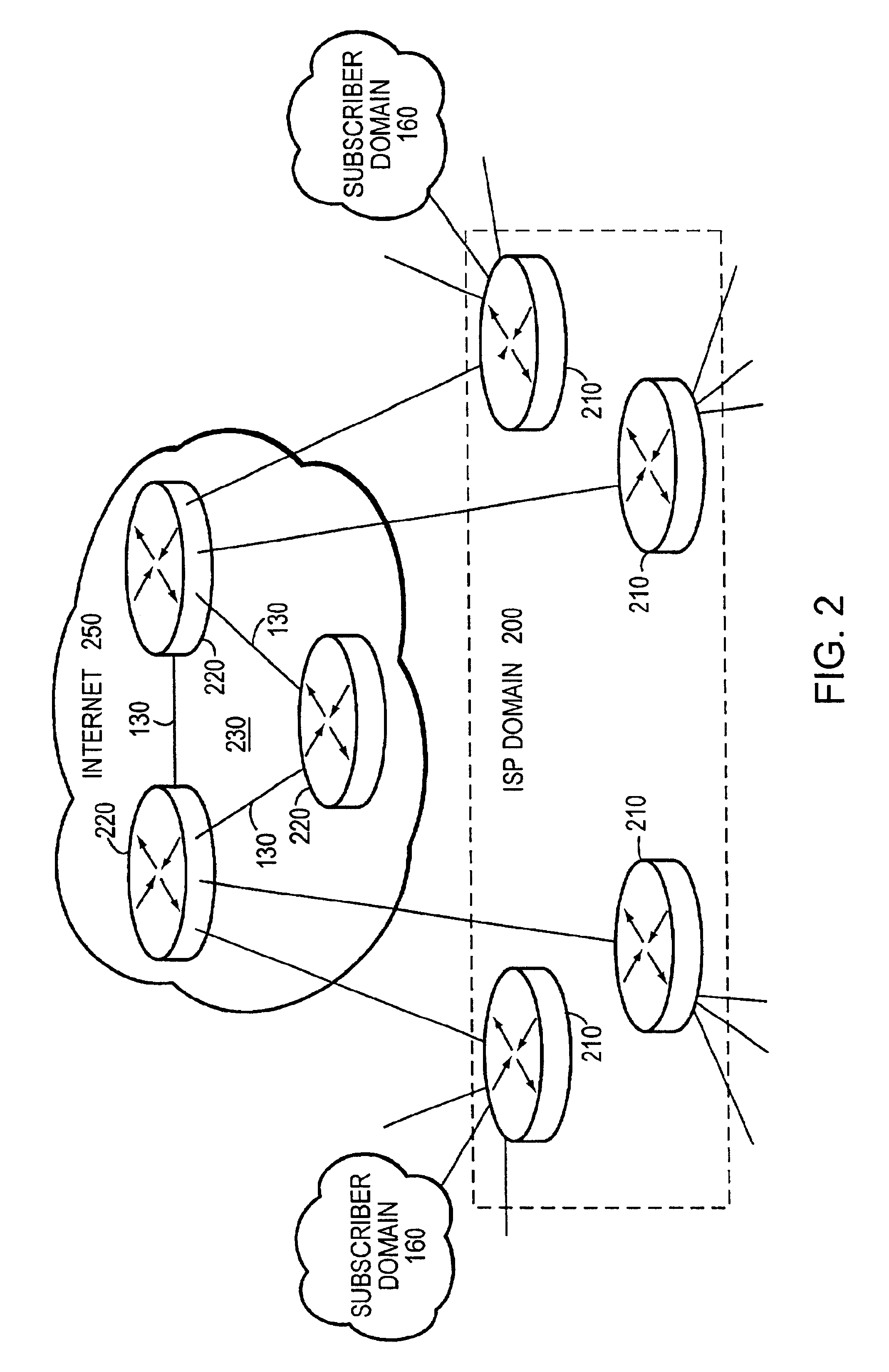

Method and apparatus for controlling packet header buffer wrap around in a forwarding engine of an intermediate network node

ActiveUS6847645B1Limited amountManipulation is limitedDigital computer detailsData switching by path configurationDirect memory accessComputer science

A method and apparatus manages packet header buffers of a forwarding engine contained within an intermediate node, such as an aggregation router, of a computer network. Processors of the forwarding engine add and remove headers from packets using a packet header buffer, i.e., context memory, associated with each processor. Addition and removal of the headers occurs while preserving a portion of the “on-chip” context memory for passing state information to and between processors of a pipeline, and also for passing move commands to direct memory access (DMA) logic external to the forwarding engine. A wrap control function capability within the move command works in conjunction with the ability of the DMA logic to detect the end of the context and wrap to a specified offset within the context. That is, rather than wrapping to the beginning of a context, the wrap control capability specifies a predetermined offset within the context at which the wrap point occurs.

Owner:CISCO TECH INC

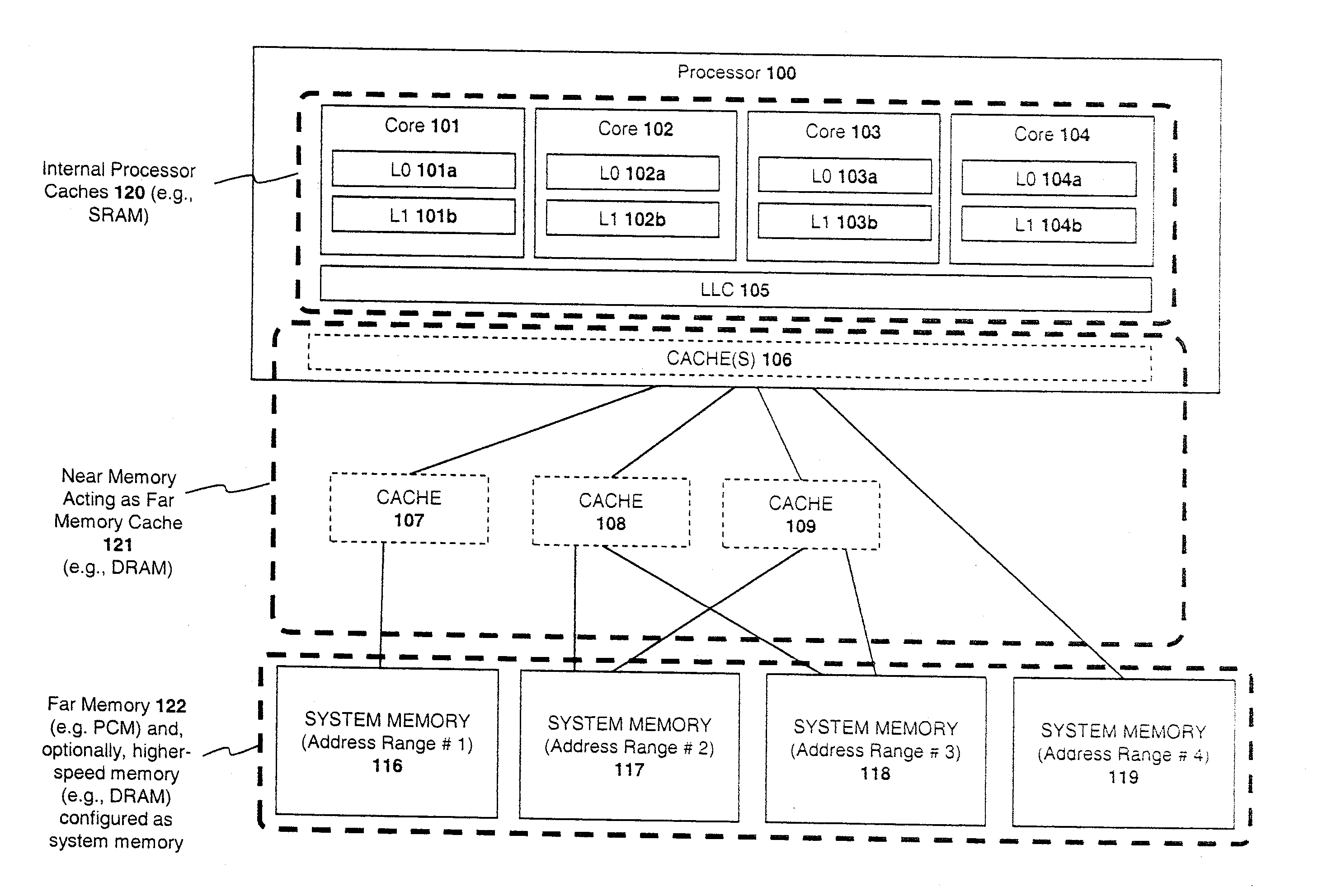

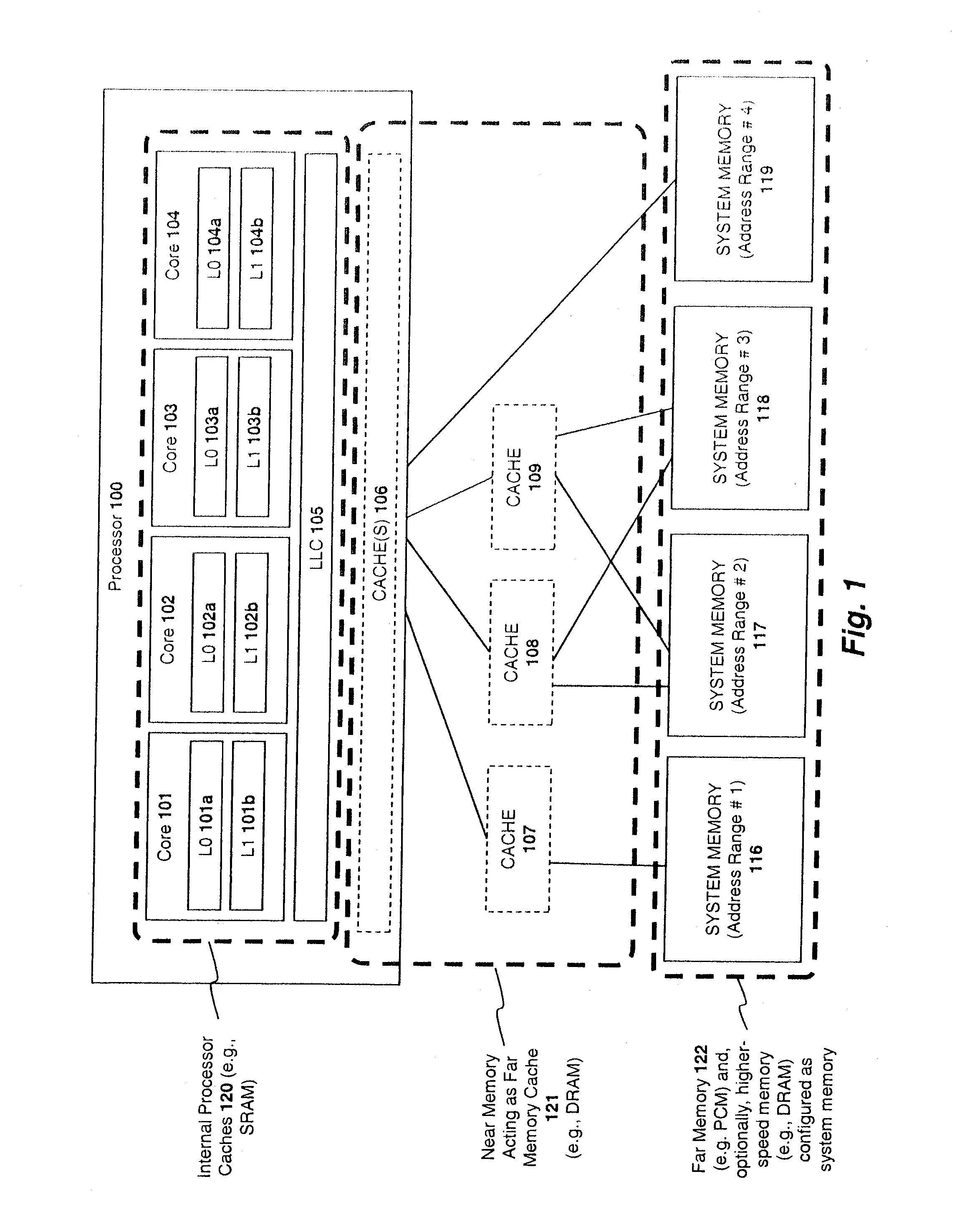

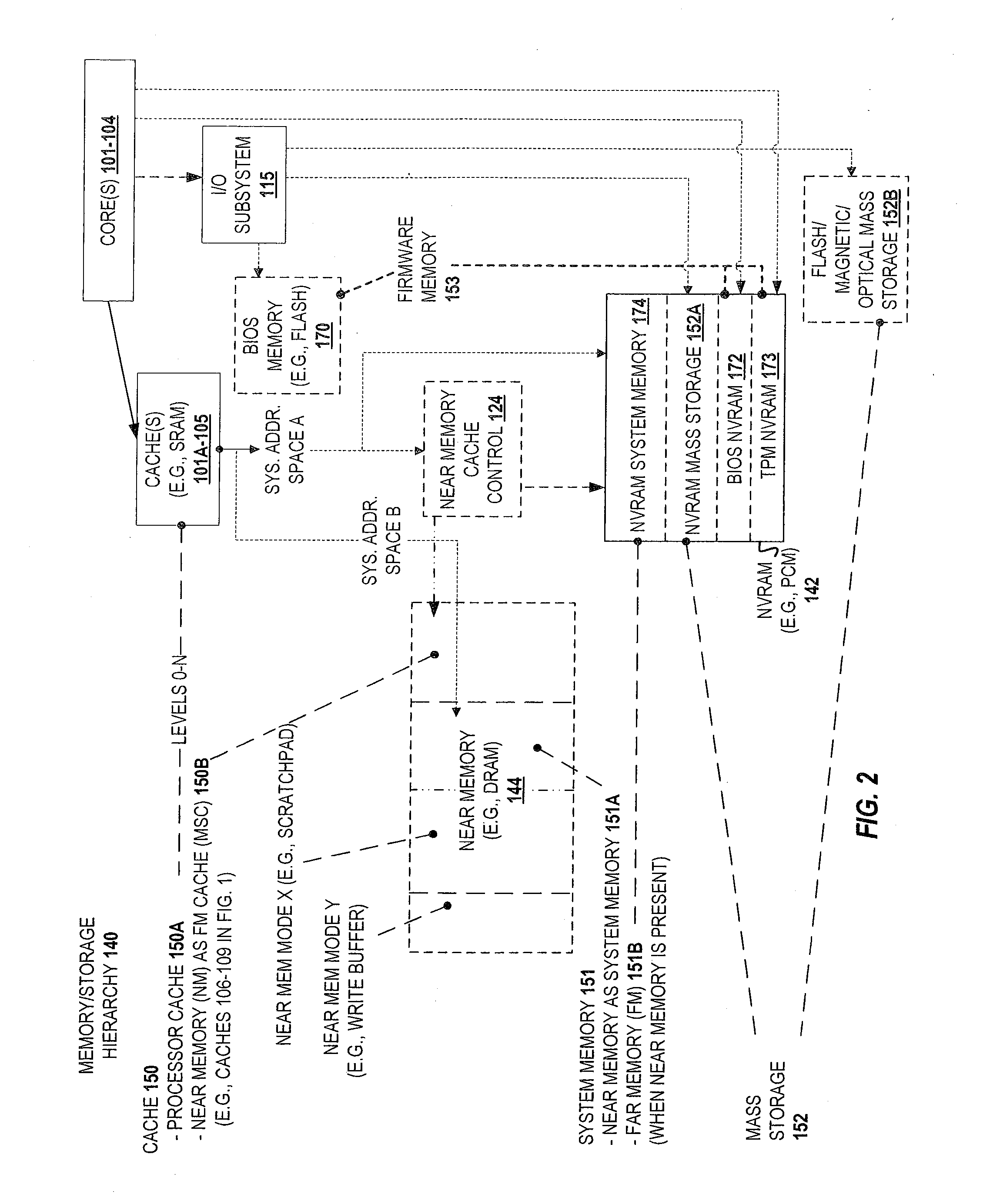

Memory channel that supports near memory and far memory access

ActiveUS20140040550A1Memory architecture accessing/allocationError detection/correctionUniform memory accessDirect memory access

A semiconductor chip comprising memory controller circuitry having interface circuitry to couple to a memory channel. The memory controller includes first logic circuitry to implement a first memory channel protocol on the memory channel. The first memory channel protocol is specific to a first volatile system memory technology. The interface also includes second logic circuitry to implement a second memory channel protocol on the memory channel. The second memory channel protocol is specific to a second non volatile system memory technology. The second memory channel protocol is a transactional protocol.

Owner:TAHOE RES LTD

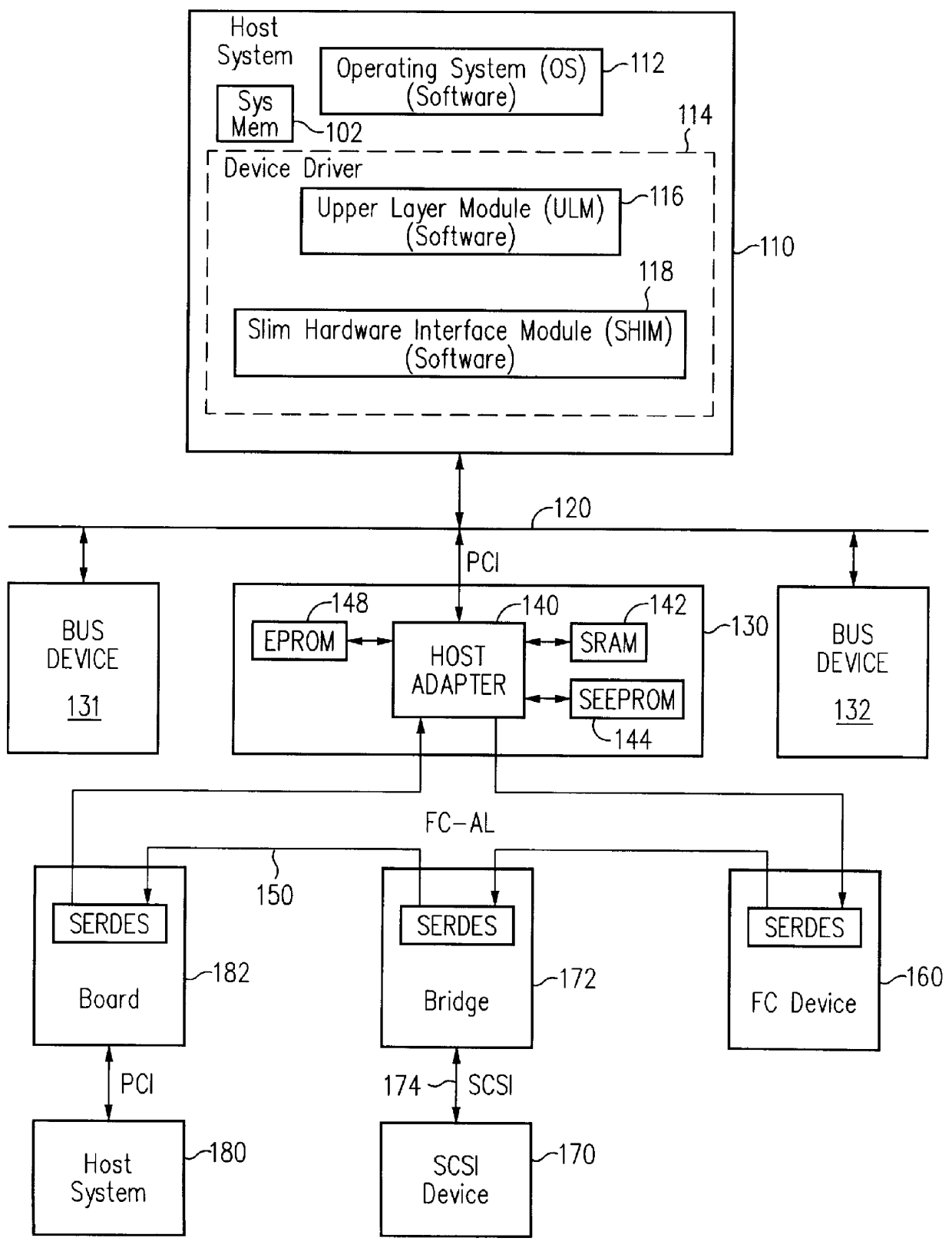

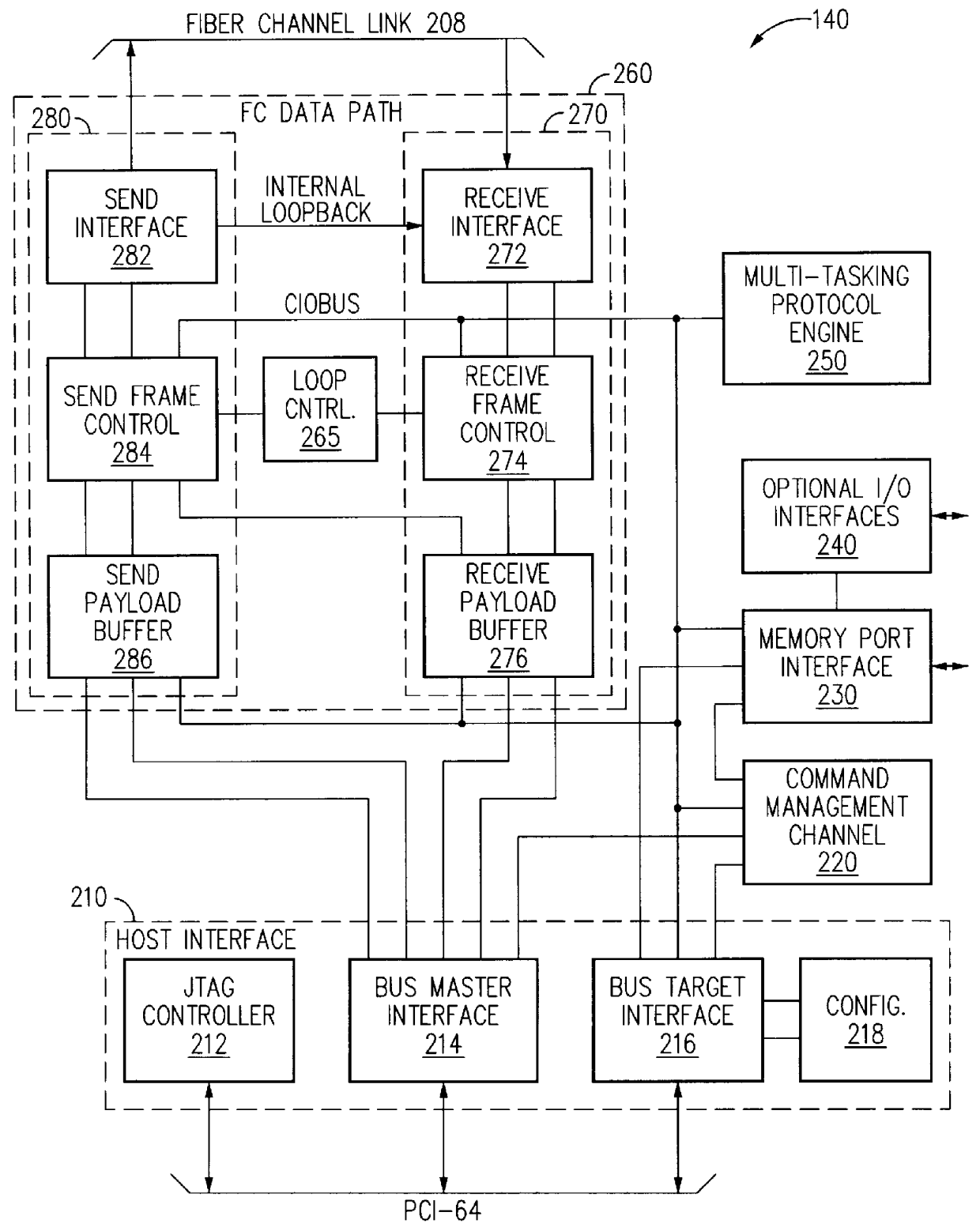

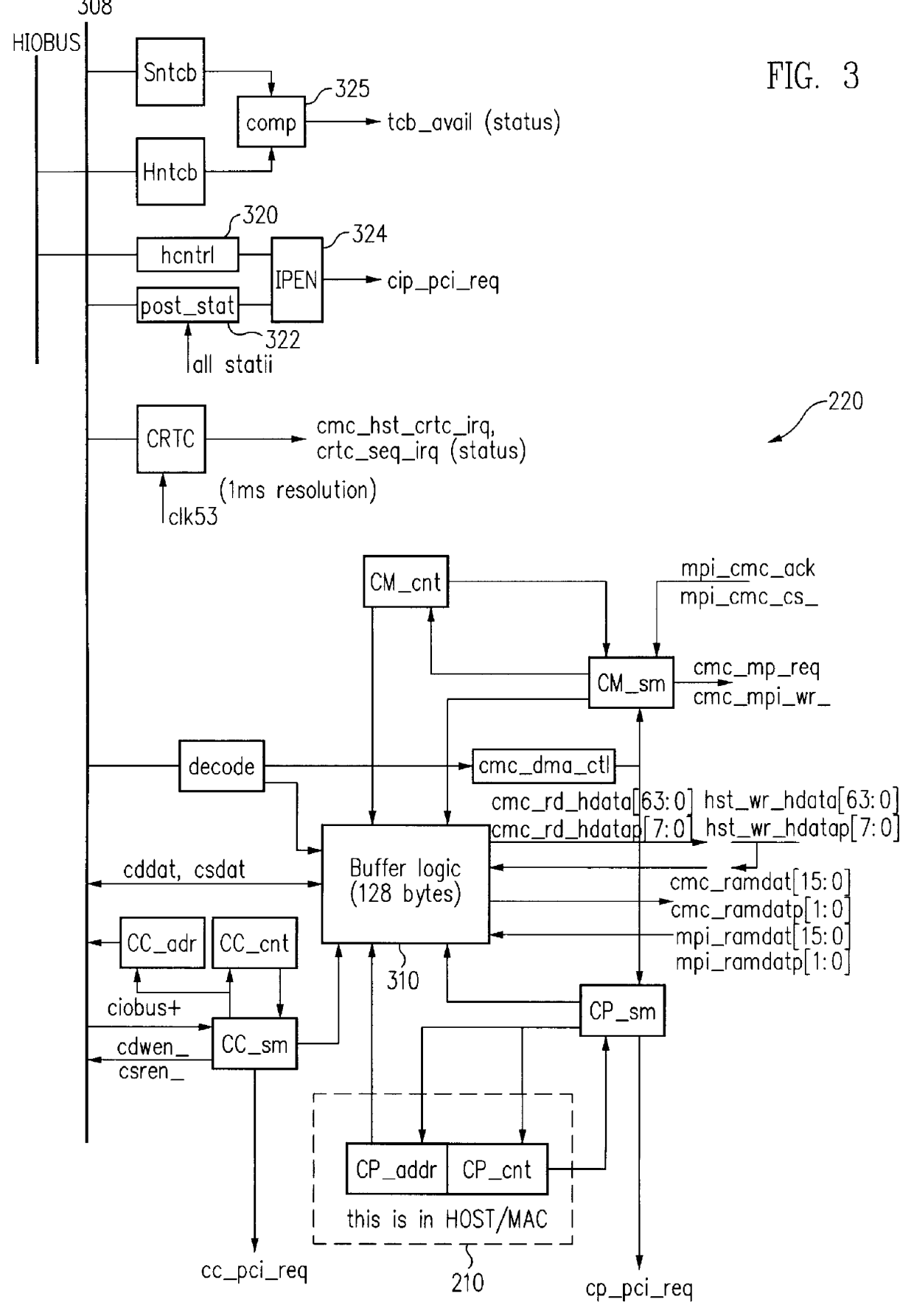

Communications interface adapter for a computer system including posting of system interrupt status

InactiveUS6085278AEliminates read accessProgram initiation/switchingCommunication interfaceDirect memory access

To facilitate access of interrupt status information, interrupt posting status. POST-STAT registers are readable by a host driver routine to quickly supply information relating to a functional block which has given rise to an interrupt status condition. The interrupt posting status POST-STAT registers contain a summary of interrupt status information. The host driver may then read the interrupt posting status POST-STAT register corresponding to the functional block to further investigate the cause of the interrupt status. System memory includes a mirror storage of the interrupt posting status POST-STAT registers that is transferred to the mirror storage by a direct memory access (DMA) operation. Values in the system mirror storage are updated automatically when a change occurs in a value within the interrupt posting status POST-STAT registers. A host system software driver accesses the interrupt posting status POST-STAT registers via a bus access operation, changes a bit in a POST-STAT register, and monitors the result of the access and bit change in the mirror of the POST-STAT register in the system memory without a further bus read access. Advantageously, multiple accesses through the bus to verify when the written value status is correct is eliminated.

Owner:ADAPTEC +1

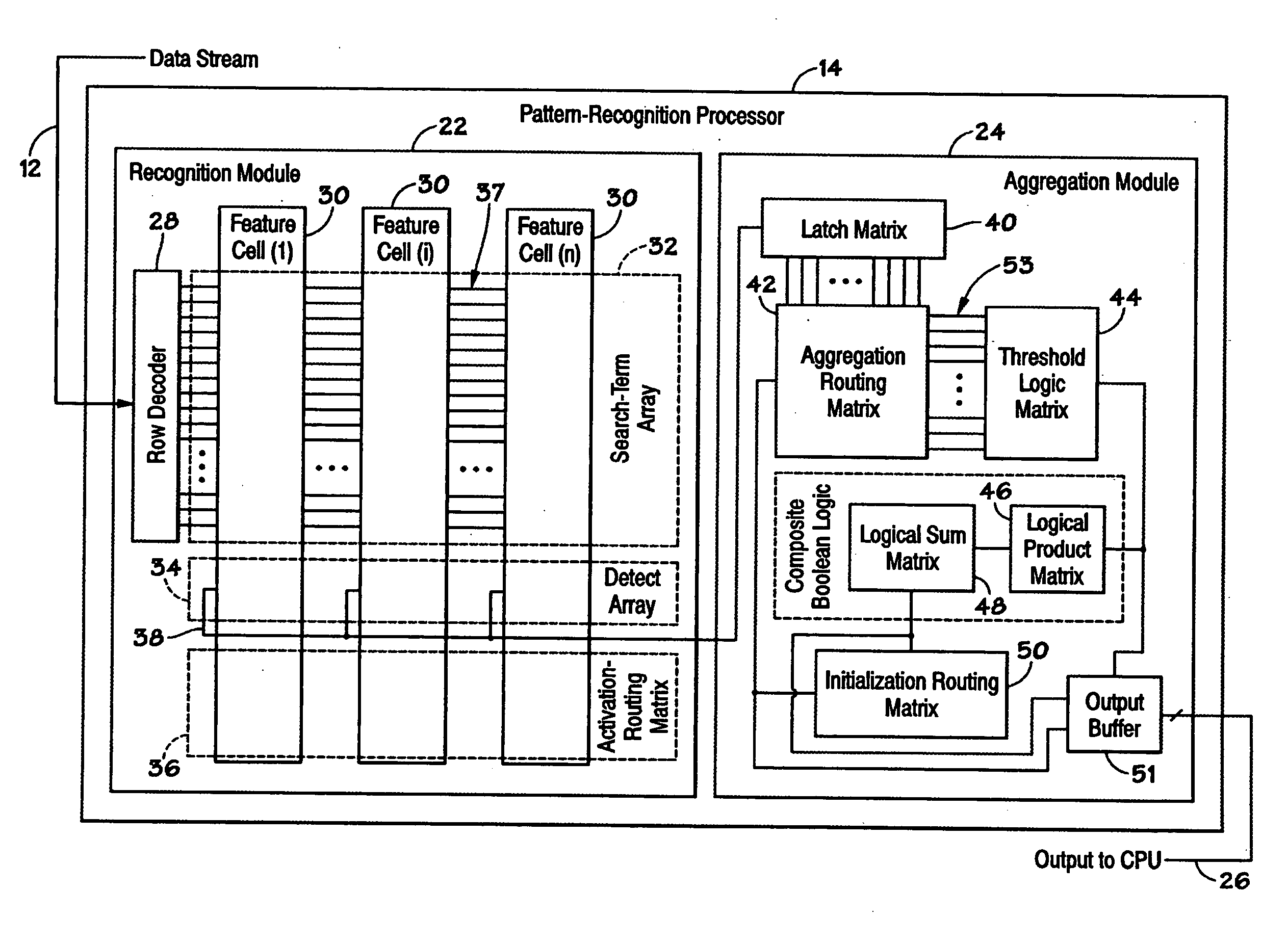

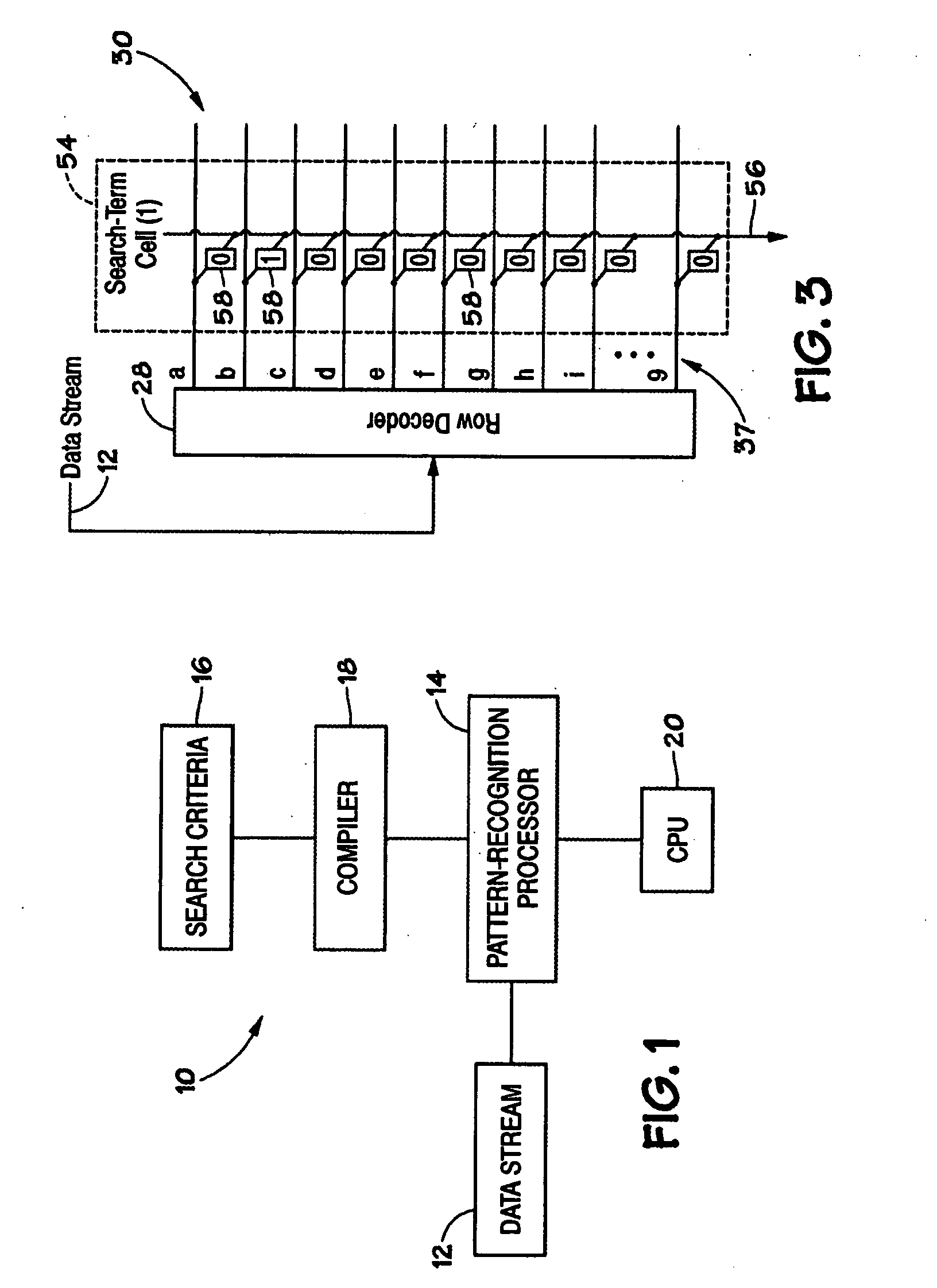

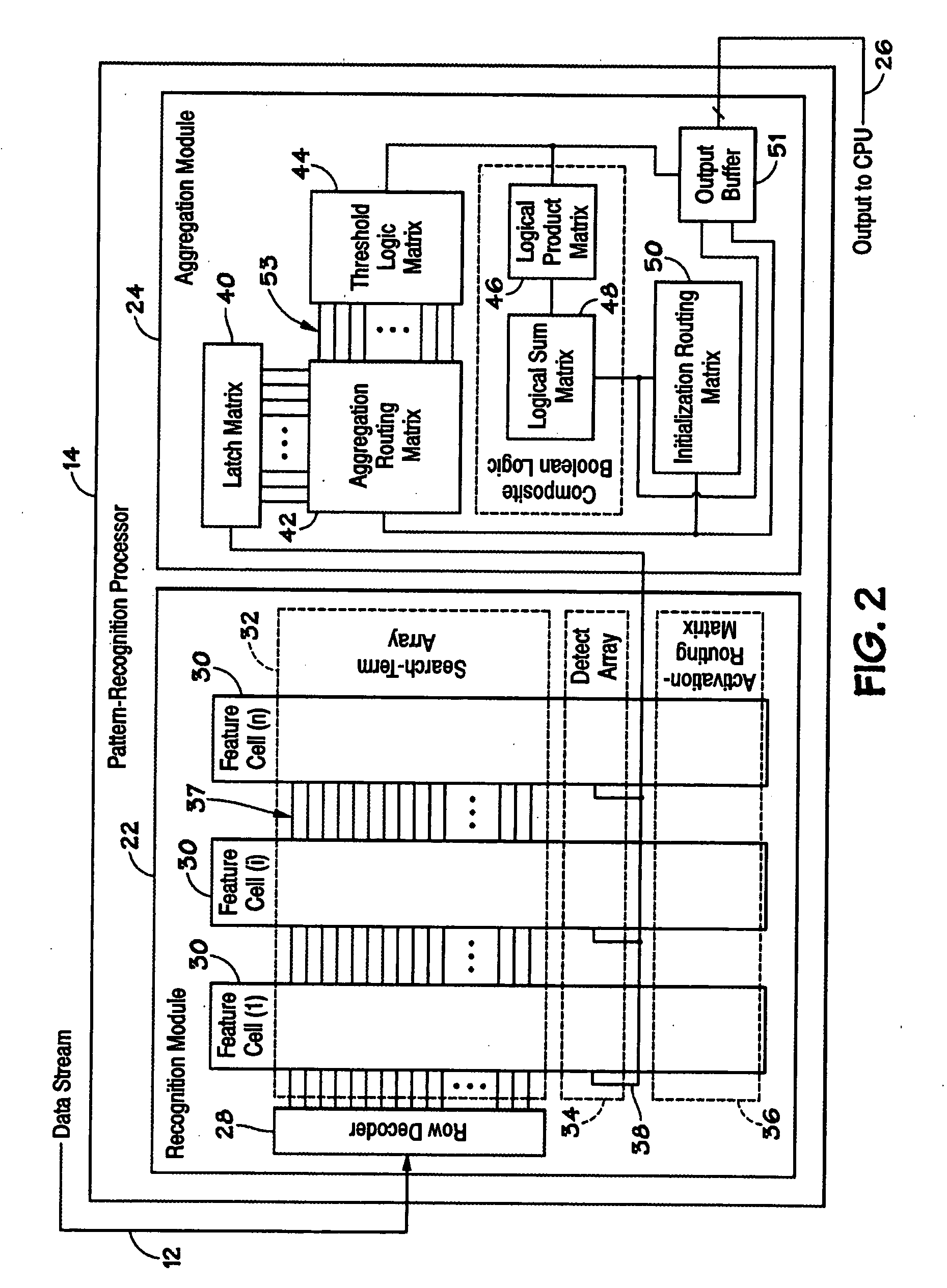

Devices, systems, and methods to synchronize simultaneous DMA parallel processing of a single data stream by multiple devices

Disclosed are methods and devices, among which is a system that includes a device that includes one or more pattern-recognition processors in a pattern-recognition cluster, for example. One of the one or more pattern-recognition processors may be initialized to perform as a direct memory access master device able to control the remaining pattern-recognition processors for synchronized processing of a data stream.

Owner:MICRON TECH INC

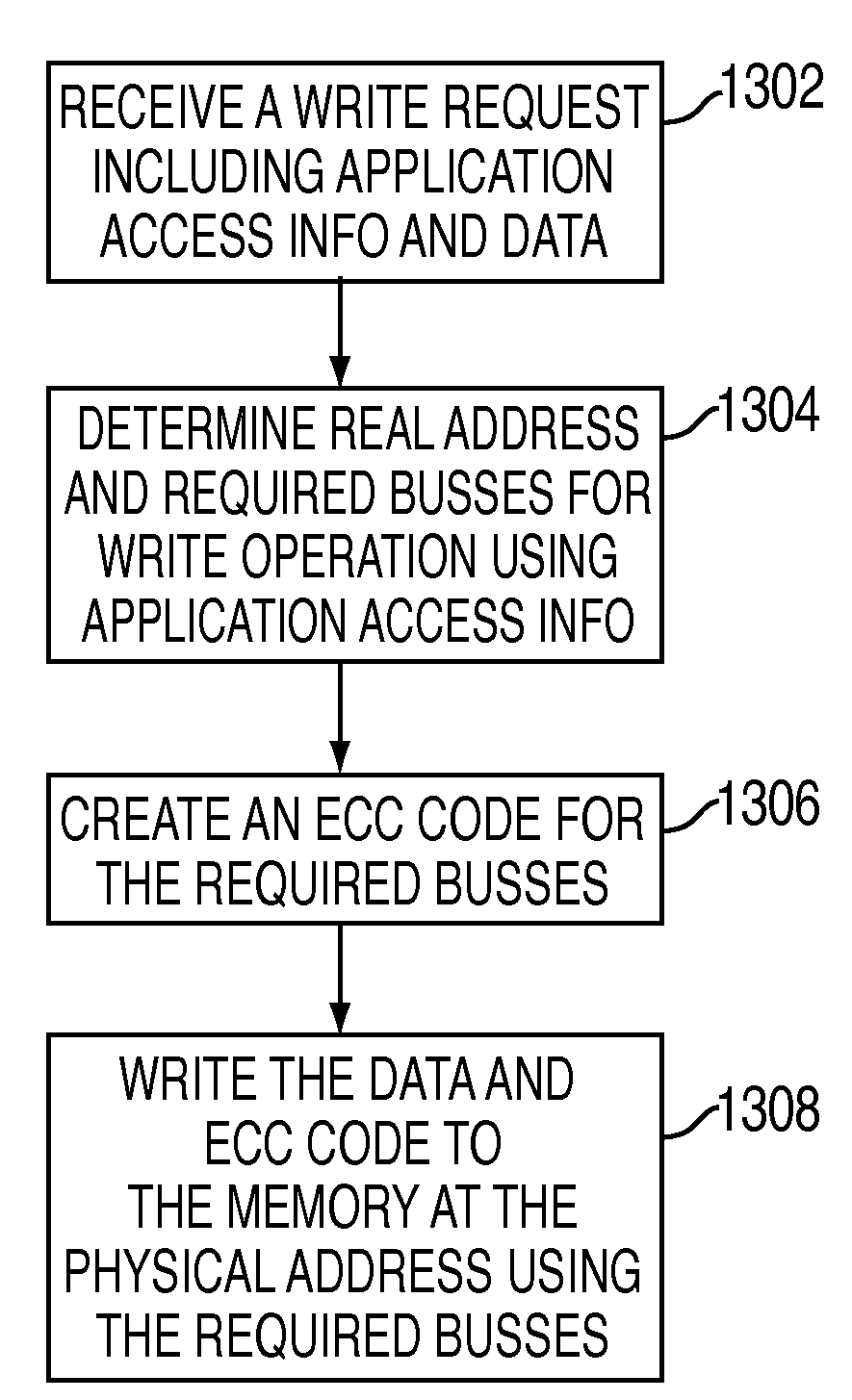

Systems and methods for program directed memory access patterns

InactiveUS20080046666A1Memory adressing/allocation/relocationMicro-instruction address formationDirect memory accessVirtual memory management

Systems and methods for program directed memory access patterns including a memory system with a memory, a memory controller and a virtual memory management system. The memory includes a plurality of memory devices organized into one or more physical groups accessible via associated busses for transferring data and control information. The memory controller receives and responds to memory access requests that contain application access information to control access pattern and data organization within the memory. Responding to memory access request includes accessing one or more memory devices. The virtual memory management system includes: a plurality of page table entries for mapping virtual memory addresses to real addresses in the memory; a hint state responsive to application access information for indicating how real memory for associated pages is to be physically organized within the memory; and a means for conveying the hint state to the memory controller.

Owner:IBM CORP

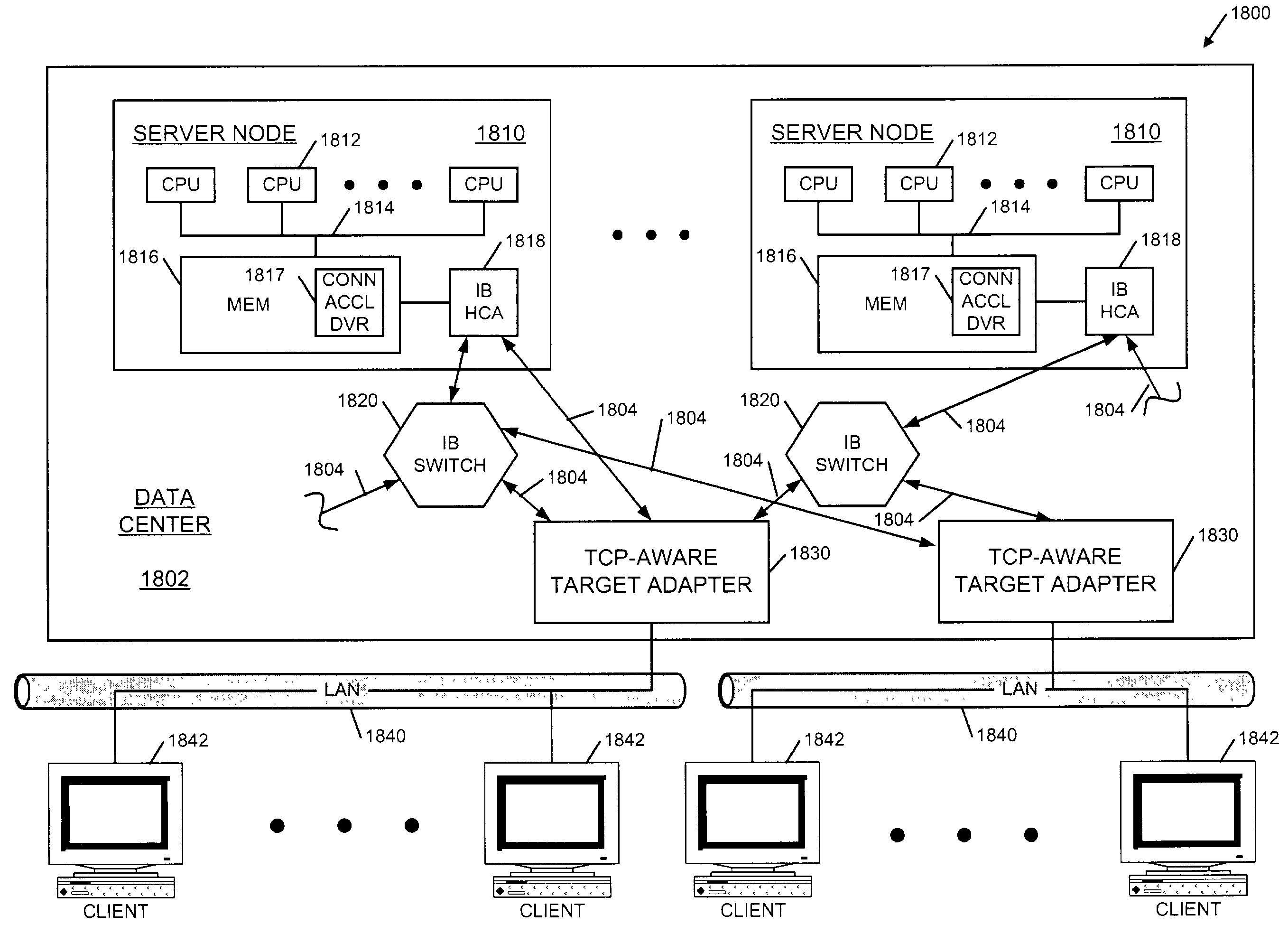

Infiniband TM work queue to TCP/IP translation

InactiveUS7149817B2Increase capacityFast data transferMultiple digital computer combinationsMemory systemsDirect memory accessIp processing

A TCP-aware target adapter for accelerating TCP / IP connections between clients and servers, where the servers are interconnected over an Infiniband™ fabric and the clients are interconnected over a TCP / IP-based network. The TCP-aware target adapter includes an accelerated connection processor and a target channel adapter. The accelerated connection processor bridges TCP / IP transactions between the clients and the servers. The accelerated connection processor accelerates the TCP / IP connections prescribing Infiniband remote direct memory access operations to retrieve / provide transaction data from / to the servers. The target channel adapter is coupled to the accelerated connection processor. The target channel adapter supports Infiniband operations with the servers, including execution of the remote direct memory access operations to retrieve / provide the transaction data. The TCP / IP connections are accelerated by offloading TCP / IP processing otherwise performed by the servers to retrieve / provide said transaction data.

Owner:INTEL CORP

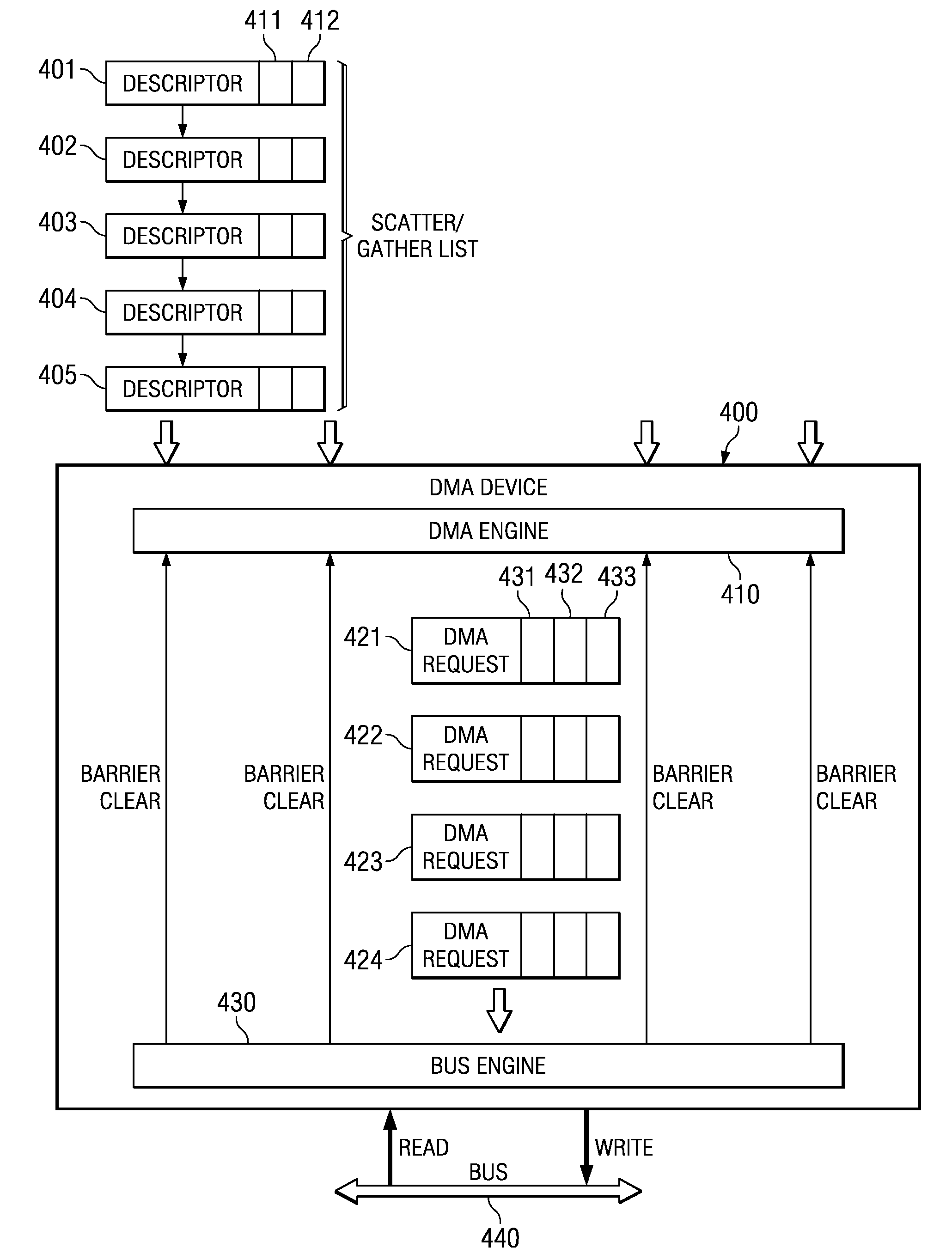

Barrier and Interrupt Mechanism for High Latency and Out of Order DMA Device

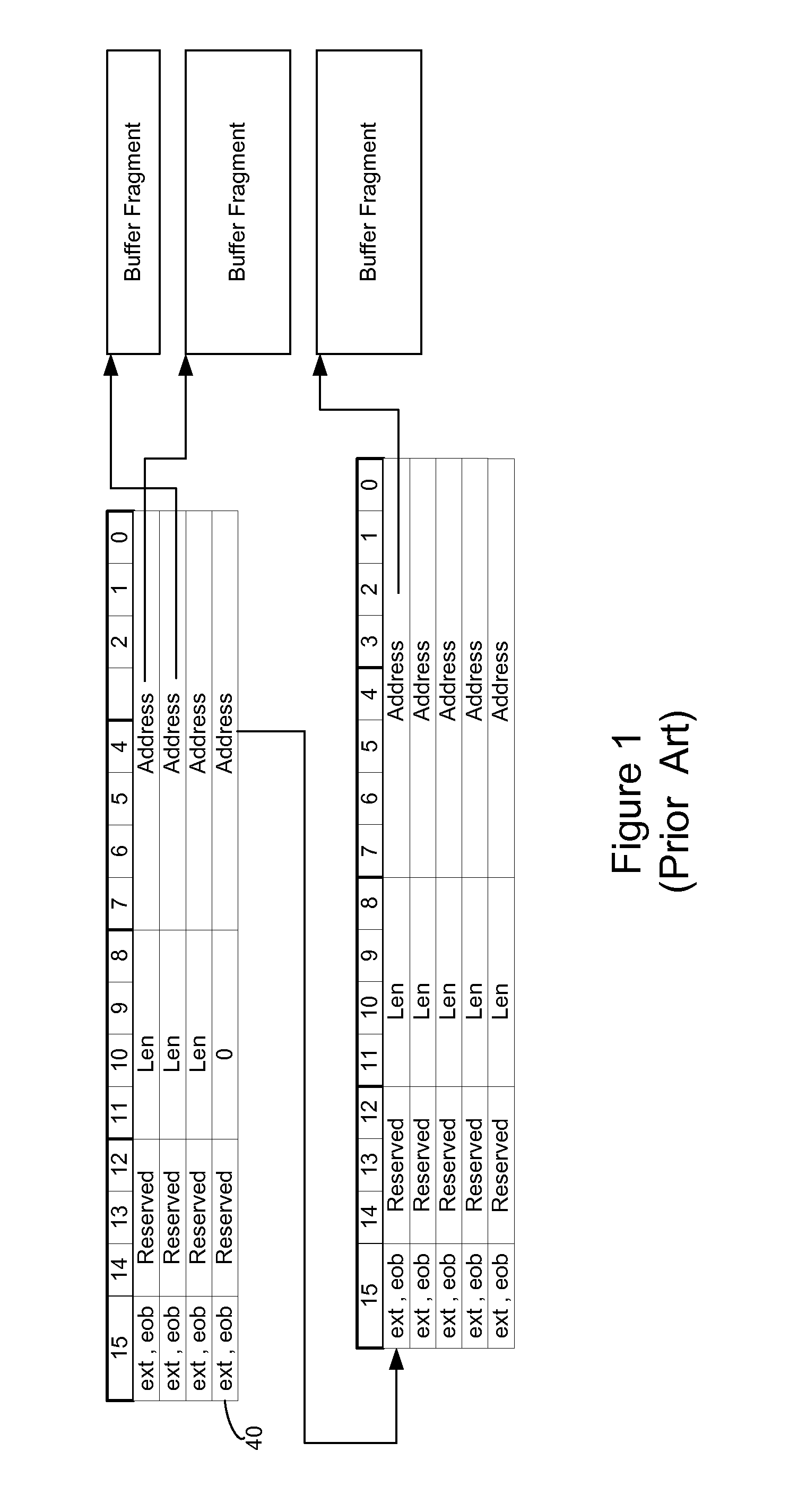

A direct memory access (DMA) device includes a barrier and interrupt mechanism that allows interrupt and mailbox operations to occur in such a way that ensures correct operation, but still allows for high performance out-of-order data moves to occur whenever possible. Certain descriptors are defined to be “barrier descriptors.” When the DMA device encounters a barrier descriptor, it ensures that all of the previous descriptors complete before the barrier descriptor completes. The DMA device further ensures that any interrupt generated by a barrier descriptor will not assert until the data move associated with the barrier descriptor completes. The DMA controller only permits interrupts to be generated by barrier descriptors. The barrier descriptor concept also allows software to embed mailbox completion messages into the scatter / gather linked list of descriptors.

Owner:IBM CORP

Hardware-based multi-threading for packet processing

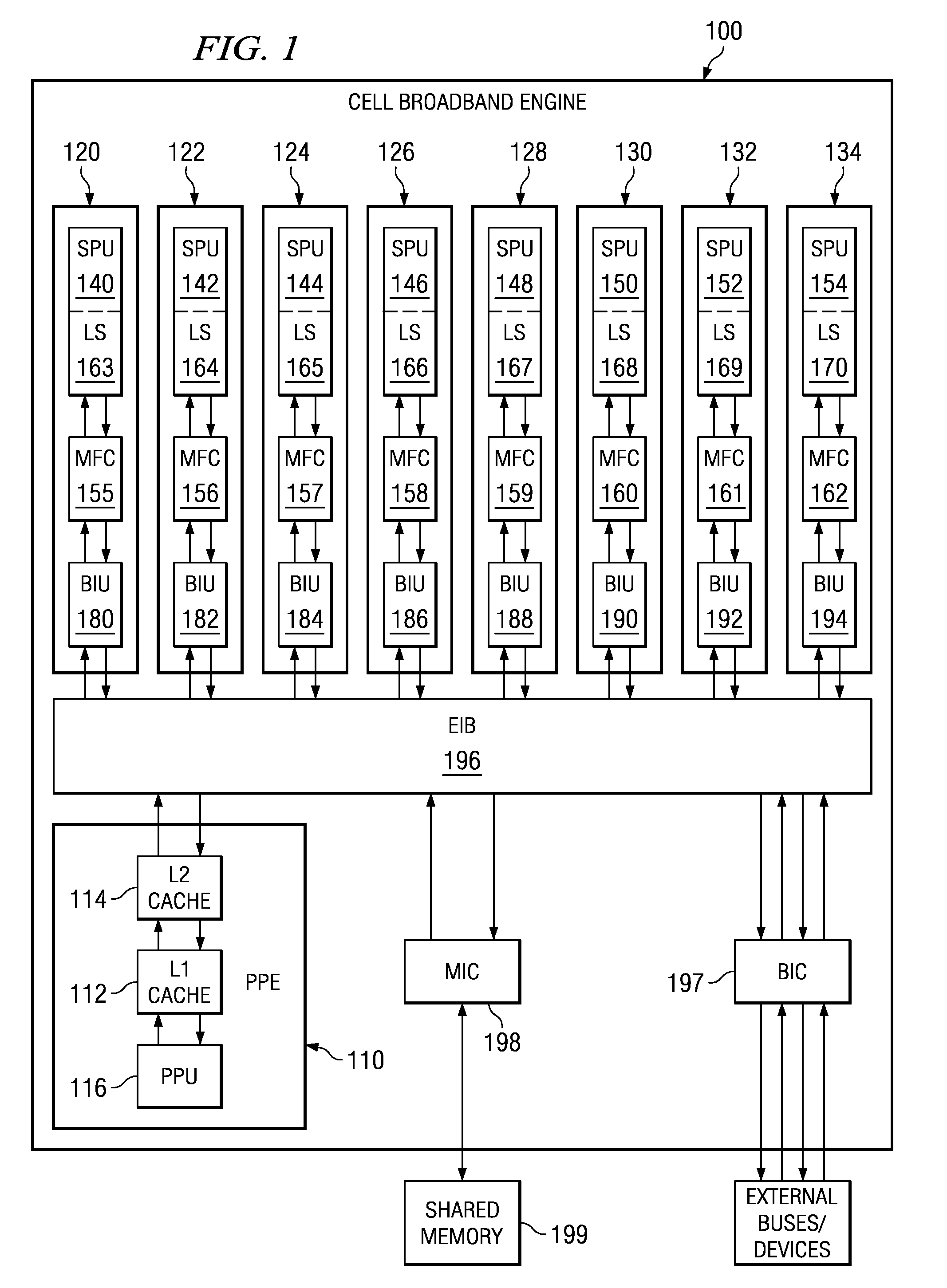

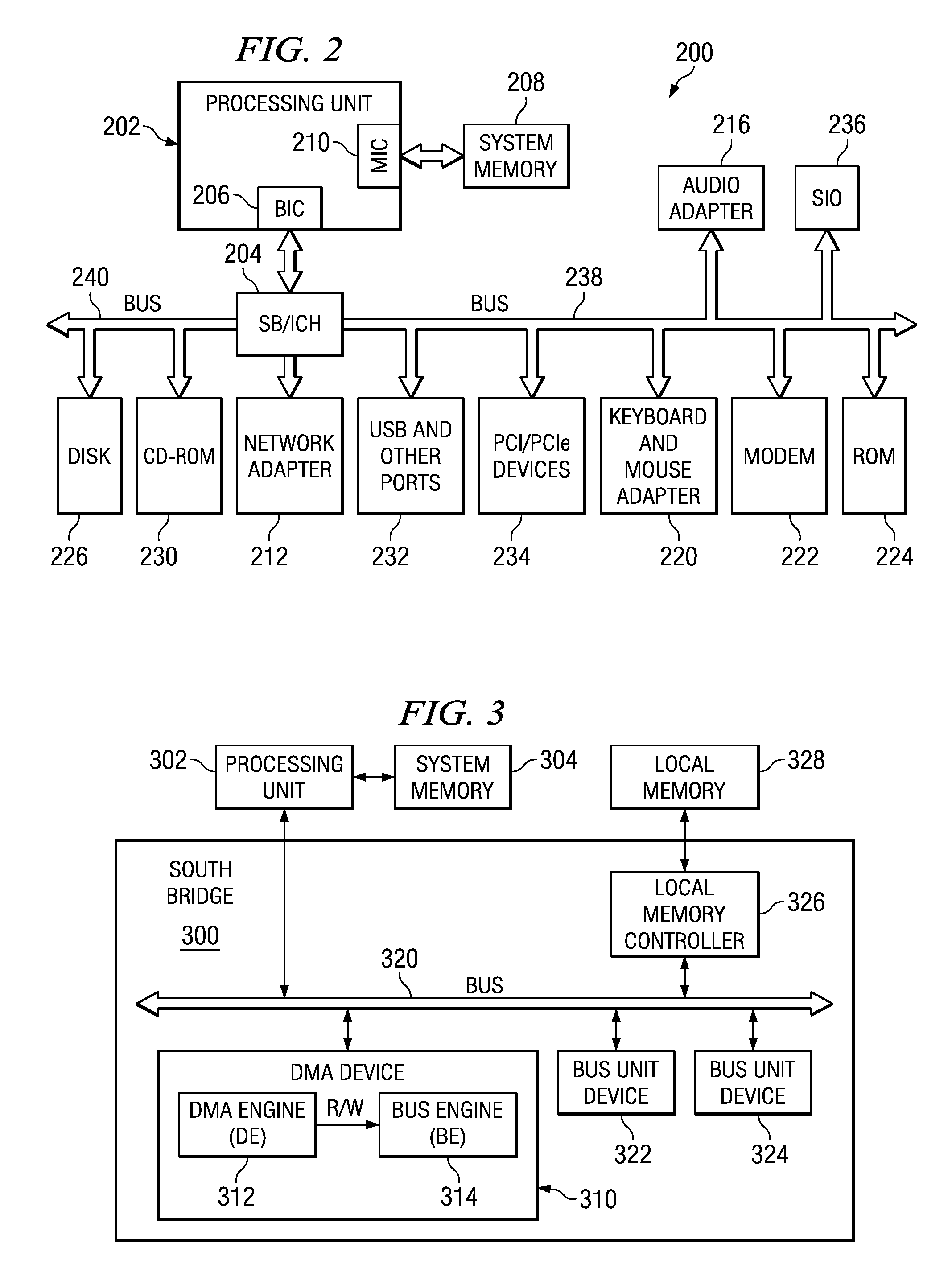

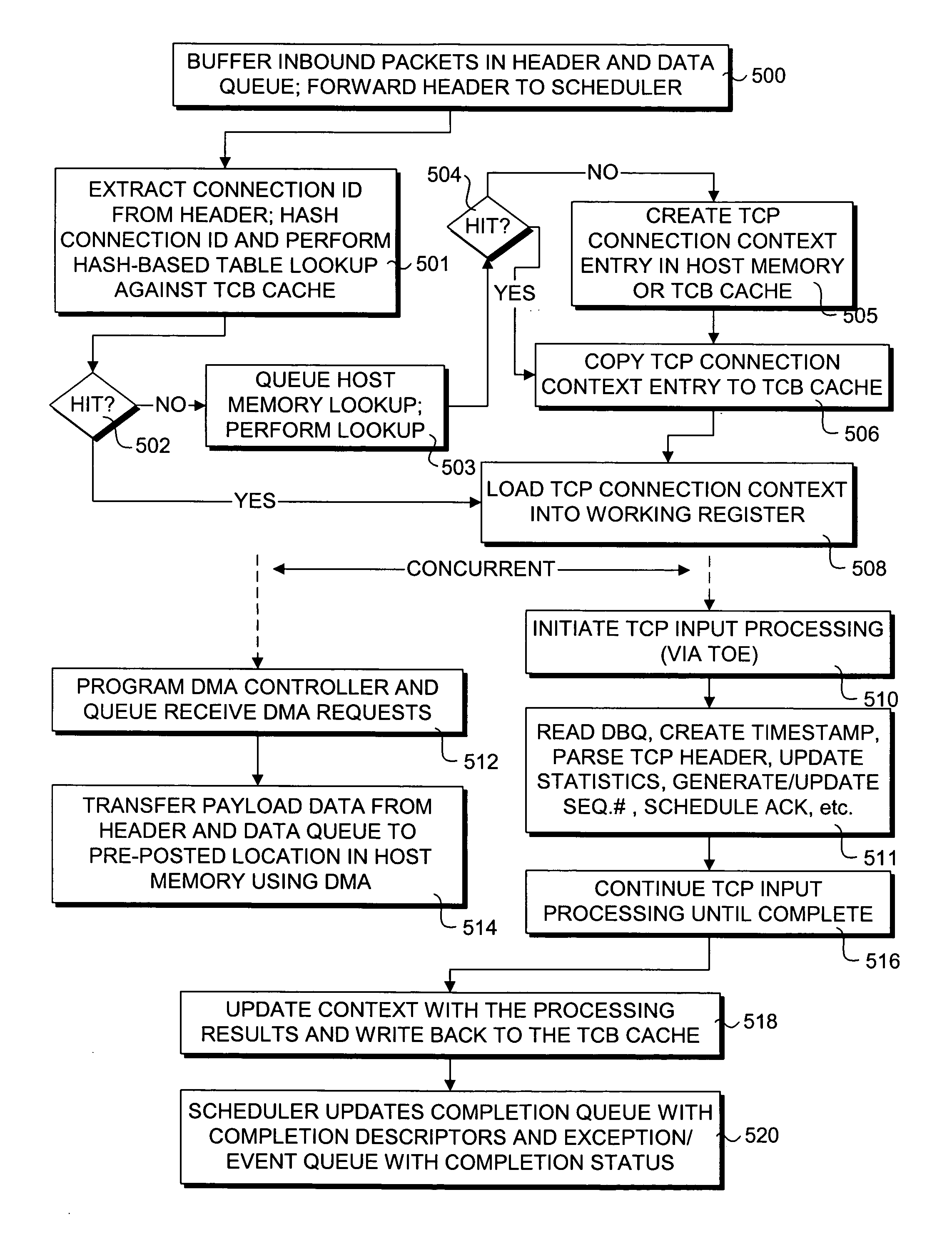

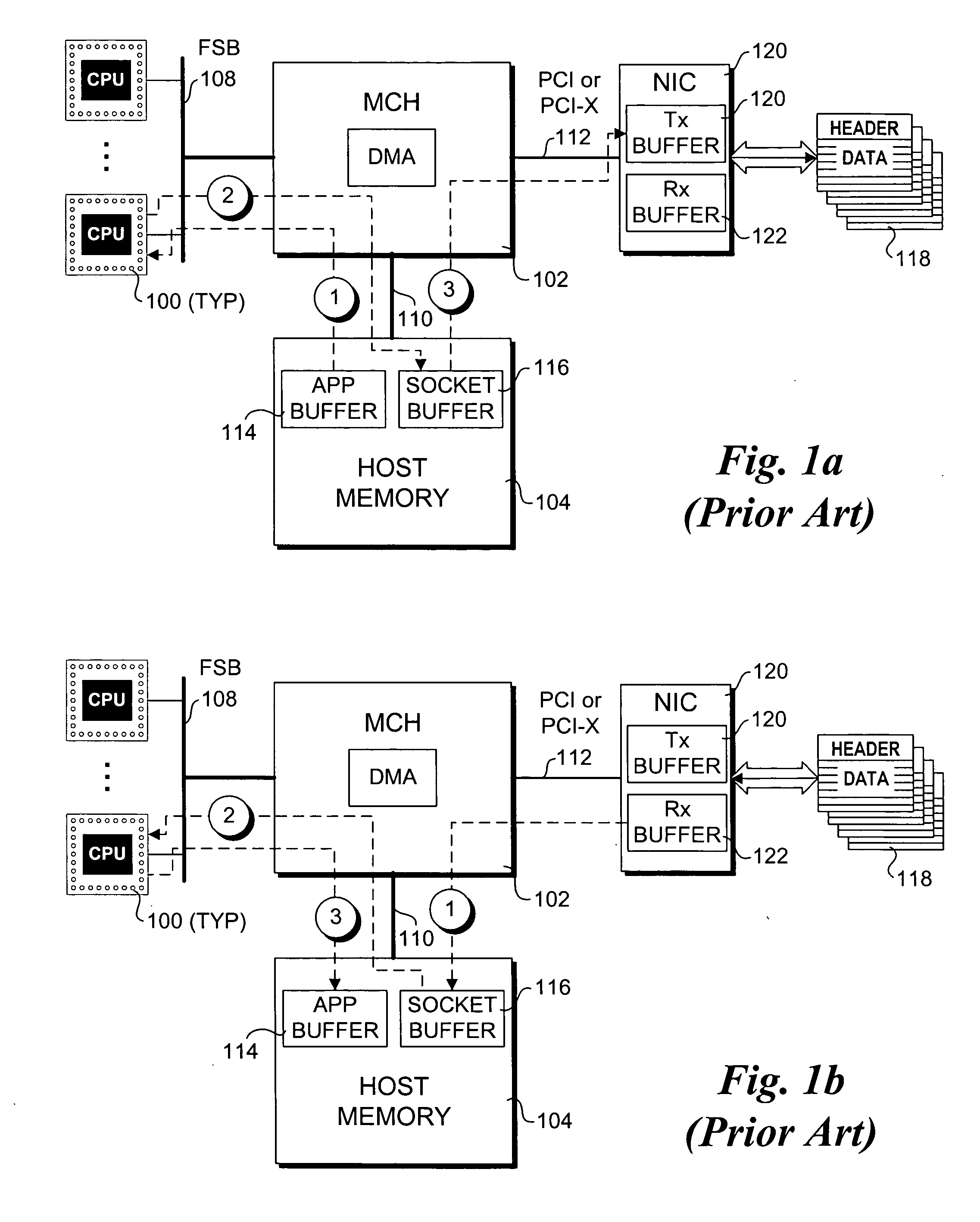

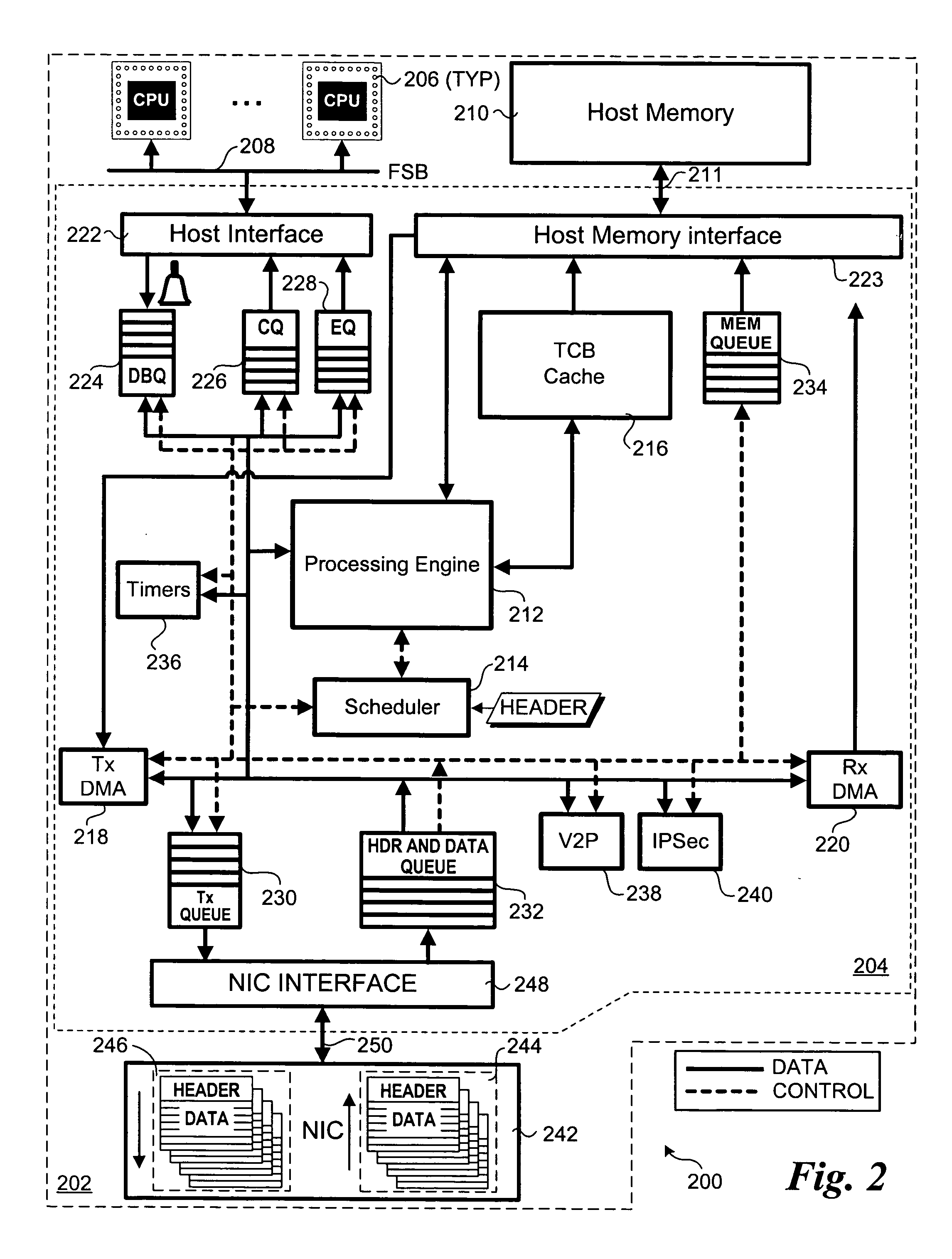

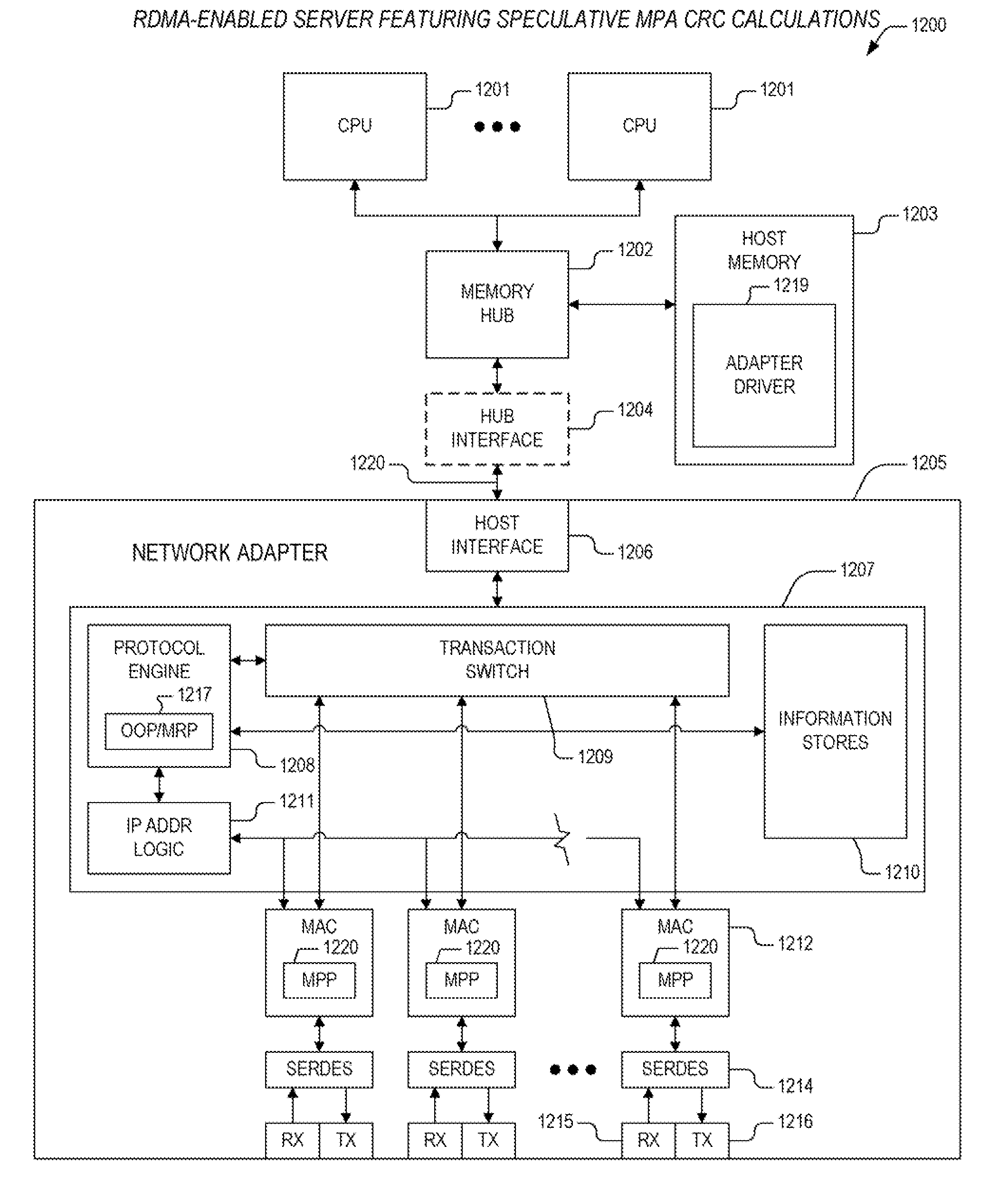

Methods and apparatus for processing transmission control protocol (TCP) packets using hardware-based multi-threading techniques. Inbound and outbound TCP packet are processed using a multi-threaded TCP offload engine (TOE). The TOE includes an execution core comprising a processing engine, a scheduler, an on-chip cache, a host memory interface, a host interface, and a network interface controller (NIC) interface. In one embodiment, the TOE is embodied as a memory controller hub (MCH) component of a platform chipset. The TOE may further include an integrated direct memory access (DMA) controller, or the DMA controller may be embodied as separate circuitry on the MCH. In one embodiment, inbound packets are queued in an input buffer, the headers are provided to the scheduler, and the scheduler arbitrates thread execution on the processing engine. Concurrently, DMA payload data transfers are queued and asynchronously performed in a manner that hides memory latencies. In one embodiment, the technique can process typical-size TCP packets at 10 Gbps or greater line speeds.

Owner:TAHOE RES LTD

Apparatus and method for in-line insertion and removal of markers

ActiveUS20080043750A1Efficient and effective insertion/removalData switching by path configurationDirect memory accessHost memory

An apparatus is provided, for performing a direct memory access (DMA) operation between a host memory in a first server and a network adapter. The apparatus includes a host frame parser and a protocol engine. The host frame parser is configured to receive data corresponding to the DMA operation from a host interface, and is configured to insert markers on-the-fly into the data at a prescribed interval and to provide marked data for transmission to a second server over a network fabric. The protocol engine is coupled to the host frame parser. The protocol engine is configured to direct the host frame parser to insert the markers, and is configured to specify a first marker value and an offset value, whereby the host frame parser is enabled to locate and insert a first marker into the data.

Owner:INTEL CORP

Integrated processor platform supporting wireless handheld multi-media devices

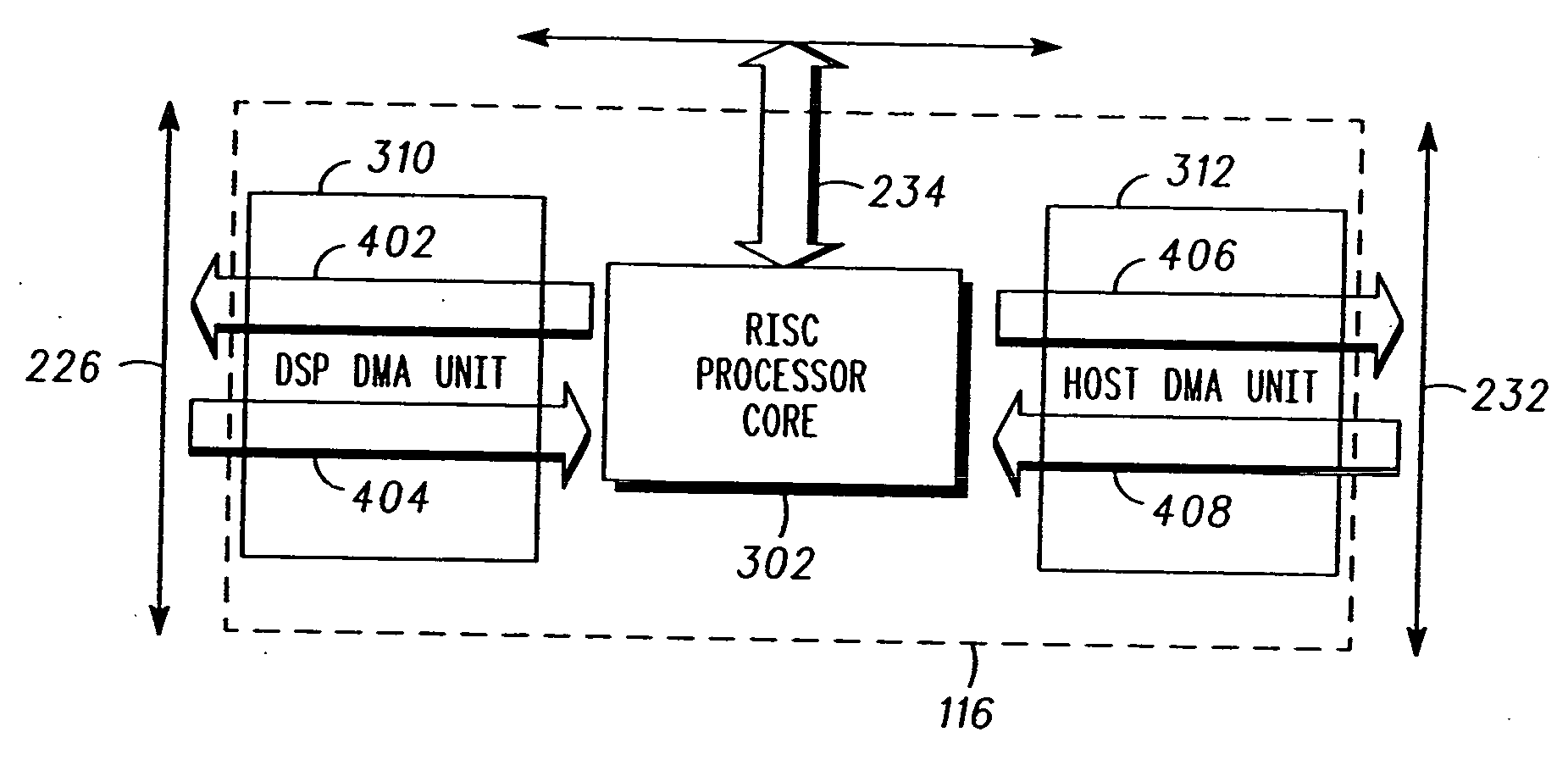

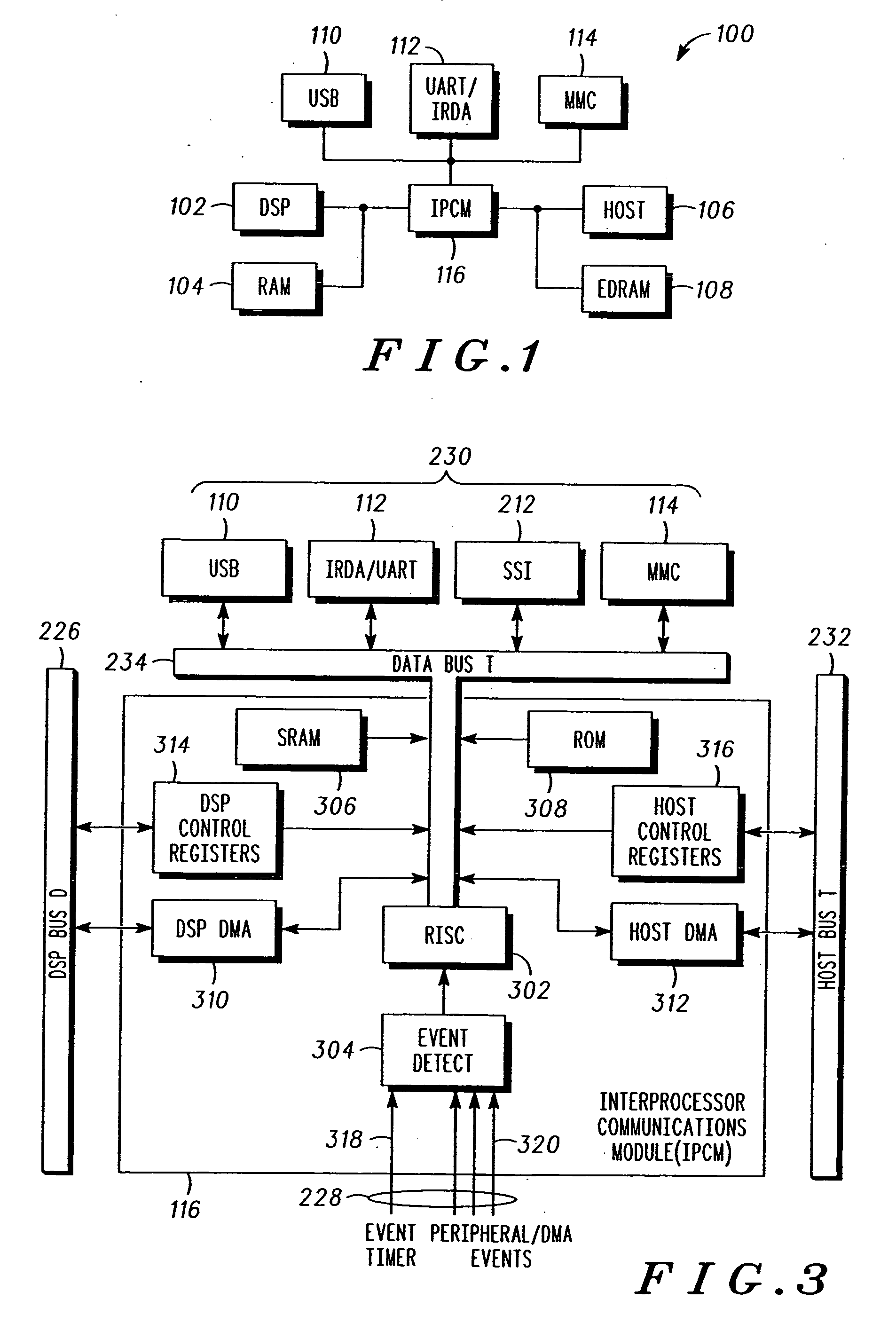

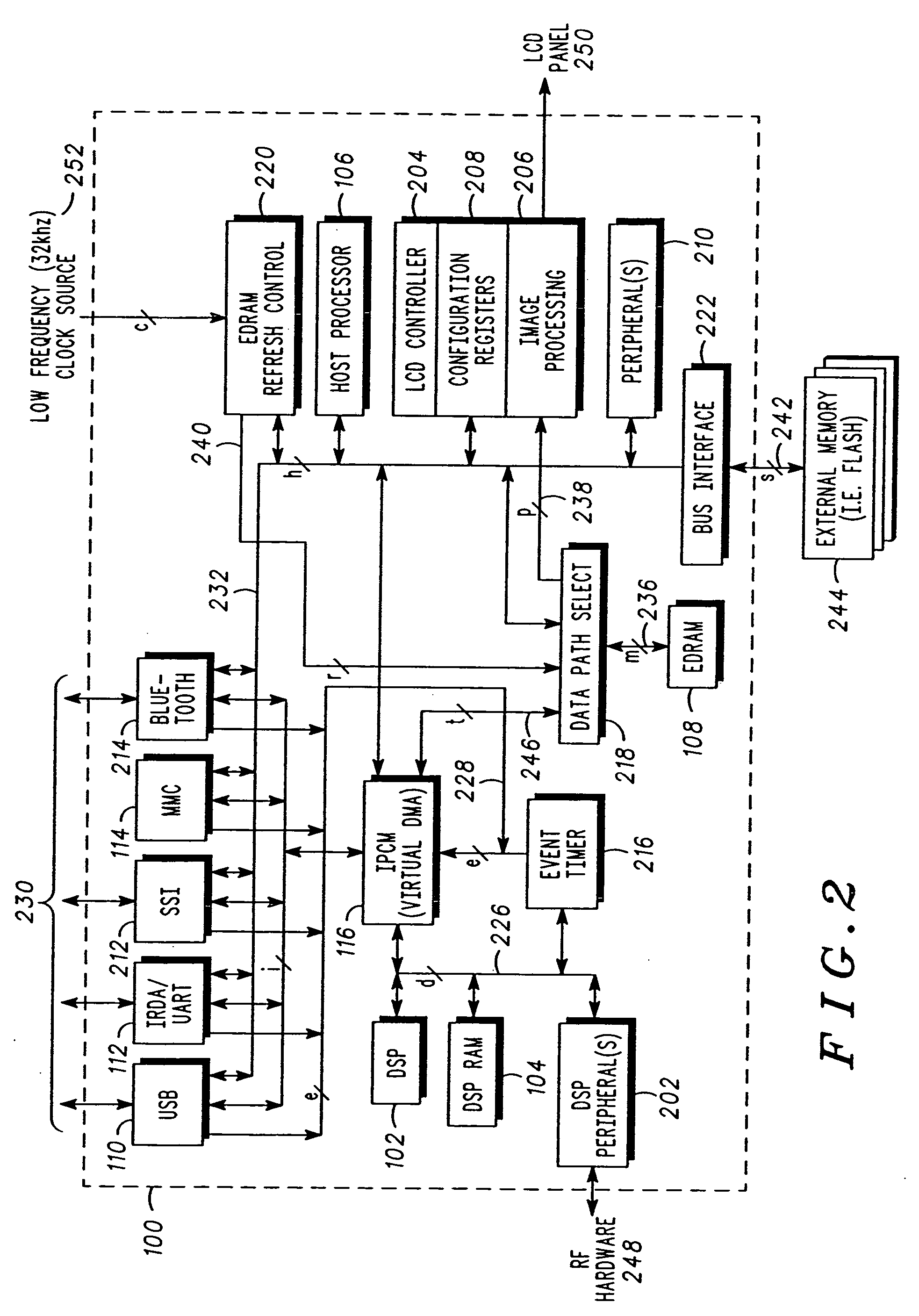

InactiveUS20060010264A1Energy efficient ICTBuilding constructionsDirect memory accessMedia processor

A direct memory access system consists of a direct memory access controller establishing a direct memory access data channel and including a first interface for coupling to a memory. A second interface is for coupling to a plurality of nodes. And a processor is coupled to the direct memory access controller and coupled to the second interface, wherein the processor configures the direct memory access data channel to transfer data between a programmably selectable respective one or more of the plurality of nodes and the memory. In some embodiments, the plurality of nodes are a digital signal processor memory and a host processor memory of a multi-media processor platform to be implemented in a wireless multi-media handheld telephone.

Owner:GOOGLE TECH HLDG LLC

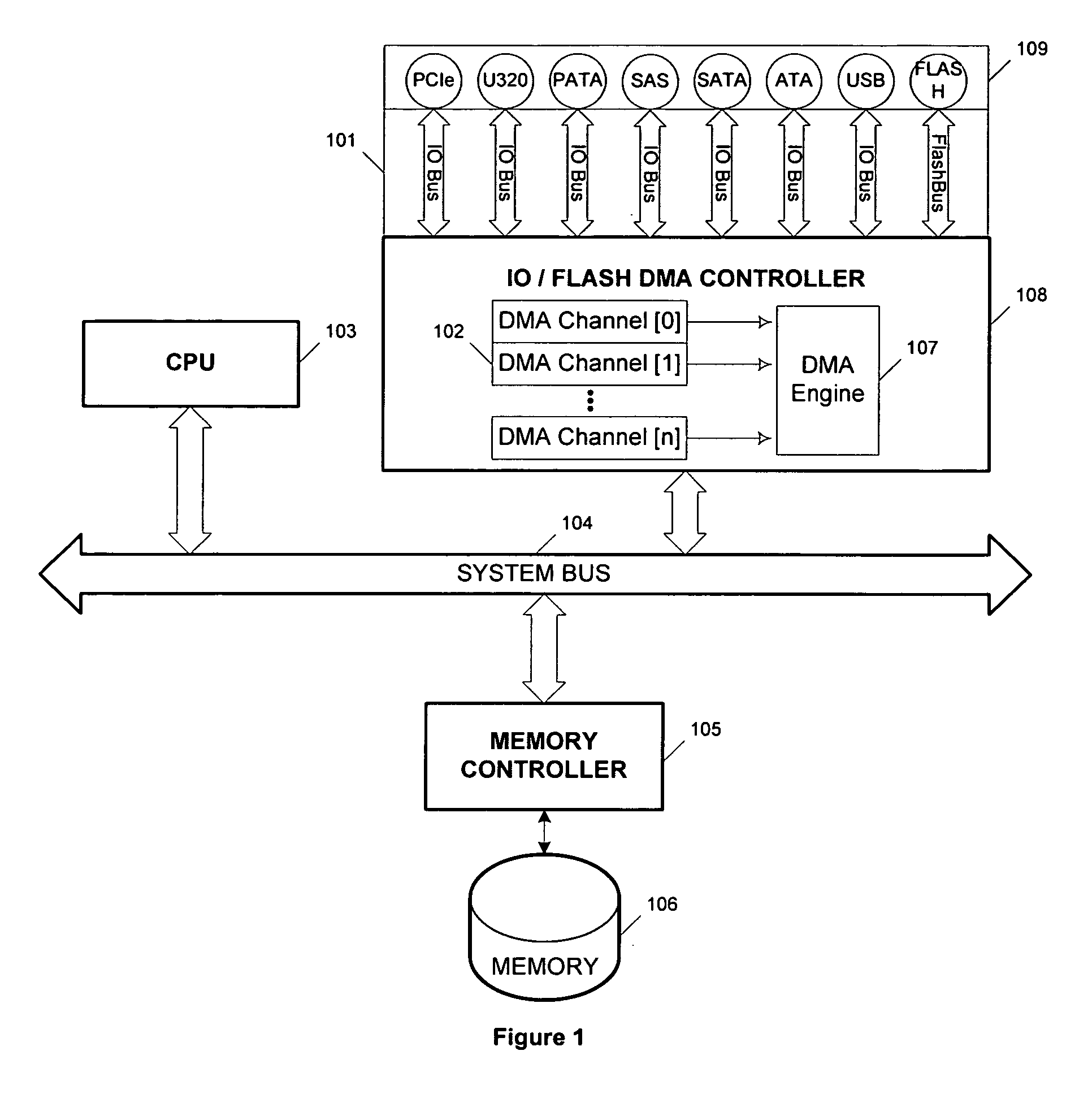

Direct memory access controller with encryption and decryption for non-blocking high bandwidth I/O transactions

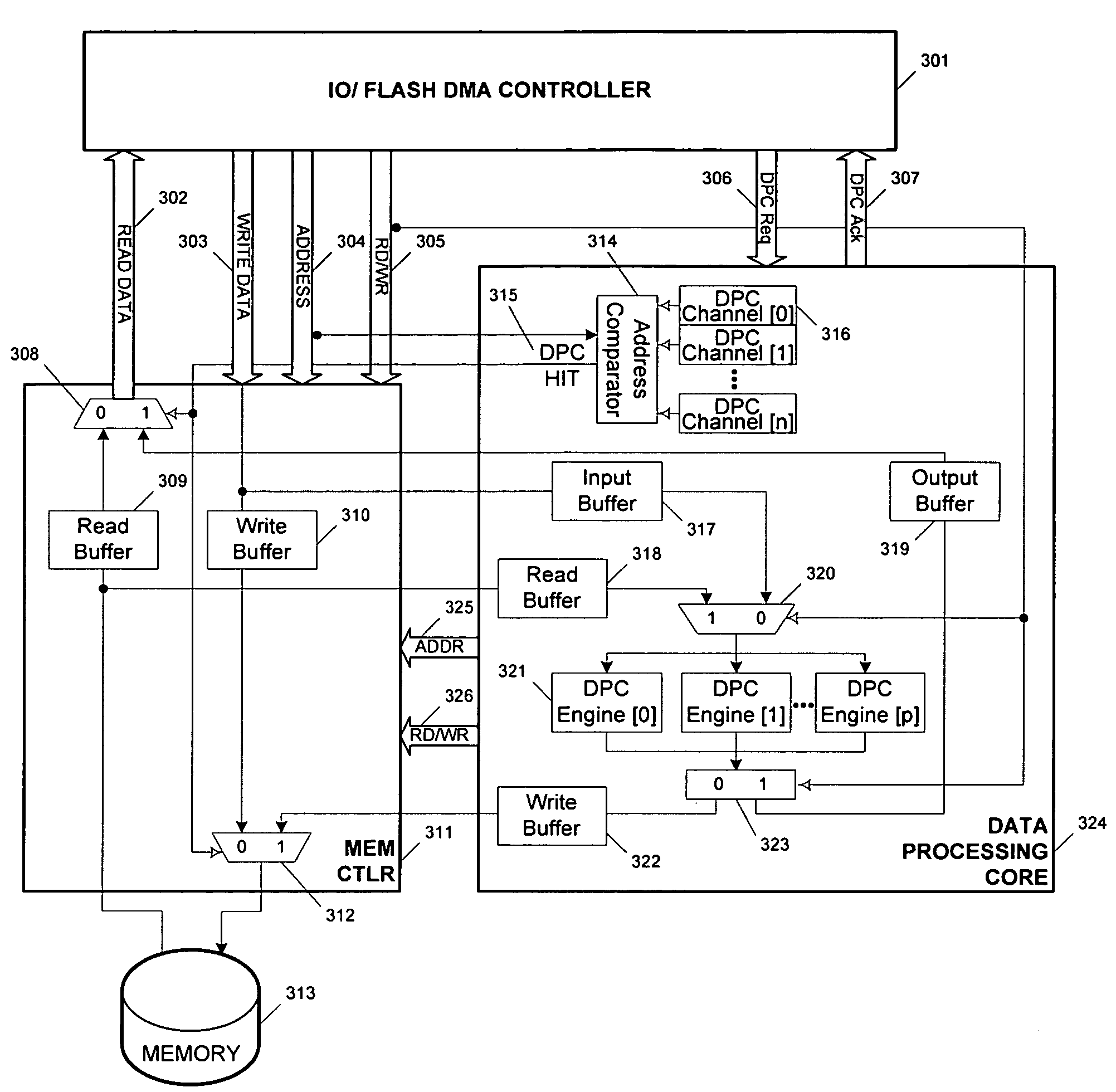

ActiveUS7716389B1Time-division multiplexData switching by path configurationHigh bandwidthDirect memory access

Due to the integration of multiple I / O device controllers in a storage controller and the need to provide secure and fast data transfers between the I / O devices and the storage controller, an architecture that can perform multiple encrypt / decrypt operations simultaneously is therefore needed to service multiple transfer requests without a negative impact on the speed of transfer and processing. The present invention relates to enhancing Direct Memory Access (DMA) operations between multiple IO devices and a storage controller by adding a Data Processing Core. Exemplary implementations are provided to illustrate the background mechanism used by a DMA controller that minimizes central-processing-unit (CPU) intervention and the multi-channel architecture which allows multiple IO requests to be serviced simultaneously.

Owner:BITMICRO LLC

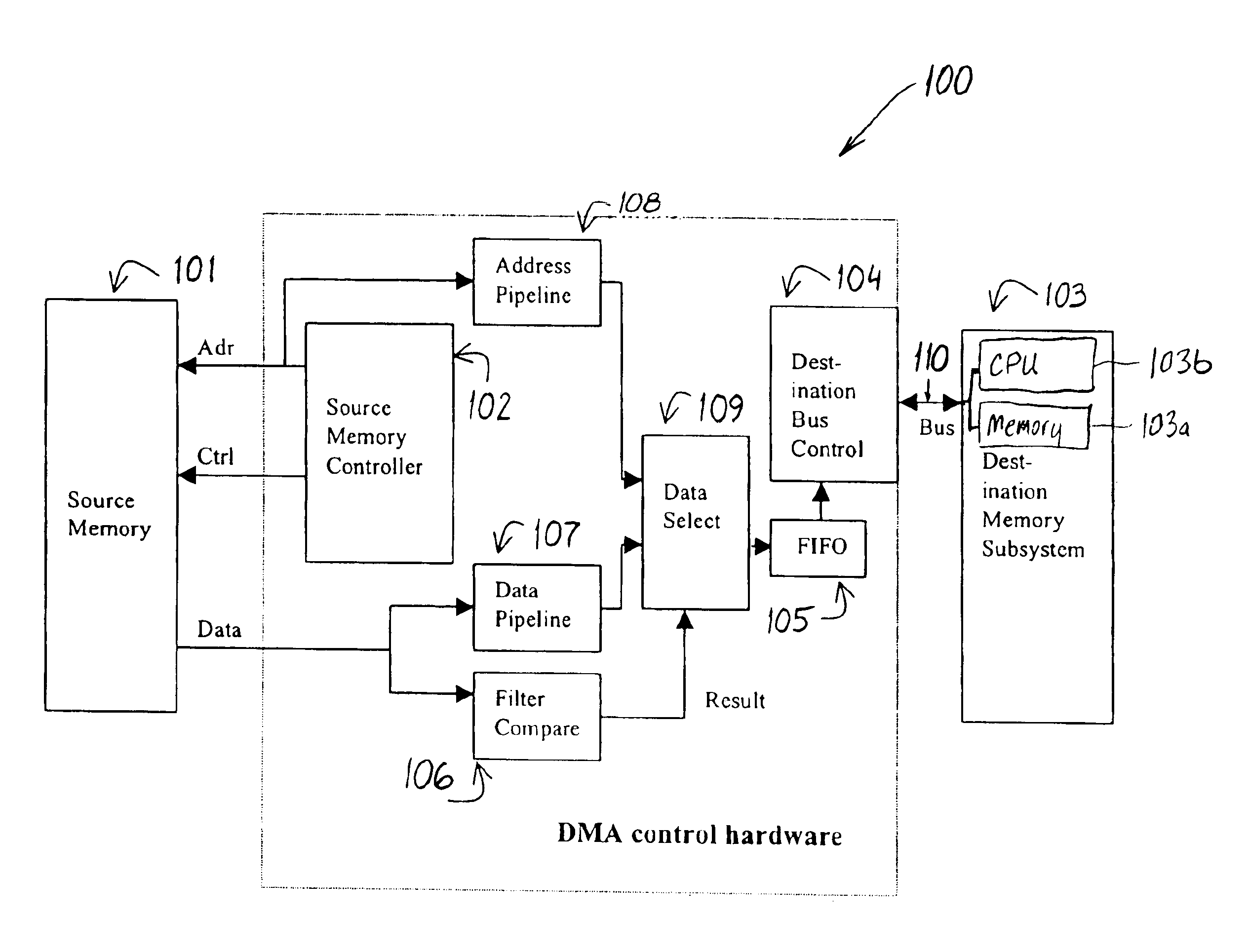

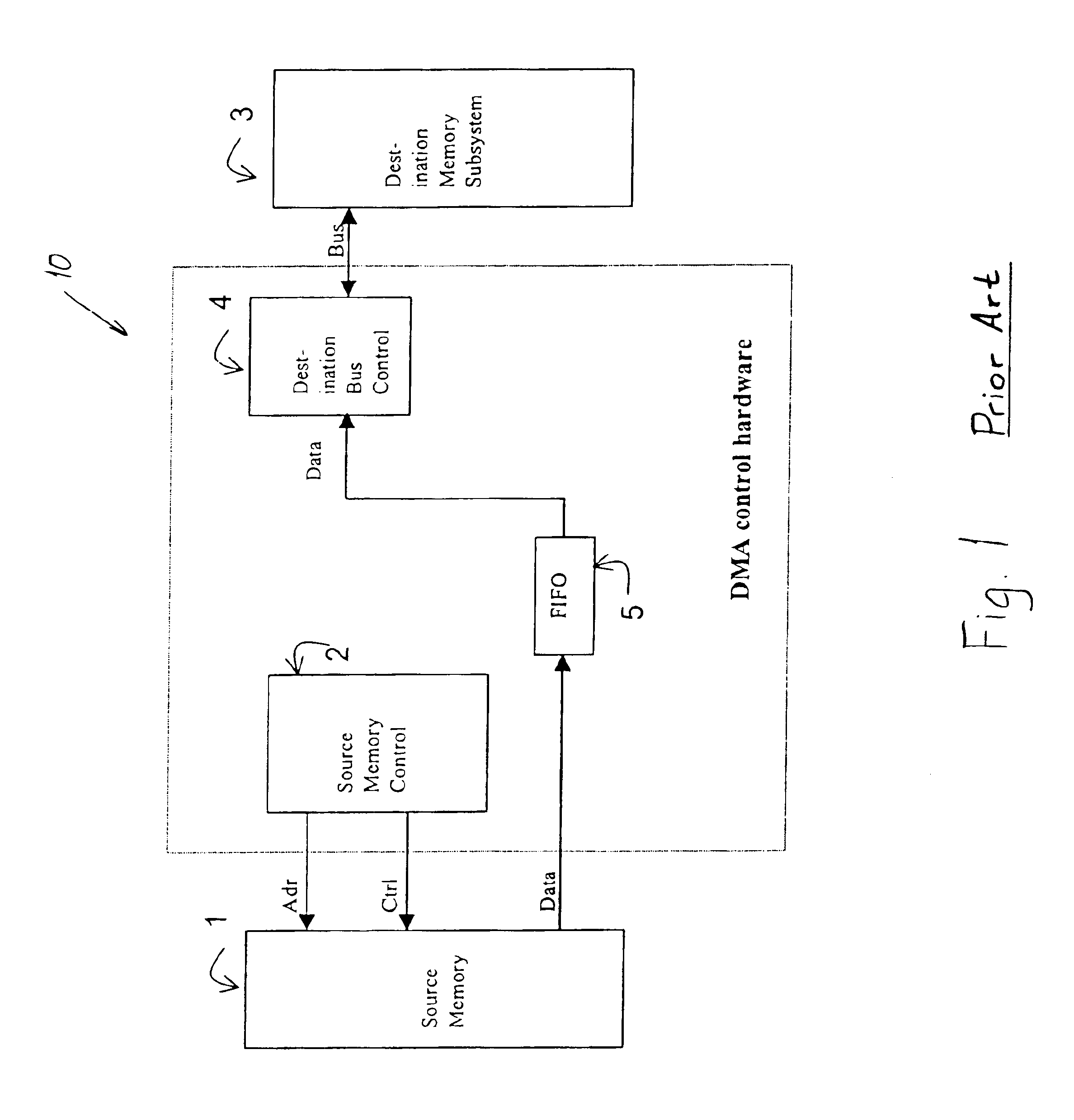

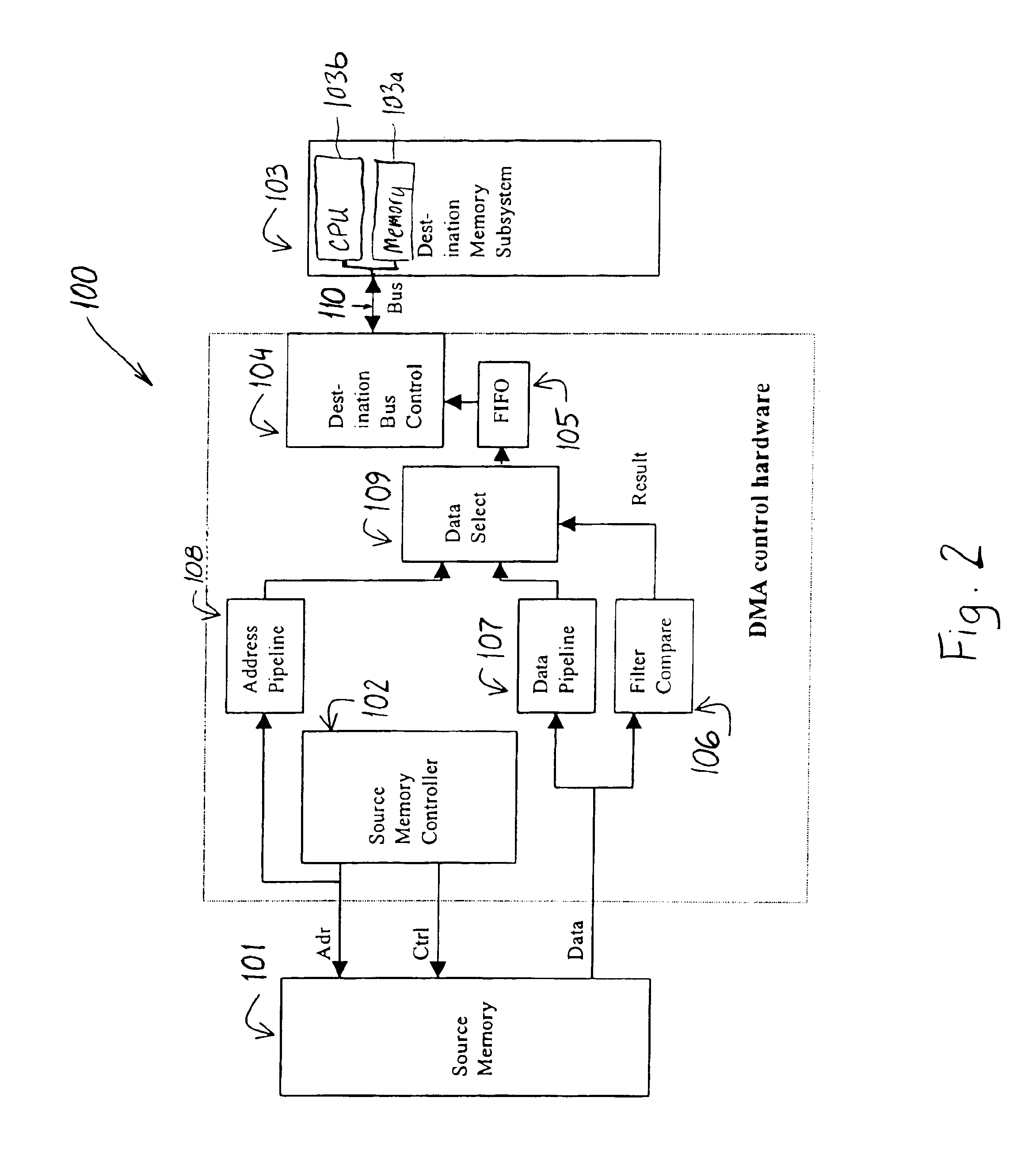

Direct memory access controller and method of filtering data during data transfer from a source memory to a destination memory

InactiveUS6904473B1Convenient and efficient transferEfficient transferData processing applicationsElectric digital data processingDirect memory accessMemory controller

A direct memory access controller includes a source memory controller for controlling a source memory, a destination bus controller for controlling the transfer of data to a destination memory, a first-in-first-out memory buffer for receiving data from the source memory, and a filter connected upstream of the first-in-first-out memory buffer for comparing the source memory data to a filter criterion and passing to the first-in-first-out memory buffer only that data which matches the filter criterion.

Owner:XYRATEX TECH LTD

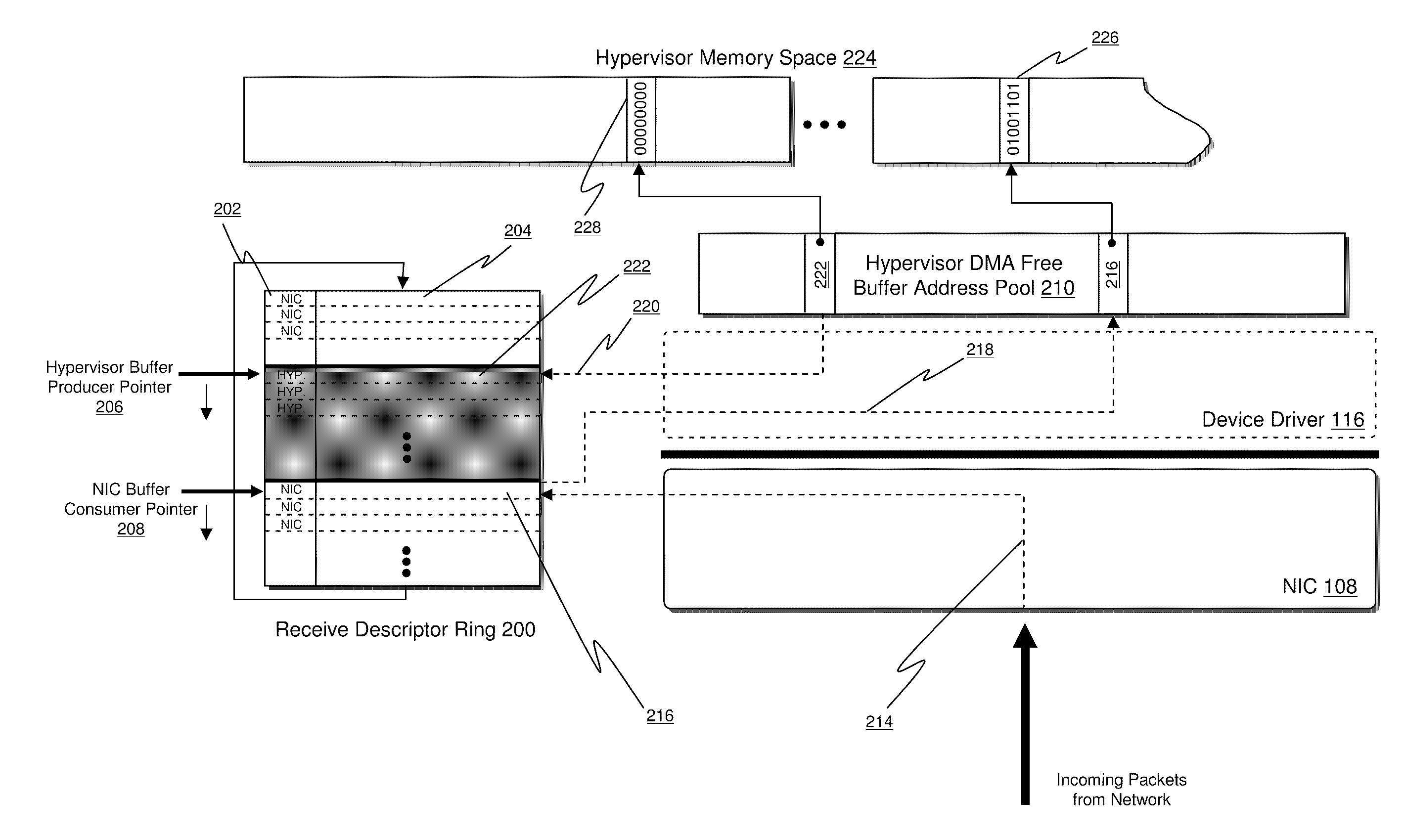

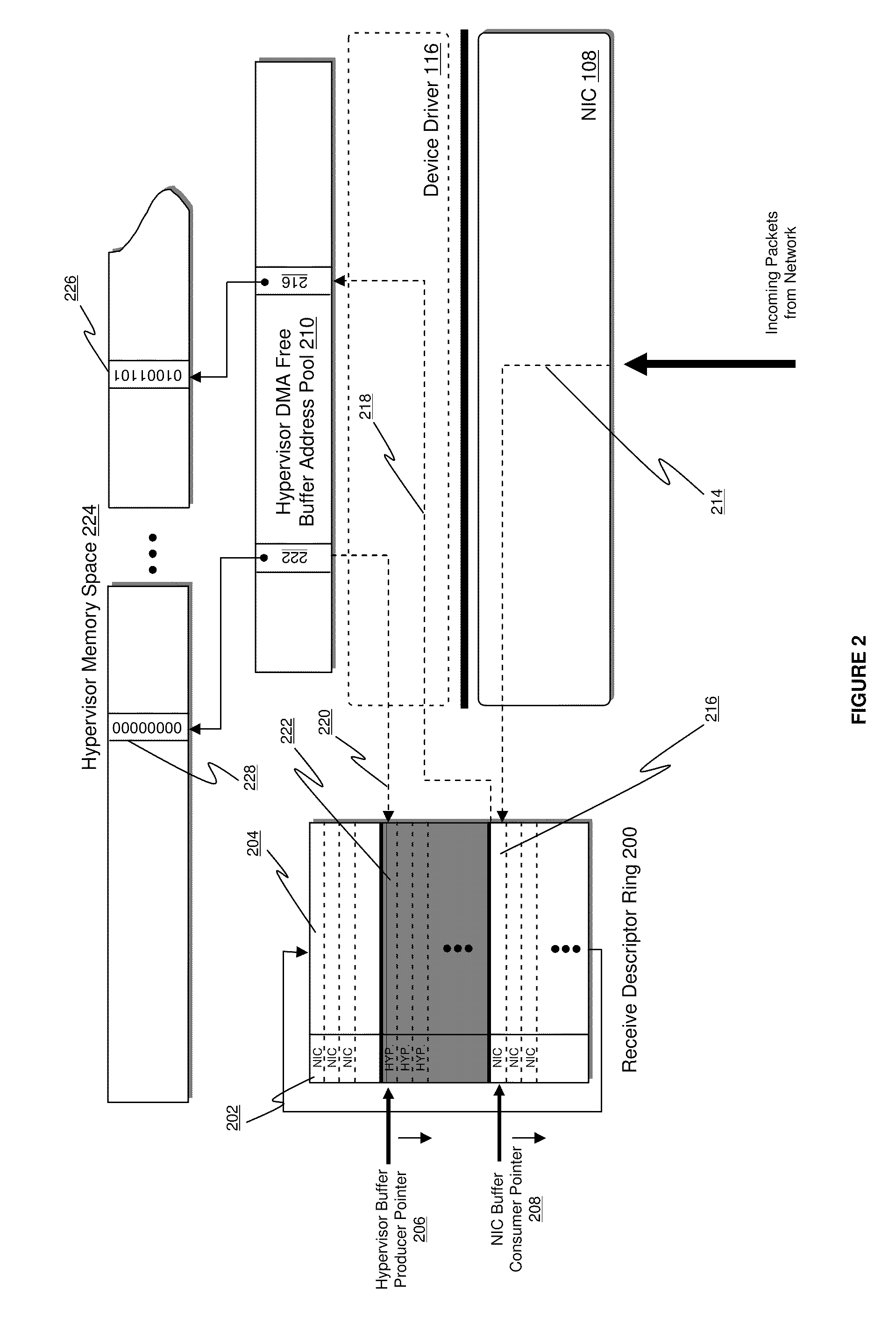

System and Method for Reducing Communication Overhead Between Network Interface Controllers and Virtual Machines

ActiveUS20100070677A1Reduce overheadMeet cutting requirementsMemory adressing/allocation/relocationComputer security arrangementsDirect memory accessOperational system

Available buffers in the memory space of a guest operating system of a virtual machine are provided to a network interface controller (NIC) for use during direct memory access (DMA) and the guest operating system is notified accordingly when data is written into such available buffers. These capabilities obviate the requirement of using hypervisor memory as a staging area to determine which virtual machine to forward incoming data.

Owner:VMWARE INC

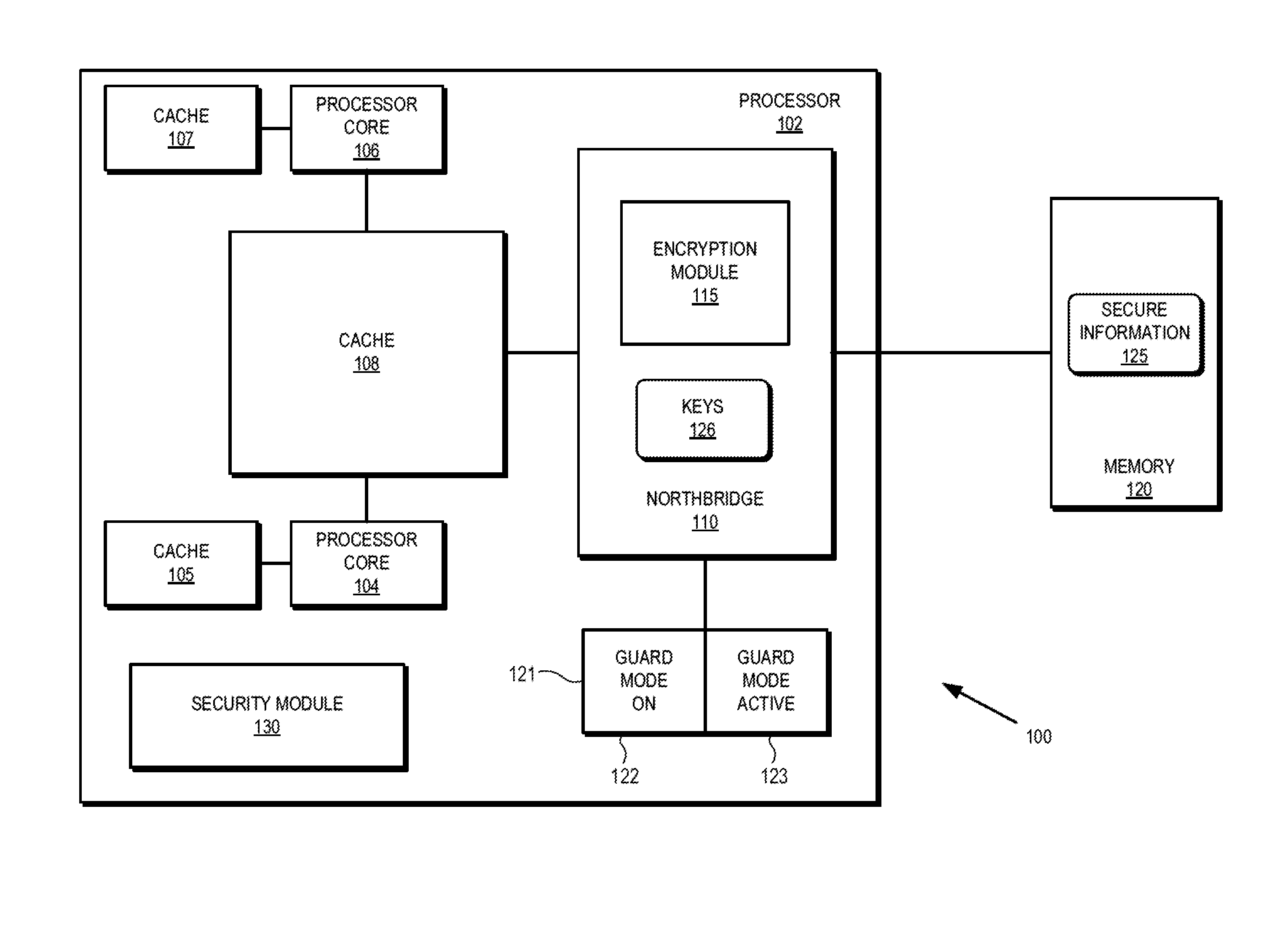

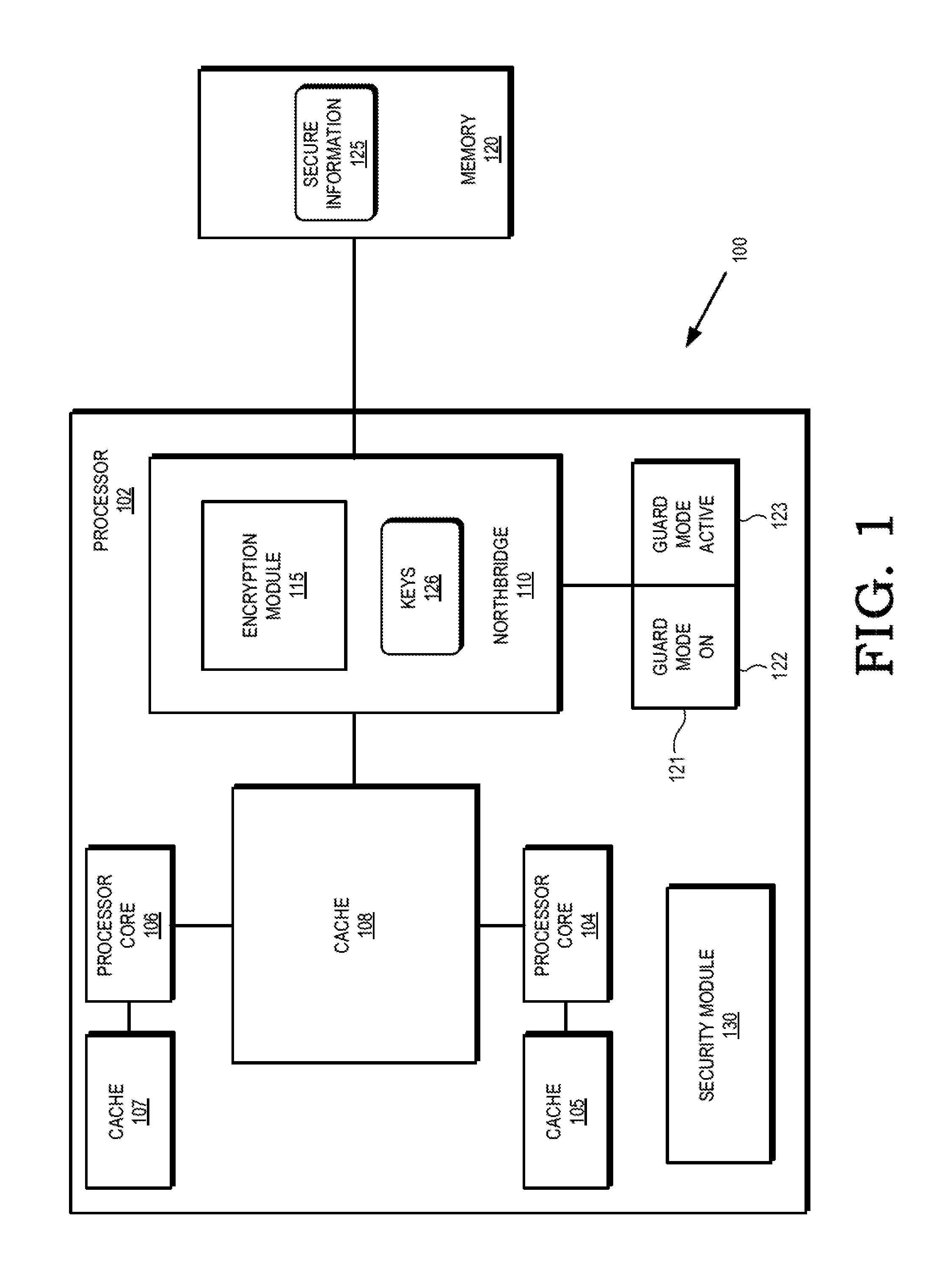

Cryptographic protection of information in a processing system

ActiveUS20150248357A1Memory architecture accessing/allocationUnauthorized memory use protectionComputer hardwareDirect memory access

A processor employs a hardware encryption module in the processor's memory access path to cryptographically isolate secure information. In some embodiments, the encryption module is located at a memory controller (e.g. northbridge) of the processor, and each memory access provided to the memory controller indicates whether the access is a secure memory access, indicating the data associated with the memory access is designated for cryptographic protection, or a non-secure memory access. For secure memory accesses, the encryption module performs encryption (for write accesses) or decryption (for read accesses) of the data associated with the memory access.

Owner:ADVANCED MICRO DEVICES INC

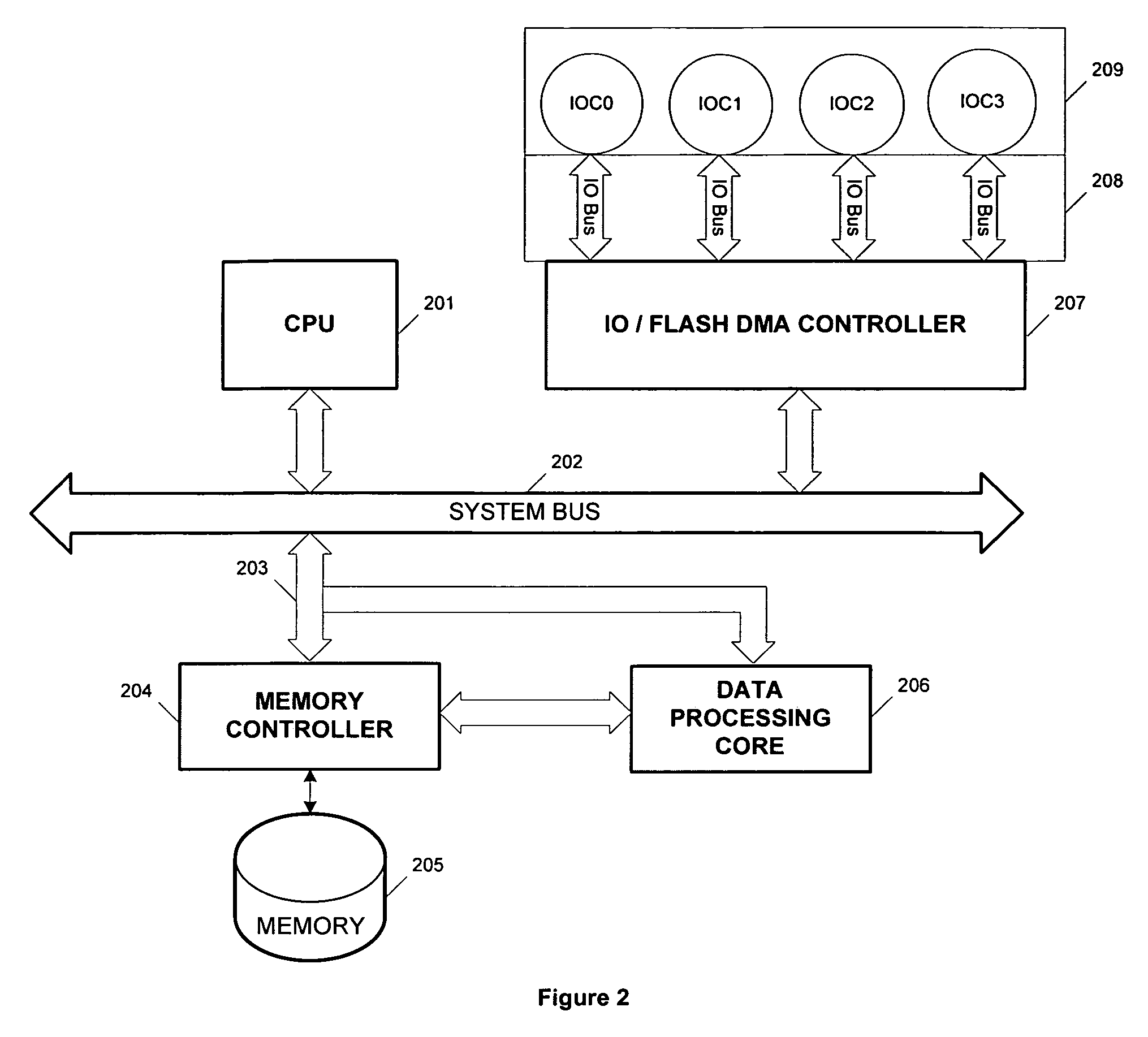

System and Method for Multicore Communication Processing

InactiveUS20080181245A1Effective latencySustaining media speedTime-division multiplexData switching by path configurationDirect memory accessProcessing core

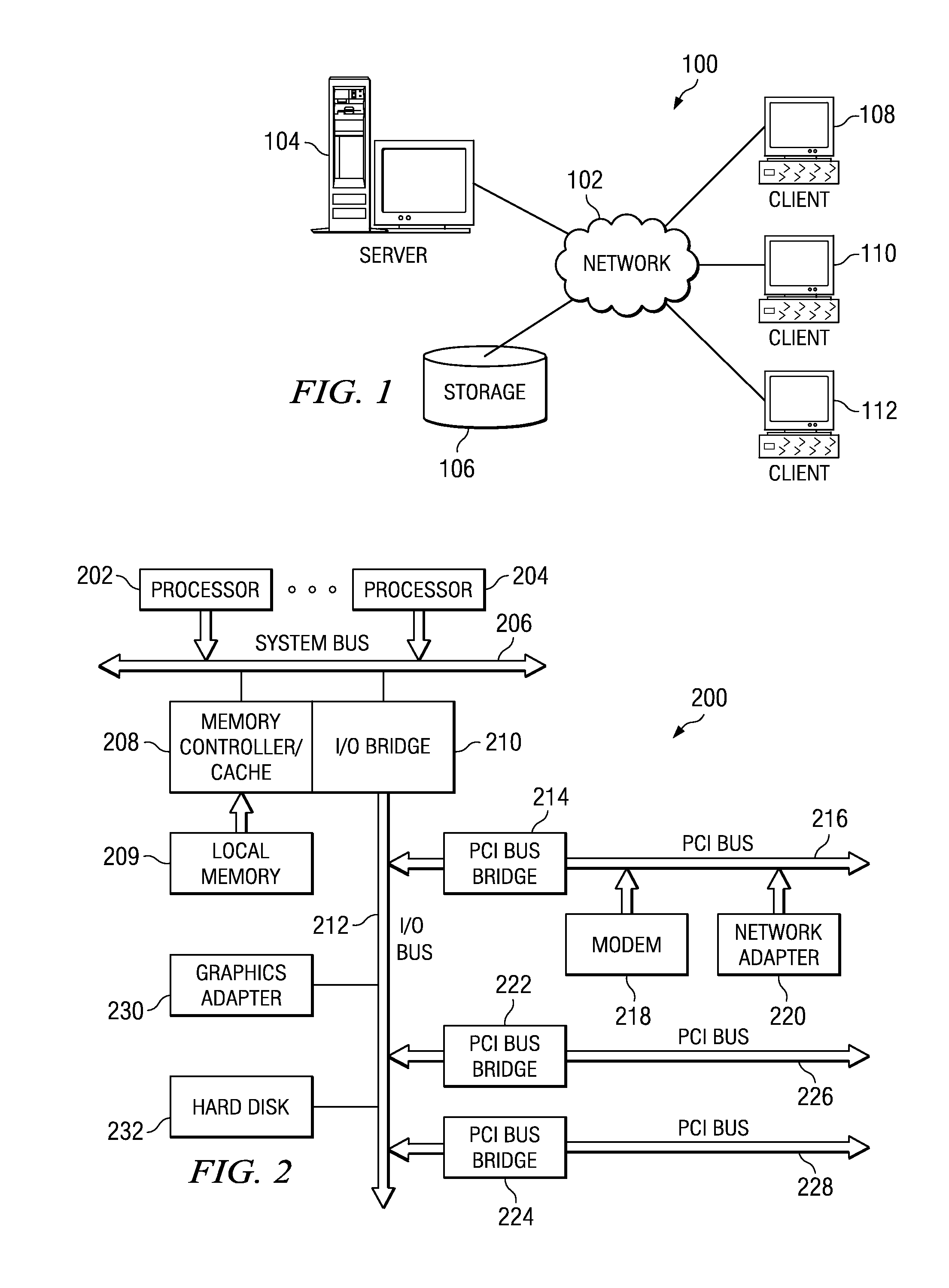

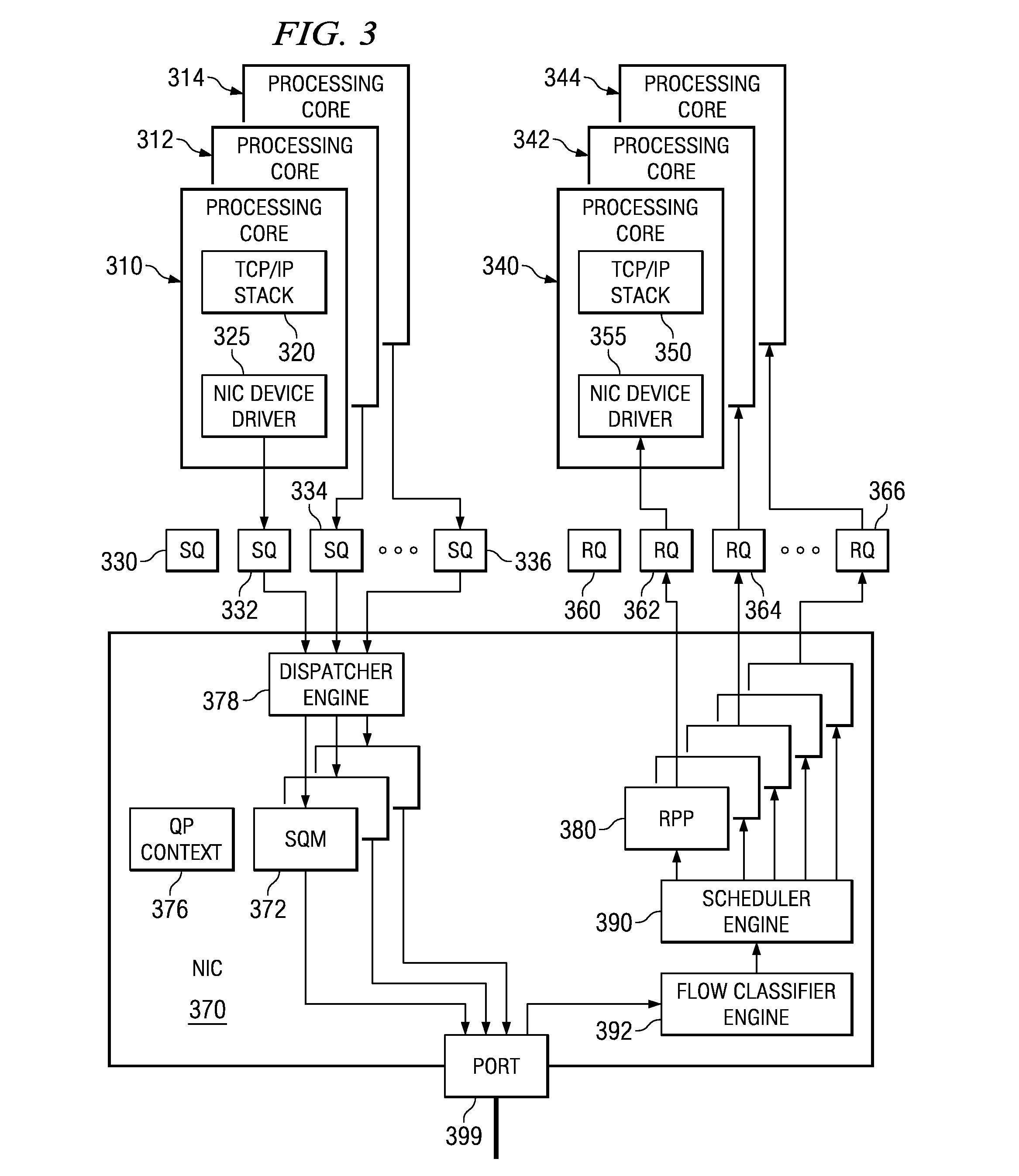

A system and method for multicore processing of communications between data processing devices are provided. With the mechanisms of the illustrative embodiments, a set of techniques that enables sustaining media speed by distributing transmit and receive-side processing over multiple processing cores is provided. In addition, these techniques also enable designing multi-threaded network interface controller (NIC) hardware that efficiently hides the latency of direct memory access (DMA) operations associated with data packet transfers over an input / output (I / O) bus. Multiple processing cores may operate concurrently using separate instances of a communication protocol stack and device drivers to process data packets for transmission with separate hardware implemented send queue managers in a network adapter processing these data packets for transmission. Multiple hardware receive packet processors in the network adapter may be used, along with a flow classification engine, to route received data packets to appropriate receive queues and processing cores for processing.

Owner:IBM CORP

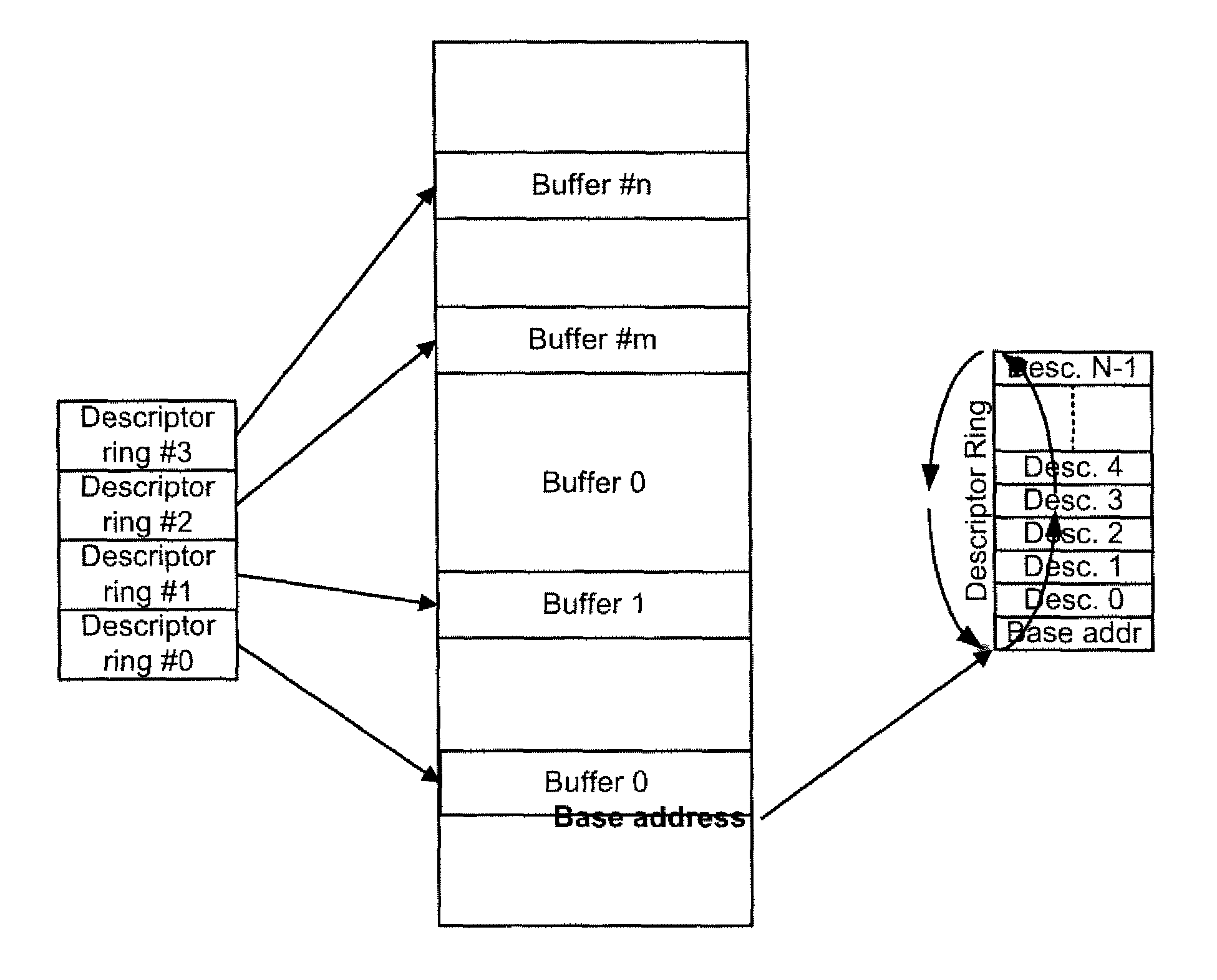

Packet buffer apparatus and method

In managing and buffering packet data for transmission out of a host, descriptor ring data is pushed in from a host memory into a descriptor ring cache and cached therein. The descriptor ring data is processed to read a data packet descriptor, and a direct memory access is initiated to the host to read the data packet corresponding to the read data packet descriptor to a data transmission buffer. The data packet is written by the direct memory access into the data transmission buffer and cached therein. A return pointer is written to the host memory by the direct memory access indicating that the data packet descriptor has been read and the corresponding data packet has been transmitted. In managing and buffering packet data for transmission to a host, descriptor ring data is pushed in from a host memory into a descriptor ring cache and cached therein. Data packets for transmission to the host memory are received and cached in a data reception buffer. Data is read from the data reception buffer according to a data packet descriptor retrieved from the descriptor ring cache, and the data packet is written to a data reception queue within the host memory by a direct memory access. A return pointer is written to the host memory by the direct memory access indicating that the data packet has been written.

Owner:MARVELL ASIA PTE LTD

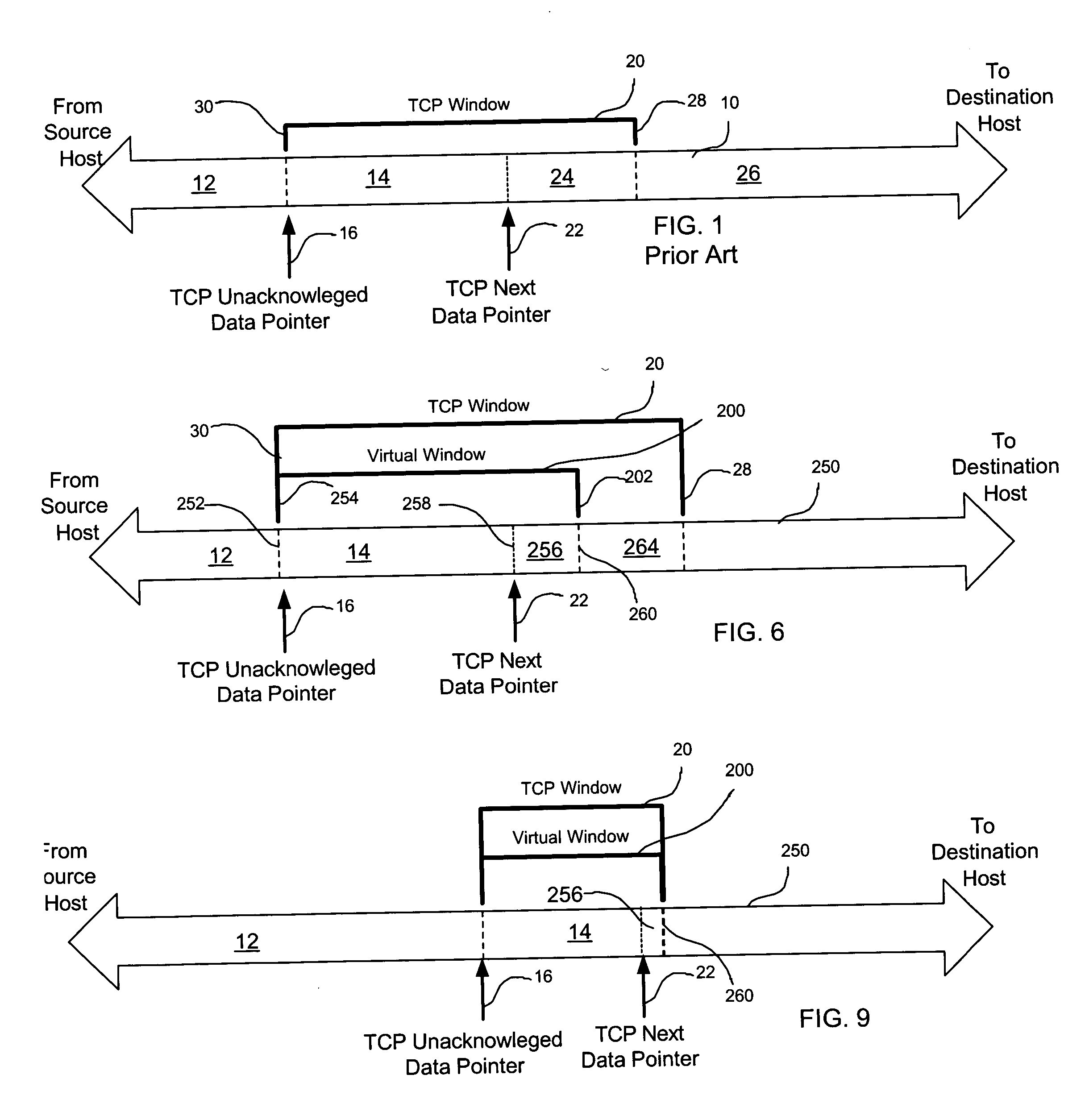

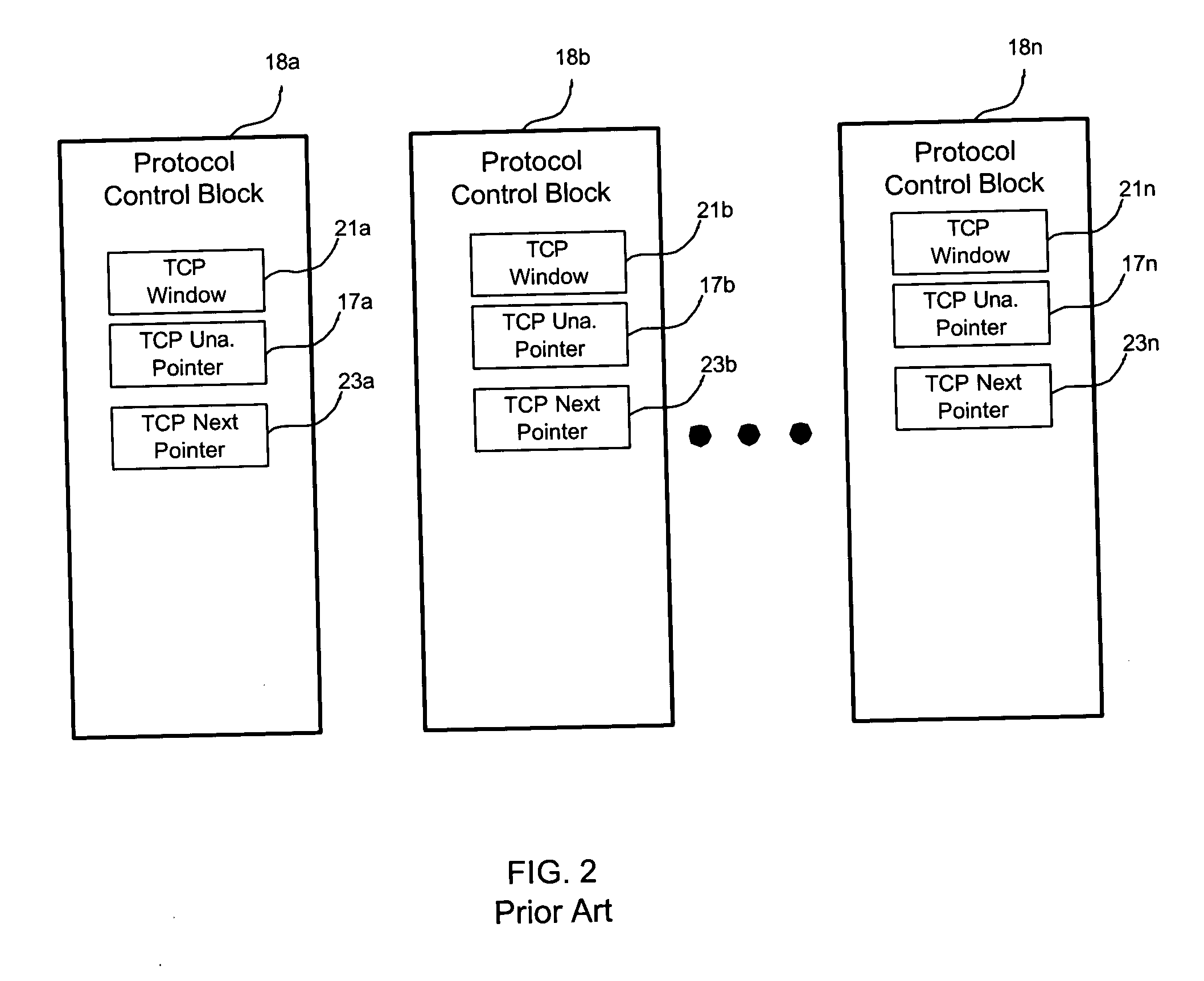

Method, system, and program for managing data transmission through a network

InactiveUS20050060442A1Data switching networksElectric digital data processingDirect memory accessData transmission

Provided are a method, system, and program for managing data transmission from a source to a destination through a network. The destination imposes a window value on the source which limits the quantity of data packets which can be sent from the source to the destination without receiving an acknowledgment of being received by the destination. In one embodiment, the source imposes a second window value, smaller than the destination window value, which limits even further the quantity of data packets which can be sent from the source to the destination without receiving an acknowledgment of being received by the destination. In another embodiment, a plurality of direct memory access connections are established between the source and a plurality of specified memory locations of a plurality of destinations. The source imposes a plurality of message limits, each message limit imposing a separate limit for each direct memory access connection on the quantity of messages sent from the source to the specified memory location of the direct memory access connection associated with the message limit and lacking a message acknowledgment of being received by the destination of the direct memory access connection associated with the message limit.

Owner:INTEL CORP

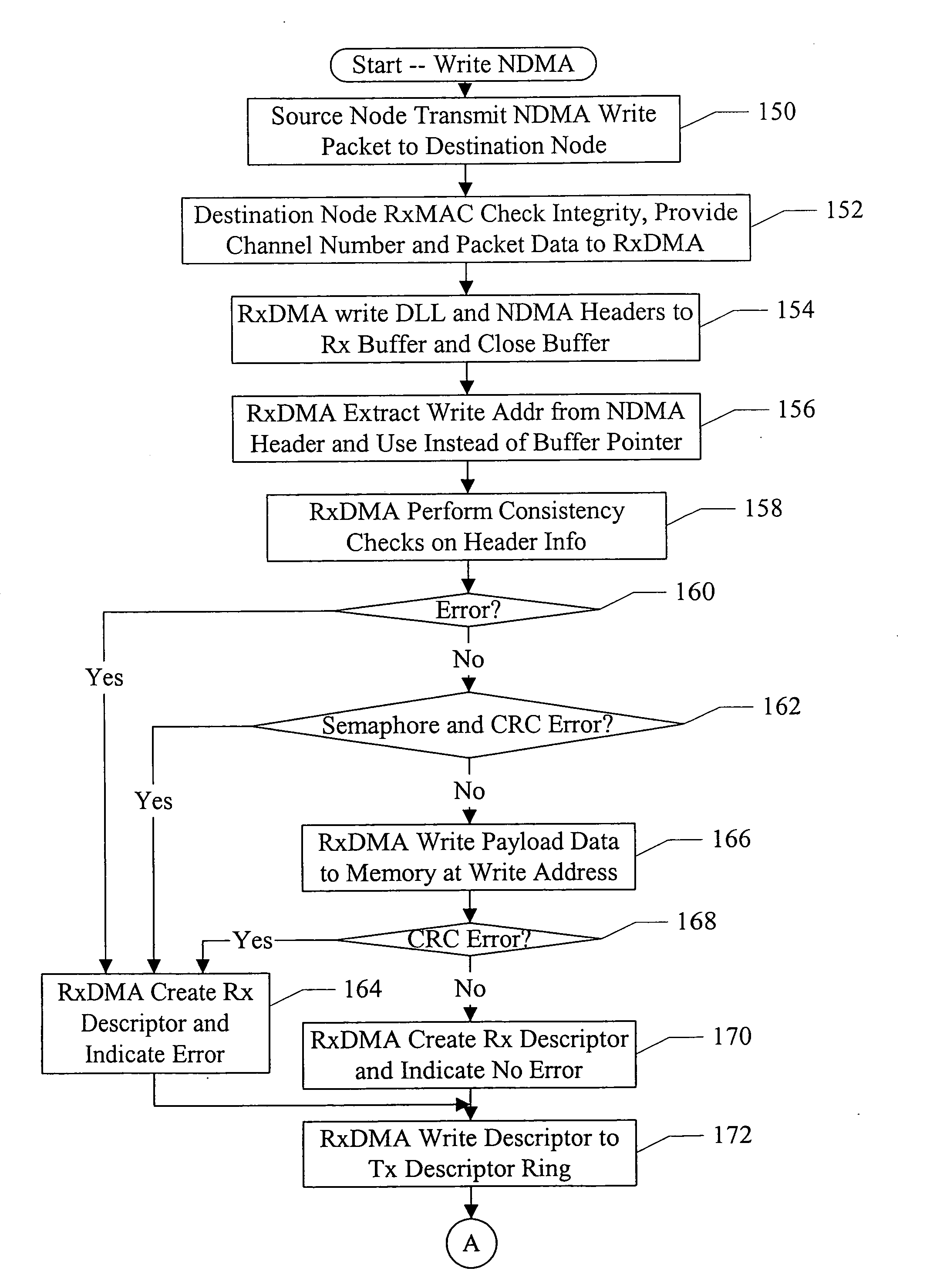

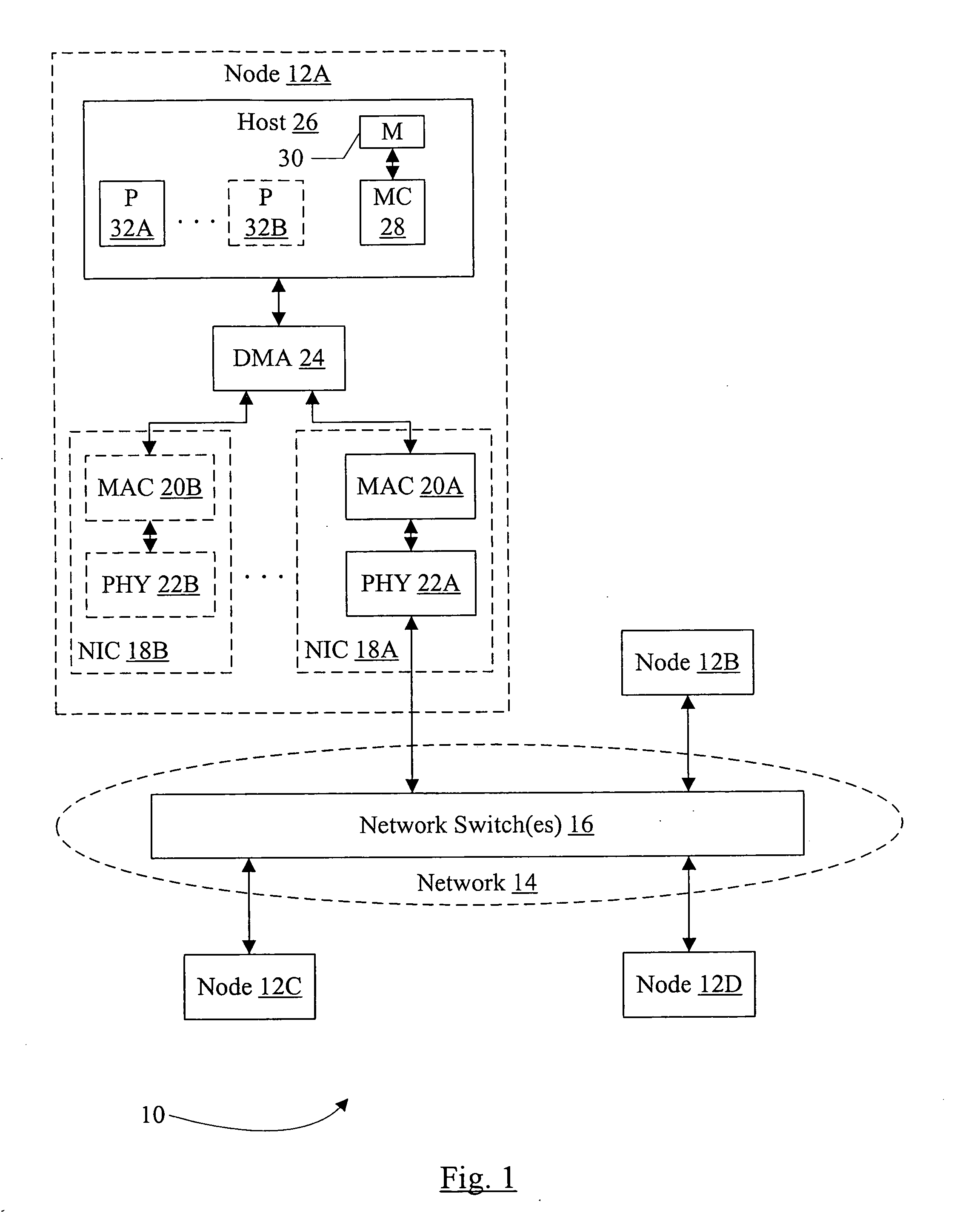

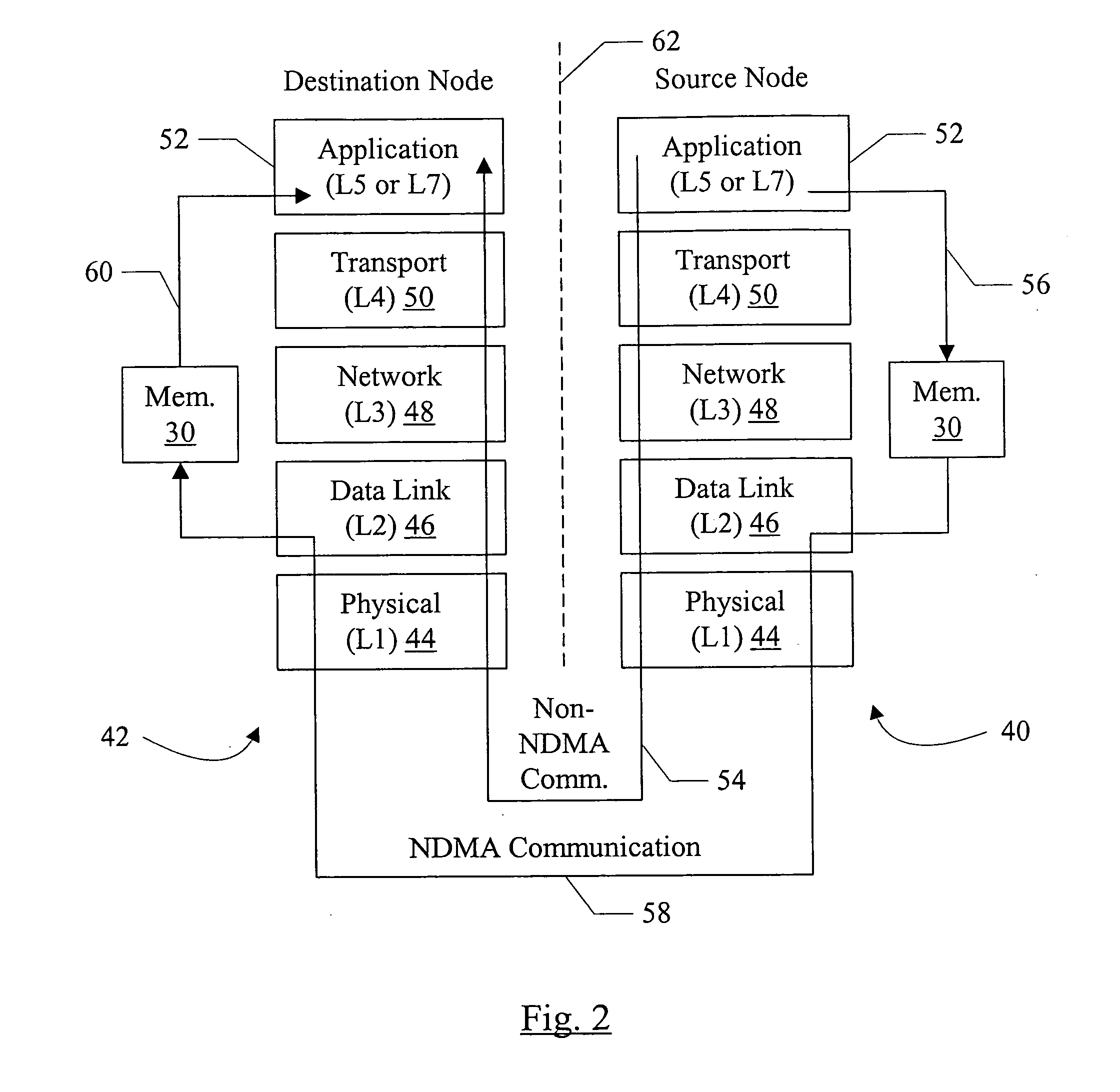

Network direct memory access

InactiveUS20080043732A1Data switching by path configurationMultiple digital computer combinationsDirect memory accessRemote direct memory access

In one embodiment, a system comprises at least a first node and a second node coupled to a network. The second node comprises a local memory and a direct memory access (DMA) controller coupled to the local memory. The first node is configured to transmit at least a first packet to the second node to access data in the local memory and at least one other packet that is not coded to access the local memory. The second node is configured to capture the packet from a data link layer of a protocol stack, and wherein the DMA controller is configured to perform one more transfers with the local memory to access the data specified by the first packet responsive to the first packet received from the data link layer. The second node is configured to process the other packet to a top of the protocol stack.

Owner:APPLE INC

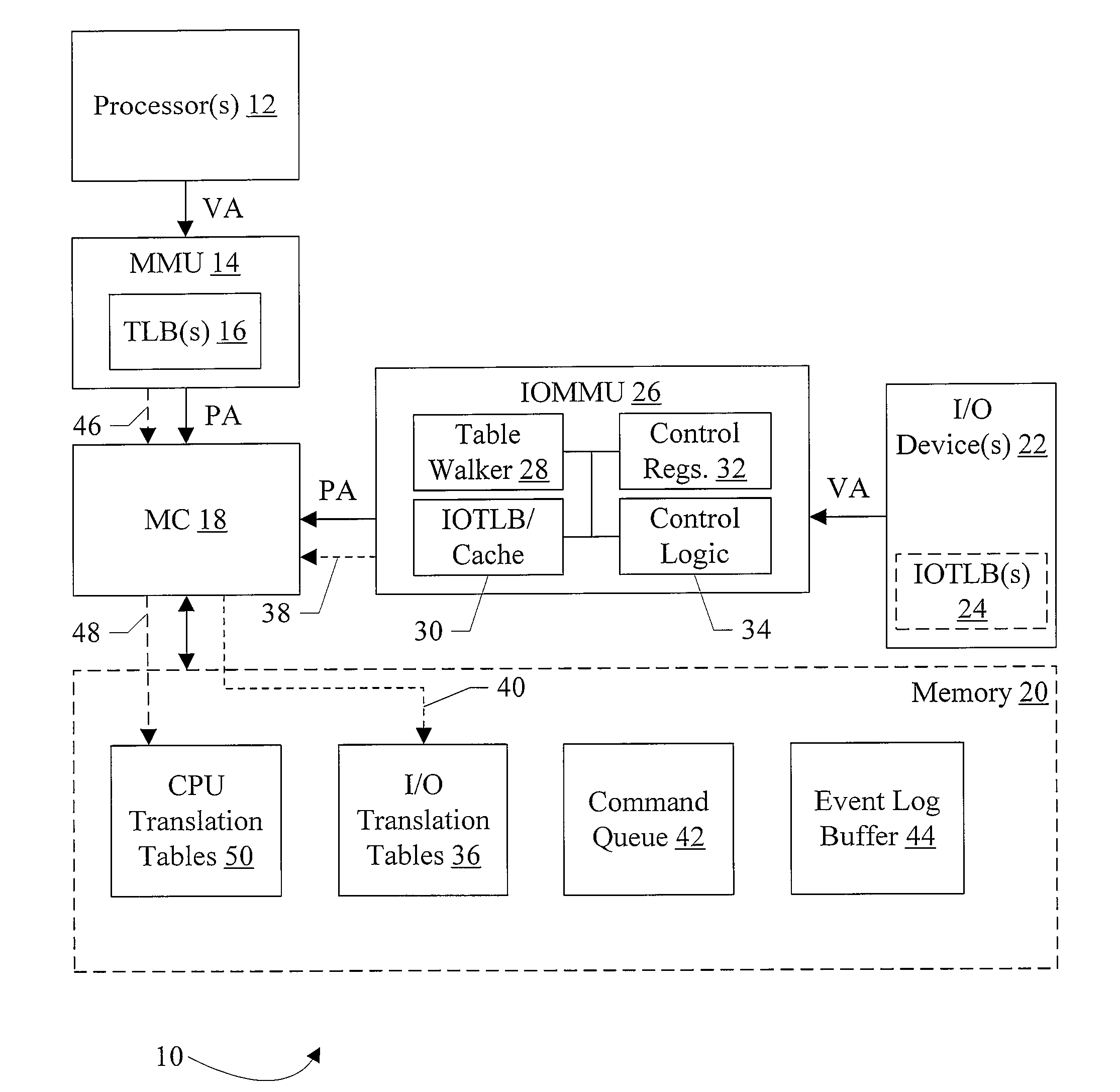

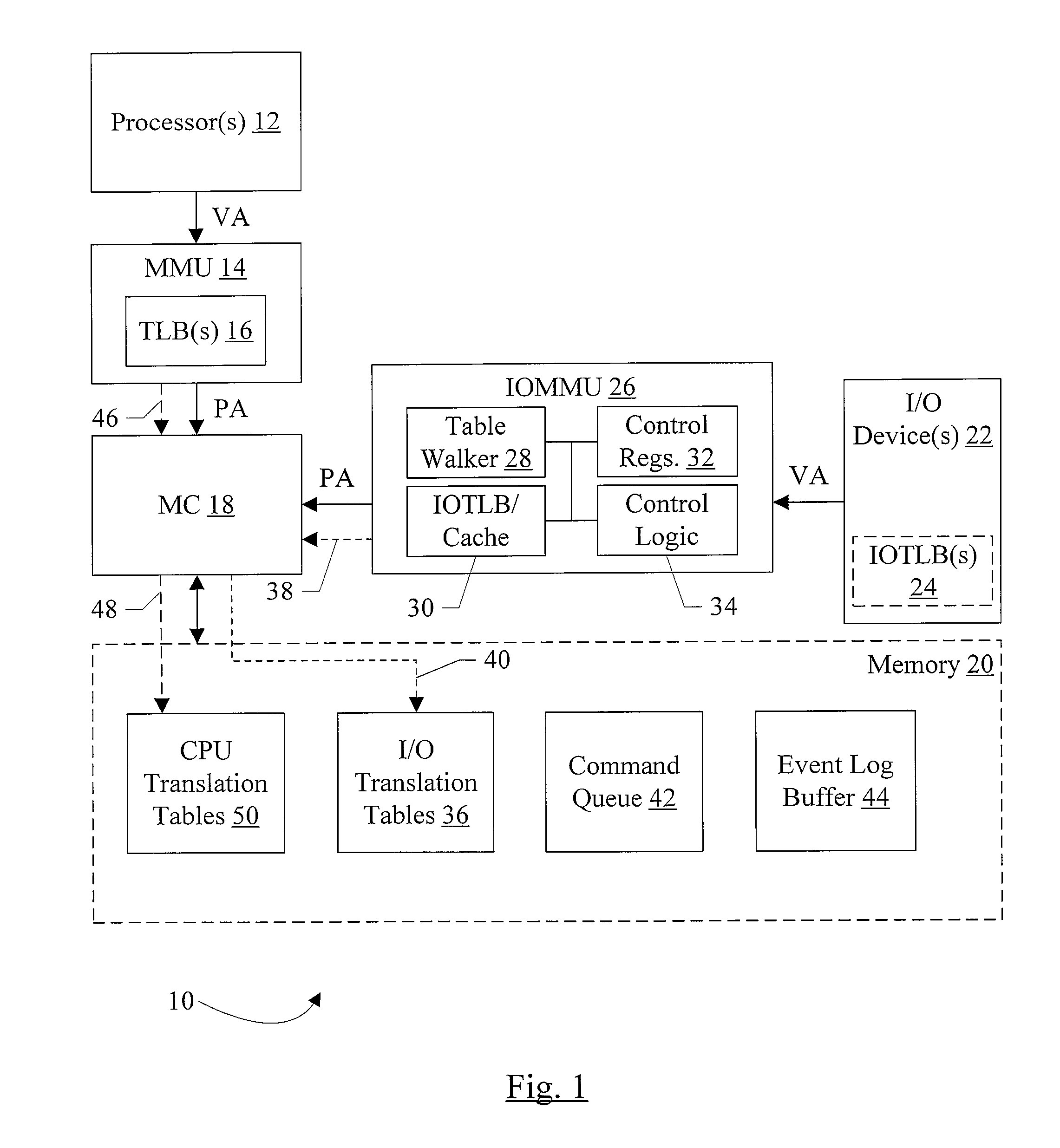

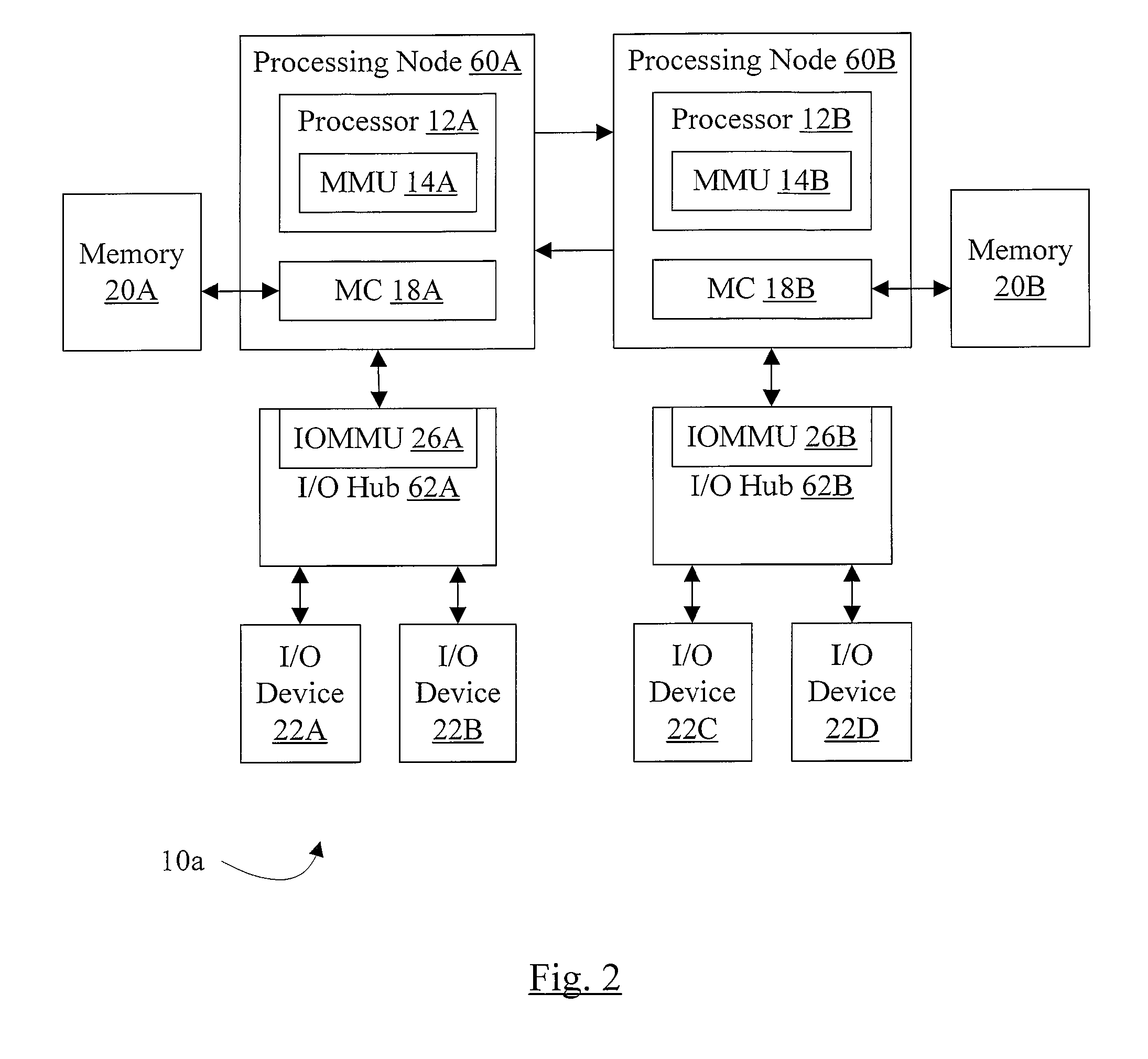

Translation Data Prefetch in an IOMMU

ActiveUS20080209130A1Memory architecture accessing/allocationMemory adressing/allocation/relocationDirect memory accessManagement unit

In an embodiment, a system memory stores a set of input / output (I / O) translation tables. One or more I / O devices initiate direct memory access (DMA) requests including virtual addresses. An I / O memory management unit (IOMMU) is coupled to the I / O devices and the system memory, wherein the IOMMU is configured to translate the virtual addresses in the DMA requests to physical addresses to access the system memory according to an I / O translation mechanism implemented by the IOMMU. The IOMMU comprises one or more caches, and is configured to read translation data from the I / O translation tables responsive to a prefetch command that specifies a first virtual address. The reads are responsive to the first virtual address and the I / O translation mechanism, and the IOMMU is configured to store data in the caches responsive to the read translation data.

Owner:GLOBALFOUNDRIES US INC

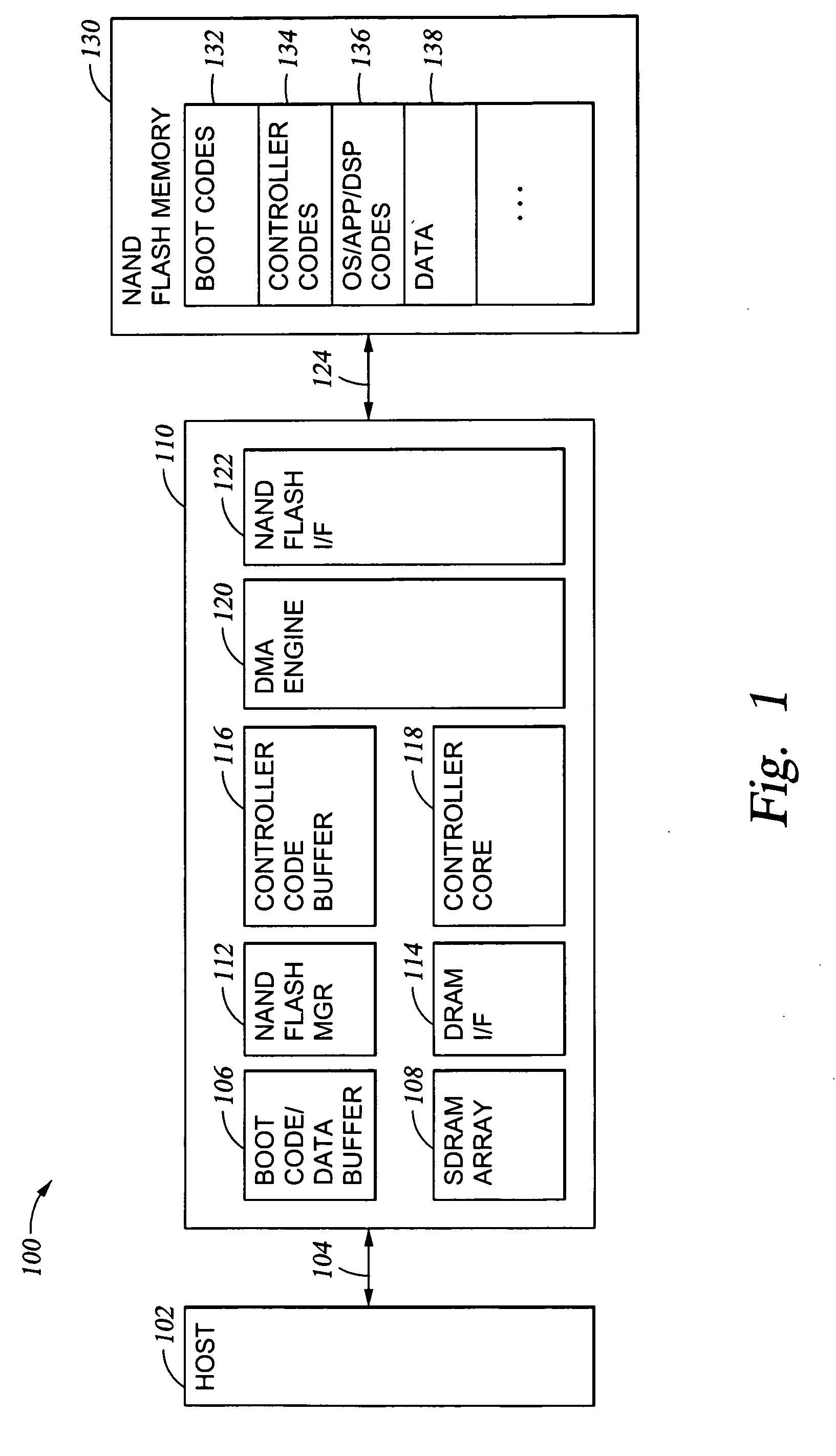

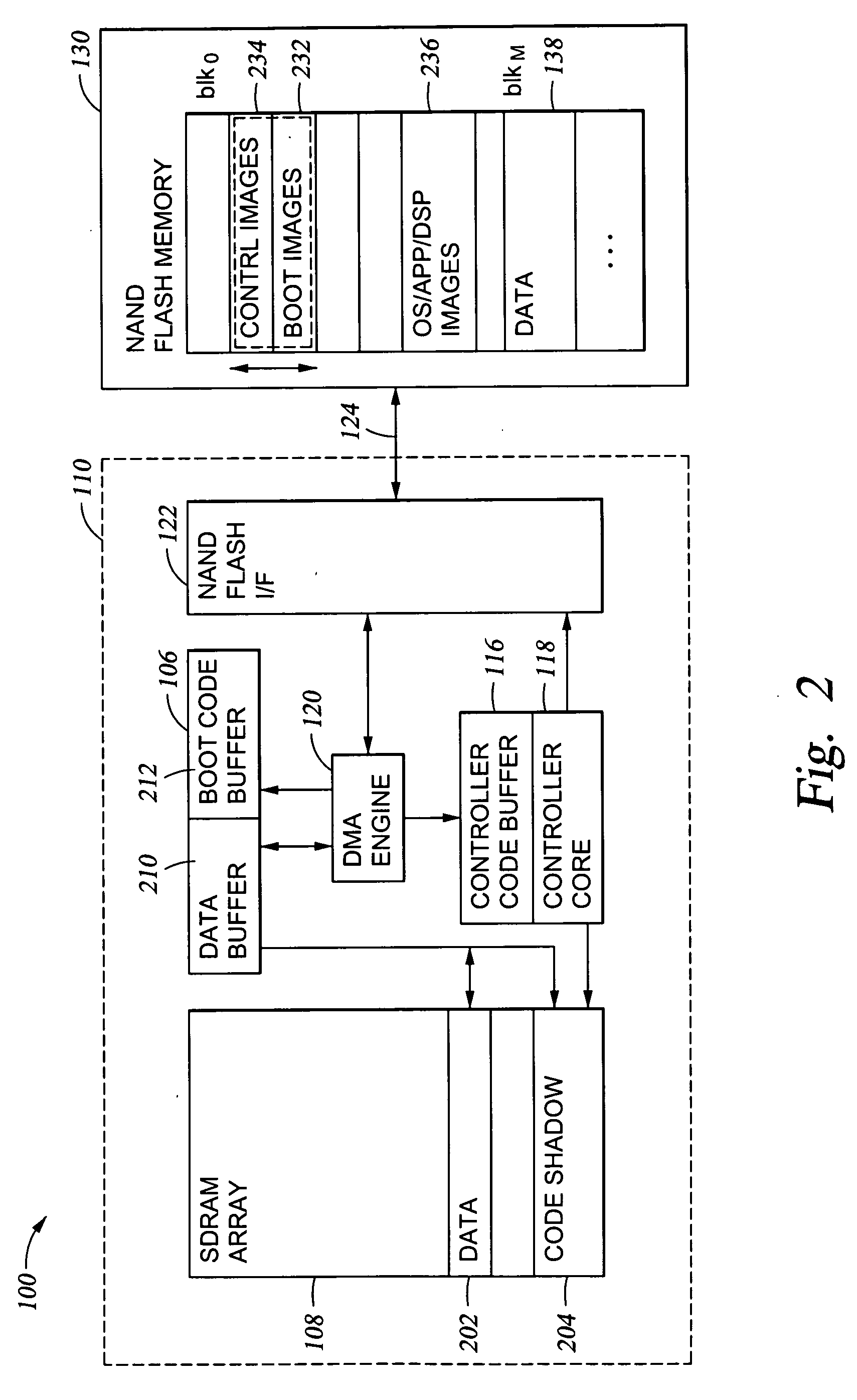

Method of system booting with a direct memory access in a new memory architecture

Embodiments of the invention provide a method and apparatus for initializing a computer system, wherein the computer system includes a processor, a volatile memory, and a non-volatile memory. In one embodiment, the method includes, when the computer system is initialized, automatically copying initialization code stored in the non-volatile memory to the volatile memory, wherein circuitry in the volatile memory automatically creates the copy, and executing, by the processor, the copy of the initialization code from the volatile memory.

Owner:POLARIS INNOVATIONS LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com