Patents

Literature

53 results about "TCP offload engine" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

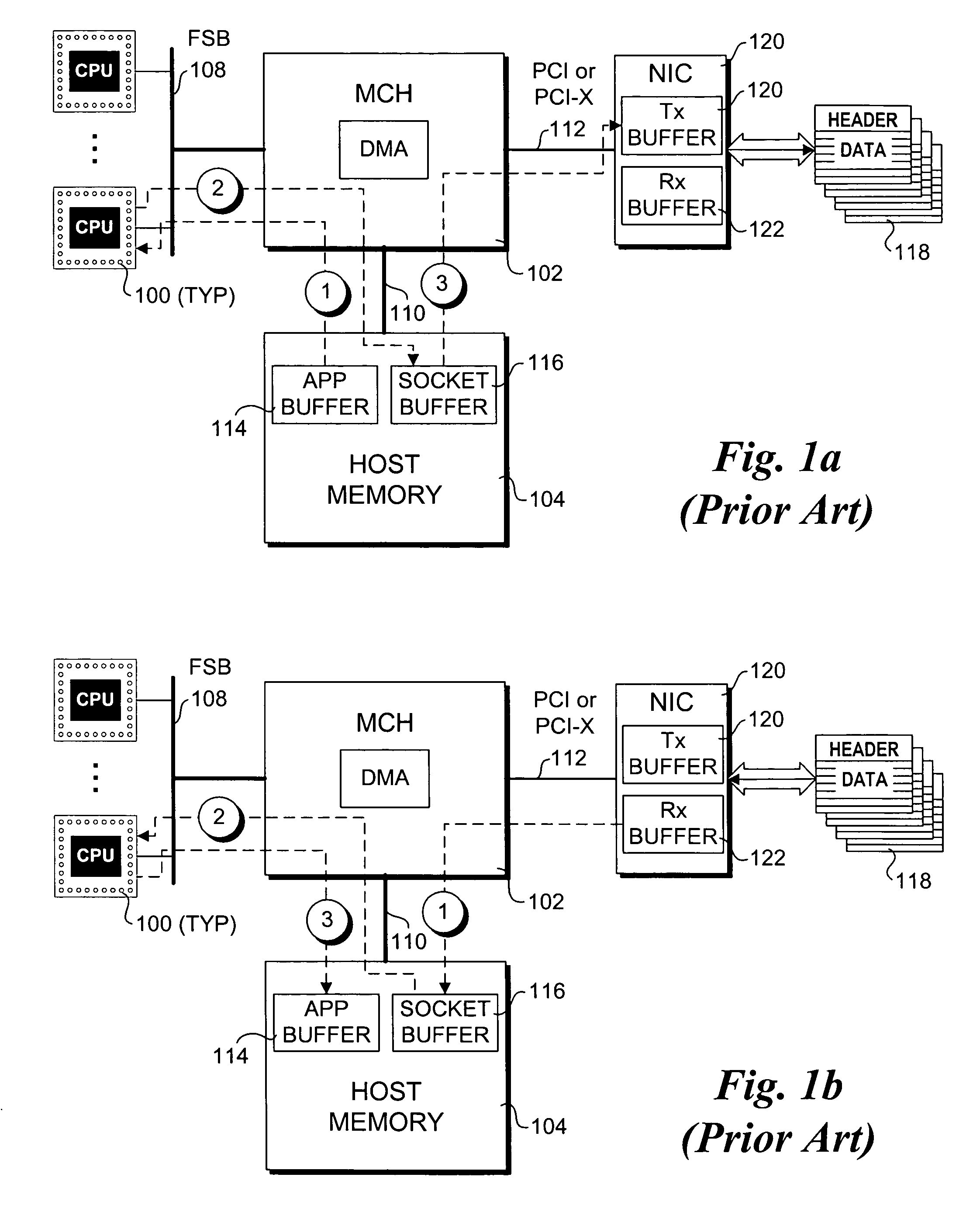

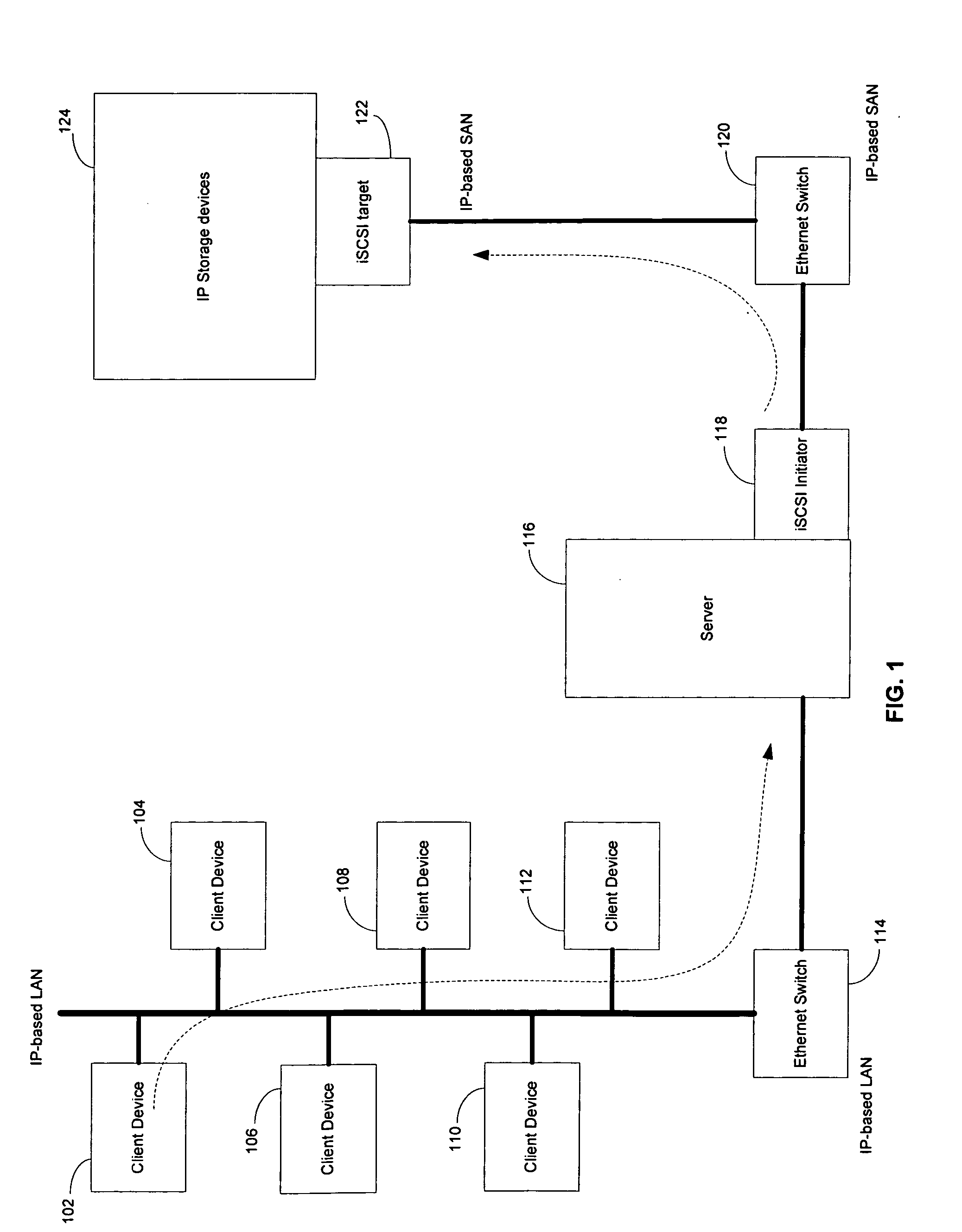

TCP offload engine (TOE) is a technology used in network interface cards (NIC) to offload processing of the entire TCP/IP stack to the network controller. It is primarily used with high-speed network interfaces, such as gigabit Ethernet and 10 Gigabit Ethernet, where processing overhead of the network stack becomes significant.

TCP/IP offload device with reduced sequential processing

ActiveUS6996070B2Block valueNarrow structureError preventionTransmission systemsProtocol processingState variable

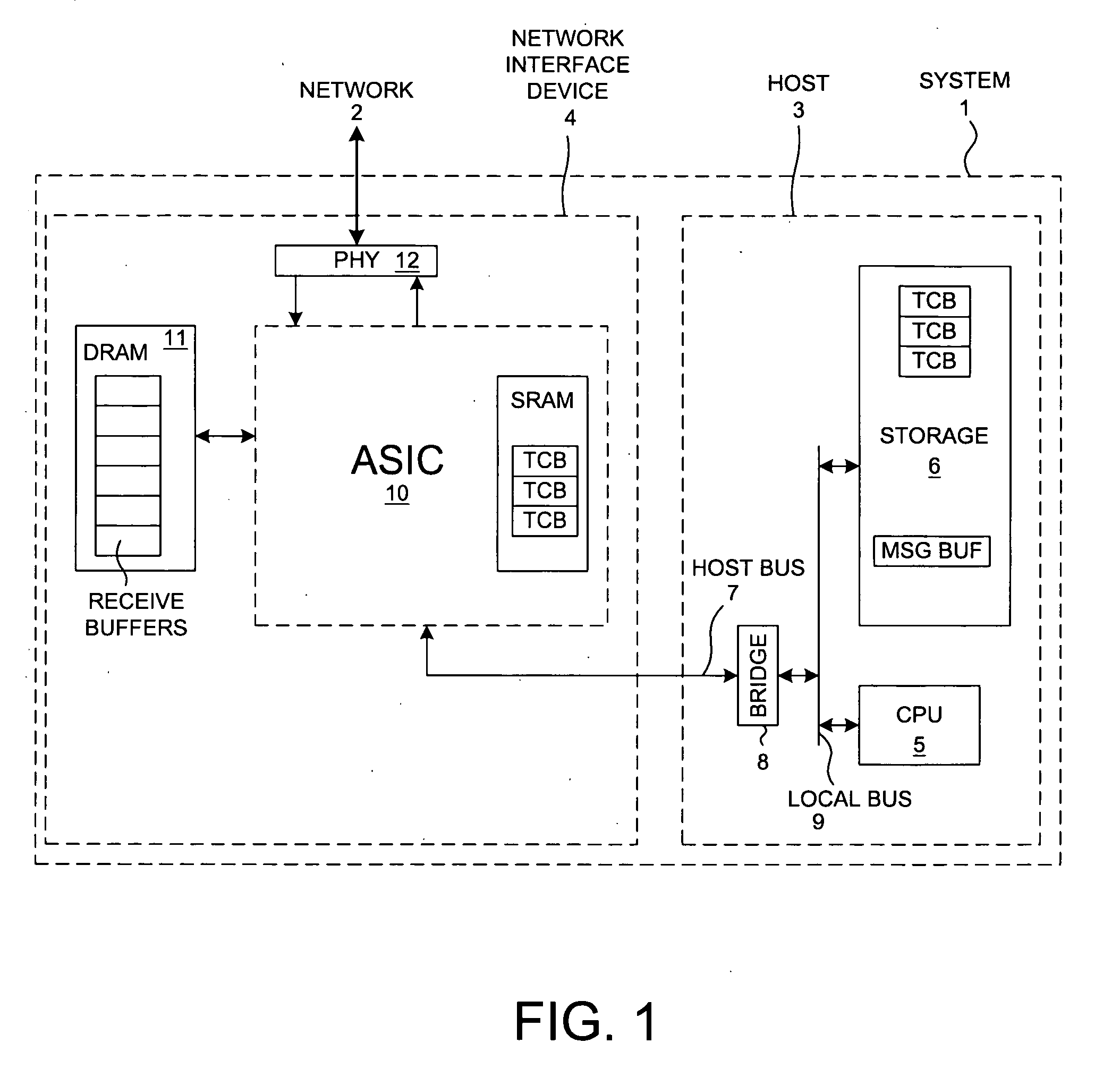

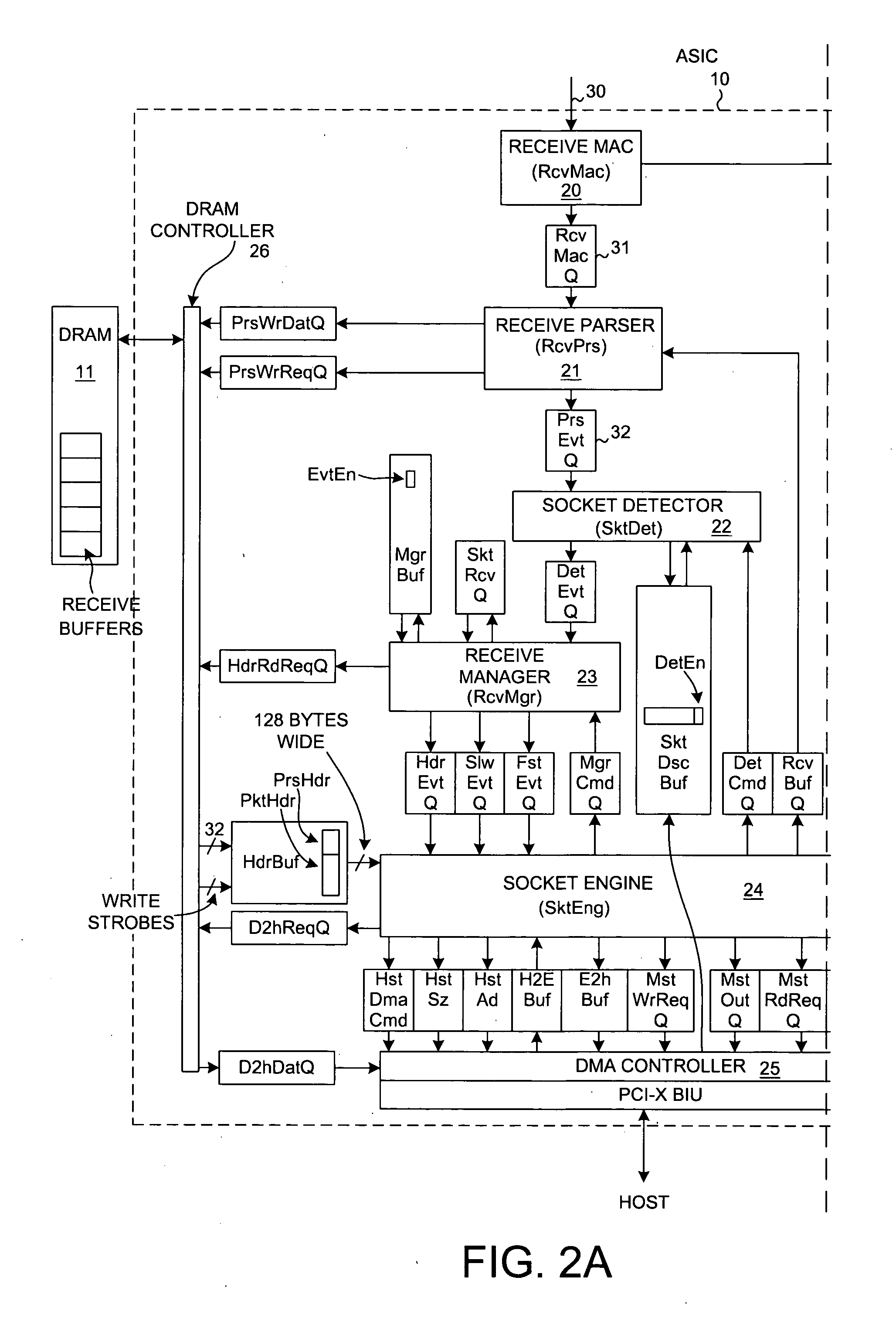

A TCP Offload Engine (TOE) device includes a state machine that performs TCP / IP protocol processing operations in parallel. In a first aspect, the state machine includes a first memory, a second memory, and combinatorial logic. The first memory stores and simultaneously outputs multiple TCP state variables. The second memory stores and simultaneously outputs multiple header values. In contrast to a sequential processor technique, the combinatorial logic generates a flush detect signal from the TCP state variables and header values without performing sequential processor instructions or sequential memory accesses. In a second aspect, a TOE includes a state machine that performs an update of multiple TCP state variables in a TCB buffer all simultaneously, thereby avoiding multiple sequential writes to the TCB buffer memory. In a third aspect, a TOE involves a state machine that sets up a DMA move in a single state machine clock cycle.

Owner:ALACRITECH

TCP/IP offload device with reduced sequential processing

ActiveUS20050122986A1Reduce power consumptionLess-expensive memoryError preventionTransmission systemsProtocol processingState variable

A TCP Offload Engine (TOE) device includes a state machine that performs TCP / IP protocol processing operations in parallel. In a first aspect, the state machine includes a first memory, a second memory, and combinatorial logic. The first memory stores and simultaneously outputs multiple TCP state variables. The second memory stores and simultaneously outputs multiple header values. In contrast to a sequential processor technique, the combinatorial logic generates a flush detect signal from the TCP state variables and header values without performing sequential processor instructions or sequential memory accesses. In a second aspect, a TOE includes a state machine that performs an update of multiple TCP state variables in a TCB buffer all simultaneously, thereby avoiding multiple sequential writes to the TCB buffer memory. In a third aspect, a TOE involves a state machine that sets up a DMA move in a single state machine clock cycle.

Owner:ALACRITECH

Hardware-based multi-threading for packet processing

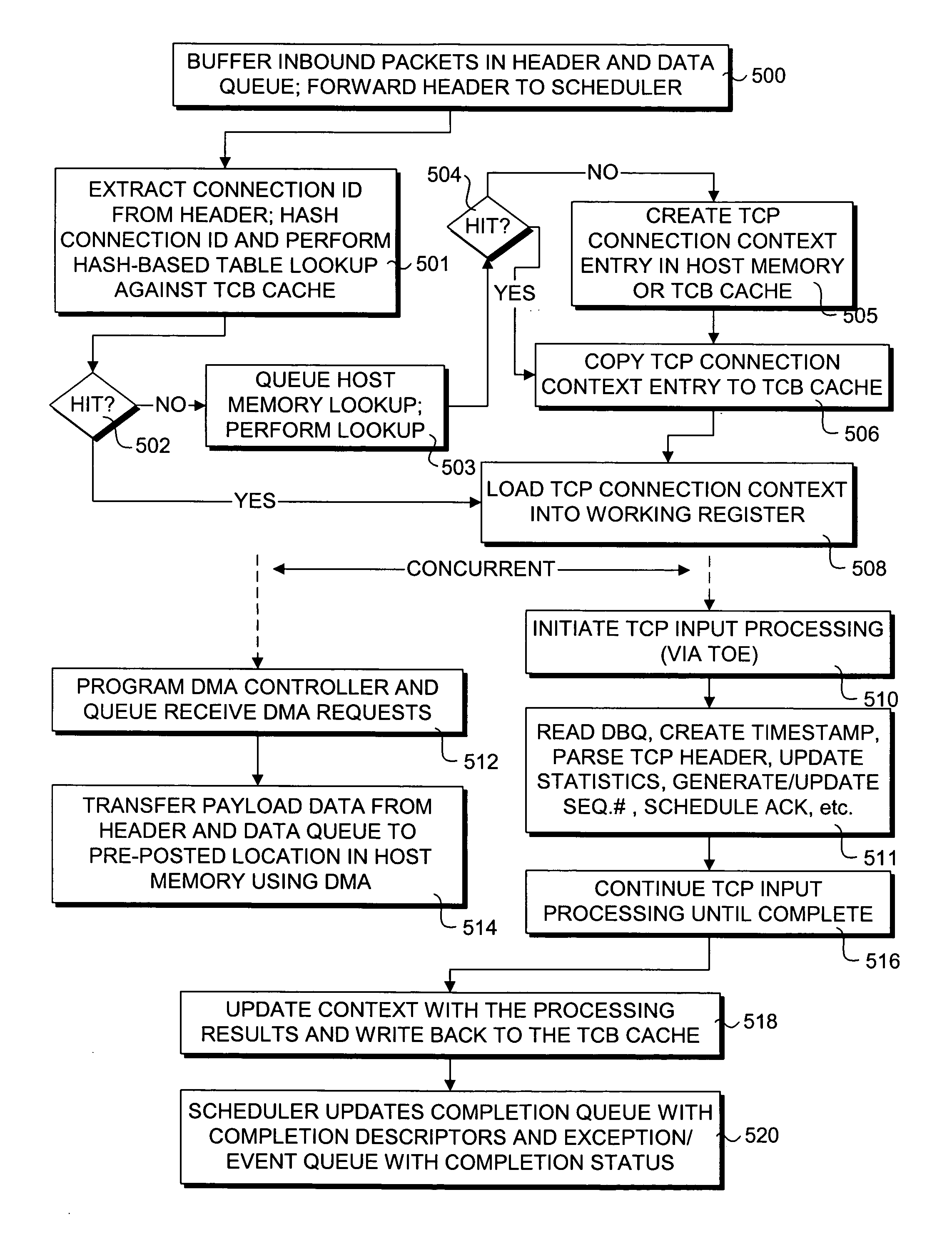

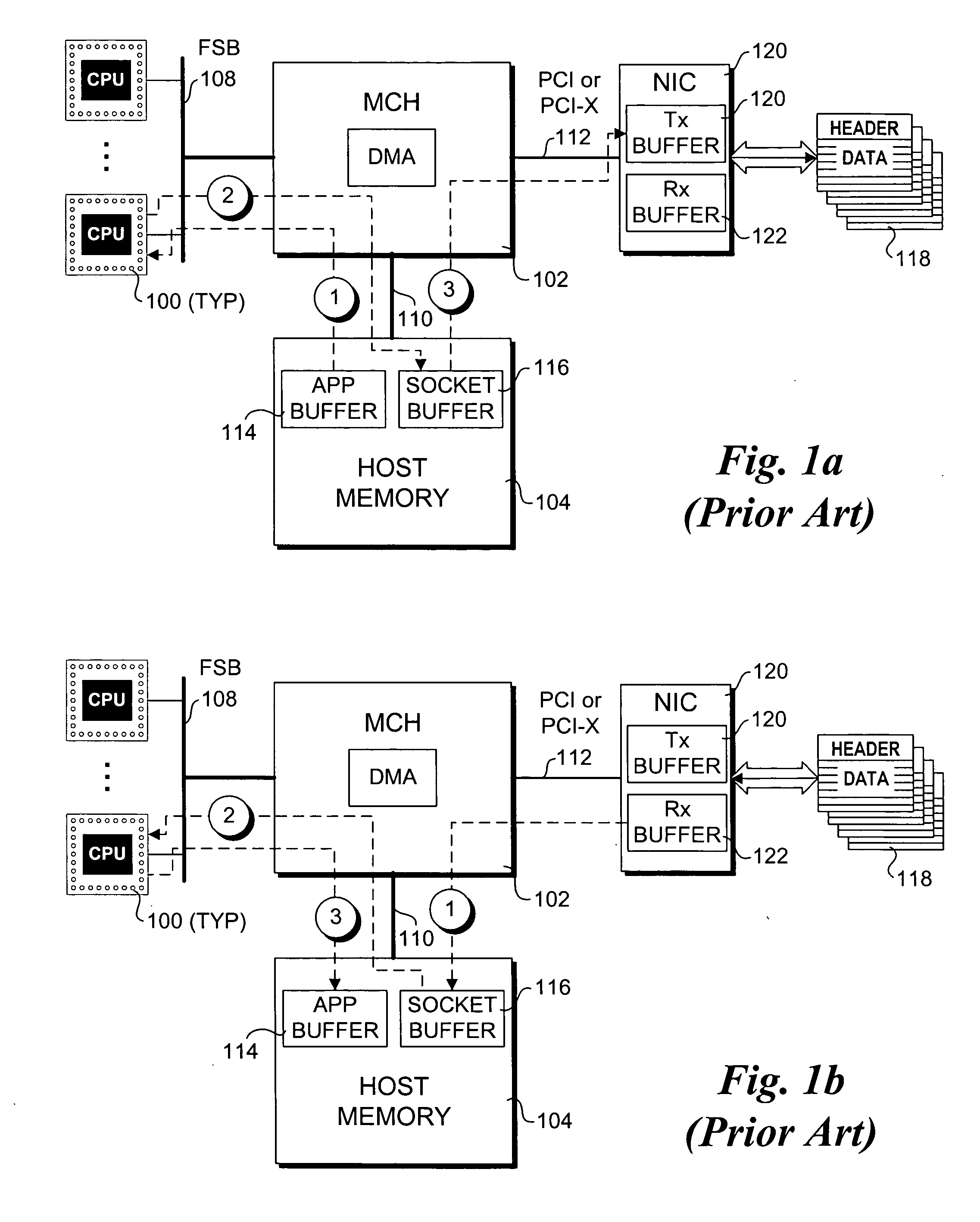

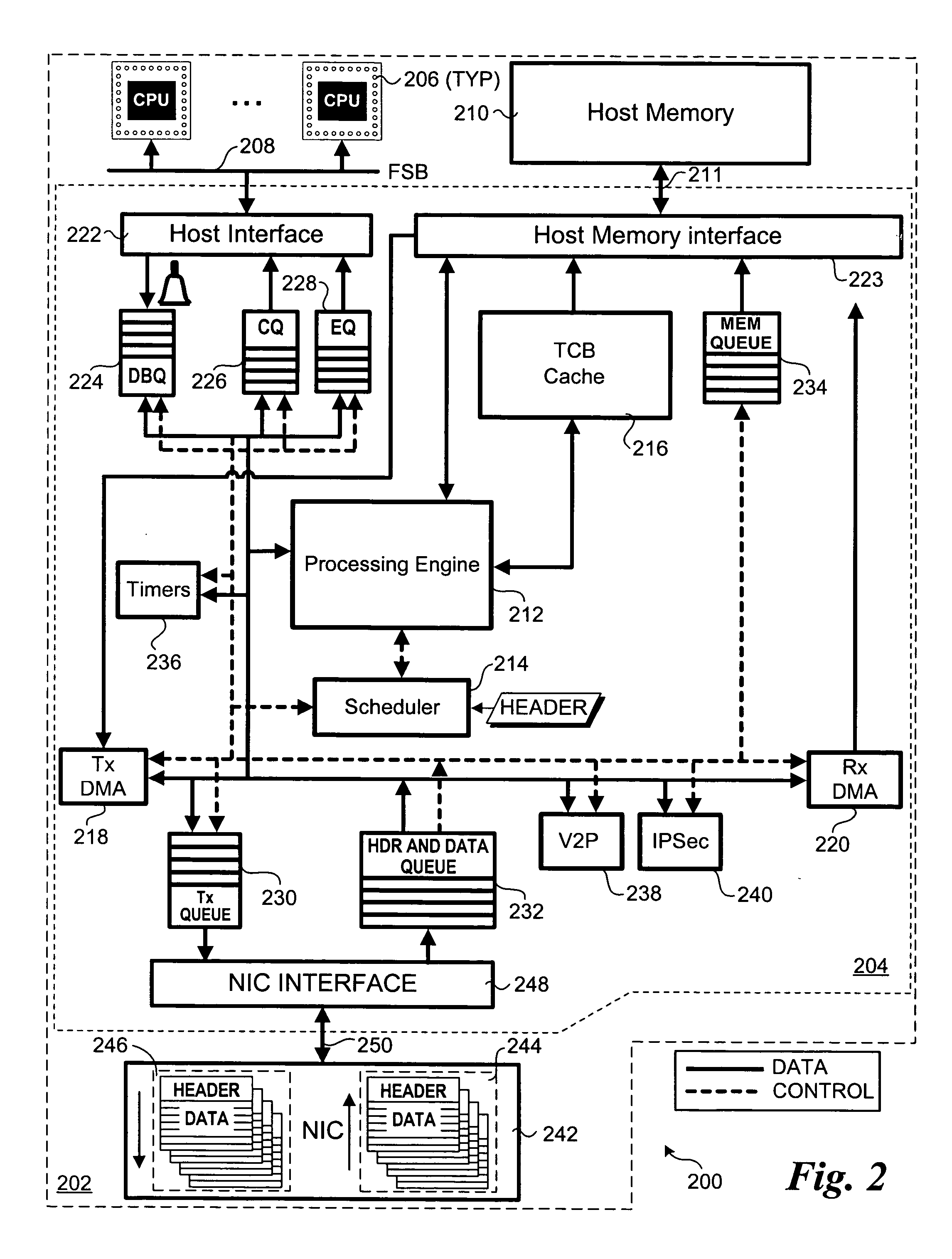

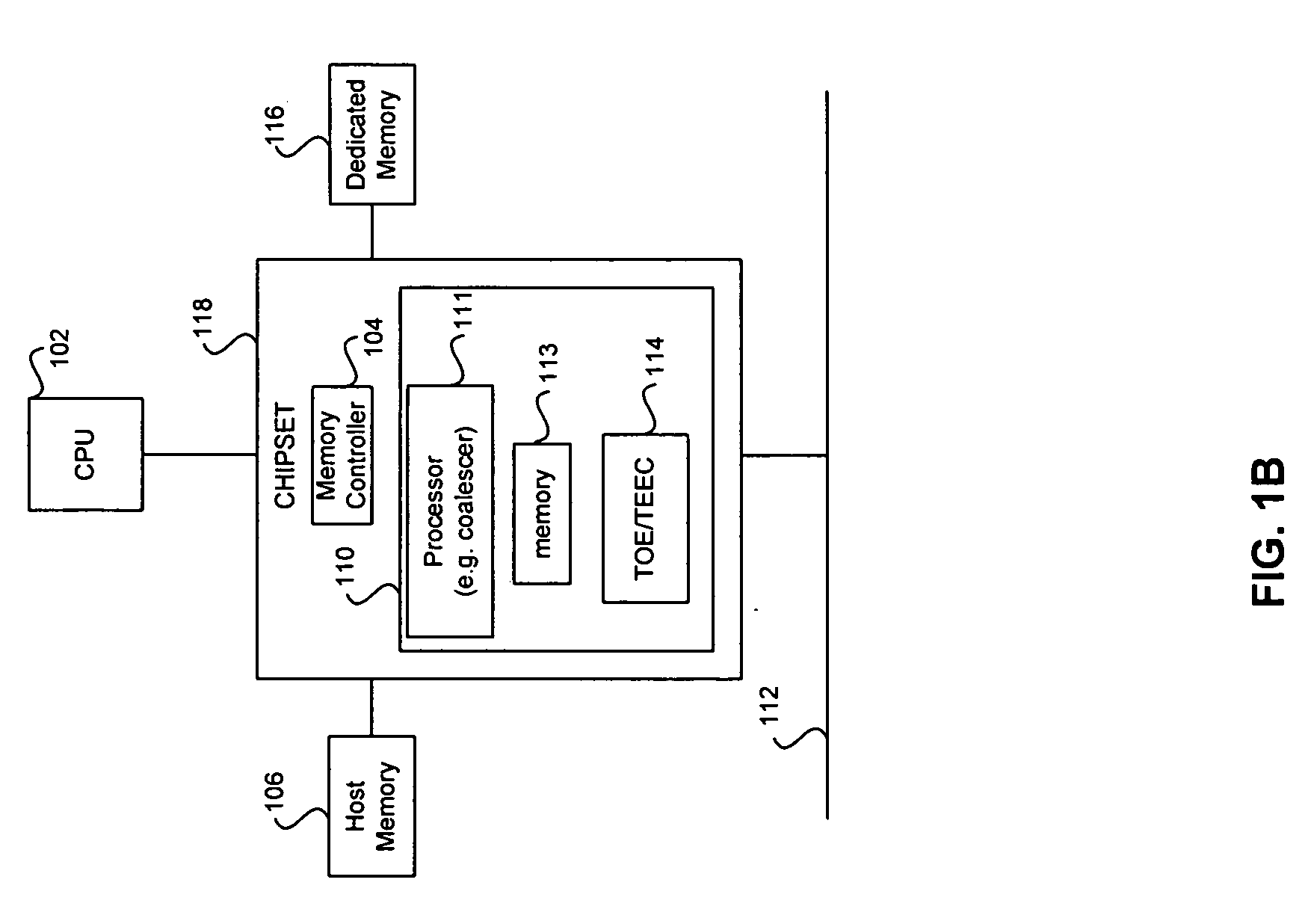

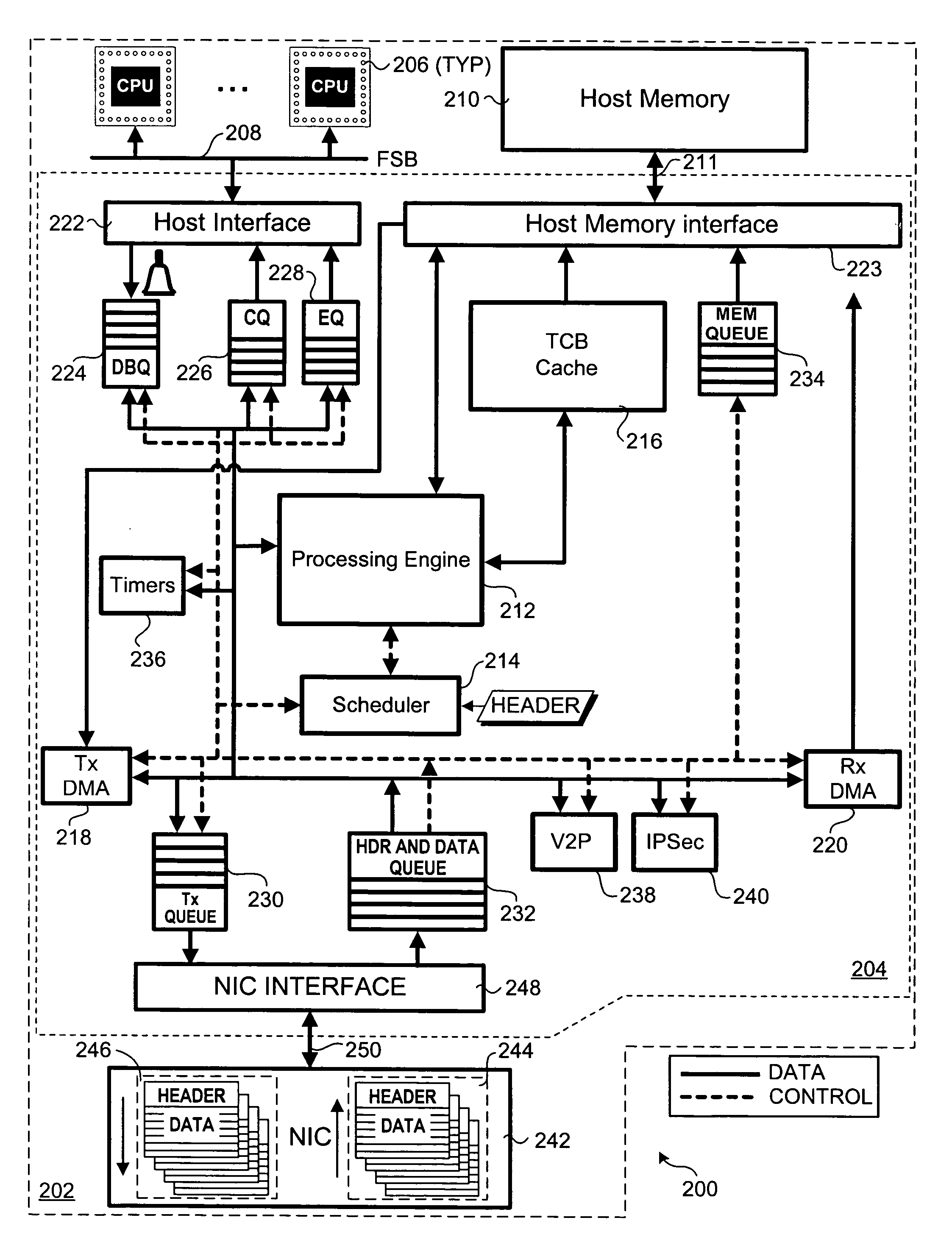

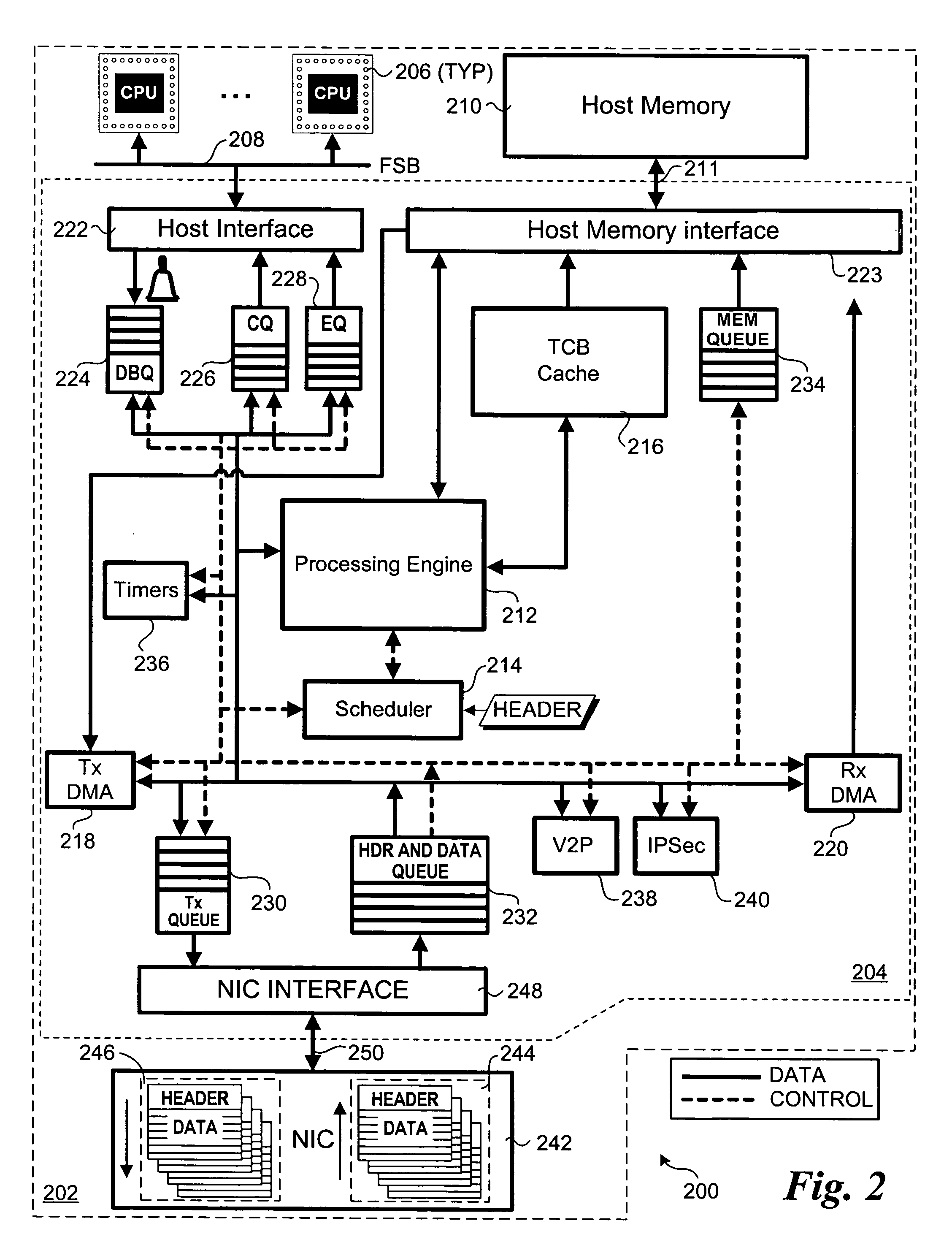

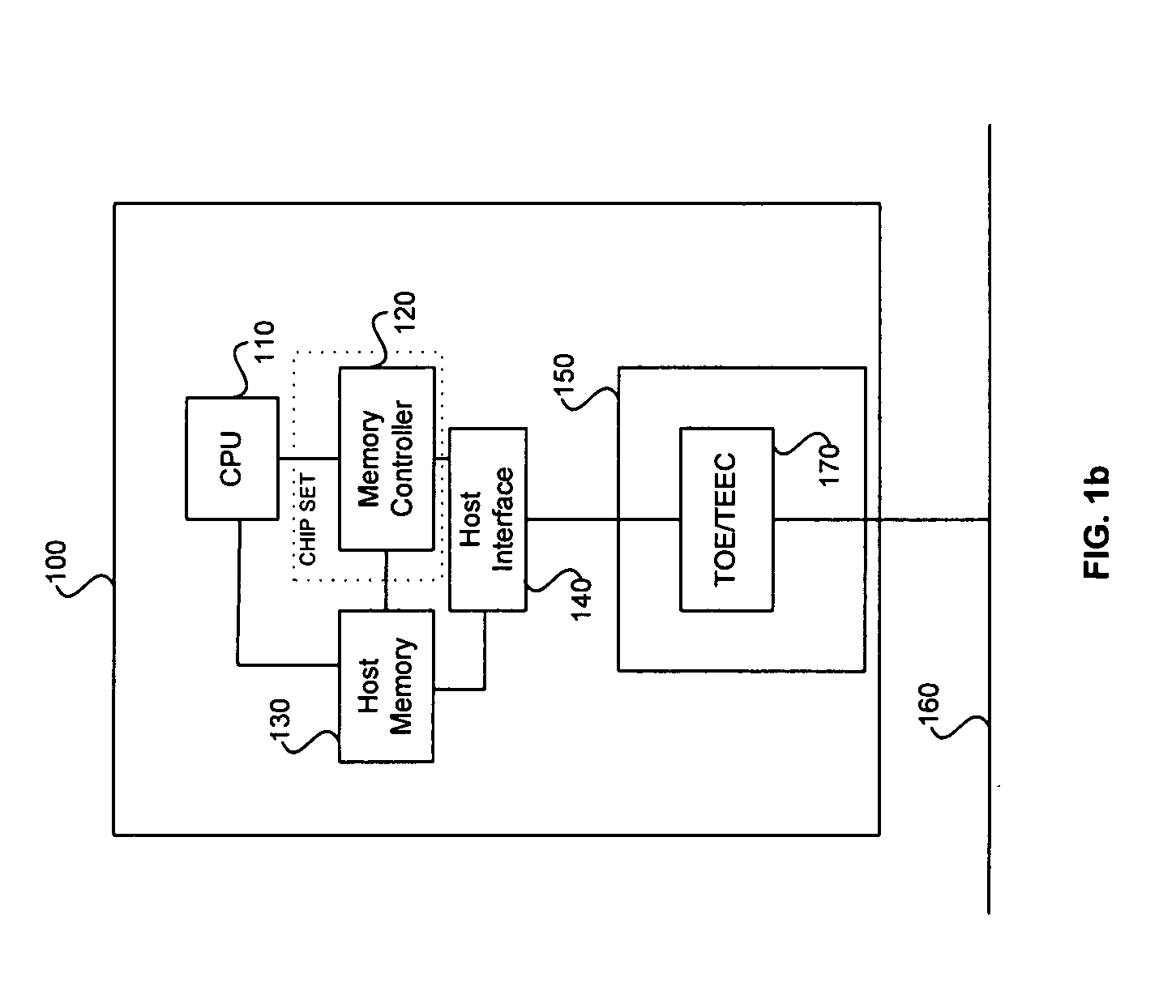

Methods and apparatus for processing transmission control protocol (TCP) packets using hardware-based multi-threading techniques. Inbound and outbound TCP packet are processed using a multi-threaded TCP offload engine (TOE). The TOE includes an execution core comprising a processing engine, a scheduler, an on-chip cache, a host memory interface, a host interface, and a network interface controller (NIC) interface. In one embodiment, the TOE is embodied as a memory controller hub (MCH) component of a platform chipset. The TOE may further include an integrated direct memory access (DMA) controller, or the DMA controller may be embodied as separate circuitry on the MCH. In one embodiment, inbound packets are queued in an input buffer, the headers are provided to the scheduler, and the scheduler arbitrates thread execution on the processing engine. Concurrently, DMA payload data transfers are queued and asynchronously performed in a manner that hides memory latencies. In one embodiment, the technique can process typical-size TCP packets at 10 Gbps or greater line speeds.

Owner:TAHOE RES LTD

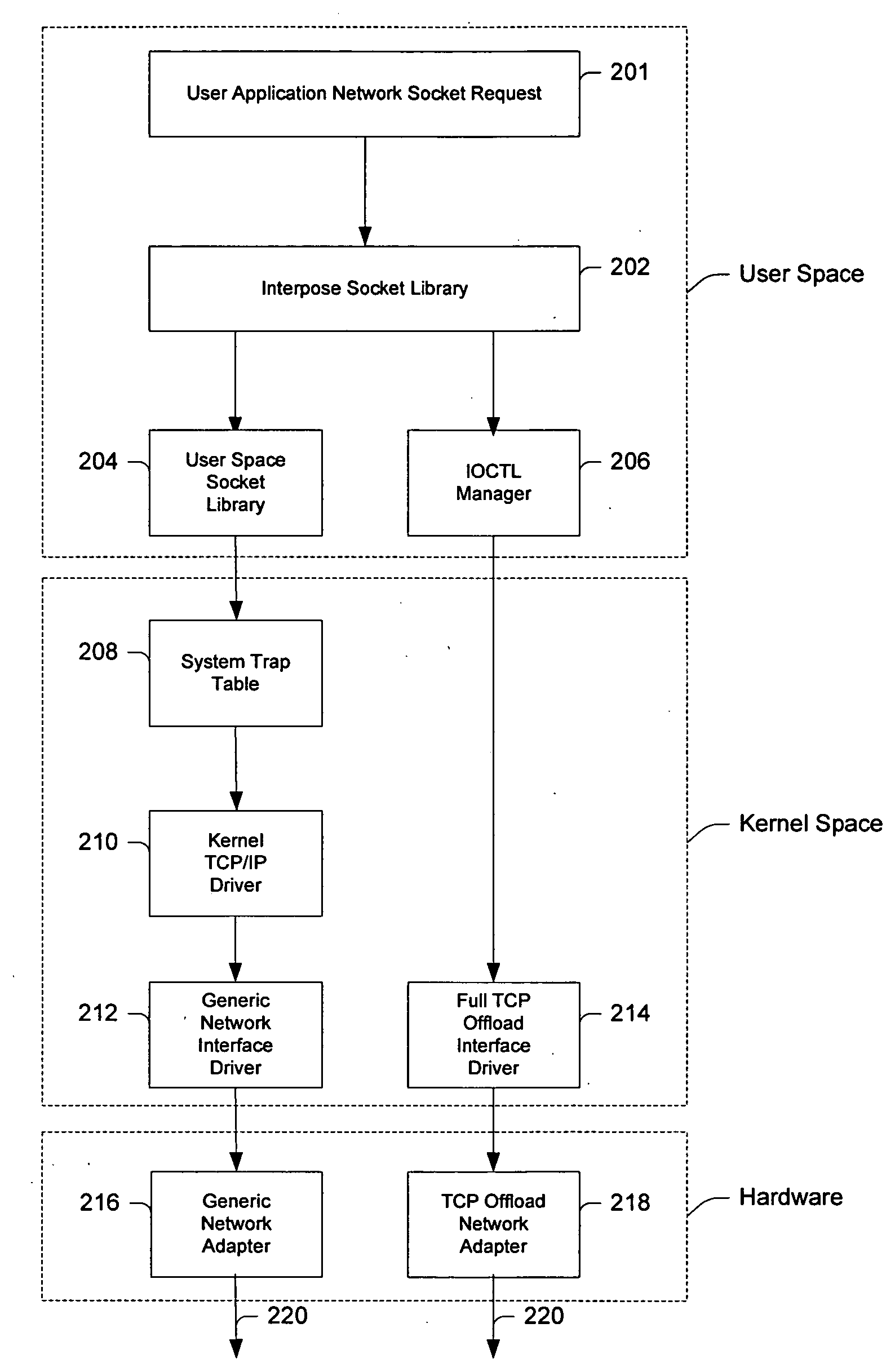

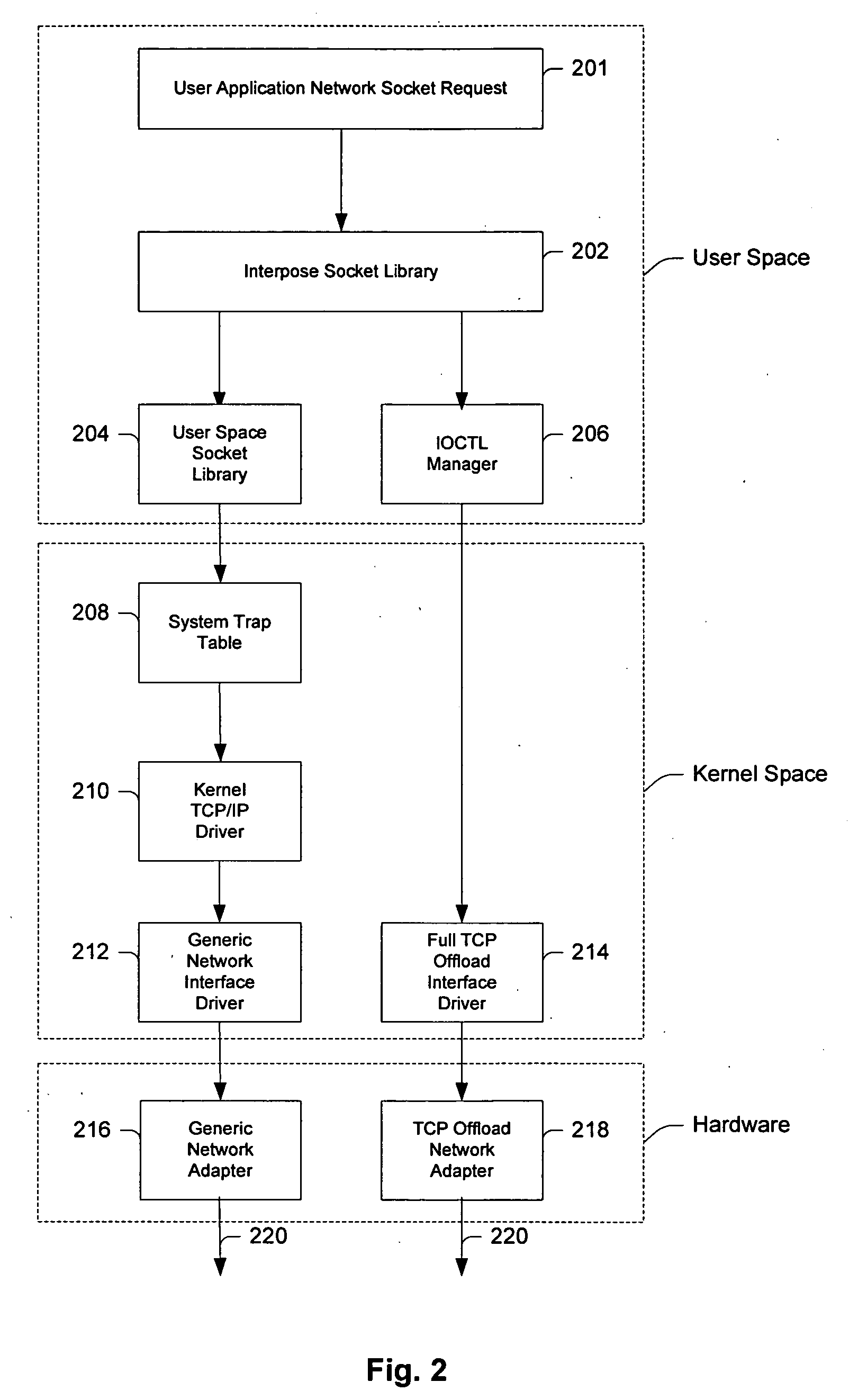

System and method for interfacing TCP offload engines using an interposed socket library

InactiveUS20050021680A1Improve system performanceReduce CPU utilizationMultiple digital computer combinationsProgram controlOperational systemNetwork socket

Owner:CENATA NETWORKS

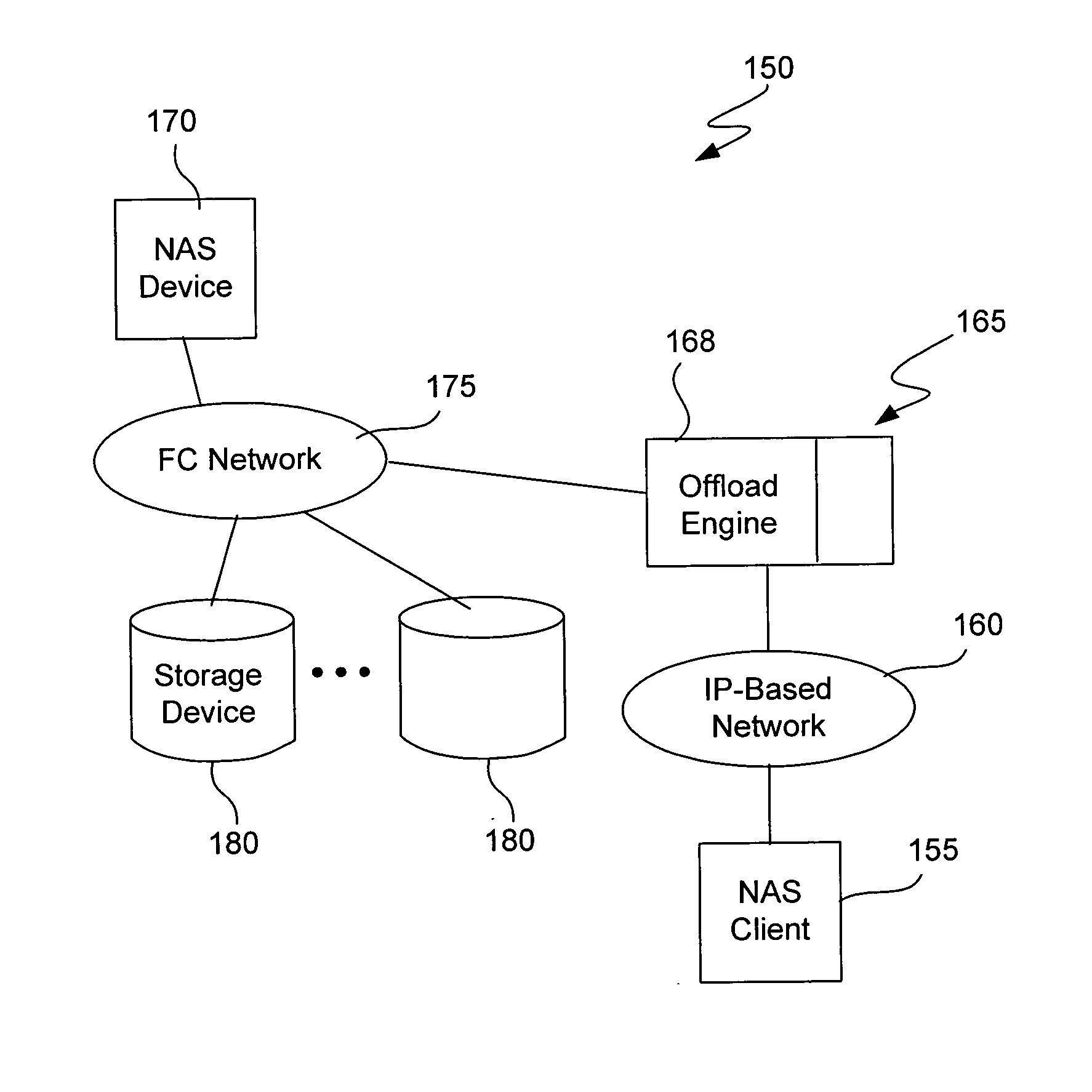

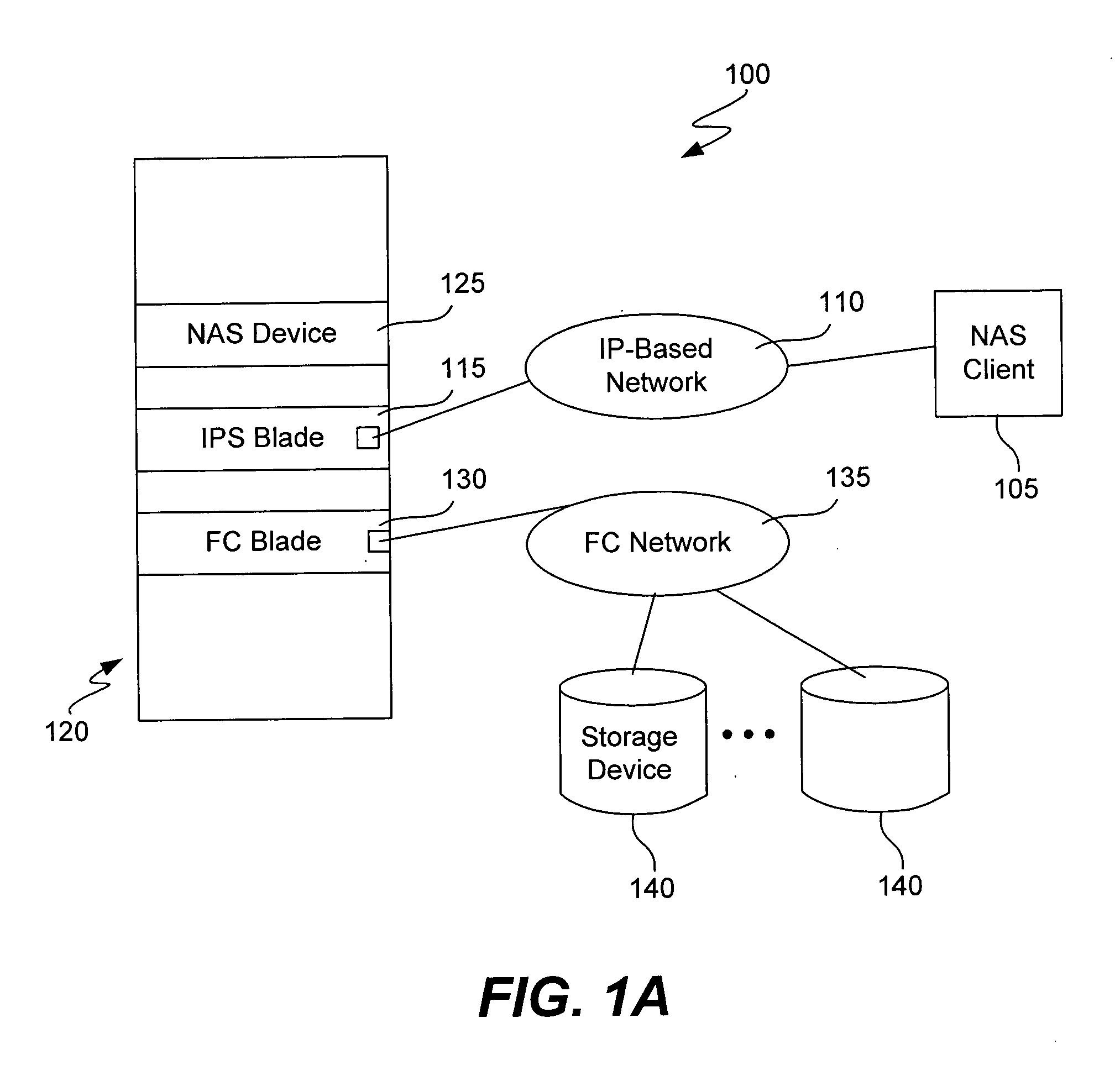

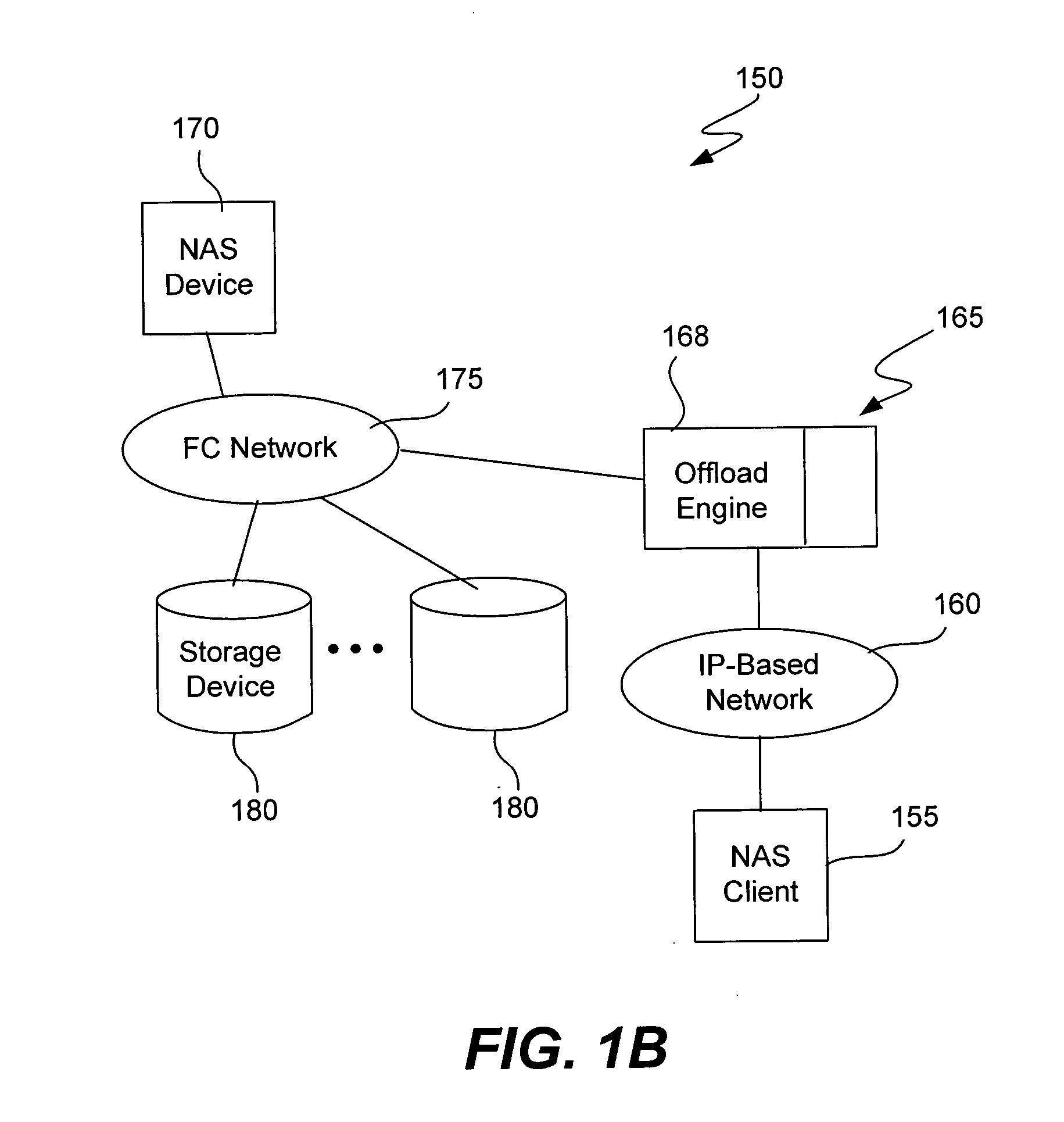

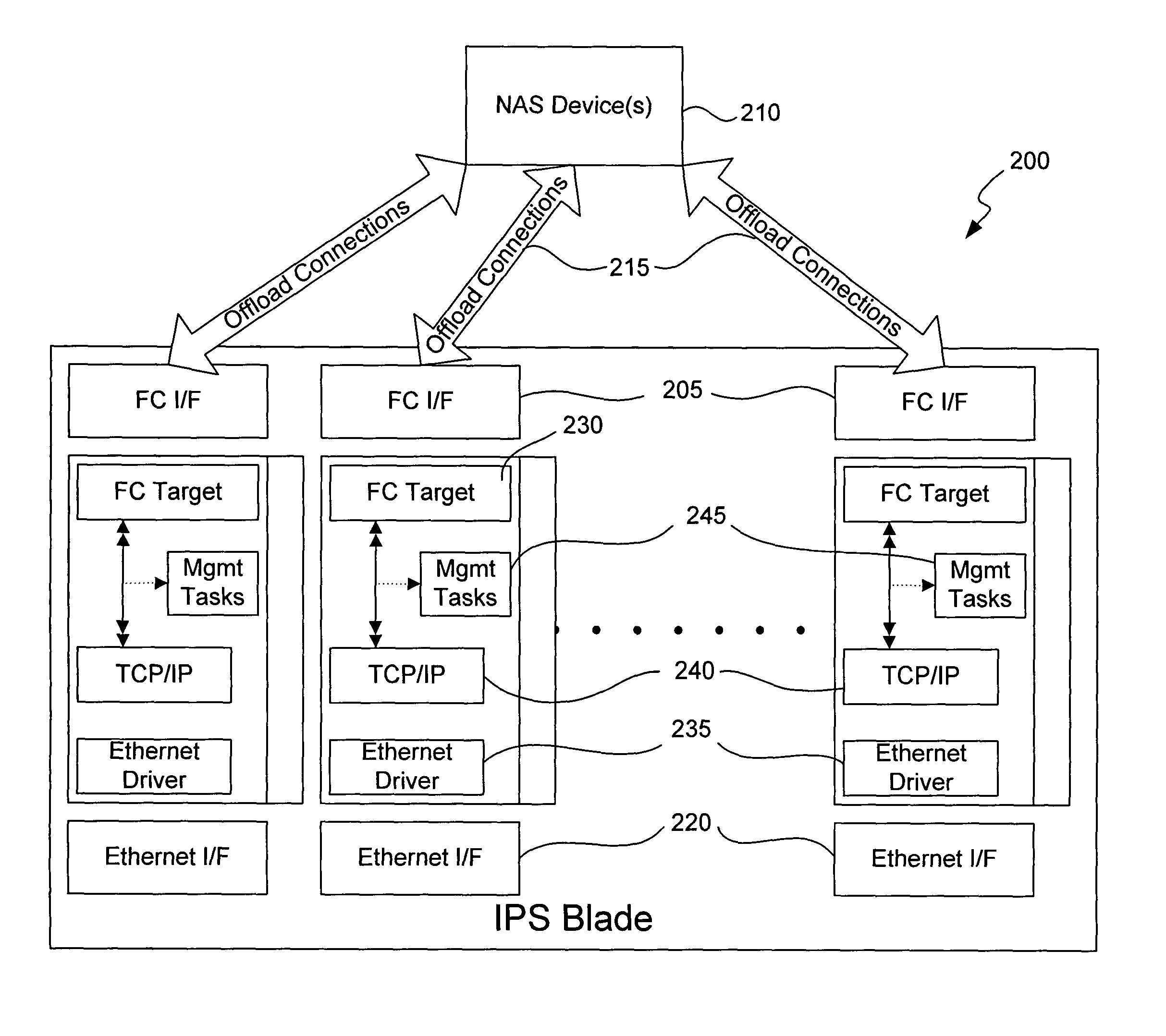

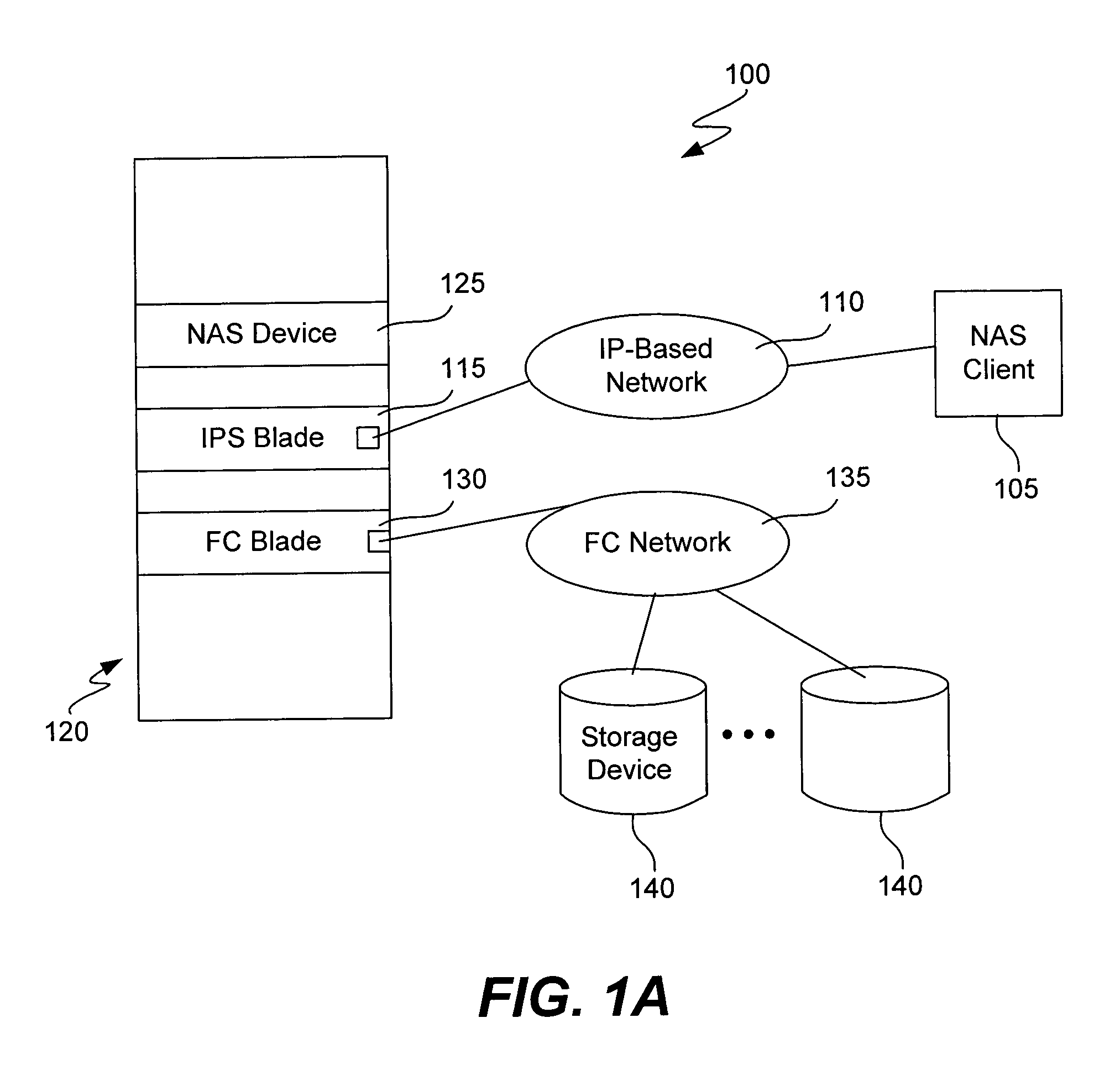

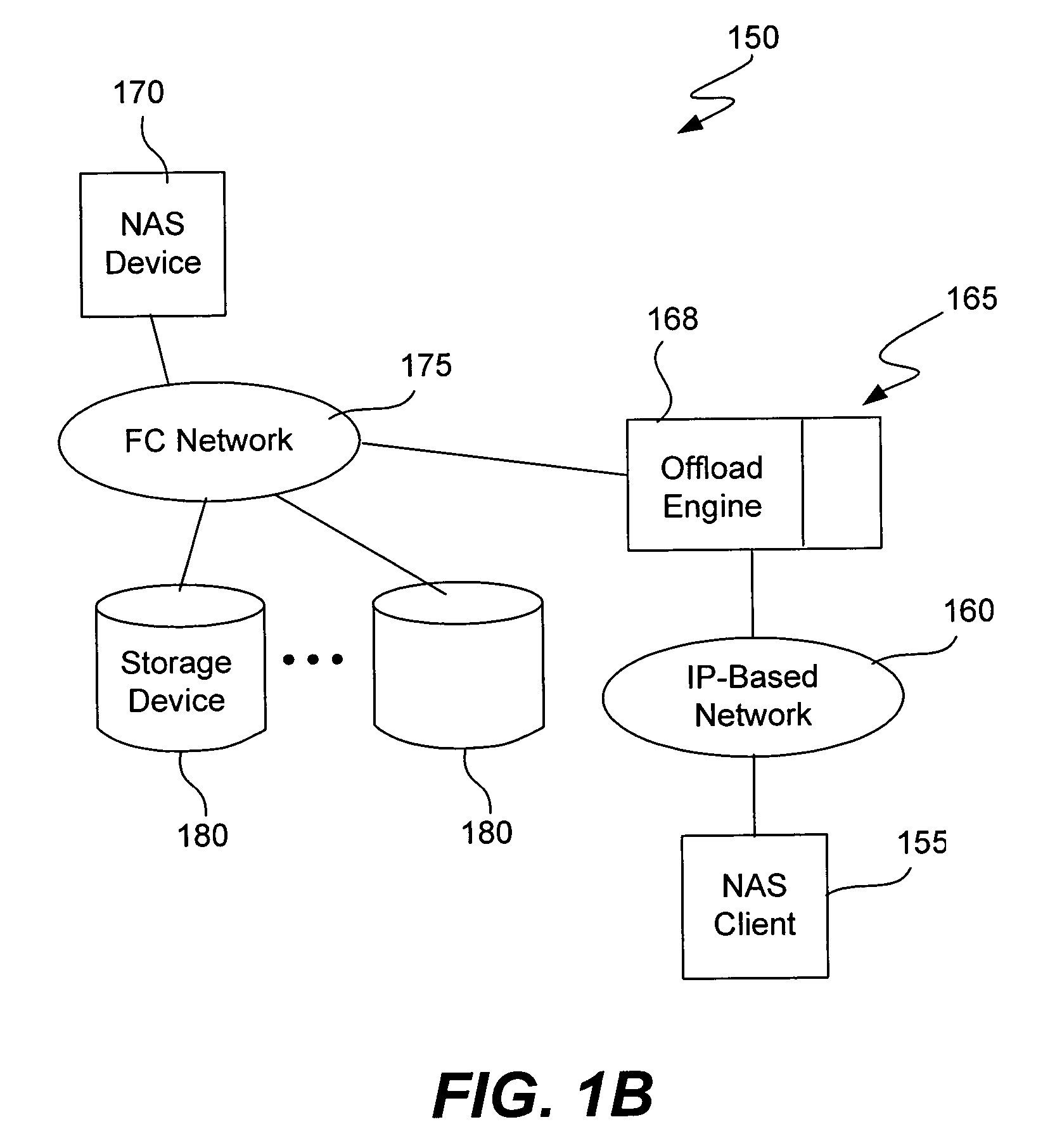

Encoding a TCP offload engine within FCP

ActiveUS20050190787A1Time-division multiplexStore-and-forward switching systemsFibre ChannelTCP offload engine

The present invention defines a new protocol for communicating with an offload engine that provides Transmission Control Protocol (“TCP”) termination over a Fibre Channel (“FC”) fabric. The offload engine terminates all protocols up to and including TCP and performs the processing associated with those layers. The offload protocol guarantees delivery and is encapsulated within FCP-formatted frames. Thus, the TCP streams are reliably passed to the host. Additionally, using this scheme, the offload engine can provide parsing of the TCP stream to further assist the host. The present invention also provides network devices (and components thereof) that are configured to perform the foregoing methods. The invention further defines how network attached storage (“NAS”) protocol data units (“PDUs”) are parsed and delivered.

Owner:CISCO TECH INC

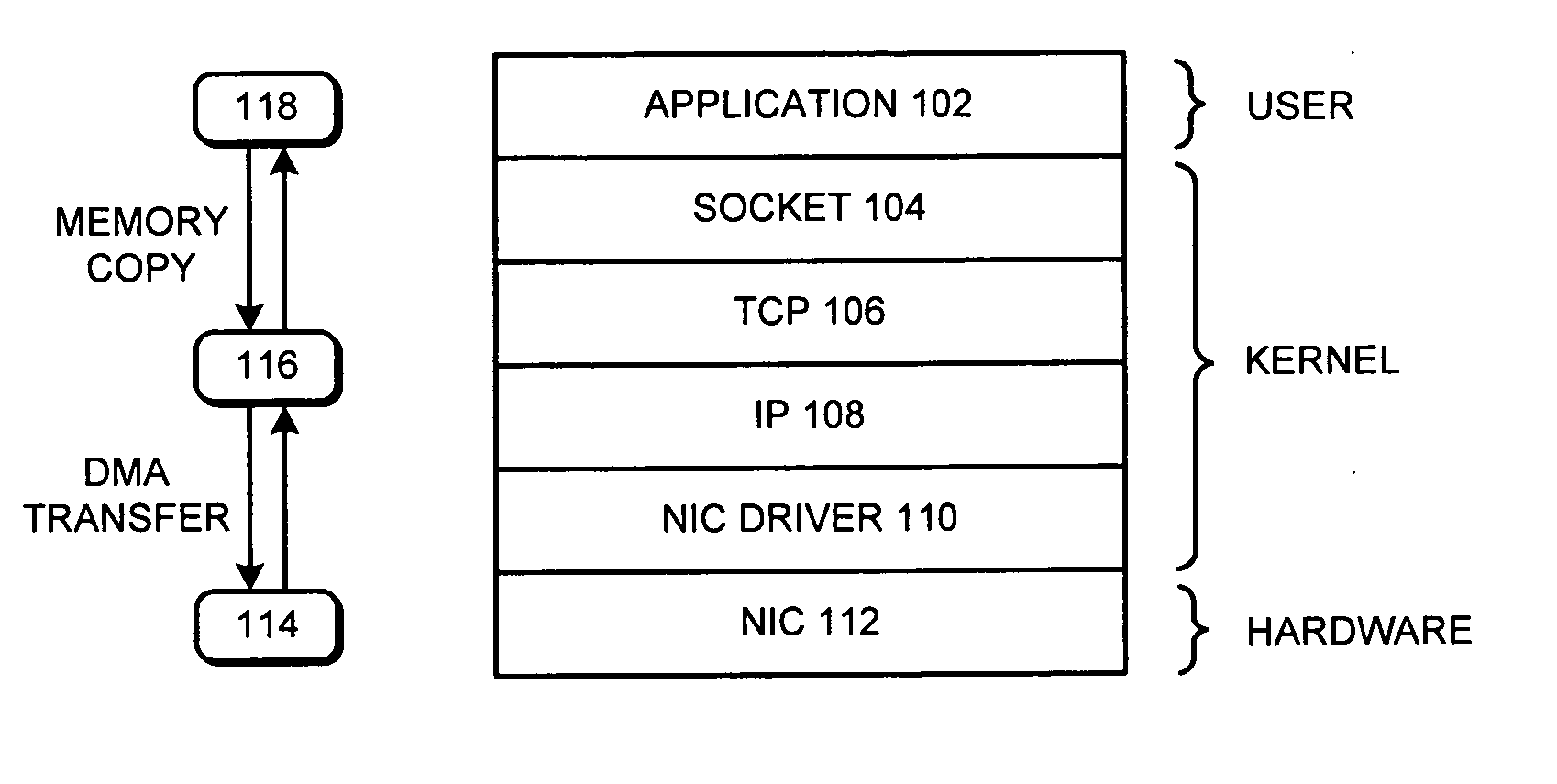

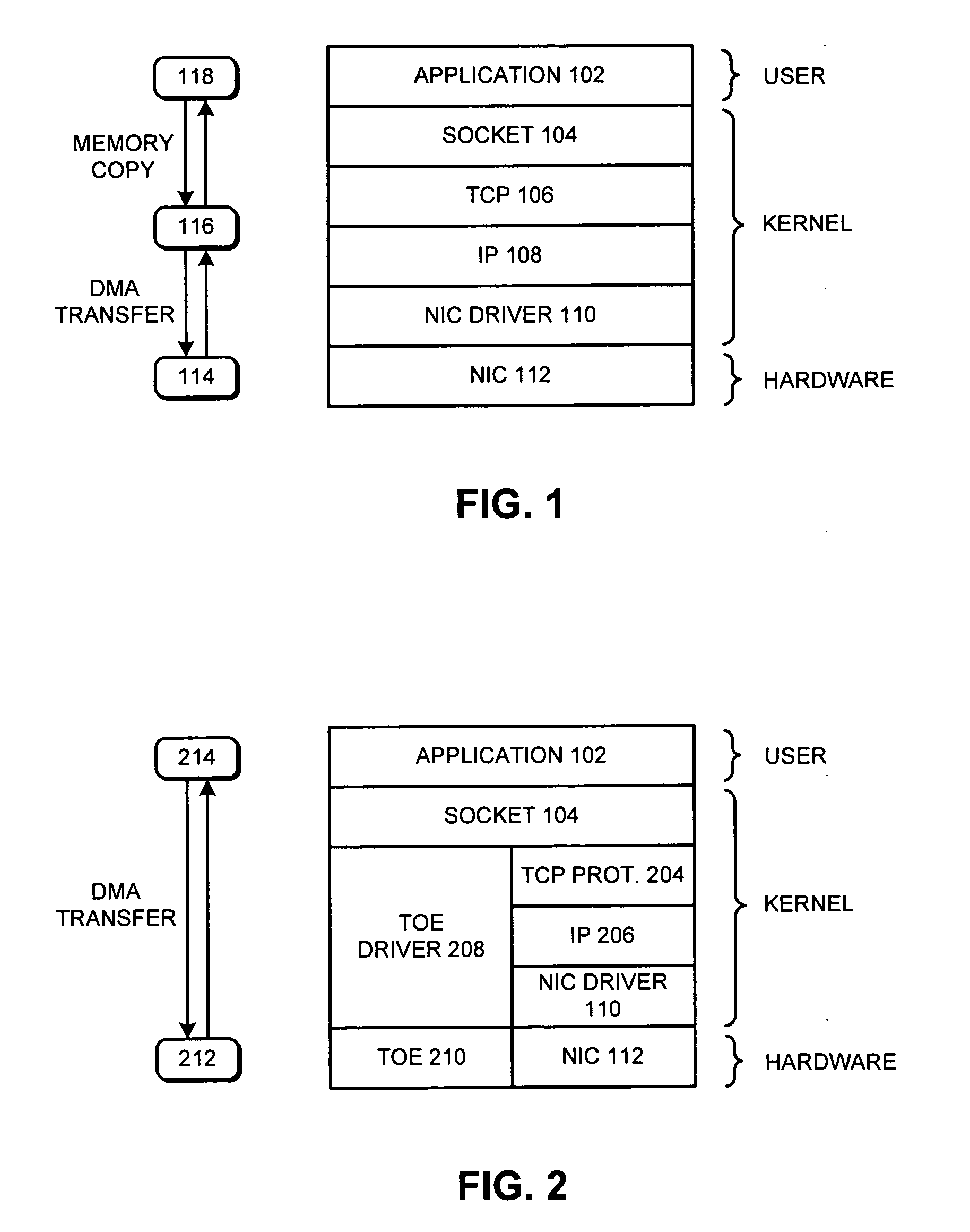

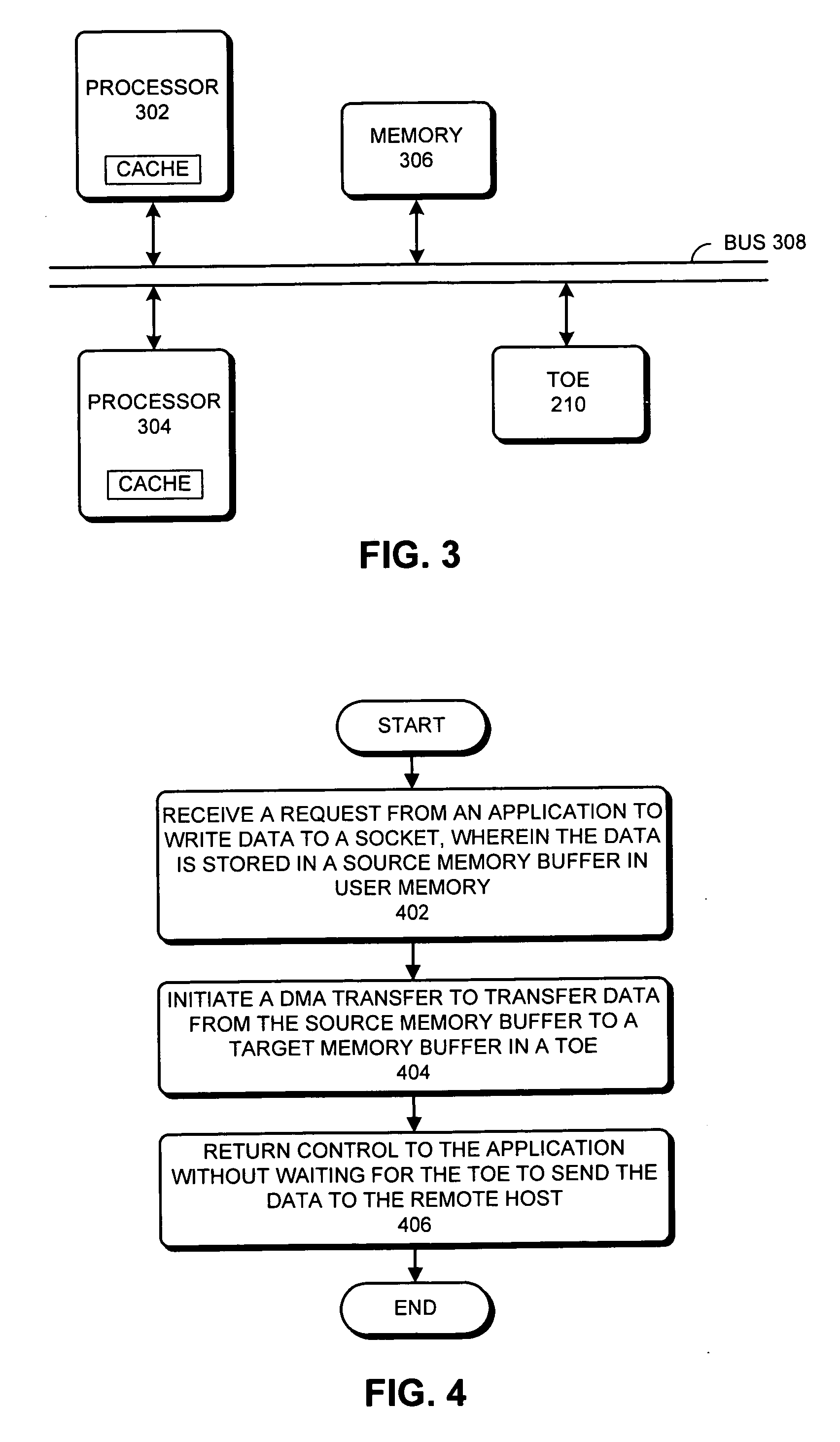

TCP-offload-engine based zero-copy sockets

One embodiment of the present invention provides a system for sending data to a remote host using a socket. During operation the system receives a request from an application to write data to the socket, wherein the data is stored in a source memory buffer in user memory. Next, the system initiates a DMA (Direct Memory Access) transfer to transfer the data from the source memory buffer to a target memory buffer in a TCP (Transmission Control Protocol) Offload Engine. The system then returns control to the application without waiting for the TCP Offload Engine to send the data to the remote host.

Owner:SUN MICROSYSTEMS INC

Method and system for a user space TCP offload engine (TOE)

InactiveUS20070255866A1TransmissionInput/output processes for data processingTCP offload engineApplication software

Certain aspects of a method and system for user space TCP offload are disclosed. Aspects of a method may include offloading transmission control protocol (TCP) processing of received data to an on-chip processor. The received data may be posted directly to hardware, bypassing kernel processing of the received data, utilizing a user space library. If the received data is not cached in memory, an application buffer comprising the received data may be registered by the user space library. The application buffer may be pinned and posted to the hardware.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE +1

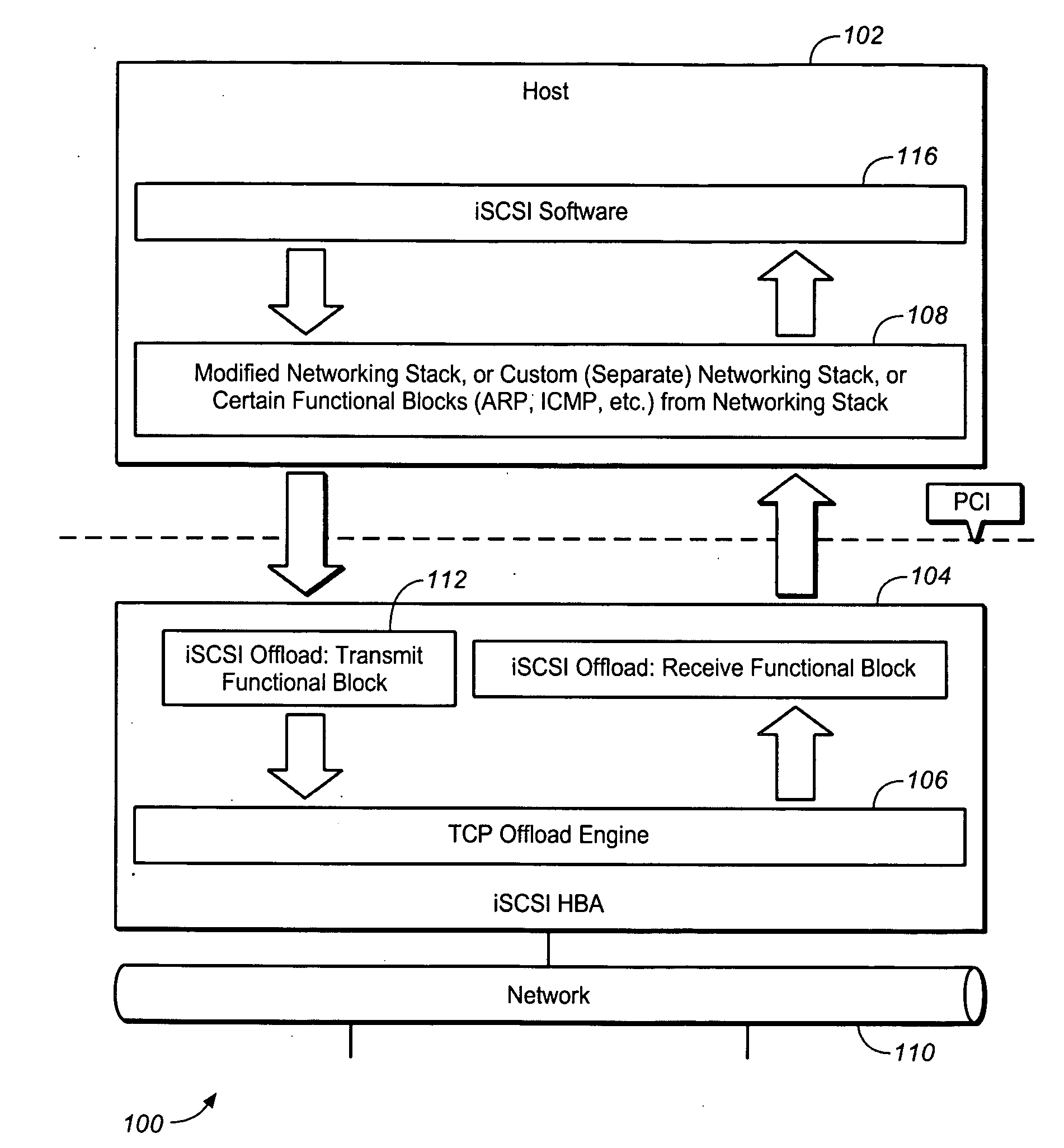

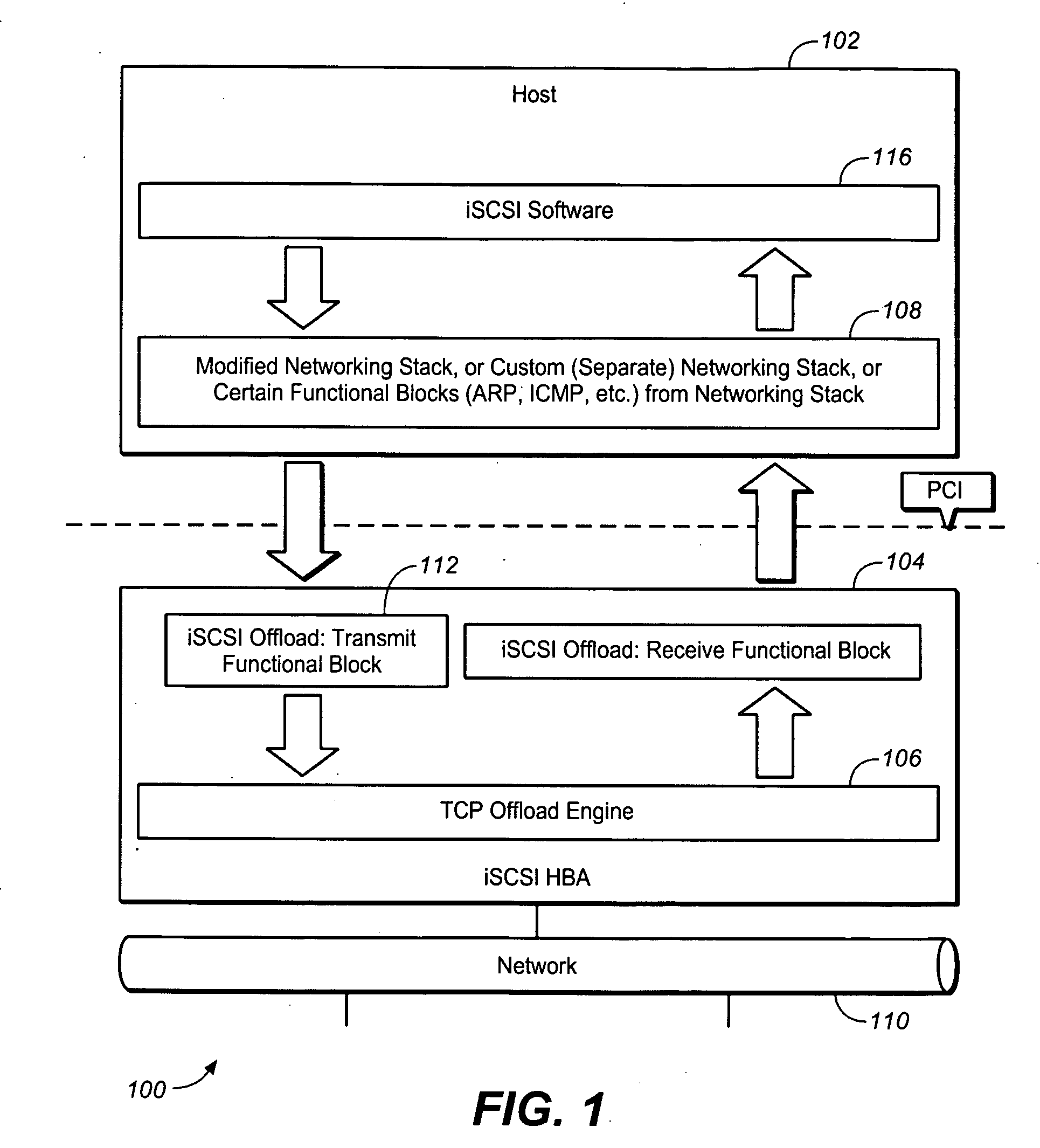

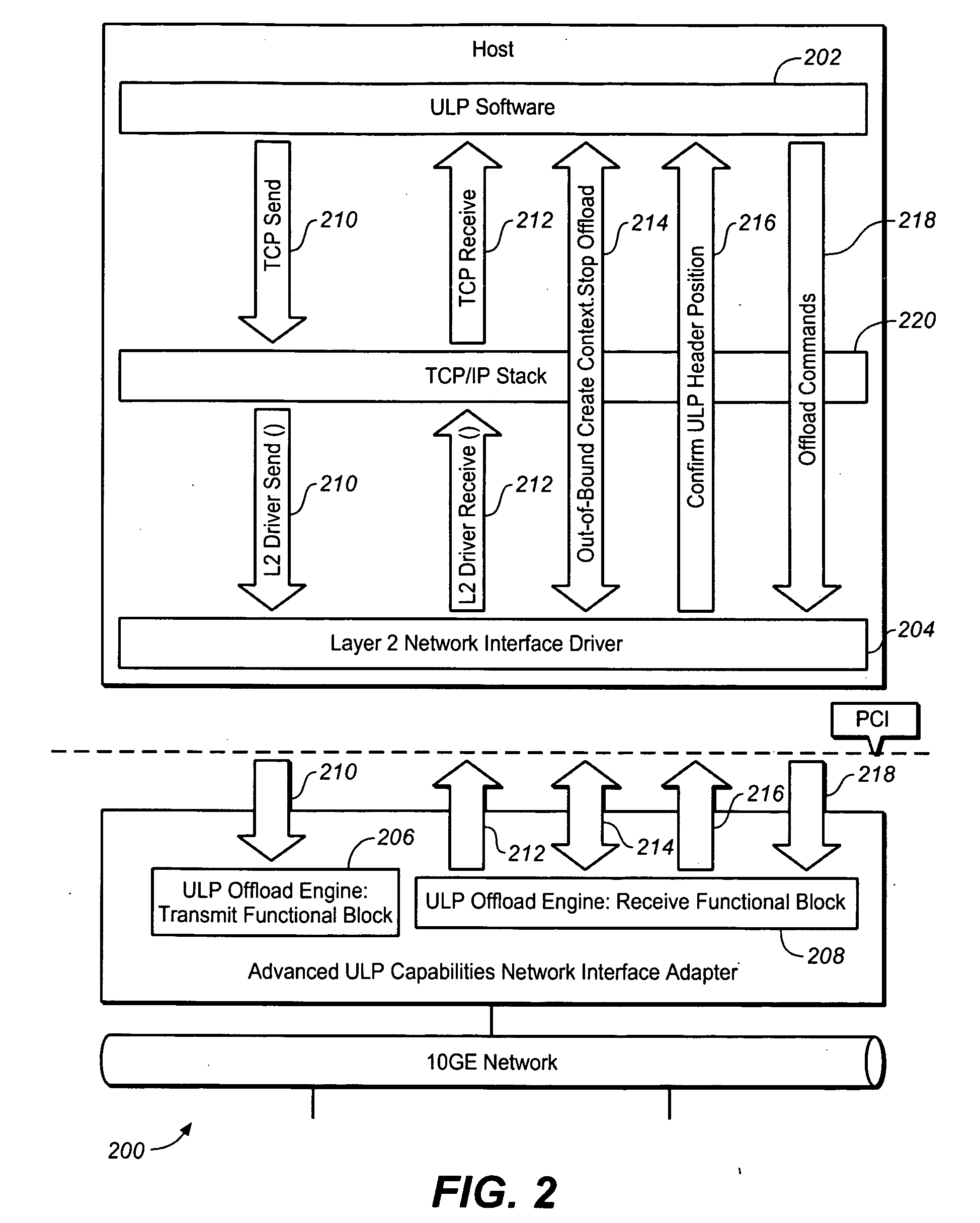

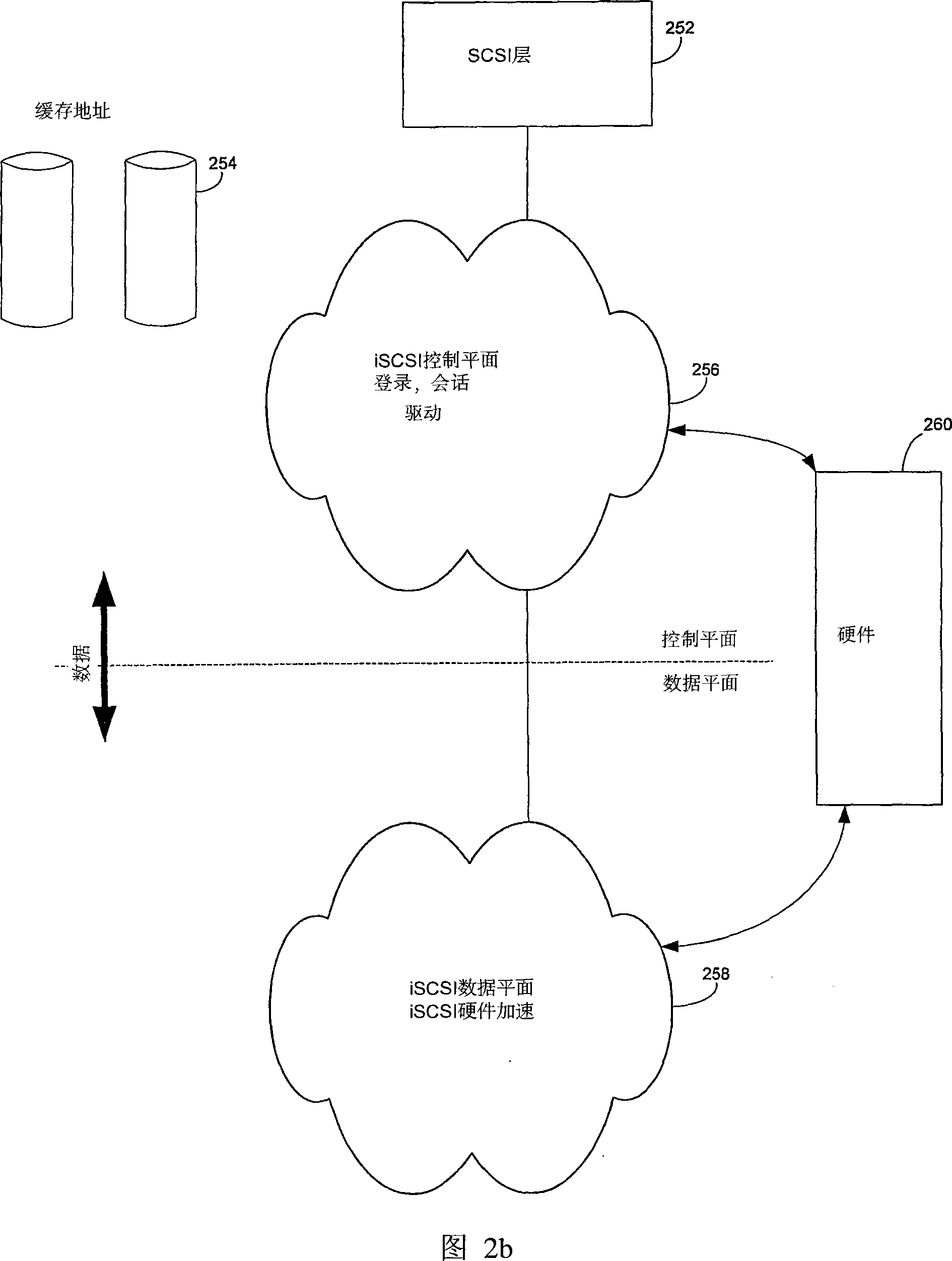

OFFLOADING iSCSI WITHOUT TOE

InactiveUS20090183057A1Reduce complexityData representation error detection/correctionCode conversionEngineeringTCP offload engine

A ULP offload engine system, method and associated data structure are provided for performing protocol offloads without requiring a TCP offload engine (TOE). In an embodiment, the ULP offload engine provides iSCSI offload services.

Owner:INTEL CORP

Connection establishment on a TCP offload engine

InactiveUS20060221946A1Firmly connectedProcess controlData switching by path configurationTCP offload engineReal-time computing

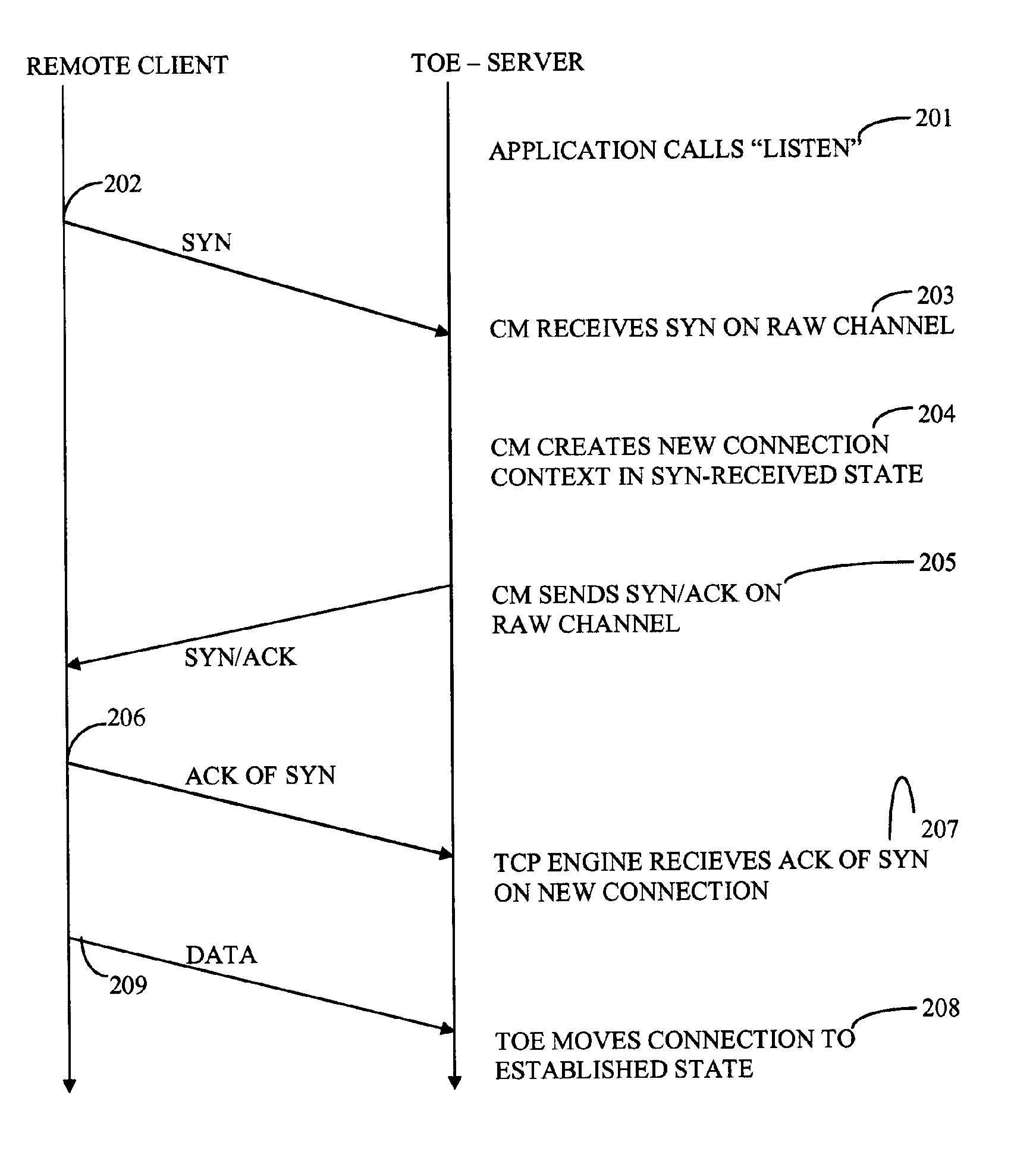

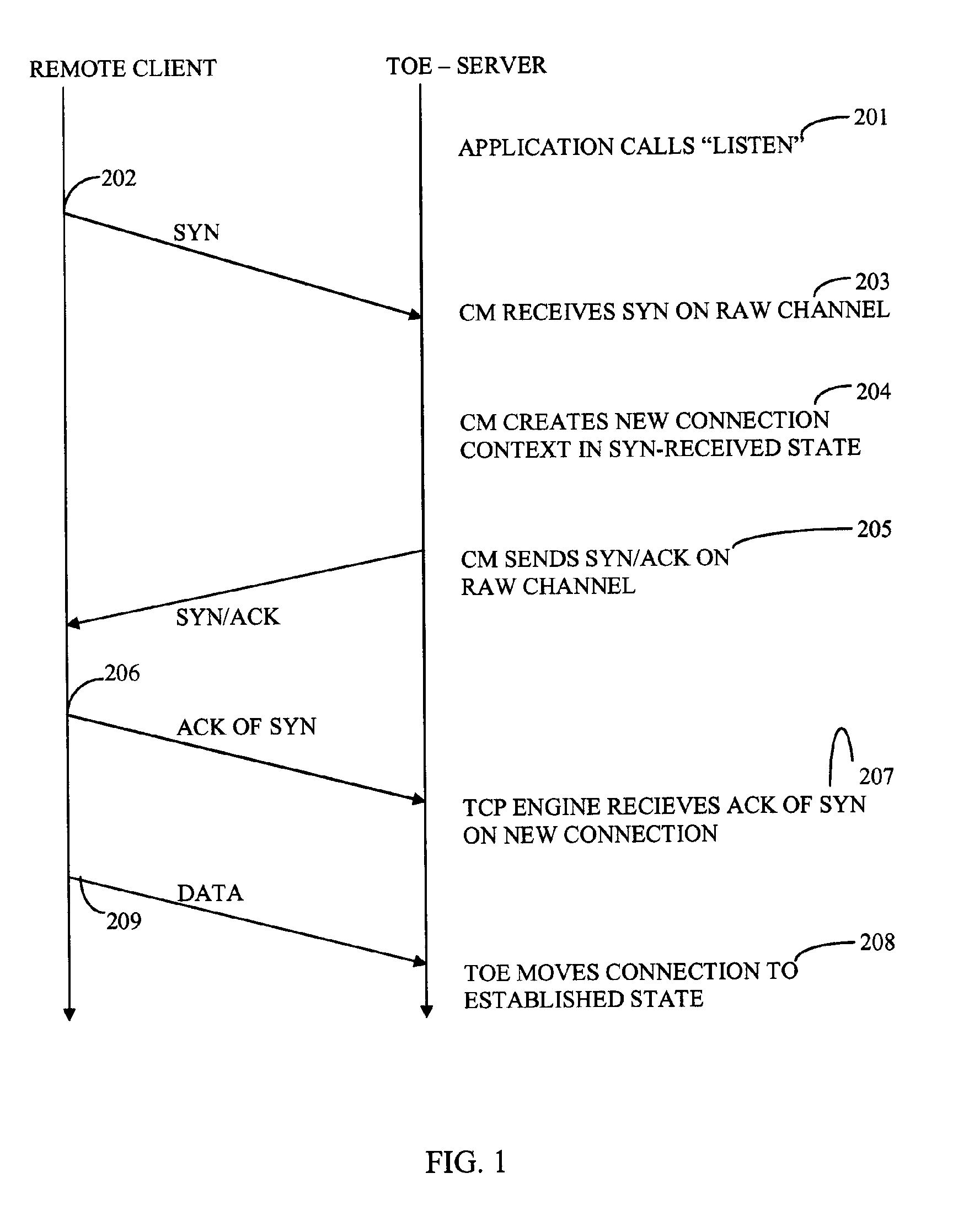

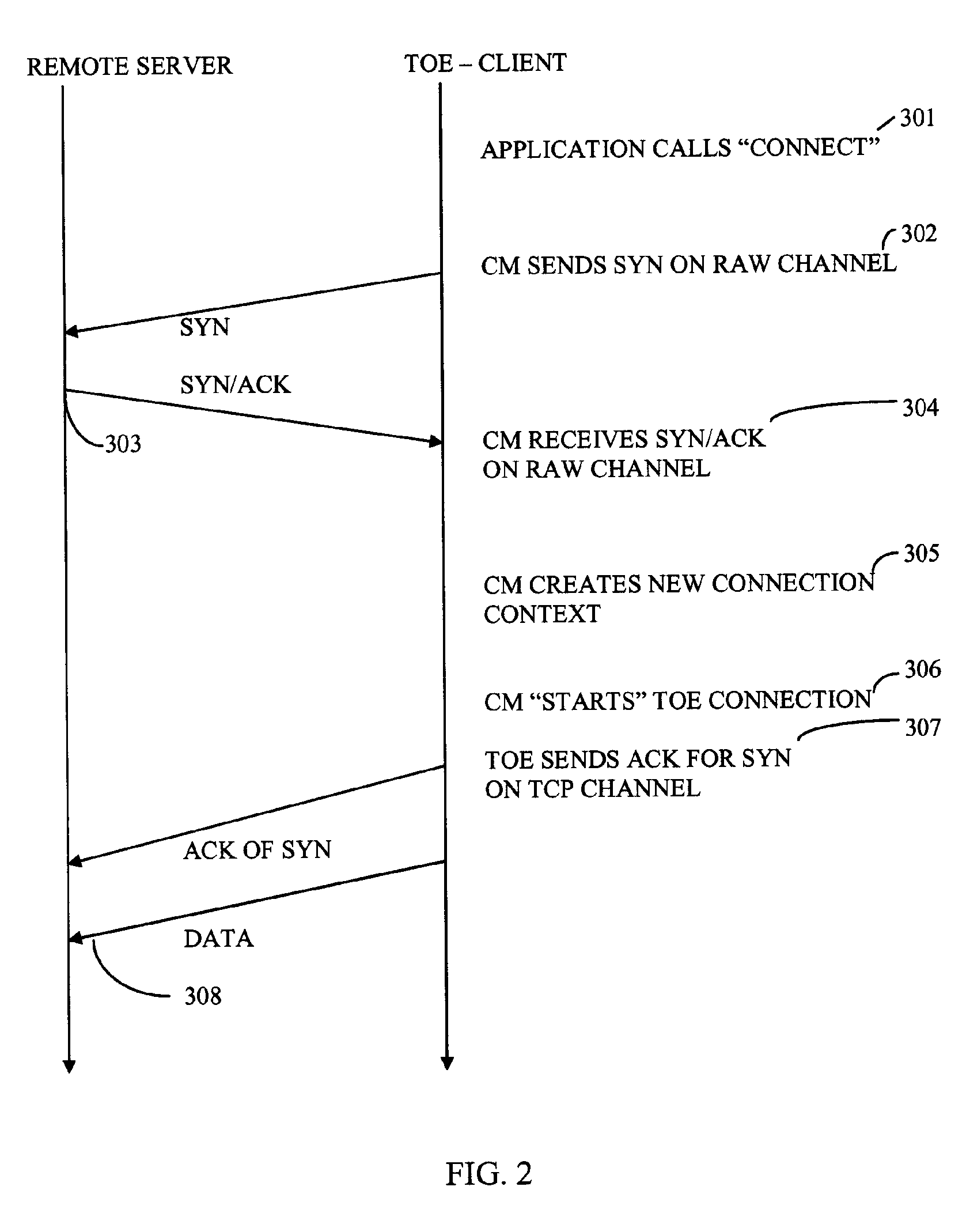

A method for performing connection establishment in TCP (transmission control protocol), the method including sending a SYN segment from a sender to a TCP offload engine (TOE), the SYN segment comprising a TCP packet adapted to synchronize sequence numbers on connecting computers, creating a connection context, acknowledging receipt of the SYN segment by sending a SYN / ACK segment to the sender, and sending an ACK segment from the sender to the TOE to acknowledge receipt of the SYN / ACK segment. Alternatively, the method may include sending a SYN segment from a sender to a computer, acknowledging receipt of the SYN segment by sending a SYN / ACK segment to the TOE, creating a connection context, and sending an ACK segment from the TOE to acknowledge receipt of the SYN / ACK segment.

Owner:IBM CORP

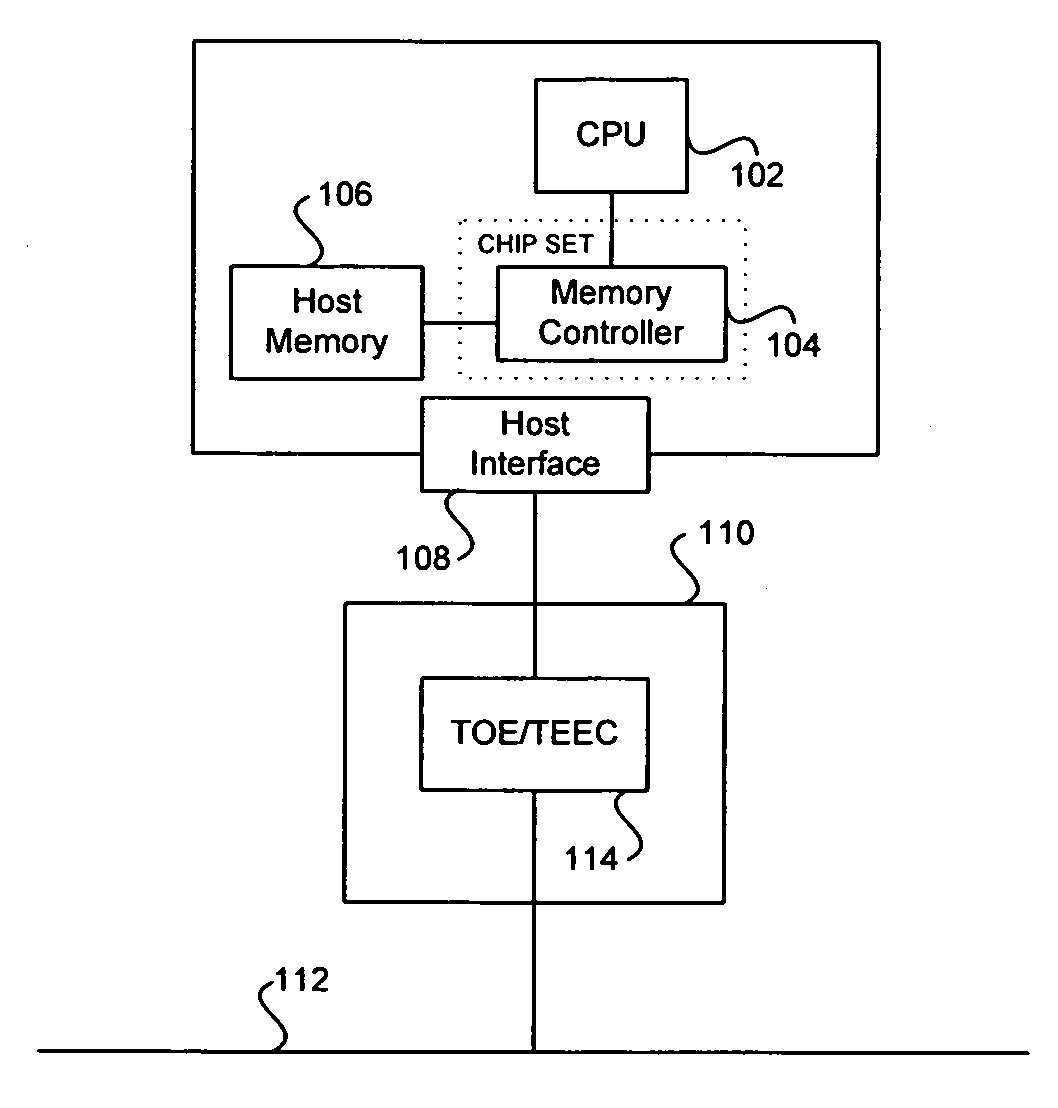

Hardware-based multi-threading for packet processing

ActiveUS7668165B2Multiprogramming arrangementsData switching by path configurationChipsetHost memory

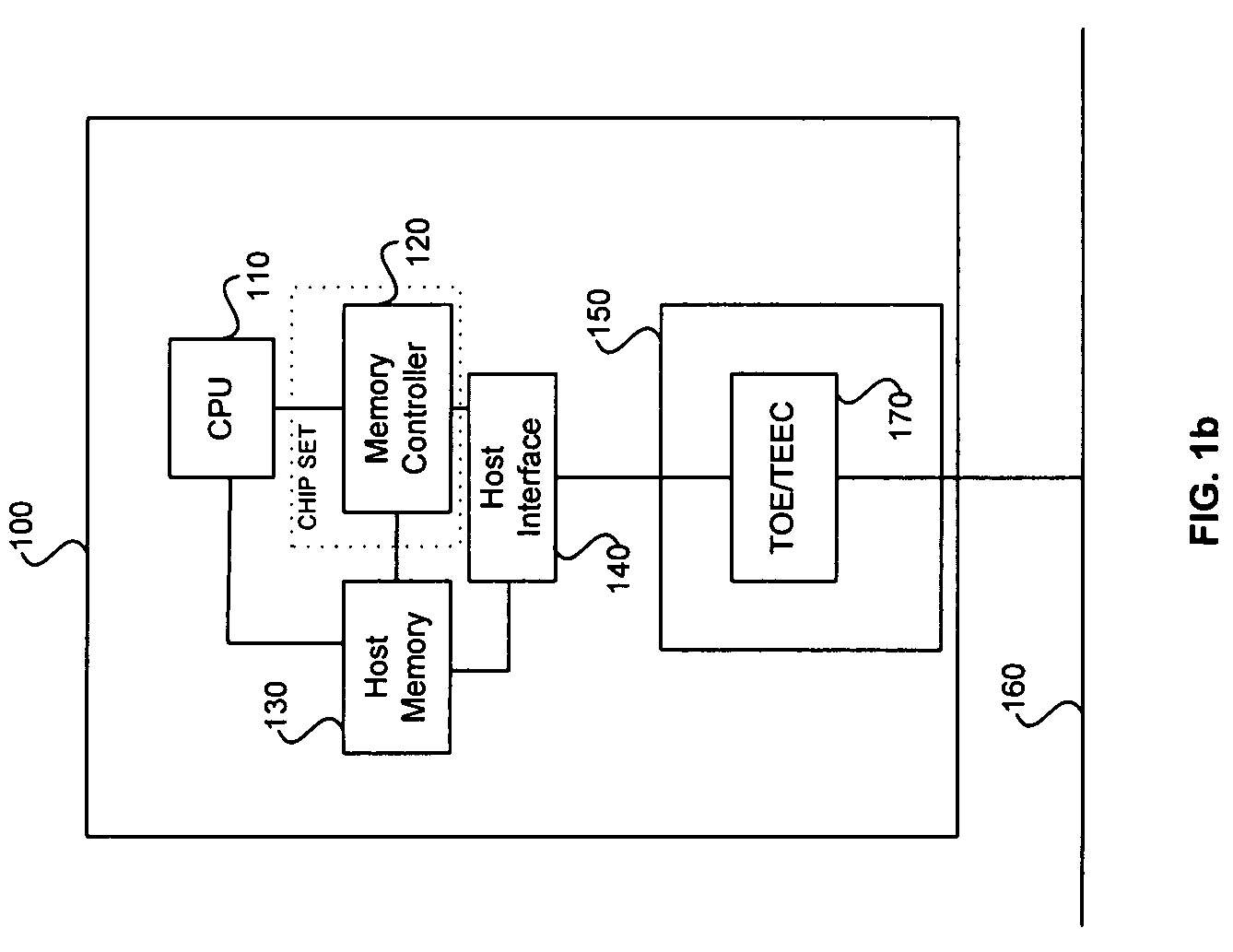

Methods and apparatus for processing transmission control protocol (TCP) packets using hardware-based multi-threading techniques. Inbound and outbound TCP packet are processed using a multi-threaded TCP offload engine (TOE). The TOE includes an execution core comprising a processing engine, a scheduler, an on-chip cache, a host memory interface, a host interface, and a network interface controller (NIC) interface. In one embodiment, the TOE is embodied as a memory controller hub (MCH) component of a platform chipset. The TOE may further include an integrated direct memory access (DMA) controller, or the DMA controller may be embodied as separate circuitry on the MCH. In one embodiment, inbound packets are queued in an input buffer, the headers are provided to the scheduler, and the scheduler arbitrates thread execution on the processing engine. Concurrently, DMA payload data transfers are queued and asynchronously performed in a manner that hides memory latencies. In one embodiment, the technique can process typical-size TCP packets at 10 Gbps or greater line speeds.

Owner:TAHOE RES LTD

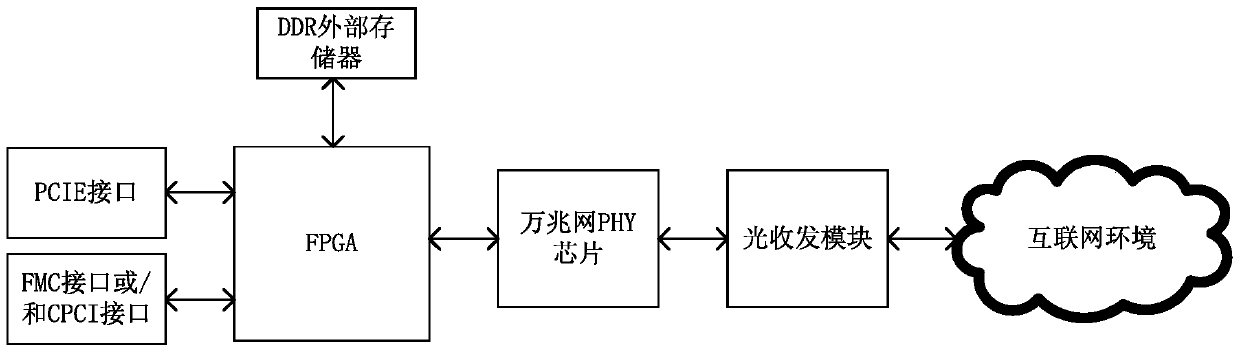

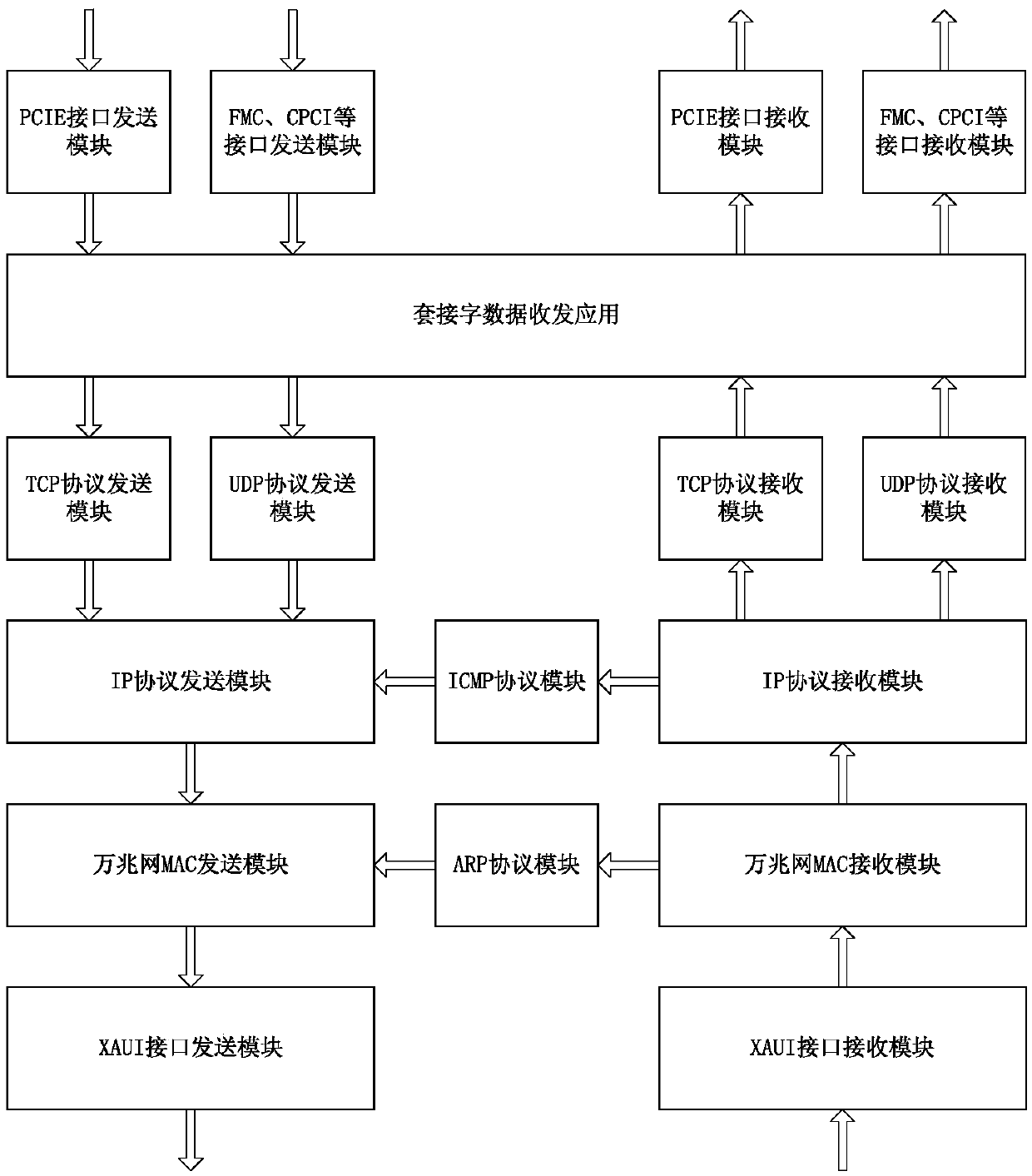

10-gigabit Ethernet TCP offload engine (TOE) system realized based on FPGA

ActiveCN105516191AAvoid homeostasisImprove the status quo of high loadTransmissionTransceiverNetworked Transport of RTCM via Internet Protocol

The invention discloses a 10-gigabit Ethernet TCP offload engine (TOE) system realized based on an FPGA, comprising the FPGA for realizing a TCP / IP protocol stack and a 10-gigabit Ethernet MAC layer, a 10-gigabit Ethernet PHY chip connected with the FPGA, a 10G optical transceiver module connected with the FPGA and used for serving as a 10-gigabit Ethernet transmission medium, and a DDR external memory connected with the FPGA and used for data caching. The FPGA is creatively adopted to realize the TCP / IP protocol stack, replacing a soft TCP / IP protocol stack realized by a conventional way of using a processor and an operation system with a hardware manner. According to the system, the processing speed of the TCP / IP protocol stack is effectively increased, the smoothness and balance of 10-gigabit Ethernet transmission are realized, and most importantly, computer application and network protocol separation is realized.

Owner:CHENGDU ZHIXUN LIANCHUANG TECH

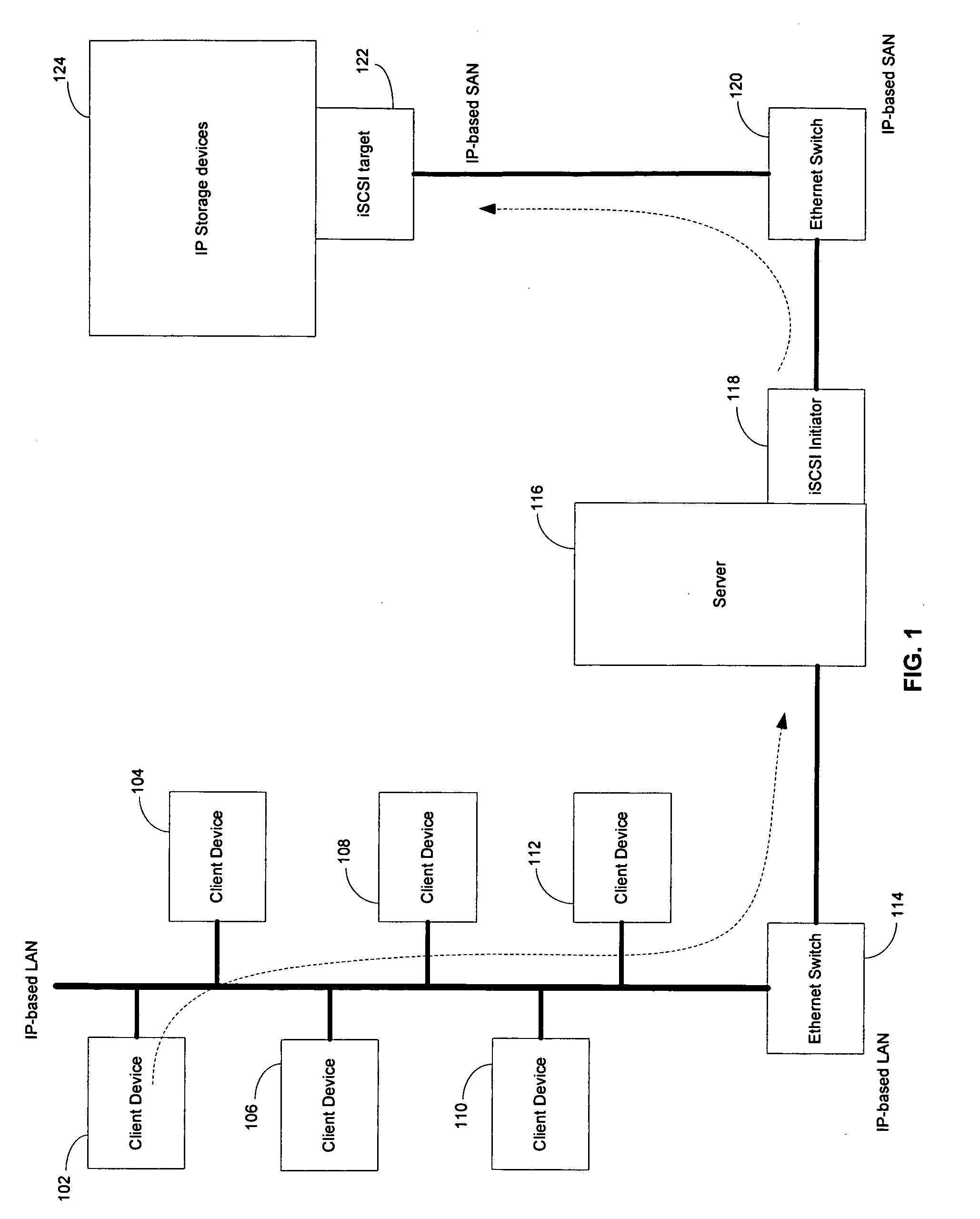

System and method for conducting direct data placement (DDP) using a TOE (TCP offload engine) capable network interface card

ActiveUS7523179B1Data switching by path configurationMultiple digital computer combinationsTCP offload engineData placement

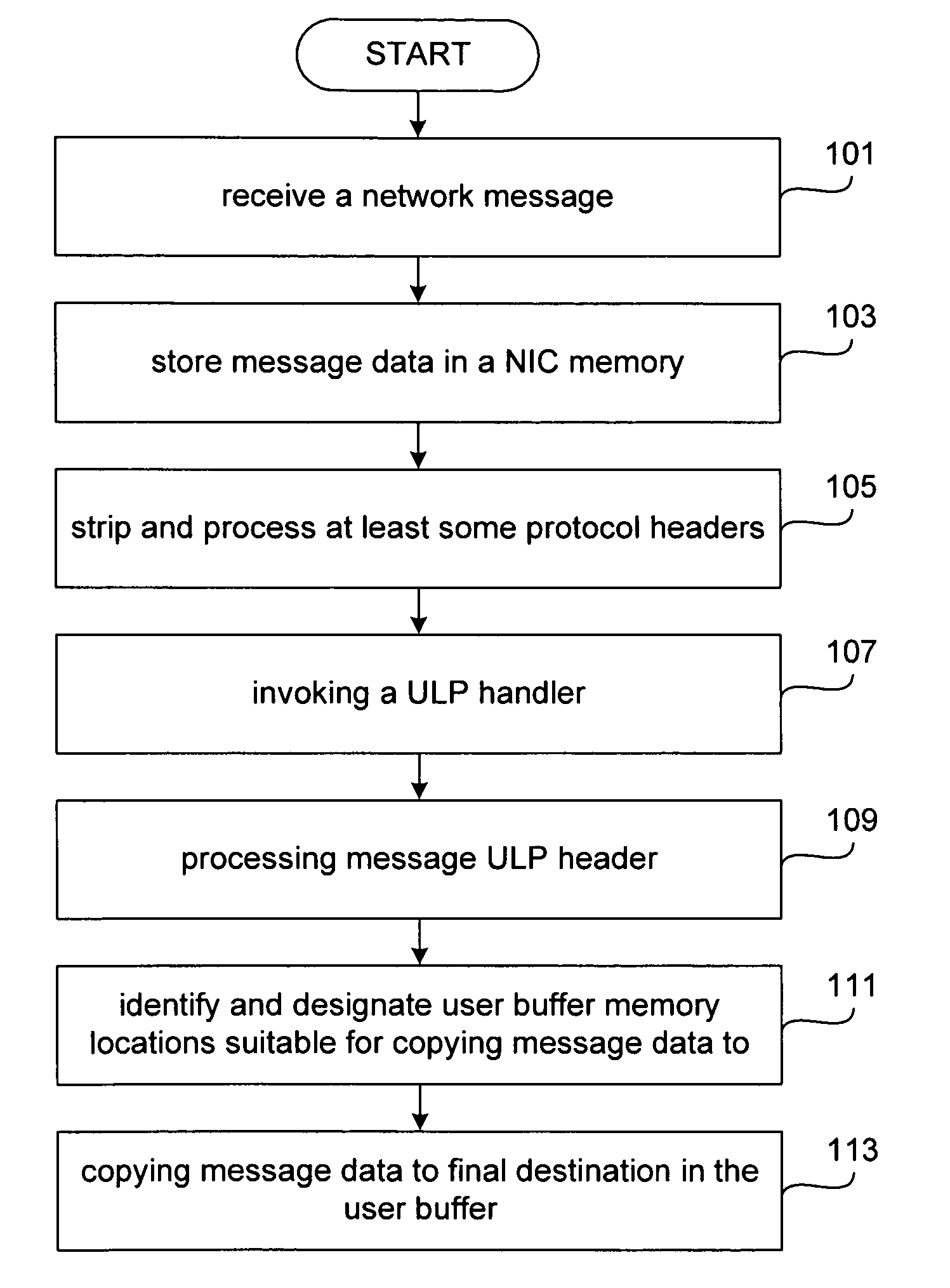

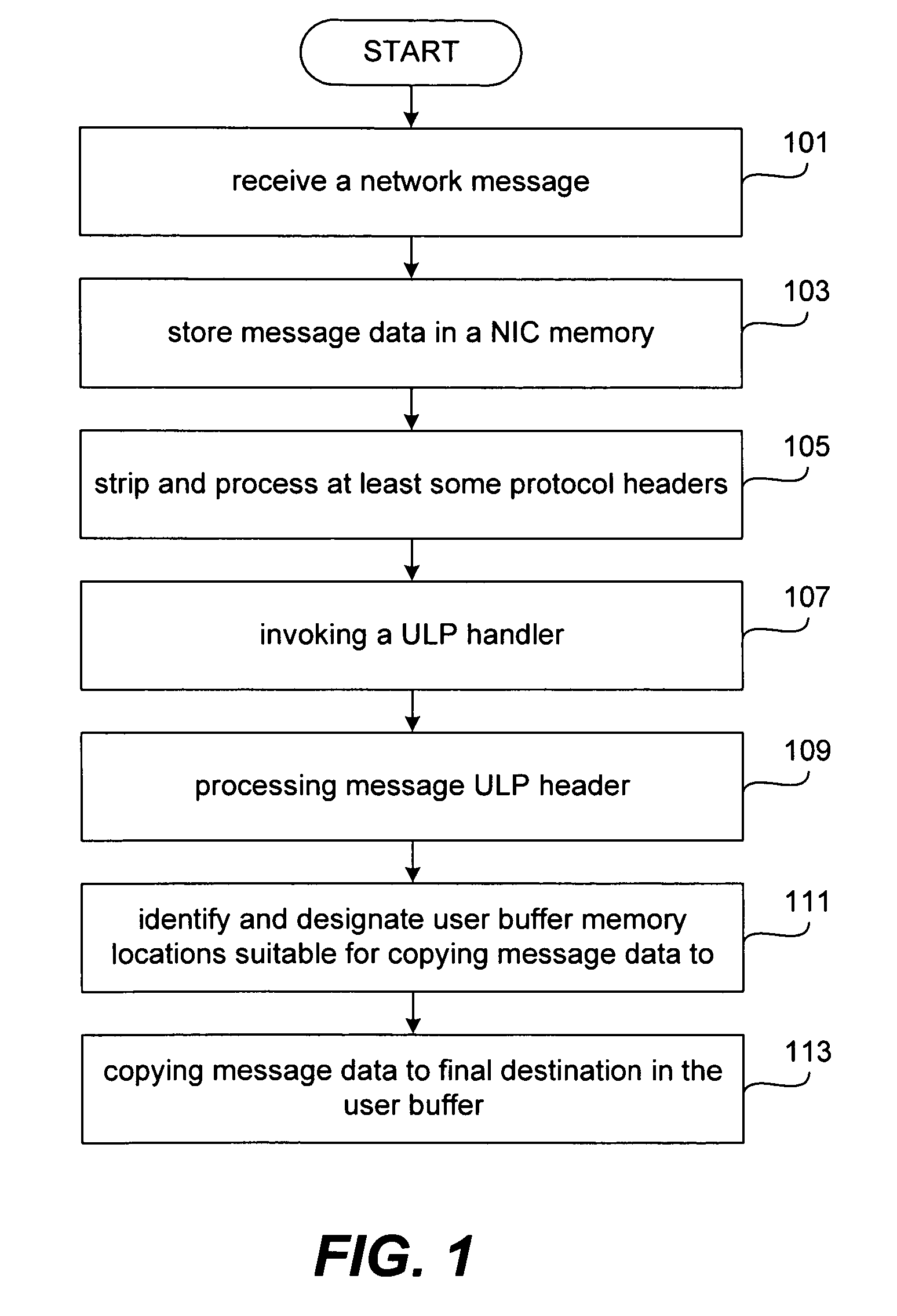

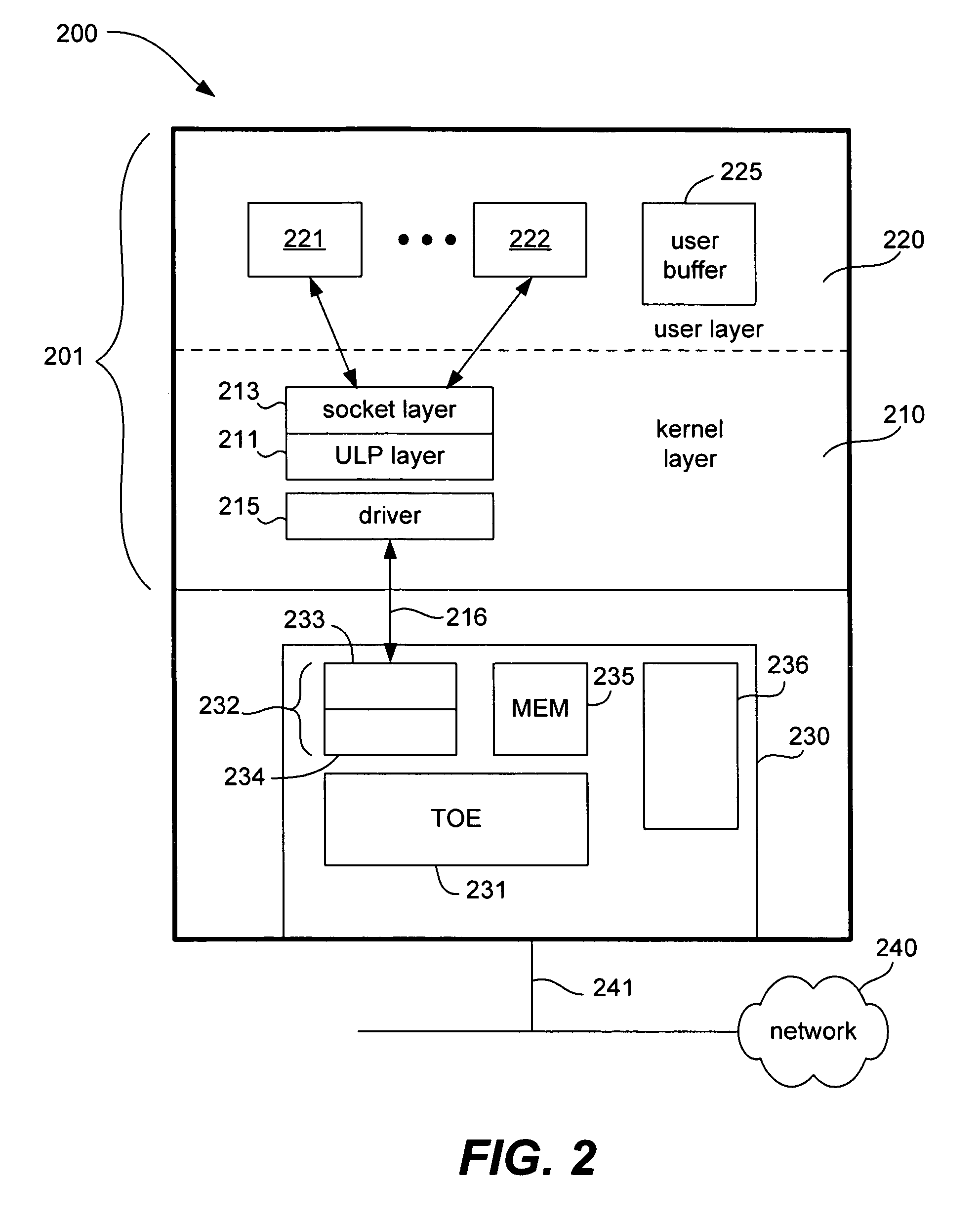

Techniques, systems, and apparatus for conducting direct data placement of network message data to a final destination in a user buffer are disclosed. Generally, the invention is configured to conduct direct data copying from a NIC memory to a final destination user buffer location without any intermediate copying to a kernel buffer. The invention includes a method that involves receiving network delivered messages by a NIC of a local computer. The message is stored in the memory of the NIC. The headers are stripped from the message and processed. A ULP handler of the local computer is invoked to process the ULP header of the network message. Using information obtained from the processed ULP header, suitable memory locations in a user buffer are identified and designated for saving associated message data. The message data is then directly placed from the NIC memory to the designated memory location in the user buffer without intermediate copy steps like DMA.

Owner:ORACLE INT CORP

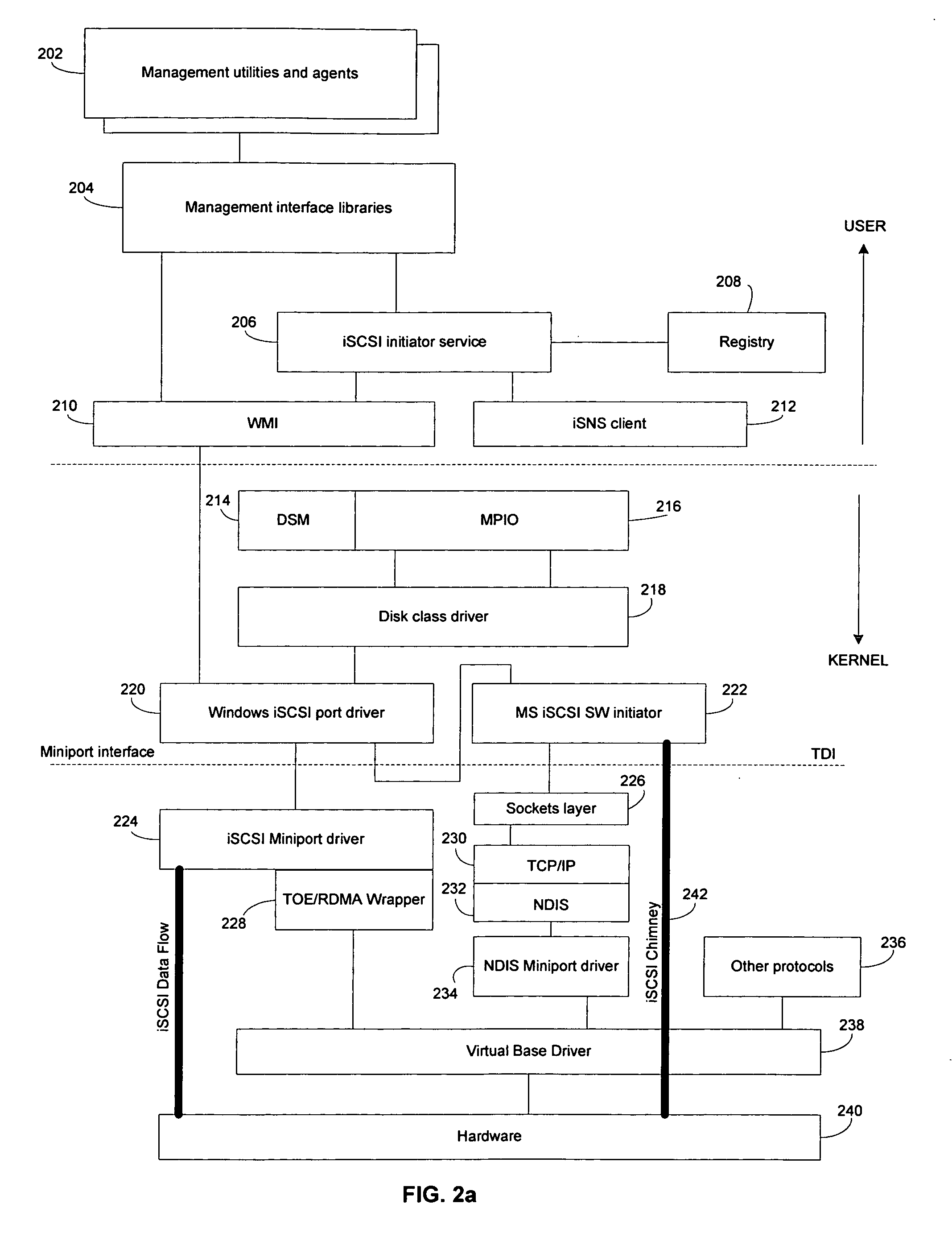

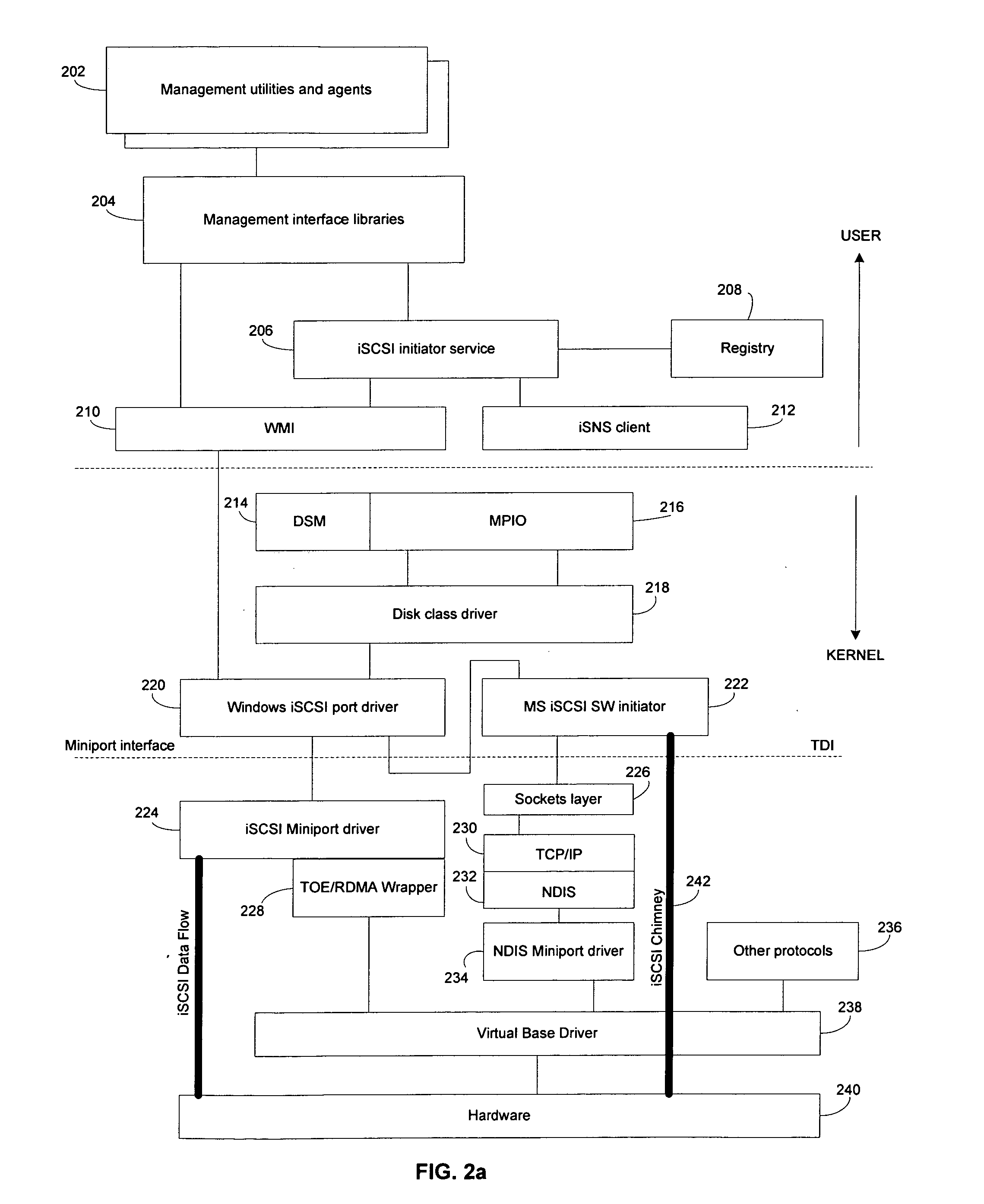

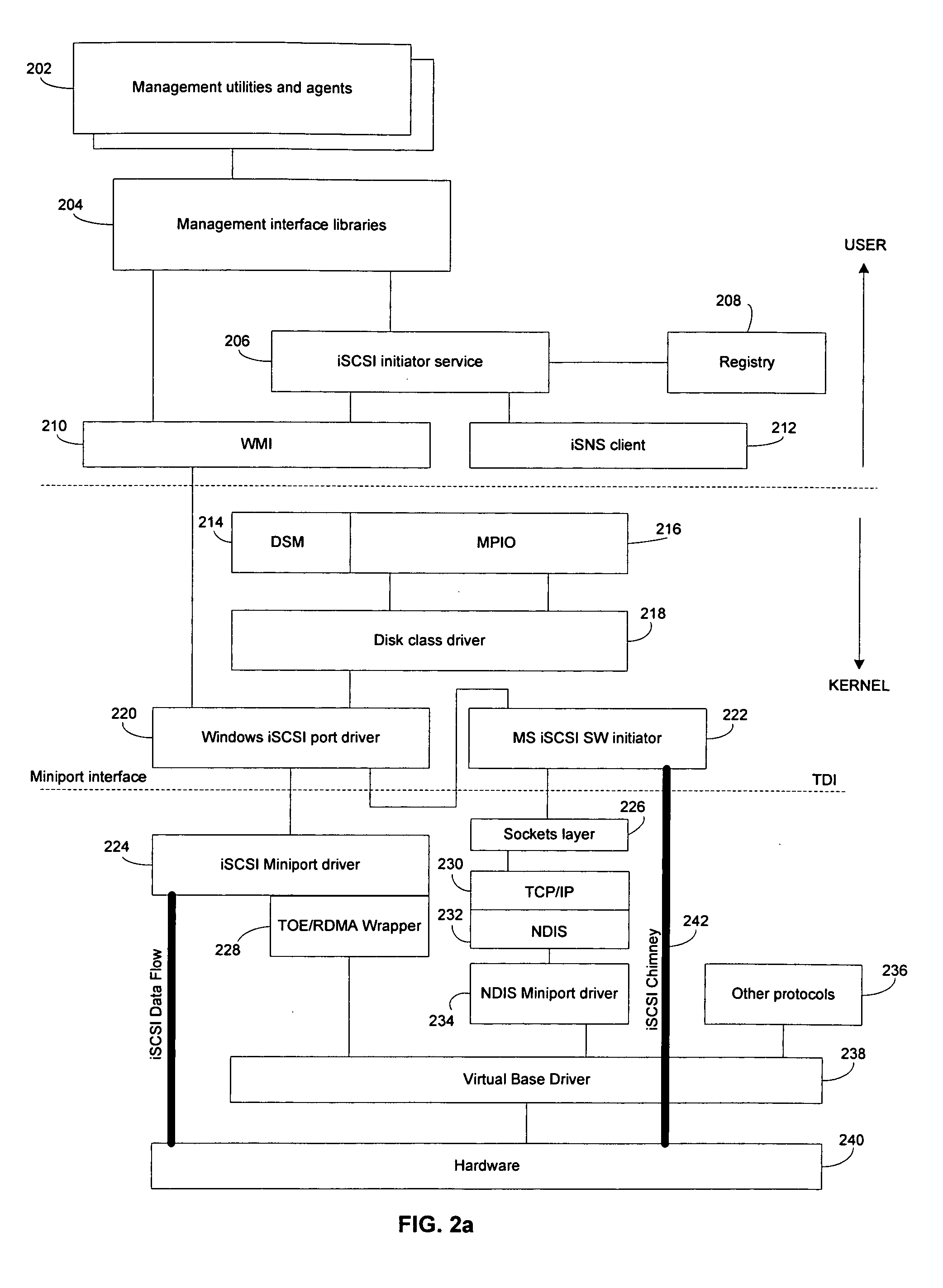

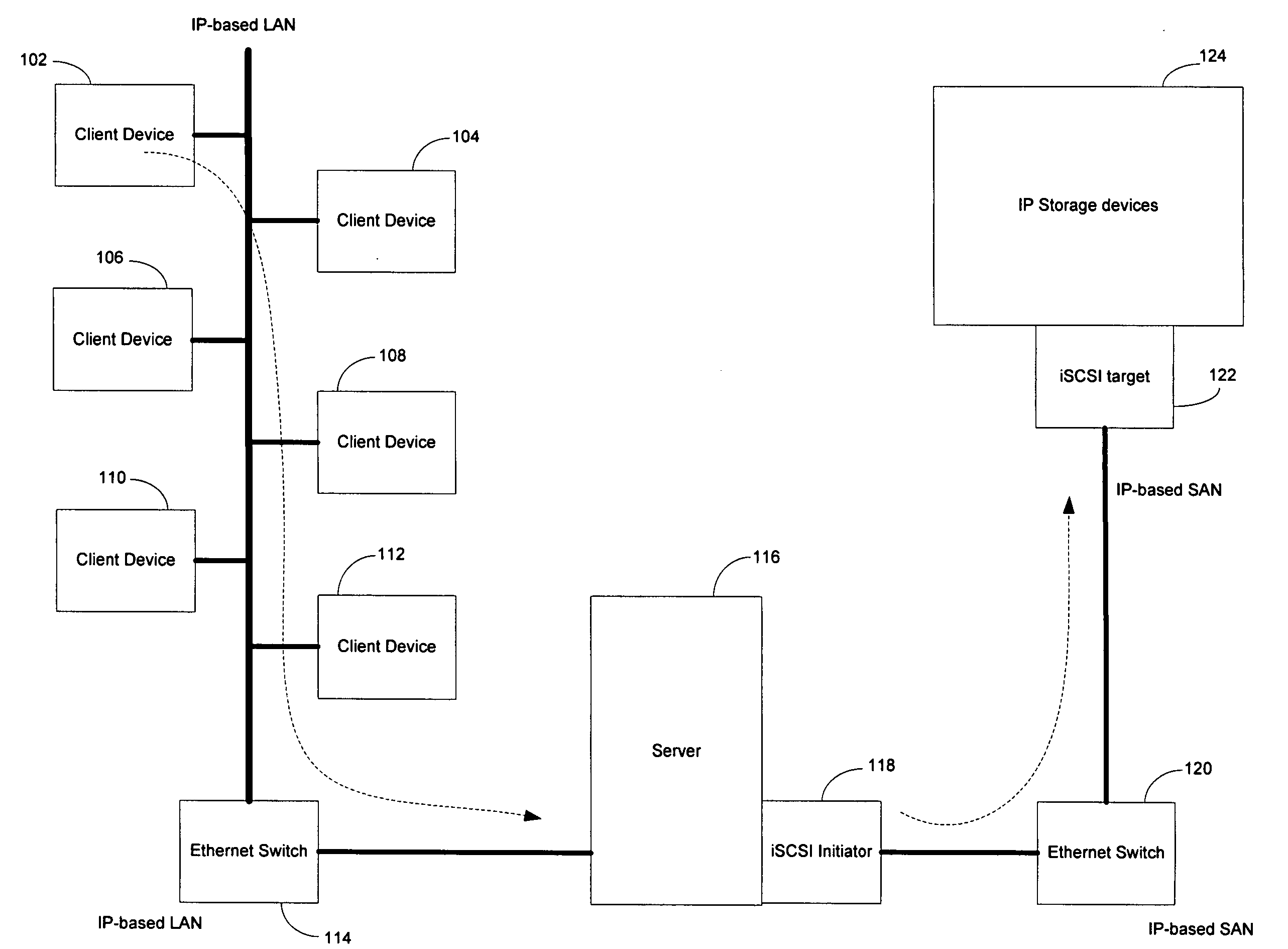

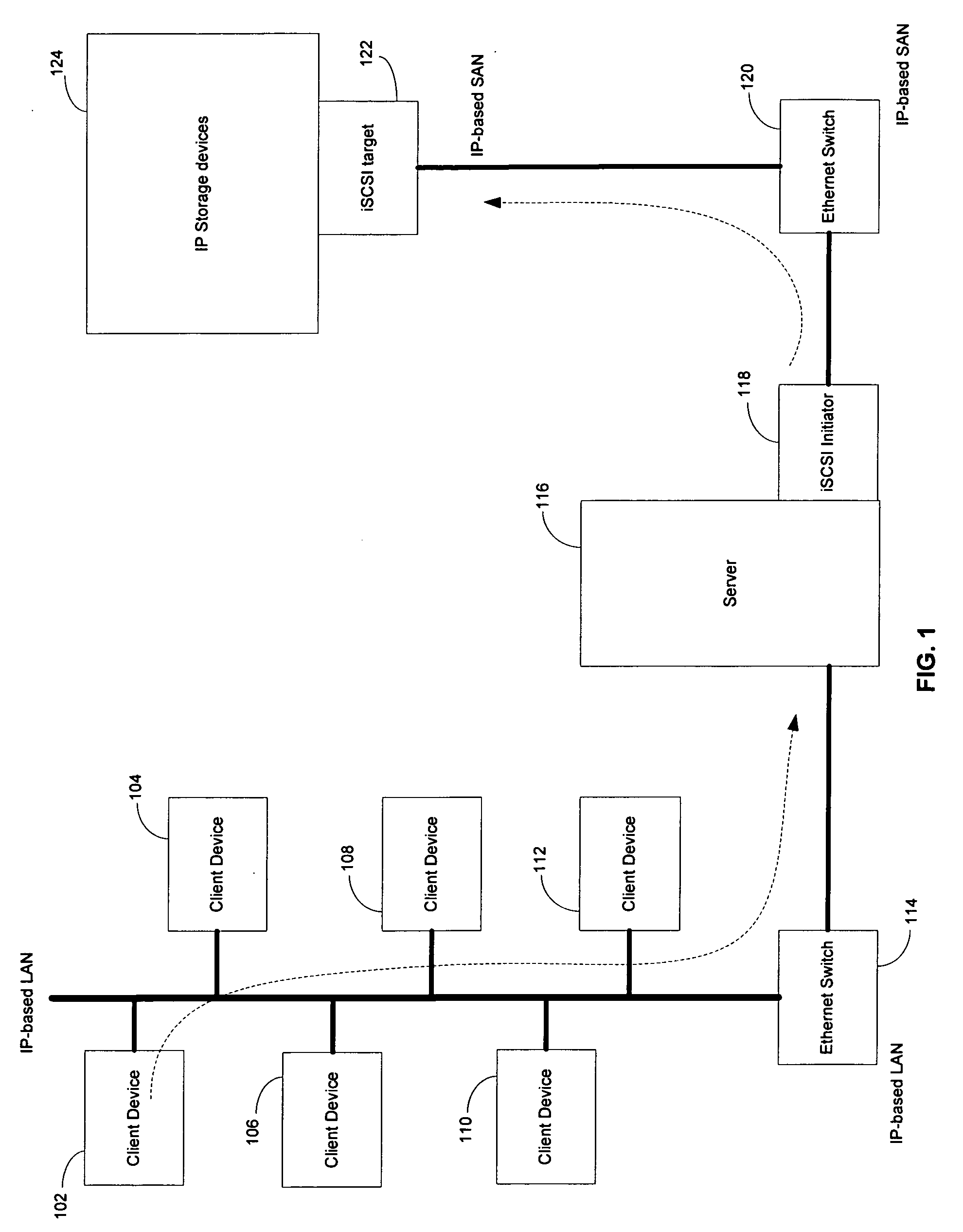

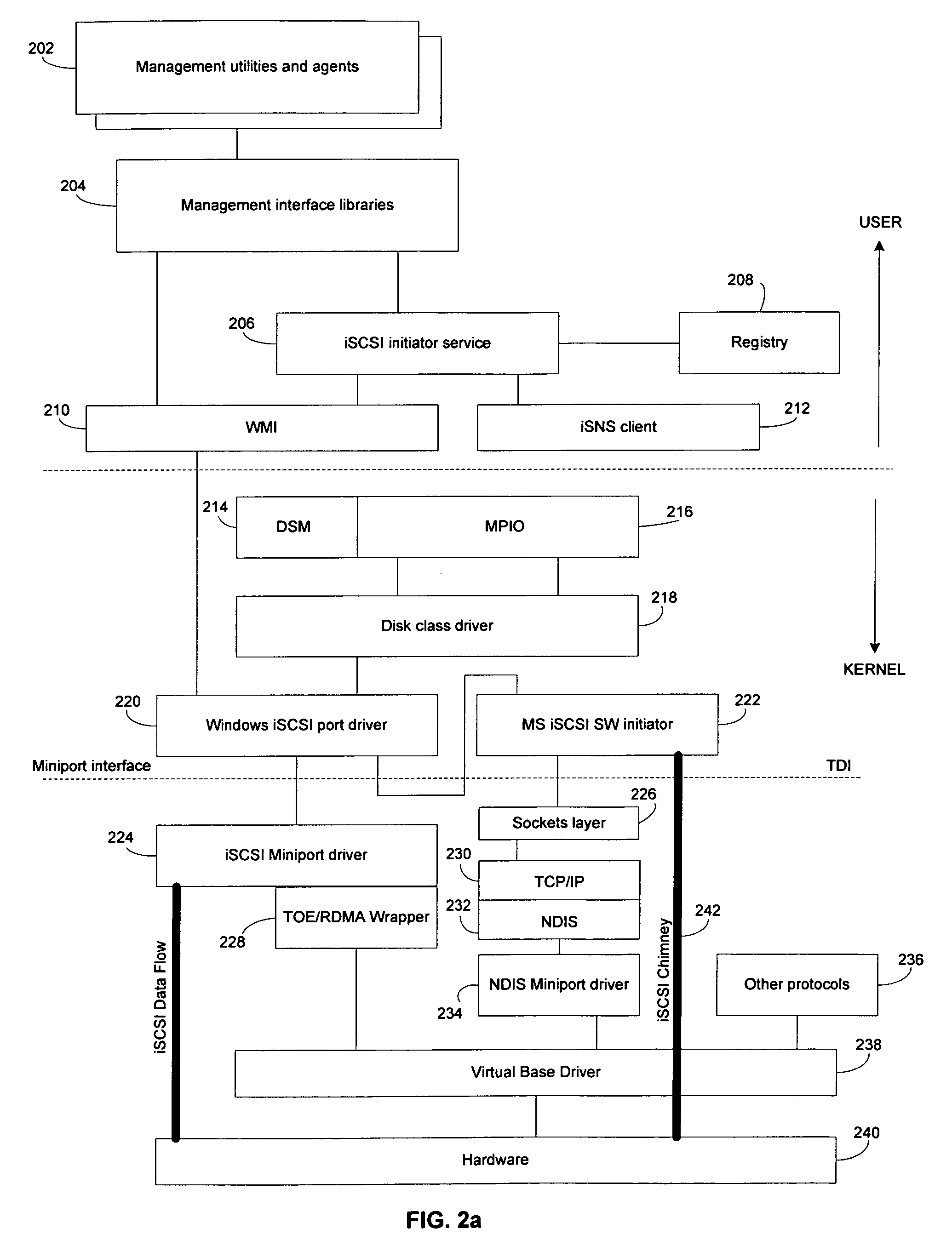

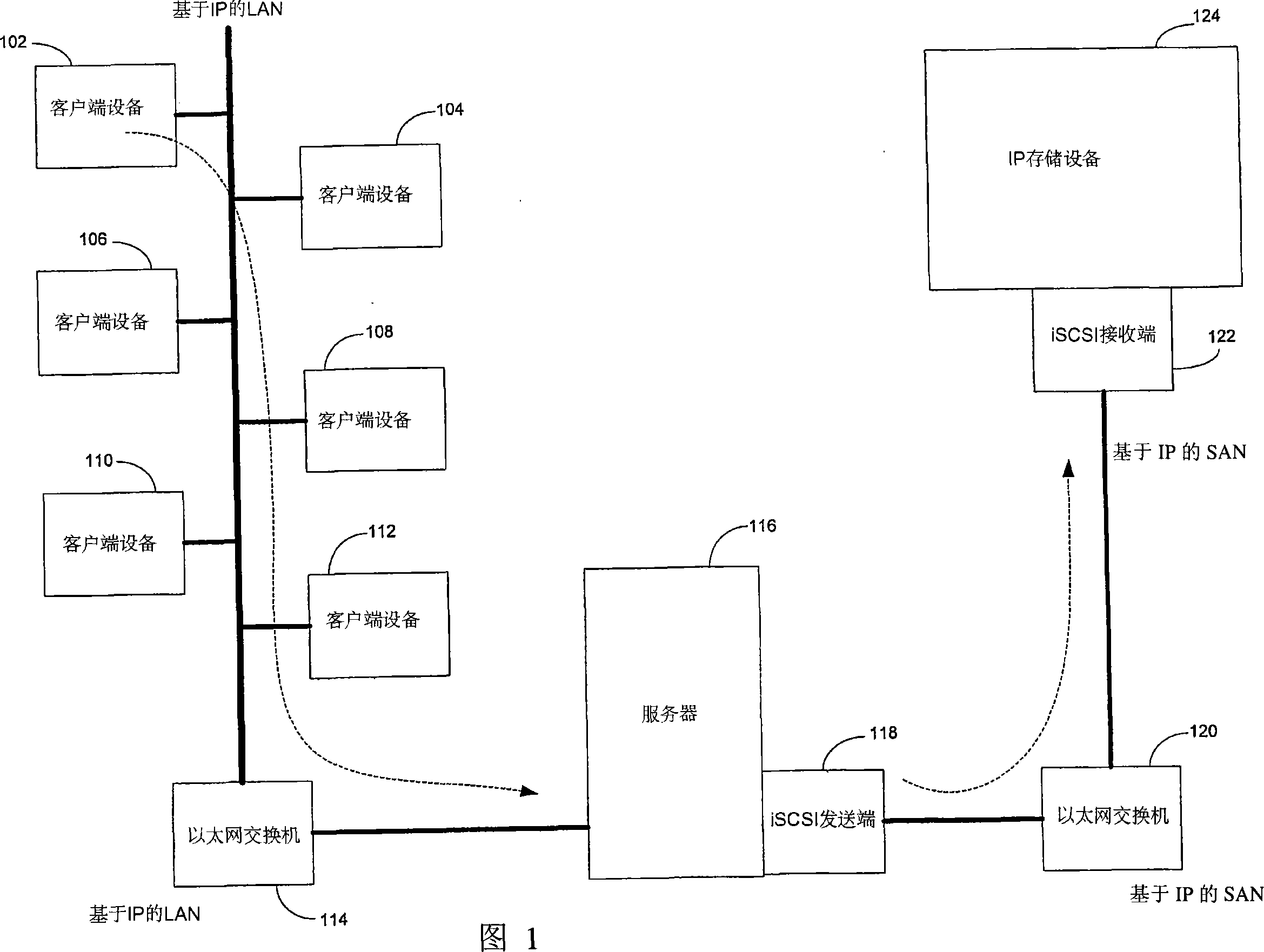

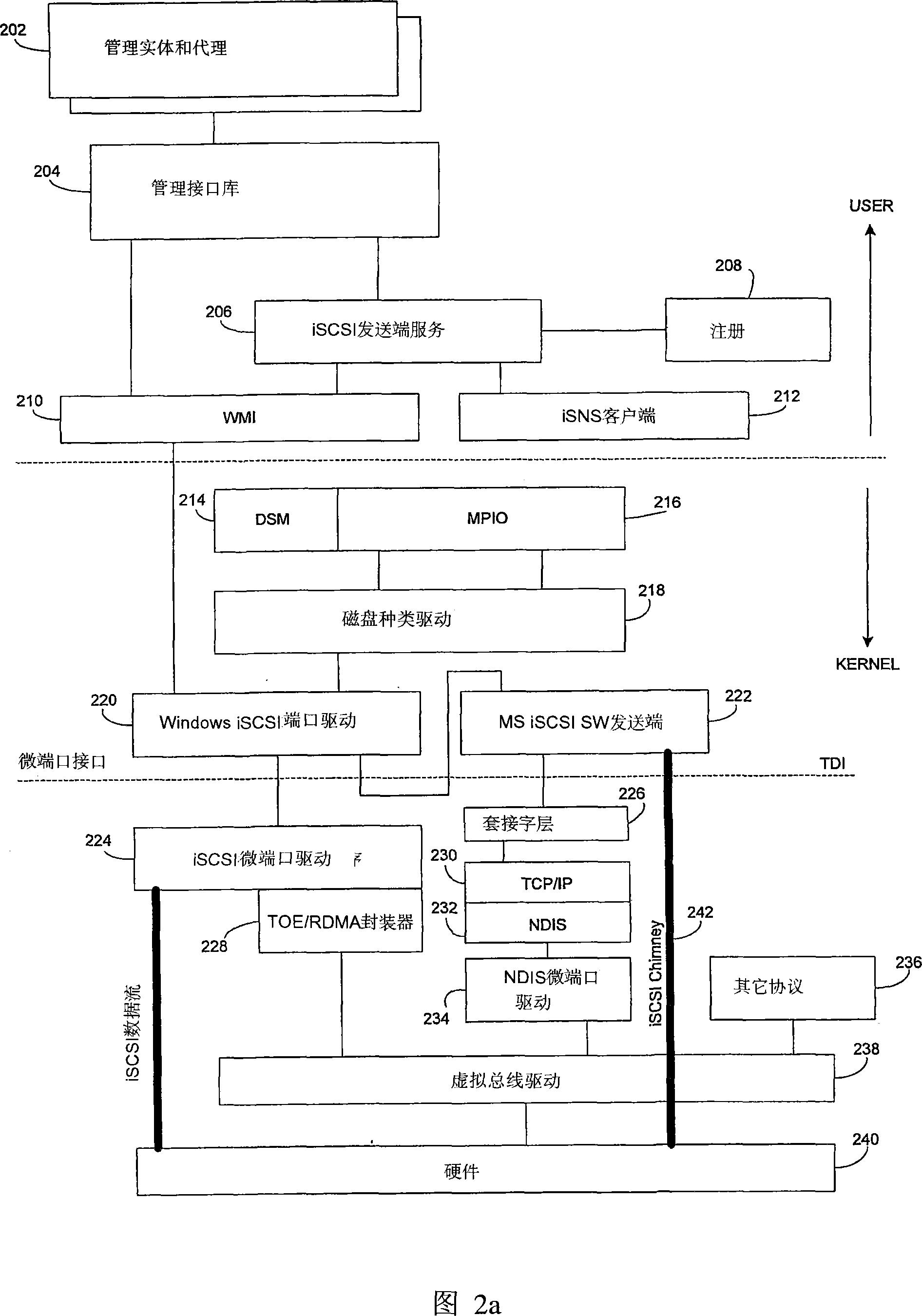

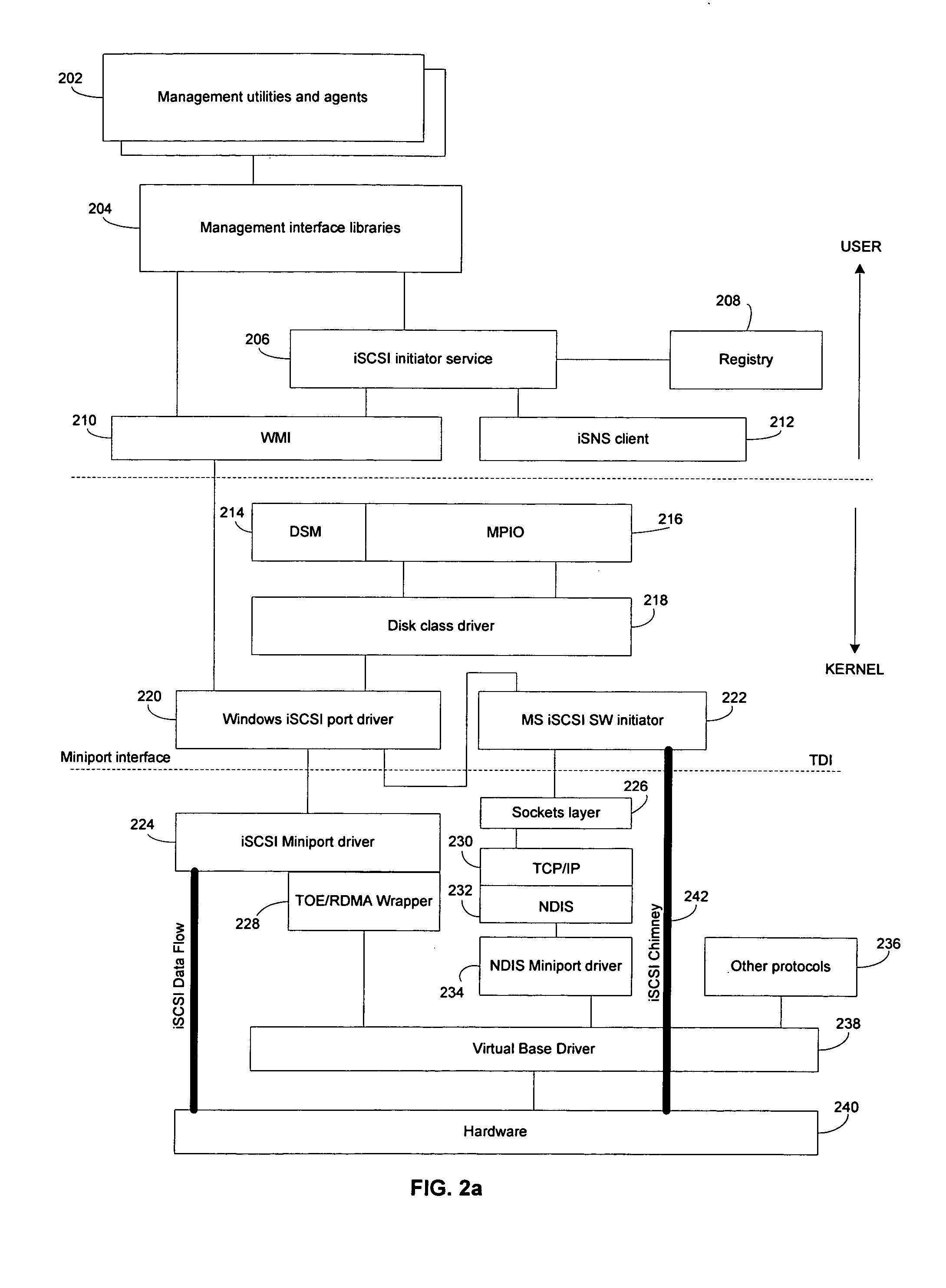

Method and system for supporting hardware acceleration for iSCSI read and write operations and iSCSI chimney

ActiveUS20050281280A1Time-division multiplexData switching by path configurationZero-copyTCP offload engine

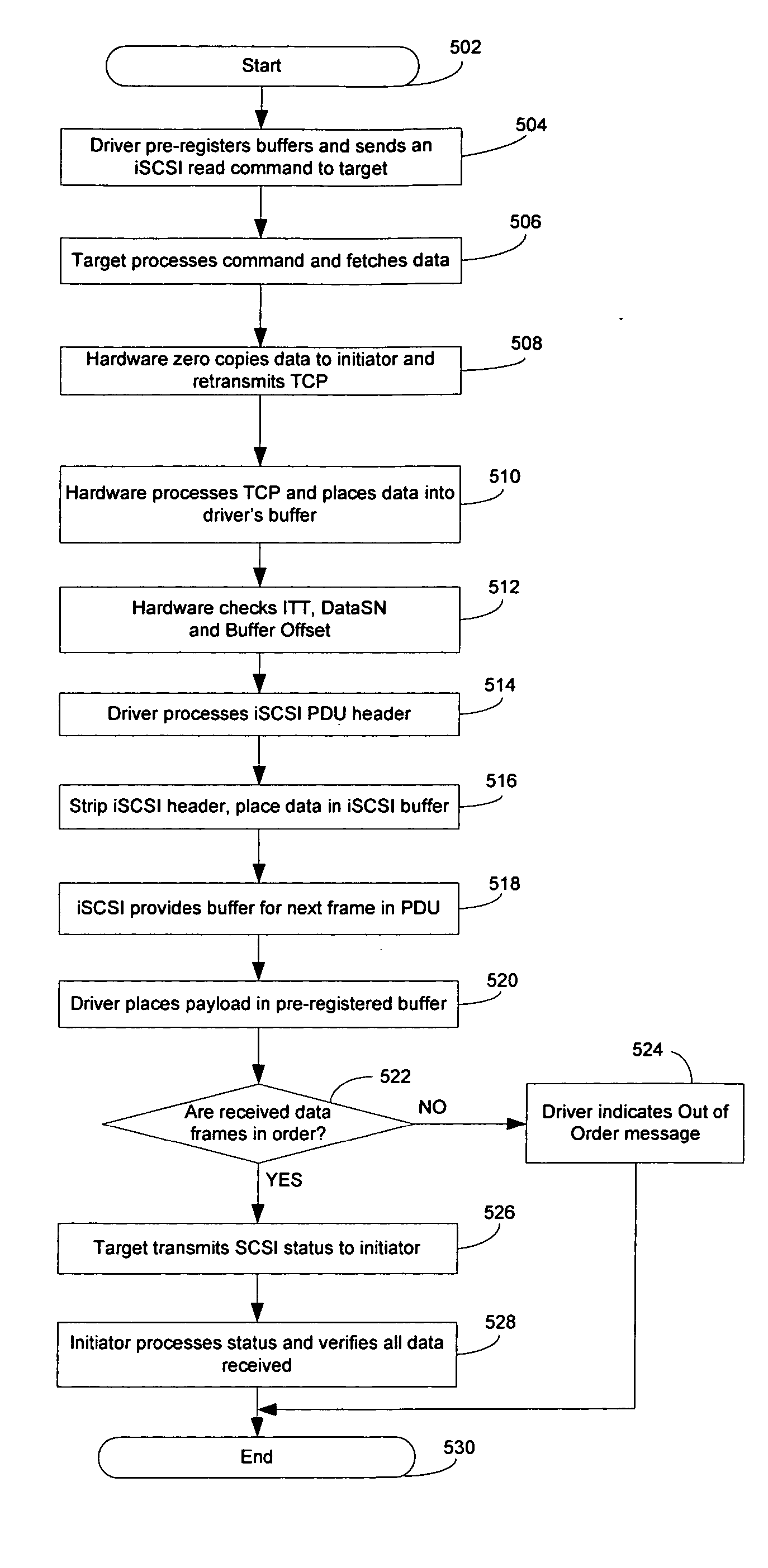

Certain aspects of a method and system for supporting hardware acceleration for iSCSI read and write operations via a TCP offload engine may comprise pre-registering at least one buffer with hardware. An iSCSI command may be received from an initiator. An initiator test tag value, a data sequence value and / or a buffer offset value of an iSCSI buffer may be compared with the pre-registered buffer. Data may be fetched from the pre-registered buffer based on comparing the initiator test tag value, the data sequence value and / or the buffer offset value of the iSCSI buffer with the pre-registered buffer. The fetched data may be zero copied from the pre-registered buffer to the initiator.

Owner:AVAGO TECH INT SALES PTE LTD

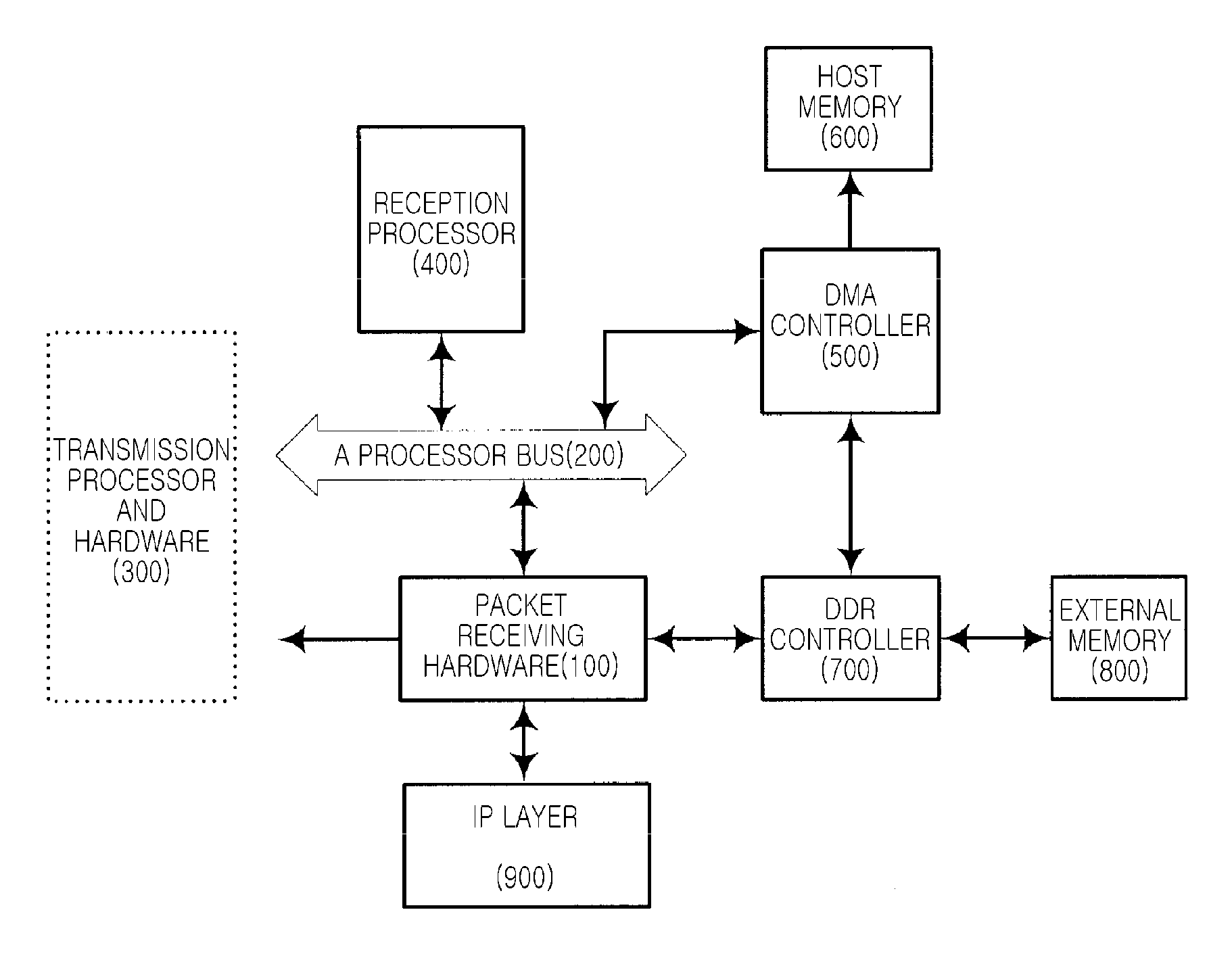

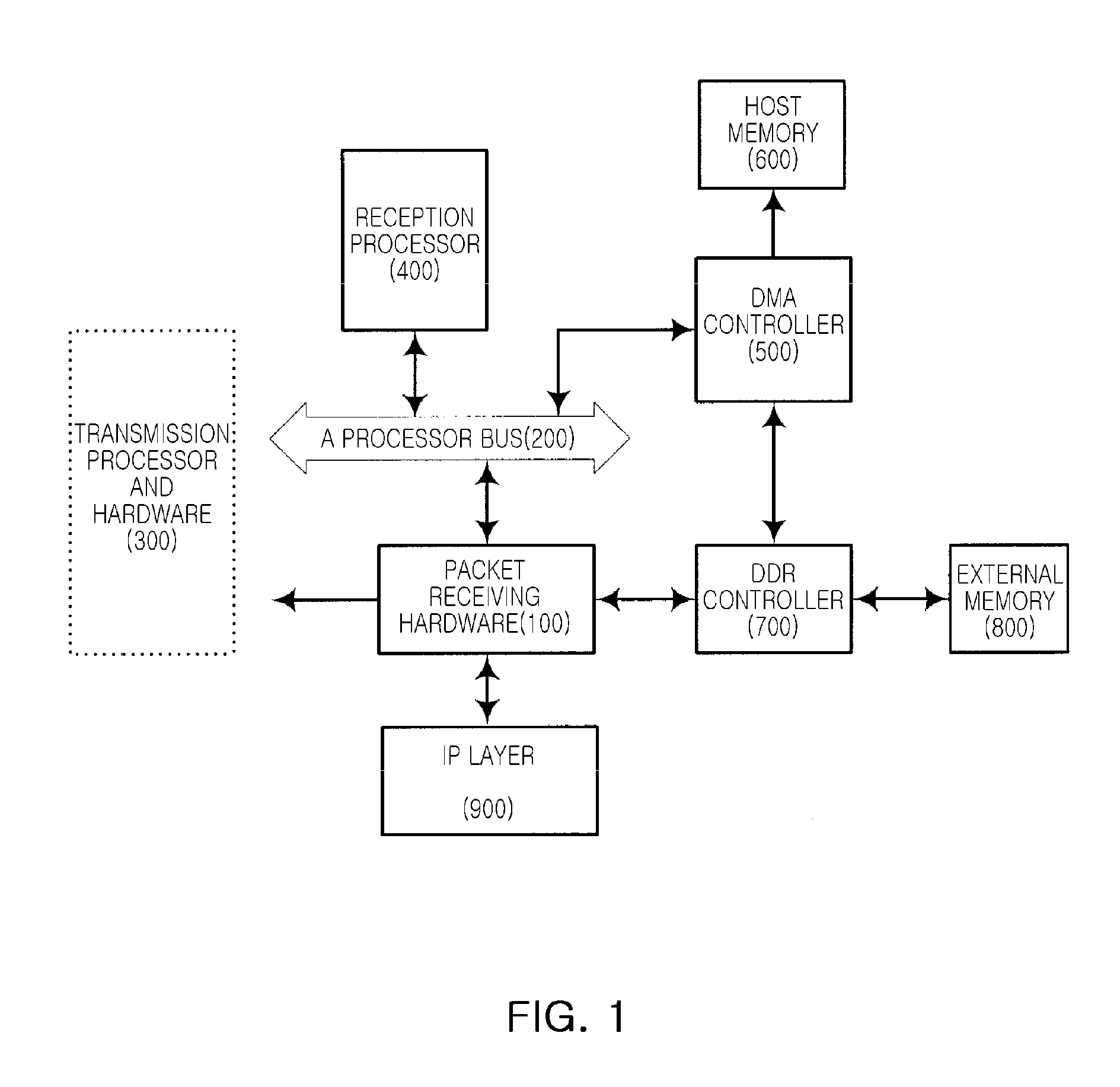

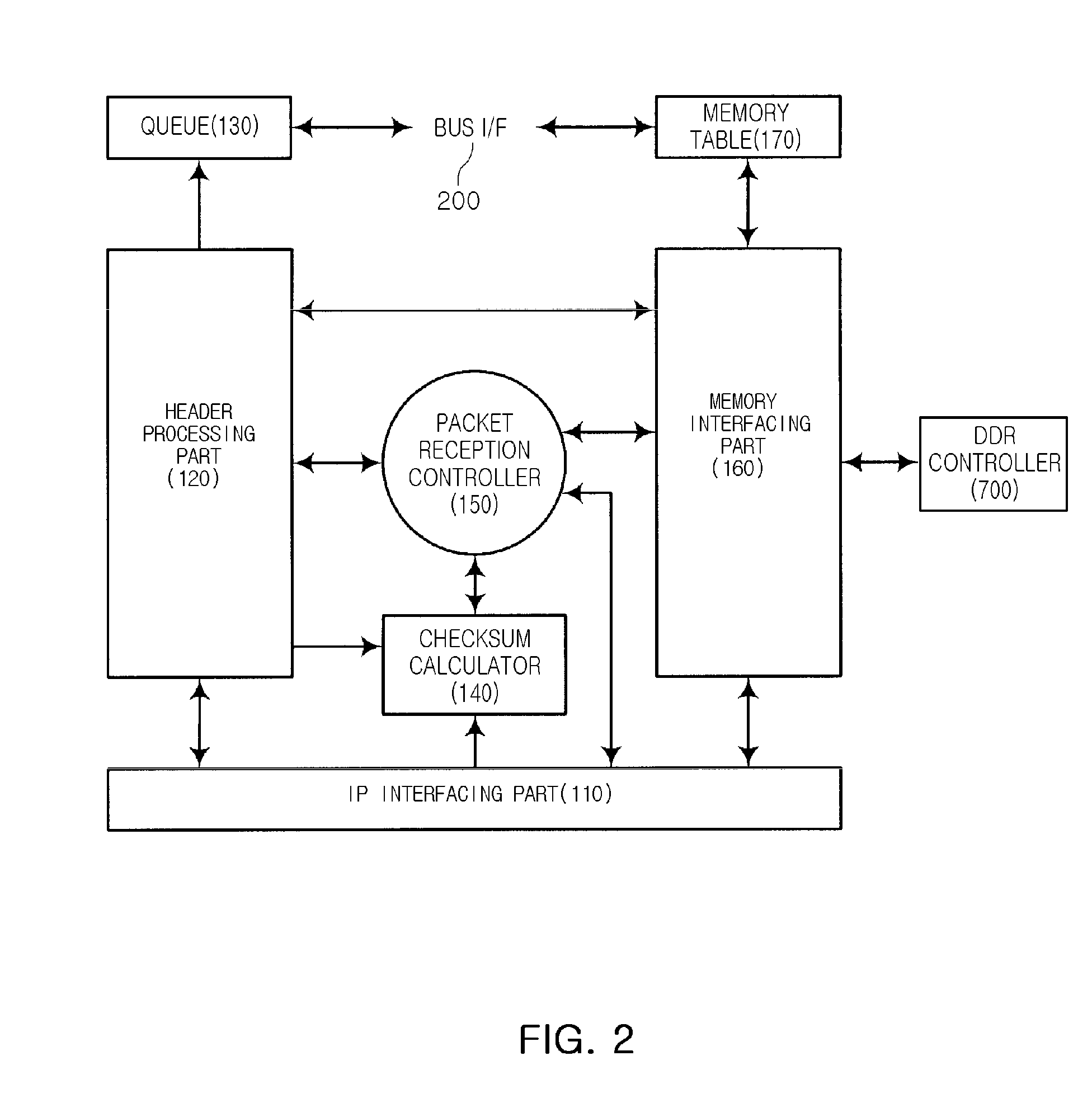

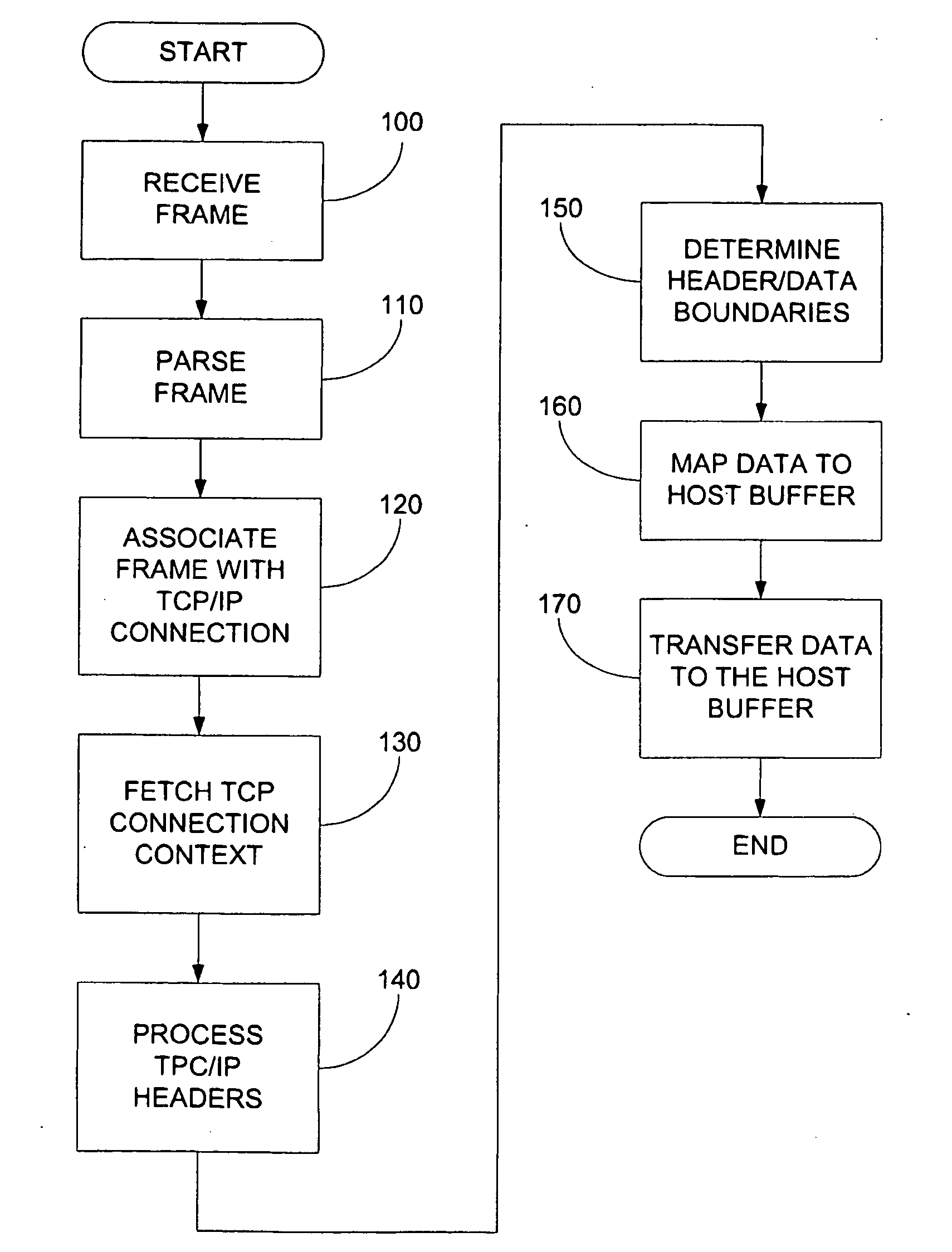

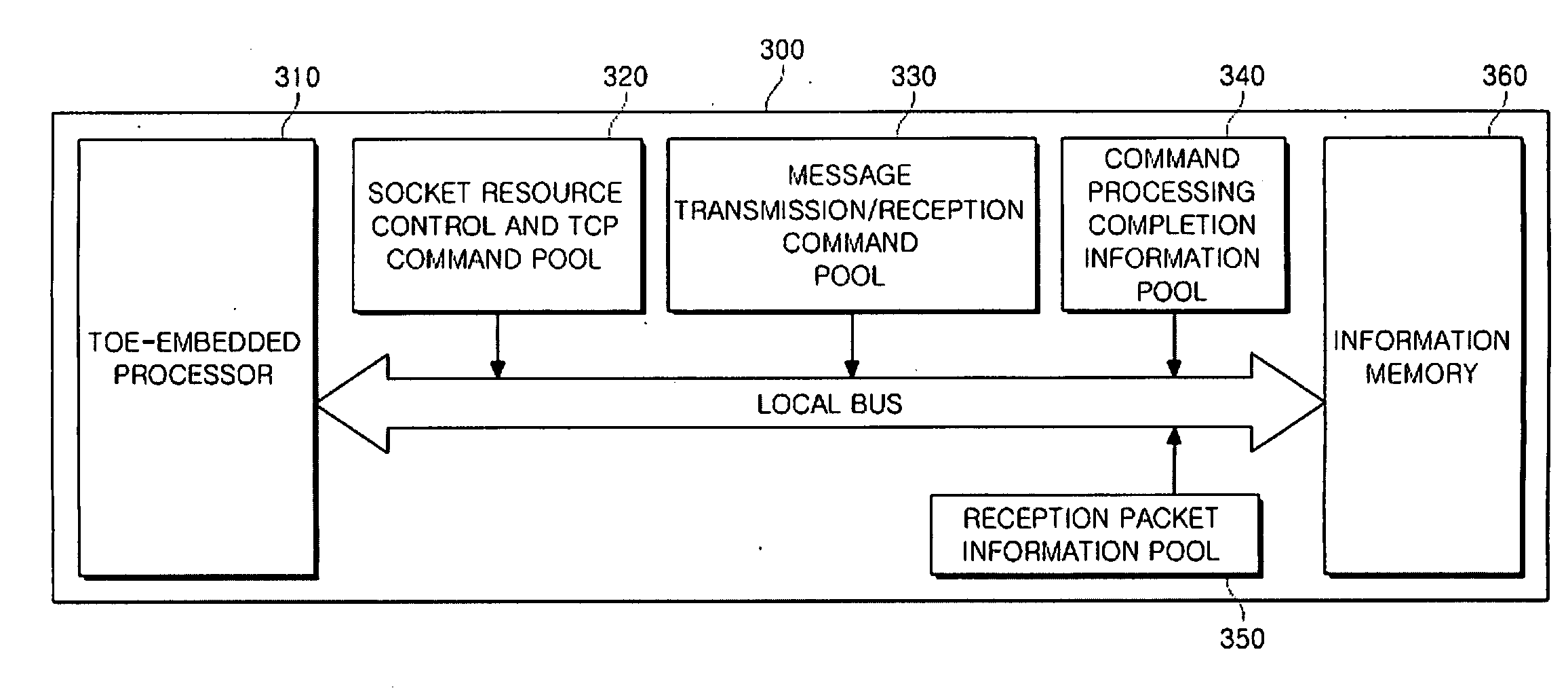

Packet receiving hardware apparatus for TCP offload engine and receiving system and method using the same

InactiveUS20080133798A1Reduce overheadQuick managementTransmissionInput/output processes for data processingProtocol processingExternal storage

A hardware apparatus for receiving a packet for a TCP offload engine (TOE), and receiving system and method using the same are provided. Specifically, information required to protocol processing by a processor is stored in the internal queue included in the packet receiving hardware. Data to be stored in a host memory is transmitted to the host memory after the data is stored in an external memory and protocol processing is performed by the processor. With these techniques, it is possible that a processor can operate asynchronously with a receiving time of a practical packet and it is possible to reduce an overhead that processor deals with unnecessary information

Owner:ELECTRONICS & TELECOMM RES INST

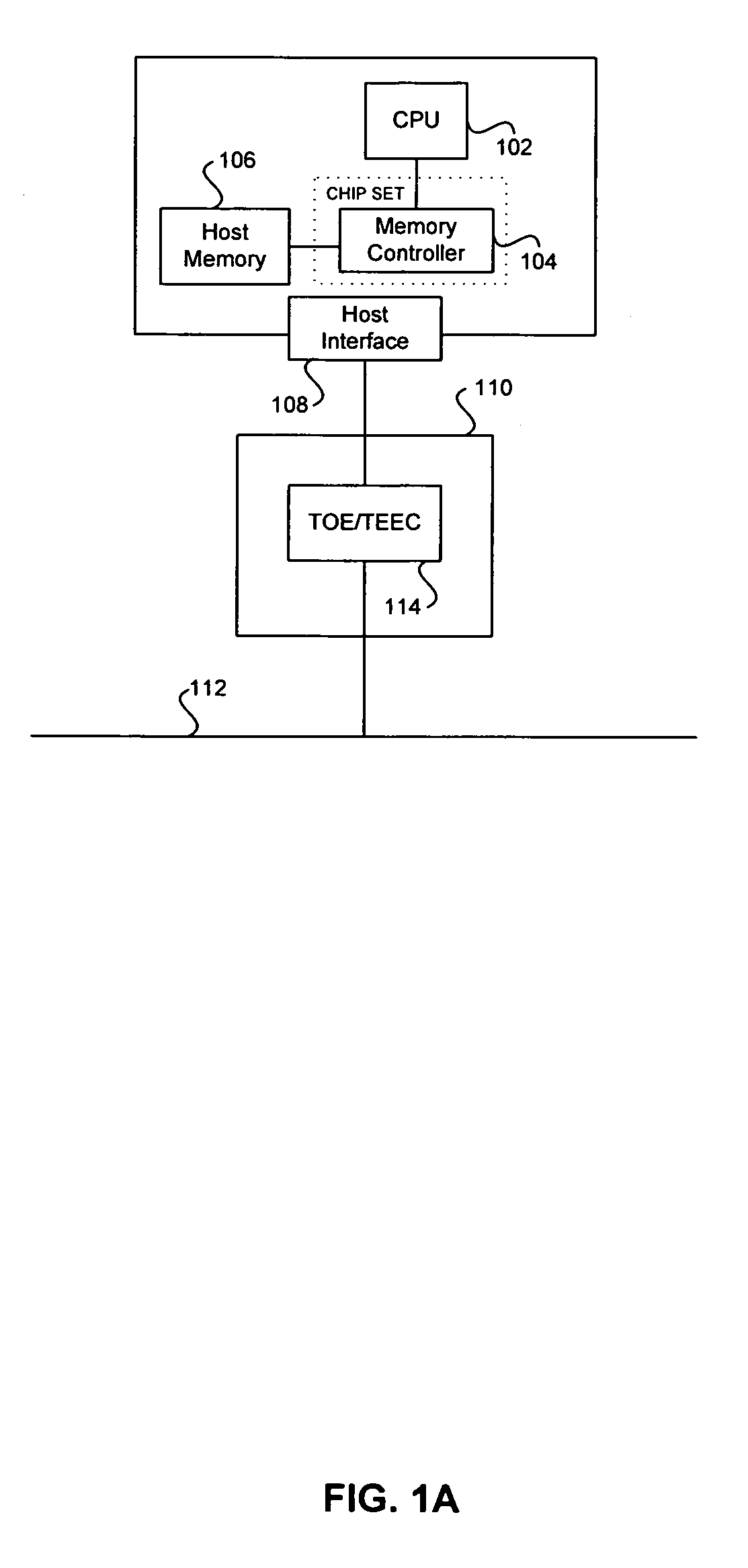

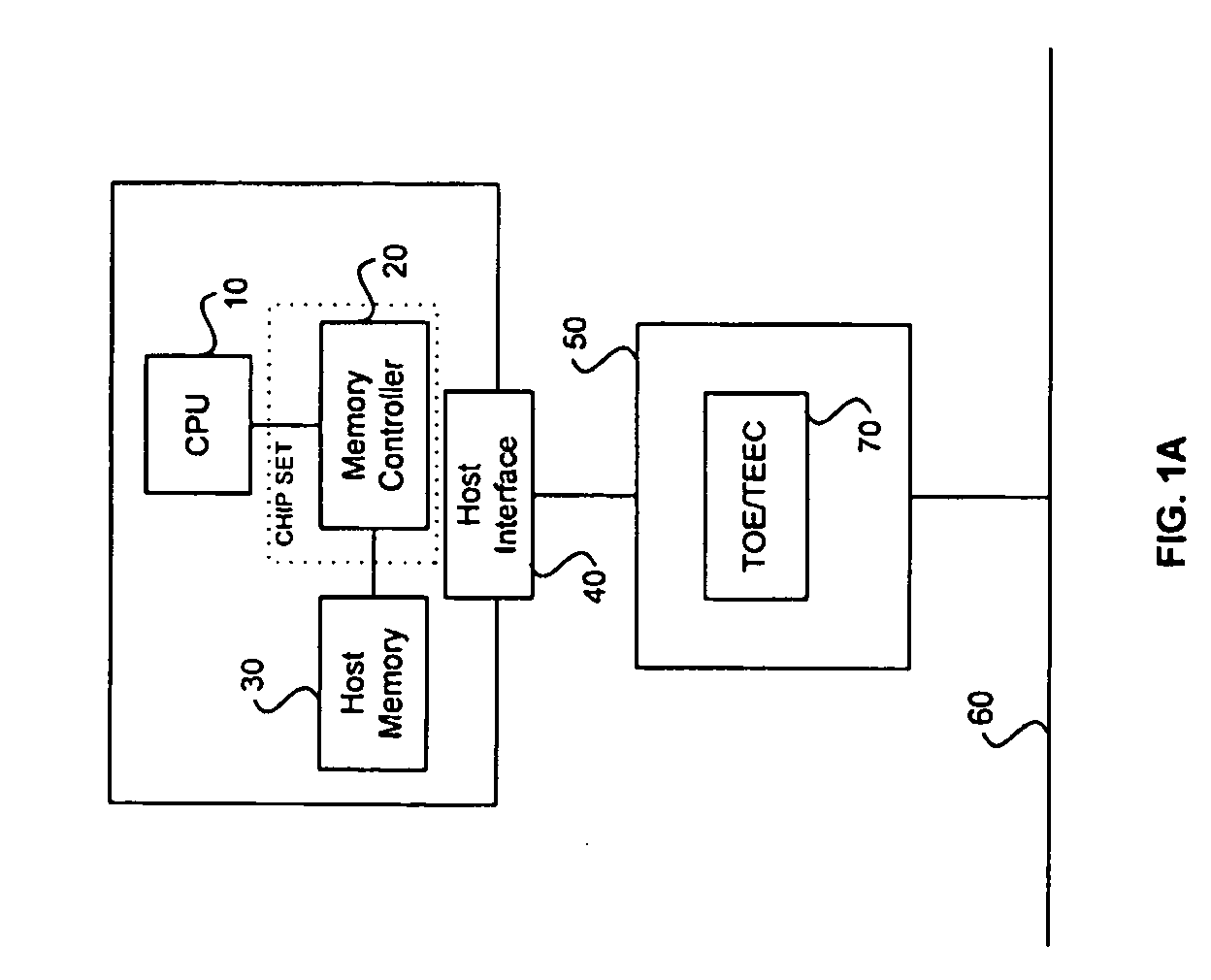

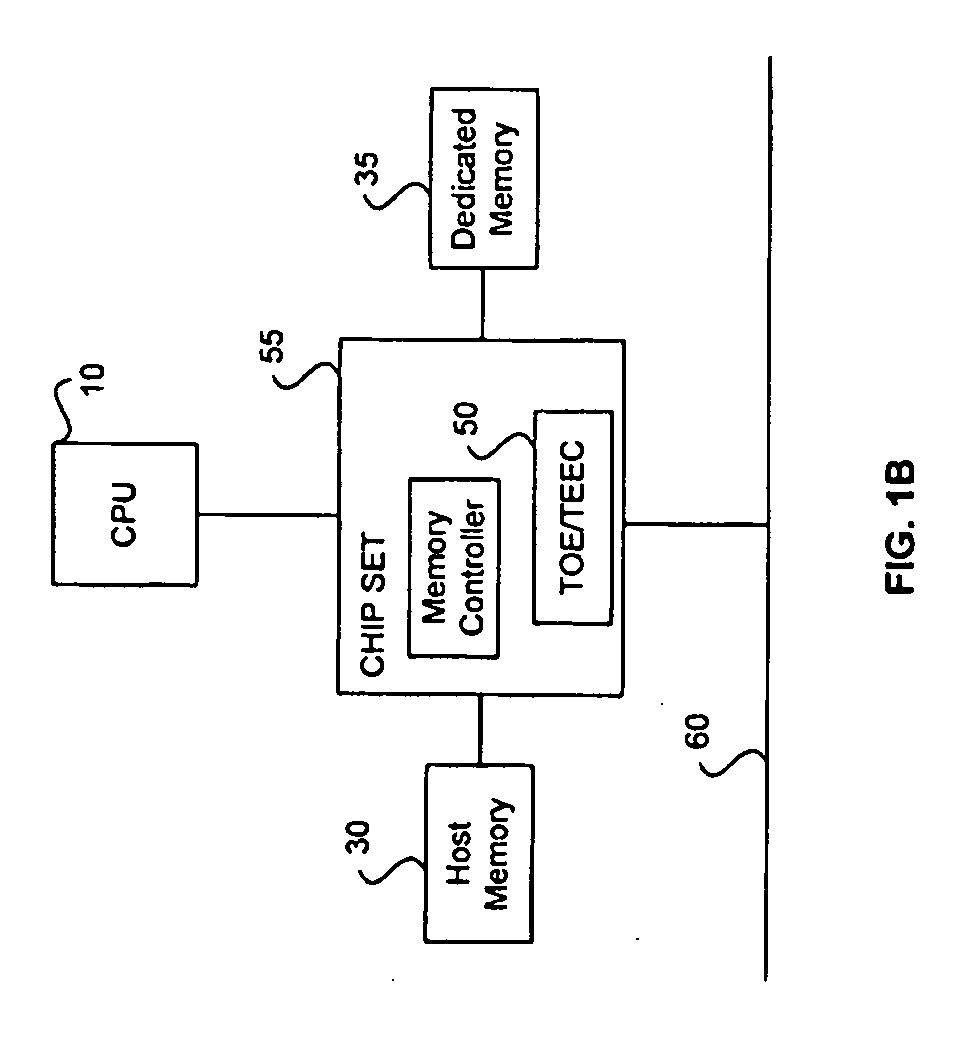

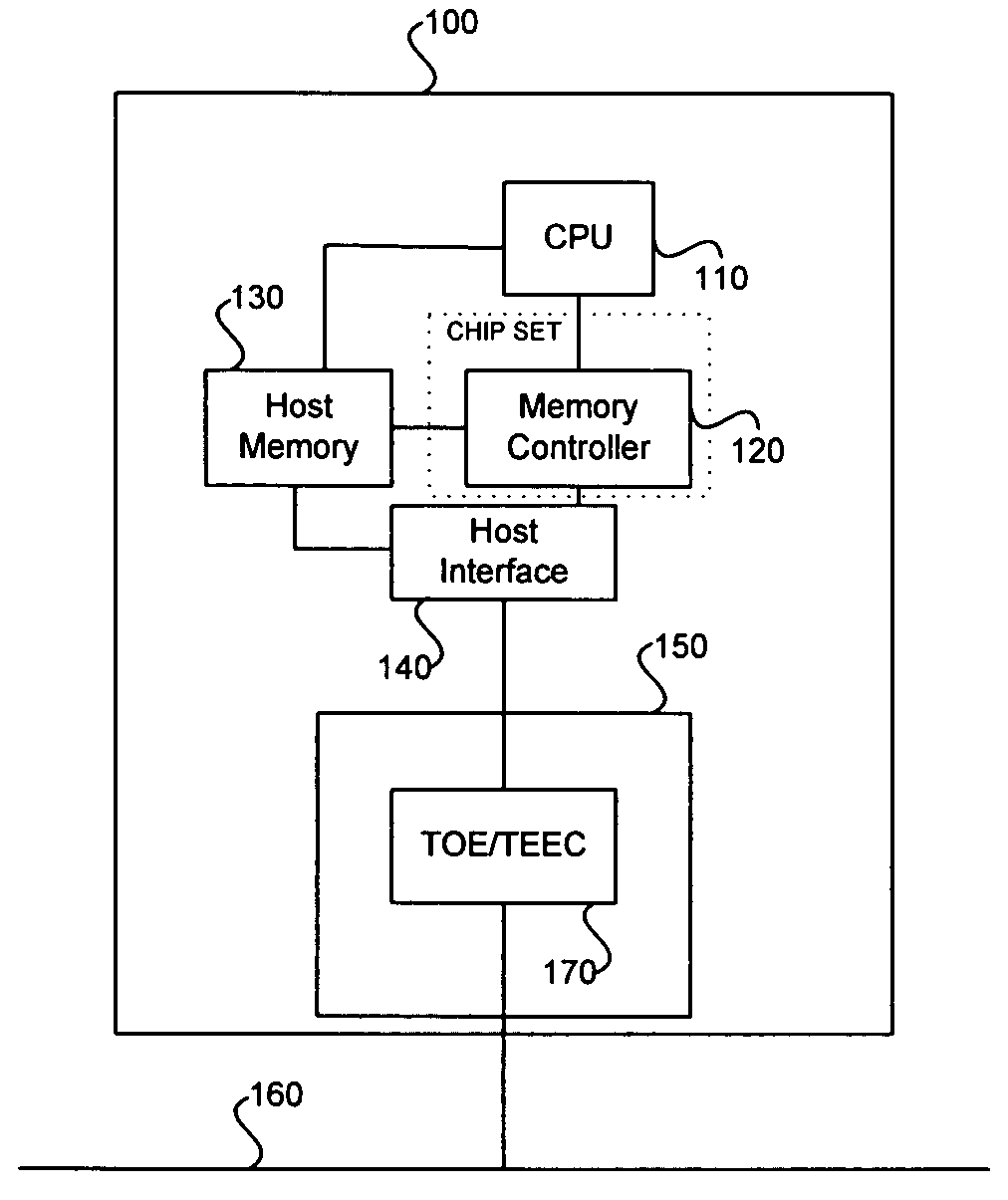

System and method for handling out-of-order frames

InactiveUS20080298369A1Networks interconnectionSecuring communicationTCP offload engineData placement

A system for handling out-of-order frames may include one or more processors that enable receiving of an out-of-order frame via a network subsystem. The one or more processors may enable placing data of the out-of-order frame in a host memory, and managing information relating to one or more holes resulting from the out-of-order frame in a receive window. The one or more processors may enable setting a programmable limit with respect to a number of holes allowed in the receive window. The out-of-order frame is received via a TCP offload engine (TOE) of the network subsystem or a TCP-enabled Ethernet controller (TEEC) of the network subsystem. The network subsystem may not store the out-of-order frame on an onboard memory, and may not store one or more missing frames relating to the out-of-order frame. The network subsystem may include a network interface card (NIC).

Owner:AVAGO TECH INT SALES PTE LTD

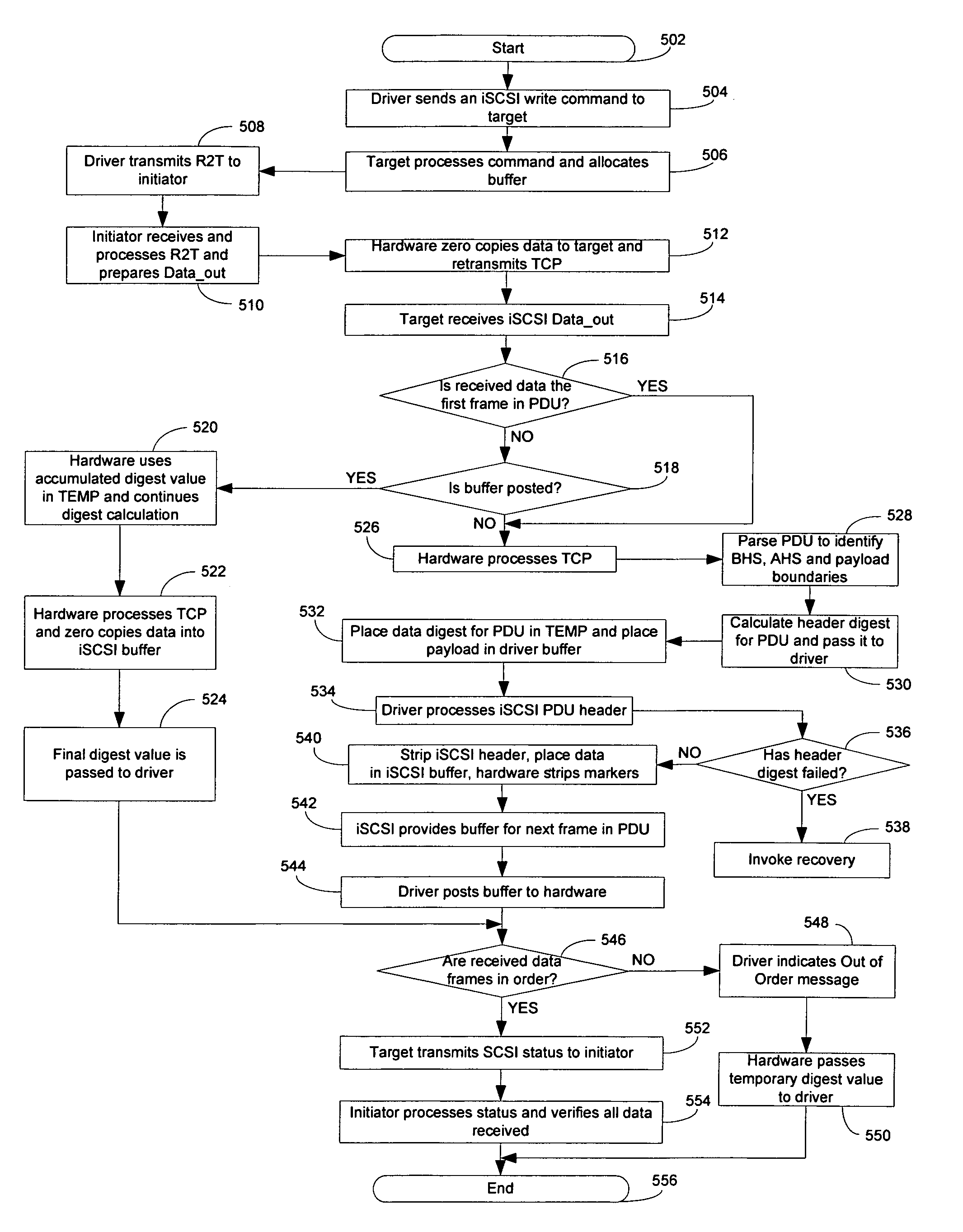

Method and system for supporting write operations with CRC for iSCSI and iSCSI chimney

A method and system is provided for handling data by a TCP offload engine. The TCP offload engine may be adapted to perform SCSI write operations and may comprise receiving an iSCSI write command from an iSCSI port driver. At least one buffer may be allocated for handling data associated with the received iSCSI write command from the iSCSI port driver. The received iSCSI write command may be formatted into at least one TCP segment. The at least one TCP segment may be transmitted to a target. A request to transmit (R2T) signal may be communicated from the target to an initiator. The write data may be zero copied from the allocated at least one buffer in a server to the initiator. A digest value may be calculated, which may be appended to the TCP segment communicated by the initiator to the target.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

Method and system for supporting read operations with CRC for iSCSI and iSCSI chimney

Certain embodiments of the invention may be found in a method and system for performing SCSI read operations with a CRC via a TCP offload engine. Aspects of the method may comprise receiving an iSCSI read command from an initiator. Data may be fetched from a buffer based on the received iSCSI read command. The fetched data may be zero copied from the buffer to the initiator and a TCP sequence may be retransmitted to the initiator. A digest value may be calculated, which may be communicated to the initiator. An accumulated digest value stored in a temporary buffer may be utilized to calculate a final digest value, if the buffer is posted. The retransmitted TCP sequence may be processed and the fetched data may be zero copied into an iSCSI buffer, if the buffer is posted. The calculated final digest value may be communicated to the initiator.

Owner:AVAGO TECH INT SALES PTE LTD

Method and system for transmission control protocol (TCP) traffic smoothing

Various aspects of a method and system for transmission control protocol (TCP) traffic smoothing are presented. Traffic smoothing may comprise a method for controlling data transmission in a communications system that further comprises scheduling the timing of transmission of information from a TCP offload engine (TOE) based on a traffic profile. Traffic smoothing may comprise transmitting information from a TOE at a rate that is either greater than, approximately equal to, or less than, the rate at which the information was generated. Some conventional network interface cards (NIC) that utilize TOEs may not provide a mechanism that enables traffic shaping. By not providing a mechanism for traffic shaping, there may be a greater probability of lost packets in the network.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

Method and system for supporting write operations for iSCSI and iSCSI chimney

Certain embodiments of the invention may be found in a method and system for performing SCSI write operations via a TCP offload engine. Aspects of the method may comprise receiving an iSCSI write command from an initiator. At least one buffer may be allocated for handling data associated with the received iSCSI write command from the initiator. A request to transmit (R2T) signal may be received that may be transmitted by the initiator. The data may be zero copied from the allocated at least one buffer to the initiator.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

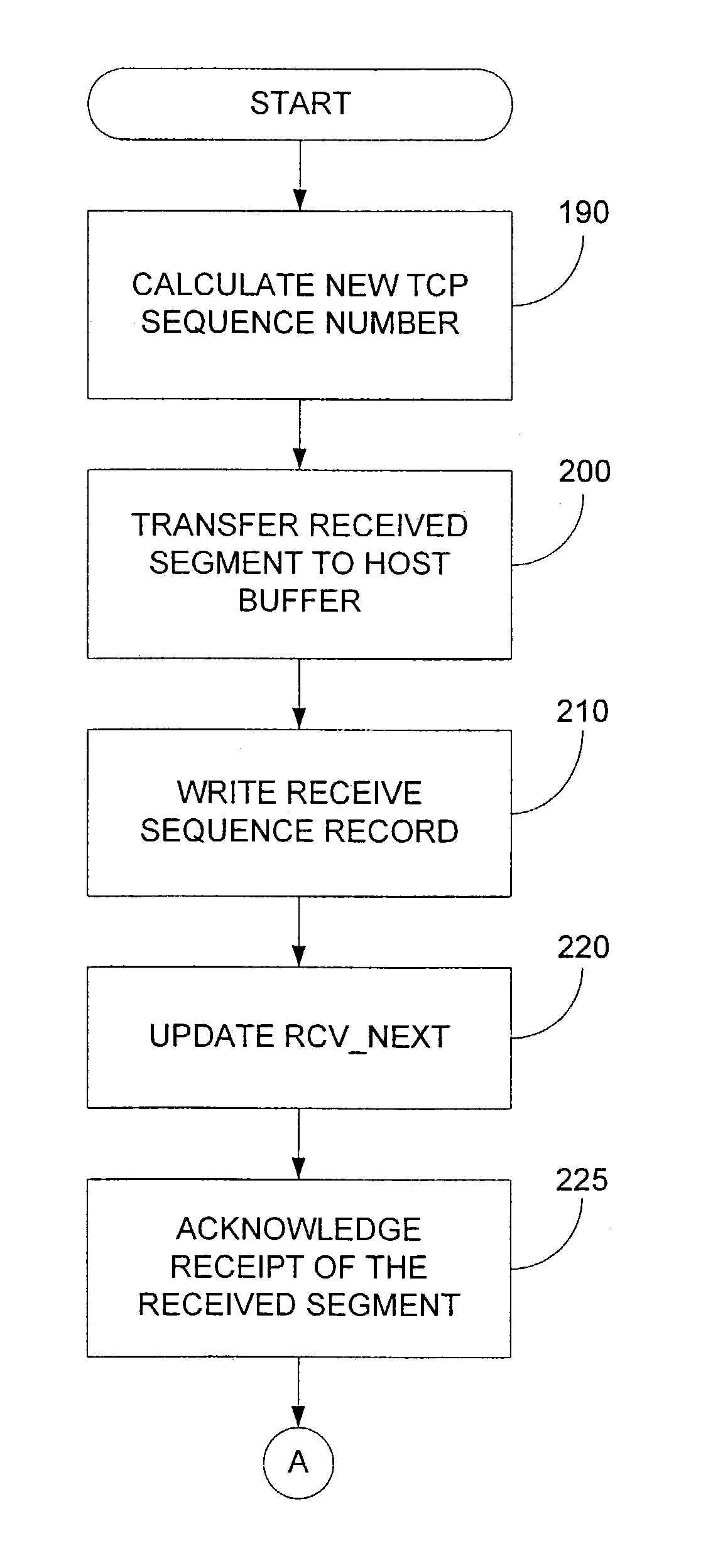

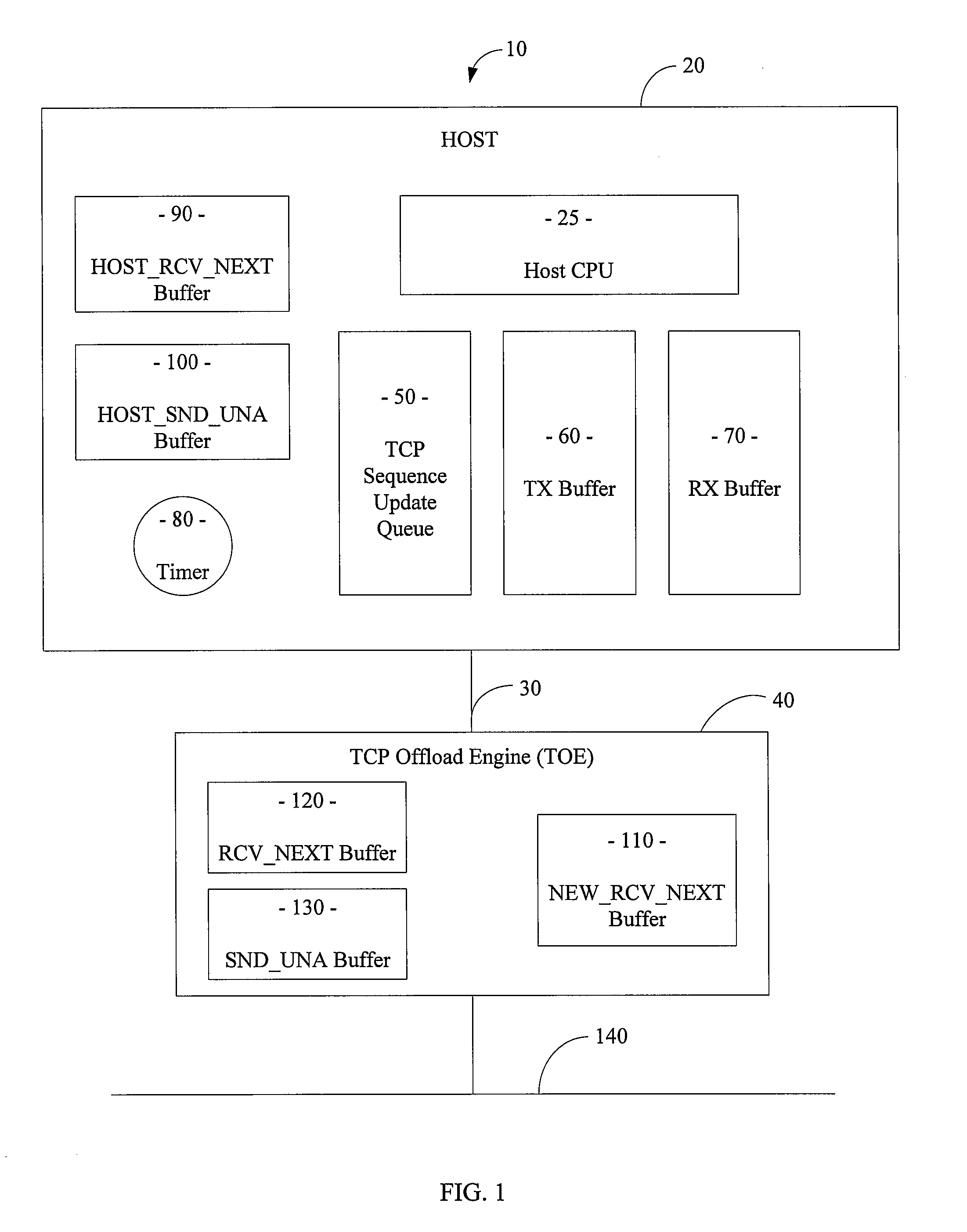

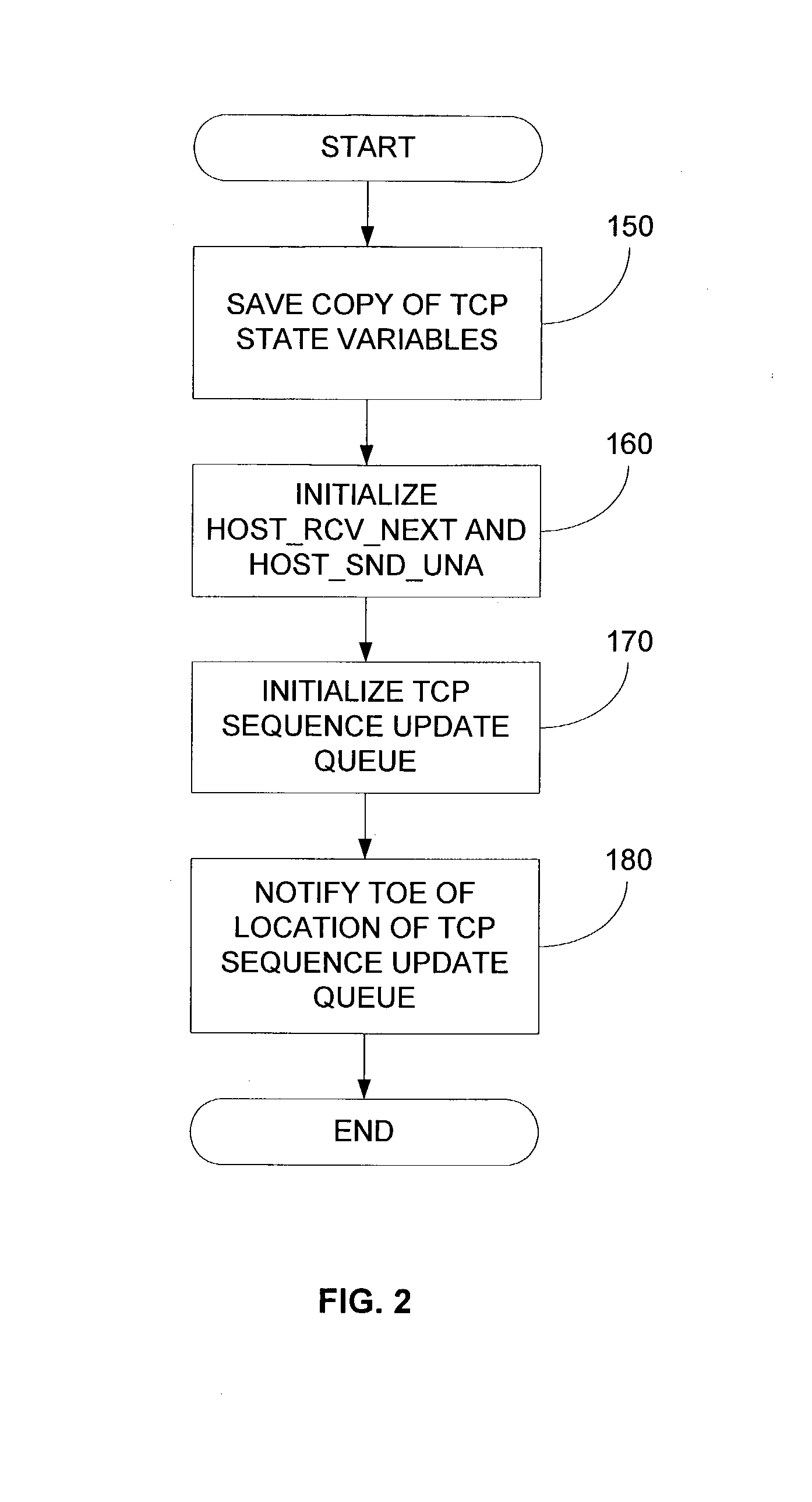

System and method for fault tolerant TCP offload

InactiveUS7224692B2Fault toleranceError preventionFrequency-division multiplex detailsTCP offload engineReal-time computing

Systems and methods that provide fault tolerant transmission control protocol (TCP) offloading are provided. In one example, a method that provides fault tolerant TCP offloading is provided. The method may include one or more of the following steps: receiving TCP segment via a TCP offload engine (TOE); calculating a TCP sequence number; writing a receive sequence record based upon at least the calculated TCP sequence number to a TCP sequence update queue in a host; and updating a first host variable with a value from the written receive sequence record.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

Encoding a TCP offload engine within FCP

The present invention defines a new protocol for communicating with an offload engine that provides Transmission Control Protocol (“TCP”) termination over a Fibre Channel (“FC”) fabric. The offload engine terminates all protocols up to and including TCP and performs the processing associated with those layers. The offload protocol guarantees delivery and is encapsulated within FCP-formatted frames. Thus, the TCP streams are reliably passed to the host. Additionally, using this scheme, the offload engine can provide parsing of the TCP stream to further assist the host. The present invention also provides network devices (and components thereof) that are configured to perform the foregoing methods. The invention further defines how network attached storage (“NAS”) protocol data units (“PDUs”) are parsed and delivered.

Owner:CISCO TECH INC

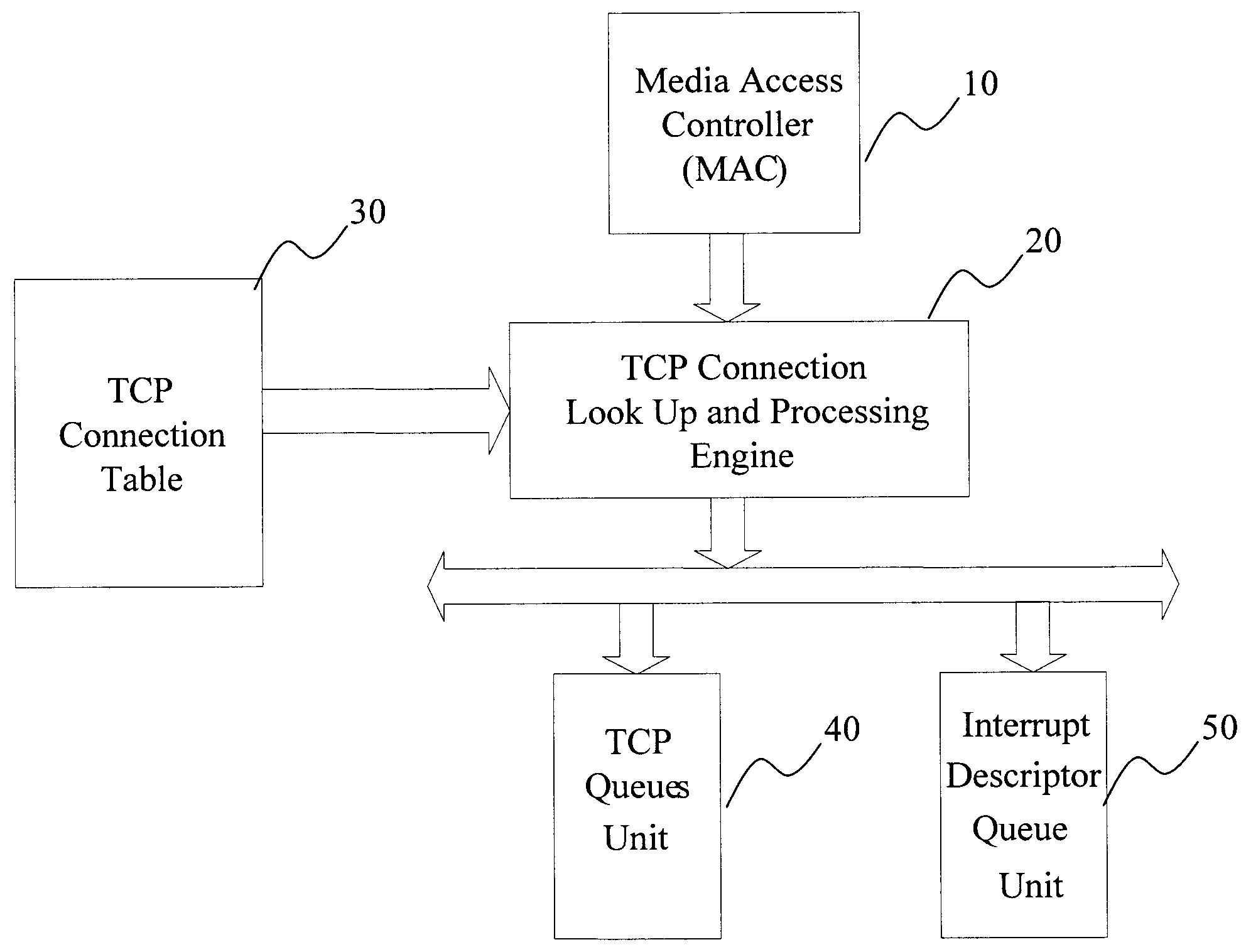

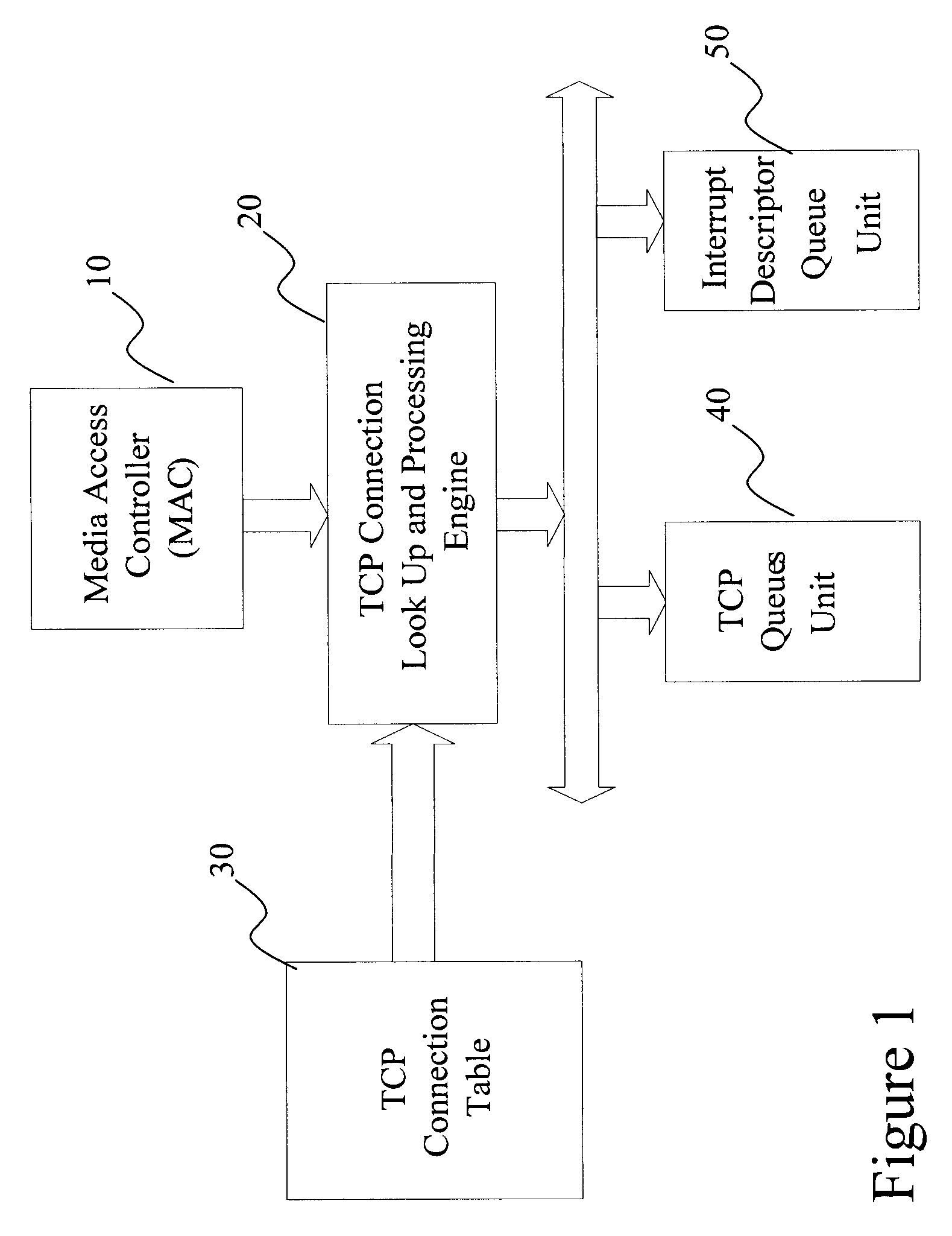

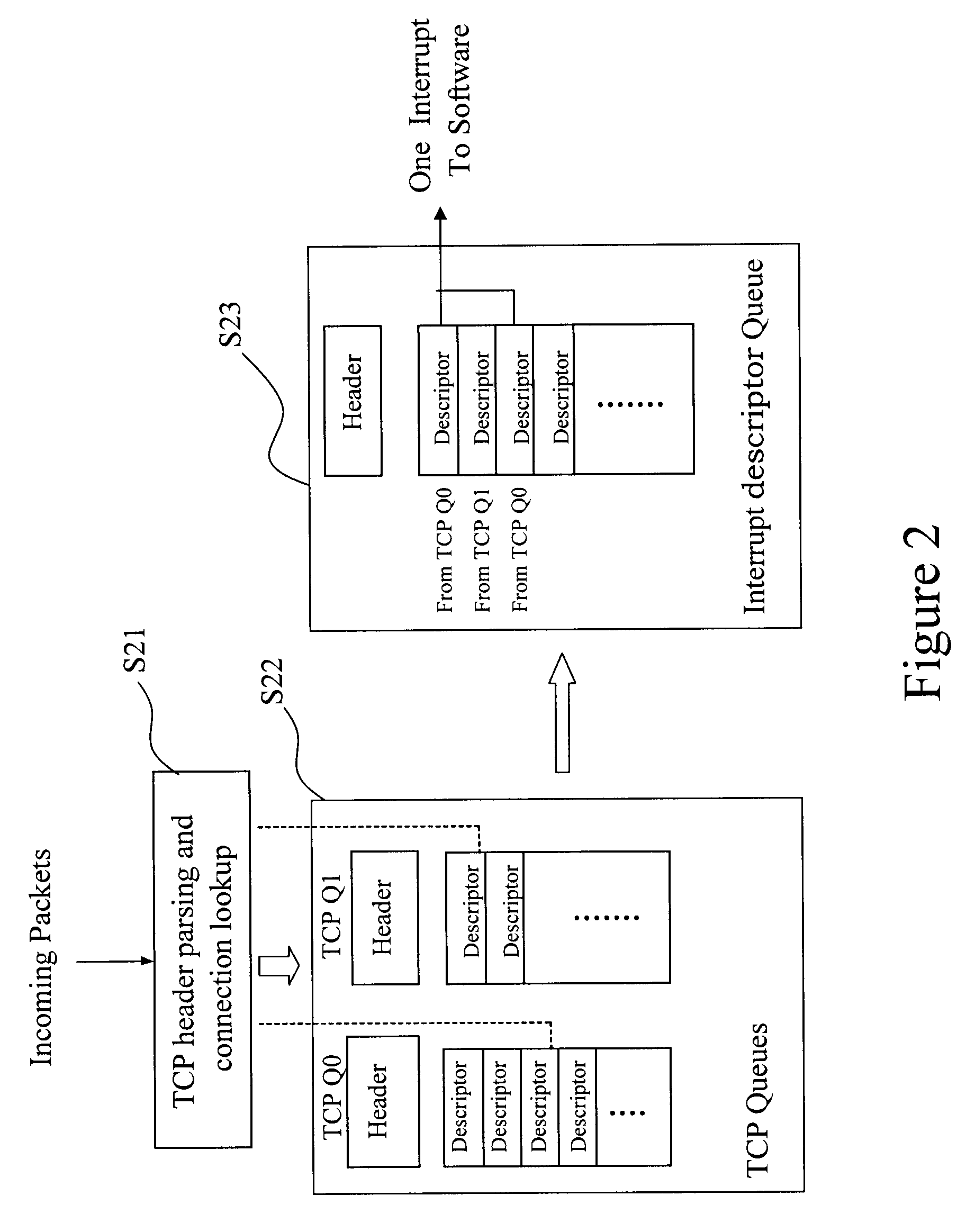

Interrupt coalescing scheme for high throughput TCP offload engine

InactiveUS20090022171A1Improve throughputImprove performanceData switching by path configurationInterrupt coalescingTCP offload engine

An interrupt coalescing scheme for high throughput TCP offload engine and method thereof are disclosed. An interrupt descriptor queue is used, that TCP offload engine saves TCP connection information and interrupt information in an interrupt event descriptor per interrupt. Meanwhile the software processes an interrupt by reading interrupt event descriptors asynchronously. The software may process multiple interrupt event descriptors in one interrupt context.

Owner:INPHI

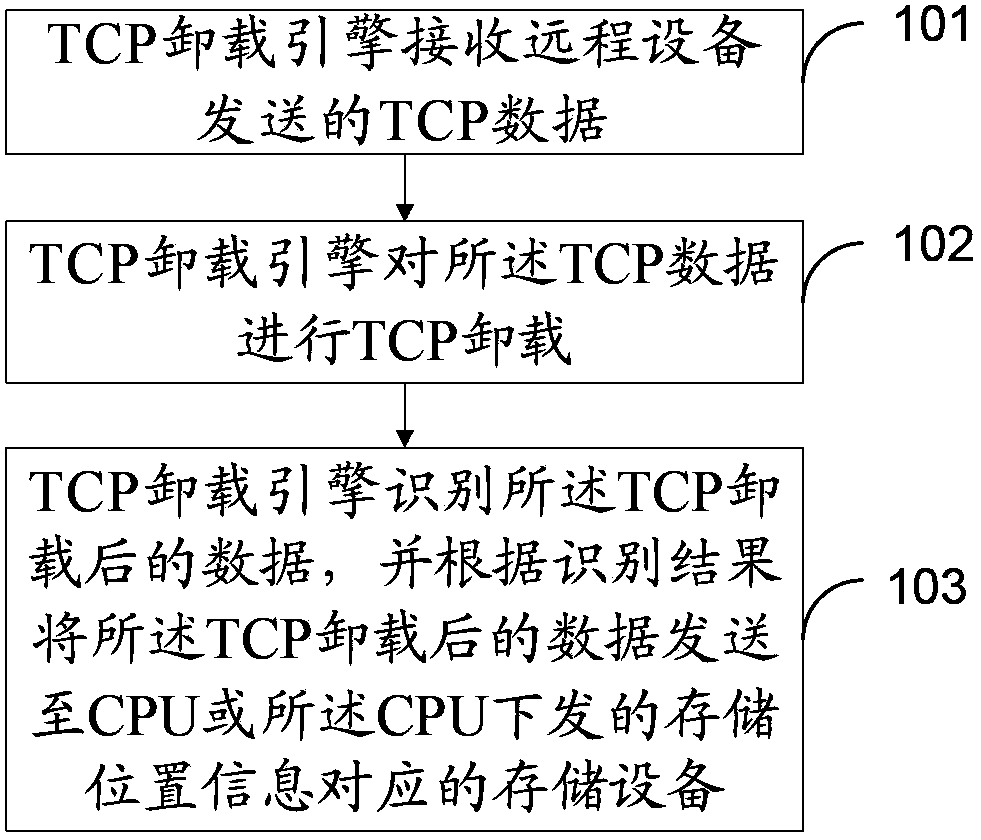

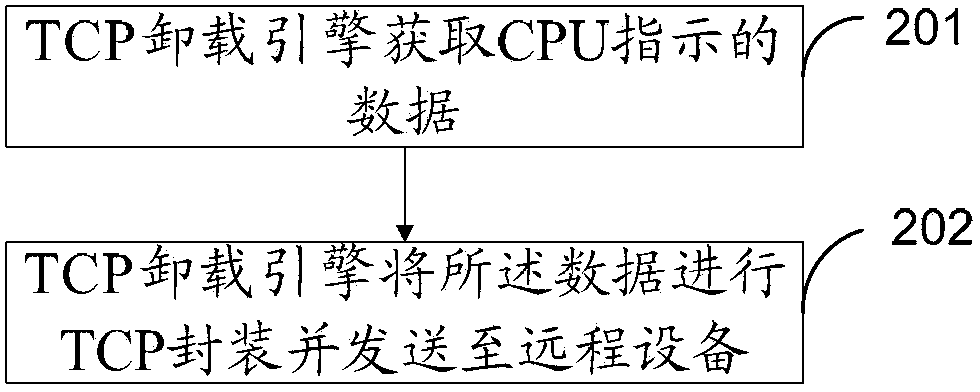

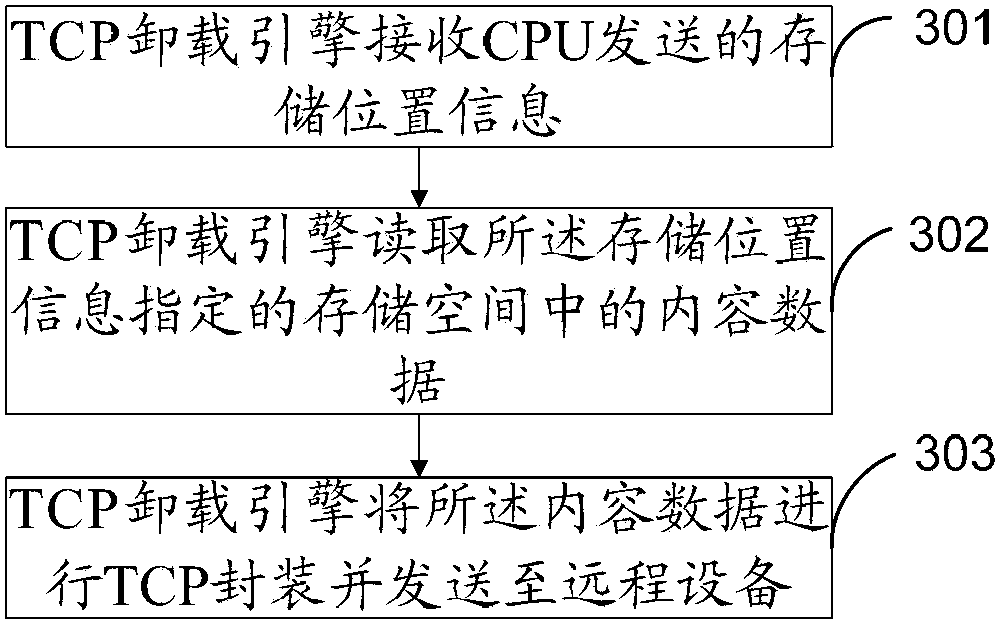

TCP (transmission control protocol) data transmission method and TCP unloading engine and system

InactiveCN103546424AReduce parsing workReduce data movementTransmissionTransport control protocolTCP offload engine

Embodiments of the present invention provide a transmission control protocol (TCP) data transmission method, TCP uninstallation engine, and system, which relate to the communications field, and can reduce data removal between TCP the uninstallation engine and a CPU, and at the same time reduce parsing work on data by a CPU, so as to achieve the effects of reducing resources for processing TCP / IP data of the CPU and reducing a transmission delay. The method comprises: a TCP uninstallation engine receiving TCP data sent by a remote device; performing TCP uninstallation on the TCP data; and identifying the data after TCP uninstallation, and sending the data after TCP uninstallation to a CPU or a storage device corresponding to storage position information delivered by the CPU according to the identification result. The embodiments of the present invention are applicable to TCP data transmission.

Owner:HUAWEI TECH CO LTD

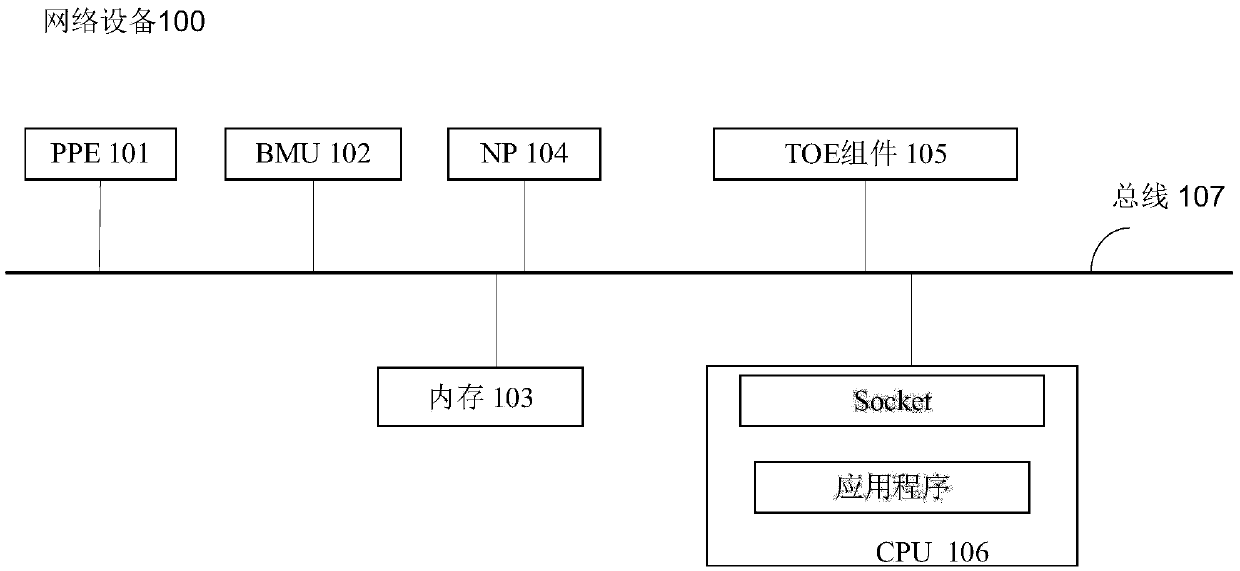

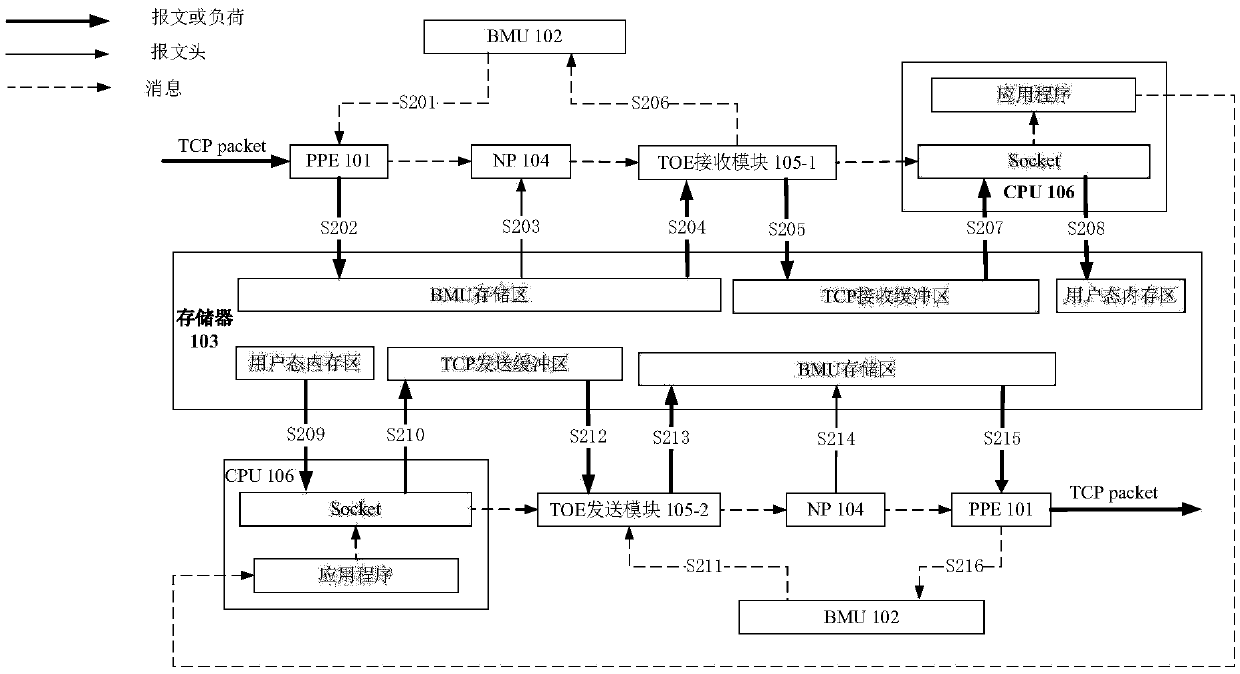

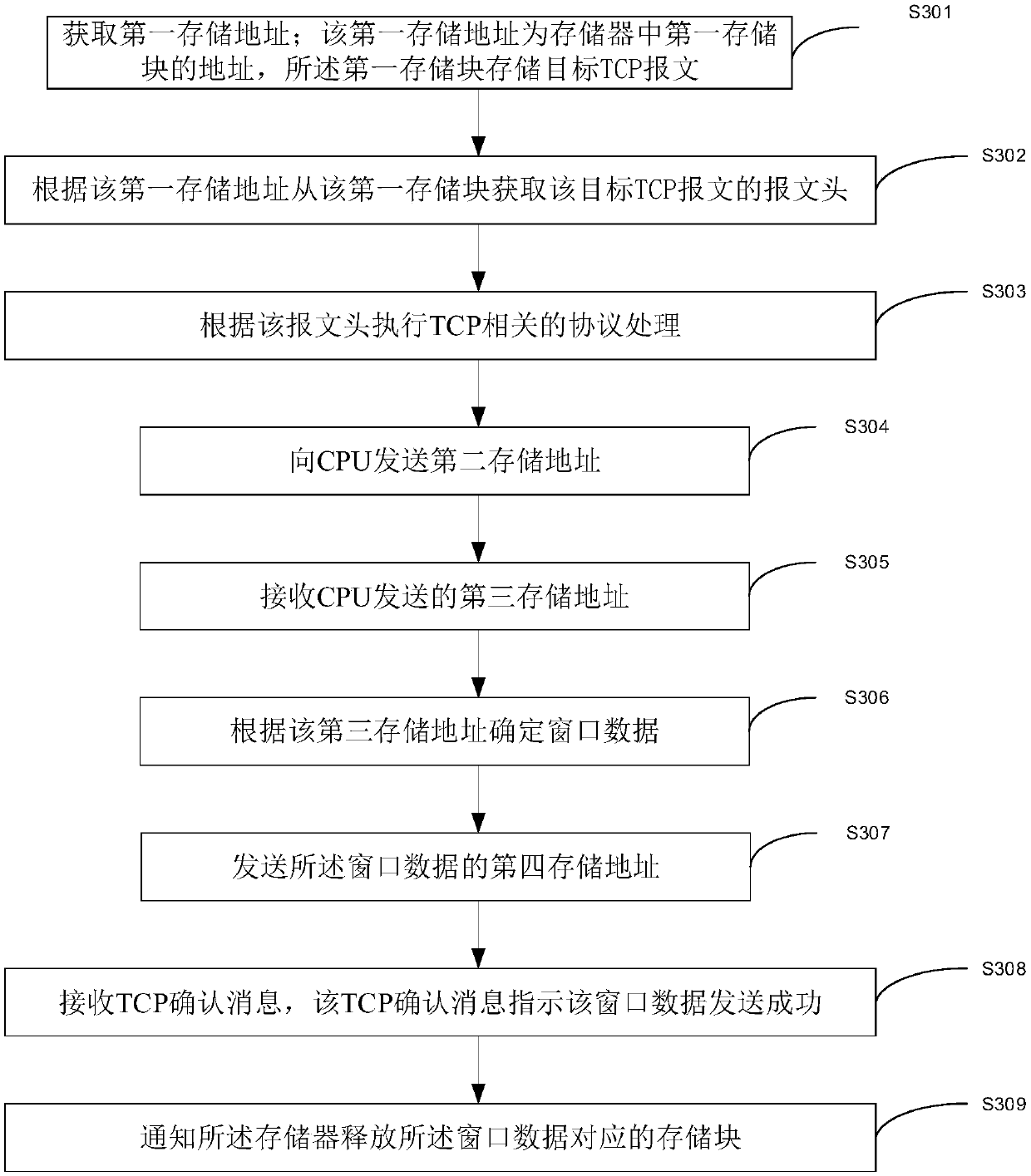

TCP message processing method, TOE component and network equipment

The invention discloses a method for processing a transmission control protocol (TCP) message, a TOE component and network equipment comprising the TOE component. The method, the TOE component and thenetwork equipment are used for reducing the burden of a central processing unit (CPU) of the network equipment and saving storage resources of the network equipment when the TCP message is processed.A TCP offload engine TOE component acquires a first storage address, the first storage address is an address of a first storage block in a memory, the first storage block stores a target TCP message,and the target TCP message comprises a message header and a TCP load. The TOE component acquires the message header from the first storage block according to the first storage address. The TOE component executes TCP related protocol processing according to the message header, and in the process that the TOE component executes the TCP related protocol processing according to the message header, the TCP load is not read out of the first storage block by the TOE component.

Owner:HUAWEI TECH CO LTD

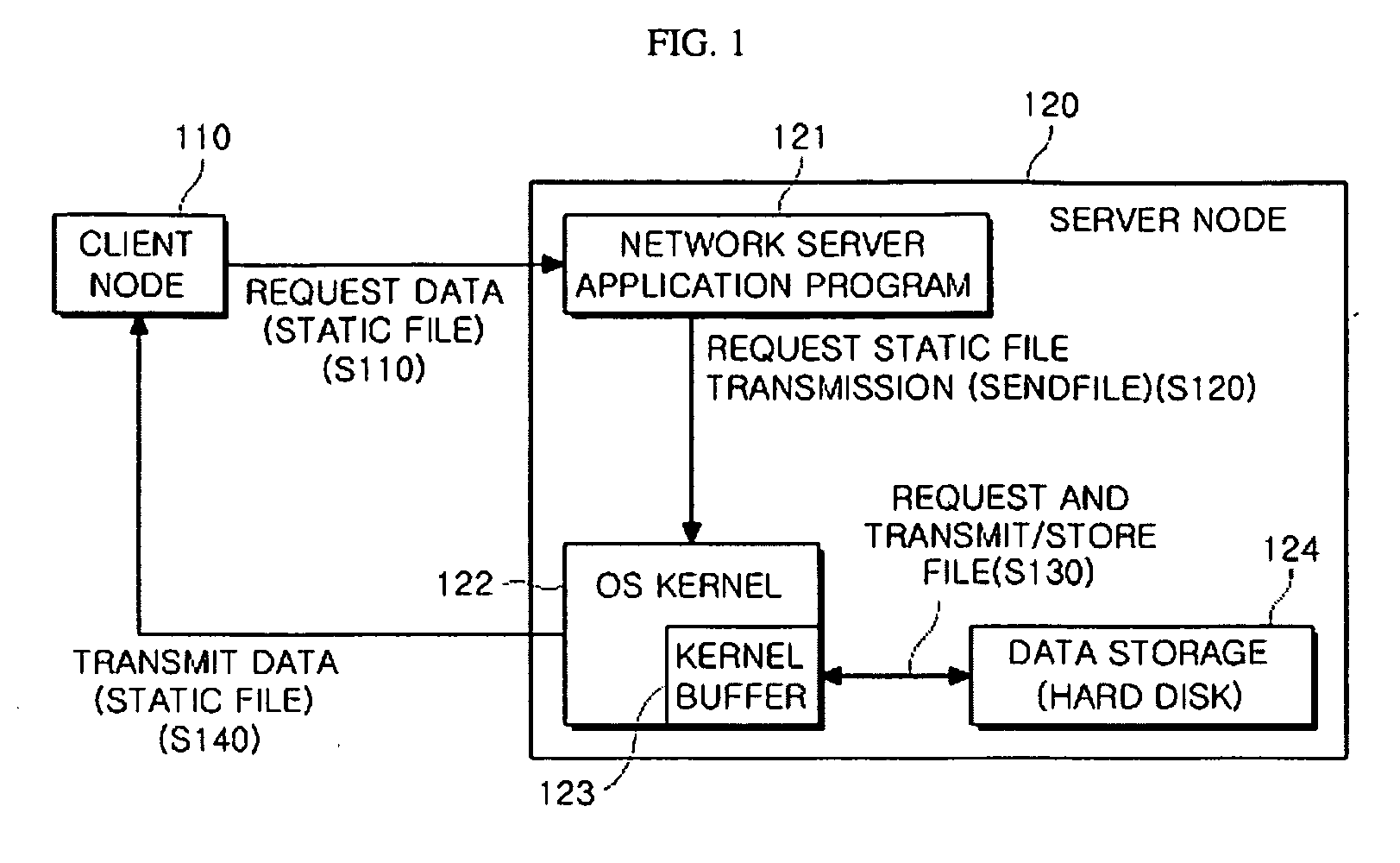

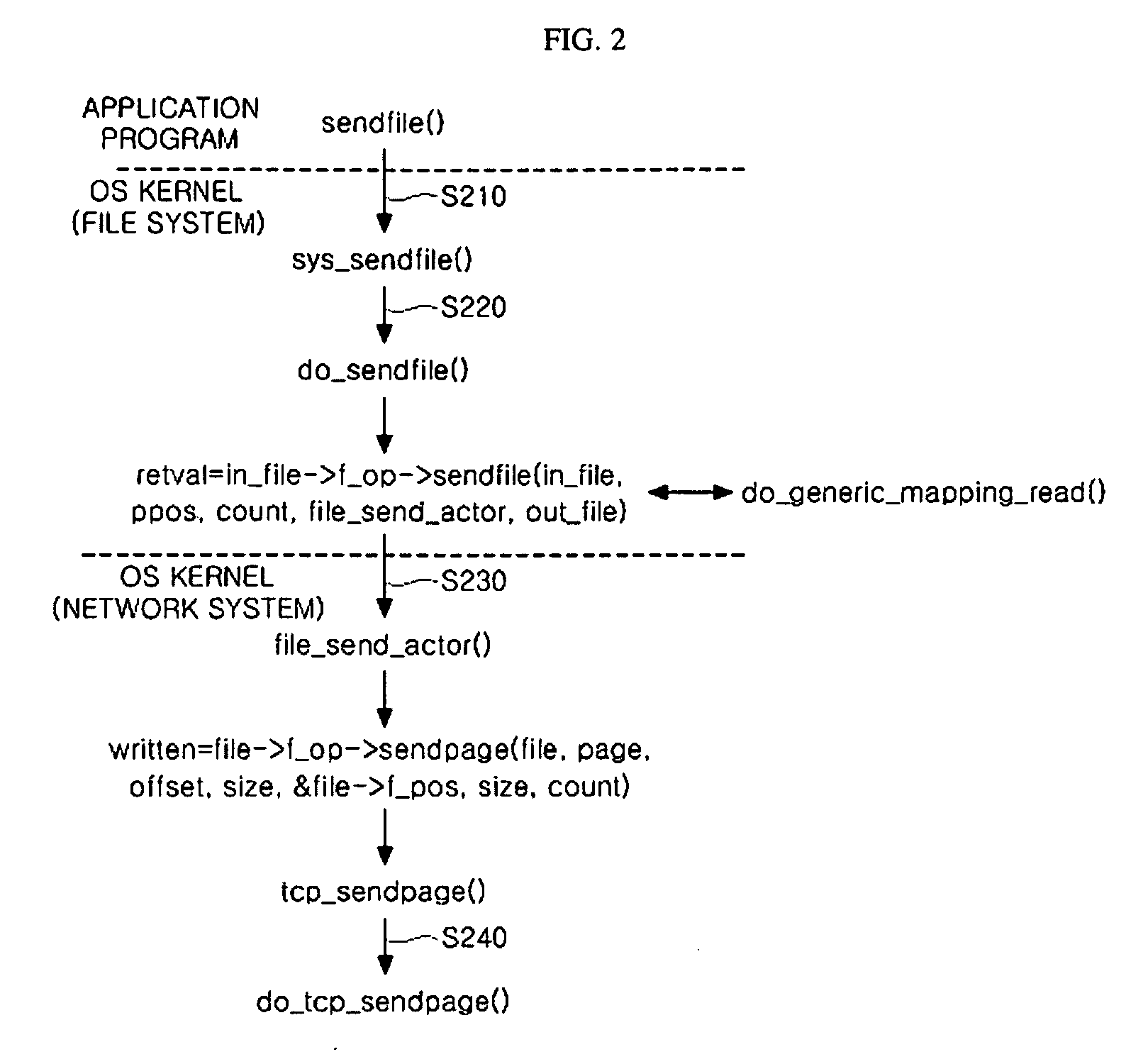

TCP offload engine apparatus and method for system call processing for static file transmission

InactiveUS20090157896A1Improve system performanceReduce system loadLink editingData switching by path configurationComputer hardwareFile transmission

Provided are a TCP offload engine (TOE) apparatus and method for static file transmission. An apparatus for system call processing for static file transmission includes an application program block for generating a file transmission command upon a user's file transmission request, a BSD socket module for converting the file transmission command of a file unit into a division transmission command for division-transmission of a certain size unit, a TOE kernel module for receiving the division transmission command and converting the division transmission command into a TOE control command, and a TOE apparatus module for generating a data packet of the certain size for network transmission in response to the TOE control command and transmitting the data packet to a node having requested file transmission.

Owner:ELECTRONICS & TELECOMM RES INST

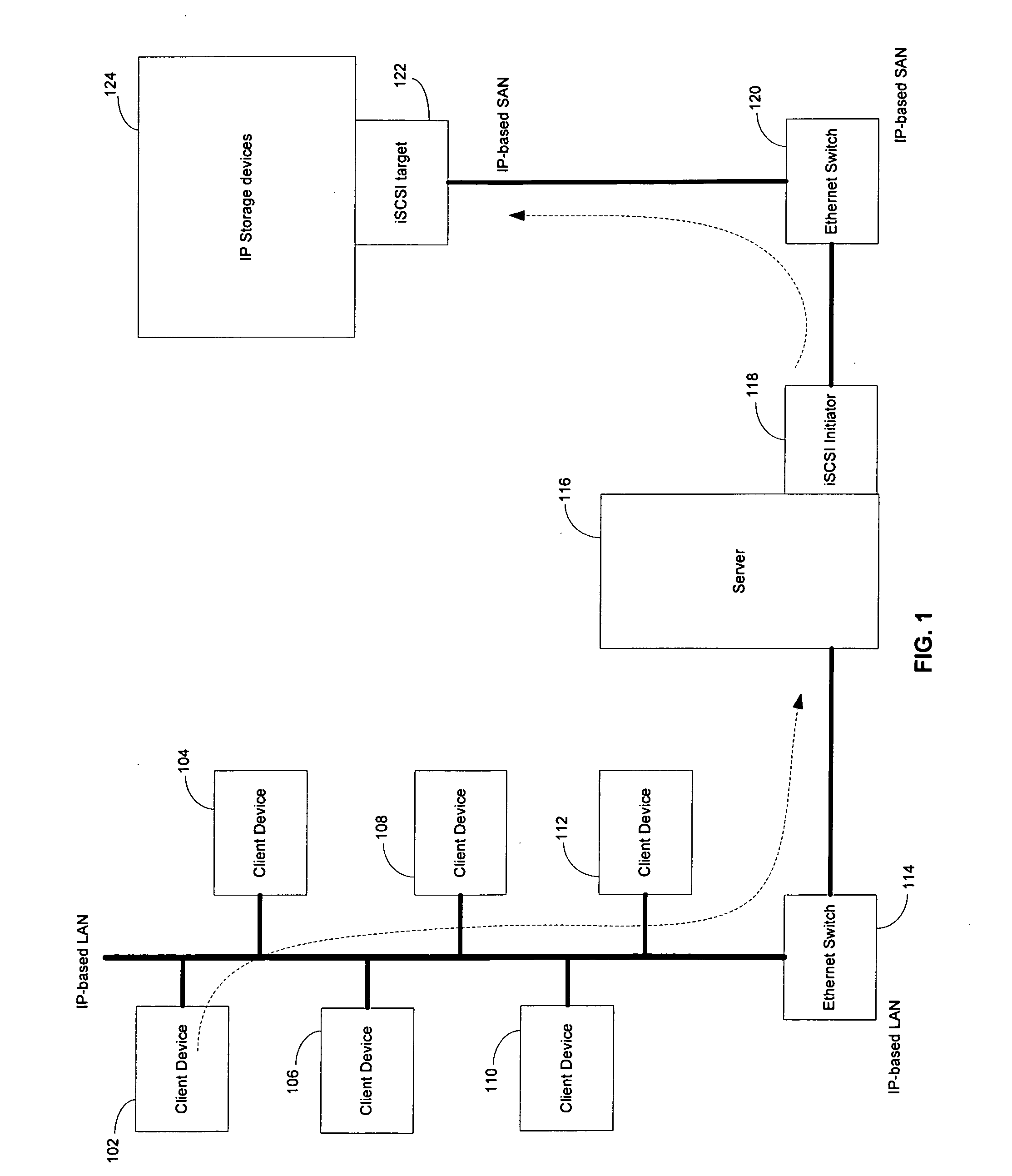

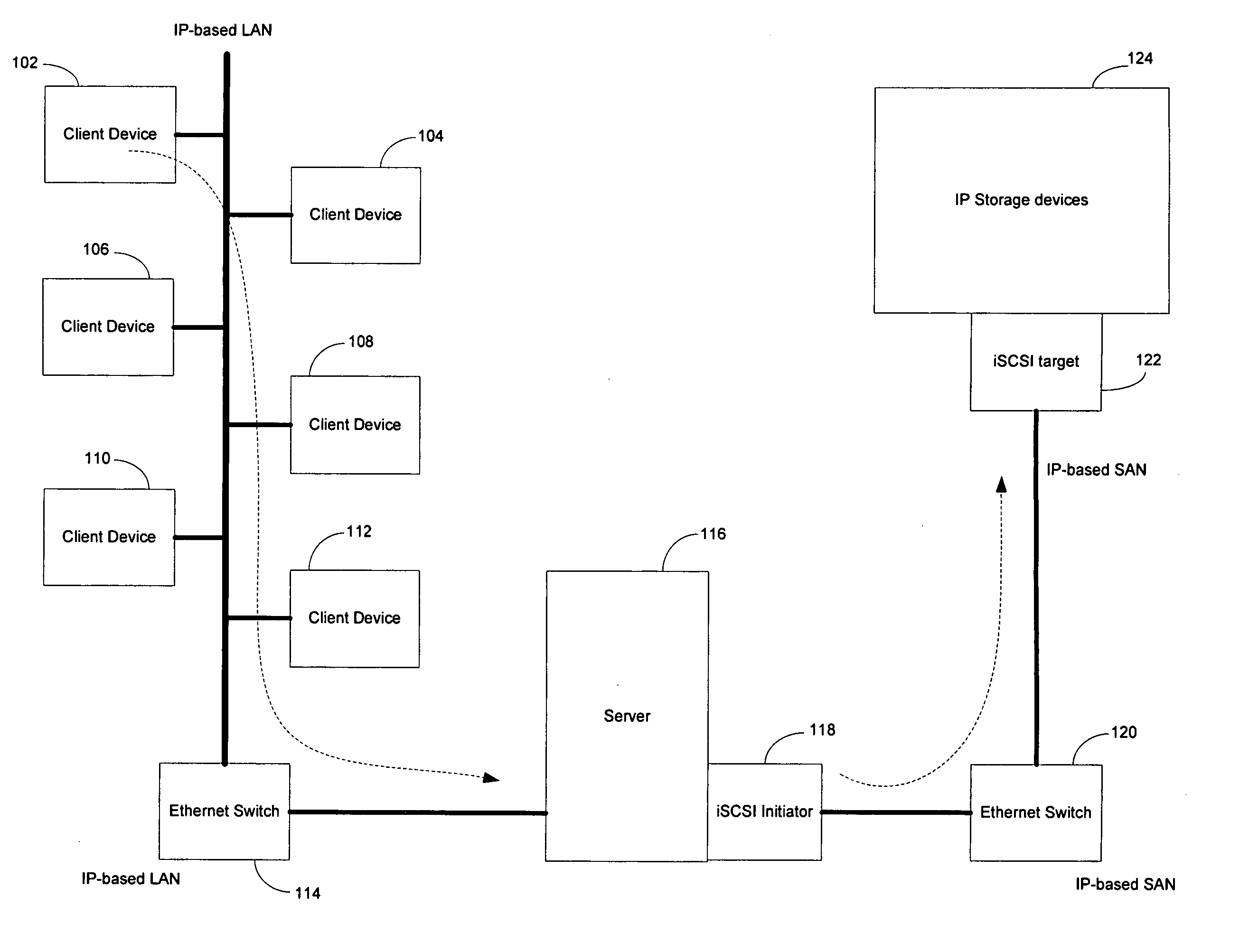

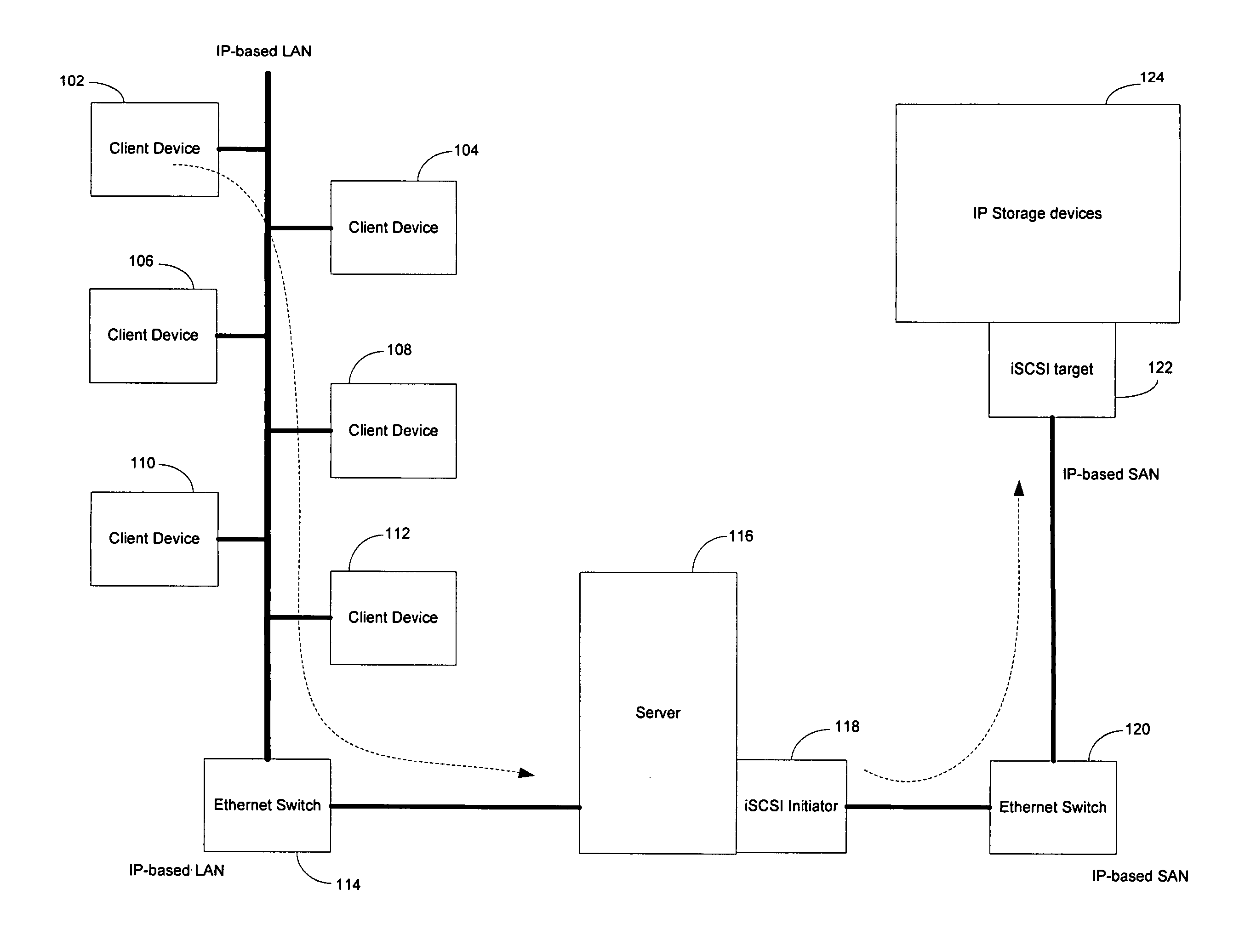

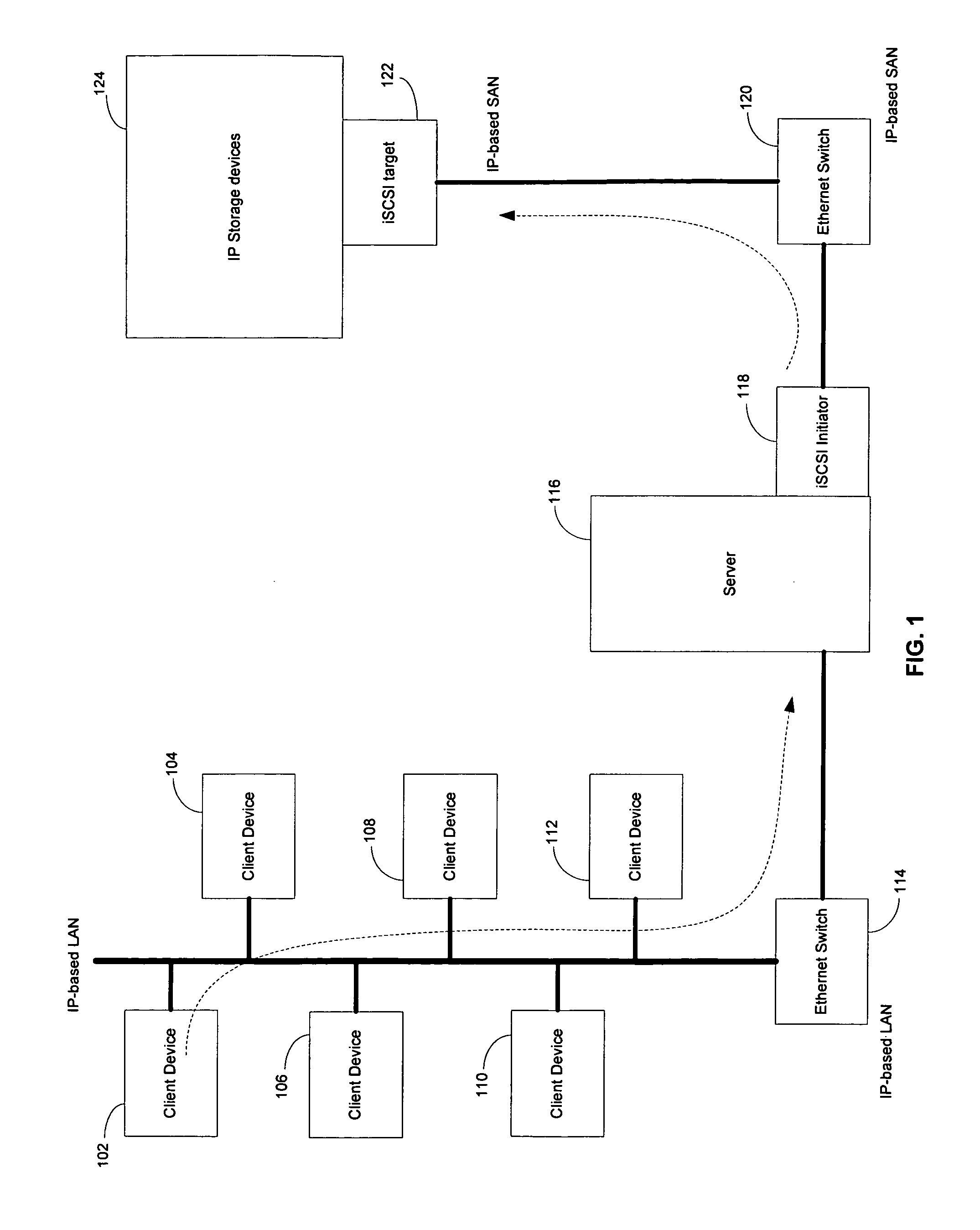

Method and system for supporting iSCSI read operations and iSCSI chimney

Certain embodiments of the invention may be found in a method and system for performing a SCSI read operation via a TCP offload engine. Aspects of the method may comprise receiving an iSCSI read command from an initiator (118). Data may be fetched from a buffer based on the received iSCSI read command. The fetched data may be zero copied from the buffer to the initiator (118) and a TCP sequence may be retransmitted to the initiator. The method may further comprise checking if the zero copied fetched data is a first frame in an iSCSI protocol data unit and if the buffer is posted. The retransmitted TCP sequence may be processed and the fetched data may be zero copied into an iSCSI buffer, if the buffer is posted.

Owner:BROADCOM CORP

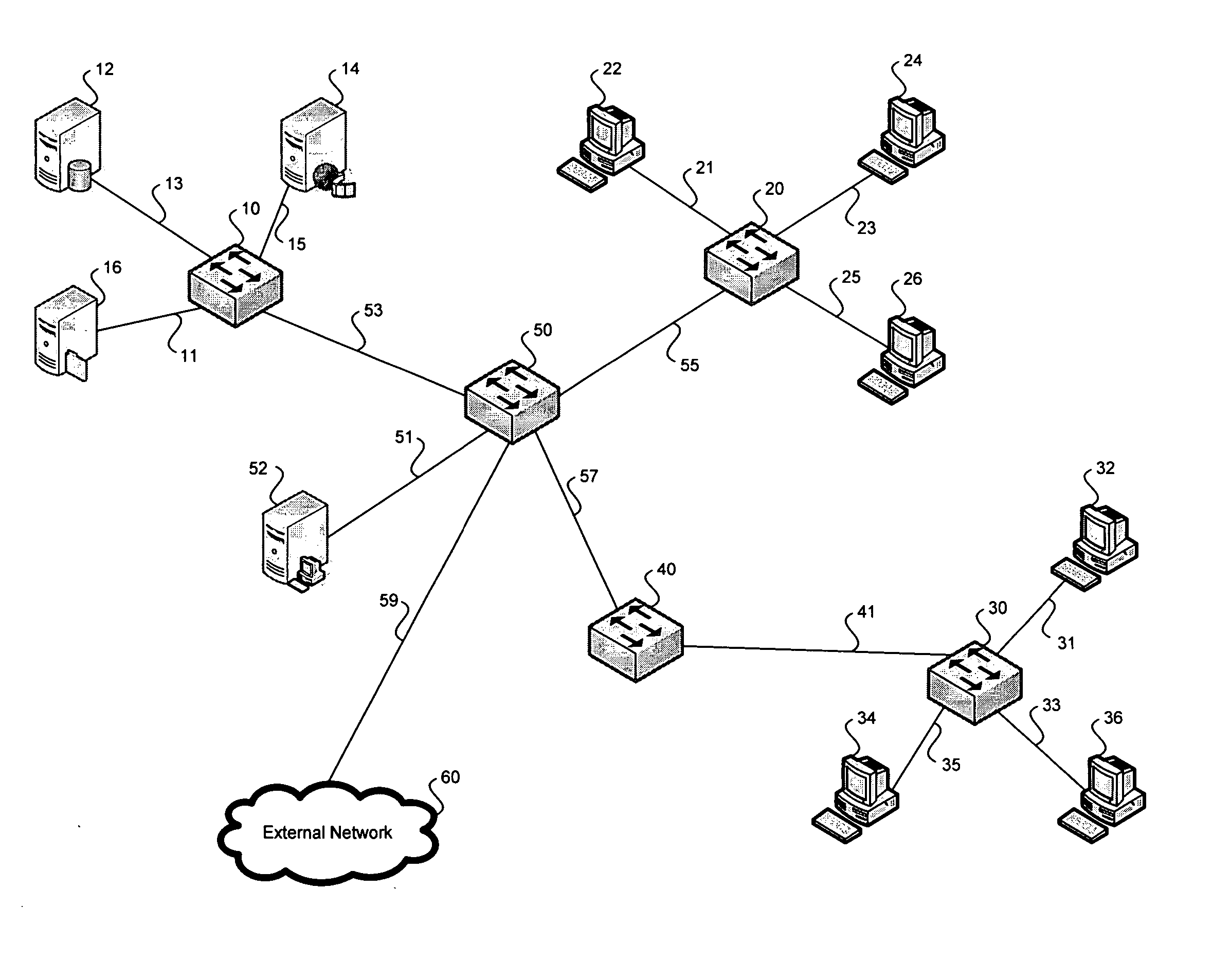

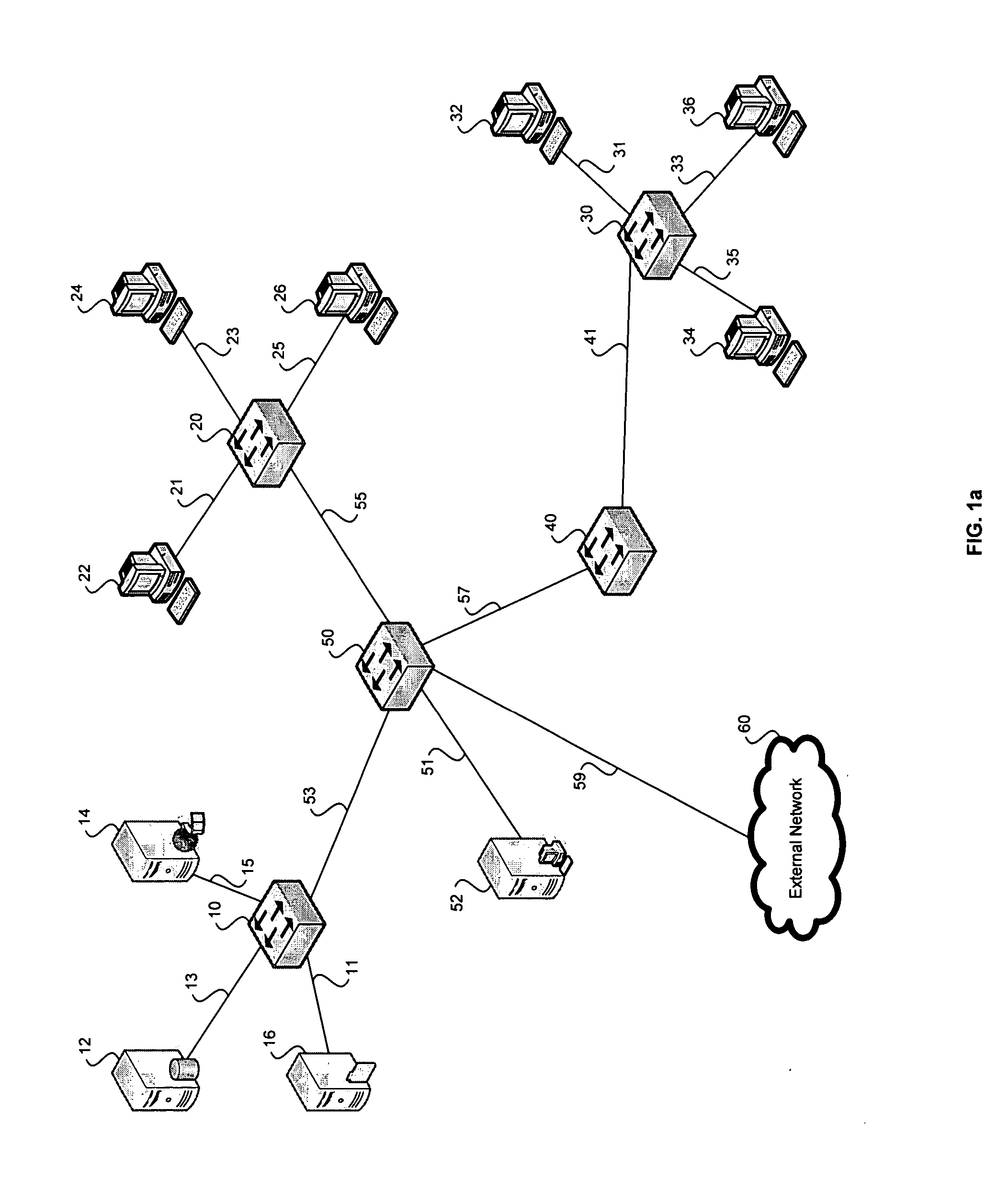

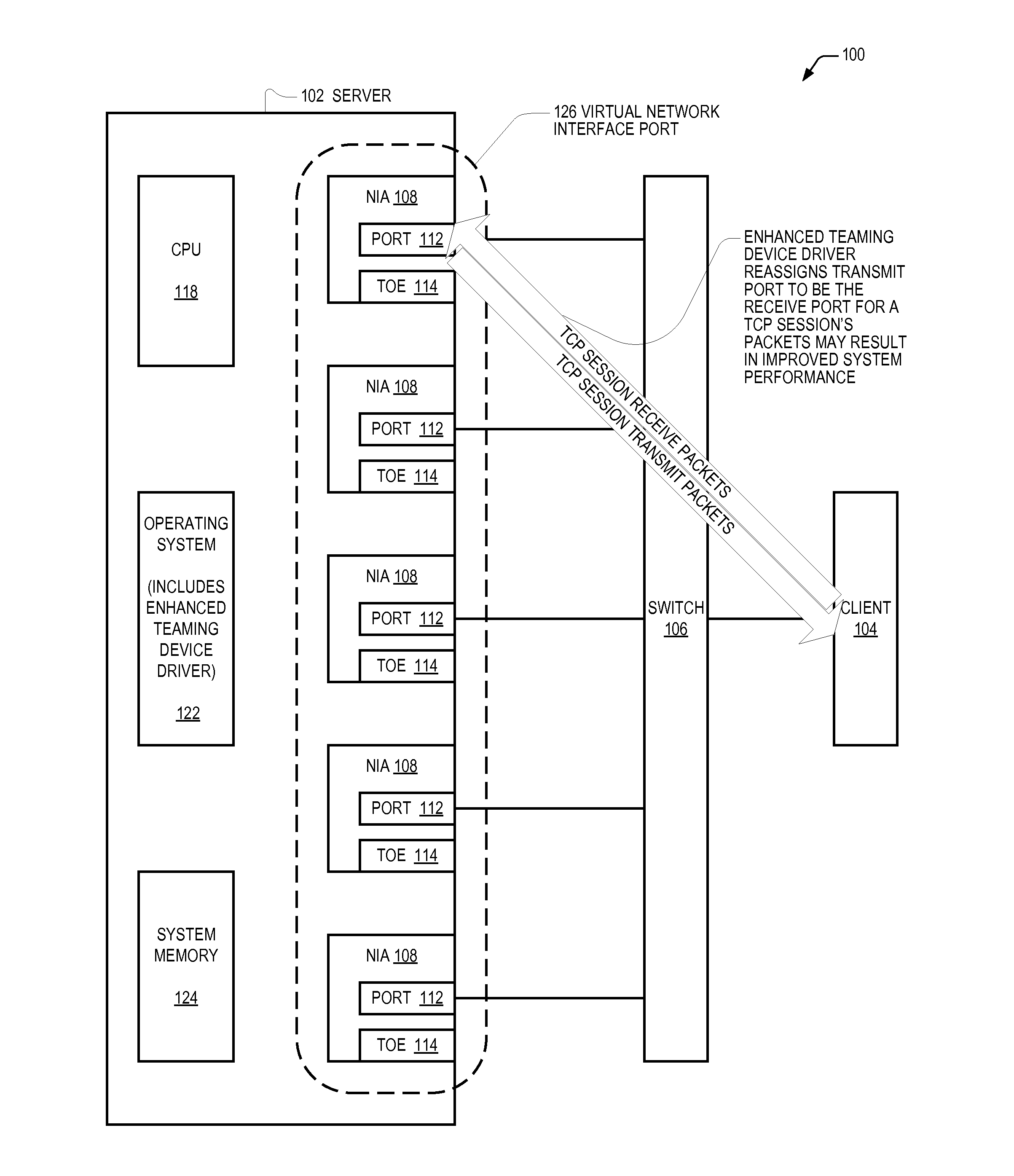

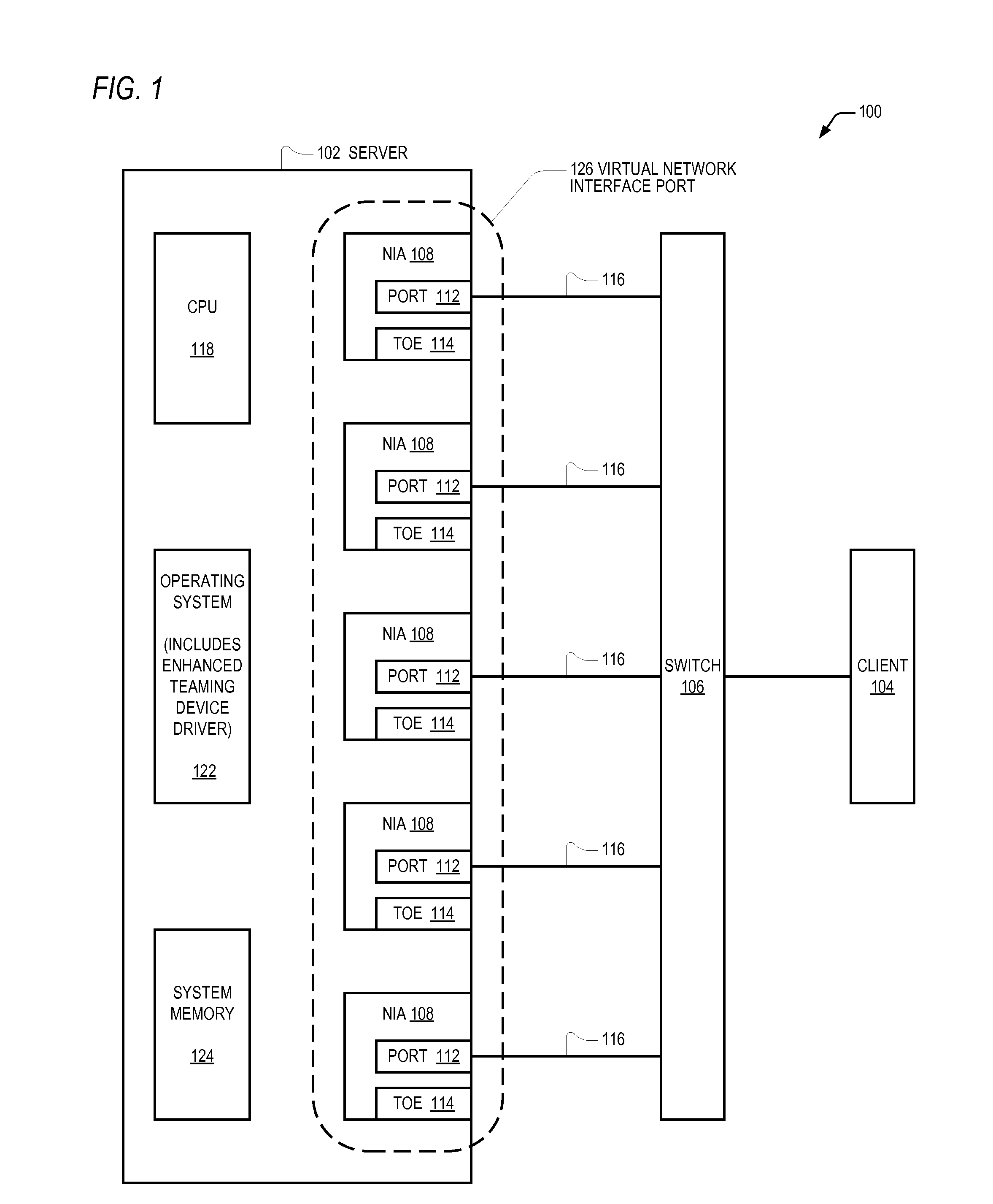

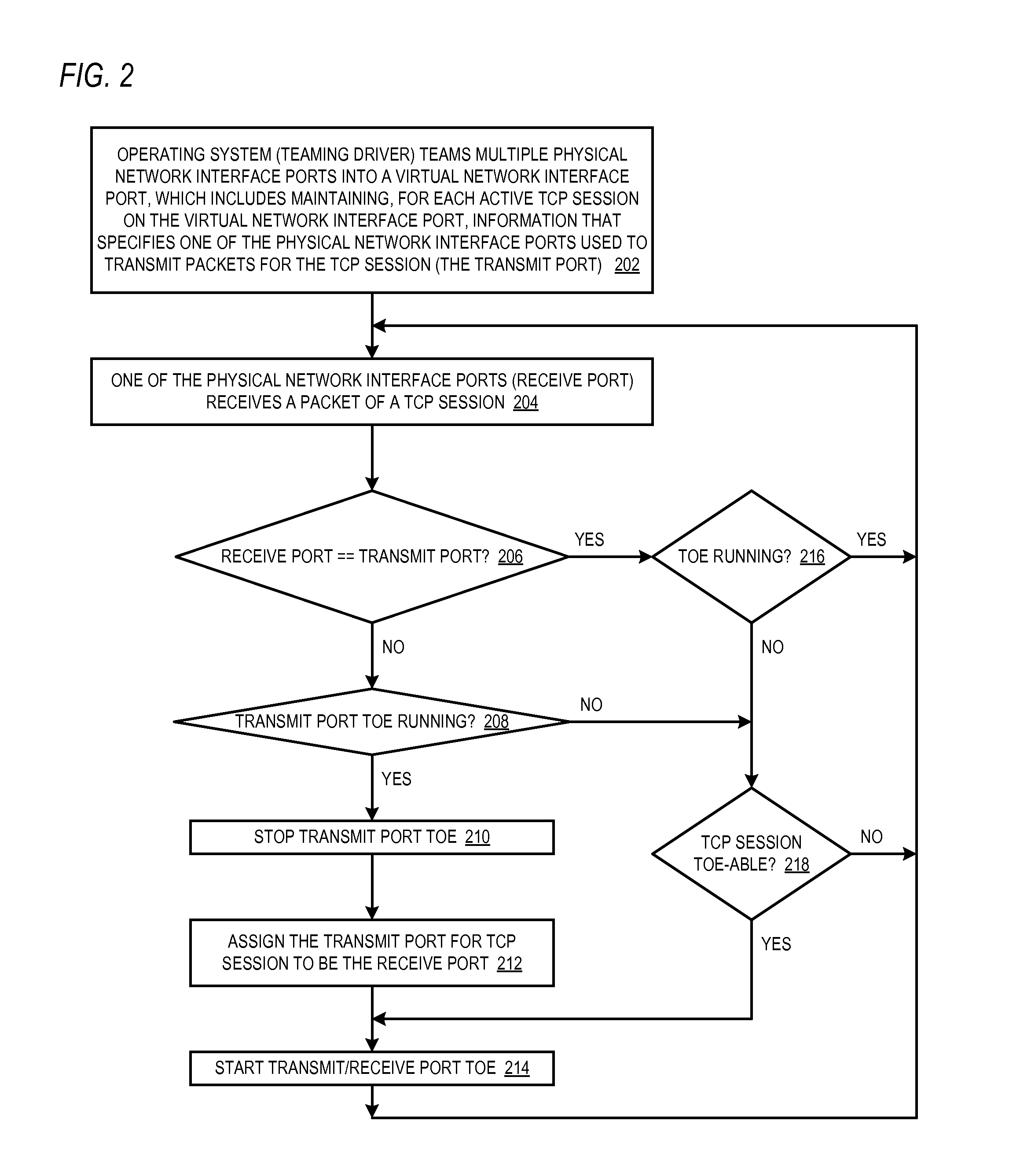

Adaptive receive path learning to facilitate combining TCP offloading and network adapter teaming

ActiveUS20140185623A1Without negatively impacting the overall performanceData switching by path configurationHigh level techniquesTCP offload engineSelf adaptive

One or more modules include a first portion that teams together multiple physical network interface ports of a computing system to appear as a single virtual network interface port to a switch to which the physical ports are linked. A second portion determines a receive port upon which a packet of a TCP session was received. A third portion assigns a transmit port to be the receive port, wherein the transmit port is used by the computing system to transmit packets of the TCP session. The third portion assigns the transmit port prior to a TCP offload engine (TOE) being enabled to offload from the system CPU processing of packets of the TCP session transceived on the assigned transmit / receive port. If a subsequent packet for the TCP session is received on a different second port, the transmit port is reassigned to be the second port.

Owner:AVAGO TECH INT SALES PTE LTD

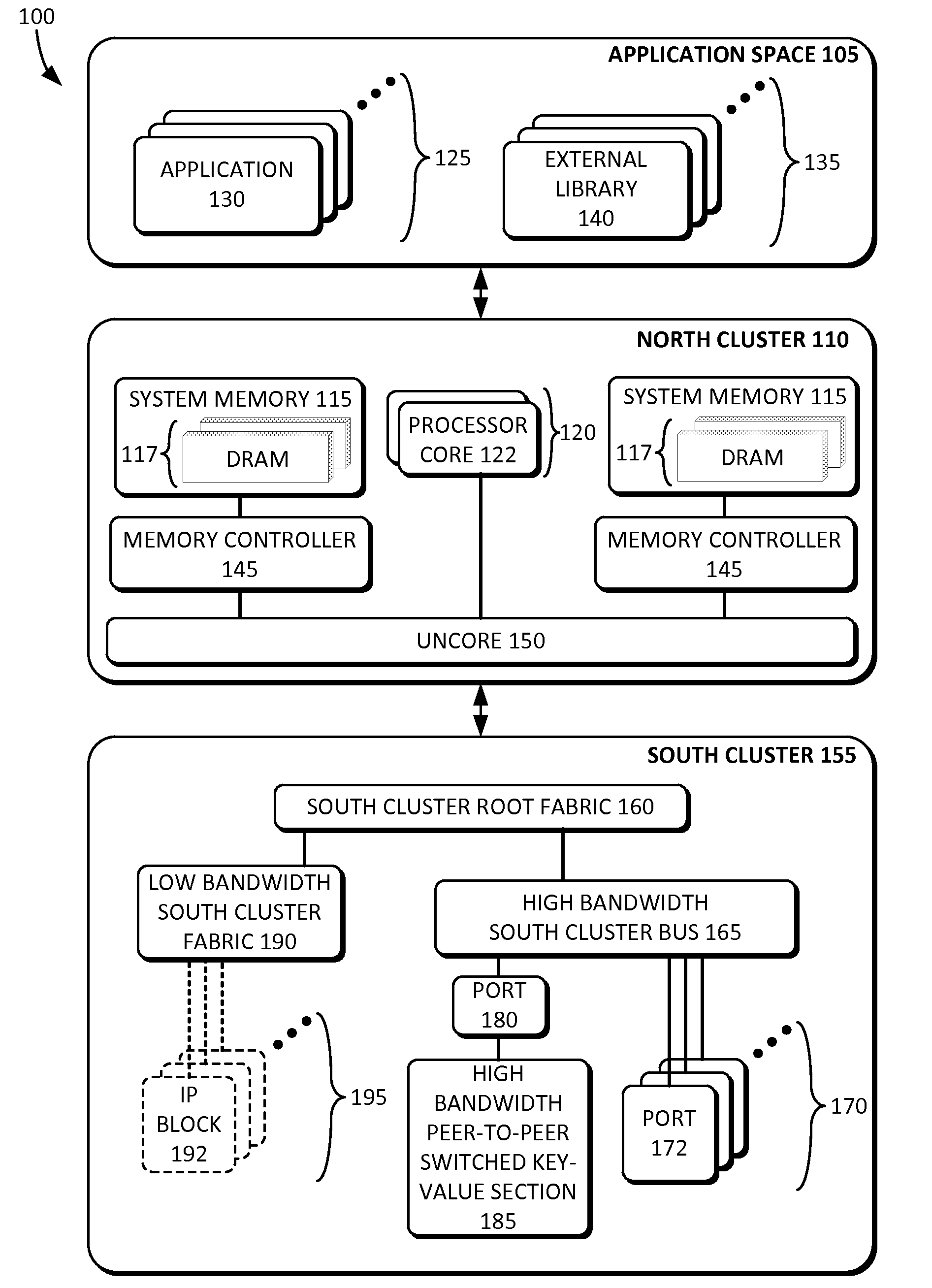

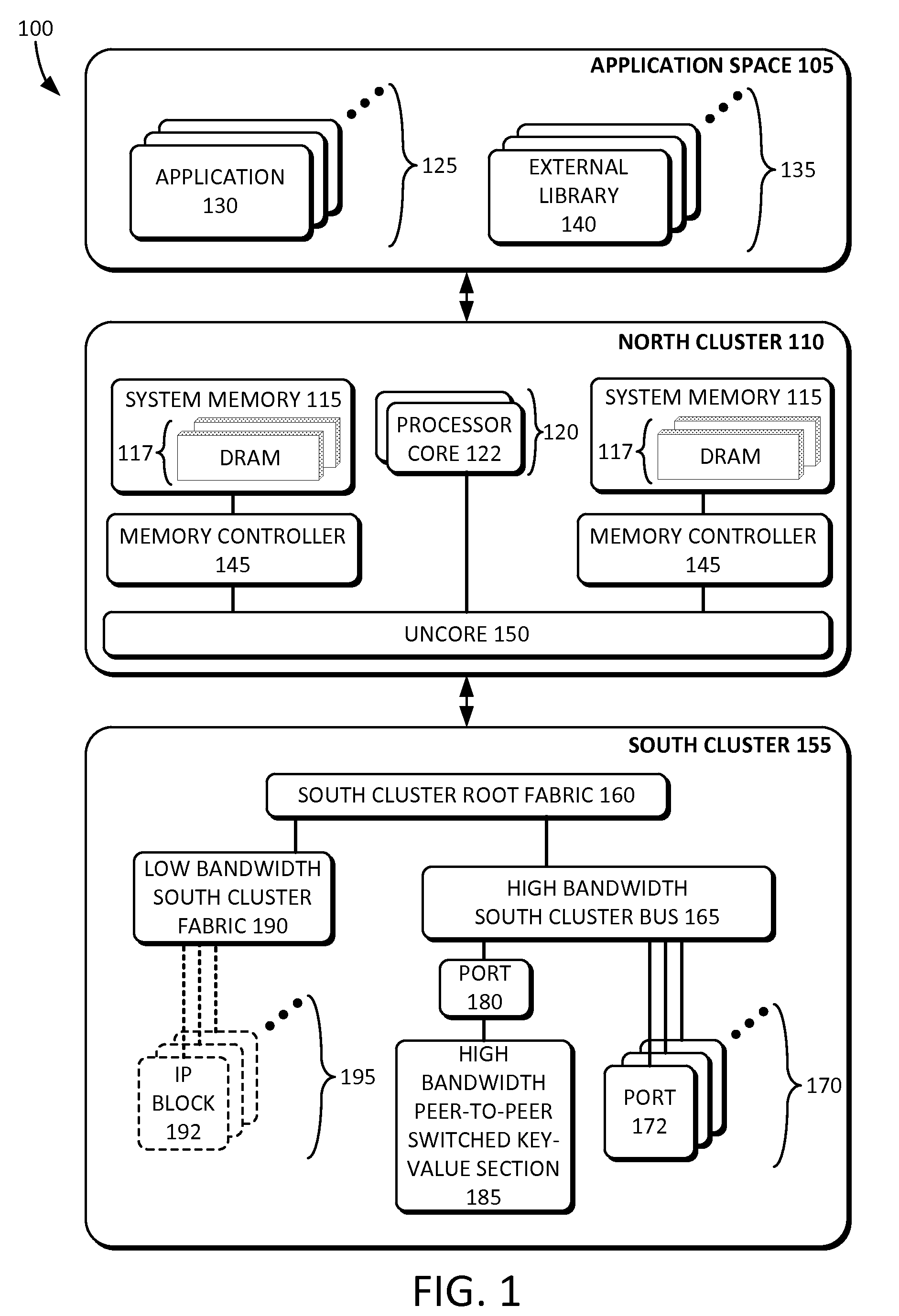

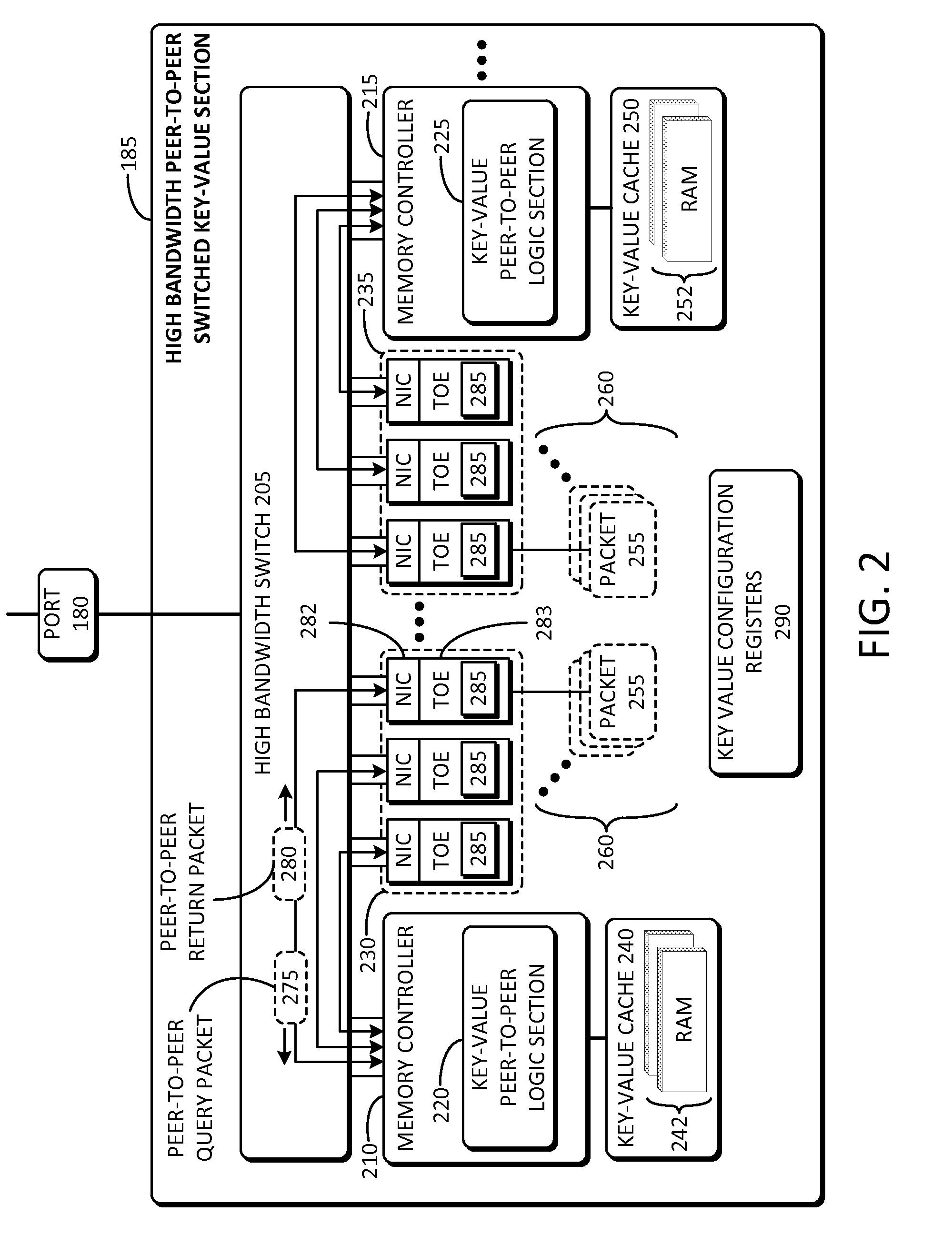

High bandwidth peer-to-peer switched key-value caching

InactiveUS20160094638A1Multiple digital computer combinationsTransmissionComputer architectureHigh bandwidth

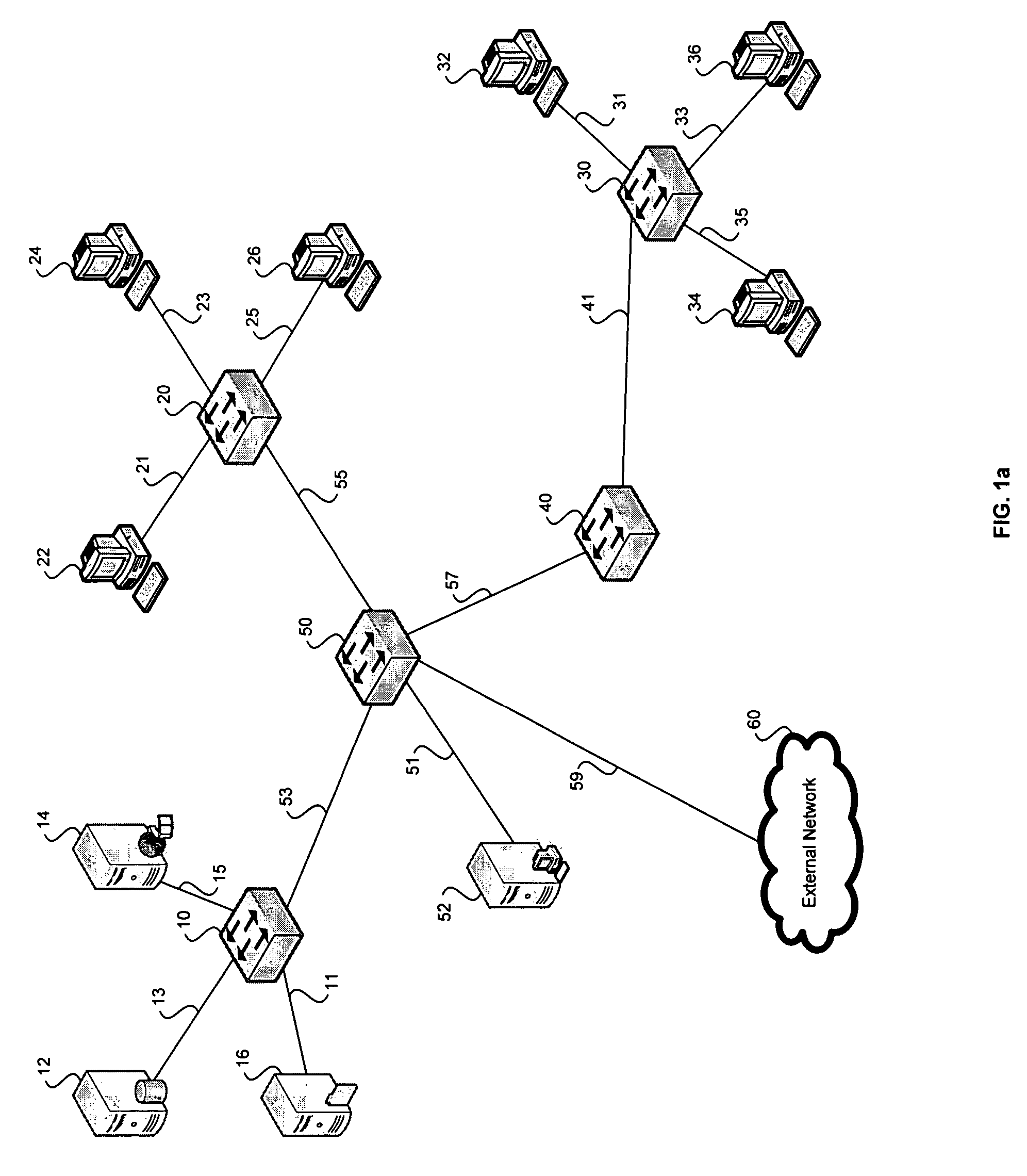

Inventive aspects include a high bandwidth peer-to-peer switched key-value system, method, and section. The system can include a high bandwidth switch, multiple network interface cards communicatively coupled to the switch, one or more key-value caches to store a plurality of key-values, and one or more memory controllers communicatively coupled to the key-value caches and to the network interface cards. The memory controllers can include a key-value peer-to-peer logic section that can coordinate peer-to-peer communication between the memory controllers and the multiple network interface cards through the switch. The system can further include multiple transmission control protocol (TCP) offload engines that are each communicatively coupled to a corresponding one of the network interface cards. Each of the TCP offload engines can include a packet peer-to-peer logic section that can coordinate the peer-to-peer communication between the memory controllers and the network interface cards through the switch.

Owner:SAMSUNG ELECTRONICS CO LTD

Method and system for transmission control protocol (TCP) traffic smoothing

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

Method and system for supporting read operations for iSCSI and iSCSI chimney

InactiveUS20050281262A1Data switching by path configurationInput/output processes for data processingSCSIZero-copy

Certain embodiments of the invention may be found in a method and system for performing a SCSI read operation via a TCP offload engine. Aspects of the method may comprise receiving an iSCSI read command from an initiator. Data may be fetched from a buffer based on the received iSCSI read command. The fetched data may be zero copied from the buffer to the initiator and a TCP sequence may be retransmitted to the initiator. The method may further comprise checking if the zero copied fetched data is a first frame in an iSCSI protocol data unit and if the buffer is posted. The retransmitted TCP sequence may be processed and the fetched data may be zero copied into an iSCSI buffer, if the buffer is posted.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com