Patents

Literature

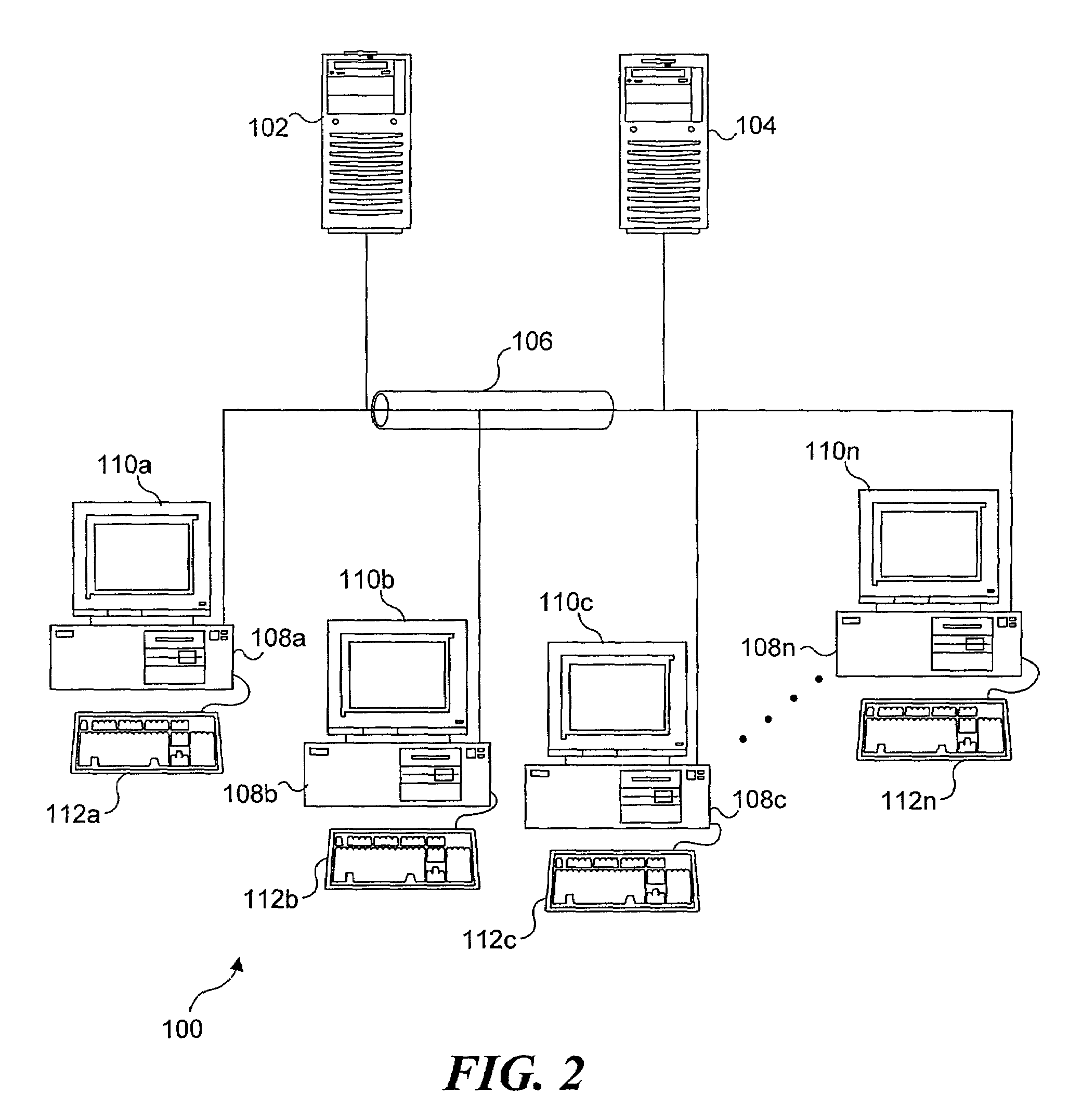

7755 results about "Queue" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computer science, a queue is a collection in which the entities in the collection are kept in order and the principal (or only) operations on the collection are the addition of entities to the rear terminal position, known as enqueue, and removal of entities from the front terminal position, known as dequeue. This makes the queue a First-In-First-Out (FIFO) data structure. In a FIFO data structure, the first element added to the queue will be the first one to be removed. This is equivalent to the requirement that once a new element is added, all elements that were added before have to be removed before the new element can be removed. Often a peek or front operation is also entered, returning the value of the front element without dequeuing it. A queue is an example of a linear data structure, or more abstractly a sequential collection.

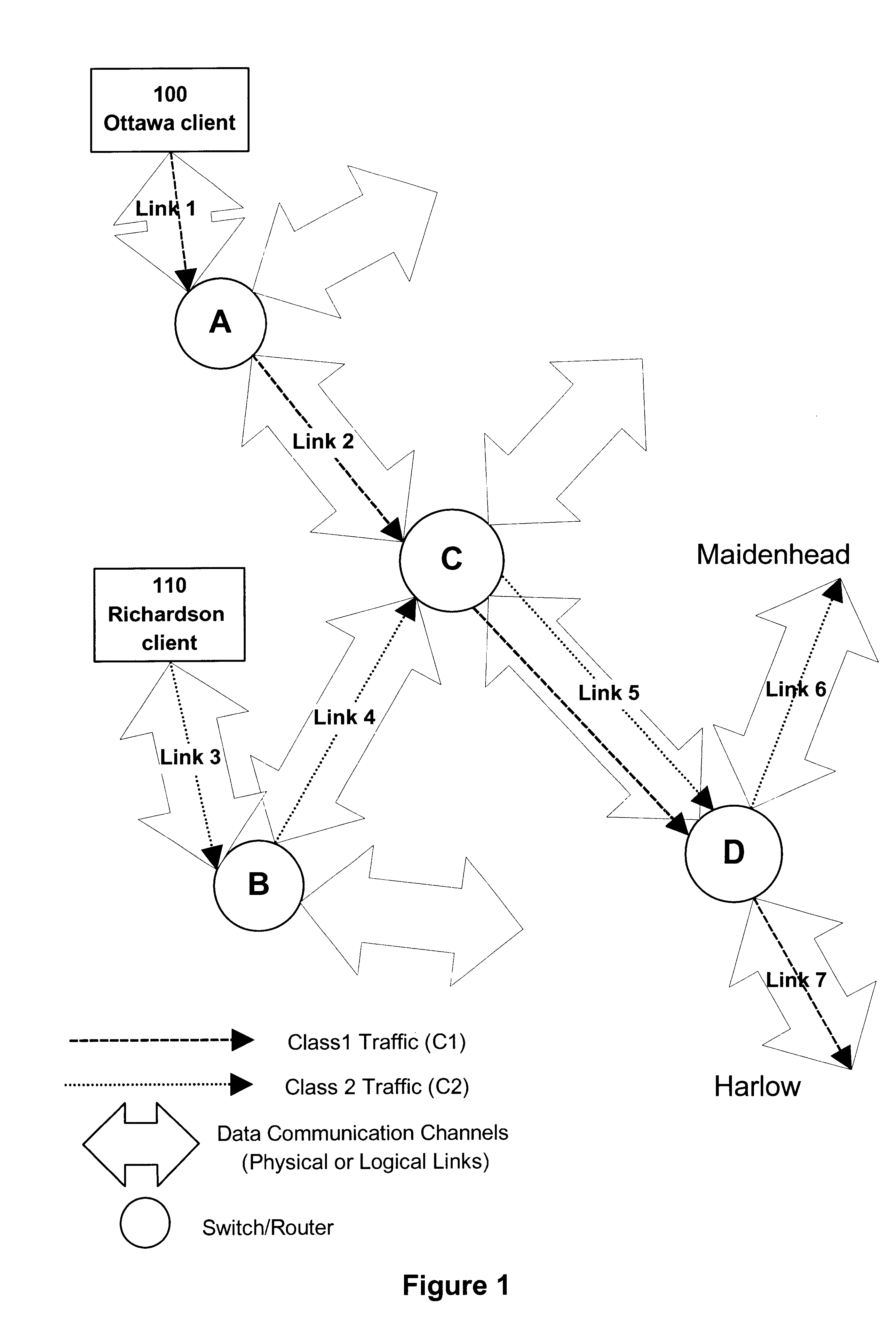

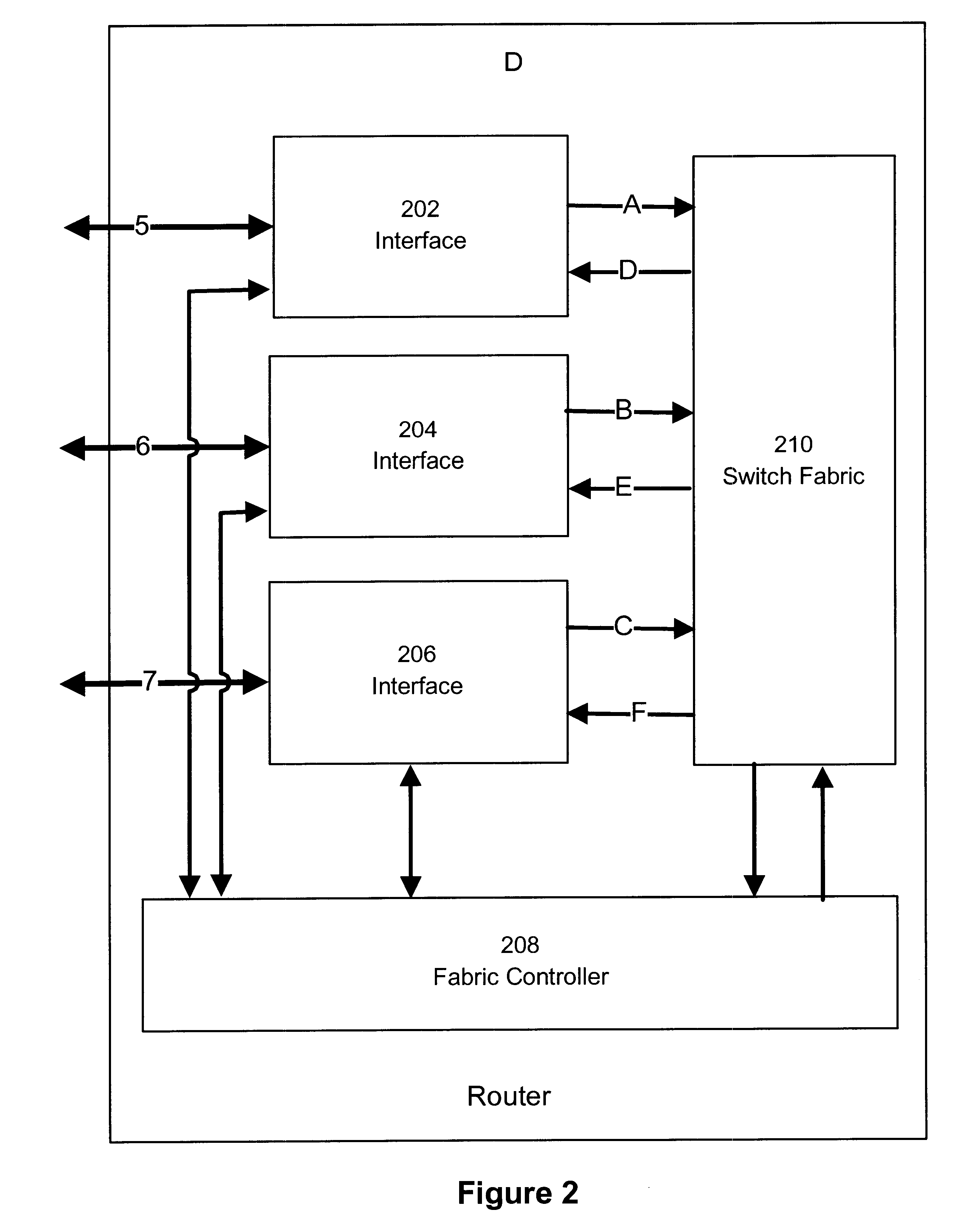

Policy-based synchronization of per-class resources between routers in a data network

ActiveUS20020194369A1Multiple digital computer combinationsProgram controlCommunication interfaceData stream

A data network may include an upstream router having one or more data handling queues, a downstream router, and a policy server. In one embodiment, the policy server includes processing resources, a communication interface in communication with the processing resources, and data storage that stores a configuration manager executable by the processing resources. The configuration manager configures data handling queues of the upstream router to provide a selected bandwidth to one or more of a plurality of service classes of data flows. In addition, the configuration manager transmits to the downstream router one or more virtual pool capacities, each corresponding to a bandwidth at the upstream router for one or more associated service classes among the plurality of service classes. In one embodiment, the configuration manager configures the data handling queues on the upstream router only in response to acknowledgment that one or more virtual pool capacities transmitted to the downstream router were successfully installed.

Owner:VERIZON PATENT & LICENSING INC

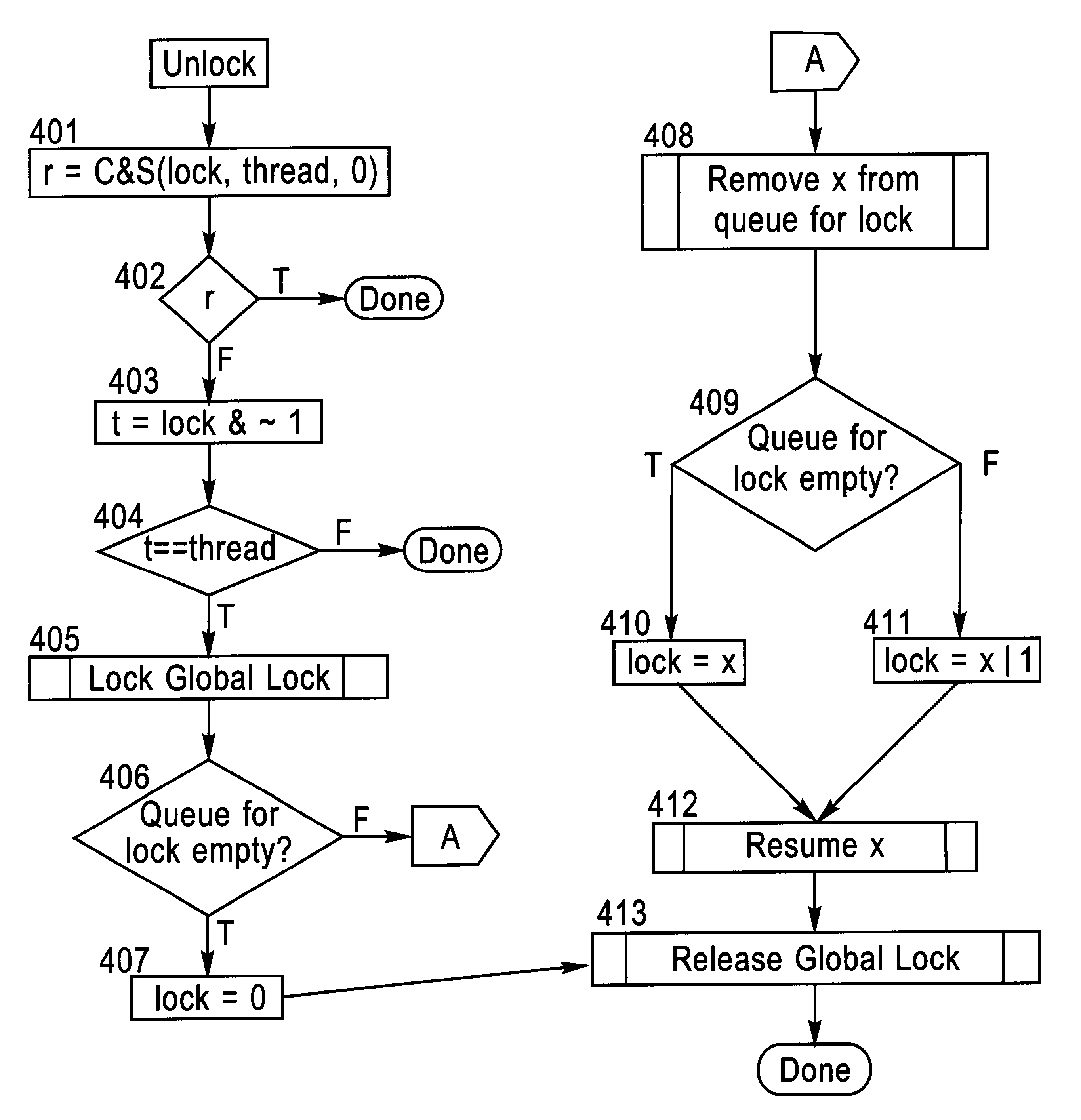

Locking and unlocking mechanism for controlling concurrent access to objects

InactiveUS6247025B1Data processing applicationsDigital data information retrievalNormal caseSpin locks

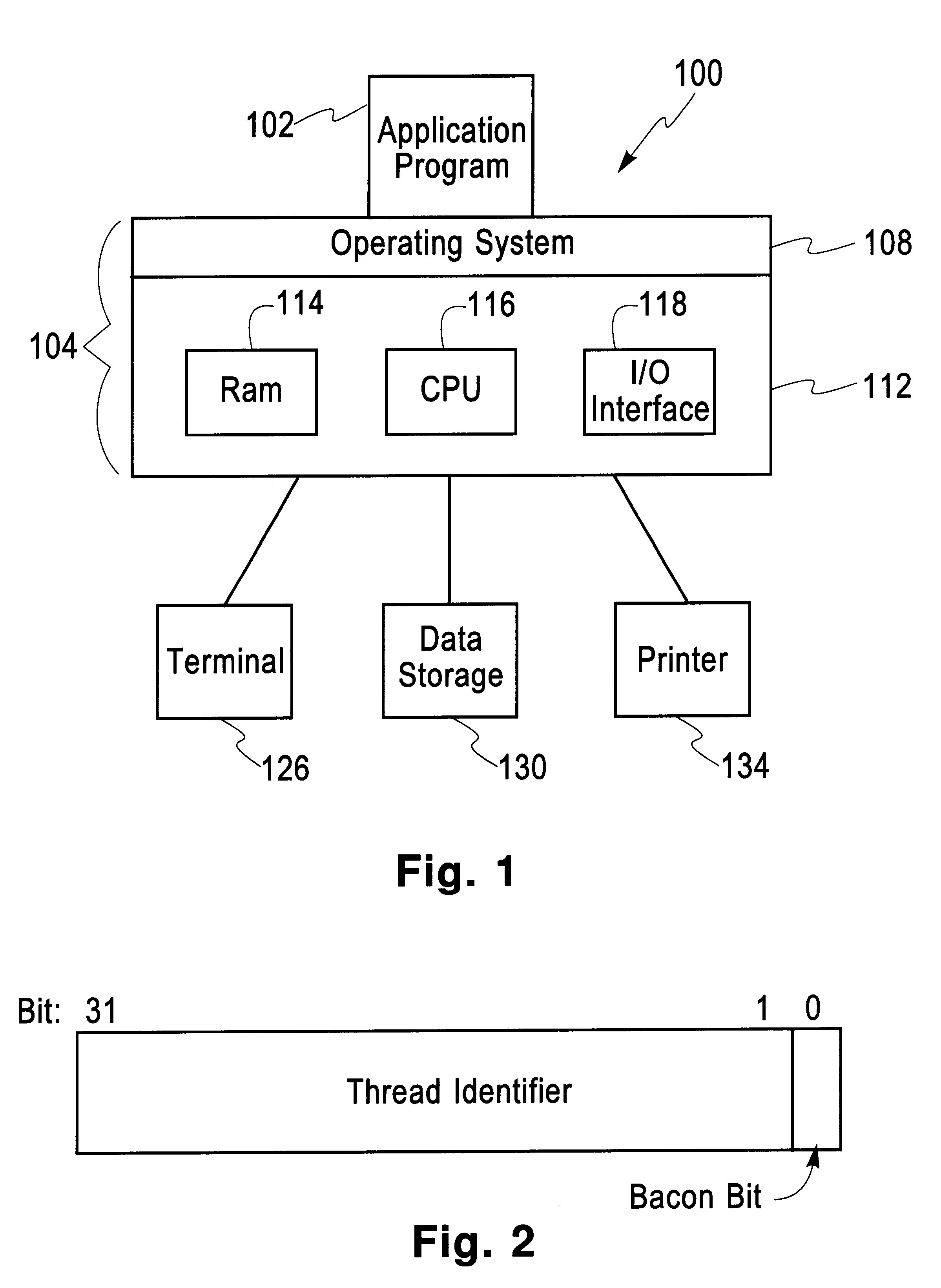

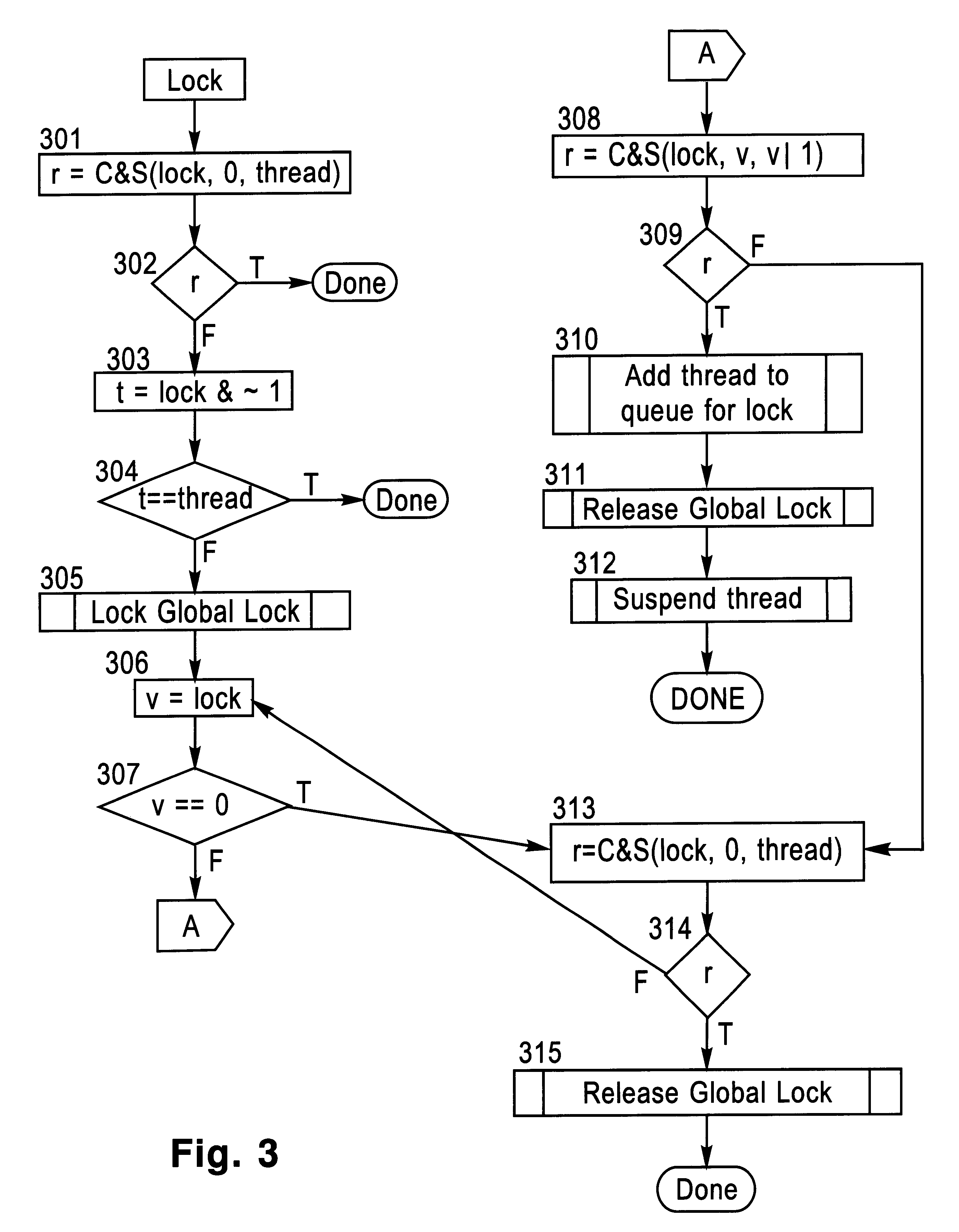

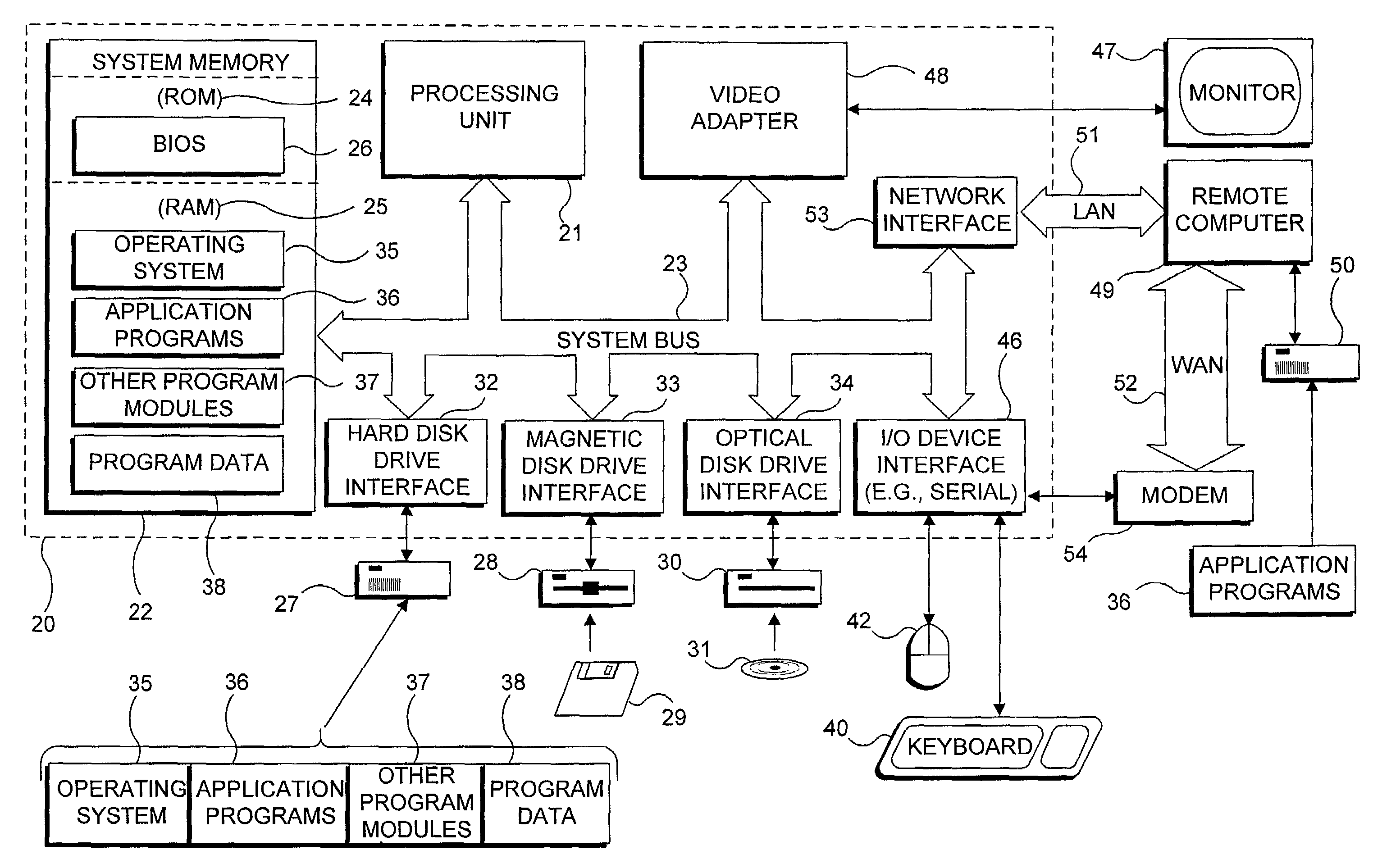

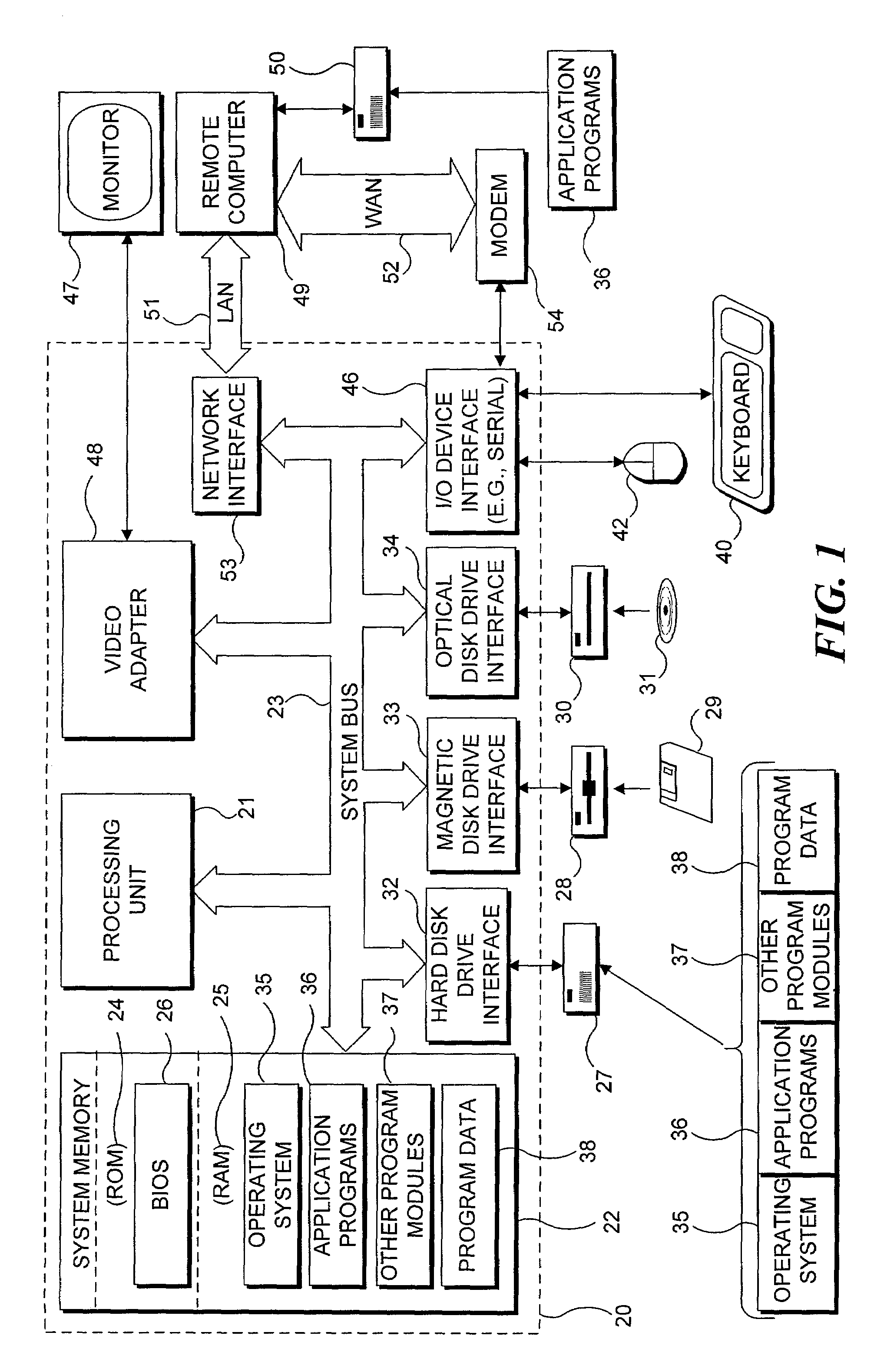

A lock / unlock mechanism to control concurrent access to objects in a multi-threaded computer processing system comprises two parts: a thread pointer (or thread identifier), and a one-bit flag called a "Bacon bit". Preferably, when an object is not locked (i.e., no thread has been granted access to the object), the thread identifier and Bacon bit are set to 0. When an object is locked by a particular thread (i.e., the thread has been granted access to the object), the thread identifier is set to a value that identifies the particular thread; if no other threads are waiting to lock the object, the Bacon bit is set to 0; however, if other threads are waiting to lock the object, the Bacon bit is set to "1', which indicates the there is a queue of waiting threads associated with the object. To lock an object, a single CompareAndSwap operation is preferably used, much like with spin-locks; if the lock is already held by another thread, enqueueing is handled in out-of-line code. To unlock an object, in the normal case, a single CompareAndSwap operation may be used. This single operation atomically tests that the current thread owns the lock, and that no other threads are waiting for the object (i.e., the Bacon bit is "0'). A global lock is preferably used to change the Bacon bit of the lock. This provides an lock / unlock mechanism which combines many of the desirable features of both spin locking and queued locking, and can be used as the basis for a very fast implementation of the synchronization facilities of the Java language.

Owner:IBM CORP

Distributed computing of a job corresponding to a plurality of predefined tasks

ActiveUS6988139B1Efficient processingDigital computer detailsMultiprogramming arrangementsDistributed Computing EnvironmentData bank

In a distributed computing environment, a queue of jobs is maintained on a job database, along with parameters for each of the computing devices available to process the jobs. A task model defining the job is provided for generating a plurality of tasks comprising each job. The tasks are maintained in a tuple database, along with the status of each task, indicating when each task is ready for processing. As a computing device becomes available to process a task, its capabilities are matched with those required to complete tasks that are ready for processing and the highest priority task meeting those requirements is assigned to the computing device to be processed. These steps are repeated until all the tasks required for the job have been processed, or the job is otherwise terminated.

Owner:MICROSOFT TECH LICENSING LLC

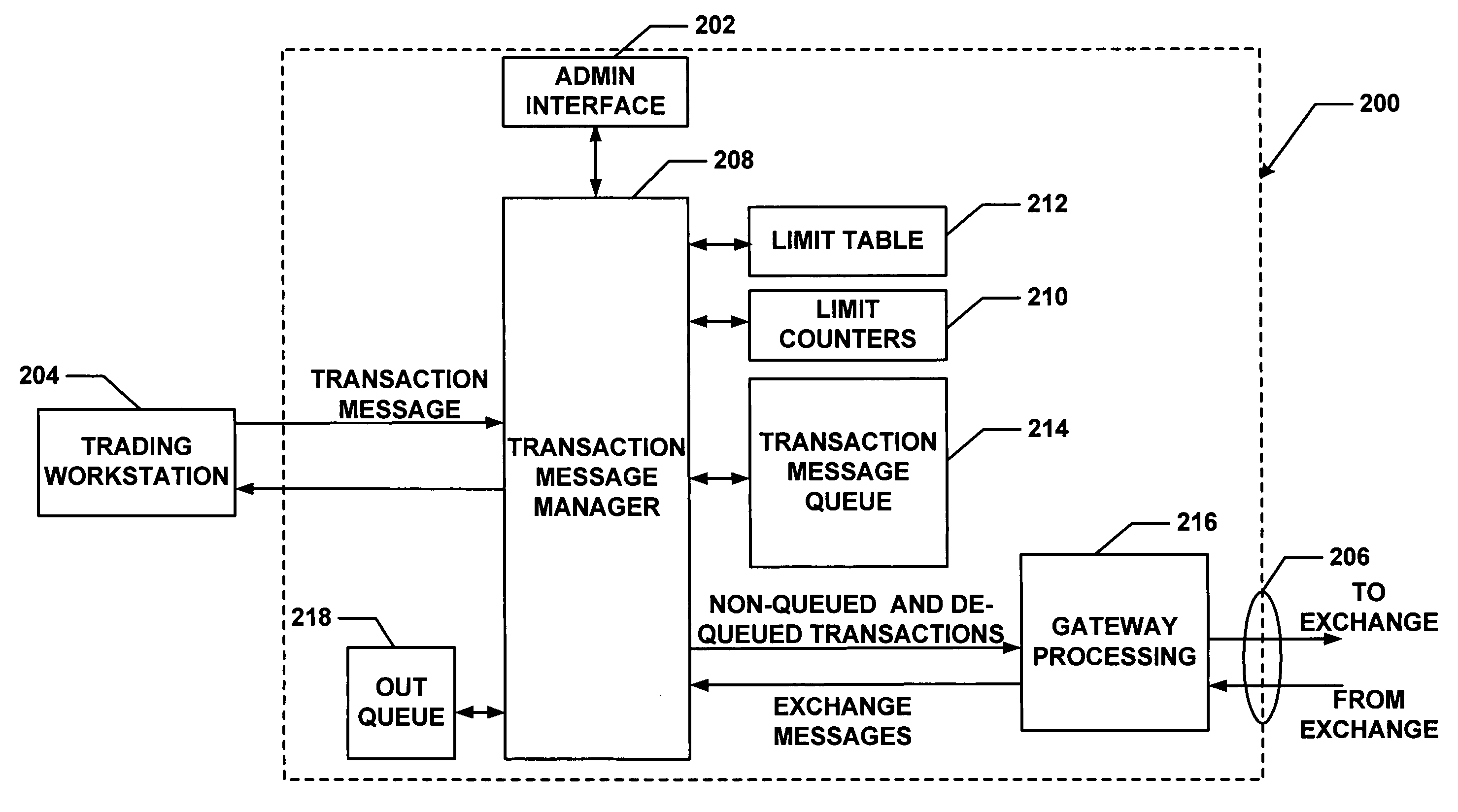

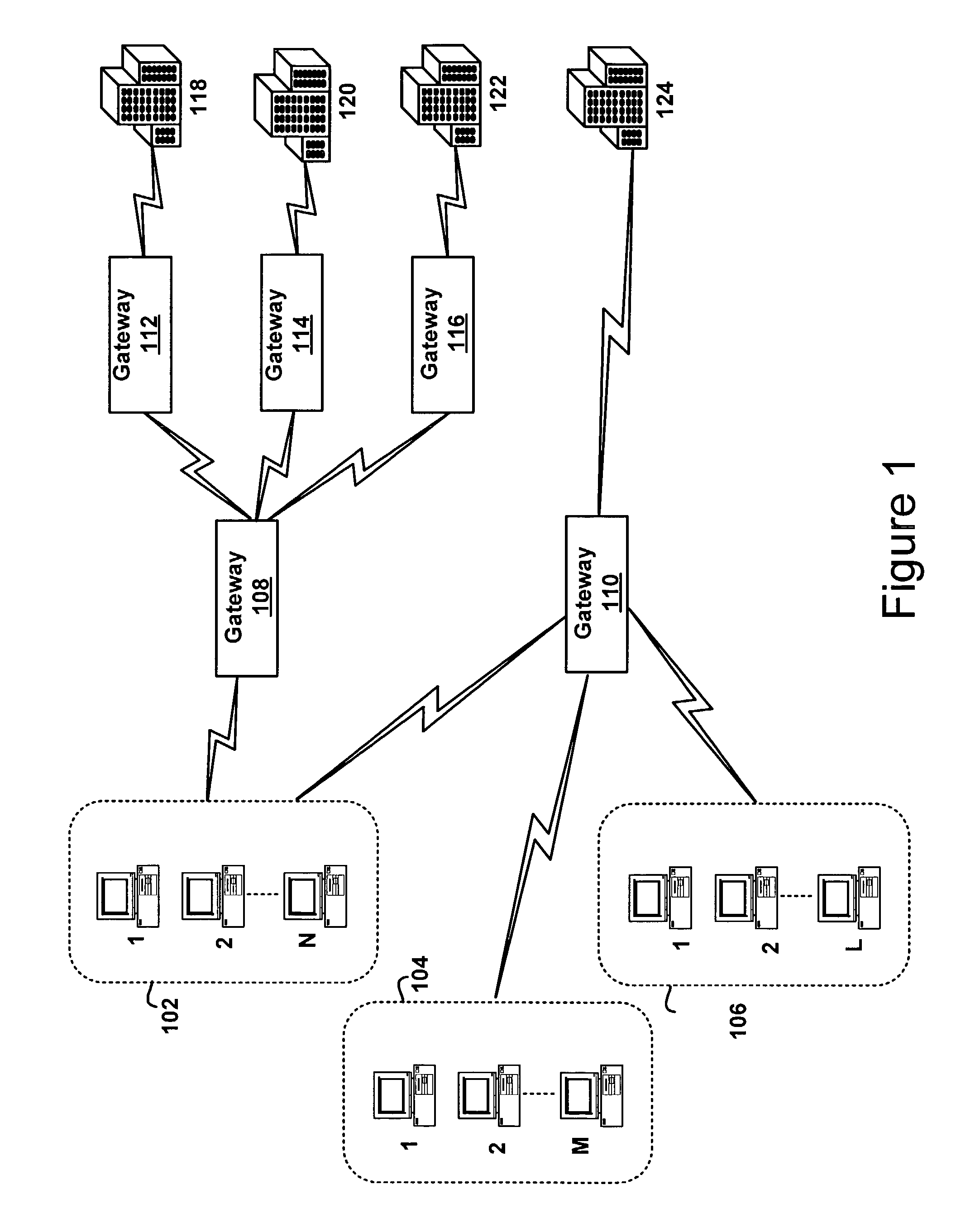

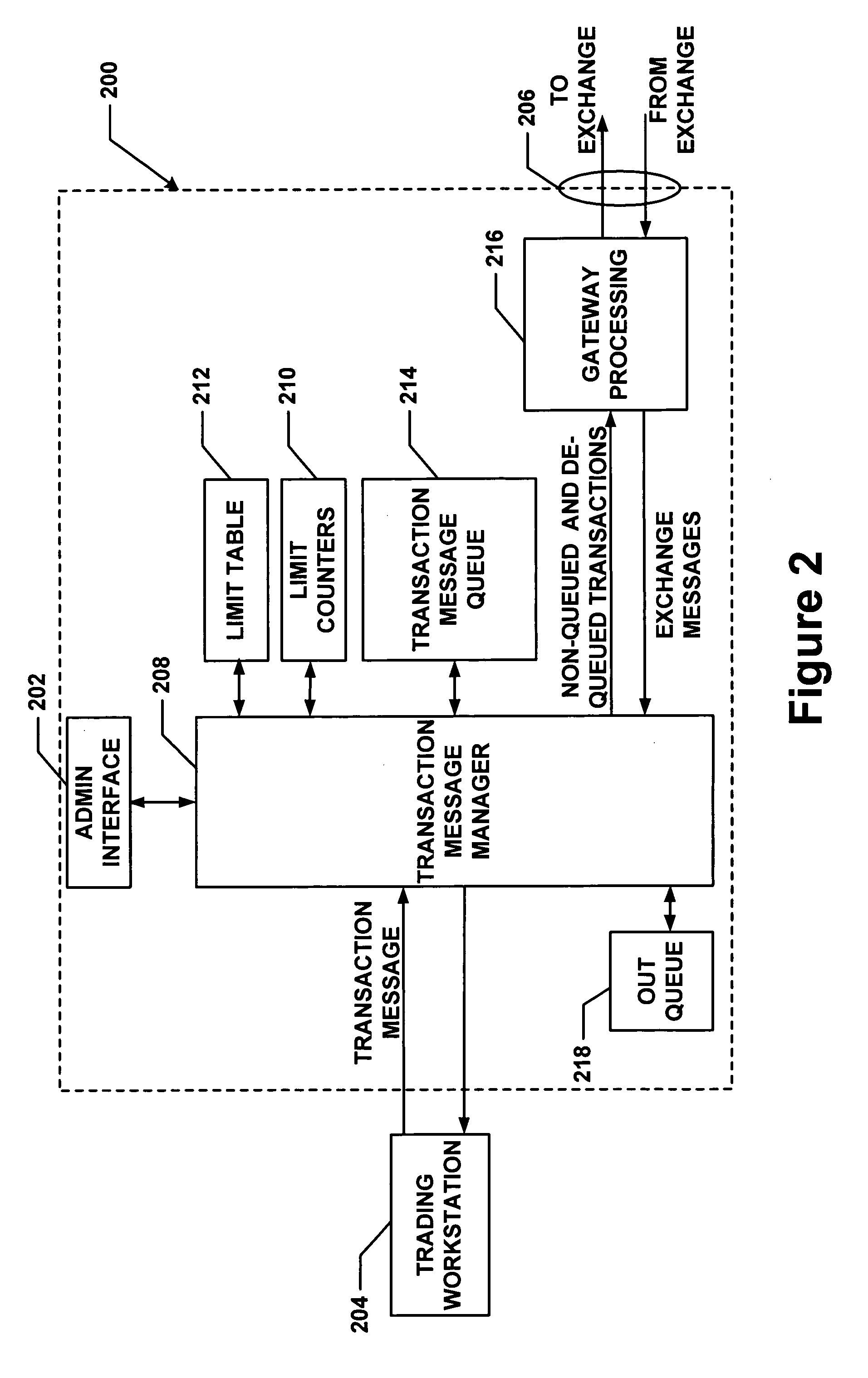

Method and apparatus for message flow and transaction queue management

Management of transaction message flow utilizing a transaction message queue. The system and method are for use in financial transaction messaging systems. The system is designed to enable an administrator to monitor, distribute, control and receive alerts on the use and status of limited network and exchange resources. Users are grouped in a hierarchical manner, preferably including user level and group level, as well as possible additional levels such as account, tradable object, membership, and gateway levels. The message thresholds may be specified for each level to ensure that transmission of a given transaction does not exceed the number of messages permitted for the user, group, account, etc.

Owner:TRADING TECH INT INC

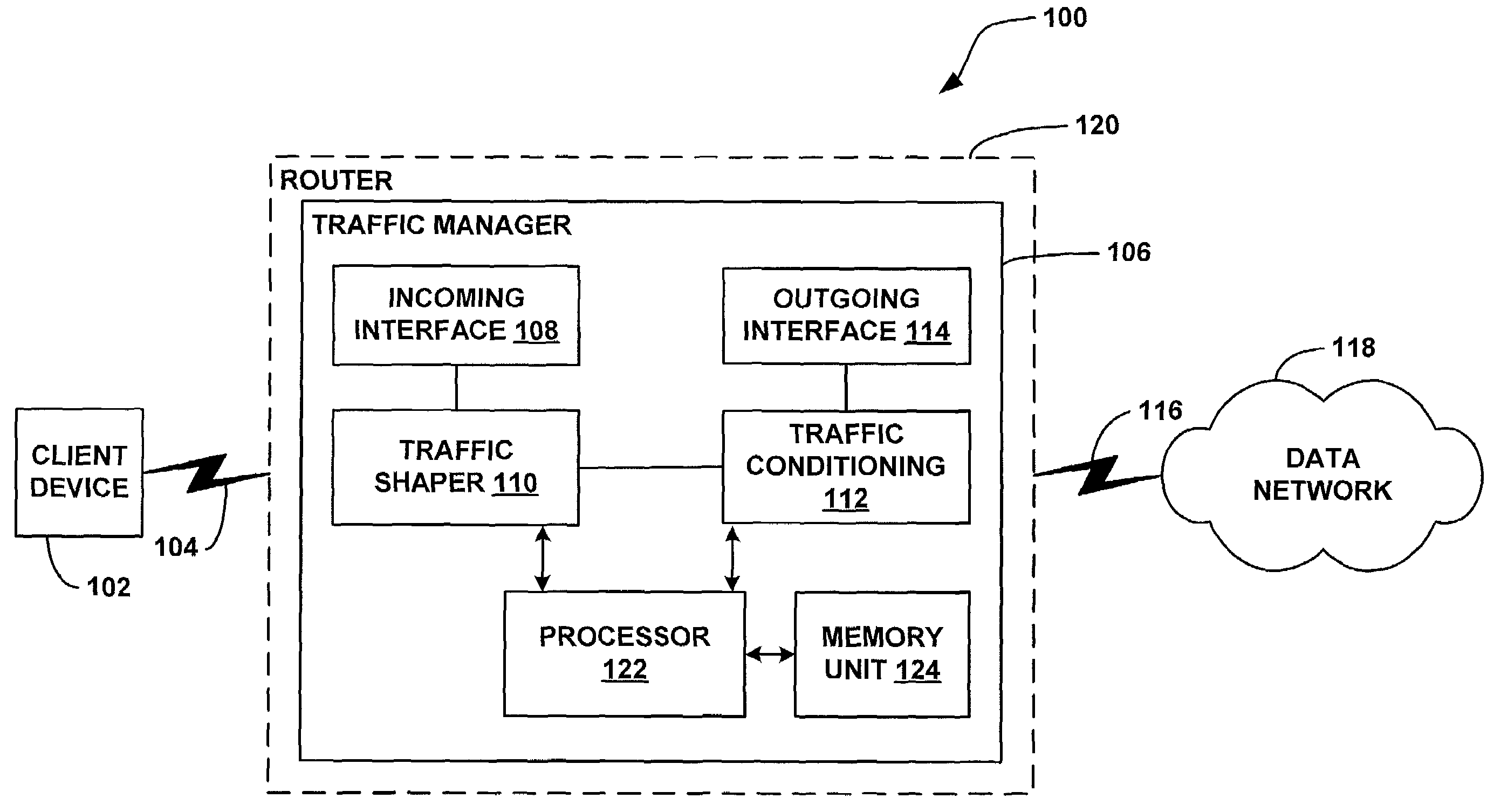

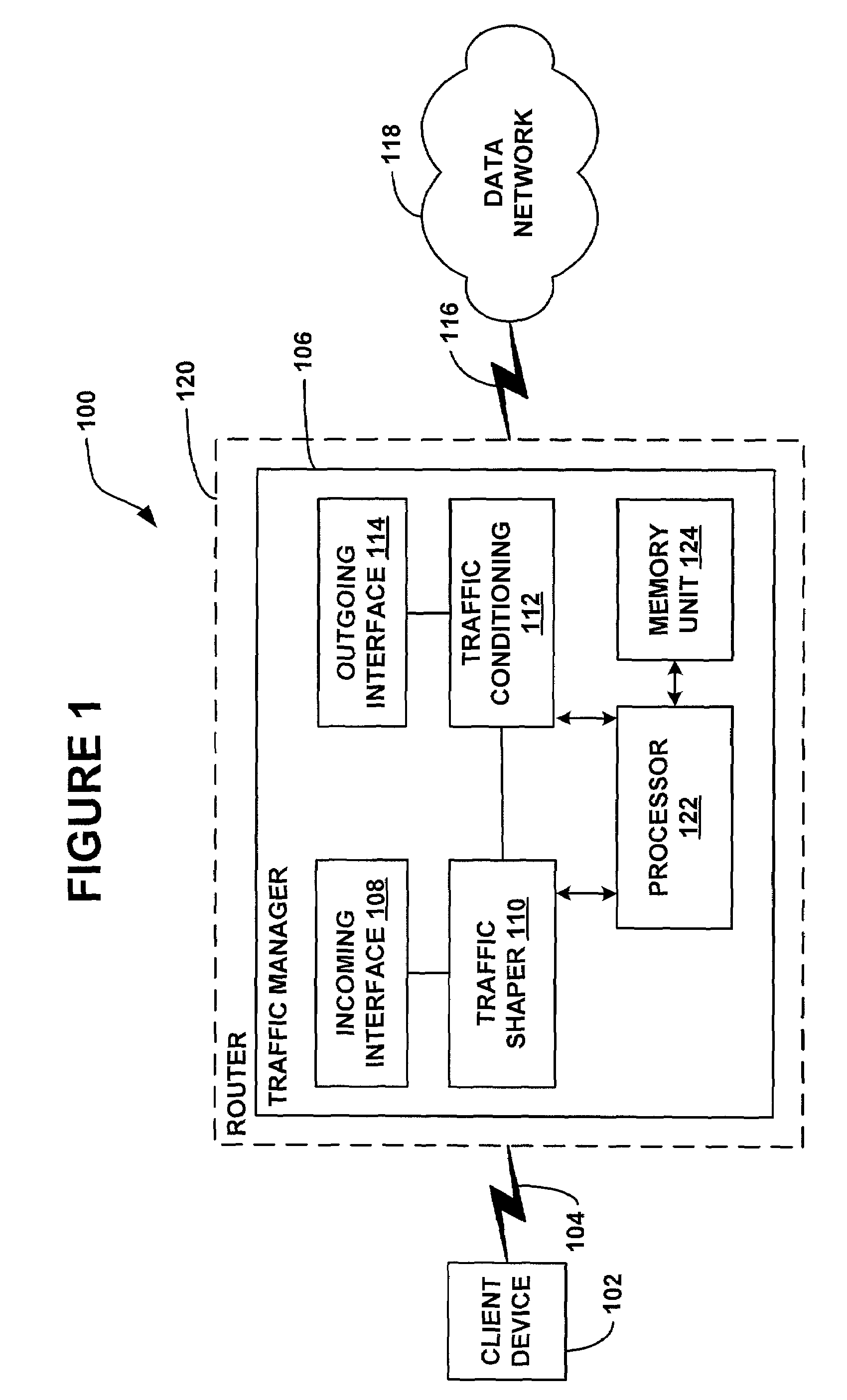

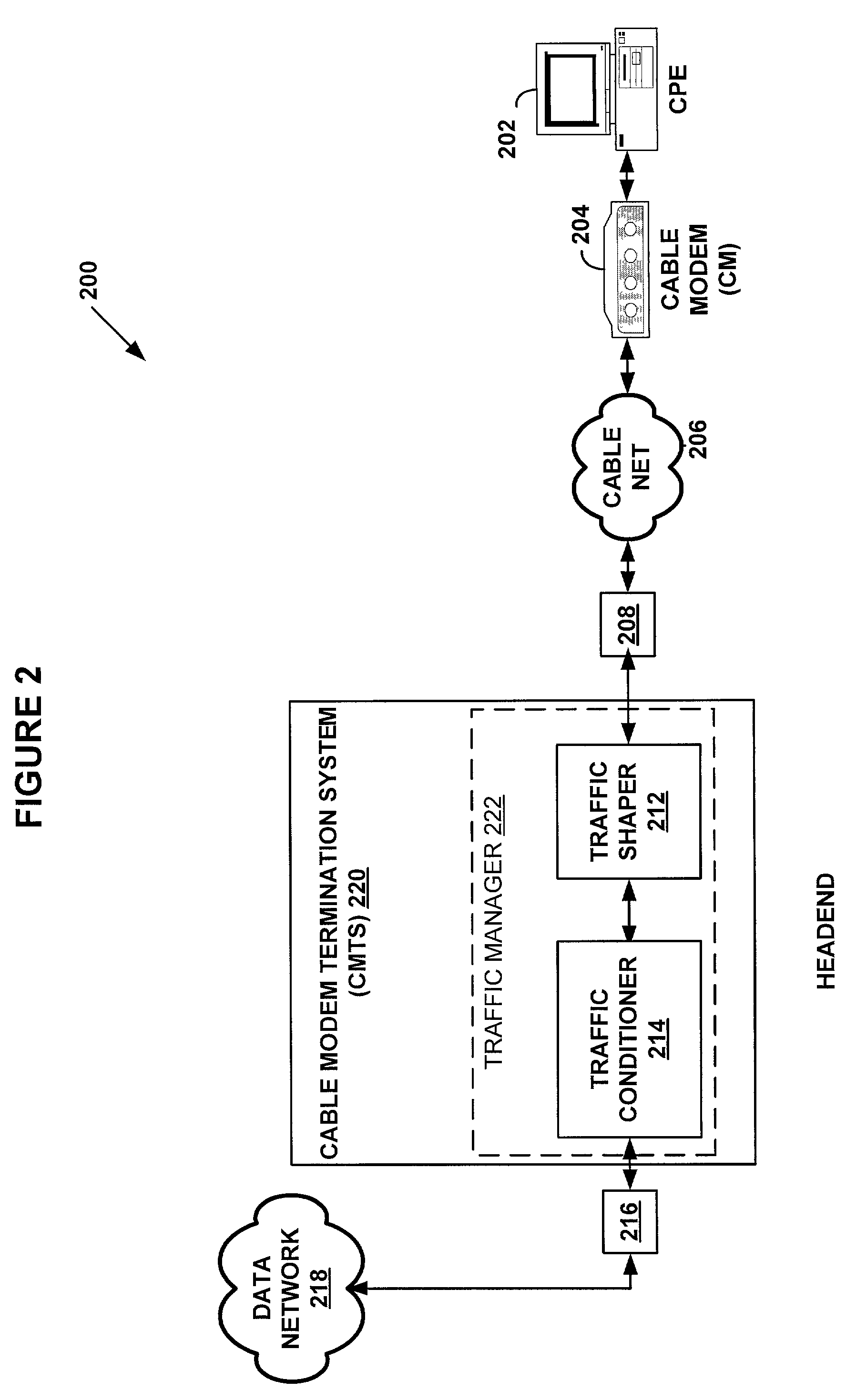

System and method for traffic shaping based on generalized congestion and flow control

ActiveUS7088678B1Overcome problemsError preventionFrequency-division multiplex detailsPacket arrivalModem device

A system and methods are shown for traffic shaping and congestion avoidance in a computer network such as a data-over-cable network. A headend of the data-over-cable system includes a traffic shaper configured to calculate a packet arrival rate from a cable modem and a traffic conditioner configured to calculate an average queue size on an output interface to an external network. For example, the traffic shaper compares the packet arrival rate to three packet arrival thresholds including a committed rate threshold, a control rate threshold and a peak rate threshold. If the calculated packet arrival rate falls between the committed threshold and control rate threshold, the traffic shaper applies a link layer mechanism, such as a MAP bandwidth allocation mechanism, to lower the transmission rate from the cable modem.

Owner:VL COLLECTIVE IP LLC

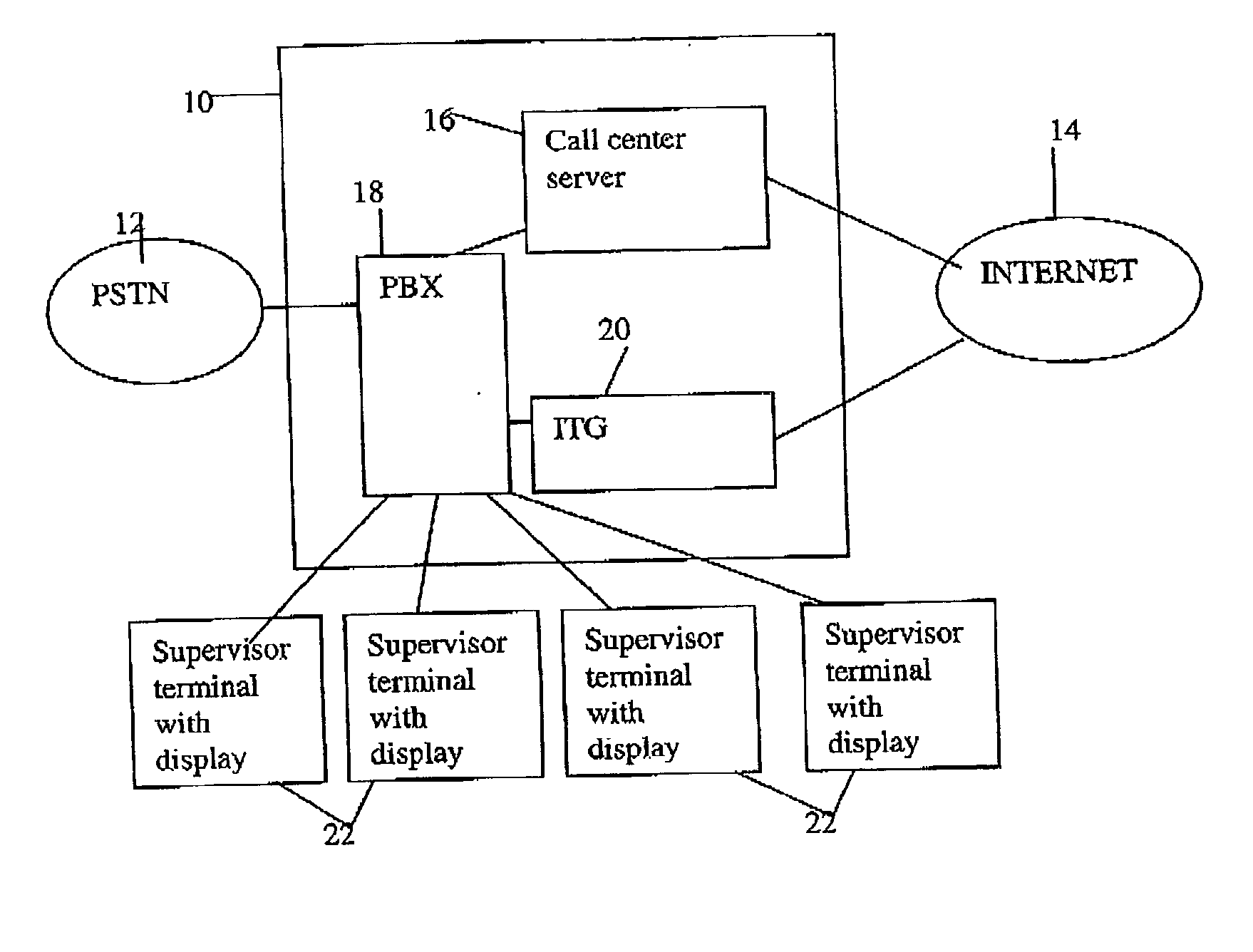

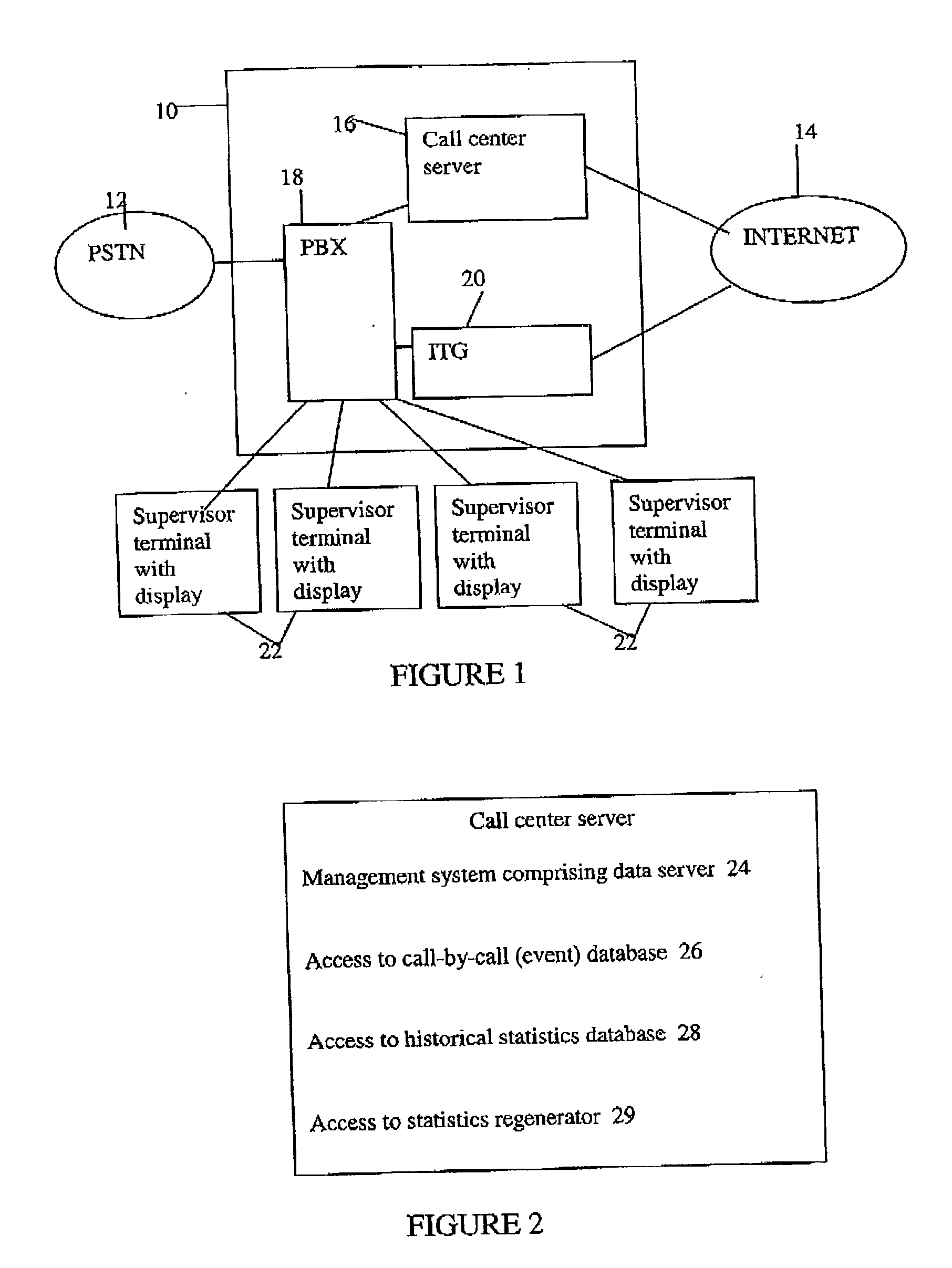

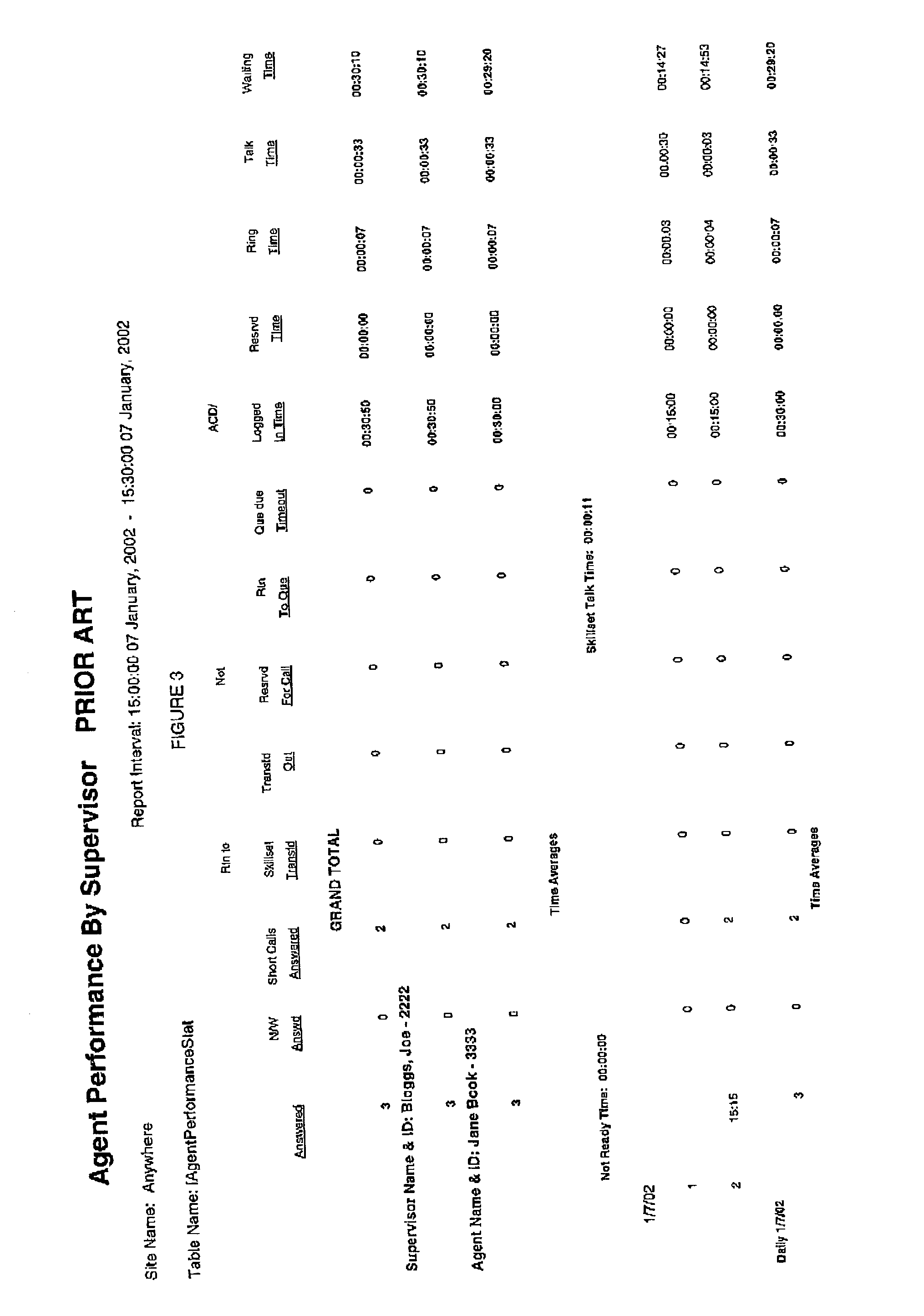

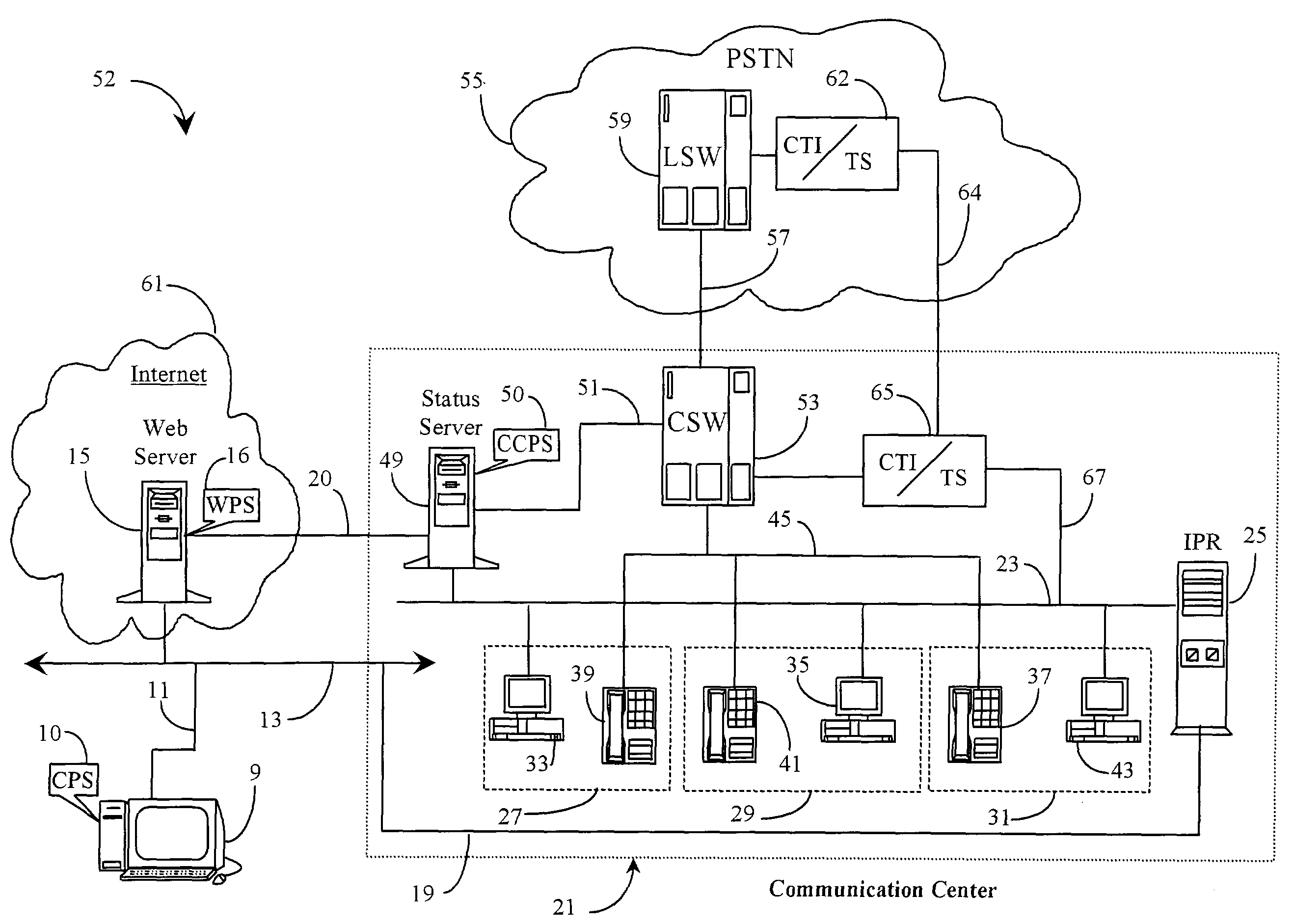

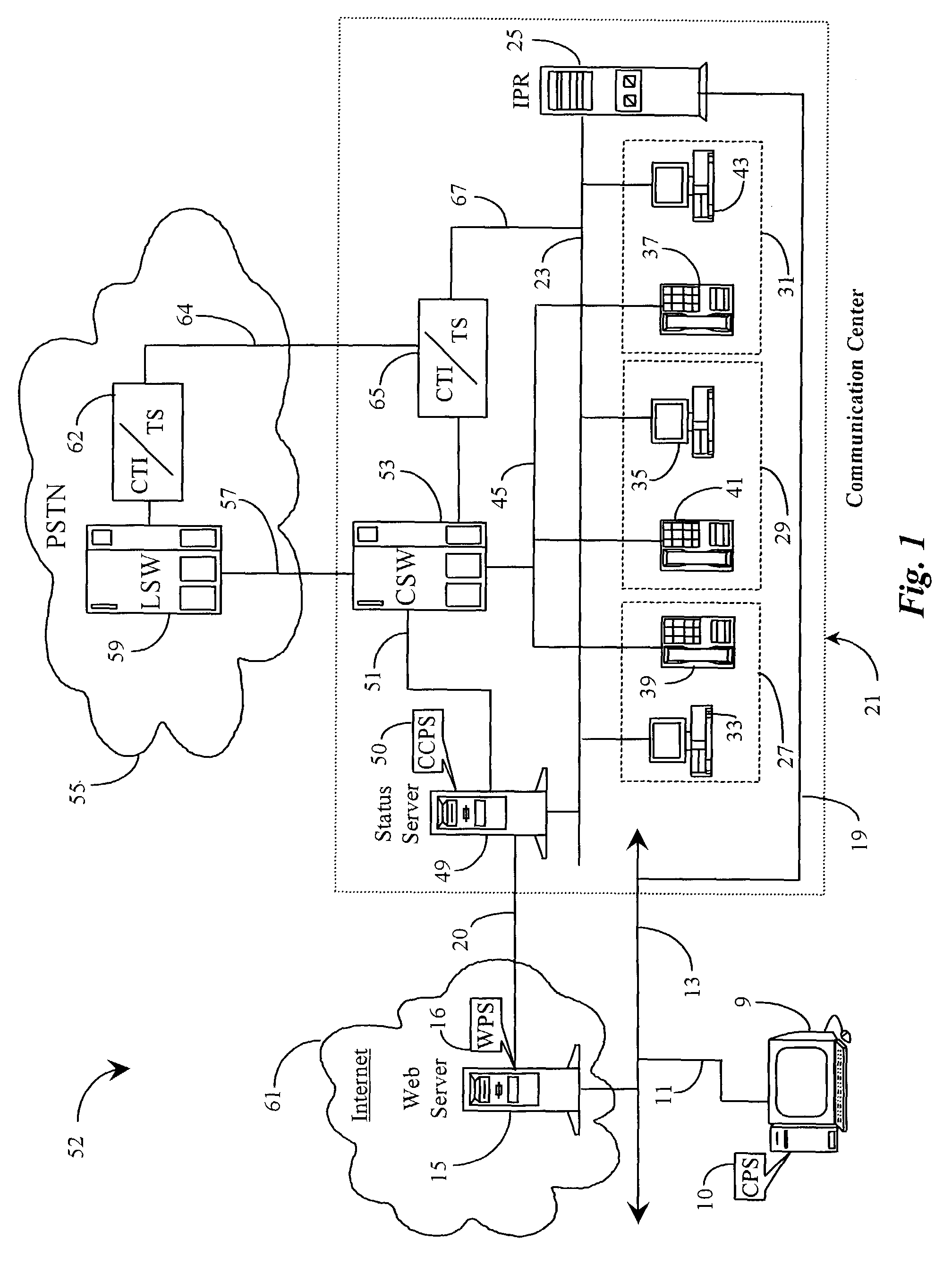

Determining statistics about the behaviour of a call center at a past time instant

InactiveUS20040057416A1Processing speedThe process is simple and effectiveSpecial service for subscribersData switching by path configurationActive agentData science

Call center supervisors require information about the performance of call center agents in order to manage them appropriately. Previously this information has been provided in two general forms. Current information in the form of statistics such as queue lengths, numbers of active agents, etc. and historical information in the form of aggregate data such as the average waiting time over a 15 minute period. At present supervisors have no means of accessing information about the state of a call center at a particular past time instant. The present invention provides this information by using a pegging engine to extrapolate from statistics about behaviour of the call center at a past time instant to values of those statistics at a required past time instant. Information about events occurring in the call center is used in order to carry out this extrapolation.

Owner:AVAYA INC

Method and apparatus for optimizing response time to events in queue

ActiveUS7929562B2Short response timeQuick cureError preventionFrequency-division multiplex detailsApplication softwareProcess time

A system for optimizing response time to events or representations thereof waiting in a queue has a first server having access to the queue; a software application running on the first server; and a second server accessible from the first server, the second server containing rules governing the optimization. In a preferred embodiment, the software application at least periodically accesses the queue and parses certain ones of events or tokens in the queue and compares the parsed results against rules accessed from the second server in order to determine a measure of disposal time for each parsed event wherein if the determined measure is sufficiently low for one or more of the parsed events, those one or more events are modified to a reflect a higher priority state than originally assigned enabling faster treatment of those events resulting in relief from those events to the queue system load.

Owner:GENESYS TELECOMMUNICATIONS LABORATORIES INC

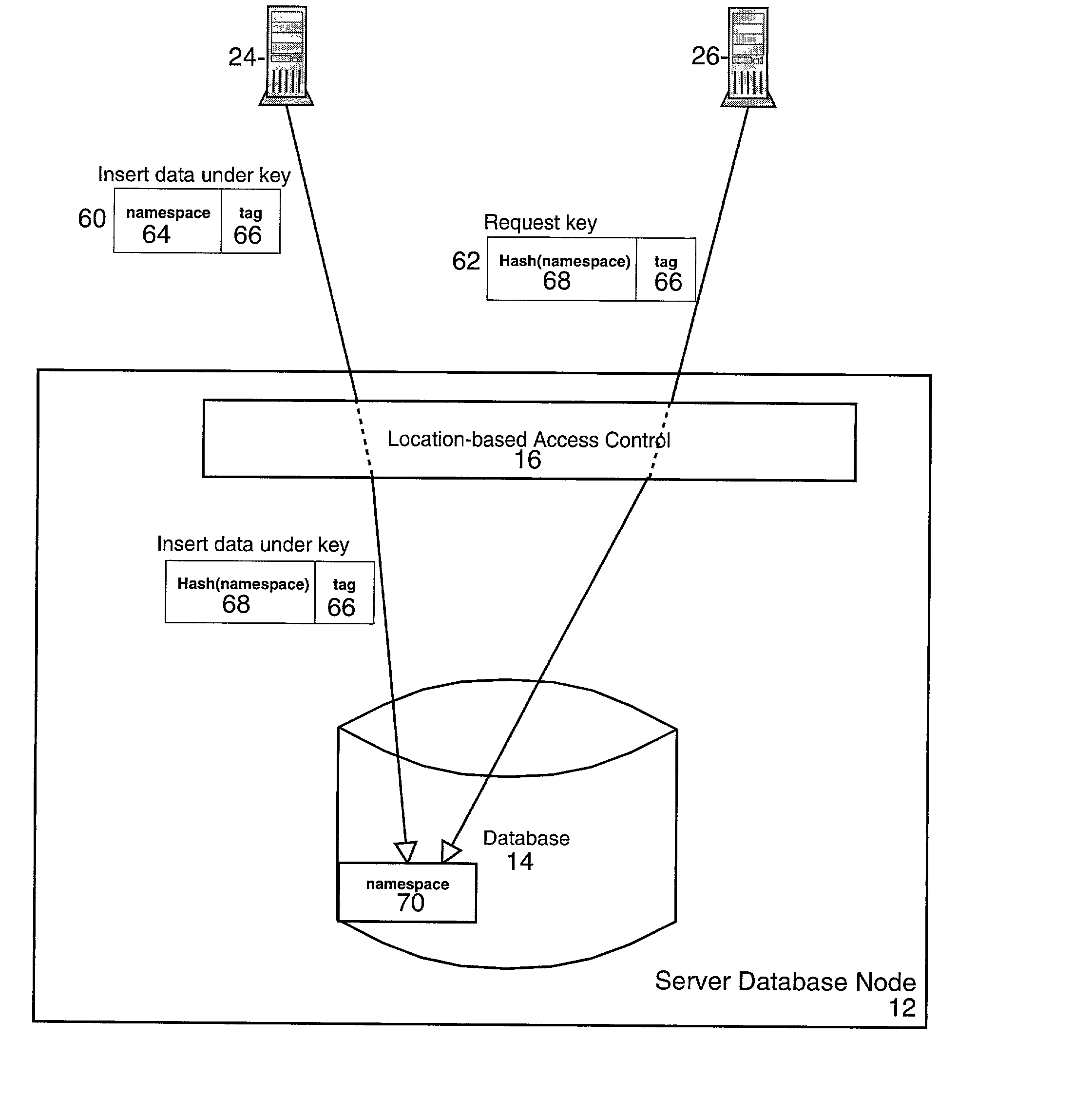

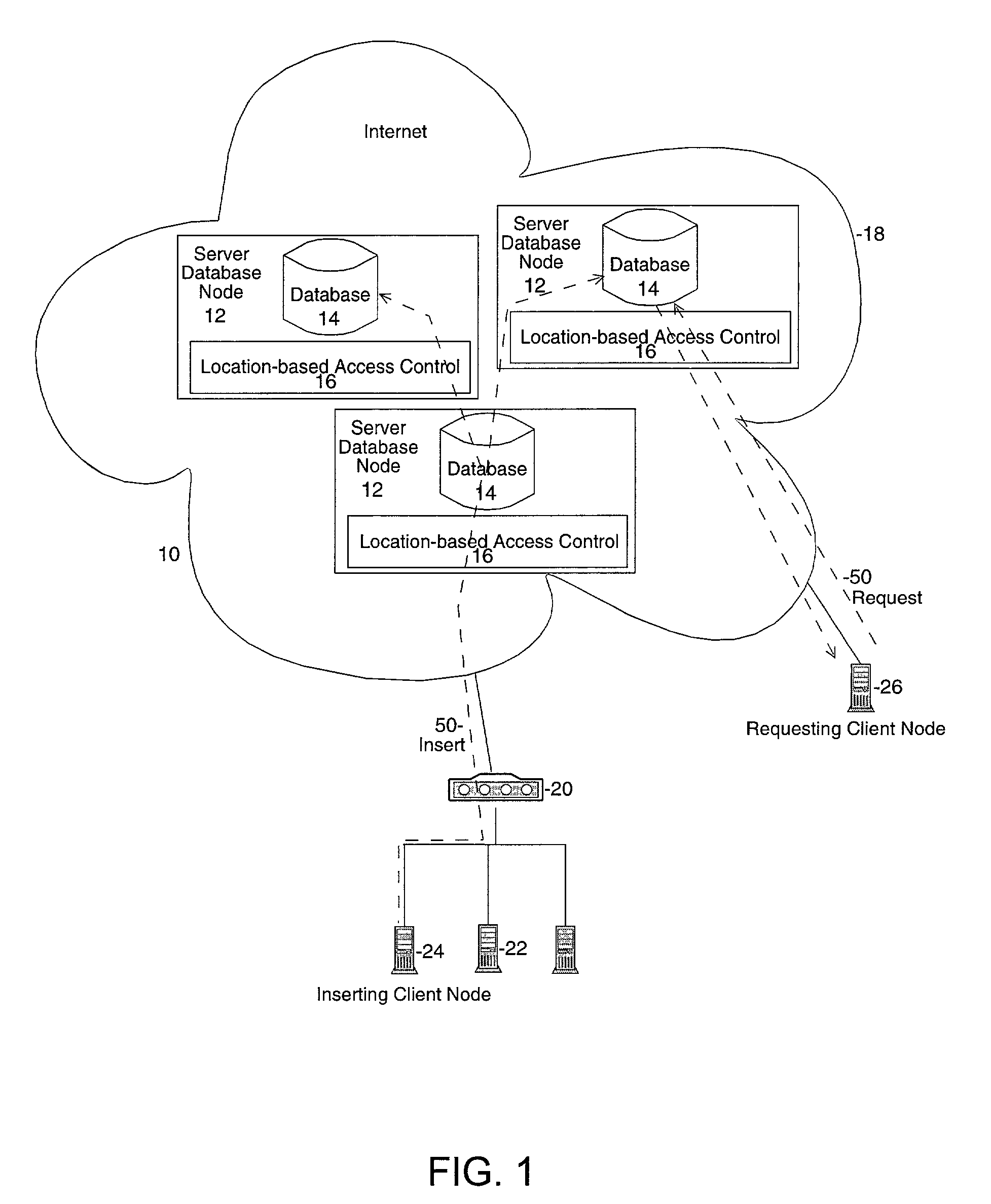

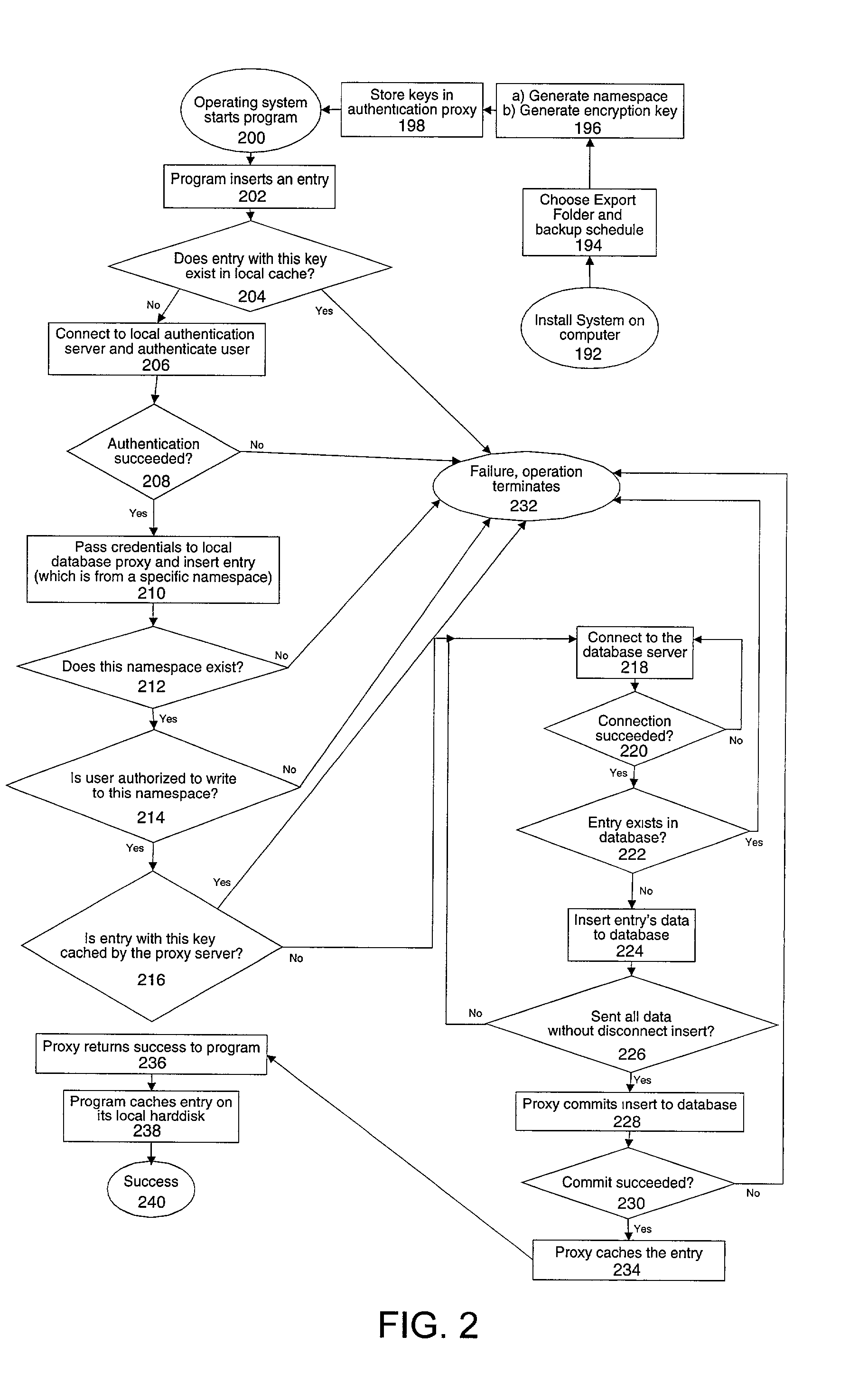

Method and system for asynchronous transmission, backup, distribution of data and file sharing

InactiveUS20030046260A1High degree of consistencySave bandwidthDigital data information retrievalData processing applicationsFile sharingOperating system

The instant invention provides for a system and method for asynchronously sharing, backing up, and distributing data based on immutable entries. The system which is layered upon an existing operating system automatically stores, queues and distributes requested data among users.

Owner:ZOTECA

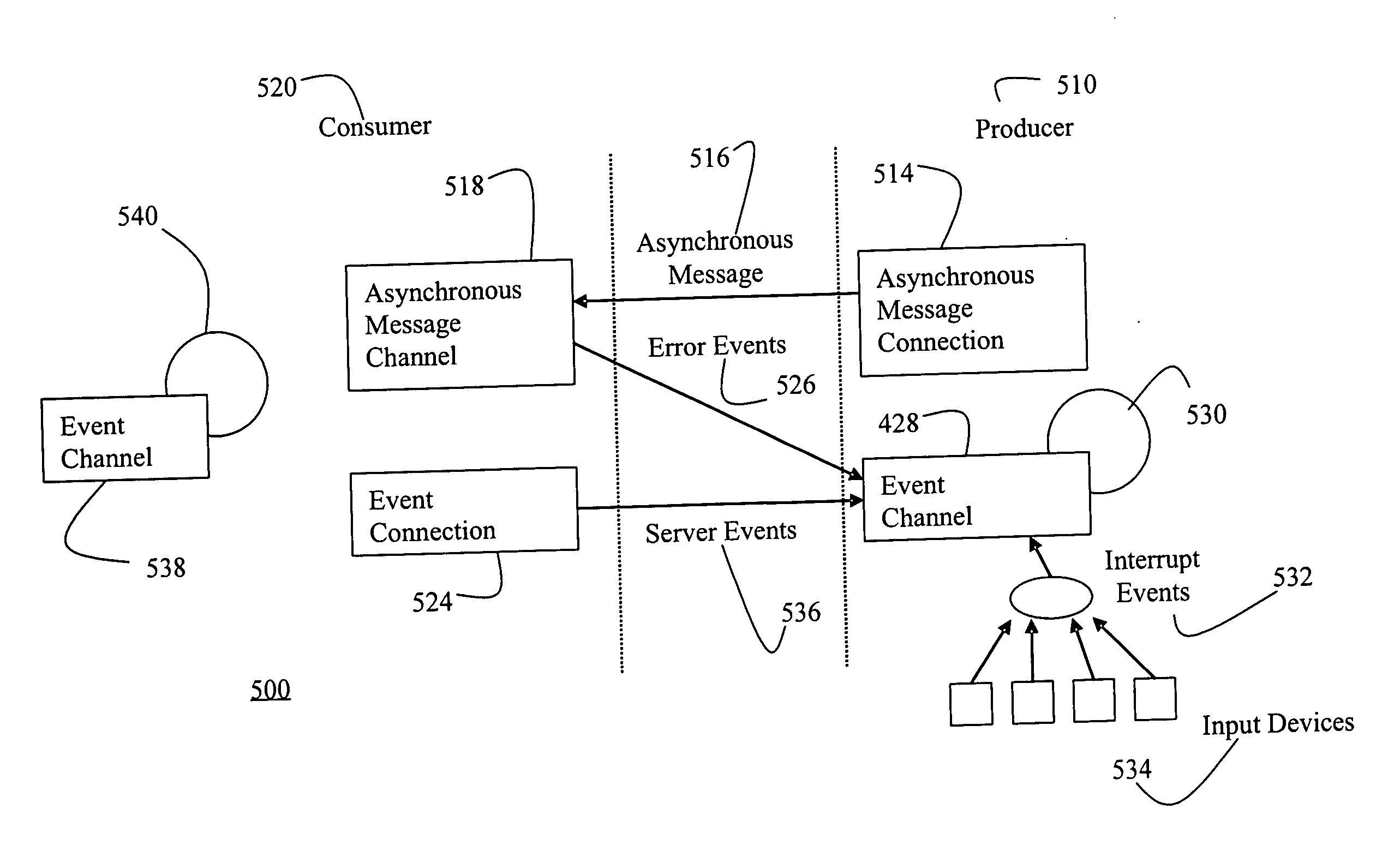

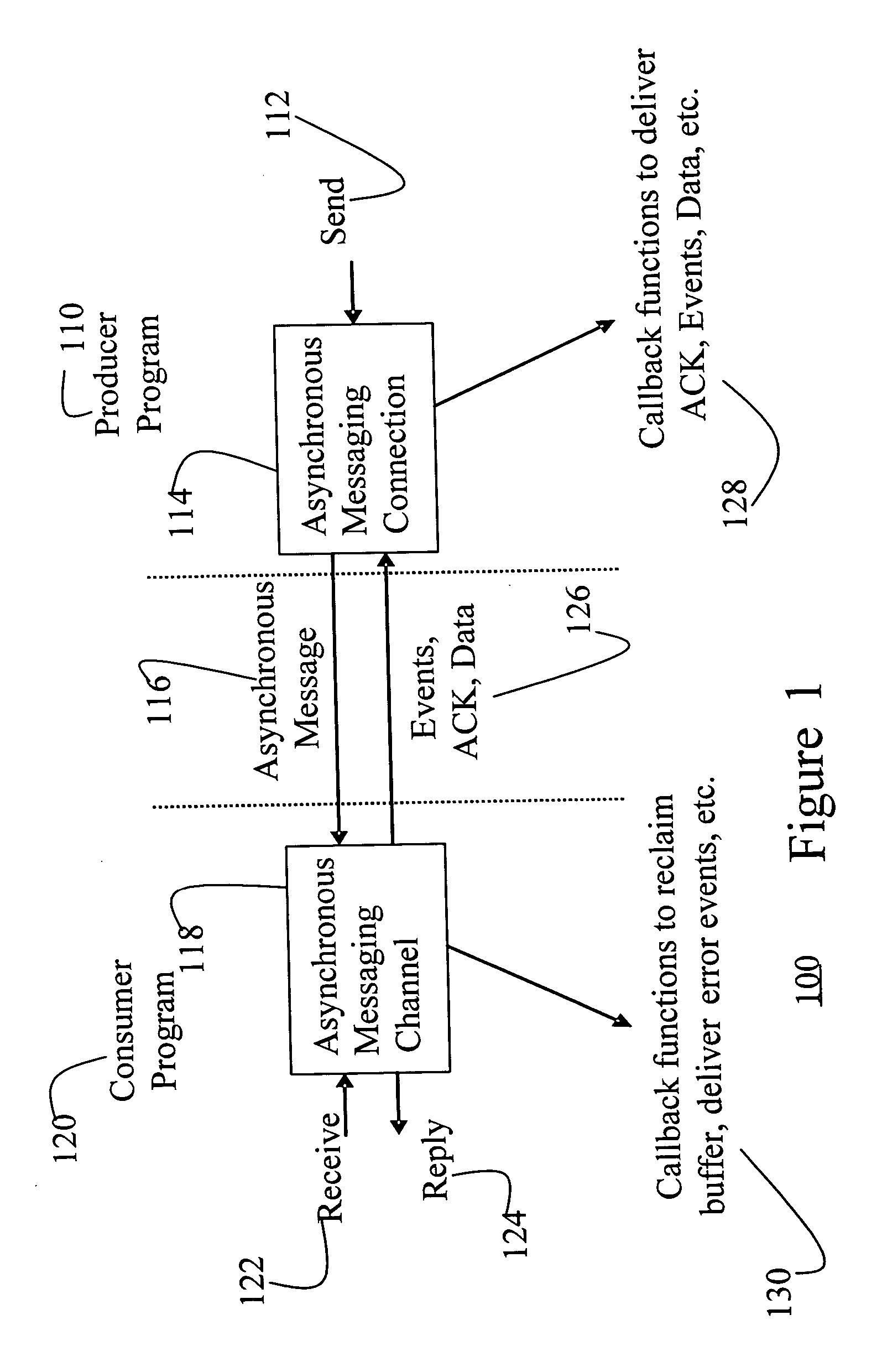

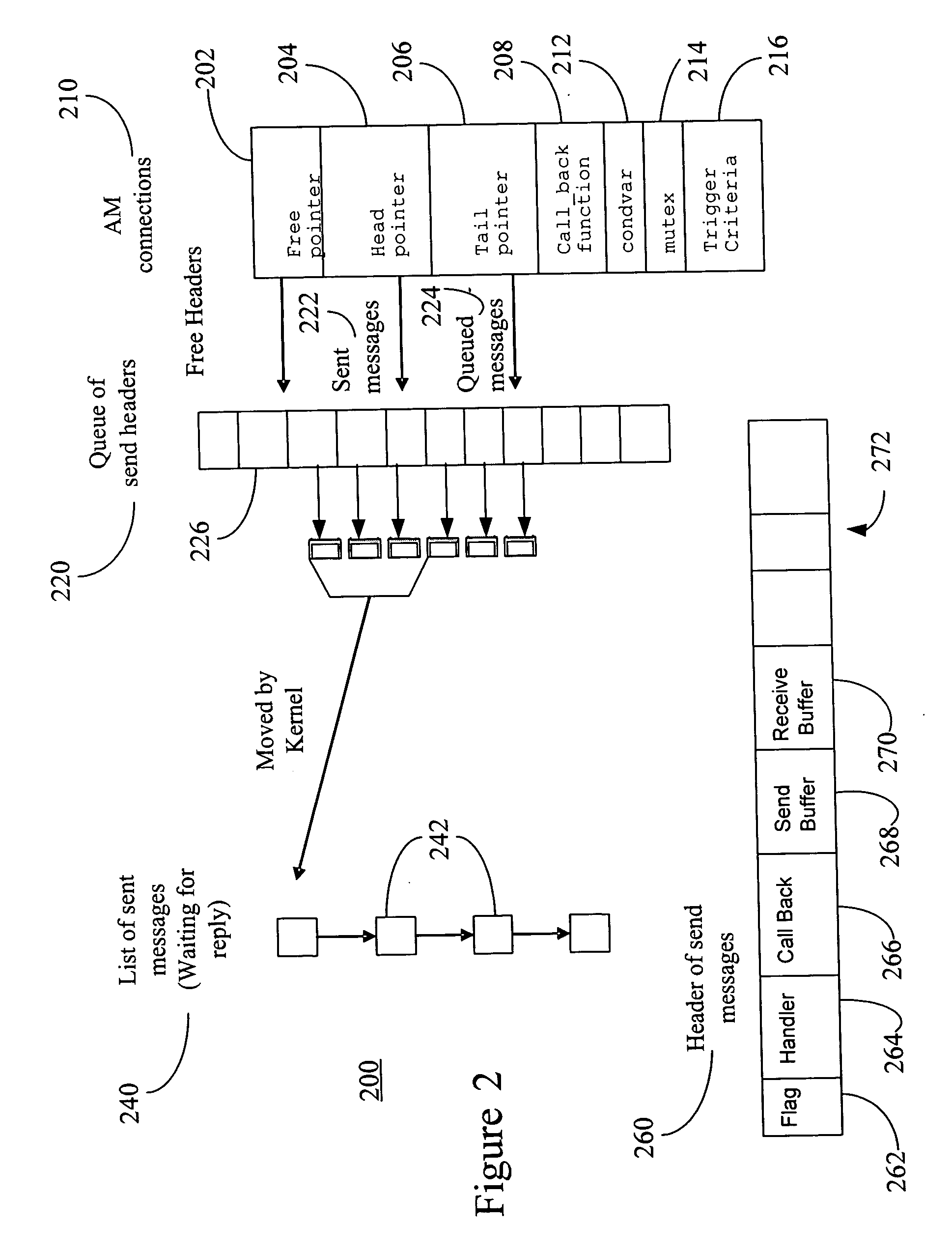

Fast and memory protected asynchronous message scheme in a multi-process and multi-thread environment

ActiveUS20060182137A1Speeding up queue operationSlow performanceTime-division multiplexStore-and-forward switching systemsComputer hardwareData transmission

An asynchronous message passing mechanism that allows for multiple messages to be batched for delivery between processes, while allowing for full memory protection during data transfers and a lockless mechanism for speeding up queue operation and queuing and delivering messages simultaneously.

Owner:MALIKIE INNOVATIONS LTD

Data access, replication or communication system comprising a distributed software application

ActiveUS20070130255A1Improve availabilityHigh bandwidthMultiple digital computer combinationsData switching networksMessage queueCommunications system

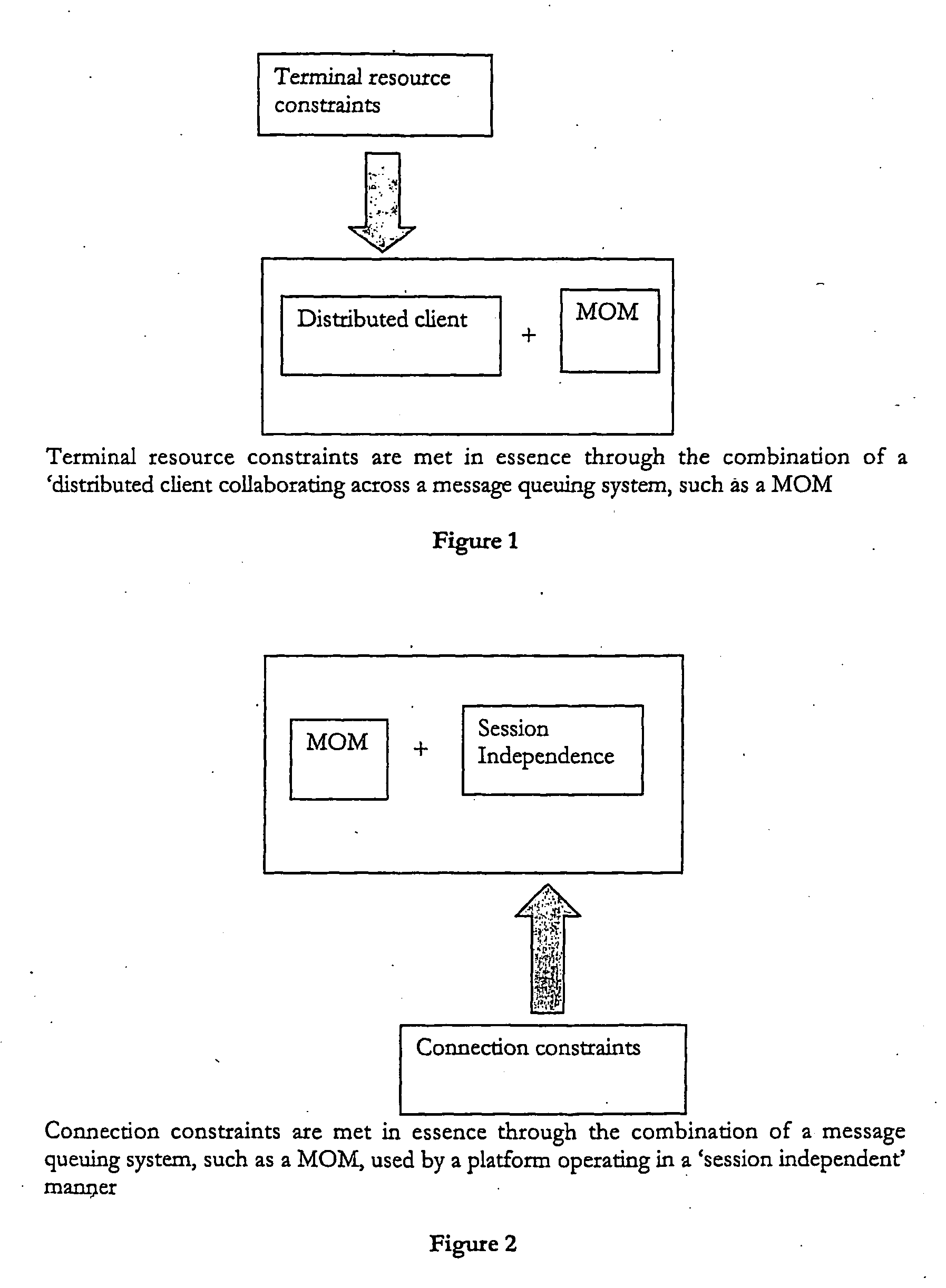

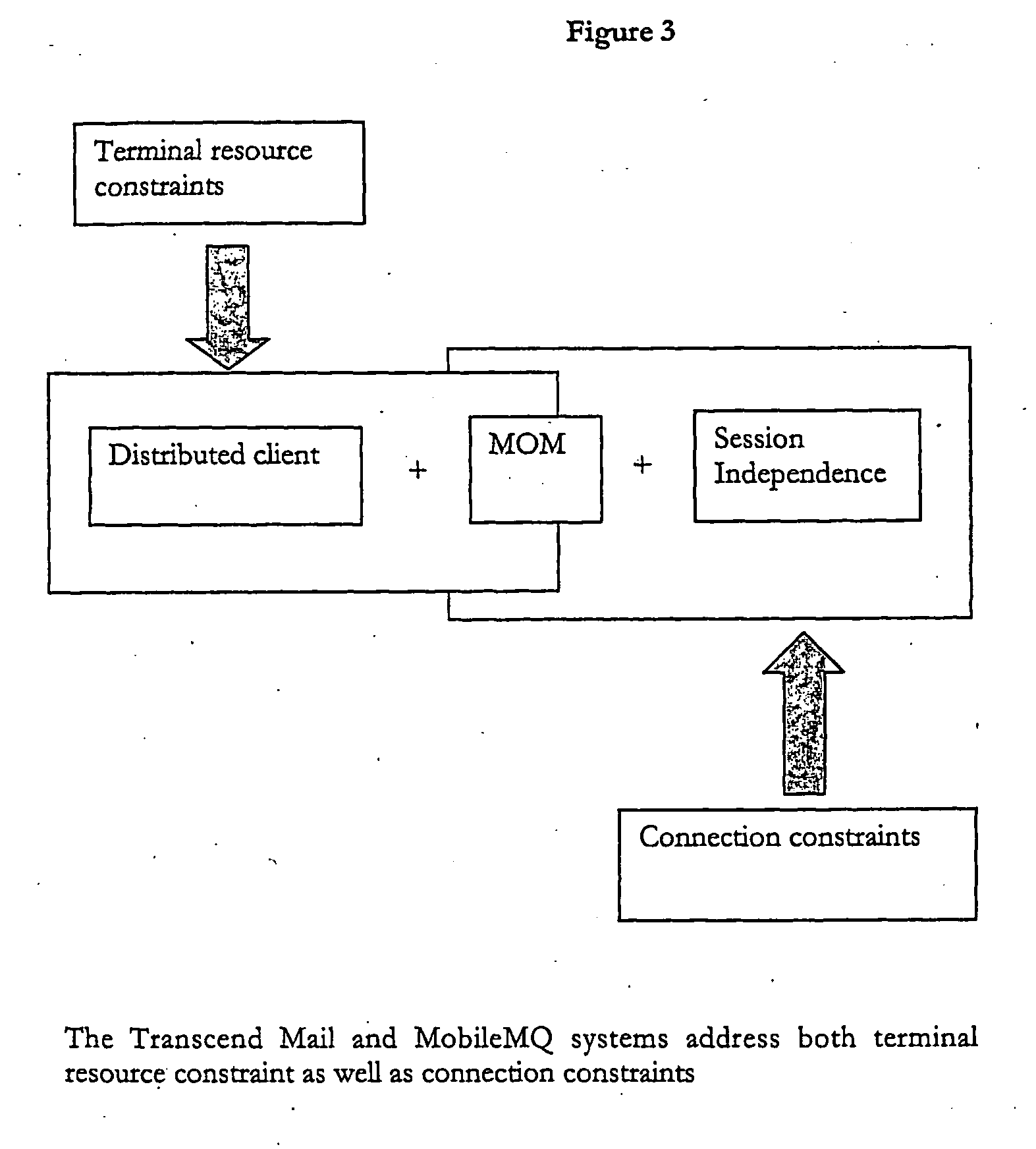

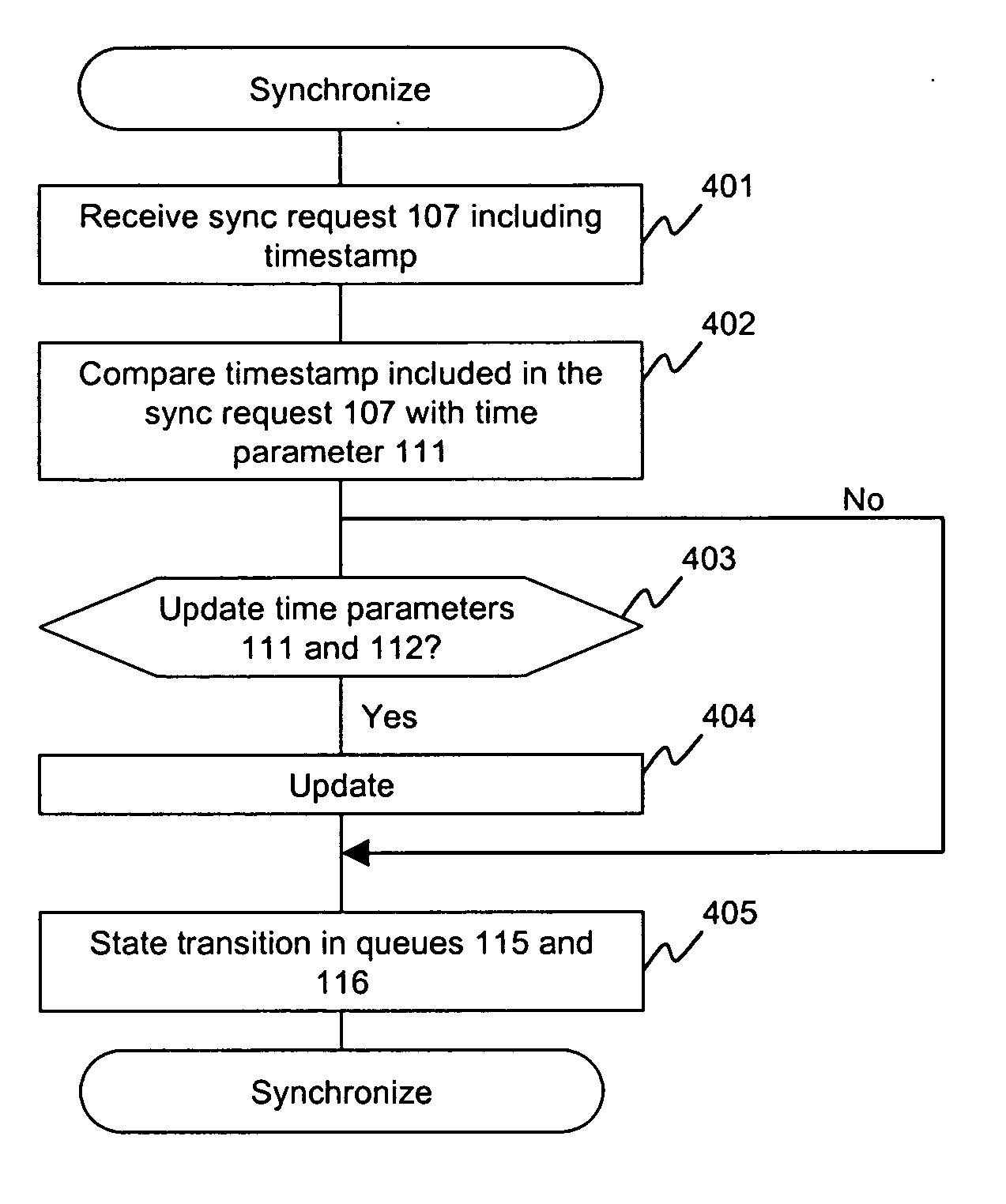

The present invention envisages a data access, replication or communications system comprising a software application that is distributed across a terminal-side component running on a terminal and a server-side component; in which the terminal-side component and the server-side component (i) together constitute a client to a server and (ii) collaborate by sending messages using a message queuing system over a network Hence, we split (i.e. distribute) the functionality of an application that serves as the client in a client-server configuration into component parts that run on two or more physical devices that commuunicate with each other over a network connection using a message queuing system, such as message oriented middleware. The component parts collectively act as a client in a larger client-server arrangement, with the server being, for example, a mail server. We call this a ‘Distributed Client’ model. A core advantage of the Distributed Client model is that it allows a terminal, such as mobile device with limited processing capacity, power, and connectivity, to enjoy the functionality of full-featured client access to a server environment using minimum resources on the mobile device by distributing some of the functionality normally associated with the client onto the server side, which is not so resource constrained.

Owner:MALIKIE INNOVATIONS LTD

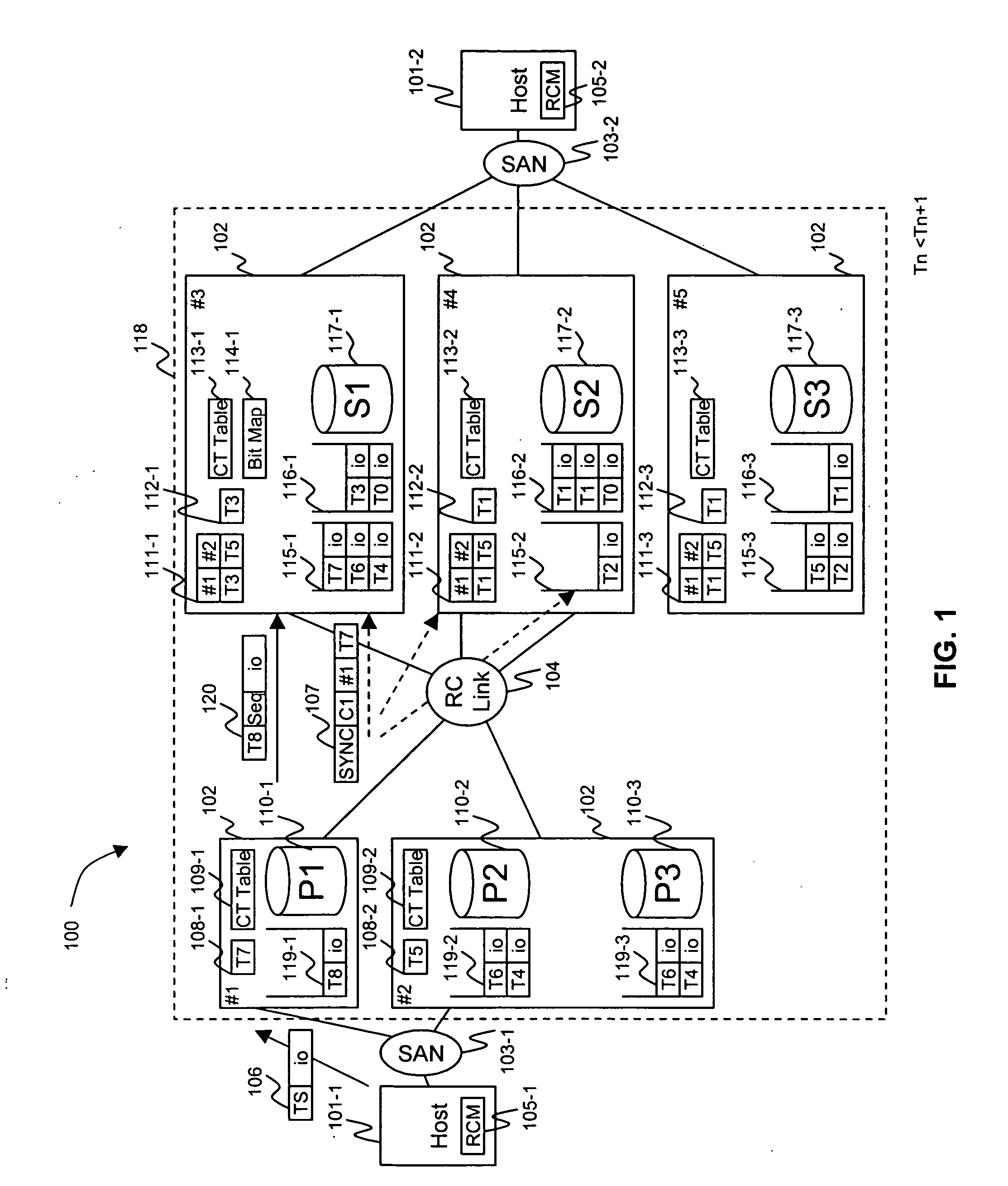

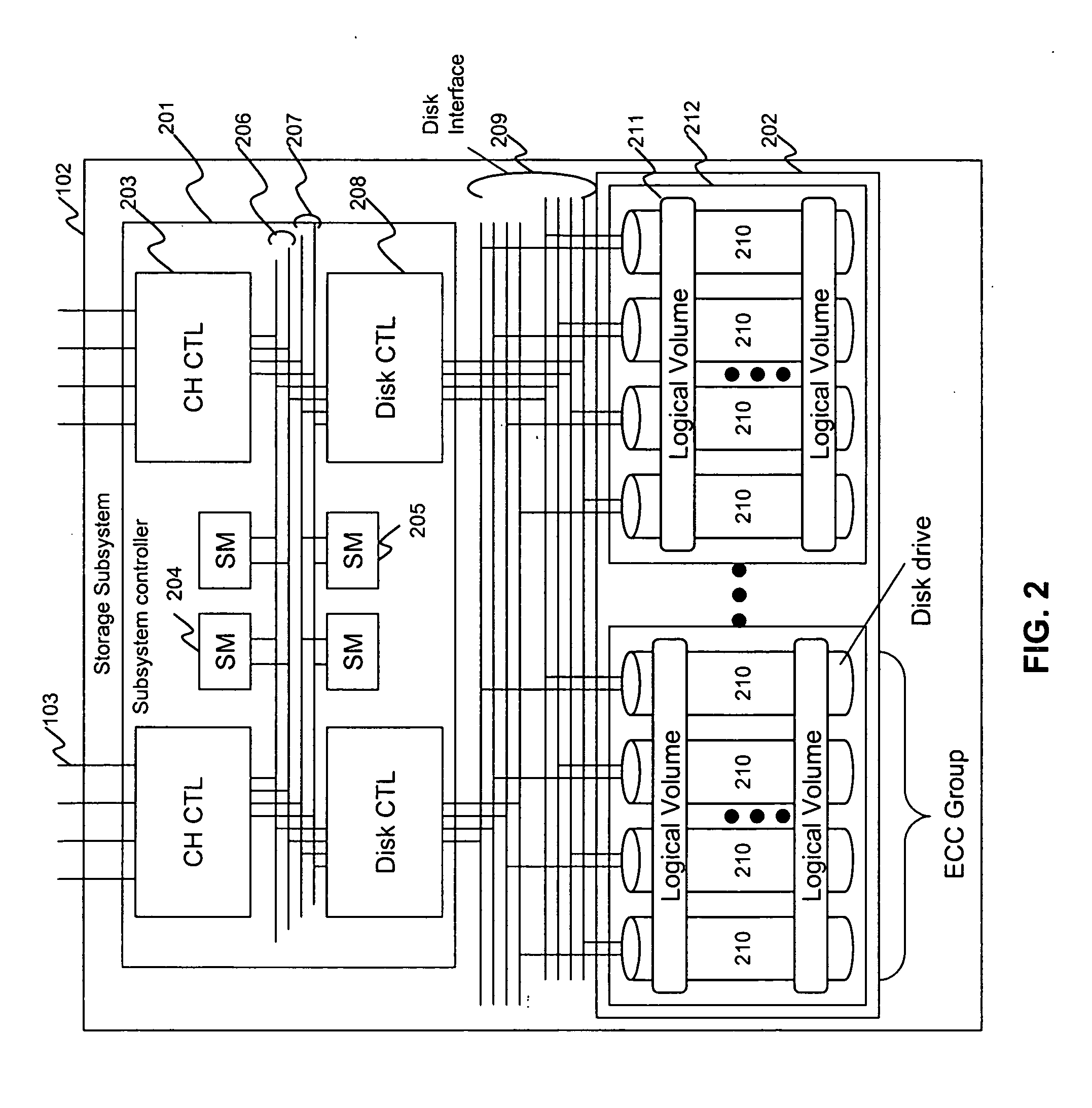

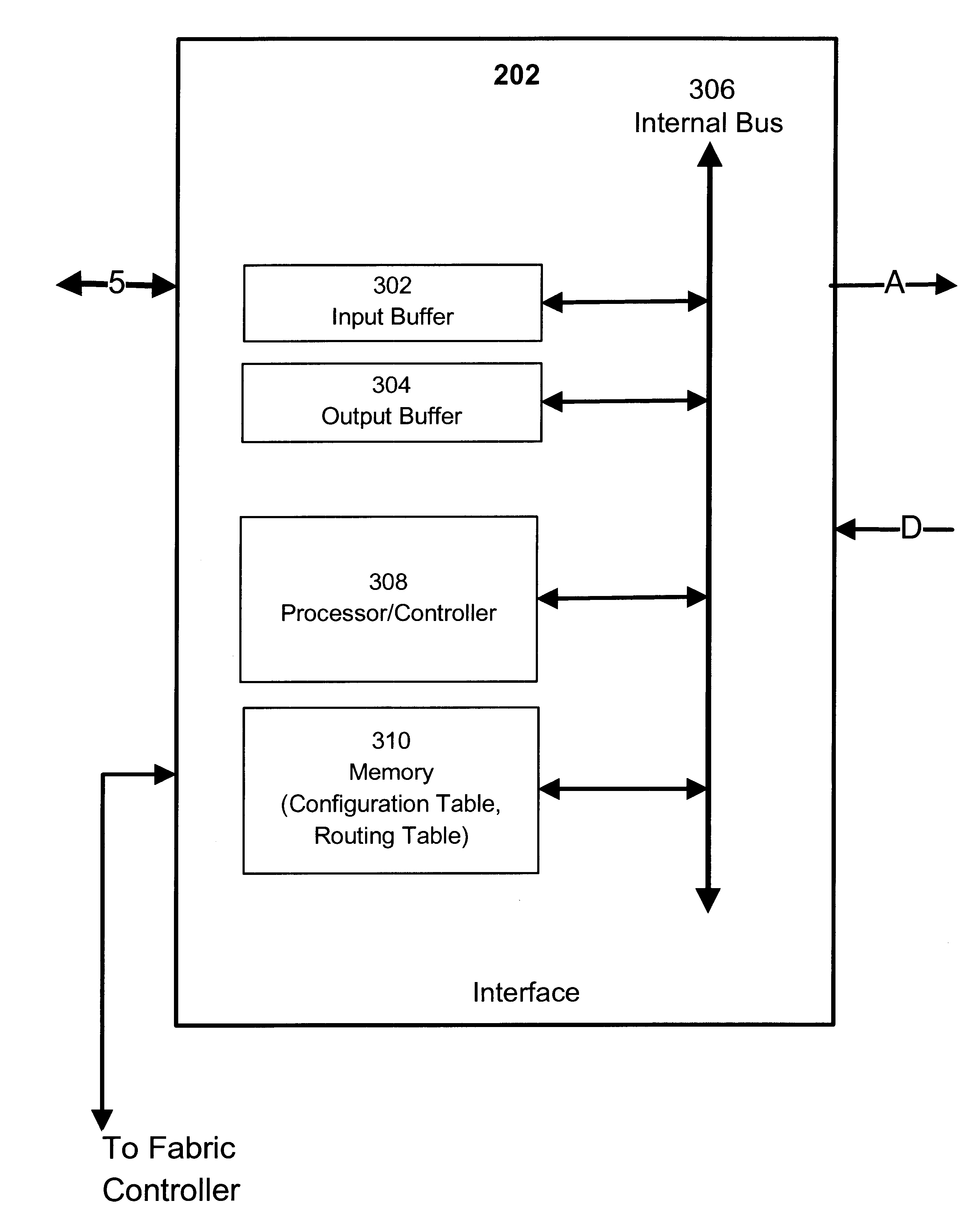

Method and apparatus of remote copy for multiple storage subsystems

A system and method for synchronizing remote copies within a multiple storage network apparatus, incorporates the steps of receiving a plurality of timestamps, comparing the timestamps with a plurality of timestamps stored in a remote copy table, updating a synchronize time value stored by a synchronized time parameter, and receiving a synchronized time stamp, wherein the value associated with the received timestamp is ulterior to the value of the synchronized time stored by the synchronized time parameter. Further, a system and method for synchronizing secondary storage subsystems, incorporates the steps of collecting a plurality of synchronous timestamps from a plurality of secondary storage subsystems, comparing the plurality of collected synchronous timestamps with a synchronize time parameter, updating a remote copy time table, issuing a remote copy queue request, receiving status information about a secondary storage subsystem starting host, and synchronizing the secondary storage subsystem.

Owner:GOOGLE LLC

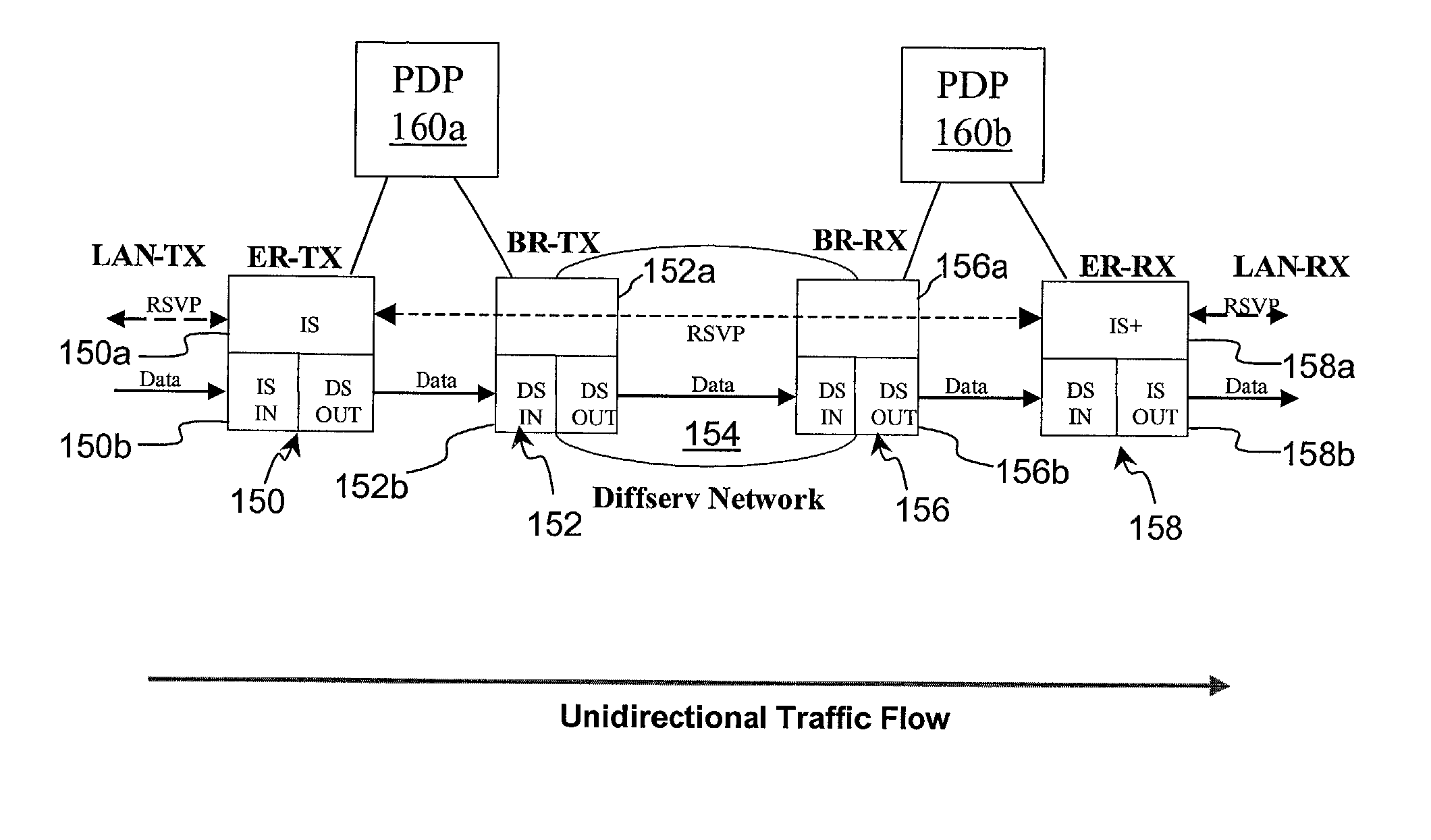

Method and apparatus for simple IP-layer bandwidth allocation using ingress control of egress bandwidth

InactiveUS6628609B2Reduce the possibilityMinimum bandwidthError preventionTransmission systemsDynamic bandwidth allocationData transmission

Owner:NORTEL NETWORKS LTD

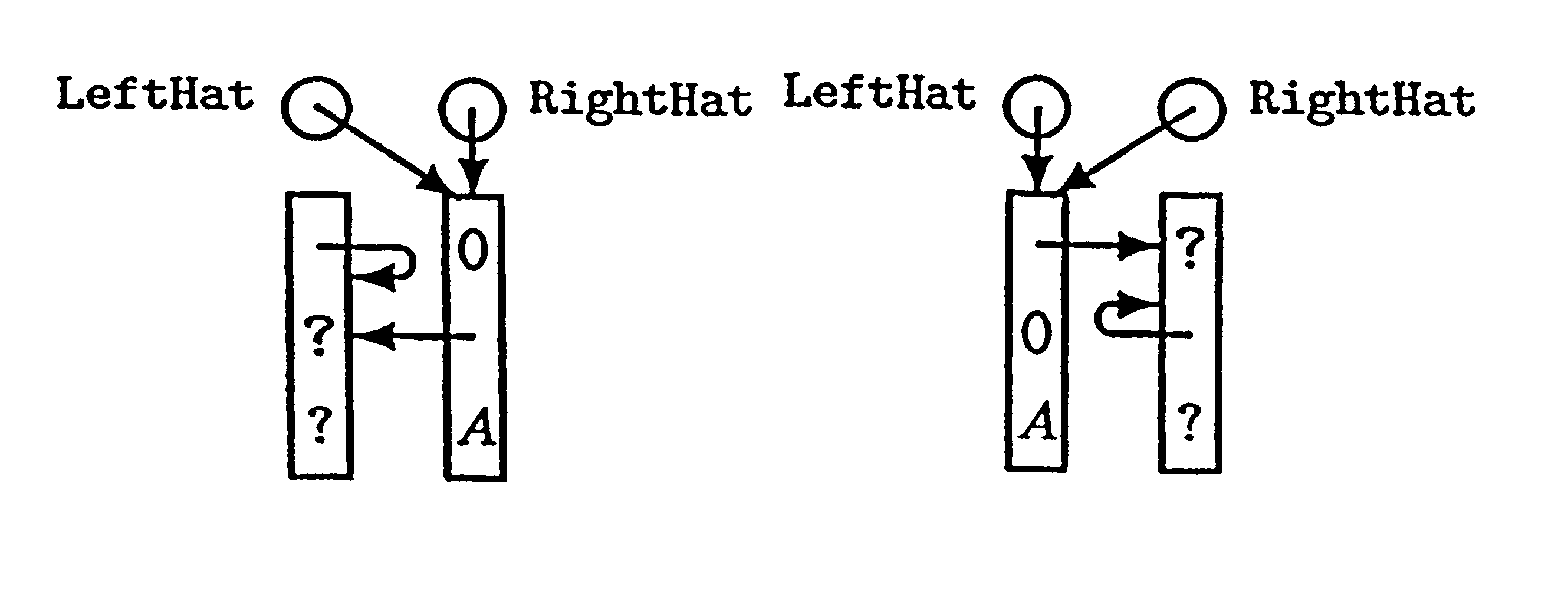

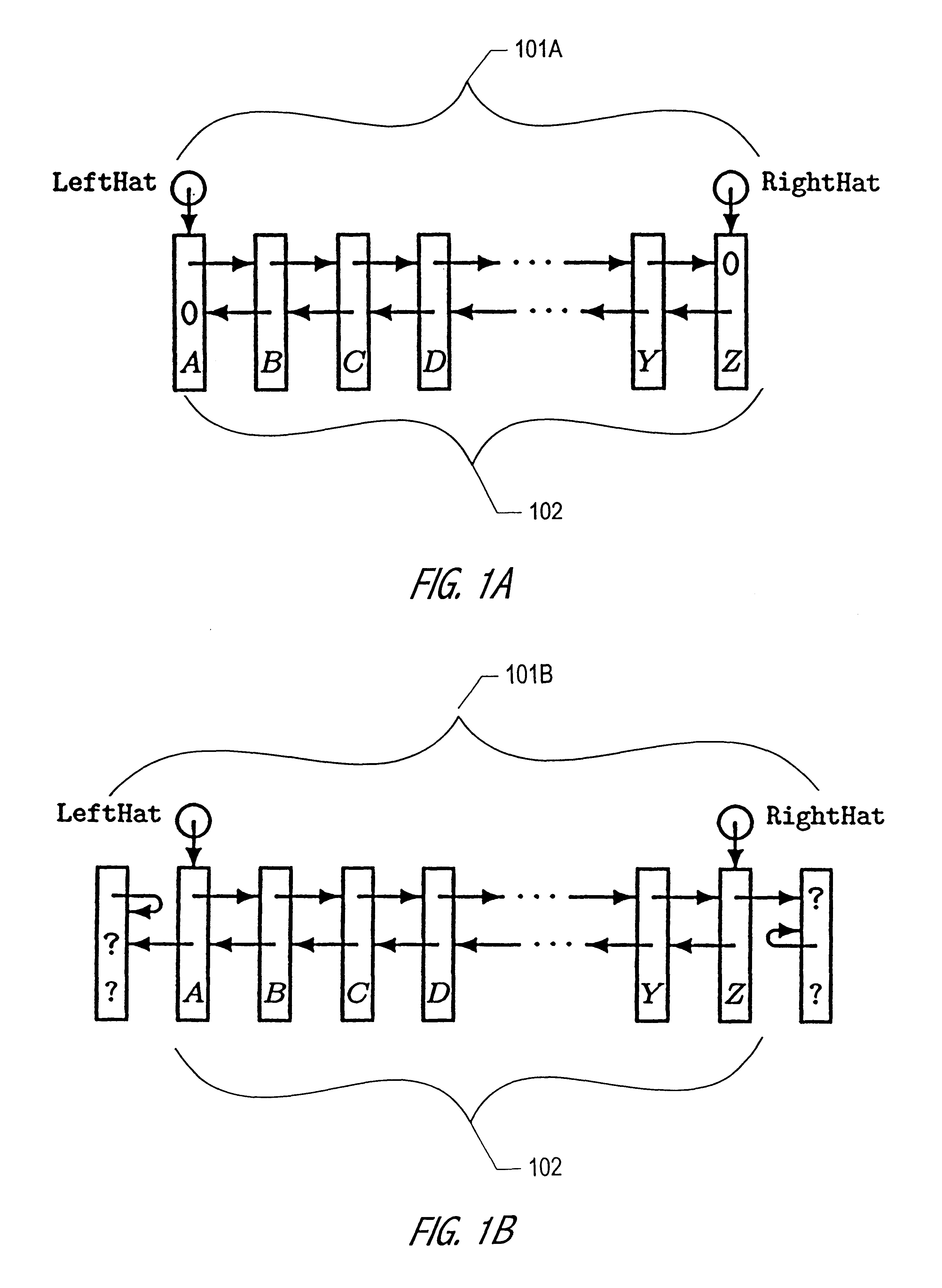

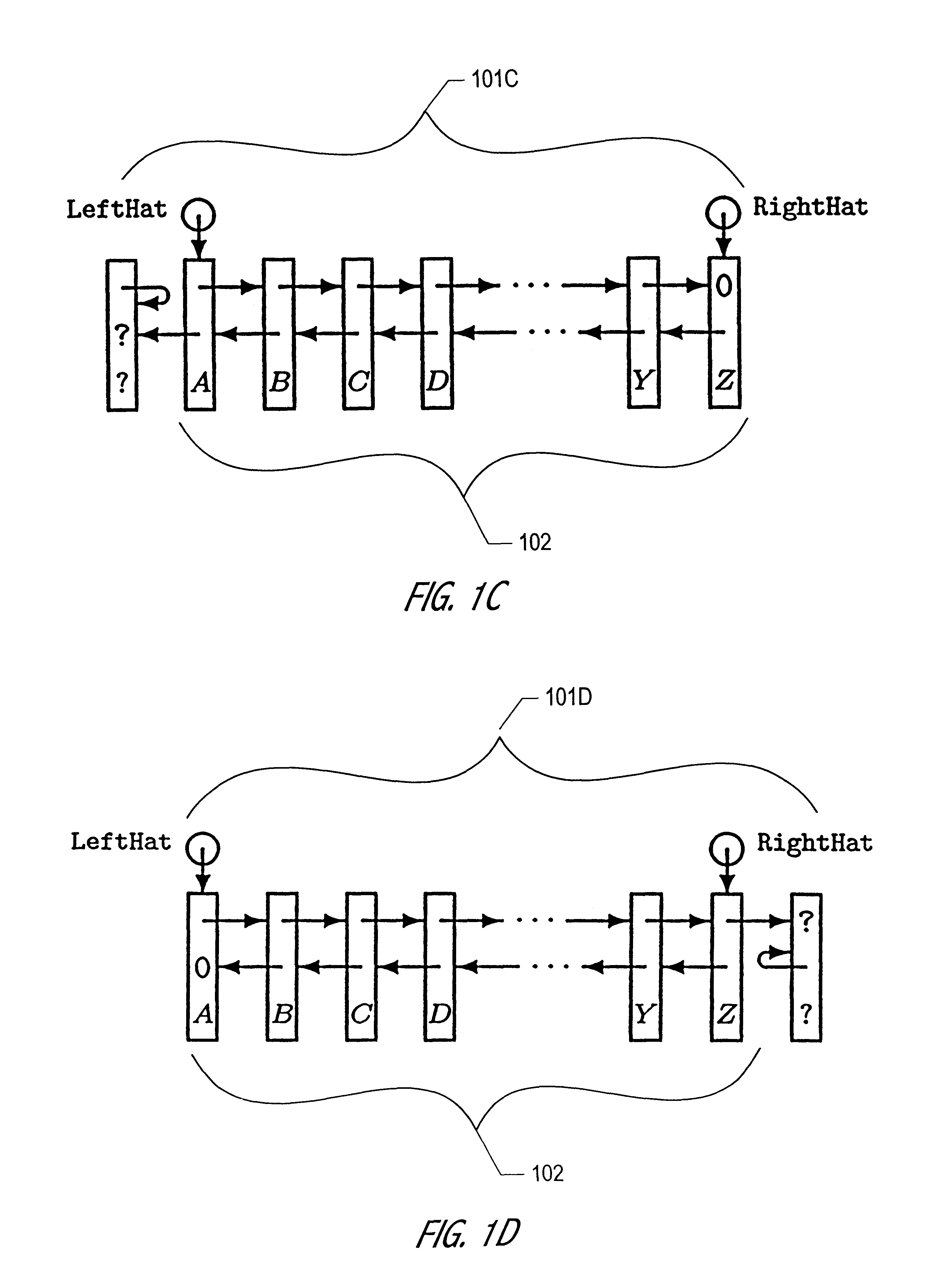

Lock-free implementation of concurrent shared object with dynamic node allocation and distinguishing pointer value

A novel linked-list-based concurrent shared object implementation has been developed that provides non-blocking and linearizable access to the concurrent shared object. In an application of the underlying techniques to a deque, non-blocking completion of access operations is achieved without restricting concurrency in accessing the deque's two ends. In various realizations in accordance with the present invention, the set of values that may be pushed onto a shared object is not constrained by use of distinguishing values. In addition, an explicit reclamation embodiment facilitates use in environments or applications where automatic reclamation of storage is unavailable or impractical.

Owner:ORACLE INT CORP

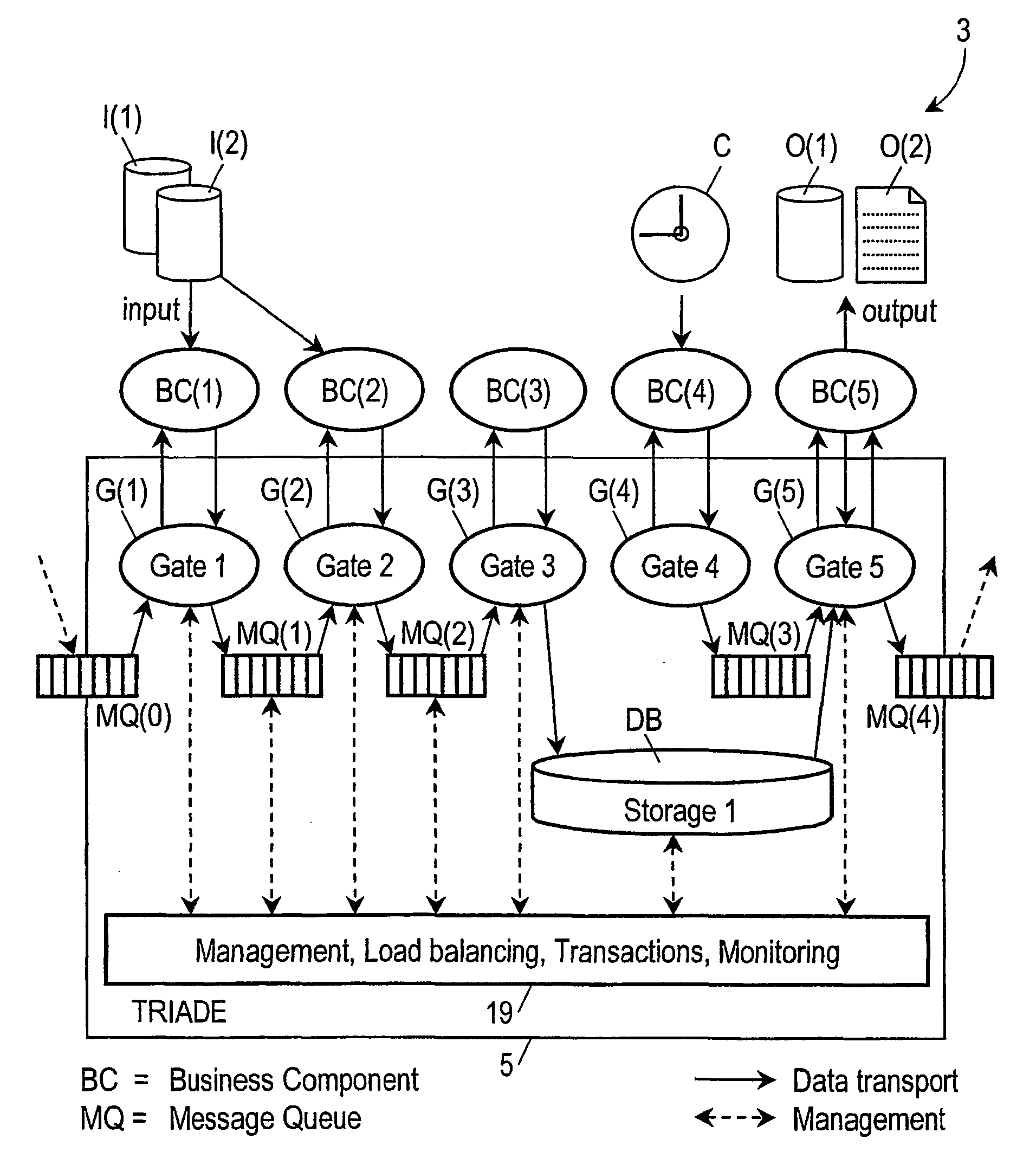

System and method for processing transaction data

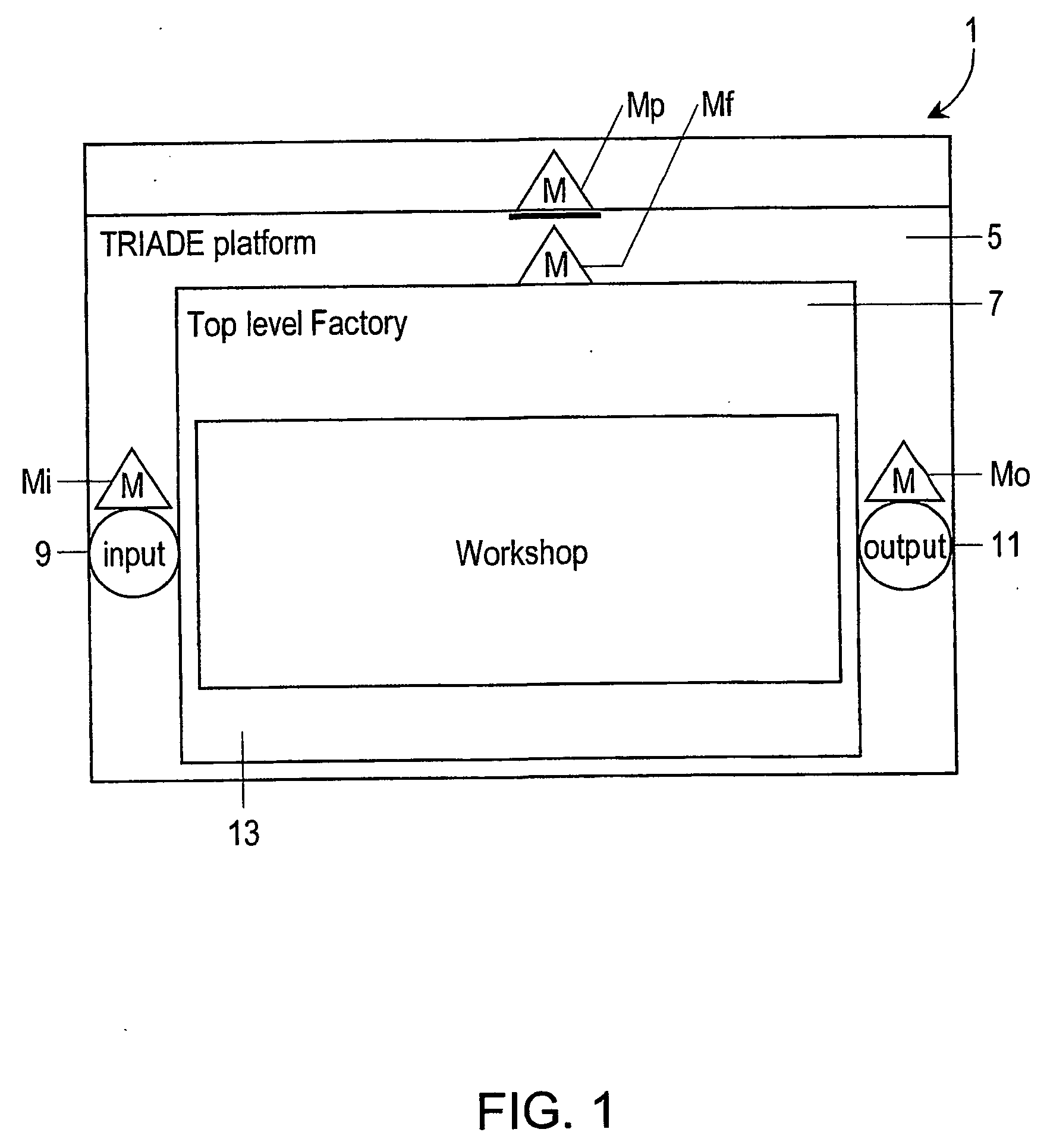

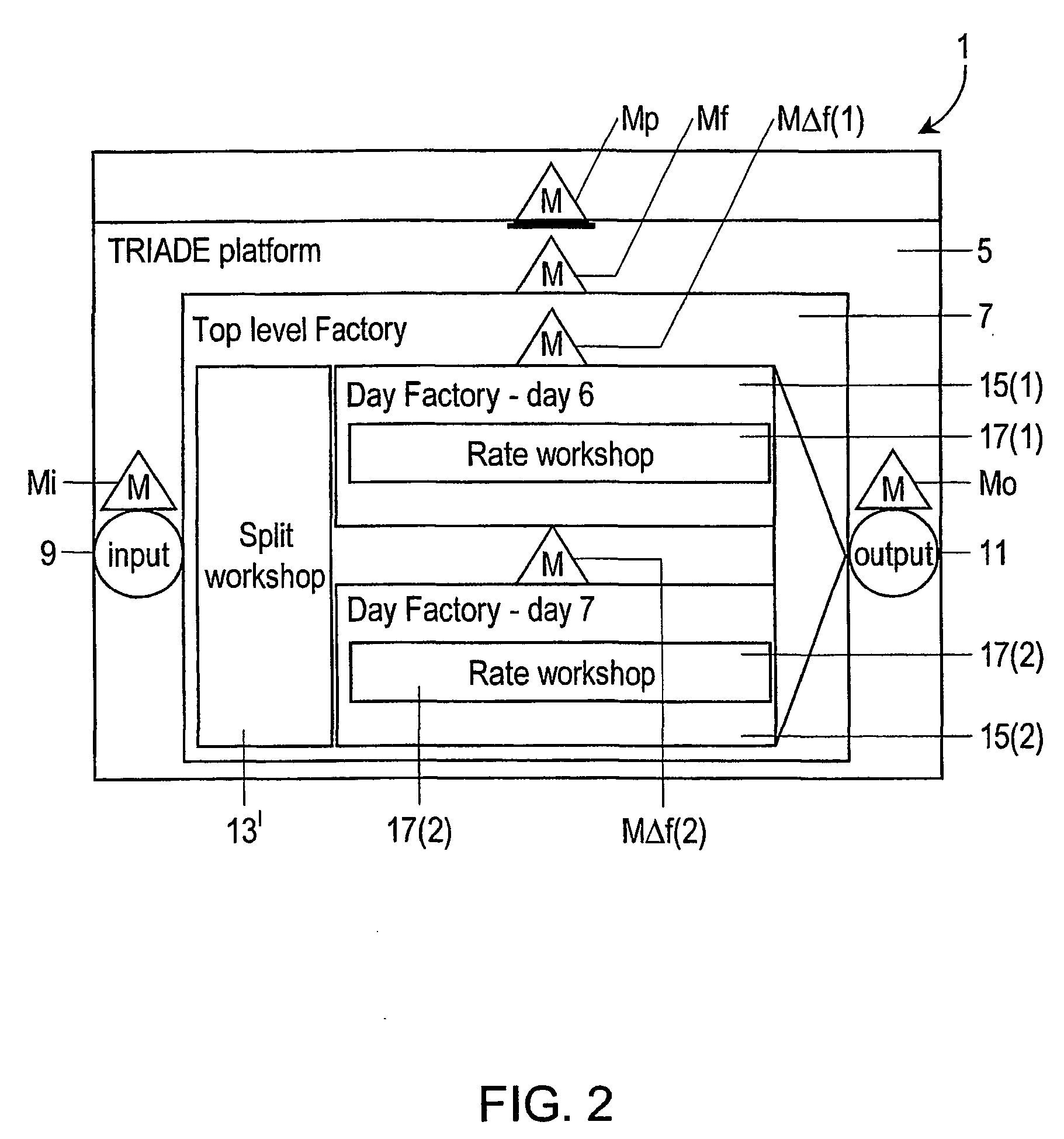

InactiveUS20040024626A1FinanceMultiple digital computer combinationsMessage queueSoftware architecture

Computer system provided with a software architecture for processing transaction data which comprises a platform (5) with at least one logical processing unit (21) comprising the following components: a plurality of gates (G(k)); one or more message queues (MQ(k)), these being memories for temporary storage of data; one or more databases (DB); a hierarchical structure of managers in the form of software modules for the control of the gates (G(j)), the messages queues (MQ(k)), the one or more databases, the at least one logical processing unit (21) and the platform (5), wherein the gates are defined as software modules with the task of communicating with corresponding business components (BC(j)) located outside the platform (5), which are defined as software modules for carrying out a predetermined transformation on a received set of data.

Owner:NEDERLANDSE ORG VOOR TOEGEPAST-NATUURWETENSCHAPPELIJK ONDERZOEK (TNO)

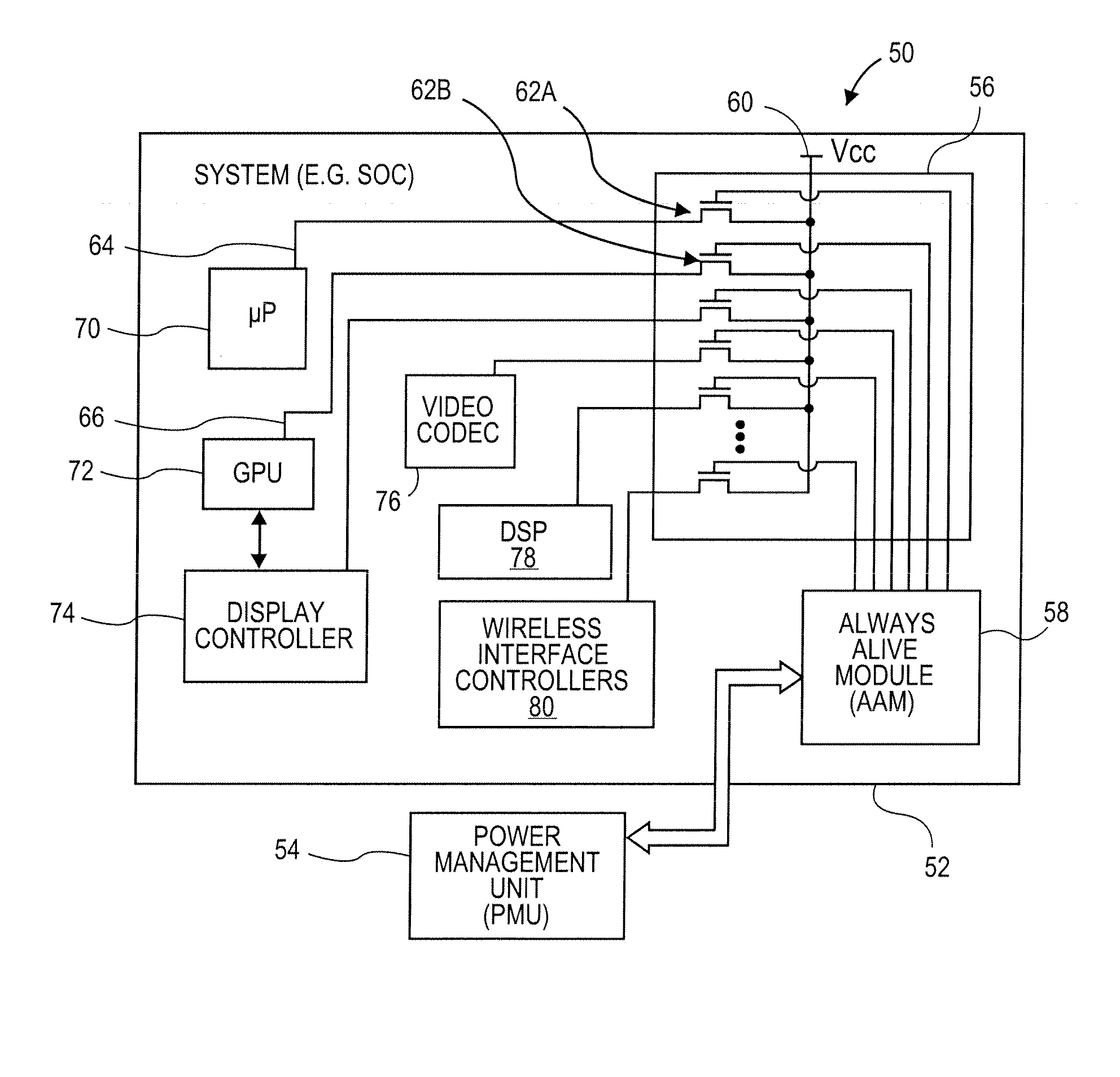

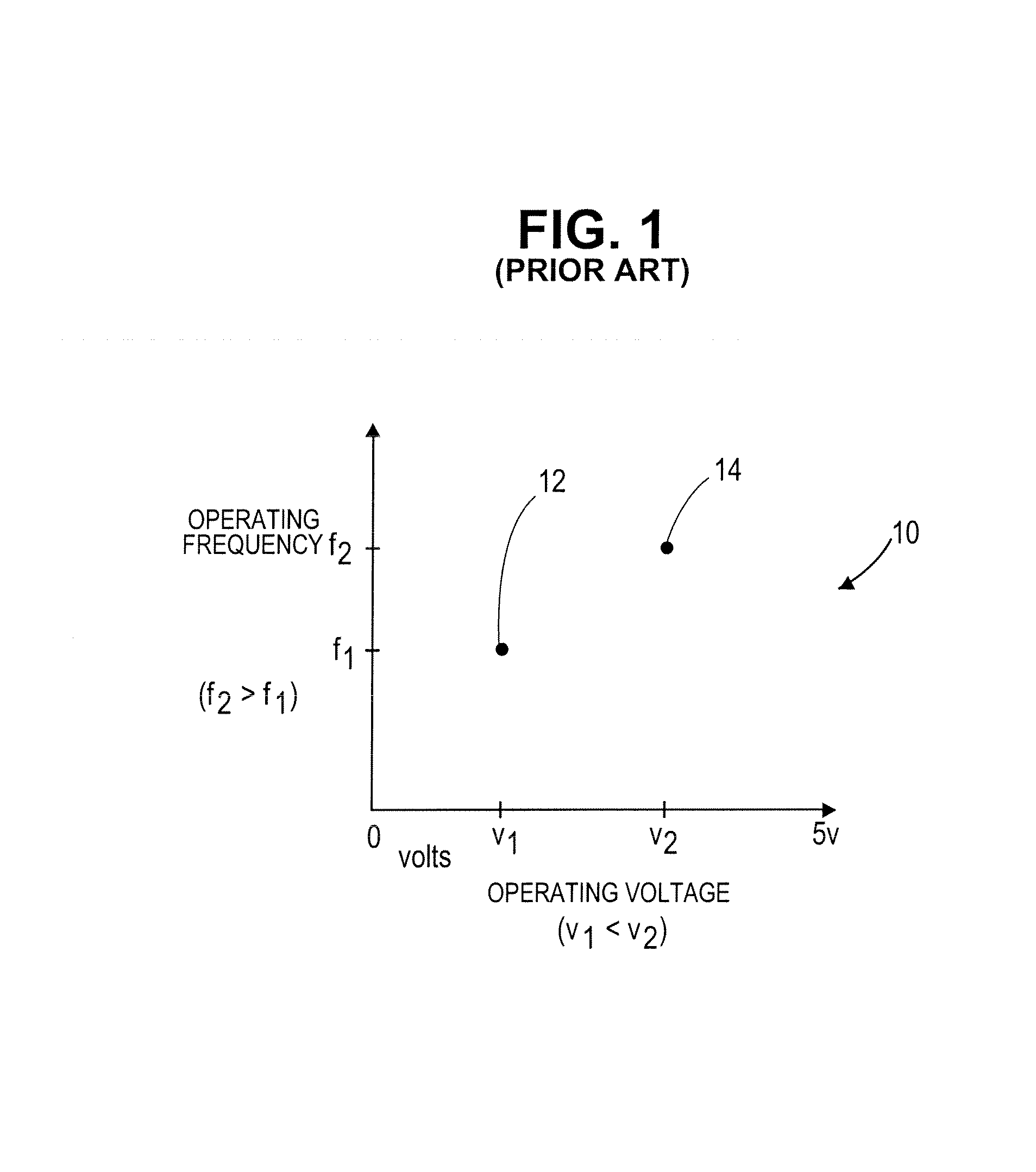

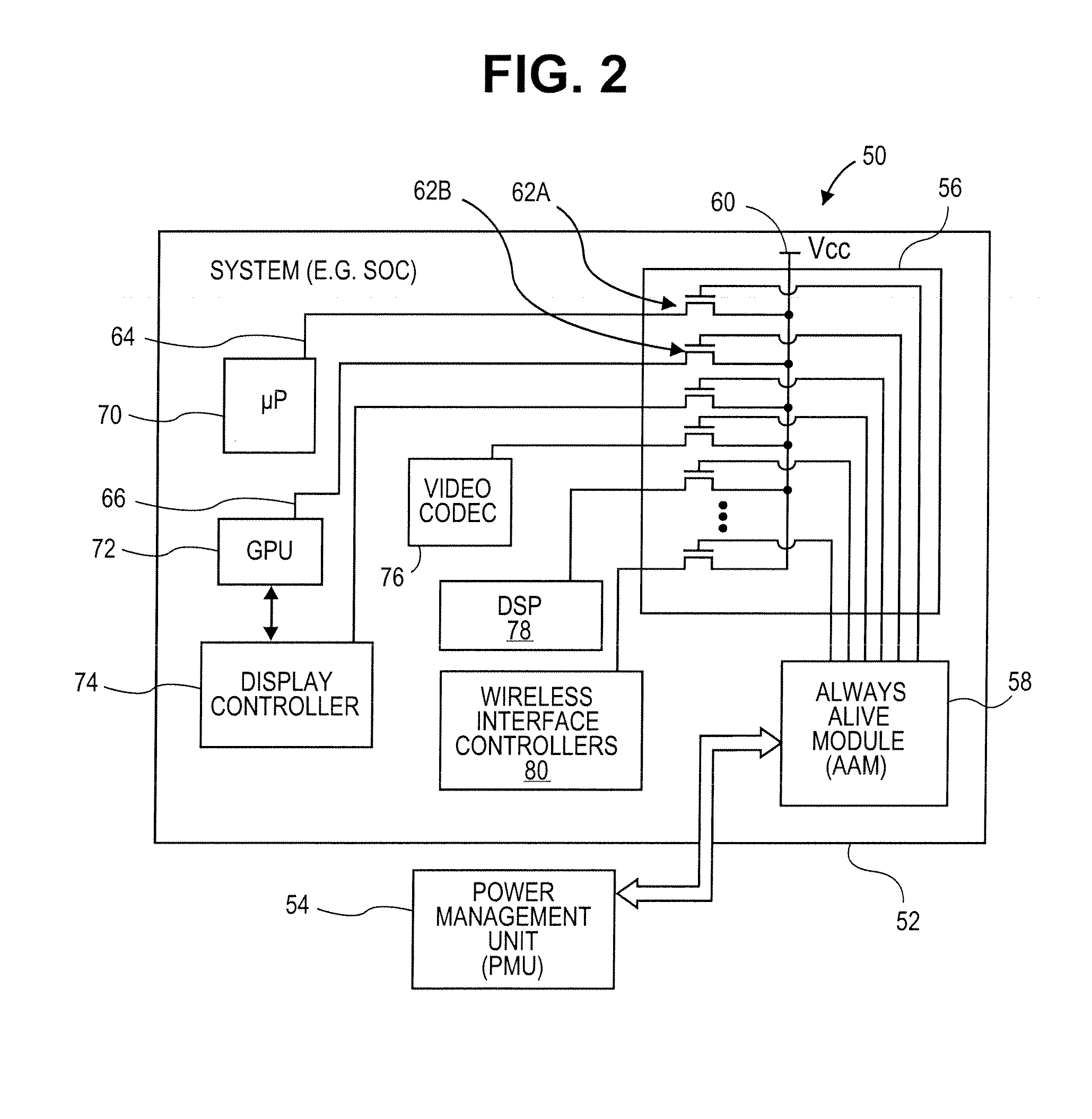

Methods and Systems for Power Management in a Data Processing System

ActiveUS20080168285A1Lower latencyReduce total powerEnergy efficient ICTVolume/mass flow measurementData processing systemGeneral purpose

Methods and systems for managing power consumption in data processing systems are described. In one embodiment, a data processing system includes a general purpose processing unit, a graphics processing unit (GPU), at least one peripheral interface controller, at least one bus coupled to the general purpose processing unit, and a power controller coupled to at least the general purpose processing unit and the GPU. The power controller is configured to turn power off for the general purpose processing unit in response to a first state of an instruction queue of the general purpose processing unit and is configured to turn power off for the GPU in response to a second state of an instruction queue of the GPU. The first state and the second state represent an instruction queue having either no instructions or instructions for only future events or actions.

Owner:APPLE INC

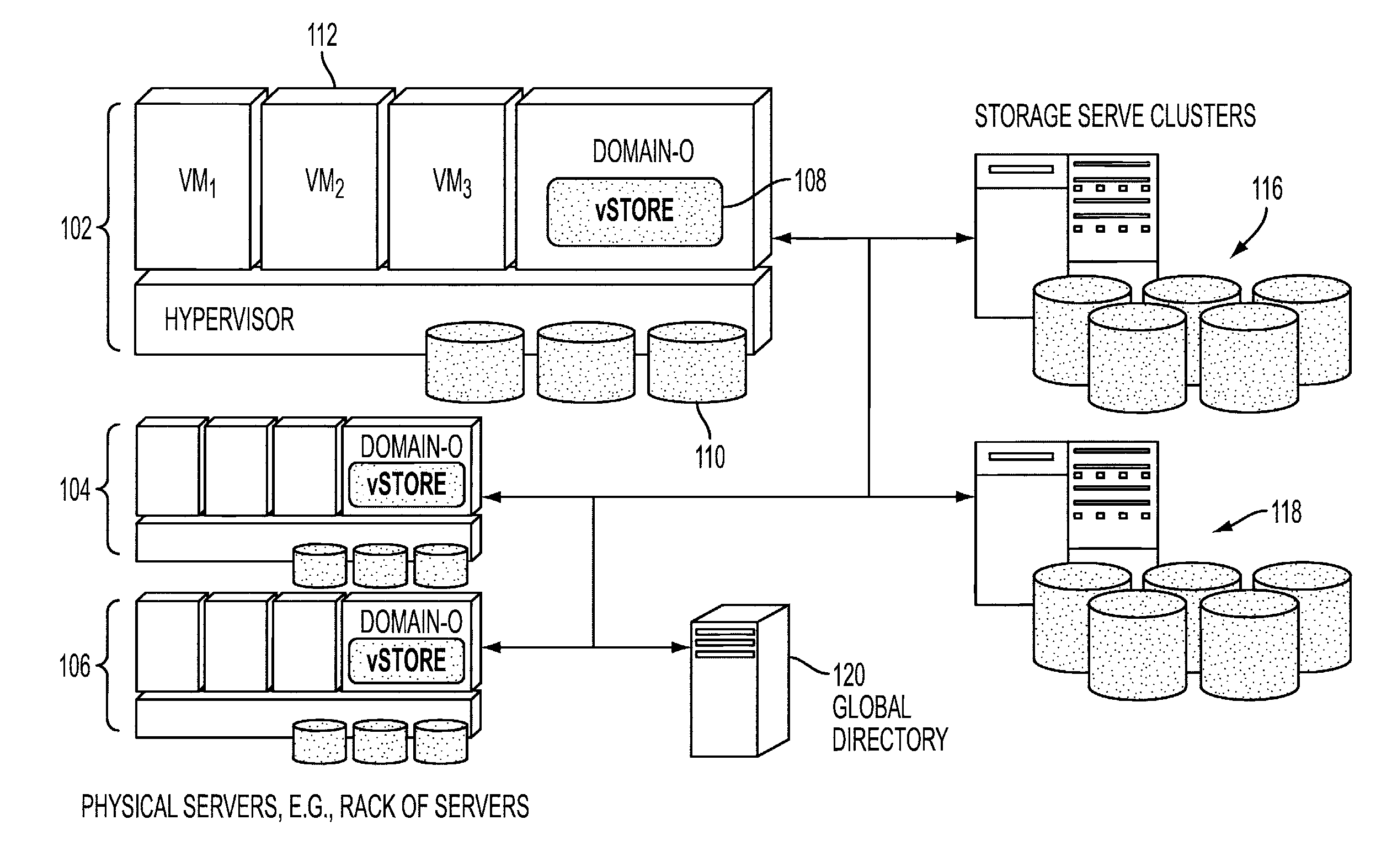

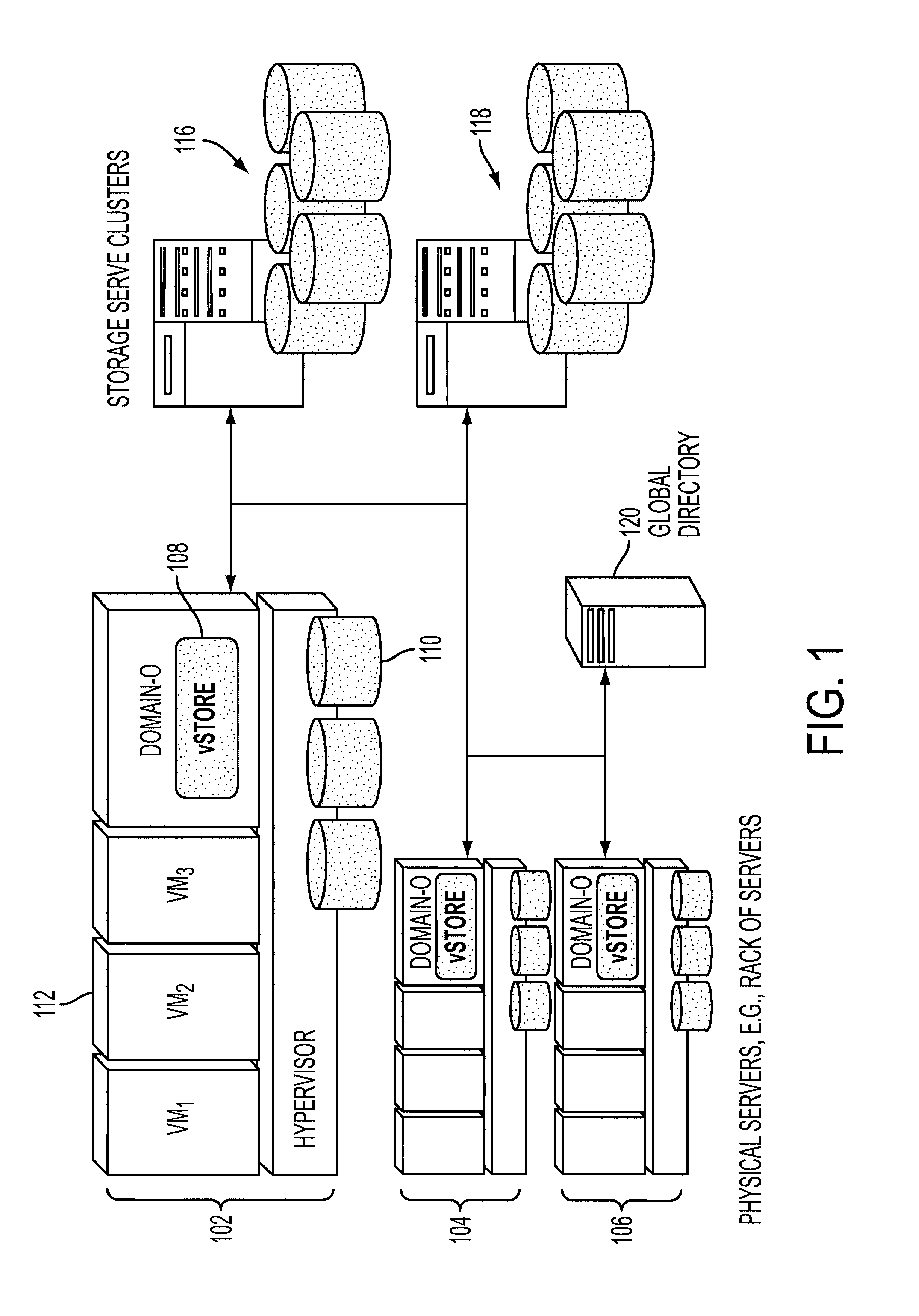

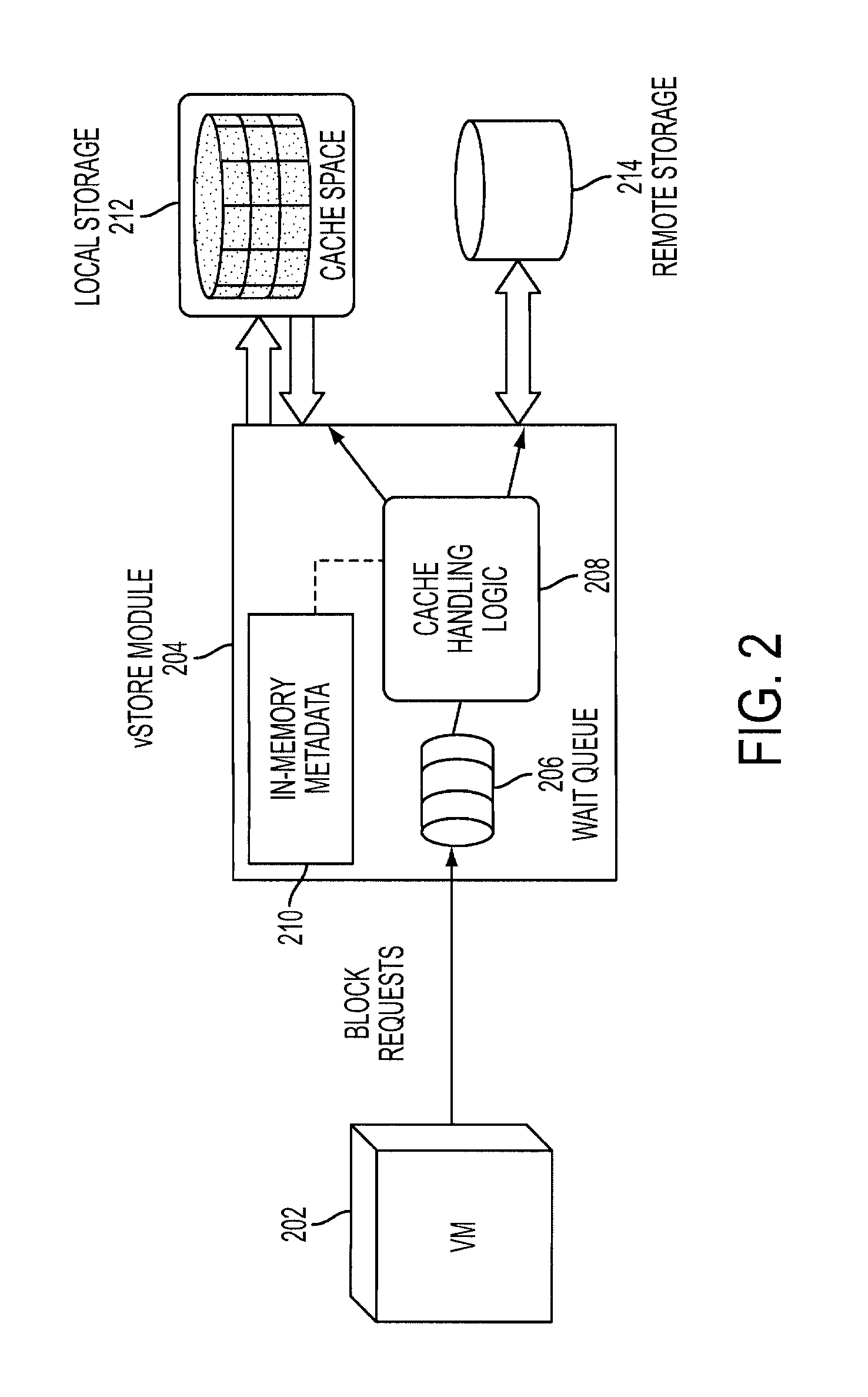

Scalable cloud storage architecture

InactiveUS20120179874A1Memory architecture accessing/allocationError detection/correctionTerm memoryCloud storage

a virtual storage module operable to run in a virtual machine monitor may include a wait-queue operable to store incoming block-level data requests from one or more virtual machines. In-memory metadata may store information associated with data stored in local persistent storage that is local to a host computer hosting the virtual machines. The data stored in local persistent storage replicates a subset of data in one or more virtual disks provided to the virtual machines. The virtual disks are mapped to remote storage accessible via a network connecting the virtual machines and the remote storage. A cache handling logic may be operable to handle the block-level data requests by obtaining the information in the in-memory metadata and making I / O re-quests to the local persistent storage or the remote storage or combination of the local persistent storage and the remote storage to service the block-level data requests.

Owner:IBM CORP

Multitask process monitoring method and system in distributed system environment

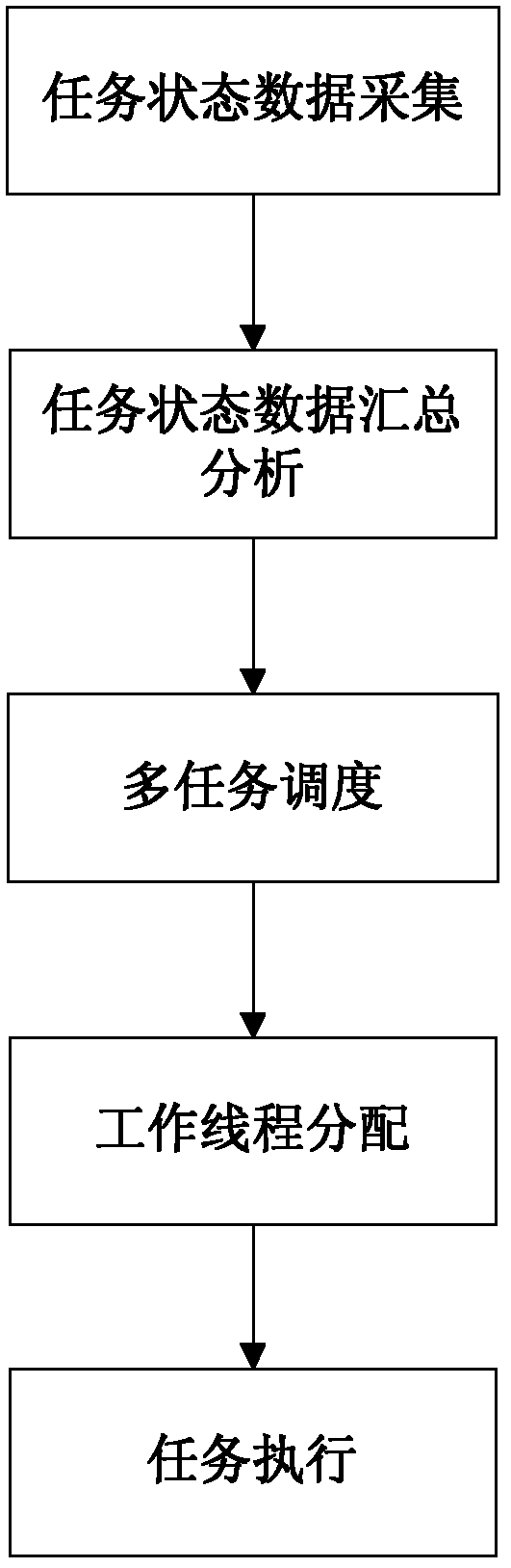

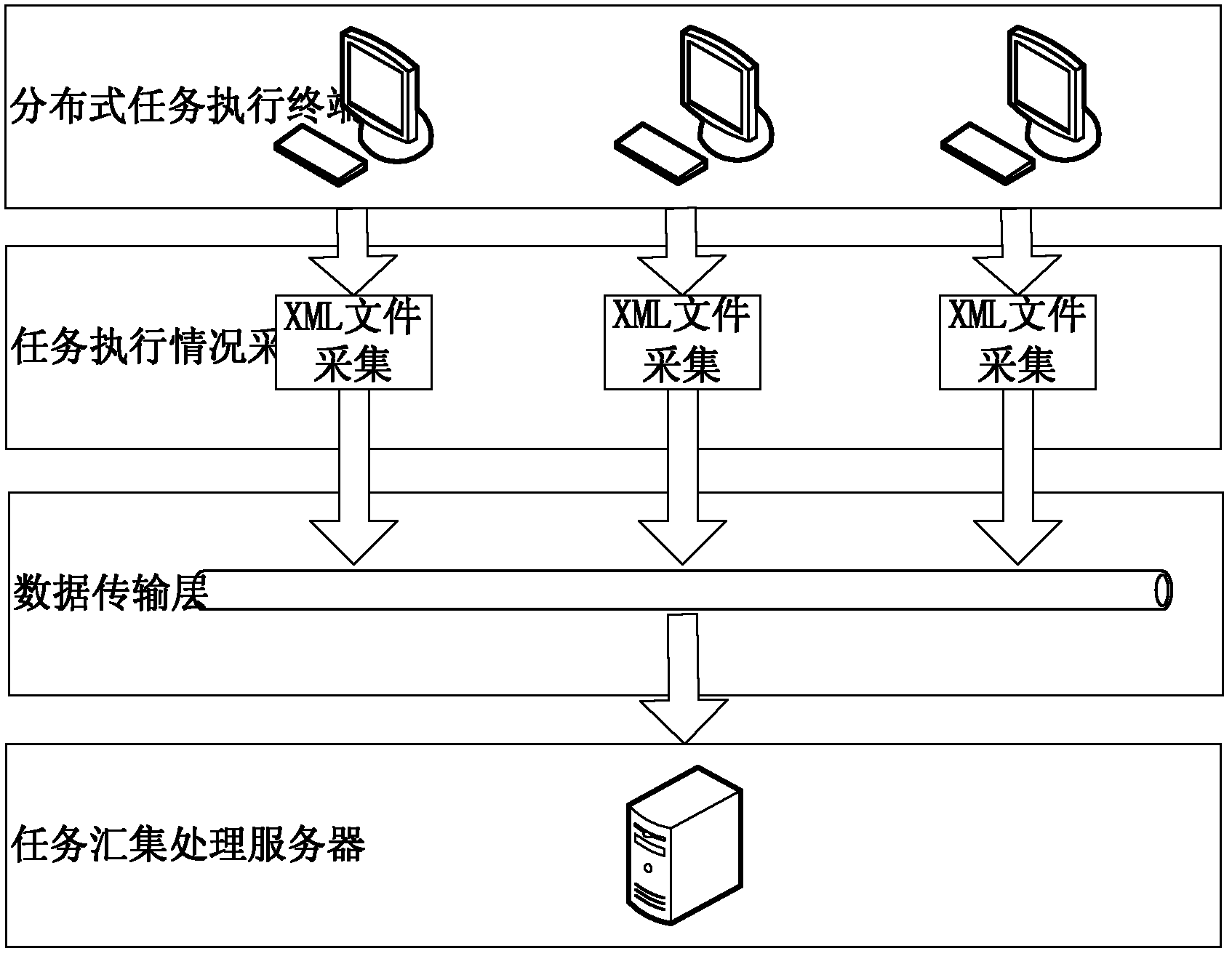

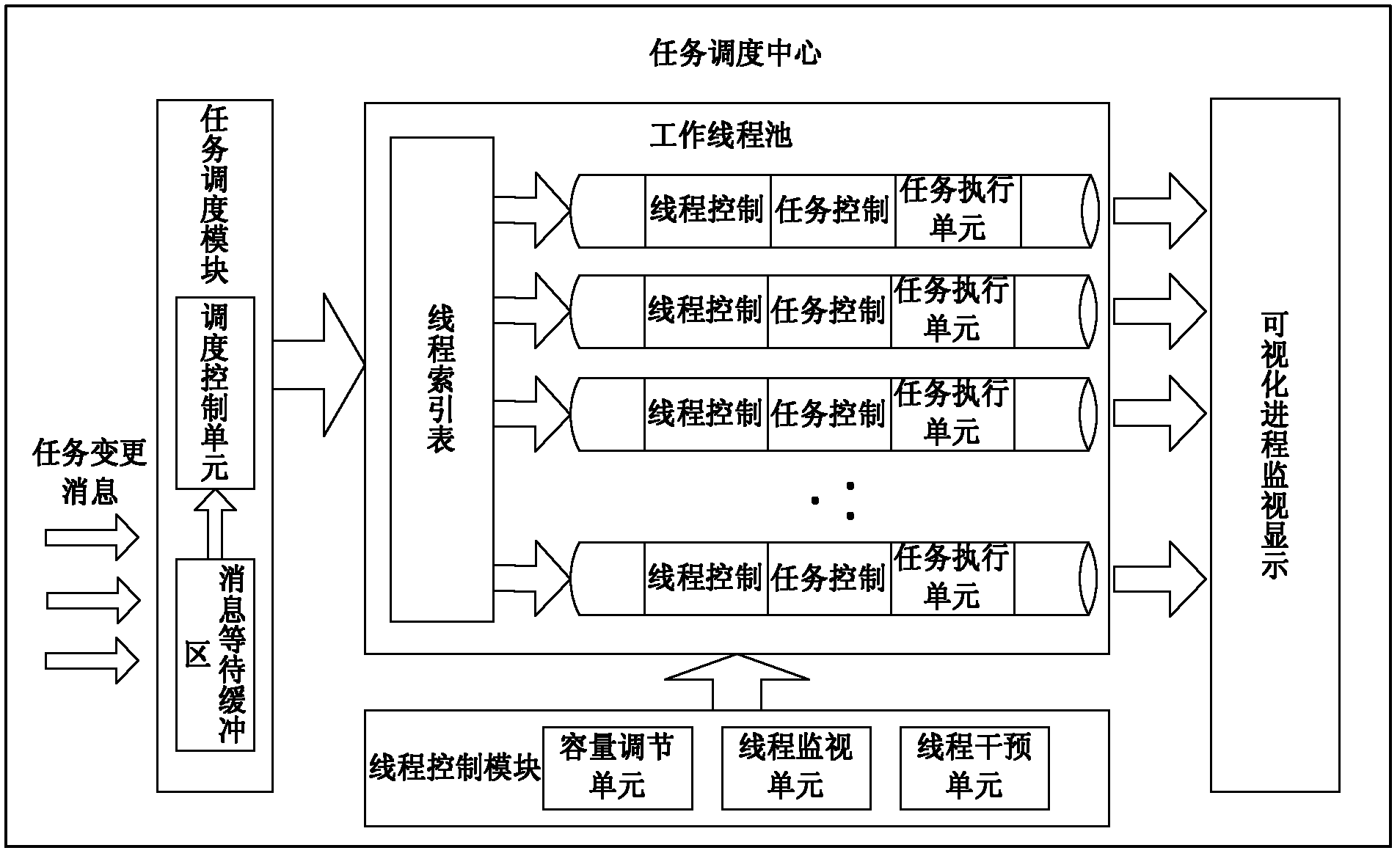

ActiveCN102360310AEfficient parallel processingGood for load balancingResource allocationFiltrationMonitoring system

The invention discloses a multitask process monitoring method in the distributed system environment. The method comprises the following steps that: five states of the task execution process of each task execution terminal in the distributed system environment are monitored; an XML (eXtensible markup language) format description file is transported to a task collecting and processing server, the task execution conditions after filtration are written in a database and simultaneously task change information is sent to notify a task scheduling center; the task scheduling center directly submits the information to a task scheduling module after receiving the task change information and the task scheduling module adds the received information to information waiting queues; a scheduling control unit searches for a thread index table for threads of execution of the task and gives the threads of execution to the threads to be executed; and a thread control module monitors a plurality of threads in a work thread pool in real time in the system operation process. The invention also discloses a multitask process monitoring system. The system comprises a plurality of distributed task execution terminals, the task collecting and processing server and the task scheduling center.

Owner:THE 28TH RES INST OF CHINA ELECTRONICS TECH GROUP CORP

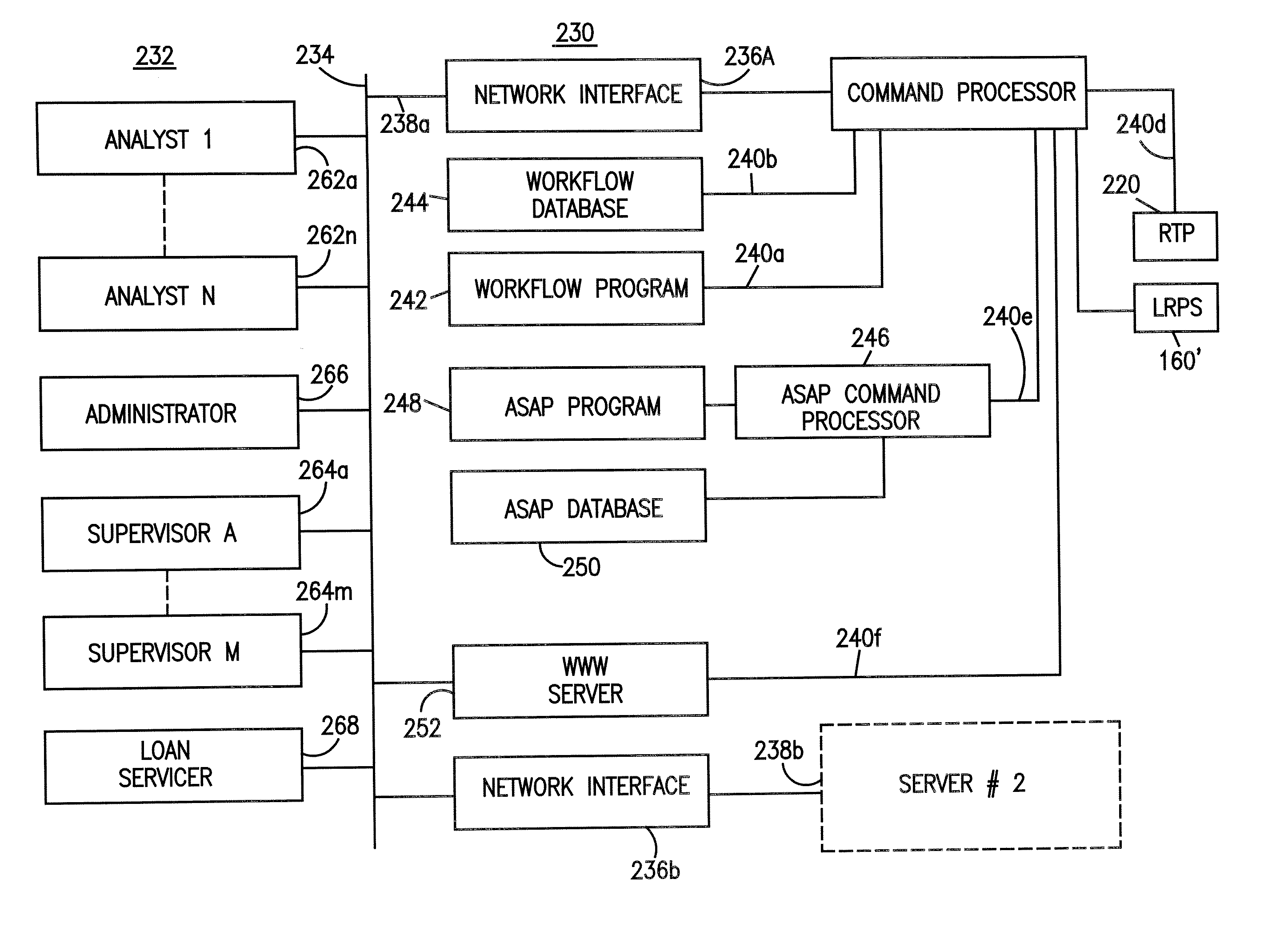

Workflow management system and method

InactiveUS20070208606A1Easy to editFacilitates economy of scale and processing efficiencyFinancePayment architectureStream managementSystems management

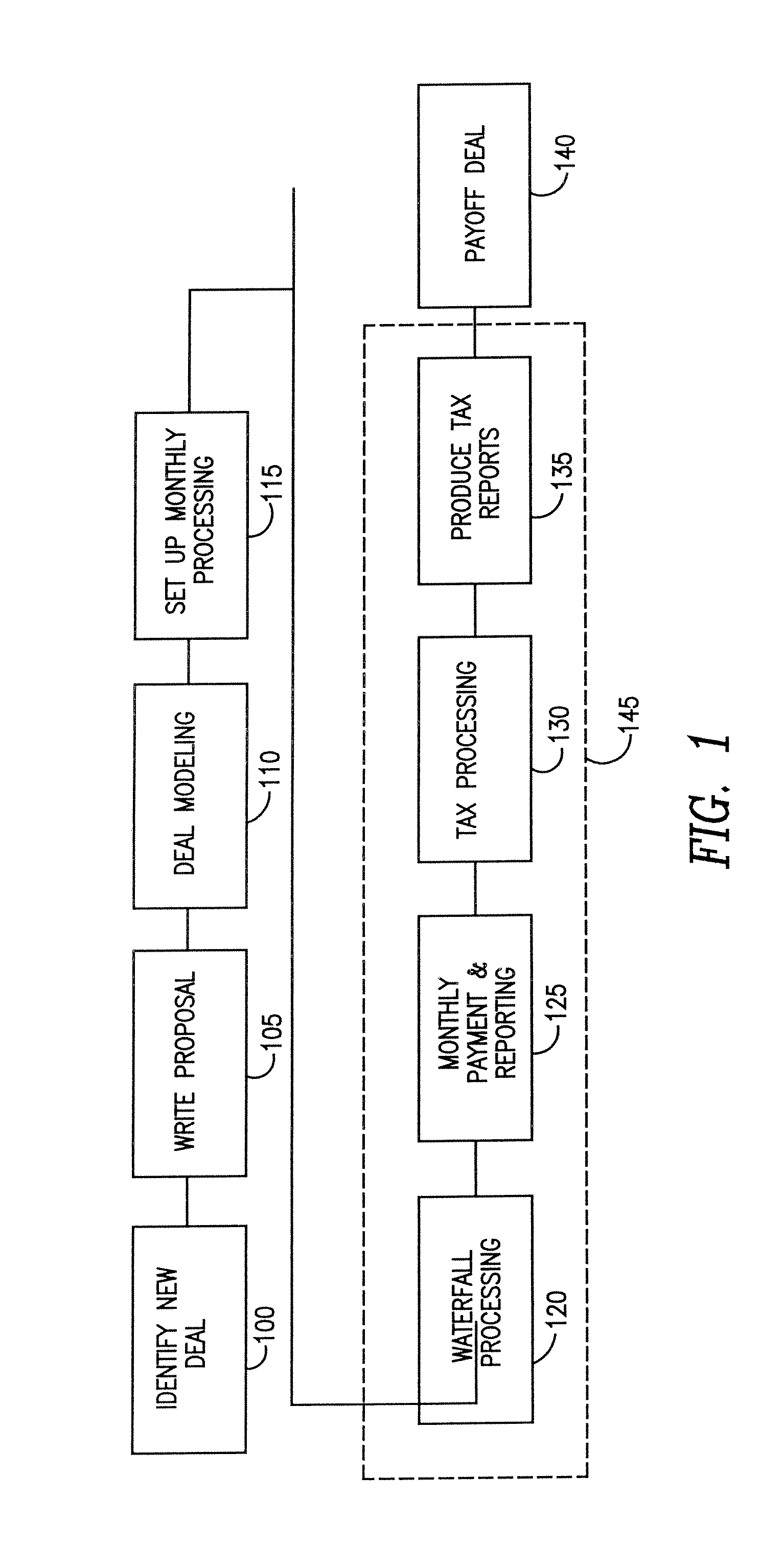

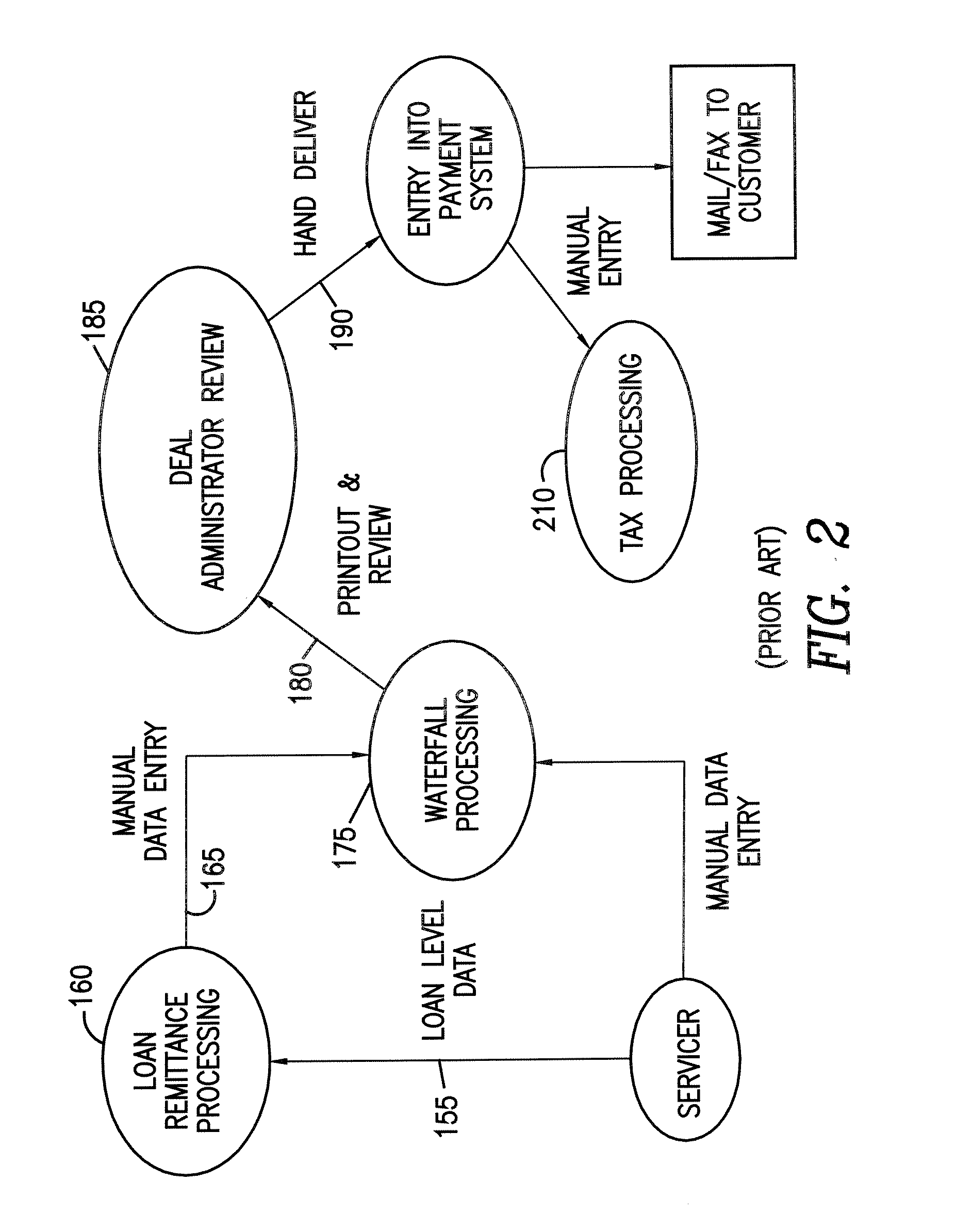

A computerized workflow management method and system to provide operational support for complex multi-step processes, having particular utility in supporting operations involving securitizations for which periodic valuation and distribution computations, disbursements and reporting must be set up and executed. The invention permits unification of manual operations and operations performed by legacy software, even if implemented with database structures different from the workflow management system, automated quality control, workflow status display and automatic updating of workflow status records. The method of workflow management involves creating an underlying database structure for recording the processing steps and other information required for each transaction, entering the necessary setup information by selection from lists of pre-stored information about processing functions, associated workflow events and milestones for the queues, mapping the data structures of the subsystem databases and the workflow management database to provide transparent interfacing and convenient manual entry of data were necessary, displaying for the user the workflow status of all transactions for which he or she is responsible, permitting menu driven initiation of required actions and automatically updating the database records for the universe of deals being managed by the system.

Owner:JPMORGAN CHASE BANK NA

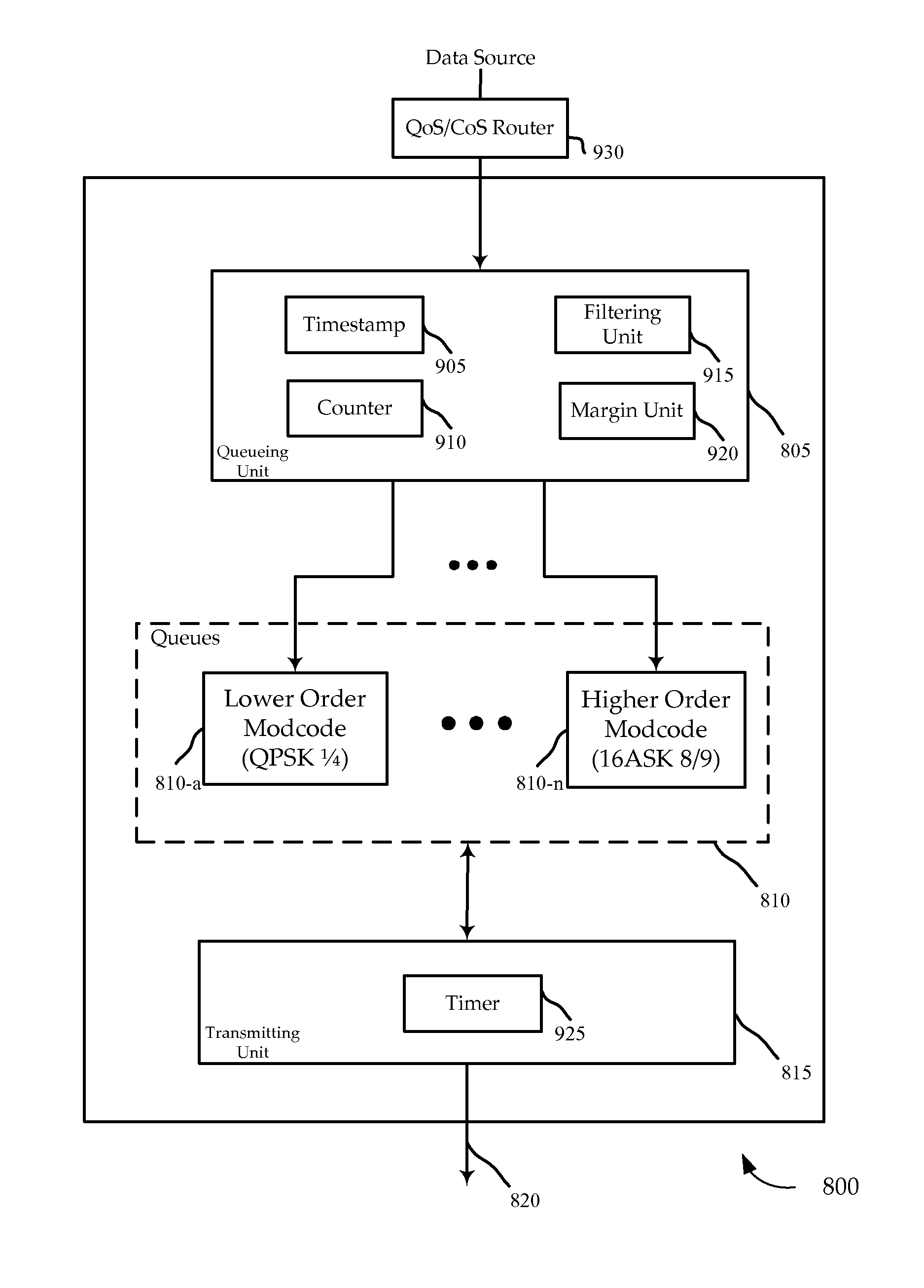

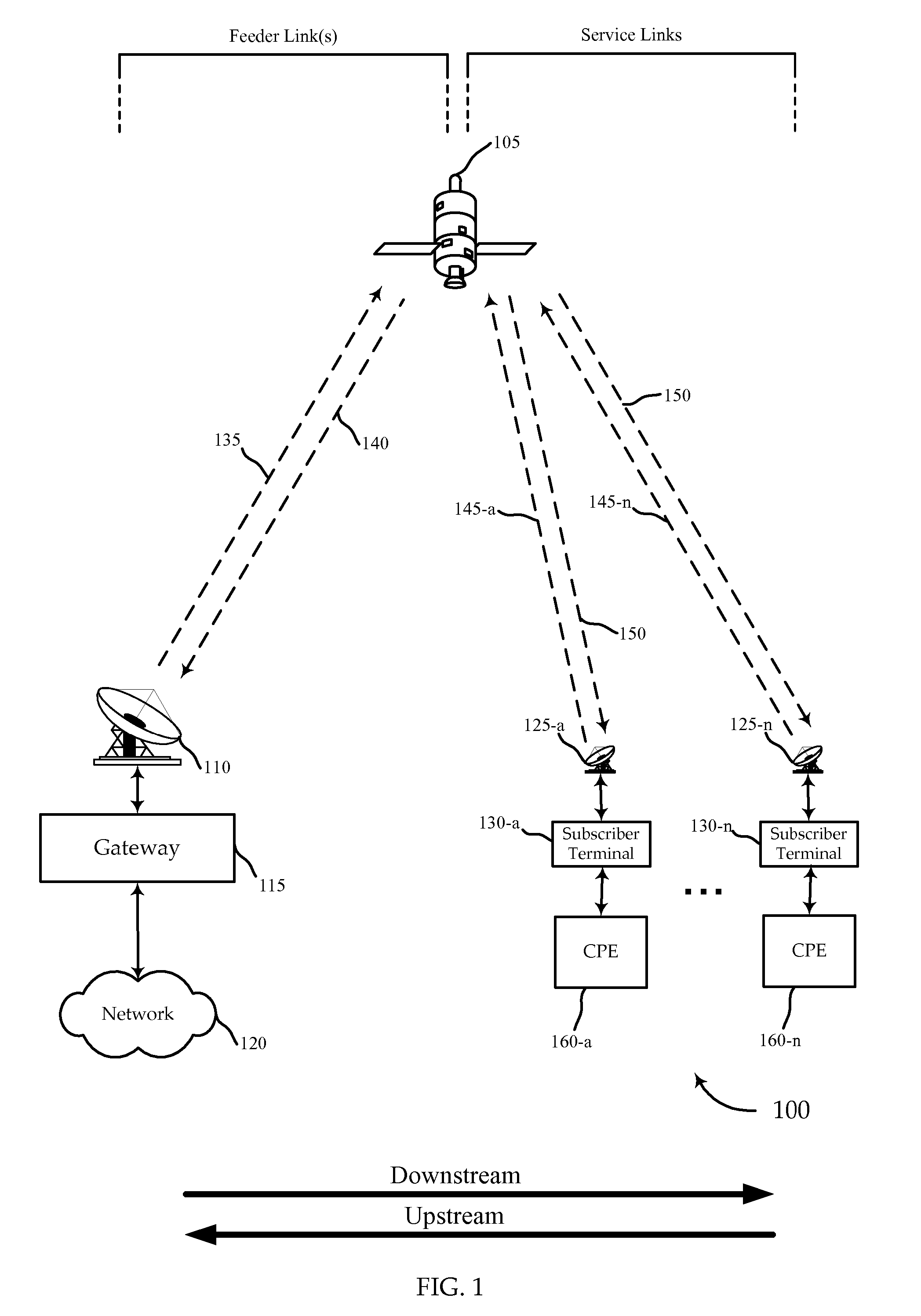

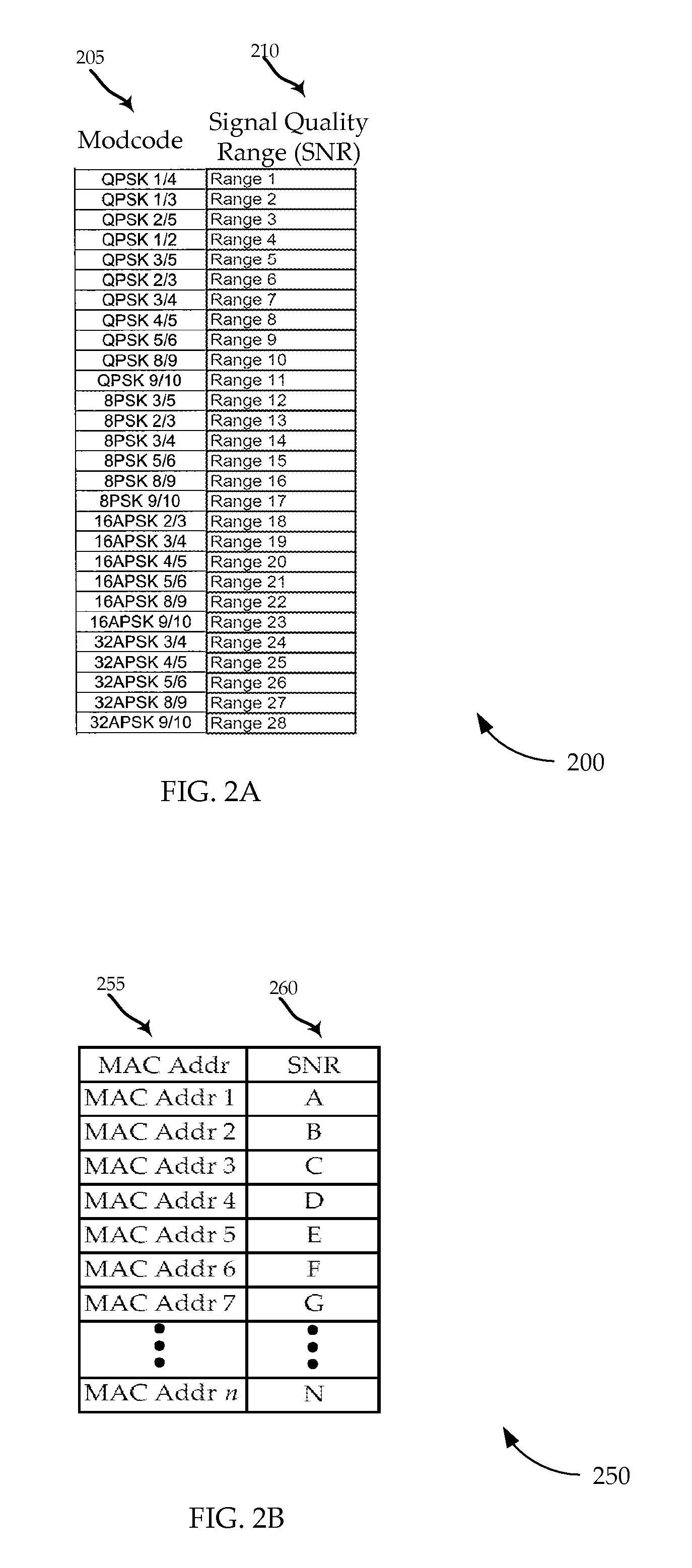

Adaptive coding and modulation queuing methods and devices

ActiveUS20070116152A1Data switching by path configurationMultiple modulation transmitter/receiver arrangementsTraffic capacitySignal quality

A process is described to build physical layer frames with a modcode adapted to the signal quality of a destination terminal. Data packets assigned to the same modcode may be sent in the same frame, although packets associated with higher modcodes may be used to complete a frame before switching to the applicable higher modcode for construction of subsequent frames. After an interval, the order of progression is restarted with an out of order packet above a threshold age. Flow control filtering mechanisms and a variable reliability margin may be used to adapt dynamically to the current data traffic conditions.

Owner:VIASAT INC

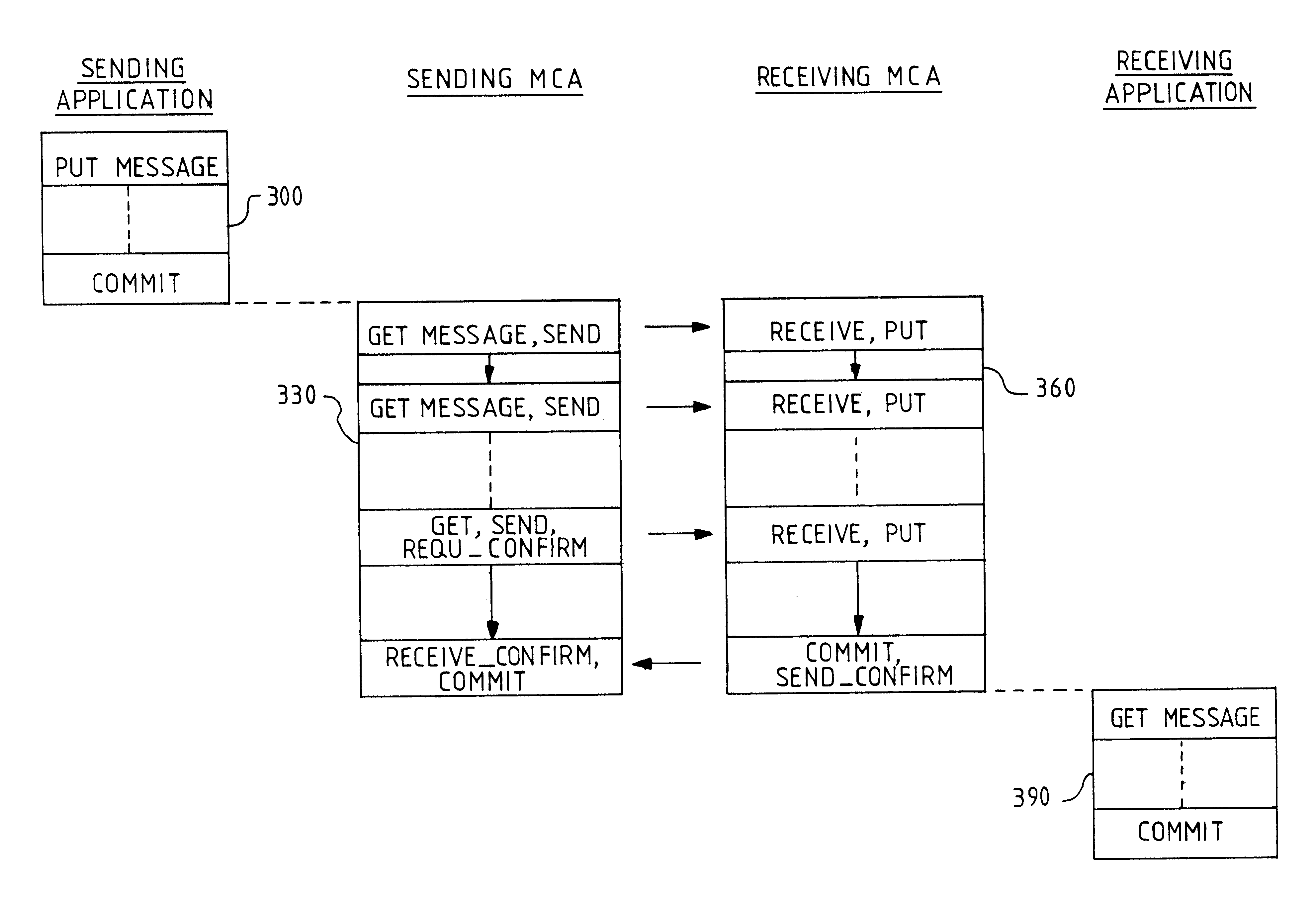

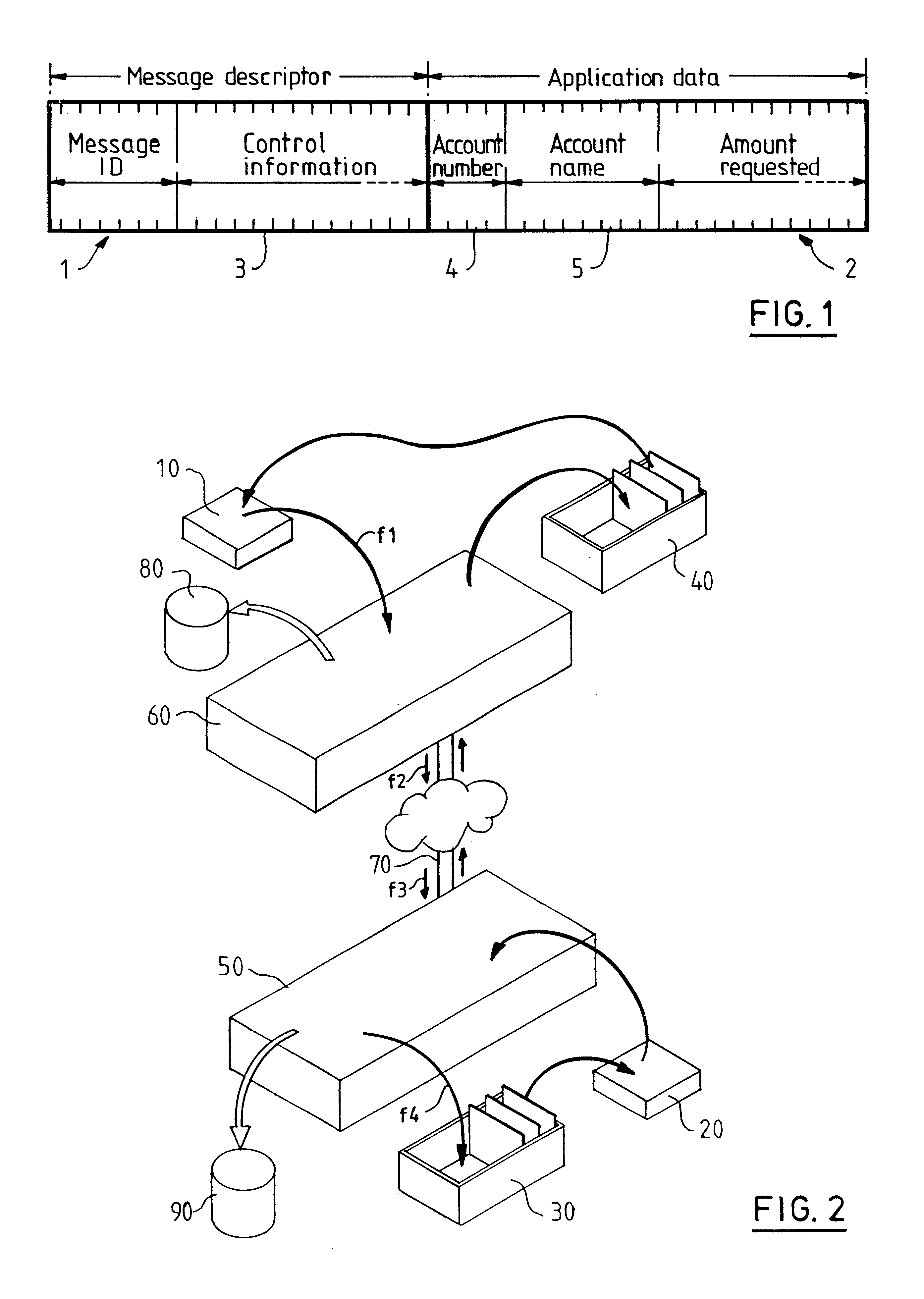

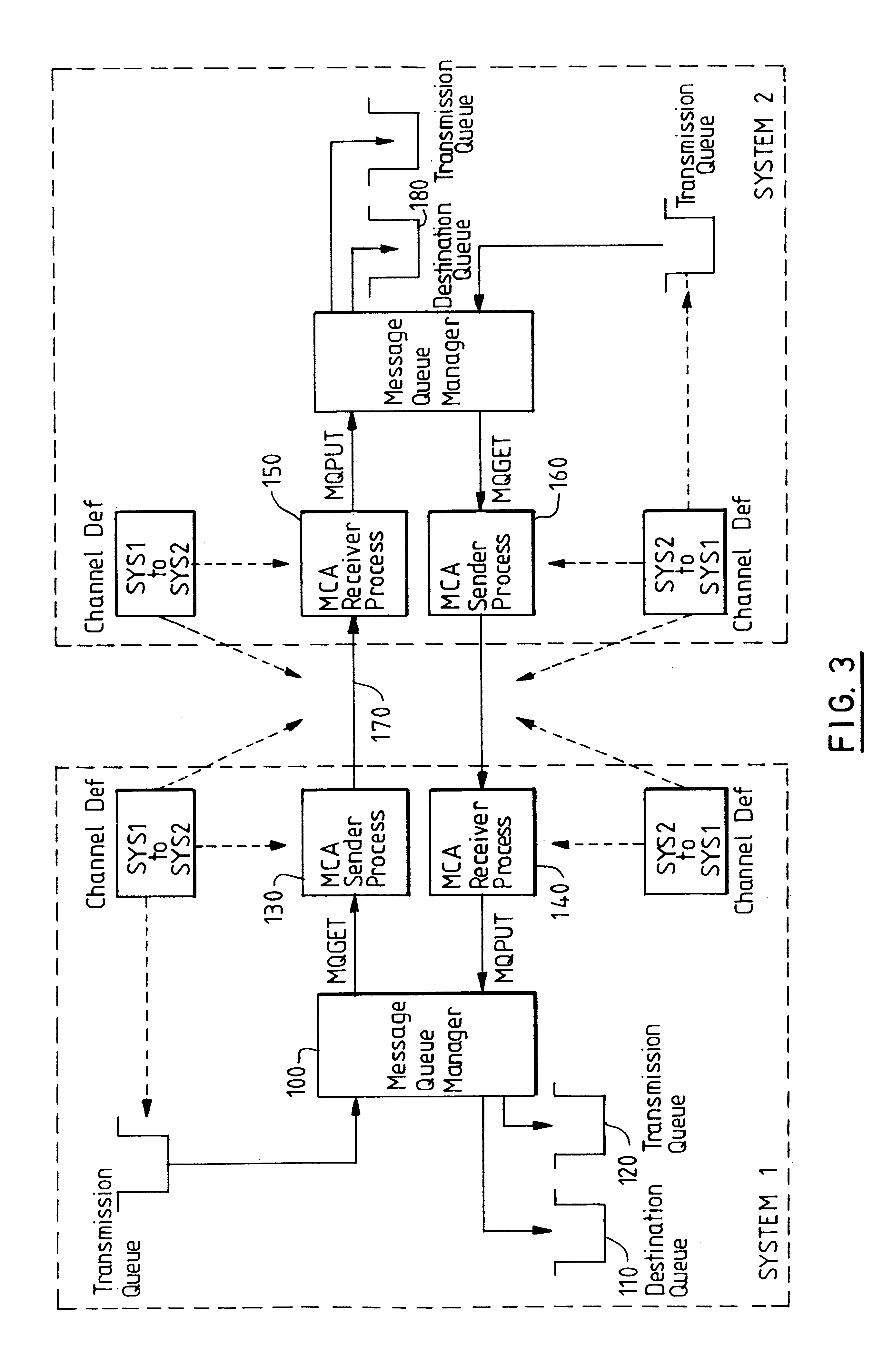

Method of transferring messages between computer programs across a network

A method of delivering messages between application programs is provided which ensures that no messages are lost and none are delivered more than once. The method uses asynchronous message queuing. One or more queue manager programs (100) is located at each computer of a network for controlling the transmission of messages to and from that computer. Messages to be transmitted to a different queue manager are put onto special transmission queues (120). Transmission to an adjacent queue manager comprises a sending process (130) on the local queue manager (100) getting messages from a transmission queue and sending them as a batch of messages within a syncpoint-manager-controlled unit of work. A receiving process (150) on the receiving queue manager receives the messages and puts them within a second syncpoint-manager-controlled unit of work to queues (180) that are under the control of the receiving queue manager. Commitment of the batch is coordinated by the sender transmitting a request for commitment and for confirmation of commitment with the last message of the batch, commit at the sender then being triggered by the confirmation that is sent by the receiver in response to the request.The invention avoids the additional message flow that is a feature of two-phase commit procedures, avoiding the need for resource managers to synchronise with each other. It further reduces the commit flows by permitting batching of a number of messages.

Owner:IBM CORP

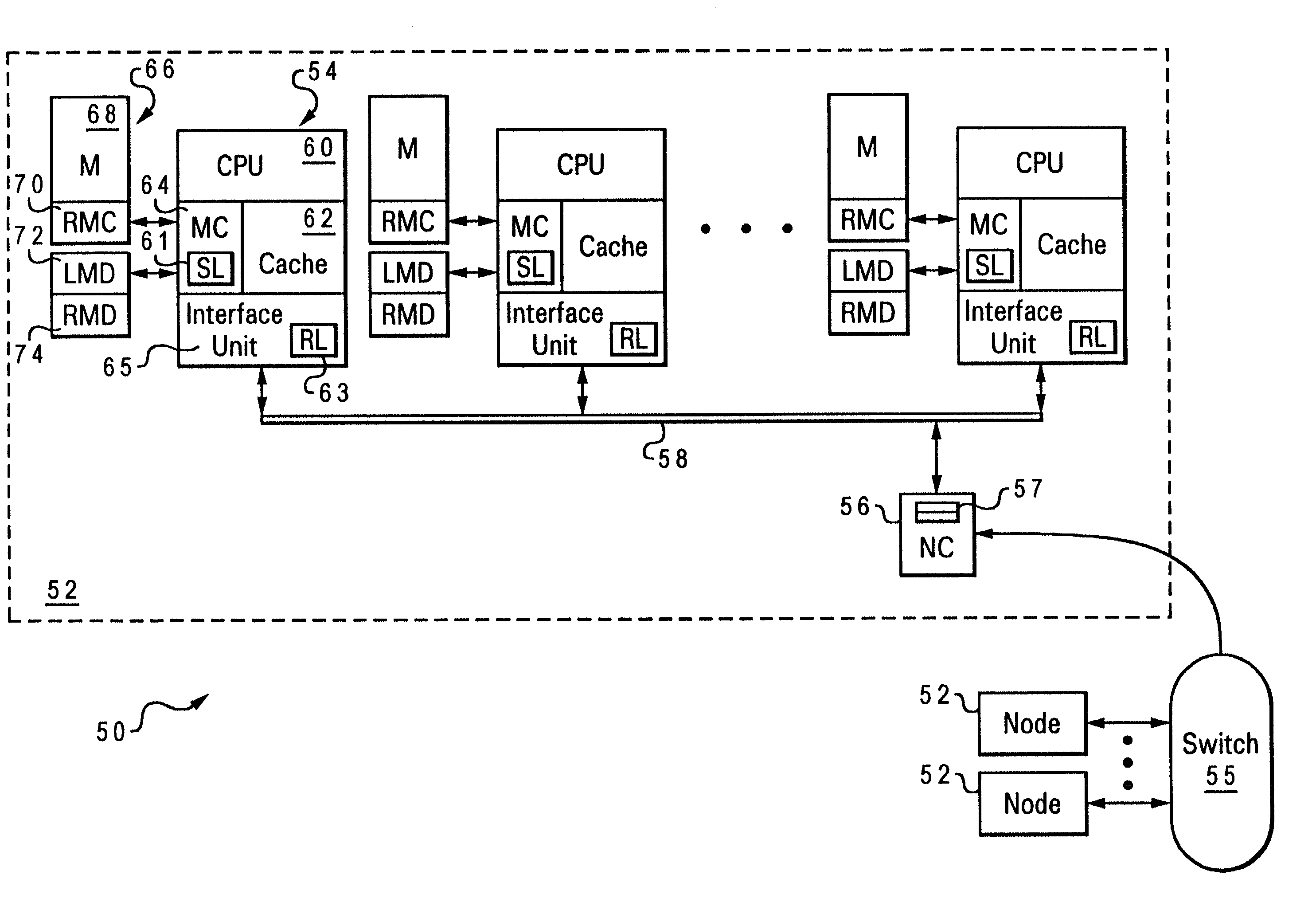

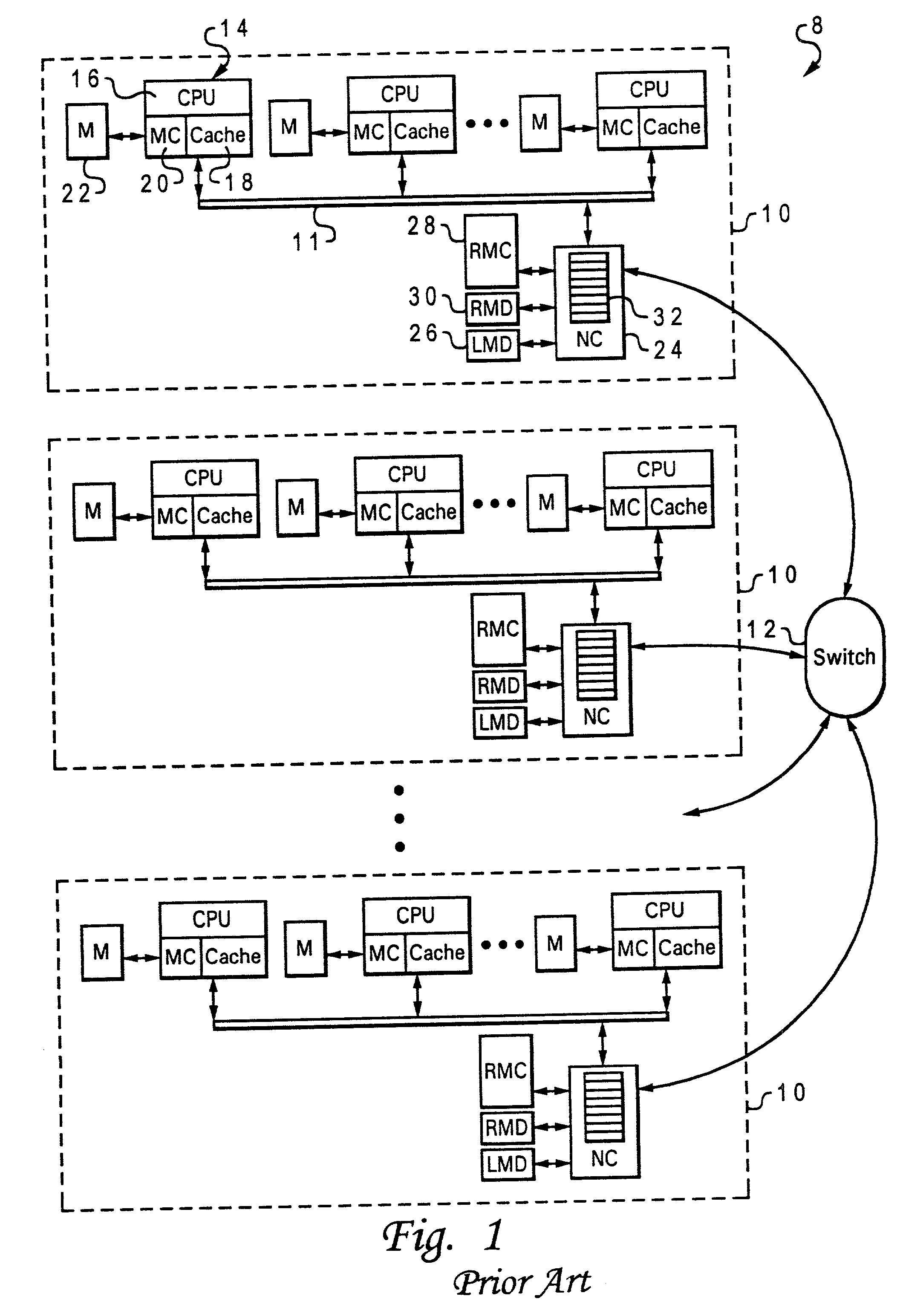

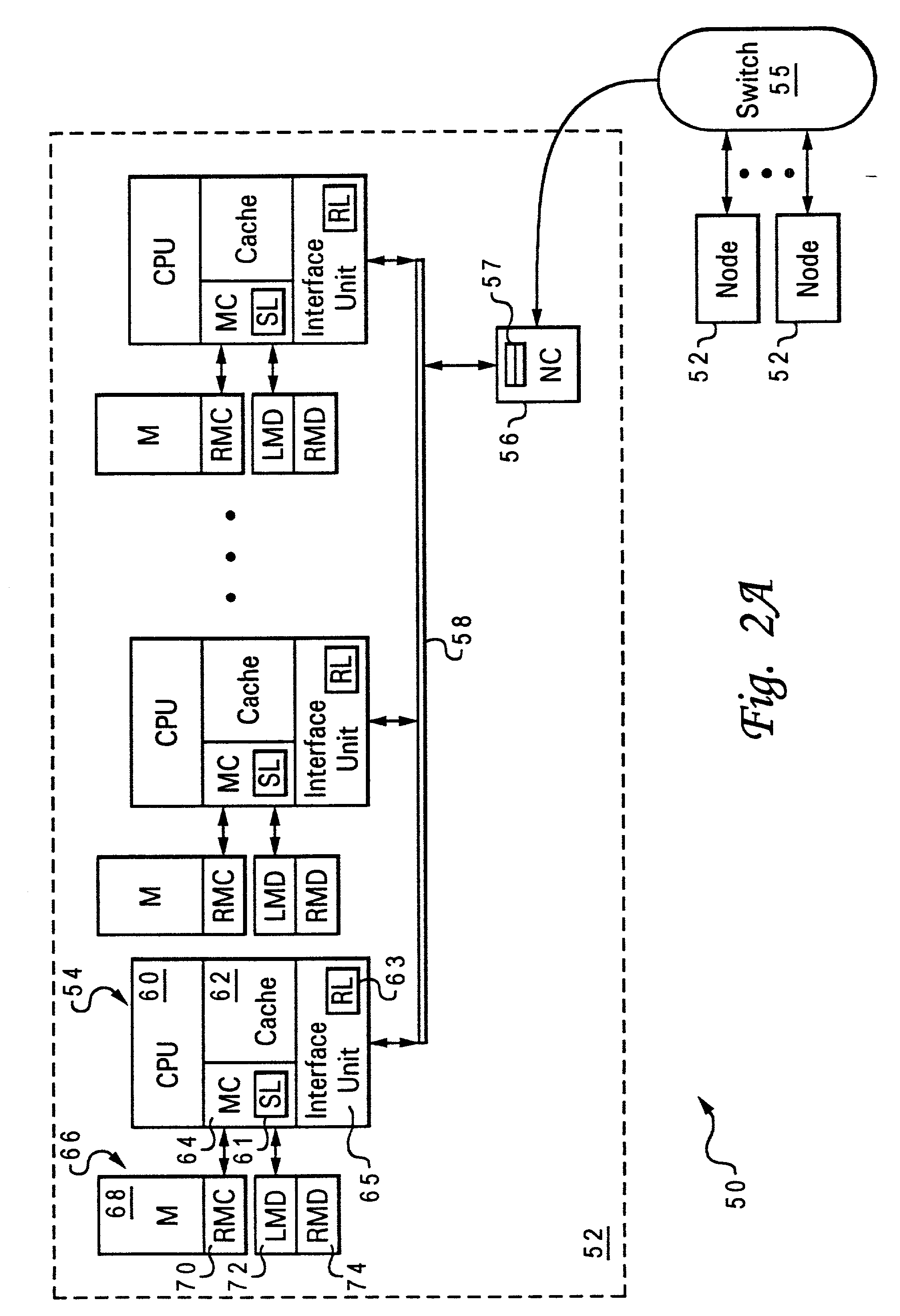

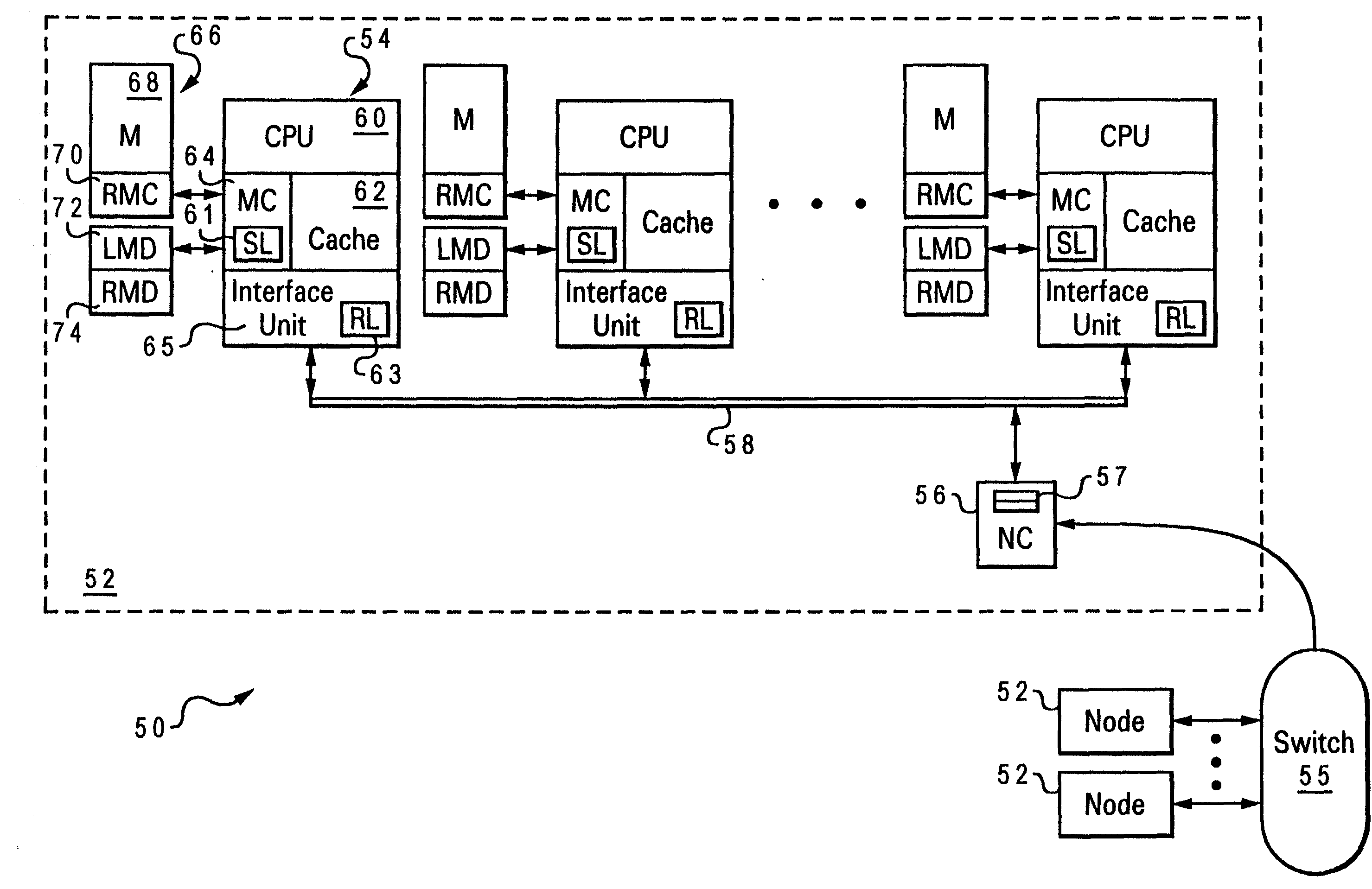

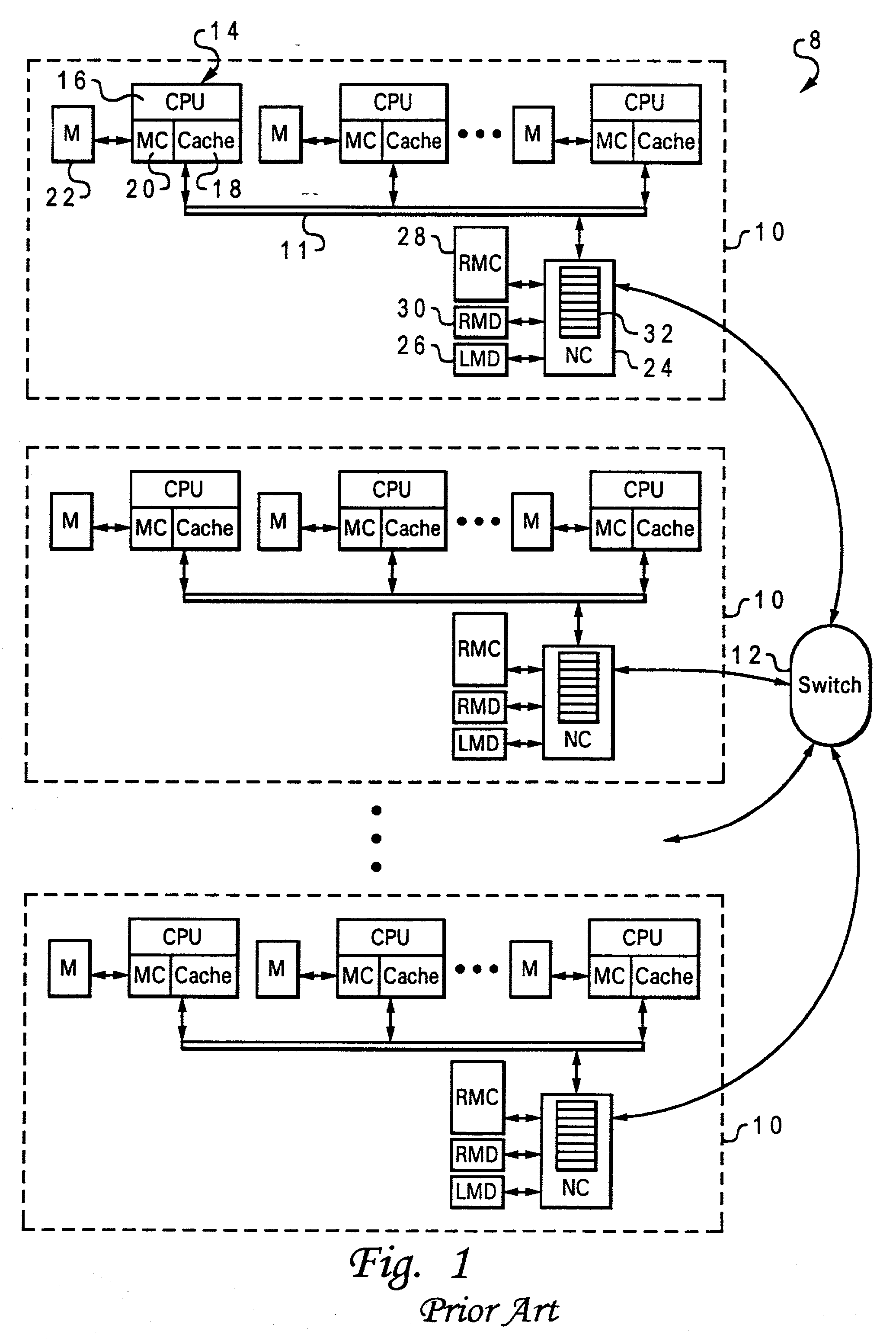

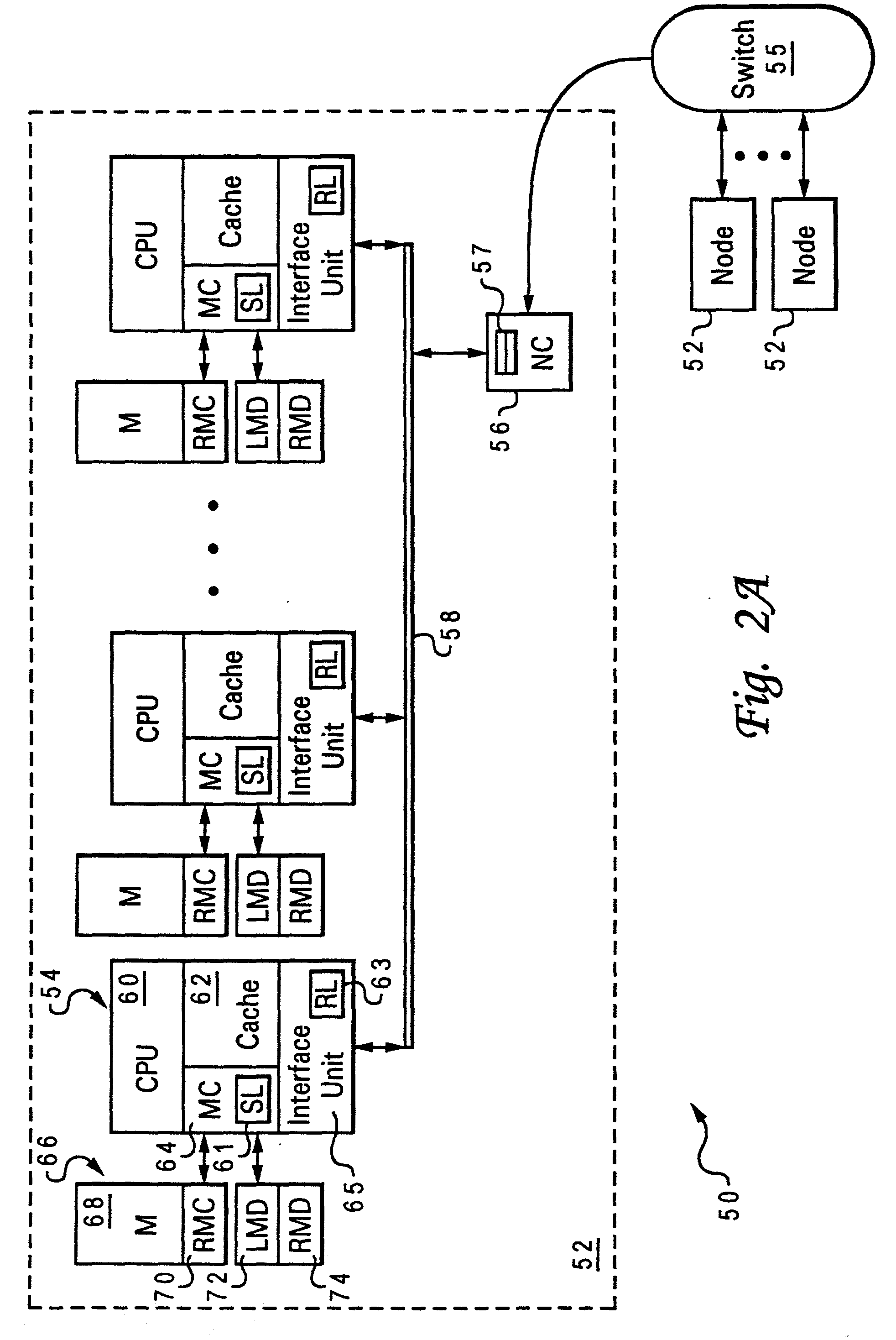

Two-stage request protocol for accessing remote memory data in a NUMA data processing system

InactiveUS20030009643A1Memory architecture accessing/allocationMemory adressing/allocation/relocationRemote memoryReceipt

A non-uniform memory access (NUMA) computer system includes a remote node coupled by a node interconnect to a home node having a home system memory. The remote node includes a local interconnect, a processing unit and at least one cache coupled to the local interconnect, and a node controller coupled between the local interconnect and the node interconnect. The processing unit first issues, on the local interconnect, a read-type request targeting data resident in the home system memory with a flag in the read-type request set to a first state to indicate only local servicing of the read-type request. In response to inability to service the read-type request locally in the remote node, the processing unit reissues the read-type request with the flag set to a second state to instruct the node controller to transmit the read-type request to the home node. The node controller, which includes a plurality of queues, preferably does not queue the read-type request until receipt of the reissued read-type request with the flag set to the second state.

Owner:IBM CORP

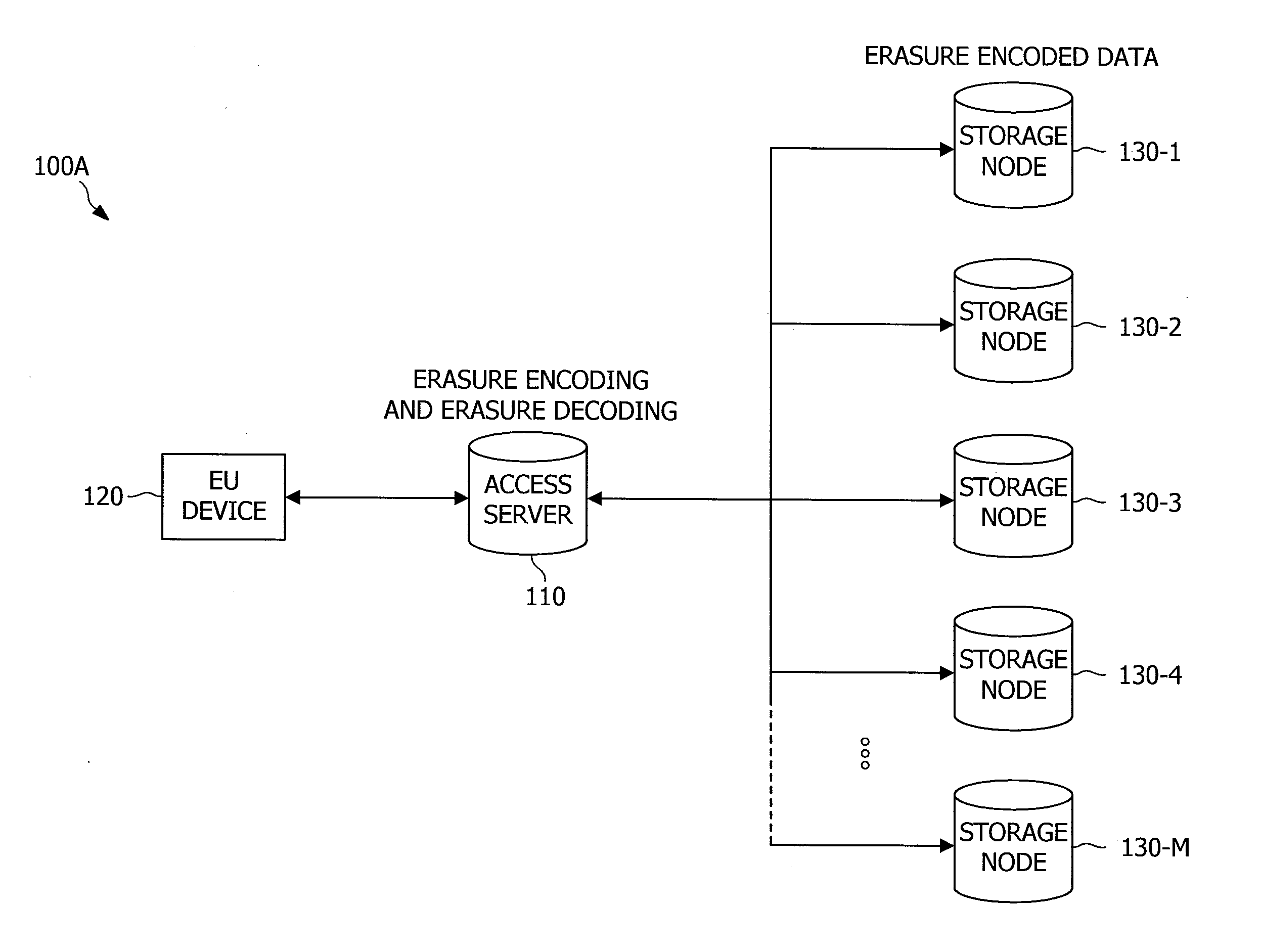

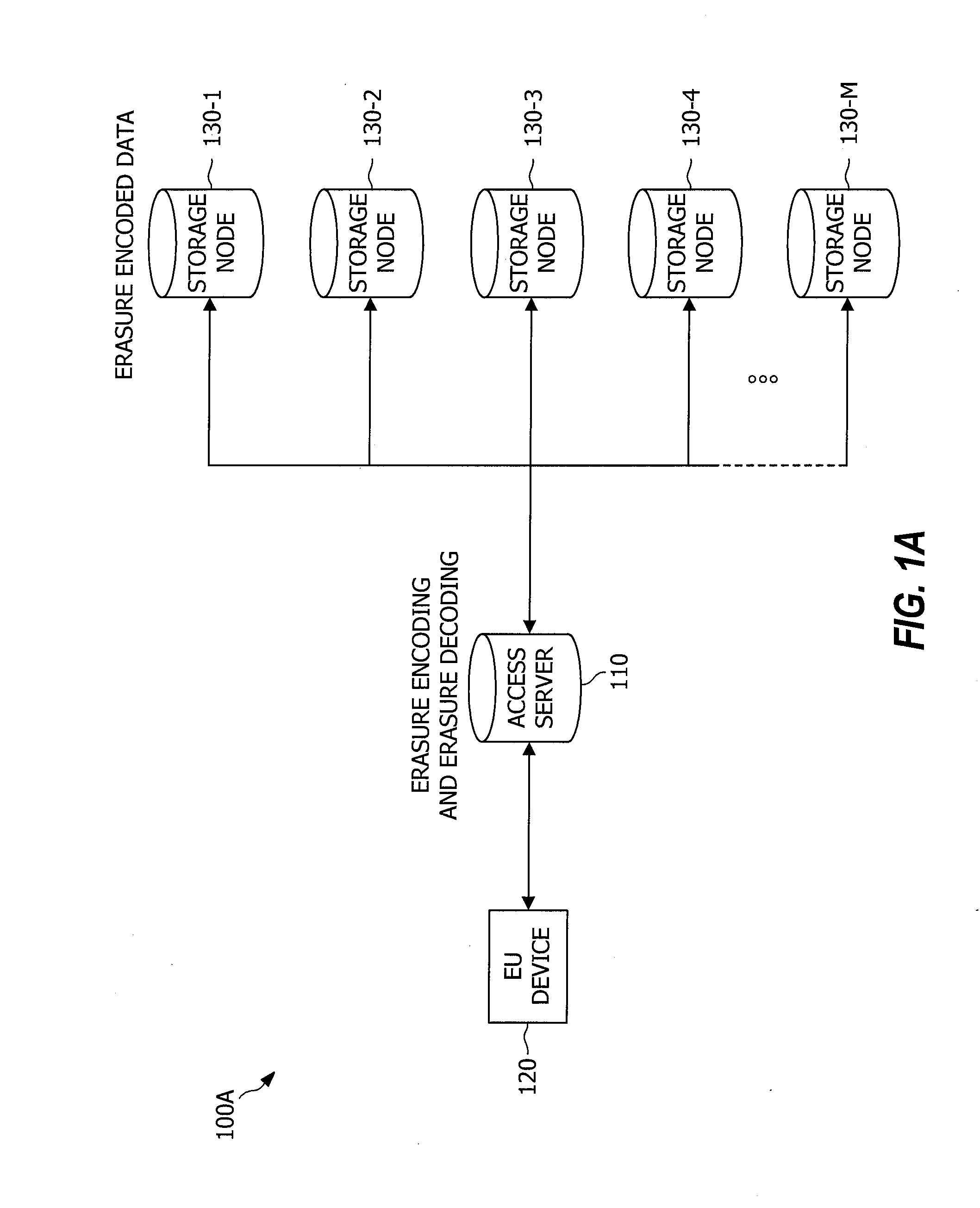

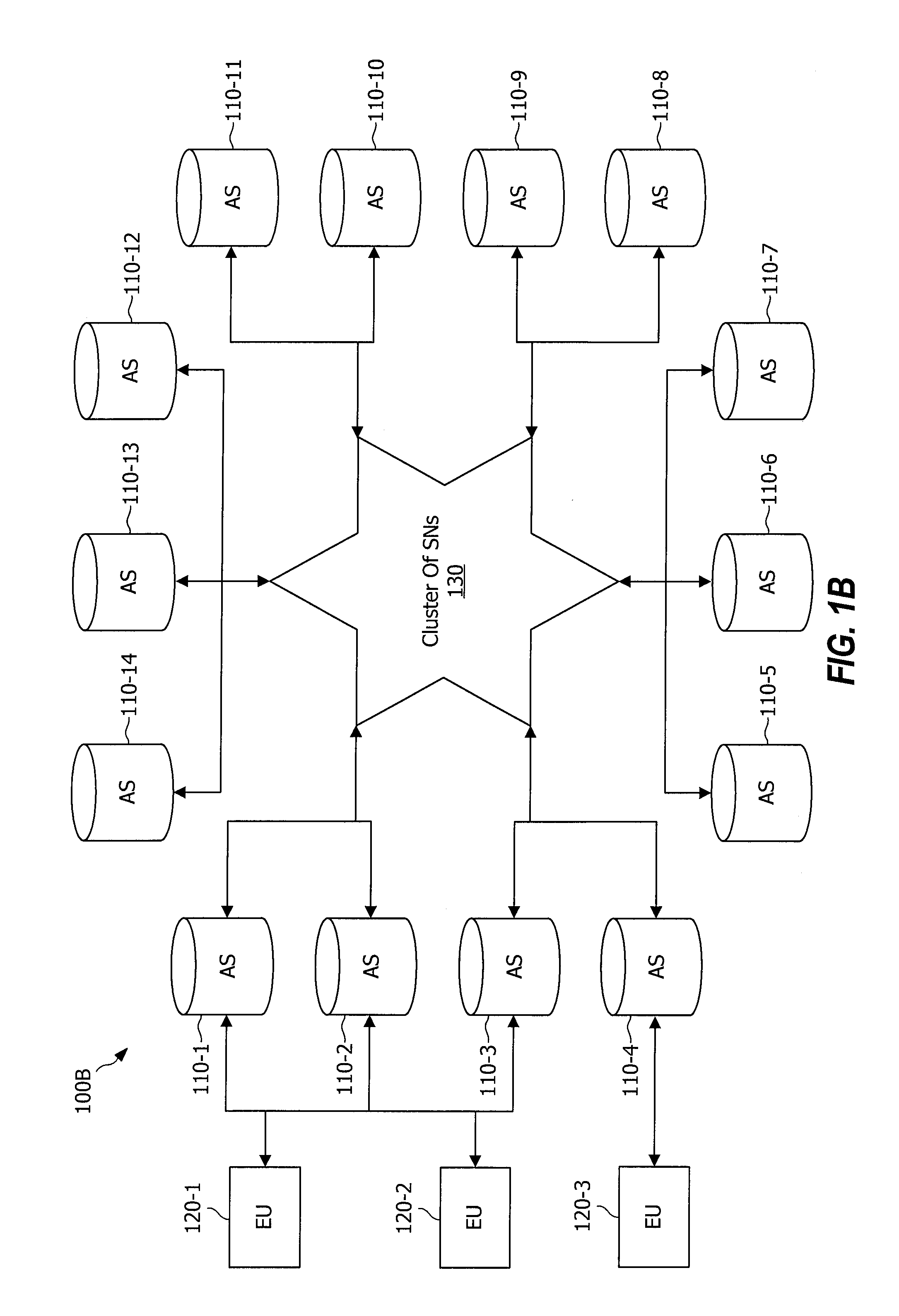

Systems and methods for reliably storing data using liquid distributed storage

InactiveUS20160011936A1Input/output to record carriersRedundant data error correctionStreaming dataData stream

Embodiments provide methodologies for reliably storing data within a storage system using liquid distributed storage control. Such liquid distributed storage control operates to compress repair bandwidth utilized within a storage system for data repair processing to the point of operating in a liquid regime. Liquid distributed storage control logic of embodiments may employ a lazy repair policy, repair bandwidth control, a large erasure code, and / or a repair queue. Embodiments of liquid distributed storage control logic may additionally or alternatively implement a data organization adapted to allow the repair policy to avoid handling large objects, instead streaming data into the storage nodes at a very fine granularity.

Owner:QUALCOMM INC

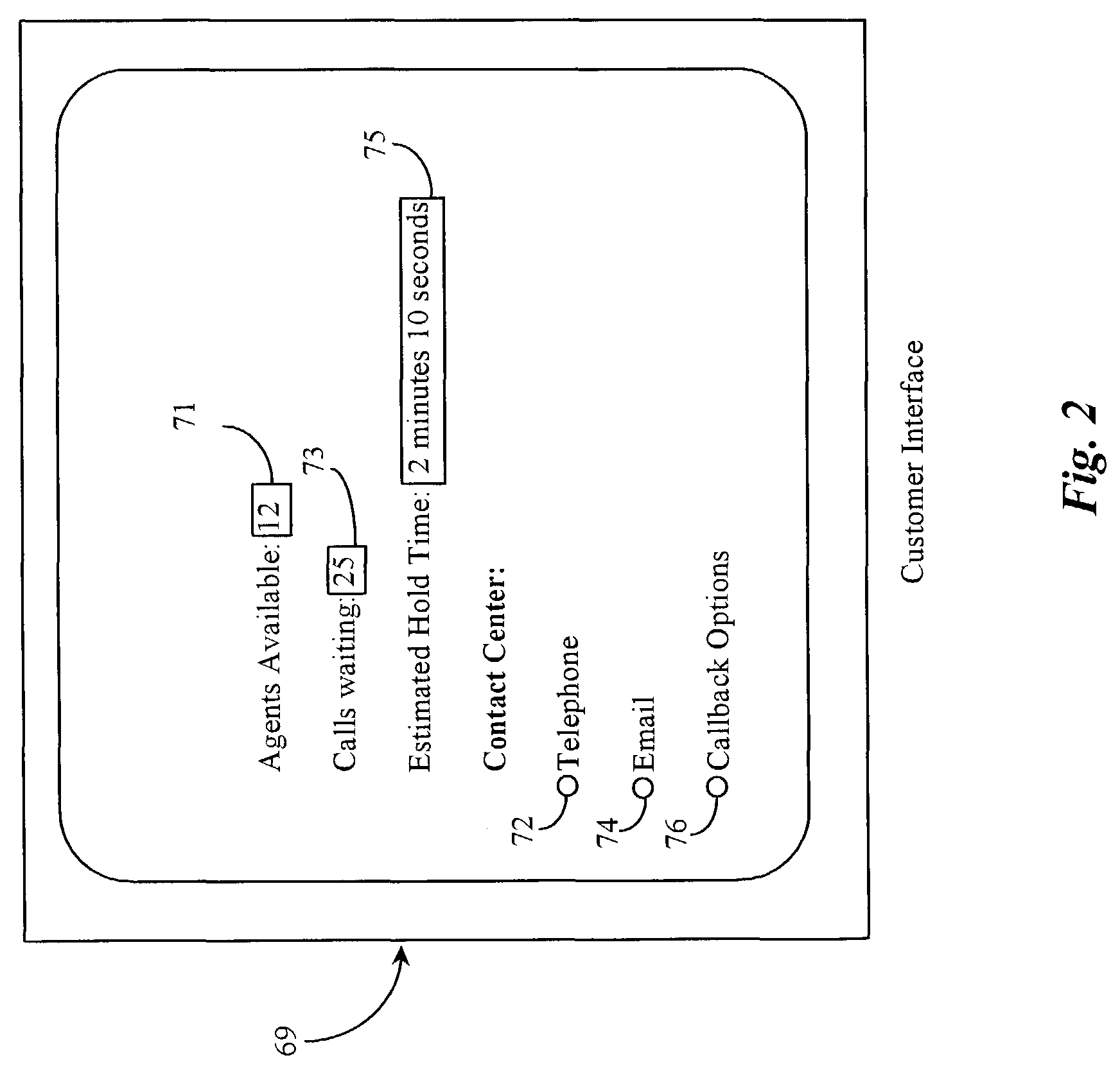

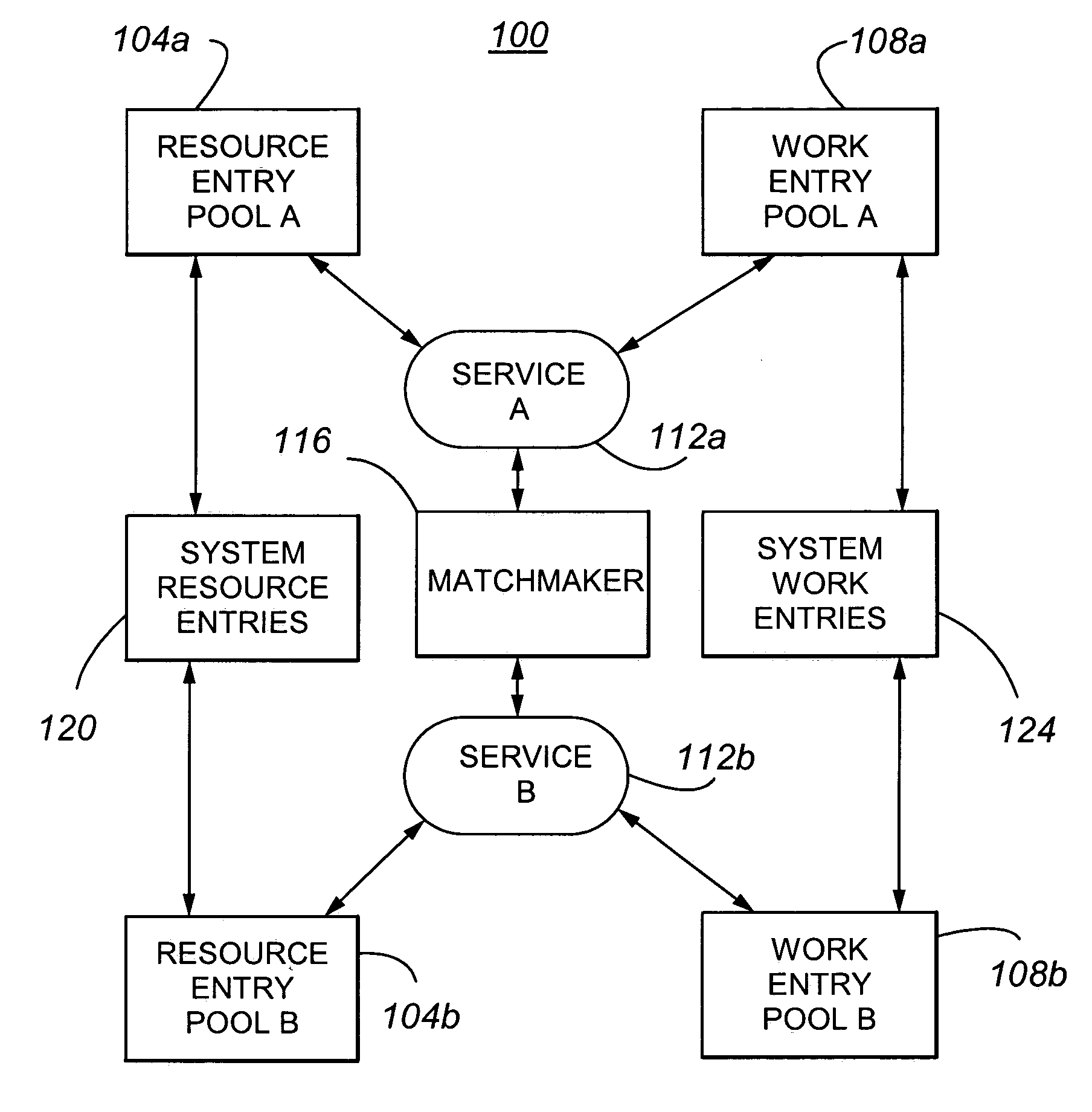

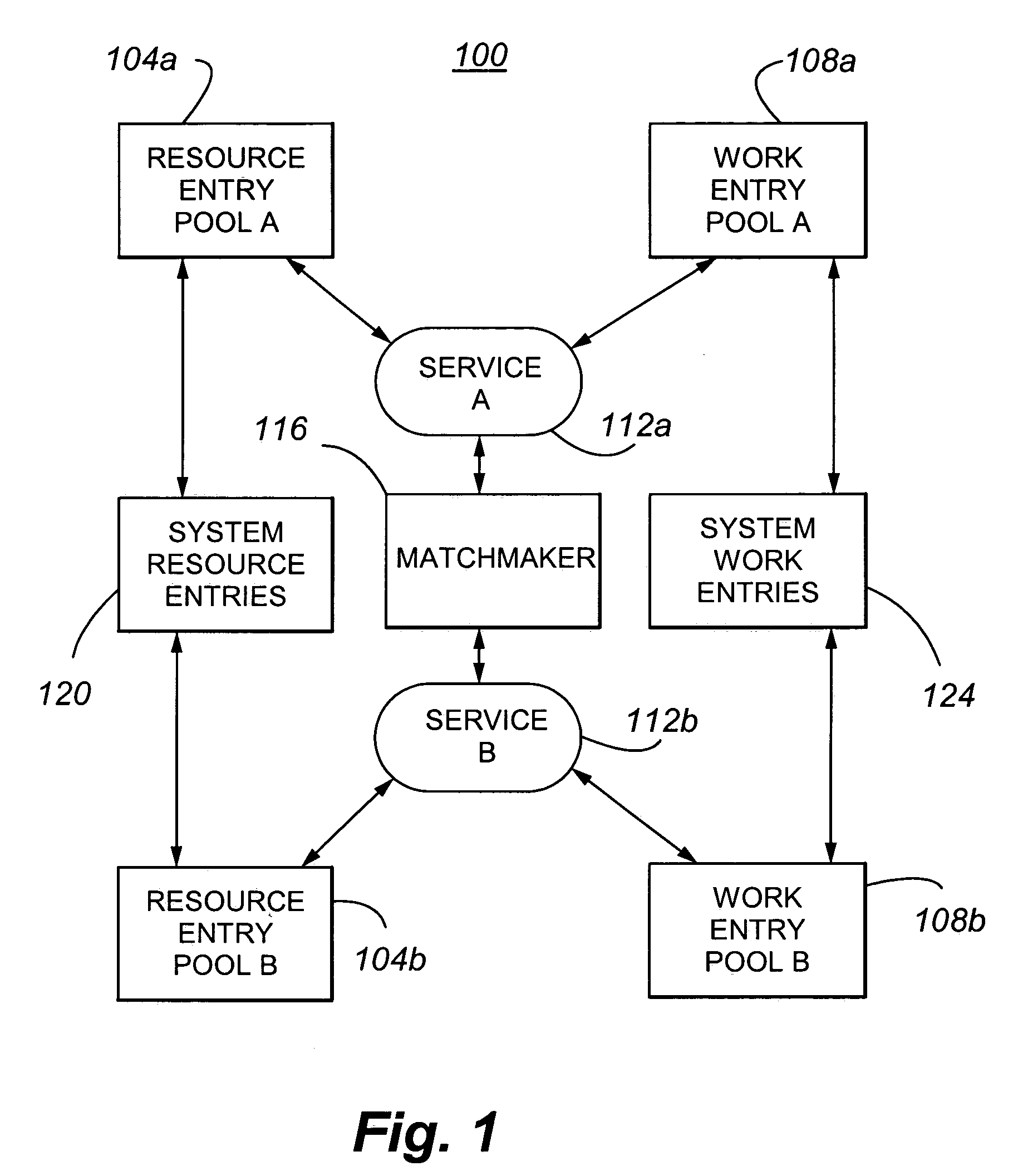

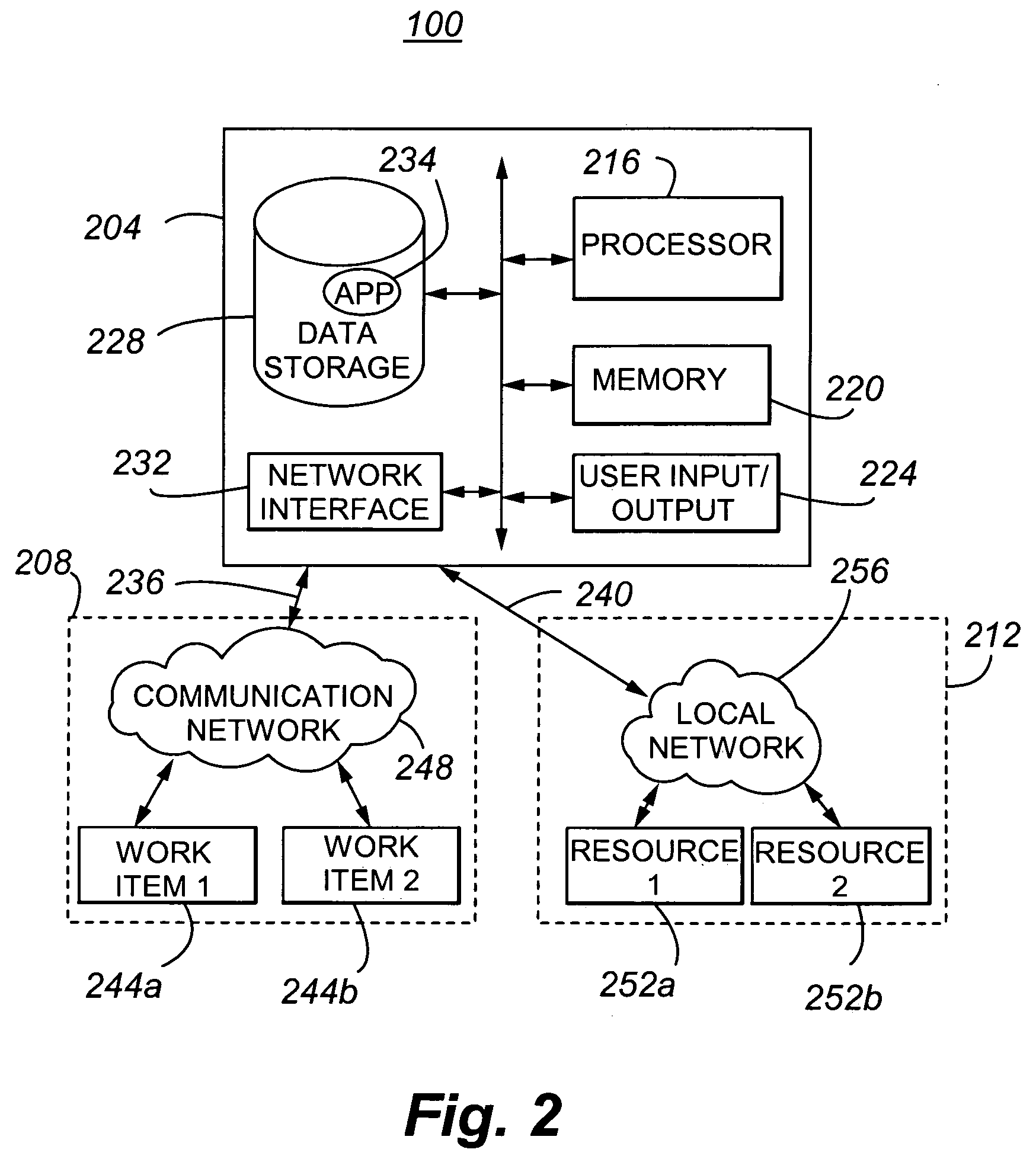

Method and apparatus for supporting individualized selection rules for resource allocation

The present invention relates to the matching of resources to work entries. In particular, the present invention allows work items to be assigned to a particular resource based on the characteristics of the work item and on the qualifications and preferences of the resource. Furthermore, the present invention does not rely on queues, thereby allowing characteristics of a work item other than or in addition to the amount of time that a work item has been waiting for service to be considered in assigning the work item to a resource. The types of work items that may be validly assigned to a resource, or the preference rules used to select a valid work item for handling by a resource, may be altered by altering the validation rules and preference rules associated with the resource and / or work item. Accordingly, the rules for allocating work may be adjusted easily and quickly, including at run-time, and may represent any attribute desired for use in allocating work.

Owner:AVAYA INC

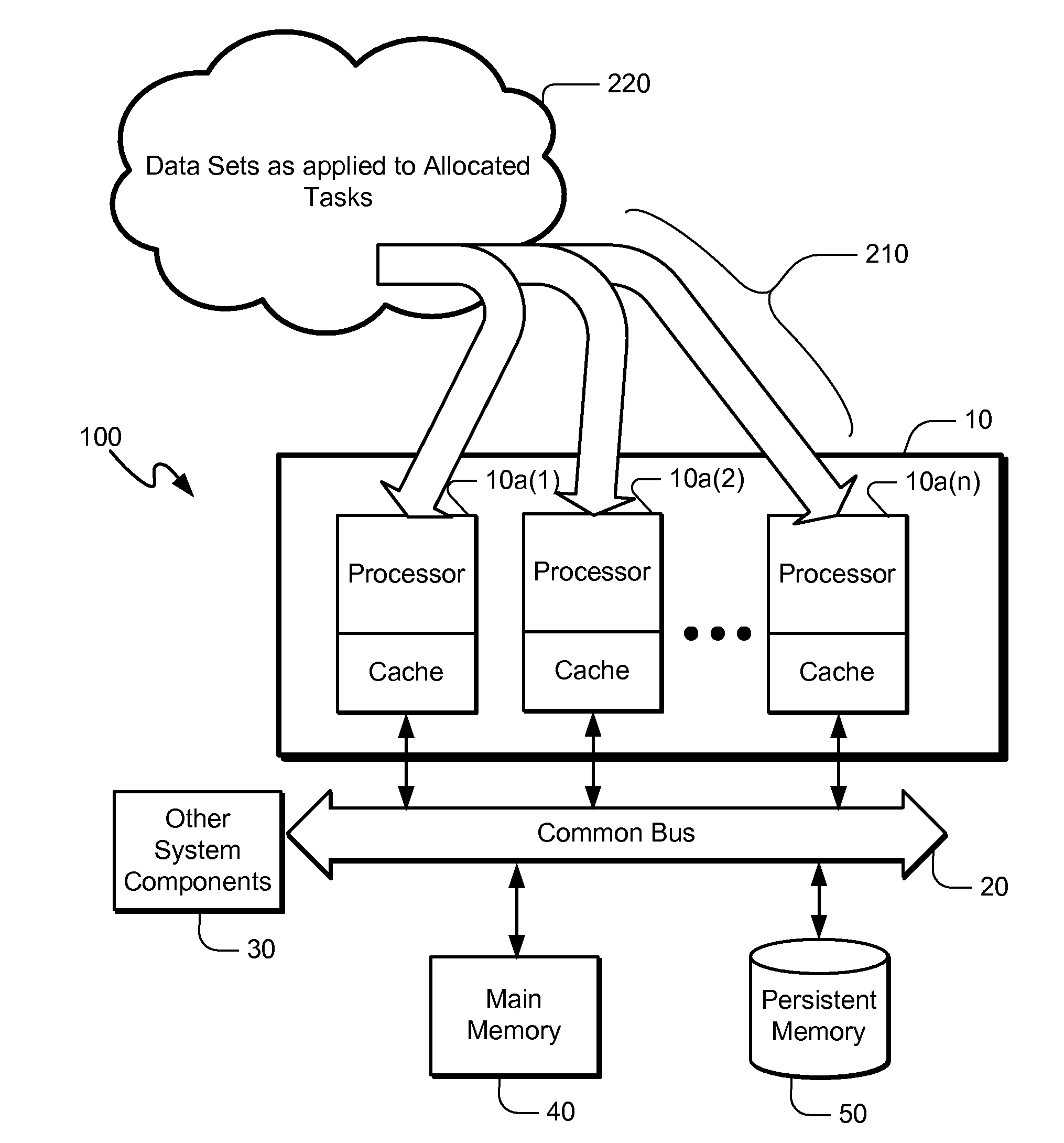

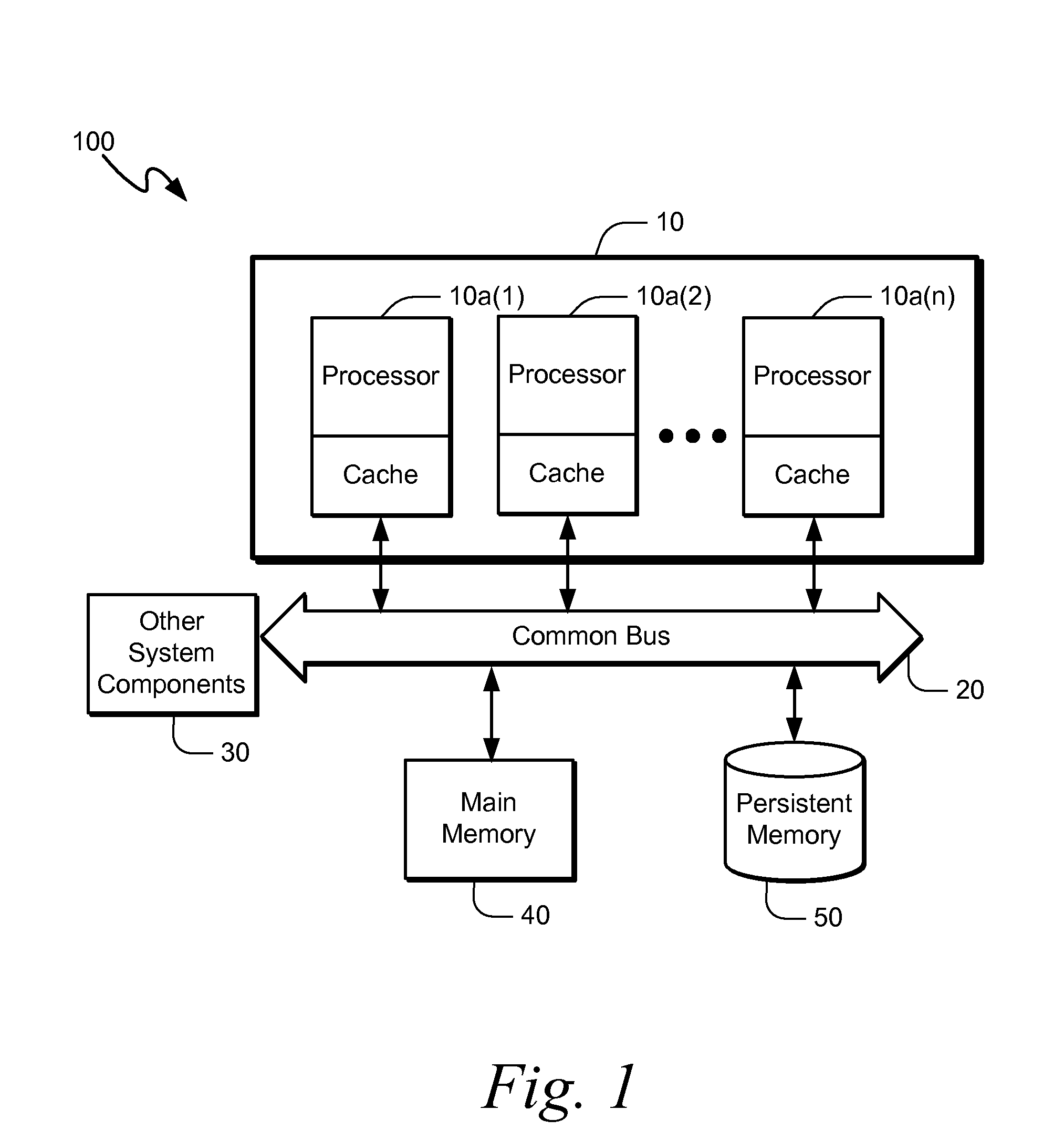

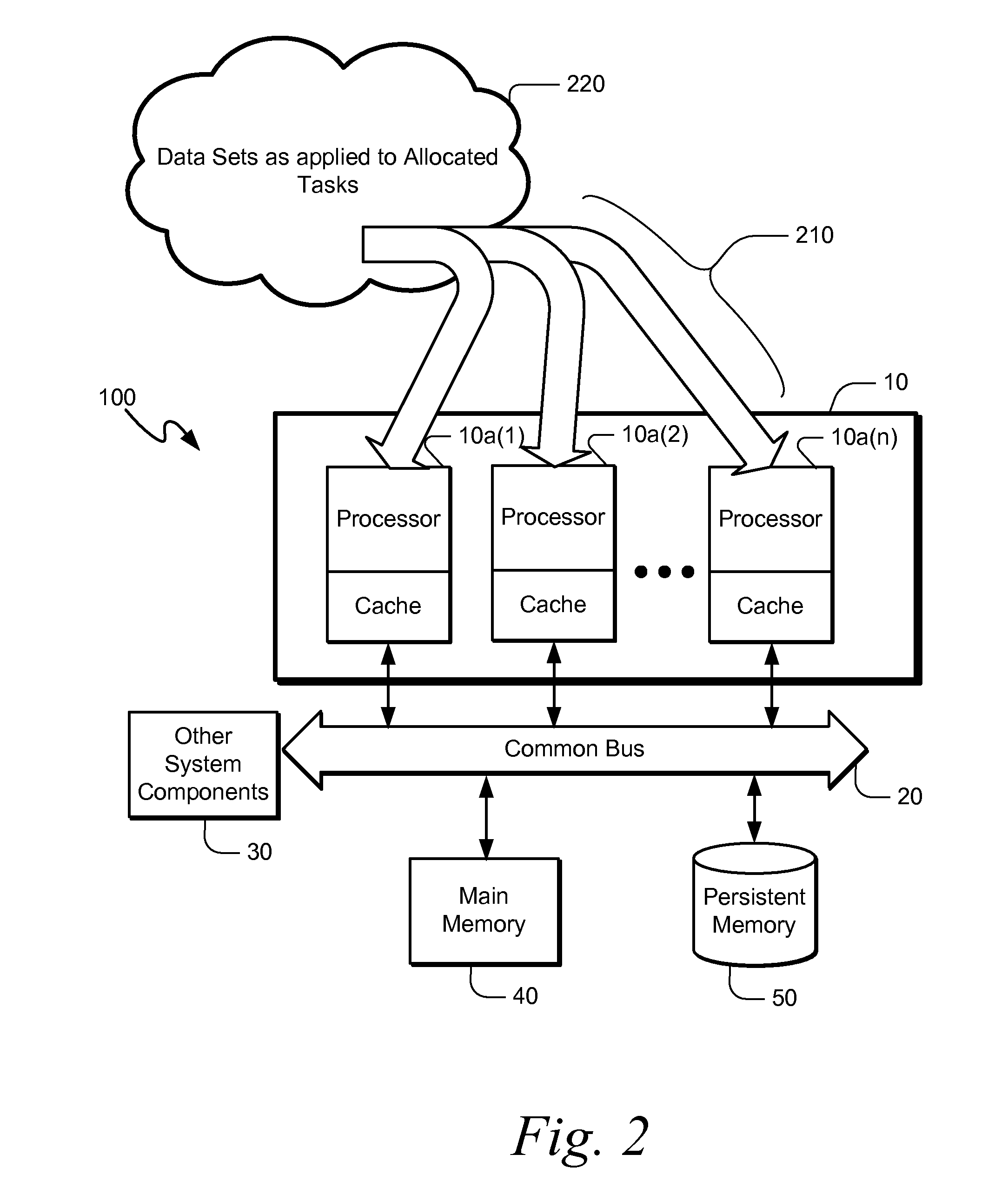

System and method for optimizing data analysis

ActiveUS20090007127A1Reduce timeReduce analysis processing timeAnalysis by electrical excitationMultiprogramming arrangementsFluorescenceData analysis

There is provided an adaptive semi-synchronous parallel processing system and method, which may be adapted to various data analysis applications such as flow cytometry systems. By identifying the relationship and memory dependencies between tasks that are necessary to complete an analysis, it is possible to significantly reduce the analysis processing time by selectively executing tasks after careful assignment of tasks to one or more processor queues, where the queue assignment is based on an optimal execution strategy. Further strategies are disclosed to address optimal processing once a task undergoes computation by a computational element in a multiprocessor system. Also disclosed is a technique to perform fluorescence compensation to correct spectral overlap between different detectors in a flow cytometry system due to emission characteristics of various fluorescent dyes.

Owner:SOFTLIFE PROJECTS LIMITED DOING BUSINESS AS APPLI ED CYTOMETRY SYST

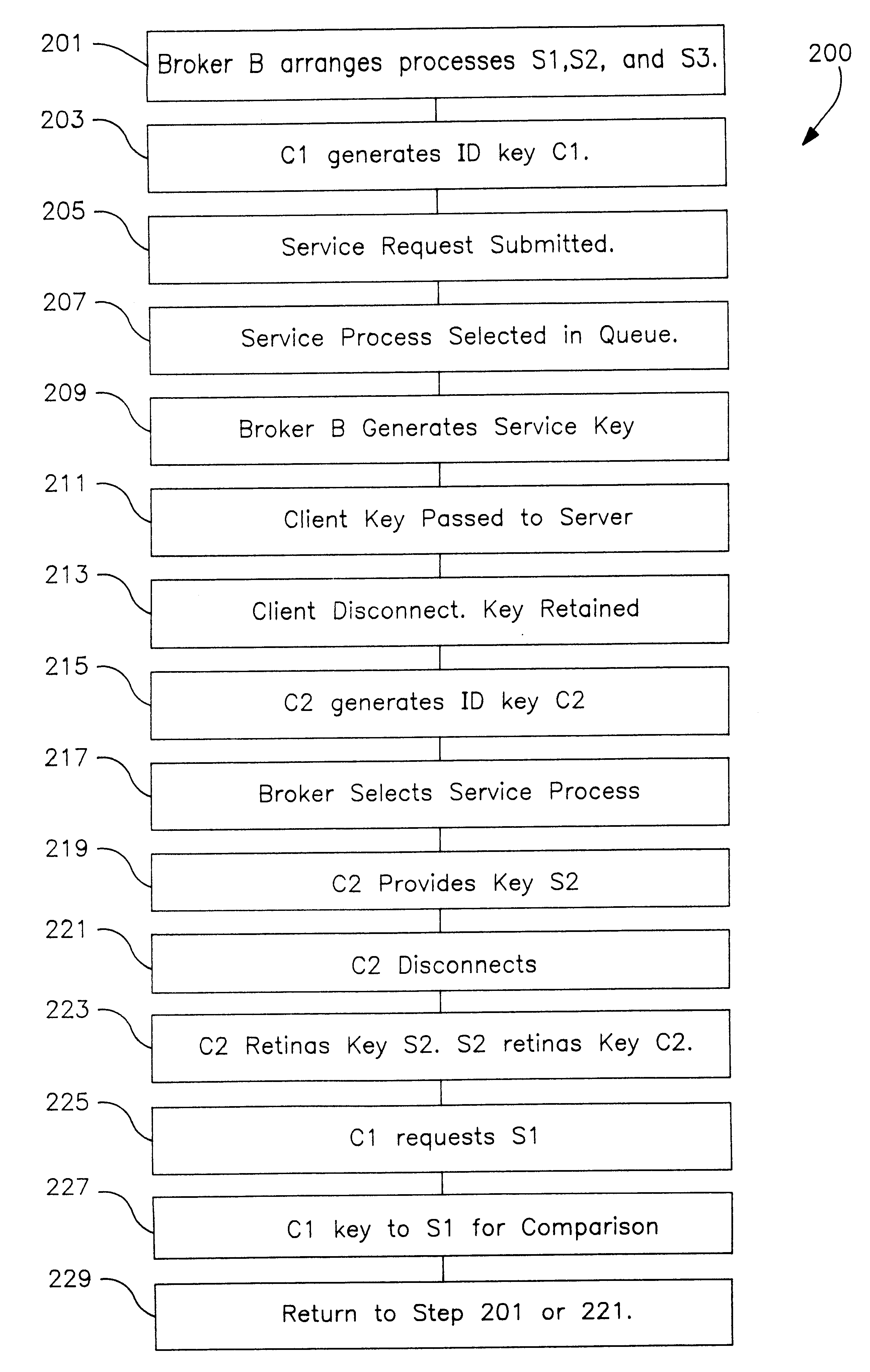

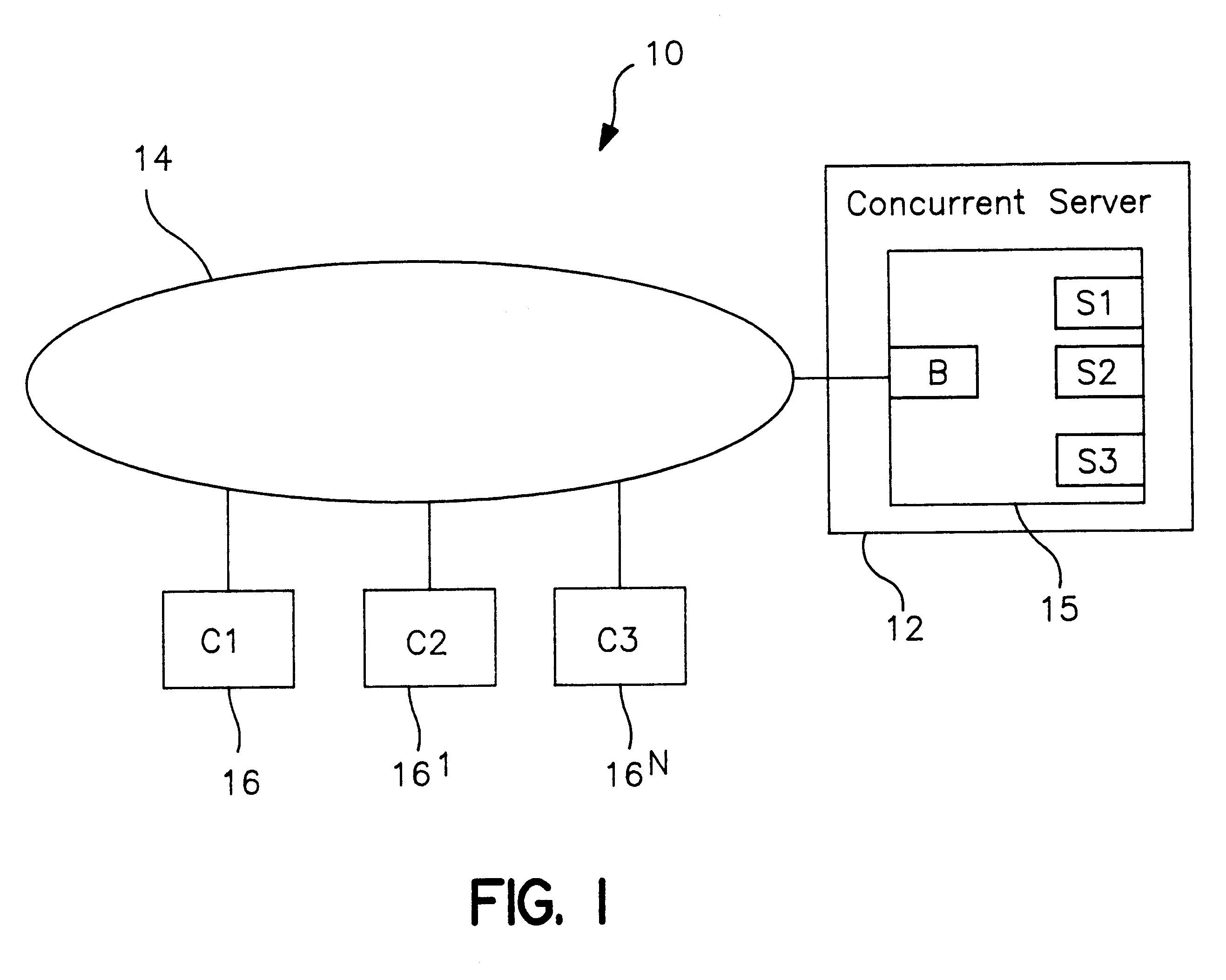

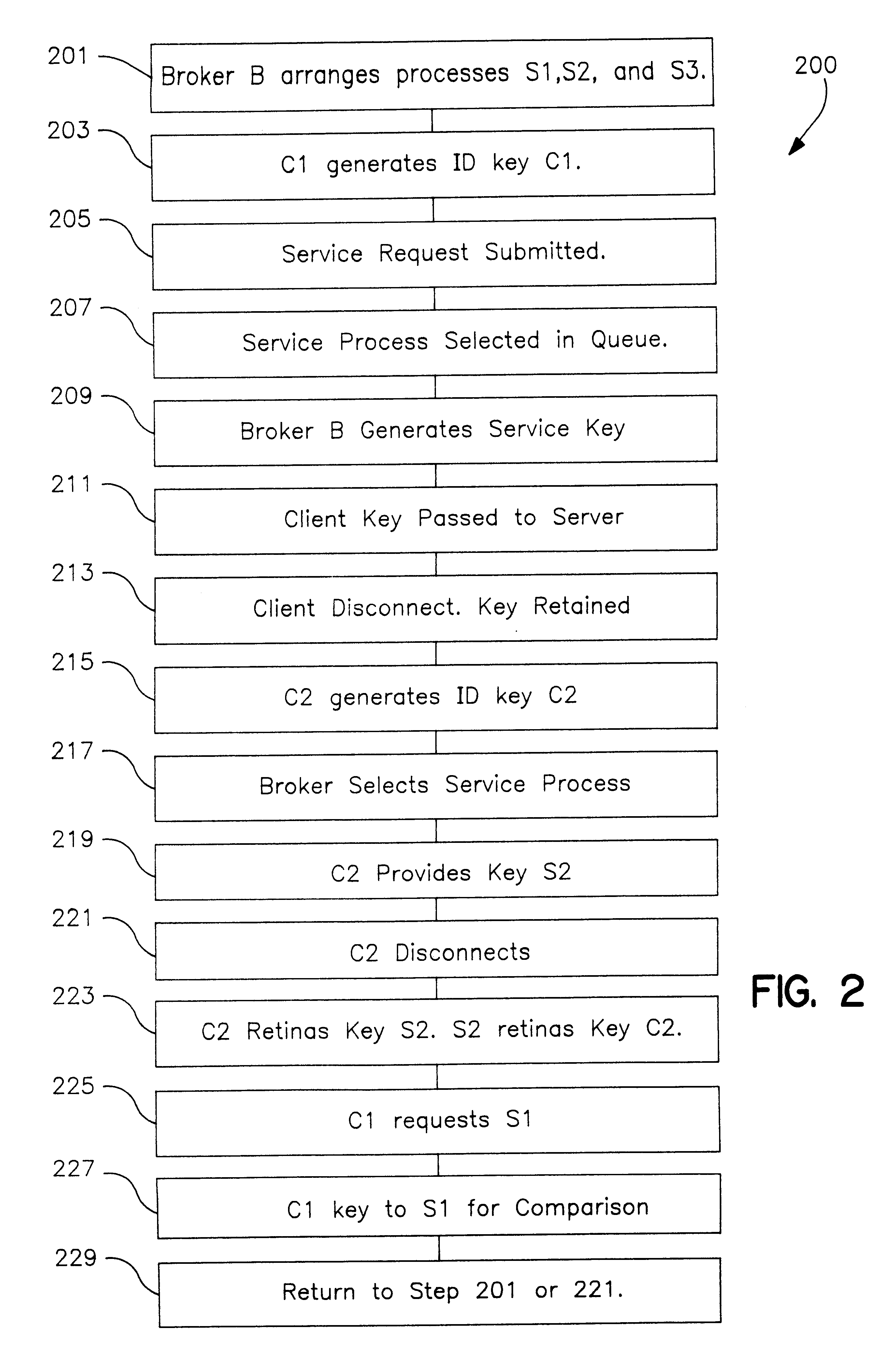

Concurrent server and method of operation having client-server affinity using exchanged client and server keys

InactiveUS6195682B1Eliminate needMultiple digital computer combinationsPayment architectureS/KEYInformation networks

In a distributed information network, a broker server is coupled to a plurality of child servers and to a plurality of clients in the network. The broker server connects clients to a child server in a queue on a FIFO basis and provides the client with a key identifying the child server. The client provides the server with a copy of its key at the time of the initial service request. Both the child server and the client retain a copy of the other's key upon disconnect. The child server returns to the bottom of the queue after disconnect. On a subsequent client service request, the client includes the child server key in the service request and the broker automatically re-connects the client to the child server wherever S1 may be in the queue, provided the child server is not busy serving other clients. When reconnected, the client send its key to the child server which compares the key to the retained copy of the client key. If the keys match, the child server does not refresh and reload the client state data which improves server performance. If the child server is not available, the broker assigns the client to the child server at the top of the queue. The client may also be an intermediate server for other or first tier clients, in which case the intermediate server forwards the server keys to the first tier clients for service requests to the child server.

Owner:IBM CORP

Decentralized global coherency management in a multi-node computer system

InactiveUS20030009637A1Memory adressing/allocation/relocationDigital computer detailsComputerized systemConsistency management

A non-uniform memory access (NUMA) computer system includes a first node and a second node coupled by a node interconnect. The second node includes a local interconnect, a node controller coupled between the local interconnect and the node interconnect, and a controller coupled to the local interconnect. In response to snooping an operation from the first node issued on the local interconnect by the node controller, the controller signals acceptance of responsibility for coherency management activities related to the operation in the second node, performs coherency management activities in the second node required by the operation, and thereafter provides notification of performance of the coherency management activities. To promote efficient utilization of queues within the node controller, the node controller preferably allocates a queue to the operation in response to receipt of the operation from the node interconnect and then deallocates the queue in response to transferring responsibility for coherency management activities to the controller.

Owner:IBM CORP

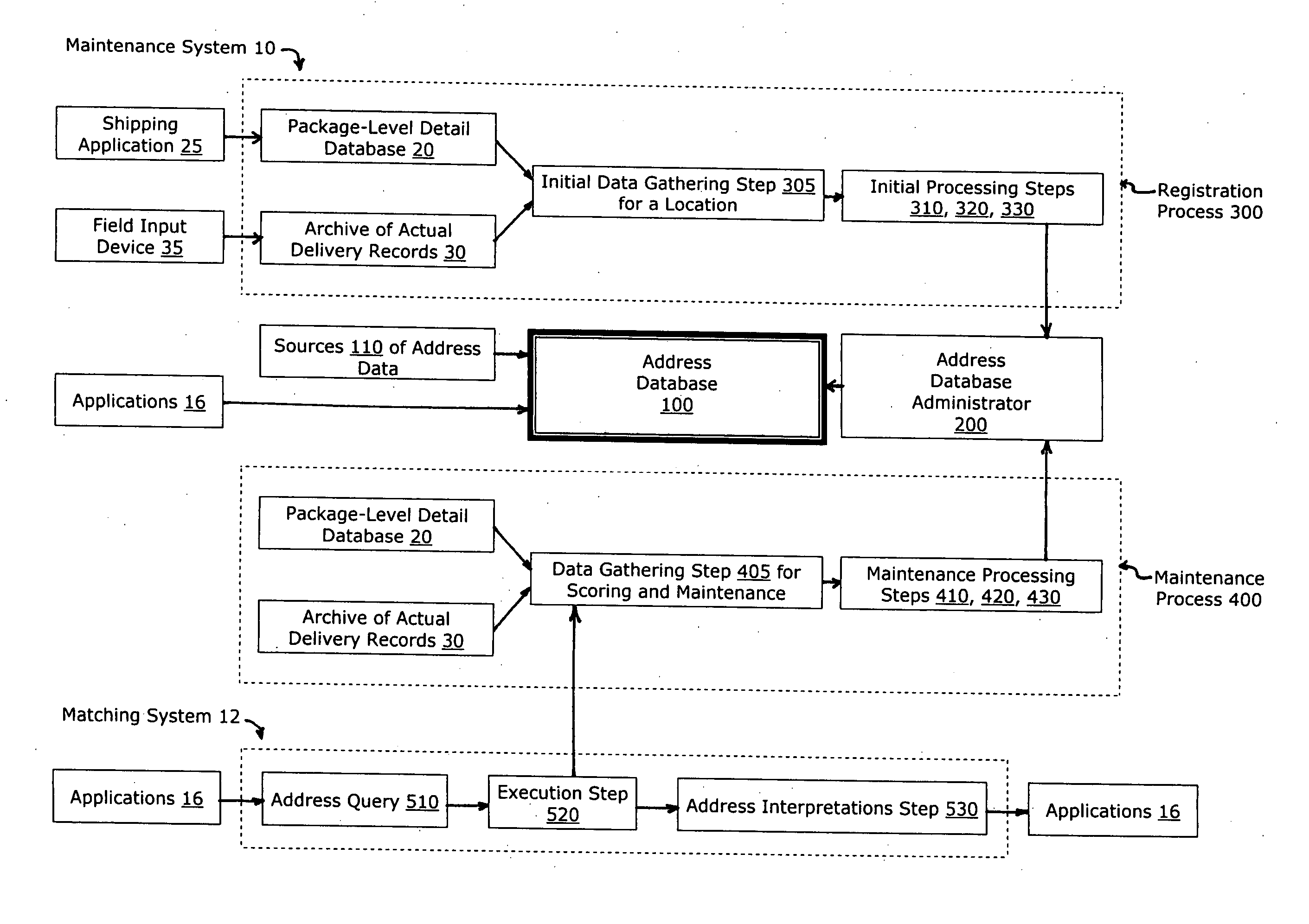

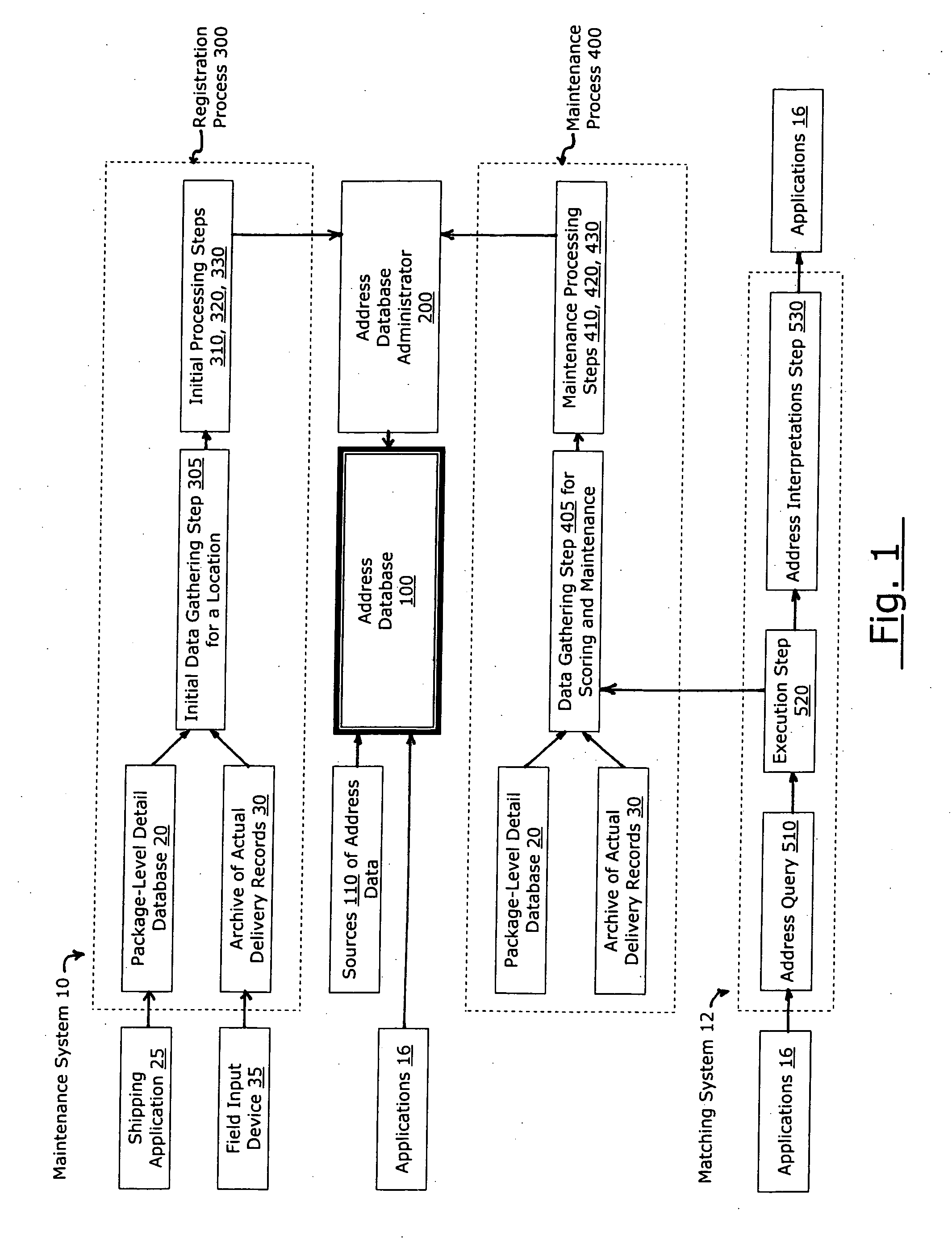

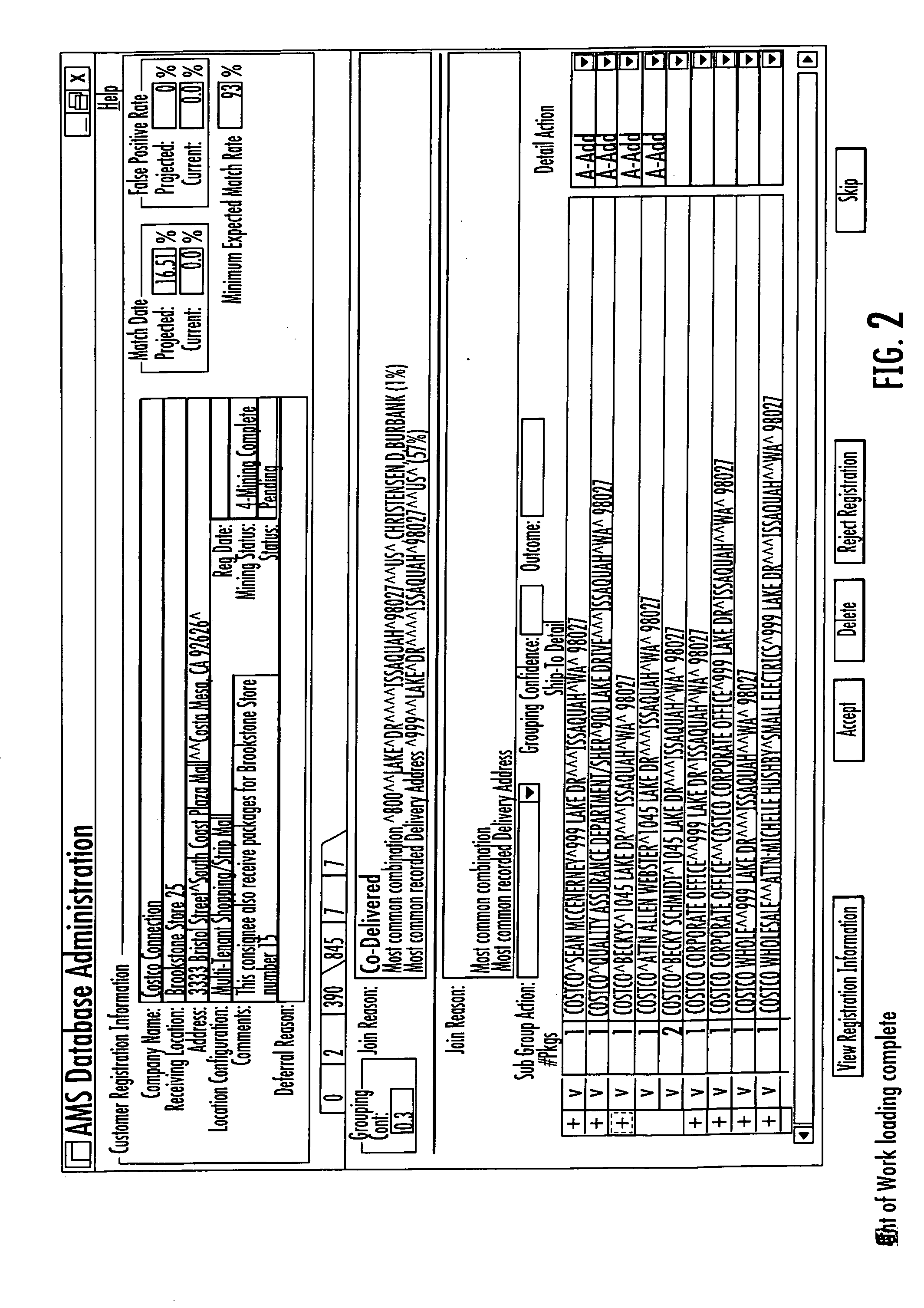

Registration and maintenance of address data for each service point in a territory

A computer system and method is disclosed for mining current and archived address data in order to identify a preferred address for each service point in a territory. The data mining system may start in response to the presentation of a candidate address for matching. The set of mined data may be prioritized by clustering like characteristics, building similarity matrices, and by constructing dendrograms with nodes joined according to common characteristics. A computer system and method for maintaining a central database of preferred addresses is also disclosed. Selected address data gathered in a queue may be scored by characteristic, grouped by consignee location, and staged for processing. The scored queue of data may be prioritized by clustering like characteristics, building similarity matrices, and by constructing dendrograms.

Owner:UNITED PARCEL SERVICE OF AMERICAN INC

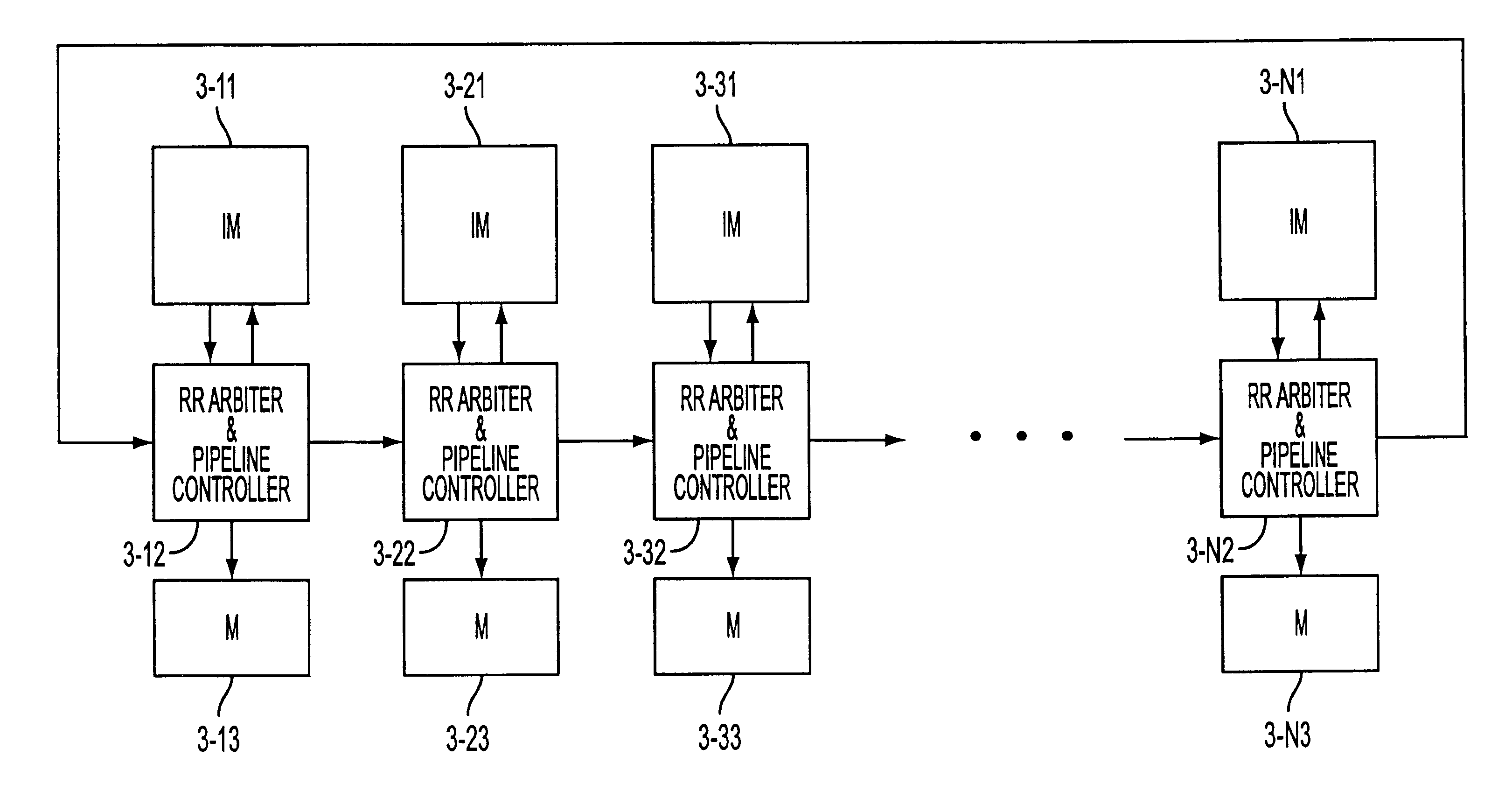

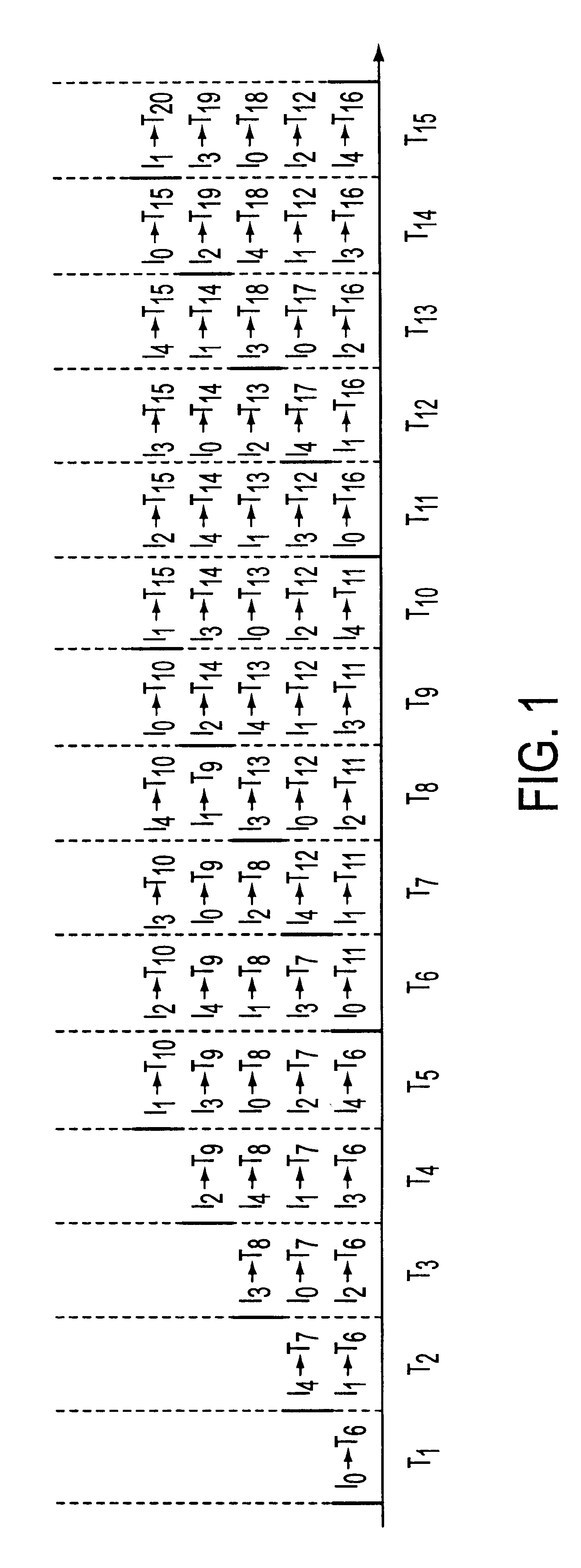

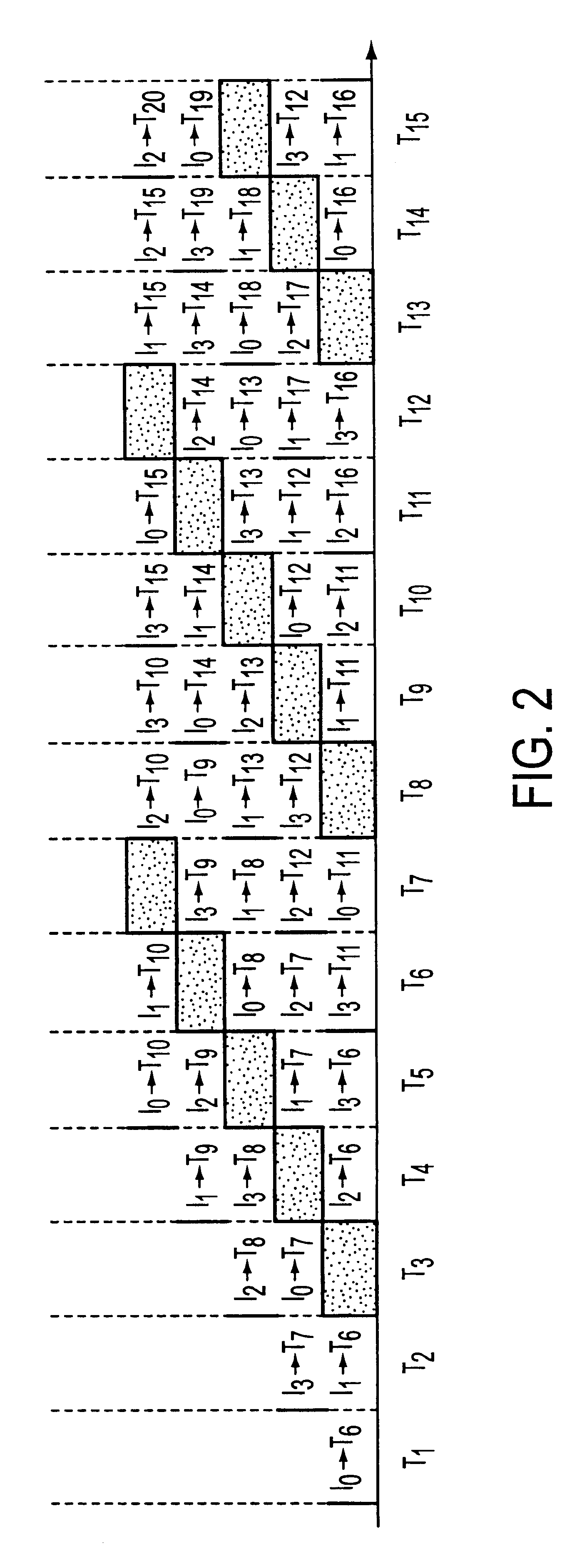

RRGS-round-robin greedy scheduling for input/output terabit switches

InactiveUS6618379B1Easy to useImprove performanceTime-division multiplexLoop networksCrossbar switchOptimal scheduling

A novel protocol for scheduling of packets in high-speed cell based switches is provided. The switch is assumed to use a logical cross-bar fabric with input buffers. The scheduler may be used in optical as well as electronic switches with terabit capacity. The proposed round-robin greedy scheduling (RRGS) achieves optimal scheduling at terabit throughput, using a pipeline technique. The pipeline approach avoids the need for internal speedup of the switching fabric to achieve high utilization. A method for determining a time slot in a NxN crossbar switch for a round robin greedy scheduling protocol, comprising N logical queues corresponding to N output ports, the input for the protocol being a state of all the input-output queues, output of the protocol being a schedule, the method comprising: choosing input corresponding to i=(constant-k-1)mod N, stopping if there are no more inputs, otherwise choosing the next input in a round robin fashion determined by i=(i+1)mod N; choosing an output j such that a pair (i,j) to a set C={(i,j)| there is at least one packet from I to j}, if the pair (i,j) exists; removing i from a set of inputs and repeating the steps if the pair (i,j) does not exist; removing i from the set of inputs and j from a set of outputs; and adding the pair (i,j) to the schedule and repeating the steps.

Owner:NEC CORP

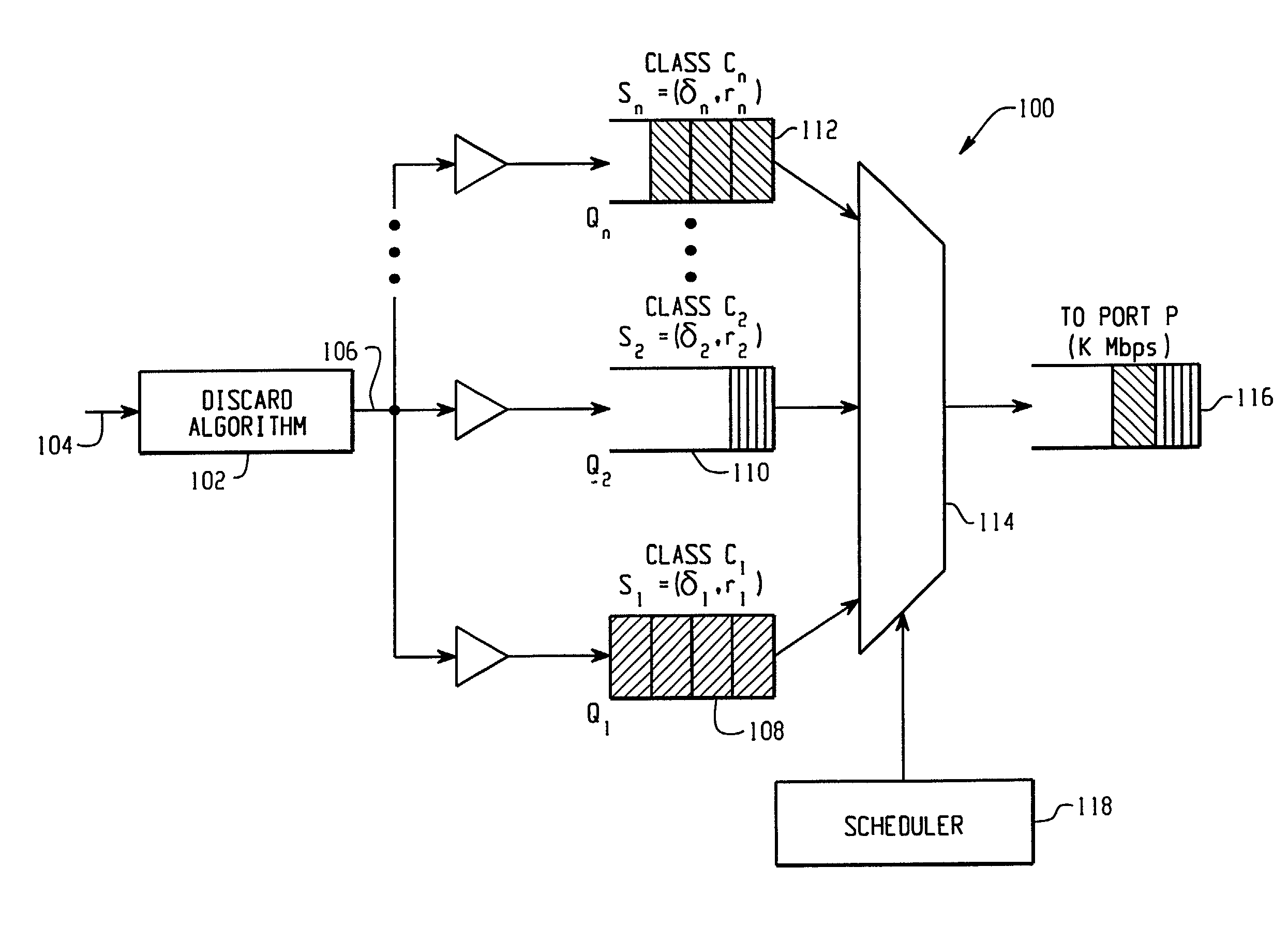

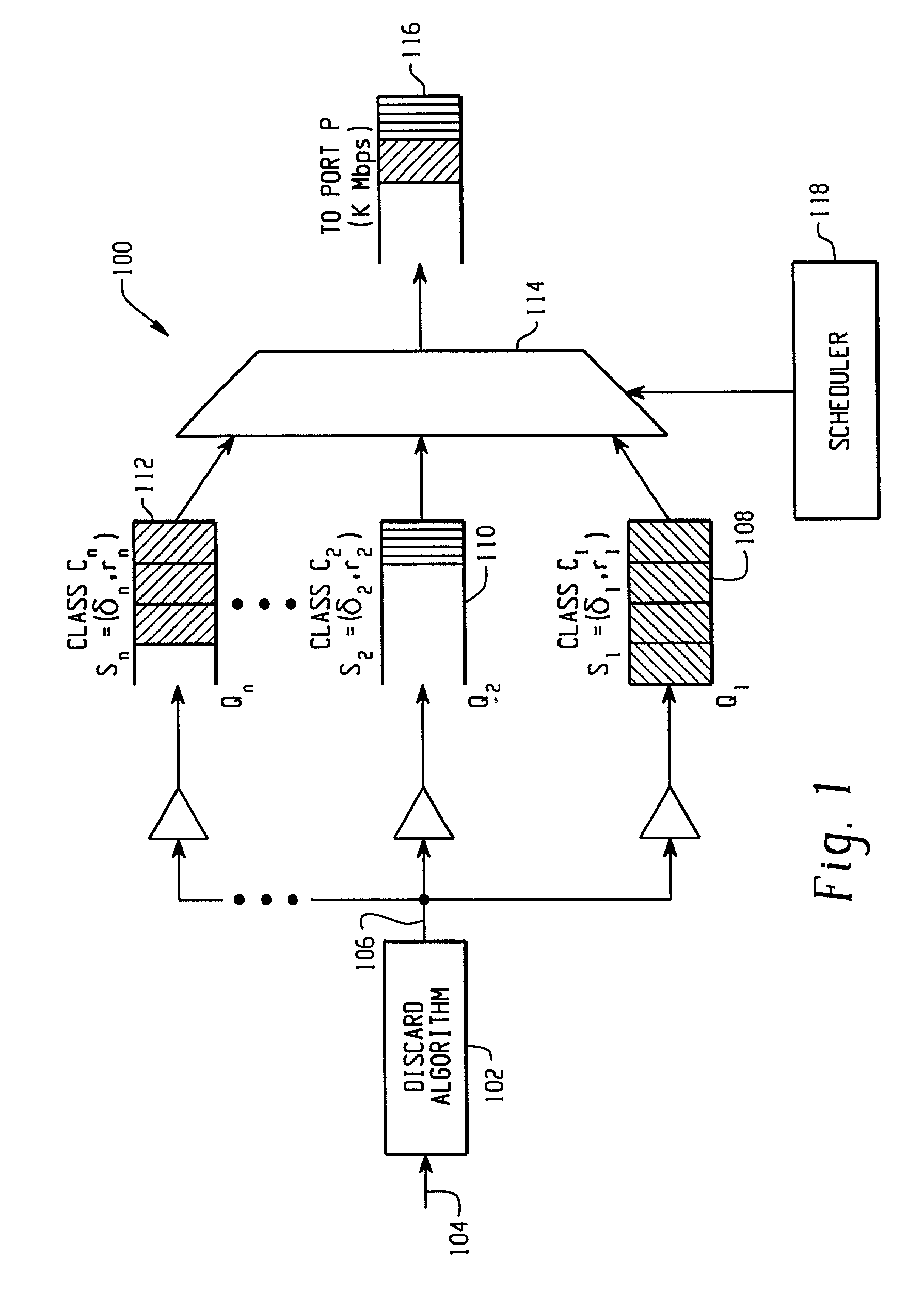

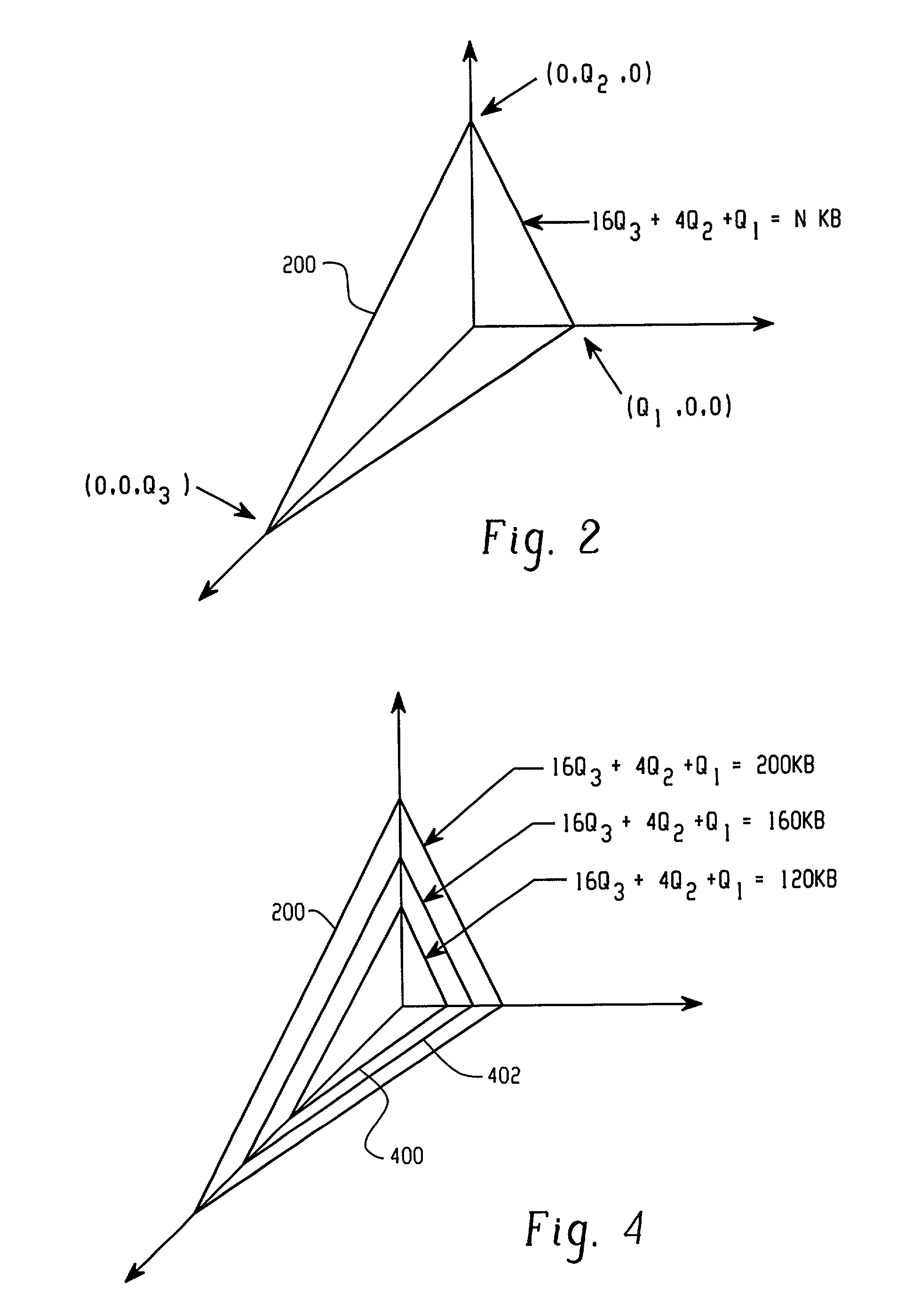

Unified algorithm for frame scheduling and buffer management in differentiated services networks

InactiveUS6990529B2Multiple digital computer combinationsData switching networksDifferentiated servicesDifferentiated service

A frame forwarding and discard architecture in a Differentiated Services network environment. The architecture comprises a discard logic for discarding a frame from a stream of incoming frames of the network environment in accordance with a discard algorithm, the frame being discarded if a predetermined congestion level in the network environment has been reached, and a predetermined backlog limit of a queue associated with the frame, has been reached. Scheduling logic is also provided for scheduling the order in which to transmit one or more enqueued frames of the network environment.

Owner:SYNAPTICS INC

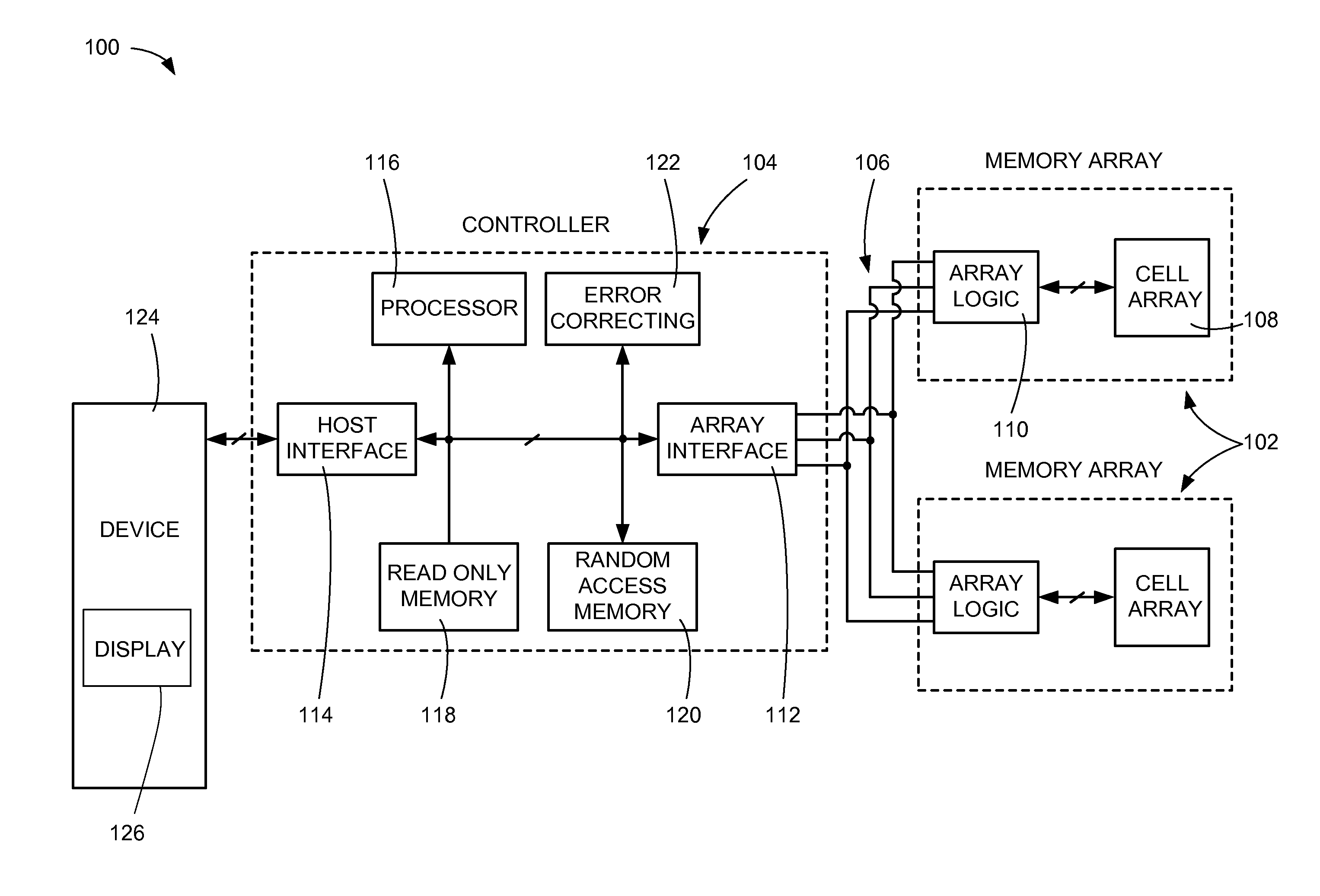

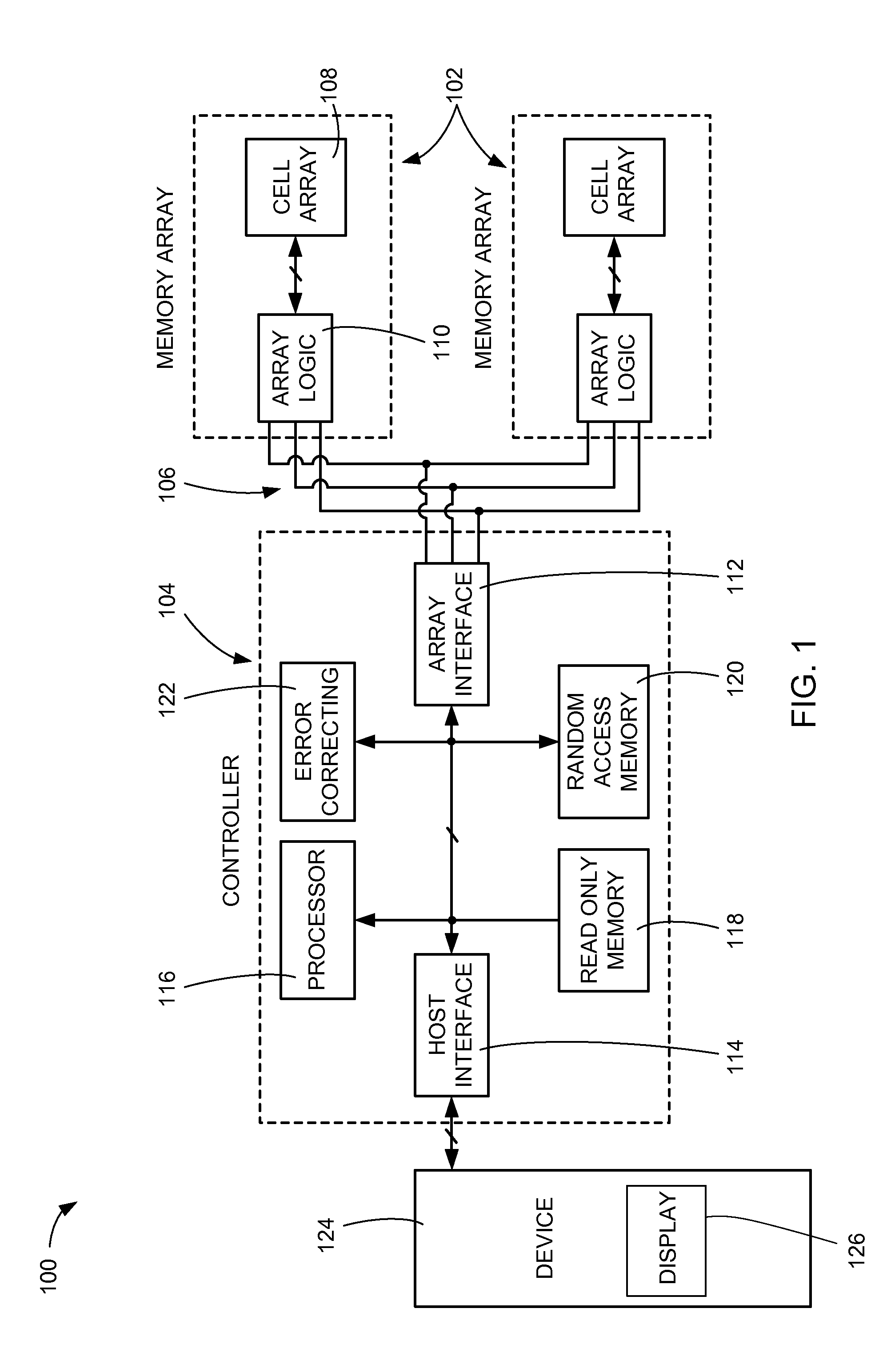

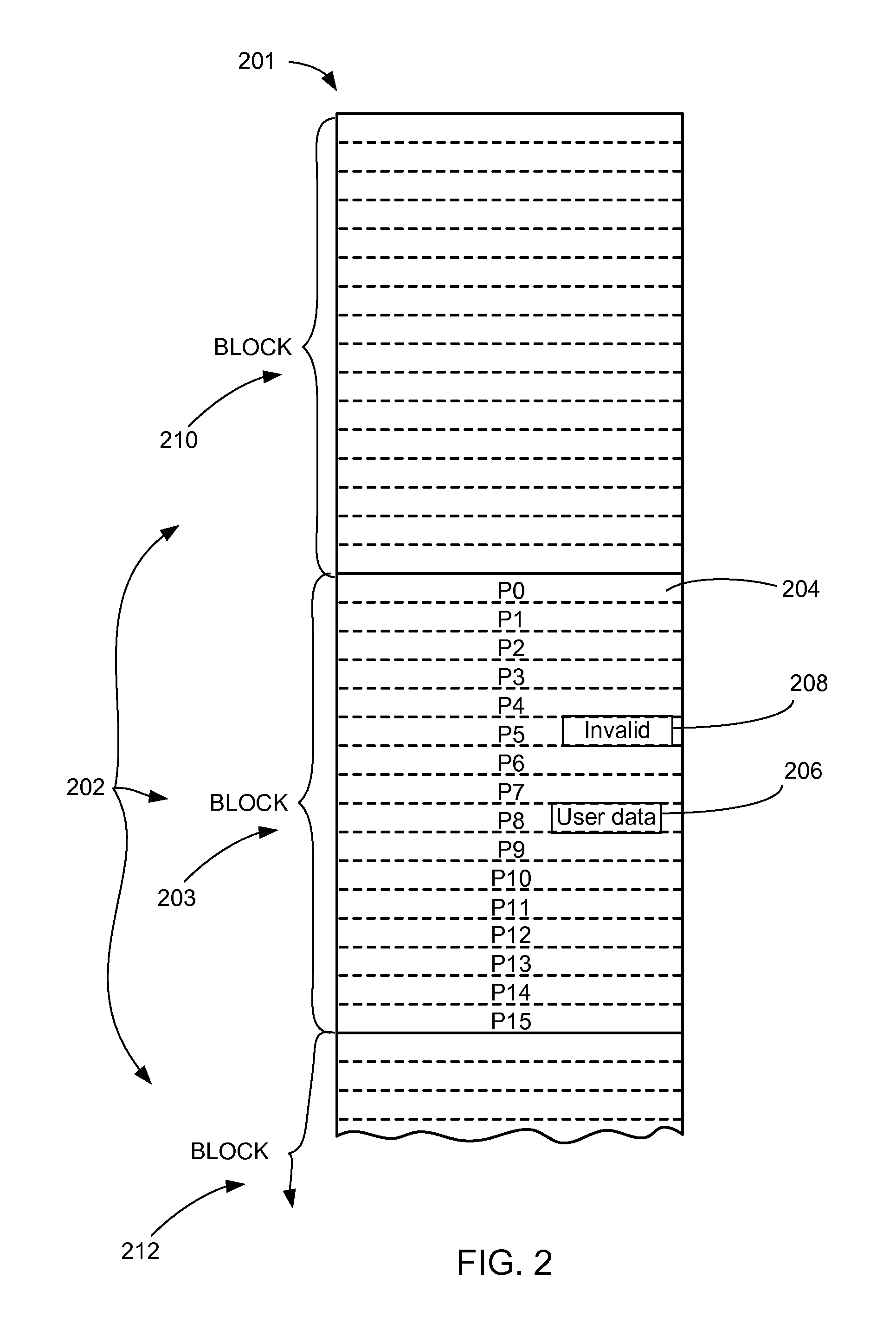

Memory system with tiered queuing and method of operation thereof

InactiveUS20120203993A1Memory architecture accessing/allocationMemory adressing/allocation/relocationLocality of referenceParallel computing

A method of operation of a memory system includes: providing a memory array having a dynamic queue and a static queue; and grouping user data by a temporal locality of reference having more frequently handled data in the dynamic queue and less frequently handled data in the static queue.

Owner:SANDISK TECH LLC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com