Patents

Literature

234 results about "Queue manager" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Computerized person-to-person payment system and method without use of currency

InactiveUS20080177668A1Maximum protection of privacyMaximum protectionSynchronising transmission/receiving encryption devicesFinanceBank accountClient-side

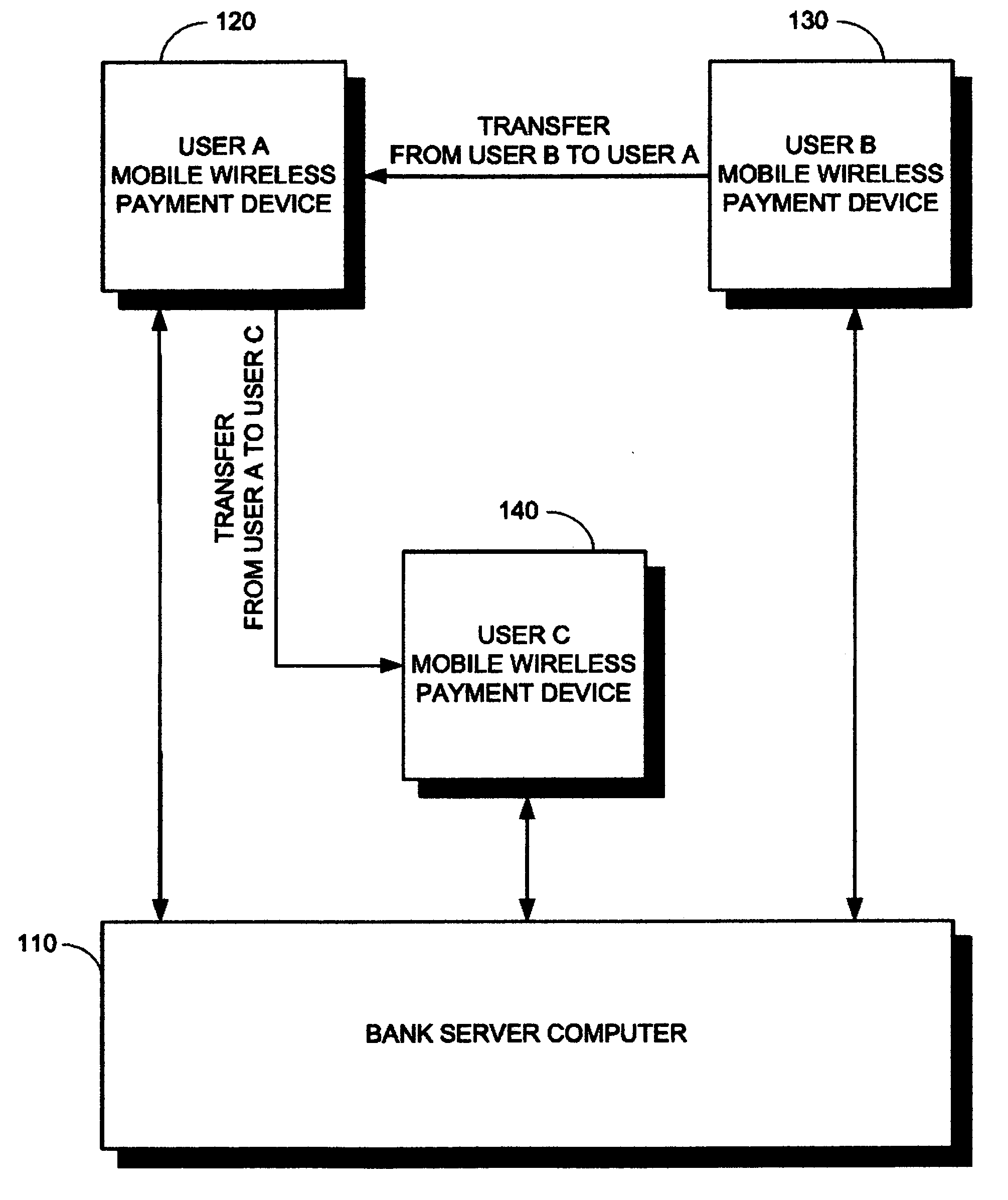

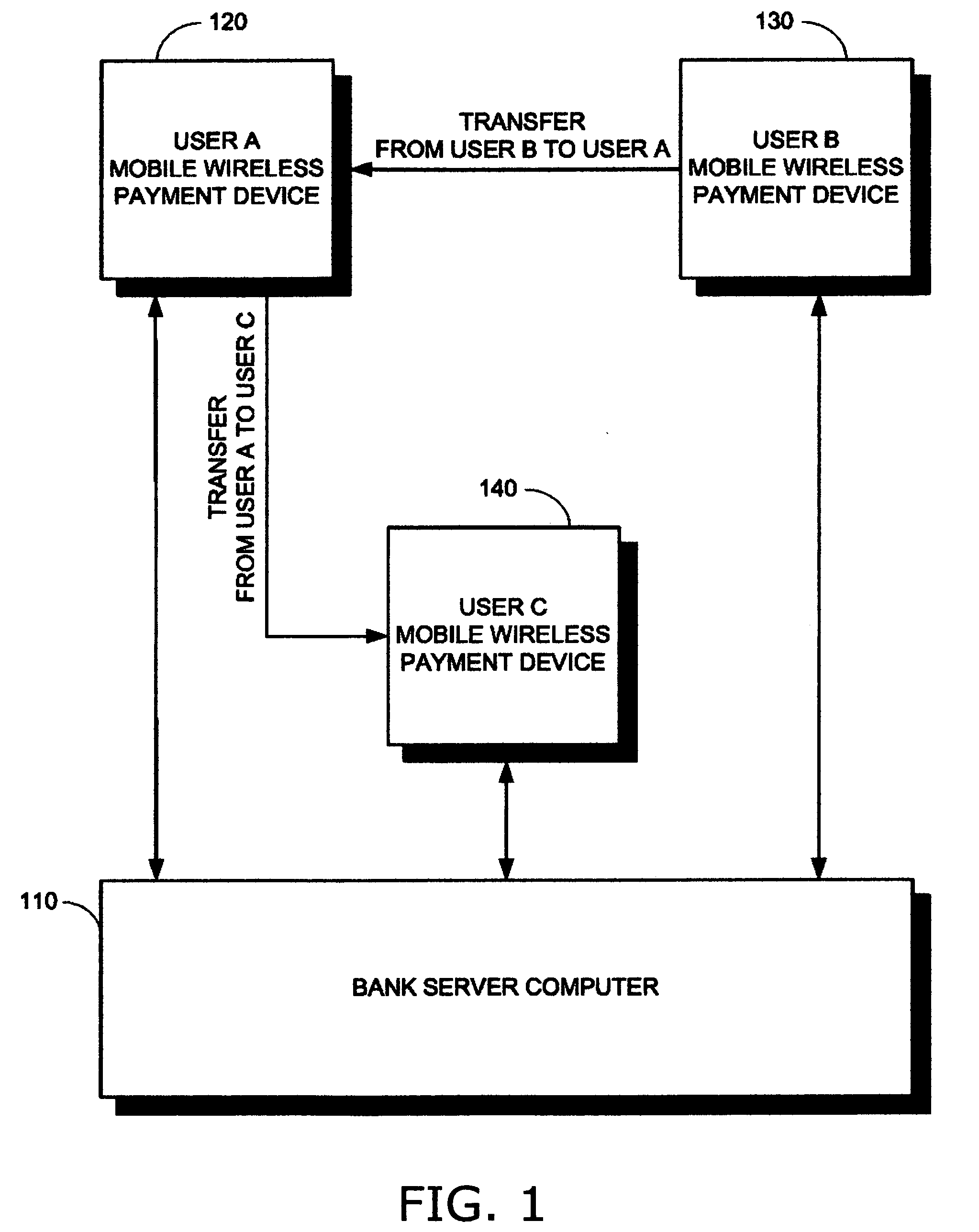

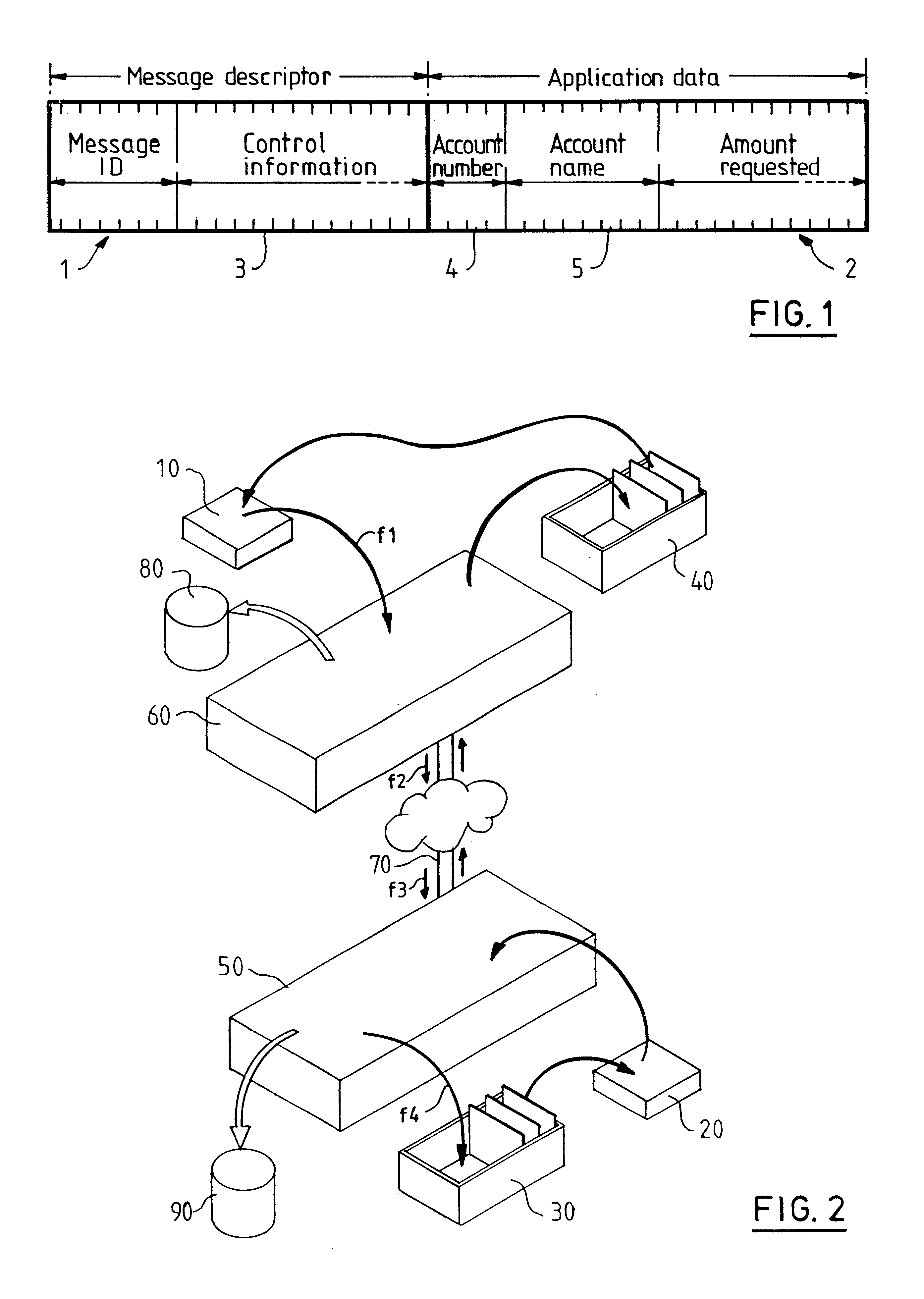

An electronic funds system, including a plurality of payment devices, each payment device including a payment application for (i) transferring funds to another payment device, (ii) receiving funds from another payment device, and (iii) synchronizing transactions with a bank server computer, a queue manager for queuing transactions for synchronization with the bank server computer, an encoder for encrypting transaction information, a proximity communication module for wirelessly communicating with another of the plurality of payment devices over a short range, a wireless communication module for communicating with a client computer and with the bank server computer over a long range, a plurality of client computers, each client computer including a payment device manager for (i) transmitting funds to a payment device, (ii) receiving funds from a payment device, and (iii) setting payment device parameters, and a wireless communication module for communicating with at least one of the plurality of payment devices and with a bank server computer over a long range, and at least one bank server computer, each bank server computer including an account manager for (i) managing at least one bank account associated with at least one of the payment devices, and (ii) processing transactions received from the plurality of payment devices, a decoder for decrypting encrypted transaction information, and a wireless communication module for communicating with the plurality of payment devices and with the plurality of client computers over a long range. A method and a computer-readable storage medium are also described and claimed.

Owner:DELEAN BRUNO

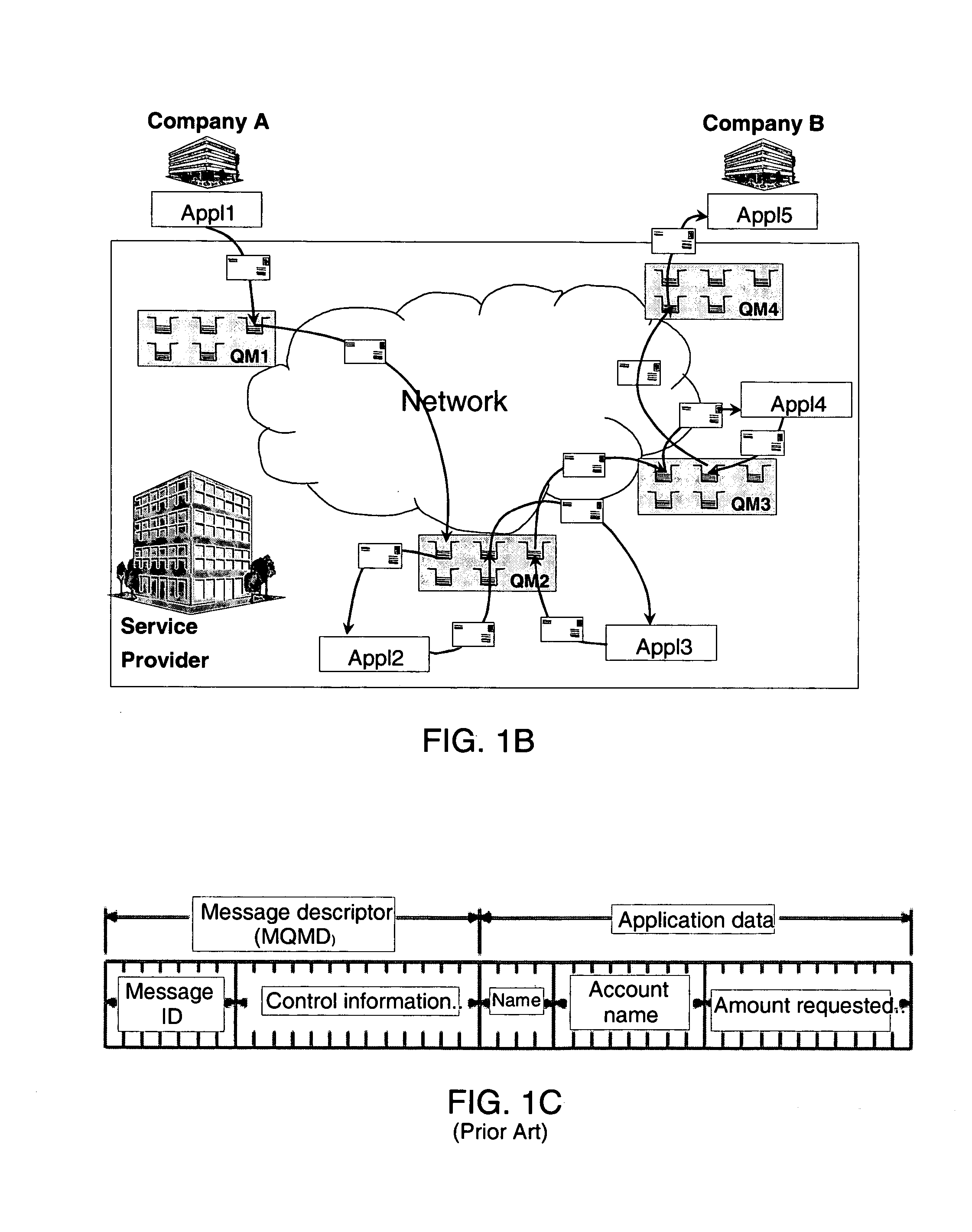

Method of transferring messages between computer programs across a network

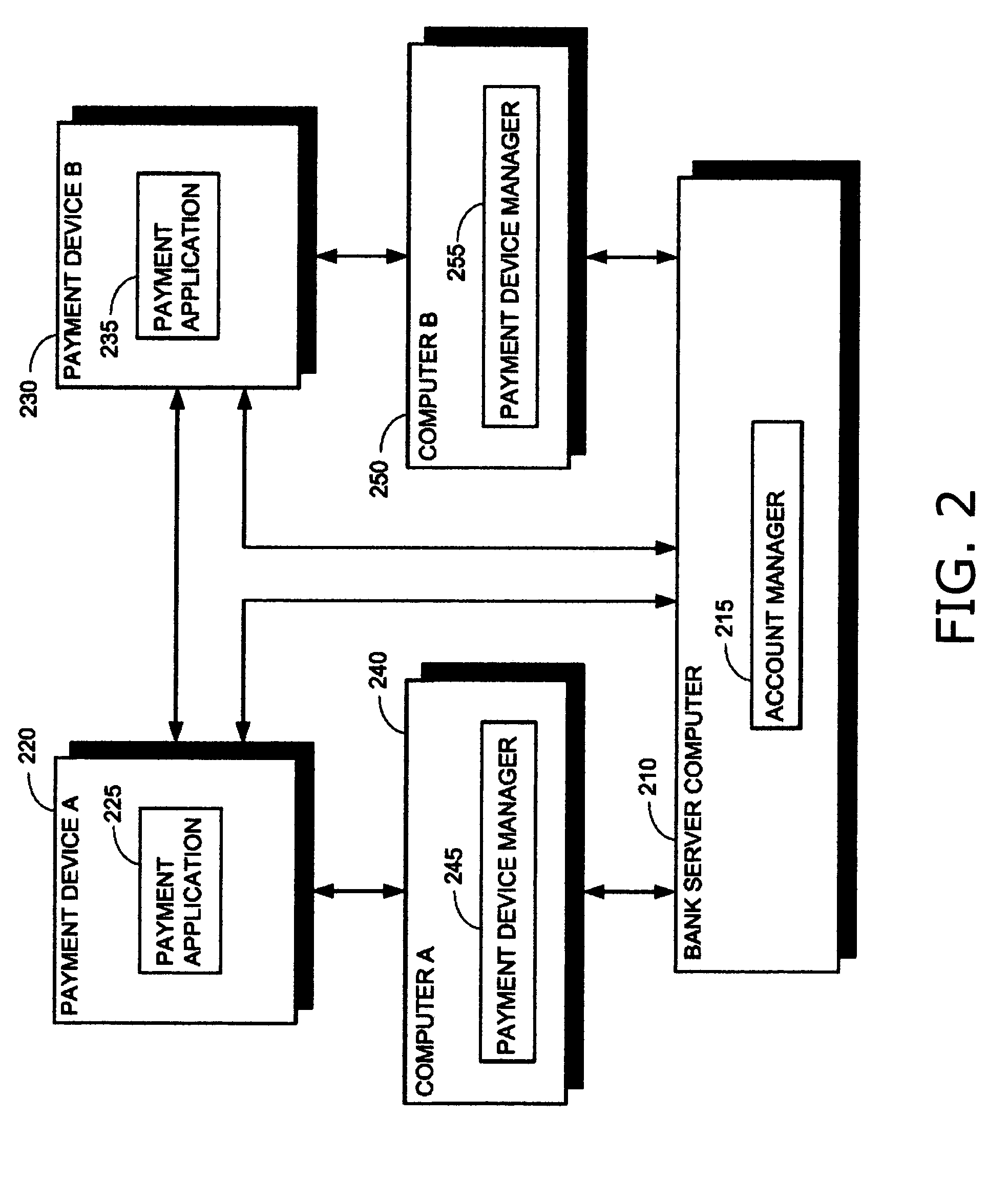

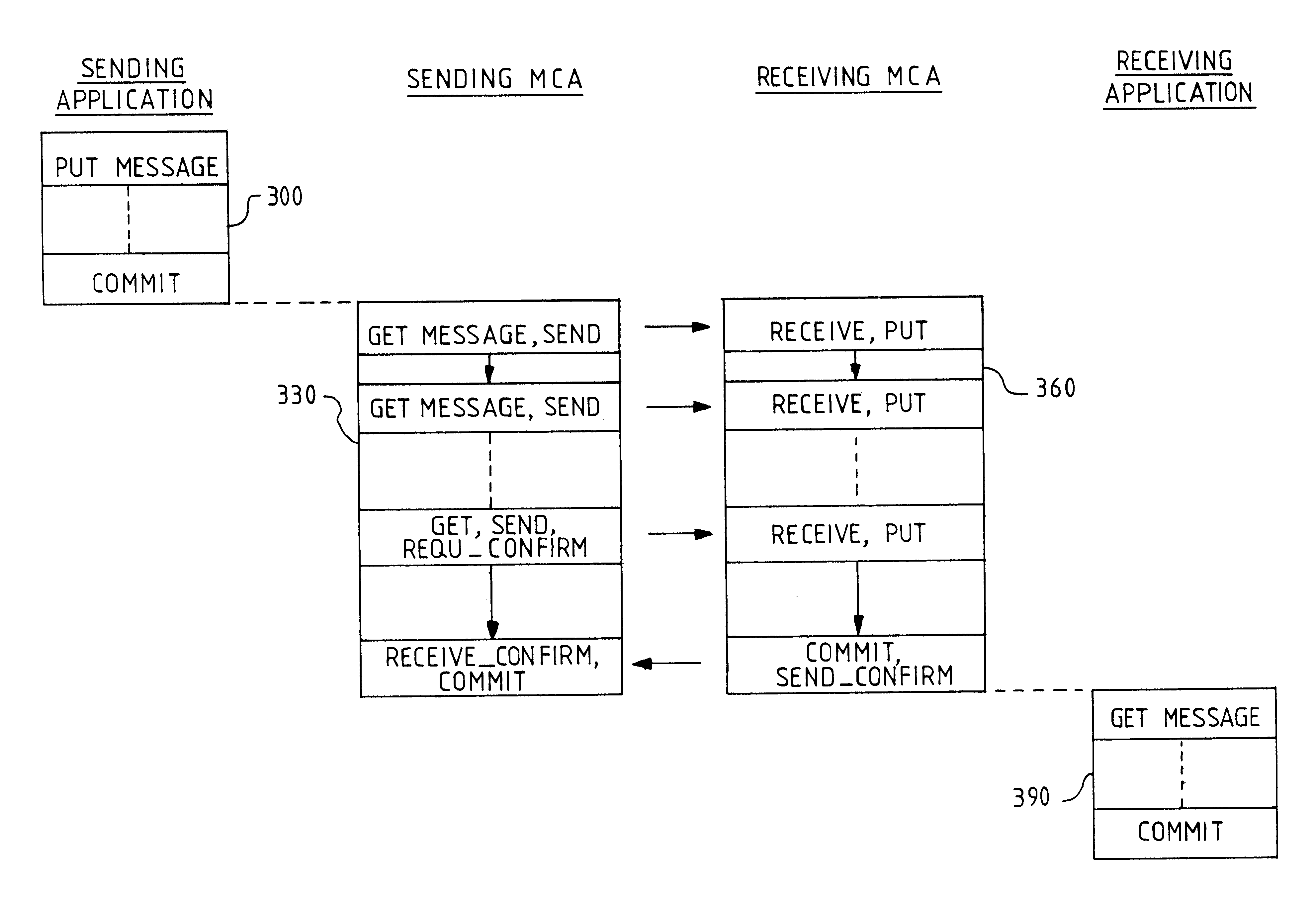

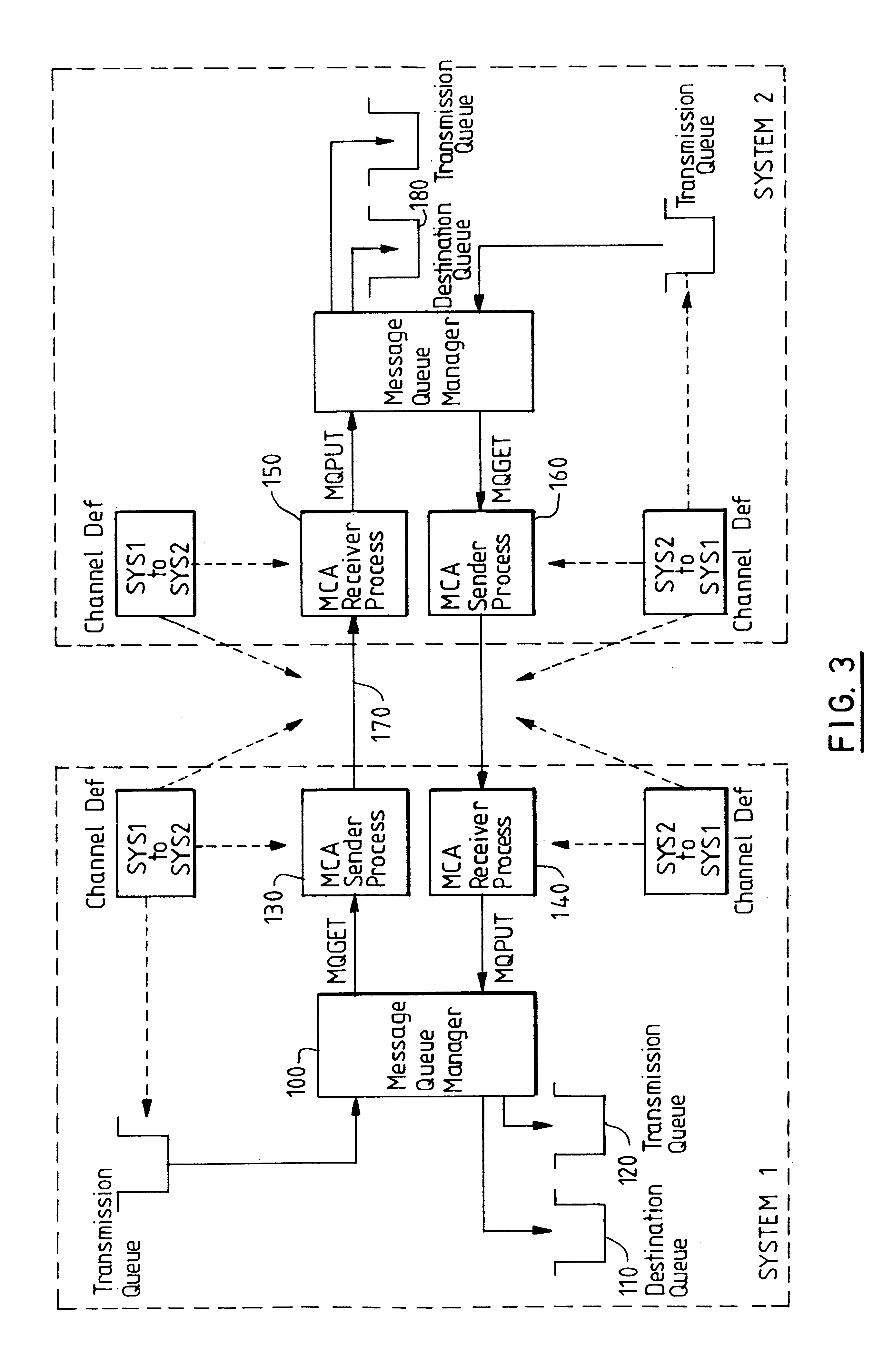

A method of delivering messages between application programs is provided which ensures that no messages are lost and none are delivered more than once. The method uses asynchronous message queuing. One or more queue manager programs (100) is located at each computer of a network for controlling the transmission of messages to and from that computer. Messages to be transmitted to a different queue manager are put onto special transmission queues (120). Transmission to an adjacent queue manager comprises a sending process (130) on the local queue manager (100) getting messages from a transmission queue and sending them as a batch of messages within a syncpoint-manager-controlled unit of work. A receiving process (150) on the receiving queue manager receives the messages and puts them within a second syncpoint-manager-controlled unit of work to queues (180) that are under the control of the receiving queue manager. Commitment of the batch is coordinated by the sender transmitting a request for commitment and for confirmation of commitment with the last message of the batch, commit at the sender then being triggered by the confirmation that is sent by the receiver in response to the request.The invention avoids the additional message flow that is a feature of two-phase commit procedures, avoiding the need for resource managers to synchronise with each other. It further reduces the commit flows by permitting batching of a number of messages.

Owner:IBM CORP

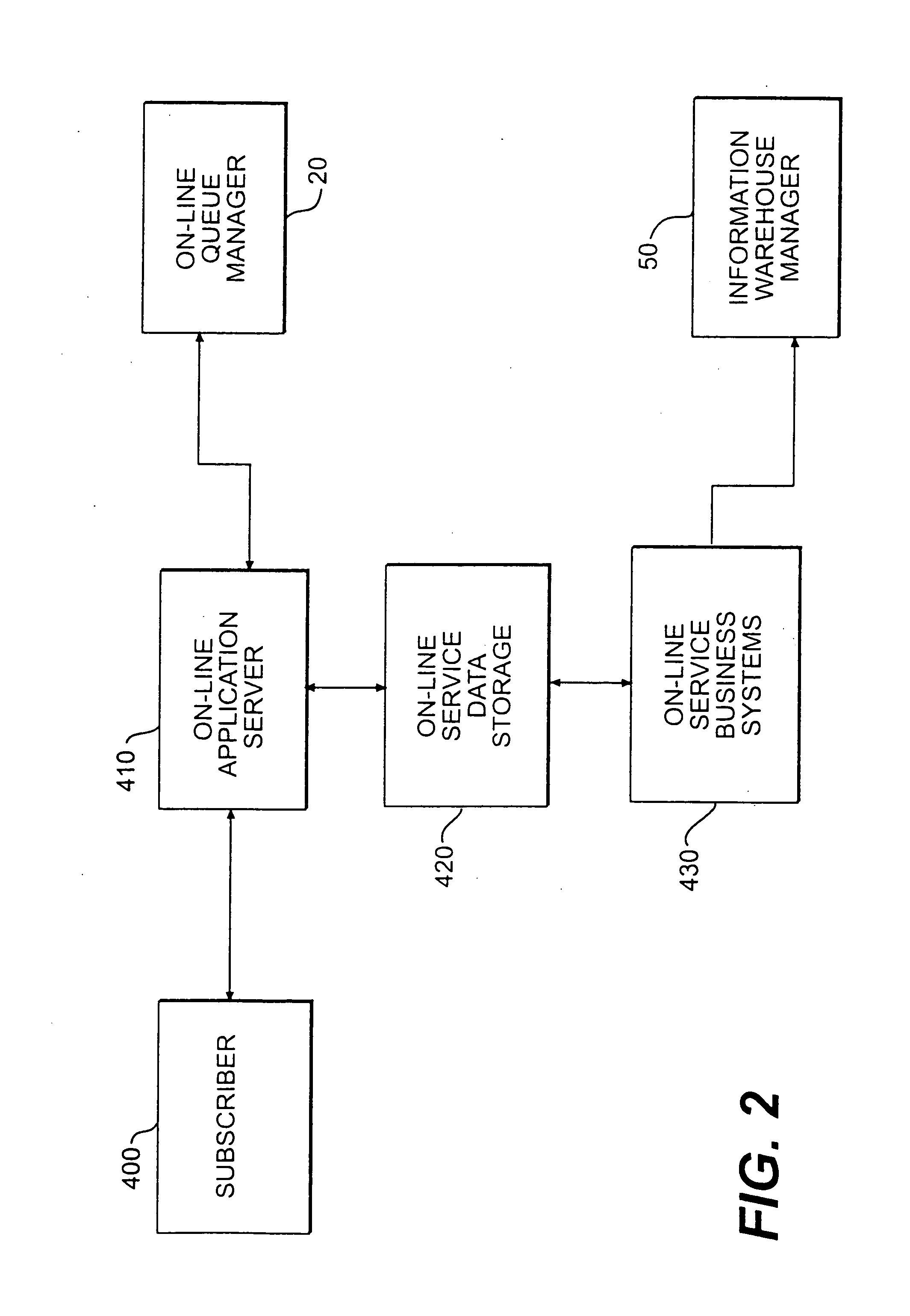

On-line interactive system and method for providing content and advertising information to a targeted set of viewers

InactiveUS20060122882A1AdvertisementsFundraising managementThird partyComputer-mediated communication

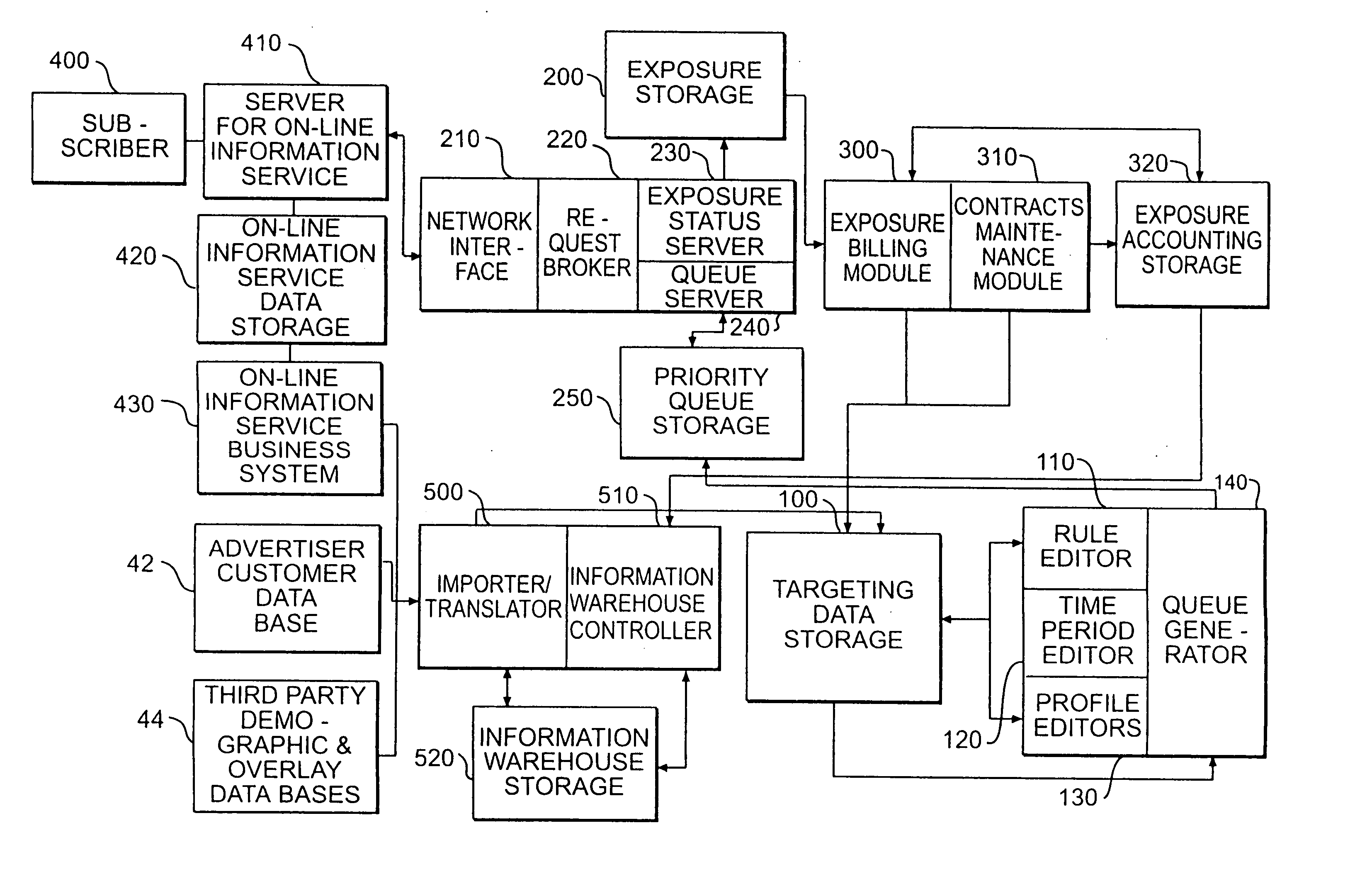

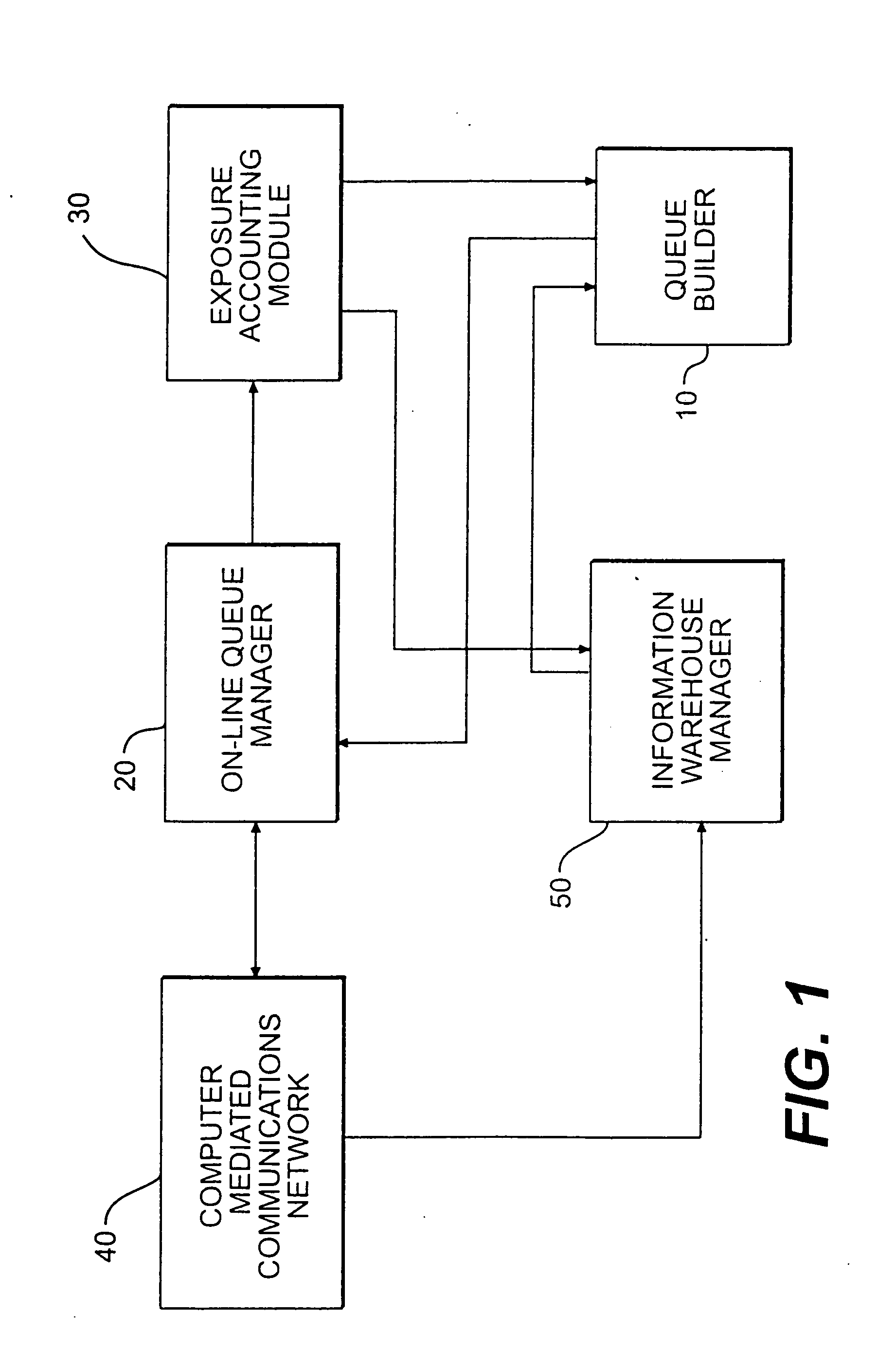

Prioritized queues of advertising and content data are generated by a queue builder and sent to an on-line queue manager. A computer mediated communications network provides content and subscriber data to the queue builder and receives content segment play lists from the on-line queue manager. An exposure accounting module calculates and stores information about the number of exposures of targeted material received by subscribers and generates billing information. An information warehouse manager is employed to receive data from advertisers' data bases and third party sources as well as from the computer mediated communications network.

Owner:MICROSOFT TECH LICENSING LLC +1

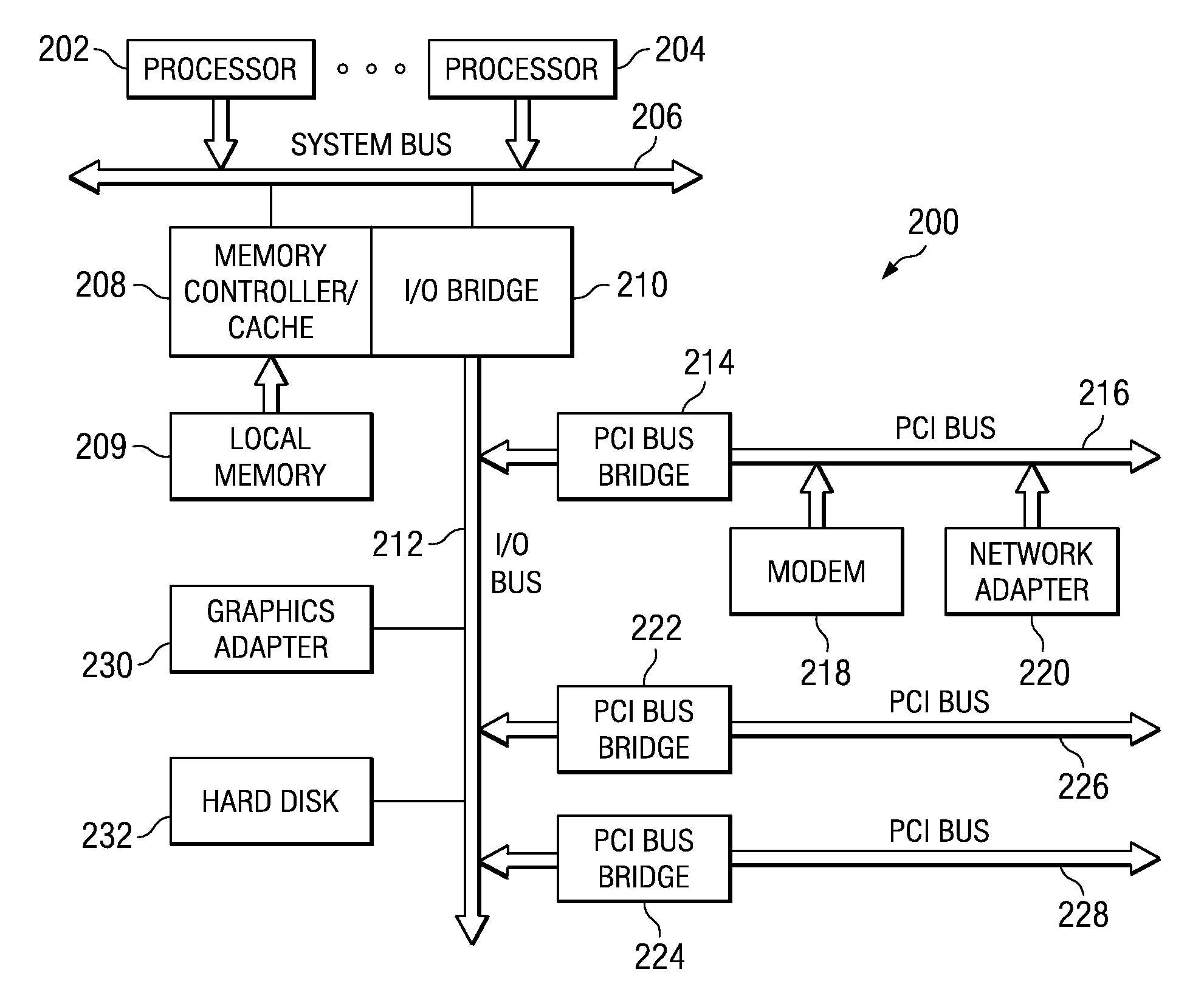

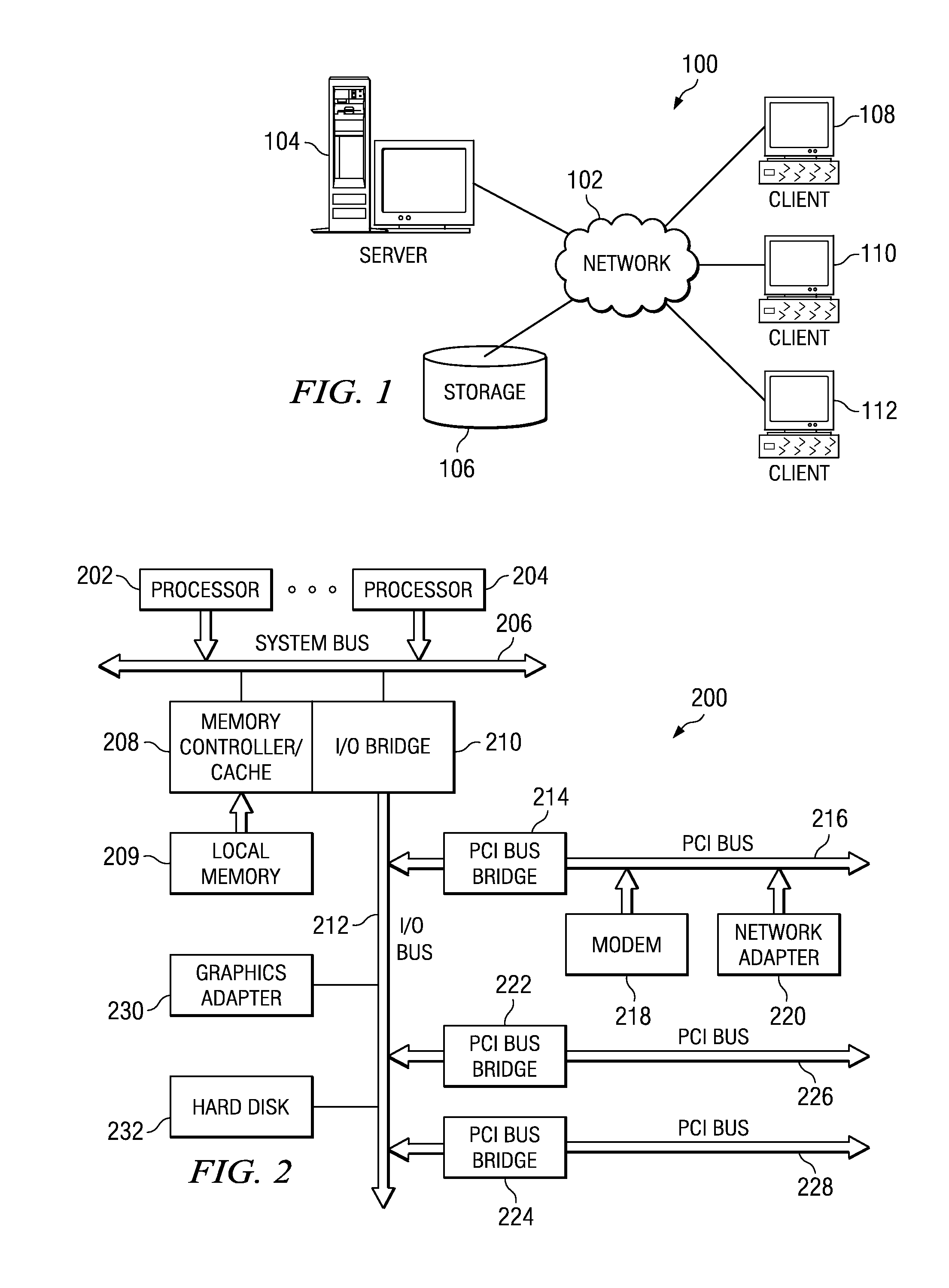

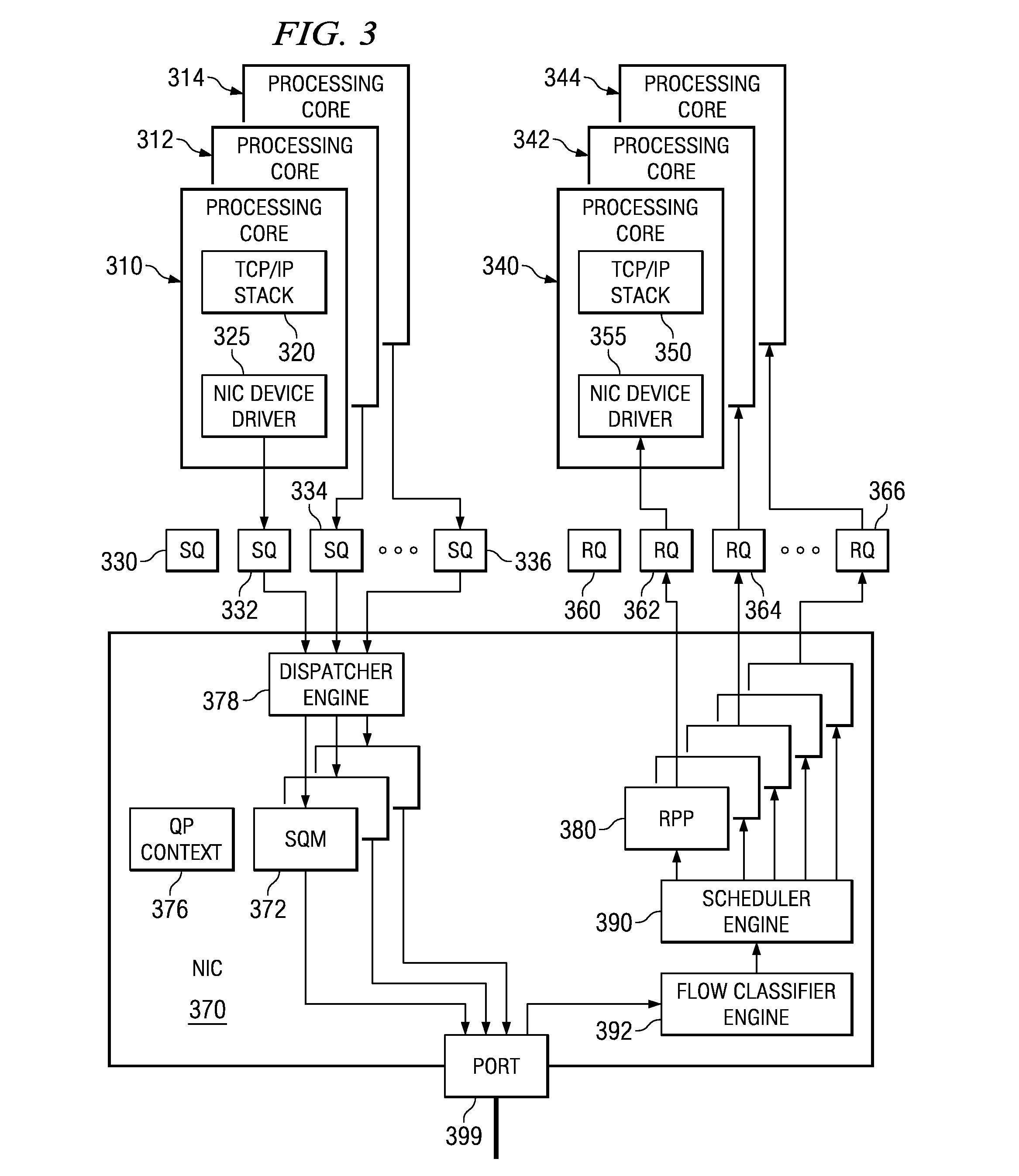

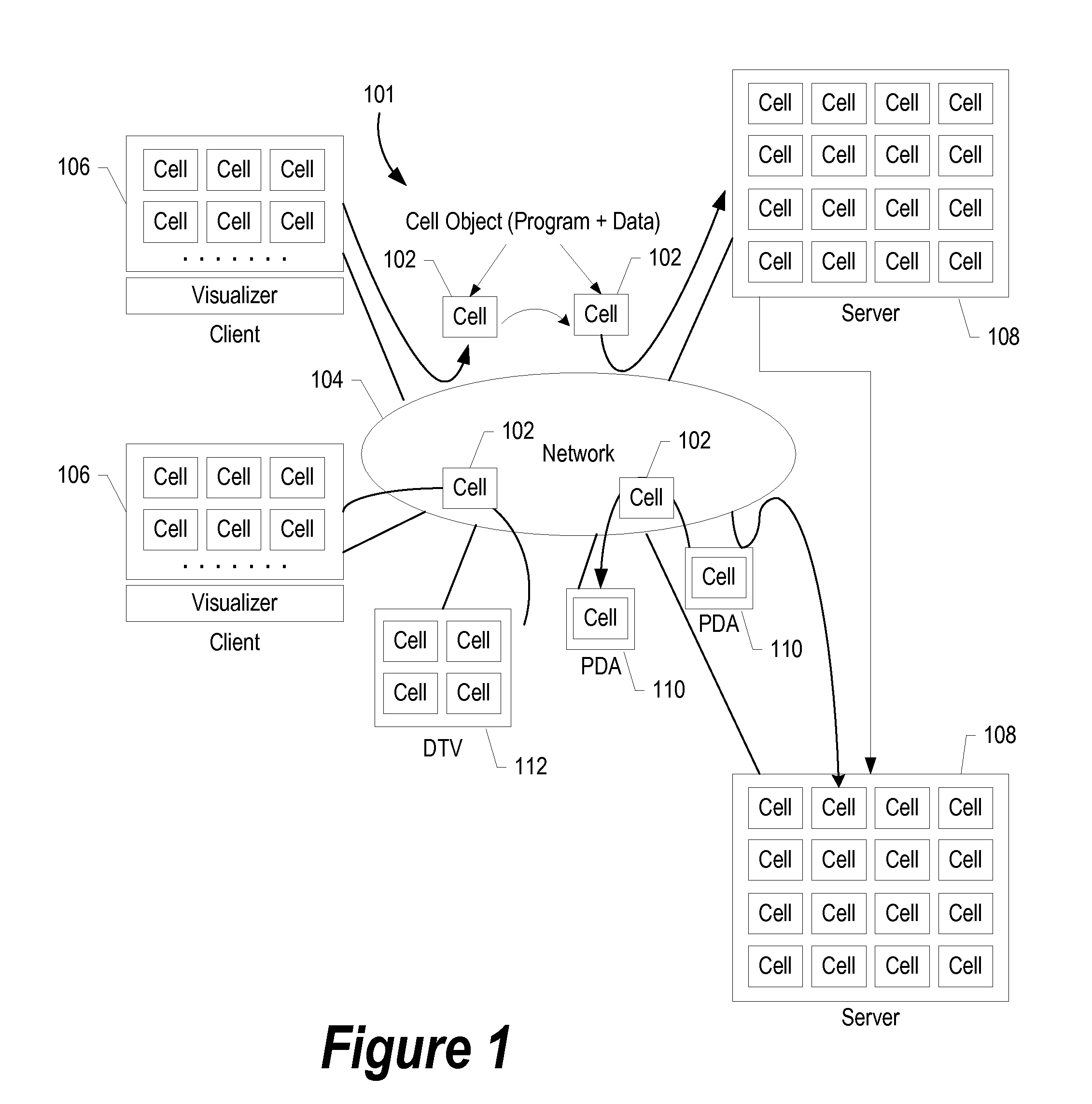

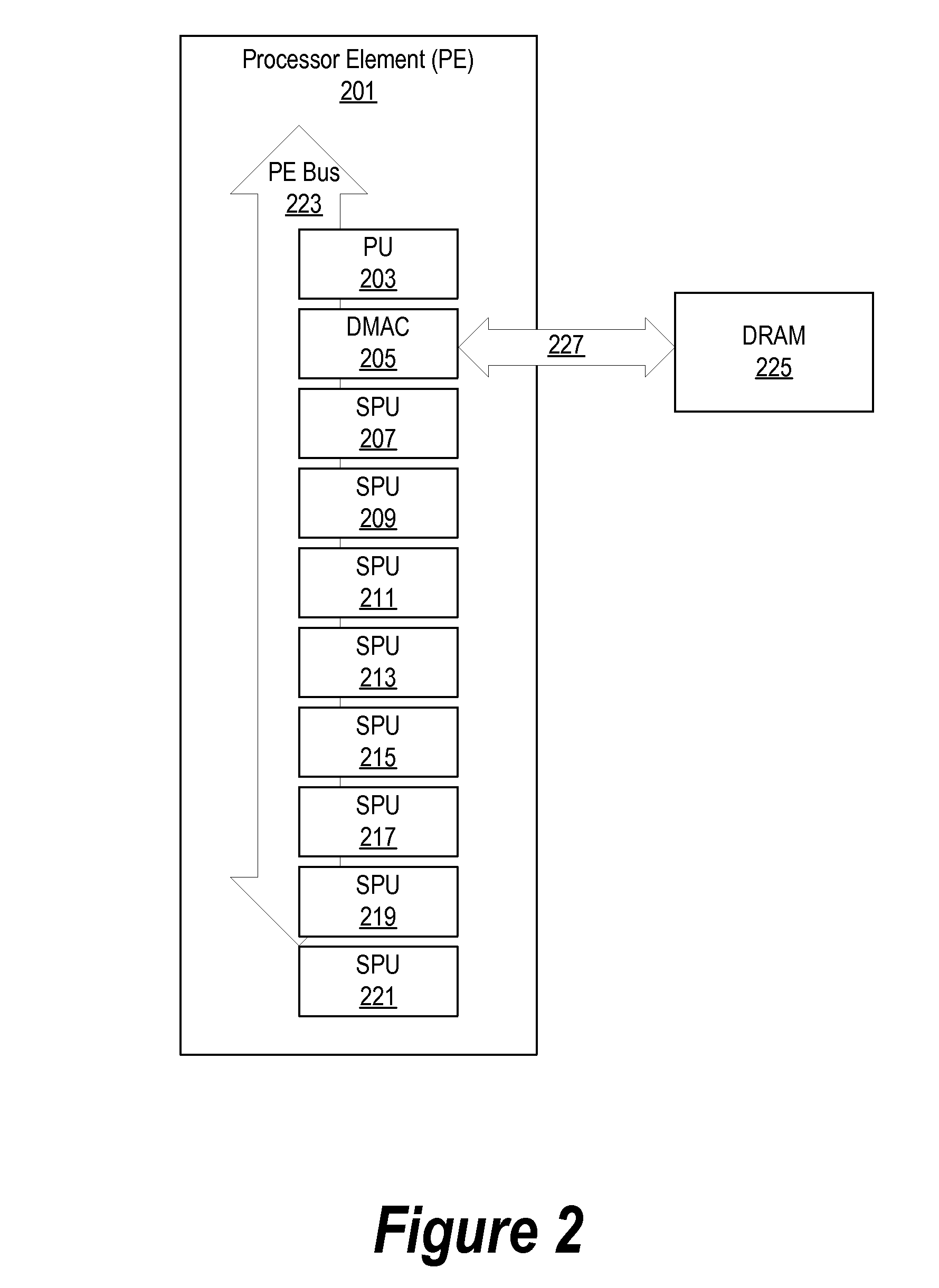

System and Method for Multicore Communication Processing

InactiveUS20080181245A1Effective latencySustaining media speedTime-division multiplexData switching by path configurationDirect memory accessProcessing core

A system and method for multicore processing of communications between data processing devices are provided. With the mechanisms of the illustrative embodiments, a set of techniques that enables sustaining media speed by distributing transmit and receive-side processing over multiple processing cores is provided. In addition, these techniques also enable designing multi-threaded network interface controller (NIC) hardware that efficiently hides the latency of direct memory access (DMA) operations associated with data packet transfers over an input / output (I / O) bus. Multiple processing cores may operate concurrently using separate instances of a communication protocol stack and device drivers to process data packets for transmission with separate hardware implemented send queue managers in a network adapter processing these data packets for transmission. Multiple hardware receive packet processors in the network adapter may be used, along with a flow classification engine, to route received data packets to appropriate receive queues and processing cores for processing.

Owner:IBM CORP

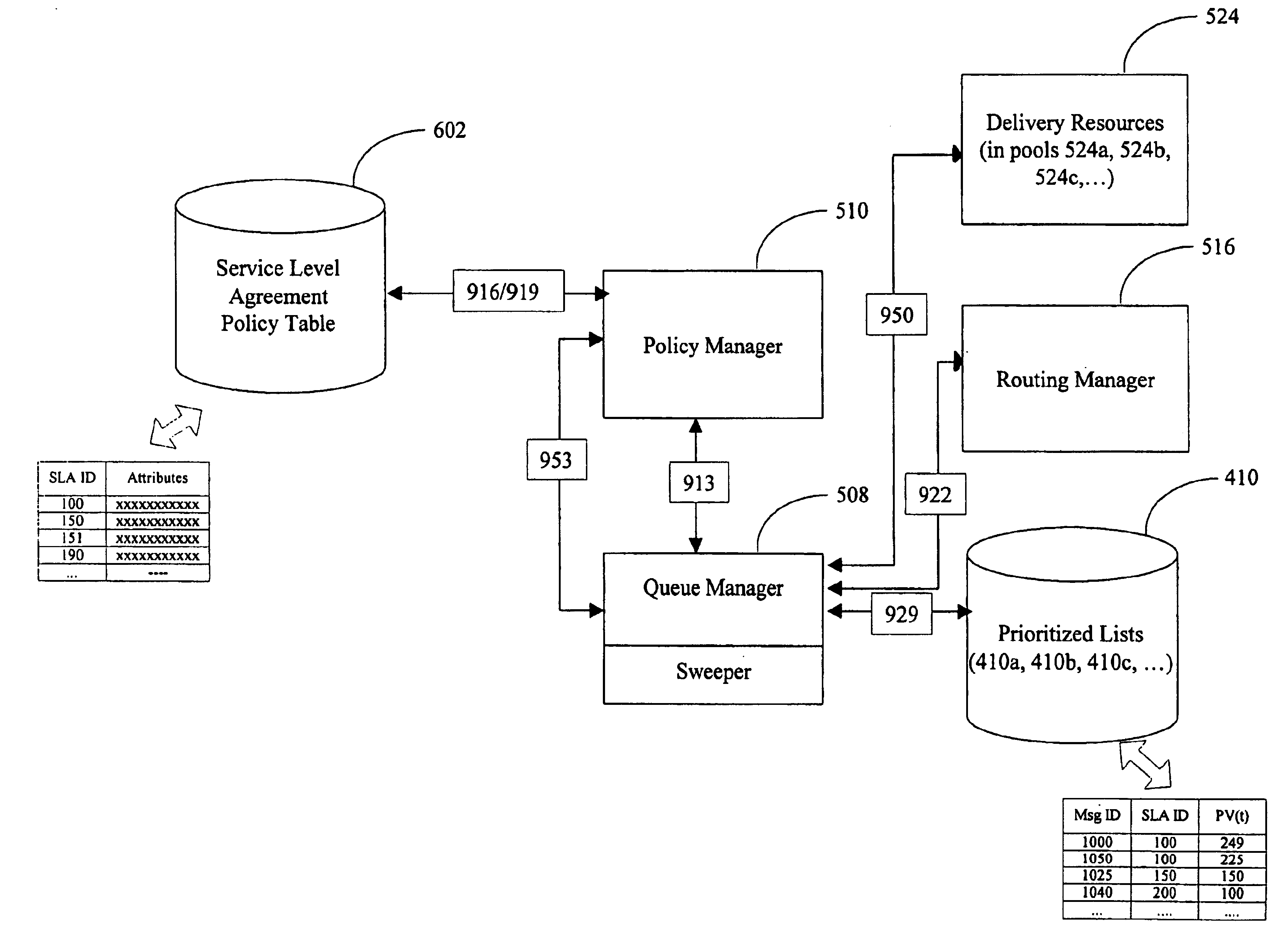

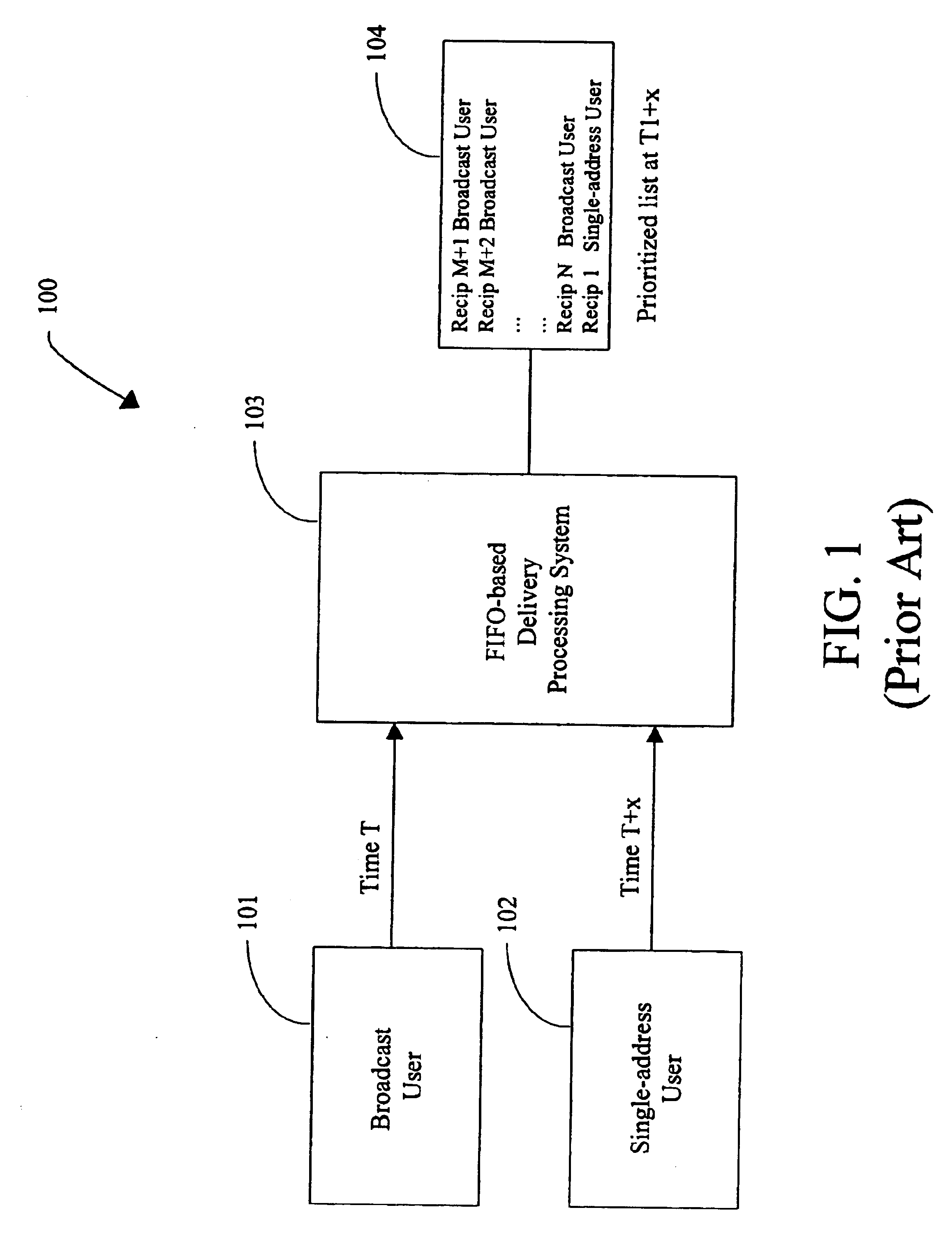

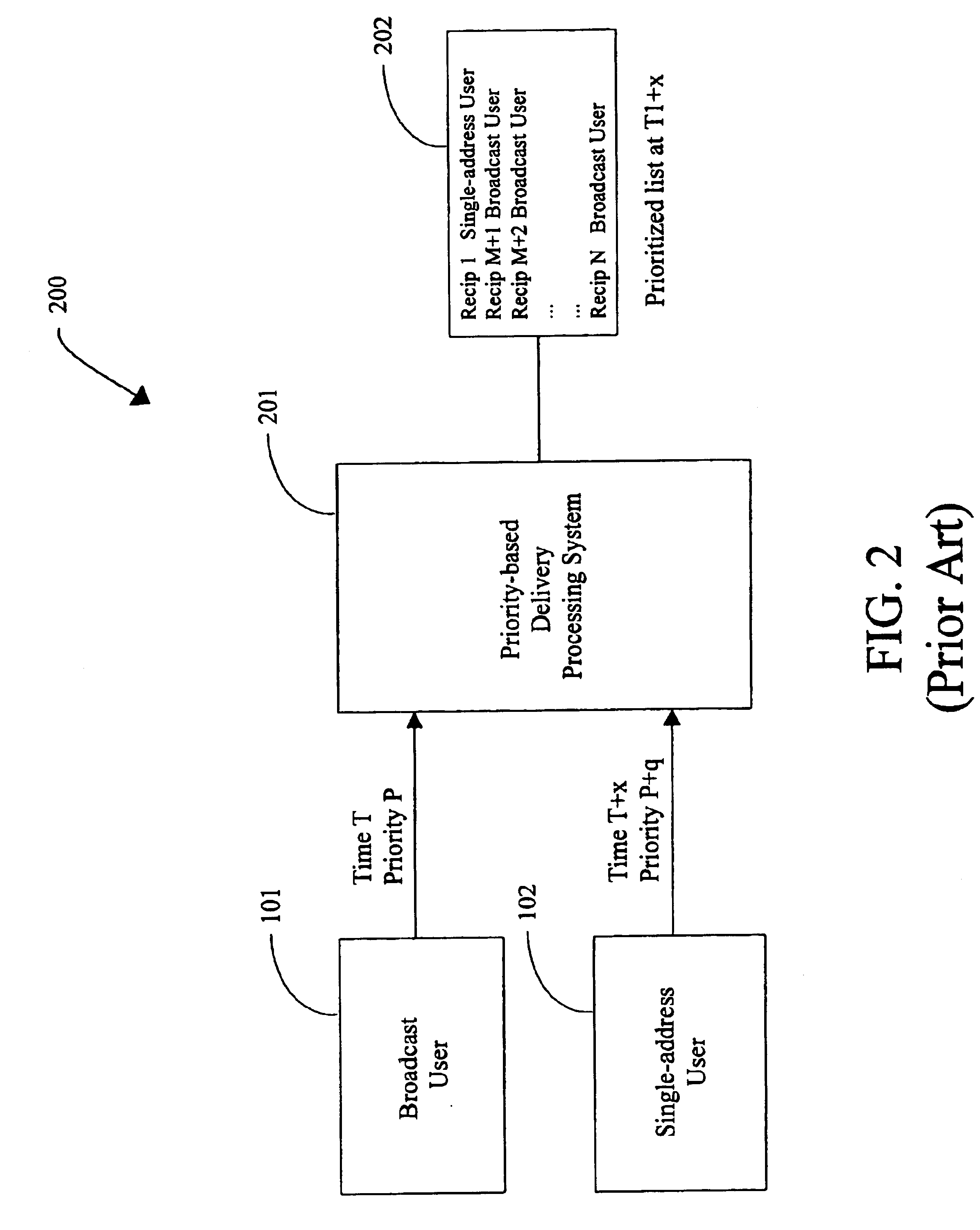

System and method for managing compliance with service level agreements

InactiveUS6917979B1Complete efficientlyGuaranteed levelMultiple digital computer combinationsData switching networksService-level agreementService level requirement

Compliance with subscriber job delivery requirements is managed and tracked. Job requirements set forth in service level agreements are stored electronically as delivery parameters. A queue manager creates a prioritized list of delivery jobs. A delivery manager delivers the jobs in accordance with the prioritized list. A routing manager determines optimal routes, e.g., least cost route for delivery of each job. Retries of jobs that are not successfully delivered can be performed. delivery job sources include broadcast subscribers, high- and low-priority single address subscribers, free subscribers and off-peak subscribers.

Owner:NET2PHONE

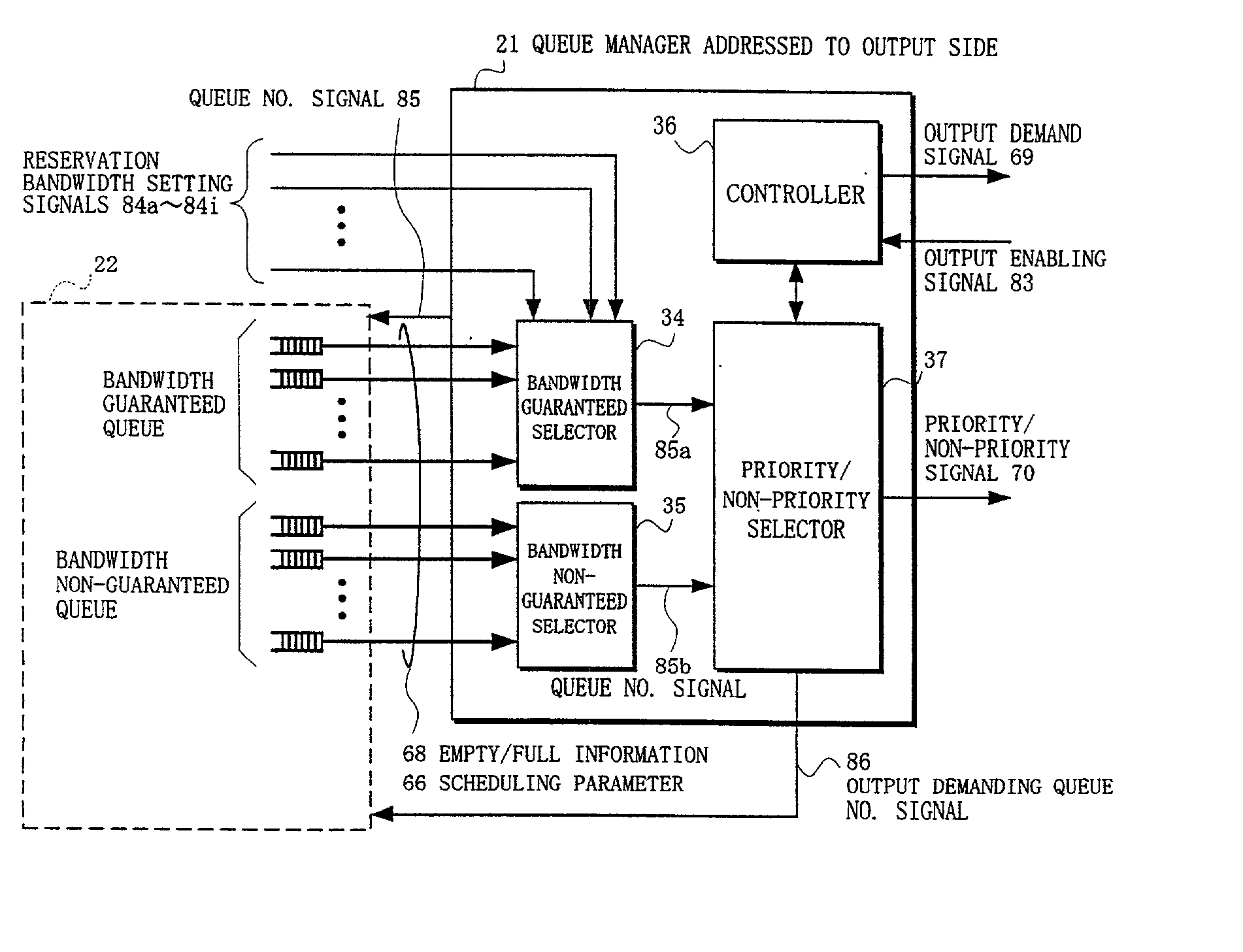

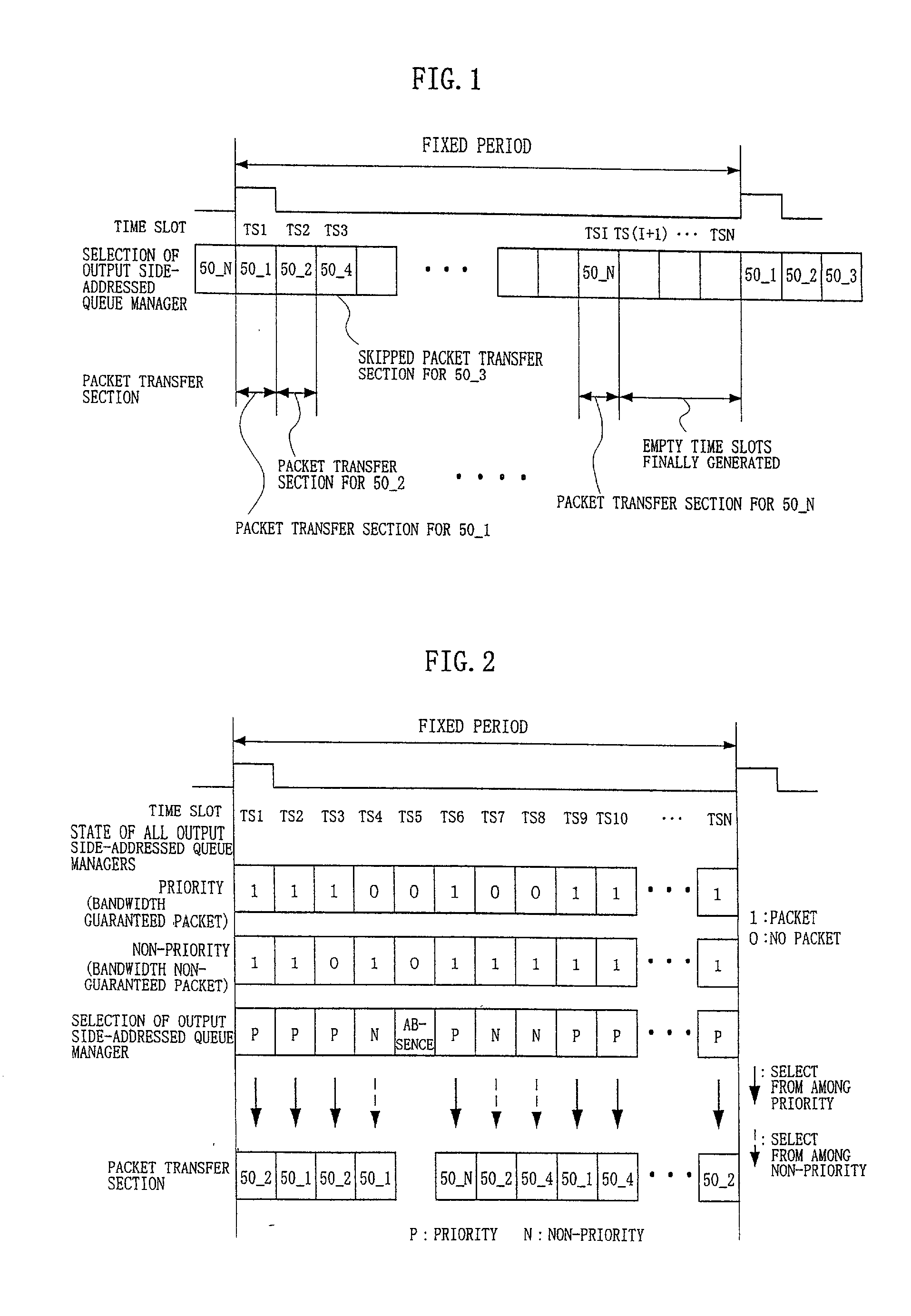

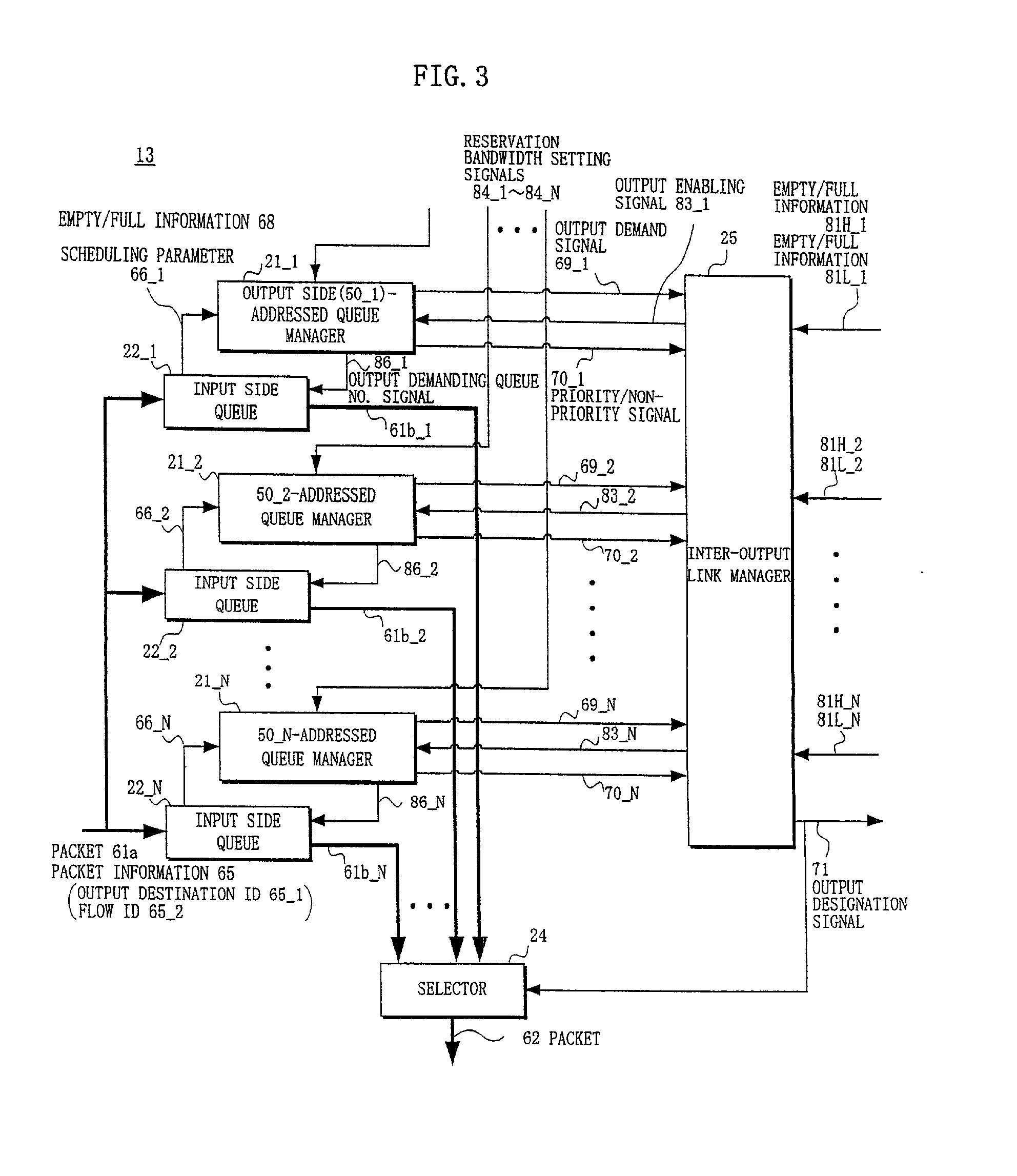

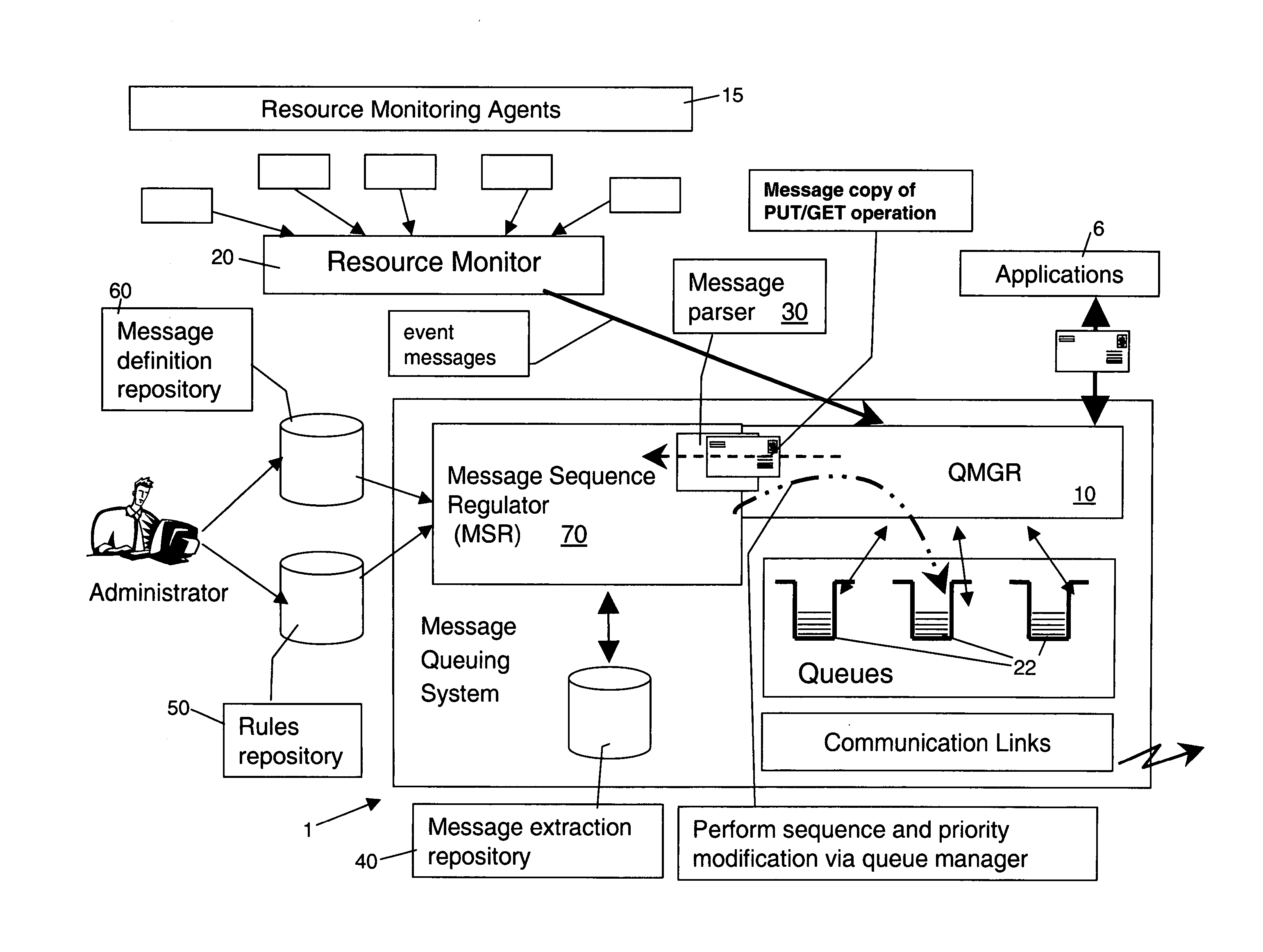

Relaying apparatus

InactiveUS20020061027A1Time-division multiplexData switching by path configurationEngineeringQueue manager

In a relaying apparatus comprising input side accommodating portions including input side queues, a plurality of output side accommodating portions including output side queues, and a switch fabric for mutually connecting the input side queues and the output side queues in a mesh form, in a time slot of a fixed period, the output side-addressed queue managers corresponding to the output side accommodating portions select a bandwidth guaranteed packet so as to guarantee a bandwidth, and output priority / non-priority signals for indicating which of the bandwidth guaranteed packet and a bandwidth non-guaranteed packet has been selected, and output demand signals of the packet. An inter-output link manager selects the packet having an empty area in the priority queue or non-priority queue of the output side accommodating portion as a destination corresponding to the packet which is demanded to be outputted.

Owner:FUJITSU LTD

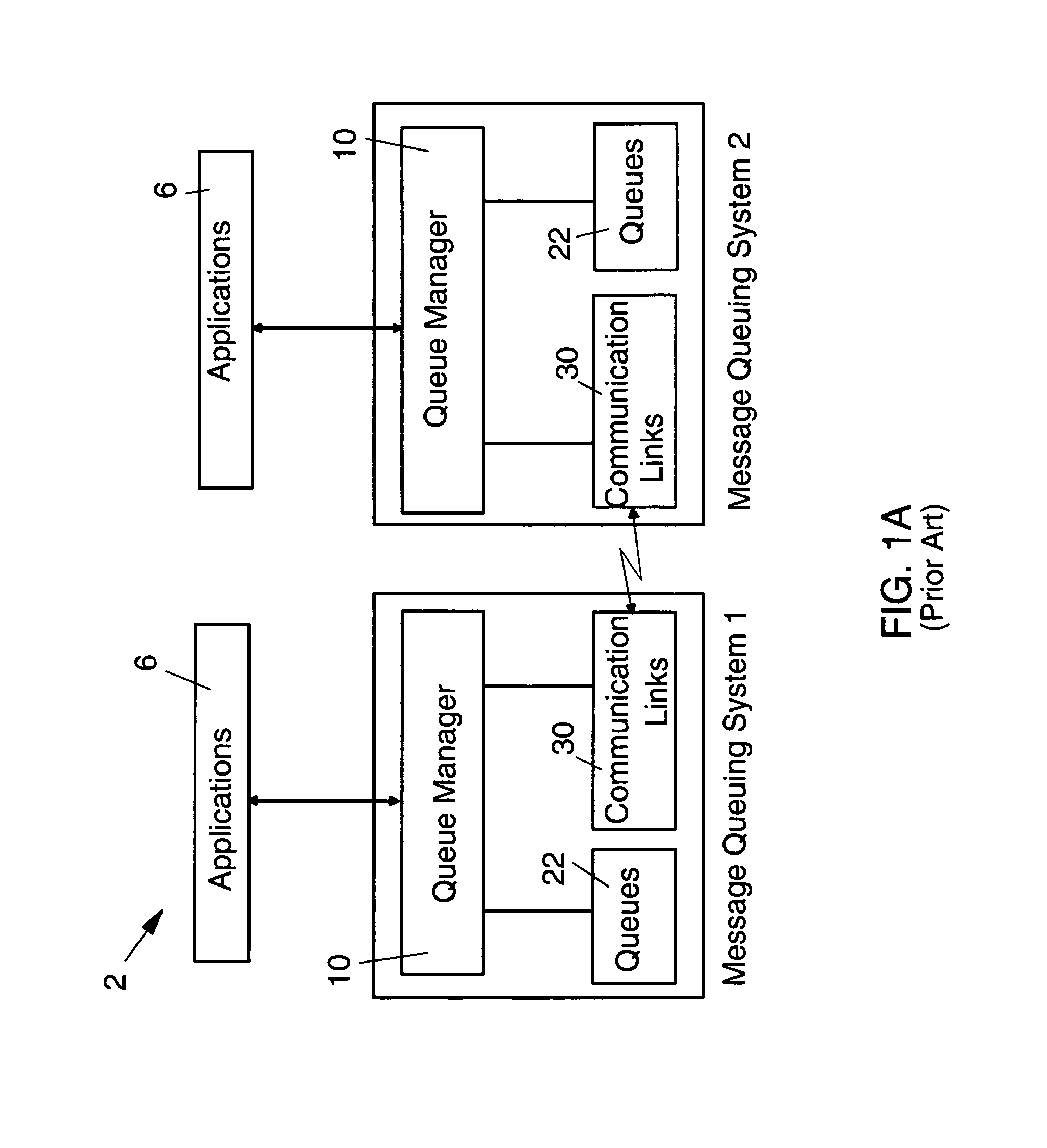

System, method and computer program product for dynamically changing message priority or message sequence number in a message queuing system based on processing conditions

A message sequence number or message priority level stored in a queue maintained by a message queuing system may be dynamically changed based on processing conditions by the use of a message sequence regulator (MSR) system. The MSR system includes a message parser, a message extraction repository, a rules repository, a message definition repository, an interface to a resource monitor for monitoring system resources by single resource monitoring agents, and a notification component for identifying sequence regulation operations which cannot be executed. The MSR system receives copies of messages loaded into or retrieved from queues by a queue manager as well as event messages from the resource monitor. The message extracts parts of the message using message structures defined in the rules repository and stores the extracted parts in the message extraction repository. If a defined condition is found to exist, the MSR system initiates calculation of an appropriate message sequence number or message priority level. The queue manager updates the message record without removing the message from the queue.

Owner:IBM CORP

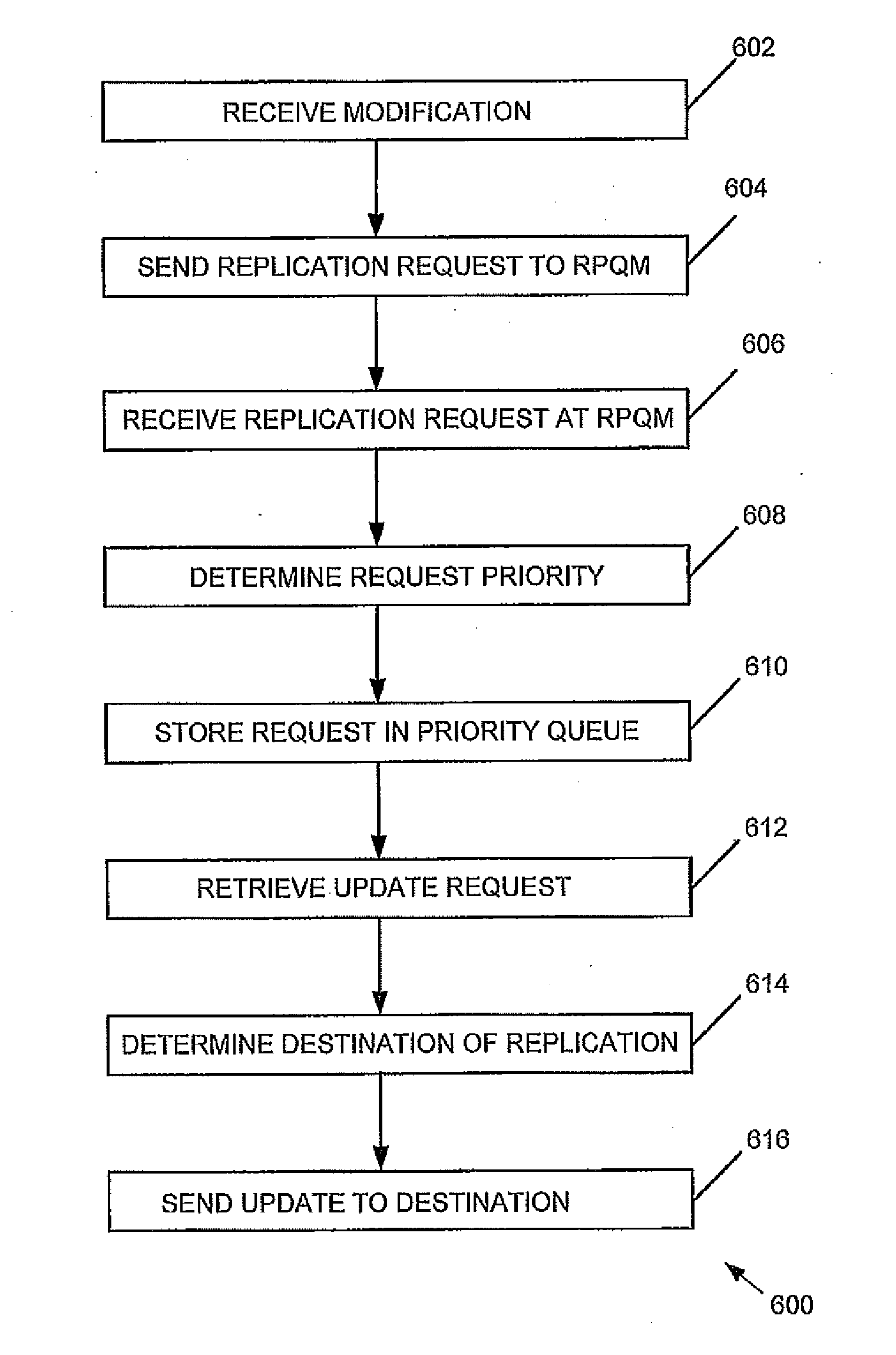

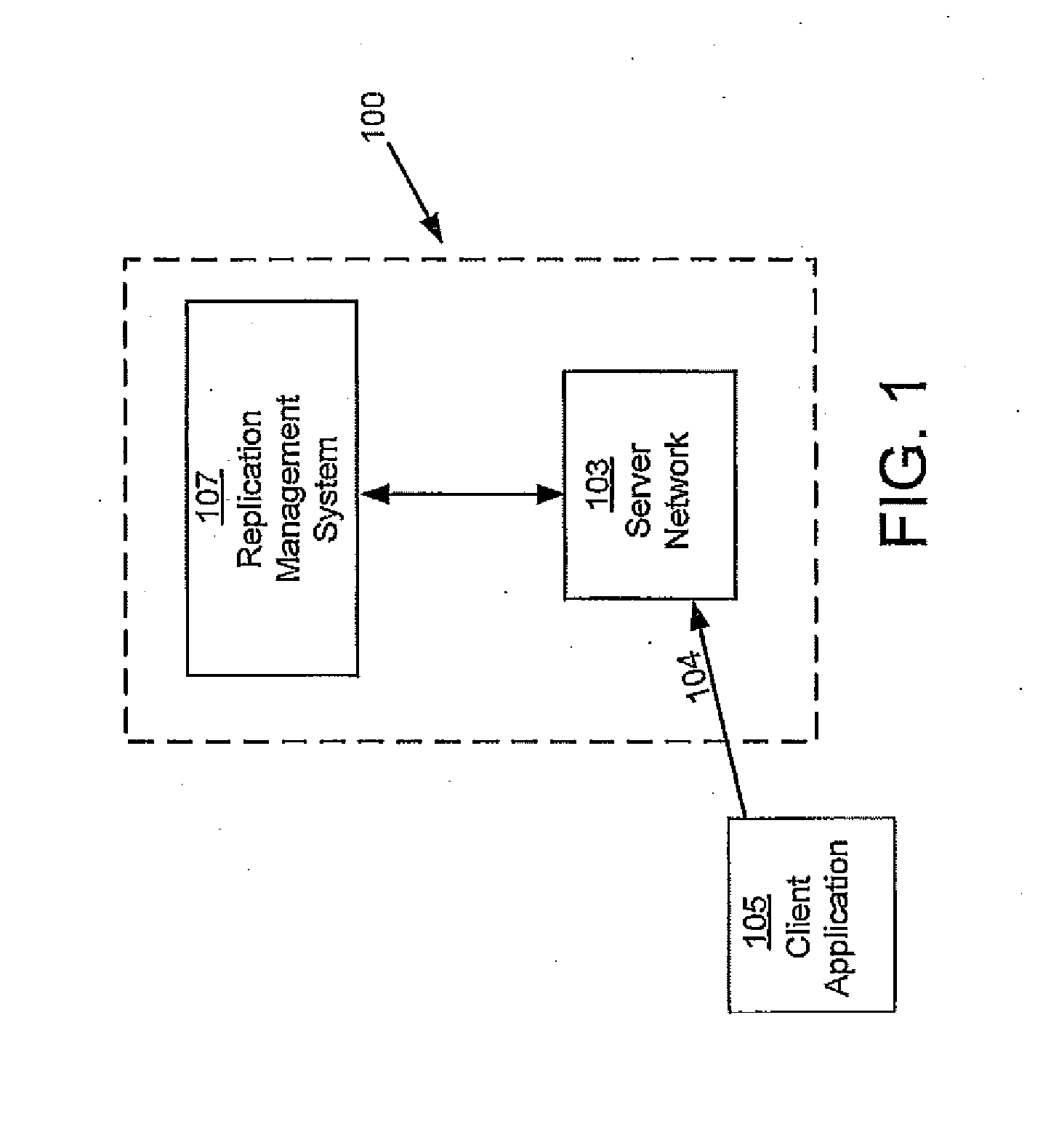

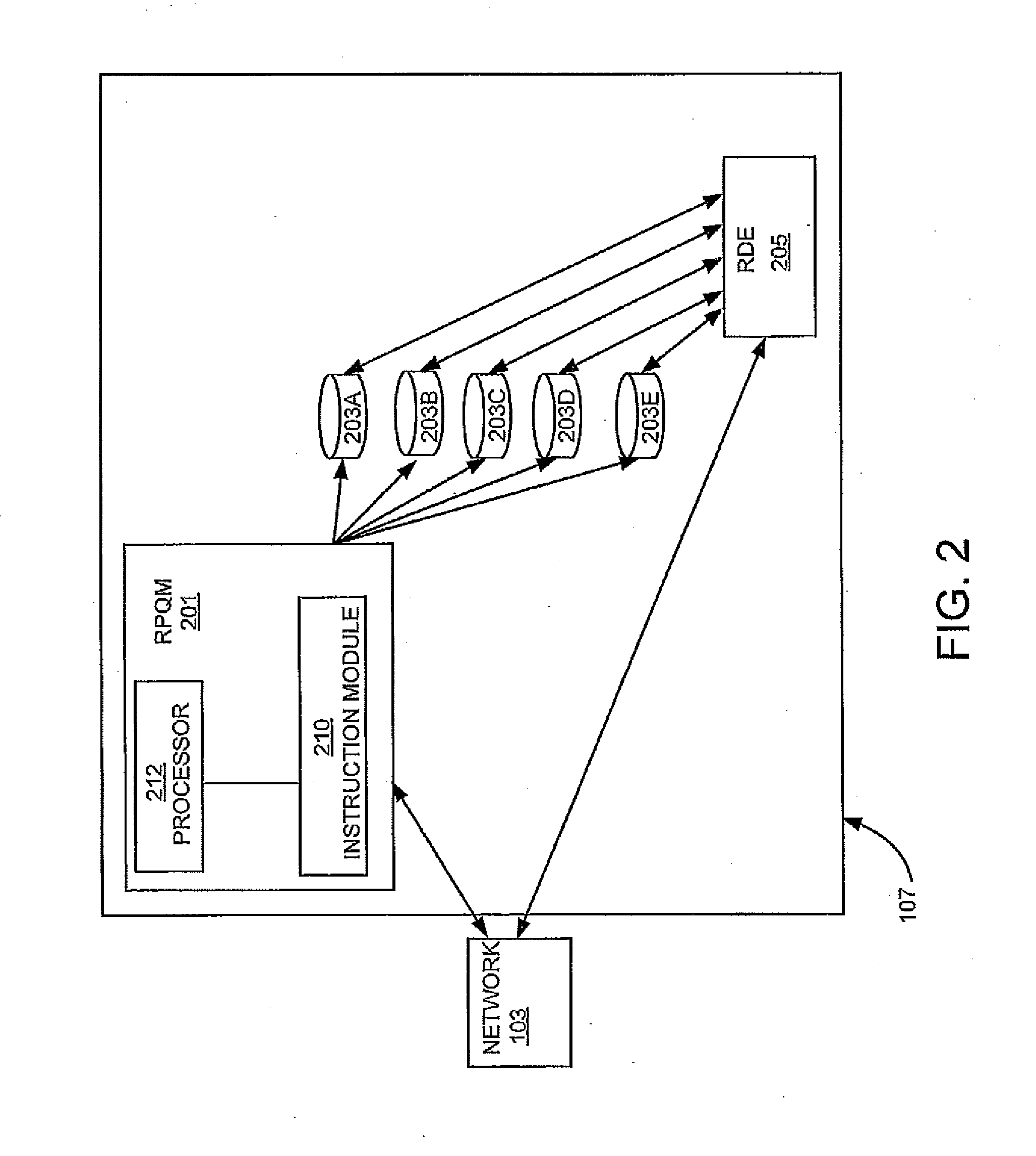

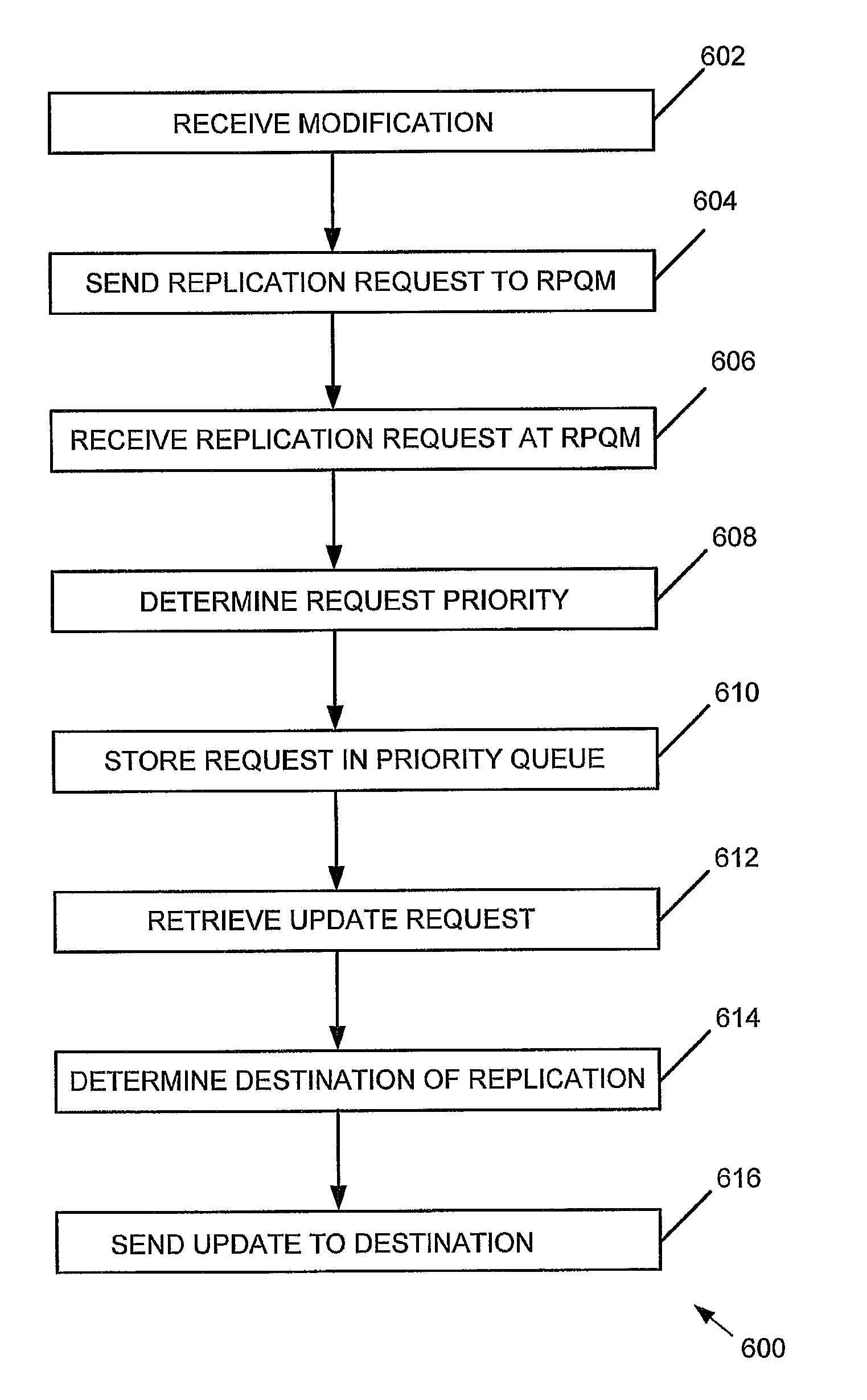

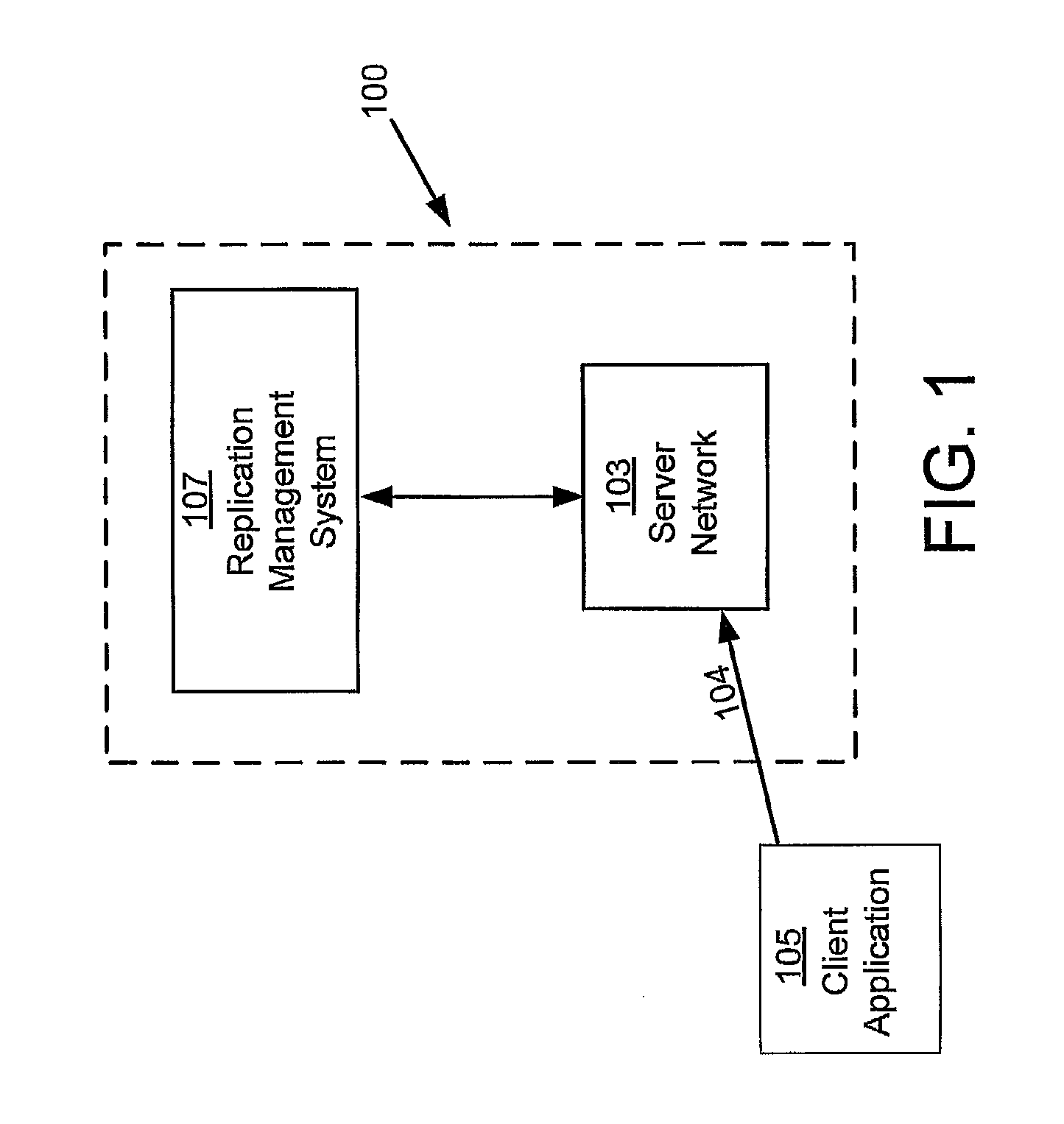

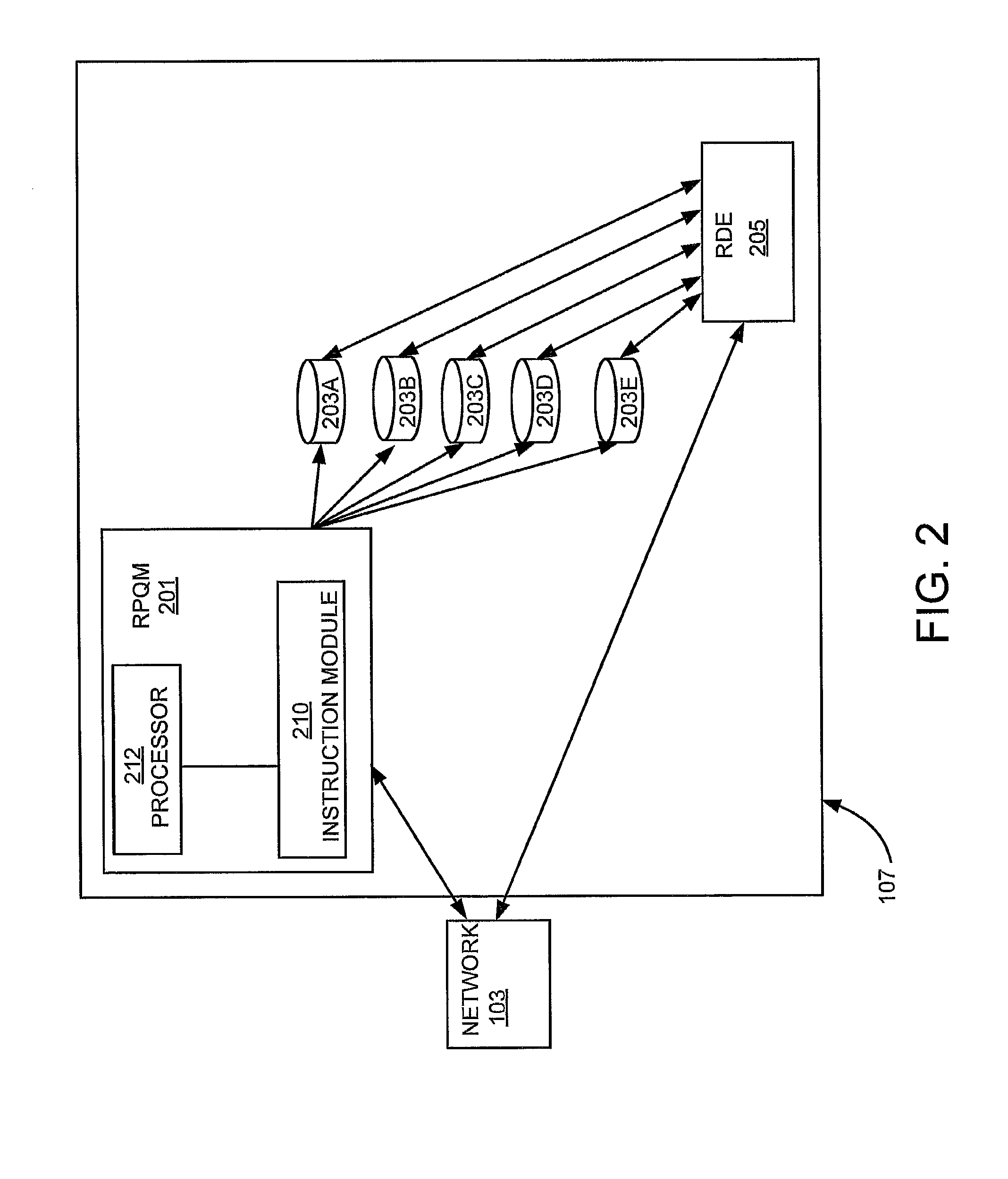

LDAP Replication Priority Queuing Mechanism

A replication priority queuing system prioritizes replication requests in accordance with a predetermined scheme. An exemplary system includes a Replication Priority Queue Manager that receives update requests and assigns a priority based upon business rules and stores the requests in associated storage means. A Replication Decision Engine retrieves the requests from storage and determines a destination for the update based upon predetermined replication rules, and sends the update to the destination.

Owner:CINGULAR WIRELESS II LLC

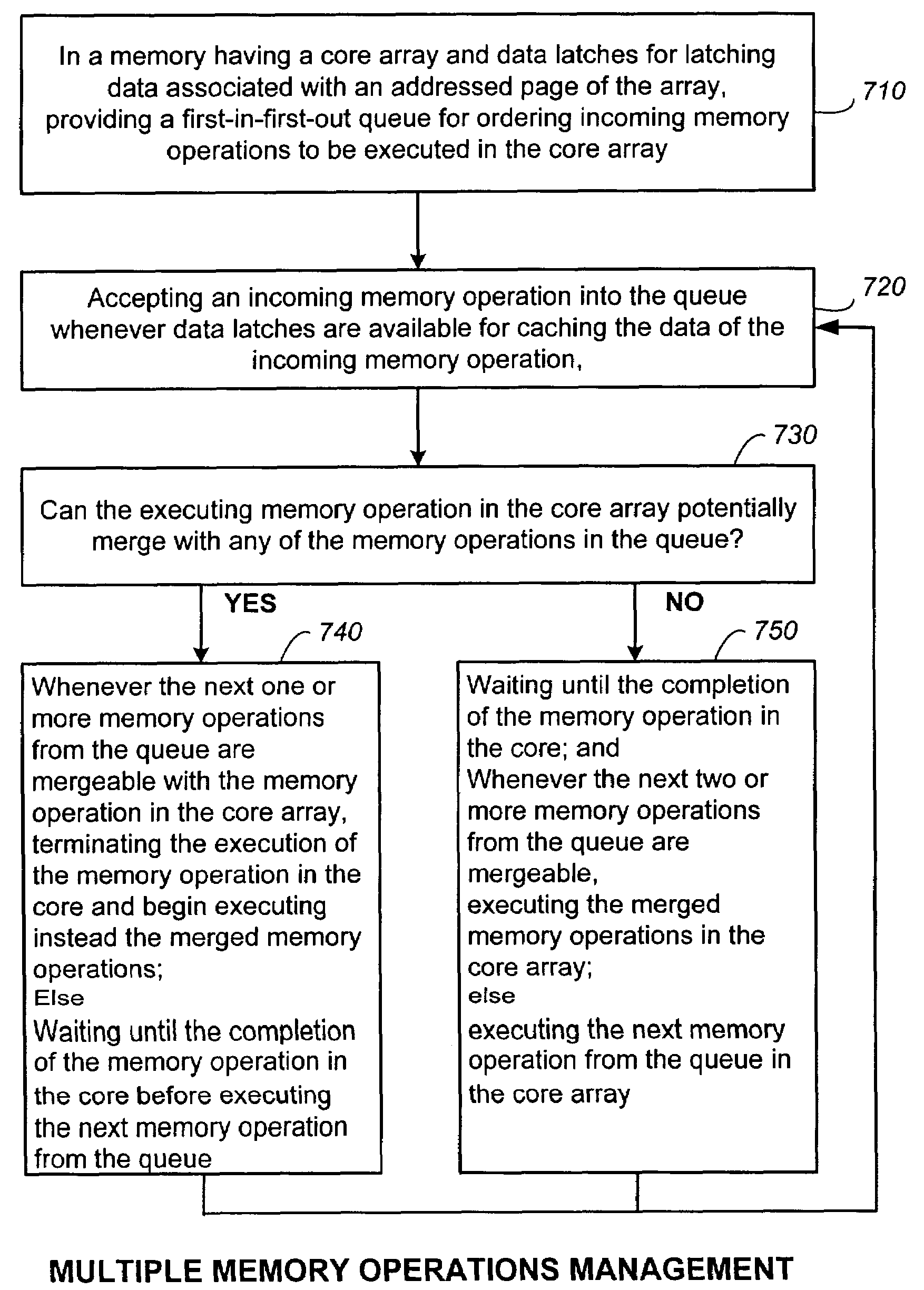

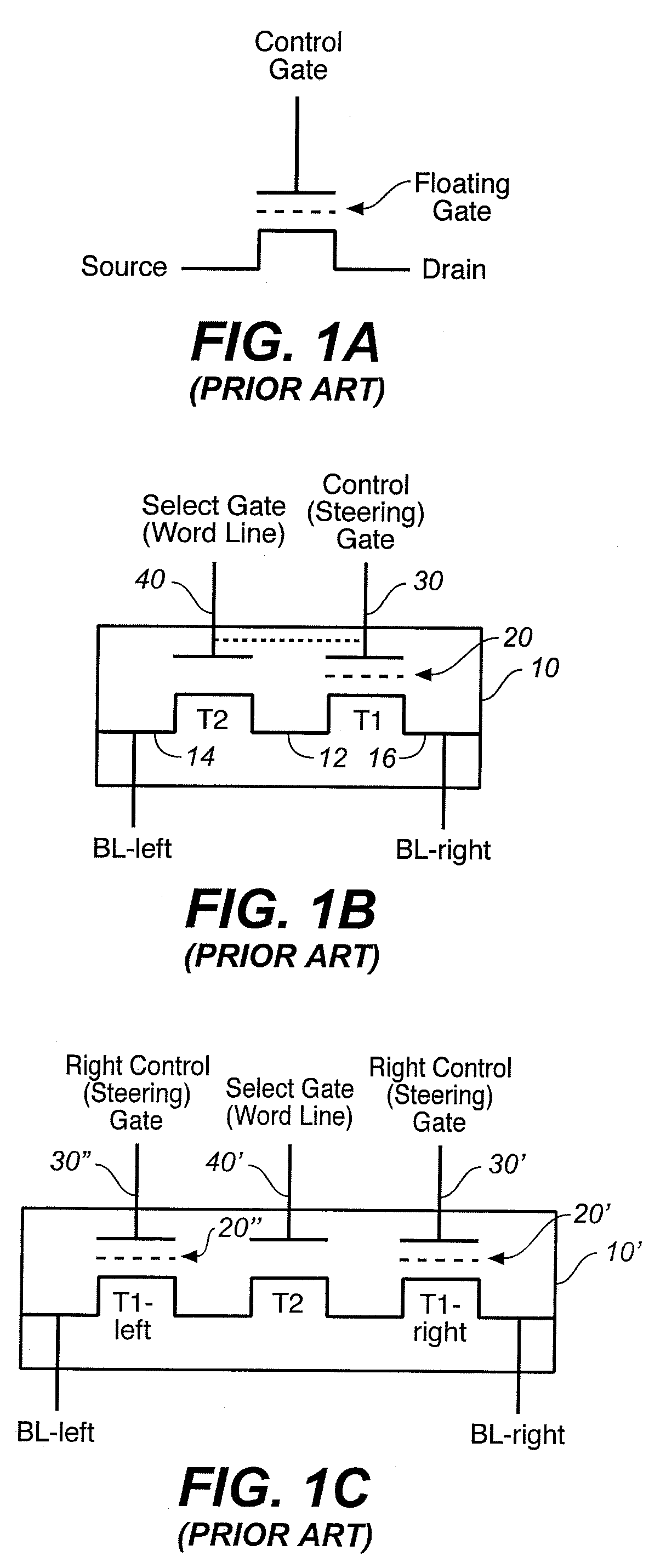

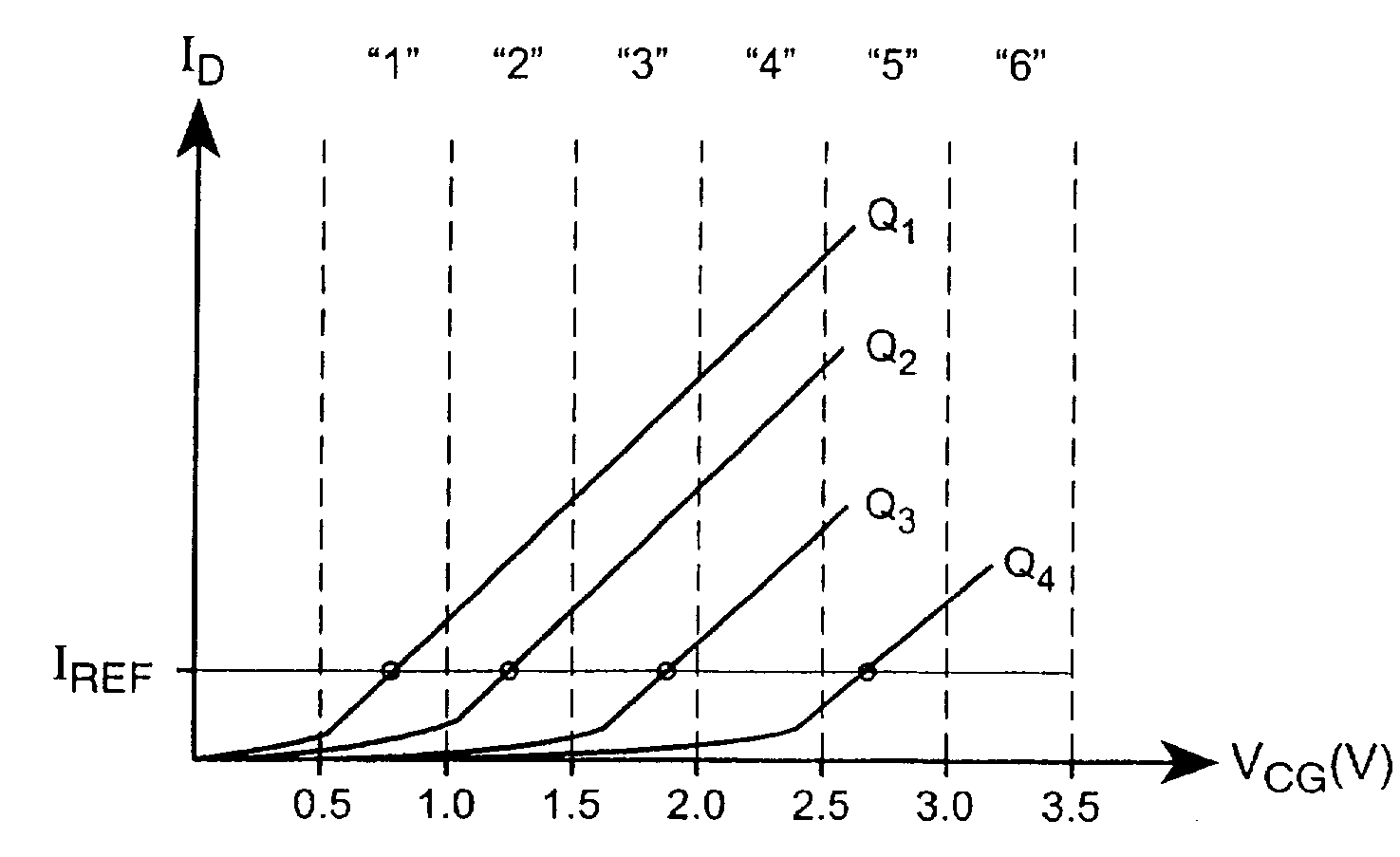

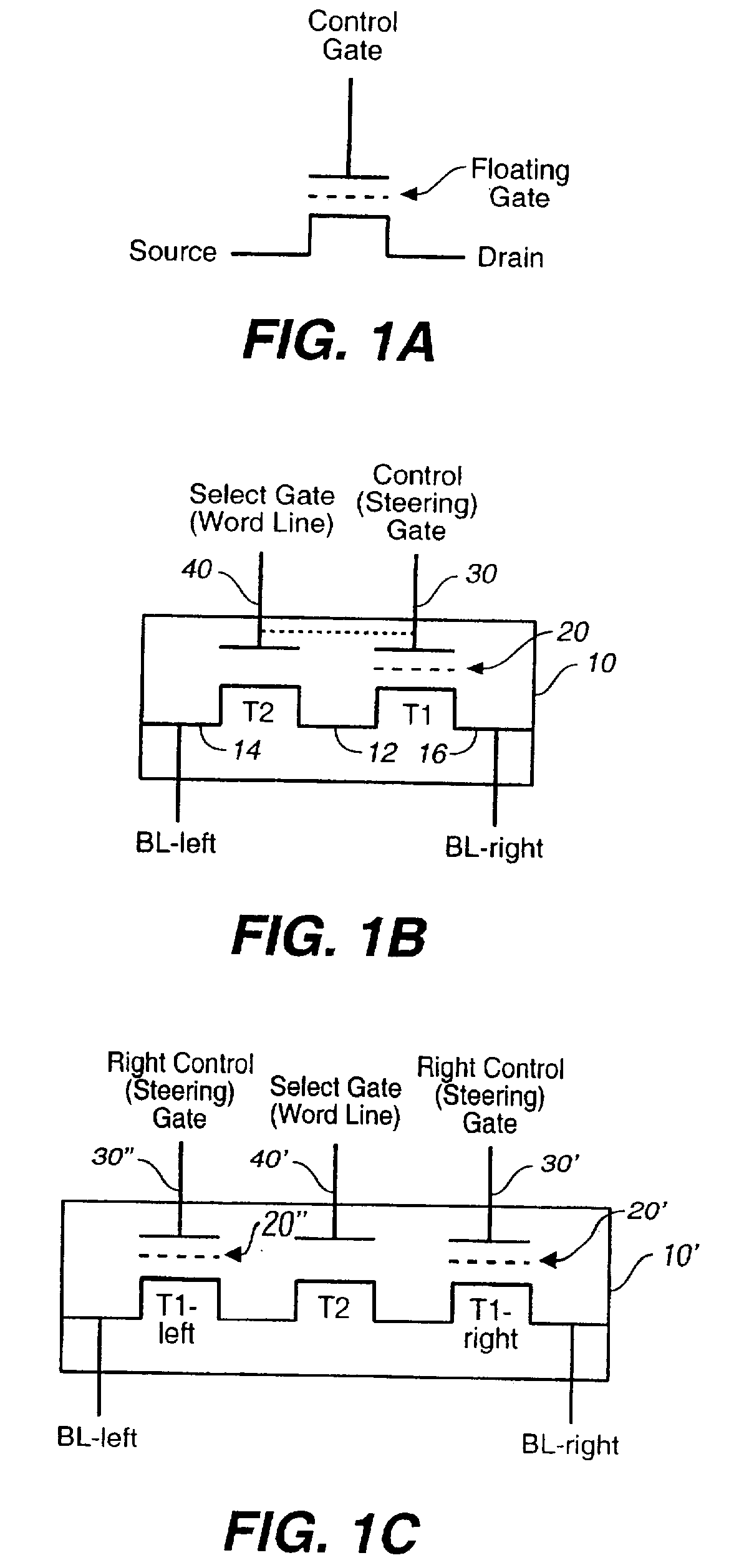

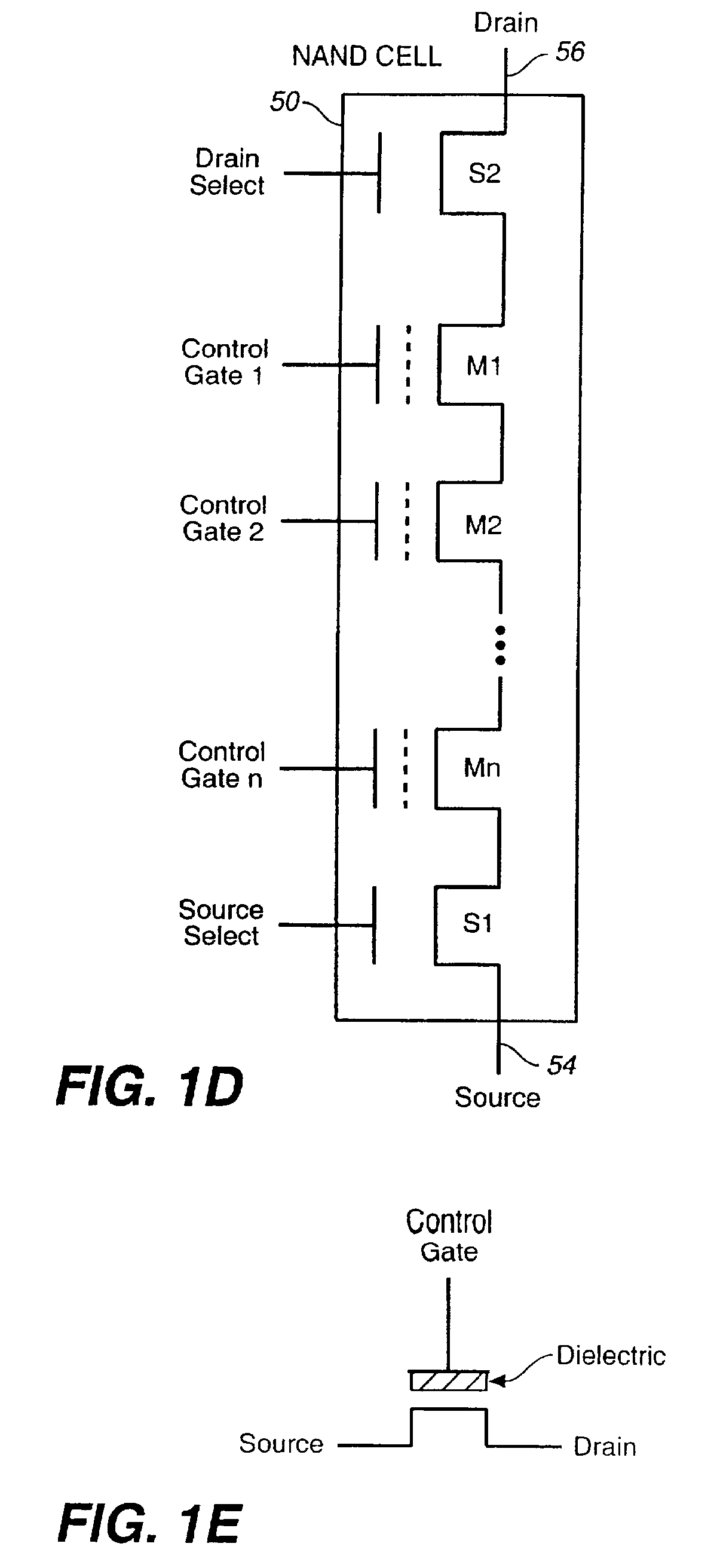

Method for non-volatile memory with managed execution of cached data

ActiveUS7463521B2Save transfer timeImprove performanceMemory architecture accessing/allocationRead-only memoriesParallel computingControl memory

Methods and circuitry are present for executing current memory operation while other multiple pending memory operations are queued. Furthermore, when certain conditions are satisfied, some of these memory operations are combinable or mergeable for improved efficiency and other benefits. The management of the multiple memory operations is accomplished by the provision of a memory operation queue controlled by a memory operation queue manager. The memory operation queue manager is preferably implemented as a module in the state machine that controls the execution of a memory operation in the memory array.

Owner:SANDISK TECH LLC

LDAP replication priority queuing mechanism

A replication priority queuing system prioritizes replication requests in accordance with a predetermined scheme. An exemplary system includes a Replication Priority Queue Manager that receives update requests and assigns a priority based upon business rules and stores the requests in associated storage means. A Replication Decision Engine retrieves the requests from storage and determines a destination for the update based upon predetermined replication rules, and sends the update to the destination.

Owner:CINGULAR WIRELESS II LLC

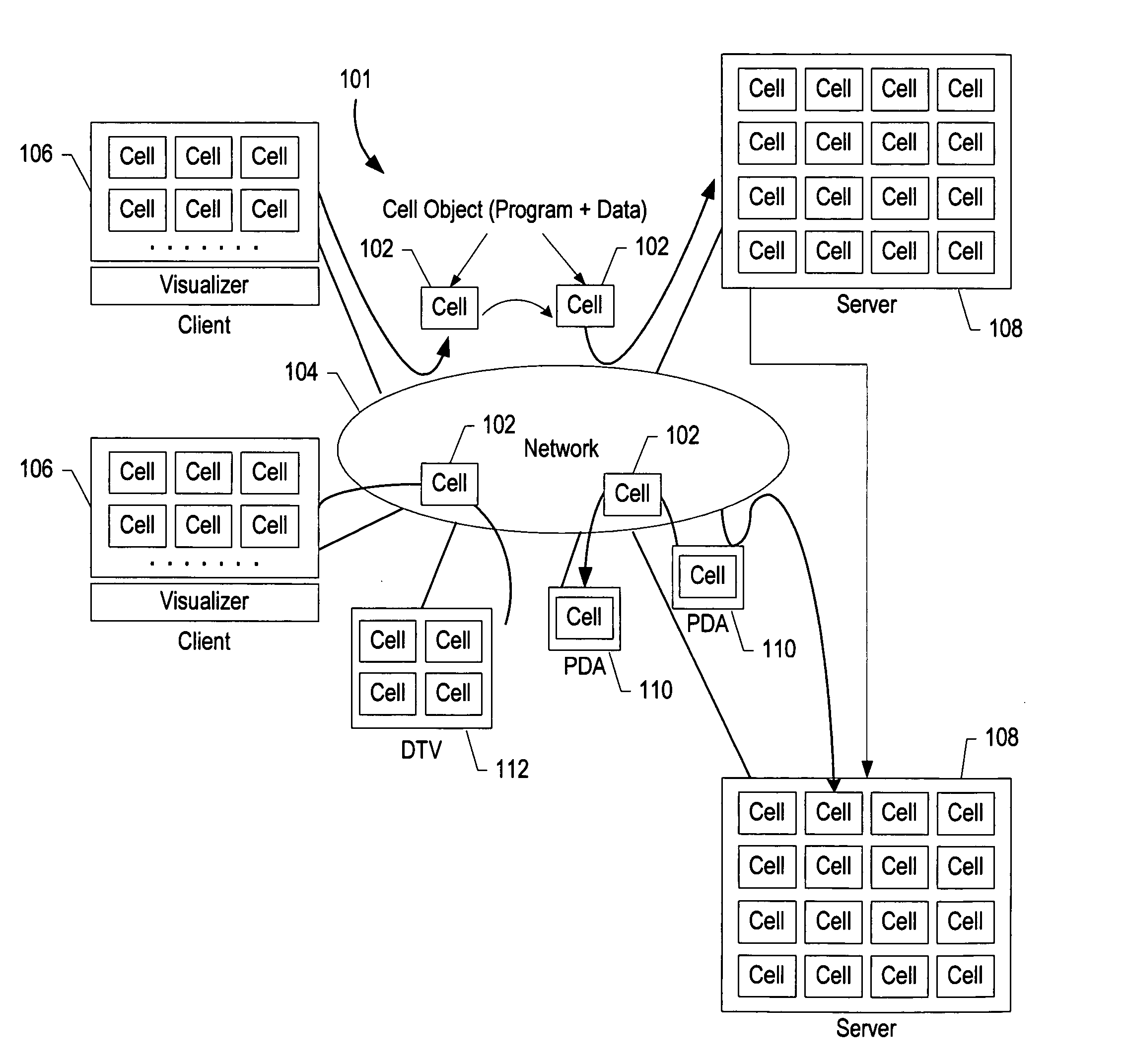

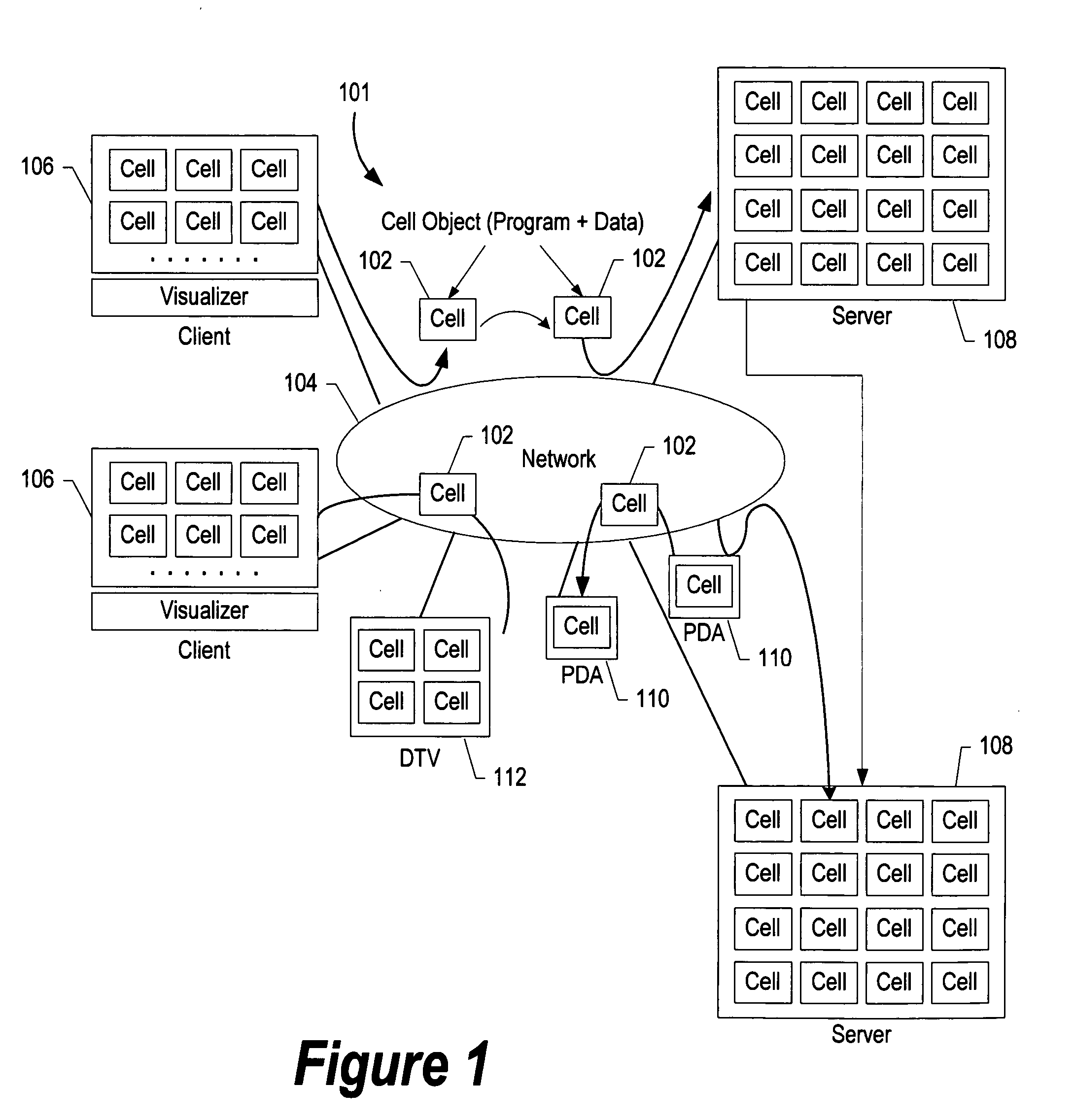

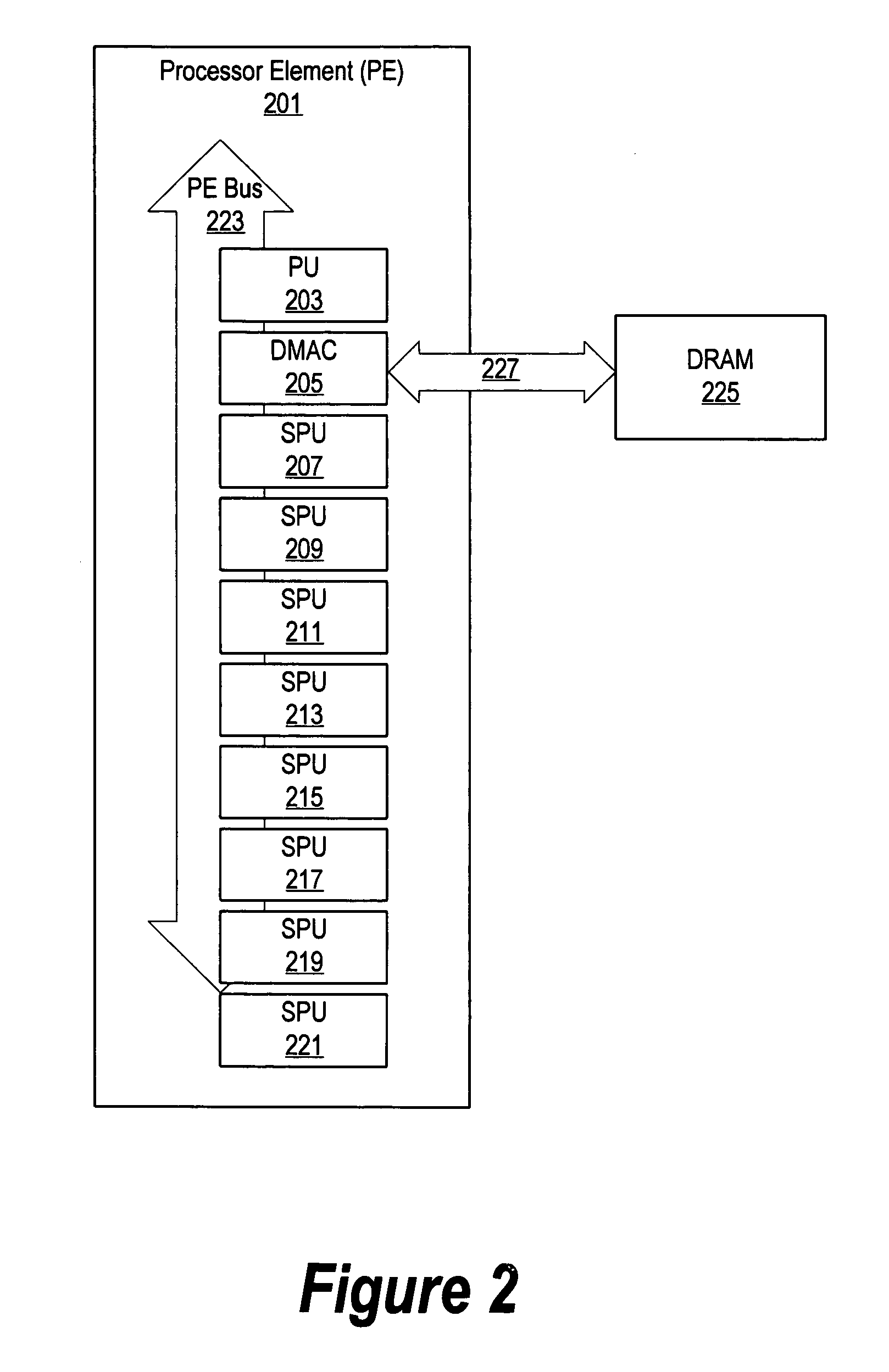

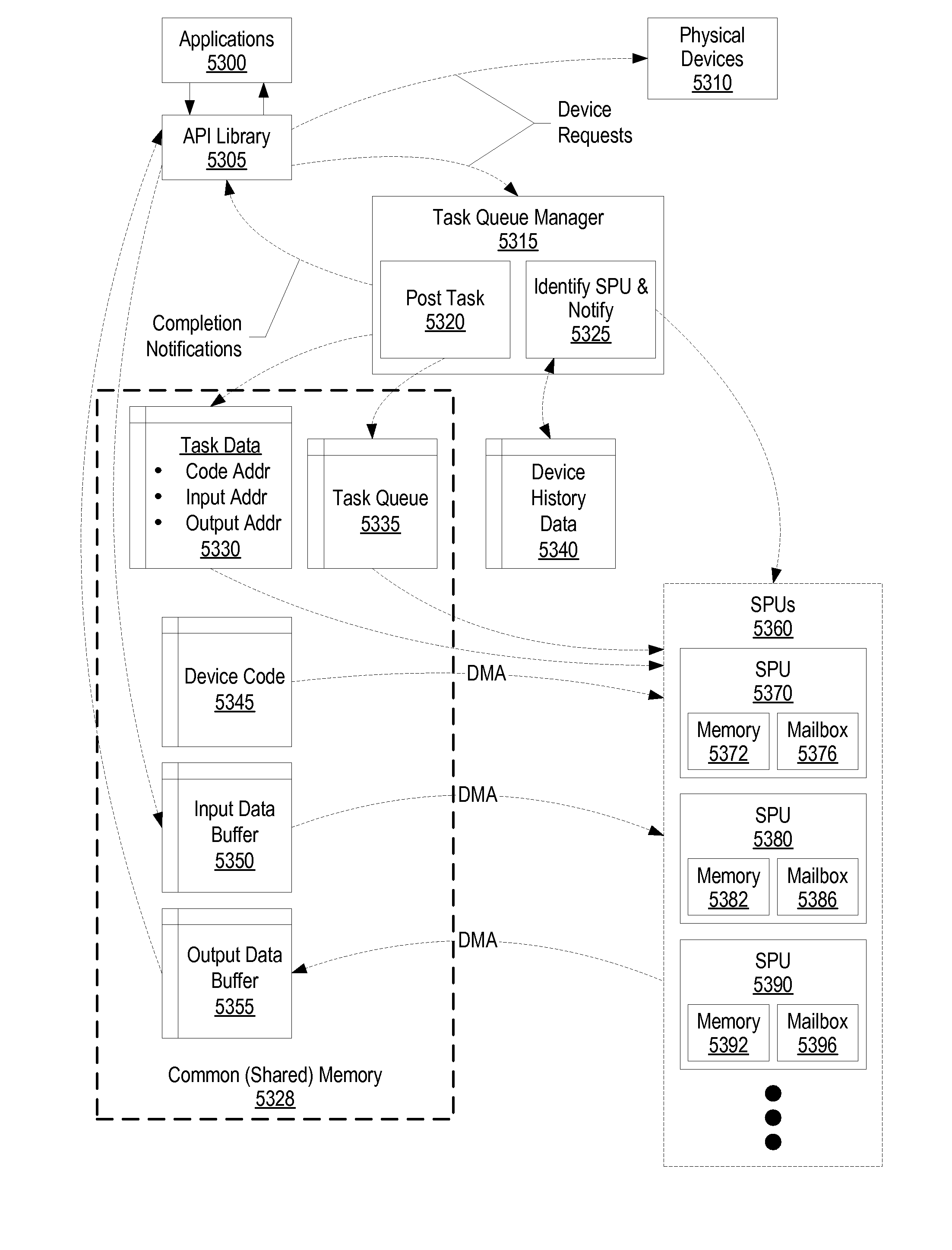

System and method for task queue management of virtual devices using a plurality of processors

A task queue manager manages the task queues corresponding to virtual devices. When a virtual device function is requested, the task queue manager determines whether an SPU is currently assigned to the virtual device task. If an SPU is already assigned, the request is queued in a task queue being read by the SPU. If an SPU has not been assigned, the task queue manager assigns one of the SPUs to the task queue. The queue manager assigns the task based upon which SPU is least busy as well as whether one of the SPUs recently performed the virtual device function. If an SPU recently performed the virtual device function, it is more likely that the code used to perform the function is still in the SPU's local memory and will not have to be retrieved from shared common memory using DMA operations.

Owner:IBM CORP

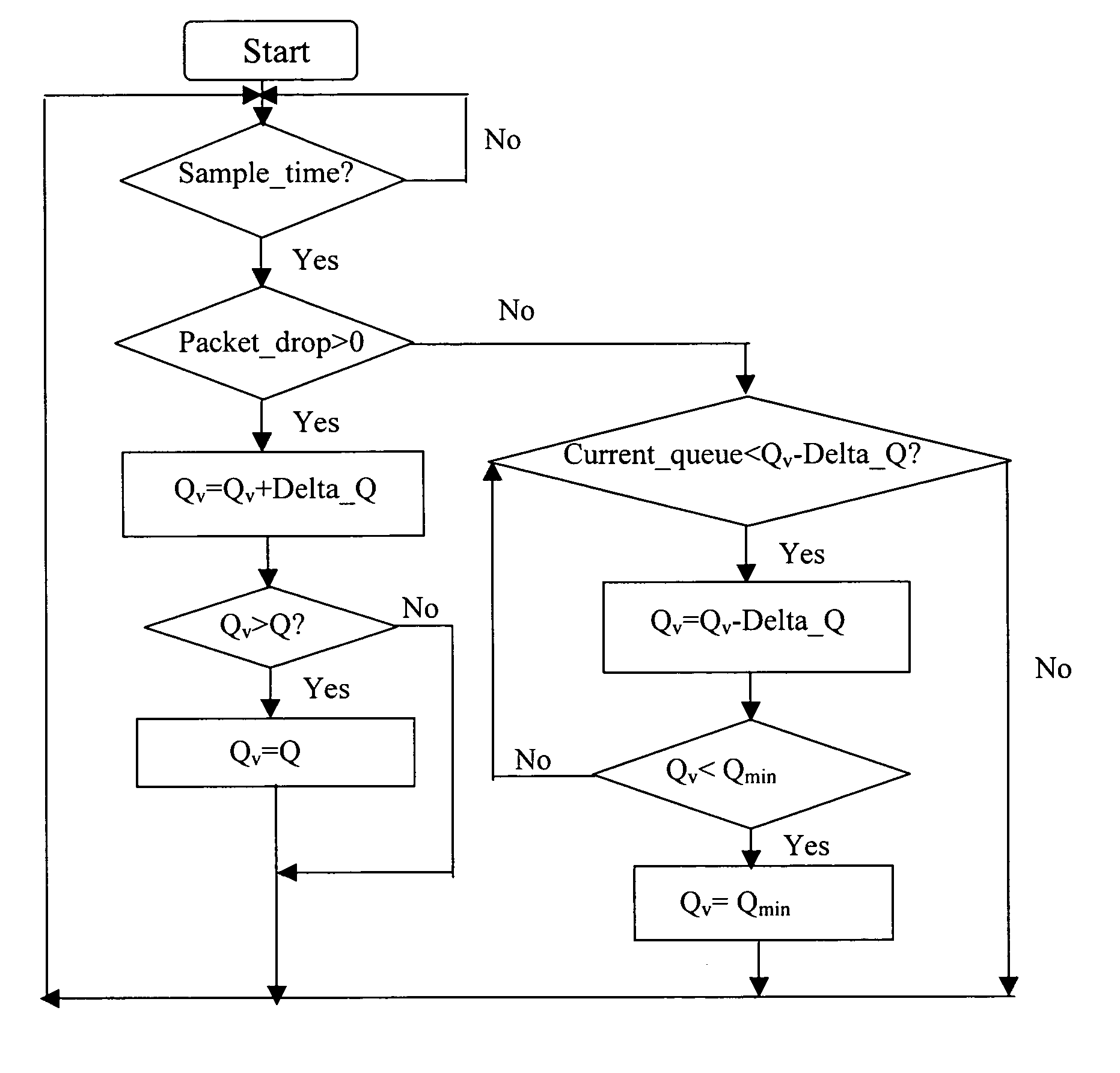

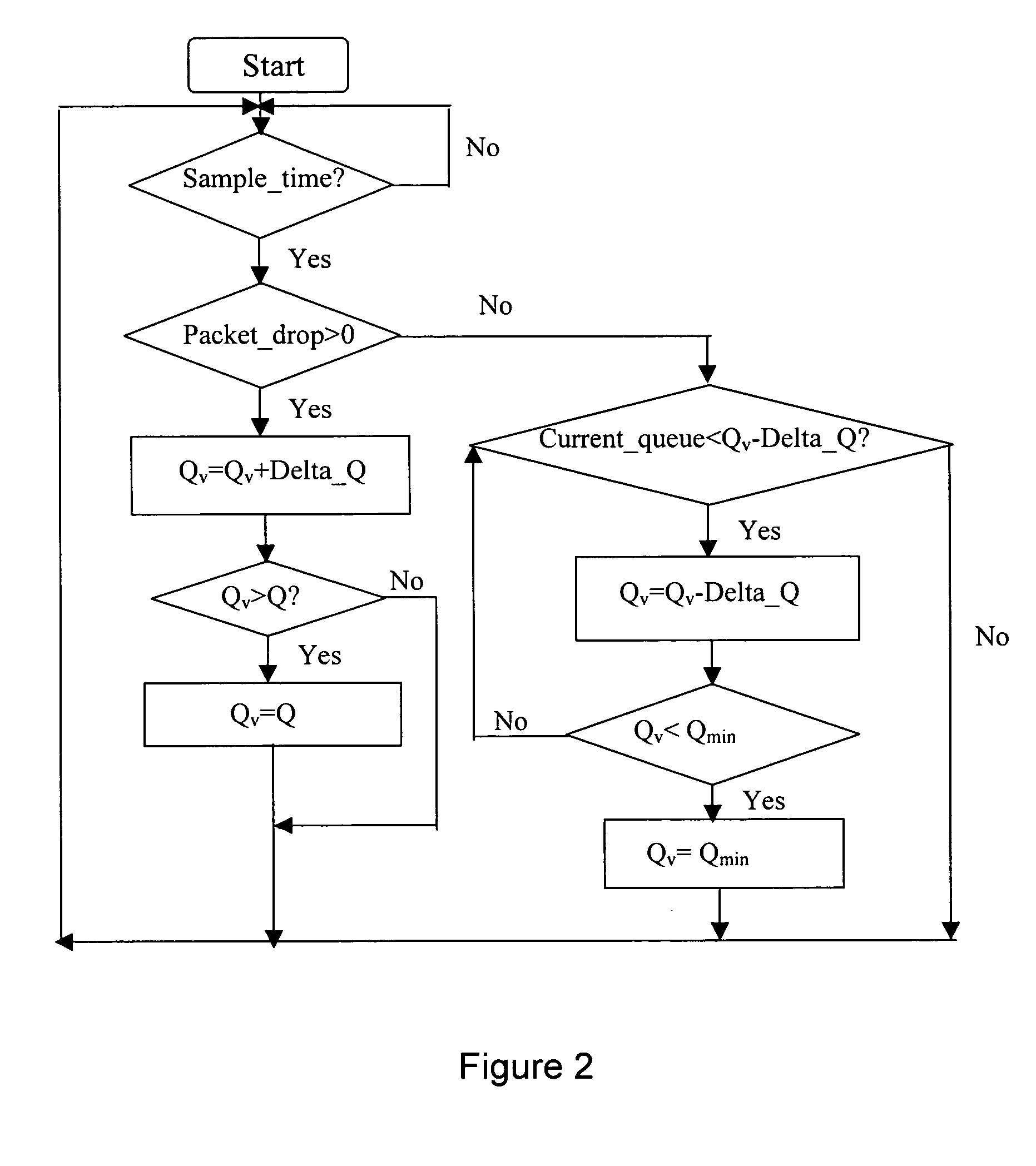

Queue-based active queue management process

ActiveUS20060045008A1More buffer capacityIncreasing queue sizeError preventionTransmission systemsNetwork communicationActive queue management

An active queue management (AQM) process for network communications equipment. The AQM process is queue based and involves applying at a queue size threshold congestion notification to communications packets in a queue of a link via packet dropping; and adjusting said queue size threshold on the basis of the congestion level. The AQM process releases more buffer capacity to accommodate more incoming packets by increasing said queue size threshold when congestion increases; and decreases buffer capacity by reducing said queue size threshold when congestion decreases. Network communications equipment includes a switch component for switching communications packets between input ports and output ports, packet queues for at least the output ports, and an active queue manager for applying congestion notification to communications packets in the queues for the output ports via packet dropping. The congestion notification is applied at respective queue size thresholds for the queues, and the thresholds adjusted on the basis of the respective congestion levels of the queues of the output ports.

Owner:INTELLECTUAL VENTURES II

Methods, systems, and media to enhance persistence of a message

InactiveUS20050060374A1Multiprogramming arrangementsMultiple digital computer combinationsMiddlewareQueue manager

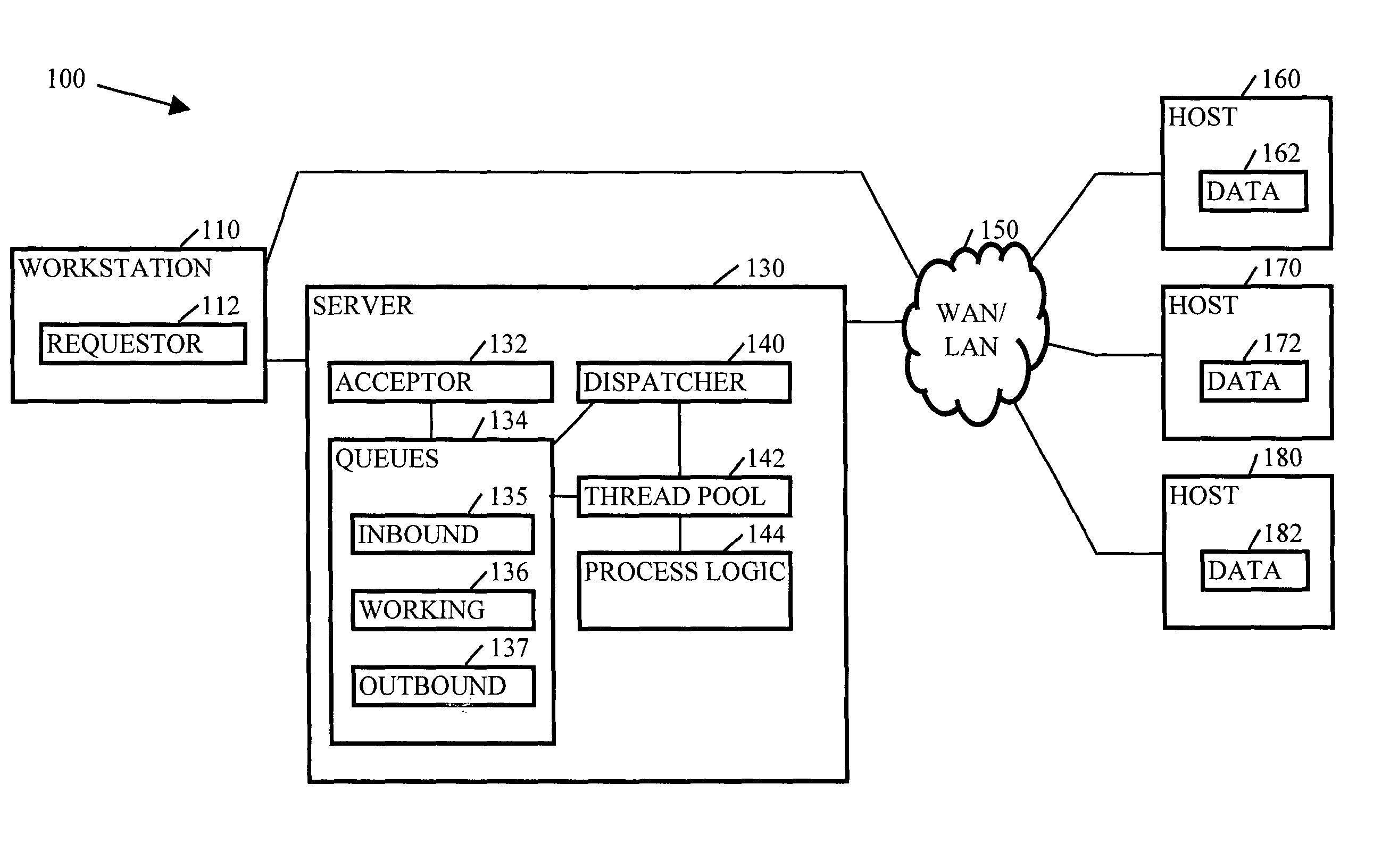

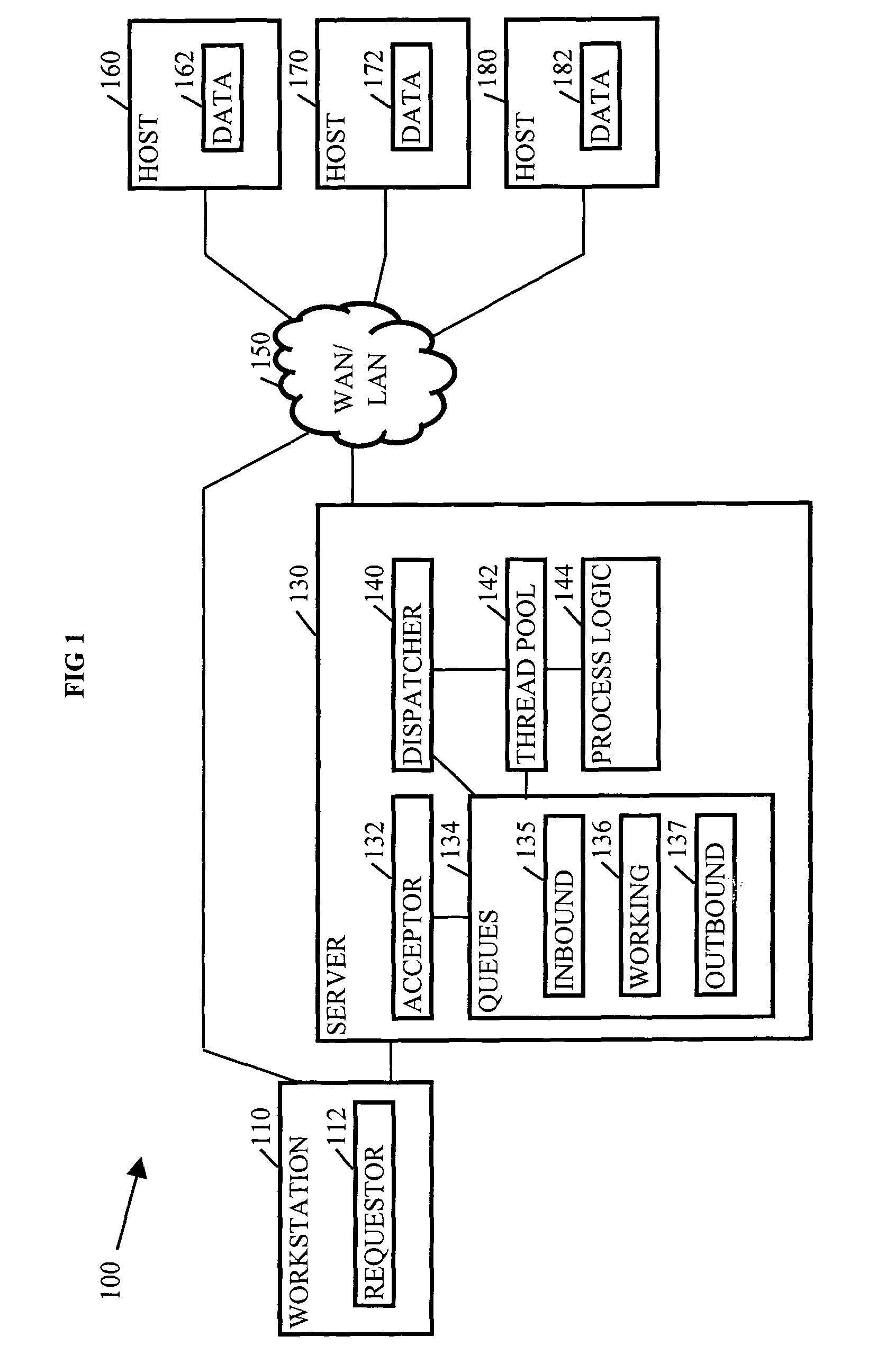

Methods, systems, and media for enhancing persistence of a message are disclosed. Embodiments include hardware and / or software for storing of a message in an inbound queue, copying the message to a working queue prior to removing the message from the inbound queue, processing the message base upon the copy in the working queue, and storing a committed reply for the message in an outbound queue. Embodiments may also include a queue manager to persist the message and the committed reply after receipt of the message, to close or substantially close gaps in persistence. Several embodiments include a dispatcher that browses the inbound queue to listen for receipt of messages to process, copy the message to the working queue, and assign the message to a thread to perform processing associated with the message. Further embodiments include persistence functionality in middleware, alleviating the burden of persisting messages from applications like upperware.

Owner:SNAP INC

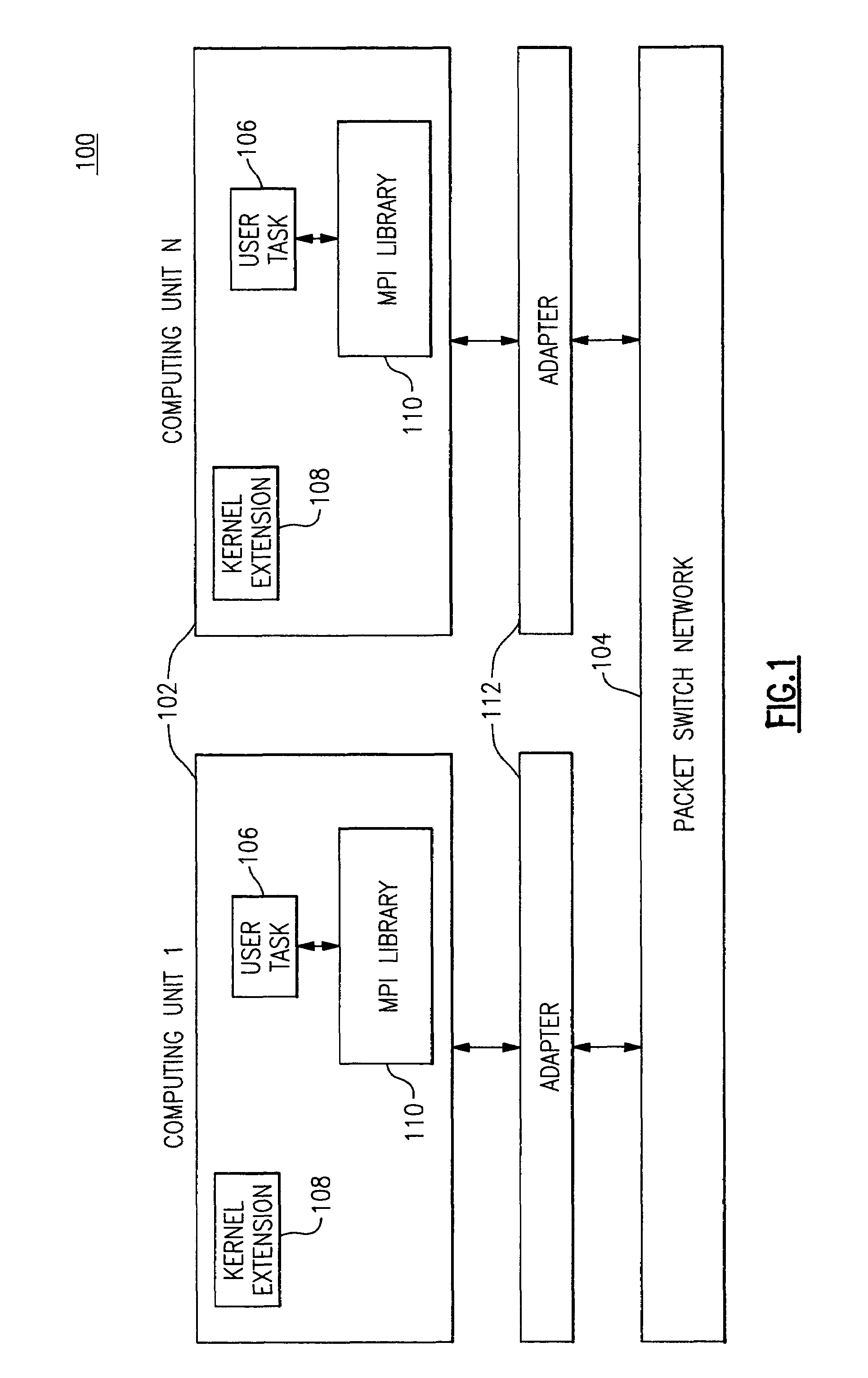

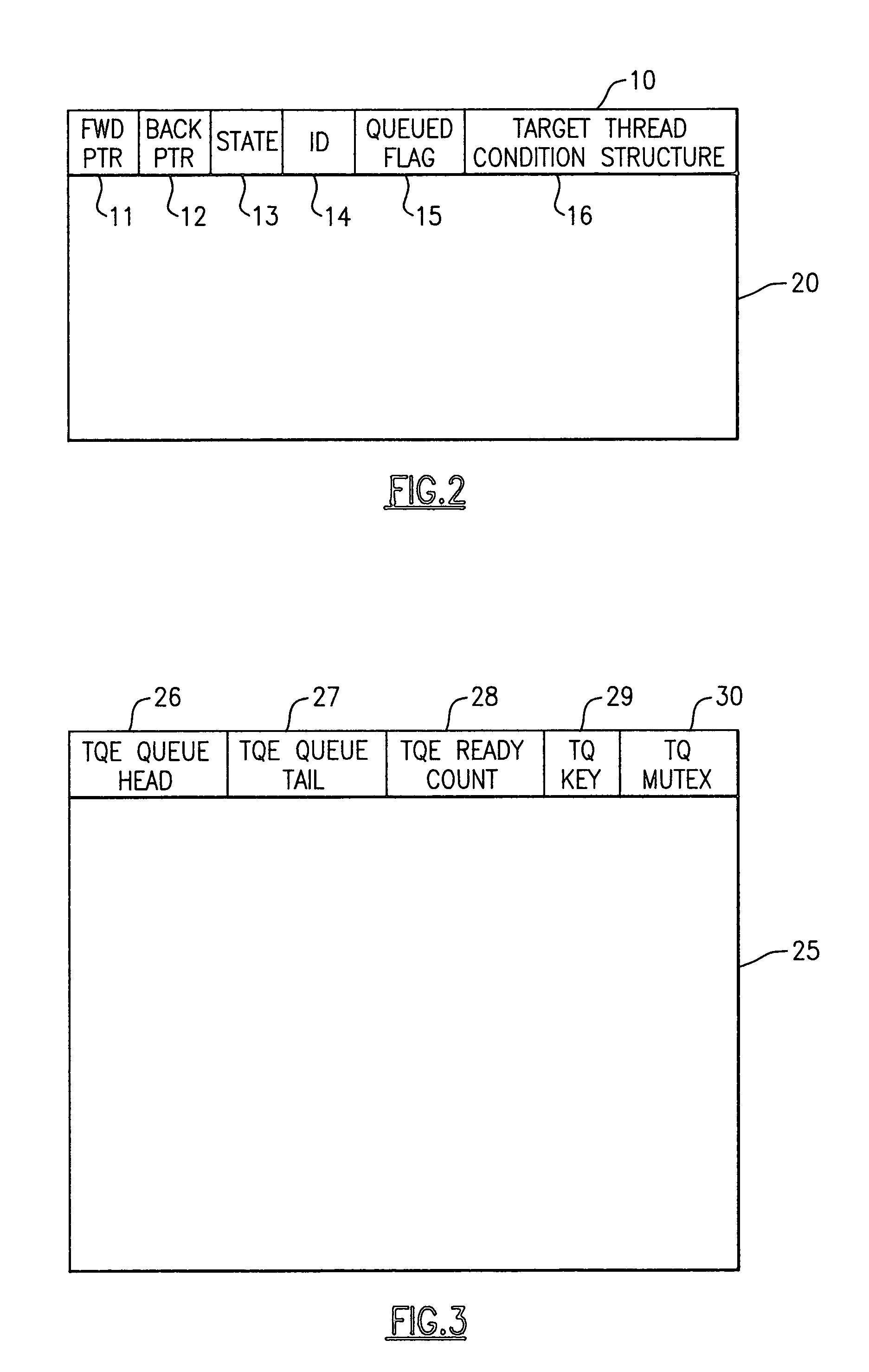

Thread dispatcher for multi-threaded communication library

InactiveUS6934950B1Improve efficiencyRaise priorityMultiprogramming arrangementsMemory systemsPOSIX ThreadsThread scheduling

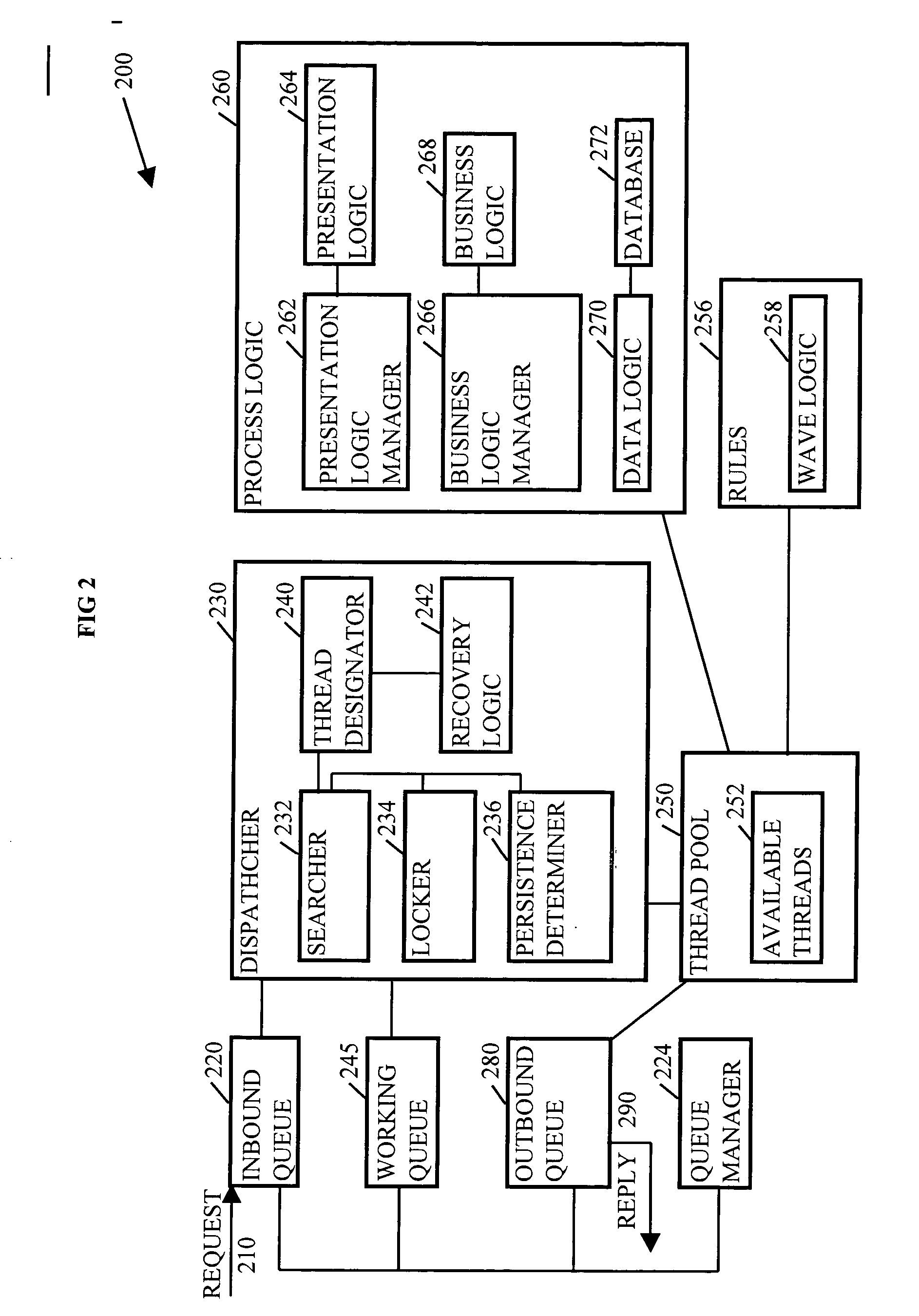

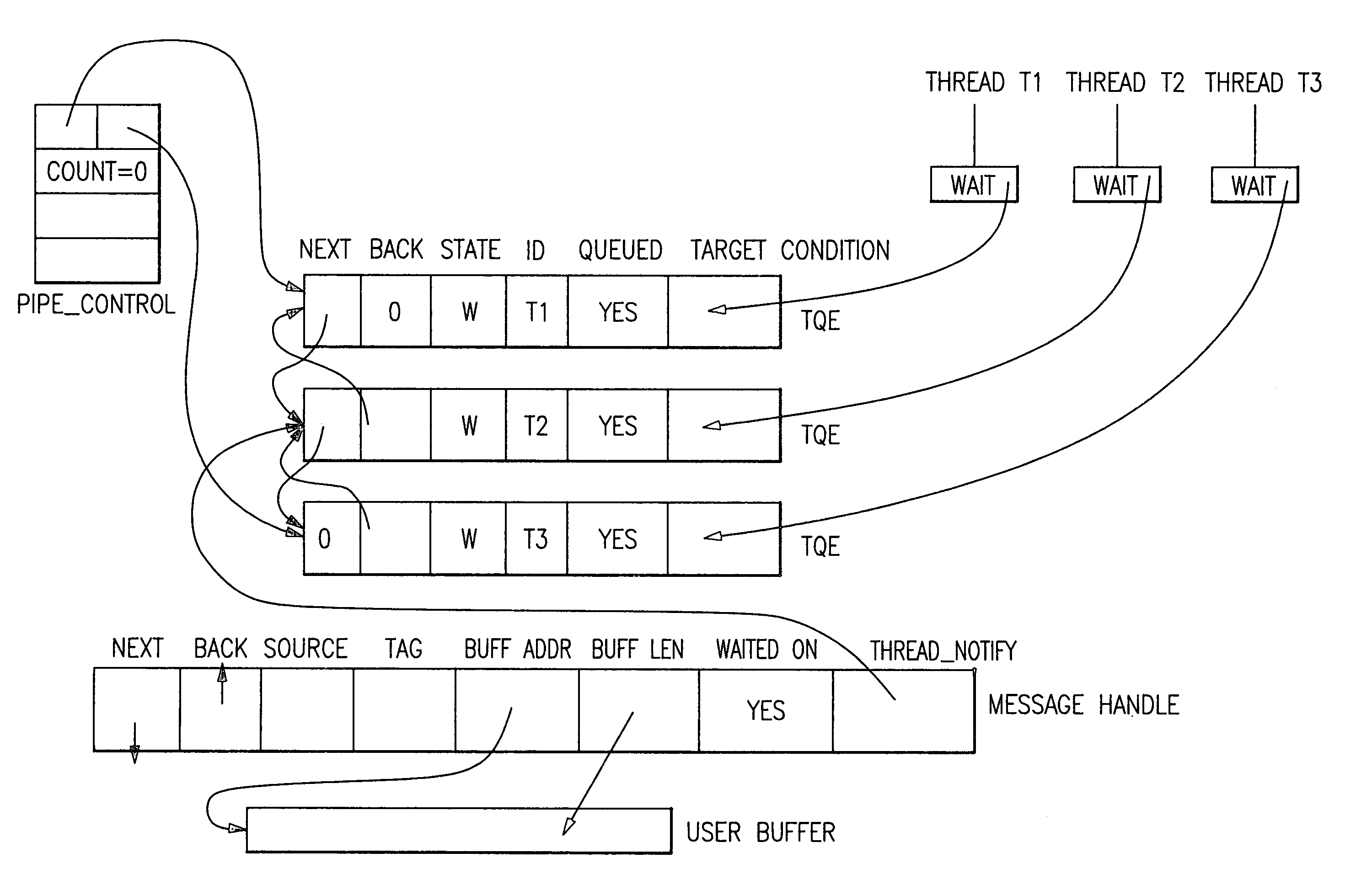

Method, computer program product, and apparatus for efficiently dispatching threads in a multi-threaded communication library which become runnable by completion of an event. Each thread has a thread-specific structure containing a “ready flag” and a POSIX thread condition variable unique to that thread. Each message is assigned a “handle”. When a thread waits for a message, thread-specific structure is attached to the message handle being waited on, and the thread is enqueued, waiting for its condition variable to be signaled. When a message completes, the message matching logic sets the ready flag to READY, and causes the queue to be examined. The queue manager scans the queue of waiting threads, and sends a thread awakening condition signal to one of the threads with its ready flag set to READY. The queue manager can implement any desired policy, including First-In-First-Out (FIFO), Last-In-First-Out (LIFO), or some other thread priority scheduling policy. This ensures that the thread which is awakened has the highest priority message to be processed, and enhances the efficiency of message delivery. The priority of the message to be processed is computed based on the overall state of the communication subsystem.

Owner:IBM CORP

Method for Non-Volatile Memory with Managed Execution of Cached Data

ActiveUS20060239080A1Improve programming performanceMore program dataMemory architecture accessing/allocationRead-only memoriesControl memoryOperating system

Methods and circuitry are present for executing current memory operation while other multiple pending memory operations are queued. Furthermore, when certain conditions are satisfied, some of these memory operations are combinable or mergeable for improved efficiency and other benefits. The management of the multiple memory operations is accomplished by the provision of a memory operation queue controlled by a memory operation queue manager. The memory operation queue manager is preferably implemented as a module in the state machine that controls the execution of a memory operation in the memory array.

Owner:SANDISK TECH LLC

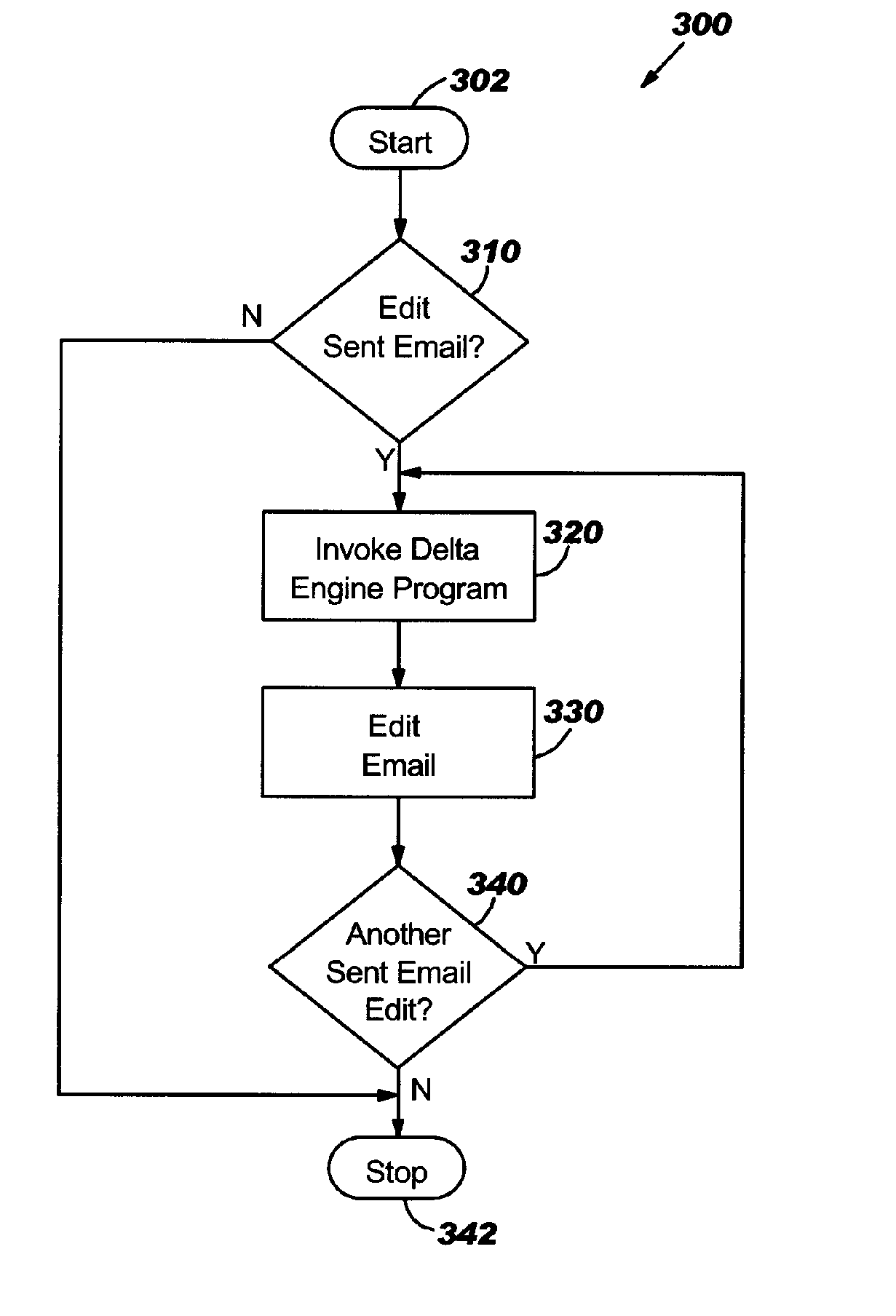

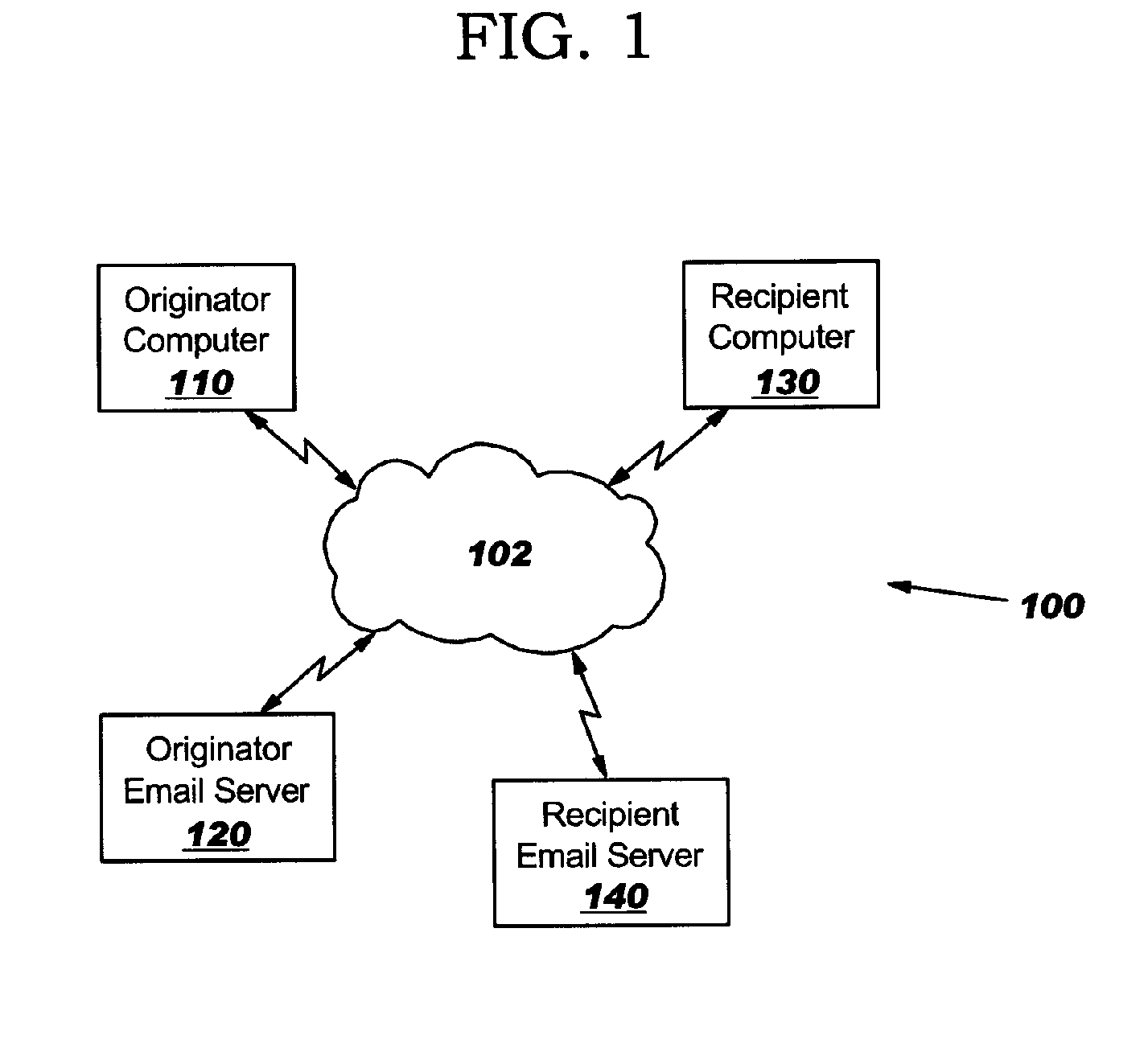

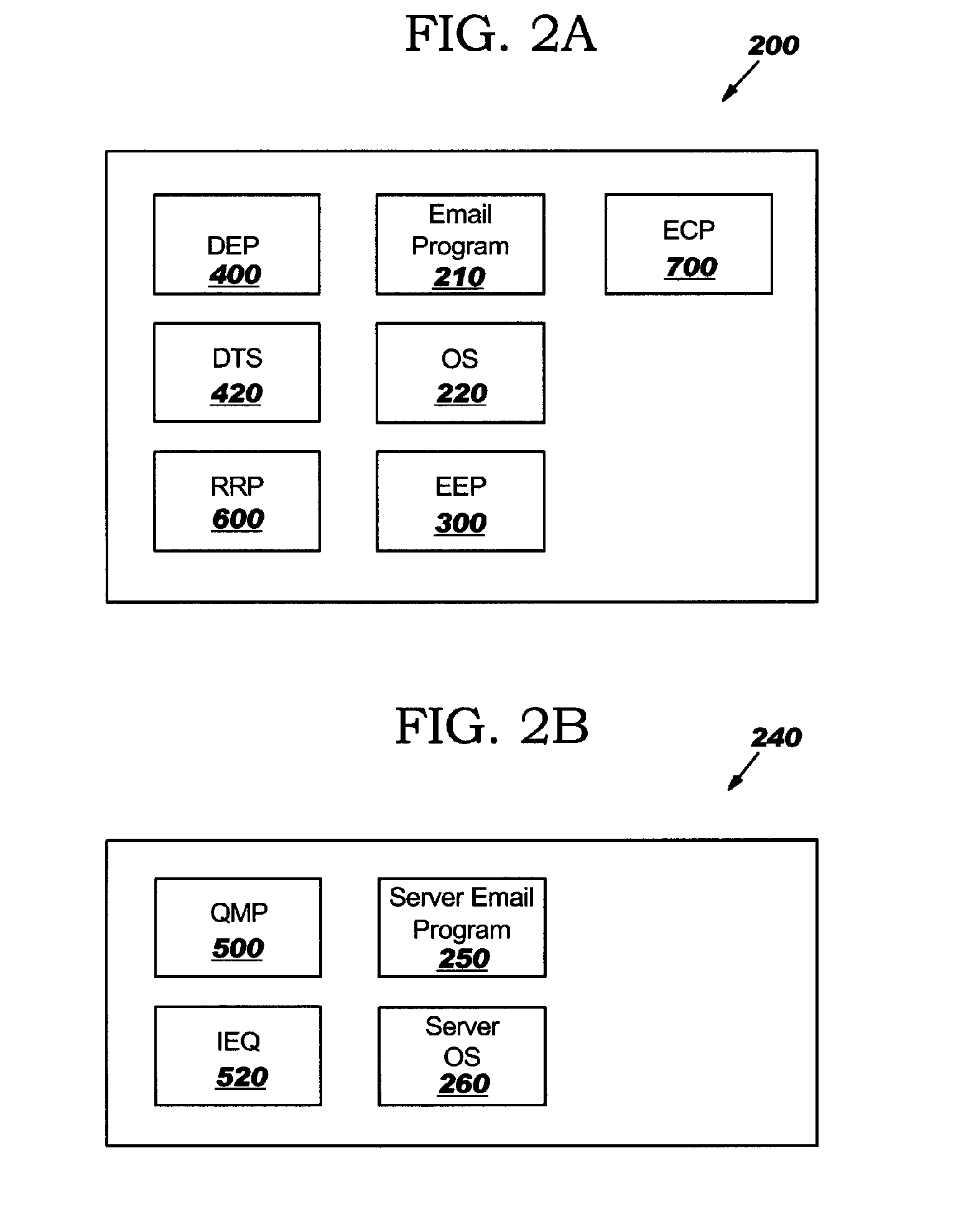

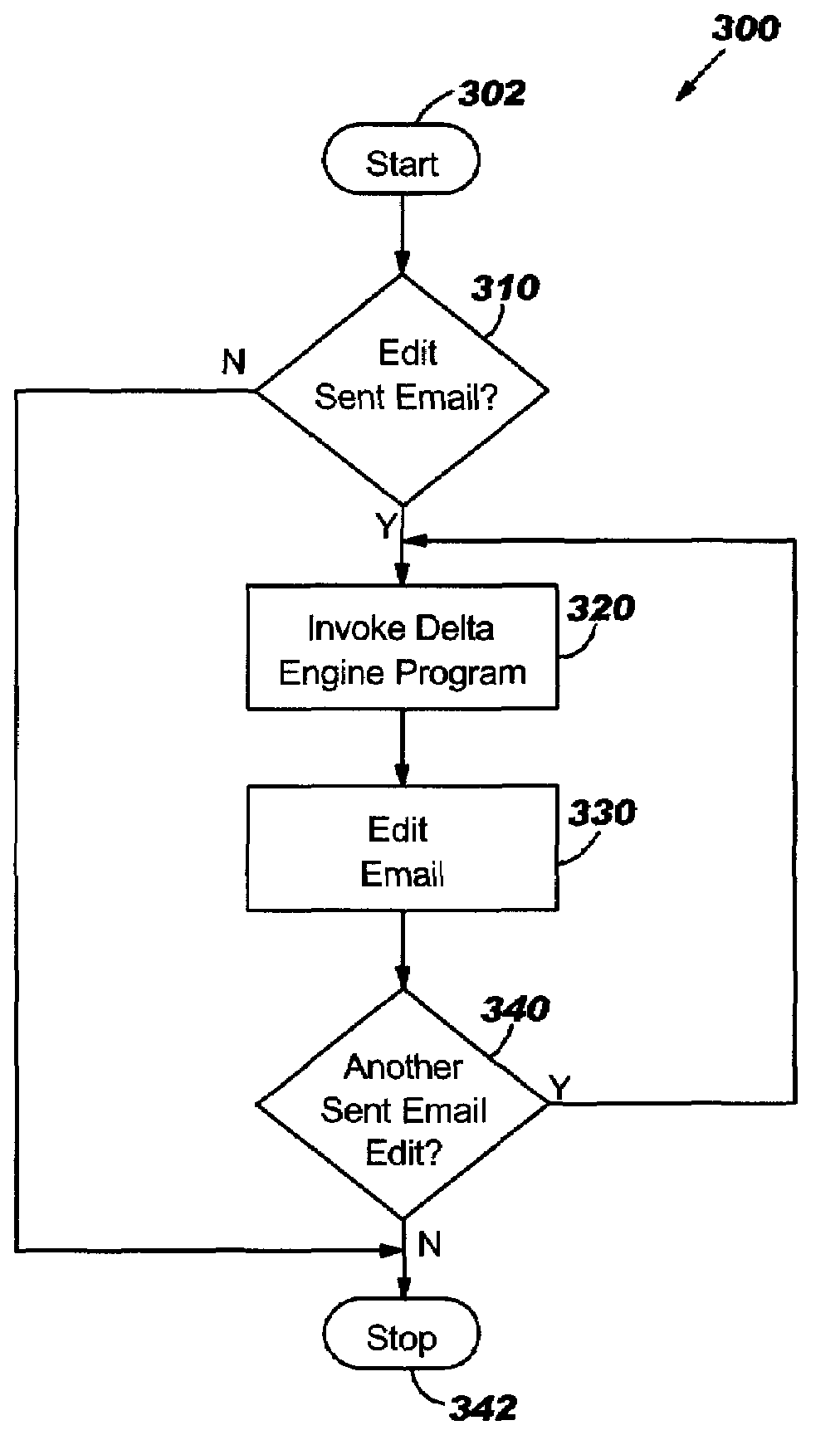

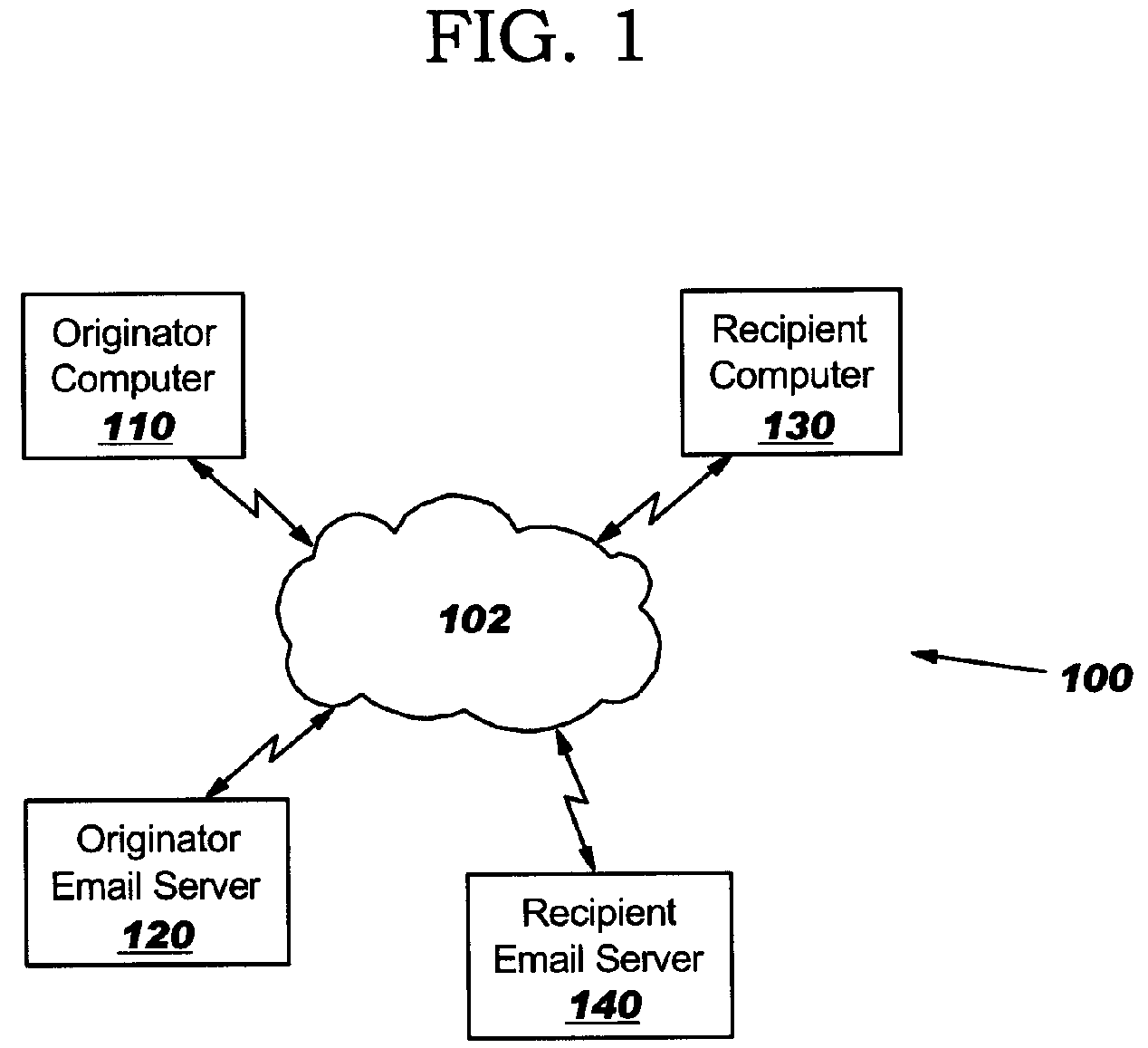

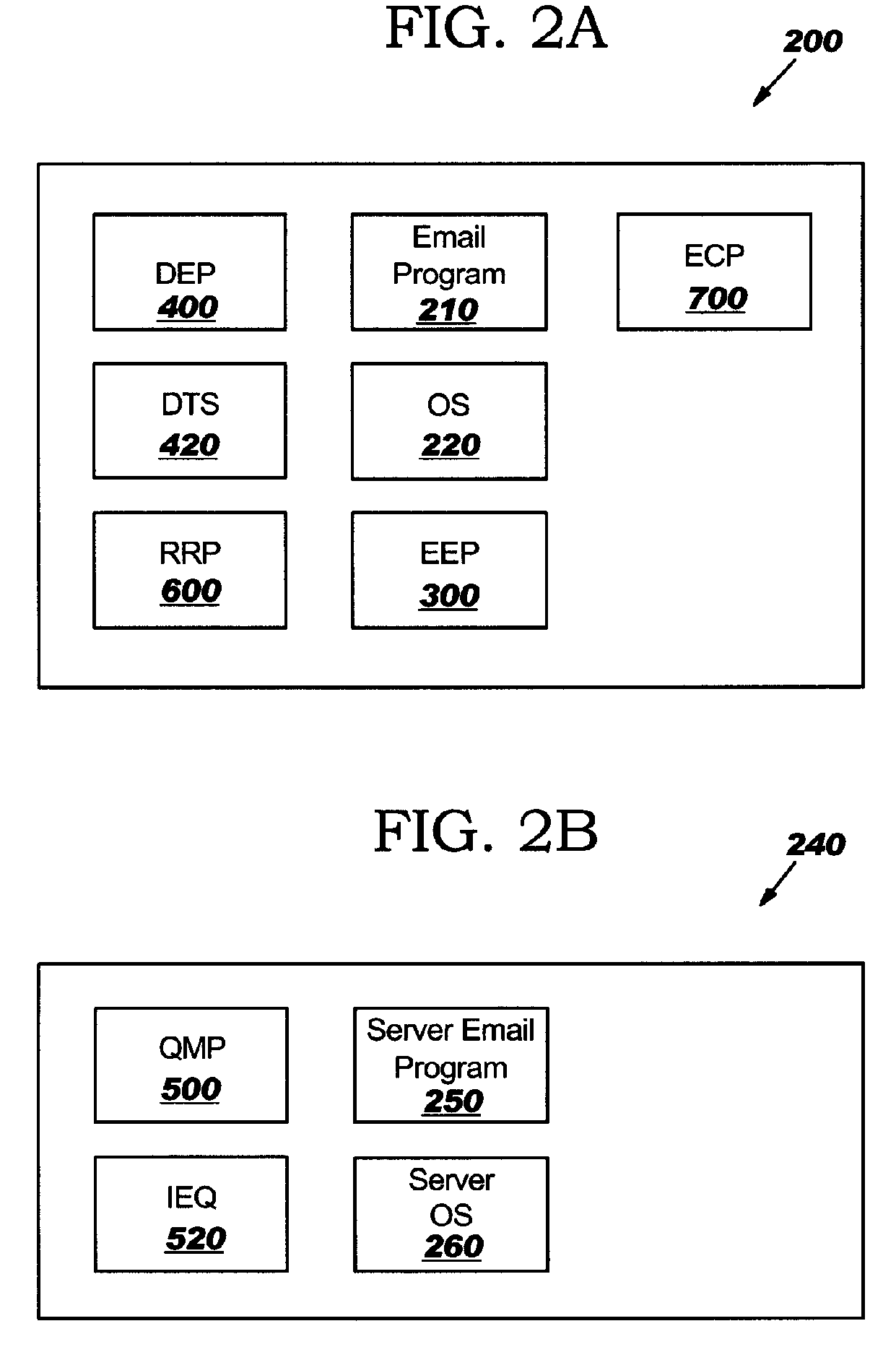

Dynamic Email Content Update Process

InactiveUS20060168346A1Natural language data processingMultiple digital computer combinationsWorld Wide WebElectronic mail

An email update system dynamically updates the content of an email when the originator of an email has sent the email, and the originator later determines that the email requires editing. The updating may take place transparent to the recipient and without the introduction of duplicative content into the recipient's email program. The email update system comprises a delta engine program and a delta temporary storage in a sender's computer, a queue manager program and an intermediate email queue in a server computer, and a recipient email retrieval program in a recipient's computer.

Owner:SNAPCHAT

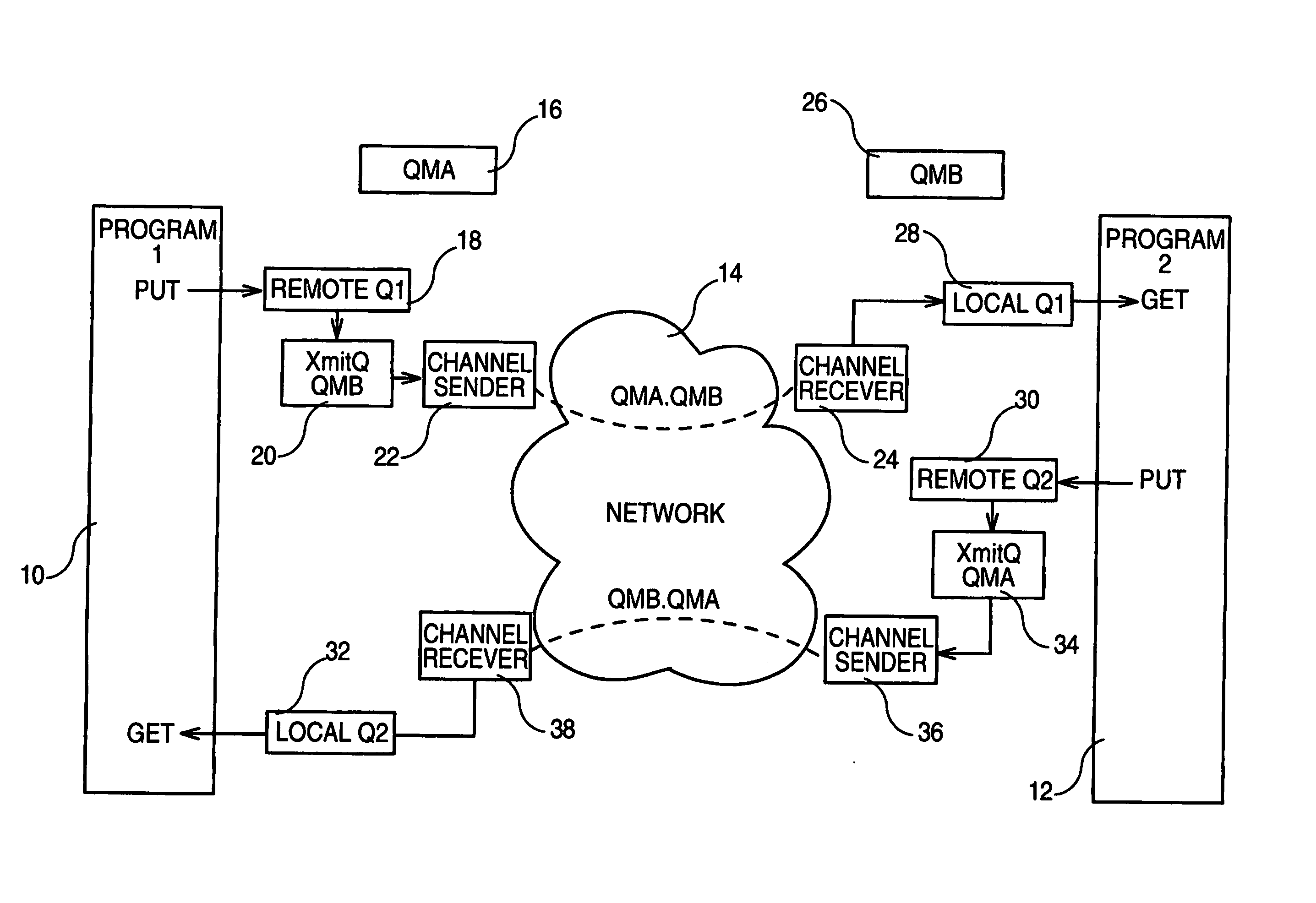

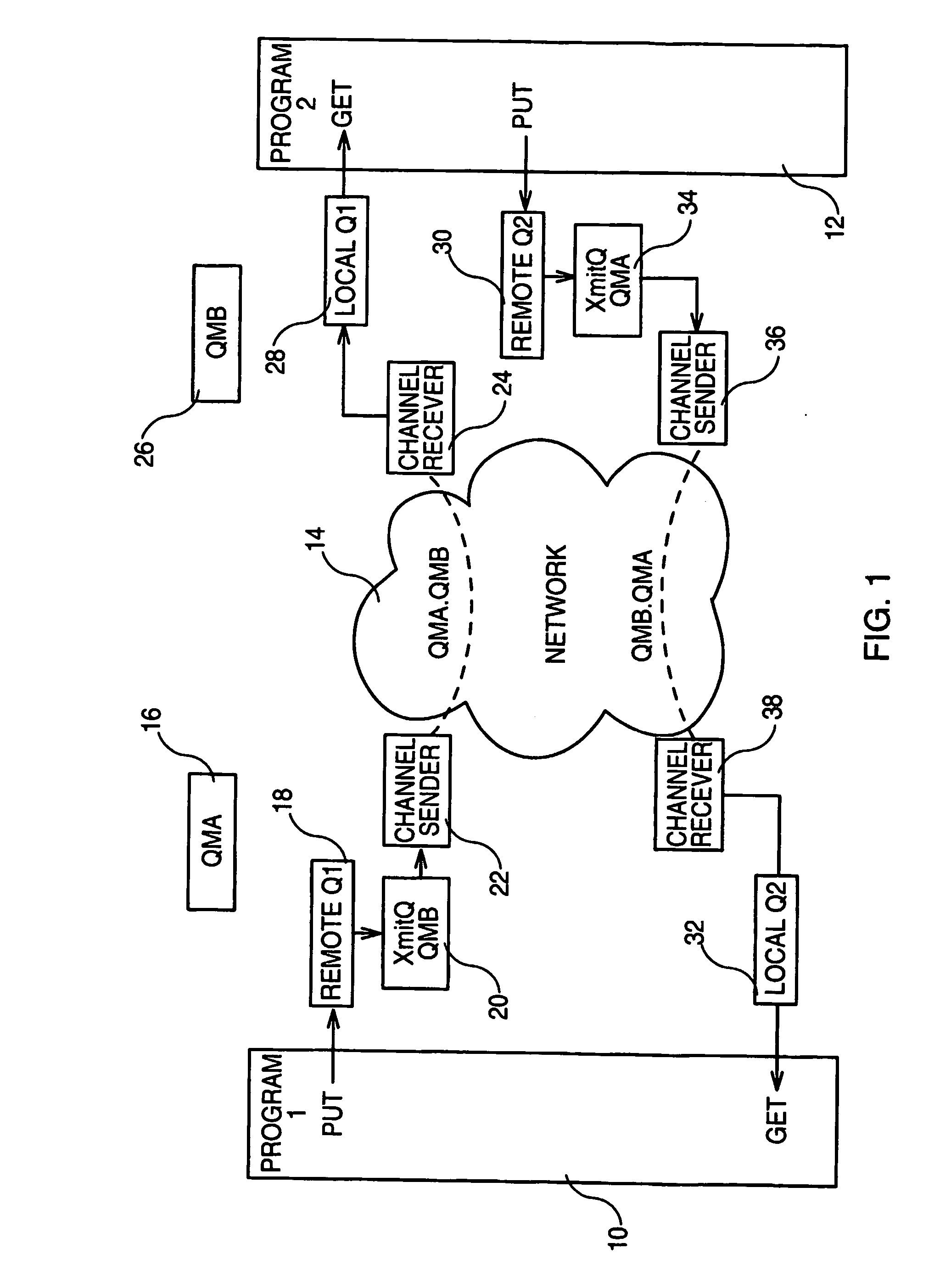

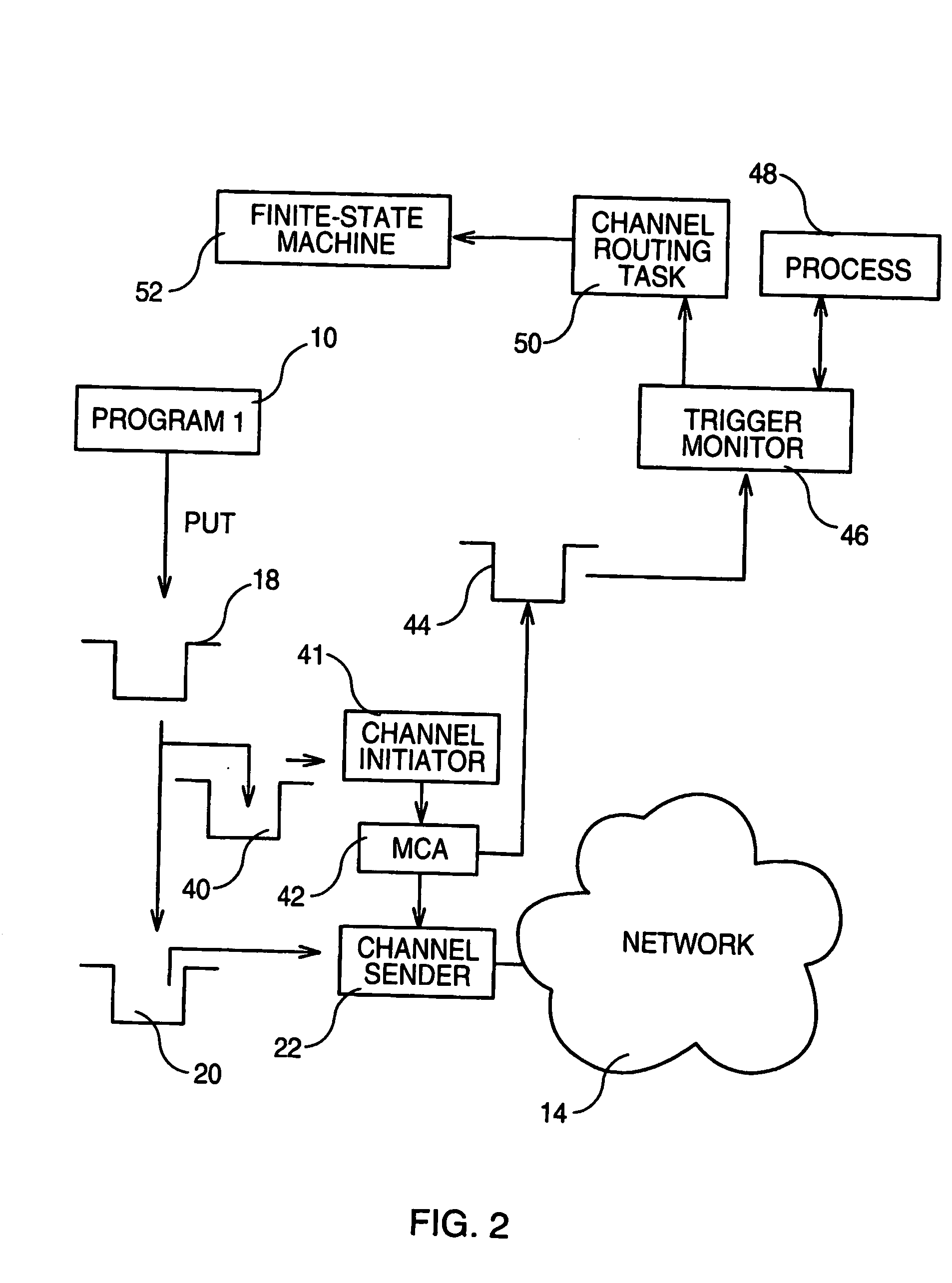

System for defining an alternate channel routing mechanism in a messaging middleware environment

InactiveUS20070078995A1Multiprogramming arrangementsMultiple digital computer combinationsMessage queueEvent type

A method and system for routing channels in which messages are transmitted from a source application to a destination application. The system includes a finite-state machine, source message queues, and a source queue manager for managing the source message queues. The source message queues include a transmission queue for holding a first message for subsequent transmission of the first message from the transmission queue over a first channel to a local queue of the destination application. The method includes: activating the finite-state machine; and performing or not performing a channel routing action, by the finite-state machine, depending on: a channel event having caused the first channel to be started or stopped, a channel sender set for the first channel, and an event type characterizing the channel event as normal or abnormal. The channel routing action is a function of the channel event, the channel sender, and the event type.

Owner:KYNDRYL INC

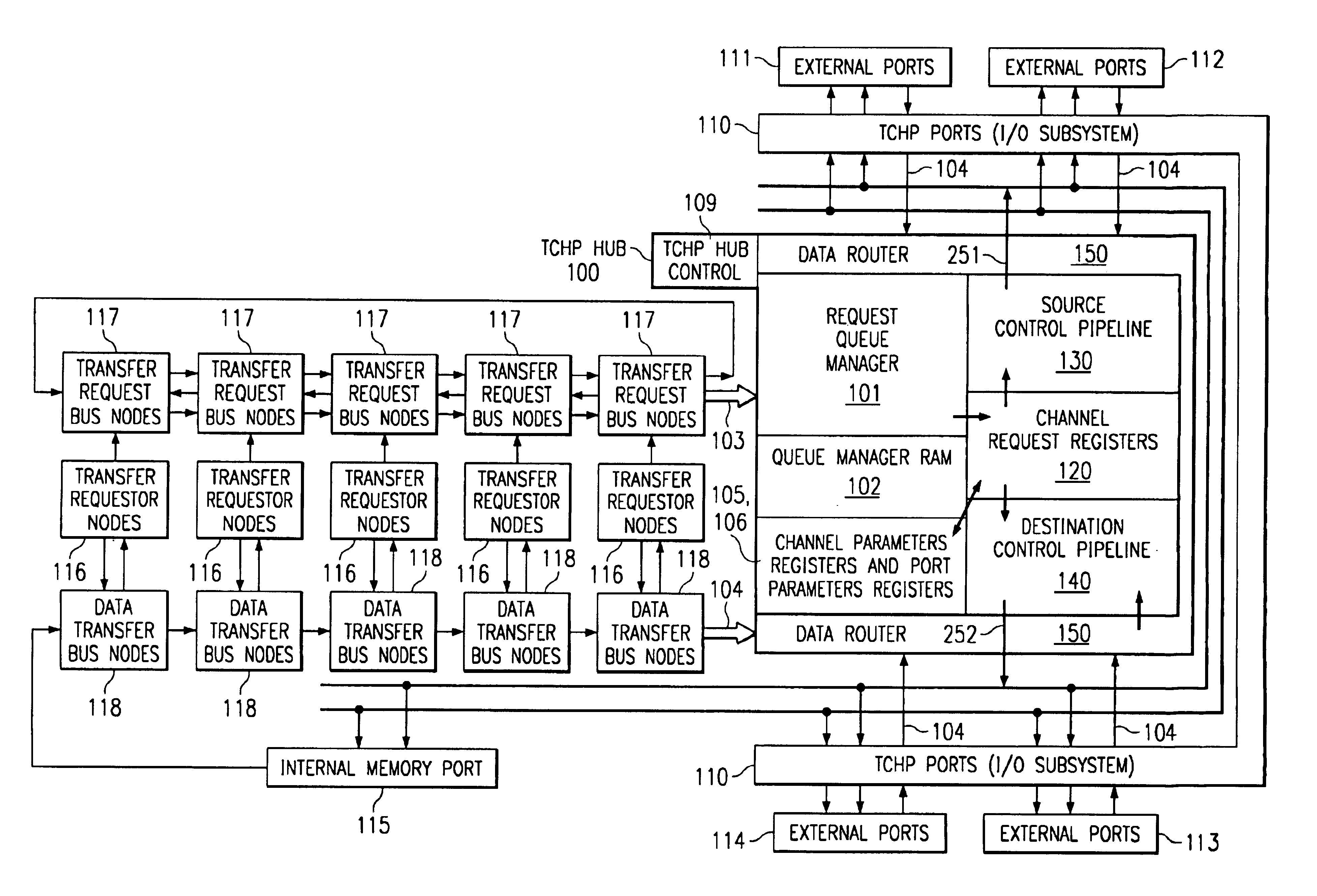

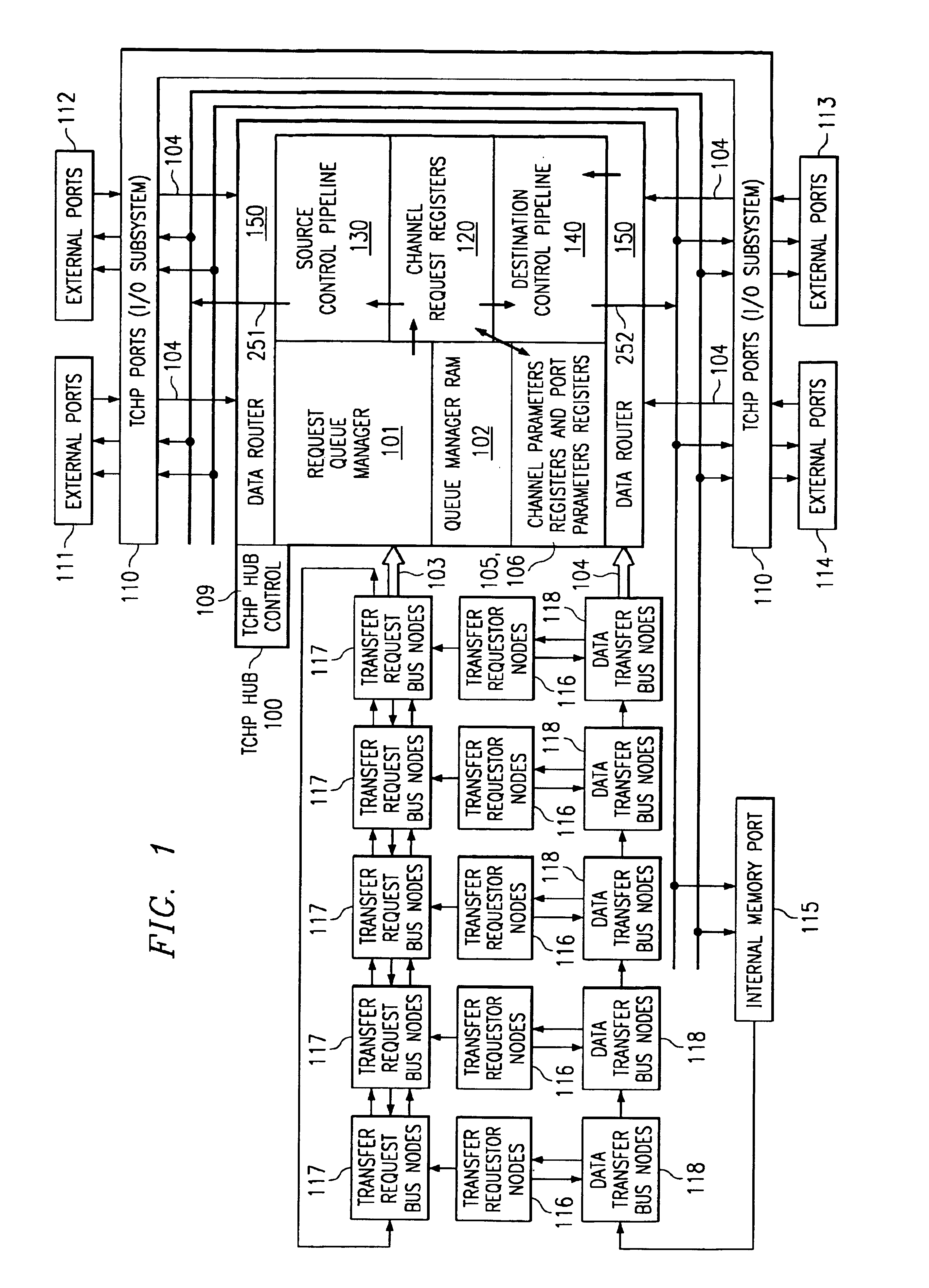

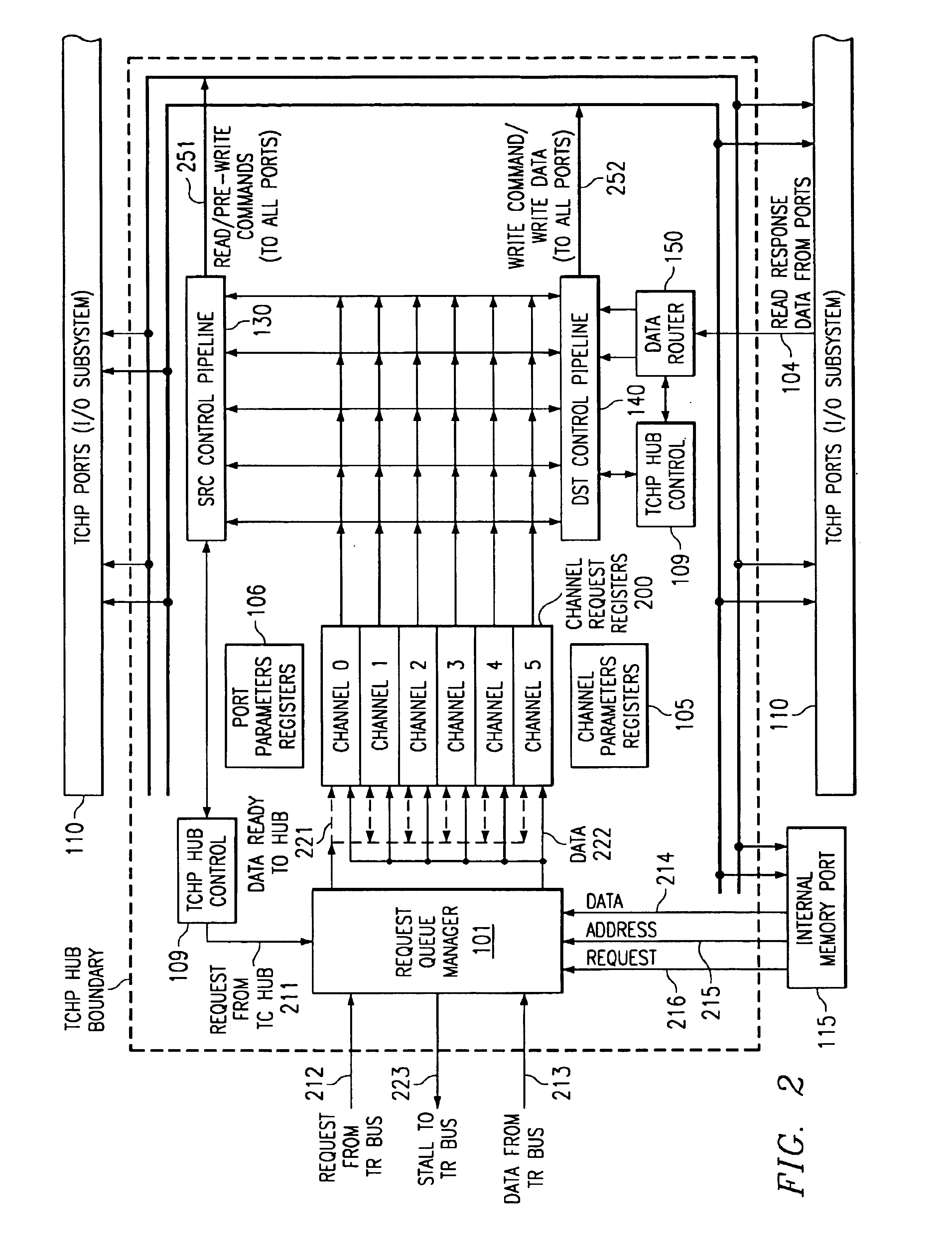

Request queue manager in transfer controller with hub and ports

Owner:TEXAS INSTR INC

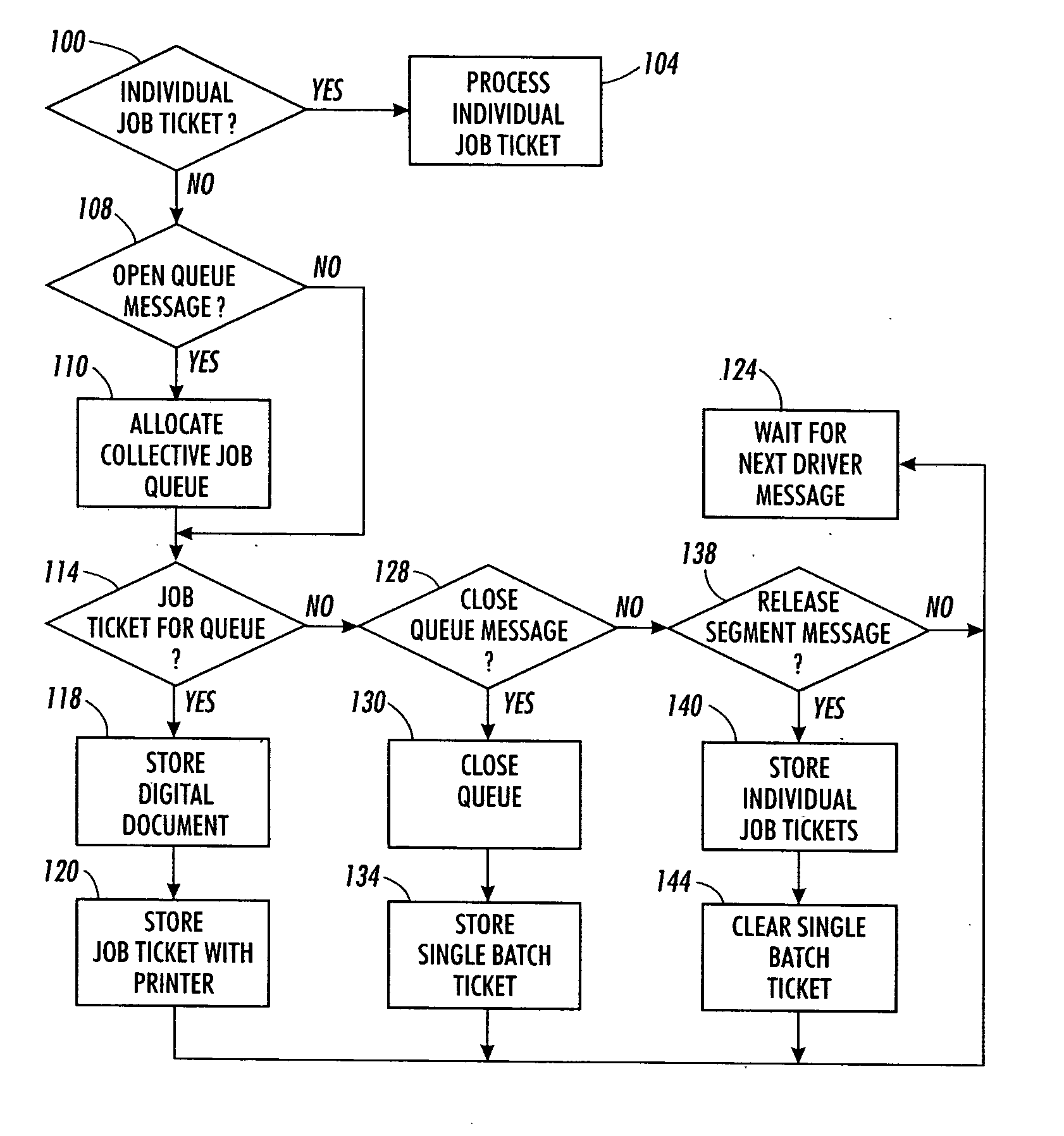

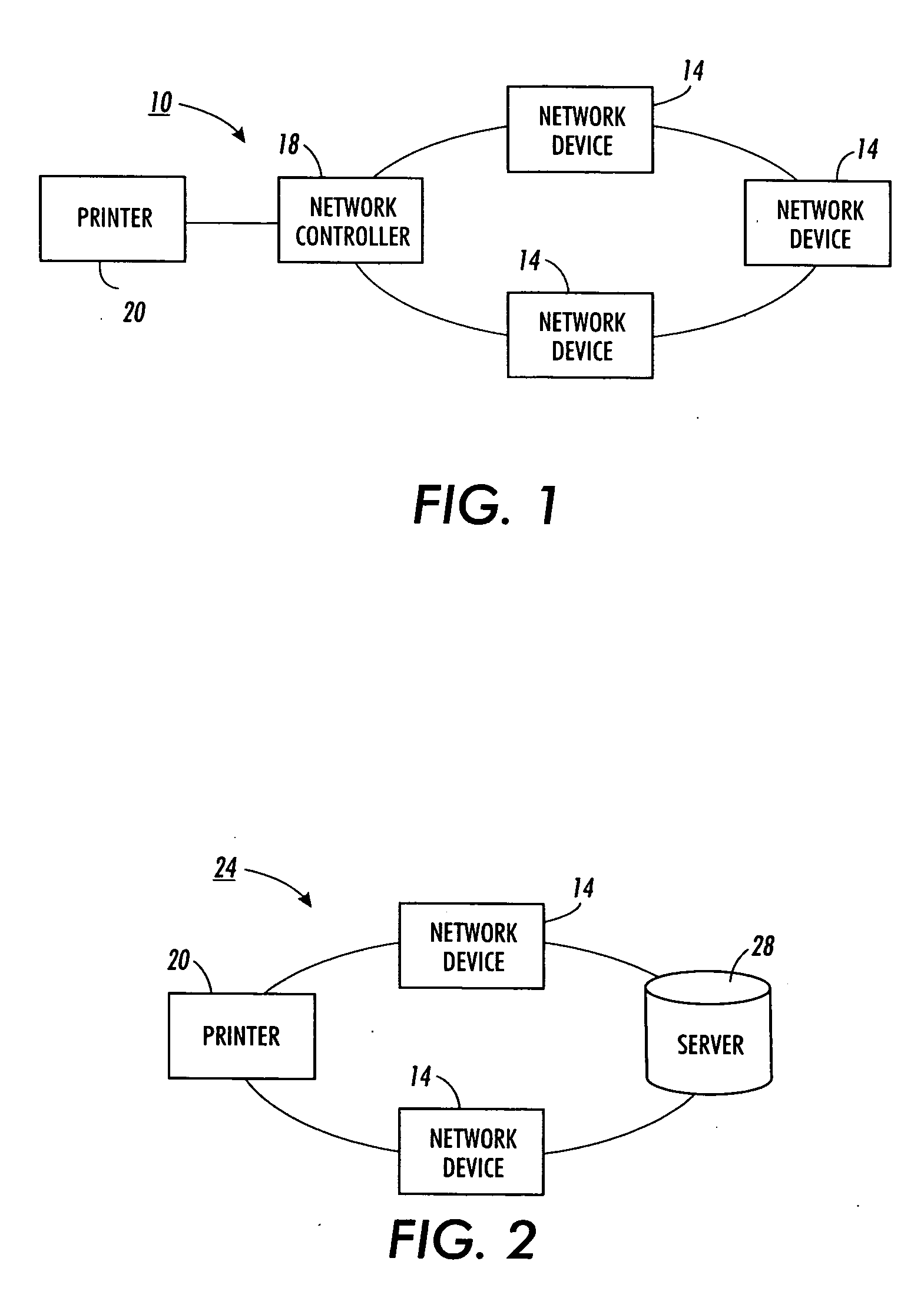

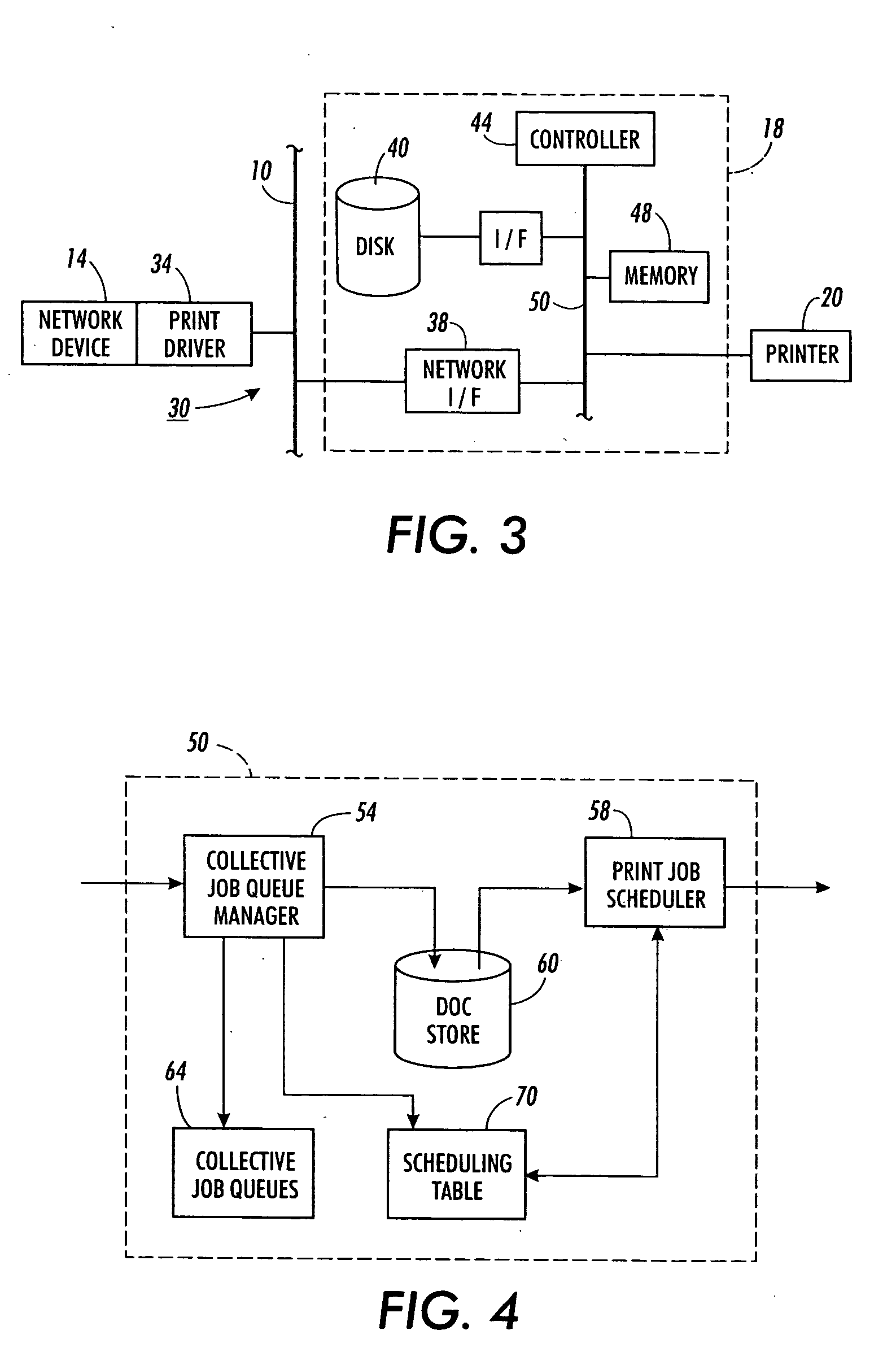

Method and system for managing print job files for a shared printer

InactiveUS20060033958A1Promote recoveryEfficient printingDigitally marking record carriersVisual presentation using printersComputer scienceQueue manager

A system and method enable a user to generate a single batch job ticket for a plurality of print job tickets. The system includes a print driver, a print job manager, and a print engine. The print driver enables a user to request generation of a collective job queue and to provide a plurality of job tickets for the job queue. The print job manager includes a collective job queue manager and a print job scheduler. The collective job queue manager collects job tickets for a job queue and generates a single batch job ticket for the print job scheduling table when the job queue is closed. The print job scheduler selects single batch job tickets in accordance with various criteria and releases the job segments to a print engine for contiguous printing of the job segments.

Owner:XEROX CORP

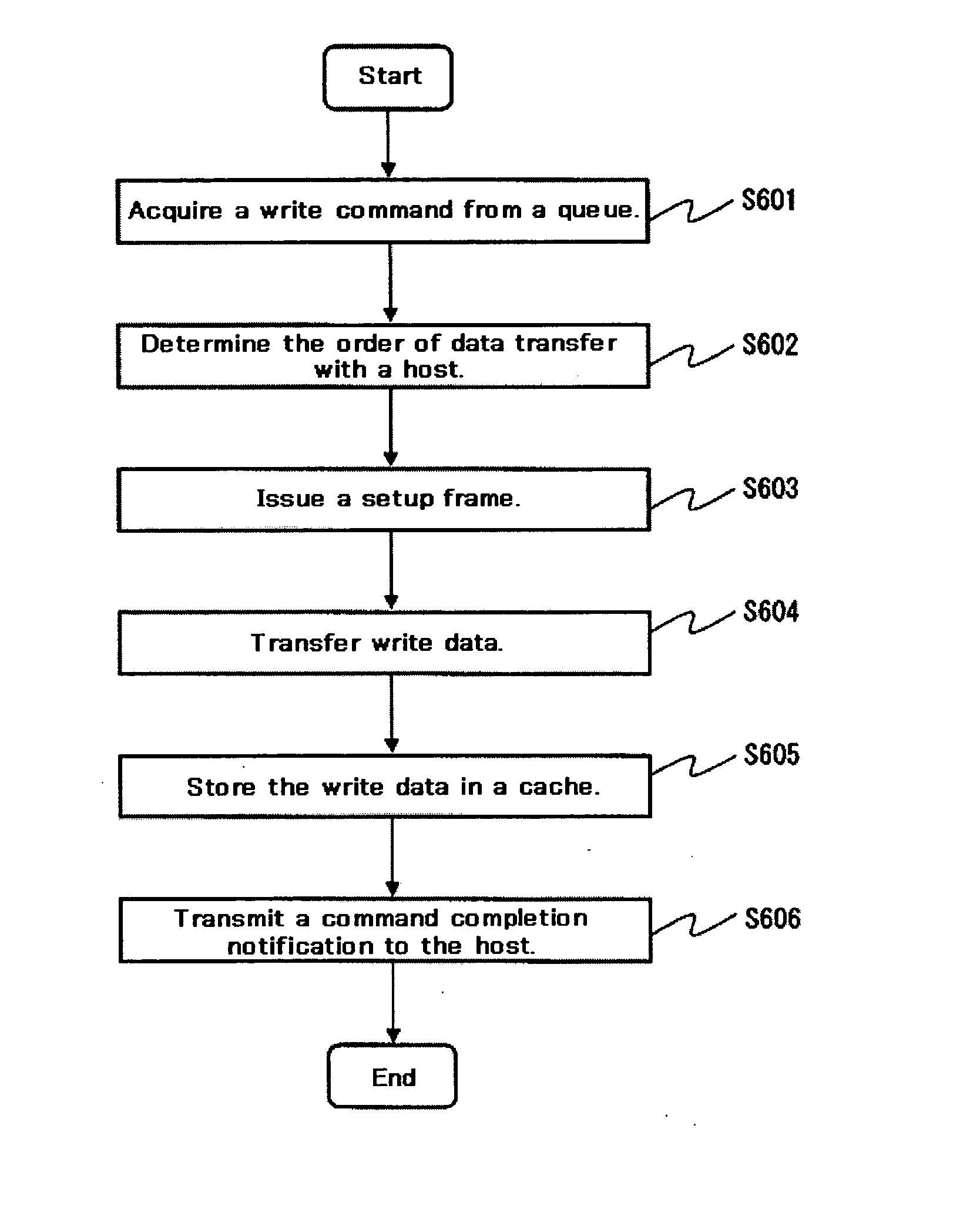

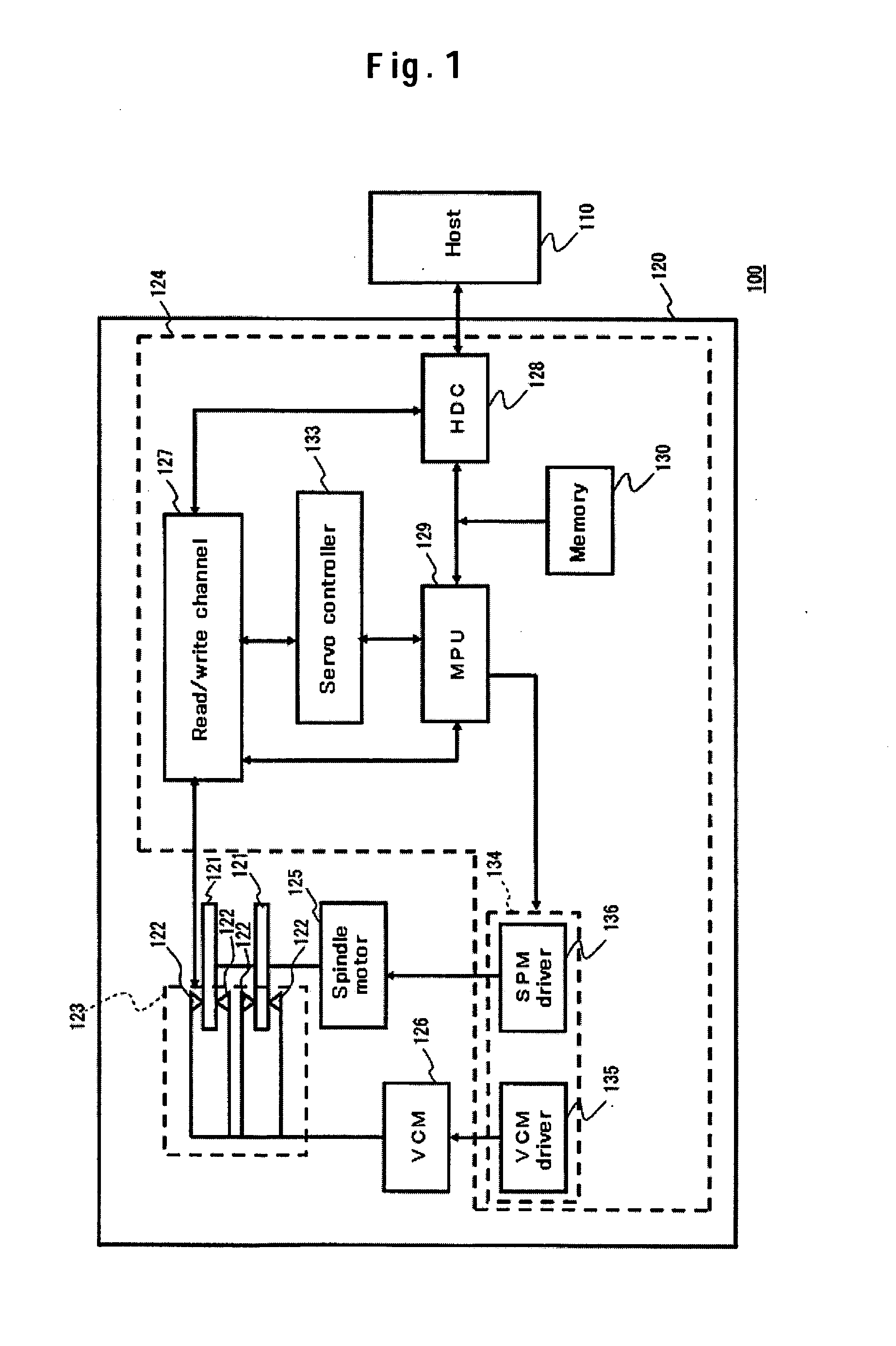

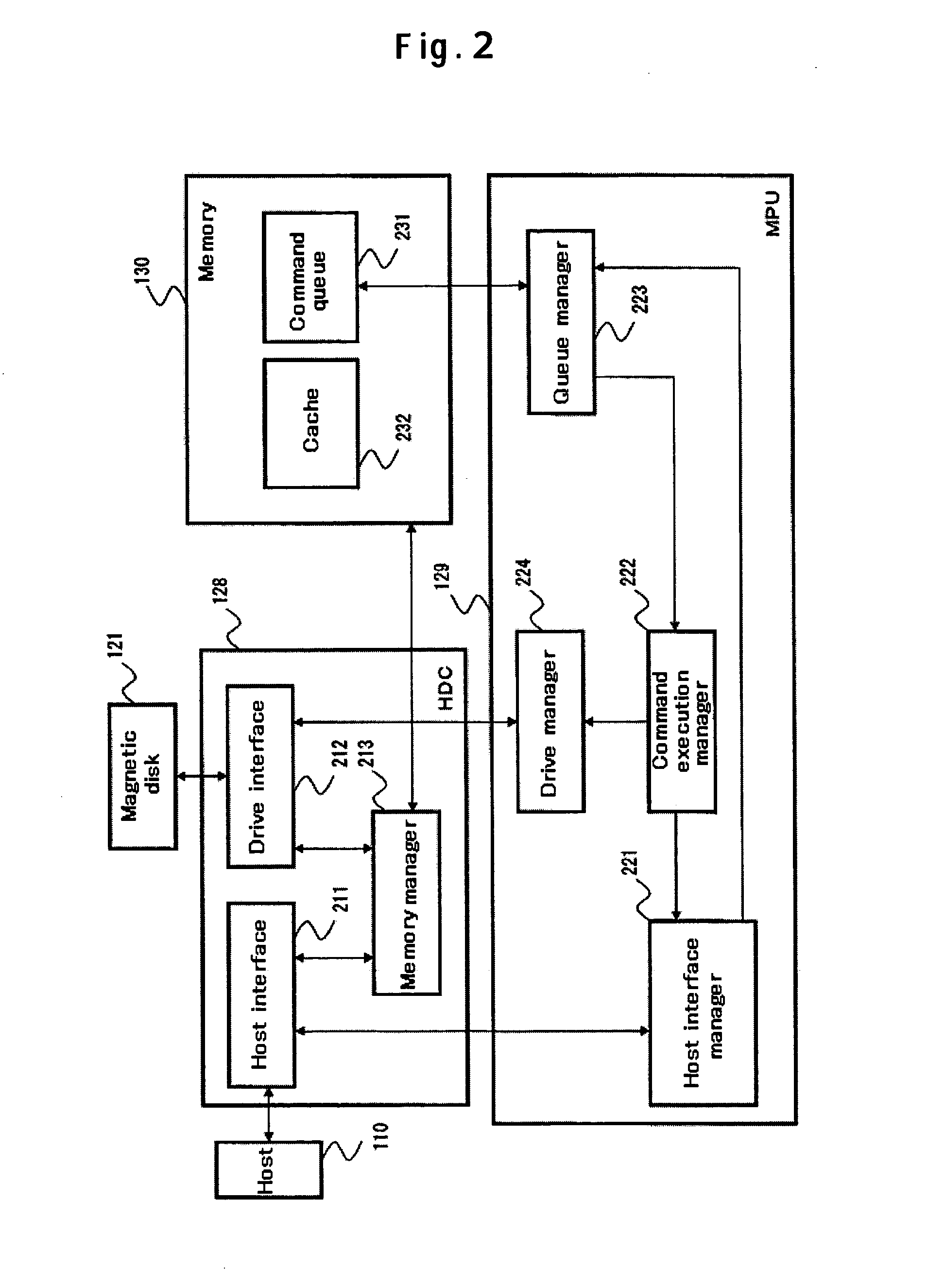

Media drive and command execution method thereof

InactiveUS20060106980A1Low costPerformance of hardMemory systemsInput/output processes for data processingData transmissionOperating system

Embodiments of the present invention provide a media drive capable of improving command processing performance by, when a plurality of commands is queued, shortening seek time and rotational latency, and also effectively making use of the shortened period of time. In one embodiment, a HDD includes a queue capable of storing a plurality of commands, and a queue manager for optimizing the execution order of the plurality of commands on the basis of whether or not the execution of each command requires access to a medium. The queue manager determines the execution order so that medium access processing of accessing a disk for execution, and data transfer processing of transferring data between the HDD and a host, are executed in parallel with each other. For example, read processing and transfer processing are executed in parallel with each other. The read processing is adaptive to read out a read command, data of which does not exist in the cache, from the disk into the cache. The transfer processing is adaptive to transfer a read command, data of which exists in the cache, to the host.

Owner:HITACHI GLOBAL STORAGE TECH NETHERLANDS BV

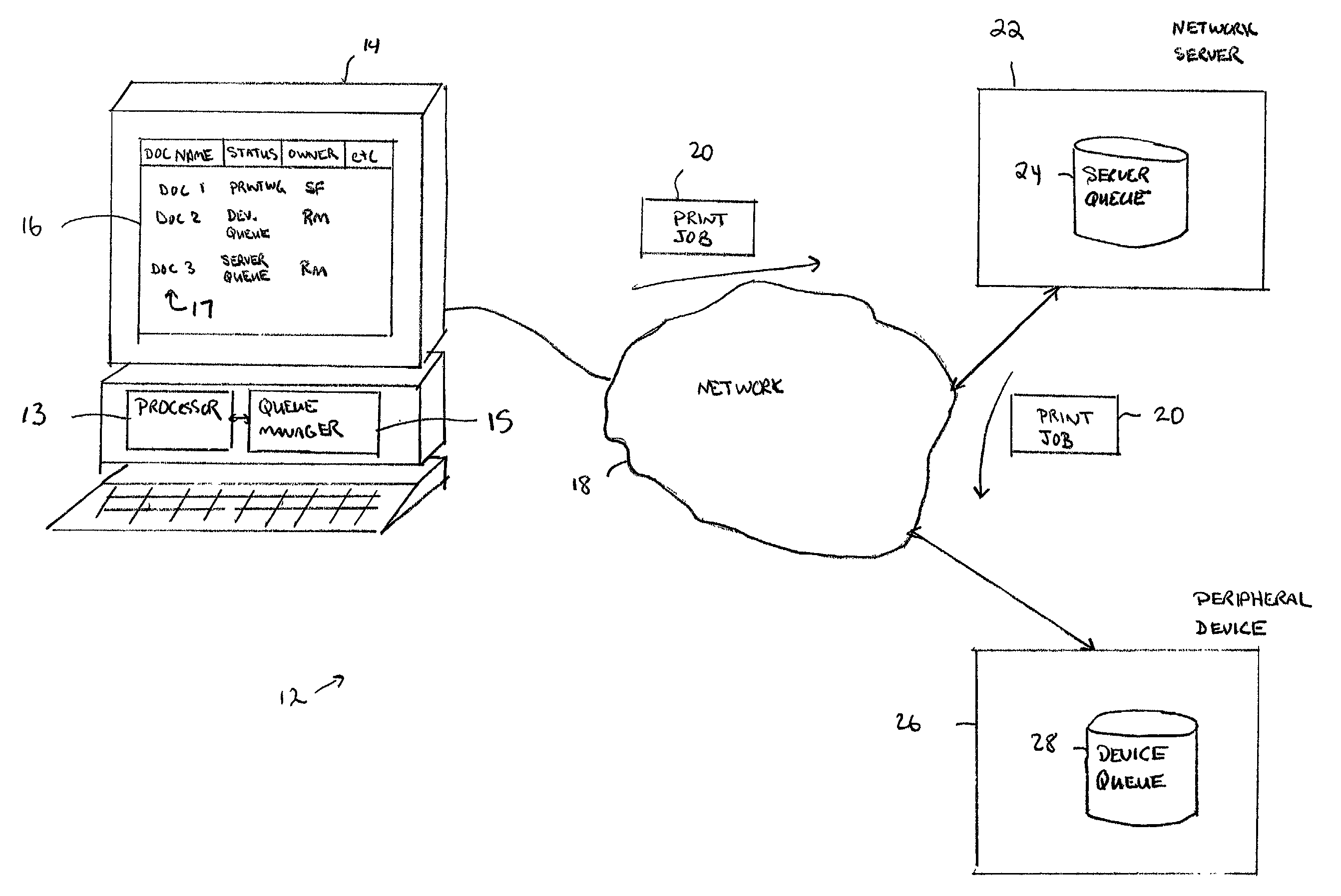

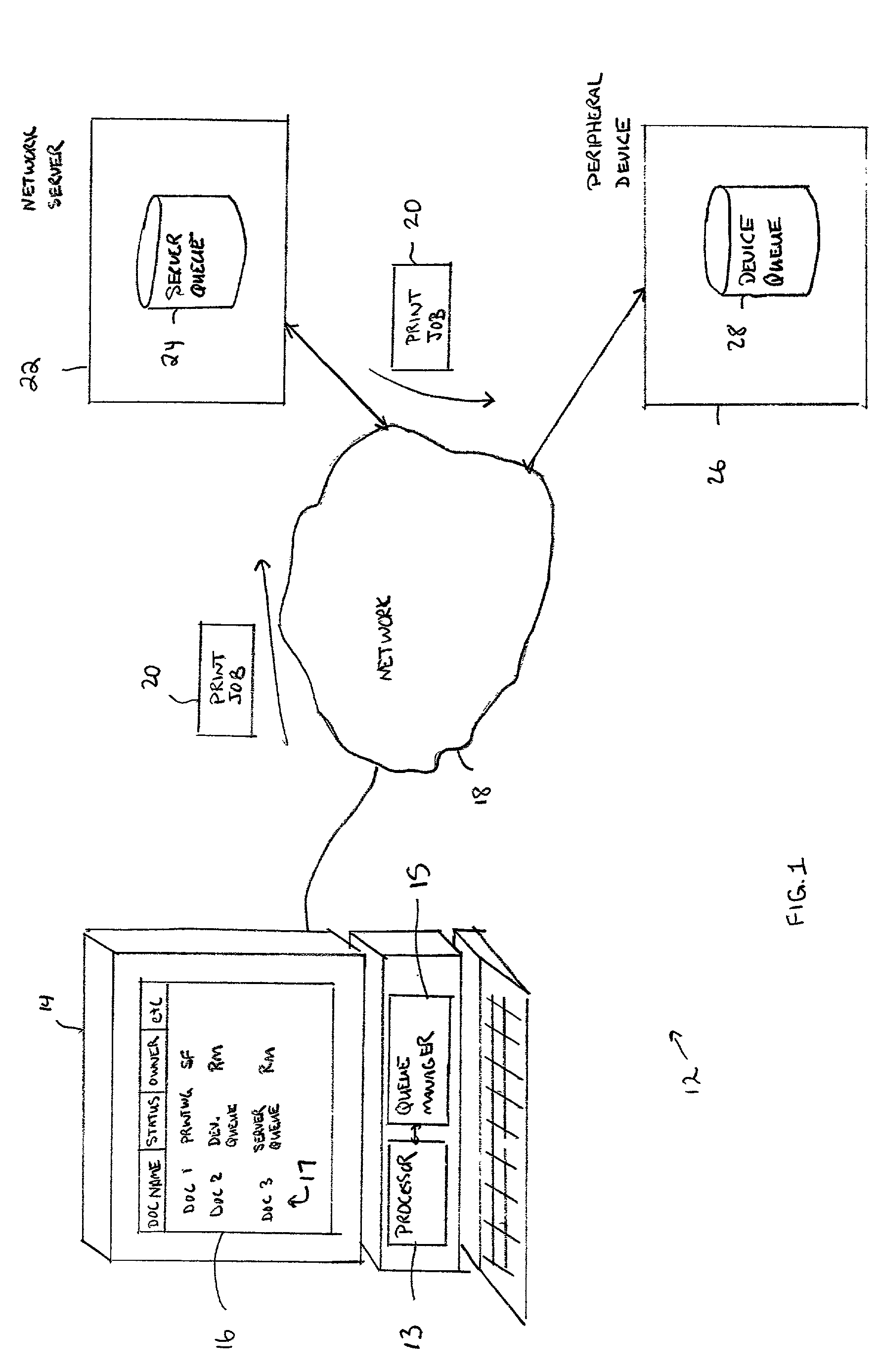

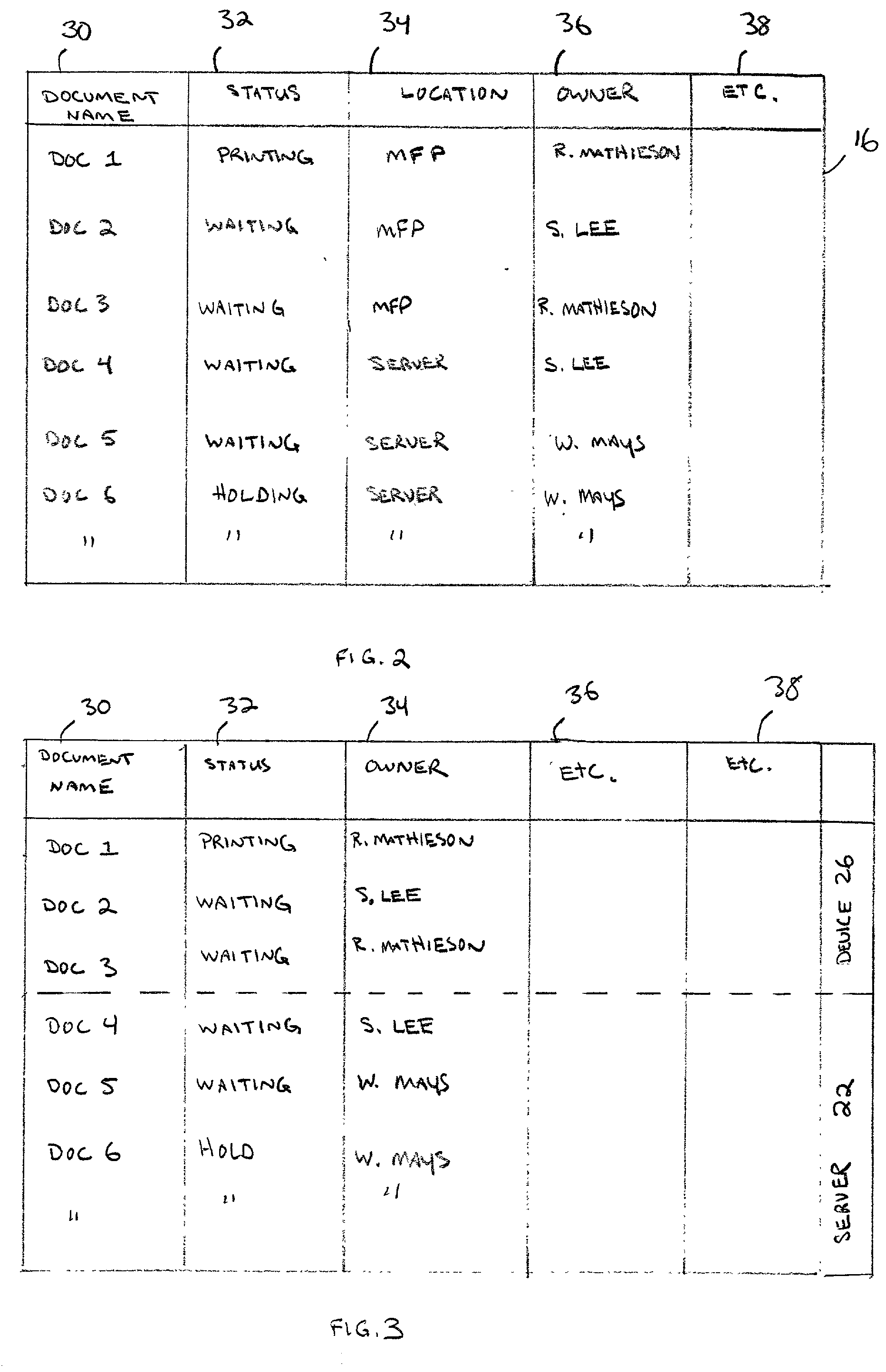

Method and apparatus for managing job queues

A queue manager monitors status of a server queue in a network server and status of a device queue in a peripheral device at the same time. A user interface displays the status of jobs in the server queue and device queue on the same display and allows a user to manipulate any of the jobs on either queue using the same user interface.

Owner:SHARP KK

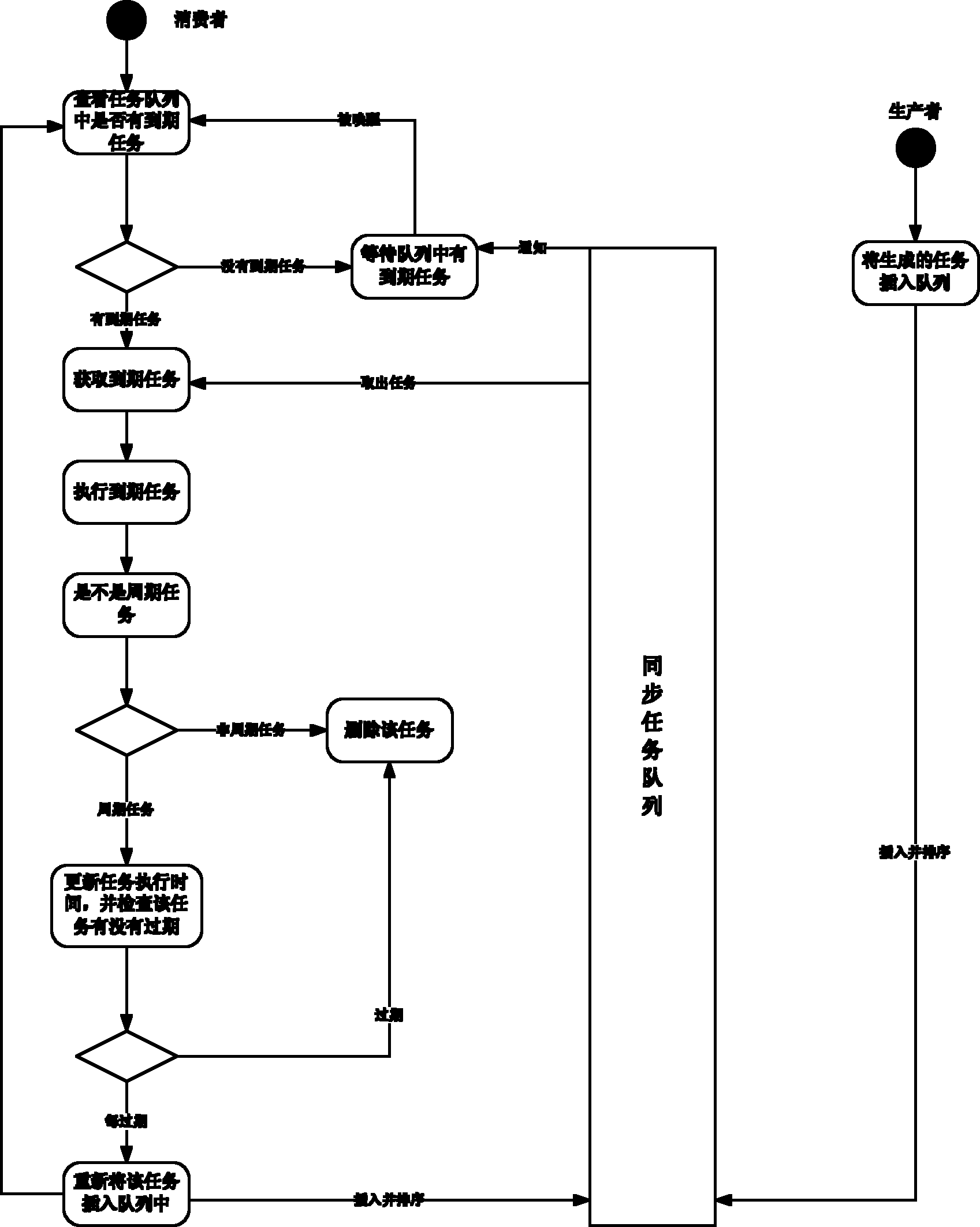

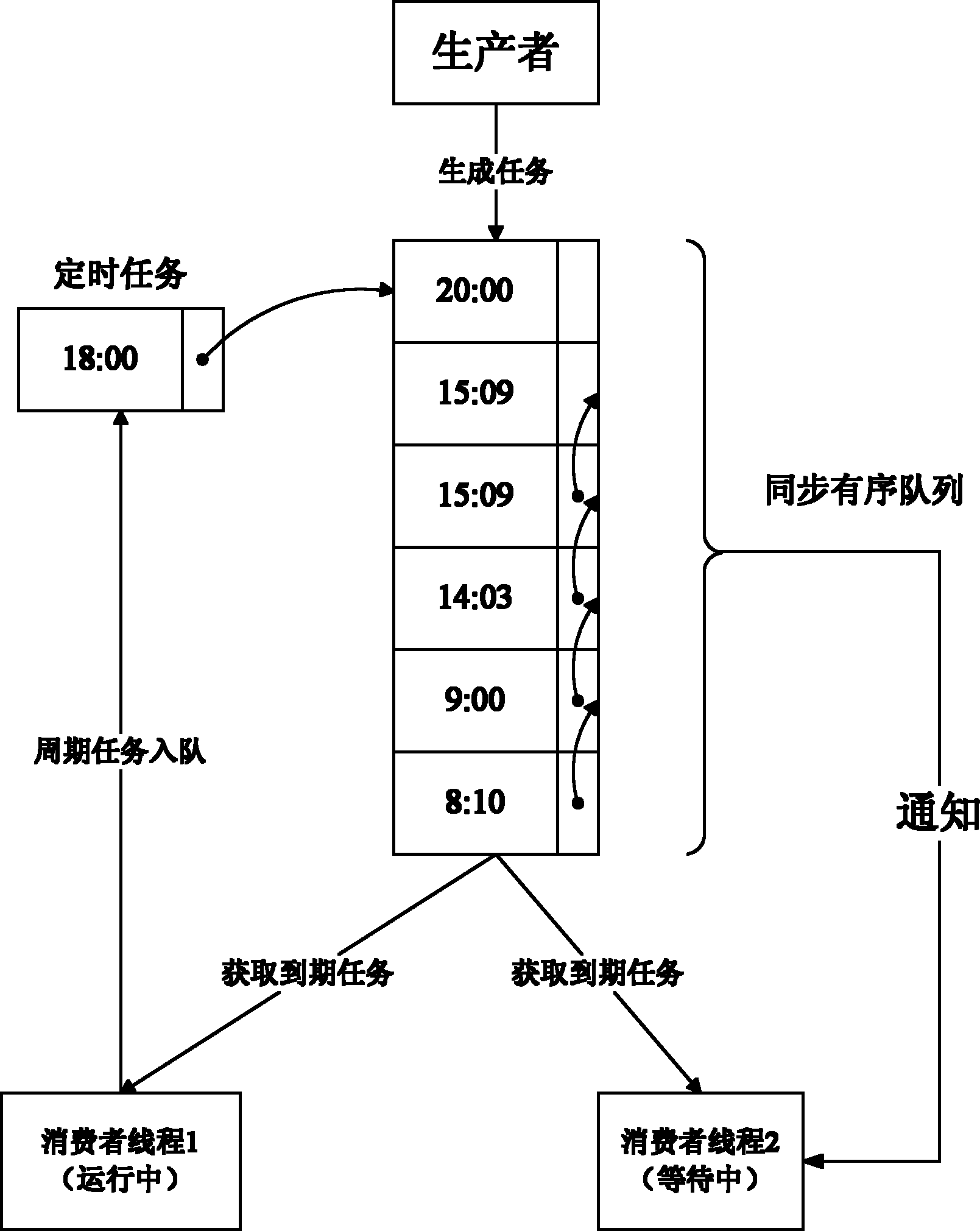

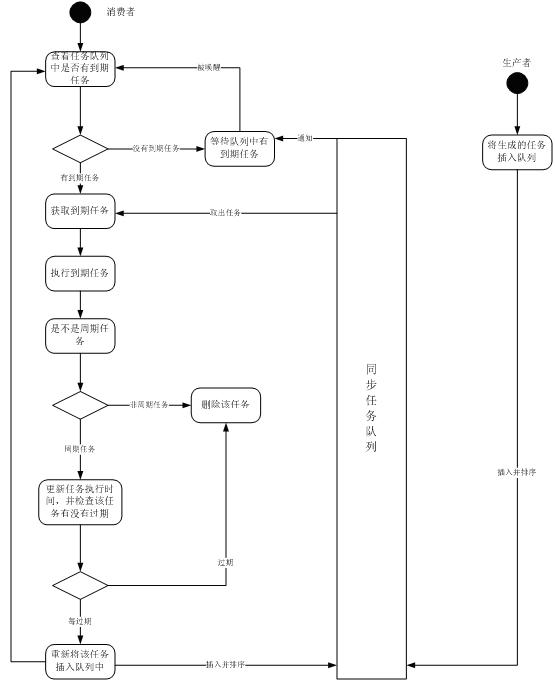

Universal timed task management realization method

InactiveCN102129393AEasy to operateEasy to integrateProgram initiation/switchingTask managementInsertion sort

The invention discloses a universal timed task management realization method, which is characterized by comprising three steps. In the first step, a manager consisting of a synchronous ordered queue, a plurality of generator threads and a plurality of consumer threads is set. In the second step, the queue manager manages different timed tasks by the synchronous ordered queue, sorts the tasks in the queue in an ascending way according to execution time, triggers a notice every time when tasks are added into the queue, and ensures that the order of the queue is unchanged by insertion sort. The third step specifically comprises the following steps of: when the consumer threads are started, checking whether the queue comprises periodical tasks or not; if the queue is checked not to comprise the periodic tasks, blocking the threads, awakening waiting tasks by using the notice when the waiting tasks are inserted and rechecking the queue; if the queue is checked to comprise the periodical tasks, checking whether the tasks are expired, and if the tasks are checked to be expired, calculating expiration dates, starting blocking the threads and waiting for a limited time; rechecking the queue if waiting for the limited time or the waiting tasks are awakened by the notice; executing the expired tasks immediately when the expired tasks are found in the queue checking of each time; and for the periodical tasks, modifying execution starting time after the execution of each time, and inserting the periodical tasks into the queue again for calling and execution. The method has the characteristics of universality and high efficiency.

Owner:NANJING NRIET IND CORP

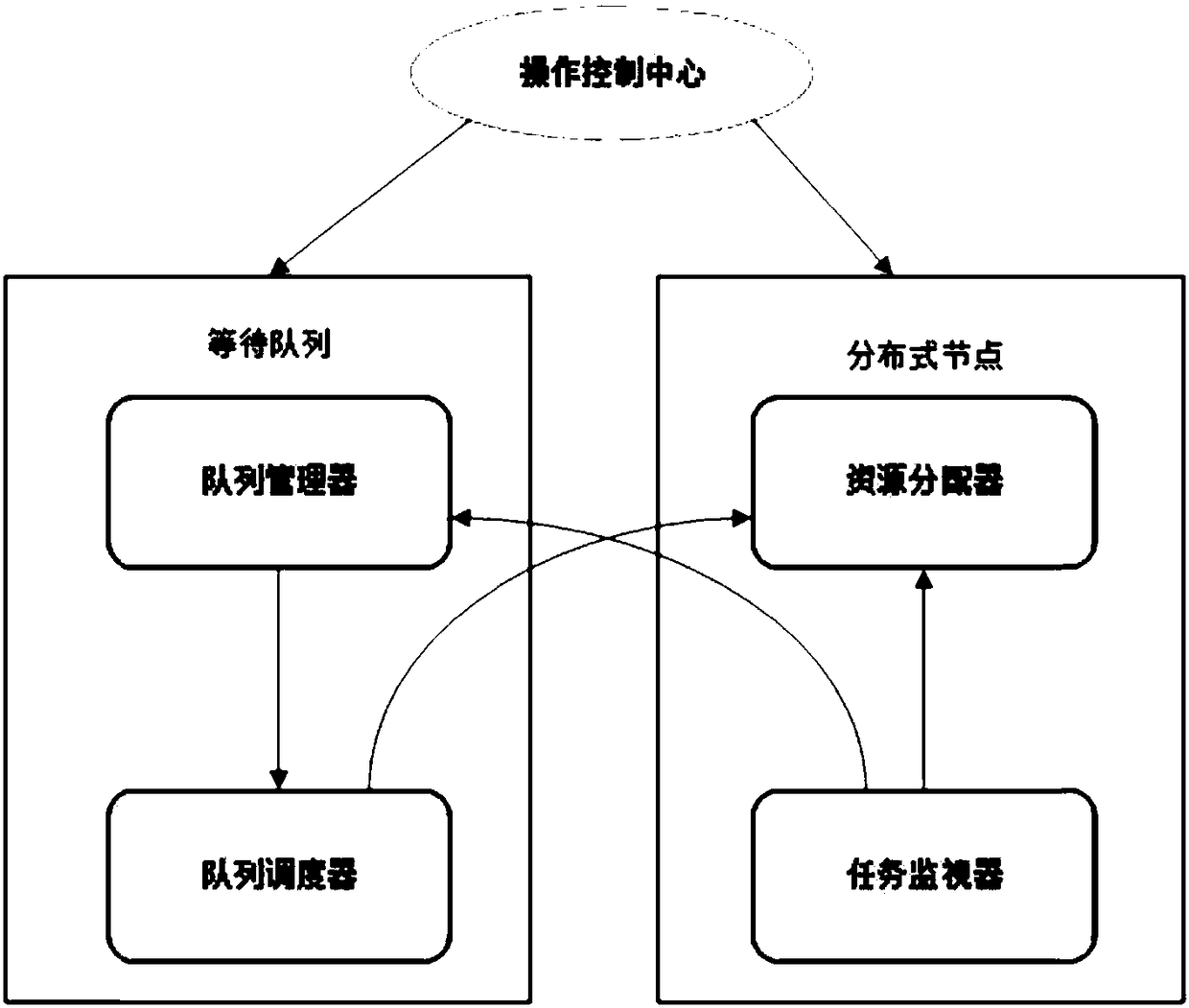

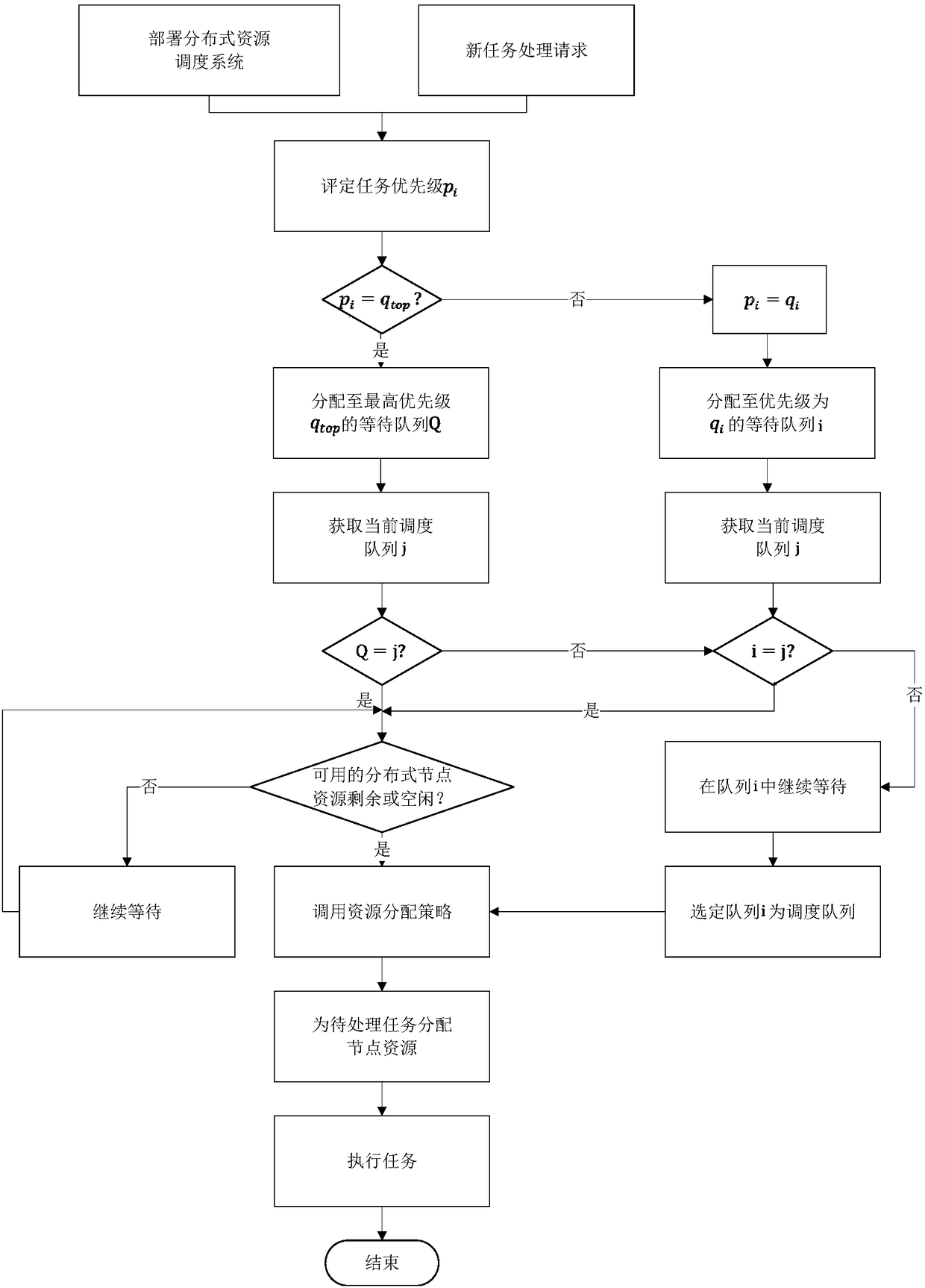

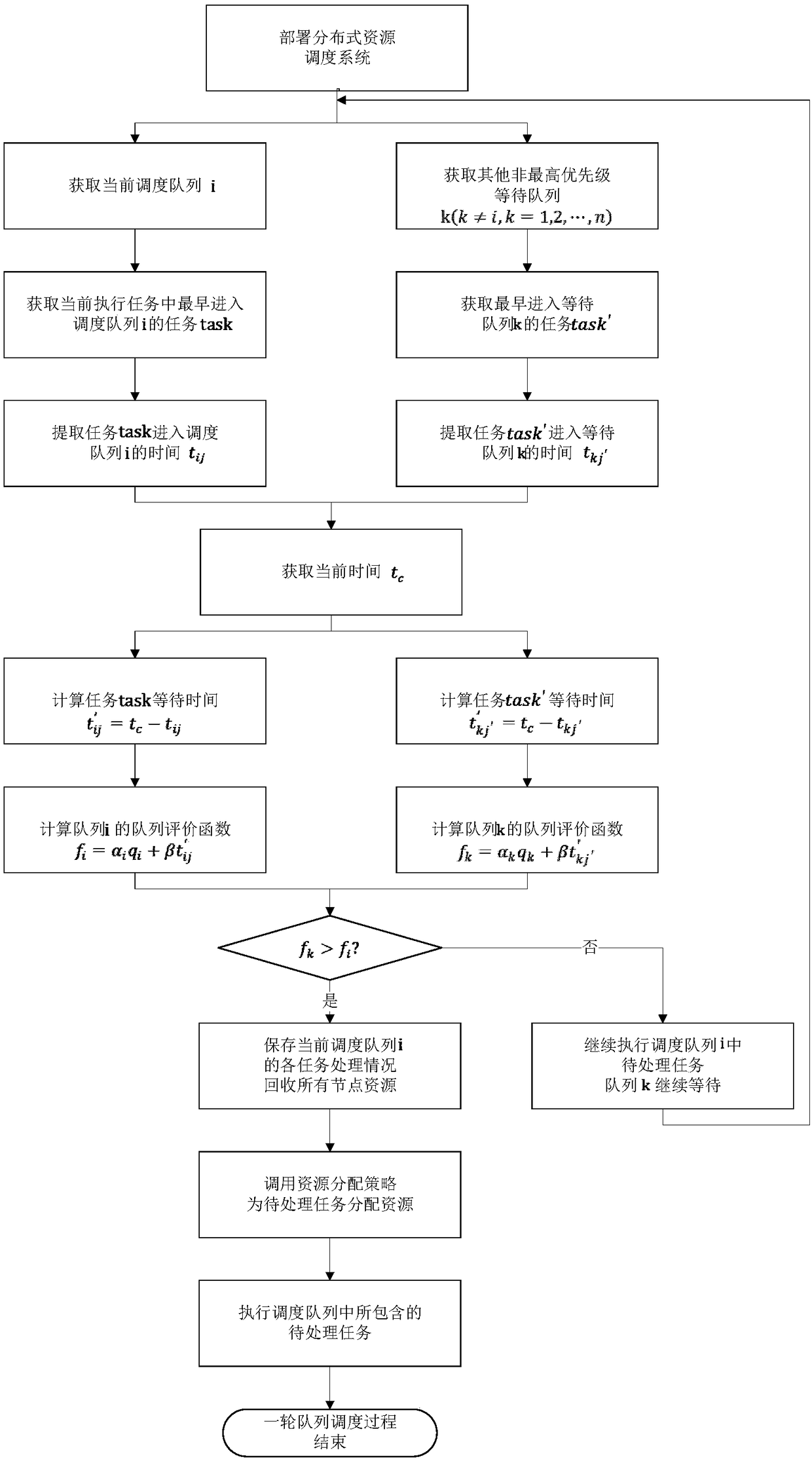

Distributed resource scheduling method and system

ActiveCN108345501AImprove satisfactionImprove resource utilizationProgram initiation/switchingResource allocationTask completionResource utilization

The invention provides a distributed resource scheduling method and system. The method comprises the steps of allocating tasks to a corresponding priority waiting queue according to assessed priorities of new task processing requests; according to the priority waiting queue obtaining the tasks, selecting a current scheduling queue; and according to the selected scheduling queue, calling a resourceallocation policy to allocate available distributed node resources. The system comprises a queue manager, a queue scheduler, a task monitor and a resource allocator. According to the technical schemeprovided by the distributed resource scheduling method and system, the total task completion time cost and the total task completion cost is effectively balanced according to a user demand; the resource utilization rate is improved; the task execution efficiency is improved; the service quality is guaranteed; and the user satisfaction is improved.

Owner:GLOBAL ENERGY INTERCONNECTION RES INST CO LTD +3

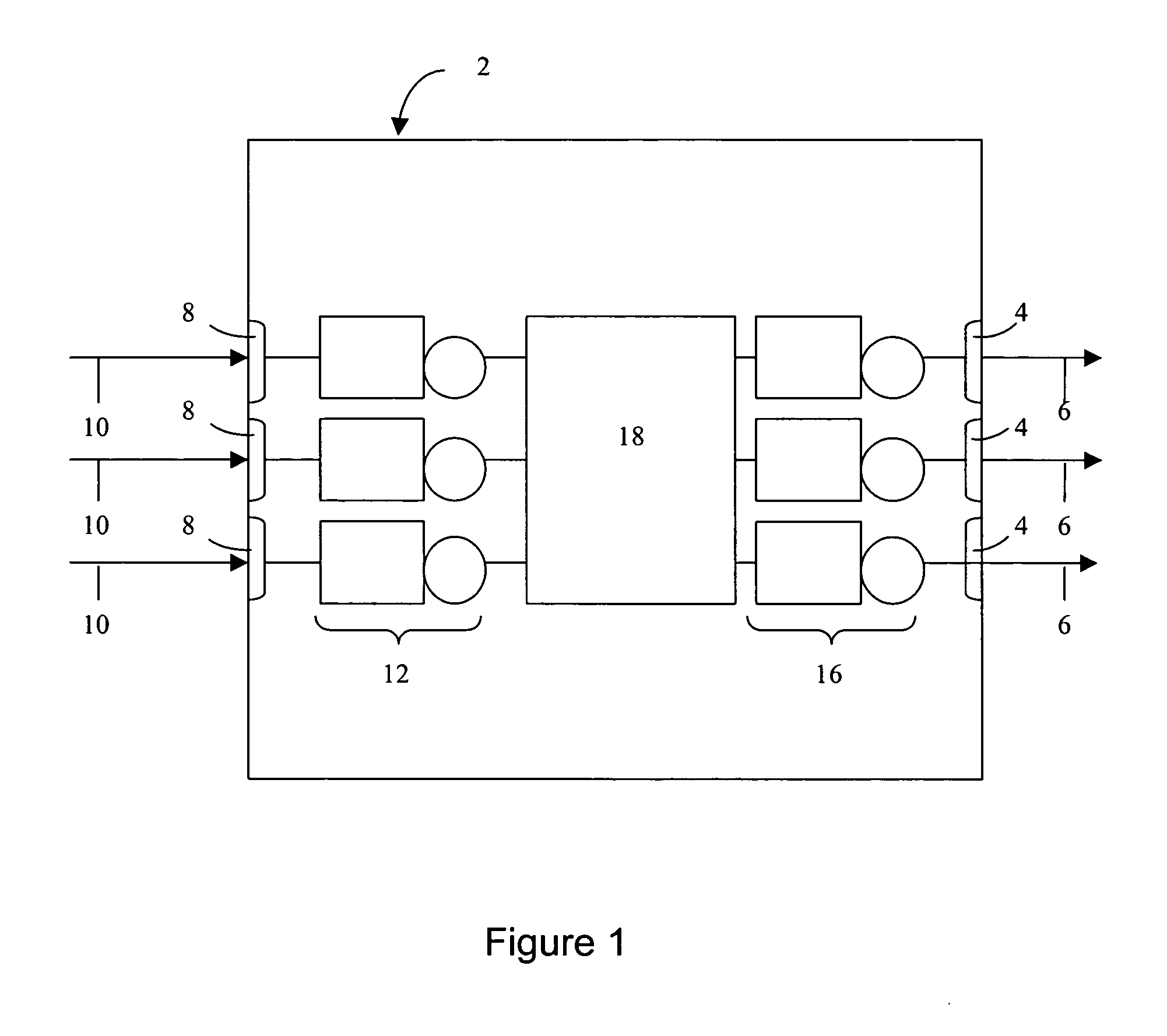

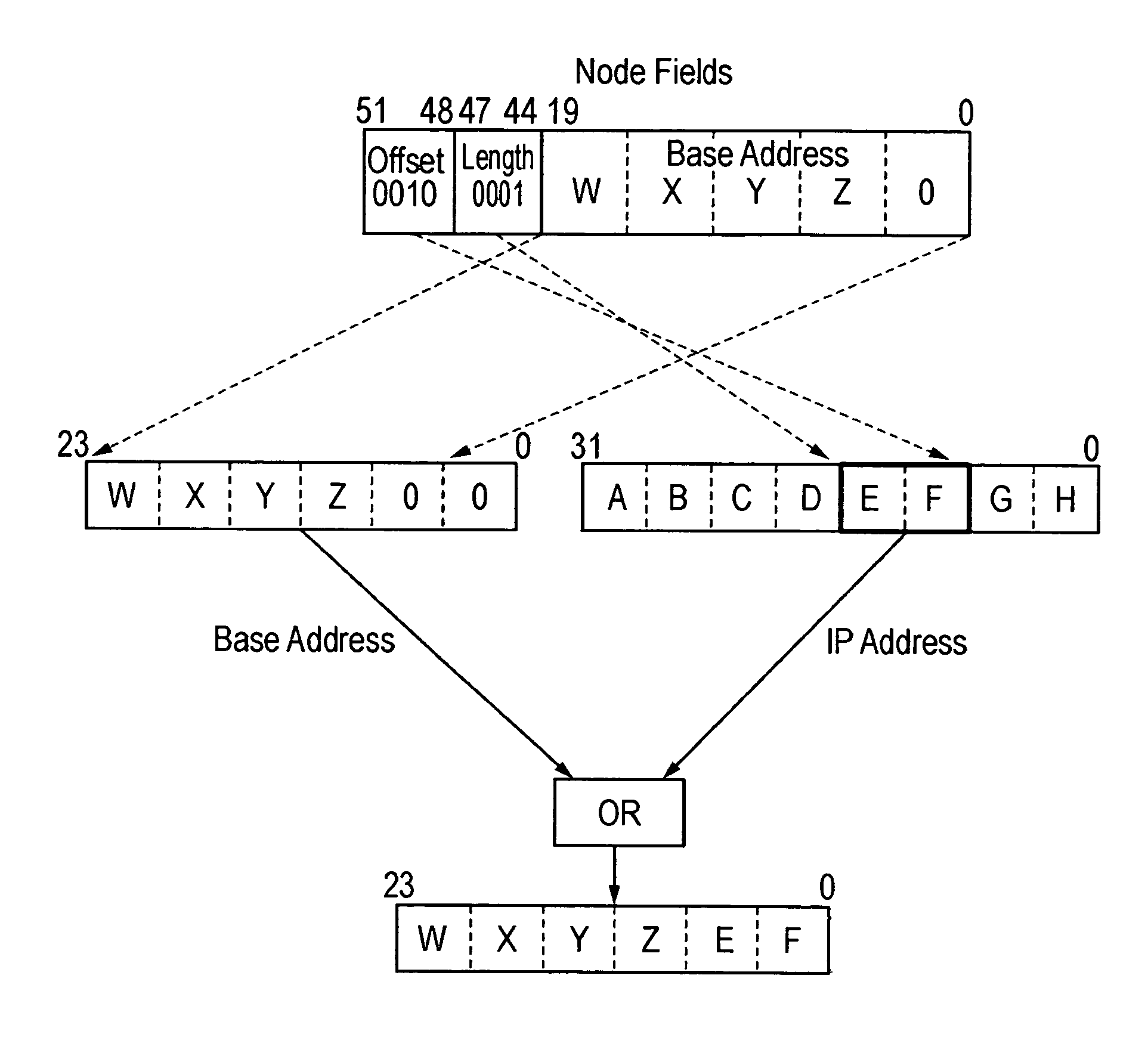

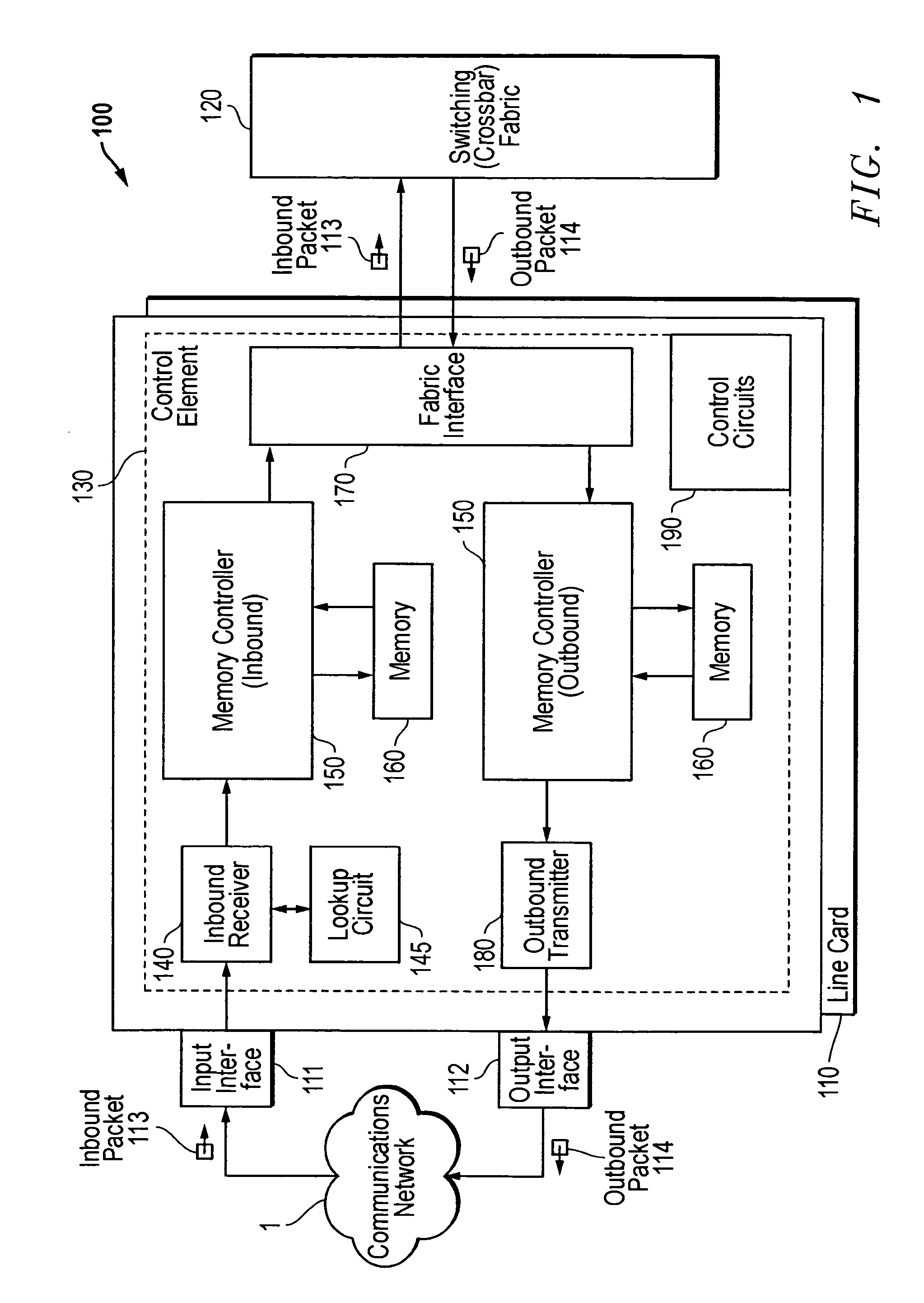

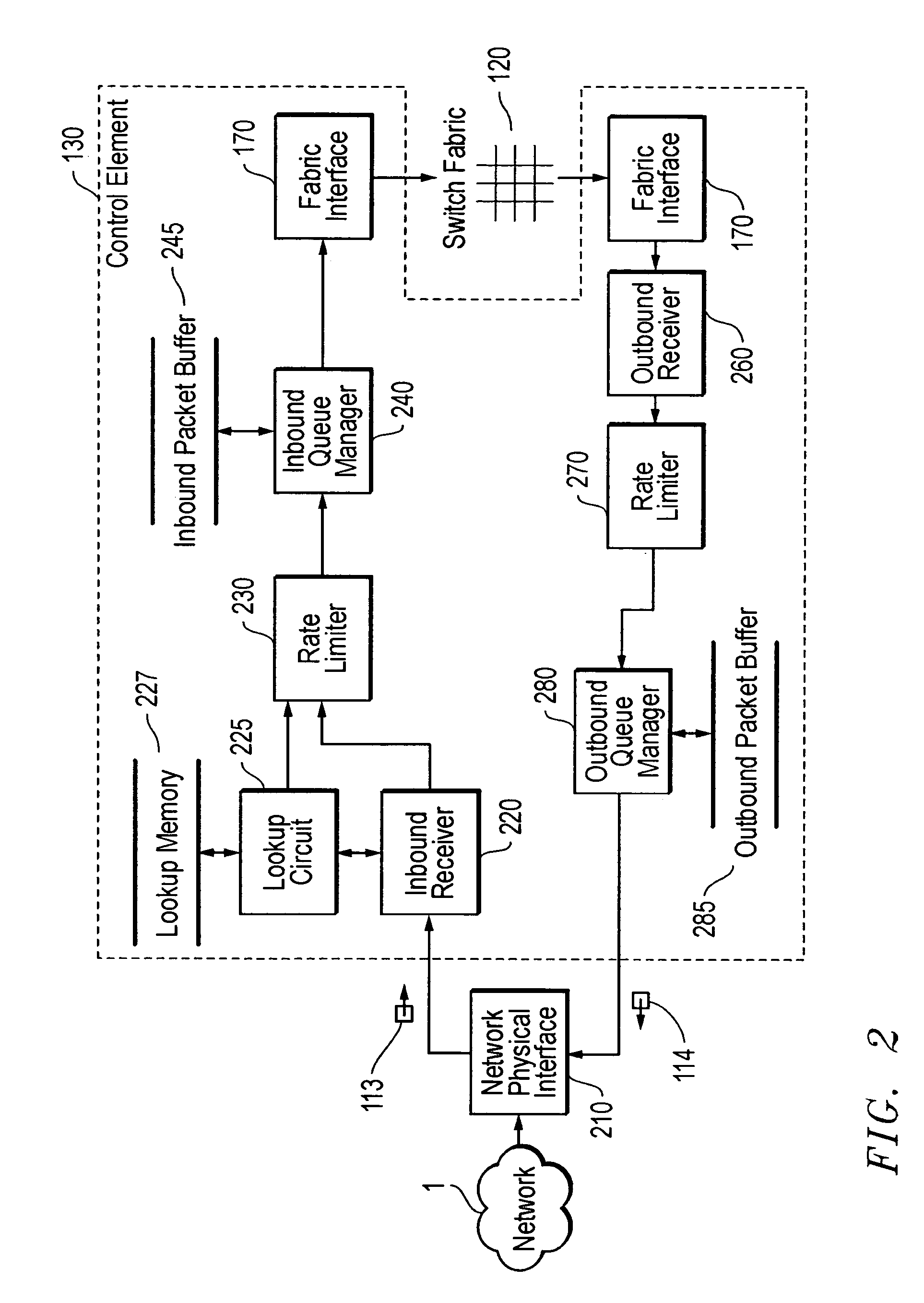

Architecture for high speed class of service enabled linecard

InactiveUS7558270B1High speed routingMinimal packet delaySpecial service provision for substationData switching by path configurationWire speedData stream

A linecard architecture for high speed routing of data in a communications device. This architecture provides low latency routing based on packet priority: packet routing and processing occurs at line rate (wire speed) for most operations. A packet data stream is input to the inbound receiver, which uses a small packet FIFO to rapidly accumulate packet bytes. Once the header portion of the packet is received, the header alone is used to perform a high speed routing lookup and packet header modification. The queue manager then uses the class of service information in the packet header to enqueue the packet according to the required priority. Enqueued packets are buffered in a large memory space holding multiple packets prior to transmission across the device's switch fabric to the outbound linecard. On arrival at the outbound linecard, the packet is enqueued in the outbound transmitter portion of the linecard architecture. Another large, multi-packet memory structure, as employed in the inbound queue manager, provides buffering prior to transmission onto the network.

Owner:CISCO TECH INC

Task Queue Management of Virtual Devices Using a Plurality of Processors

InactiveUS20080162834A1General purpose stored program computerMultiprogramming arrangementsVirtual deviceComputer science

A task queue manager manages the task queues corresponding to virtual devices. When a virtual device function is requested, the task queue manager determines whether an SPU is currently assigned to the virtual device task. If an SPU is already assigned, the request is queued in a task queue being read by the SPU. If an SPU has not been assigned, the task queue manager assigns one of the SPUs to the task queue. The queue manager assigns the task based upon which SPU is least busy as well as whether one of the SPUs recently performed the virtual device function. If an SPU recently performed the virtual device function, it is more likely that the code used to perform the function is still in the SPU's local memory and will not have to be retrieved from shared common memory using DMA operations.

Owner:BROKENSHIRE DANIEL ALAN +4

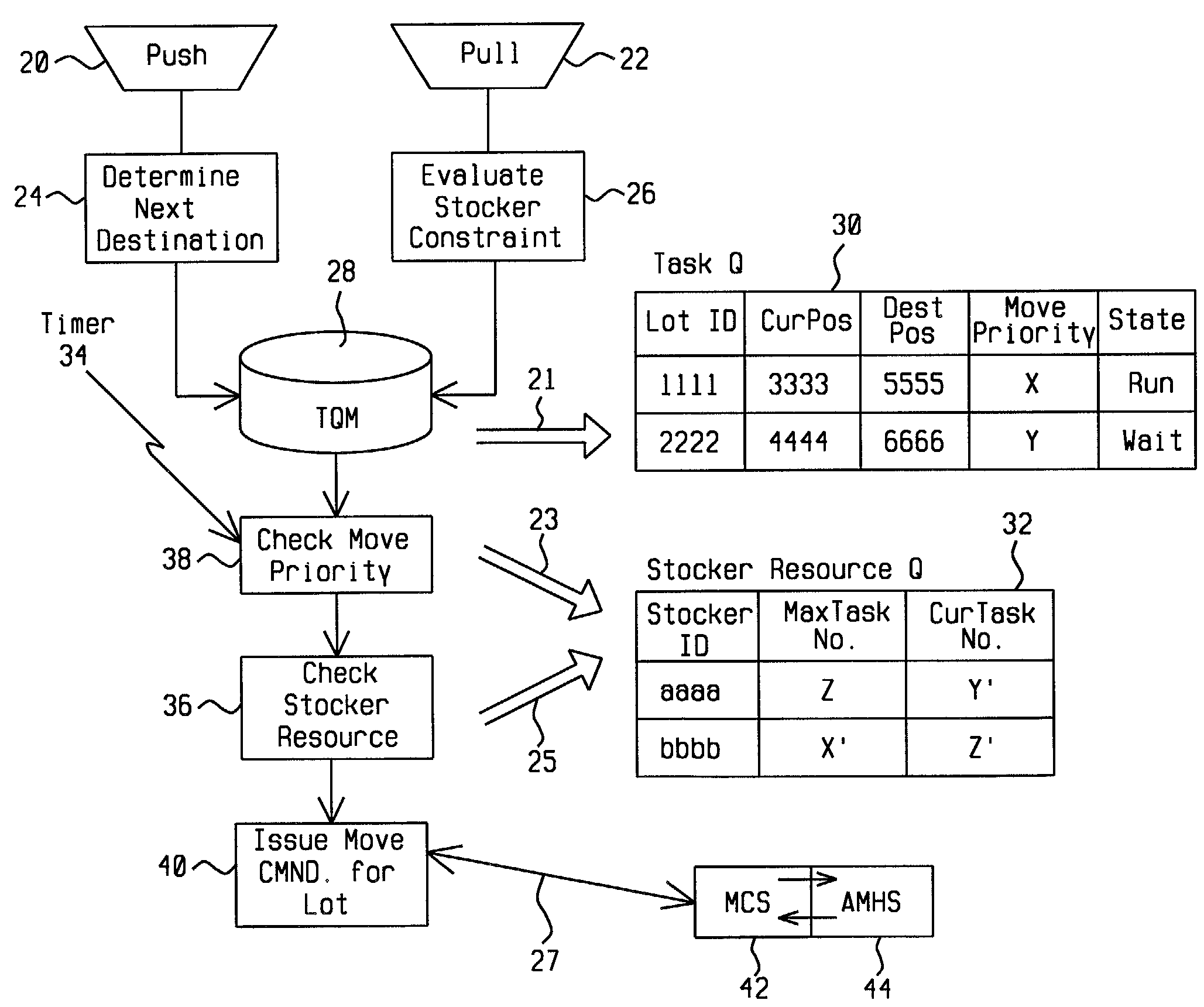

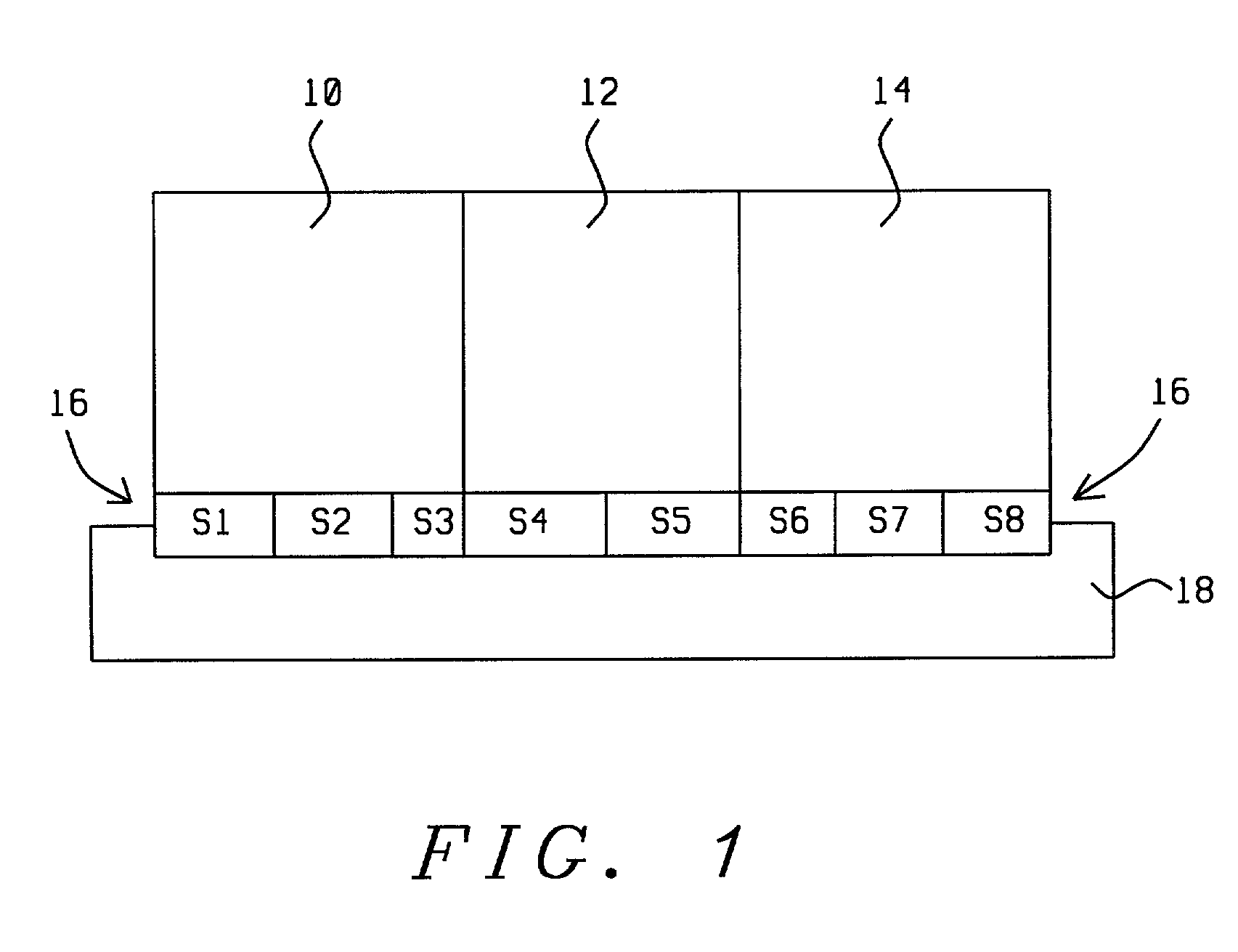

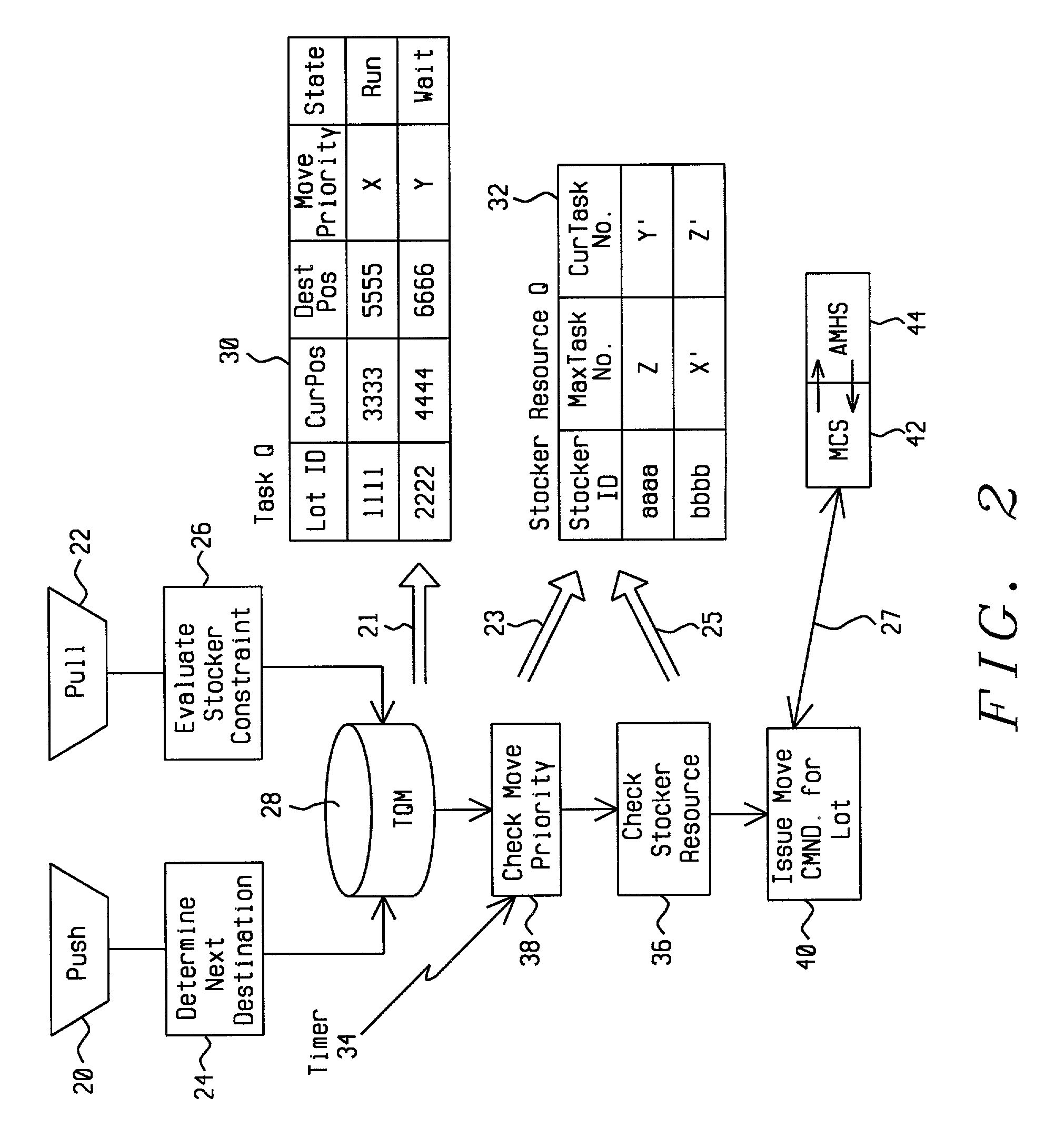

Task queuing methodology for reducing traffic jam and to control transmission priority in an automatic material handling system

InactiveUS7664561B1Ease traffic congestionProgramme controlTotal factory controlTraffic congestionHandling system

A new Task Queue Methodology (TQM) is provided for reducing traffic jams and for controlling transportation priority in an Automatic Material Handling System (AMHS). A Stocker Resource Q, under control of TQM, maintains records of stockers that are under control of the AMHS and the availability thereof. For available stockers, a Task-Q under control of a Queue Manager (TQM) is accessed, extracting therefrom records that match available stocker resources. For the available stocker resources, the tasks that are scheduled against these resources are sorted by priority and by longest wait time, resulting in one selected task. For the in this manner selected task, a Move command is issued by the TQM to the Automatic Material Handling System.

Owner:TAIWAN SEMICON MFG CO LTD

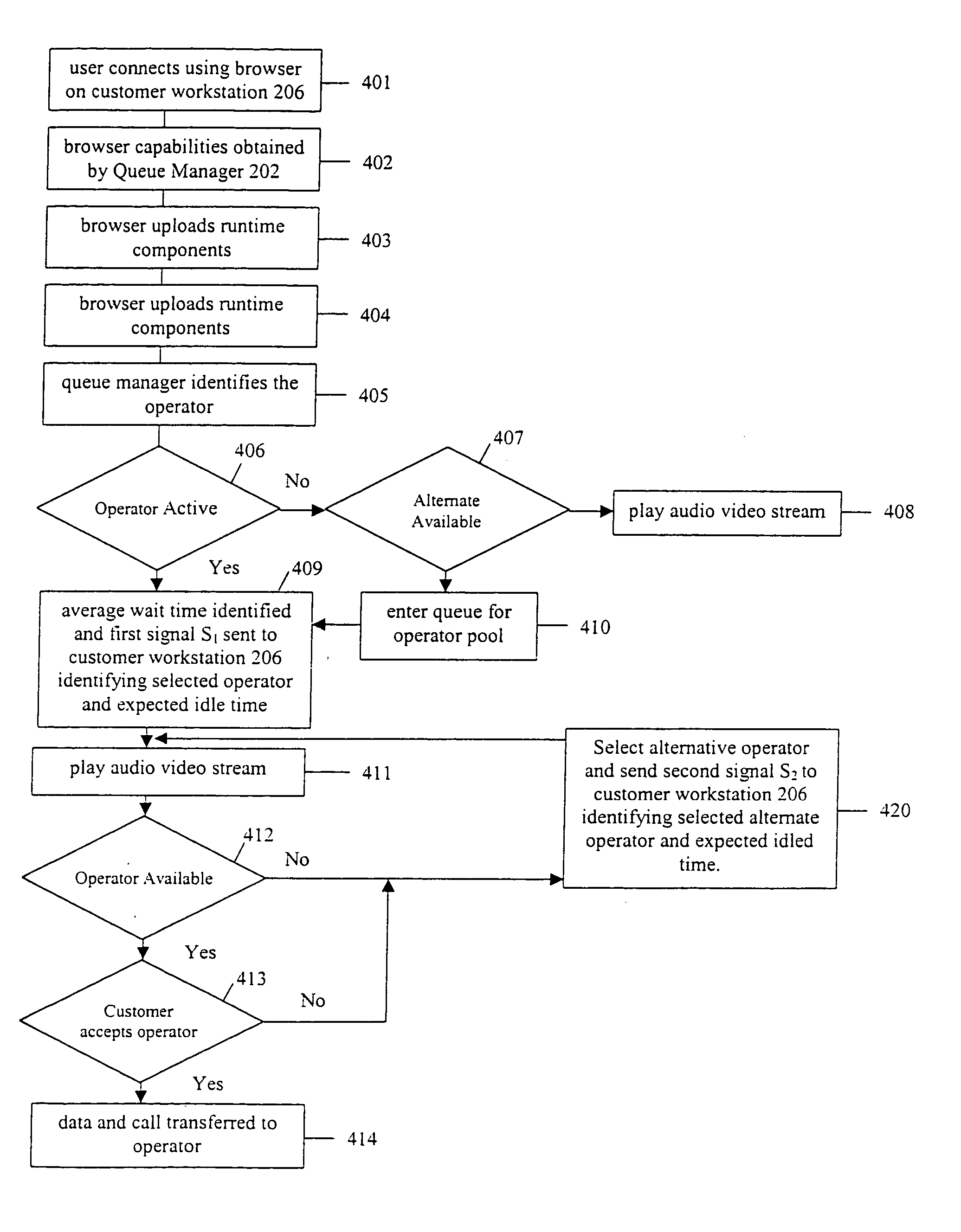

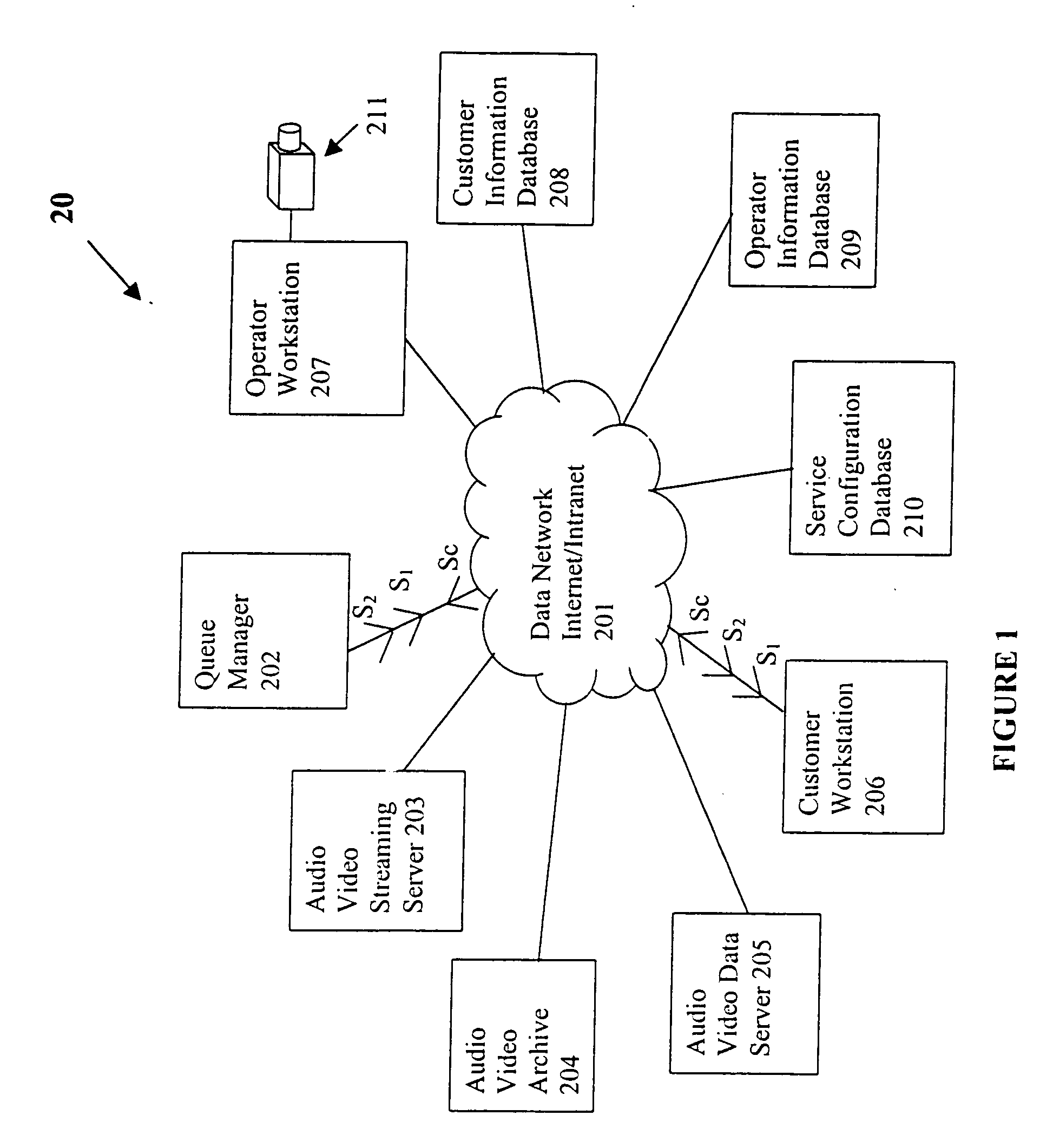

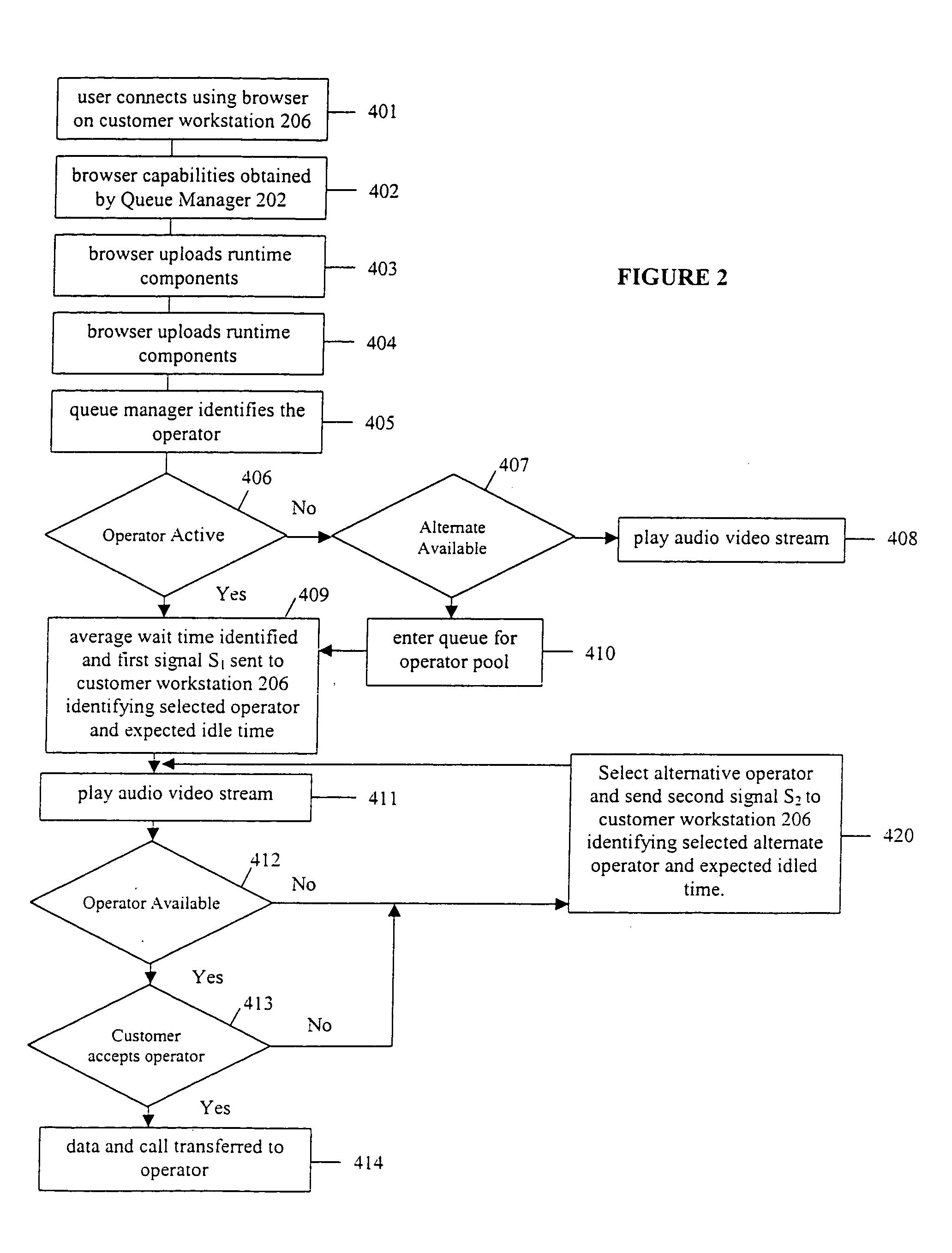

Device and system to facilitate remote customer-service

InactiveUS20050100160A1Operator-client rapport can be improvedFacilitating customer serviceSpecial service for subscribersManual exchangesIdle timeComputer network

A device and system for providing customer-service over the Internet via remote browser control, text communication and two-way audio / one-way video link is disclosed. A user at a customer workstation accesses the system through a network, such as the Internet. The user is then identified by a queue manager. The queue manager then queries a customer database to determine which operators previously communicated with the user and the nature of the information previously requested. The queue manager then connects the user with operators with which the user previously communicated with. The user communicates with the operators by sending information by means of text, audio and / or video. Generally, video signals will only be sent one way from the operator to the customer. During the idle time while the user is waiting for an operator, the queue manager sends information to the user in the form of text audio and / or video relating to information which the user previously requested. The call can also be transferred from one operator to another over the data network.

Owner:HELPCASTER TECH

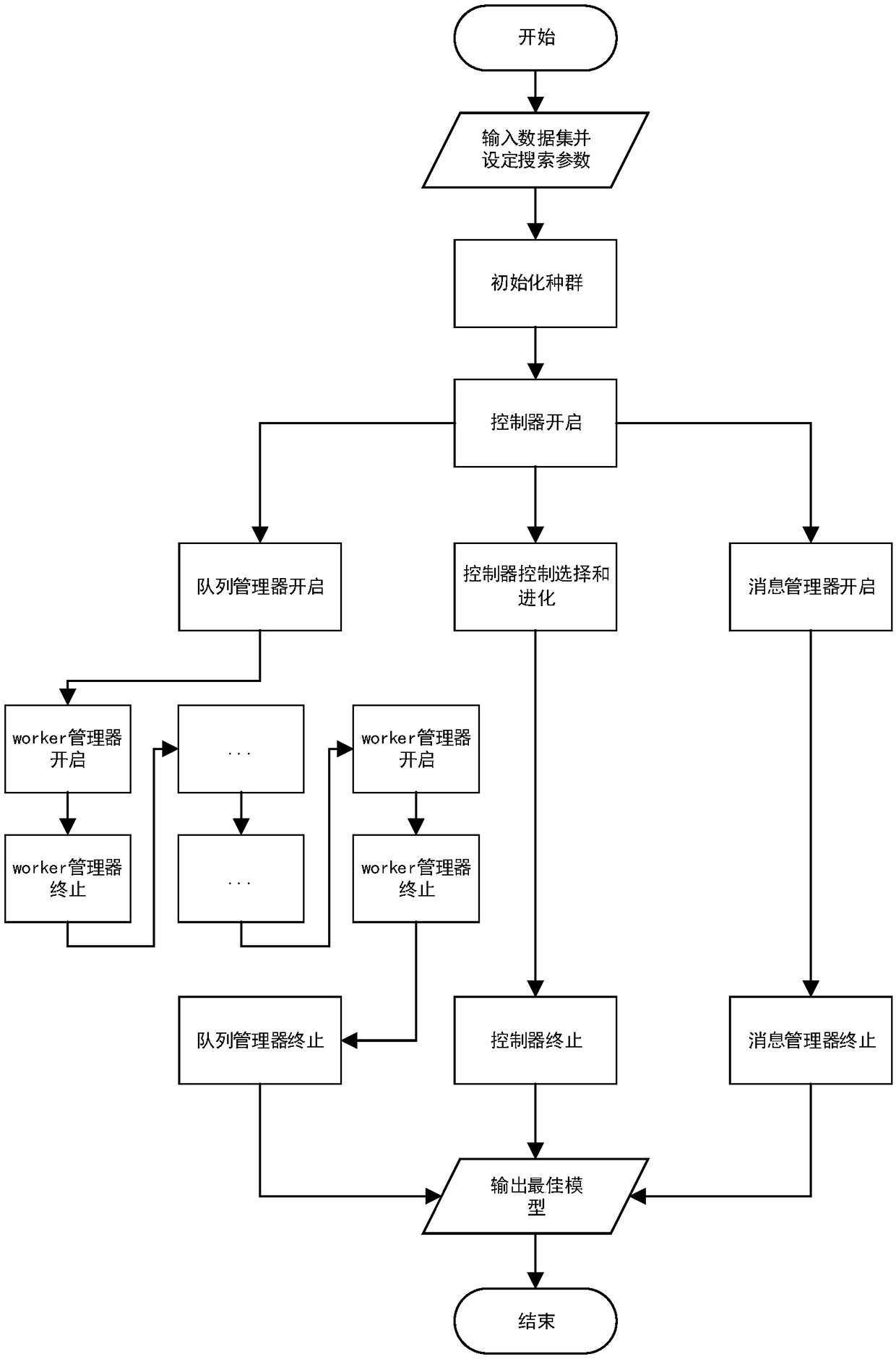

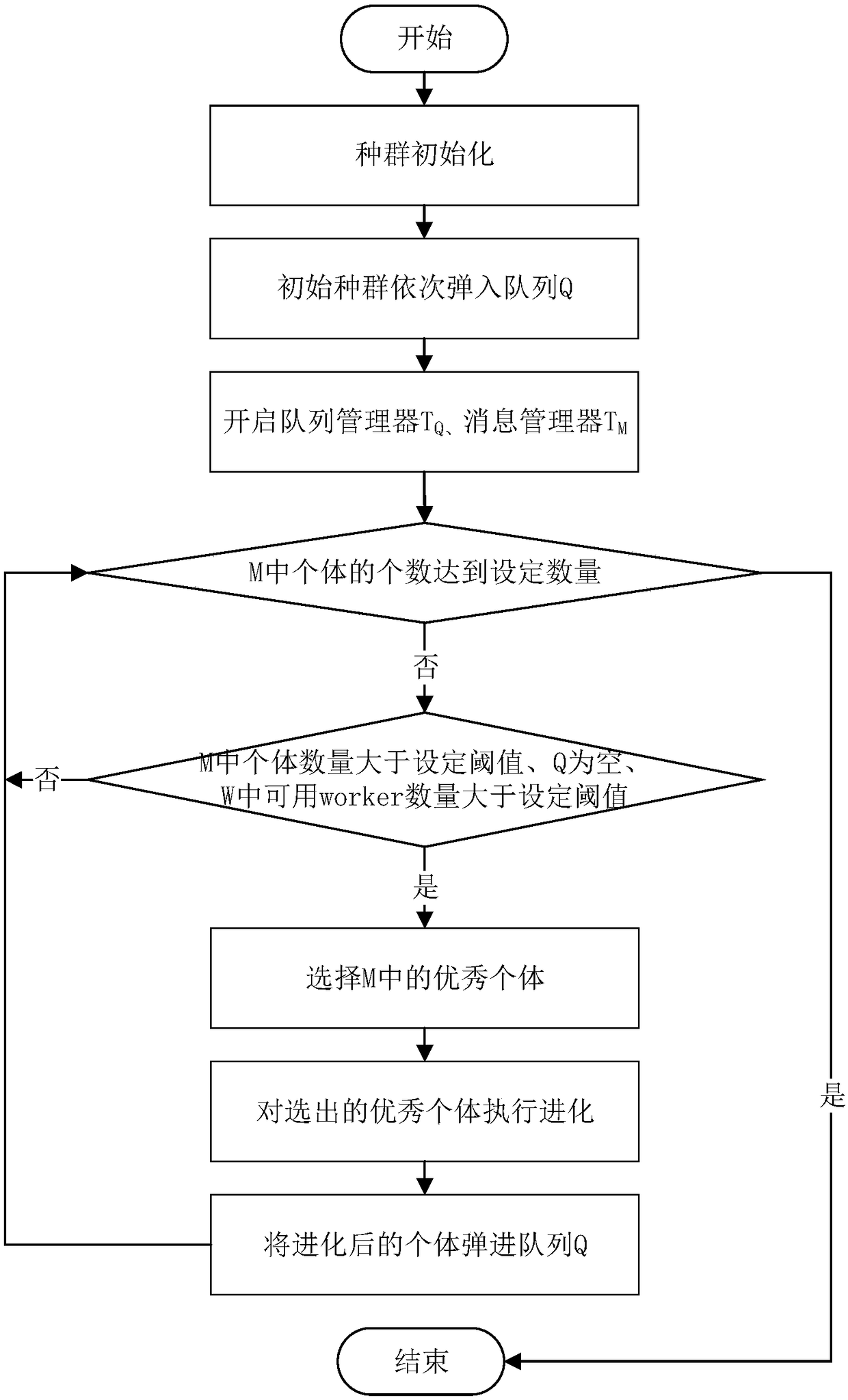

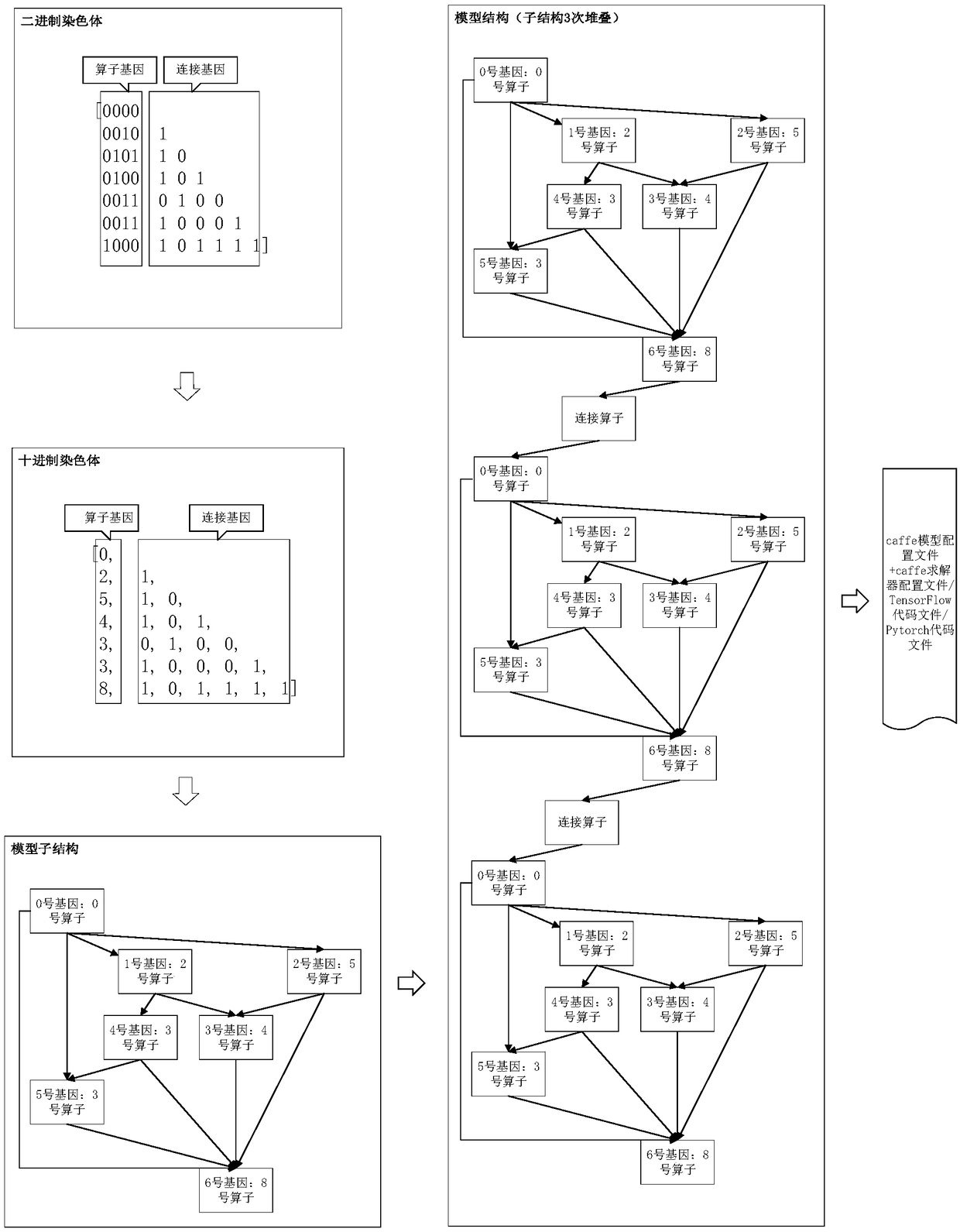

A structure search method and system of convolution neural network based on evolutionary algorithm

ActiveCN109299142AScaleProcessedDigital data information retrievalArtificial lifeComputer clusterData set

The invention discloses a convolution neural network structure searching method and a system based on an evolutionary algorithm. The method comprises the steps of inputting a data set and setting preset parameters to obtain an initial population. By the controller TC as the main thread popping the initial population into the queue q and opening the queue manager tq and the message manager tm, After the queue manager TQ is turned on, the untrained chromosomes in the queue Q are pop-up and decoded, and a worker manager TW as an independent temporary thread is started to calculate the fitness forthe training, Through the cooperation of controller TC, queue manager TQ, worker manager TW and message manager TM, the parallel search of convolution neural network structure based on evolutionary algorithm is completed and the best model is output. The invention can realize automatic modeling, parameter adjustment and training for a given data set, has the advantages of high performance, large-scale, flow-chart and good certainty, and is particularly suitable for deployment and implementation on a high-performance computer cluster.

Owner:SUN YAT SEN UNIV

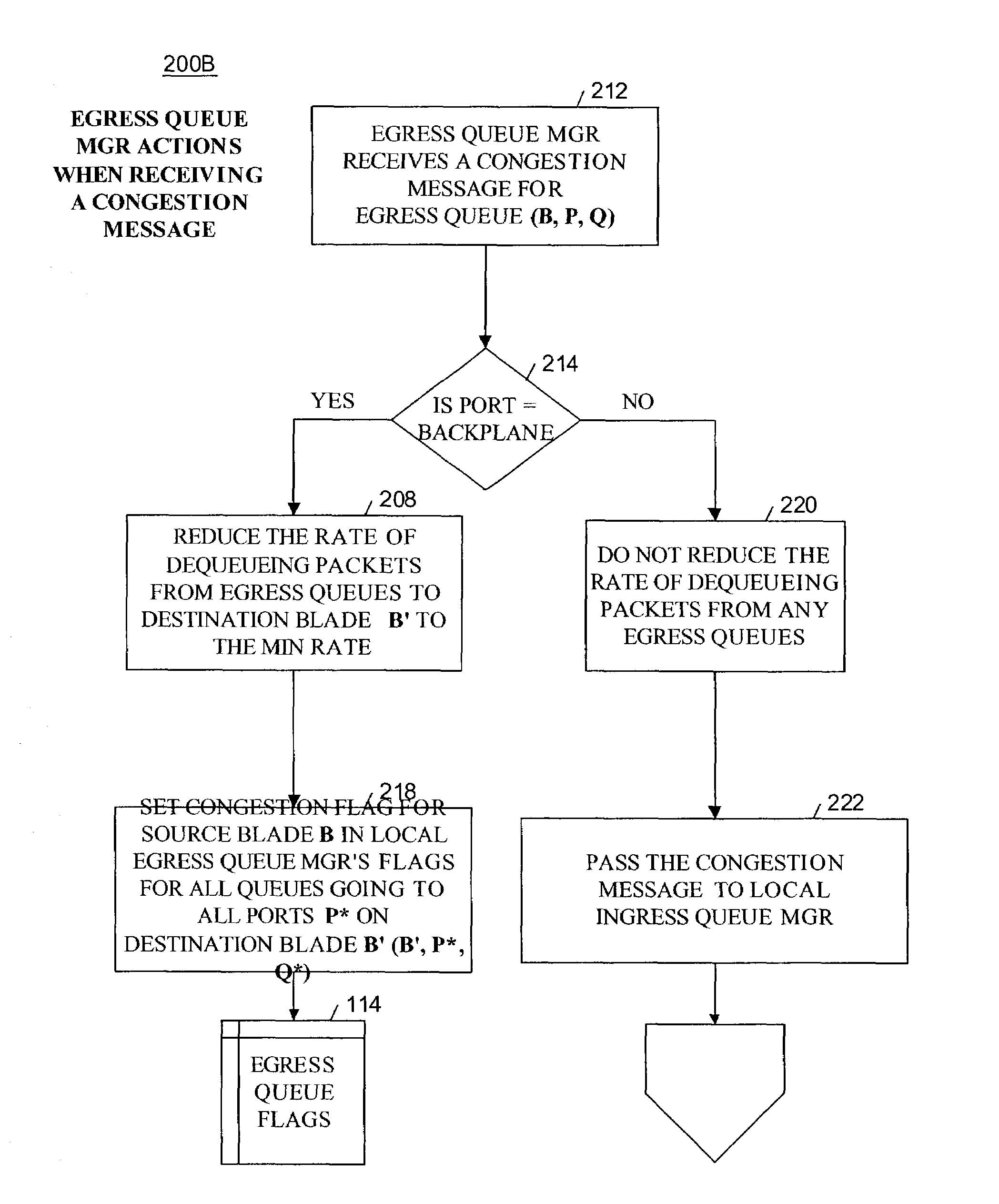

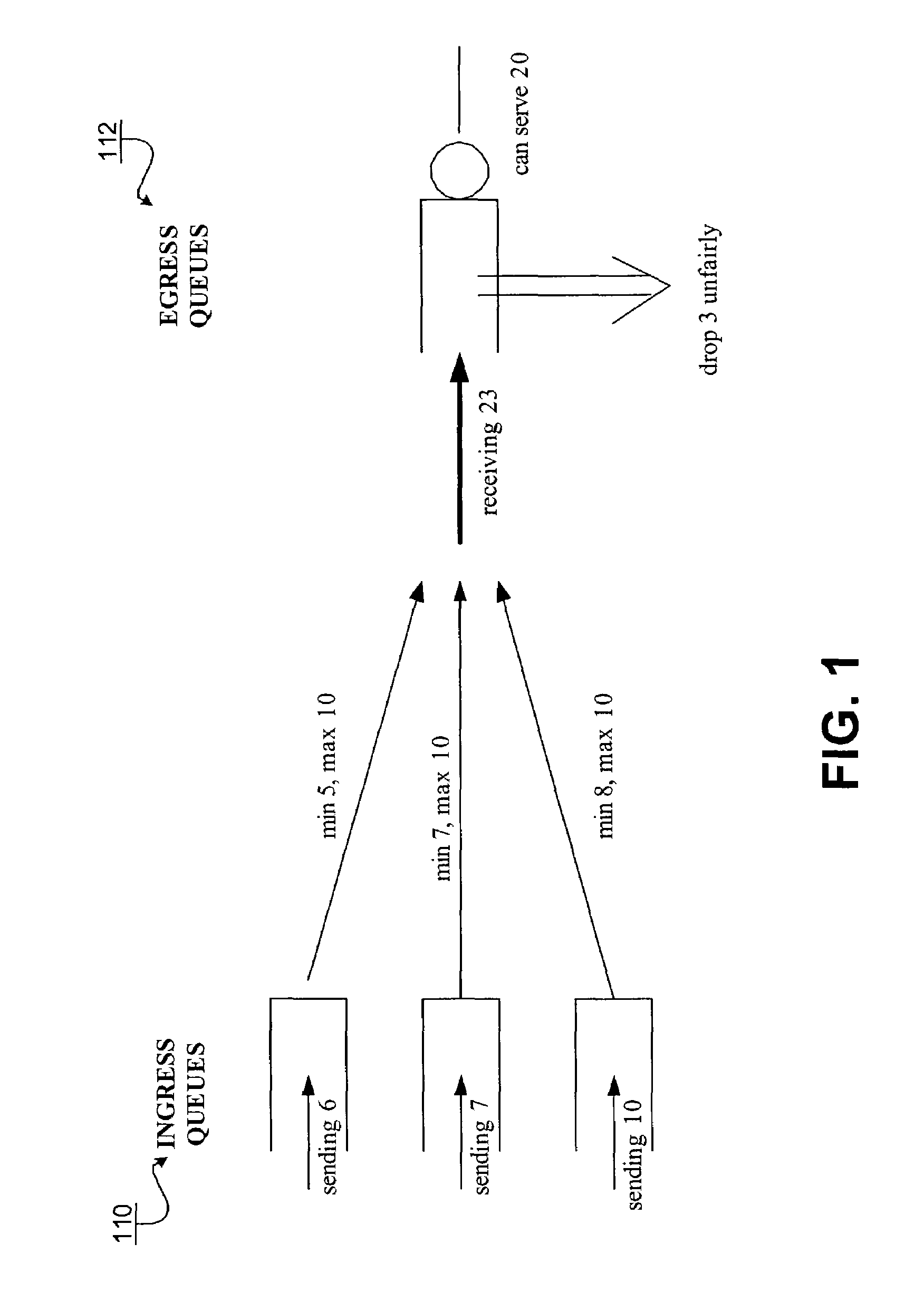

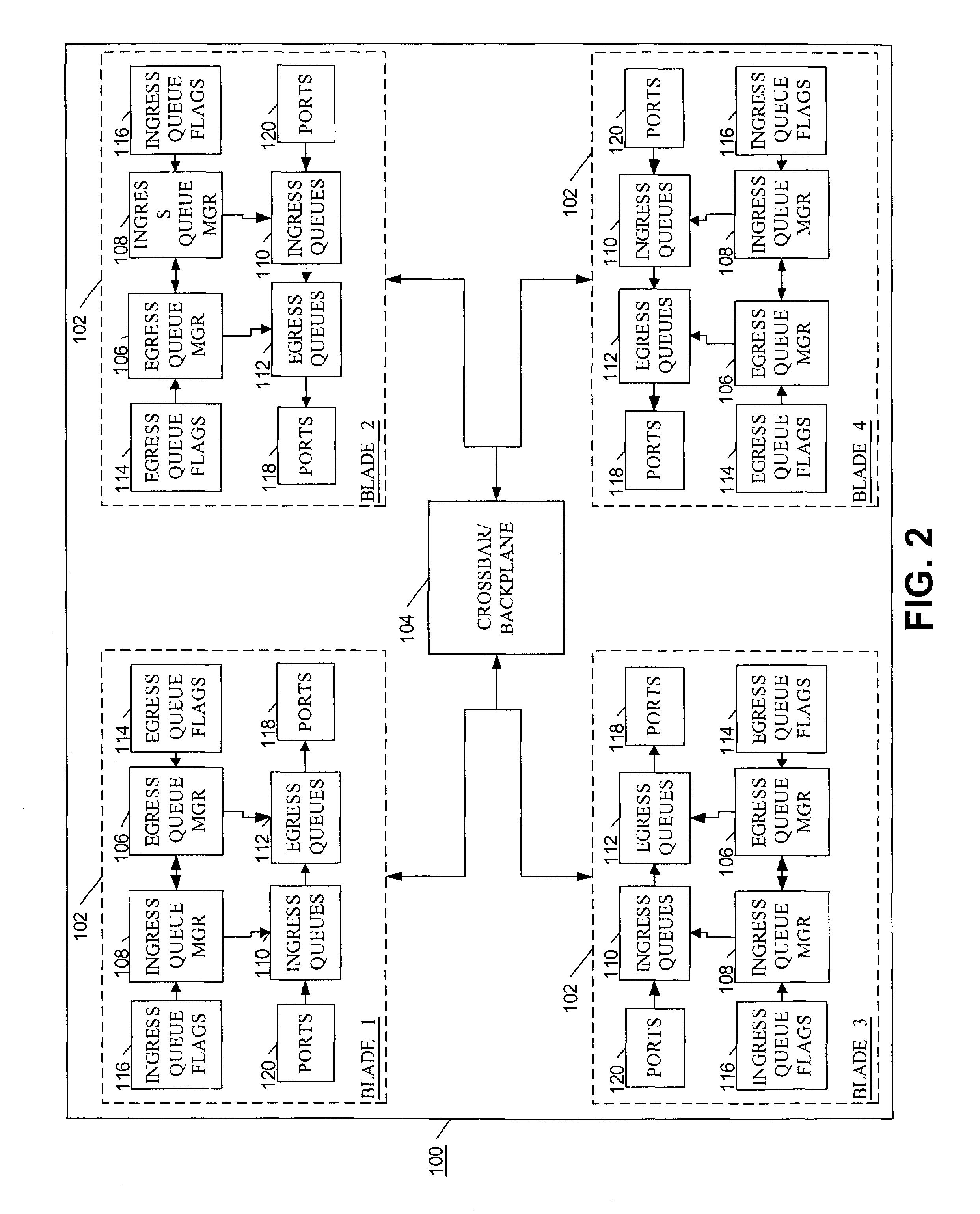

Method and apparatus for providing quality of service across a switched backplane for multicast packets

ActiveUS7286552B1Improve robustnessReduce probabilityError preventionFrequency-division multiplex detailsTraffic capacityQuality of service

A method and system is provided to enable quality of service across a backplane switch for multicast packets. For multicast traffic, an egress queue manager manages congestion control in accordance with multicast scheduling flags. A multicast scheduling flag is associated with each egress queue capable of receiving a packet from a multicast ingress queue. When the multicast scheduling flag is set and the congested egress queue is an outer queue, the egress queue manager refrains from dequeueing any marked multicast packets to the destination ports associated with the congested outer queue until the congestion subsides. When the congested egress queue is a backplane queue, the egress queue manager refrains from dequeuing any marked multicast packets to the destination ports on the destination blade associated with the congested backplane queue until the congestion subsides.

Owner:EXTREME NETWORKS INC

Dynamic email content update process

InactiveUS7478132B2Natural language data processingMultiple digital computer combinationsDuplicate contentComputer science

An email update system dynamically updates the content of an email when the originator of an email has sent the email, and the originator later determines that the email requires editing. The updating may take place transparent to the recipient and without the introduction of duplicative content into the recipient's email program. The email update system comprises a delta engine program and a delta temporary storage in a sender's computer, a queue manager program and an intermediate email queue in a server computer, and a recipient email retrieval program in a recipient's computer.

Owner:SNAPCHAT

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com