Patents

Literature

29258 results about "Convolution" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In mathematics (in particular, functional analysis) convolution is a mathematical operation on two functions (f and g) that produces a third function expressing how the shape of one is modified by the other. The term convolution refers to both the result function and to the process of computing it. It is defined as the integral of the product of the two functions after one is reversed and shifted.

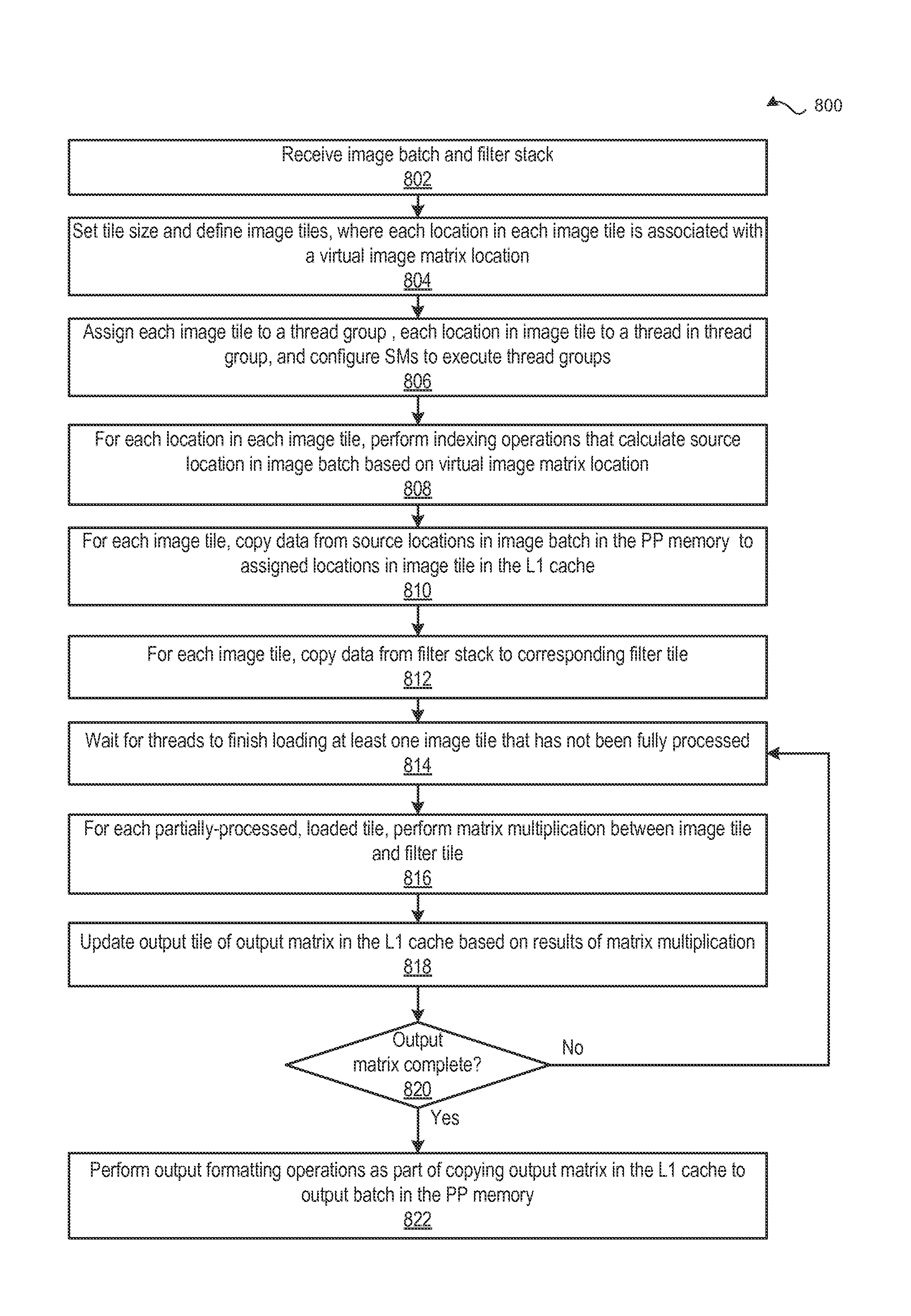

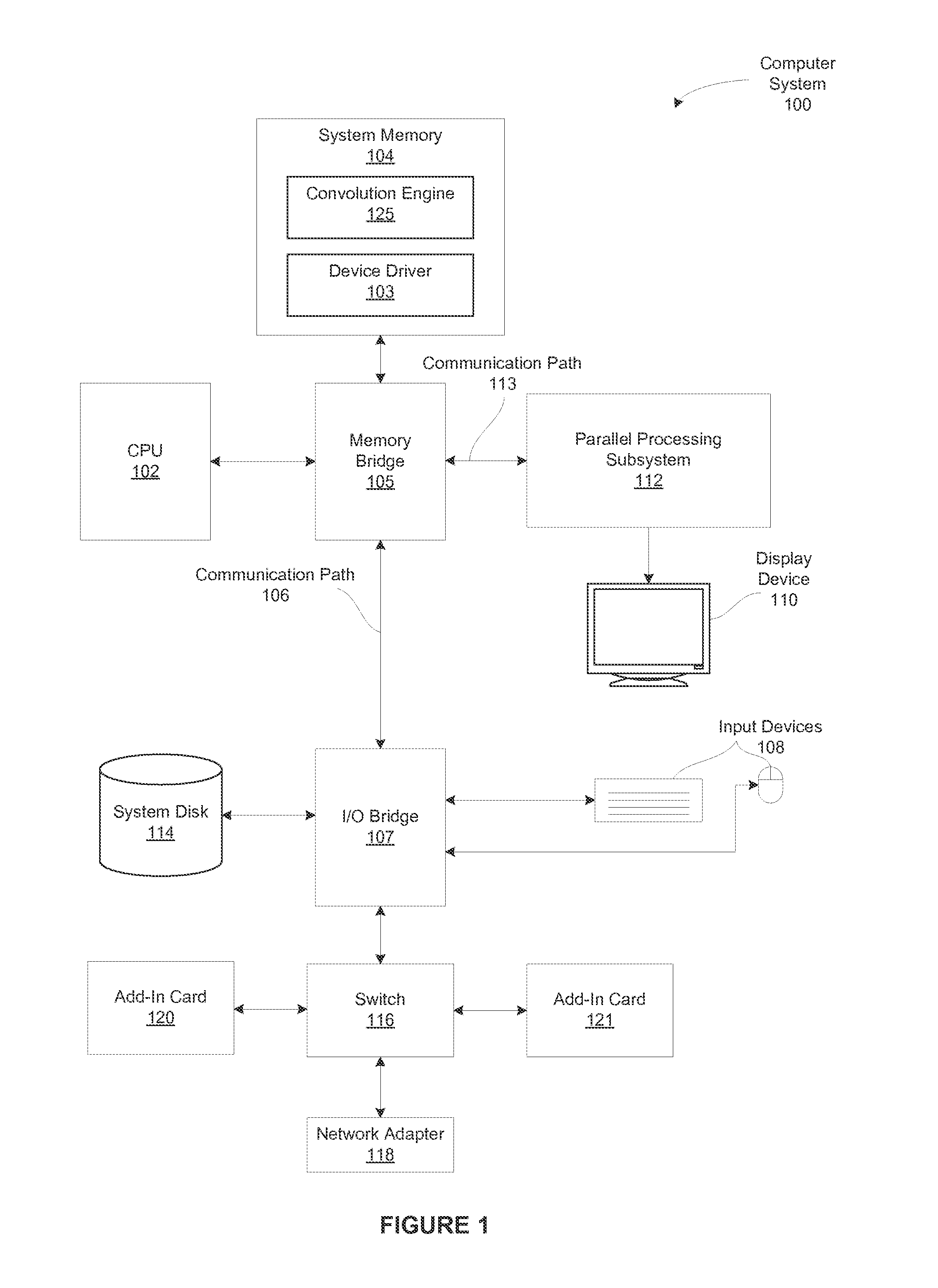

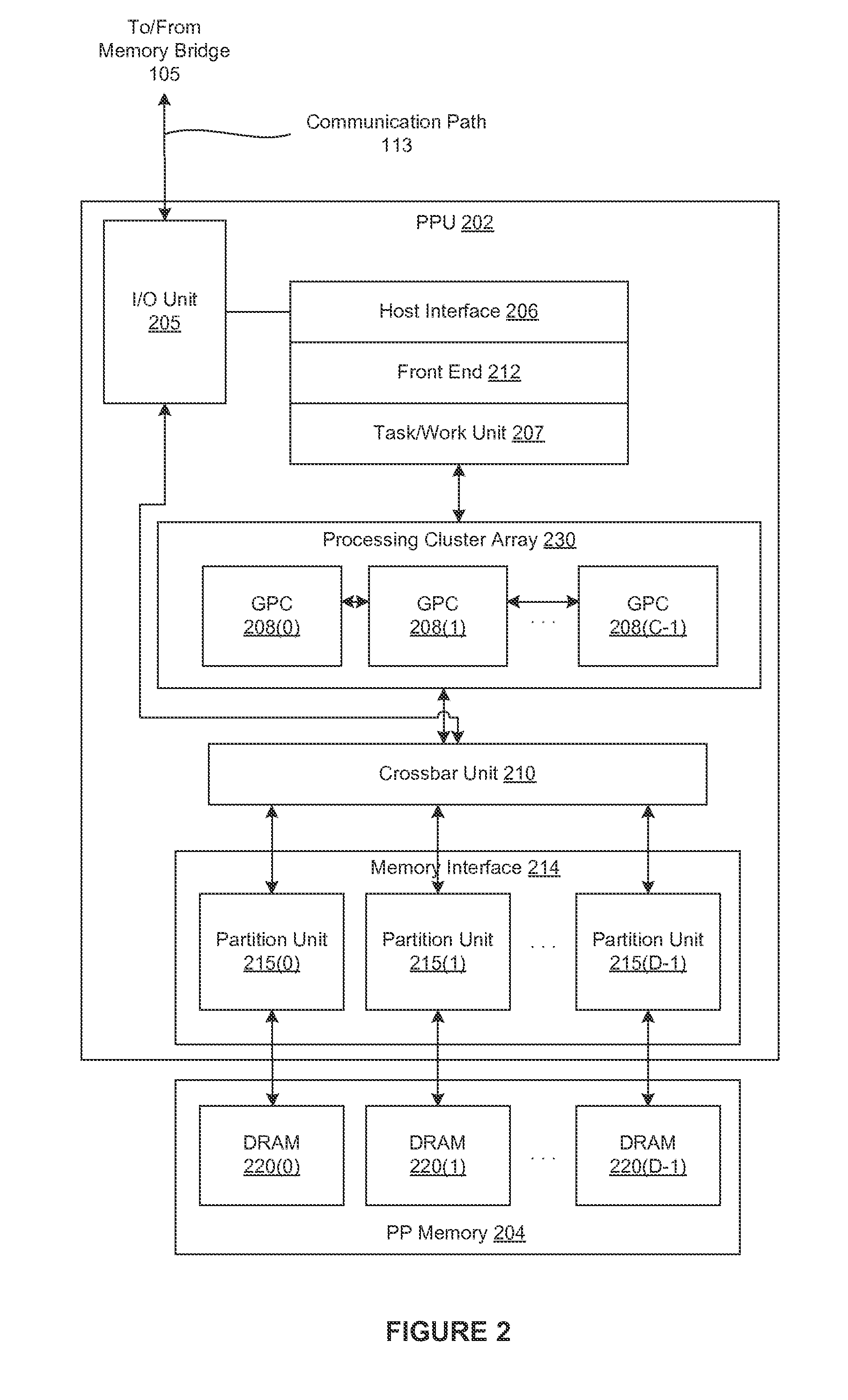

Performing multi-convolution operations in a parallel processing system

ActiveUS20160062947A1Easy to operateOptimizing on-chip memory usageBiological modelsComplex mathematical operationsLine tubingParallel processing

In one embodiment of the present invention a convolution engine configures a parallel processing pipeline to perform multi-convolution operations. More specifically, the convolution engine configures the parallel processing pipeline to independently generate and process individual image tiles. In operation, for each image tile, the pipeline calculates source locations included in an input image batch. Notably, the source locations reflect the contribution of the image tile to an output tile of an output matrix—the result of the multi-convolution operation. Subsequently, the pipeline copies data from the source locations to the image tile. Similarly, the pipeline copies data from a filter stack to a filter tile. The pipeline then performs matrix multiplication operations between the image tile and the filter tile to generate data included in the corresponding output tile. To optimize both on-chip memory usage and execution time, the pipeline creates each image tile in on-chip memory as-needed.

Owner:NVIDIA CORP

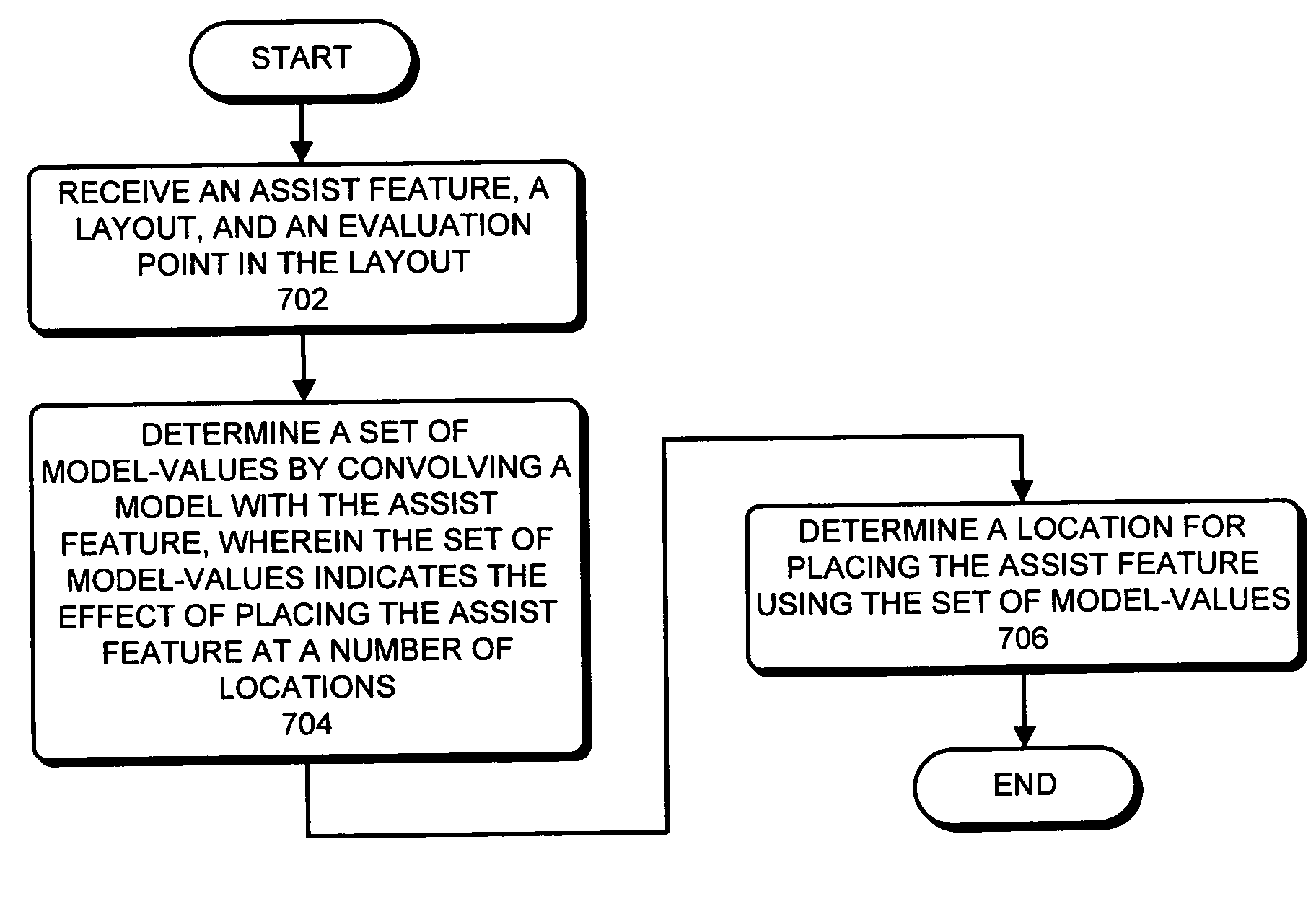

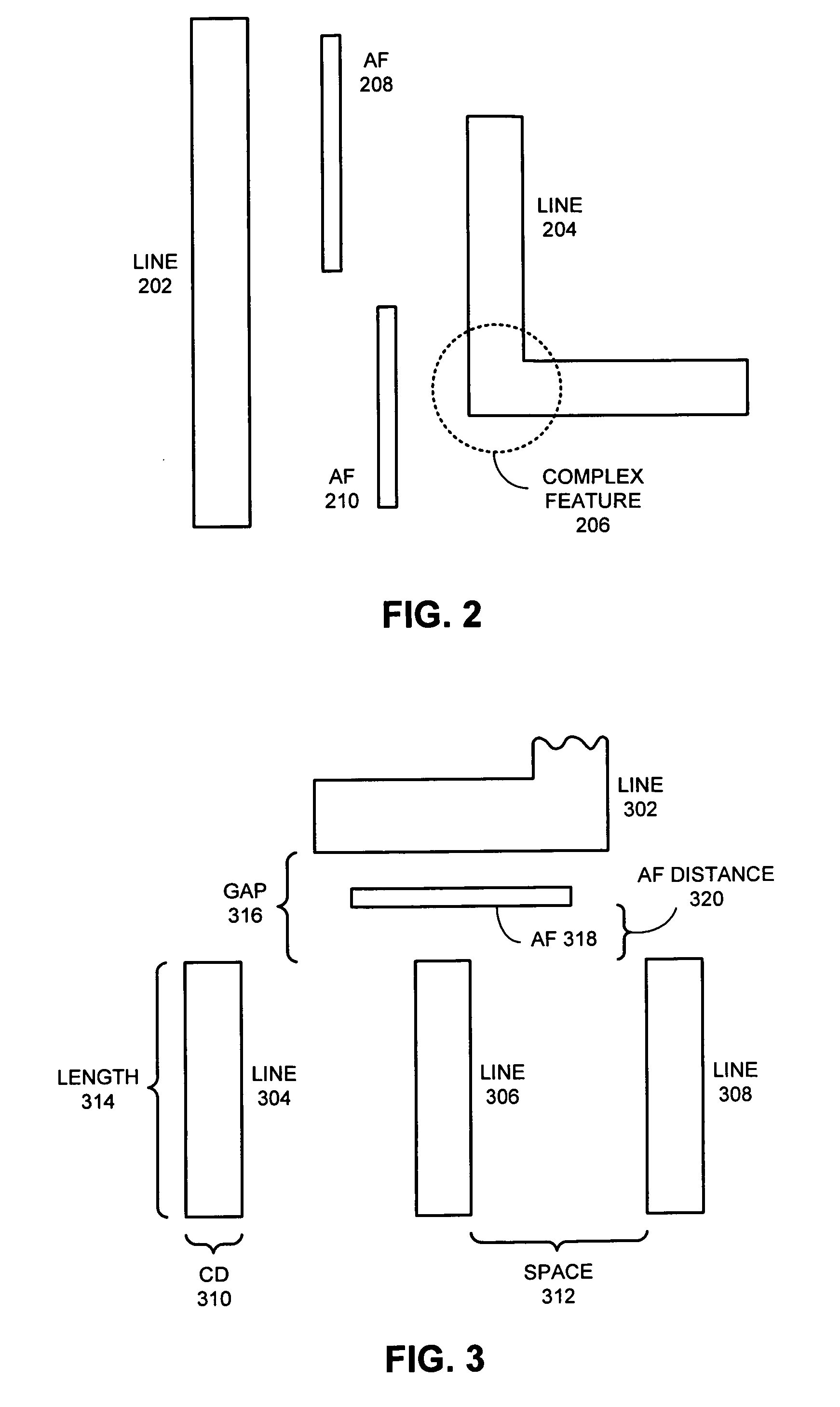

Method and apparatus for quickly determining the effect of placing an assist feature at a location in a layout

ActiveUS20070038973A1Quick effectOptimize locationPhotomechanical apparatusCAD circuit designEngineeringSystem usage

One embodiment of the present invention determines the effect of placing an assist feature at a location in a layout. During operation, the system receives a first value which was pre-computed by convolving a model with a layout at an evaluation point, wherein the model models semiconductor manufacturing processes. Next, the system determines a second value by convolving the model with an assist feature, which is assumed to be located at a first location which is in proximity to the evaluation point. The system then determines the effect of placing an assist feature using the first value and the second value. An embodiment of the present invention can be used to determine a substantially optimal location for placing an assist feature in a layout.

Owner:SYNOPSYS INC

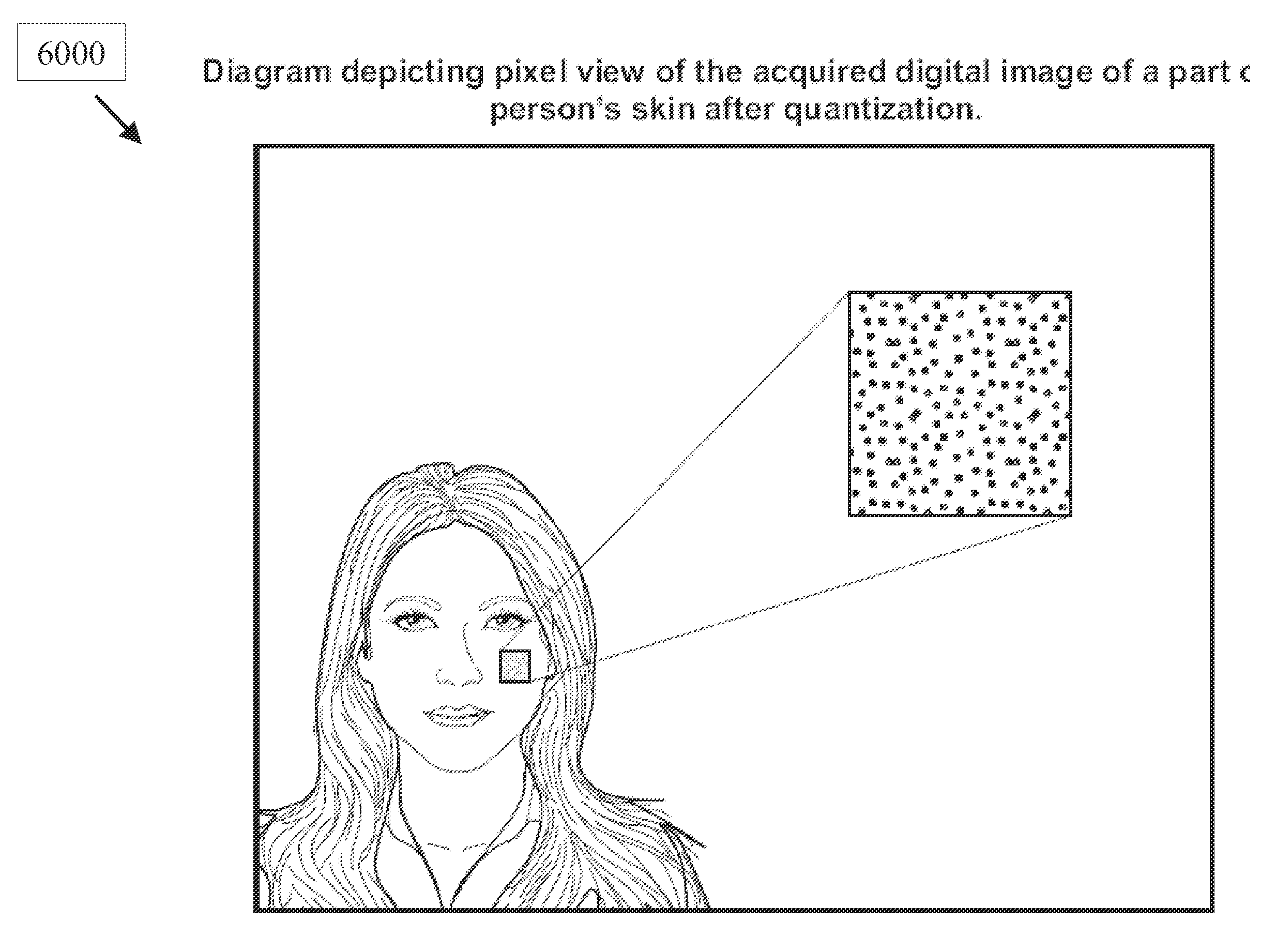

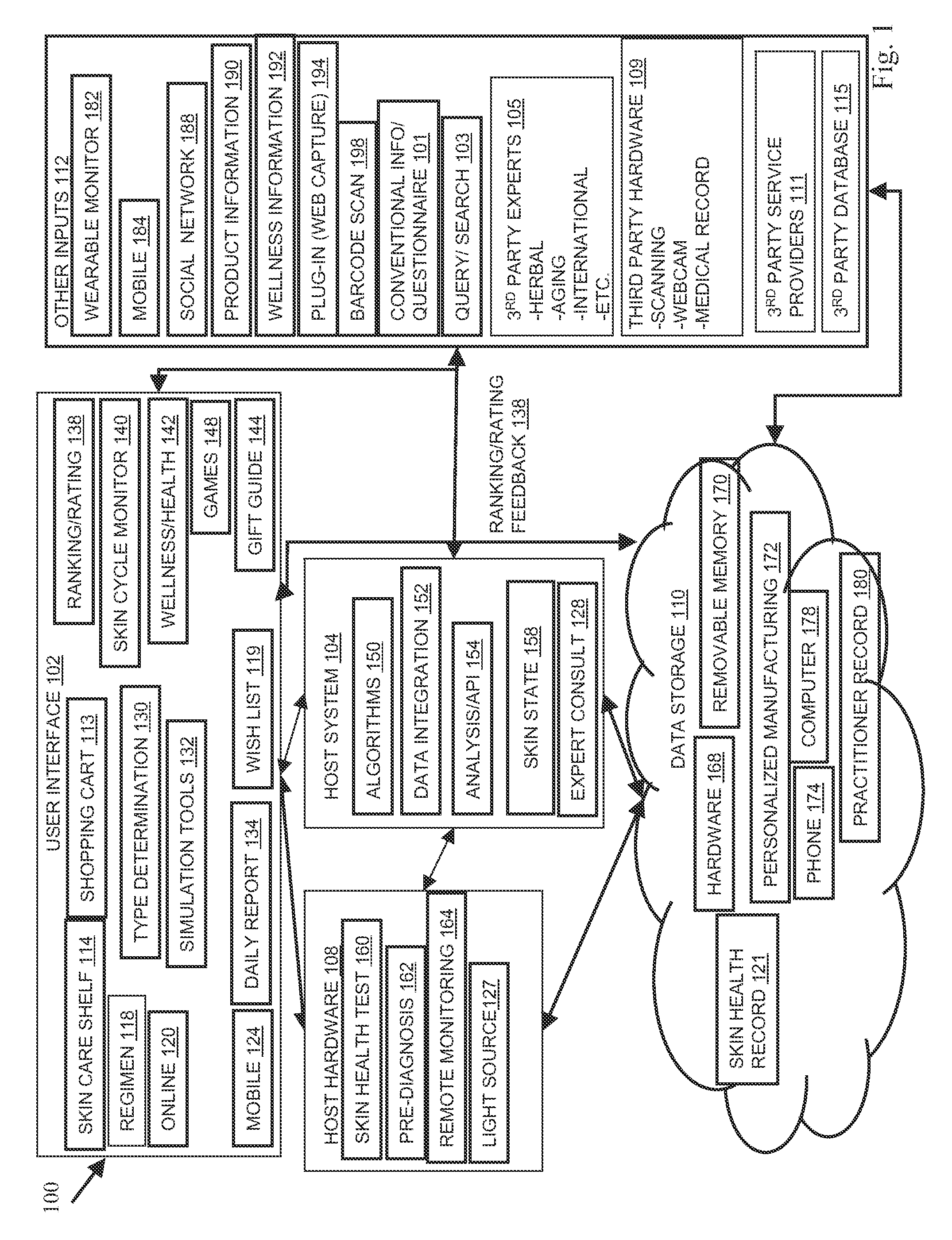

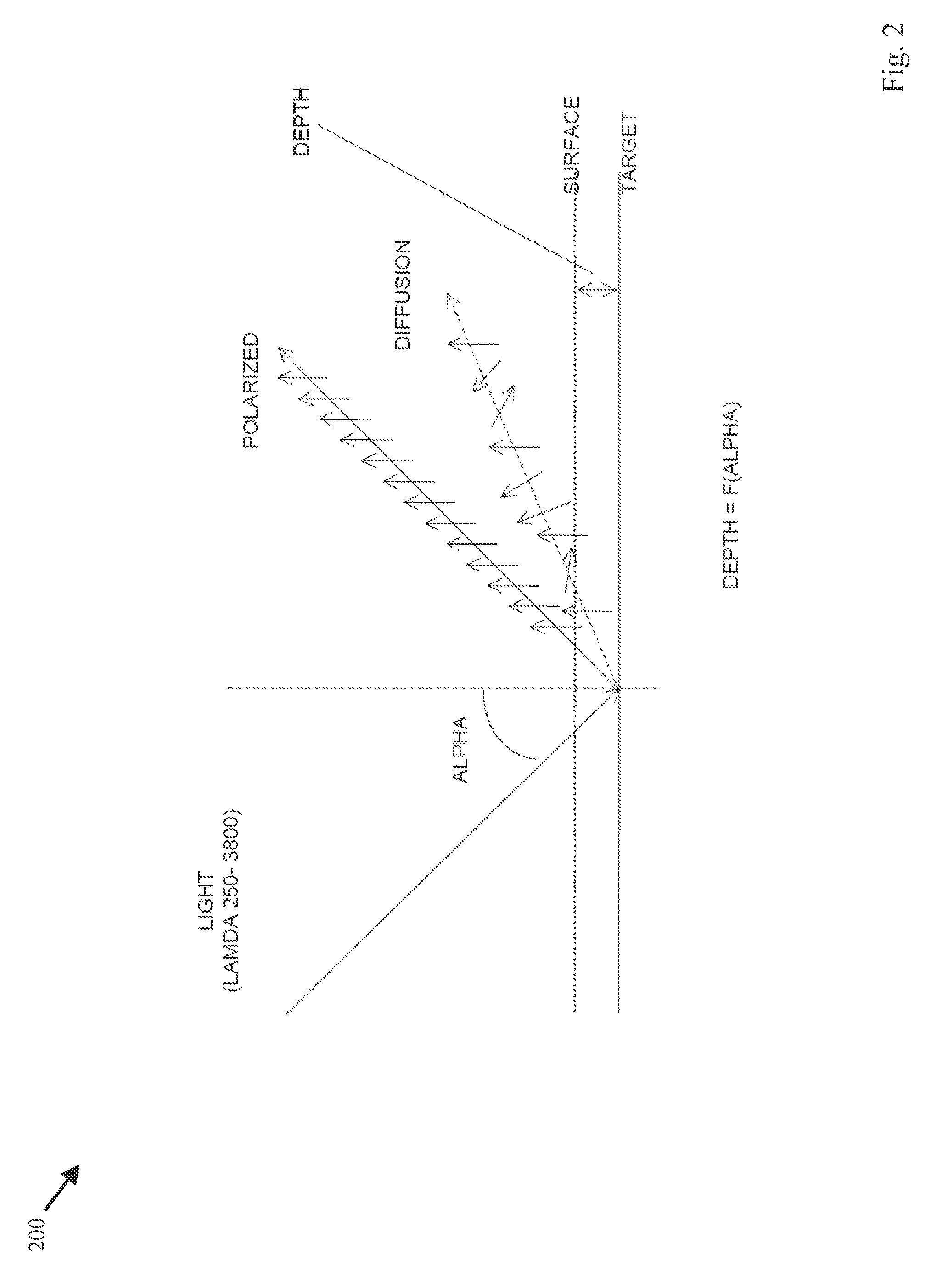

System and method for analysis of light-matter interaction based on spectral convolution

InactiveUS20090245603A1Facilitates timely skin condition assessment skin skinFacilitates skin skin regimen recommendation skin regimenImage analysisCharacter and pattern recognitionPattern recognitionDigital image

In embodiments of the present invention, systems and methods of a method and algorithm for creating a unique spectral fingerprint are based on the convolution of RGB color channel spectral plots generated from digital images that have captured single and / or multi-wavelength light-matter interaction.

Owner:MYSKIN

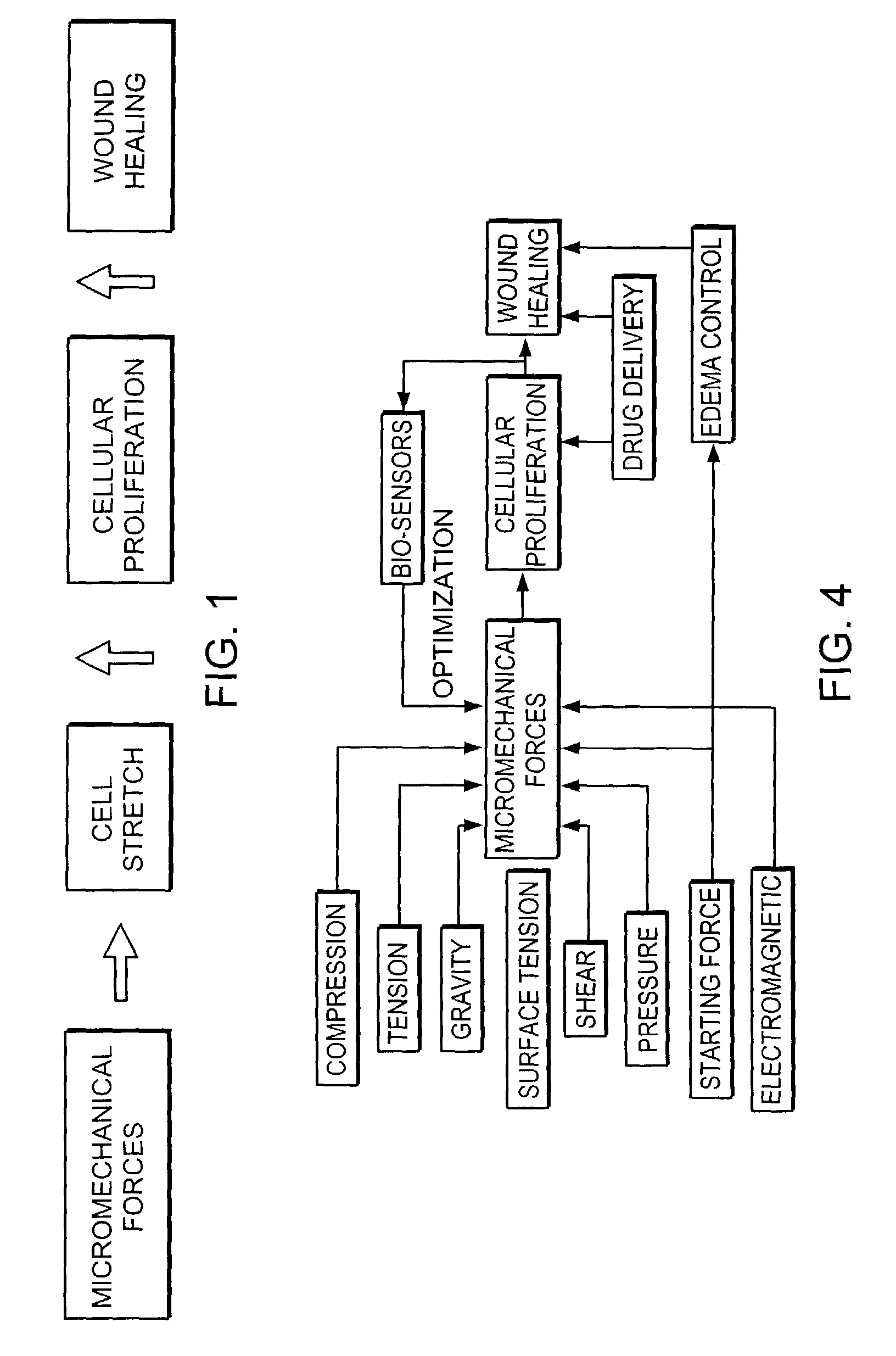

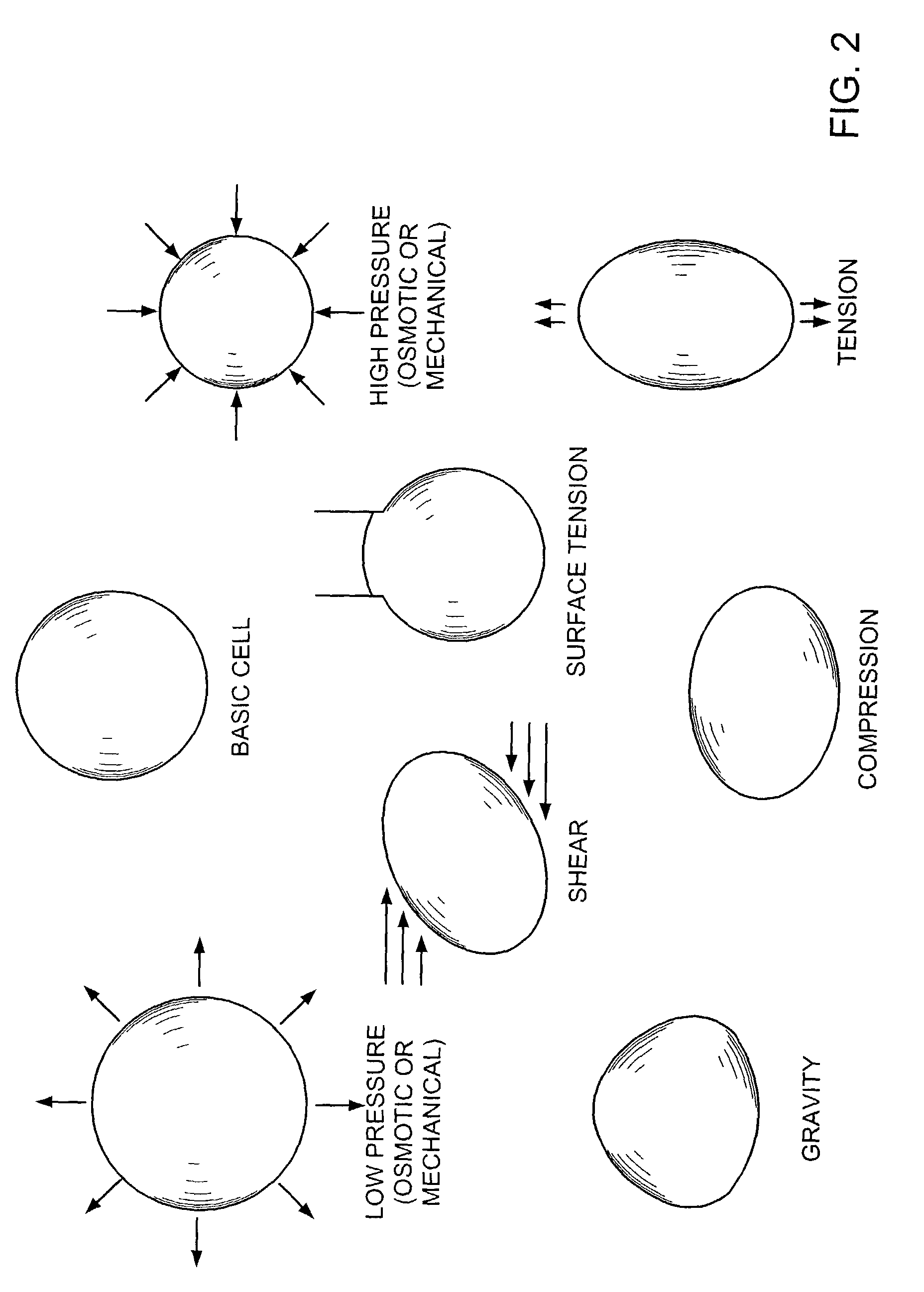

Methods and apparatus for application of micro-mechanical forces to tissues

InactiveUS7494482B2Accelerate tissue ingrowthEnhancing tissue repairNon-adhesive dressingsBone implantMicron scaleCell-Extracellular Matrix

Methods and devices for transmitting micromechanical forces locally to induce surface convolutions into tissues on the millimeter to micron scale for promoting wound healing are presented. These convolutions induce a moderate stretching of individual cells, stimulating cellular proliferation and elaboration of natural growth factors without increasing the size of the wound. Micromechanical forces can be applied directly to tissue, through biomolecules or the extracellular matrix. This invention can be used with biosensors, biodegradable materials and drug delivery systems. This invention will also be useful in pre-conditioned tissue-engineering constructs in vitro. Application of this invention will shorten healing times for wounds and reduce the need for invasive surgery.

Owner:MASSACHUSETTS INST OF TECH +2

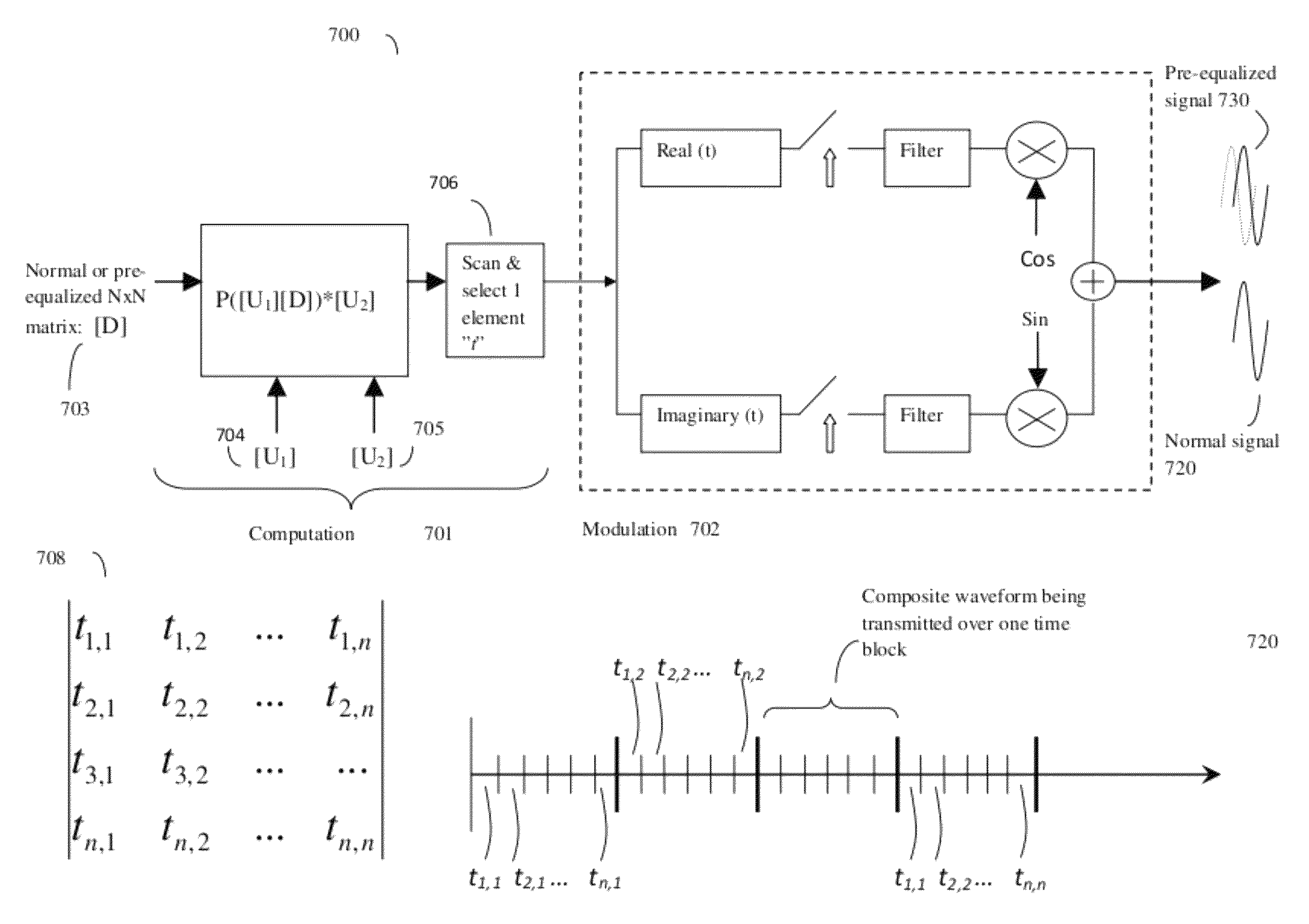

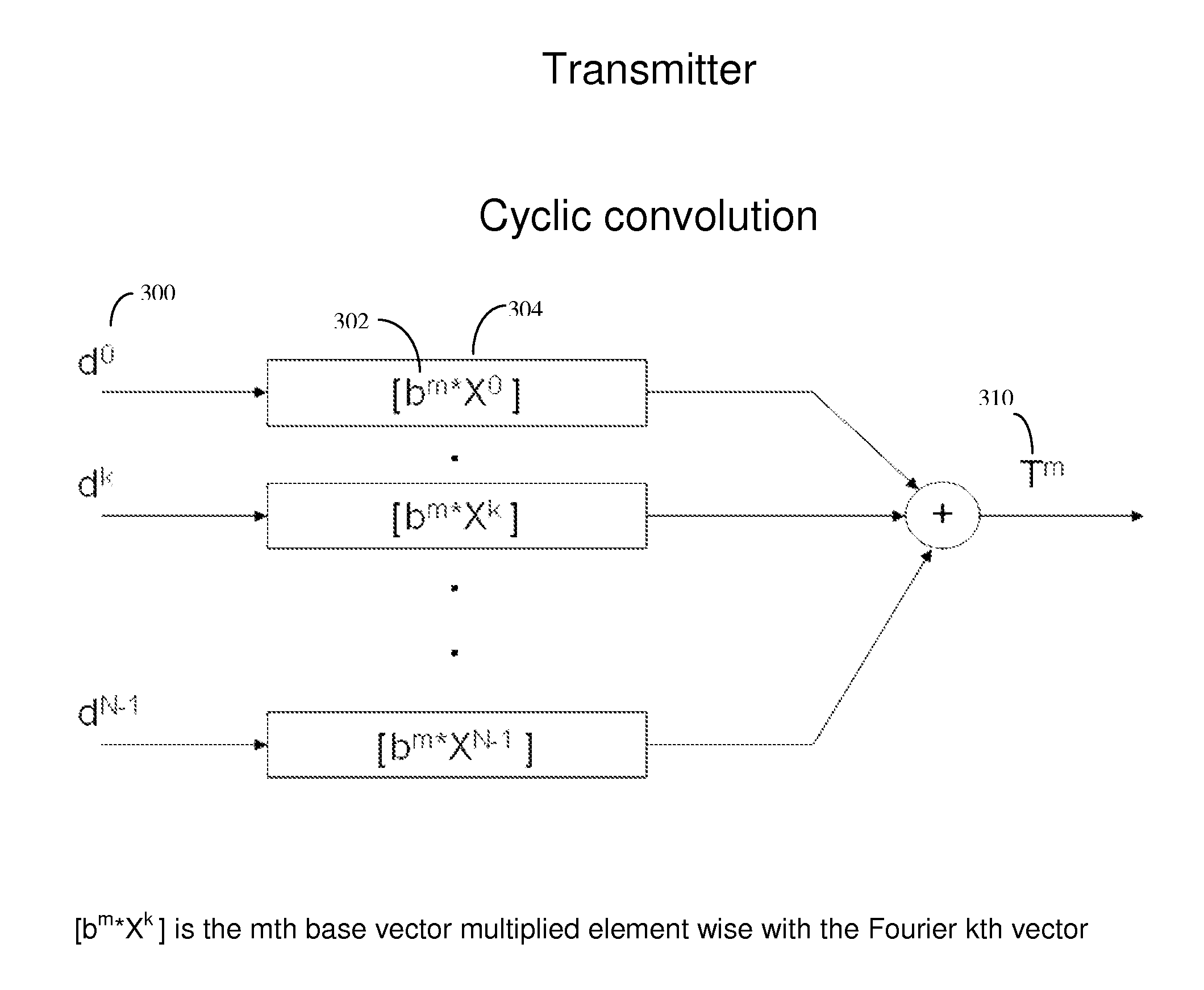

Signal modulation method resistant to echo reflections and frequency offsets

ActiveUS9083595B2Improve performancePromote decompositionNetwork traffic/resource managementMulti-frequency code systemsHigh rateEngineering

A method of modulating communications signals, such as optical fiber, wired electronic, or wireless signals in a manner that facilitates automatic correction for the signal distortion effects of echoes and frequency shifts, while still allowing high rates of data transmission. Data symbols intended for transmission are distributed into N×N matrices, and used to weigh or modulate a family of cyclically time shifted and cyclically frequency shifted waveforms. Although these waveforms may then be distorted during transmission, their basic cyclic time and frequency repeating structure facilitates use of improved receivers with deconvolution devices that can utilize the repeating patterns to correct for these distortions. The various waveforms may be sent in N time blocks at various time spacing and frequency spacing combinations in a manner that can allow interleaving of blocks from different transmitters. Applications to channel sounding / characterization, system optimization, and also radar are also discussed.

Owner:COHERE TECH

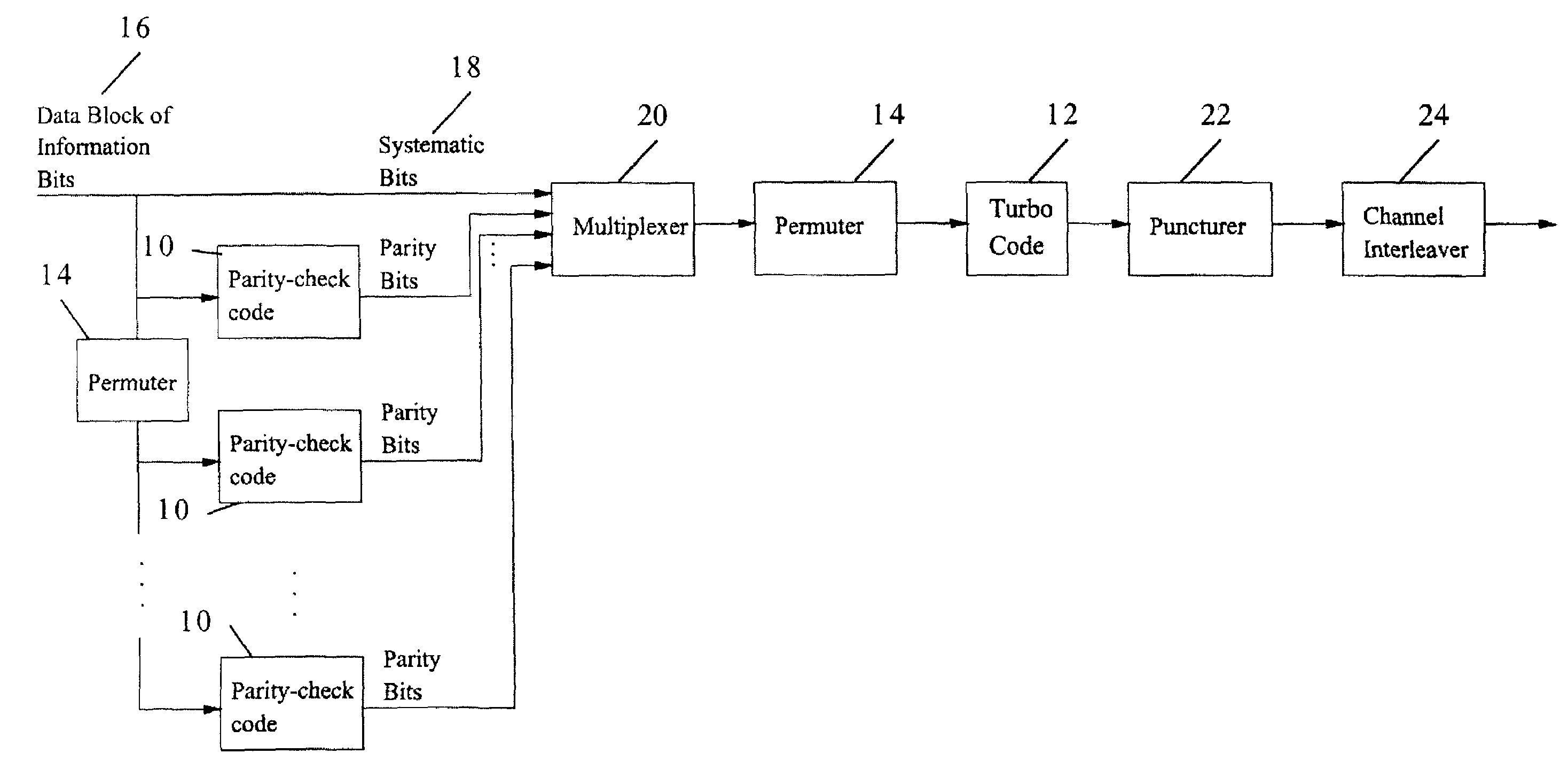

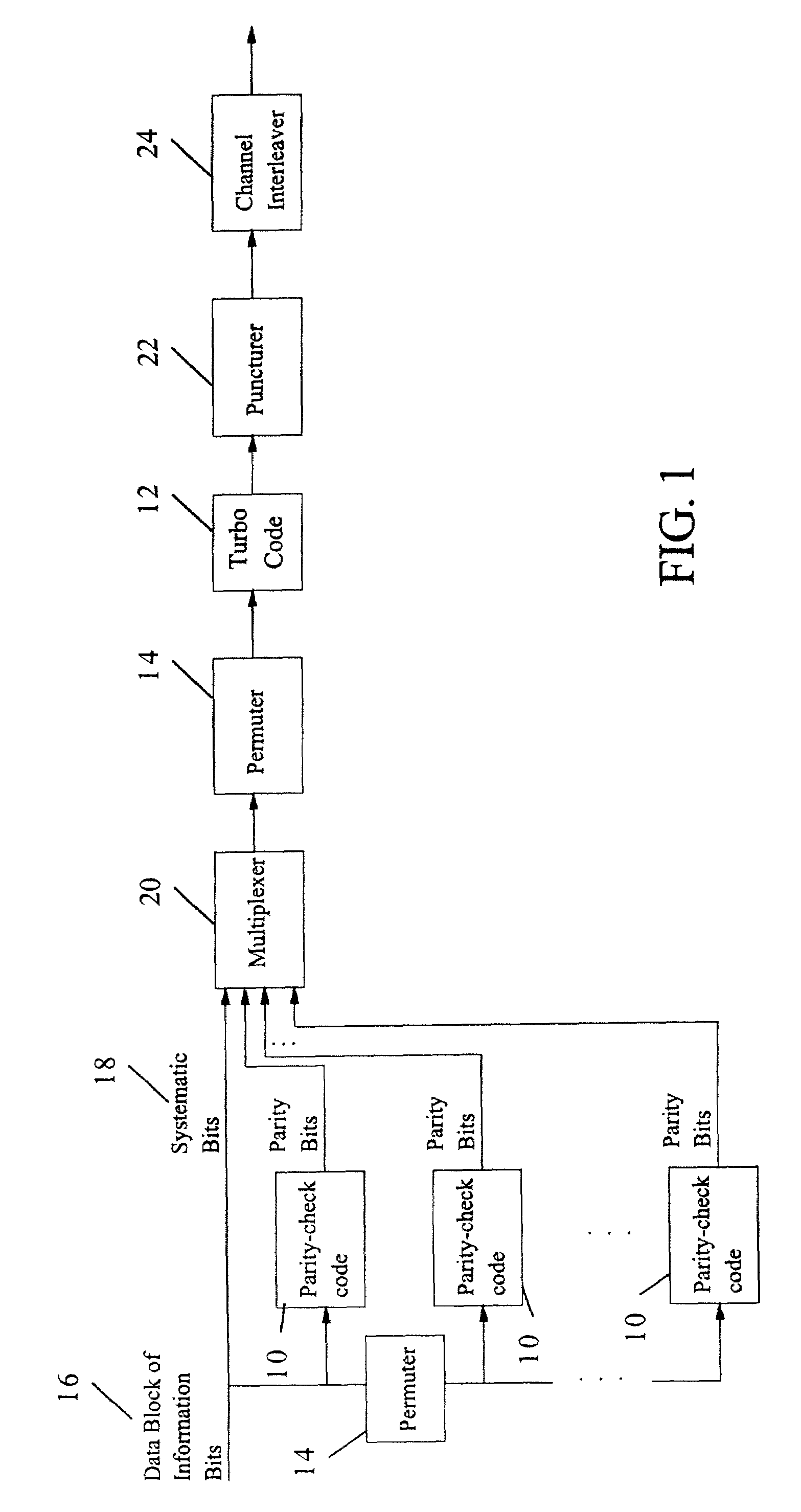

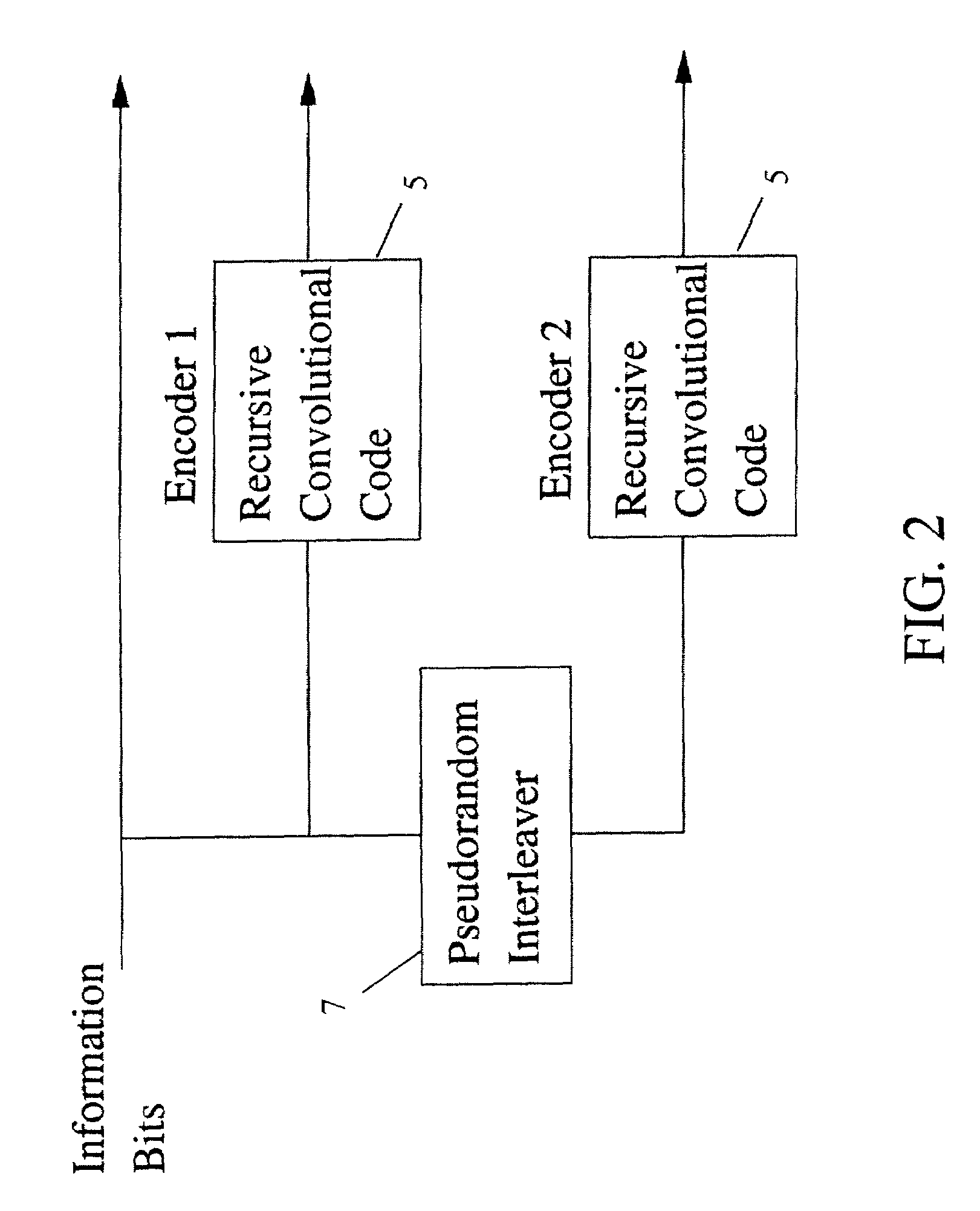

Method and coding means for error-correction utilizing concatenated parity and turbo codes

InactiveUS7093179B2Reduction in rateImprove overall utilizationError preventionError detection/correctionParallel computingTurbo coded

A method and apparatus for encoding and decoding data using an overall code comprising an outer parity-check and an inner parallel concatenated convolutional, or turbo code. The overall code provides error probabilities that are significantly lower than can be achieved by using turbo codes alone. The output of the inner code can be punctured to maintain the same turbo code rate as the turbo code encoding without the outer code. Multiple parity-check codes can be concatanated either serially or in parallel as outer codes. Decoding can be performed with iterative a posteriori probability (APP) decoders or with other decoders, depending on the requirements of the system. The parity-check code can be applied to a subset of the bits to achieve unequal error protection. Moreover, the techniques presented can be mapped to higher order modulation schemes to achieve improved power and bandwidth efficiency.

Owner:FLORIDA UNIV OF A FLORIDA +1

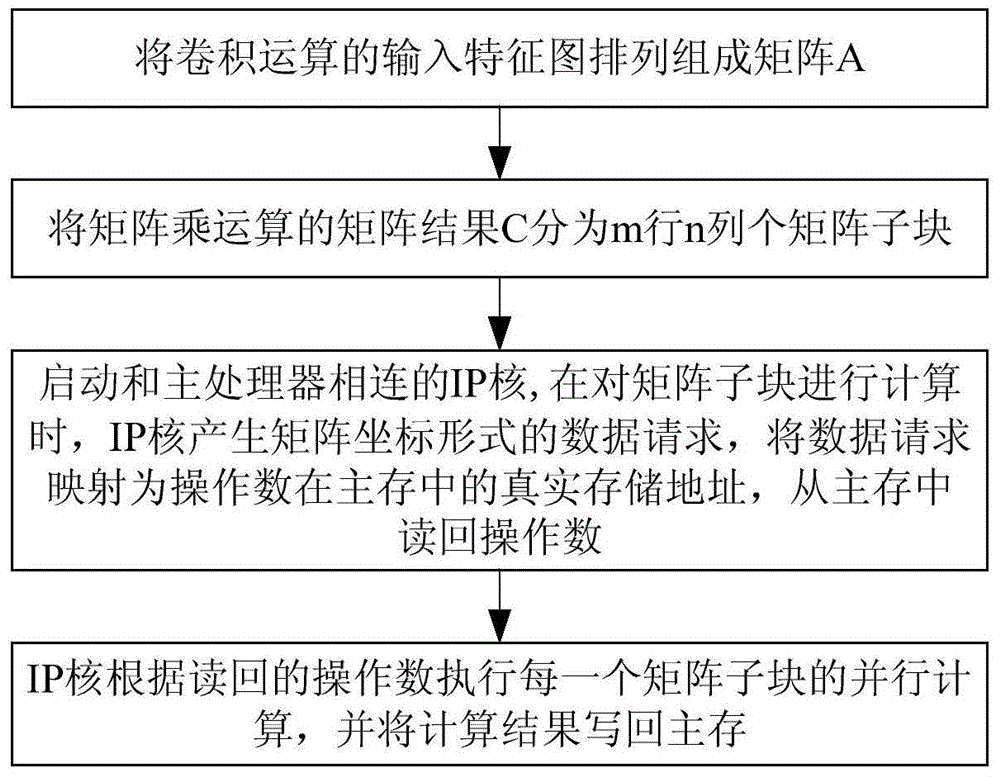

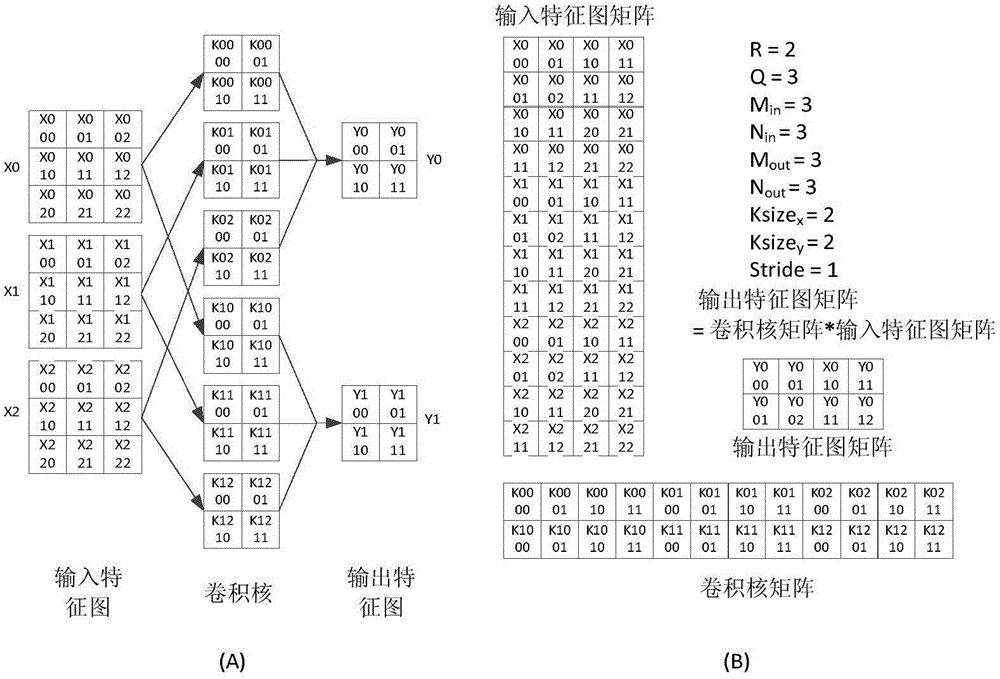

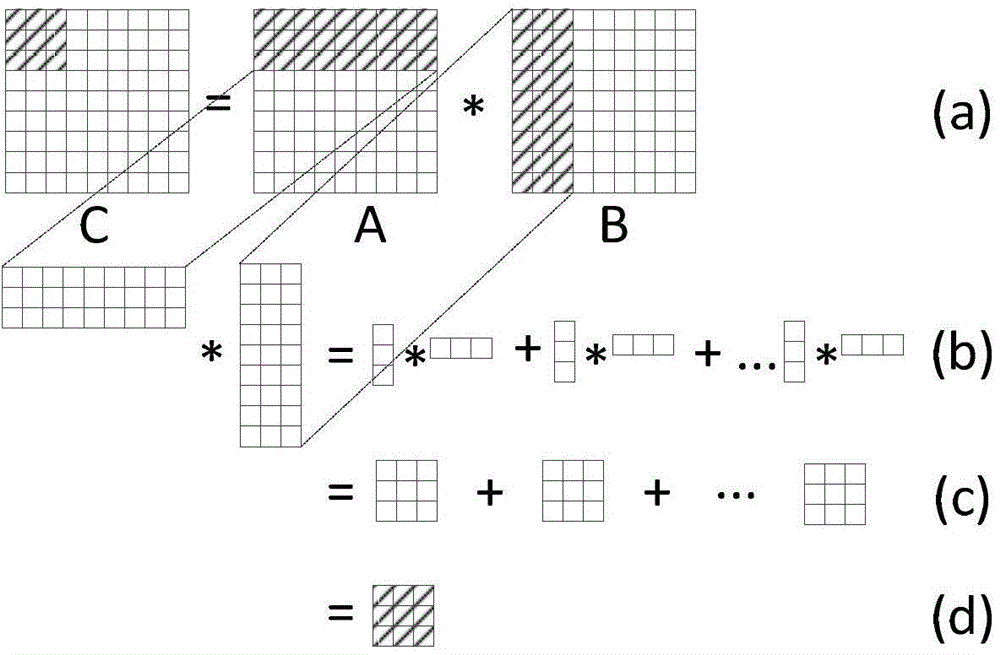

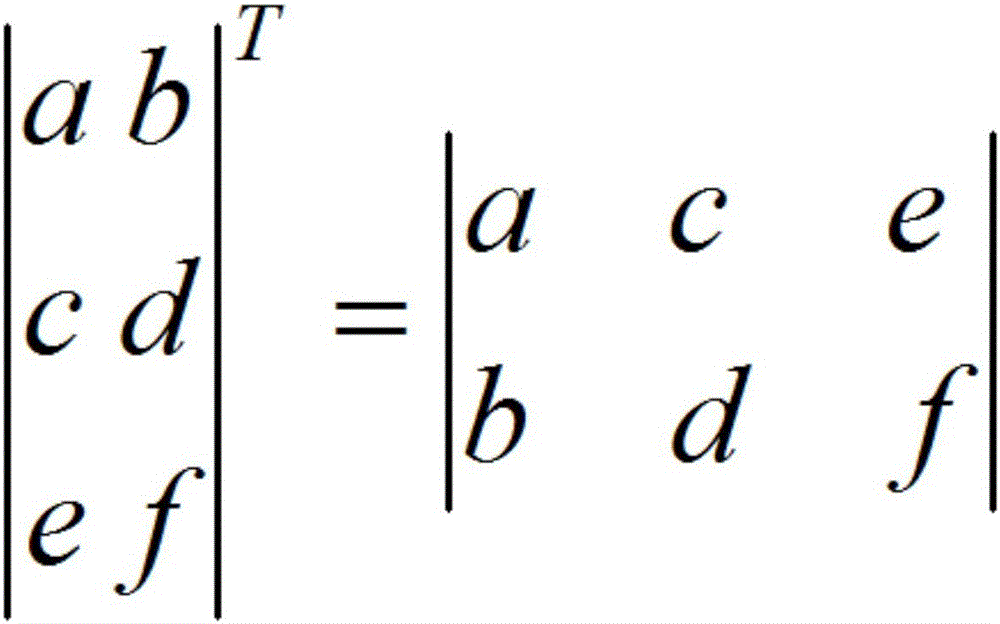

Method for accelerating convolution neutral network hardware and AXI bus IP core thereof

ActiveCN104915322AImprove adaptabilityIncrease flexibilityResource allocationMultiple digital computer combinationsChain structureMatrix multiplier

The invention discloses a method for accelerating convolution neutral network hardware and an AXI bus IP core thereof. The method comprises the first step of performing operation and converting a convolution layer into matrix multiplication of a matrix A with m lines and K columns and a matrix B with K lines and n columns; the second step of dividing the matrix result into matrix subblocks with m lines and n columns; the third step of starting a matrix multiplier to prefetch the operation number of the matrix subblocks; and the fourth step of causing the matrix multiplier to execute the calculation of the matrix subblocks and writing the result back to a main memory. The IP core comprises an AXI bus interface module, a prefetching unit, a flow mapper and a matrix multiplier. The matrix multiplier comprises a chain type DMA and a processing unit array, the processing unit array is composed of a plurality of processing units through chain structure arrangement, and the processing unit of a chain head is connected with the chain type DMA. The method can support various convolution neutral network structures and has the advantages of high calculation efficiency and performance, less requirements for on-chip storage resources and off-chip storage bandwidth, small in communication overhead, convenience in unit component upgrading and improvement and good universality.

Owner:NAT UNIV OF DEFENSE TECH

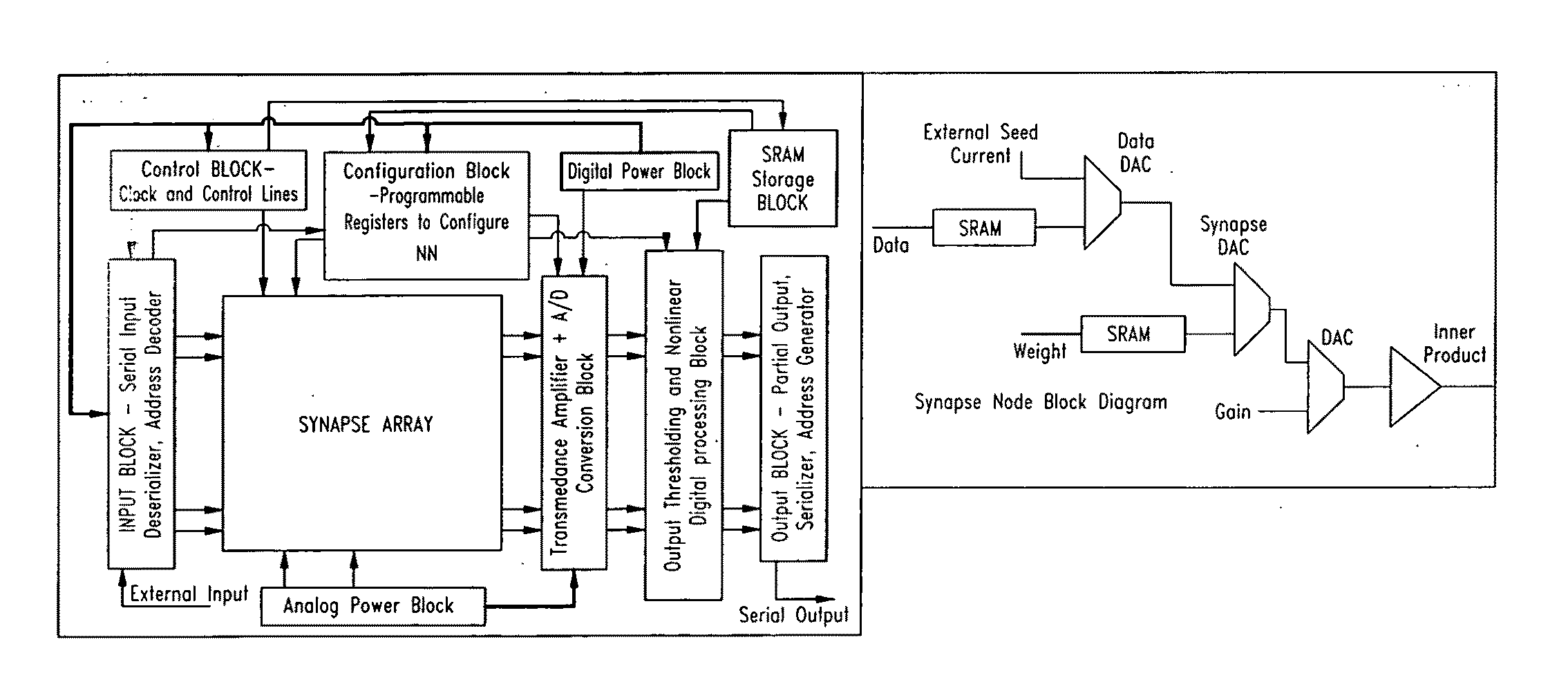

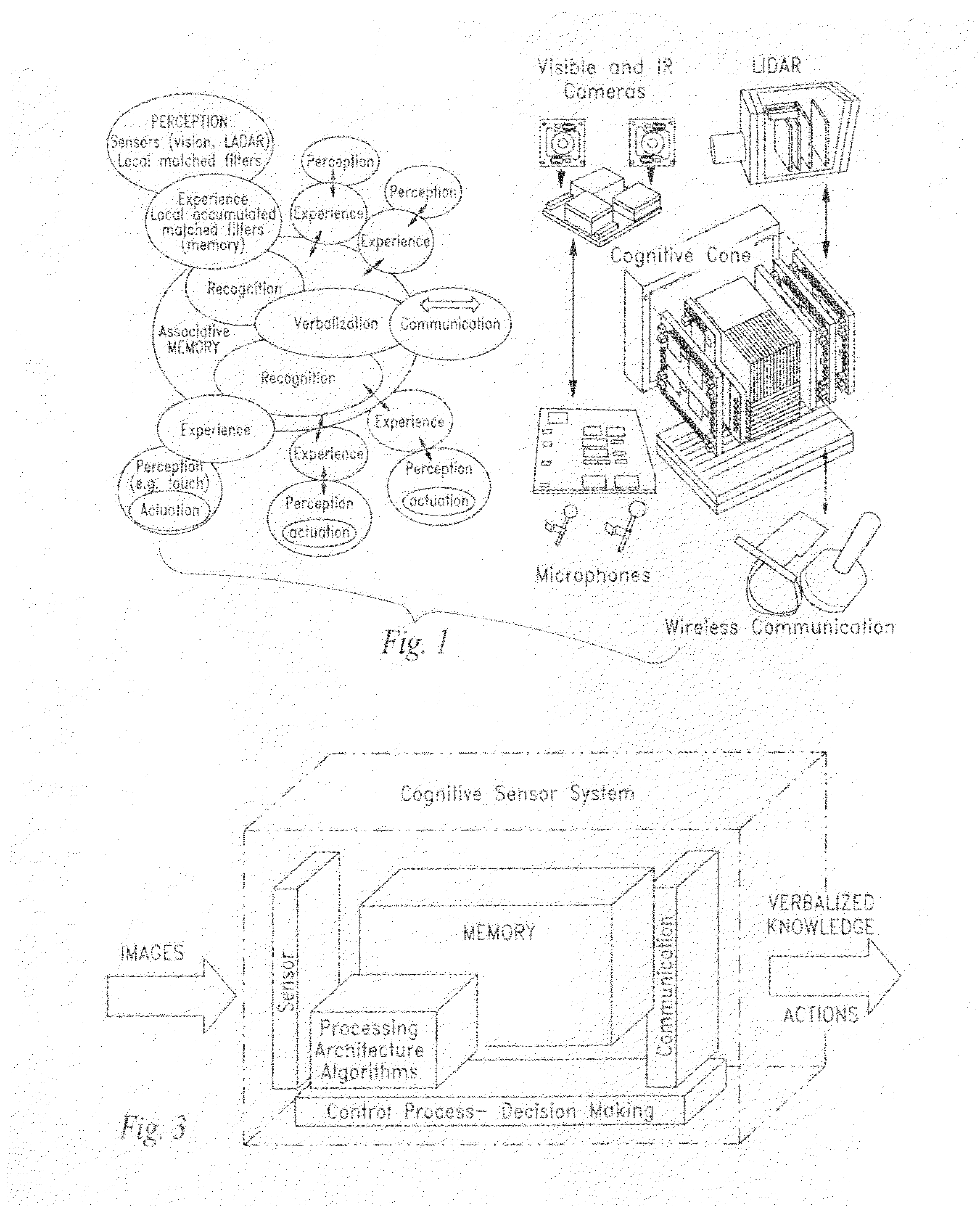

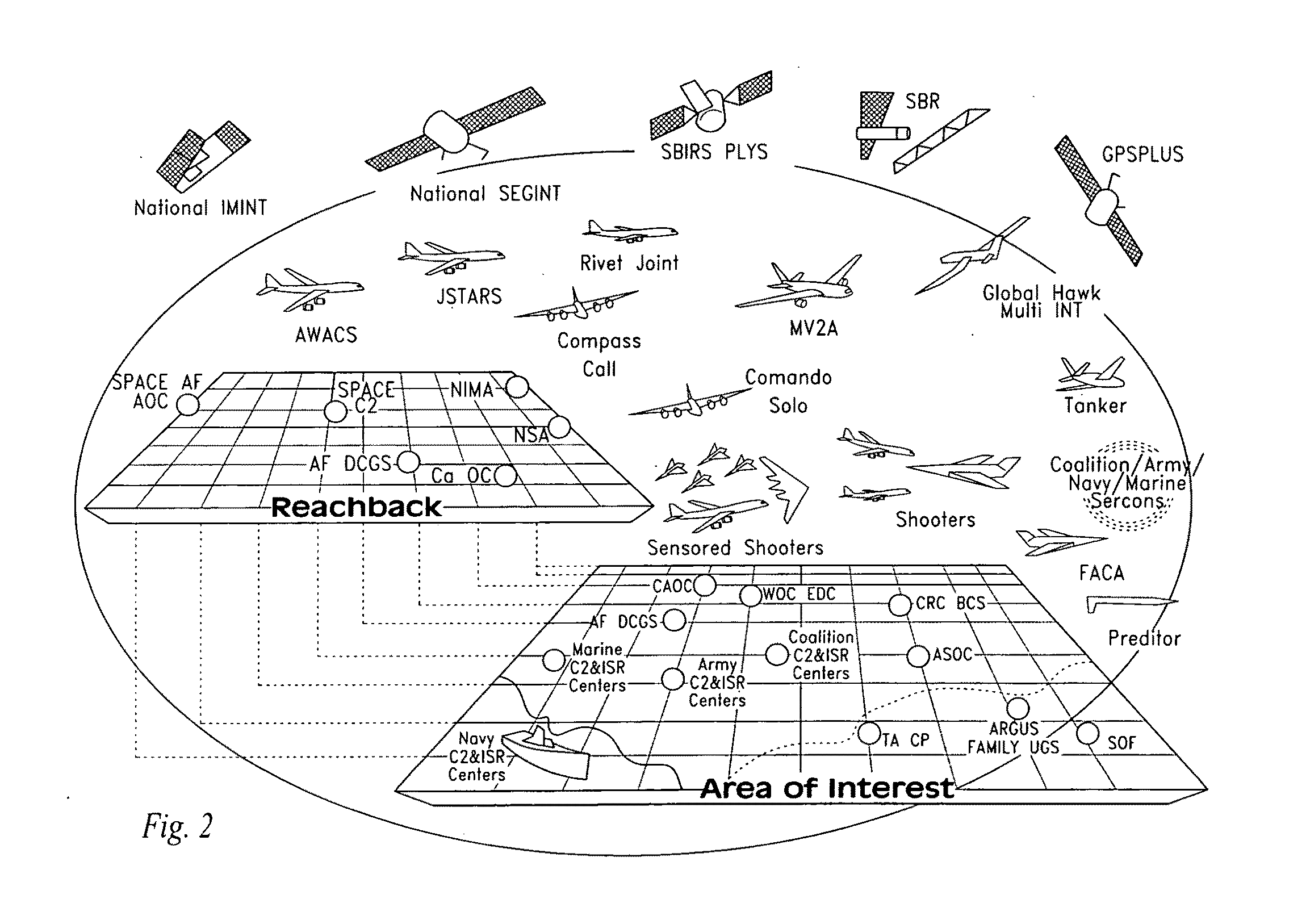

Apparatus comprising artificial neuronal assembly

ActiveUS20100241601A1Augment sensory awarenessDigital computer detailsDigital dataVisual cortexRetina

An artificial synapse array and virtual neural space are disclosed.More specifically, a cognitive sensor system and method are disclosed comprising a massively parallel convolution processor capable of, for instance, situationally dependent identification of salient features in a scene of interest by emulating the cortical hierarchy found in the human retina and visual cortex.

Owner:PFG IP +1

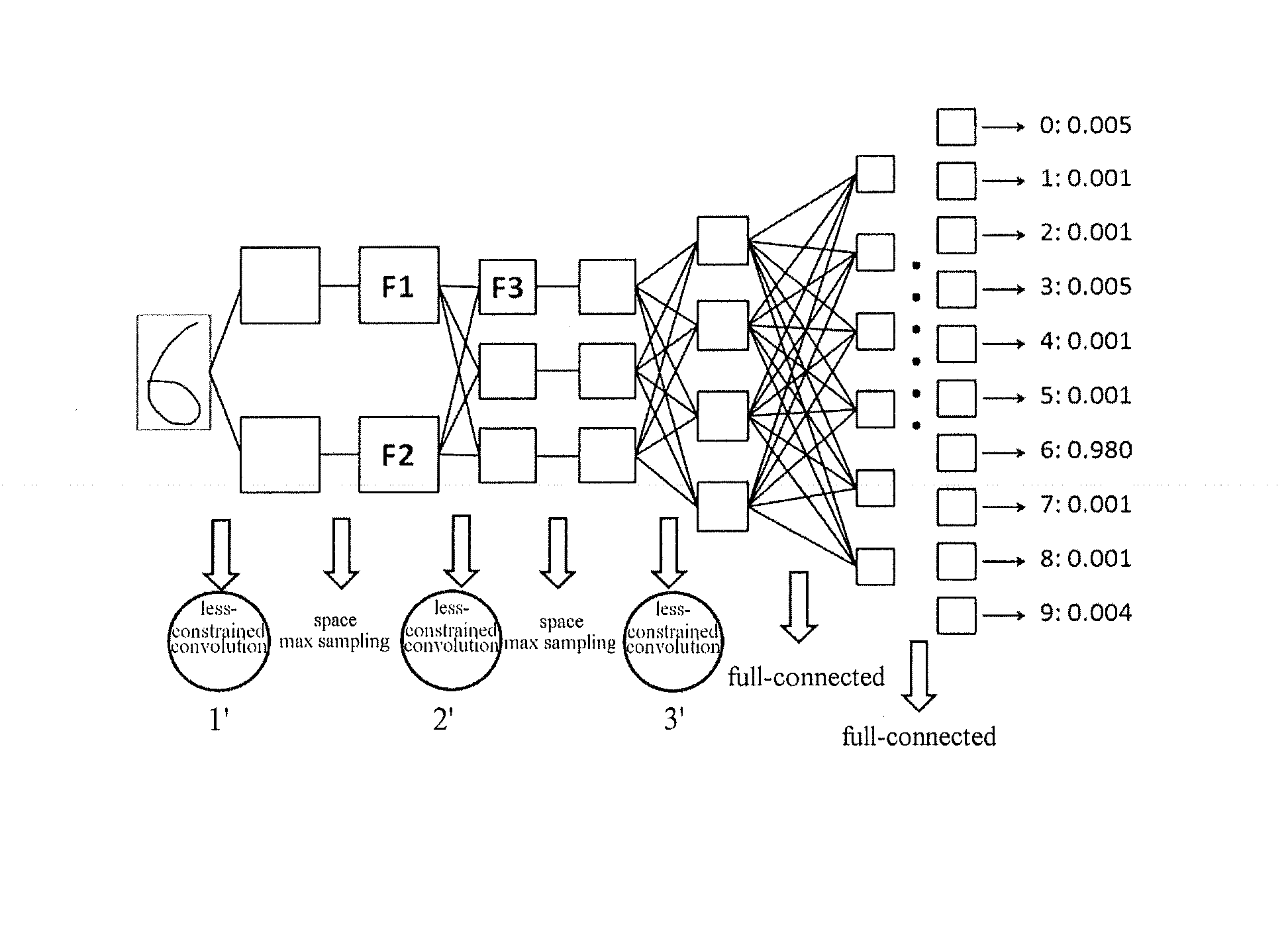

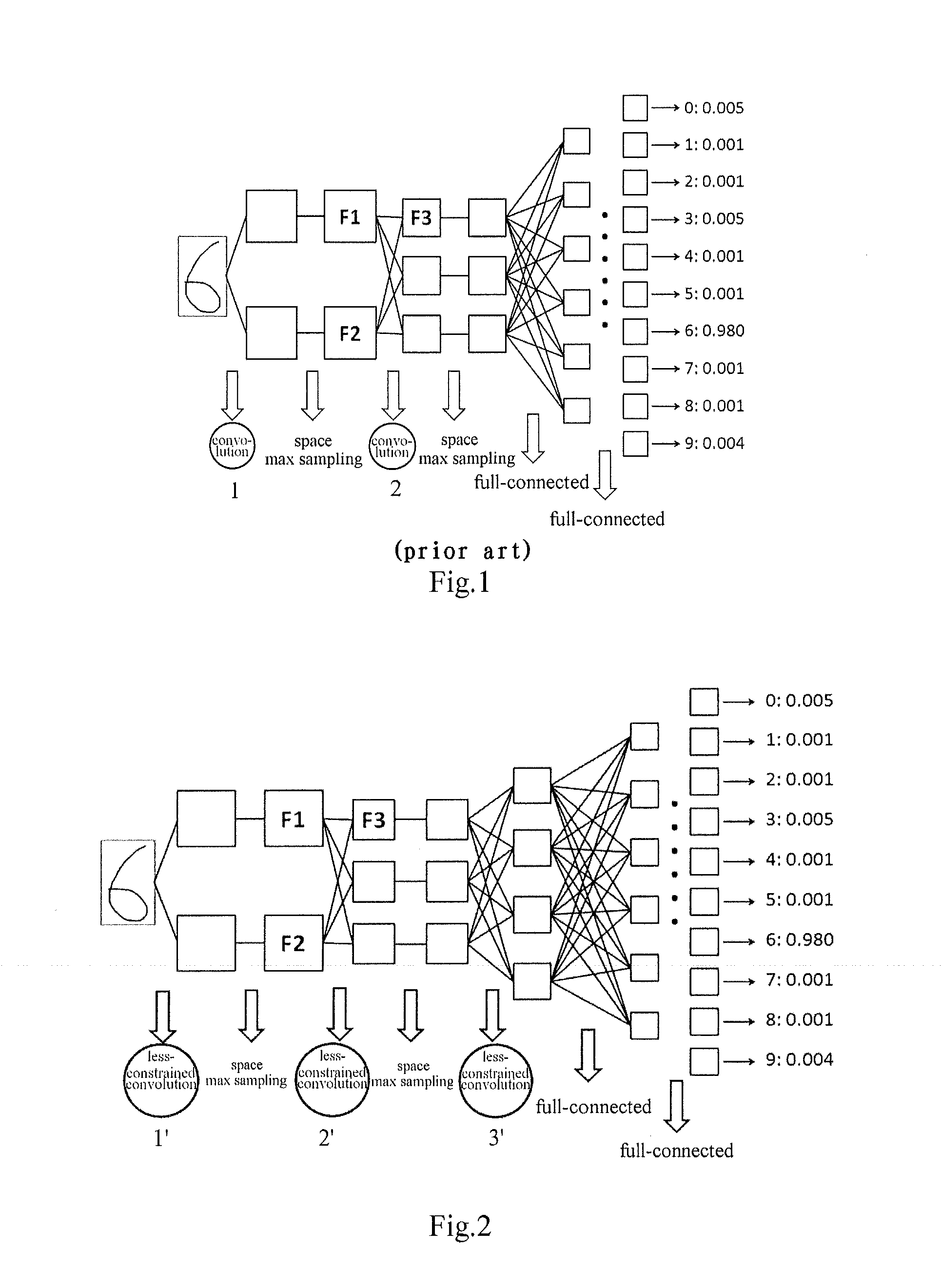

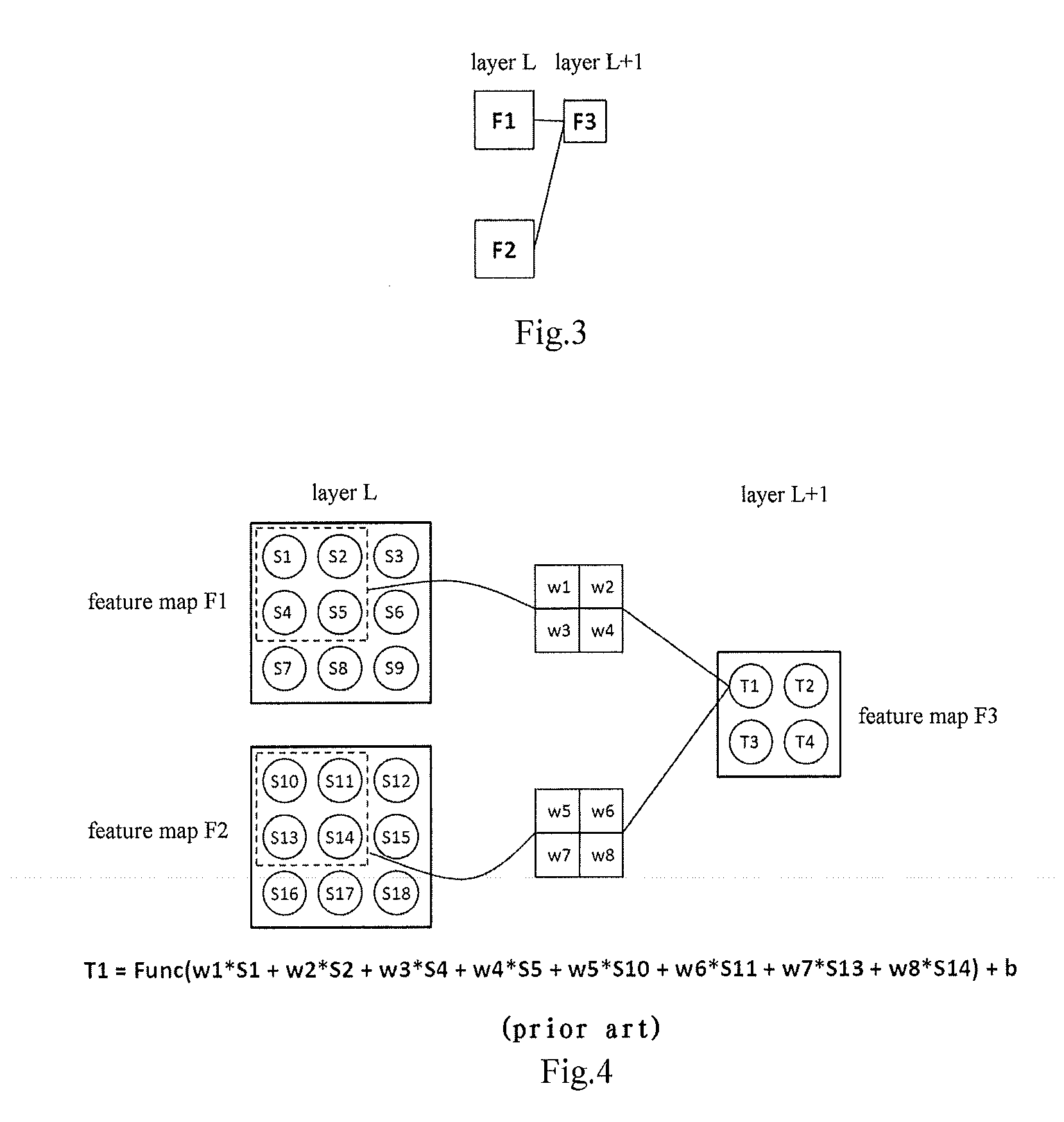

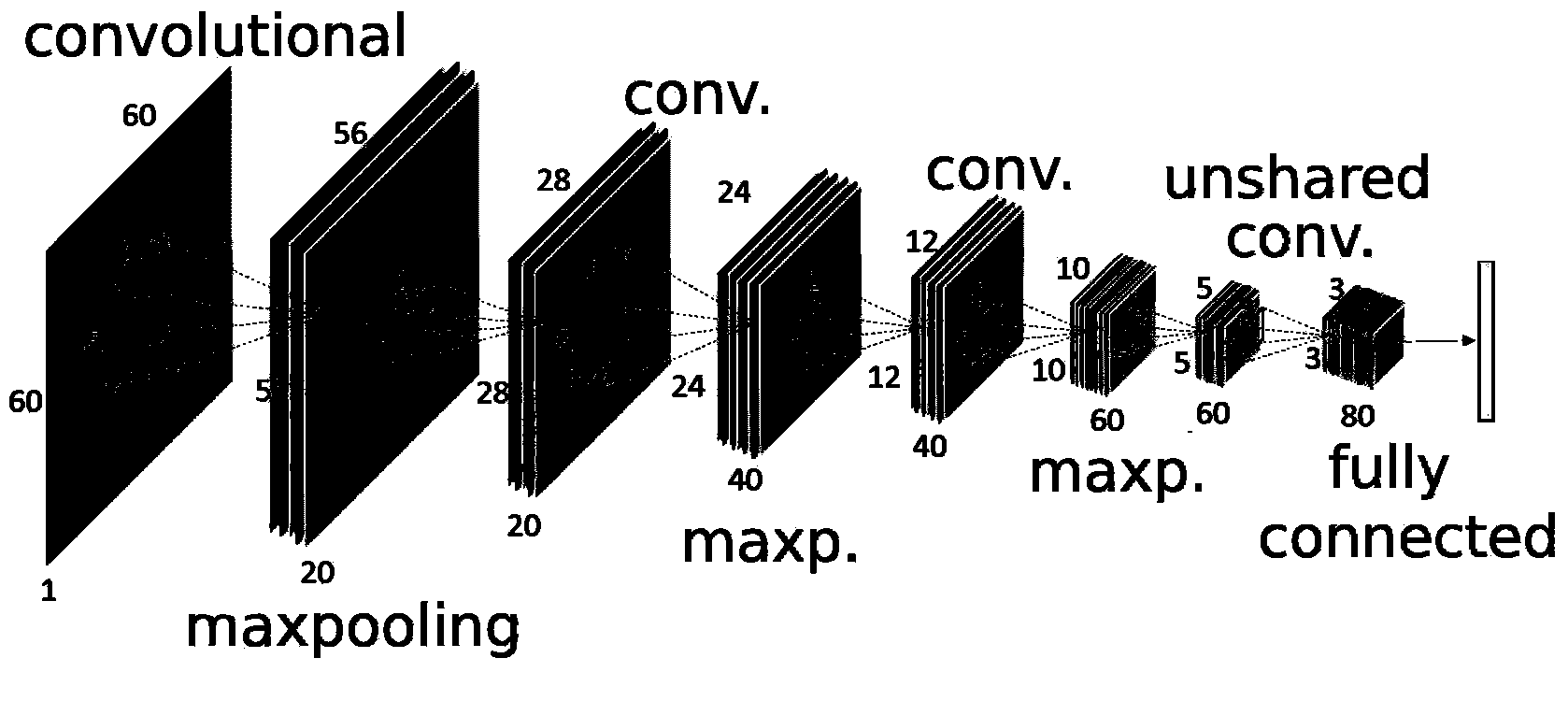

Convolutional-neural-network-based classifier and classifying method and training methods for the same

InactiveUS20150036920A1Character and pattern recognitionNeural learning methodsClassification methodsNeuron

The present invention relates to a convolutional-neural-network-based classifier, a classifying method by using a convolutional-neural-network-based classifier and a method for training the convolutional-neural-network-based classifier. The convolutional-neural-network-based classifier comprises: a plurality of feature map layers, at least one feature map in at least one of the plurality of feature map layers being divided into a plurality of regions; and a plurality of convolutional templates corresponding to the plurality of regions respectively, each of the convolutional templates being used for obtaining a response value of a neuron in the corresponding region.

Owner:FUJITSU LTD

Human face super-resolution reconstruction method based on generative adversarial network and sub-pixel convolution

ActiveCN107154023AImprove recognition accuracyClear outline of the faceGeometric image transformationNeural architecturesData setImage resolution

The invention discloses a human face super-resolution reconstruction method based on a generative adversarial network and sub-pixel convolution, and the method comprises the steps: A, carrying out the preprocessing through a normally used public human face data set, and making a low-resolution human face image and a corresponding high-resolution human face image training set; B, constructing the generative adversarial network for training, adding a sub-pixel convolution to the generative adversarial network to achieve the generation of a super-resolution image and introduce a weighted type loss function comprising feature loss; C, sequentially inputting a training set obtained at step A into a generative adversarial network model for modeling training, adjusting the parameters, and achieving the convergence; D, carrying out the preprocessing of a to-be-processed low-resolution human face image, inputting the image into the generative adversarial network model, and obtaining a high-resolution image after super-resolution reconstruction. The method can achieve the generation of a corresponding high-resolution image which is clearer in human face contour, is more specific in detail and is invariable in features. The method improves the human face recognition accuracy, and is better in human face super-resolution reconstruction effect.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

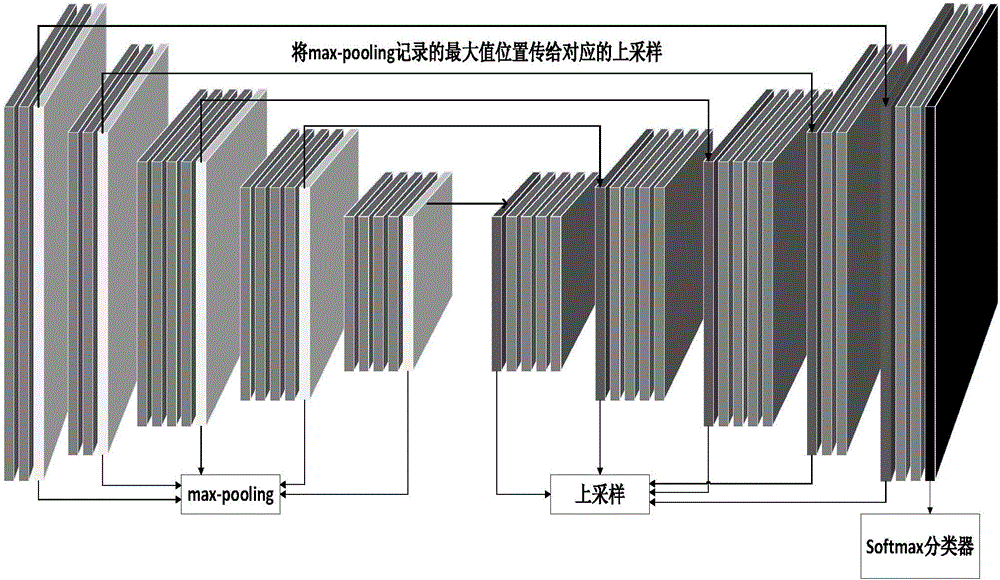

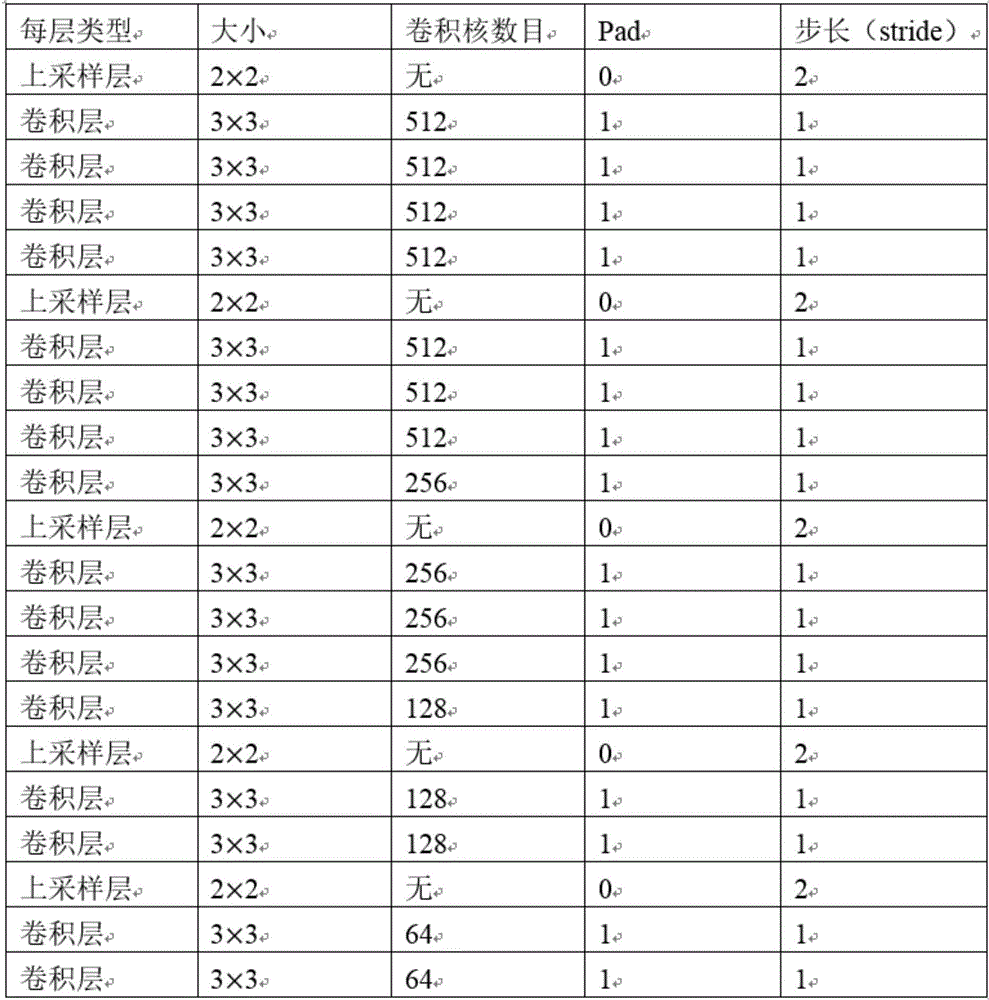

Fundus image retinal vessel segmentation method and system based on deep learning

ActiveCN106408562AEasy to classifyImprove accuracyImage enhancementImage analysisSegmentation systemBlood vessel

The invention discloses a fundus image retinal vessel segmentation method and a fundus image retinal vessel segmentation system based on deep learning. The fundus image retinal vessel segmentation method comprises the steps of performing data amplification on a training set, enhancing an image, training a convolutional neural network by using the training set, segmenting the image by using a convolutional neural network segmentation model to obtain a segmentation result, training a random forest classifier by using features of the convolutional neural network, extracting a last layer of convolutional layer output from the convolutional neural network, using the convolutional layer output as input of the random forest classifier for pixel classification to obtain another segmentation result, and fusing the two segmentation results to obtain a final segmentation image. Compared with the traditional vessel segmentation method, the fundus image retinal vessel segmentation method uses the deep convolutional neural network for feature extraction, the extracted features are more sufficient, and the segmentation precision and efficiency are higher.

Owner:SOUTH CHINA UNIV OF TECH

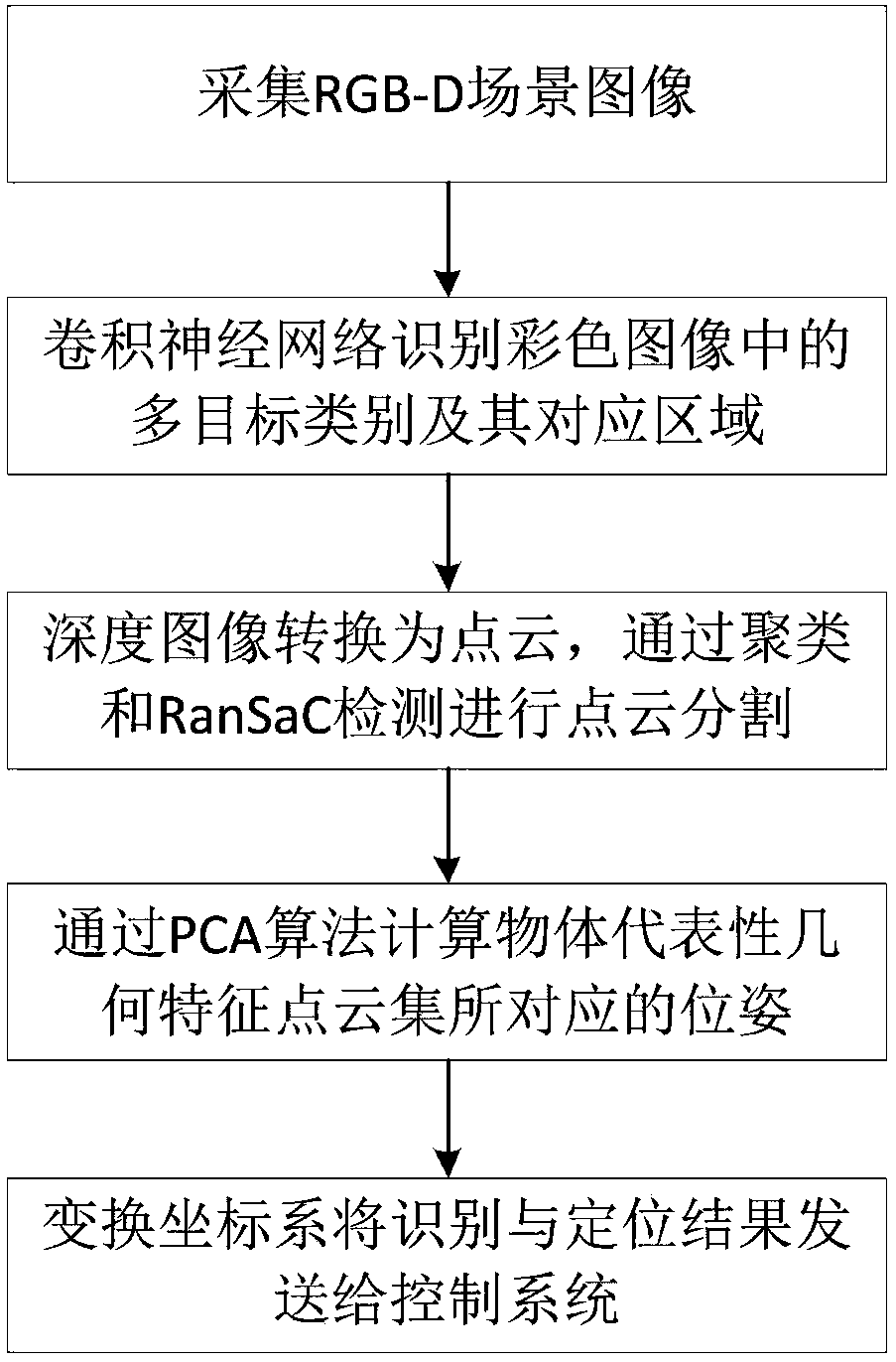

Visual recognition and positioning method for robot intelligent capture application

The invention relates to a visual recognition and positioning method for robot intelligent capture application. According to the method, an RGB-D scene image is collected, a supervised and trained deep convolutional neural network is utilized to recognize the category of a target contained in a color image and a corresponding position region, the pose state of the target is analyzed in combinationwith a deep image, pose information needed by a controller is obtained through coordinate transformation, and visual recognition and positioning are completed. Through the method, the double functions of recognition and positioning can be achieved just through a single visual sensor, the existing target detection process is simplified, and application cost is saved. Meanwhile, a deep convolutional neural network is adopted to obtain image features through learning, the method has high robustness on multiple kinds of environment interference such as target random placement, image viewing anglechanging and illumination background interference, and recognition and positioning accuracy under complicated working conditions is improved. Besides, through the positioning method, exact pose information can be further obtained on the basis of determining object spatial position distribution, and strategy planning of intelligent capture is promoted.

Owner:合肥哈工慧拣智能科技有限公司

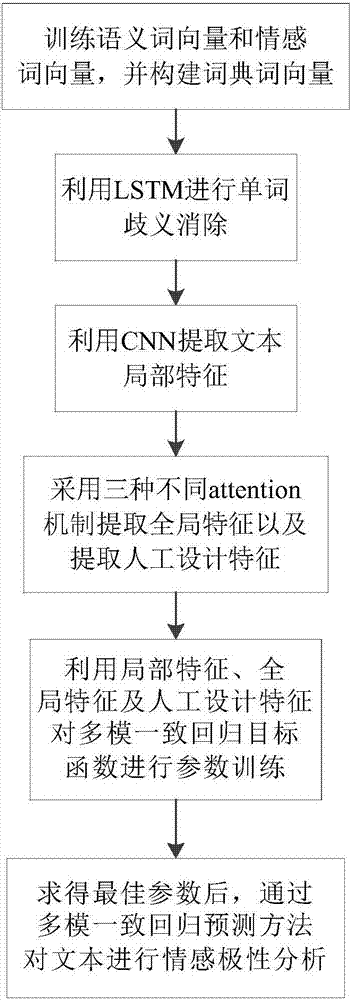

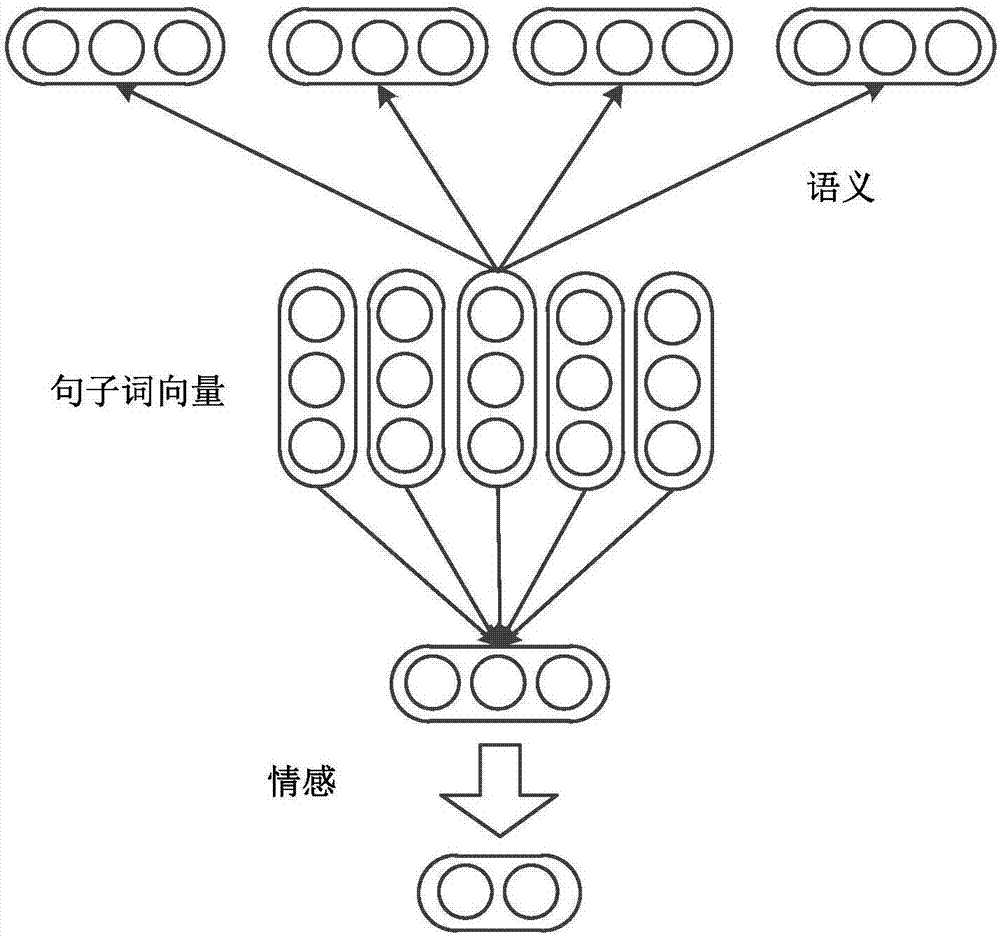

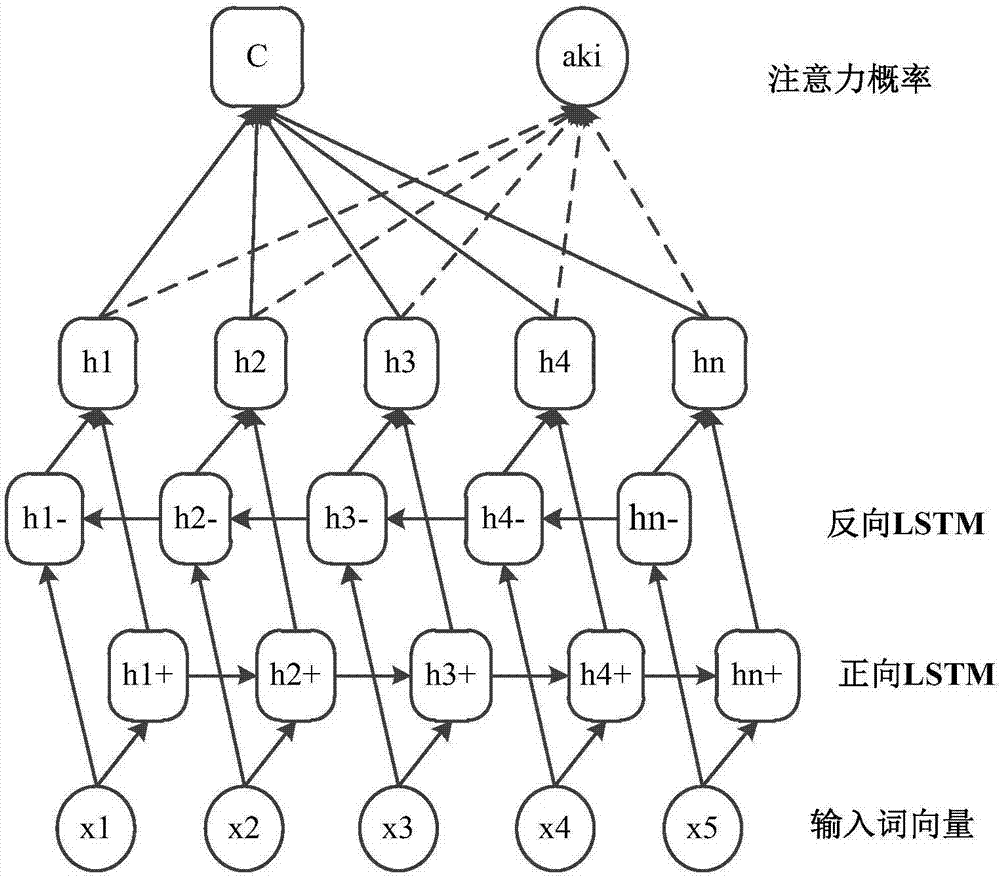

attention CNNs and CCR-based text sentiment analysis method

ActiveCN107092596AHigh precisionImprove classification accuracySemantic analysisNeural architecturesFeature extractionAmbiguity

The invention discloses an attention CNNs and CCR-based text sentiment analysis method and belongs to the field of natural language processing. The method comprises the following steps of 1, training a semantic word vector and a sentiment word vector by utilizing original text data and performing dictionary word vector establishment by utilizing a collected sentiment dictionary; 2, capturing context semantics of words by utilizing a long-short-term memory (LSTM) network to eliminate ambiguity; 3, extracting local features of a text in combination with convolution kernels with different filtering lengths by utilizing a convolutional neural network; 4, extracting global features by utilizing three different attention mechanisms; 5, performing artificial feature extraction on the original text data; 6, training a multimodal uniform regression target function by utilizing the local features, the global features and artificial features; and 7, performing sentiment polarity prediction by utilizing a multimodal uniform regression prediction method. Compared with a method adopting a single word vector, a method only extracting the local features of the text, or the like, the text sentiment analysis method can further improve the sentiment classification precision.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

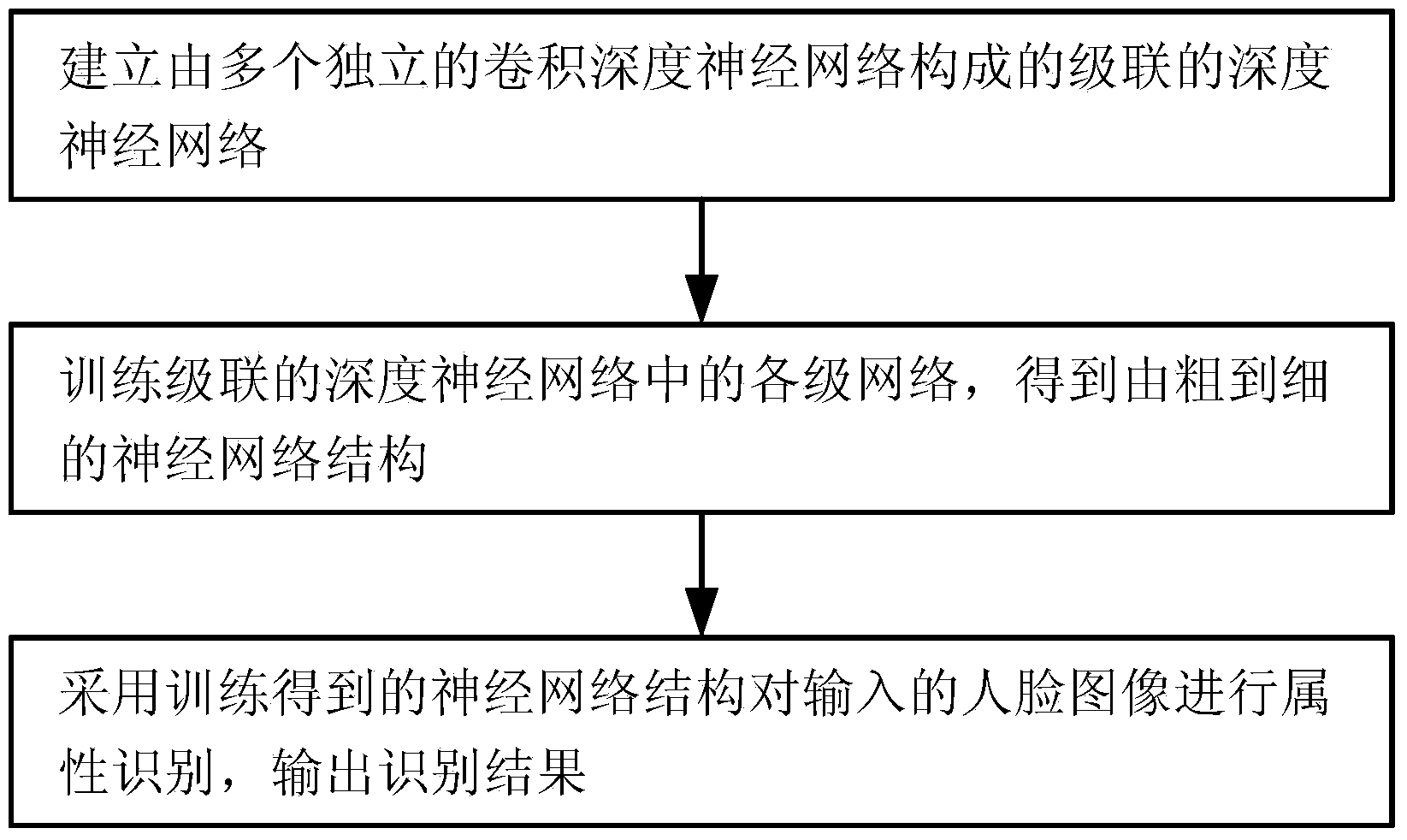

Cascaded depth neural network-based face attribute recognition method

ActiveCN103824054AHigh speedImprove accuracyCharacter and pattern recognitionNeural learning methodsPattern recognitionNetwork structure

The invention relates to a cascaded depth neural network-based face attribute recognition method. The method includes the following steps that: 1) a cascaded depth neural network composed of a plurality of independent convolution depth neural networks is constructed; 2) a large number of face image data are adopted to train networks at all levels in the cascaded depth neural network level by level, and the output of networks of previous levels is adopted as the input of networks of posterior levels, such that a coarse-to-fine neural network structure can be obtained; and 3) the coarse-to-fine neural network structure is adopted to recognize the attributes of an inputted face image, and final recognition results can be outputted. According to the cascaded depth neural network-based face attribute recognition method of the invention, a cascade algorithm system is adopted based on depth learning, and therefore, training time can be accelerated; and a cascaded coarse-to-fine processing process is realized, and the performance of a final network can be improved by networks of each level through utilizing information of networks of upper levels, and therefore, the speed and the accuracy of face attribute recognition can be effectively improved.

Owner:BEIJING KUANGSHI TECH

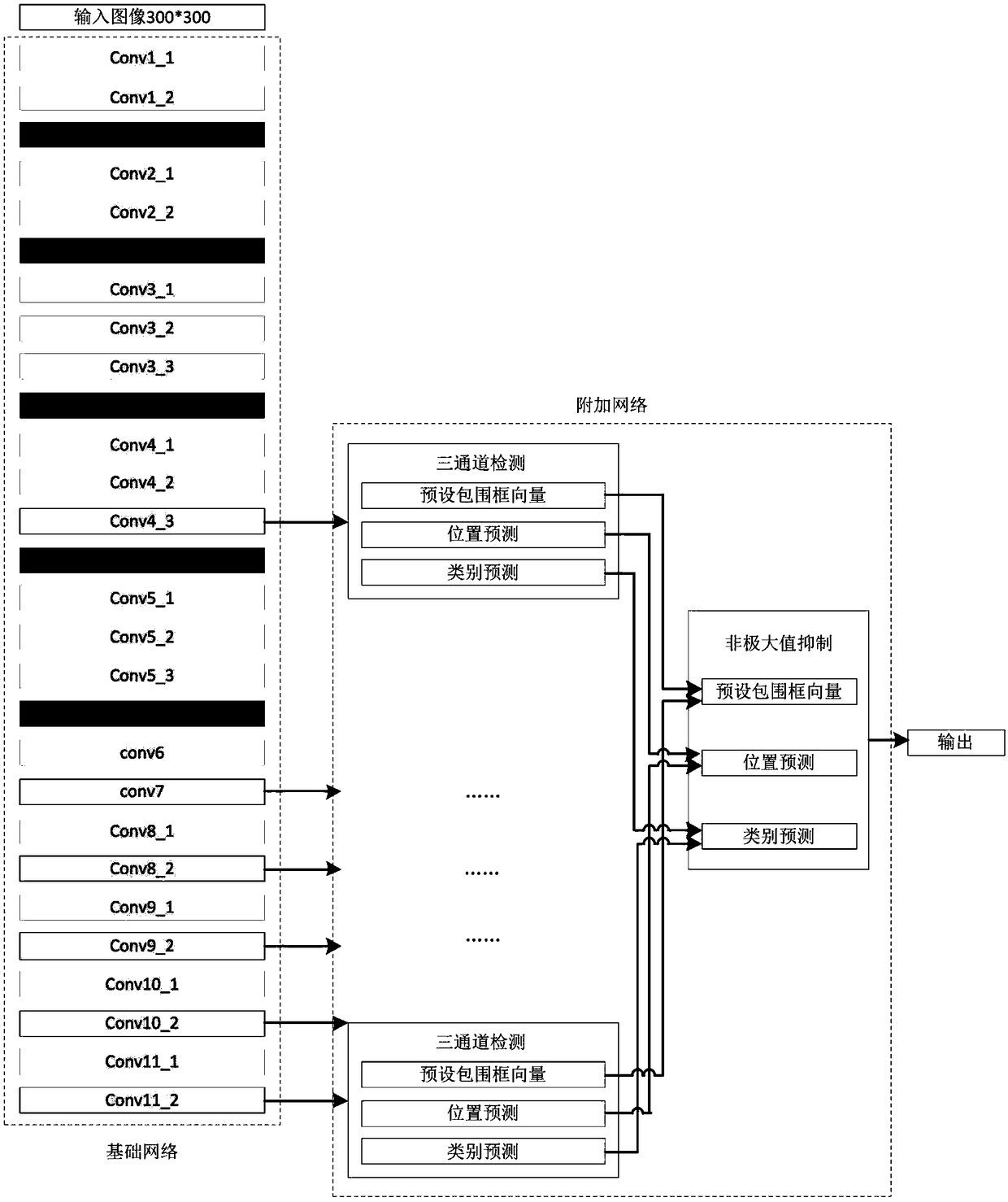

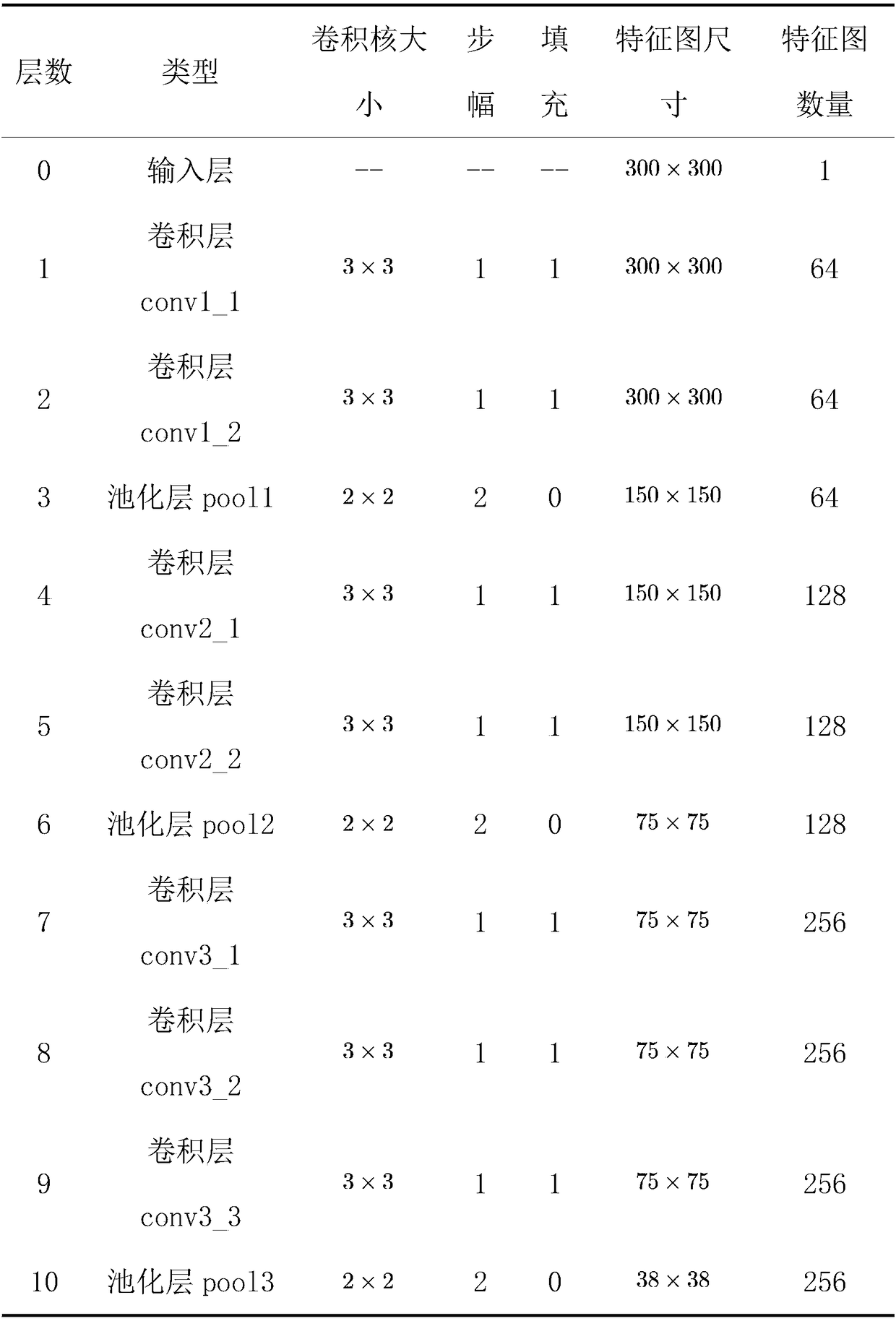

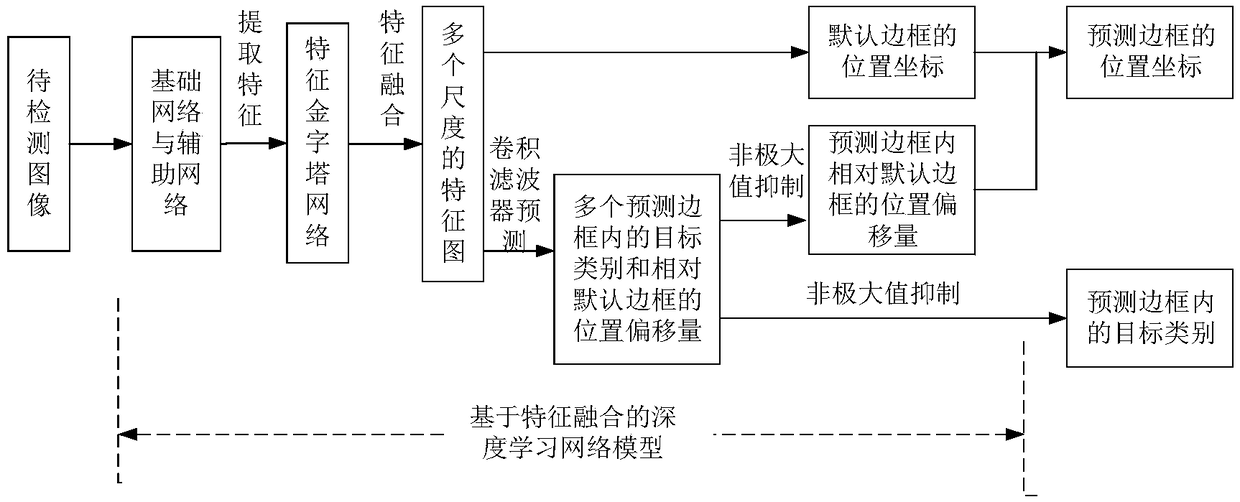

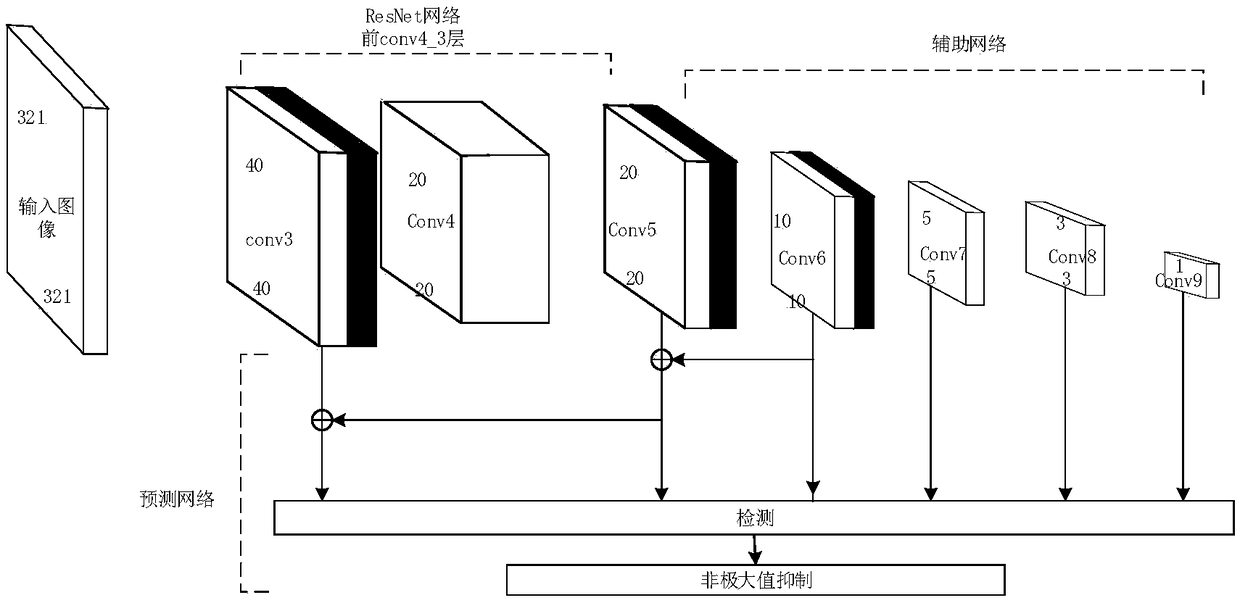

Small target detection method based on feature fusion and depth learning

InactiveCN109344821AScalingRich information featuresCharacter and pattern recognitionNetwork modelFeature fusion

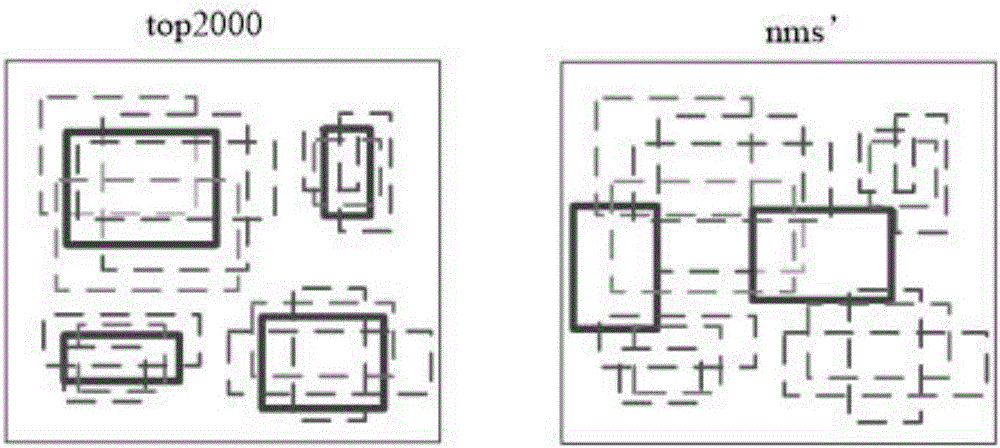

The invention discloses a small target detection method based on feature fusion and depth learning, which solves the problems of poor detection accuracy and real-time performance for small targets. The implementation scheme is as follows: extracting high-resolution feature map through deeper and better network model of ResNet 101; extracting Five successively reduced low resolution feature maps from the auxiliary convolution layer to expand the scale of feature maps. Obtaining The multi-scale feature map by the feature pyramid network. In the structure of feature pyramid network, adopting deconvolution to fuse the feature map information of high-level semantic layer and the feature map information of shallow layer; performing Target prediction using feature maps with different scales and fusion characteristics; adopting A non-maximum value to suppress the scores of multiple predicted borders and categories, so as to obtain the border position and category information of the final target. The invention has the advantages of ensuring high precision of small target detection under the requirement of ensuring real-time detection, can quickly and accurately detect small targets in images, and can be used for real-time detection of targets in aerial photographs of unmanned aerial vehicles.

Owner:XIDIAN UNIV

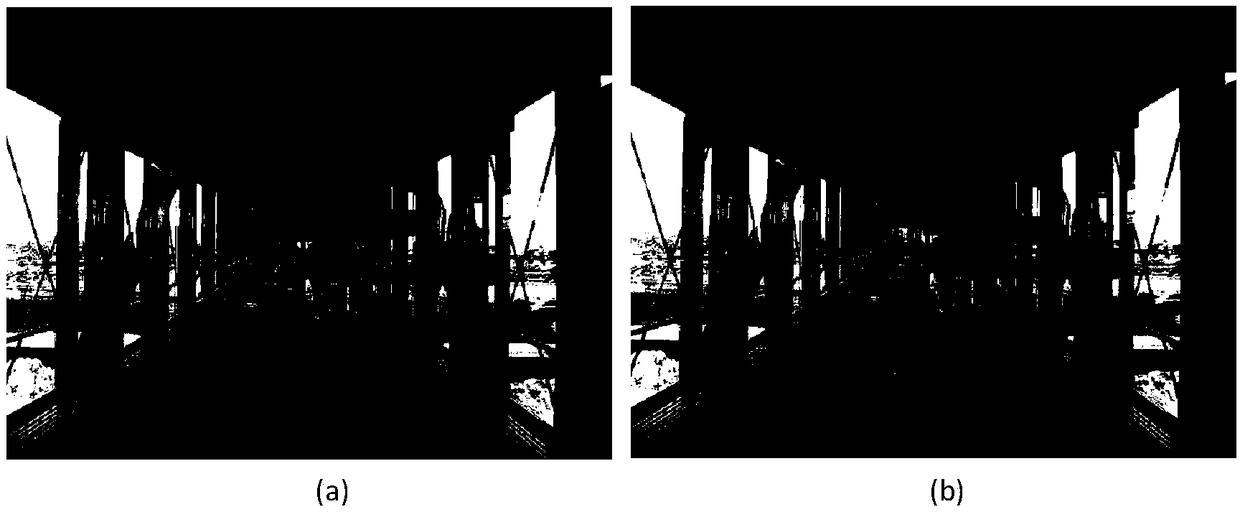

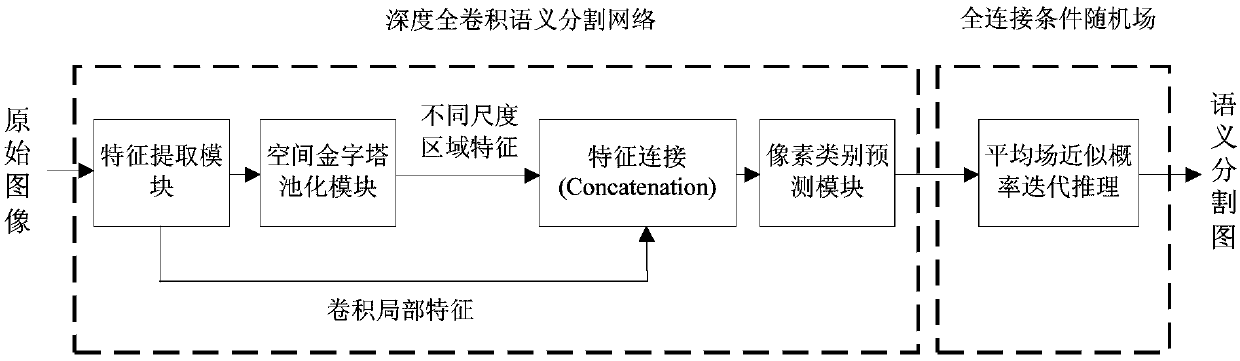

Image semantic division method based on depth full convolution network and condition random field

InactiveCN108062756ADoes not reduce dimensionalityHigh-resolutionImage enhancementImage analysisConditional random fieldImage resolution

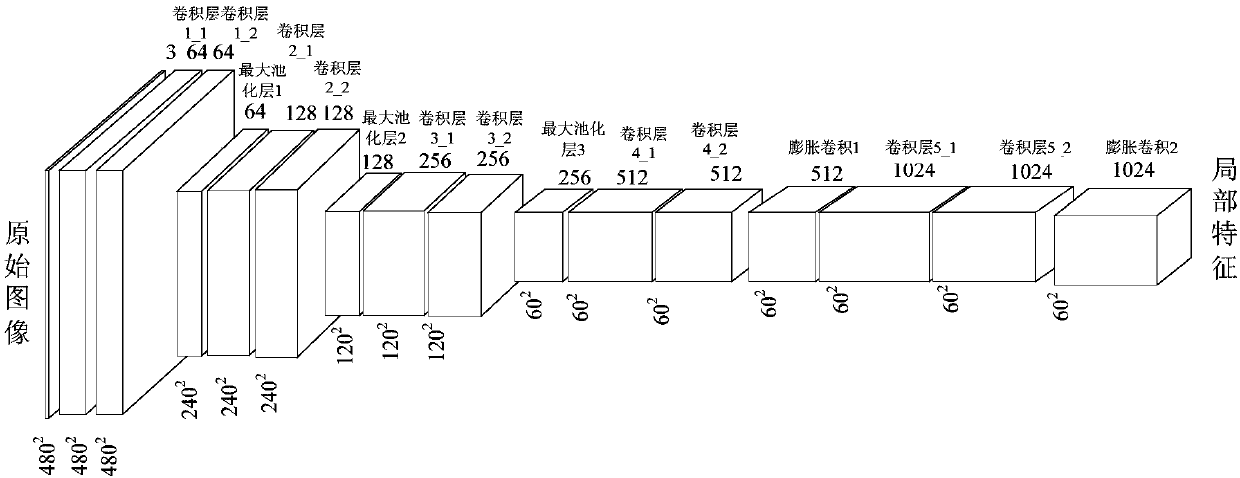

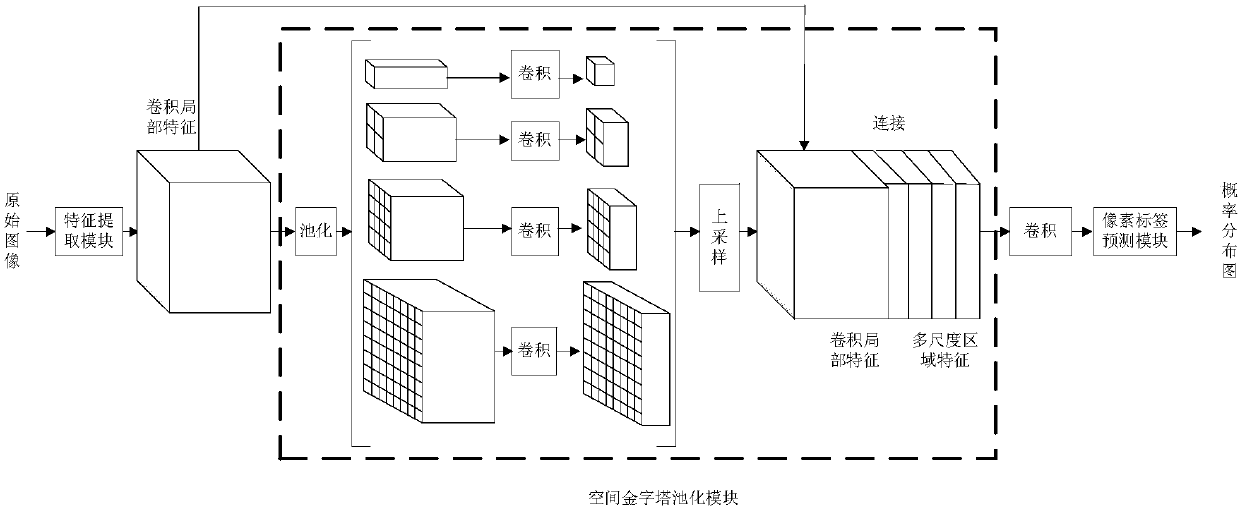

The invention provides an image semantic division method based on a depth full convolution network and a condition random field. The image semantic division method comprises the following steps: establishing a depth full convolution semantic division network model; carrying out structured prediction based on a pixel label of a full connection condition random field, and carrying out model training, parameter learning and image semantic division. According to the image semantic division method provided by the invention, expansion convolution and a spatial pyramid pooling module are introduced into the depth full convolution network, and a label predication pattern output by the depth full convolution network is further revised by utilizing the condition random field; the expansion convolution is used for enlarging a receptive field and ensures that the resolution ratio of a feature pattern is not changed; the spatial pyramid pooling module is used for extracting contextual features of different scale regions from a convolution local feature pattern, and a mutual relation between different objects and connection between the objects and features of regions with different scales are provided for the label predication; the full connection condition random field is used for further optimizing the pixel label according to feature similarity of pixel strength and positions, so that a semantic division pattern with a high resolution ratio, an accurate boundary and good space continuity is generated.

Owner:CHONGQING UNIV OF TECH

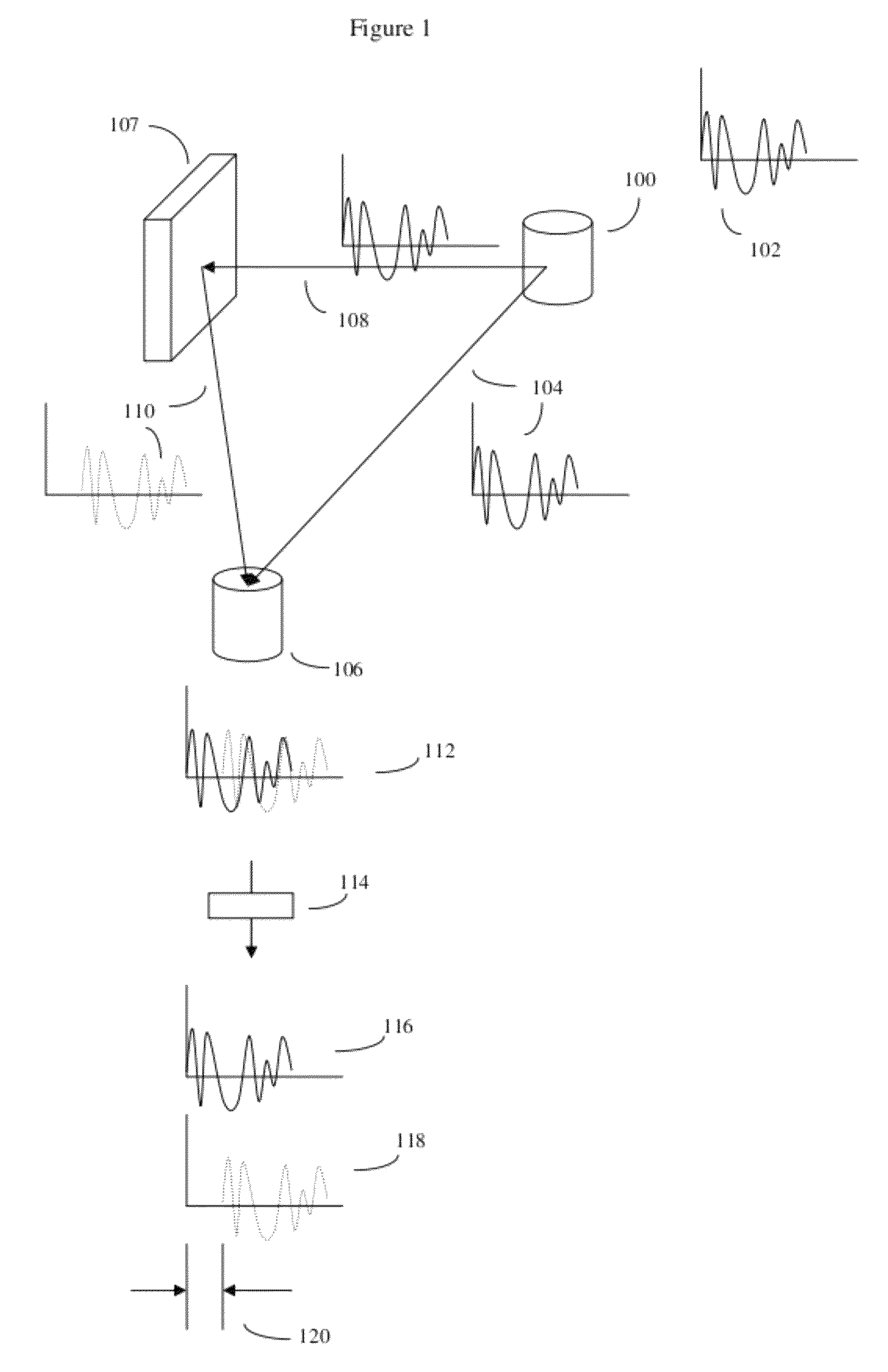

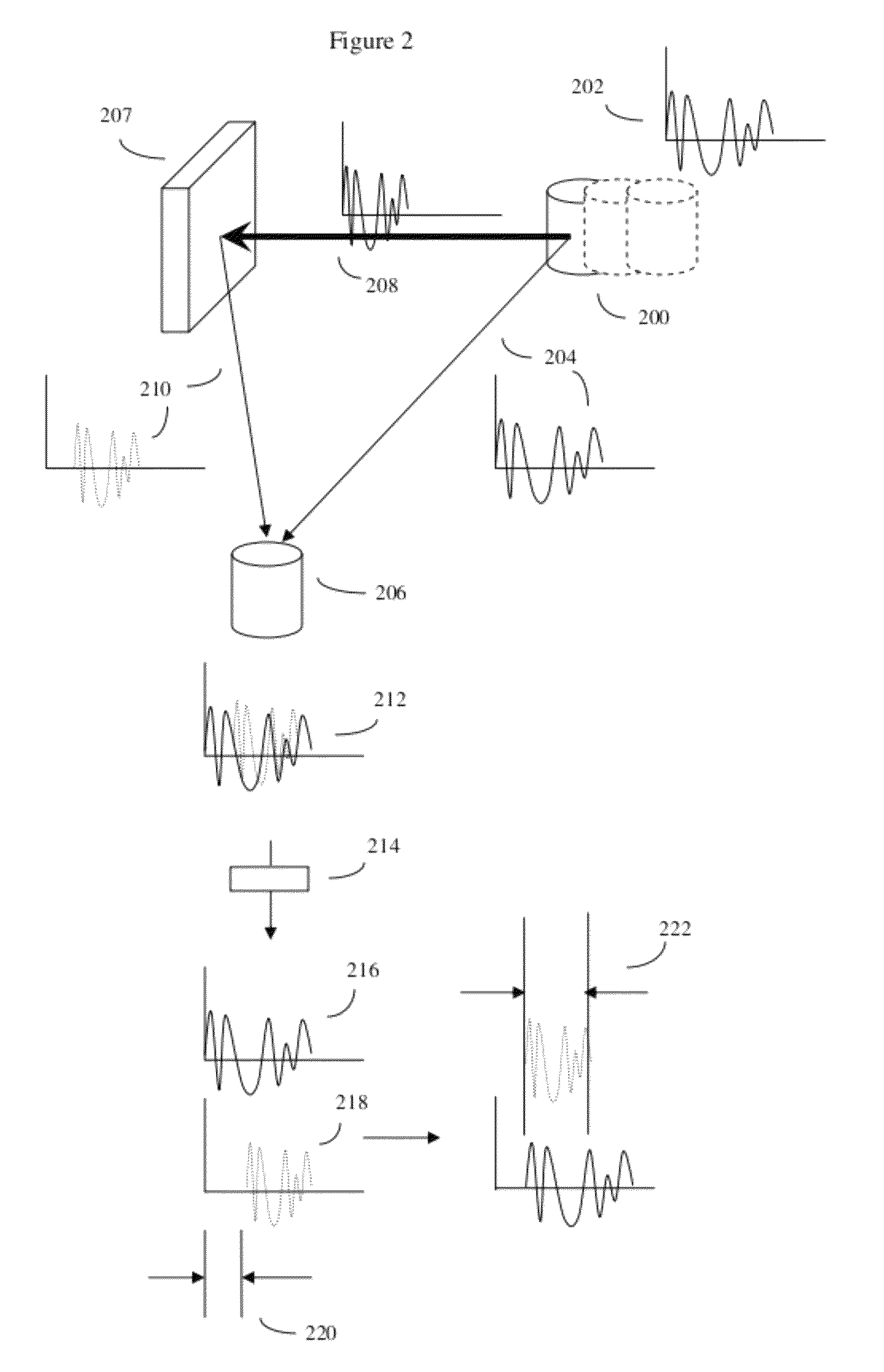

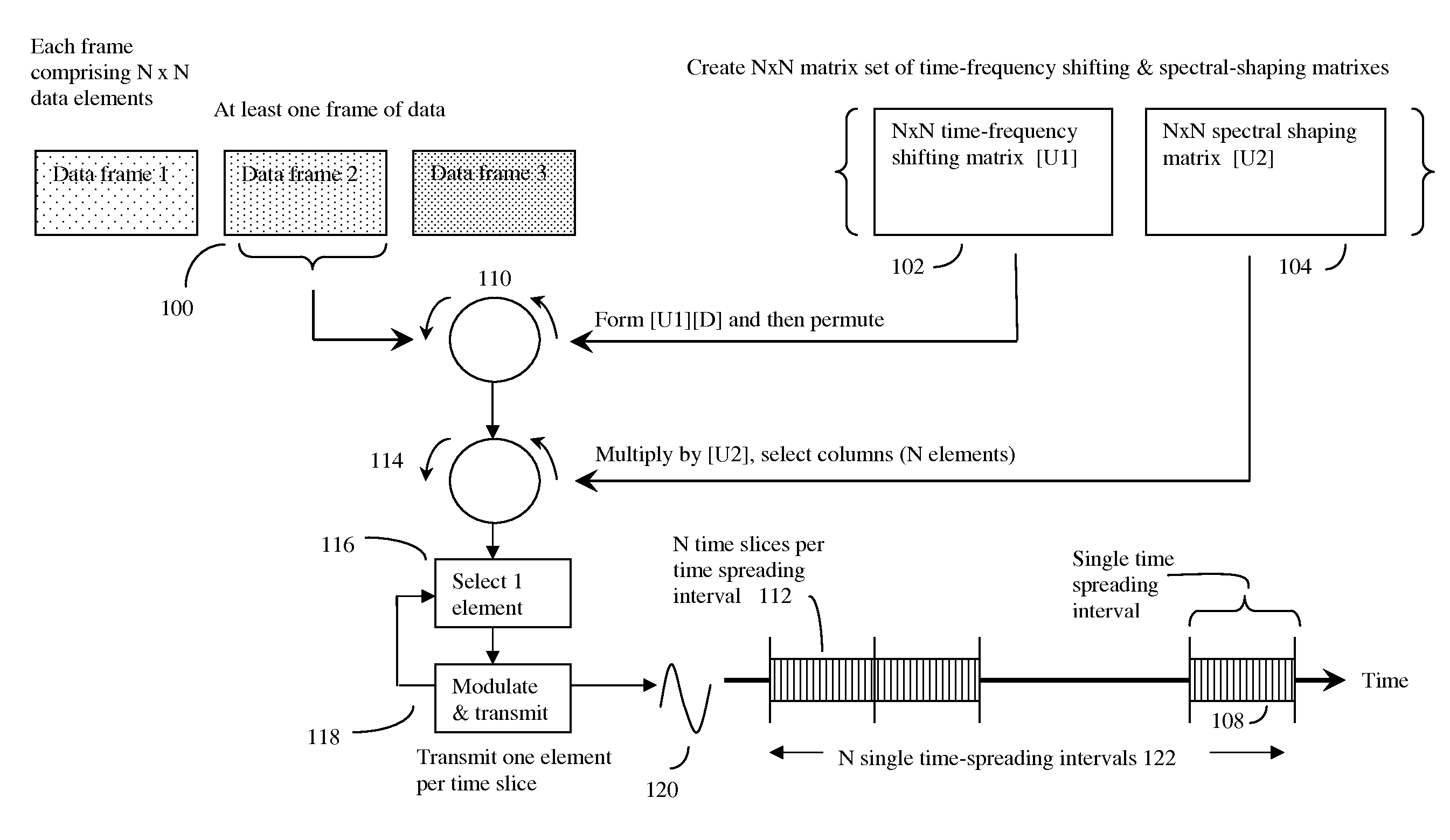

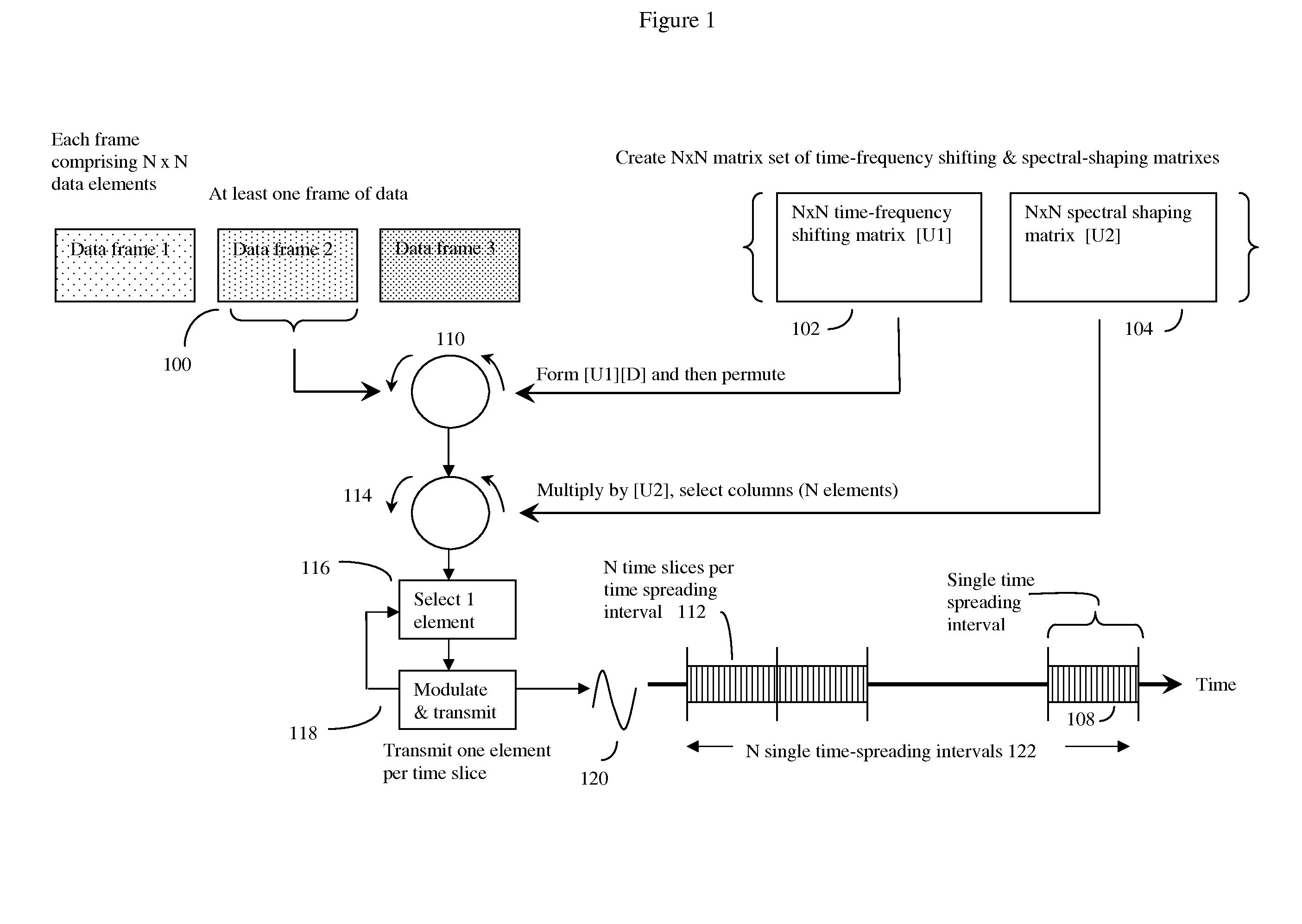

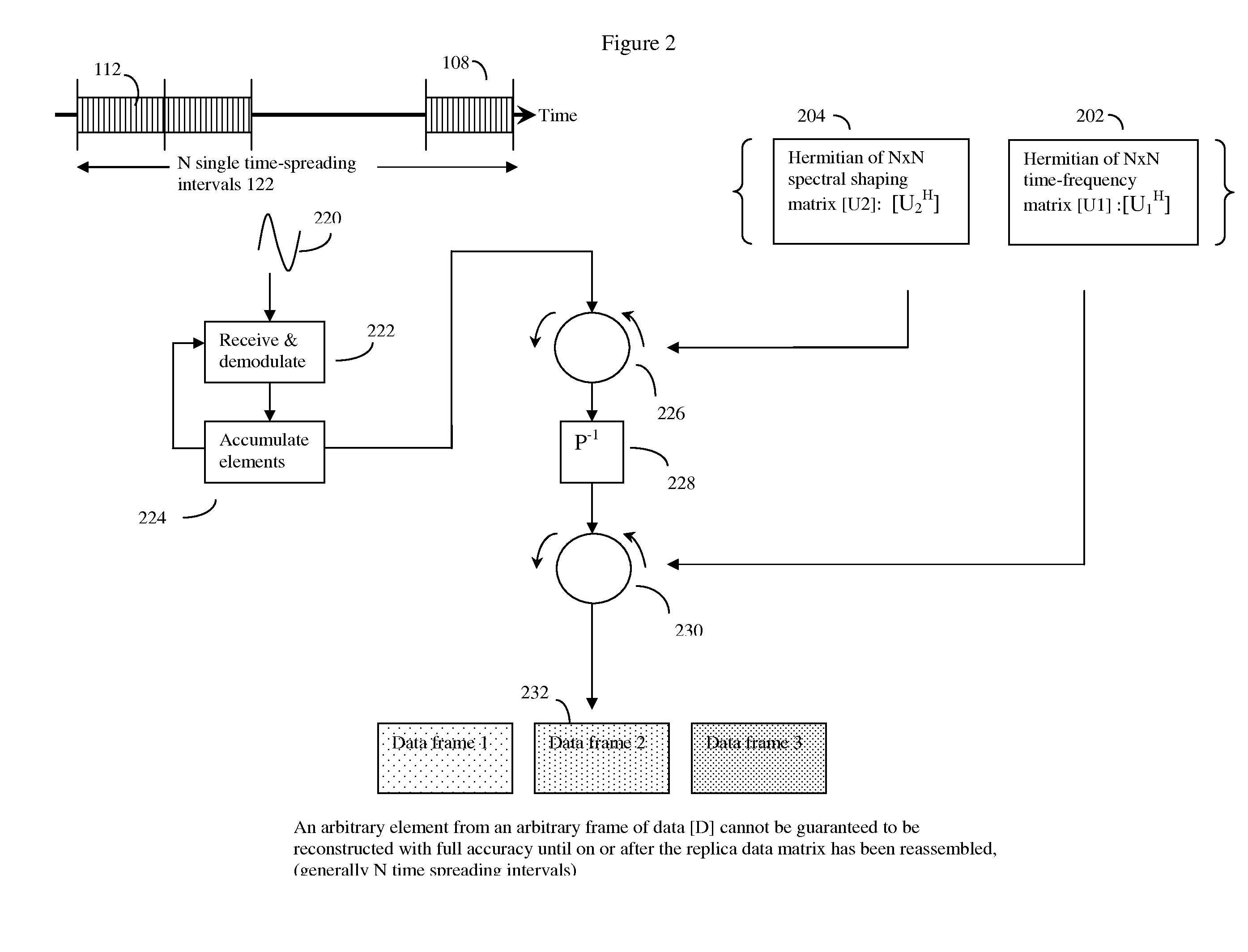

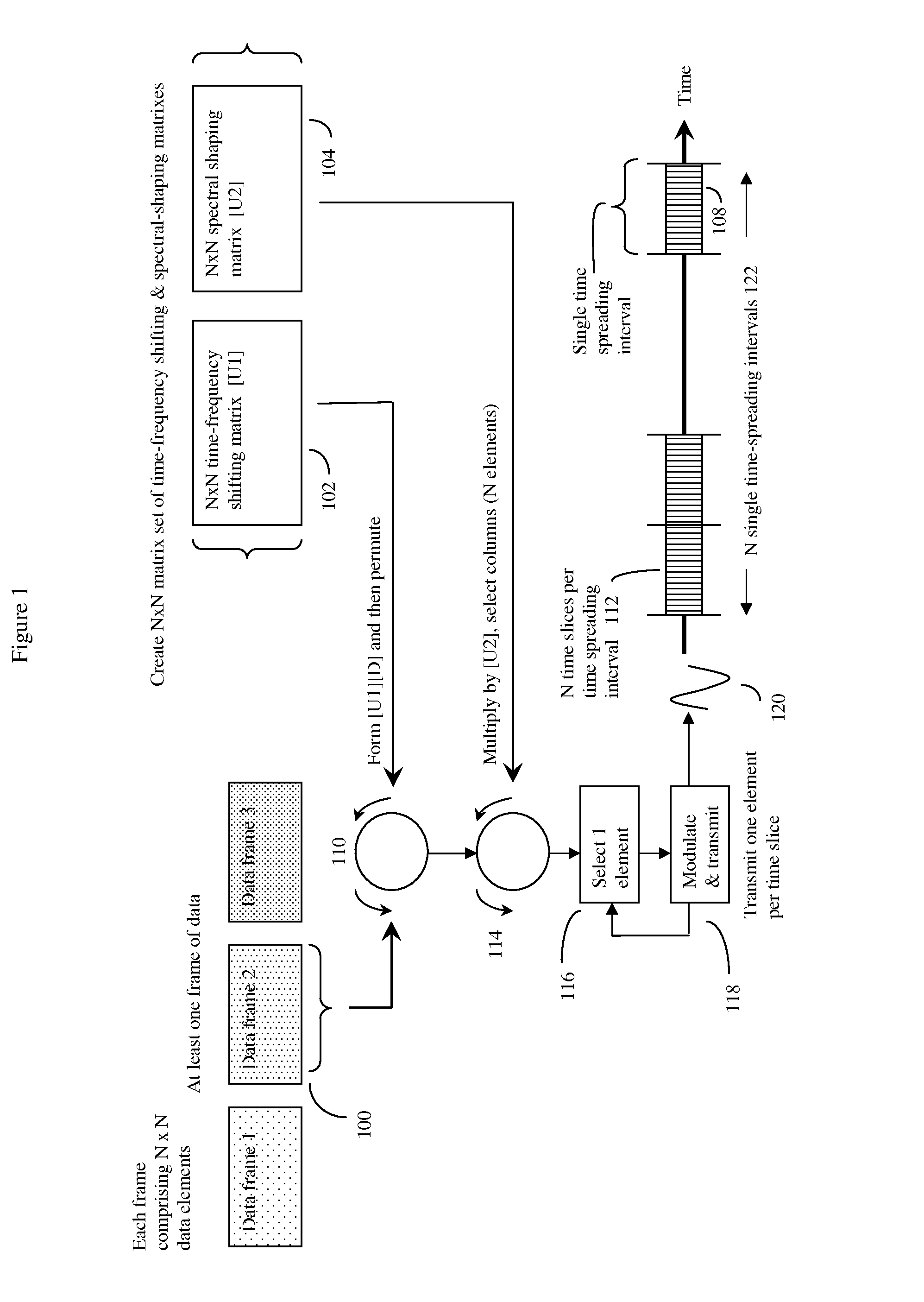

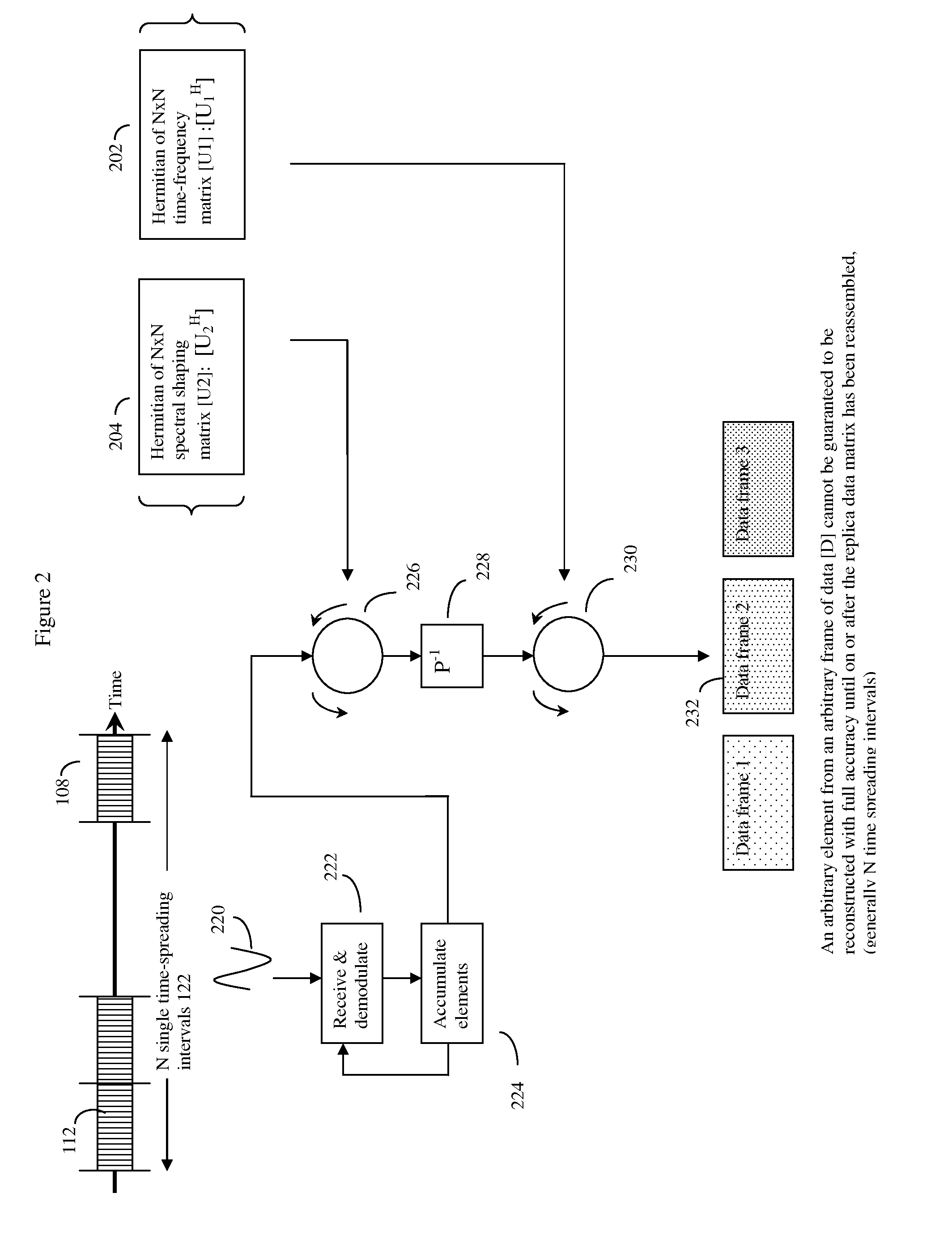

Communications method employing orthonormal time-frequency shifting and spectral shaping

ActiveUS20110292971A1Long to transmitGood compensationNetwork traffic/resource managementModulated-carrier systemsFrequency spectrumEngineering

A wireless combination time, frequency and spectral shaping communications method that transmits data in convolution unit matrices (data frames) of N×N (N2), where generally either all N2 data symbols or elements are received over N spreading time intervals (each composed of N time slices), or none are. To transmit, each data element is assigned a unique waveform which is derived from a basic waveform of duration N time slices over one spreading time interval, where each basic waveform has a data element specific combination of a time and frequency cyclic shift. At the receiver, the received signal is correlated with the set of all N2 waveforms previously assigned to each data element by a transmitter for that specific time spreading interval, producing a unique correlation score for each one of the N2 data elements. The scores are summed over each data element, and this summation reproduces the data frame.

Owner:COHERE TECH

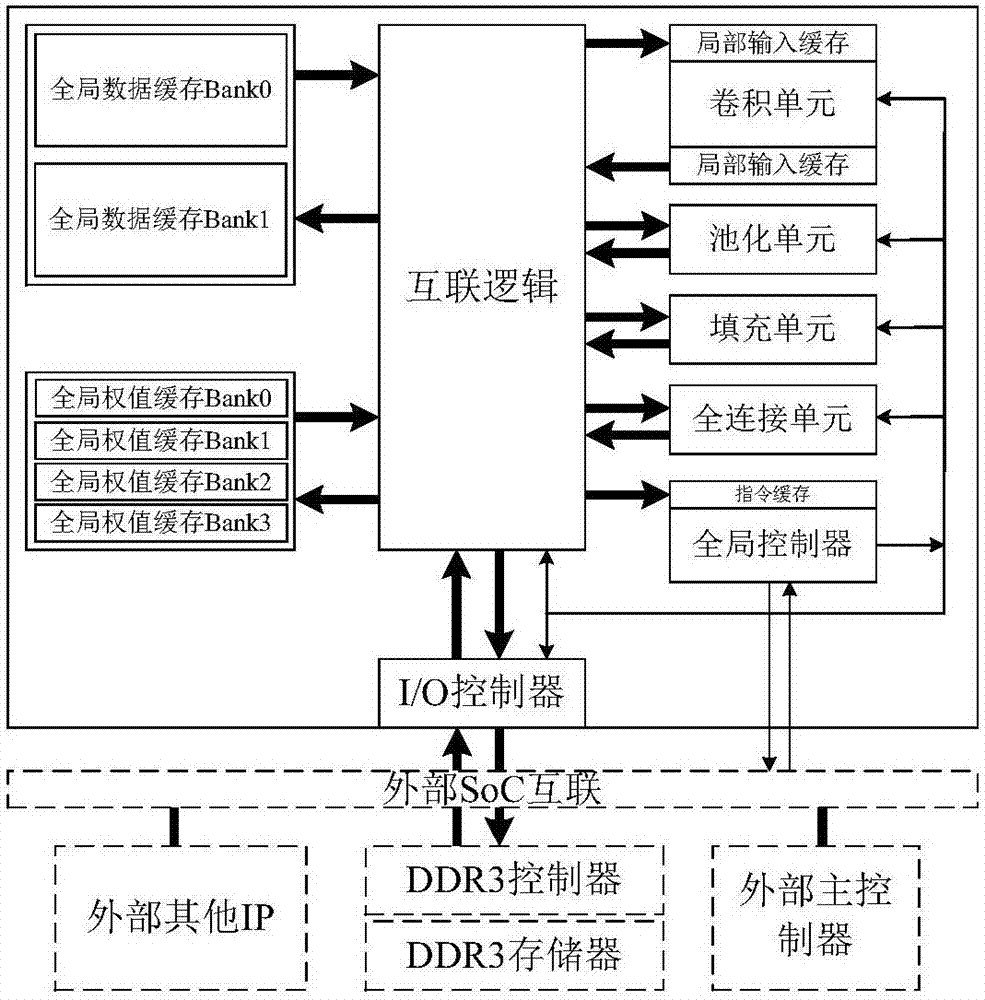

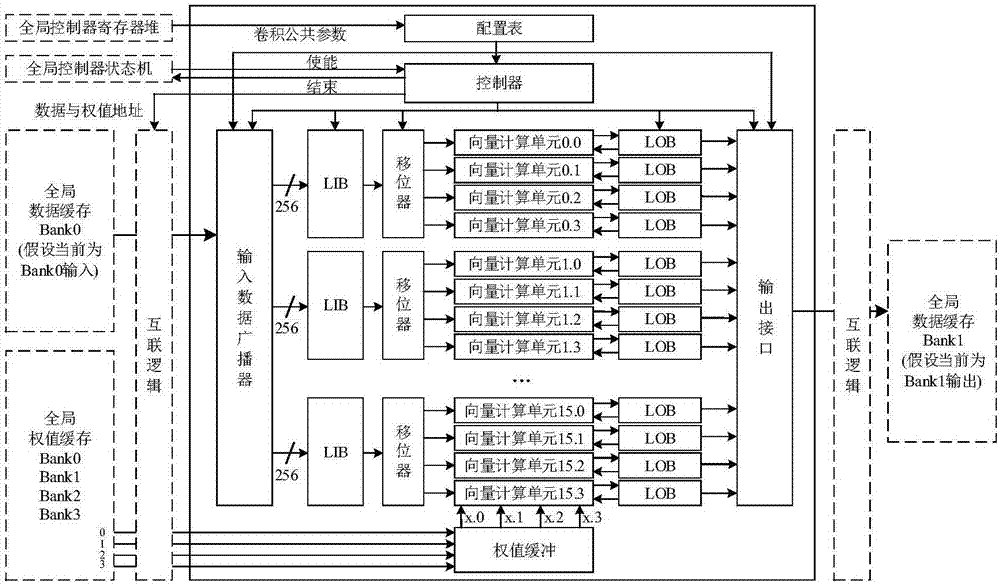

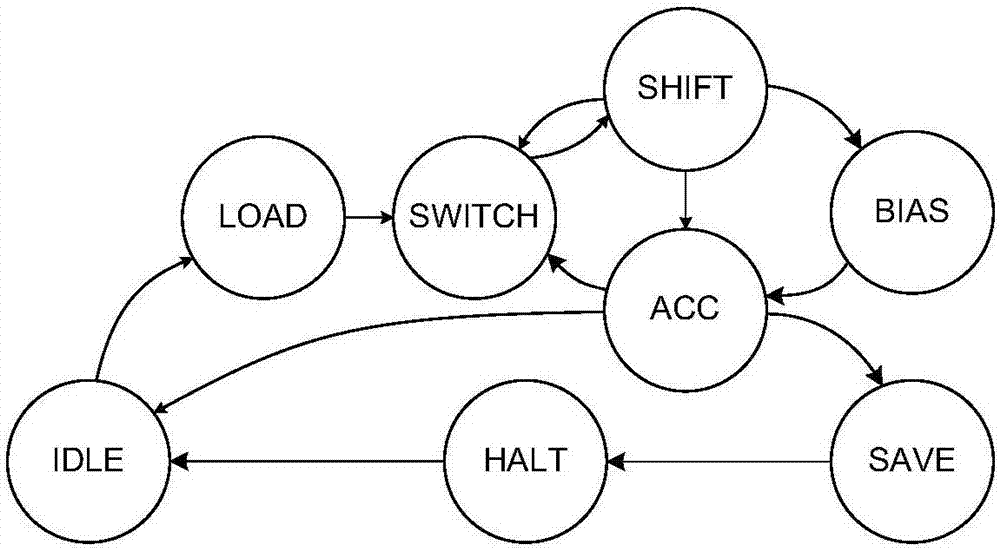

Co-processor IP core of programmable convolutional neural network

ActiveCN106940815AReduce frequencyReduce bandwidth pressureNeural architecturesPhysical realisationHardware structureInstruction set design

The present invention discloses a co-processor IP core of a programmable convolutional neural network. The invention aims to realize the arithmetic acceleration of the convolutional neural network on a digital chip (FPGA or ASIC). The co-processor IP core specifically comprises a global controller, an I / O controller, a multi-level cache system, a convolution unit, a pooling unit, a filling unit, a full-connection unit, an internal interconnection logical unit, and an instruction set designed for the co-processor IP. The proposed hardware structure supports the complete flows of convolutional neural networks diversified in scale. The hardware-level parallelism is fully utilized and the multi-level cache system is designed. As a result, the characteristics of high performance, low power consumption and the like are realized. The operation flow is controlled through instructions, so that the programmability and the configurability are realized. The co-processor IP core can be easily applied to different application scenes.

Owner:XI AN JIAOTONG UNIV

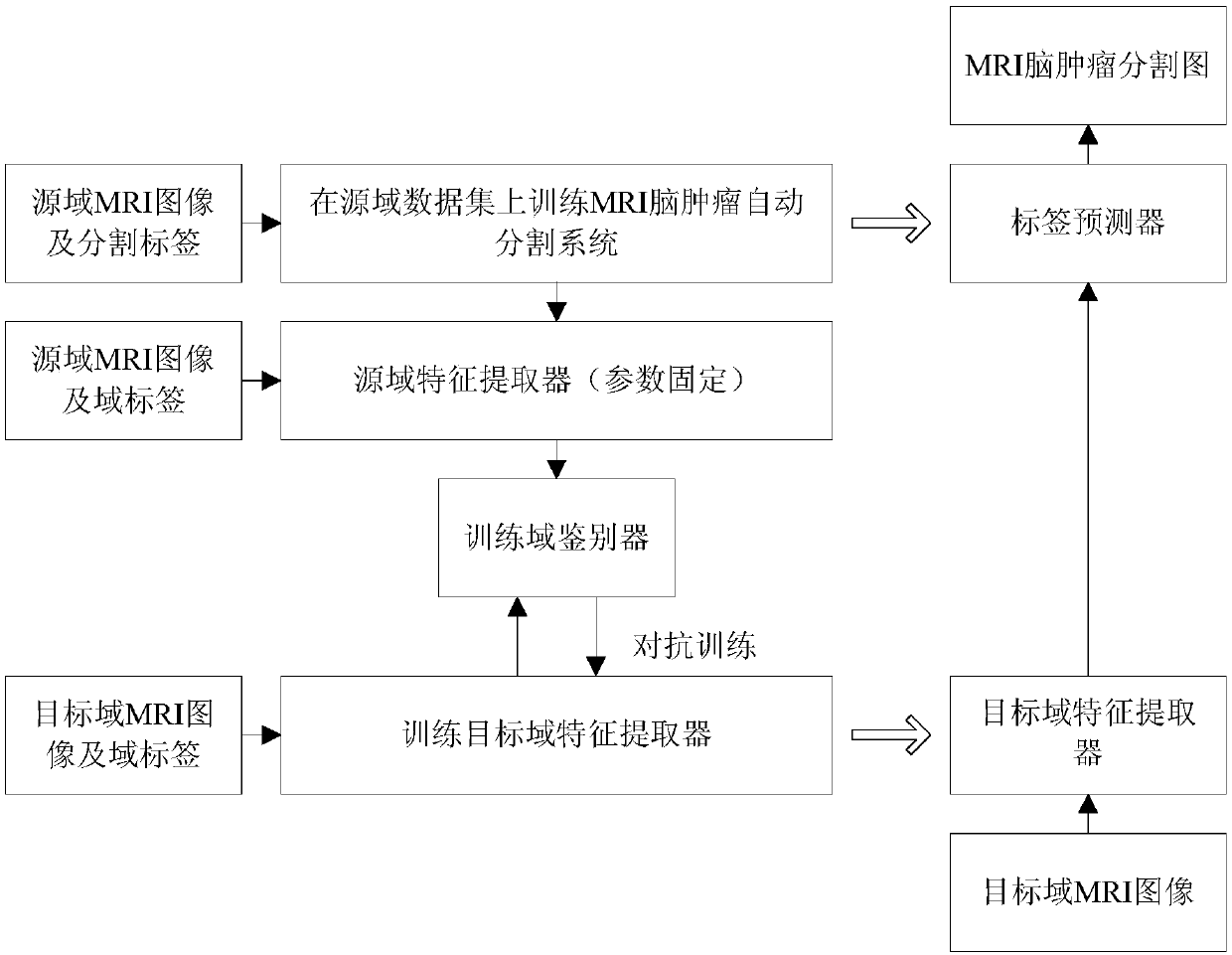

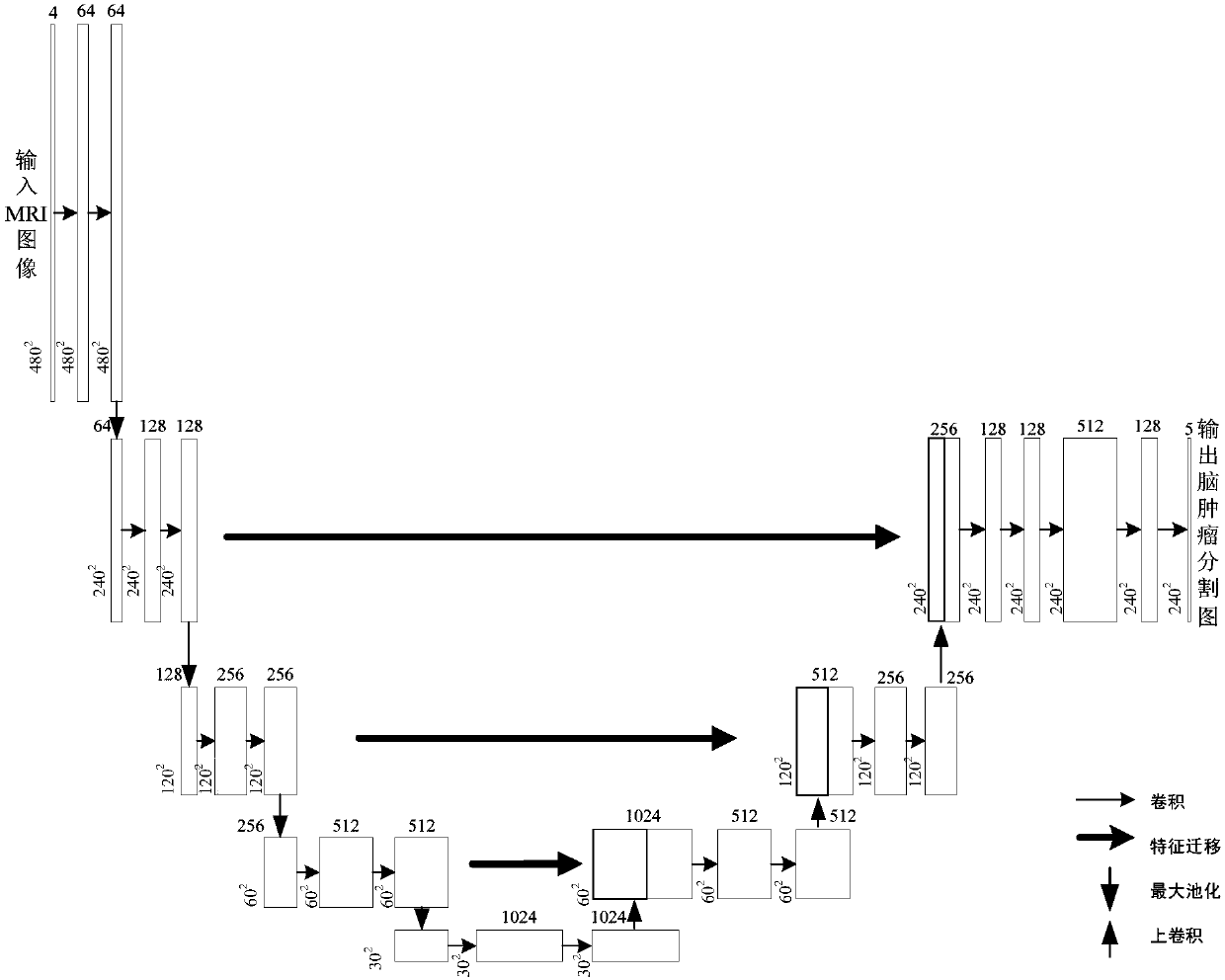

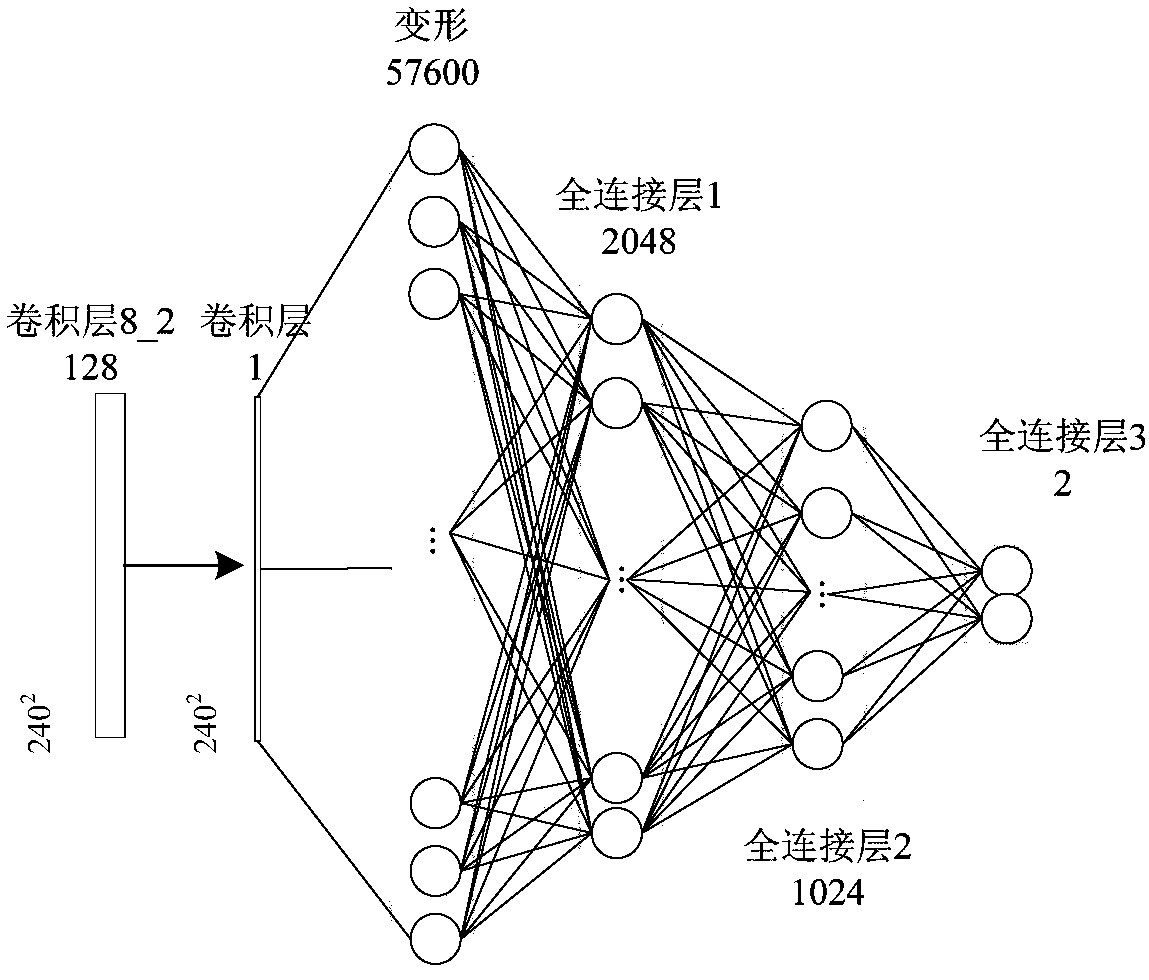

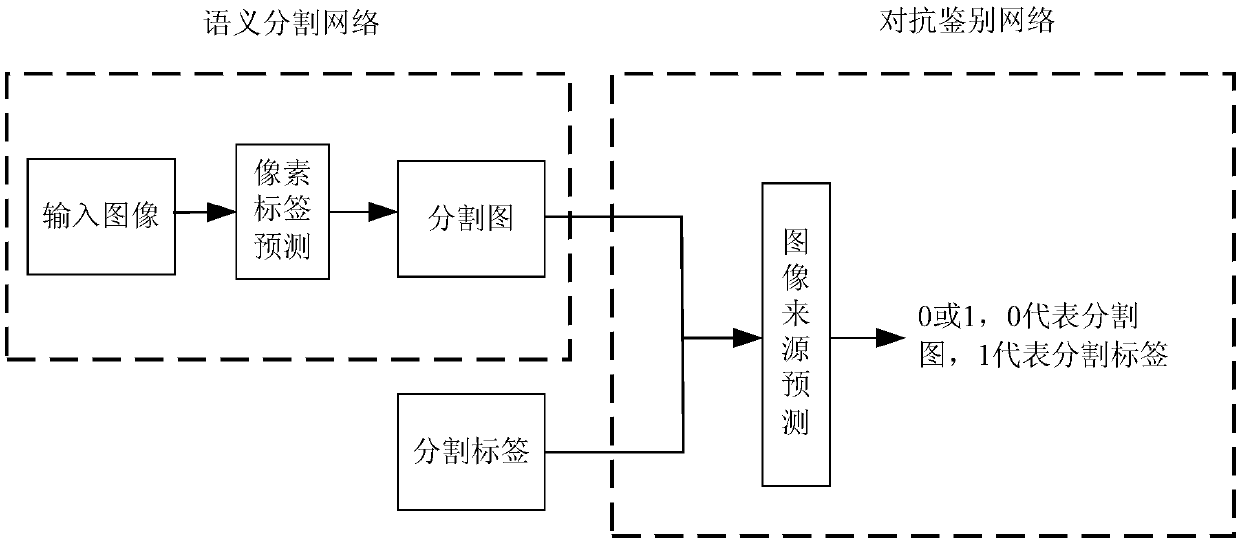

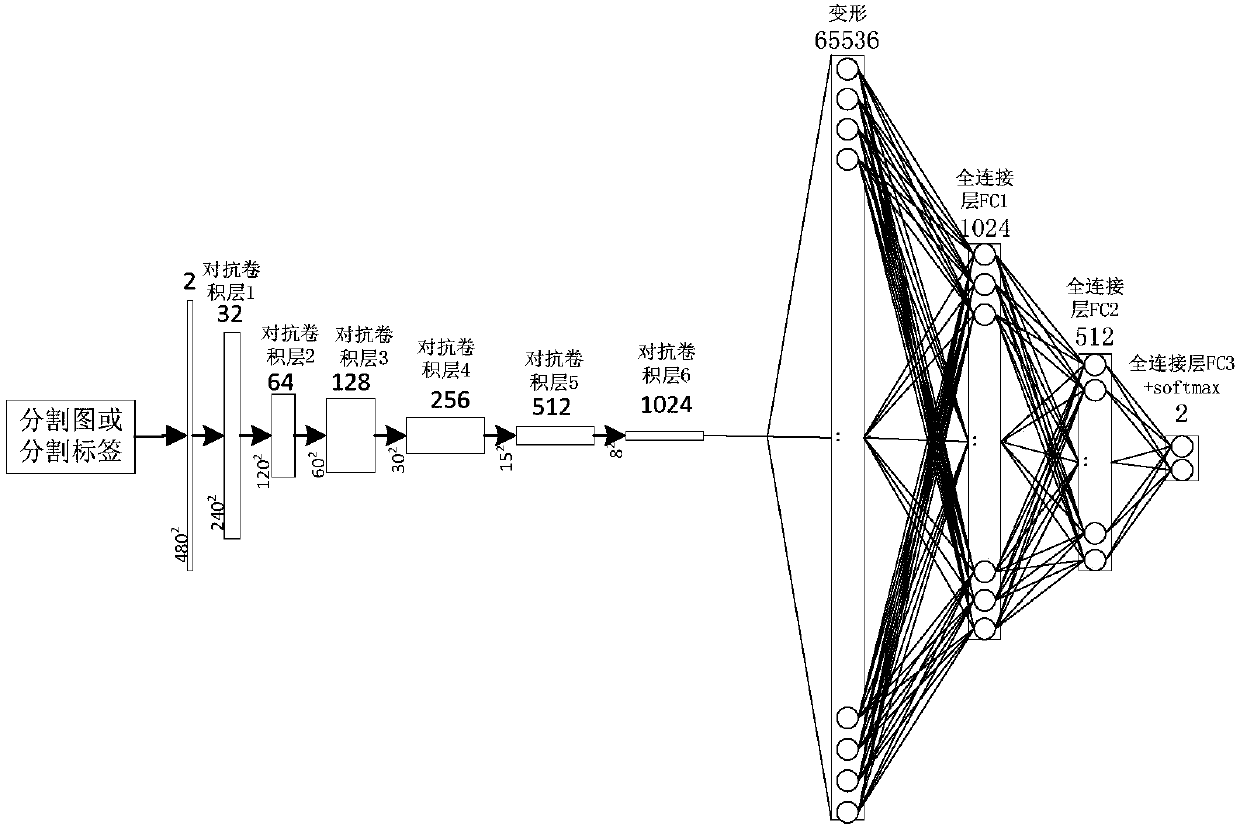

Unsupervised domain-adaptive brain tumor semantic segmentation method based on deep adversarial learning

InactiveCN108062753AAccurate predictionEasy to trainImage enhancementImage analysisDiscriminatorNetwork model

The invention provides an unsupervised domain-adaptive brain tumor semantic segmentation method based on deep adversarial learning. The method comprises the steps of deep coding-decoding full-convolution network segmentation system model setup, domain discriminator network model setup, segmentation system pre-training and parameter optimization, adversarial training and target domain feature extractor parameter optimization and target domain MRI brain tumor automatic semantic segmentation. According to the method, high-level semantic features and low-level detailed features are utilized to jointly predict pixel tags by the adoption of a deep coding-decoding full-convolution network modeling segmentation system, a domain discriminator network is adopted to guide a segmentation model to learn domain-invariable features and a strong generalization segmentation function through adversarial learning, a data distribution difference between a source domain and a target domain is minimized indirectly, and a learned segmentation system has the same segmentation precision in the target domain as in the source domain. Therefore, the cross-domain generalization performance of the MRI brain tumor full-automatic semantic segmentation method is improved, and unsupervised cross-domain adaptive MRI brain tumor precise segmentation is realized.

Owner:CHONGQING UNIV OF TECH

Communications method employing orthonormal time-frequency shifting and spectral shaping

ActiveUS8547988B2Inhibition rateLong to transmitModulated-carrier systemsNetwork traffic/resource managementFrequency spectrumData element

Owner:COHERE TECH

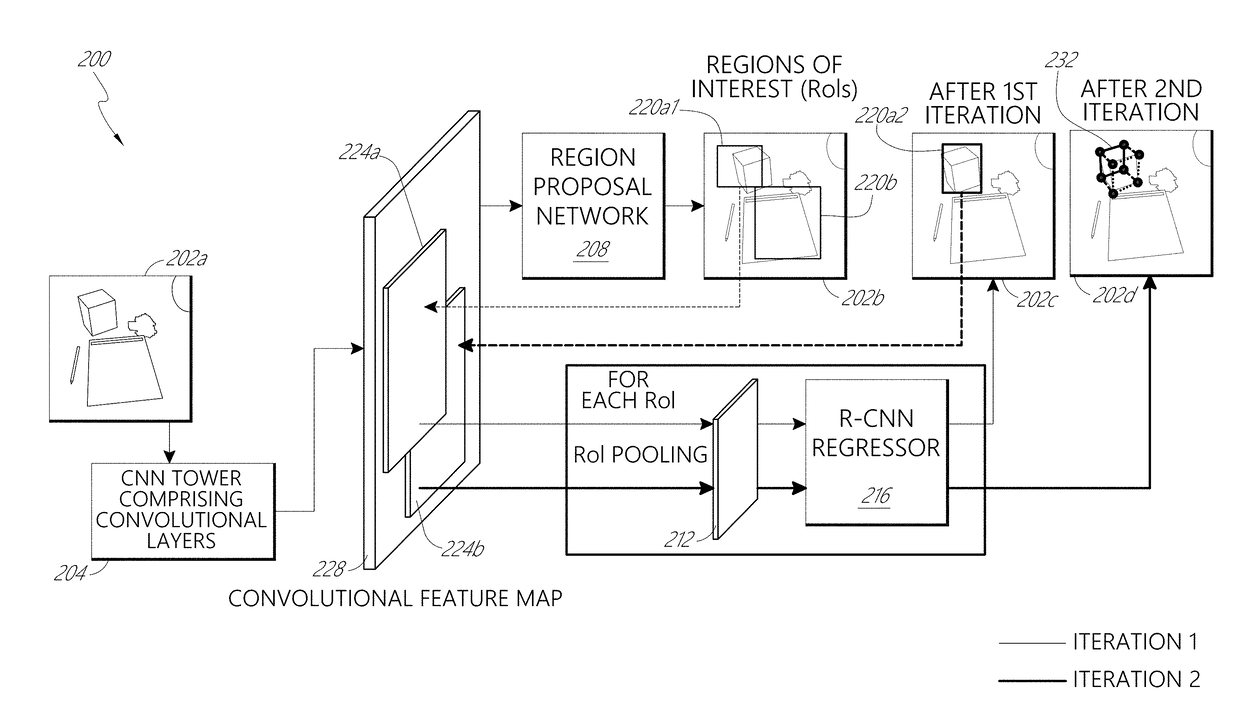

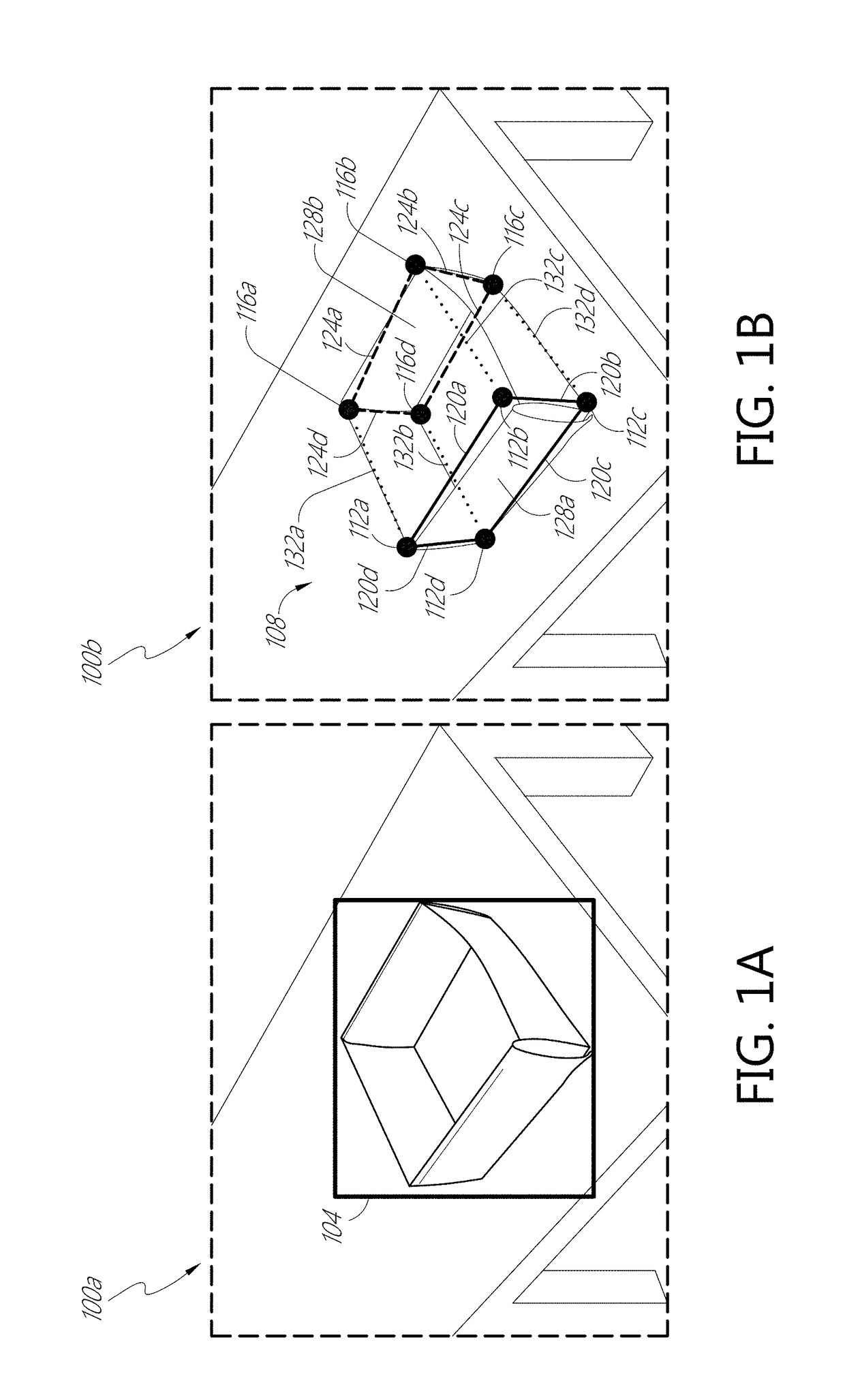

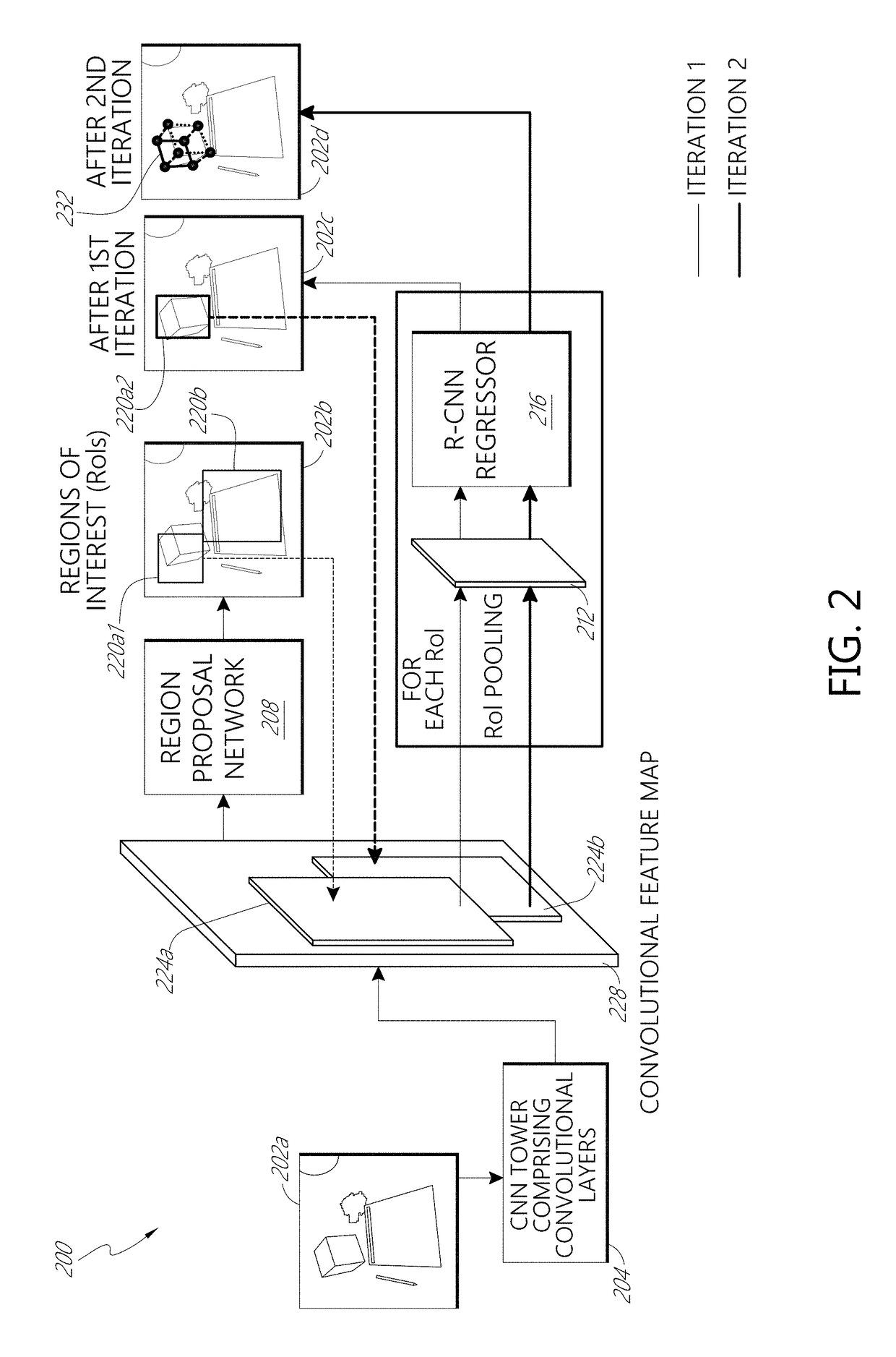

Deep learning system for cuboid detection

Systems and methods for cuboid detection and keypoint localization in images are disclosed. In one aspect, a deep cuboid detector can be used for simultaneous cuboid detection and keypoint localization in monocular images. The deep cuboid detector can include a plurality of convolutional layers and non-convolutional layers of a trained convolution neural network for determining a convolutional feature map from an input image. A region proposal network of the deep cuboid detector can determine a bounding box surrounding a cuboid in the image using the convolutional feature map. The pooling layer and regressor layers of the deep cuboid detector can implement iterative feature pooling for determining a refined bounding box and a parameterized representation of the cuboid.

Owner:MAGIC LEAP INC

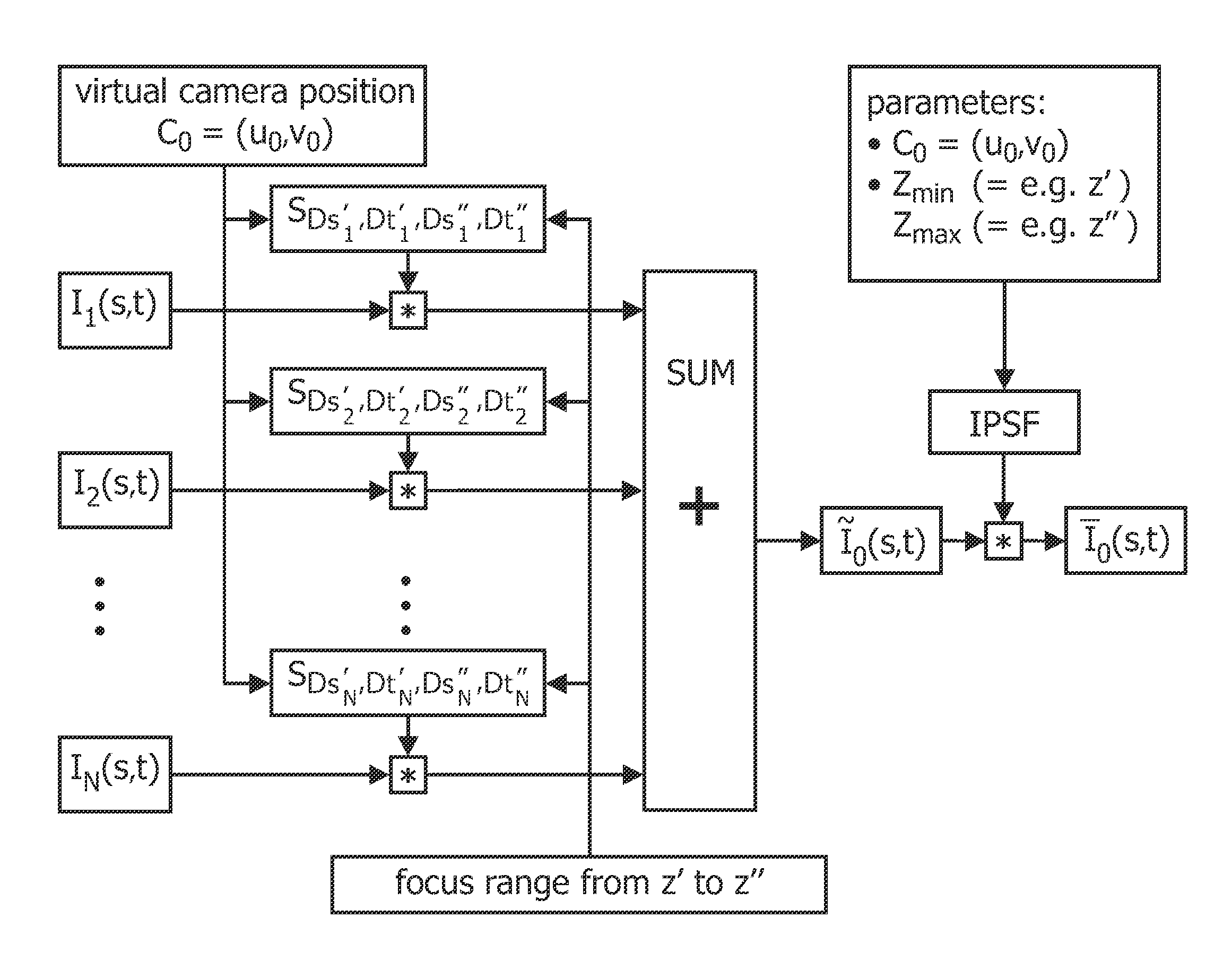

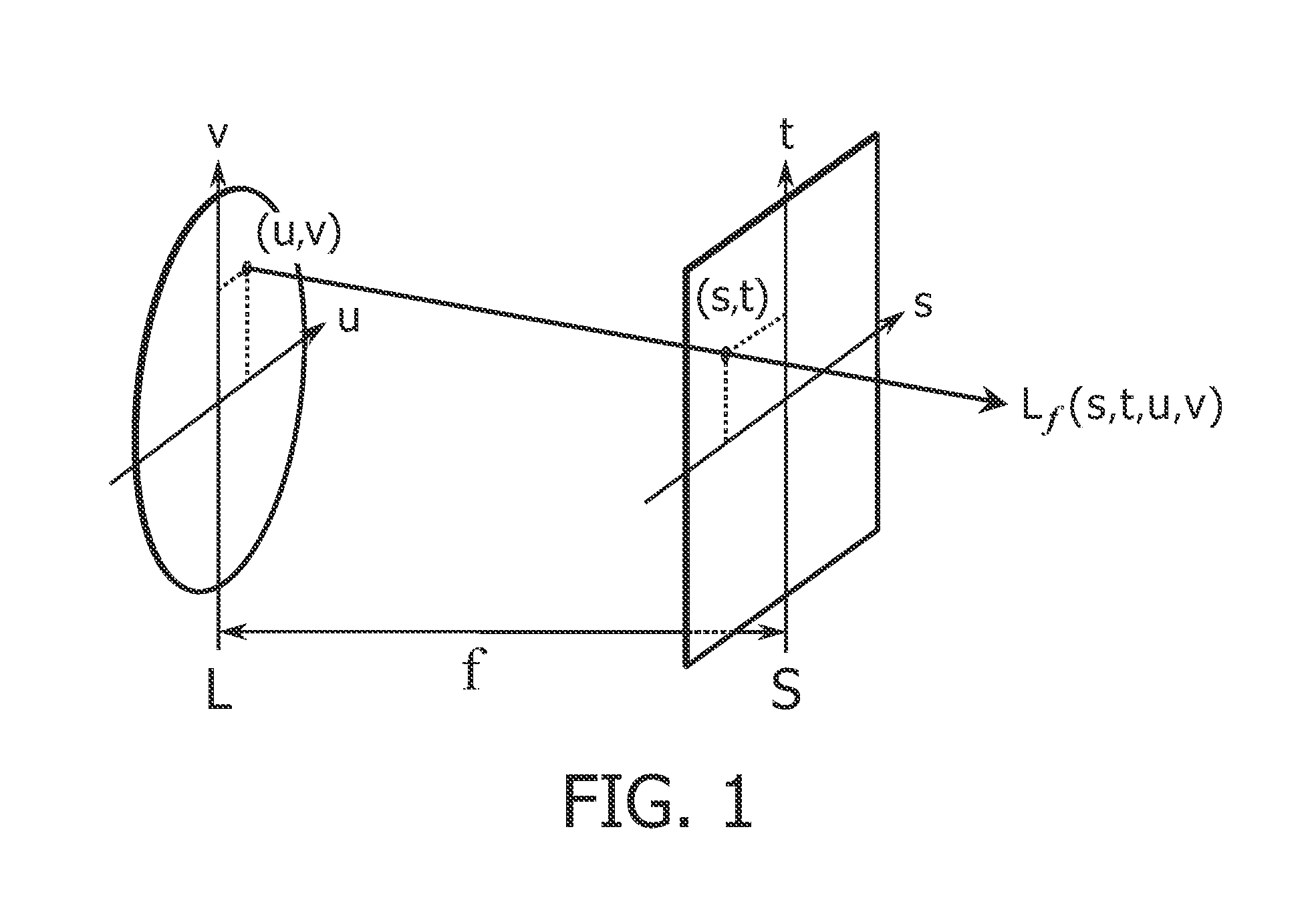

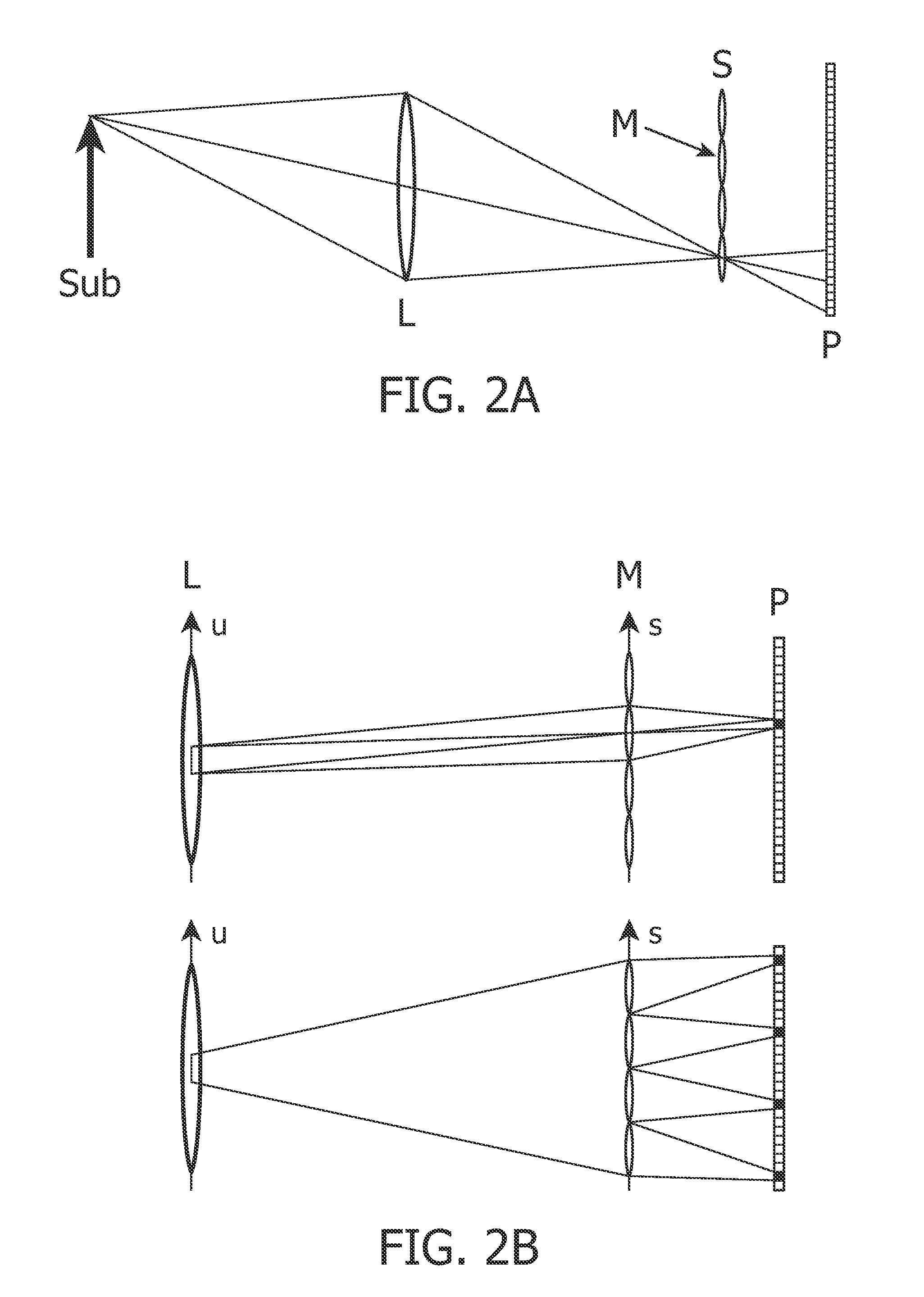

Method and system for producing a virtual output image from data obtained by an array of image capturing devices

ActiveUS20130088489A1Raise the possibilityImage enhancementTelevision system detailsPoint spread functionImaging data

In a method and system for providing virtual output images from an array of image capturing devices image data (Ii(s,t), I2(s,t)) is taken from the devices (Ci, C2). This image data is processed by convolving the image data with a function, e.g. the path (S) and thereafter deconvolving them, either after or before summation (SUM), with an inverse point spread function (IPSF) or a filter (HP) equivalent thereto to produce all-focus image data (I0(s,t)).

Owner:KONINKLIJKE PHILIPS ELECTRONICS NV

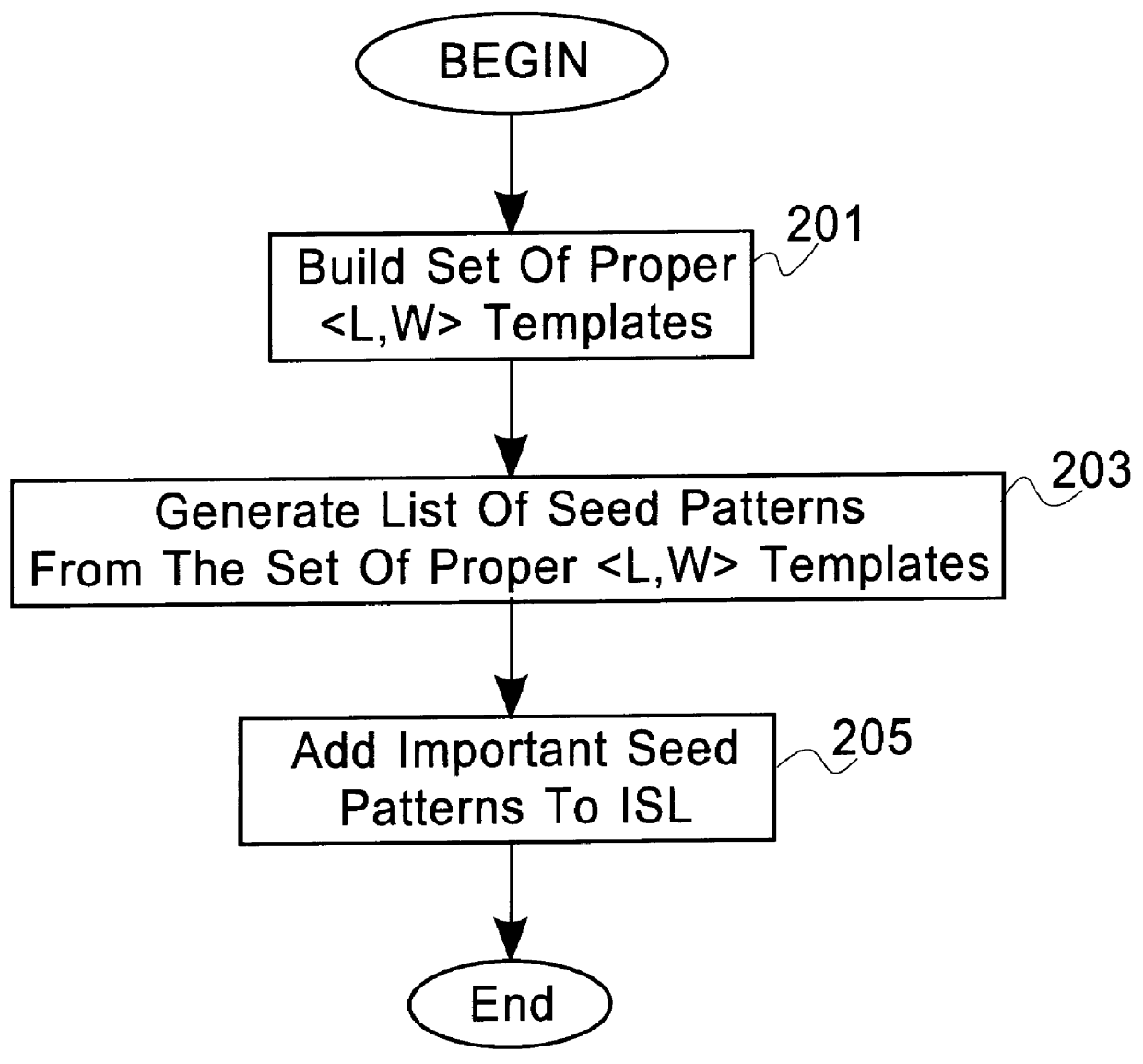

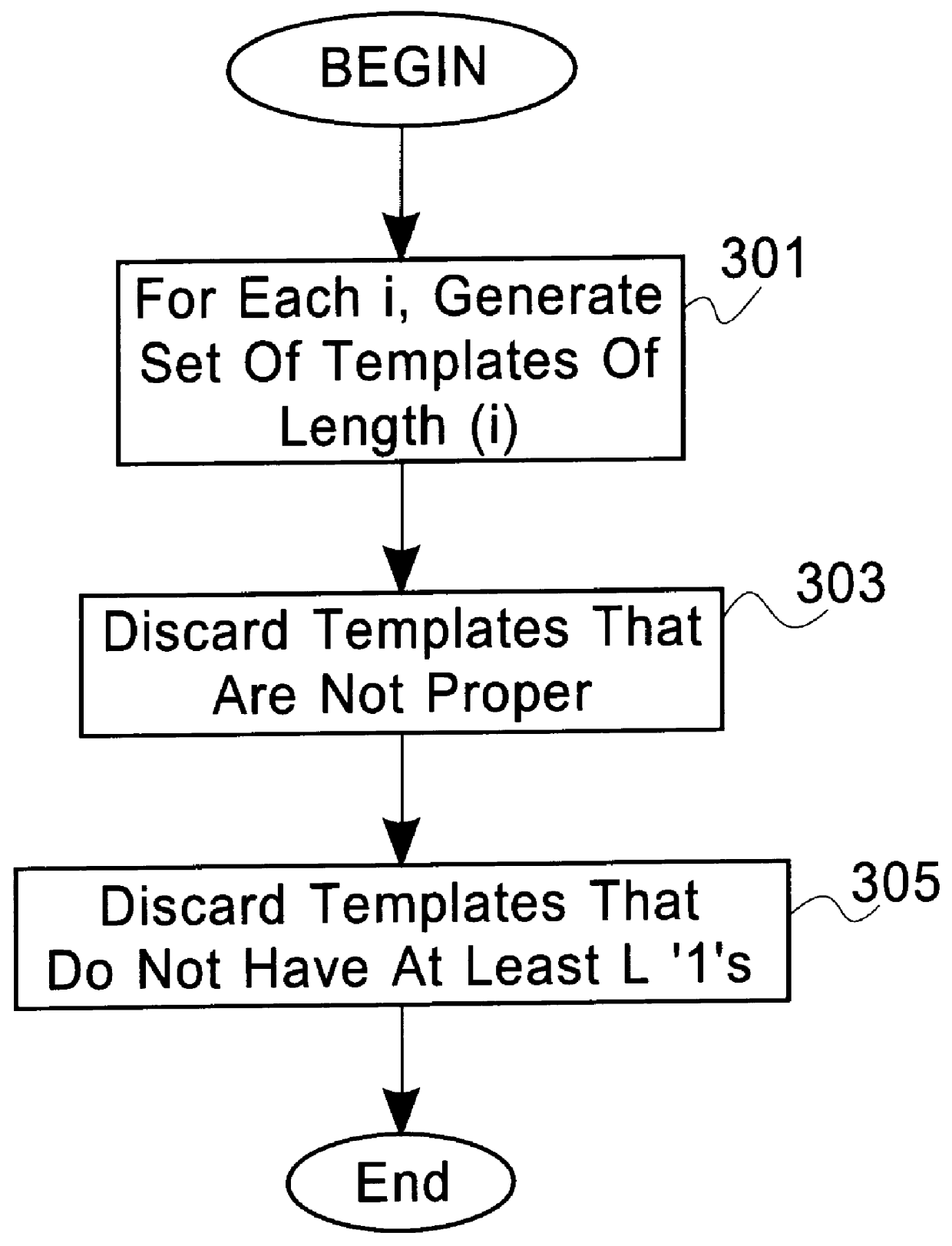

Method and apparatus for discovery, clustering and classification of patterns in 1-dimensional event streams

InactiveUS6092065AData processing applicationsDigital data information retrievalData miningBioinformatics

The present invention groups character sequences by identifying a sequence of characters. A set of internal repeats in said sequence of characters is identified by a pattern discovery technique. For at least one internal repeat belonging to the set of internal repeats, it is determined if the internal repeat corresponds to a group of character sequences; If so, first data that identifies the sequence of characters and second data that associates the sequence of characters with the group of character sequences is stored in persistent memory. The pattern discovery mechanism discovers patterns in a sequence of characters in two phases. In a sampling phase, preferably proper templates corresponding to the sequence of characters are generated. Patterns are then generated corresponding to the templates and stored in memory. In a convolution phase, the patterns stored in memory are combined to identify a set of maximal patterns.

Owner:IBM CORP

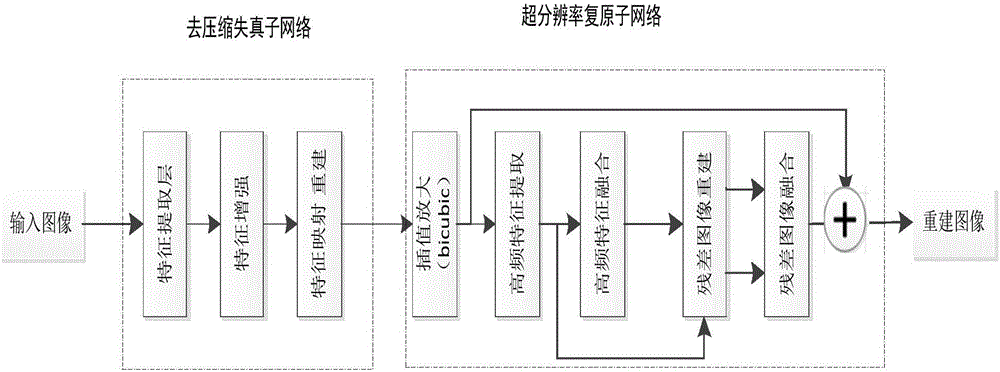

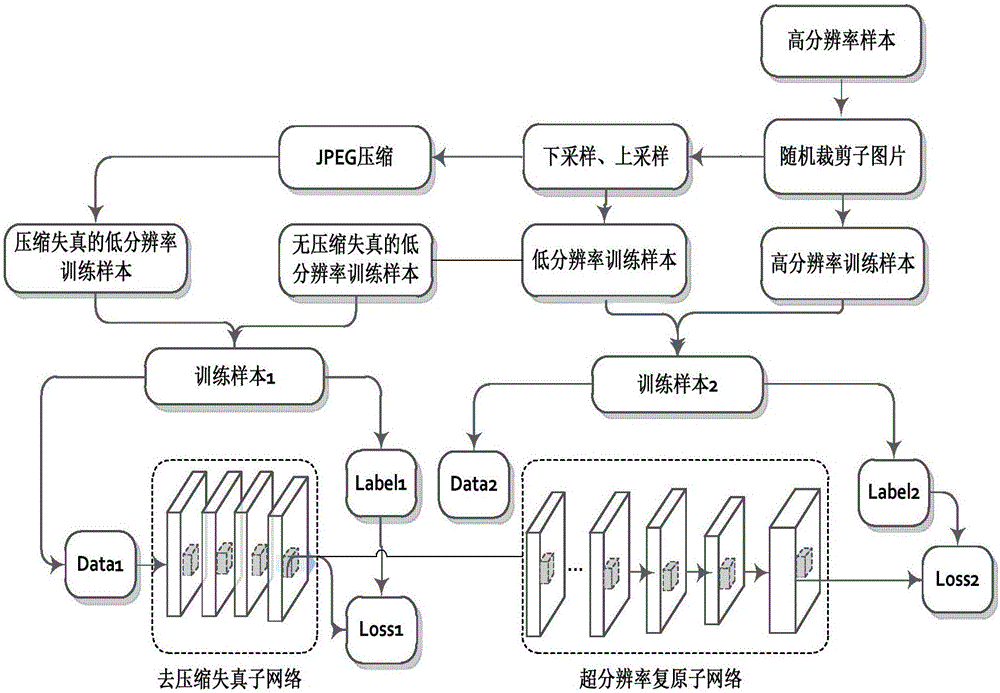

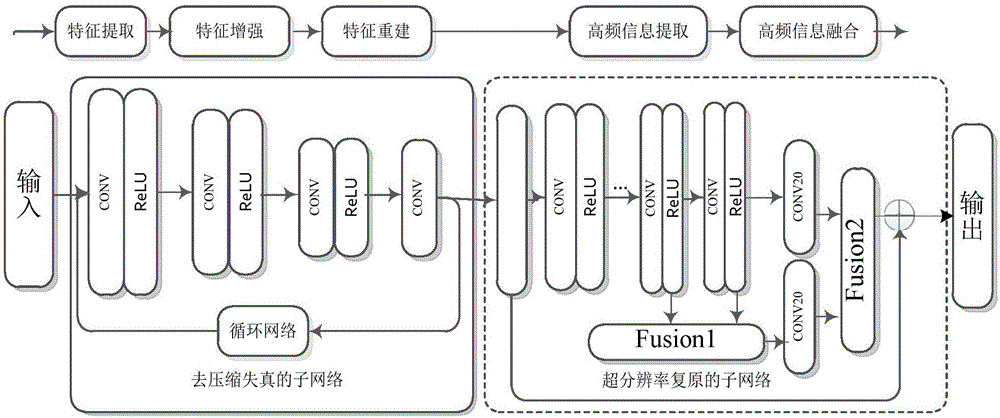

Compressed low-resolution image restoration method based on combined deep network

The present invention provides a compressed low-resolution image restoration method based on a combined deep network, belonging to the digital image / video signal processing field. The compressed low-resolution image restoration method based on the combined deep network starts from the aspect of the coprocessing of the compression artifact and downsampling factors to complete the restoration of a degraded image with the random combination of the compression artifact and the low resolution; the network provided by the invention comprises 28 convolution layers to establish a leptosomatic network structure, according to the idea of transfer learning, a model trained in advance employs a fine tuning mode to complete the training convergence of a greatly deep network so as to solve the problems of vanishing gradients and gradient explosion; the compressed low-resolution image restoration method completes the setting of the network model parameters through feature visualization, and the relation of the end-to-end learning degeneration feature and the ideal features omits the preprocessing and postprocessing; and finally, three important fusions are completed, namely the fusion of the feature figures with the same size, the fusion of residual images and the fusion of the high-frequency information and the high-frequency initial estimation figure, and the compressed low-resolution image restoration method can solve the super-resolution restoration problem of the low-resolution image with the compression artifact.

Owner:BEIJING UNIV OF TECH

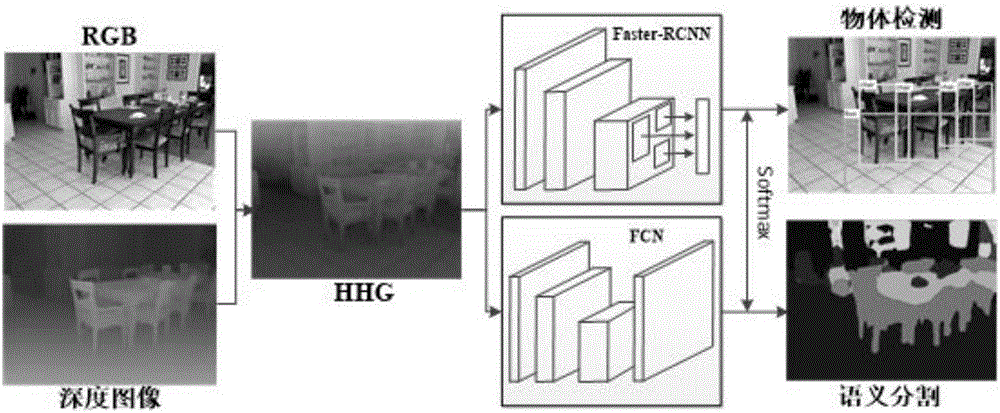

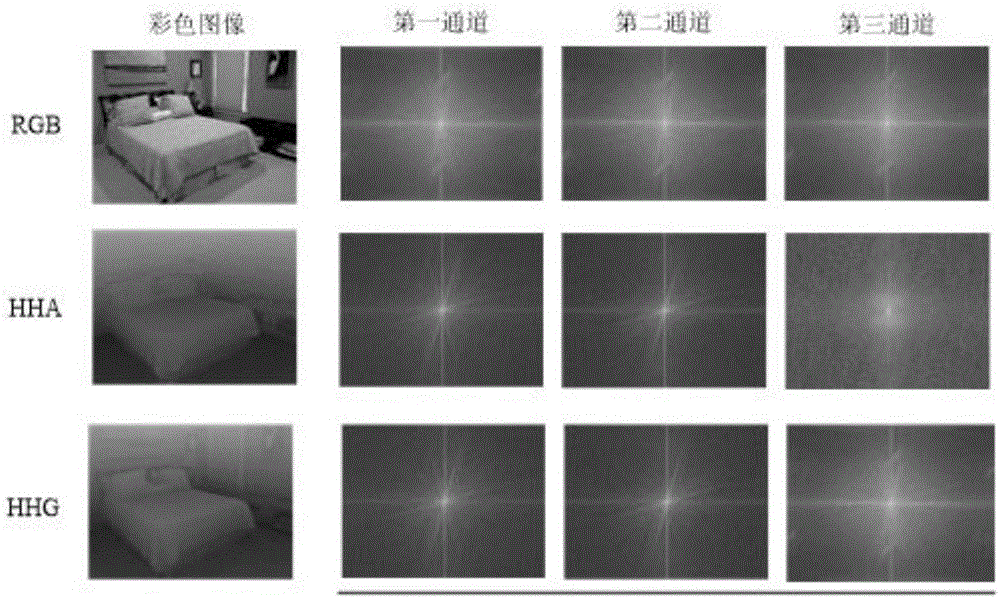

RGB-D image object detection and semantic segmentation method based on deep convolution network

ActiveCN106709568ACharacter and pattern recognitionNeural architecturesMachine visionVisual perception

The invention discloses an RGB-D image object detection and semantic segmentation method based on a deep convolution network, which belongs to the field of depth learning and machine vision. According to the method provided by the technical scheme of the invention, Faster-RCNN is used to replace the original slow RCNN; Faster-RCNN uses GPU, which is fast in the aspect of feature extracting, and at the same time generates a regional scheme in the network; the whole training process is training from end to end; FCN is used to carry out RGB-D image semantic segmentation; FCN uses a GPU and the deep convolution network to rapidly extract the deep features of an image; deconvolution is used to fuse deep features and shallow features of the image convolution; and the local semantic information of the image is integrated into the global semantic information.

Owner:深圳市小枫科技有限公司

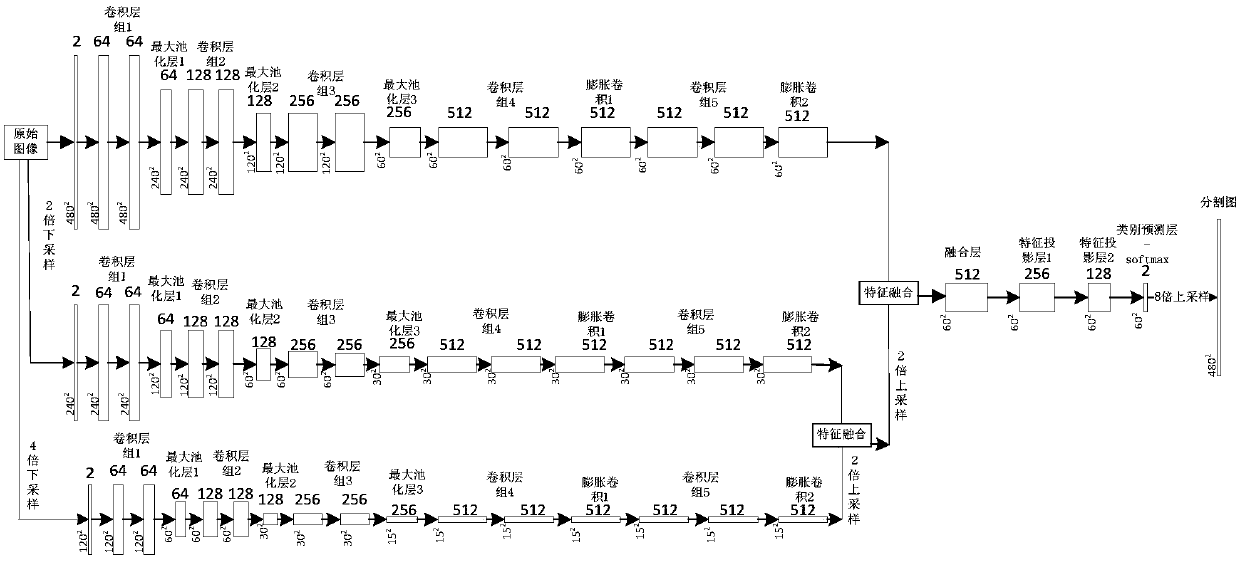

Multi-scale feature fusion ultrasonic image semantic segmentation method based on adversarial learning

ActiveCN108268870AImprove forecast accuracyFew parametersNeural architecturesRecognition of medical/anatomical patternsPattern recognitionAutomatic segmentation

The invention provides a multi-scale feature fusion ultrasonic image semantic segmentation method based on adversarial learning, and the method comprises the following steps: building a multi-scale feature fusion semantic segmentation network model, building an adversarial discrimination network model, carrying out the adversarial training and model parameter learning, and carrying out the automatic segmentation of a breast lesion. The method provided by the invention achieves the prediction of a pixel class through the multi-scale features of input images with different resolutions, improvesthe pixel class label prediction accuracy, employs expanding convolution for replacing partial pooling so as to improve the resolution of a segmented image, enables the segmented image generated by asegmentation network guided by an adversarial discrimination network not to be distinguished from a segmentation label, guarantees the good appearance and spatial continuity of the segmented image, and obtains a more precise high-resolution ultrasonic breast lesion segmented image.

Owner:CHONGQING NORMAL UNIVERSITY

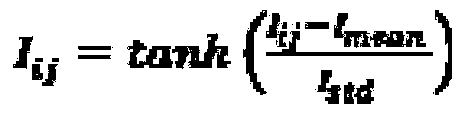

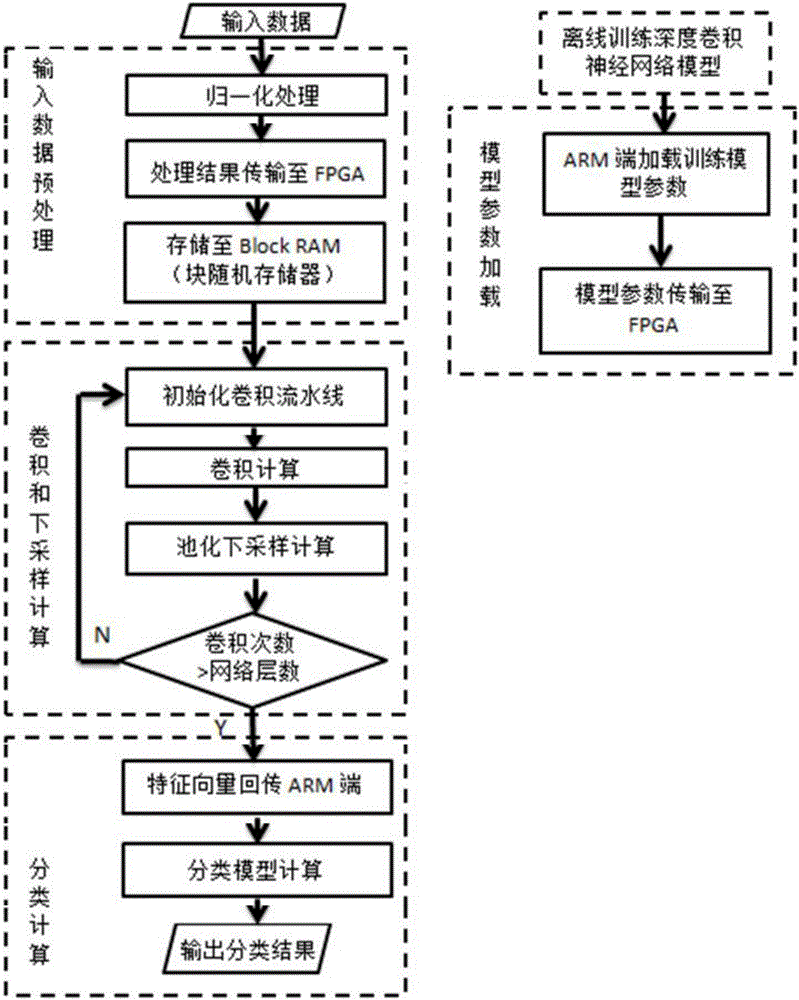

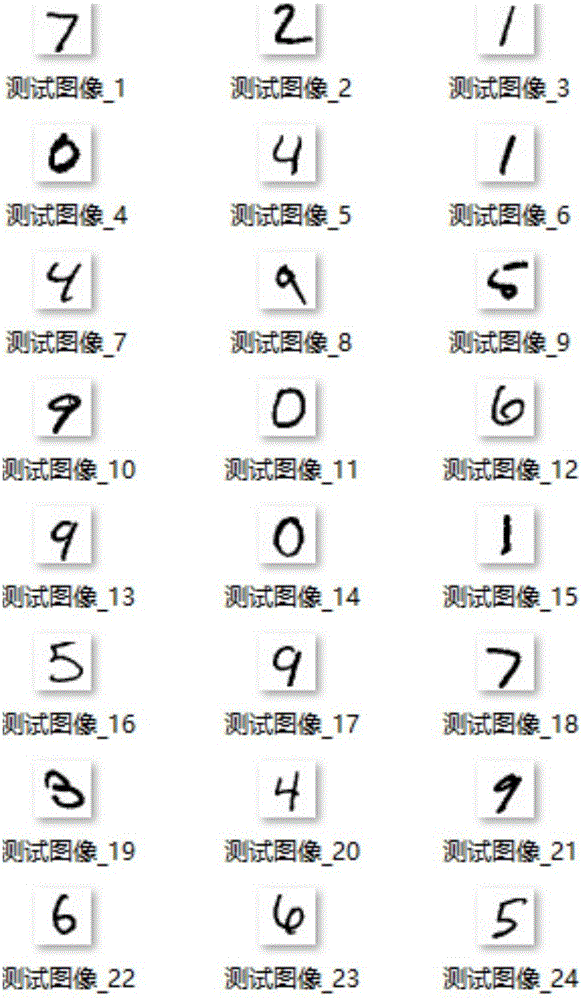

FPGA-based deep convolution neural network realizing method

ActiveCN106228240ASimple designReduce resource consumptionCharacter and pattern recognitionSpeech recognitionFeature vectorAlgorithm

The invention belongs to the technical field of digital image processing and mode identification, and specifically relates to an FPGA-based deep convolution neural network realizing method. The hardware platform for realizing the method is XilinxZYNQ-7030 programmable sheet SoC, and an FPGA and an ARM Cortex A9 processor are built in the hardware platform. Trained network model parameters are loaded to an FPGA end, pretreatment for input data is conducted at an ARM end, and the result is transmitted to the FPGA end. Convolution calculation and down-sampling of a deep convolution neural network are realized at the FPGA end to form data characteristic vectors and transmit the data characteristic vectors to the ARM end, thus completing characteristic classification calculation. Rapid parallel processing and extremely low-power high-performance calculation characteristics of FPGA are utilized to realize convolution calculation which has the highest complexity in a deep convolution neural network model. The algorithm efficiency is greatly improved, and the power consumption is reduced while ensuring algorithm correct rate.

Owner:FUDAN UNIV

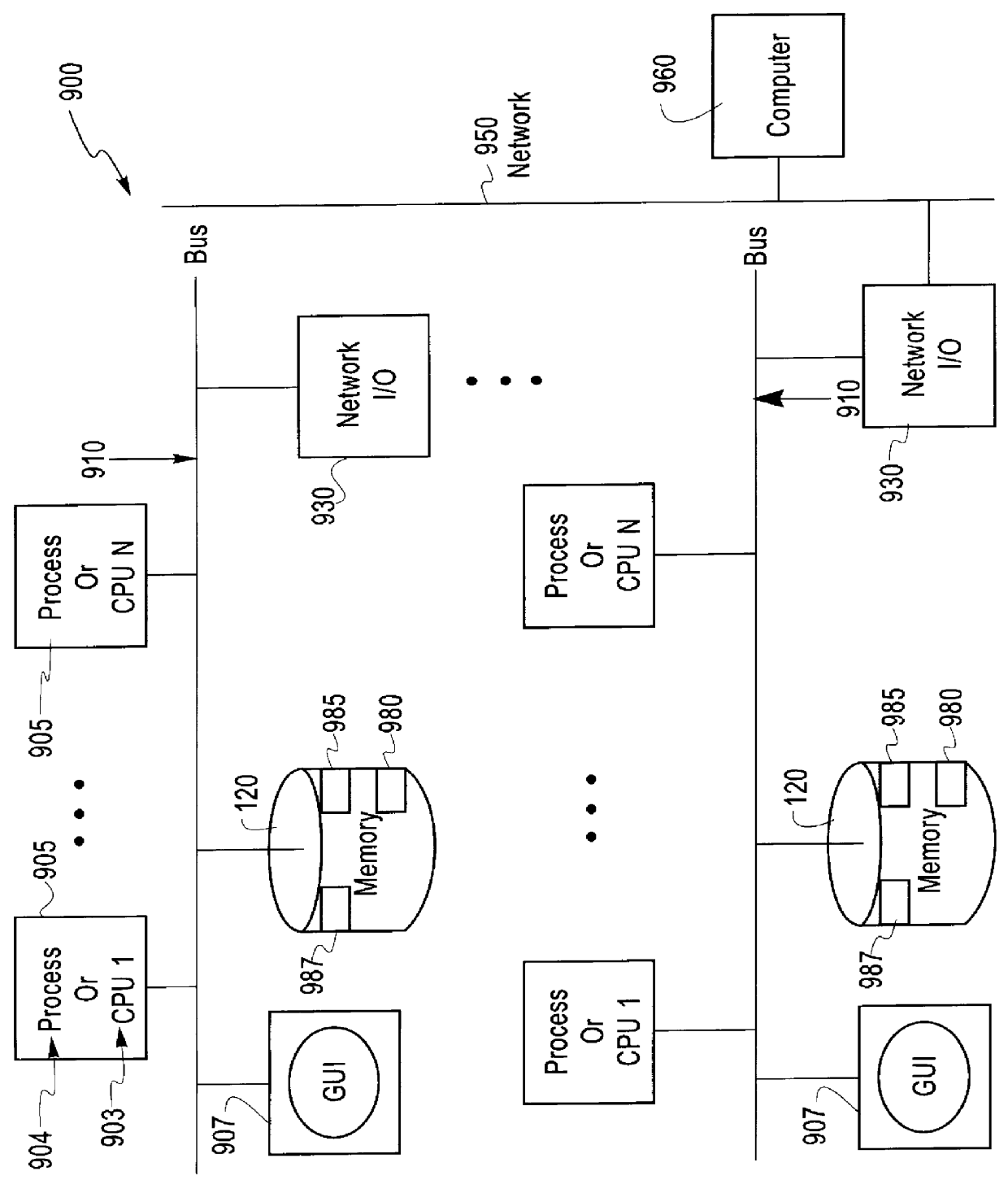

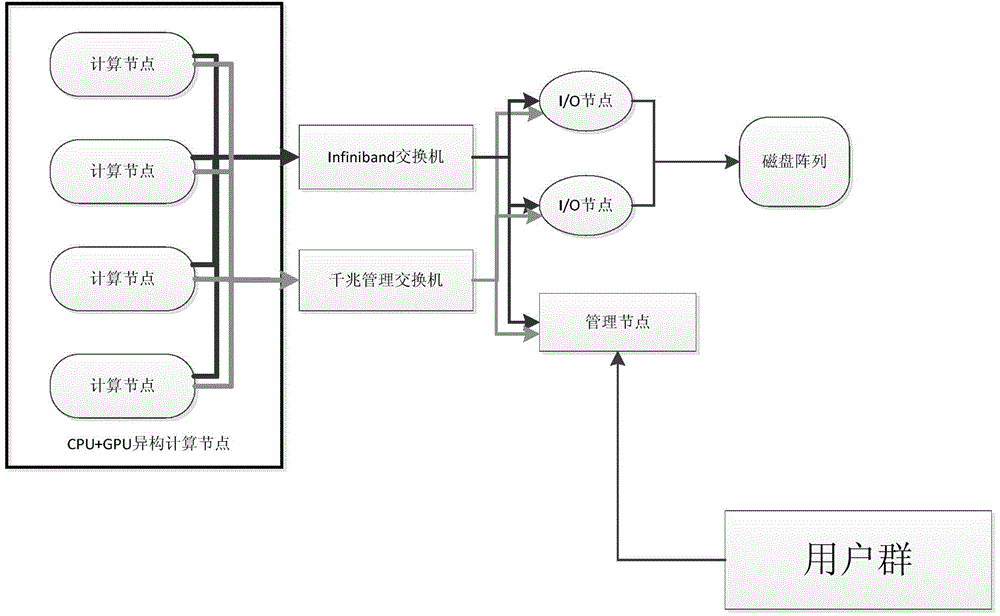

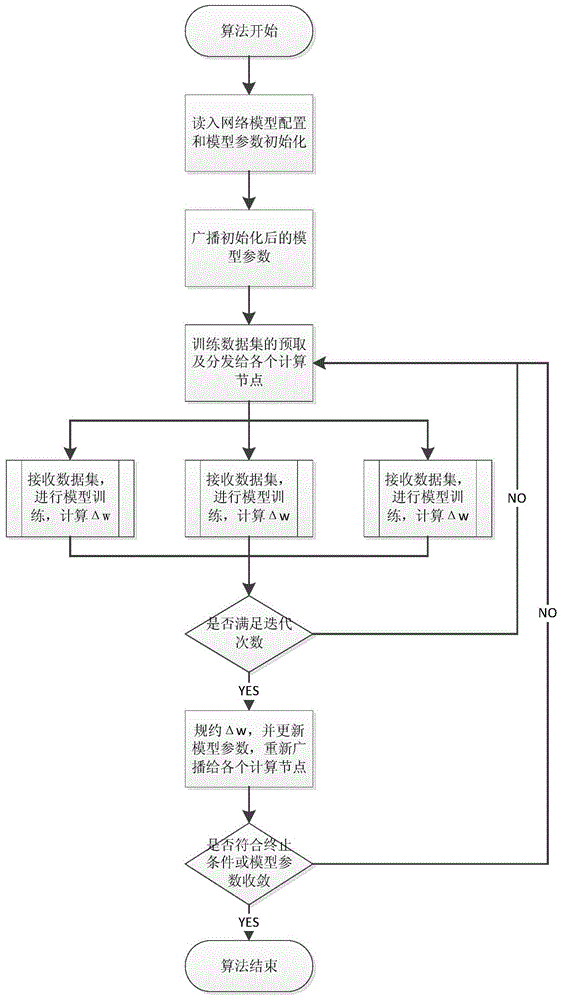

Convolution neural network parallel processing method based on large-scale high-performance cluster

InactiveCN104463324AReduce training timeImprove computing efficiencyBiological neural network modelsConcurrent instruction executionNODALAlgorithm

The invention discloses a convolution neural network parallel processing method based on a large-scale high-performance cluster. The method comprises the steps that (1) a plurality of copies are constructed for a network model to be trained, model parameters of all the copies are identical, the number of the copies is identical with the number of nodes of the high-performance cluster, each node is provided with one model copy, one node is selected to serve as a main node, and the main node is responsible for broadcasting and collecting the model parameters; (2) a training set is divided into a plurality of subsets, the training subsets are issued to the rest of sub nodes except the main mode each time to conduct parameter gradient calculation together, gradient values are accumulated, the accumulated value is used for updating the model parameters of the main node, and the updated model parameters are broadcast to all the sub nodes until model training is ended. The convolution neural network parallel processing method has the advantages of being capable of achieving parallelization, improving the efficiency of model training, shortening the training time and the like.

Owner:CHANGSHA MASHA ELECTRONICS TECH

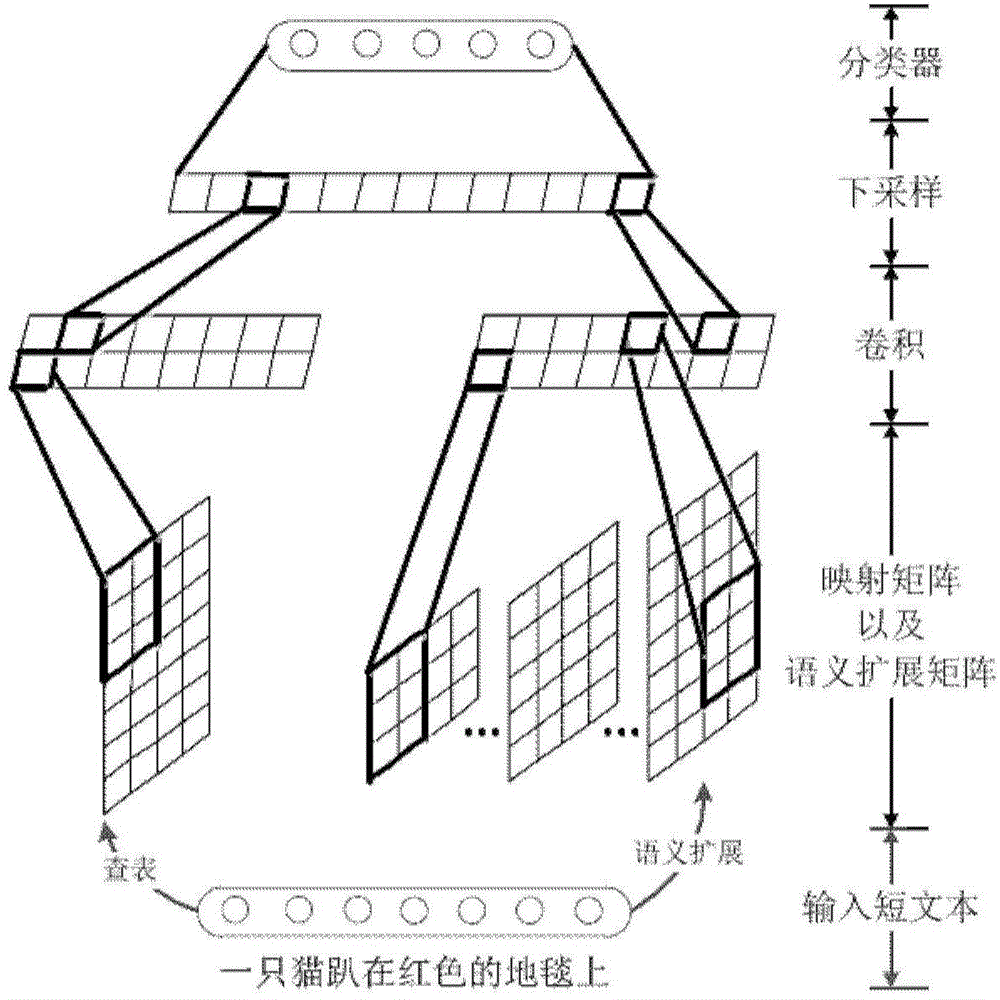

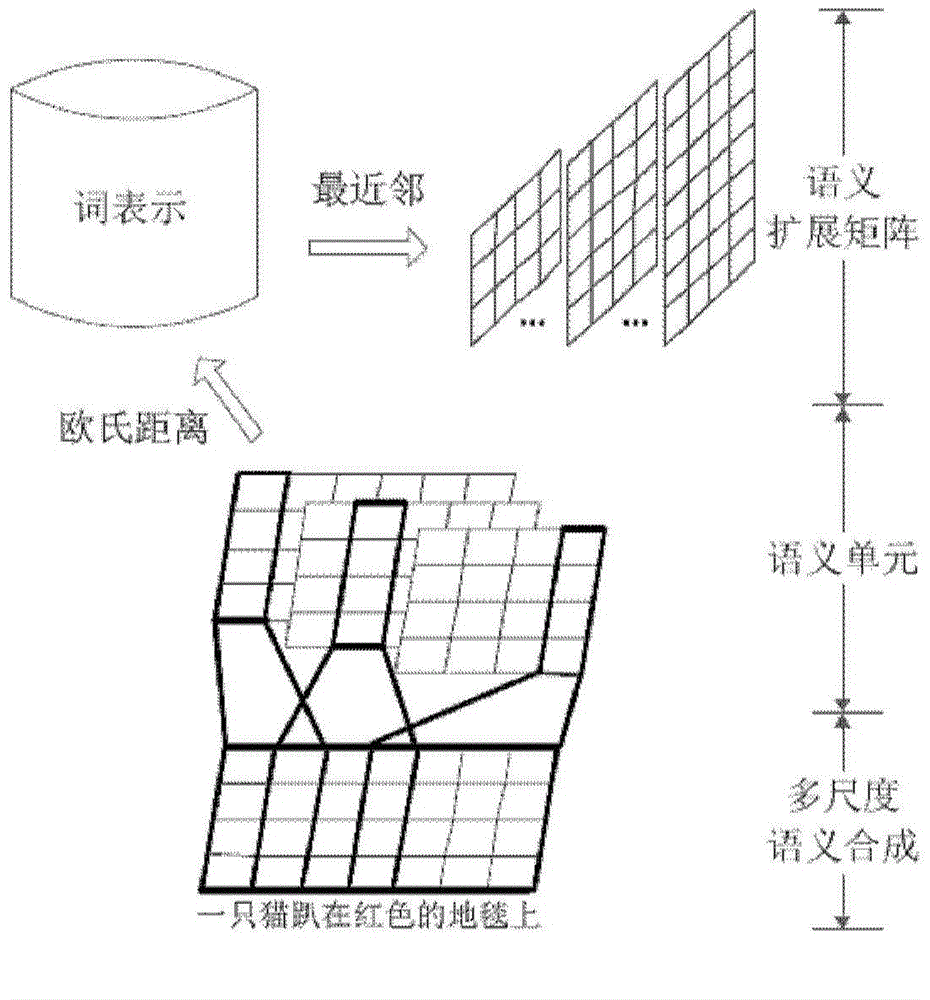

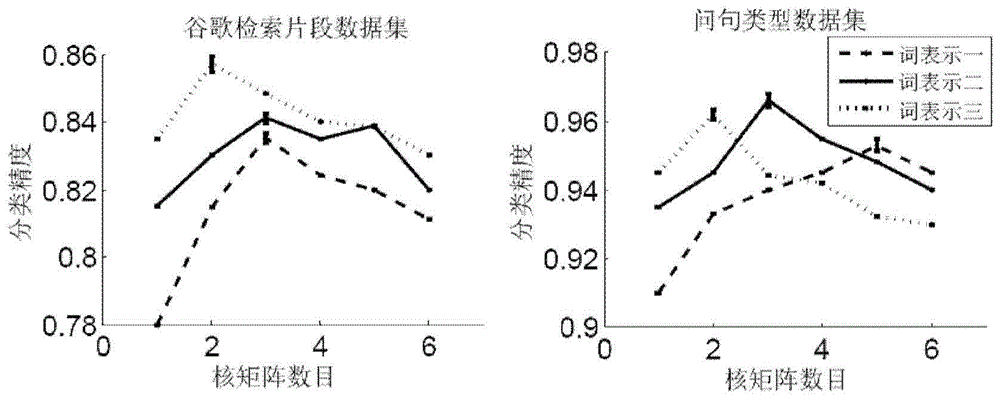

Short text classification method based on convolution neutral network

ActiveCN104834747AImprove semantic sensitivity issuesImprove classification performanceInput/output for user-computer interactionBiological neural network modelsNear neighborClassification methods

The invention discloses a short text classification method based on a convolution neutral network. The convolution neutral network comprises a first layer, a second layer, a third layer, a fourth layer and a fifth layer. On the first layer, multi-scale candidate semantic units in a short text are obtained; on the second layer, Euclidean distances between each candidate semantic unit and all word representation vectors in a vector space are calculated, nearest-neighbor word representations are found, and all the nearest-neighbor word representations meeting a preset Euclidean distance threshold value are selected to construct a semantic expanding matrix; on the third layer, multiple kernel matrixes of different widths and different weight values are used for performing two-dimensional convolution calculation on a mapping matrix and the semantic expanding matrix of the short text, extracting local convolution features and generating a multi-layer local convolution feature matrix; on the fourth layer, down-sampling is performed on the multi-layer local convolution feature matrix to obtain a multi-layer global feature matrix, nonlinear tangent conversion is performed on the global feature matrix, and then the converted global feature matrix is converted into a fixed-length semantic feature vector; on the fifth layer, a classifier is endowed with the semantic feature vector to predict the category of the short text.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

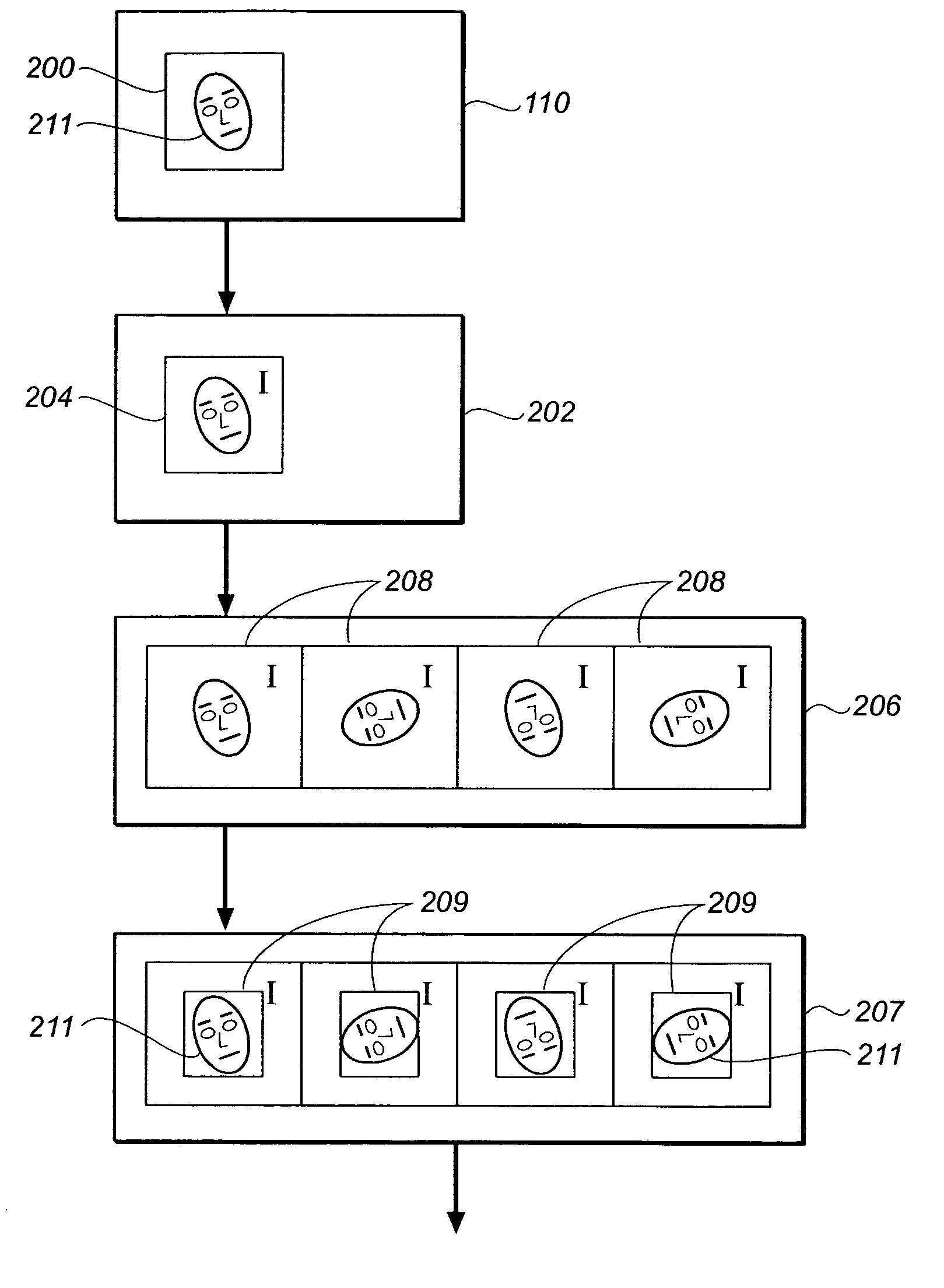

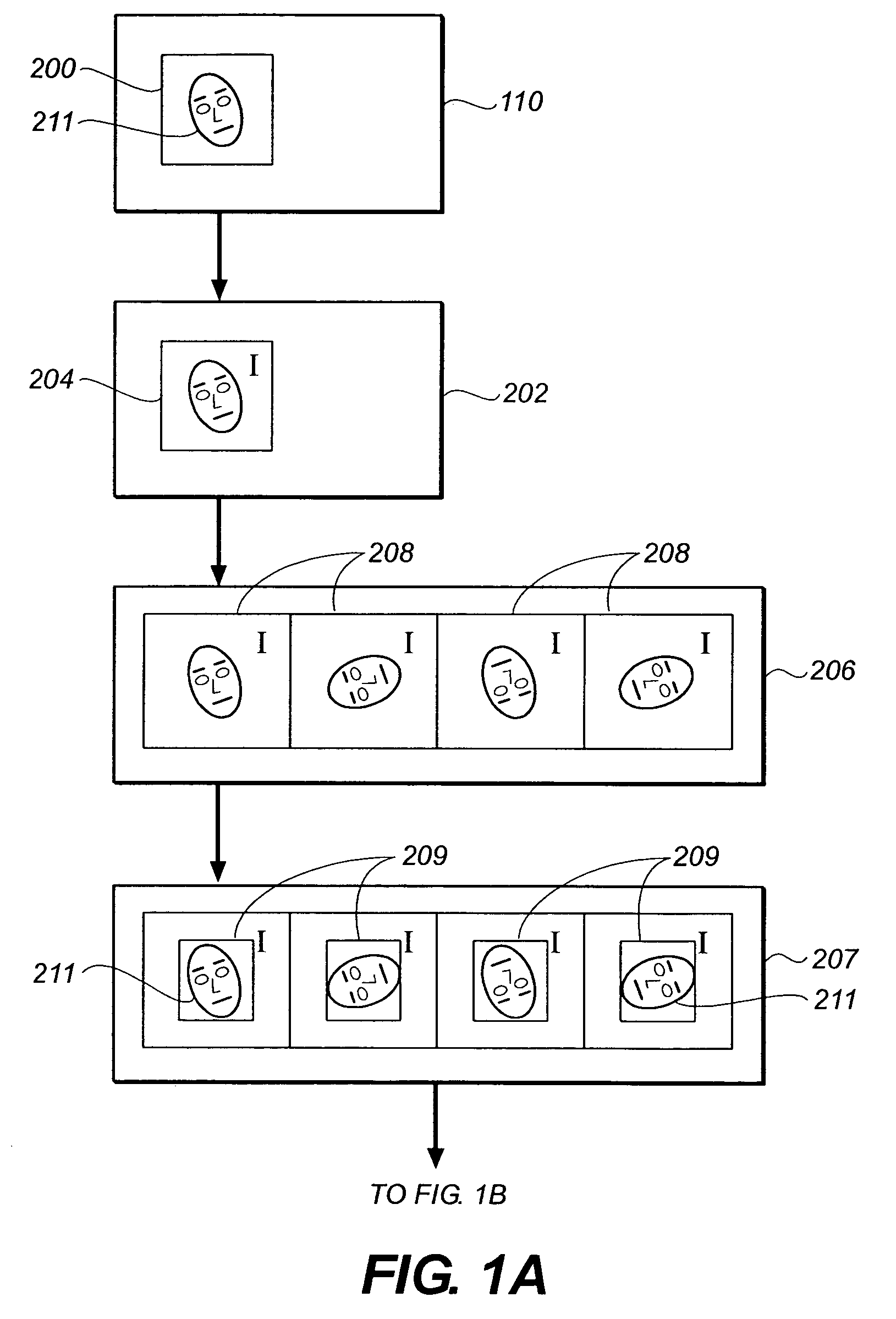

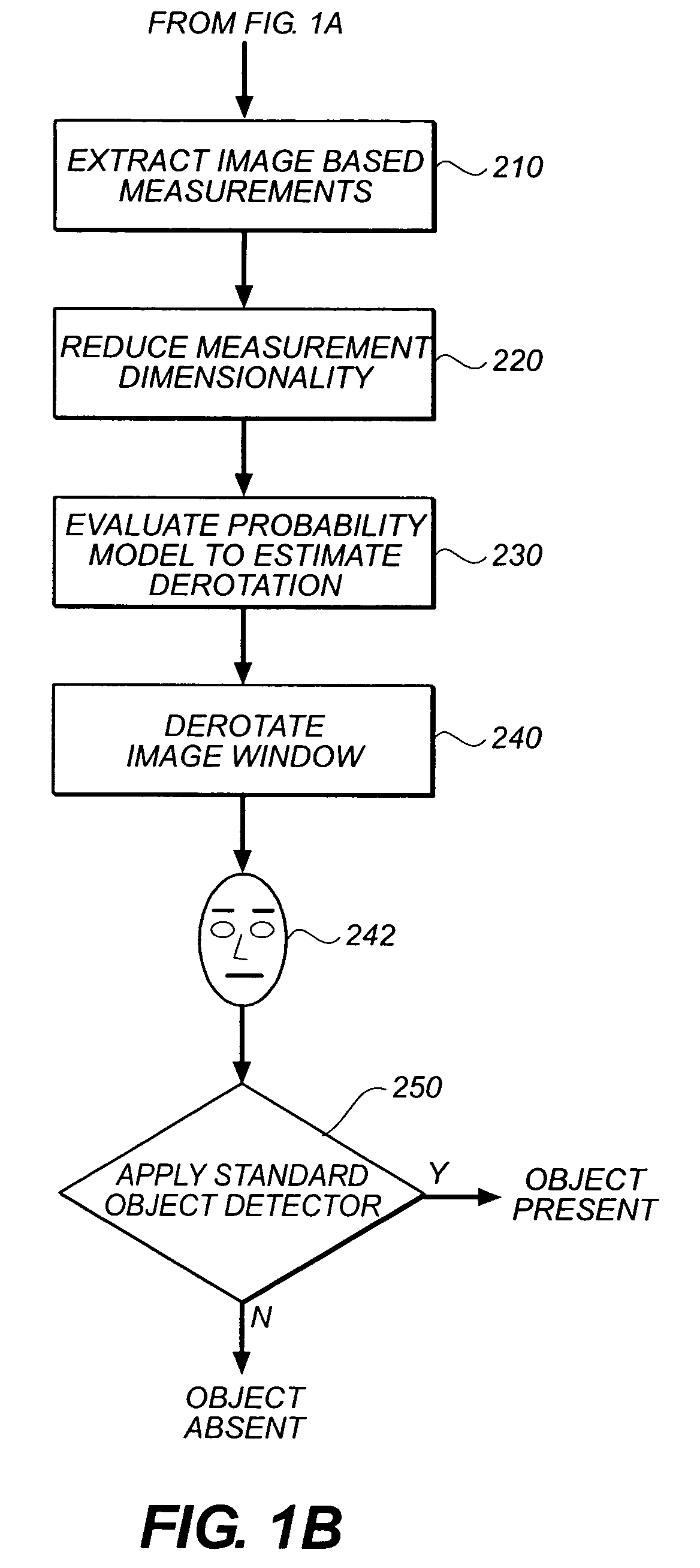

In-plane rotation invariant object detection in digitized images

In methods, systems, and computer program products for locating a regularly configured object within a digital image, a plurality of primary rotated integral images of the digital image are computed. Each primary rotated integral image has a different in-plane rotation. A set of secondary rotated integral images are derived from each of the primary rotated integral images. The secondary rotated integral images have further in-plane rotations relative to the respective primary rotated integral image. A window is defined within the digital image and corresponding windows of the rotated integral images. The values of convolution sums of a predetermined set of feature boxes within the window, in each of the rotated integral images are extracted. The dimensionality of the convolution sums is reduced to provide a set of reduced sums. A probability model is applied to the reduced sums to provide a best estimated derotated image of the window.

Owner:MONUMENT PEAK VENTURES LLC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com