Patents

Literature

6294 results about "Feature fusion" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

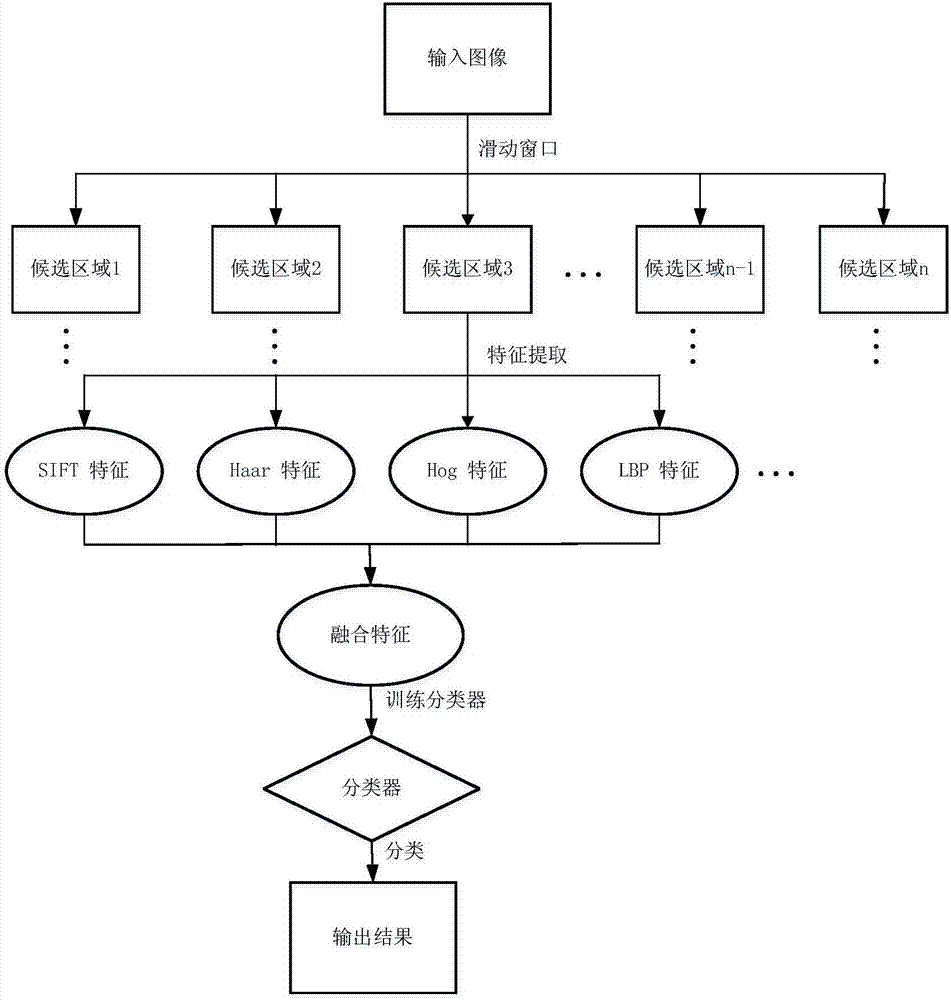

Feature fusion is the process of combining two feature vectors to obtain a single feature vector, which is more discriminative than any of the input feature vectors.

Small target detection method based on feature fusion and depth learning

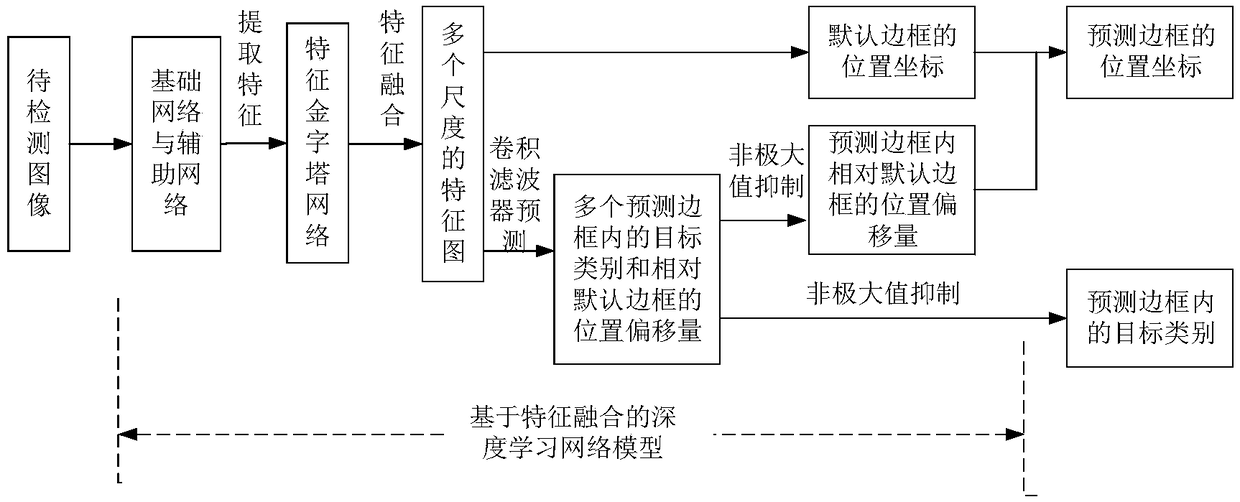

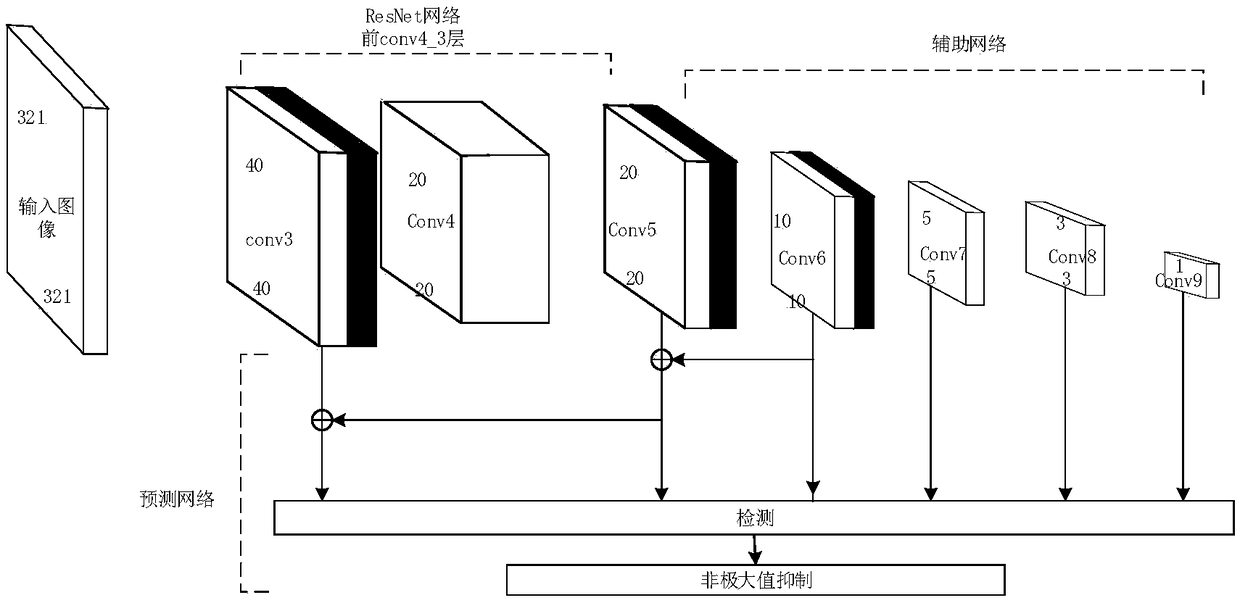

InactiveCN109344821AScalingRich information featuresCharacter and pattern recognitionNetwork modelFeature fusion

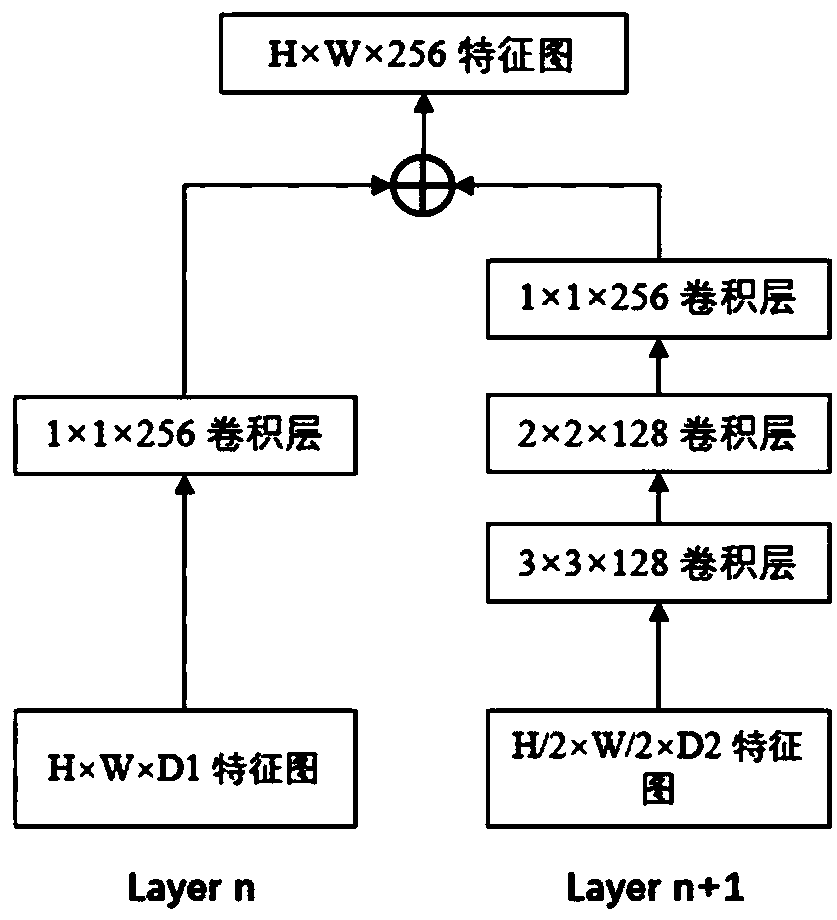

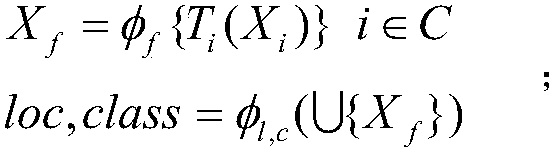

The invention discloses a small target detection method based on feature fusion and depth learning, which solves the problems of poor detection accuracy and real-time performance for small targets. The implementation scheme is as follows: extracting high-resolution feature map through deeper and better network model of ResNet 101; extracting Five successively reduced low resolution feature maps from the auxiliary convolution layer to expand the scale of feature maps. Obtaining The multi-scale feature map by the feature pyramid network. In the structure of feature pyramid network, adopting deconvolution to fuse the feature map information of high-level semantic layer and the feature map information of shallow layer; performing Target prediction using feature maps with different scales and fusion characteristics; adopting A non-maximum value to suppress the scores of multiple predicted borders and categories, so as to obtain the border position and category information of the final target. The invention has the advantages of ensuring high precision of small target detection under the requirement of ensuring real-time detection, can quickly and accurately detect small targets in images, and can be used for real-time detection of targets in aerial photographs of unmanned aerial vehicles.

Owner:XIDIAN UNIV

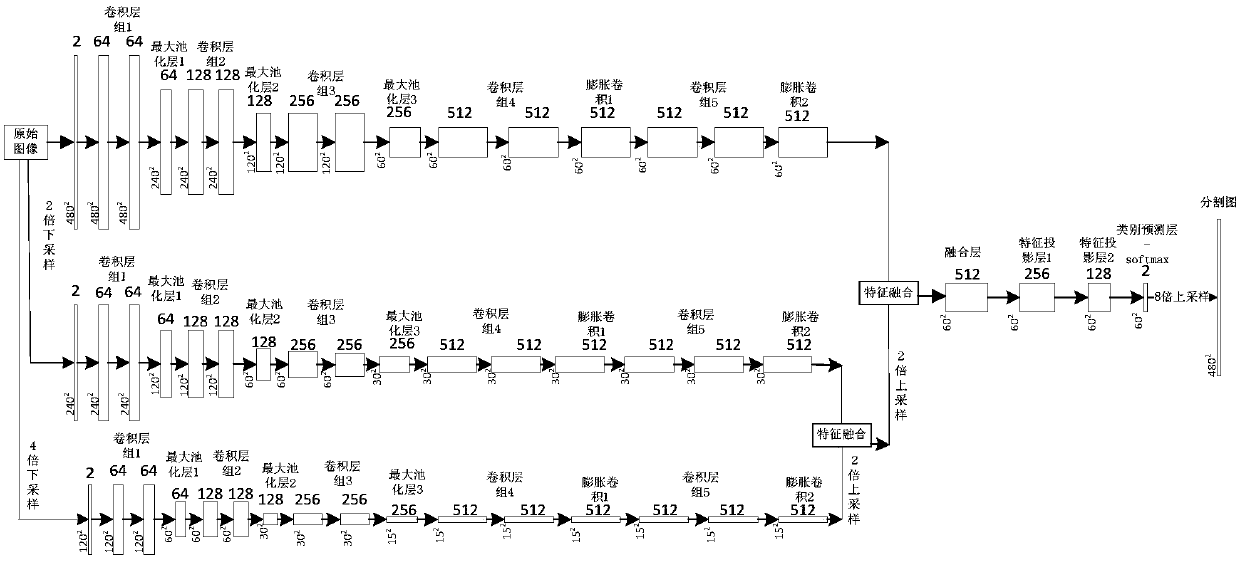

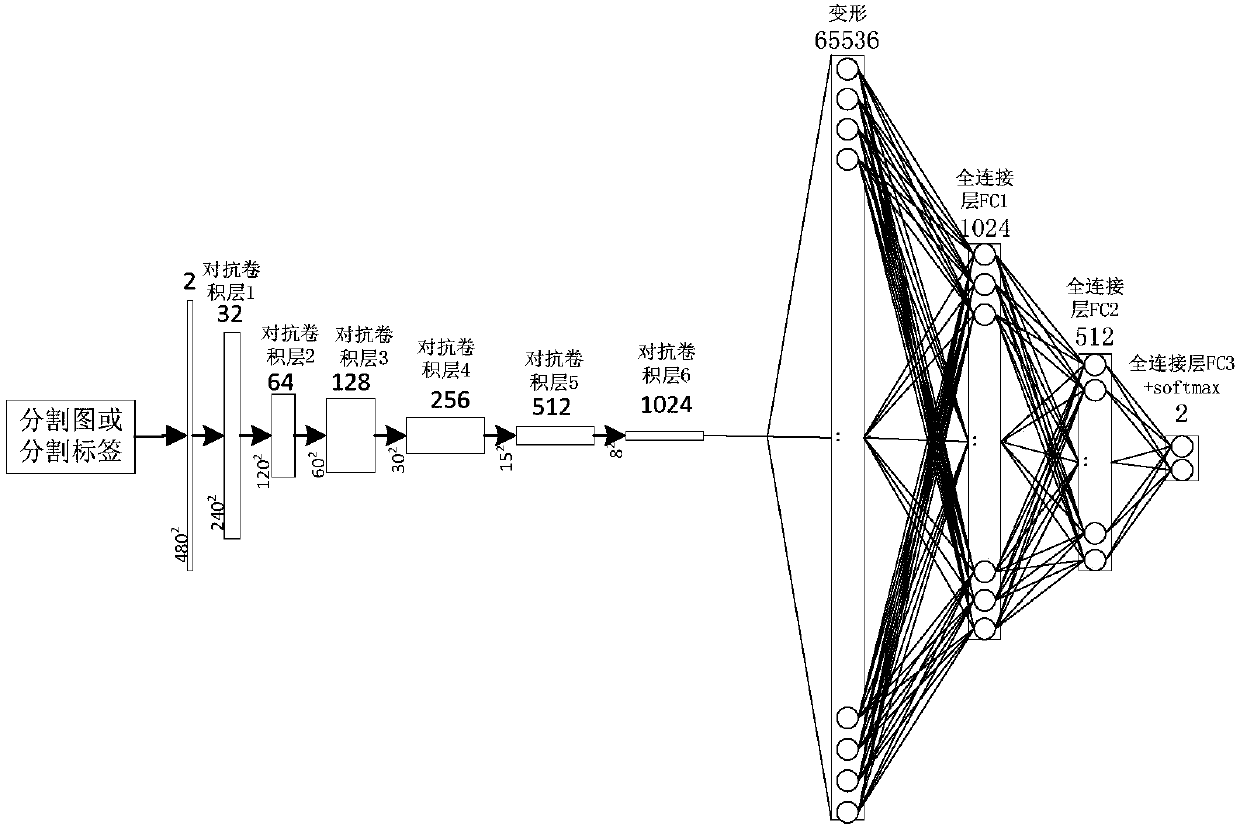

Multi-scale feature fusion ultrasonic image semantic segmentation method based on adversarial learning

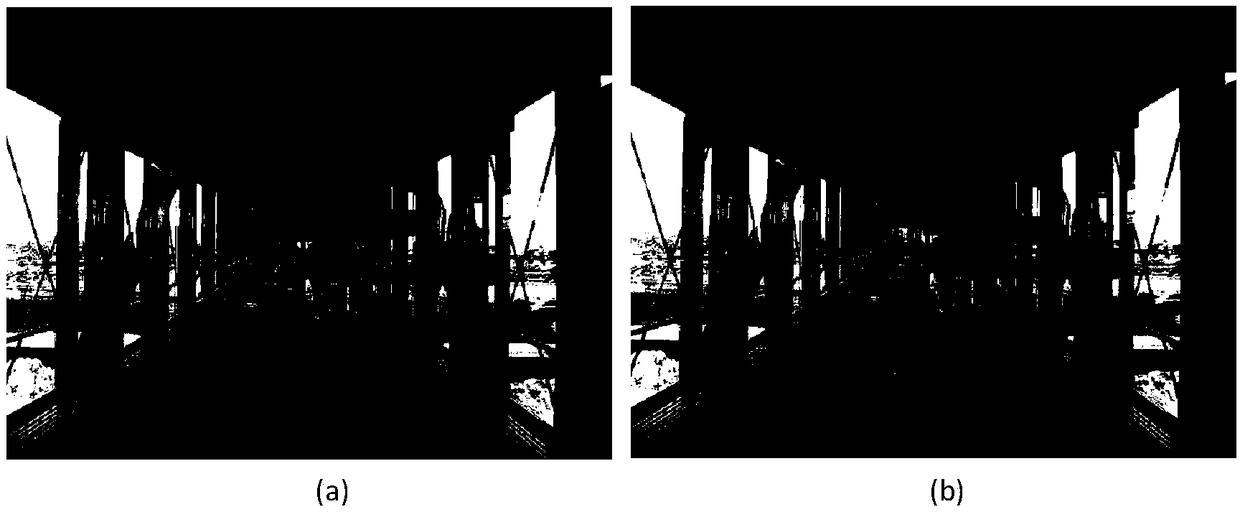

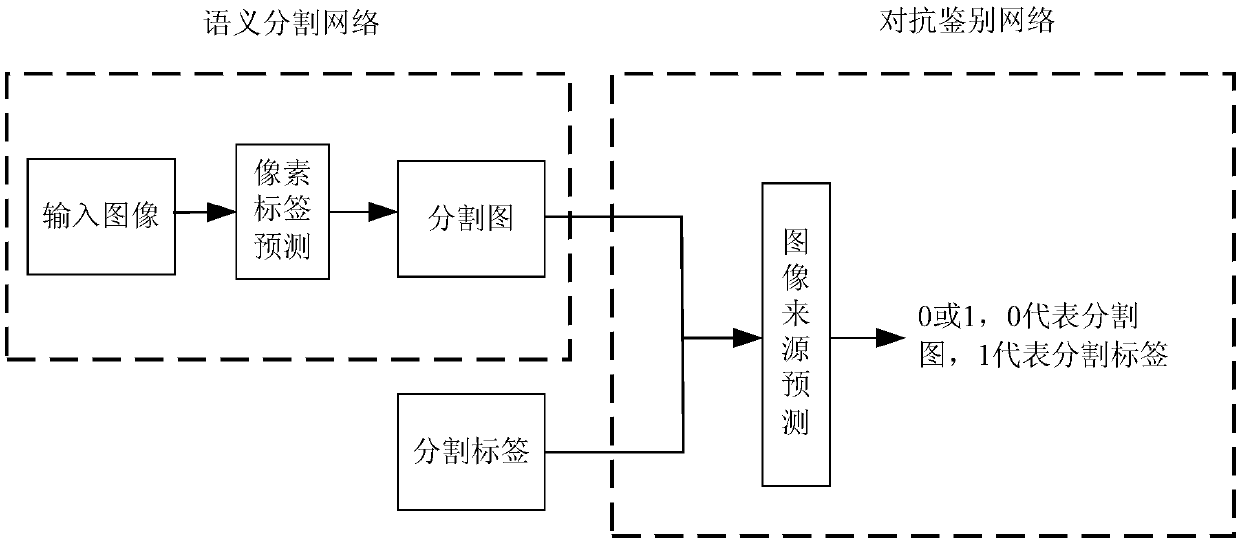

ActiveCN108268870AImprove forecast accuracyFew parametersNeural architecturesRecognition of medical/anatomical patternsPattern recognitionAutomatic segmentation

The invention provides a multi-scale feature fusion ultrasonic image semantic segmentation method based on adversarial learning, and the method comprises the following steps: building a multi-scale feature fusion semantic segmentation network model, building an adversarial discrimination network model, carrying out the adversarial training and model parameter learning, and carrying out the automatic segmentation of a breast lesion. The method provided by the invention achieves the prediction of a pixel class through the multi-scale features of input images with different resolutions, improvesthe pixel class label prediction accuracy, employs expanding convolution for replacing partial pooling so as to improve the resolution of a segmented image, enables the segmented image generated by asegmentation network guided by an adversarial discrimination network not to be distinguished from a segmentation label, guarantees the good appearance and spatial continuity of the segmented image, and obtains a more precise high-resolution ultrasonic breast lesion segmented image.

Owner:CHONGQING NORMAL UNIVERSITY

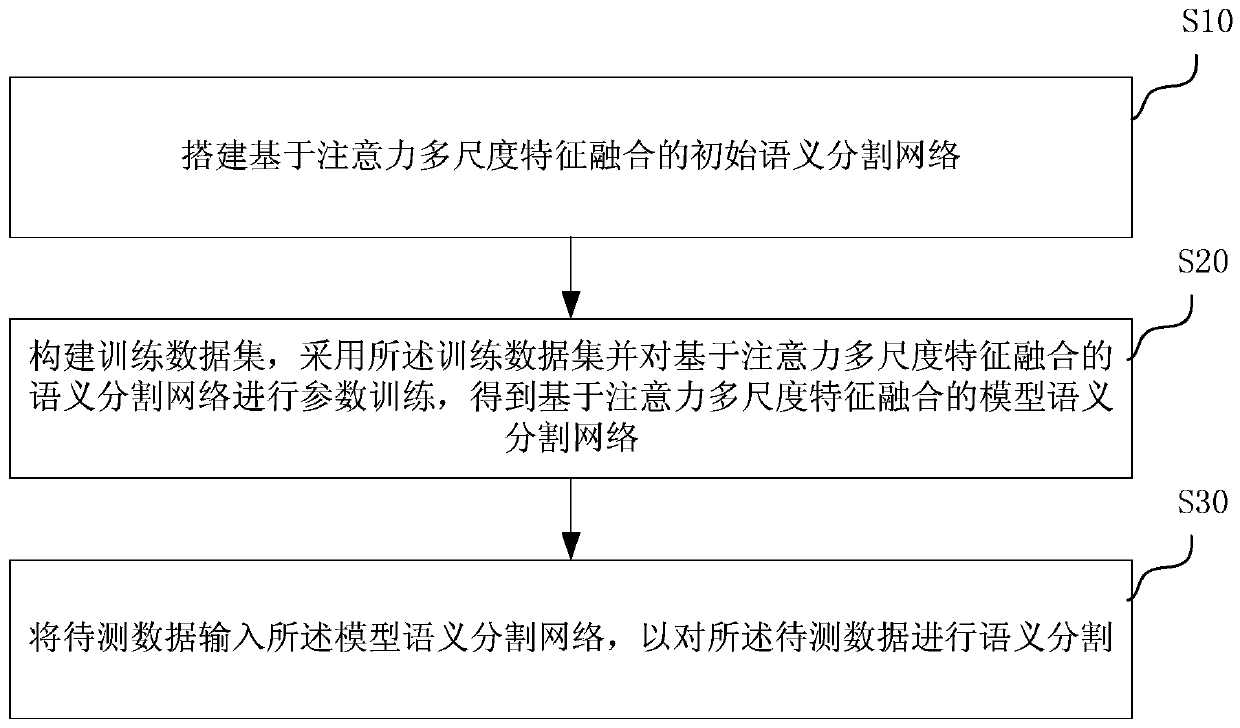

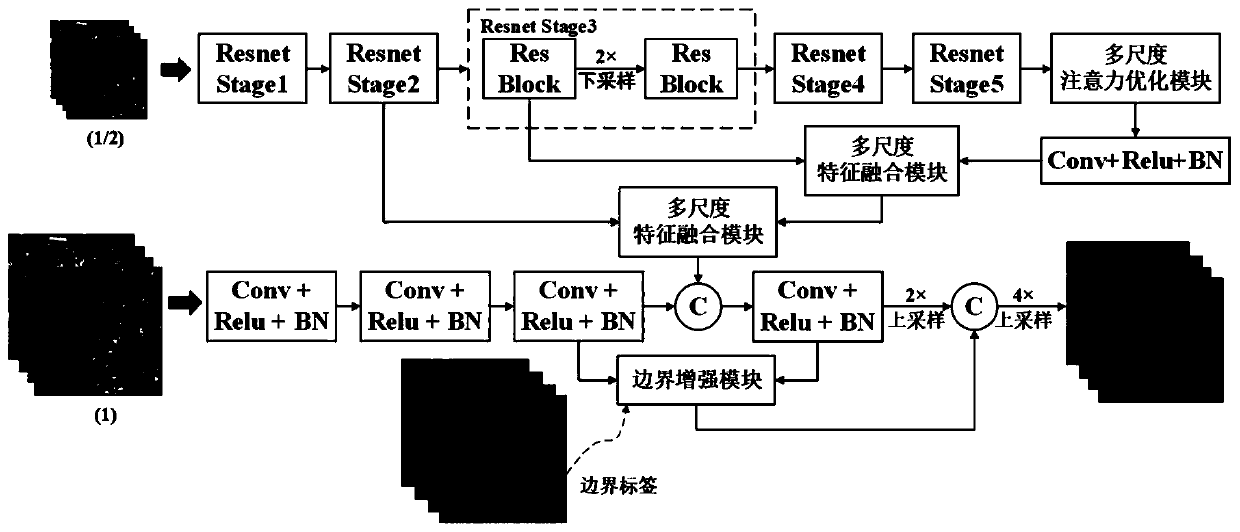

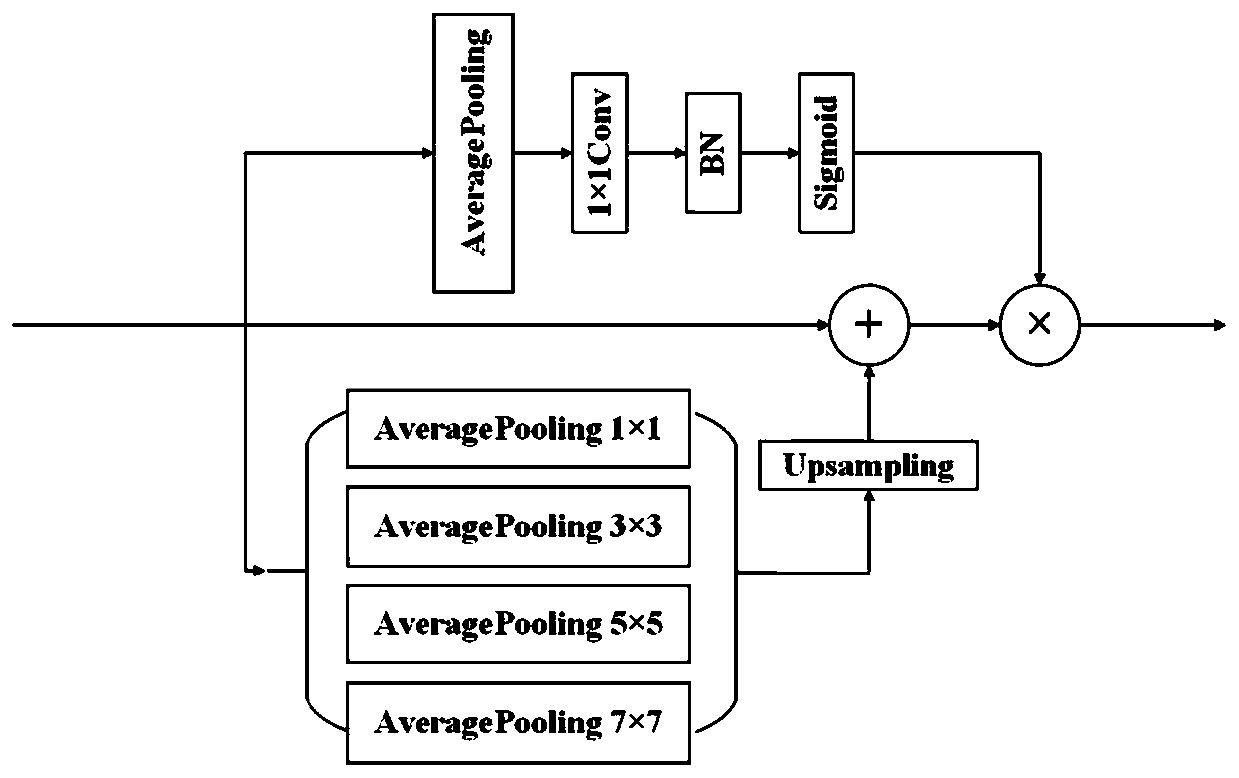

Remote sensing image semantic segmentation method based on attention multi-scale feature fusion

PendingCN111127493AImprove performanceReduce the amount of parametersImage enhancementImage analysisPattern recognitionEncoder decoder

The invention discloses a remote sensing image semantic segmentation method based on attention multi-scale feature fusion, and the method comprises the steps: establishing a semantic segmentation network based on attention multi-scale feature fusion, building a training data set, and carrying out the network parameter training through employing the training data set. And performing semantic segmentation on to-be-tested data by using the trained network during testing. And the network is a lightweight encoder-decoder structure. Wherein the idea of an image cascade network is introduced; meanwhile, coding features and decoding features are optimized by using an attention mechanism, a multi-scale attention optimization module, a multi-scale feature fusion module and a boundary enhancement module are constructed, feature maps of different scales are extracted and fused, training is guided by using multi-scale semantic tags and boundary tags, and semantic segmentation of remote sensing images can be effectively carried out.

Owner:CHINA UNIV OF MINING & TECH

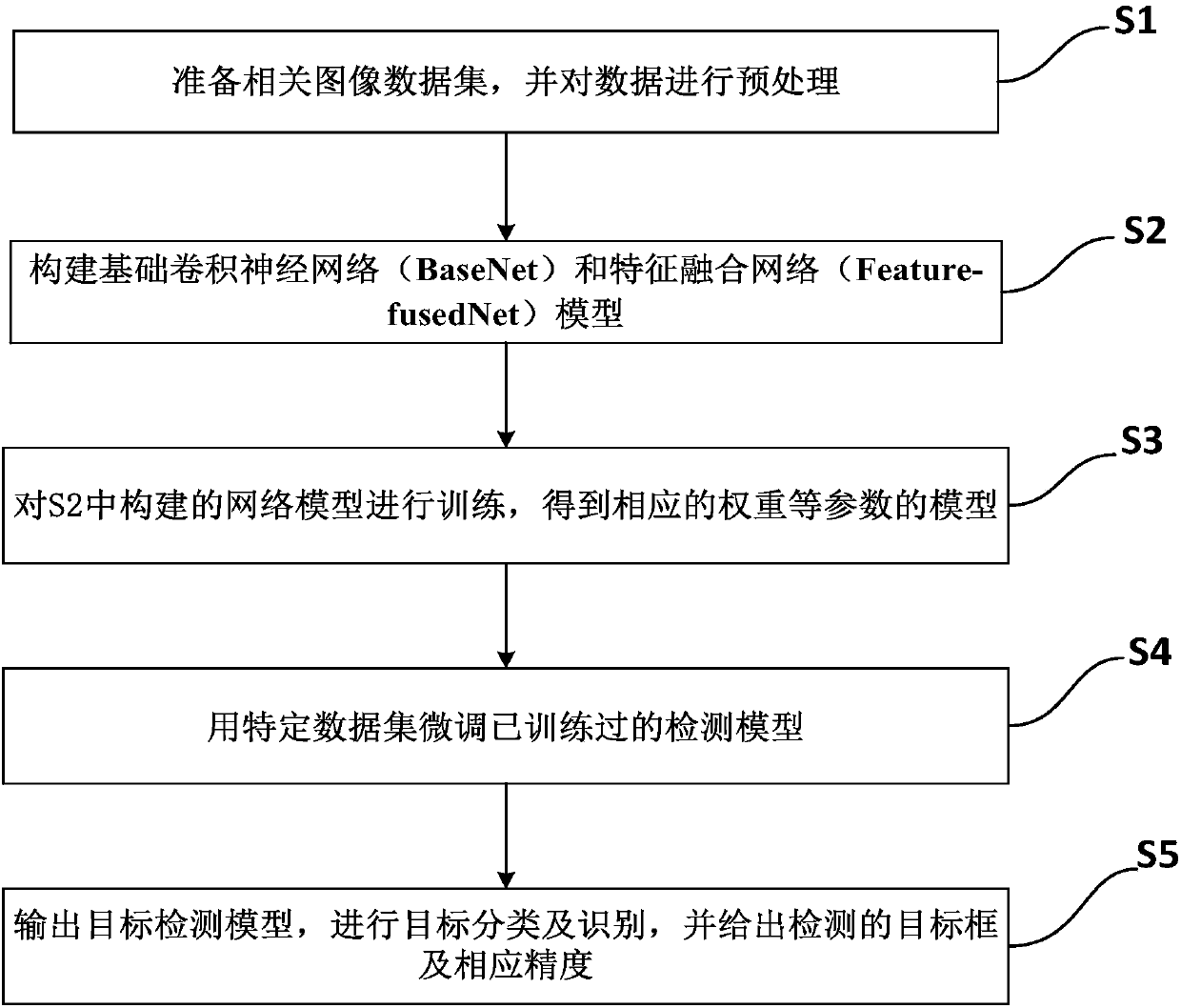

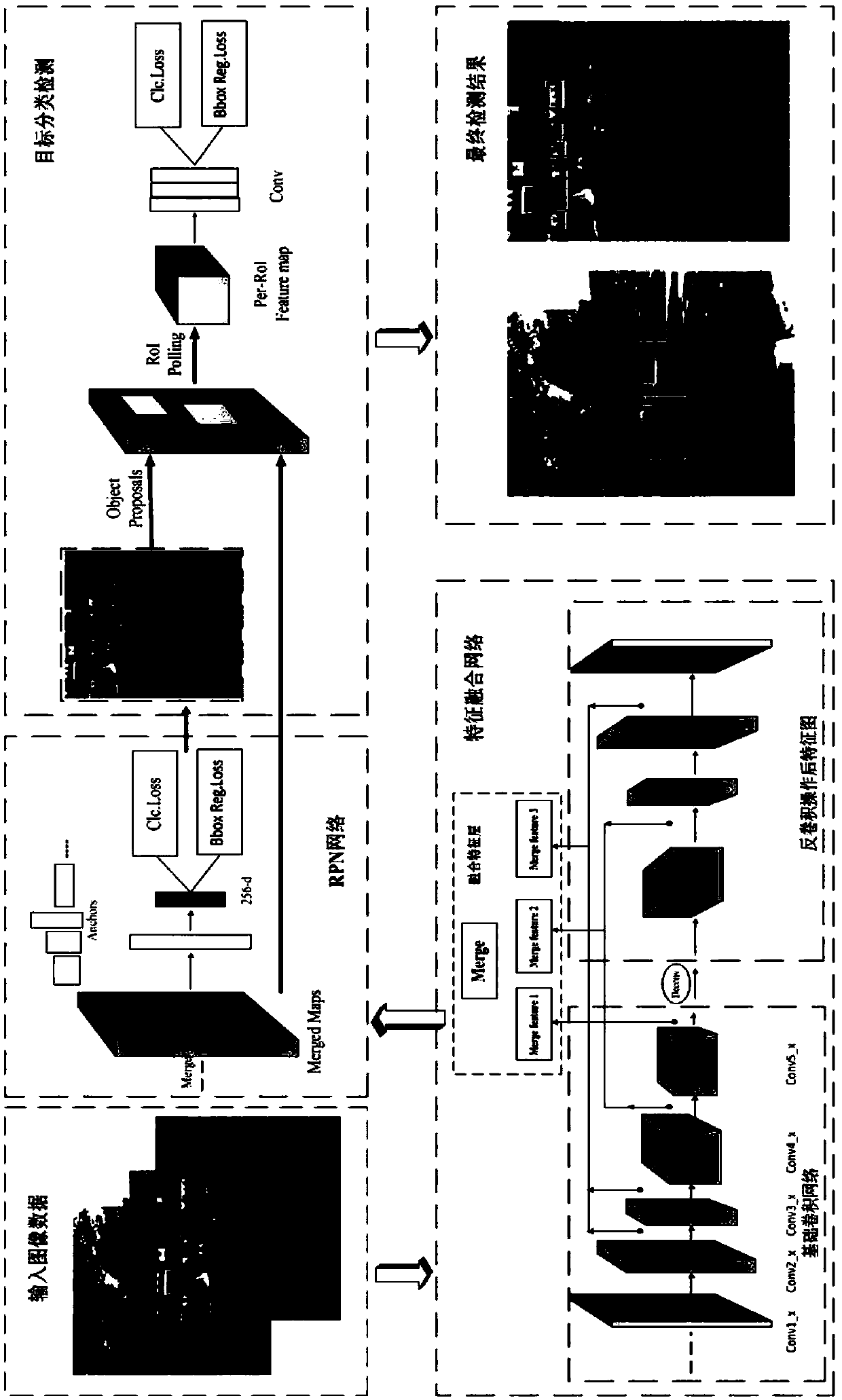

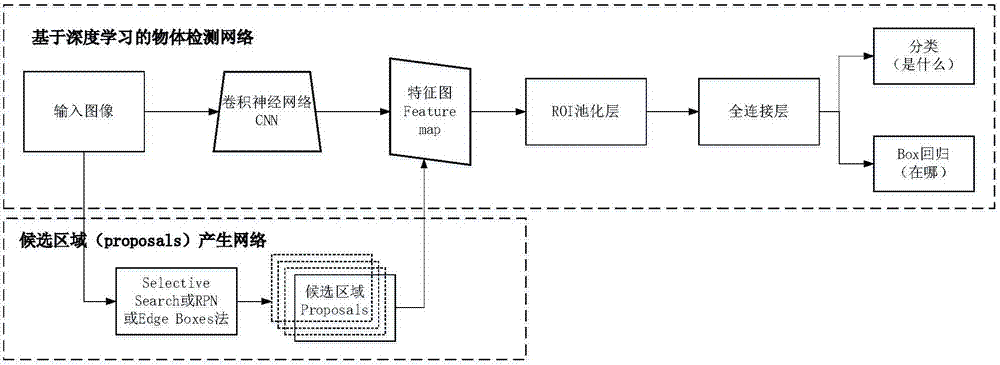

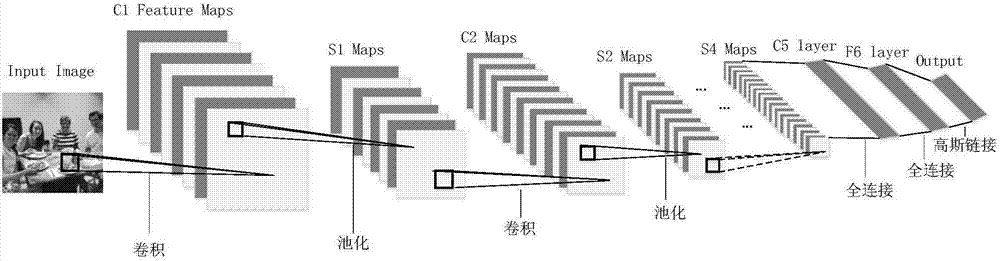

Multistage target detection method and model based on CNN multistage feature fusion

ActiveCN108509978AImprove accuracyOptimize network structureCharacter and pattern recognitionNeural architecturesData setNerve network

The invention discloses a multi-stage target detection method and model based on CNN multistage characteristic fusion; the method mainly comprises the following steps: preparing a related image data set, and processing the data; building a base convolution nerve network (BaseNet) and Feature-fused Net model; training the network model built in the previous step so as to obtain a model of the corresponding weight parameter; using a special data set to finely adjust the trained detection model; outputting a target detection model so as to make target classification and identification, and providing a detection target frame and the corresponding precision. In addition, the invention also provides a multi-class target detection structure model based on CNN multistage characteristic fusion, thus improving the whole detection accuracy, optimizing model parameter values, and providing a more reasonable model structure.

Owner:CENT SOUTH UNIV

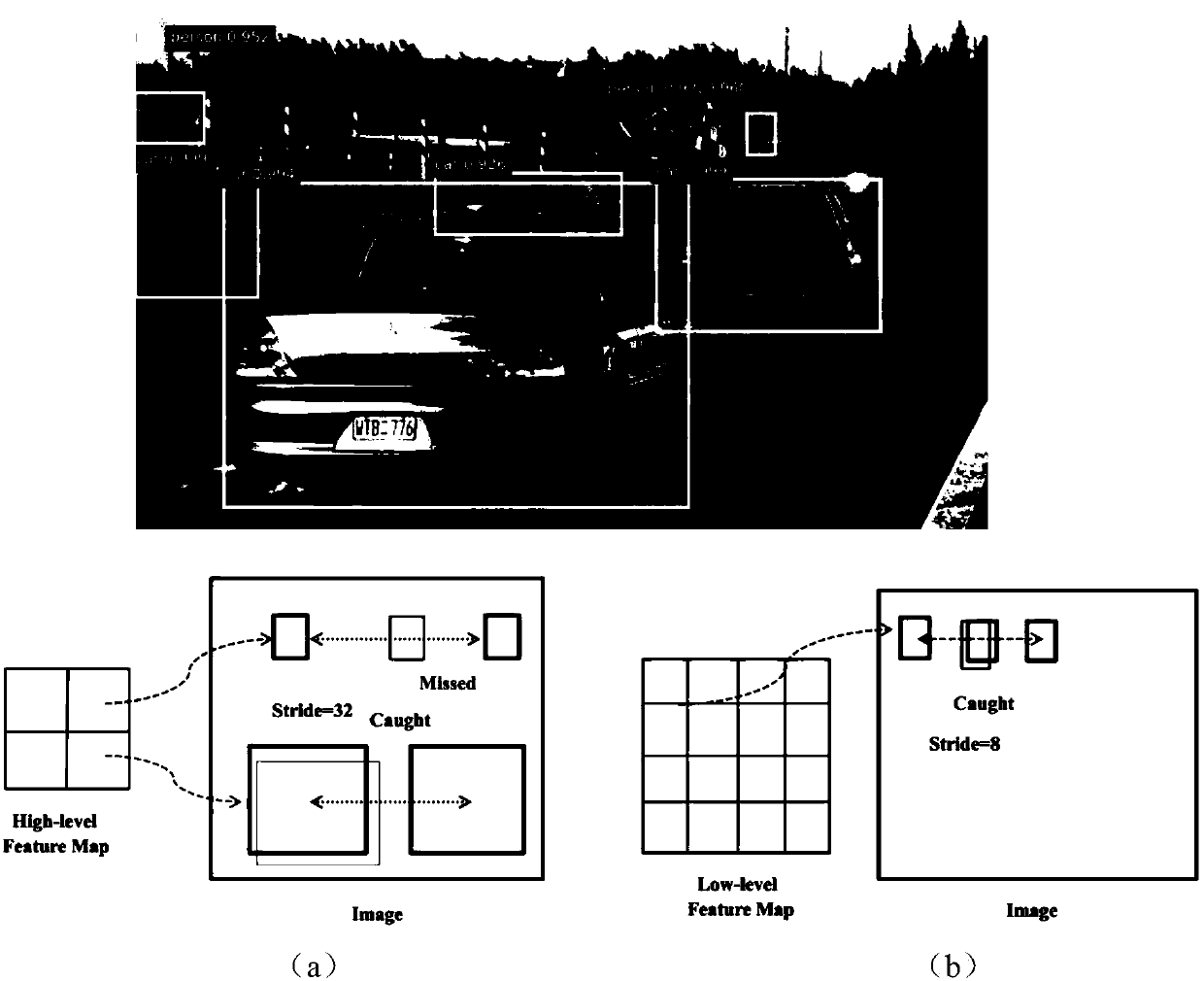

Multi-scale small object detection method based on deep-learning hierarchical feature fusion

ActiveCN107341517ARealize detectionEasy to identifyCharacter and pattern recognitionMachine visionResearch Object

The invention relates to the object verification technology in the machine vision field, and especially relates to a multi-scale small object detection method based on deep-learning hierarchical feature fusion; for solving the defects that the existing object detection is low in detection precision under real scene, constrained by scale size and different for small object detection, the invention puts forward a multi-scale small object detection method based on deep-learning hierarchical feature fusion. The detection method comprises the following steps: taking an image under the real scene as a research object, extracting the feature of the input image by constructing the convolution neural network, producing less candidate regions by using a candidate region generation network, and then mapping candidate region to a feature image generated by the convolution neural network to obtain the feature of each candidate region, obtaining the feature with fixed size and fixed dimension after passing a pooling layer to input to the full-connecting layer, wherein two branches behind the full-connecting layer respectively output the recognition type and the returned position. The method disclosed by the invention is suitable for the object verification in the machine vision field.

Owner:HARBIN INST OF TECH

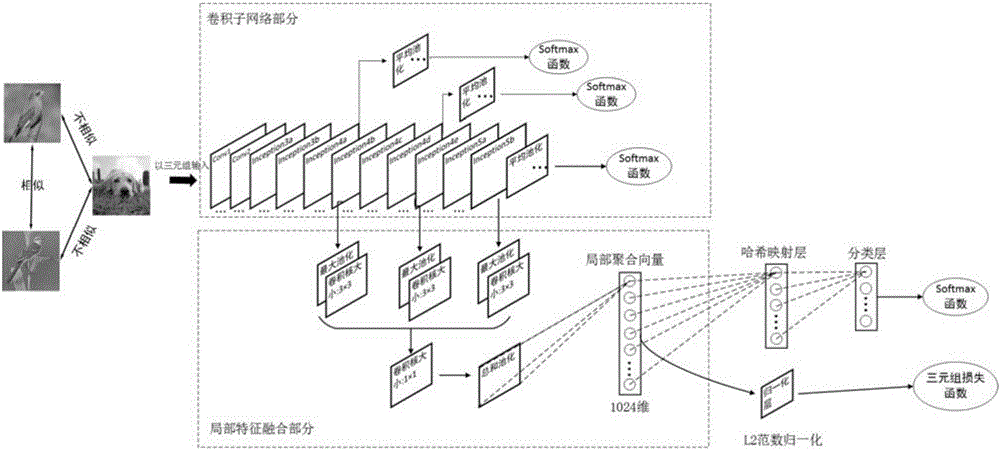

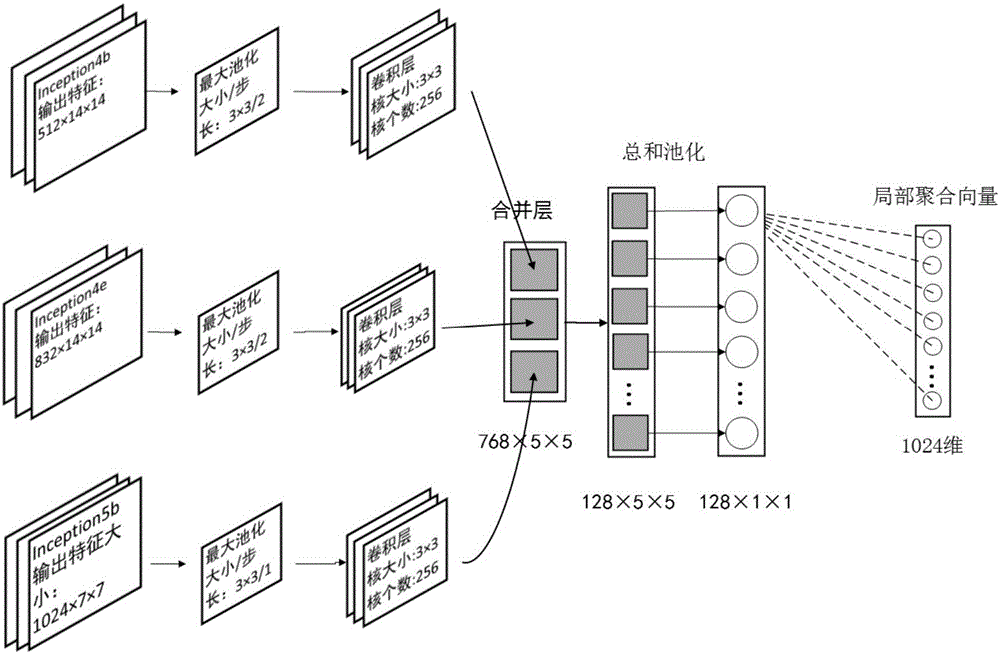

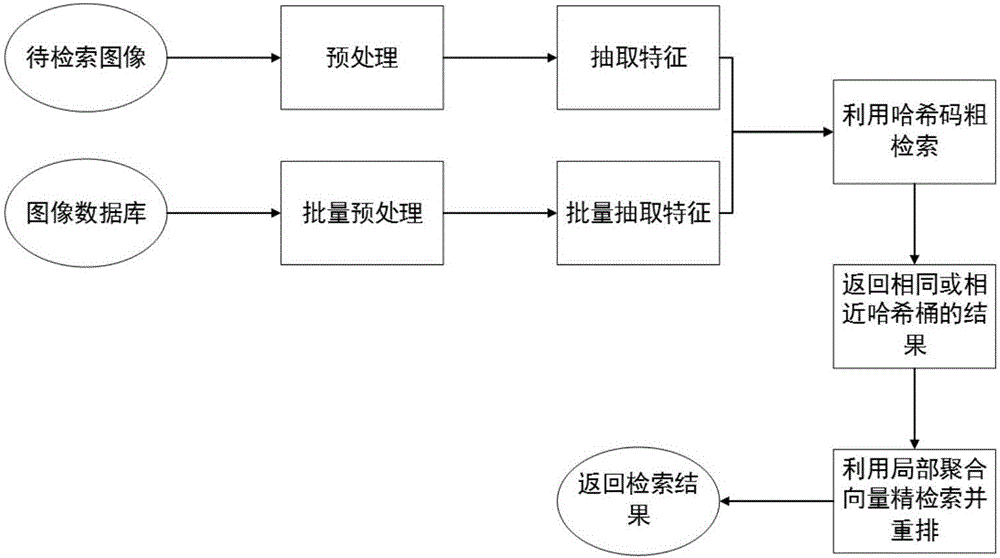

Method for Hash image retrieval based on deep learning and local feature fusion

ActiveCN106682233AFast and Efficient Image Retrieval TasksCharacter and pattern recognitionSpecial data processing applicationsFloating pointImage retrieval

The invention relates to a method for Hash image retrieval based on deep learning and local feature fusion. The method comprises a step (1) of preprocessing an image; a step (2) of using a convolutional neural network to train images containing category tags; a step (3) of using a binarization mode to generate Hash codes of the images and extract 1024-dimensional floating-point type local polymerization vectors; a step (4) of using the Hash codes to perform rough retrieval; and a step (5) of using the local polymerization vectors to perform fine retrieval. According to the method for Hash image retrieval based on deep learning and local feature fusion, an approximate nearest neighbor search strategy is utilized to perform image retrieval after two features are extracted, the retrieval accuracy is high, and the retrieval speed is quick.

Owner:HUAQIAO UNIVERSITY

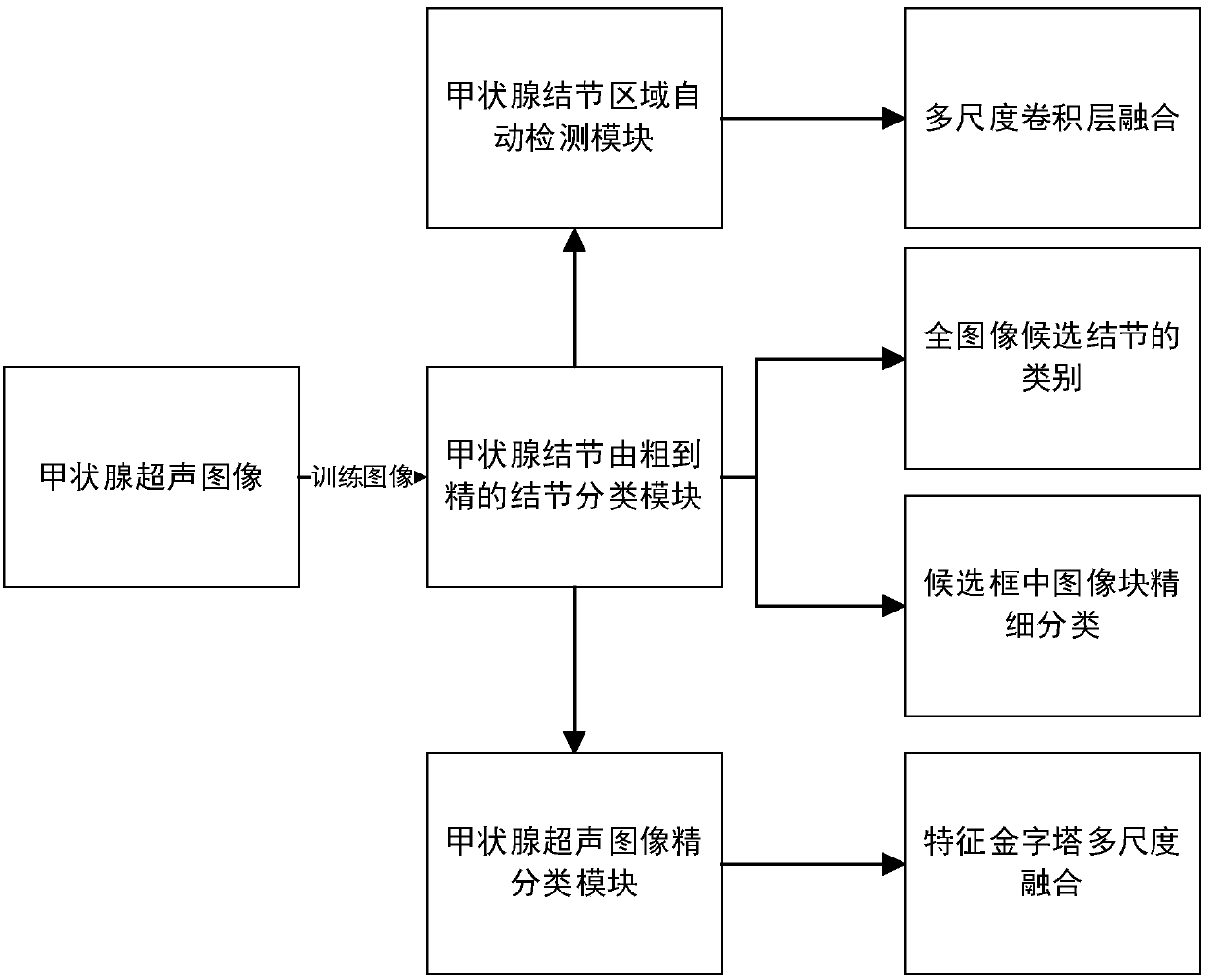

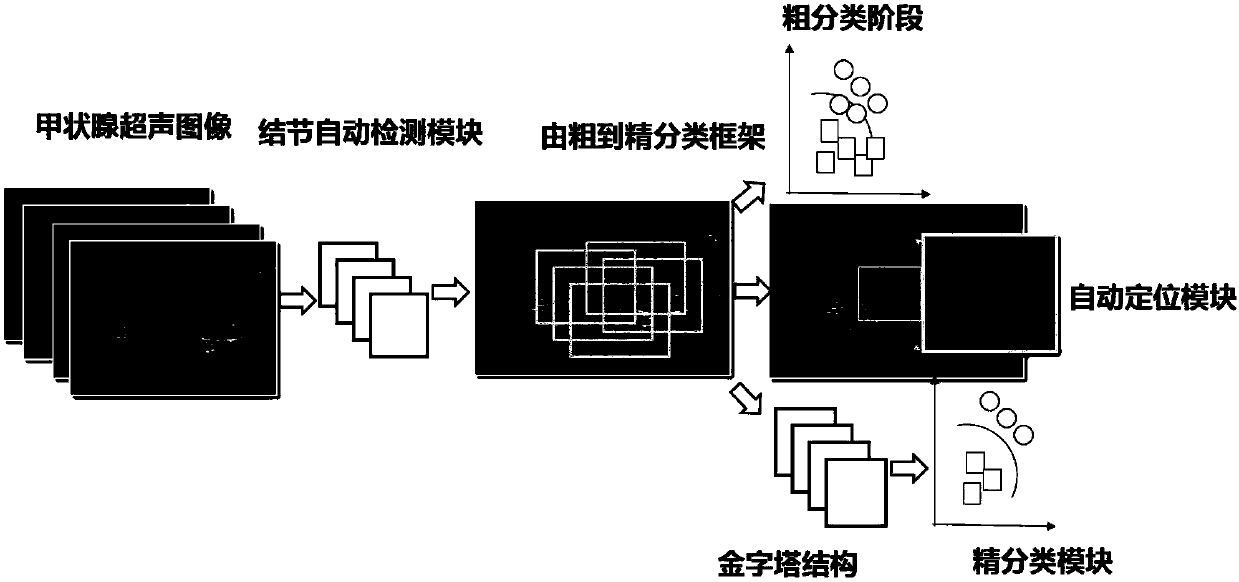

Thyroid ultrasound image nodule automatic diagnosis system based on multi-scale convolutional neural network

ActiveCN107680678AAccurate detectionAdapt to polymorphic automatic detectionImage enhancementImage analysisSemantic featureGlobal information

The invention provides a thyroid ultrasound image nodule automatic diagnosis system based on a multi-scale convolutional neural network. The system includes a thyroid nodule coarse-to-fine classification module, a thyroid nodule region automatic detection module, and a thyroid nodule fine classification module. The size features of different sensing regions are extracted through a multi-scale feature fusion convolutional neural network, and then, the context semantic features of a thyroid nodule can be extracted according to local and global information, and the thyroid nodule can be automatically located. Through multi-scale coarse-to-fine feature extraction based on a neural network and the design of a multi-scale fine classification AlexNet of a pyramid structure, the position of a focus and the probability that the focus is benign or malignant can be accurately predicted, doctors can be assisted in diagnosing a thyroid focus, and the objectivity of diagnosis is improved. The systemhas the characteristics of good real-time performance and high accuracy.

Owner:BEIHANG UNIV

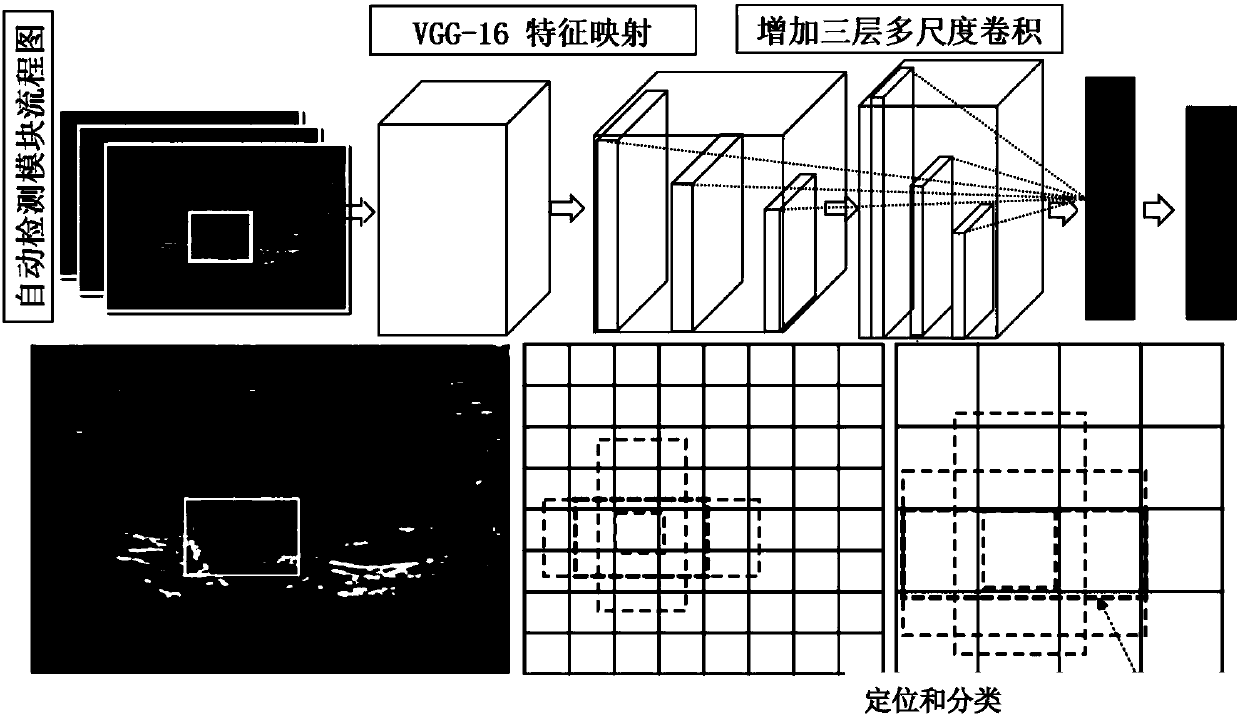

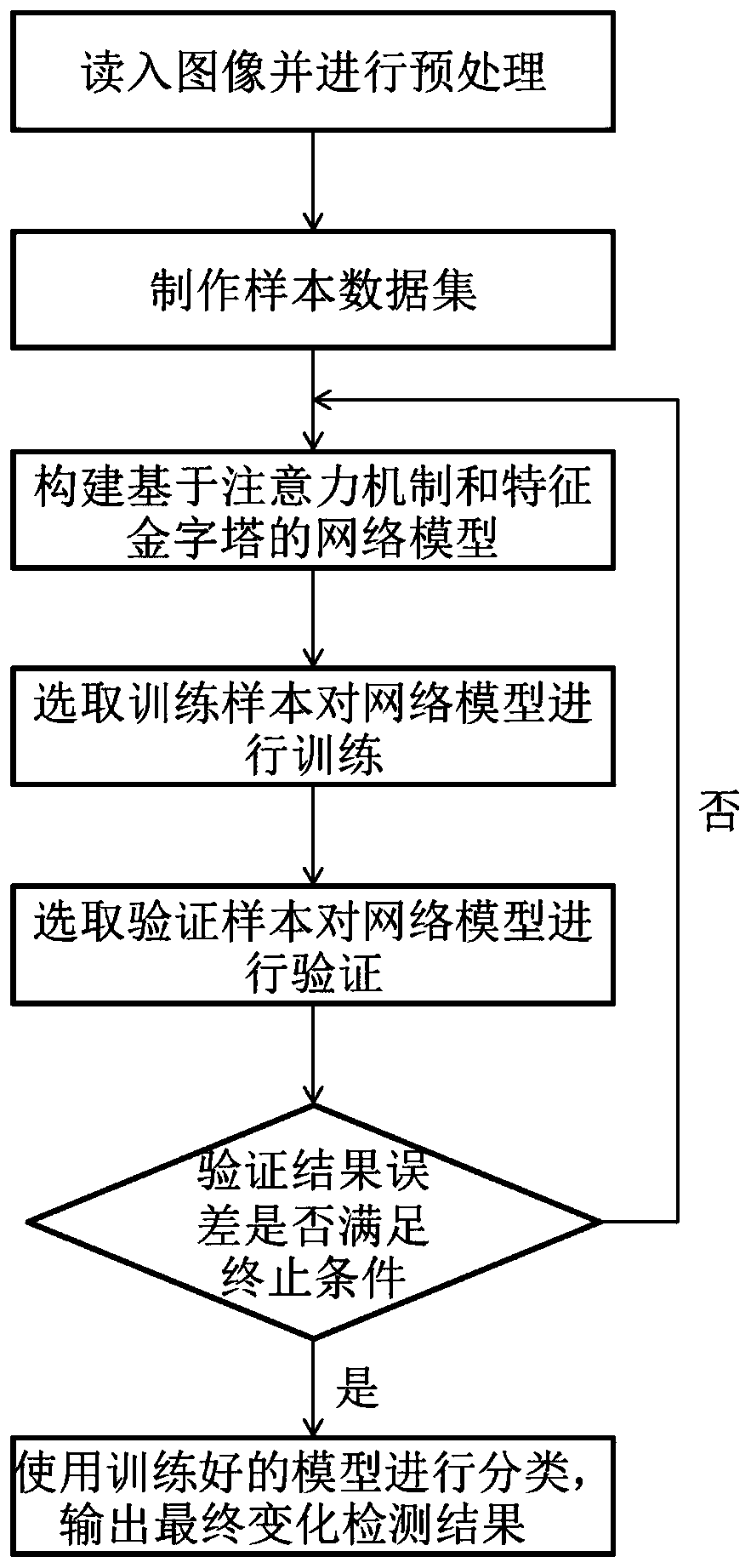

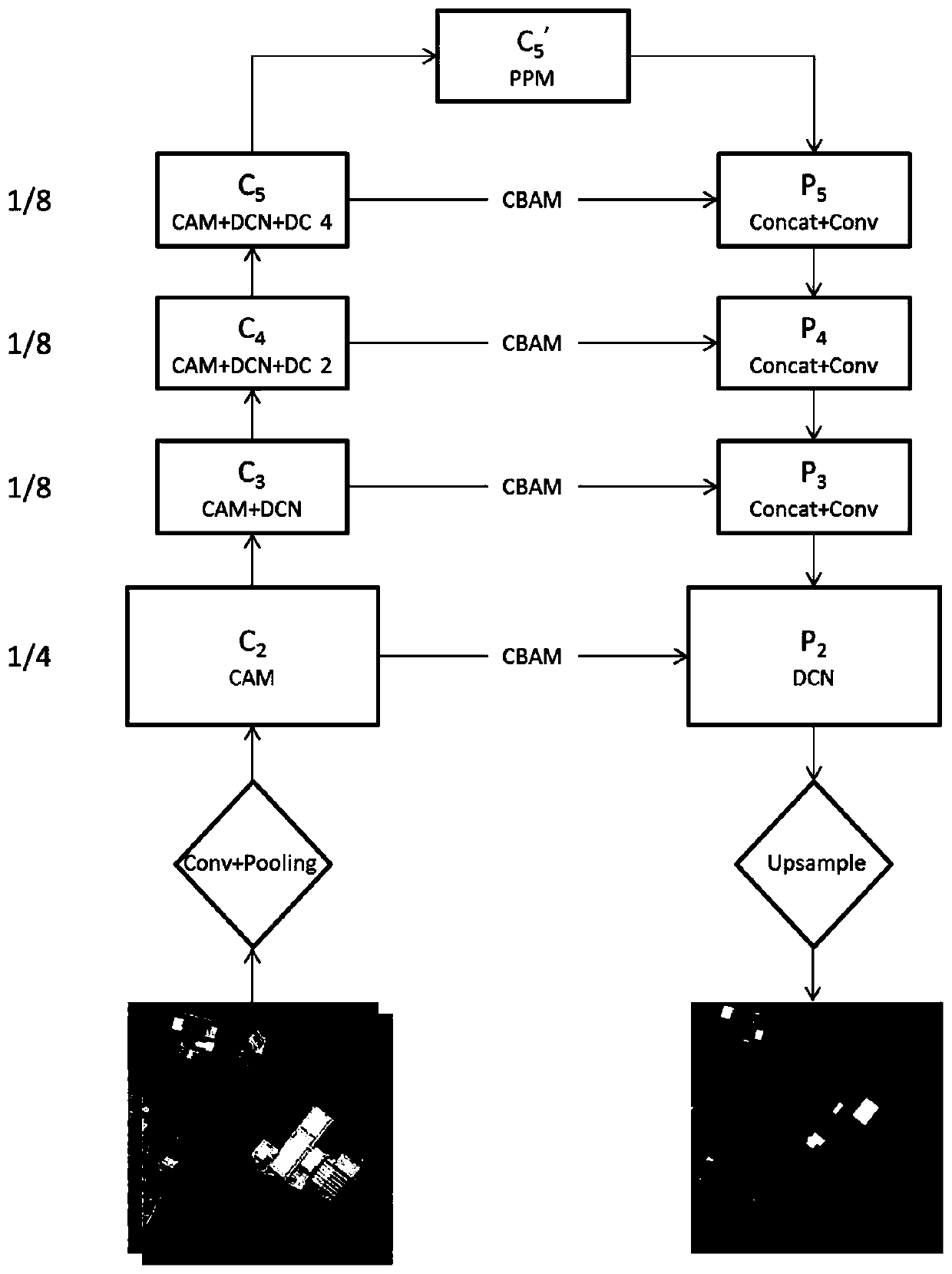

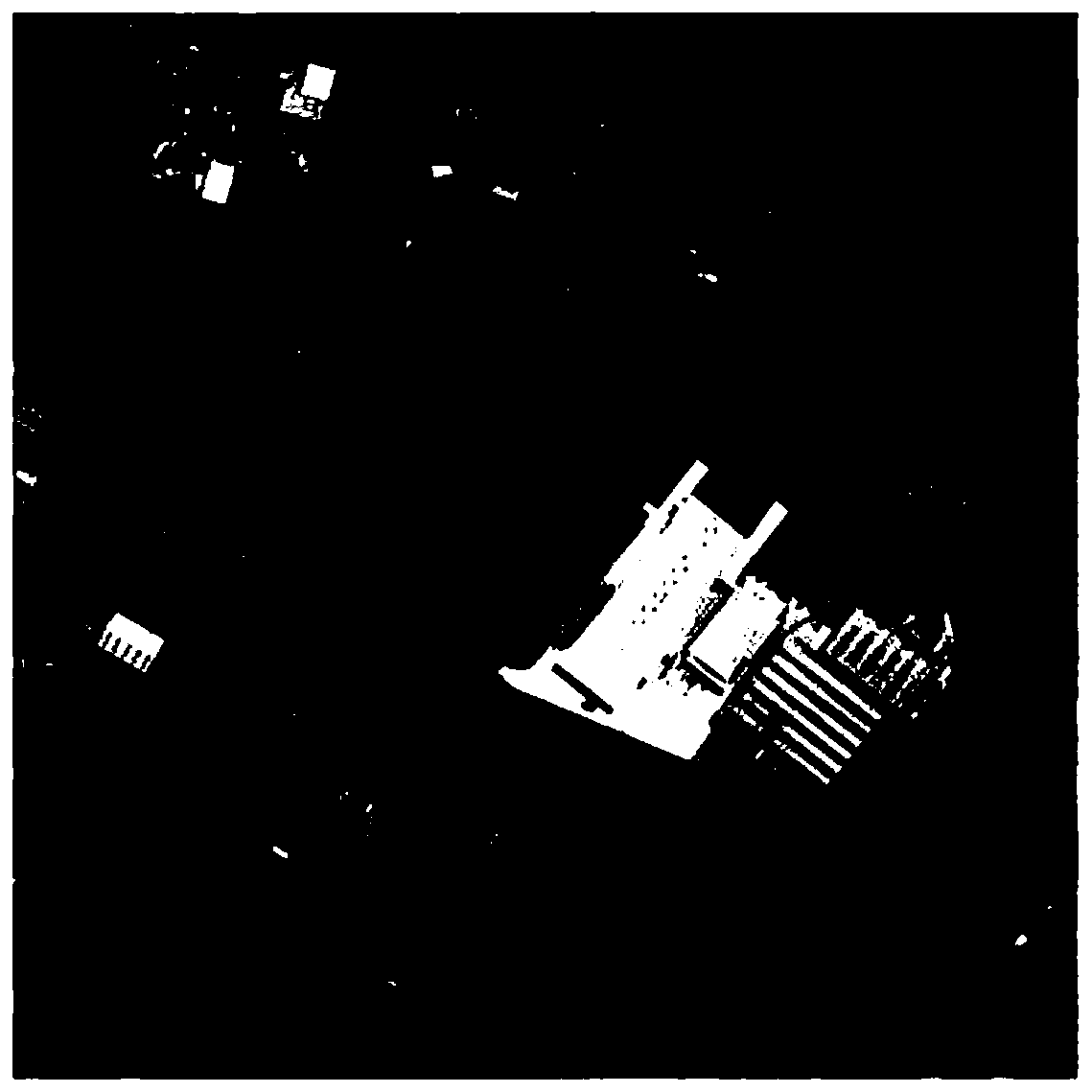

Remote-sensing image building change detection method

PendingCN110705457AEasy to detectReduce false alarm rateCharacter and pattern recognitionNeural architecturesPattern recognitionData set

The invention belongs to the technical field of remote sensing image processing, and particularly relates to a remote sensing image building change detection method, which comprises the following operation steps: (1) reading an image and preprocessing the image; (2) making a sample data set; (3) constructing a network model based on an attention mechanism and a feature pyramid; (4) selecting a training sample to train the network model; (5) selecting a verification sample to verify the network model; and (6) classifying by using the trained model, and outputting a final change detection result. According to the method, a feature pyramid network is introduced, an attention mechanism is used in the multi-scale feature fusion process of all levels, and features are enhanced layer by layer tobe used for target detection of different scales; through application of deformable convolution and cavity convolution, the network obtains the feature expression capability of automatically adaptingto object deformation, the feature size is reserved while the receptive field is expanded, multi-scale information is obtained, the false alarm rate is effectively reduced, and the detection precisionis improved.

Owner:BEIJING RES INST OF URANIUM GEOLOGY

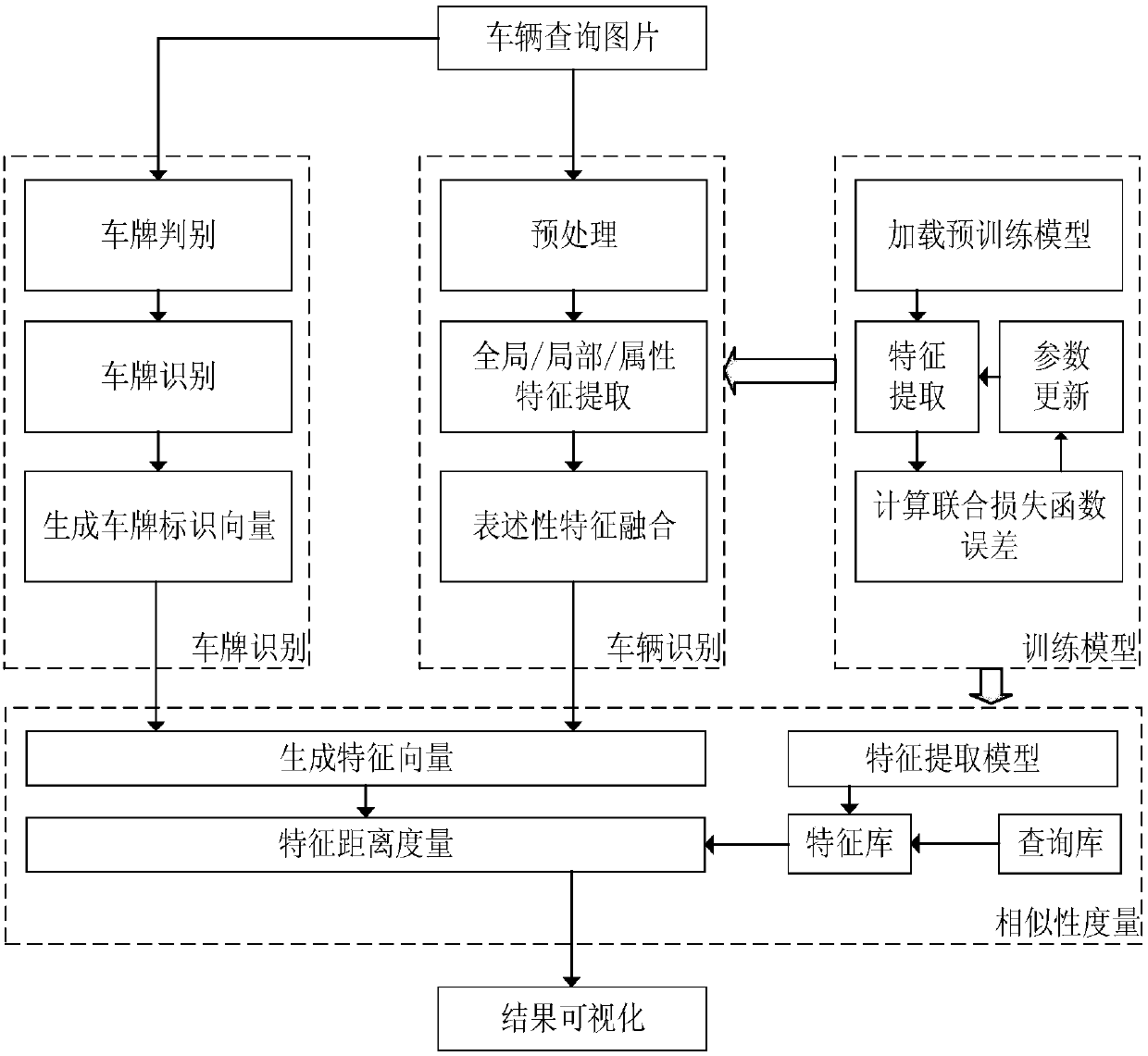

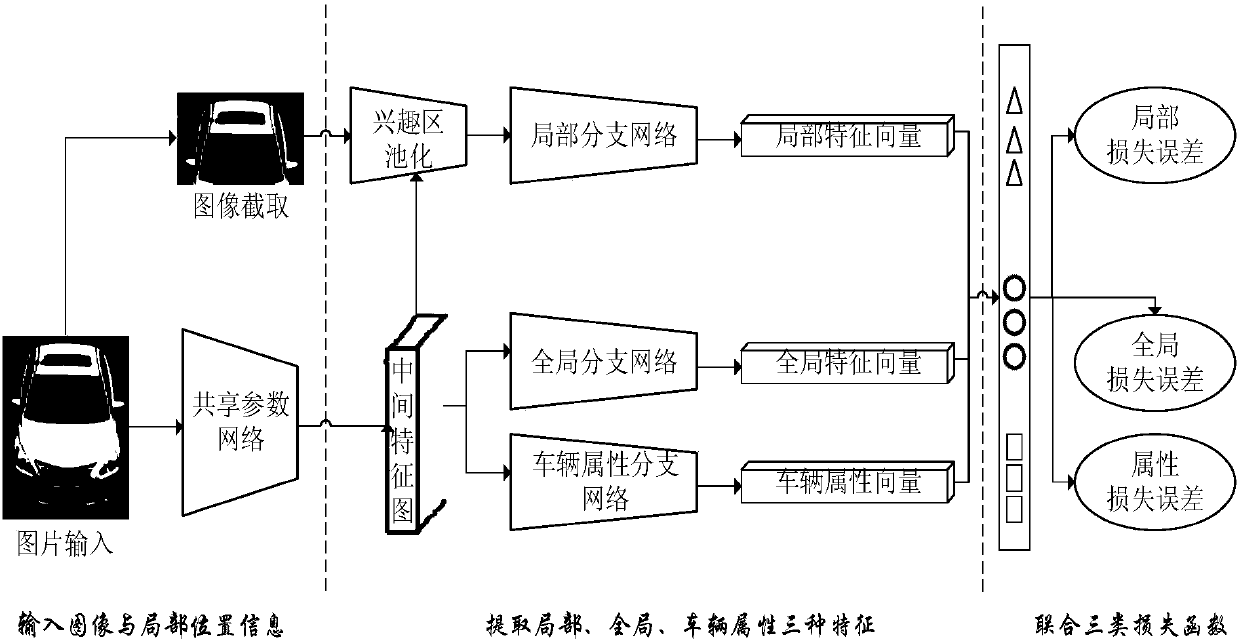

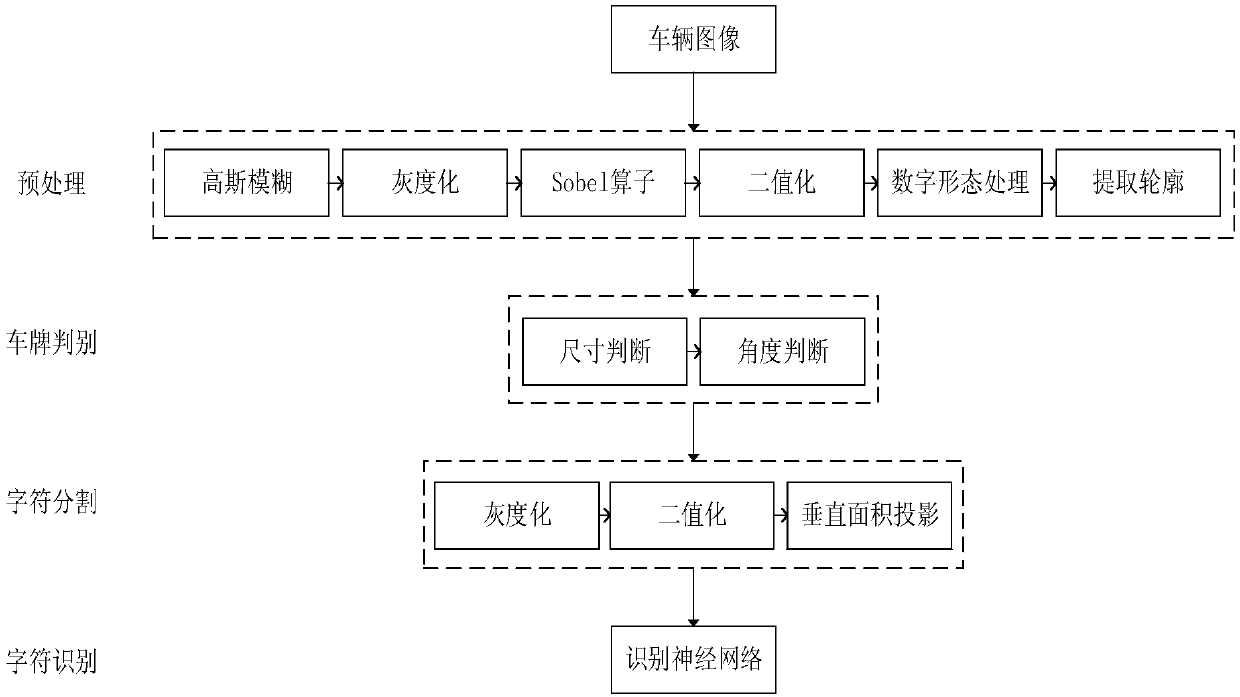

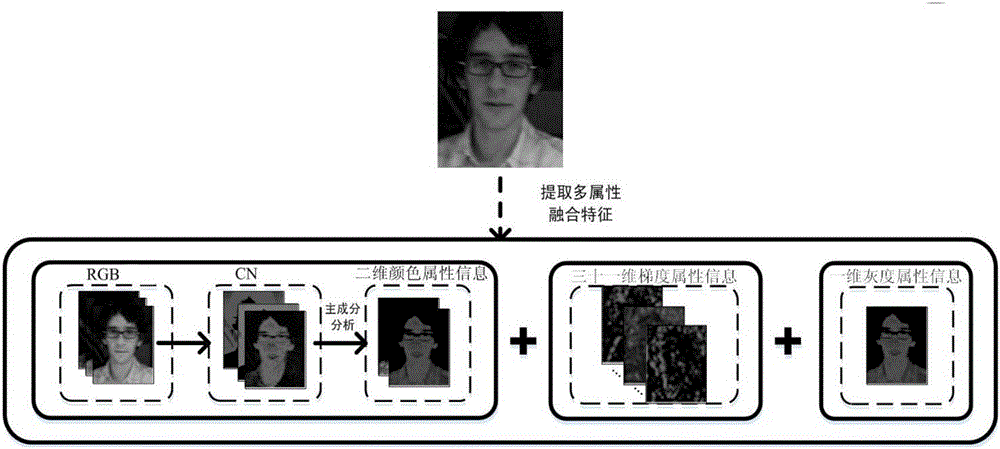

Multi-feature fusion vehicle re-identification method based on deep learning

ActiveCN107729818AImprove feature extractionGood presentation skillsCharacter and pattern recognitionFeature vectorData set

The invention discloses a multi-feature fusion vehicle re-identification method based on deep learning. The method comprises five parts of model training, license plate identification, vehicle identification, similarity measurement and visualization. The method comprises the steps that a large-scale vehicle data set is used for model training, and a multi-loss function-phased joint training policyis used for training; license plate identification is carried out on each vehicle image, and a license plate identification feature vector is generated according to the license plate recognition condition; a trained model is used to extract the vehicle descriptive features and vehicle attribute features of an image to be analyzed and an image in a query library, and the vehicle descriptive features and the license plate identification vector are combined with the unique re-identification feature vector of each vehicle image; in the stage of similarity measurement, similarity measurement is carried out on the image to be analyzed and the re-identification feature vector of the image in the query library; and a search result which meets requirements is locked and visualized.

Owner:BEIHANG UNIV

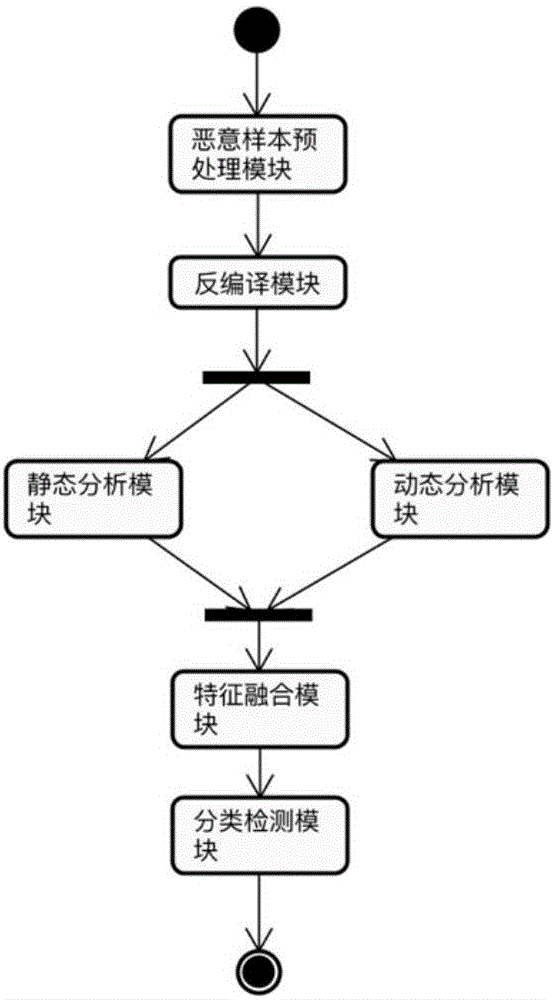

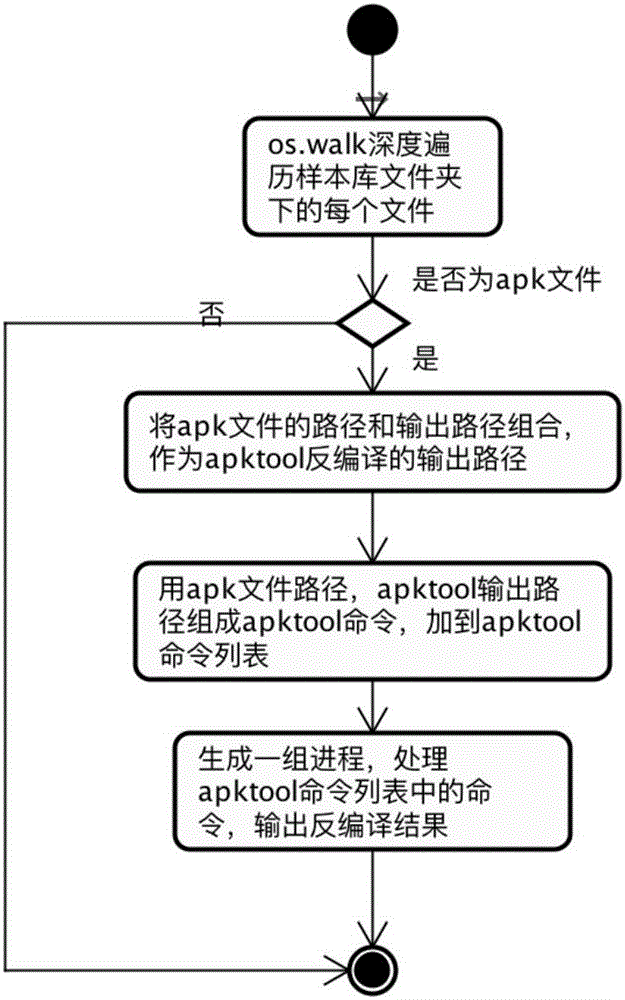

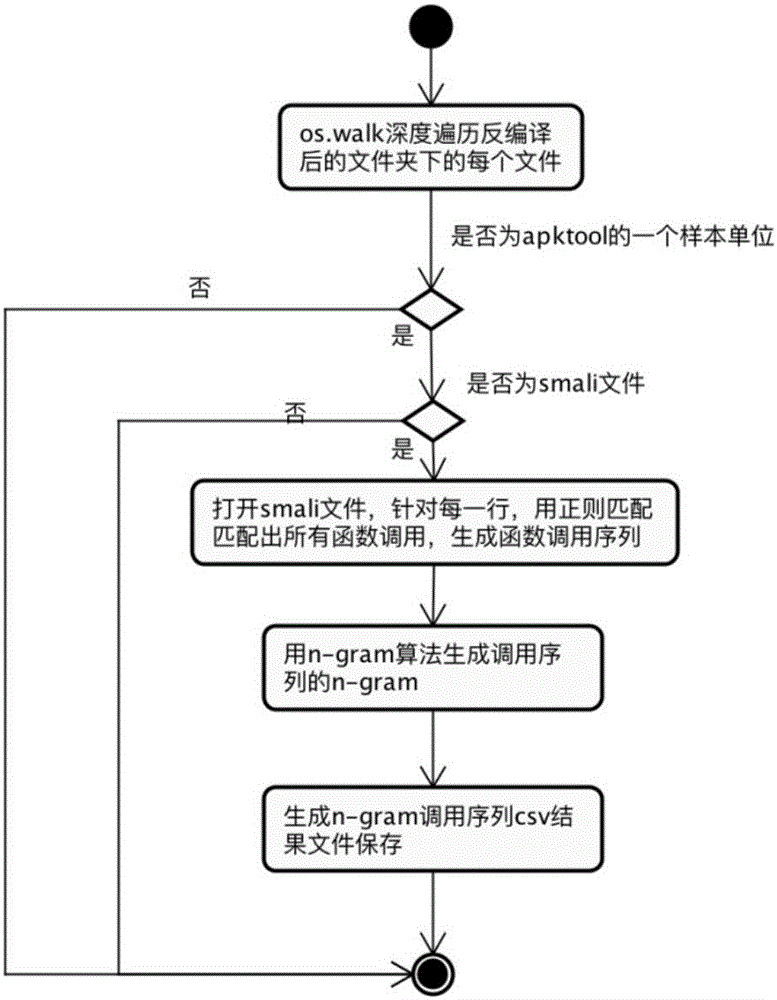

Android malicious application detection method and system based on multi-feature fusion

ActiveCN107180192AImprove detection accuracyReflect malicious appsCharacter and pattern recognitionPlatform integrity maintainanceFeature vectorAnalytical problem

The invention discloses an Android malicious application detection method and system based on multi-feature fusion. The method comprises the following steps that: carrying out decompilation on an Android application sample to obtain a decompilation file; extracting static features from the decompilation file; operating the Android application sample in an Android simulator to extract dynamic features; carrying out feature mapping on the static features and the dynamic features by the text Hash mapping part of a locality sensitive Hash algorithm, mapping to a low-dimensional feature space to obtain a fused feature vector; and on the basis of the fused feature vector, utilizing a machine learning classification algorithm to train to obtain a classifier, and utilizing the classifier to carry out classification detection. By use of the method, the high-dimensional feature analysis problem of a malicious code rare sample family can be solved, and detection accuracy is improved.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

Remote sensing image classification method based on multi-feature fusion

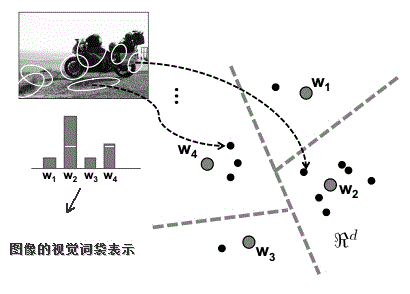

ActiveCN102622607AImprove classification accuracyEnhanced Feature RepresentationCharacter and pattern recognitionSynthesis methodsClassification methods

The invention discloses a remote sensing image classification method based on multi-feature fusion, which includes the following steps: A, respectively extracting visual word bag features, color histogram features and textural features of training set remote sensing images; B, respectively using the visual word bag features, the color histogram features and the textural features of the training remote sensing images to perform support vector machine training to obtain three different support vector machine classifiers; and C, respectively extracting visual word bag features, color histogram features and textural features of unknown test samples, using corresponding support vector machine classifiers obtained in the step B to perform category forecasting to obtain three groups of category forecasting results, and synthesizing the three groups of category forecasting results in a weighting synthesis method to obtain the final classification result. The remote sensing image classification method based on multi-feature fusion further adopts an improved word bag model to perform visual word bag feature extracting. Compared with the prior art, the remote sensing image classification method based on multi-feature fusion can obtain more accurate classification result.

Owner:HOHAI UNIV

Rail surface defect detection method based on depth learning network

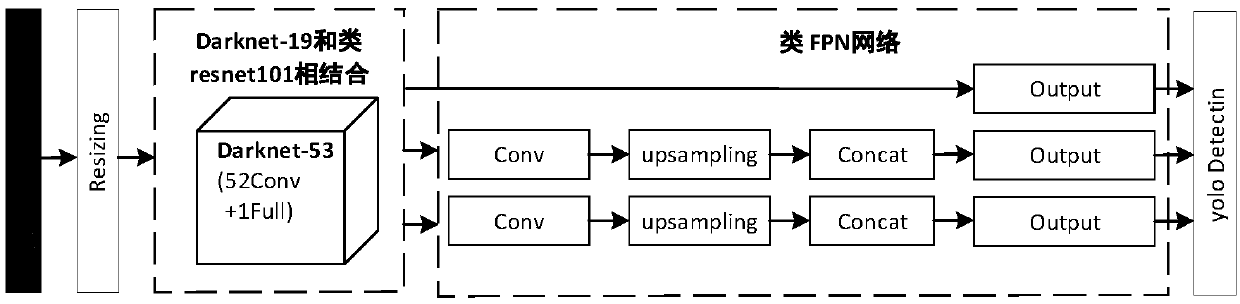

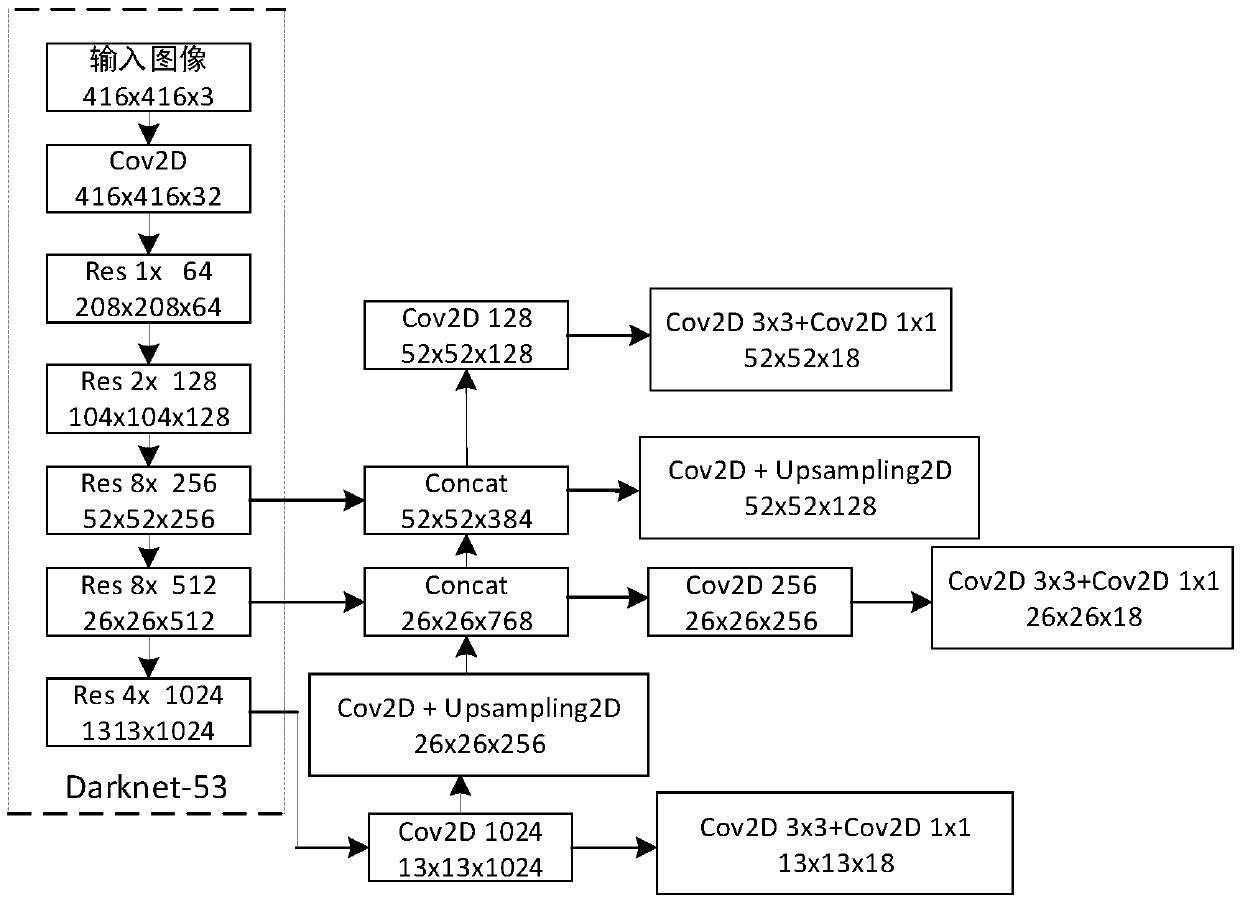

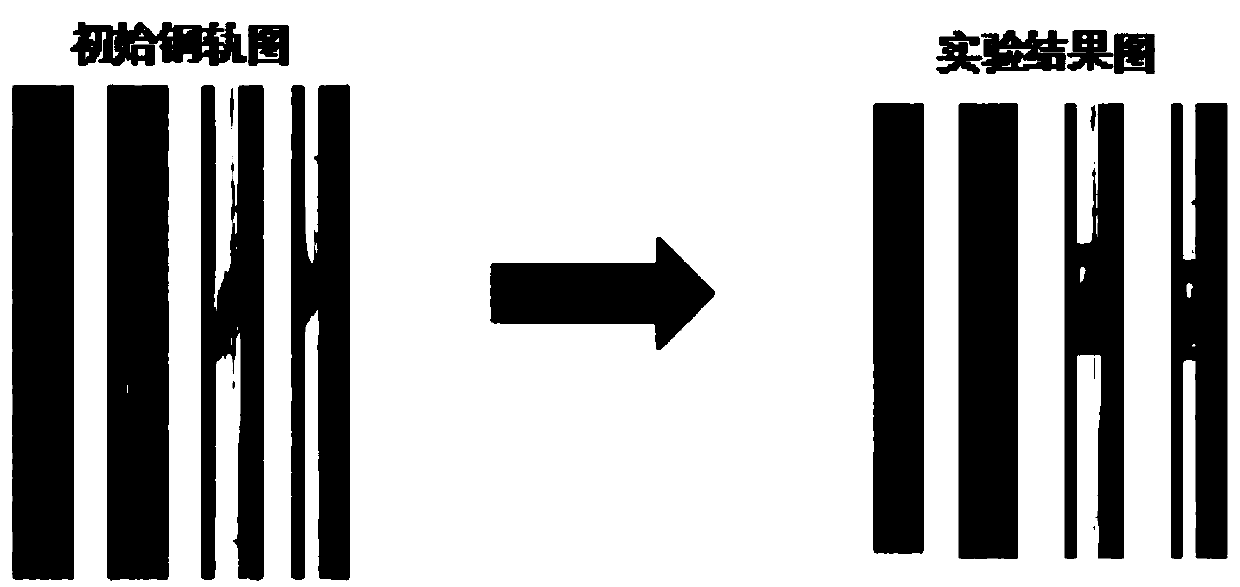

InactiveCN109064461AResolve overlapTargeting is accurateImage analysisOptically investigating flaws/contaminationImage extractionNetwork model

The invention belongs to the field of depth learning, and provides a rail surface defect detection method based on the depth learning network, aiming at solving various problems existing in the priorrail detection methods. The depth learning method first automatically resets the input rail image to 416*416, and then extracts and processes the image. Image extraction mainly by Darknet-53 model complete. The processing output is mainly accomplished by the FPN-like network model. Firstly, the rail image is divided into cells. According to the position of the defects in the cells, the width, height and coordinates of the center point of the defects are calculated by dimension clustering method, and the coordinates are normalized. At the same time, we use logistic regression to predict the fraction of boundary box object, use binary cross-entropy loss to predict the category contained in the boundary box, calculate the confidence level, and then process the convolution in the output, up-sampling, network feature fusion to get the prediction results. The invention can accurately identify defects and effectively improve the detection and identification rate of rail surface defects.

Owner:CHANGSHA UNIVERSITY OF SCIENCE AND TECHNOLOGY

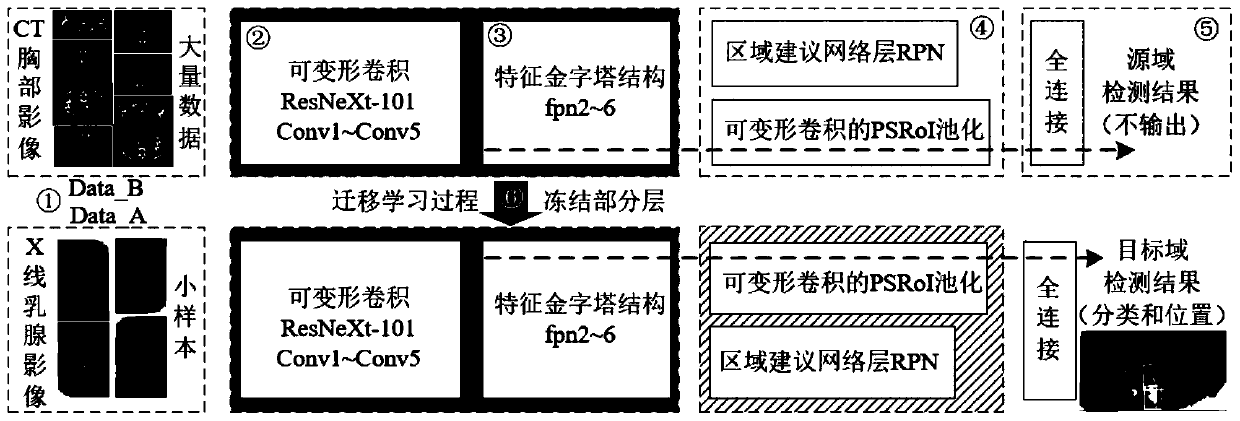

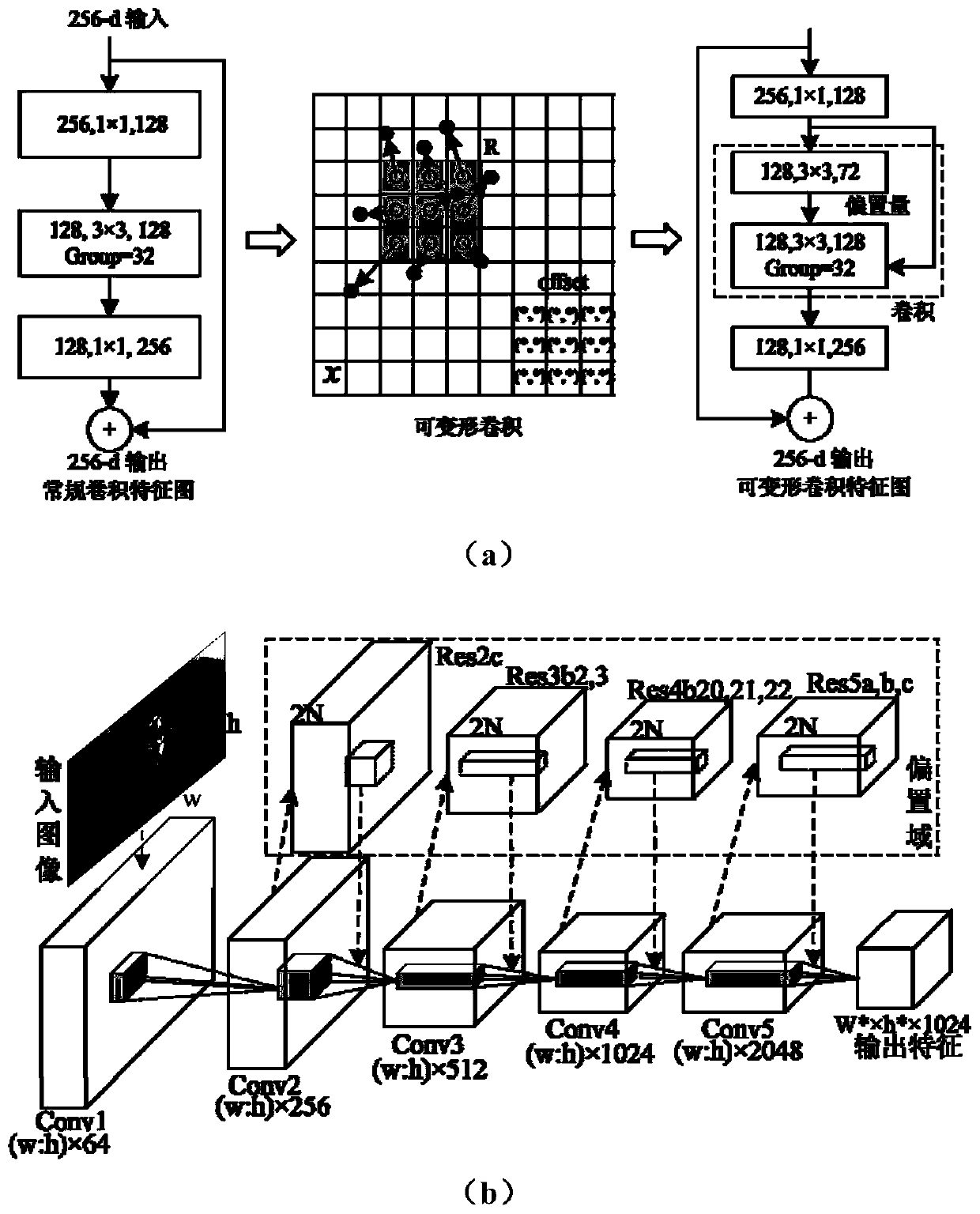

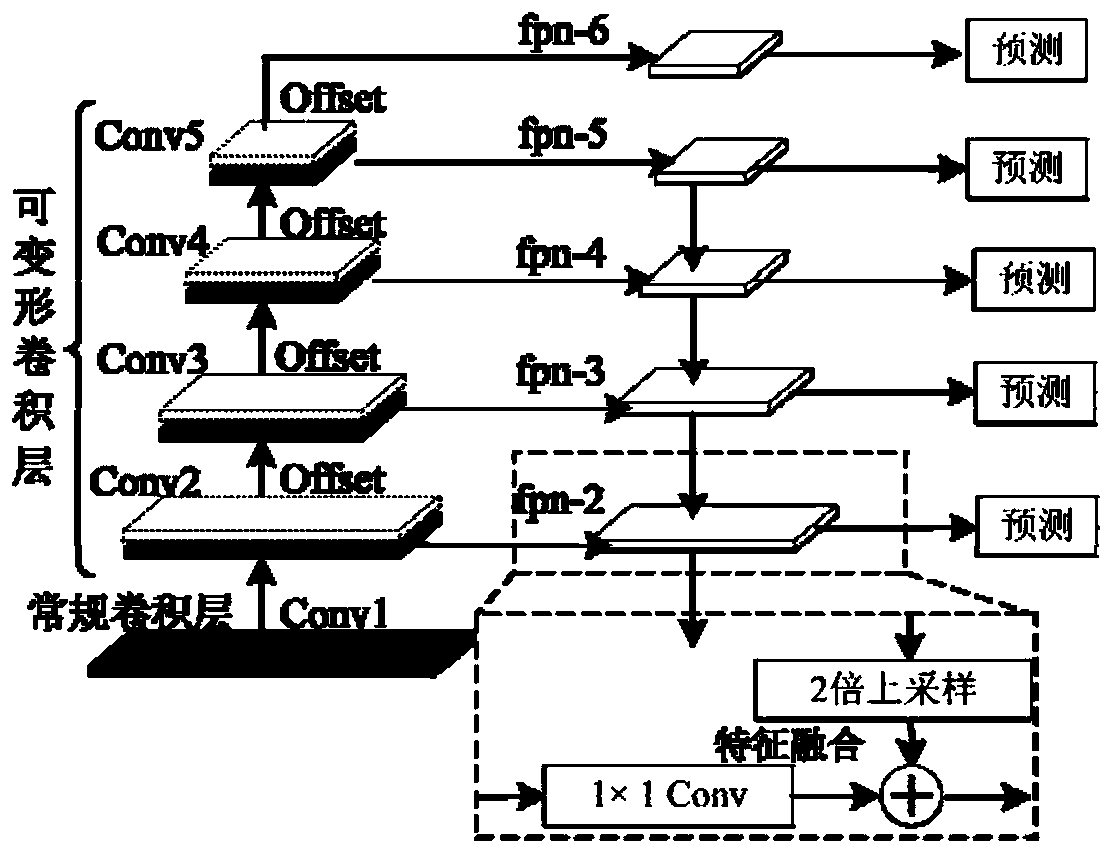

Method for detecting X-ray mammary gland lesion image based on feature pyramid network under transfer learning

ActiveCN110674866AImprove robustnessImprove the extraction effectImage enhancementImage analysisData setImage detection

The invention provides a method for detecting an X-ray mammary gland lesion image based on feature pyramid network under transfer learning. The method comprises the steps: 1, establishing a source domain data set and a target domain data set; 2, establishing a deformable convolution residual network layer by a deformable convolution and extended residual network module; 3, establishing a multi-scale feature extraction sub-network based on a feature pyramid structure through a feature map up-sampling and feature fusion method in combination with a deformable convolution residual network layer;4, establishing a deformable pooling sub-network sensitive to the focus position; 5, establishing a post-processing network layer to optimize a prediction result and a loss function; and 6, migratingthe training model to a small sample molybdenum target X-ray mammary gland focus detection task so as to improve the detection precision of the network model on the focus in the small sample image. According to the method, a transfer learning strategy is combined to realize focus image processing in a small sample medical image.

Owner:LANZHOU UNIVERSITY OF TECHNOLOGY

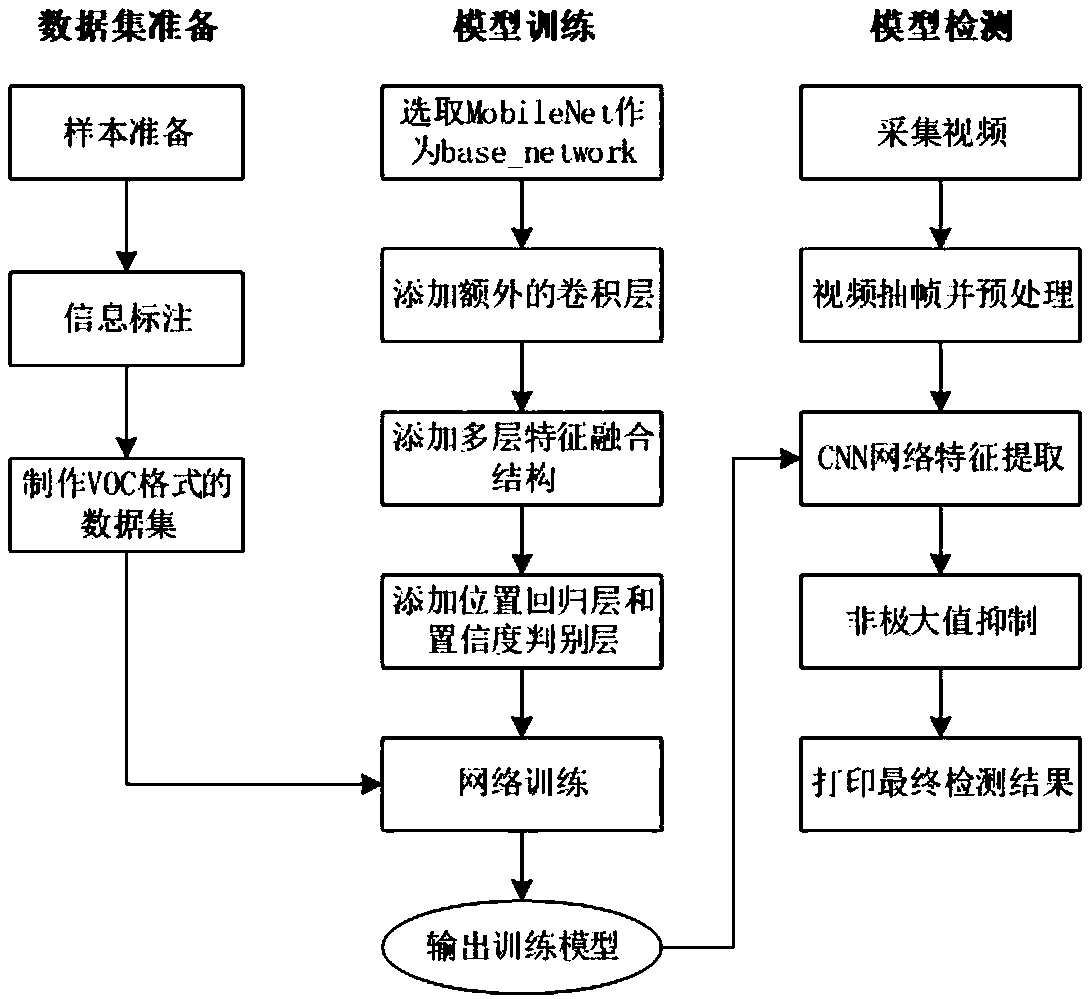

Driving scene target detection method based on deep learning and multi-layer feature fusion

InactiveCN108875595AHigh quality feature extractionFeature extraction quality improvementCharacter and pattern recognitionNeural architecturesData setImaging processing

The invention relates to the technical field of traffic image processing, and discloses a driving scene target detection method based on deep learning and multi-layer feature fusion. The method comprises the following steps of 1) collecting a video image to serve as a training data set, and performing preprocessing; 2) building a training network; 3) initializing the training network to obtain a pre-training model; 4) performing training on the training data set by using the pre-training model obtained in the step 3), thereby obtaining a training model; 5) collecting a front image by using a vehicle-mounted camera, and inputting the image into the training model obtained in the step 4), thereby obtaining a detection result. The multi-layer feature fusion method based on a feature pyramid is adopted to enhance semantic information of a low-layer feature graph, so that the feature extraction quality of the network is improved, and higher detection precision is obtained.

Owner:CHONGQING UNIV

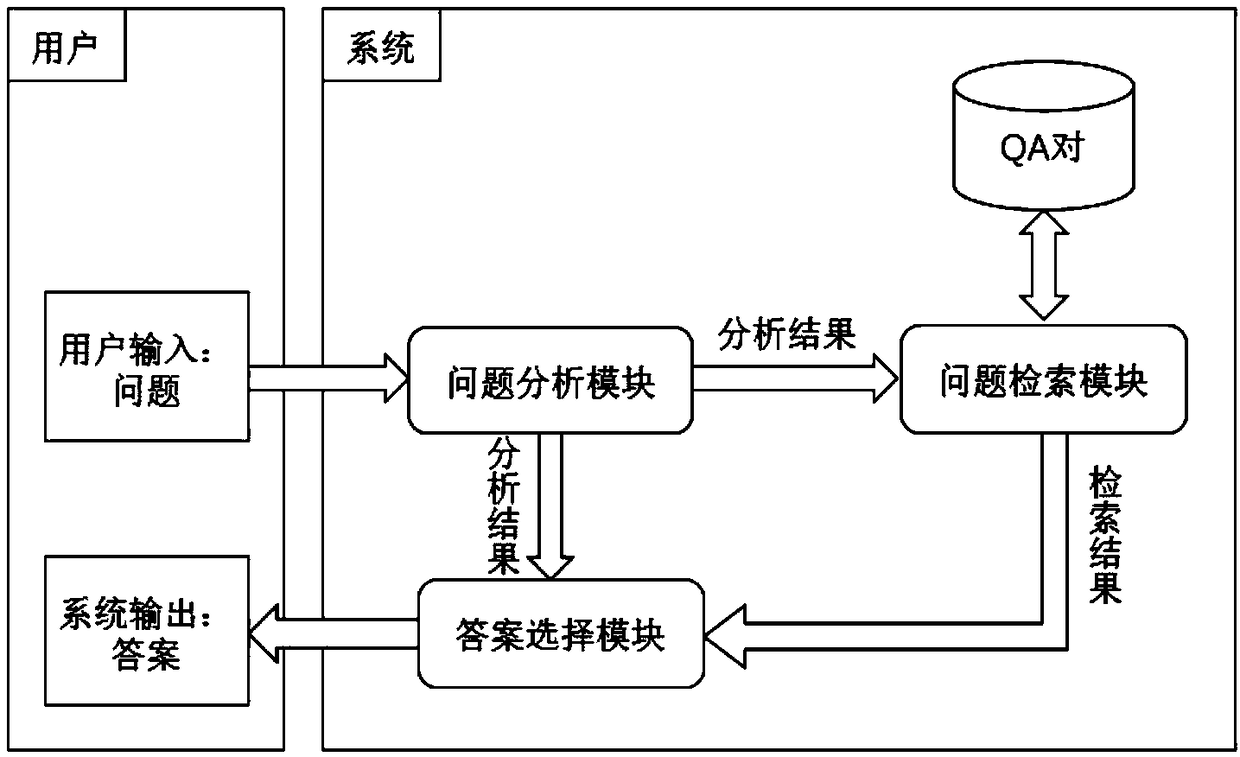

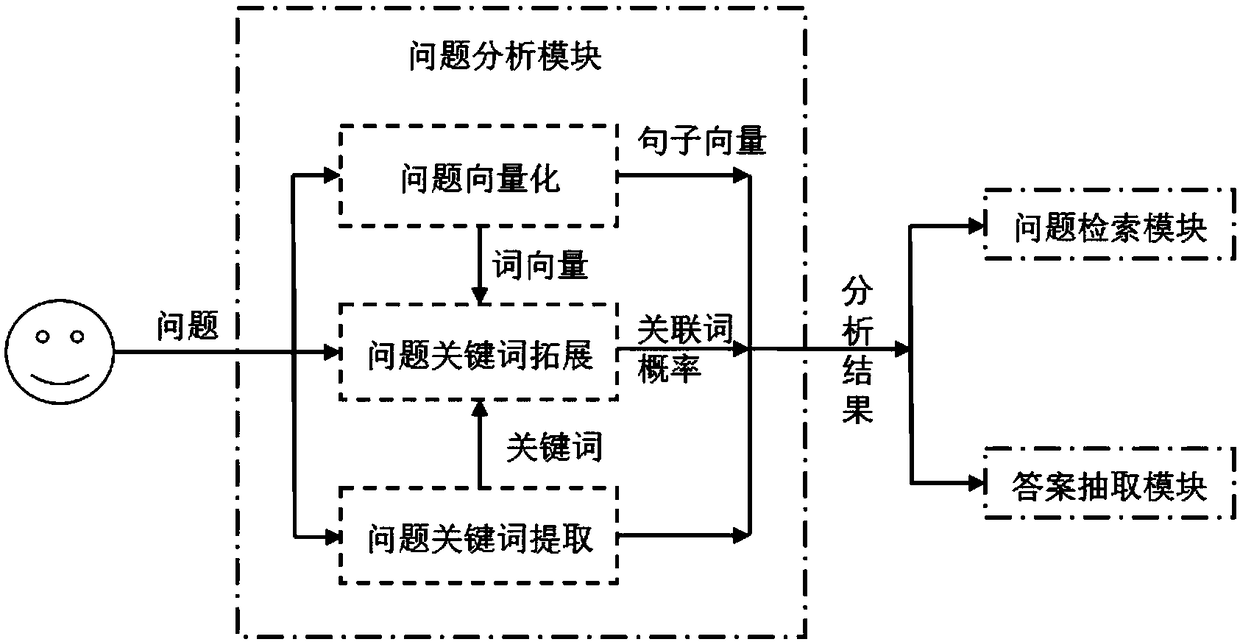

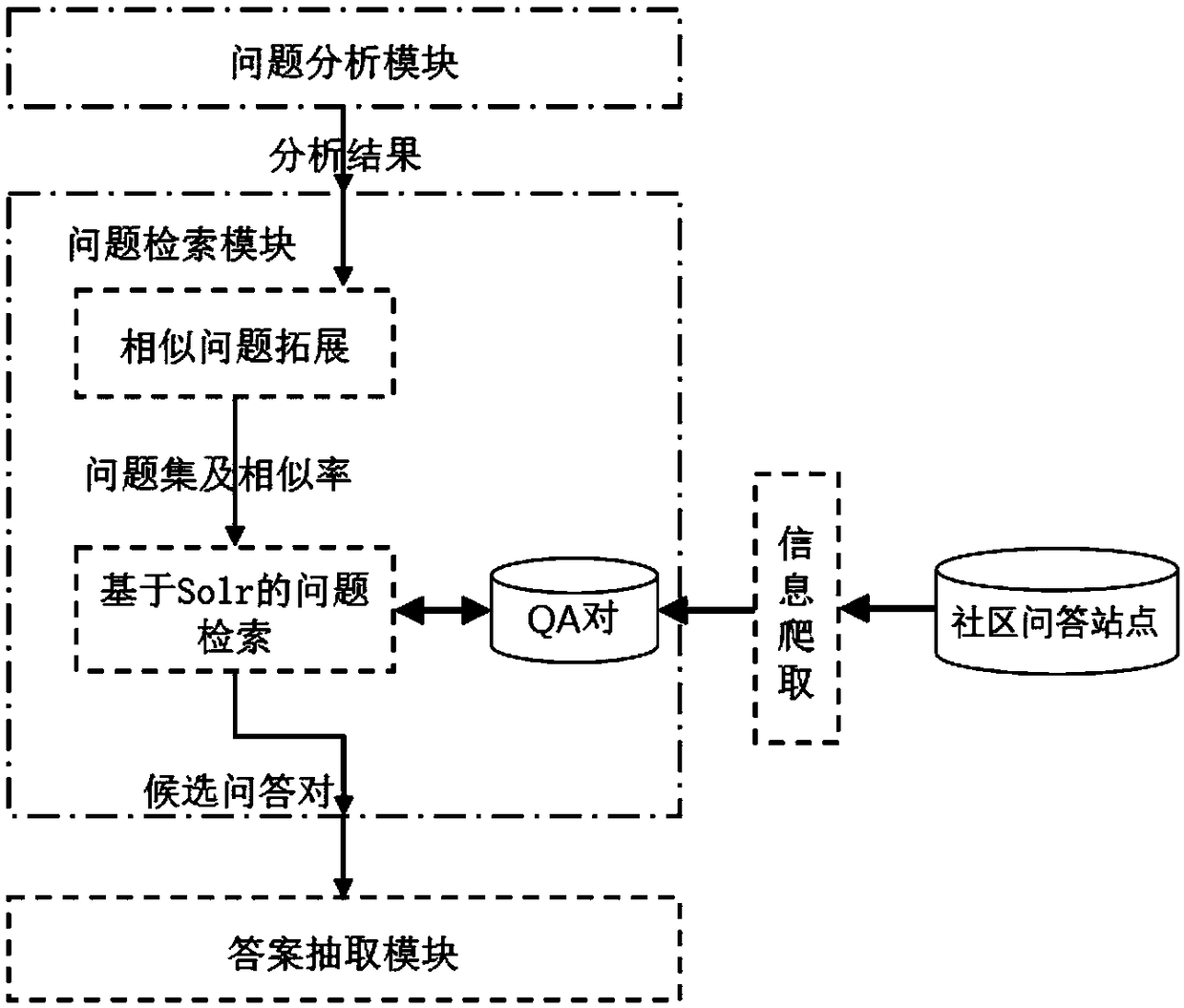

A method for implementing a question answering system based on a question-answer pair

ActiveCN109271505AImprove accuracyText database queryingSpecial data processing applicationsSorting algorithmSingle best answer

A method for implementing a question answering system based on a question-answer pair comprises the following steps: question analysis, question retrieval and answer selection. After a user submits aquestion expressed in natural language to the question answering system, the question answering system uses question vectorization, keyword extraction, keyword extension and other natural language processing techniques to understand the questioning intent of a user, and then uses the engine searching method in the question-answer pair database to obtain the question-related candidate question-answer pair set, and uses a matching algorithm and sorting algorithm to accurately select the best answer from the candidate sets. The invention obtains the function of the matching degree score between the question and the answer by learning by synthesizing different algorithms and models, the method of choosing the best answer from the candidate question-answer pairs is realized, and an answer selection method based on convolution neural network and Xgboost feature fusion is completed, which provides a better method for the answer selection of the question answering system.

Owner:深圳智能思创科技有限公司

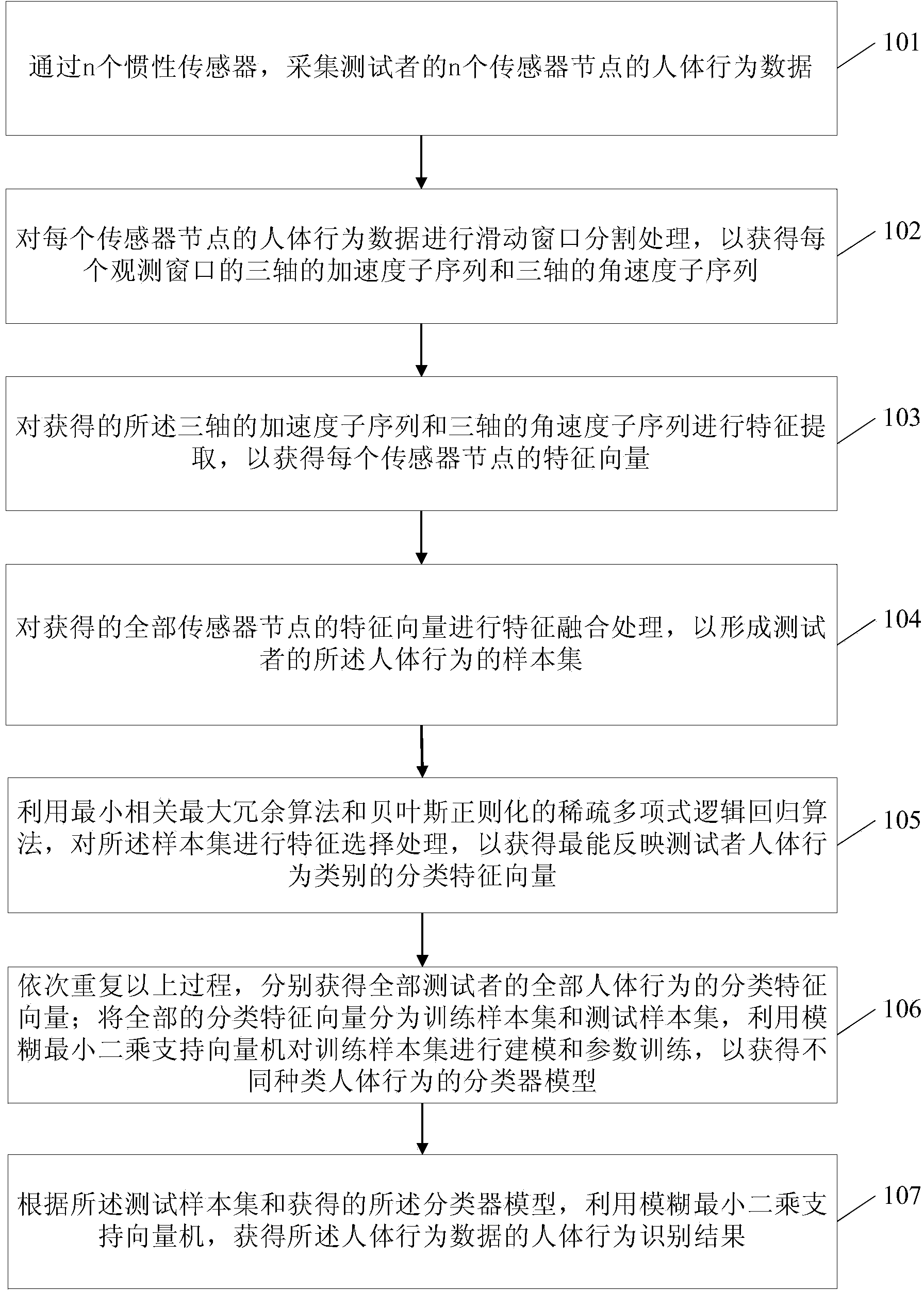

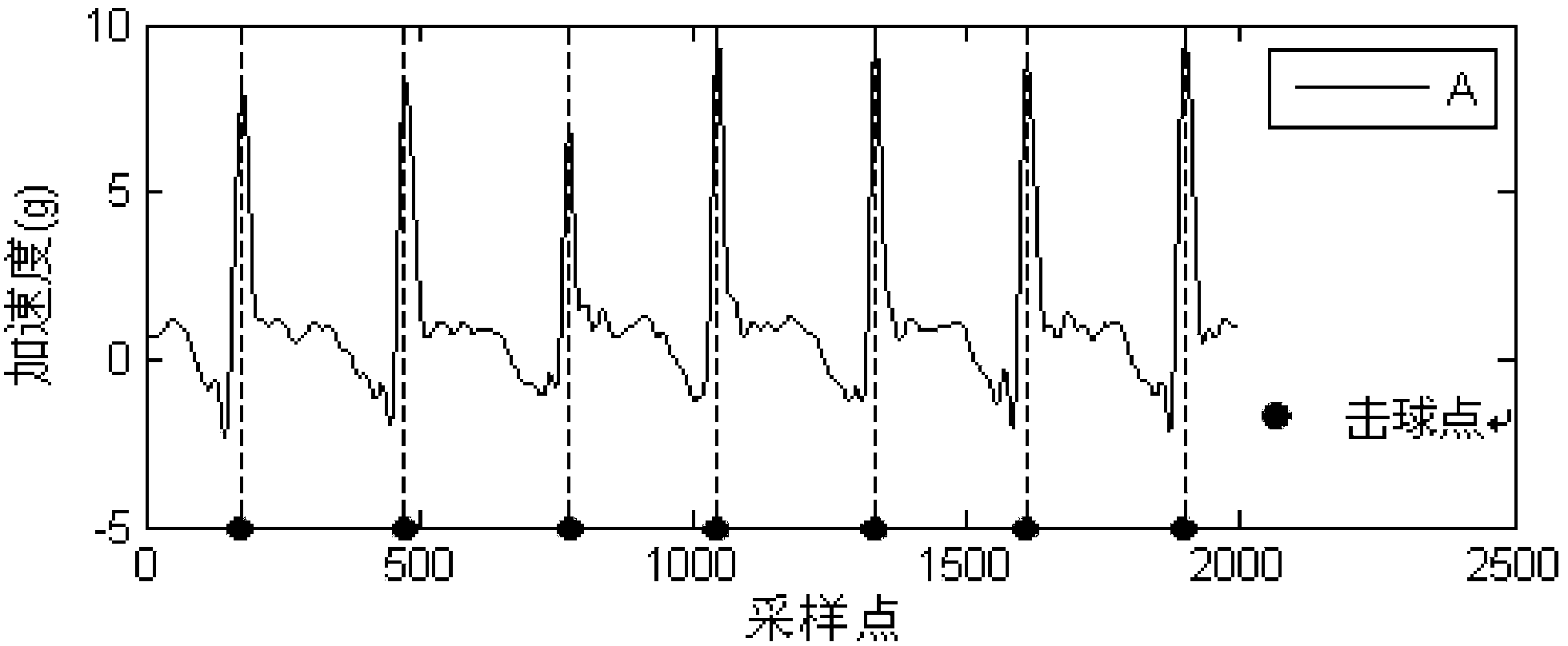

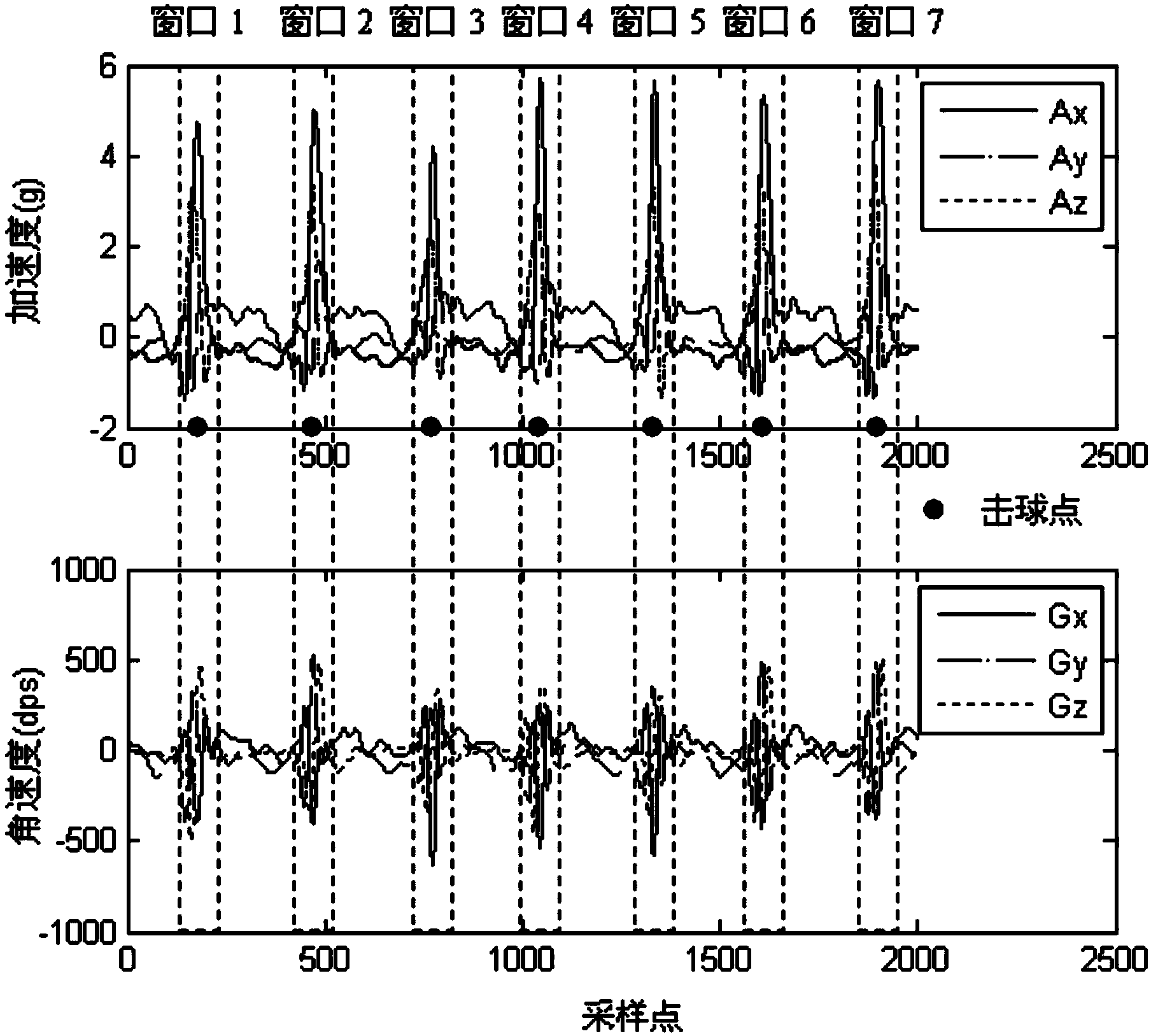

Human body behavior identification method based on inertial sensor

The invention provides a human body behavior identification method based on an inertial sensor. The method comprises the steps of acquiring human body behavior data of testees by means of the inertial sensor, conducting sliding window segmentation on the acquired human body behavior data, conducting feature extraction on a triaxial accelerated speed subsequence and a triaxial angular speed subsequence which are obtained after sliding window segmentation, conducting feature fusion on a feature vector to form a sample set of the human body behaviors of the testees, conducting feature selection on the sample set by means of the least correlated maximum redundant algorithm and the Bayes regularization sparse polynomial logistic regression algorithm, obtaining the classification feature vectors of all human body behaviors of all the testees, obtaining a classifier model of each human body behavior by means of a fuzzy least square support vector machine, and obtaining a human body behavior identification result after the human body behavior data are tested by means of the fuzzy least square support vector machine. By means of the human body behavior identification method, self-adaptation and identification efficiency can be improved.

Owner:DALIAN UNIV OF TECH

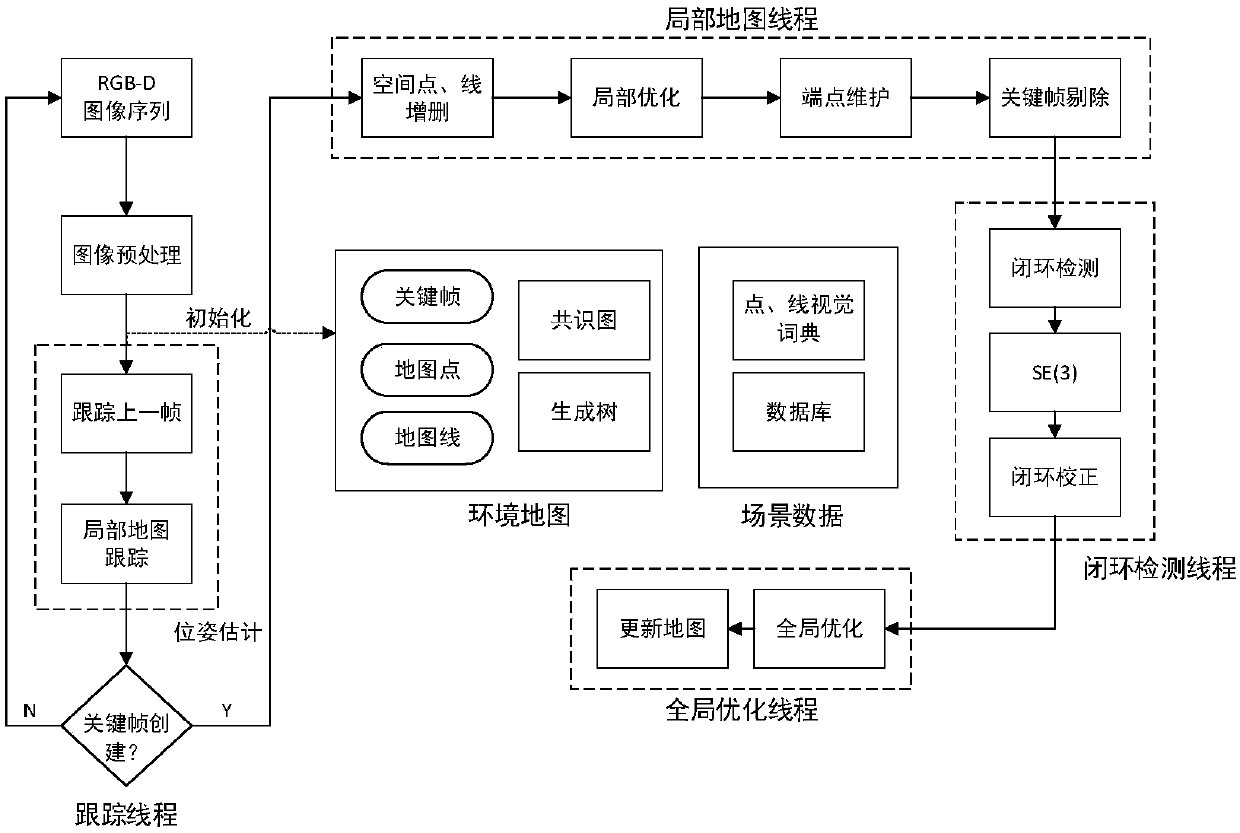

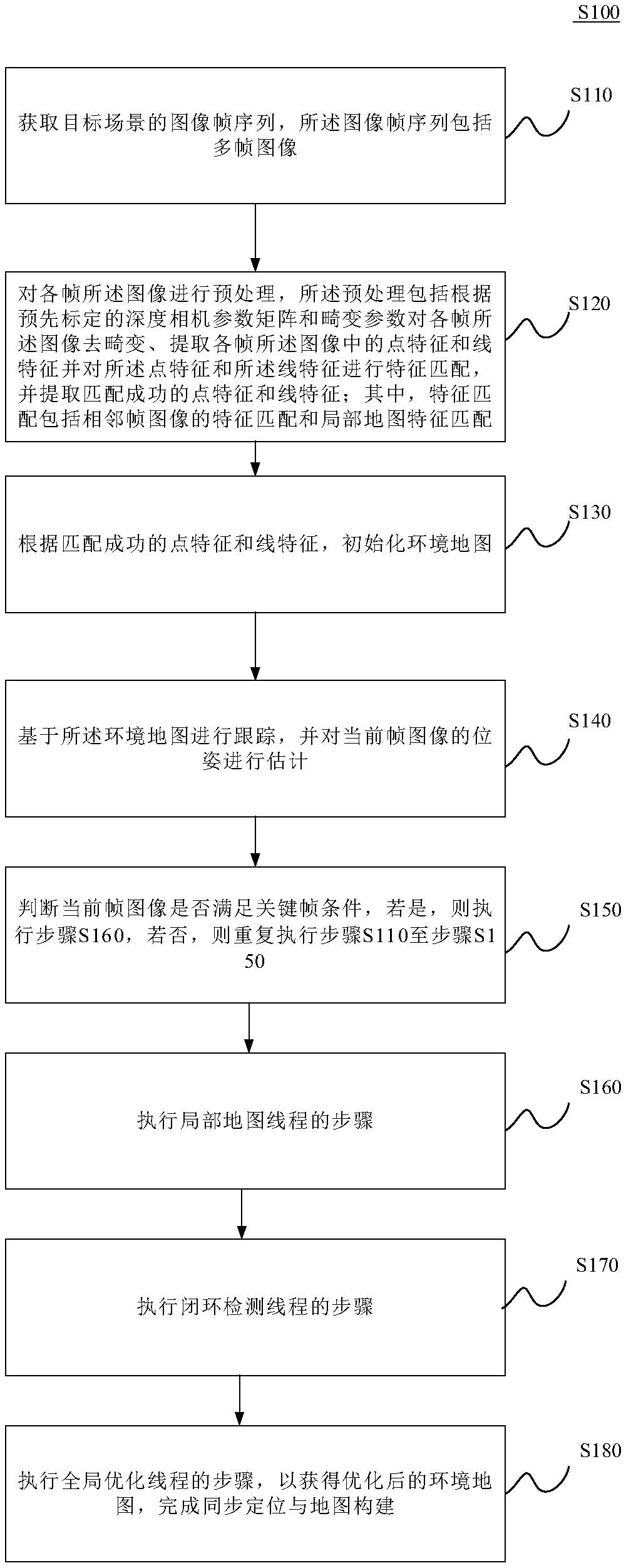

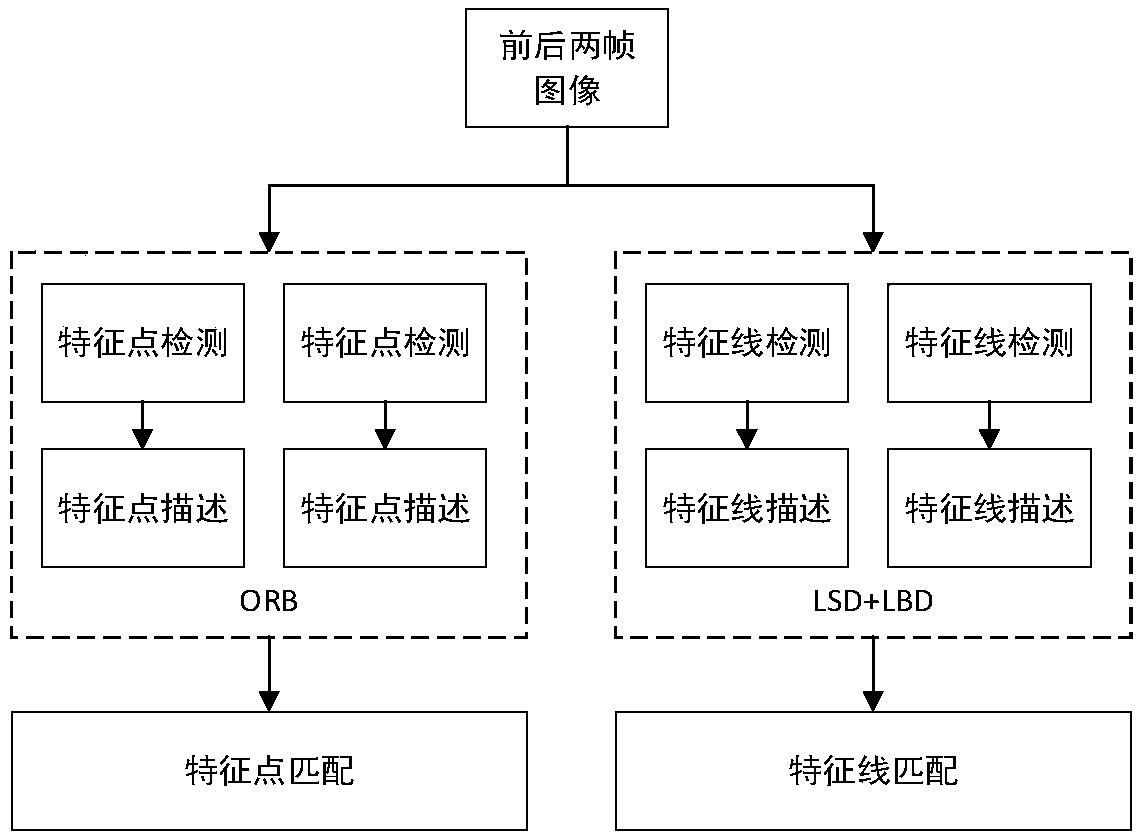

vSLAM implementation method and system based on point and line feature fusion

InactiveCN108682027AImprove accuracyReliable loopback detection functionImage enhancementImage analysisSimultaneous localization and mappingClosed loop

The invention discloses a vSLAM implementation method and system based on point and line feature fusion. The method includes Step S110, obtaining an image frame sequence of a target scene; Step S120,preprocessing each frame of image; Step S130, according to successfully matched point features and lie features, initializing an environmental map; Step S140, tracking based on the environmental map,and estimating the pose of a current frame of image; Step S150, judging whether the current frame of image meets a key frame condition, if yes, executing Step S160, otherwise repeatedly executing StepS110 to Step S150; Step S160, executing a step of a local map thread; Step S170, executing a step of a closed-loop detection thread; and Step S180, executing a step of a global optimization thread toobtain an optimized environmental map and complete simultaneous localization and mapping. The extraction and matching processes of line features are improved, so as to improve the accuracy of data association in the front end, and thus the defects of vSLAM which exist in a complicated low-texture scene can be effectively overcome.

Owner:BEIJING HUAJIE IMI TECH CO LTD

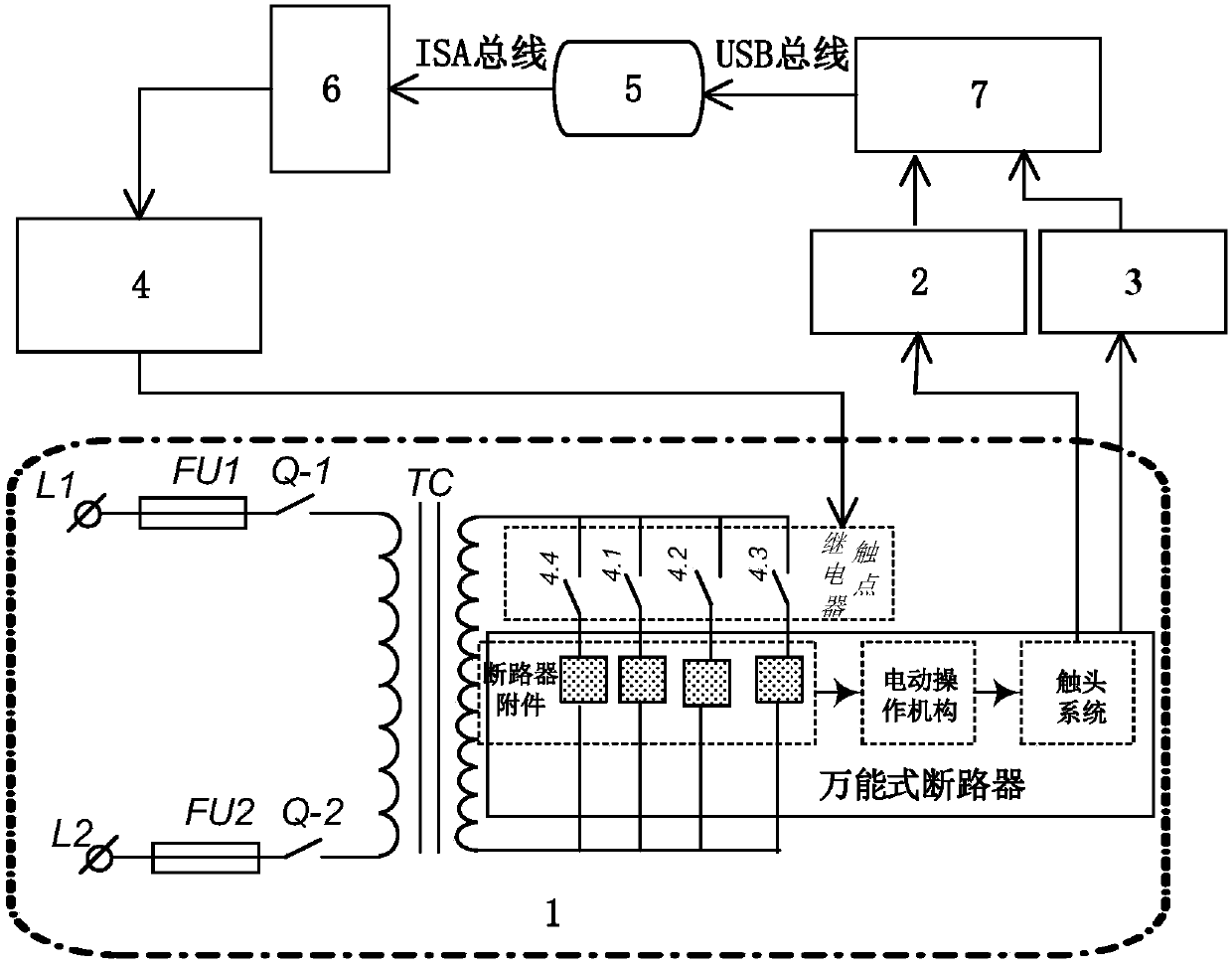

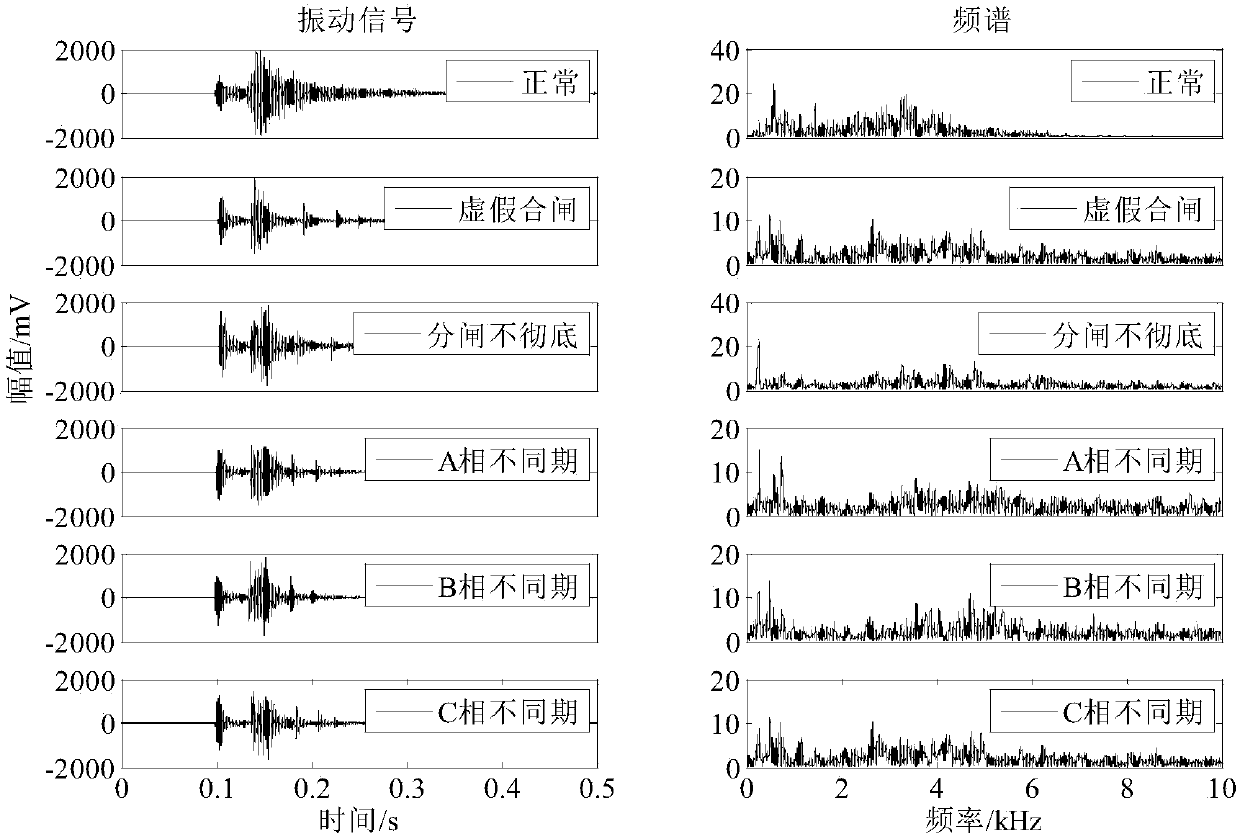

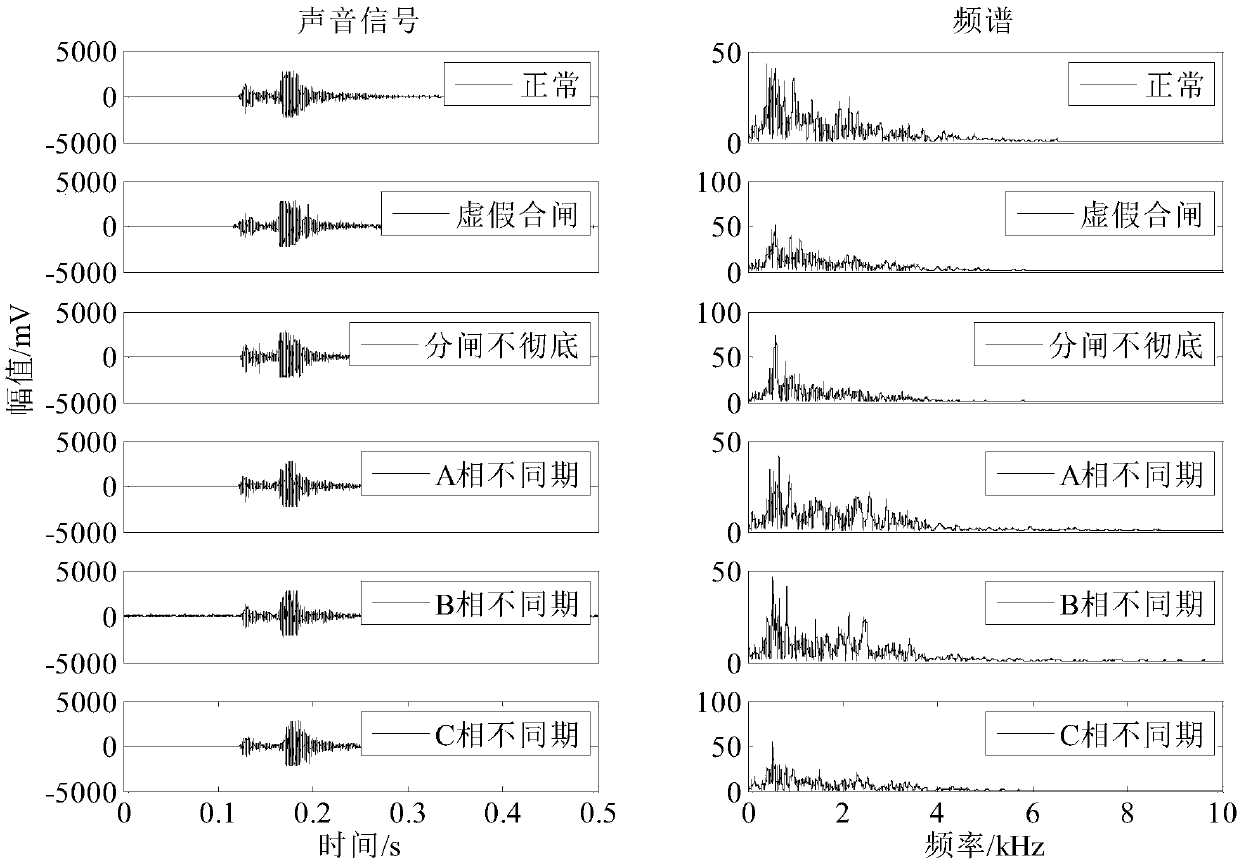

Universal circuit breaker mechanical fault diagnosis method based on feature fusion of vibration and sound signals

InactiveCN106017879AImprove reliabilityThe detection method is simpleMachine part testingCircuit interrupters testingDiagnostic Radiology ModalityMachining vibrations

The invention provides a universal circuit breaker mechanical fault diagnosis method based on feature fusion of vibration and sound signals. The method includes steps of 1, collecting machine vibration signals and machine sound signals during an engaging and disengaging process of a universal circuit breaker; 2, adopting an improved wavelet packet threshold value denoising algorithm for denoising; 3, adopting a complementary total average empirical mode decomposition algorithm for extracting a plurality of solid mode function components reflecting state information of engagement and disengagement actions of the circuit breaker from the denoising signals; 4, determining the number Z of the solid mode function components; 5, calculating the energy ratio, the sample ratio and the power spectrum entropy as three types of features; 6, adopting a combination core principal component analysis method for performing dimension reduction on a feature sample with unified three types of features of the vibration and the sound signals and obtaining M principle components; 7, establishing a related vector machine based sequence binary tree multiple classifier model.

Owner:HEBEI UNIV OF TECH

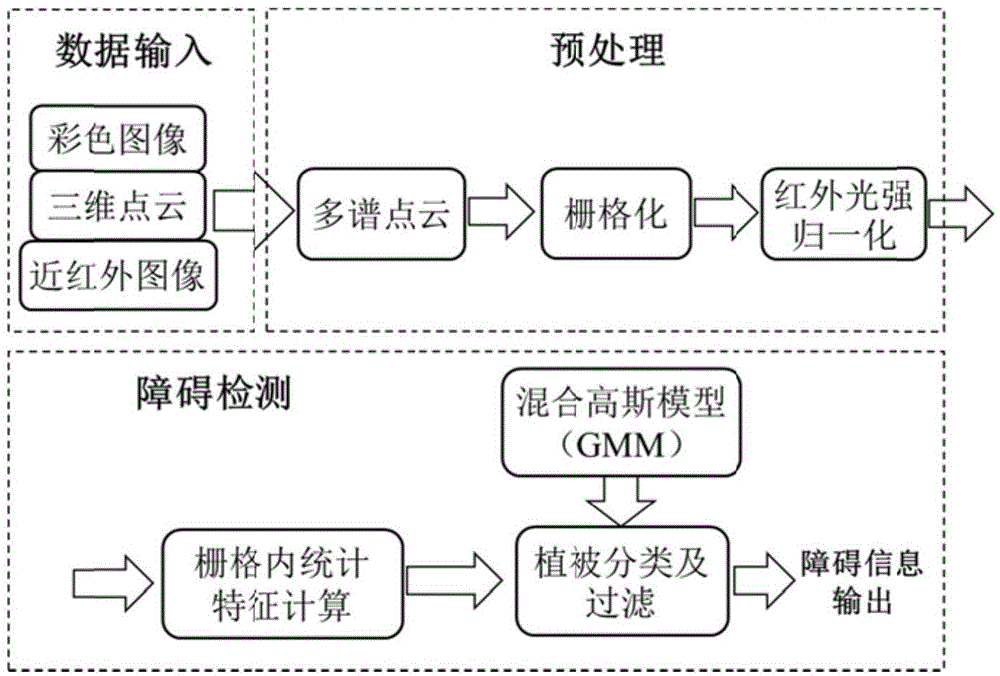

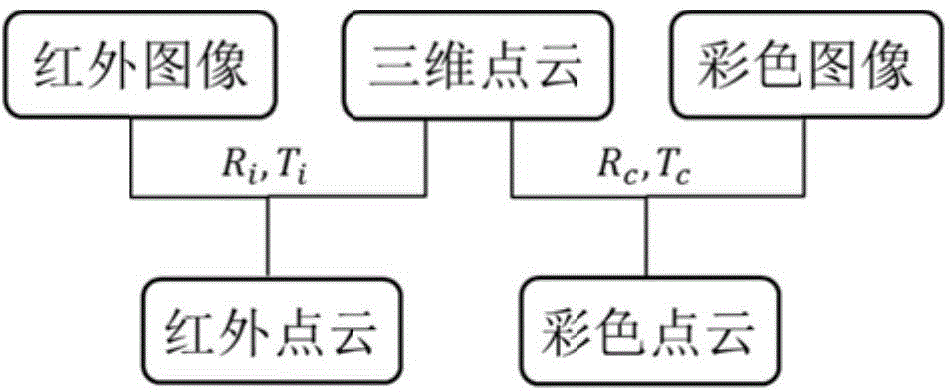

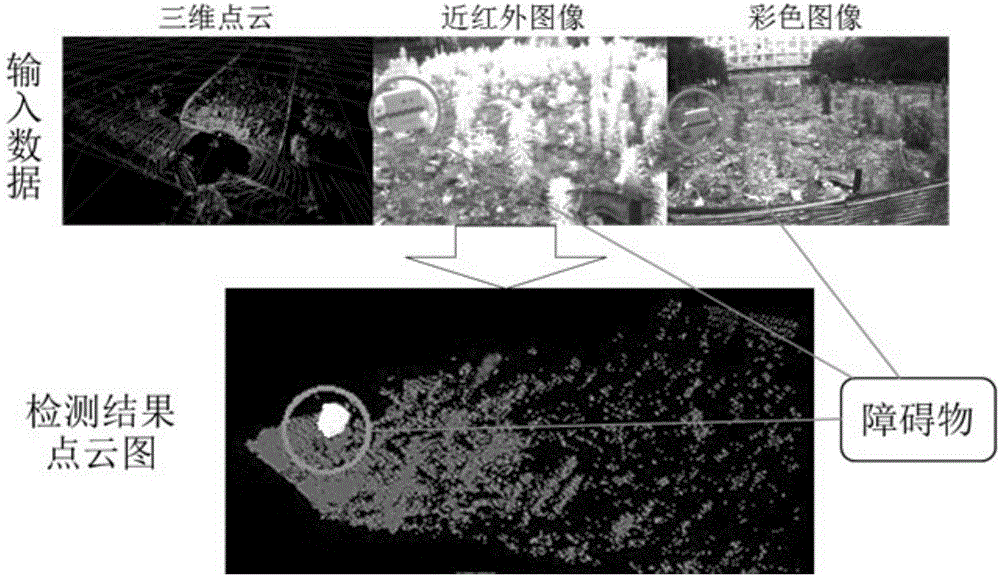

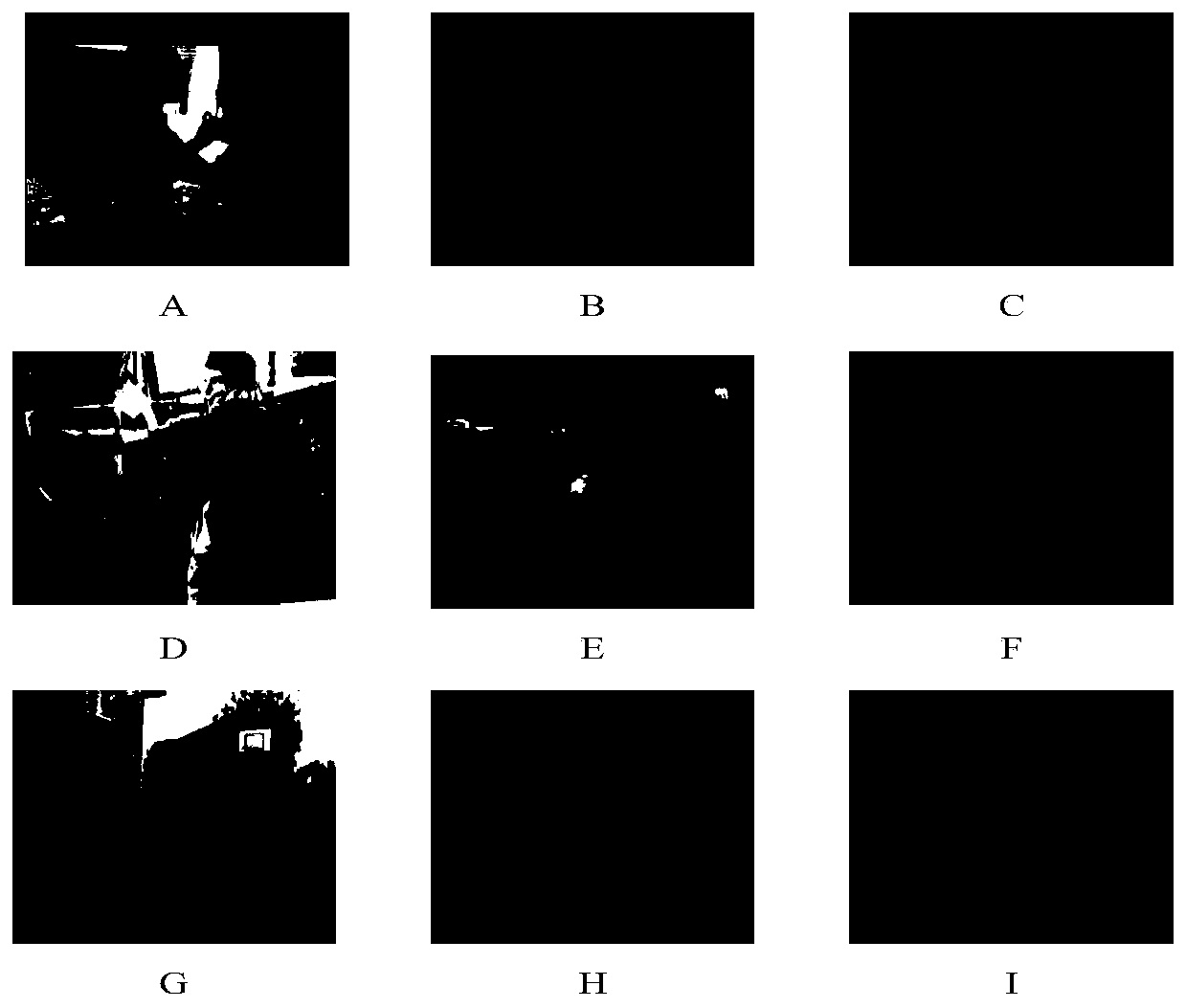

Barrier detection method in vegetation environment based on multispectral and 3D feature fusion

InactiveCN104933708AEffective filteringImprove detection rateImage enhancementImage analysisVegetationPoint cloud

The invention discloses a barrier detection method in the vegetation environment based on multispectral and 3D feature fusion. The method comprises that 3D point cloud data is collected, and color and near-infrared images are collected at the same time; 3D point cloud data is registered with multispectral data to obtain multispectral 3D point cloud; a grid map is established, and candidate barrier grids are obtained via a height threshold; the scale of the near-infrared image is adjusted to normalize the infrared light intensity; the near-infrared intensity value and the visible light intensity of RGB color information within the grids are combined and serve as feature information, and 2D feature is obtained via calculation; and the candidates barrier grids are processed and filtered by utilizing a Gaussian mixture model to obtain a final barrier detection result. According to the method, influence caused by change of illumination condition is reduced by normalizing the infrared light intensity, and multispectral 3D features are sued to realize effective barrier detection.

Owner:ZHEJIANG UNIV

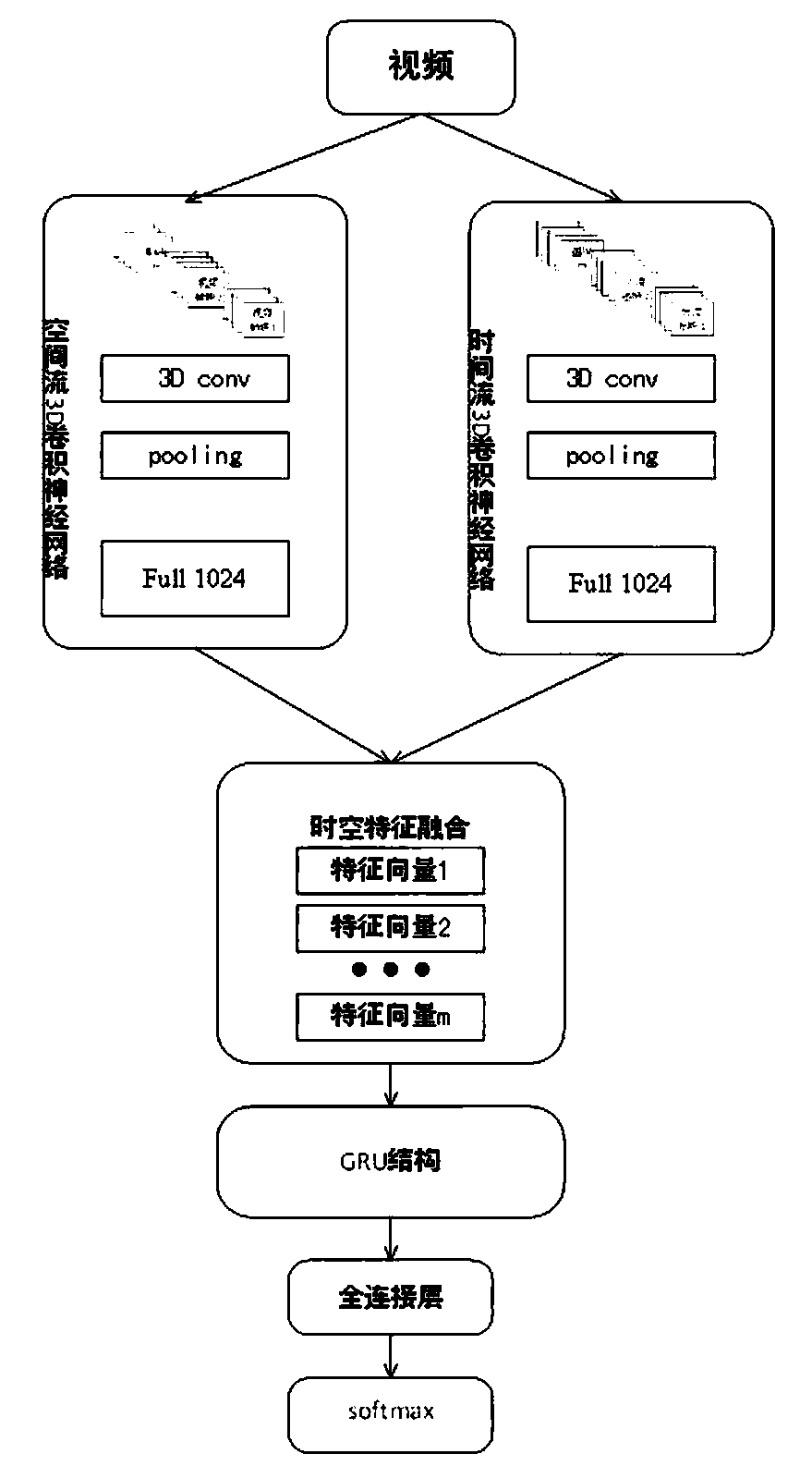

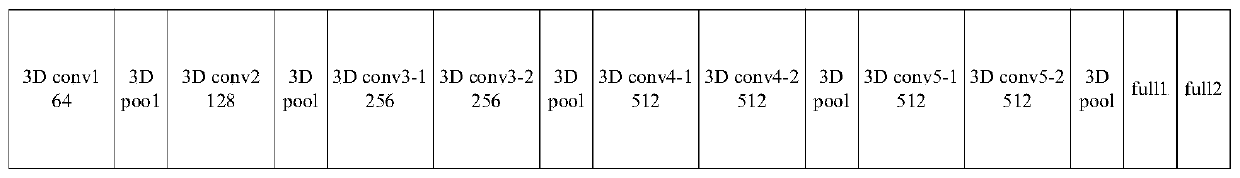

Behavior recognition technical method based on deep learning

PendingCN110188637AGood feature expression abilityEasy to useCharacter and pattern recognitionNeural architecturesVideo monitoringNetwork model

The invention discloses a behavior recognition technical method based on deep learning, which solves a problem that the intelligentization of a video monitoring system in the prior art needs to be improved. The method comprises the following steps of constructing a deeper space-time double-flow CNN-GRU neural network model by adopting a mode of combining a double-flow convolutional neural networkand a GRU network; extracting the time domain and space domain features of the video; extracting the long-time serialization characteristics of the spatial-temporal characteristic sequence according to the capability of the GRU network for memorizing information, and carrying out the behavior recognition of a video through employing a softmax classifier; proposing a new related entropy-based lossfunction; and with the help of a method for processing the mass information by referring to a human brain visual nerve attention mechanism, introducing an attention mechanism before a space-time double-flow CNN-GRU neural network model performs space-time feature fusion. The accuracy of the model provided by the technology is 61.5%, and compared with an algorithm based on a double-flow convolutional neural network, the recognition rate is improved to a certain extent.

Owner:XIDIAN UNIV

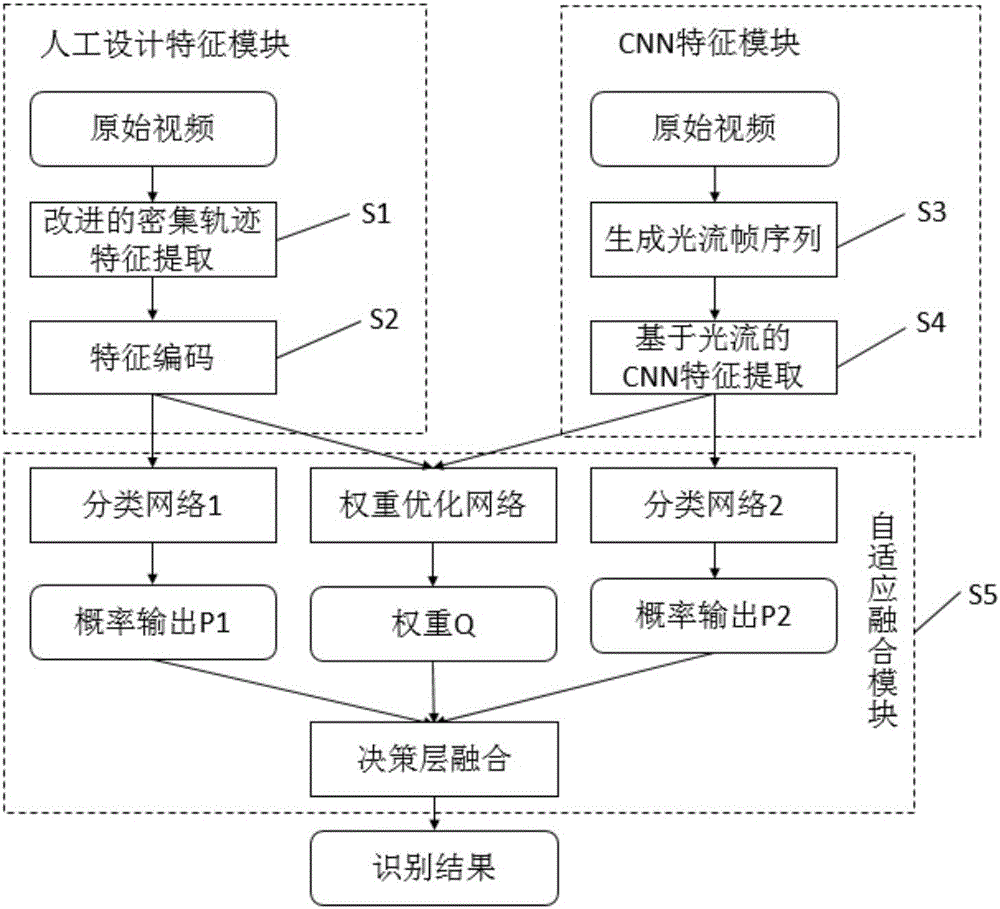

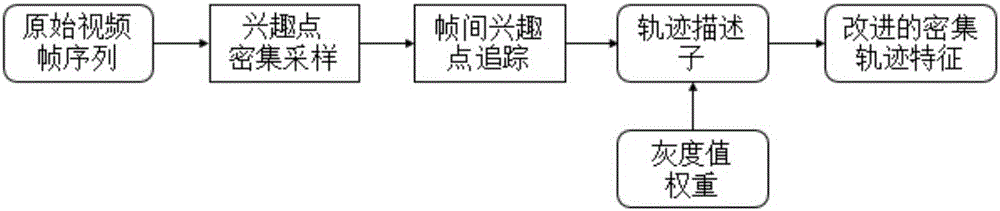

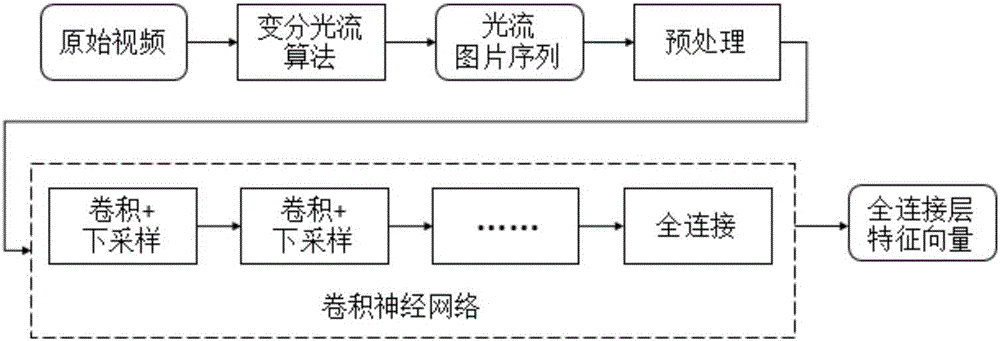

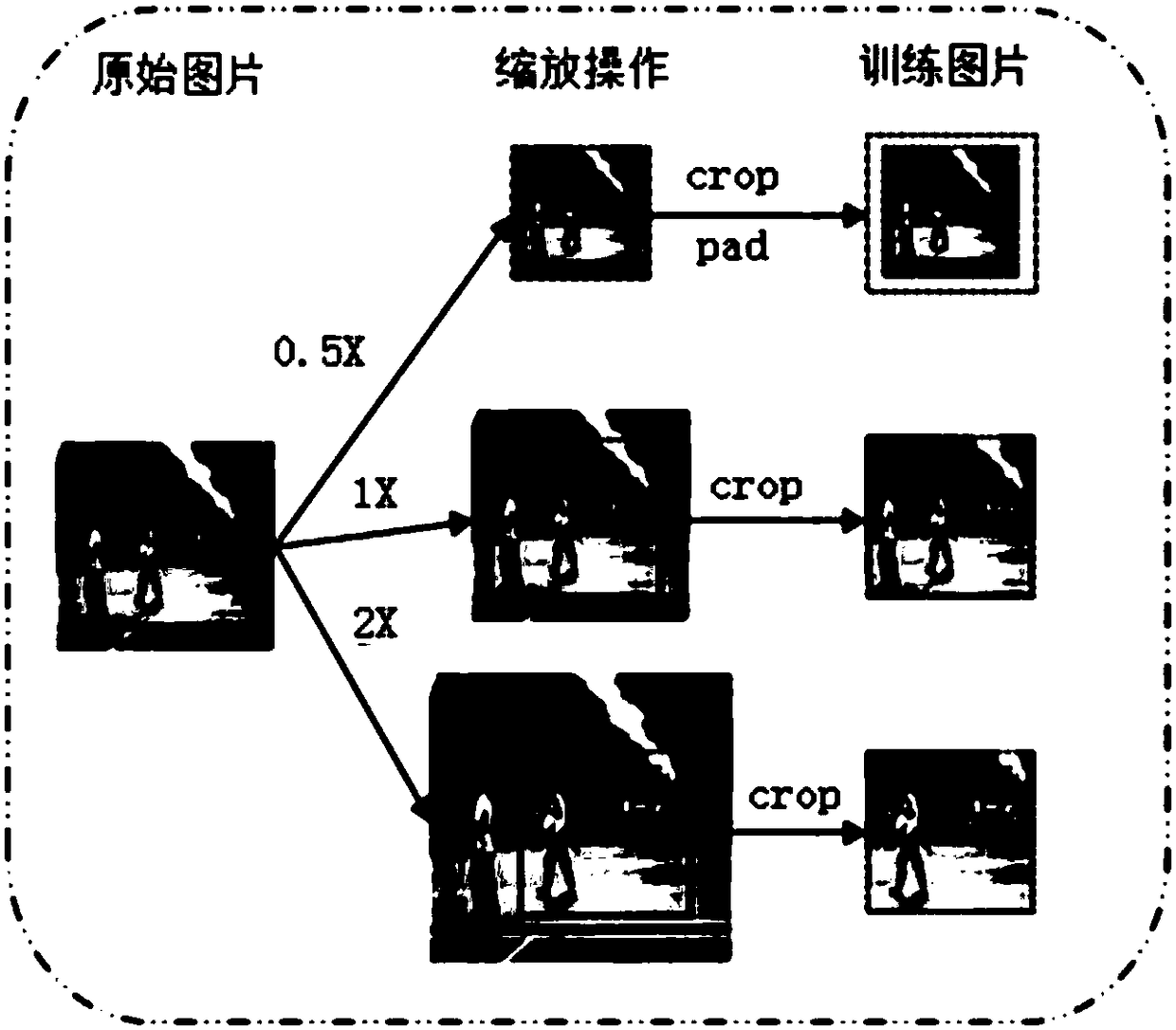

Infrared behavior identification method based on adaptive fusion of artificial design feature and depth learning feature

ActiveCN105787458AImprove reliabilityInnovative feature fusionCharacter and pattern recognitionNeural learning methodsData setFeature coding

The invention relates to an infrared behavior identification method based on adaptive fusion of an artificial design feature and a depth learning feature. The method comprises: S1, improved dense track feature extraction is carried out on an original video by using an artificial design feature module; S2, feature coding is carried out on the extracted artificial design feature; S3, with a CNN feature module, optic flow information extraction is carried out on an original video image sequence by using a variation optic flow algorithm, thereby obtaining a corresponding optic flow image sequence; S4, CNN feature extraction is carried out on the optic flow sequence obtained at the S3 by using a convolutional neural network; and S5, a data set is divided into a training set and a testing set; and weight learning is carried out on the training set data by using a weight optimization network, weight fusion is carried out on probability outputs of a CNN feature classification network and an artificial design feature classification network by using the learned weight, an optimal weight is obtained based on a comparison identification result, and then the optimal weight is applied to testing set data classification. According to the method, a novel feature fusion way is provided; and reliability of behavior identification in an infrared video is improved. Therefore, the method has the great significance in a follow-up video analysis.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

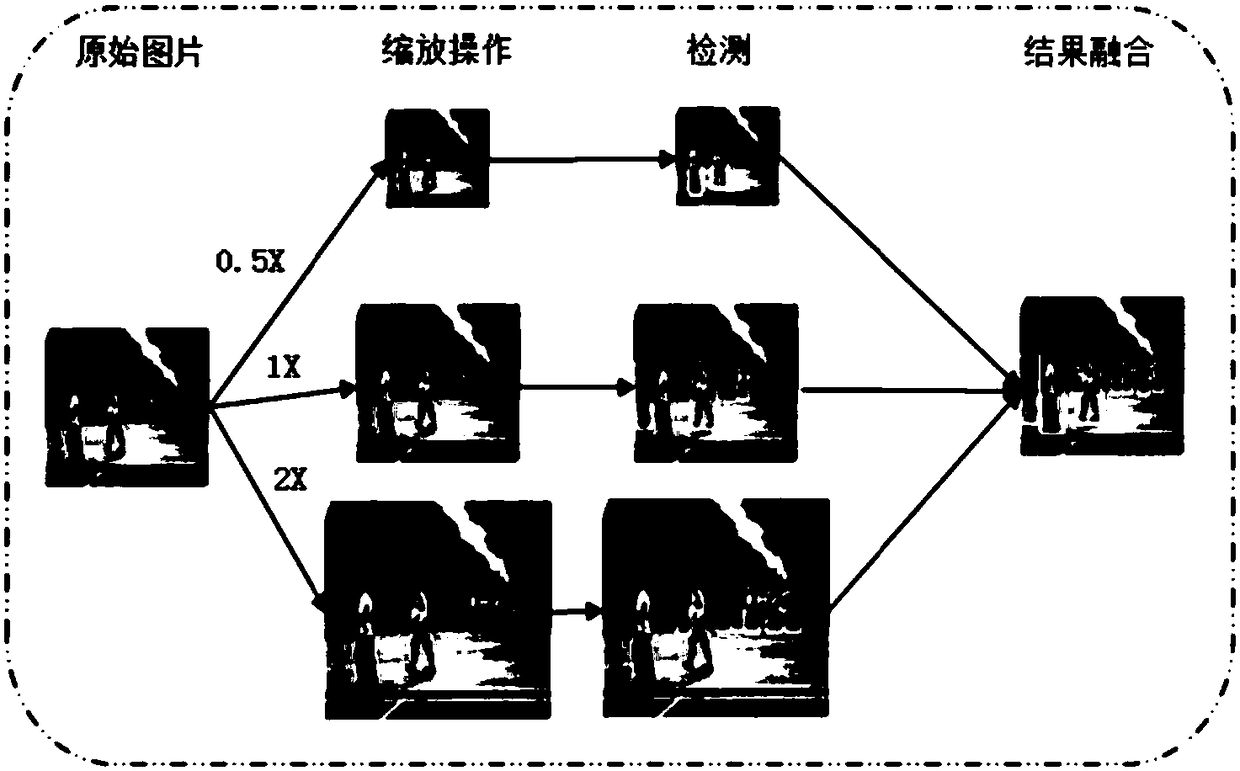

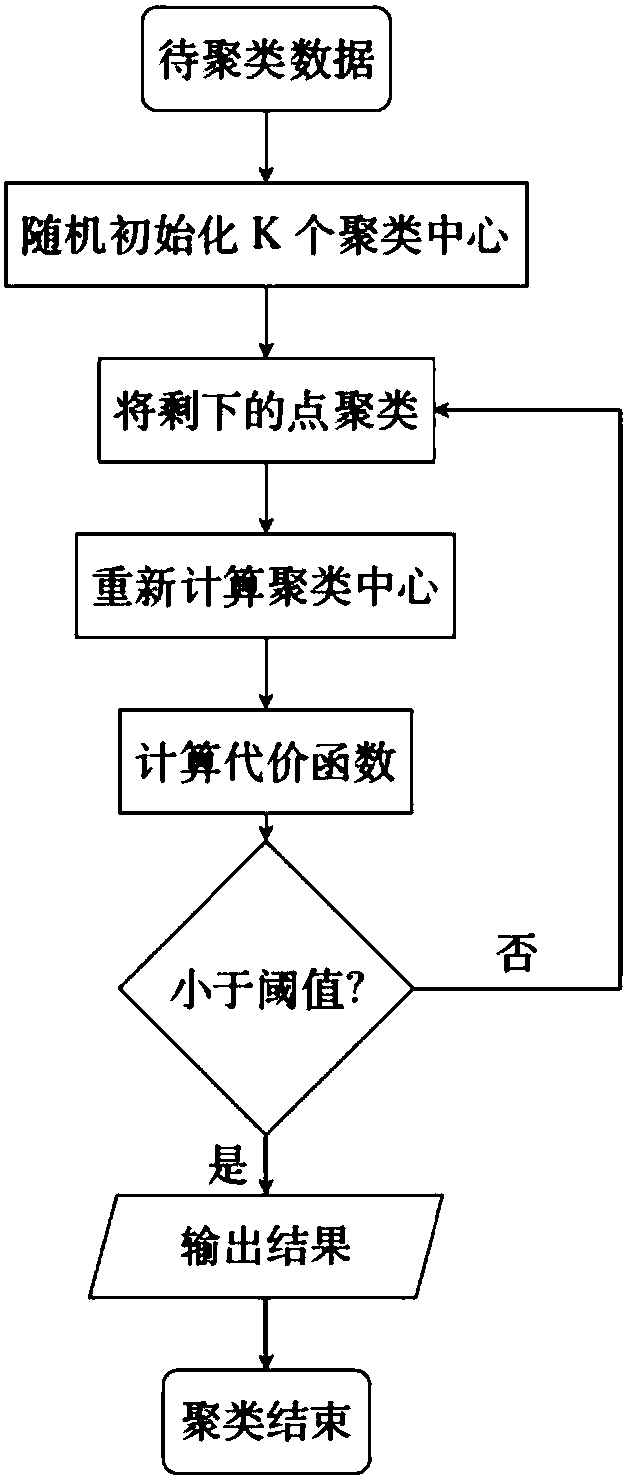

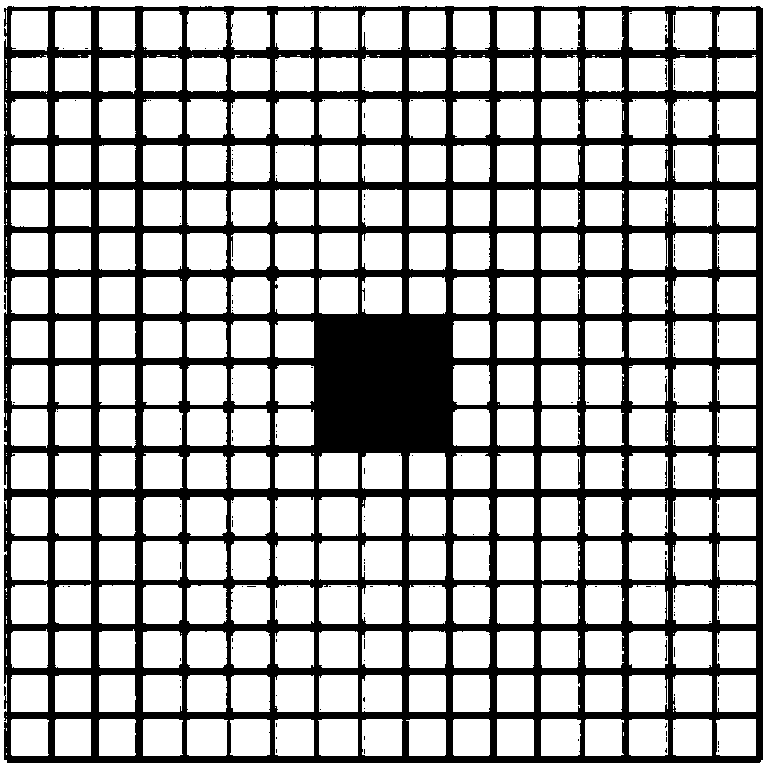

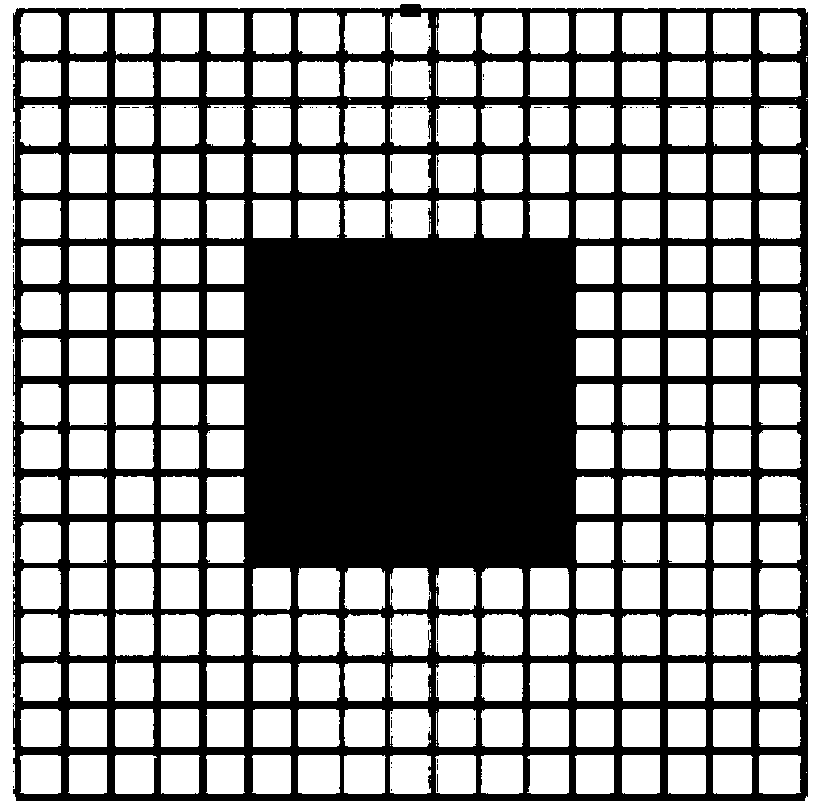

Target detection method and system based on multi-scale feature fusion in image

InactiveCN108460403AMeet the detection effectImprove detection accuracyCharacter and pattern recognitionPattern recognitionMulti resolution

The invention discloses a target detection method and system based on multi-scale feature fusion in an image. The method comprises the steps that firstly, zooming of different scales is carried out byutilizing the to-be-detected picture, and an image pyramid is constructed; secondly, a set of multi-scale detection templates covering most of the sample dimensions is obtained by utilizing a statistical clustering method; thirdly, based on the multi-scale detection template, scale self-adaptive target context construction is performed; fourthly, multi-scale depth features are fused; fifthly, thenon-maximum value restrain based on soft judgment is carried out. The invention is advantageous in that the image multi-resolution sparse pyramid, the multi-scale detection template and the templatescale self-adaptive context, multi-scale depth feature fusion and the like are constructed, full mining and fusion utilization of the depth features are achieved, and the target detection performancecan be improved.

Owner:SHANGHAI JIAO TONG UNIV

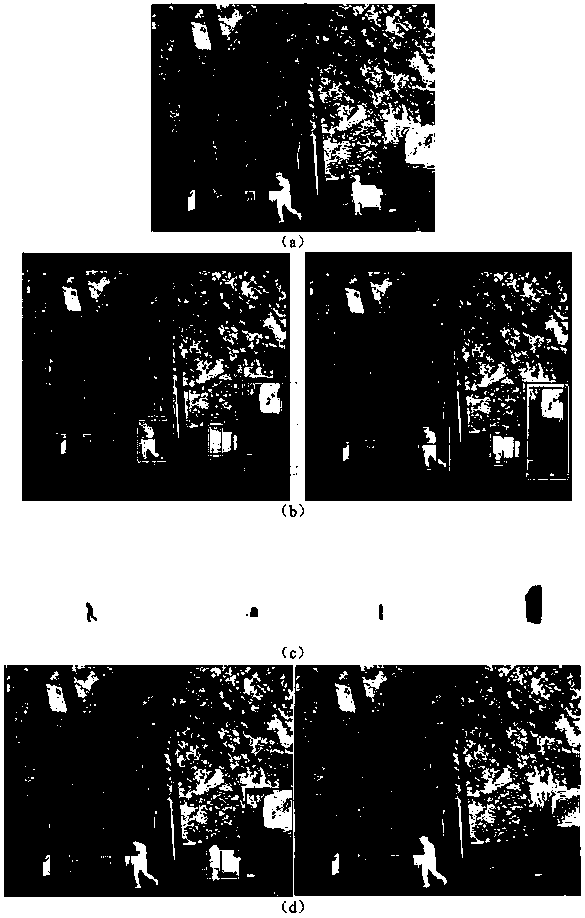

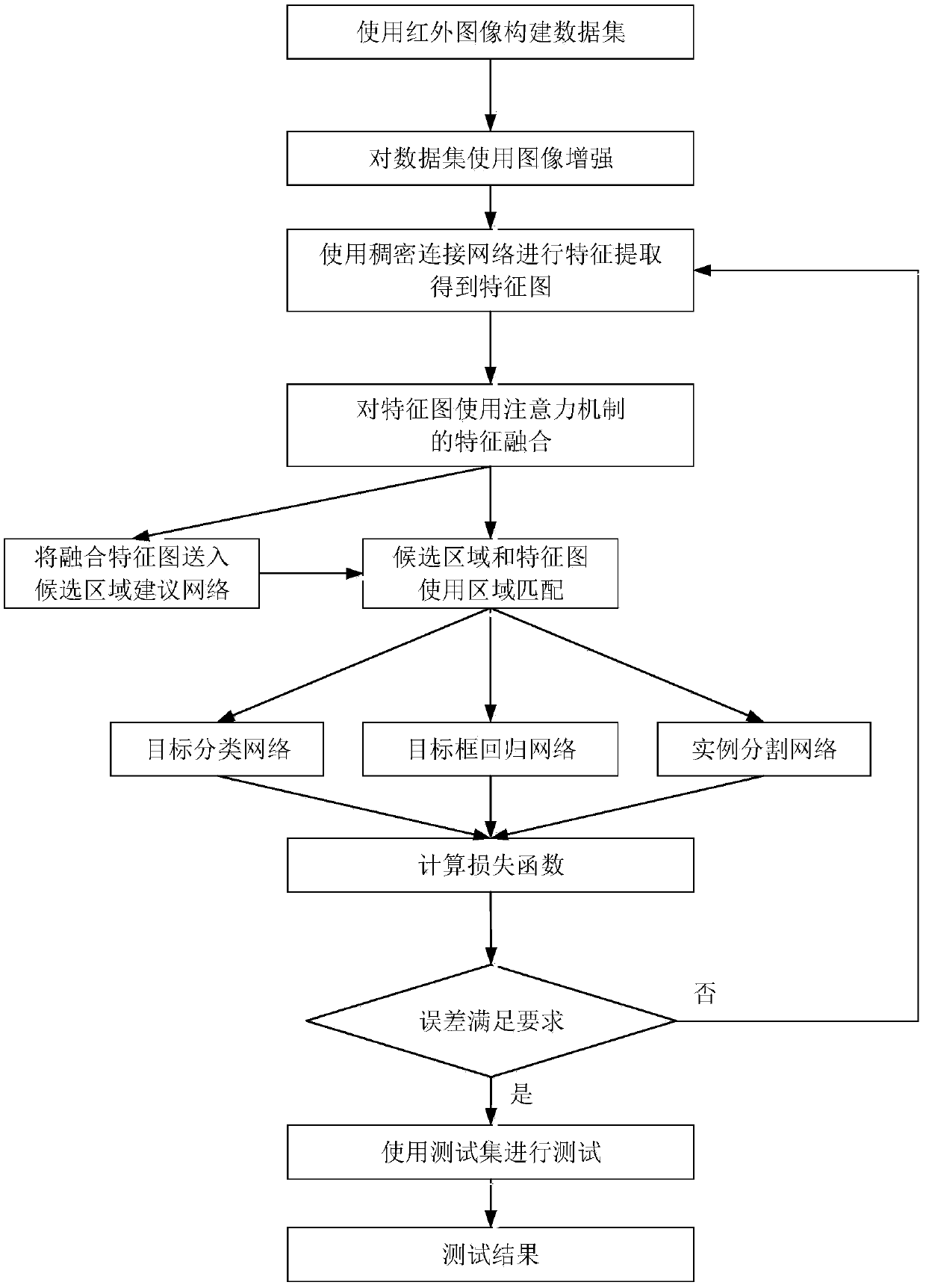

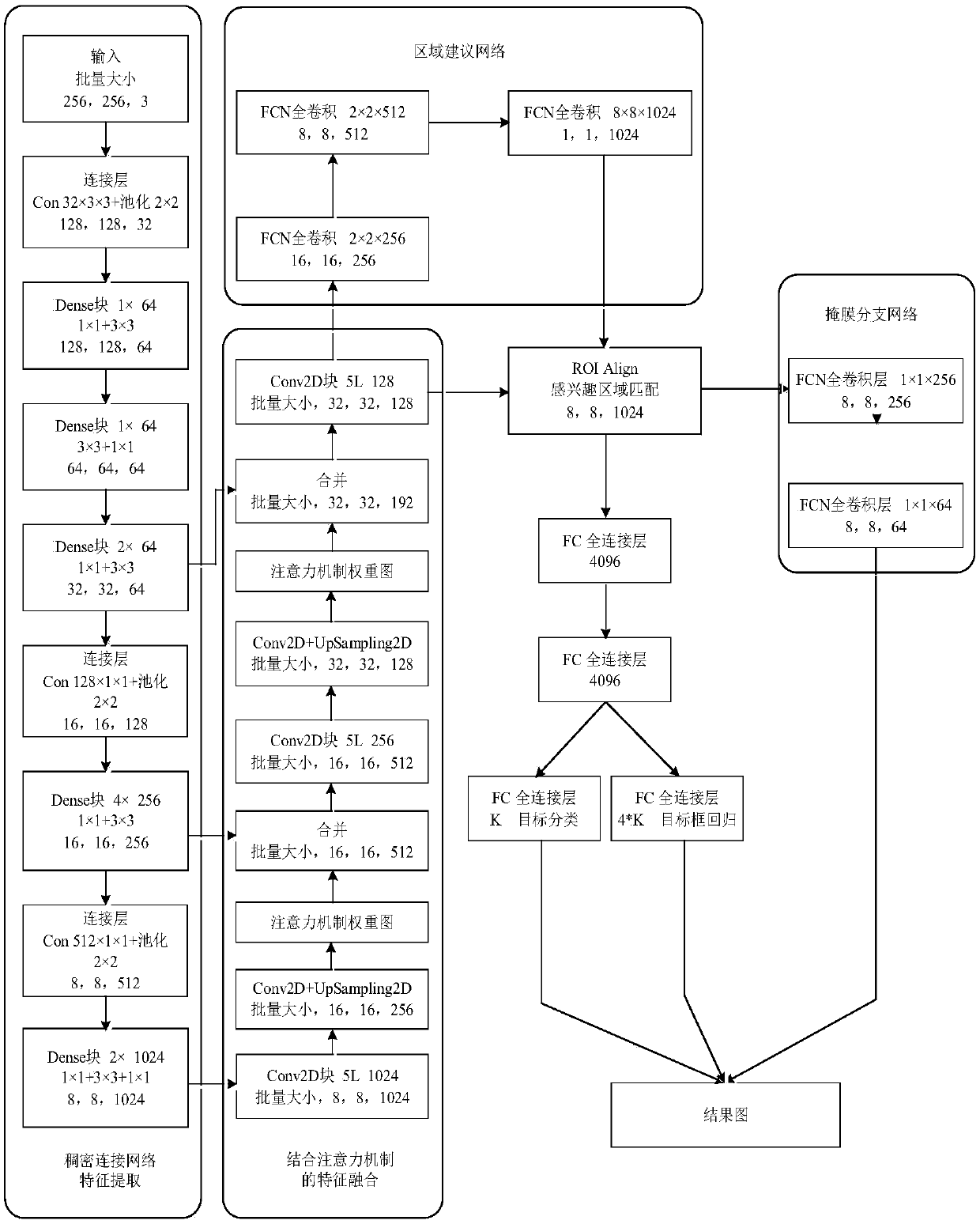

Infrared target instance segmentation method based on feature fusion and a dense connection network

PendingCN109584248ASolving the gradient explosion/gradient disappearance problemStrengthen detection and segmentation capabilitiesImage enhancementImage analysisData setFeature fusion

The invention discloses an infrared target instance segmentation method based on feature fusion and a dense connection network, and the method comprises the steps: collecting and constructing an infrared image data set required for instance segmentation, and obtaining an original known infrared tag image; Performing image enhancement preprocessing on the infrared image data set; Processing the preprocessed training set to obtain a classification result, a frame regression result and an instance segmentation mask result graph; Performing back propagation in the convolutional neural network by using a random gradient descent method according to the prediction loss function, and updating parameter values of the convolutional neural network; Selecting a fixed number of infrared image data training sets each time and sending the infrared image data training sets to the network for processing, and repeatedly carrying out iterative updating on the convolutional network parameters until the convolutional network training is completed by the maximum number of iterations; And processing the test set image data to obtain average precision and required time of instance segmentation and a finalinstance segmentation result graph.

Owner:XIDIAN UNIV

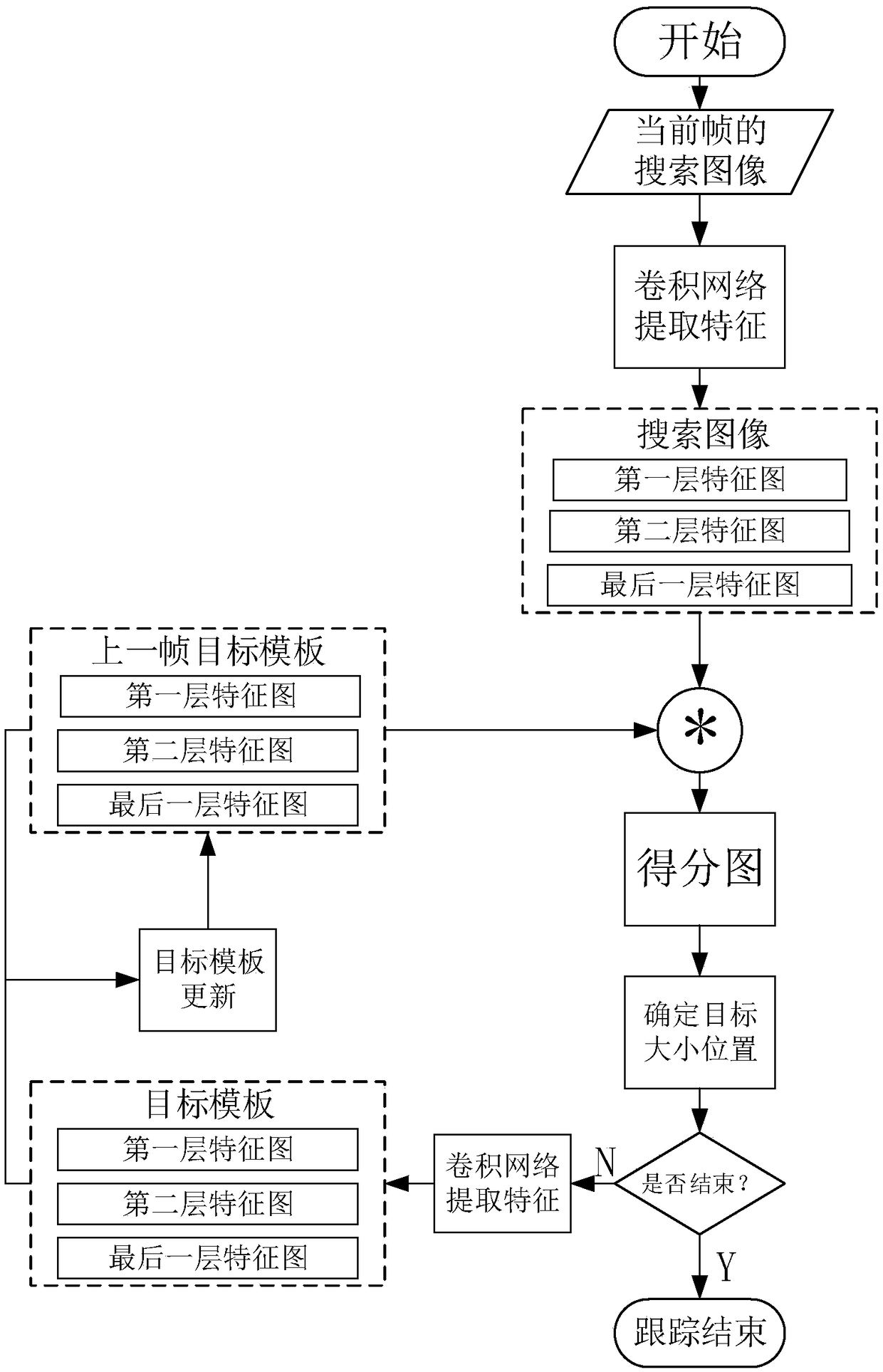

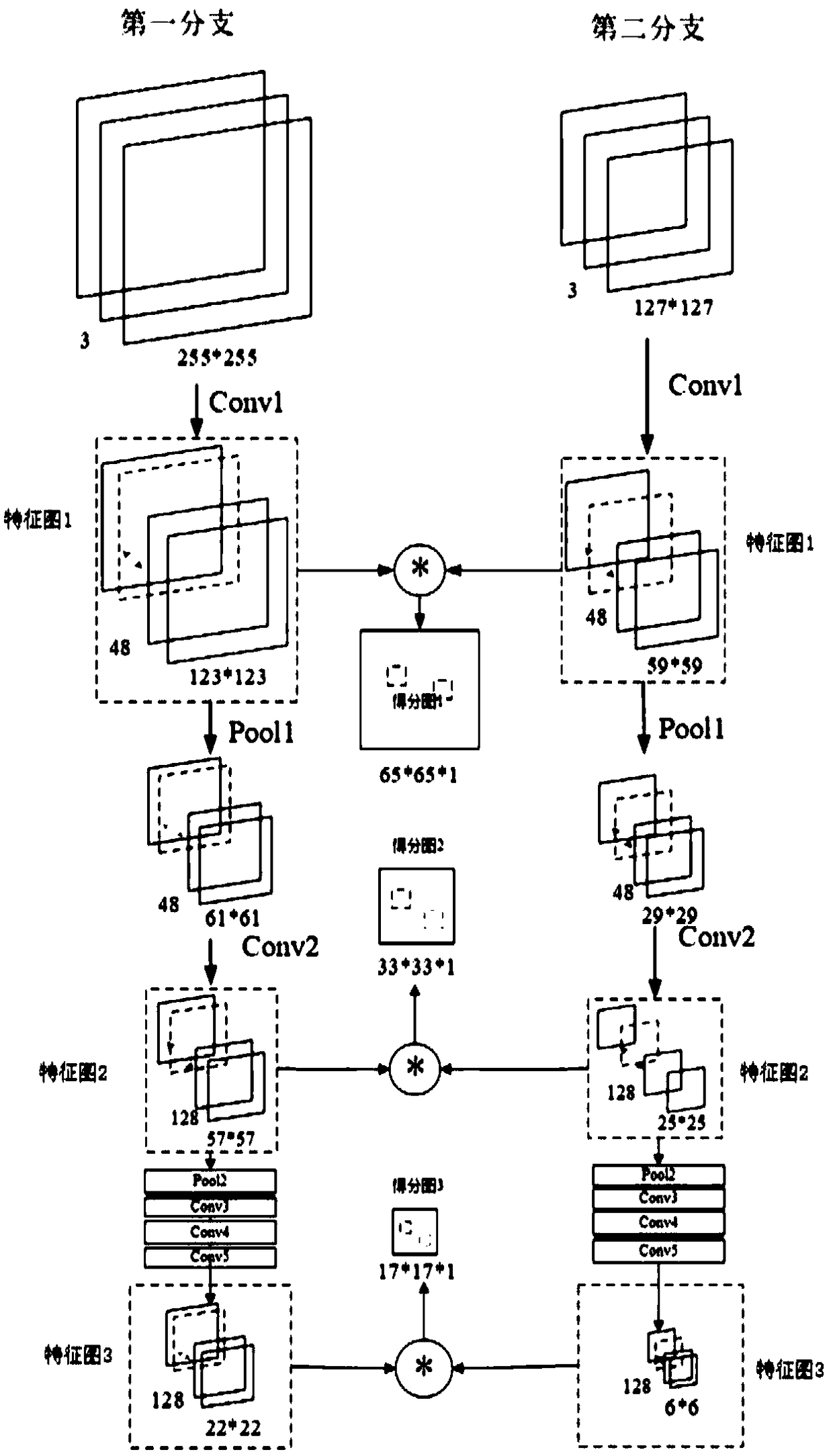

Target tracking method and system of full convolution twin network based on multi-layer feature fusion

InactiveCN109191491ADifferentiate interferenceAvoid Gradient DiffusionImage enhancementImage analysisData setSemantic feature

The invention discloses a target tracking method and system of a convolution twin network based on multi-layer feature fusion. The method comprises the following steps of: according to the target position and size of the images, cutting out the target template images and the search area images of all images in the image sequence training set, and forming a training data set by image pairs composedof the target template images and the search area images; constructing a convolution twin network based on multi-layer feature fusion; training the convolution twin network based on the multi-layer feature fusion based on the training data set to obtain a trained convolution twin network based on the multi-layer feature fusion; using the trained convolution twin network based on multi-layer feature fusion for target tracking. In the process of tracking targets, the invention integrates scores of different layers, combines high-level semantic features and bottom-level detail features, better distinguishes the interference of similar or similar targets, and prevents the problems of target drift and target loss in the tracking process.

Owner:HUAZHONG UNIV OF SCI & TECH

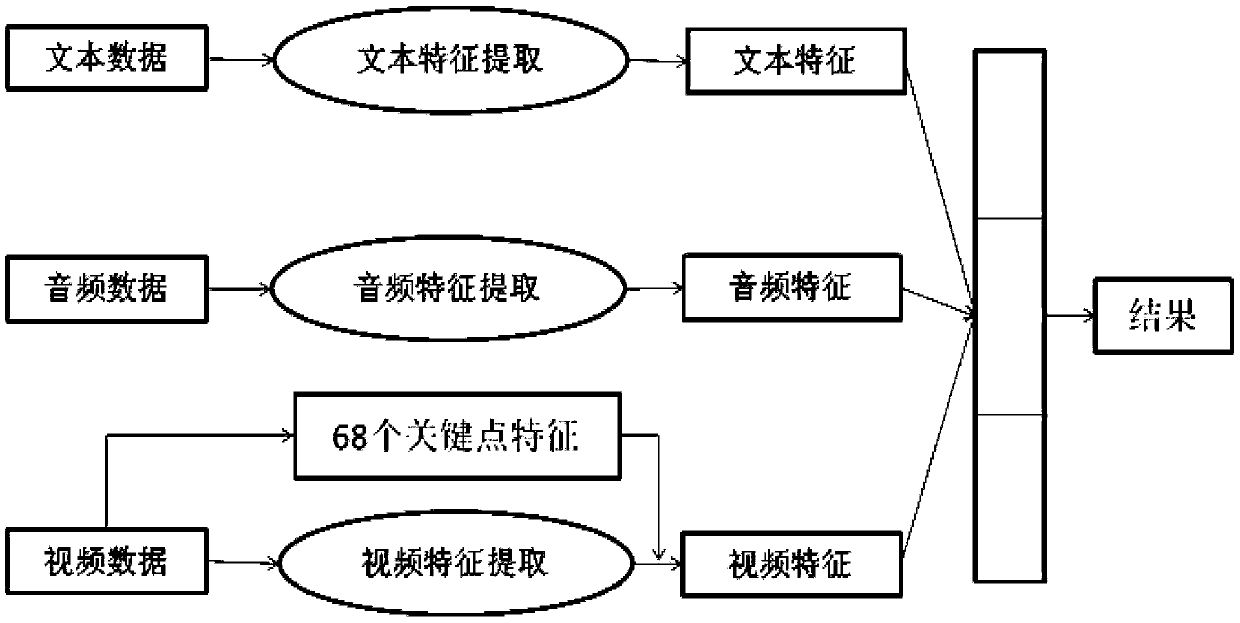

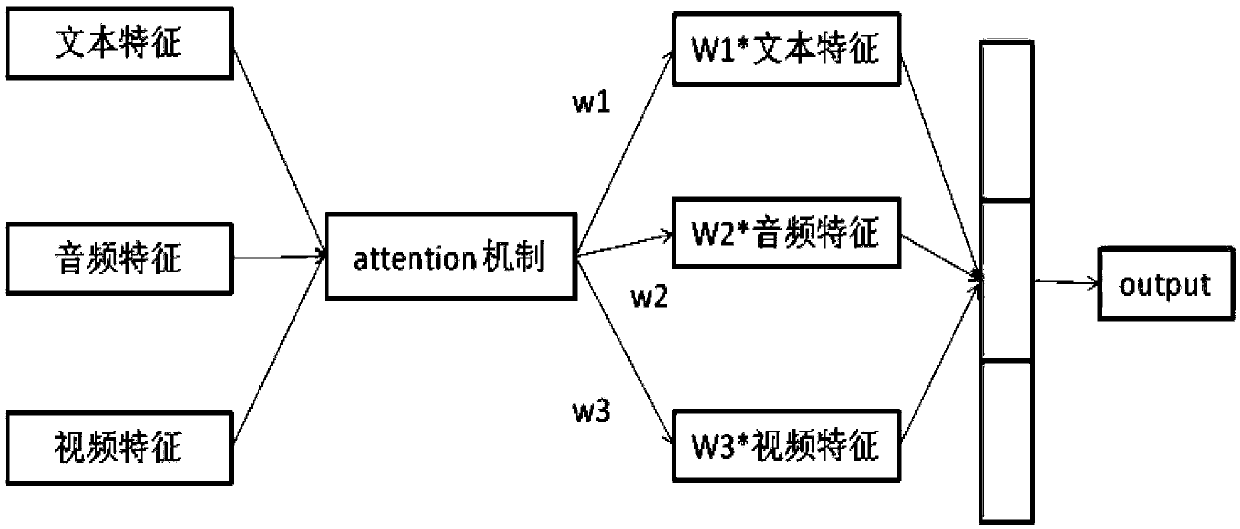

Multi-modal emotion recognition method based on attention feature fusion

InactiveCN109614895AMaximize UtilizationFully reflect the degree of influenceCharacter and pattern recognitionNeural architecturesSpeech soundComputer science

The invention relates to a multi-modal emotion recognition method based on attention feature fusion. According to the multi-modal emotion recognition method based on attention feature fusion, final emotion recognition is carried out by mainly utilizing data of three modes of a text, a voice and a video. The method comprises the following steps: firstly, performing feature extraction on data of three modes; in the text aspect, bidirectional LSTM is used for extracting text features, a convolutional neural network is used for extracting features in a voice mode, and a three-dimensional convolutional neural network model is used for extracting video features in a video mode. Performing feature fusion on the features of the three modes by adopting an attention-based feature layer fusion mode;a traditional feature layer fusion mode is changed, complementary information between different modes is fully utilized, certain weights are given to the features of the different modes, the weights and the network are obtained through training and learning together, and therefore the method better conforms to the whole data distribution of people, and the final recognition effect is well improved.

Owner:SHANDONG UNIV

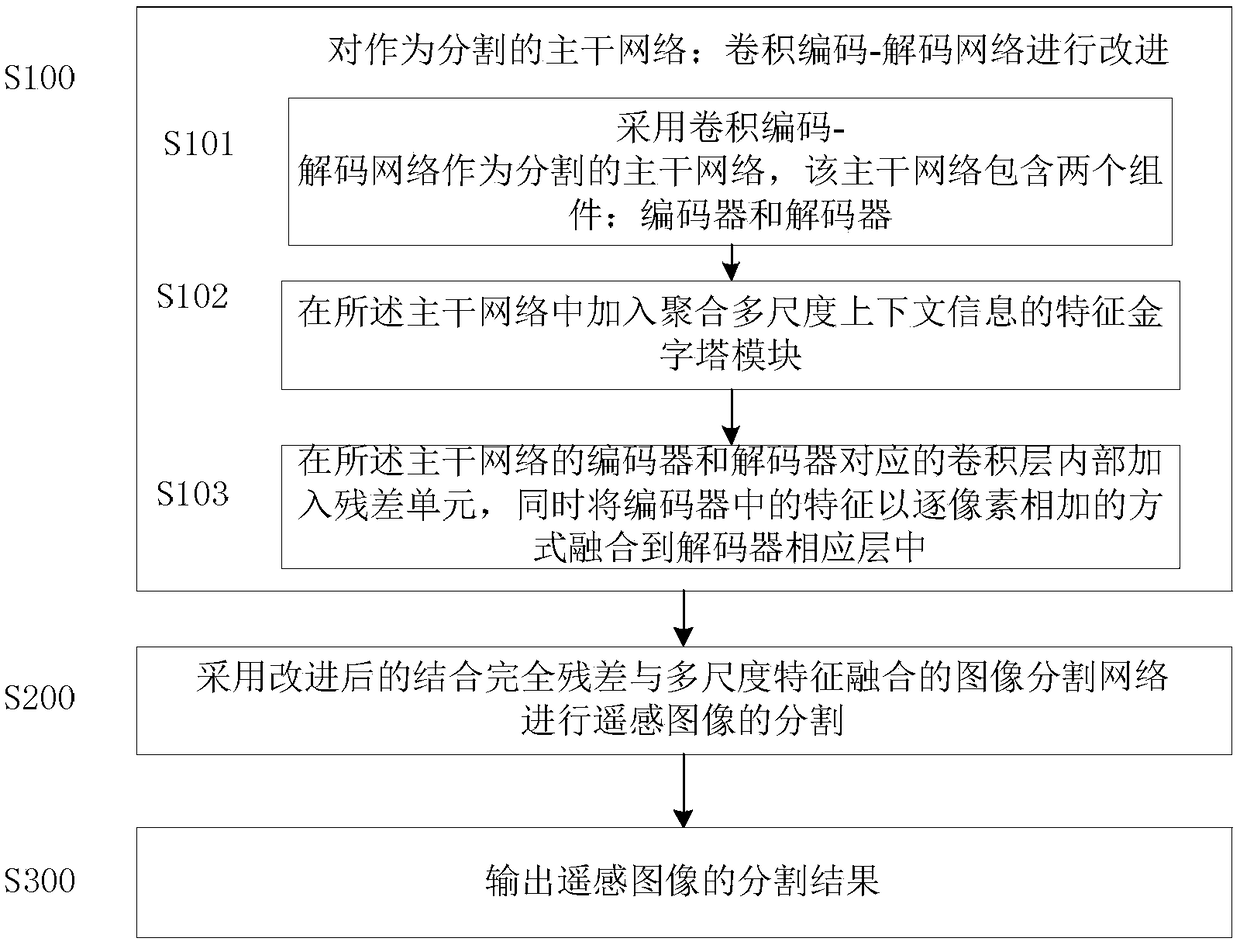

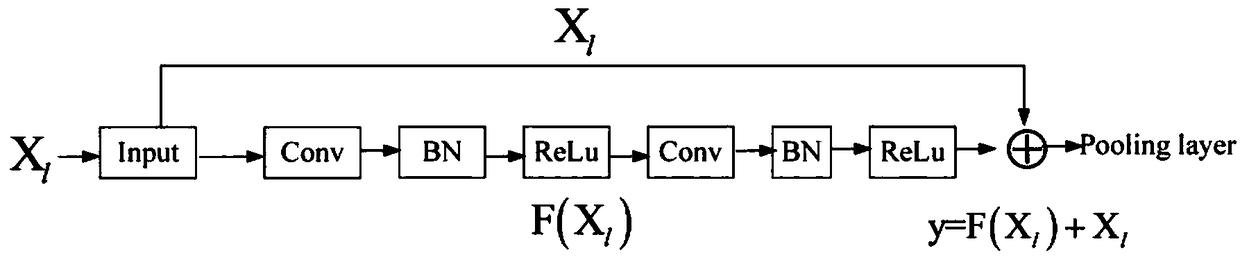

Remote sensing image segmentation method combining complete residual and multi-scale feature fusion

ActiveCN109447994AEnhanced Feature FusionSimplify the training processImage enhancementImage analysisPattern recognitionCoding decoding

A remote sensing image segmentation method combining complete residual and multi-scale feature fusion includes S100 improving a convolution coding-decoding network as a segmentation backbone network,separately comprising S101 using the convolution coding-decoding network as the segmentation backbone network; S102 adding a feature pyramid module for aggregating multi-scale context information intothe backbone network; S103 adding a residual unit into the convolution layer corresponding to the encoder and the decoder of the backbone network, and meanwhile, fusing the features in the encoder into the corresponding layer of the decoder in a pixel-by-pixel manner; S200 using the improved image segmentation network combined with complete residual and multi-scale feature fusion for remote sensing image segmentation; S300 outputting the segmentation result of the remote sensing image. This method not only simplifies the training of the deep network and enhances the feature fusion, but also enables the network to extract rich context information, cope with changes in the scale of the target, and improve the segmentation performance.

Owner:SHAANXI NORMAL UNIV

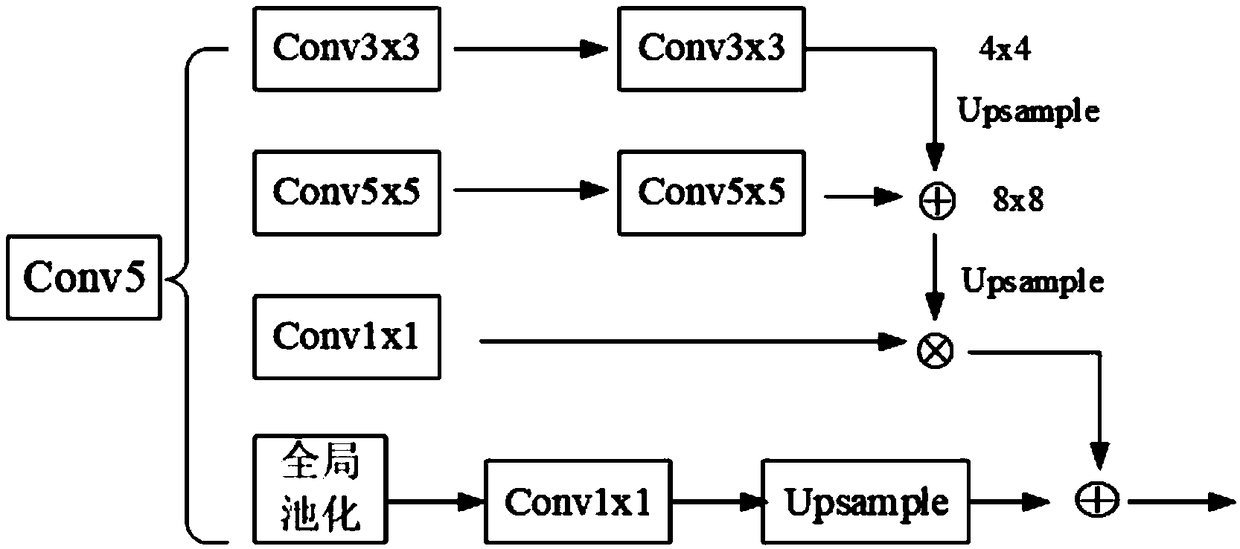

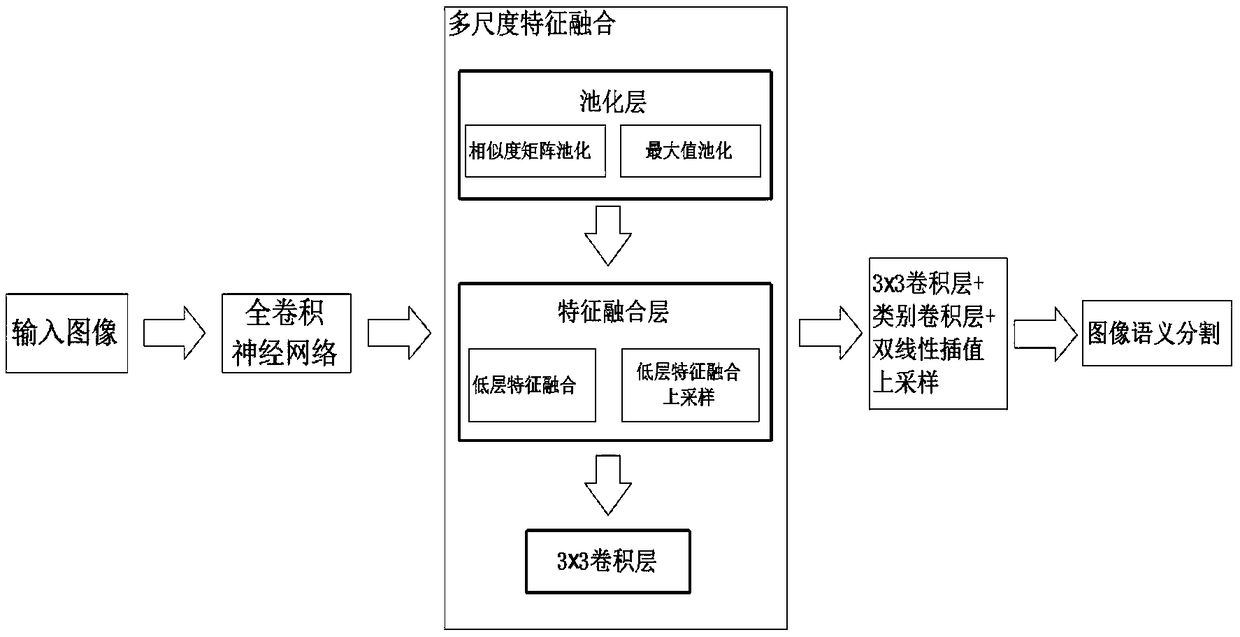

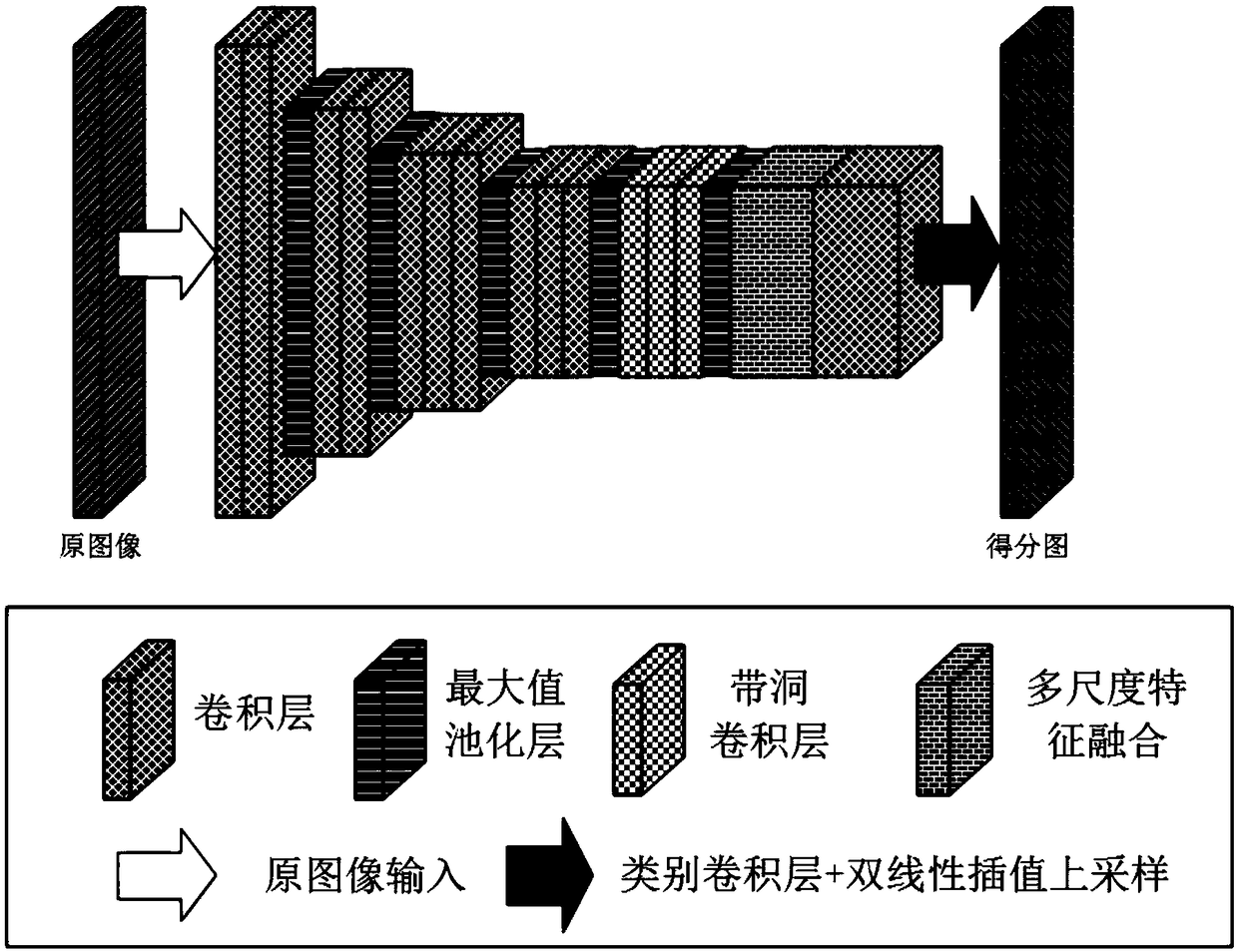

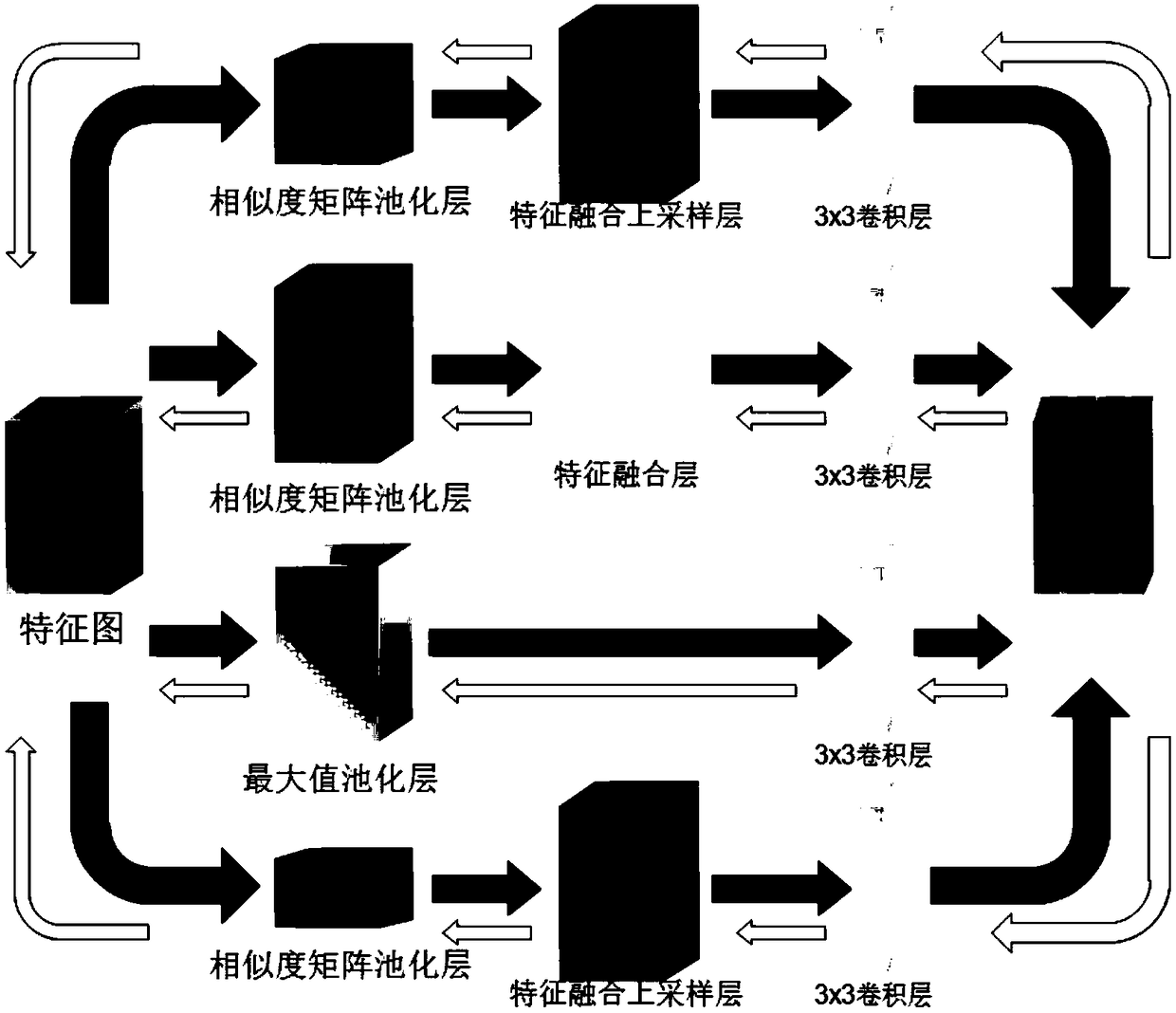

Full convolutional network semantic segmentation method based on multi-scale low-level feature fusion

ActiveCN108830855AEasy to identifyMulti-global feature informationImage analysisCharacter and pattern recognitionScale downDeep level

The invention discloses a full convolutional network (FCN) semantic segmentation method based on multi-scale low-level feature fusion. The method comprises firstly extracting the dense feature of an input image by using the FCN; and then subjecting the extracted feature images to multi-scale feature fusion, including steps of subjecting the input feature images to multi-scale pooling to form a plurality of processing branches; then performing low-level feature fusion on the pooled scale-invariant feature maps in respective branches and performing low-level feature fusion upsampling on the pooled scale-down feature maps in respective branches; inputting the feature maps into a 3*3 convolutional layer to learn deeper features and reduce the number of channels of output feature maps; then combining the output feature maps of respective branches in a channel number splicing manner, and obtaining a score map having a size the same as that of an original image by a class convolutional layerand bilinear interpolation upsampling. In combination with local low-level feature information and global multi-scale image information, the effect of image semantic segmentation is significant.

Owner:SOUTH CHINA UNIV OF TECH

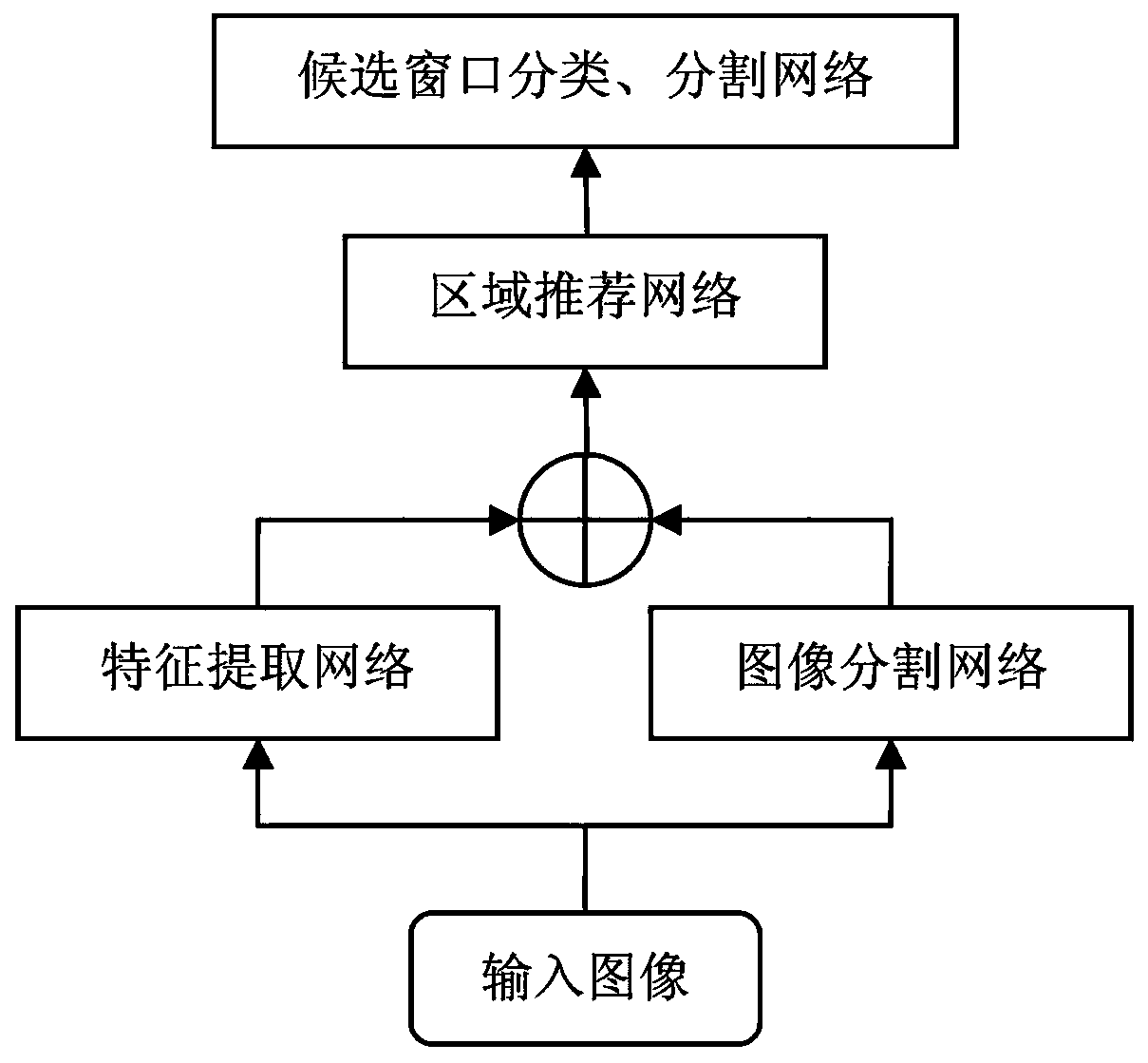

Object detection network design method based on image segmentation feature fusion

InactiveCN109145769AGreat effect on large targetsImage analysisBiometric pattern recognitionImage segmentation algorithmImage segmentation

The invention discloses a design method of a target detection network based on fused image segmentation features, which is effective for large-scale targets. Based on the general target detection framework Mask RCNN and image segmentation feature fusion, the target segmentation feature and a ResNet-101 convolutional network are integrated into the rpn module, an RoI Pooling module and an RoI Alignmodule, the experiments show that the method is effective for large targets, and the image segmentation algorithm for small targets can be improved completely if the image segmentation effect is ideal.

Owner:LIAONING UNIVERSITY OF TECHNOLOGY

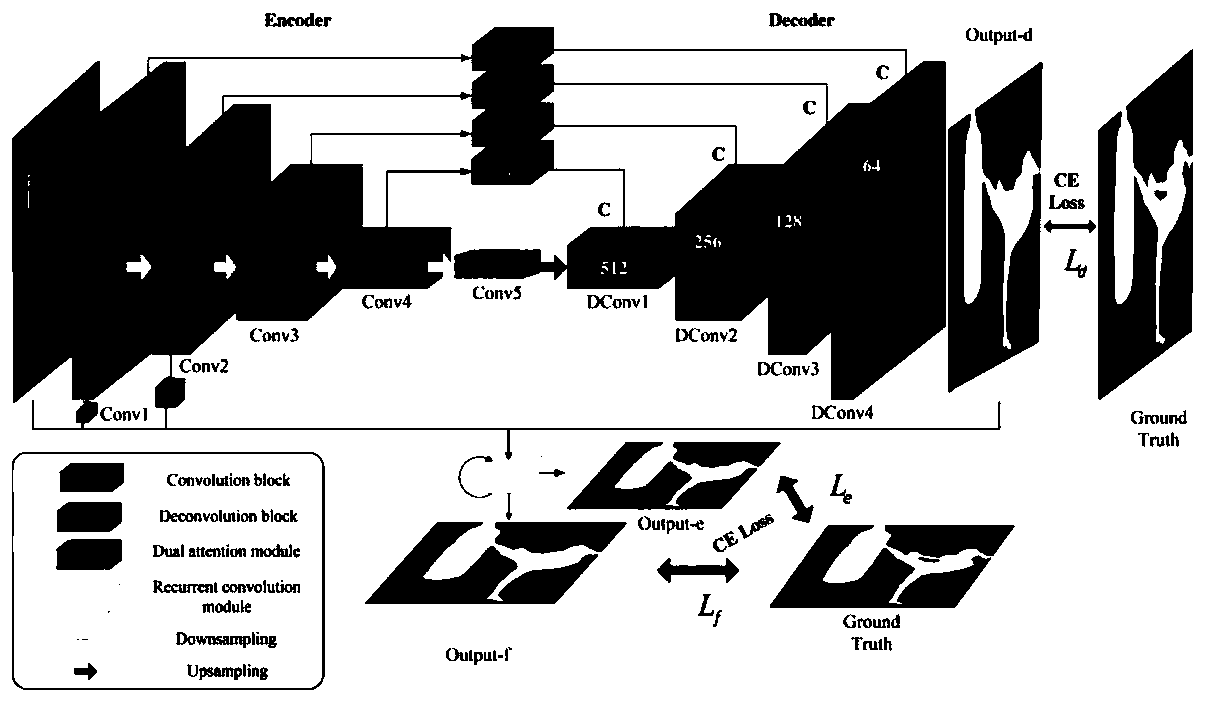

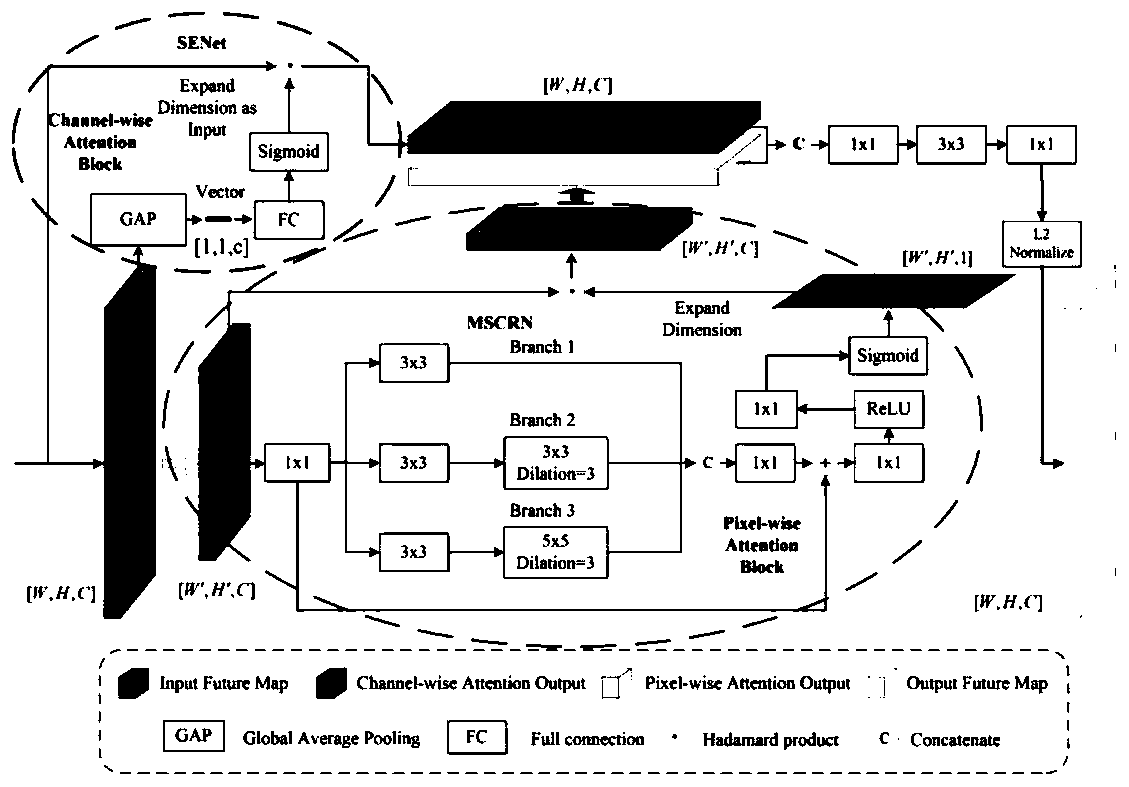

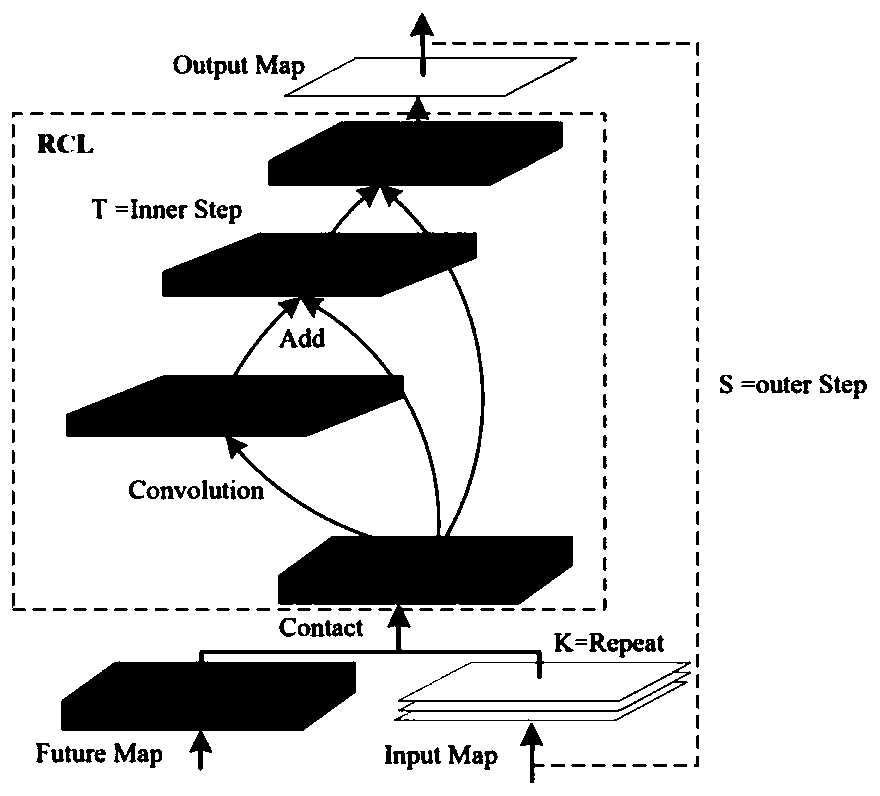

Multi-feature cyclic convolution saliency target detection method based on attention mechanism

InactiveCN110648334AFind out quicklyFind out exactlyImage enhancementImage analysisEncoder decoderFeature fusion

The invention discloses a multi-feature cyclic convolution significance target detection method based on an attention mechanism. The method comprises the following steps: ; the method comprises the following steps of: 1, analyzing common characteristics of a salient target in a natural image, including spatial distribution and contrast characteristics, using an improved U-Net full convolutional neural network, performing pixel-by-pixel prediction by adopting an encoder-decoder structure, and performing multi-level and multi-scale characteristic fusion between an encoder and a decoder by adopting a cross-layer connection mode; secondly, a large number of clutters can be introduced to interfere with the generation of a final prediction graph by carrying out concentage fusion on coding end features and decoding end features, so that an attention module is introduced to calibrate full-pixel weights from two angles between channels and between pixels, the task-related pixel weights are enhanced, and the background and noise influence is weakened; and 3, a multi-feature cyclic convolution module is used as a post-processing means, the spatial resolution capability is enhanced through iteration, the edge of an image region is further refined and segmented, and a finer significant target mask is obtained.

Owner:中国人民解放军火箭军工程大学

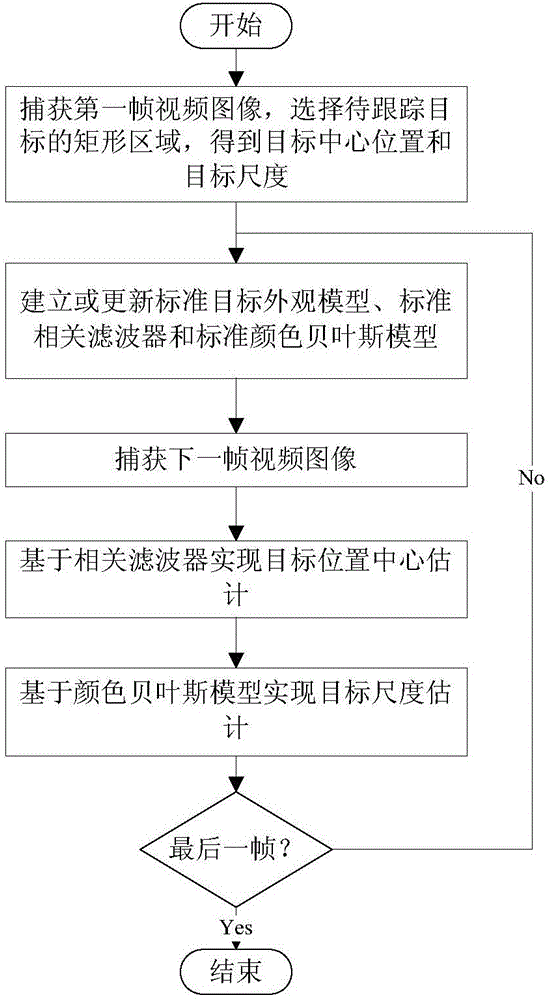

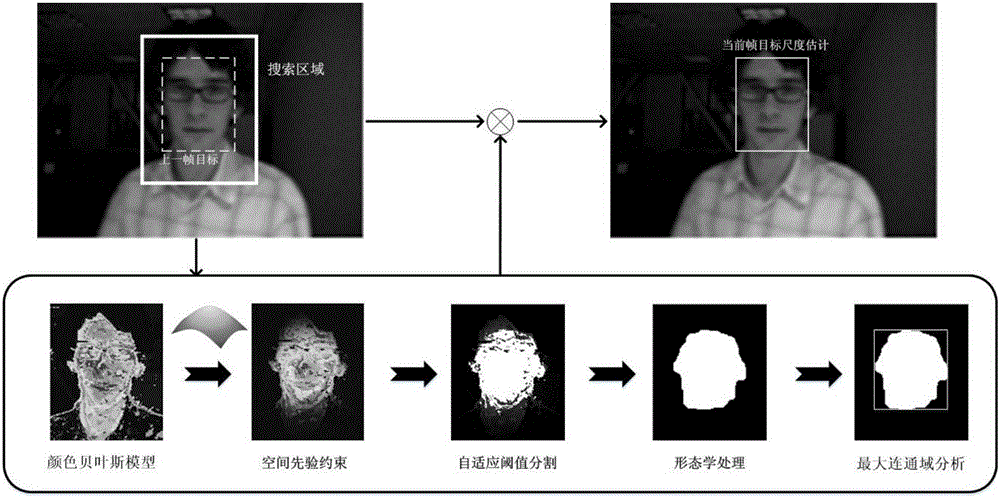

Kernel correlation filtering target tracking method based on feature fusion and Bayesian classification

ActiveCN106570486AFully characterize the targetAccurate Target Center Position EstimationCharacter and pattern recognitionMoving averageScale estimation

The invention provides a kernel correlation filtering target tracking method based on feature fusion and Bayesian classification. The method is characterized by comprising steps: firstly, the position and the scale information of an initial frame target are given; then, a standard target appearance model, a standard correlation filter and a standard color Bayesian model are built or updated; based on the target center point of a former frame, a search area is extracted; and a correlation filter of a Gaussian kernel is used for realizing target displacement estimation, the color Bayesian model is used for realizing target scale estimation, and the tracking result of the current frame is obtained further. Through sequentially processing each frame of video image, tracking of a moving target in the video is realized. The target tracking method can effectively solve the problem of precisely positioning the moving target in the video and can realize target scale estimation. In multiple challenging environments, the target can be tracked effectively, certain robustness is realized, and the tracking algorithm precision is improved.

Owner:SOUTH CHINA UNIV OF TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com