Infrared behavior identification method based on adaptive fusion of artificial design feature and depth learning feature

A technology of deep learning and recognition methods, applied in the field of image processing and computer vision, can solve the problem of loss of optical video surveillance, and achieve the effect of improving reliability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0039] The preferred embodiments of the present invention will be described in detail below with reference to the accompanying drawings.

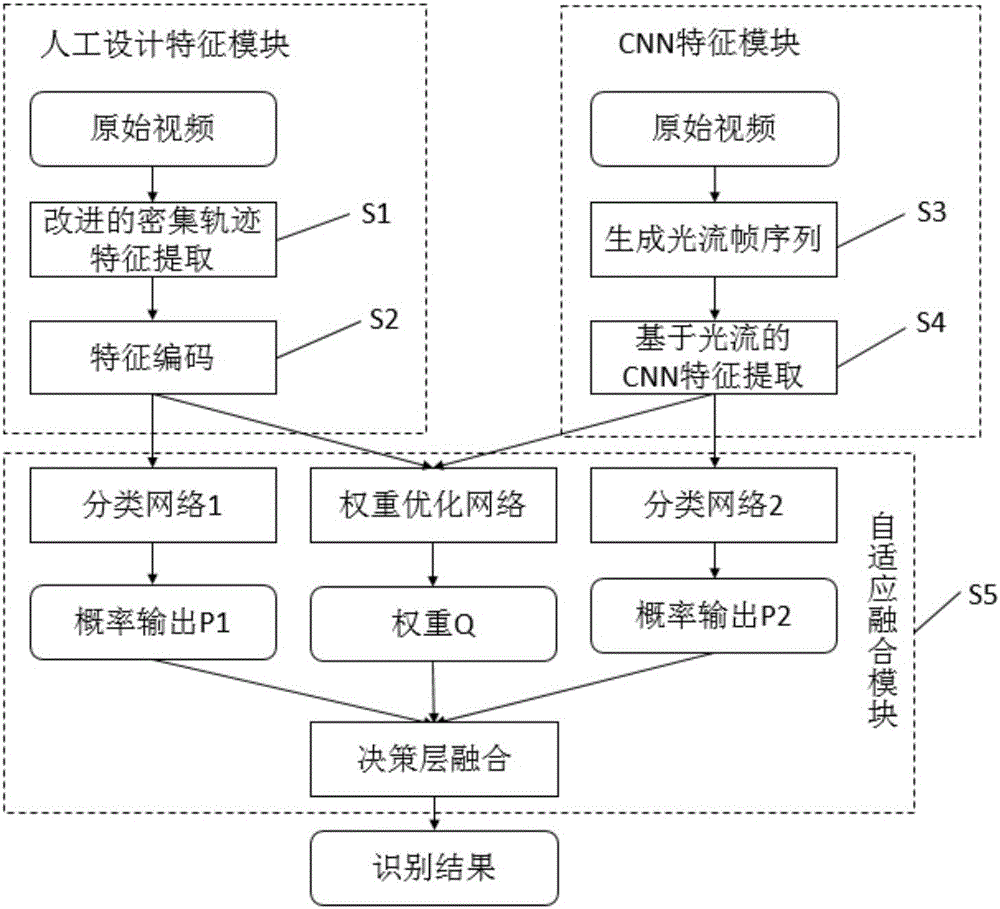

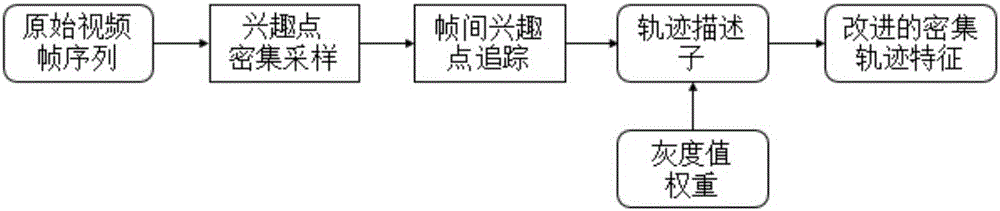

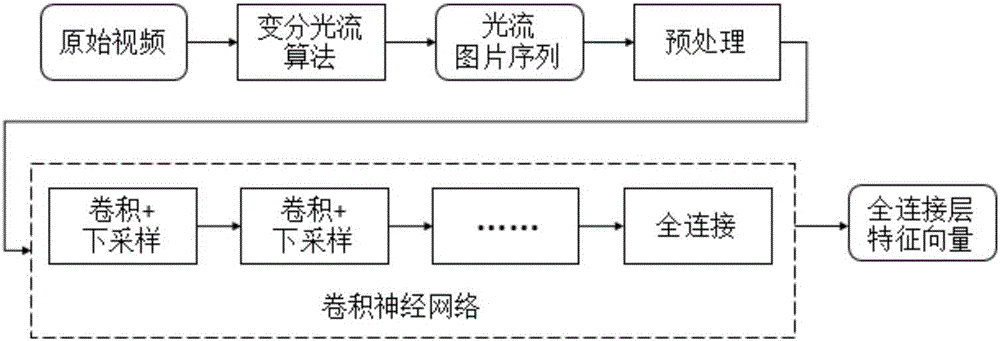

[0040] In the present invention, the feature module is artificially designed to extract the improved dense trajectory feature from the original video, and the extracted features are encoded; the improved dense trajectory feature is added gray value weight to the descriptor of the original dense trajectory information, the improved dense trajectory feature mainly embodies the spatiotemporal information of the video image sequence, and highlights the foreground motion information of the image sequence; the CNN feature module uses a variational optical flow algorithm to extract the optical flow information from the original infrared video image sequence, forming For the optical flow image sequence, the extracted optical flow graph is used as the input of the convolutional neural network, and the features of the fully connected layer of the conv...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com