Patents

Literature

5971 results about "Information extraction" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

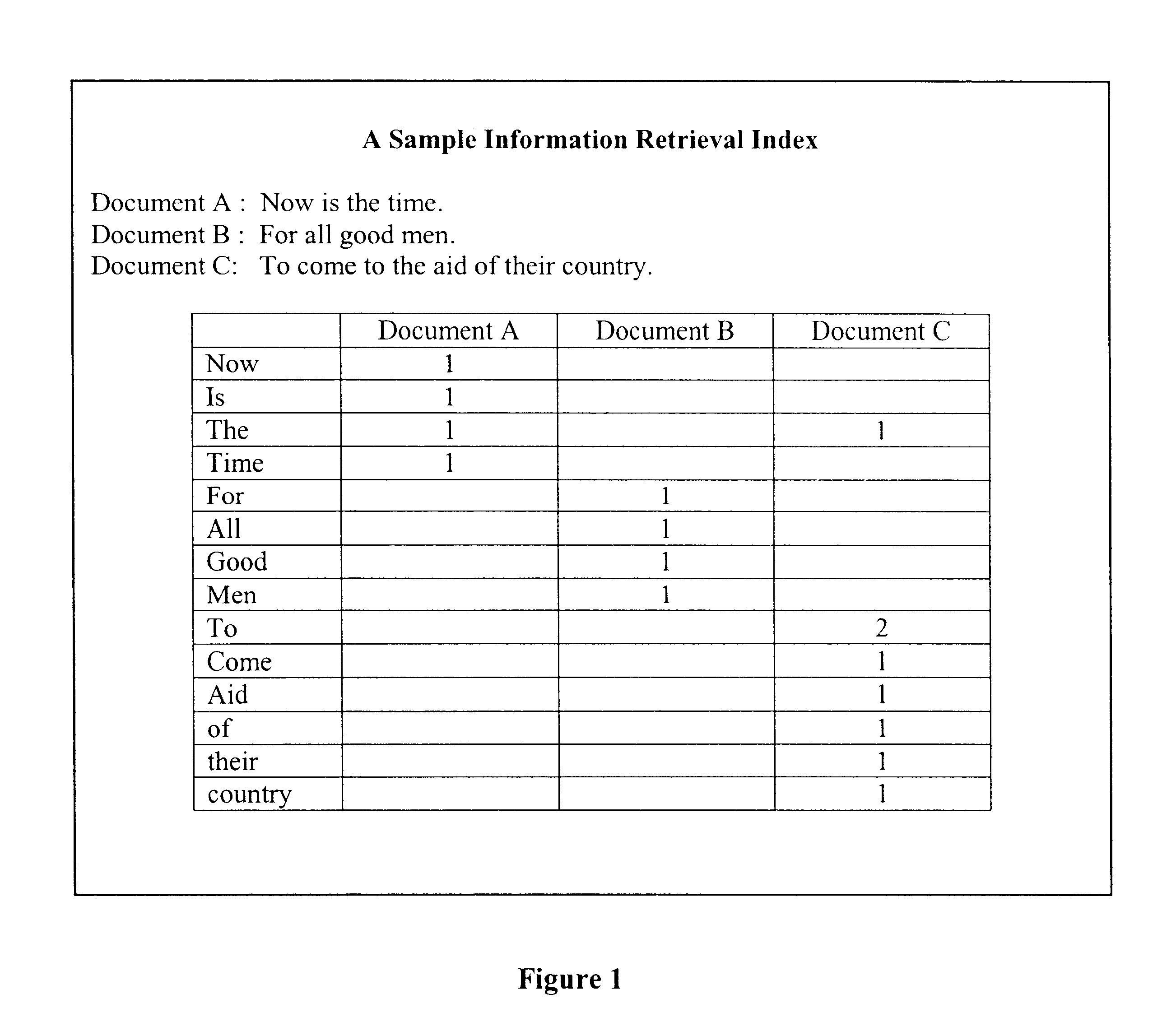

Information extraction (IE) is the task of automatically extracting structured information from unstructured and/or semi-structured machine-readable documents. In most of the cases this activity concerns processing human language texts by means of natural language processing (NLP). Recent activities in multimedia document processing like automatic annotation and content extraction out of images/audio/video/documents could be seen as information extraction Due to the difficulty of the problem, current approaches to IE focus on narrowly restricted domains.

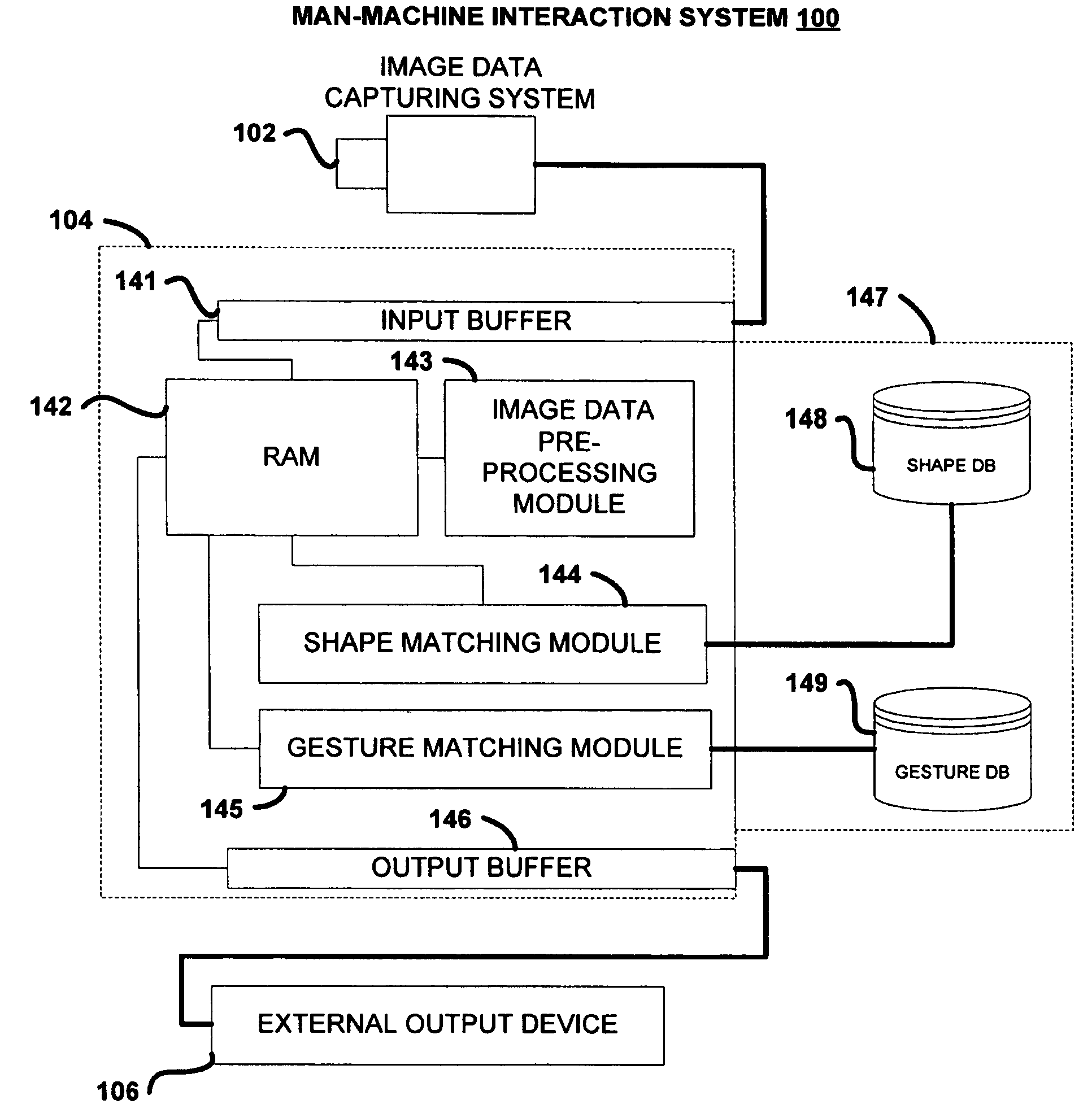

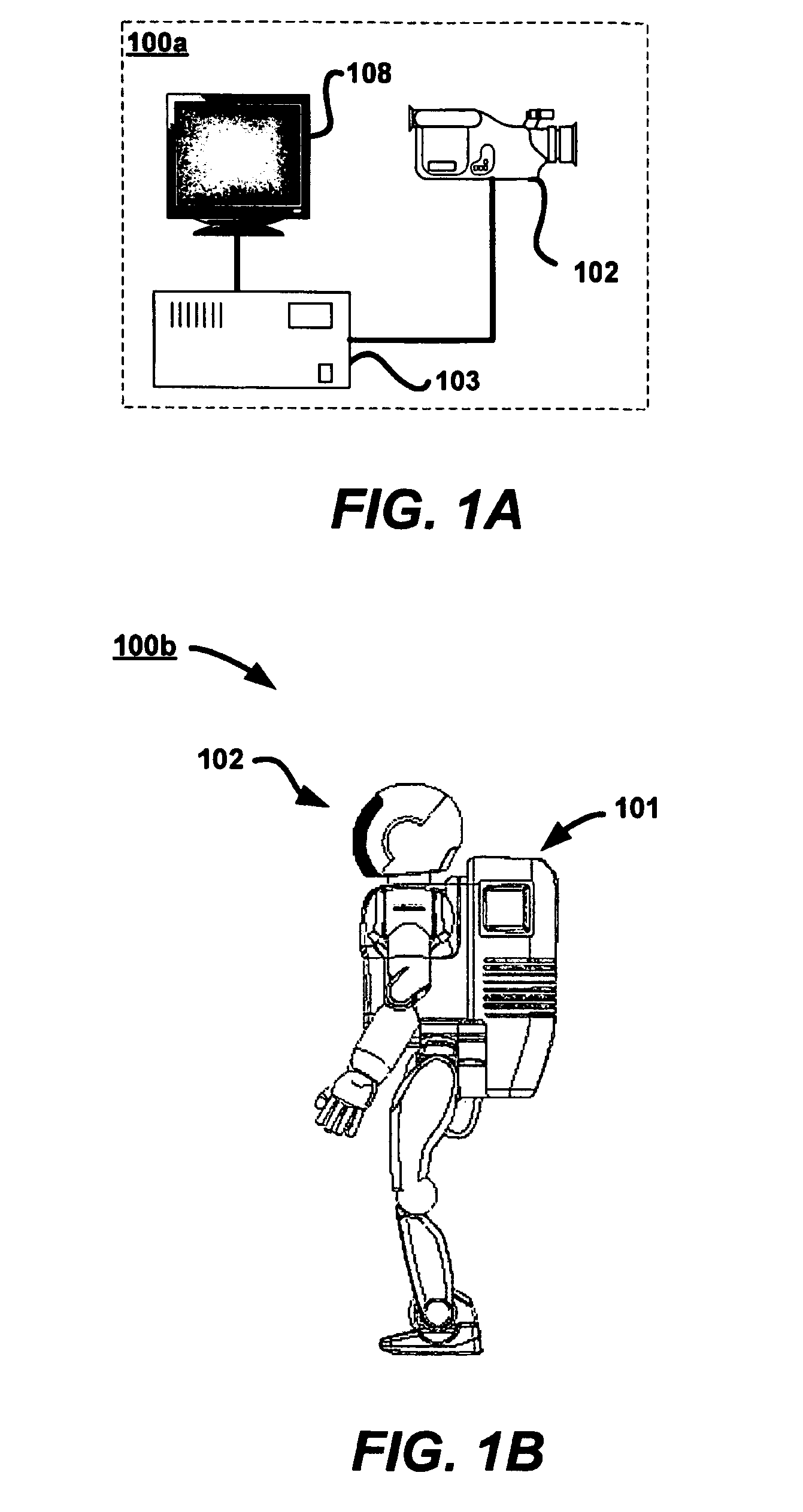

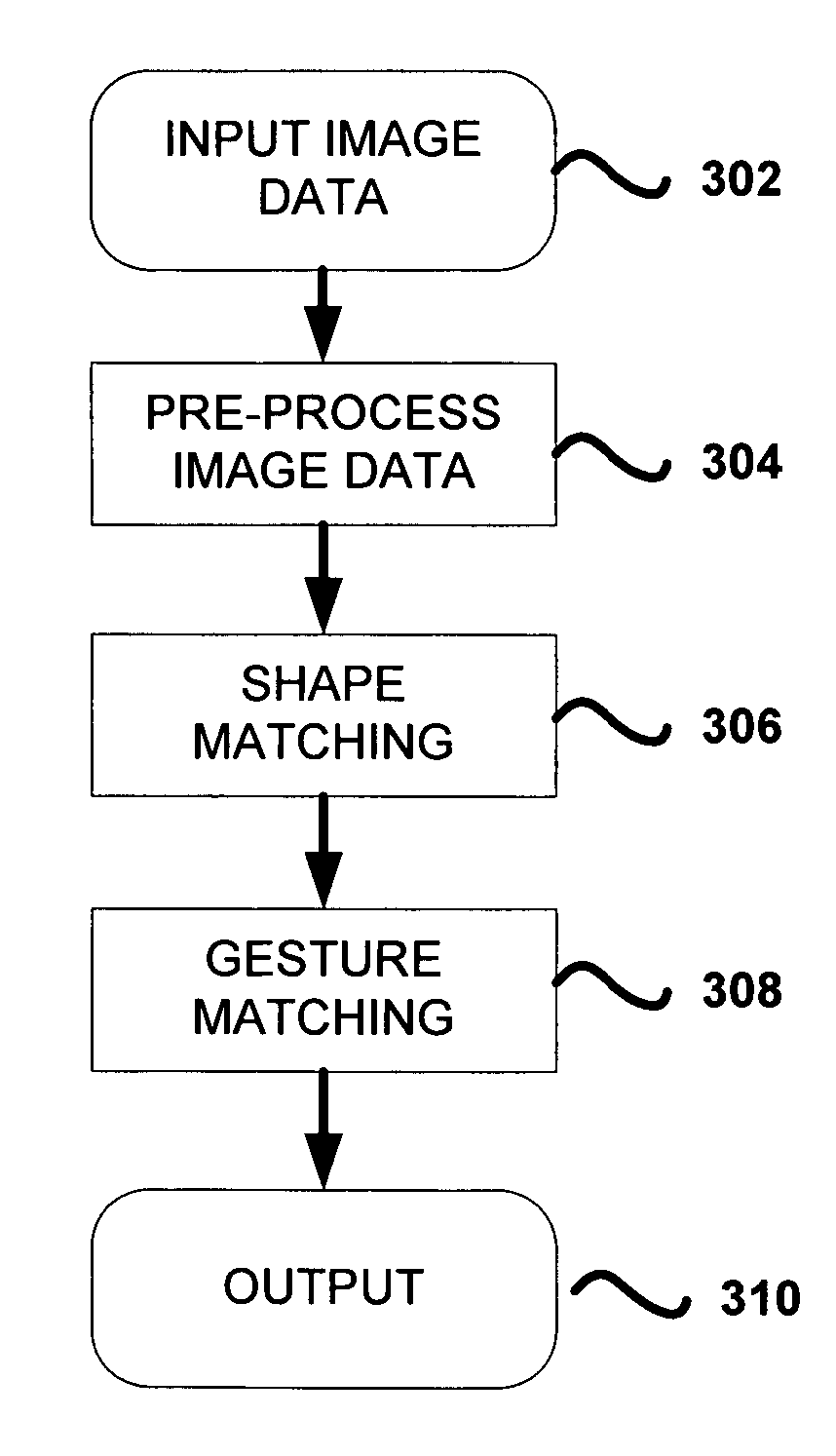

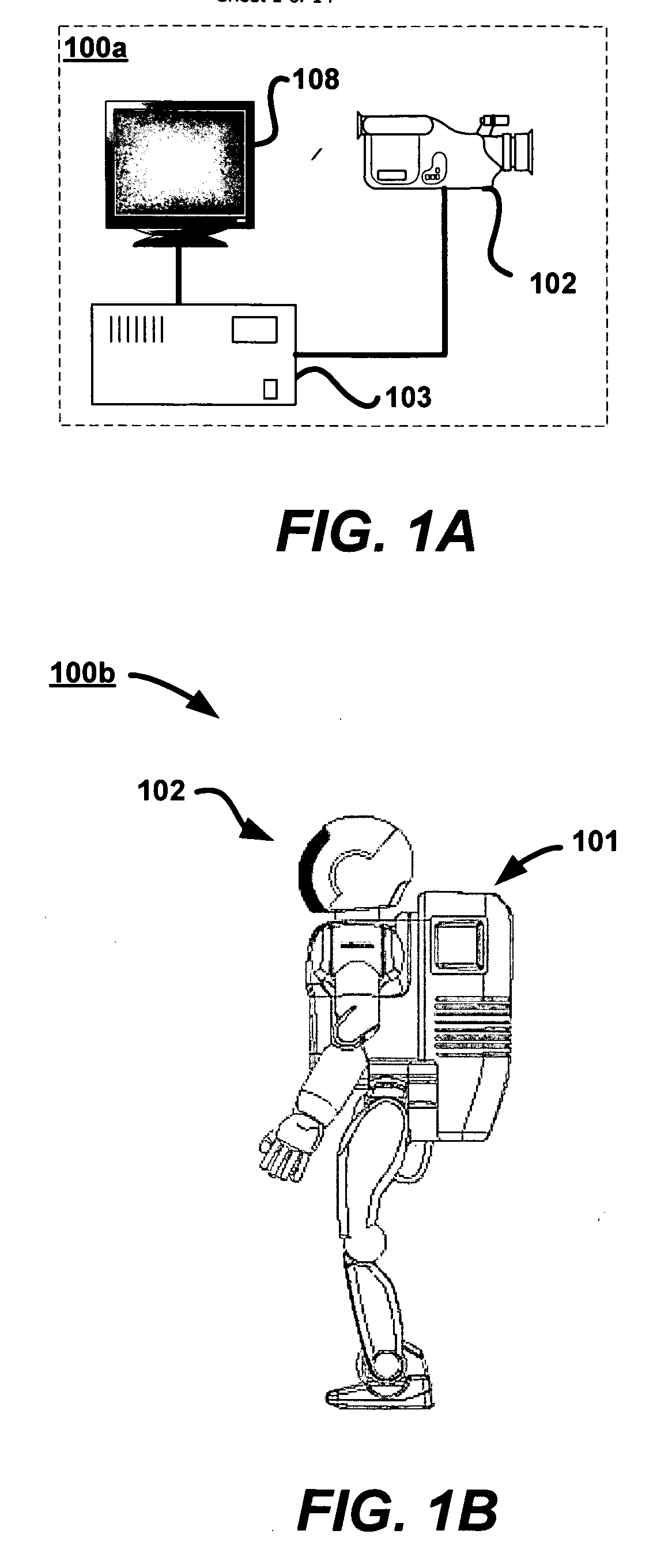

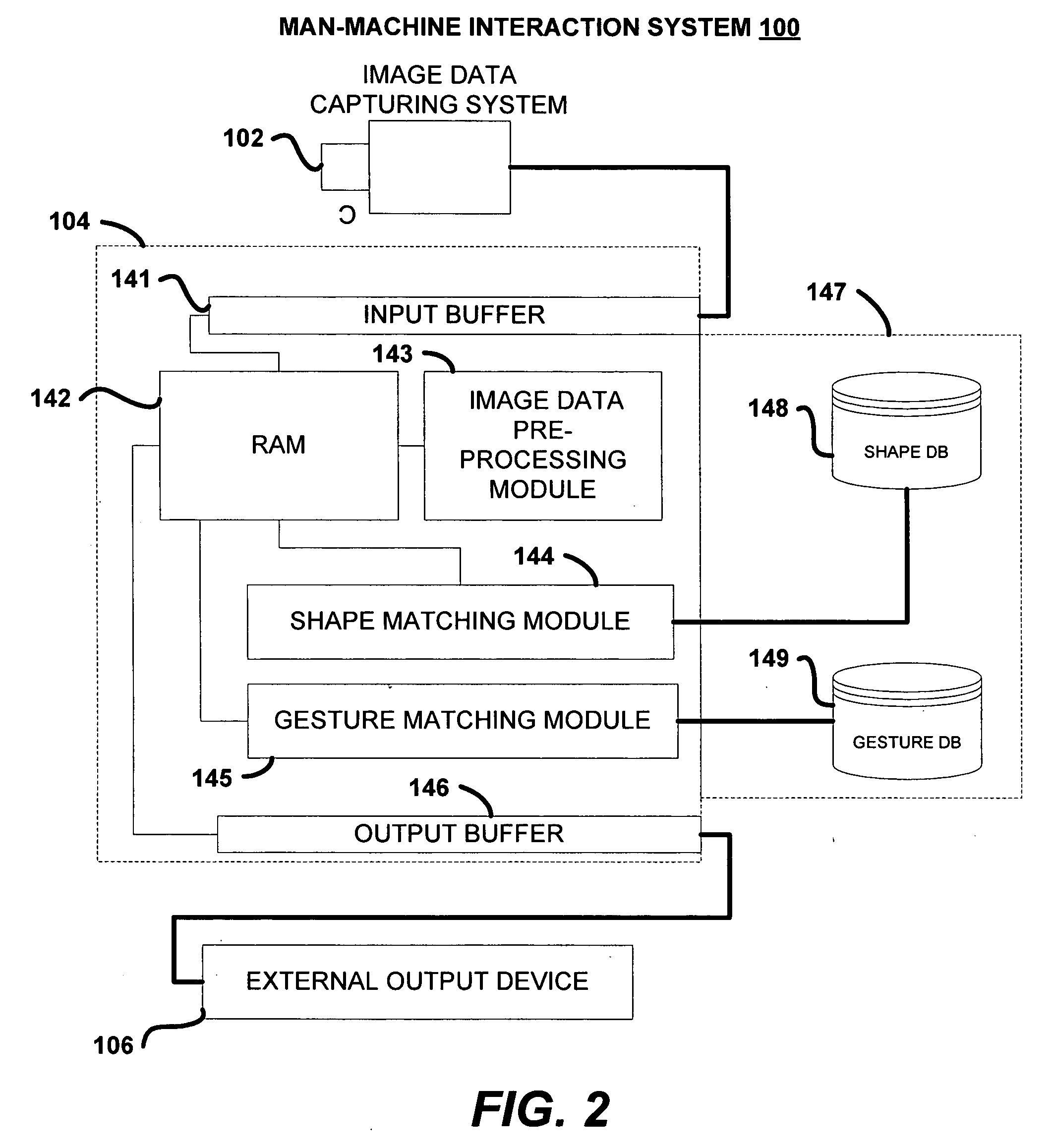

Sign based human-machine interaction

Communication is an important issue in man-to-robot interaction. Signs can be used to interact with machines by providing user instructions or commands. Embodiment of the present invention include human detection, human body parts detection, hand shape analysis, trajectory analysis, orientation determination, gesture matching, and the like. Many types of shapes and gestures are recognized in a non-intrusive manner based on computer vision. A number of applications become feasible by this sign-understanding technology, including remote control of home devices, mouse-less (and touch-less) operation of computer consoles, gaming, and man-robot communication to give instructions among others. Active sensing hardware is used to capture a stream of depth images at a video rate, which is consequently analyzed for information extraction.

Owner:THE OHIO STATE UNIV RES FOUND +1

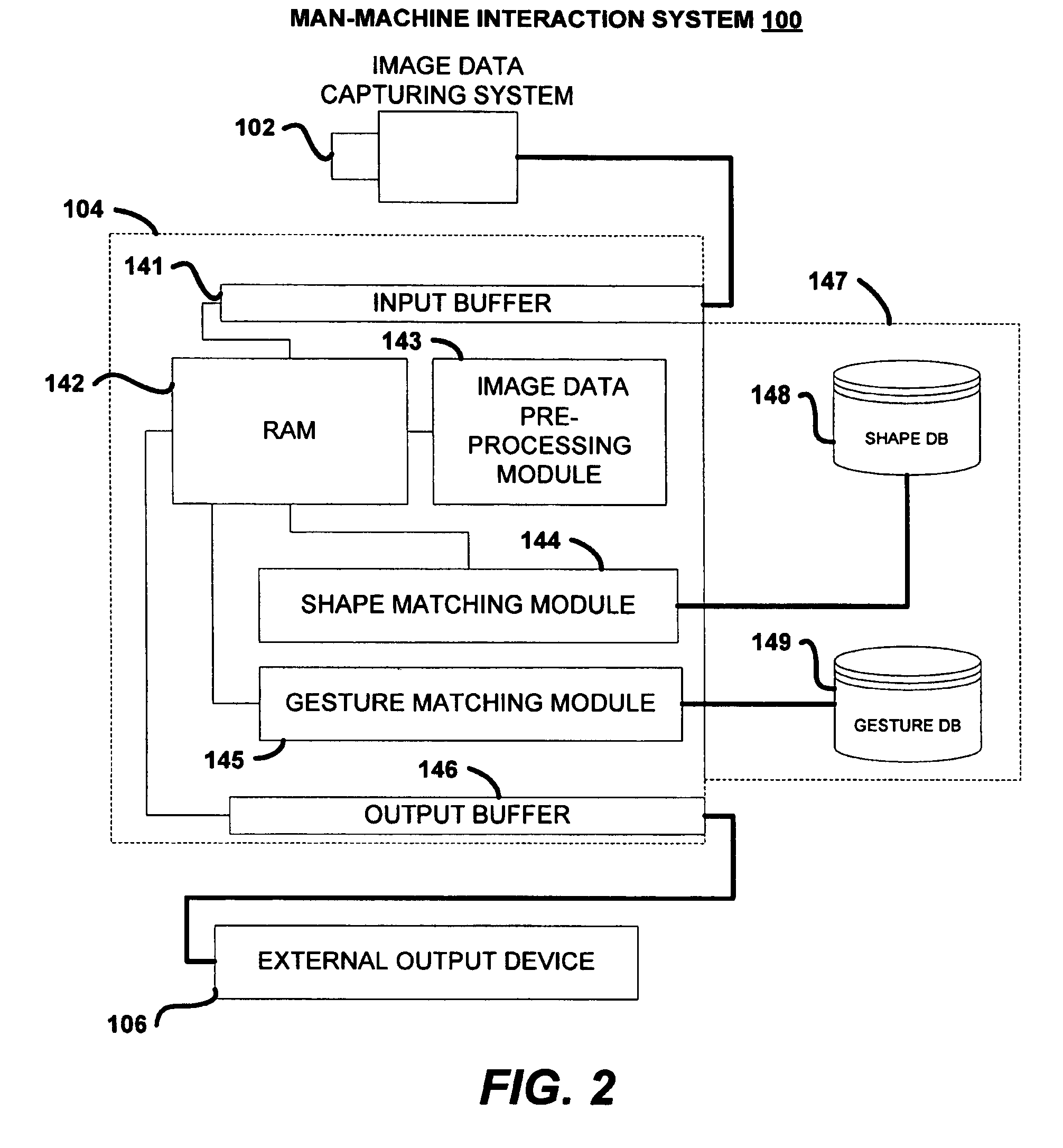

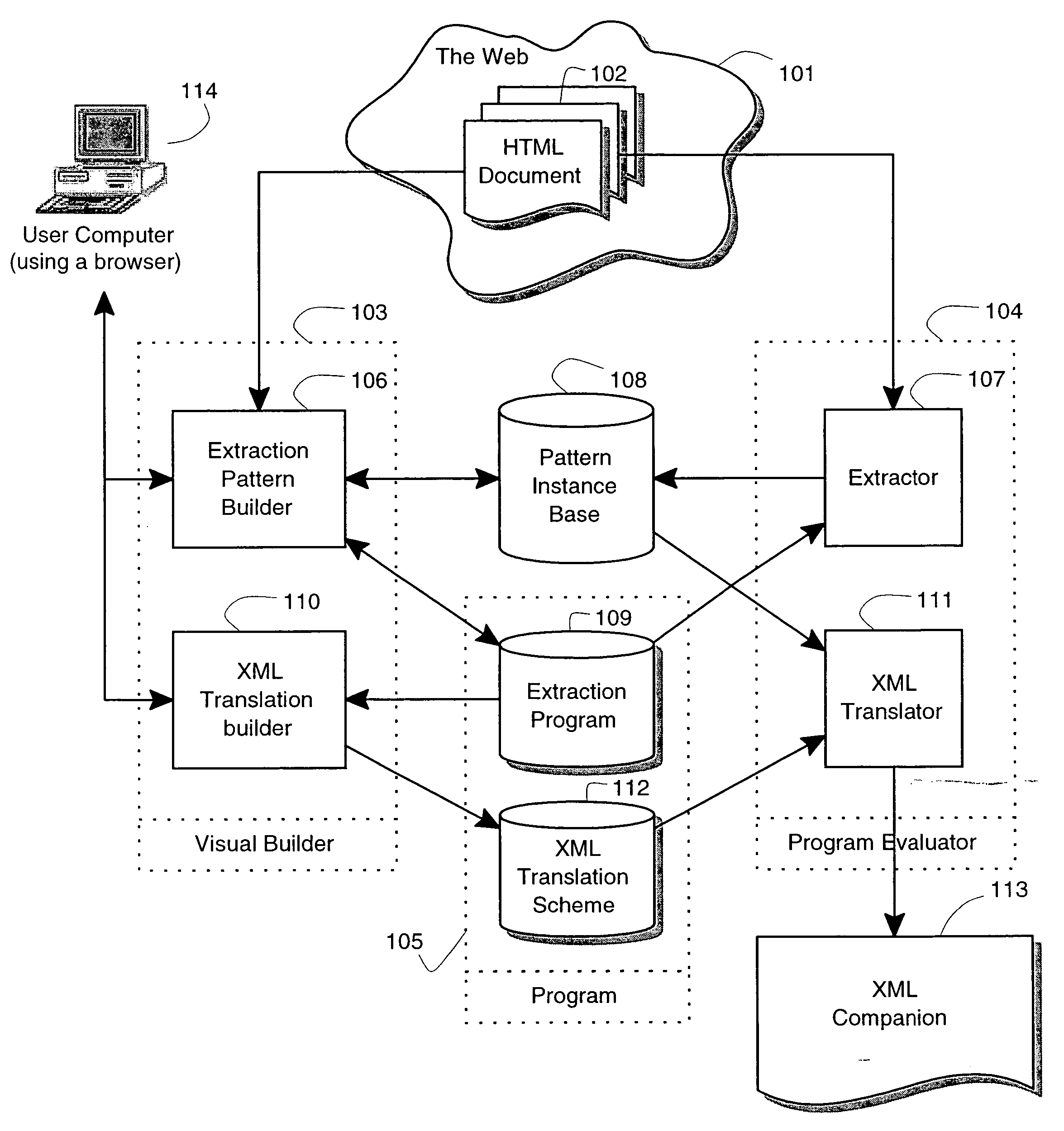

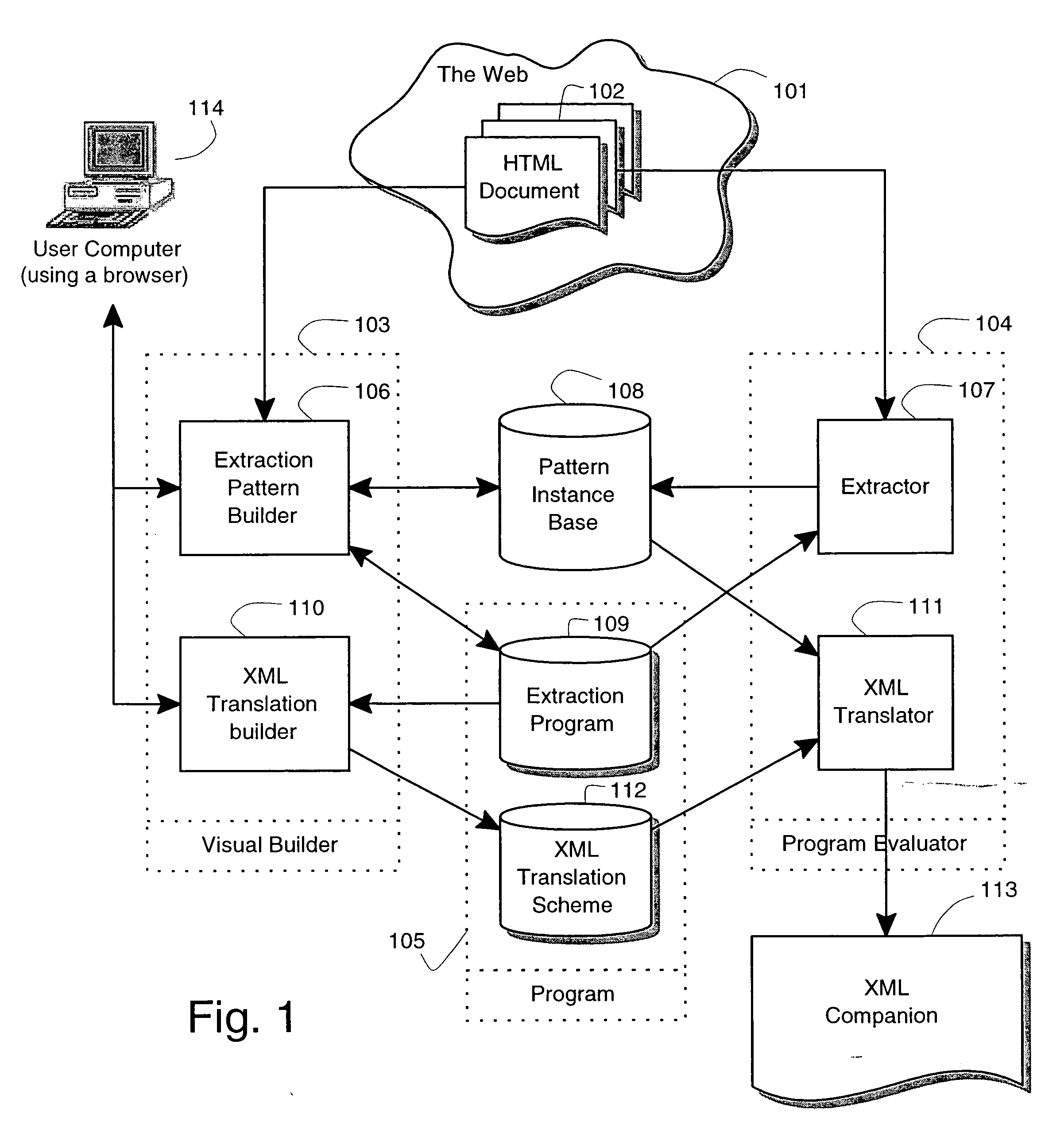

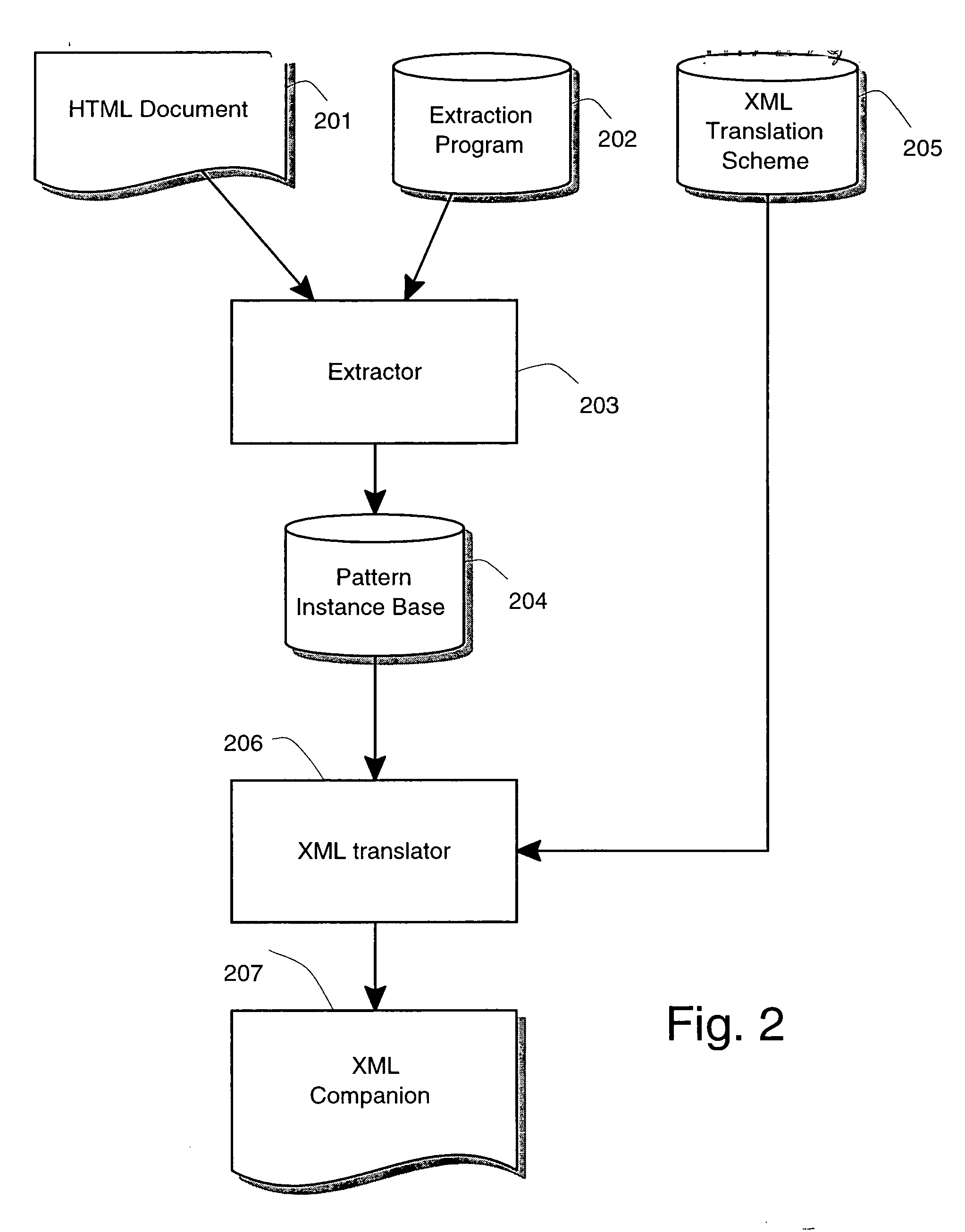

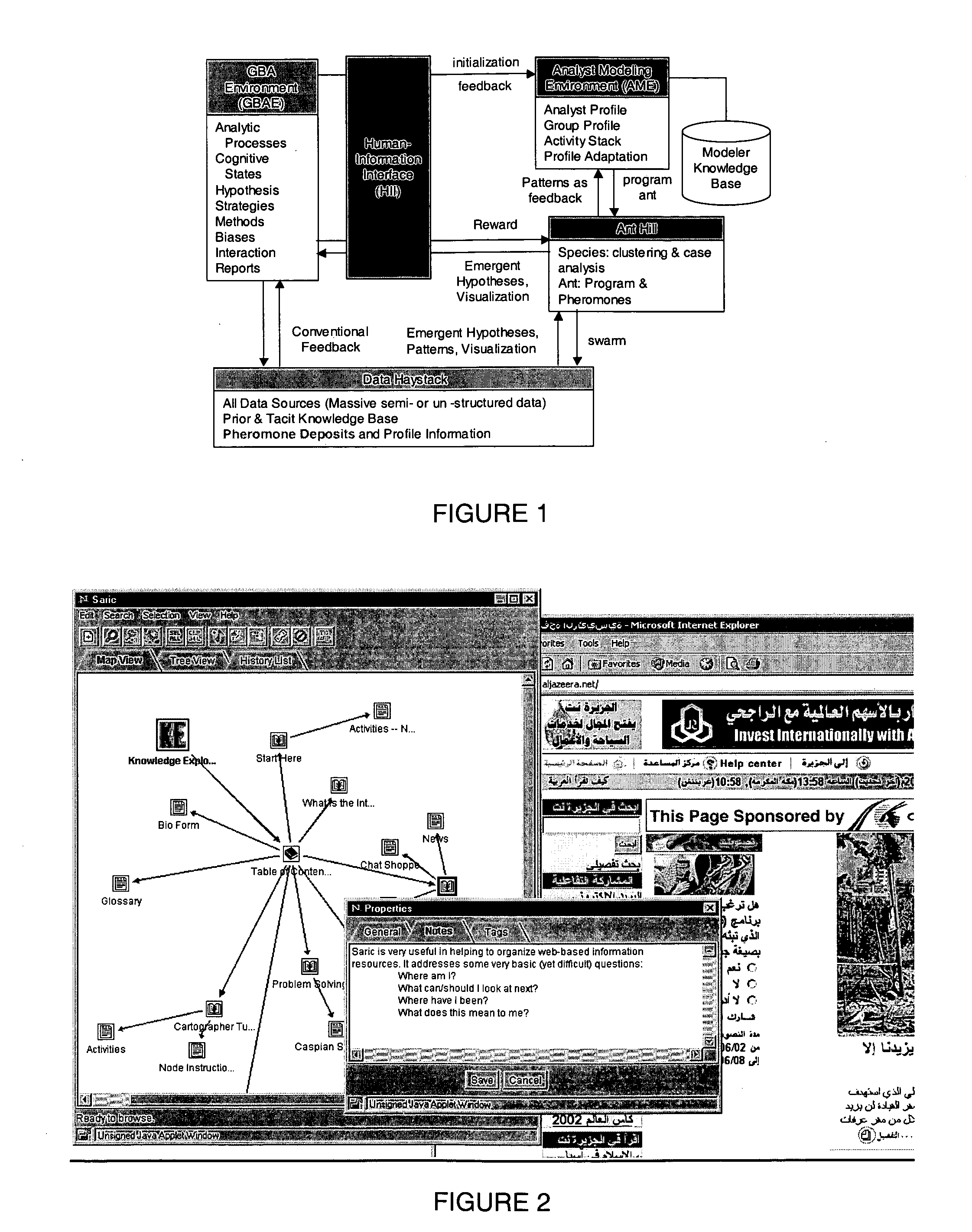

Visual and interactive wrapper generation, automated information extraction from web pages, and translation into xml

InactiveUS20050022115A1Data efficientEfficient codingDigital computer detailsSemi-structured data indexingData transformationEngineering

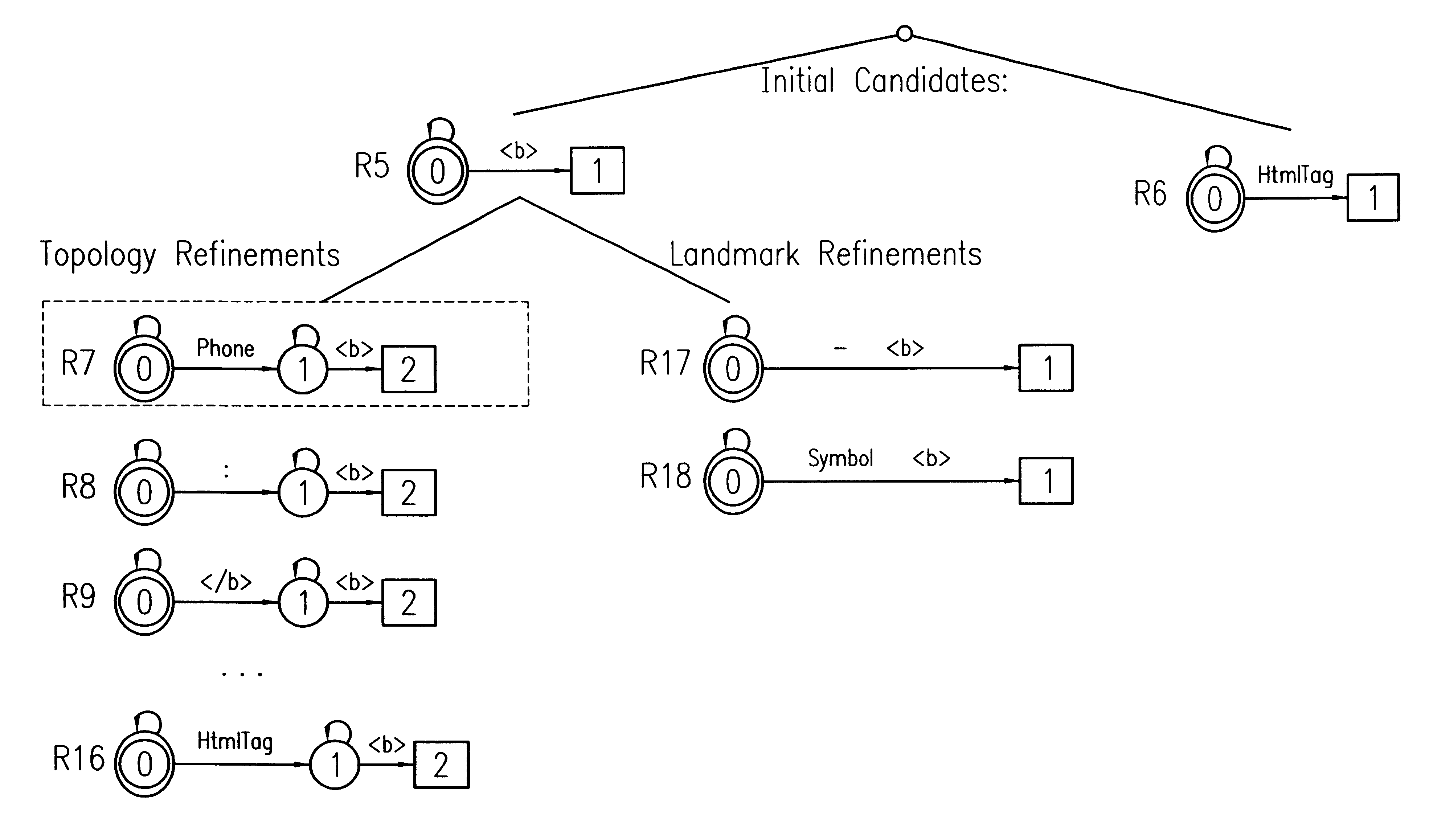

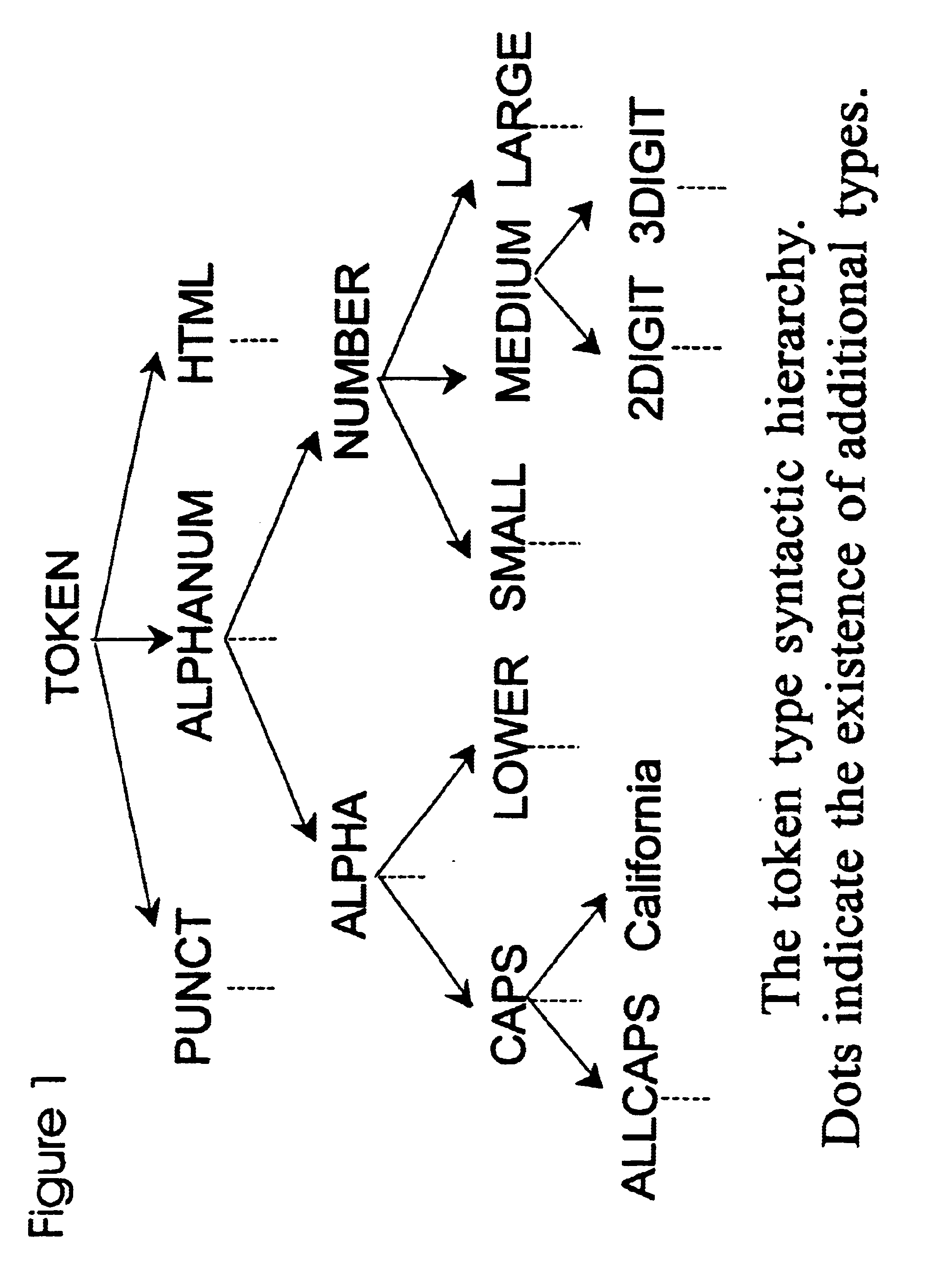

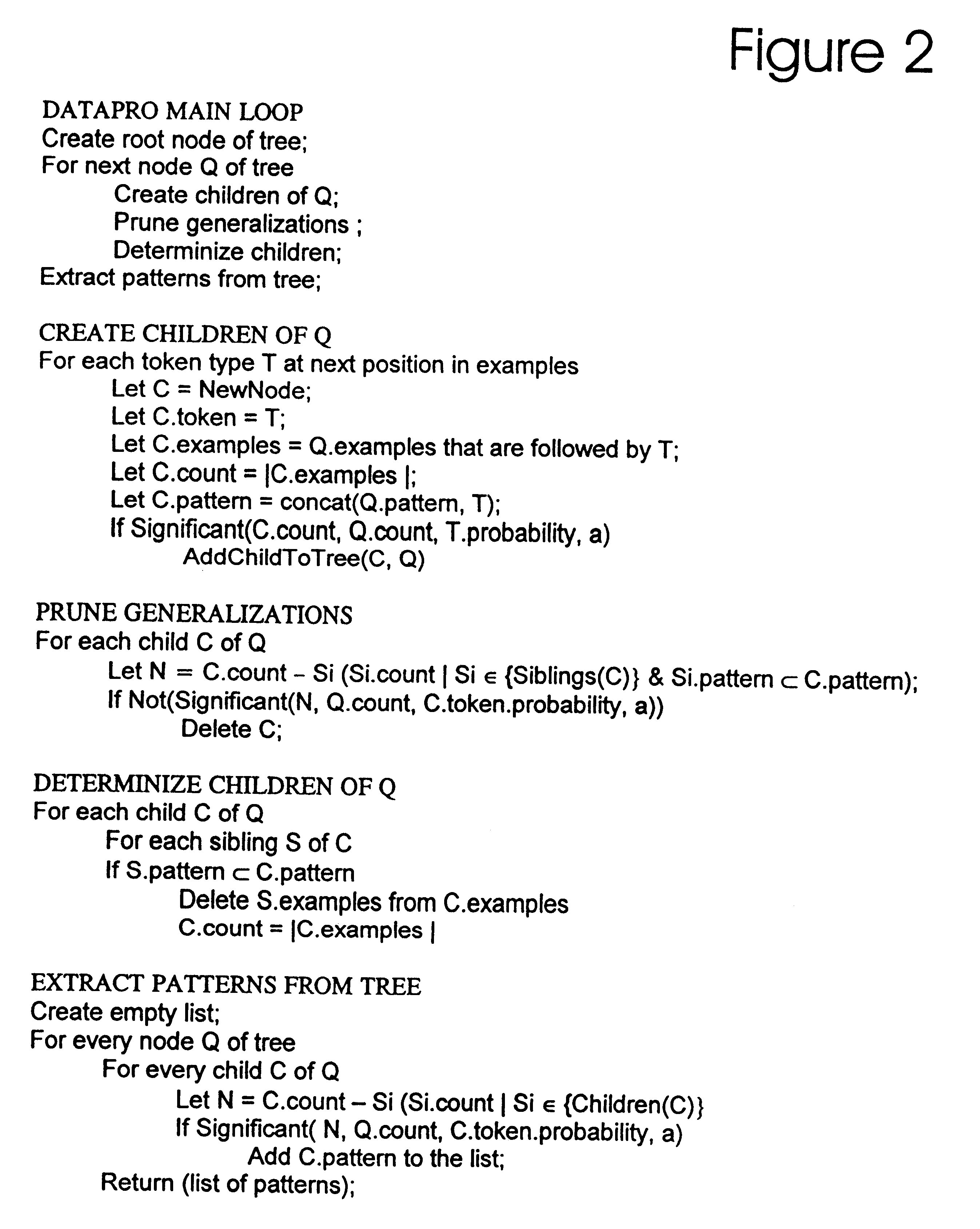

A method and a system for information extraction from Web pages formatted with markup languages such as HTML [8]. A method and system for interactively and visually describing information patterns of interest based on visualized sample Web pages [5,6,16-29]. A method and data structure for representing and storing these patterns [1]. A method and system for extracting information corresponding to a set of previously defined patterns from Web pages [2], and a method for transforming the extracted data into XML is described. Each pattern is defined via the (interactive) specification of one or more filters. Two or more filters for the same pattern contribute disjunctively to the pattern definition [3], that is, an actual pattern describes the set of all targets specified by any of its filters. A method and for extracting relevant elements from Web pages by interpreting and executing a previously defined wrapper program of the above form on an input Web page [9-14] and producing as output the extracted elements represented in a suitable data structure. A method and system for automatically translating said output into XML format by exploiting the hierarchical structure of the patterns and by using pattern names as XML tags is described.

Owner:LIXTO SOFTWARE

Sign based human-machine interaction

Communication is an important issue in man-to-robot interaction. Signs can be used to interact with machines by providing user instructions or commands. Embodiment of the present invention include human detection, human body parts detection, hand shape analysis, trajectory analysis, orientation determination, gesture matching, and the like. Many types of shapes and gestures are recognized in a non-intrusive manner based on computer vision. A number of applications become feasible by this sign-understanding technology, including remote control of home devices, mouse-less (and touch-less) operation of computer consoles, gaming, and man-robot communication to give instructions among others. Active sensing hardware is used to capture a stream of depth images at a video rate, which is consequently analyzed for information extraction.

Owner:THE OHIO STATE UNIV RES FOUND +1

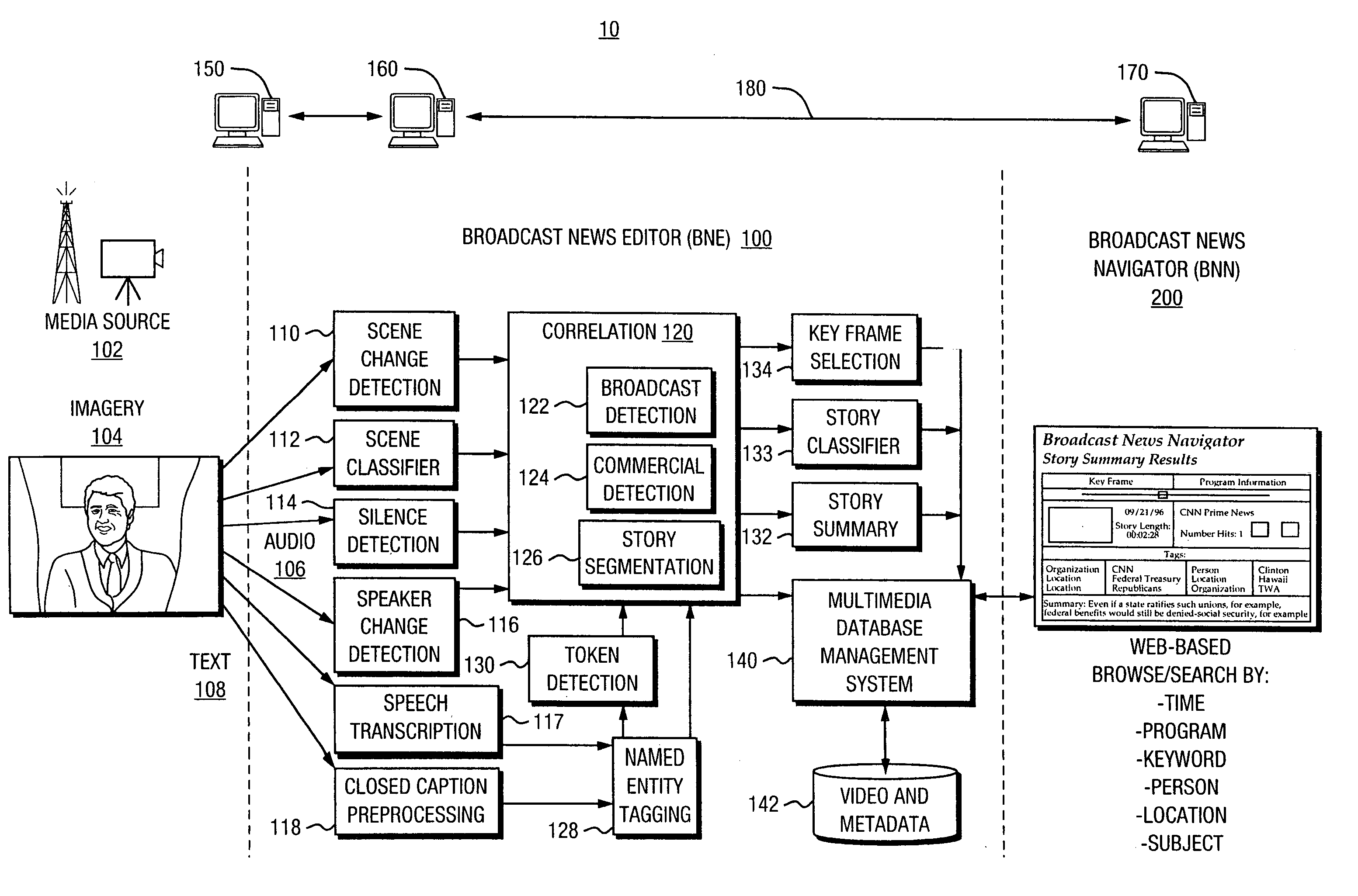

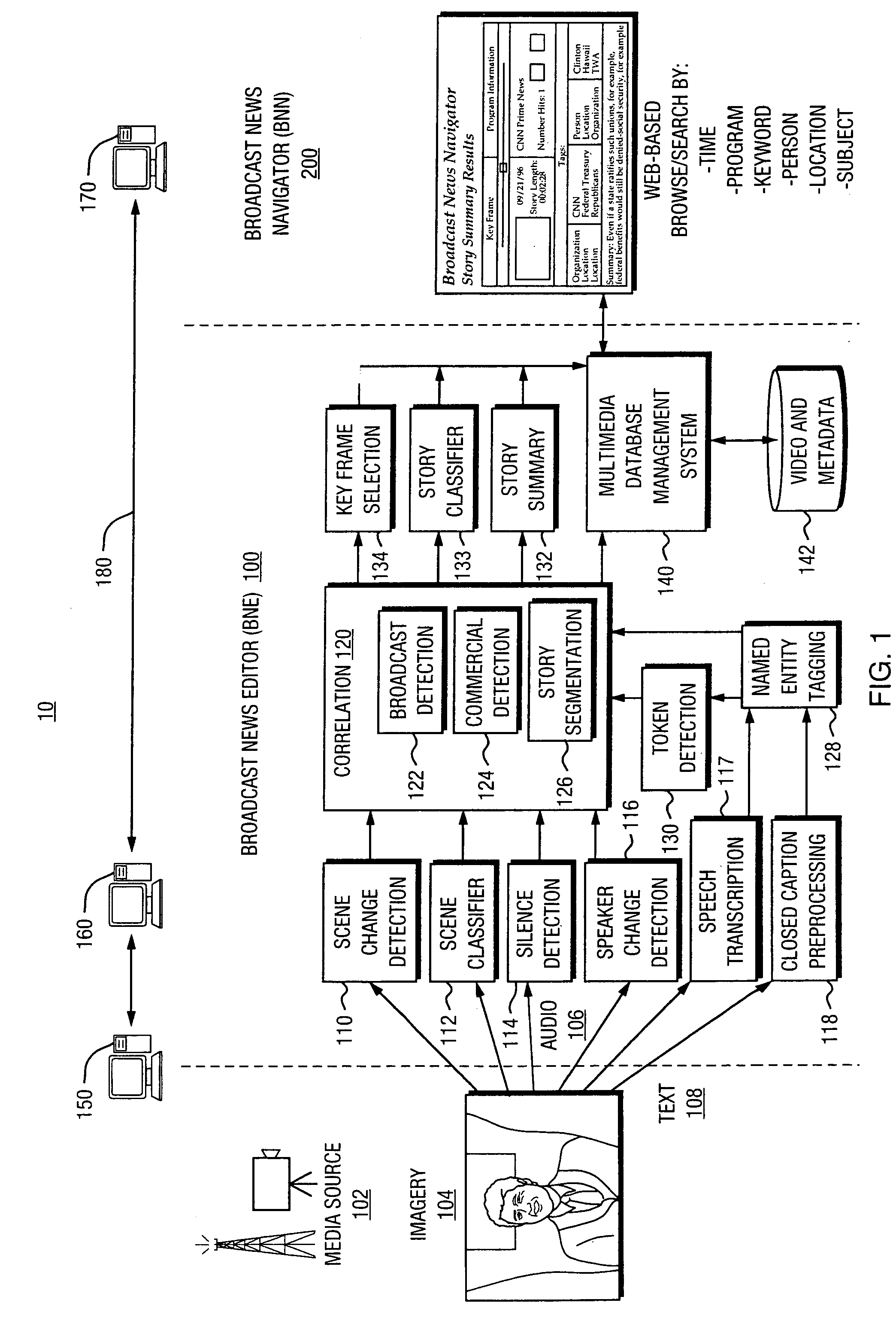

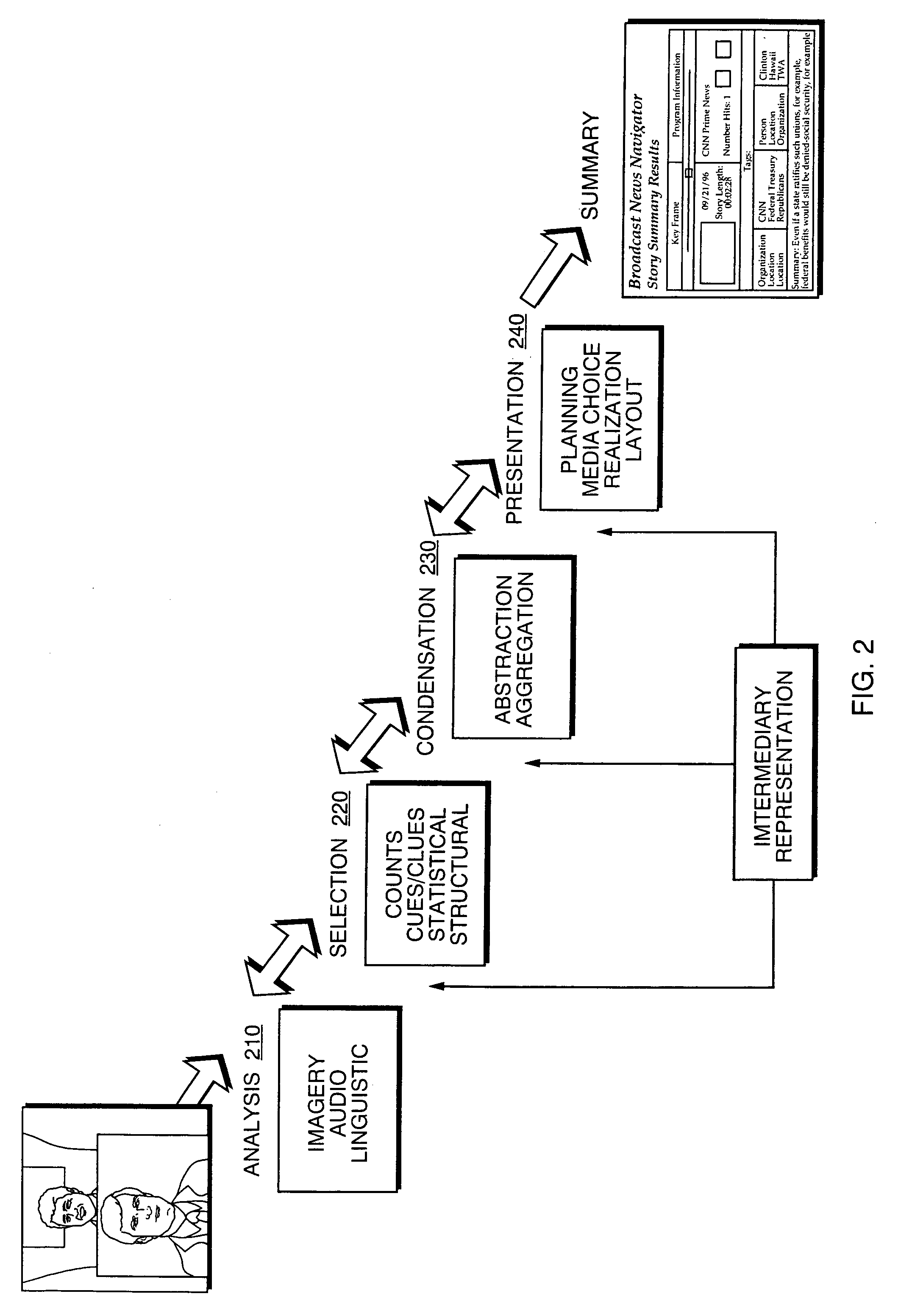

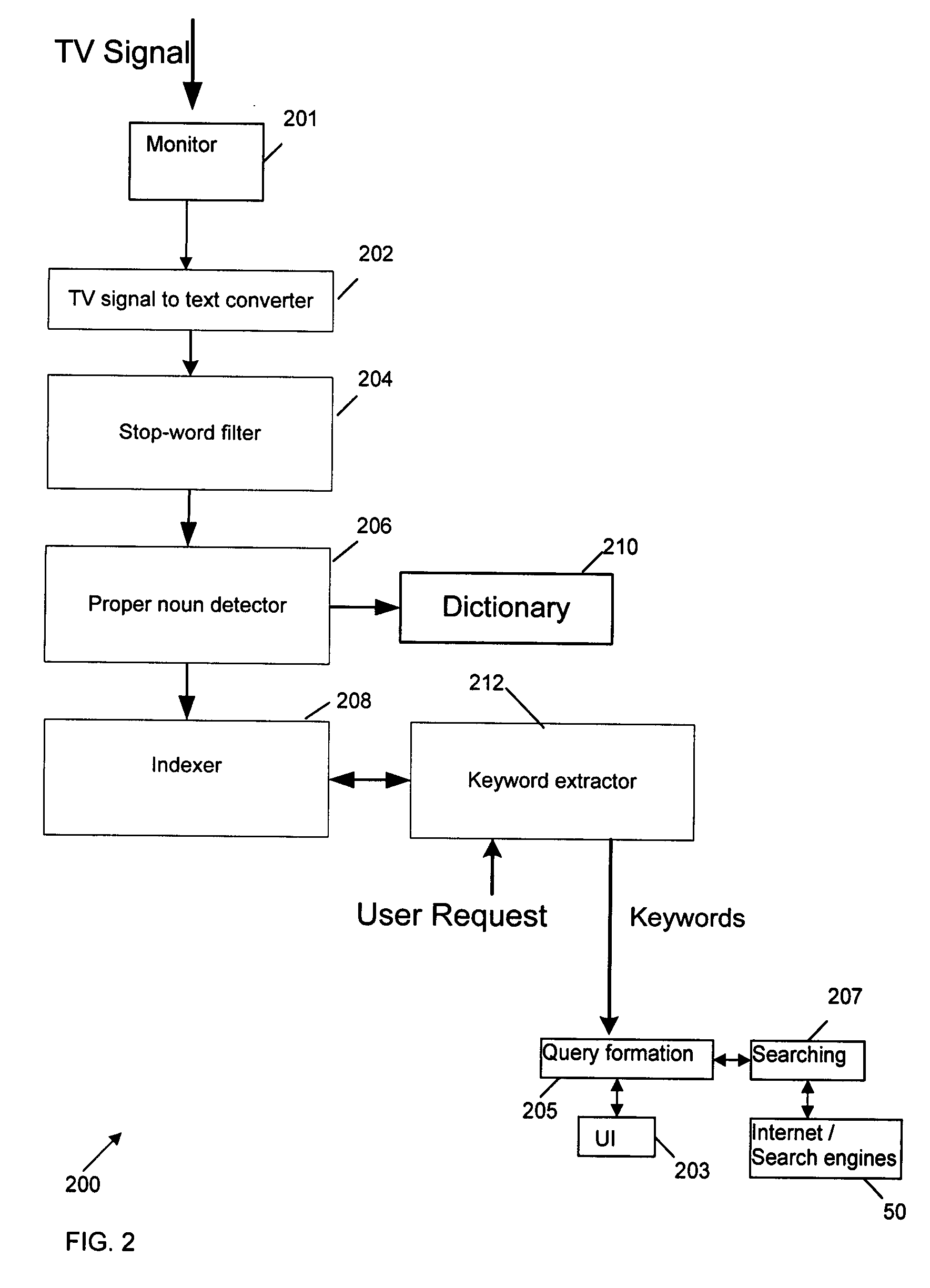

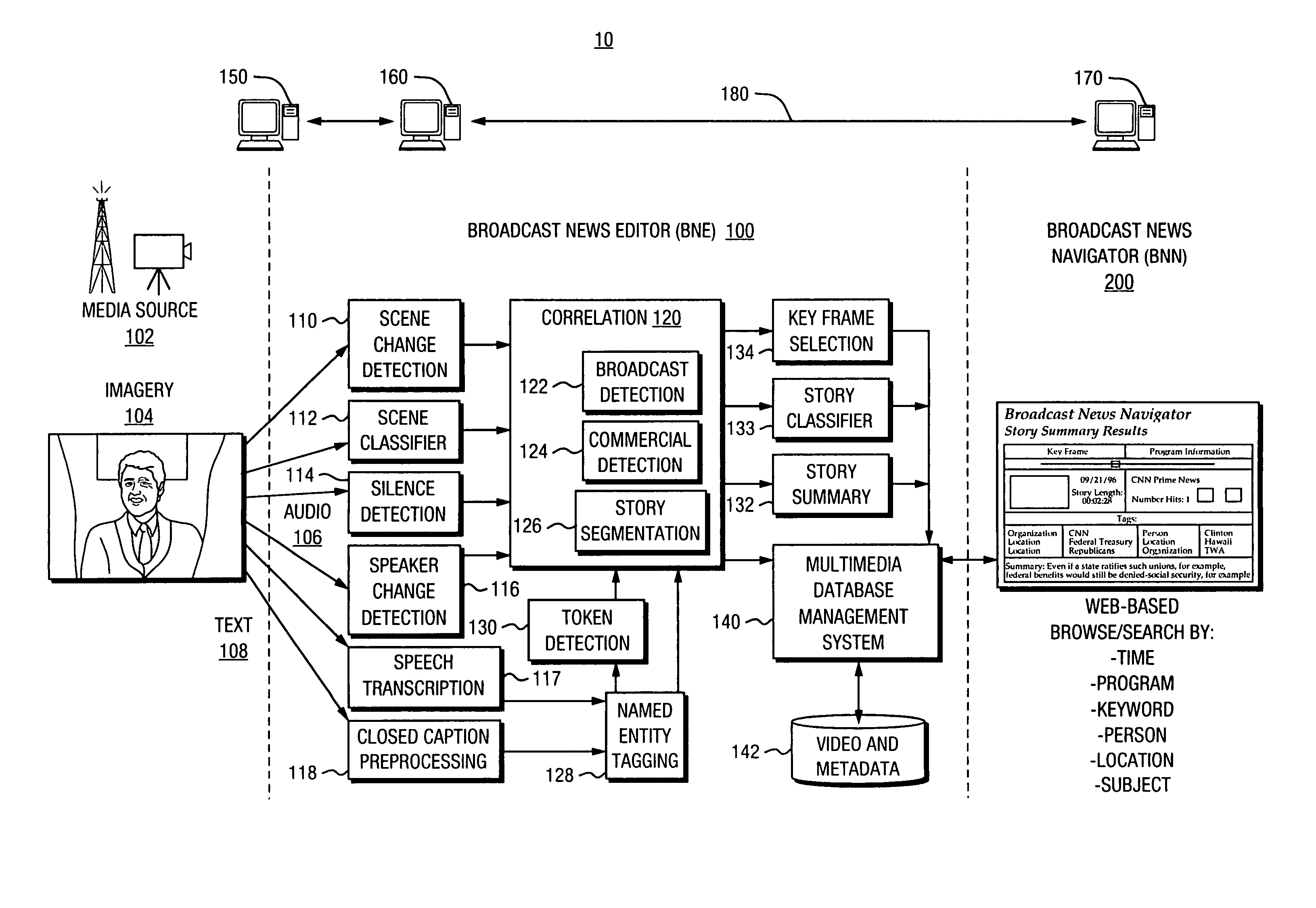

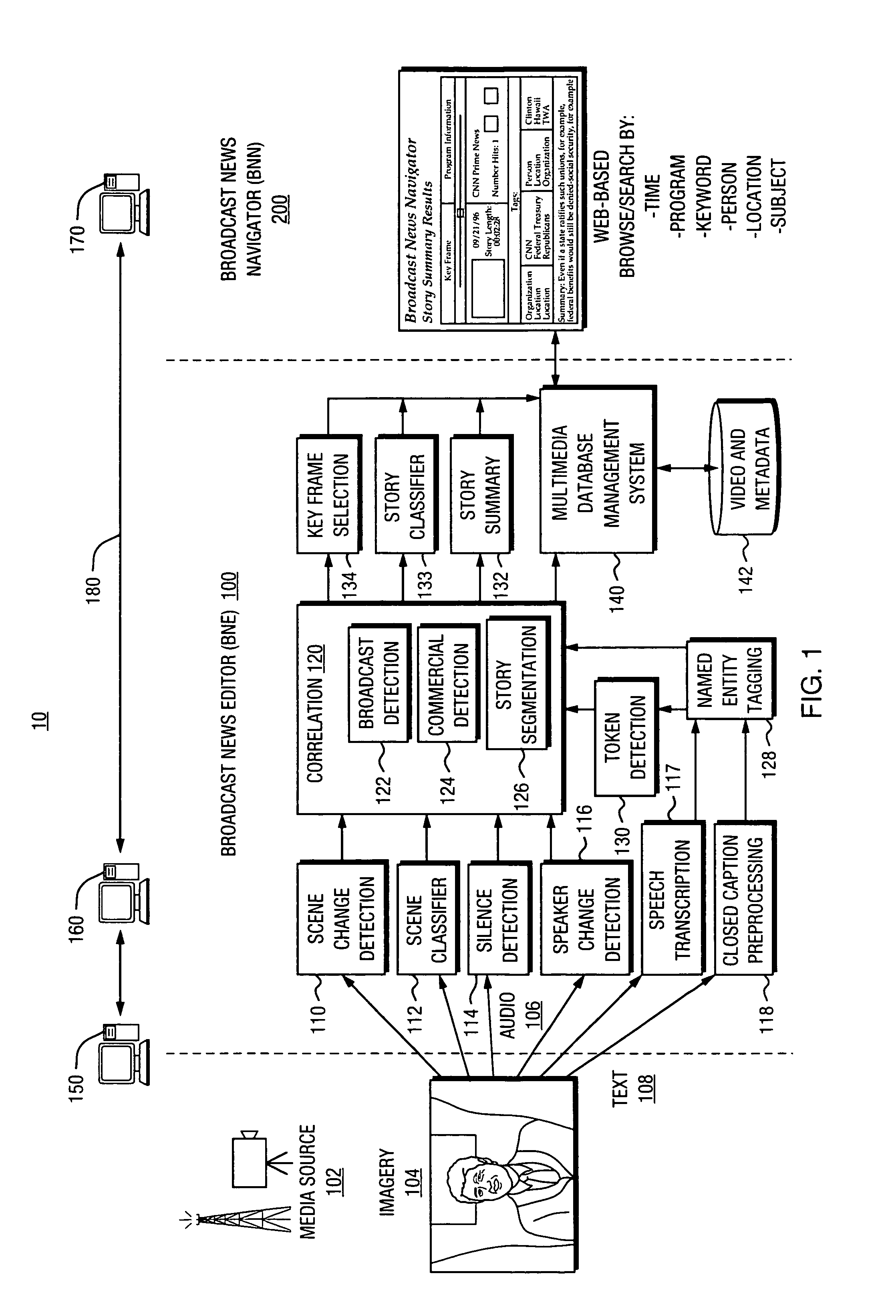

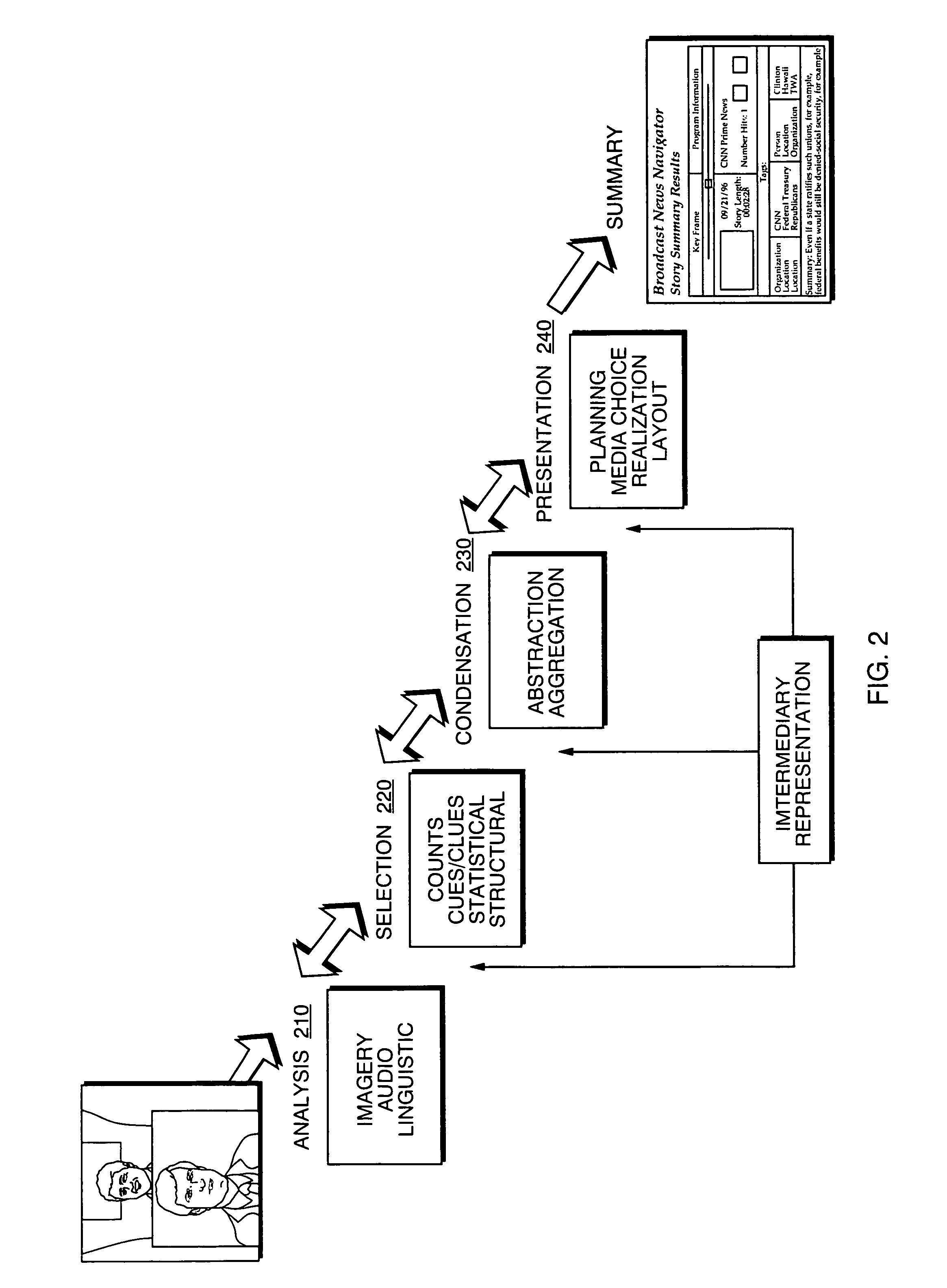

Automated segmentation, information extraction, summarization, and presentation of broadcast news

InactiveUS6961954B1More efficientMore timelyTelevision system detailsData processing applicationsSystems analysisWeb browser

A technique for automated analysis of multimedia, such as, for example, a news broadcast. A Broadcast News Editor and Broadcast News Navigator system analyze, select, condense, and then present news summaries. The system enables not only viewing a hierarchical table of contents of the news, but also summaries tailored to individual needs. This is accomplished through story segmentation and proper name extraction which enables the use of common information retrieval methodologies, such as Web browsers. Robust segmentation processing is provided using multistream analysis on imagery, audio, and closed captioned stream cue events.

Owner:OAKHAM TECH

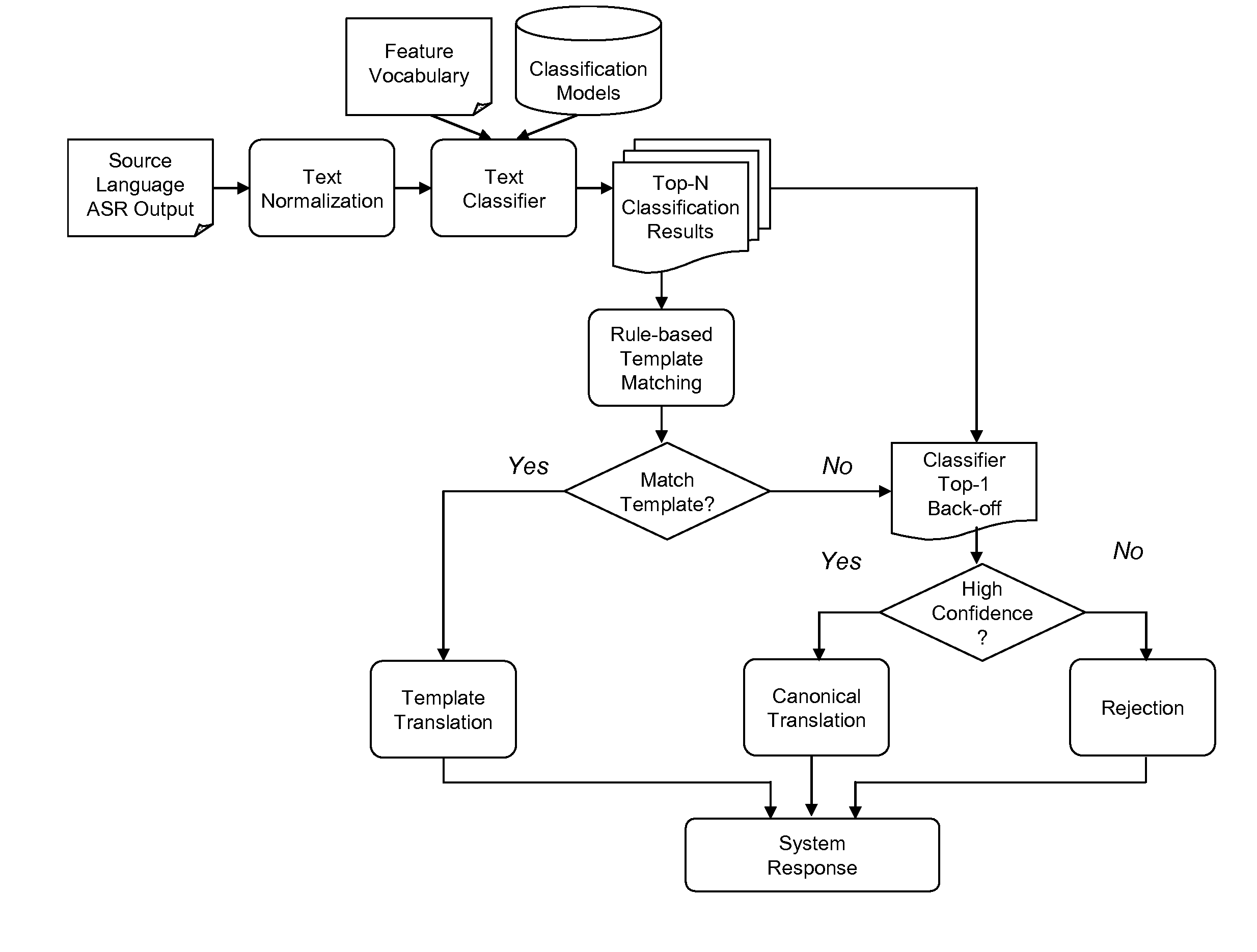

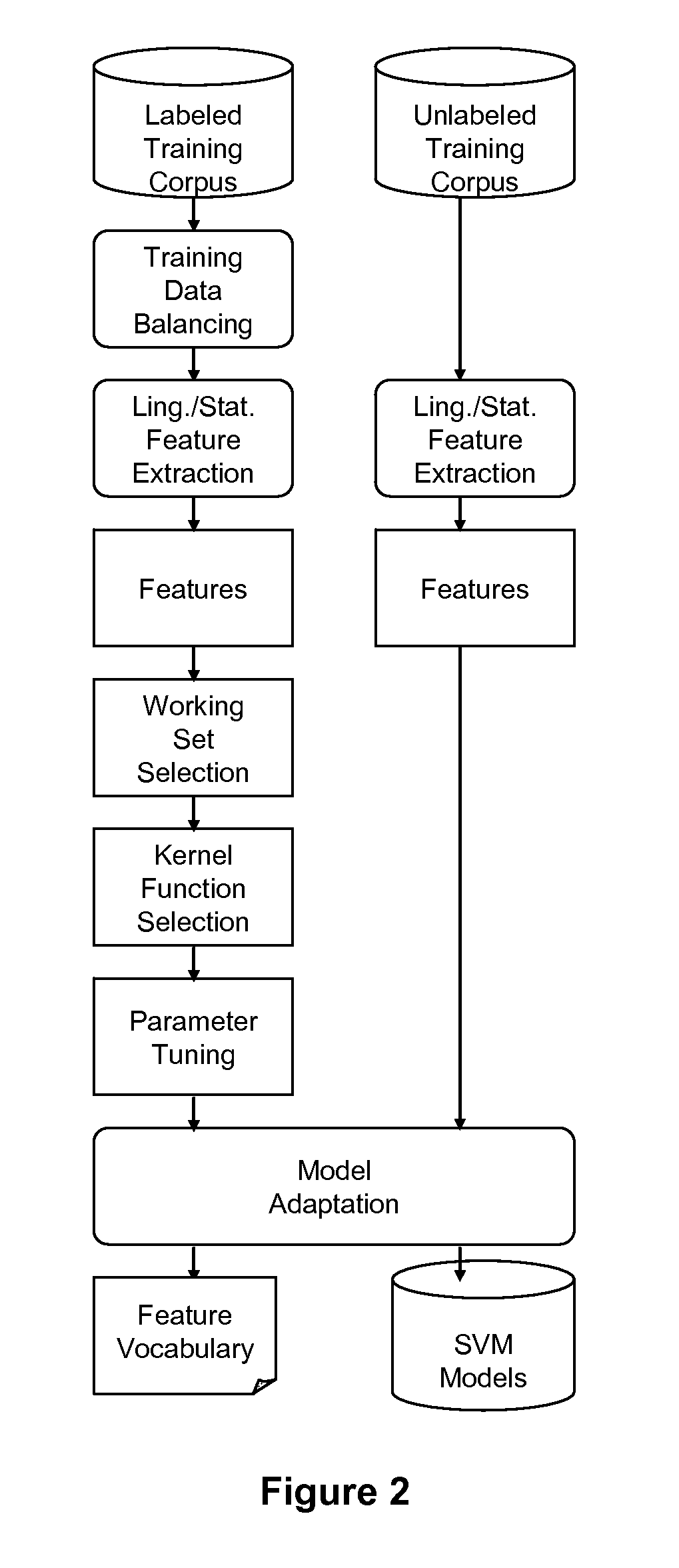

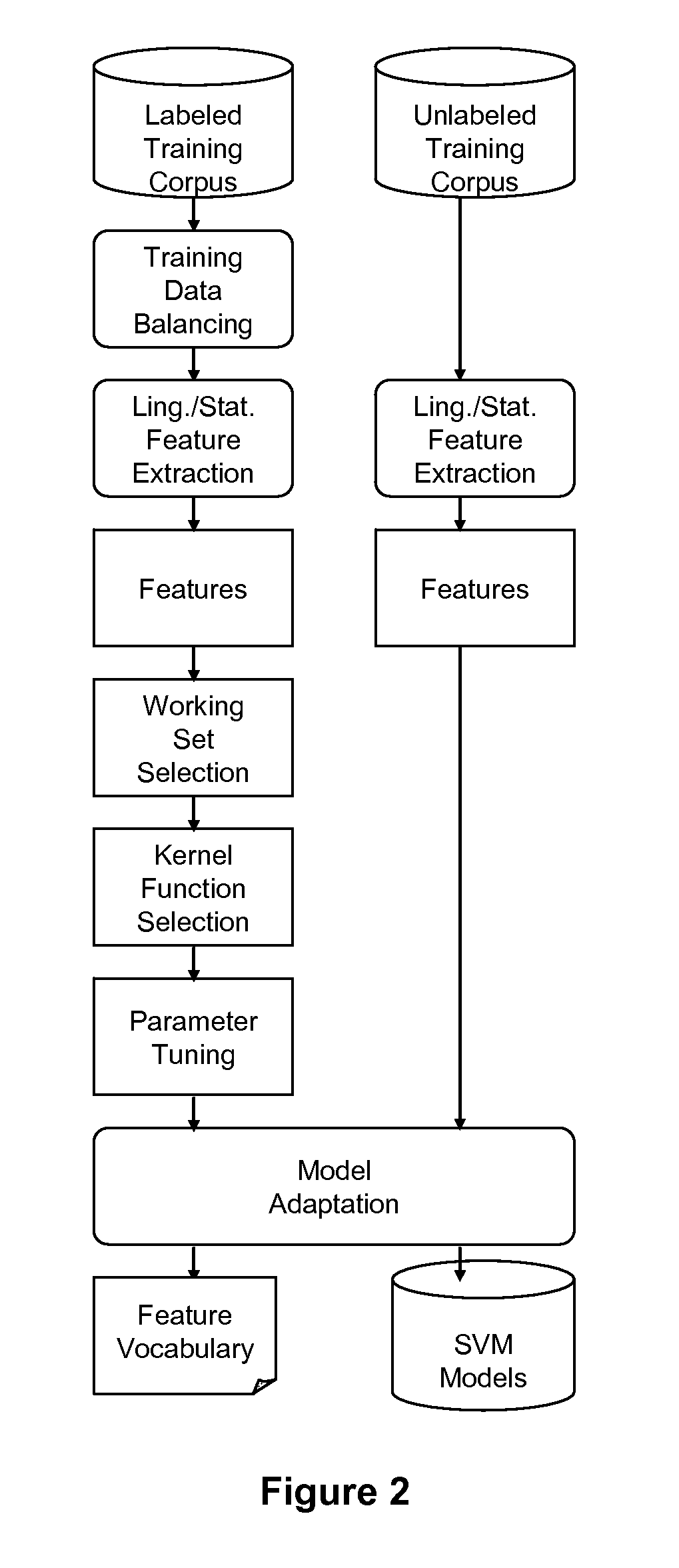

Robust information extraction from utterances

ActiveUS8583416B2Increase cross-entropyHigh precisionSpeech recognitionSpecial data processing applicationsFeature extractionText categorization

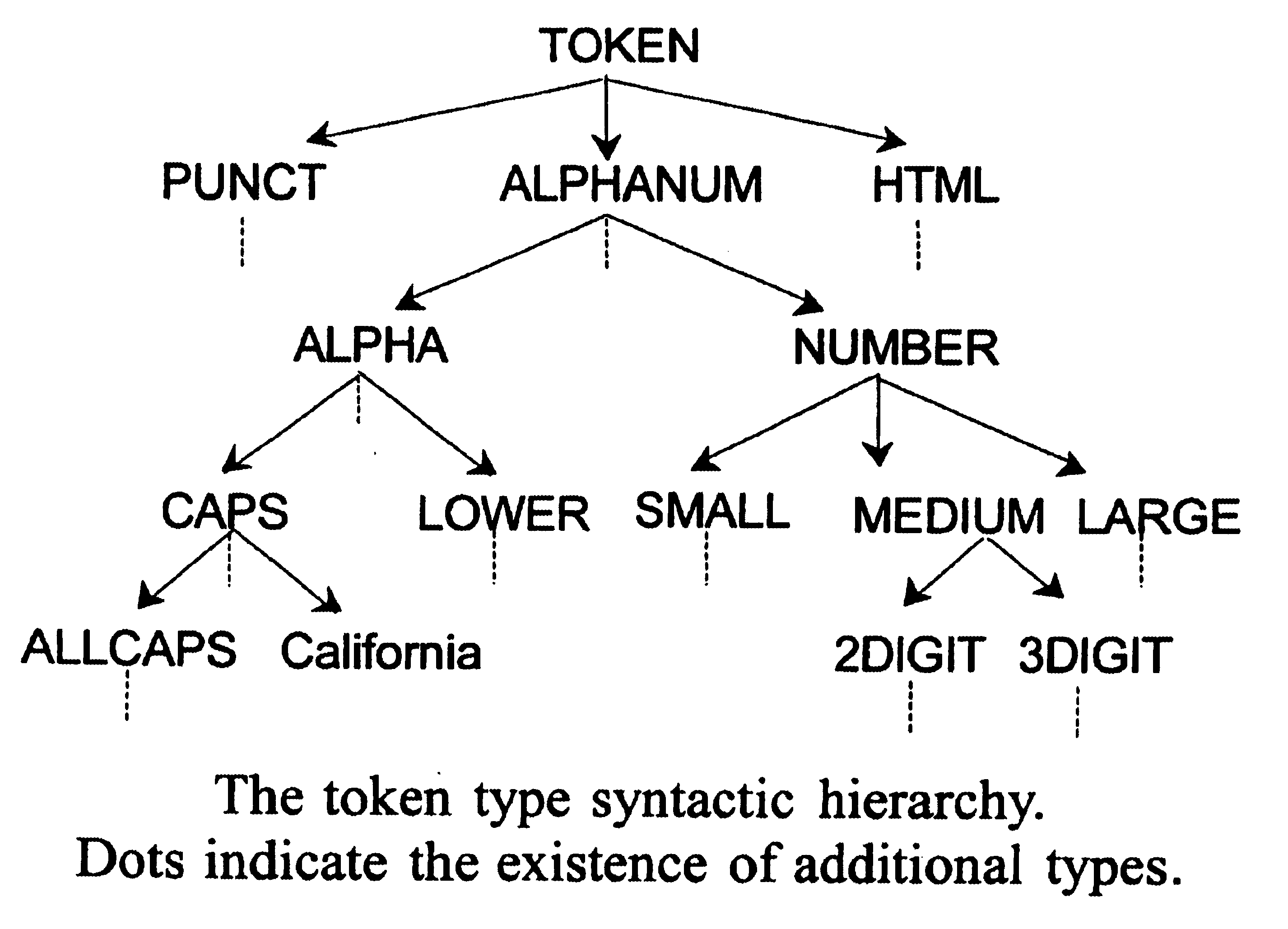

The performance of traditional speech recognition systems (as applied to information extraction or translation) decreases significantly with, larger domain size, scarce training data as well as under noisy environmental conditions. This invention mitigates these problems through the introduction of a novel predictive feature extraction method which combines linguistic and statistical information for representation of information embedded in a noisy source language. The predictive features are combined with text classifiers to map the noisy text to one of the semantically or functionally similar groups. The features used by the classifier can be syntactic, semantic, and statistical.

Owner:NANT HLDG IP LLC

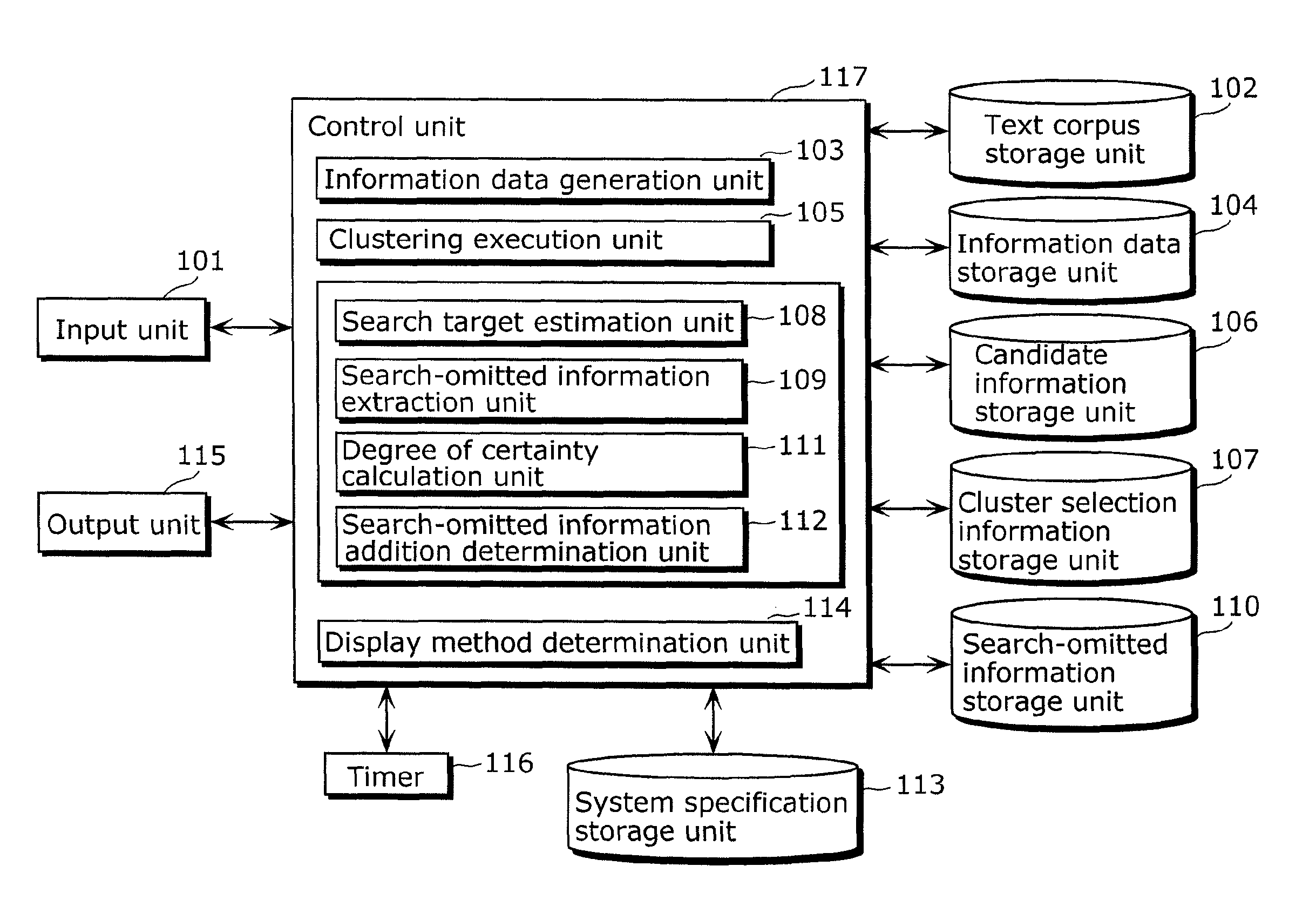

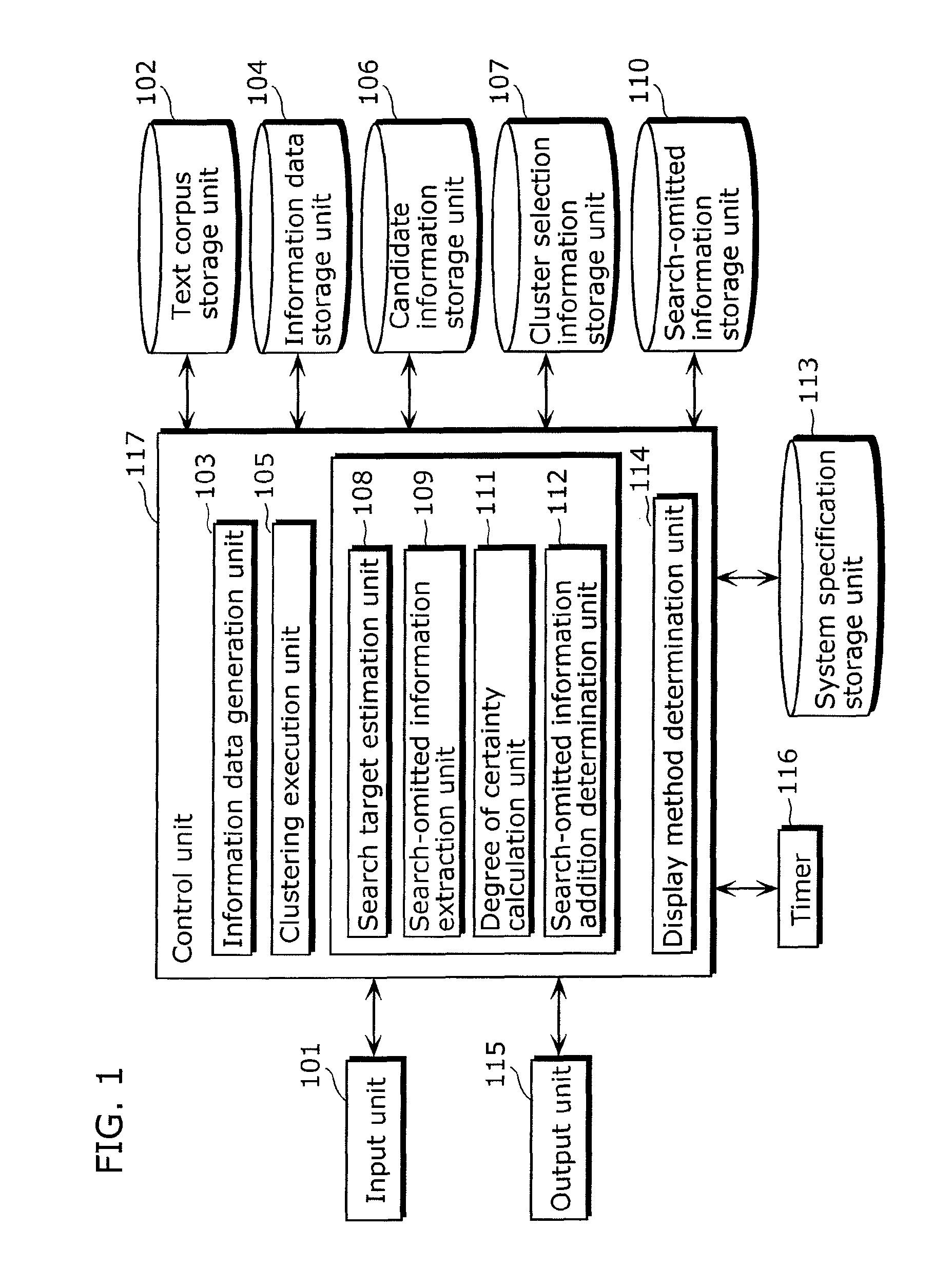

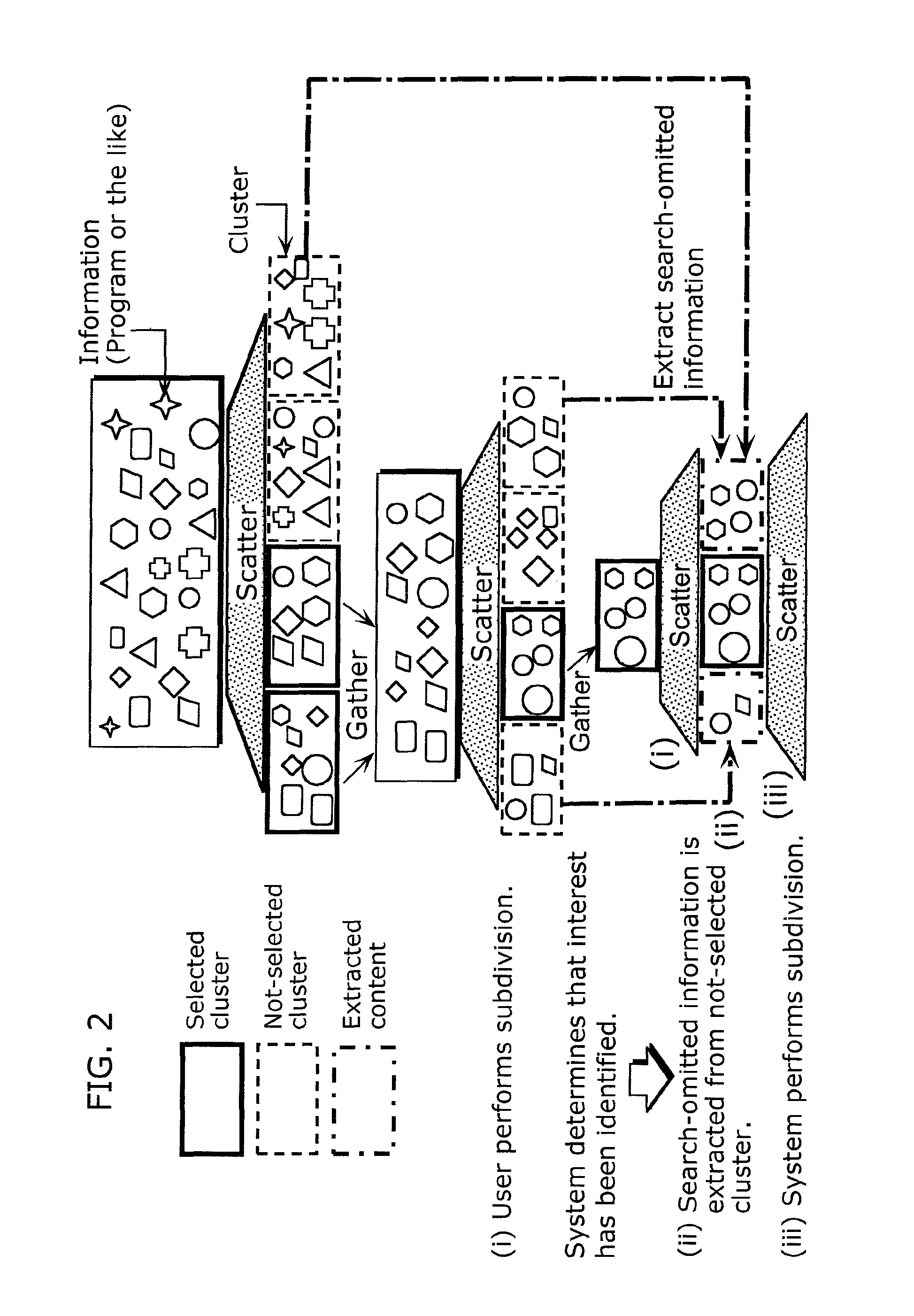

Information search support method and information search support device

InactiveUS8099418B2Enhanced informationDigital data information retrievalDigital data processing detailsDegree of certaintyInformation searching

An information search support device includes: a cluster selection history information accumulation unit which accumulates content information of each cluster and cluster selection history information indicating a cluster selected by a user; a degree of certainty calculation unit which calculates a degree of certainty indicating a degree to which a vague search target of the user has been identified, based on the cluster selection history information accumulated; a search target estimation unit which obtains a condition for estimating the search target; a search-omitted information extraction unit which extracts search-omitted information that is included in the cluster that is not selected and is estimated from the obtained condition; and a search-omitted information addition unit which adds, to the cluster selected by the user, the extracted search-omitted information, in the case where the degree of certainty is equal to or higher than a predetermined threshold.

Owner:PANASONIC INTELLECTUAL PROPERTY CORP OF AMERICA

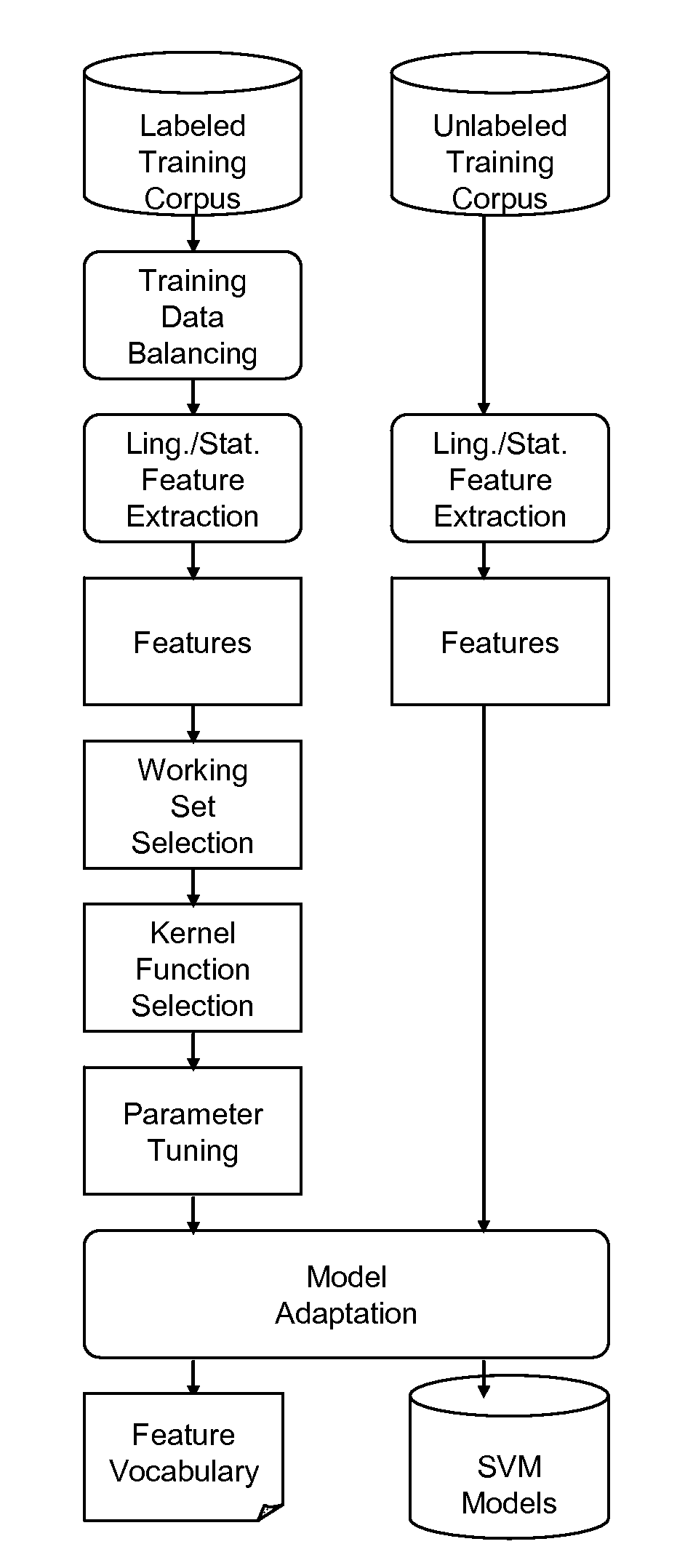

Robust Information Extraction from Utterances

ActiveUS20090171662A1Increase cross-entropyImprove precisionSpeech recognitionSpecial data processing applicationsFeature extractionText categorization

The performance of traditional speech recognition systems (as applied to information extraction or translation) decreases significantly with, larger domain size, scarce training data as well as under noisy environmental conditions. This invention mitigates these problems through the introduction of a novel predictive feature extraction method which combines linguistic and statistical information for representation of information embedded in a noisy source language. The predictive features are combined with text classifiers to map the noisy text to one of the semantically or functionally similar groups. The features used by the classifier can be syntactic, semantic, and statistical.

Owner:NANT HLDG IP LLC

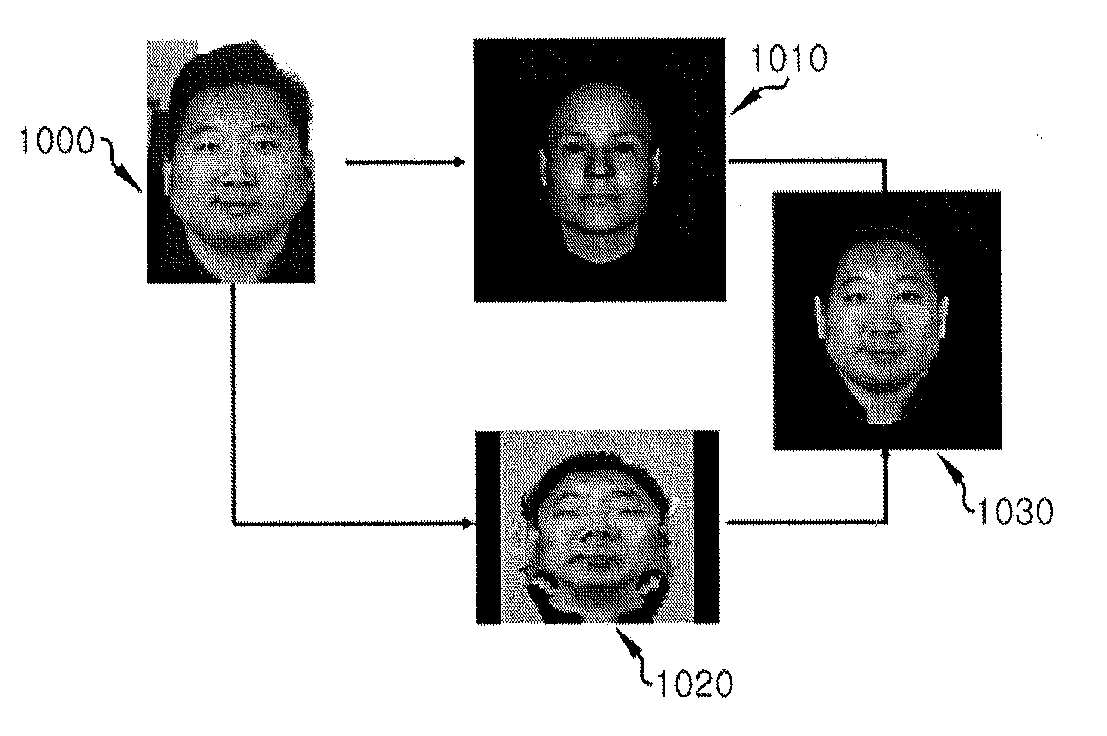

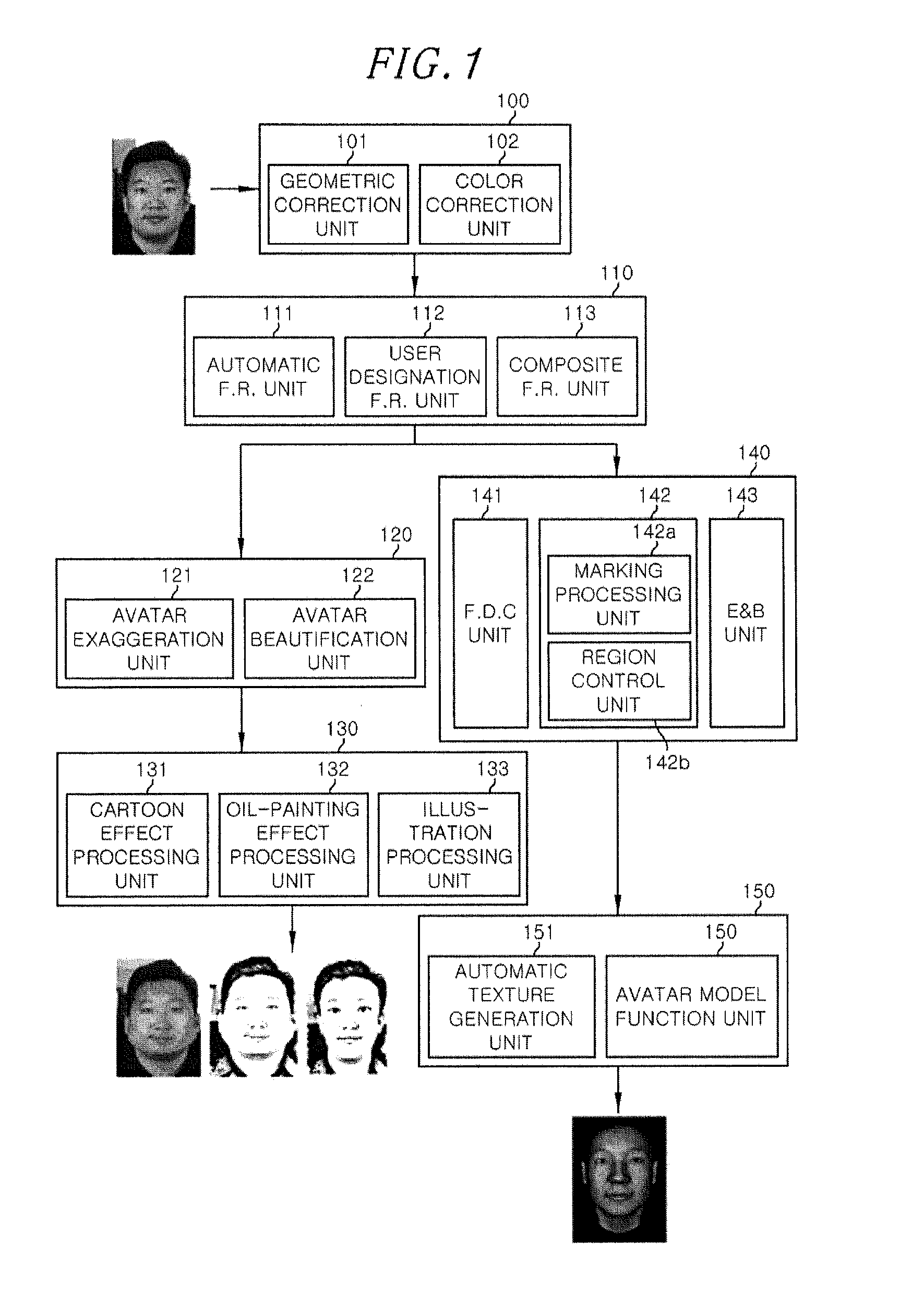

Method and apparatus for generating face avatar

A face avatar generating apparatus includes: a face feature information extraction unit for receiving a face photo and extracting face feature information from the face photo and a two-dimensional (2D) avatar generation unit for selecting at least one region from the face photo based on the face feature information, and exaggerating or beautifying the selected region to create a 2D avatar image. The apparatus further includes a 3D avatar generation unit for modifying a standard 3D face model through a comparison with the standard 3D model based on the face feature information and pre-stored standard information to create a 3D avatar image.

Owner:ELECTRONICS & TELECOMM RES INST

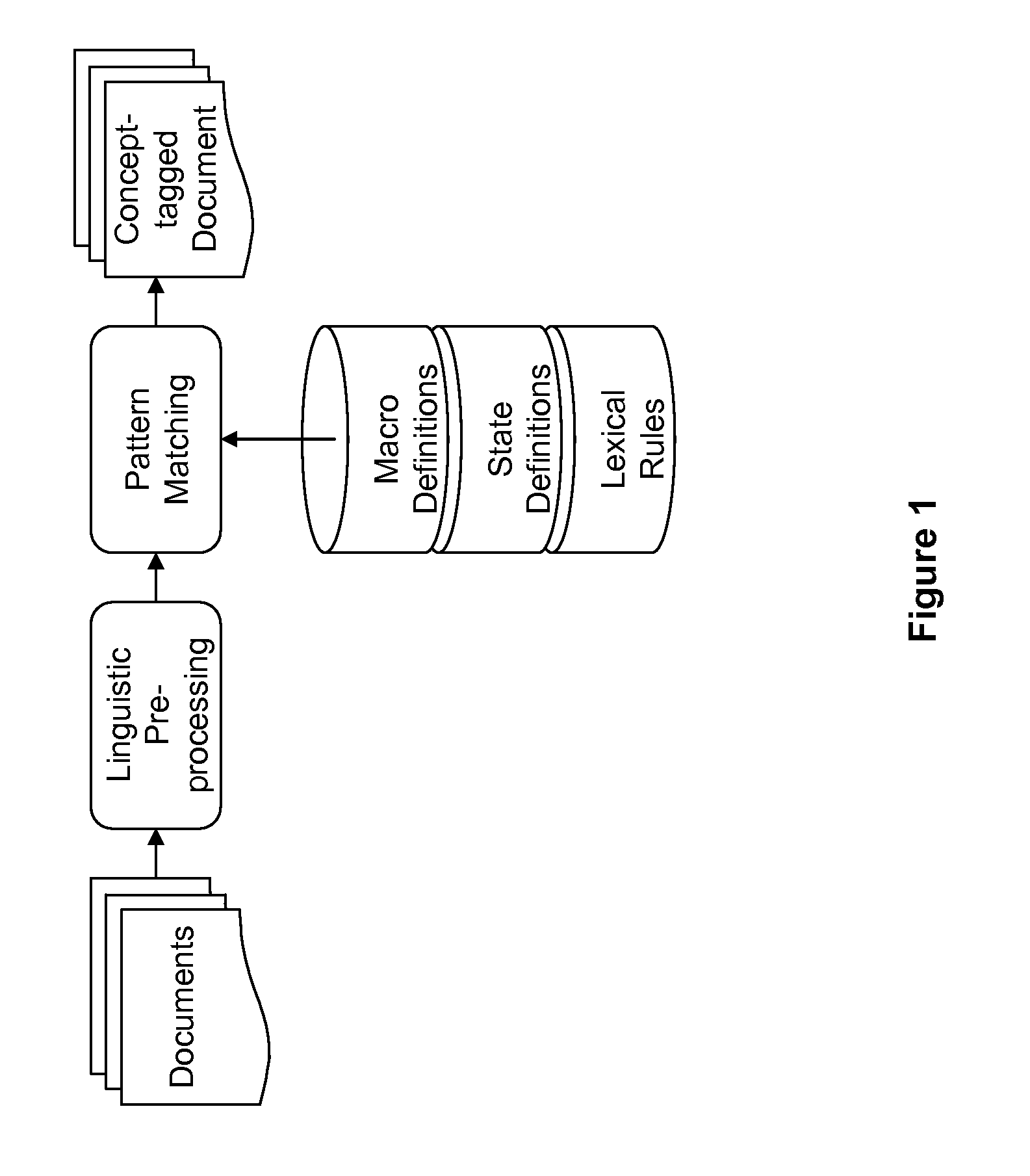

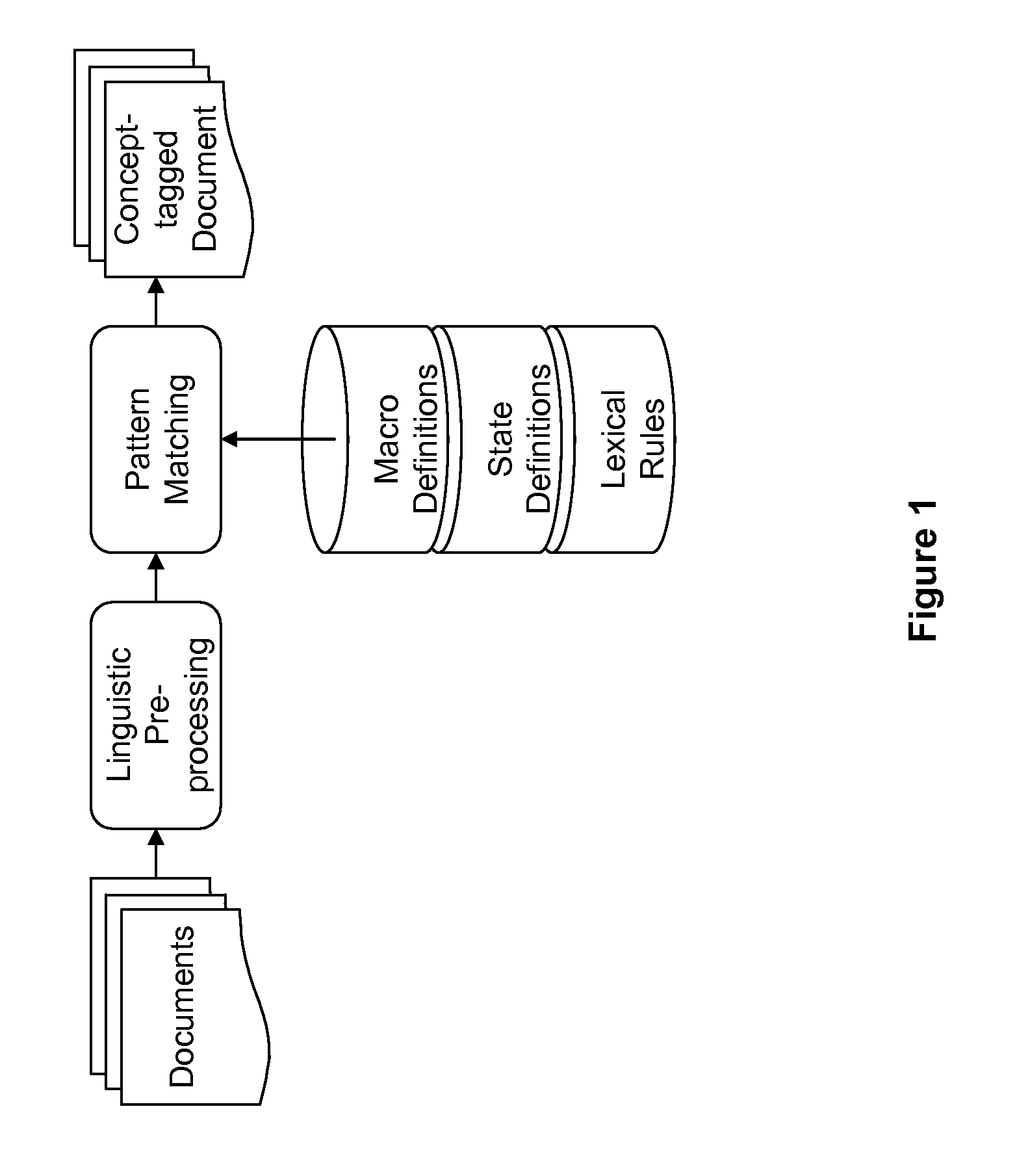

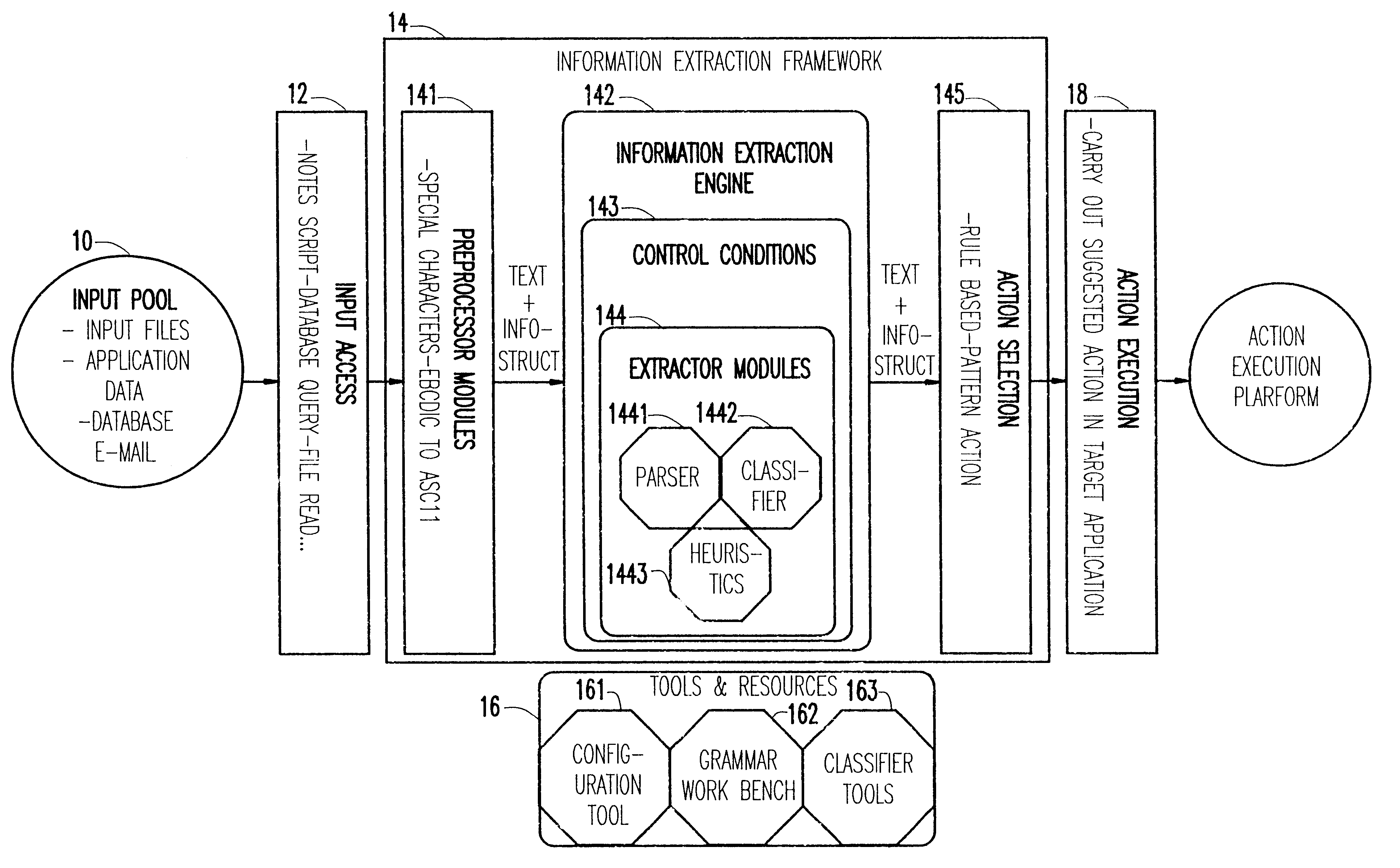

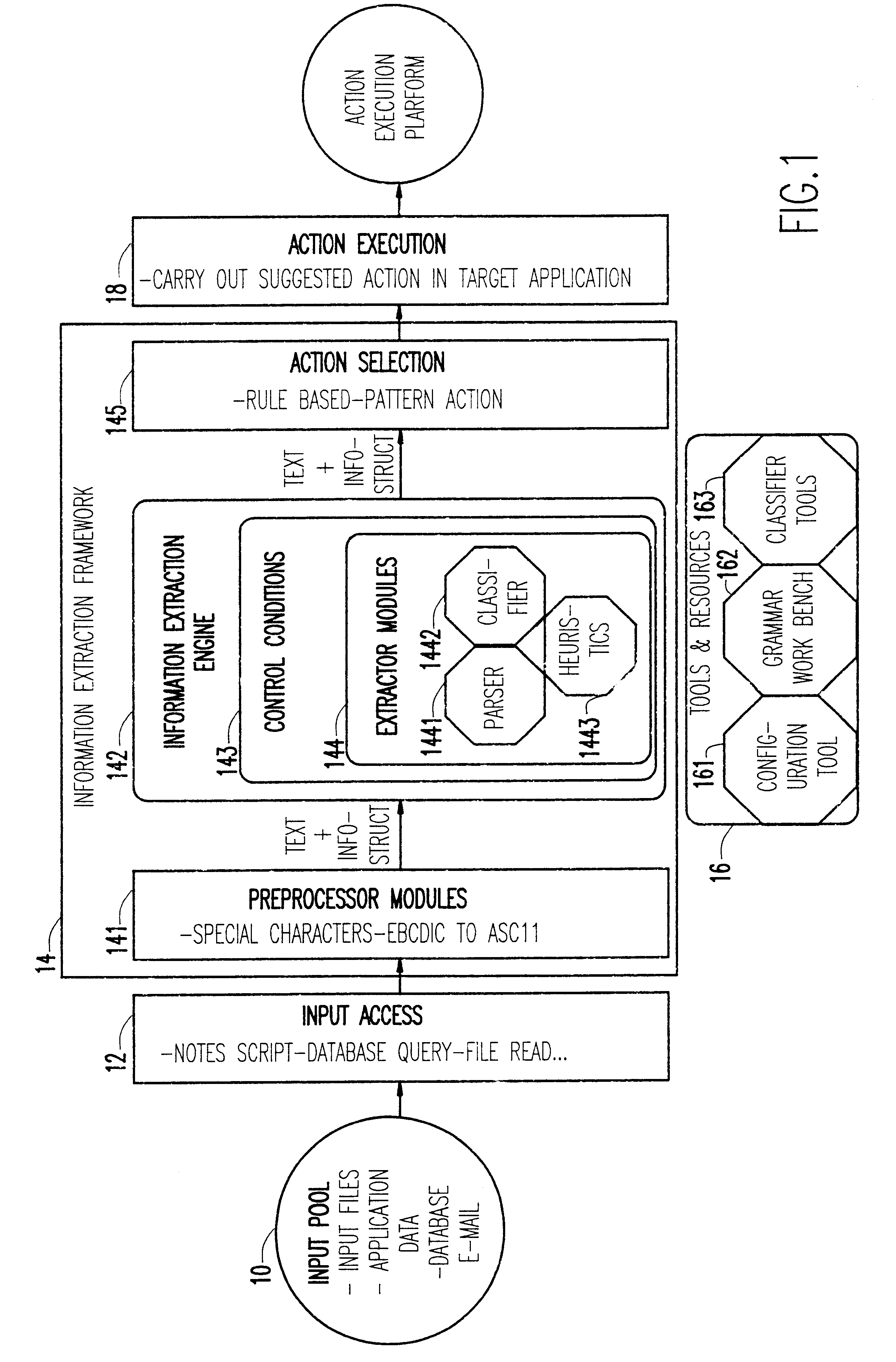

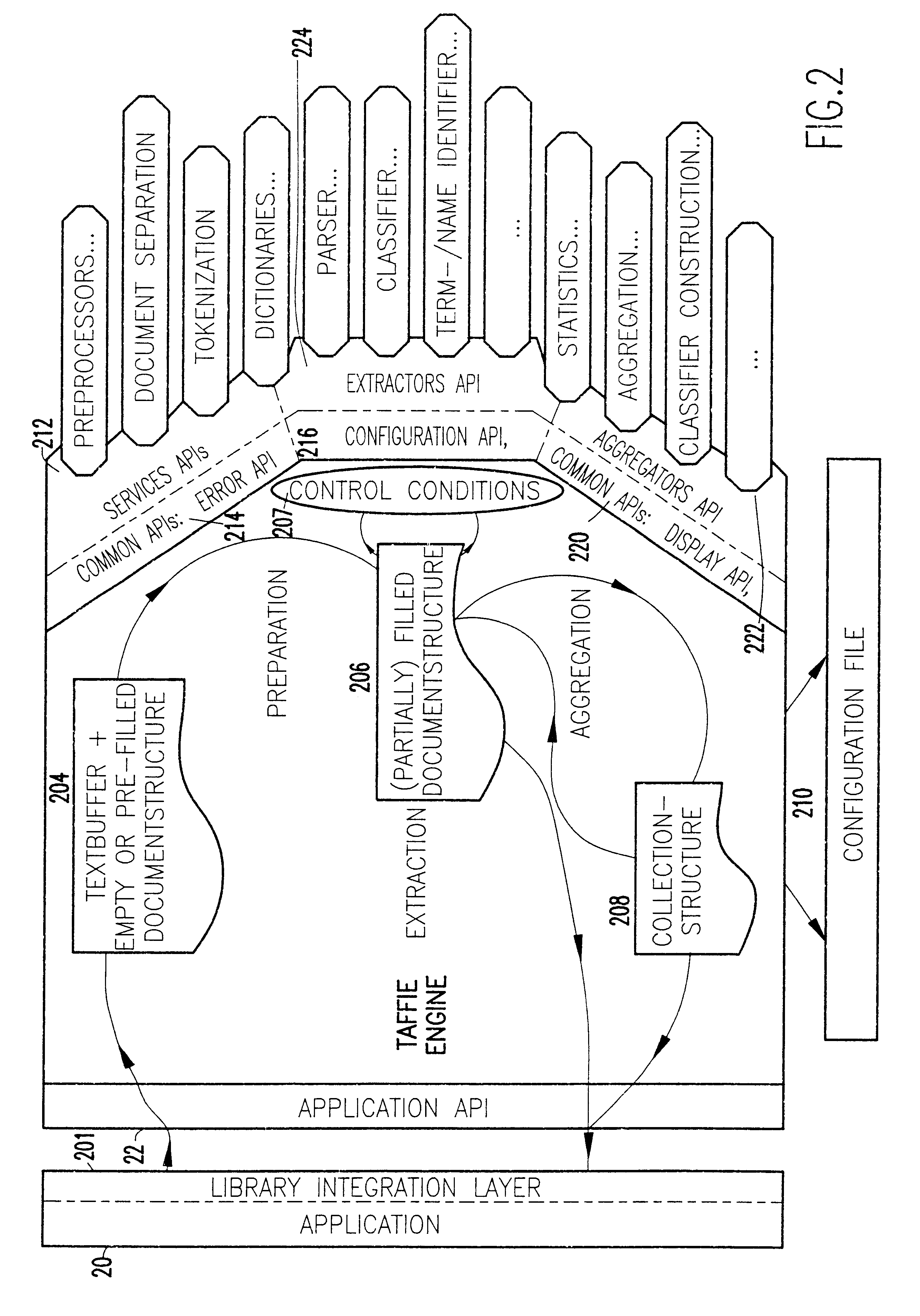

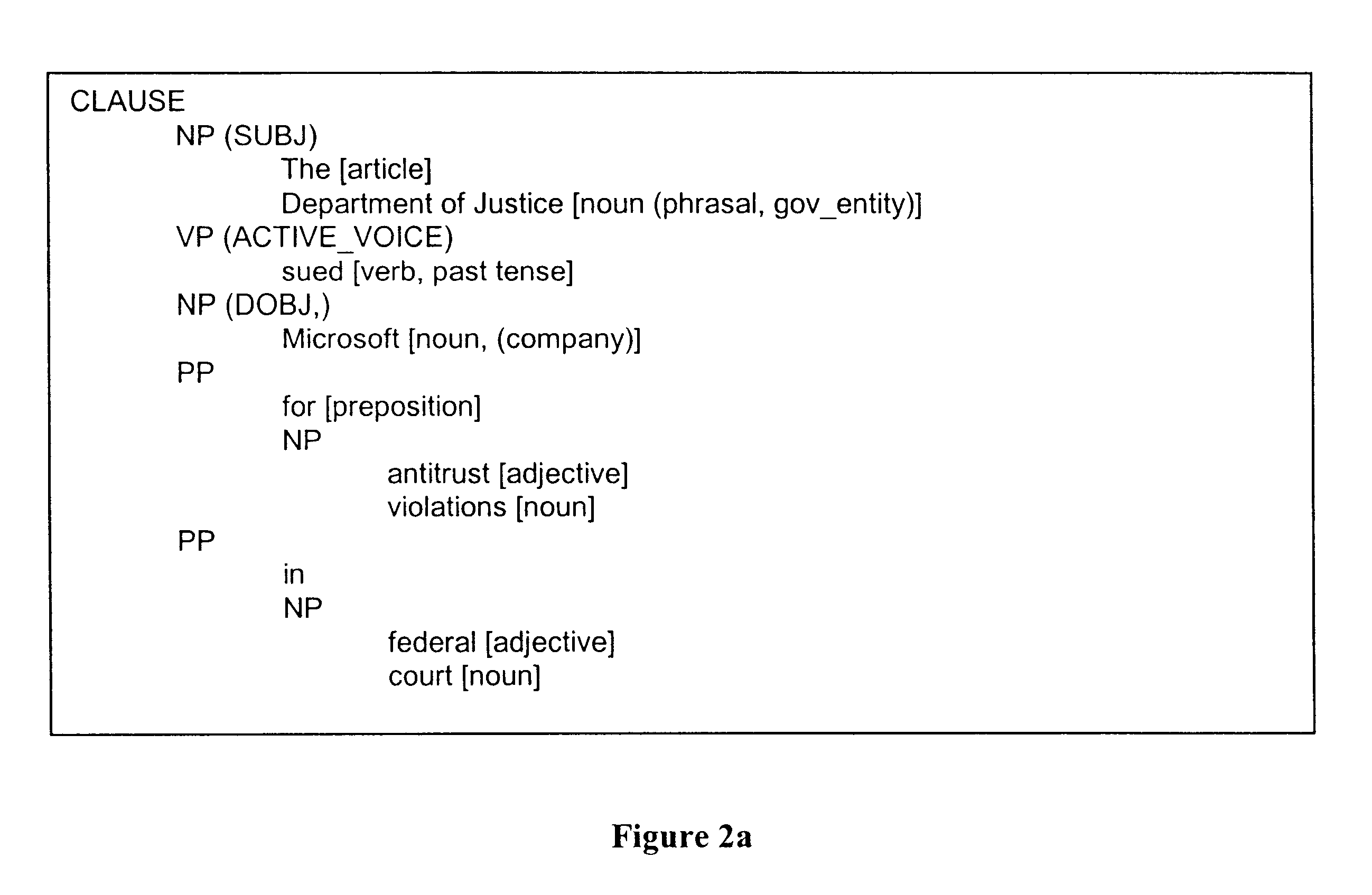

Architecture of a framework for information extraction from natural language documents

InactiveUS6553385B2Data processing applicationsNatural language data processingDocumentation procedureDocument preparation

A framework for information extraction from natural language documents is application independent and provides a high degree of reusability. The framework integrates different Natural Language / Machine Learning techniques, such as parsing and classification. The architecture of the framework is integrated in an easy to use access layer. The framework performs general information extraction, classification / categorization of natural language documents, automated electronic data transmission (e.g., E-mail and facsimile) processing and routing, and plain parsing. Inside the framework, requests for information extraction are passed to the actual extractors. The framework can handle both pre- and post processing of the application data, control of the extractors, enrich the information extracted by the extractors. The framework can also suggest necessary actions the application should take on the data. To achieve the goal of easy integration and extension, the framework provides an integration (outside) application program interface (API) and an extractor (inside) API.

Owner:INT BUSINESS MASCH CORP

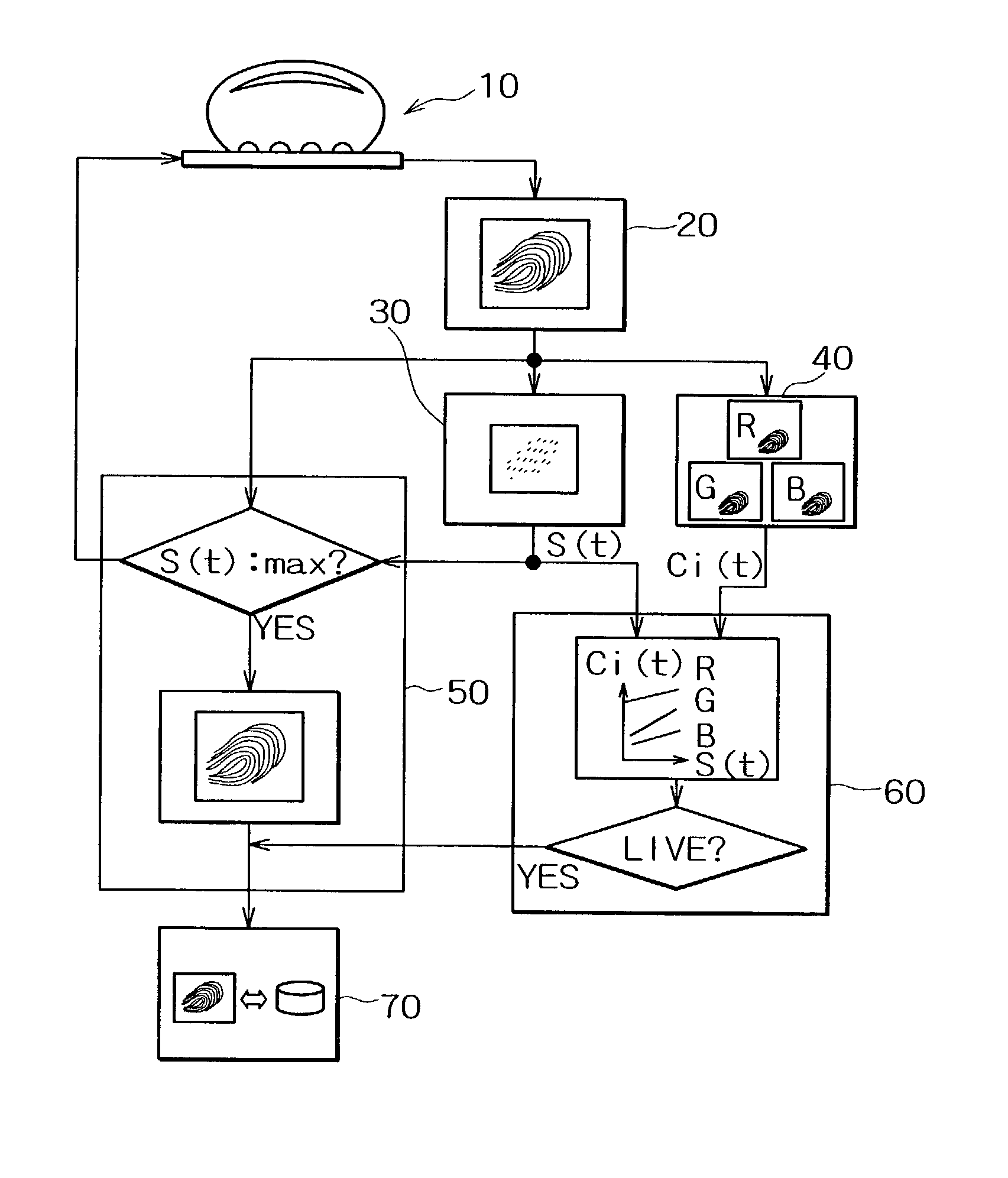

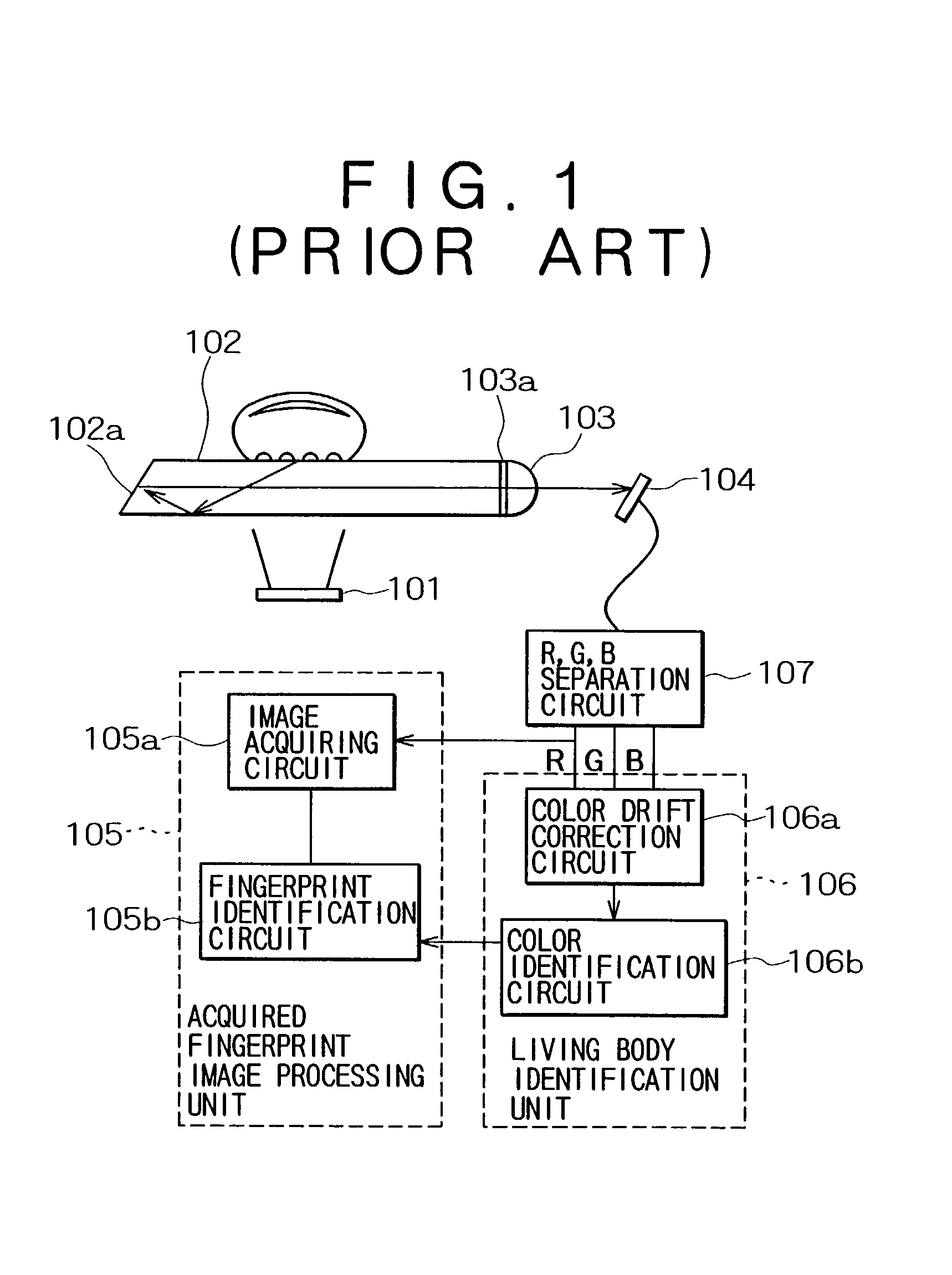

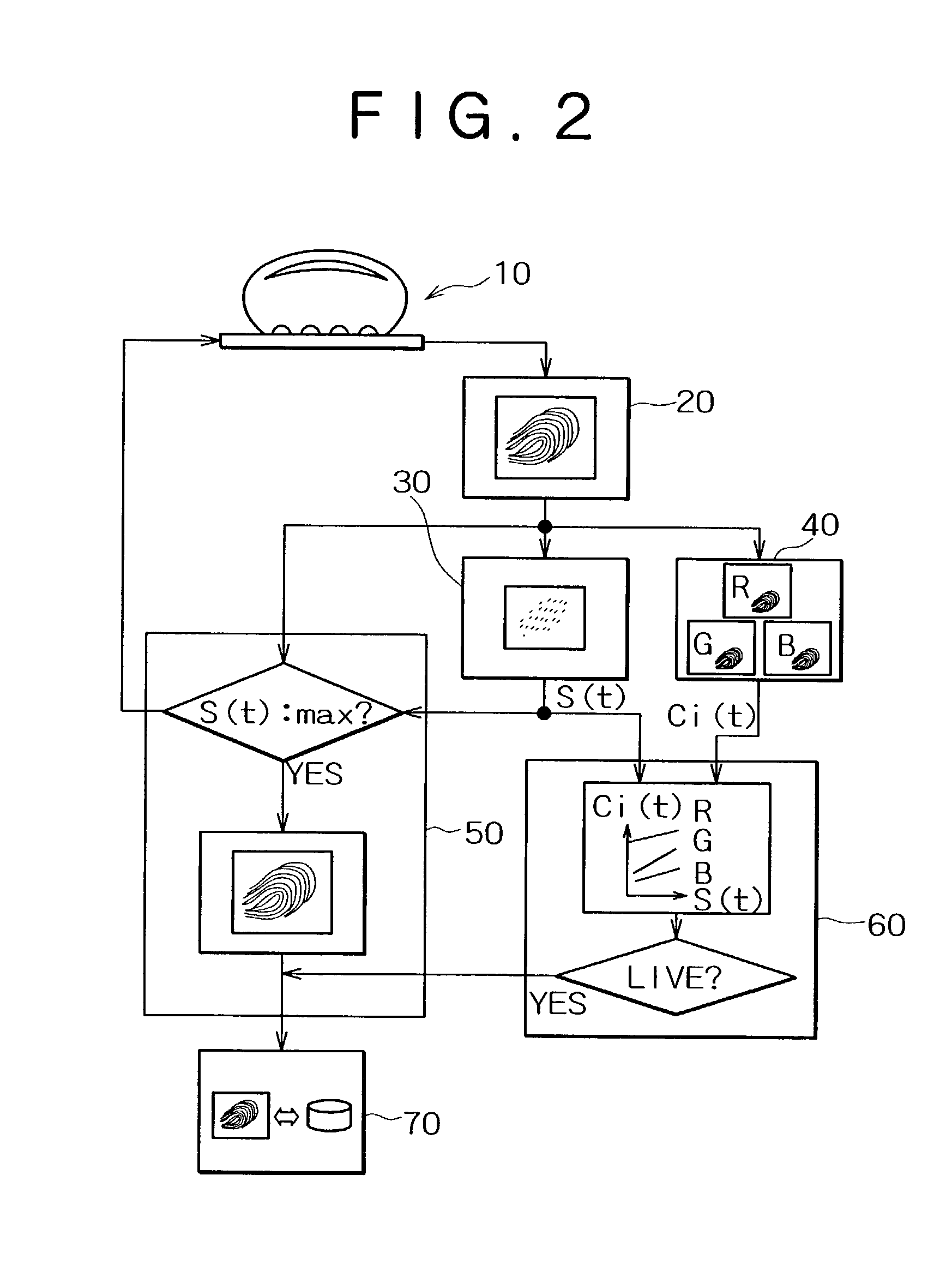

Fingerprint image input device and living body identification method using fingerprint image

InactiveUS20030044051A1Improve reliabilityElectric signal transmission systemsImage analysisPattern recognitionColor image

A color image sensor sequentially acquires a plurality of fingerprint images when a finger is pressed against the detector surface. A color information extraction unit detects the finger color in synchronization with the input of the plurality of fingerprint images. An areal information extraction unit detects a physical quantity representing the pressure applied by the finger to the color image sensor when the plurality of fingerprint images are acquired, particularly, the quantity related with the area of the finger in contact with the detector surface. A living body identification unit determines whether the finger is a live or dead one by the analysis of correlation between the physical quantity and the finger color. According to this configuration, even if the finger color does not change much, it is possible to distinguish living bodies from dead ones if there is a sufficient correlation with information such as the area of the fingerprint that reflects the finger pressure. The thickness of the fingerprint input unit is approximately 1-2 mm, determined by the sum of the thickness of the planar light source and that of the color image sensor.

Owner:VISTA PEAK VENTURES LLC

Wrapper induction by hierarchical data analysis

InactiveUS6606625B1Easy to useAccurate extractionData processing applicationsWeb data indexingInductive algorithmDocumentation

An inductive algorithm, denominated STALKER, generating high accuracy extraction rules based on user-labeled training examples. With the tremendous amount of information that becomes available on the Web on a daily basis, the ability to quickly develop information agents has become a crucial problem. A vital component of any Web-based information agent is a set of wrappers that can extract the relevant data from semistructured information sources. The novel approach to wrapped induction provided herein is based on the idea of hierarchical information extraction, which turns the hard problem of extracting data from an arbitrarily complex document into a series of easier extraction tasks. Labeling the training data represents the major bottleneck in using wrapper induction techniques, and experimental results show that STALKER performs significantly better than other approaches; on one hand, STALKER requires up to two orders of magnitude fewer examples than other algorithms, while on the other hand it can handle information sources that could not be wrapped by prior techniques. STALKER uses an embedded catalog formalism to parse the information source and render a predictable structure from which information may be extracted or by which such information extraction may be facilitated and made easier.

Owner:UNIV OF SOUTHERN CALIFORNIA +1

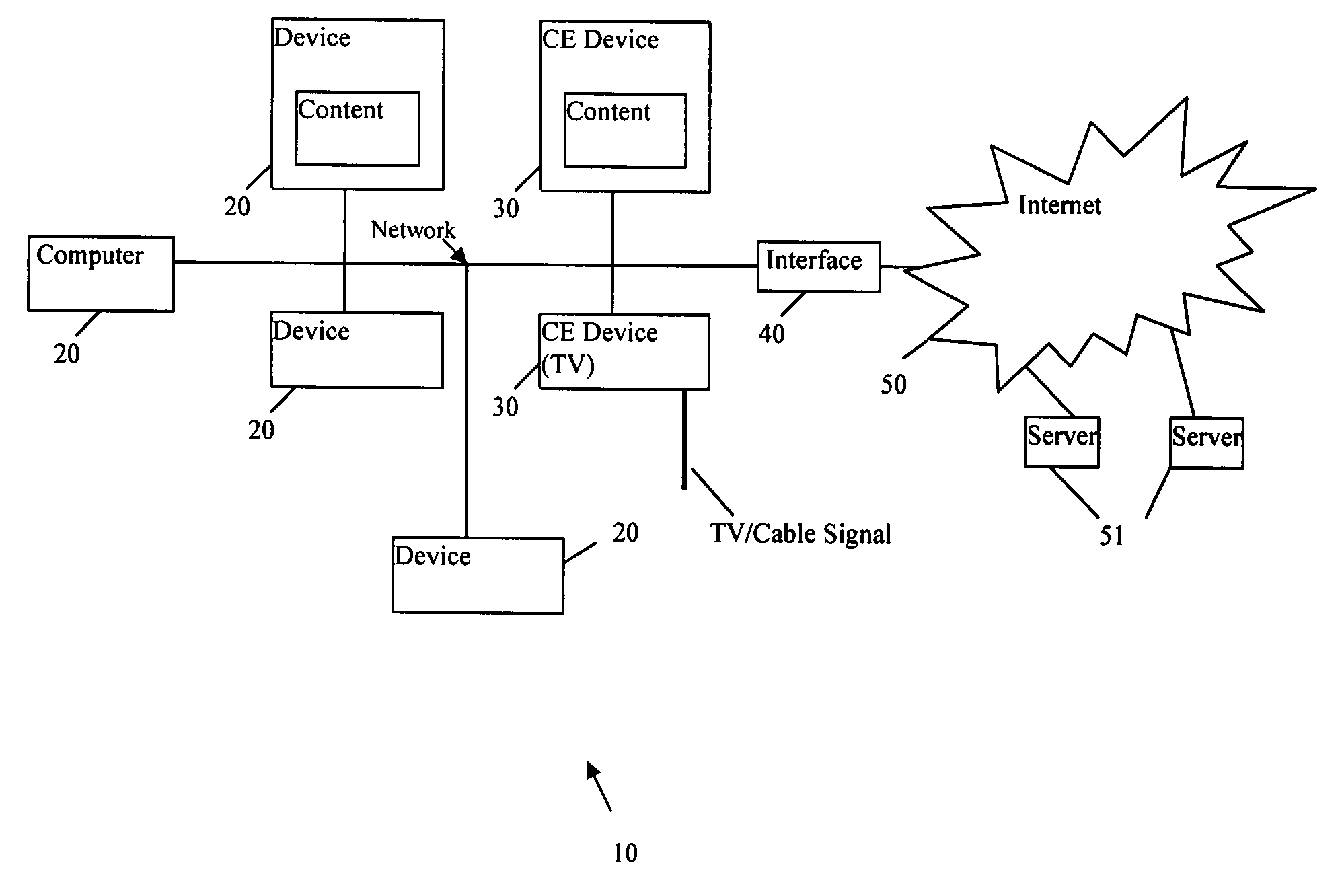

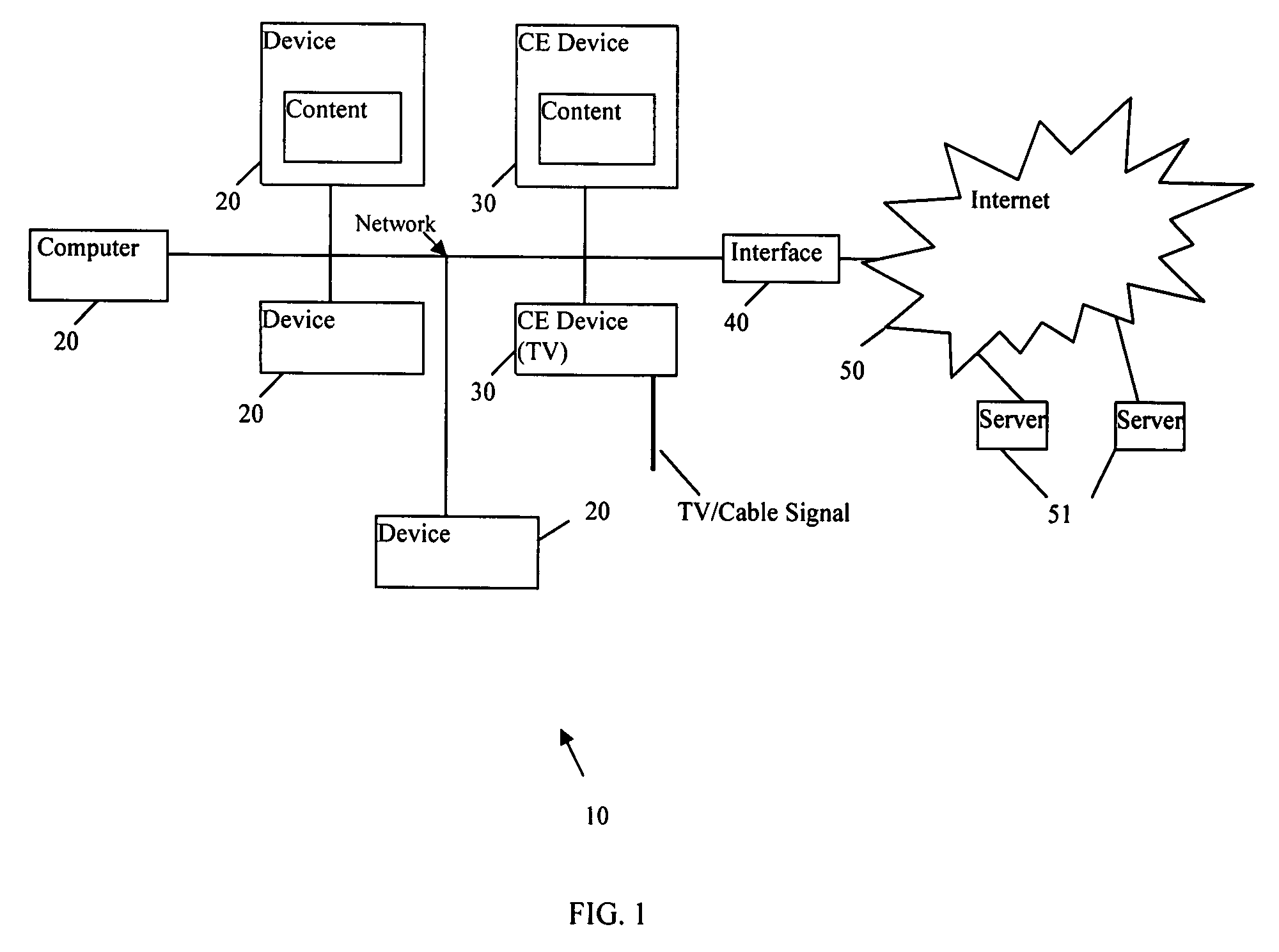

Method and system for extracting relevant information from content metadata

InactiveUS20080204595A1Digital data information retrievalPicture reproducers using cathode ray tubesRelevant informationContent type

A method and system for extracting relevant information from content metadata is provided. User access to content is monitored. A set of extraction rules for information extraction is selected. Key information is extracted from metadata for the content based on the selected extraction rules. Additionally, a type for the content can be determined, and a set of extraction rules is selected based on the content type. The key information is used in queries for searching information of potential interest to the user, related to the content accessed.

Owner:SAMSUNG ELECTRONICS CO LTD

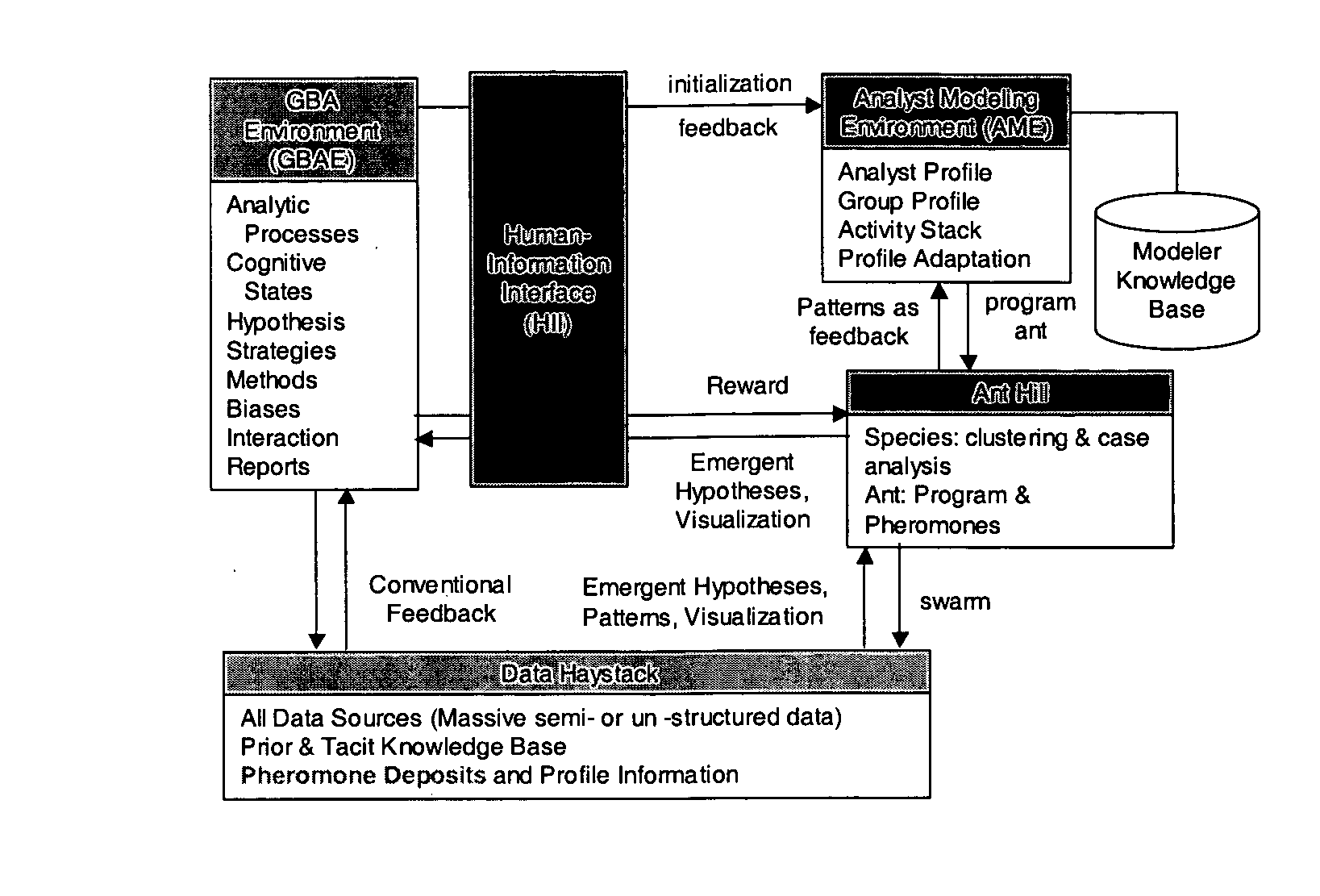

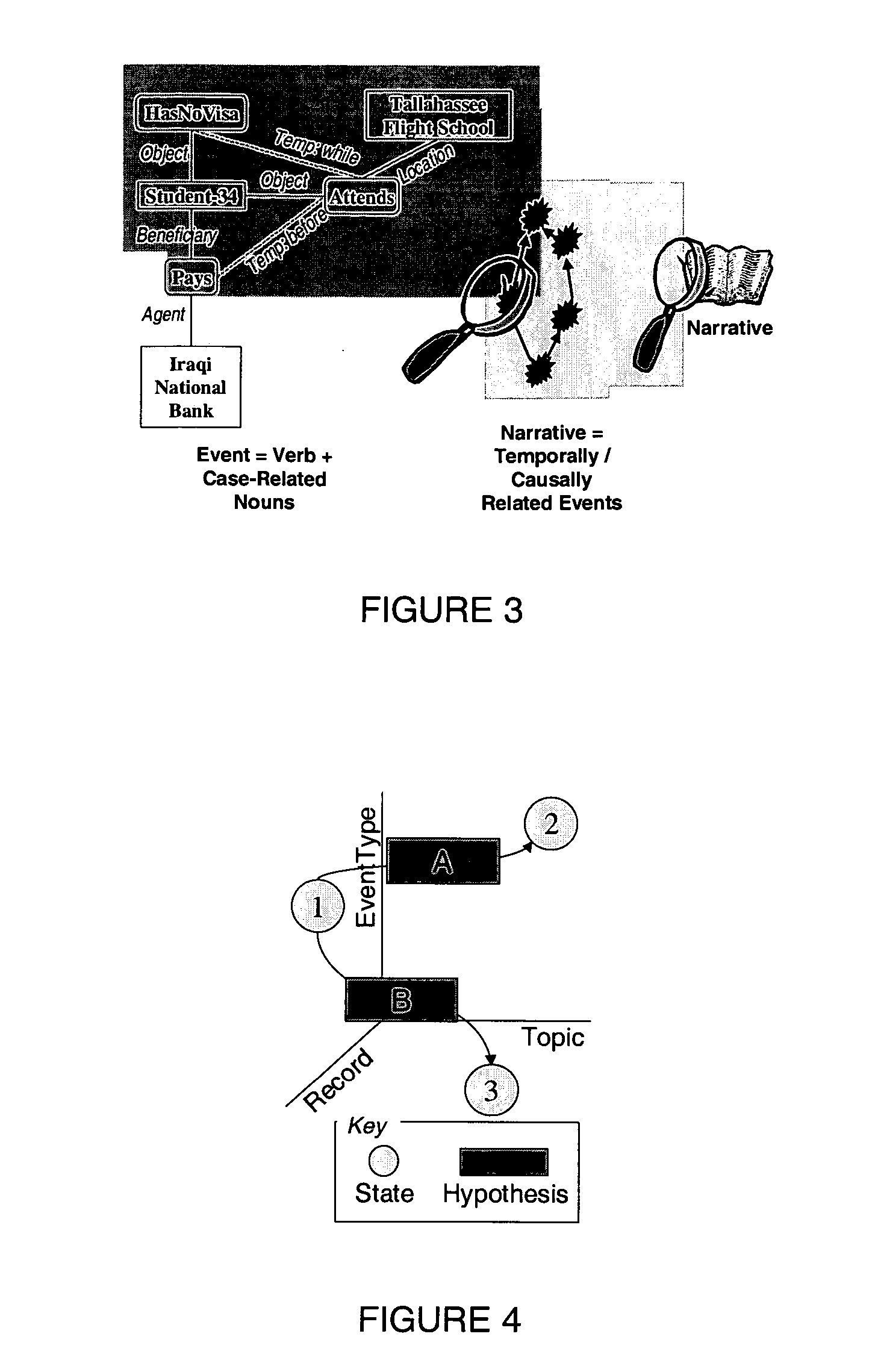

Dynamic information extraction with self-organizing evidence construction

InactiveUS20050154701A1Digital data processing detailsSpecial data processing applicationsPaper documentData analysis system

A data analysis system with dynamic information extraction and self-organizing evidence construction finds numerous applications in information gathering and analysis, including the extraction of targeted information from voluminous textual resources. One disclosed method involves matching text with a concept map to identify evidence relations, and organizing the evidence relations into one or more evidence structures that represent the ways in which the concept map is instantiated in the evidence relations. The text may be contained in one or more documents in electronic form, and the documents may be indexed on a paragraph level of granularity. The evidence relations may self-organize into the evidence structures, with feedback provided to the user to guide the identification of evidence relations and their self-organization into evidence structures. A method of extracting information from one or more documents in electronic form includes the steps of clustering the document into clustered text; identifying patterns in the clustered text; and matching the patterns with the concept map to identify evidence relations such that the evidence relations self-organize into evidence structures that represent the ways in which the concept map is instantiated in the evidence relations.

Owner:TECHTEAM GOVERNMENT SOLUTIONS

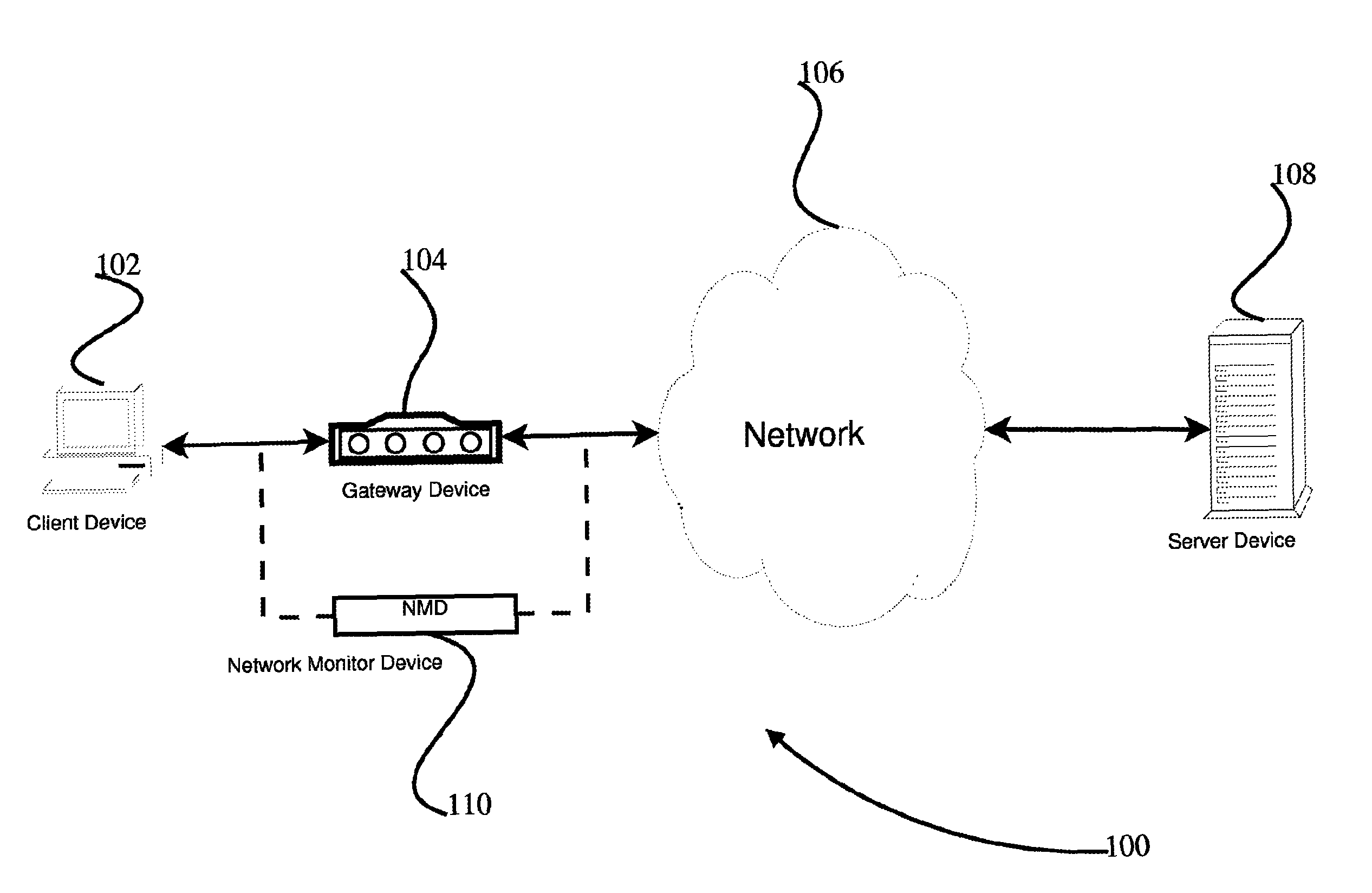

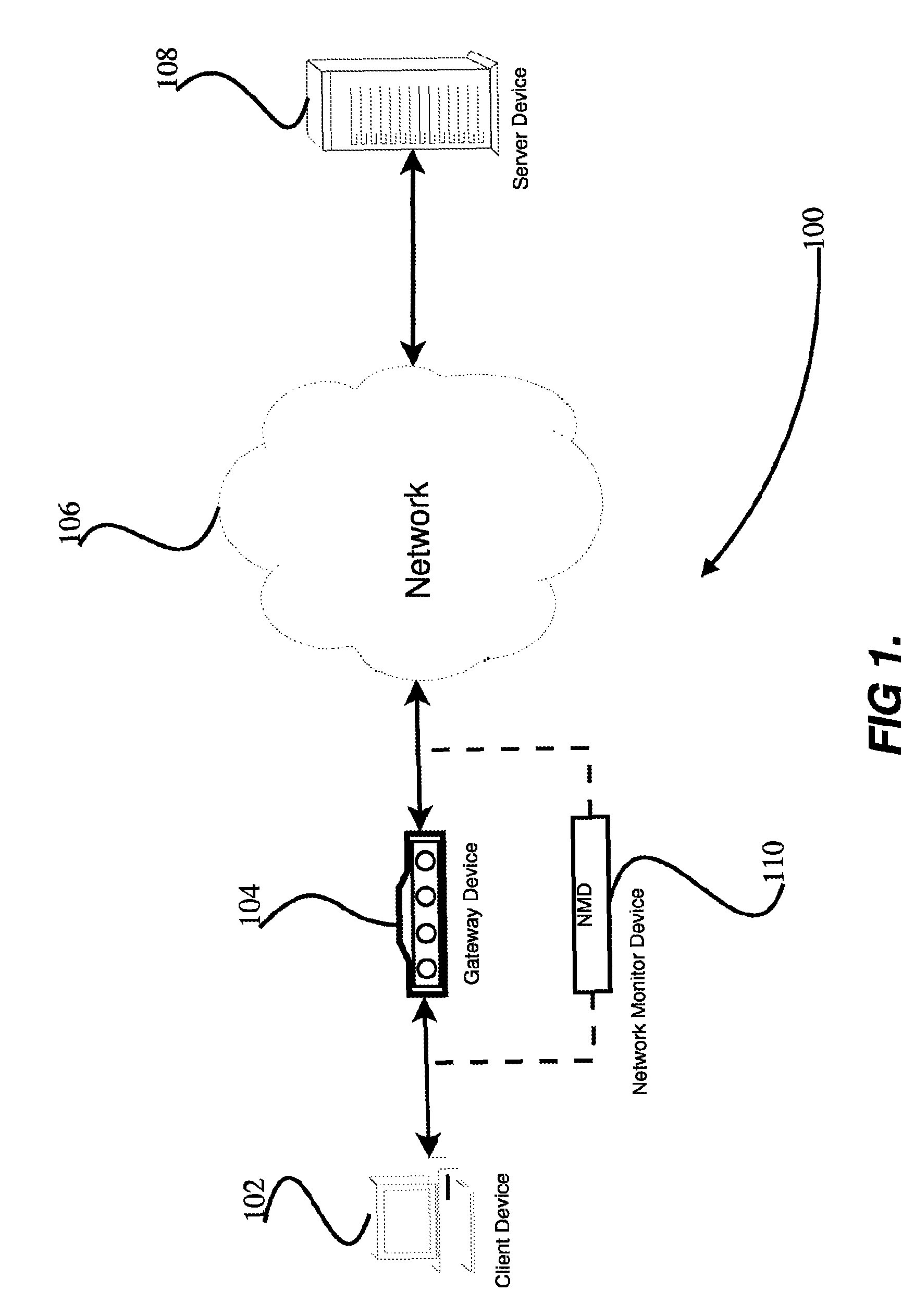

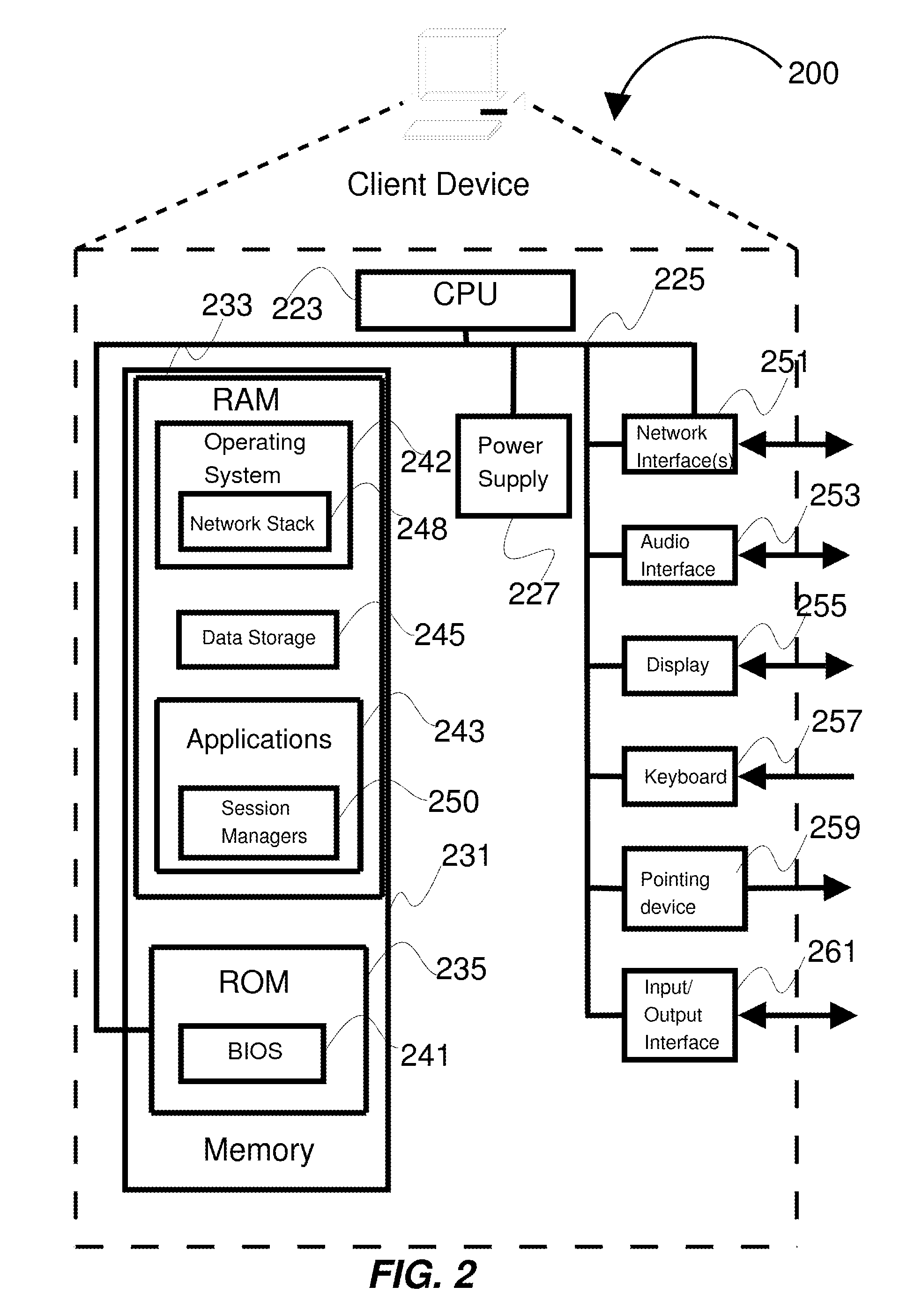

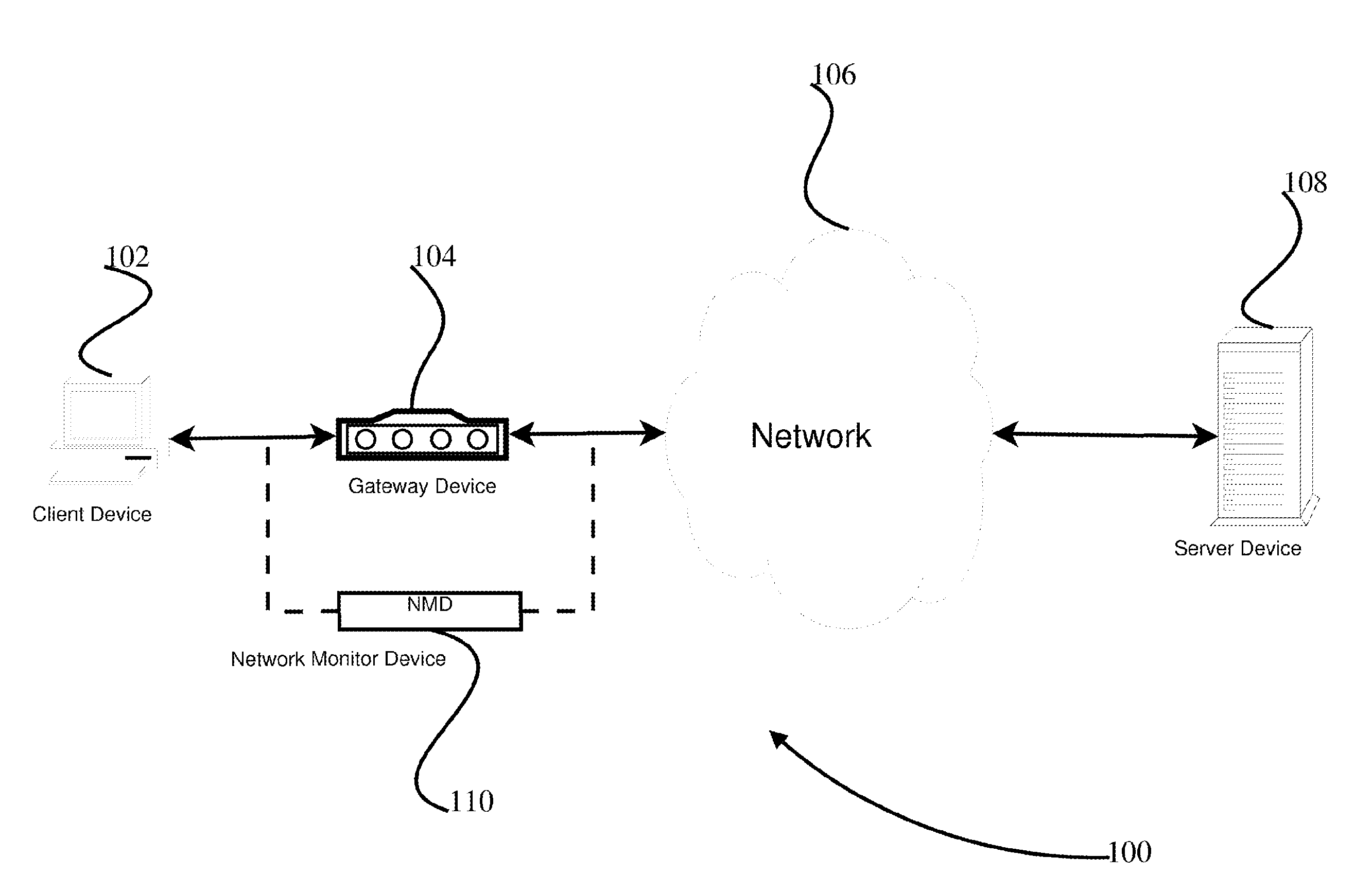

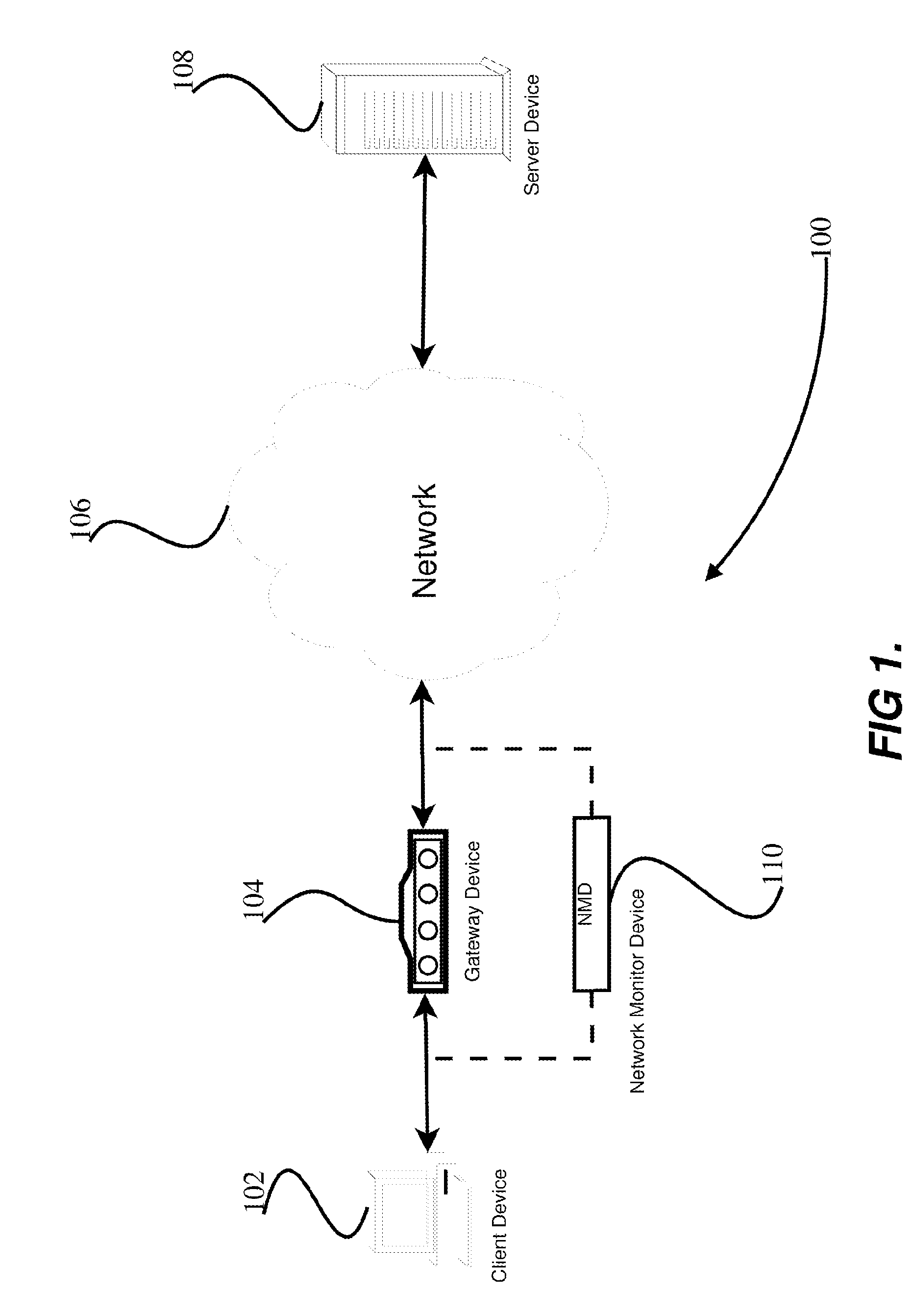

Detecting anomalous network application behavior

ActiveUS8185953B2Memory loss protectionError detection/correctionInformation analysisInternet traffic

System and Method for detecting anomalous network application behavior. Network traffic between at least one client and one or more servers may be monitored. The client and the one or more servers may communicate using one or more application protocols. The network traffic may be analyzed at the application-protocol level to determine anomalous network application behavior. Analyzing the network traffic may include determining, for one or more communications involving the client, if the client has previously stored or received an identifier corresponding to the one or more communications. If no such identifier has been observed in a previous communication, then the one or more communications involving the client may be determined to be anomalous. A network monitoring device may perform one or more of the network monitoring, the information extraction, or the information analysis.

Owner:EXTRAHOP NETWORKS

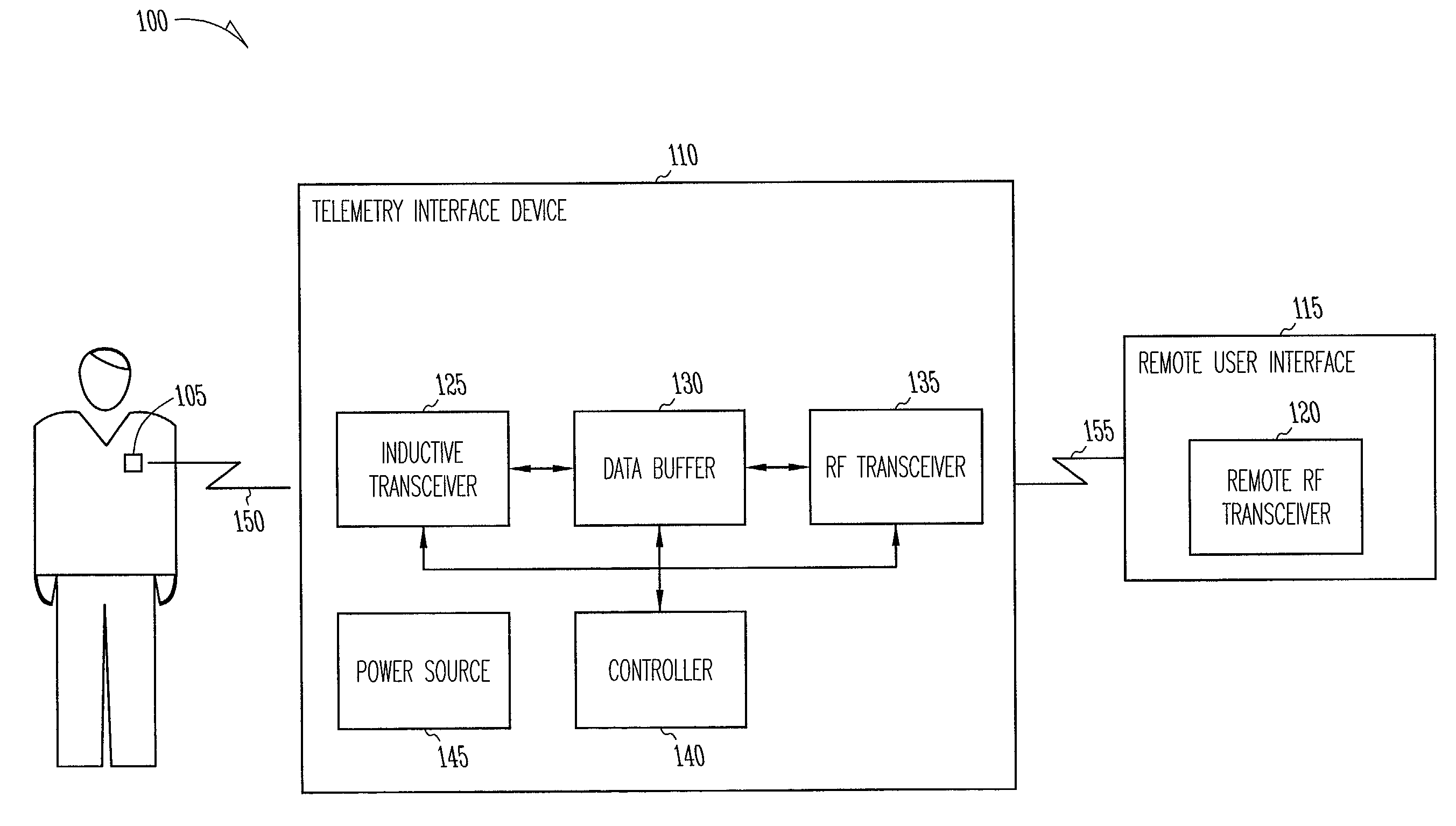

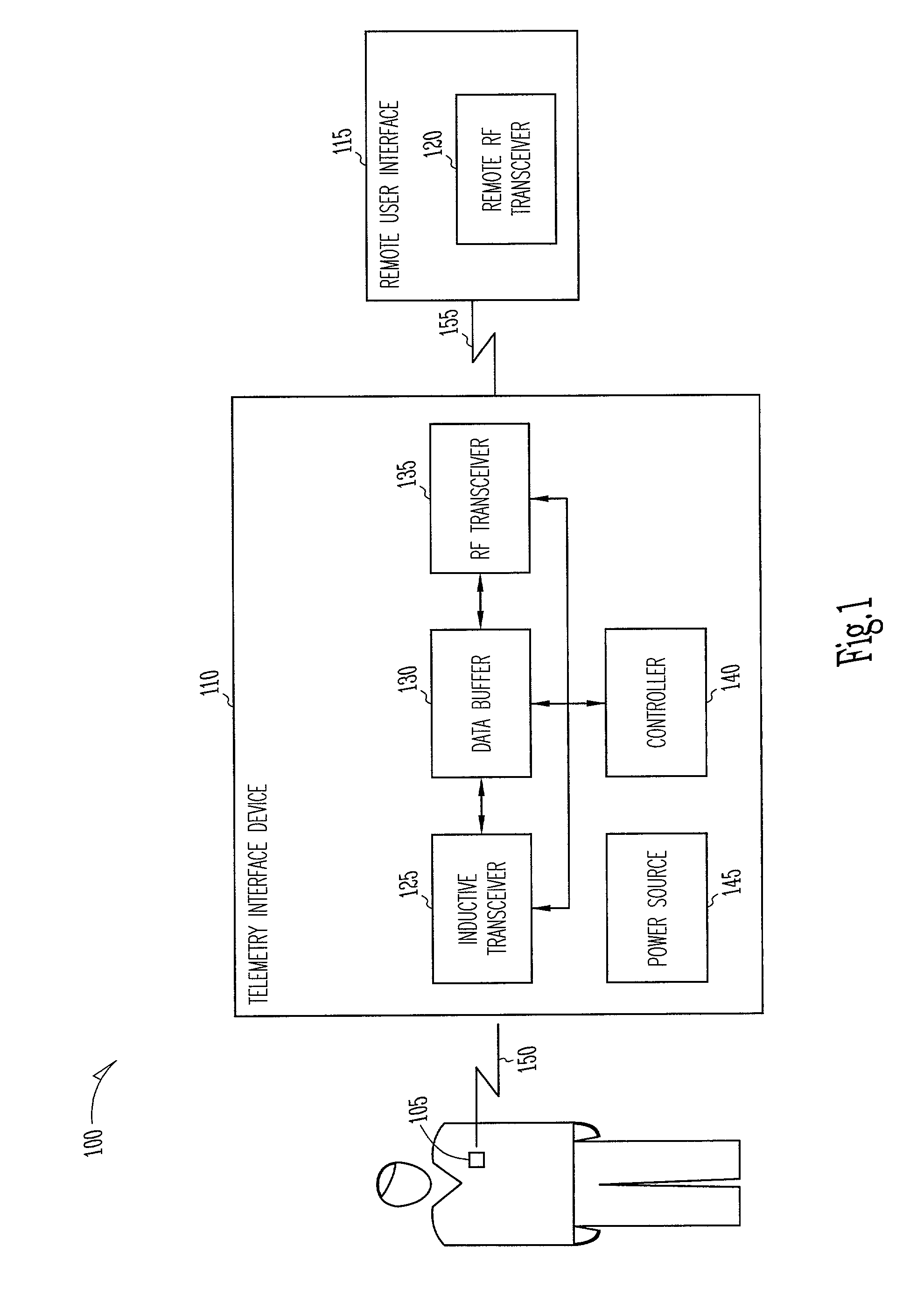

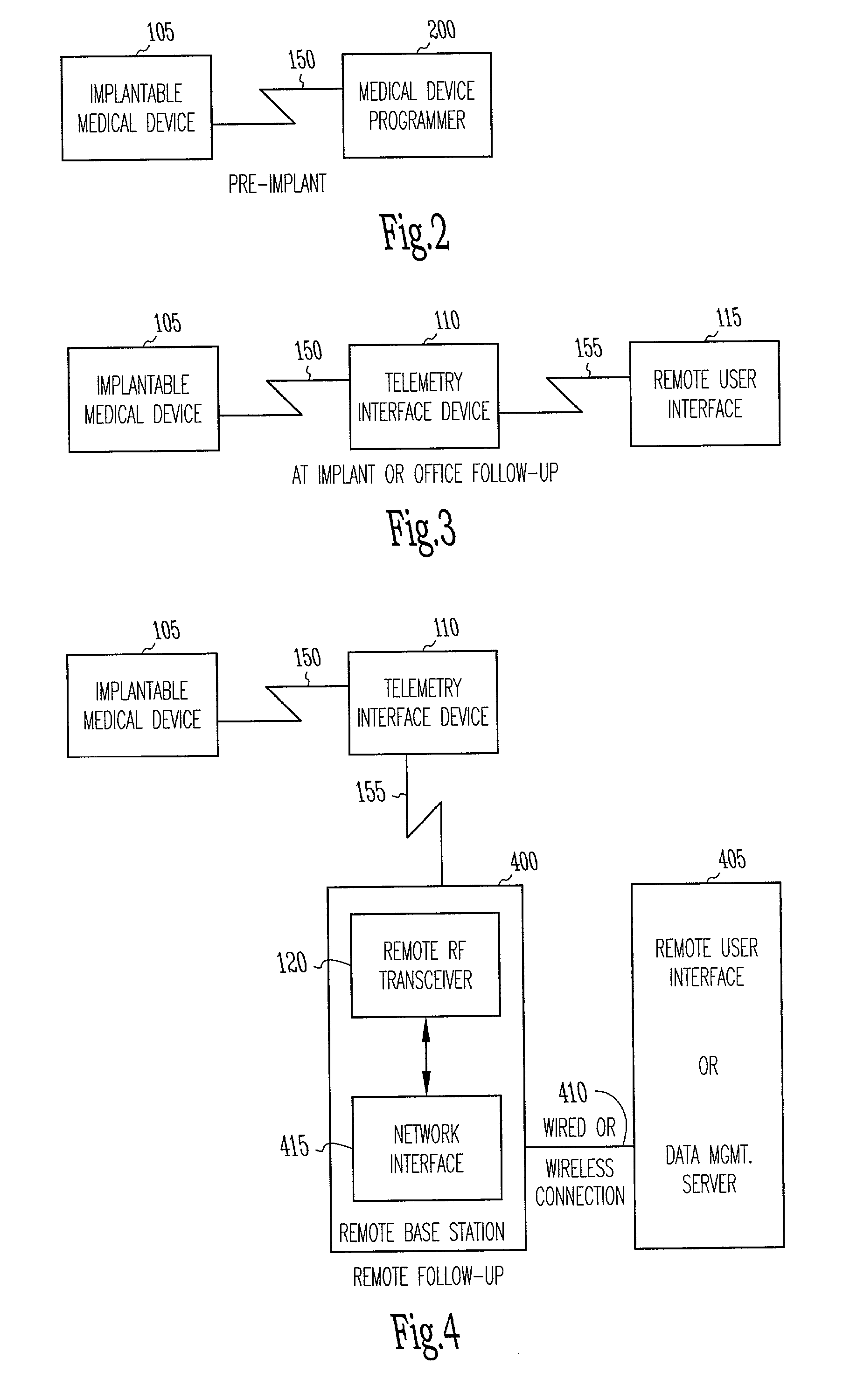

Two-hop telemetry interface for medical device

This document discusses a system that includes an intermediary telemetry interface device for communicating between a cardiac rhythm management system or other implantable medical device and a programmer or other remote device. One example provides an inductive near-field communication link between the telemetry interface and the implantable medical device, and a radio-frequency (RF) far-field communication link between the telemetry interface device and the remote device. The telemetry interface device provides data buffering. In another example, the telemetry interface device includes a data processing module for compressing and / or decompressing data, or for extracting information from data. Such information extraction may include obtaining heart rate, interval, and / or depolarization morphology information from an electrogram signal received from the implantable medical device.

Owner:CARDIAC PACEMAKERS INC

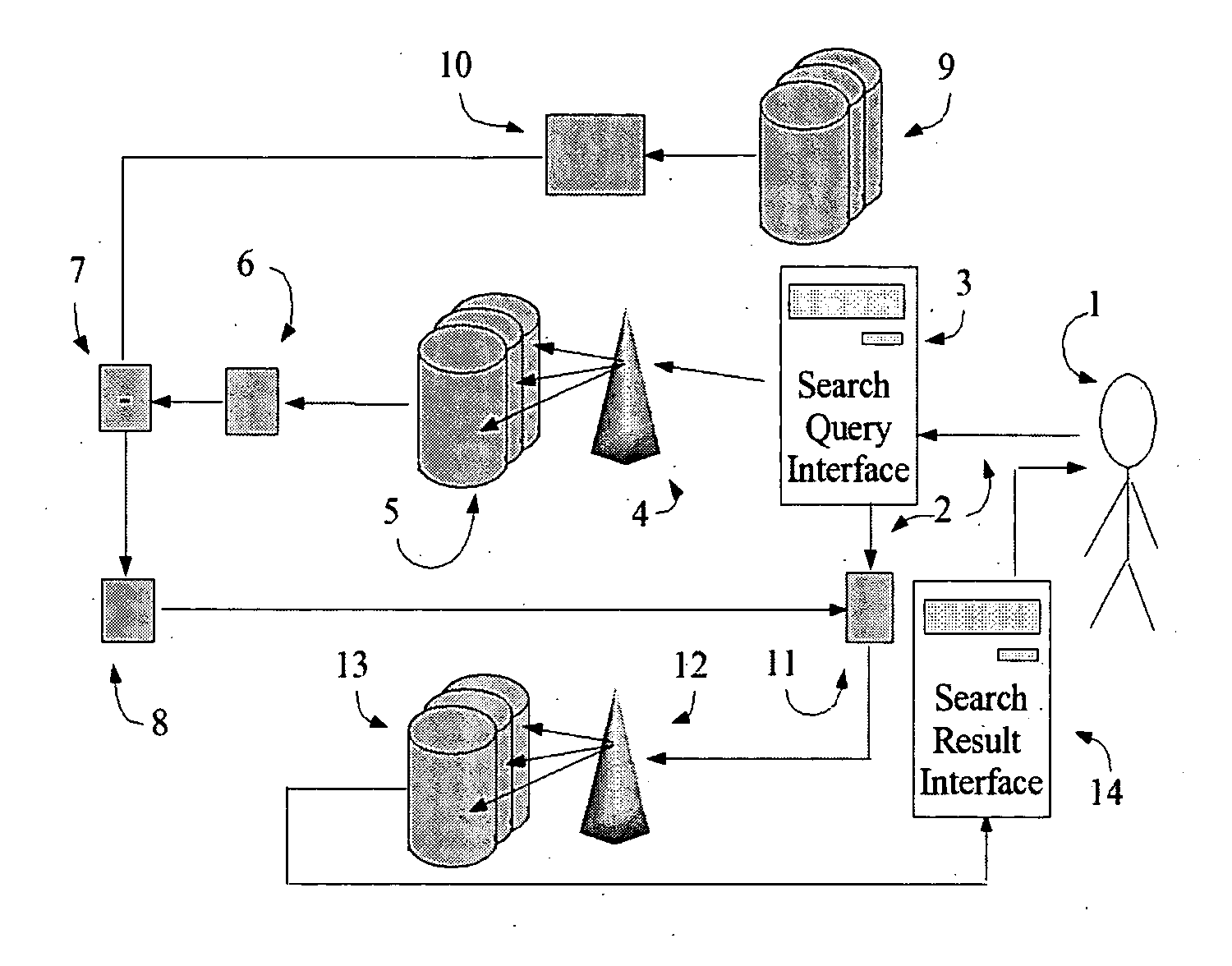

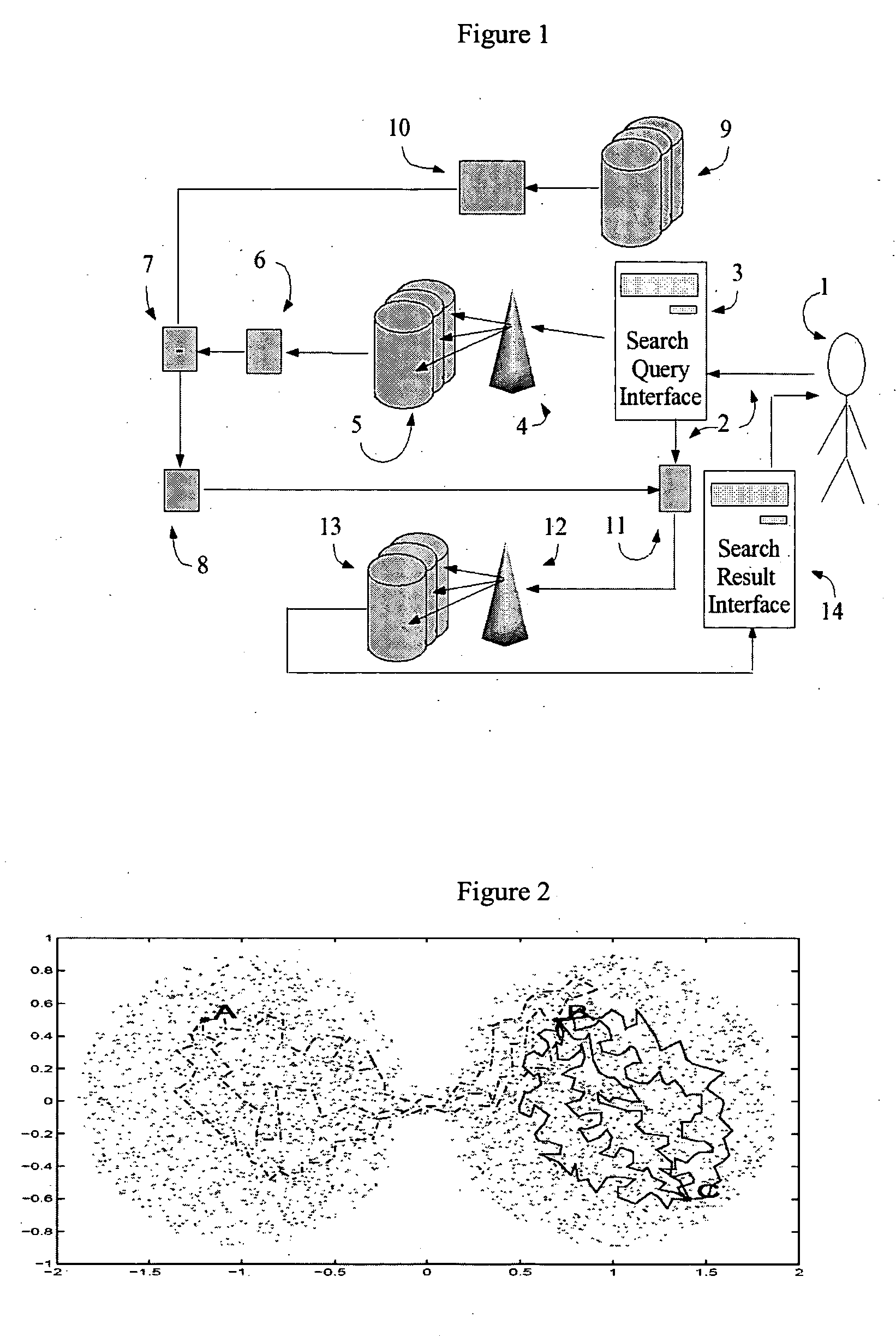

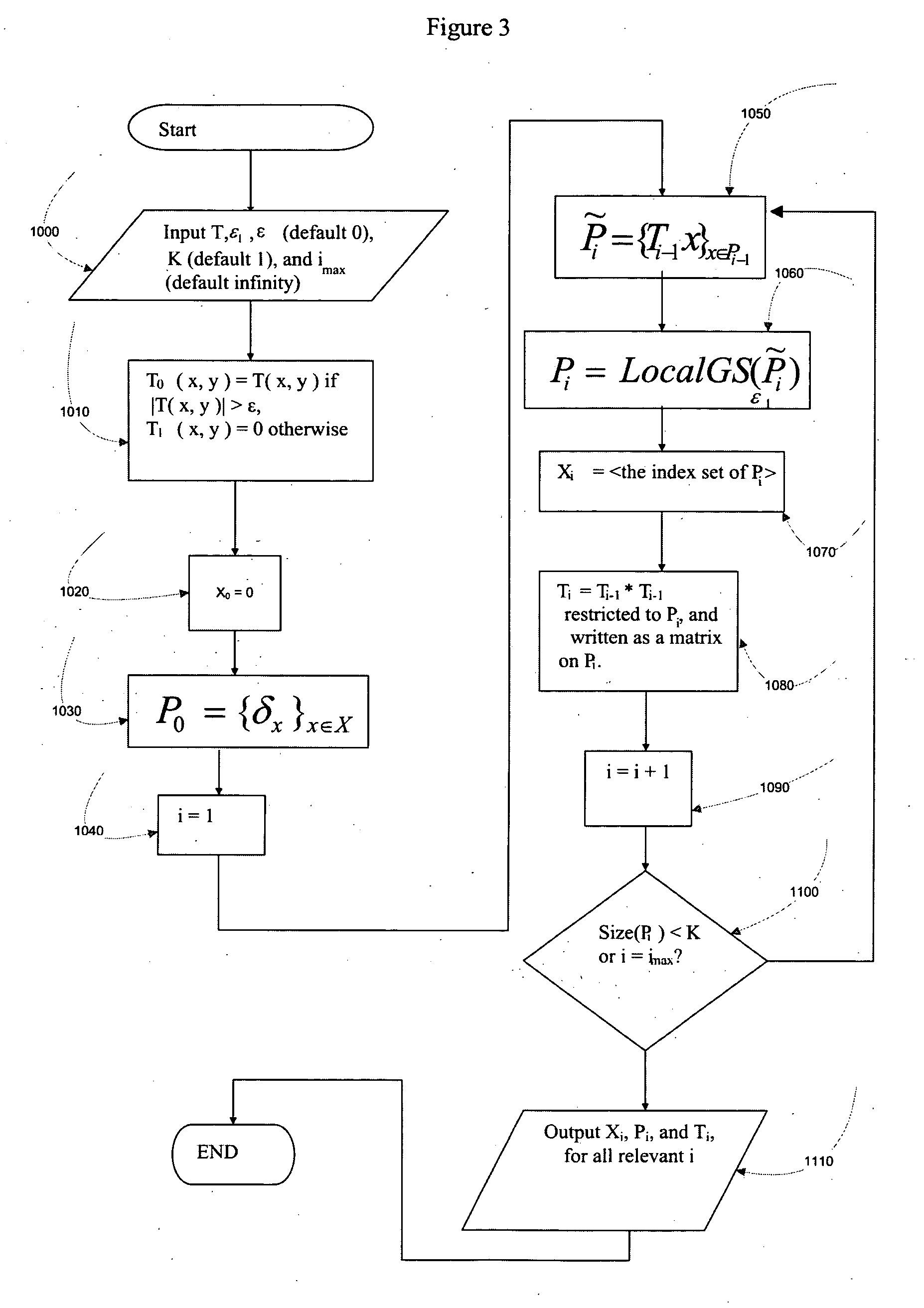

System and method for document analysis, processing and information extraction

InactiveUS20060155751A1Increase in amount of trafficEasy retrievalWeb data indexingSpecial data processing applicationsDocument analysisData element

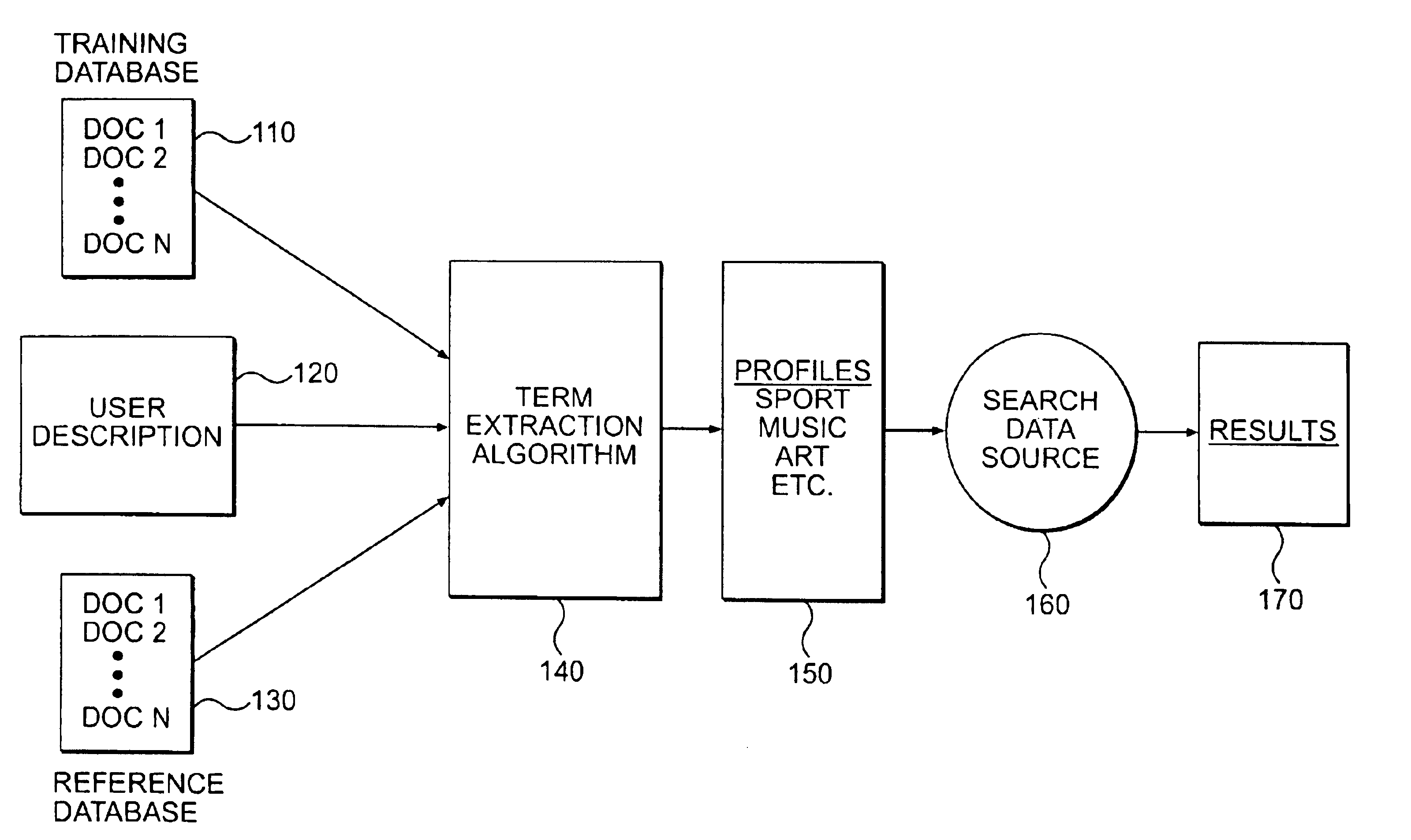

A method and system for retrieving information in response to an information retrieval request comprises extracting additional information from a first corpus of data elements based on the request. The request is modified based on the additional information to refine the scope of information to be retrieved from a second corpus of data elements. The information is retrieved from the second corpus of data elements based on the modified request.

Owner:PLAIN SIGHT SYST

Apparatus and method for creating 3D content for oriental painting

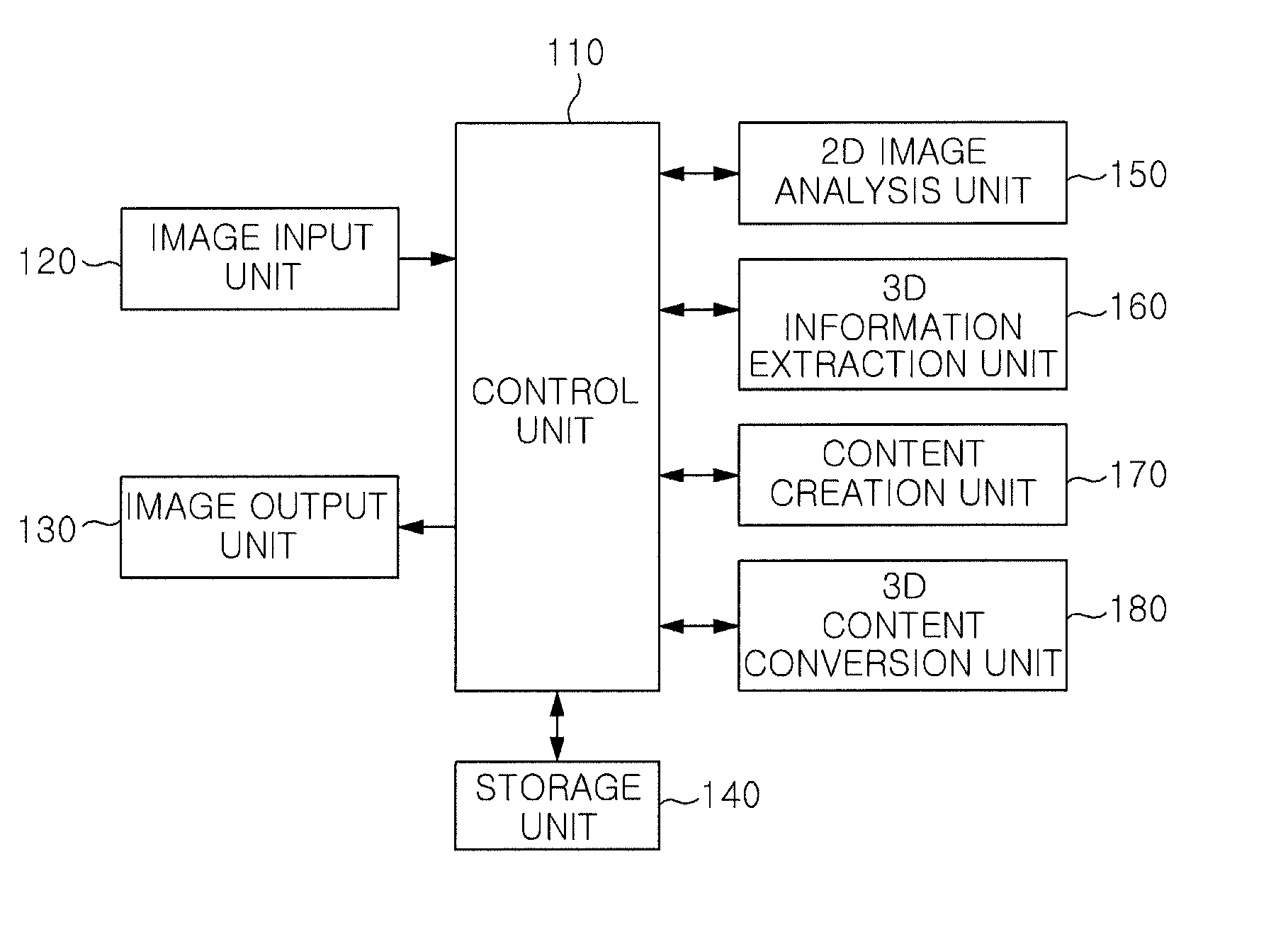

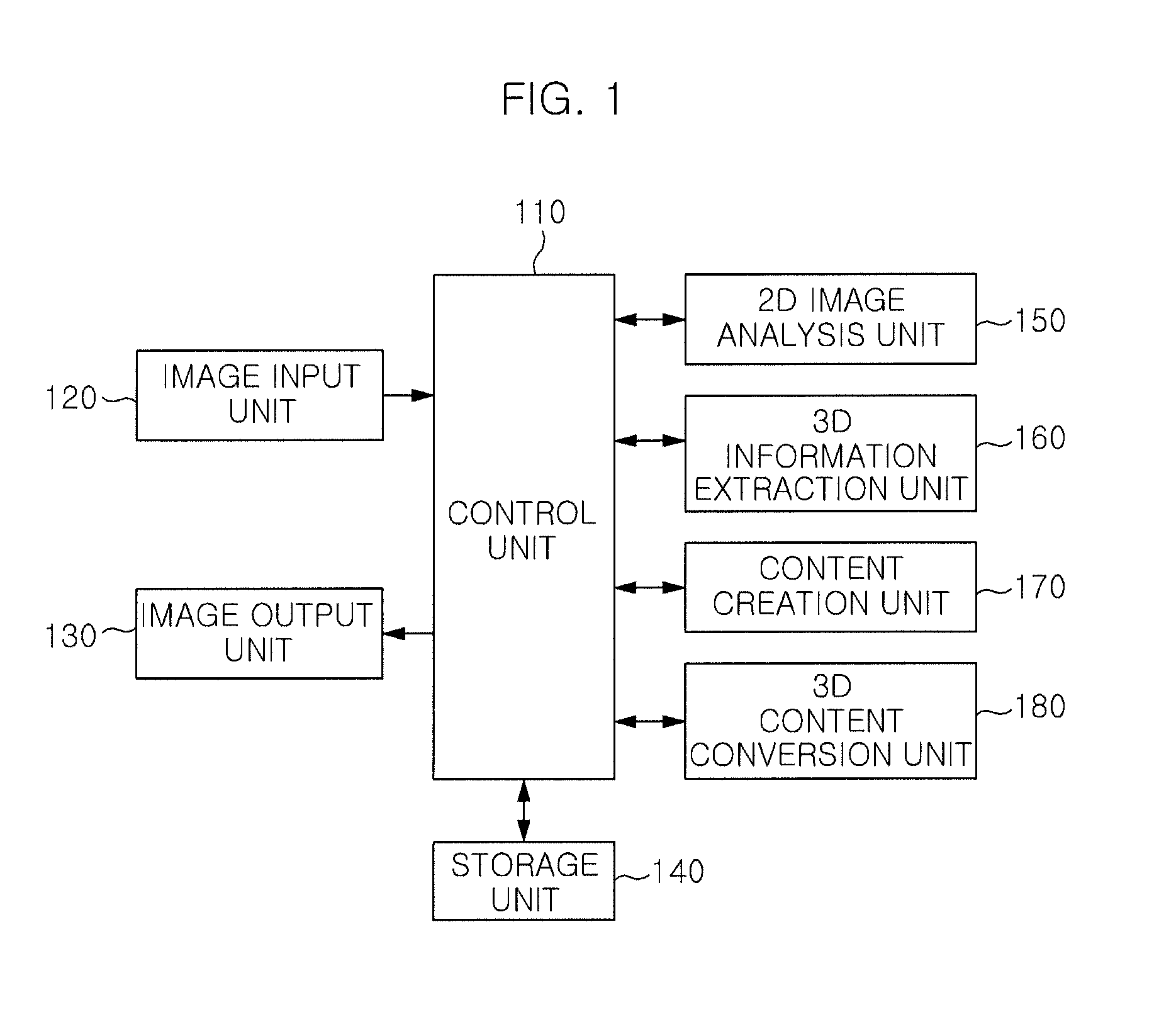

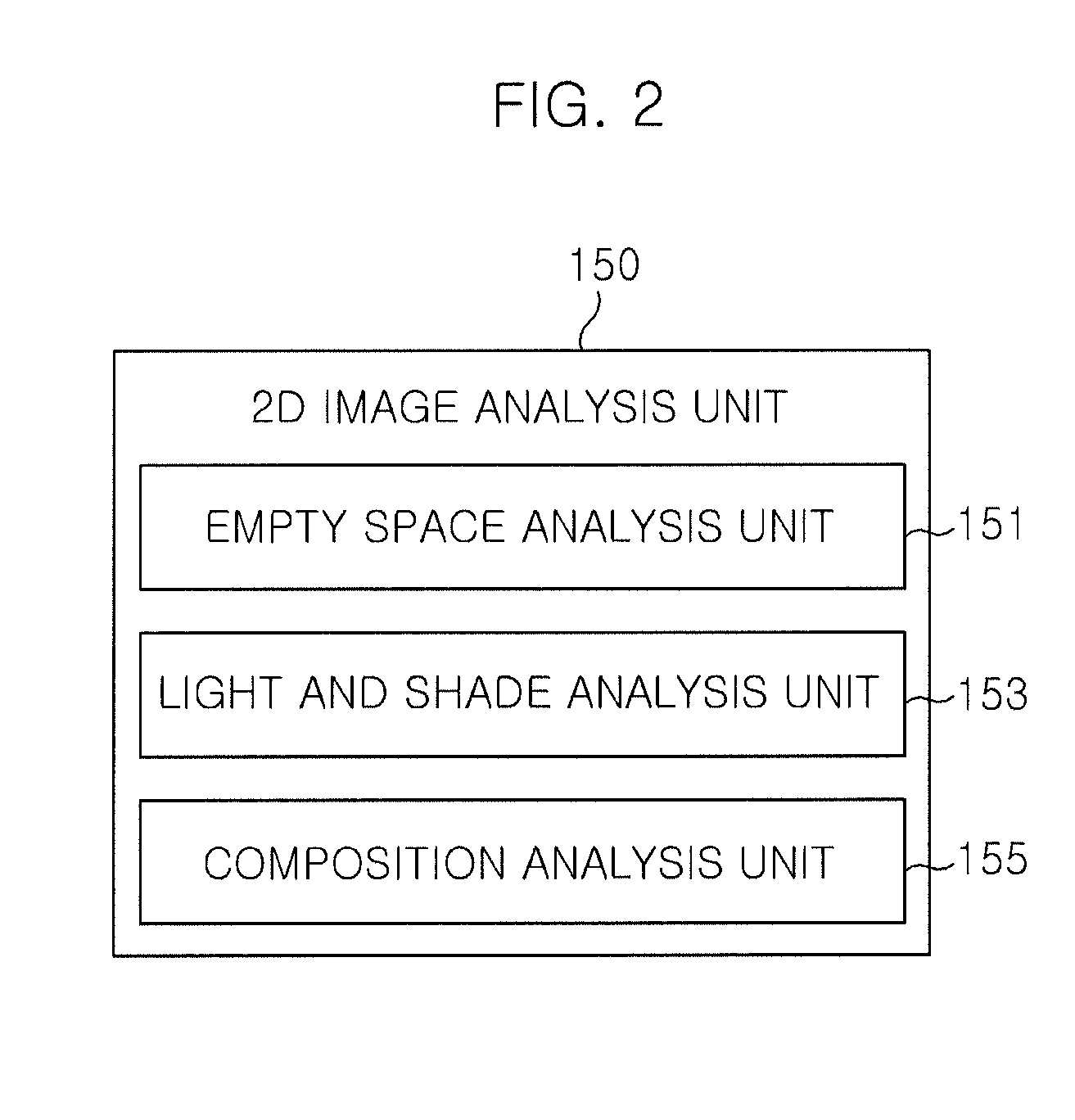

Disclosed herein are an apparatus and method for creating 3D content for an Oriental painting. The apparatus includes a 2D image analysis unit, a 3D information extraction unit, a content creation unit, and a 3D content conversion unit. The 2D image analysis unit receives previous knowledge information, and analyzes 2D information about at least one of an empty space, light and shading, and a composition of an image of the Oriental painting based on the previous knowledge information. The 3D information extraction unit extracts 3D information about at least one of a distance, a depth, a viewpoint, and a focus of the Oriental painting image. The content creation unit creates content for the Oriental painting image based on the analysis information and the 3D information. The 3D content conversion unit converts the content for the Oriental painting image into 3D content based on the 3D information.

Owner:ELECTRONICS & TELECOMM RES INST

Automated segmentation and information extraction of broadcast news via finite state presentation model

InactiveUS7765574B1More efficientMore timelyTelevision system detailsDigital data information retrievalWeb browserPublic information

Owner:OAKHAM TECH

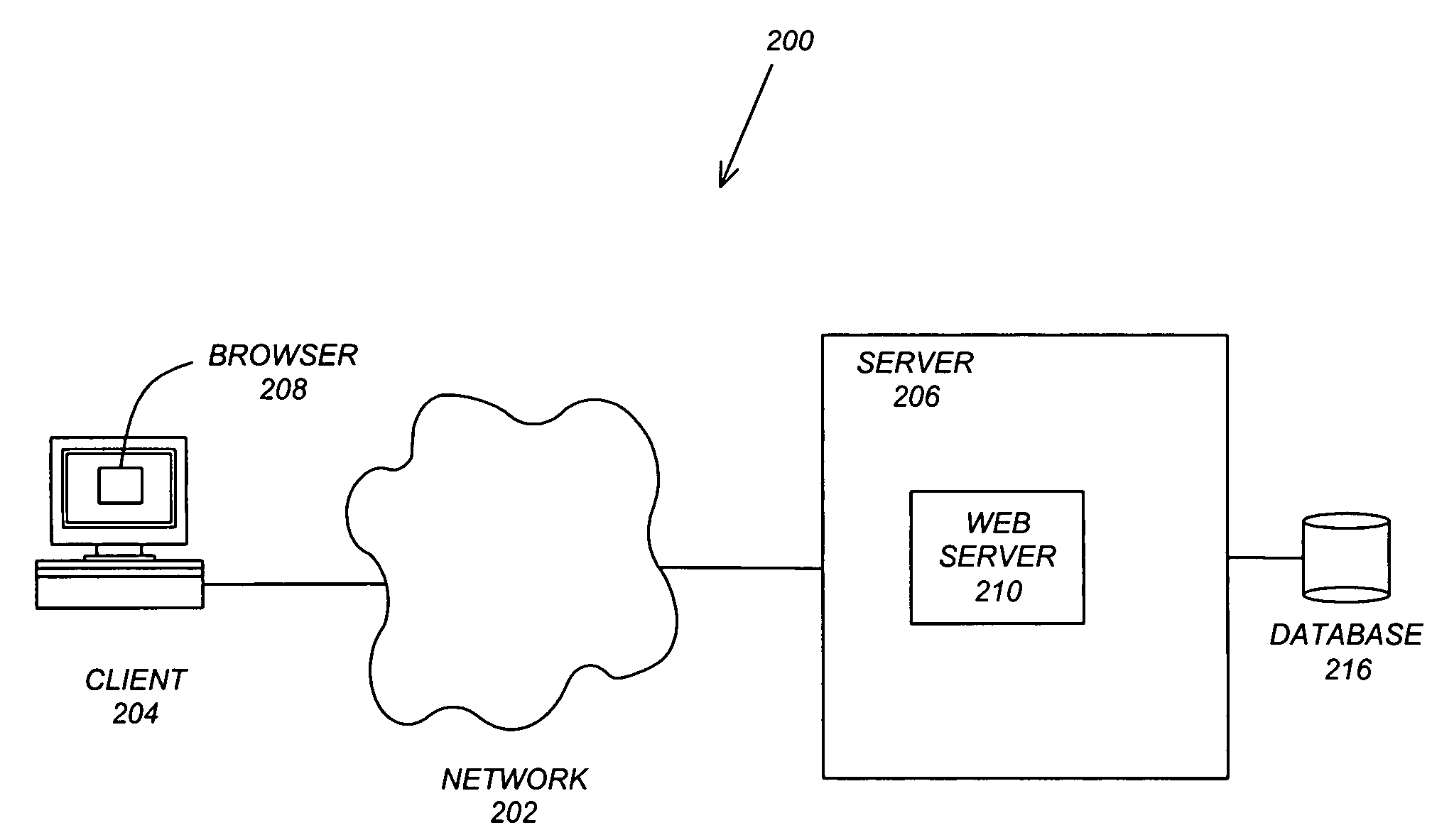

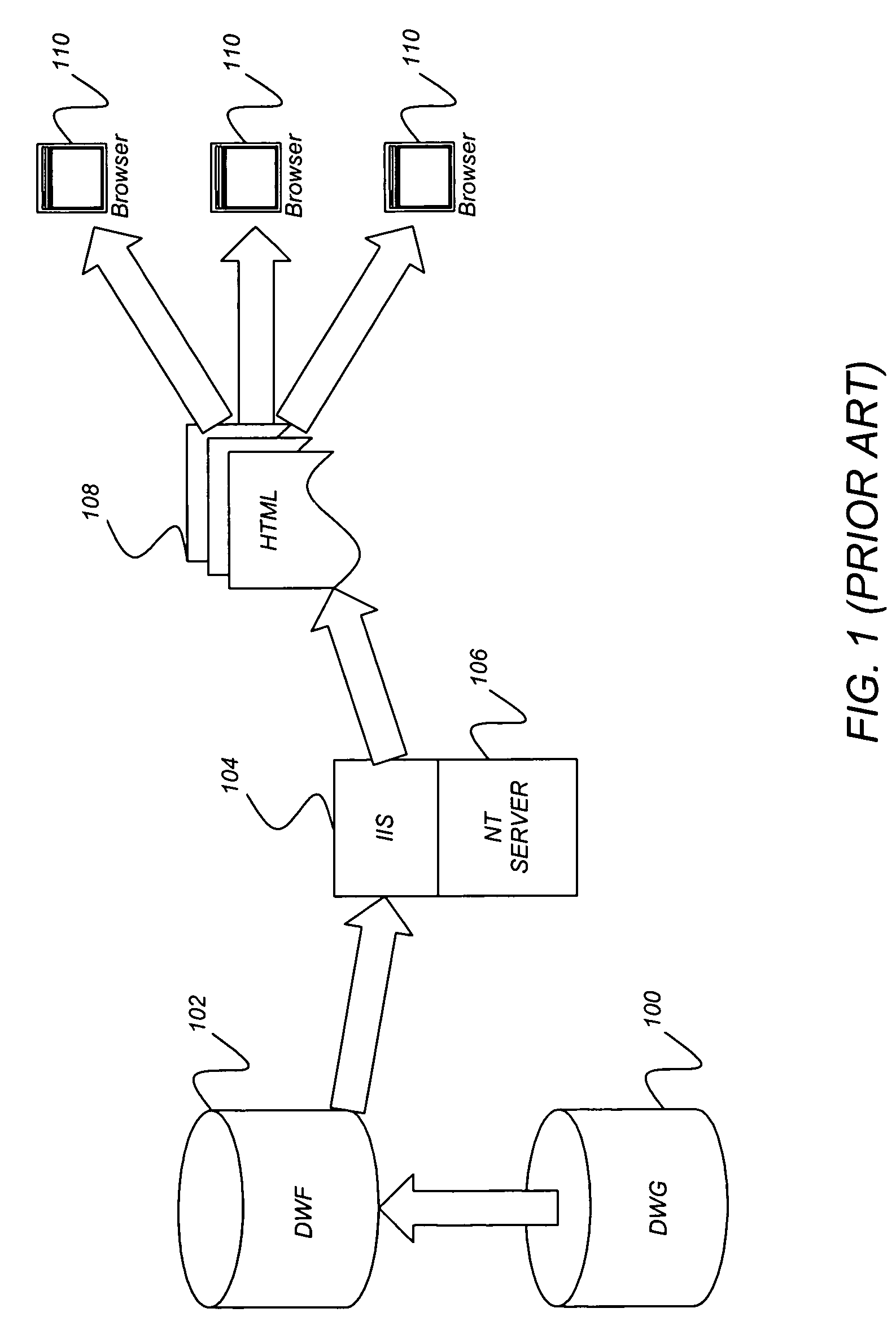

Method and apparatus for providing access to drawing information

InactiveUS7047180B1Improve automationImprove the level ofData processing applicationsDigital data processing detailsWeb siteWeb browser

A method, apparatus, and article of manufacture for automating the finding and serving of CAD design data is disclosed. One or more embodiments of the invention increase the level of access and automation possible with design data. One or more embodiments of the invention provide a server comprised of various components including an information extraction component, a search component, and a conversion component. The various components provide users with enhanced access to drawing and design data. One or more embodiments of the invention also provide programmable, scriptable components that can query, filter, manipulate, merge, and translate design drawing data using the Web browser interface. Further, Web site administrators can use the present invention to dynamically index and publish design drawing data.

Owner:AUTODESK INC

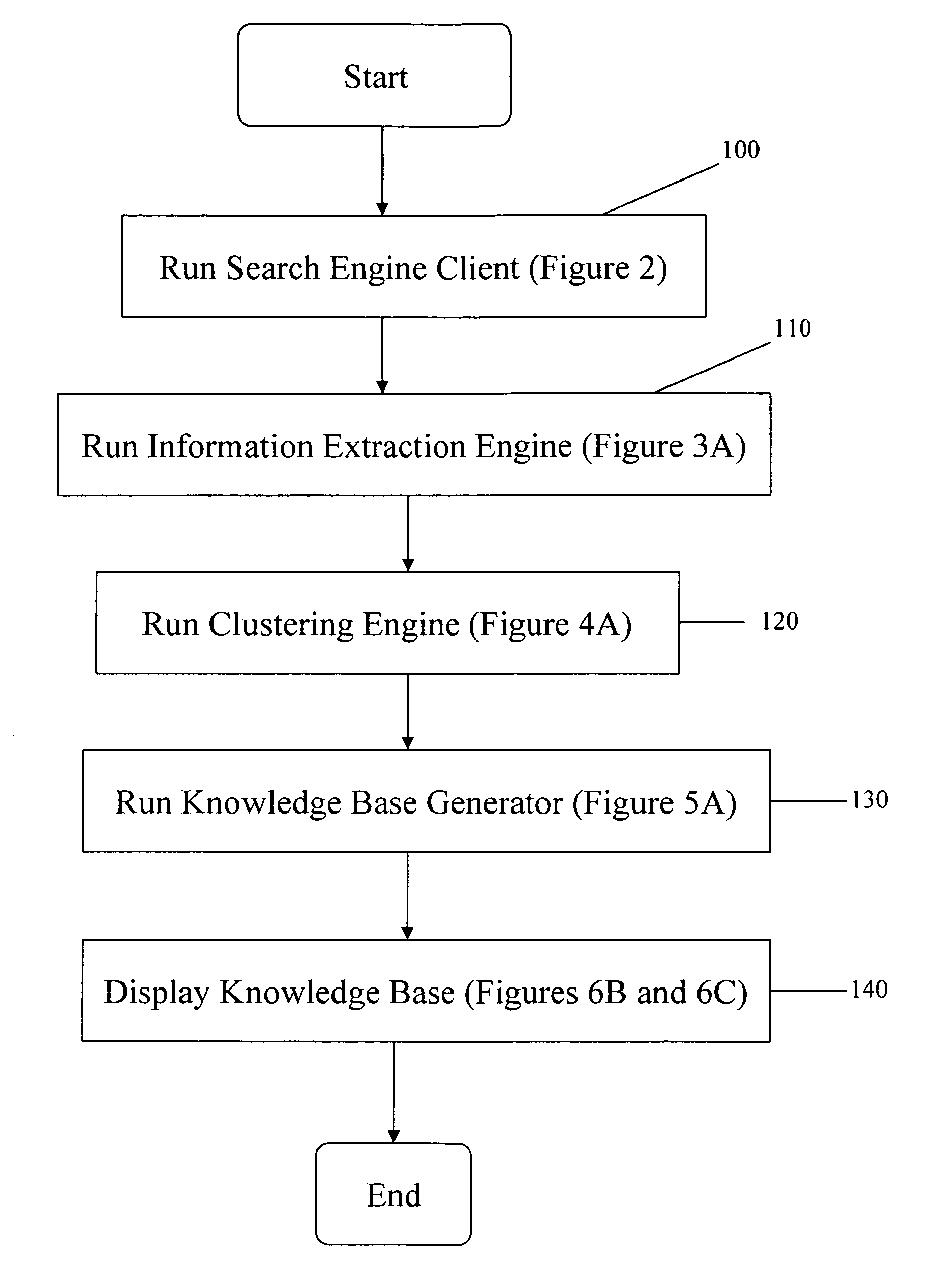

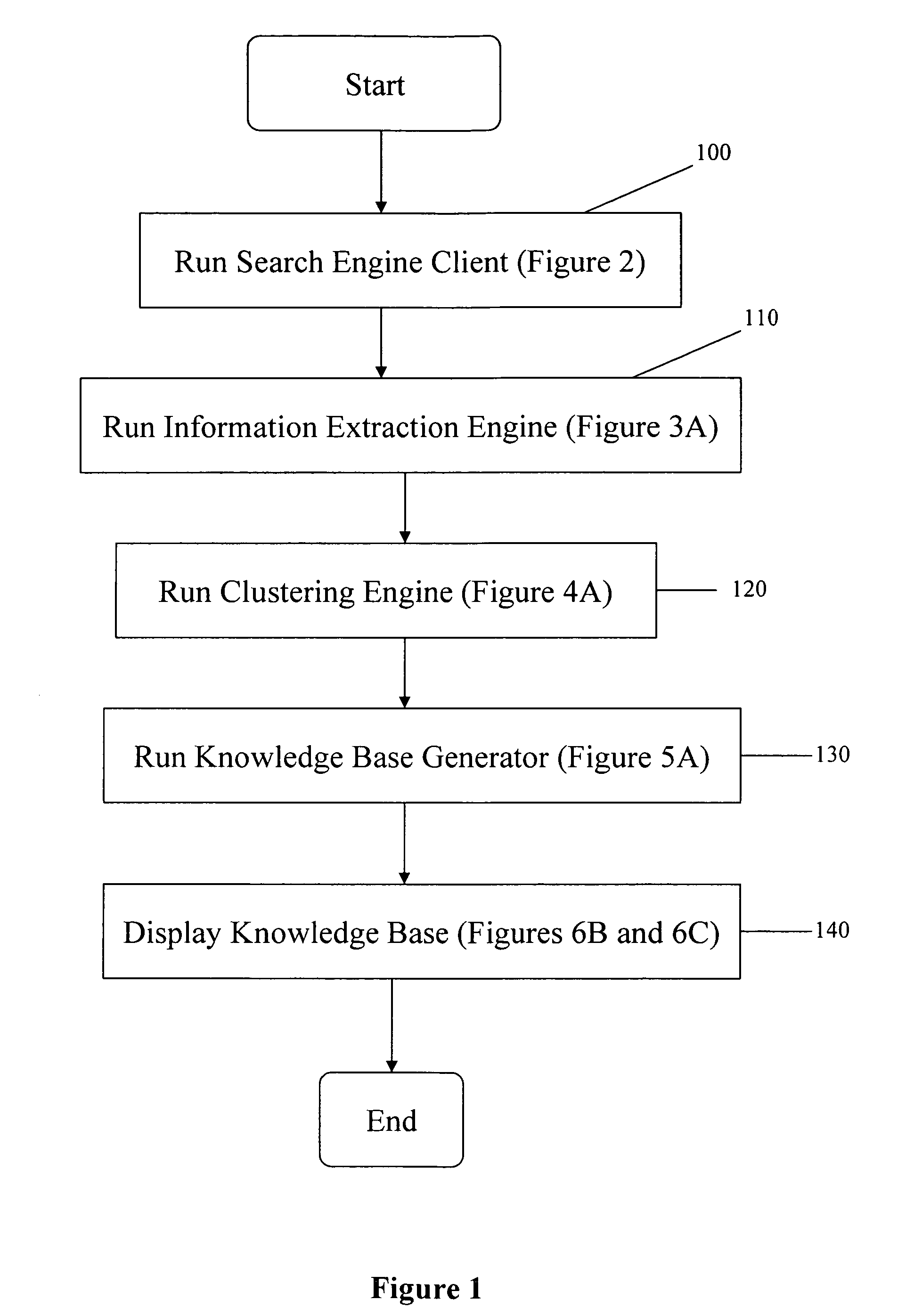

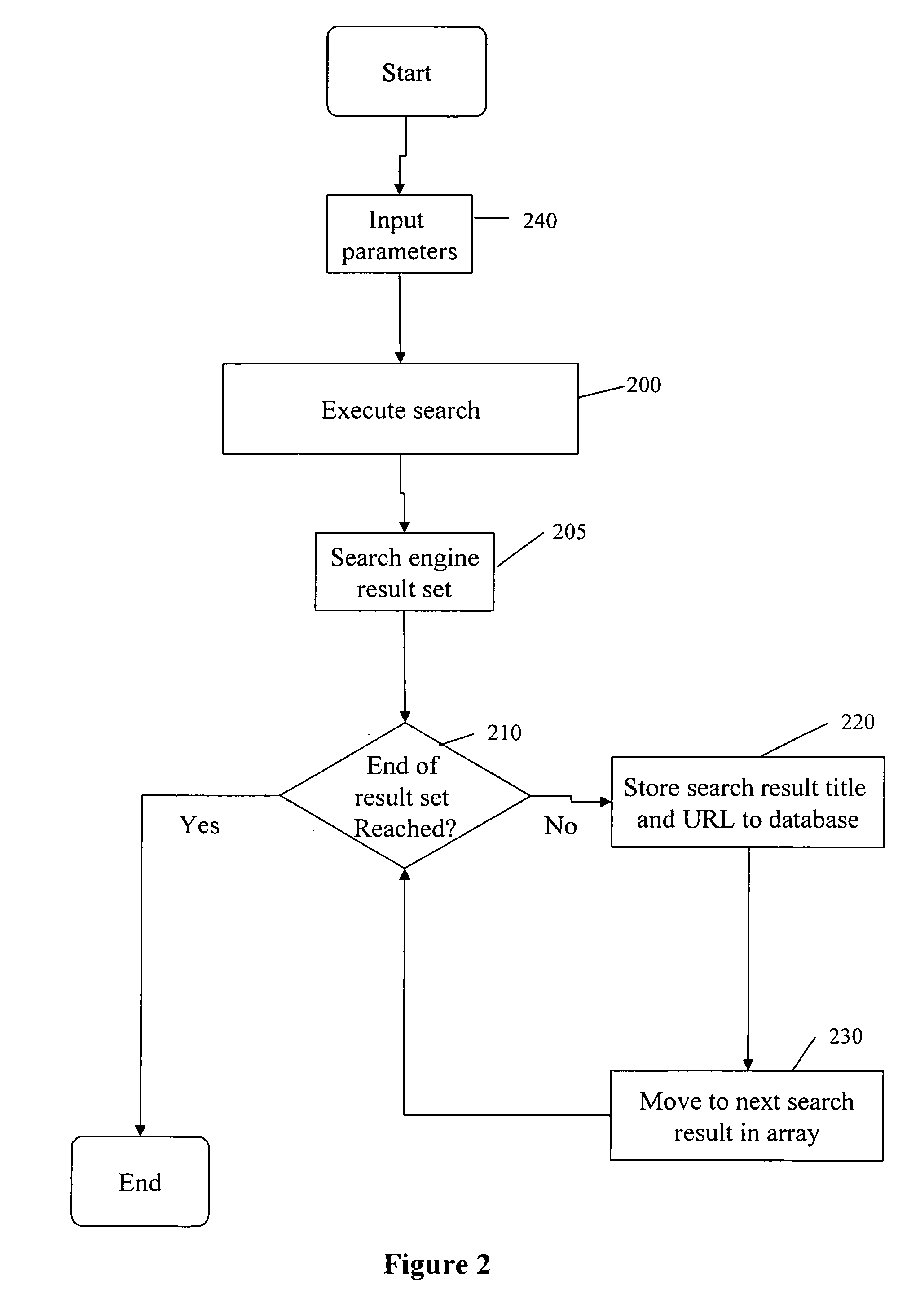

Method and system for automated knowledge extraction and organization

InactiveUS20070078889A1Satisfies needDigital data processing detailsSpecial data processing applicationsInformation resourceKnowledge extraction

A method and system for automated knowledge extraction and organization, which uses information retrieval services to identify text documents related to a specific topic, to identify and extract trends and patterns from the identified documents, and to transform those trends and patterns into an understandable, useful and organized information resource. An information extraction engine extracts concepts and associated text passages from the identified text documents. A clustering engine organizes the most significant concepts in a hierarchical taxonomy. A hypertext knowledge base generator generates a knowledge base by organizing the extracted concepts and associated text passages according to the hierarchical taxonomy.

Owner:HOSKINSON RONALD ANDREW

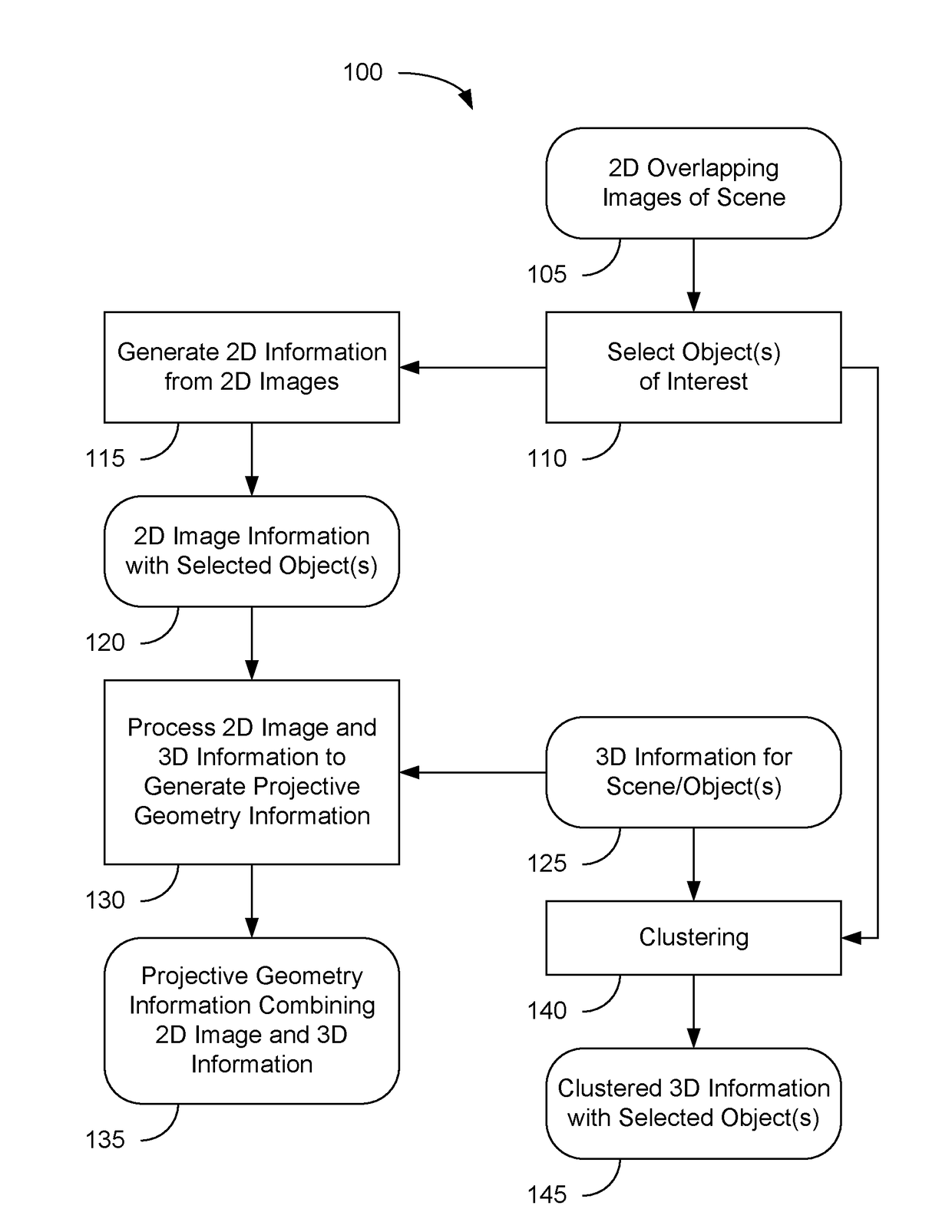

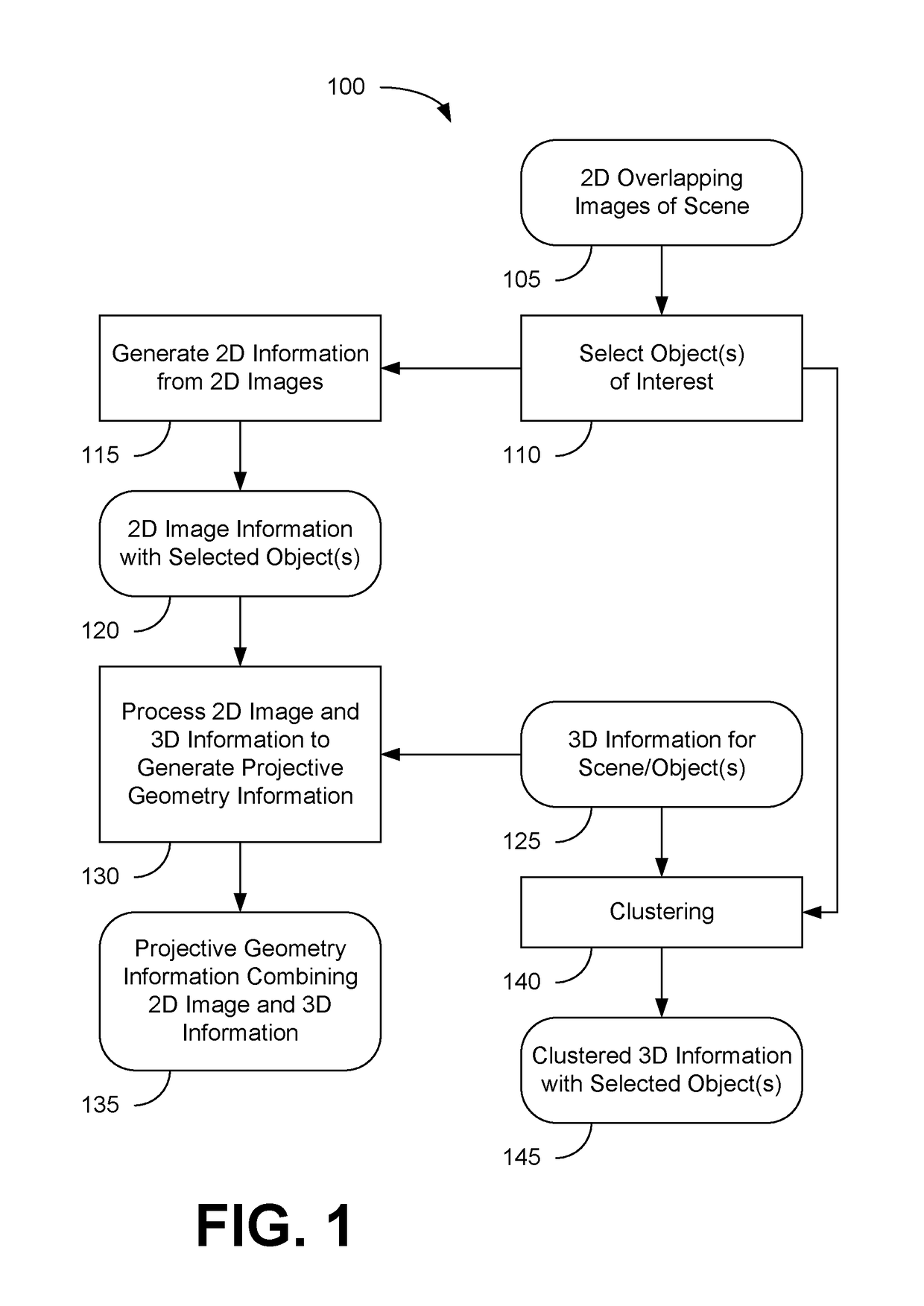

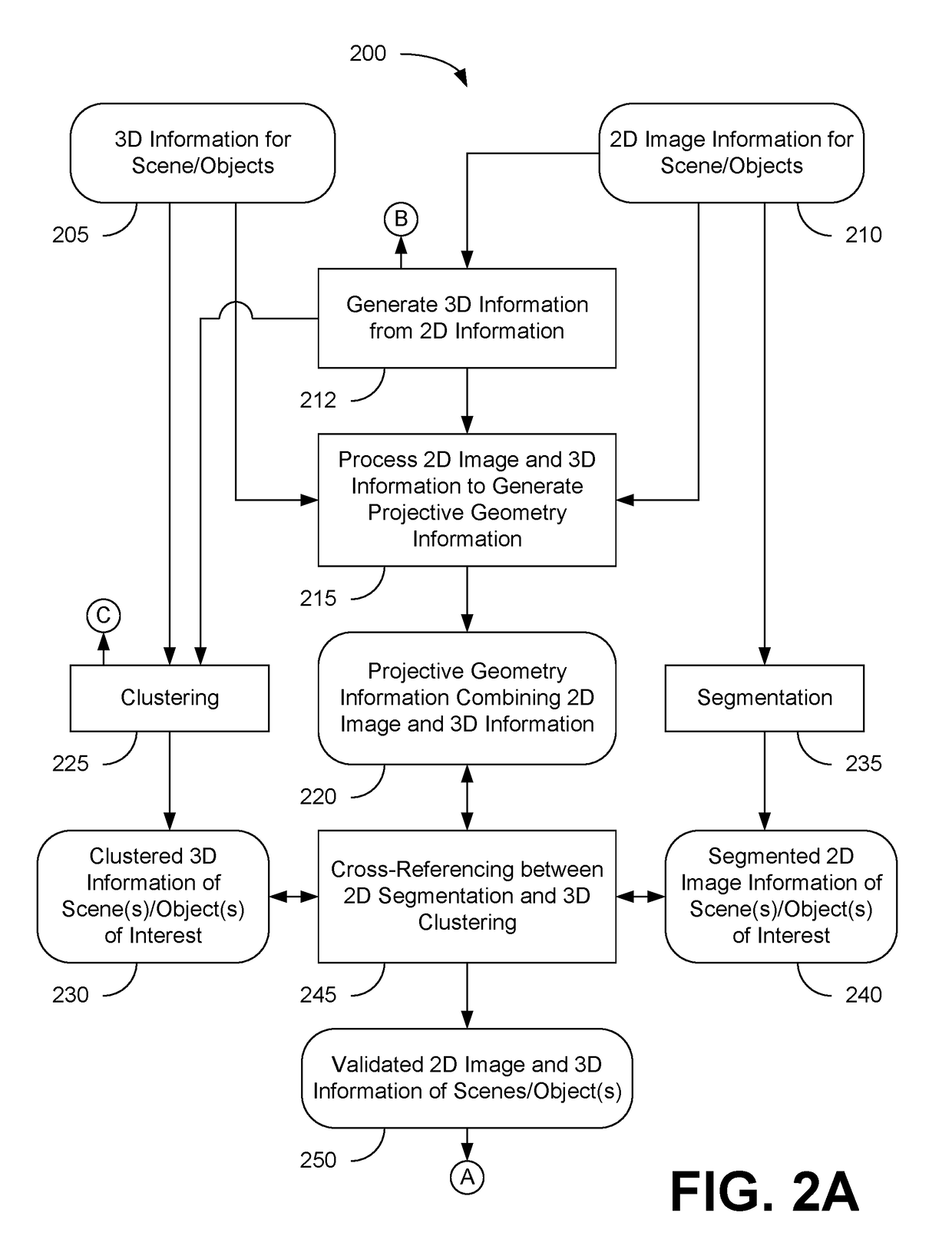

Systems and methods for extracting information about objects from scene information

Examples of various method and systems are provided for information extraction from scene information. 2D image information can be generated from 2D images of the scene that are overlapping at least part of one or more object(s). The 2D image information can be combined with 3D information about the scene incorporating at least part of the object(s) to generate projective geometry information. Clustered 3D information associated with the object(s) can be generated by partitioning and grouping 3D data points present in the 3D information. The clustered 3D information can be used to provide, e.g., measurement information, dimensions, geometric information, and / or topological information about the object(s). Segmented 2D information can also be generated from the 2D image information. Validated 2D and 3D information can be produced by cross-referencing between the projective geometry information, clustered 3D information, and / or segmented 2D image information, and used to label the object(s) in the scene.

Owner:POINTIVO

Learning data prototypes for information extraction

InactiveUS6714941B1Facilitate wrapper generationData processing applicationsWeb data indexingLearning dataData mining

Owner:IMPORT IO GLOBAL INC +1

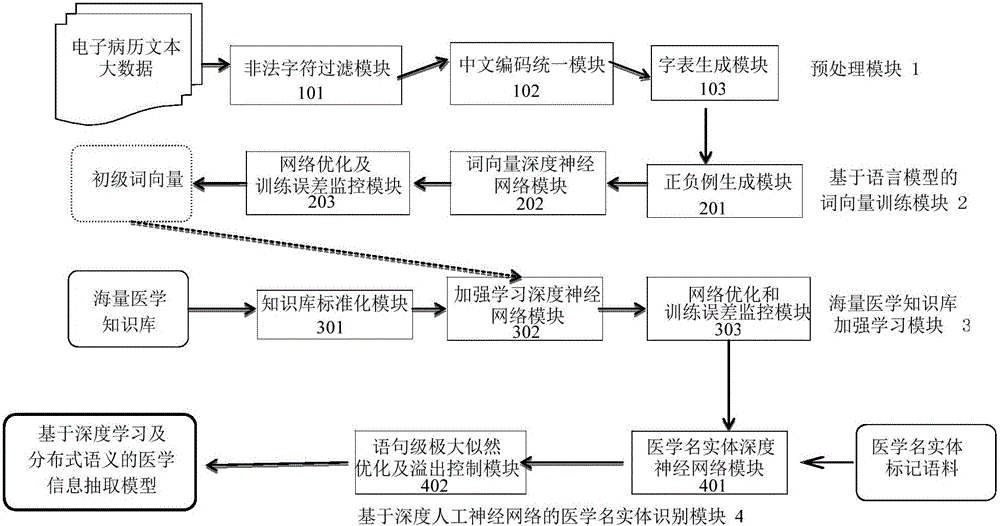

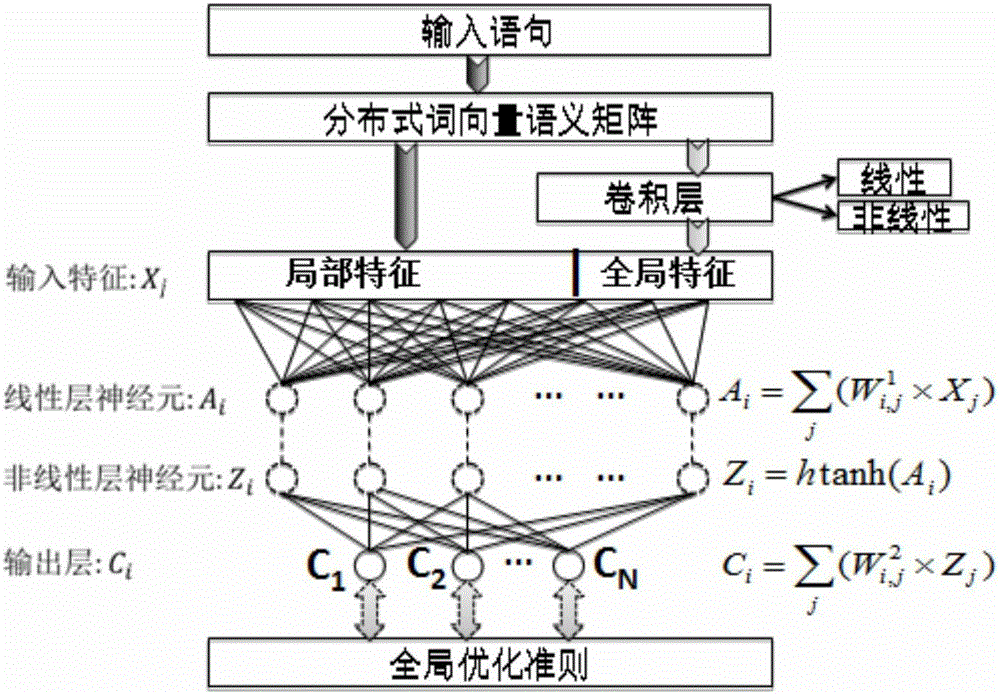

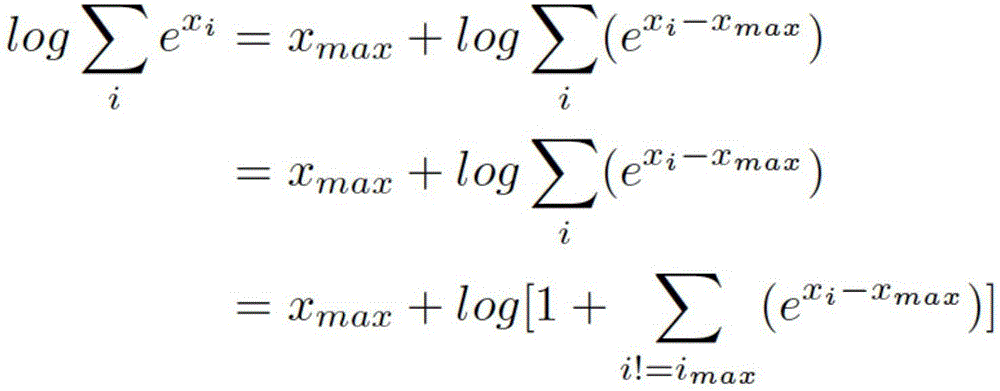

Medical information extraction system and method based on depth learning and distributed semantic features

ActiveCN105894088AAvoid floating point overflow problemsHigh precisionNeural learning methodsNerve networkStudy methods

he invention discloses a medical information extraction system and method based on depth learning and distributed semantic features. The system is composed of a pretreatment module, a linguistic-model-based word vector training module, a massive medical knowledge base reinforced learning module, and a depth-artificial-neural-network-based medical term entity identification module. With a depth learning method, generation of the probability of a linguistic model is used as an optimization objective; and a primary word vector is trained by using medical text big data; on the basis of the massive medical knowledge base, a second depth artificial neural network is trained, and the massive knowledge base is combined to the feature leaning process of depth learning based on depth reinforced learning, so that distributed semantic features for the medical field are obtained; and then Chinese medical term entity identification is carried out by using the depth learning method based on the optimized statement-level maximum likelihood probability. Therefore, the word vector is generated by using lots of unmarked linguistic data, so that the tedious feature selection and optimization adjustment process during medical natural language process can be avoided.

Owner:神州医疗科技股份有限公司 +1

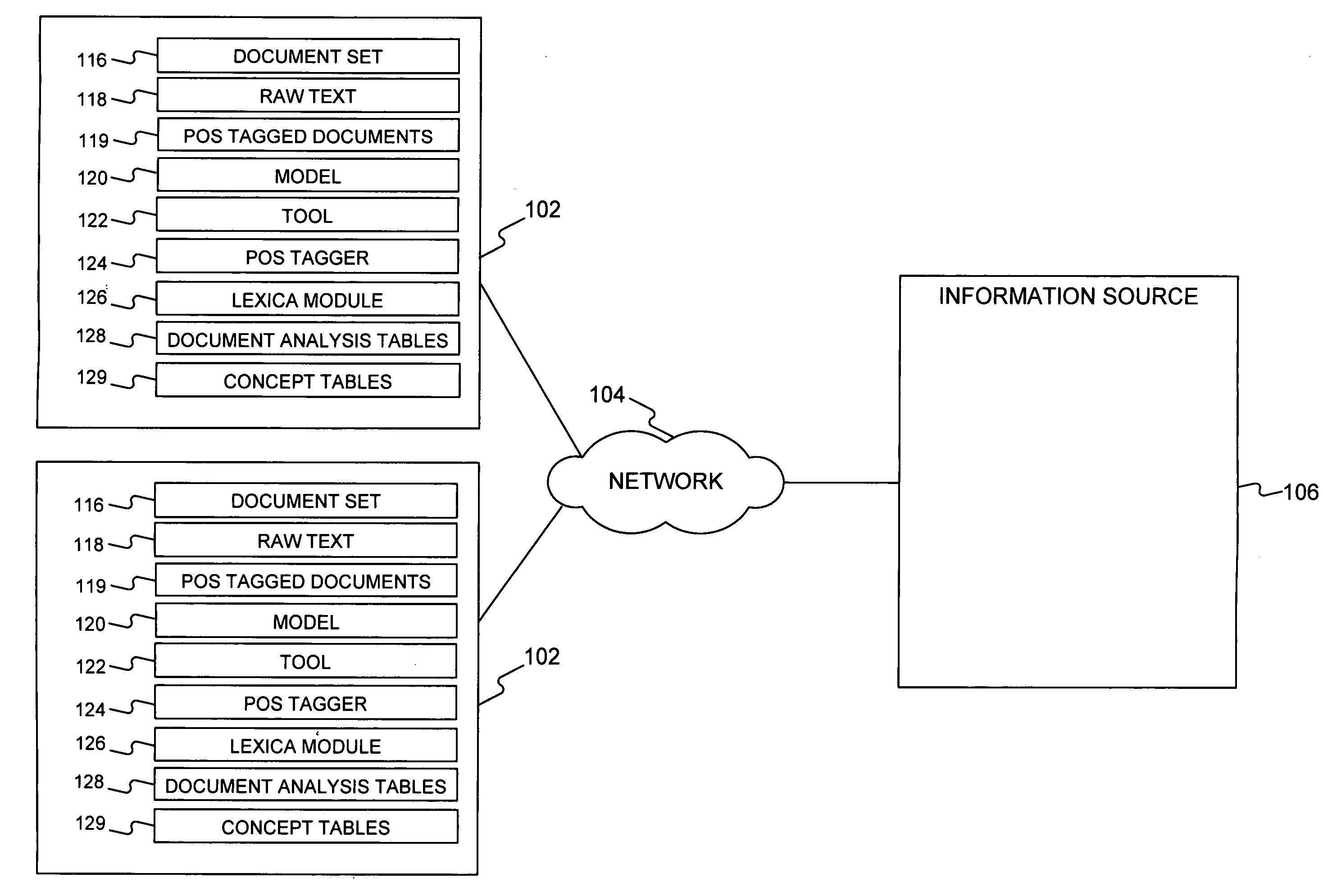

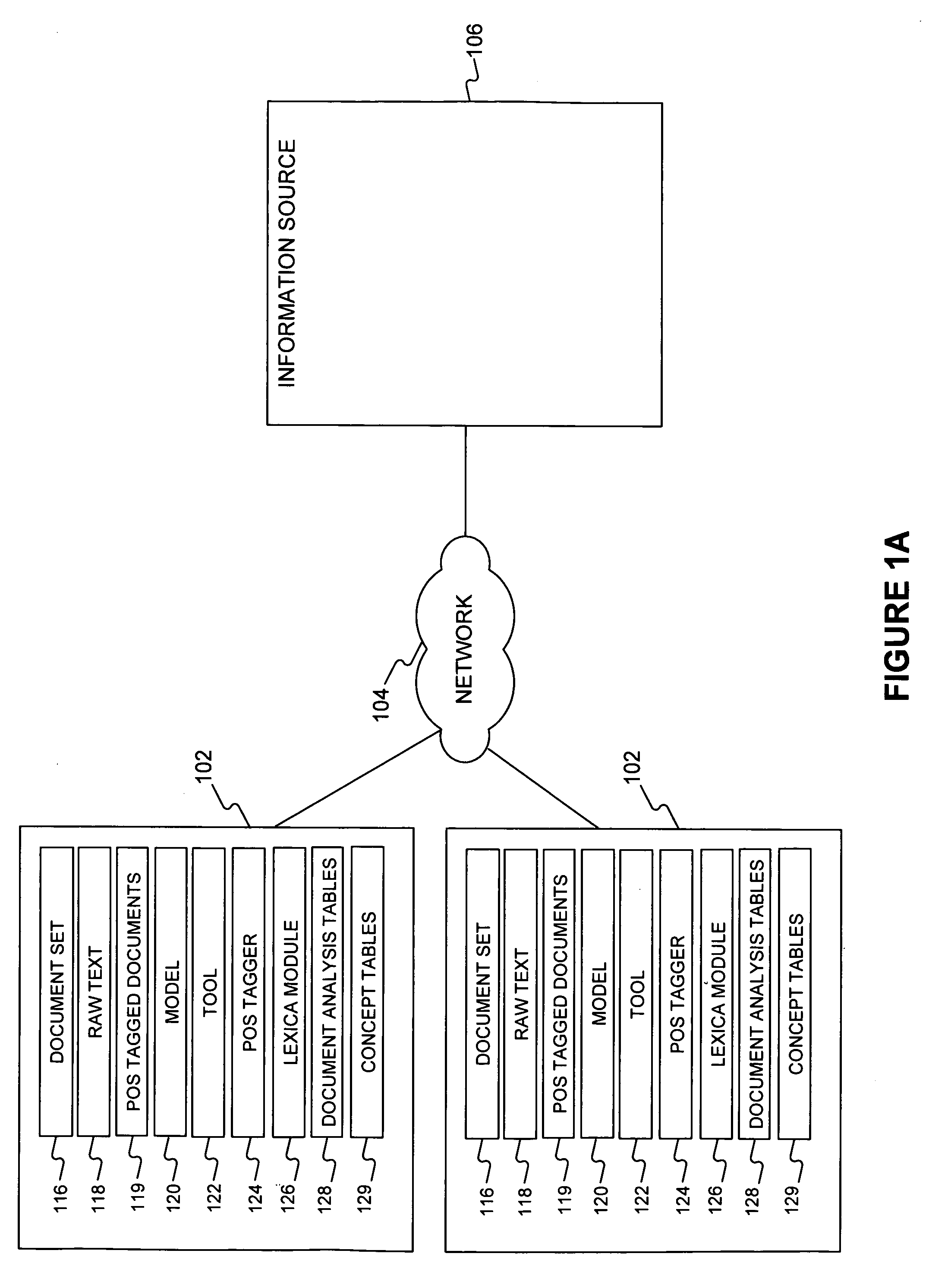

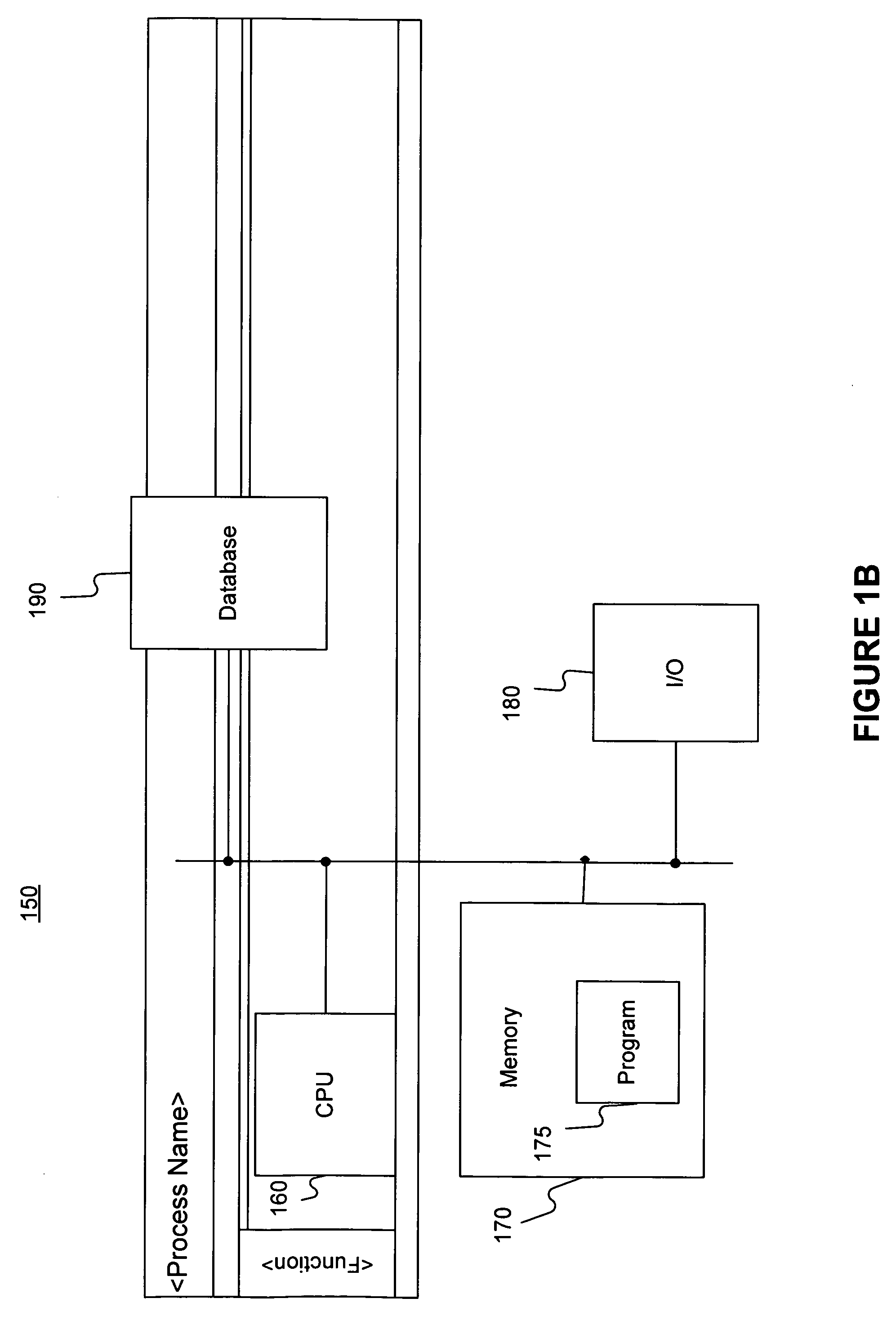

Method and system for information extraction and modeling

InactiveUS20100169299A1Data processing applicationsDigital data processing detailsDocument preparationDocumentation

Systems and methods for modeling information from a set of documents are disclosed. A tool allows a user to extract and model concepts of interest and relations among the concepts from a set of documents. The tool automatically configures a database of the model so that the model and extracted concepts from the documents may be customized, modified, and shared.

Owner:NOBLIS

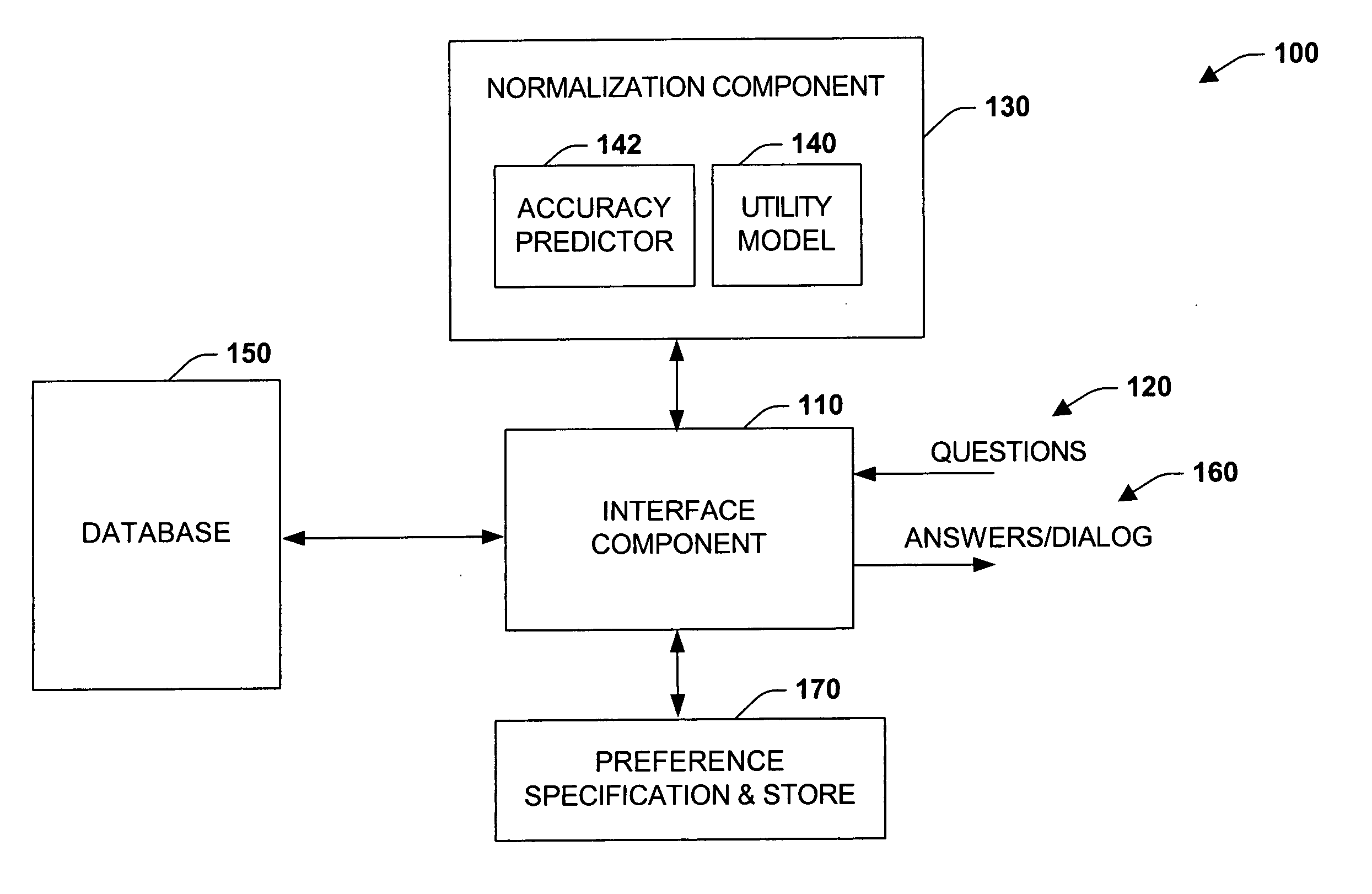

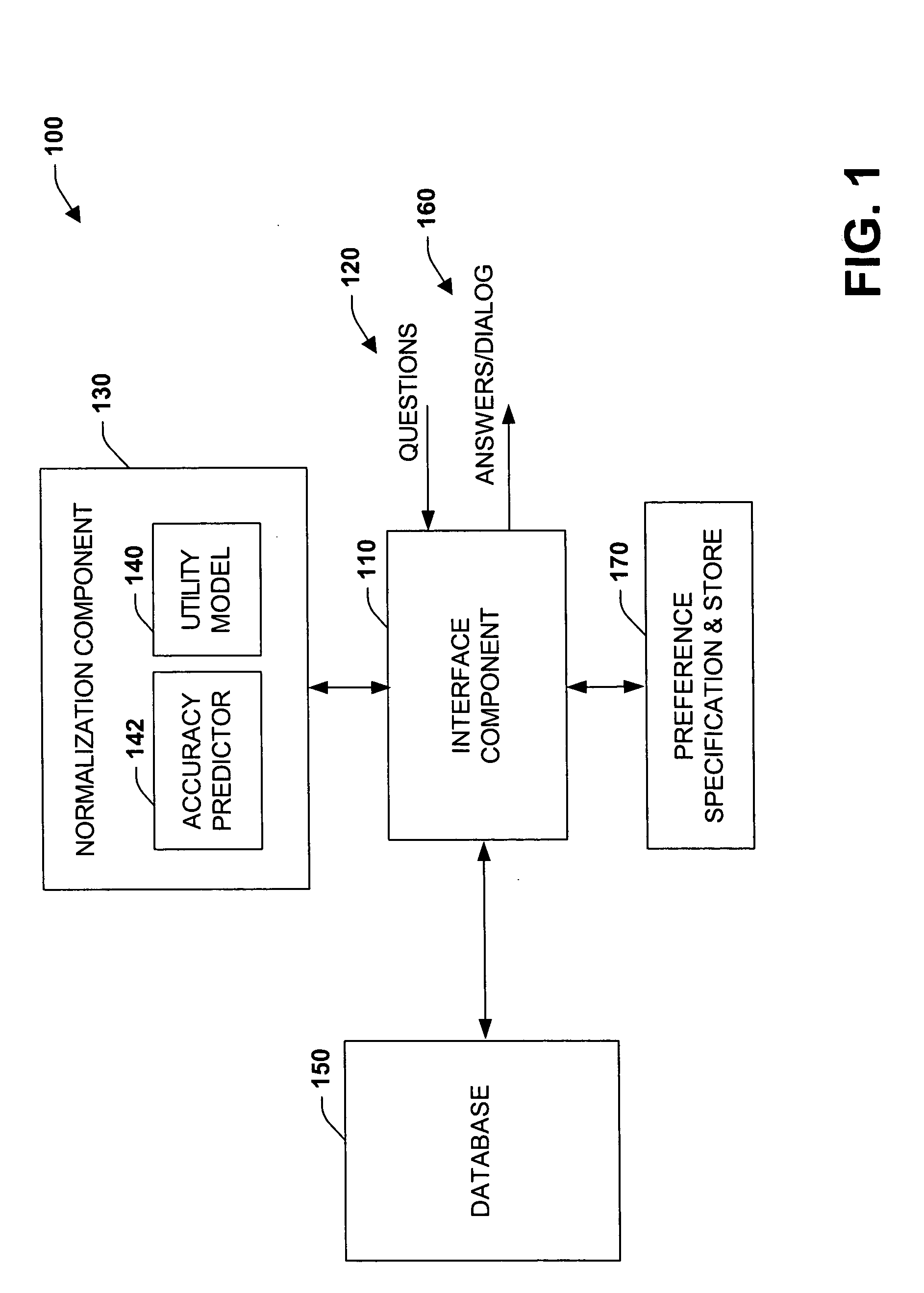

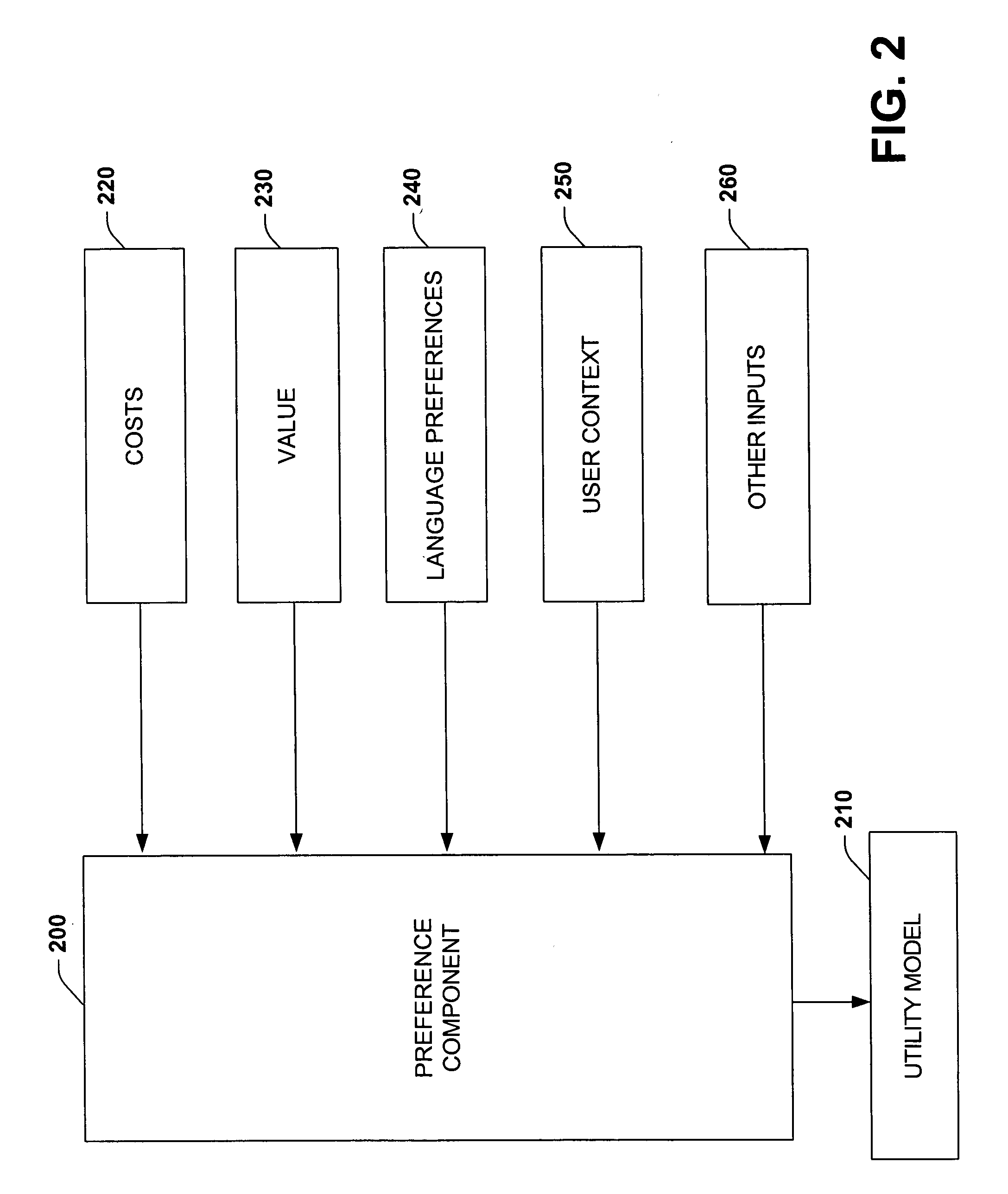

Cost-benefit approach to automatically composing answers to questions by extracting information from large unstructured corpora

InactiveUS20050033711A1Simple modelImprove predictive performanceData processing applicationsDigital data information retrievalProbit modelBenefit analysis

The present invention relates to a system and methodology to facilitate extraction of information from a large unstructured corpora such as from the World Wide Web and / or other unstructured sources. Information in the form of answers to questions can be automatically composed from such sources via probabilistic models and cost-benefit analyses to guide resource-intensive information-extraction procedures employed by a knowledge-based question answering system. The analyses can leverage predictions of the ultimate quality of answers generated by the system provided by Bayesian or other statistical models. Such predictions, when coupled with a utility model can provide the system with the ability to make decisions about the number of queries issued to a search engine (or engines), given the cost of queries and the expected value of query results in refining an ultimate answer. Given a preference model, information extraction actions can be taken with the highest expected utility. In this manner, the accuracy of answers to questions can be balanced with the cost of information extraction and analysis to compose the answers.

Owner:MICROSOFT TECH LICENSING LLC

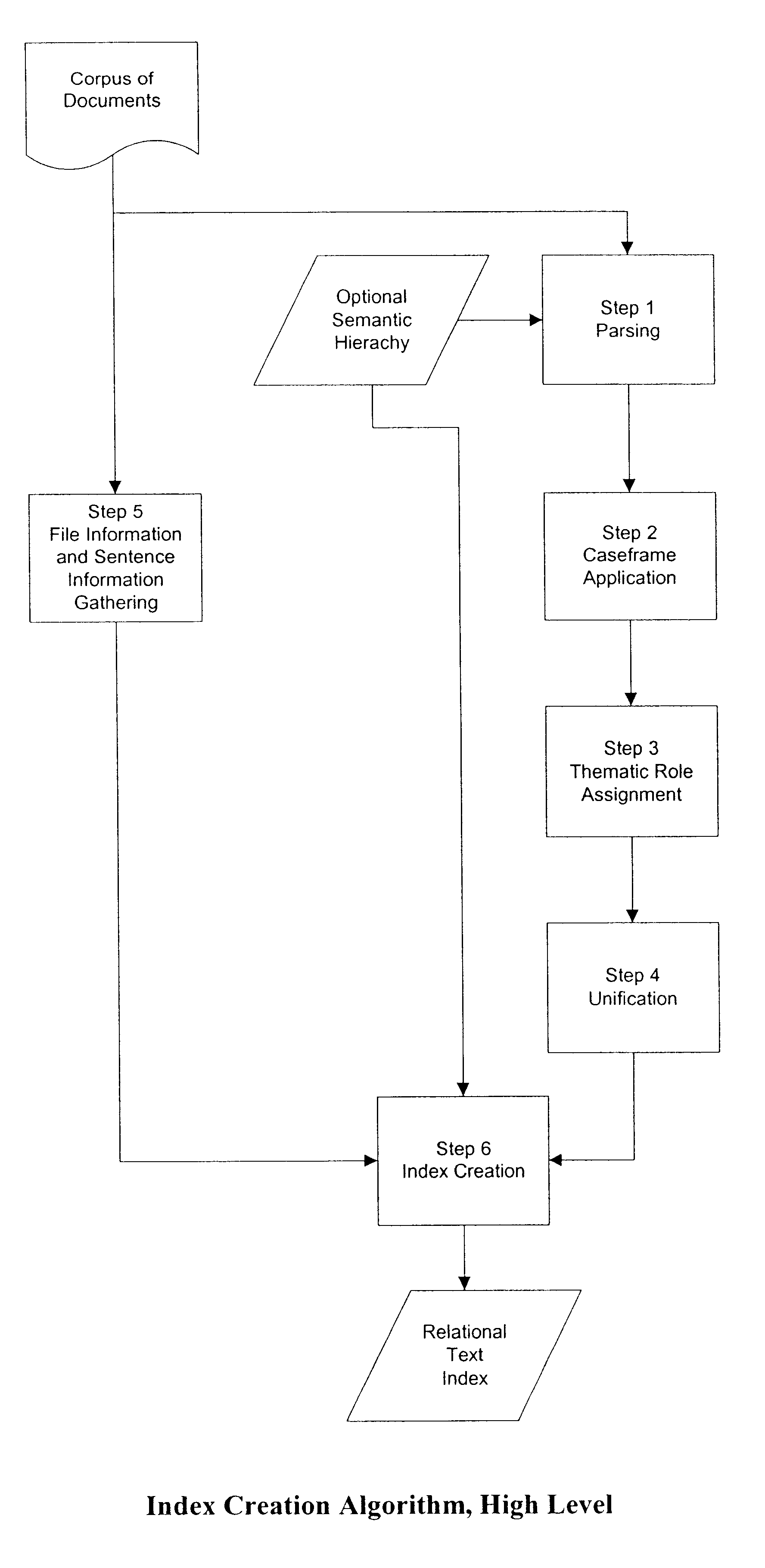

Relational text index creation and searching

InactiveUS6738765B1Easy to readData processing applicationsRelational databasesDatabaseInformation extraction

In an environment where it is desire to perform information extraction over a large quantity of textual data, methods, tools and structures are provided for building a relational text index from the textual data and performing searches using the relational text index.

Owner:ATTENSITY CORP

Detecting Anomalous Network Application Behavior

ActiveUS20080222717A1Memory loss protectionError detection/correctionTraffic capacityInformation analysis

System and Method for detecting anomalous network application behavior. Network traffic between at least one client and one or more servers may be monitored. The client and the one or more servers may communicate using one or more application protocols. The network traffic may be analyzed at the application-protocol level to determine anomalous network application behavior. Analyzing the network traffic may include determining, for one or more communications involving the client, if the client has previously stored or received an identifier corresponding to the one or more communications. If no such identifier has been observed in a previous communication, then the one or more communications involving the client may be determined to be anomalous. A network monitoring device may perform one or more of the network monitoring, the information extraction, or the information analysis.

Owner:EXTRAHOP NETWORKS

Text categorization toolkit

InactiveUS6212532B1Data processing applicationsDigital data information retrievalFeature extractionText categorization

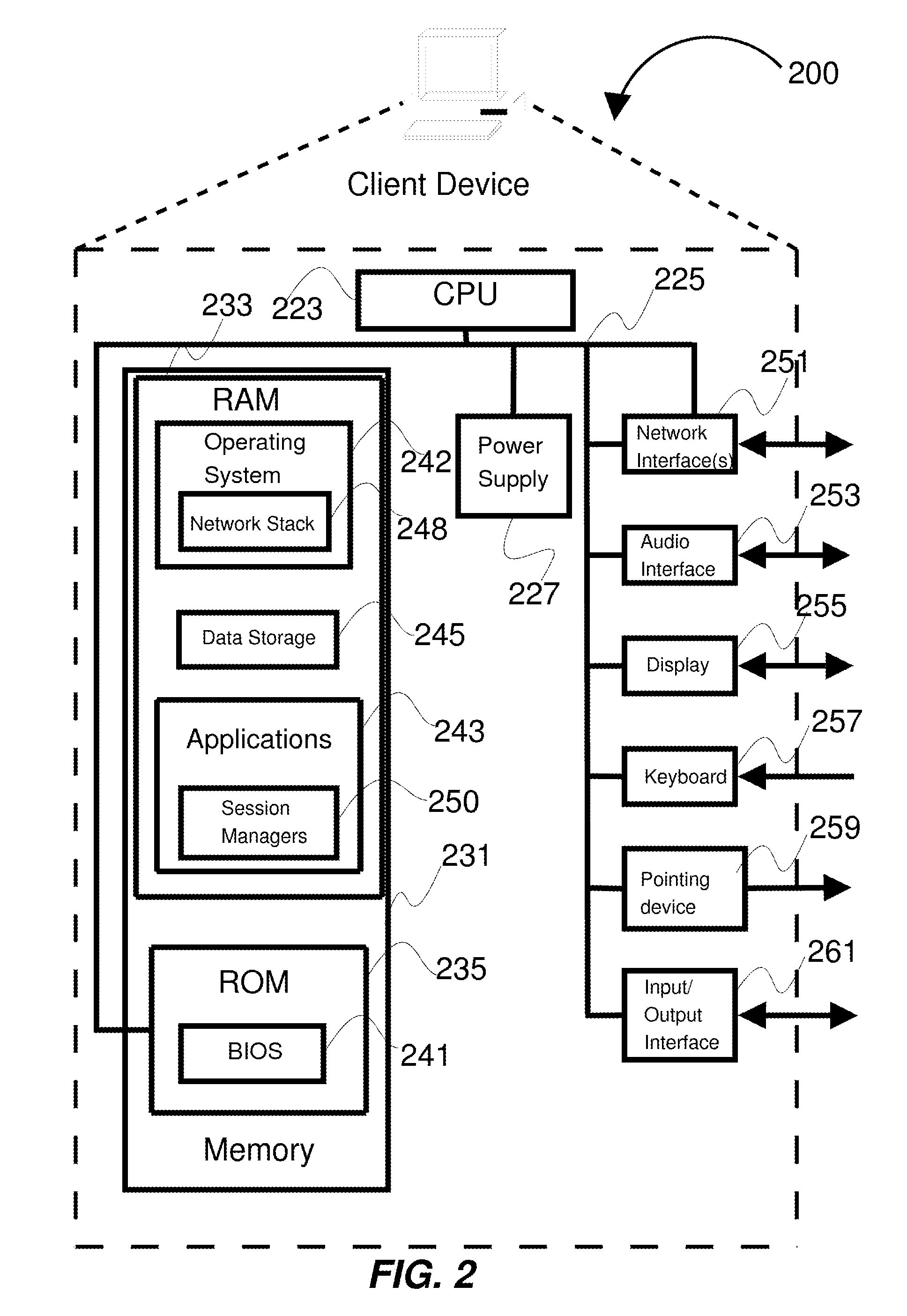

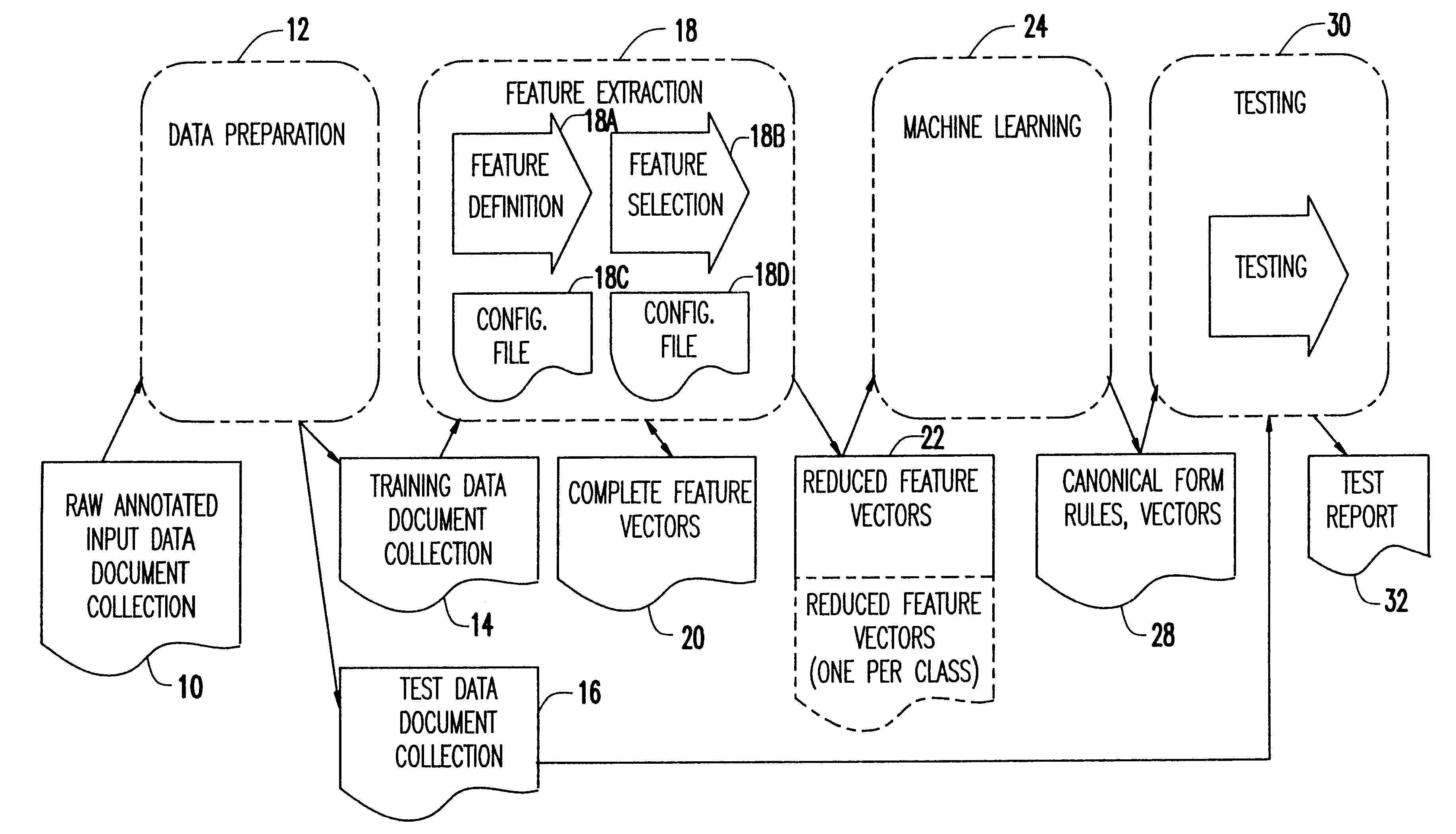

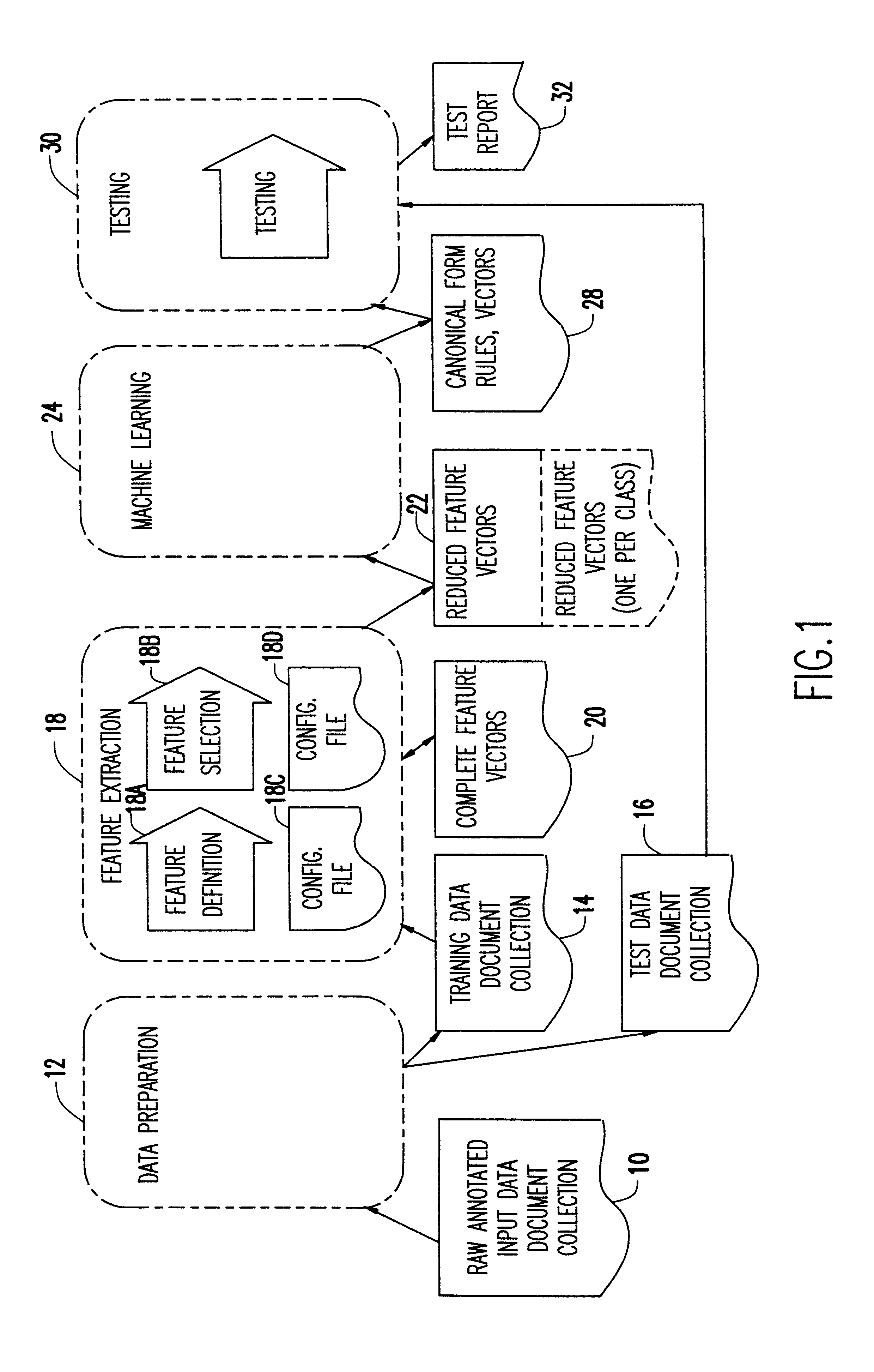

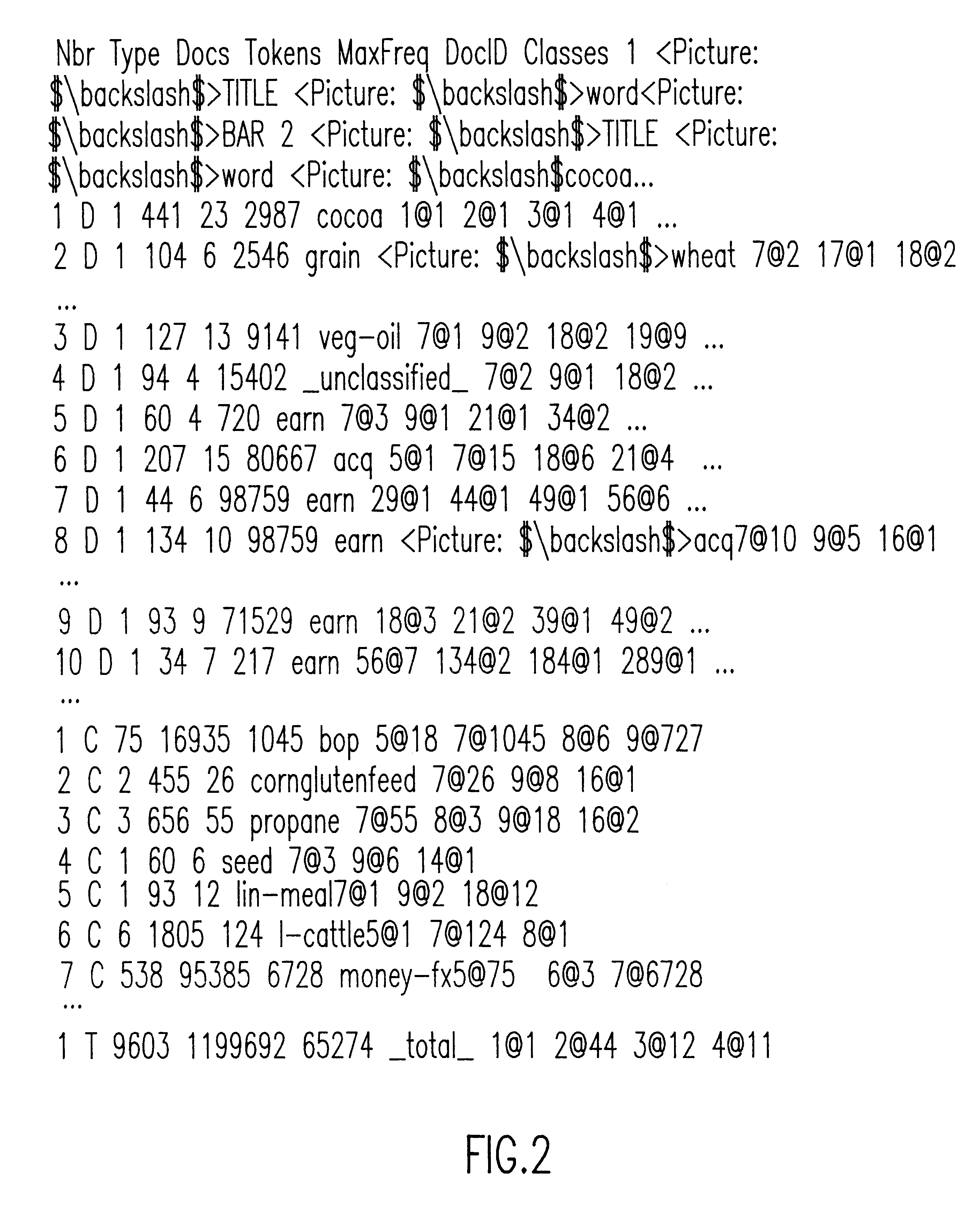

A module information extraction system capable of extracting information from natural language documents. The system includes a plurality of interchangeable modules including a data preparation module for preparing a first set of raw data having class labels to be tested, the data preparation module being selected from a first type of the interchangeable modules. The system further includes a feature extraction module for extracting features from the raw data received from the data preparation module and storing the features in a vector format, the feature extraction module being selected from a second type of the interchangeable modules. A core classification module is also provided for applying a learning algorithm to the stored vector format and producing therefrom a resulting classifier, the core classification module being selected from a third type of the interchangeable modules. A testing module compares the resulting classifier to a set of preassigned classes, where the testing module is selected from a fourth type of the interchangeable modules, where the testing module tests a second set of raw data having class labels received by the data preparation module to determine the degree to which the class labels of the second set of raw data approximately corresponds to the resulting classifier.

Owner:IBM CORP

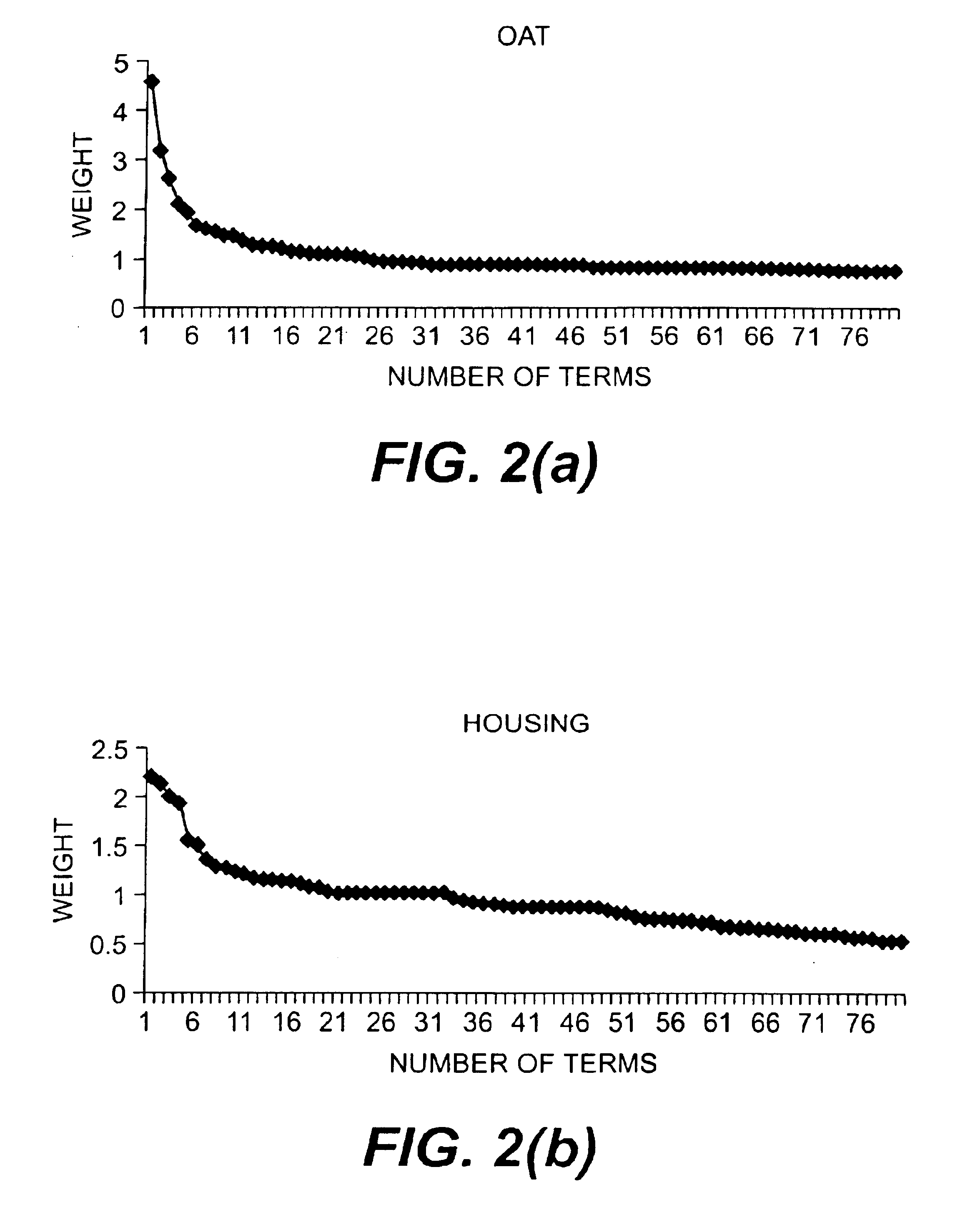

Method and apparatus for database retrieval utilizing vector optimization

InactiveUS6728701B1Enhanced informationMinimize the numberData processing applicationsDigital data information retrievalWeighting curveVector optimization

A technique for optimizing the number of terms in a profile used for information extraction. This optimization is performed by estimating the number of terms which will substantively affect the information extraction process. That is, the technique estimates the point in a term weight curve where that curve becomes flat. A term generally is important and remains part of the profile as long as its weight and the weight of the next term may be differentiated. When terms' weights are not differentiable, then they are not significant and may be cut off. Reducing the number of terms used in a profile increases the efficiency and effectiveness of the information retrieval process.

Owner:JUSTSYST EVANS RES

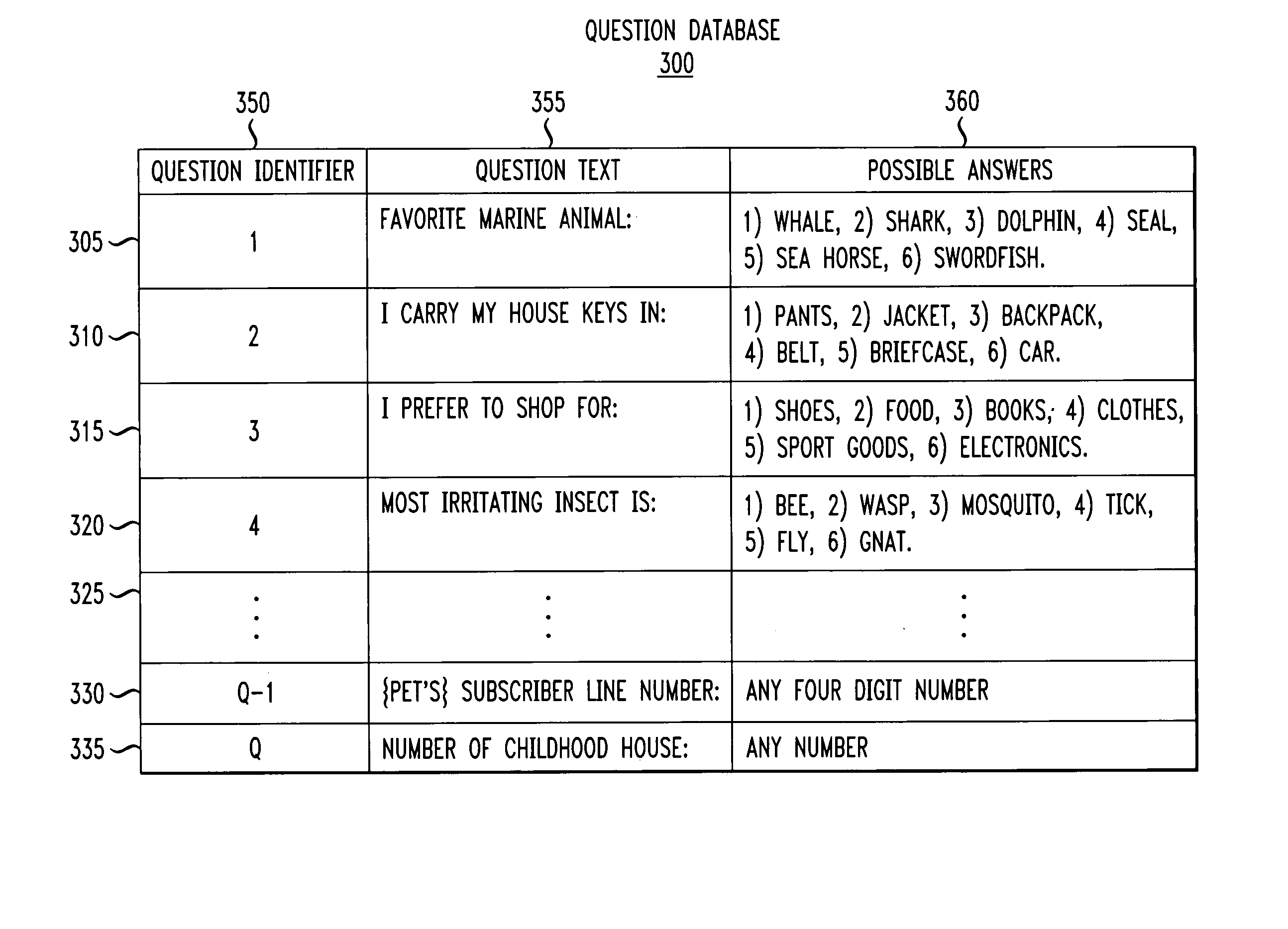

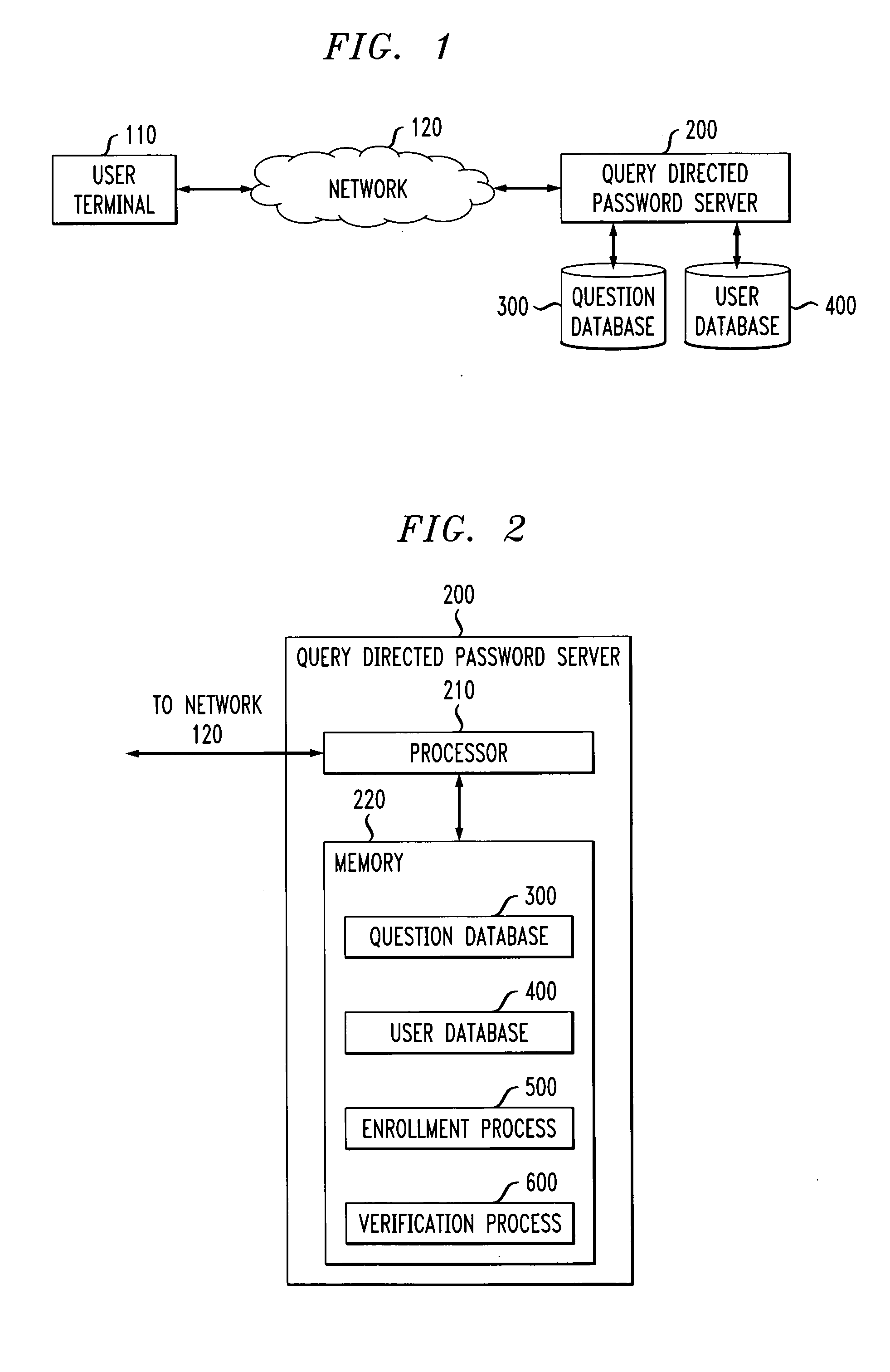

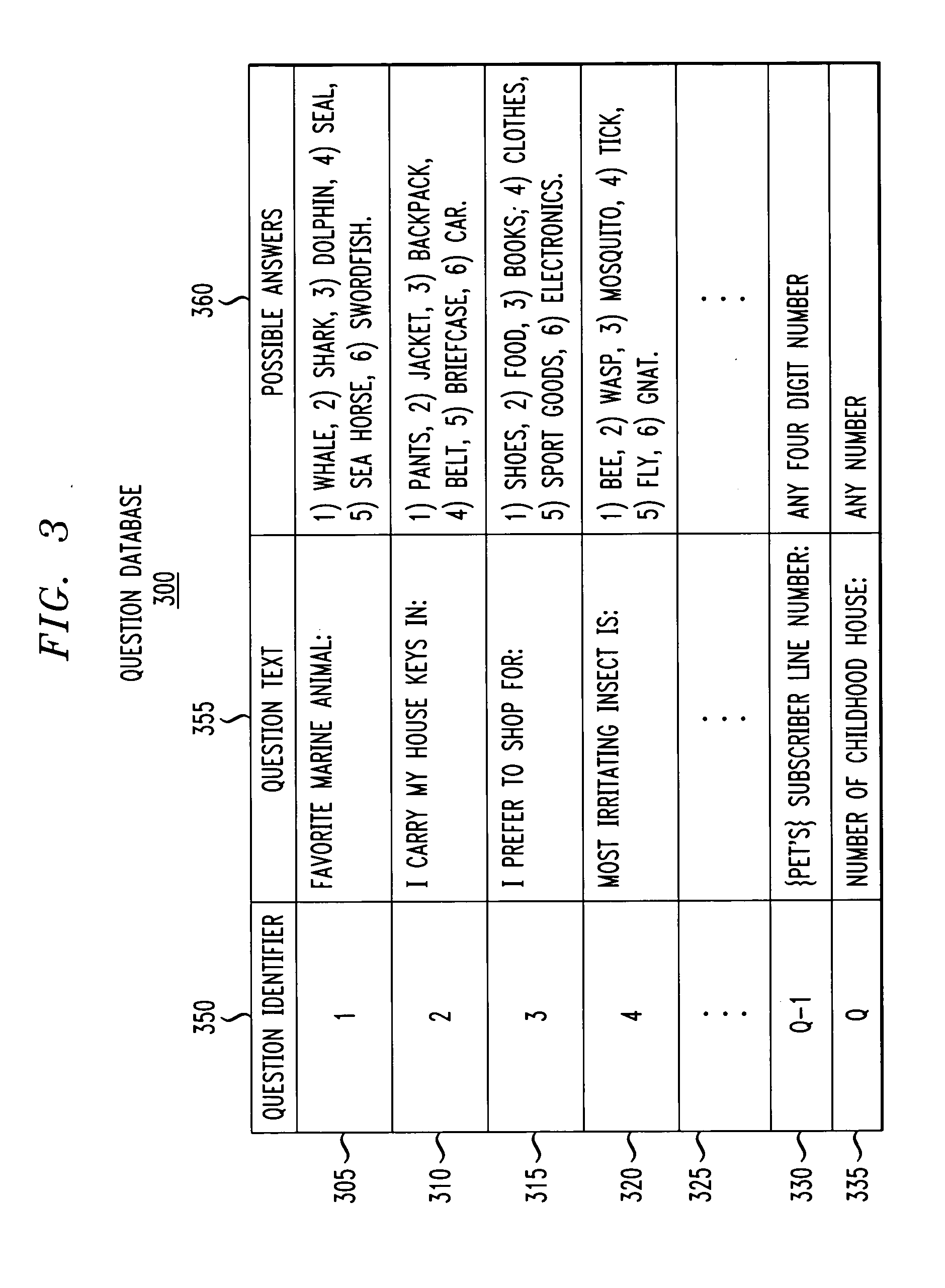

Method and apparatus for authenticating a user using query directed passwords

InactiveUS20050039057A1Met criterionImprove securityDigital data processing detailsUnauthorized memory use protectionPasswordFactoid

A query directed password scheme is disclosed that employs attack-resistant questions having answers that generally cannot be correlated with the user using online searching techniques, such as user opinions, trivial facts, or indirect facts. During an enrollment phase, the user is presented with a pool of questions from which the user must select a subset of such questions to answer. Information extraction techniques optionally ensure that the selected questions and answers cannot be correlated with the user. A security weight can optionally be assigned to each selected question. The selected questions should optionally meet predefined criteria for topic distribution. During a verification phase, the user is challenged with a random subset of the questions that the user has previously answered and answers these questions until a level of security for a given application is exceeded as measured by the number of correct questions out of the number of questions asked. Security may be further improved by combining the query directed password protocol with one or more additional factors such as Caller ID that assure that the questions are likely asked only to the registered user.

Owner:AVAYA TECH CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com