Patents

Literature

29670results about "3D-image rendering" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

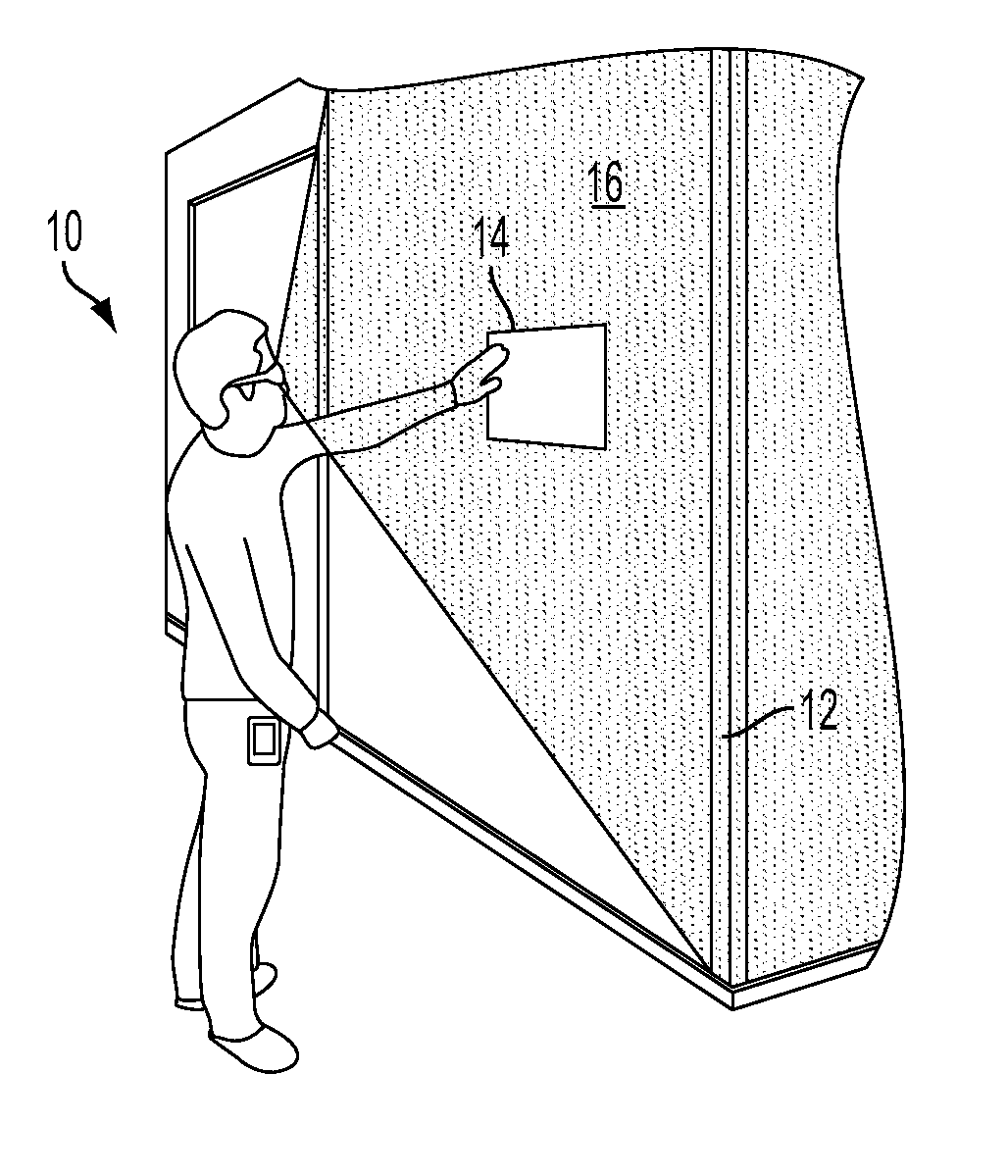

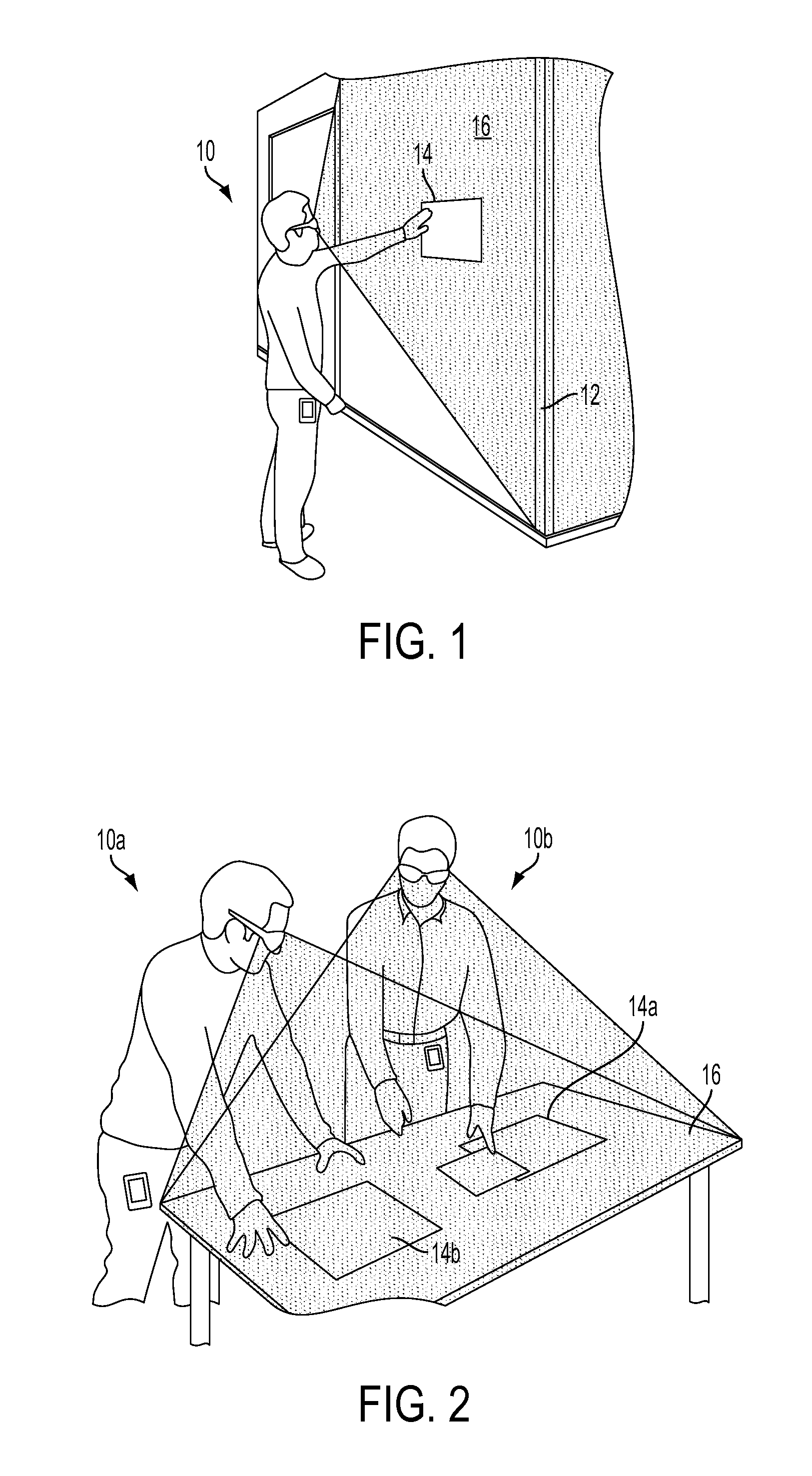

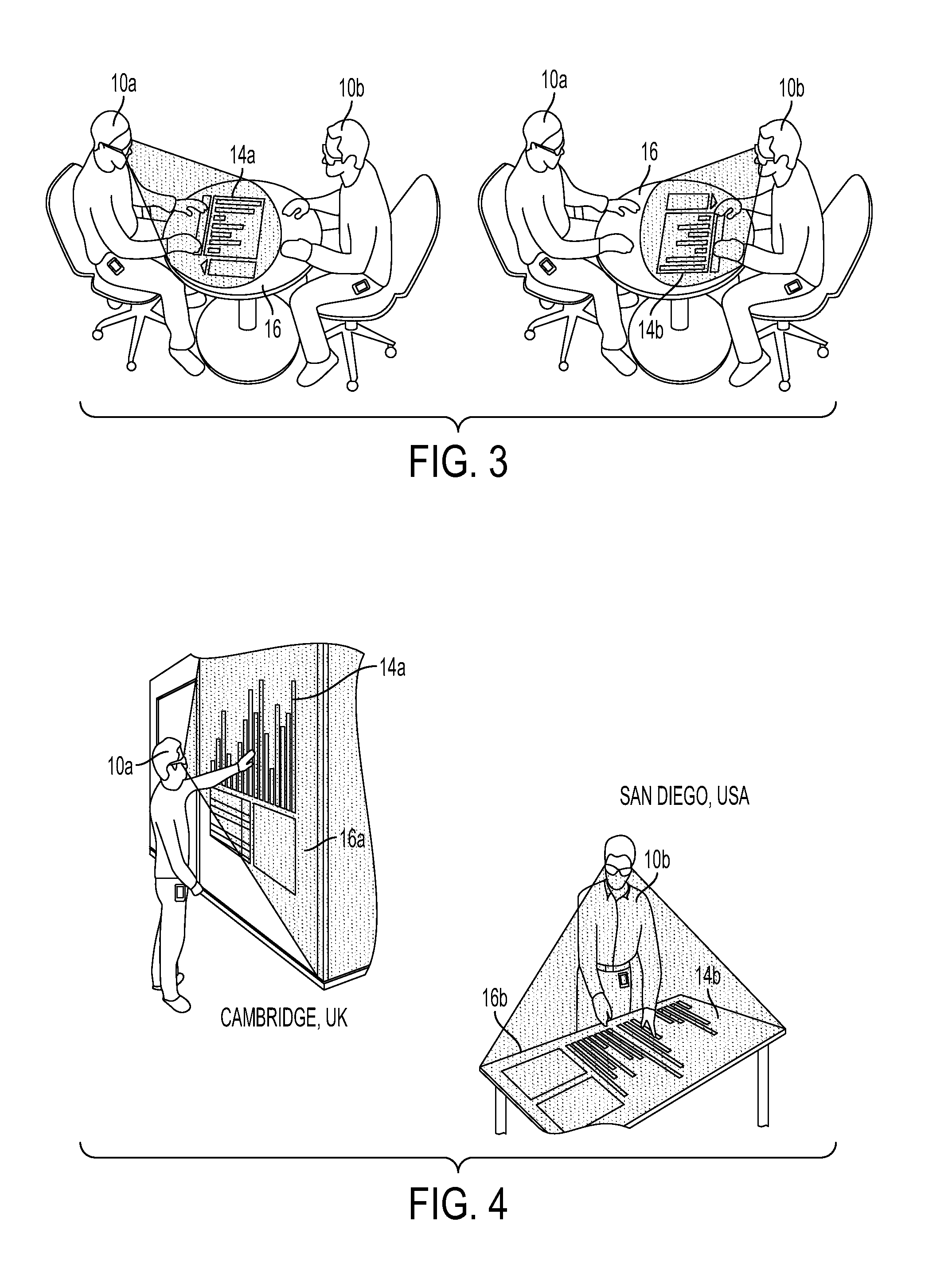

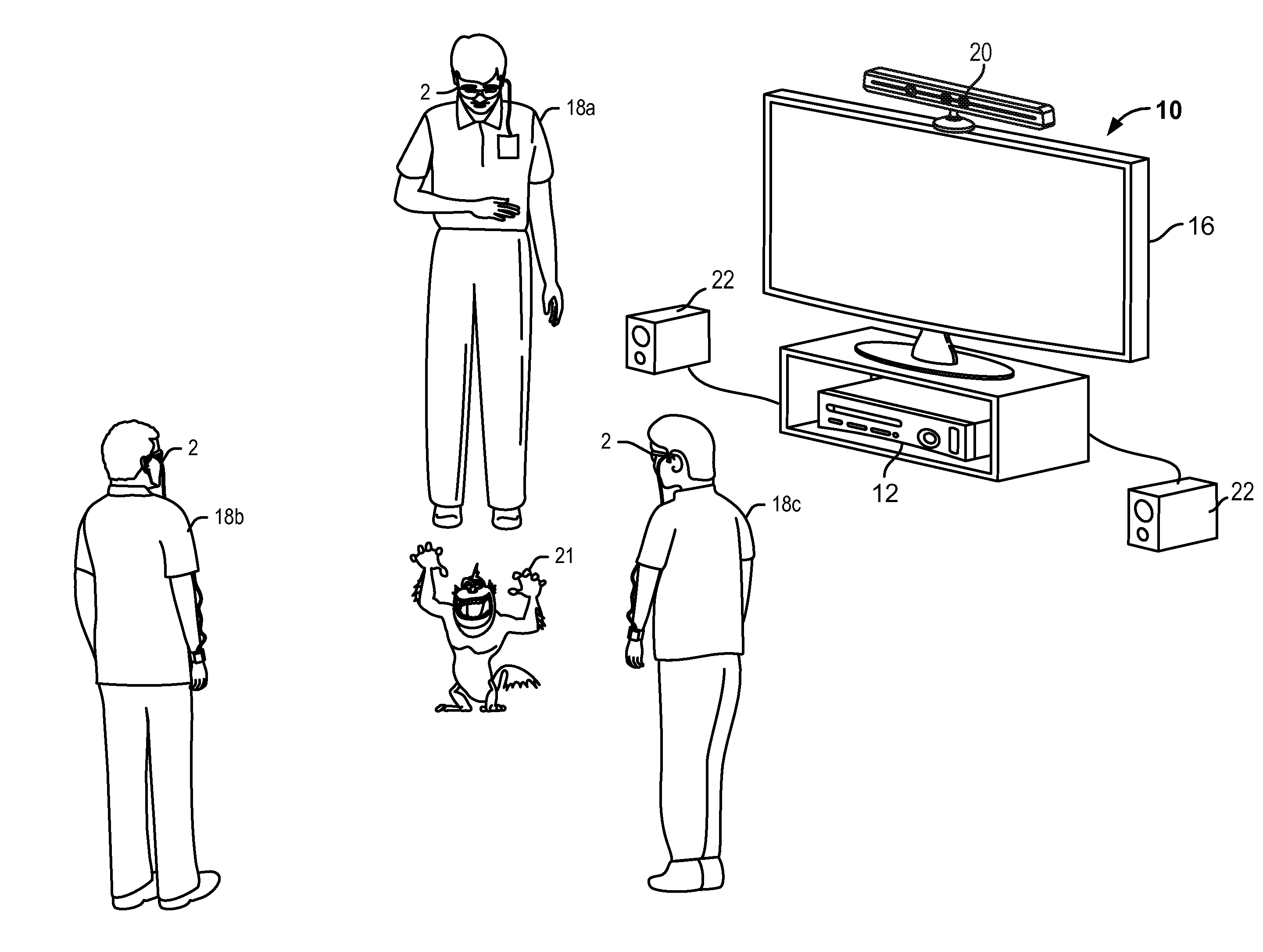

Anchoring virtual images to real world surfaces in augmented reality systems

ActiveUS20120249741A1Television system detailsColor television detailsSensor arrayAugmented reality systems

A head mounted device provides an immersive virtual or augmented reality experience for viewing data and enabling collaboration among multiple users. Rendering images in a virtual or augmented reality system may include capturing an image and spatial data with a body mounted camera and sensor array, receiving an input indicating a first anchor surface, calculating parameters with respect to the body mounted camera and displaying a virtual object such that the virtual object appears anchored to the selected first anchor surface. Further operations may include receiving a second input indicating a second anchor surface within the captured image that is different from the first anchor surface, calculating parameters with respect to the second anchor surface and displaying the virtual object such that the virtual object appears anchored to the selected second anchor surface and moved from the first anchor surface.

Owner:QUALCOMM INC

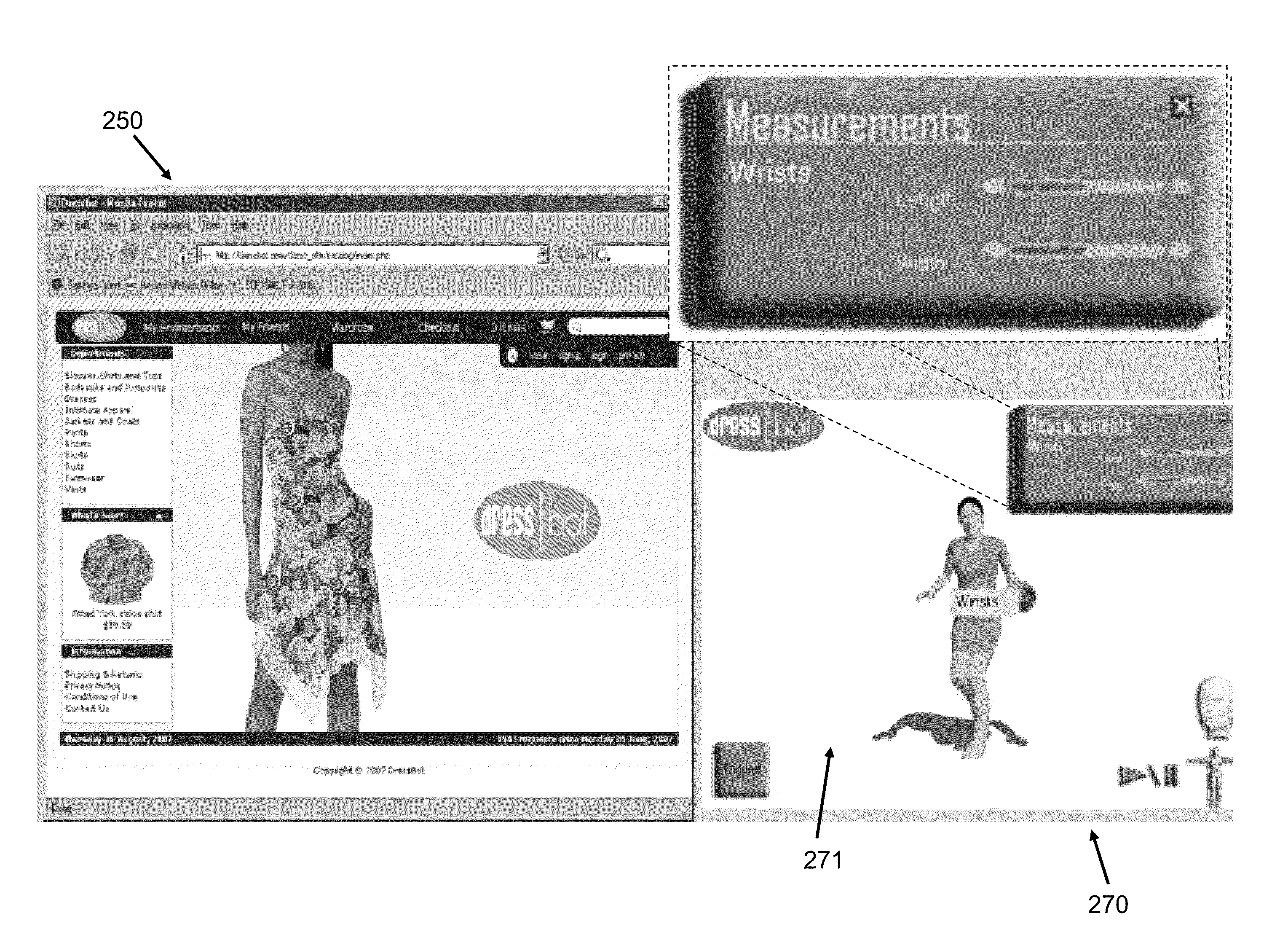

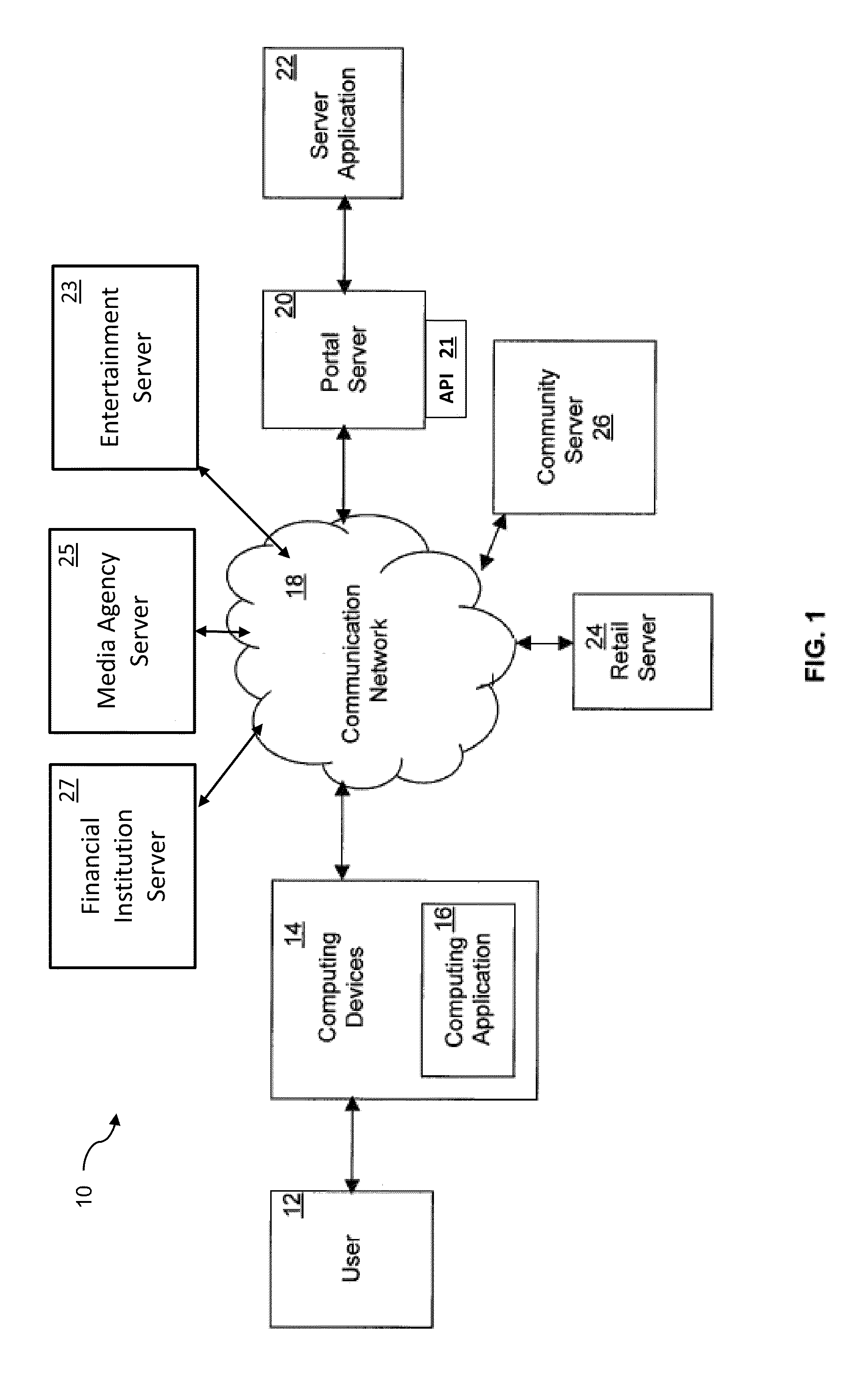

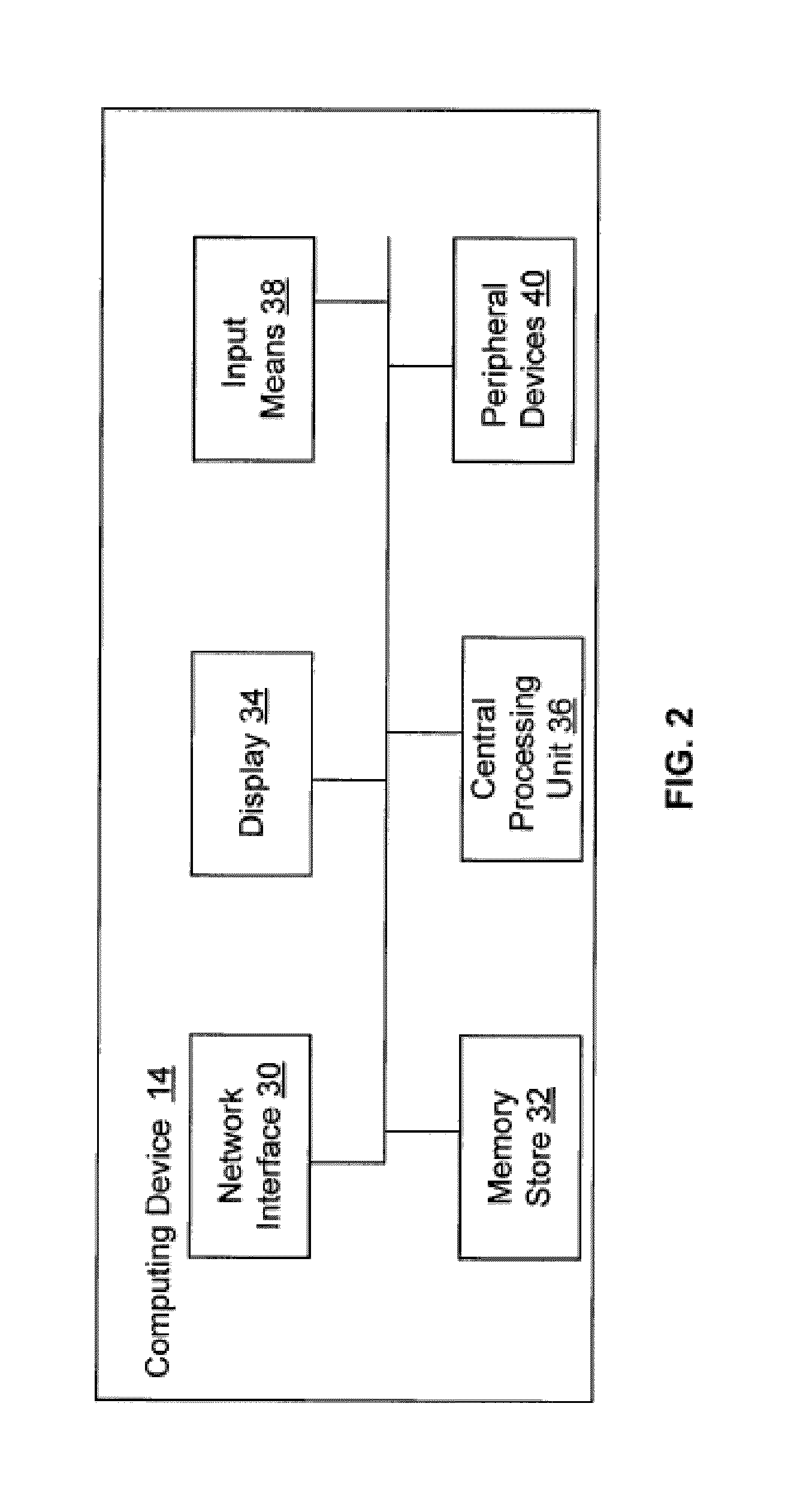

System and Method for Collaborative Shopping, Business and Entertainment

The methods and systems described herein relate to online methods of collaboration in community environments. The methods and systems are related to an online apparel modeling system that allows users to have three-dimensional models of their physical profile created. Users may purchase various goods and / or services and collaborate with other users in the online environment.

Owner:DRESSBOT

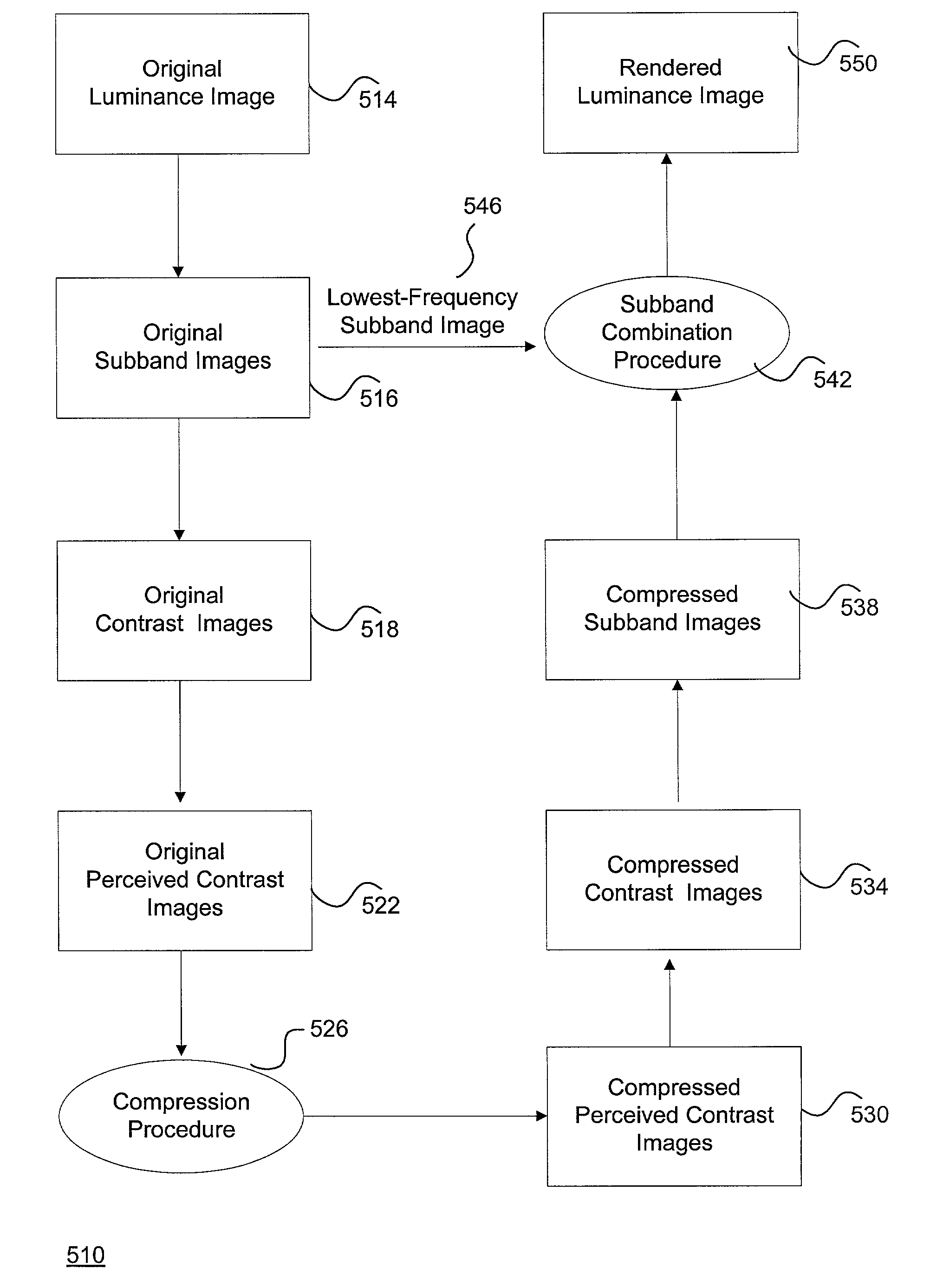

System and method for effectively rendering high dynamic range images

InactiveUS6993200B2High imagingEffectively renderingImage enhancementImage analysisComputer graphics (images)Image conversion

A system and method for rendering high dynamic range images includes a rendering manager that divides an original luminance image into a plurality of original subband images. The rendering manager converts the original subband images into original contrast images which are converted into original perceived contrast images. The rendering manager performs a compression procedure upon the original perceived contrast images to produce compressed perceived contrast images. The rendering manager converts the compressed perceived contrast images into compressed contrast images which are converted into compressed subband images. The rendering manager performs a subband combination procedure for combining the compressed subband images together with a lowest-frequency subband image to generate a rendered luminance image. The rendering manager may combines the rendered luminance image with corresponding chrominance information to generate a rendered composite image.

Owner:SONY CORP +1

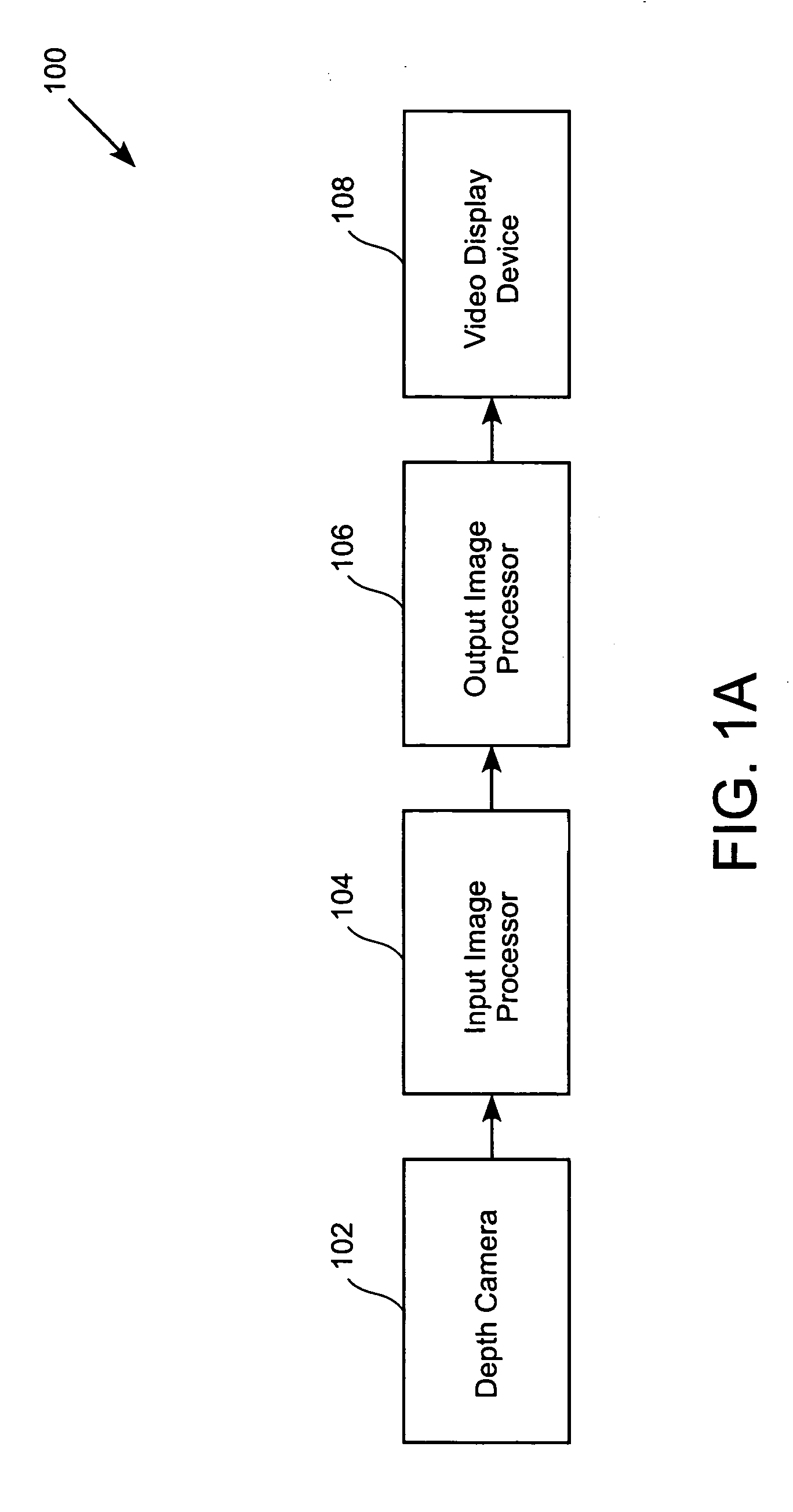

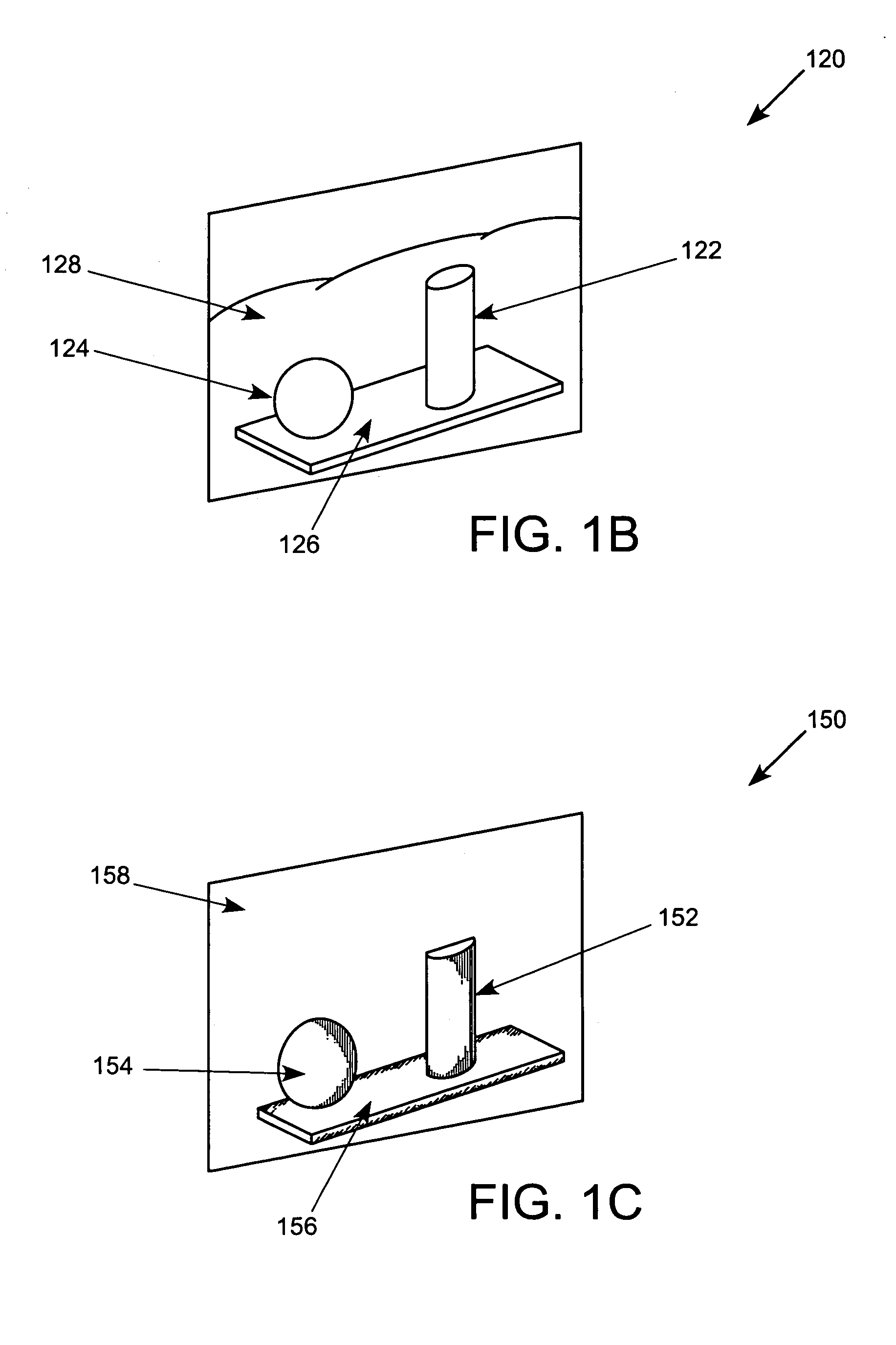

System, method and a computer readable medium for providing an output image

InactiveUS8718333B2Electric signal transmission systemsGeometric image transformationComputer visionComputer science

A method for providing an output image, the method includes: determining an importance value for each input pixels out of multiple input pixels of an input image; applying on each of the multiple input pixels a conversion process that is responsive to the importance value of the input pixel to provide multiple output pixels that form the output image; wherein the input image differs from the output image.

Owner:RAMOT AT TEL AVIV UNIV LTD

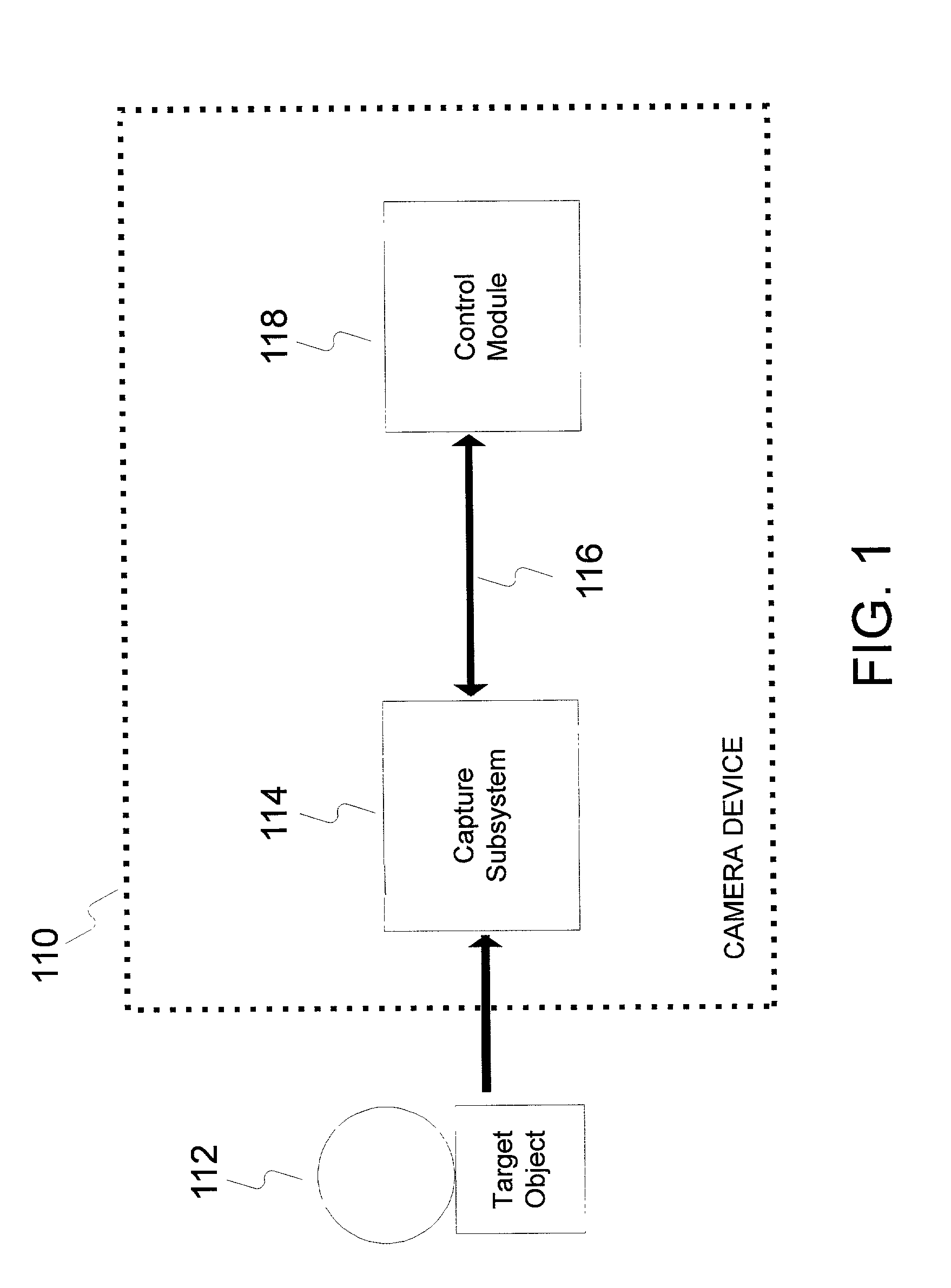

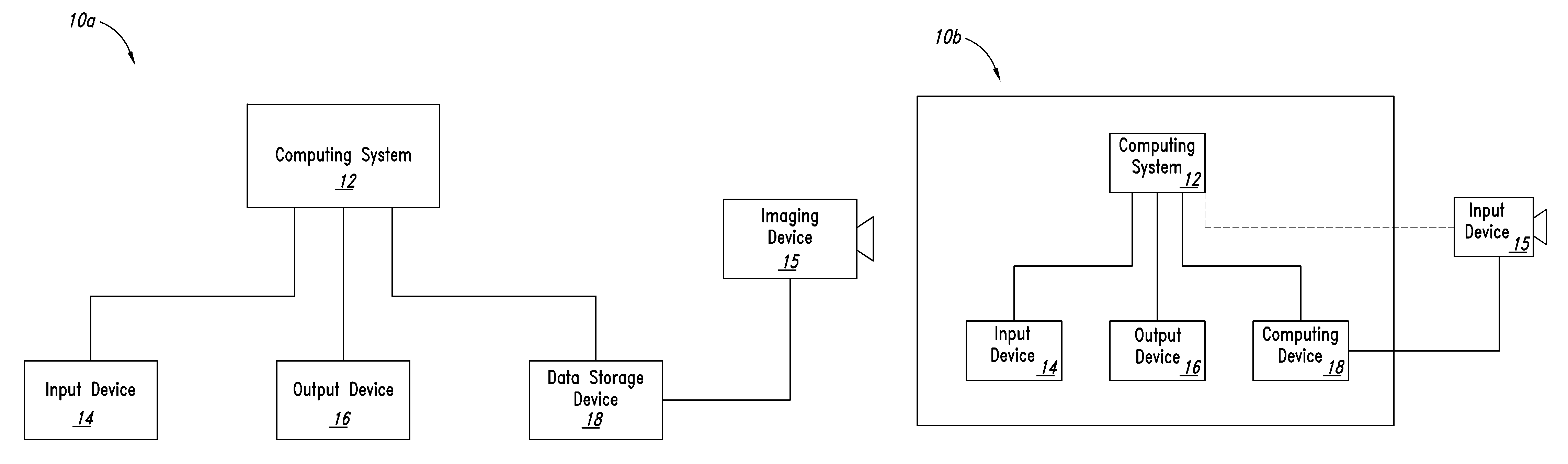

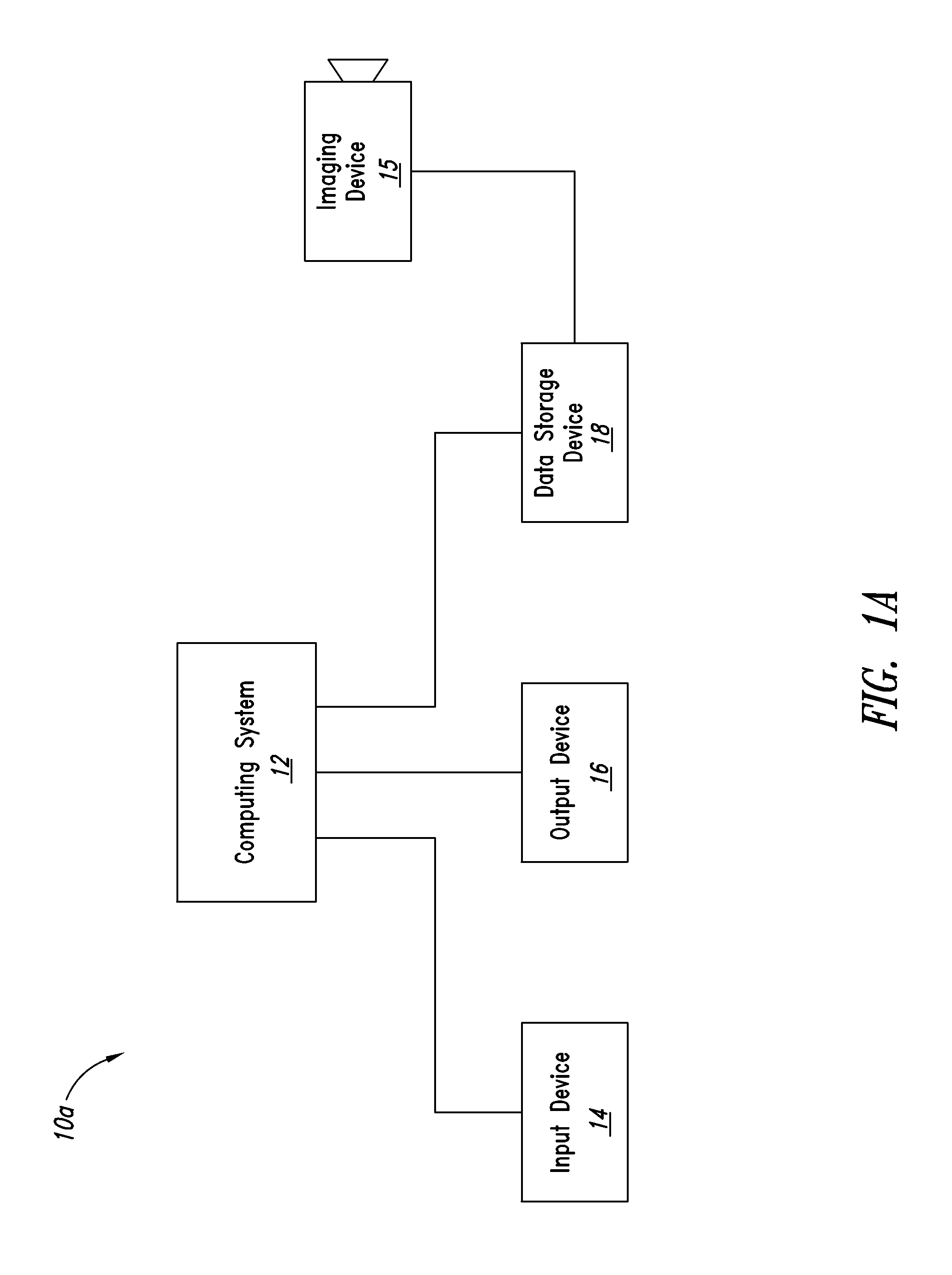

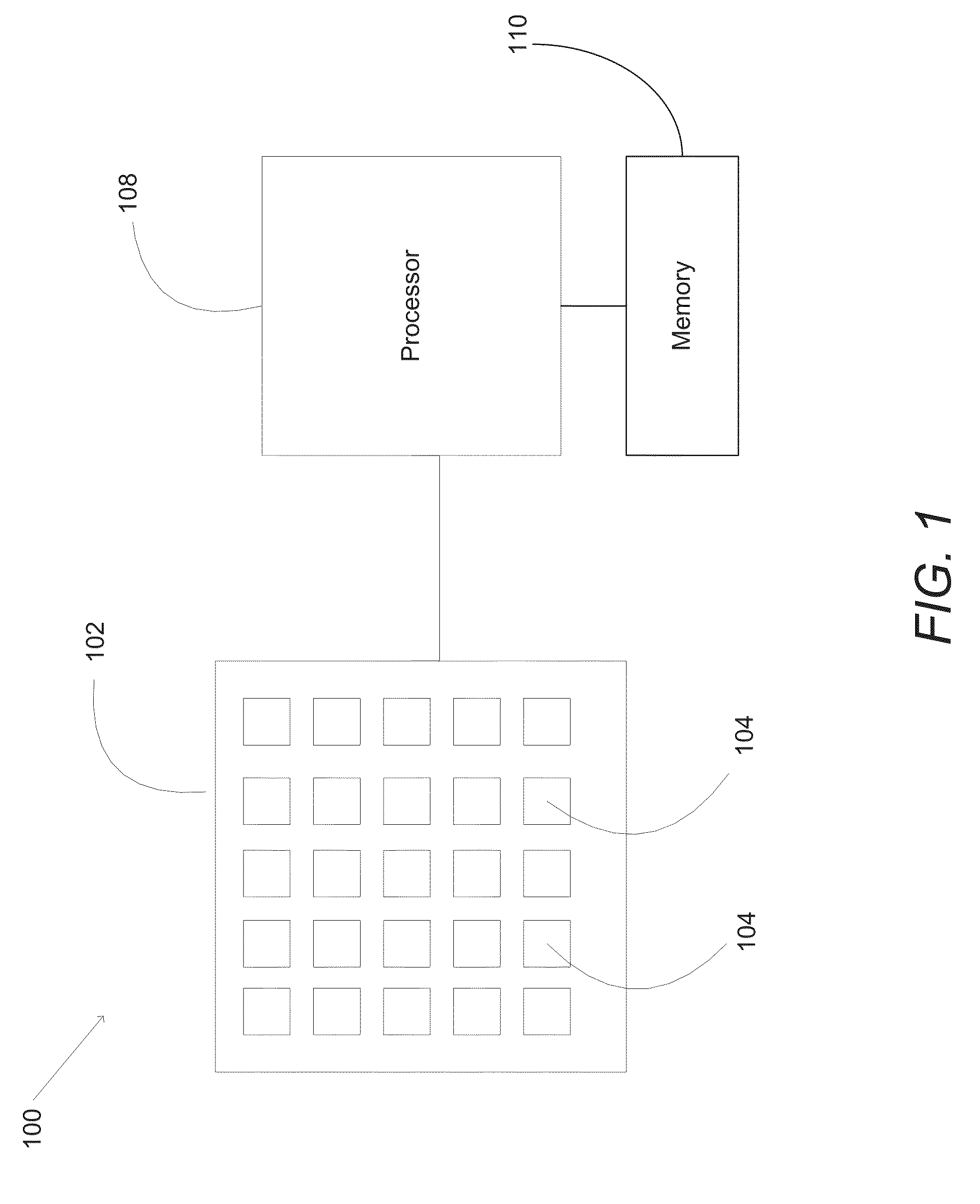

Semi-automatic dimensioning with imager on a portable device

A method of operating a dimensioning system to determine dimensional information for objects is disclosed. A number of images are acquired. Objects in at least one of the acquired images are computationally identified. One object represented in the at least one of the acquired images is computationally initially selected as a candidate for processing. An indication of the initially selected object is provided to a user. At least one user input indicative of an object selected for processing is received. Dimensional data for the object indicated by the received user input is computationally determined.

Owner:INTERMEC IP CORP

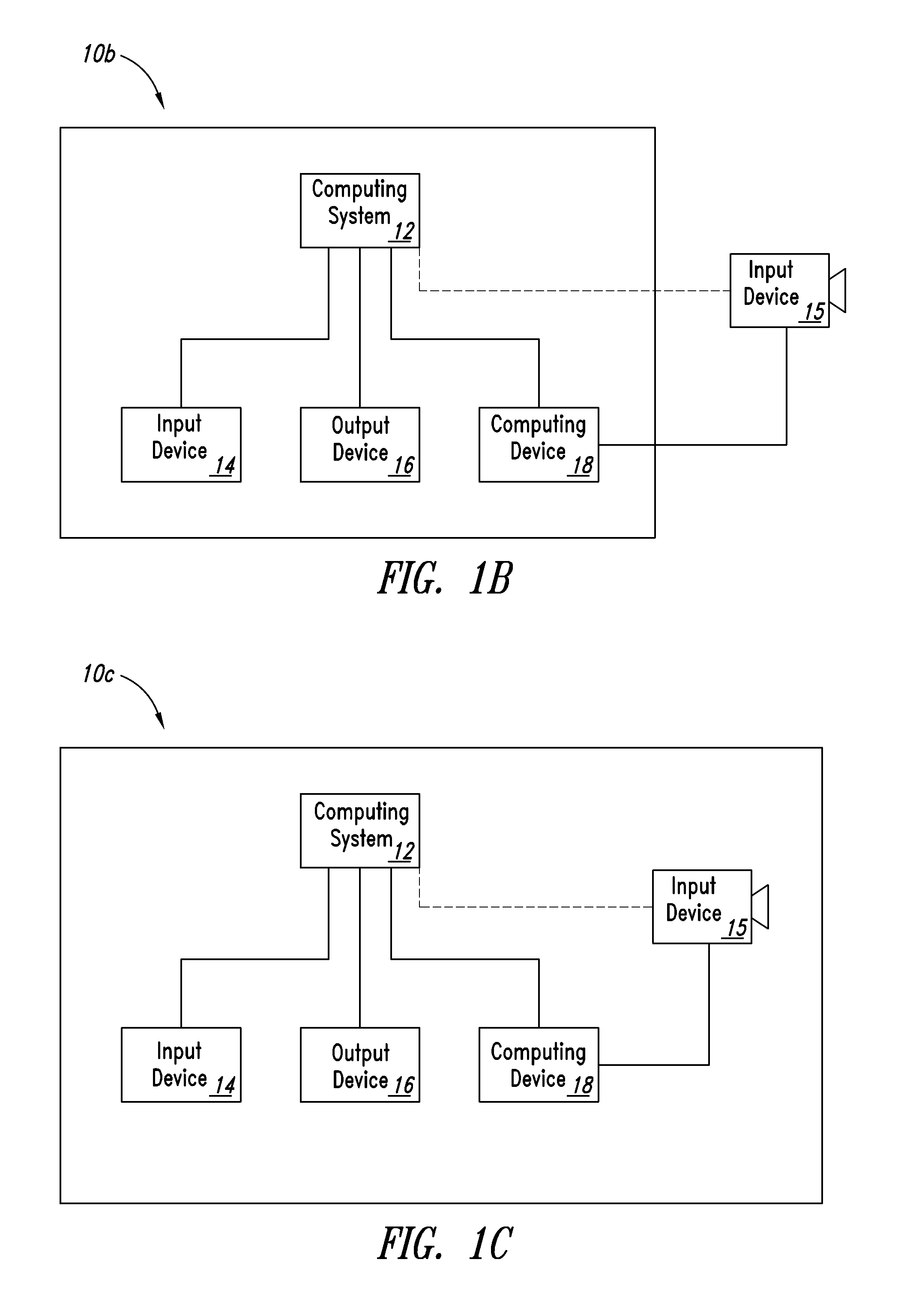

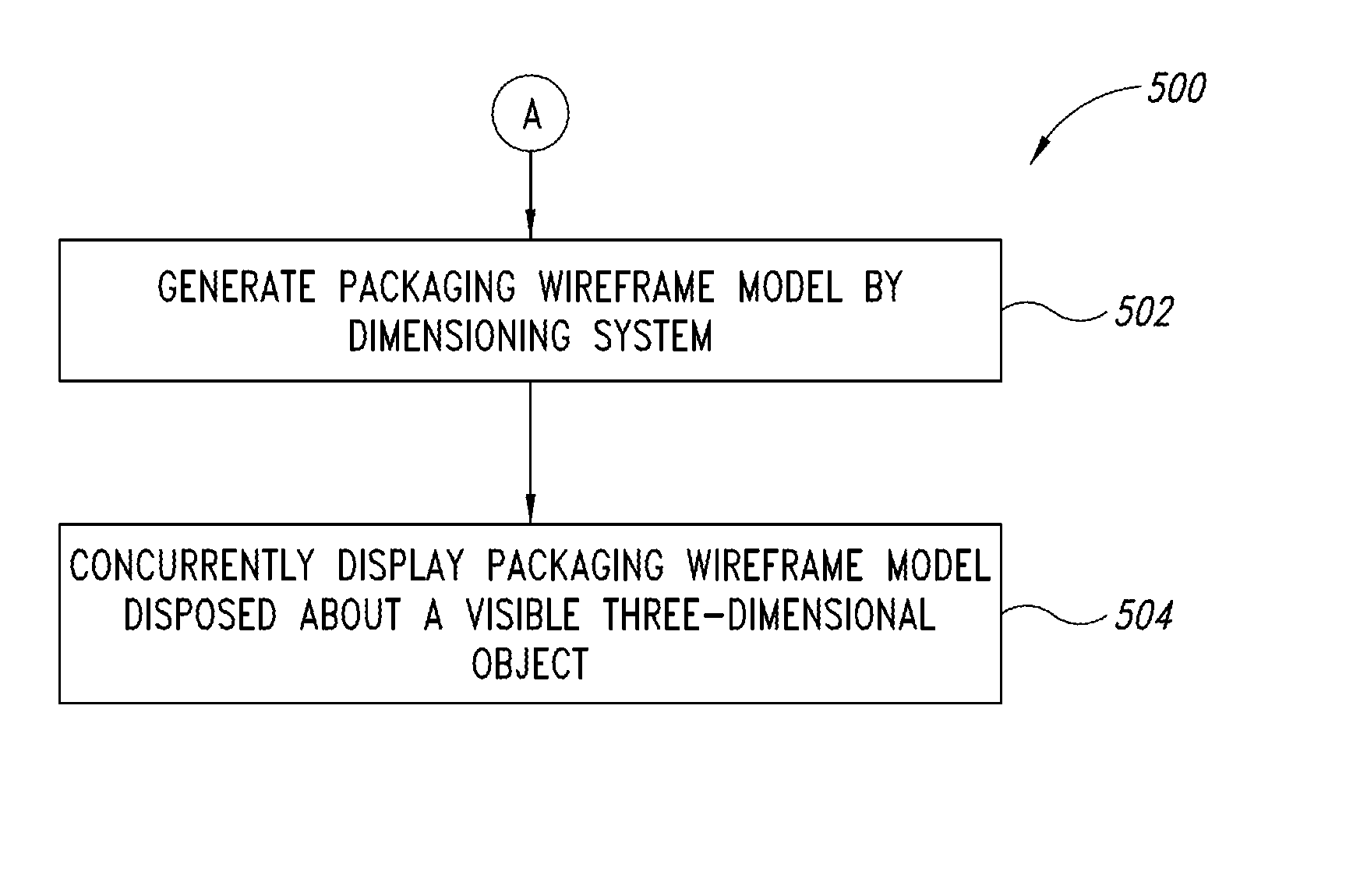

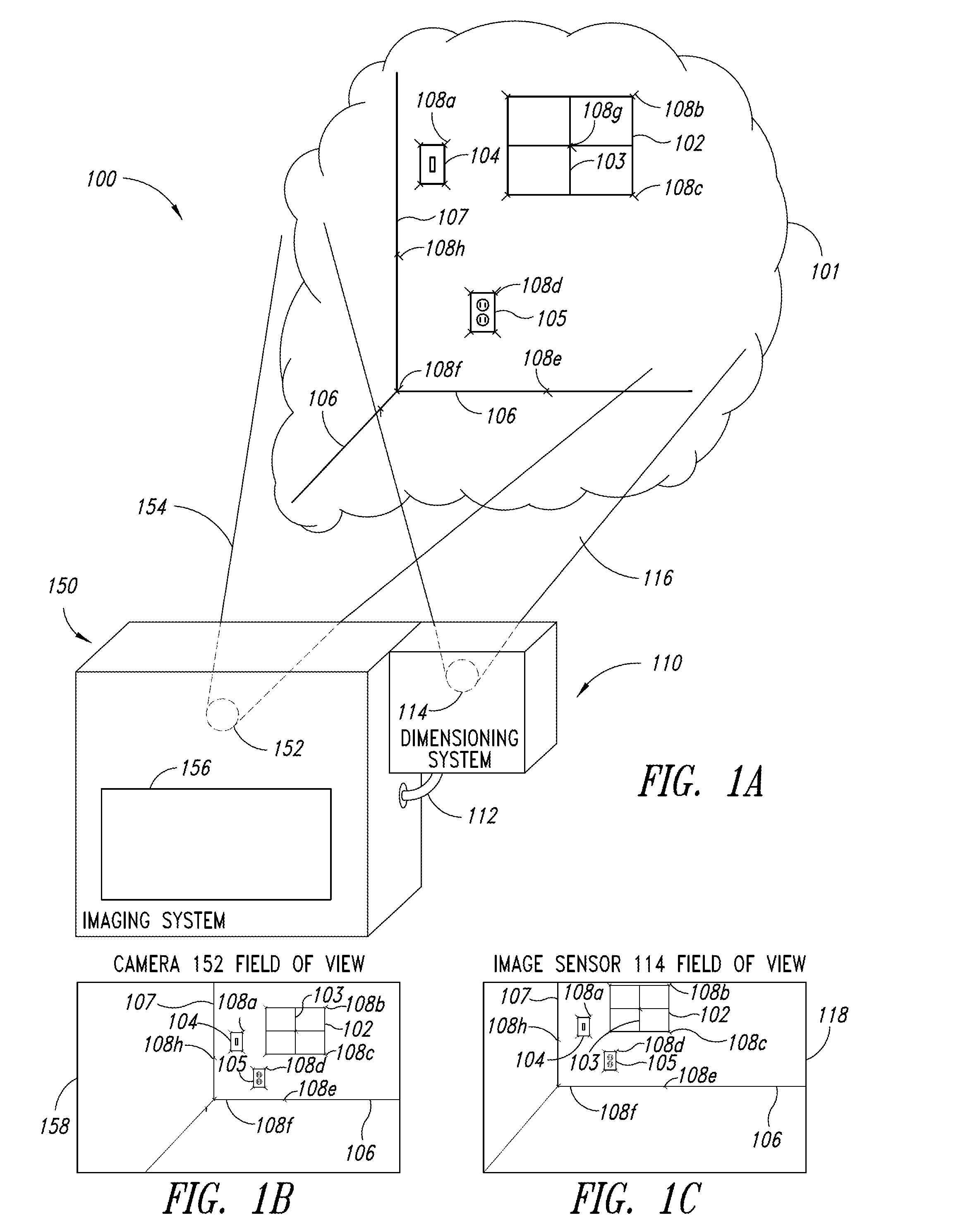

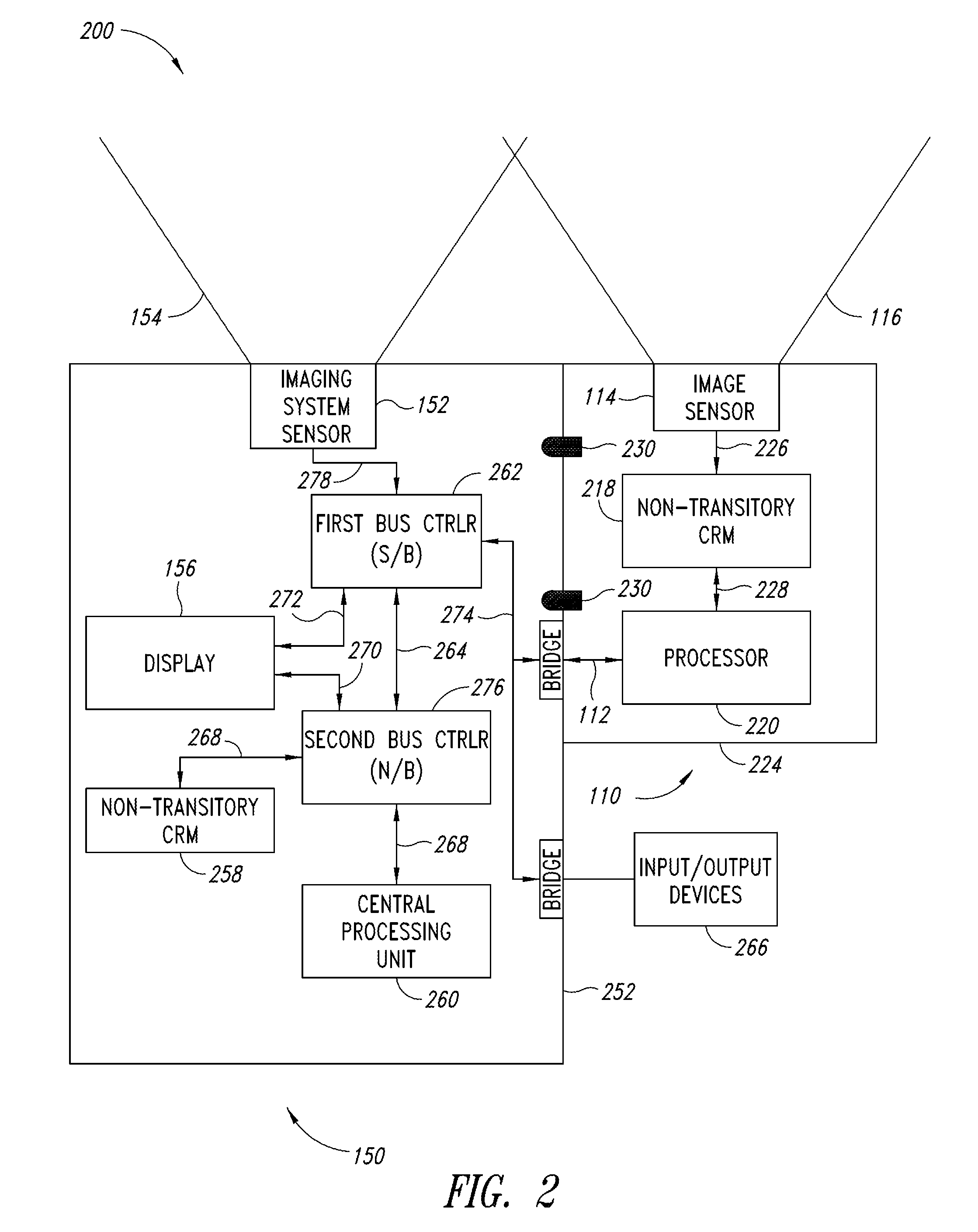

Dimensioning system calibration systems and methods

Systems and methods of determining the volume and dimensions of a three-dimensional object using a dimensioning system are provided. The dimensioning system can include an image sensor, a non-transitory, machine-readable, storage, and a processor. The dimensioning system can select and fit a three-dimensional packaging wireframe model about each three-dimensional object located within a first point of view of the image sensor. Calibration is performed to calibrate between image sensors of the dimensioning system and those of the imaging system. Calibration may occur pre-run time, in a calibration mode or period. Calibration may occur during a routine. Calibration may be automatically triggered on detection of a coupling between the dimensioning and the imaging systems.

Owner:INTERMEC IP

An articulated structured light based-laparoscope

The present invention provides a structured-light based system for providing a 3D image of at least one object within a field of view within a body cavity, comprising: a. An endoscope; b. at least one camera located in the endoscope's proximal end, configured to real-time provide at least one 2D image of at least a portion of said field of view by means of said at least one lens; c. a light source, configured to real-time illuminate at least a portion of said at least one object within at least a portion of said field of view with at least one time and space varying predetermined light pattern; and, d. a sensor configured to detect light reflected from said field of view; e. a computer program which, when executed by data processing apparatus, is configured to generate a 3D image of said field of view.

Owner:TRANSENTERIX EURO SARL

Generating a depth map from a two-dimensional source image for stereoscopic and multiview imaging

InactiveUS20070024614A1Saving in bandwidth requirementIncrease widthImage enhancementImage analysisViewpointsImage pair

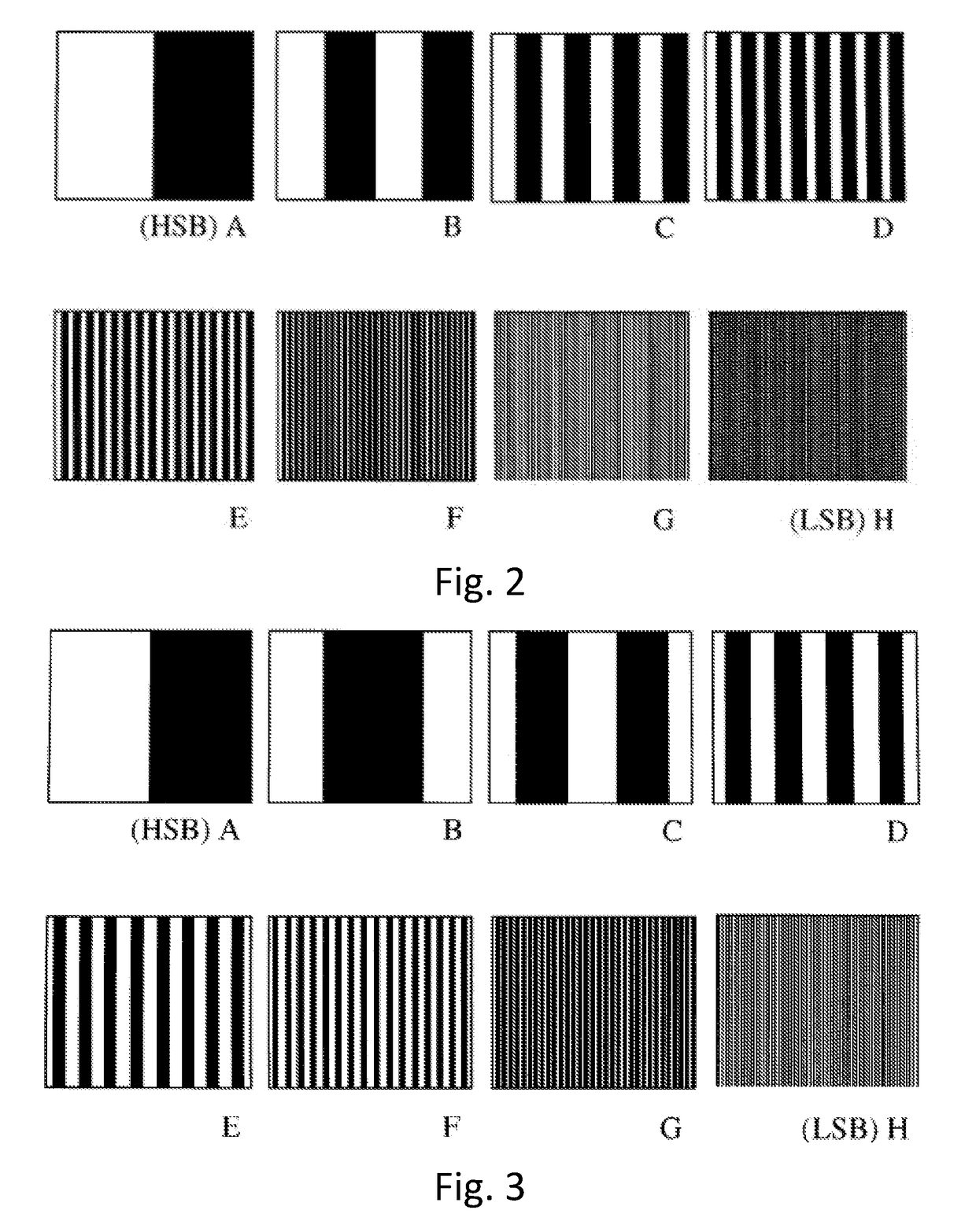

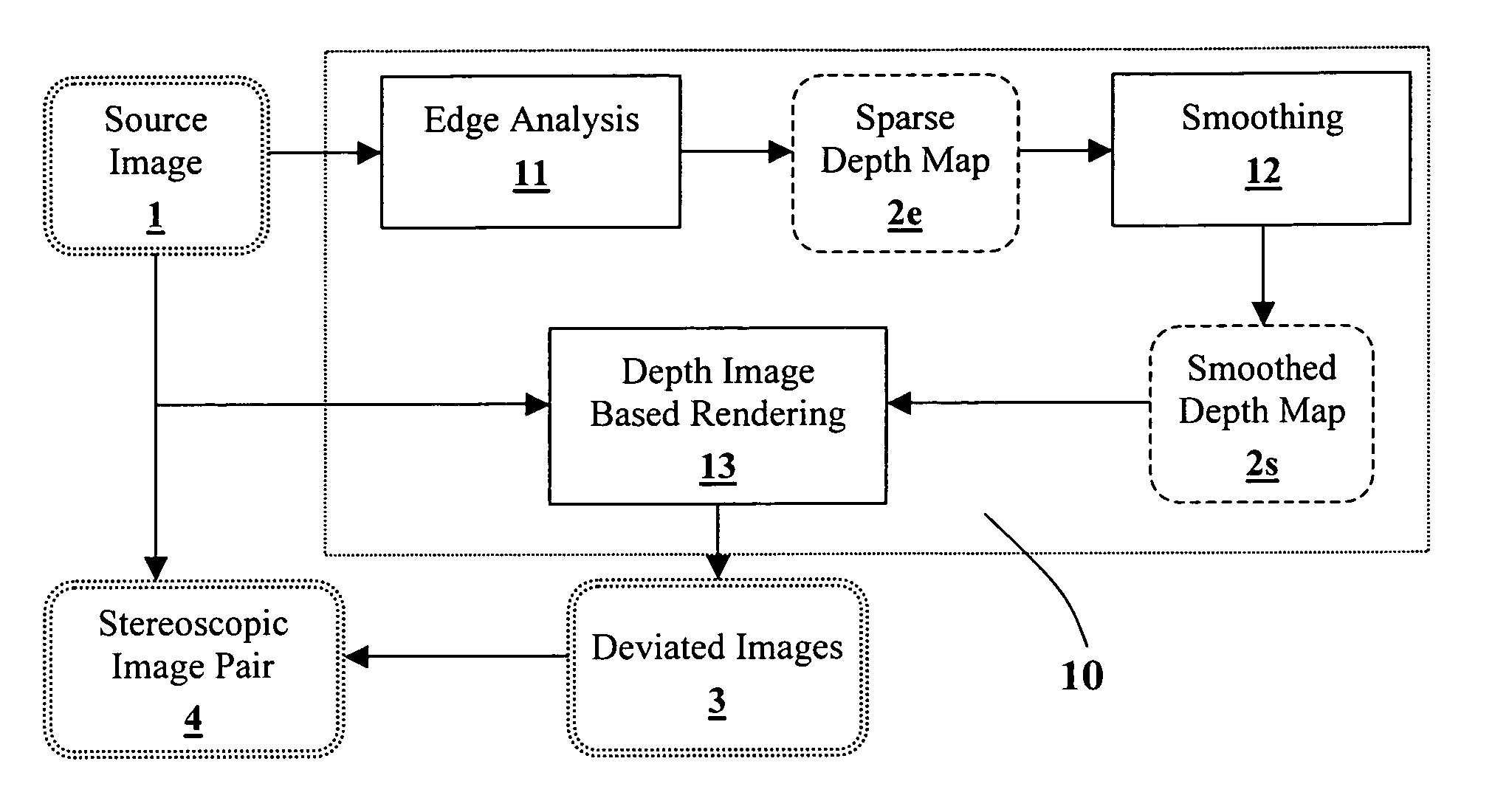

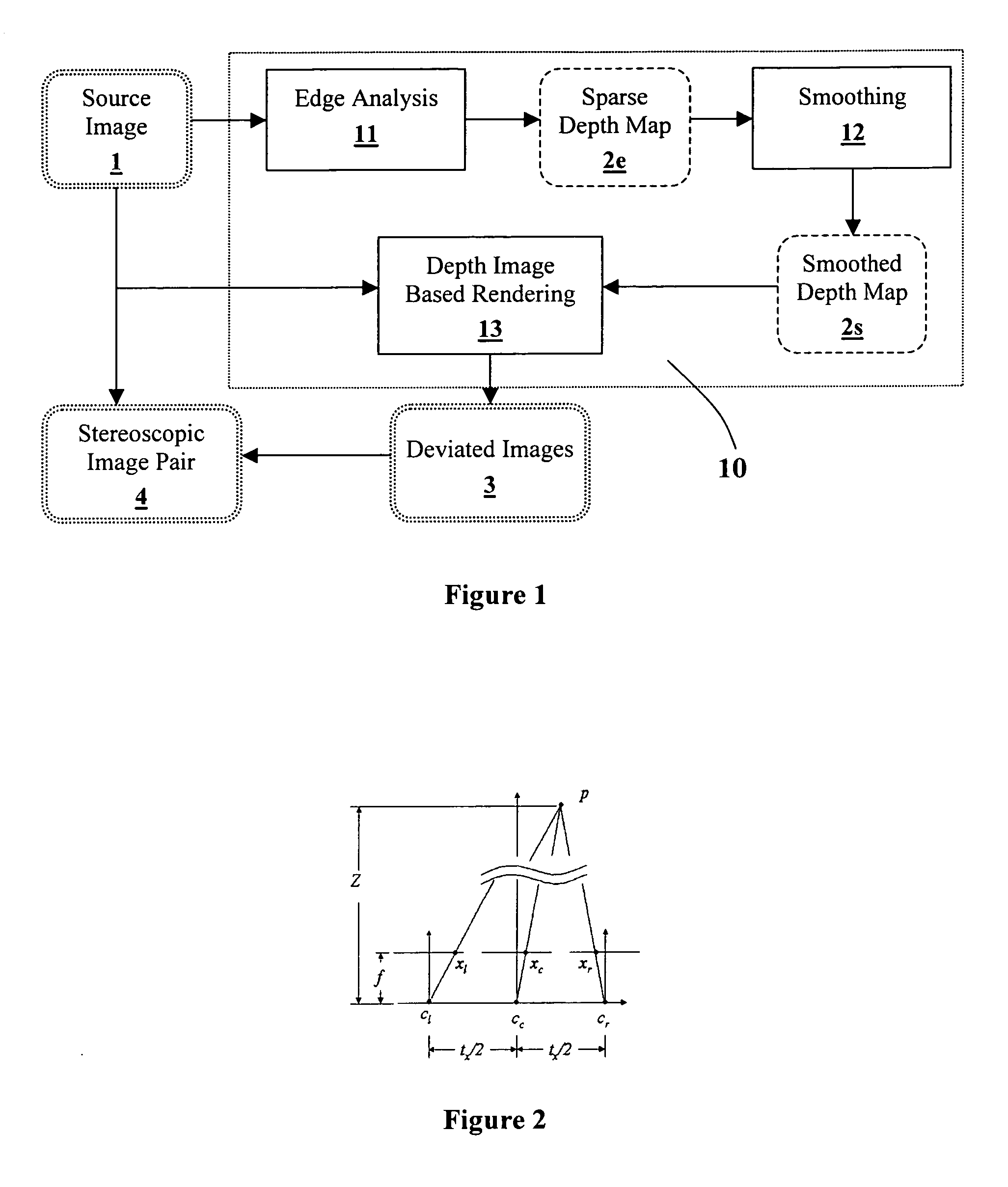

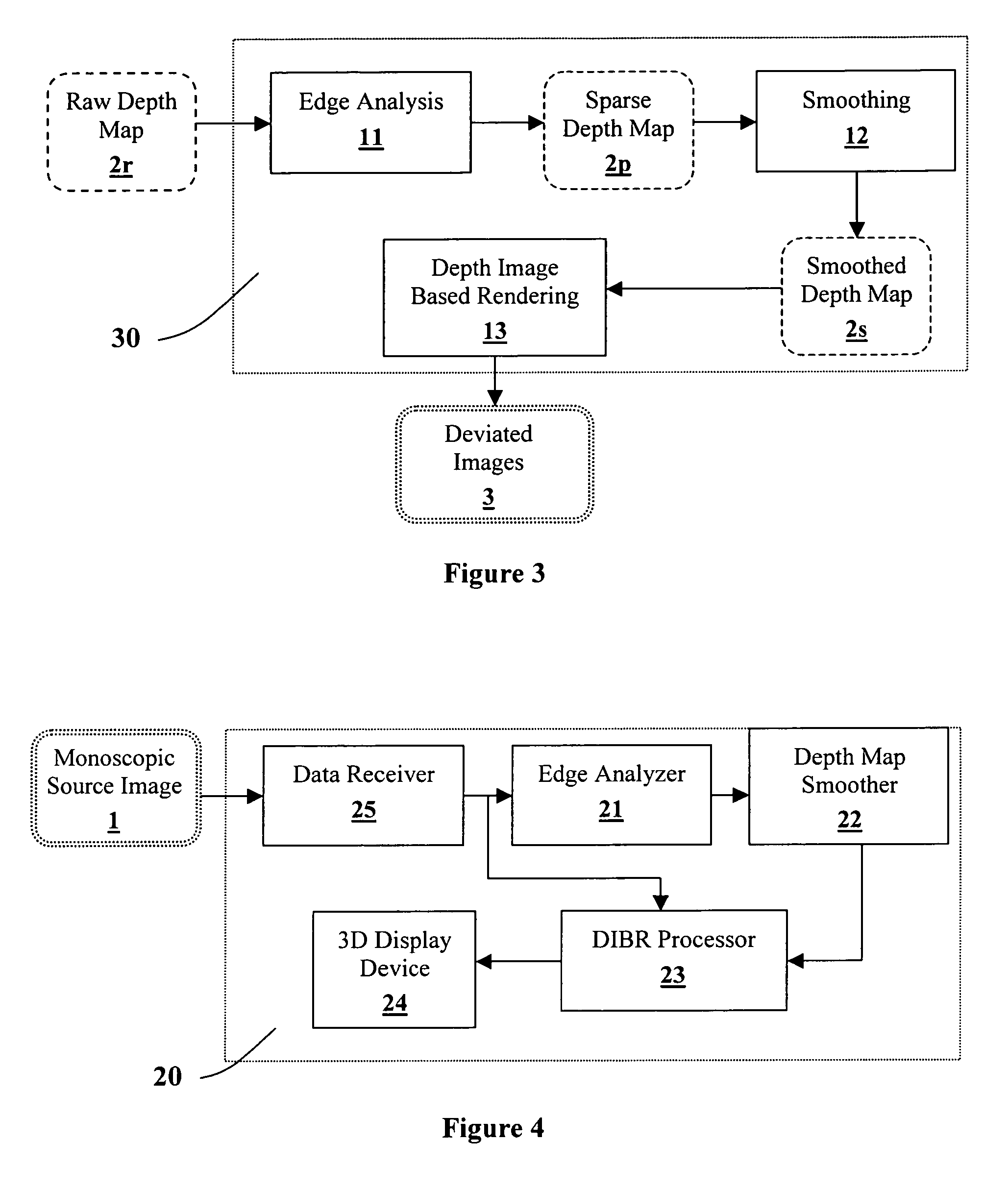

Depth maps are generated from a monoscopic source images and asymmetrically smoothed to a near-saturation level. Each depth map contains depth values focused on edges of local regions in the source image. Each edge is defined by a predetermined image parameter having an estimated value exceeding a predefined threshold. The depth values are based on the corresponding estimated values of the image parameter. The depth map is used to process the source image by a depth image based rendering algorithm to create at least one deviated image, which forms with the source image a set of monoscopic images. At least one stereoscopic image pair is selected from such a set for use in generating different viewpoints for multiview and stereoscopic purposes, including still and moving images.

Owner:HER MAJESTY THE QUEEN & RIGHT OF CANADA REPRESENTED BY THE MIN OF IND THROUGH THE COMM RES CENT

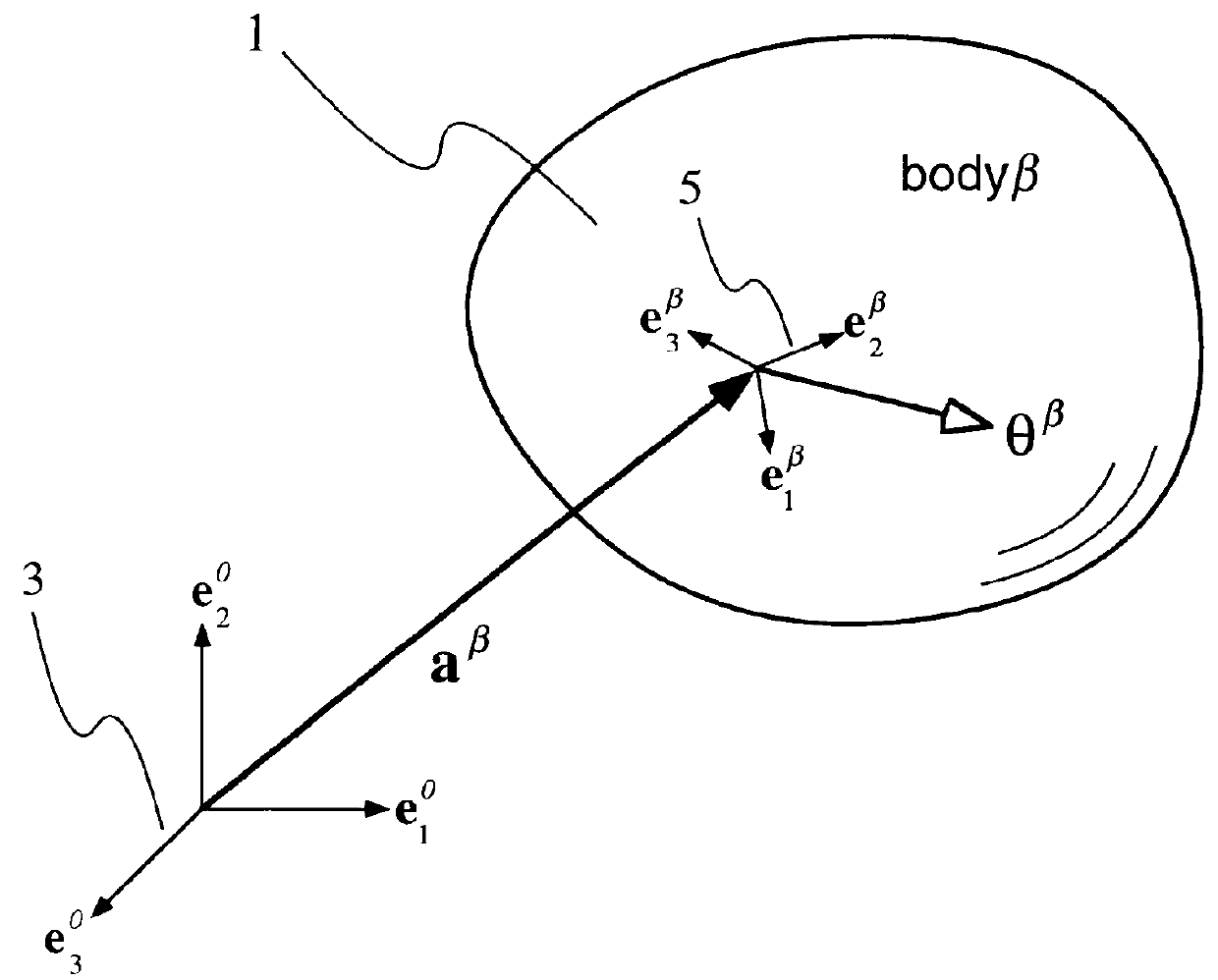

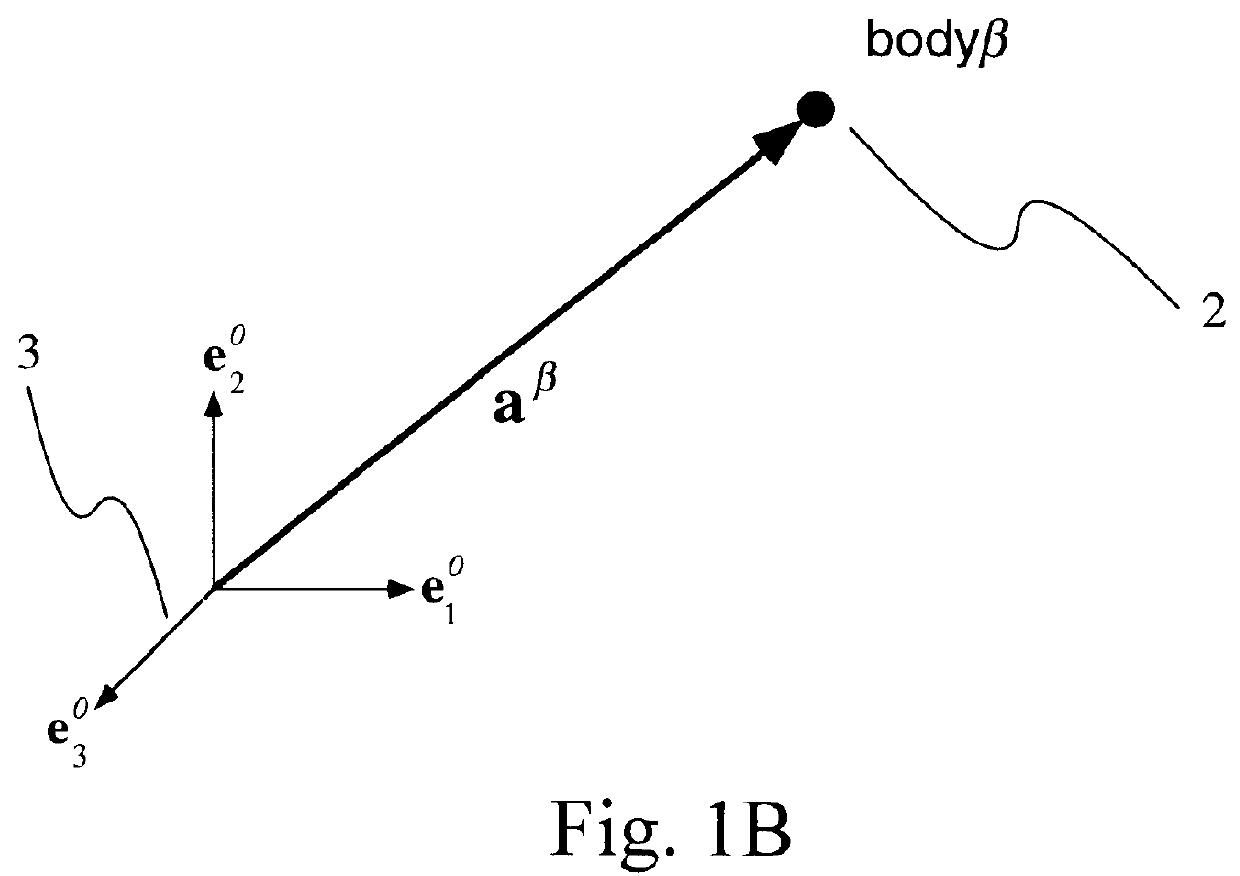

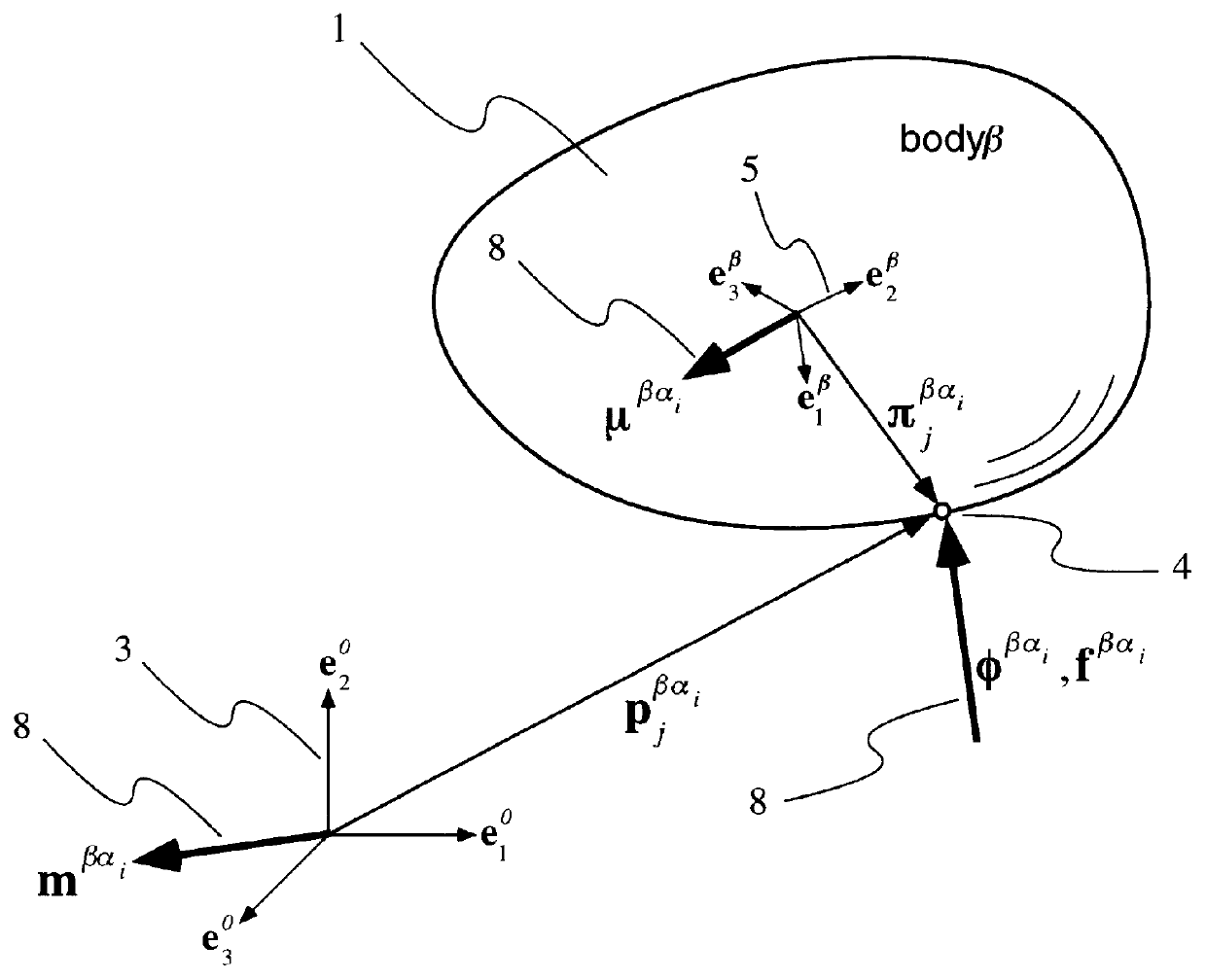

Three dimensional multibody modeling of anatomical joints

InactiveUS6161080AEasy to modifyPerson identificationAnalogue computers for chemical processesData selectionDimensional modeling

The present invention relates to a method of generating a three dimensional representation of one or more anatomical joints, wherein the representation comprises two or more movable bodies and one or more links, comprising the steps of inputting anatomically representative data of two or more movable bodies of the selected joint or joints; selecting one or more link types responsive to the representative data of the bodies; selecting link characteristics responsive to each selected link type; generating an equilibrium condition responsive to interaction between the bodies and the links; and displaying a three dimensional representation of the selected joint or joints responsive to the data generated from the equilibrium condition of the anatomical joint or joints. The present invention further relates to a system for generating a three dimensional representation of one or more anatomical joints, and a method of planning surgery of one or more anatomical joints.

Owner:THE TRUSTEES OF COLUMBIA UNIV IN THE CITY OF NEW YORK

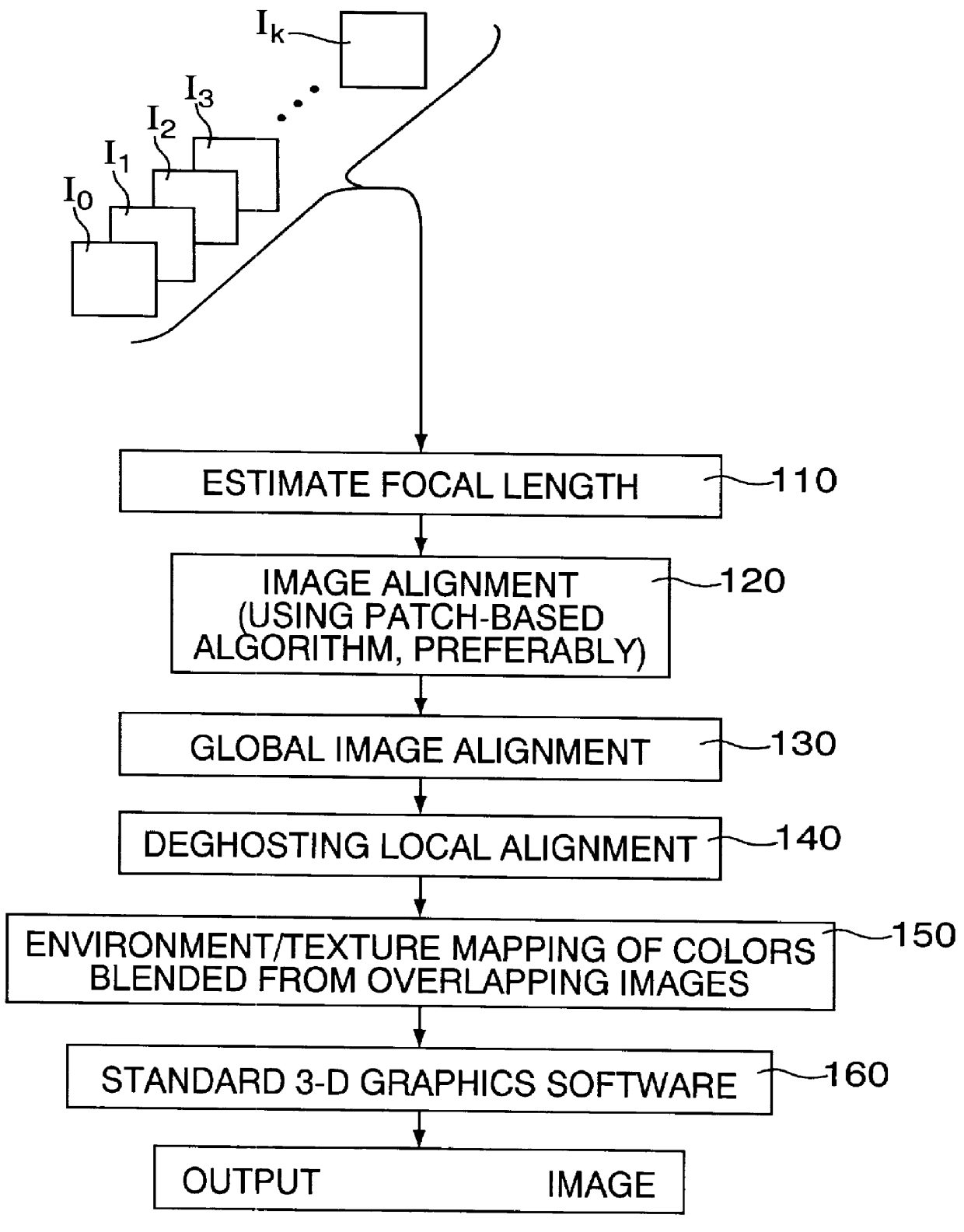

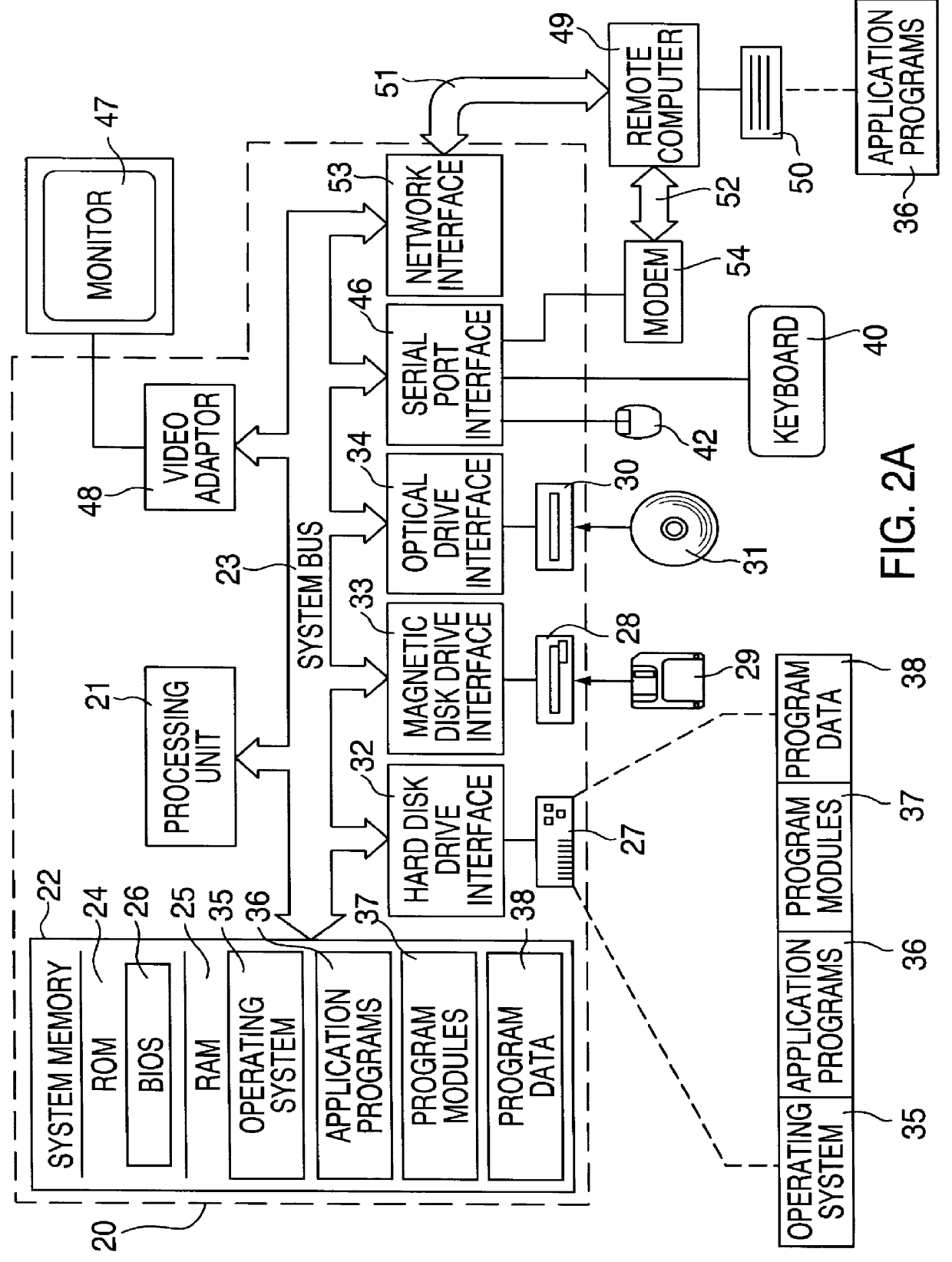

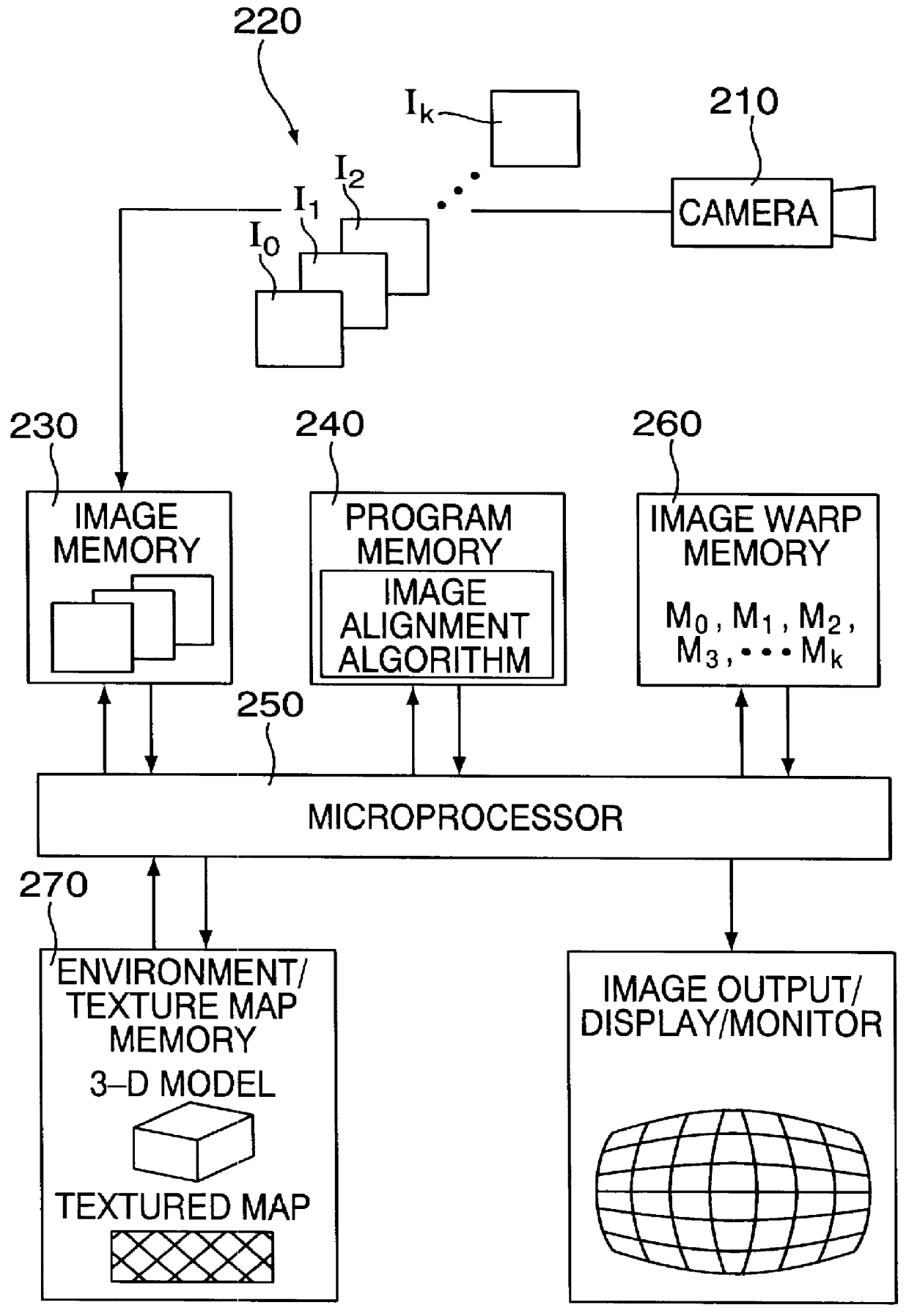

3-dimensional image rotation method and apparatus for producing image mosaics

InactiveUS6157747AQuality improvementEfficient solutionGeometric image transformationCharacter and pattern recognitionPattern recognitionComputer graphics (images)

The invention aligns a set of plural images to construct a mosaic image. At least different pairs of the images overlap partially (or fully), and typically are images captured by a camera looking at the same scene but oriented at different angles from approximately the same location or similar locations. In order to align one of the images with another one of the images, the following steps are carried out: (a) determining a difference error between the one image and the other image; (b) computing an incremental rotation of the one image relative to a 3-dimensional coordinate system through an incremental angle which tends to reduce the difference error; and (c) rotating the one image in accordance with the incremental rotation to produce an incrementally warped version of the one image. As long as the difference error remains significant, the method continues by re-performing the foregoing determining, computing and rotating steps but this time with the incrementally warped version of the one image.

Owner:MICROSOFT TECH LICENSING LLC

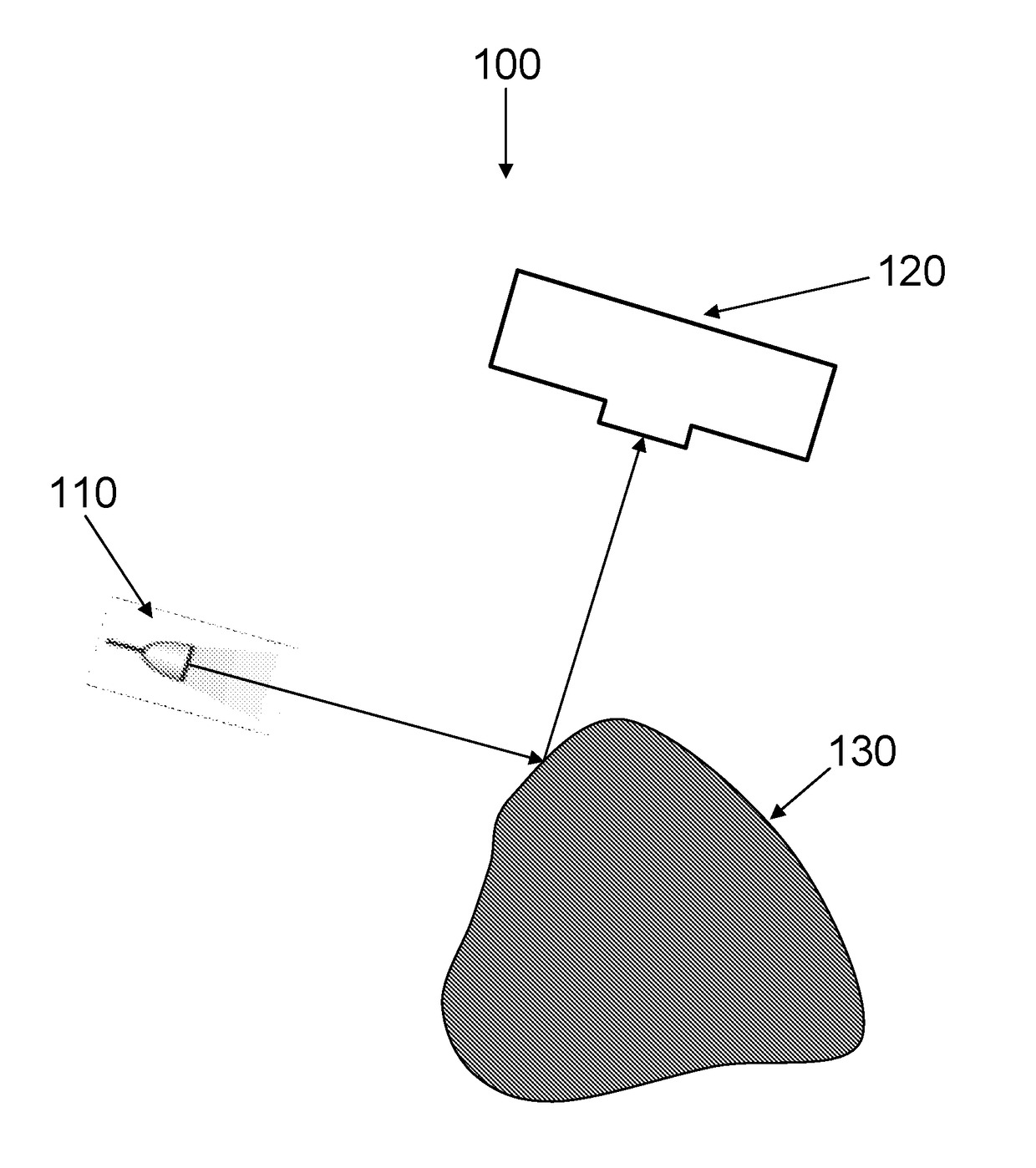

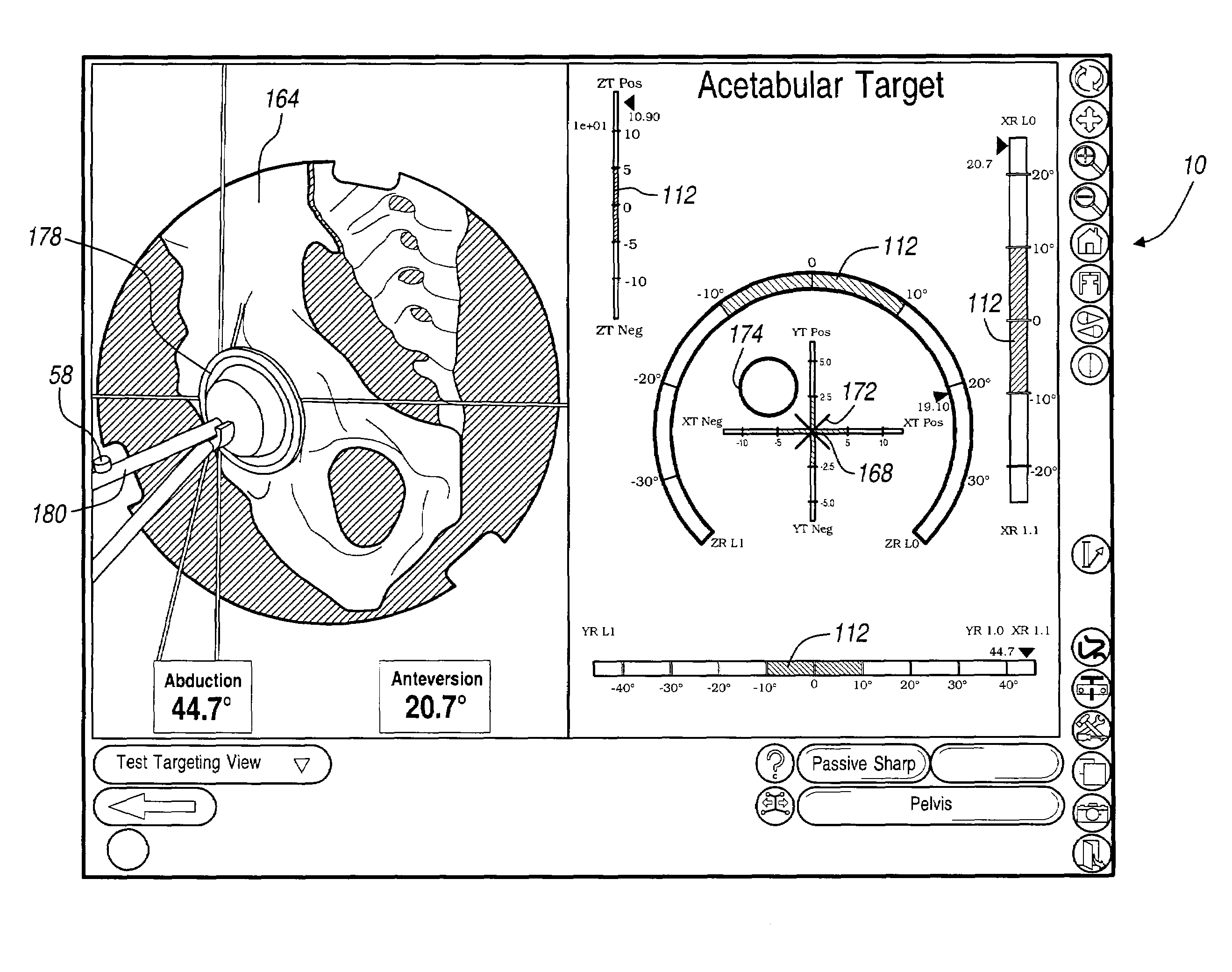

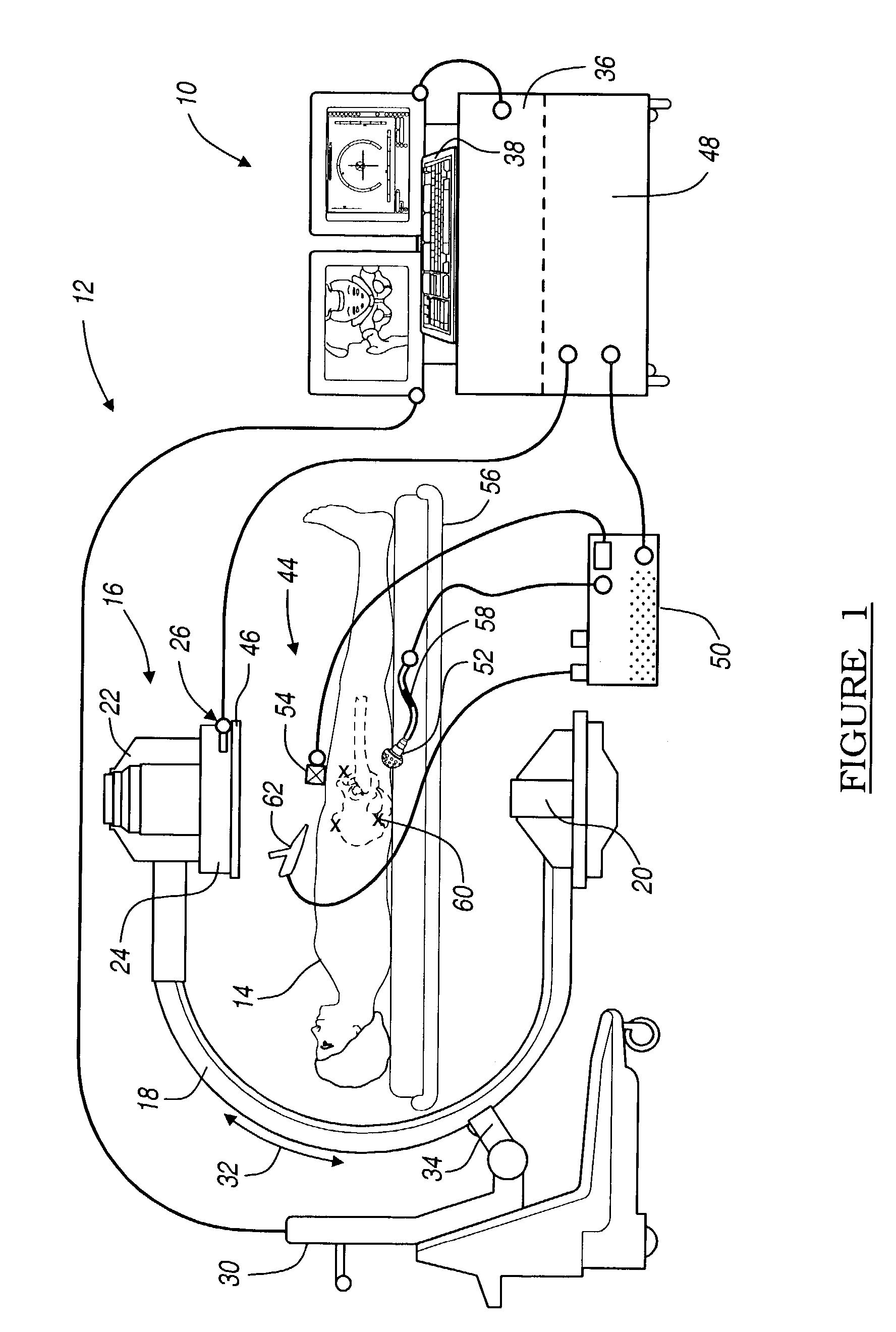

Six degree of freedom alignment display for medical procedures

A display and navigation system for use in guiding a medical device to a target in a patient includes a tracking sensor, a tracking device and display. The tracking sensor is associated with the medical device and is used to track the medical device. The tracking device tracks the medical device with the tracking sensor. The display includes indicia illustrating at least five degree of freedom information and indicia of the medical device in relation to the five degree of freedom information.

Owner:SURGICAL NAVIGATION TECH

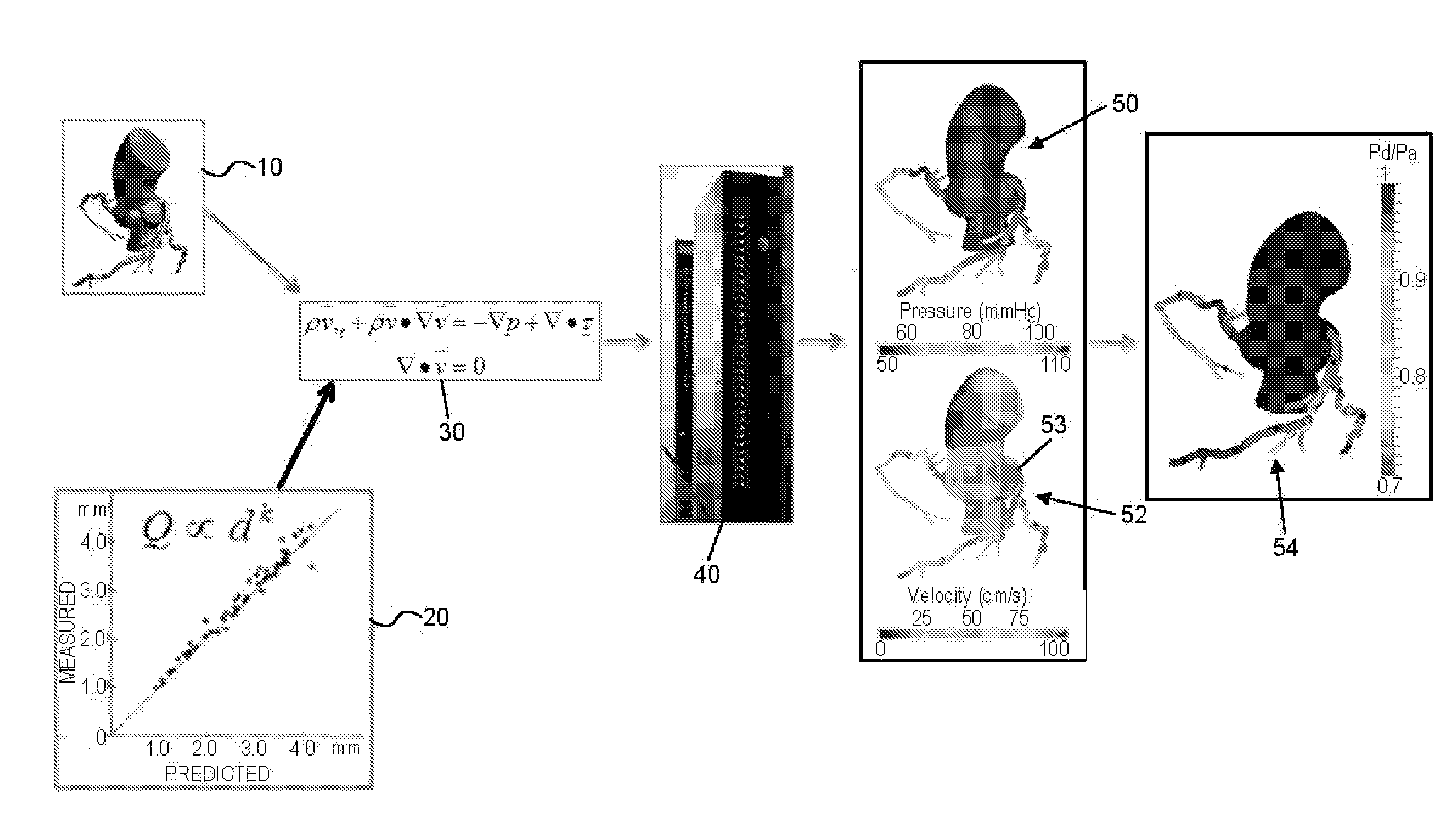

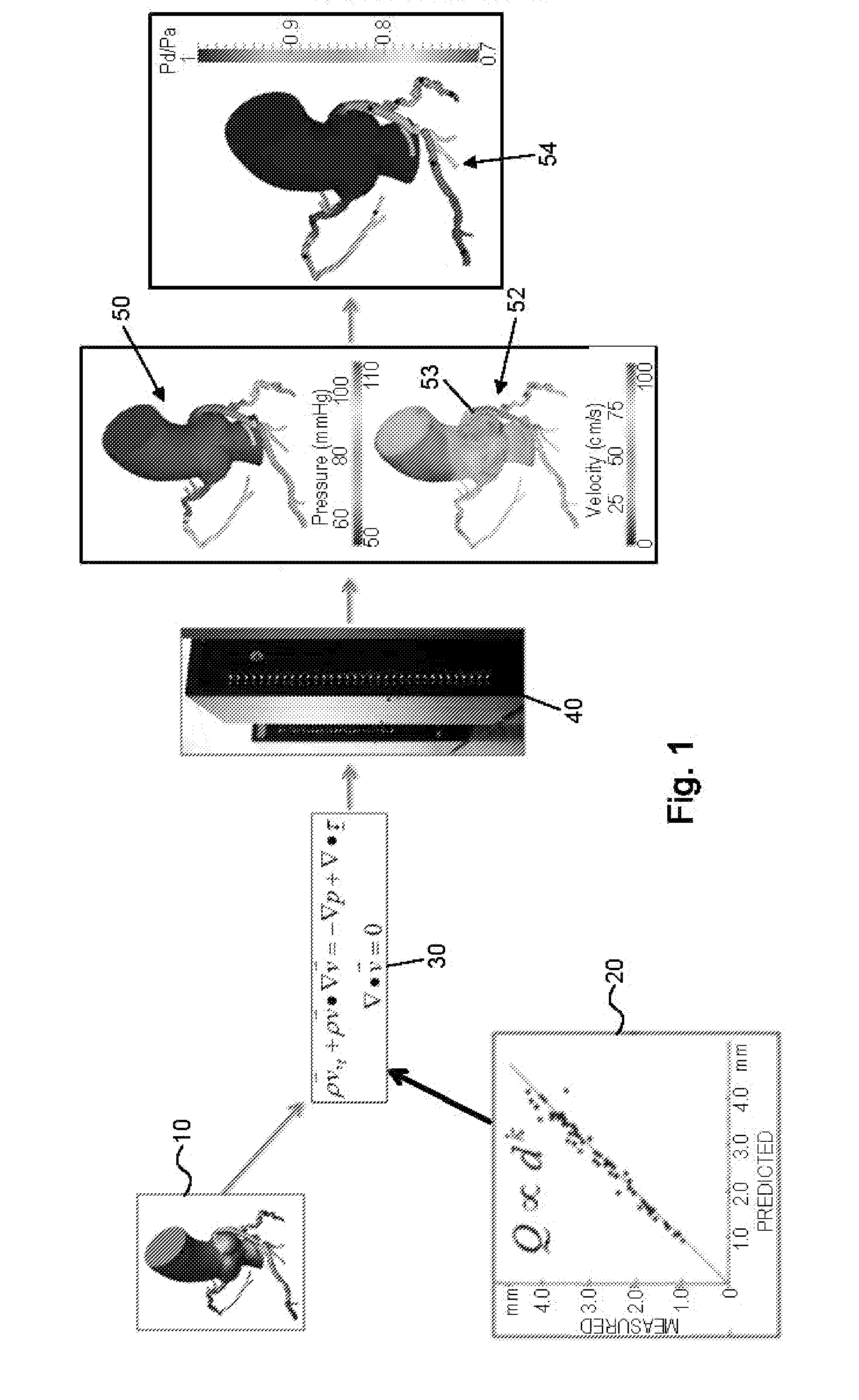

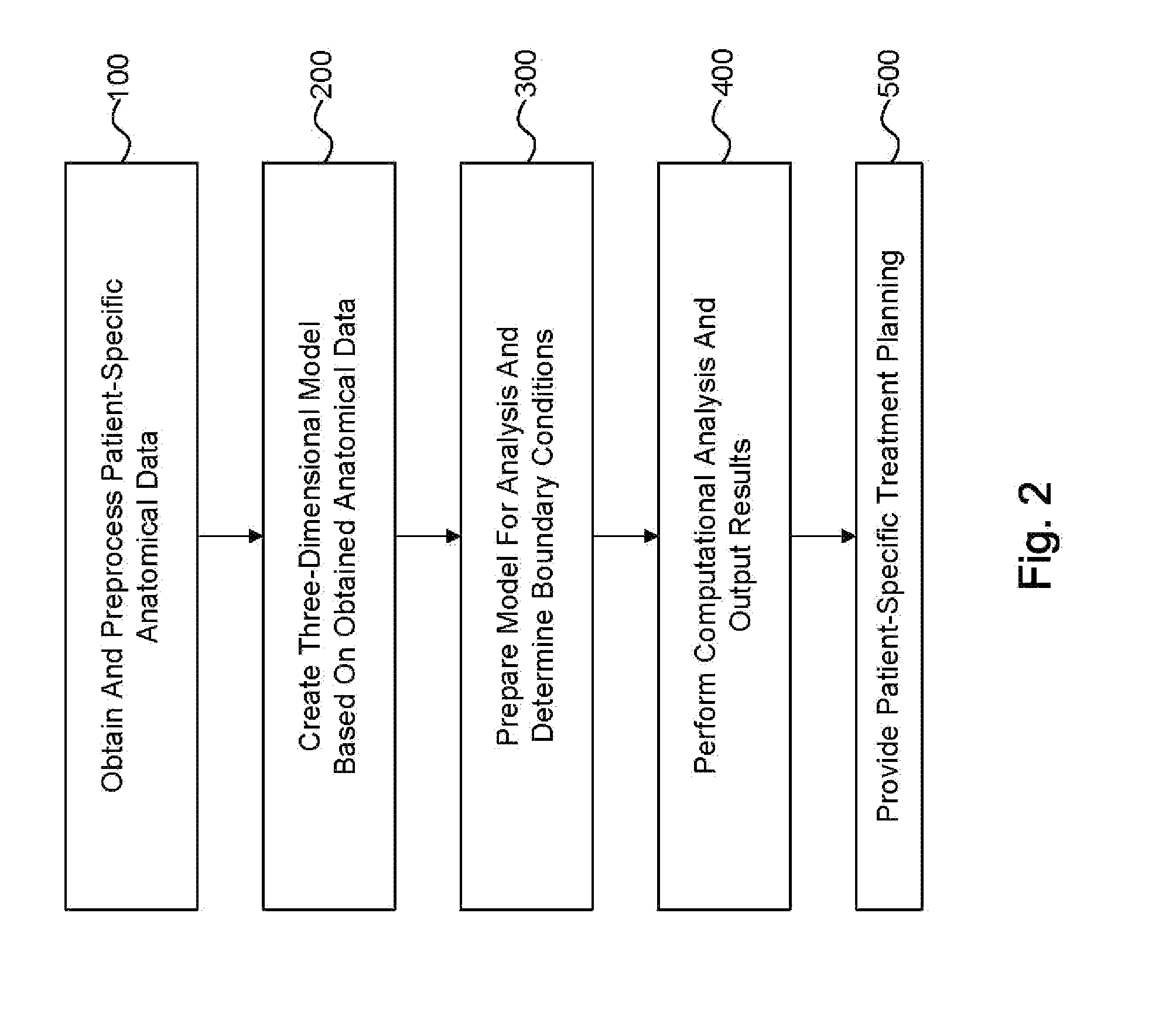

Method and system for patient-specific modeling of blood flow

Embodiments include a system for determining cardiovascular information for a patient. The system may include at least one computer system configured to receive patient-specific data regarding a geometry of the patient's heart, and create a three-dimensional model representing at least a portion of the patient's heart based on the patient-specific data. The at least one computer system may be further configured to create a physics-based model relating to a blood flow characteristic of the patient's heart and determine a fractional flow reserve within the patient's heart based on the three-dimensional model and the physics-based model.

Owner:HEARTFLOW

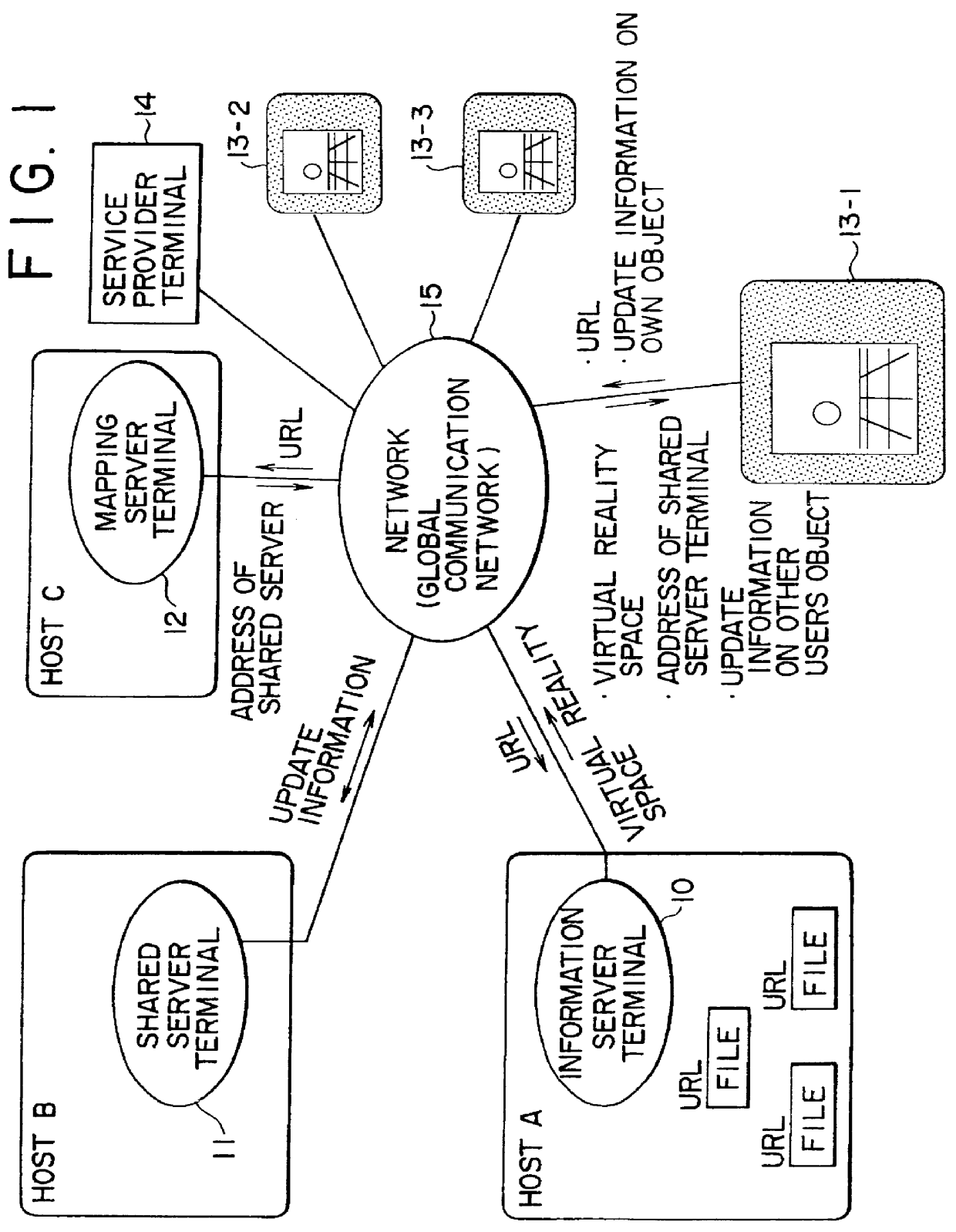

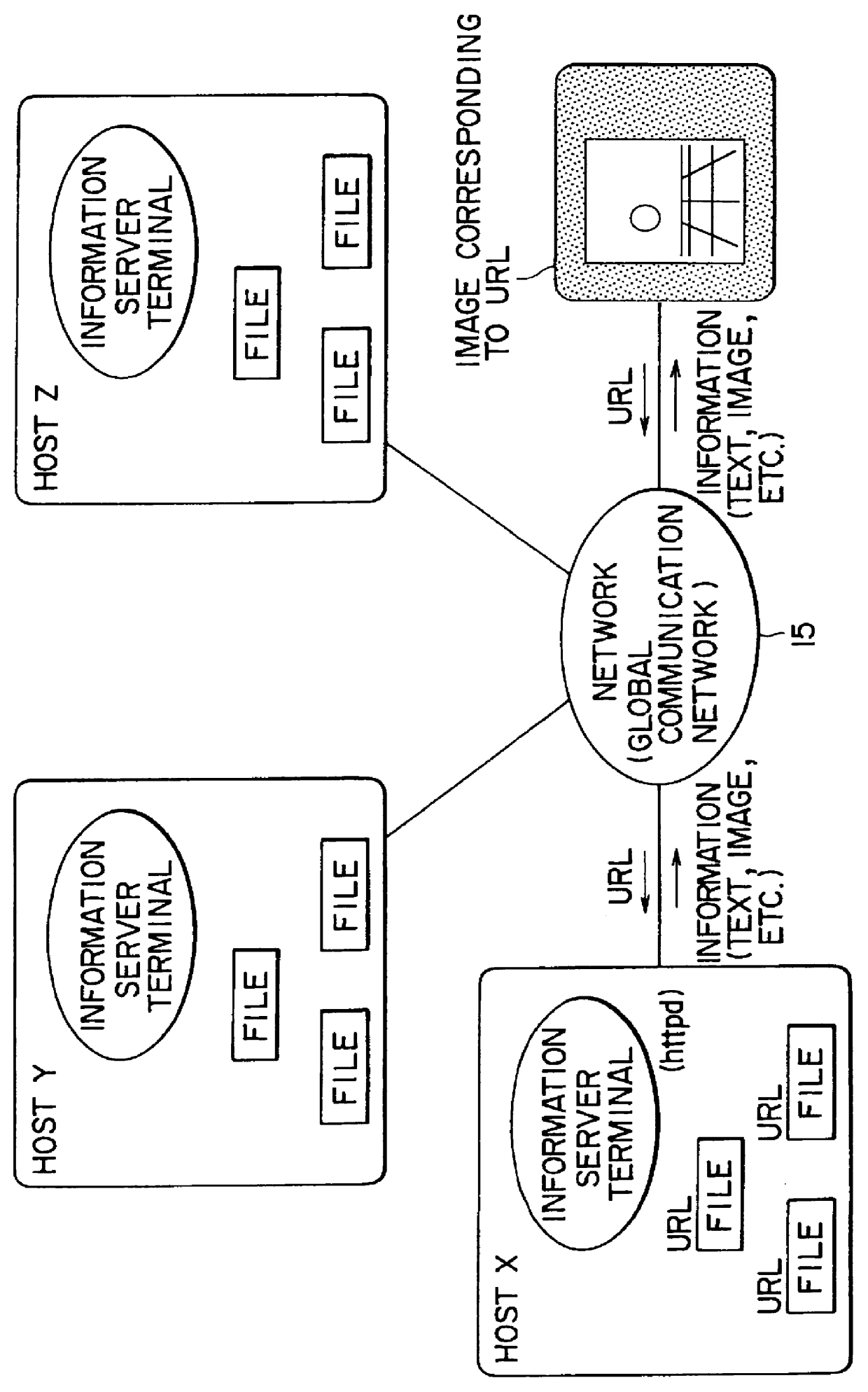

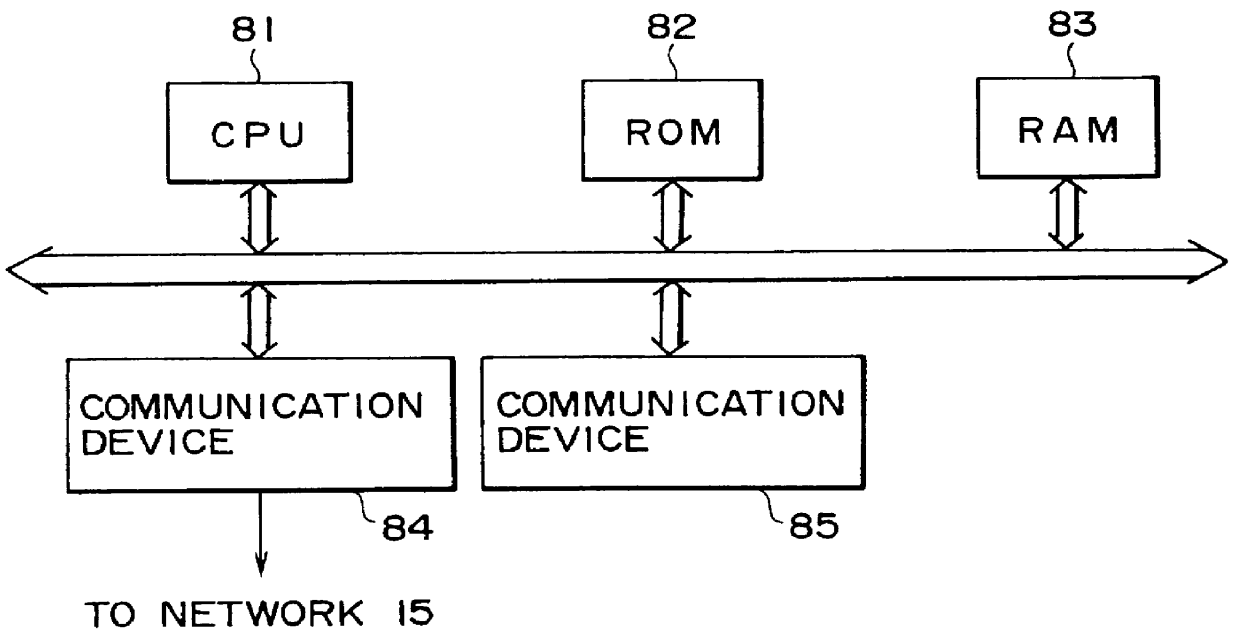

3D virtual reality multi-user interaction with superimposed positional information display for each user

Positions of users other than a particular user in a virtual reality space shared by many users can be recognized with ease and in a minimum display space. The center (or intersection) of a cross of radar map corresponds to the particular user and the positions of other users (to be specific, the avatars of the other users) around the particular user are indicated by dots or squares colored red for example in the radar map. This radar map is displayed on virtual reality space image in a superimposed manner.

Owner:SONY CORP

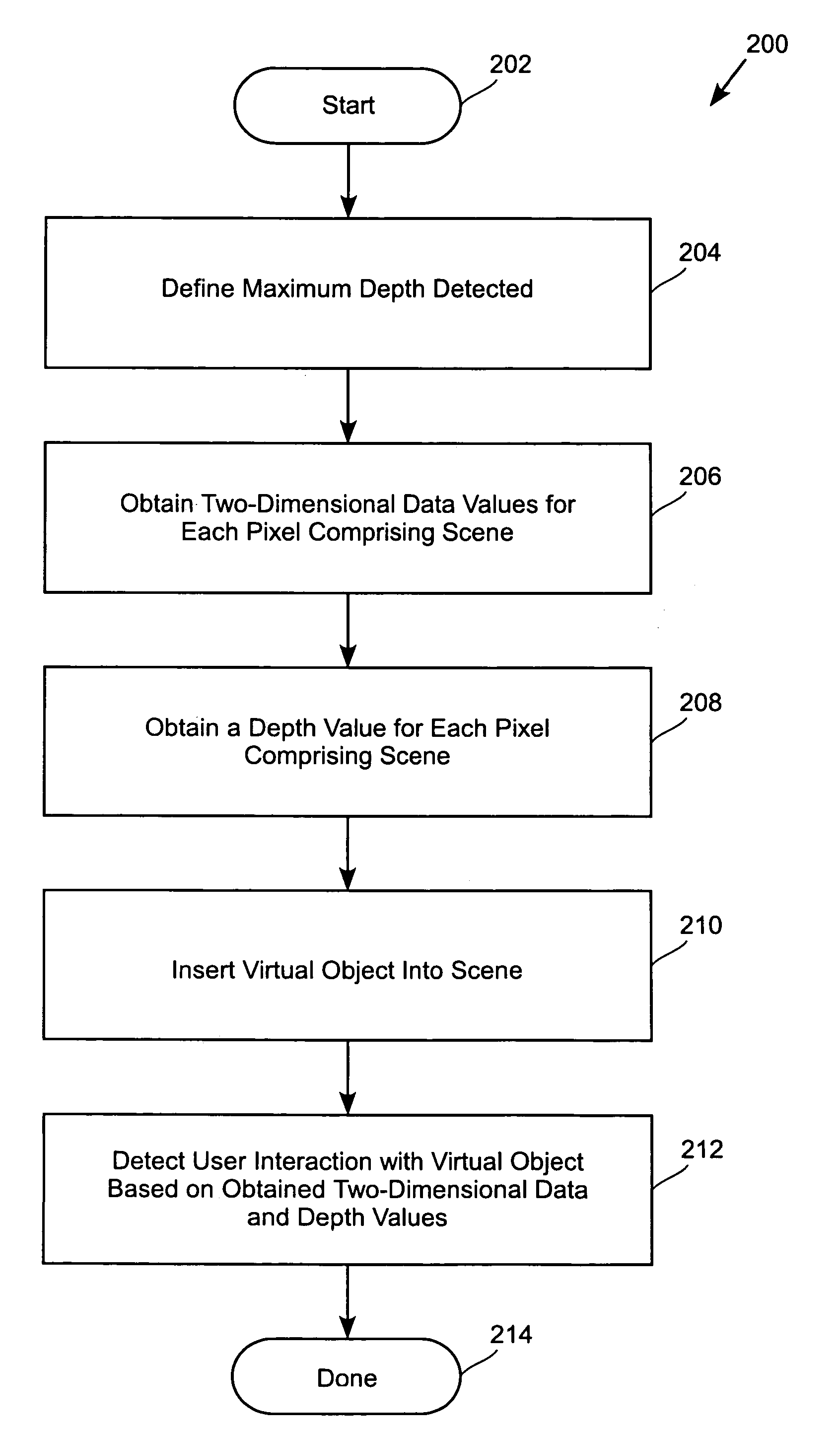

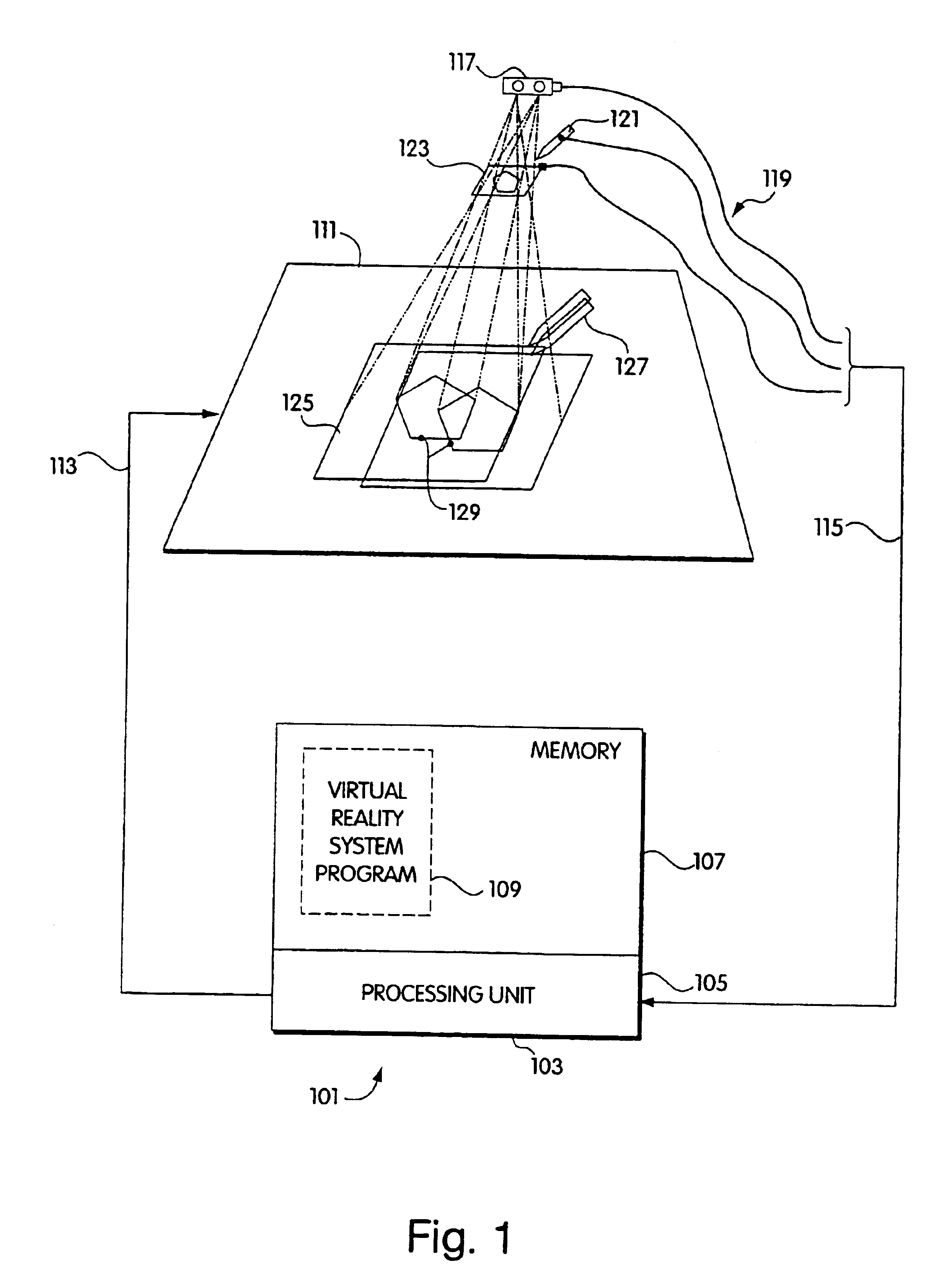

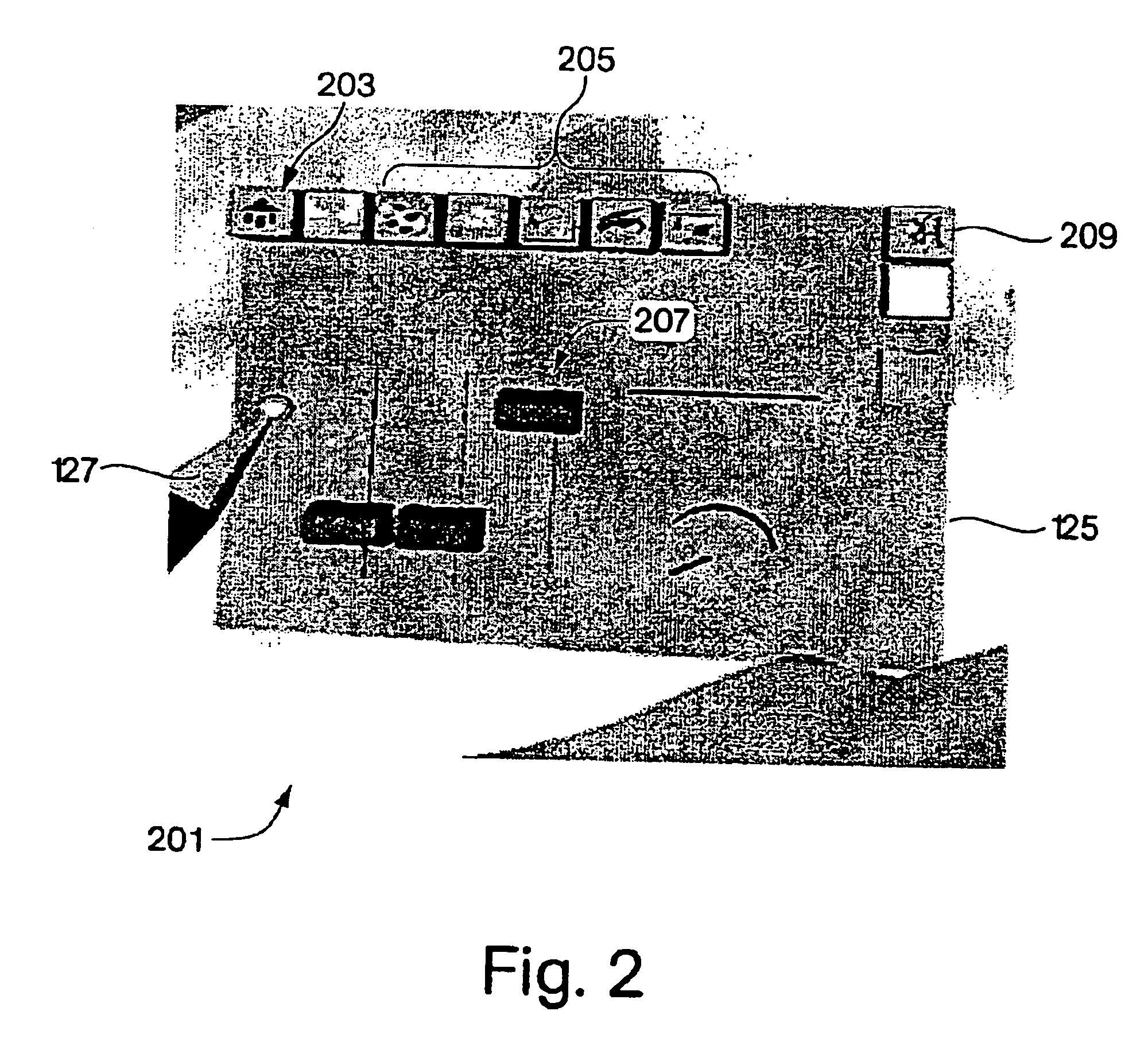

System and method for providing a real-time three-dimensional interactive environment

ActiveUS8072470B2Altering its appearanceAppearance of a physical object in the scene can be visually alteredCathode-ray tube indicatorsAnimationComputer graphics (images)Data value

Owner:SONY COMPUTER ENTERTAINMENT INC

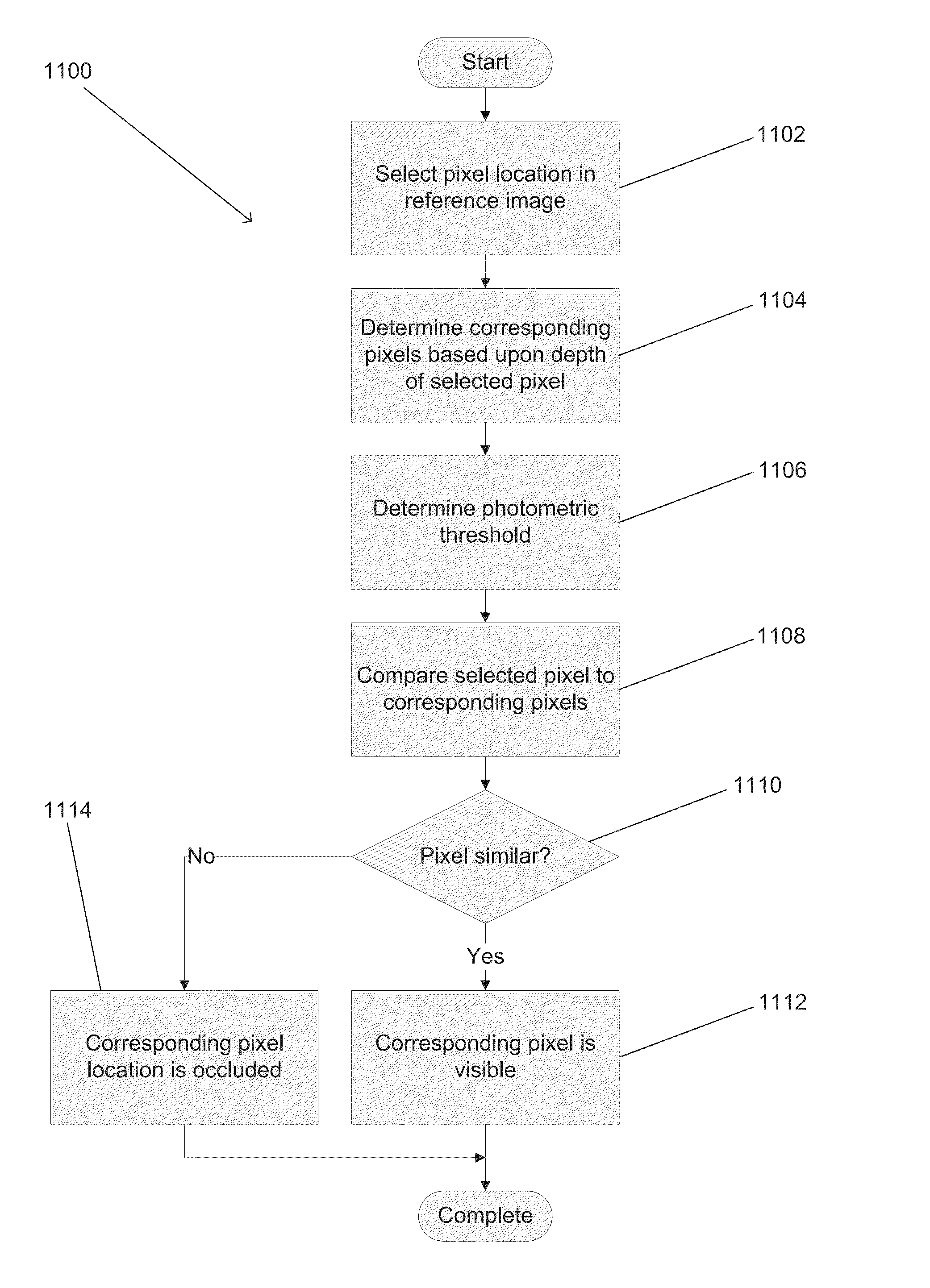

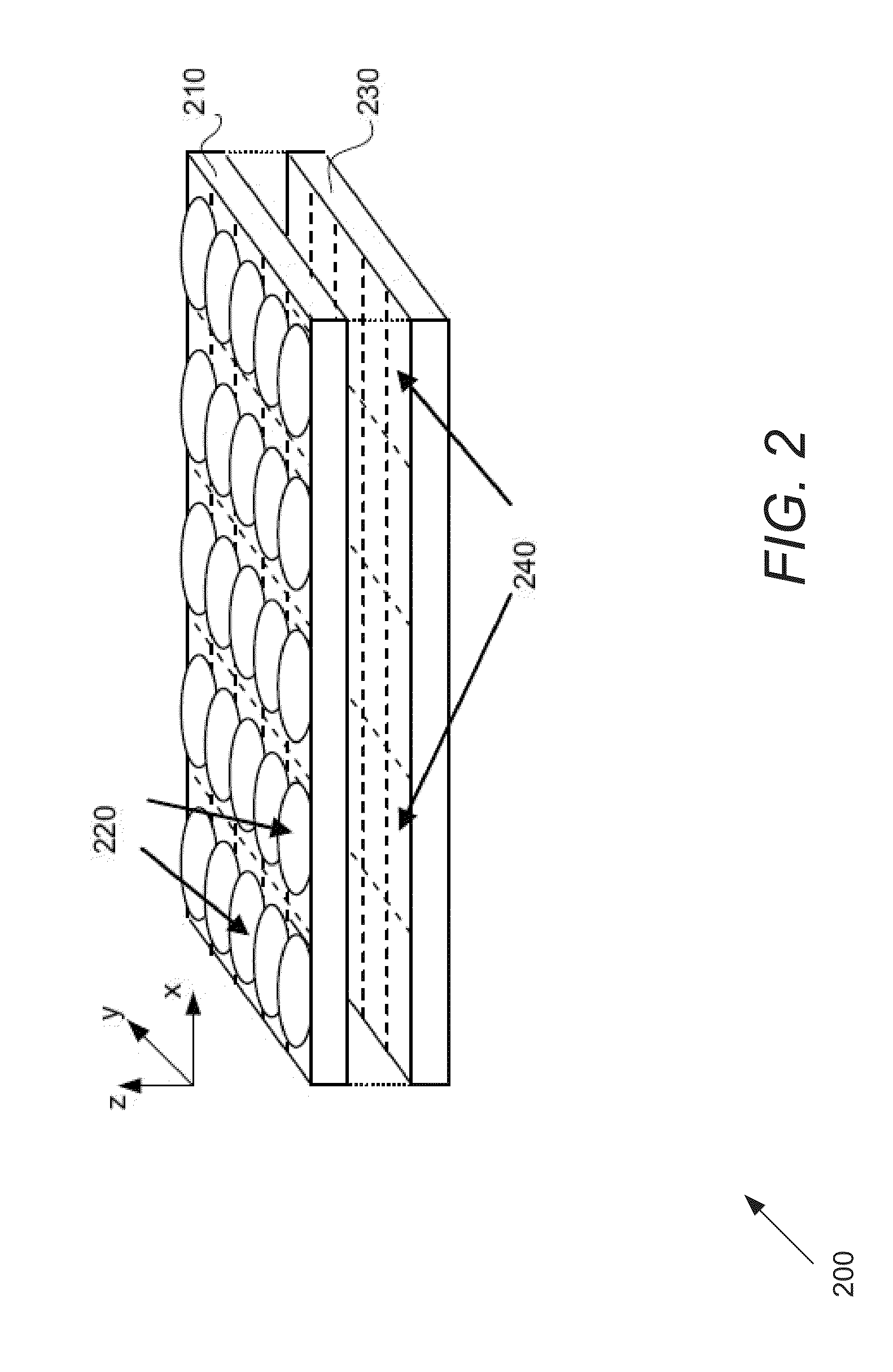

Systems and methods for parallax detection and correction in images captured using array cameras that contain occlusions using subsets of images to perform depth estimation

ActiveUS8619082B1Reduce the weighting appliedMinimal costImage enhancementTelevision system detailsParallaxViewpoints

Systems in accordance with embodiments of the invention can perform parallax detection and correction in images captured using array cameras. Due to the different viewpoints of the cameras, parallax results in variations in the position of objects within the captured images of the scene. Methods in accordance with embodiments of the invention provide an accurate account of the pixel disparity due to parallax between the different cameras in the array, so that appropriate scene-dependent geometric shifts can be applied to the pixels of the captured images when performing super-resolution processing. In several embodiments, detecting parallax involves using competing subsets of images to estimate the depth of a pixel location in an image from a reference viewpoint. In a number of embodiments, generating depth estimates considers the similarity of pixels in multiple spectral channels. In certain embodiments, generating depth estimates involves generating a confidence map indicating the reliability of depth estimates.

Owner:FOTONATION LTD

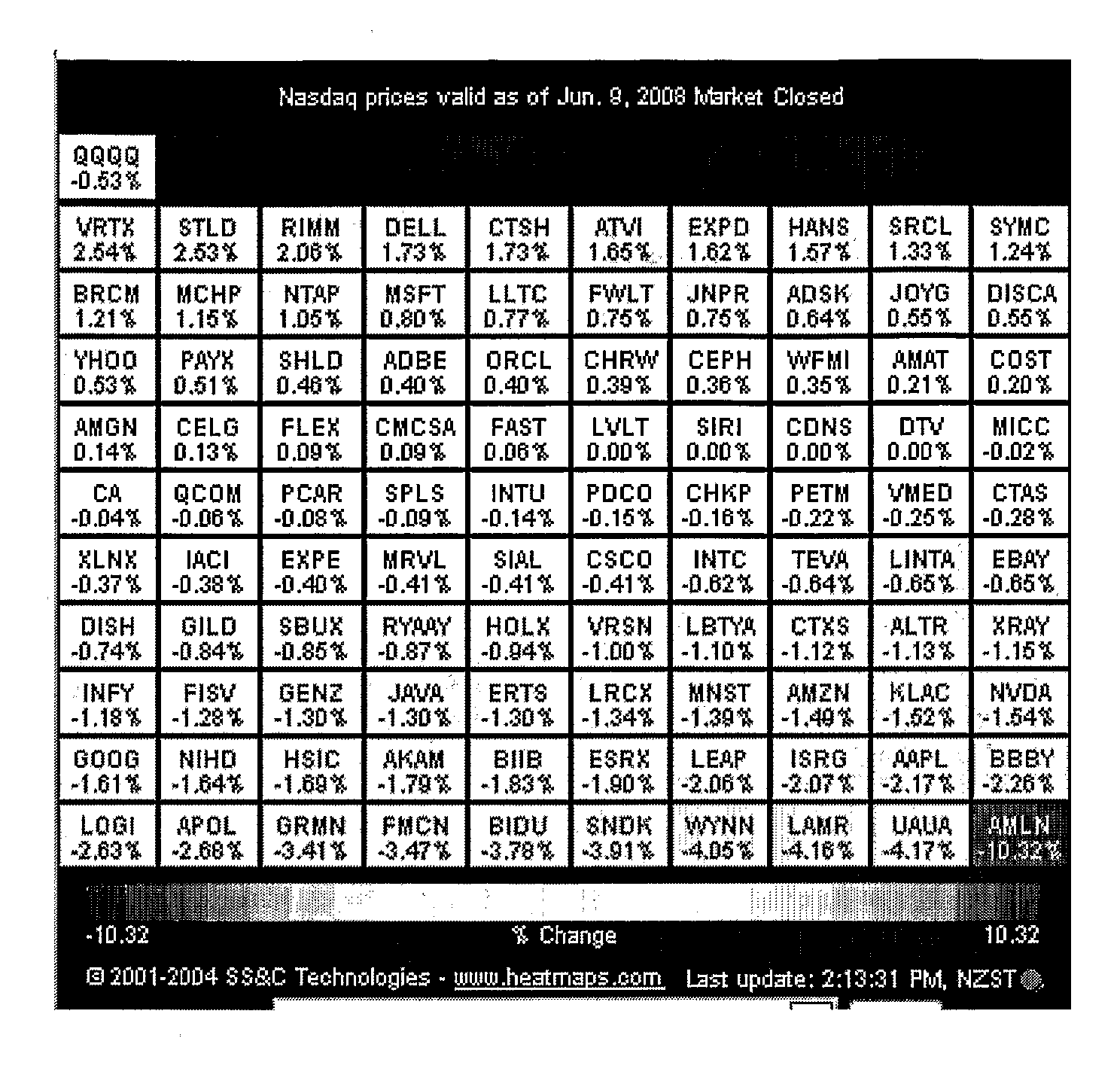

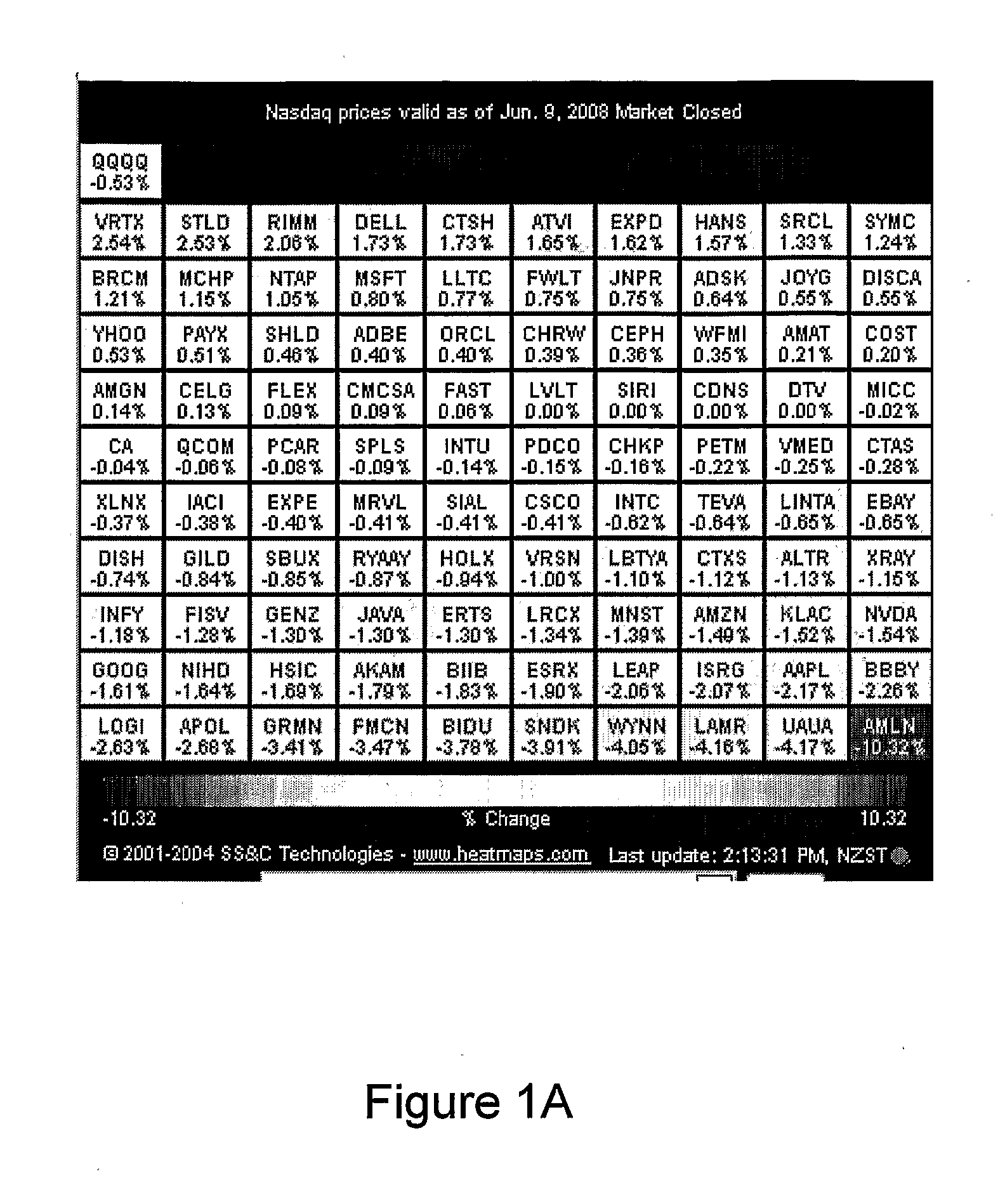

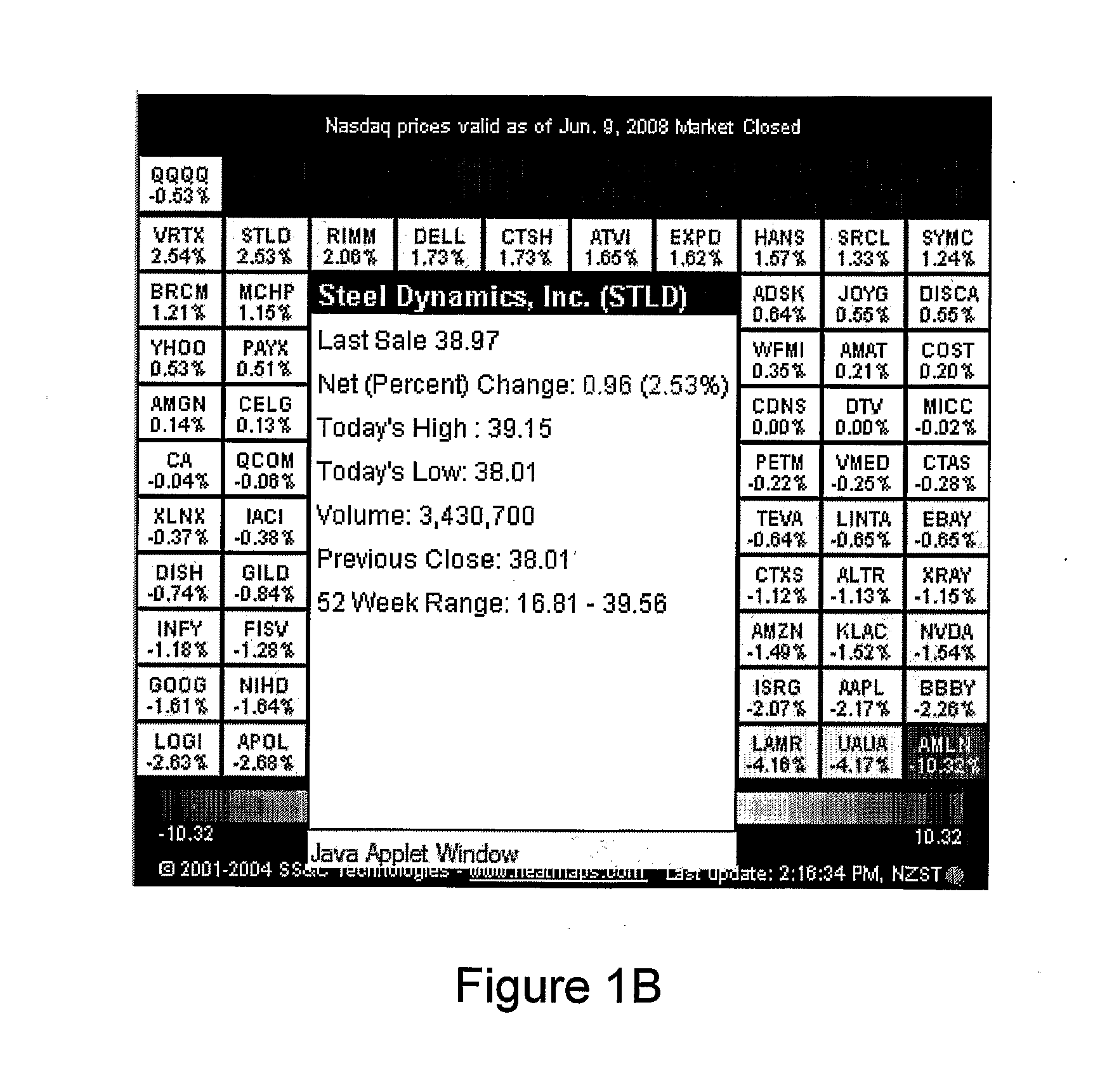

Methods, apparatus and systems for data visualization and related applications

In a data visualization system, a method of creating a visual representation of data, the method including the steps of providing instructions to an end user to assist the end user in: constructing multiple graphical representations of data, where each graphical representation is one of a predefined type and includes multiple layers of elements that contribute to the end user's understanding of the data; arranging multiple graphical representations of different types within the visual representation in a manner that enables the end user to understand and focus on the data being represented; and displaying the visual representation.

Owner:NEW BIS SAFE LUXCO S A R L

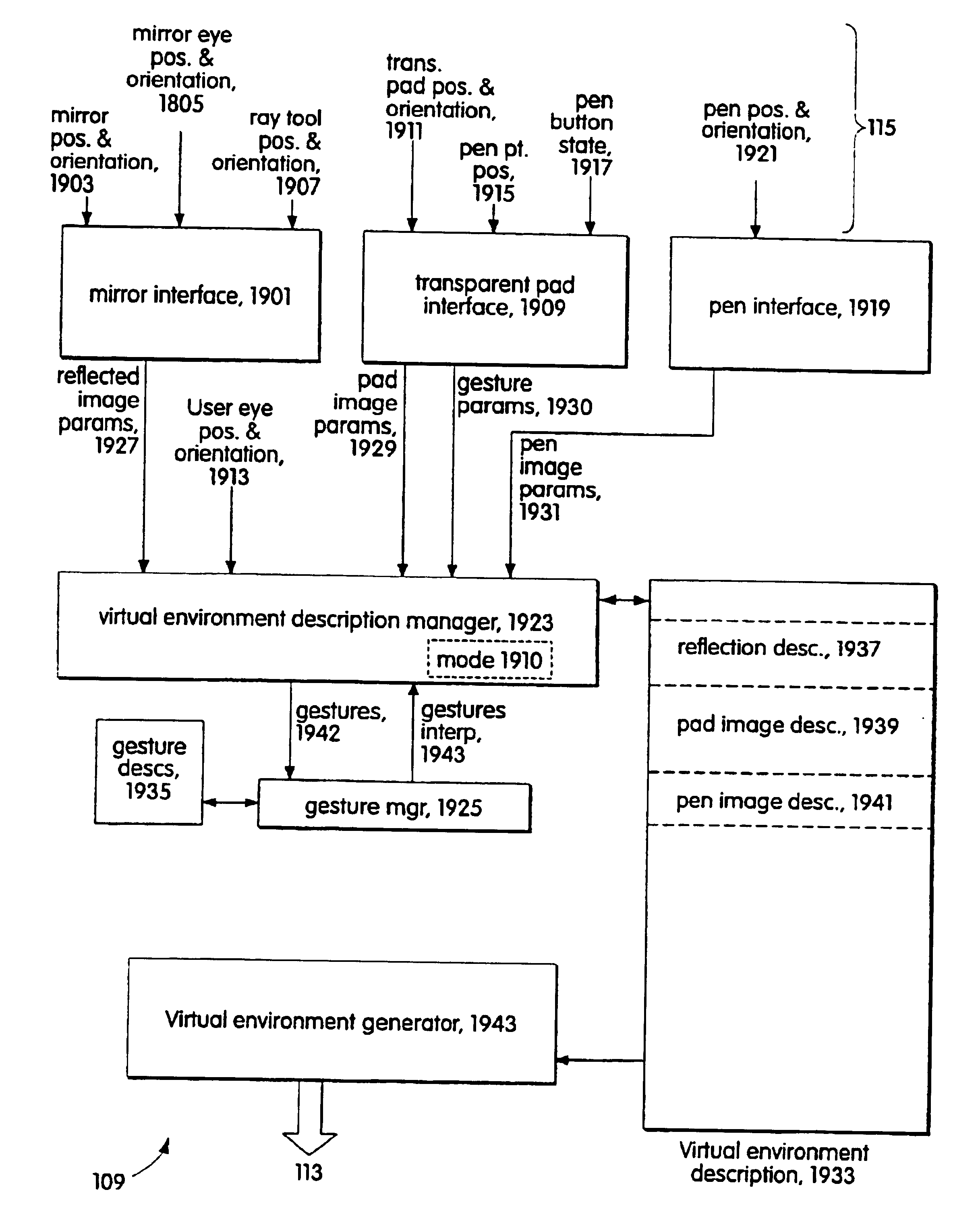

Tools for interacting with virtual environments

InactiveUS6842175B1Input/output for user-computer interactionGraph readingComputer graphics (images)Magic lens

The tools include components which are images produced by the system (103) that creates the virtual environment in response to inputs specifying the tool's location and the point of view of the tool's user. One class of the tools includes transparent components (121, 123); the image component of the tool is produced at a location in the virtual environment determined by the transparent component's location and the point of view of the tool's user. Tools in this class include a transparent pad (121) and a transparent stylus (123). The transparent pad (121) has an image component that may be used as a palette, as a magic lens, and as a device for selecting and transporting components of the virtual environment or even a portion of the virtual environment itself.

Owner:FRAUNHOFER GESELLSCHAFT ZUR FOERDERUNG DER ANGEWANDTEN FORSCHUNG EV

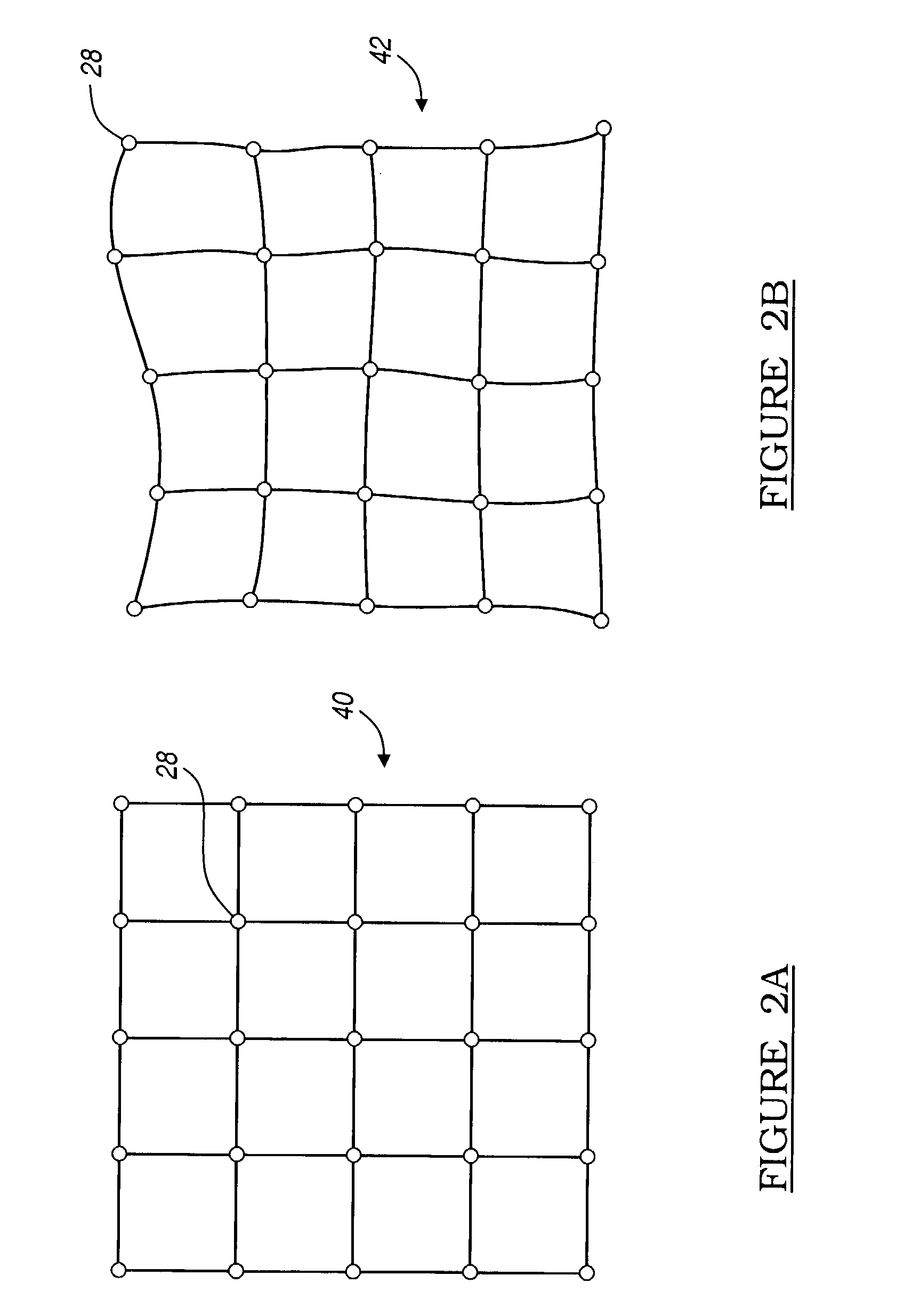

Simulation gridding method and apparatus including a structured areal gridder adapted for use by a reservoir simulator

InactiveUS6106561AHigh simulationSimulation results are accurateElectric/magnetic detection for well-loggingComputation using non-denominational number representationHorizonTriangulation

A Flogrid Simulation Gridding Program includes a Flogrid structured gridder. The structured gridder includes a structured areal gridder and a block gridder. The structured areal gridder will build an areal grid on an uppermost horizon of an earth formation by performing the following steps: (1) building a boundary enclosing one or more fault intersection lines on the horizon, and building a triangulation that absorbs the boundary and the faults; (2) building a vector field on the triangulation; (3) building a web of control lines and additional lines inside the boundary which have a direction that corresponds to the direction of the vector field on the triangulation, thereby producing an areal grid; and (4) post-processing the areal grid so that the control lines and additional lines are equi-spaced or smoothly distributed. The block gridder of the structured gridder will drop coordinate lines down from the nodes of the areal grid to complete the construction of a three dimensional structured grid. A reservoir simulator will receive the structured grid and generate a set of simulation results which are displayed on a 3D Viewer for observation by a workstation operator.

Owner:SCHLUMBERGER TECH CORP

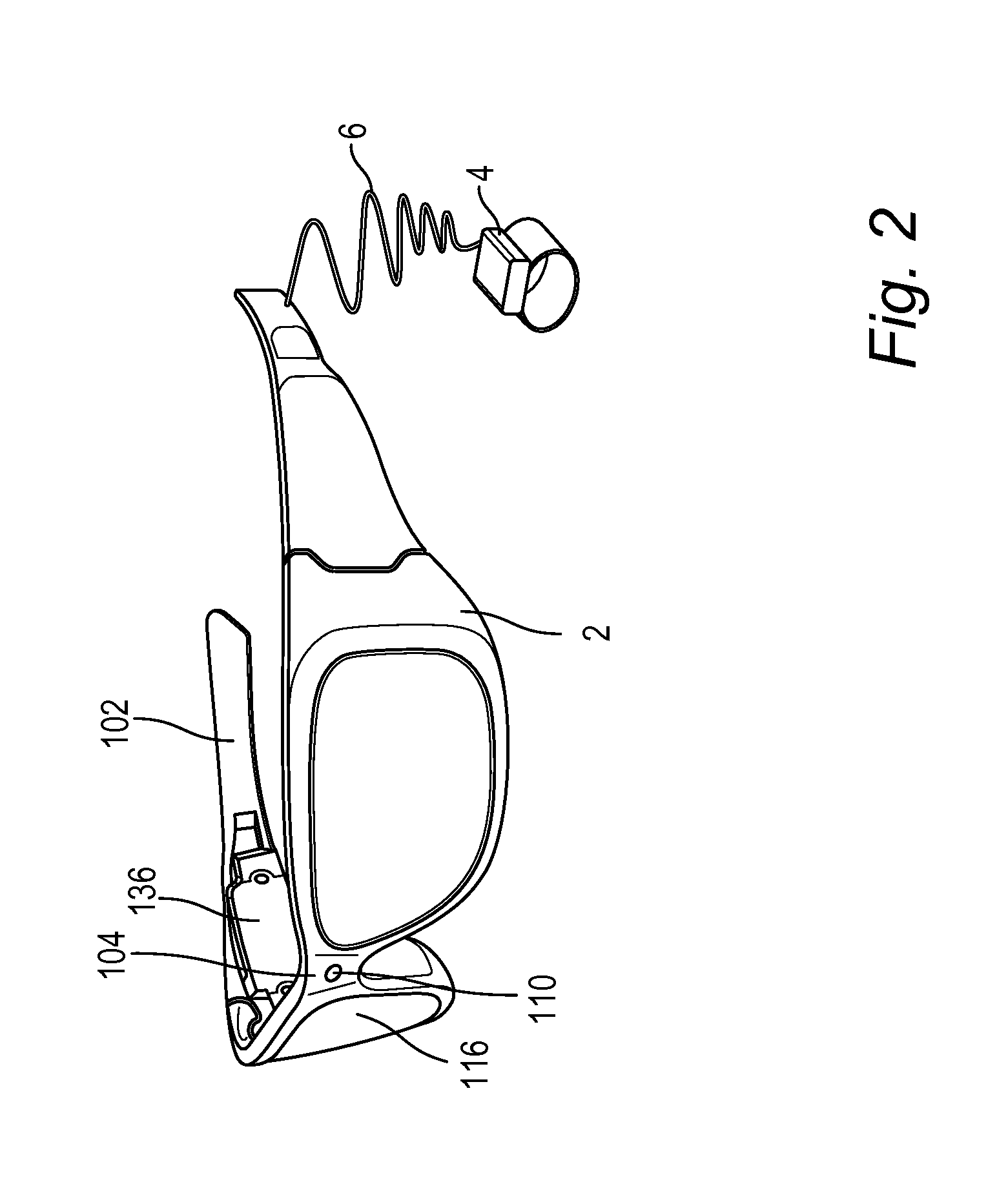

Low-latency fusing of virtual and real content

ActiveUS20120105473A1Introduce inherent latencyImage analysisCathode-ray tube indicatorsMixed realityLatency (engineering)

A system that includes a head mounted display device and a processing unit connected to the head mounted display device is used to fuse virtual content into real content. In one embodiment, the processing unit is in communication with a hub computing device. The processing unit and hub may collaboratively determine a map of the mixed reality environment. Further, state data may be extrapolated to predict a field of view for a user in the future at a time when the mixed reality is to be displayed to the user. This extrapolation can remove latency from the system.

Owner:MICROSOFT TECH LICENSING LLC

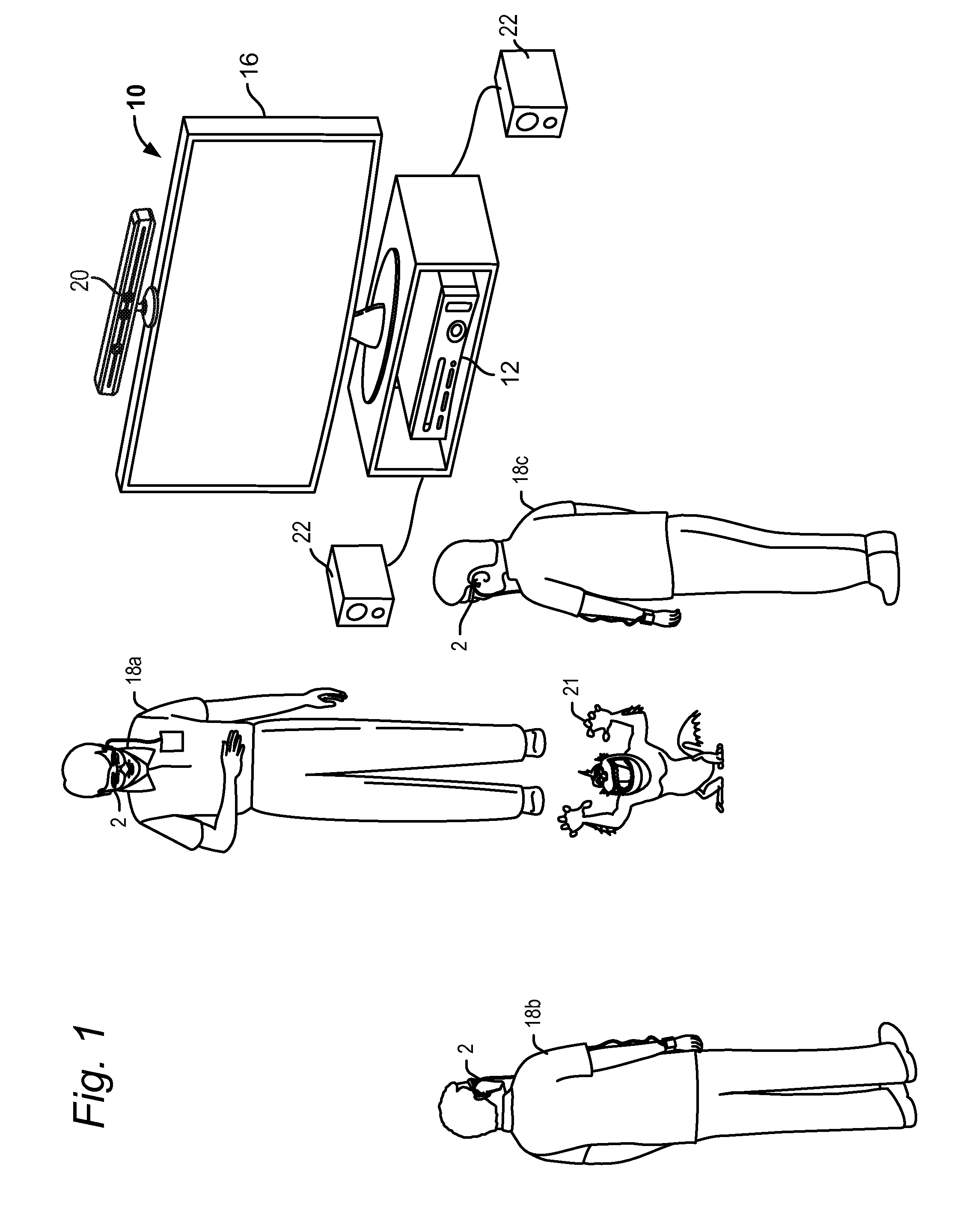

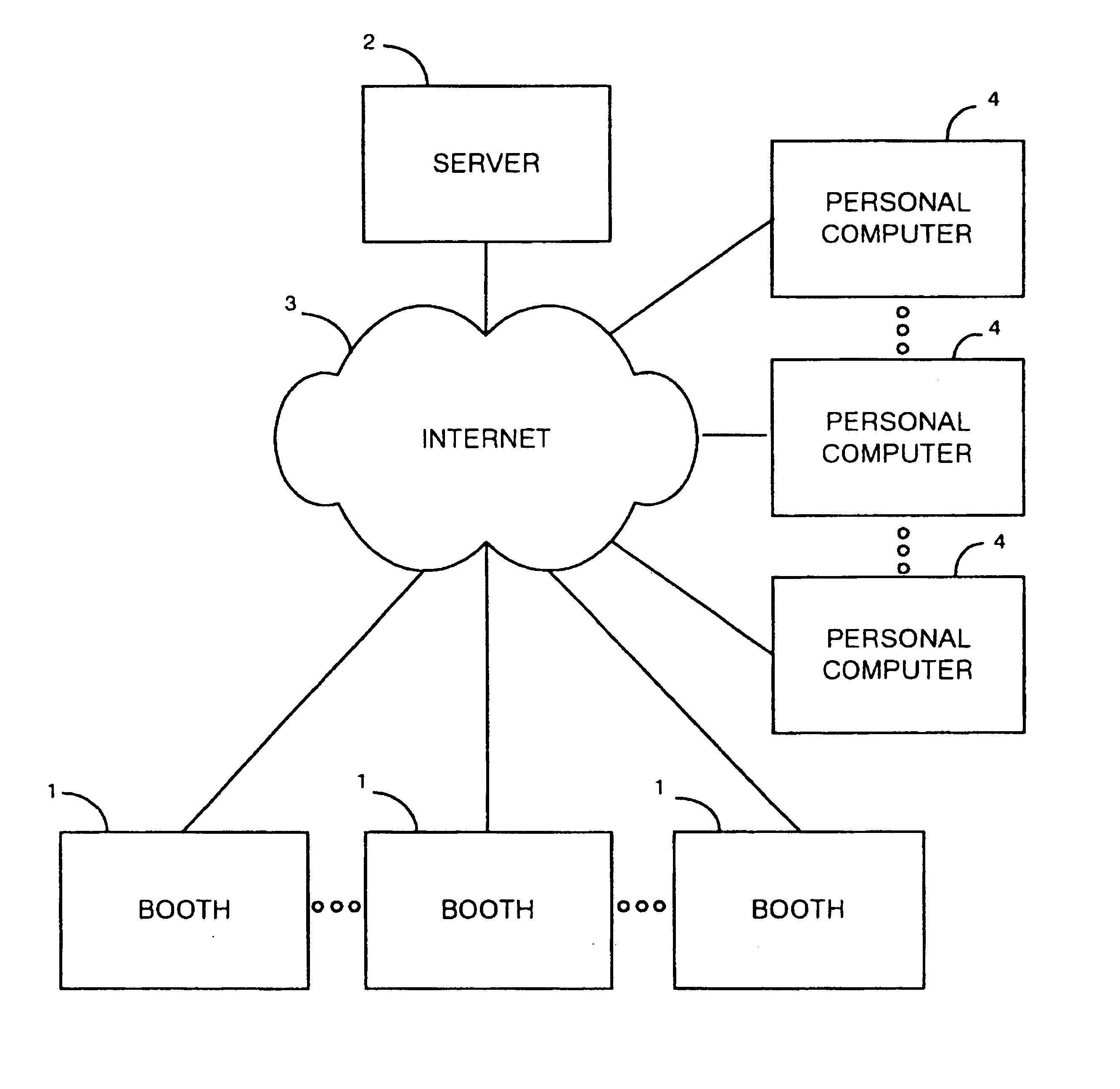

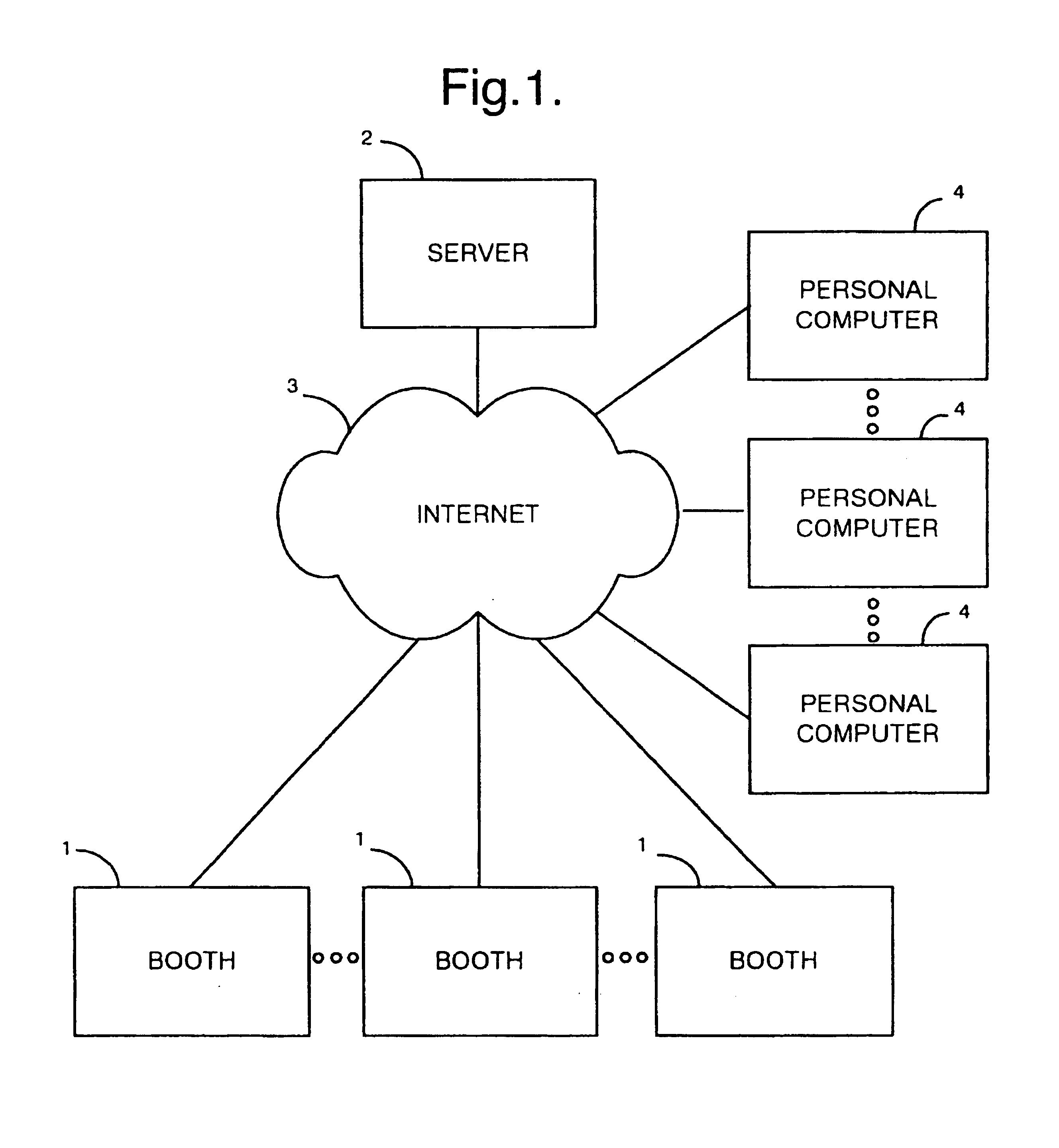

Method and apparatus for the generation of computer graphic representations of individuals

InactiveUS7184047B1Color signal processing circuitsCharacter and pattern recognitionGraphicsThe Internet

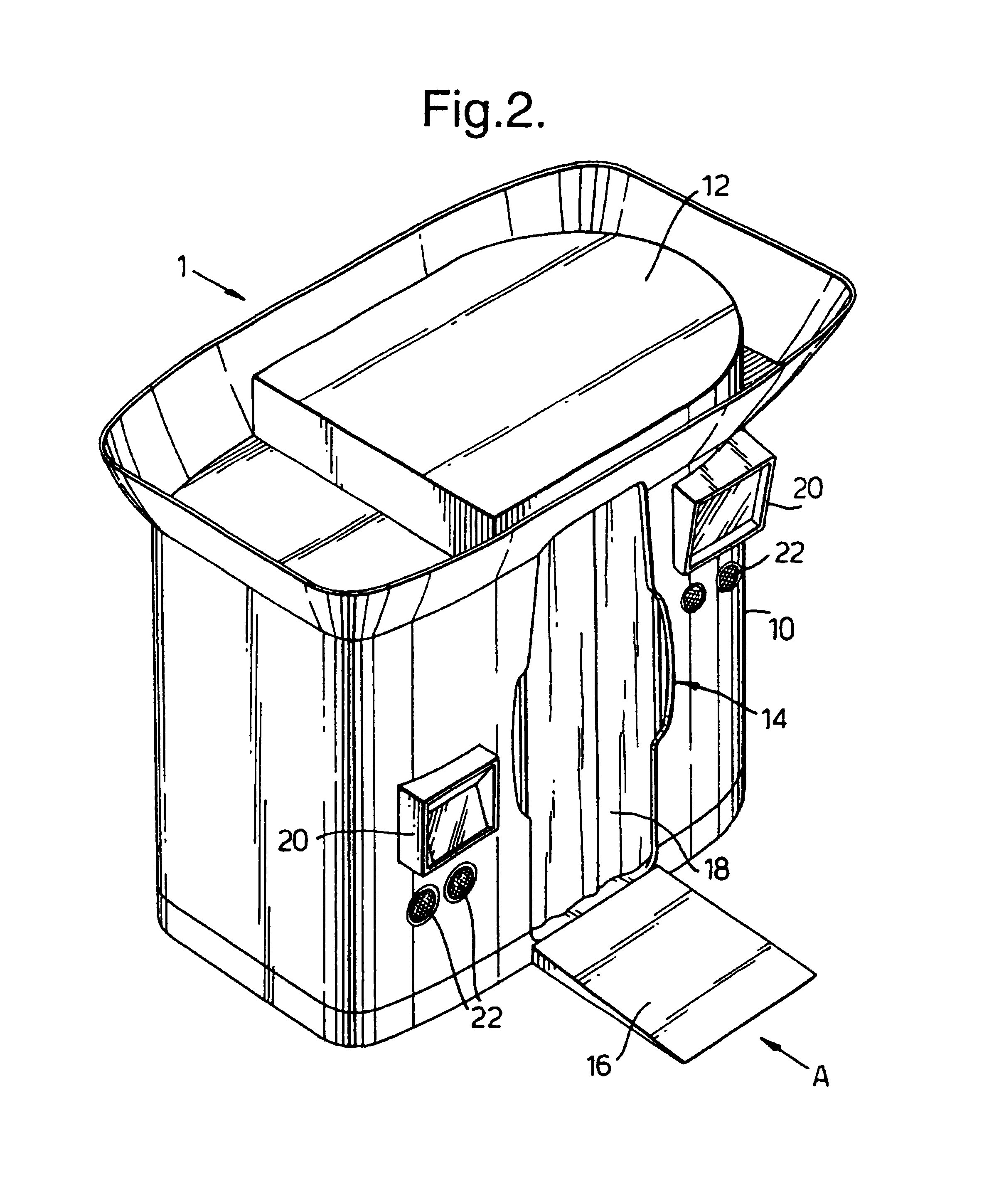

Apparatus for generating computer models of individuals is provided comprising a booth (1) that is connected to a server (2) via the Internet (3). Image data of an individual is captured using the booth (1) and a computer model corresponding to the individual is then generated by comparing the captured image data relative to a stored generic model. Data representative of a generated model is then transmitted to the server (2) where it is stored. Stored data can then be retrieved via the Internet using a personal computer (4) having application software stored therein. The application software on the personal computer (4) can then utilise the data to create graphic representations of an individual in any one of a number of poses.

Owner:CRAMPTON STEPHEN JAMES

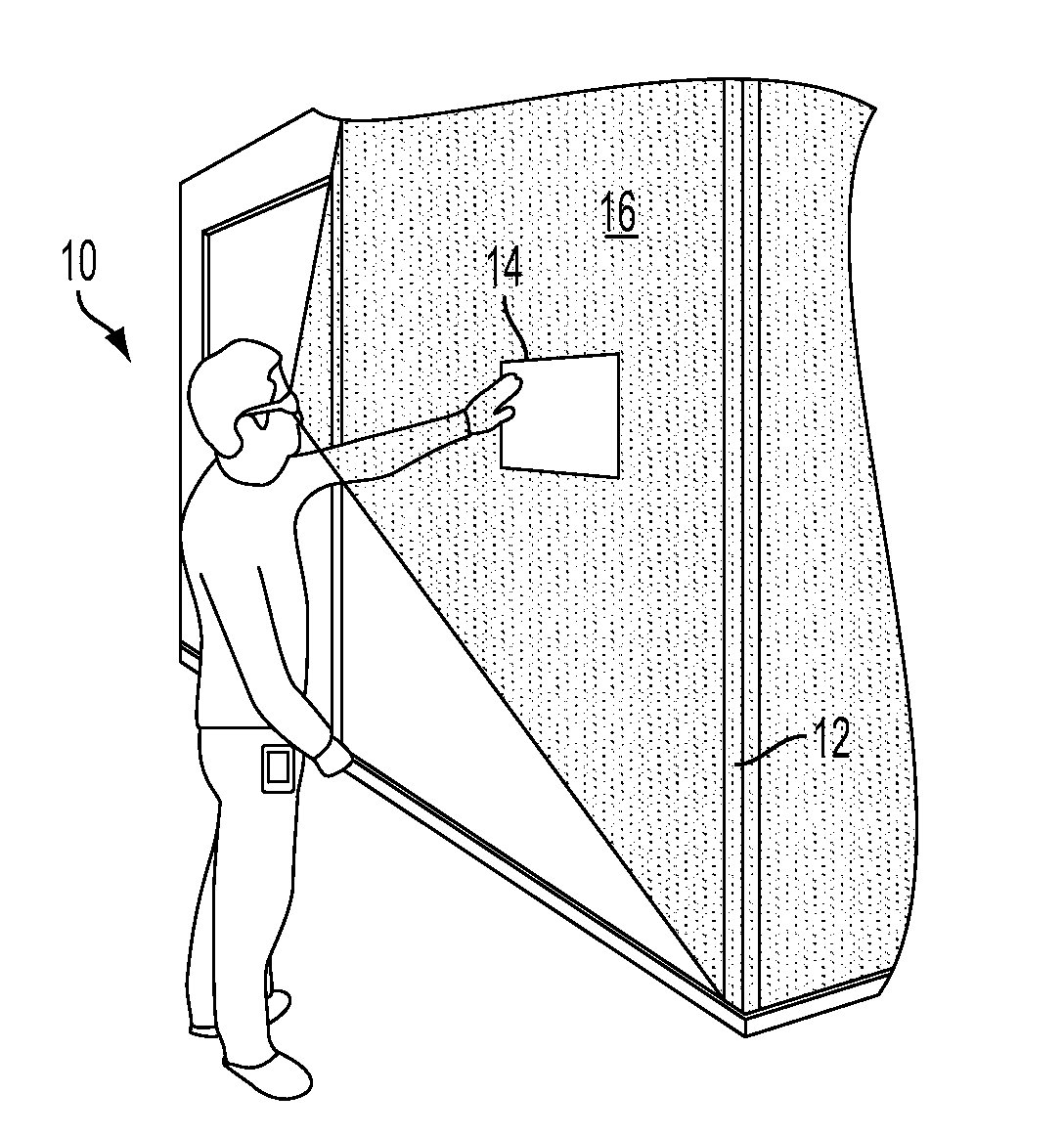

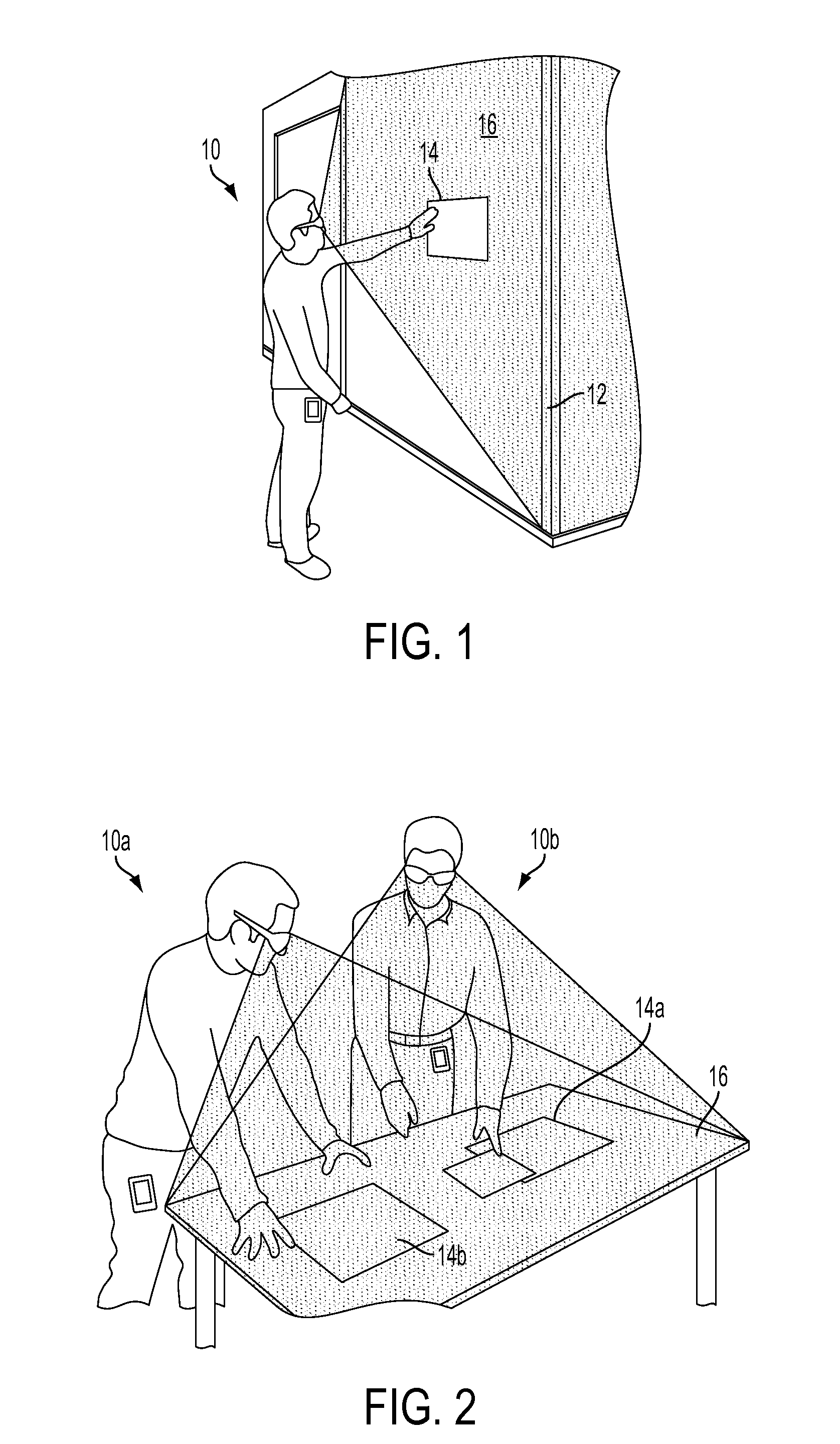

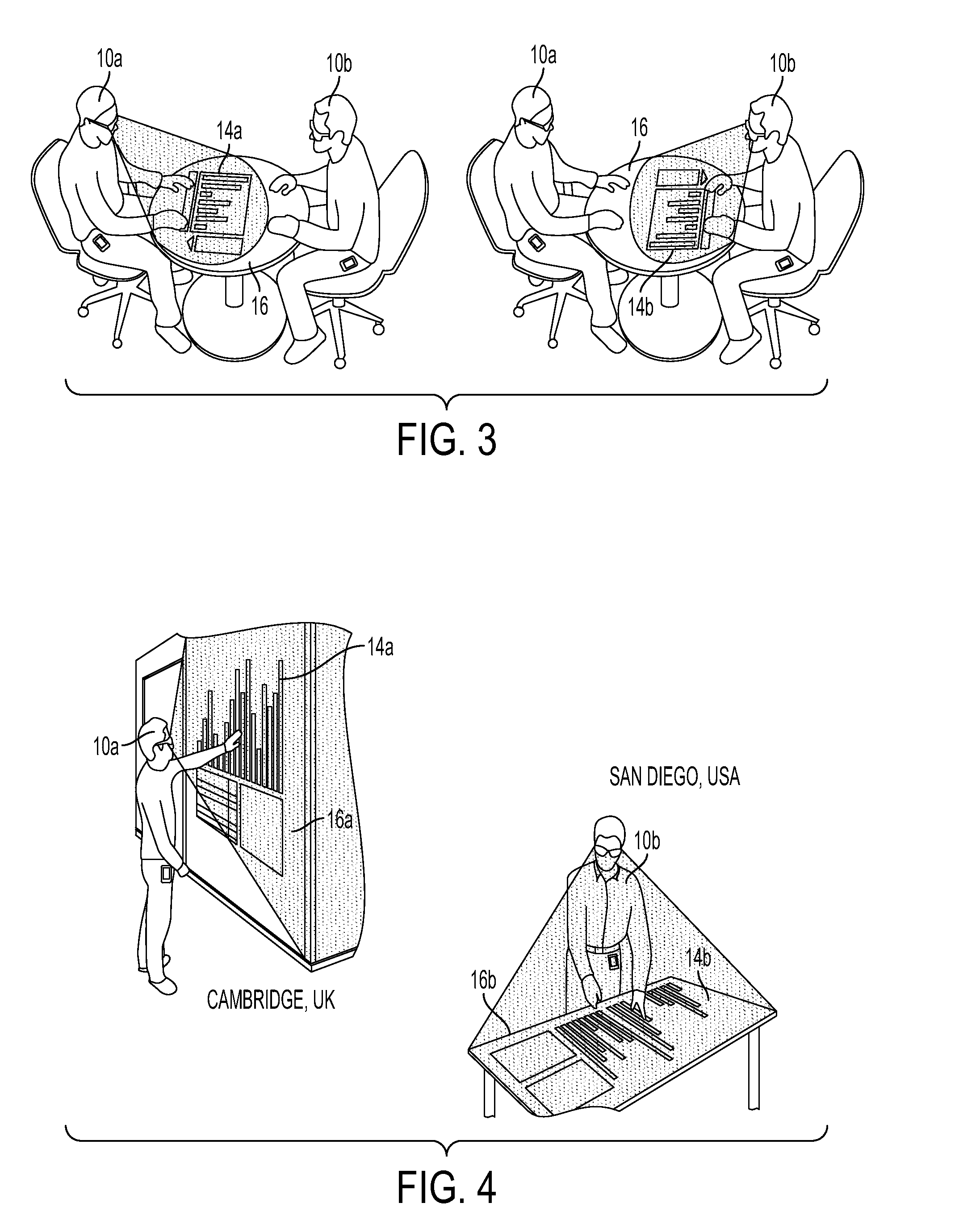

Modular mobile connected pico projectors for a local multi-user collaboration

The various embodiments include systems and methods for rendering images in a virtual or augmented reality system that may include capturing scene images of a scene in a vicinity of a first and a second projector, capturing spatial data with a sensor array in the vicinity of the first and second projectors, analyzing captured scene images to recognize body parts, and projecting images from each of the first and the second projectors with a shape and orientation determined based on the recognized body parts. Additional rendering operations may include tracking movements of the recognized body parts, applying a detection algorithm to the tracked movements to detect a predetermined gesture, applying a command corresponding to the detected predetermined gesture, and updating the projected images in response to the applied command.

Owner:QUALCOMM INC

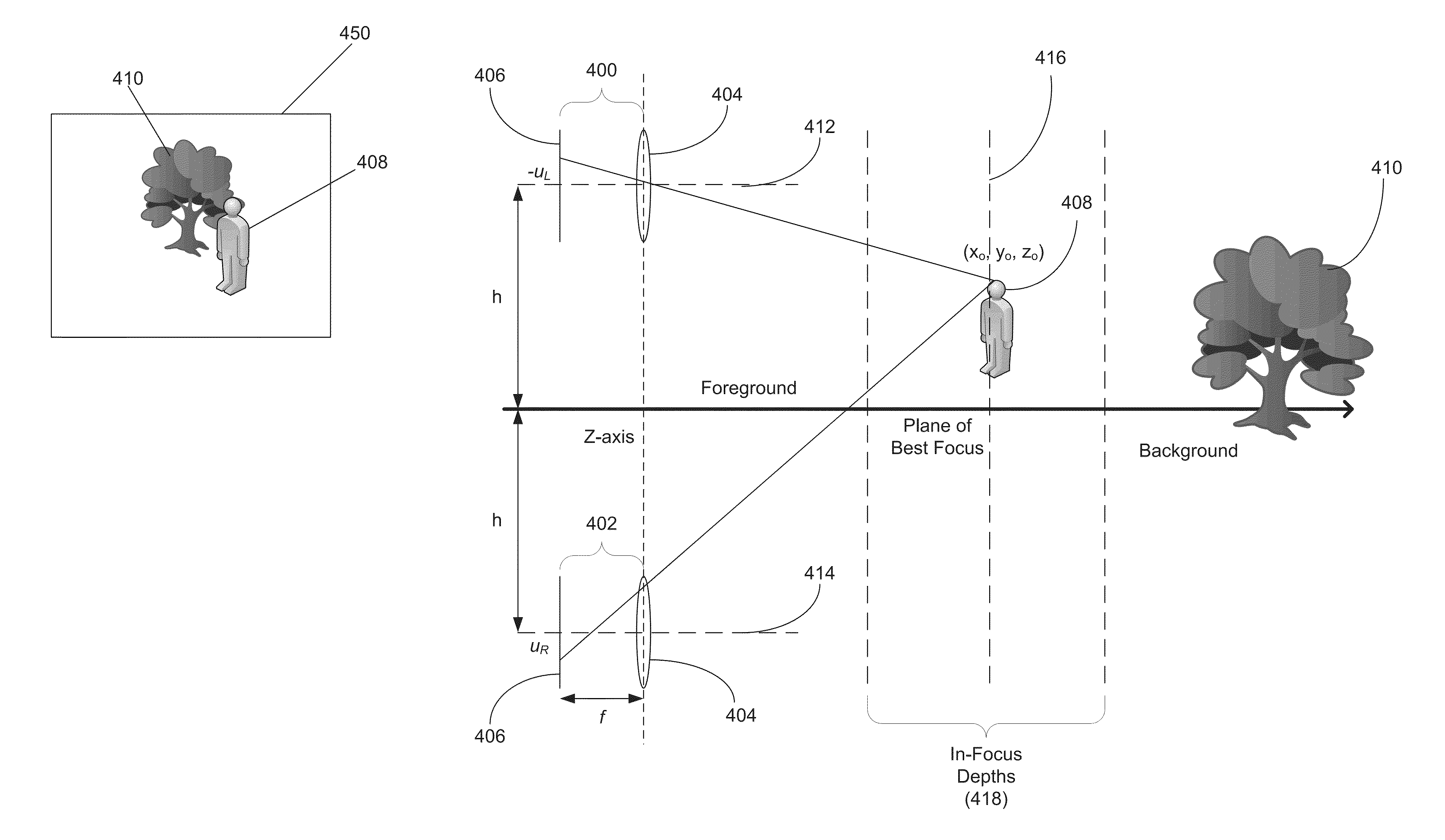

Systems and Methods for Synthesizing Images from Image Data Captured by an Array Camera Using Restricted Depth of Field Depth Maps in which Depth Estimation Precision Varies

ActiveUS20140267243A1Great depth estimation precisionHigh depth estimateImage enhancementImage analysisImaging processingViewpoints

Systems and methods are described for generating restricted depth of field depth maps. In one embodiment, an image processing pipeline application configures a processor to: determine a desired focal plane distance and a range of distances corresponding to a restricted depth of field for an image rendered from a reference viewpoint; generate a restricted depth of field depth map from the reference viewpoint using the set of images captured from different viewpoints, where depth estimation precision is higher for pixels with depth estimates within the range of distances corresponding to the restricted depth of field and lower for pixels with depth estimates outside of the range of distances corresponding to the restricted depth of field; and render a restricted depth of field image from the reference viewpoint using the set of images captured from different viewpoints and the restricted depth of field depth map.

Owner:FOTONATION LTD

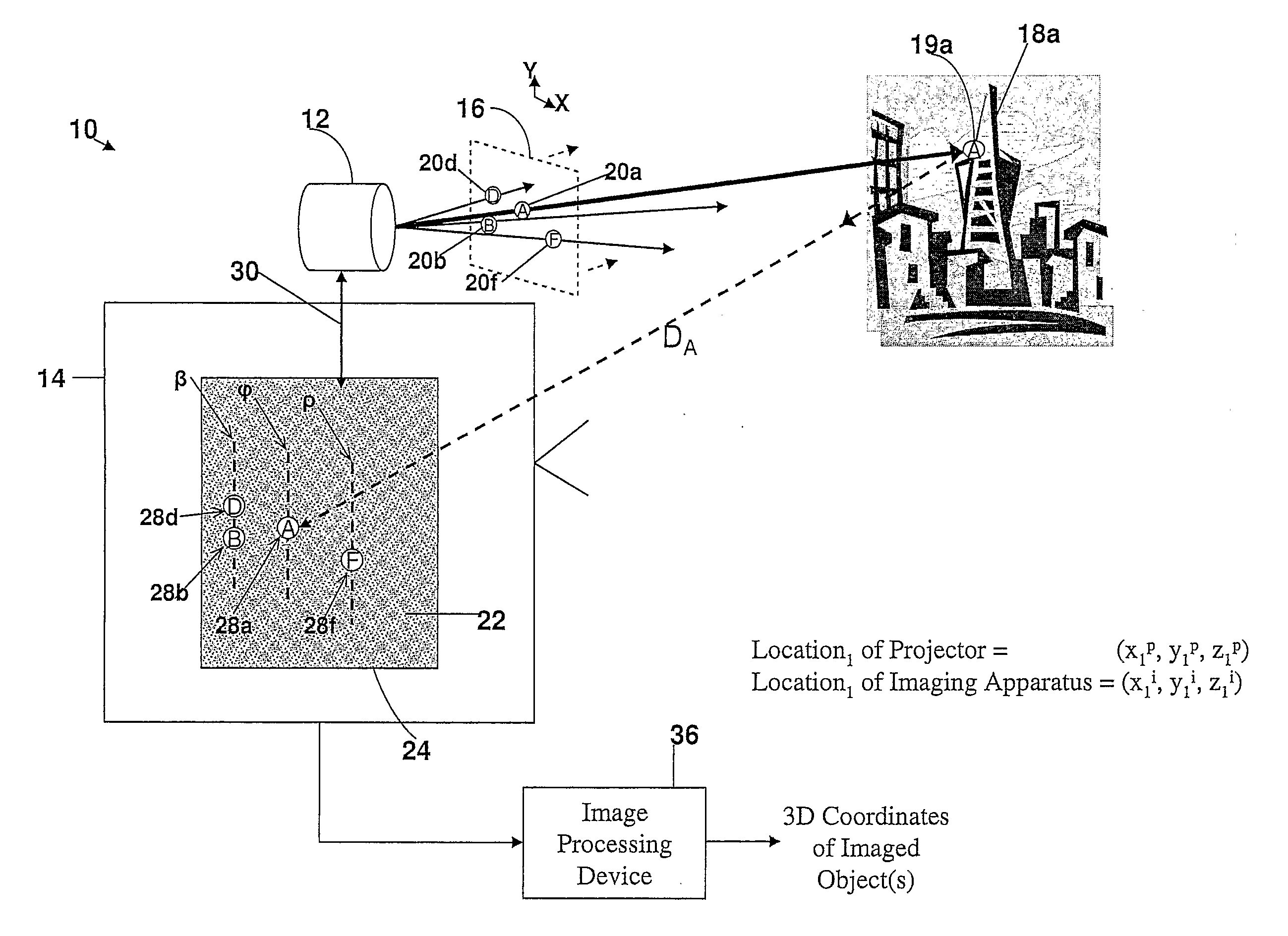

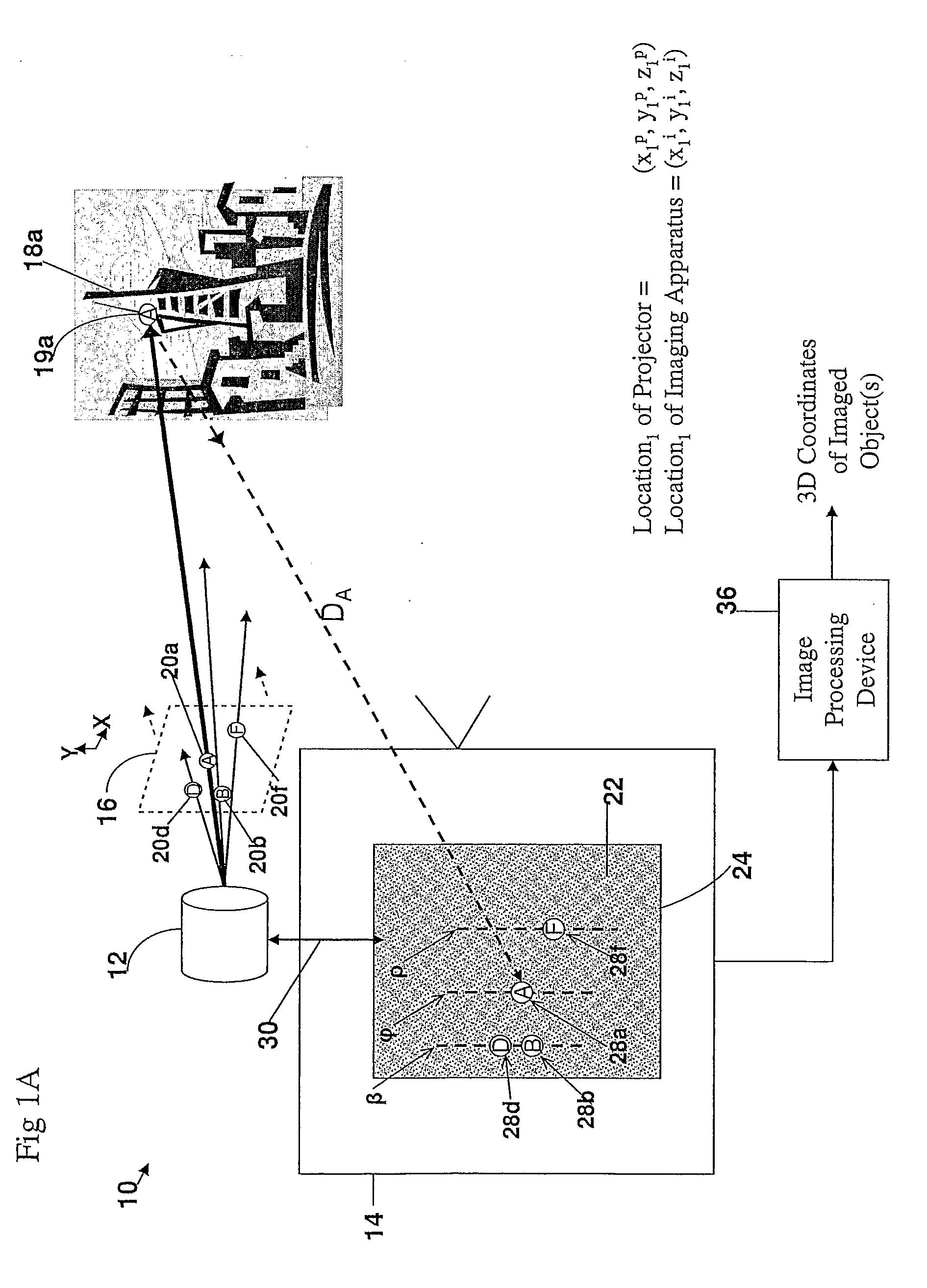

3D geometric modeling and 3D video content creation

A system, apparatus and method of obtaining data from a 2D image in order to determine the 3D shape of objects appearing in said 2D image, said 2D image having distinguishable epipolar lines, said method comprising: (a) providing a predefined set of types of features, giving rise to feature types, each feature type being distinguishable according to a unique bi-dimensional formation; (b) providing a coded light pattern comprising multiple appearances of said feature types; (c) projecting said coded light pattern on said objects such that the distance between epipolar lines associated with substantially identical features is less than the distance between corresponding locations of two neighboring features; (d) capturing a 2D image of said objects having said projected coded light pattern projected thereupon, said 2D image comprising reflected said feature types; and (e) extracting: (i) said reflected feature types according to the unique bi-dimensional formations; and (ii) locations of said reflected feature types on respective said epipolar lines in said 2D image.

Owner:MANTIS VISION

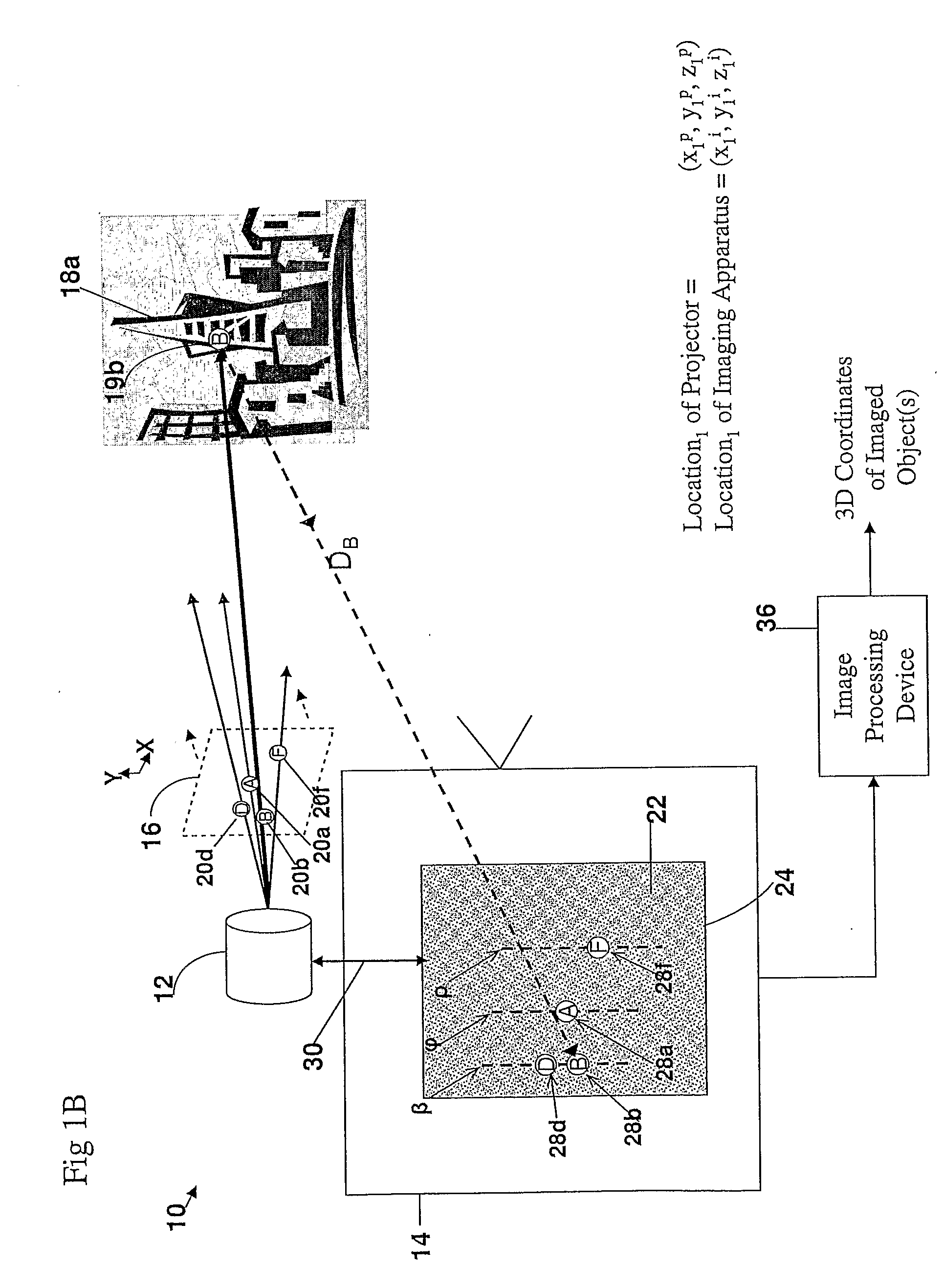

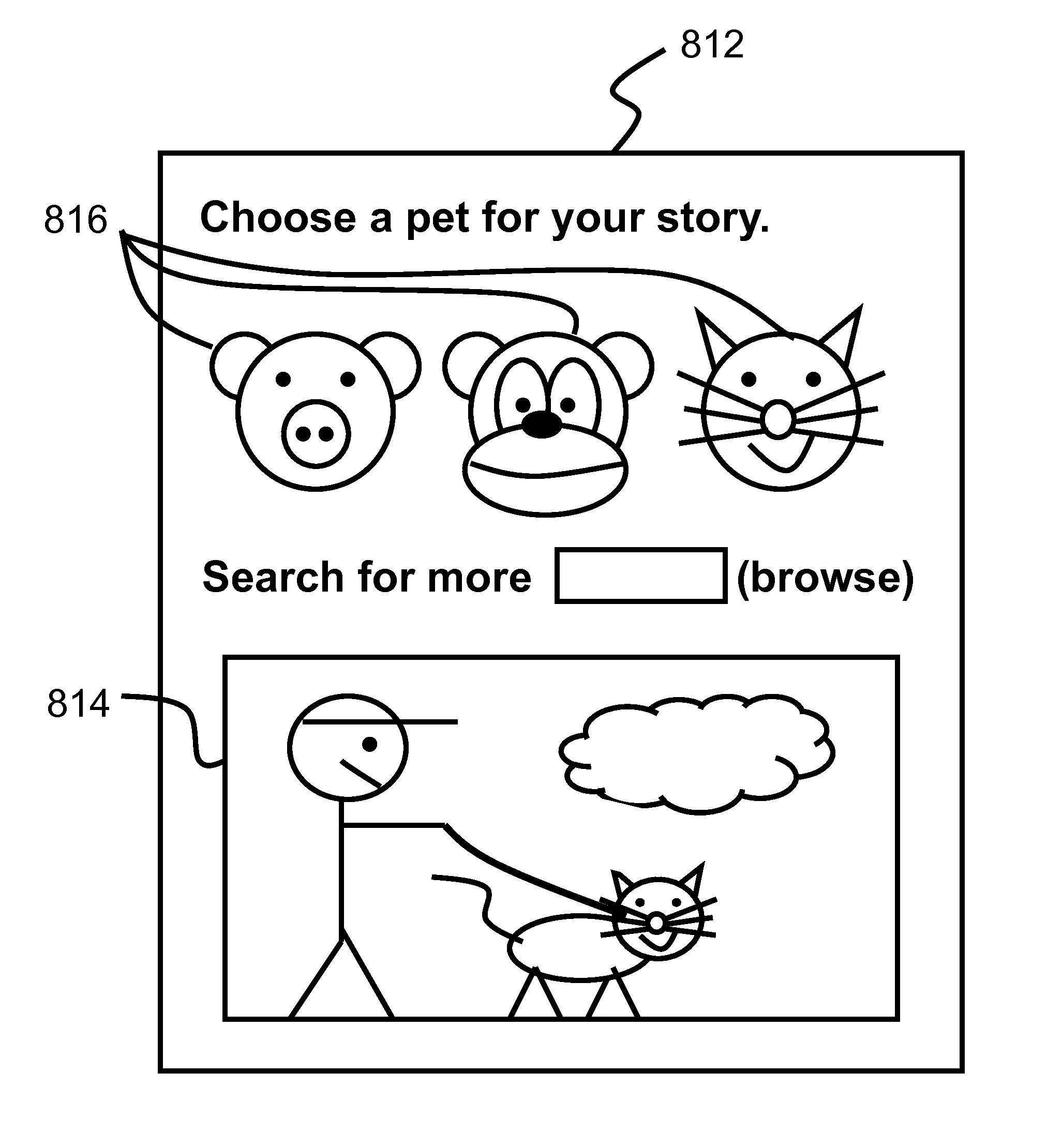

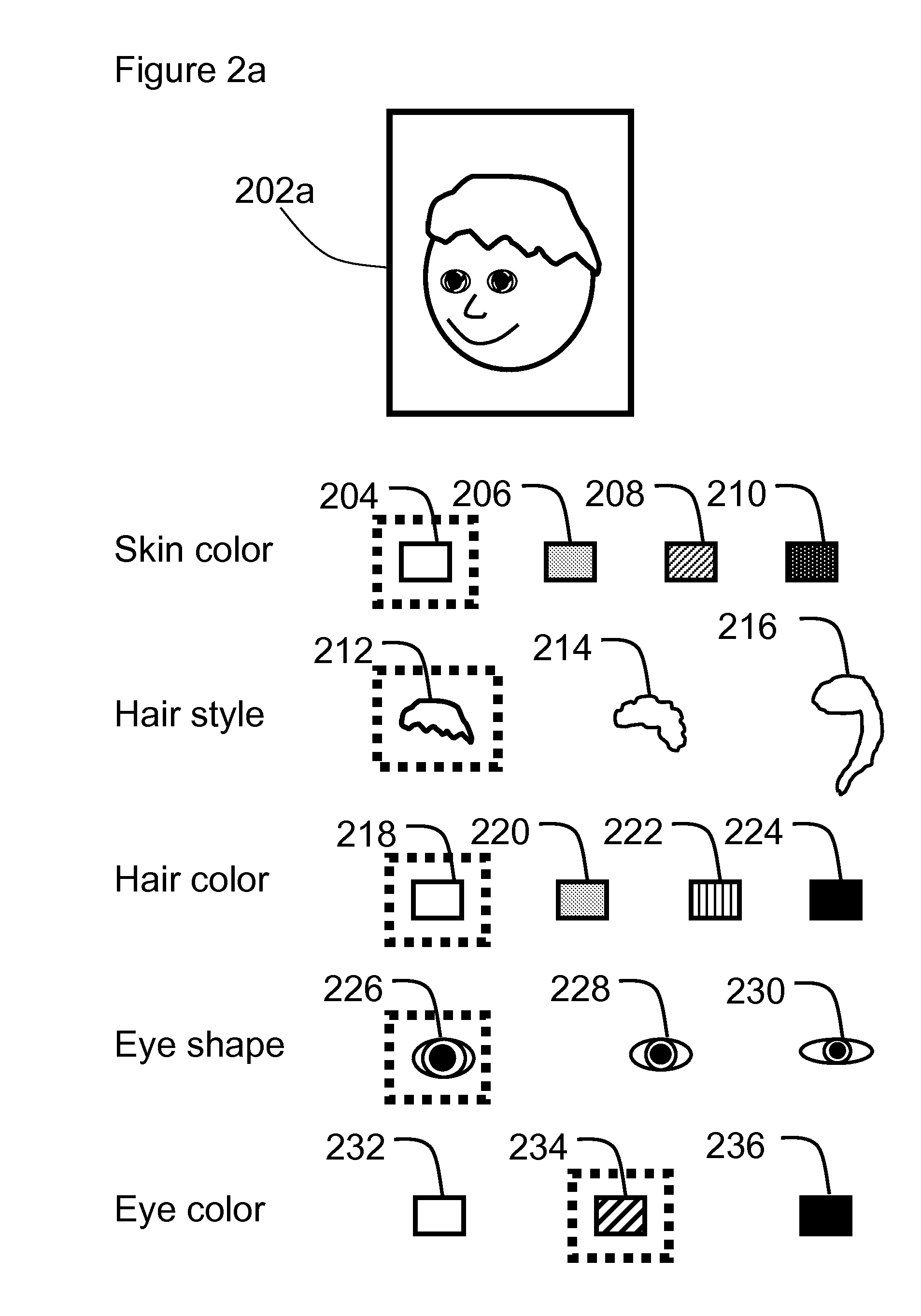

User Customized Animated Video and Method For Making the Same

A customized animation video system and method generate a customized or personalized animated video using user input where the customized animated video is rendered in a near immediate timeframe (for example, in less than 10 minutes). The customized animation video system and method of the present invention enable seamless integration of an animated representation of a subject or other custom object into the animated video. That is, the system and method of the present invention enable the generation of an animated representation of a subject that can be viewed from any desired perspective in the animated video without the use of multiple photographs or other 2D depictions of the subject. Furthermore, the system and method of the present invention enables the generation of an animated representation of a subject that is in the same graphic style as the rest of the animated video.

Owner:PANDOODLE CORPORATION

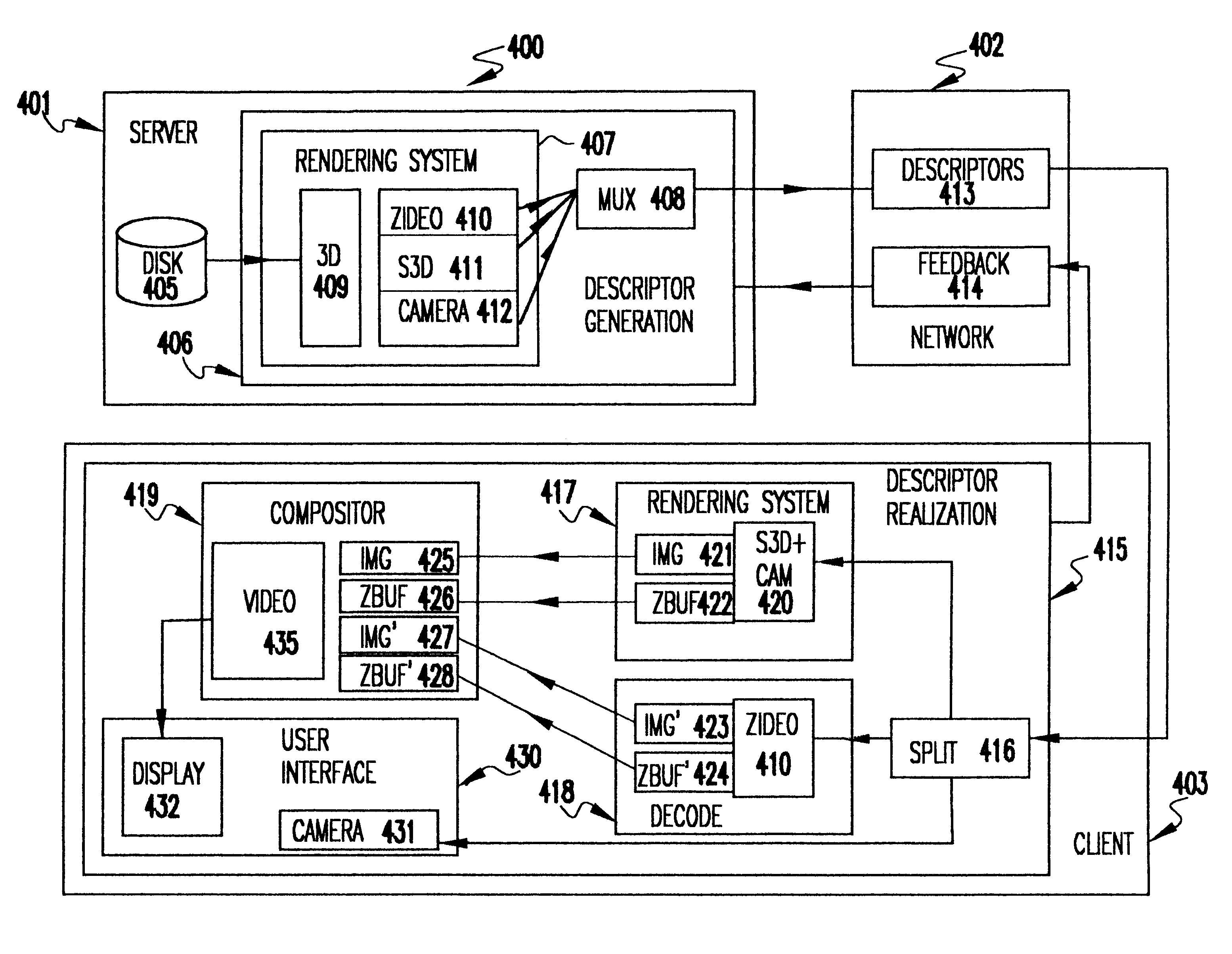

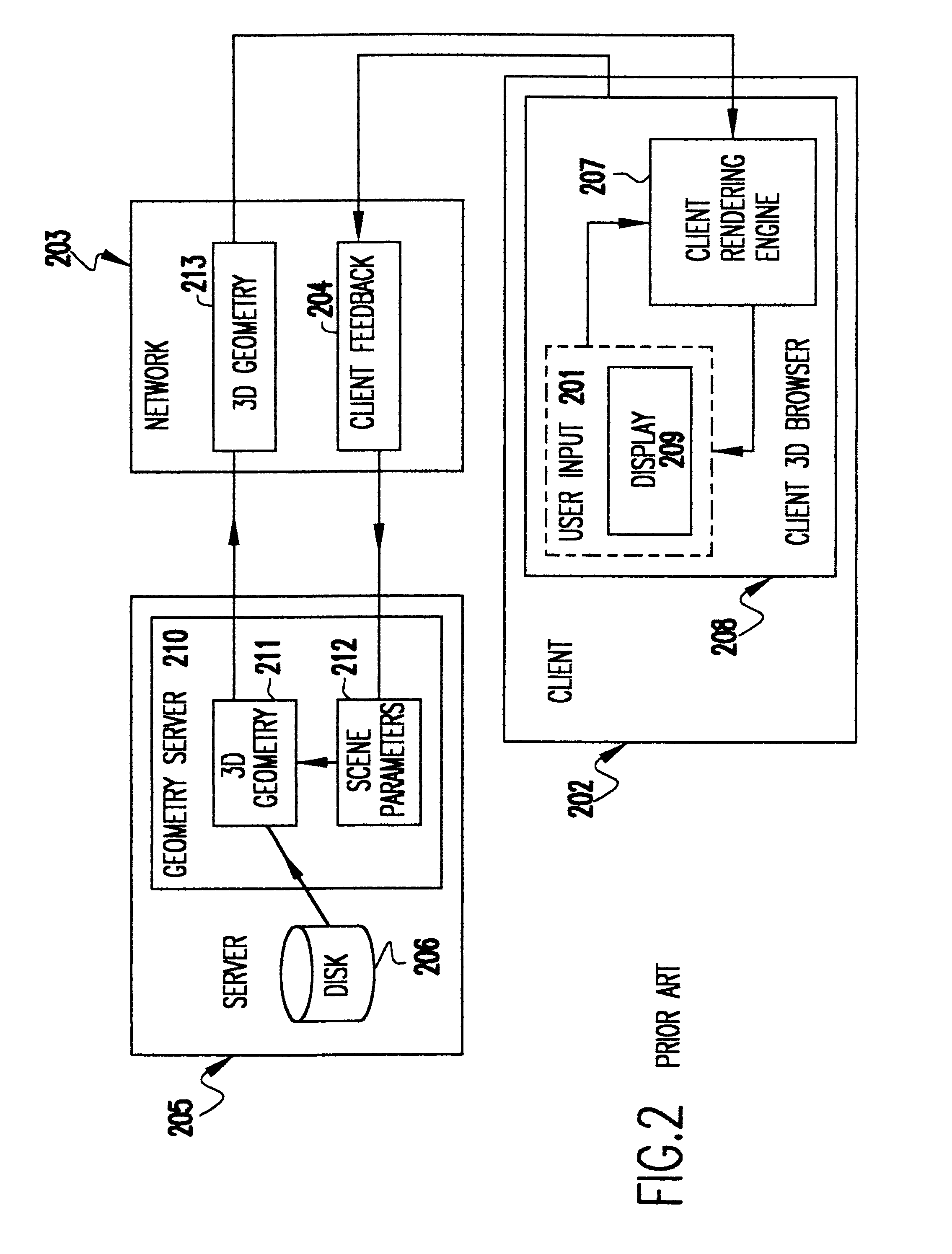

Methods and apparatus for delivering 3D graphics in a networked environment

InactiveUS6377257B1Pulse modulation television signal transmissionSelective content distributionImage resolutionThree dimensional graphics

A system and method for seamlessly combining client-only rendering techniques with server-only rendering techniques. The approach uses a composite stream containing three distinct streams. Two of the streams are synchronized and transmit camera definition, video of server-rendered objects, and a time dependent depth map for the server-rendered object. The third stream is available to send geometry from the server to the client, for local rendering if appropriate. The invention can satisfy a number of viewing applications. For example, initially the most relevant geometry can stream to the client for high quality local rendering while the server delivers renderings of less relevant geometry at lower resolutions. After the most relevant geometry has been delivered to the client, the less important geometry can be optionally streamed to the client to increase the fidelity of the entire scene. In the limit, all of the geometry is transferred to the client and the situation corresponds to client-only rendering system where local graphics hardware is used to improve fidelity and reduce bandwidth. Alternatively, if a client does not have local three-dimensional graphics capability then the server can transmit only the video of the server-rendered object and drop the other two streams. In either case, the approach also permits for a progressive improvement in the server-rendered image whenever the scene becomes static. Bandwidth that was previously used to represent changing images is allocated to improving the fidelity of the server-rendered image whenever the scene becomes static.

Owner:LENOVO (SINGAPORE) PTE LTD

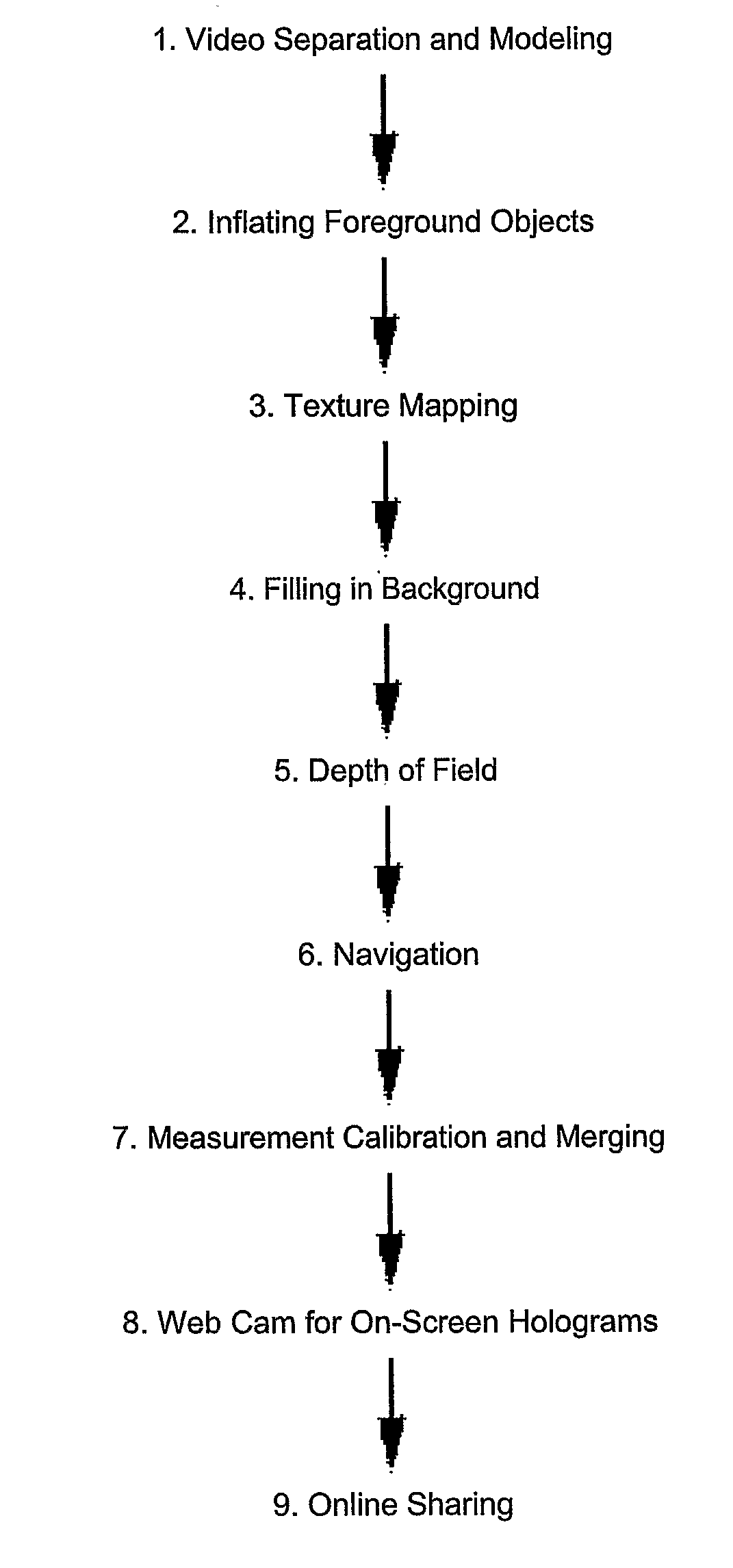

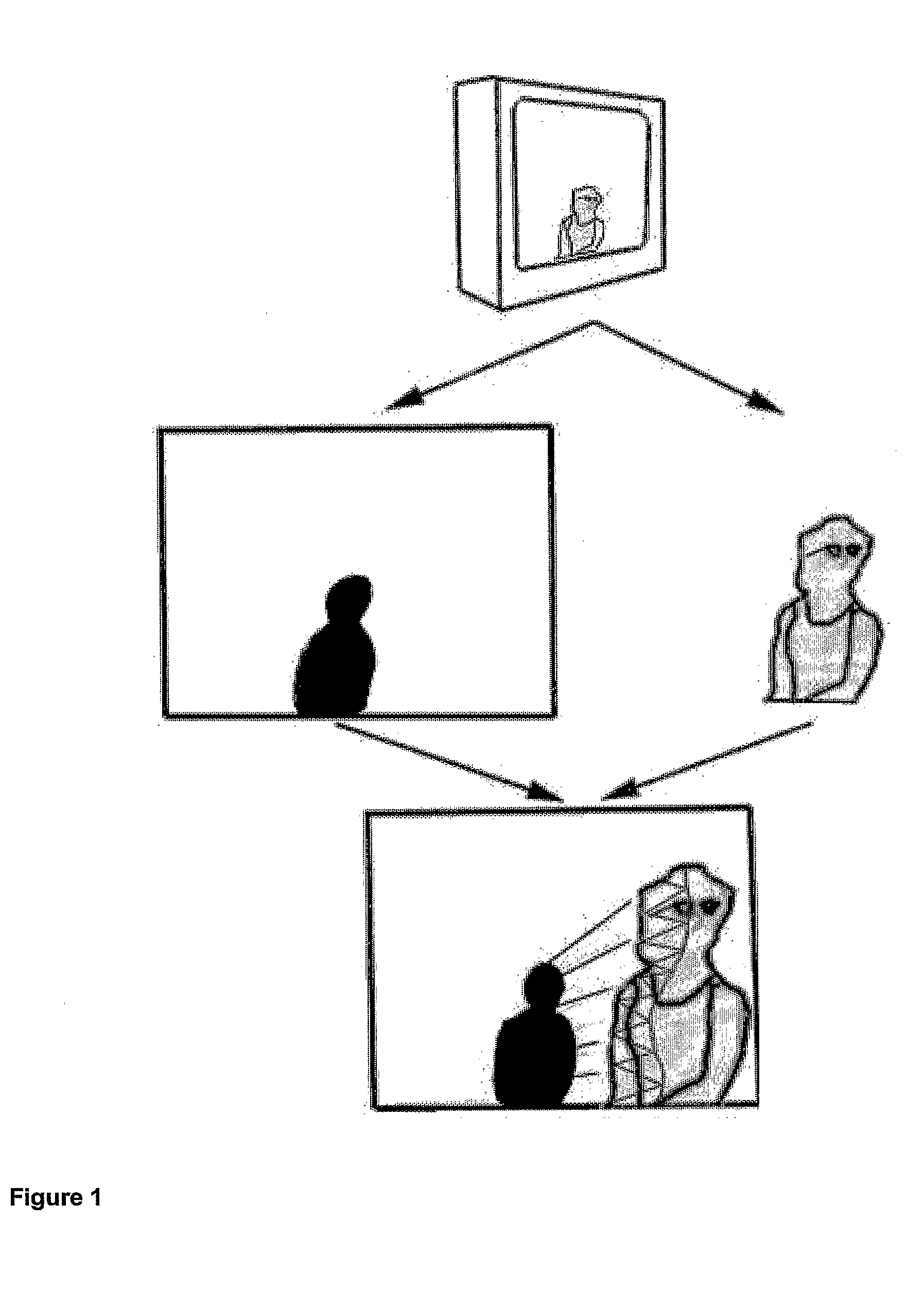

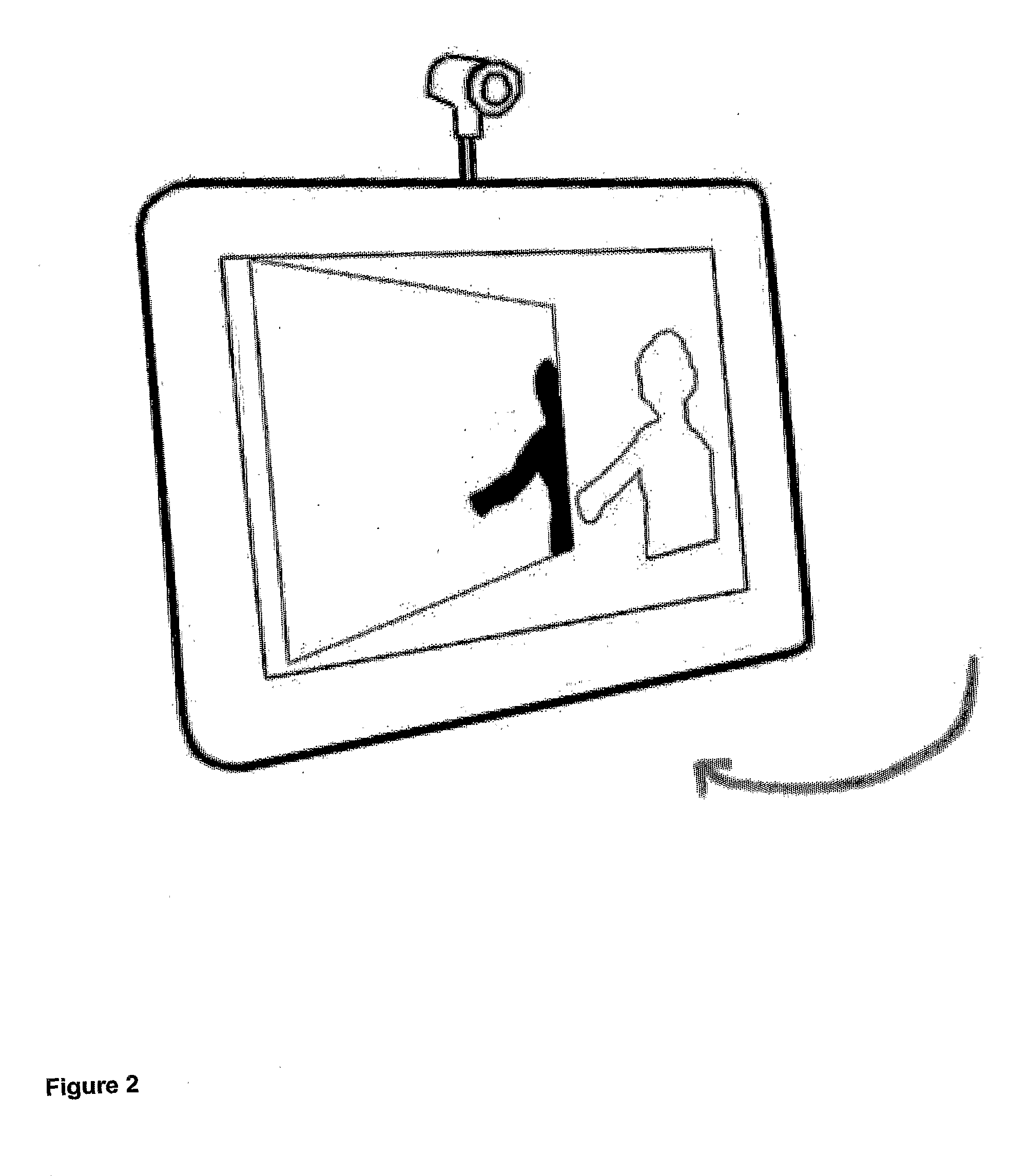

Automatic Scene Modeling for the 3D Camera and 3D Video

InactiveUS20080246759A1Reduce video bandwidthIncrease frame rateTelevision system detailsImage enhancementAutomatic controlViewpoints

Single-camera image processing methods are disclosed for 3D navigation within ordinary moving video. Along with color and brightness, XYZ coordinates can be defined for every pixel. The resulting geometric models can be used to obtain measurements from digital images, as an alternative to on-site surveying and equipment such as laser range-finders. Motion parallax is used to separate foreground objects from the background. This provides a convenient method for placing video elements within different backgrounds, for product placement, and for merging video elements with computer-aided design (CAD) models and point clouds from other sources. If home users can save video fly-throughs or specific 3D elements from video, this method provides an opportunity for proactive, branded media sharing. When this image processing is used with a videoconferencing camera, the user's movements can automatically control the viewpoint, creating 3D hologram effects on ordinary televisions and computer screens.

Owner:SUMMERS

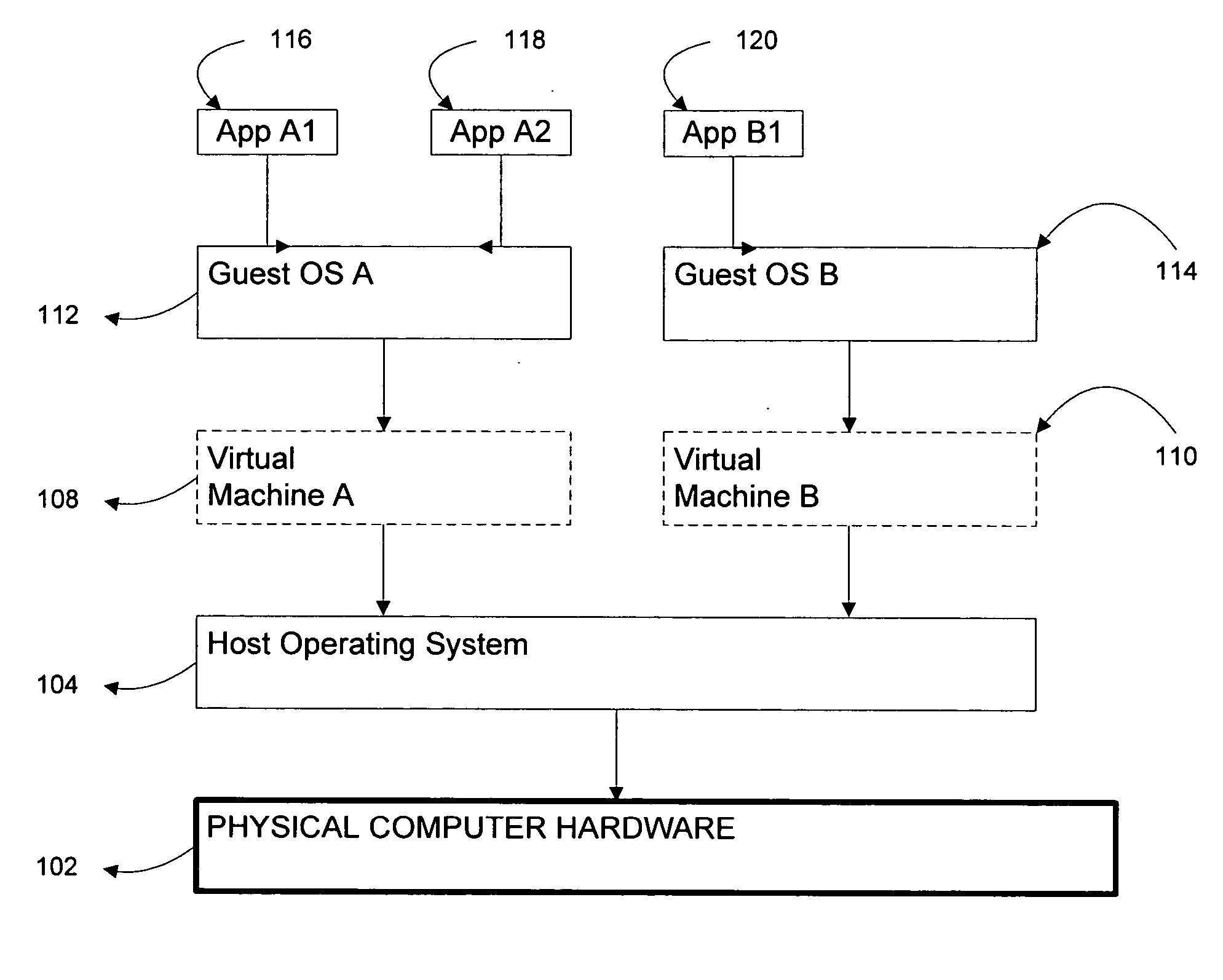

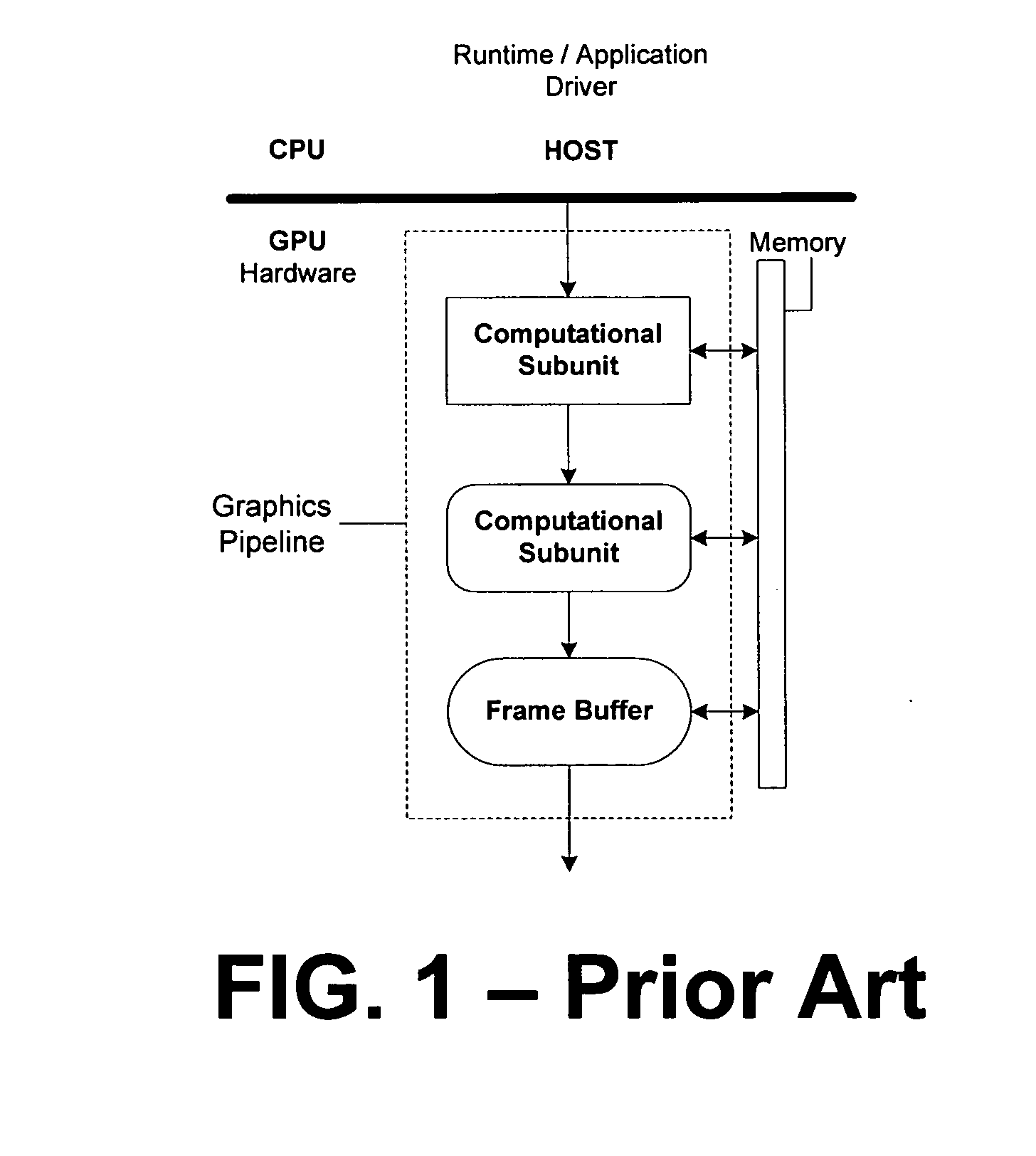

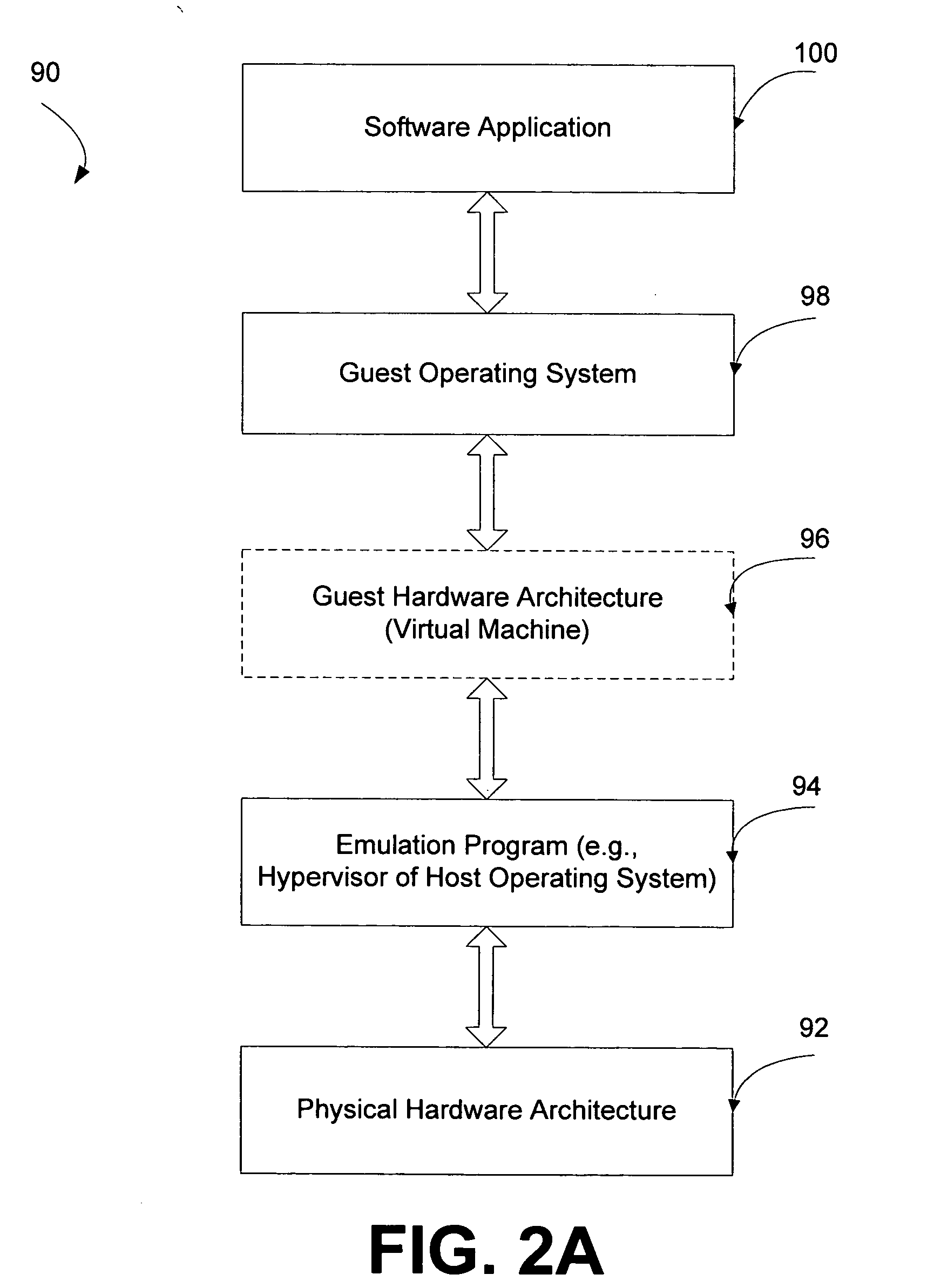

Systems and methods for virtualizing graphics subsystems

ActiveUS20060146057A1Program control using stored programsProcessor architectures/configurationVirtualizationOperational system

Systems and methods for applying virtual machines to graphics hardware are provided. In various embodiments of the invention, while supervisory code runs on the CPU, the actual graphics work items are run directly on the graphics hardware and the supervisory code is structured as a graphics virtual machine monitor. Application compatibility is retained using virtual machine monitor (VMM) technology to run a first operating system (OS), such as an original OS version, simultaneously with a second OS, such as a new version OS, in separate virtual machines (VMs). VMM technology applied to host processors is extended to graphics processing units (GPUs) to allow hardware access to graphics accelerators, ensuring that legacy applications operate at full performance. The invention also provides methods to make the user experience cosmetically seamless while running multiple applications in different VMs. In other aspects of the invention, by employing VMM technology, the virtualized graphics architecture of the invention is extended to provide trusted services and content protection.

Owner:MICROSOFT TECH LICENSING LLC

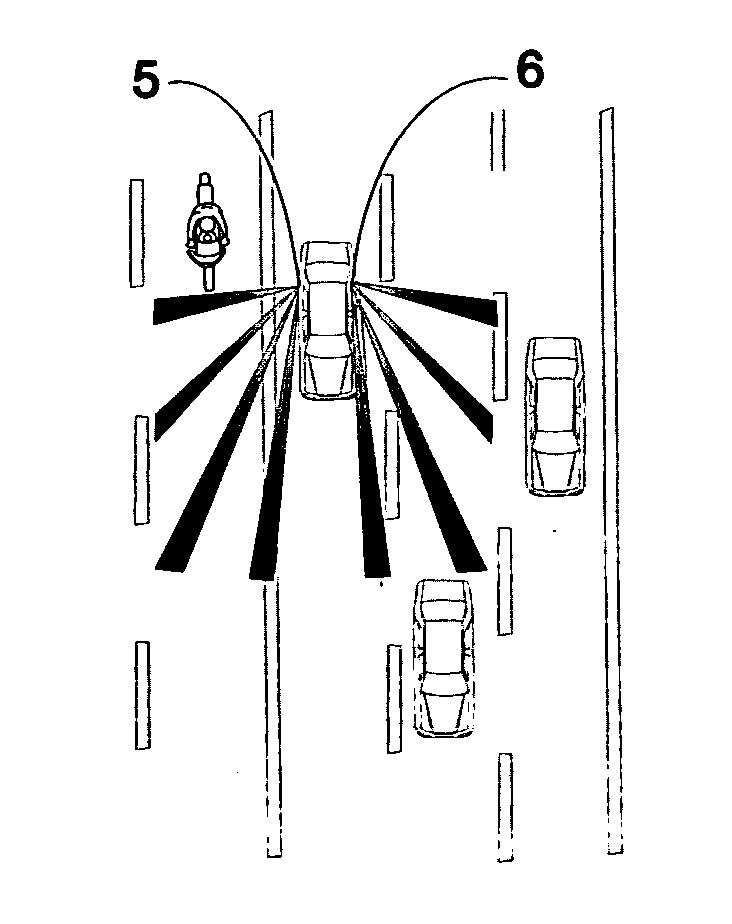

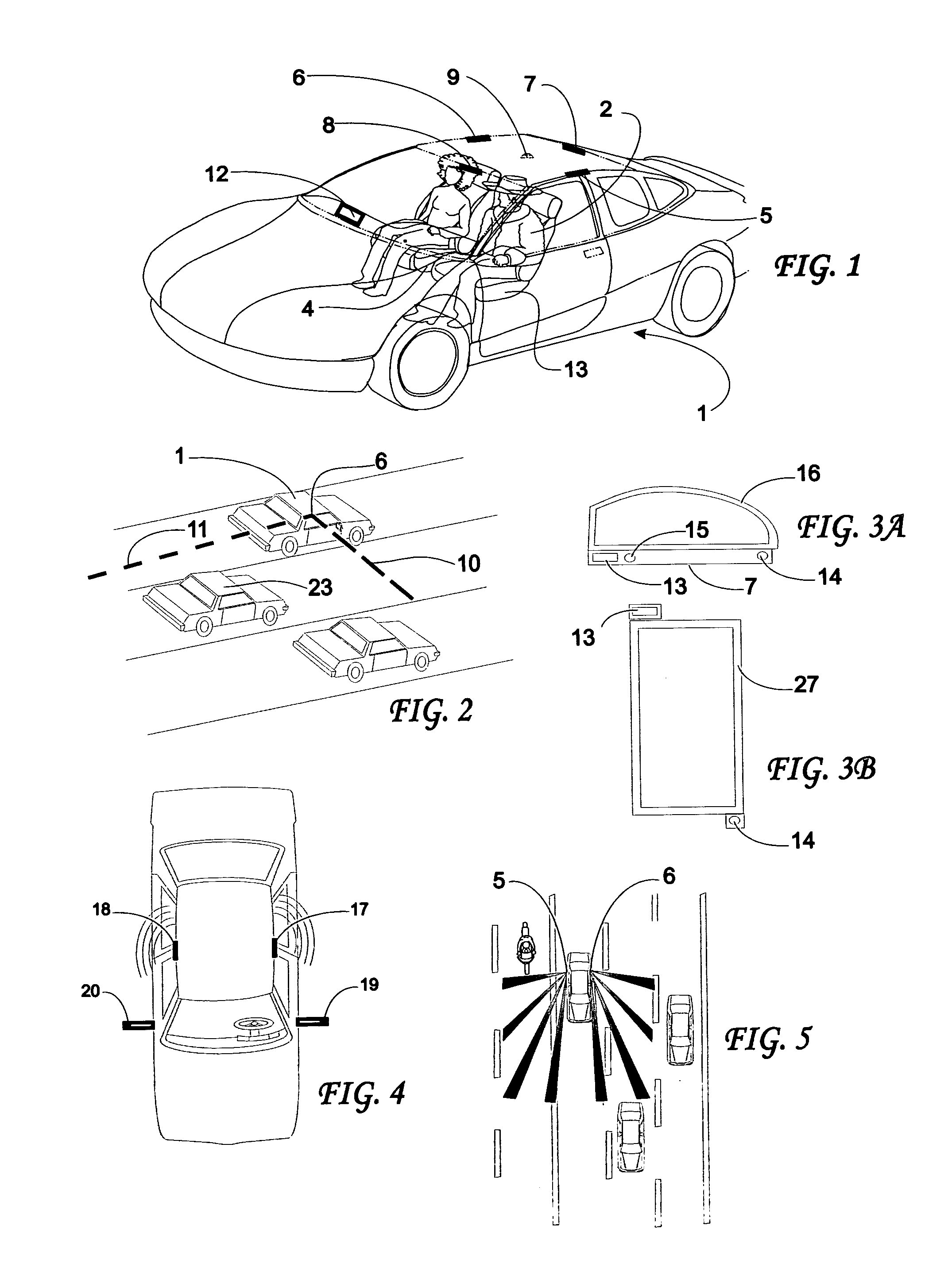

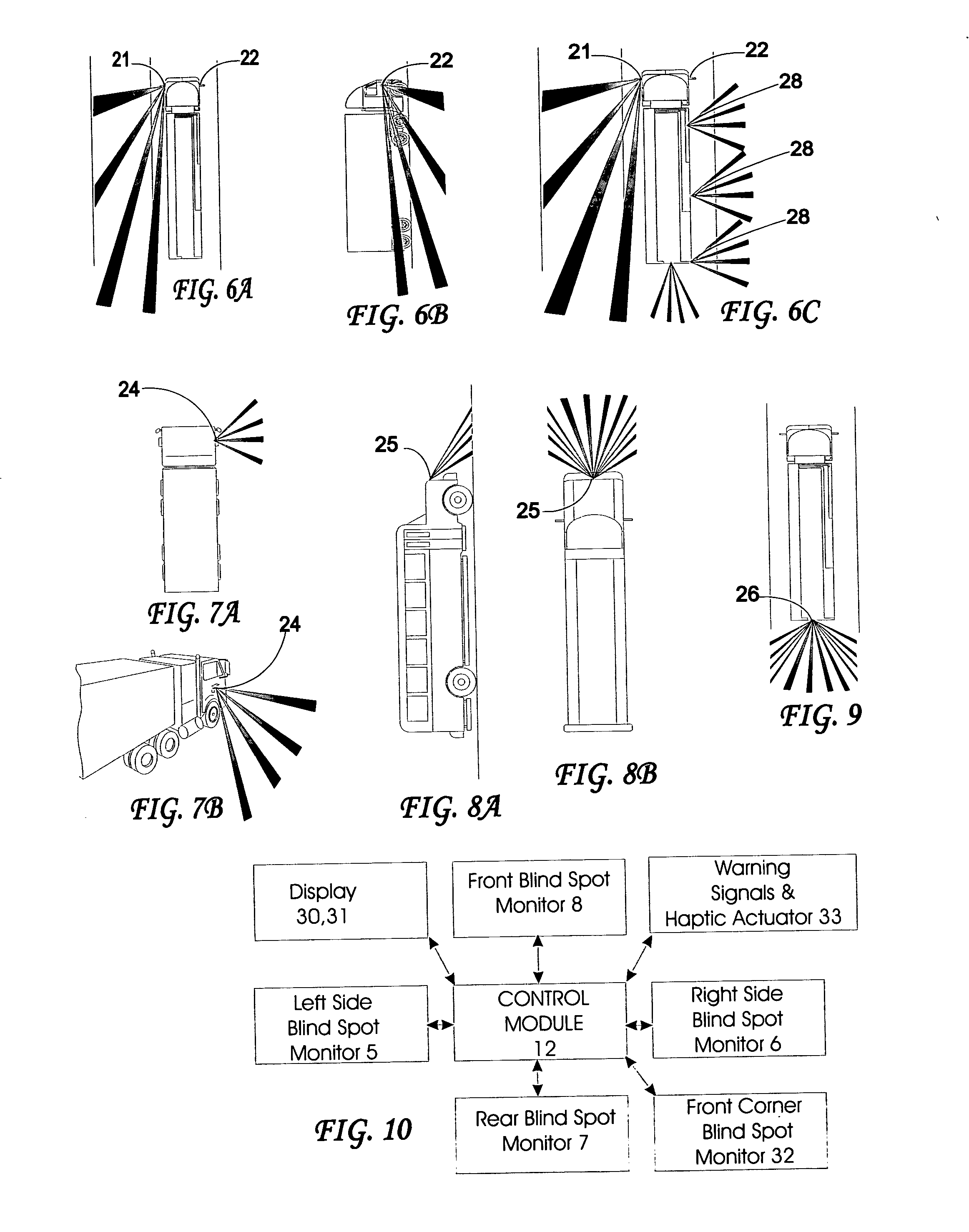

Method for obtaining information about objects in a vehicular blind spot

InactiveUS20050195383A1Accurate identificationHigh resolutionOptical rangefindersAnti-theft devicesDisplay deviceComputer vision

Method for obtaining information about objects in an environment around a vehicle in which infrared light is emitted into a portion of the environment and received and the distance between the vehicle and objects from which the infrared light is reflected is measured. An identification of each object from which light is reflected is determined and a three-dimensional representation of the portion of the environment is created based on the measured distance and the determined identification of the object. Icons representative of the objects and their position relative to the vehicle are displayed on a display visible to the driver based on the three-dimensional representation. Additionally or alternatively to the display of icons, a vehicular system can be controlled or adjusted based on the relative position and optionally velocity of the vehicle and objects in the environment around the vehicle to avoid collisions.

Owner:AMERICAN VEHICULAR SCI

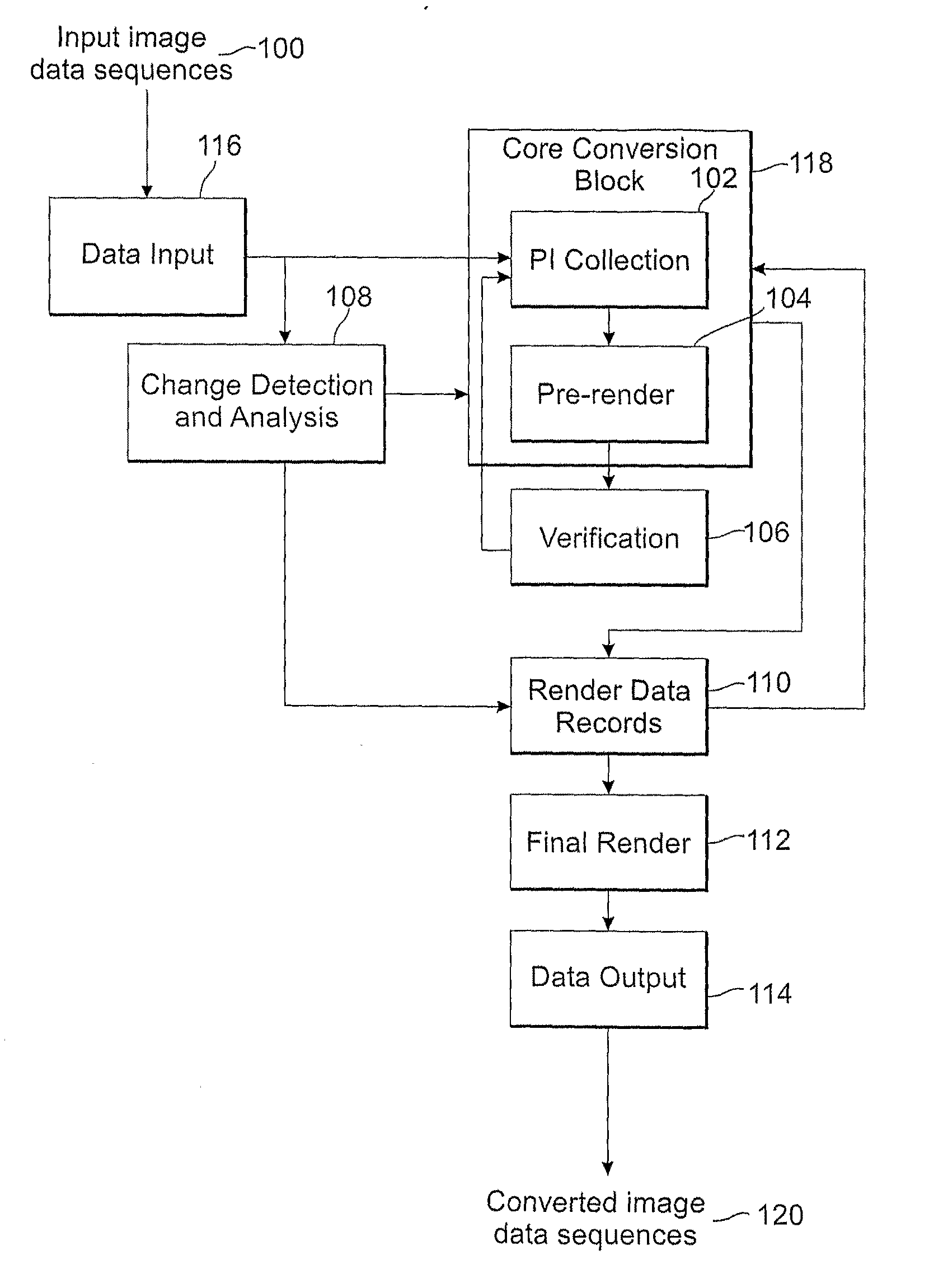

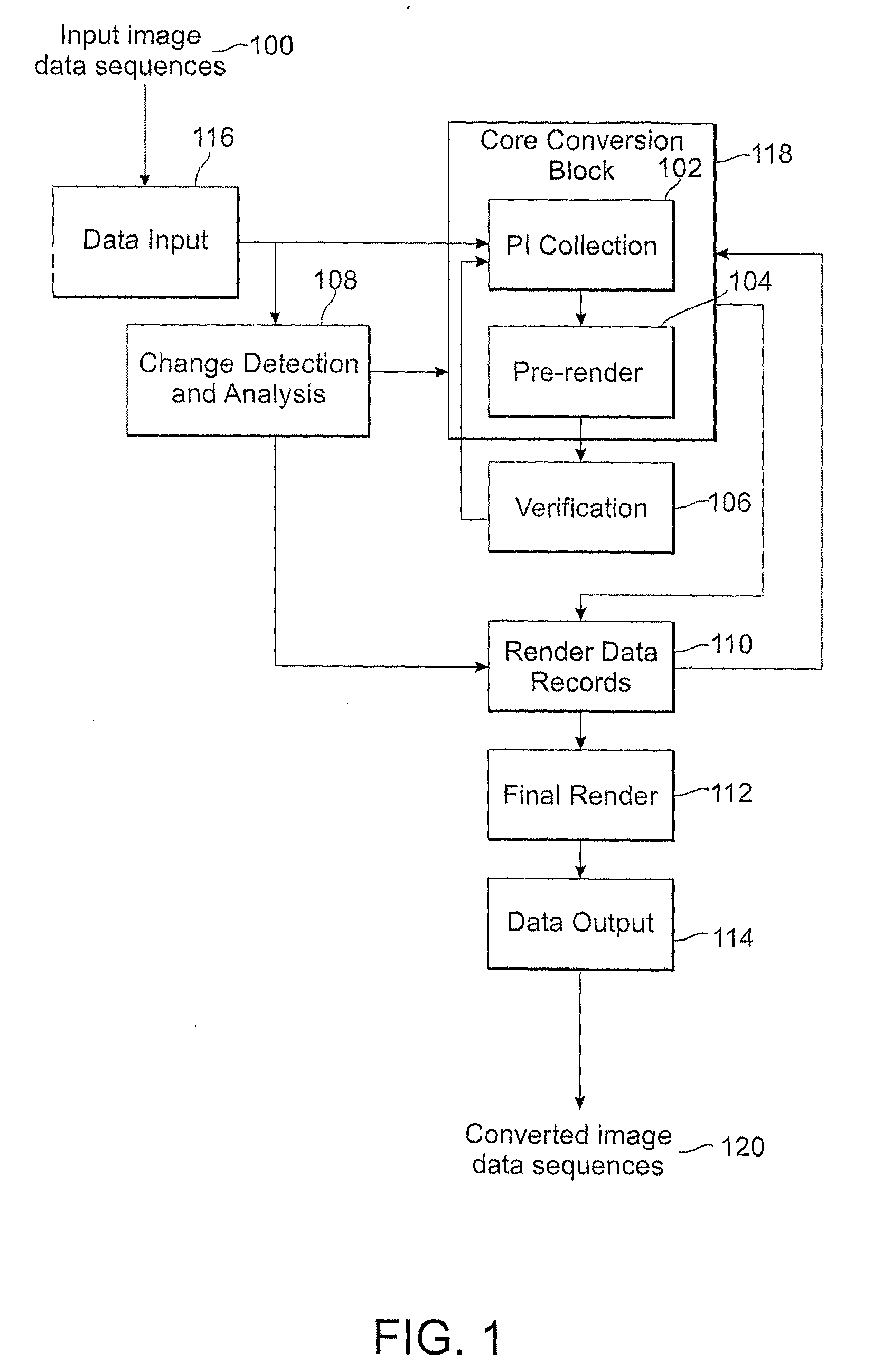

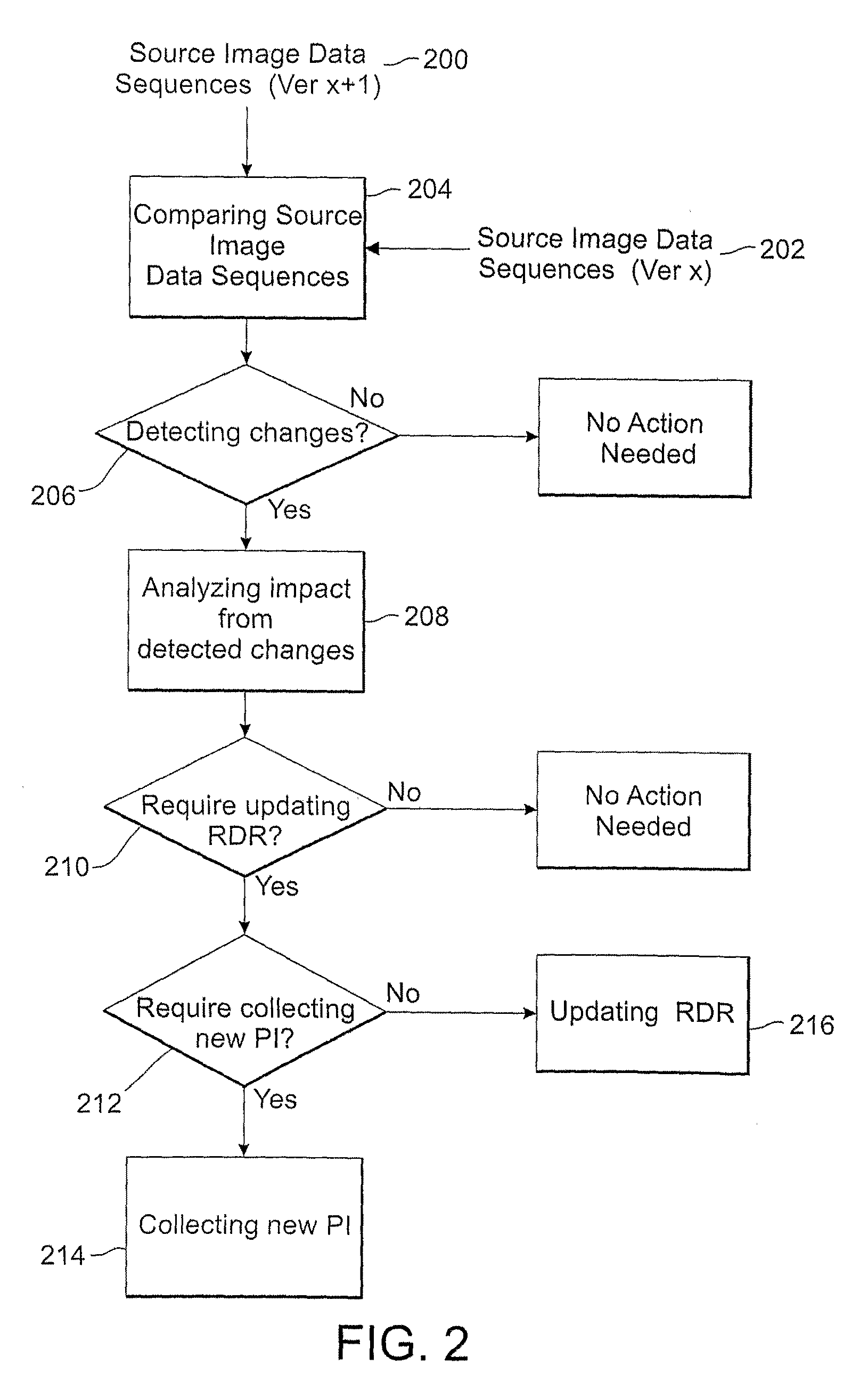

Methods and systems for converting 2d motion pictures for stereoscopic 3D exhibition

ActiveUS20090116732A1Improve image qualityImprove visual qualityPicture reproducers using cathode ray tubesPicture reproducers with optical-mechanical scanningImaging quality3d image

The present invention discloses methods of digitally converting 2D motion pictures or any other 2D image sequences to stereoscopic 3D image data for 3D exhibition. In one embodiment, various types of image data cues can be collected from 2D source images by various methods and then used for producing two distinct stereoscopic 3D views. Embodiments of the disclosed methods can be implemented within a highly efficient system comprising both software and computing hardware. The architectural model of some embodiments of the system is equally applicable to a wide range of conversion, re-mastering and visual enhancement applications for motion pictures and other image sequences, including converting a 2D motion picture or a 2D image sequence to 3D, re-mastering a motion picture or a video sequence to a different frame rate, enhancing the quality of a motion picture or other image sequences, or other conversions that facilitate further improvement in visual image quality within a projector to produce the enhanced images.

Owner:IMAX CORP

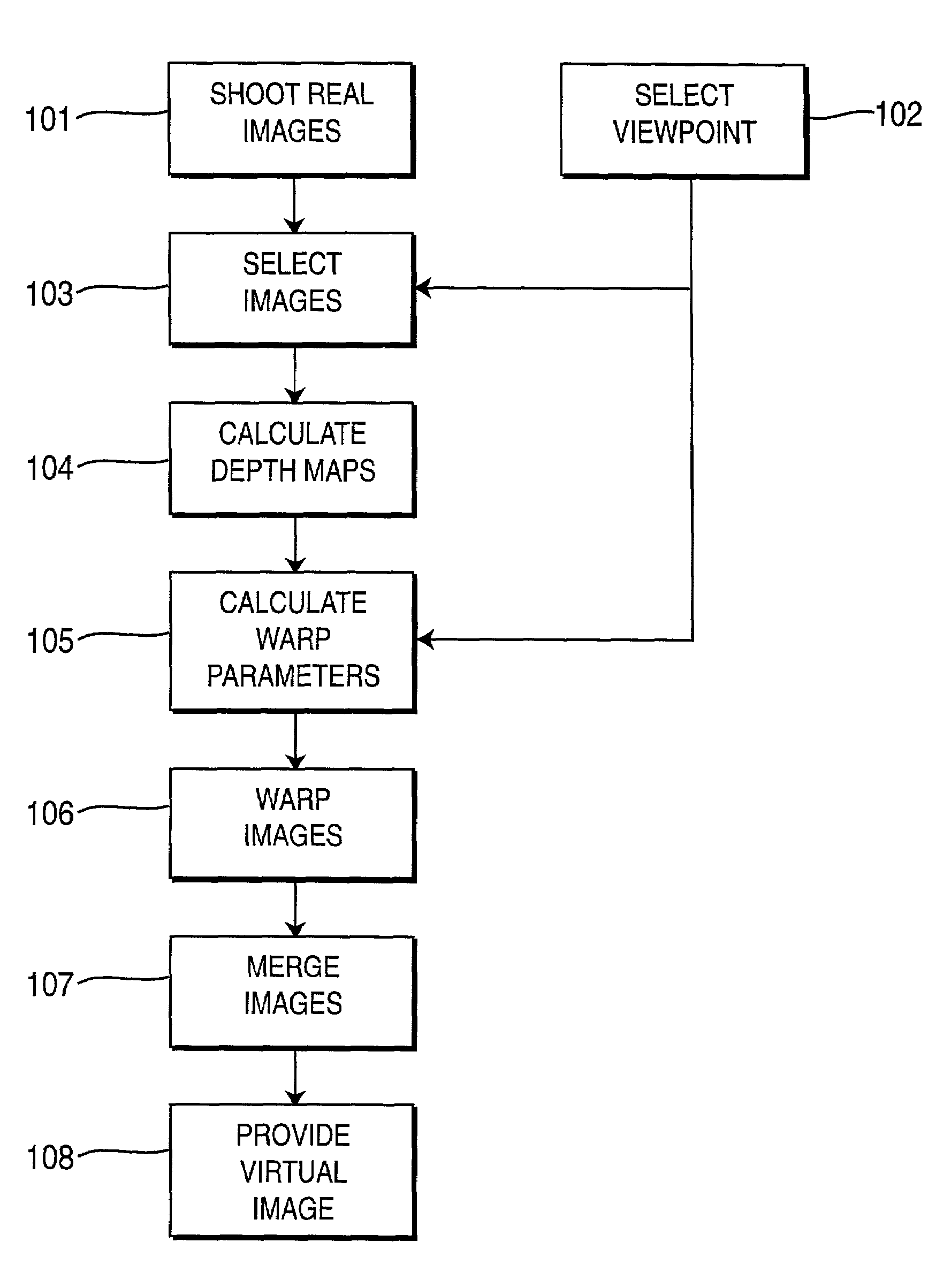

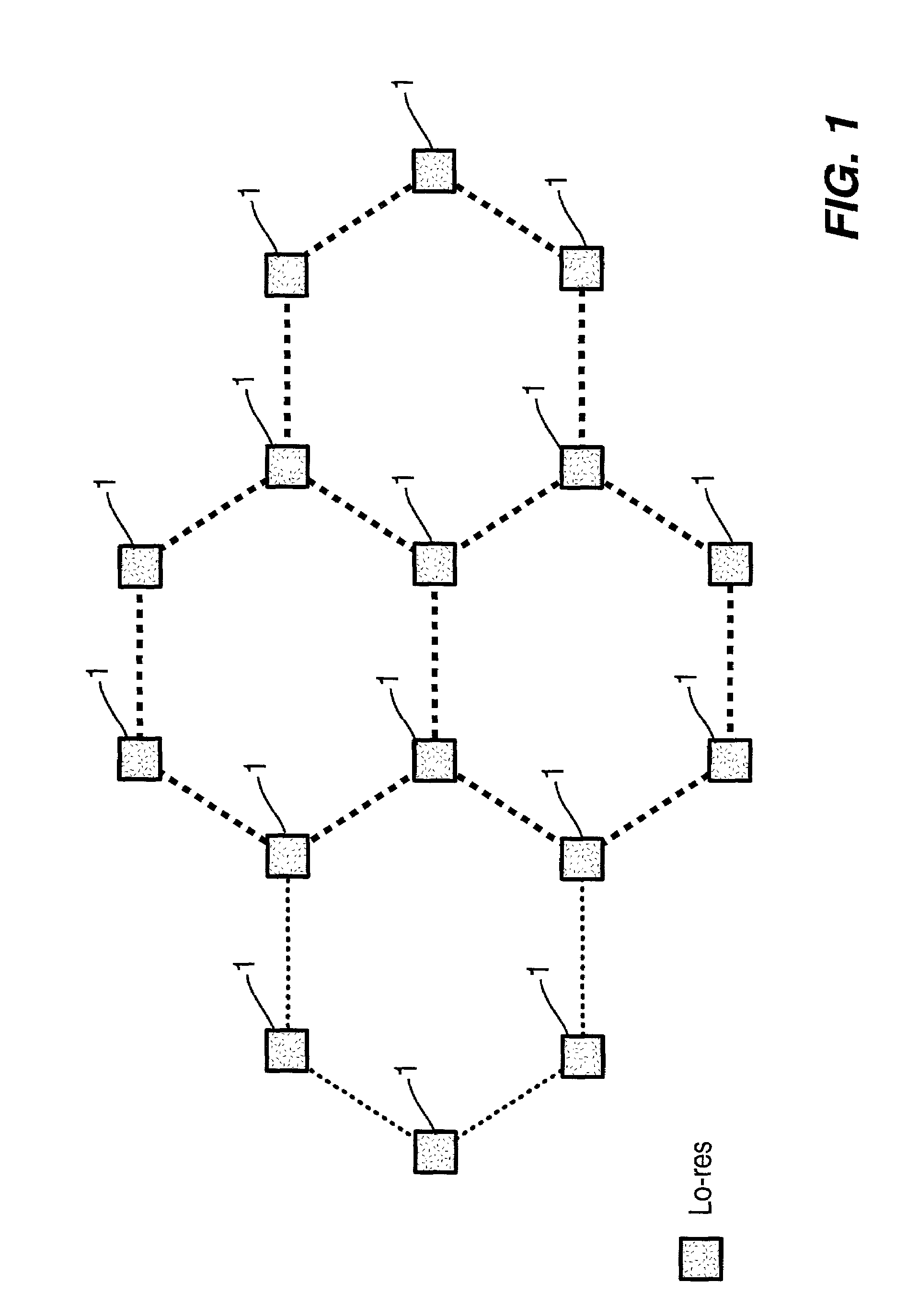

Method and apparatus for synthesizing new video and/or still imagery from a collection of real video and/or still imagery

ActiveUS7085409B2Quality improvementIncrease speedImage enhancementImage analysisViewpointsVirtual position

An image-based tele-presence system forward warps video images selected from a plurality fixed imagers using local depth maps and merges the warped images to form high quality images that appear as seen from a virtual position. At least two images, from the images produced by the imagers, are selected for creating a virtual image. Depth maps are generated corresponding to each of the selected images. Selected images are warped to the virtual viewpoint using warp parameters calculated using corresponding depth maps. Finally the warped images are merged to create the high quality virtual image as seen from the selected viewpoint. The system employs a video blanket of imagers, which helps both optimize the number of imagers and attain higher resolution. In an exemplary video blanket, cameras are deployed in a geometric pattern on a surface.

Owner:SRI INTERNATIONAL

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com