Patents

Literature

44958results about "Graph reading" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

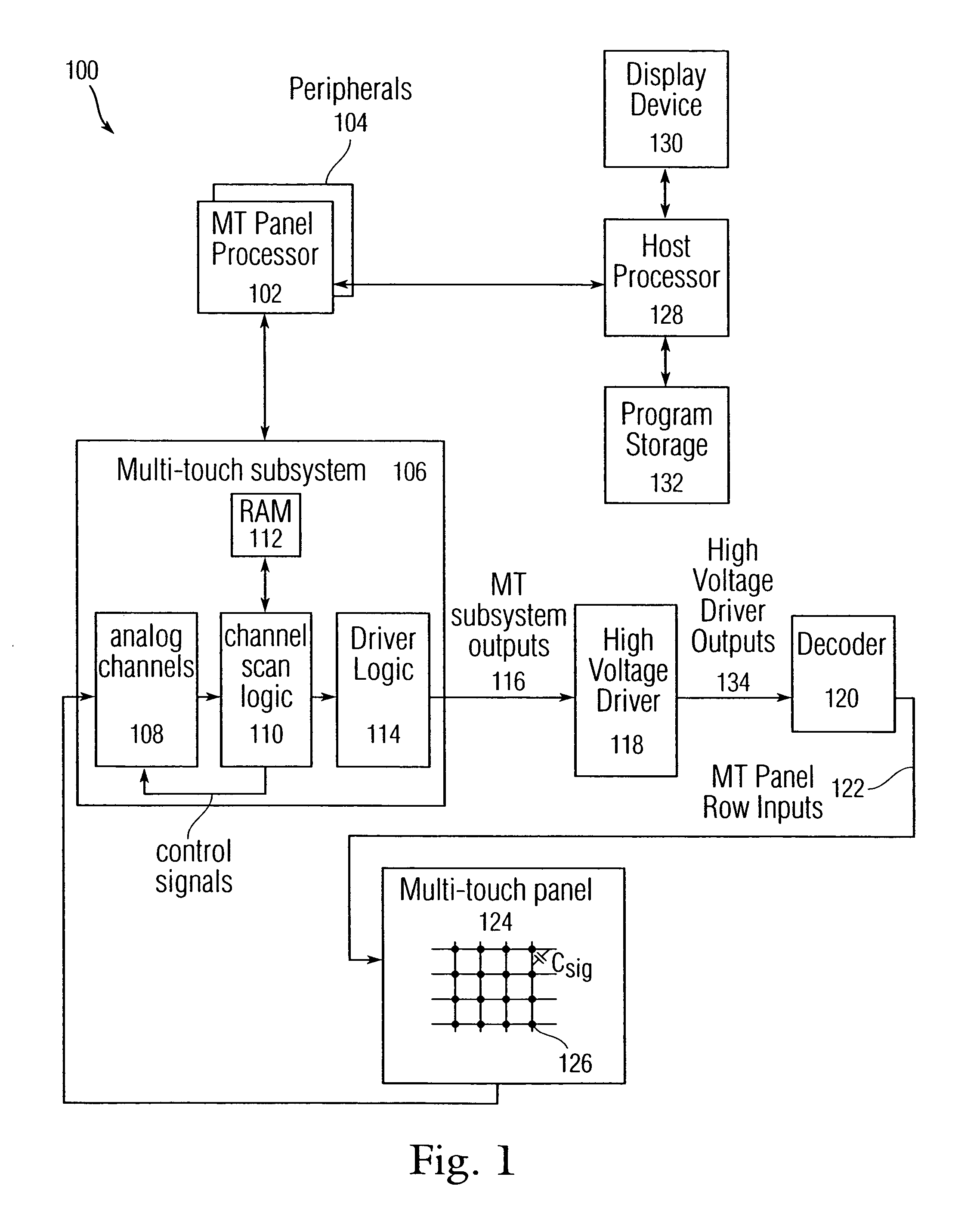

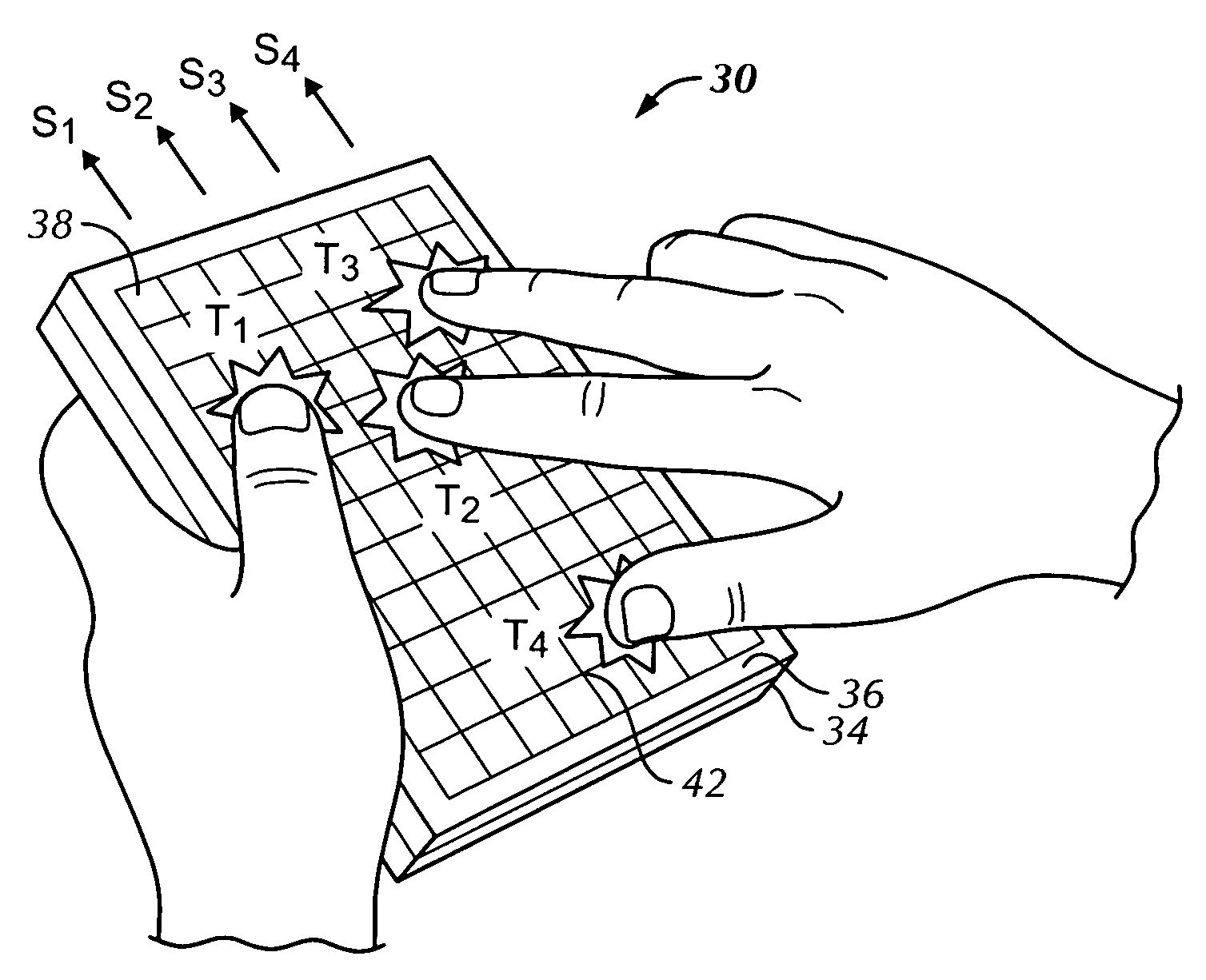

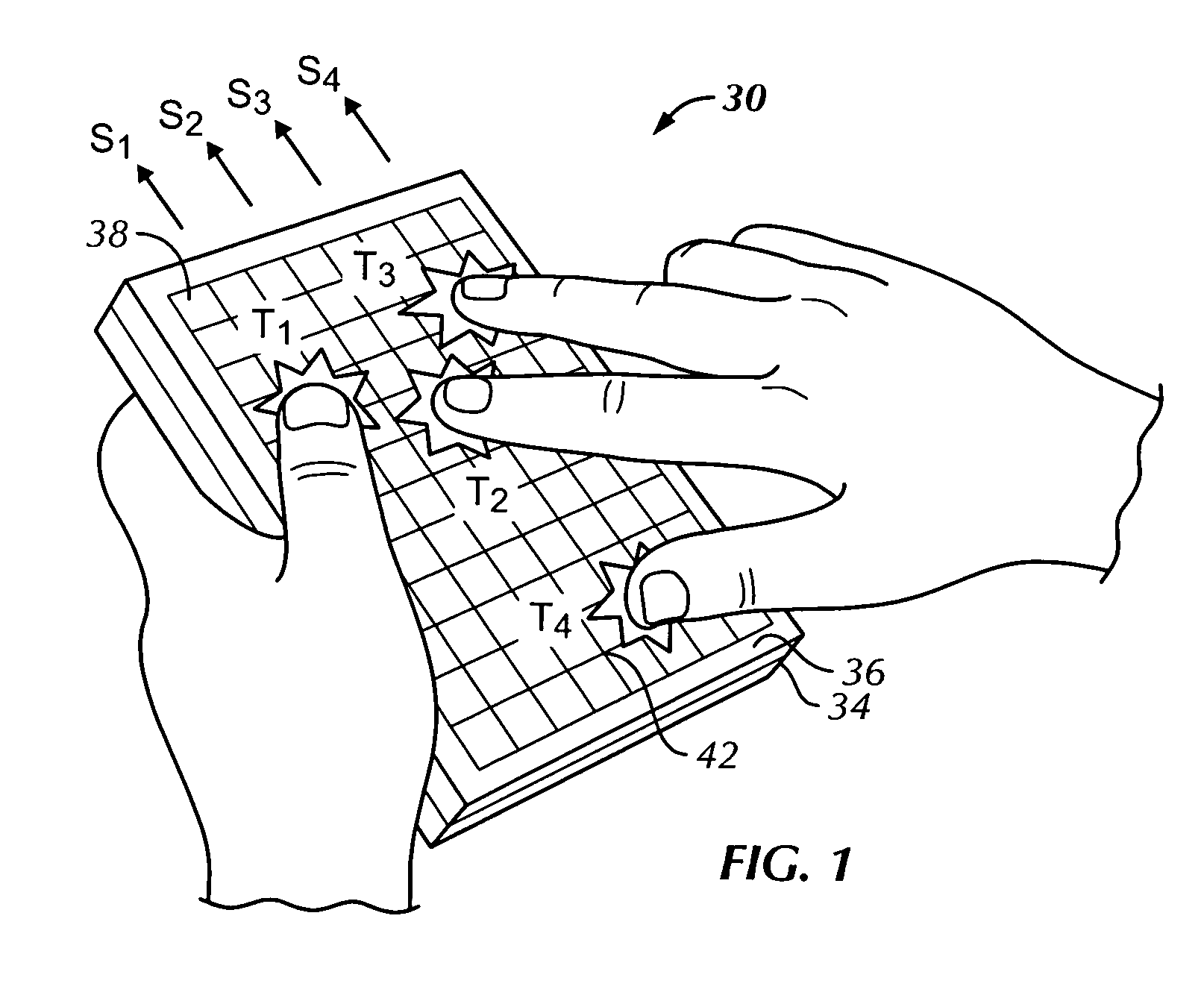

Gestures for touch sensitive input devices

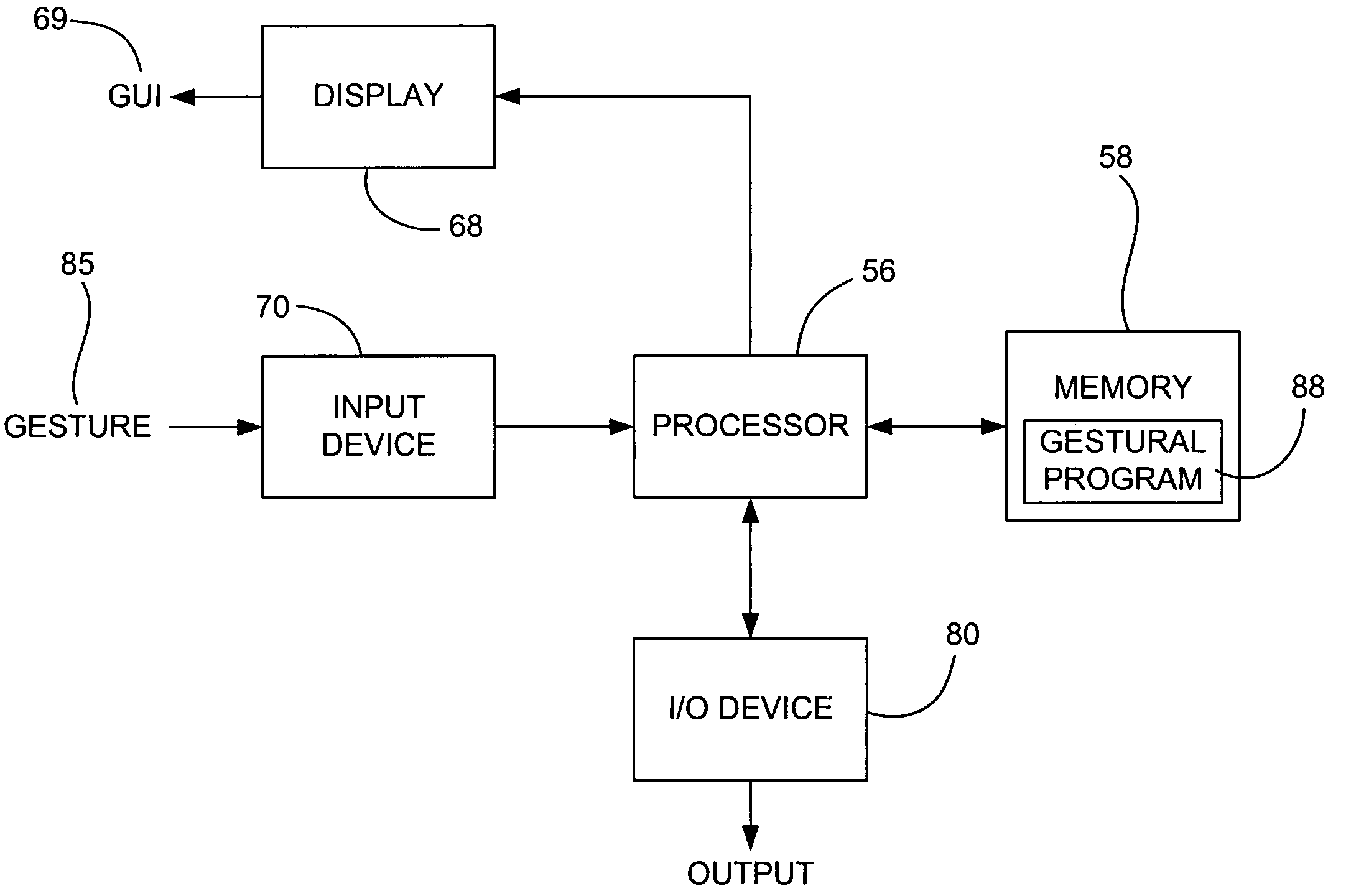

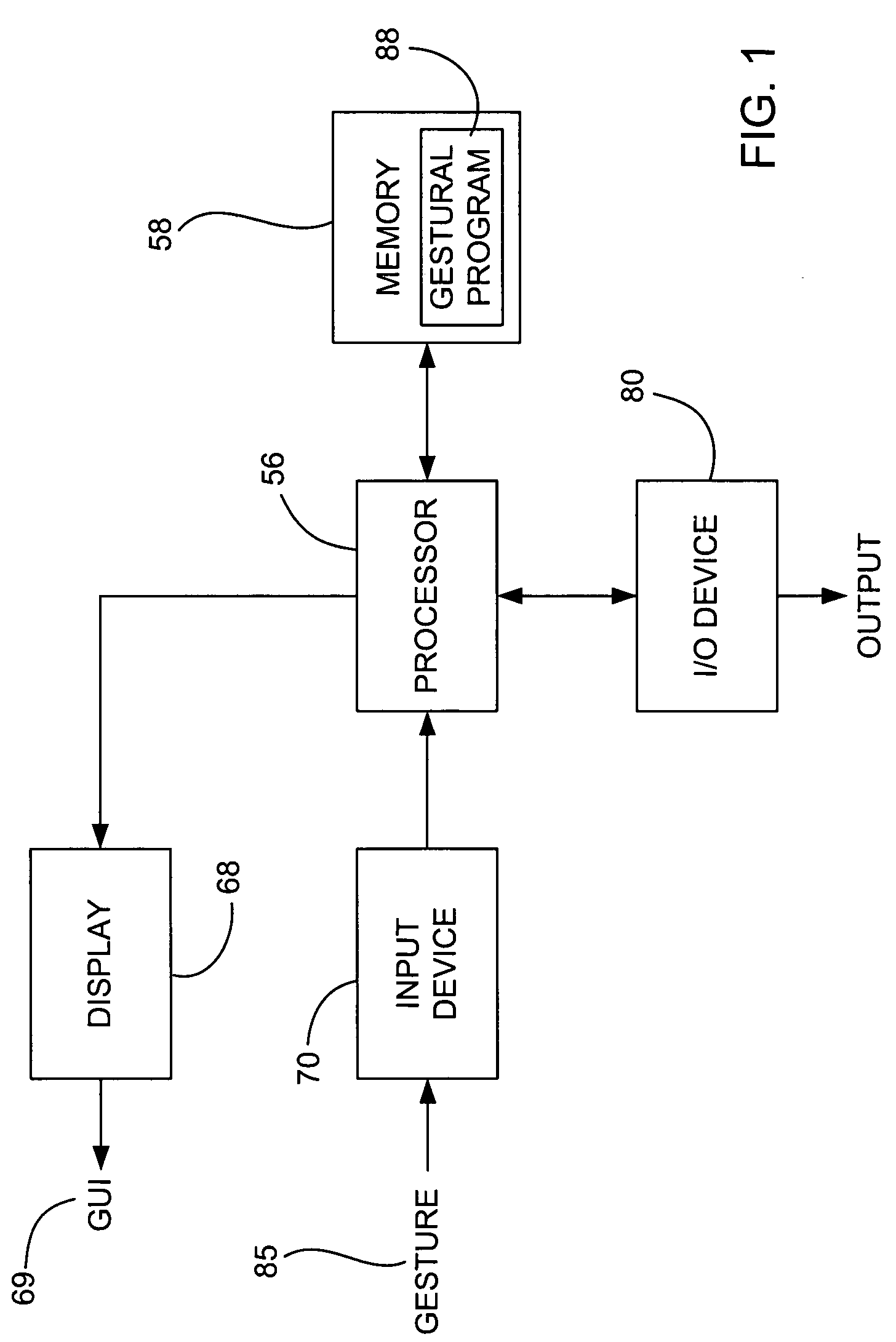

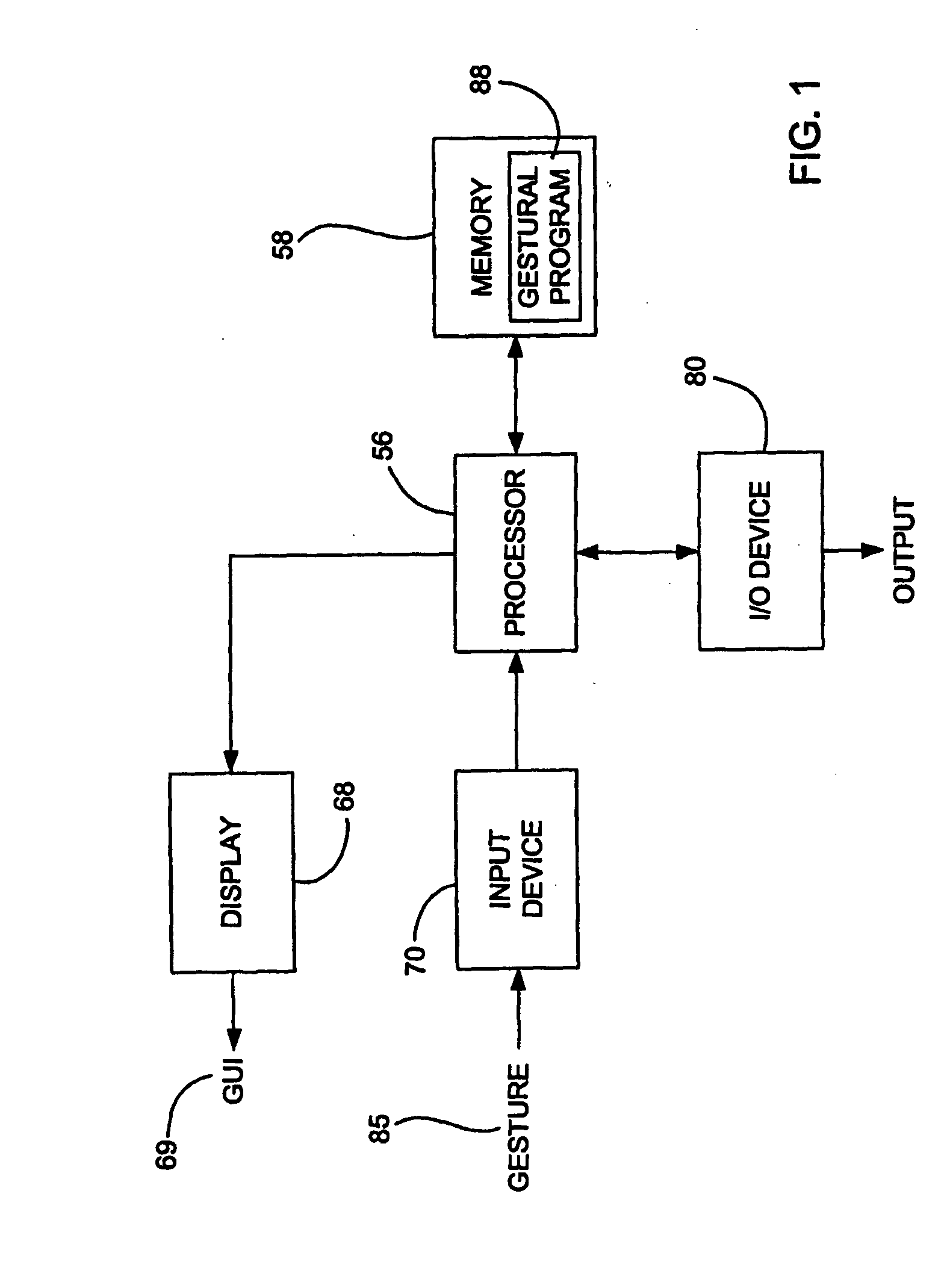

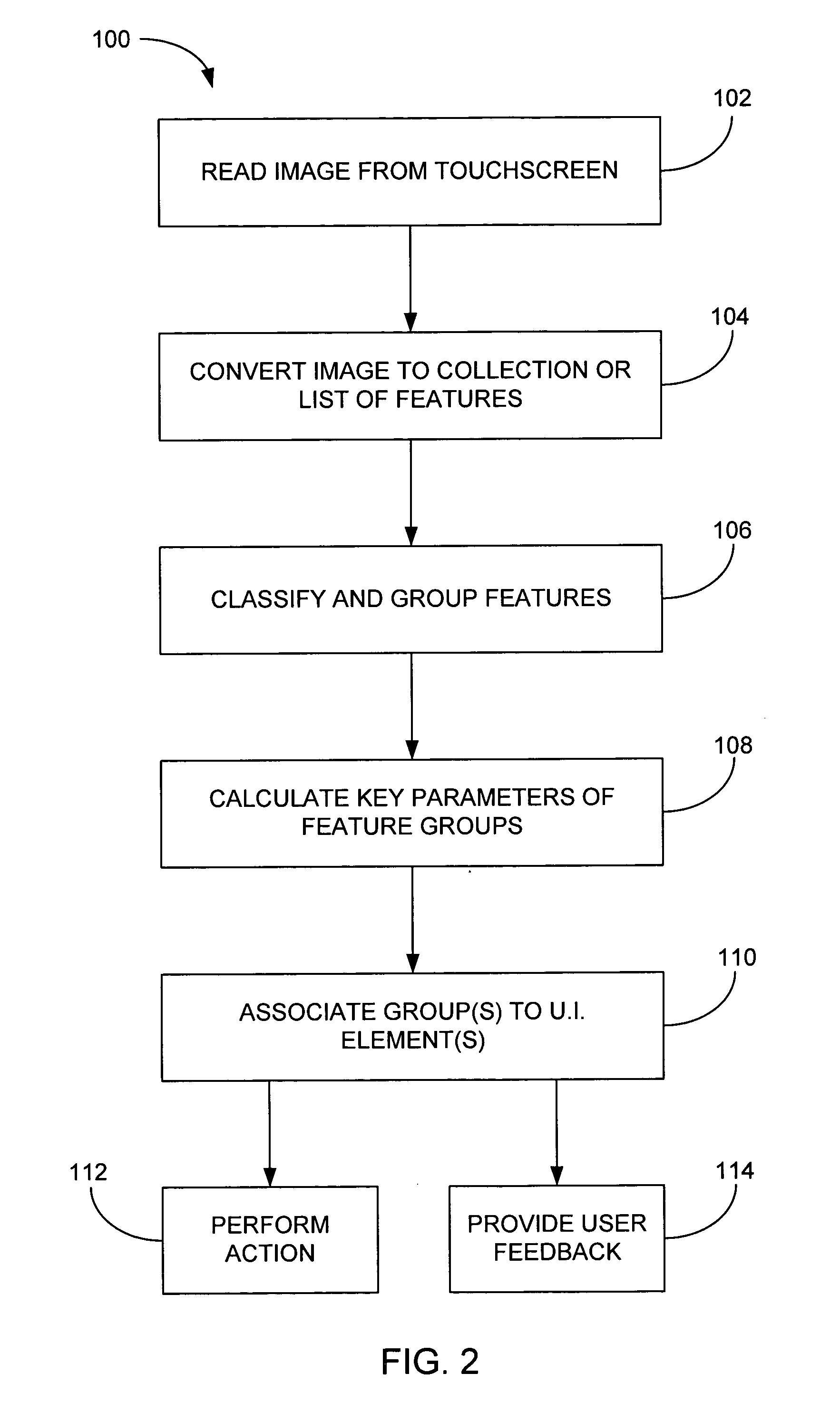

Methods and systems for processing touch inputs are disclosed. The invention in one respect includes reading data from a multipoint sensing device such as a multipoint touch screen where the data pertains to touch input with respect to the multipoint sensing device, and identifying at least one multipoint gesture based on the data from the multipoint sensing device.

Owner:APPLE INC

Mode-based graphical user interfaces for touch sensitive input devices

ActiveUS20060026535A1Input/output for user-computer interactionGraph readingGraphicsGraphical user interface

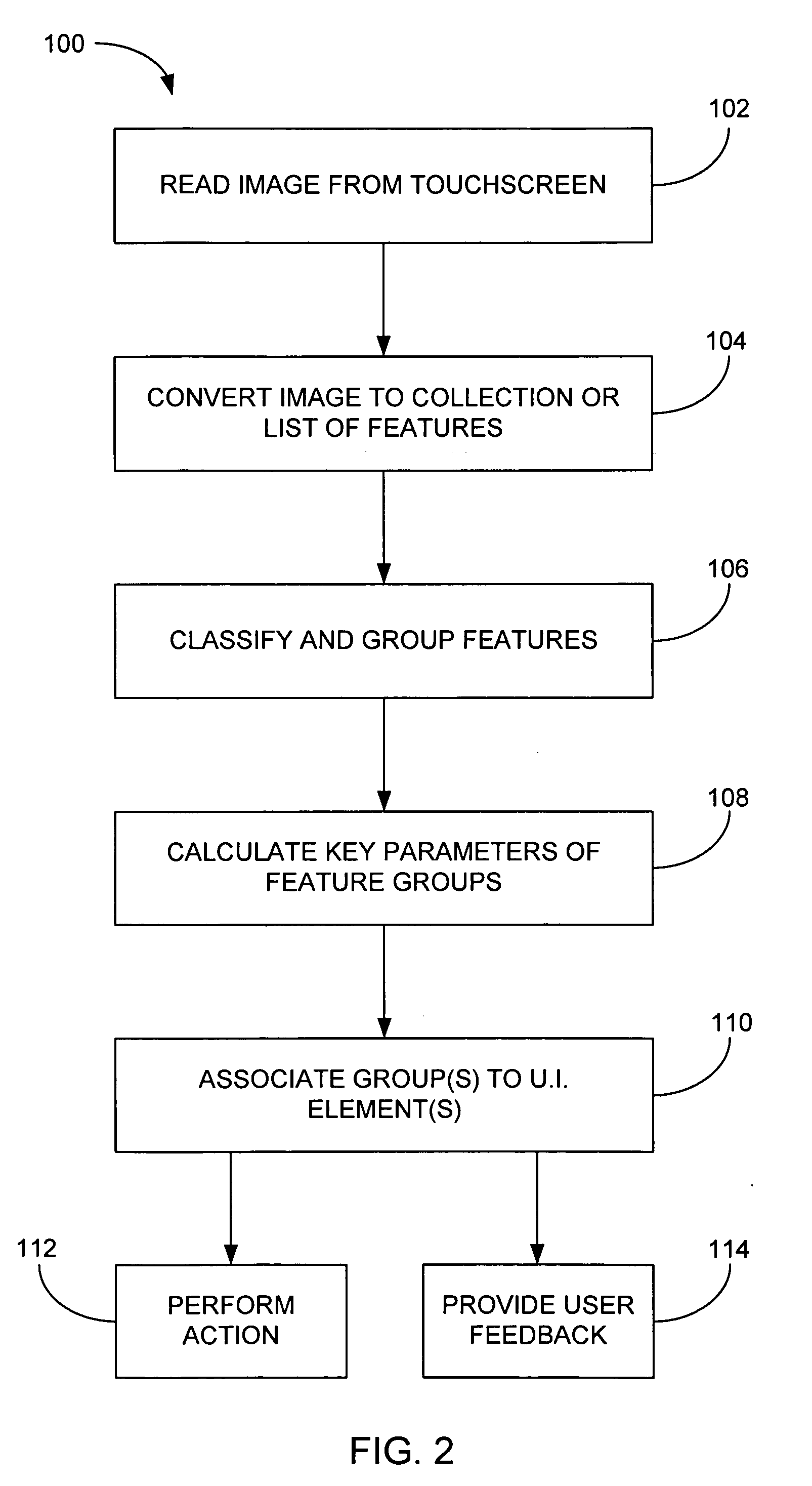

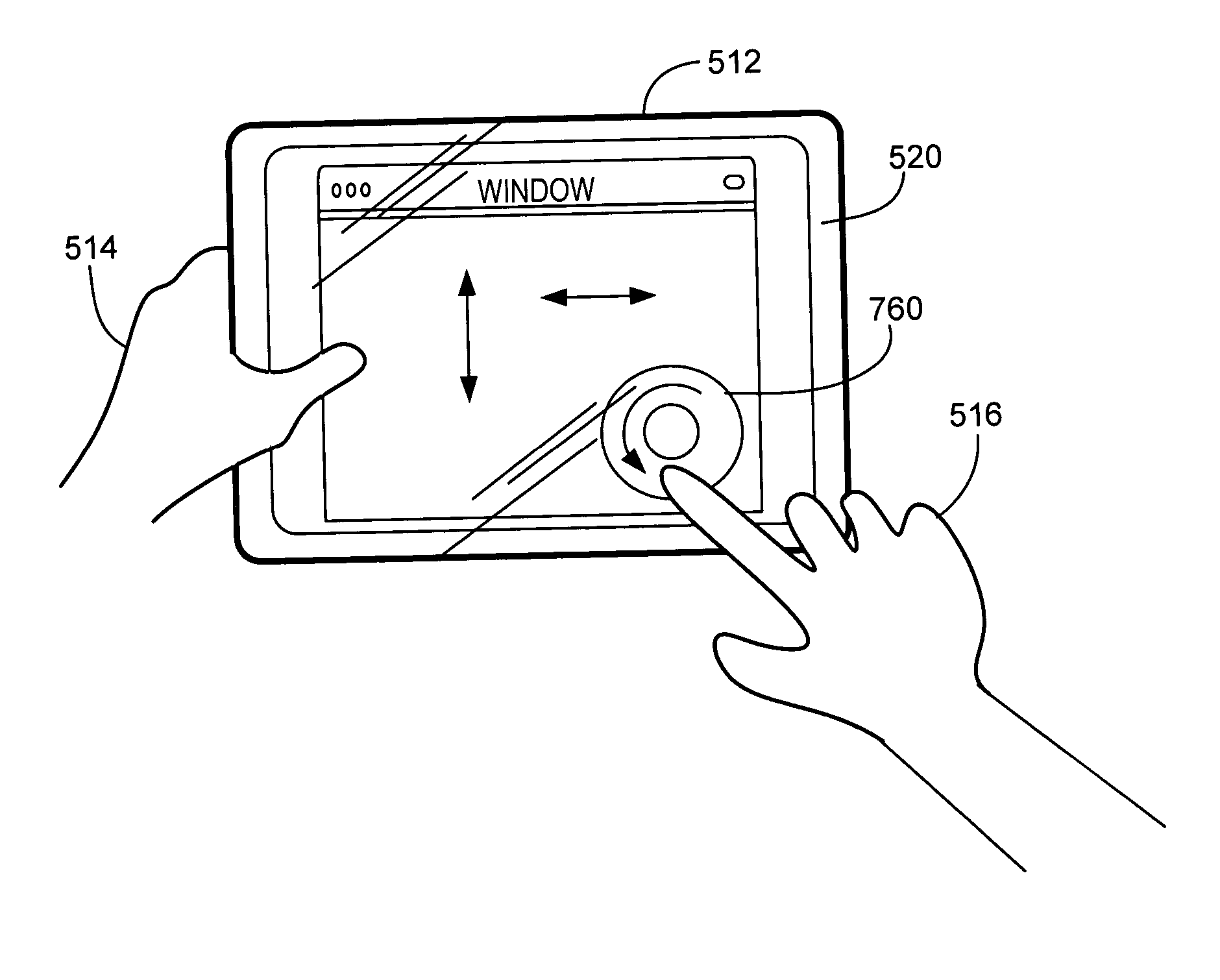

A user interface method is disclosed. The method includes detecting a touch and then determining a user interface mode when a touch is detected. The method further includes activating one or more GUI elements based on the user interface mode and in response to the detected touch.

Owner:APPLE INC

Contextual responses based on automated learning techniques

InactiveUS6842877B2Input/output for user-computer interactionComputer security arrangementsRepetitive taskUser interface

Techniques are disclosed for using a combination of explicit and implicit user context modeling techniques to identify and provide appropriate computer actions based on a current context, and to continuously improve the providing of such computer actions. The appropriate computer actions include presentation of appropriate content and functionality. Feedback paths can be used to assist automated machine learning in detecting patterns and generating inferred rules, and improvements from the generated rules can be implemented with or without direct user control. The techniques can be used to enhance software and device functionality, including self-customizing of a model of the user's current context or situation, customizing received themes, predicting appropriate content for presentation or retrieval, self-customizing of software user interfaces, simplifying repetitive tasks or situations, and mentoring of the user to promote desired change.

Owner:MICROSOFT TECH LICENSING LLC

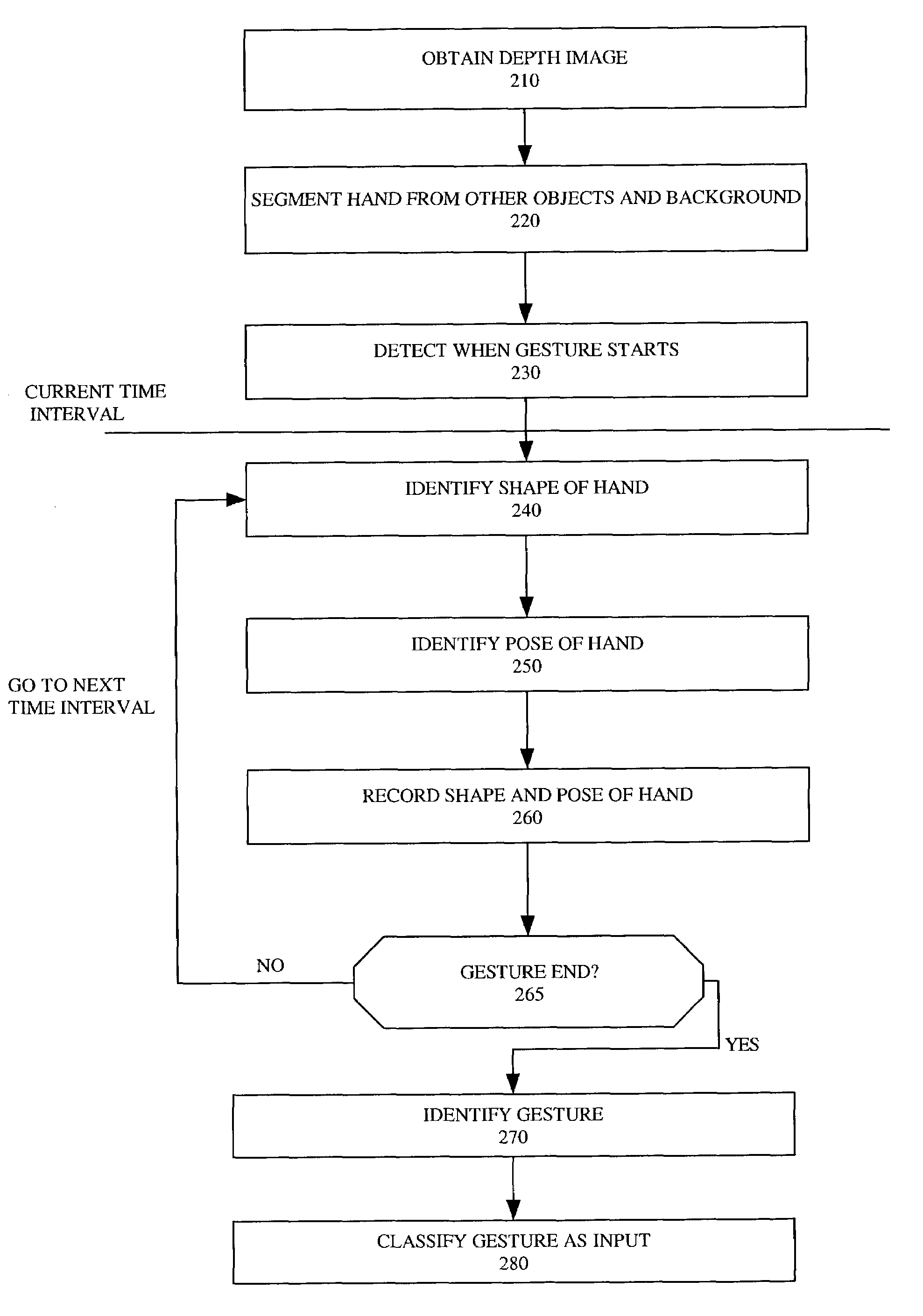

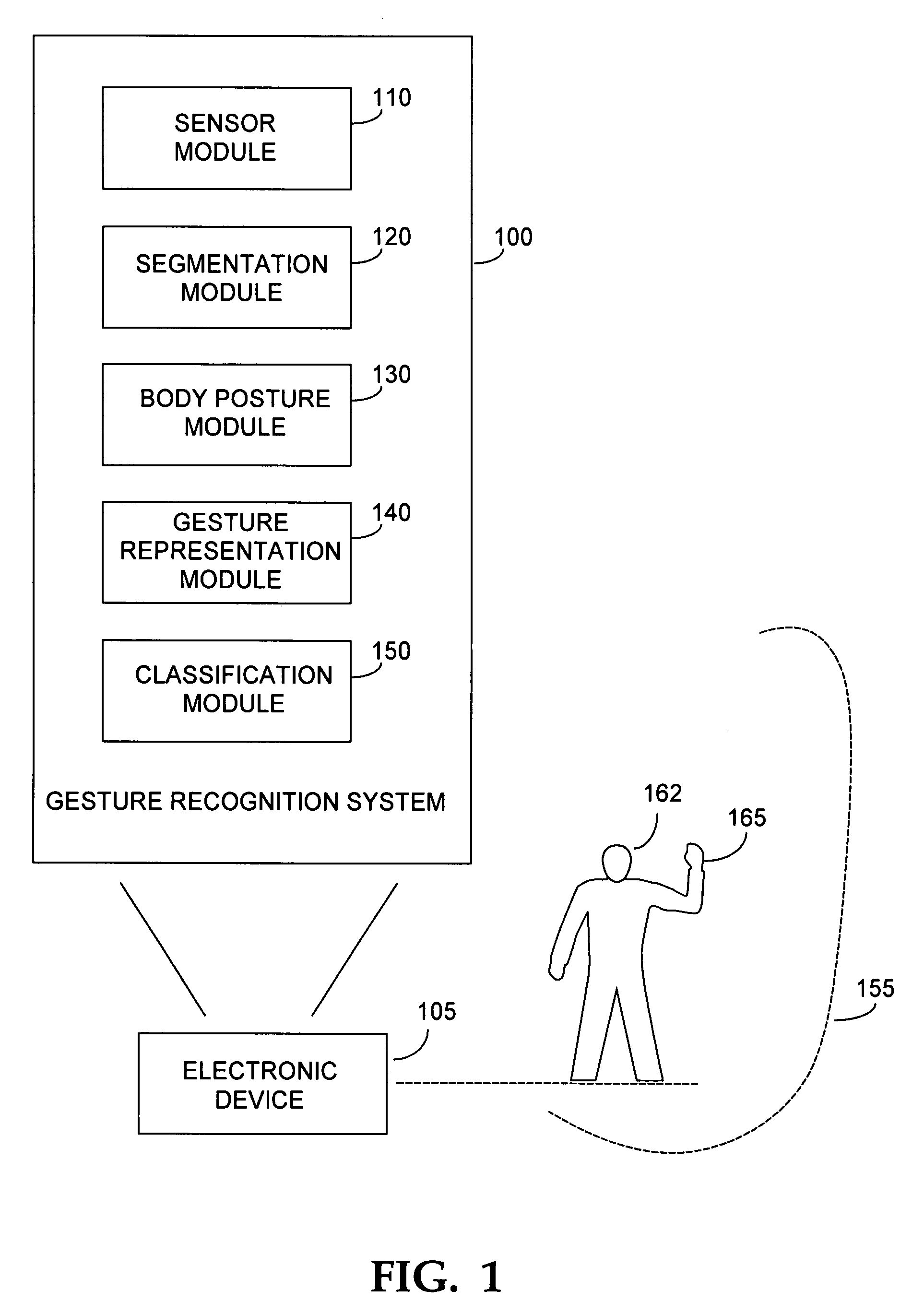

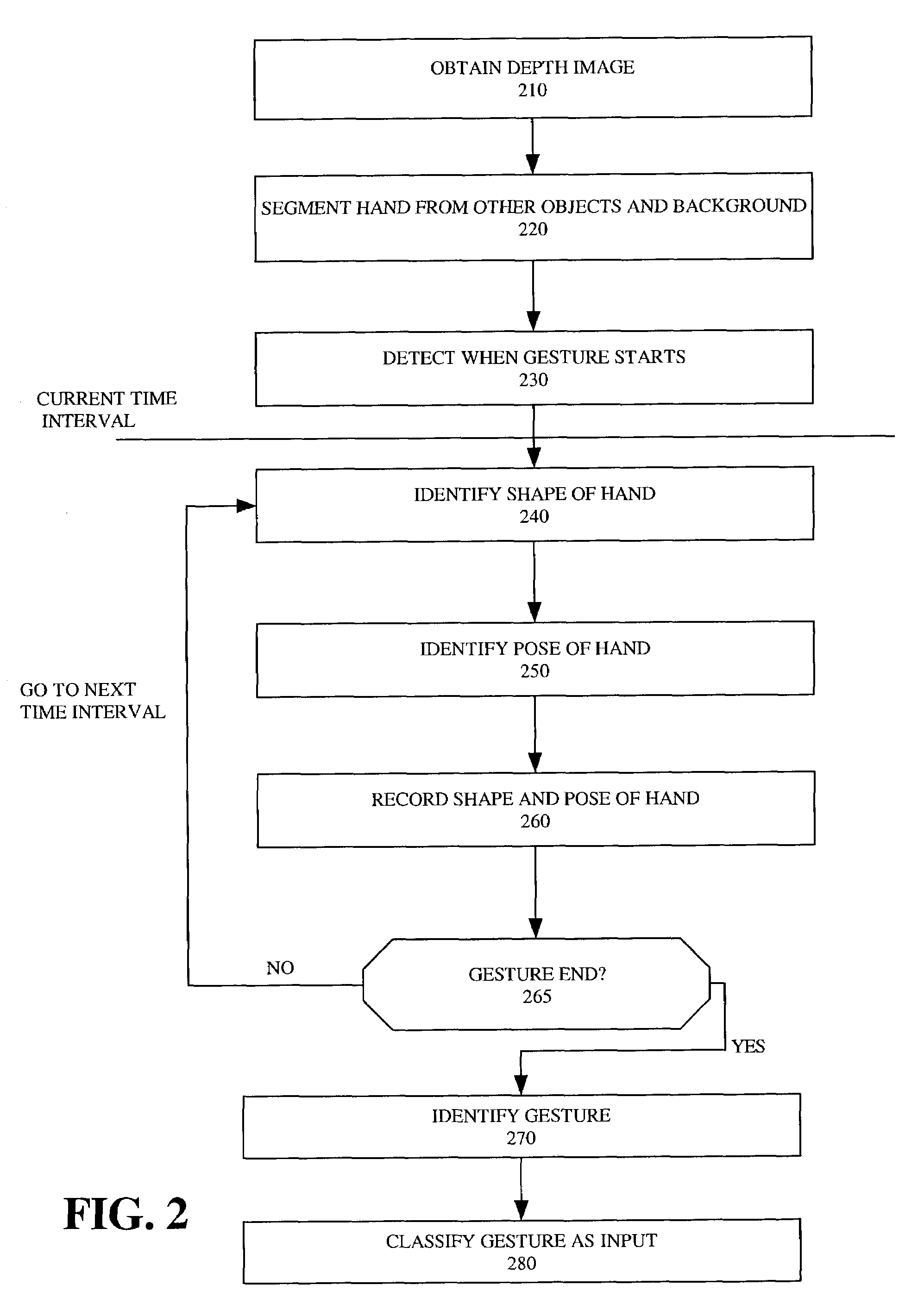

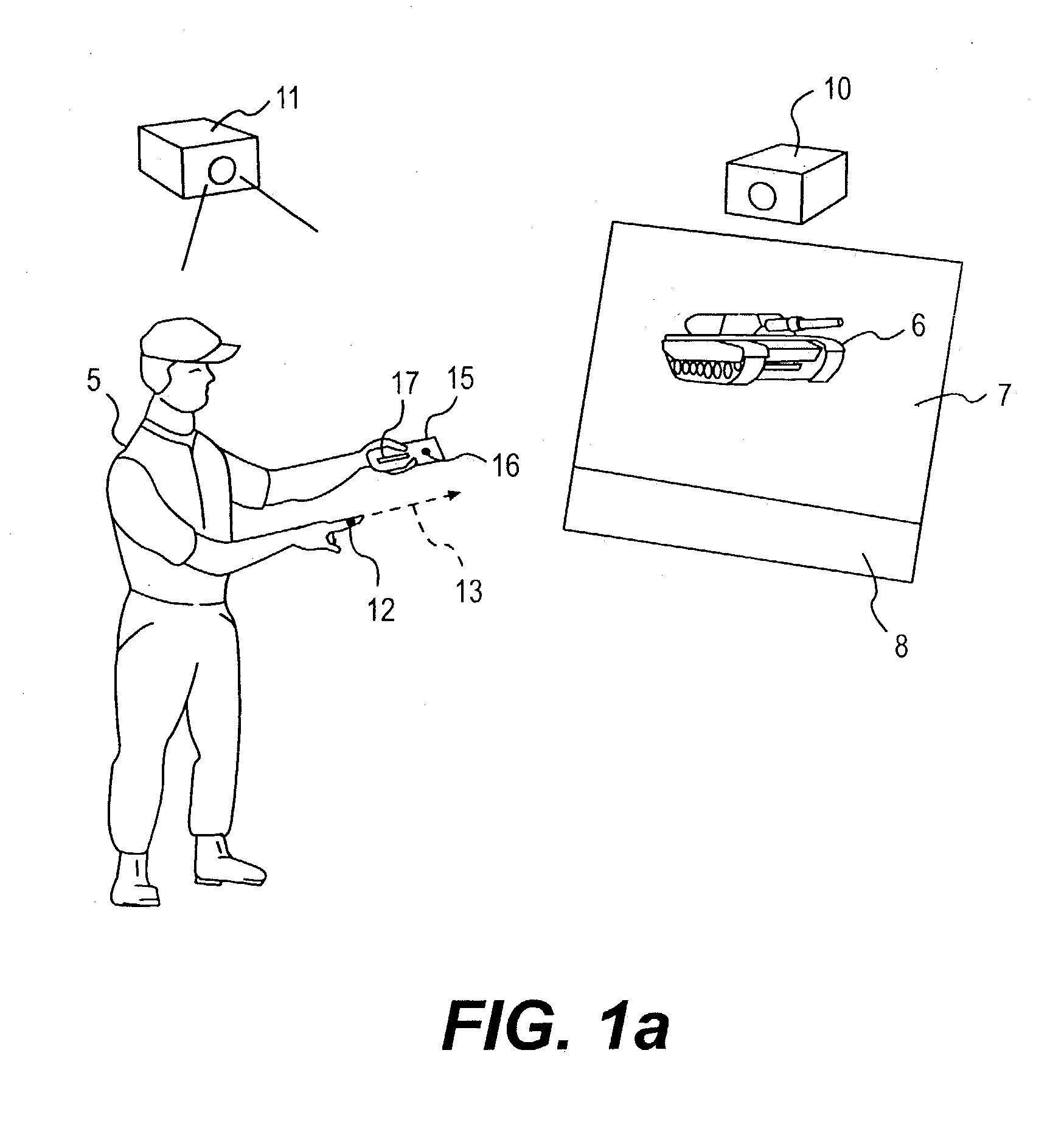

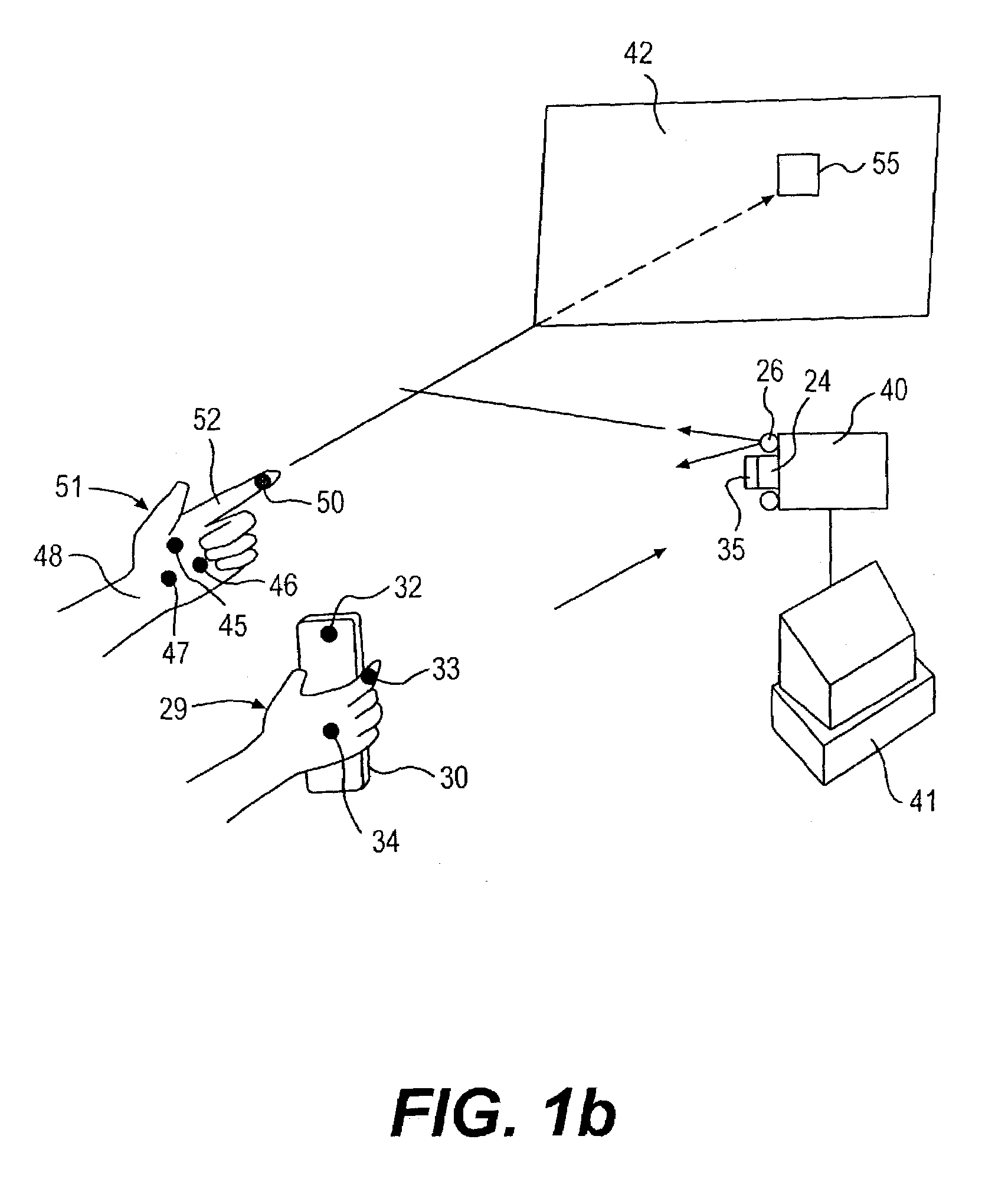

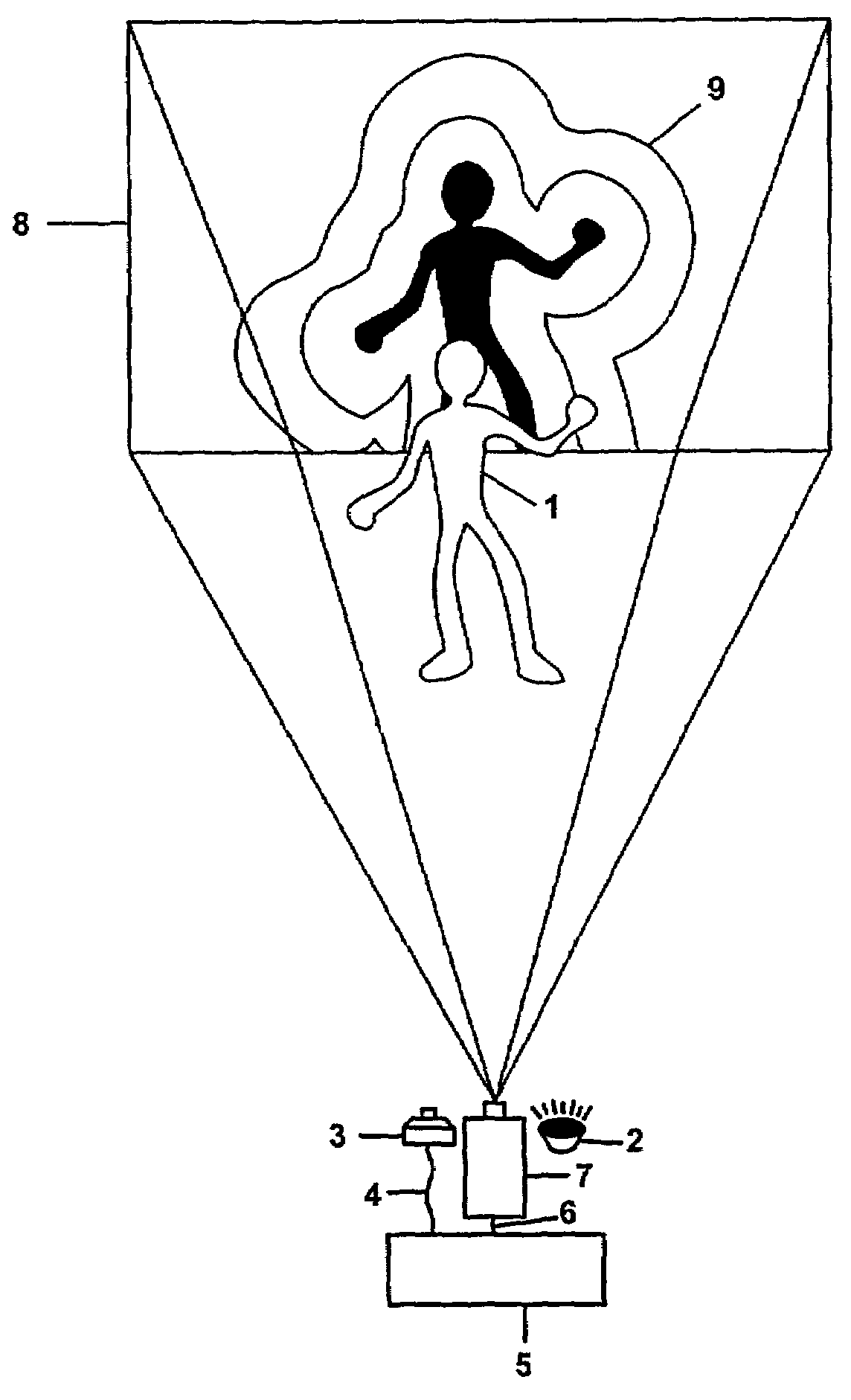

Gesture recognition system using depth perceptive sensors

ActiveUS7340077B2Input/output for user-computer interactionIndoor gamesPhysical medicine and rehabilitationPhysical therapy

Owner:MICROSOFT TECH LICENSING LLC

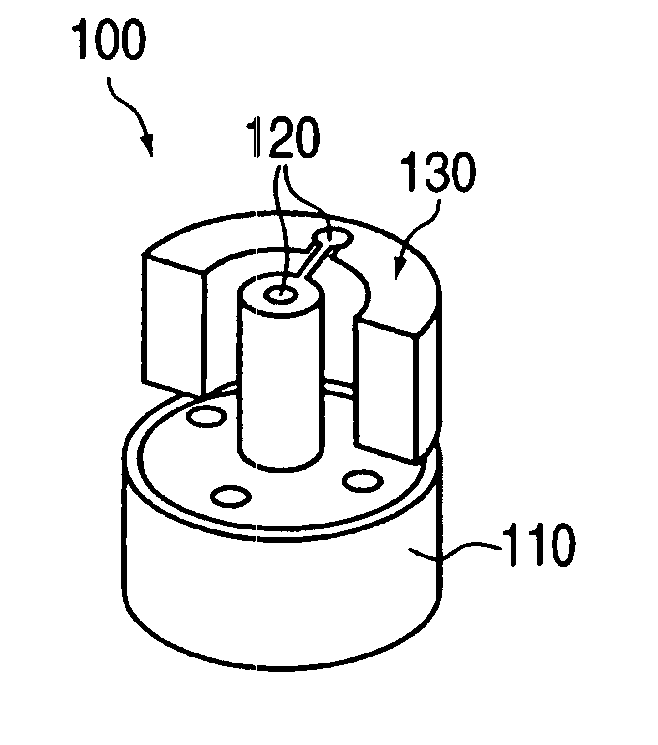

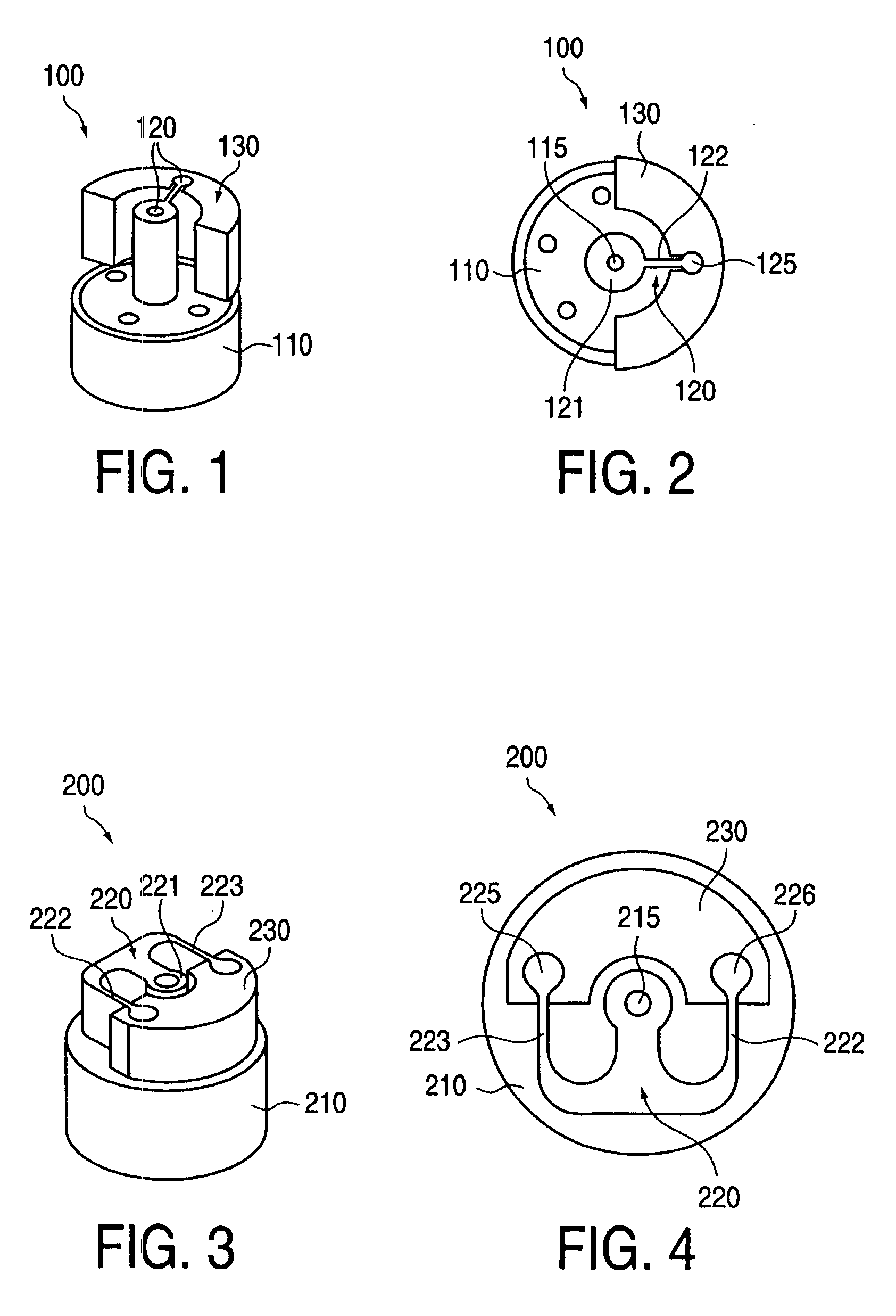

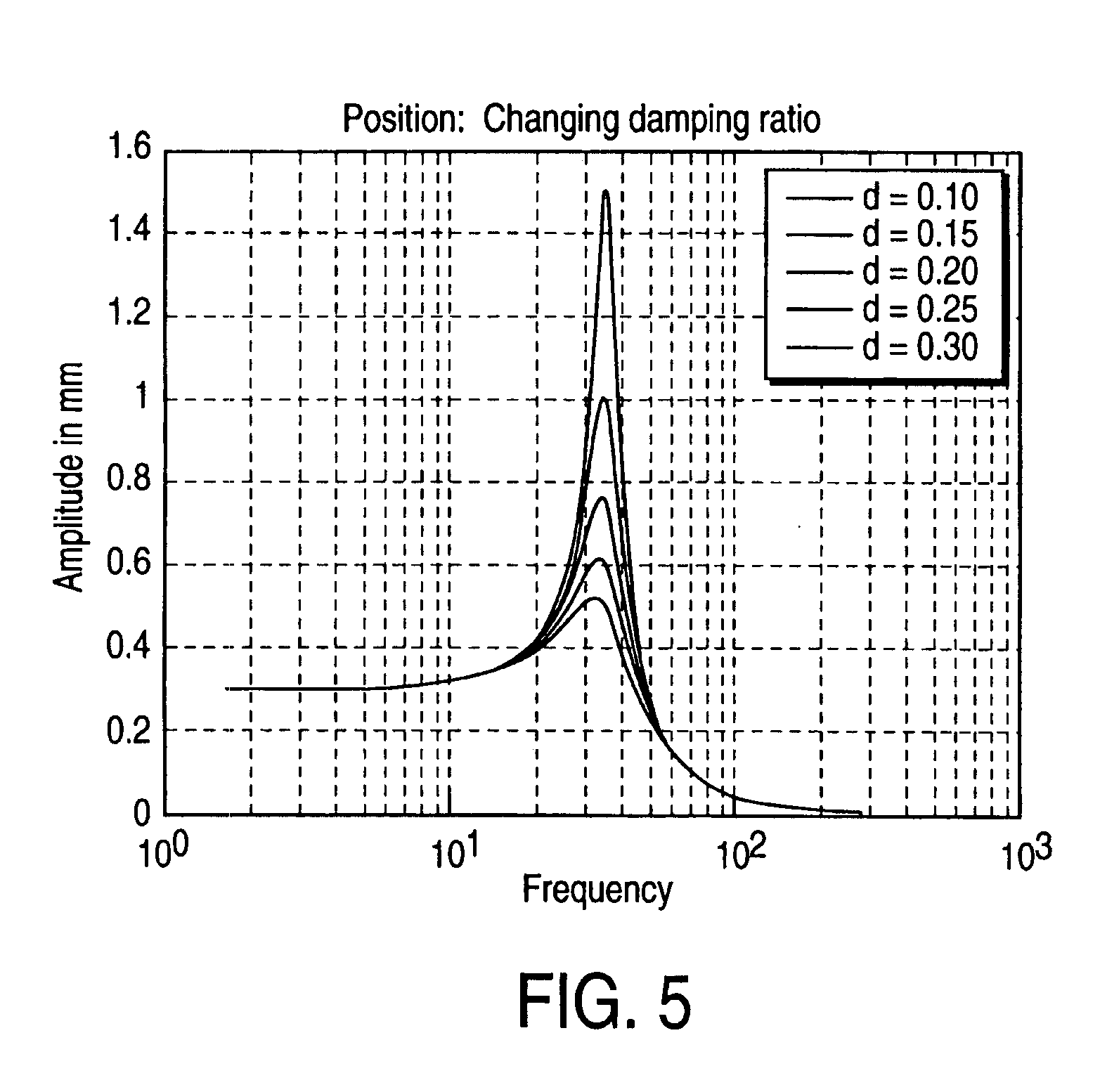

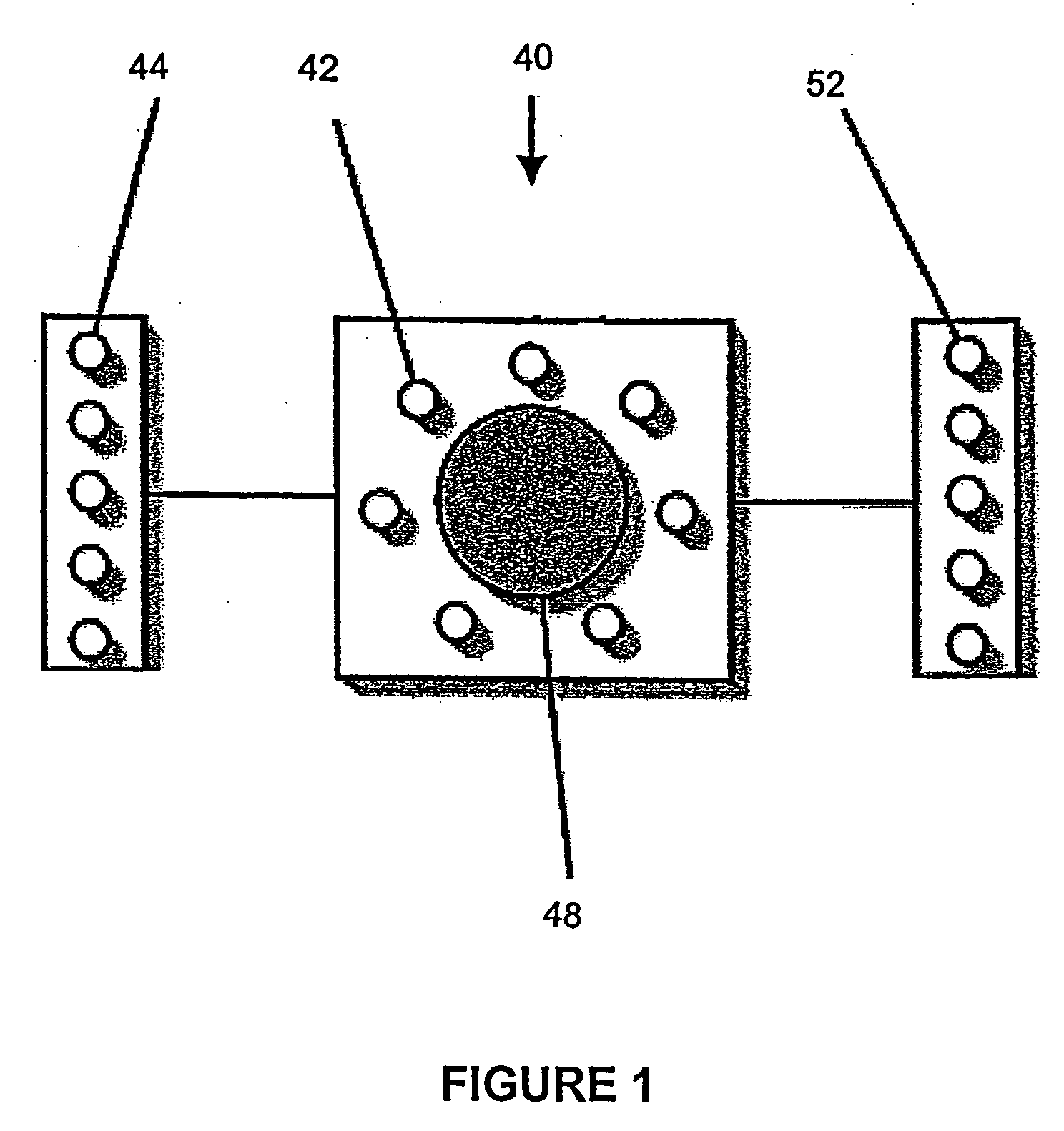

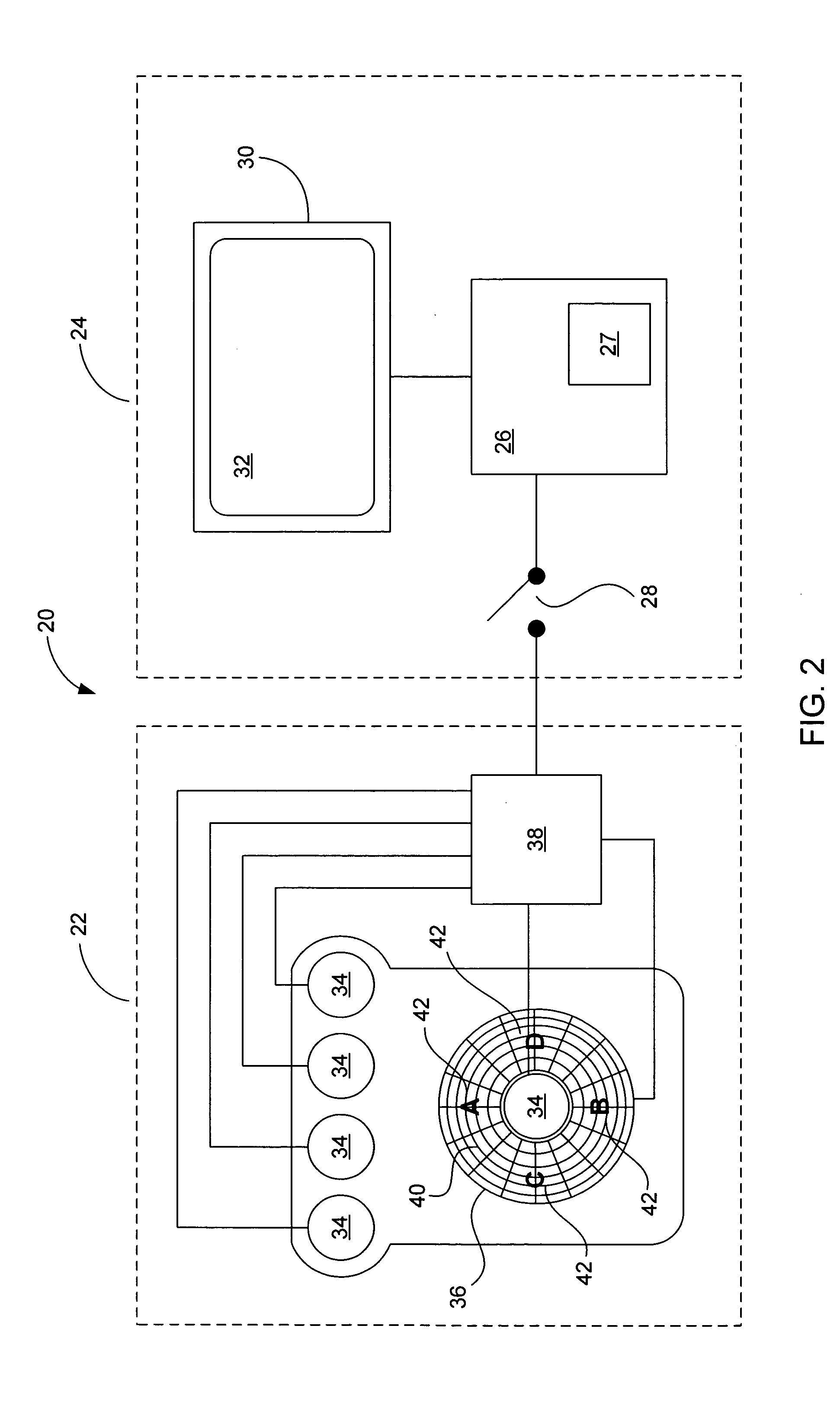

Haptic feedback using rotary harmonic moving mass

InactiveUS7161580B2Input/output for user-computer interactionCathode-ray tube indicatorsHarmonicEngineering

Owner:IMMERSION CORPORATION

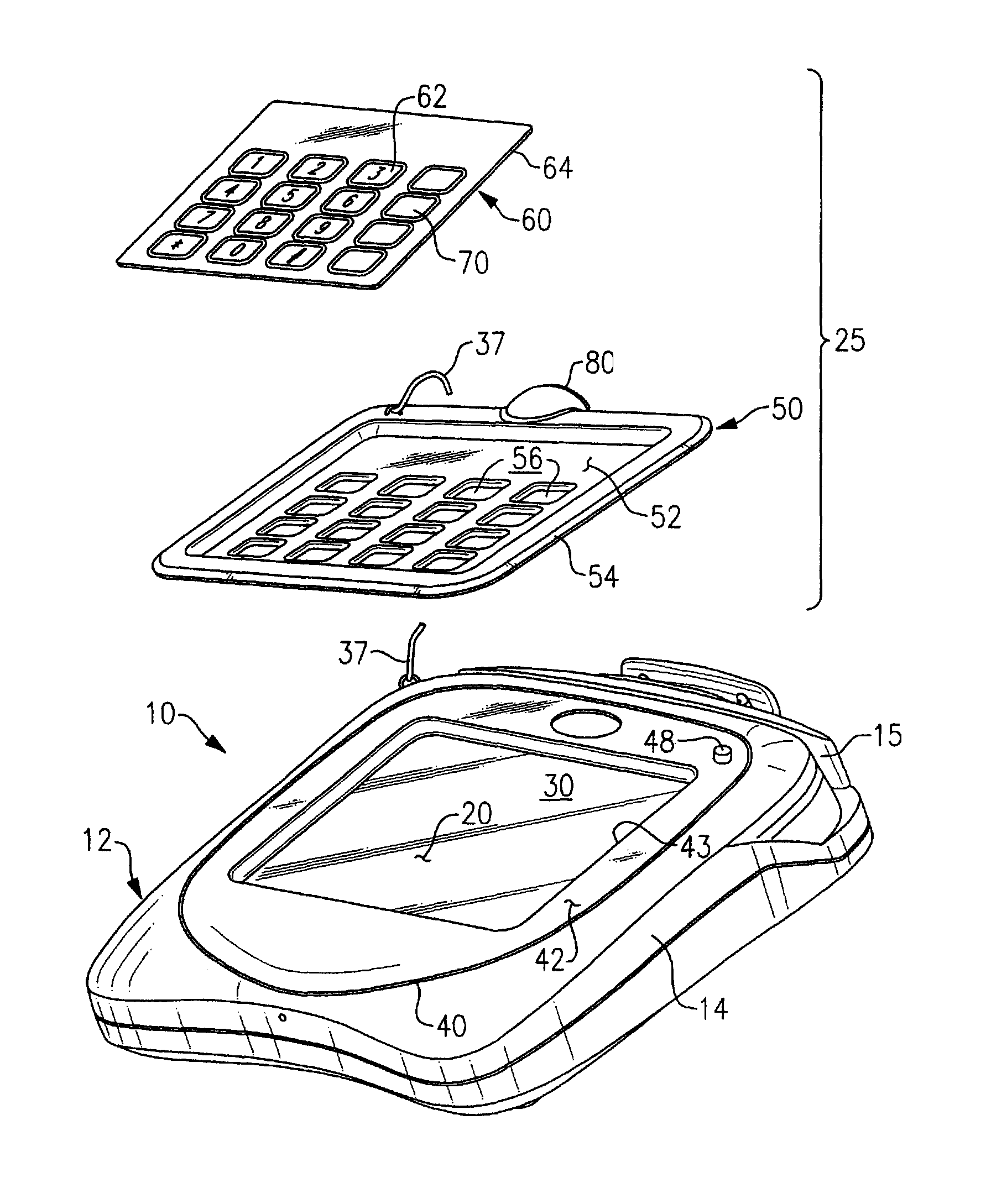

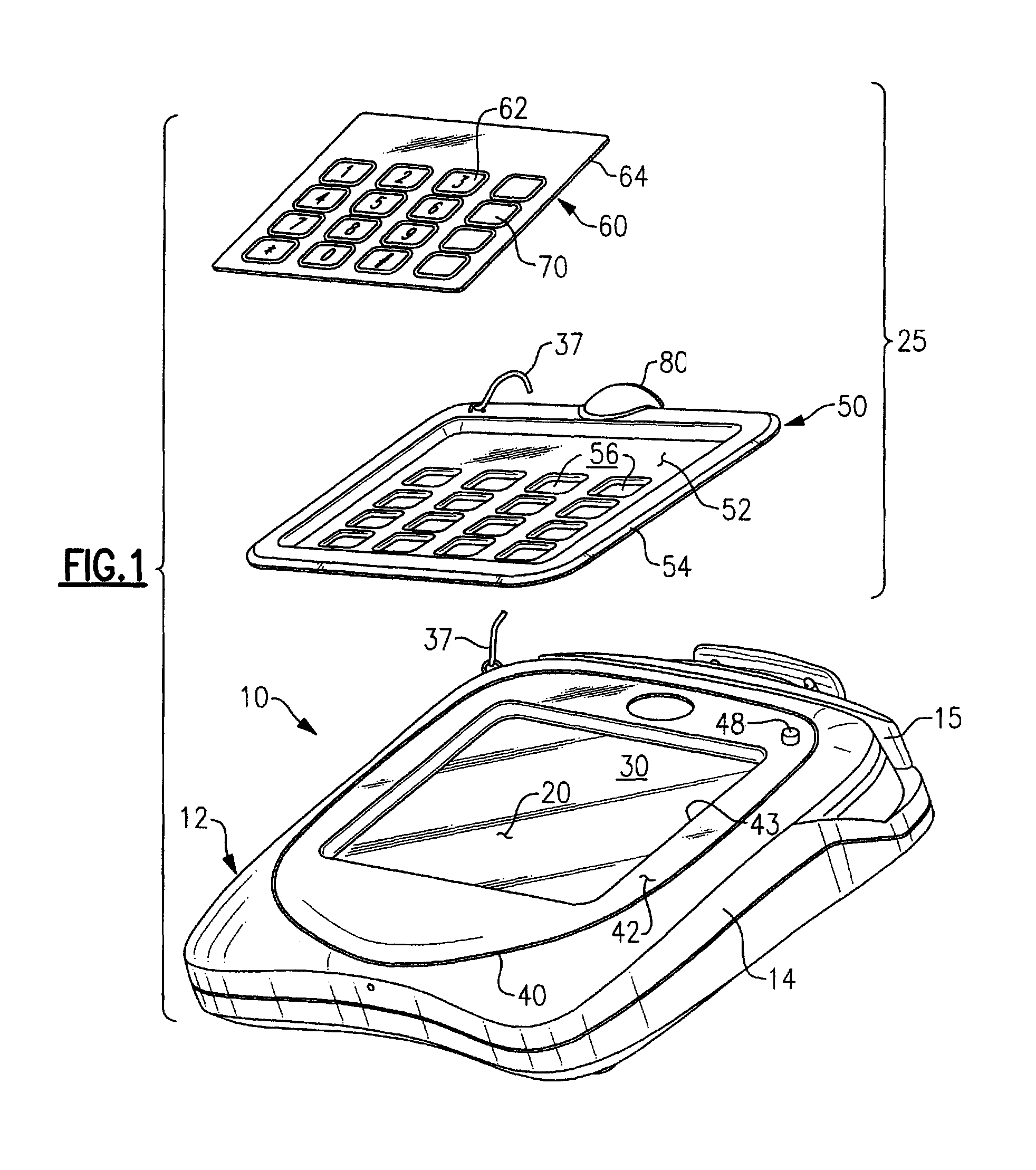

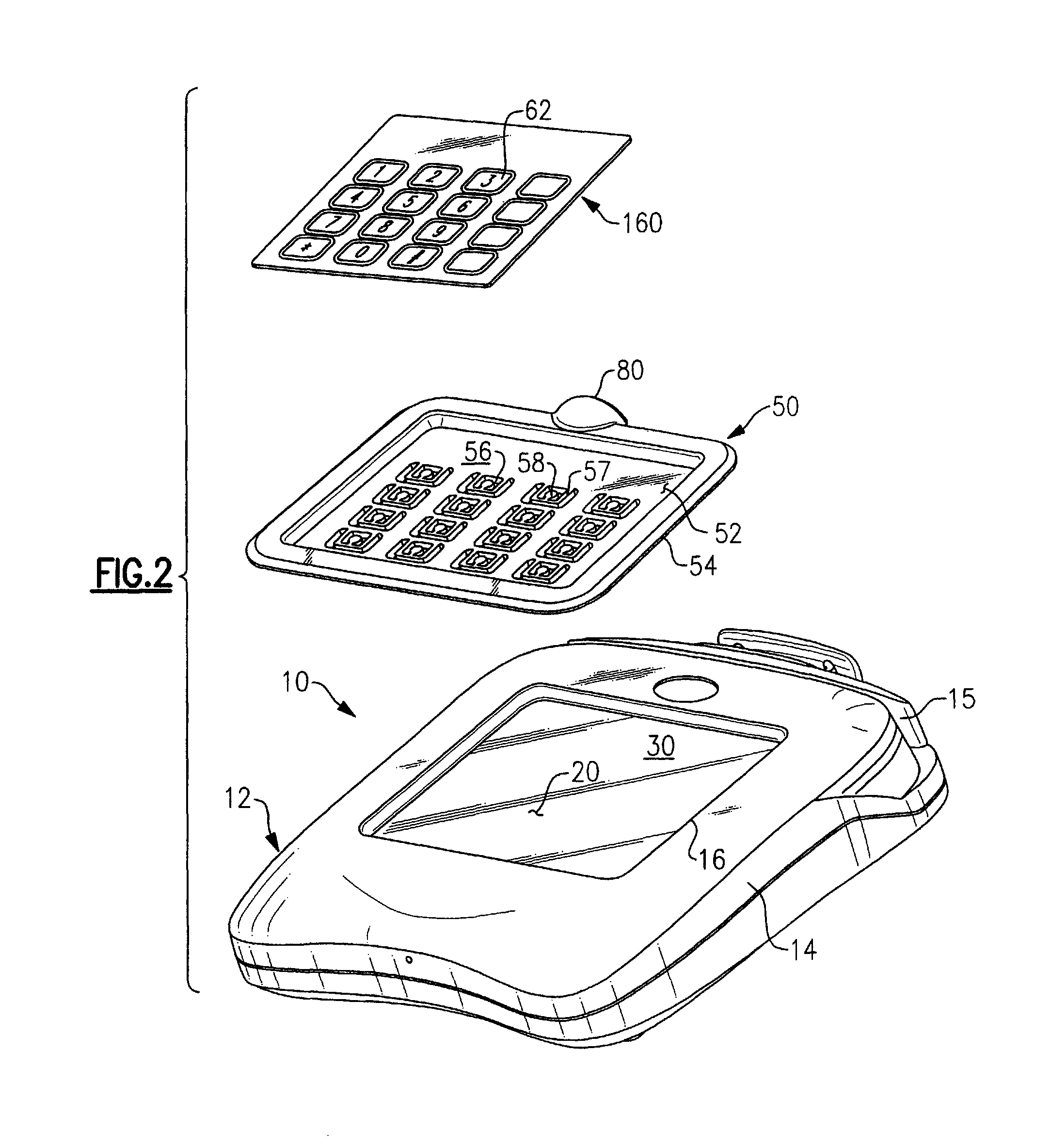

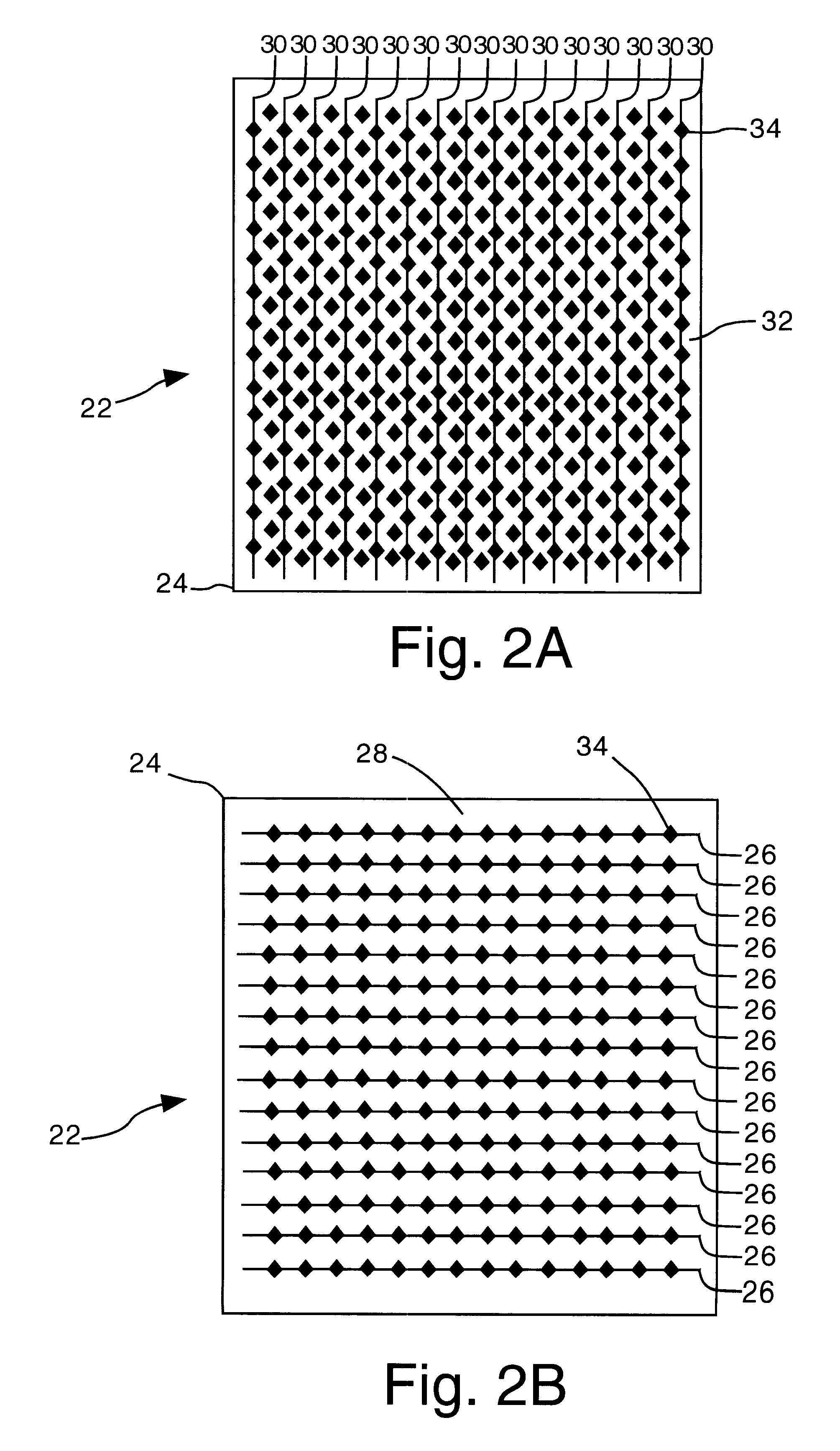

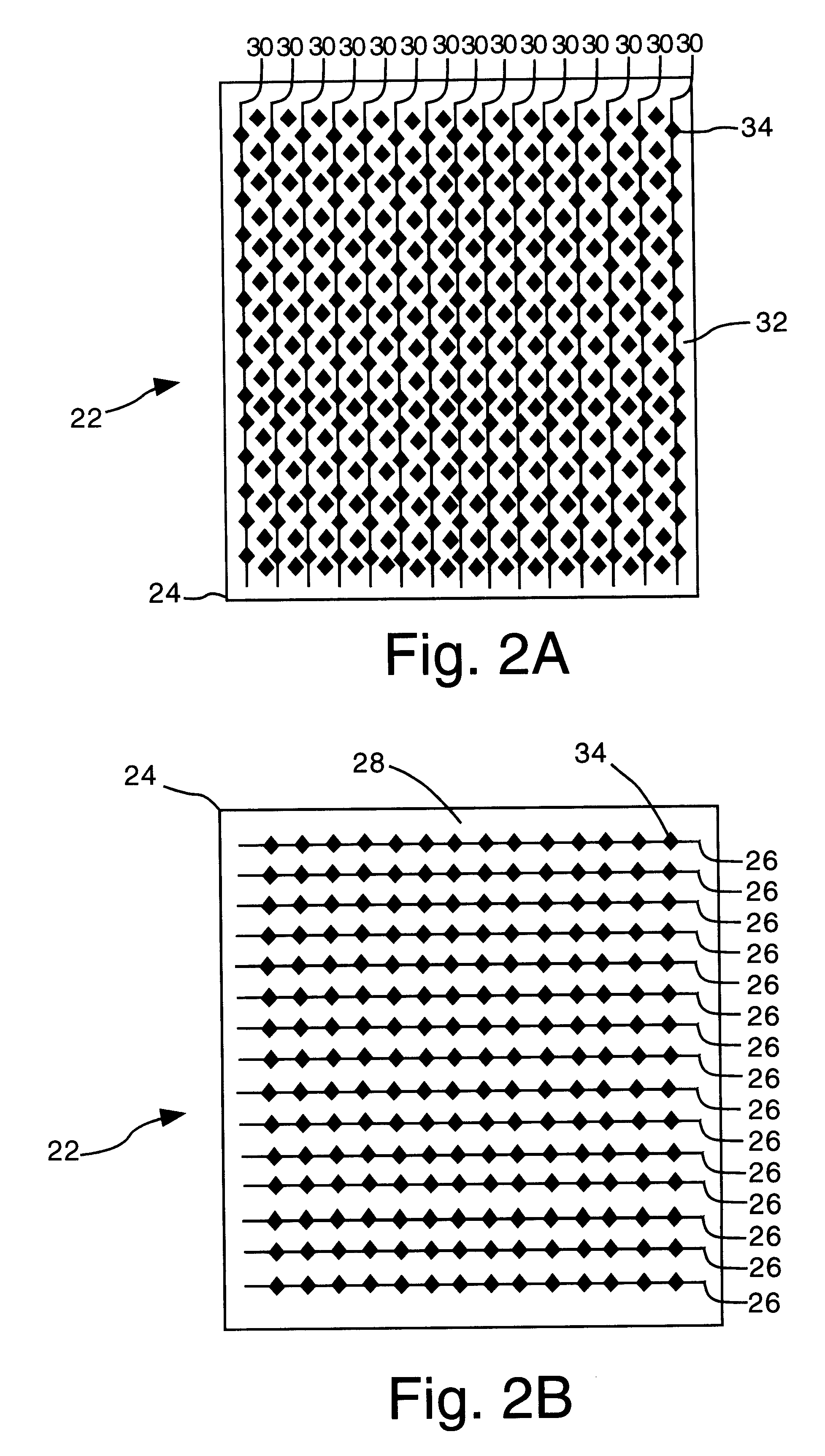

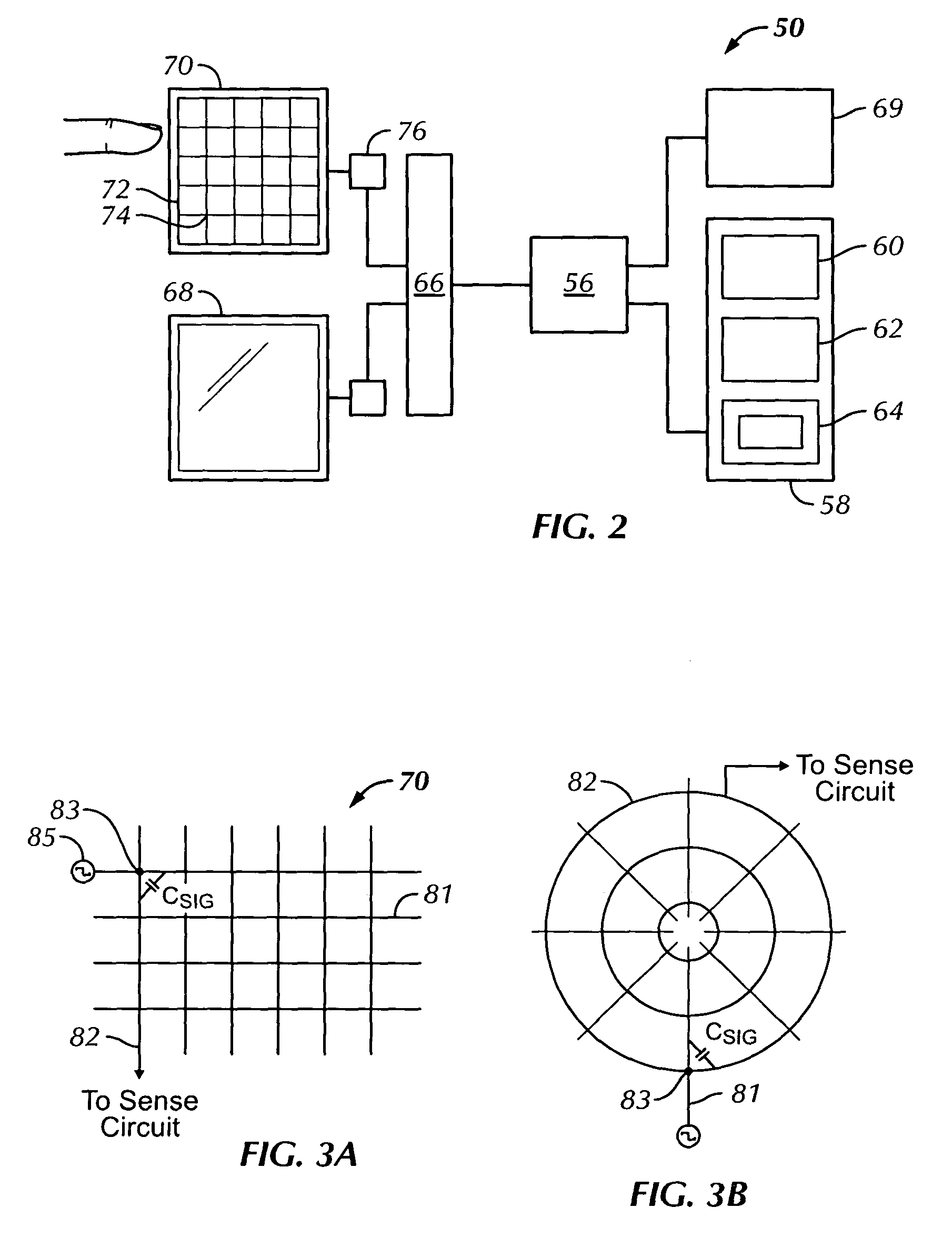

Flexible transparent touch sensing system for electronic devices

InactiveUS7030860B1Maximize transparencyAmount of overlapInput/output for user-computer interactionTransmission systemsDisplay deviceSensor observation

A transparent, capacitive sensing system particularly well suited for input to electronic devices is described. The sensing system can be used to emulate physical buttons or slider switches that are either displayed on an active display device or printed on an underlying surface. The capacitive sensor can further be used as an input device for a graphical user interface, especially if overlaid on top of an active display device like an LCD screen to sense finger position (X / Y position) and contact area (Z) over the display. In addition, the sensor can be made with flexible material for touch sensing on a three-dimensional surface. Because the sensor is substantially transparent, the underlying surface can be viewed through the sensor. This allows the underlying area to be used for alternative applications that may not necessarily be related to the sensing system. Examples include advertising, an additional user interface display, or apparatus such as a camera or a biometric security device.

Owner:SYNAPTICS INC

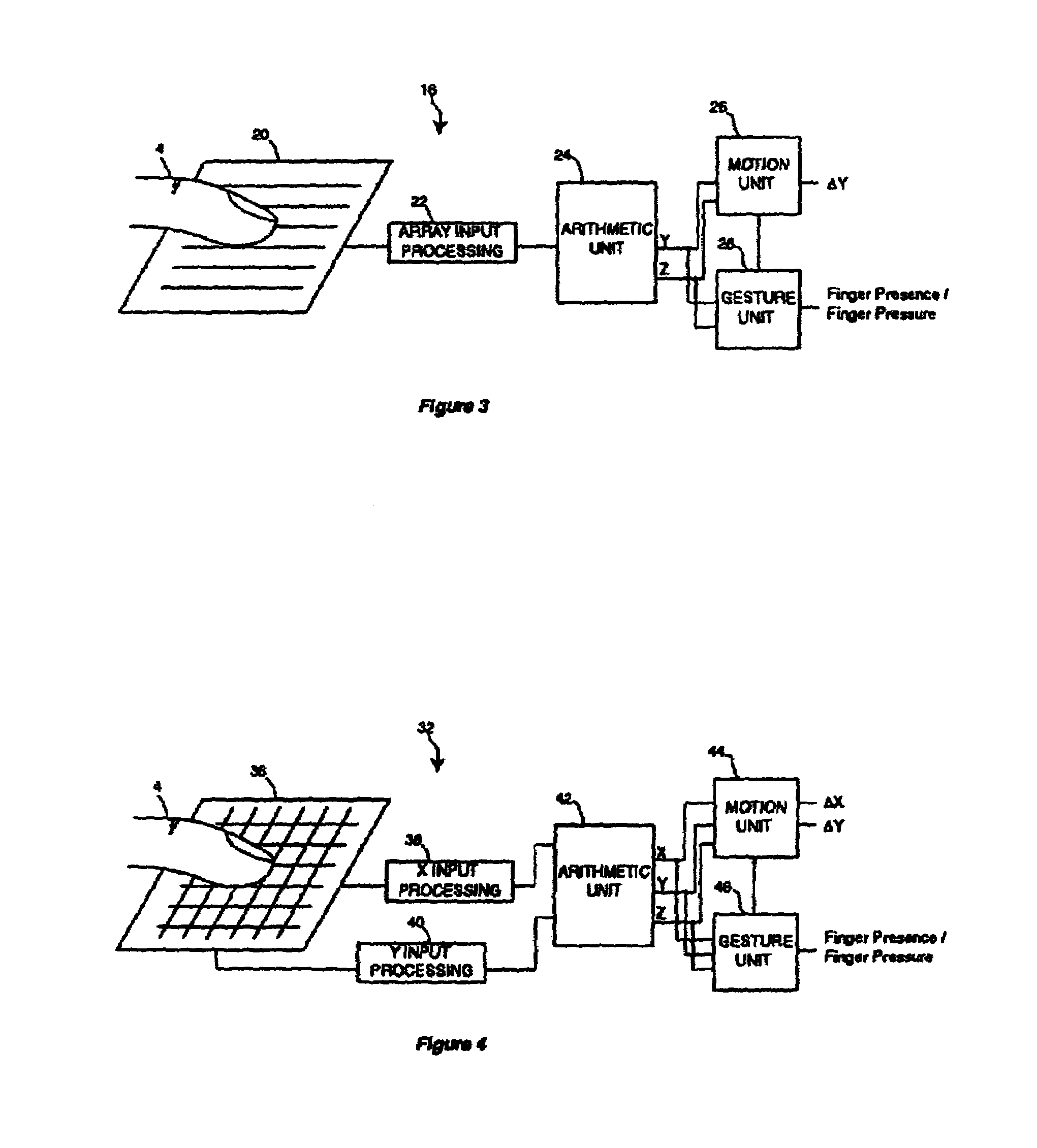

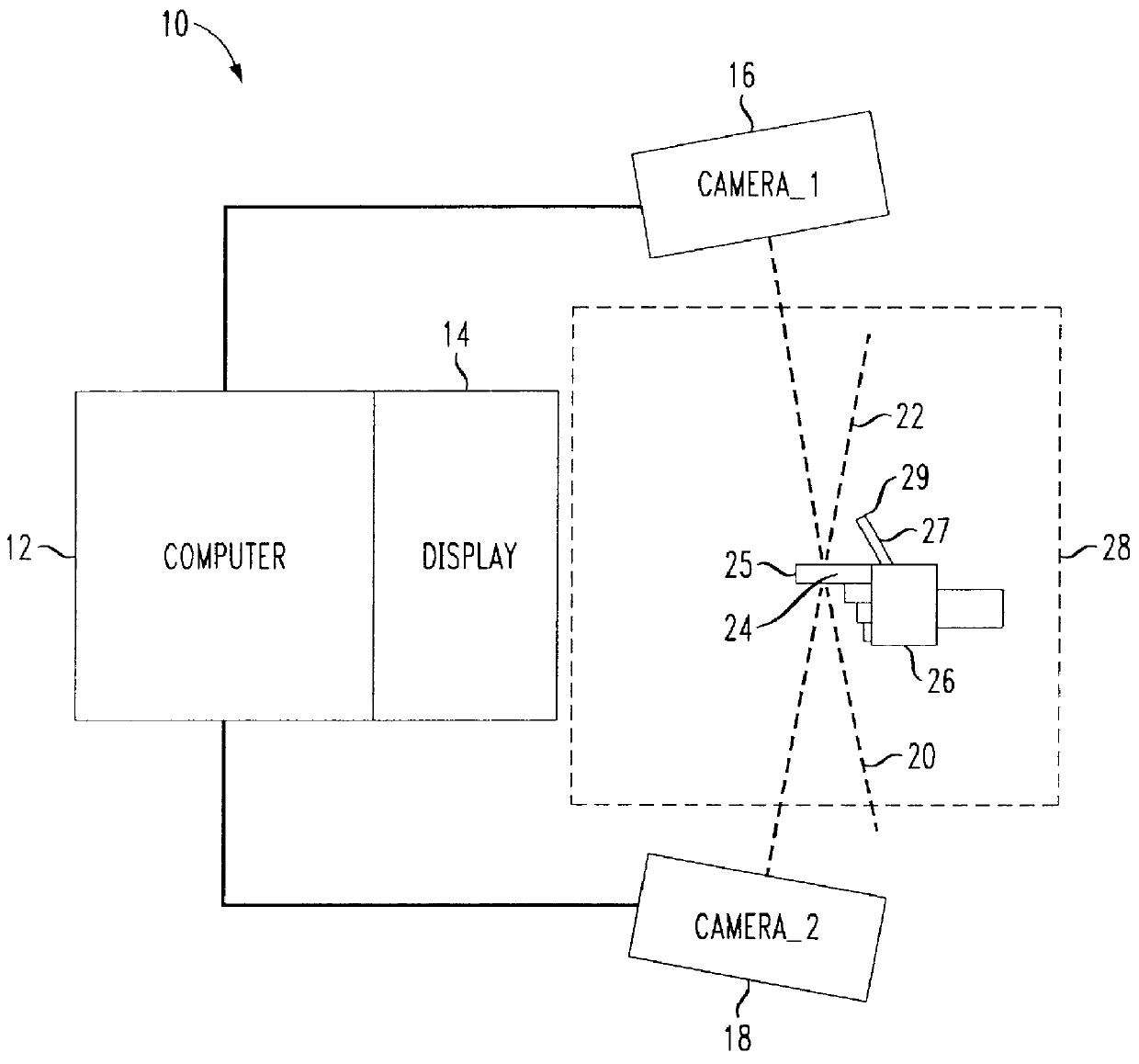

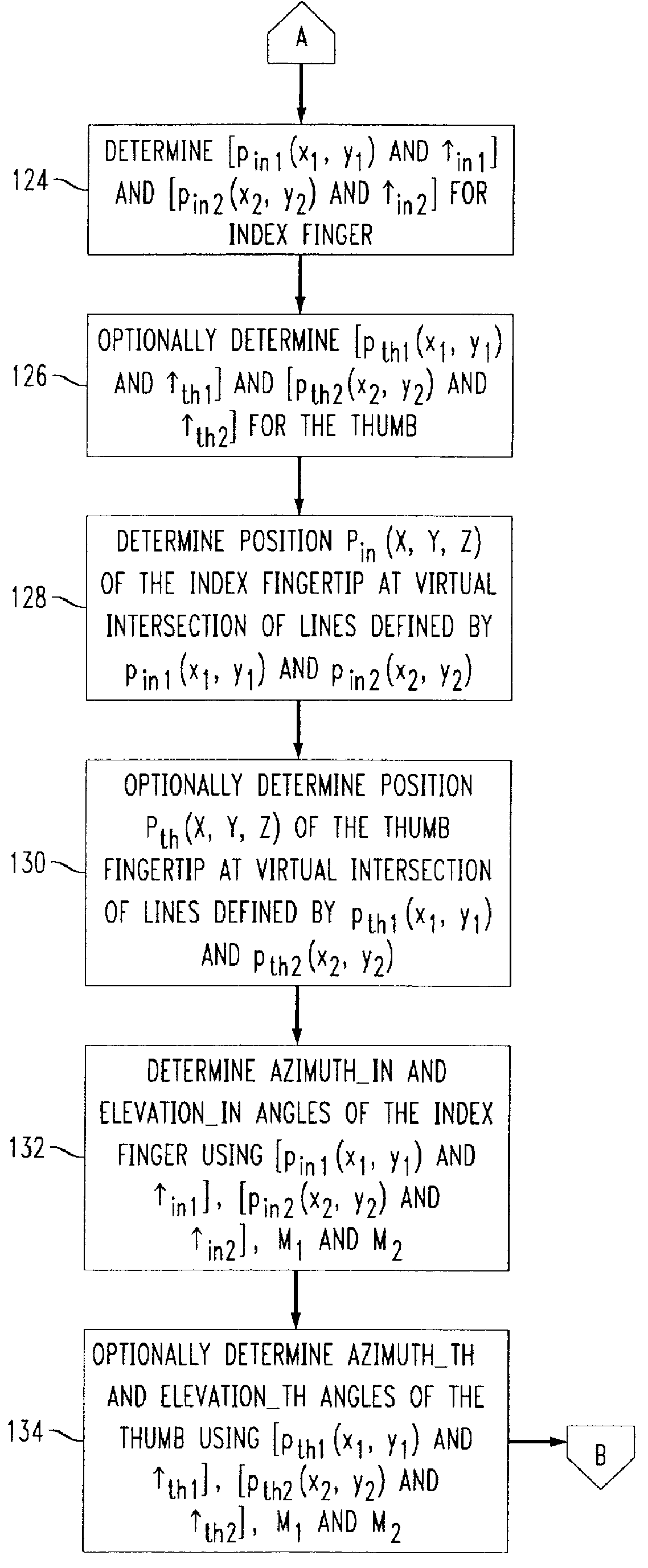

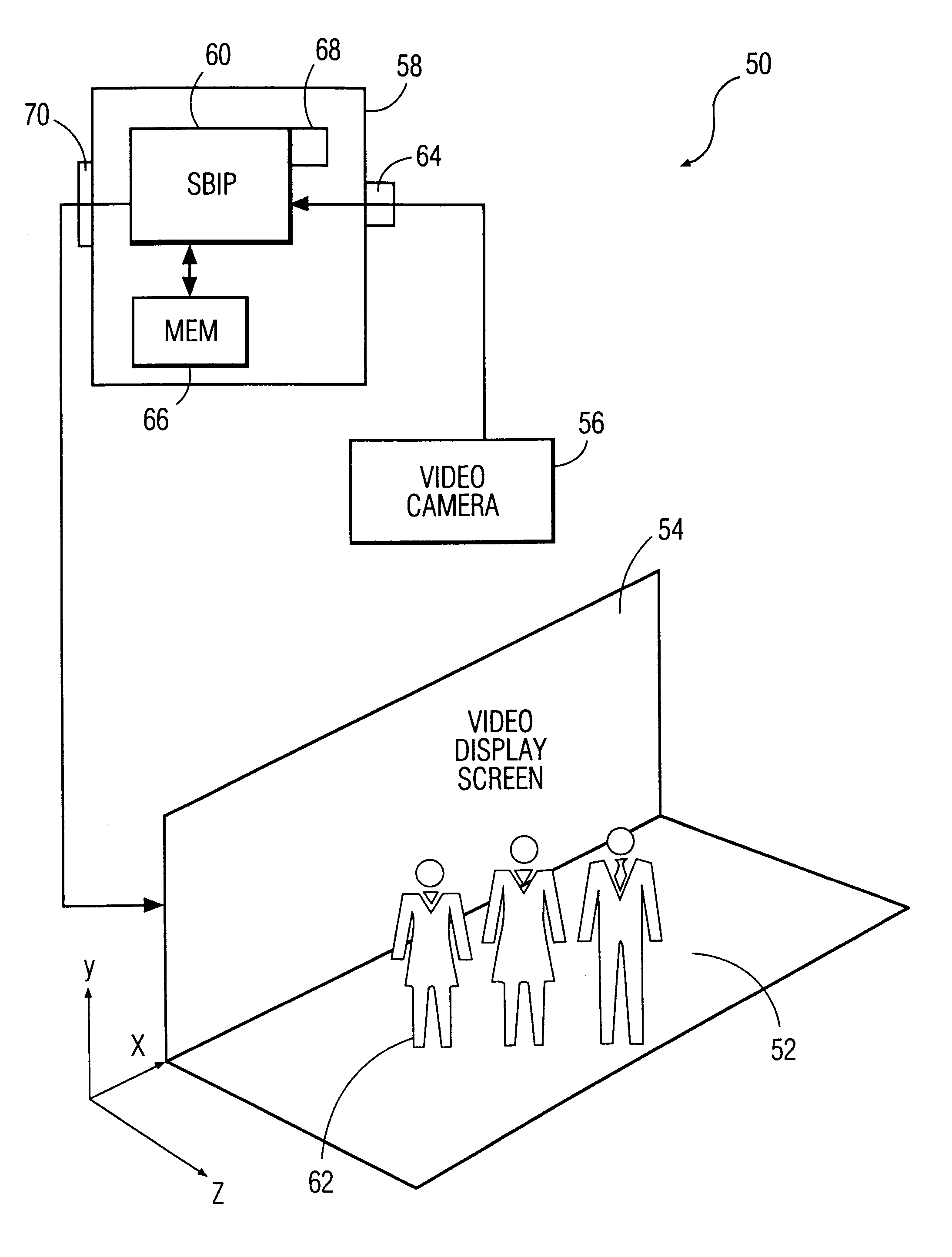

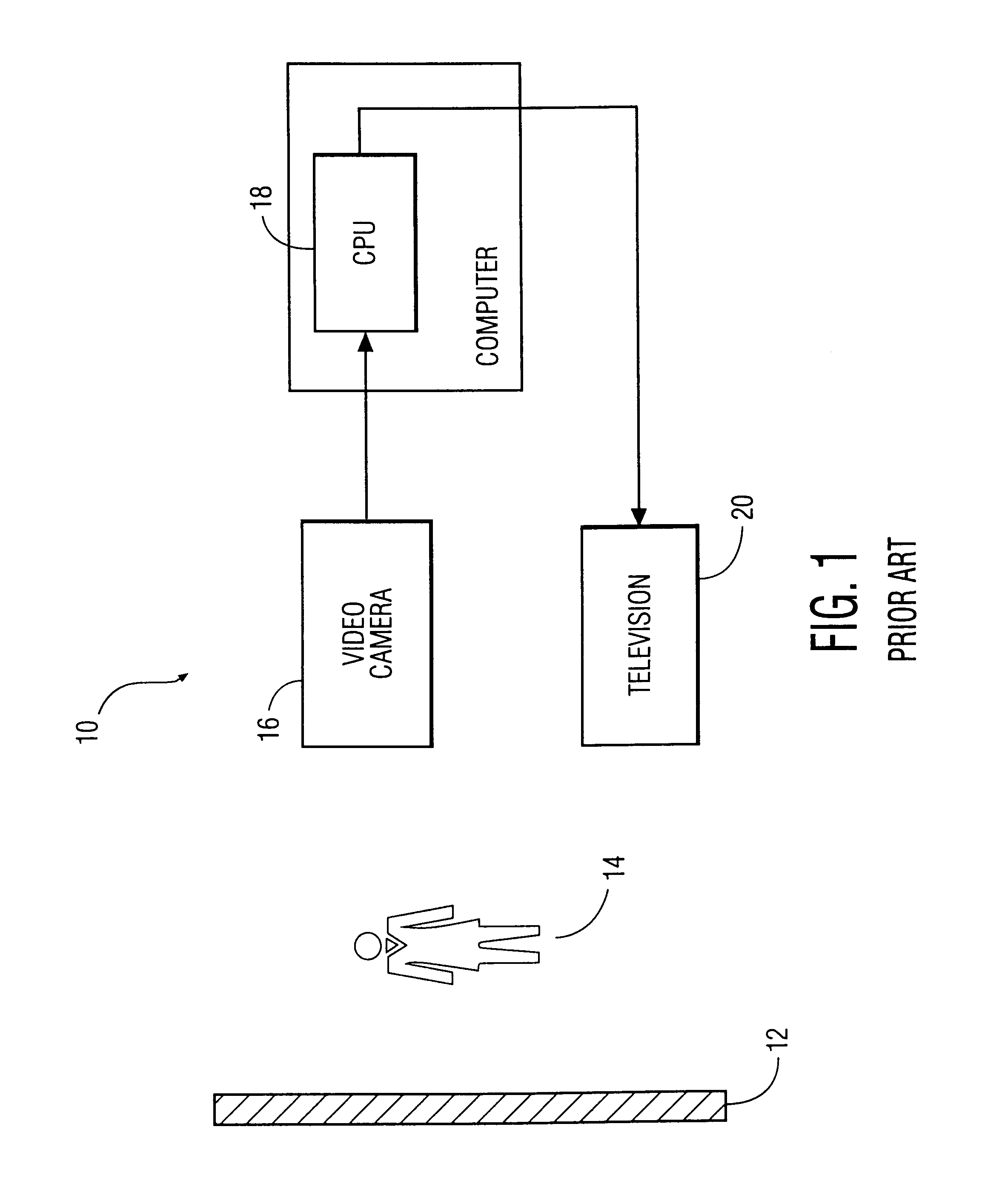

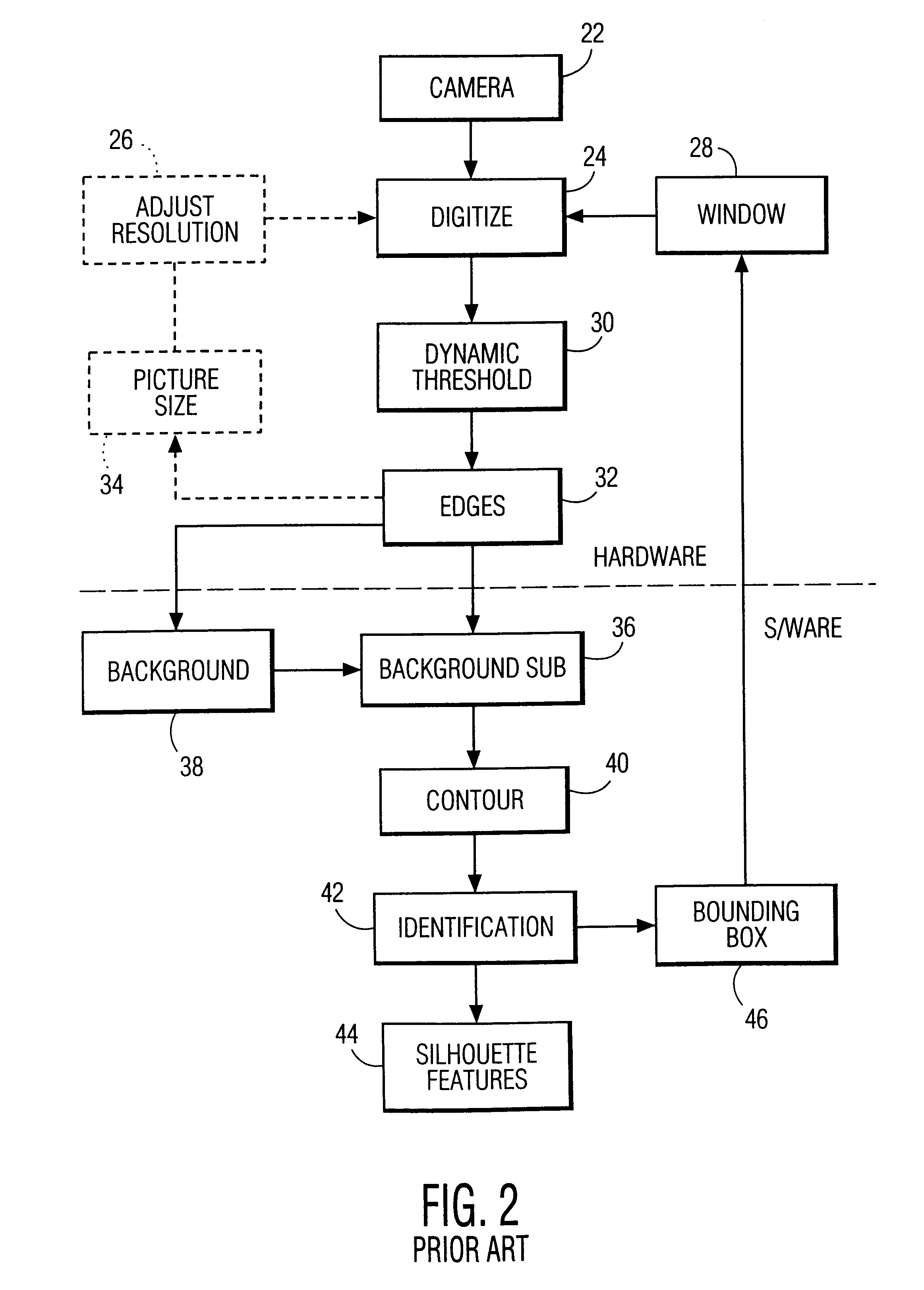

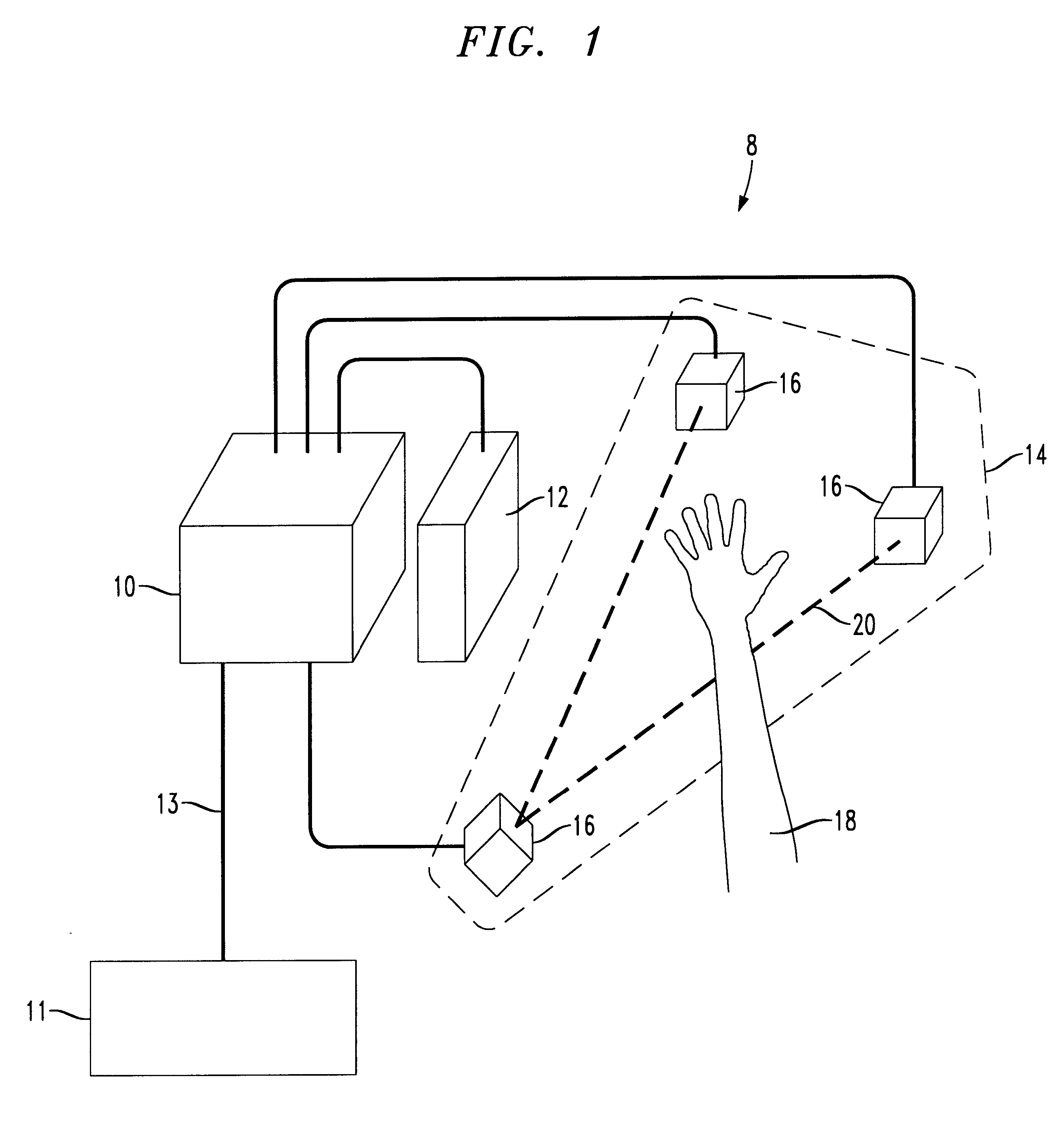

Video hand image-three-dimensional computer interface with multiple degrees of freedom

InactiveUS6147678AInput/output for user-computer interactionCharacter and pattern recognitionElevation angleImaging processing

A video gesture-based three-dimensional computer interface system that uses images of hand gestures to control a computer and that tracks motion of the user's hand or a portion thereof in a three-dimensional coordinate system with ten degrees of freedom. The system includes a computer with image processing capabilities and at least two cameras connected to the computer. During operation of the system, hand images from the cameras are continually converted to a digital format and input to the computer for processing. The results of the processing and attempted recognition of each image are then sent to an application or the like executed by the computer for performing various functions or operations. When the computer recognizes a hand gesture as a "point" gesture with one or two extended fingers, the computer uses information derived from the images to track three-dimensional coordinates of each extended finger of the user's hand with five degrees of freedom. The computer utilizes two-dimensional images obtained by each camera to derive three-dimensional position (in an x, y, z coordinate system) and orientation (azimuth and elevation angles) coordinates of each extended finger.

Owner:WSOU INVESTMENTS LLC +1

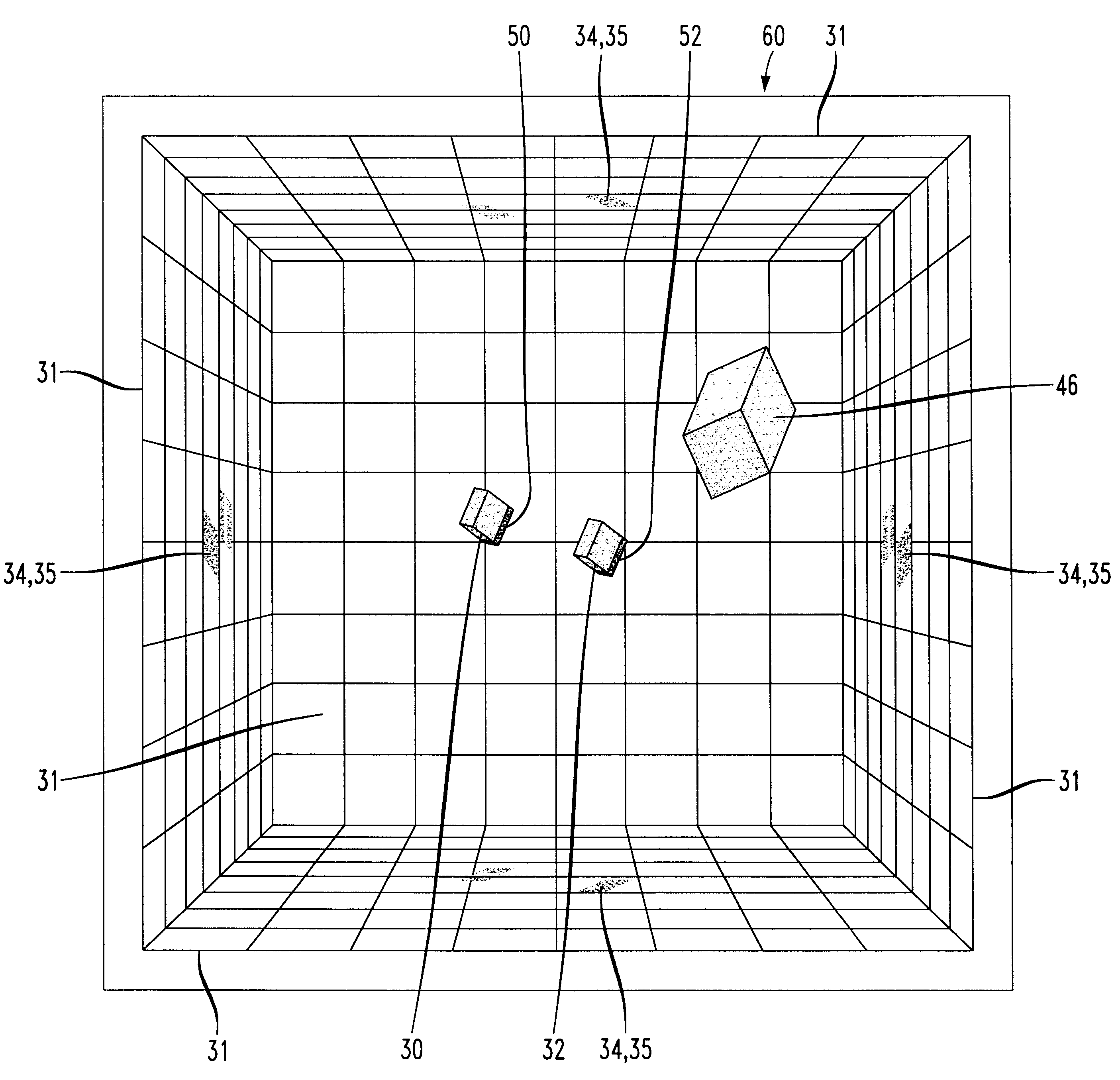

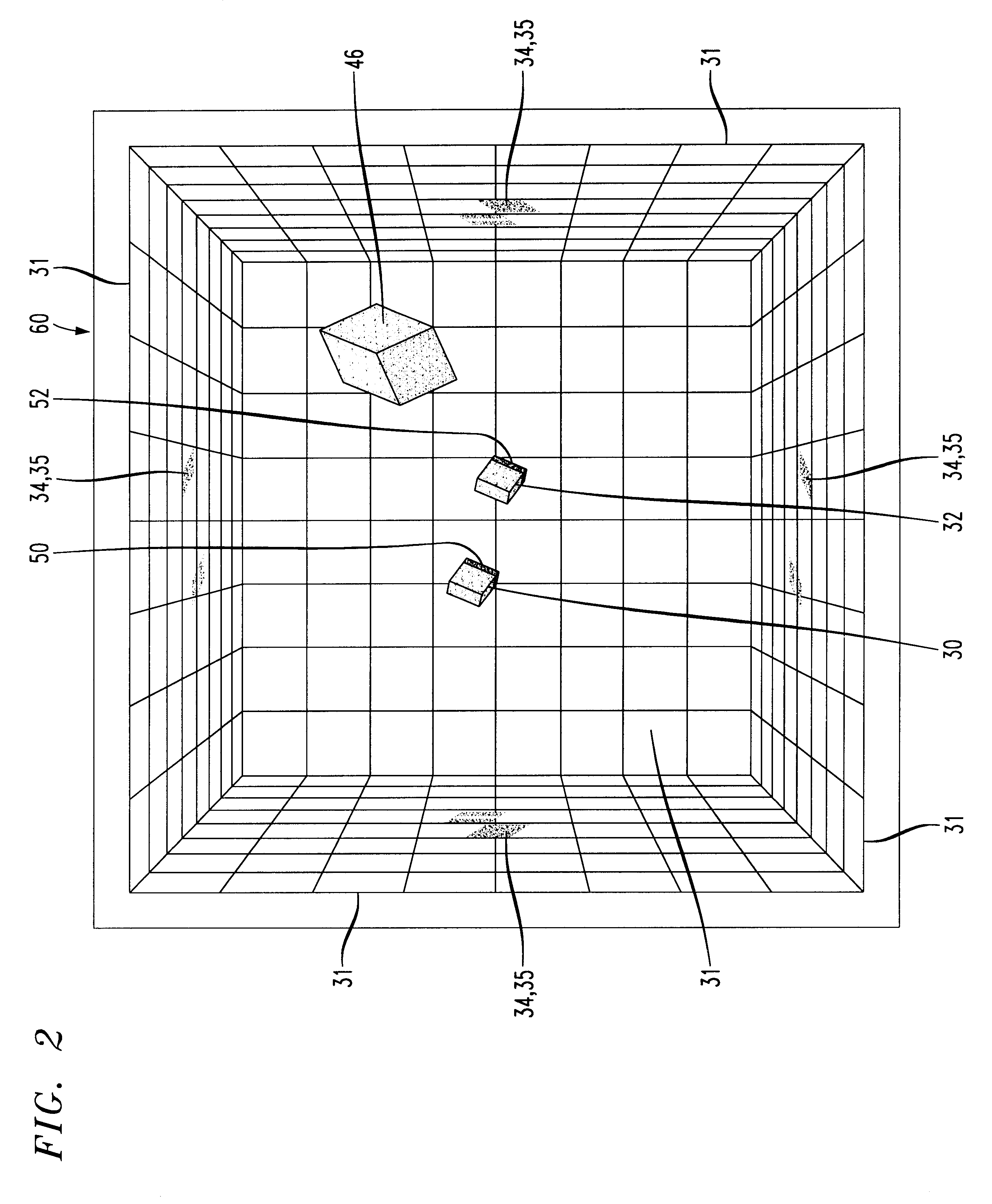

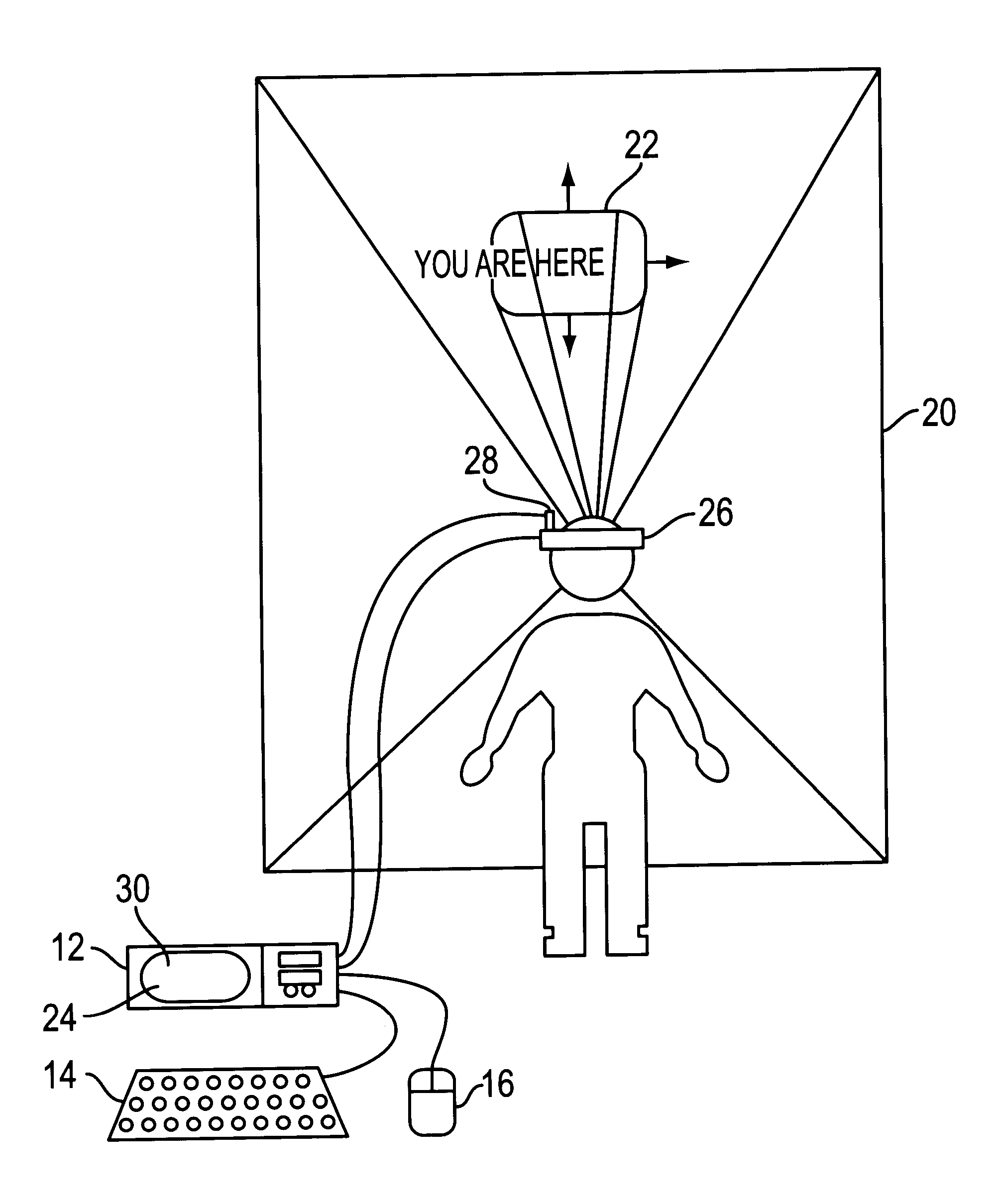

System and method for permitting three-dimensional navigation through a virtual reality environment using camera-based gesture inputs

InactiveUS6181343B1Input/output for user-computer interactionCosmonautic condition simulationsDisplay deviceThree dimensional graphics

A system and method for permitting three-dimensional navigation through a virtual reality environment using camera-based gesture inputs of a system user. The system comprises a computer-readable memory, a video camera for generating video signals indicative of the gestures of the system user and an interaction area surrounding the system user, and a video image display. The video image display is positioned in front of the system user. The system further comprises a microprocessor for processing the video signals, in accordance with a program stored in the computer-readable memory, to determine the three-dimensional positions of the body and principle body parts of the system user. The microprocessor constructs three-dimensional images of the system user and interaction area on the video image display based upon the three-dimensional positions of the body and principle body parts of the system user. The video image display shows three-dimensional graphical objects within the virtual reality environment, and movement by the system user permits apparent movement of the three-dimensional objects displayed on the video image display so that the system user appears to move throughout the virtual reality environment.

Owner:PHILIPS ELECTRONICS NORTH AMERICA

Gesture-based computer interface

InactiveUS6222465B1Input/output for user-computer interactionCharacter and pattern recognitionFree formHand movements

A system and method for manipulating virtual objects in a virtual environment, for drawing curves and ribbons in the virtual environment, and for selecting and executing commands for creating, deleting, moving, changing, and resizing virtual objects in the virtual environment using intuitive hand gestures and motions. The system is provided with a display for displaying the virtual environment and with a video gesture recognition subsystem for identifying motions and gestures of a user's hand. The system enables the user to manipulate virtual objects, to draw free-form curves and ribbons and to invoke various command sets and commands in the virtual environment by presenting particular predefined hand gestures and / or hand movements to the video gesture recognition subsystem.

Owner:LUCENT TECH INC

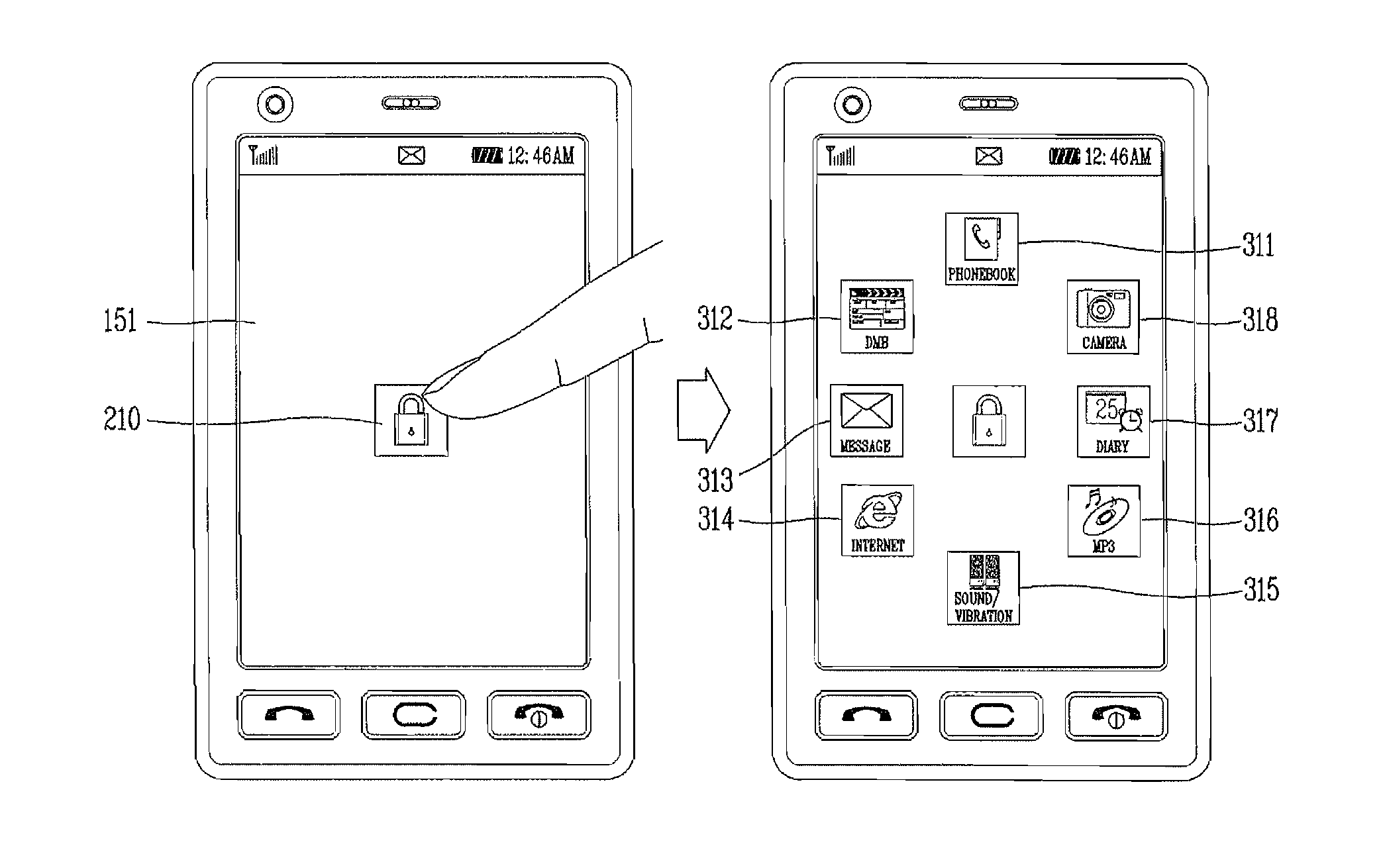

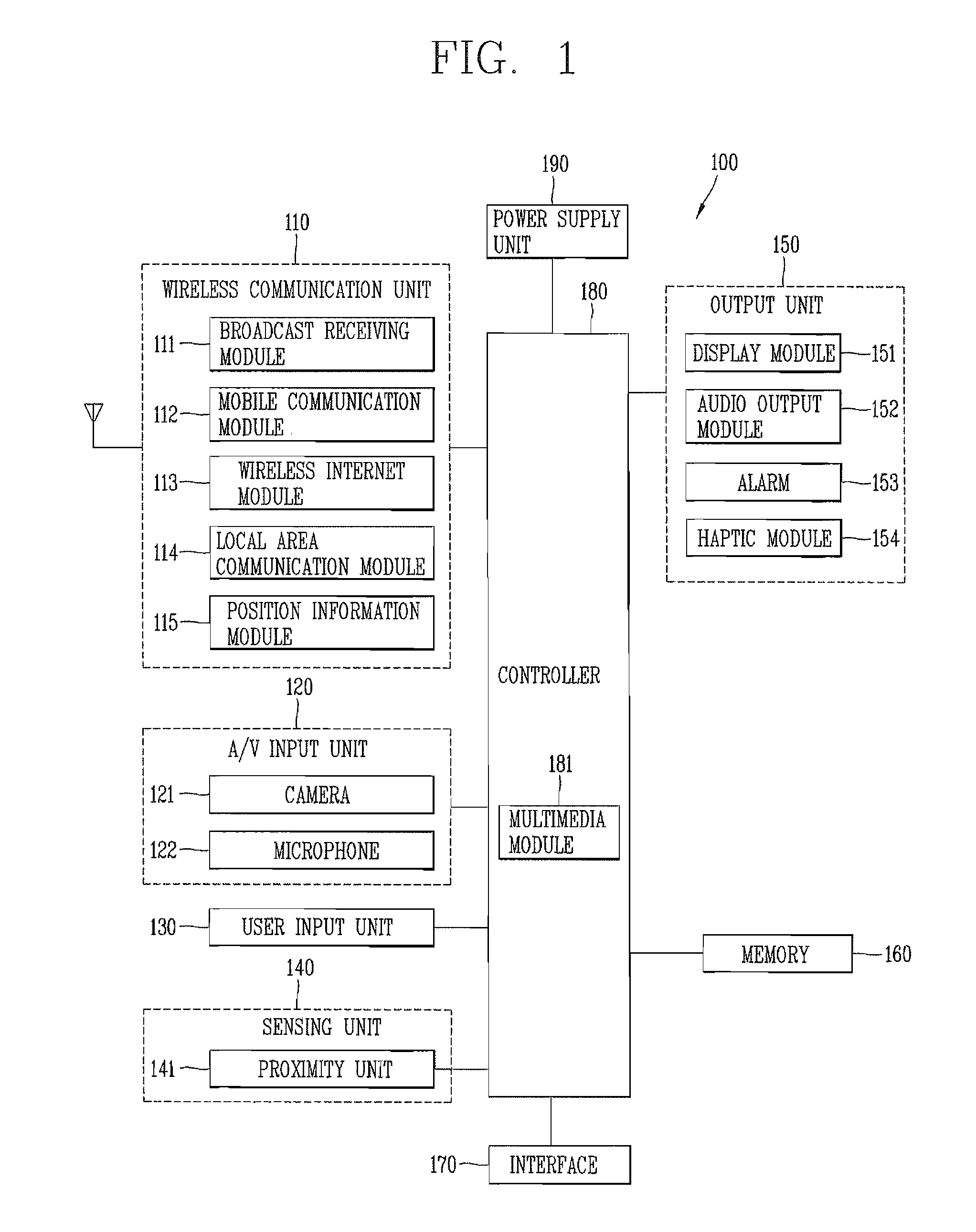

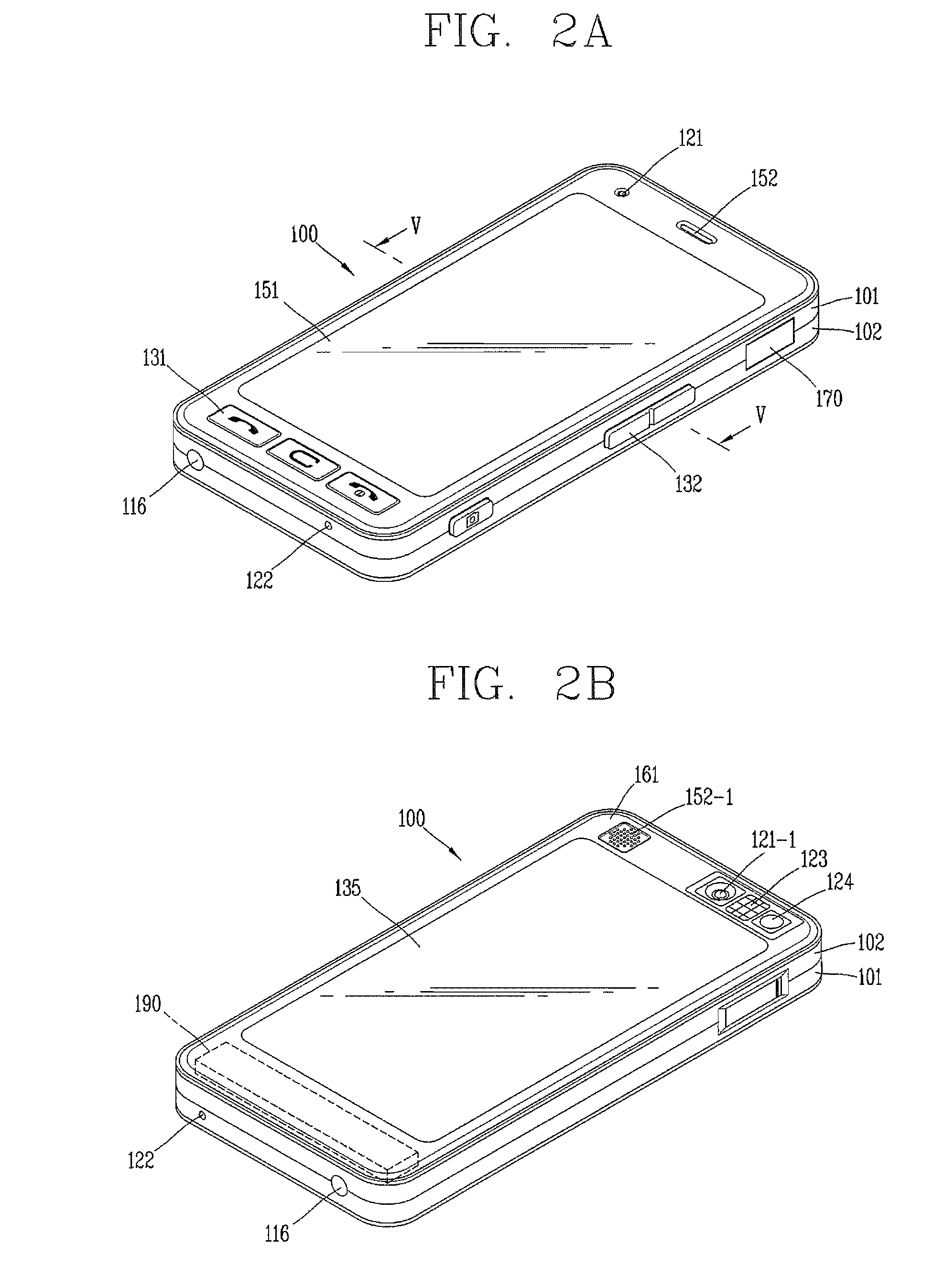

Mobile terminal and control method thereof

ActiveUS20100269040A1Input/output for user-computer interactionDigital data processing detailsComputer terminalComputer science

Owner:BRITISH TELECOMM PLC

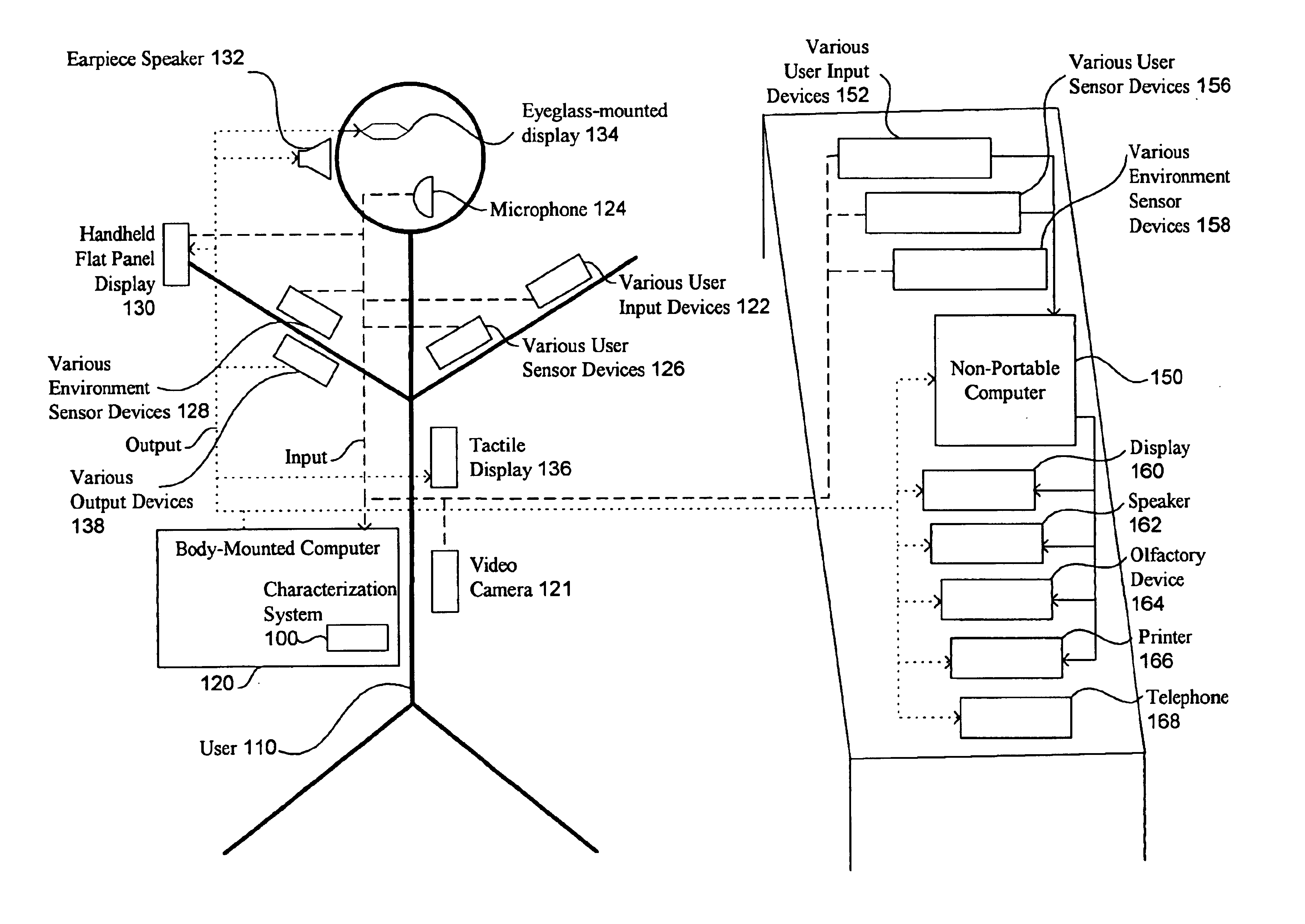

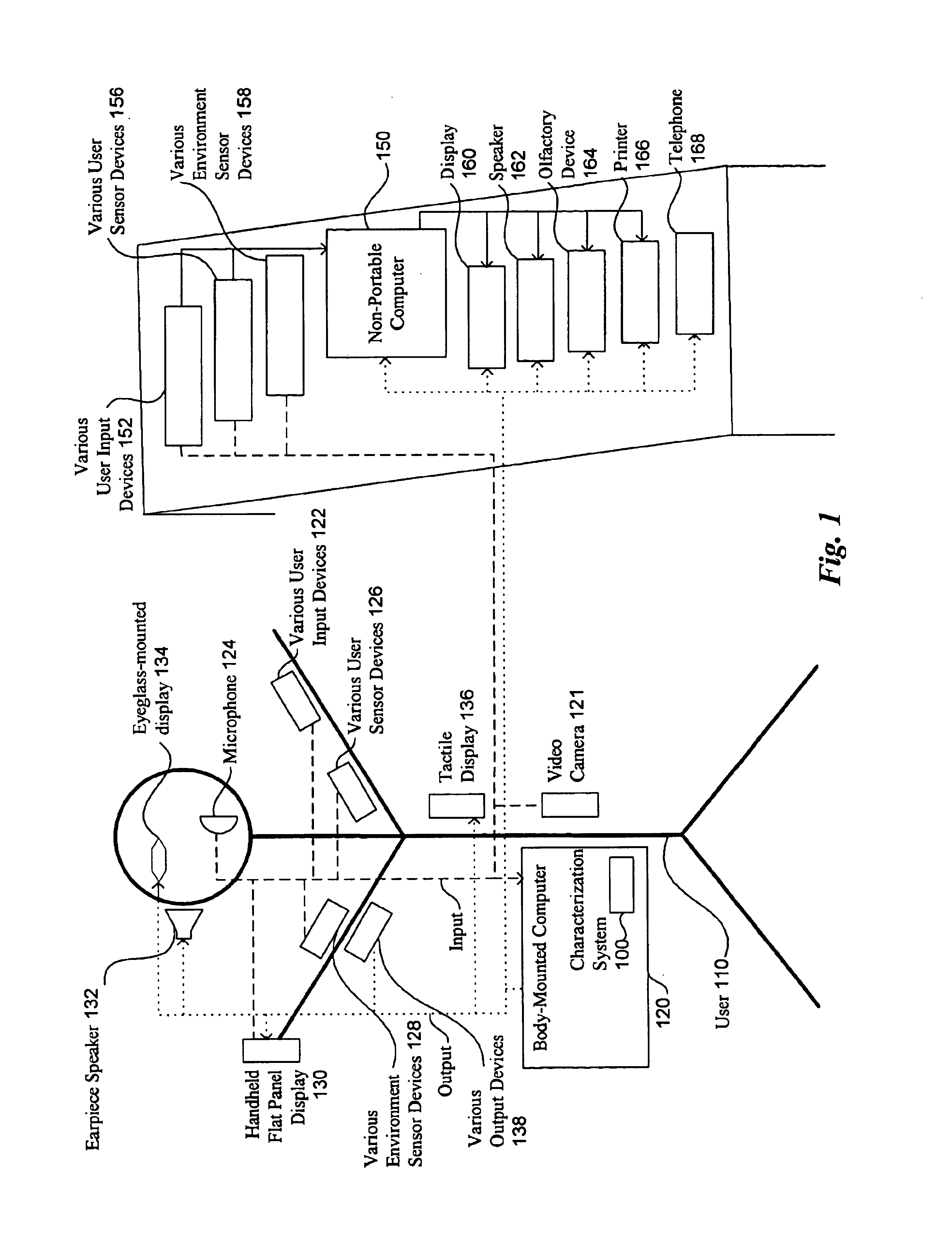

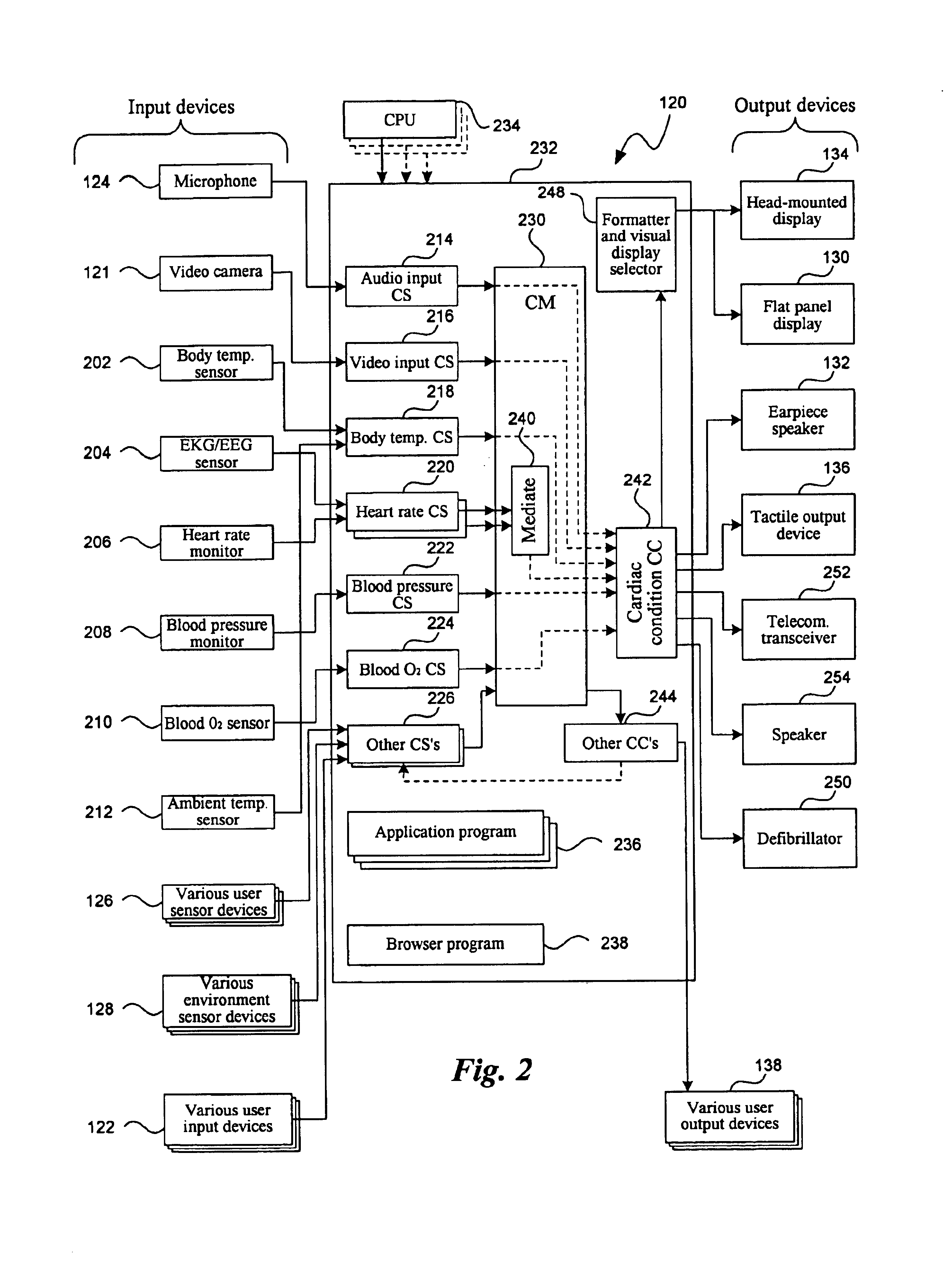

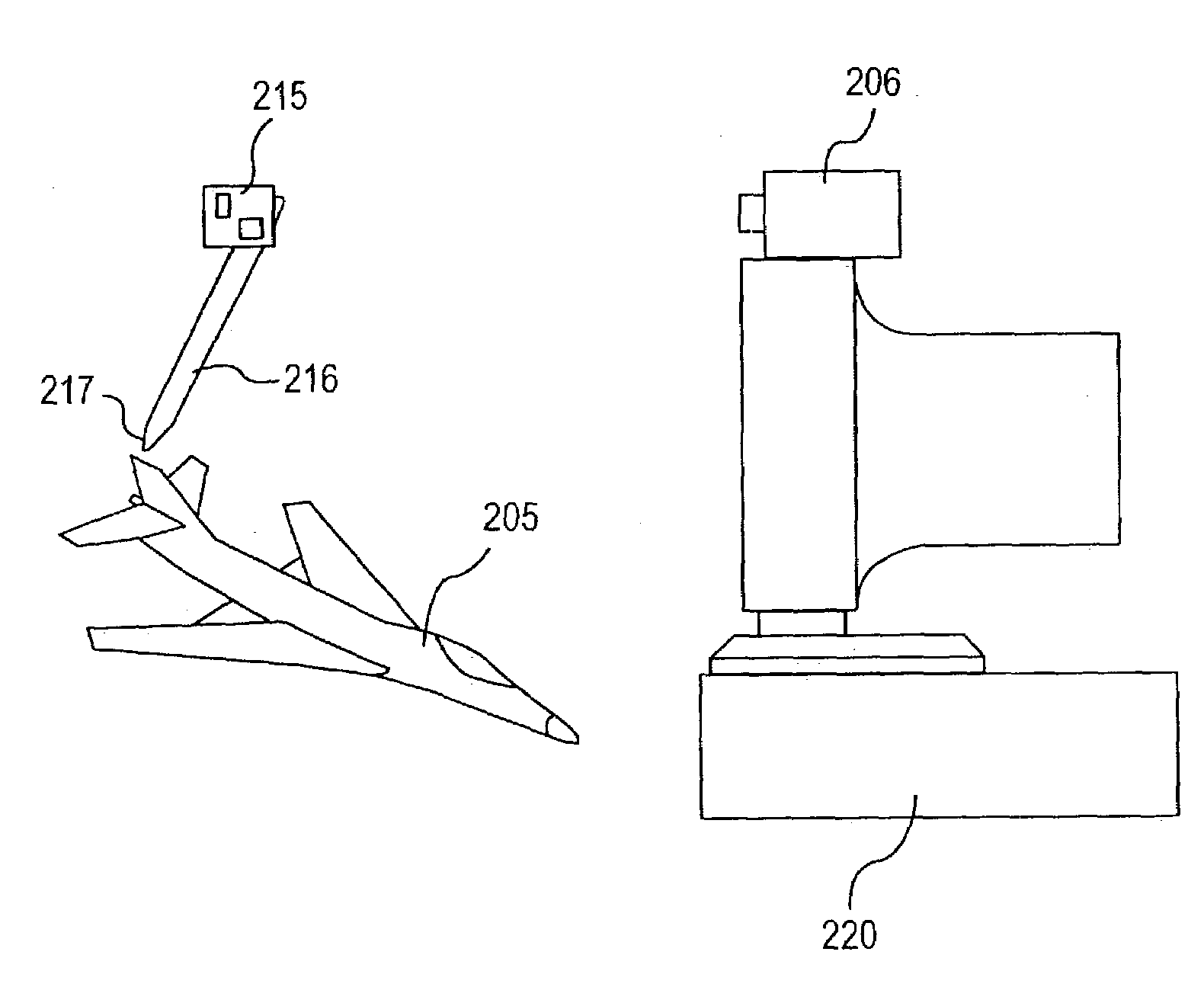

Man machine interfaces and applications

InactiveUS7042440B2Avoid carpal tunnel syndromeImprove efficiencyInput/output for user-computer interactionElectrophonic musical instrumentsComputer Aided DesignHuman–machine interface

Affordable methods and apparatus are disclosed for inputting position, attitude (orientation) or other object characteristic data to computers for the purpose of Computer Aided Design, Painting, Medicine, Teaching, Gaming, Toys, Simulations, Aids to the disabled, and internet or other experiences. Preferred embodiments of the invention utilize electro-optical sensors, and particularly TV Cameras, providing optically inputted data from specialized datum's on objects and / or natural features of objects. Objects can be both static and in motion, from which individual datum positions and movements can be derived, also with respect to other objects both fixed and moving. Real-time photogrammetry is preferably used to determine relationships of portions of one or more datums with respect to a plurality of cameras or a single camera processed by a conventional PC.

Owner:PRYOR TIMOTHY R +1

Transaction terminal and adaptor therefor

Owner:HAND HELD PRODS

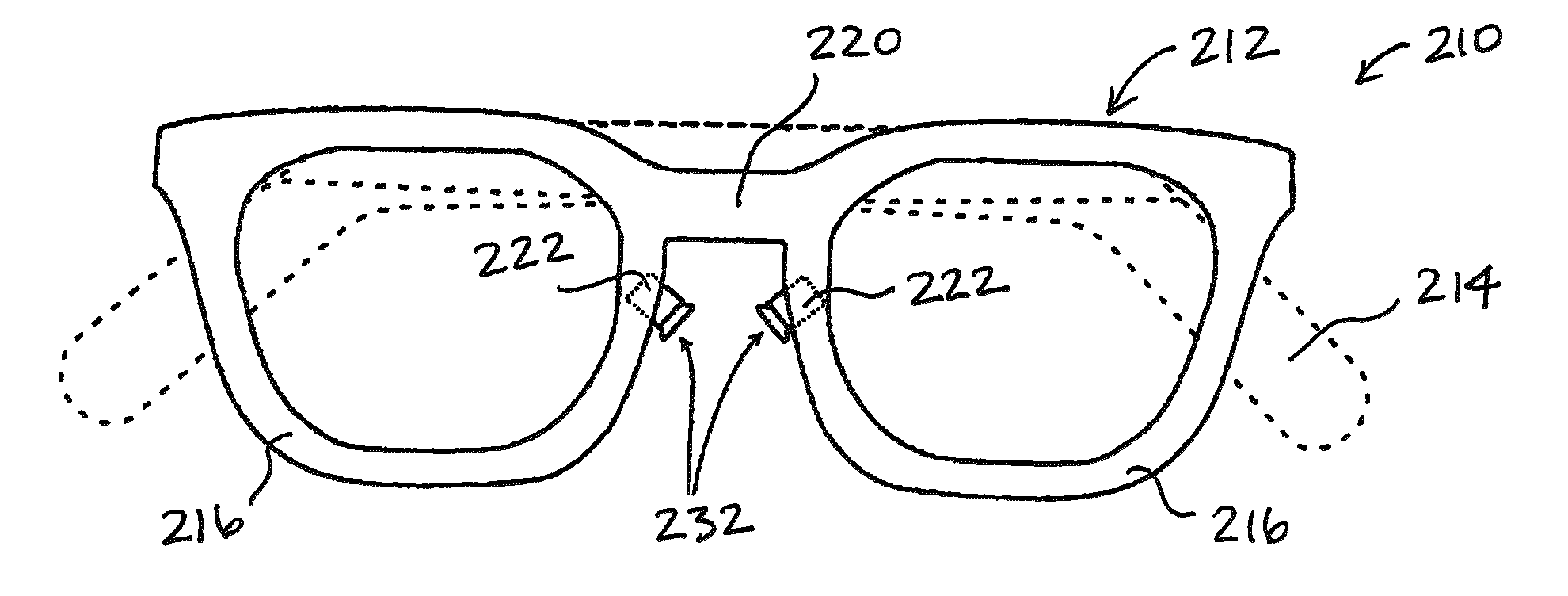

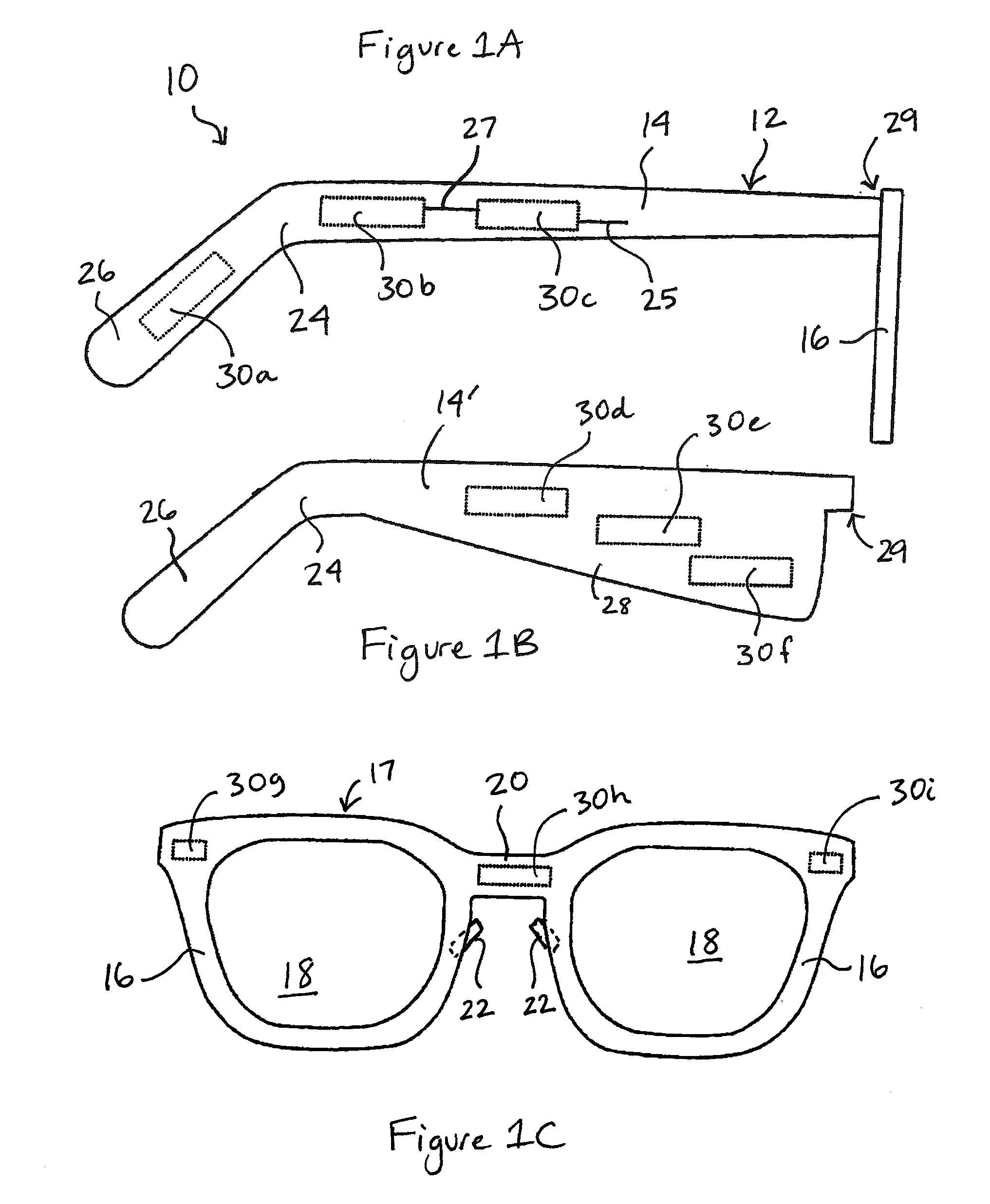

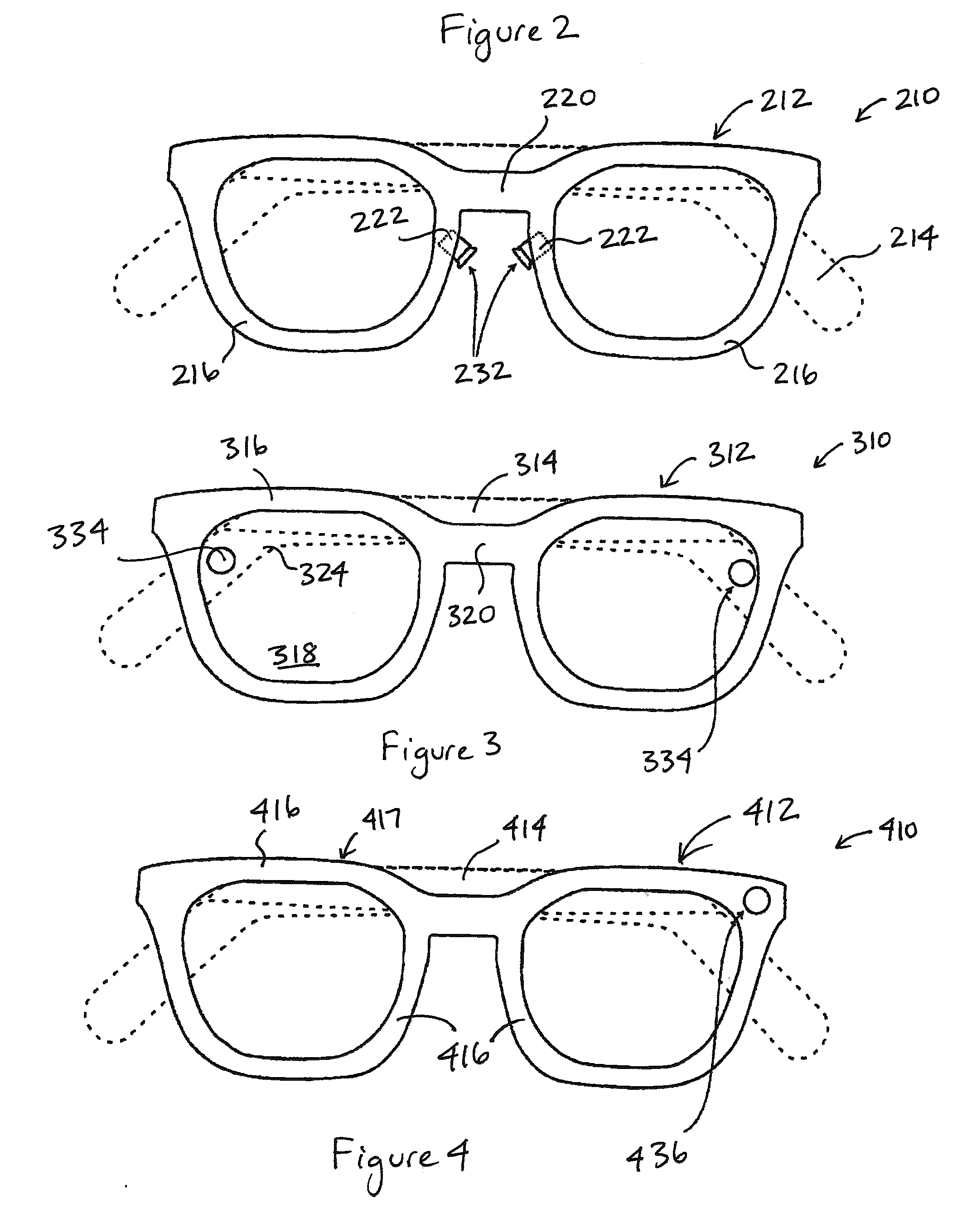

System and apparatus for eyeglass appliance platform

InactiveUS20100110368A1Input/output for user-computer interactionNon-optical adjunctsOutput deviceActuator

The present invention relates to a personal multimedia electronic device, and more particularly to a head-worn device such as an eyeglass frame having a plurality of interactive electrical / optical components. In one embodiment, a personal multimedia electronic device includes an eyeglass frame having a side arm and an optic frame; an output device for delivering an output to the wearer; an input device for obtaining an input; and a processor comprising a set of programming instructions for controlling the input device and the output device. The output device is supported by the eyeglass frame and is selected from the group consisting of a speaker, a bone conduction transmitter, an image projector, and a tactile actuator. The input device is supported by the eyeglass frame and is selected from the group consisting of an audio sensor, a tactile sensor, a bone conduction sensor, an image sensor, a body sensor, an environmental sensor, a global positioning system receiver, and an eye tracker. In one embodiment, the processor applies a user interface logic that determines a state of the eyeglass device and determines the output in response to the input and the state.

Owner:CHAUM DAVID

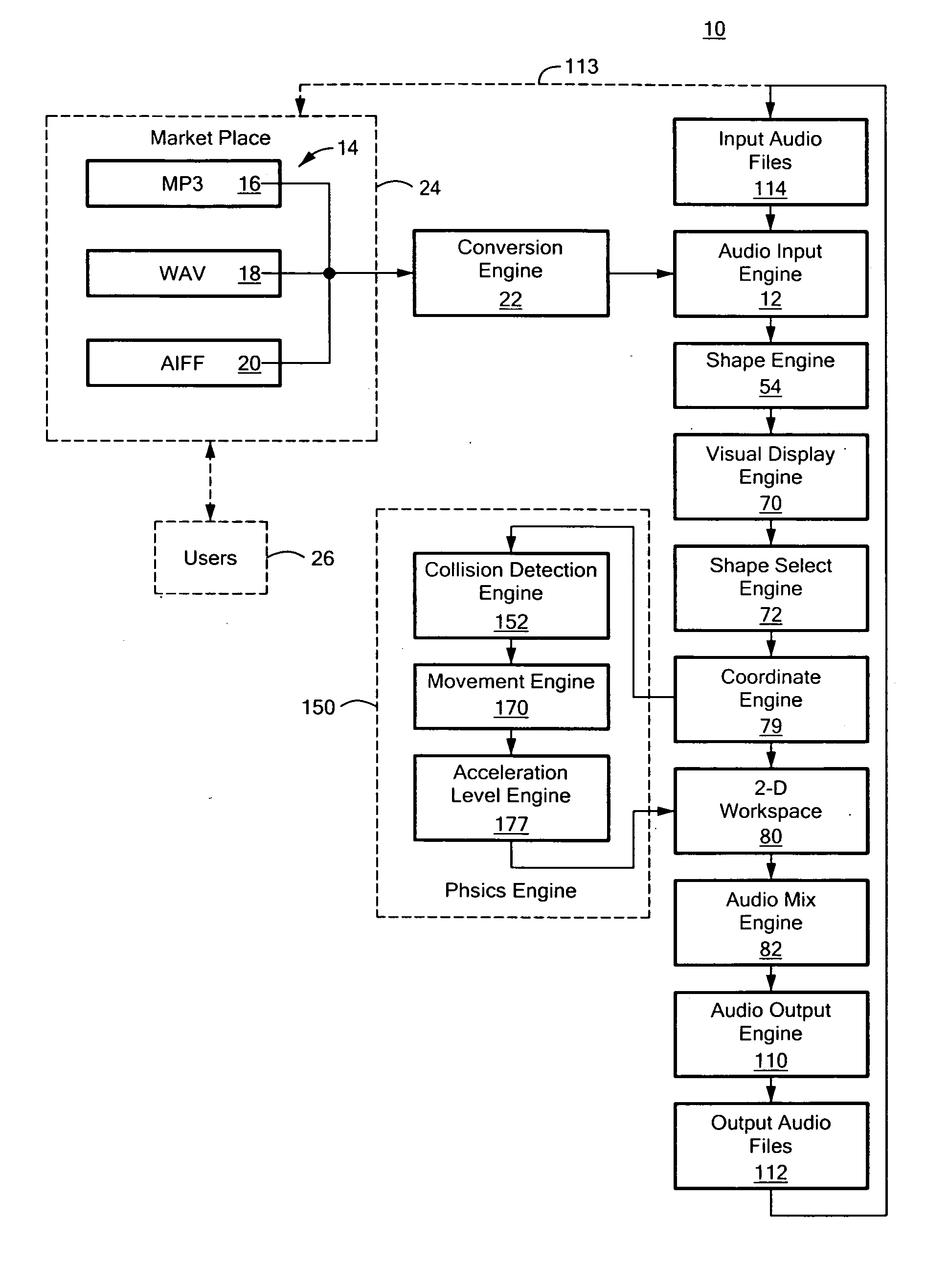

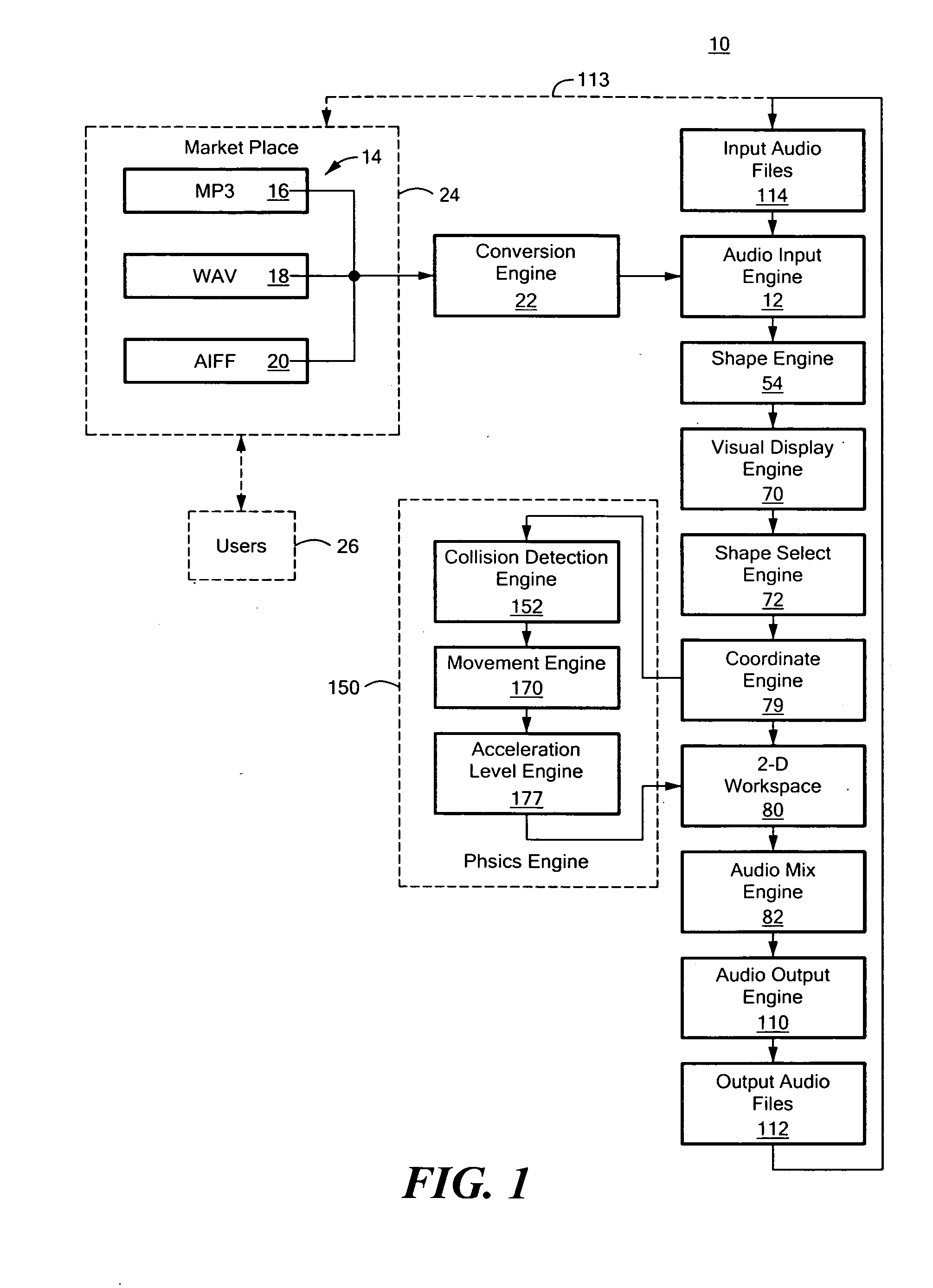

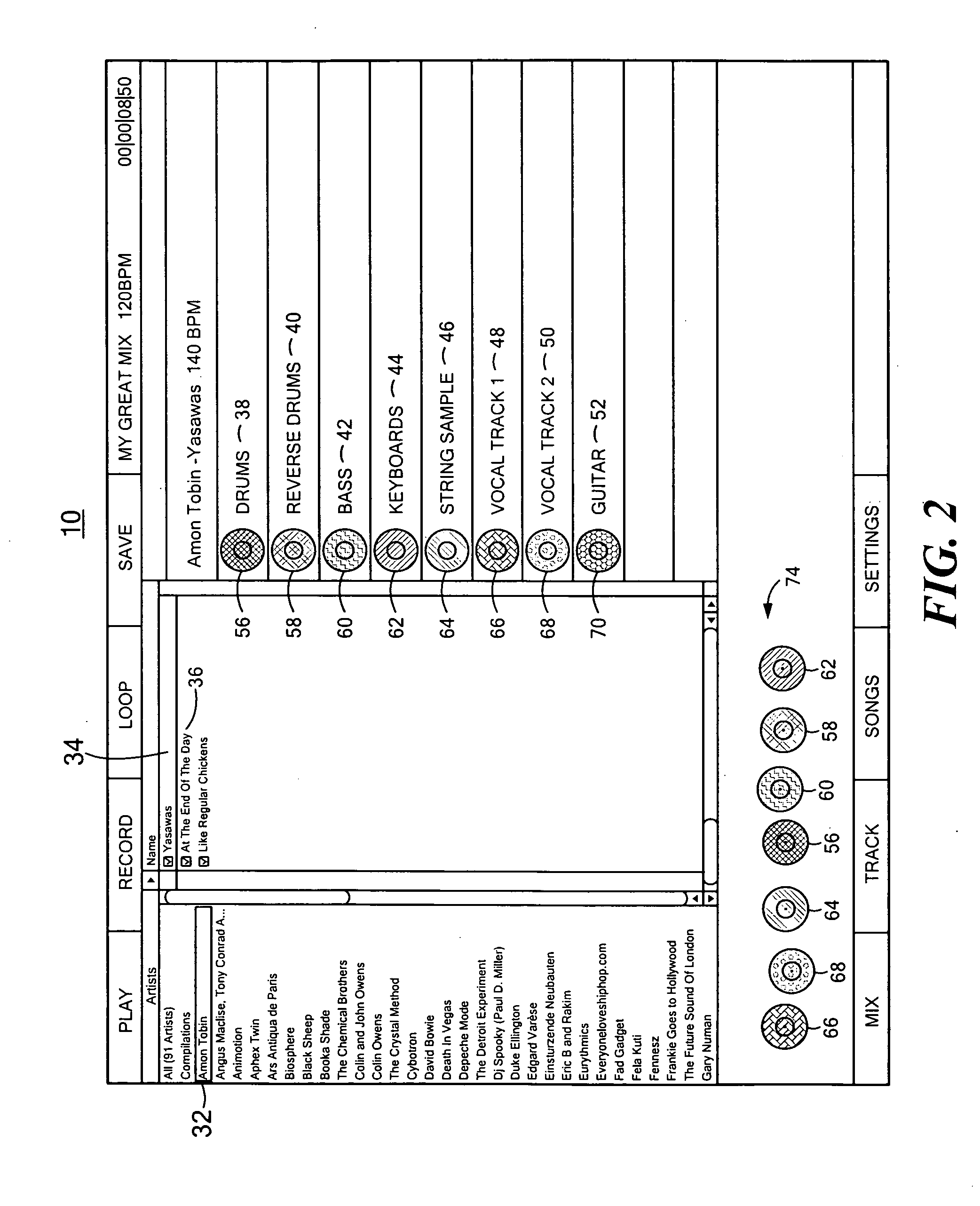

Visual audio mixing system and method thereof

InactiveUS20110271186A1Input/output for user-computer interactionElectronic editing digitised analogue information signalsHybrid systemWorkspace

A visual audio mixing system which includes an audio input engine configured to input one or more audio files each associated with a channel. A shape engine is responsive to the audio input engine and is configured to create a unique visual image of a definable shape and / or color for each of the one or more of audio files. A visual display engine is responsive to the shape engine and is configured to display each visual image. A shape select engine is responsive to the visual display engine and is configured to provide selection of one or more visual images. The system includes a two-dimensional workspace. A coordinate engine is responsive to the shape select engine and is configured to instantiate selected visual images in the two-dimensional workspace. A mix engine is responsive to coordinate engine and is configured to mix the visual images instantiated in the two-dimensional workspace such that user provided movement of one or more of the visual images in one direction represents volume and user provided movement in another direction represents pan to provide a visual and audio representation of each audio file and its associated channel.

Owner:SHAPEMIX MUSIC

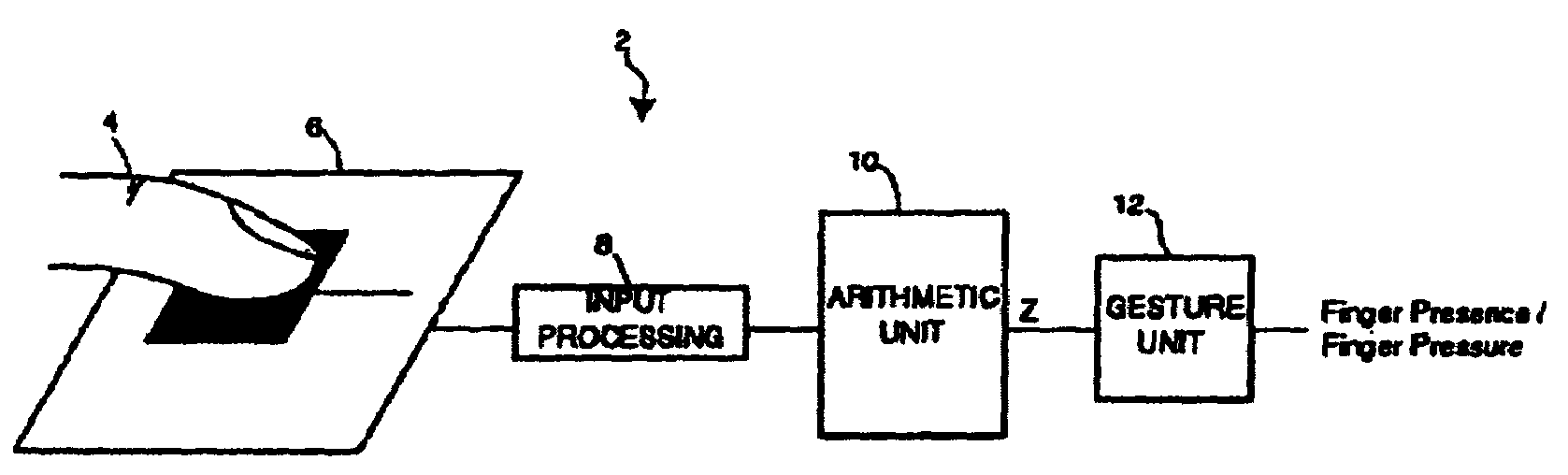

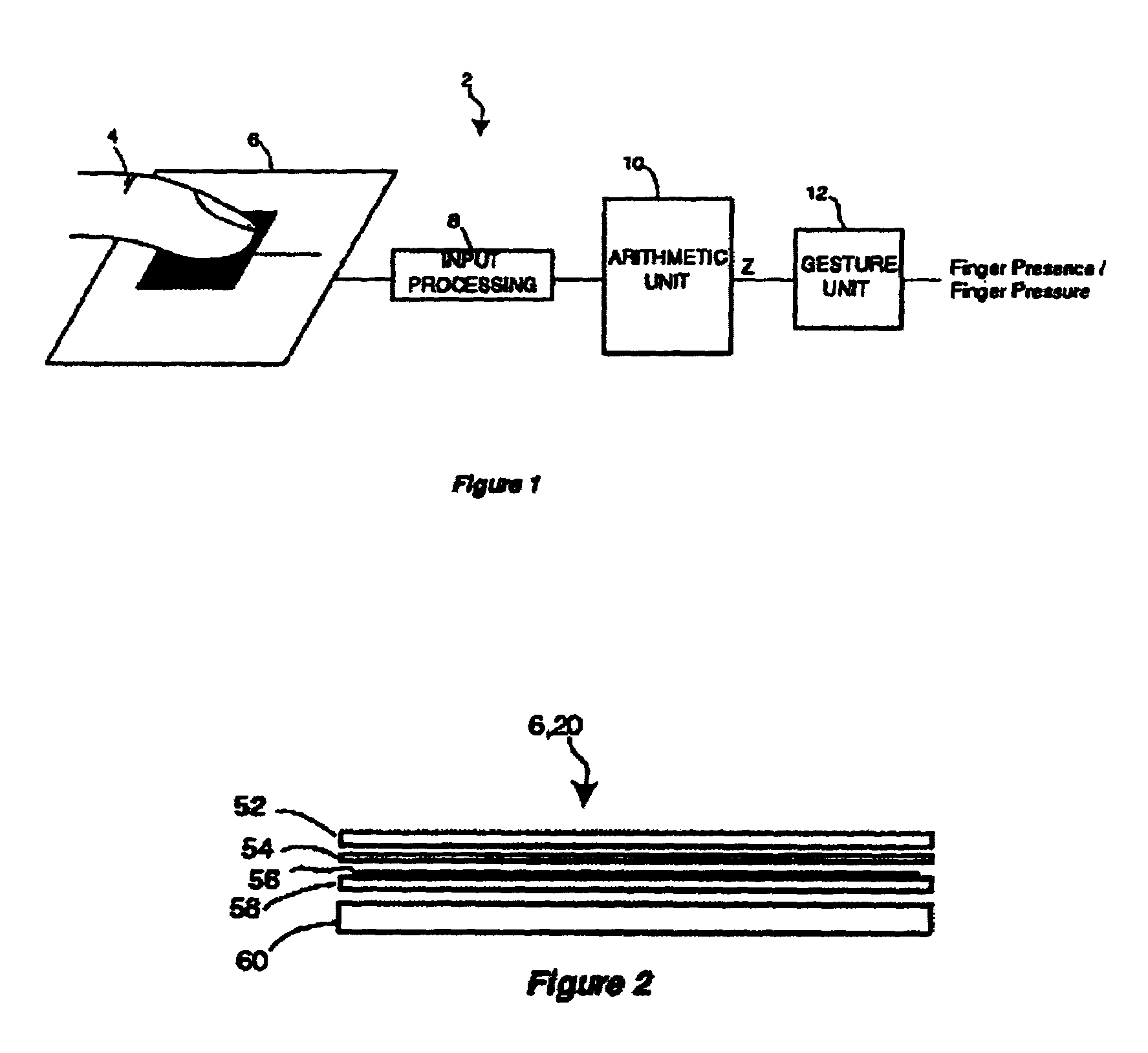

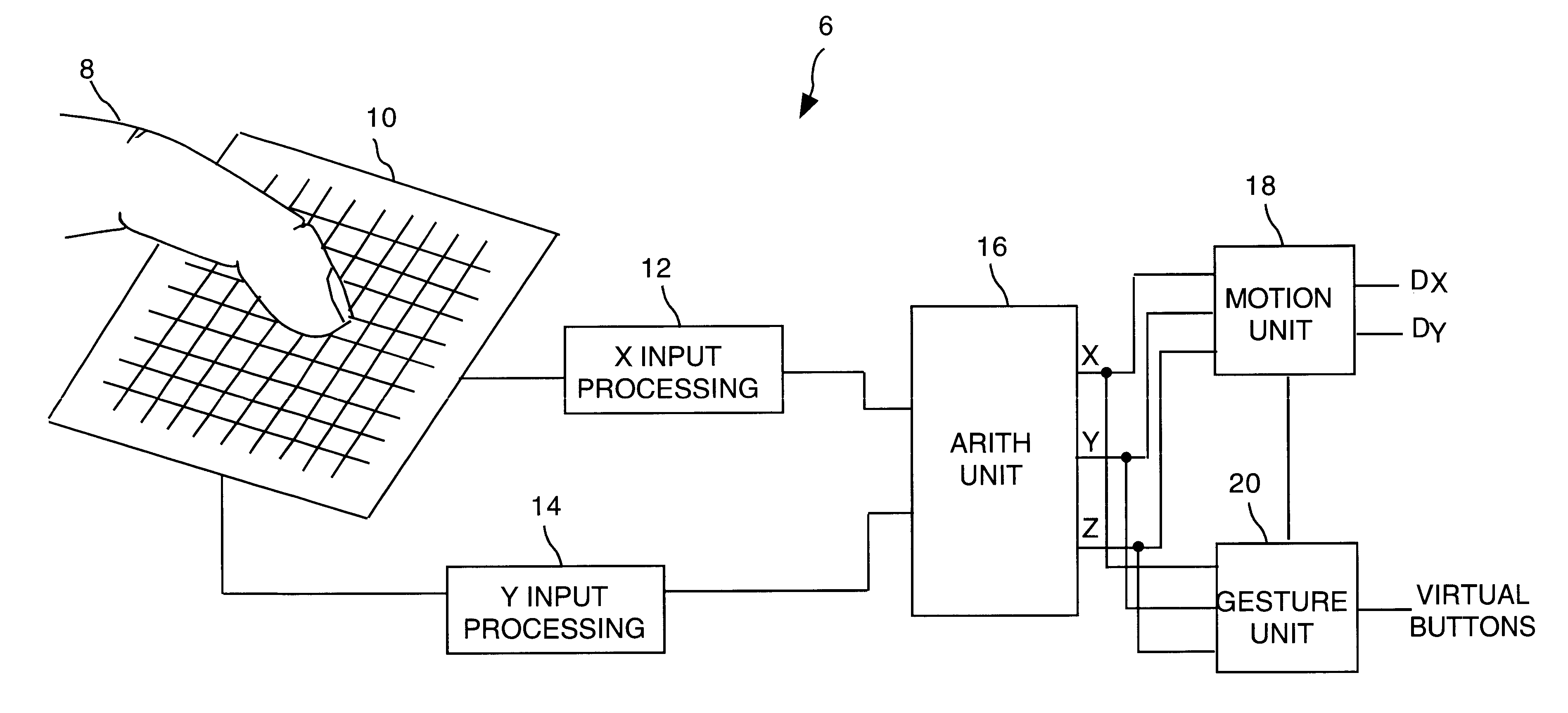

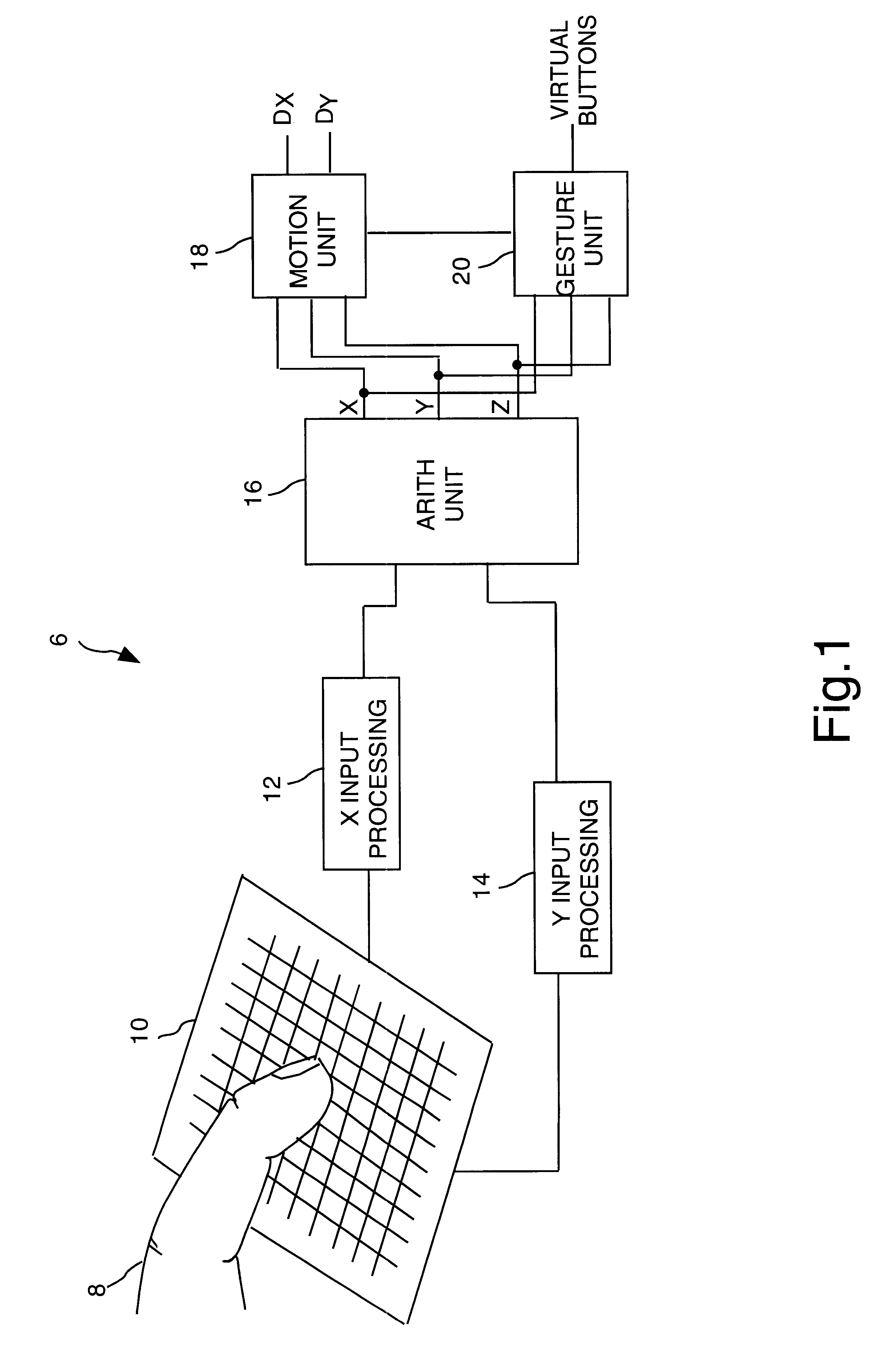

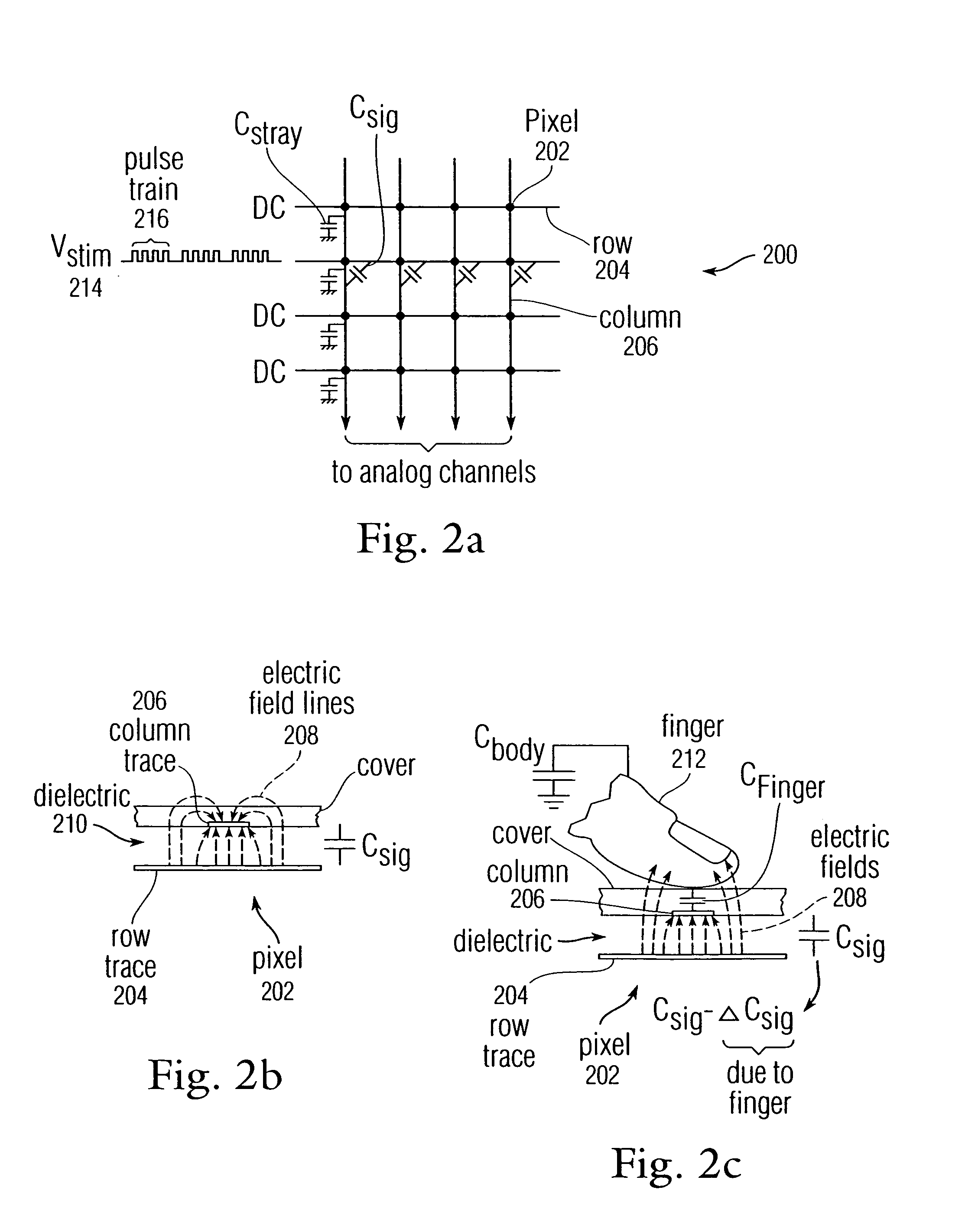

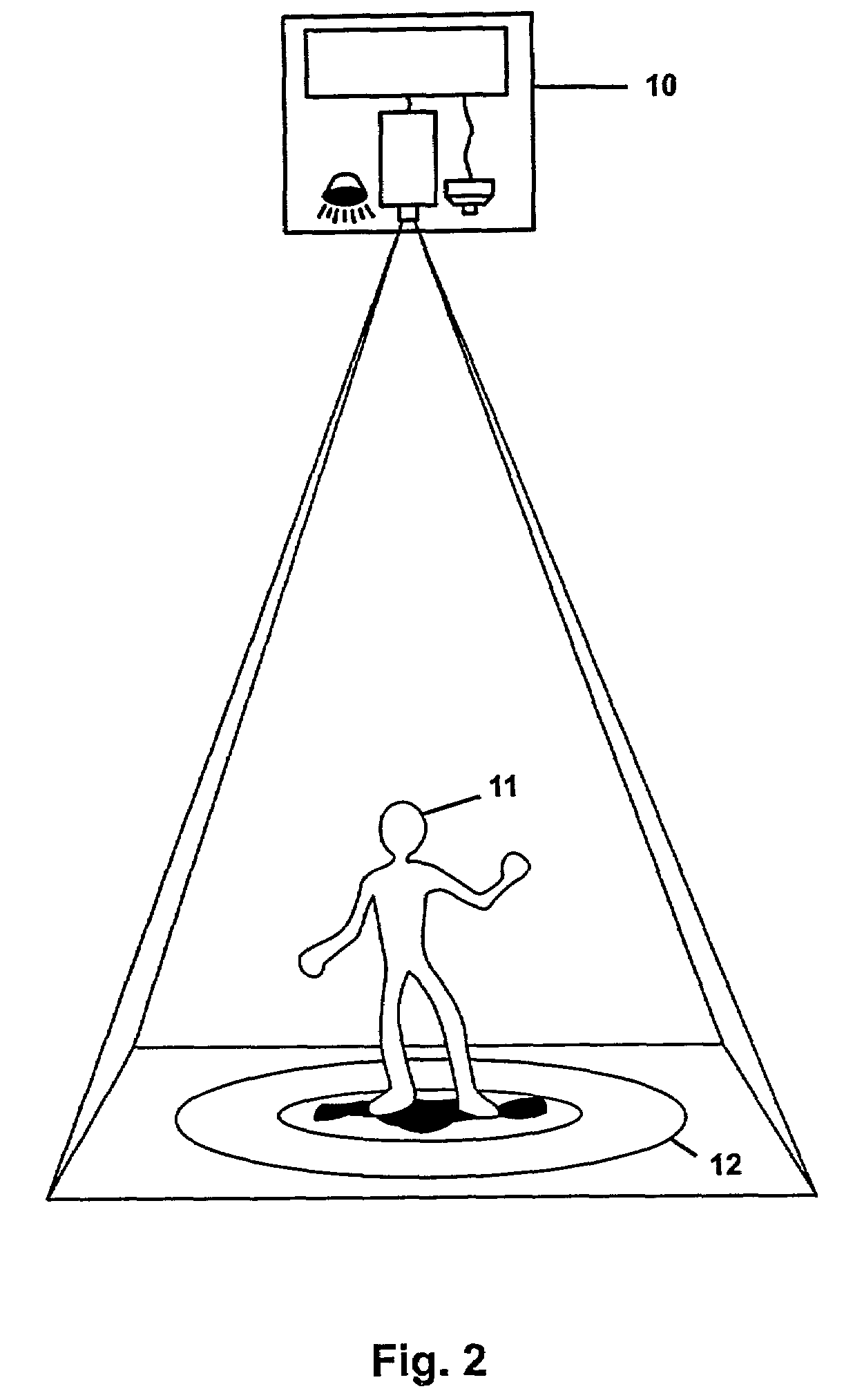

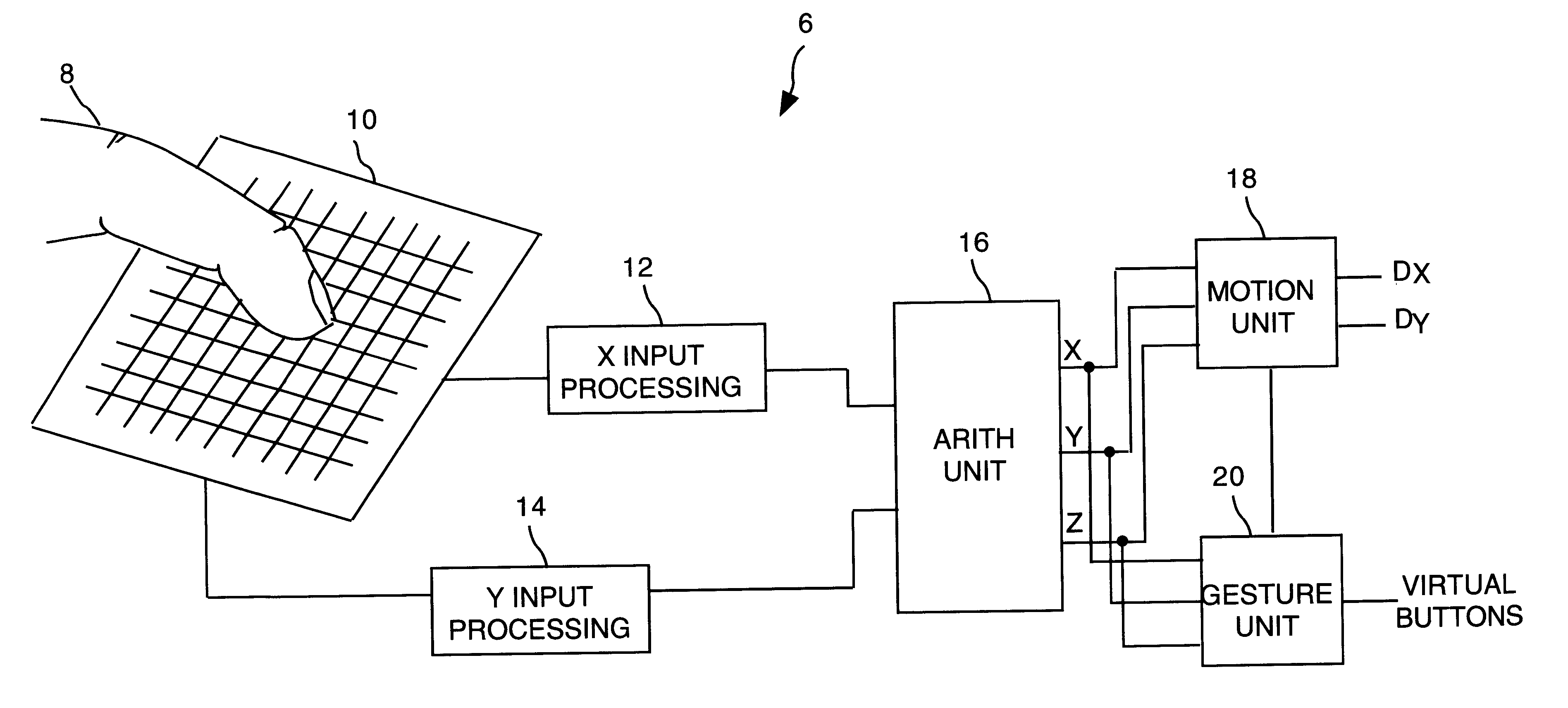

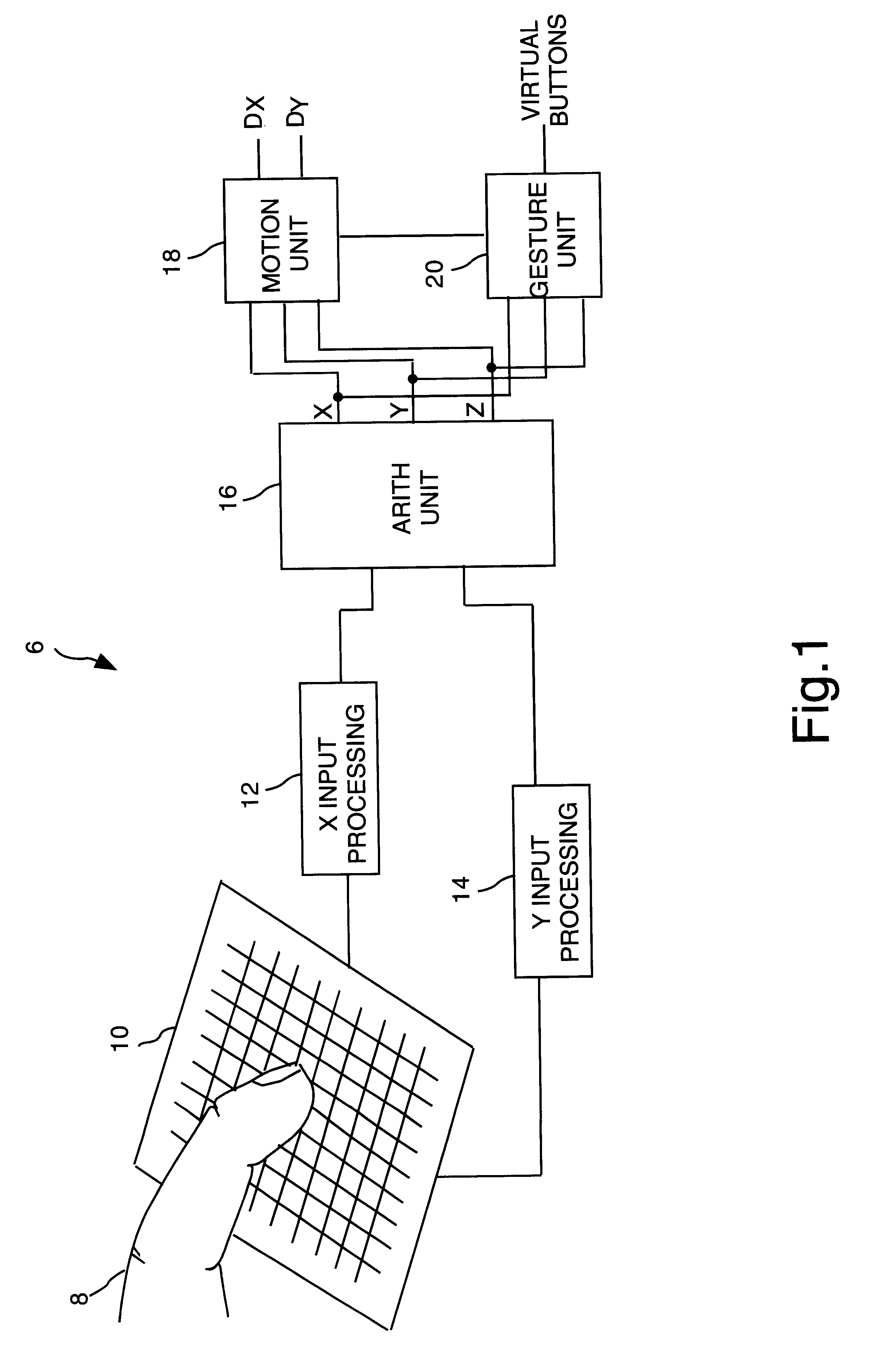

Object position detector with edge motion feature and gesture recognition

InactiveUS6414671B1Highly integratedRapid responseTransmission systemsCharacter and pattern recognitionComputer visionComputer science

Methods for recognizing gestures made by a conductive object on a touch-sensor pad and for cursor motion are disclosed. Tapping, drags, pushes, extended drags and variable drags gestures are recognized by analyzing the position, pressure, and movement of the conductive object on the sensor pad during the time of a suspected gesture, and signals are sent to a host indicating the occurrence of these gestures. Signals indicating the position of a conductive object and distinguishing between the peripheral portion and an inner portion of the touch-sensor pad are also sent to the host.

Owner:SYNAPTICS INC

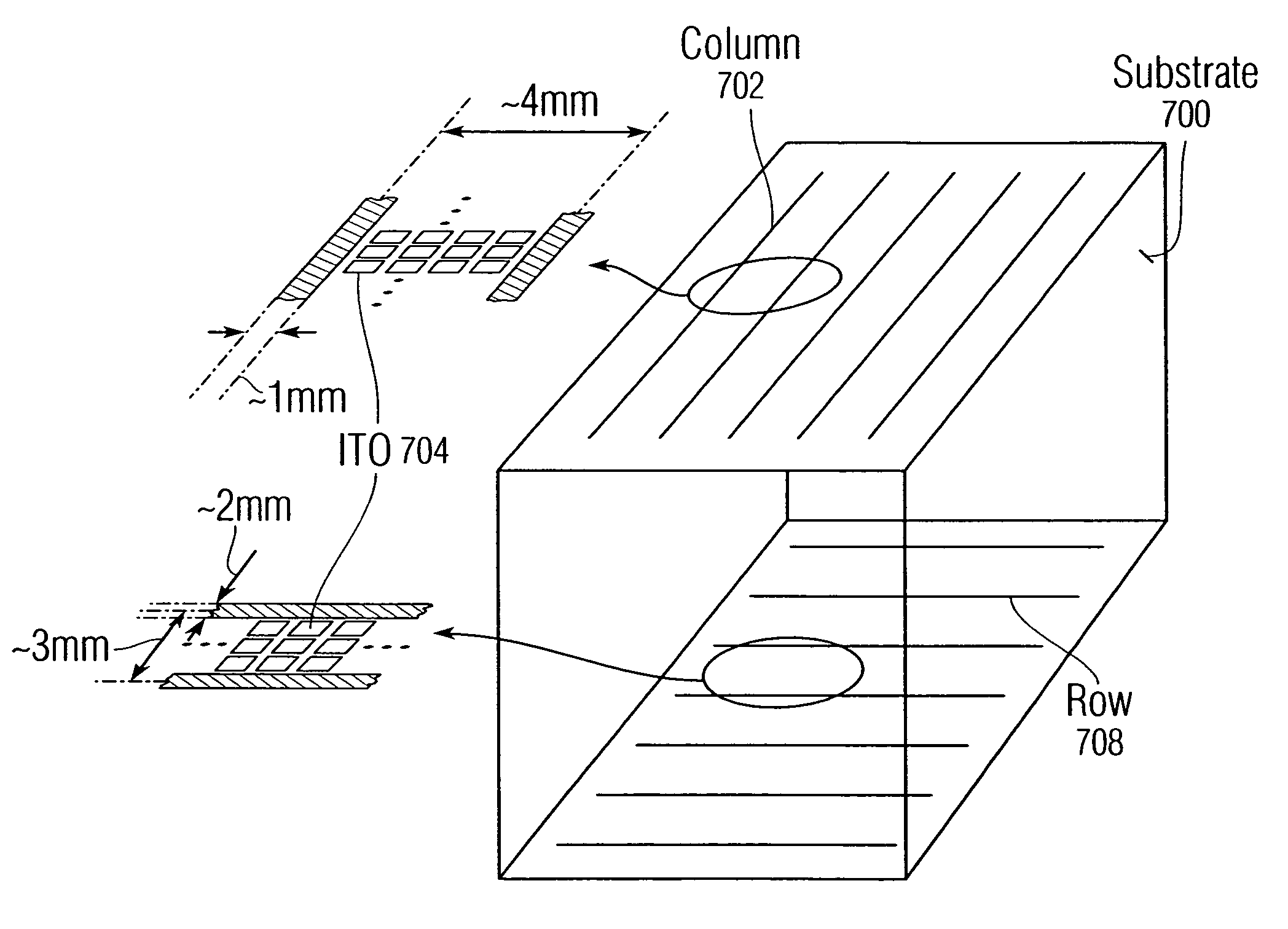

Double-sided touch-sensitive panel with shield and drive combined layer

ActiveUS7920129B2Small sizeArea minimizationTransmission systemsDigital data processing detailsCapacitanceLiquid-crystal display

A multi-touch capacitive touch sensor panel can be created using a substrate with column and row traces formed on either side of the substrate. To shield the column (sense) traces from the effects of capacitive coupling from a modulated Vcom layer in an adjacent liquid crystal display (LCD) or any source of capacitive coupling, the row traces can be widened to shield the column traces, and the row traces can be placed closer to the LCD. In particular, the rows can be widened so that there is spacing of about 30 microns between adjacent row traces. In this manner, the row traces can serve the dual functions of driving the touch sensor panel, and also the function of shielding the more sensitive column (sense) traces from the effects of capacitive coupling.

Owner:APPLE INC

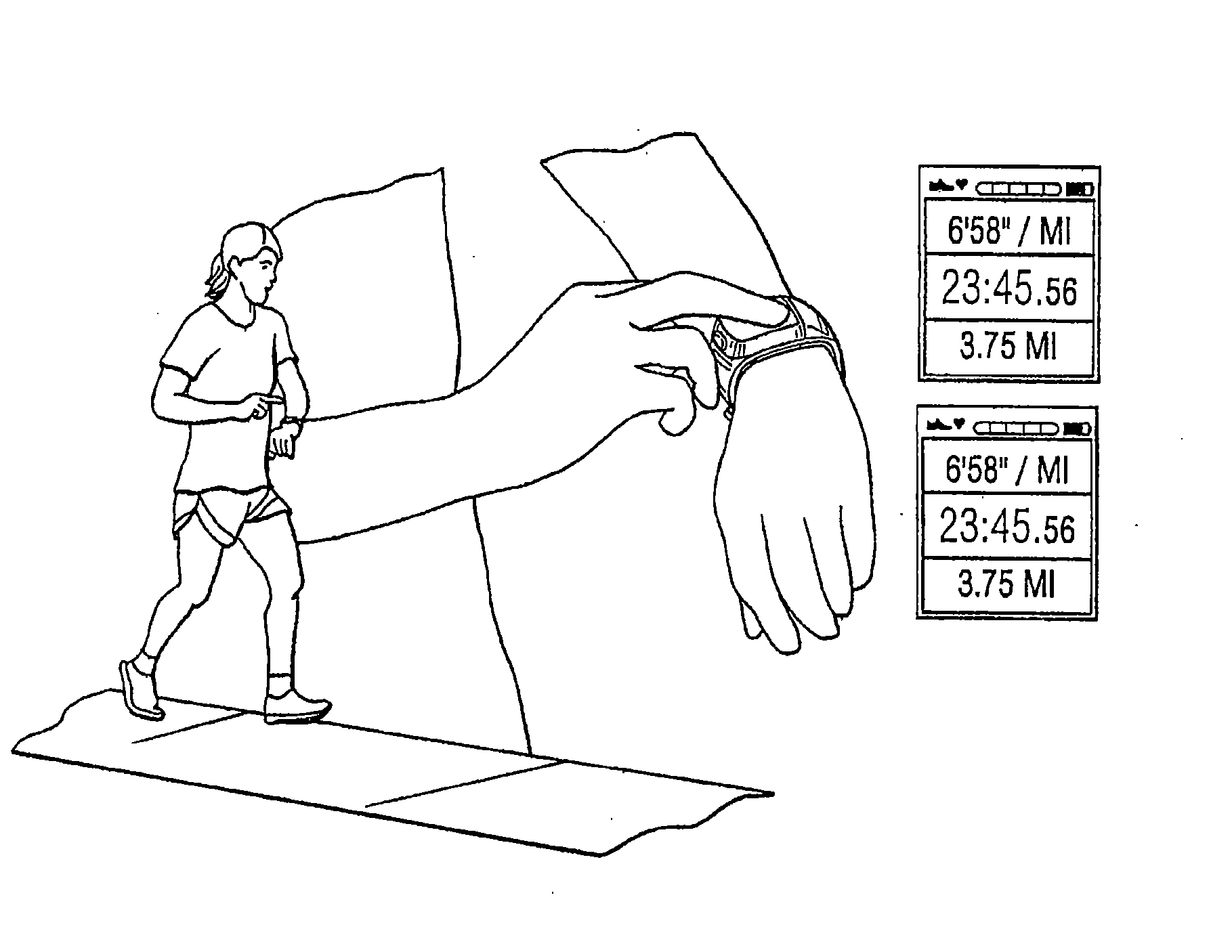

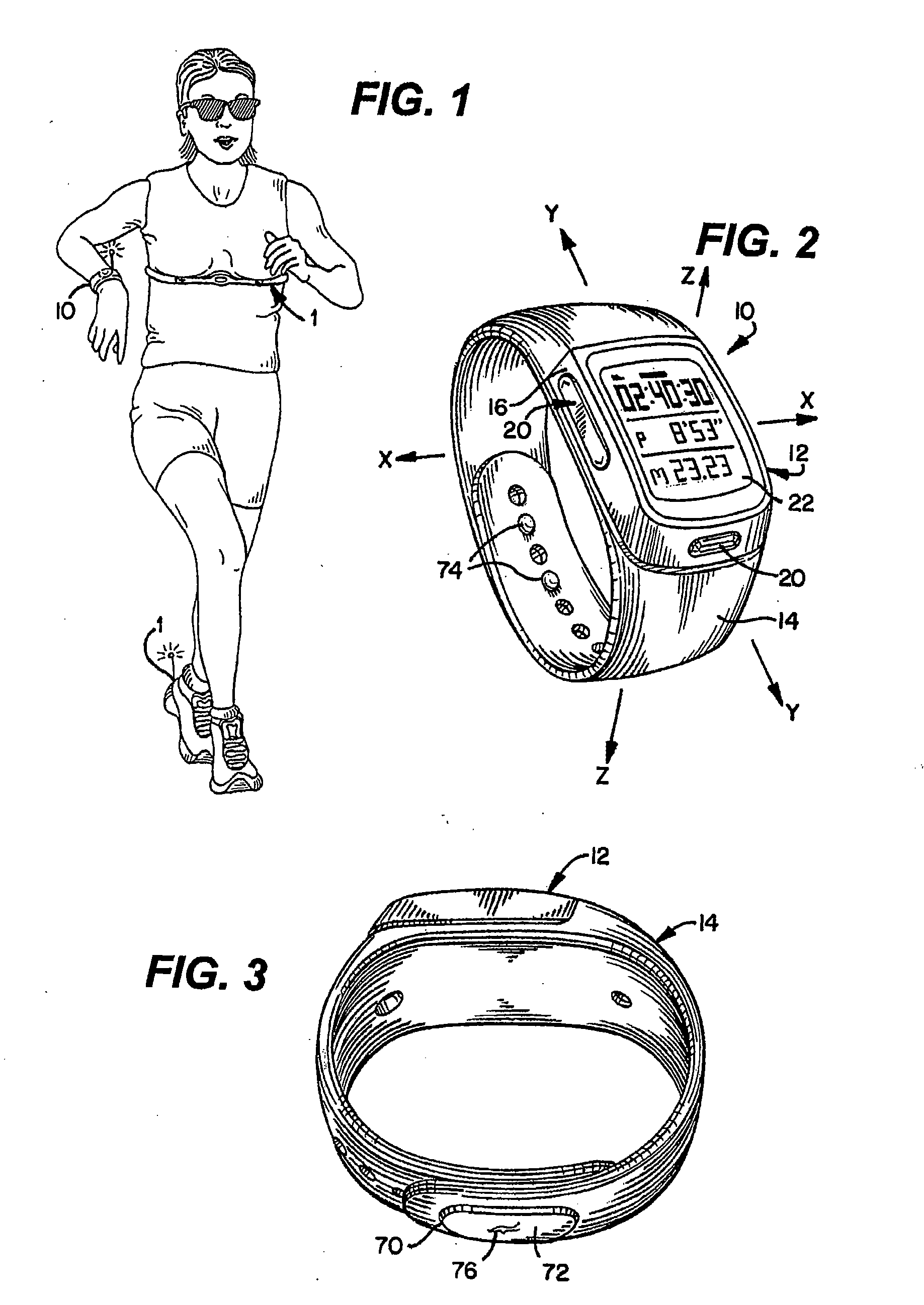

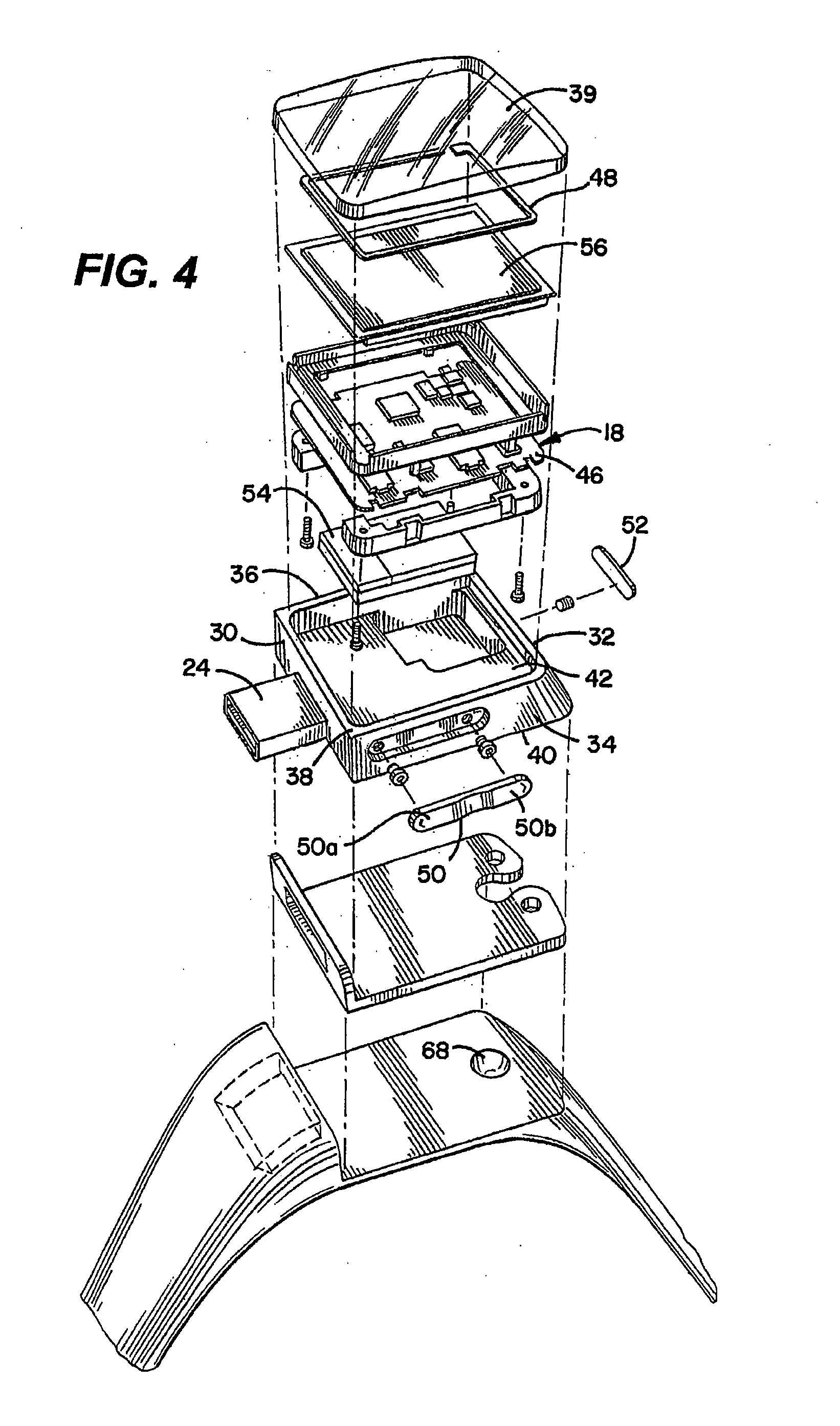

Athletic watch

ActiveUS20110003665A1Improve motor functionEasy to operateCoupling device connectionsTime indicationUser inputExercise performance

A device for monitoring athletic performance of a user has a wristband configured to be worn by the user. An electronic module is removably attached to the wristband. The electronic module has a controller and a screen and a plurality of user inputs operably associated with the controller. The user inputs include a user input configured to be applied by the user against the screen and in a direction generally normal to the screen.

Owner:NIKE INC

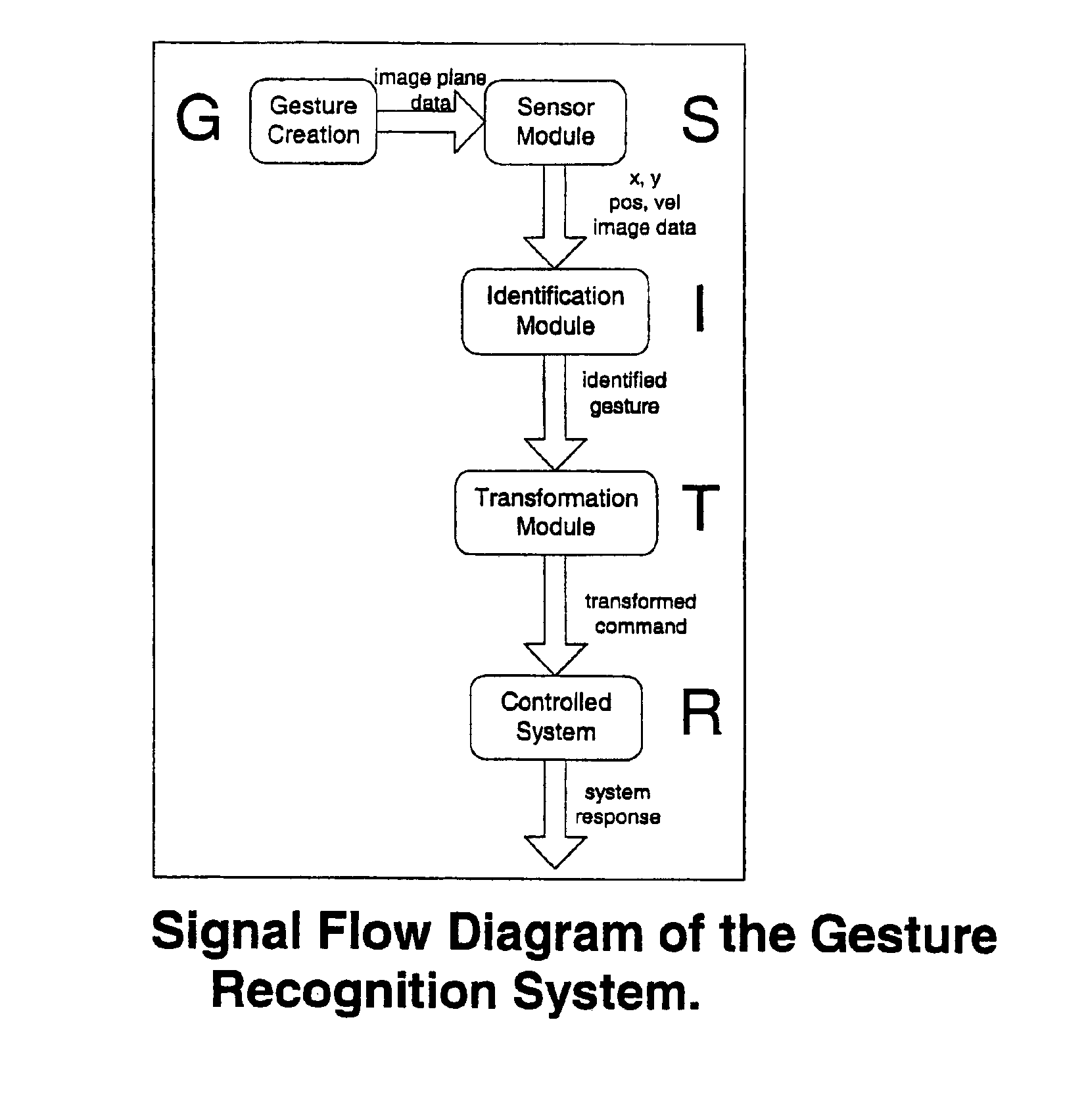

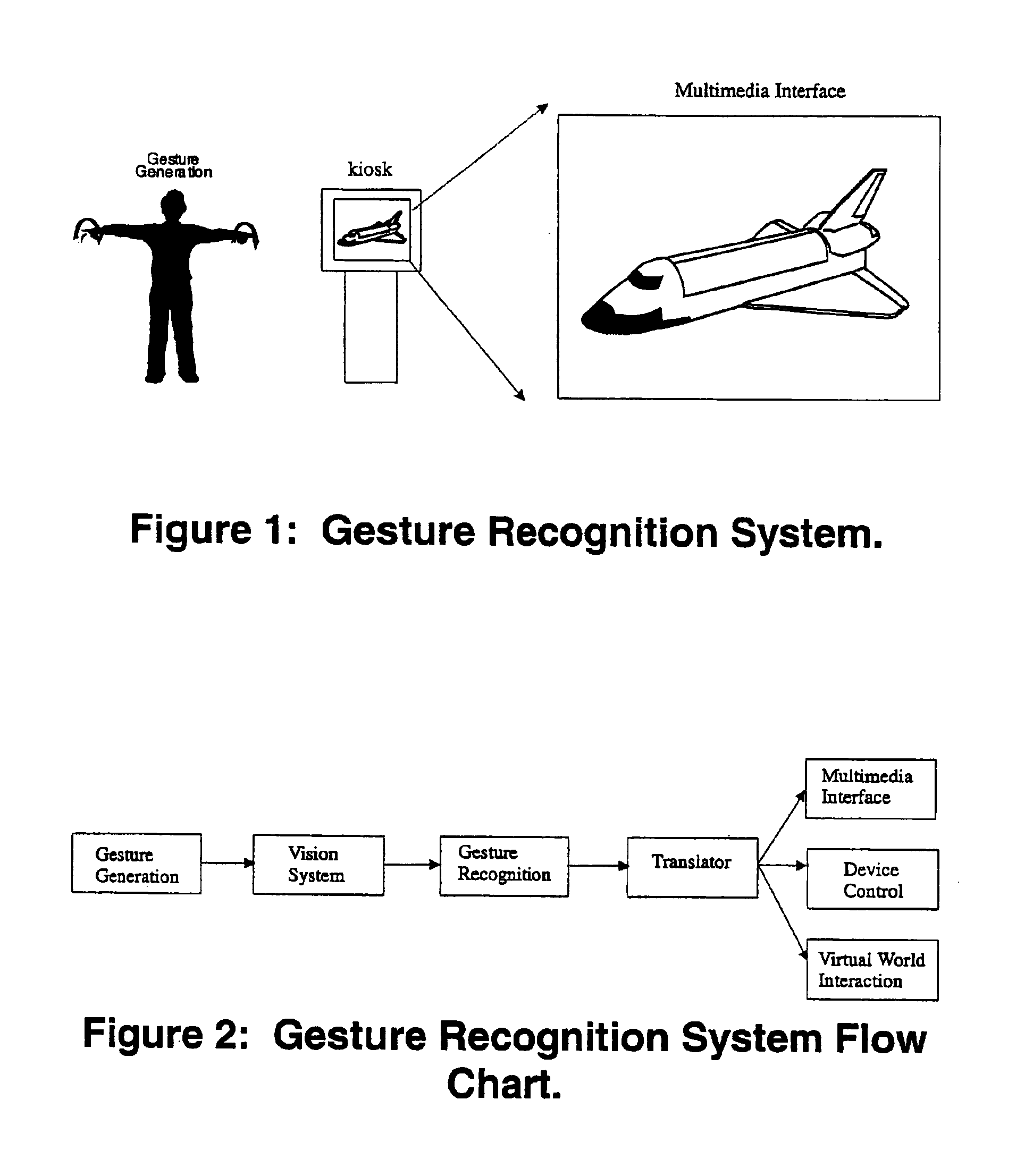

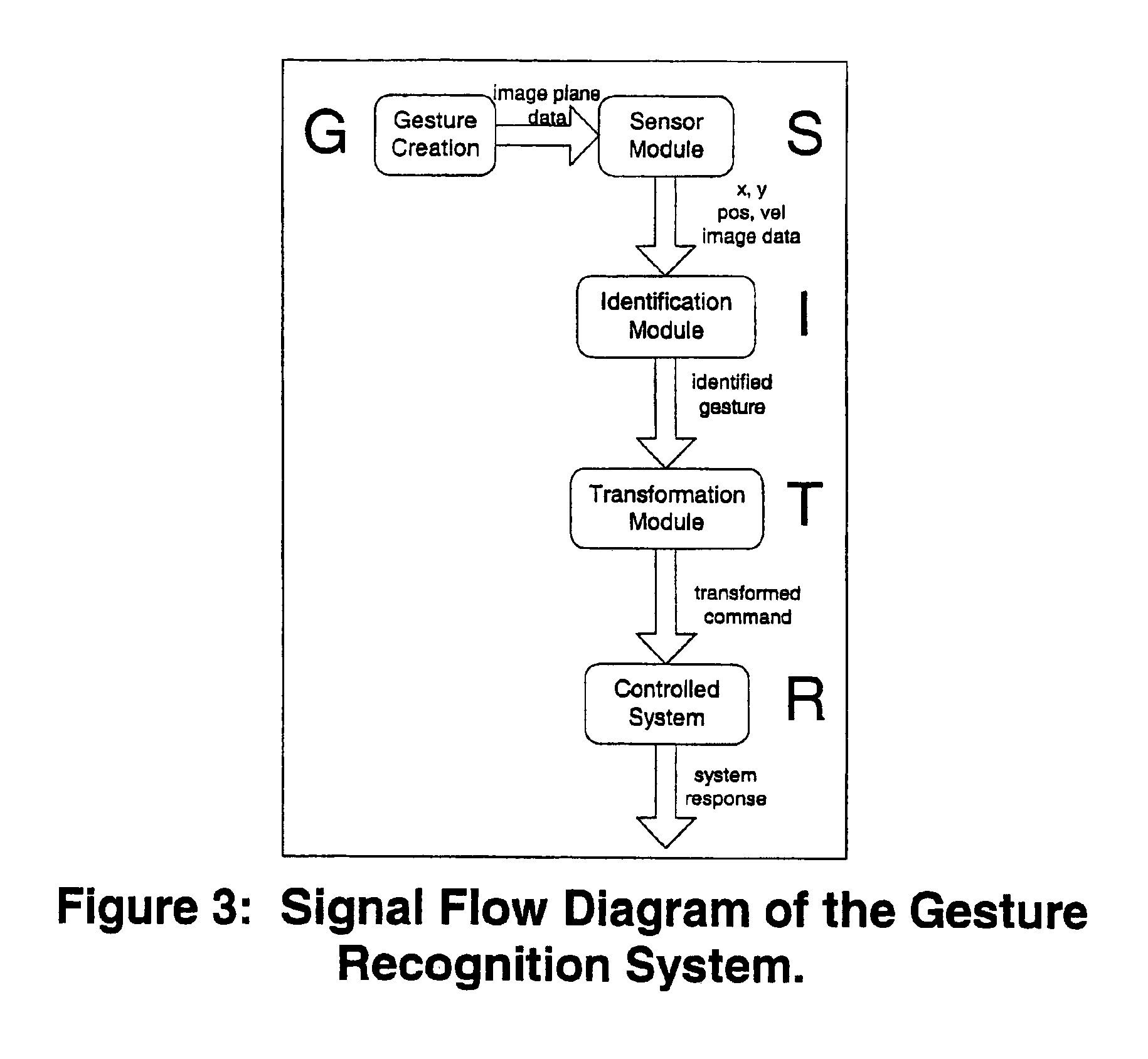

Gesture-controlled interfaces for self-service machines and other applications

InactiveUS6950534B2Input/output for user-computer interactionImage analysisApplication softwareHuman–computer interaction

A gesture recognition interface for use in controlling self-service machines and other devices is disclosed. A gesture is defined as motions and kinematic poses generated by humans, animals, or machines. Specific body features are tracked, and static and motion gestures are interpreted. Motion gestures are defined as a family of parametrically delimited oscillatory motions, modeled as a linear-in-parameters dynamic system with added geometric constraints to allow for real-time recognition using a small amount of memory and processing time. A linear least squares method is preferably used to determine the parameters which represent each gesture. Feature position measure is used in conjunction with a bank of predictor bins seeded with the gesture parameters, and the system determines which bin best fits the observed motion. Recognizing static pose gestures is preferably performed by localizing the body / object from the rest of the image, describing that object, and identifying that description. The disclosure details methods for gesture recognition, as well as the overall architecture for using gesture recognition to control of devices, including self-service machines.

Owner:JOLLY SEVEN SERIES 70 OF ALLIED SECURITY TRUST I

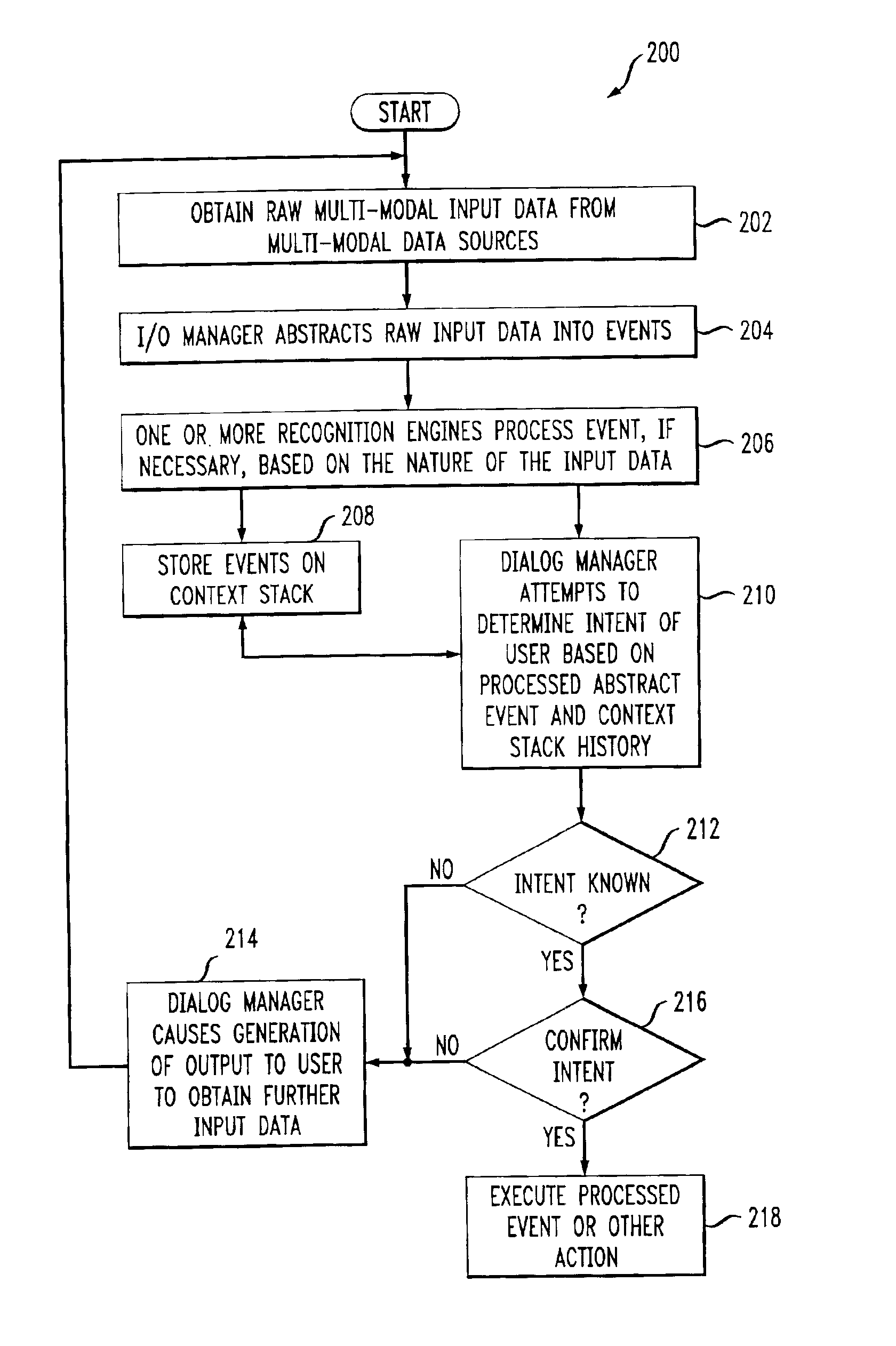

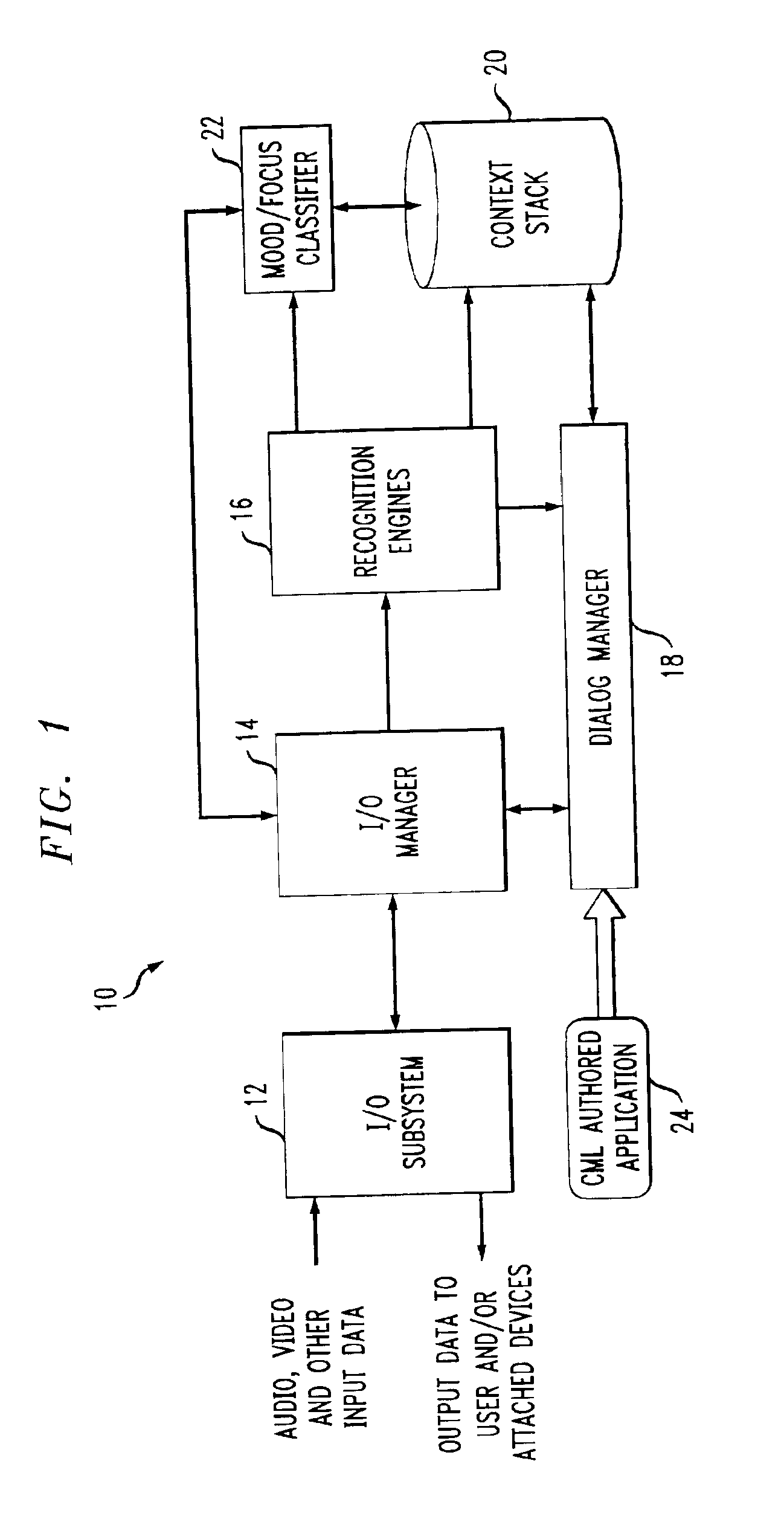

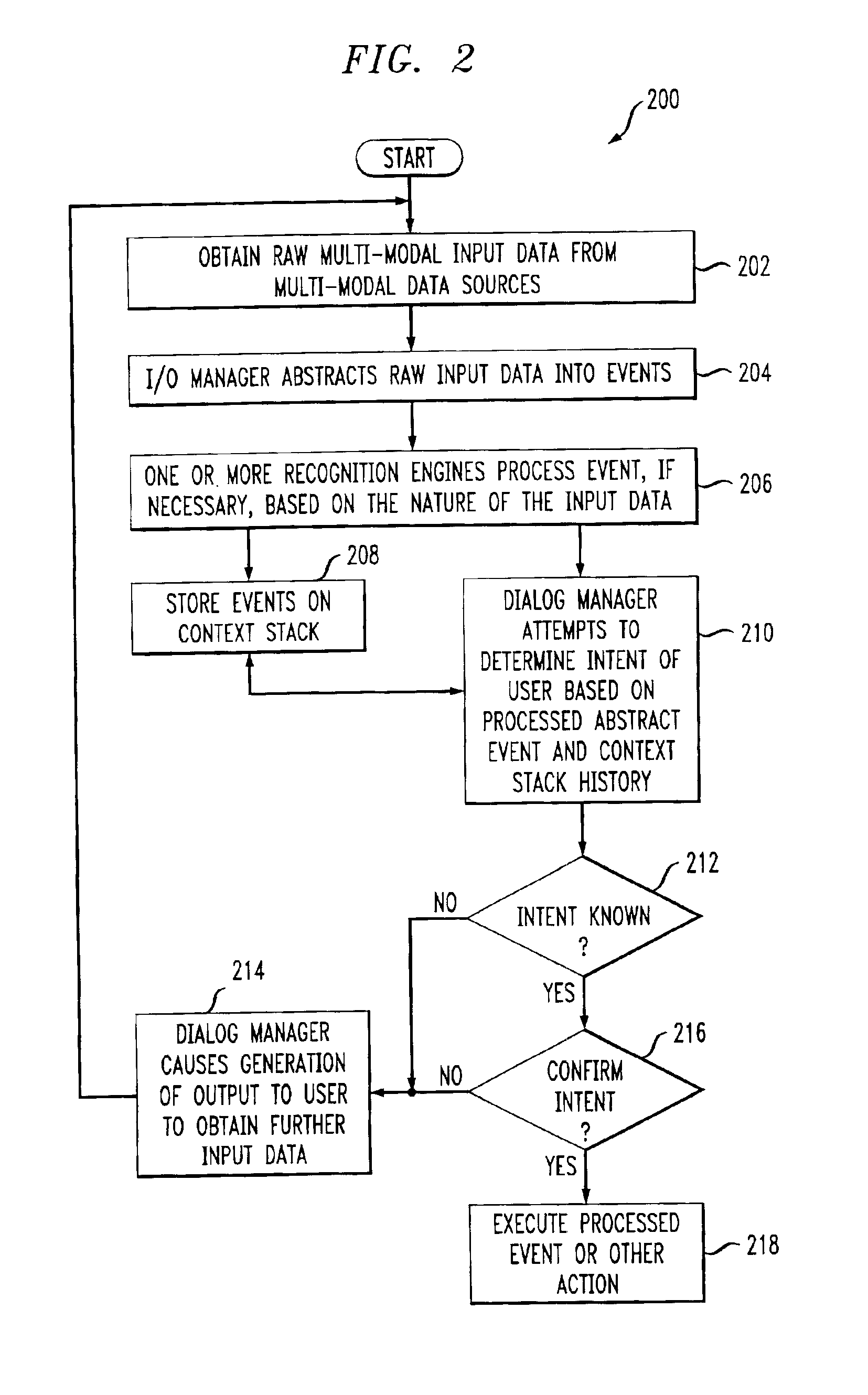

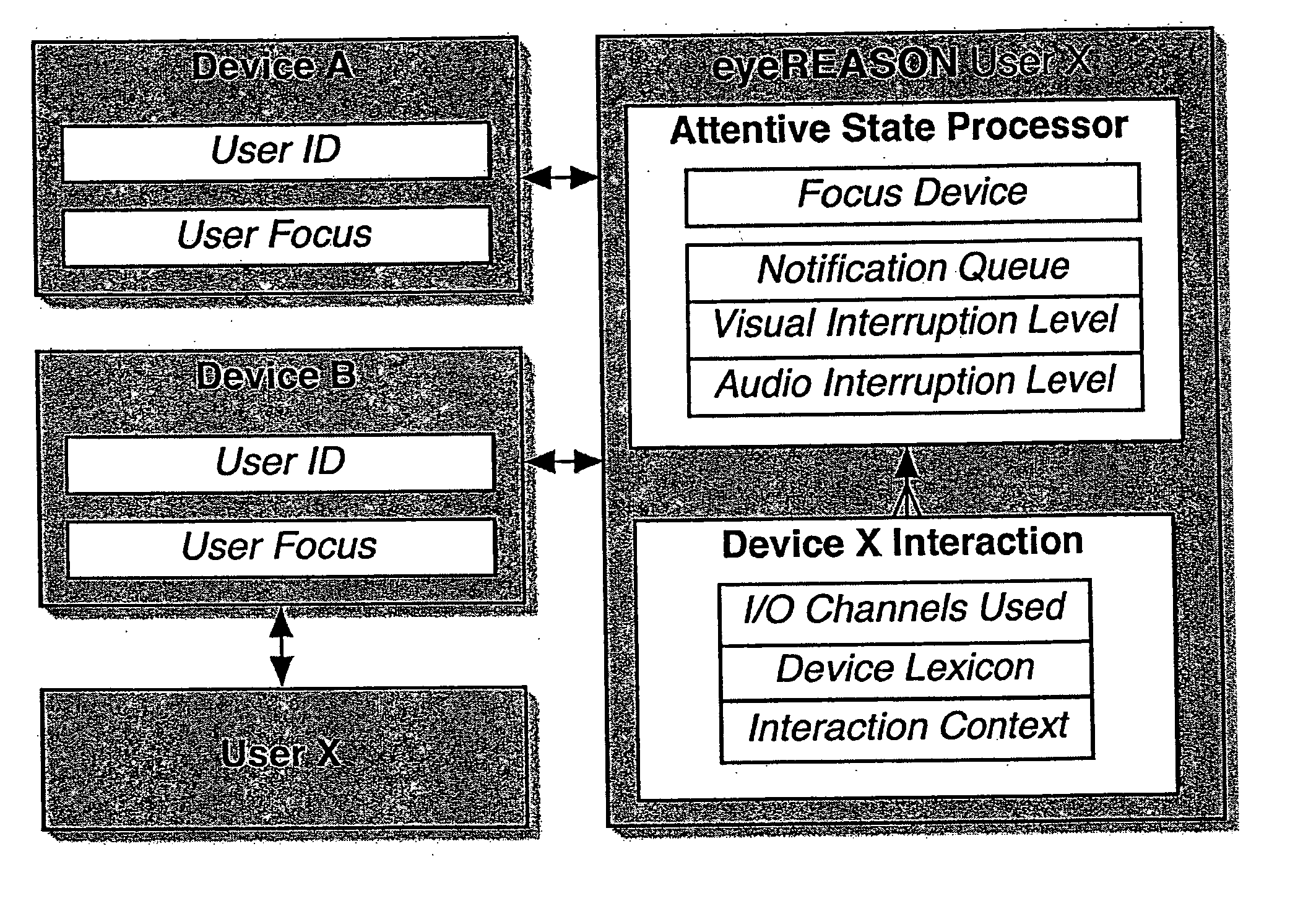

System and method for multi-modal focus detection, referential ambiguity resolution and mood classification using multi-modal input

InactiveUS6964023B2Effective conversational computing environmentInput/output for user-computer interactionData processing applicationsOperant conditioningComputer science

Owner:IBM CORP

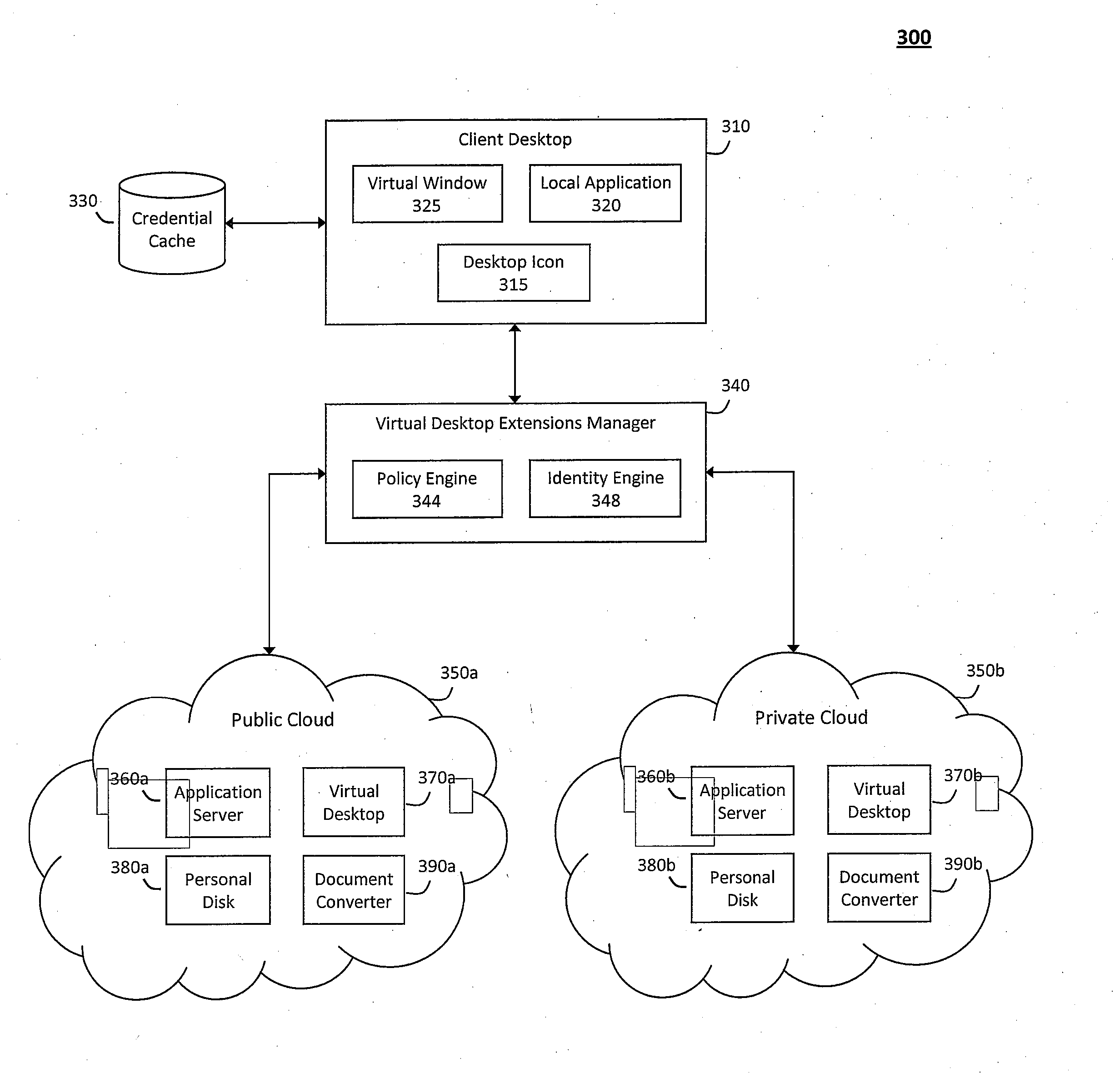

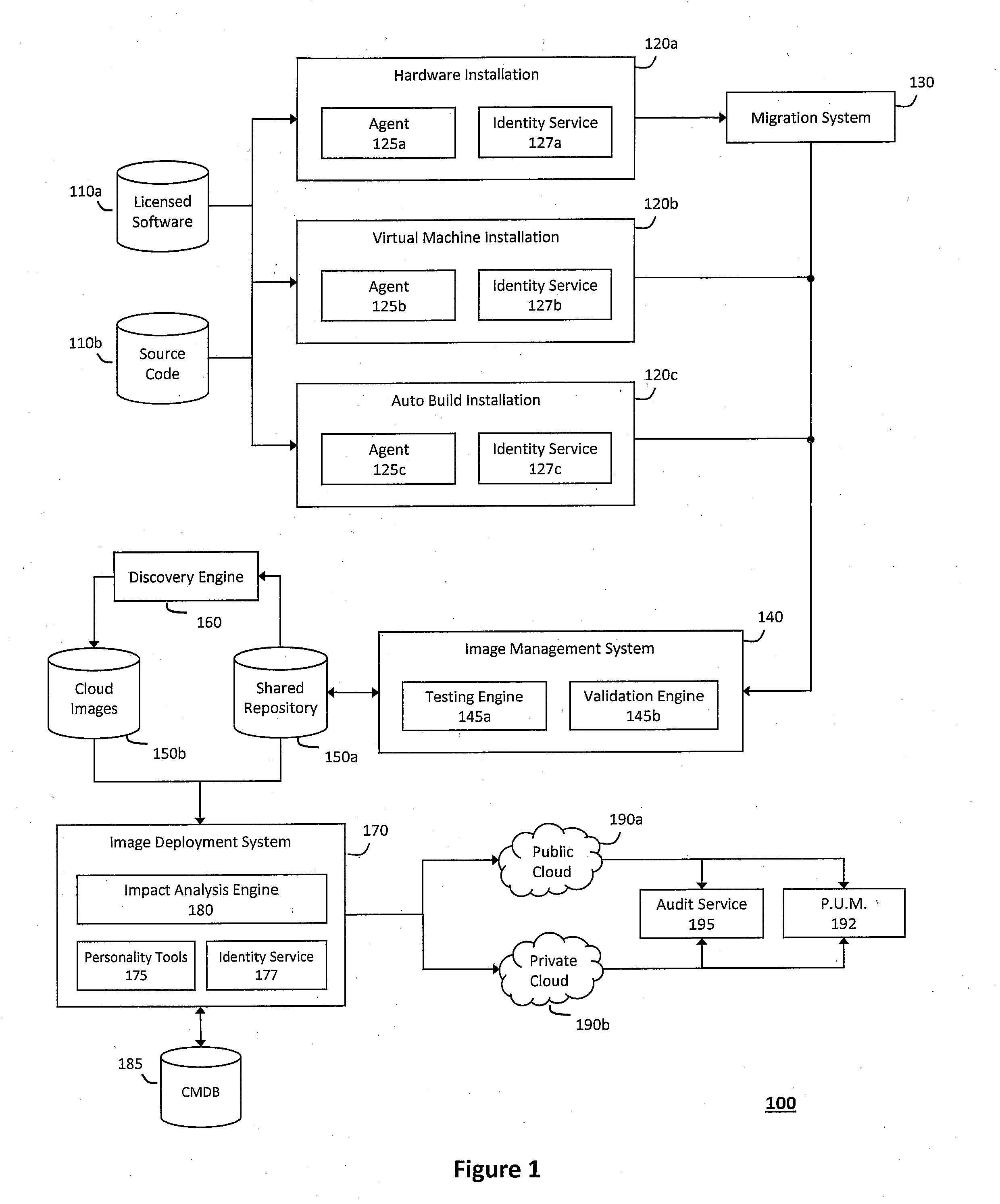

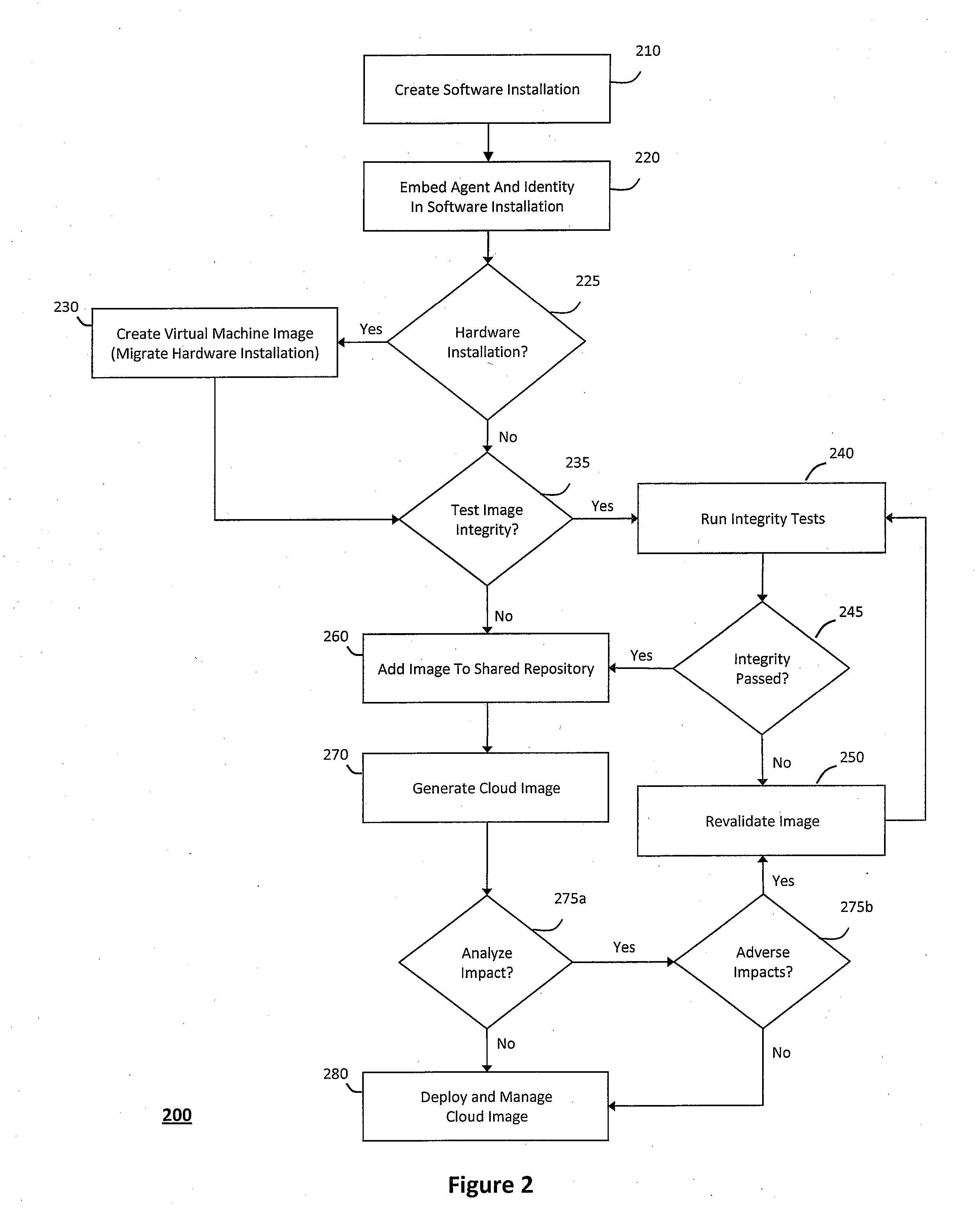

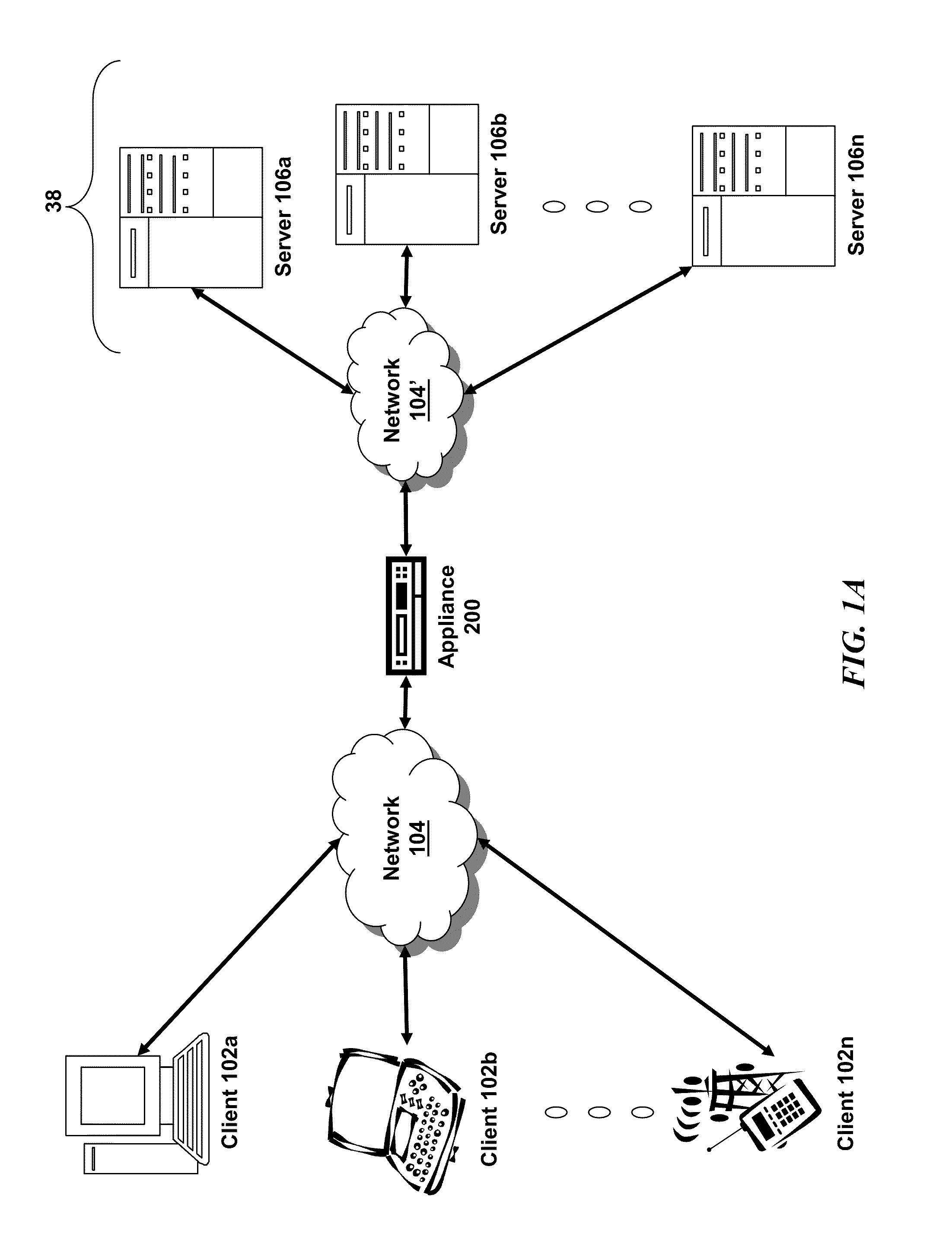

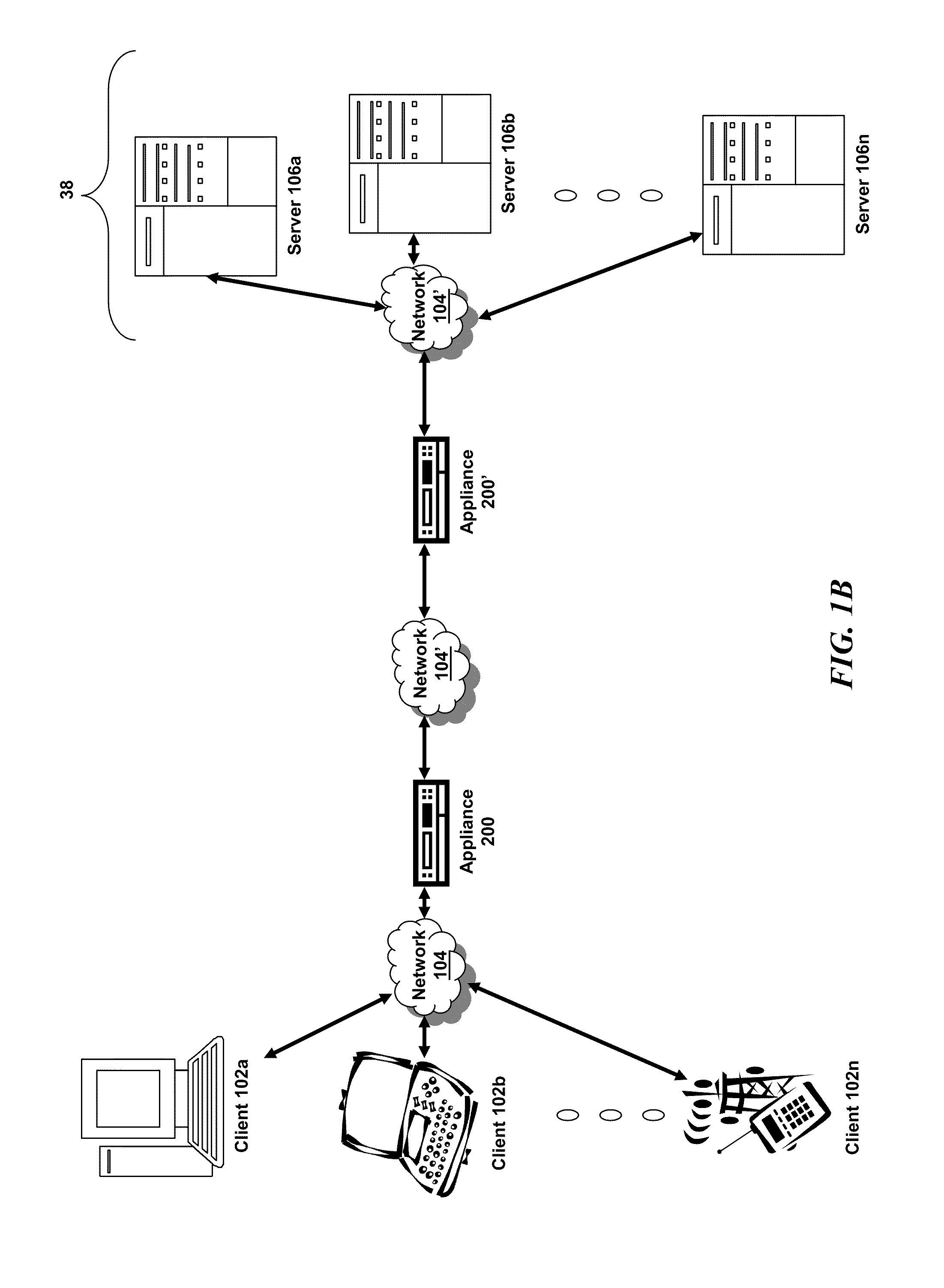

System and method for providing virtual desktop extensions on a client desktop

ActiveUS20110209064A1Simplify complexityProvide capabilityInput/output for user-computer interactionDigital data processing detailsClient-sideCloud computing

The system and method described herein may identify one or more virtual desktop extensions available in a cloud computing environment and launch virtual machine instances to host the available virtual desktop extensions in the cloud. For example, a virtual desktop extension manager may receive a virtual desktop extension request from a client desktop and determine whether authentication credentials for the client desktop indicate that the client desktop has access to the requested virtual desktop extension. In response to authenticating the client desktop, the virtual desktop extension manager may then launch a virtual machine instance to host the virtual desktop extension in the cloud and provide the client desktop with information for locally controlling the virtual desktop extension remotely hosted in the cloud.

Owner:MICRO FOCUS SOFTWARE INC

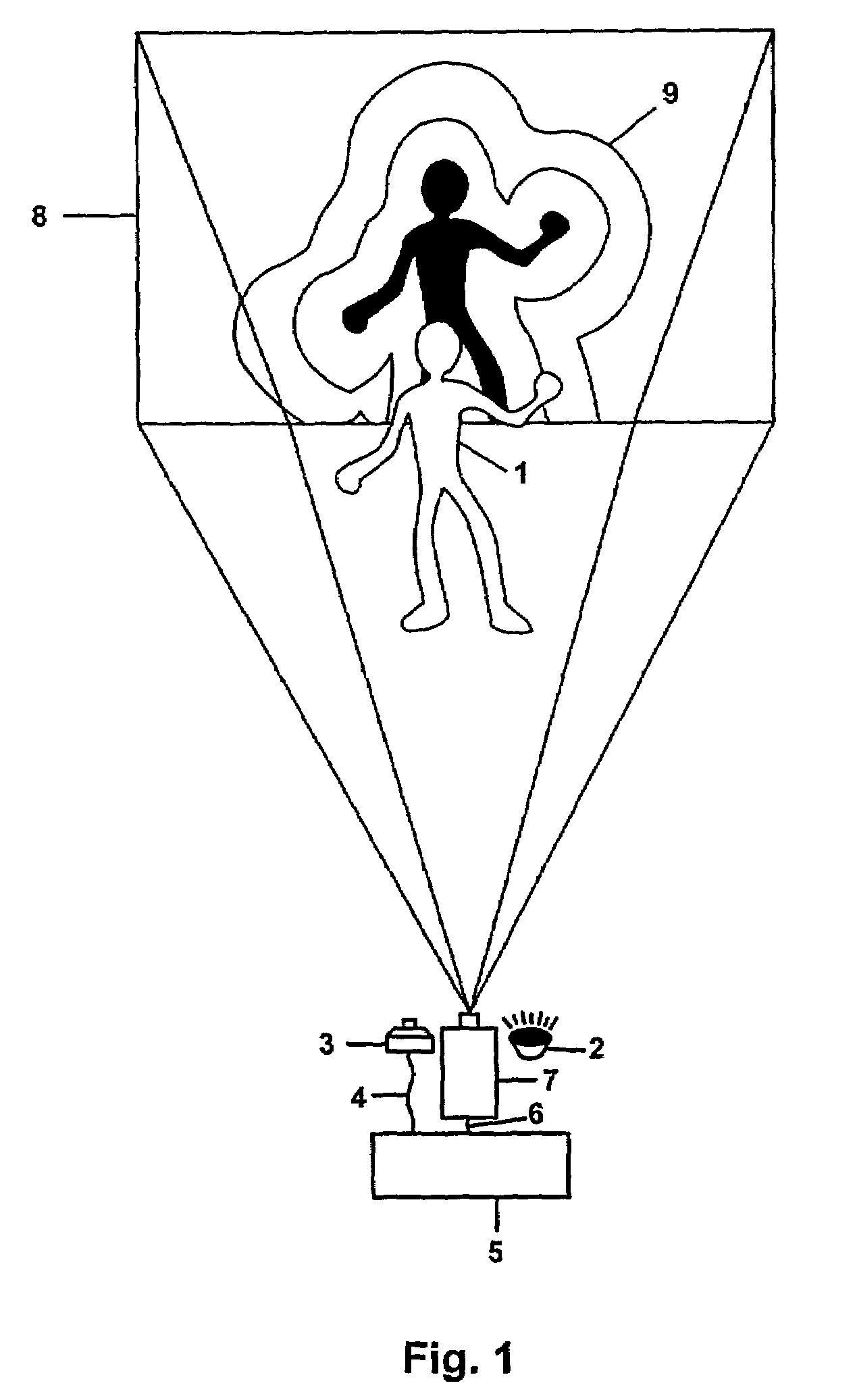

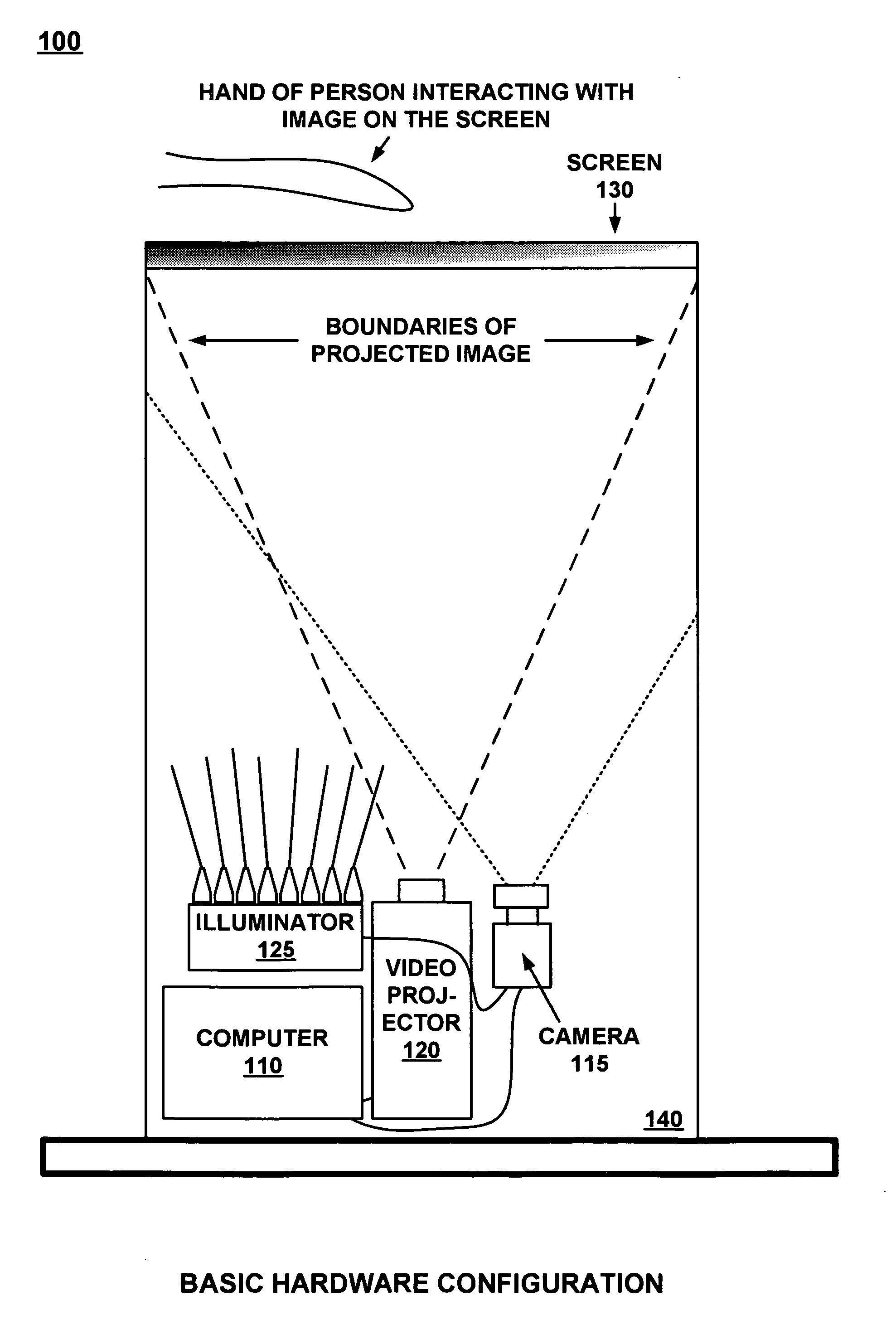

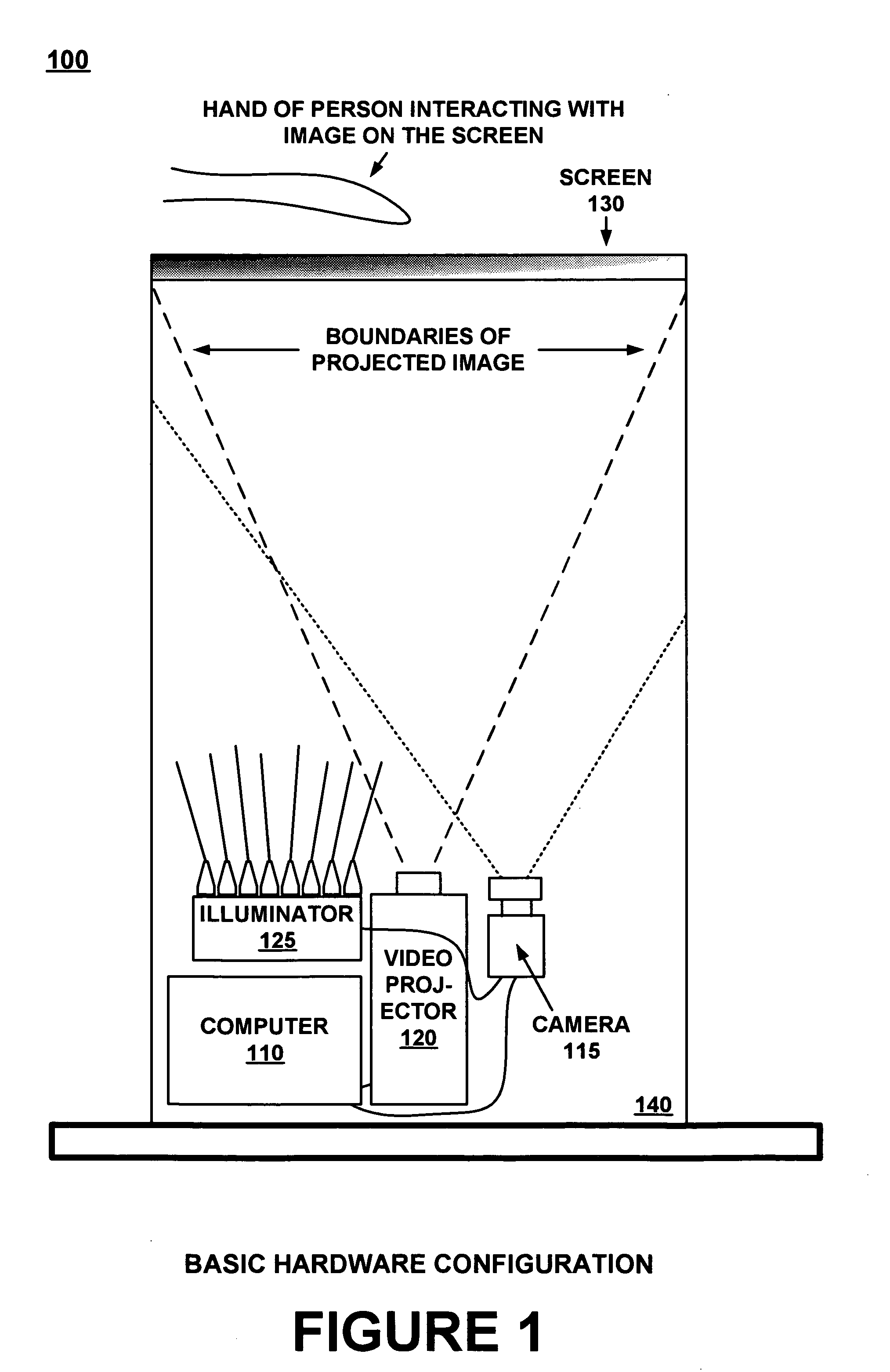

Interactive video display system

ActiveUS7259747B2Improve aestheticsInterferenceInput/output for user-computer interactionTelevision system detailsInformation spaceInteractive video

A device allows easy and unencumbered interaction between a person and a computer display system using the person's (or another object's) movement and position as input to the computer. In some configurations, the display can be projected around the user so that that the person's actions are displayed around them. The video camera and projector operate on different wavelengths so that they do not interfere with each other. Uses for such a device include, but are not limited to, interactive lighting effects for people at clubs or events, interactive advertising displays, etc. Computer-generated characters and virtual objects can be made to react to the movements of passers-by, generate interactive ambient lighting for social spaces such as restaurants, lobbies and parks, video game systems and create interactive information spaces and art installations. Patterned illumination and brightness and gradient processing can be used to improve the ability to detect an object against a background of video images.

Owner:MICROSOFT TECH LICENSING LLC

Object position detector with edge motion feature and gesture recognition

InactiveUS6380931B1Easy CalibrationEasy to implementTransmission systemsCharacter and pattern recognitionGlyphComputer vision

Owner:SYNAPTICS INC

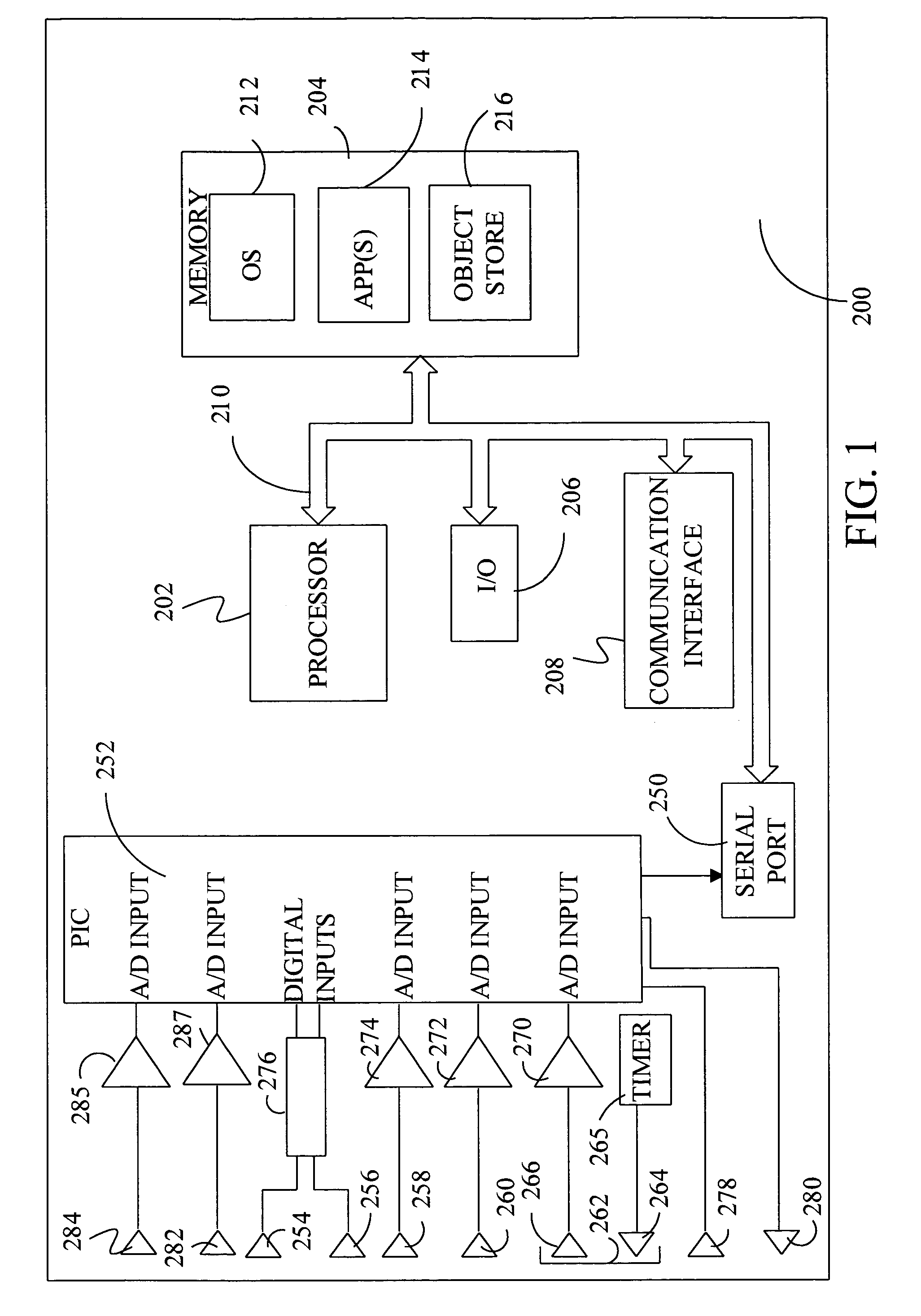

Distributed sensing techniques for mobile devices

ActiveUS20050093868A1Input/output for user-computer interactionServices signallingHuman–computer interactionMobile device

Methods and apparatus of the invention allow the coordination of resources of mobile computing devices to jointly execute tasks. In the method, a first gesture input is received at a first mobile computing device. A second gesture input is received at a second mobile computing device. In response, a determination is made as to whether the first and second gesture inputs form one of a plurality of different synchronous gesture types. If it is determined that the first and second gesture inputs form the one of the plurality of different synchronous gesture types, then resources of the first and second mobile computing devices are combined to jointly execute a particular task associated with the one of the plurality of different synchronous gesture types.

Owner:MICROSOFT TECH LICENSING LLC

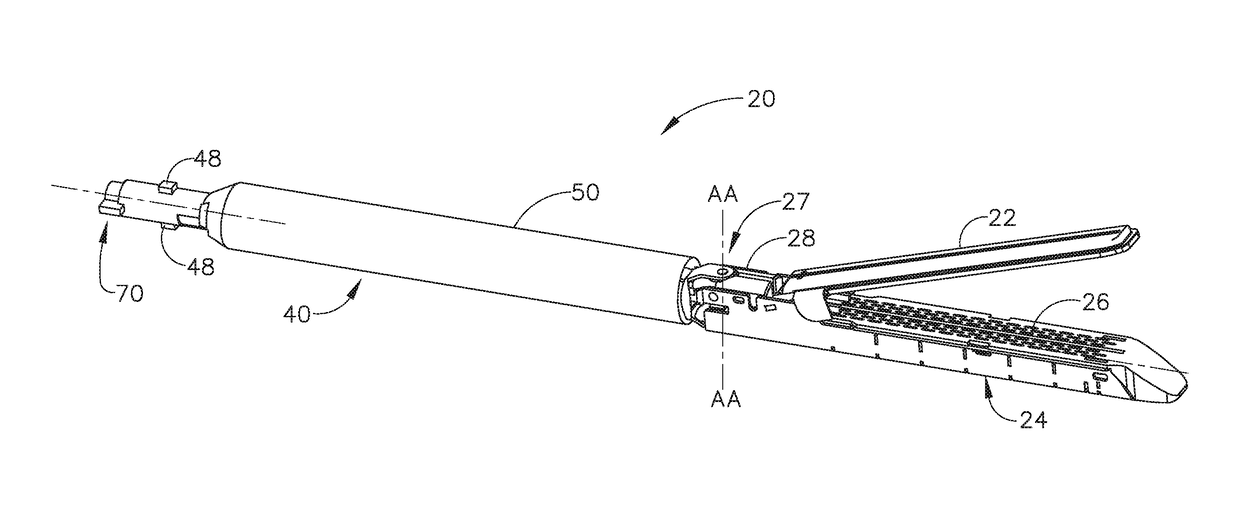

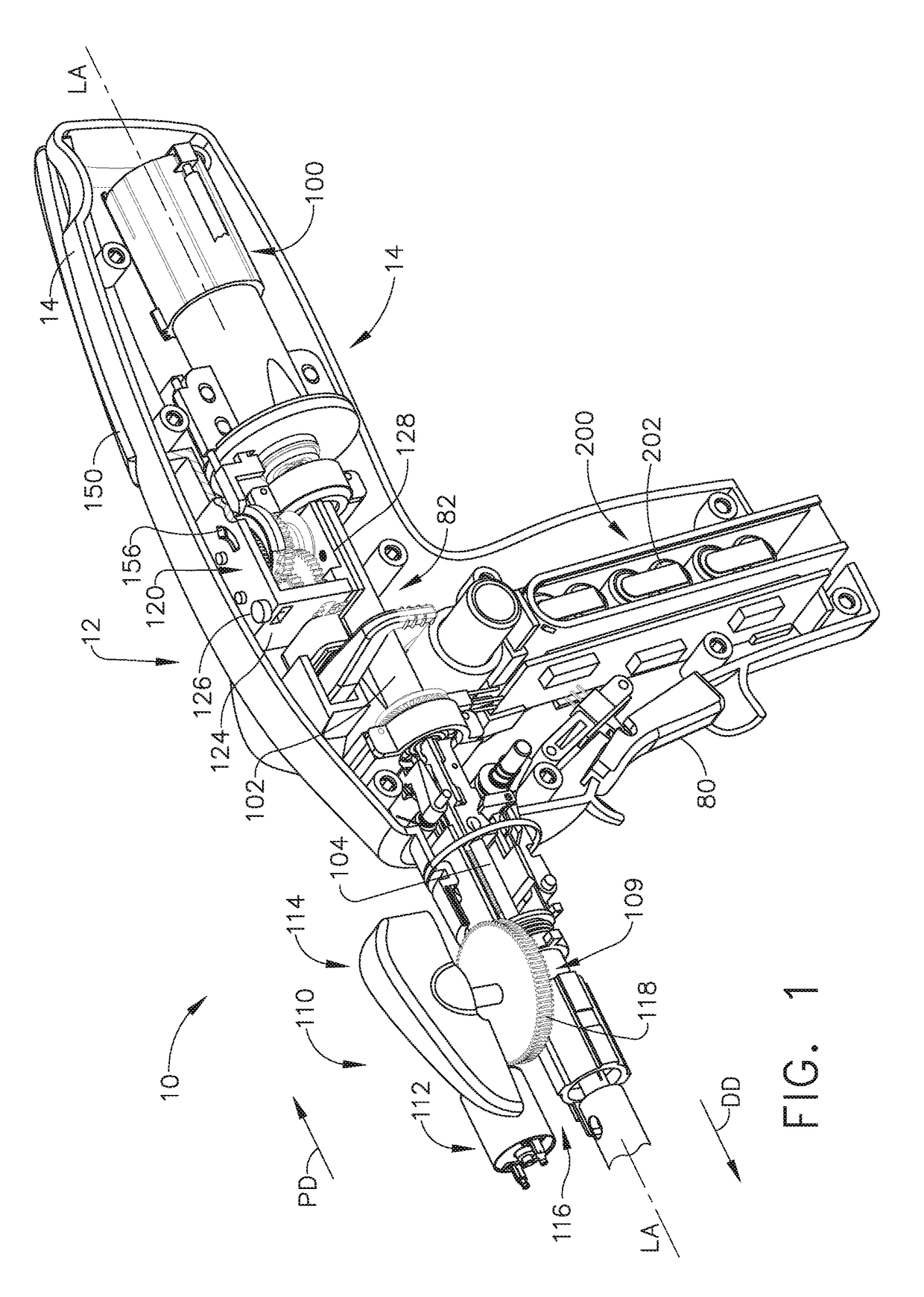

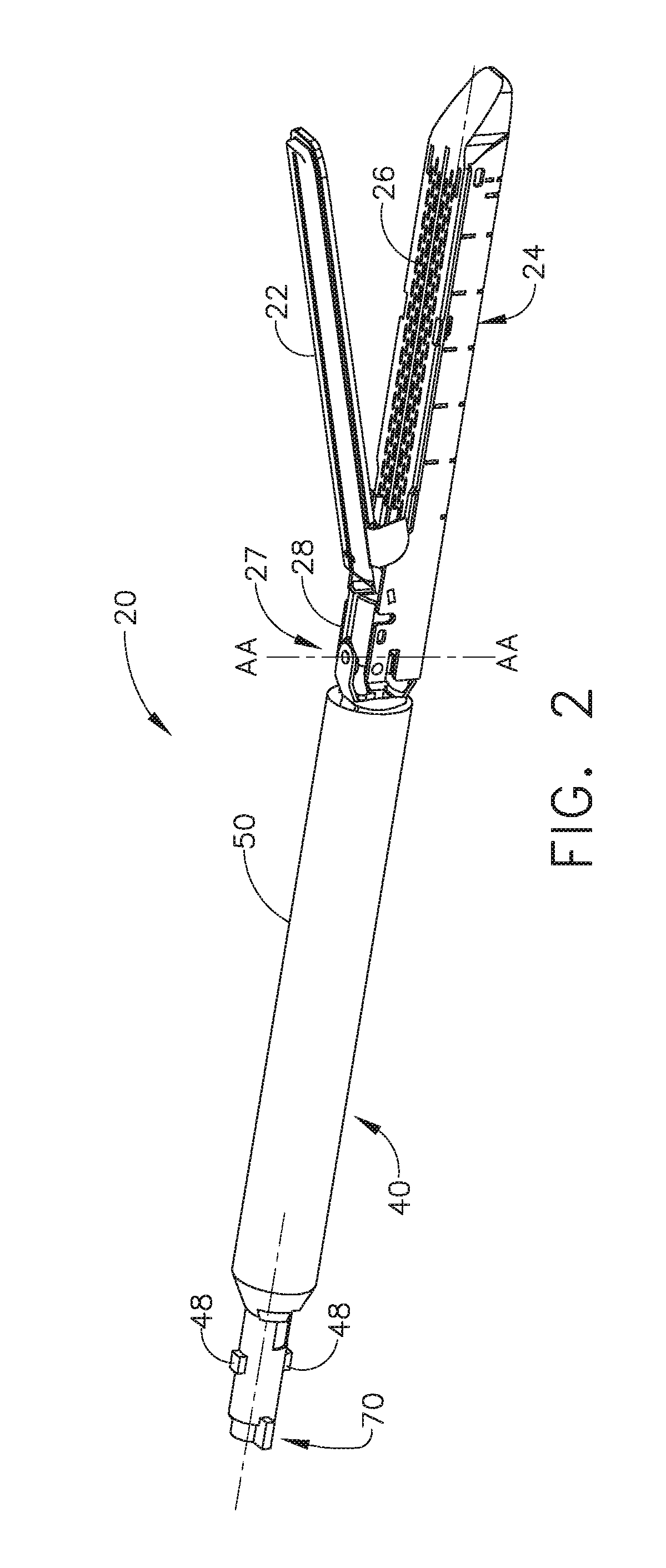

Tamper proof circuit for surgical instrument battery pack

ActiveUS20180064443A1Mechanical/radiation/invasive therapiesSurgical manipulatorsTamper resistanceData storing

A surgical instrument includes a shaft, an end effector extending distally from the shaft, and a housing extending proximally from the shaft. The housing includes a motor configured to generate at least one motion to effectuate the end effector, and a power source configured to supply power to the surgical instrument, wherein the power source includes a casing, a data storage unit, and a deactivation mechanism configured to interrupt access to data stored in the data storage unit. In addition, the power source includes a battery pack and a deactivation mechanism configured to deactivate the battery pack if the casing is breached.

Owner:CILAG GMBH INT

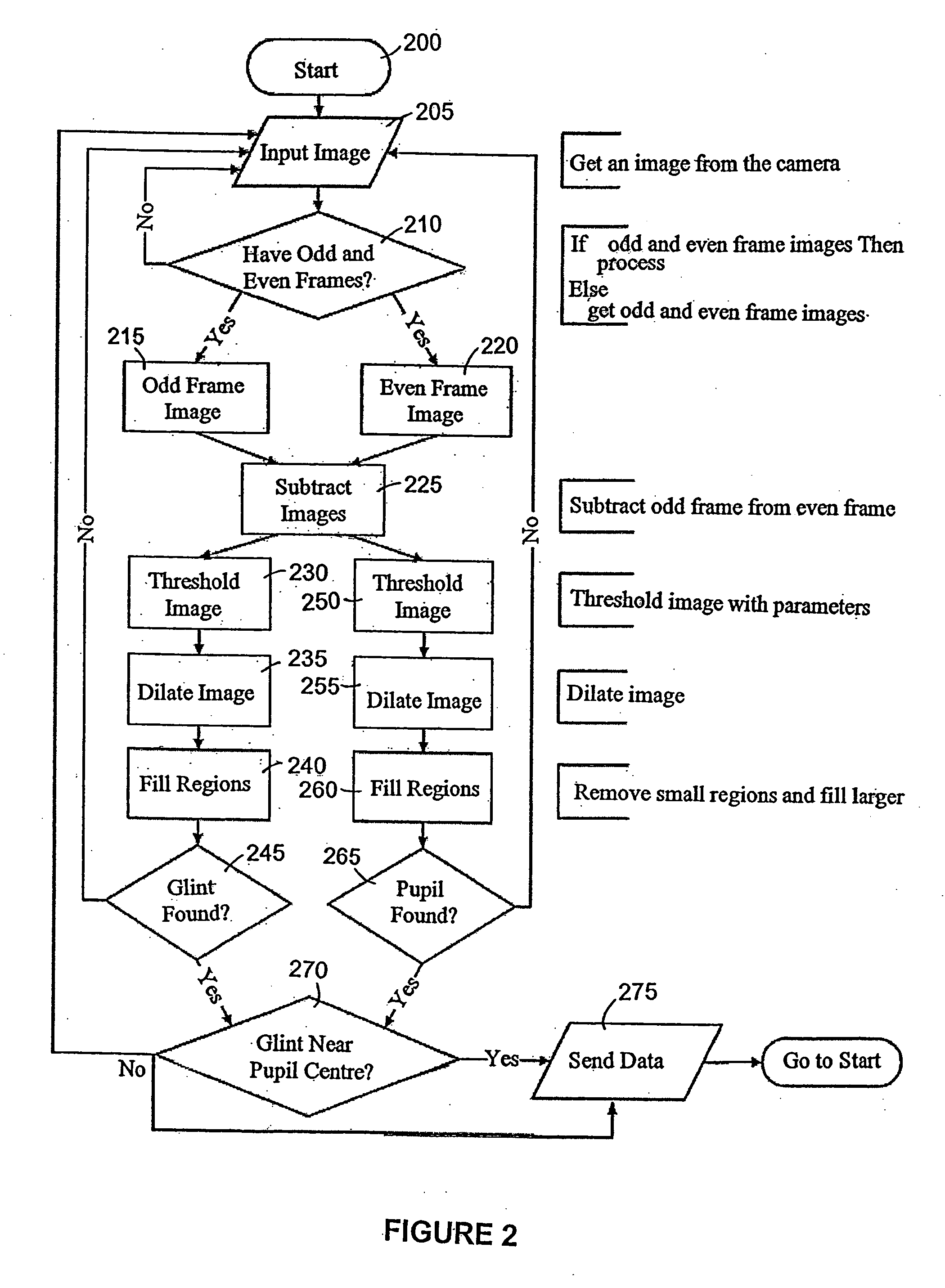

Method and apparatus for communication between humans and devices

InactiveUS20060093998A1Input/output for user-computer interactionAnalogue secracy/subscription systemsHuman–computer interactionContact eye

This invention relates to methods and apparatus for improving communications between humans and devices. The invention provides a method of modulating operation of a device, comprising providing an attentive user interface for obtaining information about an attentive state of a user; and modulating operation of a device on the basis of the obtained information, wherein the operation that is modulated is initiated by the device. Preferably, the information about the user's attentive state is eye contact of the user with the device that is sensed by the attentive user interface.

Owner:QUEENS UNIV OF KINGSTON

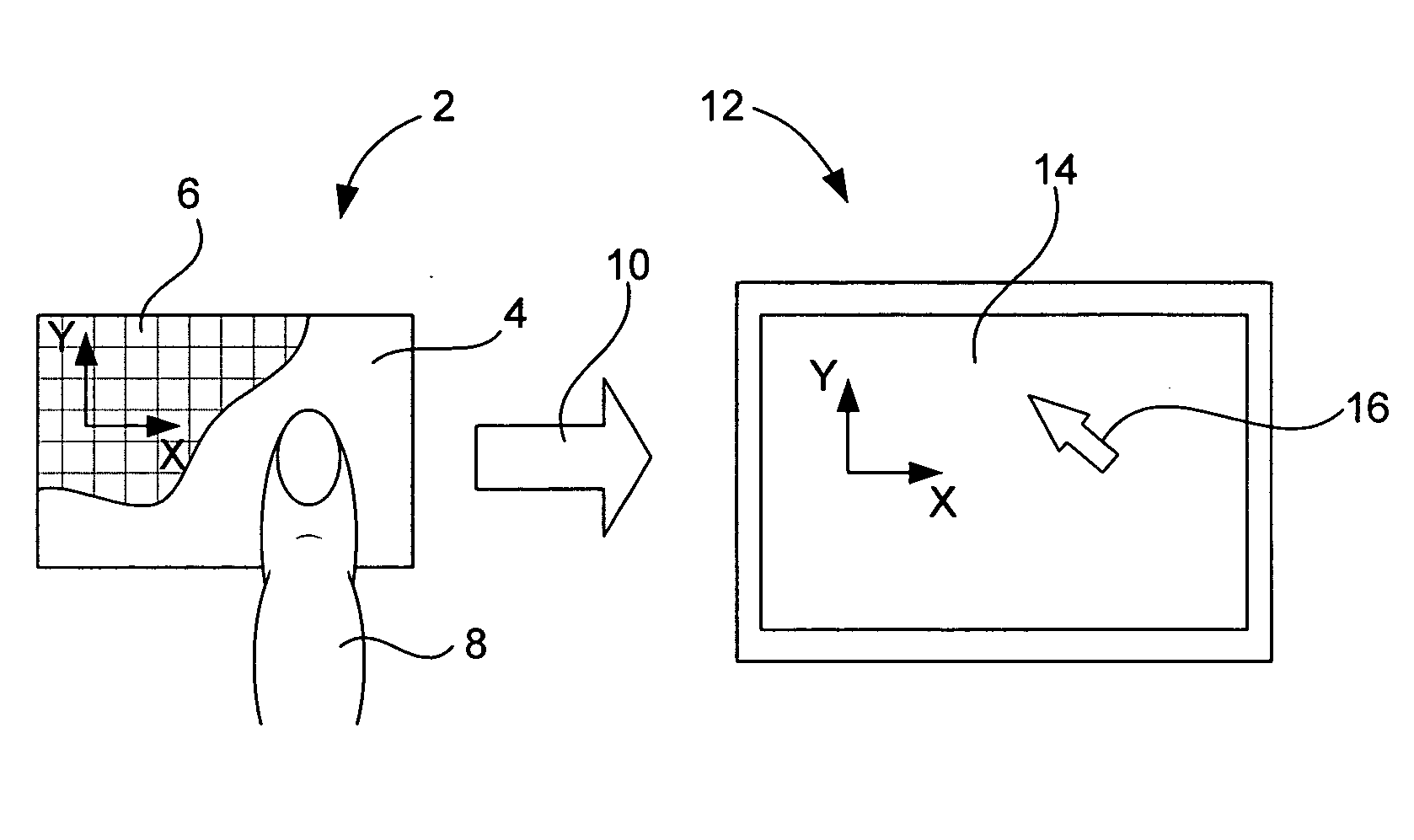

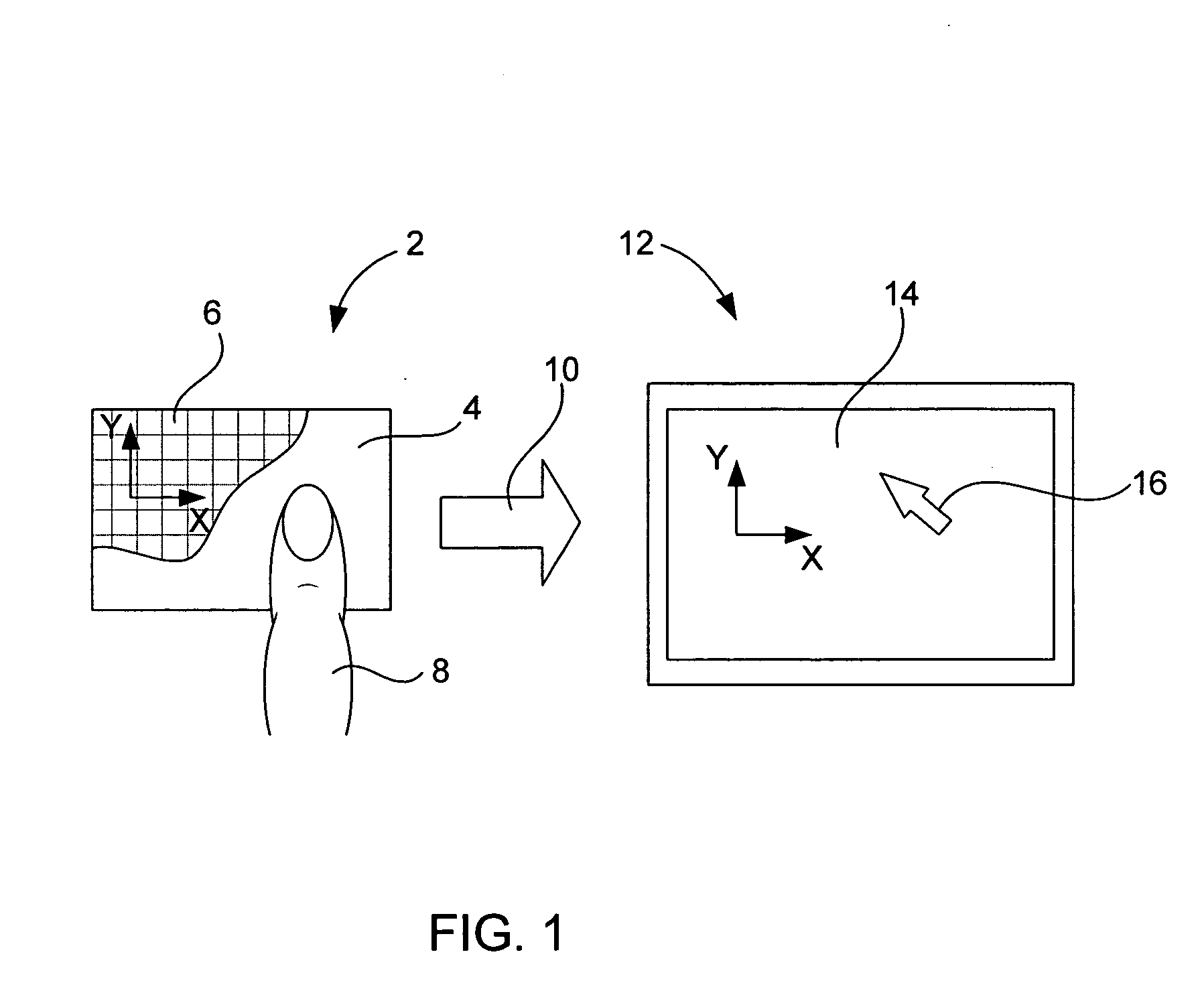

Touch pad for handheld device

A touch pad system is disclosed. The system includes mapping the touch pad into native sensor coordinates. The system also includes producing native values of the native sensor coordinates when events occur on the touch pad. The system further includes filtering the native values of the native sensor coordinates based on the type of events that occur on the touch pad. The system additionally includes generating a control signal based on the native values of the native sensor coordinates when a desired event occurs on the touch pad.

Owner:APPLE INC

Processing an image utilizing a spatially varying pattern

ActiveUS7710391B2Input/output for user-computer interactionProjectorsInteractive videoComputerized system

An interactive video window display system. A projector projects a visual image. A screen displays the visual image, wherein the projector projects the visual image onto a back side of the screen for presentation to a user on a front side of the screen, and wherein the screen is adjacent to a window. An illuminator illuminates an object on a front side of the window. A camera detects interaction of an illuminated object with the visual image, wherein the screen is at least partially transparent to light detectable by the camera, allowing the camera to detect the illuminated object through the screen. A computer system directs the projector to change the visual image in response to the interaction. The projector, the camera, the illuminator, and the computer system are located on the same side of the window.

Owner:MICROSOFT TECH LICENSING LLC

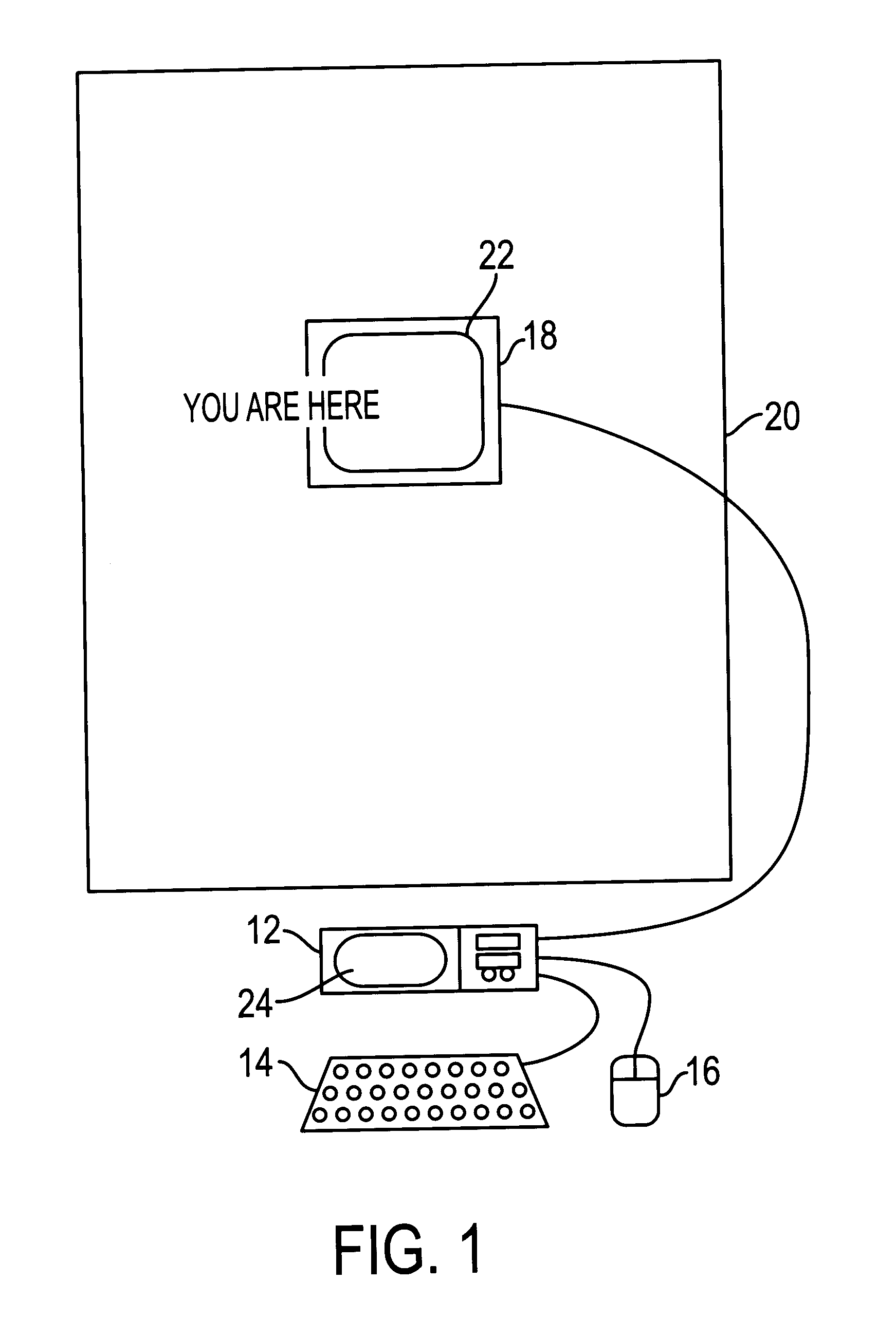

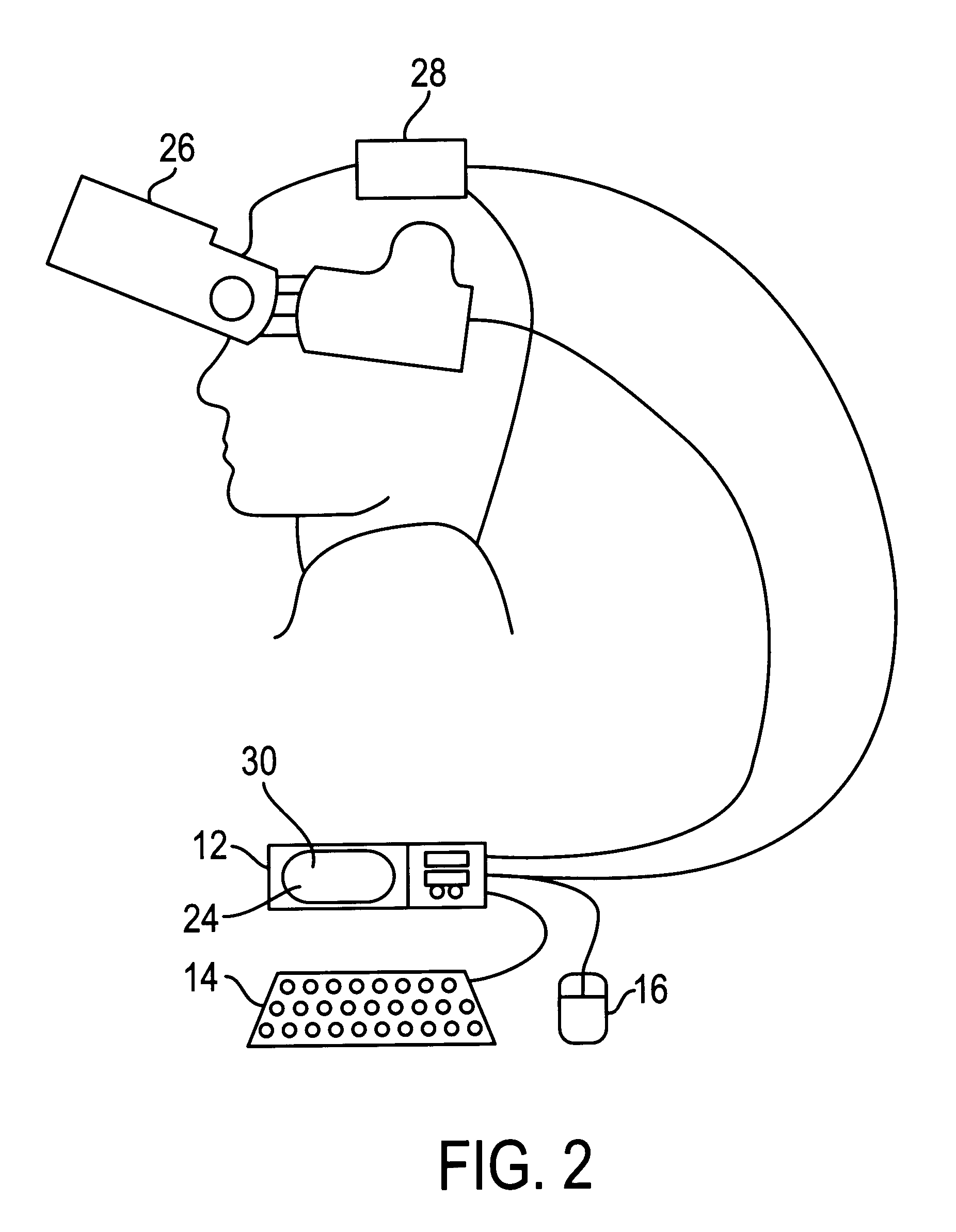

Intuitive control of portable data displays

InactiveUS6184847B1Reduce jitterInput/output for user-computer interactionCathode-ray tube indicatorsVisually impairedData display

A virtual computer monitor is described which enables instantaneous and intuitive visual access to large amounts of visual data by providing the user with a large display projected virtually in front of the user. The user wears a head-mounted display or holds a portable display containing a head-tracker or other motion tracker, which together allow the user to position an instantaneous viewport provided by the display at any position within the large virtual display by turning to look in the desired direction. The instantaneous viewport further includes a mouse pointer, which may be positioned by turning the user's head or moving the portable display, and which may be further positioned using a mouse or analogous control device. A particular advantage of the virtual computer monitor is intuitive access to enlarged computer output for visually-impaired individuals.

Owner:META PLATFORMS INC +1

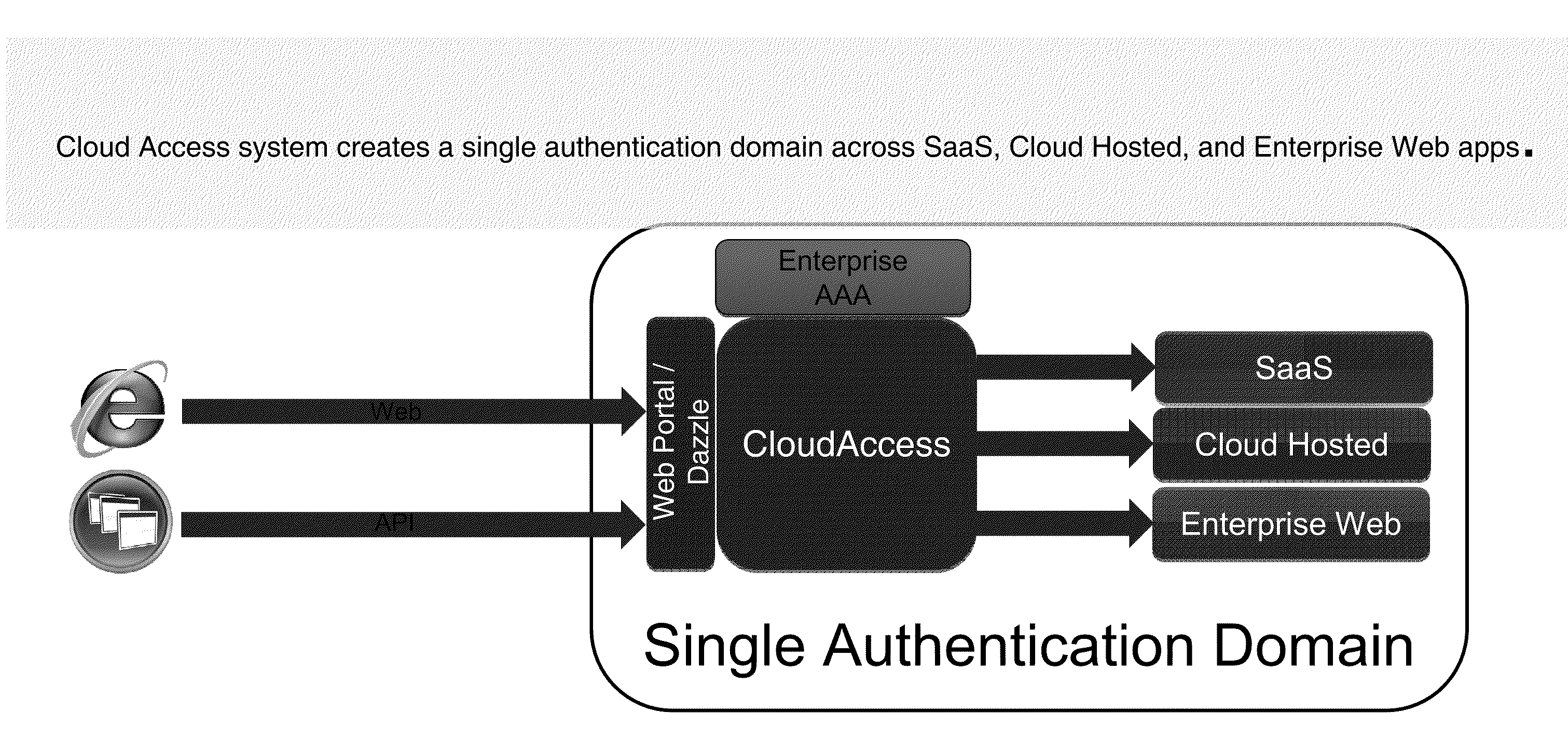

Systems and Methods for Providing a Single Click Access to Enterprise, SAAS and Cloud Hosted Application

ActiveUS20110277027A1Input/output for user-computer interactionDigital data processing detailsThird partyGraphics

The present disclosure is directed to methods and systems of providing a user-selectable list of disparately hosted applications. A device intermediary to a client and one or more servers may receive a user request to access a list of applications published to the user. The device may communicate to the client the list of published applications available to the user, the list comprising graphical icons corresponding to disparately hosted applications, at least one graphical icon corresponding to a third-party hosted application of the disparately hosted applications, the third party hosted application served by a remote third-party server. The device may receive a selection from the user of the at least one graphical icon. The device may communicate, from the remote third party server to the client of the user, execution of the third party hosted application responsive to the selection by the user.

Owner:CITRIX SYST INC

Front-end signal compensation

A touch surface device having improved sensitivity and dynamic range is disclosed. In one embodiment, the touch surface device includes a touch-sensitive panel having at least one sense node for providing an output signal indicative of a touch or no-touch condition on the panel; a compensation circuit, coupled to the at least one sense node, for generating a compensation signal that when summed with the output signal removes an undesired portion of the output signal so as to generated a compensated output signal; and an amplifier having an inverting input coupled to the output of the compensation circuit and a non-inverting input coupled to a known reference voltage.

Owner:APPLE INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com