Patents

Literature

83257 results about "Human–computer interaction" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

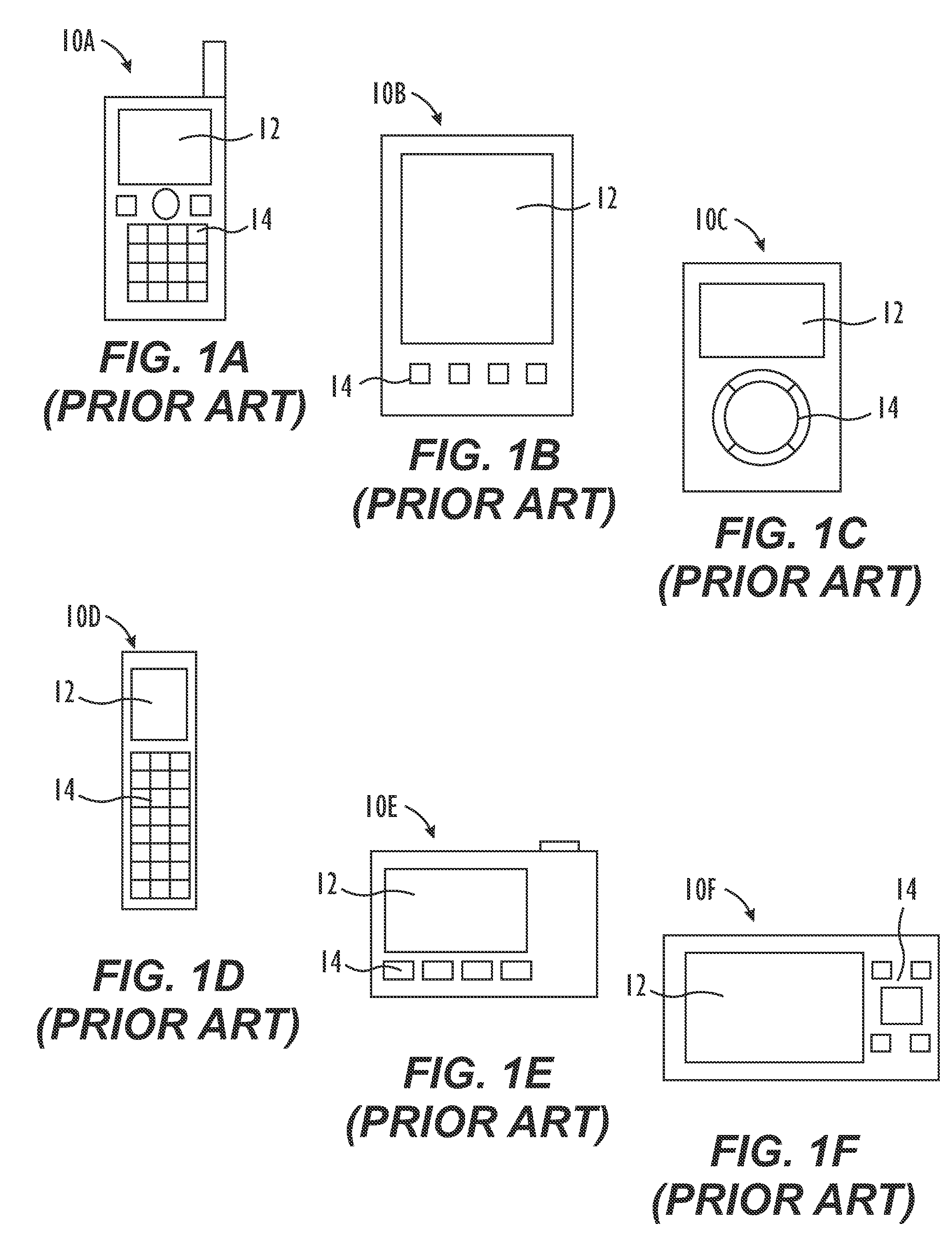

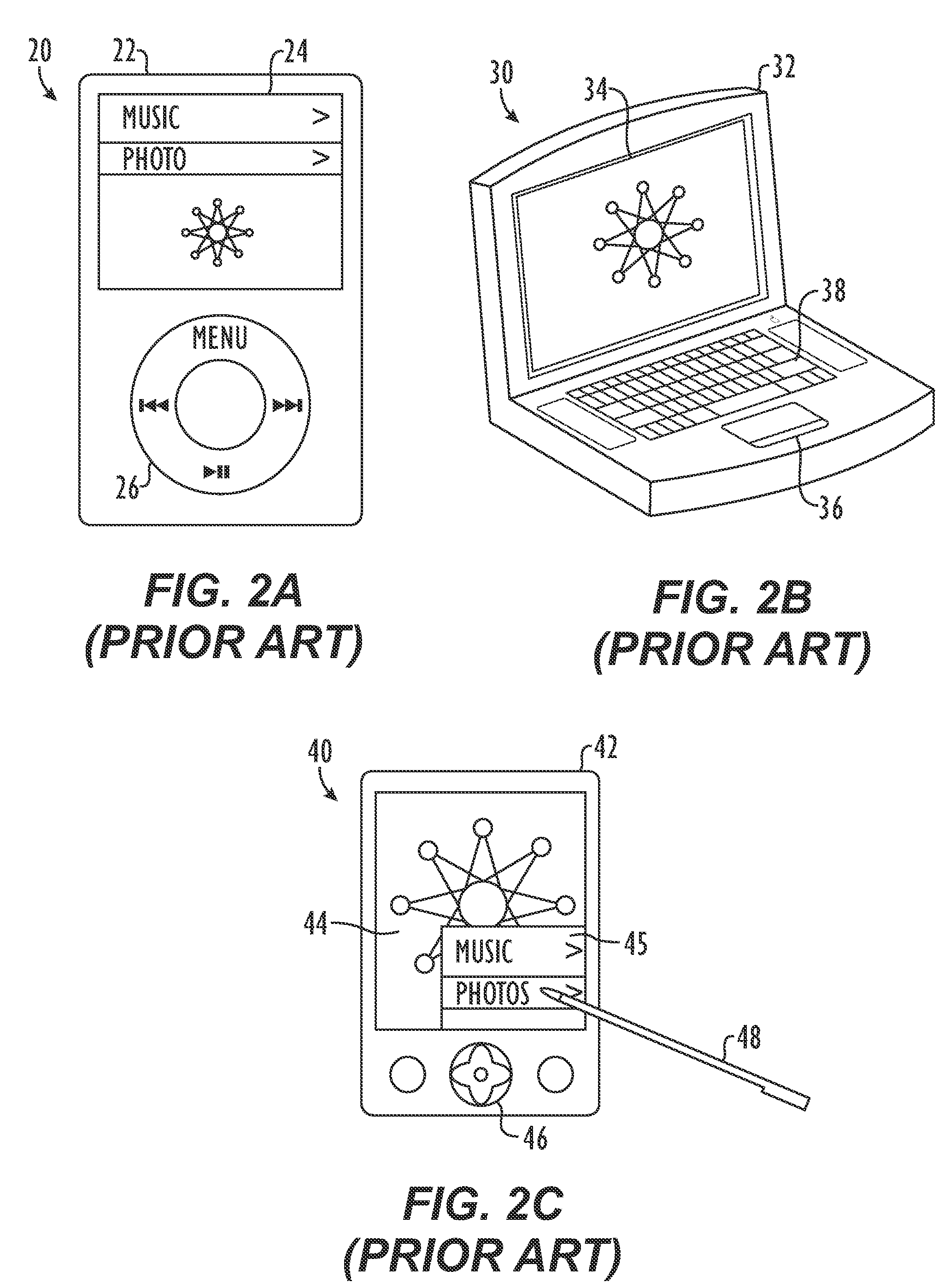

Human–computer interaction (HCI) researches the design and use of computer technology, focused on the interfaces between people (users) and computers. Researchers in the field of HCI observe the ways in which humans interact with computers and design technologies that let humans interact with computers in novel ways. As a field of research, human–computer interaction is situated at the intersection of computer science, behavioural sciences, design, media studies, and several other fields of study. The term was popularized by Stuart K. Card, Allen Newell, and Thomas P. Moran in their seminal 1983 book, The Psychology of Human–Computer Interaction, although the authors first used the term in 1980 and the first known use was in 1975. The term connotes that, unlike other tools with only limited uses (such as a hammer, useful for driving nails but not much else), a computer has many uses and this takes place as an open-ended dialog between the user and the computer. The notion of dialog likens human–computer interaction to human-to-human interaction, an analogy which is crucial to theoretical considerations in the field.

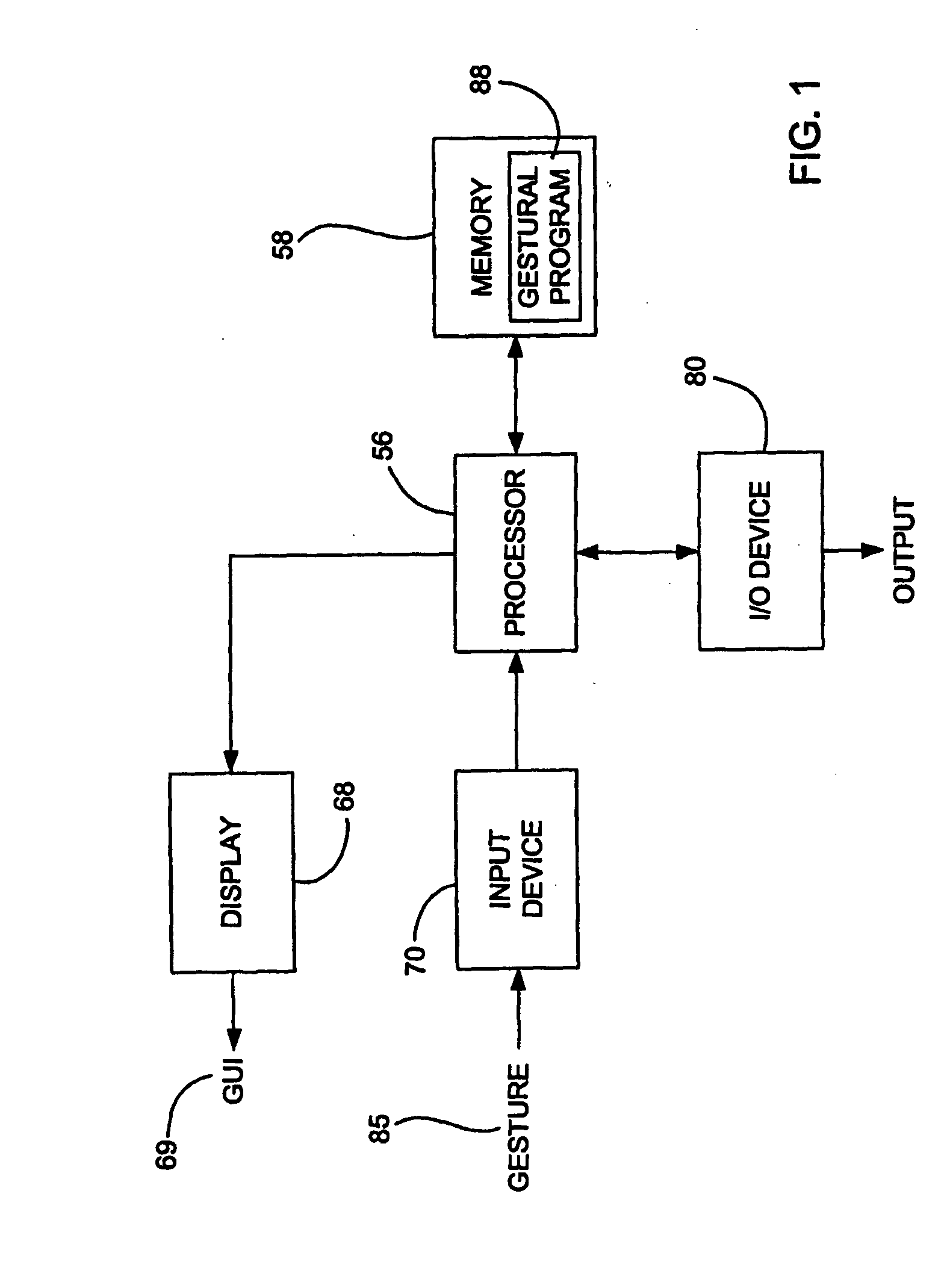

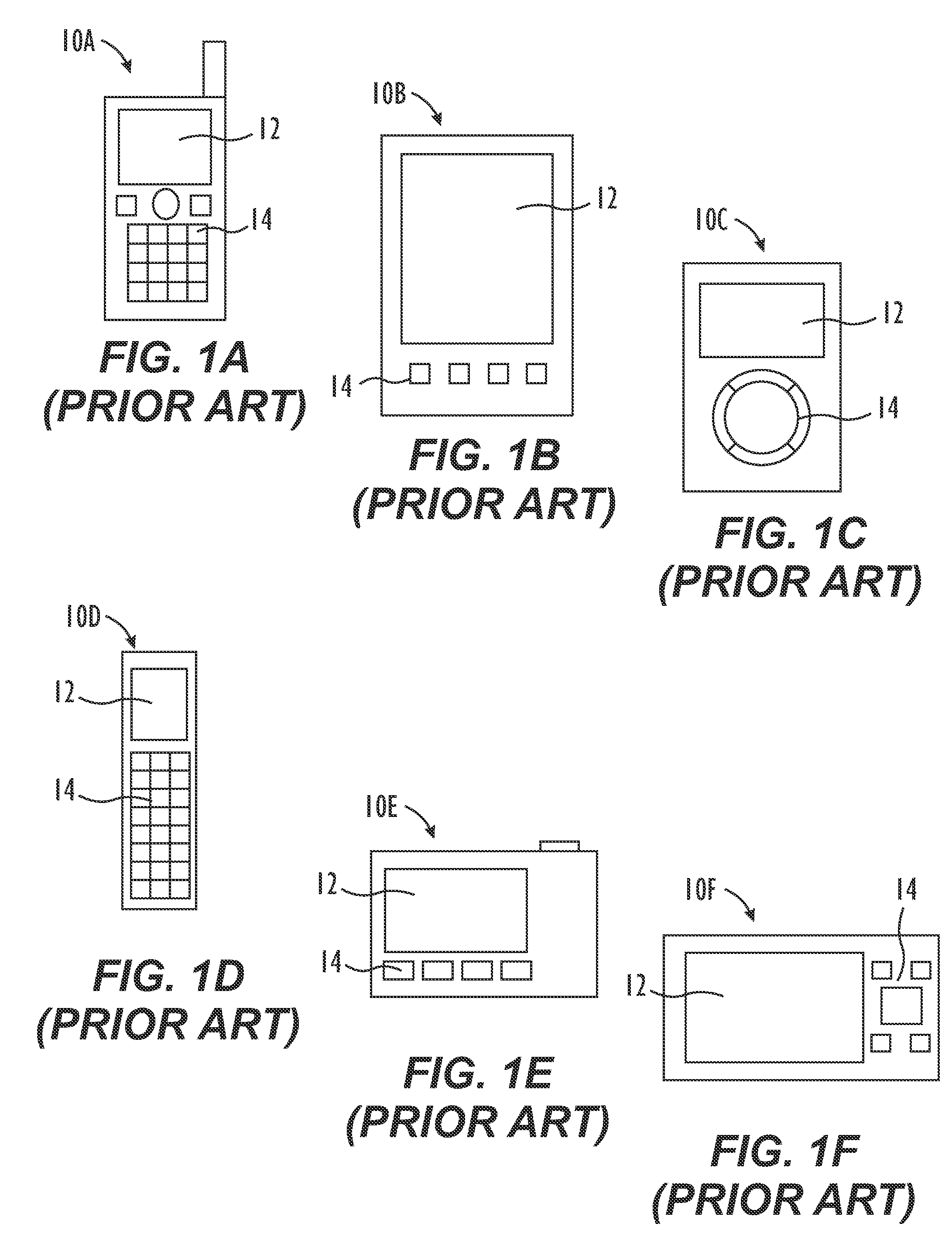

Mode-based graphical user interfaces for touch sensitive input devices

ActiveUS20060026535A1Input/output for user-computer interactionGraph readingGraphicsGraphical user interface

A user interface method is disclosed. The method includes detecting a touch and then determining a user interface mode when a touch is detected. The method further includes activating one or more GUI elements based on the user interface mode and in response to the detected touch.

Owner:APPLE INC

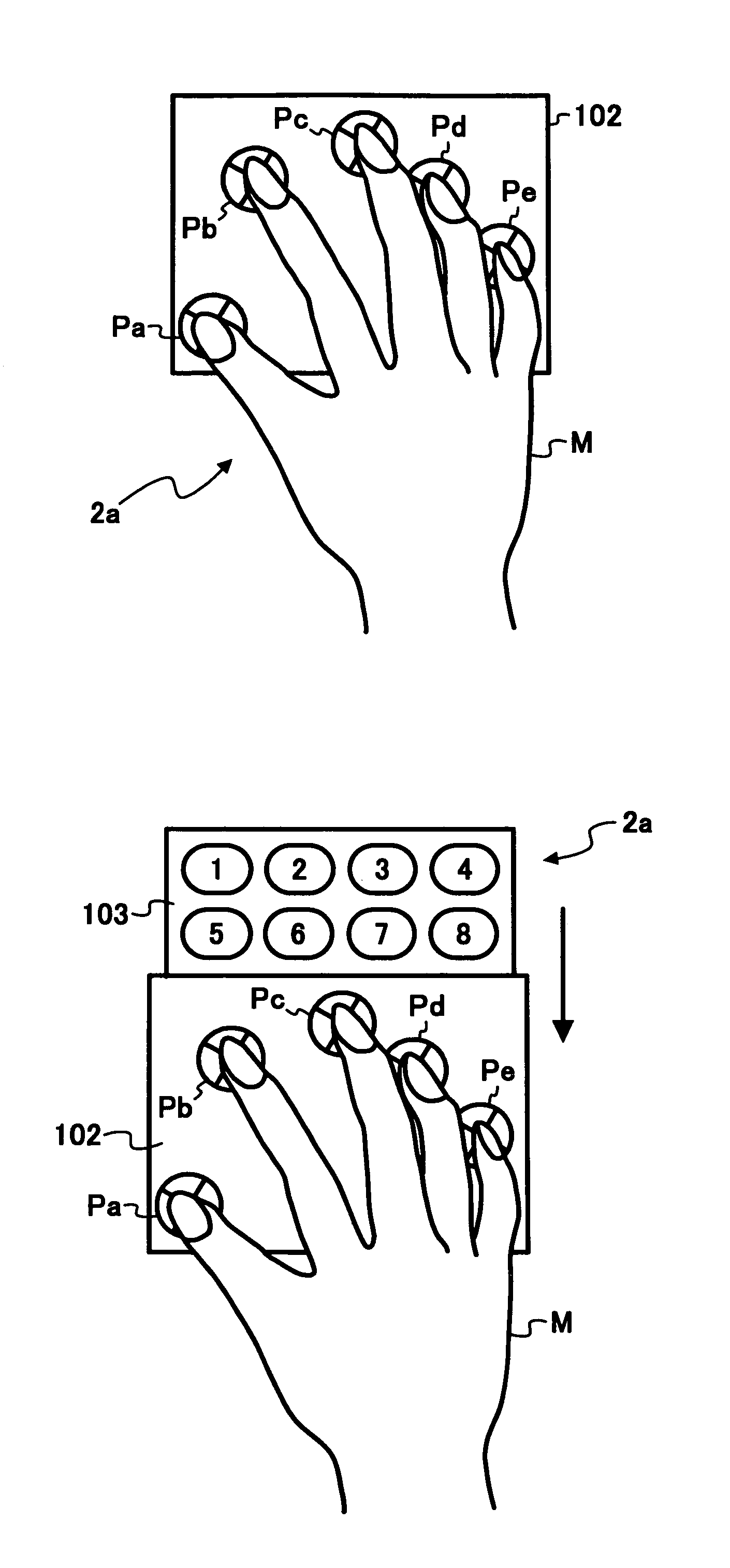

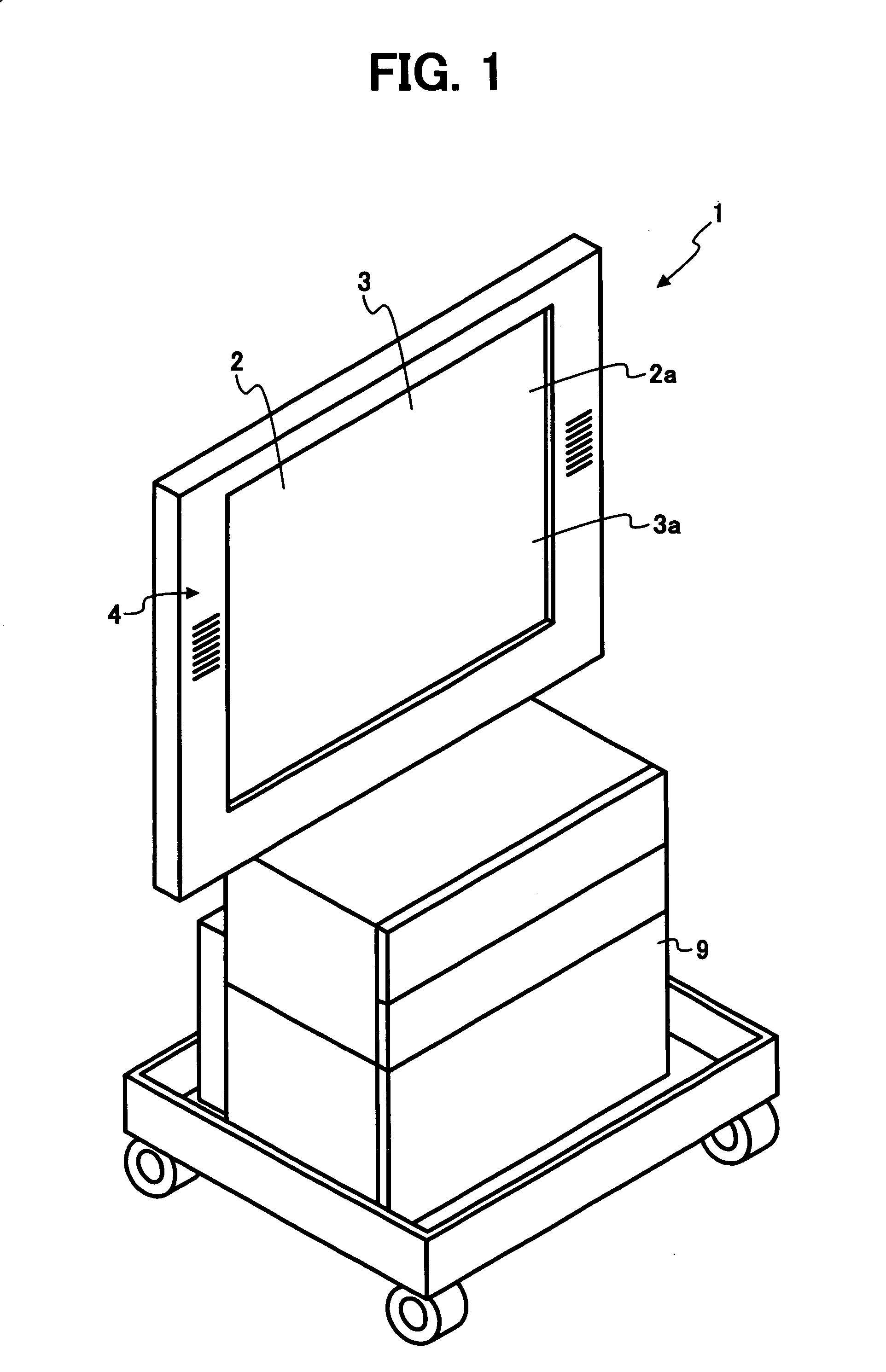

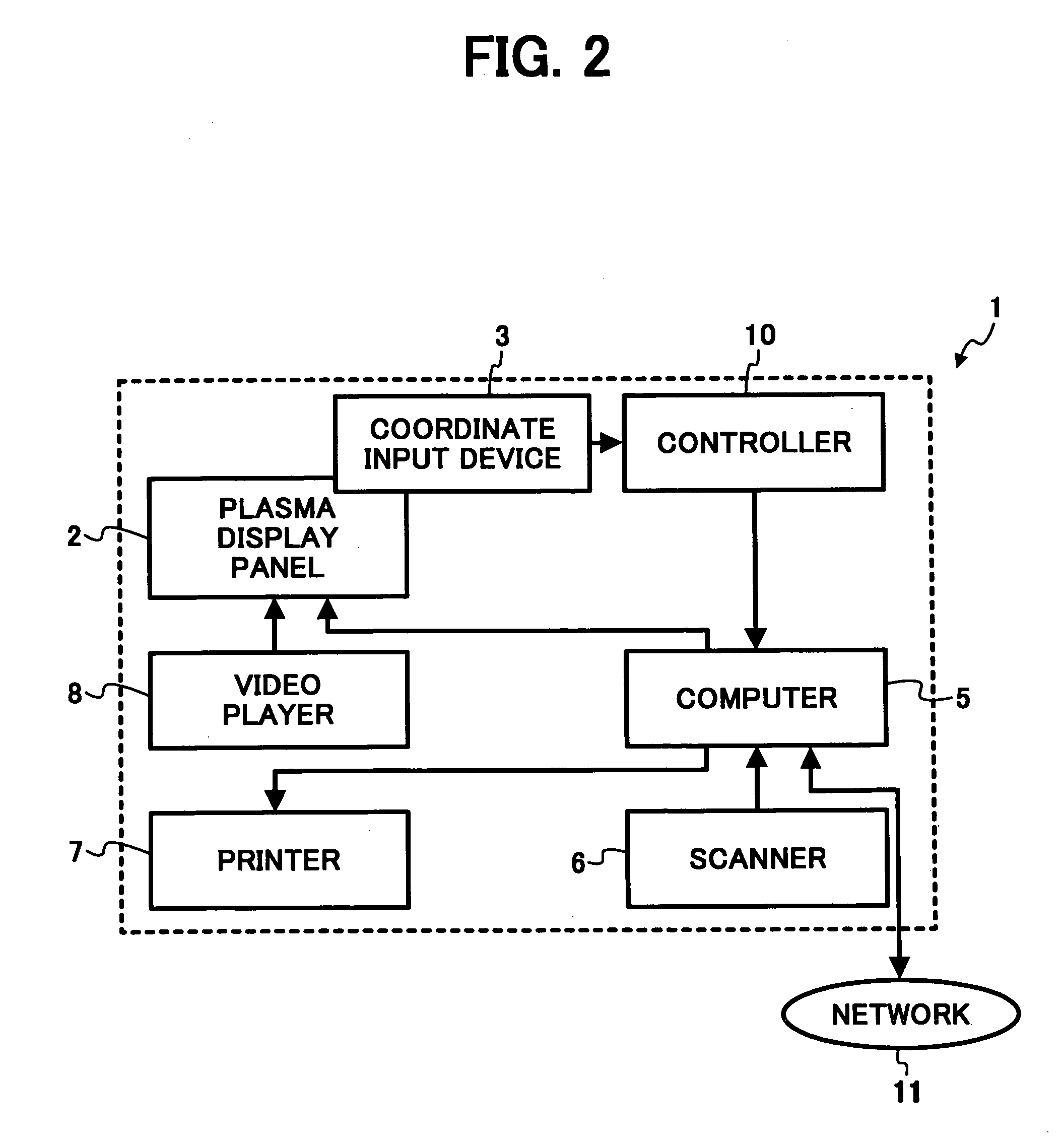

Information input and output system, method, storage medium, and carrier wave

ActiveUS7015894B2Easy to operateEasy to distinguishInput/output for user-computer interactionCathode-ray tube indicatorsCarrier signalDisplay device

A coordinate input device detects coordinates of a position by indicating a screen of a display device with fingers of one hand, and transfers information of the detected coordinates to a computer through a controller. The computer receives an operation that complies with the detected coordinates, and executes the corresponding processing. For example, when it is detected that two points on the screen have been simultaneously indicated, an icon registered in advance is displayed close to the indicated position.

Owner:RICOH KK

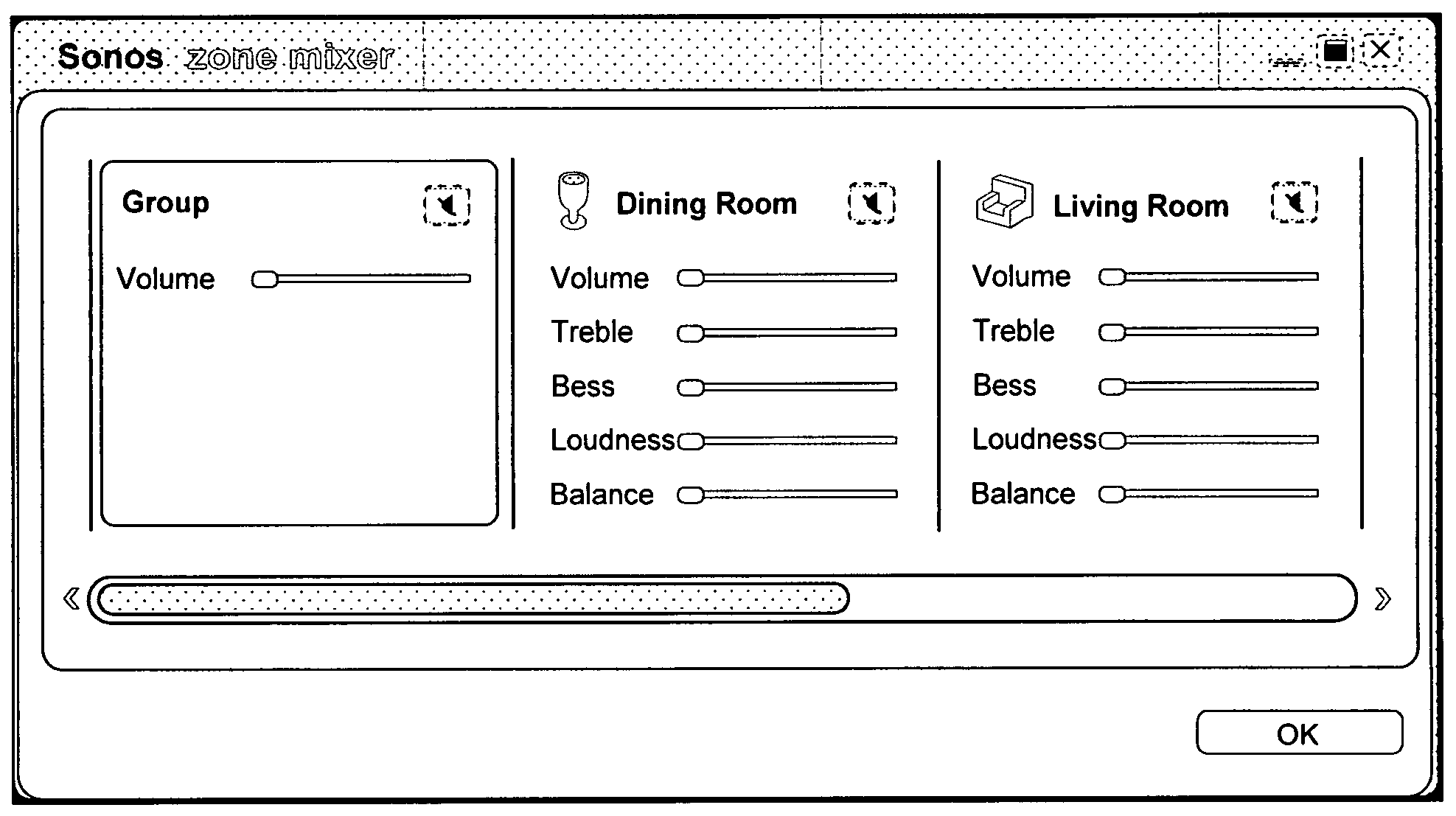

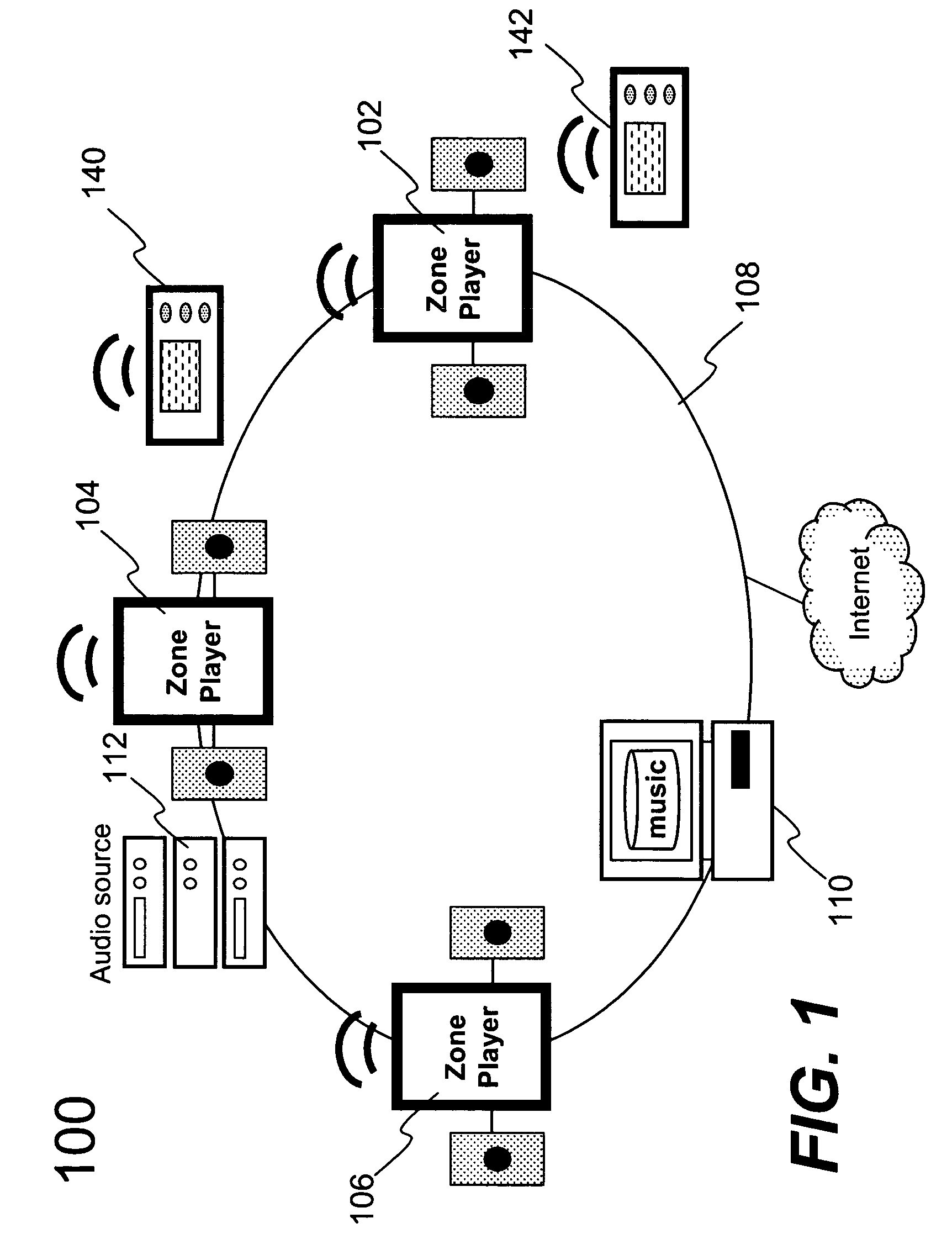

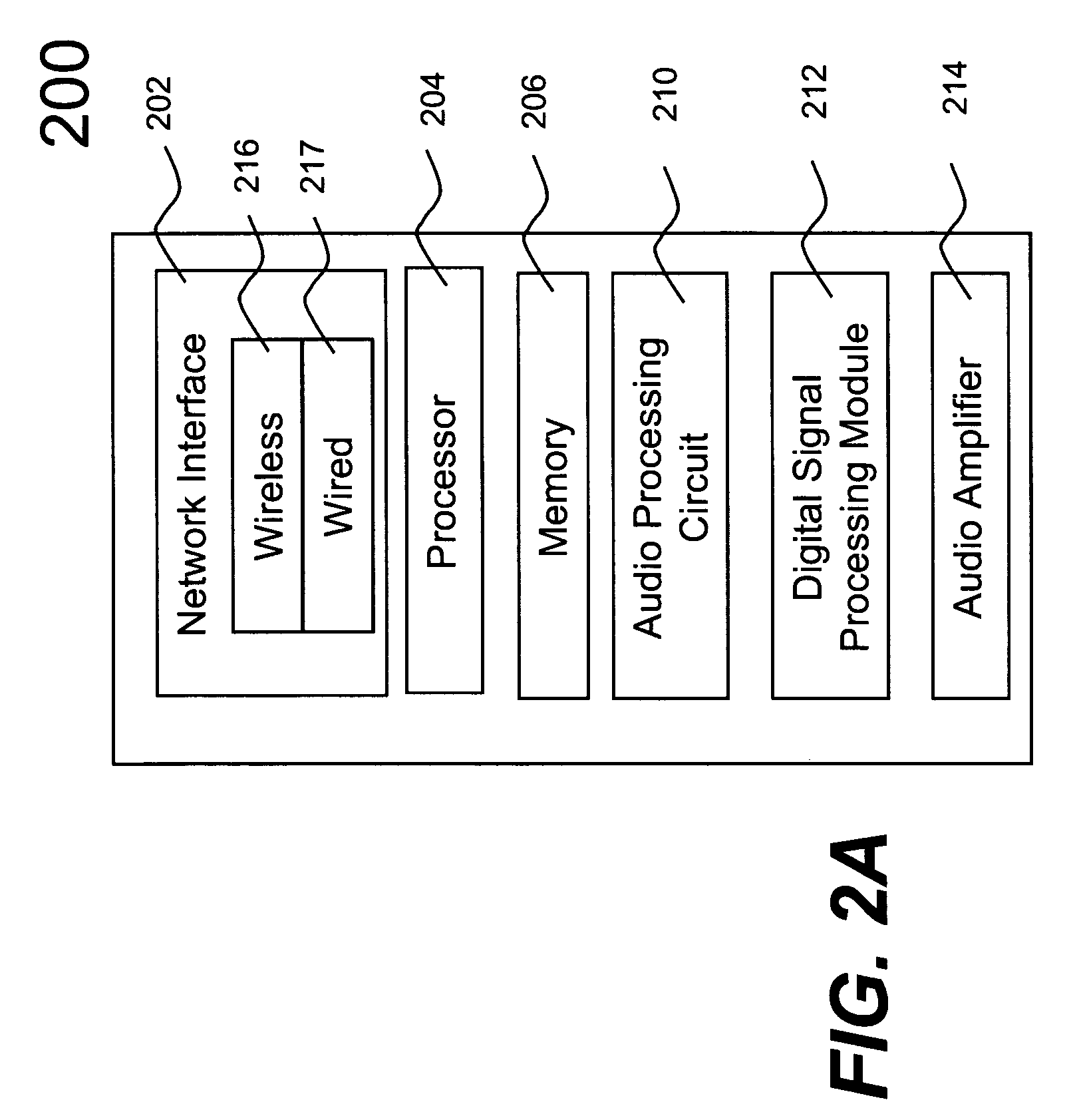

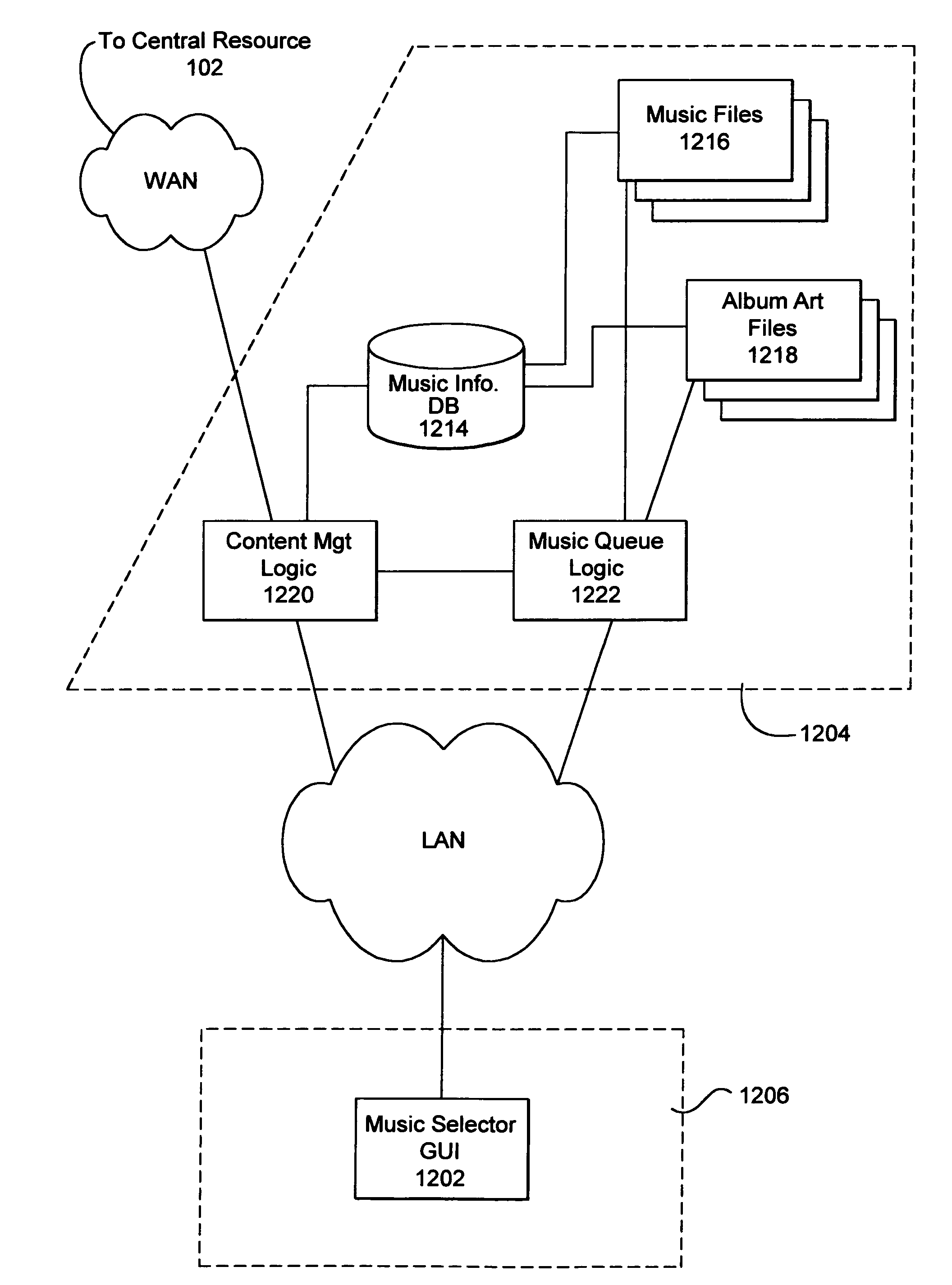

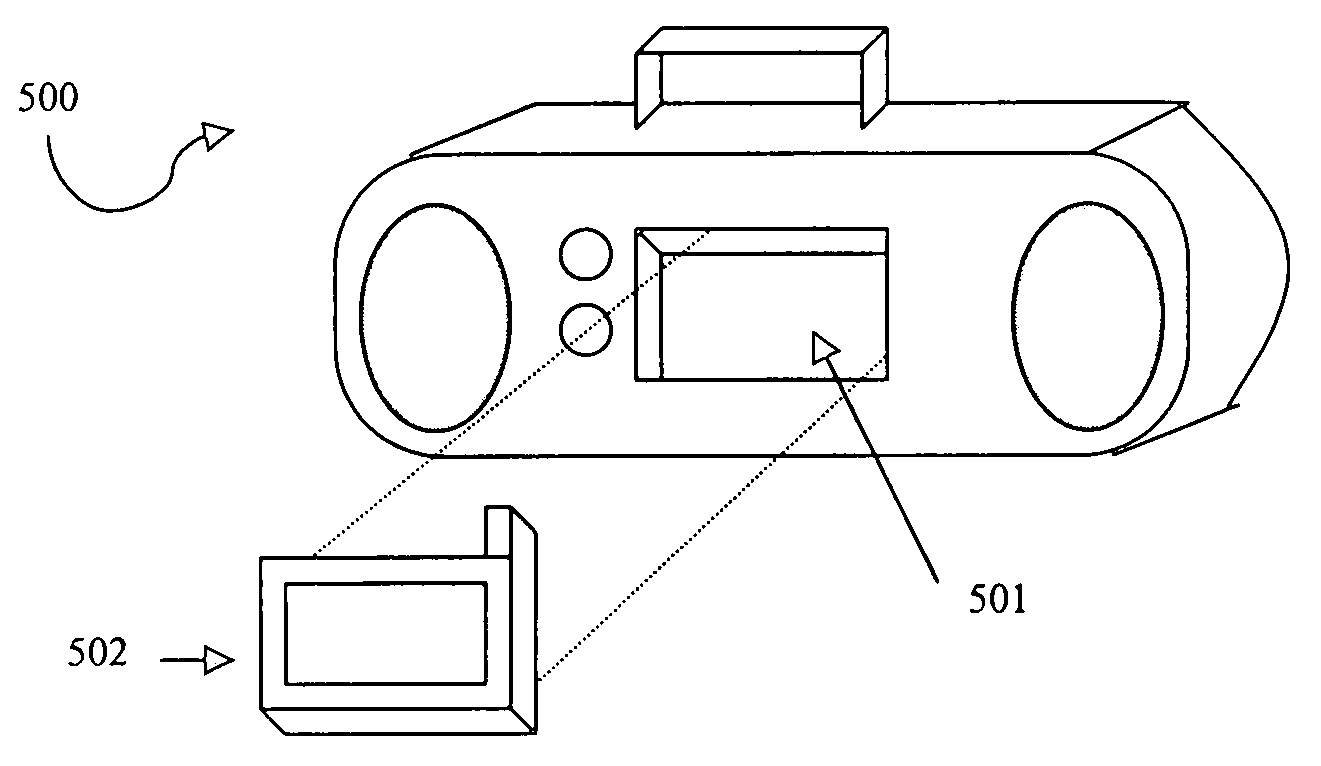

Method and apparatus for controlling multimedia players in a multi-zone system

Techniques for controlling zone group and zone group characteristics such as audio volume in a multi-zone system are disclosed. The multi-zone system includes a number of multimedia players, each preferably located in a zone. A controller may control the operations of all of the zone players remotely from any one of the zones. Two or more zone players may be dynamically grouped as a zone group for synchronized operations. According to one aspect of the techniques, a zone group configuration can be managed, updated, modified via an interactive user interface provided in a controlling device. The zone group configuration may be saved in one of zone players. According to another aspect of the techniques, the audio volume control of a zone group can be performed individually or synchronously as a group.

Owner:SONOS

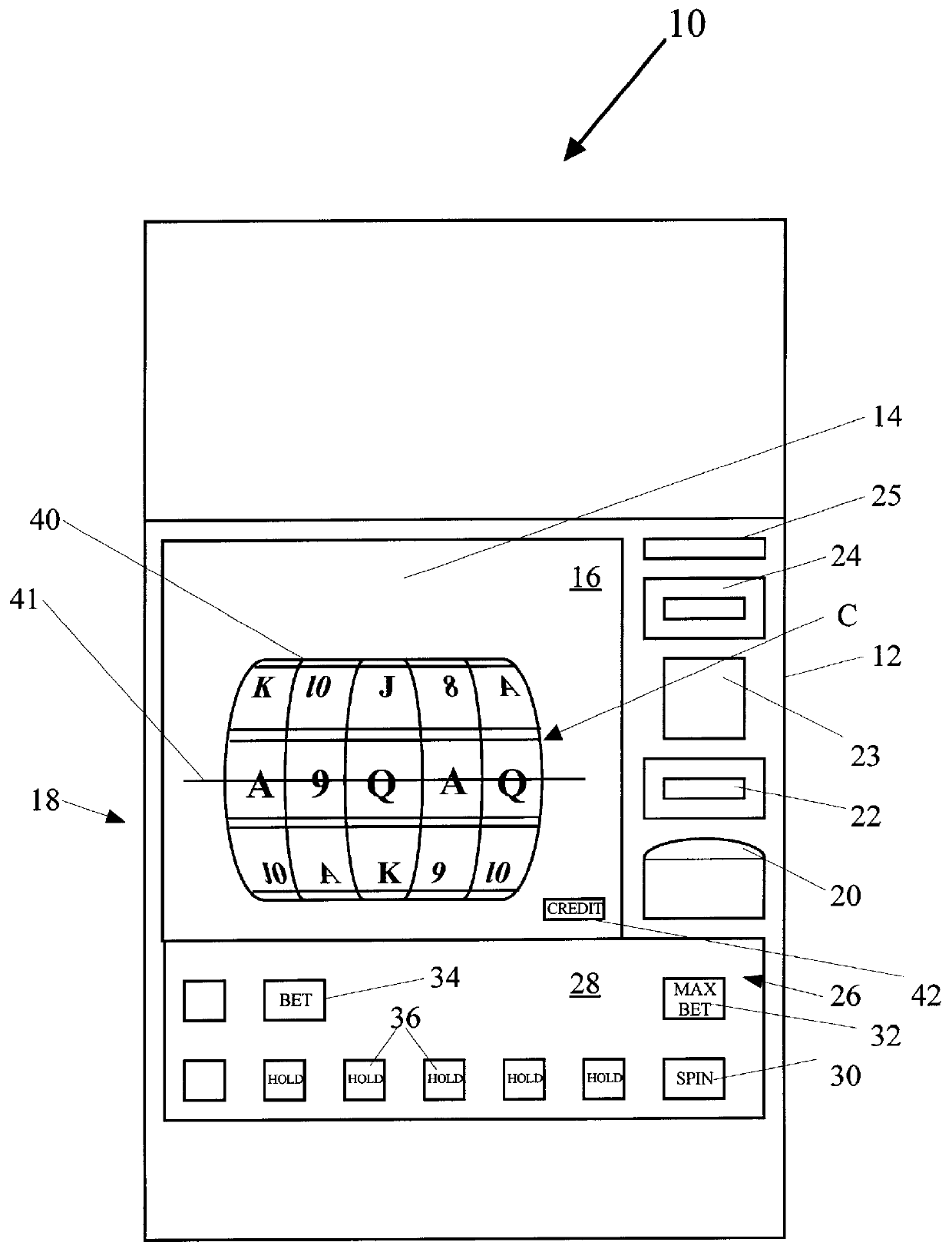

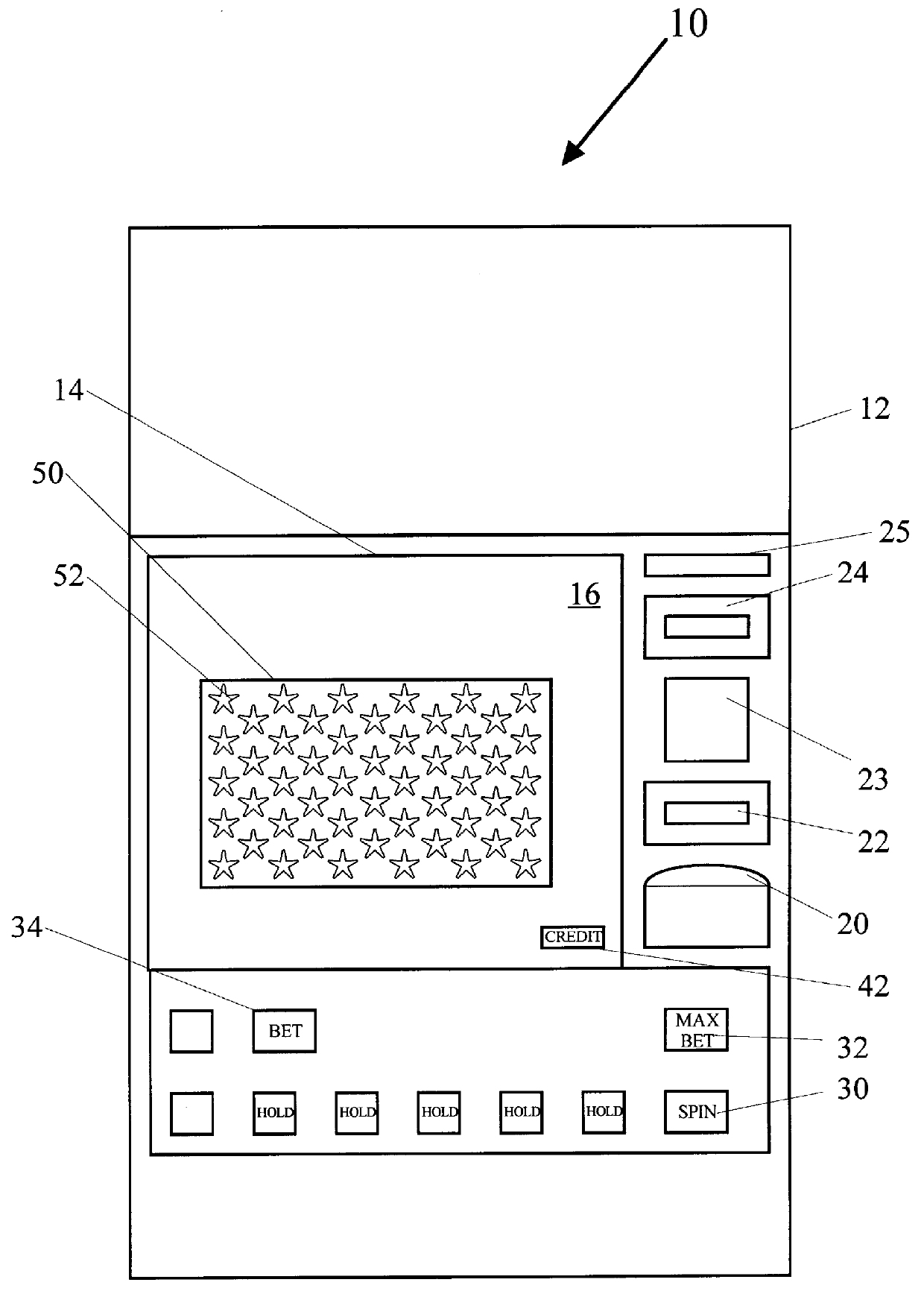

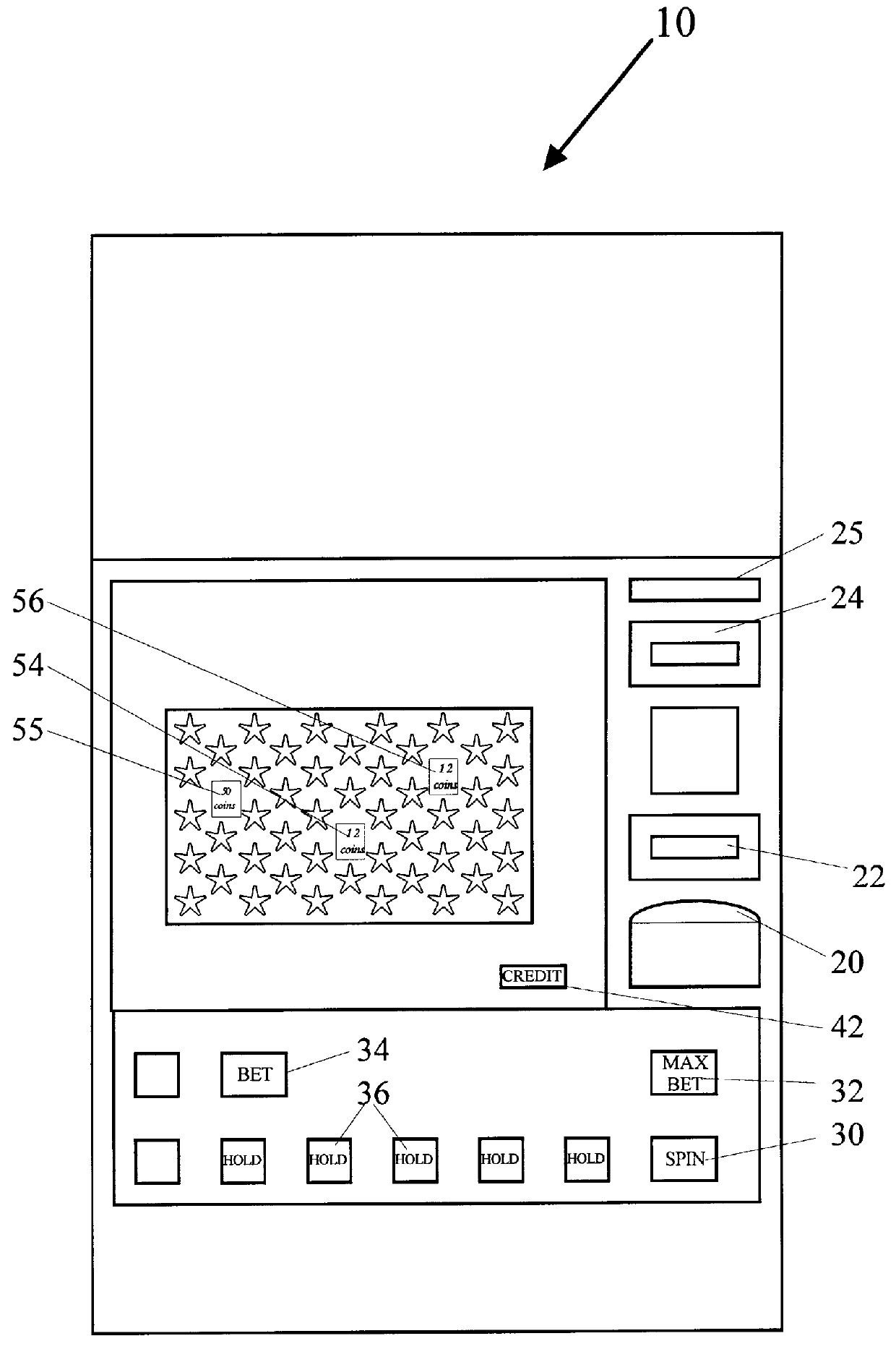

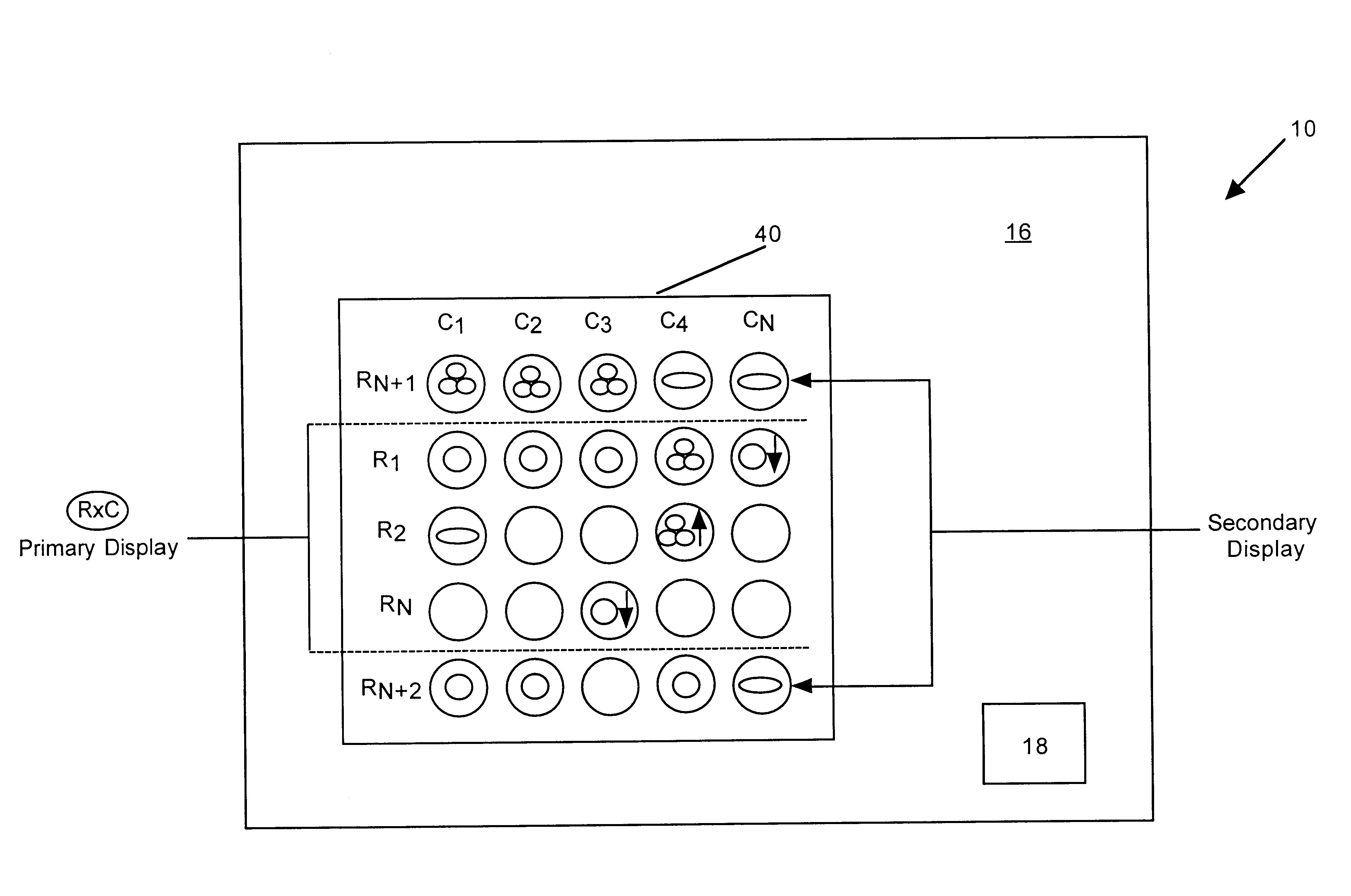

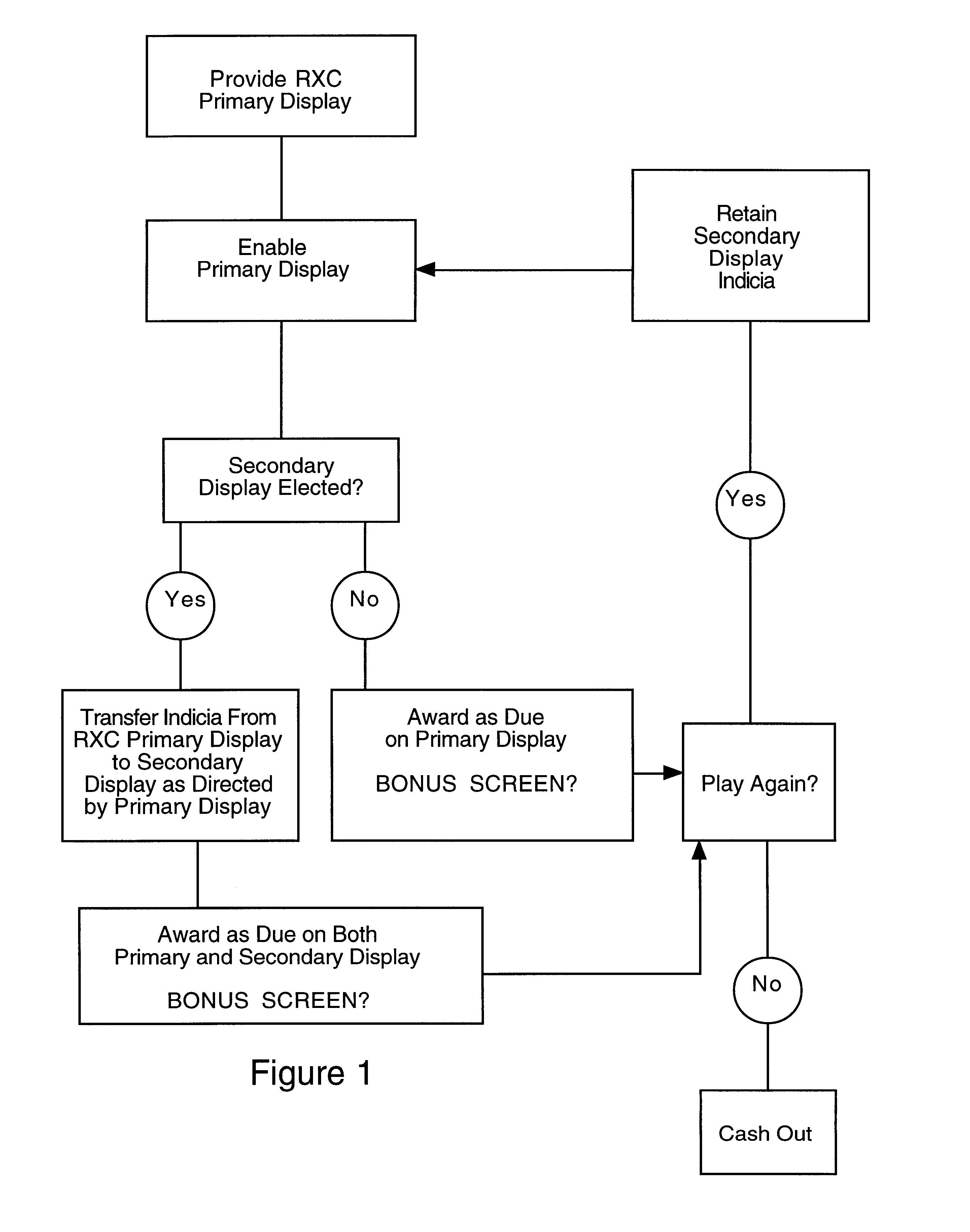

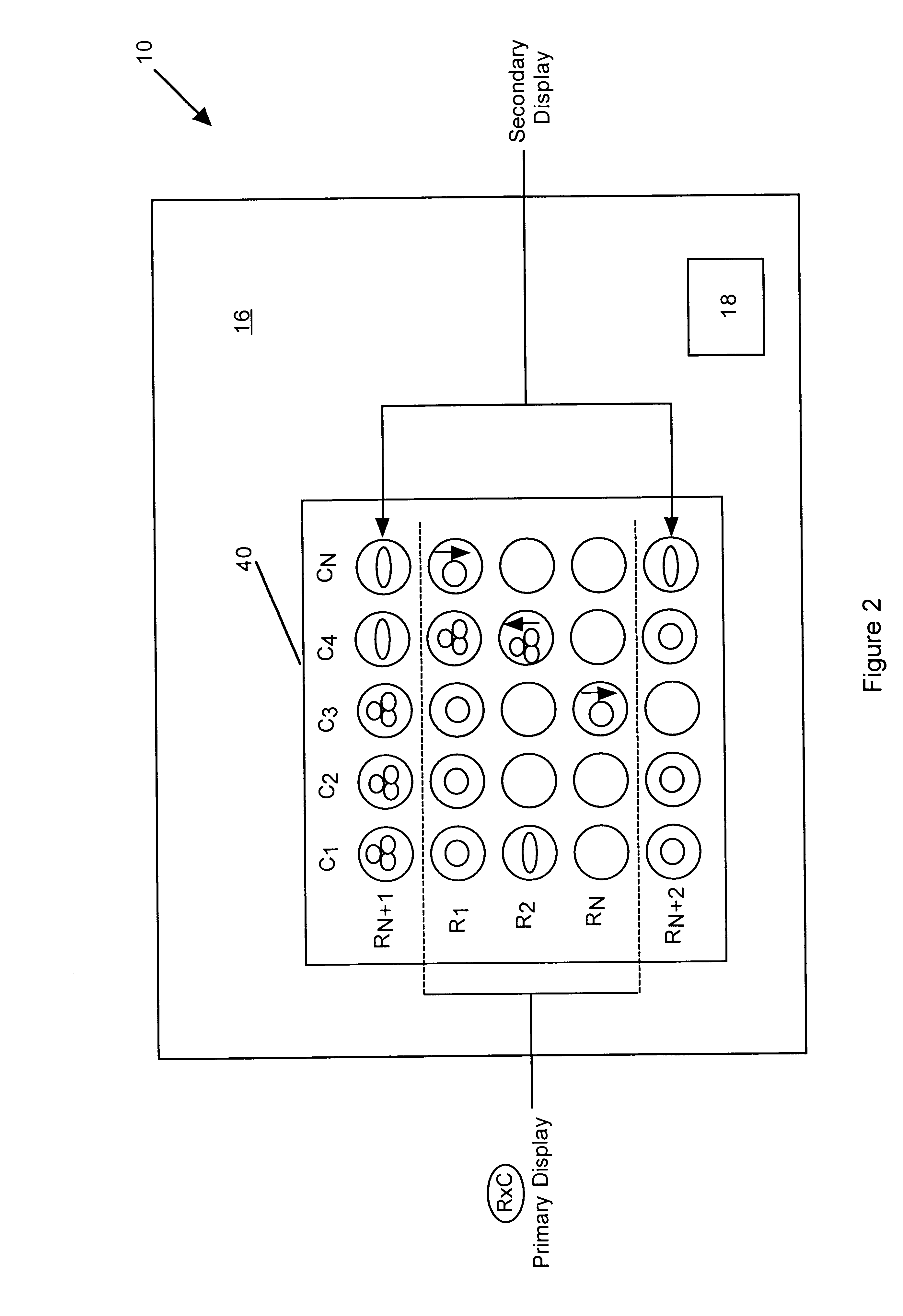

Gaming apparatus and method including a player interactive bonus game

InactiveUS6089976AEasy to understandHappy to useCard gamesApparatus for meter-controlled dispensingHuman–computer interactionImage display

Owner:CASINO DATA SYST

Distributed electronic entertainment method and apparatus

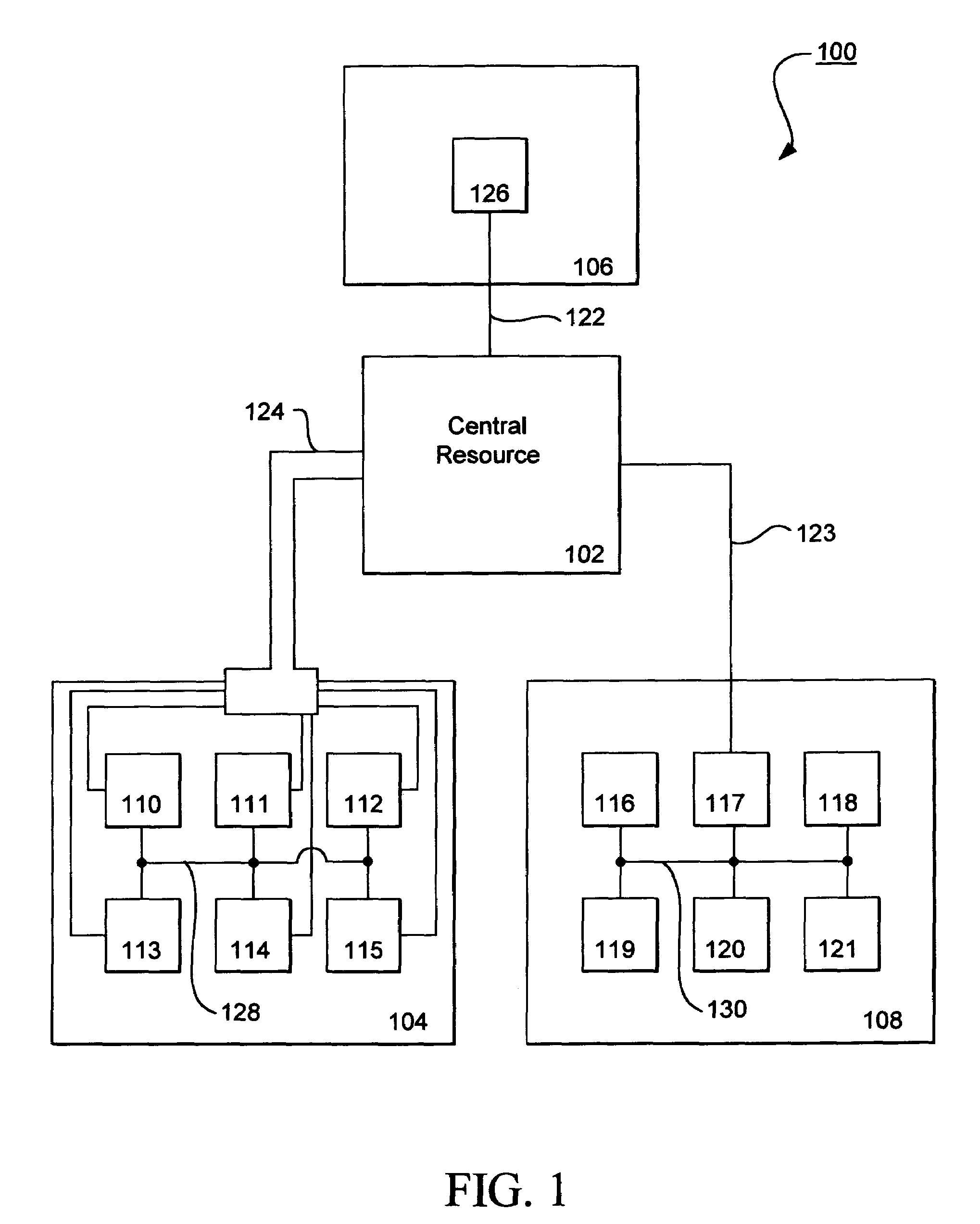

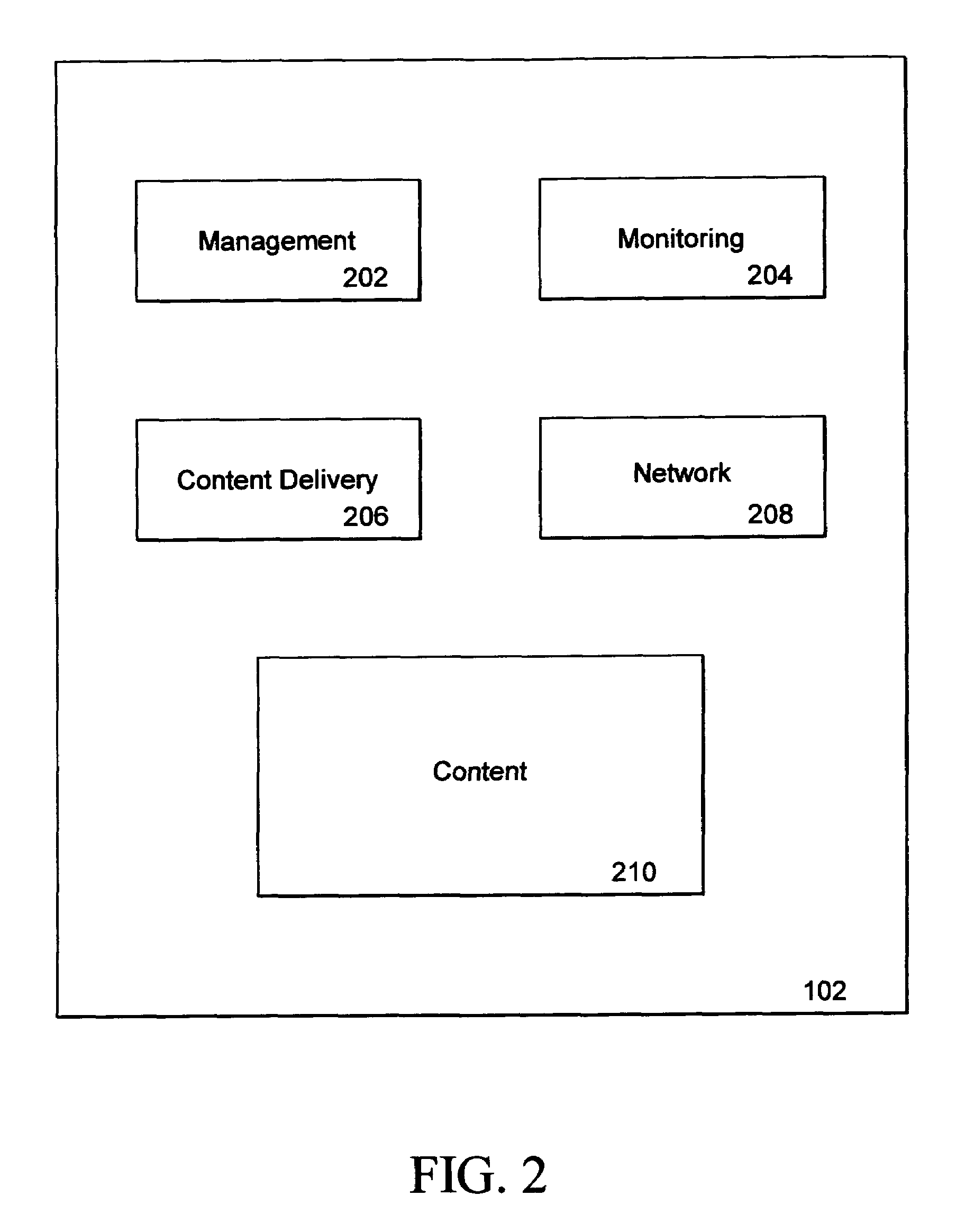

A distributed electronic entertainment method and apparatus are described. In one embodiment, a central management resource is coupled to multiple out-of-home venues through a wide area network (WAN). The central management resource stores content and performs management, monitoring and entertainment content delivery functions. At each venue at least one entertainment unit is coupled to the WAN. Multiple entertainment units in a venue are coupled to each other through a local area network (LAN). In one embodiment, an entertainment unit includes a user interface that comprises at least one graphical user interface (GUI). The entertainment unit further comprises a local memory device that stores entertainment content comprising music, a peripheral interface, and a user input device. A plurality of peripheral devices are coupled to the at least one entertainment unit via the peripheral interface, wherein a user, through the user input device and the user interface, performs at least one activity from a group comprising, playing music, playing electronic games, viewing television content, and browsing the Internet.

Owner:AMI ENTERTAINMENT NETWORK

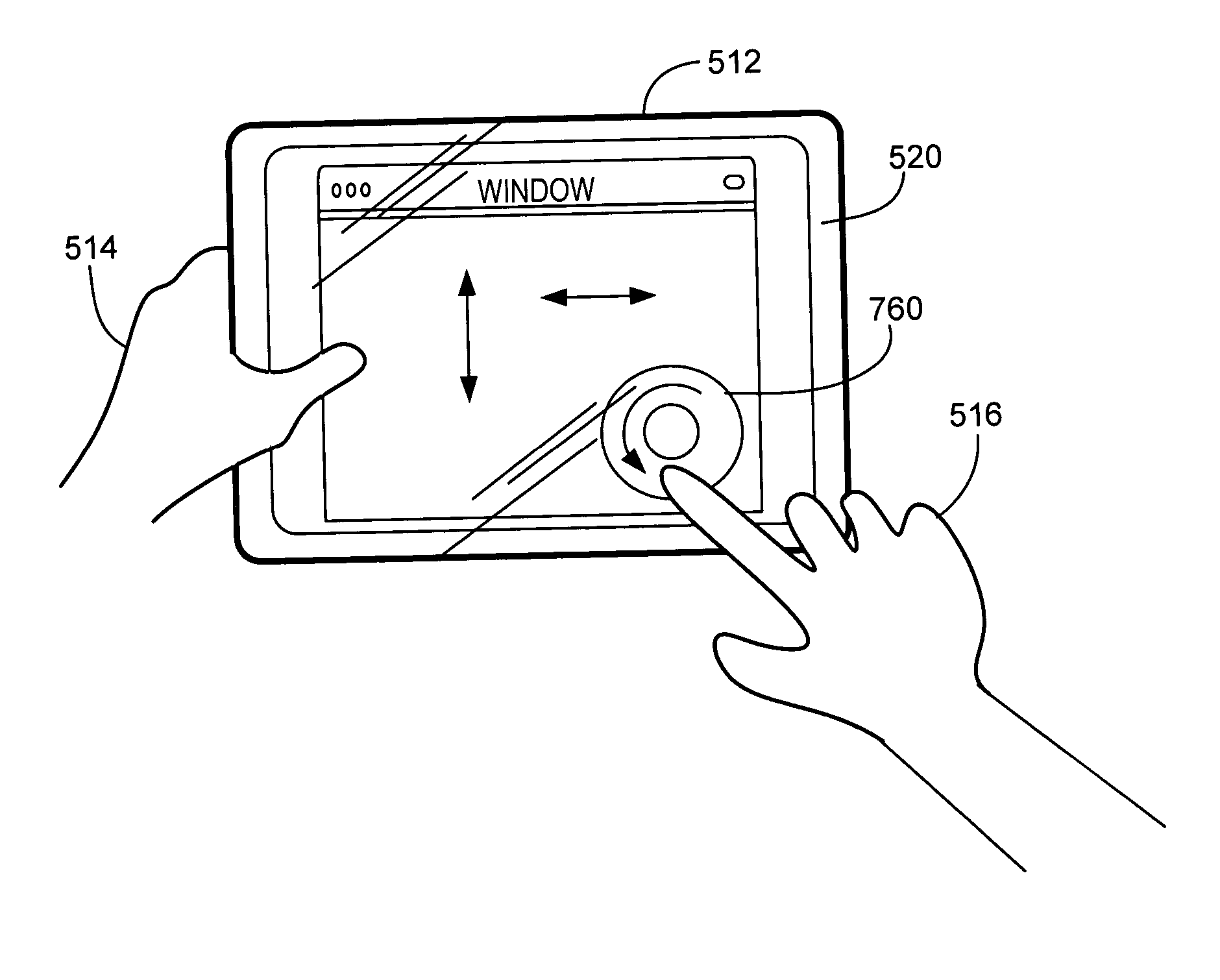

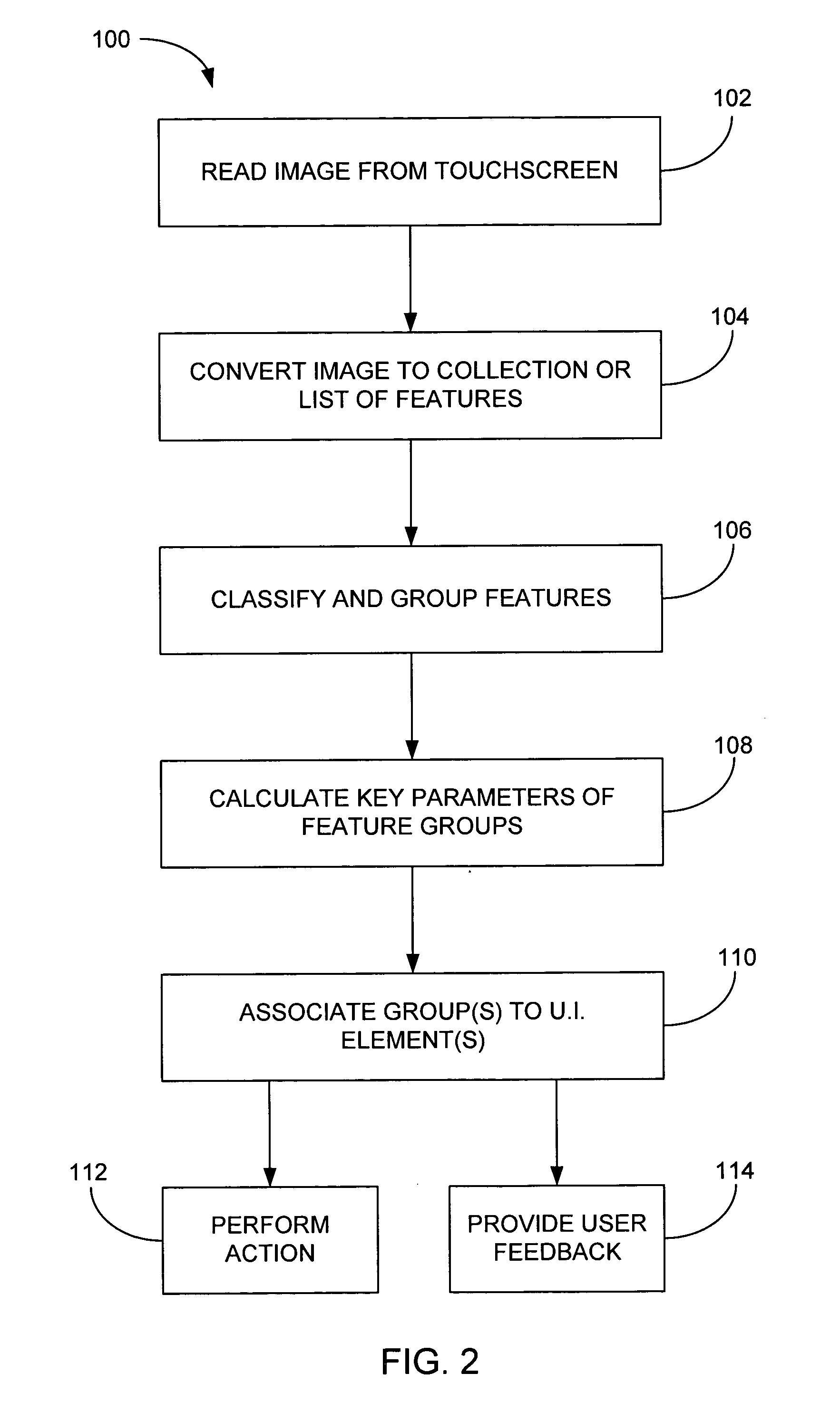

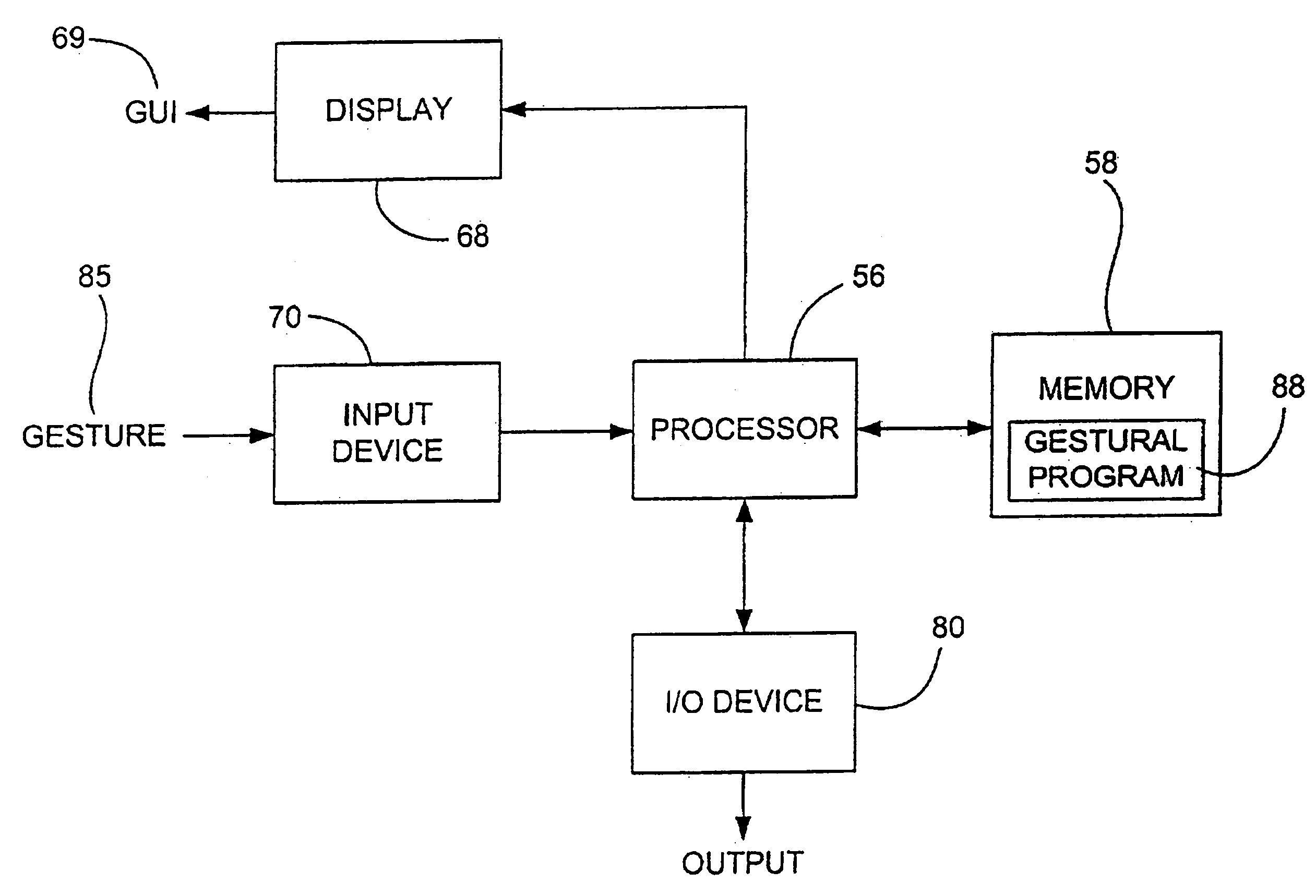

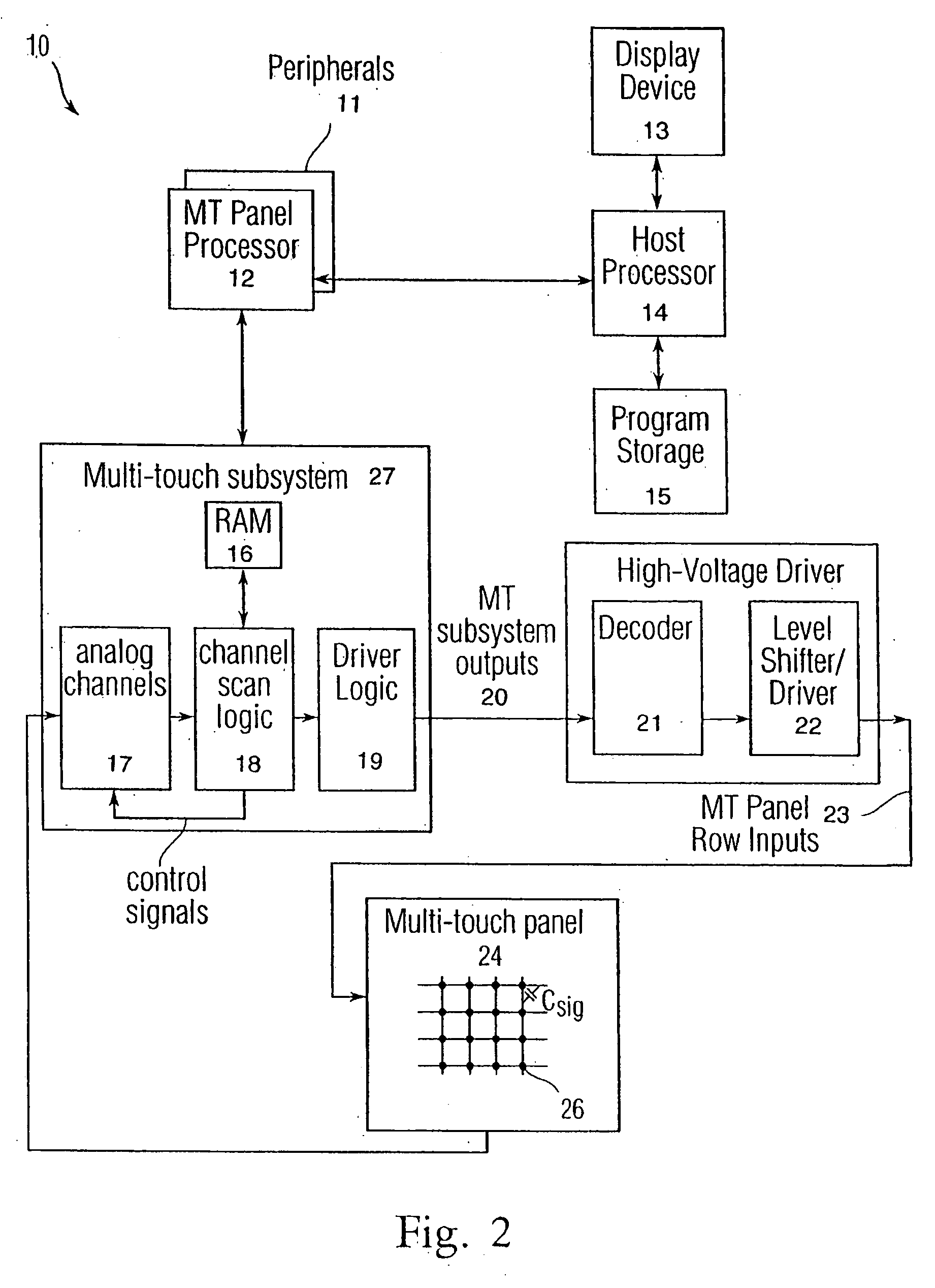

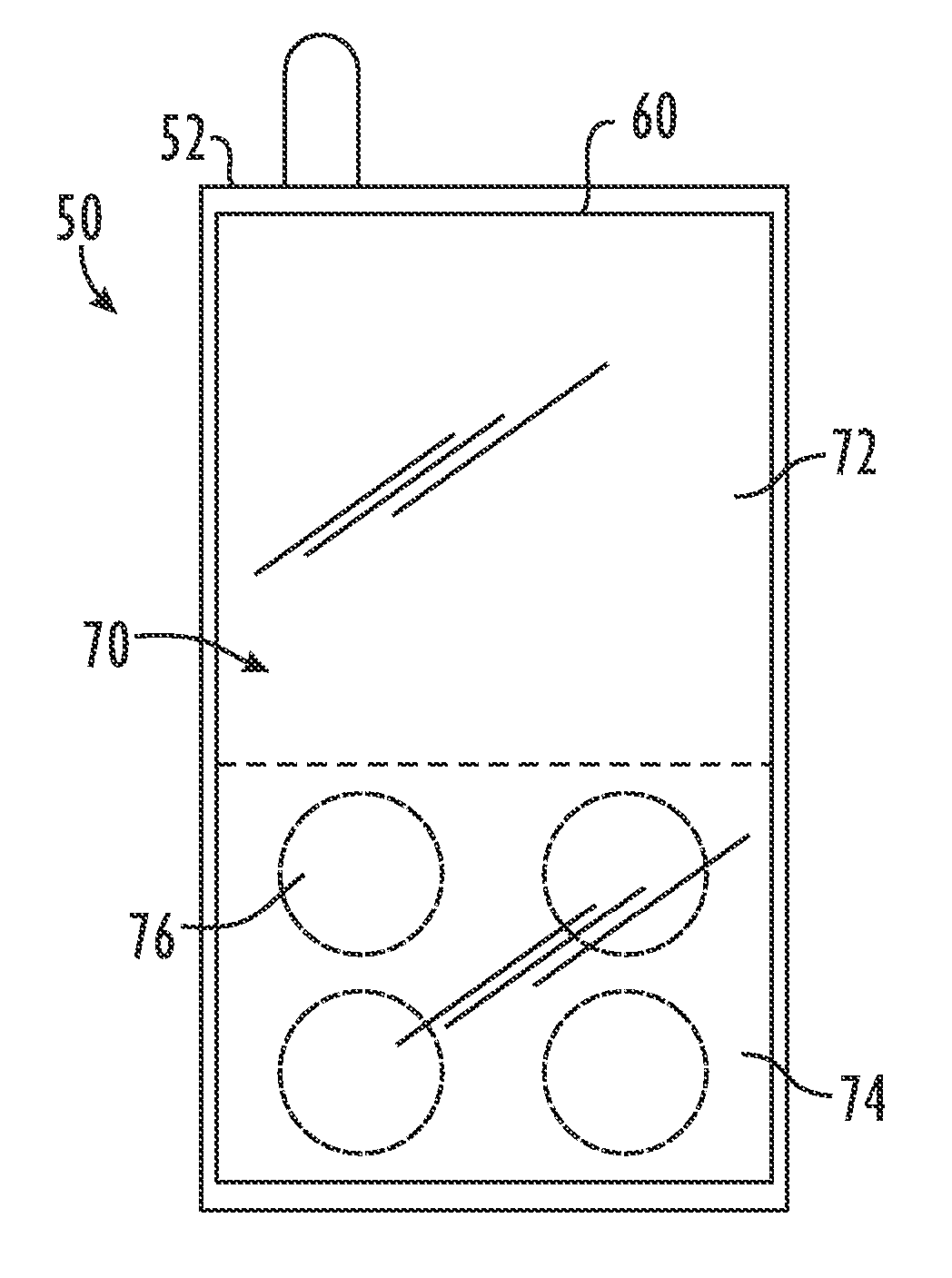

Gestures for controlling, manipulating, and editing of media files using touch sensitive devices

ActiveUS20080165141A1Electronic editing digitised analogue information signalsCarrier indicating arrangementsGraphicsSoftware

Embodiments of the invention are directed to a system, method, and software for implementing gestures with touch sensitive devices (such as a touch sensitive display) for managing and editing media files on a computing device or system. Specifically, gestural inputs of a human hand over a touch / proximity sensitive device can be used to control, edit, and manipulate files, such as media files including without limitation graphical files, photo files and video files.

Owner:APPLE INC

Social network systems and methods

InactiveUS20100306249A1Digital data processing detailsSpecial data processing applicationsInternet privacySocial web

Embodiments of computer-implemented methods and systems are described, including: in a computer network system, providing a user page region viewable by a user; providing to the user, in the user page region, indicators of each of three categories, the categories consisting essentially of: (i) what the user has, (ii) what the user wants, and (c) what the user has thought or is thinking; wherein the user page region accepts a post by the user; after the post by the user, displaying the post in a group page region, viewable by a set of one of more persons other than the user, the set of persons being separated from the user at locations on a network; before the displaying, requiring the user to select one of the three categories to be associated with the post; and displaying the category selected by the user, with the post, in the group page region.

Owner:FOSTER JOHN C

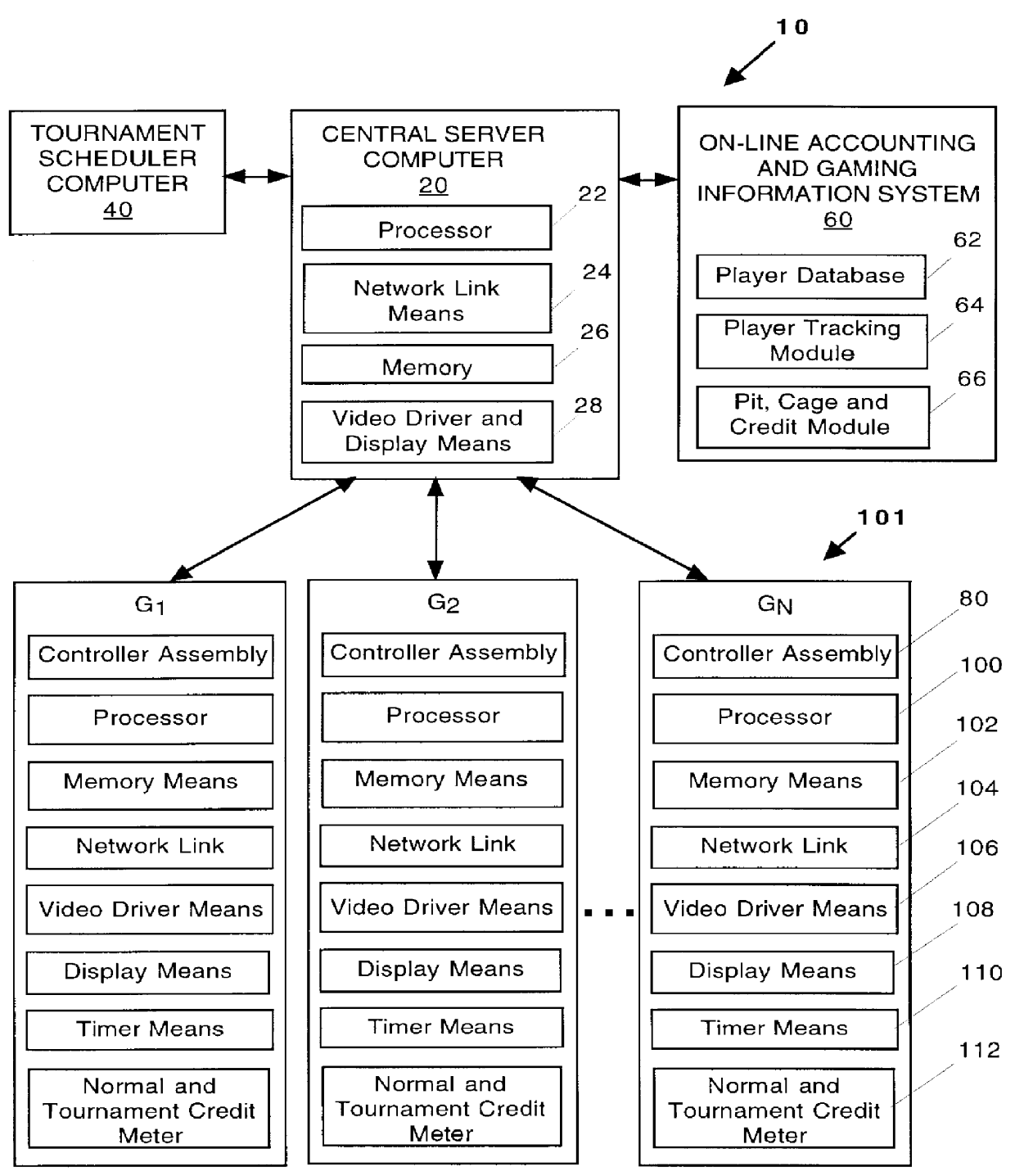

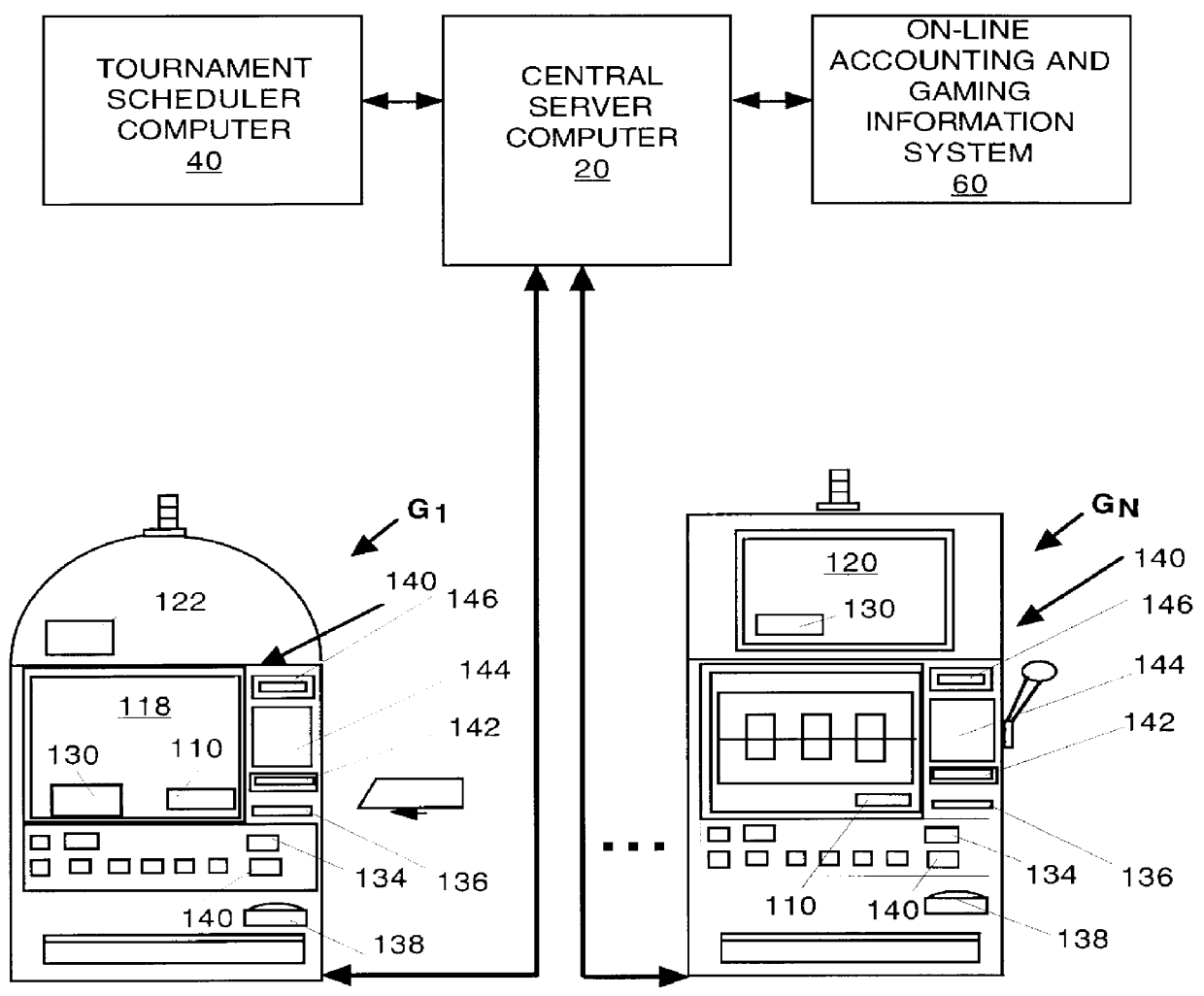

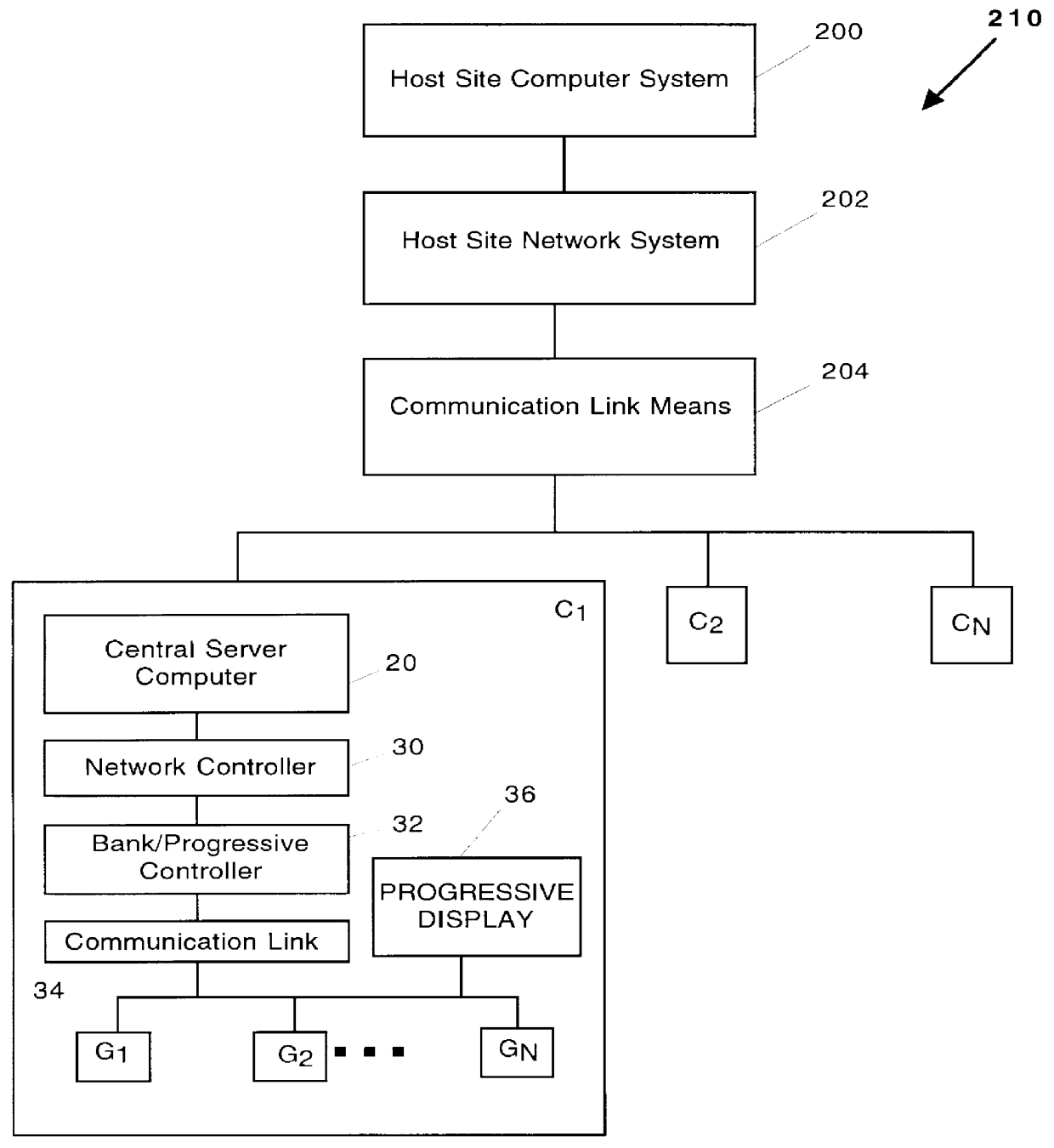

Automated tournament gaming system: apparatus and method

InactiveUS6039648AStimulate gaming experienceCard gamesApparatus for meter-controlled dispensingMulti siteHuman–computer interaction

An apparatus and method for an automated tournament gaming system (10) comprising a central server computer (20) operatively coupled to, inter alia, a plurality of gaming machine G1, G2 . . . GN for automatically harnessing any of the gaming machines for automatically inciting and running a tournament where a group of players are participating for a period after which prizes are awarded to the winning tournament play participants. In addition, the system (10) includes a host site computer (200) operatively coupled to a plurality of the central servers (20) at a variety of remote gaming sites C1, C2 . . . CN for providing a multi-site progressive automated tournament gaming system (210). The multi-site system (210) is integrated into the system (10) to increase the winnings, progressive amounts and the total buy-ins.

Owner:CASINO DATA SYST

Electronic Device Having Display and Surrounding Touch Sensitive Bezel for User Interface and Control

ActiveUS20060238517A1Location in which can alterInput/output for user-computer interactionMedical devicesDisplay deviceHuman–computer interaction

Owner:APPLE INC

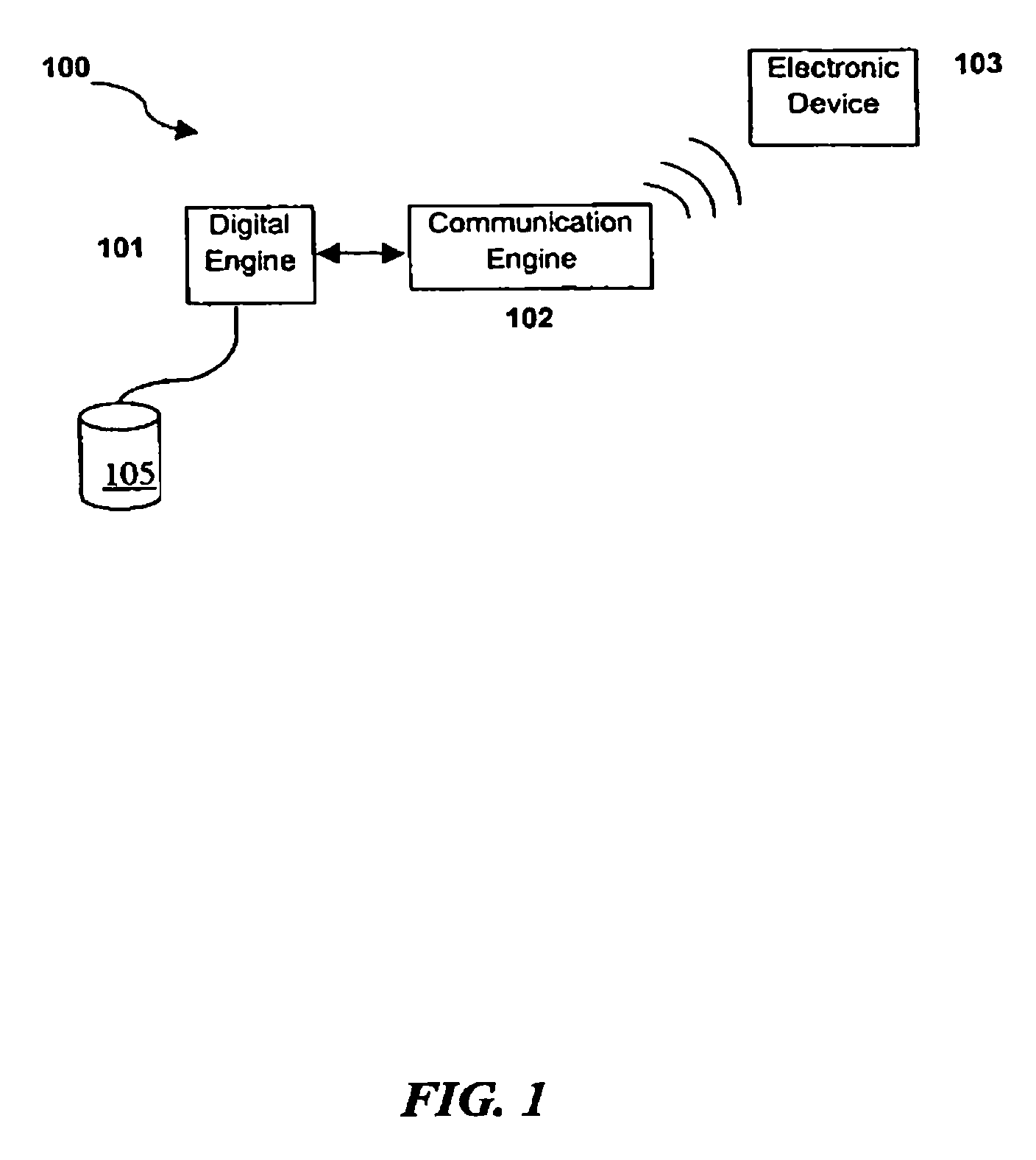

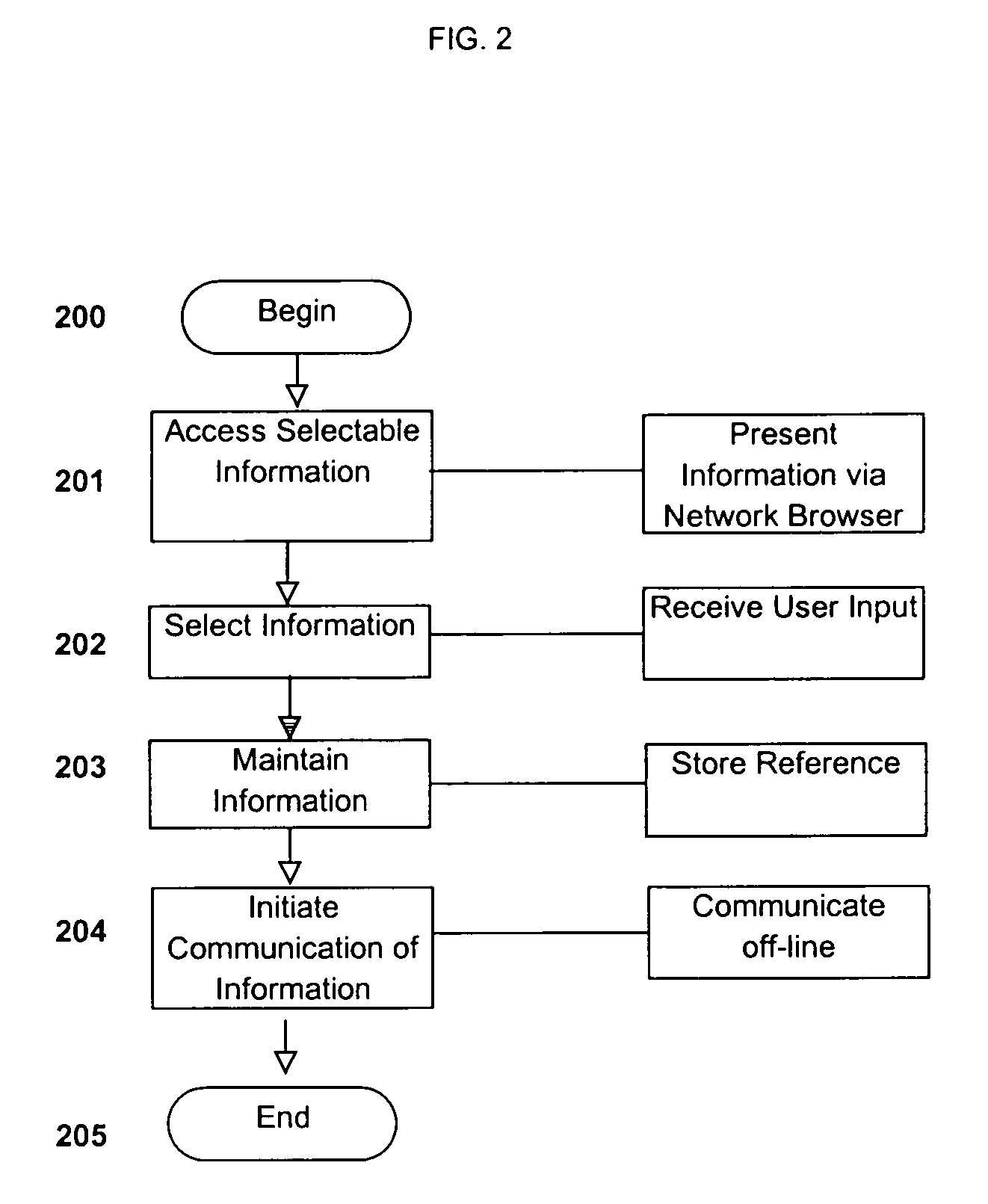

System and method for communicating selected information to an electronic device

InactiveUS7187947B1Sound qualityBig advantageNetwork traffic/resource managementNetwork topologiesGraphical user interfaceEngineering

Disclosed are a system and method for communicating selected information to an electronic device. The disclosed system may include a digital engine operable to maintain data representing the selected information in a digital format. In some embodiments, the digital engine may be communicatively coupled to a graphical user interface that allows a user to identify the selected information. The system may also include a communication engine communicatively coupled to the digital engine, the communication engine may be operable to wirelessly communicate the data representing the selected information to an electronic device.

Owner:RPX CORP

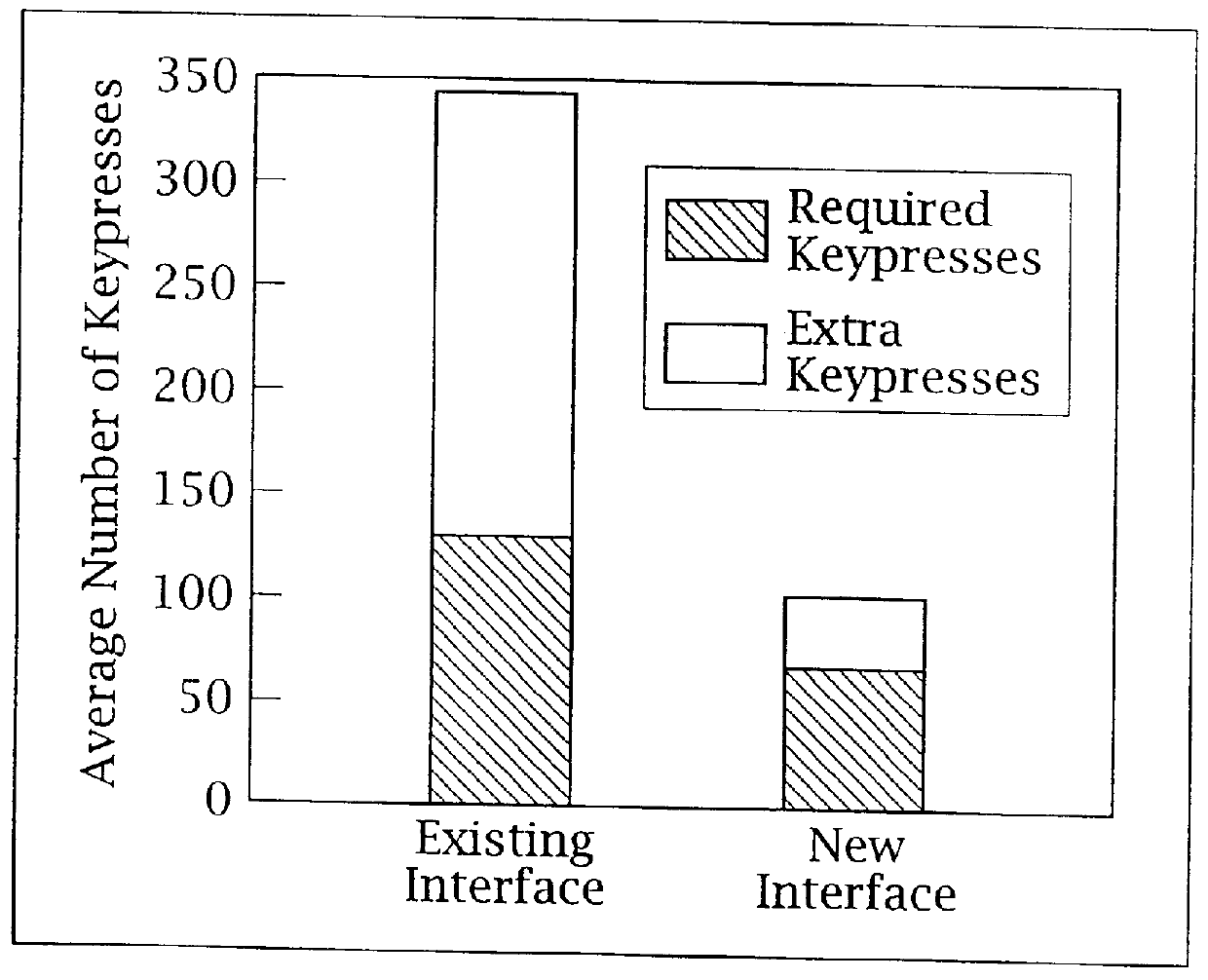

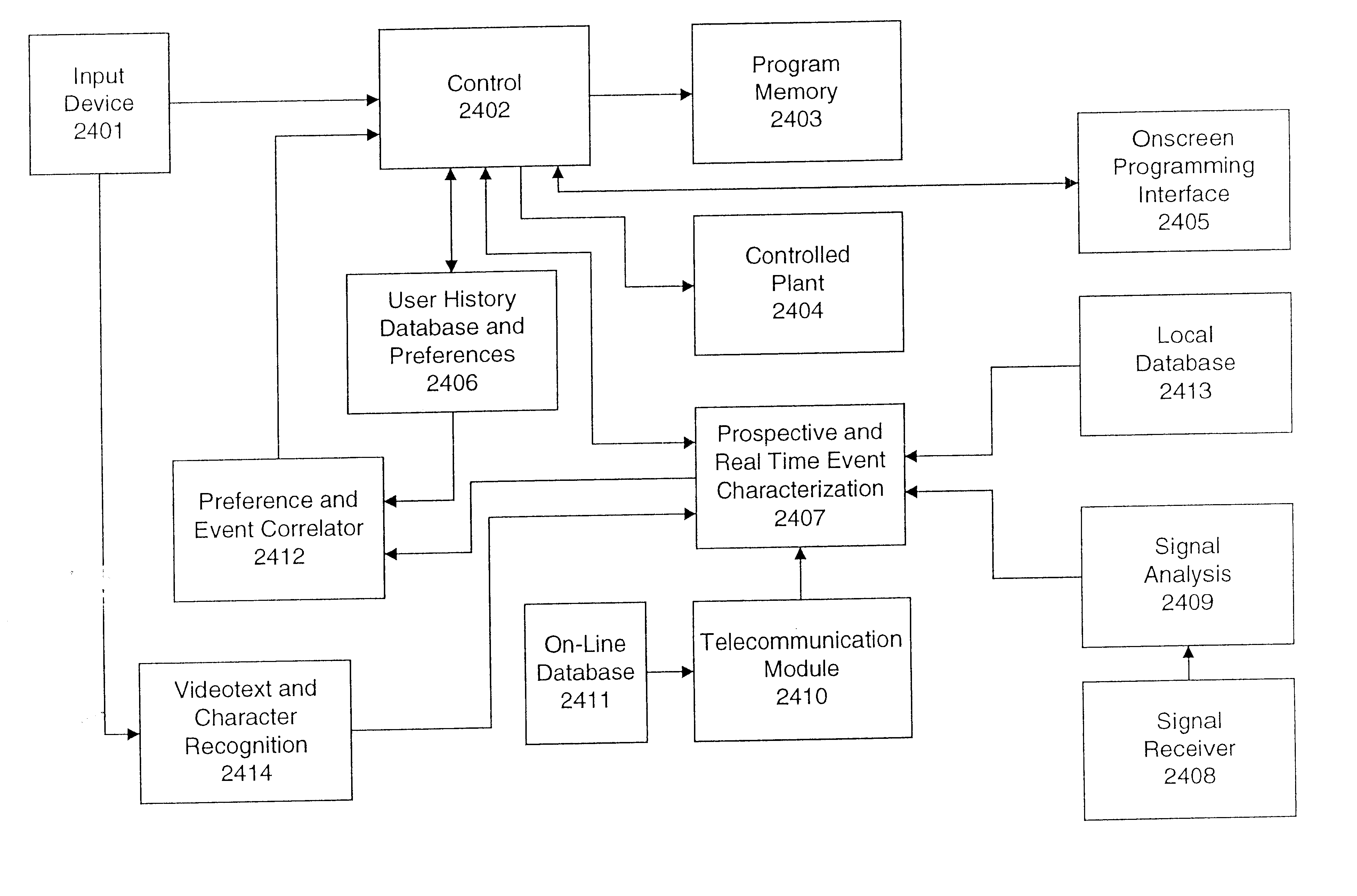

Ergonomic man-machine interface incorporating adaptive pattern recognition based control system

InactiveUS6081750ADecrease productivityImprove the environmentComputer controlSimulator controlHuman–machine interfaceData stream

An adaptive interface for a programmable system, for predicting a desired user function, based on user history, as well as machine internal status and context. The apparatus receives an input from the user and other data. A predicted input is presented for confirmation by the user, and the predictive mechanism is updated based on this feedback. Also provided is a pattern recognition system for a multimedia device, wherein a user input is matched to a video stream on a conceptual basis, allowing inexact programming of a multimedia device. The system analyzes a data stream for correspondence with a data pattern for processing and storage. The data stream is subjected to adaptive pattern recognition to extract features of interest to provide a highly compressed representation which may be efficiently processed to determine correspondence. Applications of the interface and system include a VCR, medical device, vehicle control system, audio device, environmental control system, securities trading terminal, and smart house. The system optionally includes an actuator for effecting the environment of operation, allowing closed-loop feedback operation and automated learning.

Owner:BLANDING HOVENWEEP

Detecting and interpreting real-world and security gestures on touch and hover sensitive devices

InactiveUS20080168403A1Digital output to display deviceComputer graphics (images)Application software

“Real-world” gestures such as hand or finger movements / orientations that are generally recognized to mean certain things (e.g., an “OK” hand signal generally indicates an affirmative response) can be interpreted by a touch or hover sensitive device to more efficiently and accurately effect intended operations. These gestures can include, but are not limited to, “OK gestures,”“grasp everything gestures,”“stamp of approval gestures,”“circle select gestures,”“X to delete gestures,”“knock to inquire gestures,”“hitchhiker directional gestures,” and “shape gestures.” In addition, gestures can be used to provide identification and allow or deny access to applications, files, and the like.

Owner:APPLE INC

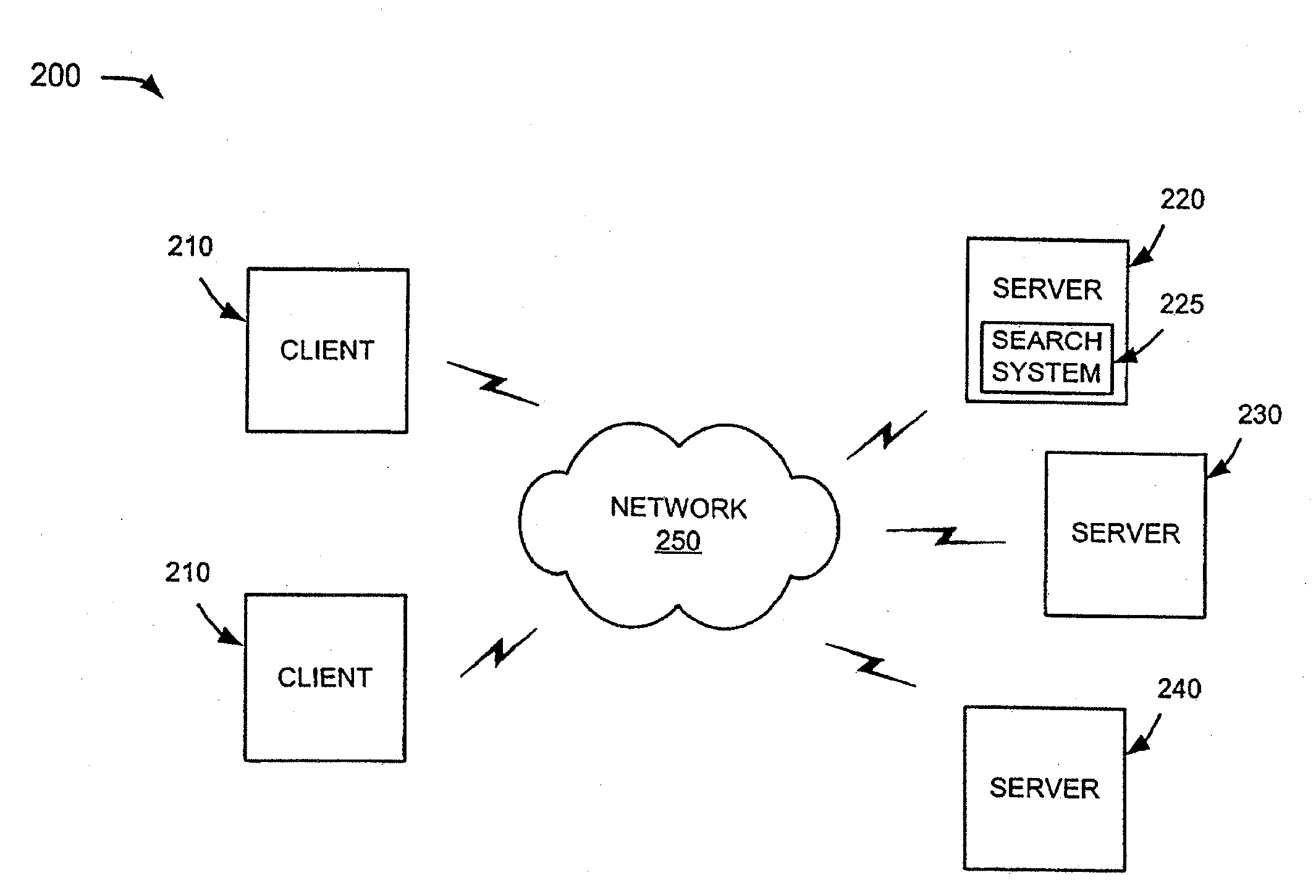

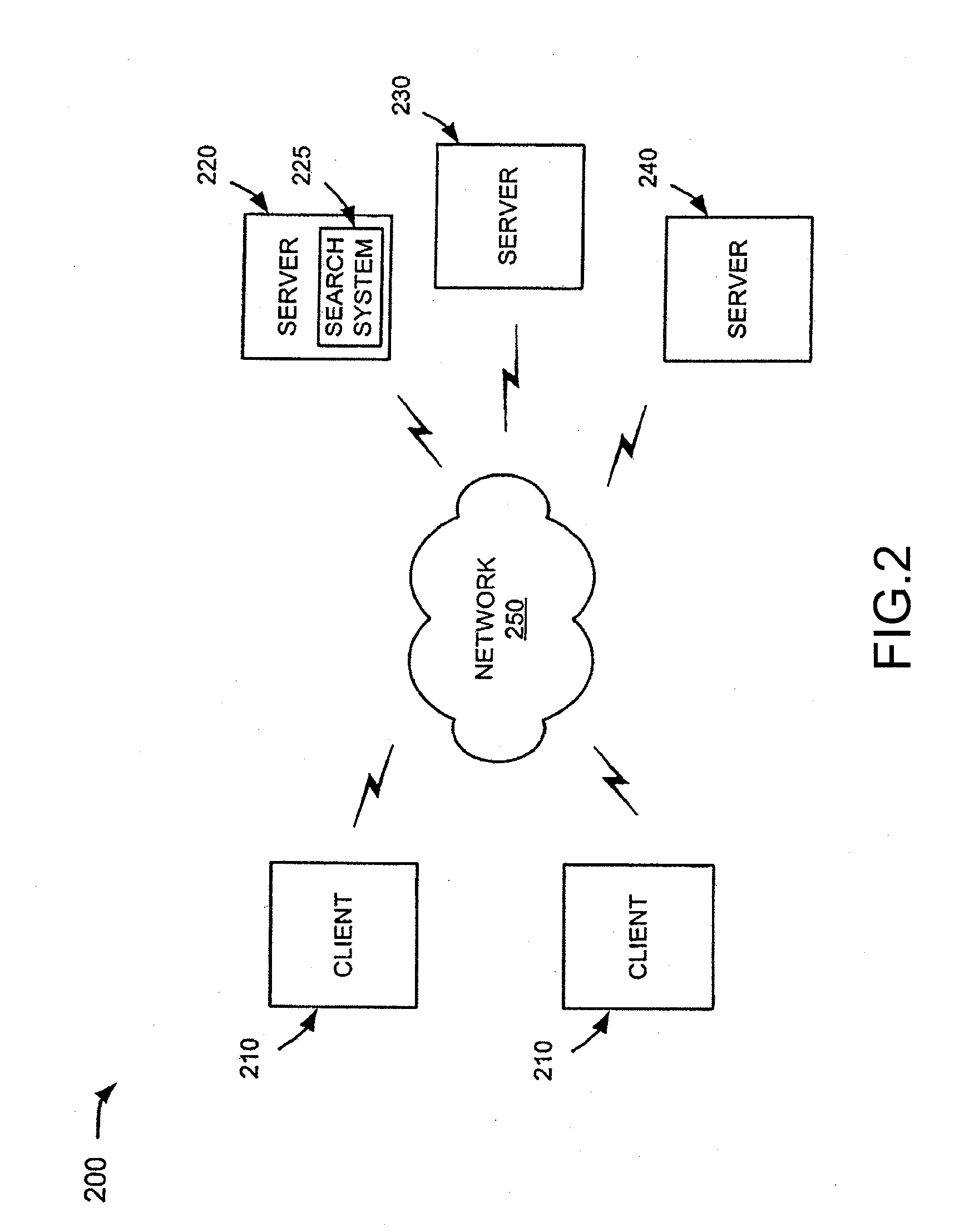

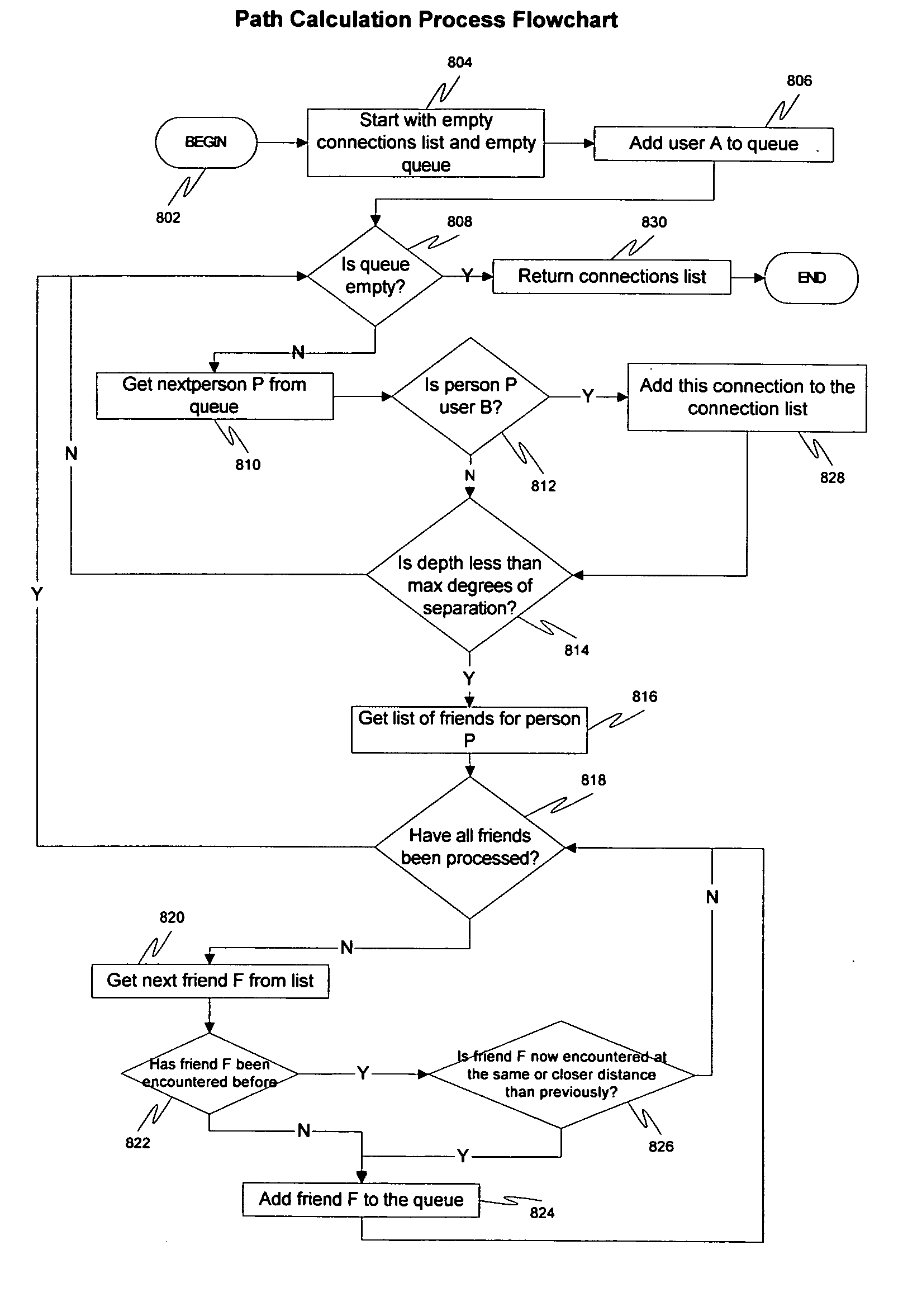

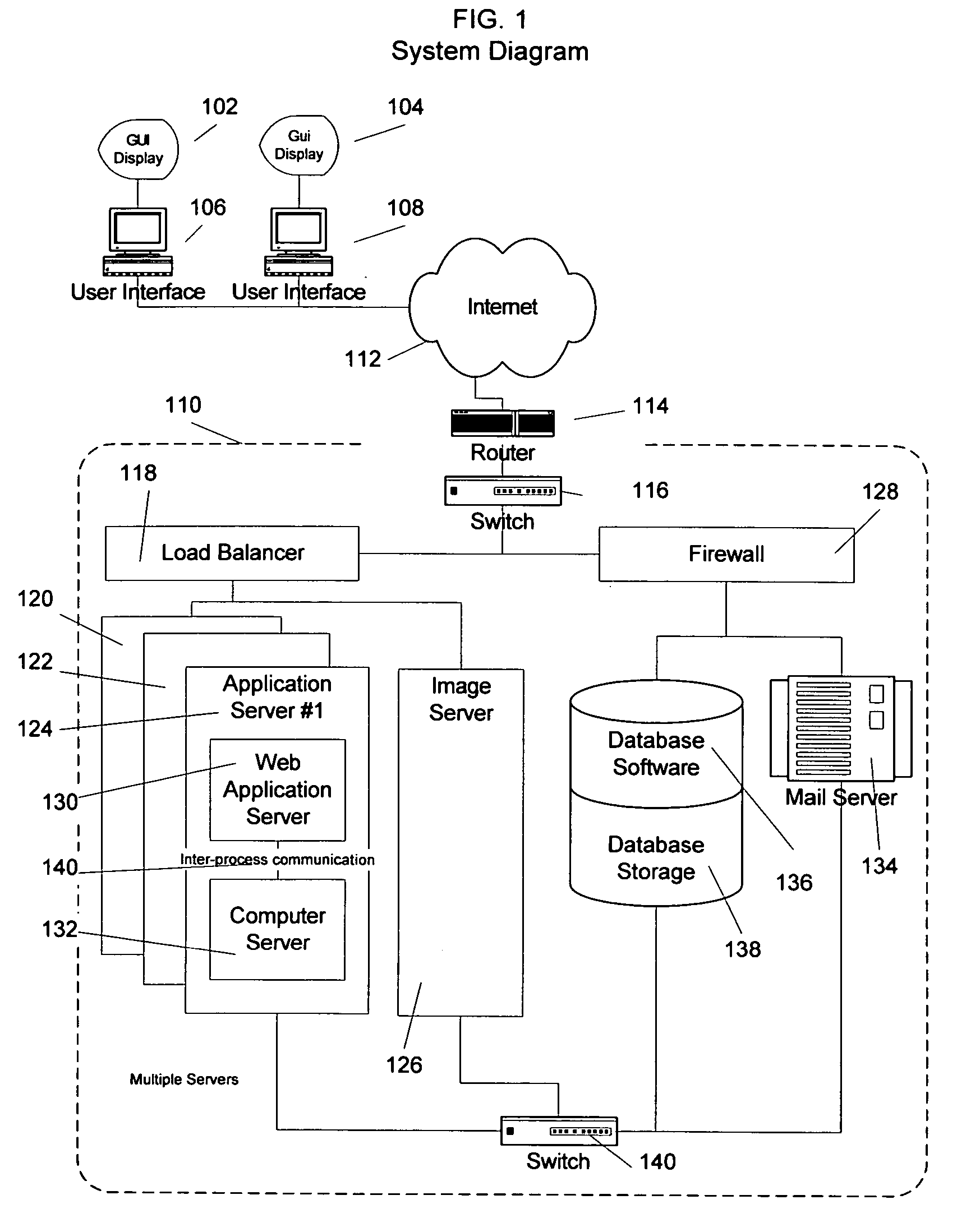

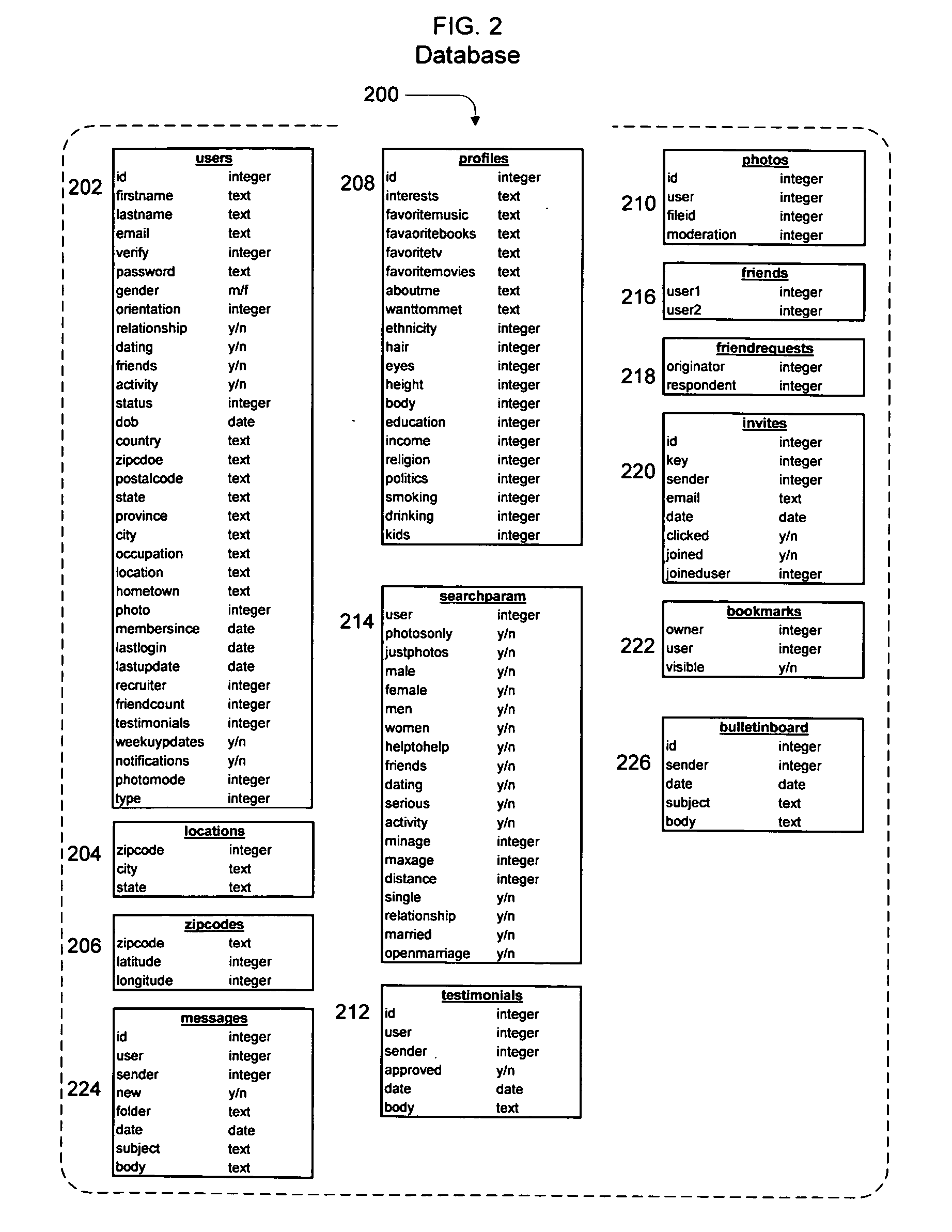

System, method and apparatus for connecting users in an online computer system based on their relationships within social networks

ActiveUS20050021750A1Enhanced interactionIncrease valueMultiple digital computer combinationsOffice automationDirect communicationSocial internet of things

A method and apparatus for calculating, displaying and acting upon relationships in a social network is described. A computer system collects descriptive data about various individuals and allows those individuals to indicate other individuals with whom they have a personal relationship. The descriptive data and the relationship data are integrated and processed to reveal the series of social relationships connecting any two individuals within a social network. The pathways connecting any two individuals can be displayed. Further, the social network itself can be displayed to any number of degrees of separation. A user of the system can determine the optimal relationship path (i.e., contact pathway) to reach desired individuals. A communications tool allows individuals in the system to be introduced (or introduce themselves) and initiate direct communication.

Owner:META PLATFORMS INC

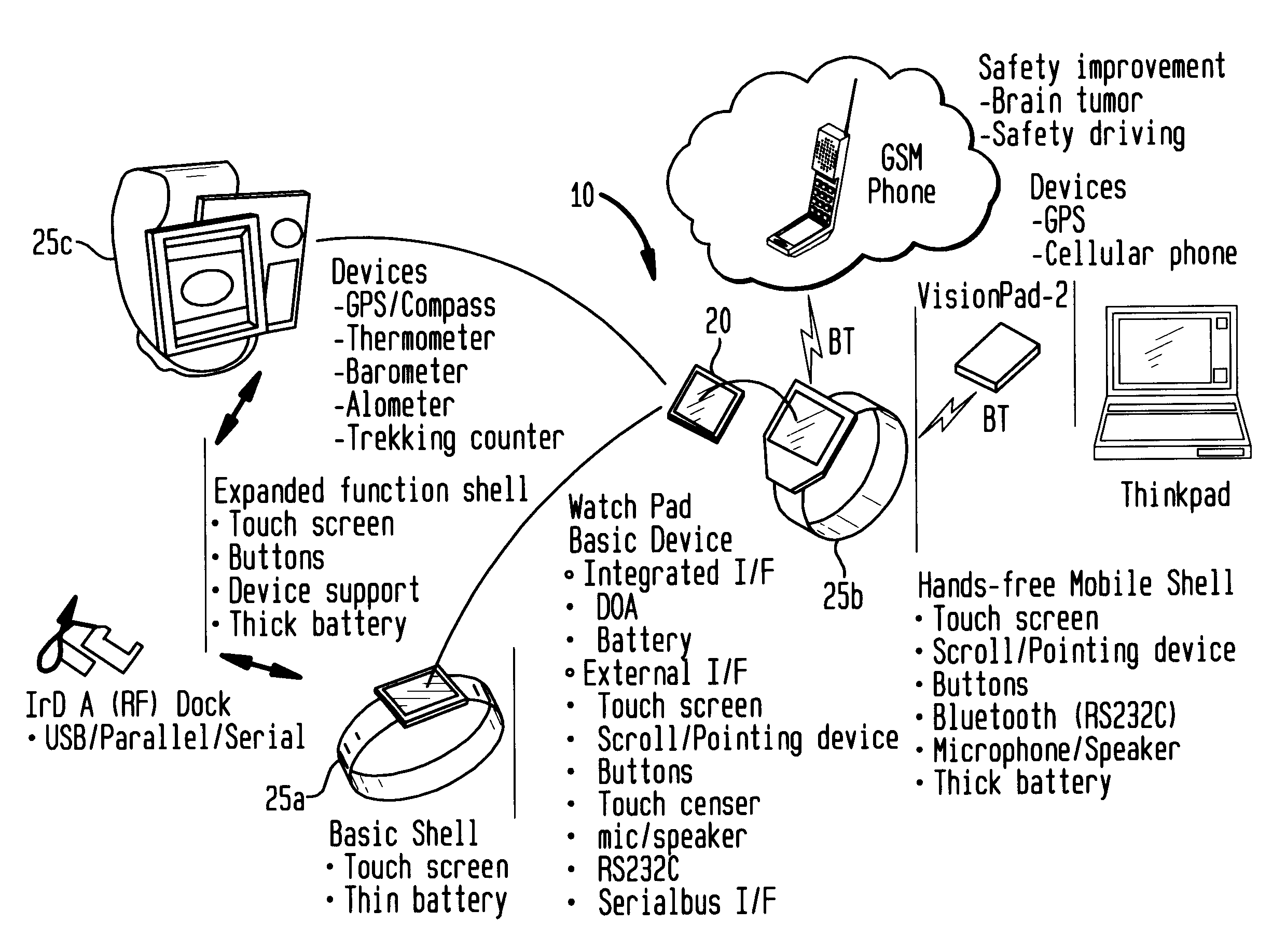

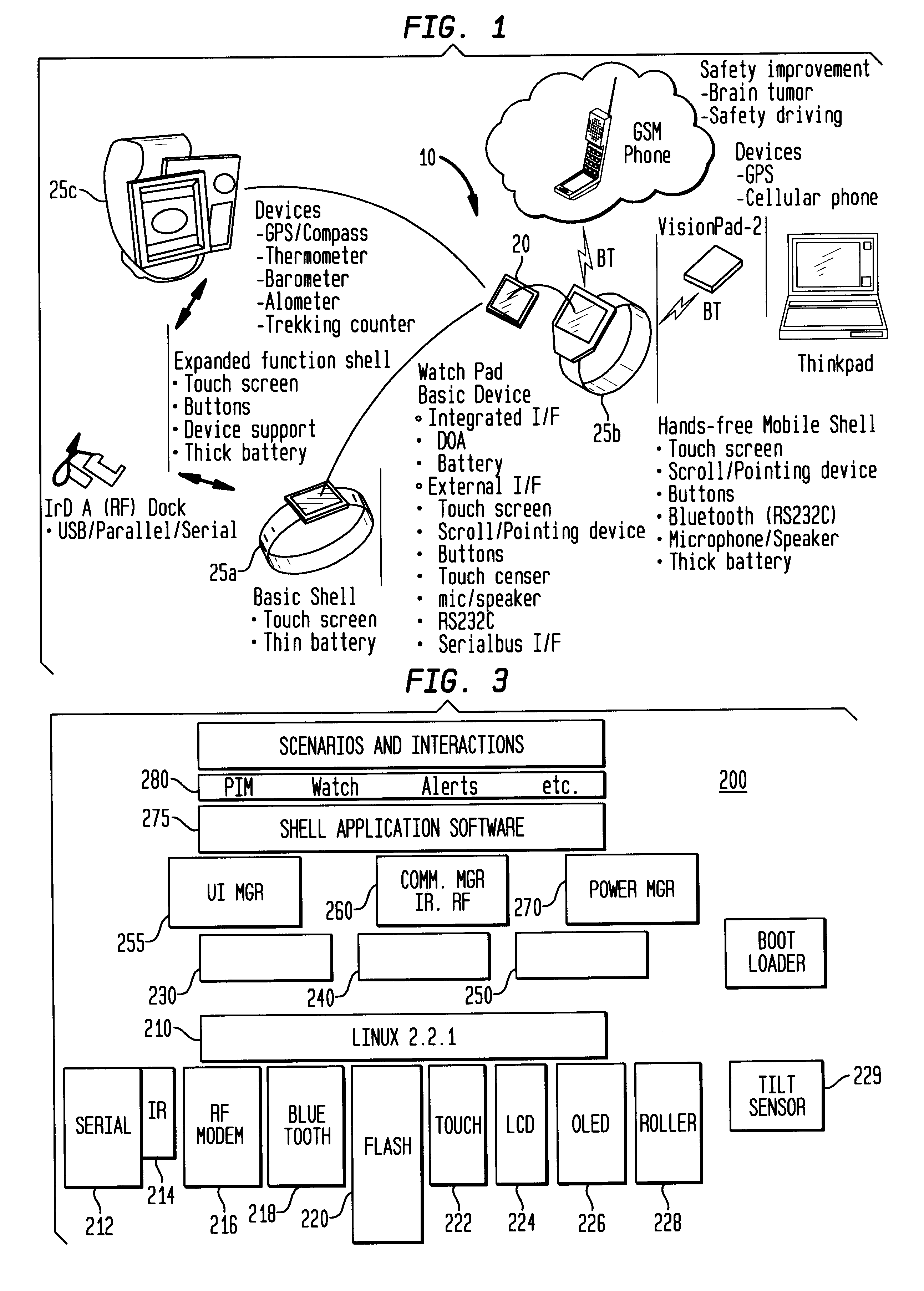

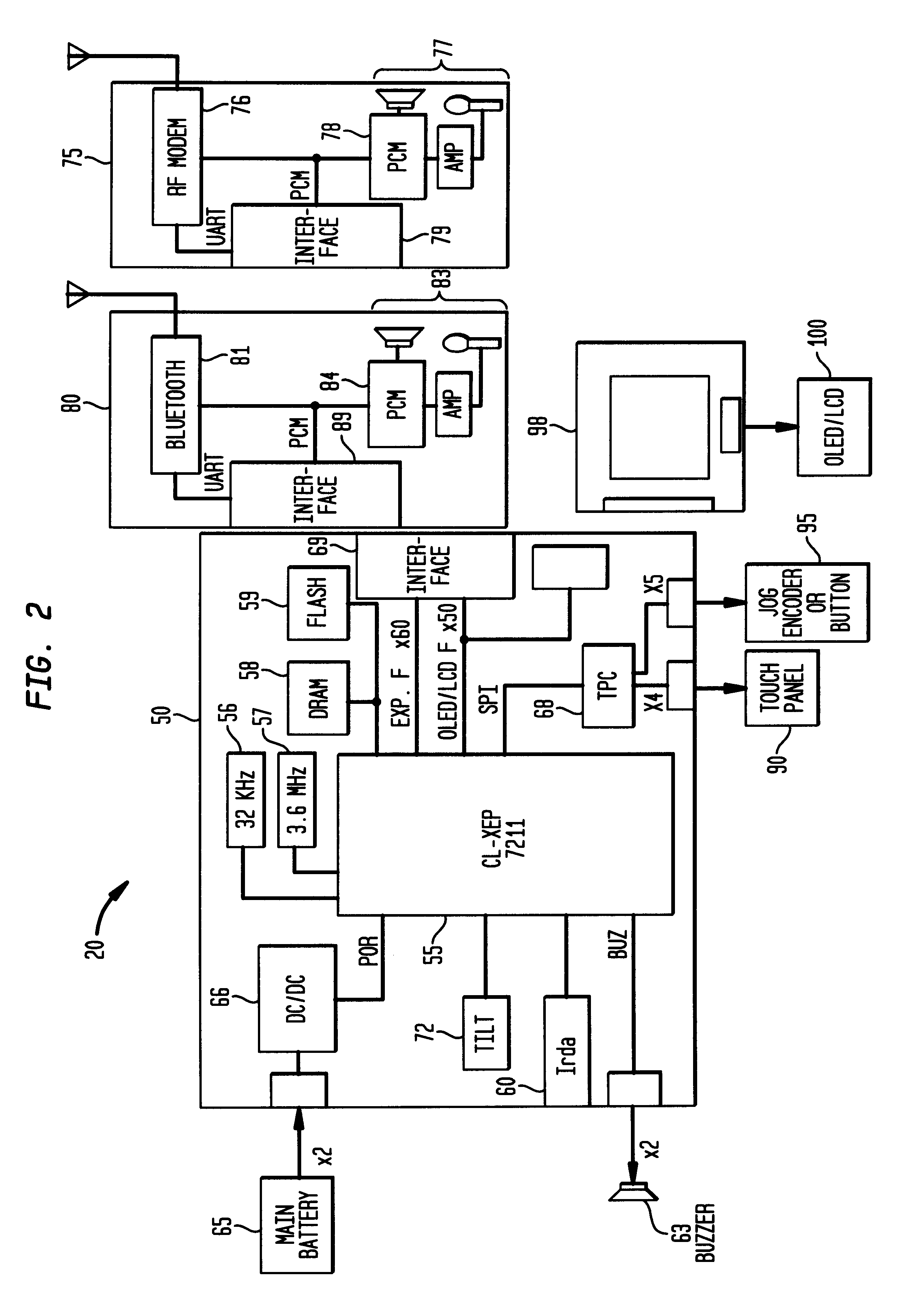

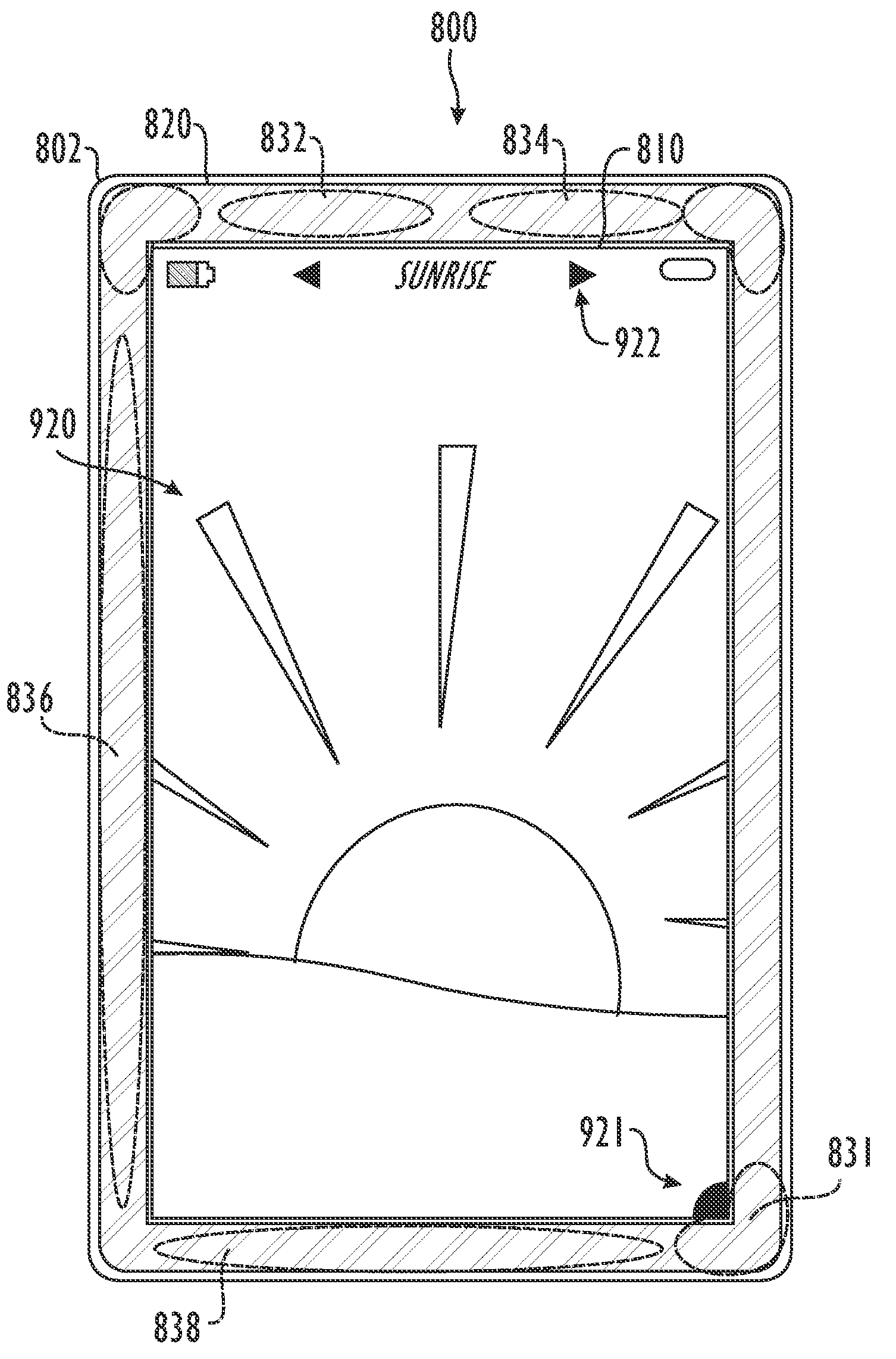

Alarm interface for a smart watch

InactiveUS6477117B1Minimal effort and concentrationVisual indicationAcoustic time signalsDisplay deviceHuman–computer interaction

A wearable mobile computing device / appliance (a wrist watch) with a high resolution display that is capable of wirelessly accessing information from a network and a variety of other devices. The mobile computing device / appliance includes a user interface that is used to efficiently interact with alarms and notifications on the watch.

Owner:IBM CORP

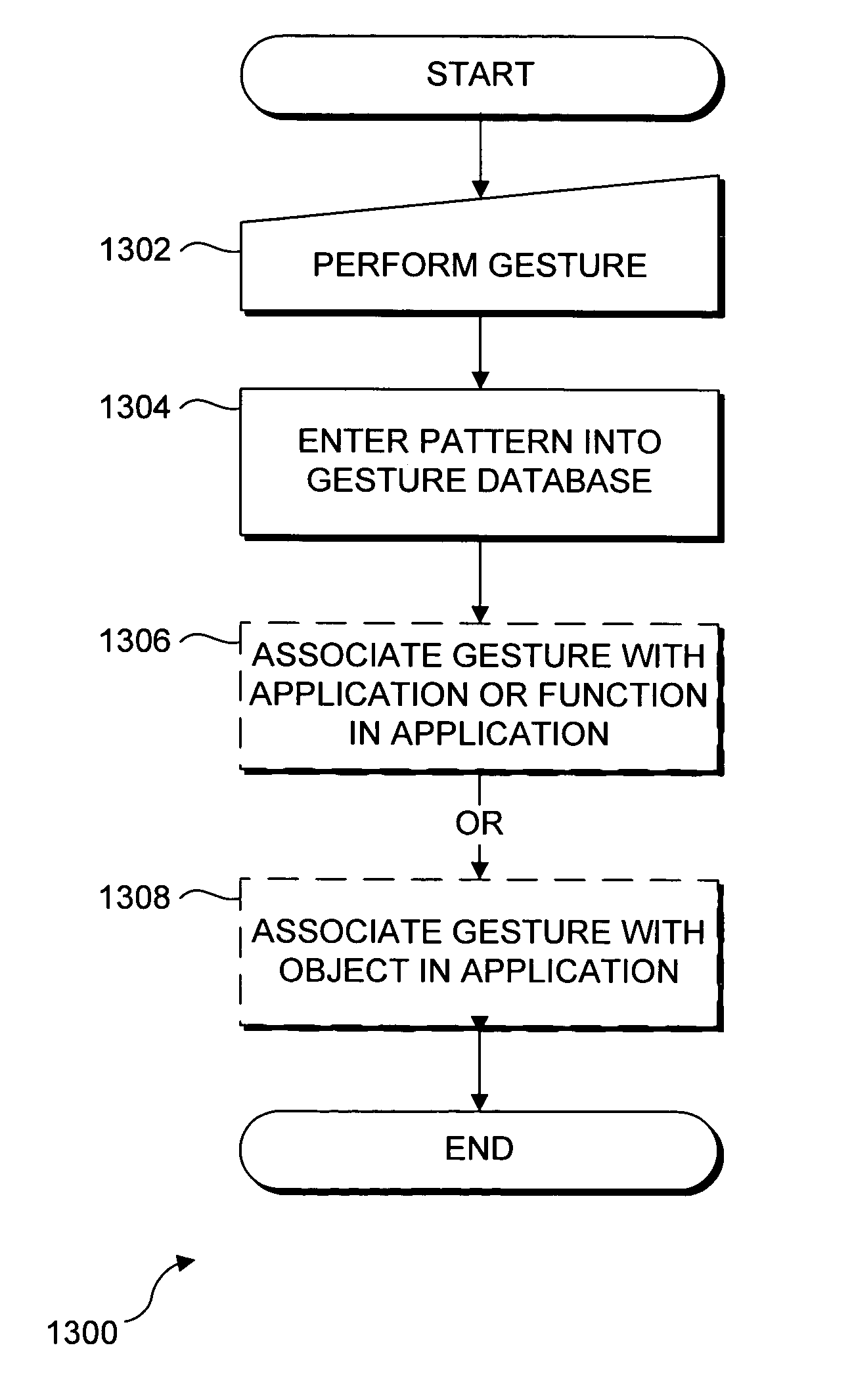

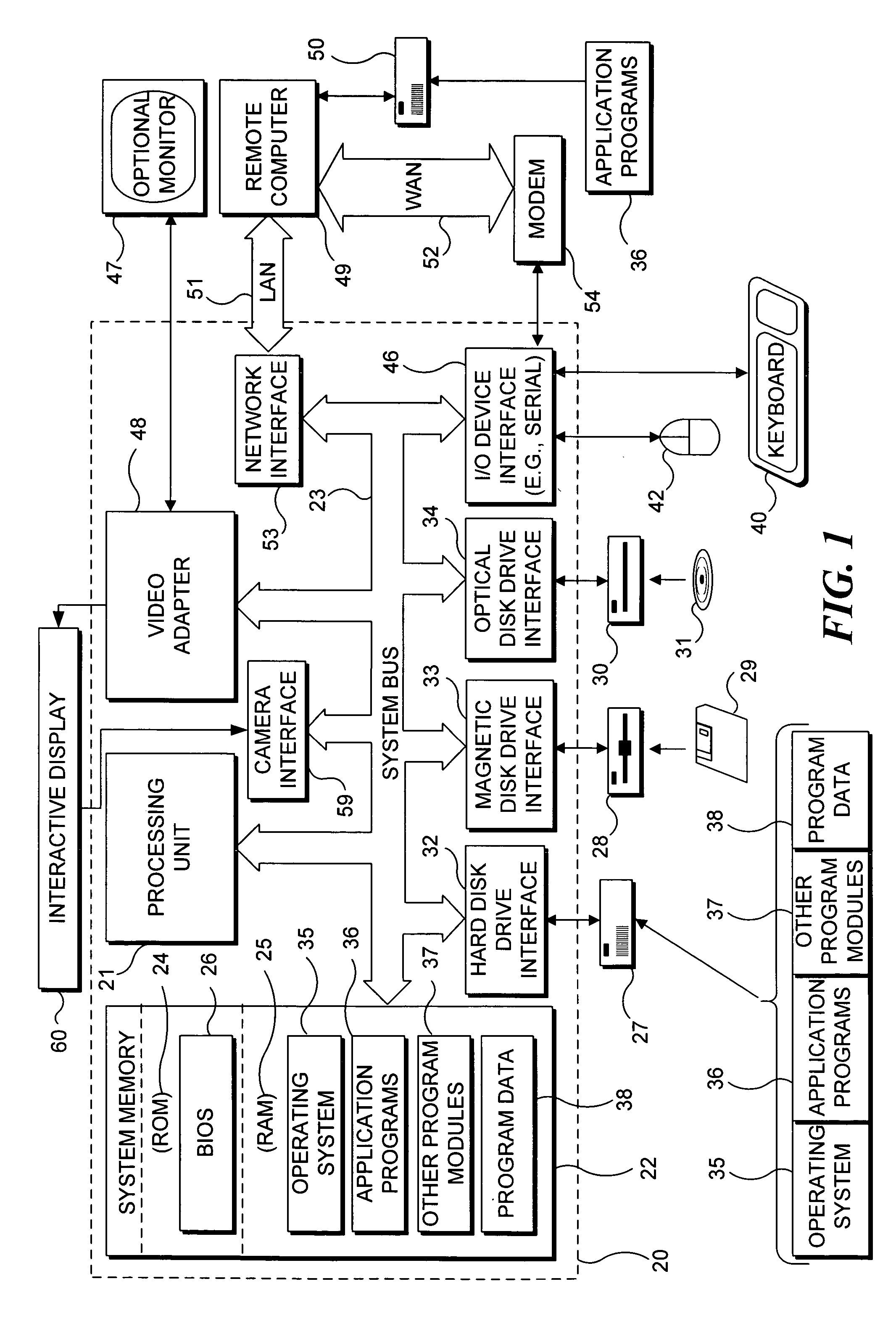

Recognizing gestures and using gestures for interacting with software applications

InactiveUS20060010400A1Character and pattern recognitionColor television detailsInteractive displaysHuman–computer interaction

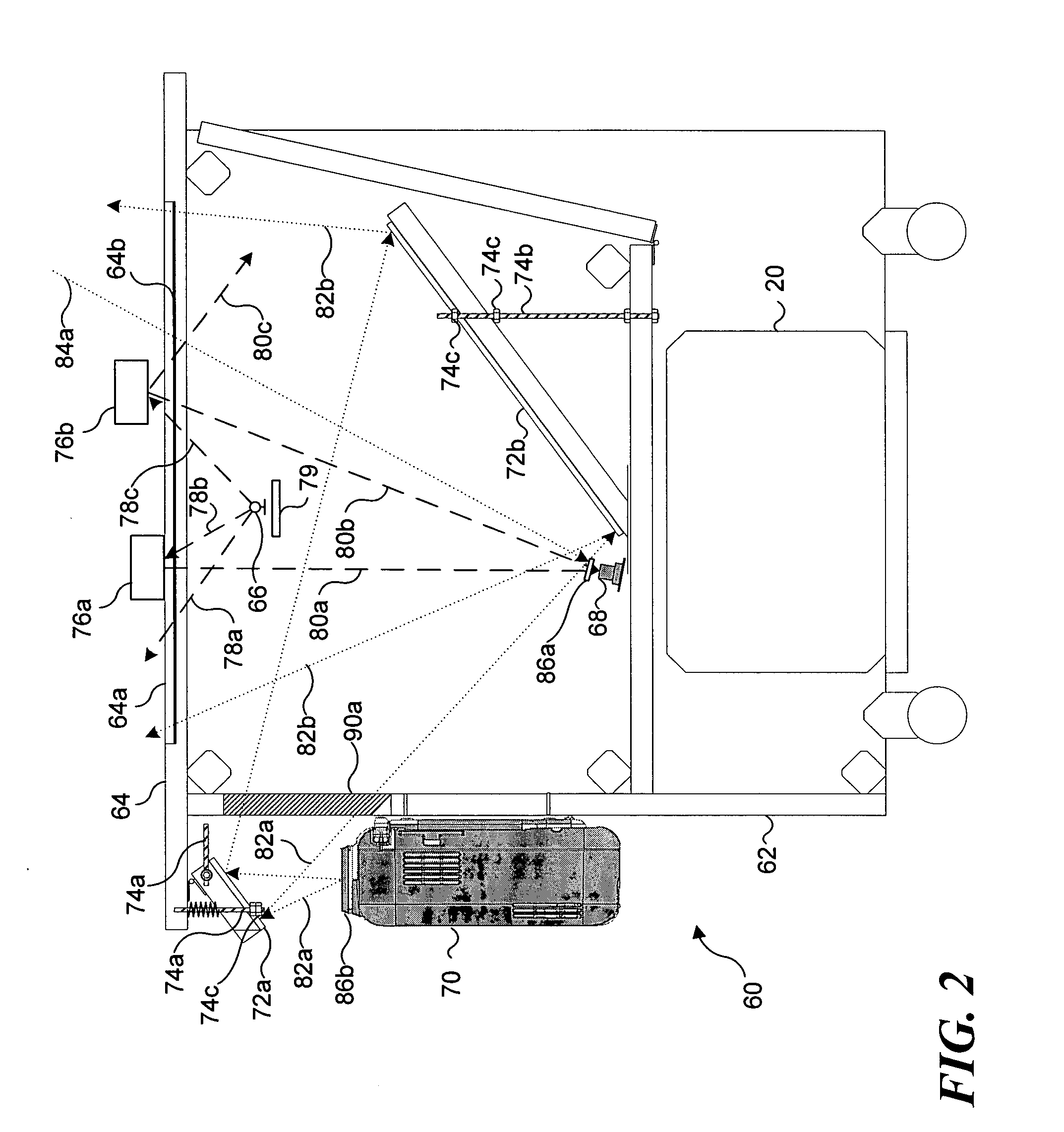

An interactive display table has a display surface for displaying images and upon or adjacent to which various objects, including a user's hand(s) and finger(s) can be detected. A video camera within the interactive display table responds to infrared (IR) light reflected from the objects to detect any connected components. Connected component correspond to portions of the object(s) that are either in contact, or proximate the display surface. Using these connected components, the interactive display table senses and infers natural hand or finger positions, or movement of an object, to detect gestures. Specific gestures are used to execute applications, carryout functions in an application, create a virtual object, or do other interactions, each of which is associated with a different gesture. A gesture can be a static pose, or a more complex configuration, and / or movement made with one or both hands or other objects.

Owner:MICROSOFT TECH LICENSING LLC

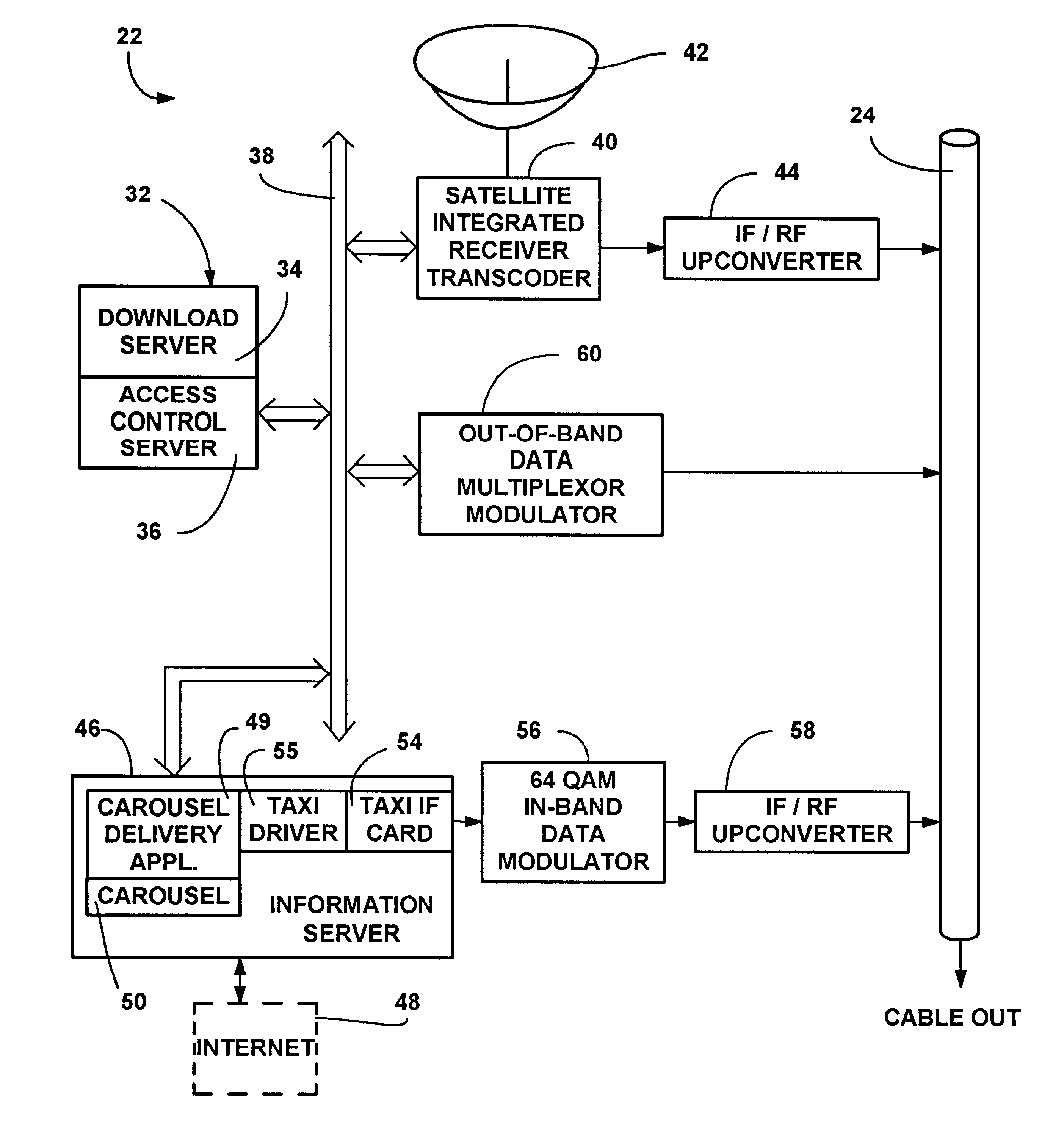

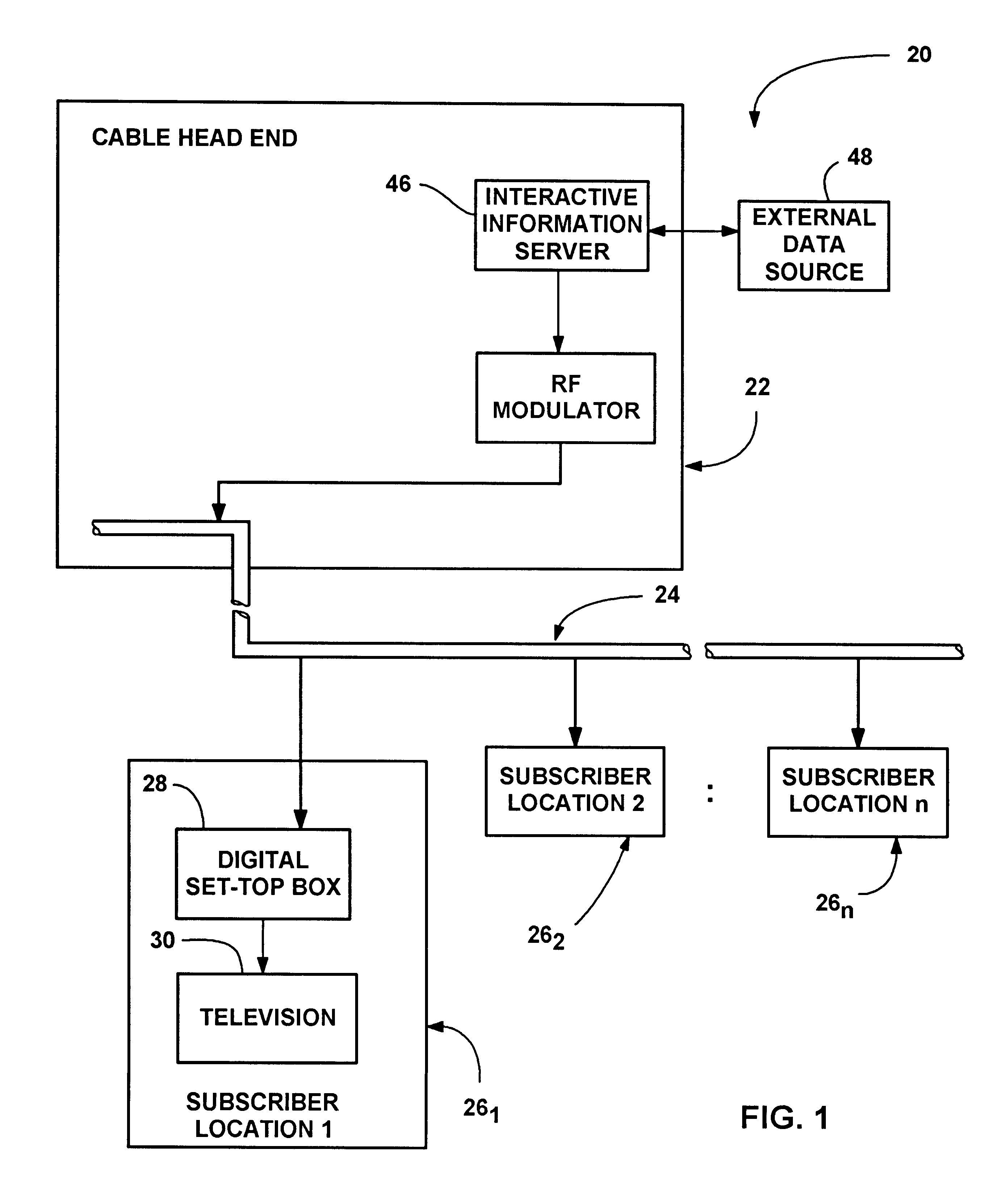

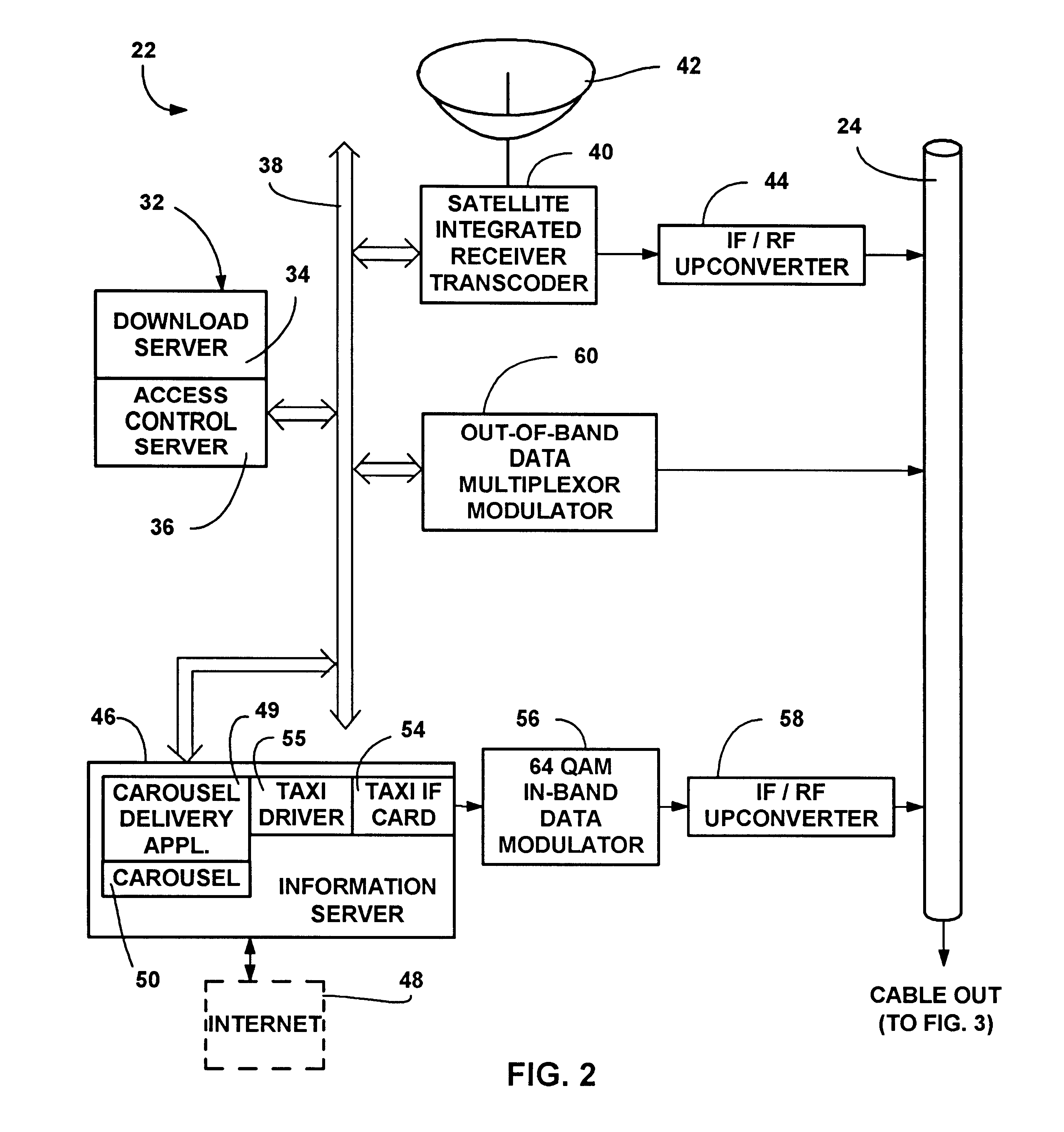

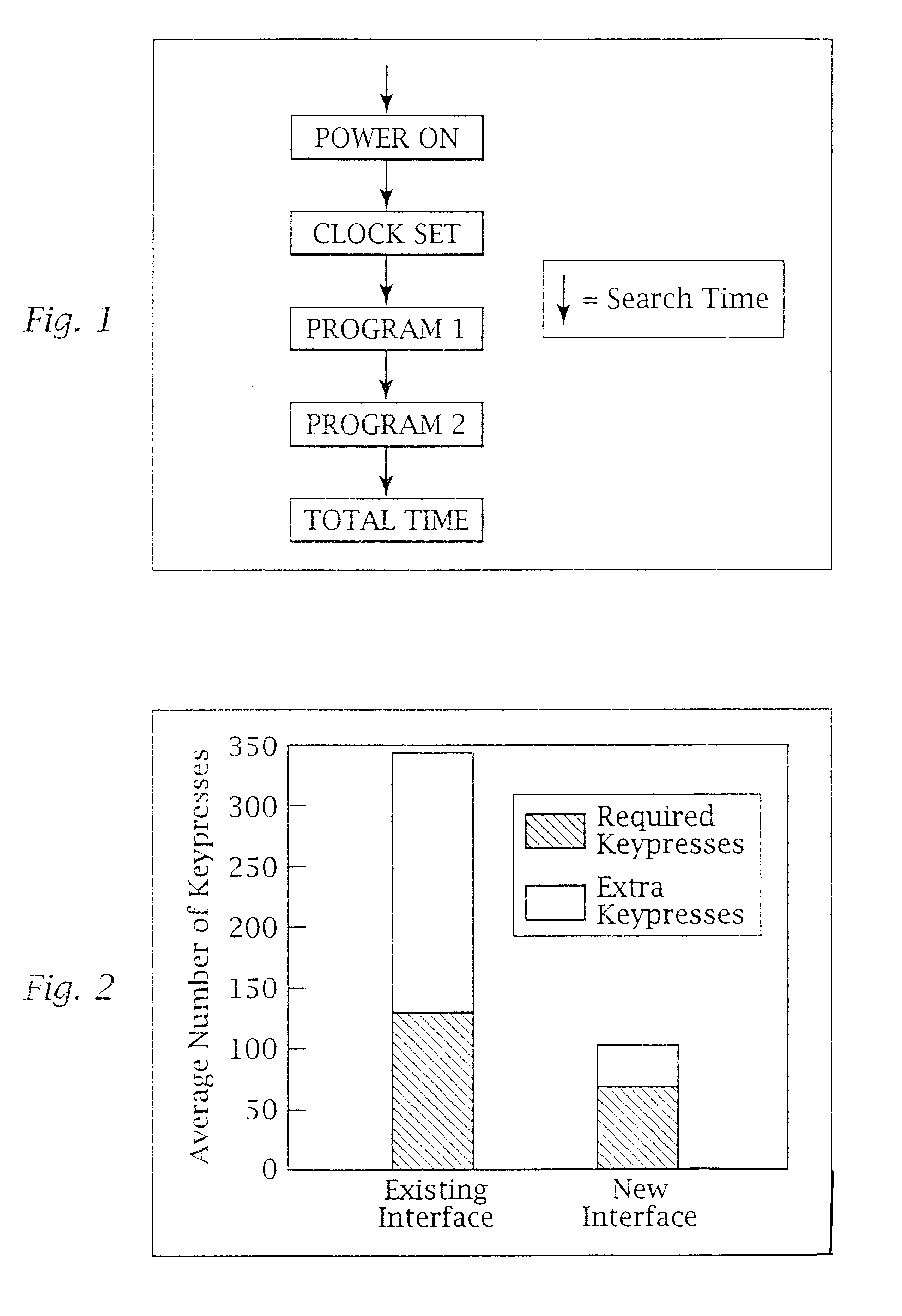

Interactive entertainment and information system using television set-top box

InactiveUS6317885B1SpeedNot much bandwidthTelevision system detailsAnalogue secracy/subscription systemsInformation systemControl equipment

An interactive entertainment and information system using a television set-top box, wherein pages of information are periodically provided to the set-top box for user interaction therewith. The pages include associated meta-data defining active locations on each page. When a page is displayed, the user interacts with the active locations on the page by entering commands via a remote control device, whereby the system reads the meta-data and takes the action associated with the location. Actions include moving to other active locations, hyperlinking to other pages, entering user form data and submitting the data as a form into memory. The form data may be read from memory, and the pages may be related to a conventional television program, thereby providing significant user interactivity with the television.

Owner:MICROSOFT TECH LICENSING LLC

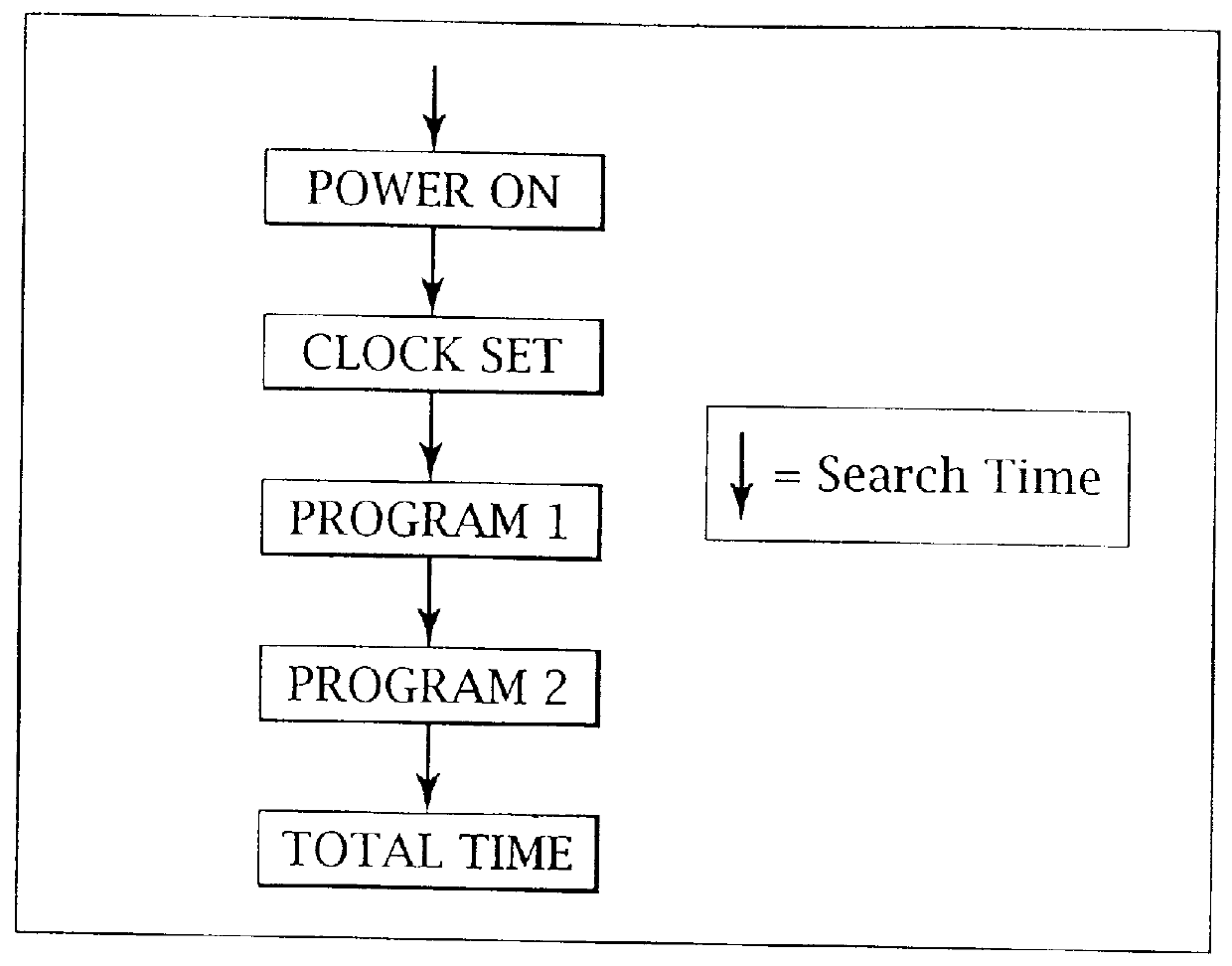

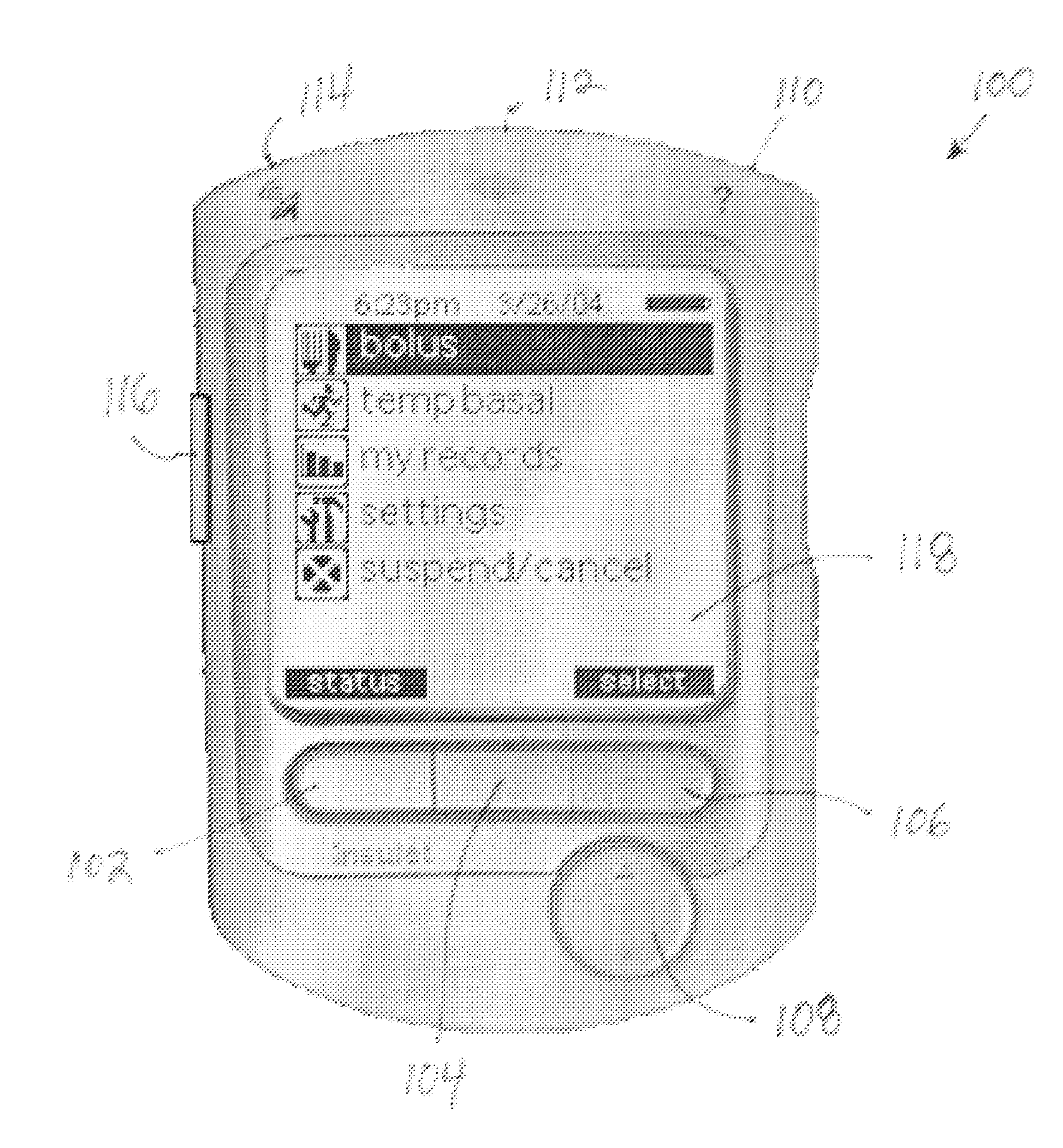

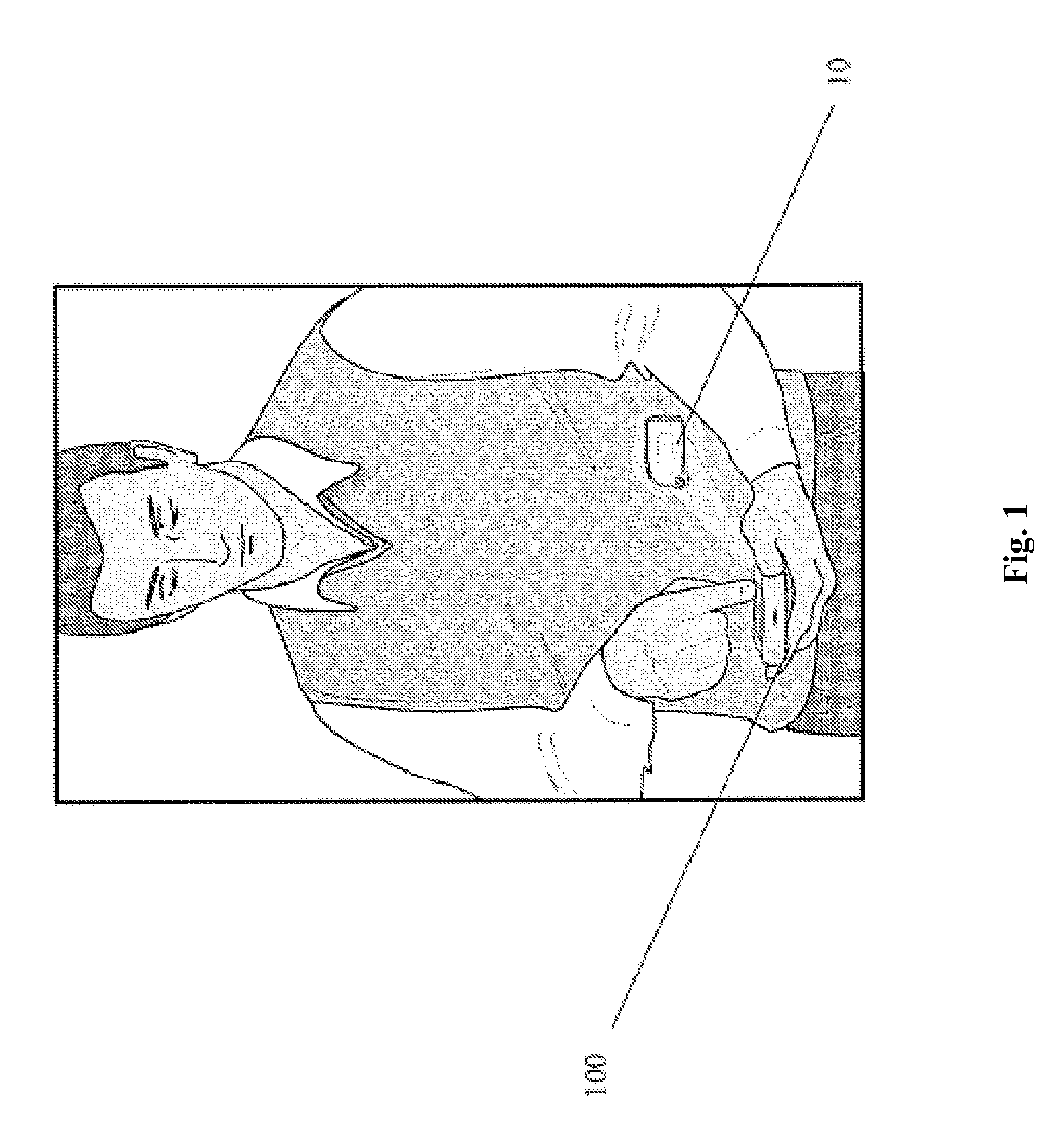

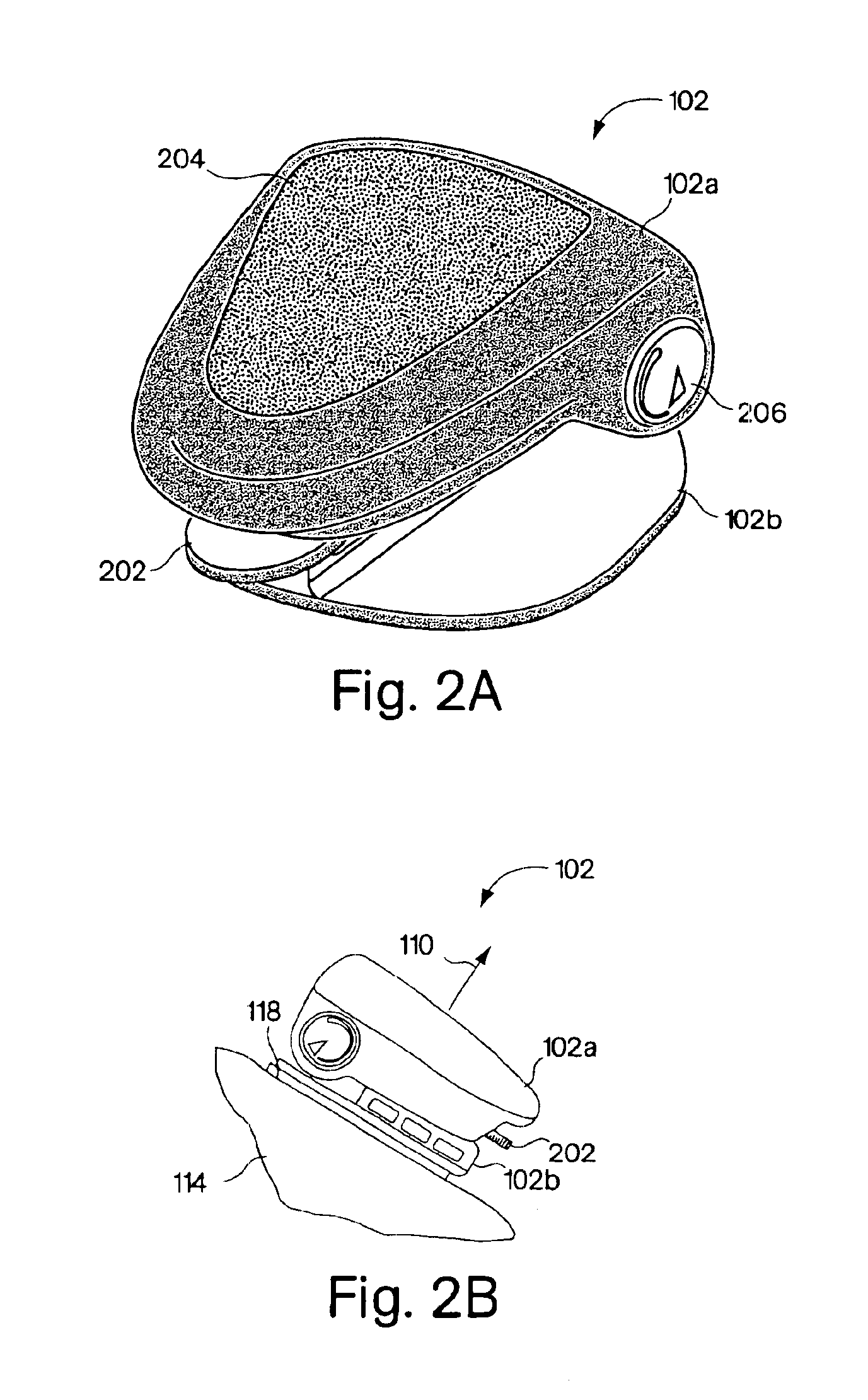

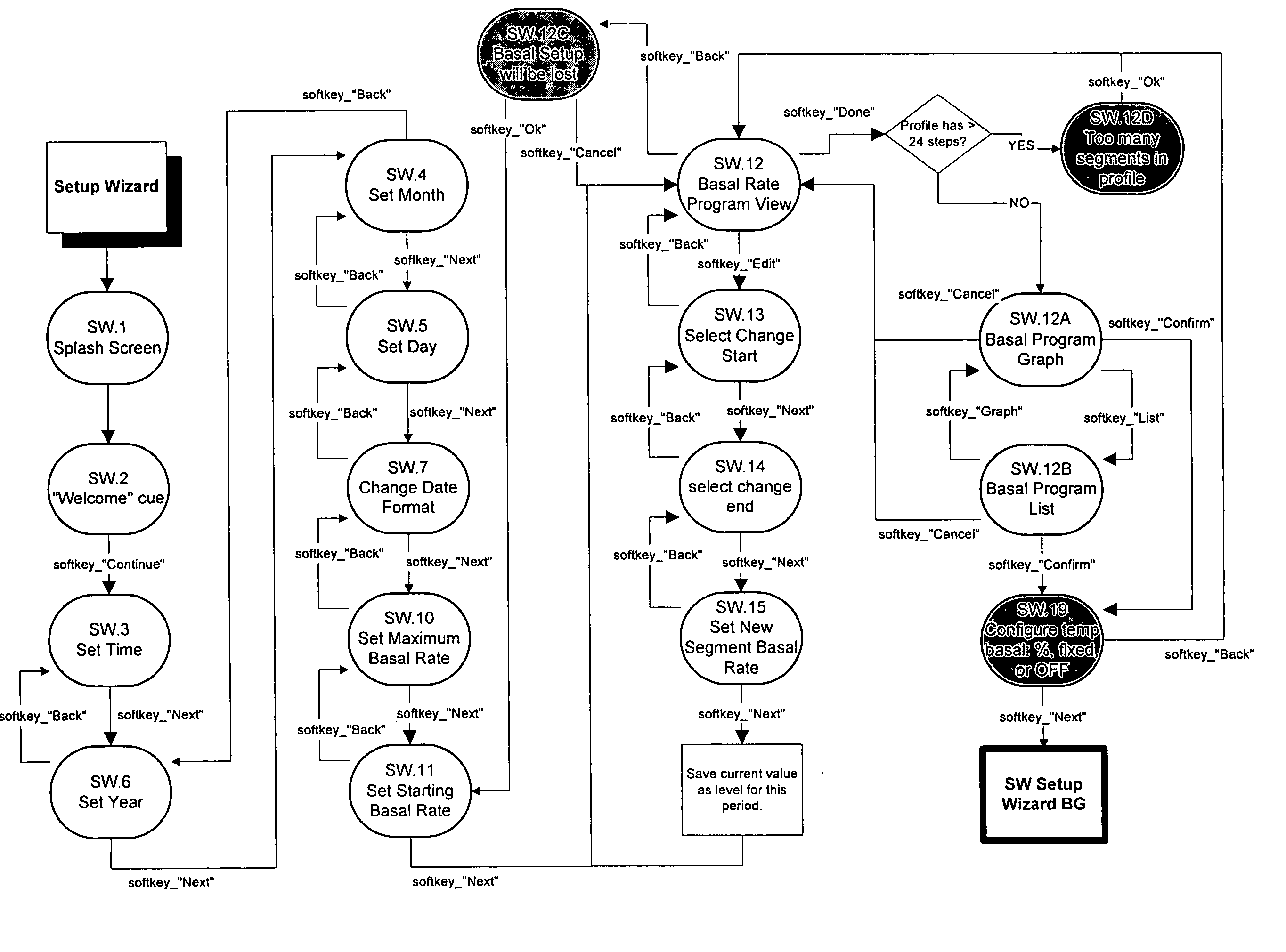

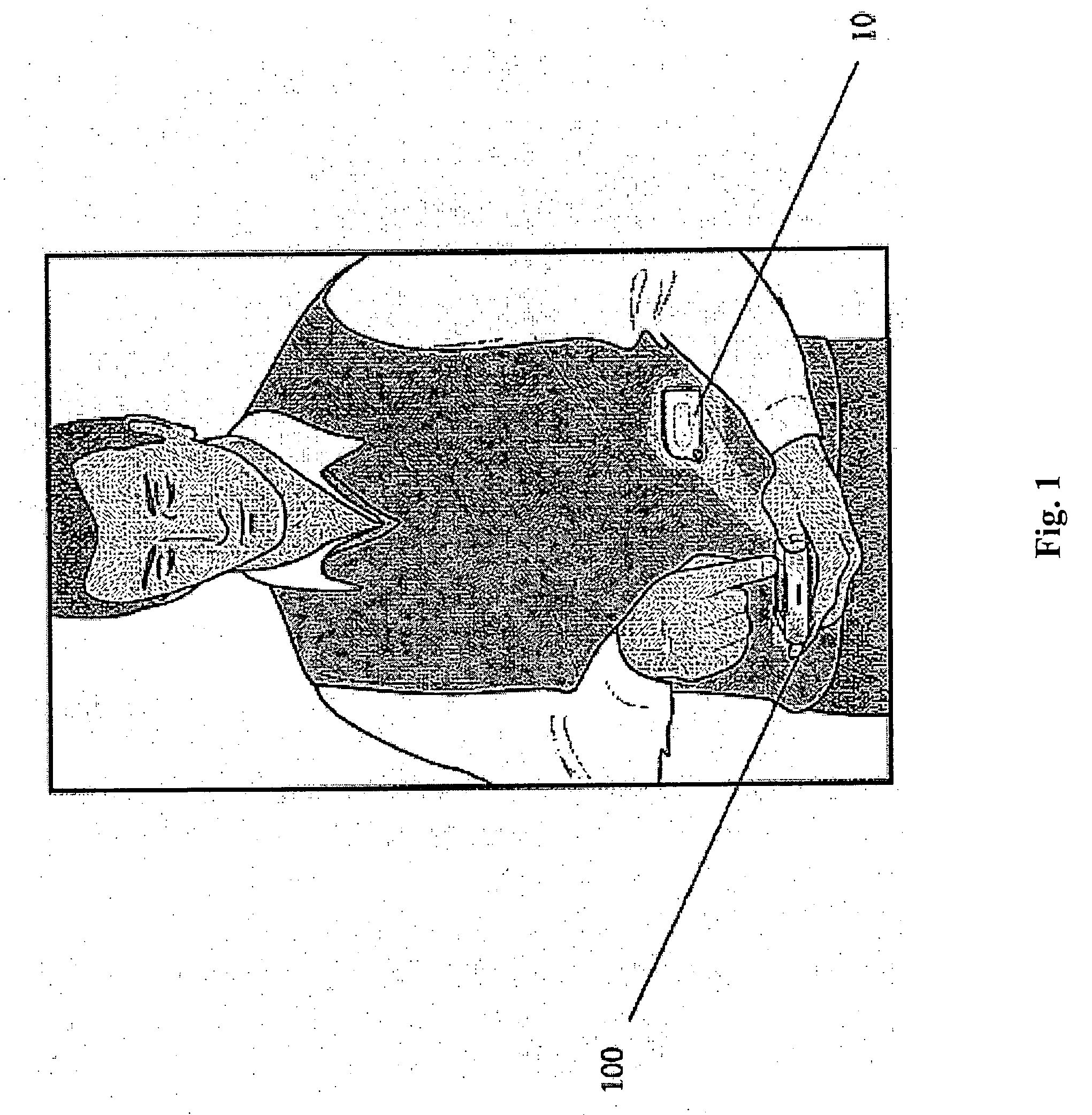

User Interface For Infusion Pump Remote Controller And Method Of Using The Same

InactiveUS20070118405A1Easily and intuitively program and operateData processing applicationsLocal control/monitoringControl systemUser input

A control system for controlling an infusion pump, including interface components for allowing a user to receive and provide information, a processor connected to the user interface components and adapted to provide instructions to the infusion pump, and a computer program having setup instructions that cause the processor to enter a setup mode upon the control system first being turned on. In the setup mode, the processor prompts the user, in a sequential manner, through the user interface components to input basic information for use by the processor in controlling the infusion pump, and allows the user to operate the infusion pump only after the user has completed the setup mode.

Owner:INSULET CORP

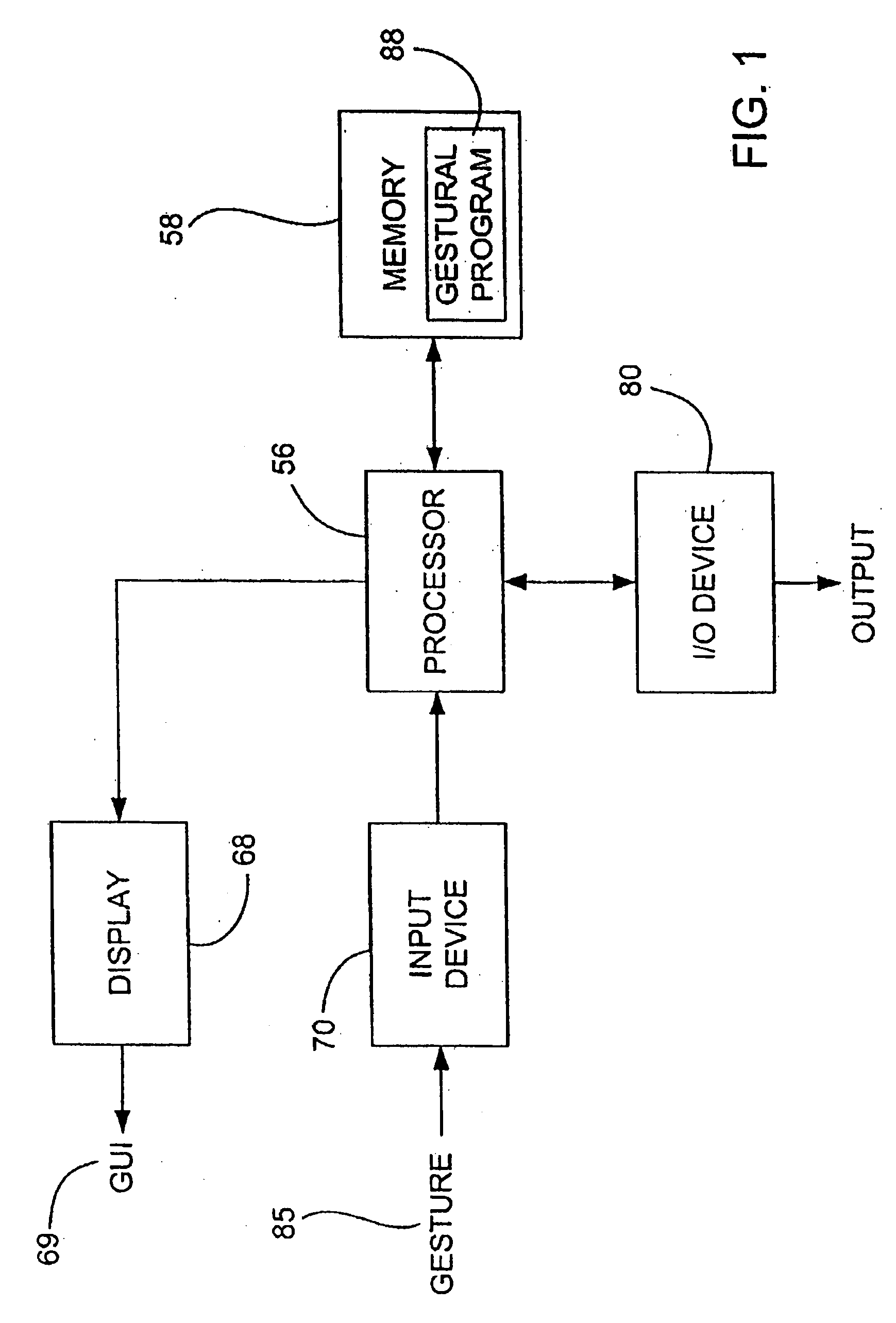

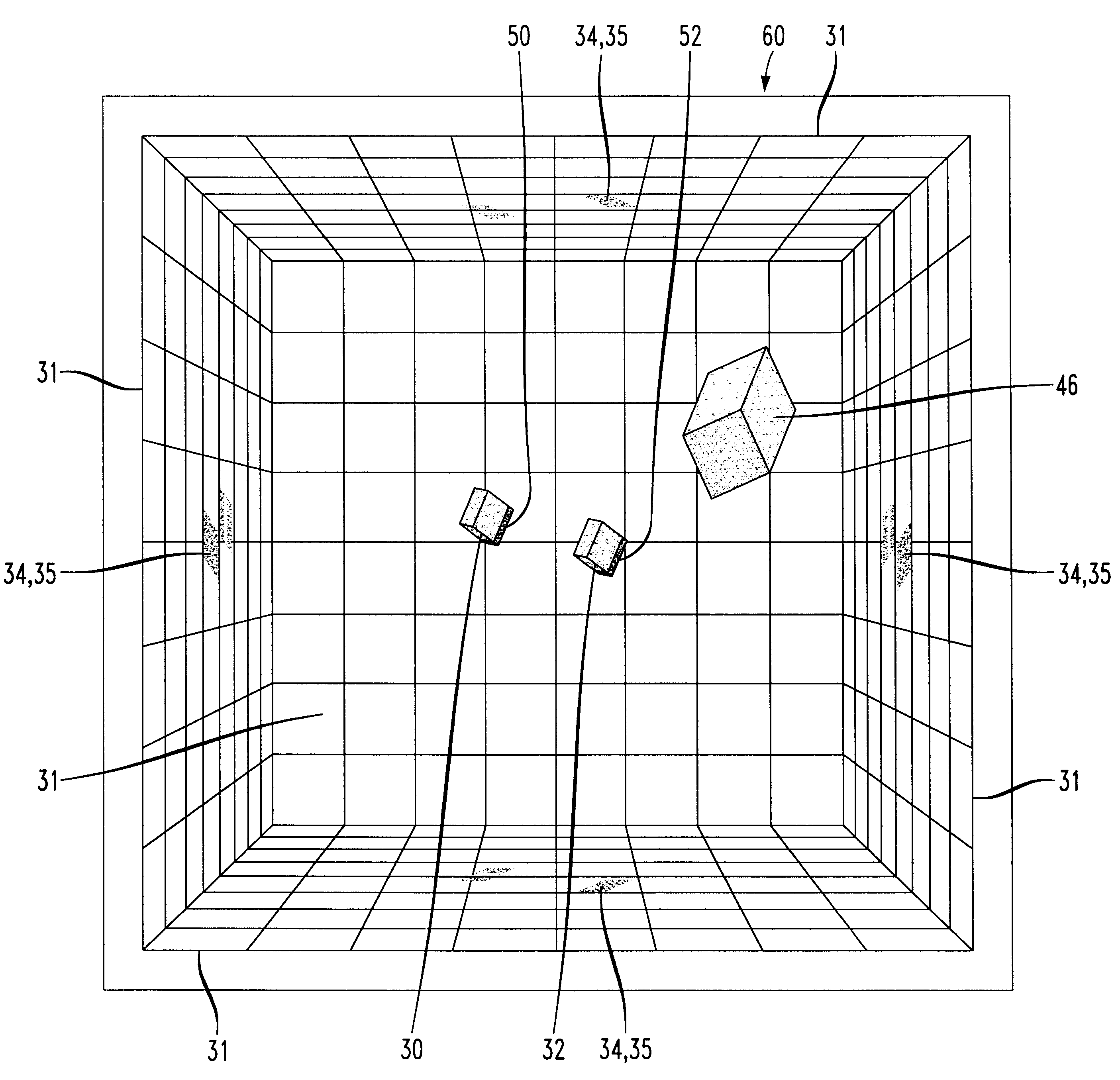

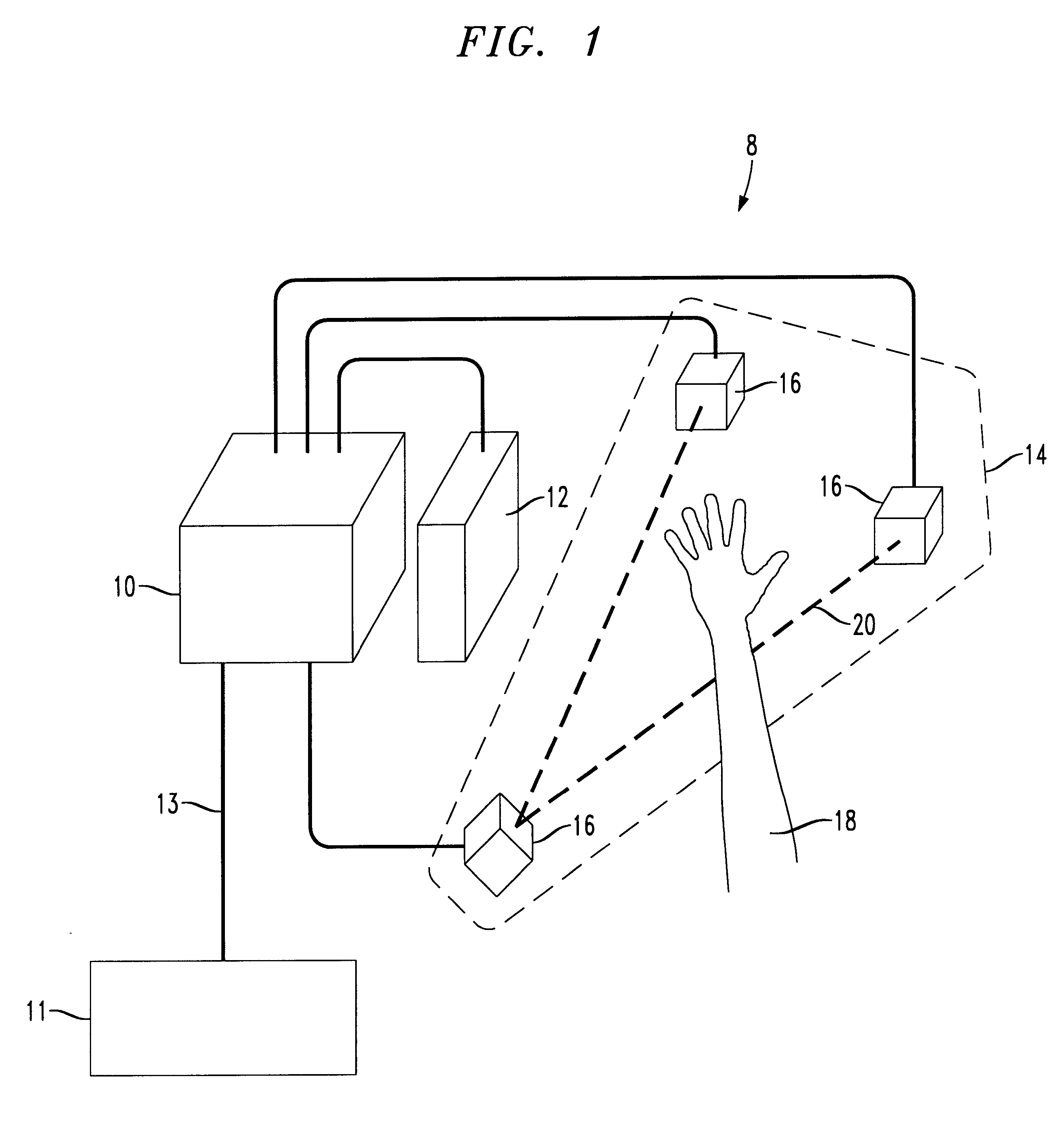

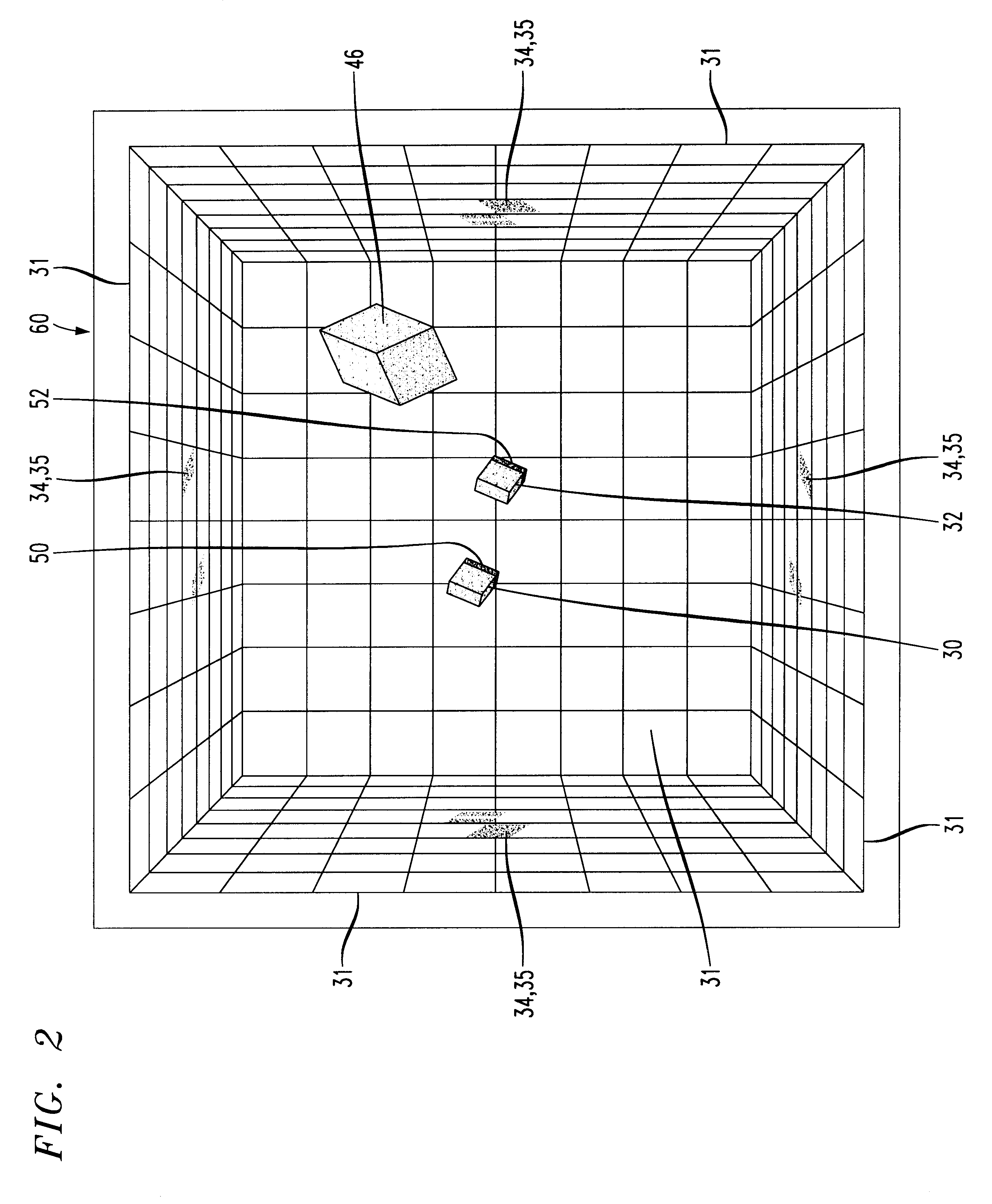

Gesture-based computer interface

InactiveUS6222465B1Input/output for user-computer interactionCharacter and pattern recognitionFree formHand movements

A system and method for manipulating virtual objects in a virtual environment, for drawing curves and ribbons in the virtual environment, and for selecting and executing commands for creating, deleting, moving, changing, and resizing virtual objects in the virtual environment using intuitive hand gestures and motions. The system is provided with a display for displaying the virtual environment and with a video gesture recognition subsystem for identifying motions and gestures of a user's hand. The system enables the user to manipulate virtual objects, to draw free-form curves and ribbons and to invoke various command sets and commands in the virtual environment by presenting particular predefined hand gestures and / or hand movements to the video gesture recognition subsystem.

Owner:LUCENT TECH INC

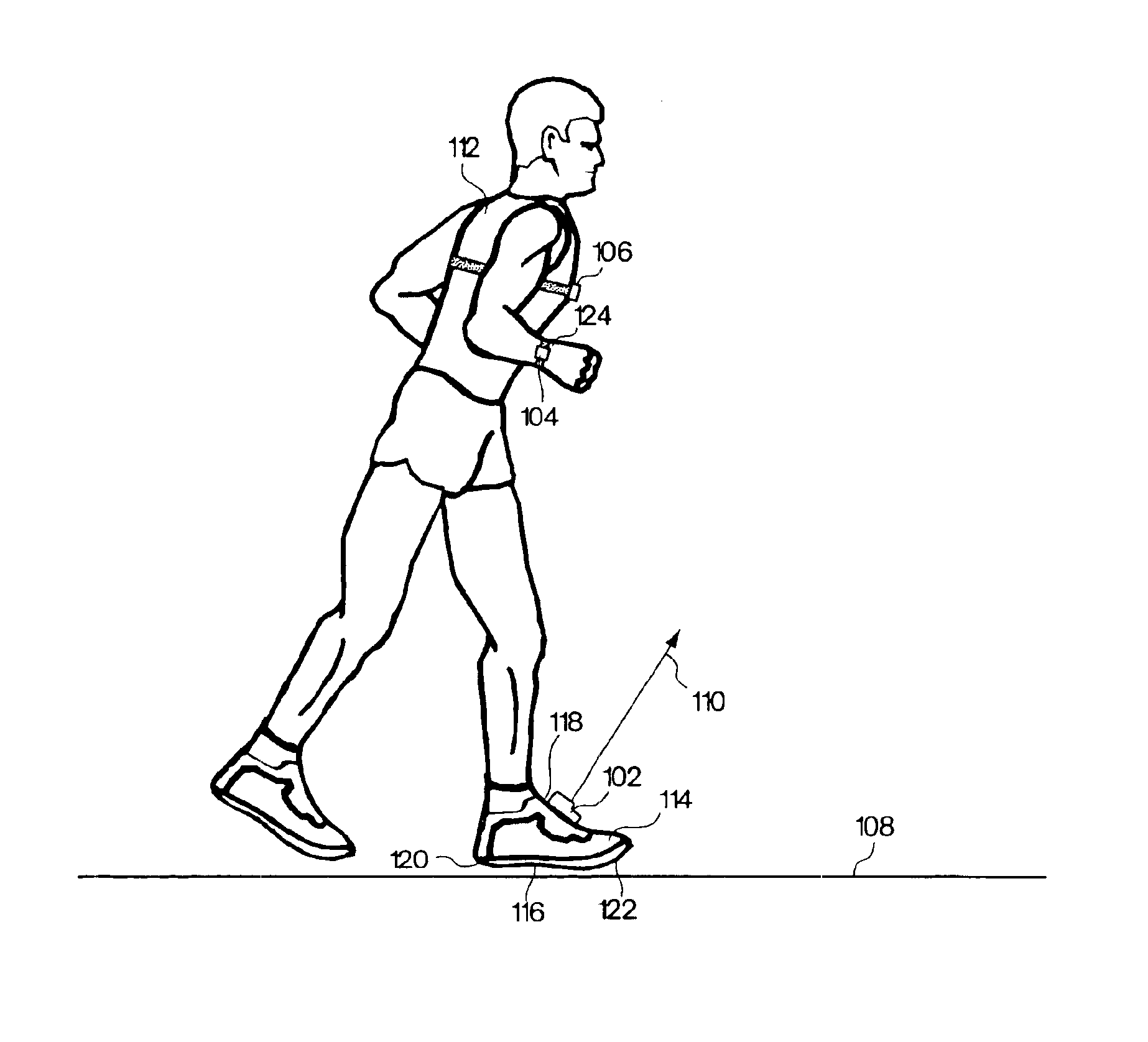

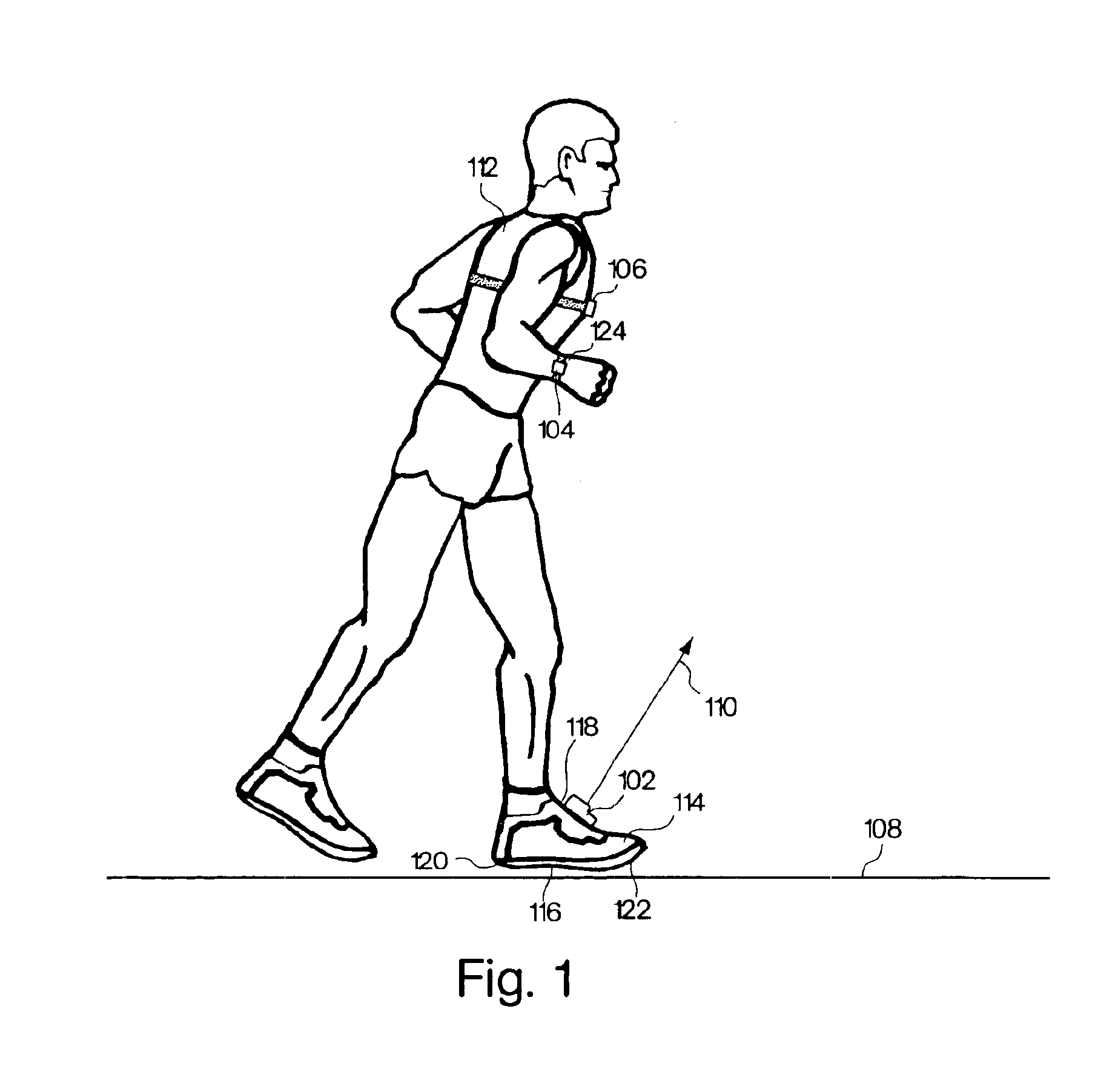

Monitoring activity of a user in locomotion on foot

InactiveUS6882955B1Time indicationSynchronous motors for clocksHuman–computer interactionVisual perception

In one embodiment, a display unit to be mounted on a wrist of a user includes a display screen, a base, and at least one strap. The display unit is configured such that, when it is worn by the user, the display screen is oriented at an angle with respect to a surface of the user's wrist. In another embodiment, visually-perceptible information indicative of determined values of the instantaneous pace of a user and the average pace of the user are displayed, simultaneously. In another embodiment, visually-perceptible information indicative of the determined values of the at least one variable physiological parameter of the user and the at least one performance parameter of the user are displayed, simultaneously.

Owner:NIKE INC +1

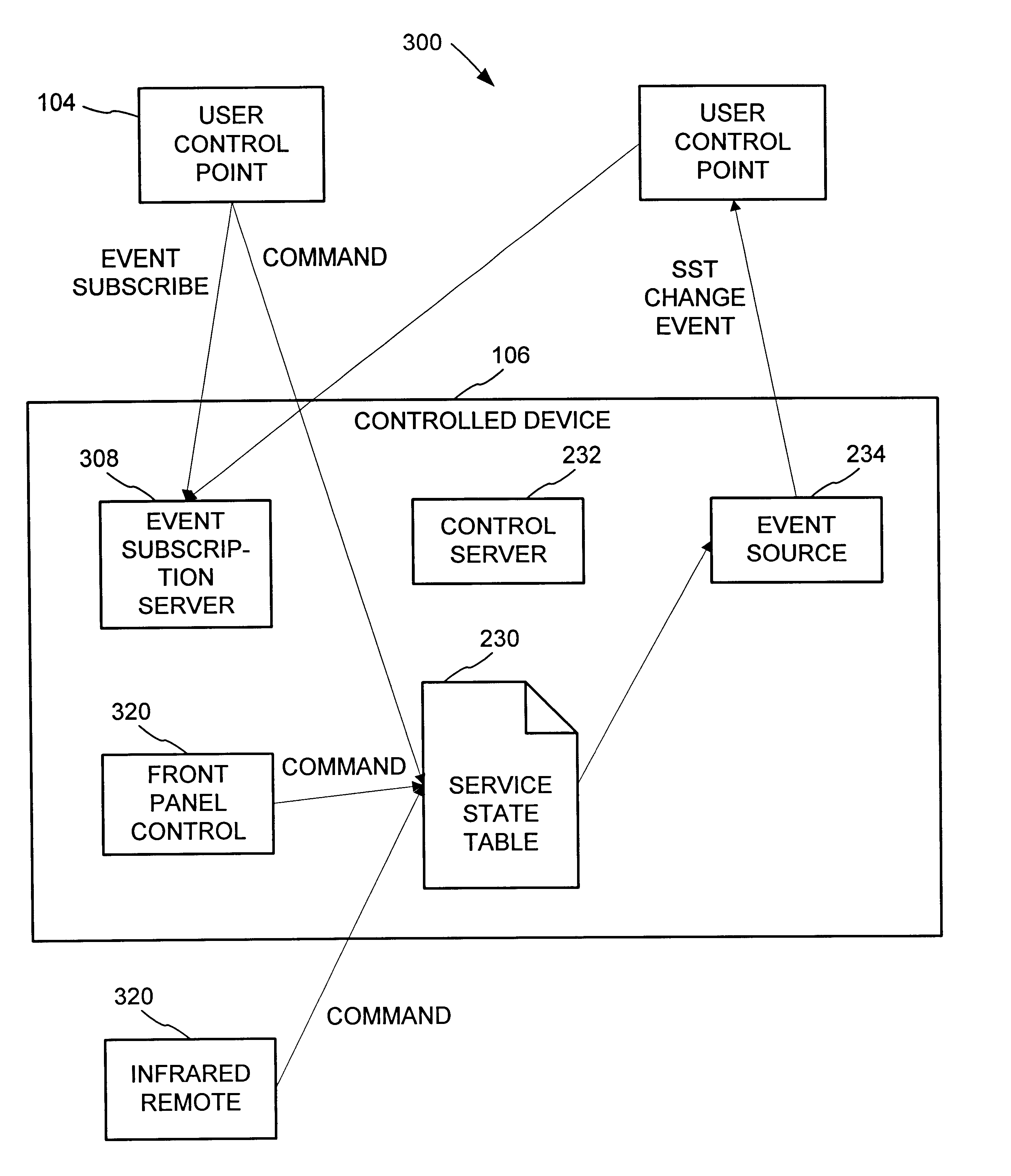

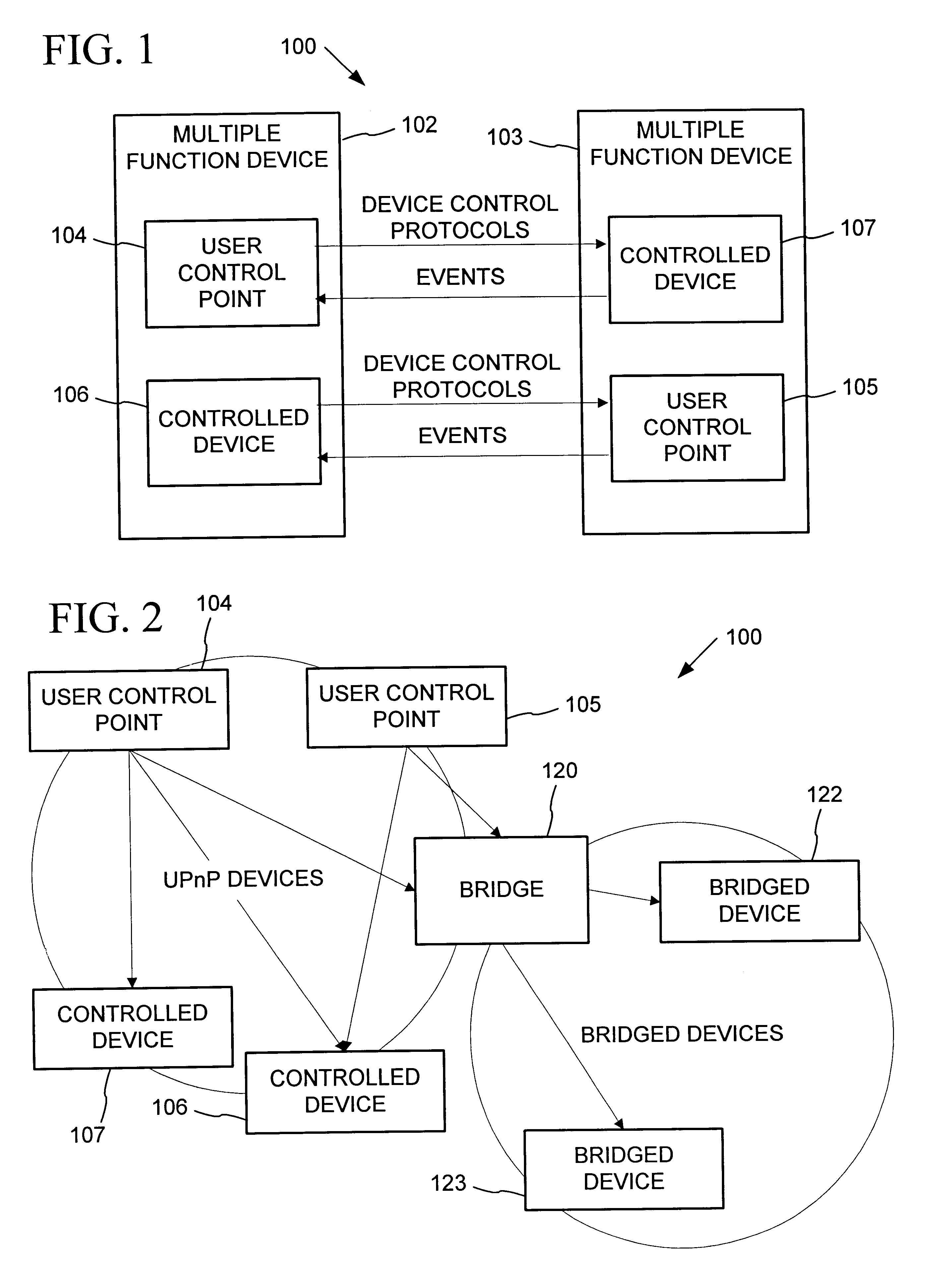

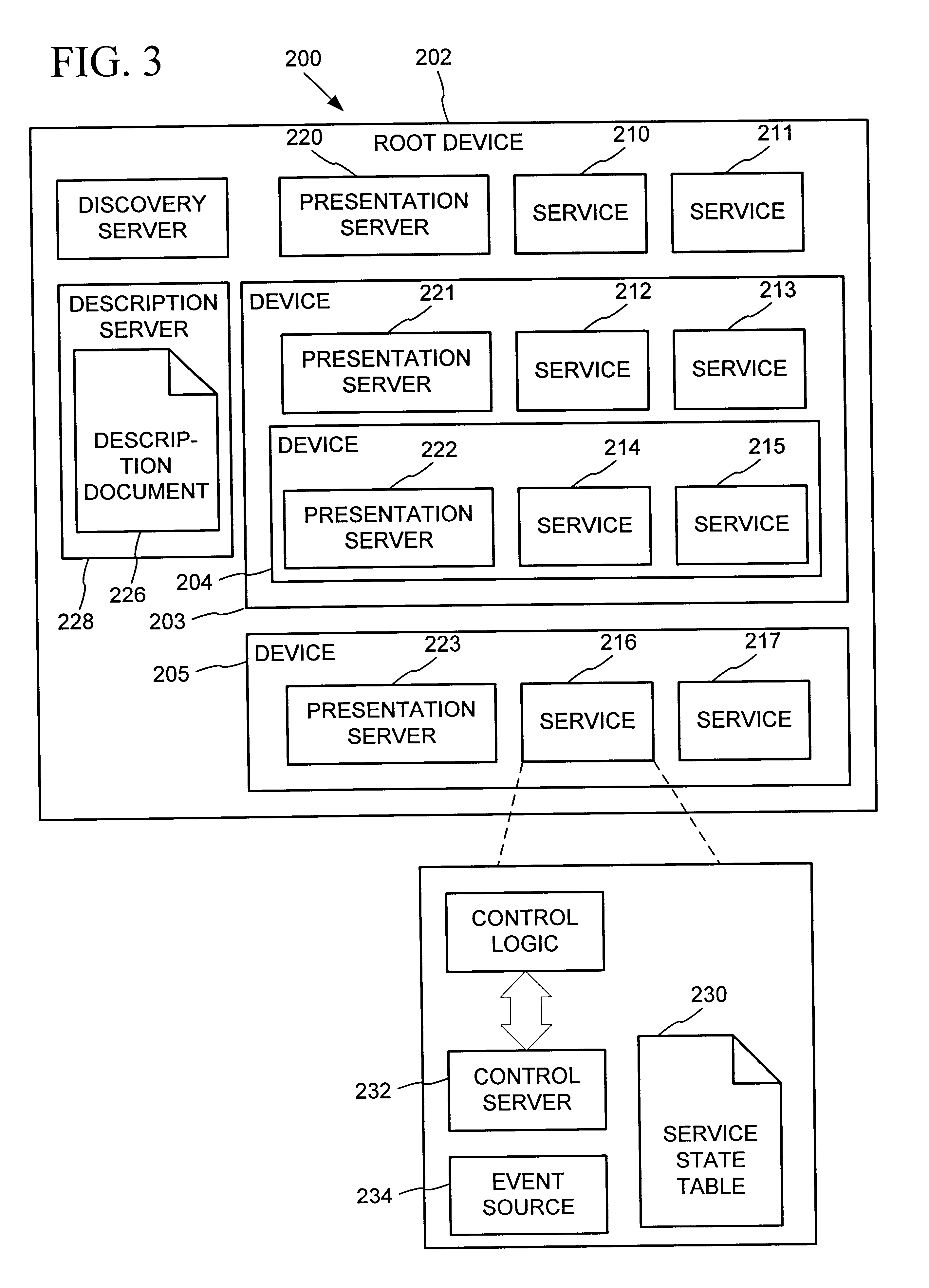

Synchronization of controlled device state using state table and eventing in data-driven remote device control model

InactiveUS6725281B1Falling in priceIncrease speedMultiple digital computer combinationsSecuring communicationEvent synchronizationEvent model

Controlled devices according to a device control model maintain a state table representative of their operational state. Devices providing a user control point interface for the controlled device obtain the state table of the controlled device, and may also obtain presentation data defining a remoted user interface of the controlled device and device control protocol data defining commands and data messaging protocol to effect control of the controlled device. These user control devices also subscribe to notifications of state table changes, which are distributed from the controlled device according to an eventing model. Accordingly, upon any change to the controlled device's operational state, the eventing model synchronizes the device's state as represented in the state table across all user control devices.

Owner:ROVI TECH CORP

User interface for infusion pump remote controller and method of using the same

InactiveUS20050022274A1Easily and intuitively program and operateData processing applicationsLocal control/monitoringControl systemHuman–computer interaction

A control system for controlling an infusion pump, including interface components for allowing a user to receive and provide information, a processor connected to the user interface components and adapted to provide instructions to the infusion pump, and a computer program having setup instructions that cause the processor to enter a setup mode upon the control system first being turned on. In the setup mode, the processor prompts the user, in a sequential manner, through the user interface components to input basic information for use by the processor in controlling the infusion pump, and allows the user to operate the infusion pump only after the user has completed the setup mode.

Owner:INSULET CORP

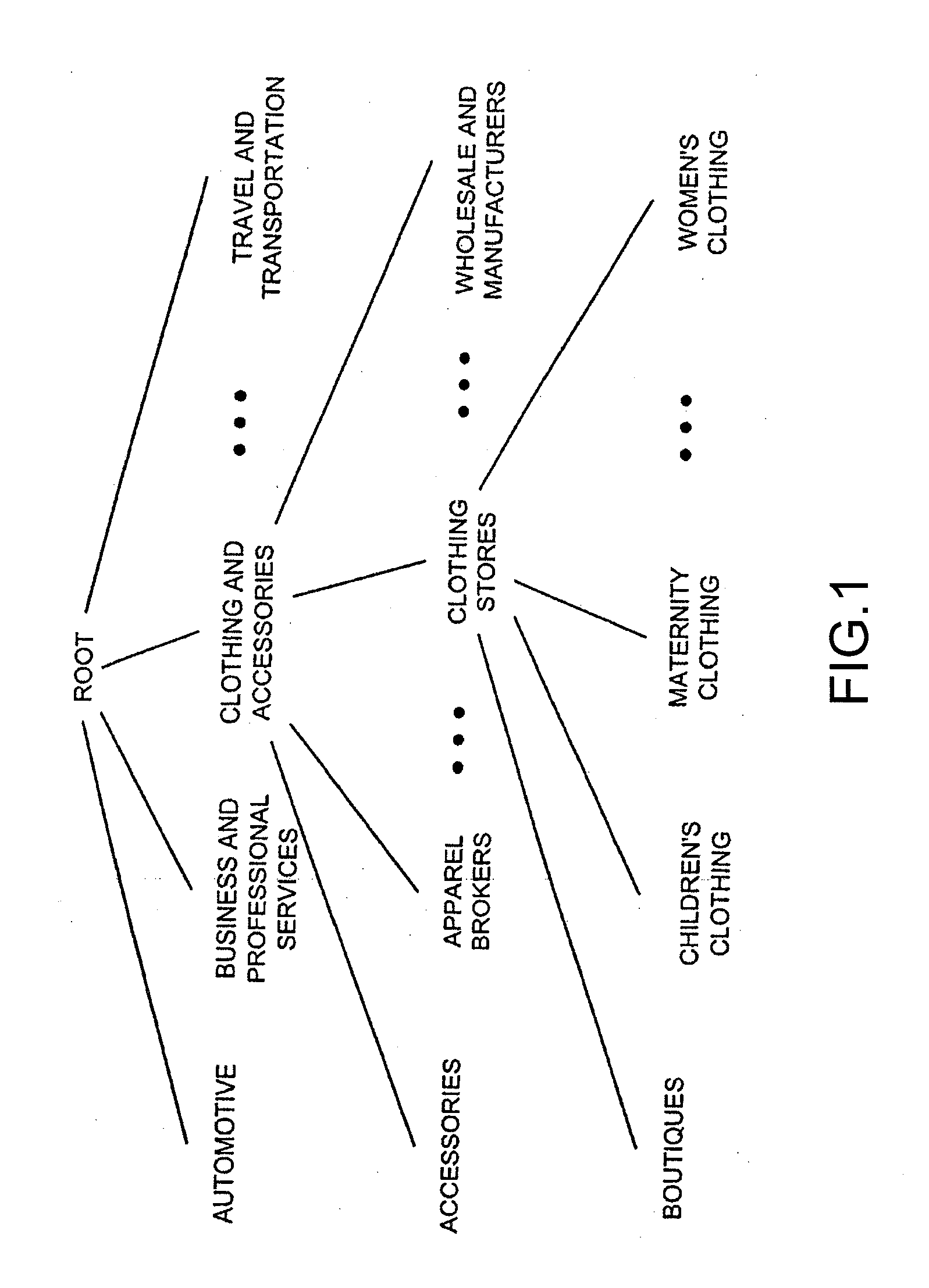

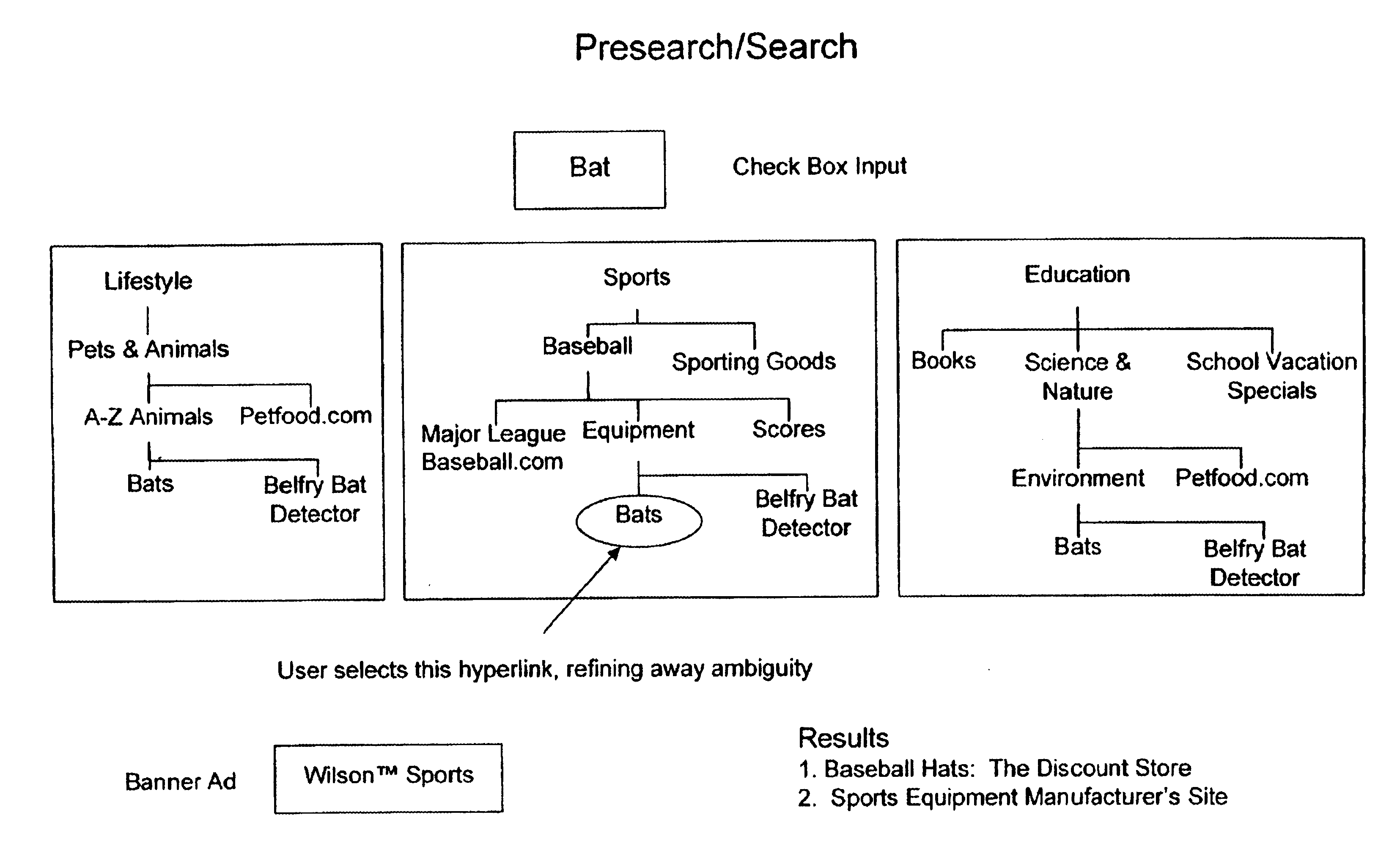

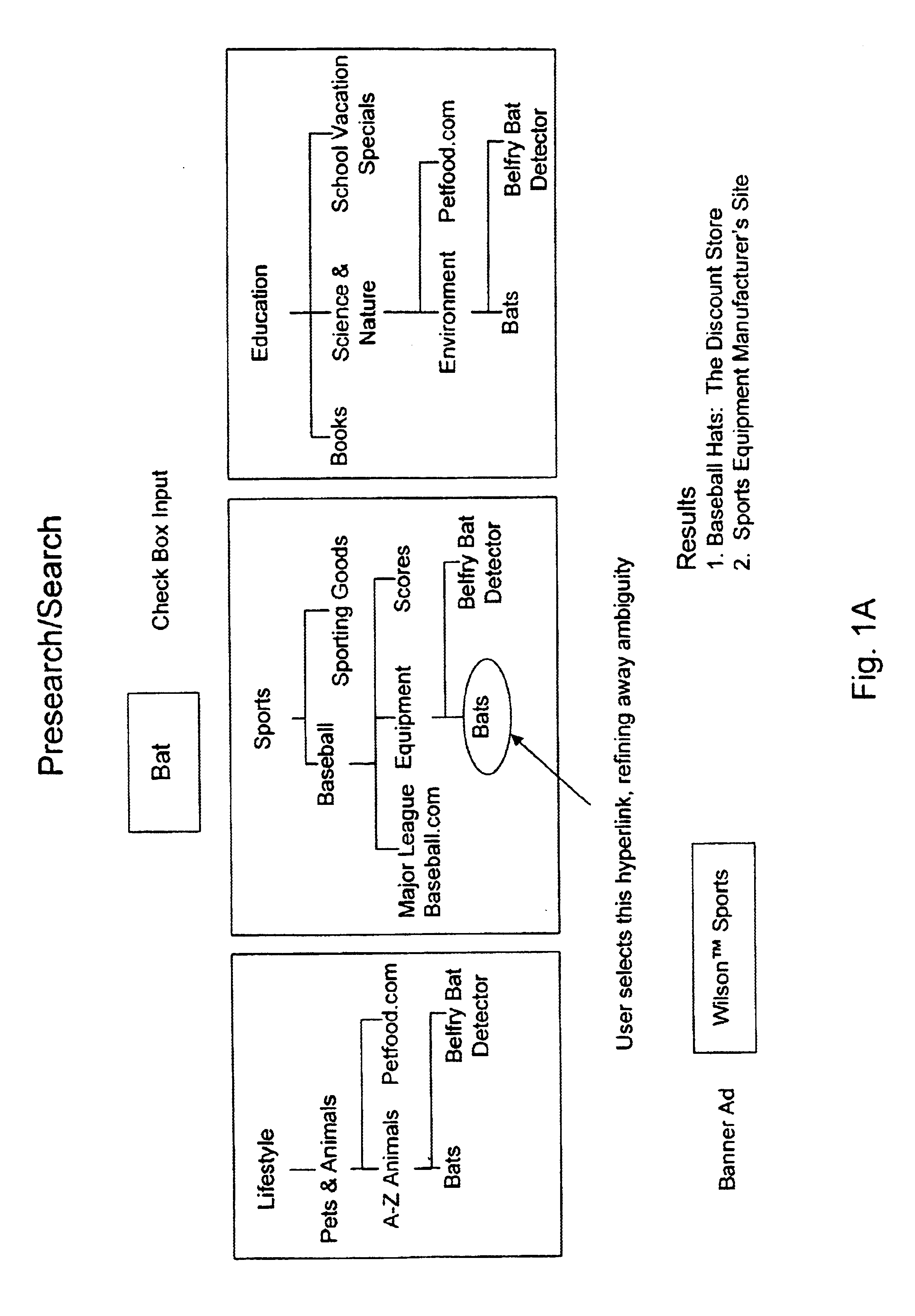

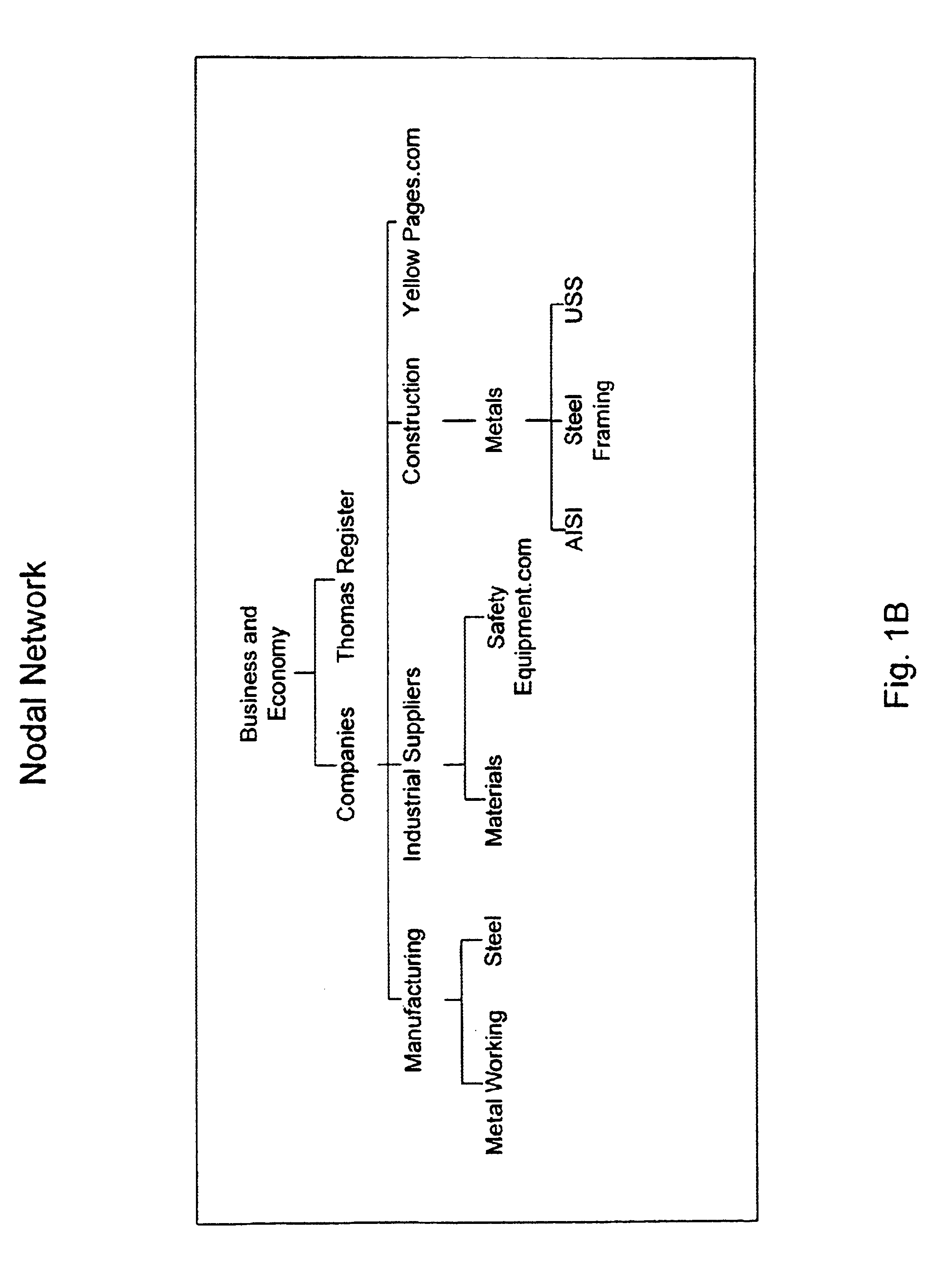

Computer graphic display visualization system and method

InactiveUS6868525B1Improve compactnessIncrease flexibilityDrawing from basic elementsAdvertisementsGraphicsCollaborative filtering

An improved human user computer interface system, providing a graphic representation of a hierarchy populated with naturally classified objects, having included therein at least one associated object having a distinct classification. Preferably, a collaborative filter is employed to define the appropriate associated object. The associated object preferably comprises a sponsored object, generating a subsidy or revenue.

Owner:RELATIVITY DISPLAY LLC

Ergonomic man-machine interface incorporating adaptive pattern recognition based control system

InactiveUS6418424B1Minimal costAvoid the needTelevision system detailsDigital data processing detailsHuman–machine interfaceData stream

An adaptive interface for a programmable system, for predicting a desired user function, based on user history, as well as machine internal status and context. The apparatus receives an input from the user and other data. A predicted input is presented for confirmation by the user, and the predictive mechanism is updated based on this feedback. Also provided is a pattern recognition system for a multimedia device, wherein a user input is matched to a video stream on a conceptual basis, allowing inexact programming of a multimedia device. The system analyzes a data stream for correspondence with a data pattern for processing and storage. The data stream is subjected to adaptive pattern recognition to extract features of interest to provide a highly compressed representation which may be efficiently processed to determine correspondence. Applications of the interface and system include a VCR, medical device, vehicle control system, audio device, environmental control system, securities trading terminal, and smart house. The system optionally includes an actuator for effecting the environment of operation, allowing closed-loop feedback operation and automated learning.

Owner:BLANDING HOVENWEEP

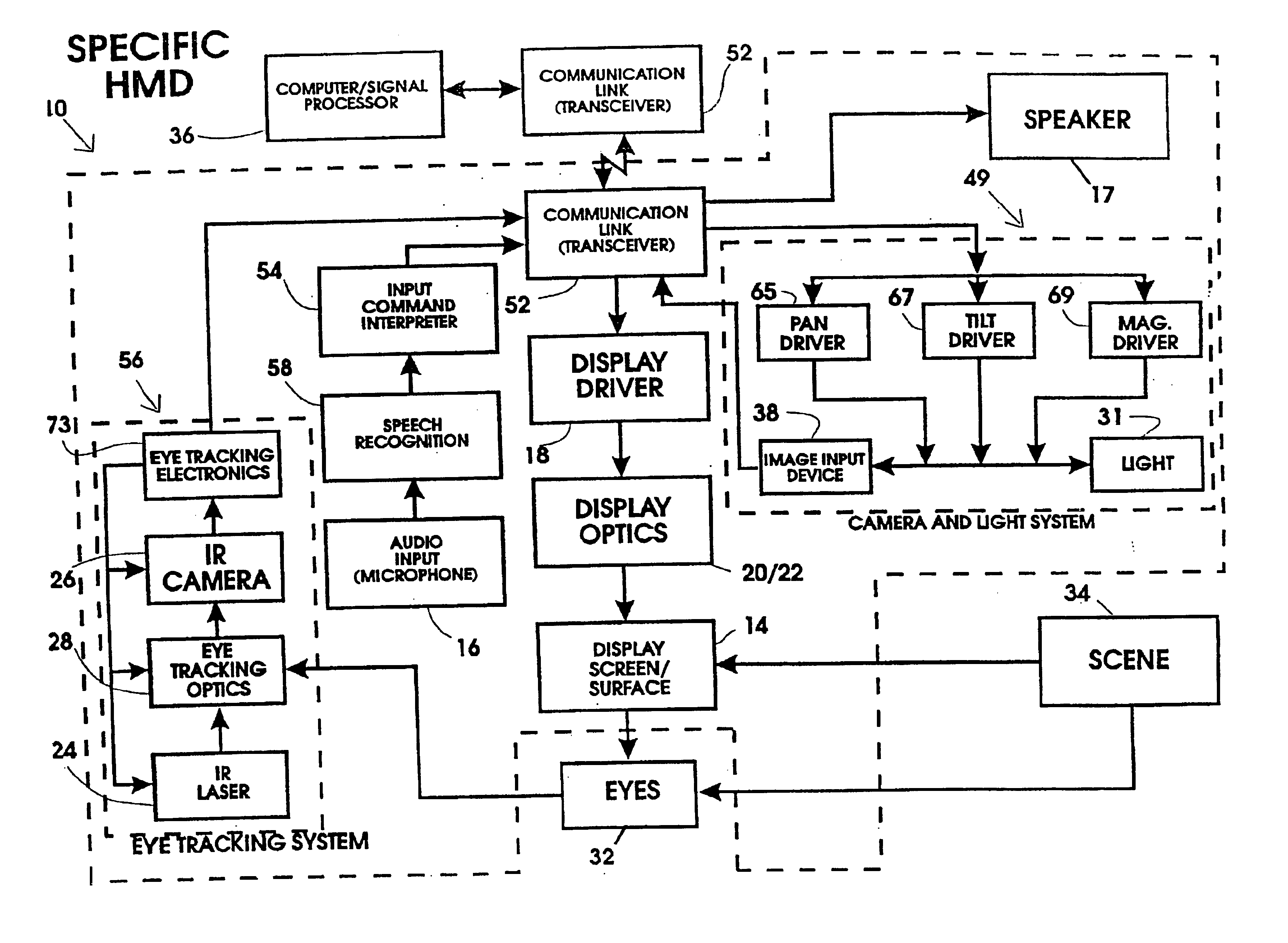

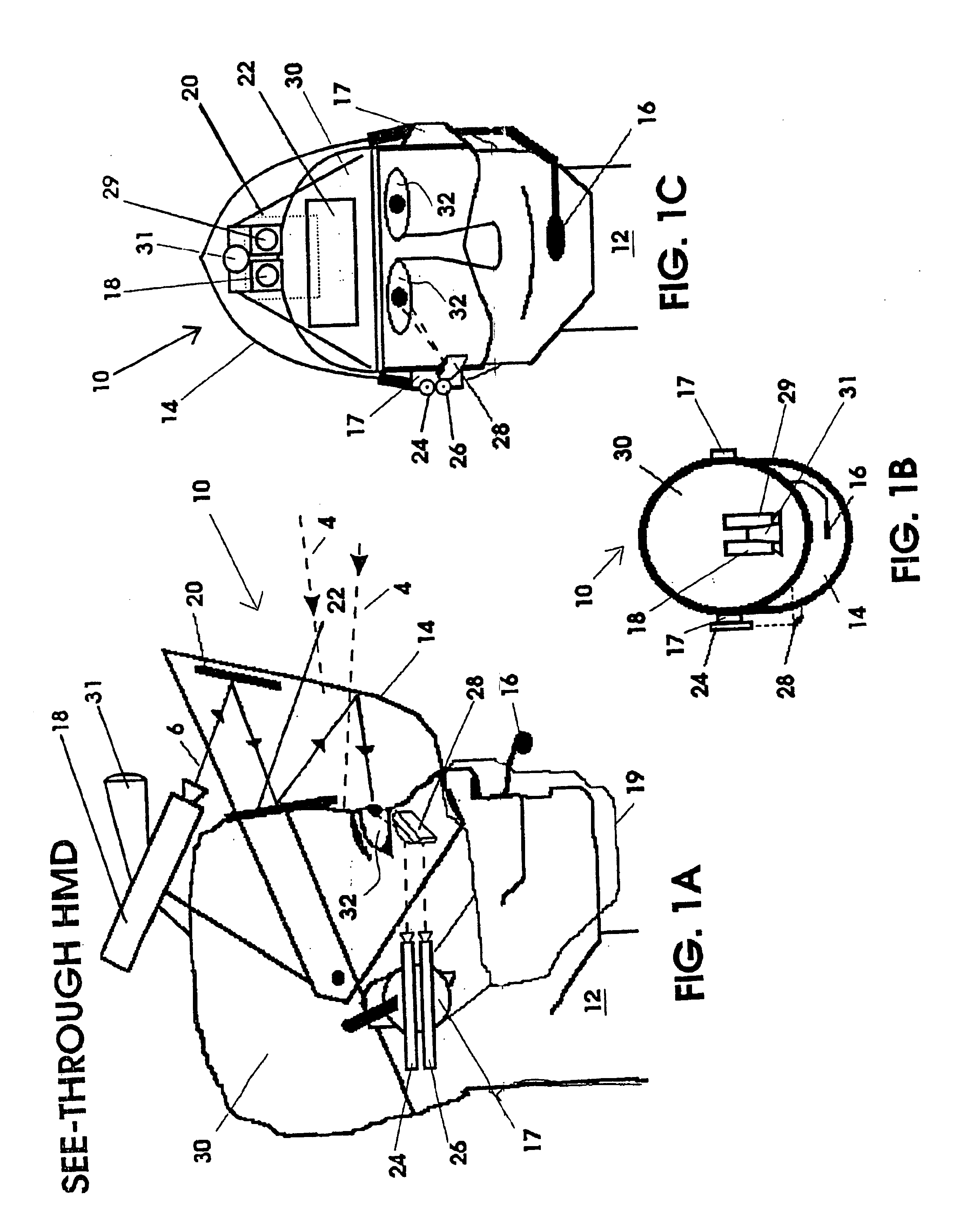

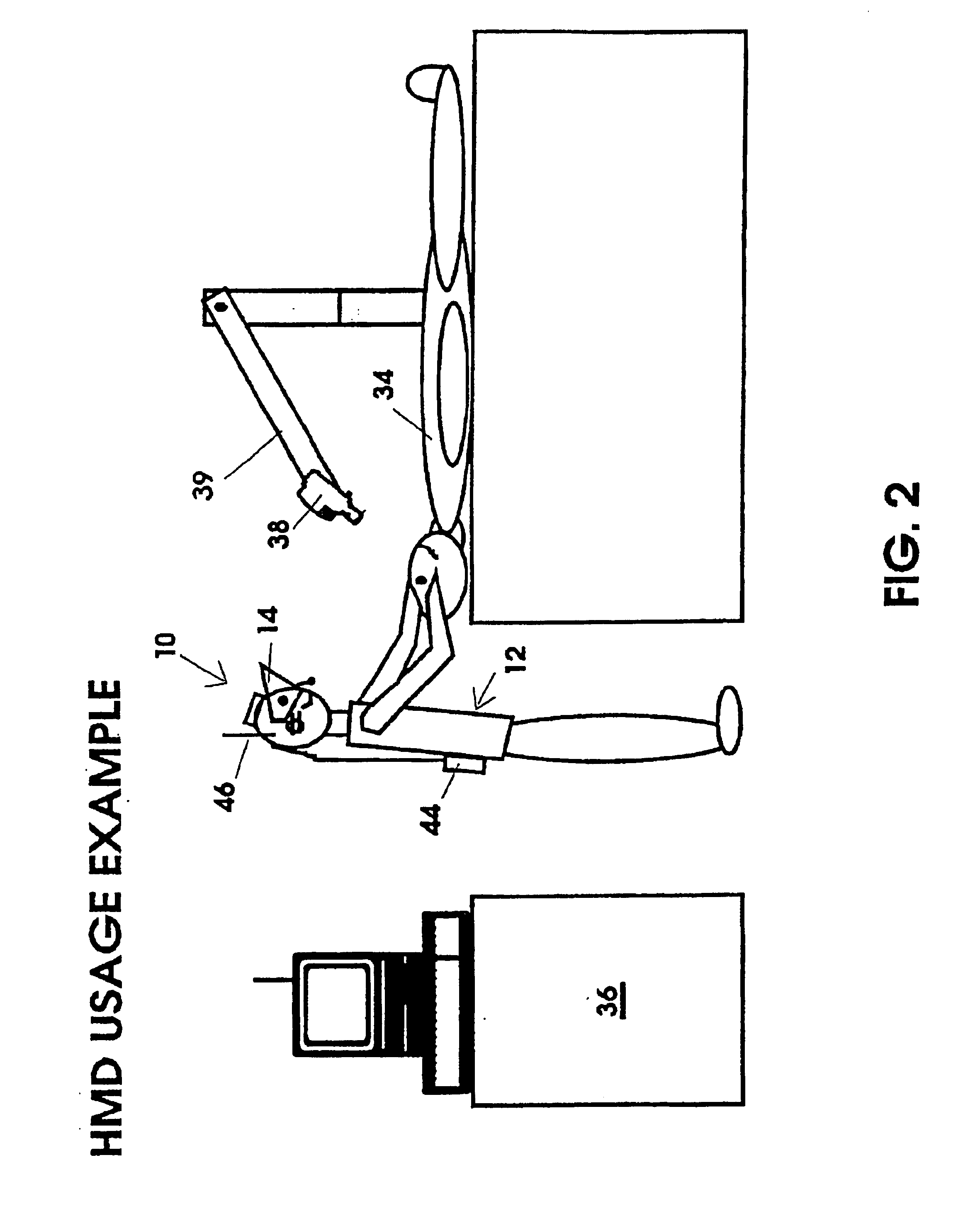

Selectively controllable heads-up display system

Systems and methods are disclosed for displaying data on a head's-up display screen. Multiple forms of data can be selectively displayed on a semi-transparent screen mounted in the user's normal field of view. The screen can either be mounted on the user's head, or mounted on a moveable implement and positioned in front of the user. A user interface is displayed on the screen including a moveable cursor and a menu of computer control icons. An eye-tracking system is mounted proximate the user and is employed to control movement of the cursor. By moving and focusing his or her eyes on a specific icon, the user controls the cursor to move to select the icon. When an icon is selected, a command computer is controlled to acquire and display data on the screen. The data is typically superimposed over the user's normal field of view.

Owner:LEMELSON JEROME H +1

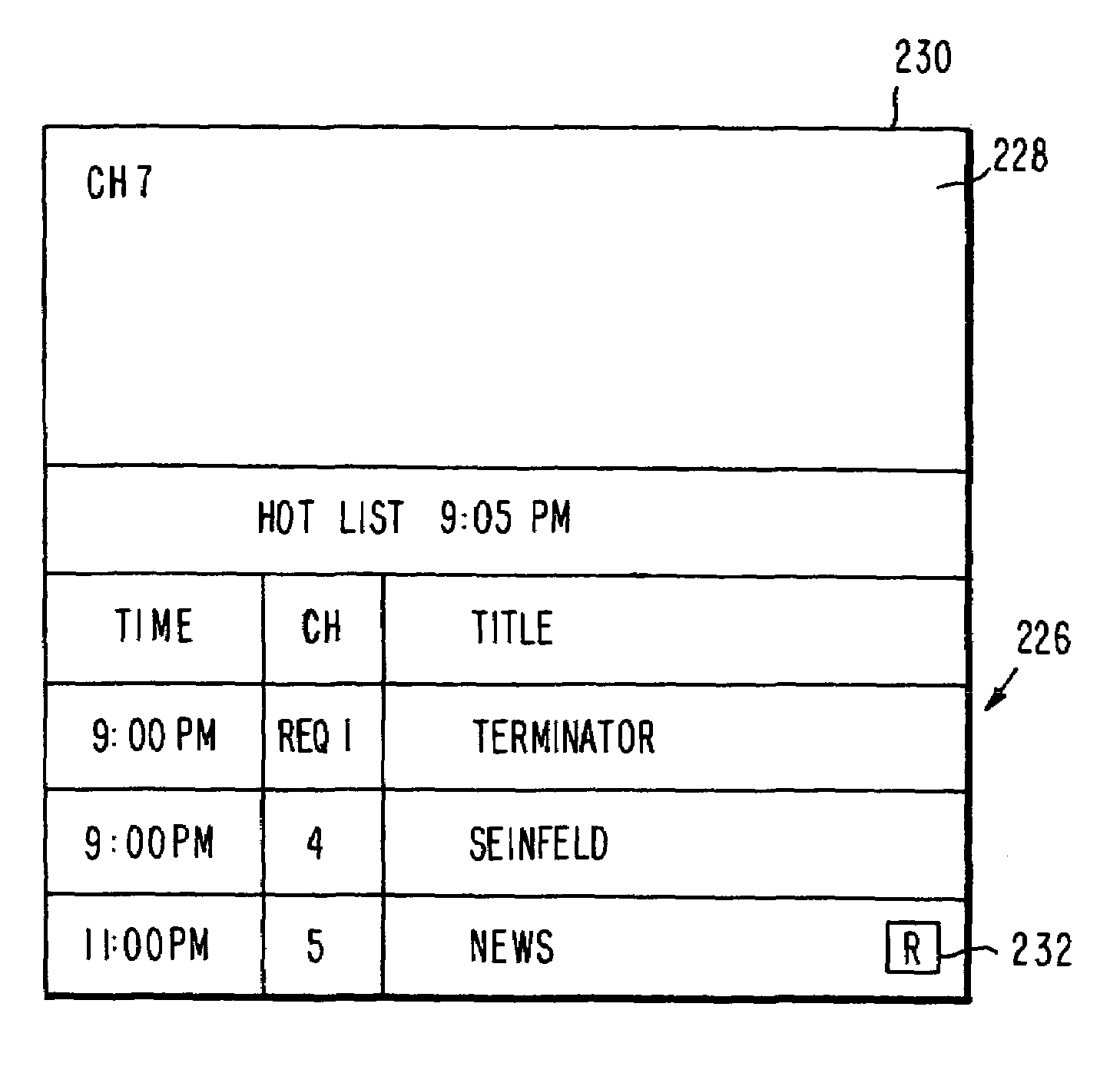

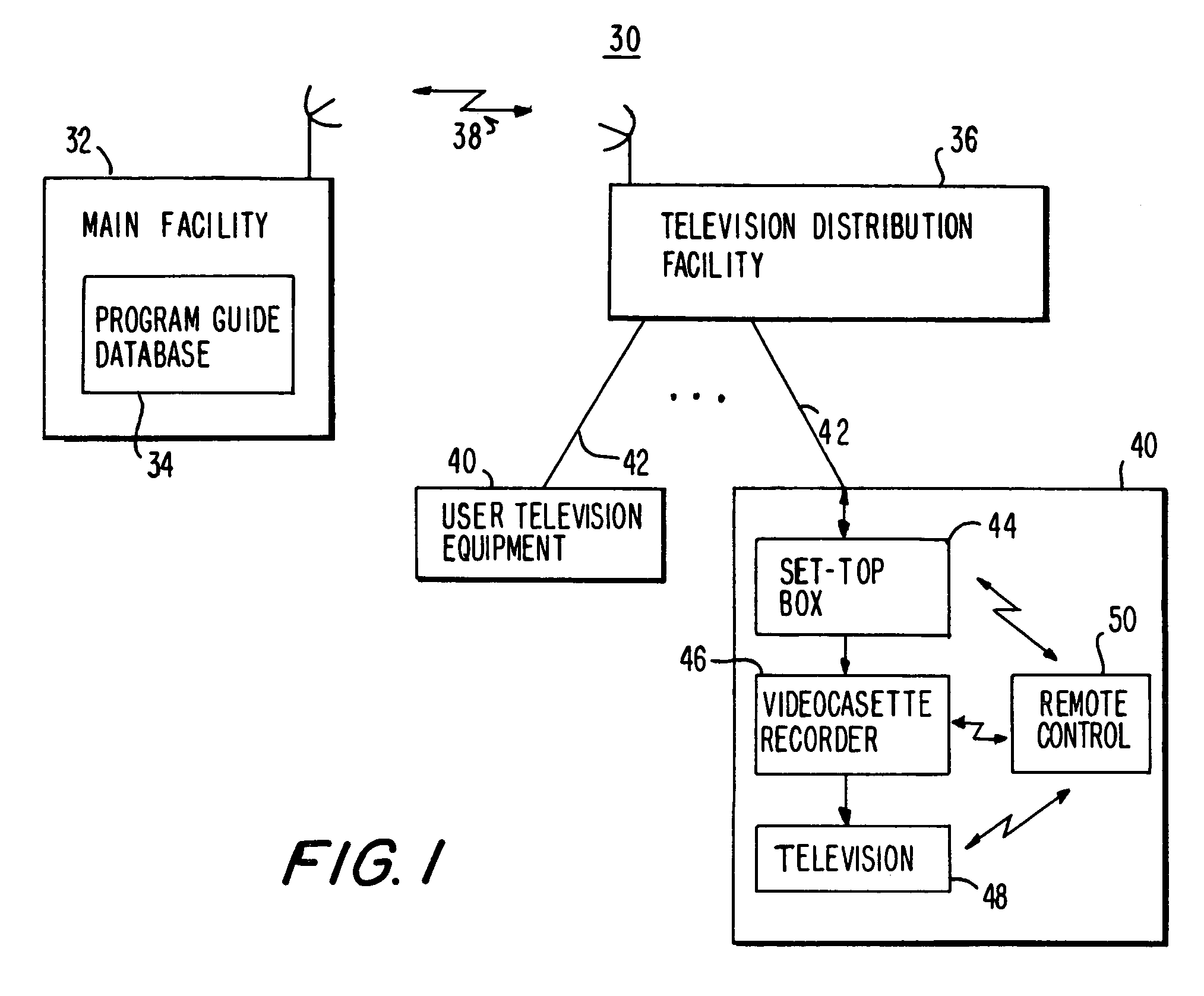

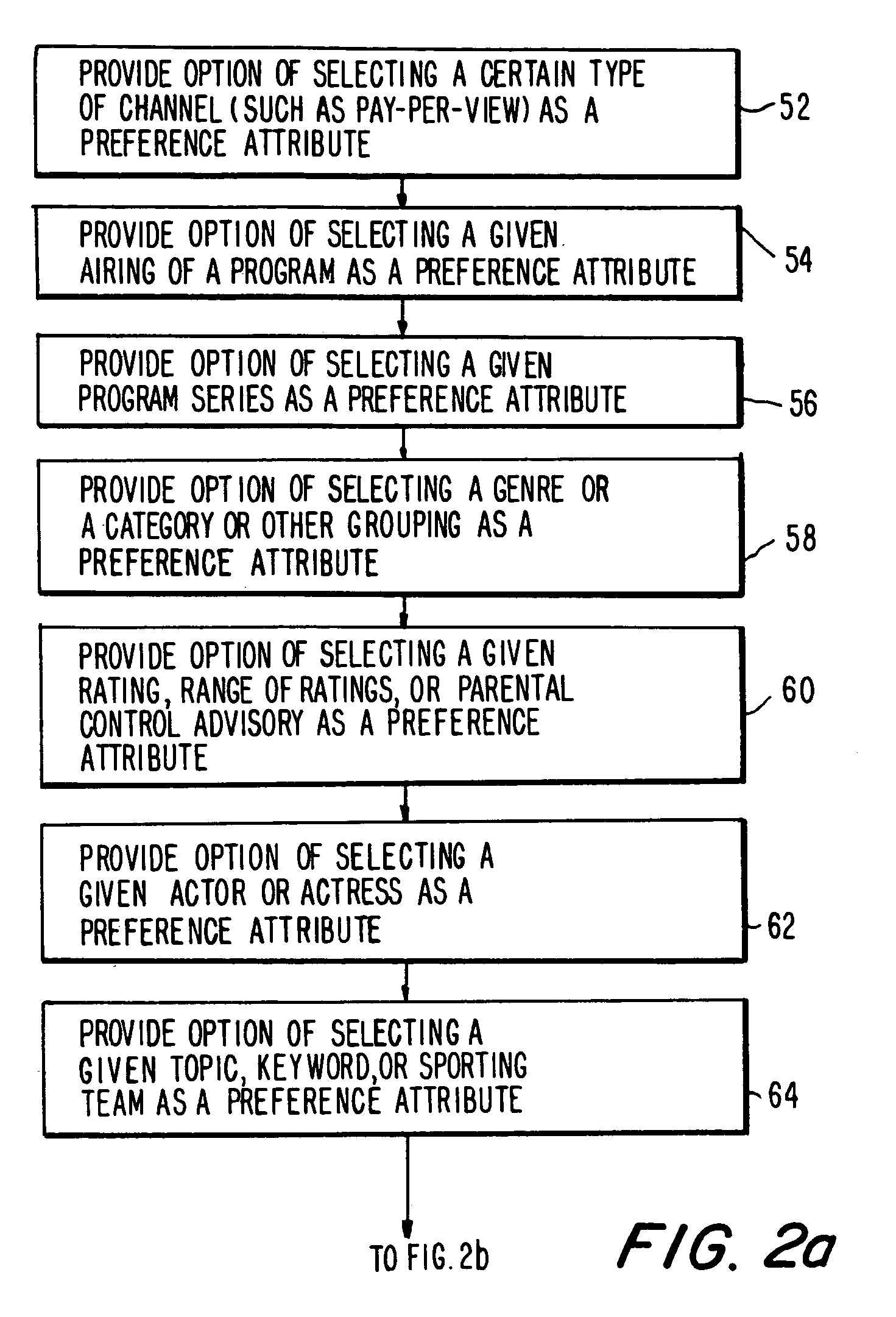

Program guide system with preference profiles

InactiveUS7185355B1Television system detailsColor television detailsInteractive televisionBroadcasting

An interactive television program guide system is provided in which a user may inform a program guide of the user's interests. Information on the user's interests may be stored in a preference profile. There may be more than one preference profile, each for a different user. Each preference profile contains a number of preference attributes (program titles, genres, viewing times, channels, broadcast characteristics, etc.). A preference level (e.g., strong or weak like, strong or weak dislike, illegal, mandatory, don't care, etc.) that is indicative of the user's level of interest is associated with each preference attribute. Preference profiles may be used to restrict the programs that are listed in various program listings display screens and may be used to limit the channels to which the program guide allows the user to tune.

Owner:ROVI GUIDES INC

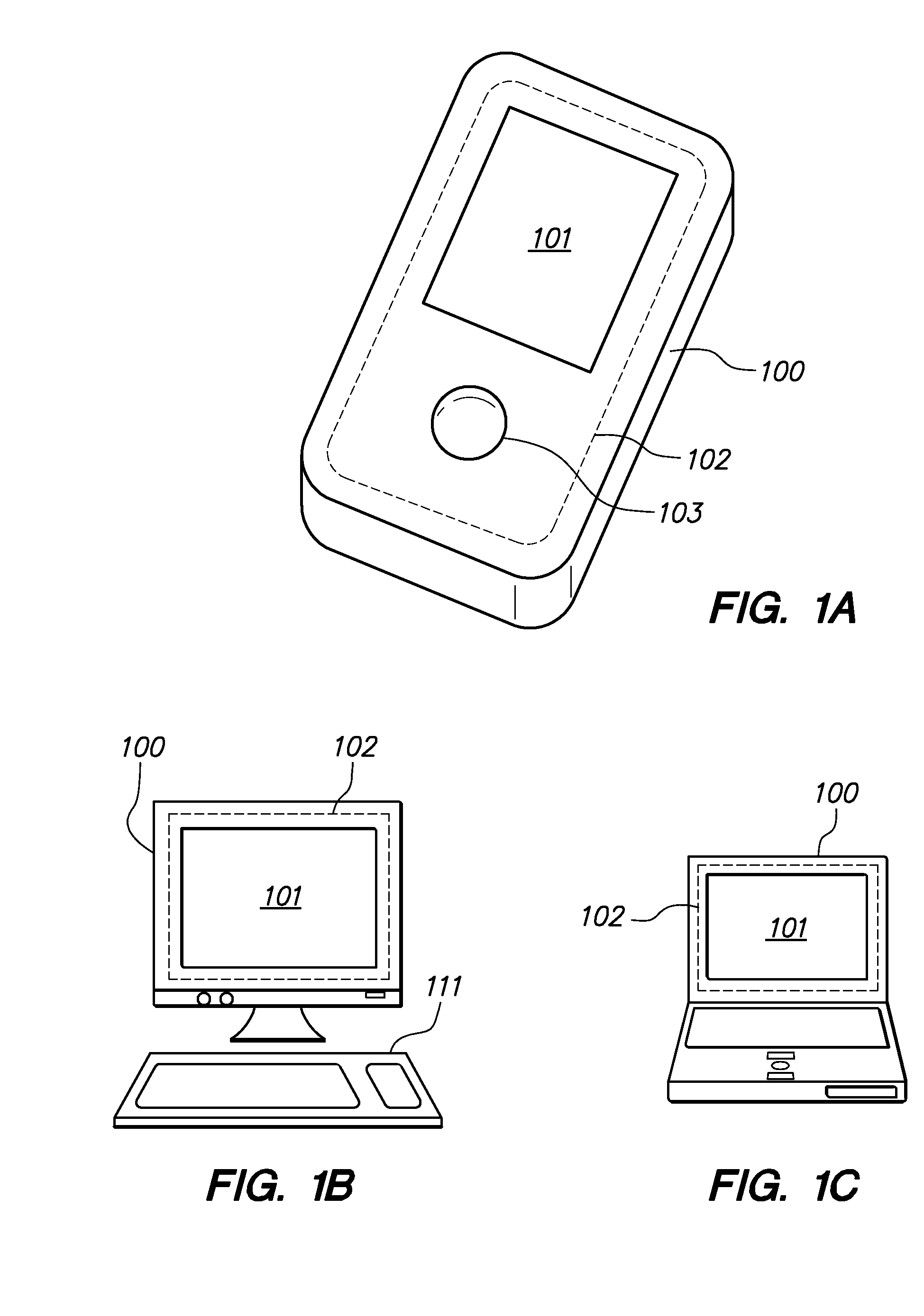

Electronic device having display and surrounding touch sensitive bezel for user interface and control

ActiveUS7656393B2Input/output for user-computer interactionMedical devicesDisplay deviceHuman–computer interaction

Owner:APPLE INC

Extended touch-sensitive control area for electronic device

InactiveUS20090278806A1Improve the display effectInput/output processes for data processingControl areaHuman–computer interaction

A touch-sensitive display screen is enhanced by a touch-sensitive control area that extends beyond the edges of the display screen. The touch-sensitive area outside the display screen, referred to as a “gesture area,” allows a user to activate commands using a gesture vocabulary. In one aspect, the present invention allows some commands to be activated by inputting a gesture within the gesture area. Other commands can be activated by directly manipulating on-screen objects. Yet other commands can be activated by beginning a gesture within the gesture area, and finishing it on the screen (or vice versa), and / or by performing input that involves contemporaneous contact with both the gesture area and the screen.

Owner:QUALCOMM INC

Multi-line, multi-reel gaming device

InactiveUS6227971B1Easy to understandIncreases entertainment valueCard gamesApparatus for meter-controlled dispensingDisplay deviceHuman–computer interaction

Owner:CASINO DATA SYST

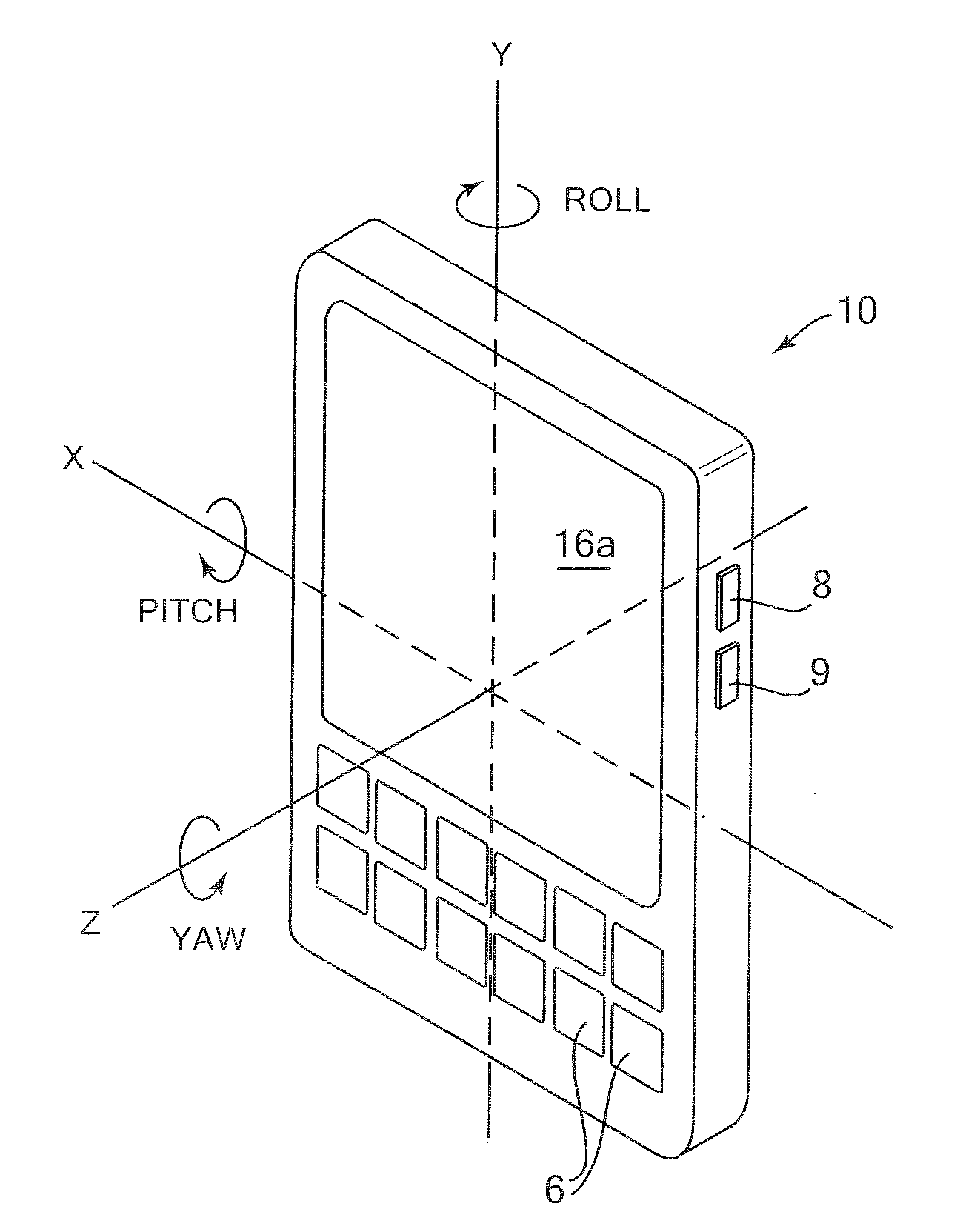

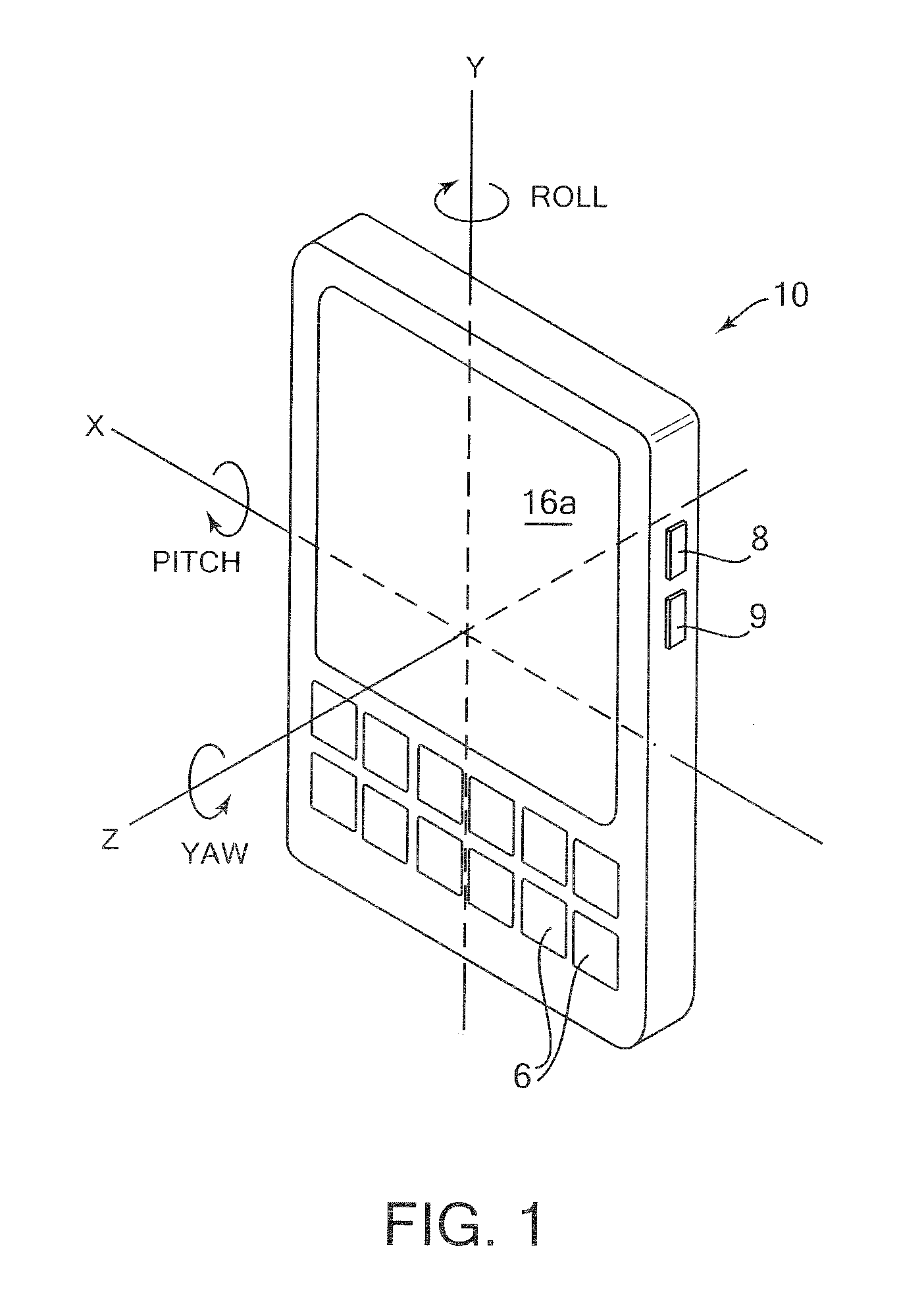

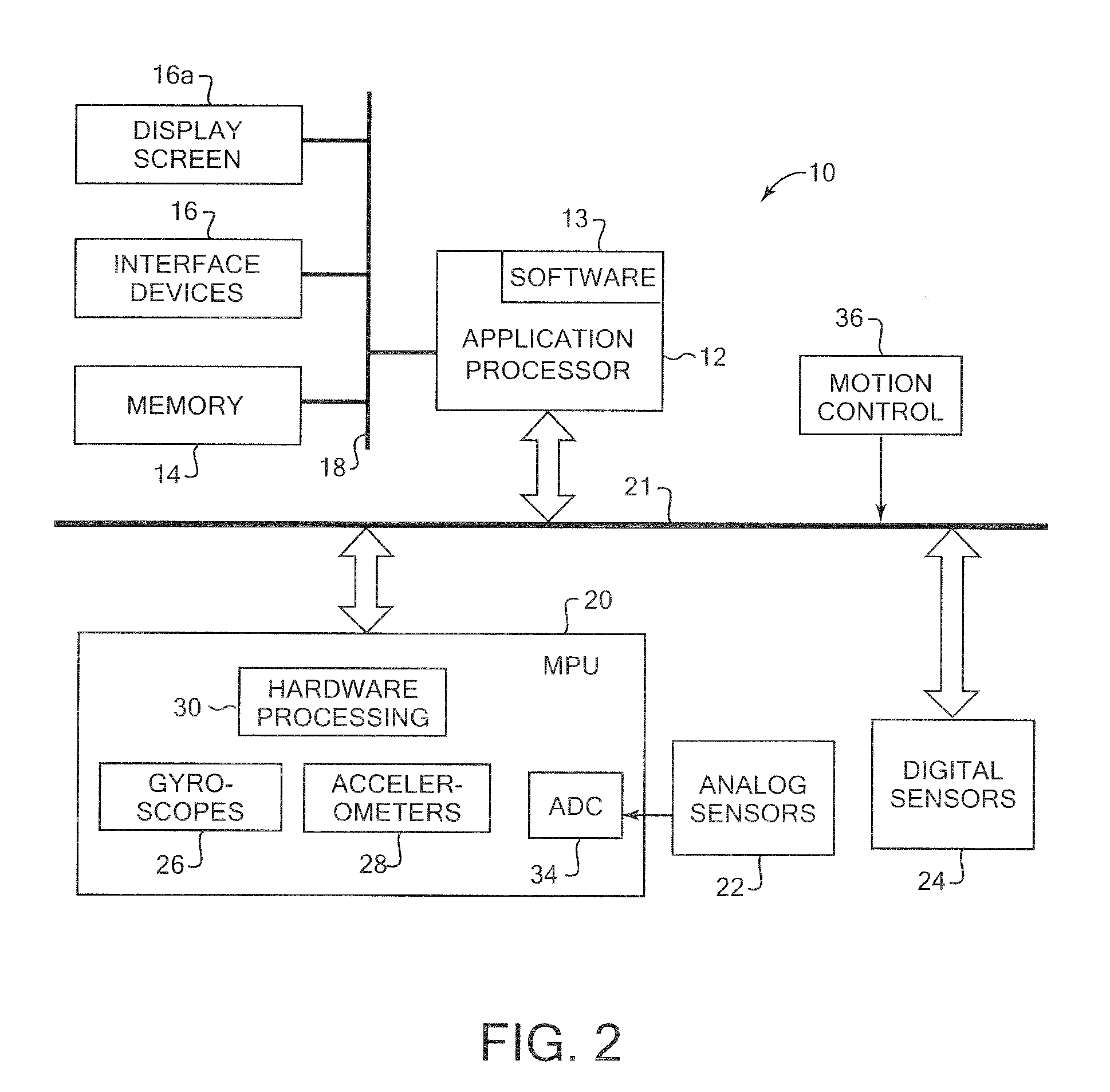

Controlling and accessing content using motion processing on mobile devices

ActiveUS20090303204A1Enhanced interactionAccurate motion dataInput/output for user-computer interactionDigital data processing detailsMotion processingAnimation

Various embodiments provide systems and methods capable of facilitating interaction with handheld electronics devices based on sensing rotational rate around at least three axes and linear acceleration along at least three axes. In one aspect, a handheld electronic device includes a subsystem providing display capability, a set of motion sensors sensing rotational rate around at least three axes and linear acceleration along at least three axes, and a subsystem which, based on motion data derived from at least one of the motion sensors, is capable of facilitating interaction with the device.

Owner:INVENSENSE

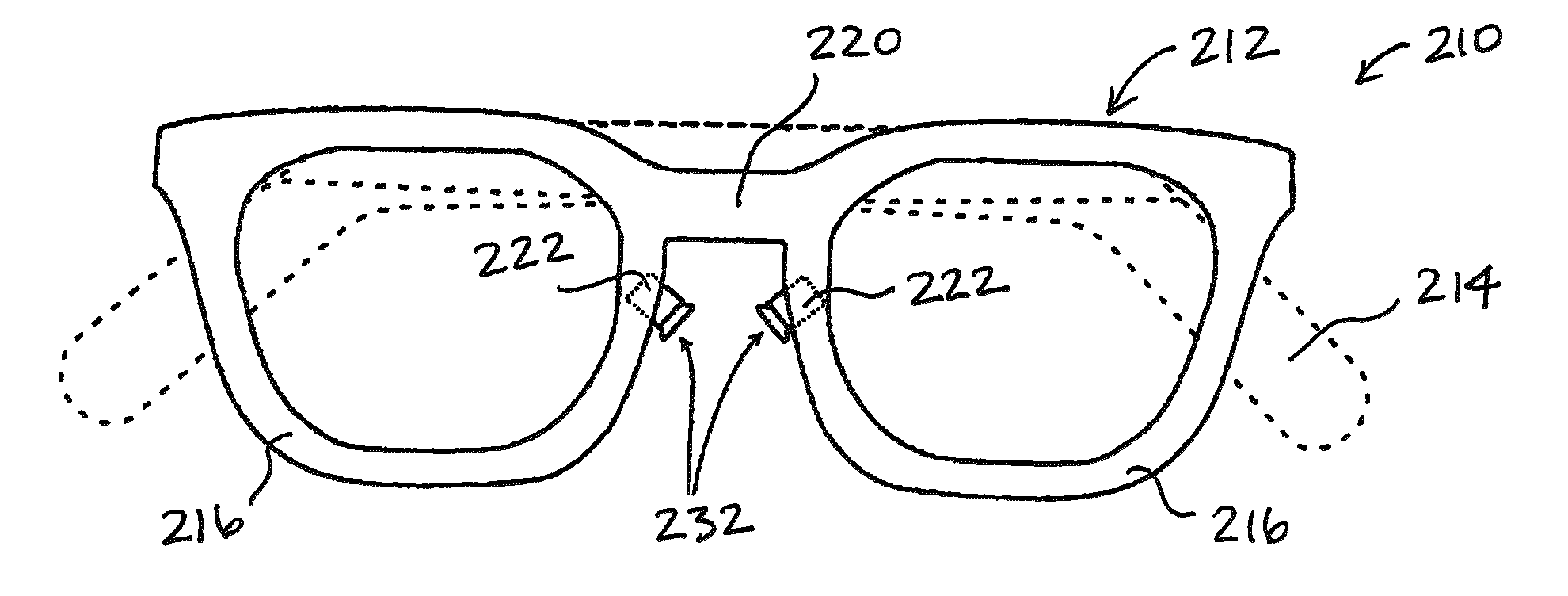

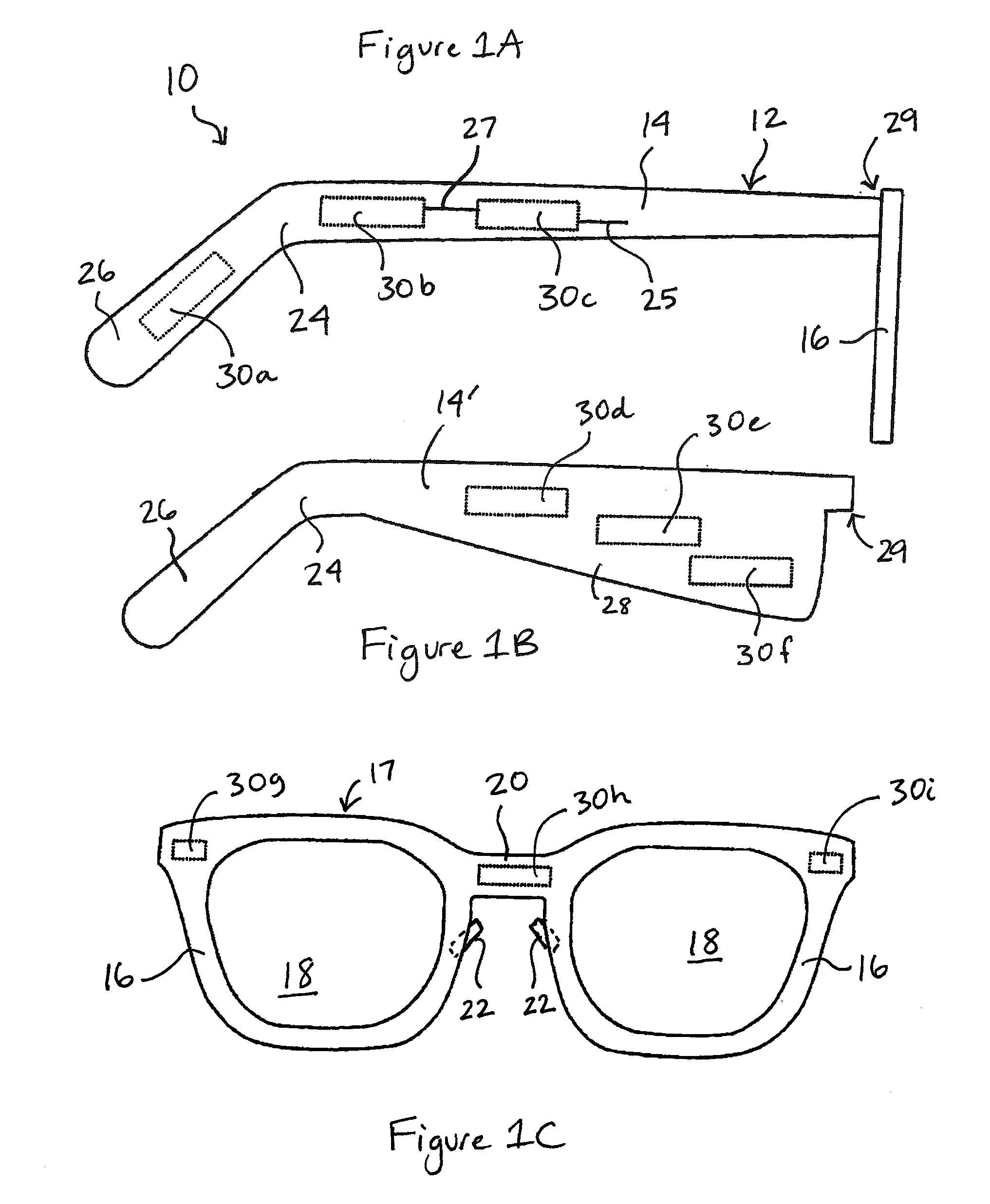

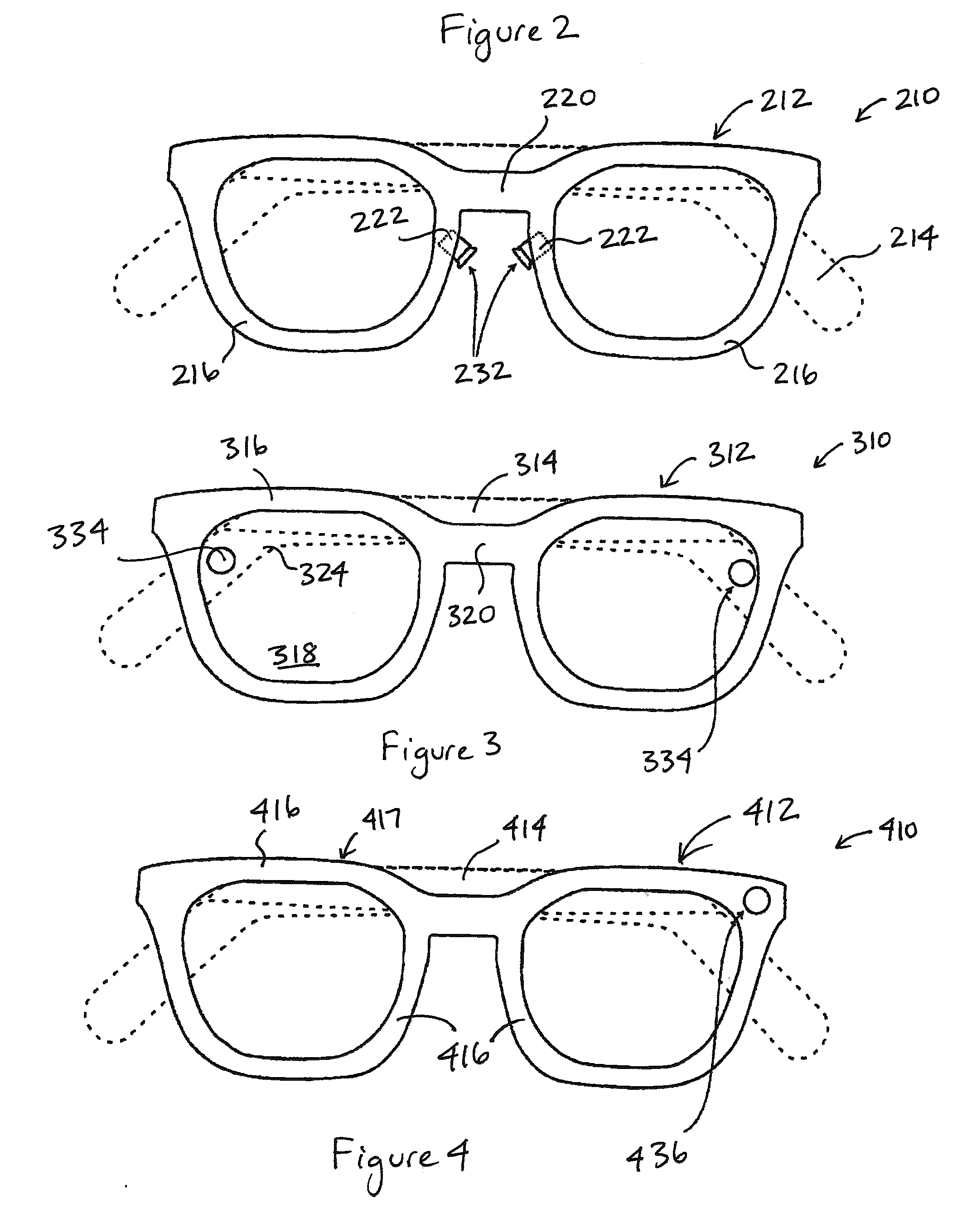

System and apparatus for eyeglass appliance platform

InactiveUS20100110368A1Input/output for user-computer interactionNon-optical adjunctsOutput deviceActuator

The present invention relates to a personal multimedia electronic device, and more particularly to a head-worn device such as an eyeglass frame having a plurality of interactive electrical / optical components. In one embodiment, a personal multimedia electronic device includes an eyeglass frame having a side arm and an optic frame; an output device for delivering an output to the wearer; an input device for obtaining an input; and a processor comprising a set of programming instructions for controlling the input device and the output device. The output device is supported by the eyeglass frame and is selected from the group consisting of a speaker, a bone conduction transmitter, an image projector, and a tactile actuator. The input device is supported by the eyeglass frame and is selected from the group consisting of an audio sensor, a tactile sensor, a bone conduction sensor, an image sensor, a body sensor, an environmental sensor, a global positioning system receiver, and an eye tracker. In one embodiment, the processor applies a user interface logic that determines a state of the eyeglass device and determines the output in response to the input and the state.

Owner:CHAUM DAVID

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com