Patents

Literature

7346 results about "Human–machine interface" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Human–machine interface is the part of the machine that handles the human–machine interaction. Membrane switches, rubber keypads and touchscreens are examples of that part of the Human Machine Interface which we can see and touch.

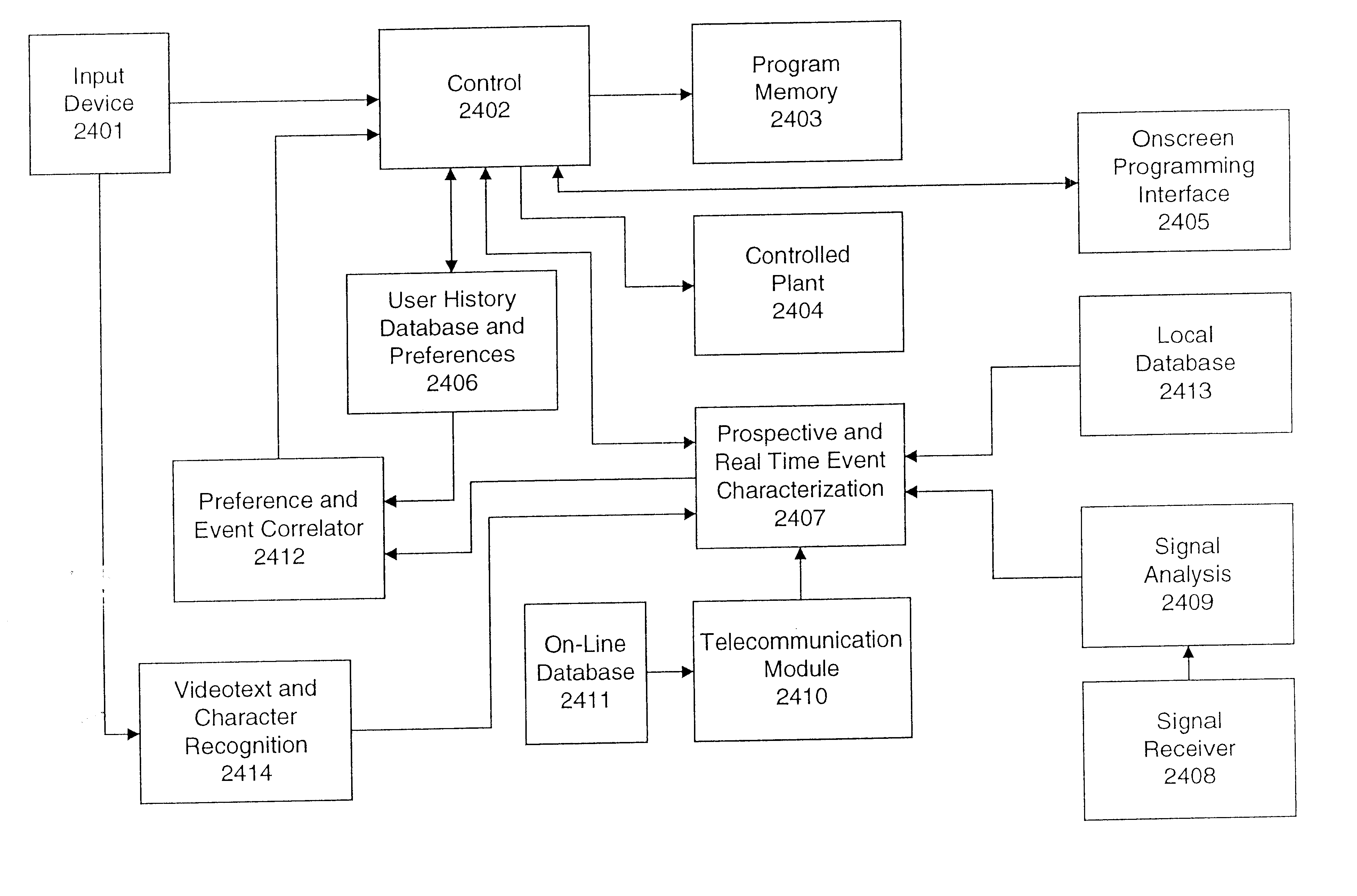

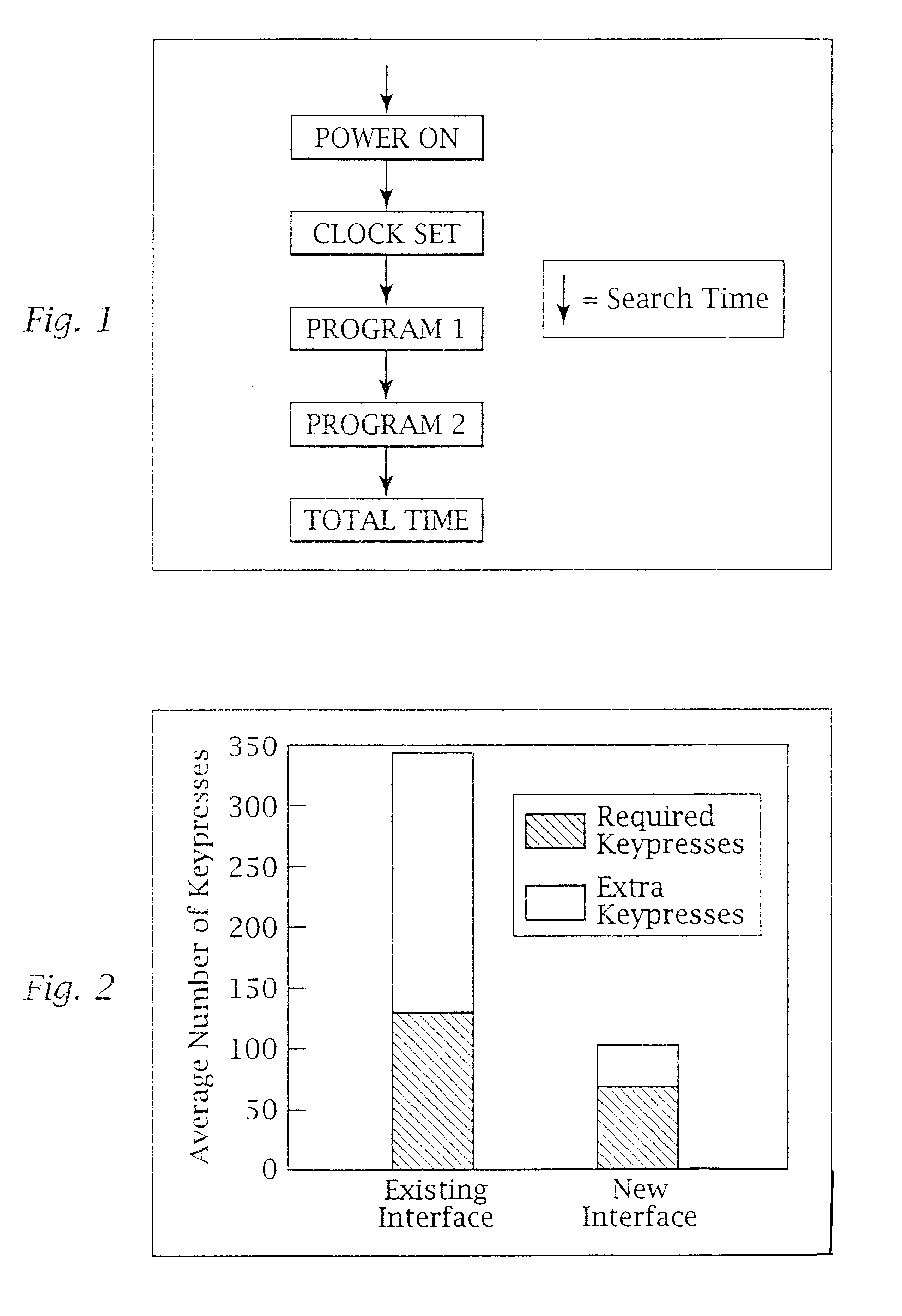

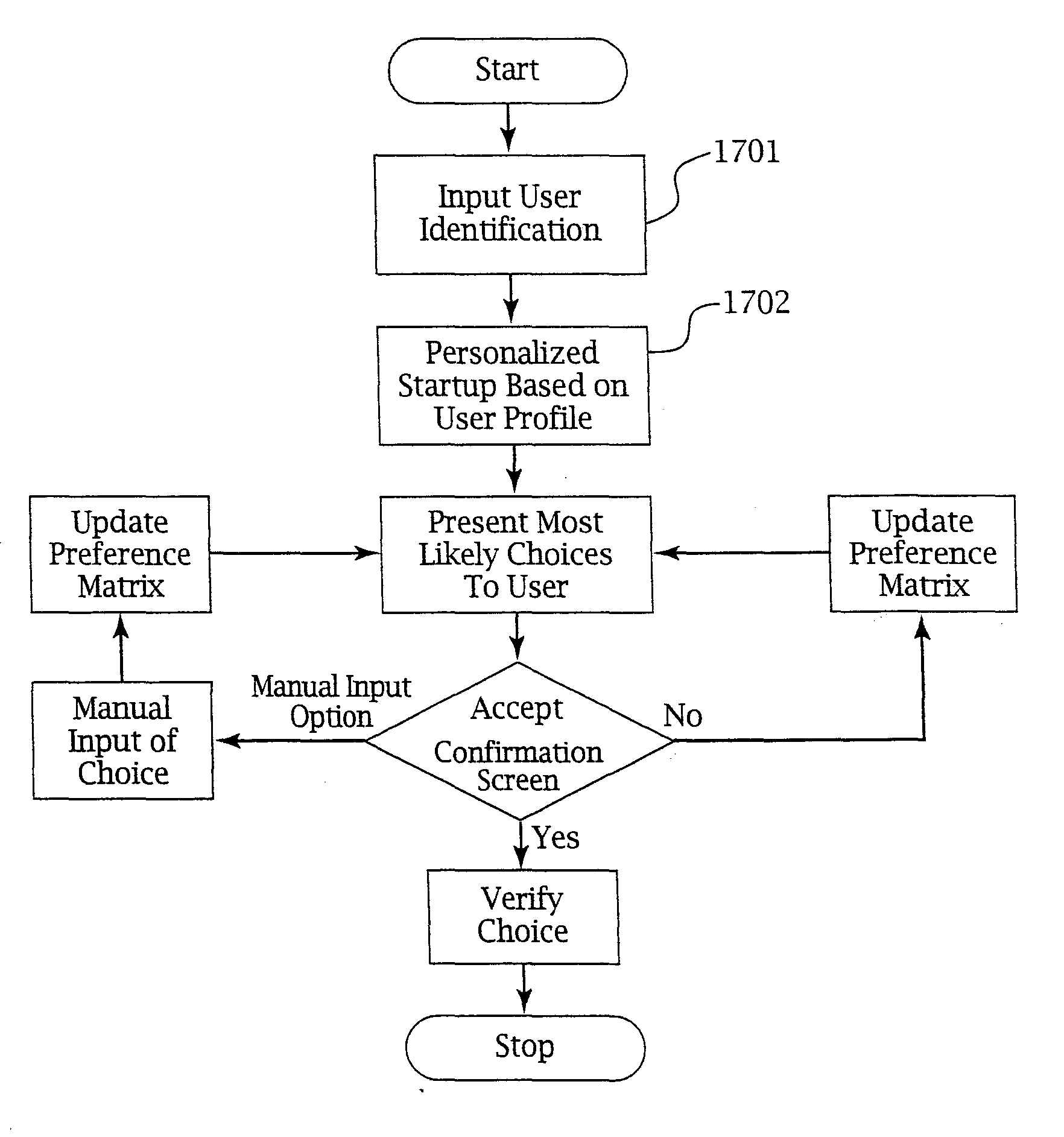

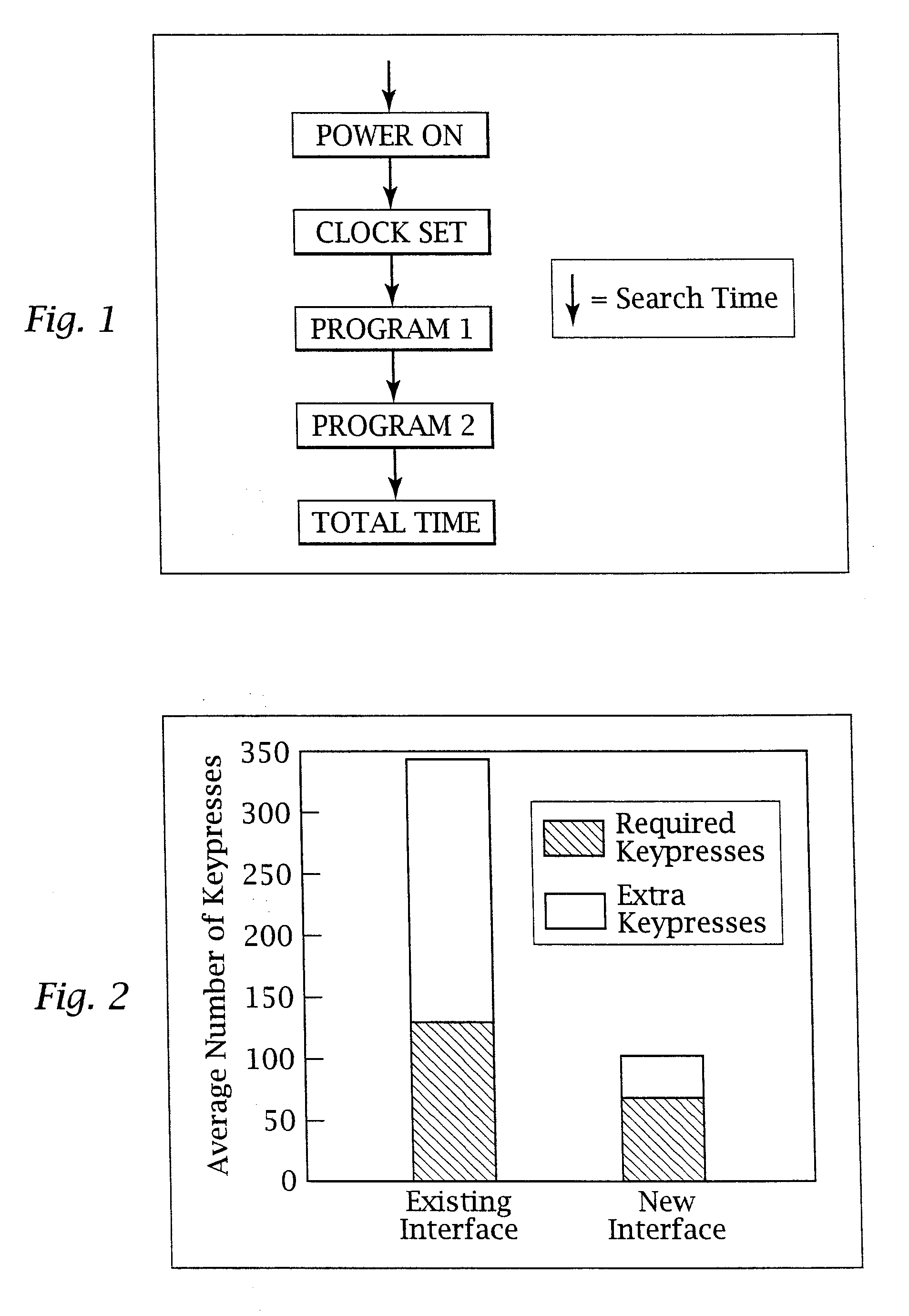

Ergonomic man-machine interface incorporating adaptive pattern recognition based control system

InactiveUS6081750ADecrease productivityImprove the environmentComputer controlSimulator controlHuman–machine interfaceData stream

An adaptive interface for a programmable system, for predicting a desired user function, based on user history, as well as machine internal status and context. The apparatus receives an input from the user and other data. A predicted input is presented for confirmation by the user, and the predictive mechanism is updated based on this feedback. Also provided is a pattern recognition system for a multimedia device, wherein a user input is matched to a video stream on a conceptual basis, allowing inexact programming of a multimedia device. The system analyzes a data stream for correspondence with a data pattern for processing and storage. The data stream is subjected to adaptive pattern recognition to extract features of interest to provide a highly compressed representation which may be efficiently processed to determine correspondence. Applications of the interface and system include a VCR, medical device, vehicle control system, audio device, environmental control system, securities trading terminal, and smart house. The system optionally includes an actuator for effecting the environment of operation, allowing closed-loop feedback operation and automated learning.

Owner:BLANDING HOVENWEEP

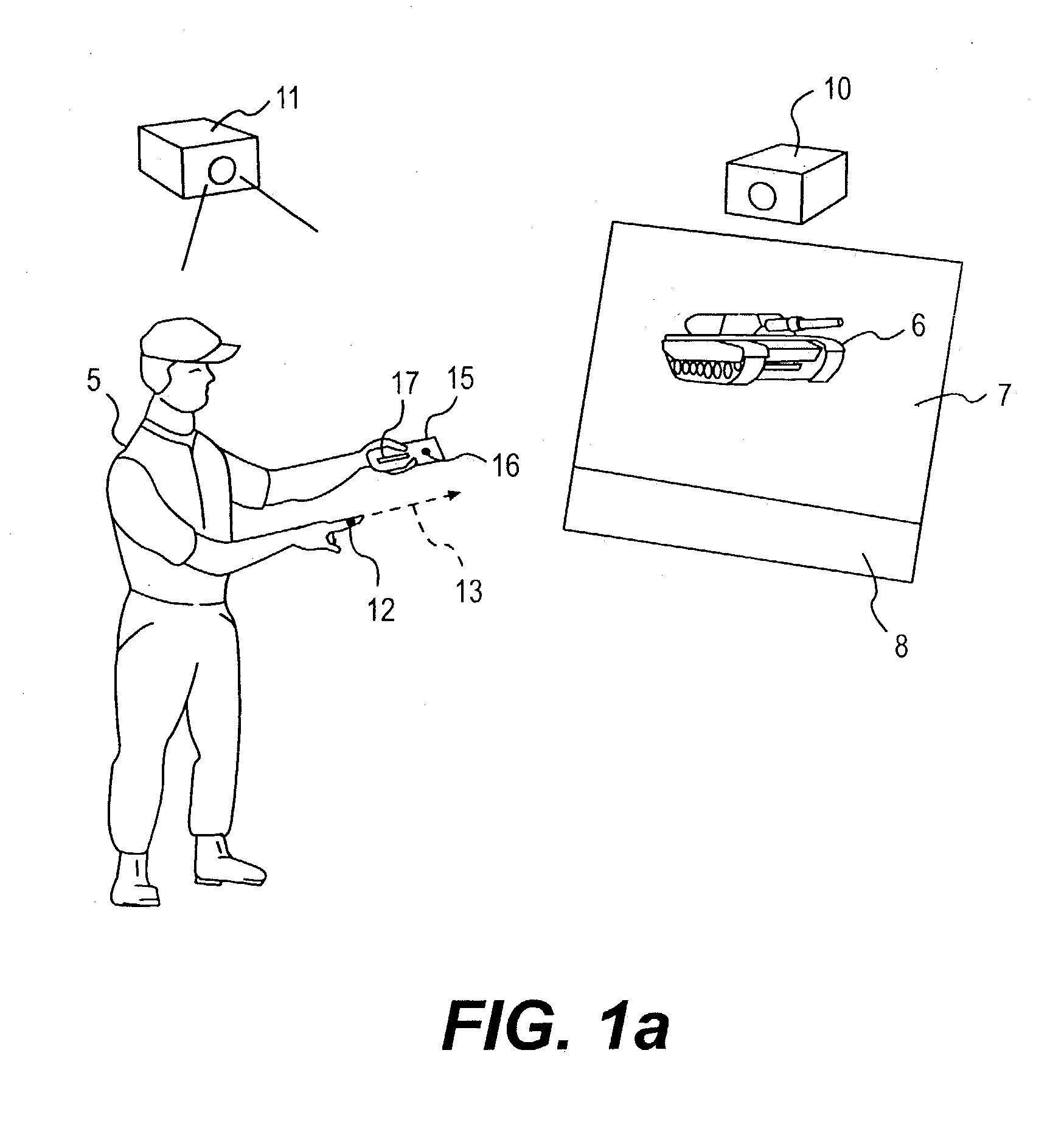

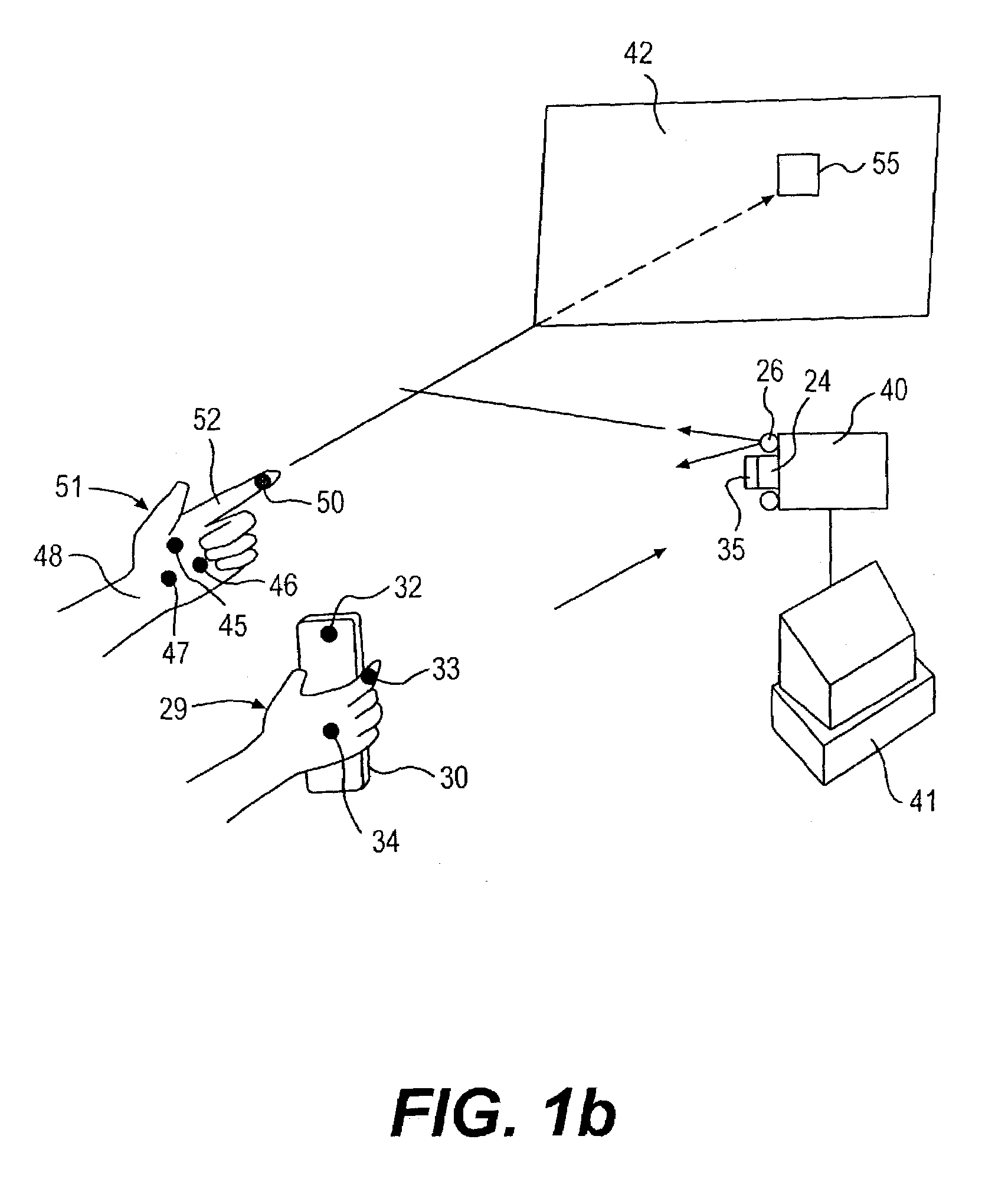

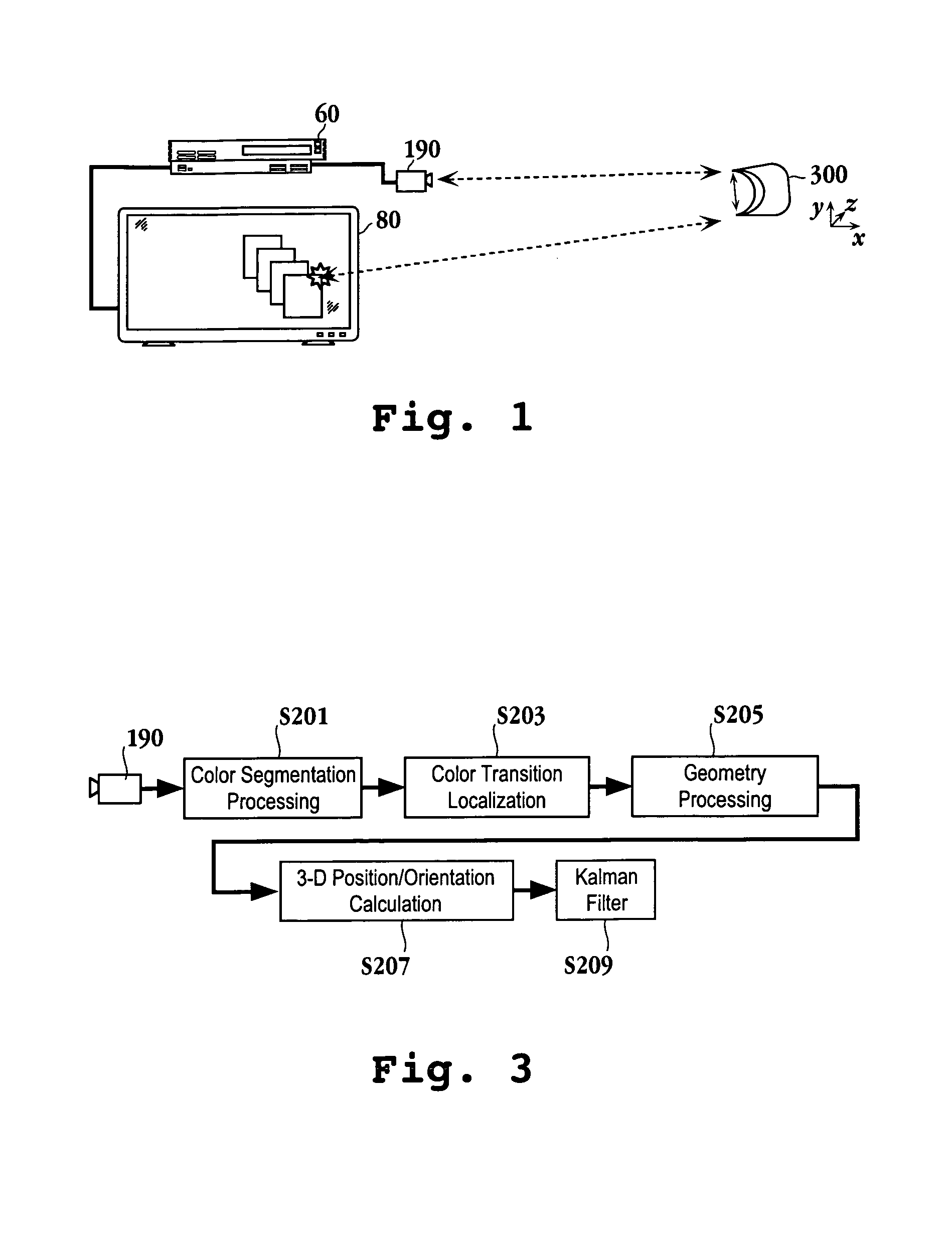

Man machine interfaces and applications

InactiveUS7042440B2Avoid carpal tunnel syndromeImprove efficiencyInput/output for user-computer interactionElectrophonic musical instrumentsComputer Aided DesignHuman–machine interface

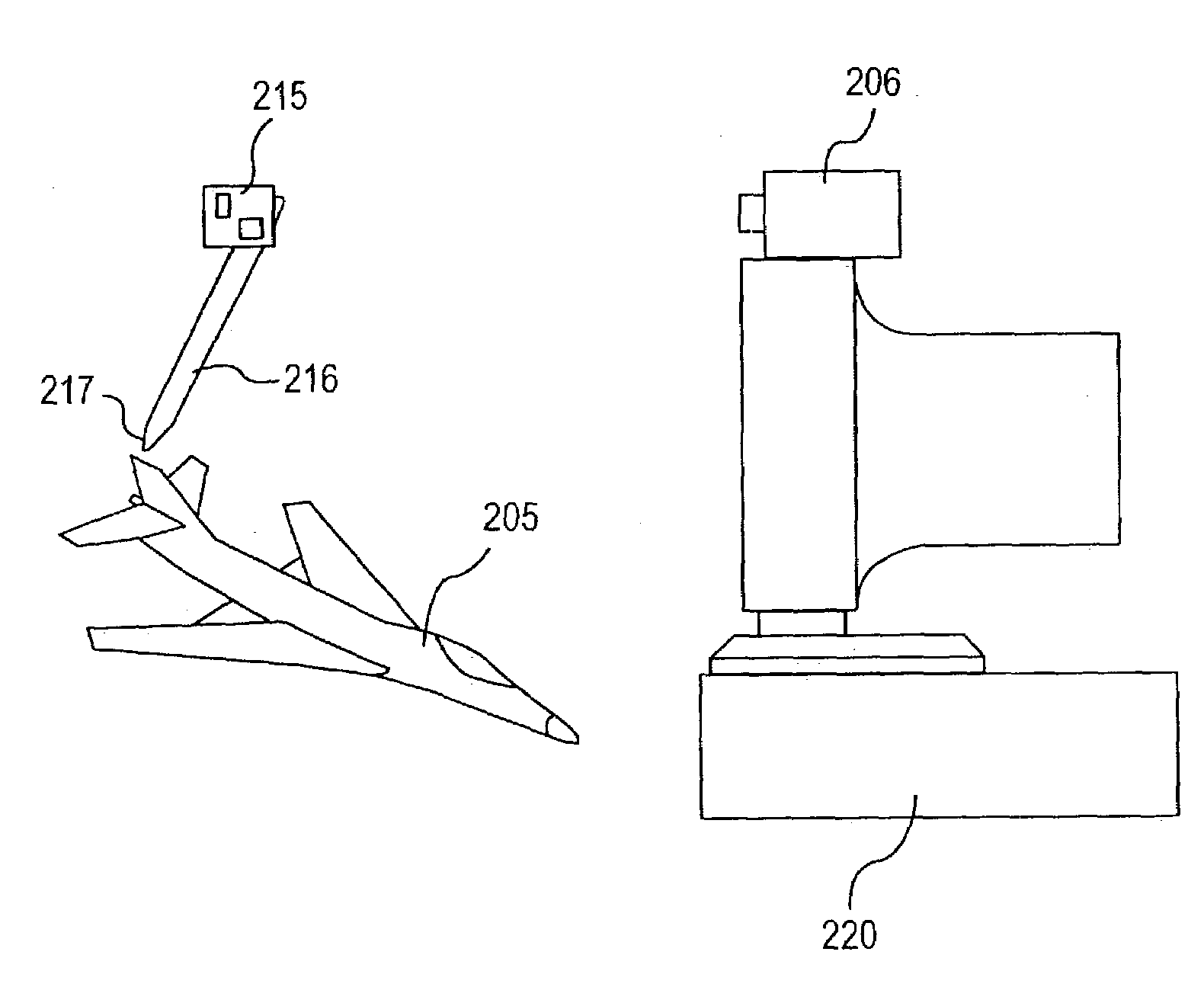

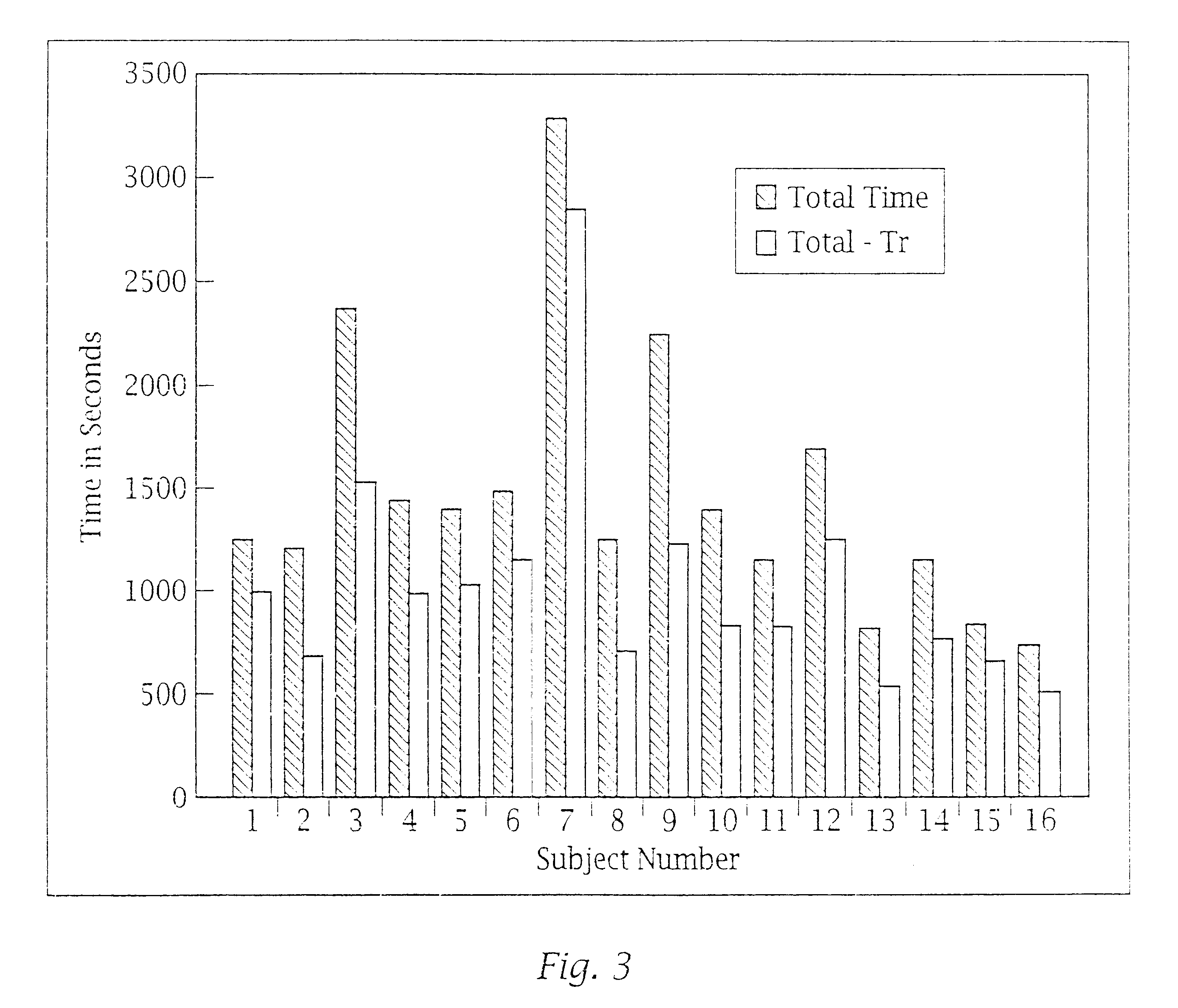

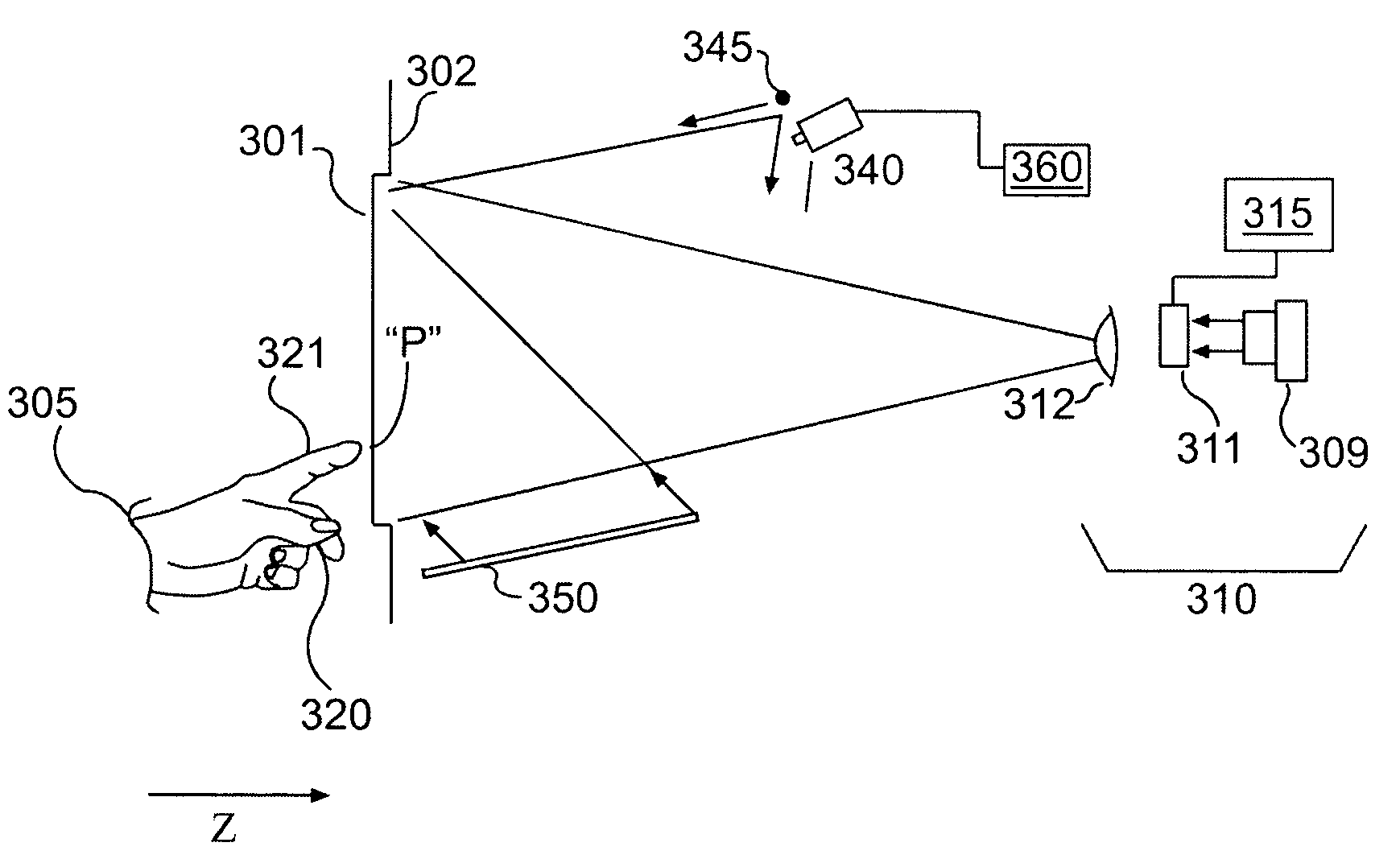

Affordable methods and apparatus are disclosed for inputting position, attitude (orientation) or other object characteristic data to computers for the purpose of Computer Aided Design, Painting, Medicine, Teaching, Gaming, Toys, Simulations, Aids to the disabled, and internet or other experiences. Preferred embodiments of the invention utilize electro-optical sensors, and particularly TV Cameras, providing optically inputted data from specialized datum's on objects and / or natural features of objects. Objects can be both static and in motion, from which individual datum positions and movements can be derived, also with respect to other objects both fixed and moving. Real-time photogrammetry is preferably used to determine relationships of portions of one or more datums with respect to a plurality of cameras or a single camera processed by a conventional PC.

Owner:PRYOR TIMOTHY R +1

Ergonomic man-machine interface incorporating adaptive pattern recognition based control system

InactiveUS6418424B1Minimal costAvoid the needTelevision system detailsDigital data processing detailsHuman–machine interfaceData stream

An adaptive interface for a programmable system, for predicting a desired user function, based on user history, as well as machine internal status and context. The apparatus receives an input from the user and other data. A predicted input is presented for confirmation by the user, and the predictive mechanism is updated based on this feedback. Also provided is a pattern recognition system for a multimedia device, wherein a user input is matched to a video stream on a conceptual basis, allowing inexact programming of a multimedia device. The system analyzes a data stream for correspondence with a data pattern for processing and storage. The data stream is subjected to adaptive pattern recognition to extract features of interest to provide a highly compressed representation which may be efficiently processed to determine correspondence. Applications of the interface and system include a VCR, medical device, vehicle control system, audio device, environmental control system, securities trading terminal, and smart house. The system optionally includes an actuator for effecting the environment of operation, allowing closed-loop feedback operation and automated learning.

Owner:BLANDING HOVENWEEP

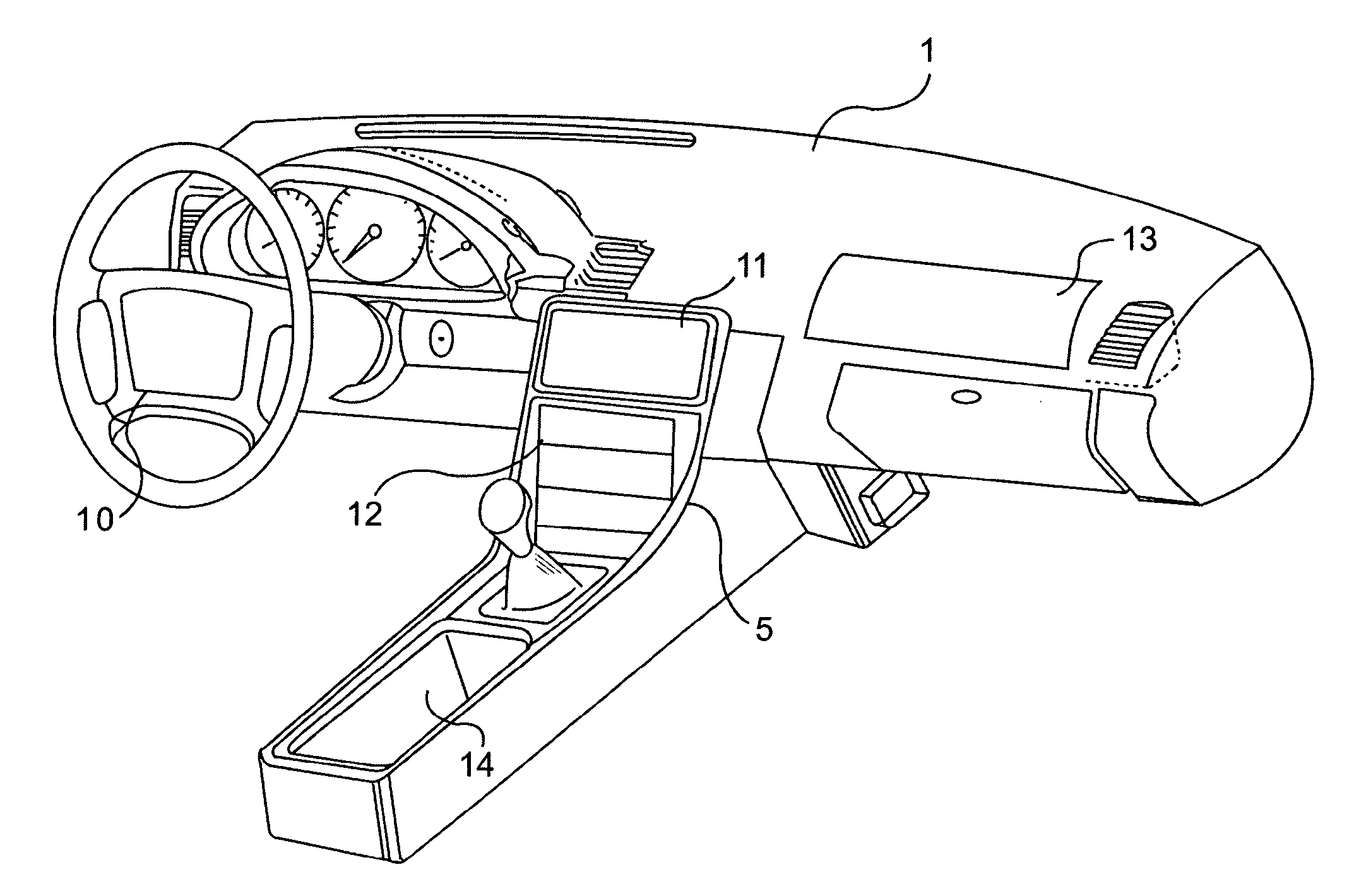

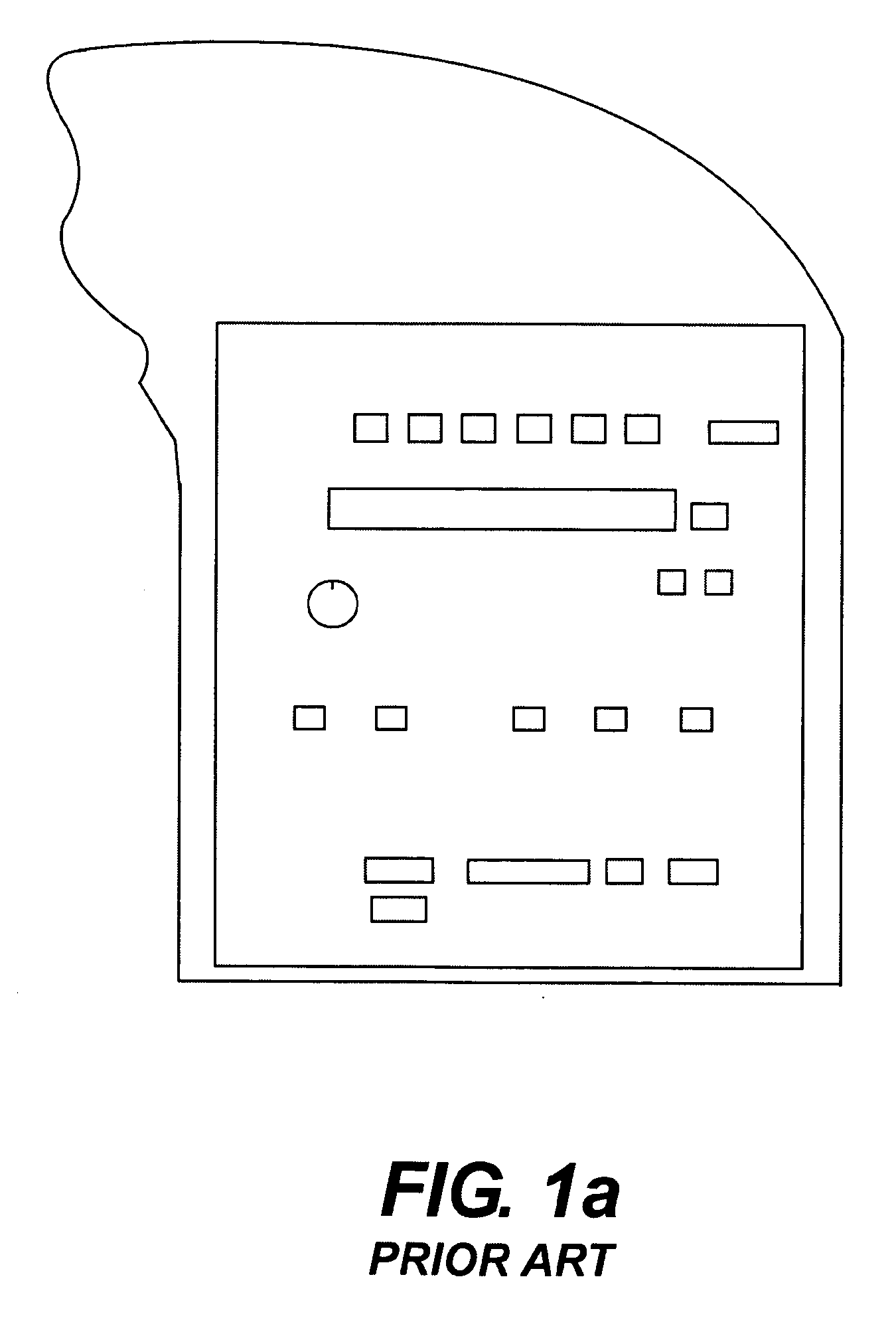

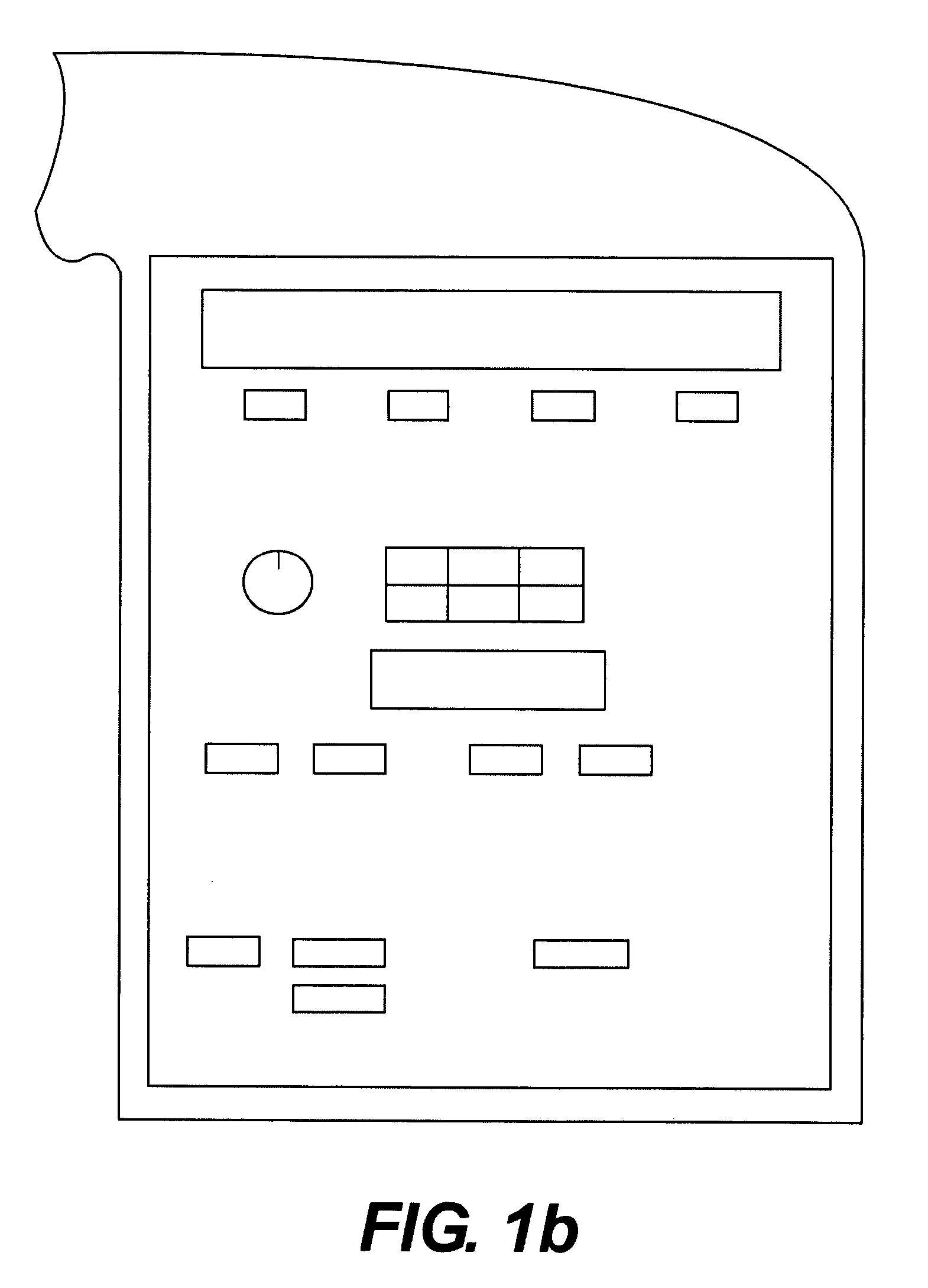

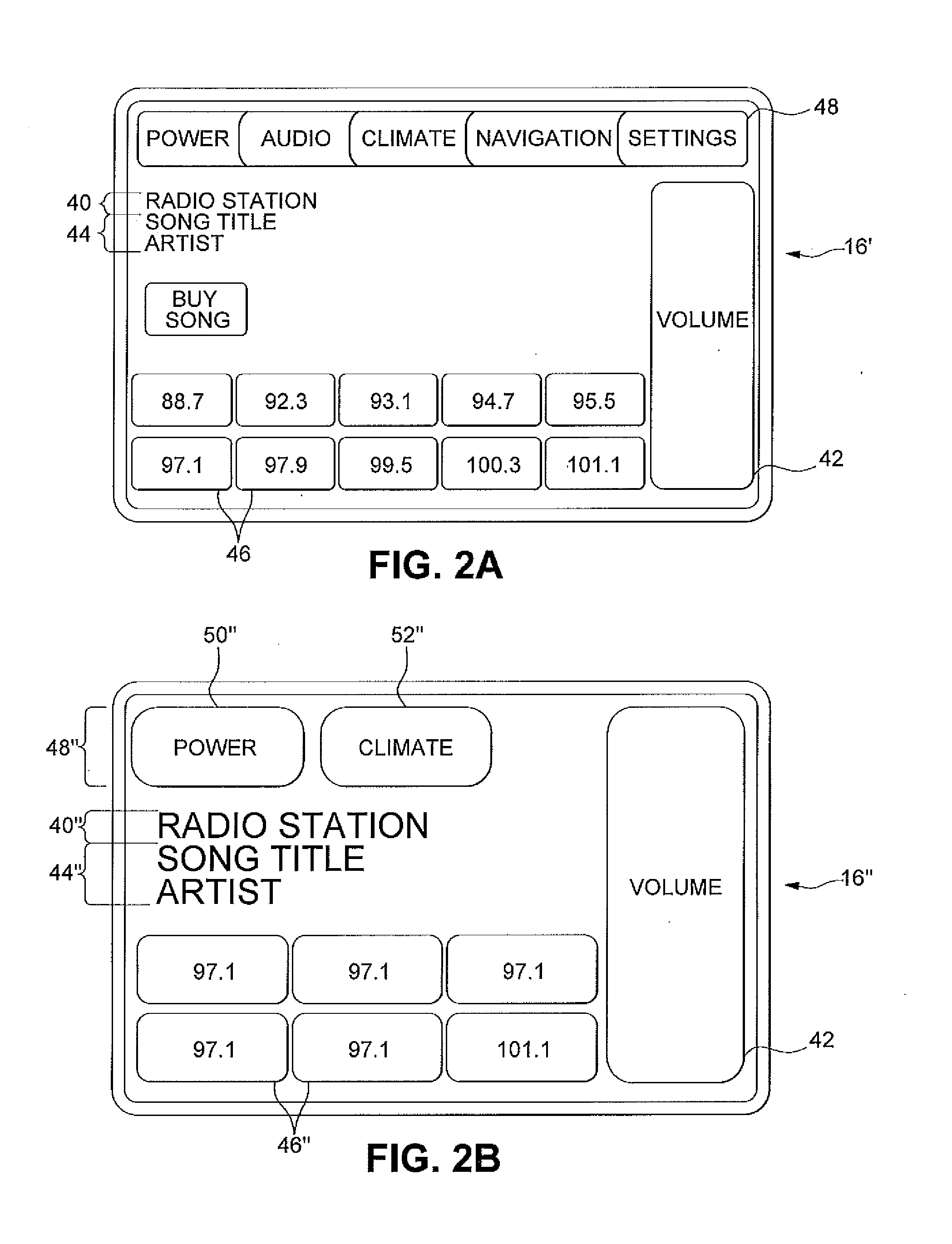

Programmable tactile touch screen displays and man-machine interfaces for improved vehicle instrumentation and telematics

InactiveUS7084859B1Known typeEasy to addCathode-ray tube indicatorsNavigation instrumentsDashboardHuman–machine interface

Disclosed are new methods and apparatus particularly suited for applications in a vehicle, to provide a wide range of information, and the safe input of data to a computer controlling the vehicle subsystems or “Telematic” communication using for example GM's “ONSTAR” or cellular based data sources. Preferred embodiments utilize new programmable forms of tactile touch screens and displays employing tactile physical selection or adjustment means which utilize direct optical data input. A revolutionary form of dashboard or instrument panel results which is stylistically attractive, lower in cost, customizable by the user, programmable in both the tactile and visual sense, and with the potential of enhancing interior safety and vehicle operation. Non-automotive applications of the invention are also disclosed, for example means for general computer input using touch screens and home automation systems.

Owner:APPLE INC

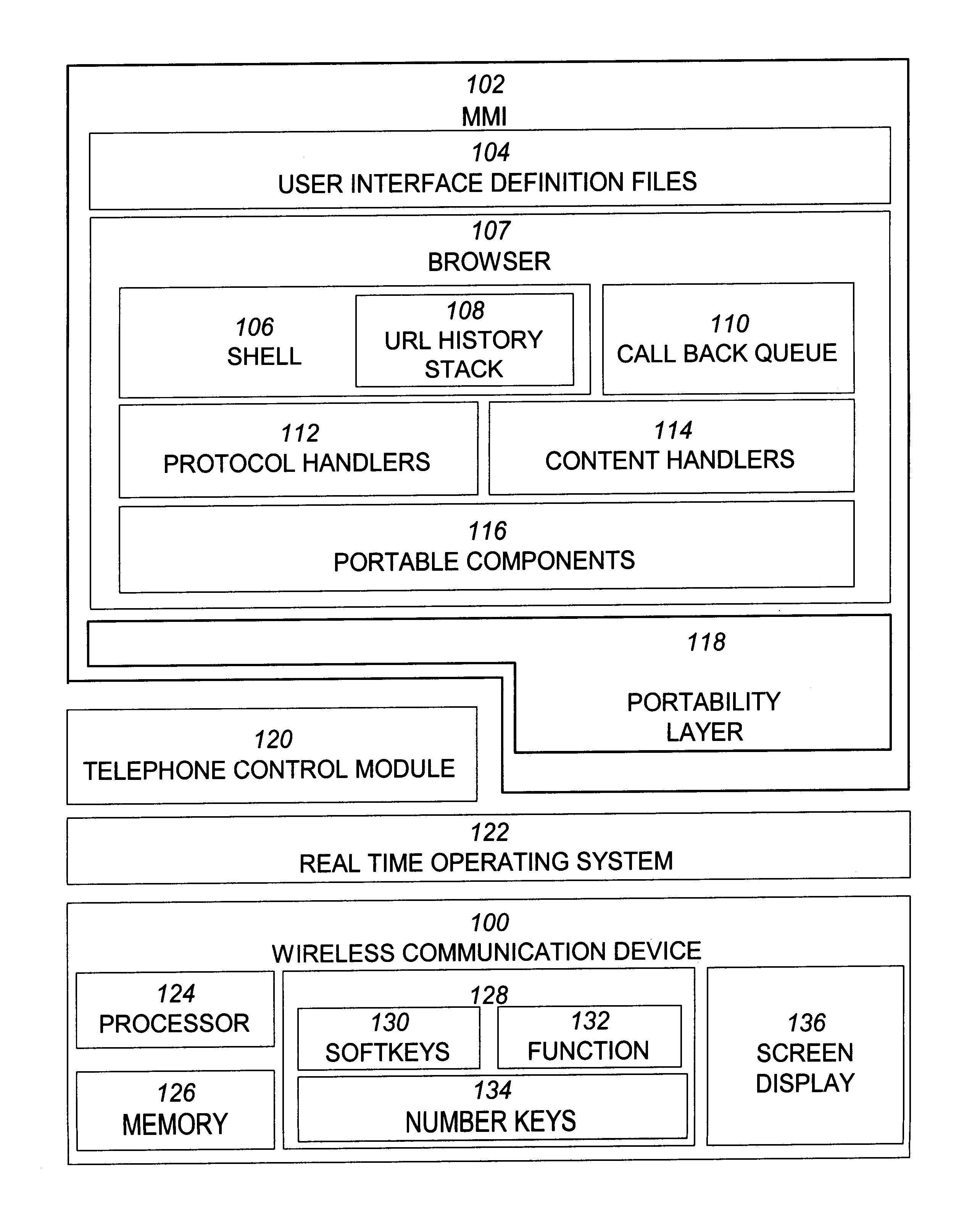

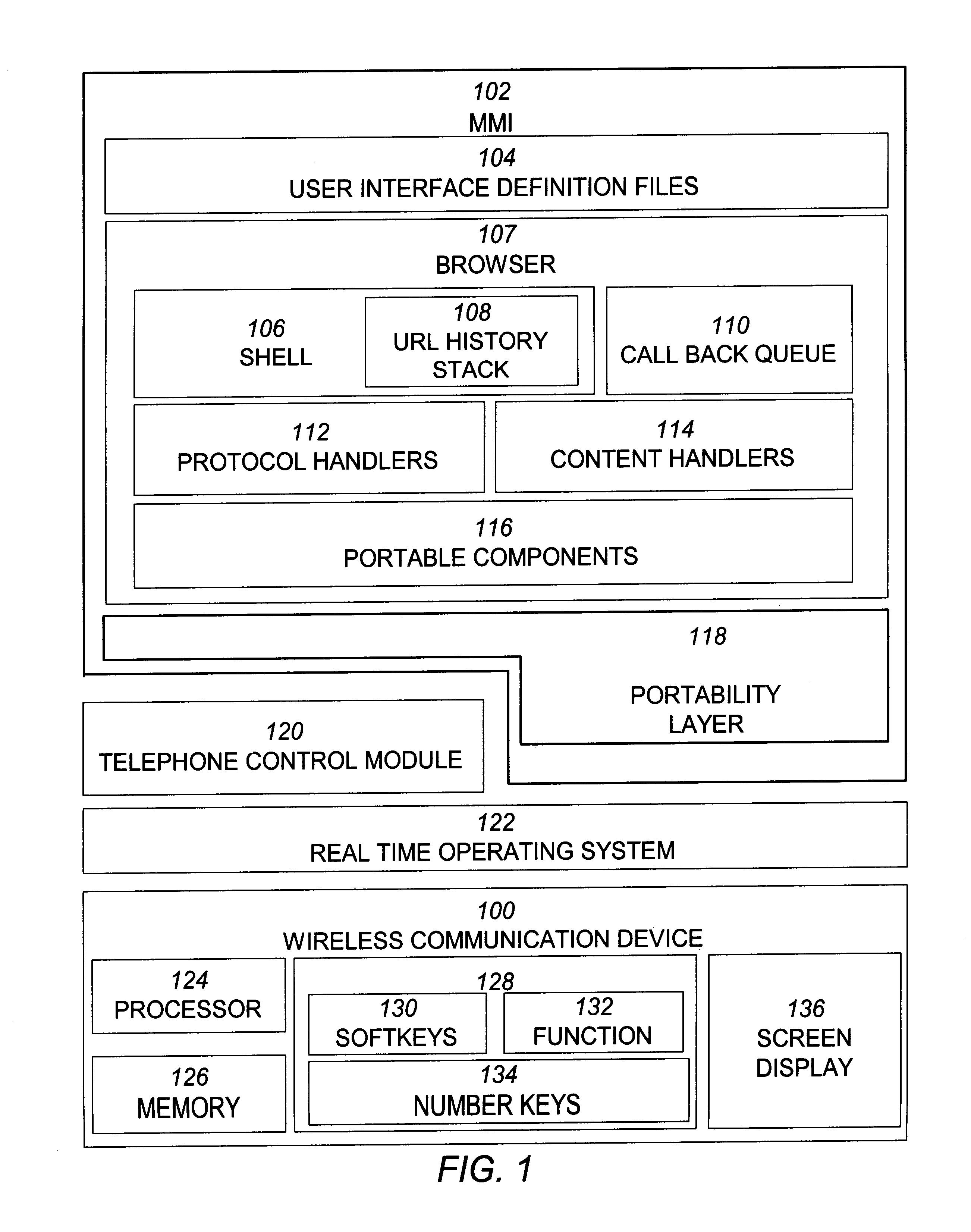

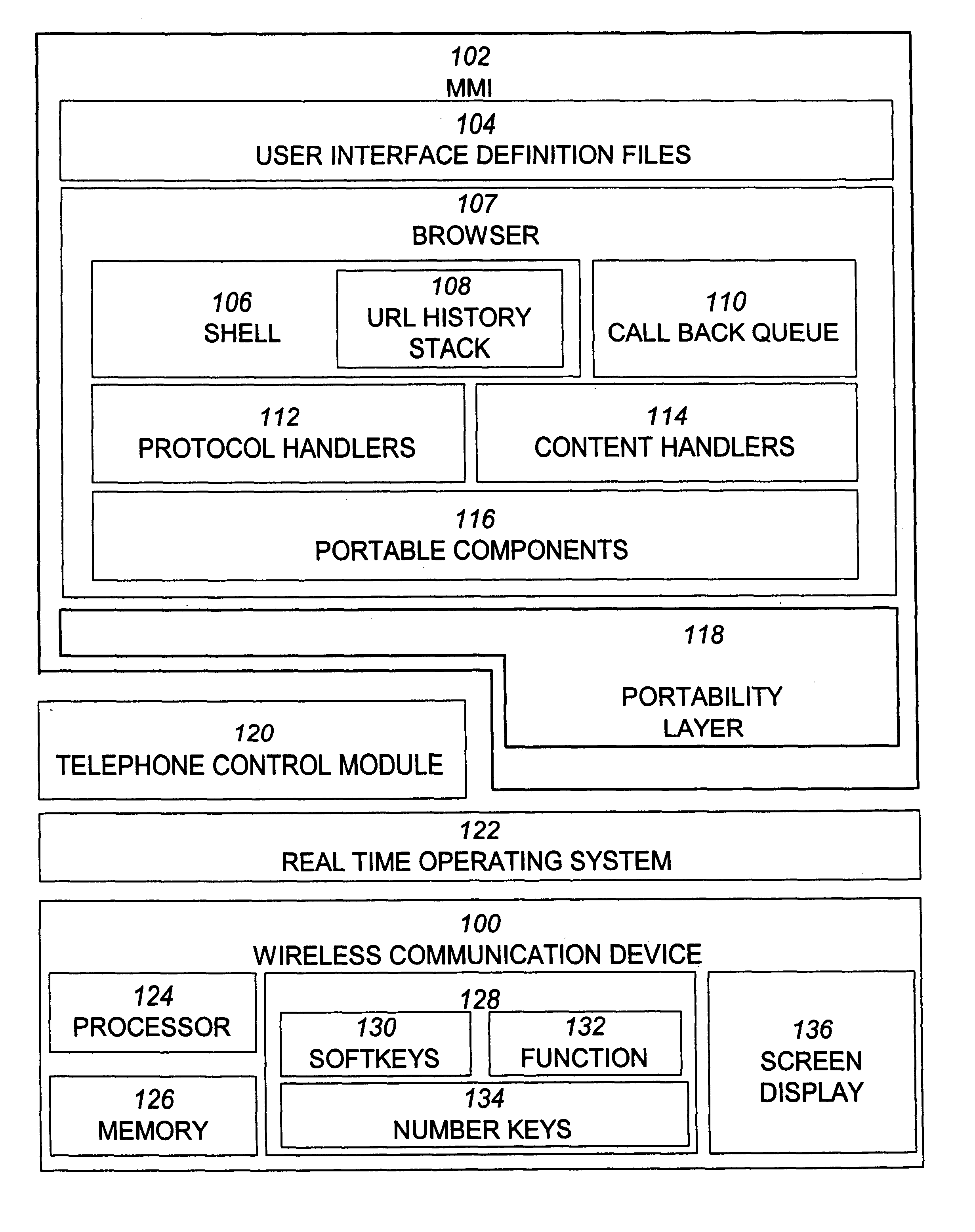

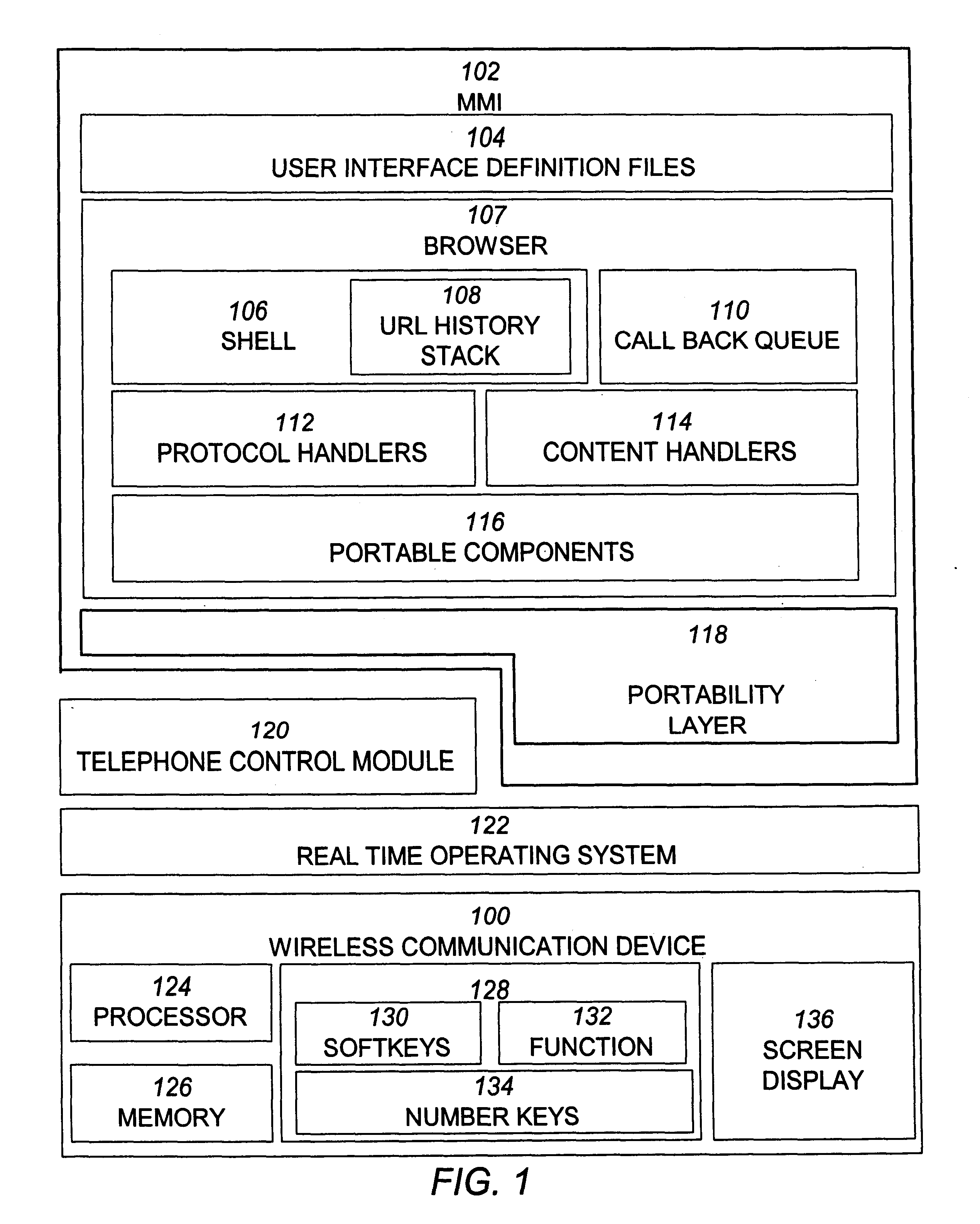

Wireless communication device with markup language based man-machine interface

A system, method, and software product provide a wireless communications device with a markup language based man-machine interface. The man-machine interface provides a user interface for the various telecommunications functionality of the wireless communication device, including dialing telephone numbers, answering telephone calls, creating messages, sending messages, receiving messages, establishing configuration settings, which is defined in markup language, such as HTML, and accessed through a browser program executed by the wireless communication device. This feature enables direct access to Internet and World Wide Web content, such as Web pages, to be directly integrated with telecommunication functions of the device, and allows Web content to be seamlessly integrated with other types of data, since all data presented to the user via the user interface is presented via markup language-based pages. The browser processes an extended form of HTML that provides new tags and attributes that enhance the navigational, logical, and display capabilities of conventional HTML, and particularly adapt HTML to be displayed and used on wireless communication devices with small screen displays. The wireless communication device includes the browser, a set of portable components, and portability layer. The browser includes protocol handlers, which implement different protocols for accessing various functions of the wireless communication device, and content handlers, which implement various content display mechanisms for fetching and outputting content on a screen display.

Owner:ACCESS

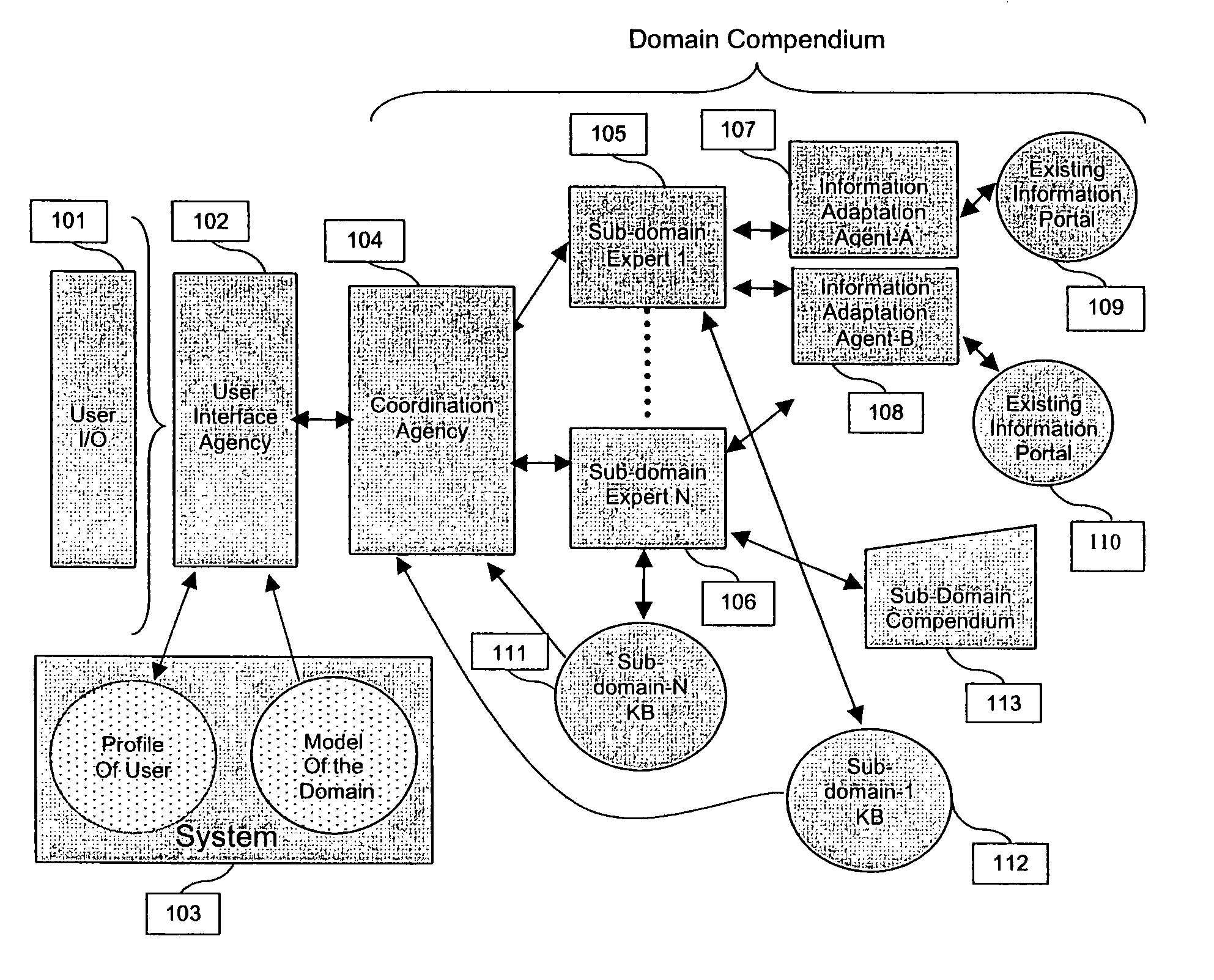

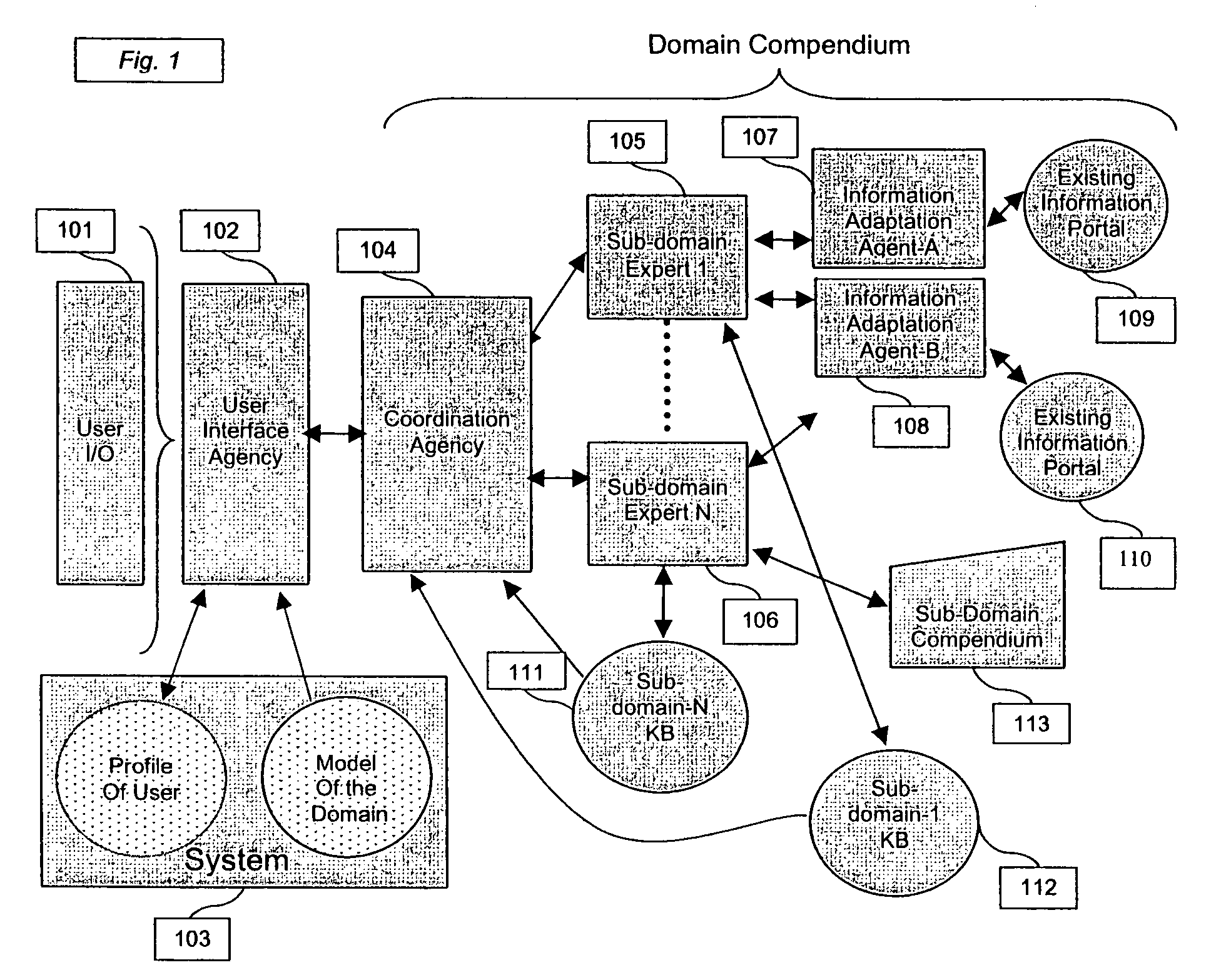

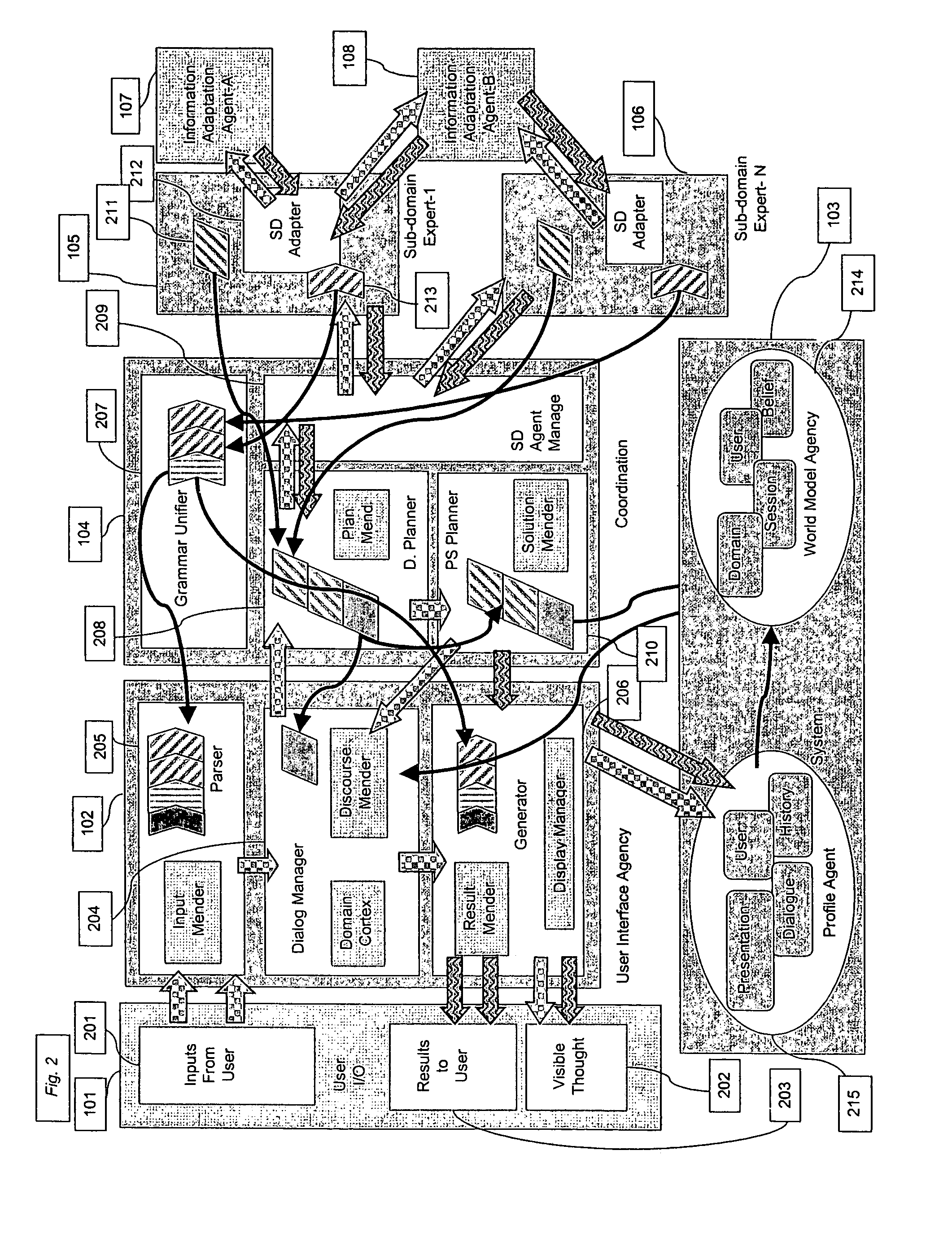

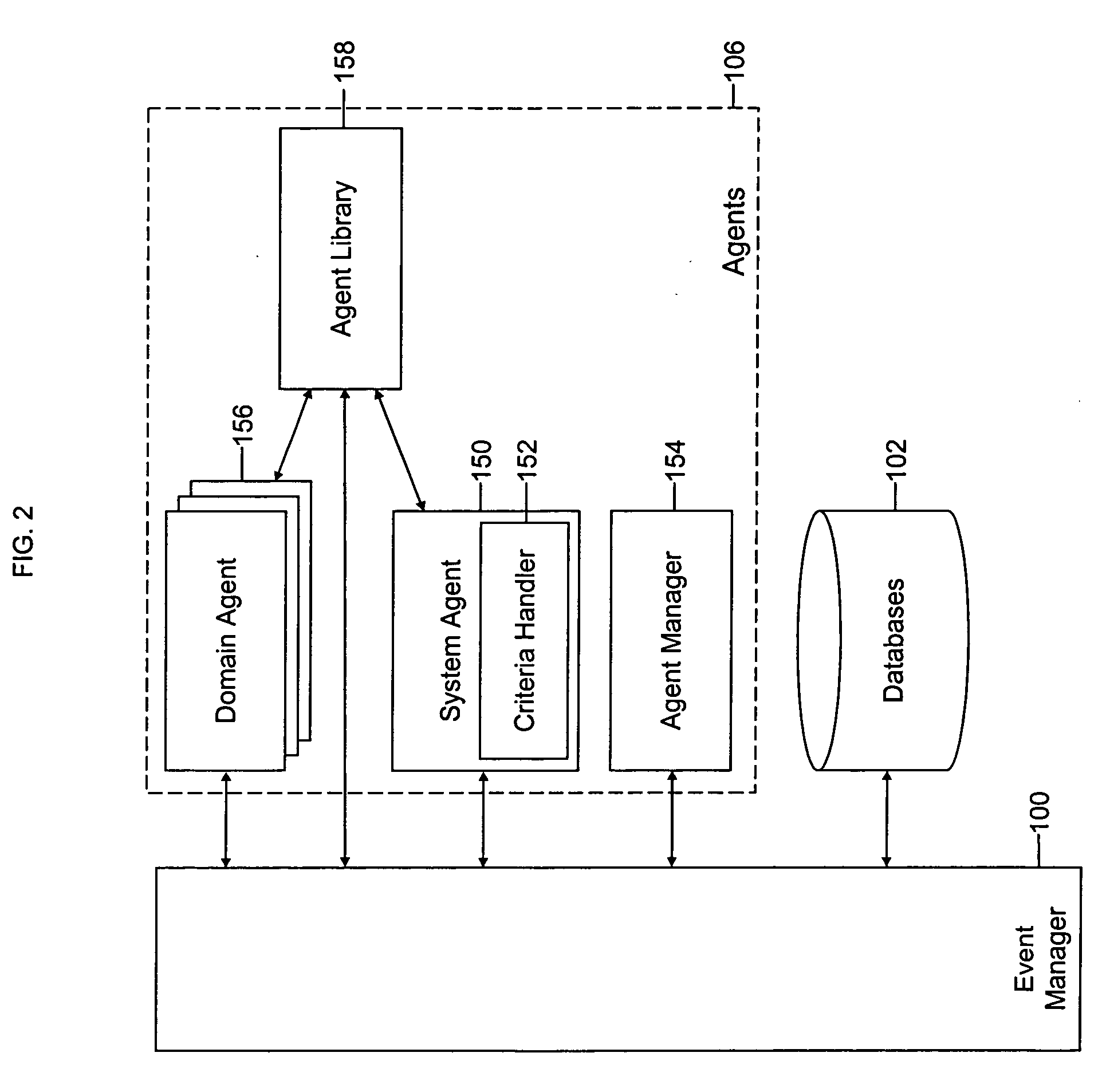

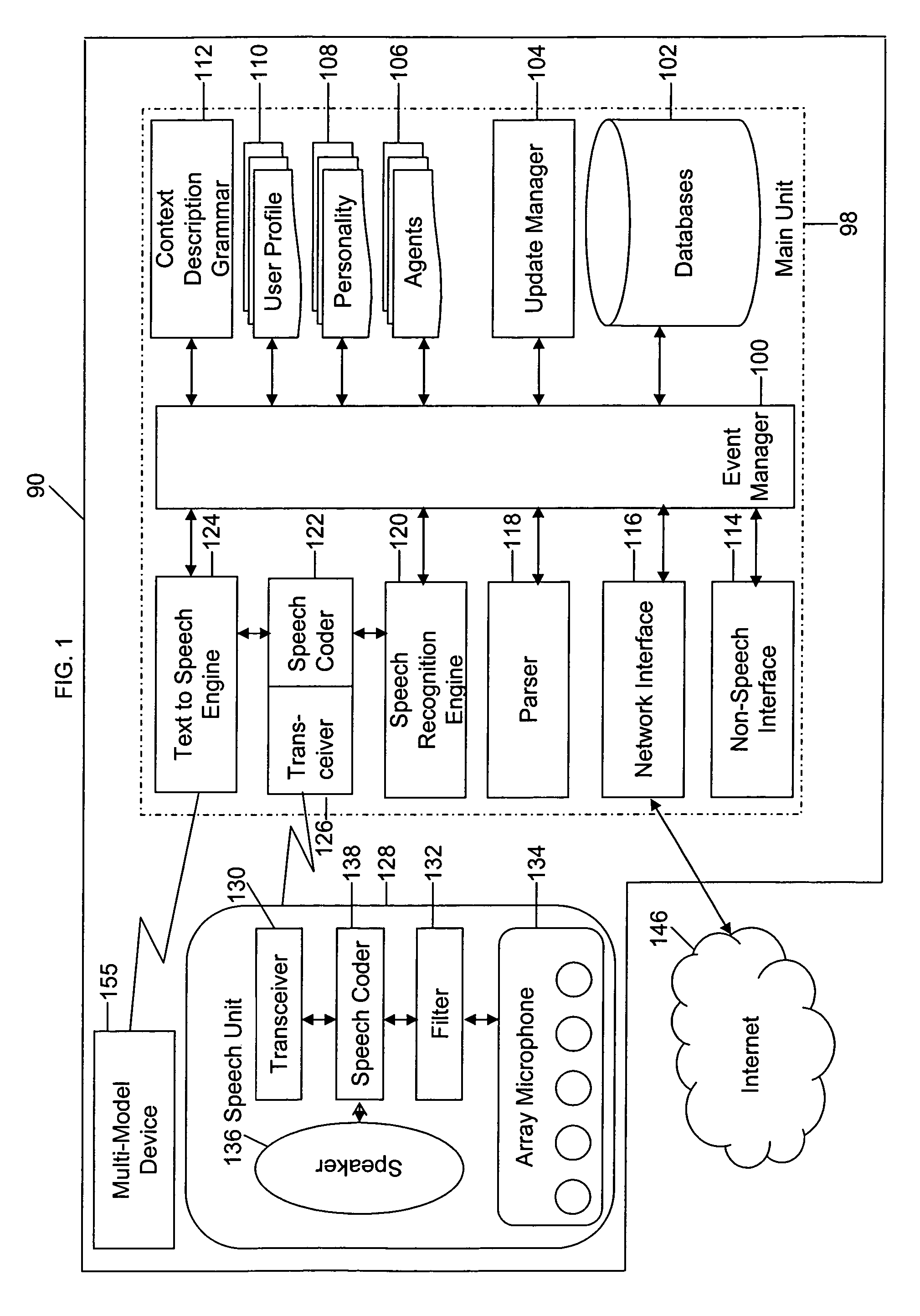

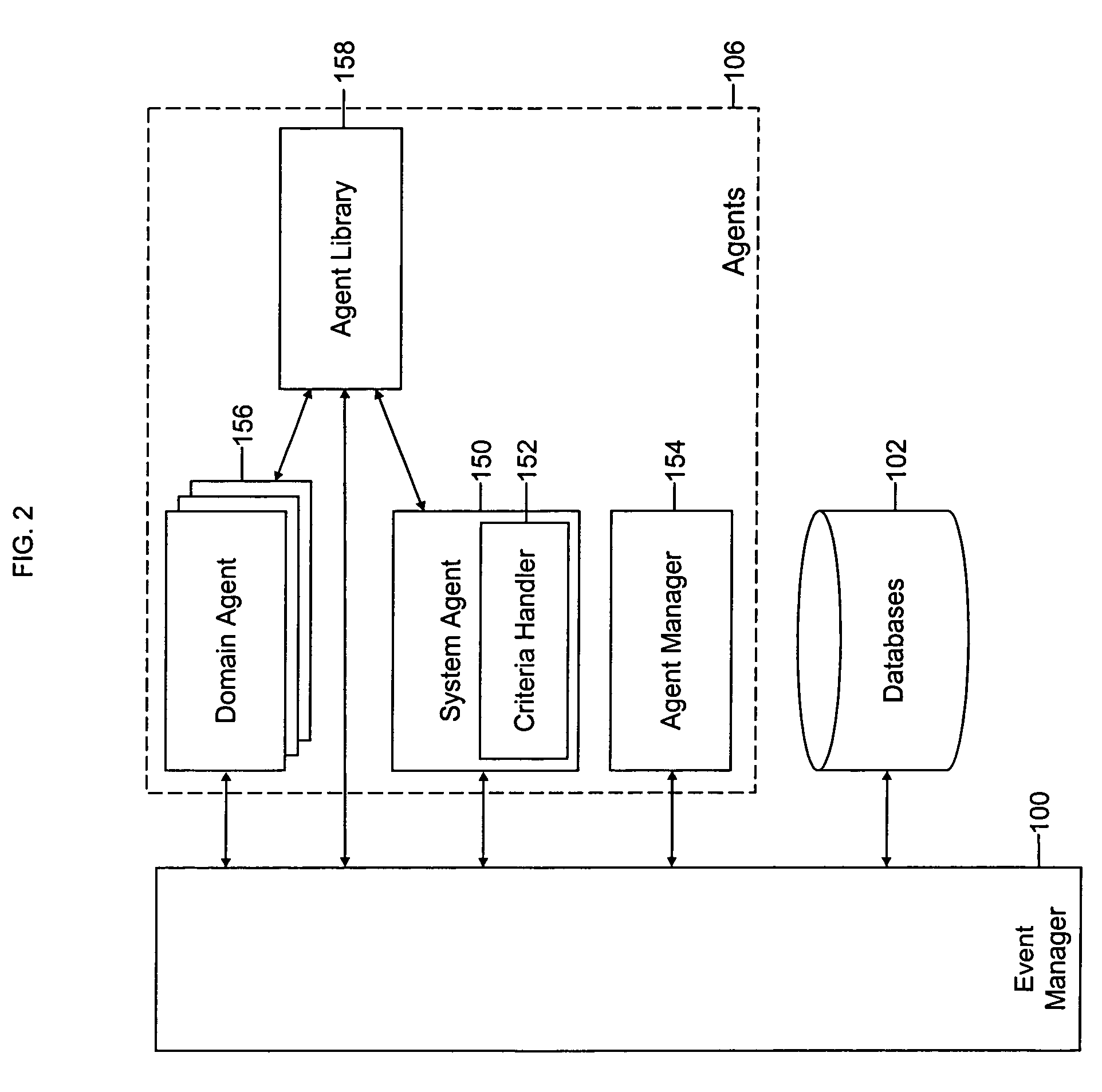

Intelligent portal engine

ActiveUS7092928B1Improve system performanceReduce ambiguityDigital computer detailsNatural language data processingGraphicsHuman–machine interface

Owner:OL SECURITY LIABILITY CO

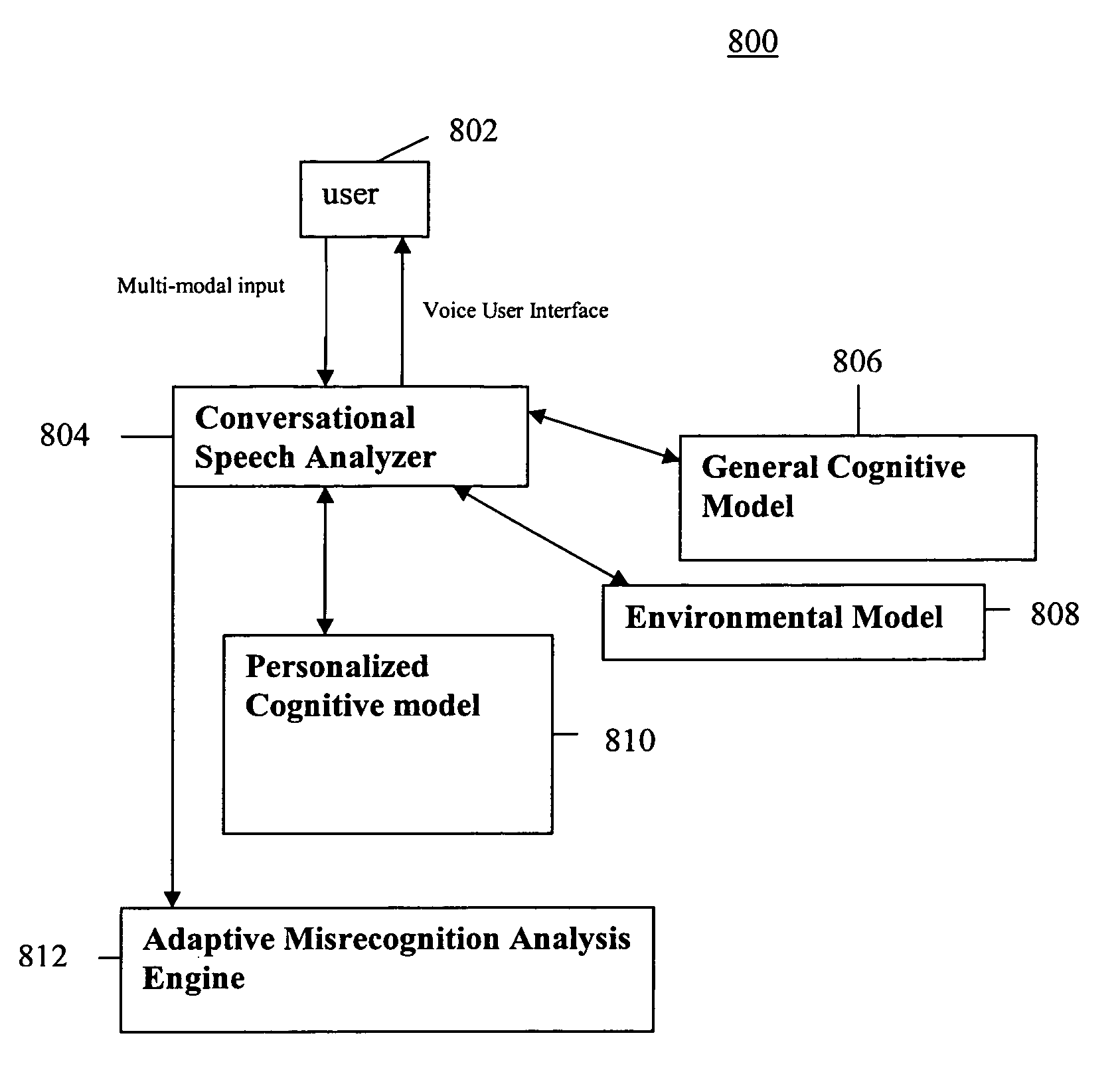

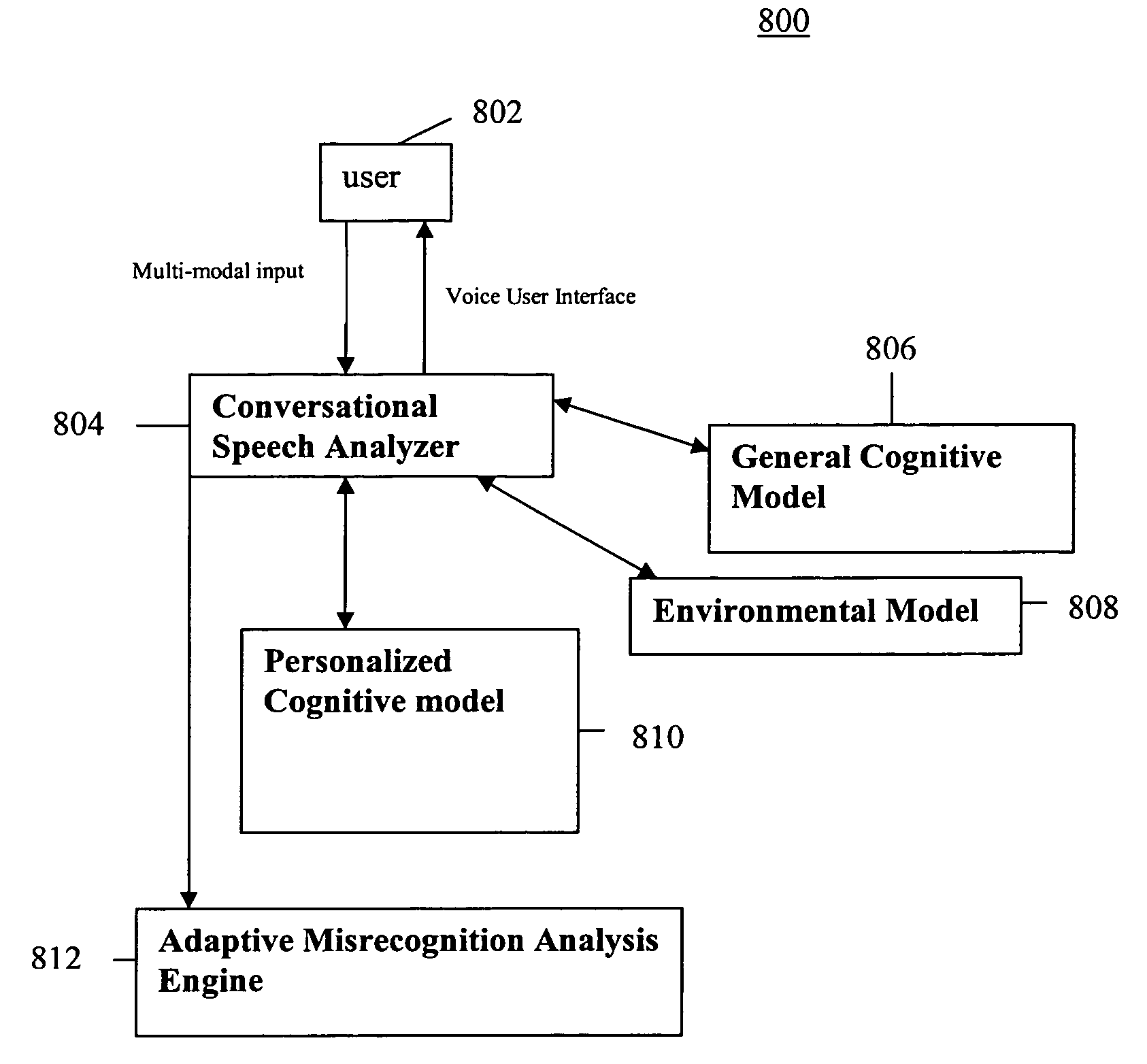

System and method of supporting adaptive misrecognition in conversational speech

ActiveUS20070038436A1Improve maximizationHigh bandwidthNatural language data processingSpeech recognitionPersonalizationSpoken language

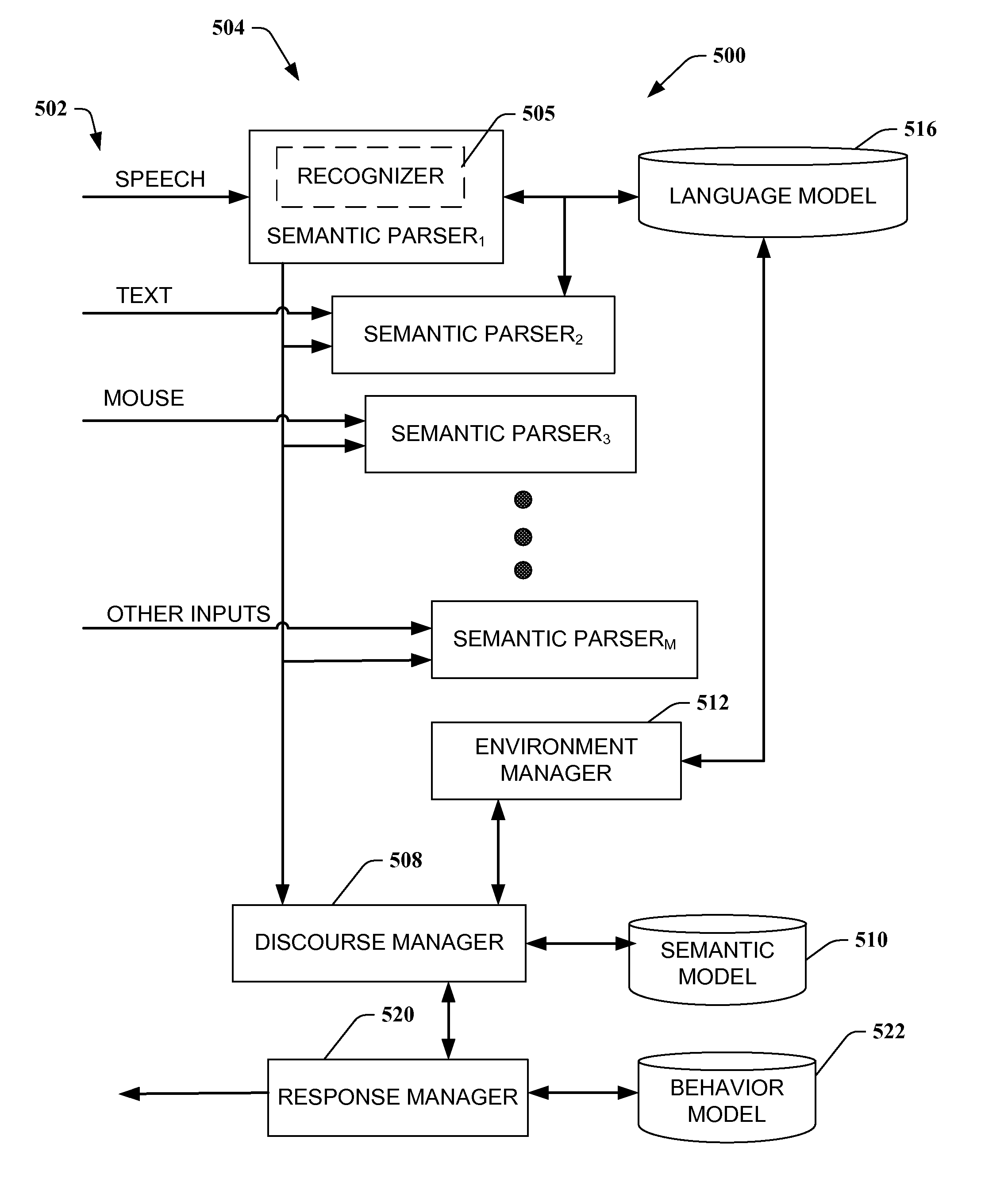

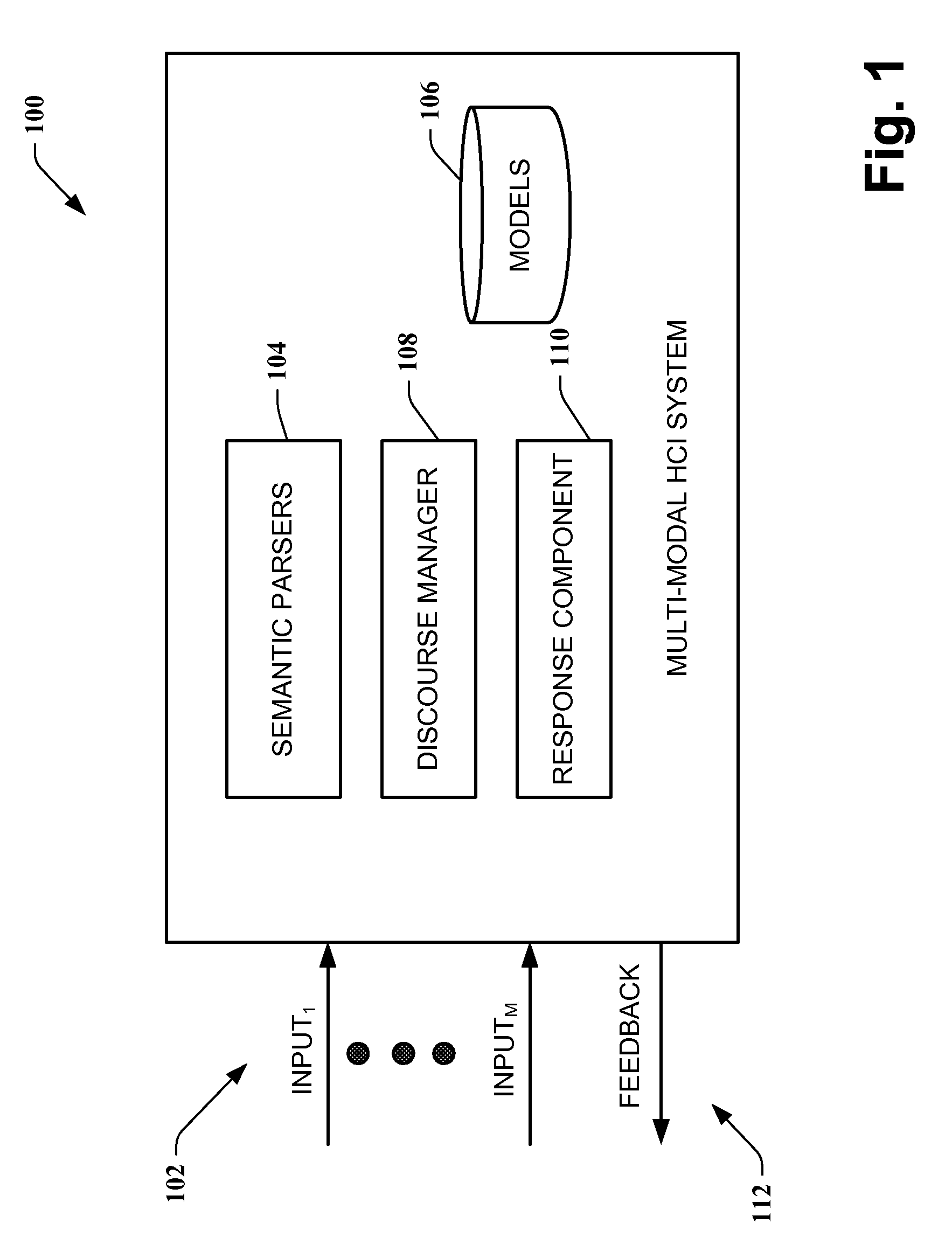

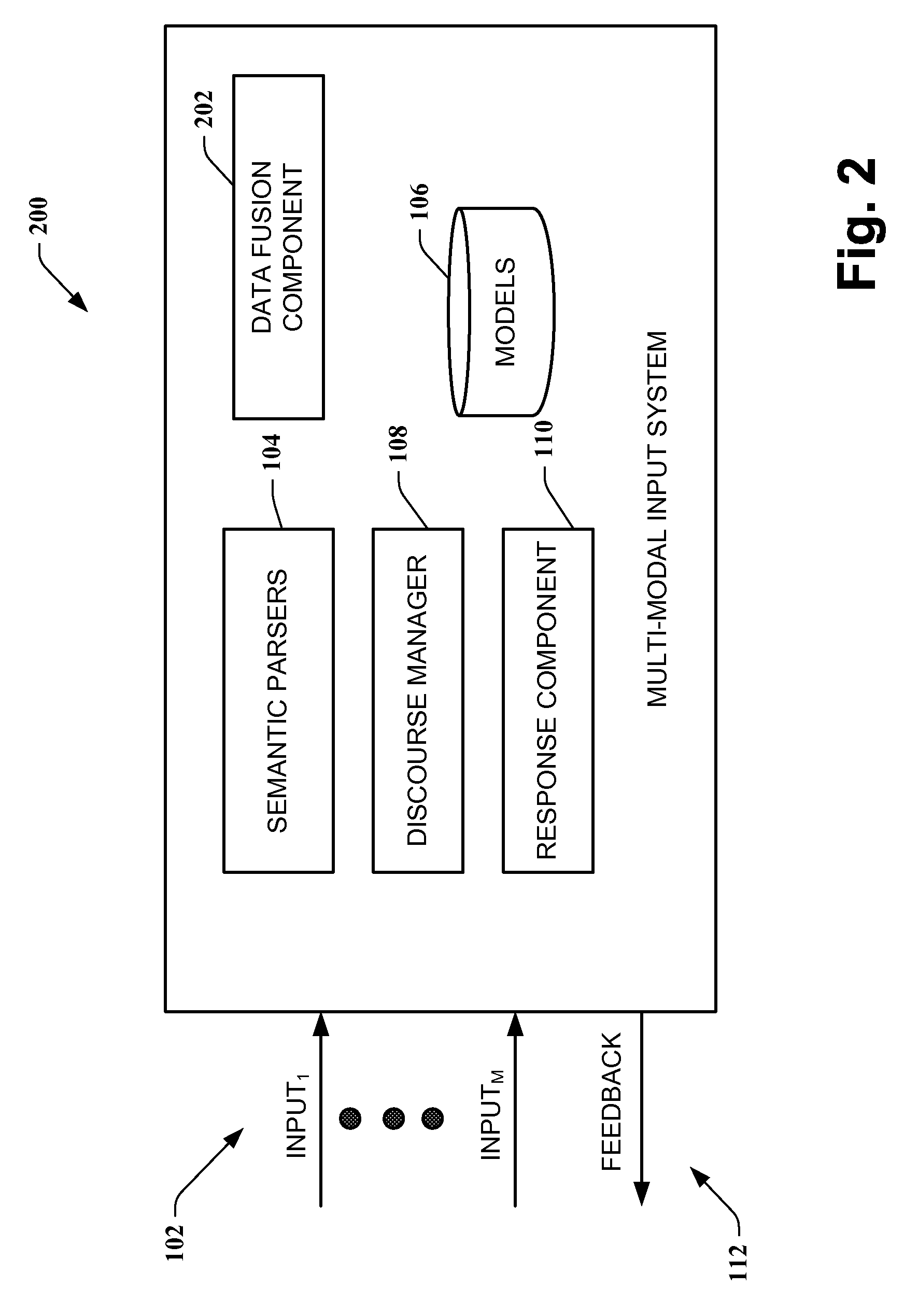

A system and method are provided for receiving speech and / or non-speech communications of natural language questions and / or commands and executing the questions and / or commands. The invention provides a conversational human-machine interface that includes a conversational speech analyzer, a general cognitive model, an environmental model, and a personalized cognitive model to determine context, domain knowledge, and invoke prior information to interpret a spoken utterance or a received non-spoken message. The system and method creates, stores and uses extensive personal profile information for each user, thereby improving the reliability of determining the context of the speech or non-speech communication and presenting the expected results for a particular question or command.

Owner:DIALECT LLC

System and method of supporting adaptive misrecognition in conversational speech

ActiveUS7620549B2Promotes feeling of naturalSignificant to useNatural language data processingSpeech recognitionPersonalizationHuman–machine interface

A system and method are provided for receiving speech and / or non-speech communications of natural language questions and / or commands and executing the questions and / or commands. The invention provides a conversational human-machine interface that includes a conversational speech analyzer, a general cognitive model, an environmental model, and a personalized cognitive model to determine context, domain knowledge, and invoke prior information to interpret a spoken utterance or a received non-spoken message. The system and method creates, stores and uses extensive personal profile information for each user, thereby improving the reliability of determining the context of the speech or non-speech communication and presenting the expected results for a particular question or command.

Owner:DIALECT LLC

Self-learning and self-personalizing knowledge search engine that delivers holistic results

InactiveUS6397212B1Web data indexingDigital data processing detailsPersonalizationHuman–machine interface

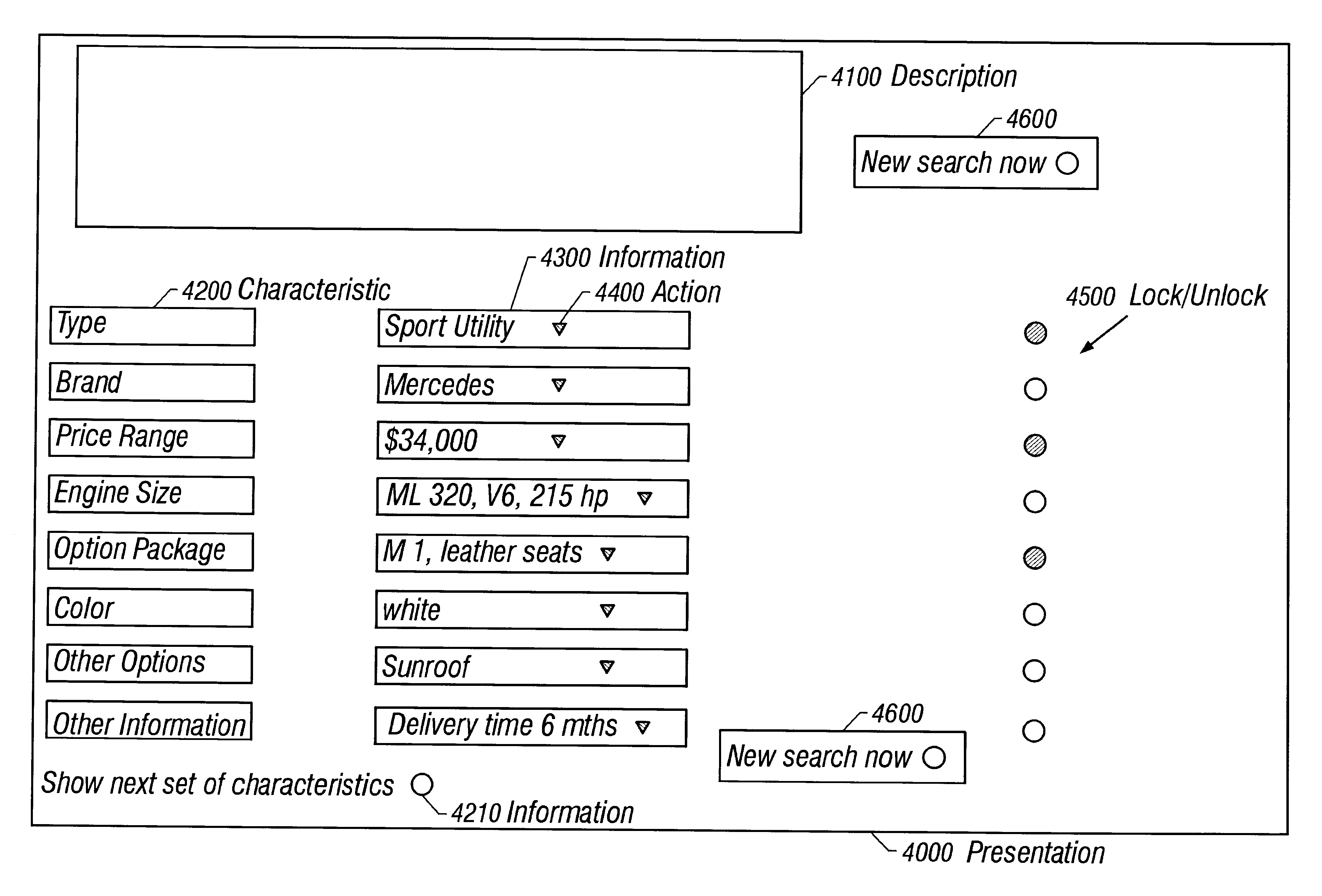

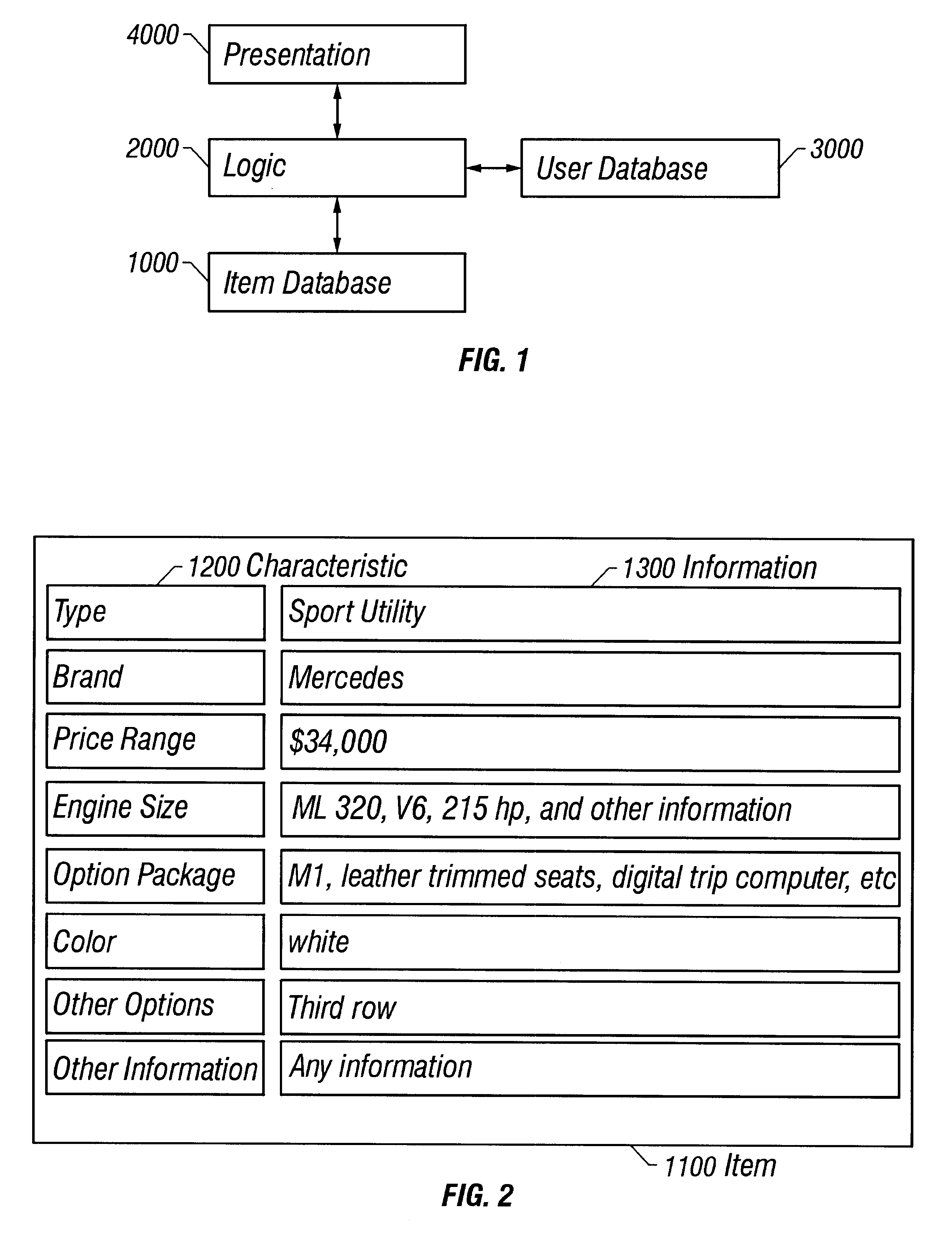

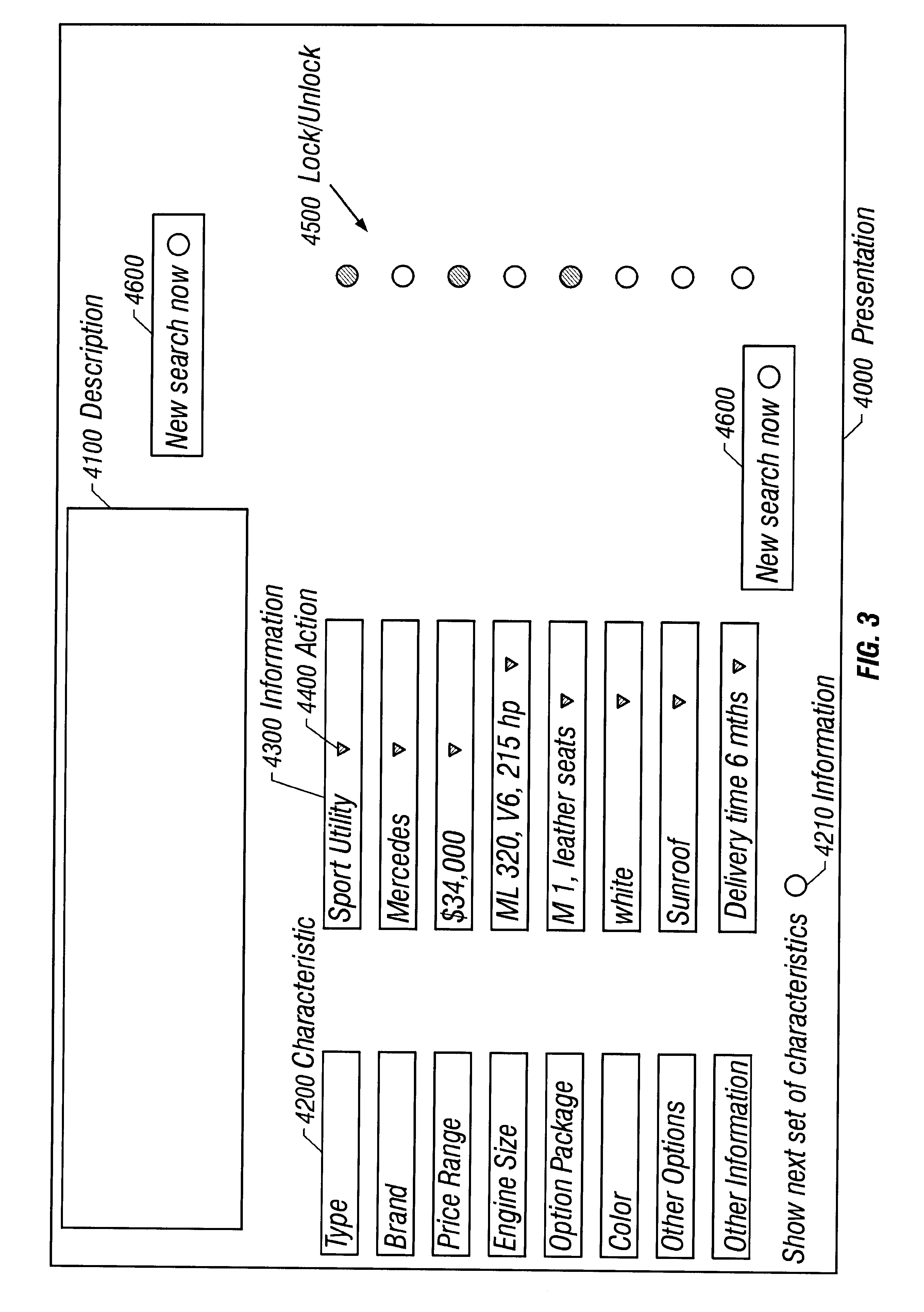

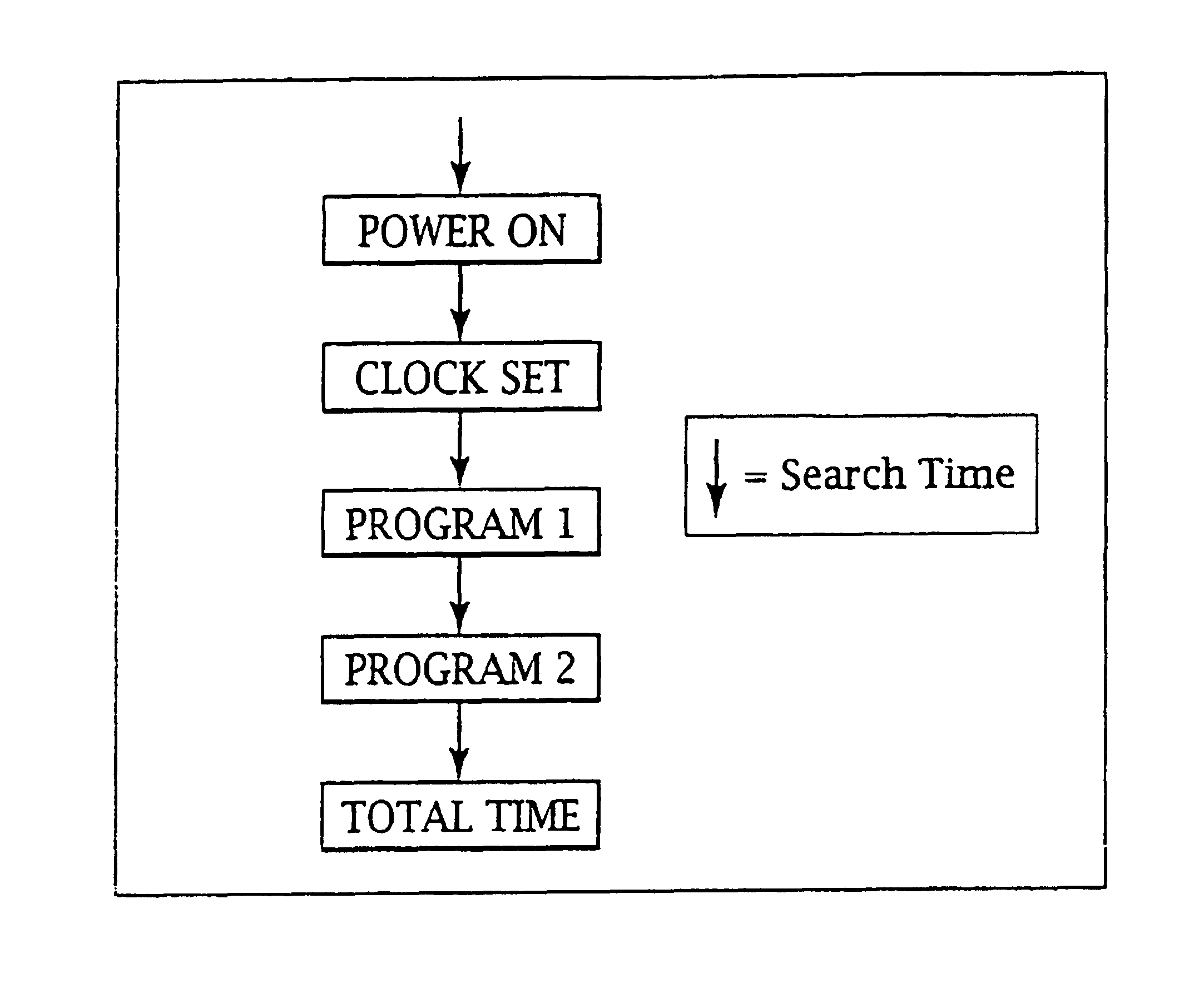

A search engine provides intelligent multi-dimensional searches, in which the search engine always presents a complete, holistic result, and in which the search engine presents knowledge (i.e. linked facts) and not just information (i.e. facts). The search engine is adaptive, such that the search results improve over time as the system learns about the user and develops a user profile. Thus, the search engine is self personalizing, i.e. it collects and analyzes the user history, and / or it has the user react to solutions and learns from such user reactions. The search engine generates profiles, e.g. it learns from all searches of all users and combines the user profiles and patterns of similar users. The search engine accepts direct user feedback to improve the next search iteration One feature of the invention is locking / unlocking, where a user may select specific attributes that are to remain locked while the search engine matches these locked attributes to all unlocked attributes. The user may also specify details about characteristics, provide and / or receive qualitative ratings of an overall result, and introduce additional criteria to the search strategy or select a search algorithm. Additionally, the system can be set up such that it does not require a keyboard and / or mouse interface, e.g. it can operate with a television remote control or other such human interface.

Owner:HANGER SOLUTIONS LLC

Ergonomic man-machine interface incorporating adaptive pattern recognition based control system

ActiveUS7136710B1Significant to useImprove computing powerComputer controlAnalogue secracy/subscription systemsConceptual basisHuman–machine interface

An adaptive interface for a programmable system, for predicting a desired user function, based on user history, as well as machine internal status and context. The apparatus receives an input from the user and other data. A predicted input is presented for confirmation by the user, and the predictive mechanism is updated based on this feedback. Also provided is a pattern recognition system for a multimedia device, wherein a user input is matched to a video stream on a conceptual basis, allowing inexact programming of a multimedia device. The system analyzes a data stream for correspondence with a data pattern for processing and storage. The data stream is subjected to adaptive pattern recognition to extract features of interest to provide a highly compressed representation which may be efficiently processed to determine correspondence. Applications of the interface and system include a VCR, medical device, vehicle control system, audio device, environmental control system, securities trading terminal, and smart house. The system optionally includes an actuator for effecting the environment of operation, allowing closed-loop feedback operation and automated learning.

Owner:BLANDING HOVENWEEP +1

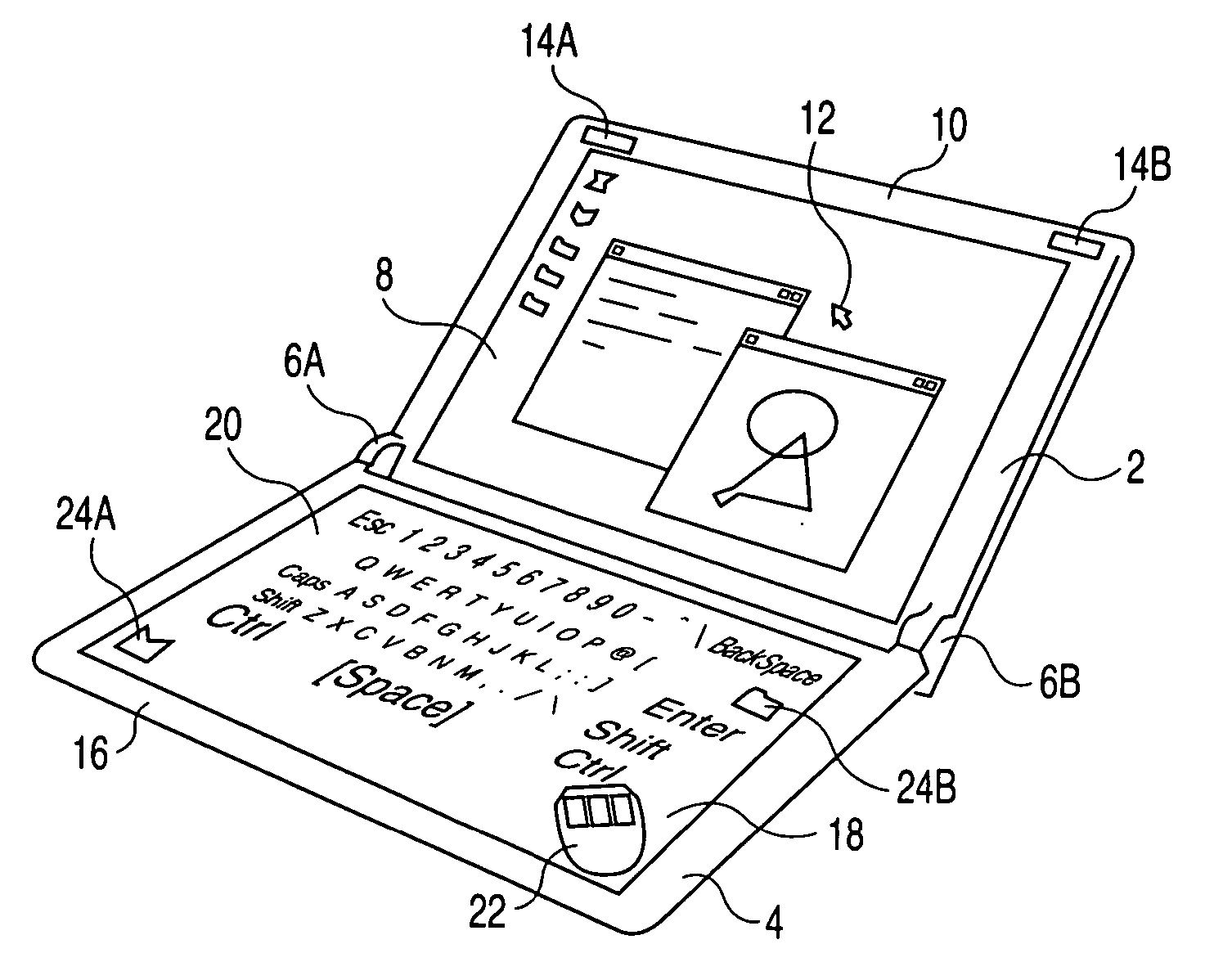

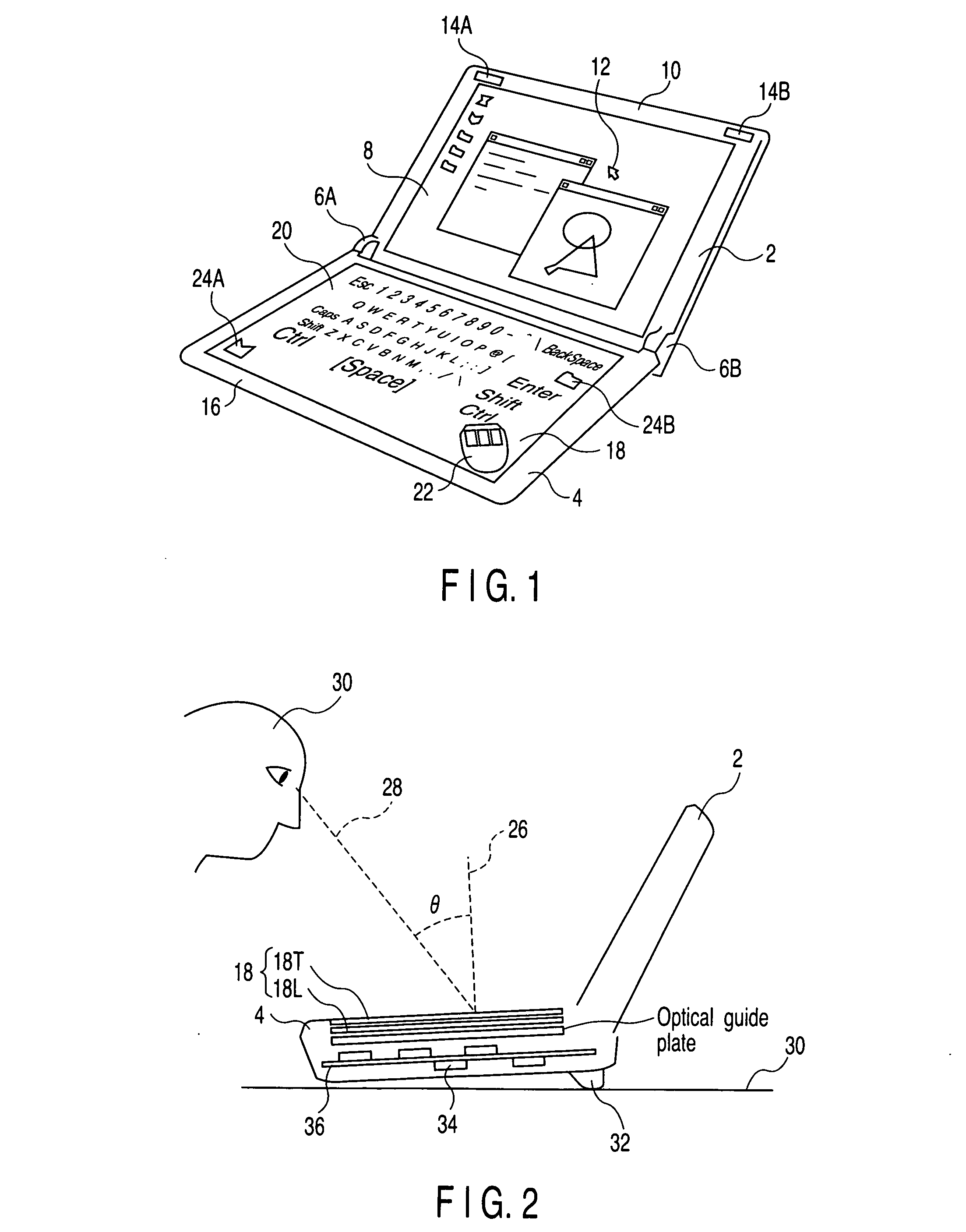

Electronic apparatus having universal human interface

InactiveUS20060034042A1Improve portabilityEasy to operateDetails for portable computersVisual presentationHuman–machine interfaceDisplay device

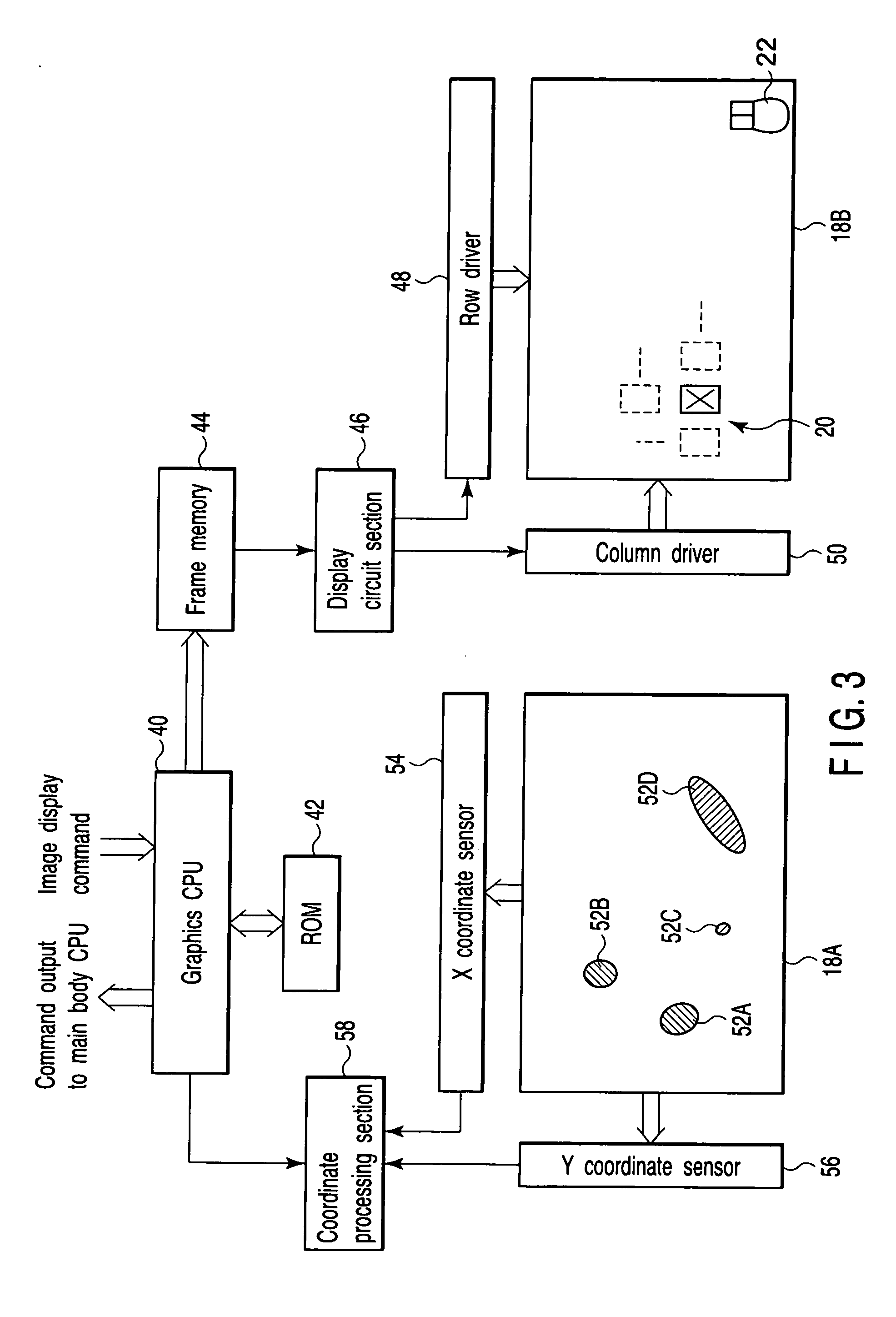

An electronic apparatus includes first and second display devices having first and second display screens held in first and second housings, respectively. The second display screen is provided with a sensor which generates an output signal determining an input area in response to an external predetermined input to the second display screen. The first and second housings are connected together by a connecting mechanism so that an opening angle between the first and second housings can be adjusted. A first interface image is displayed on the second display screen. An instruction input to the interface image is determined on the basis of a sensor output signal. In response to the instruction input, a second display image is displayed in place of the first display image. In response to the instruction input, a second interface image is displayed in place of the first interface image.

Owner:KK TOSHIBA

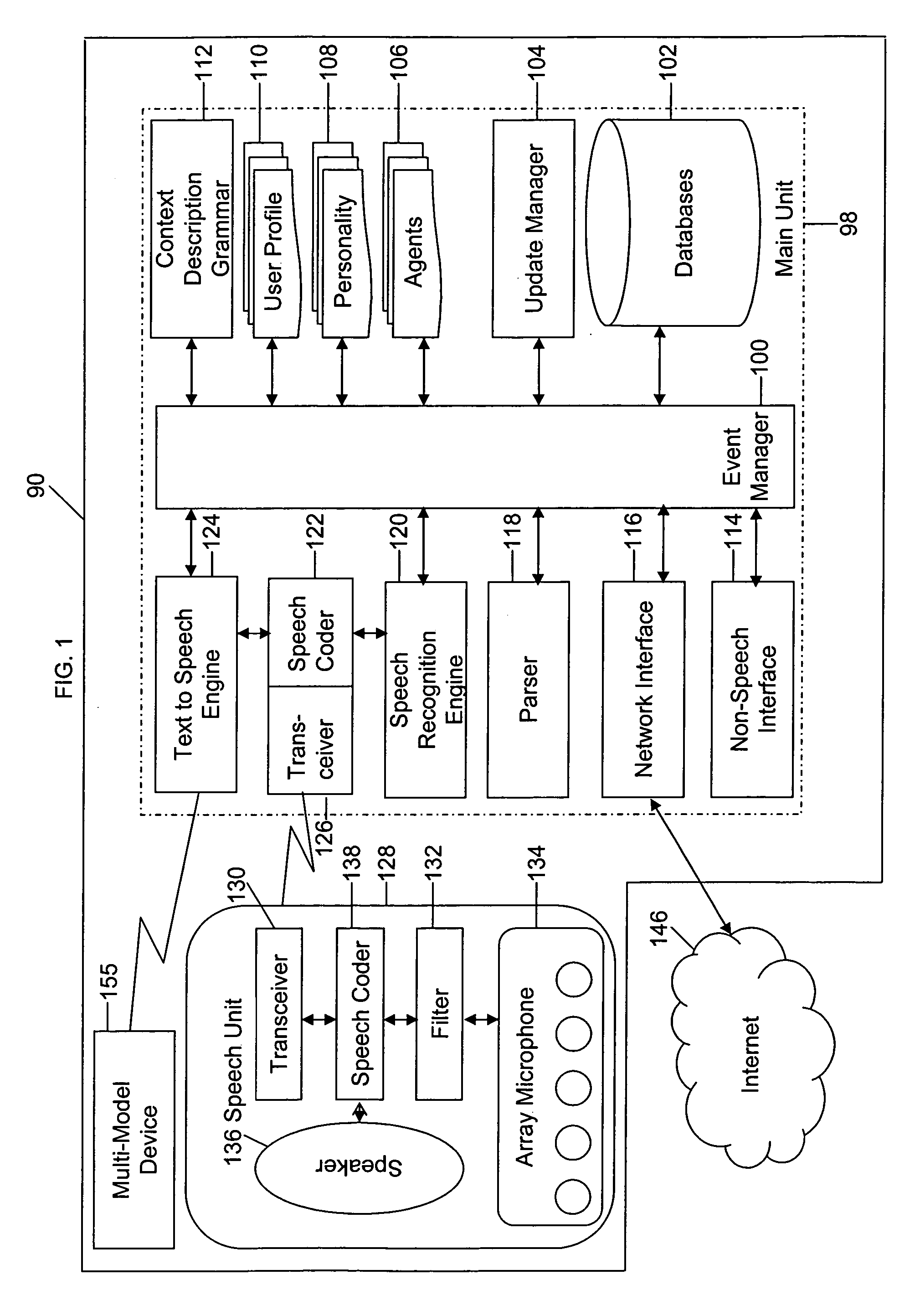

Speech-centric multimodal user interface design in mobile technology

ActiveUS8219406B2Easy to transportIncrease probabilitySpeech recognitionInput/output processes for data processingInterface designMobile technology

A multi-modal human computer interface (HCI) receives a plurality of available information inputs concurrently, or serially, and employs a subset of the inputs to determine or infer user intent with respect to a communication or information goal. Received inputs are respectively parsed, and the parsed inputs are analyzed and optionally synthesized with respect to one or more of each other. In the event sufficient information is not available to determine user intent or goal, feedback can be provided to the user in order to facilitate clarifying, confirming, or augmenting the information inputs.

Owner:MICROSOFT TECH LICENSING LLC

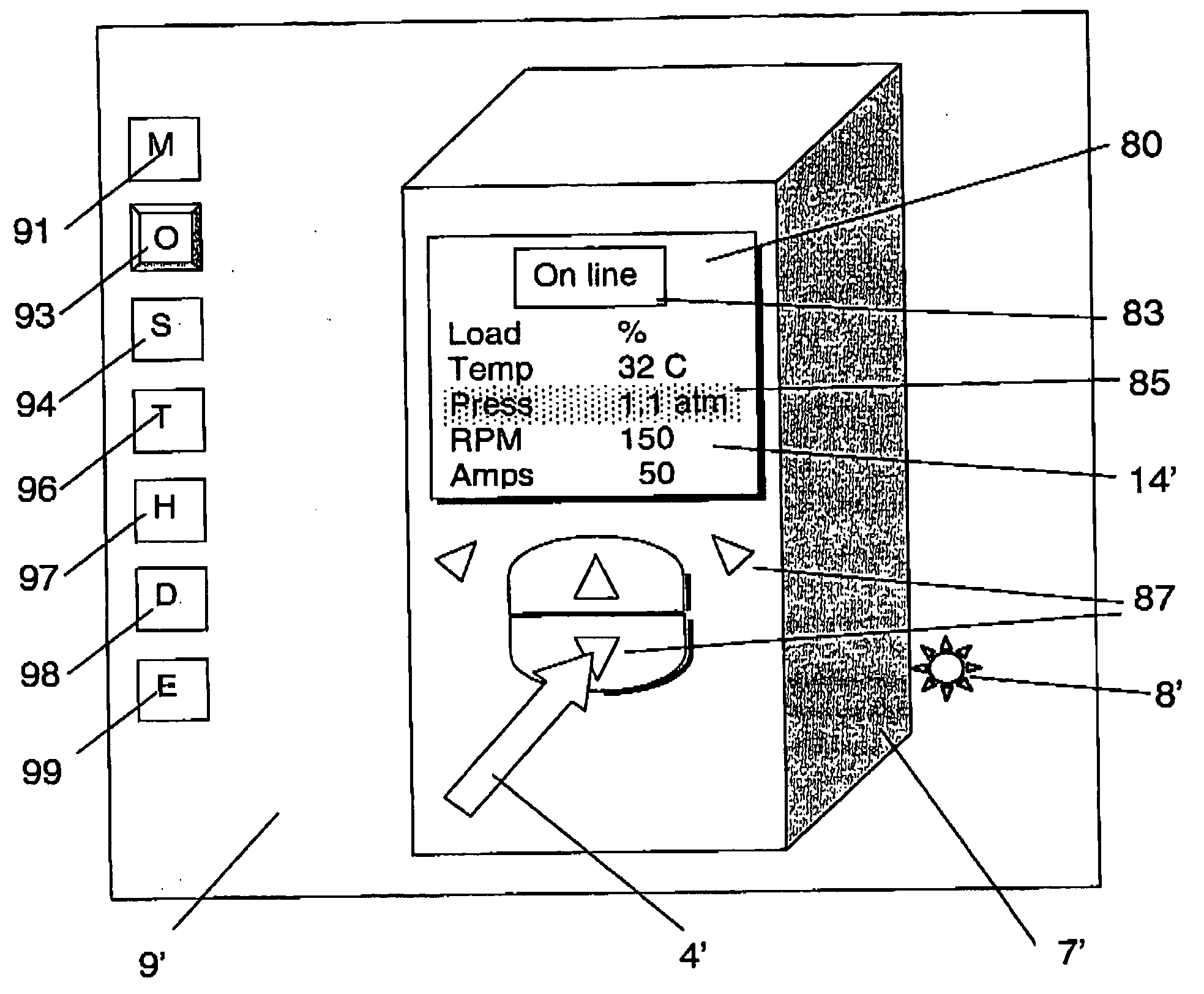

Method to generate a human machine interface

ActiveUS20060241792A1Intuitive informationProgramme controlData processing applicationsHuman–machine interfaceGraphical user interface

Owner:ABB (SCHWEIZ) AG

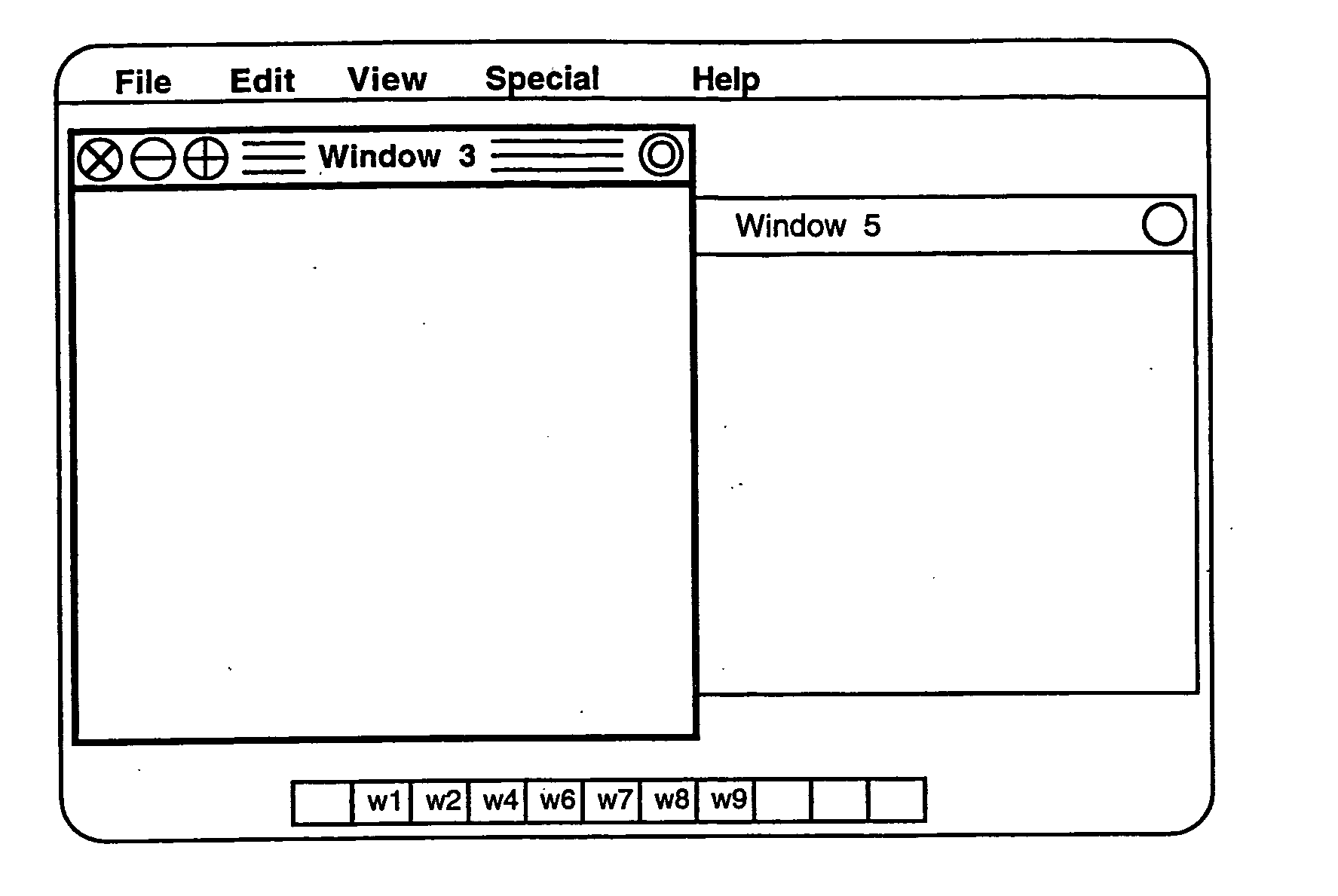

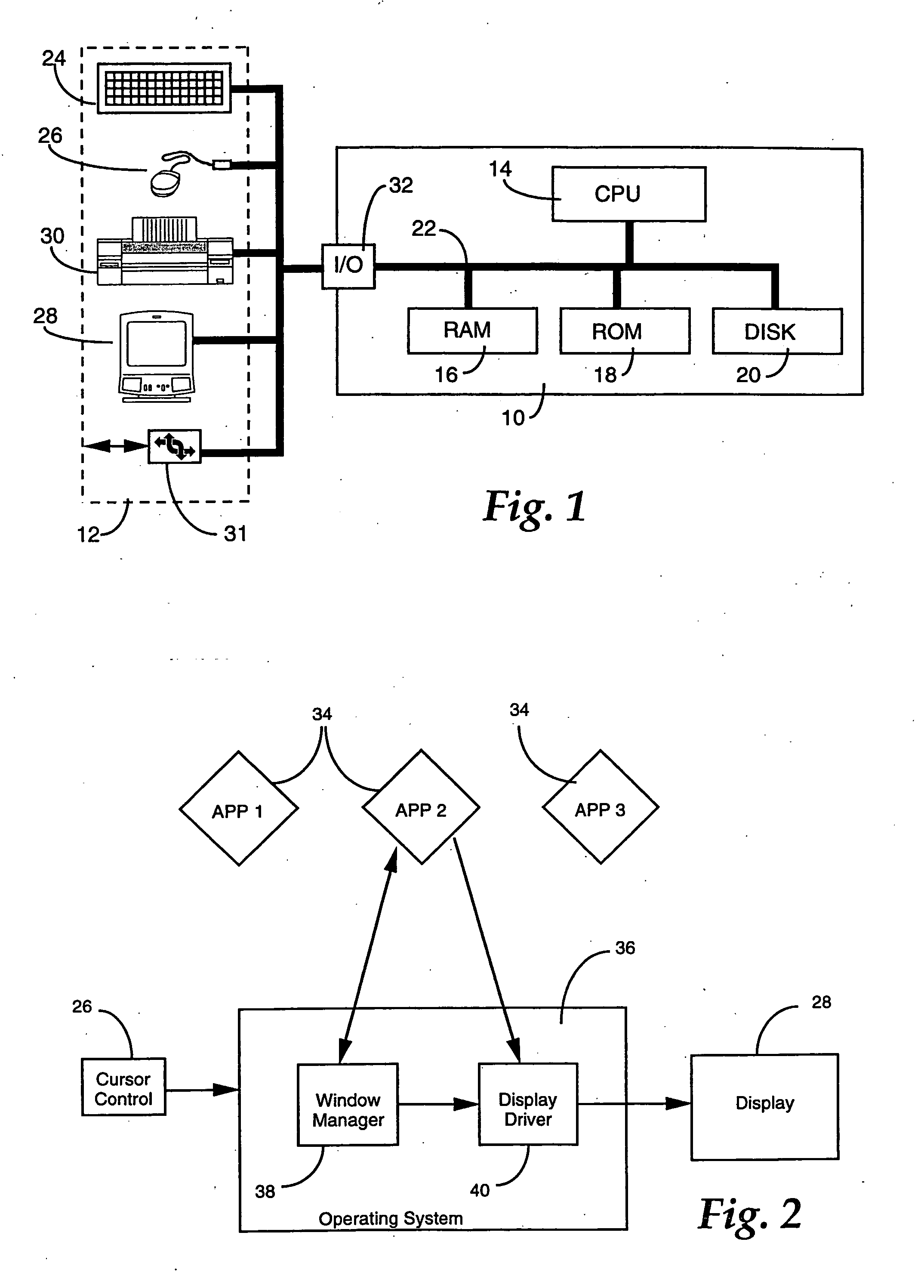

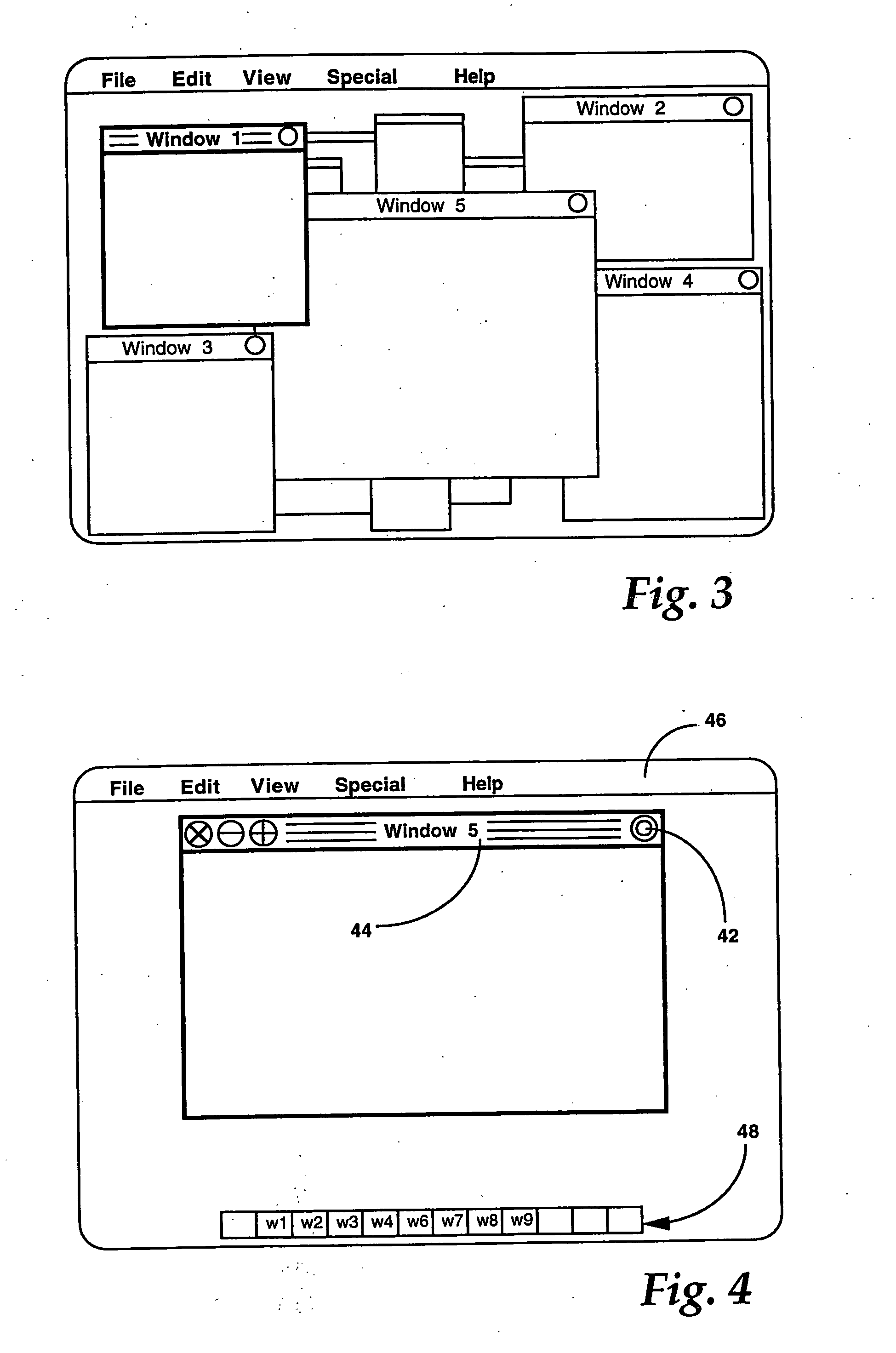

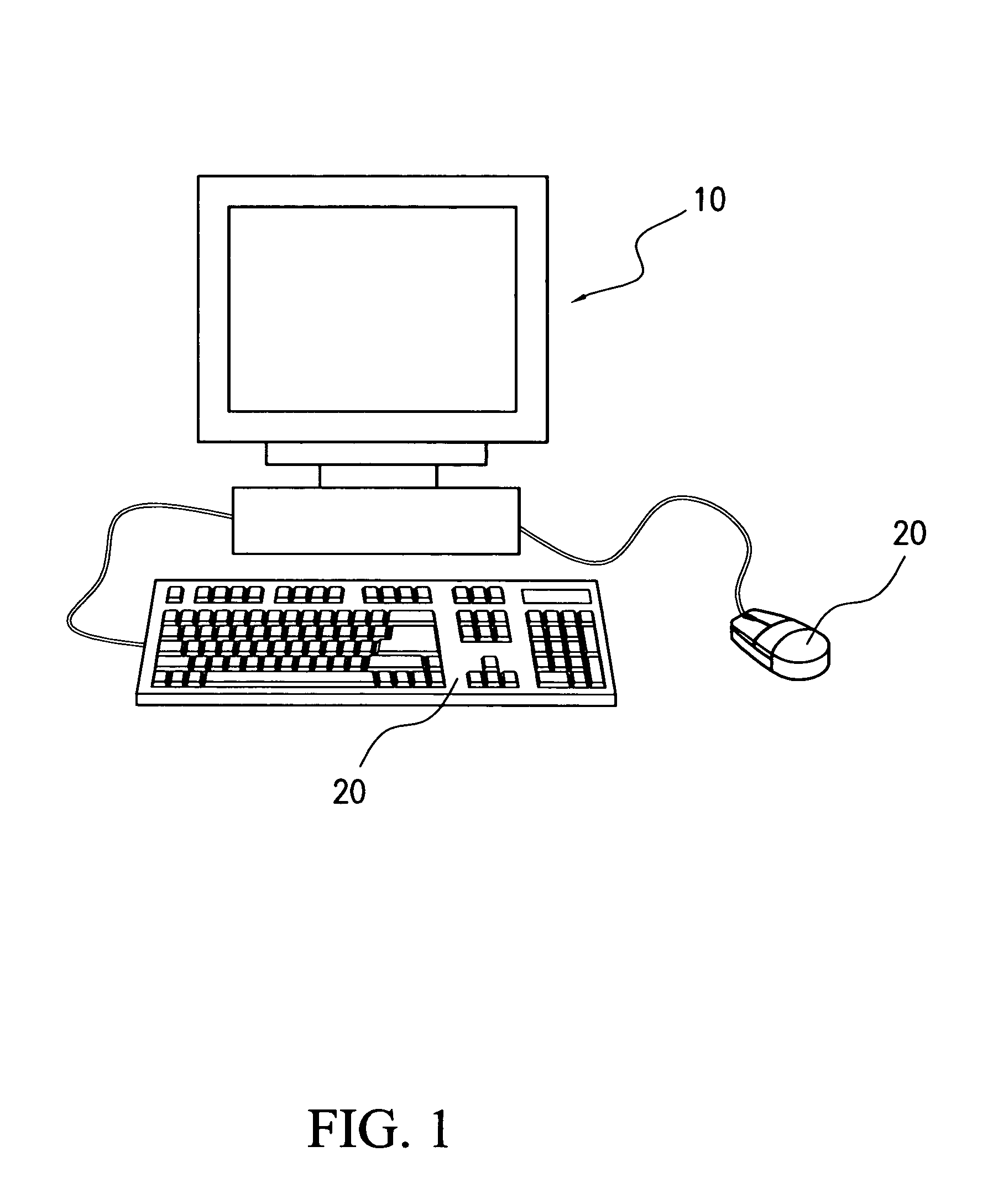

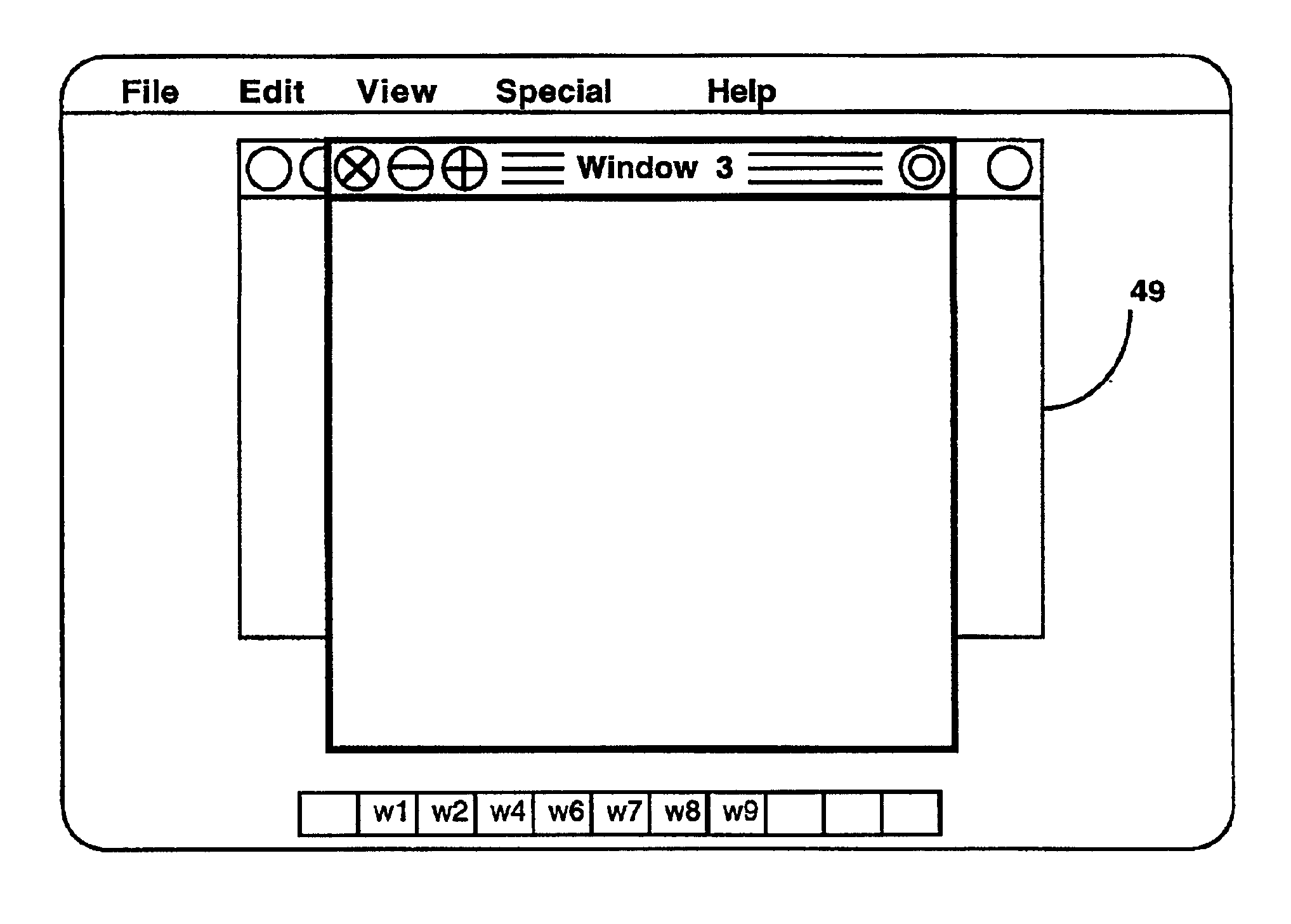

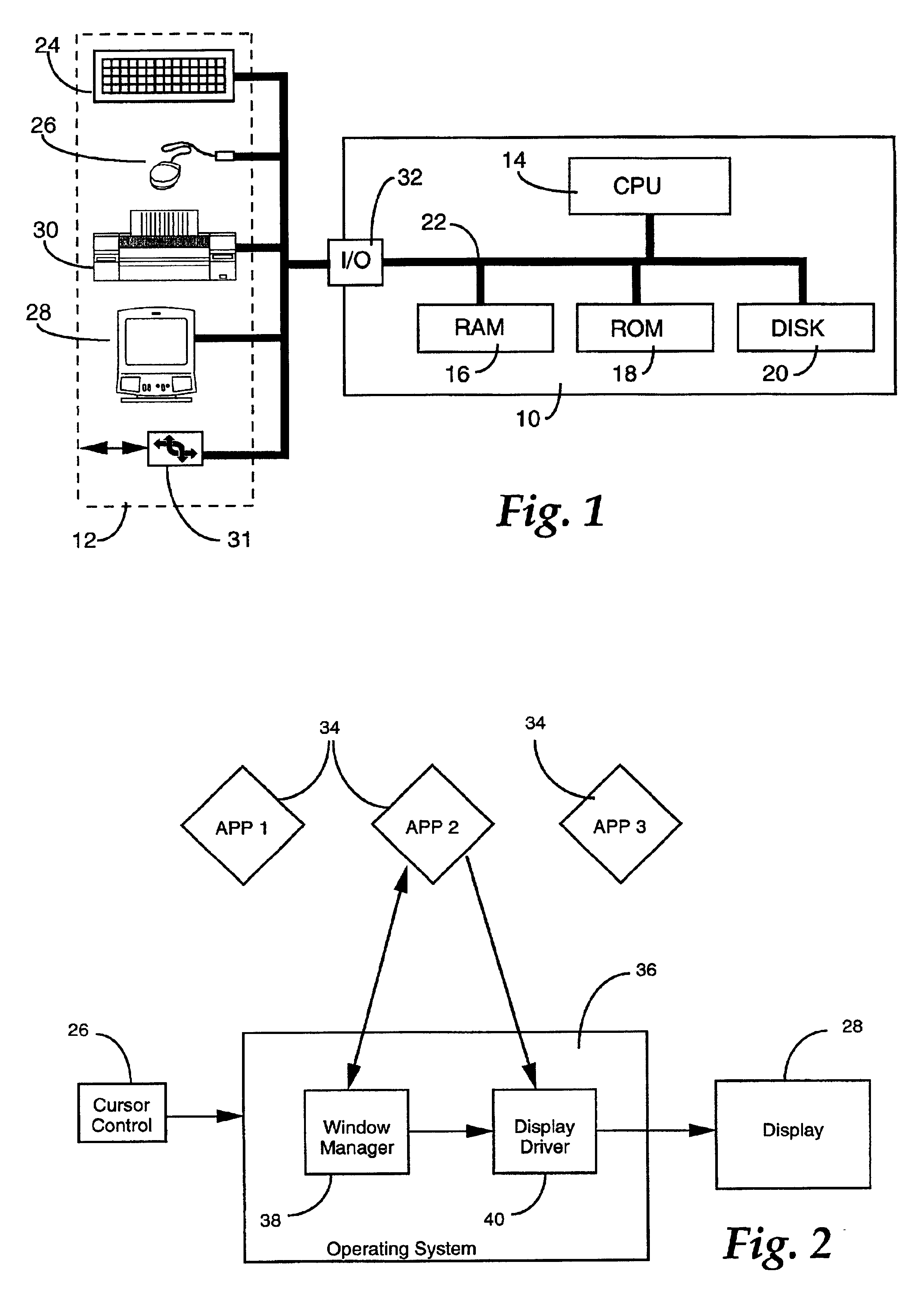

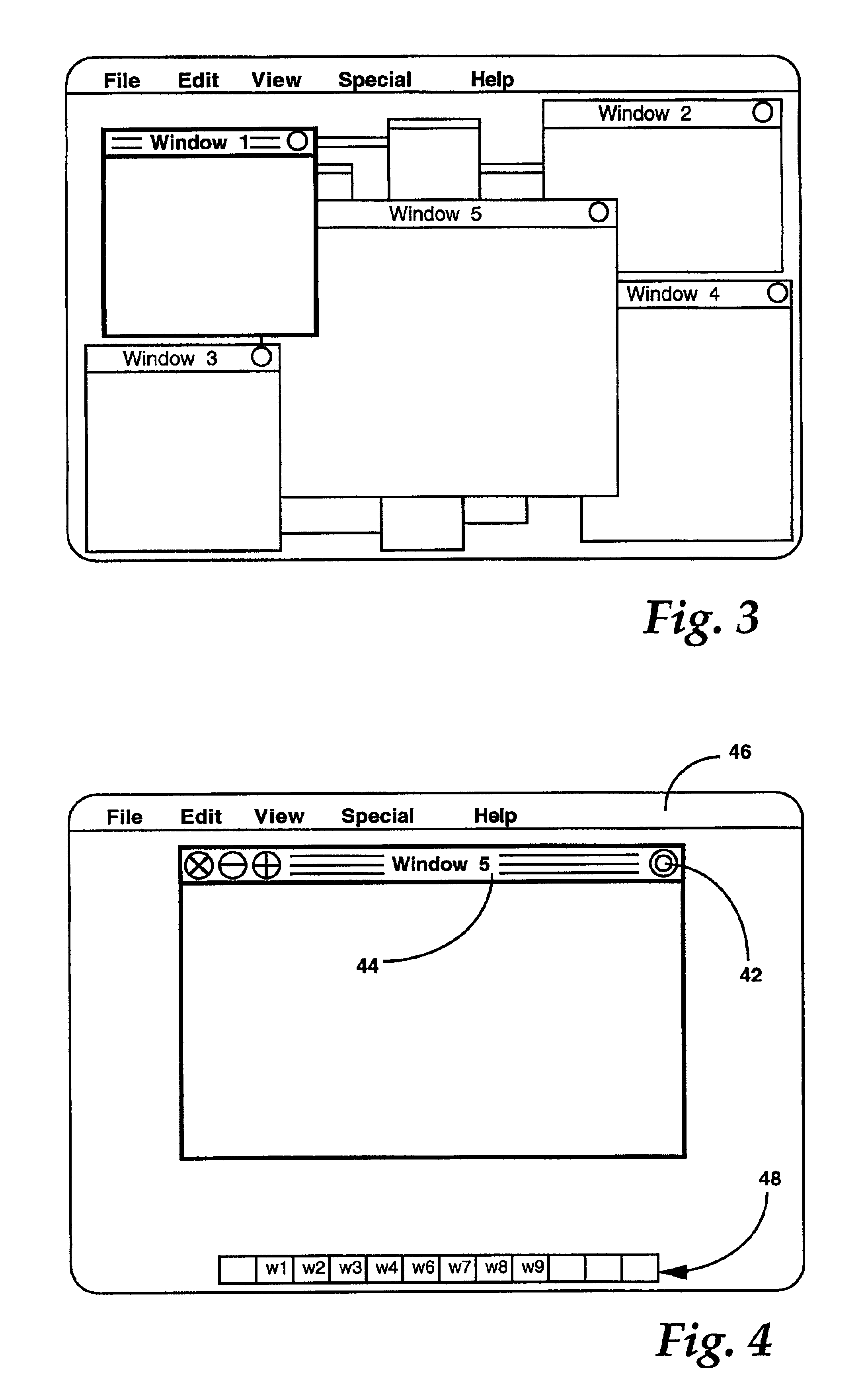

Computer interface having a single window mode of operation

InactiveUS20050149879A1Reduce clutterReduce confusionInput/output processes for data processingHuman–machine interfaceComputer monitor

A computer-human interface manages the available space of a computer display in a manner which reduces clutter and confusion caused by multiple open windows. The interface includes a user-selectable mode of operation in which only those windows associated with the currently active task are displayed on the computer monitor. All other windows relating to non-active tasks are minimized by reducing them in size or replacing them with a representative symbol, such as an icon, so that they occupy a minimal amount of space on the monitor's screen. When a user switches from the current task to a new task, by selecting a minimized window, the windows associated with the current task are automatically minimized as the window pertaining to the new task is displayed at its normal size. As a result, the user is only presented with the window that relates to the current task of interest, and clutter provided by non-active tasks is removed.

Owner:APPLE INC

Ergonomic man-machine interface incorporating adaptive pattern recognition based control system

InactiveUS20070061735A1Decrease productivityImprove the environmentTelevision system detailsRecording carrier detailsHuman–machine interfaceData stream

An adaptive interface for a programmable system, for predicting a desired user function, based on user history, as well as machine internal status and context. The apparatus receives an input from the user and other data. A predicted input is presented for confirmation by the user, and the predictive mechanism is updated based on this feedback. Also provided is a pattern recognition system for a multimedia device, wherein a user input is matched to a video stream on a conceptual basis, allowing inexact programming of a multimedia device. The system analyzes a data stream for correspondence with a data pattern for processing and storage. The data stream is subjected to adaptive pattern recognition to extract features of interest to provide a highly compressed representation which may be efficiently processed to determine correspondence. Applications of the interface and system include a VCR, medical device, vehicle control system, audio device, environmental control system, securities trading terminal, and smart house. The system optionally includes an actuator for effecting the environment of operation, allowing closed-loop feedback operation and automated learning.

Owner:BLANDING HOVENWEEP

Human-computer interface including haptically controlled interactions

InactiveUS6954899B1Lower requirementEasy to controlInput/output for user-computer interactionCathode-ray tube indicatorsPresent methodHuman–machine interface

The present invention provides a method of human-computer interfacing that provides haptic feedback to control interface interactions such as scrolling or zooming within an application. Haptic feedback in the present method allows the user more intuitive control of the interface interactions, and allows the user's visual focus to remain on the application. The method comprises providing a control domain within which the user can control interactions. For example, a haptic boundary can be provided corresponding to scrollable or scalable portions of the application domain. The user can position a cursor near such a boundary, feeling its presence haptically (reducing the requirement for visual attention for control of scrolling of the display). The user can then apply force relative to the boundary, causing the interface to scroll the domain. The rate of scrolling can be related to the magnitude of applied force, providing the user with additional intuitive, non-visual control of scrolling.

Owner:META PLATFORMS INC

Wireless communication device with markup language based man-machine interface

A system, method, and software product provide a wireless communications device with a markup language based man-machine interface. The man-machine interface provides a user interface for the various telecommunications functionality of the wireless communication device, including dialing telephone numbers, answering telephone calls, creating messages, sending messages, receiving messages, establishing configuration settings, which are defined in markup language, such as HTML, and accessed through a browser program executed by the wireless communication device. This feature enables direct access to Internet and World Wide Web content, such as Web pages, to be directly integrated with telecommunication functions of the device, and allows Web content to be seamlessly integrated with other types of data, since all data presented to the user via the user interface is presented via markup language-based pages. The browser processes an extended form of HTML that provides new tags and attributes that enhance the navigational, logical, and display capabilities of conventional HTML, and particularly adapt HTML to be displayed and used on wireless communication devices with small screen displays. The wireless communication device includes the browser, a set of portable components, and portability layer. The browser includes protocol handlers, which implement different protocols for accessing various functions of the wireless communication device, and content handlers, which implement various content display mechanisms for fetching and outputting content on a screen display.

Owner:ACCESS

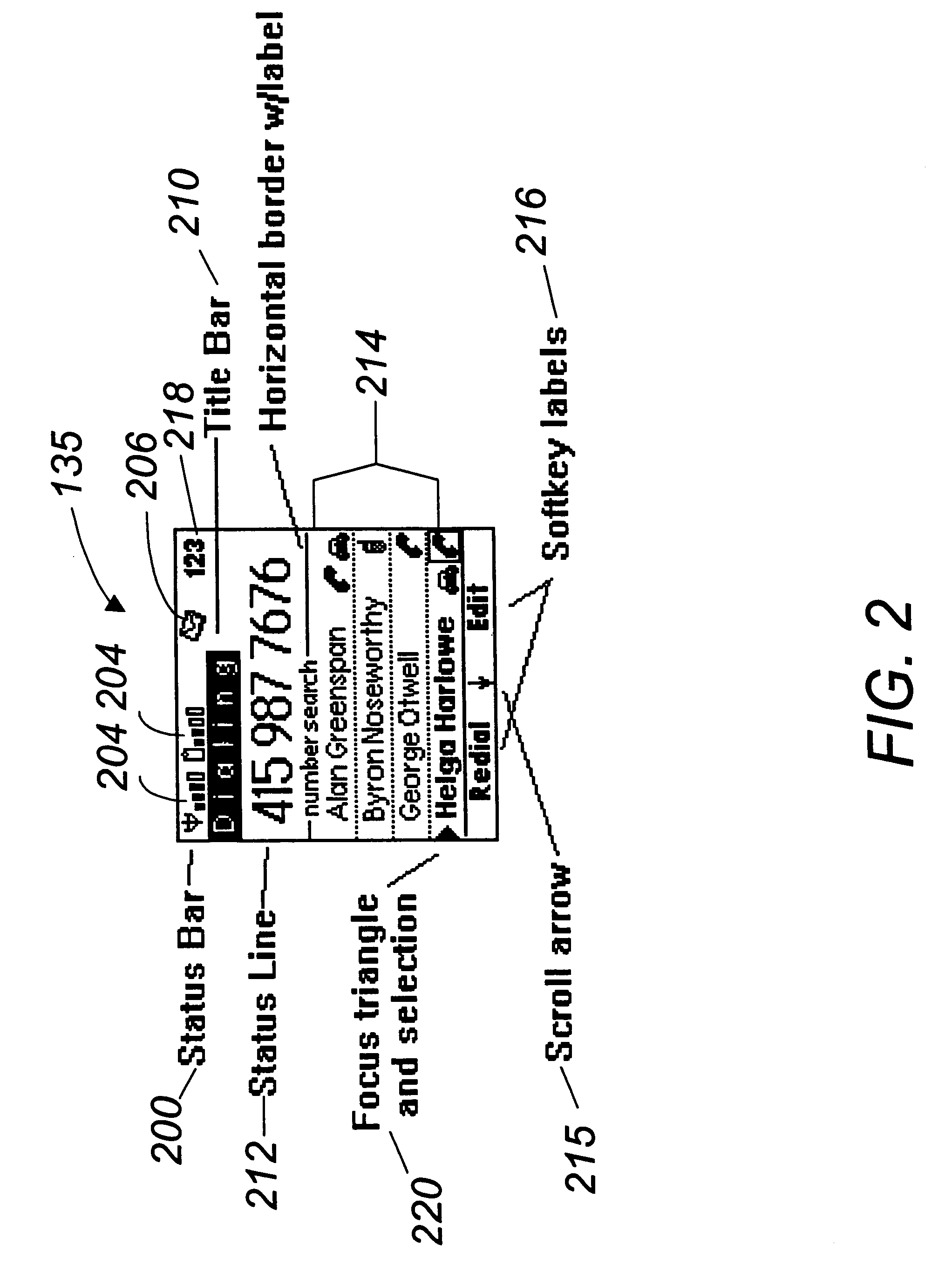

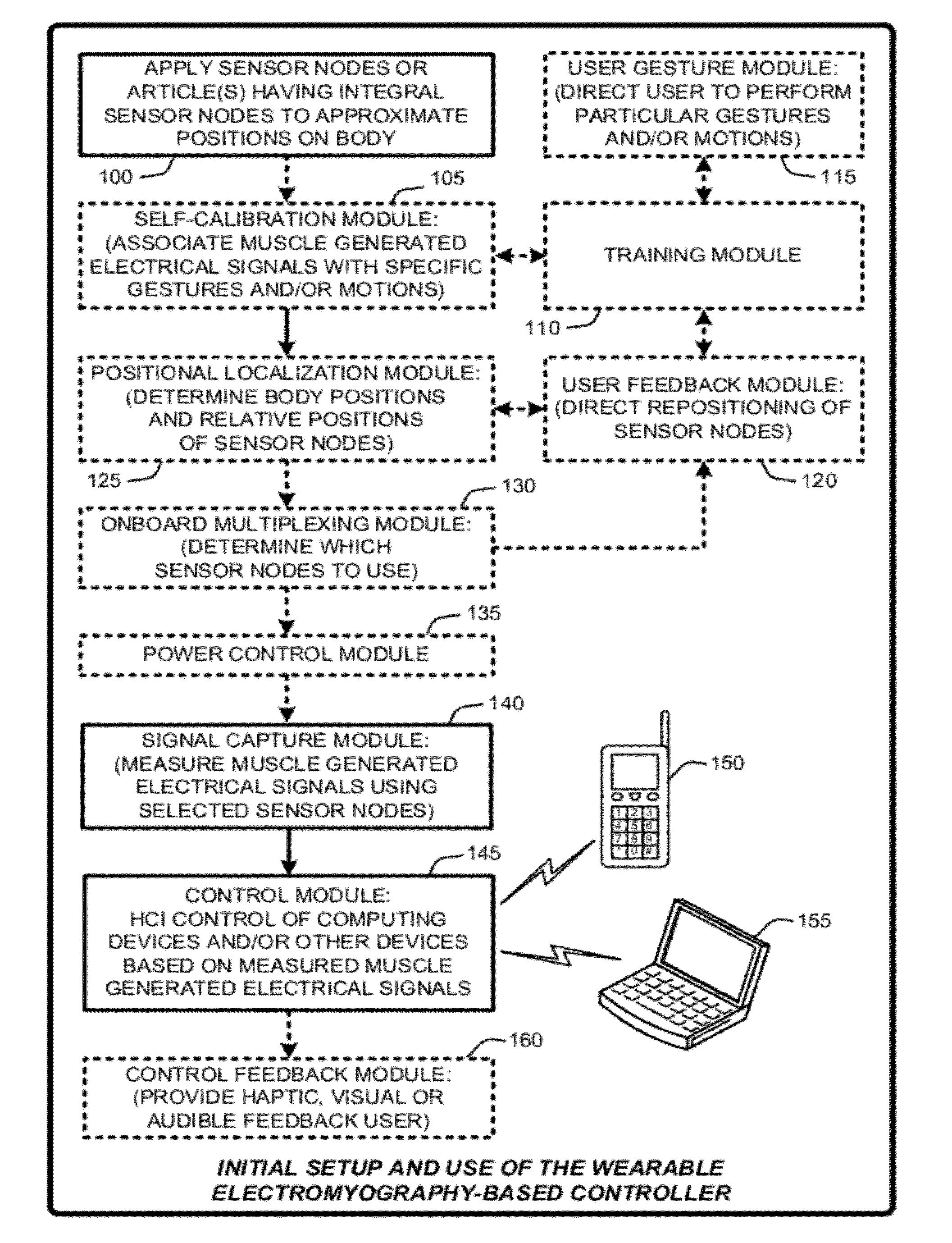

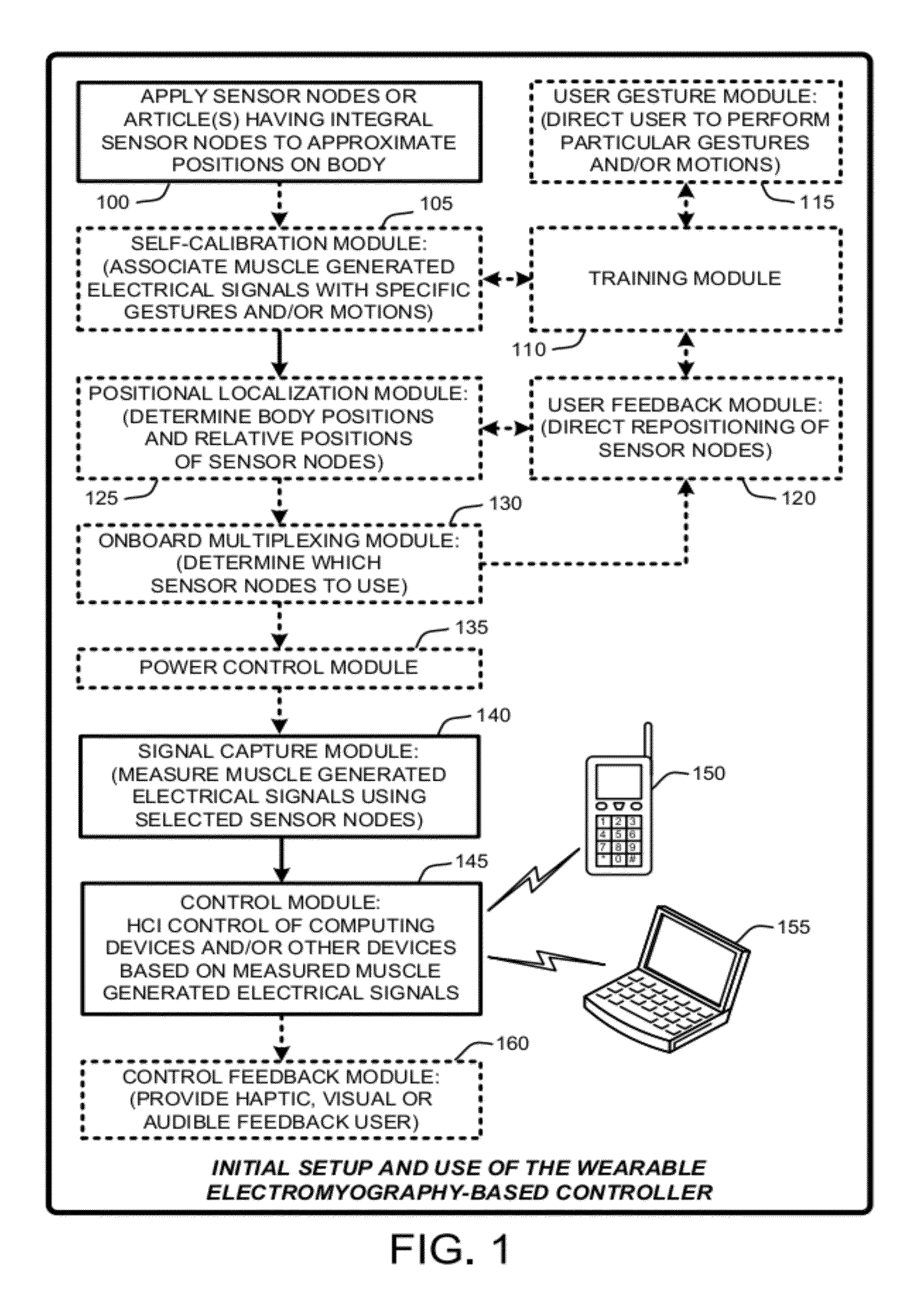

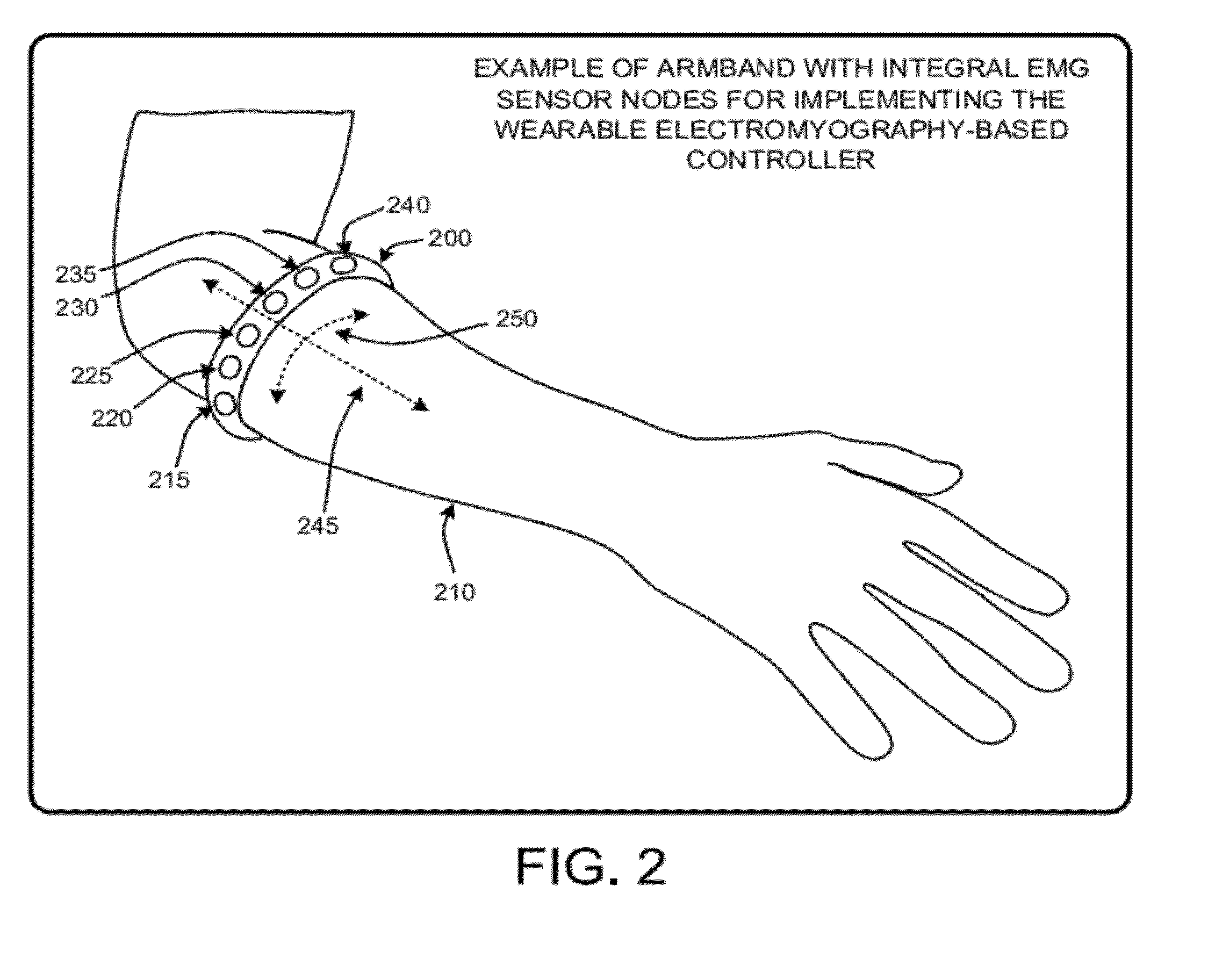

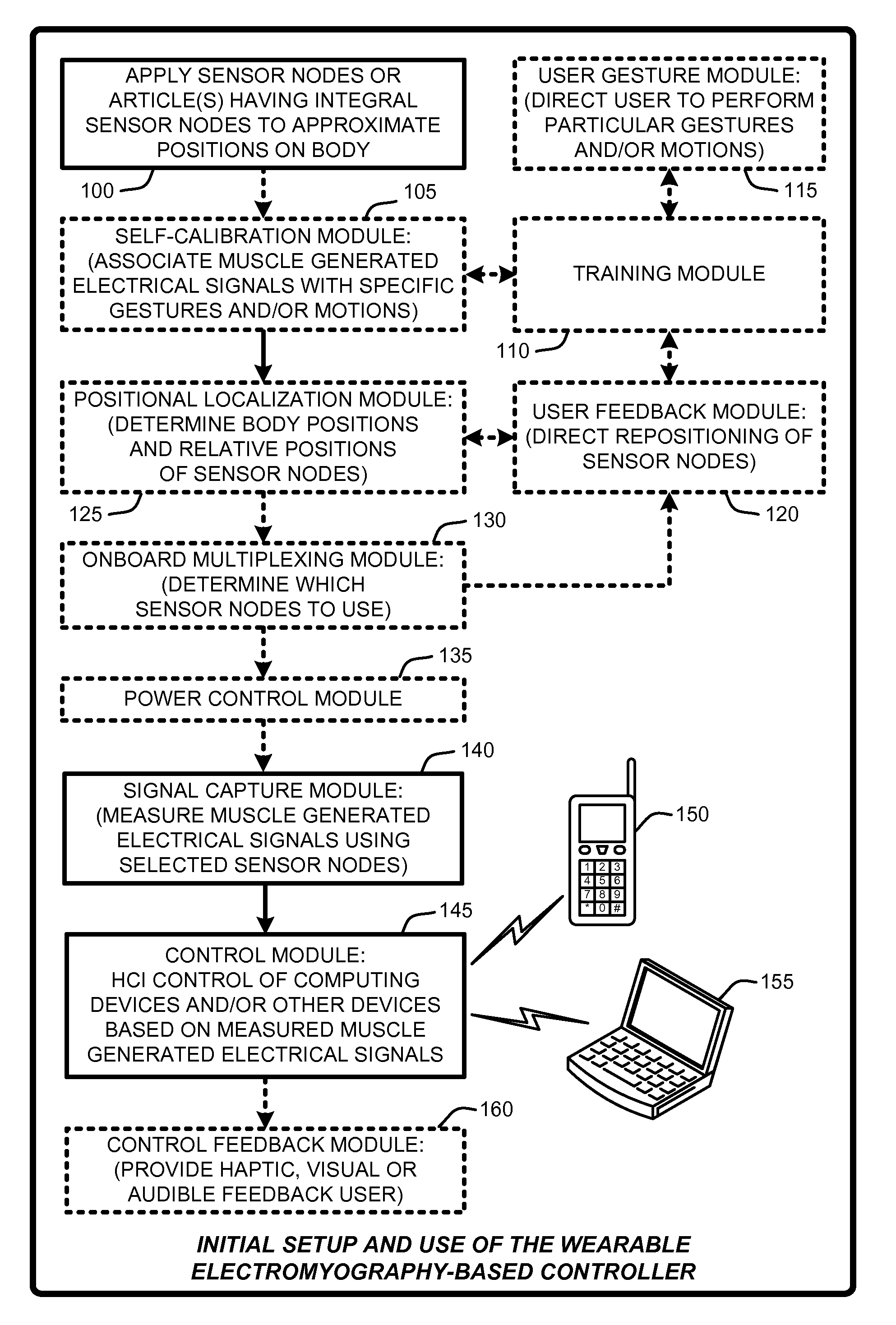

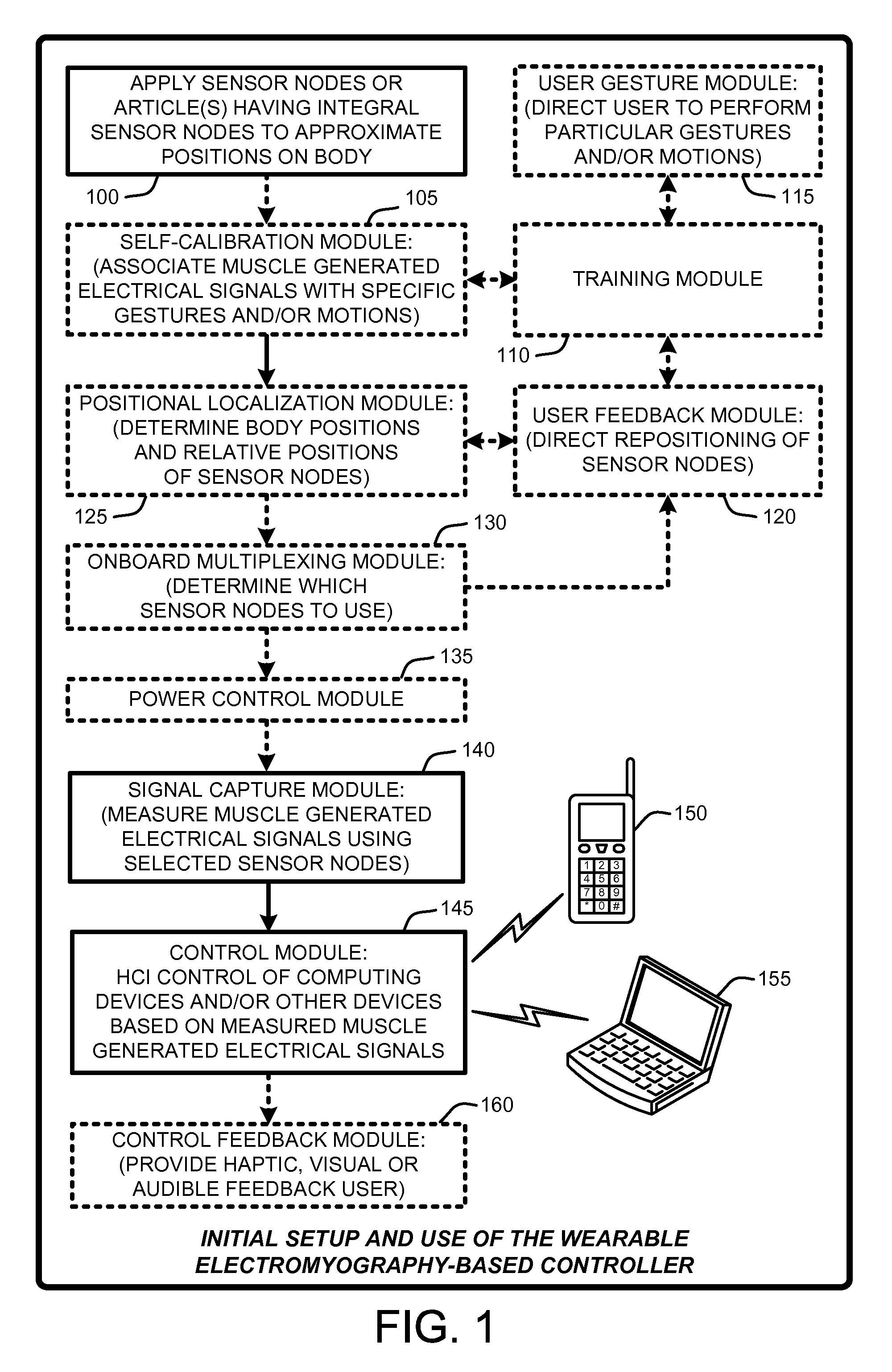

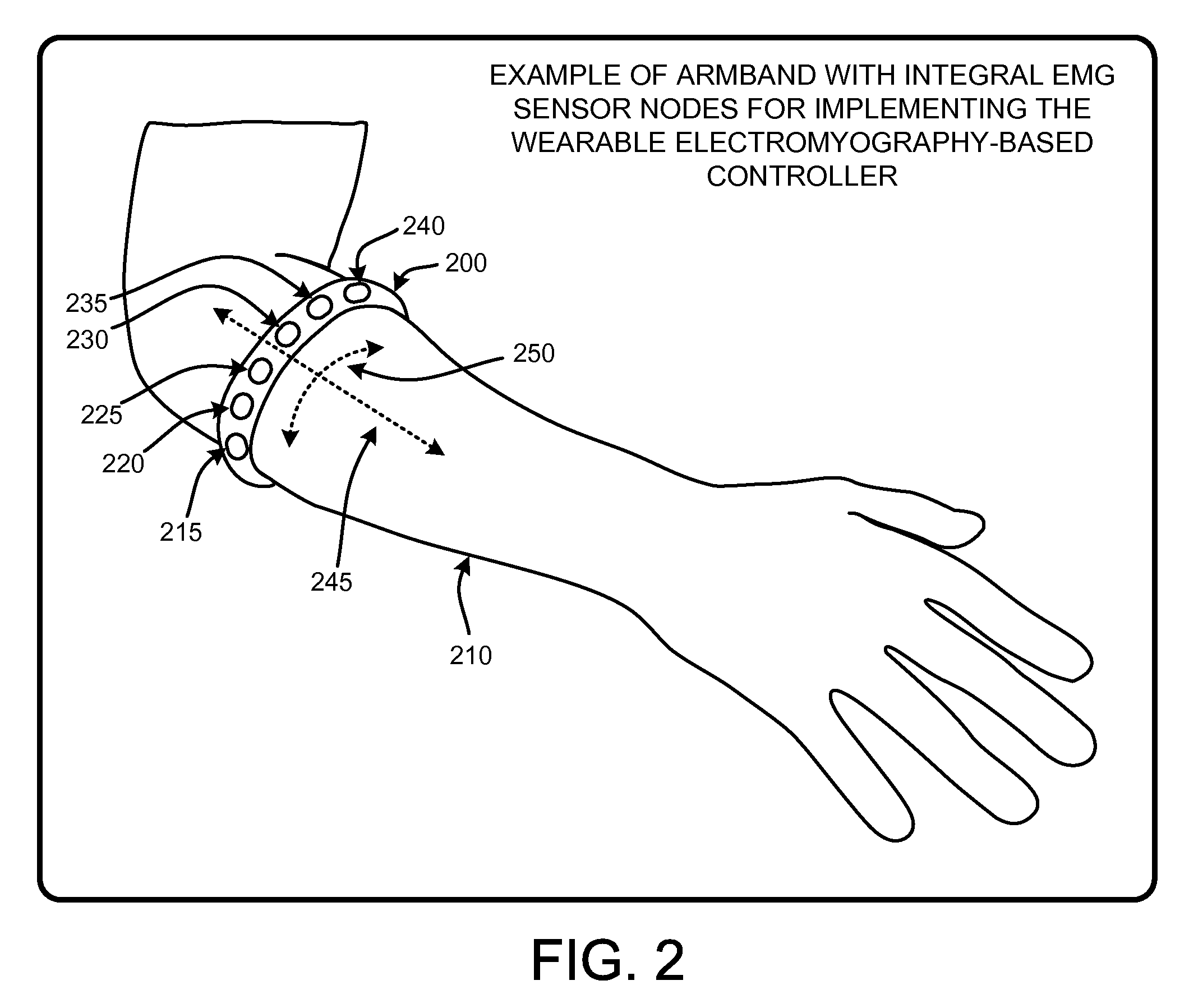

Wearable electromyography-based human-computer interface

ActiveUS20120188158A1Input/output for user-computer interactionElectromyographyHuman–machine interfacePhysical medicine and rehabilitation

A “Wearable Electromyography-Based Controller” includes a plurality of Electromyography (EMG) sensors and provides a wired or wireless human-computer interface (HCl) for interacting with computing systems and attached devices via electrical signals generated by specific movement of the user's muscles. Following initial automated self-calibration and positional localization processes, measurement and interpretation of muscle generated electrical signals is accomplished by sampling signals from the EMG sensors of the Wearable Electromyography-Based Controller. In operation, the Wearable Electromyography-Based Controller is donned by the user and placed into a coarsely approximate position on the surface of the user's skin. Automated cues or instructions are then provided to the user for fine-tuning placement of the Wearable Electromyography-Based Controller. Examples of Wearable Electromyography-Based Controllers include articles of manufacture, such as an armband, wristwatch, or article of clothing having a plurality of integrated EMG-based sensor nodes and associated electronics.

Owner:MICROSOFT TECH LICENSING LLC

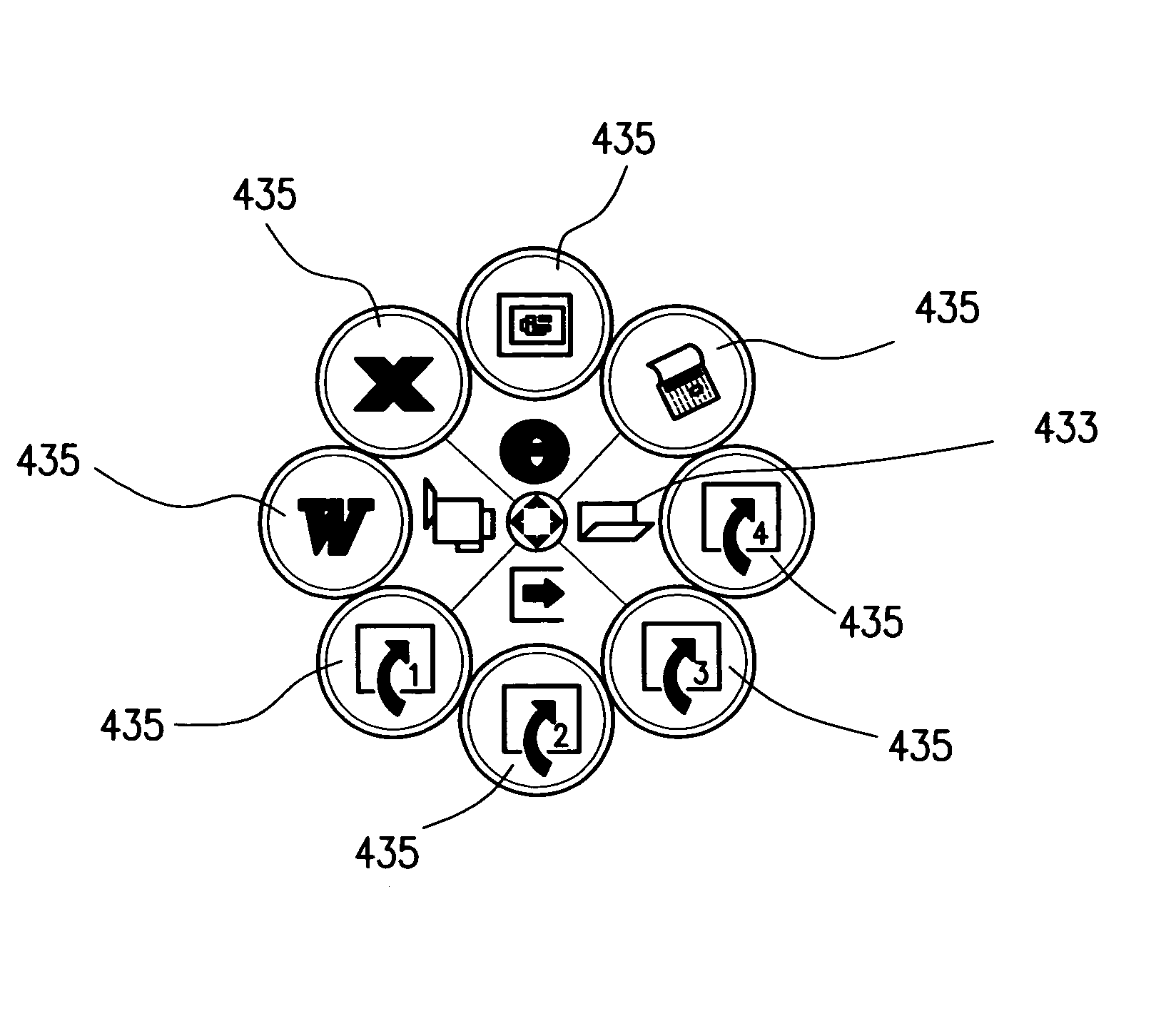

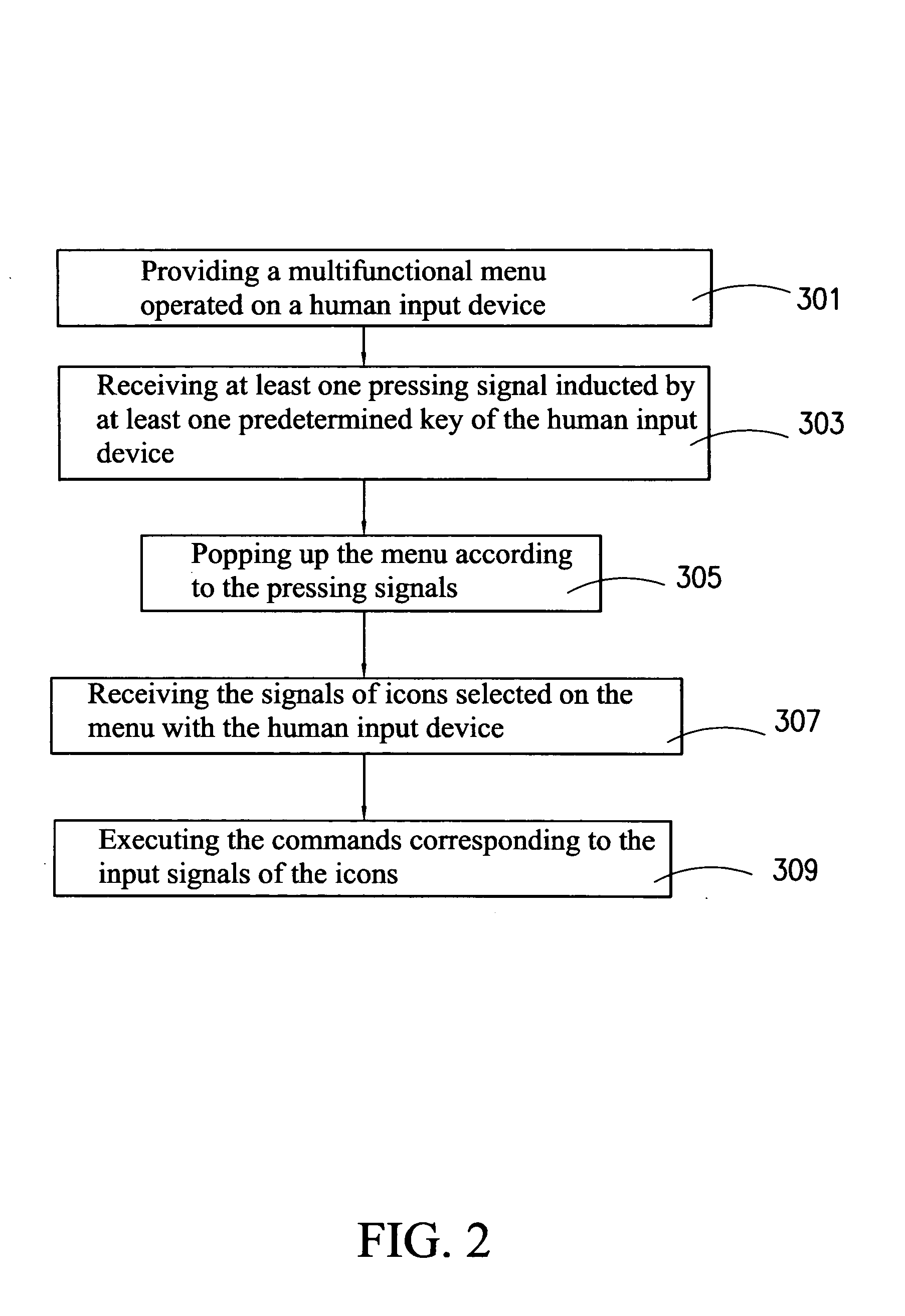

Method to process multifunctional menu and human input system

InactiveUS20050039140A1Simple and tidyIntuitive displayCathode-ray tube indicatorsInput/output processes for data processingHuman–machine interfaceOperational system

The processing method to deal with a multifunctional menu of a human input device is applied on a window operating system having plural window application programs. The menu operated on a human input device includes an auto-scroll menu to indicate scrolling function and a multifunctional menu so as to operate plural window application programs in a human interface mode. The multifunctional menu includes macro instruction icons, instruction icons corresponding to the macro instruction icons and a first switching icon for switching to the auto-scroll menu. The auto-scroll menu includes a second switching icon used on the auto-scroll menu for switching to the multifunctional menu.

Owner:BEHAVIOR TECH COMPUTER

Wearable electromyography-based controllers for human-computer interface

A “Wearable Electromyography-Based Controller” includes a plurality of Electromyography (EMG) sensors and provides a wired or wireless human-computer interface (HCl) for interacting with computing systems and attached devices via electrical signals generated by specific movement of the user's muscles. Following initial automated self-calibration and positional localization processes, measurement and interpretation of muscle generated electrical signals is accomplished by sampling signals from the EMG sensors of the Wearable Electromyography-Based Controller. In operation, the Wearable Electromyography-Based Controller is donned by the user and placed into a coarsely approximate position on the surface of the user's skin. Automated cues or instructions are then provided to the user for fine-tuning placement of the Wearable Electromyography-Based Controller. Examples of Wearable Electromyography-Based Controllers include articles of manufacture, such as an armband, wristwatch, or article of clothing having a plurality of integrated EMG-based sensor nodes and associated electronics.

Owner:MICROSOFT TECH LICENSING LLC

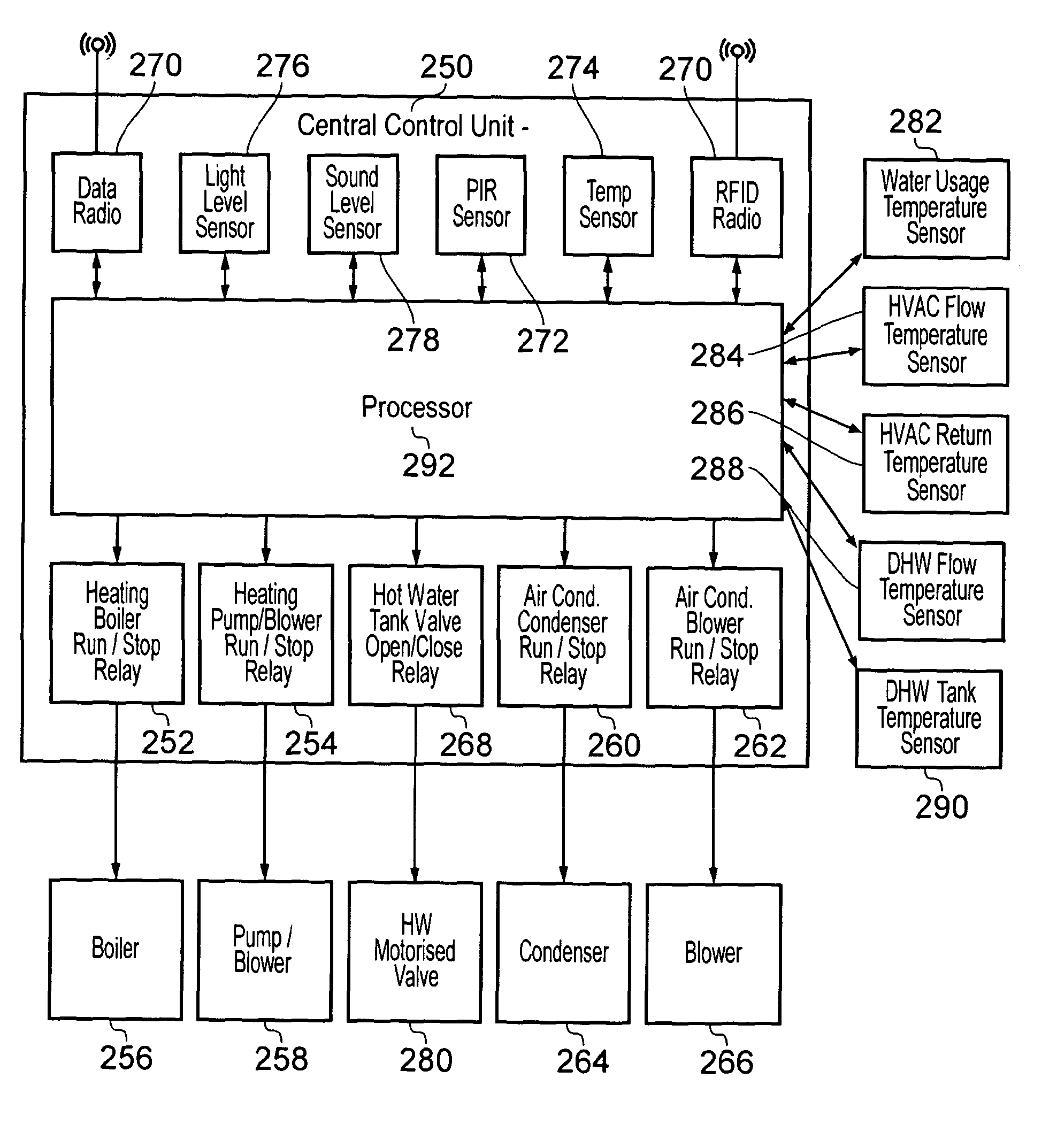

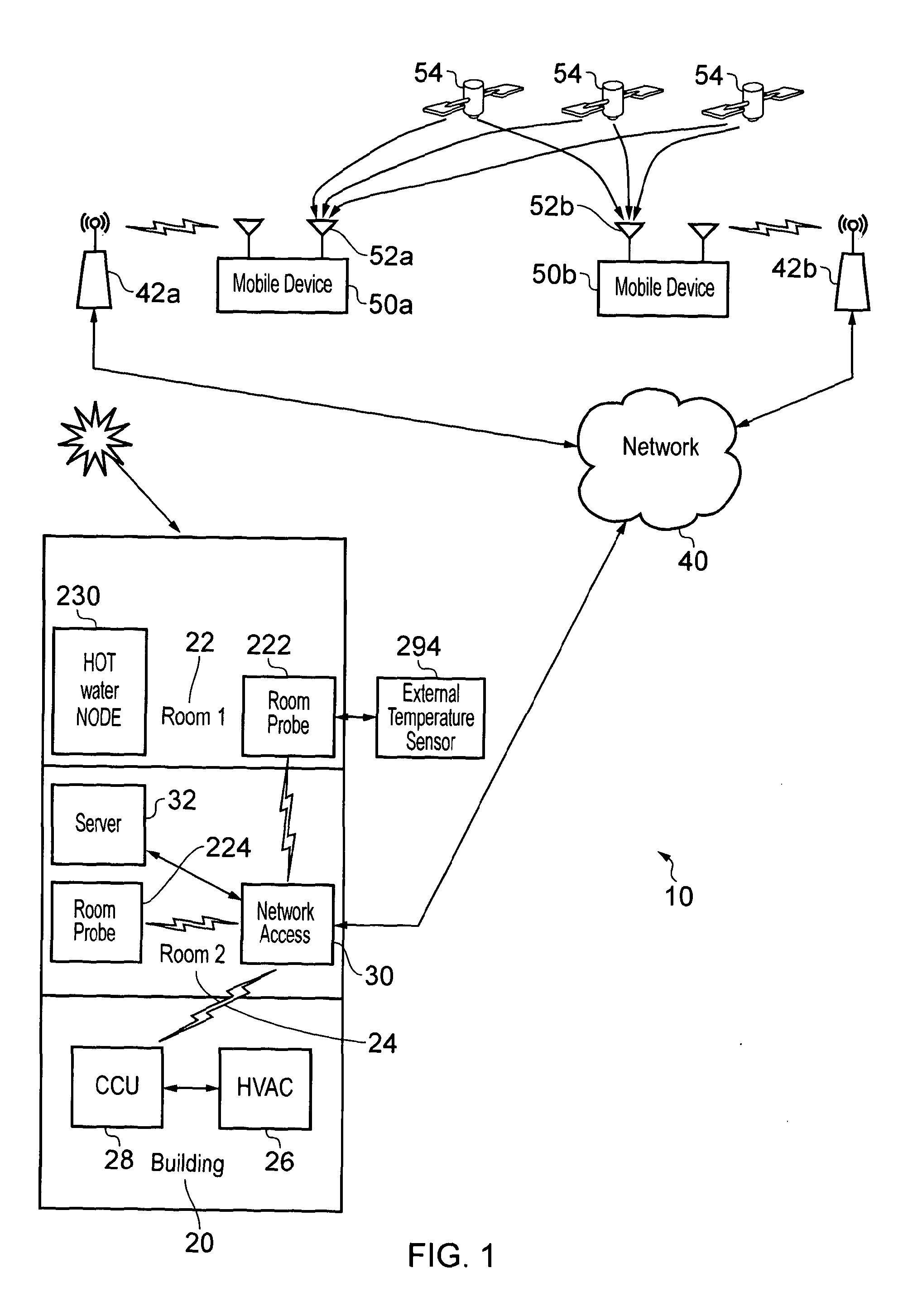

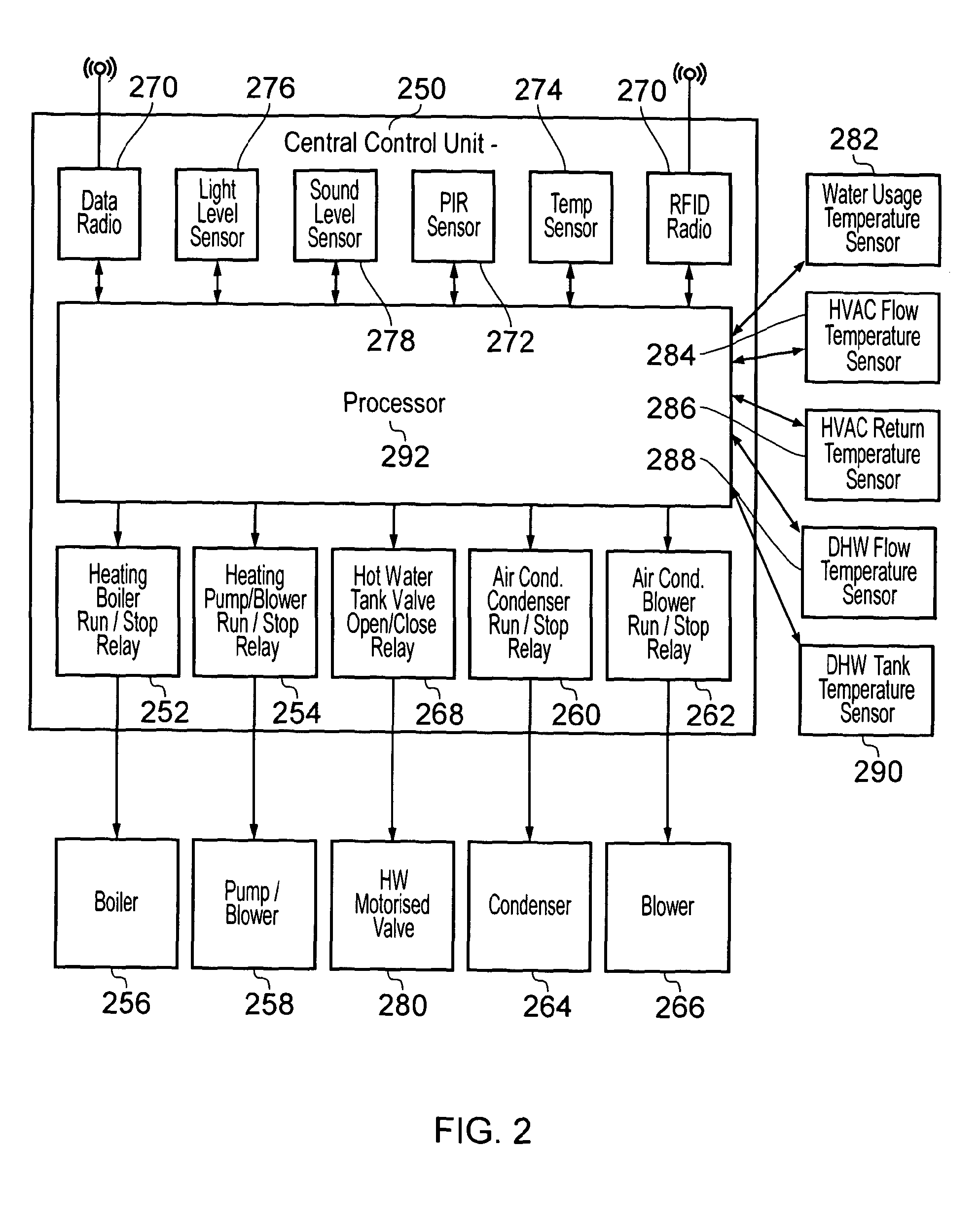

Building occupancy dependent control system

InactiveUS20130073094A1Low production costQuick installationProgramme controlSampled-variable control systemsHuman–machine interfaceControl system

An HVAC control system is described comprising: a server (32) having planned information, a man-machine interface (50) capable of communication with the server (32) to provide dynamic information about building occupancy based on a change in cold water in a mains riser. A central control unit (28) which can communicate with the server (32), and a room node (22, 24) for providing information about conditions within the room whereby, the information about room conditions is compared to planned information and / or dynamic information and adjustments made accordingly. The room node (22, 24) may comprise sensors (276, 278, 272, 274) which provide information about conditions in the room. Dynamic information can include changes to planned occupancy, the effect of solar heating and weather conditions. Changes to planned occupancy can be established through detecting location (internally or externally) or destination of a user; and calculating estimated time of arrival of a user.

Owner:TELEPURE

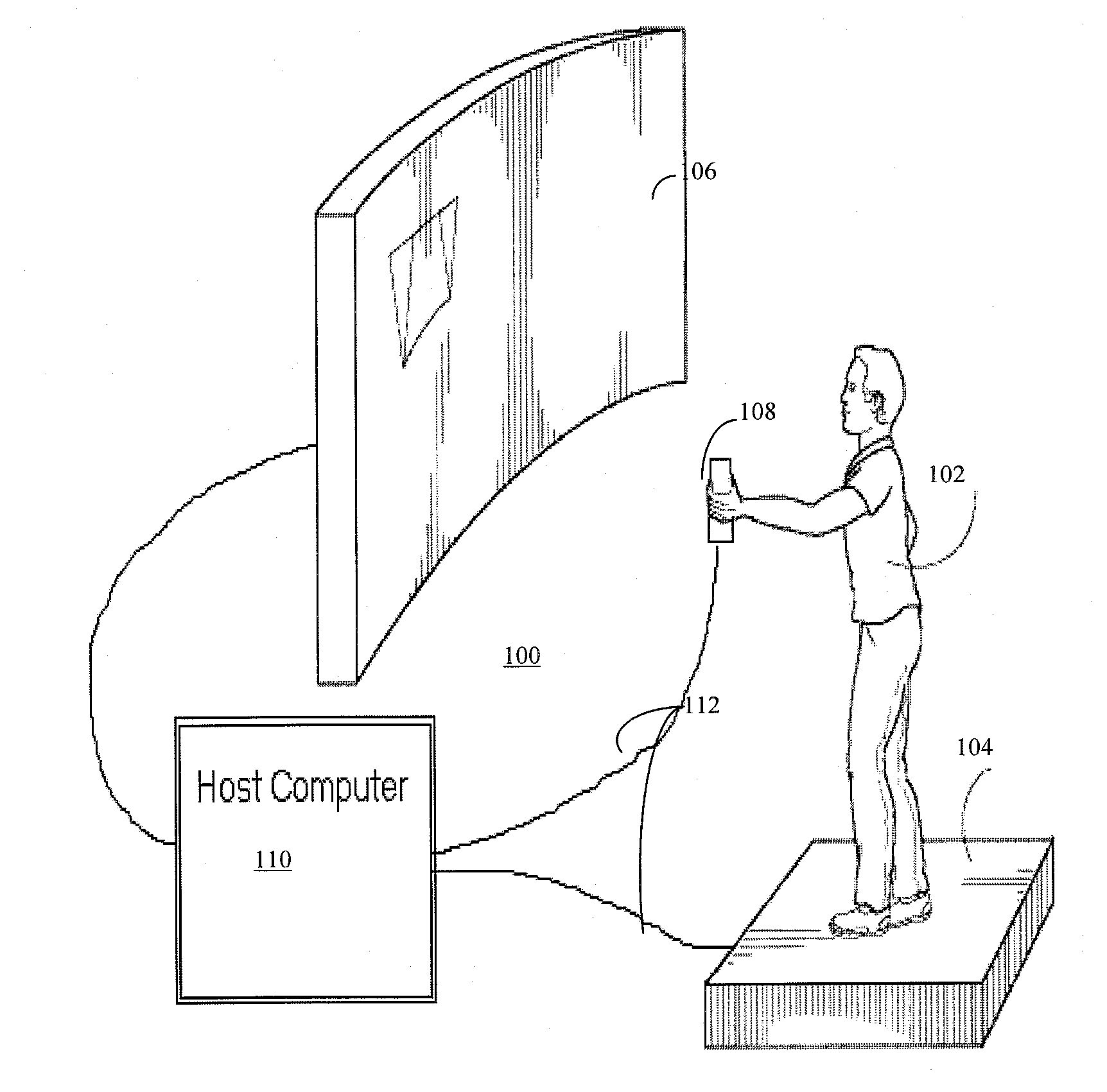

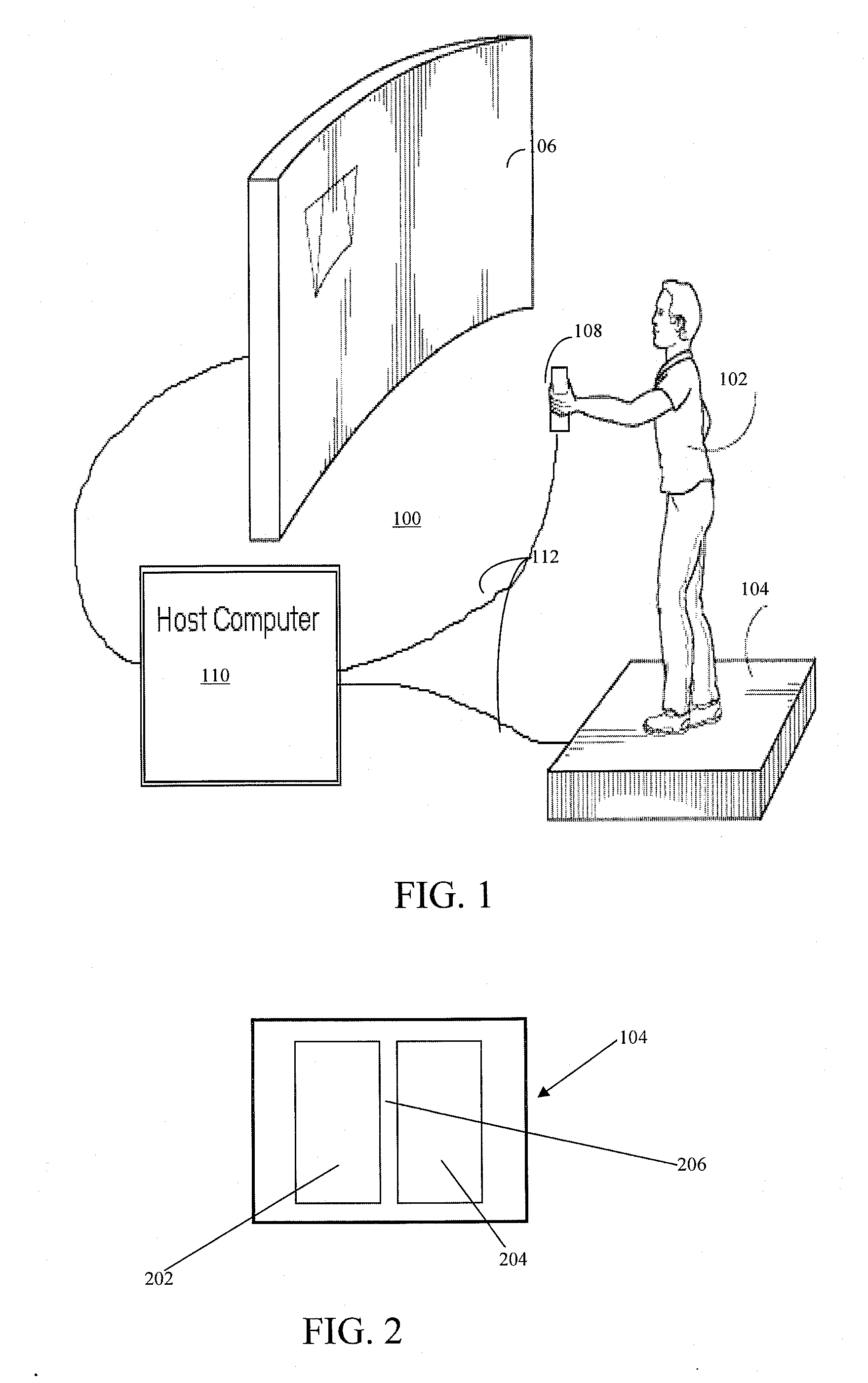

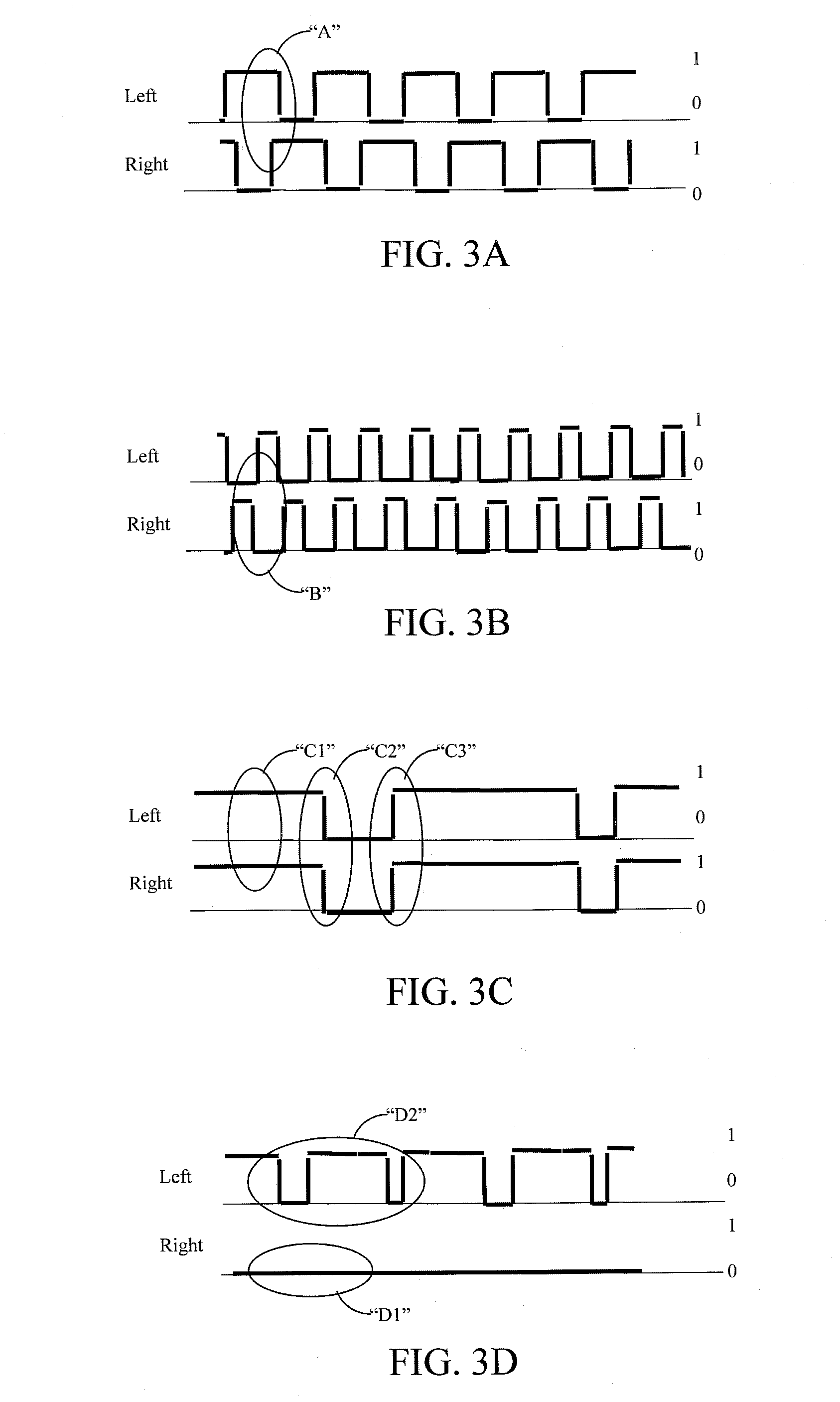

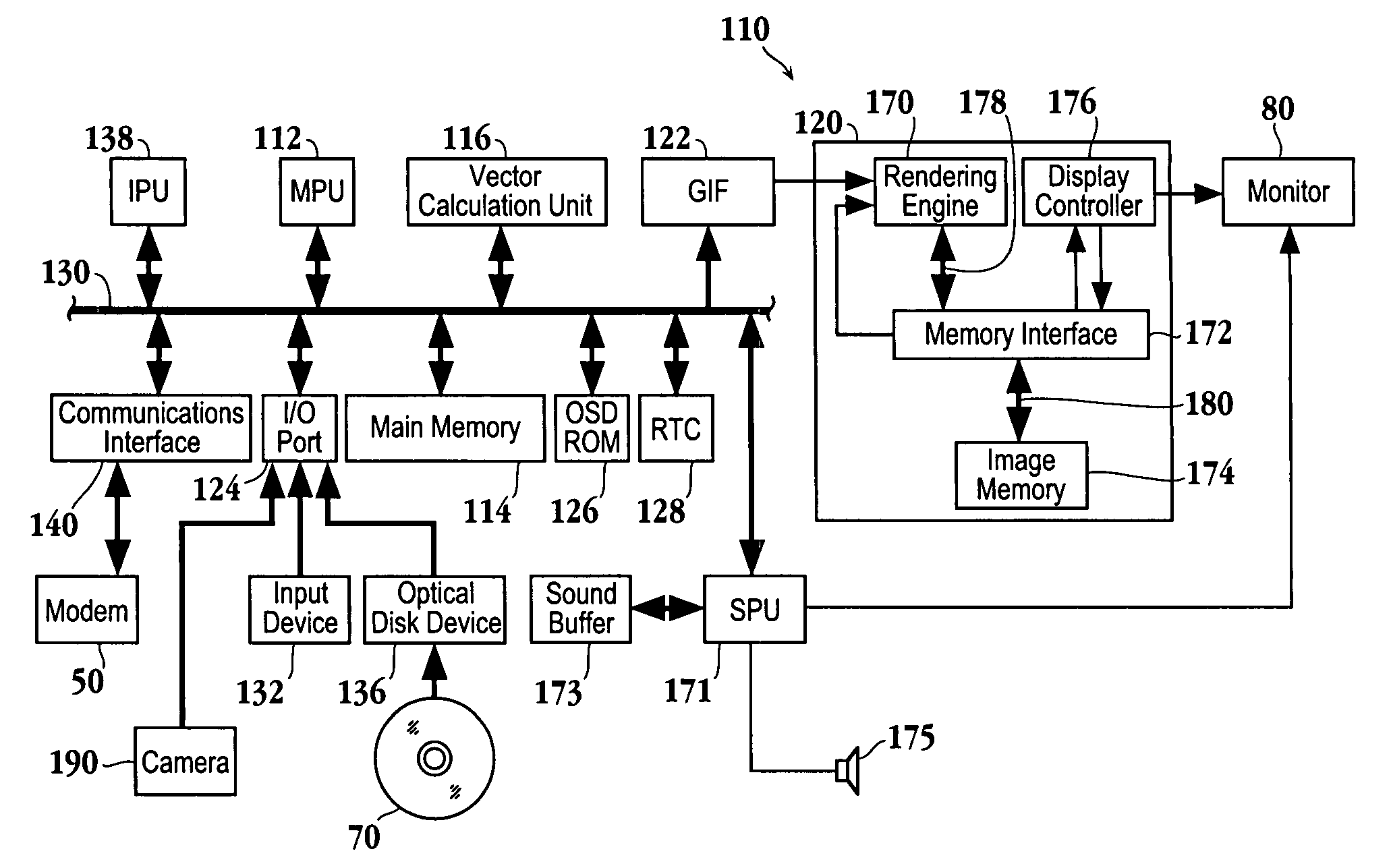

Ambulatory based human-computer interface

InactiveUS20060262120A1AnimationInput/output processes for data processingHuman–machine interfaceAmbulatory

A human computer interface system includes a user interface having sensors adapted to detect footfalls of a user's feet and generate corresponding sensor signals, a host computer communicatively coupled to the user interface and adapted to manage a virtual environment containing an avatar associated with the user, and control circuitry adapted to control the avatar within the virtual environment to perform one of a plurality of virtual activities based at least in part upon at least one of a sequence and timing of detected footfalls of the user. The virtual activities include at least two of standing, walking, jumping, hopping, jogging, and running. The host computer is further adapted to drive a display to present a view to the user of the avatar performing the at least one virtual activity within the virtual environment.

Owner:OUTLAND RES

Man-machine interface using a deformable device

InactiveUS7102615B2Input/output for user-computer interactionCharacter and pattern recognitionHuman–machine interfaceMan machine

In one embodiment a method for triggering input commands of a program run on a computing system is provided. The method initiates with monitoring a field of view in front of a capture device. Then, an input object is identified within the field of view. The detected input object is analyzed for changes in shape. Next, a change in the input object is detected. Then, an input command is triggered at the program run on the computing system. The triggering is a result of the detected change in the input object. An input detection program and a computing system are also provided.

Owner:SONY COMPUTER ENTERTAINMENT INC

Computer interface having a single window mode of operation

InactiveUS6957395B1Reduce confusionMinimal amountProgram controlMemory systemsHuman–machine interfaceComputer monitor

A computer-human interface manages the available space of a computer display in a manner which reduces clutter and confusion caused by multiple open windows. The interface includes a user-selectable mode of operation in which only those windows associated with the currently active task are displayed on the computer monitor. All other windows relating to non-active tasks are minimized by reducing them in size or replacing them with a representative symbol, such as an icon, so that they occupy a minimal amount of space on the monitor's screen. When a user switches from the current task to a new task, by selecting a minimized window, the windows associated with the current task are automatically minimized as the window pertaining to the new task is displayed at its normal size. As a result, the user is only presented with the window that relates to the current task of interest, and clutter provided by non-active tasks is removed.

Owner:APPLE INC

Programmable tactile touch screen displays and man-machine interfaces for improved vehicle instrumentation and telematics

InactiveUS20090273563A1Easy to useDashboard fitting arrangementsInstrument arrangements/adaptationsDashboardHuman–machine interface

Disclosed are new methods and apparatus particularly suited for applications in a vehicle, to provide a wide range of information, and the safe input of data to a computer controlling the vehicle subsystems or “Telematic” communication using for example GM's “ONSTAR” or cellular based data sources. Preferred embodiments utilize new programmable forms of tactile touch screens and displays employing tactile physical selection or adjustment means which utilize direct optical data input. A revolutionary form of dashboard or instrument panel results which is stylistically attractive, lower in cost, customizable by the user, programmable in both the tactile and visual sense, and with the potential of enhancing interior safety and vehicle operation. Non-automotive applications of the invention are also disclosed, for example means for general computer input using touch screens and home automation systems.

Owner:APPLE INC

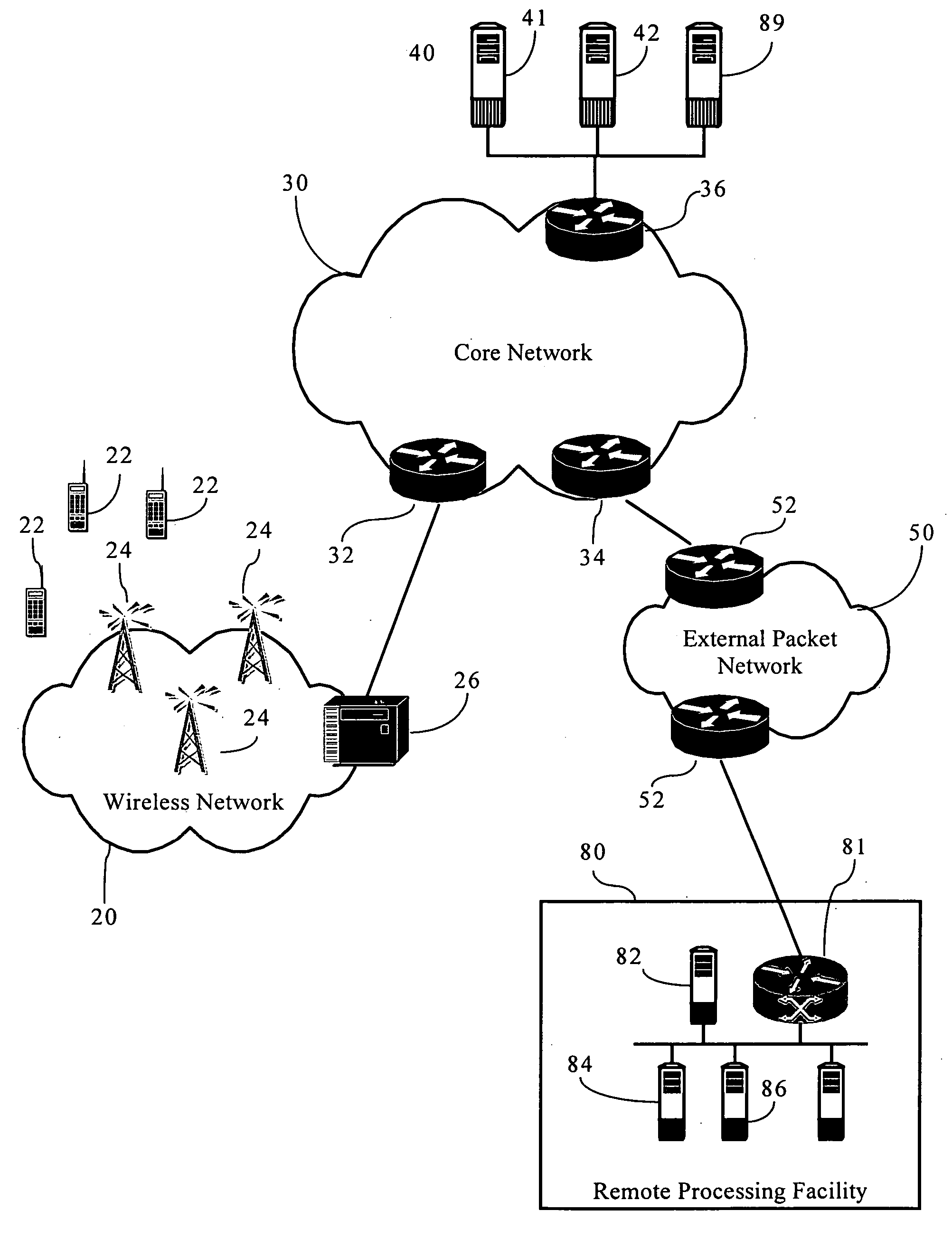

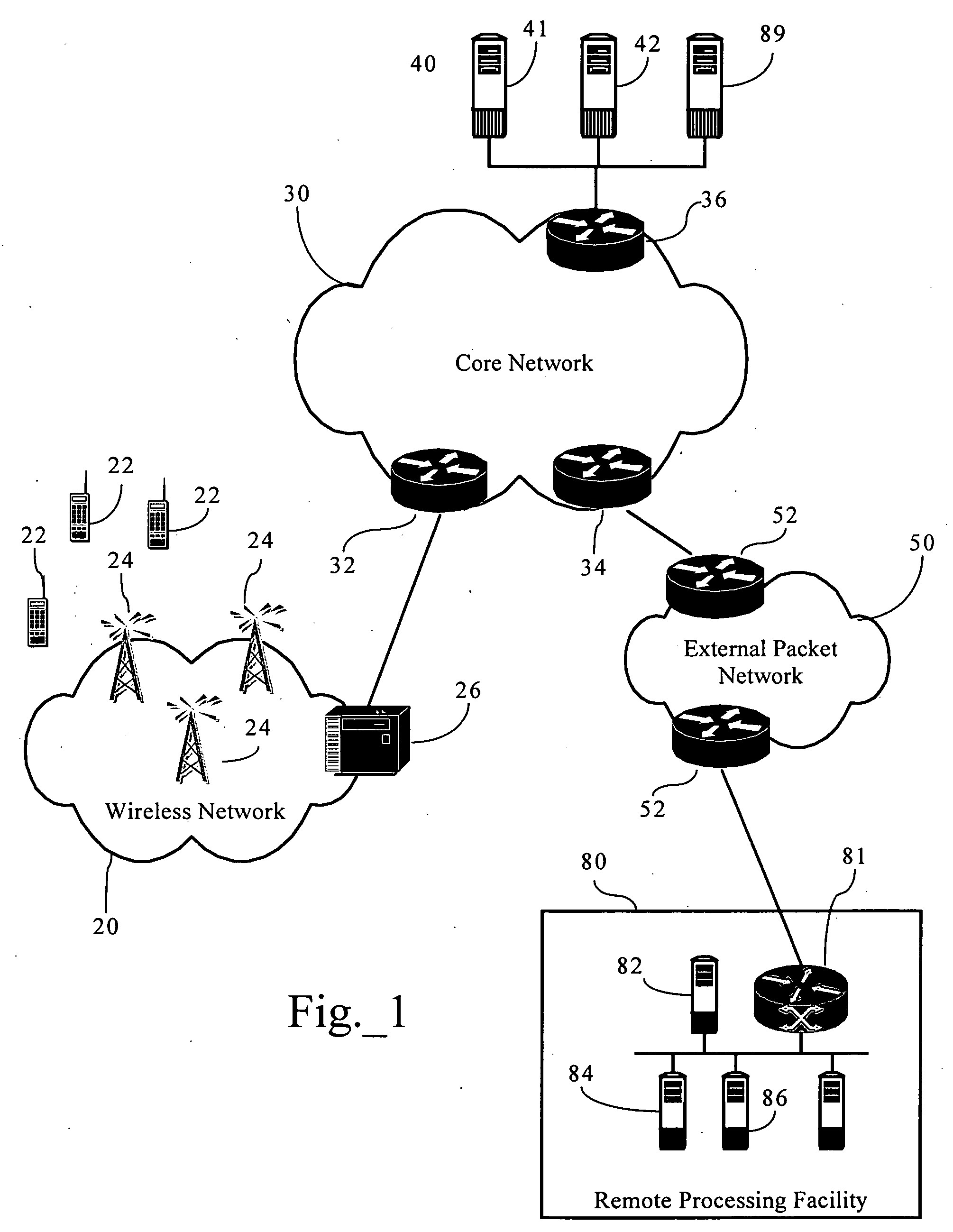

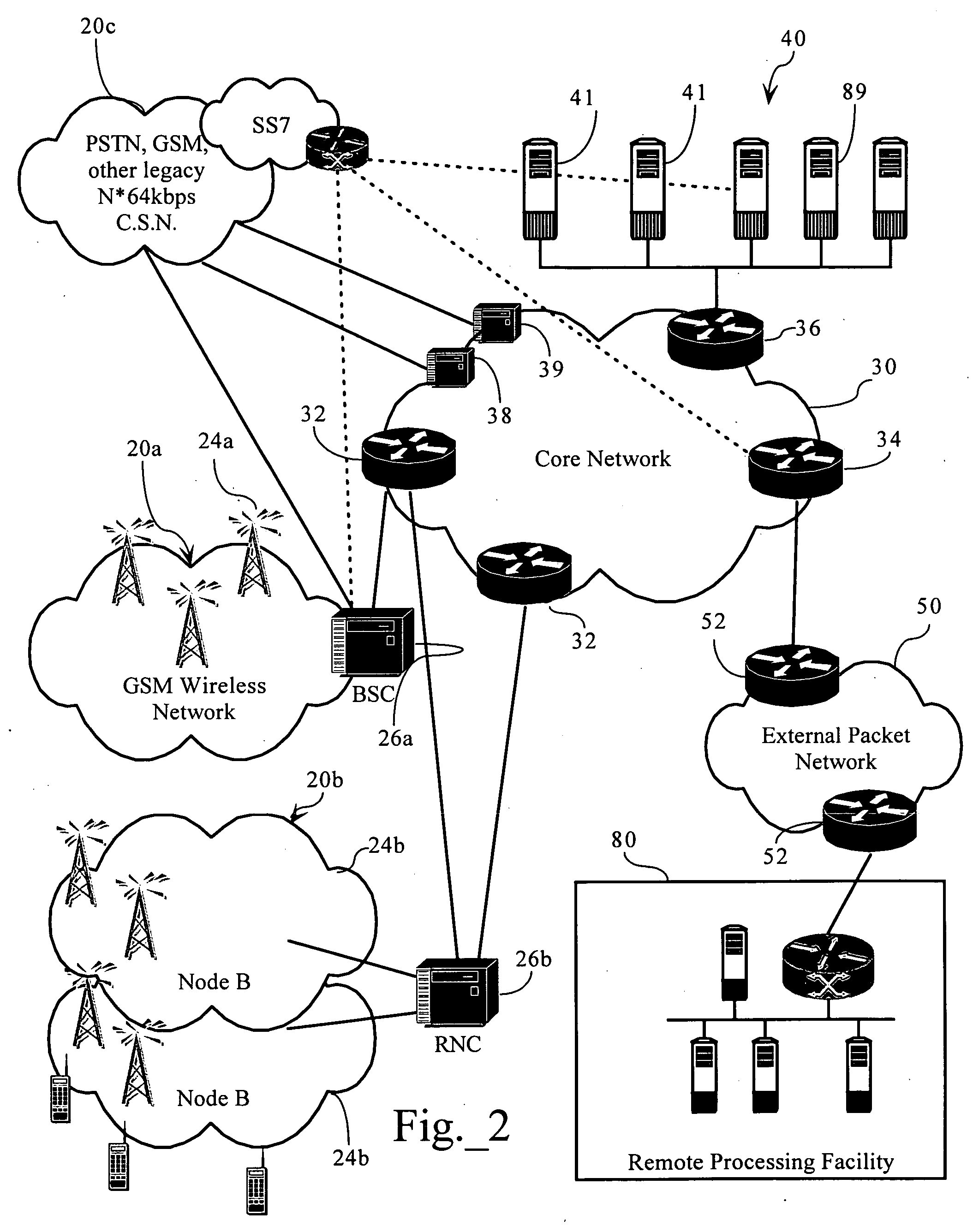

Dynamically distributed, portal-based application services network topology for cellular systems

InactiveUS20060111095A1Increase incomeEasily augment and/or customize the “look and feel”Network topologiesAutomatic exchangesHuman–machine interfaceData acquisition

Methods, apparatuses and systems directed to a portal-based, dynamically distributed application topology for cellular systems. The portal-based application topology, in one implementation, is an application architecture featuring strategic dynamic distribution of the man-machine interface (such as data acquisition and information presentation) and the backend processing aspects, of a particular application. In one implementation, all terminal interfacing aspects (such as data acquisition, data presentation) are formatted and handled by systems associated with a cellular system operator, as described below, while background processing is performed by a processing facility remote to the cellular system operator. In one implementation, the distributed application topology allows a mobile station, such as a cellular telephone, to simply act as a thin-client accessing an application server via a cellular systems operator's web portal for page-based interface screens.

Owner:GLENN PATENT GROUP

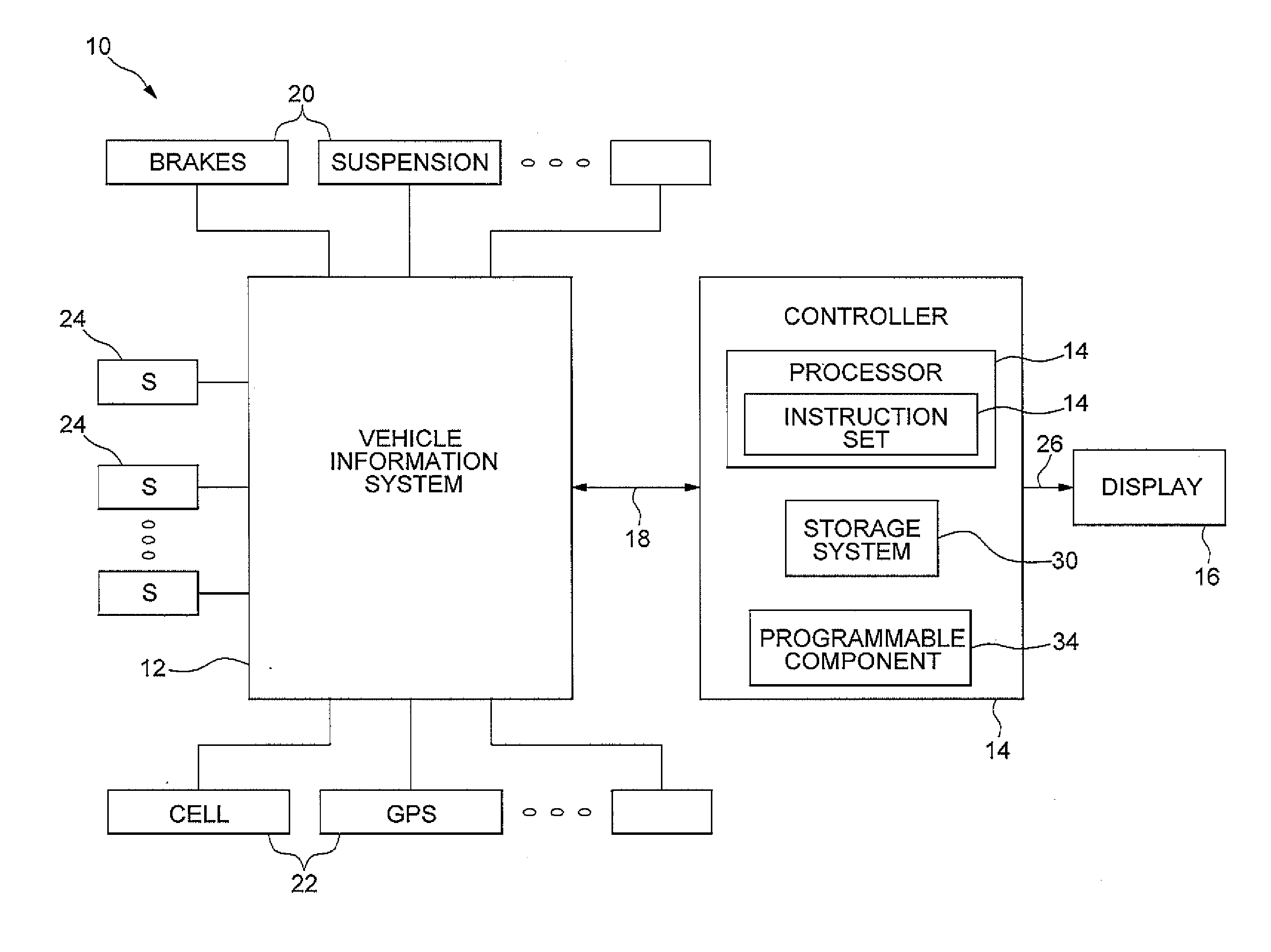

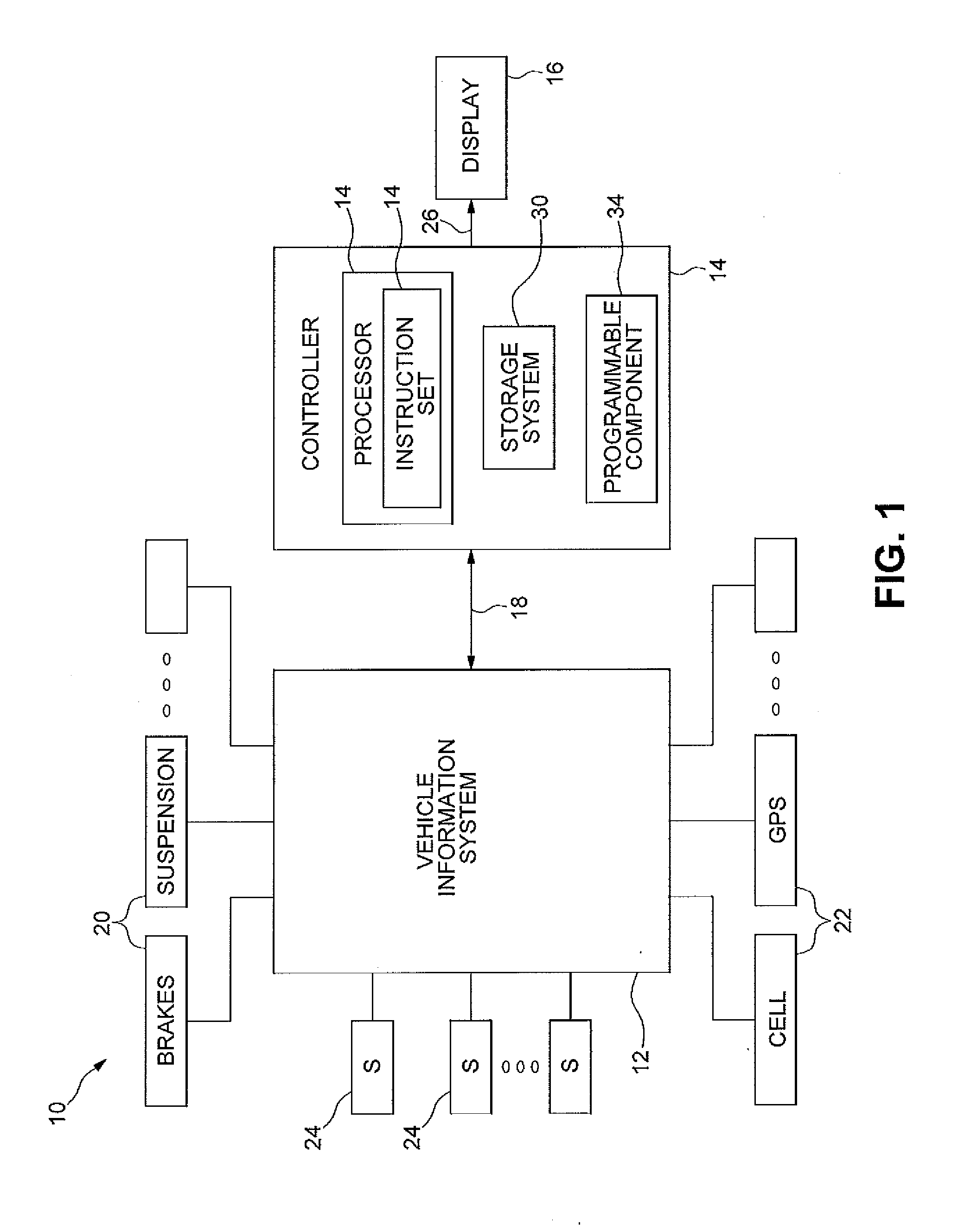

Anticipatory and adaptive automobile hmi

InactiveUS20110144857A1Vehicle testingDashboard fitting arrangementsHuman–machine interfaceControl system

An anticipatory and adaptive human machine interface control system for an automobile and a method for adaptively modifying an information display in an automobile are disclosed, wherein the control system and method control the information displayed in response to a vehicle information signal. The adaptive human machine interface control system for a vehicle includes a vehicle information system adapted to generate and transmit a vehicle information signal including data representing a vehicle condition and a controller adapted to receive and analyze the vehicle information signal. The controller generates and transmits a control signal for controlling a visual representation of data in response to the vehicle information signal.

Owner:VISTEON GLOBAL TECH INC

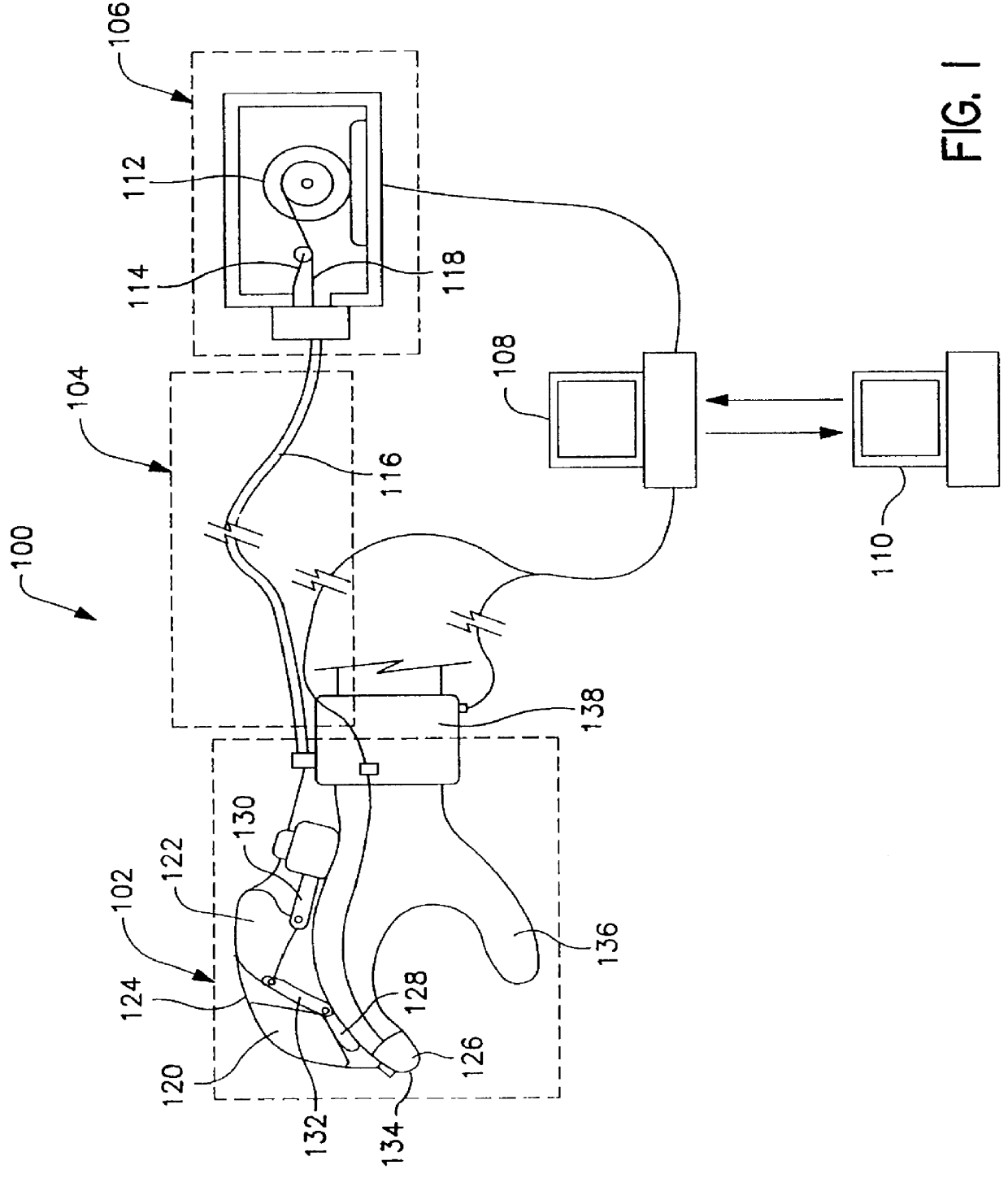

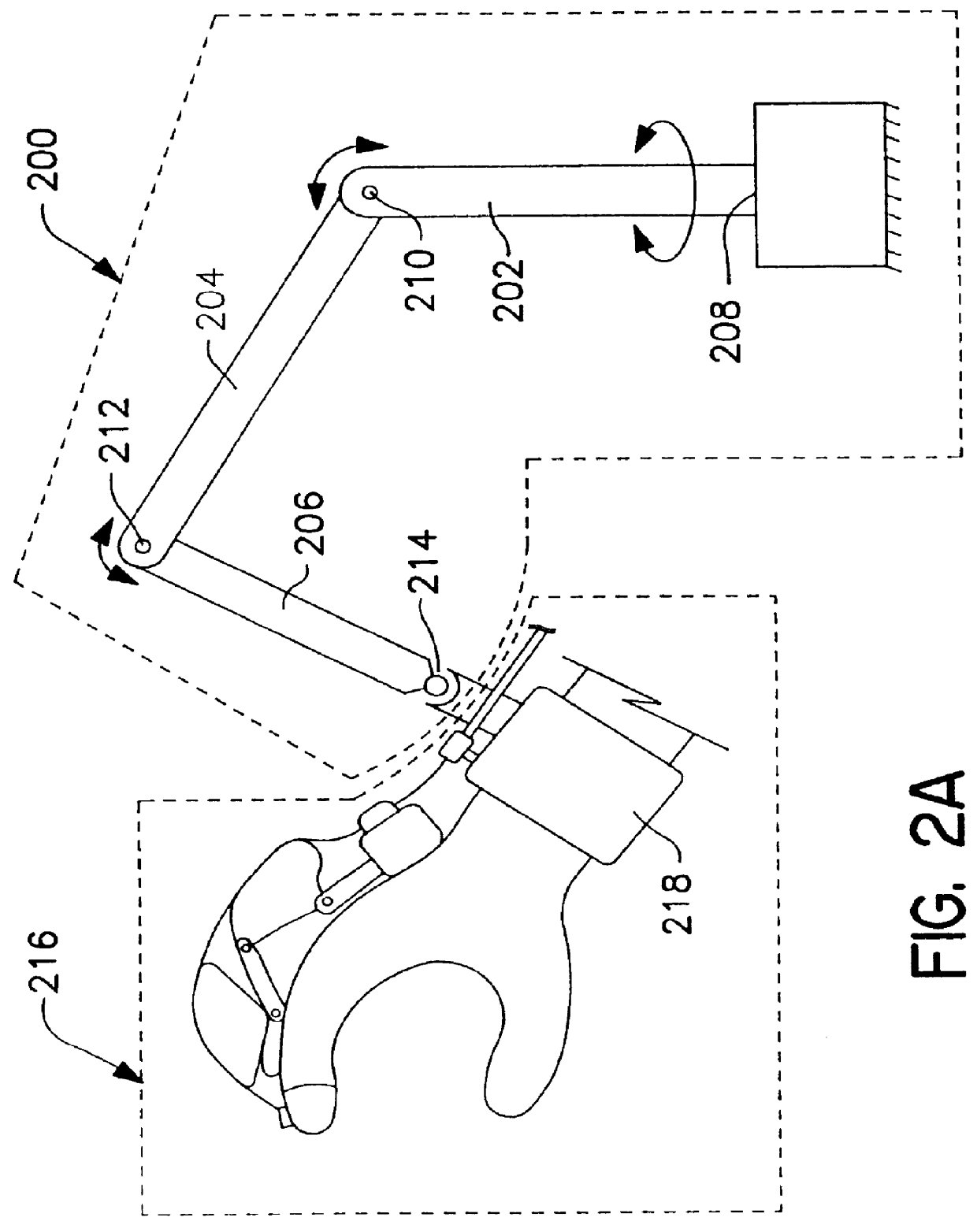

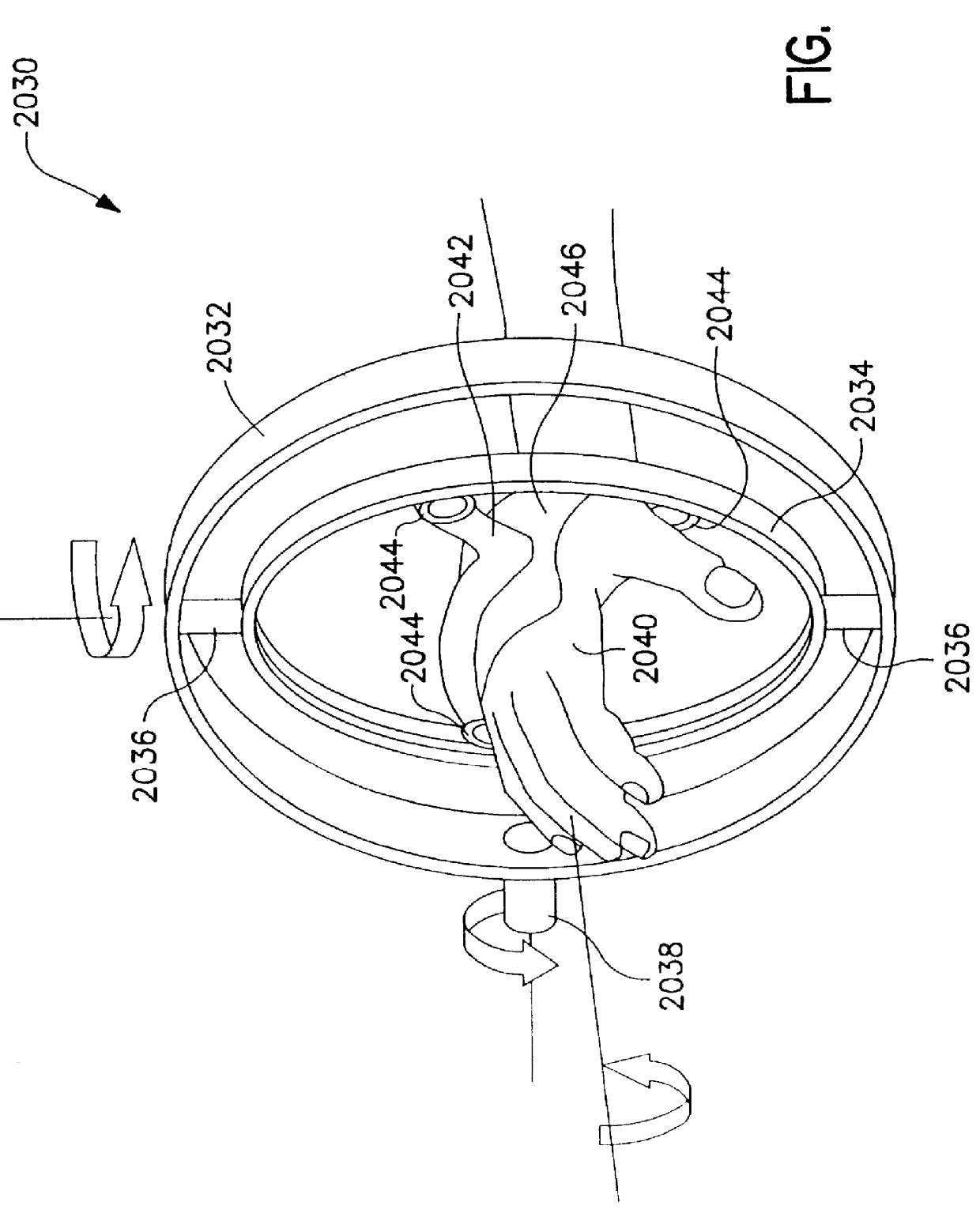

Force-feedback interface device for the hand

InactiveUS6042555ALarge momentInput/output for user-computer interactionPerson identificationHuman–machine interfaceMan machine

A man-machine interface is disclosed which provides force information to sensing body parts. The interface is comprised of a force-generating device (106) that produces a force which is transmitted to a force-applying device (102) via force-transmitting means (104). The force-applying device applies the generated force to a sensing body part. A force sensor associated with the force applies device and located in the force applicator (126) measures the actual force applies to the sensing body part, while angle sensors (136) measure the angles of relevant joint body parts. A force-control unit (108) uses the joint body part position information to determine a desired force value to be applies to the sensing body part. The force-control unit combines the joint body part position information with the force sensor information to calculate the force command which is sent to the force-generating device.

Owner:IMMERSION CORPORATION

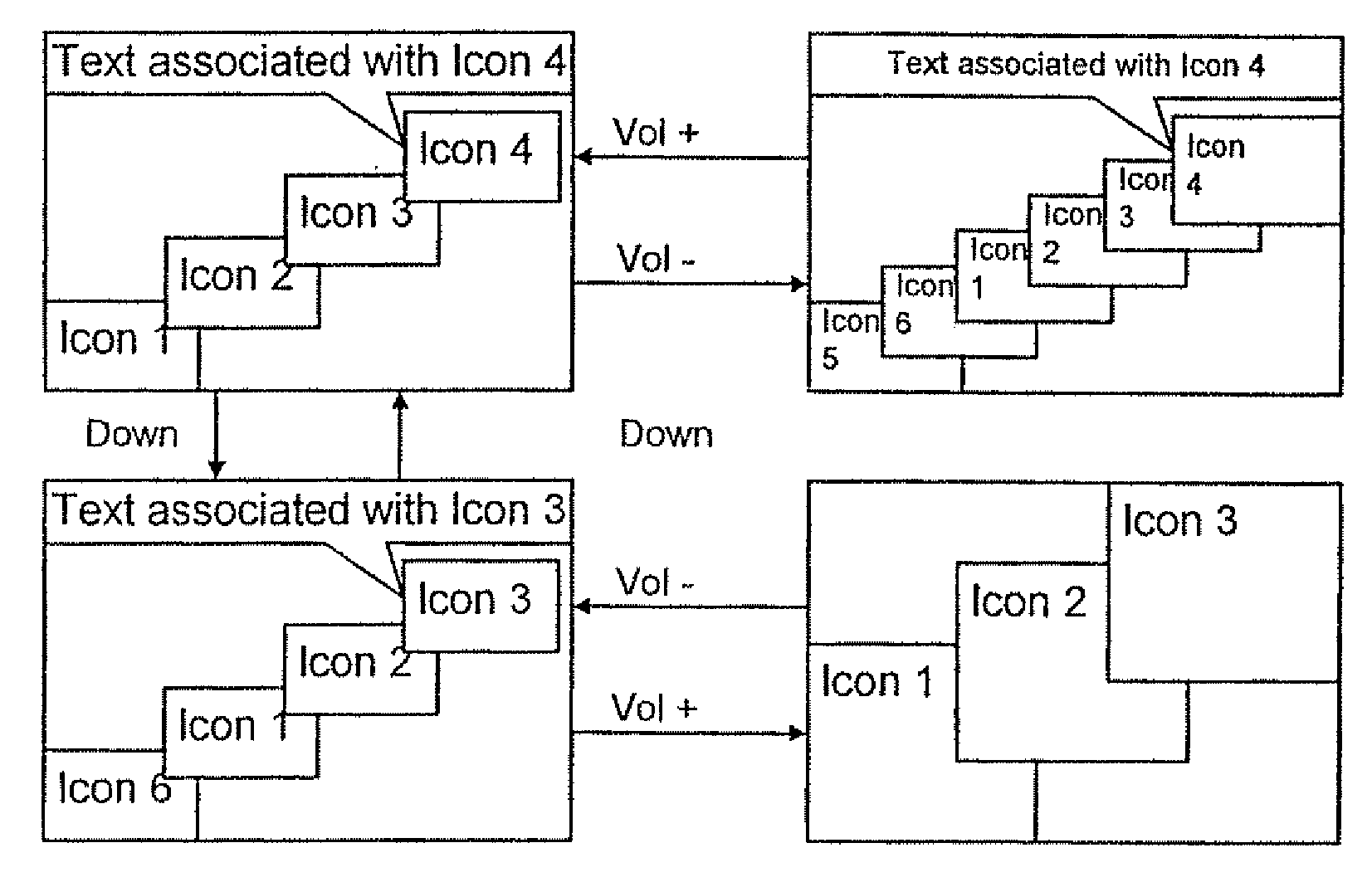

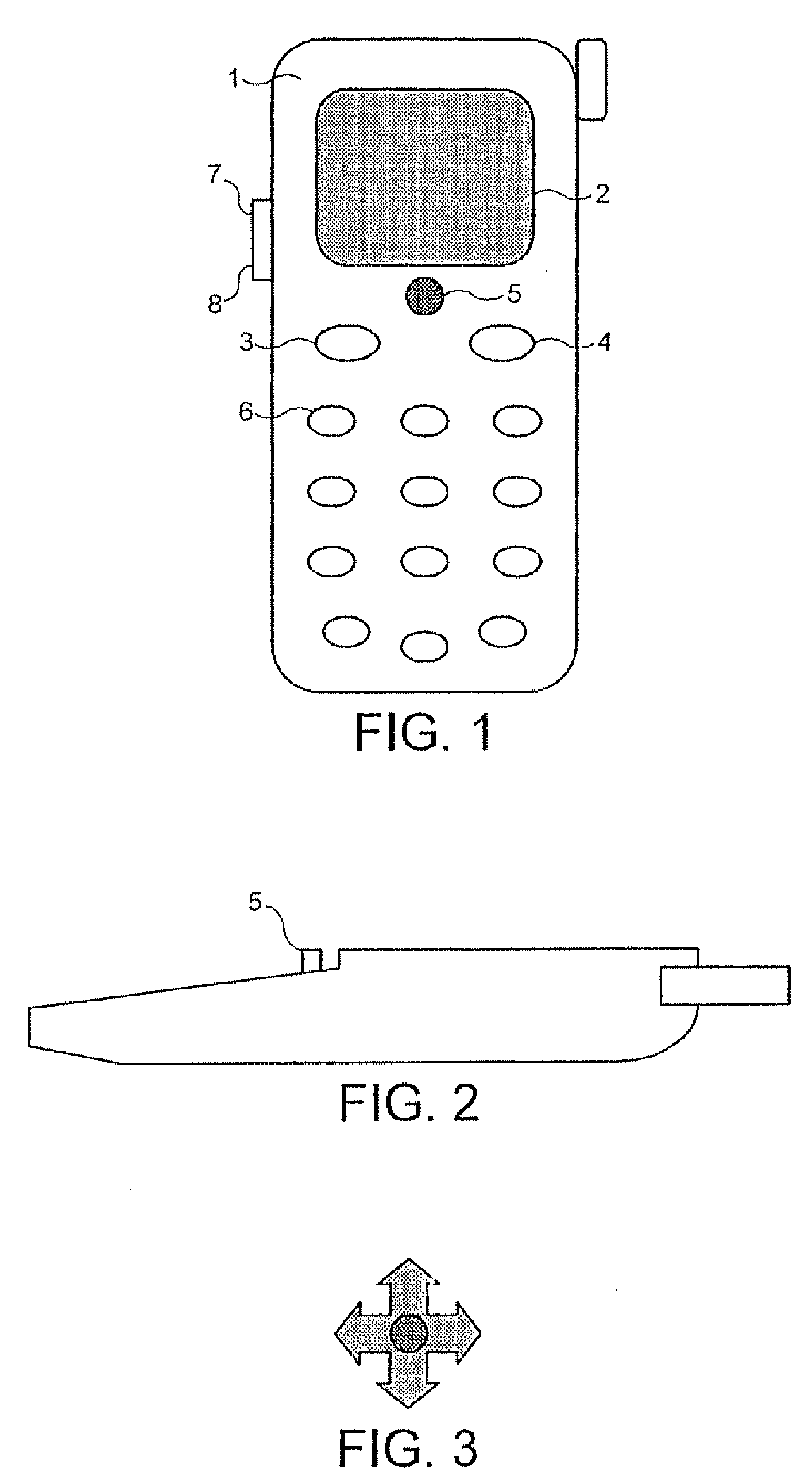

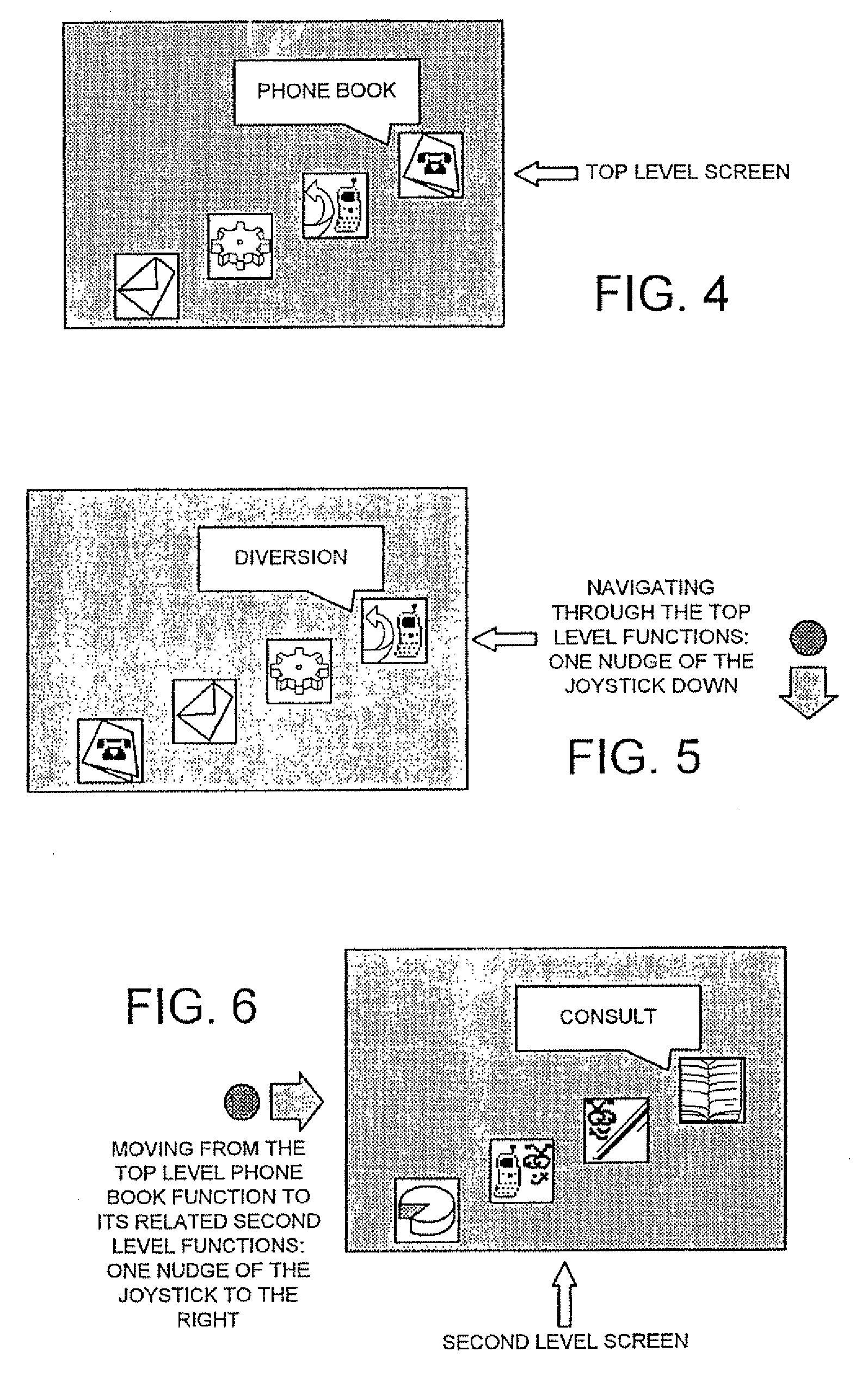

Mobile telephone with improved man machine interface

InactiveUS20070213099A1Save memoryEliminate needSubstation equipmentRadio transmissionHuman–machine interfaceCall forwarding

The present invention envisages a GSM mobile telephone in which a line of icons is displayed on a display. As a user navigates through the displayed line of icons, the positions of the icons alter so that the selectable icon moves to the head of the line. This approach makes it very clear (i) which icon is selectable at any time and (ii) where that icon sits in relation to other icons at the same functional level (e.g. only first level icons will be present in one line). First level icons typically relate to the following functions: phonebook; messages; call register; counters; call diversion; telephone settings; network details; voice mail and IrDA activation.

Owner:GOOGLE TECHNOLOGY HOLDINGS LLC

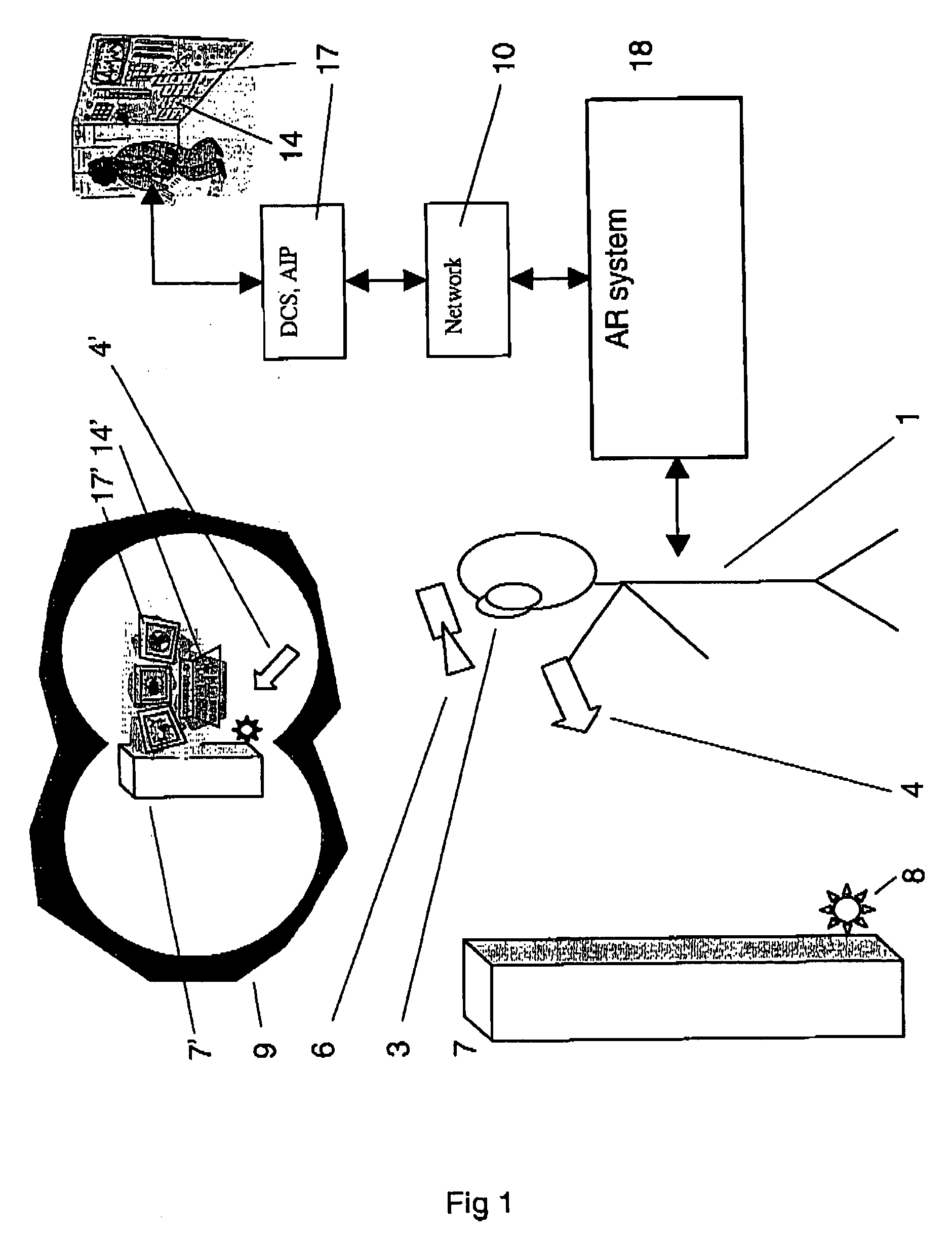

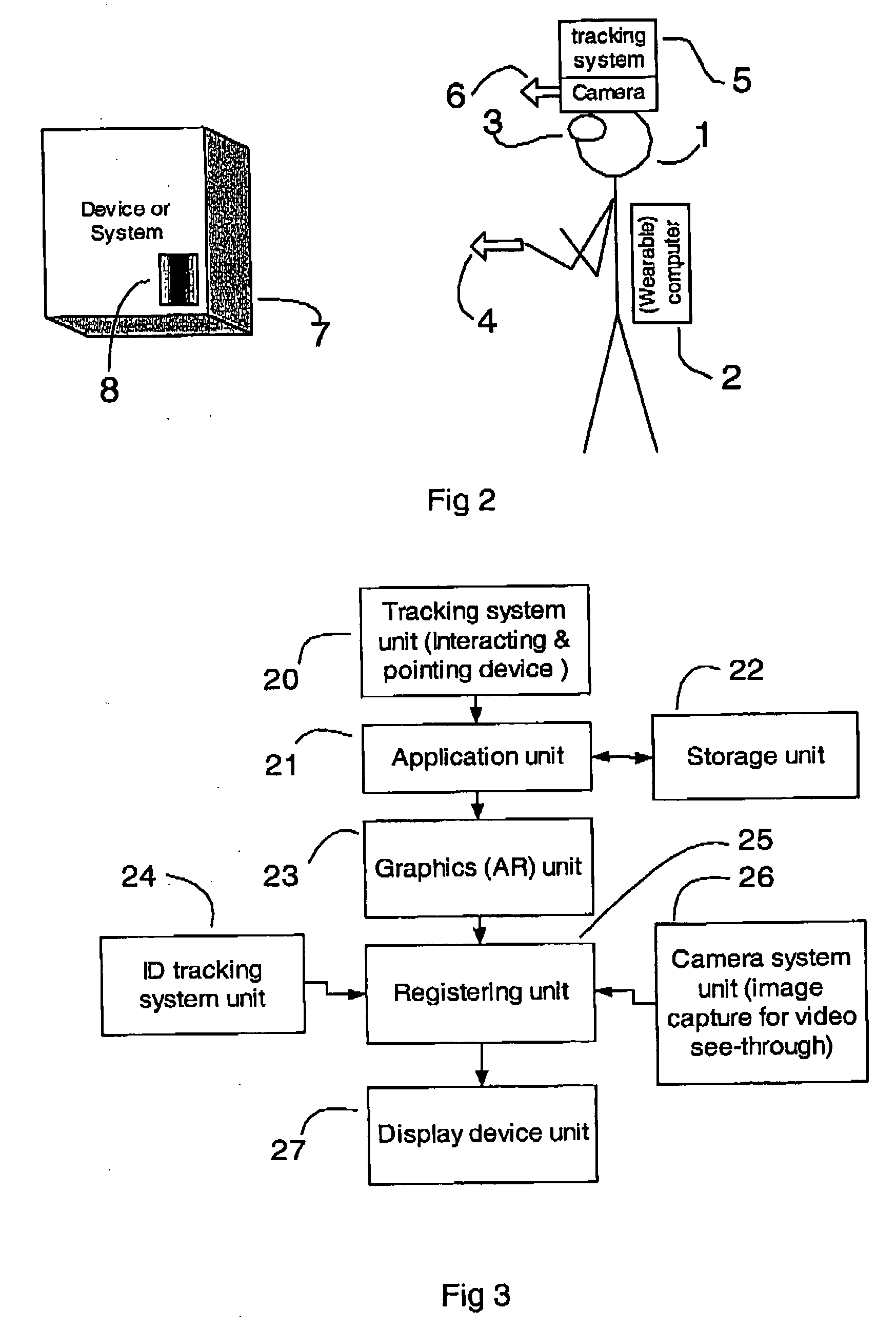

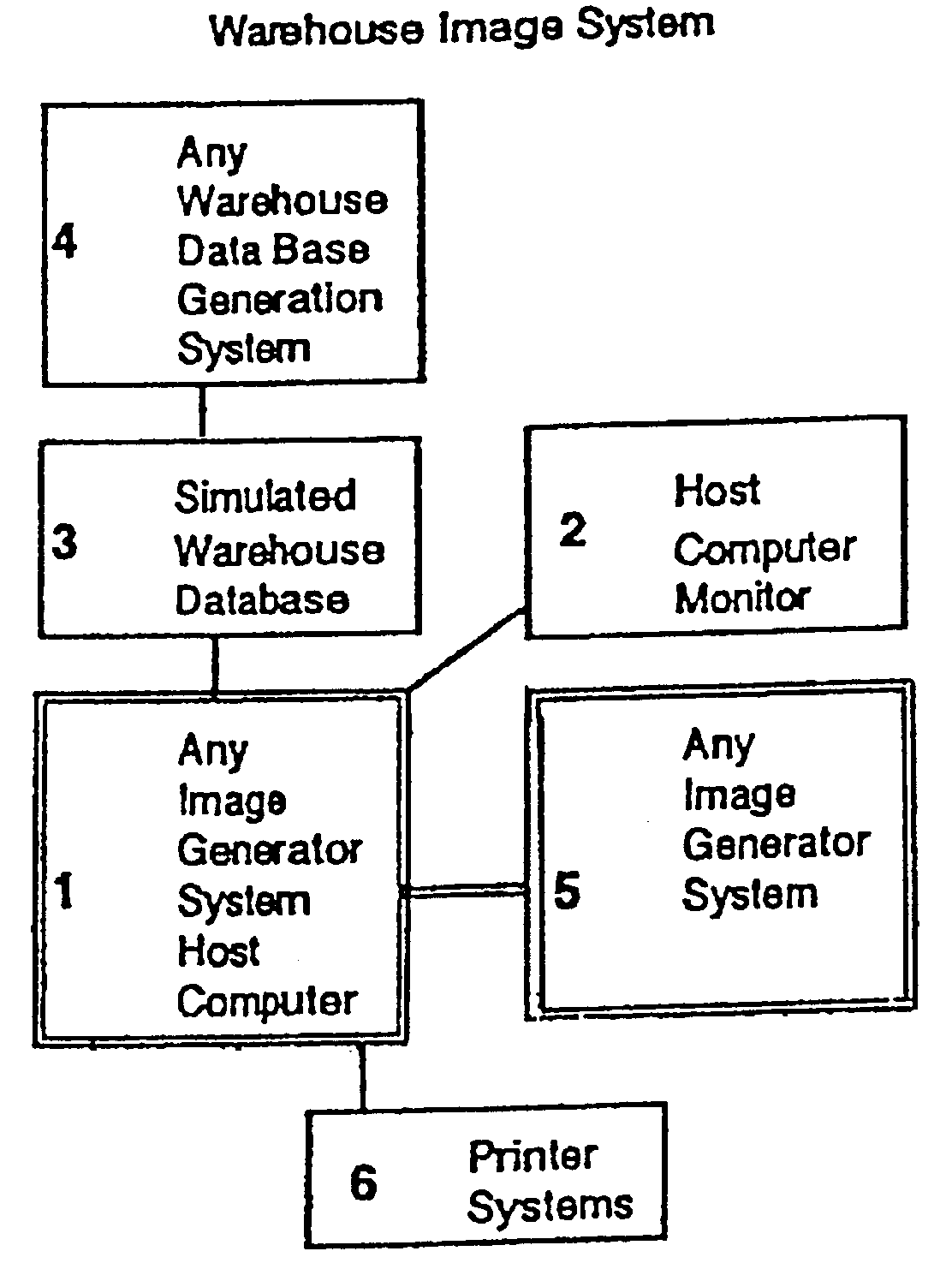

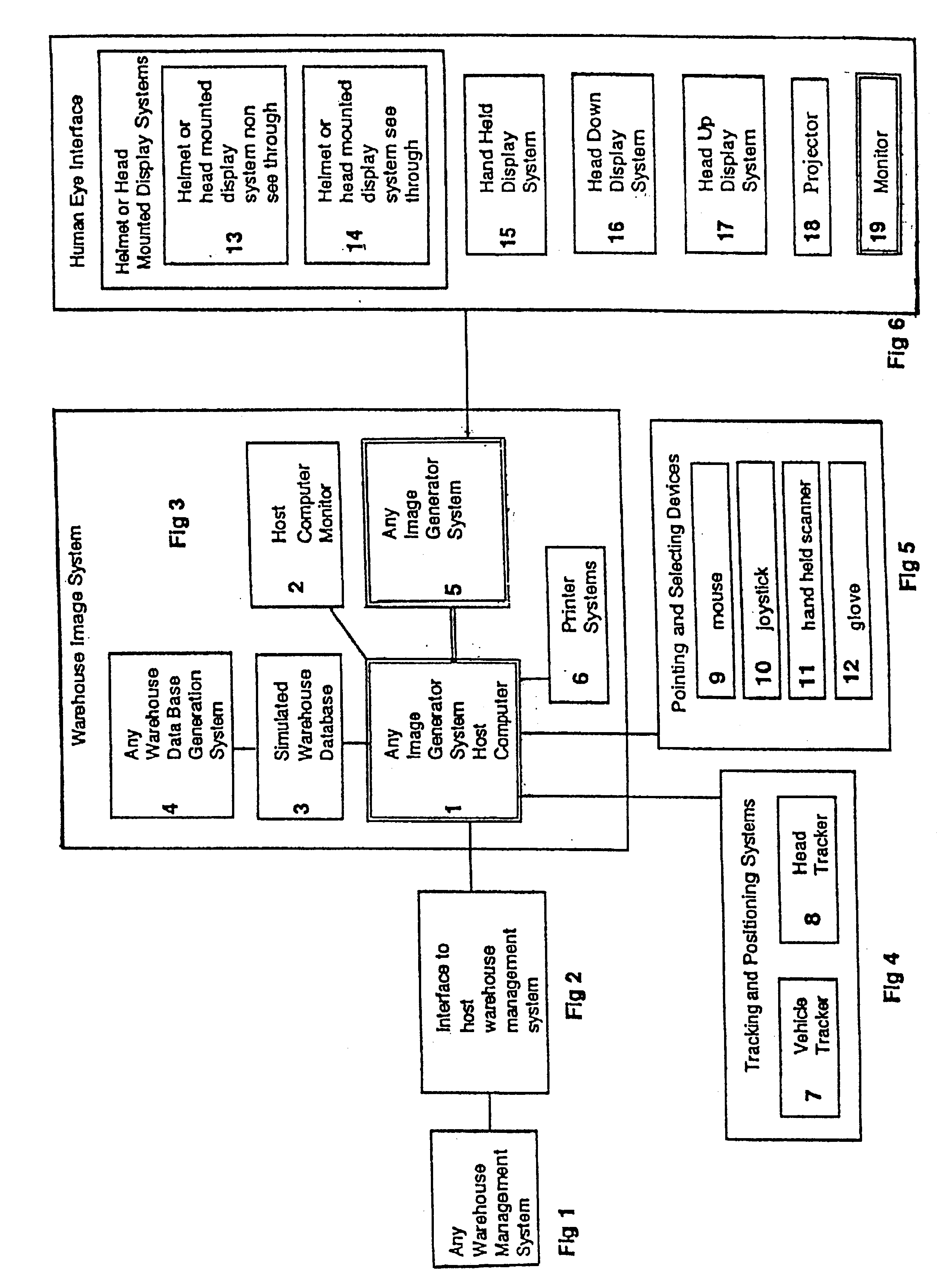

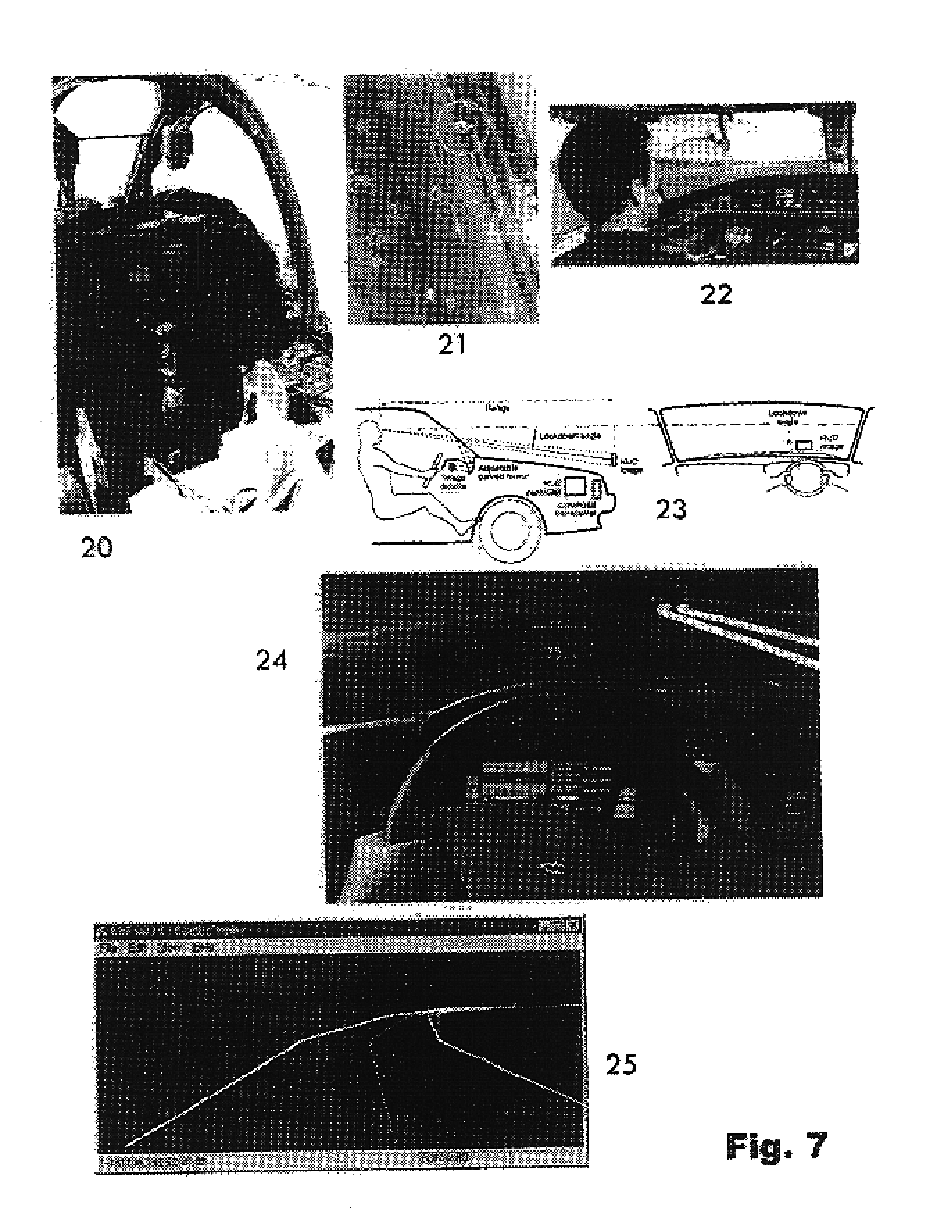

Virtual reality warehouse management system complement

An image generator system (5) with a warehouse database (3) that is integrated to an image generator system human interface (FIG. 6) (virtual reality) and integrated to a warehouse management system's (WMS) stock location system (FIG. 1). This software and hardware combination performs warehousing functions in a simple, visual, real time, non real time and three-dimensional environment. This computer combination is intended for organizations to more efficiently manage the warehousing process-especially by locating key positions. World famine can be reduced with the capabilities of visualizing locations within any warehouse. All industries that use warehouses can ultimately use this virtual reality warehouse management system complement to augment their distribution process. The combination of two previous technologies: that of Virtual Reality (real time 3D graphics) and the field of warehousing and warehouse management systems.

Owner:CHIRIELEISON JR ANTHONY +1

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com