Patents

Literature

170 results about "Skin" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computing, a skin (also known as visual styles in Windows XP) is a custom graphical appearance preset package achieved by the use of a graphical user interface (GUI) that can be applied to specific computer software, operating system, and websites to suit the purpose, topic, or tastes of different users. As such, a skin can completely change the look and feel and navigation interface of a piece of application software or operating system.

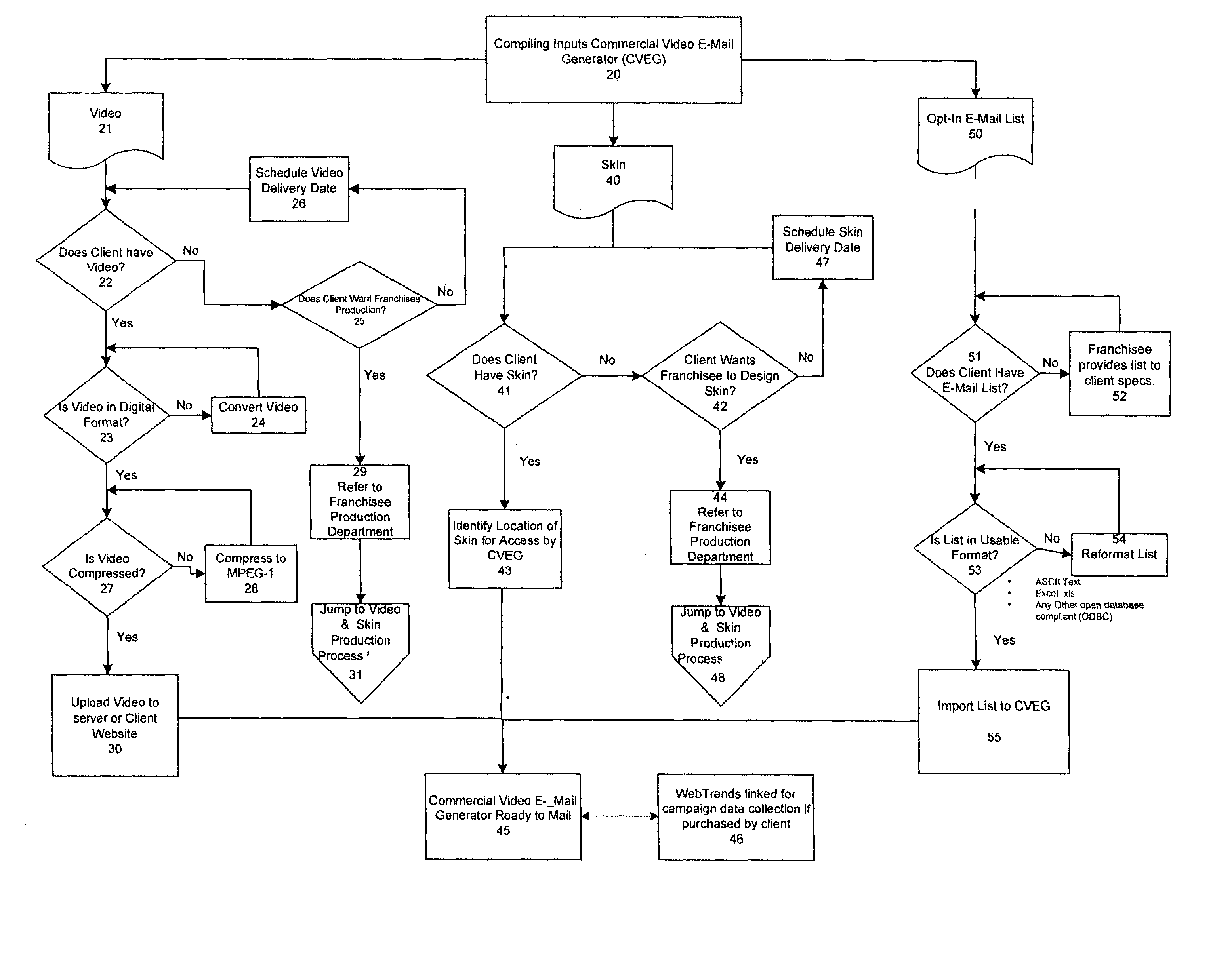

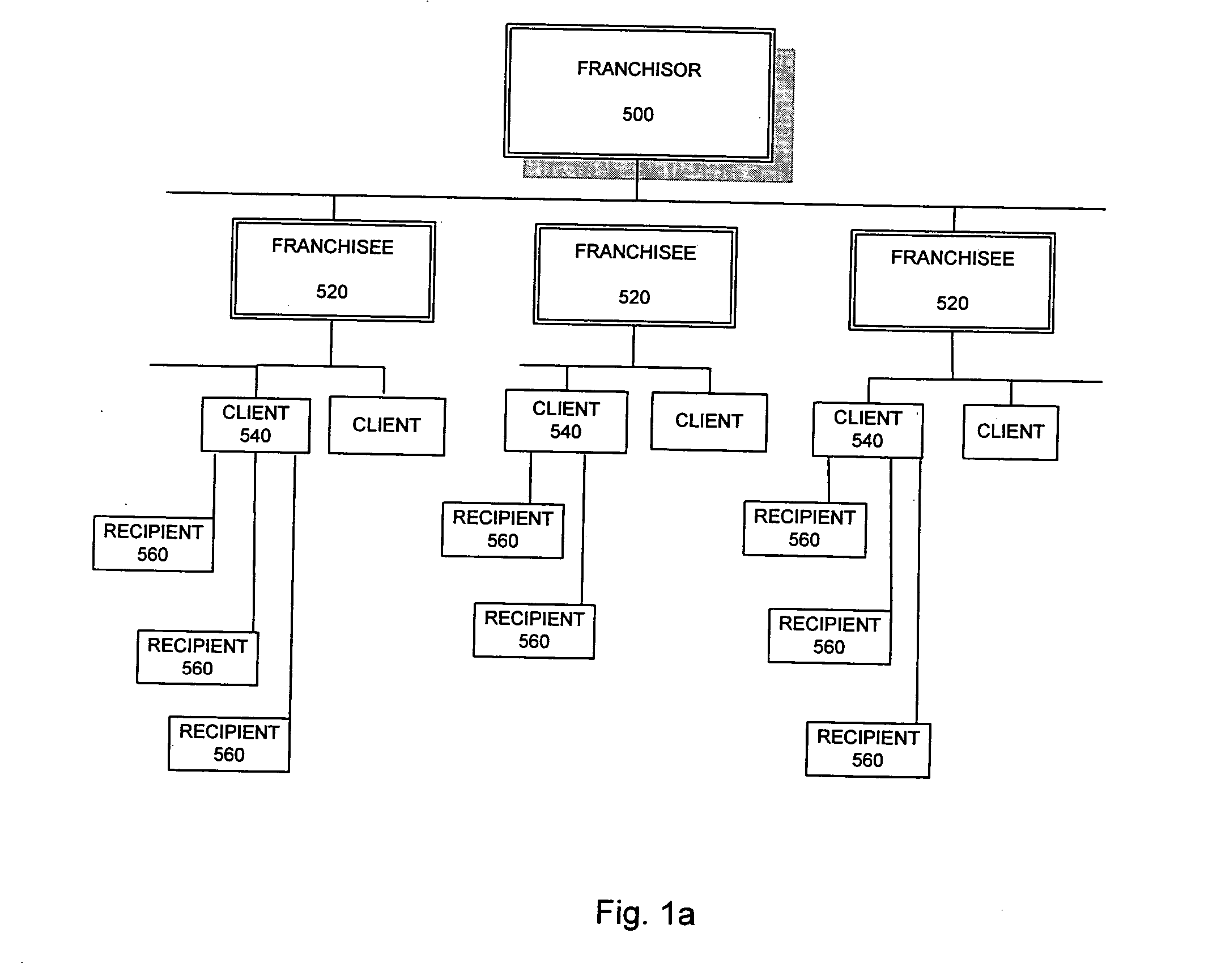

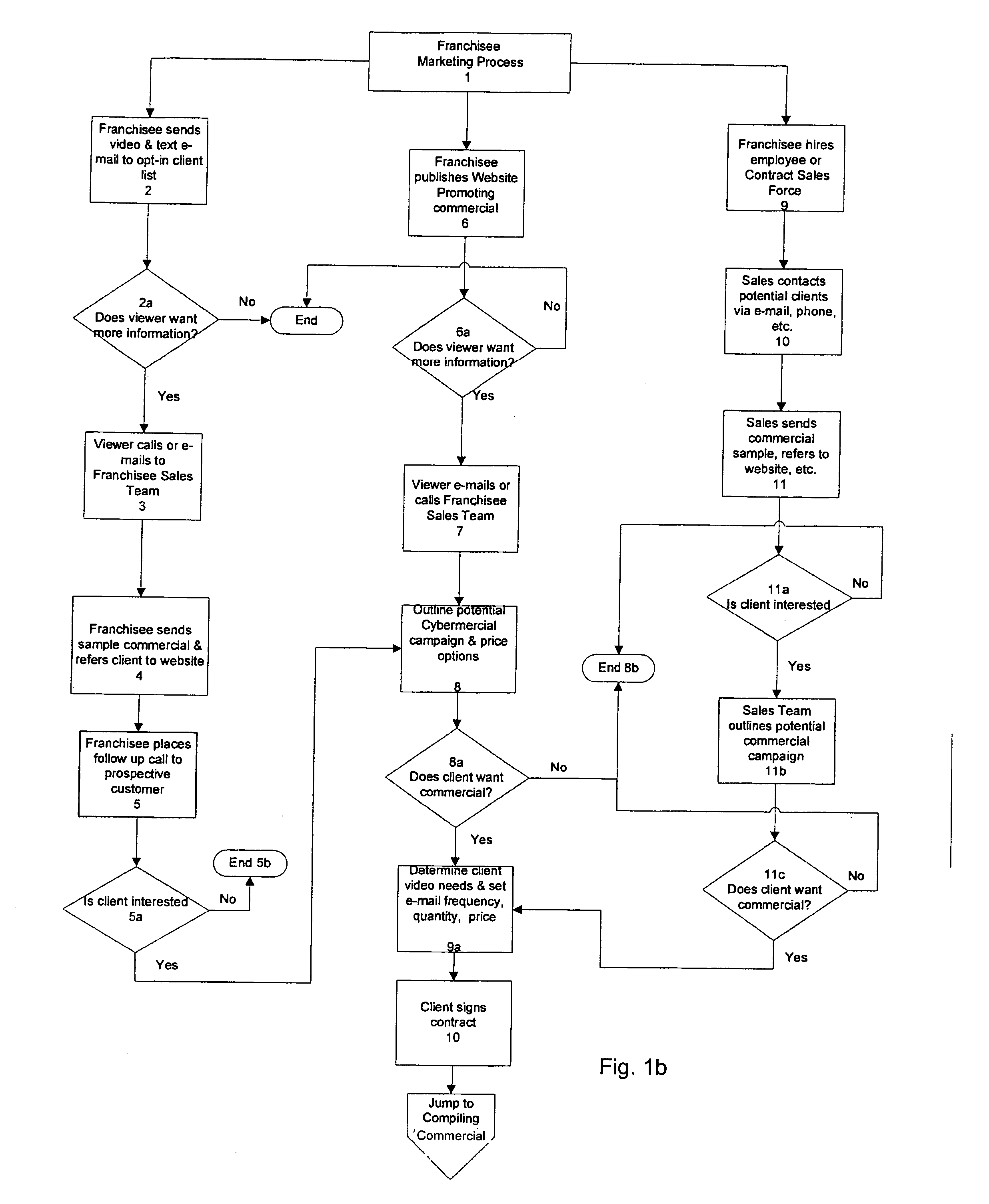

Method and apparatus for generating and marketing video e-mail and an intelligent video streaming server

InactiveUS20050033855A1High impressionHigh response rateMultiple digital computer combinationsTwo-way working systemsGraphicsAnimation

A method and apparatus for generating and sending video emails containing video and graphics, and optionally text, animation, sound, attachments and links. The graphics are produced as a skin on the computer screen and the video plays in a defined location on the computer screen within, preferably, or without the skin. An intelligent video streaming server stores the video emails and sends them when instructed.

Owner:G-4 INC

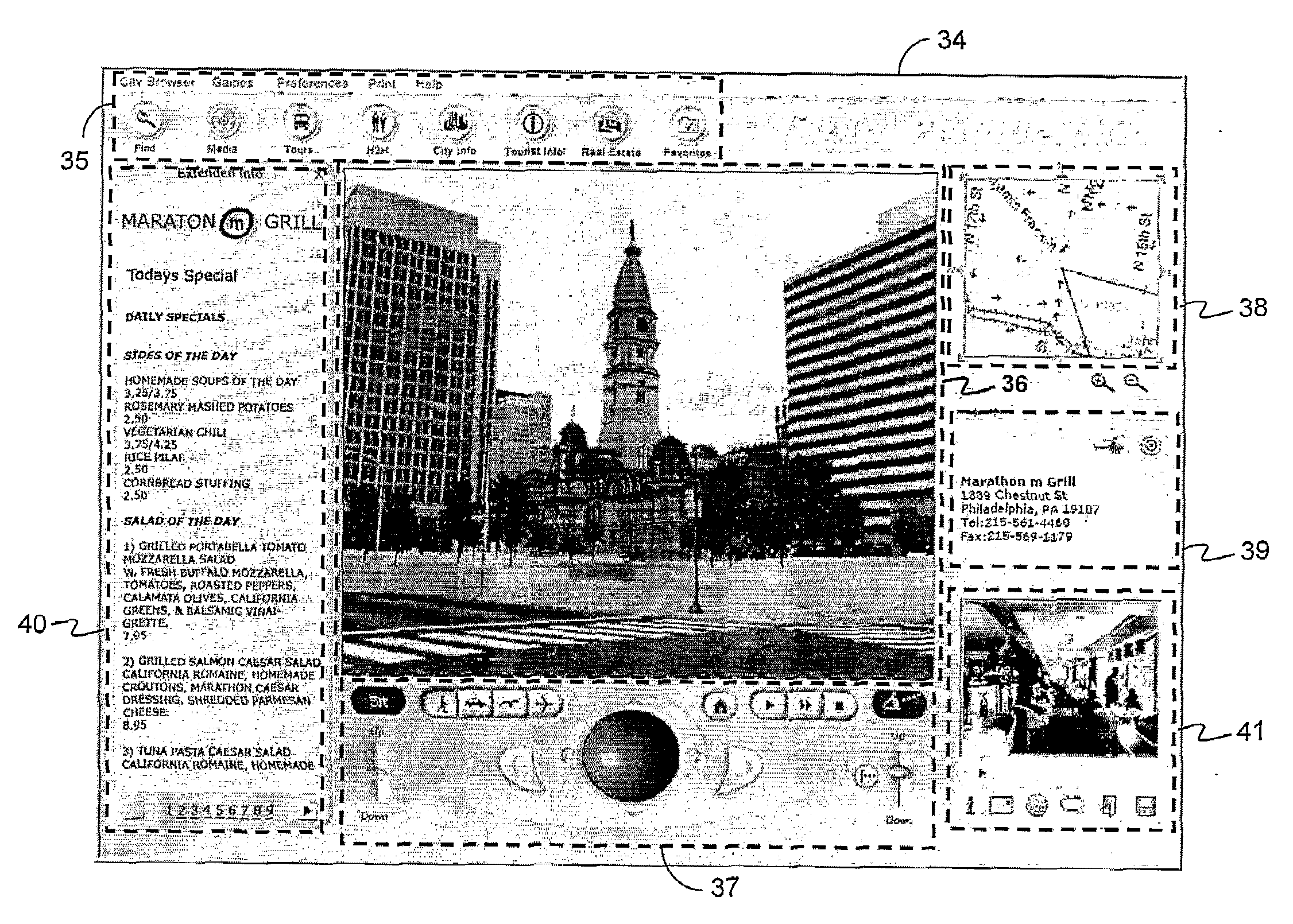

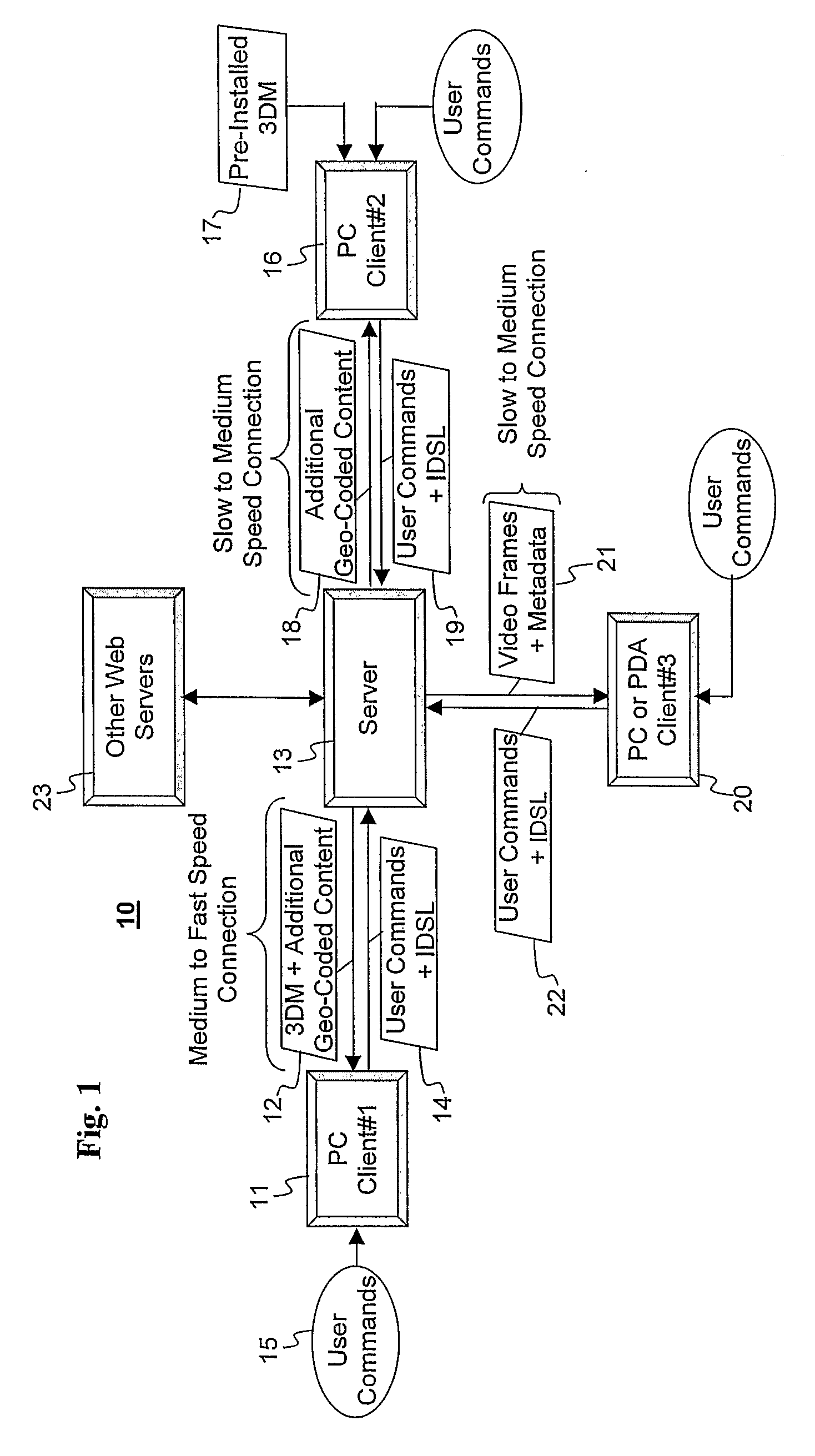

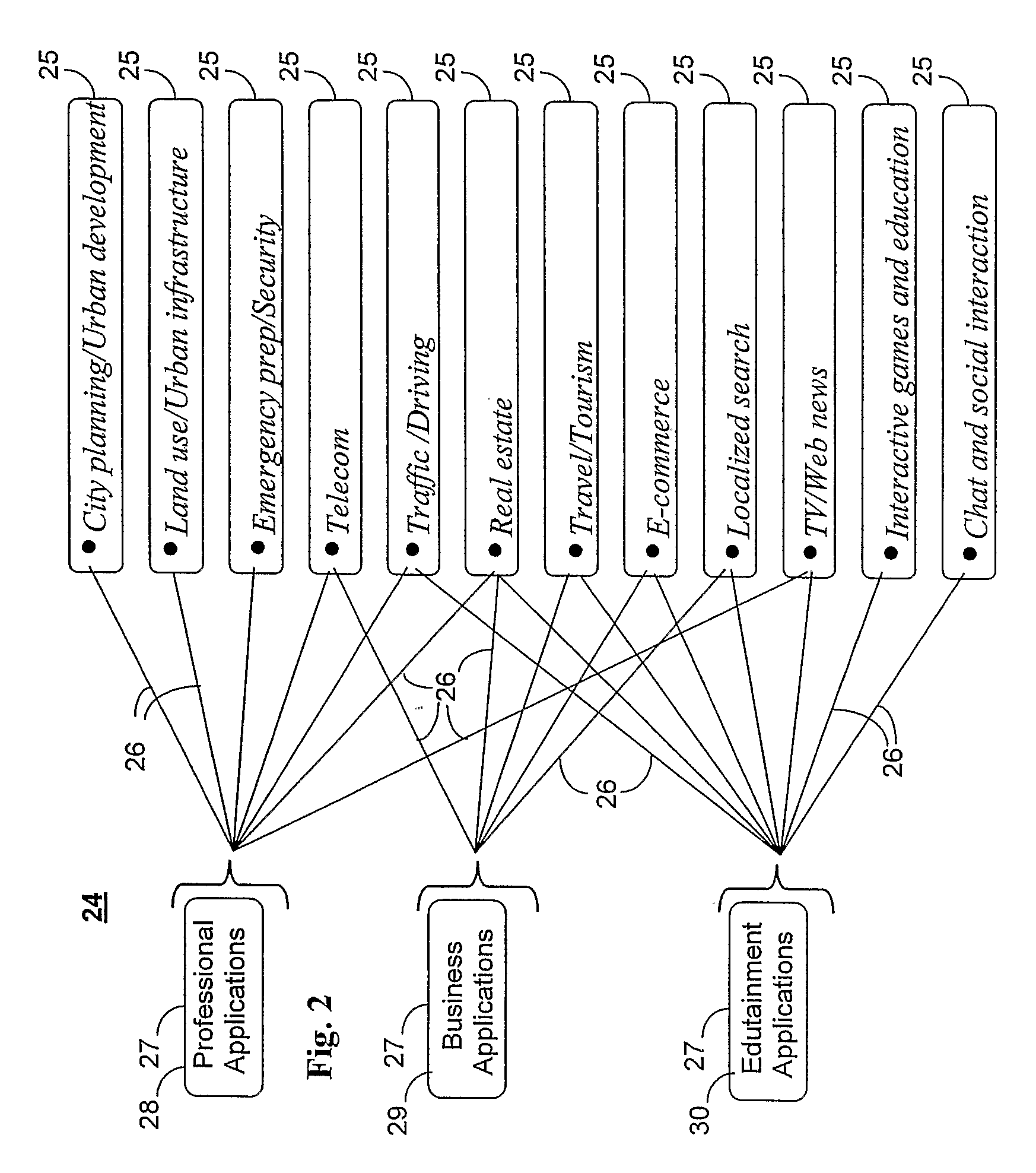

Web Enabled Three-Dimensional Visualization

InactiveUS20080231630A1Geographical information databasesSpecial data processing applicationsTerrainComputer graphics (images)

A method for presenting a perspective view of a real urban environment, augmented with associated geo-coded content, and presented on a display of a terminal device. The method comprises the steps of: connecting the terminal device to a server via a network; communicating user identification, user present-position information and at least one user command, from the terminal device to the server; processing a high-fidelity, large-scale, three-dimensional (3D) model of an urban environment, and associated geo-coded content by the server; communicating the 3D model and associated geo-coded content from said server to said terminal device, and processing said data layers and said associated geo-coded content, in the terminal device to form a perspective view of the real urban environment augmented with the associated geo-coded content. The 3D model comprises a data layer of 3D building models; a data layer of terrain skin model; and a data layer of 3D street-level-culture models. The processed data layers and the associated geo-coded content correspond to the user present-position, the user identification information, and the user command.

Owner:SHENKAR VICTOR +1

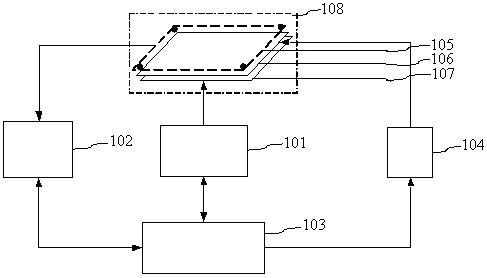

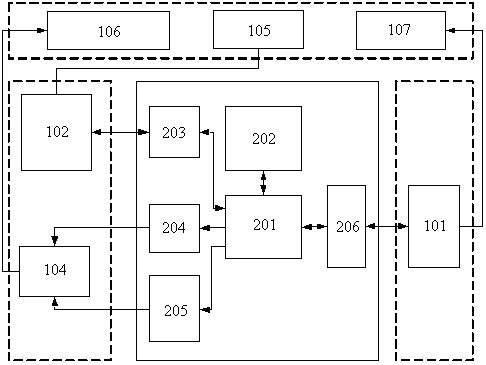

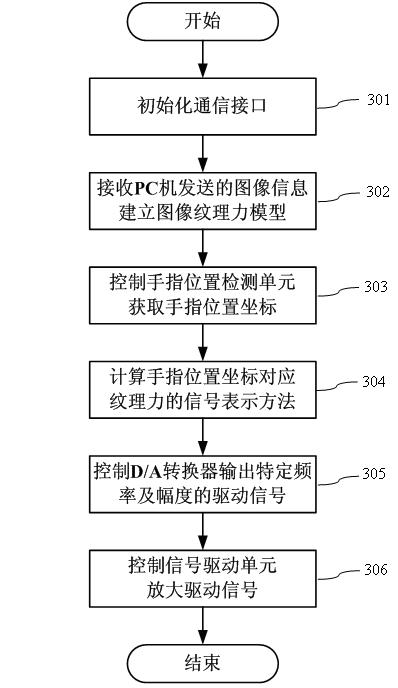

Touch representation device based on electrostatic force

InactiveCN102662477ARealize the function of tactile reproductionLow costInput/output for user-computer interactionGraph readingVisual ObjectsPersonal computer

The invention relates to a touch representation device based on electrostatic force, belonging to a touch representation device. The touch representation device comprises an electrostatic force touch representation interactive screen, a finger trace tracking unit, an electrostatic force touch representation controller, a signal driving unit and a PC (Personal Computer). The finger trace tracking unit detects a finger position, and maps a touch force representation method of a visual object at the position; an electric signal parameter for representing a touch force is generated according to the corresponding relation between an electric signal and the touch force; a driving signal is output; the driving signal is amplified; and the amplified driving signal acts on the electrostatic force touch representation interactive screen to cause skin deformation of a finger so as to sense an absorption force and a repelling force, thereby realizing touch representation. The touch representation device has the advantages of low cost, high flexibility and strong applicability, and can realize the touch representation function on a small mobile terminal.

Owner:孙晓颖

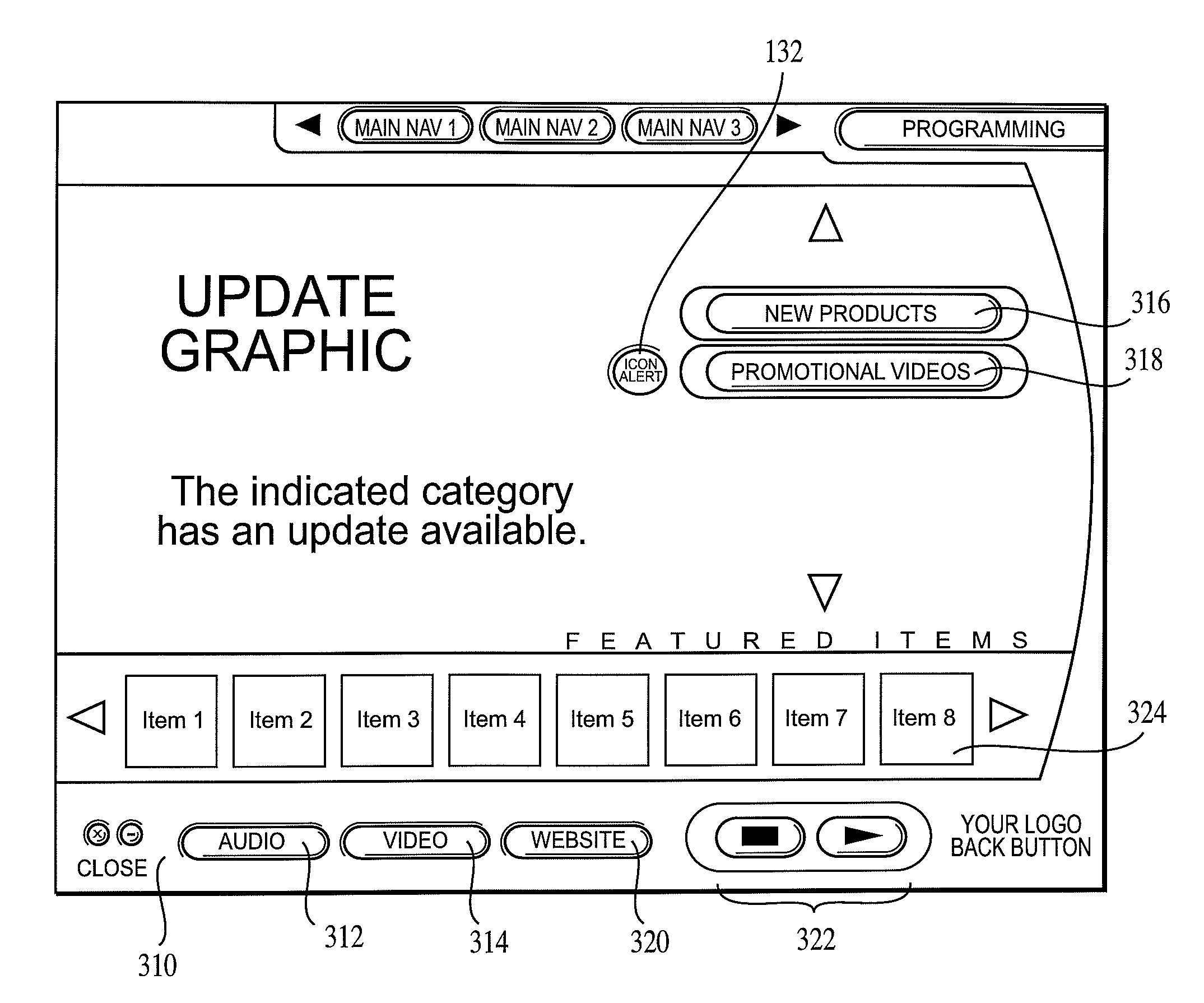

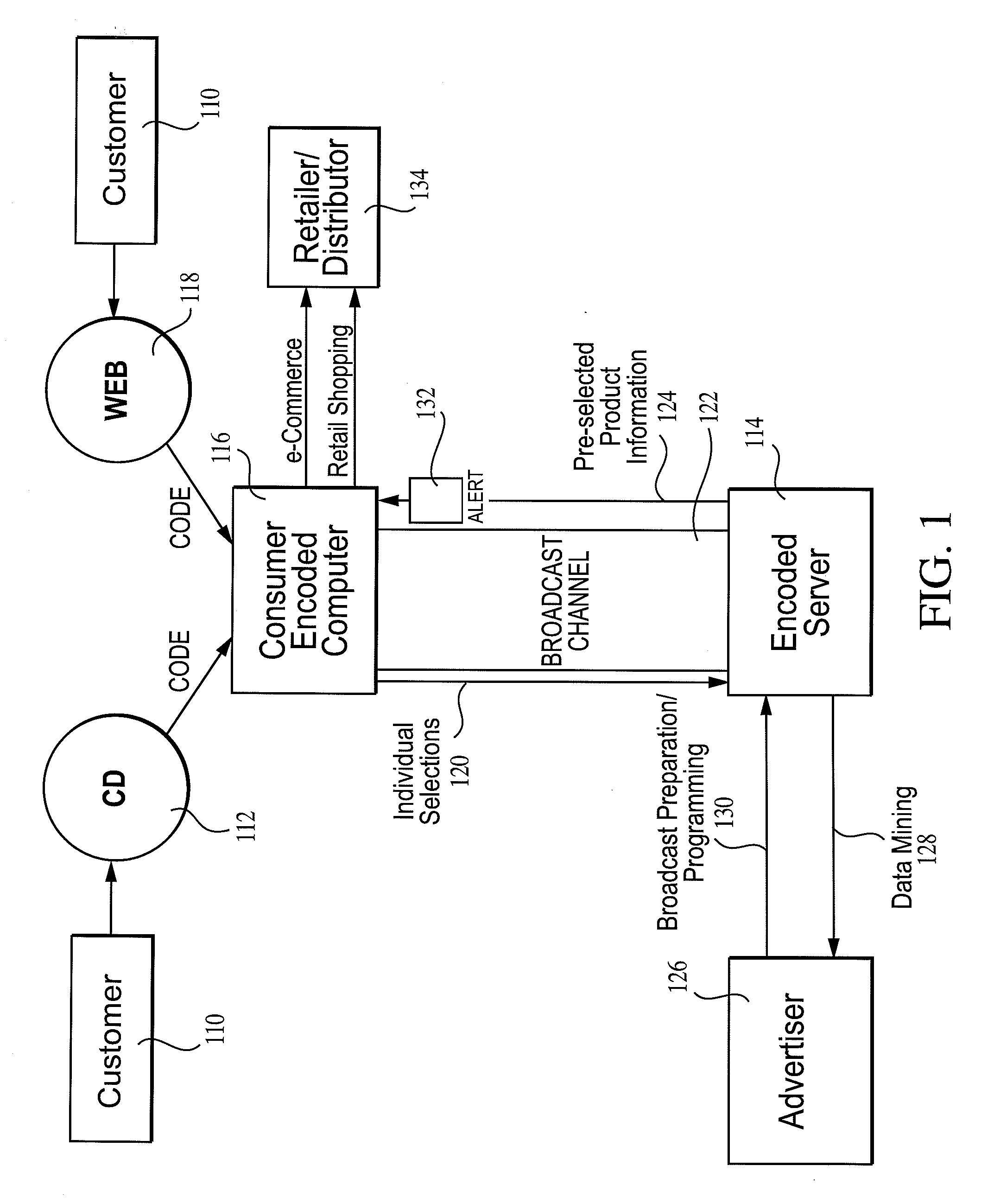

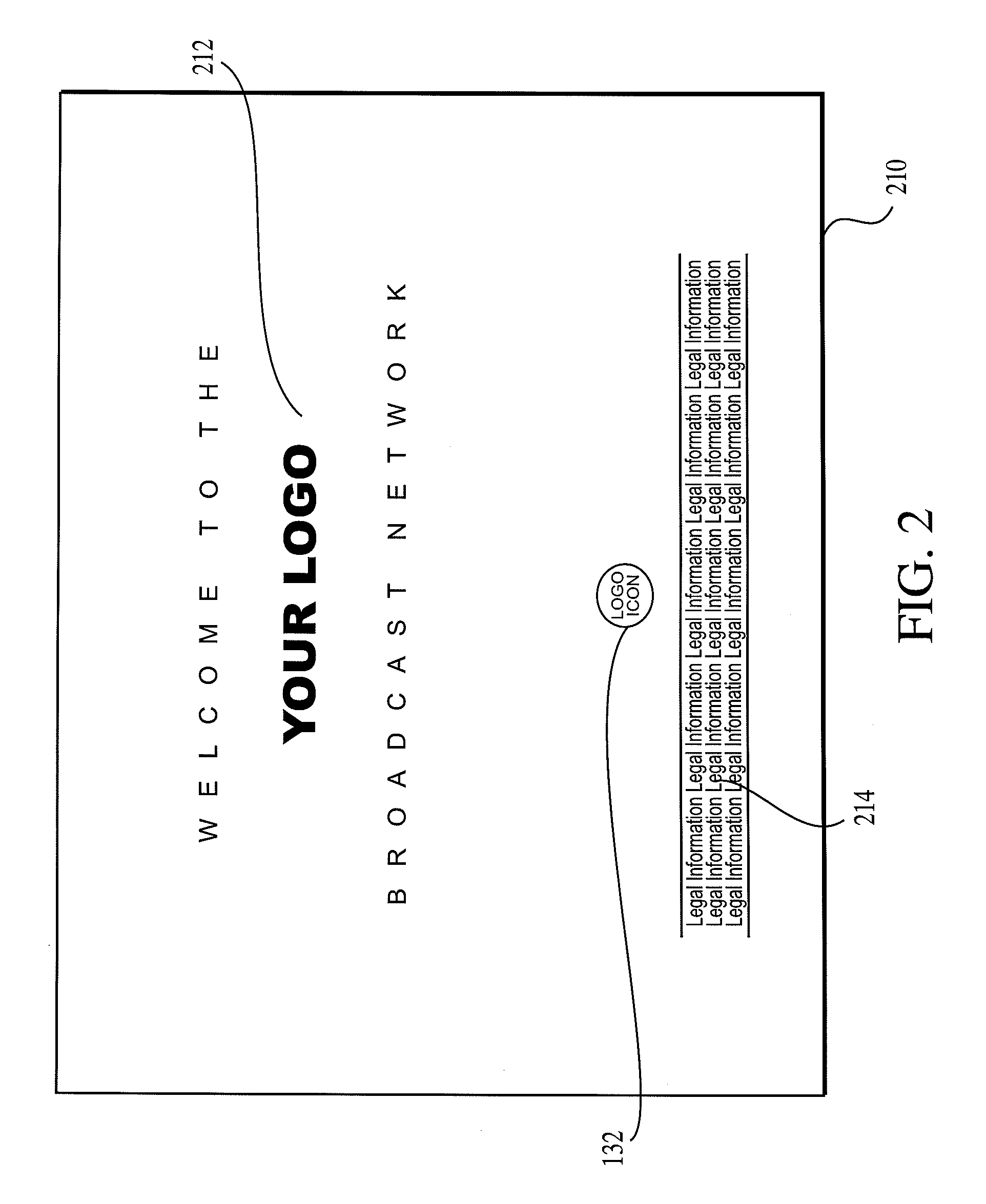

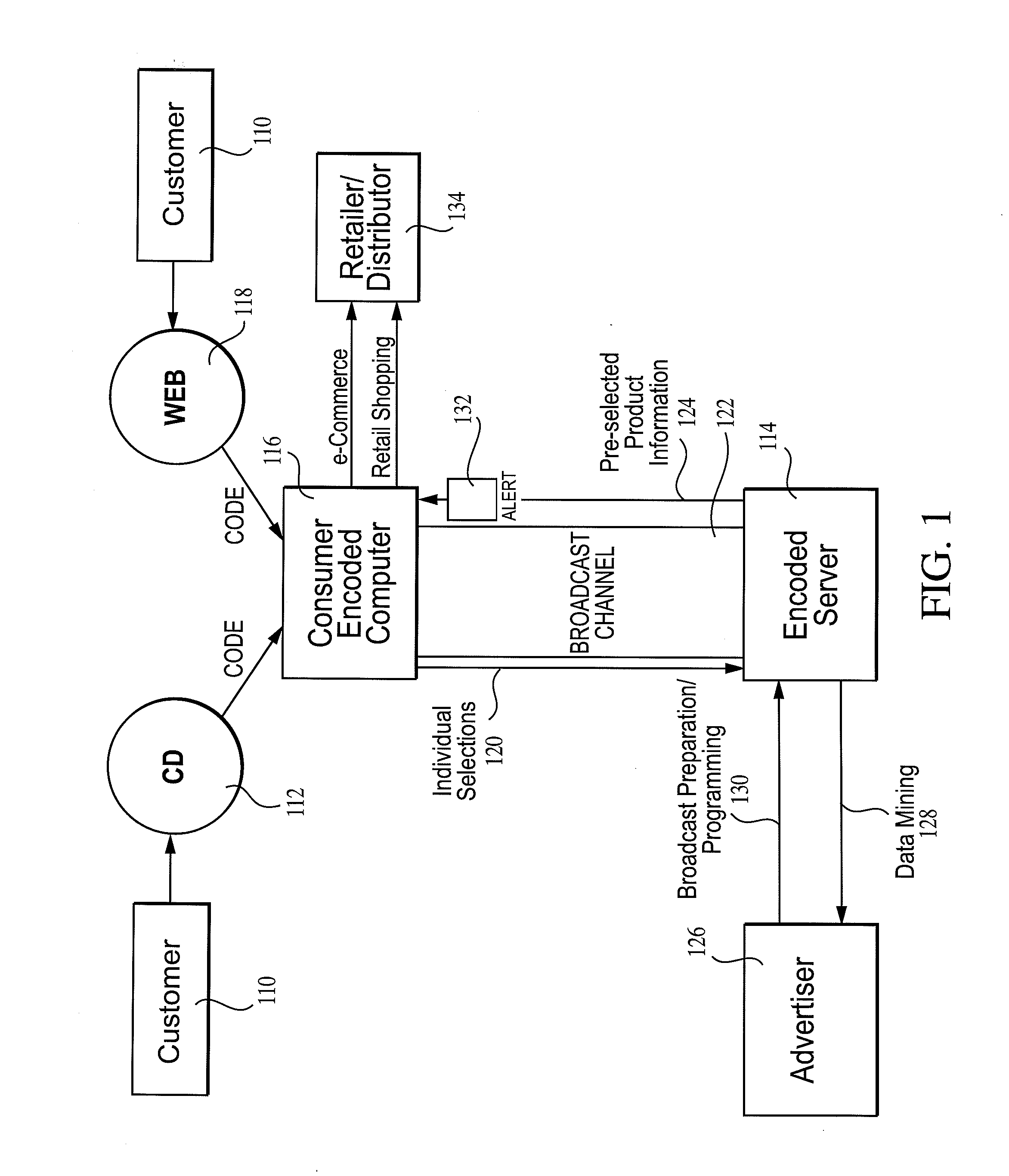

Multimedia player and browser system

InactiveUS20090228544A1Improve impact performanceMultimedia data browsing/visualisationMultiple digital computer combinationsHard disc driveComputer users

A multimedia software application that can include audio, video and / or graphics, in a manner that combines the multimedia experience with the transfer of information from and between a variety of sources (126), in a variety of directions, and subject to a variety of prompts. The application provides a “Web in Page” approach, in which a series of windows have the same or similar “look and feel”, yet can be used to access and display information from a variety of sources (126), including local content (112) (hard drive or other locally stored media), and web-based online content (118), including that available from a dedicated, integrated server (114), affiliated servers (114), or even other computer users. The application of the present invention can be provided in stand-alone form, to be loaded on a client device (116) (e.g., personal computer) from either a recorded medium or downloaded online. In addition to this “Web in Page” application interface, a “Web in Skin” interface may be provided, by which the application interface may be varied based on client user (110) or advertiser (126) preferences to provide a customized interface format. Optionally, and preferably, the application is provided in a form where it is recorded on, and thereby combined with, digitally recorded content, such as a music CD or DVD.

Owner:DEMERS TIMOTHY B +5

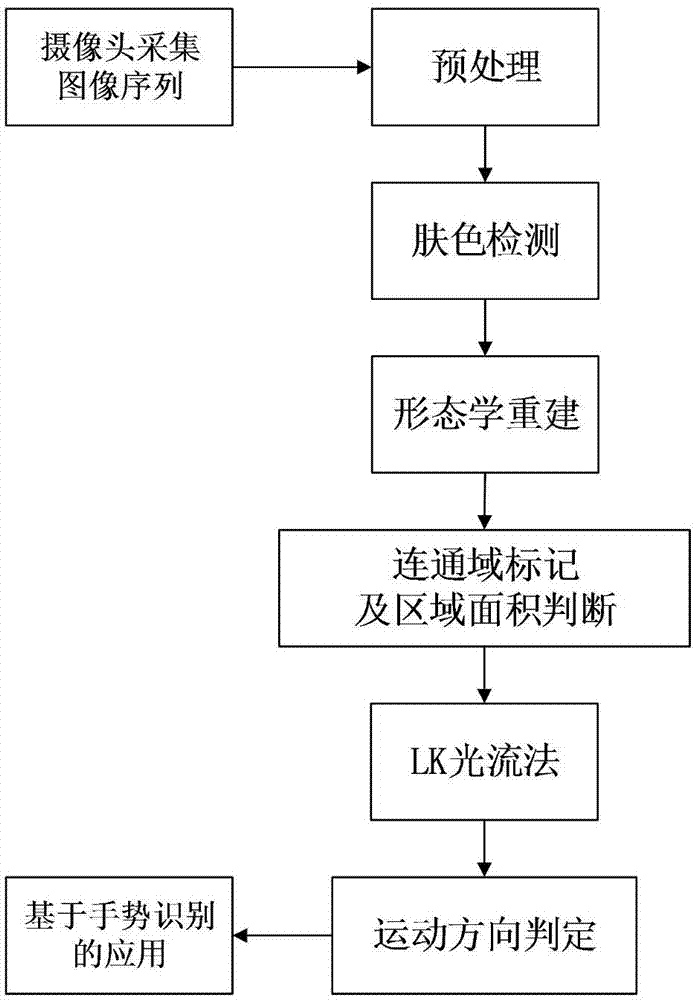

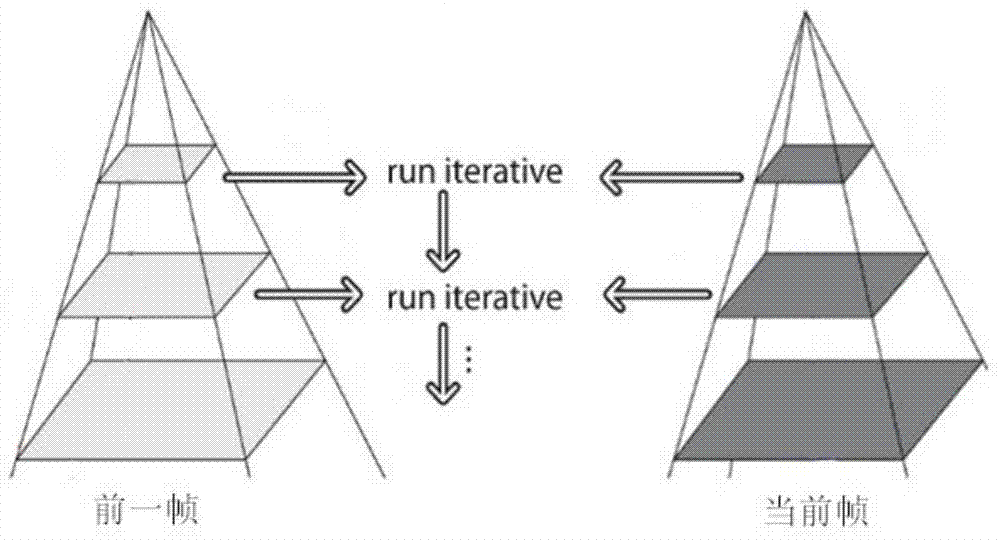

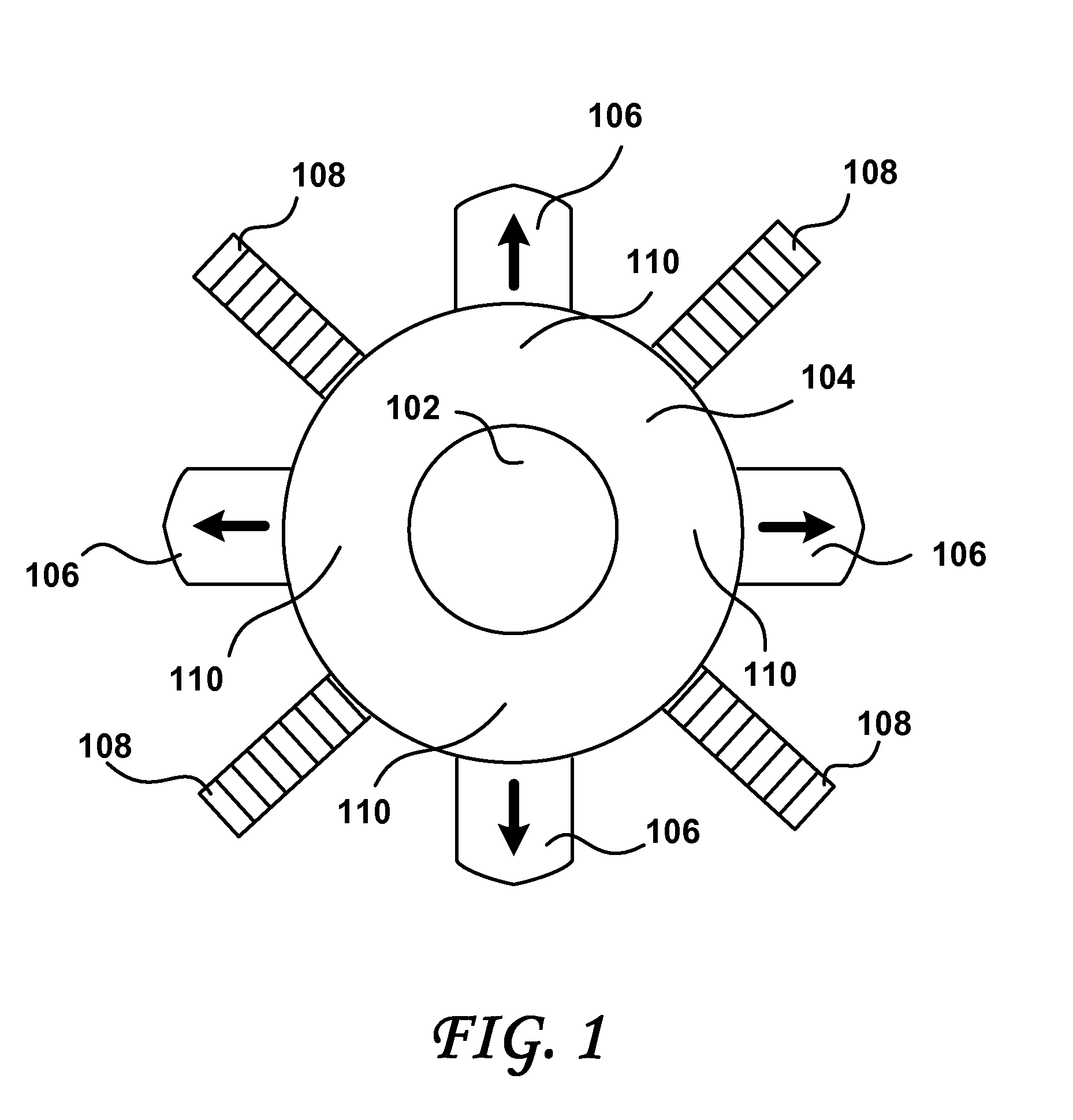

Optical flow-based gesture motion direction recognition method

InactiveCN104331151AInput/output for user-computer interactionImage analysisGraphicsImage resolution

The invention discloses an optical flow-based gesture motion direction recognition method. The method comprises the following steps of acquiring an image sequence on the front of a computer by using a common camera with video graphics array resolution, and preprocessing the image sequence; distributing skin samples in an approximately elliptical area in a CbCr plane in a concentrated way, and determining whether to accord with skin colors according to a fact whether a pixel point falls in the elliptical area in the CbCr plane; performing morphological reconstruction on binary images subjected to skin color detection, and adopting closed operation in morphology; marking each white connected region, calculating an area of each white connected region, arraying white connected regions from large to small, and reserving three largest connected regions; reducing the resolution of the images, and acquiring an optical flow motion vector in a skin color area by using a pyramid LK optical flow method; judging the direction of the optical flow motion vector; judging the direction once every other two frames, and giving a result if directions are consistent twice; after a user is familiar with and masters the gesture motion operation rule, moving the gesture in the upper, lower, left and right directions before the camera. According to the method, real-time interaction can be completed, and the gesture motion direction recognition accuracy can be higher than 95 percent.

Owner:COMMUNICATION UNIVERSITY OF CHINA

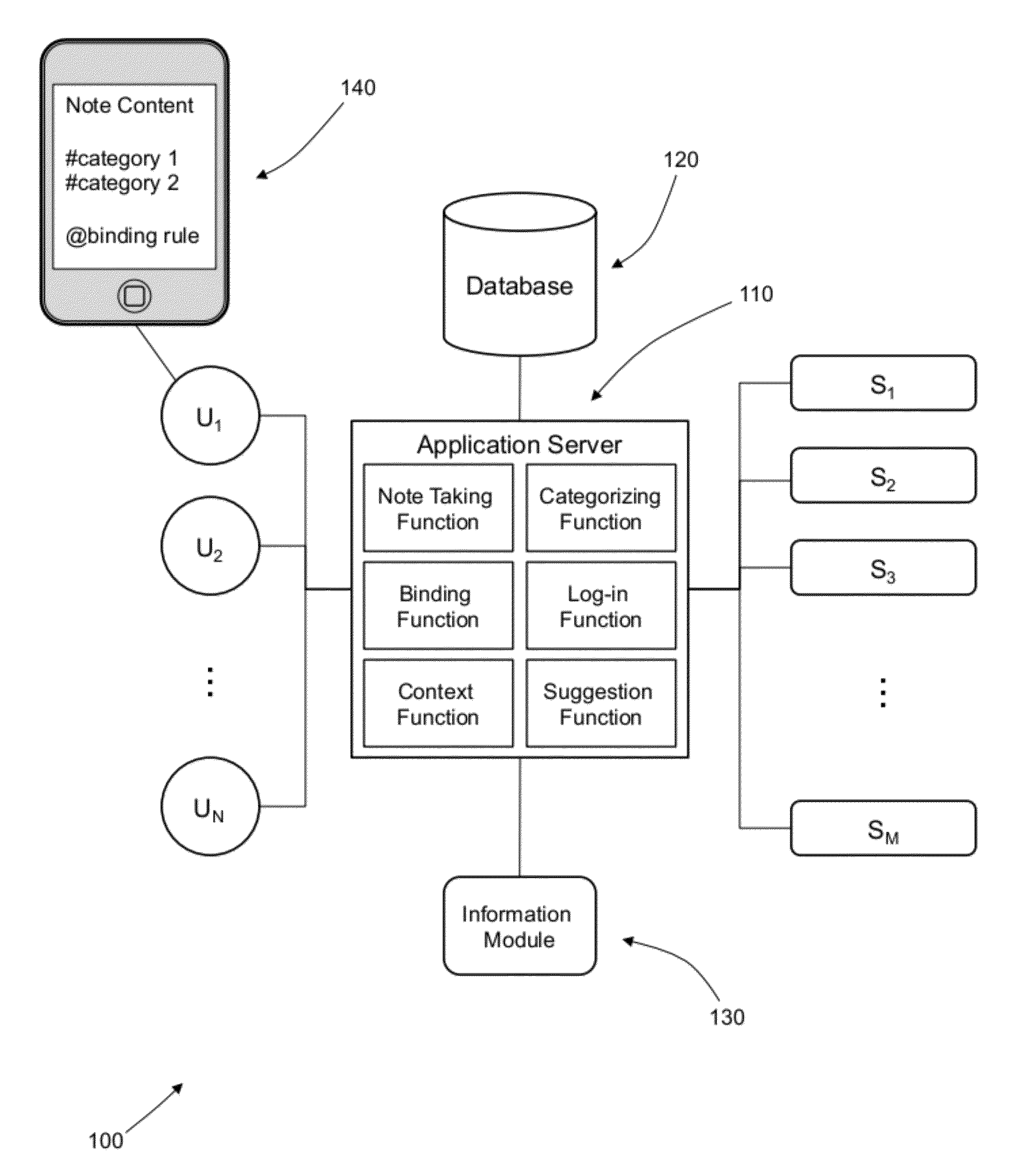

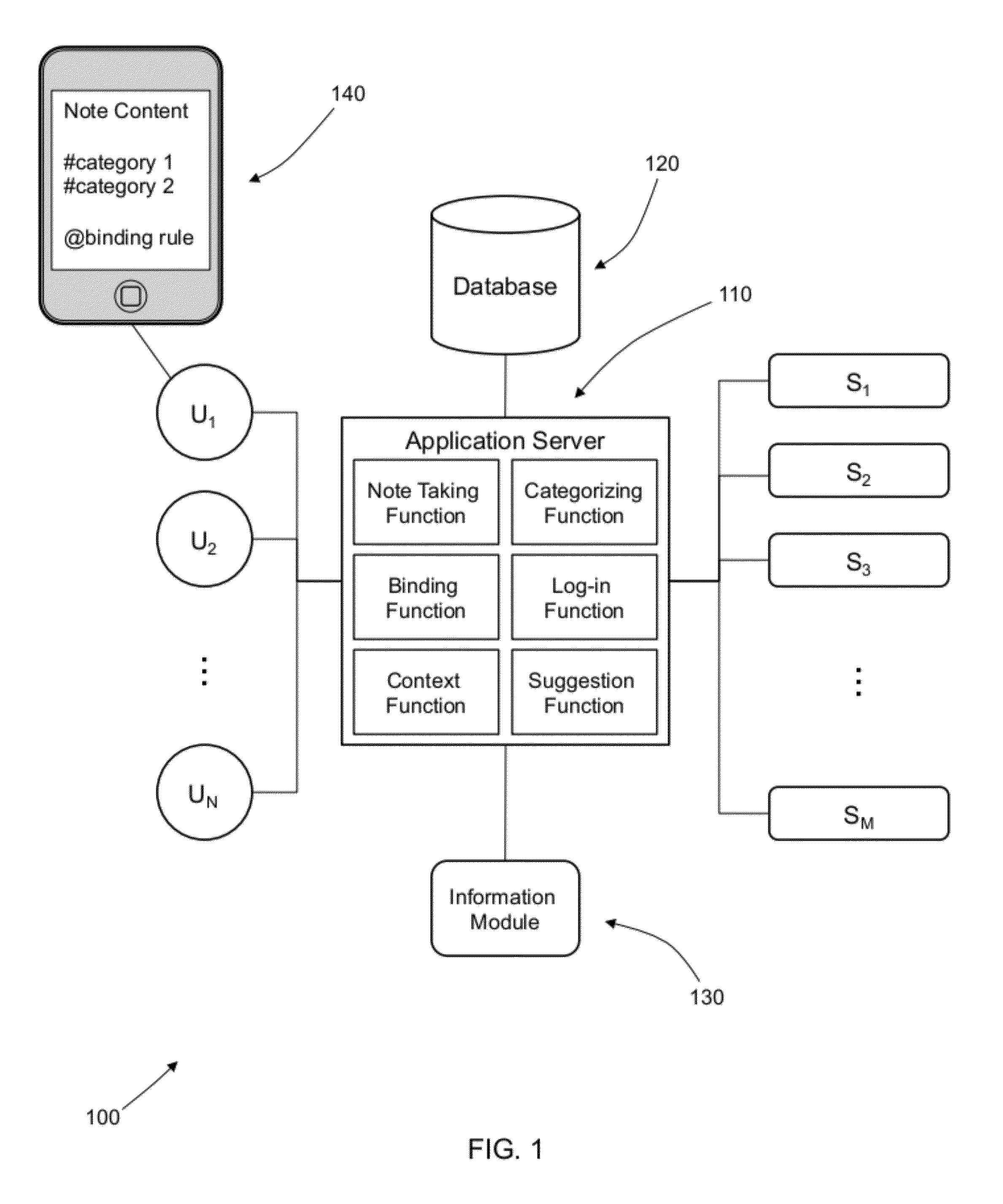

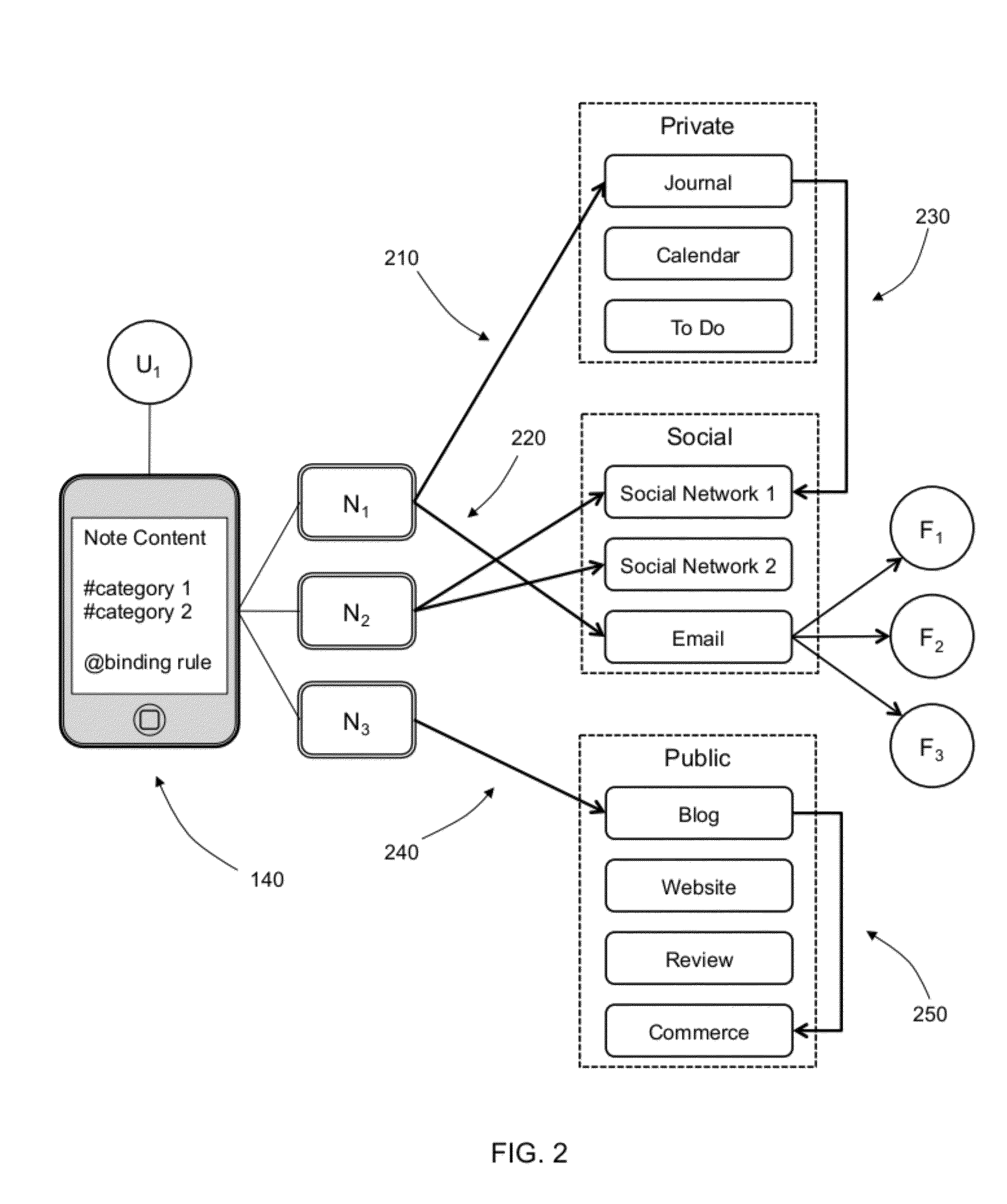

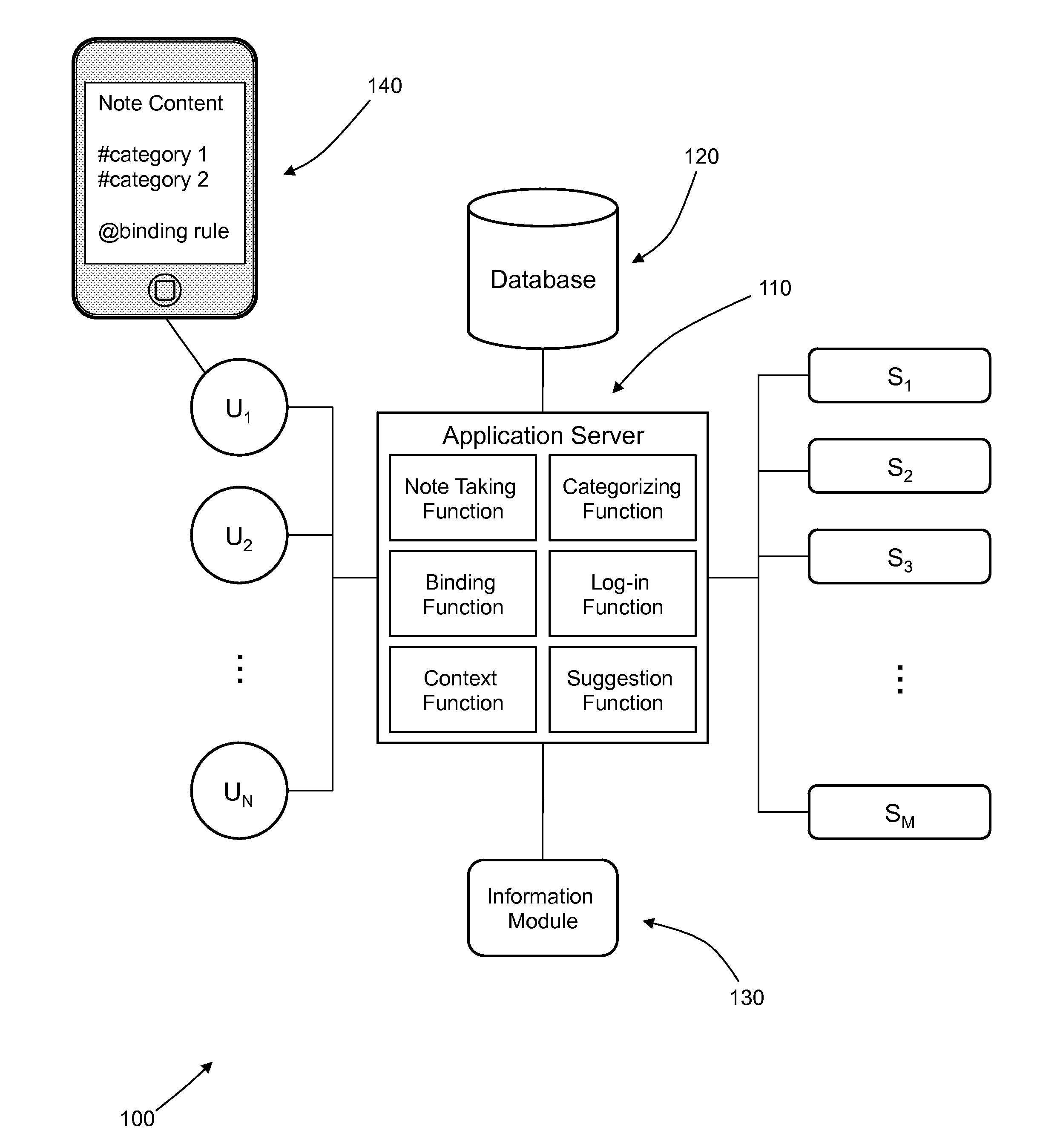

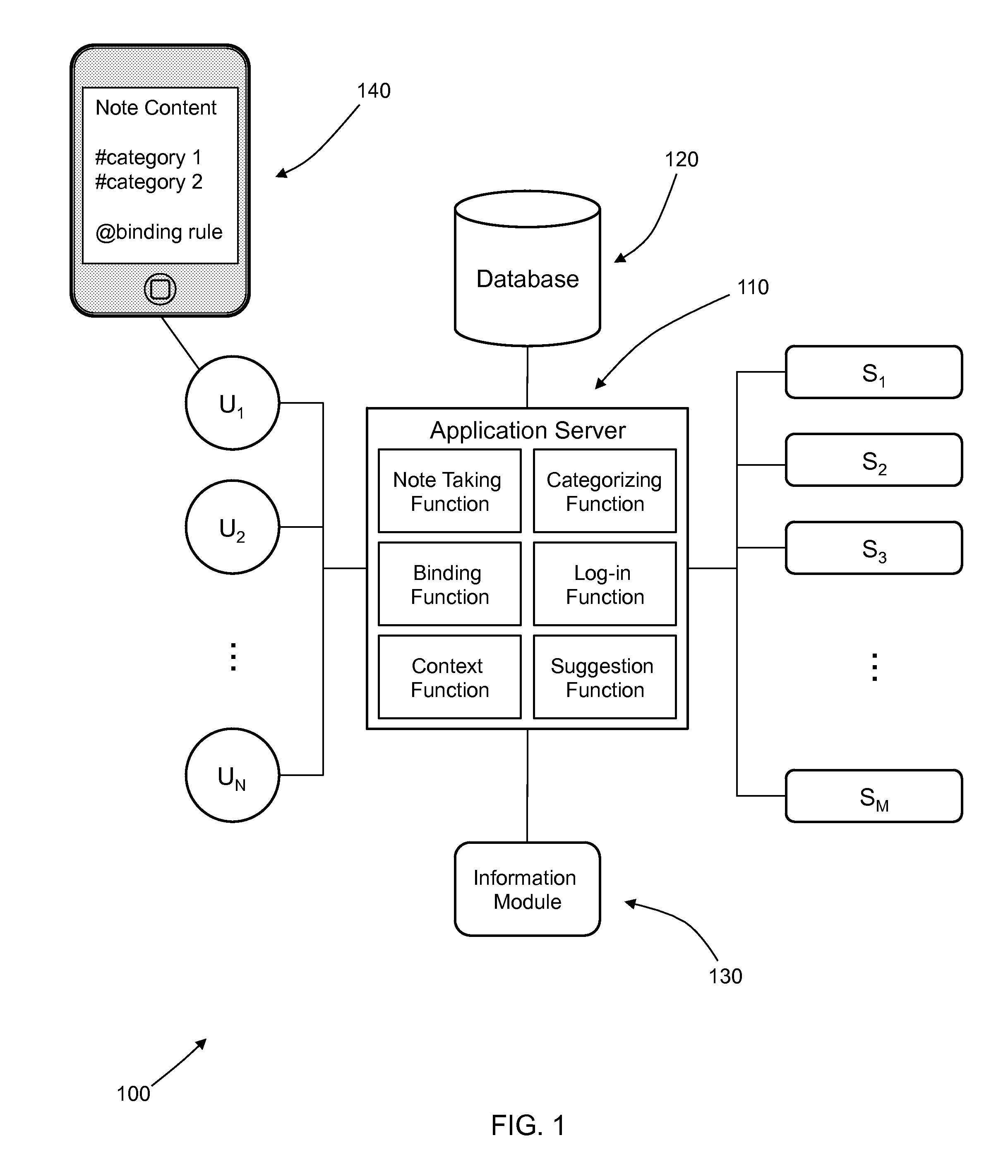

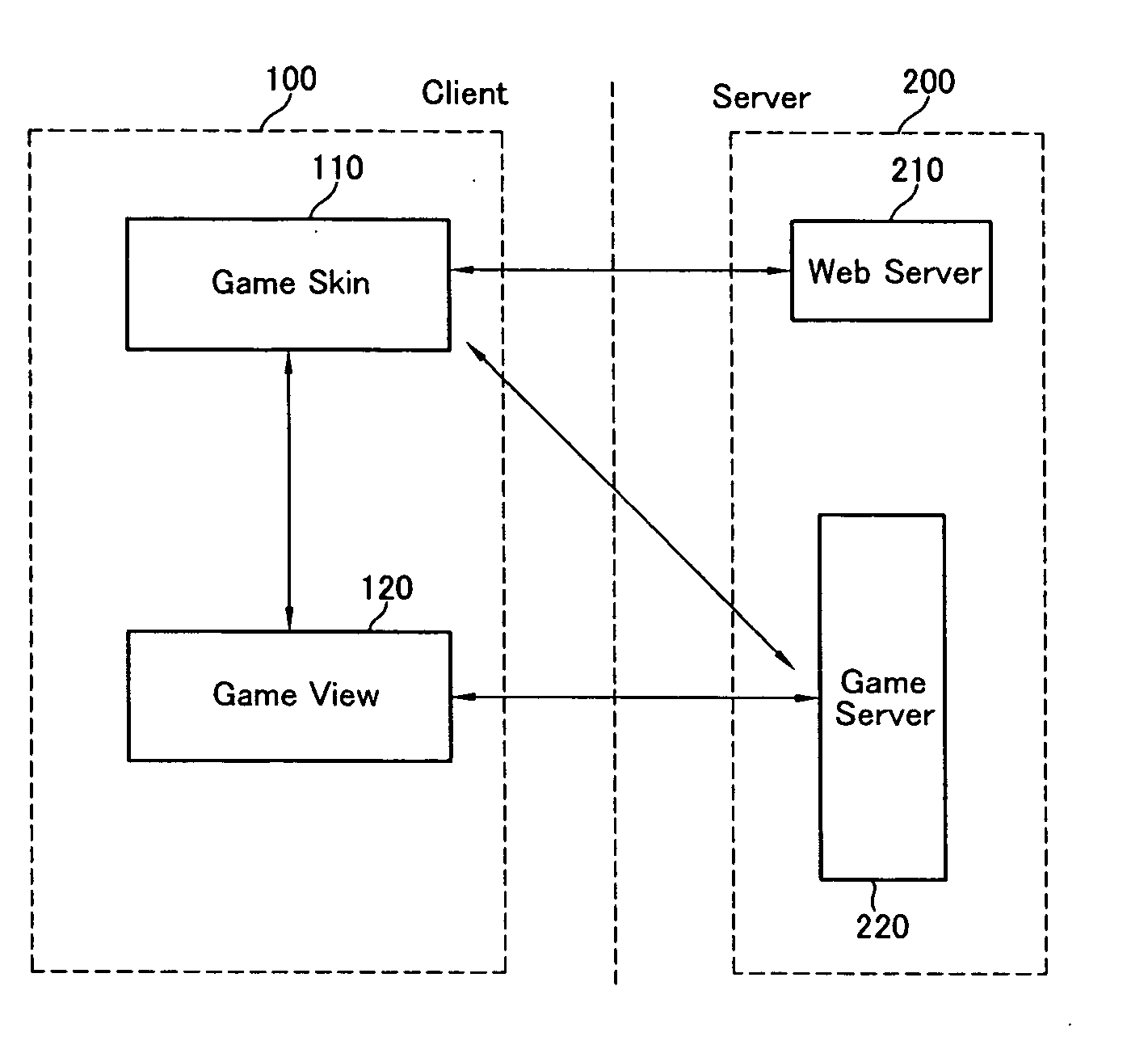

Content Management System using Sources of Experience Data and Modules for Quantification and Visualization

ActiveUS20120221659A1Gain accessExpand accessData processing applicationsNatural language data processingSystem of recordLate binding

A semantic note taking system and method for collecting information, enriching the information, and binding the information to services is provided. User-created notes are enriched with labels, context traits, and relevant data to minimize friction in the note-taking process. In other words, the present invention is directed to collecting unscripted data, adding more meaning and use out of the data, and binding the data to services. Mutable and late-binding to services is also provided to allow private thoughts to be published to a myriad of different applications and services in a manner compatible with how thoughts are processed in the brain. User interfaces and semantic skins are also provided to derive meaning out of notes without requiring a great deal of user input.

Owner:APPLE INC

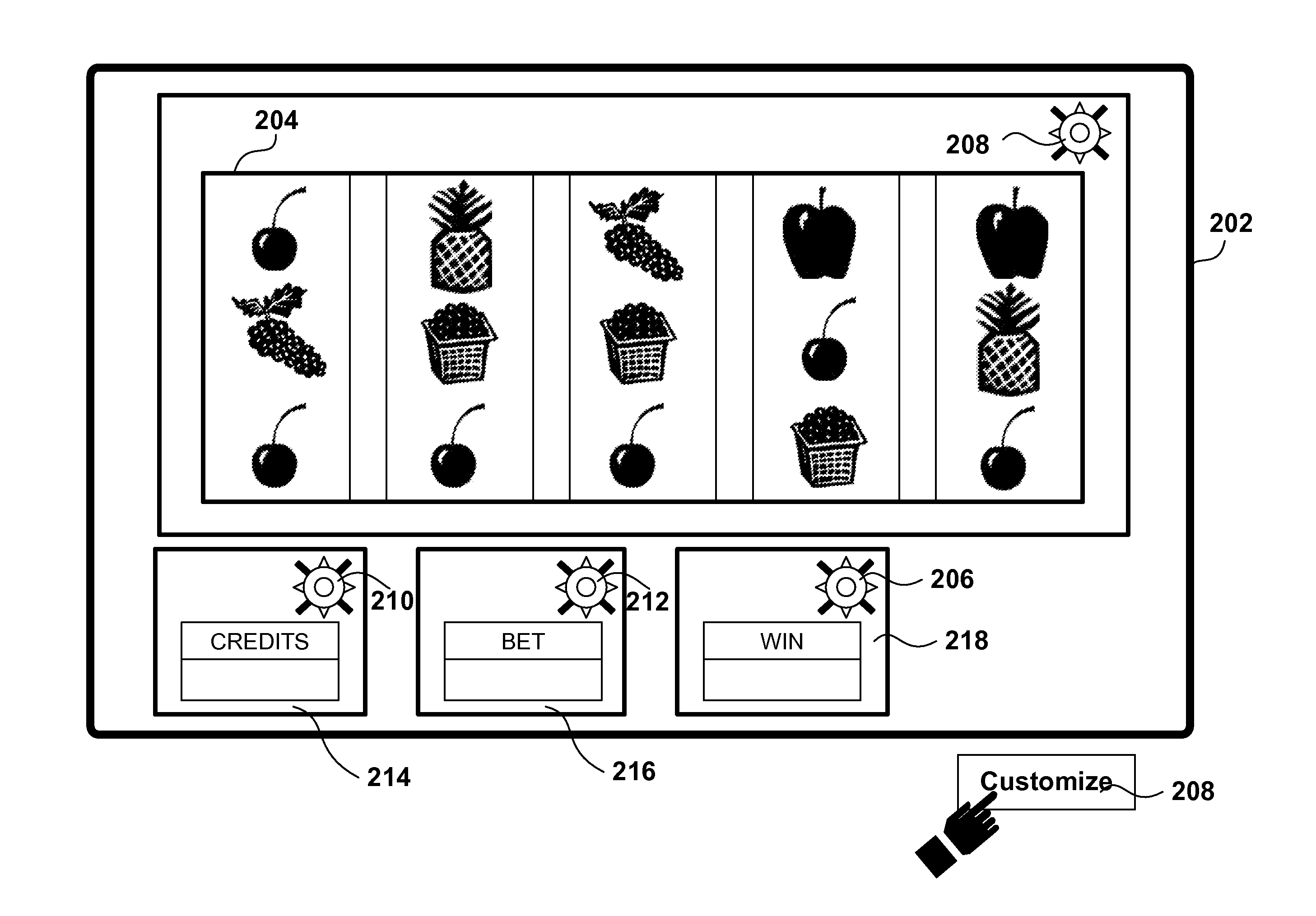

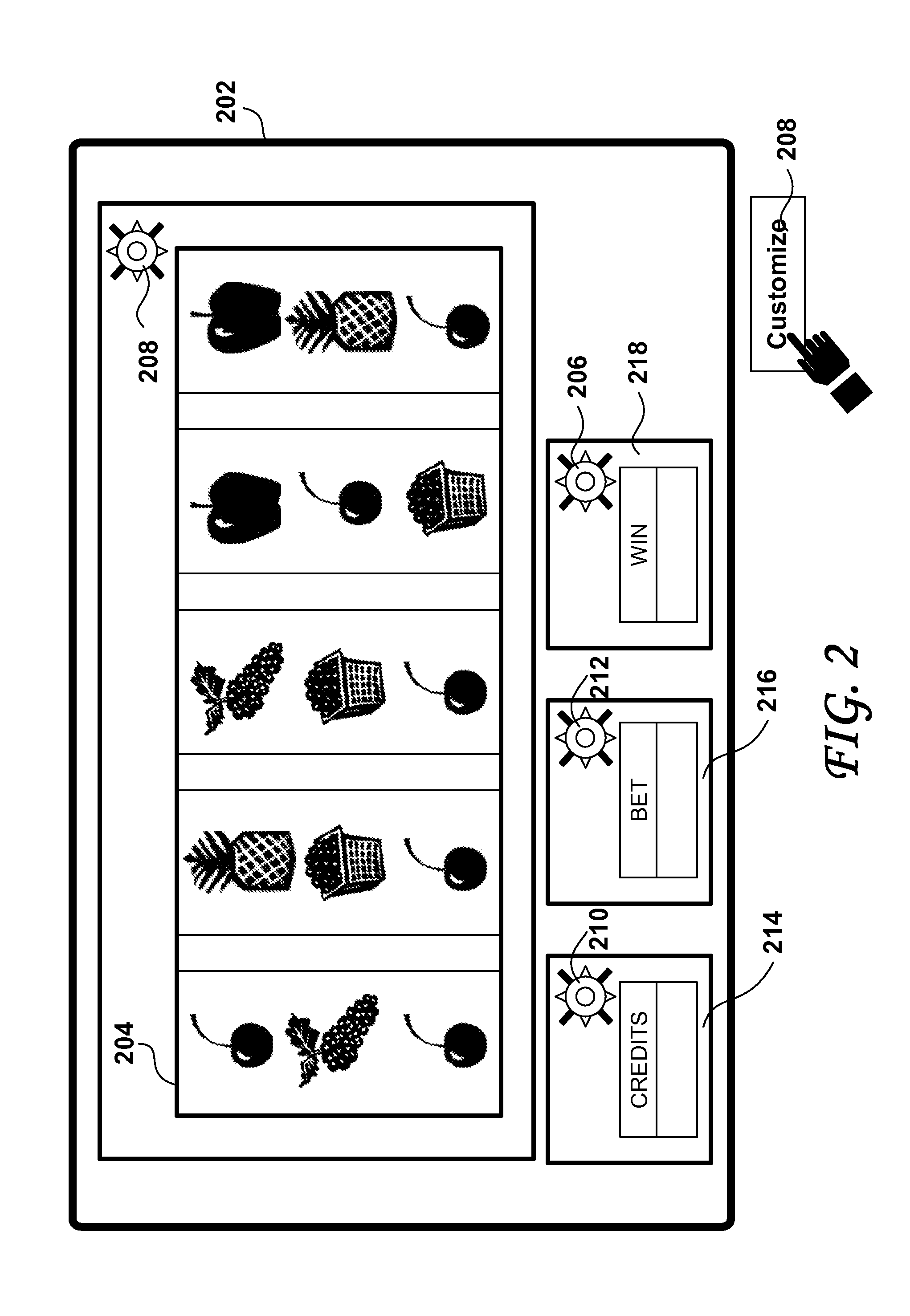

Universal player control for casino game graphic assets

ActiveUS20080194326A1Easy accessQuick navigationApparatus for meter-controlled dispensingVideo gamesGraphicsGeometric control

Universal Player Controls for regulated pay computer-controlled video games afford players an efficient, intuitive method for customizing the layout and appearance of their favorite electronic games. A popup control featuring a jog wheel for fast selection and geometric control using the touch screen may be attached to each predetermined group of graphic assets allowing players to move, resize, dim, animate, temporary hide or overlap them. Predetermined presentation styles (skins) may be successively rendered. Whereas the displays on the regulated pay computer-controlled video games of the prior art were often visually cluttered and not visually appealing to adult game console players, the present Universal Player Controls enables the players to tailor the game appearance then save it in a player profile for latter retrieval.

Owner:IGT

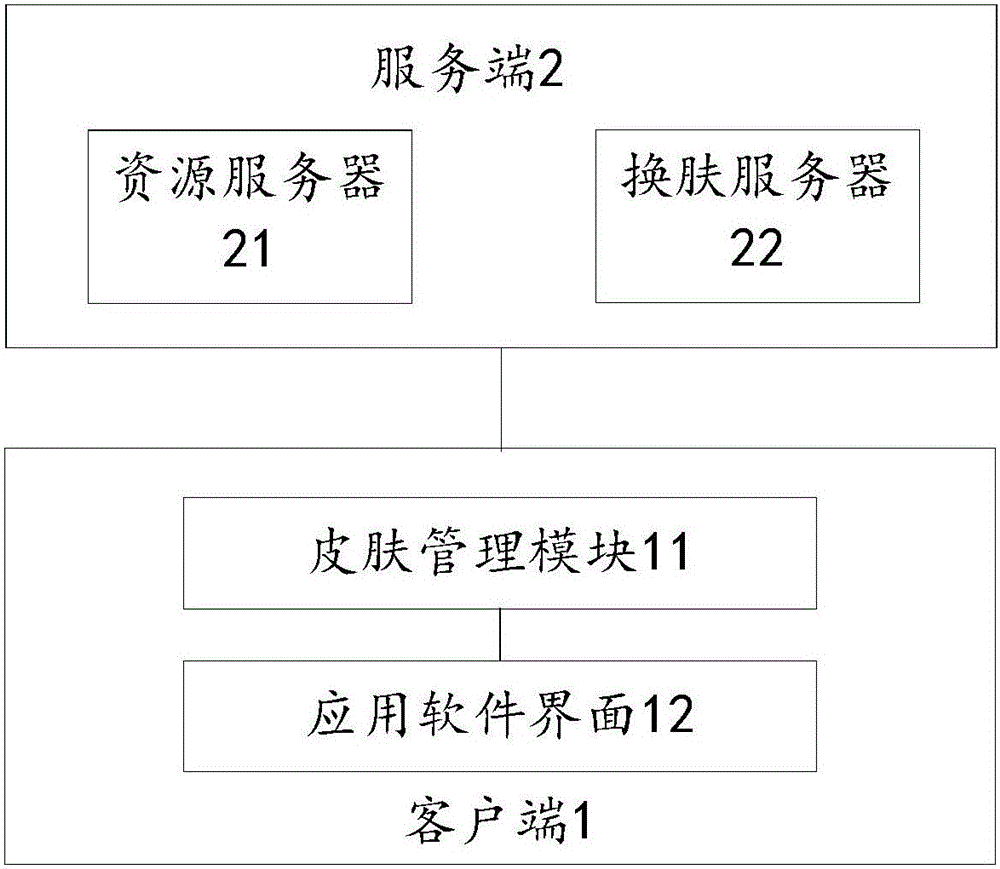

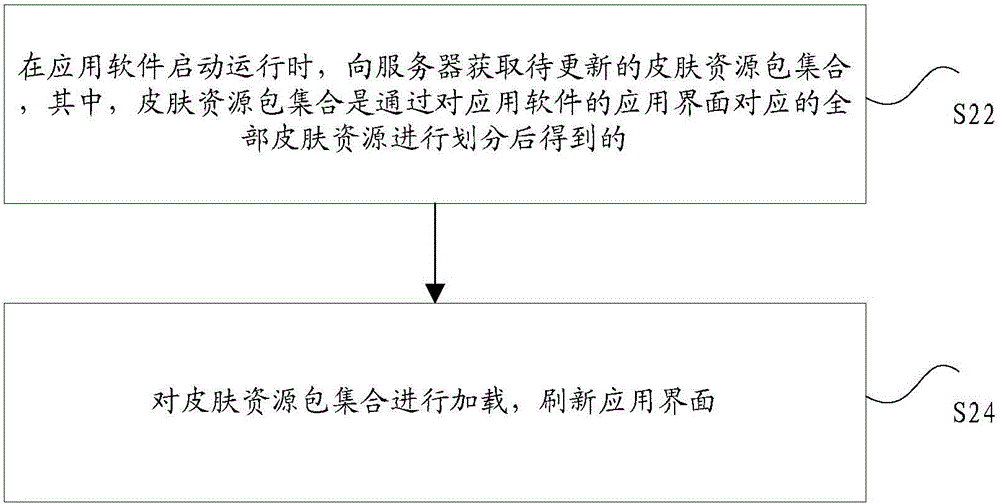

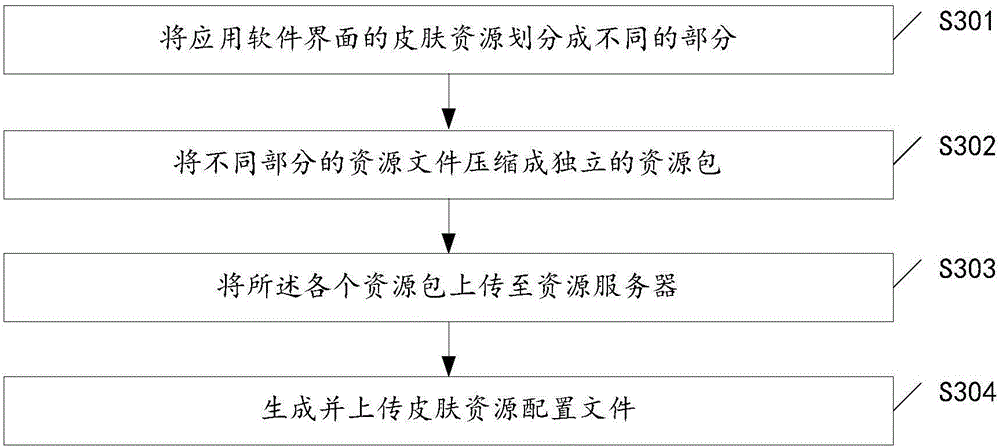

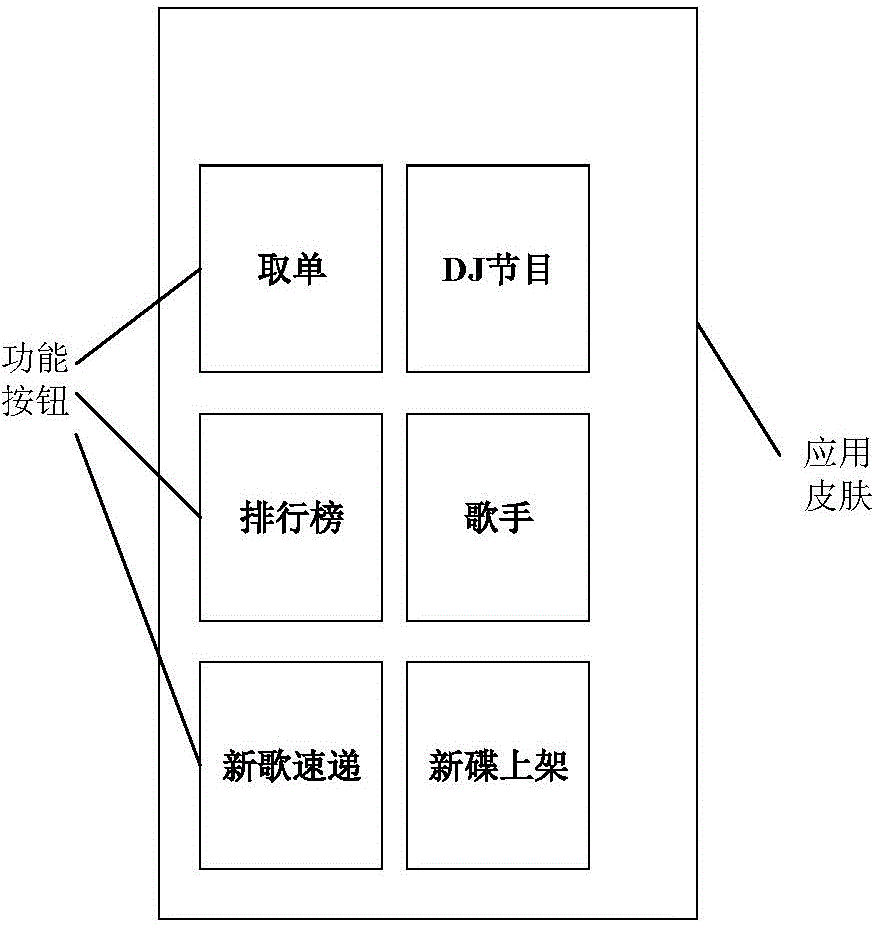

Skin exchanging method and apparatus for application software display interface

InactiveCN106227512AEfficient managementFlexible managementExecution for user interfacesResource utilizationIntact skin

The invention discloses a skin exchanging method and apparatus for application software display interface. The method comprises steps of acquiring a to-be-updated skin resource package set during start and operation of application software, loading the skin resource package set and refreshing application interface. The skin resource package set is achieved when all skin resources of the application interface of the application software are sorted. By the use of the skin exchanging method, a problem that the whole software interface in relative technology usually has only one skin package file and therefore a user has to re-download the resource during skin update can be solved, so resource utilization rate can be reduced and a technical problem of bandwidth resource can be solved.

Owner:NETEASE (HANGZHOU) NETWORK CO LTD

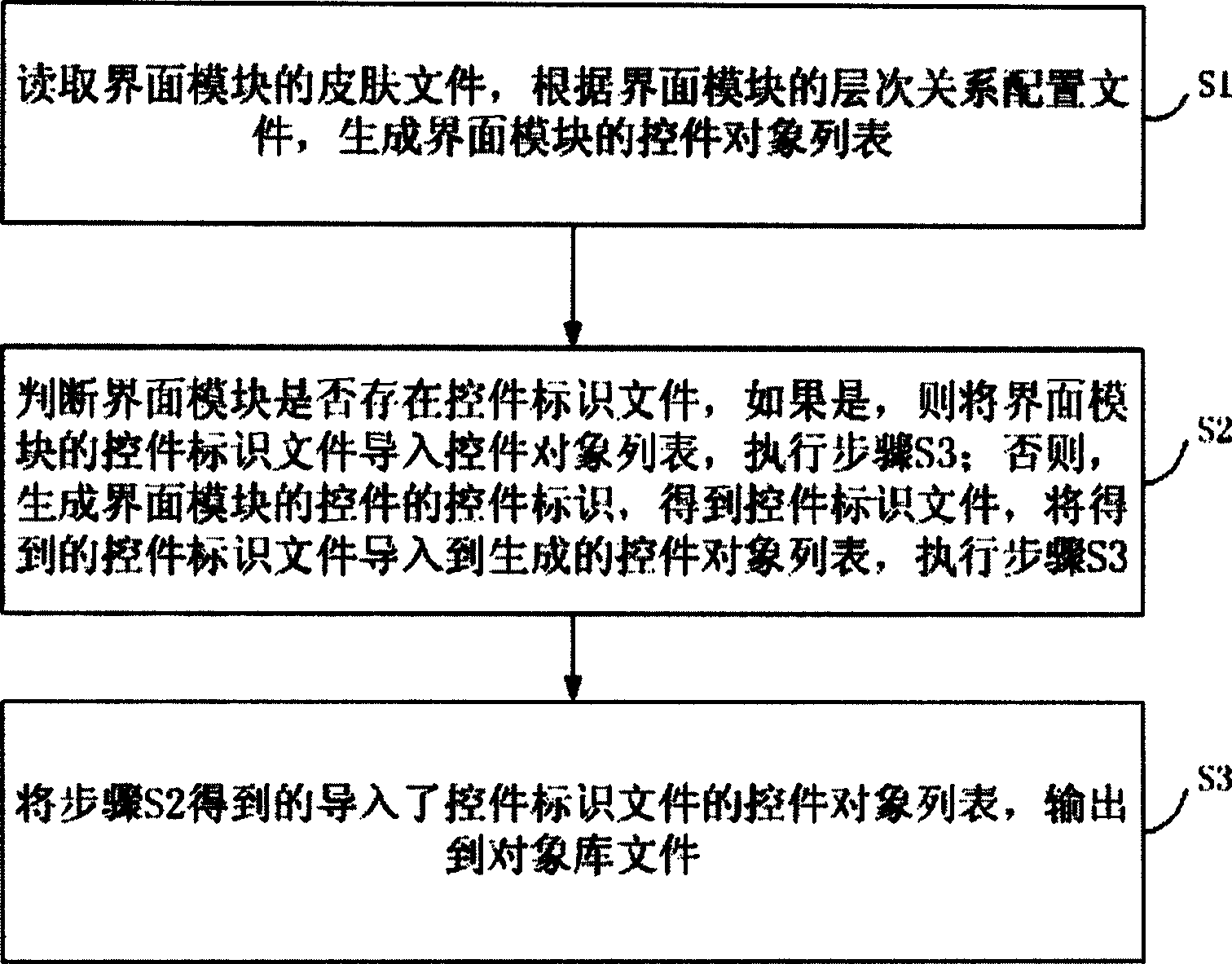

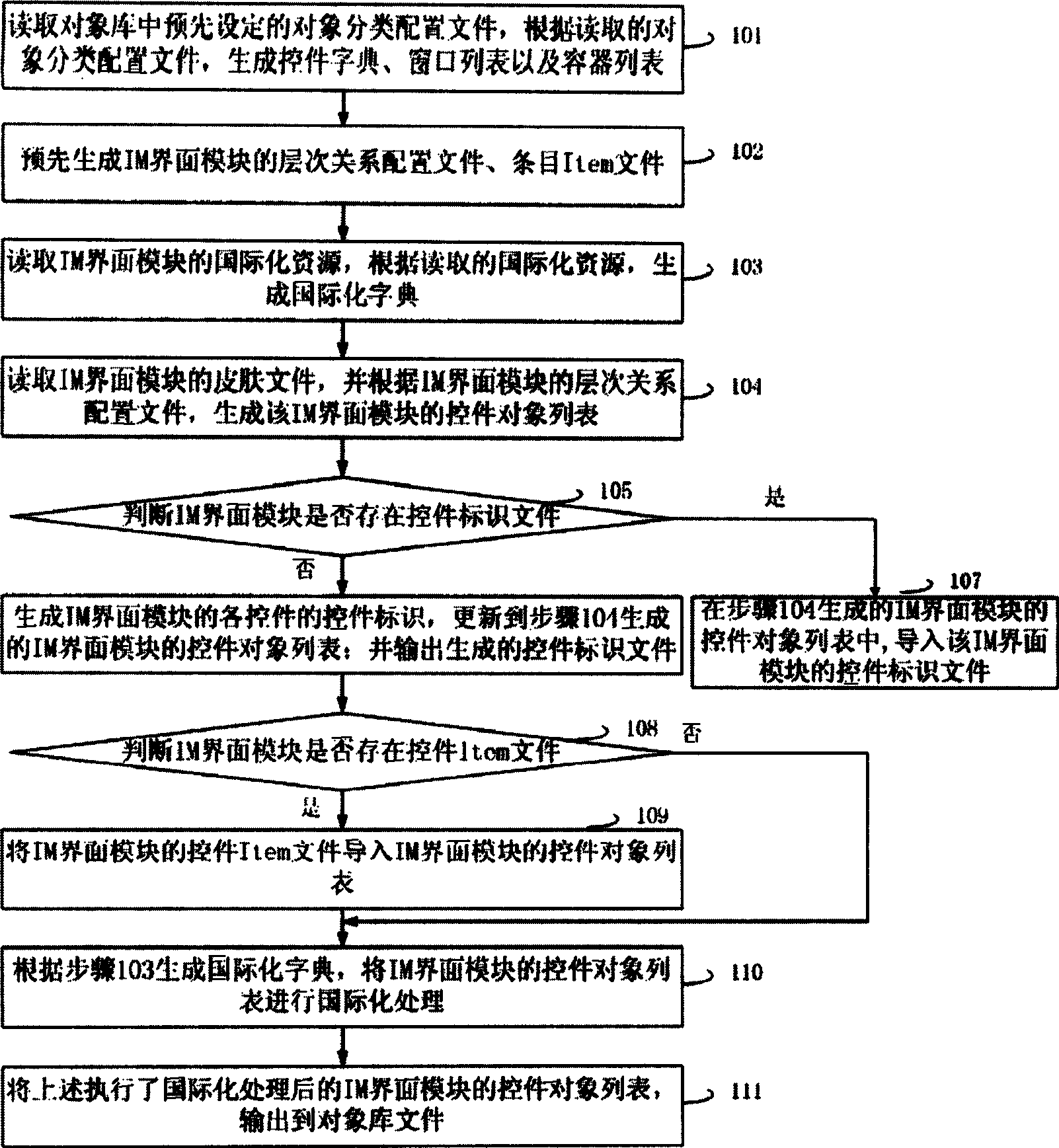

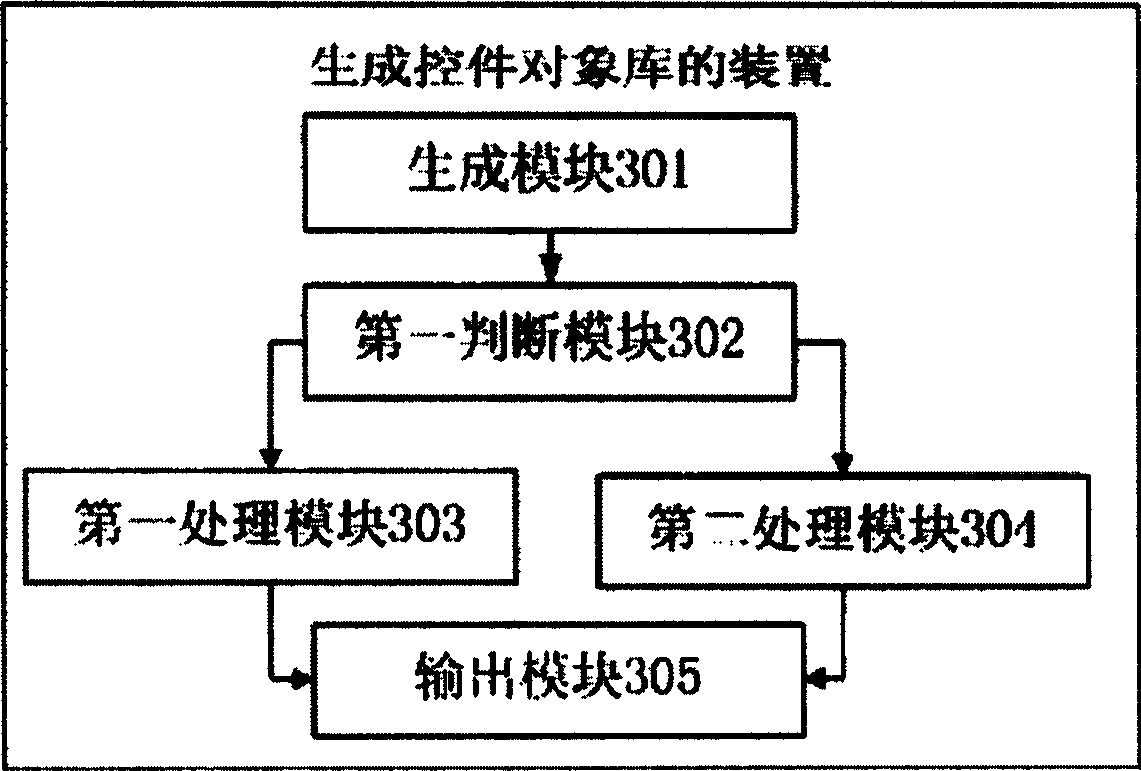

Method and device for generating control object library

ActiveCN101436133AEasy to useReduce operational complexitySoftware reuseData switching networksThe InternetSoftware engineering

The invention discloses a method and a device for generating control object libraries, which belongs to the field of computer internet. The method comprises the following steps of A: reading a skin file of an interface module and generating a control object list of the interface module according to a hierarchical relationship configuration file of the interface module; B: judging whether a control identifier file exists in the interface module, importing the control identifier file of the interface module into the control object list and executing a step C if yes, or generating a control identifier of a control of the interface module, obtaining the control identifier file, importing the obtained control identifier file into the generated control object list and executing the step C if no; and C: outputting the control object list into which the control identifier file is imported in a step B to a object library file. Aiming at widely applied IM software at present, the method automatically converts IM controls into the object library files, provides convenience for research and development personnel need to control the IM controls to use various IM controls, reduces the complexity of using operation, and reduces consumed time of operation.

Owner:TENCENT TECH (SHENZHEN) CO LTD

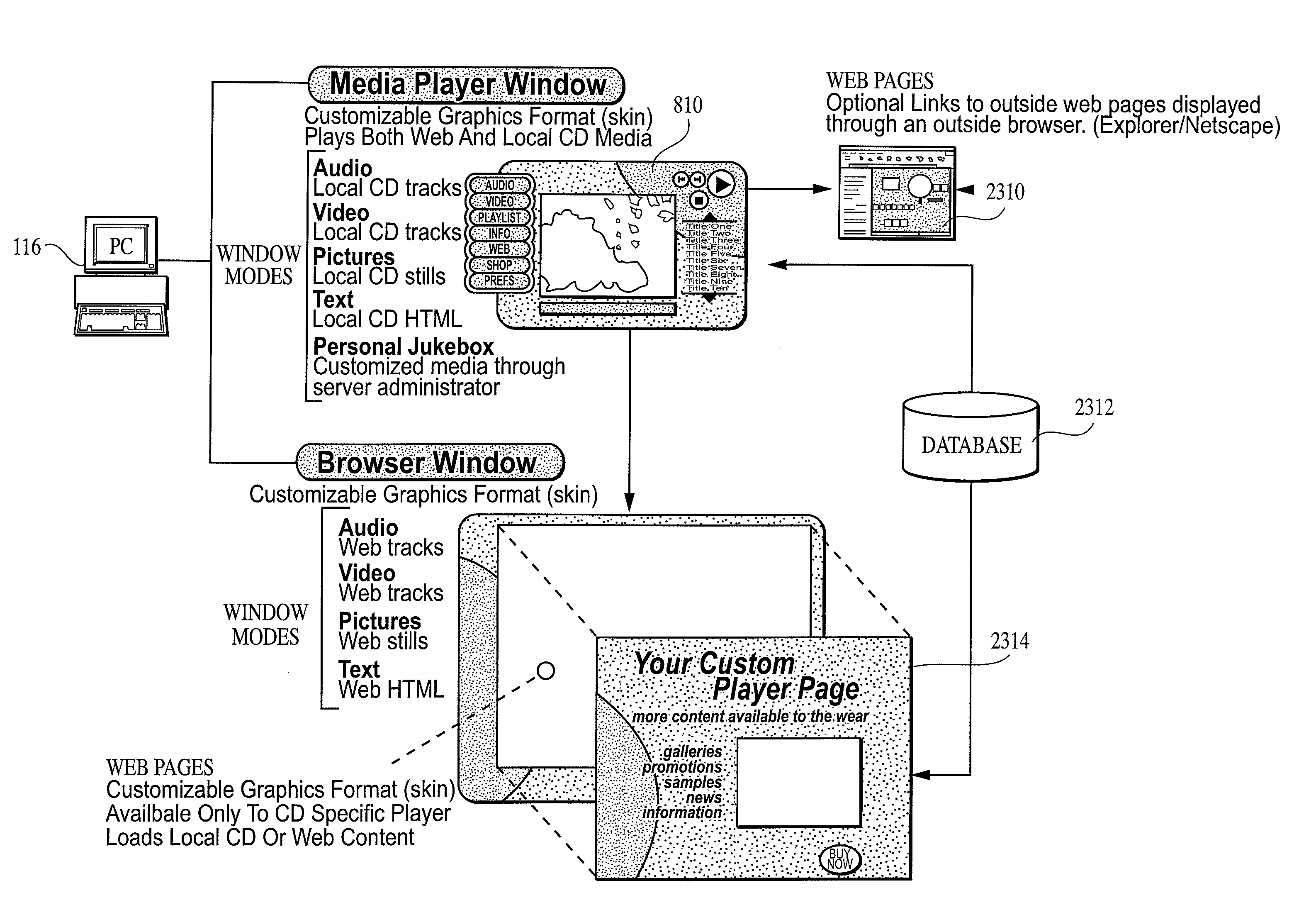

Multimedia player and browser system

InactiveUS20090228578A1Improve impact performanceMultimedia data browsing/visualisationDigital computer detailsHard disc driveComputer users

A multimedia software application that can include audio, video and / or graphics, in a manner that combines the multimedia experience with the transfer of information from and between a variety of sources (126), in a variety of directions, and subject to a variety of prompts. The application provides a “Web in Page” approach, in which a series of windows have the same or similar “look and feel”, yet can be used to access and display information from a variety of sources (126), including local content (112) (hard drive or other locally stored media), and web-based online content (118), including that available from a dedicated, integrated server (114), affiliated servers (114), or even other computer users. The application of the present invention can be provided in stand-alone form, to be loaded on a client device (116) (e.g., personal computer) from either a recorded medium or downloaded online. In addition to this “Web in Page” application interface, a “Web in Skin” interface may be provided, by which the application interface may be varied based on client user (110) or advertiser (126) preferences to provide a customized interface format Optionally, and preferably, the application is provided in a form where it is recorded on, and thereby combined with, digitally recorded content, such as a music CD or DVD.

Owner:DEMERS TIMOTHY B +5

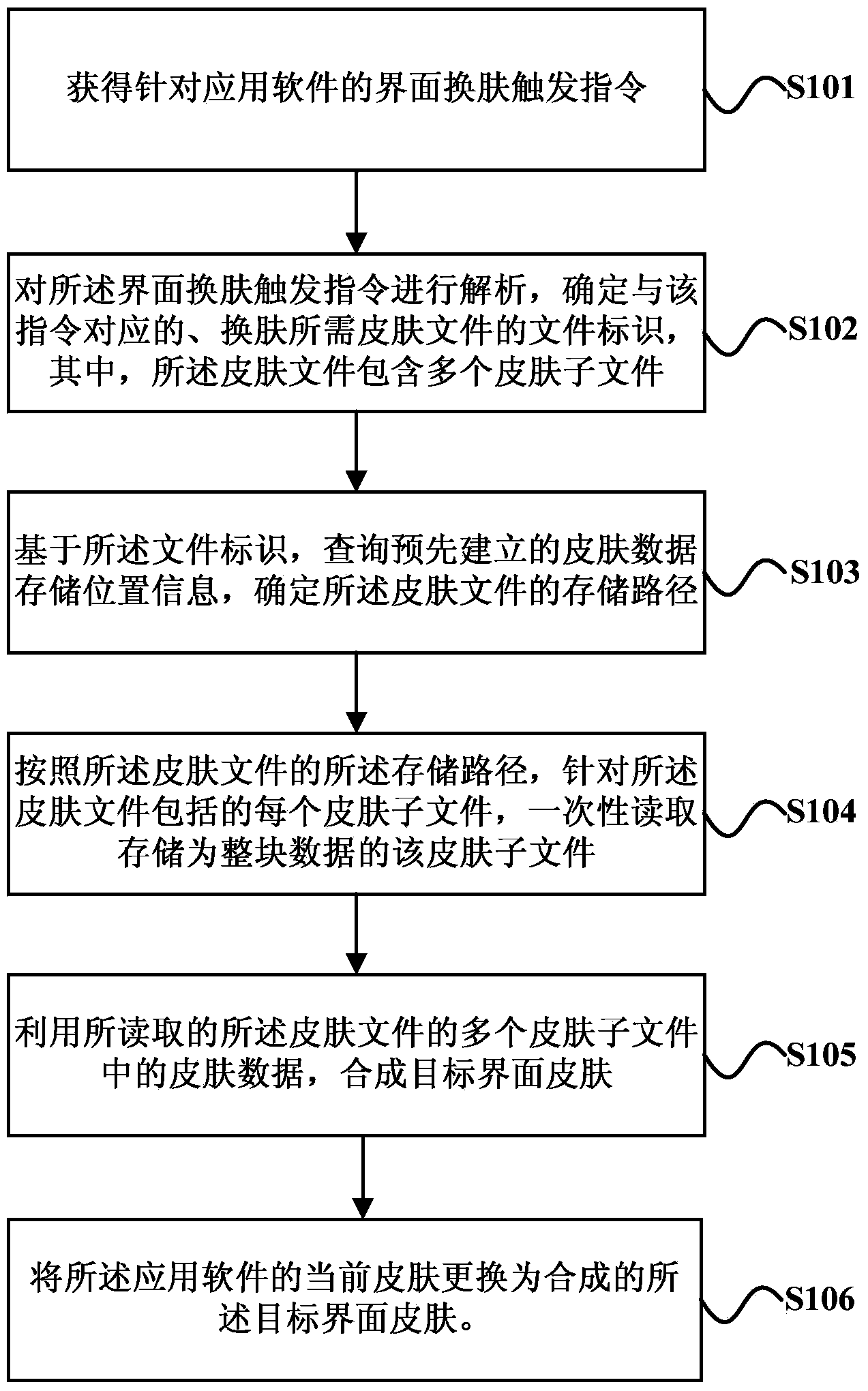

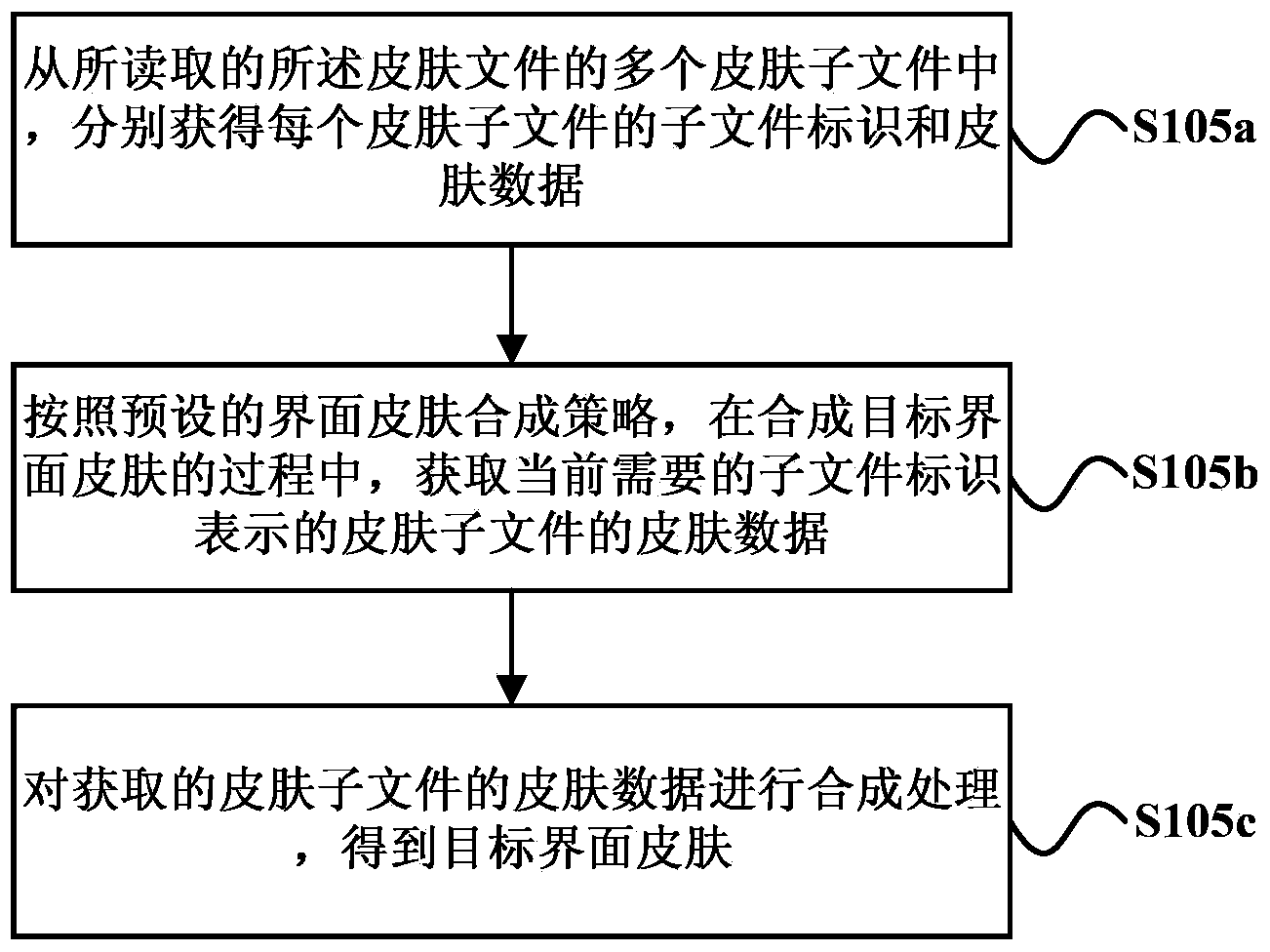

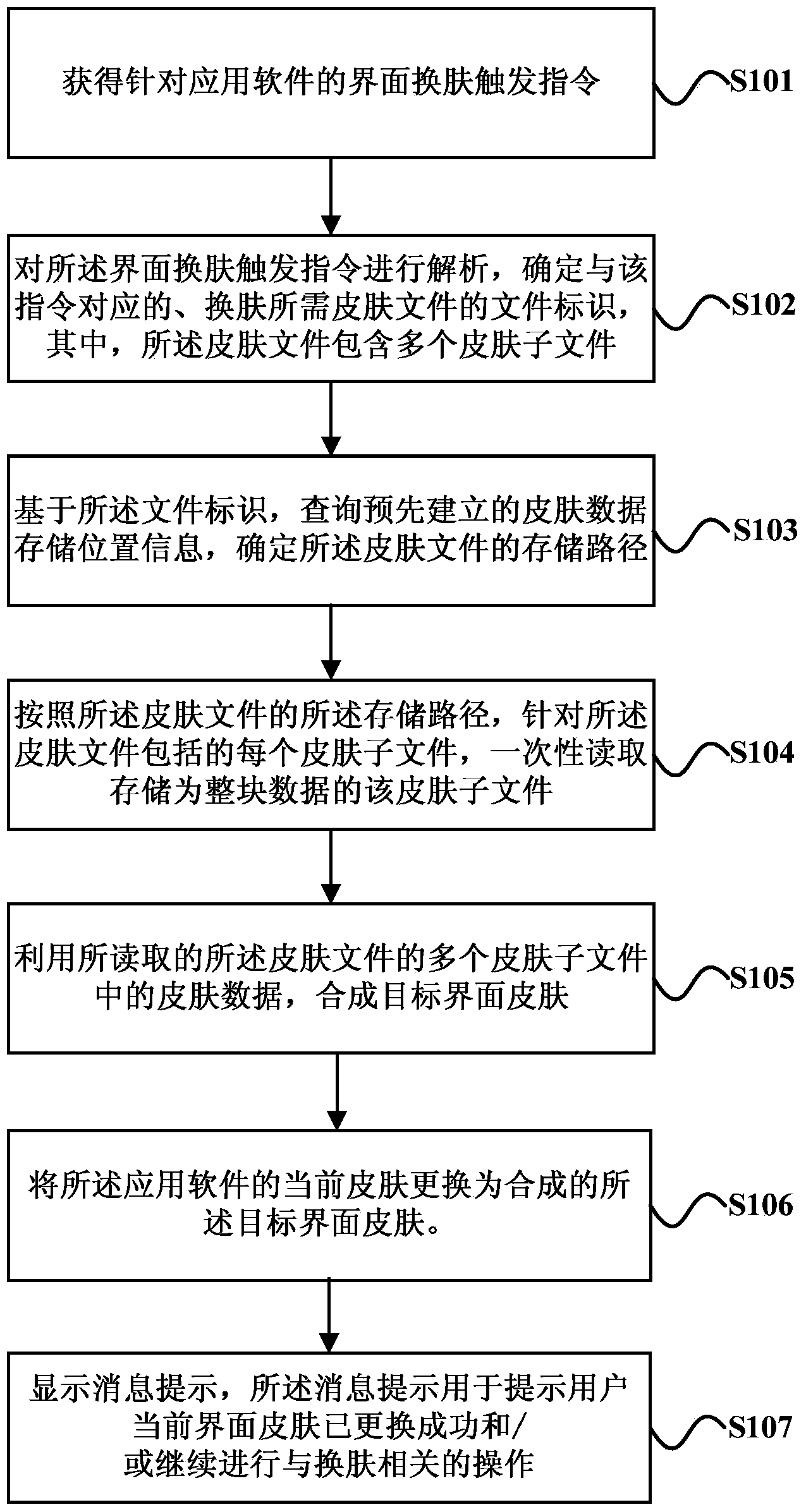

Skin changing method and device for application software interface

ActiveCN104050002AShorten the timeSimplified read timesProgram loading/initiatingApplication softwareComputer science

The embodiment of the invention discloses a skin changing method and device for an application software interface. The skin changing method for the application software interface includes the steps that an interface skin changing triggering instruction for the application software is obtained; the interface skin changing triggering instruction is analyzed, a file identification of a skin file needed for skin changing is determined and corresponds to the instruction, and the skin file includes multiple skin sub-files; based on the file identification, skin data storage position information established in advance is inquired, and the storage path of the skin file is determined; according to the storage path of the skin file, and each skin sub-file included in the skin file is read and stored in a one-off mode to be the skin sub-file of whole data; the skin data in the multiple read skin sub-files of the skin file are used for synthesizing target interface skin; the current skin of application software is replaced with the synthesized target interface skin. According to the technical scheme, the time for reading the skin data in the skin file is shortened, so that the efficiency of the whole skin changing process is improved.

Owner:GUANGZHOU KINGSOFT NETWORK TECH

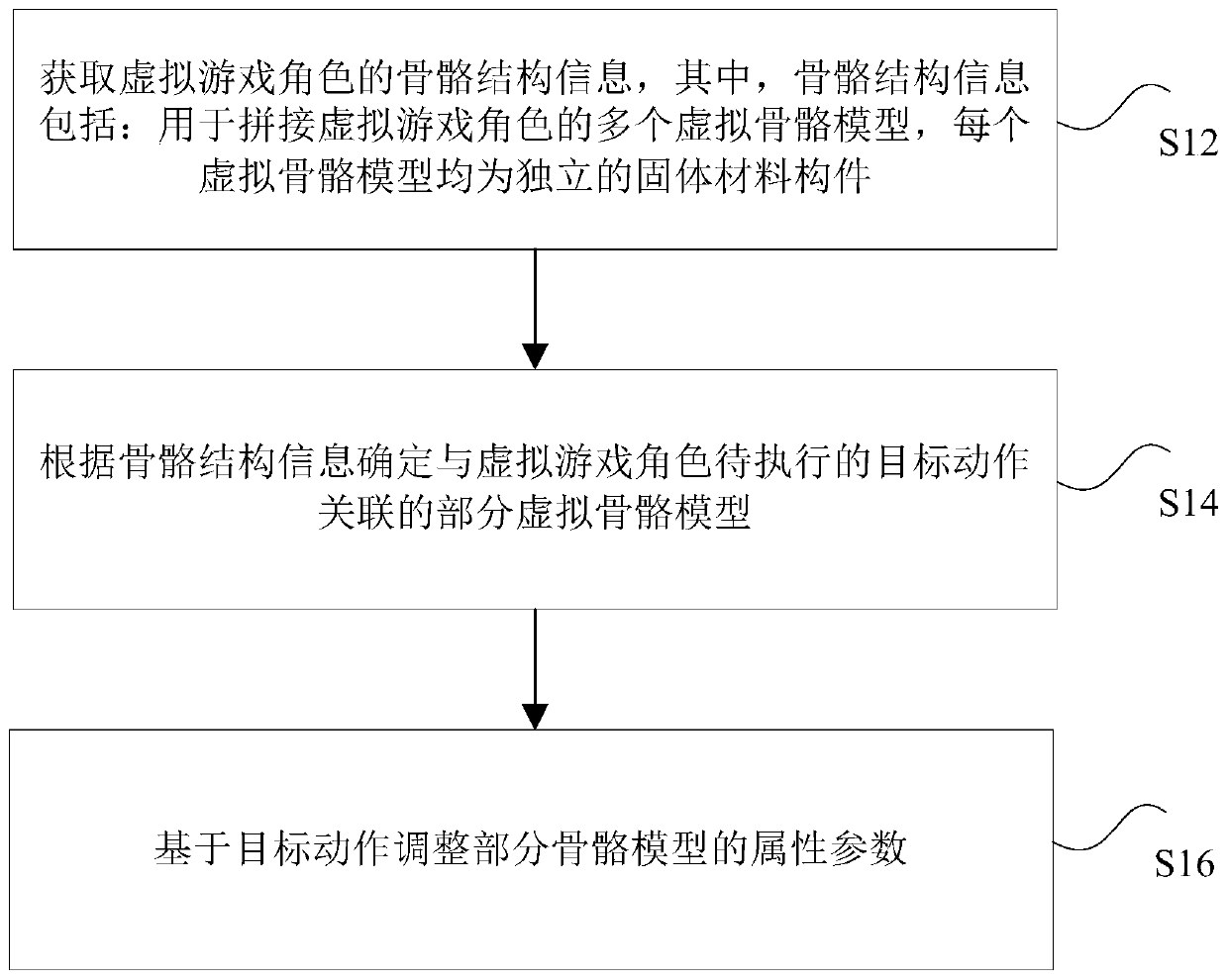

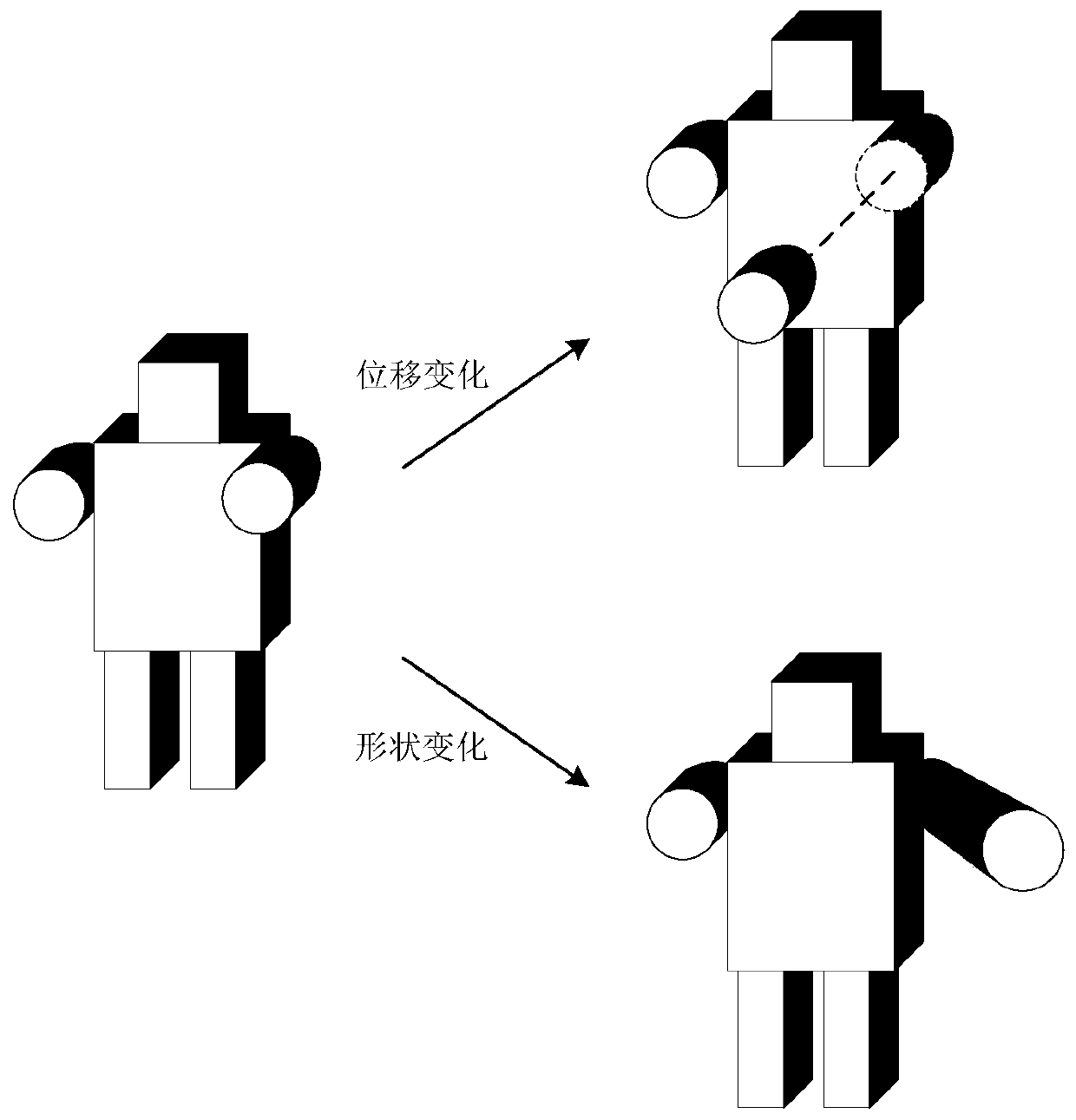

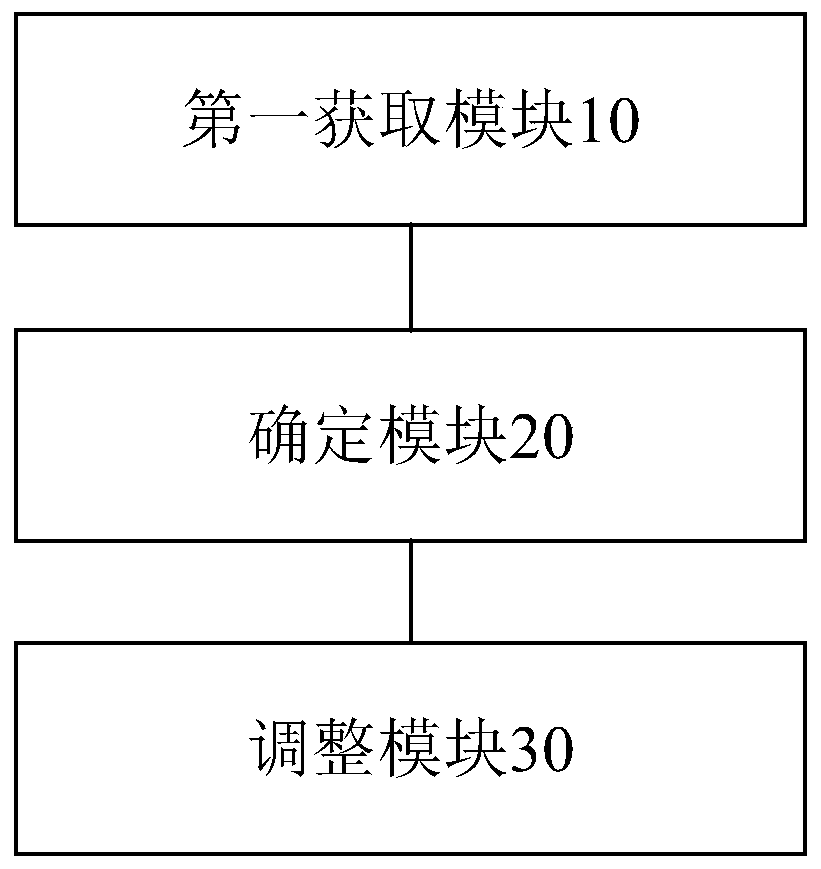

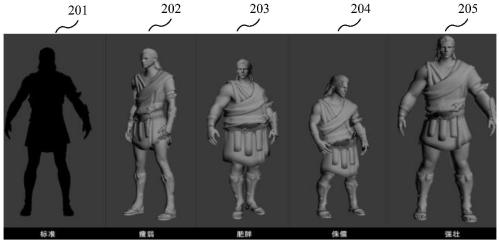

Self-adaptive adjustment method and device for virtual skeleton model, and electronic device

PendingCN111161427ATo achieve the purpose of adaptive adjustmentIncrease engagementImage data processingAnimationSkin

The invention discloses a self-adaptive adjustment method and a device for a virtual skeleton model, and an electronic device. The method comprises the steps of acquiring skeleton structure information of a virtual game role, the skeleton structure information comprises a plurality of virtual skeleton models used for splicing the virtual game role, and each virtual skeleton model is an independentsolid material component; determining a partial virtual skeleton model associated with a to-be-executed target action of the virtual game role according to the skeleton structure information; and adjusting attribute parameters of the partial skeleton model based on the target action. According to the method and the device, the technical problems of relatively large workload, relatively high operation complexity and relatively long development period caused by separately configuring each three-dimensional virtual game role appearing in a game scene according to the skeletal skin animation provided by the related technology are solved.

Owner:北京代码乾坤科技有限公司

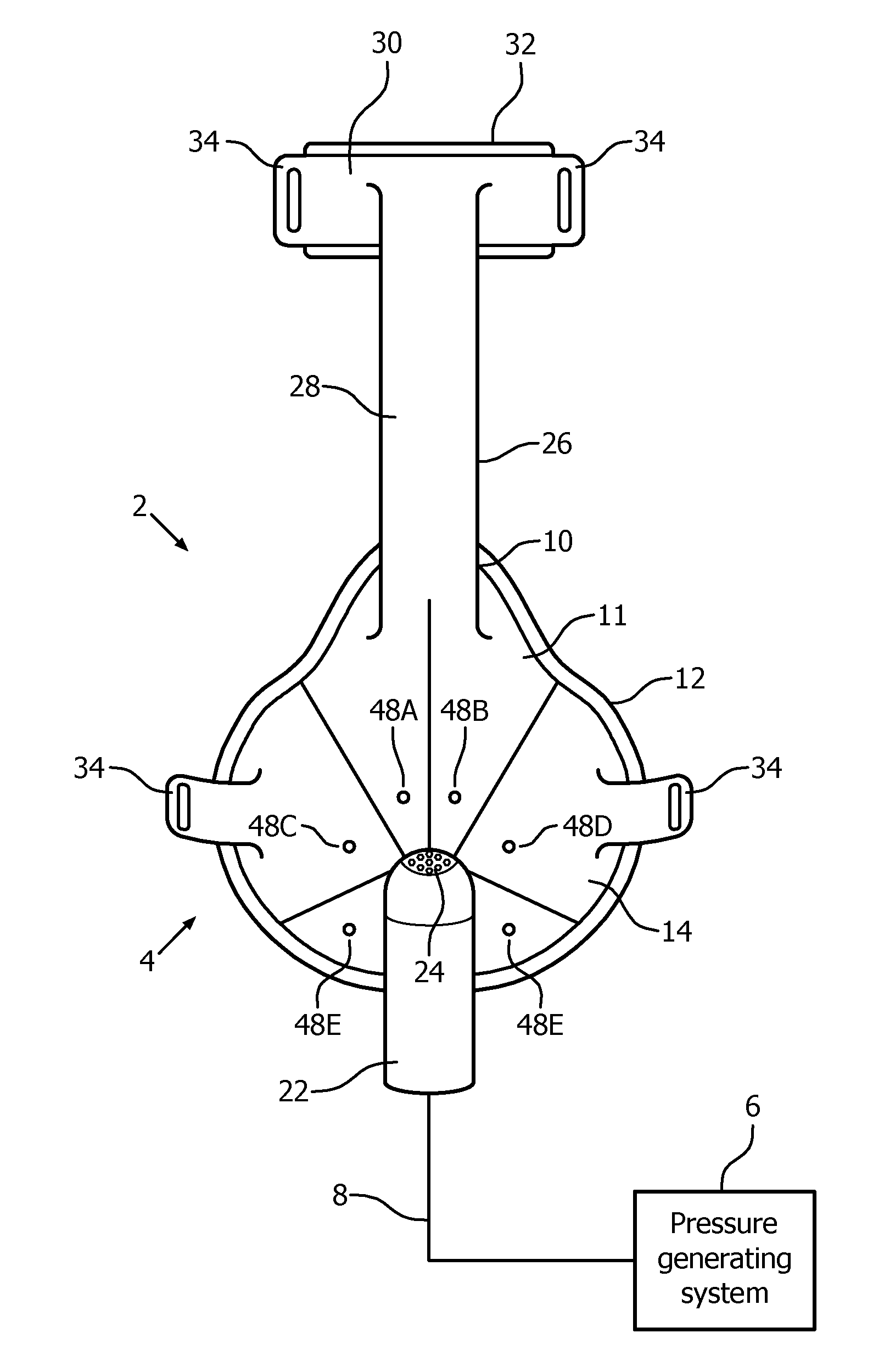

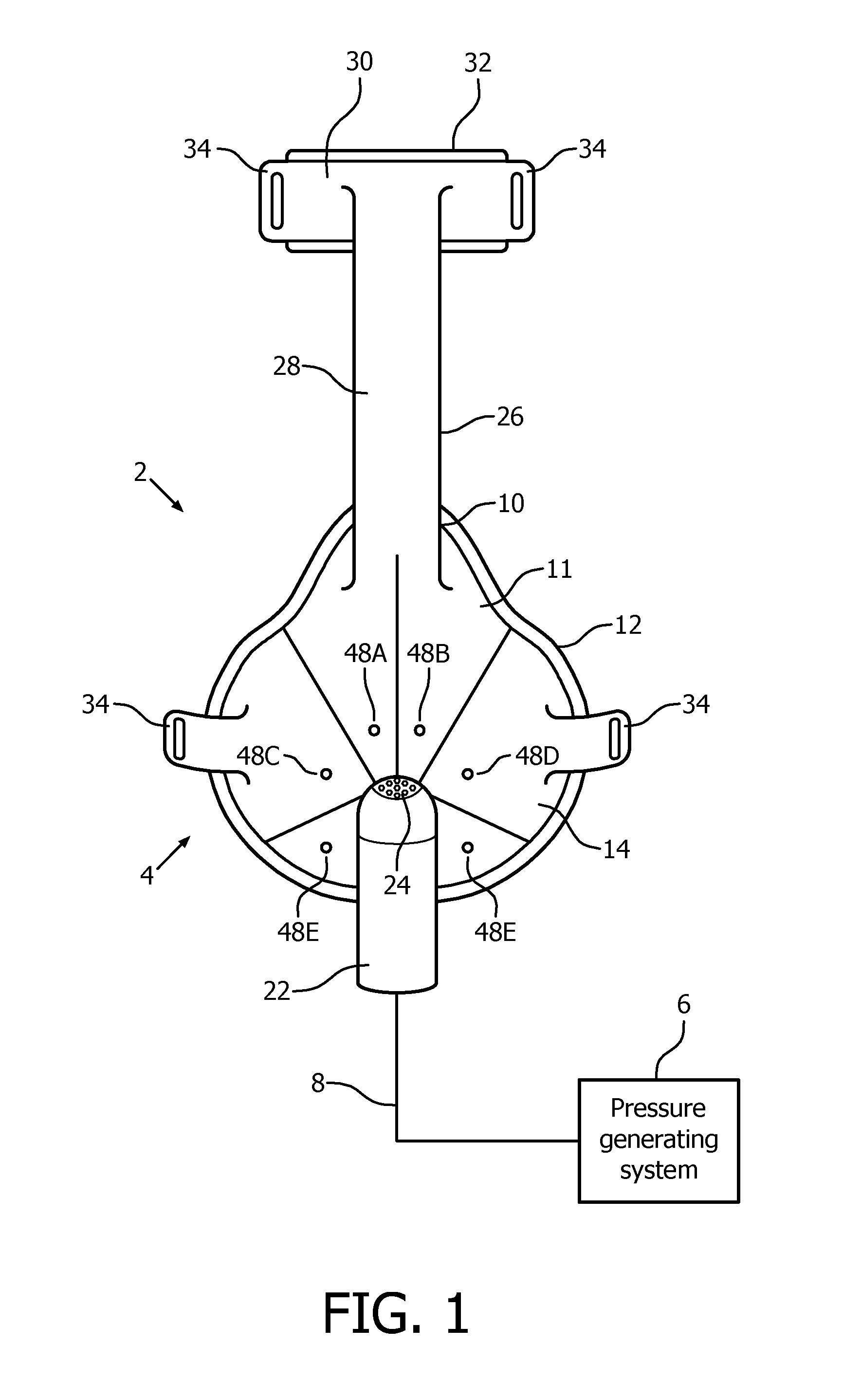

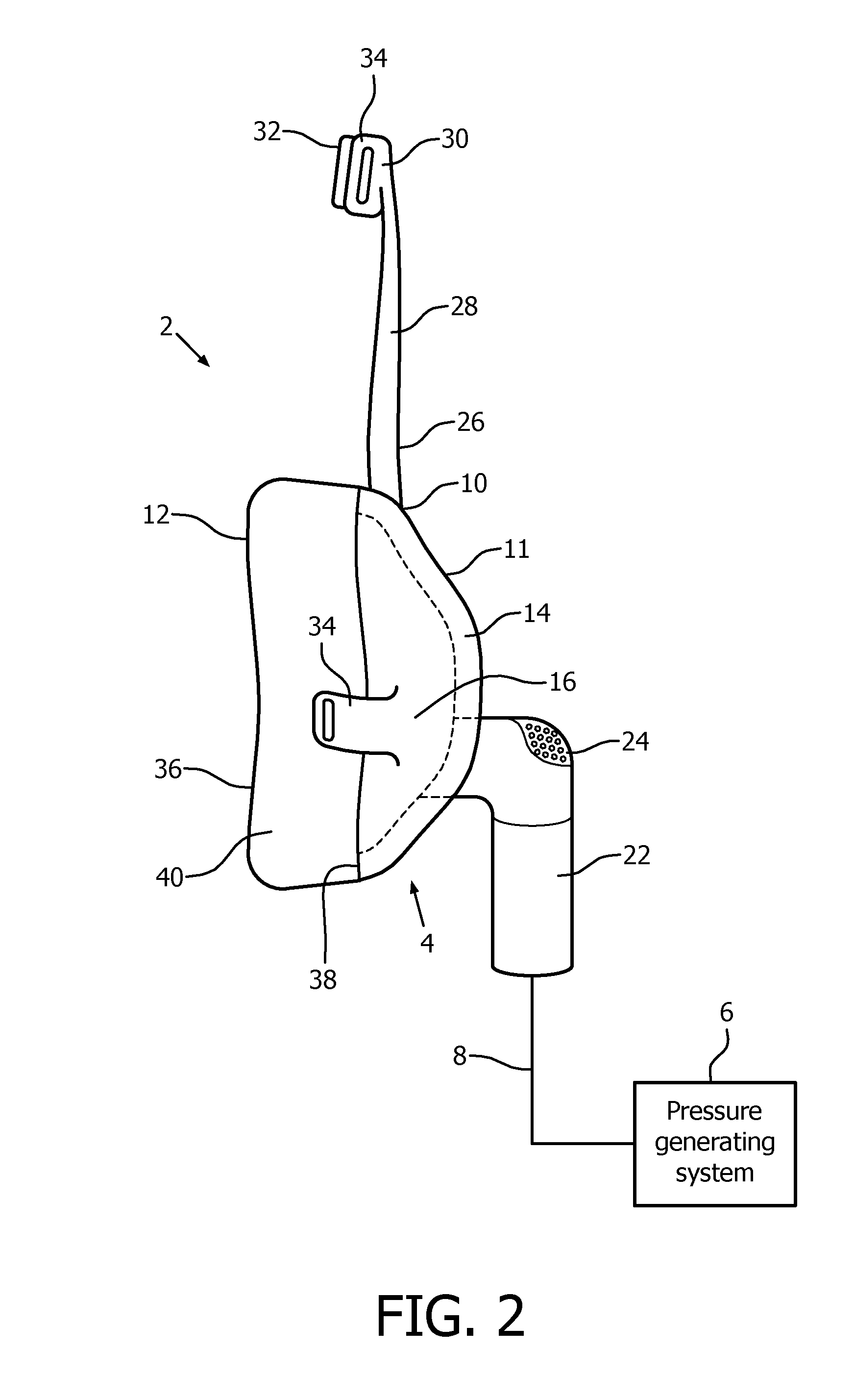

User interface device providing for improved cooling of the skin

ActiveUS20150040909A1Improve skinOvercomes shortcomingRespiratory masksBreathing masksSkin coolingUser interface

A number of user interface device embodiments are disclosed that provide for increased cooling of the skin covered by the mask and / or increased flushing of gasses, including CO2, from the mask.

Owner:KONINKLIJKE PHILIPS ELECTRONICS NV

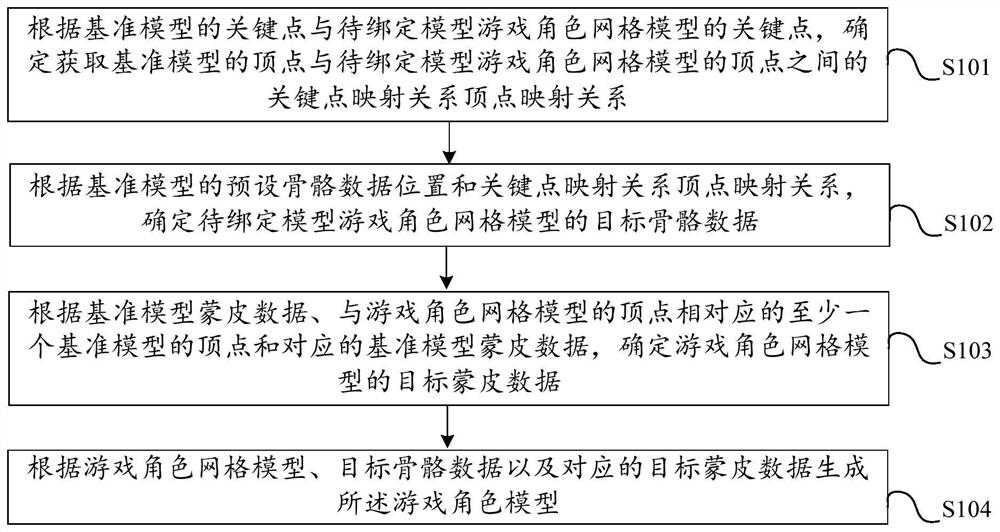

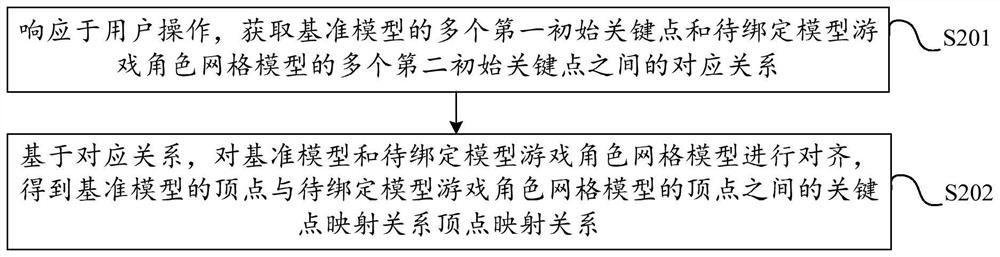

Game role model generation method and device, role adjustment method and device, equipment and medium

PendingCN111714885AImprove production efficiencyReduce manual adjustmentsAnimationVideo gamesReference modelSkin

The invention provides a game role model generation method and device, a role adjustment method and device, equipment and a medium, and relates to the technical field of games. The game role model generation method comprises the steps of determining a vertex mapping relationship between vertexes of a reference model and vertexes of a game role grid model according to key points of the reference model and key points of the game role grid model; determining target skeleton data of the game role grid model according to reference model skeleton data and the vertex mapping relationship; determiningtarget skin data of the game role grid model according to reference model skin data, the vertex of at least one reference model corresponding to the vertex of the game role grid model and corresponding reference model skin data; and generating the game role model according to the game role grid model, the target skeleton data and the corresponding target skin data. So, the fine manual adjustmentis greatly reduced, the automatic binding is realized, and the generation efficiency of the game role model is improved.

Owner:NETEASE (HANGZHOU) NETWORK CO LTD

Application interface skin replacement method and device

InactiveCN108628518AImprove the display effectInput/output processes for data processingTarget controlApplication software

The invention provides an application interface skin replacement method and device and relates to the technical field of an articulated naturality web. According to the application interface skin replacement method and device provided by the embodiment of the invention, when a skin replacement instruction of a user for an application is received, at least one preset skin identity is displayed forthe user to select; a target skin file is determined according to selection operation of the user for the at least one skin identity; and an application interface is replaced by target skin through utilization of the target skin file. The target file comprises a target image file, a target text style file and a target control style file, so a background image, a displayed text and a control styleof the application interface can be replaced completely only through one-time replacement operation of the user, and display effects of the application interface are enriched.

Owner:VISIONVERA INFORMATION TECH CO LTD

Content Management System using Sources of Experience Data and Modules for Quantification and Visualization

ActiveUS20140250184A1Expand accessData processing applicationsNatural language data processingUser inputSkin

A semantic note taking system and method for collecting information, enriching the information, and binding the information to services is provided. User-created notes are enriched with labels, context traits, and relevant data to minimize friction in the note-taking process. In other words, the present invention is directed to collecting unscripted data, adding more meaning and use out of the data, and binding the data to services. Mutable and late-binding to services is also provided to allow private thoughts to be published to a myriad of different applications and services in a manner compatible with how thoughts are processed in the brain. User interfaces and semantic skins are also provided to derive meaning out of notes without requiring a great deal of user input.

Owner:APPLE INC

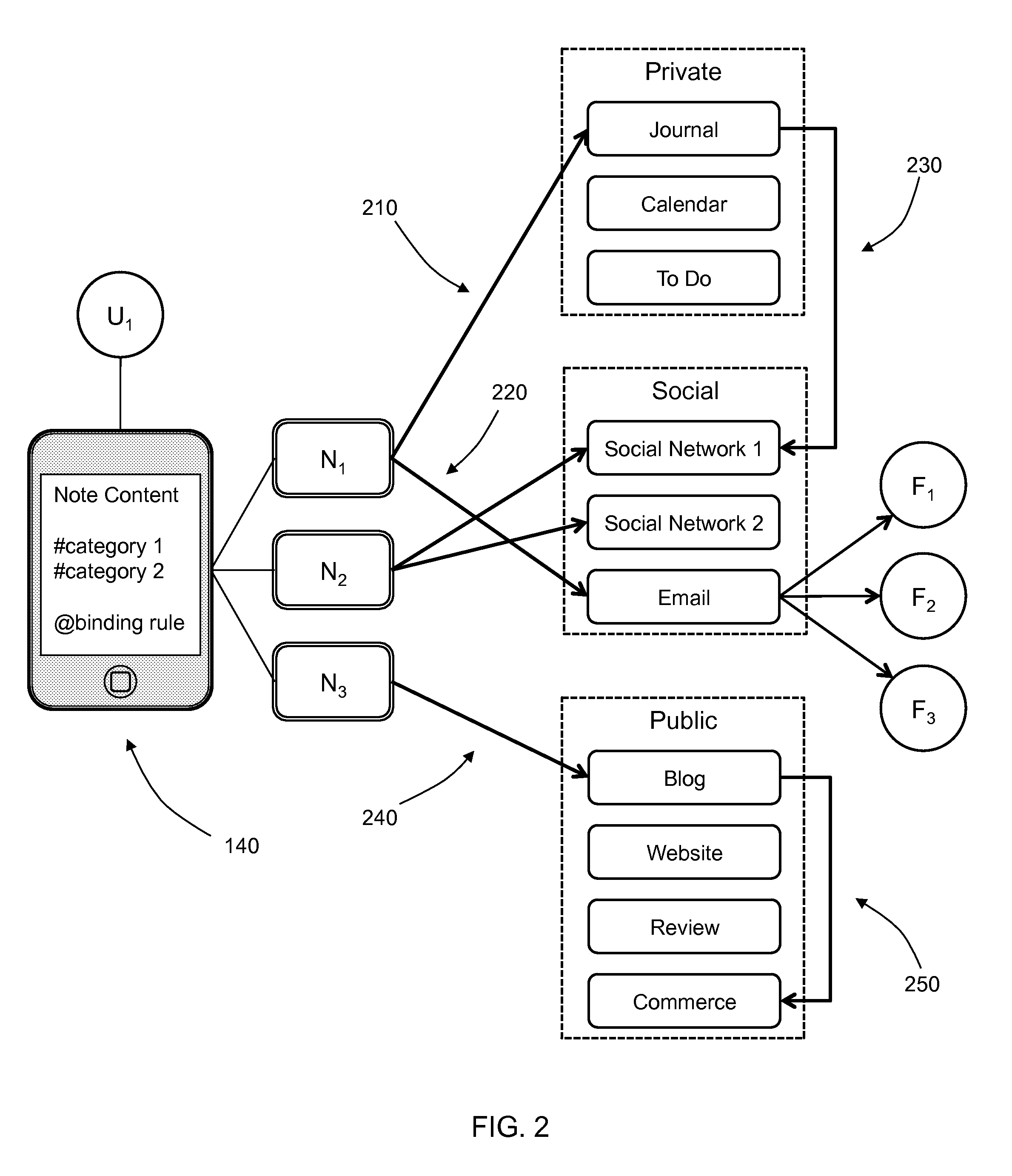

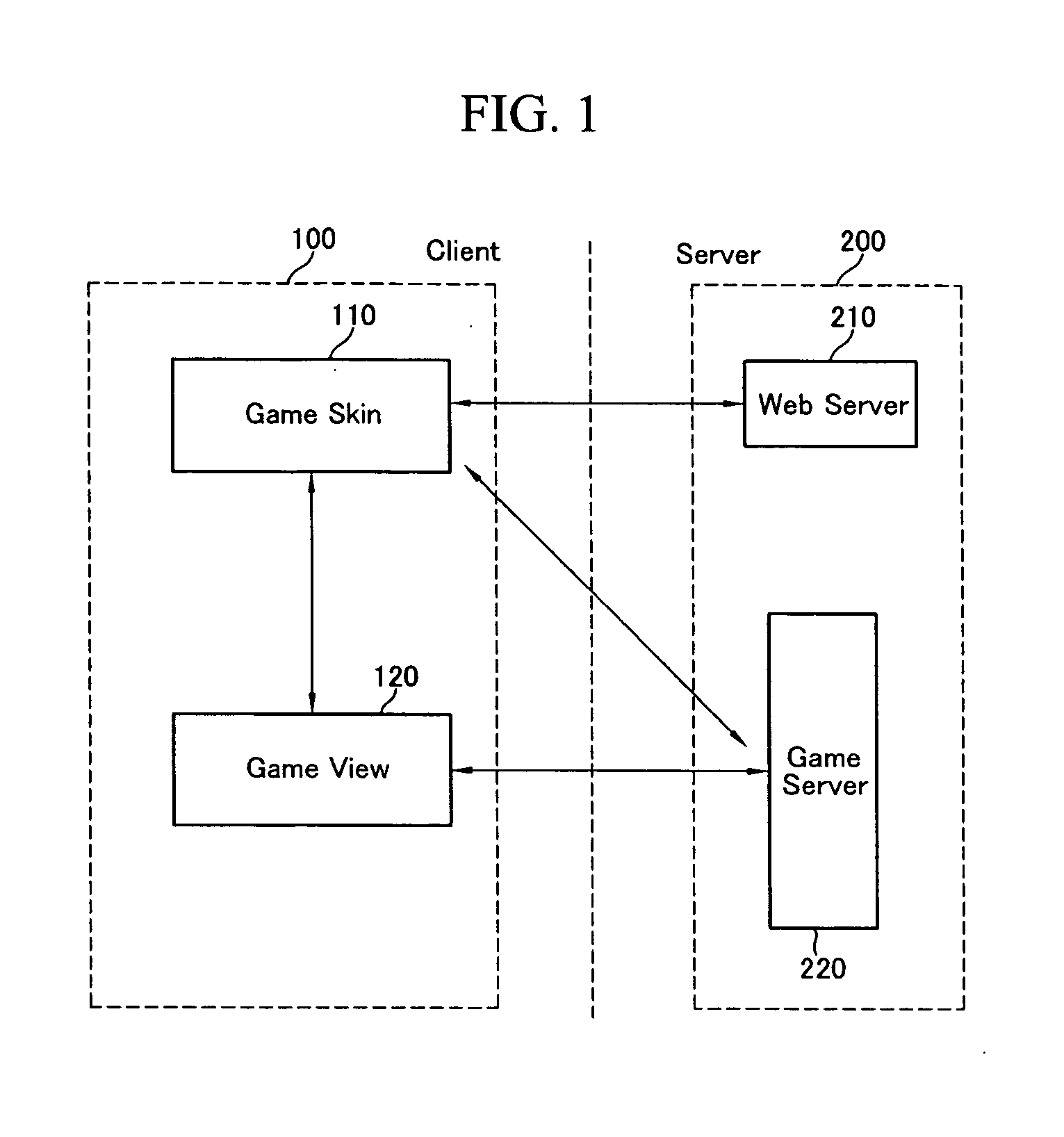

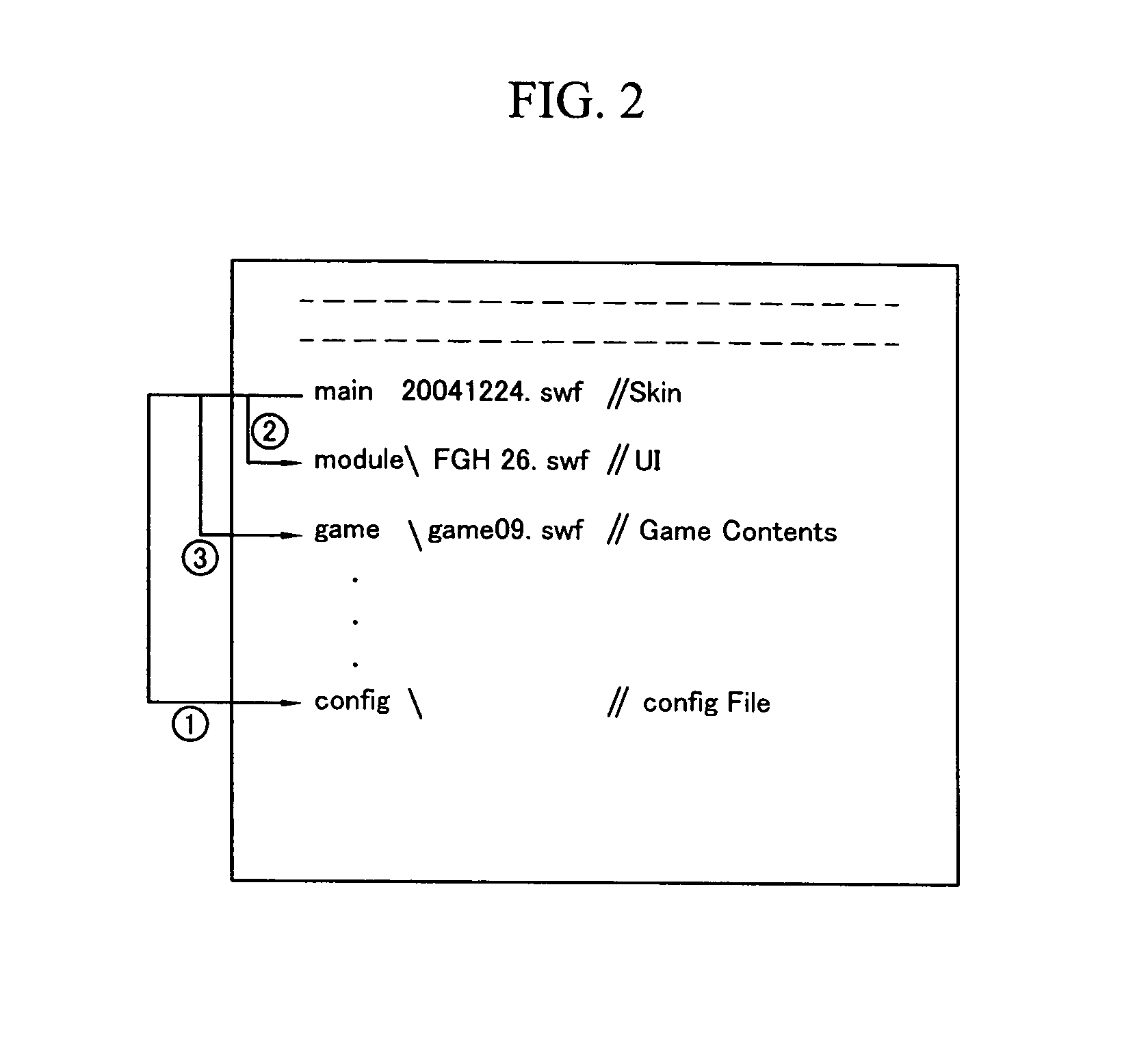

System for protecting on-line flash game, web server, method for providing webpage, and storage media recording that method execution program

The present invention relates to an online flash game protection system, a web server, a web page provision method, an online flash game protection method, and a storage medium recording the method execution program. When a flash game performance is selected, a game skin flash module controls a game skin flash that is a frame on a flash game screen to be realized on a user terminal screen. The game skin flash module checks whether a URL of the web page on which the game skin flash is started is a predetermined URL, and the game skin flash module forcibly moves the web page displayed on the user terminal to a web page having a predetermined URL or terminates game skin flash realization. Therefore, it is prevented for unknown users to randomly transfer specific flash games by checking source codes of the web page that provides various online flash games.

Owner:NHN ENTERTAINMENT

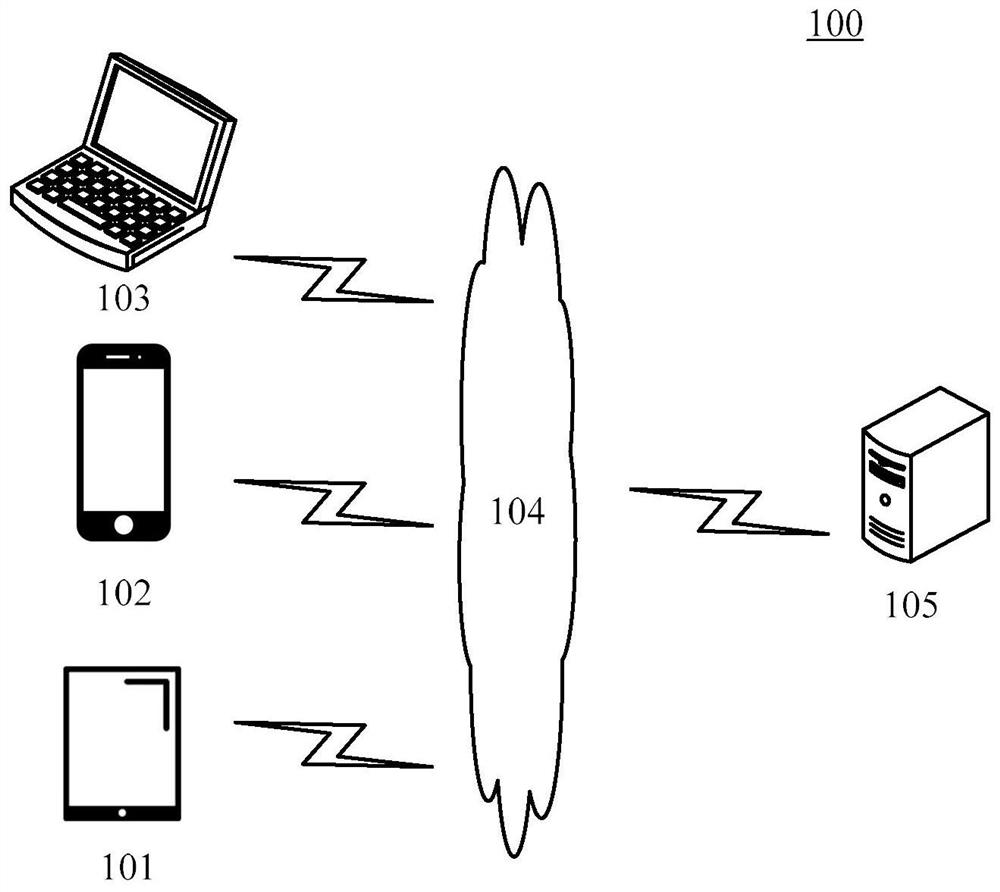

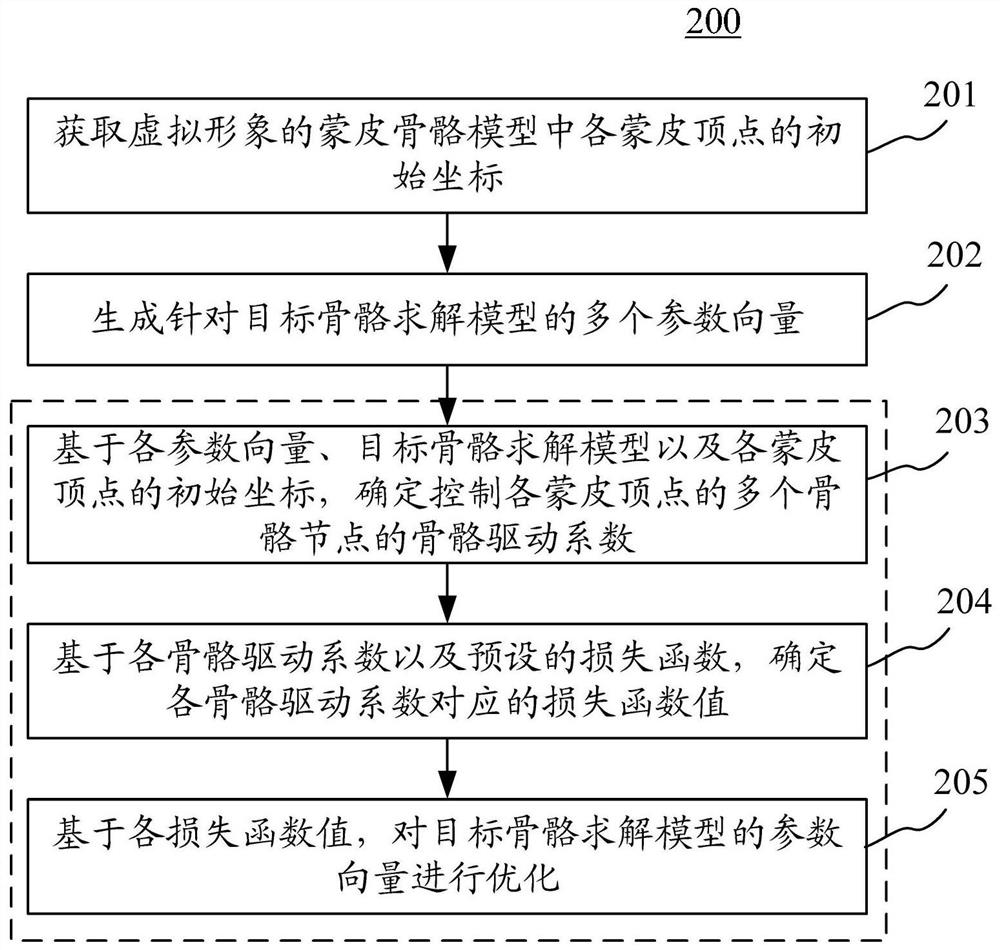

Method, device and equipment for optimizing model and storage medium

ActiveCN112862933AImprove computing efficiencyEasy to understandInternal combustion piston enginesDetails involving 3D image dataAlgorithmAnimation

The invention discloses a method and device for optimizing a model, equipment and a storage medium, and relates to the technical field of augmented reality, deep learning and animation. According to the specific implementation scheme, the method comprises the steps of obtaining initial coordinates of skin vertexes in a skin skeleton model of a virtual image; generating a plurality of parameter vectors for the target skeleton solving model; on the basis of the parameter vectors, executing the following iterative operations for multiple times: on the basis of the parameter vectors, the target skeleton solving model and the initial coordinates of the skin vertexes, determining skeleton driving coefficients for controlling a plurality of skeleton nodes of the skin vertexes; based on each skeleton driving coefficient and a preset loss function, determining a loss function value corresponding to each skeleton driving coefficient; and optimizing a parameter vector of the target skeleton solving model based on each loss function value. According to the implementation mode, a lightweight model can be provided, and the calculation efficiency is improved.

Owner:BEIJING BAIDU NETCOM SCI & TECH CO LTD

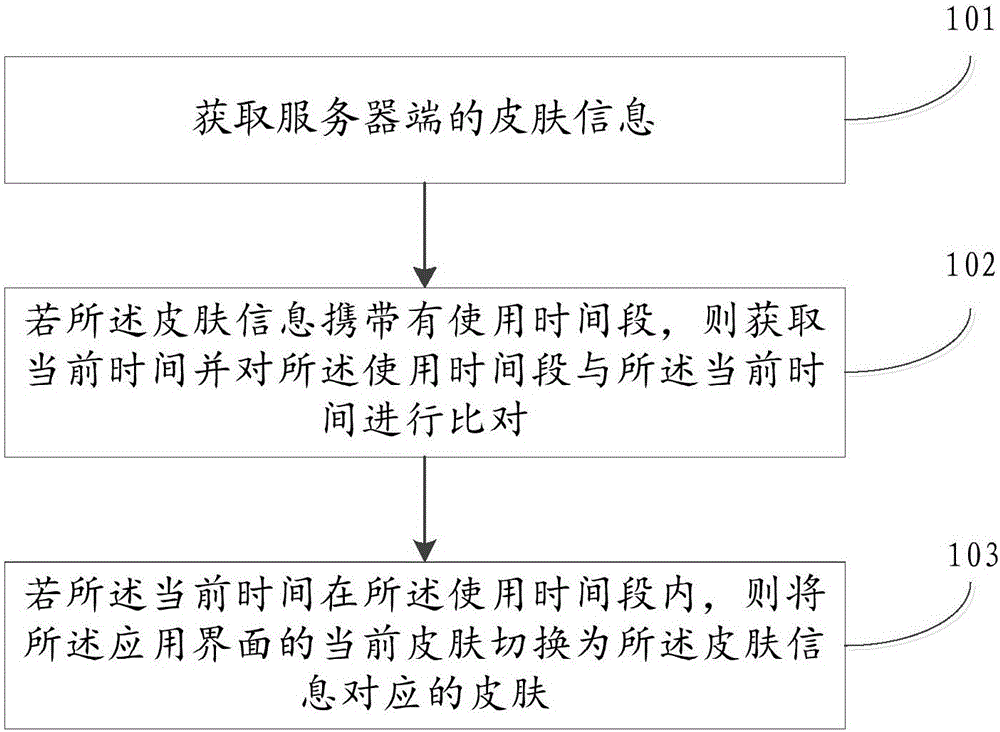

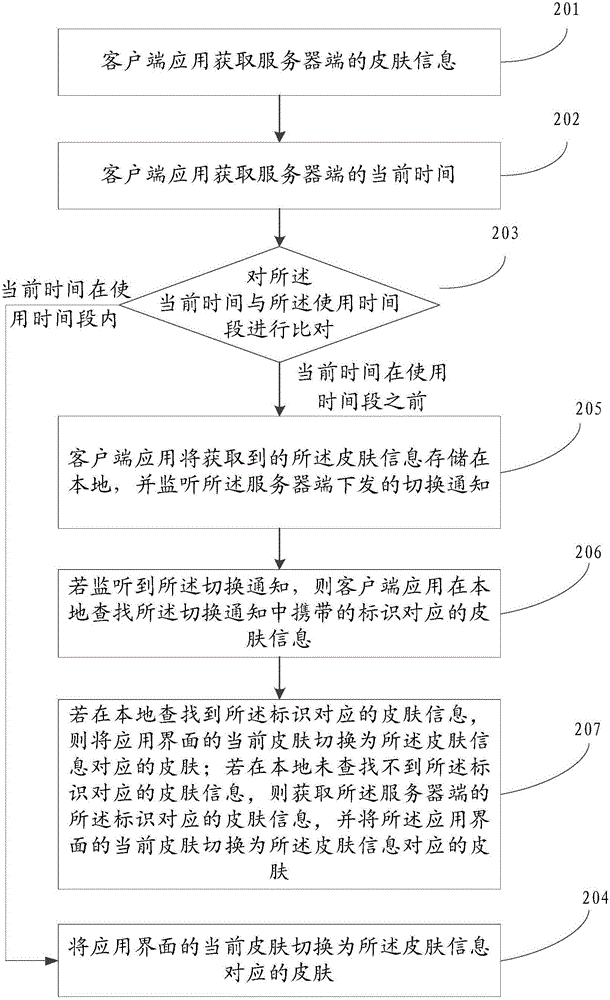

Application interface skin switching method and device

InactiveCN105975278AForget about manual downloadsSave the steps of skin switching settingsExecution for user interfacesComputer scienceUse of time

Embodiments of the present invention provide a method and device for switching application interface skins. Wherein, the method includes: acquiring skin information on the server side; if the skin information carries a time period of use, then acquiring the current time and comparing the time period of use with the current time; if the current time is within During the usage period, switch the current skin of the application interface to the skin corresponding to the skin information. The embodiment of the present invention omits the steps of user's manual download and skin switching setting, does not interrupt the user's use, realizes the dynamic change of skin switching, and improves the user's use experience.

Owner:LETV HLDG BEIJING CO LTD +1

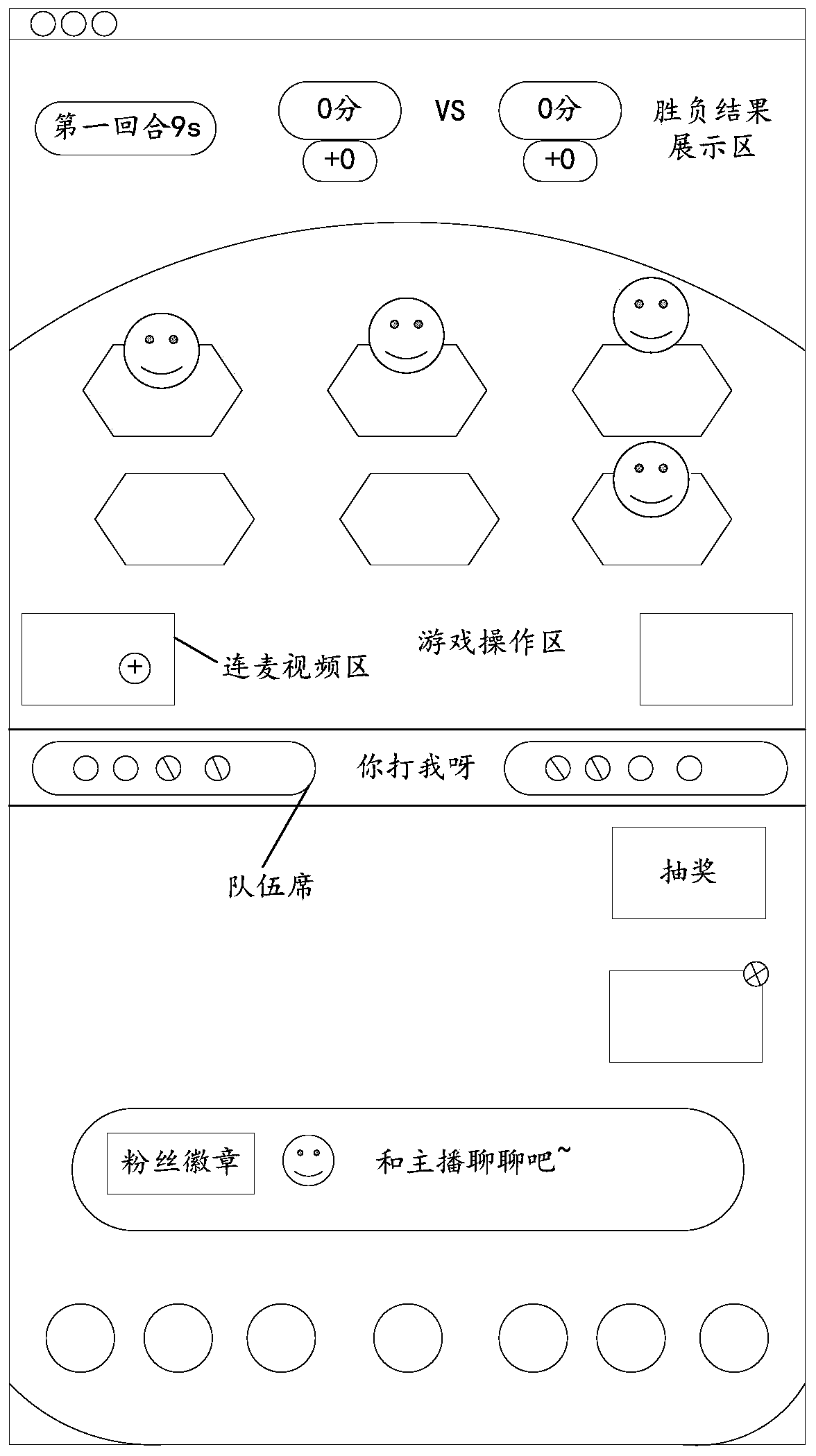

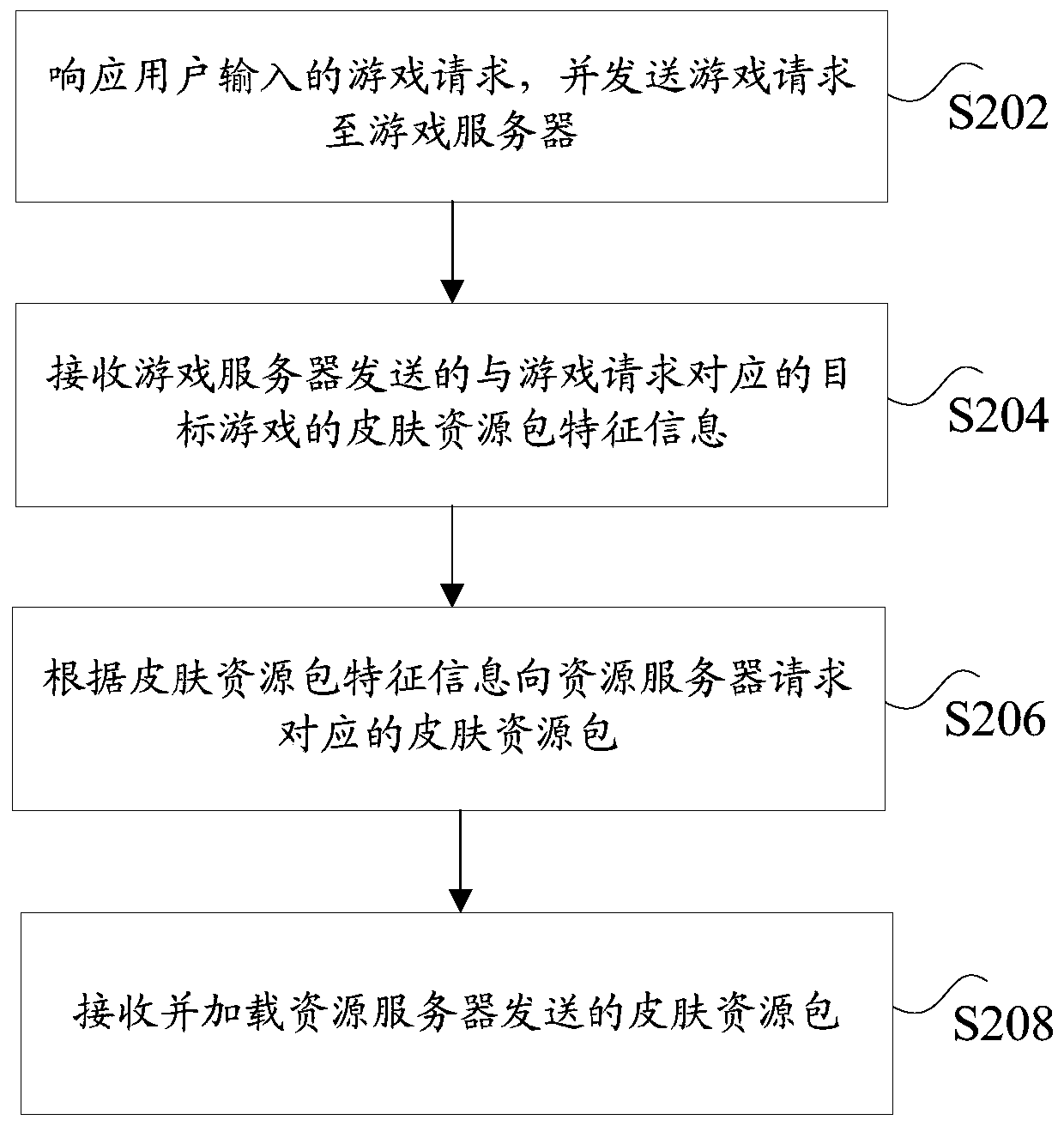

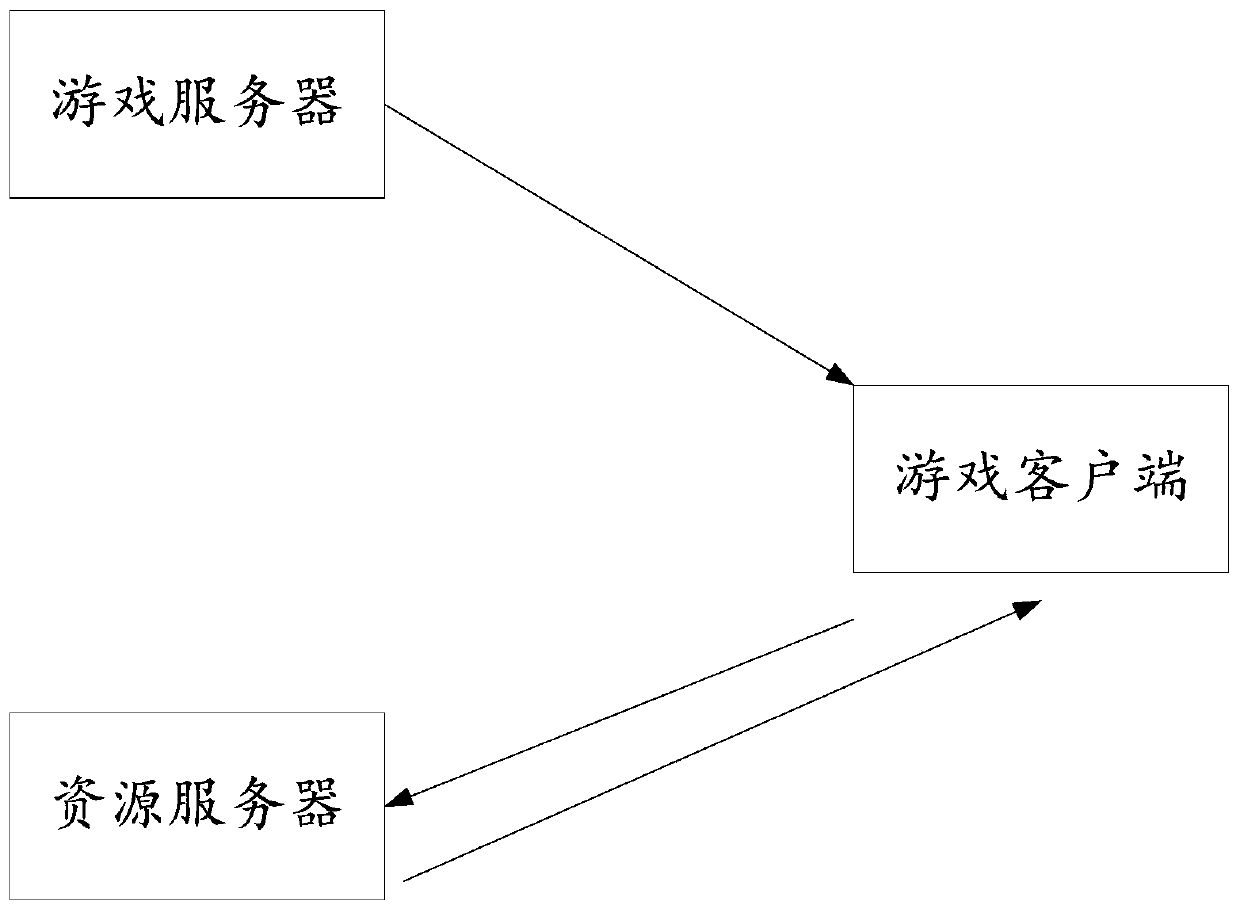

Game skin changing method and device, storage medium and processor

The invention discloses a game skin changing method and device, a storage medium and a processor. The method comprises the steps of responding a game request input by a user, and sending the game request to a game server; receiving skin resource packet feature information of a target game corresponding to the game request sent by the game server; requesting a corresponding skin resource packet from a resource server according to the skin resource packet feature information; and receiving and loading the skin resource packet sent by the resource server. According to the method and the device, the technical problem that the use of game equipment is seriously influenced due to the fact that a large number of resources are built in the game server along with the increase of small game playingmethods in related technologies is solved.

Owner:NETEASE (HANGZHOU) NETWORK CO LTD

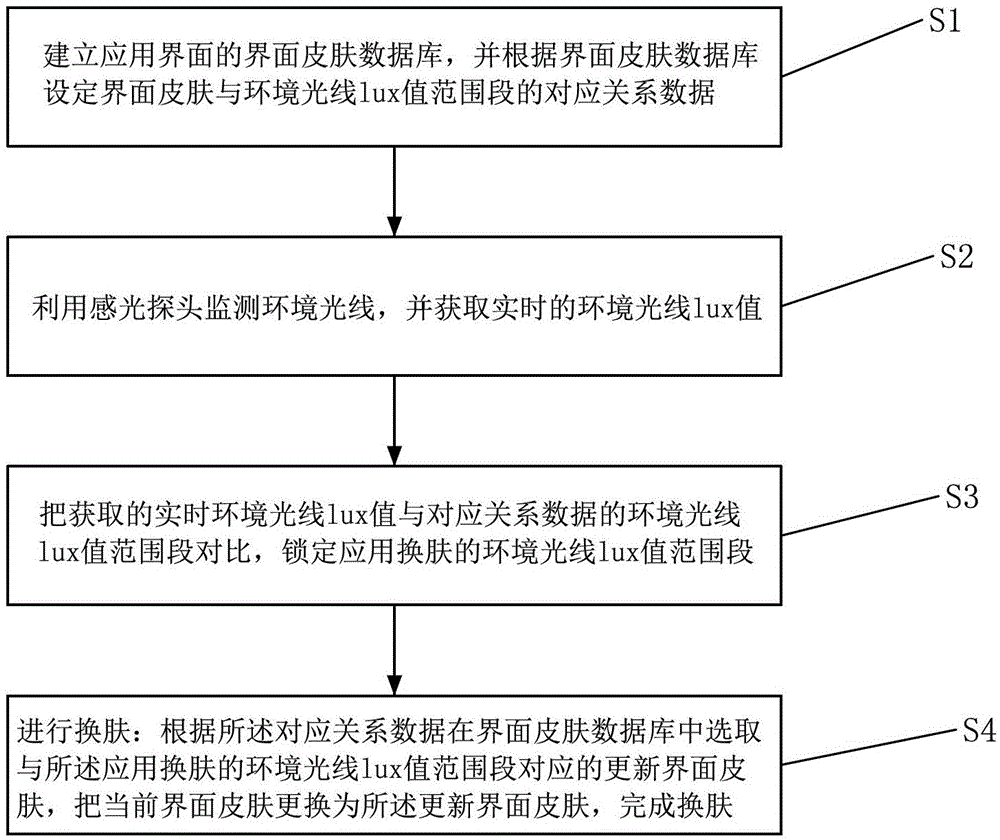

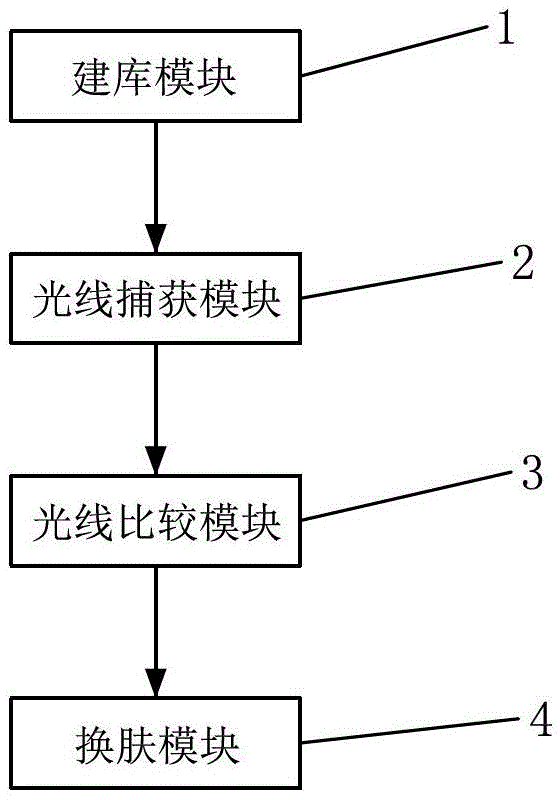

Method and system for changing interface skin according to ambient light change

InactiveCN103150090AProtect healthComfortable interface displayInput/output for user-computer interactionGraph readingComputer scienceLight intensity

The invention discloses a method and a system for changing an interface skin according to ambient light change. The method comprises the following steps of: 1, establishing an interface skin database of an application interface, and setting correspondence data of an interface skin and an ambient light lux value range according to the interface skin database; 2, monitoring ambient light by using a sensitive probe, and obtaining a real-time ambient light lux value; 3, comparing the obtained real-time ambient light lux value with the ambient light lux value range of the correspondence data, locking the ambient light lux value range of the application skin changing; and carrying out skin changing. According to the invention, by using multiple preset application interface skins, and according to the outside ambient light intensity monitored by the sensitive probe of a mobile application device, the corresponding interface skin can be obtained through changing, and thus a user obtains a more comfortable interface display under different ambient light to protect the eyes of the user.

Owner:广东利为网络科技有限公司

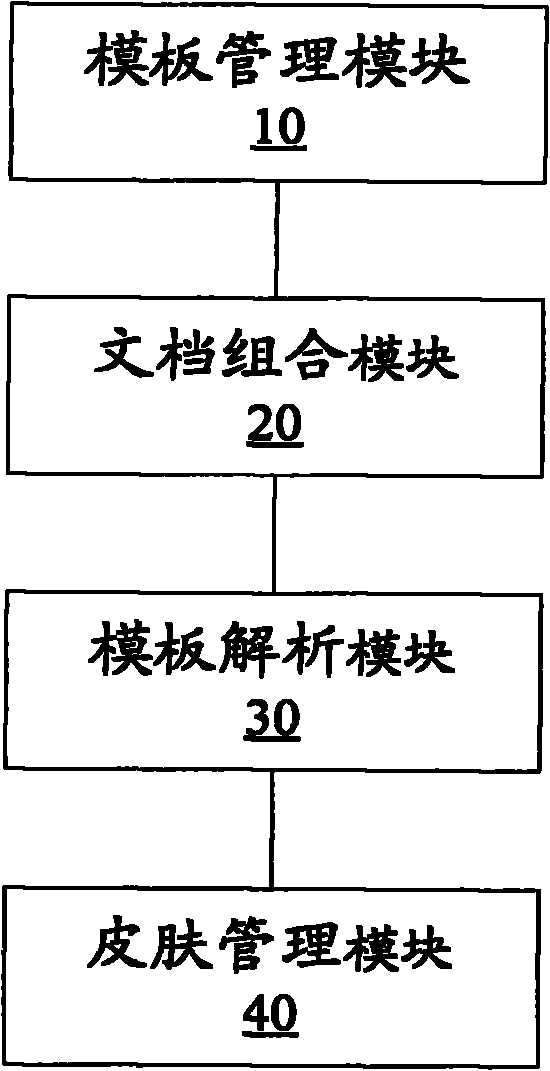

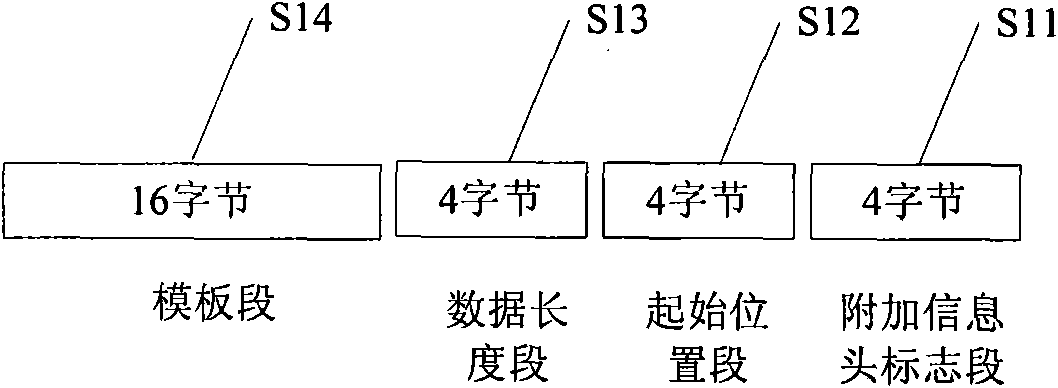

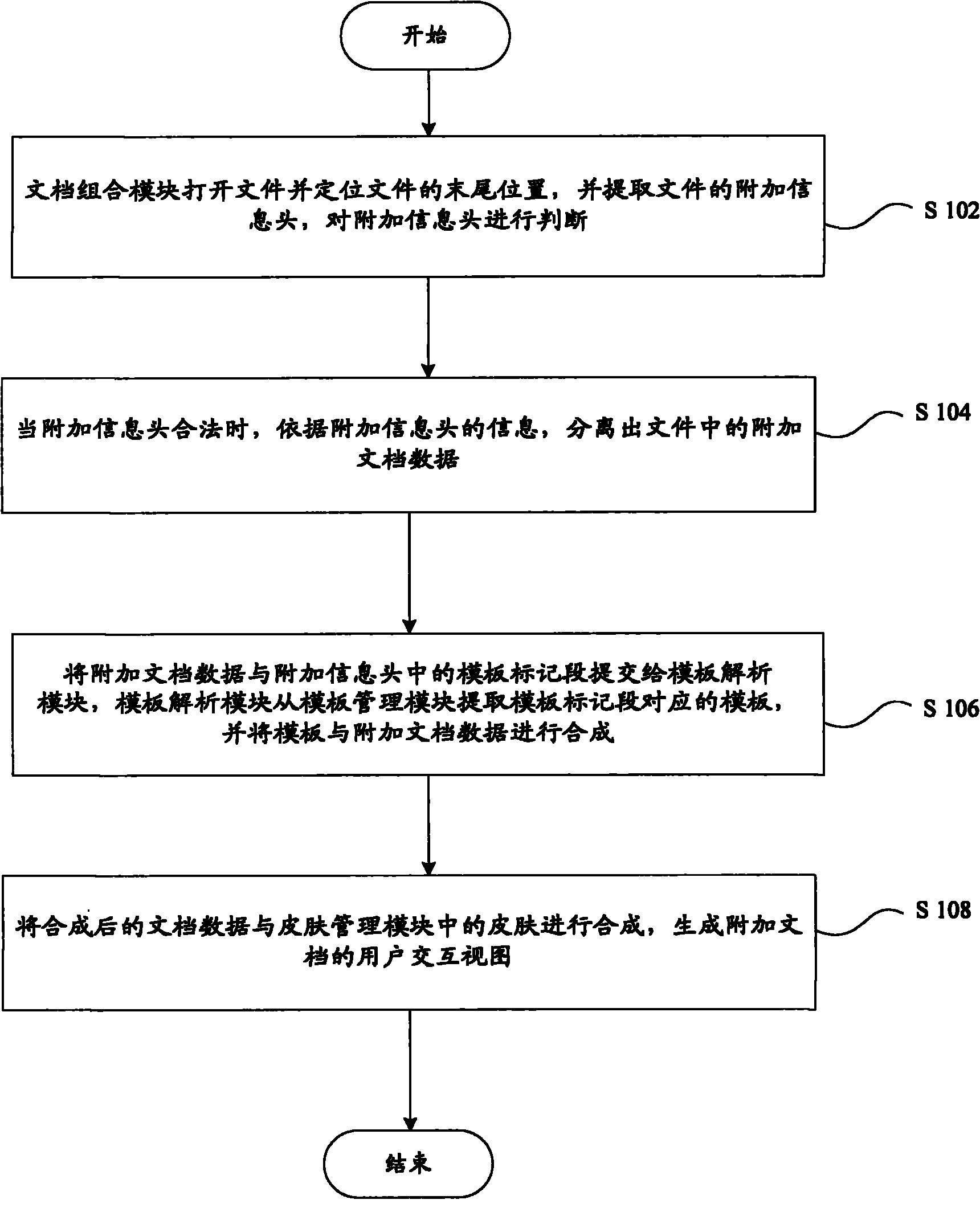

Device and method for generating compound document

ActiveCN101944087ATroubleshoot distribution issuesSpecial data processing applicationsCompound documentDocument preparation

The invention provides a device and a method for generating a compound document. The device comprises a template management module, a document combination module, a template resolving module and a skin management module, wherein the template management module is used for storing a template for describing the information constitution and data format of the document and generating additional document data of a main document according to the template; the document combination module is used for synthesizing the data of the main document and the additional document data so as to generate compounddocument data and extracting the additional document data from the compound document data; the template resolving module is used for resolving the template for the information constitution and the data format of the additional document data and converting the additional document data into the format of an additional document according to the template; and the skin management module is used for synthesizing the additional document and skin specified by a user so as to generate a user interaction view.

Owner:方正国际软件(北京)有限公司

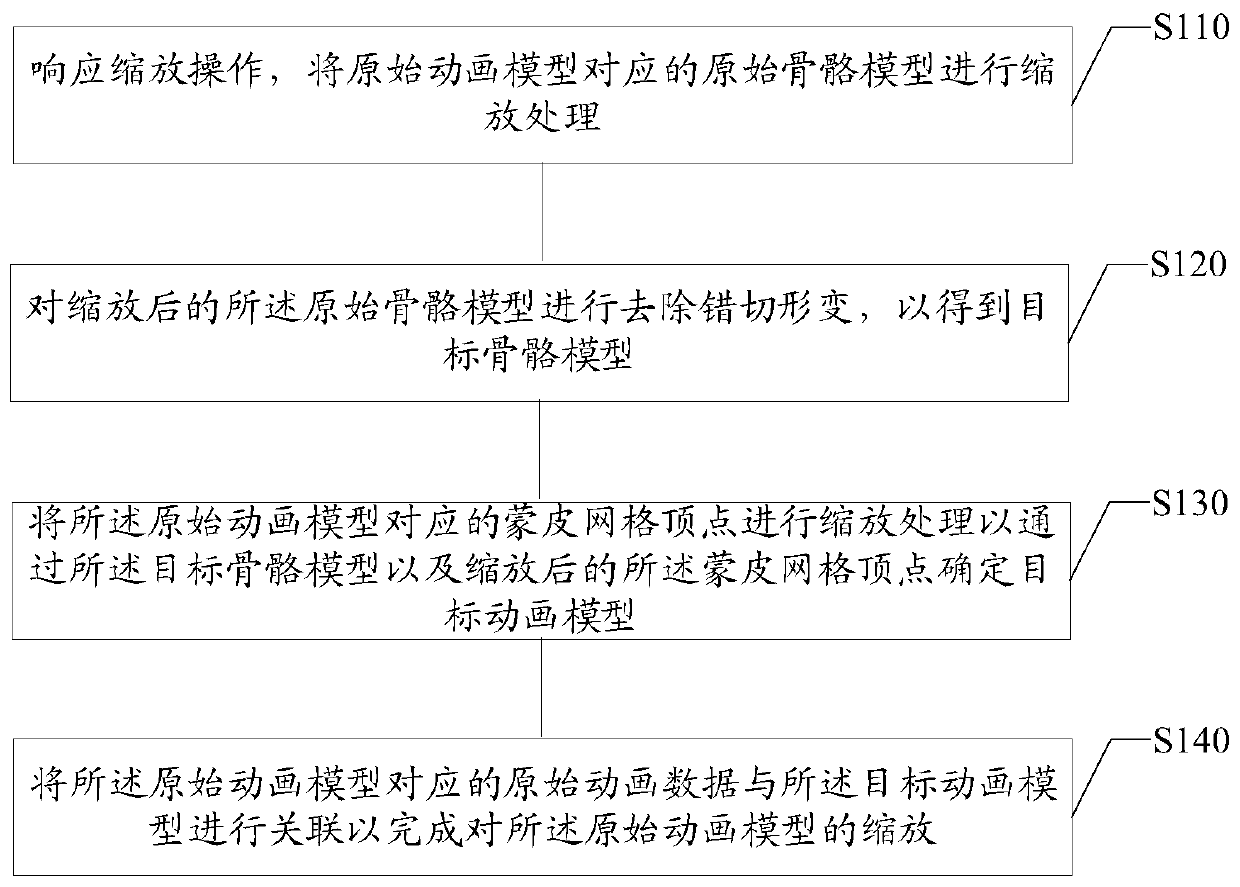

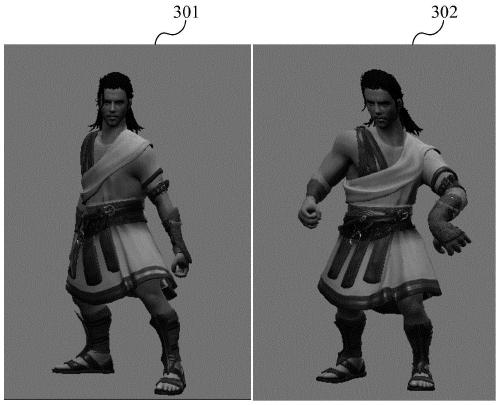

Animation model zooming method and device, electronic equipment and storage medium

ActiveCN111062864AIncrease flexibilityImprove user experienceGeometric image transformationAnimationComputational scienceAlgorithm

The invention provides an animation model zooming method and device, electronic equipment and a storage medium, and relates to the technical field of computers. The animation model scaling method comprises the following steps: in response to a scaling operation, scaling an original skeleton model corresponding to an original animation model; removing shearing deformation of the scaled original skeleton model to obtain a target skeleton model; scaling the skin grid vertex corresponding to the original animation model so as to determine a target animation model through the target skeleton modeland the scaled skin grid vertex; and associating the original animation data corresponding to the original animation model with the target animation model to complete zooming of the original animationmodel. According to the technical scheme, reuse of the animation model and the animation data can be achieved, resources are saved, wrong cutting deformation of the animation model can be avoided, and the use experience of a user is improved.

Owner:NETEASE (HANGZHOU) NETWORK CO LTD

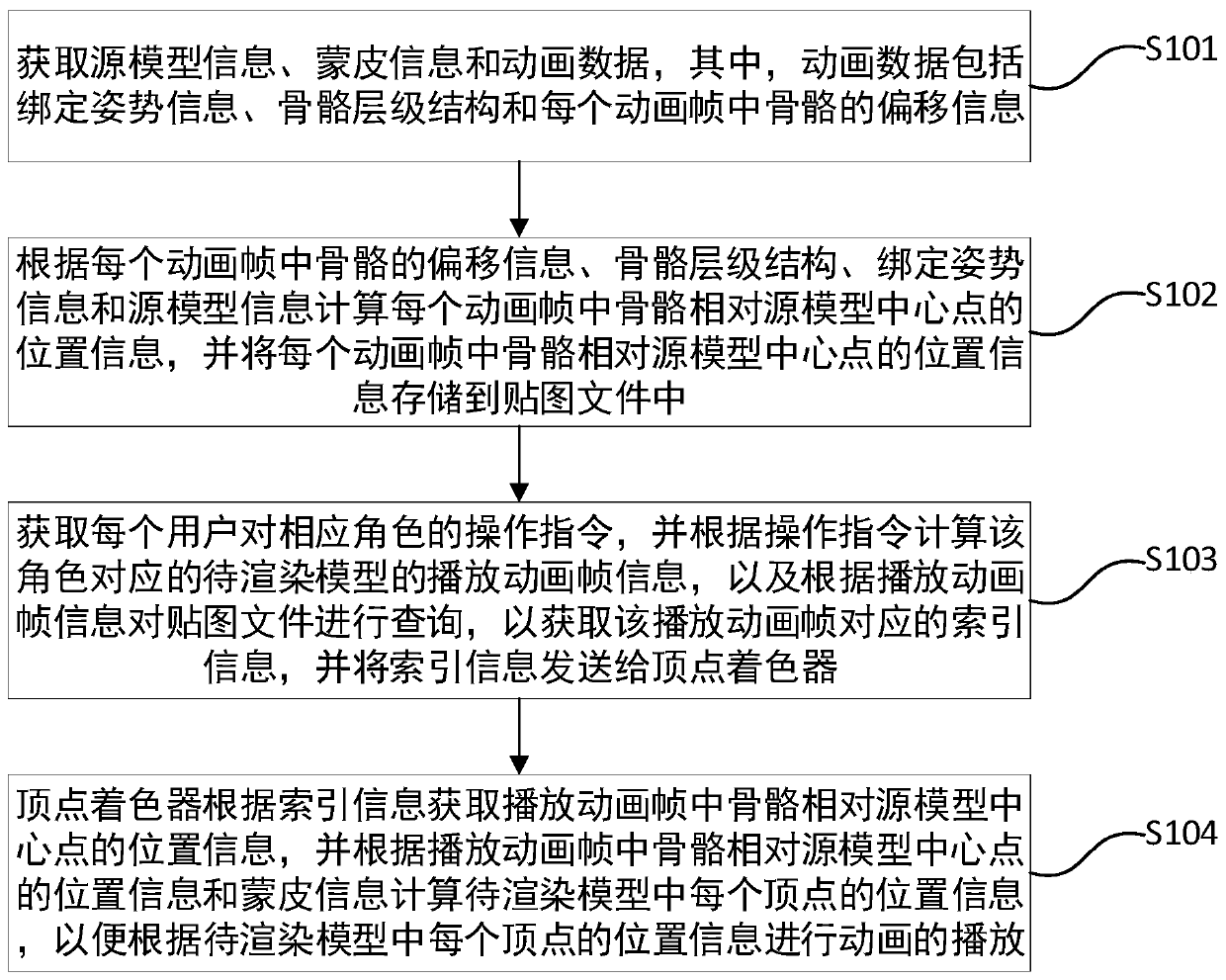

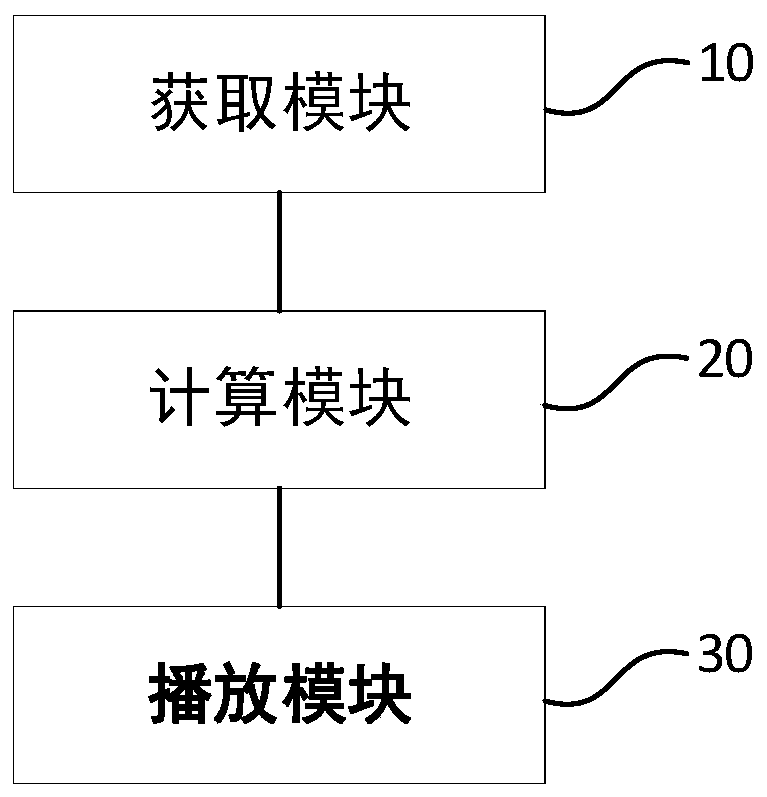

Method and device for playing large number of animations of same character, medium and equipment

The invention discloses a method and device for playing large number of animations of a same character, a medium and equipment . The method comprises the following steps: acquiring source model information, skin information and animation data; calculating the position information of the skeleton relative to the central point of the source model in each animation frame, and storing the position information of the skeleton relative to the central point of the source model in each animation frame into a mapping file; obtaining an operation instruction of each user for the corresponding role, calculating playing animation frame information of a to-be-rendered model corresponding to the role according to the operation instruction, obtaining index information corresponding to the playing animation frame, and sending the index information to a vertex shader; enabling a vertex shader to acquire the position information of the skeleton relative to the central point of the source model in the animation playing frame according to the index information, and calculates the position information of each vertex so as to play the animation according to the position information of each vertex; rendering consumption and GPU consumption in the playing process of a large number of same-role same-screen animations can be effectively reduced, and then computing resources are saved.

Owner:厦门梦加网络科技股份有限公司

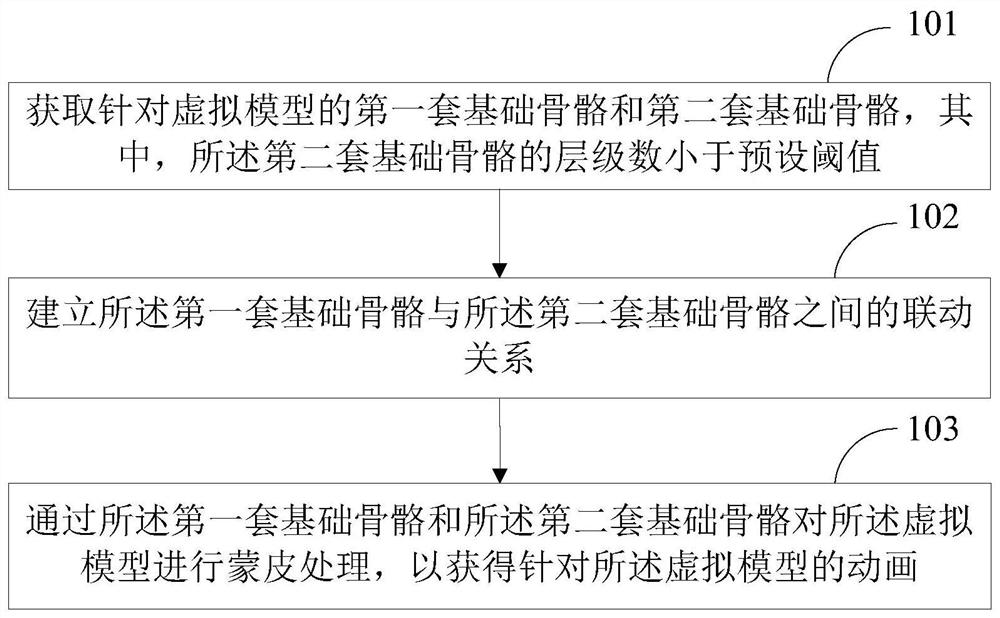

Animation production method and device, storage medium and computer equipment

The embodiment of the invention discloses an animation production method and device, a storage medium and computer equipment. The method comprises the steps: obtaining a first set of basic skeletons and a second set of basic skeletons for a virtual model, wherein the number of levels of the second set of basic skeletons is smaller than a preset threshold value; establishing a linkage relationshipbetween the first set of basic skeletons and the second set of basic skeletons; and performing skin processing on the virtual model through the first set of basic skeletons and the second set of basicskeletons to obtain an animation for the virtual model. According to the embodiment of the invention, exaggerated deformation animation production can be supported, diversified requirements of animations are met, and the animation effect is improved.

Owner:NETEASE (HANGZHOU) NETWORK CO LTD

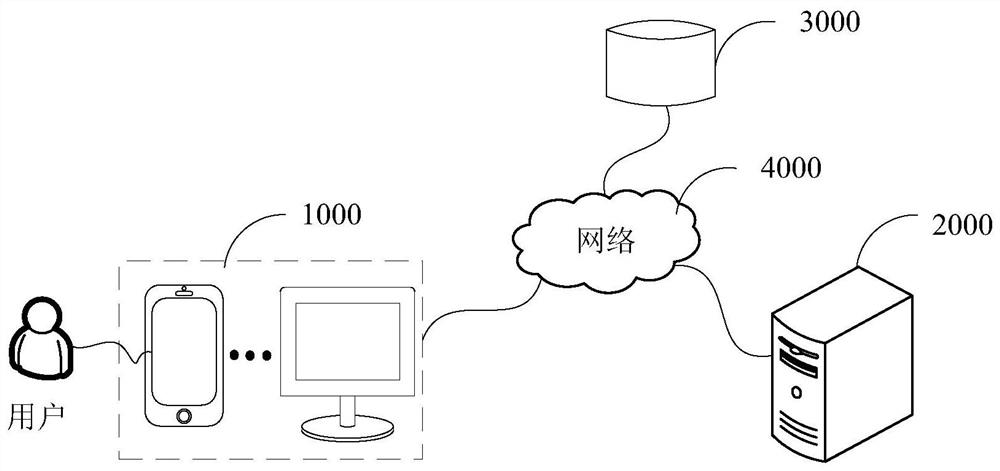

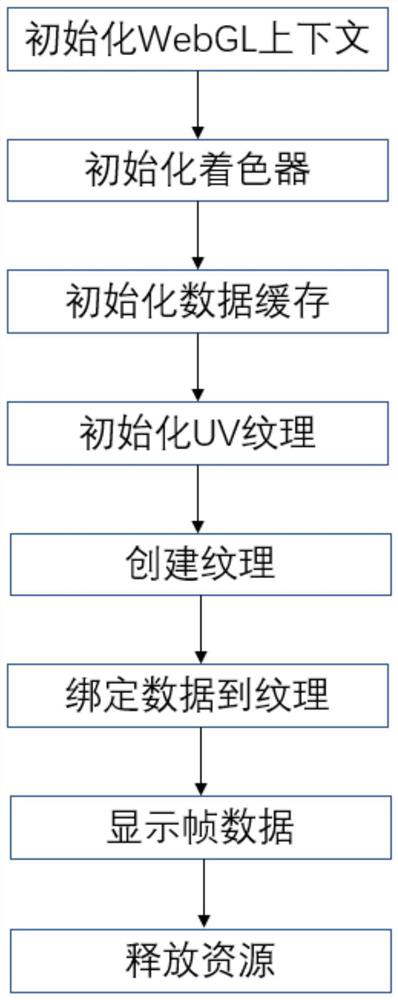

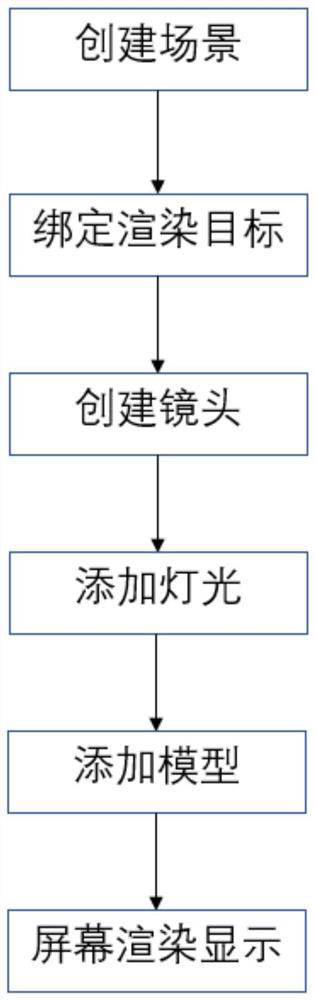

WebGL-based 3D rendering system and method

PendingCN112991508AReduce difficulty of useLow cost3D-image rendering3D modellingGraphical user interfaceSkin

The invention discloses a WebGL-based 3D rendering system and method, and the system comprises a scene object library, a special effect library, an animation frame library, a rectangular calculation library, a graphical user interface library, and an auxiliary module library. The scene object library comprises a scene object module, a light object module, a camera object module, a material object module, a particle object module, a model object module and a texture object module; the animation framework library comprises a skin animation module and a skeleton animation module; the rectangular calculation library comprises a vector calculation module and a model bounding box calculation module. According to the invention, a middleware mechanism is provided for a user, so the user can quickly develop an interactive three-dimensional graphic application without knowing bottom details; meanwhile, the method also supports GLSL 300es, does not need to be loaded, and can be directly called; a user can perform development without being familiar with a knowledge system related to computer graphics, so that the use difficulty is reduced, and the development period is shortened; the development efficiency is improved, and the development cost is reduced.

Owner:赛瓦软件(上海)有限公司

Method and device for setting application skin on operation system platform and equipment

ActiveCN104679390AImprove performanceImprove convenienceInput/output processes for data processingOperational systemComputer science

The embodiment of the invention provides a method for setting application skin on an operation system platform. The method comprises the following steps of acquiring attribute value of background color of the operation system platform which runs an application; when a preset condition for setting application skin is satisfied, matching the attribute value of the background color with a preset skin scheme; setting the skin scheme matched with the attribute value of the background color to be the application skin. The setting efficiency of the application skin can be improved by the implementation way provided by the invention.

Owner:HANGZHOU NETEASE CLOUD MUSIC TECH CO LTD

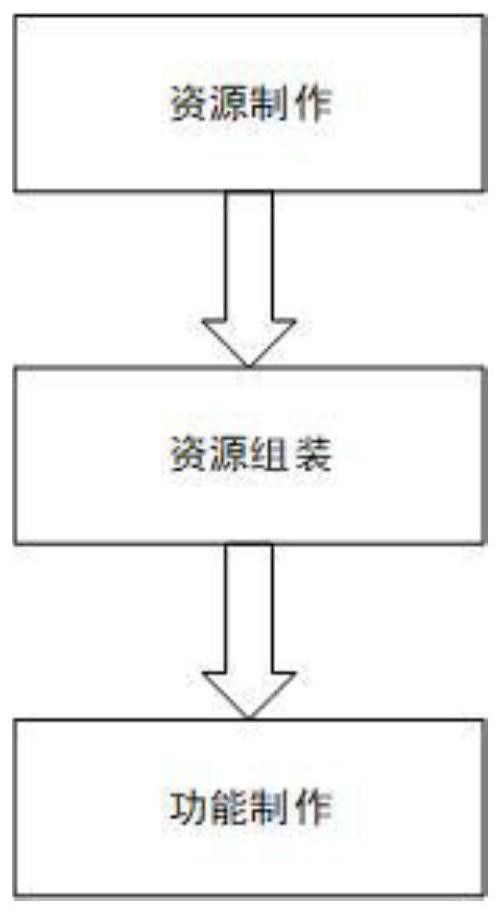

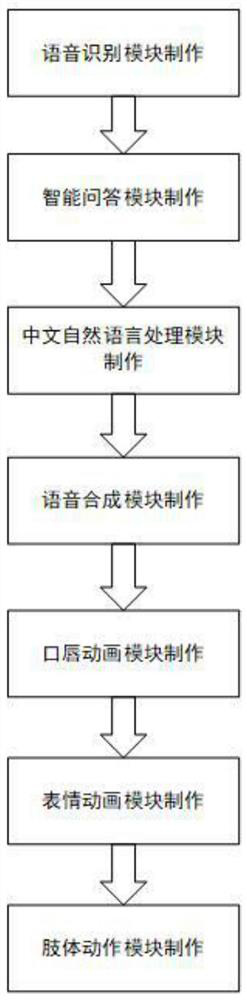

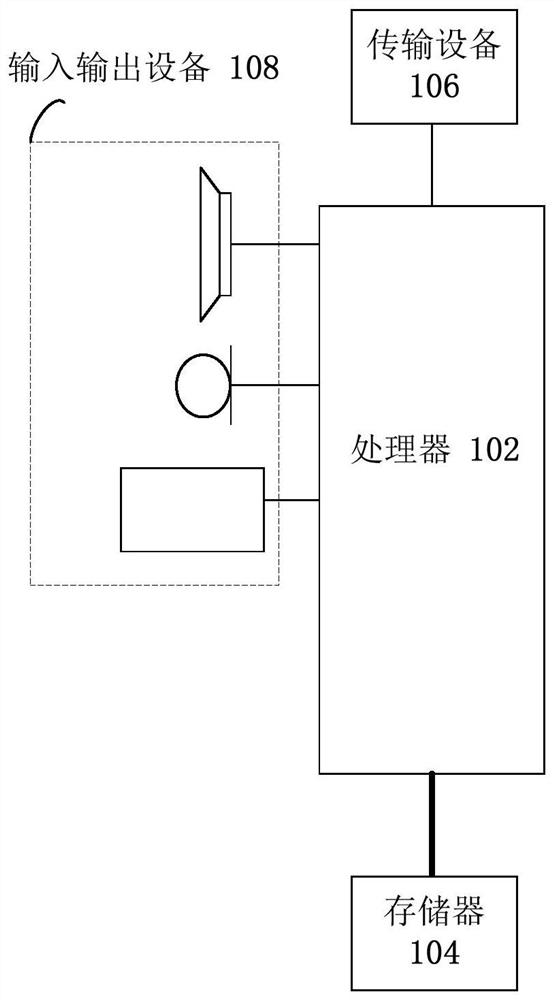

Realistic virtual human multi-modal interaction implementation method based on UE4

The invention discloses a realistic virtual human multi-modal interaction implementation method based on UE4. The method comprises the steps of resource manufacturing, resource assembling and functionmanufacturing. The system comprises a resource making module used for role model making, facial expression BlendShape making, skeletal skin binding, action making, map making and material adjustment;a resource assembly module which is used for carrying out scene building, light design and UI interface building; and the function making module is used for identifying voice input of the user, carrying out intelligent answering according to the input, playing voice, lip animation, expression animation and body movement and reflecting interaction multi-modality. The module specifically comprisesa voice recognition module, an intelligent question and answer module, a Chinese natural language processing module, a voice synthesis module, a lip animation module, an expression animation module and a body movement module. The system has affinity similar to that of a real person and can be accepted by a user more easily; the interactive habit of human beings is better met, and the application has a wider popularization range; therefore, the application is truly intelligent, and the response of the application is more in line with human logic.

Owner:长沙千博信息技术有限公司

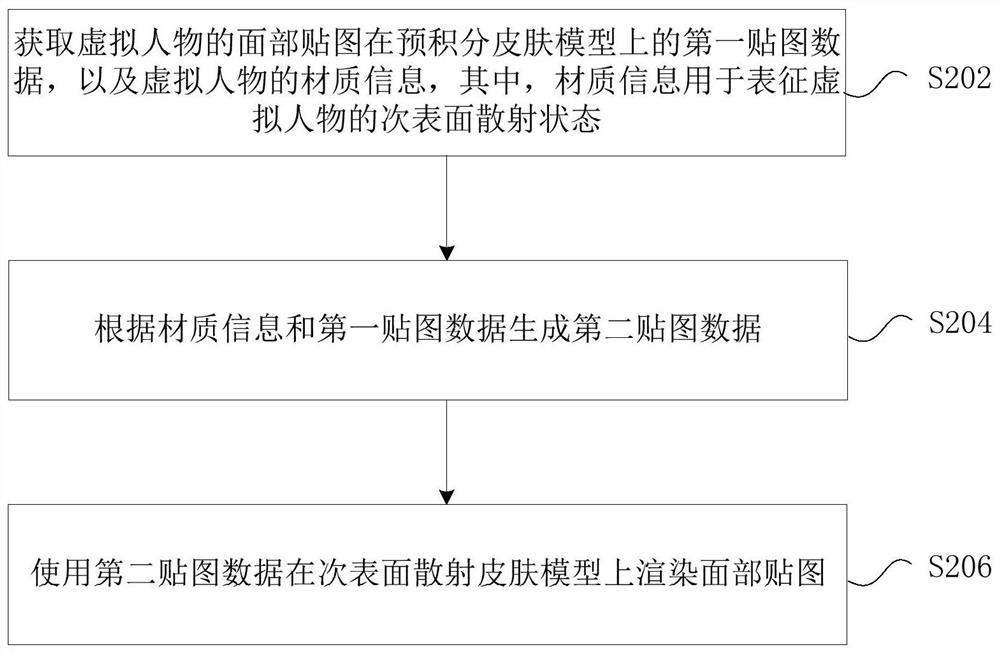

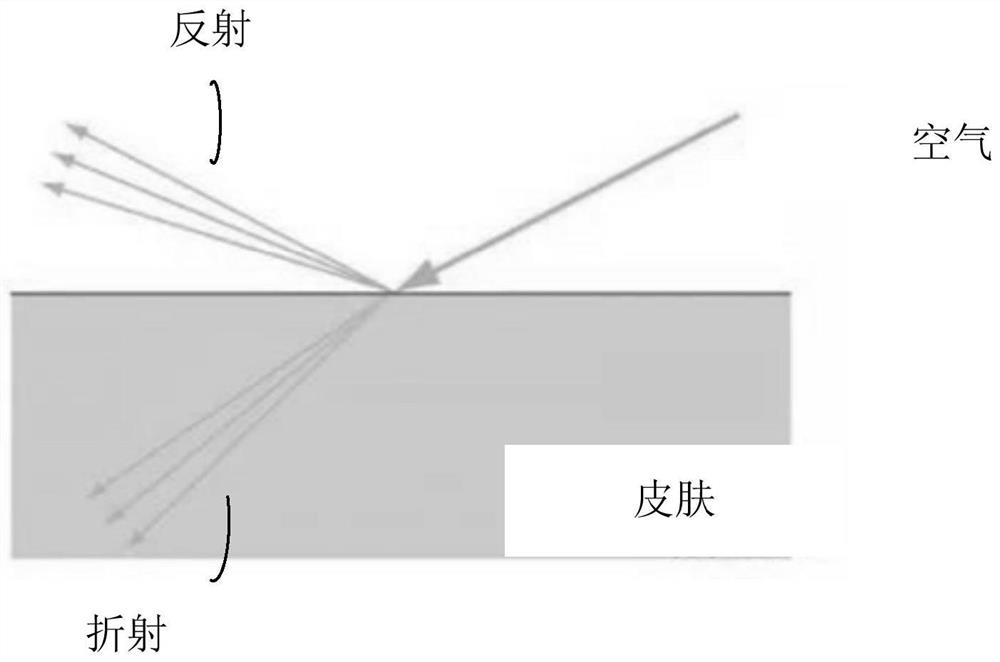

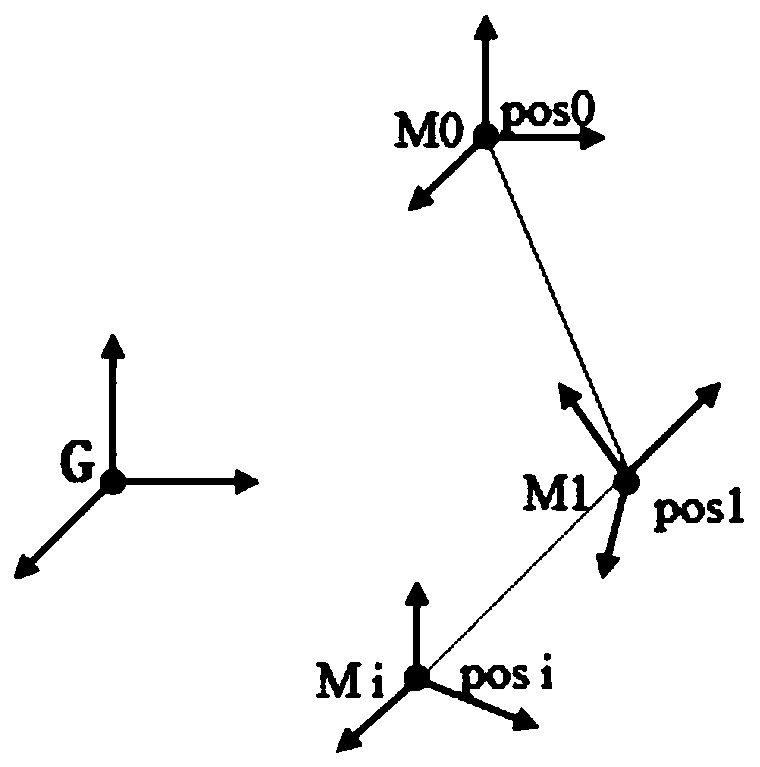

Character skin rendering method and device, storage medium and electronic device

PendingCN111862285AEnhance layeringSolving rigid technical problems3D-image renderingSubsurface scatteringComputer graphics (images)

The invention provides a character skin rendering method and device, a storage medium and an electronic device. The method comprises the steps that first mapping data of a face map of a virtual character on a pre-integration skin model and material information of the virtual character are acquired, and the material information is used for representing the sub-surface scattering state of the virtual character; second mapping data is generated according to the material information and the first mapping data; the facial map is rendered on a sub-surface scattering skin model using the second map data. The technical problem that the face of the virtual character is stiff and stiff in the prior art is solved, so that the face of the virtual character is more vivid, natural, flexible and real.

Owner:BEIJING PERFECT WORLD SOFTWARE TECH DEV CO LTD

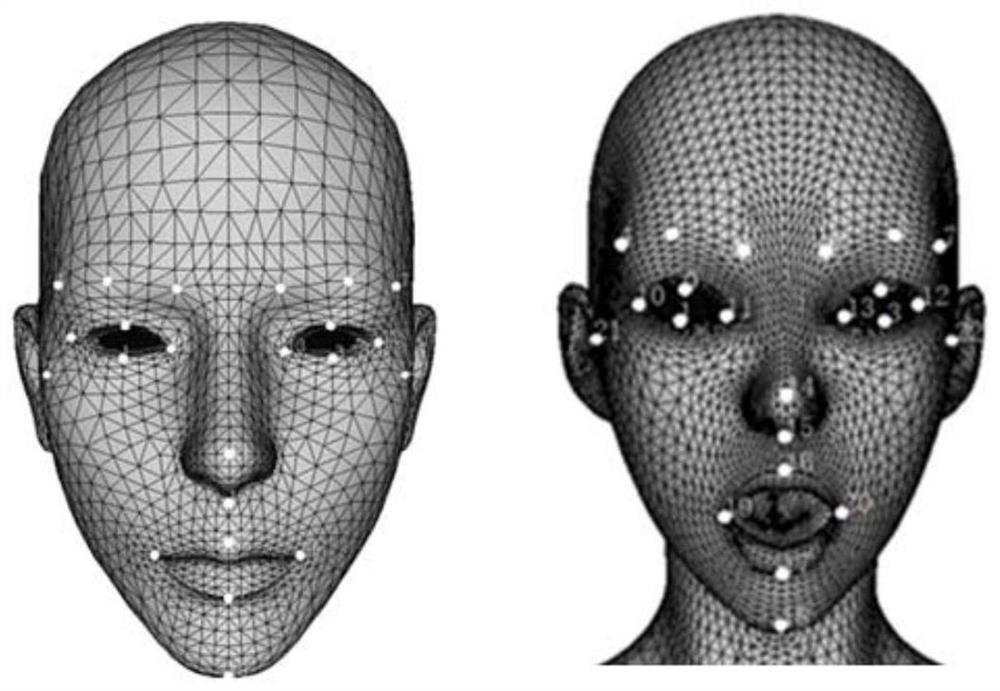

Method for manufacturing computer skin animation based on high-precision three-dimensional scanning model

ActiveCN111369649AReduce workloadUses less memory spaceAnimationManufacturing computing systemsSkinComputational model

The invention belongs to the technical field of computer graphics. The invention relates to a three-dimensional model skin animation generation method. The method for manufacturing the computer skin animation based on the high-precision three-dimensional scanning model specifically comprises the steps that firstly, skeleton binding is completed through a digital three-dimensional model skeleton line scanned by a three-dimensional scanner and an action template library of skeletons, skin weights are calculated, model vertex coordinates are updated in real time, and the computer skin animation is completed. According to the method, the three-dimensional model scanned by the high-precision three-dimensional scanner can be processed, the weight of the vertex of the model is calculated throughan algorithm, and compared with manual processing, the workload of model processing is greatly reduced; and only storing texture coordinates, indexes and node weights of the model by utilizing a skinanimation principle, and calculating new vertex data according to the node weights in each frame rendering process. Compared with a frame data merging method and a skeletal animation method, the method has the advantages that the occupied memory space is small, non-rigid vertex deformation is achieved, and the achieved animation effect is more natural.

Owner:SUZHOU DEKA TESTING TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com