Realistic virtual human multi-modal interaction implementation method based on UE4

An implementation method and virtual human technology, applied in the field of real-sense virtual human multi-modal interaction, can solve problems such as lack of voice input, lack of professional fields, and inability to give answers and responses, and achieve a wide-spread and easy-to-accept effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

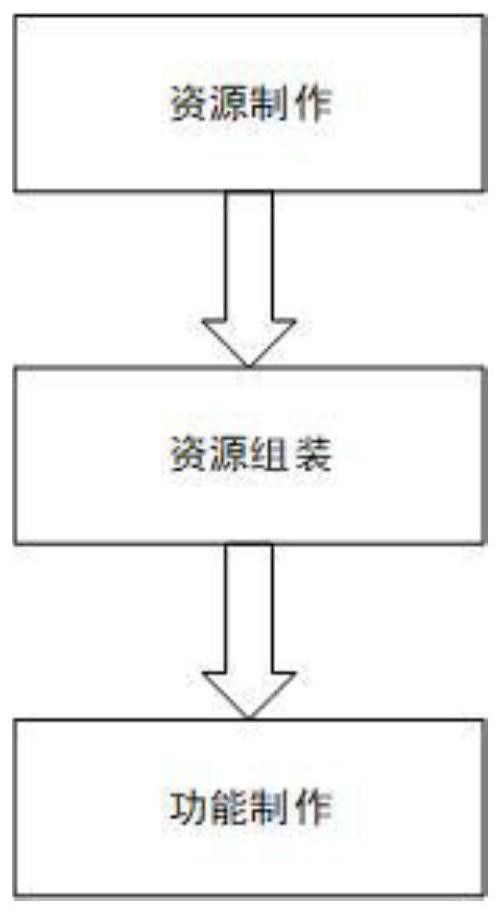

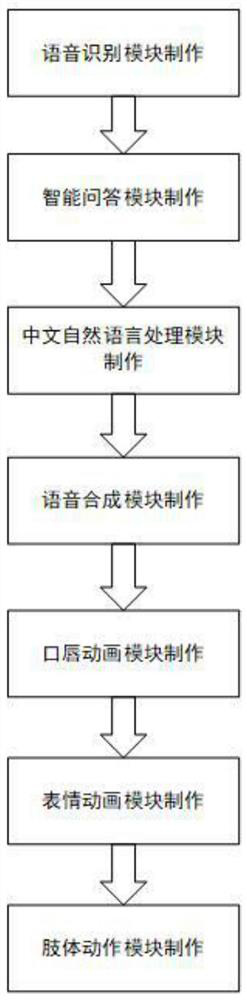

Method used

Image

Examples

Embodiment Construction

[0037] Hereinafter, the present invention will be further described with reference to the accompanying drawings.

[0038] Before proceeding with the statement of specific implementation, some terminology needs to be explained:

[0039] UE4 is the abbreviation of UNREAL ENGINE 4, Chinese: Unreal Engine 4, UE4 is currently the world's most well-known top game engine with the most extensive authorization.

[0040] BlendShape is a vertex deformation animation, which is generally used for expression production.

[0041] Maya is a world-leading software application for 3D digital animation and visual effects produced by Autodesk.

[0042] Substance is a powerful 3D texture map production software.

[0043] UMG is the abbreviation of Unreal Motion Graphics, Chinese: Unreal Motion Graphics Interface Designer, UMG is the UI interface production module in the UE4 editor;

[0044] NVBG is the abbreviation of NonVerbal-Behavior-Generator, Chinese: non-verbal behavior generator.

[004...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com