Patents

Literature

604 results about "Human behavior" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Human behavior is the response of individuals or groups of humans to internal and external stimuli. It refers to the array of every physical action and observable emotion associated with individuals, as well as the human race. While specific traits of one's personality and temperament may be more consistent, other behaviors will change as one moves from birth through adulthood. In addition to being dictated by age and genetics, behavior, driven in part by thoughts and feelings, is an insight into individual psyche, revealing among other things attitudes and values. Social behavior, a subset of human behavior, study the considerable influence of social interaction and culture. Additional influences include ethics, social environment, authority, persuasion and coercion.

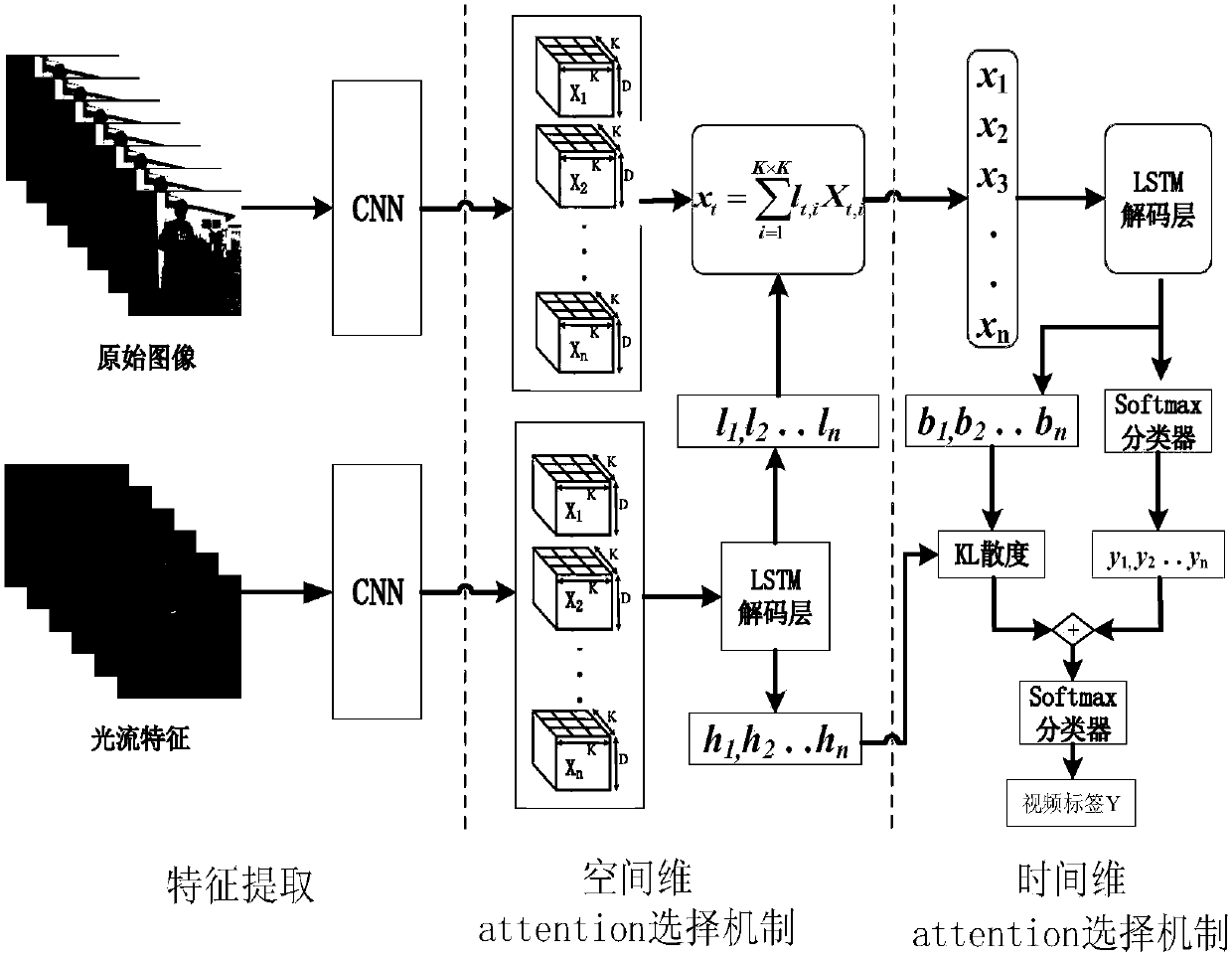

Human behavior recognition method integrating space-time dual-network flow and attention mechanism

ActiveCN107609460AImprove accuracyEliminate distractionsCharacter and pattern recognitionNeural architecturesHuman behaviorPattern recognition

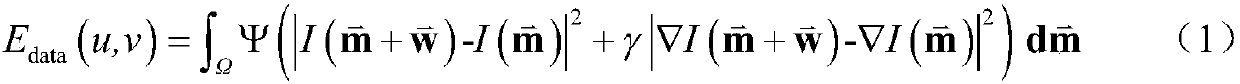

The invention discloses a human behavior recognition method integrating the space-time dual-network flow and an attention mechanism. The method includes the steps of extracting moving optical flow features and generating an optical flow feature image; constructing independent time flow and spatial flow networks to generate two segments of high-level semantic feature sequences with a significant structural property; decoding the high-level semantic feature sequence of the time flow, outputting a time flow visual feature descriptor, outputting an attention saliency feature sequence, and meanwhile outputting a spatial flow visual feature descriptor and the label probability distribution of each frame of a video window; calculating an attention confidence scoring coefficient per frame time dimension, weighting the label probability distribution of each frame of the video window of the spatial flow, and selecting a key frame of the video window; and using a softmax classifier decision to recognize the human behavior action category of the video window. Compared with the prior art, the method of the invention can effectively focus on the key frame of the appearance image in the originalvideo, and at the same time, can select and obtain the spatial saliency region features of the key frame with high recognition accuracy.

Owner:NANJING UNIV OF POSTS & TELECOMM

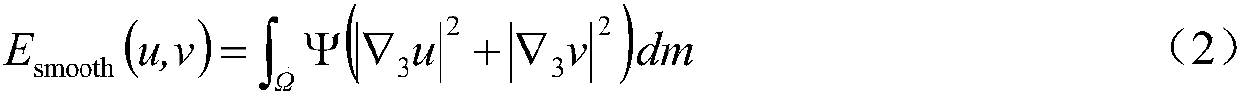

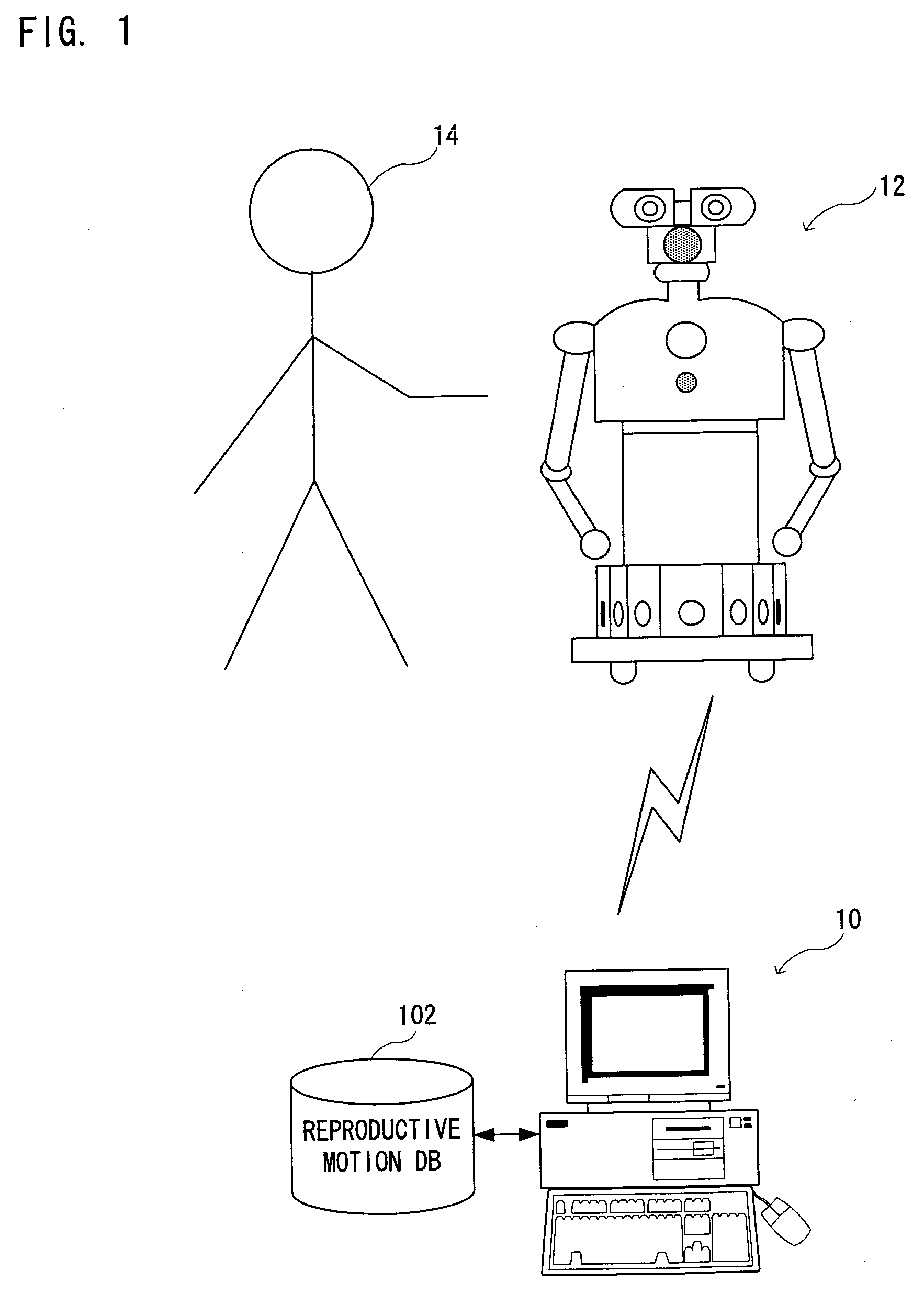

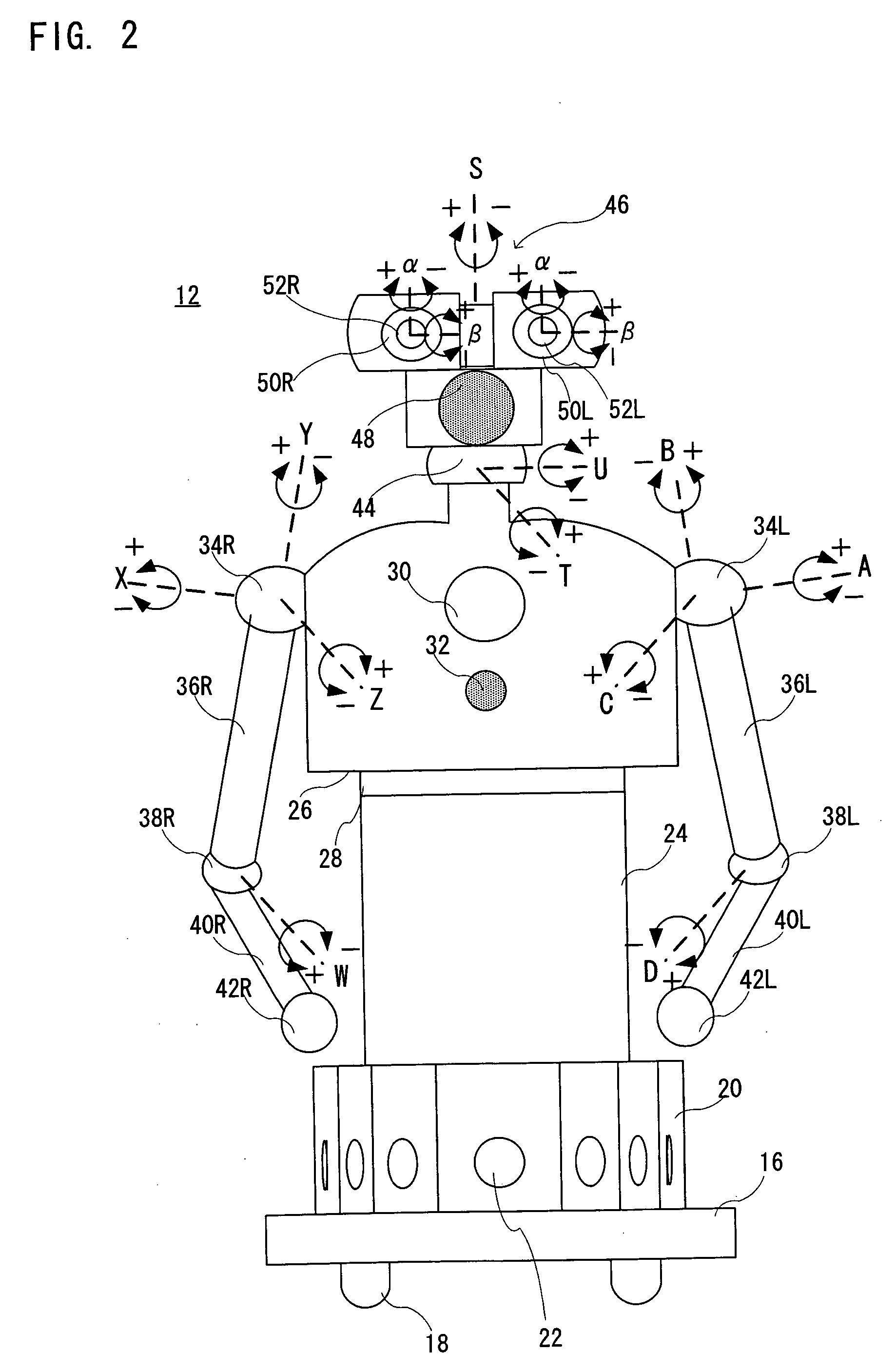

Communication robot control system

ActiveUS20060293787A1Promote generationEasy inputProgramme-controlled manipulatorComputer controlReflexHuman behavior

A communication robot control system displays a selection input screen for supporting input of actions of a communication robot. The selection input screen displays in a user-selectable manner a list of a plurality of behaviors including not only spontaneous actions but also reactive motions (reflex behaviors) in response to behavior of a person as a communication partner, and a list of emotional expressions to be added to the behaviors. According to a user's operation, the behavior and the emotional expression to be performed by the communication robot are selected and decided. Then, reproductive motion information for interactive actions including reactive motions and emotional interactive actions, is generated based on input history of the behavior and the emotional expression.

Owner:ATR ADVANCED TELECOMM RES INST INT

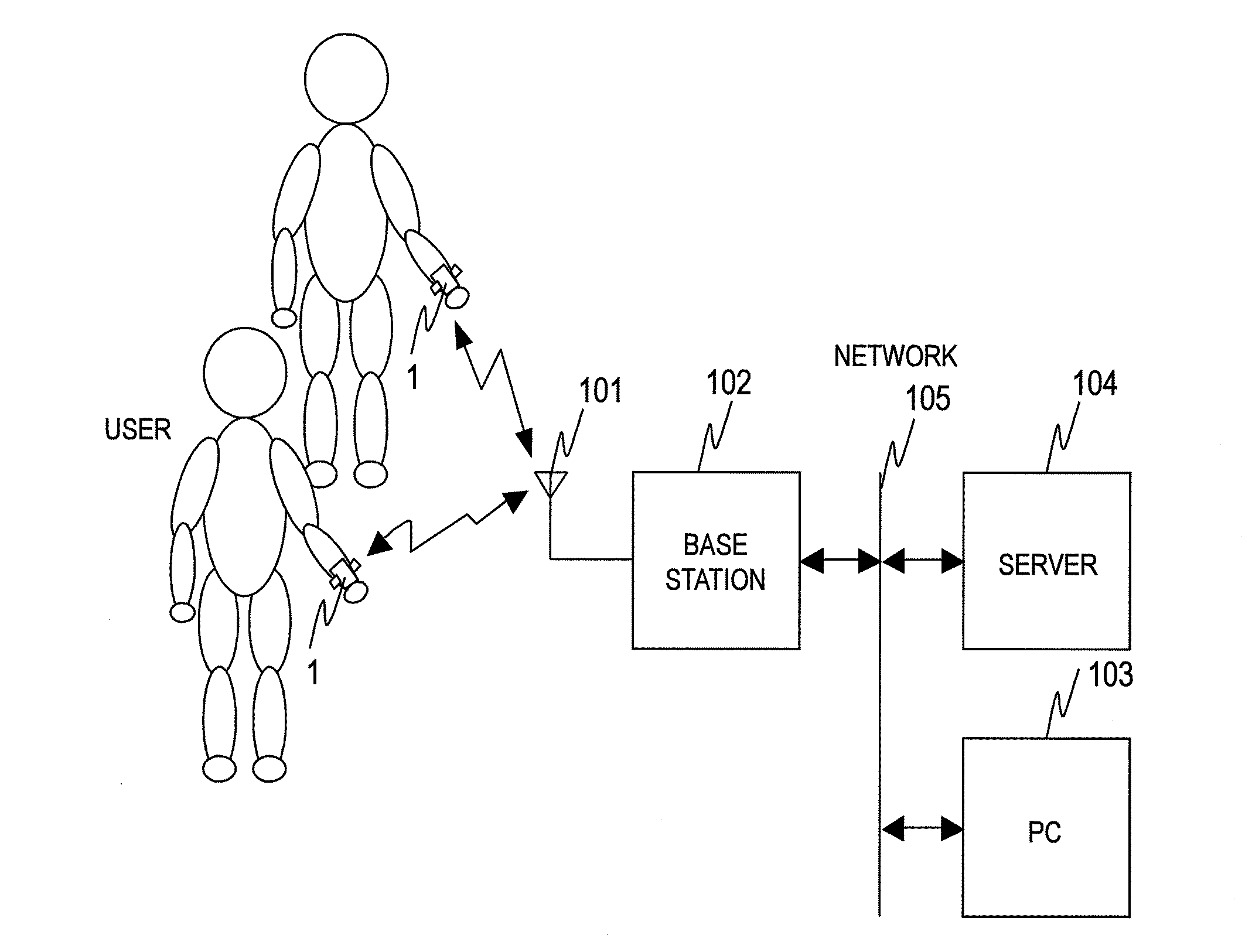

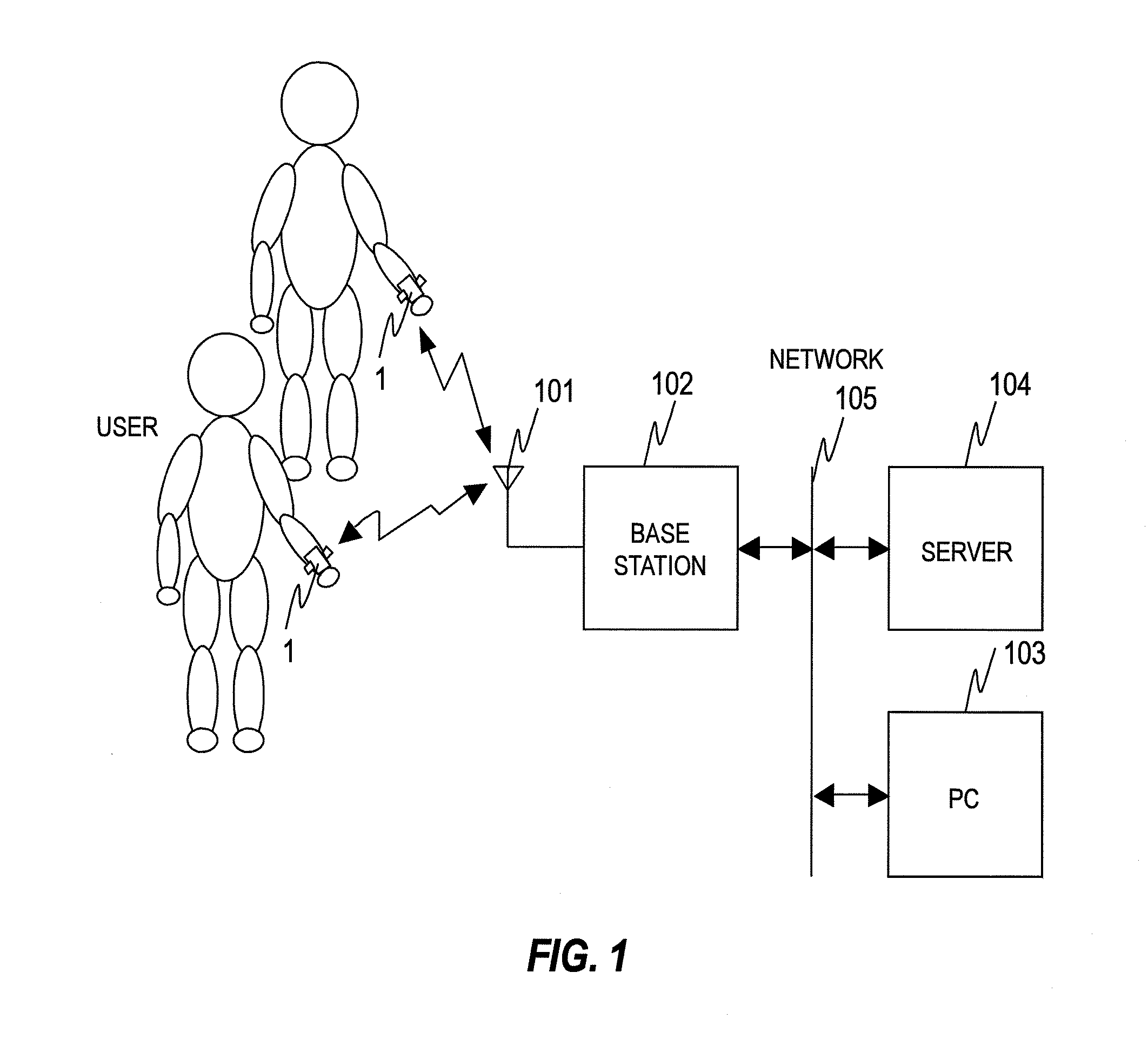

Method and system for generating history of behavior

InactiveUS20110137836A1Labor savingEasy activityPhysical therapies and activitiesInertial sensorsHuman behaviorComputer graphics (images)

Disclosed are method and system for generating history of behavior that is capable of simplifying input of a behavior content of a human behavior pattern determined from data measured by a sensor. A computer obtains biological information measured by a sensor which is mounted to a person and accumulates the biological information, obtains motion frequencies from the accumulated biological information, obtains time-series change points of the motion frequencies, extracts a period between the change points as a scene which is a period of being in the state of an identical motion, compares the motion frequencies with a preset condition for each extracted scene and identifies the action contents in the scene, estimates the behavior content performed by the person in the scene on the basis of the appearance sequence of the action contents, and generates the history of the behaviors on the basis of the estimated behavior contents.

Owner:HITACHI LTD

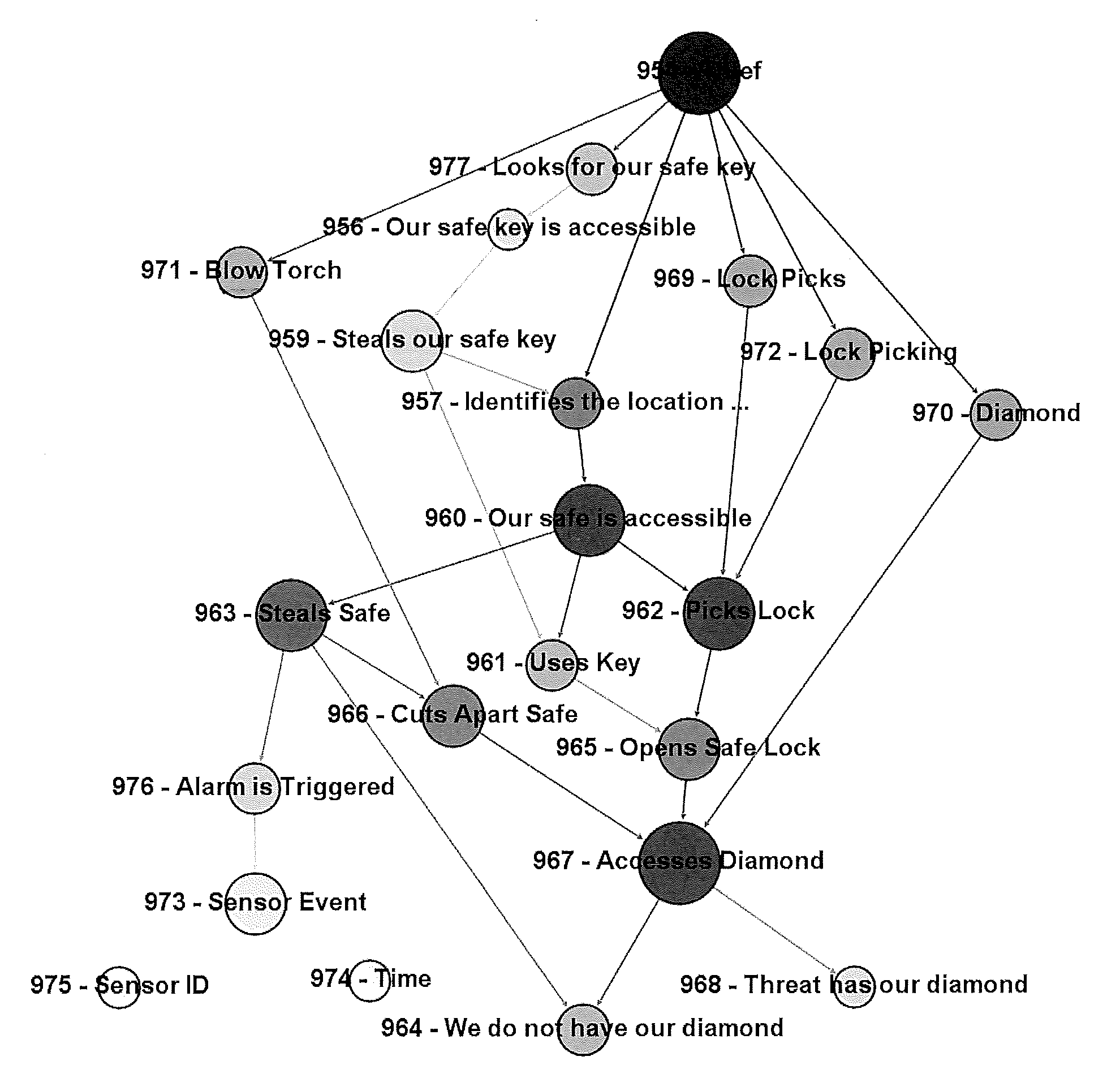

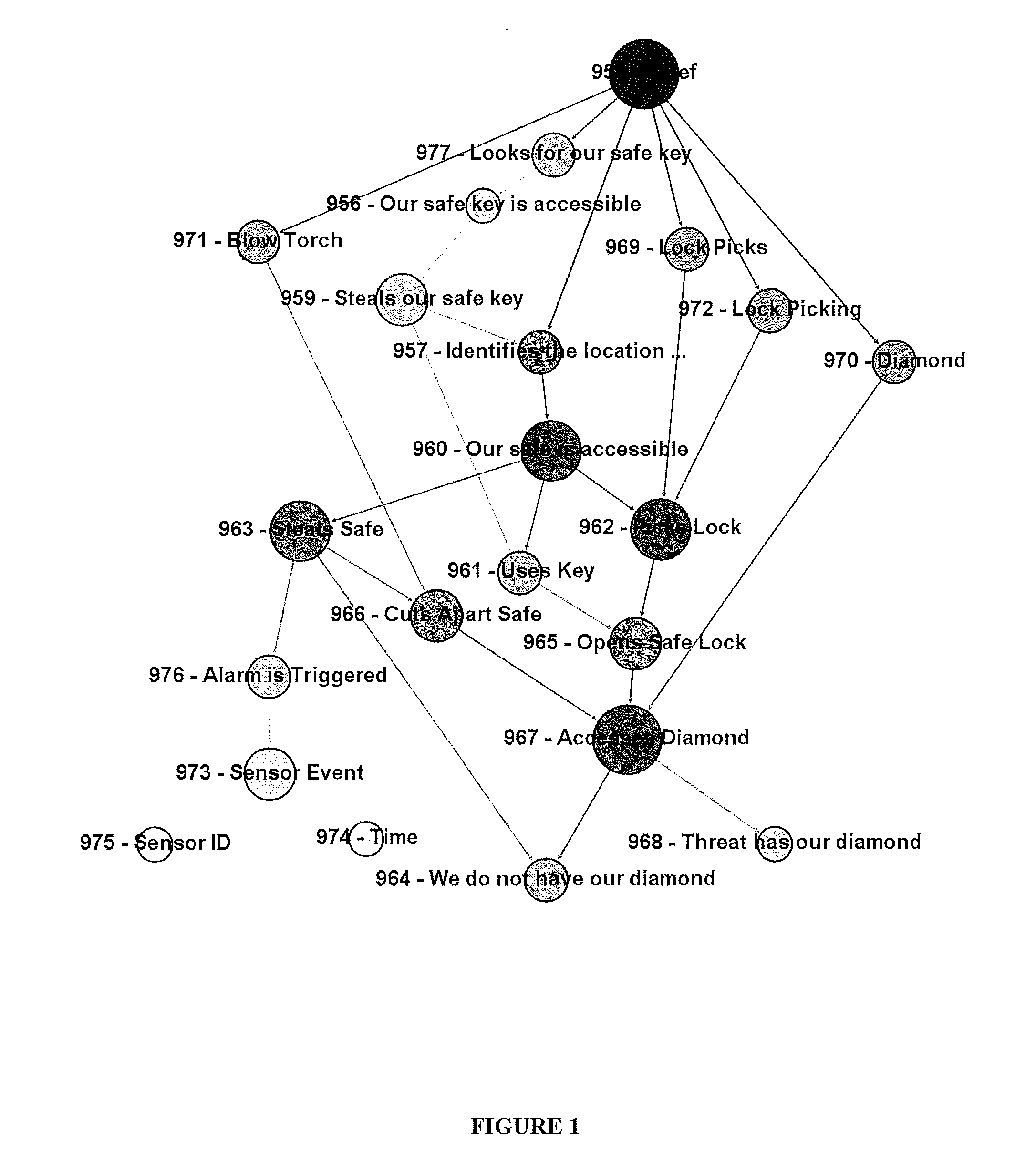

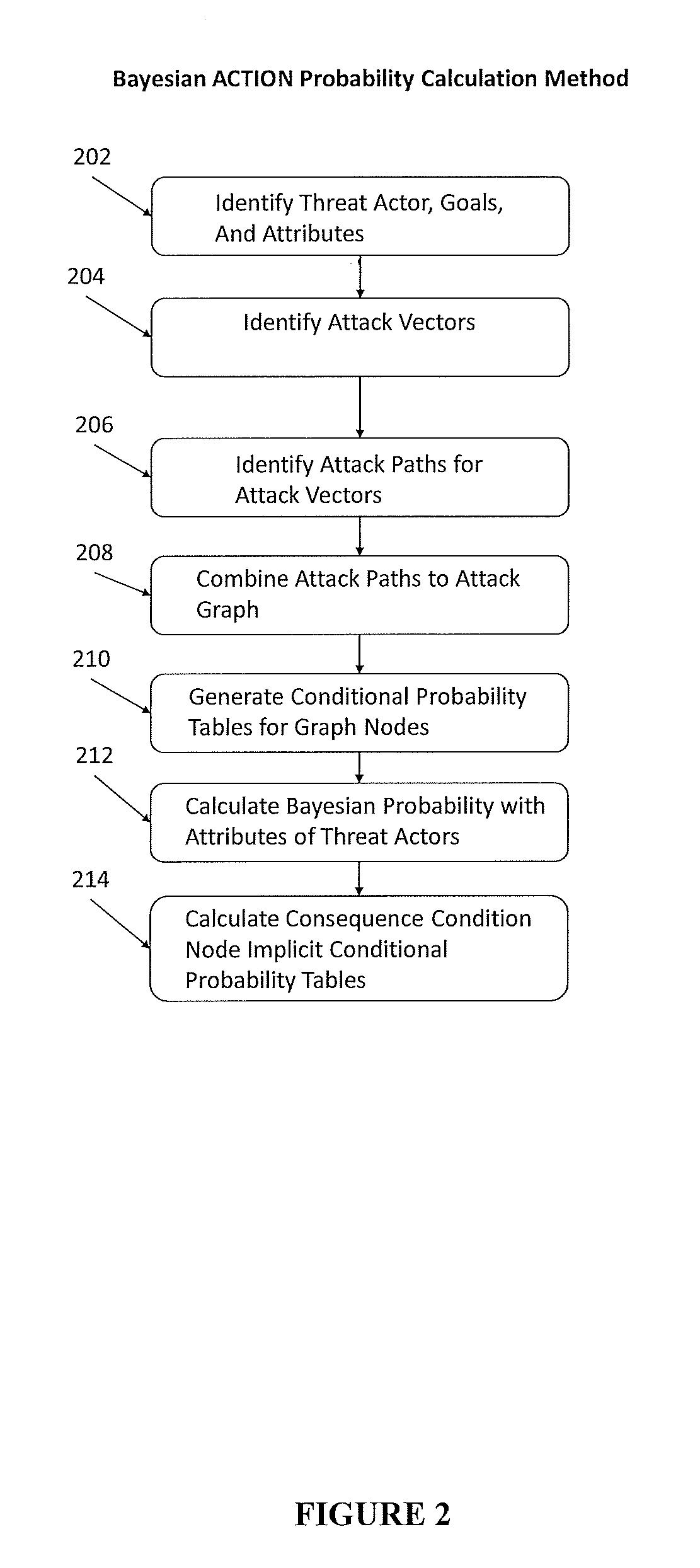

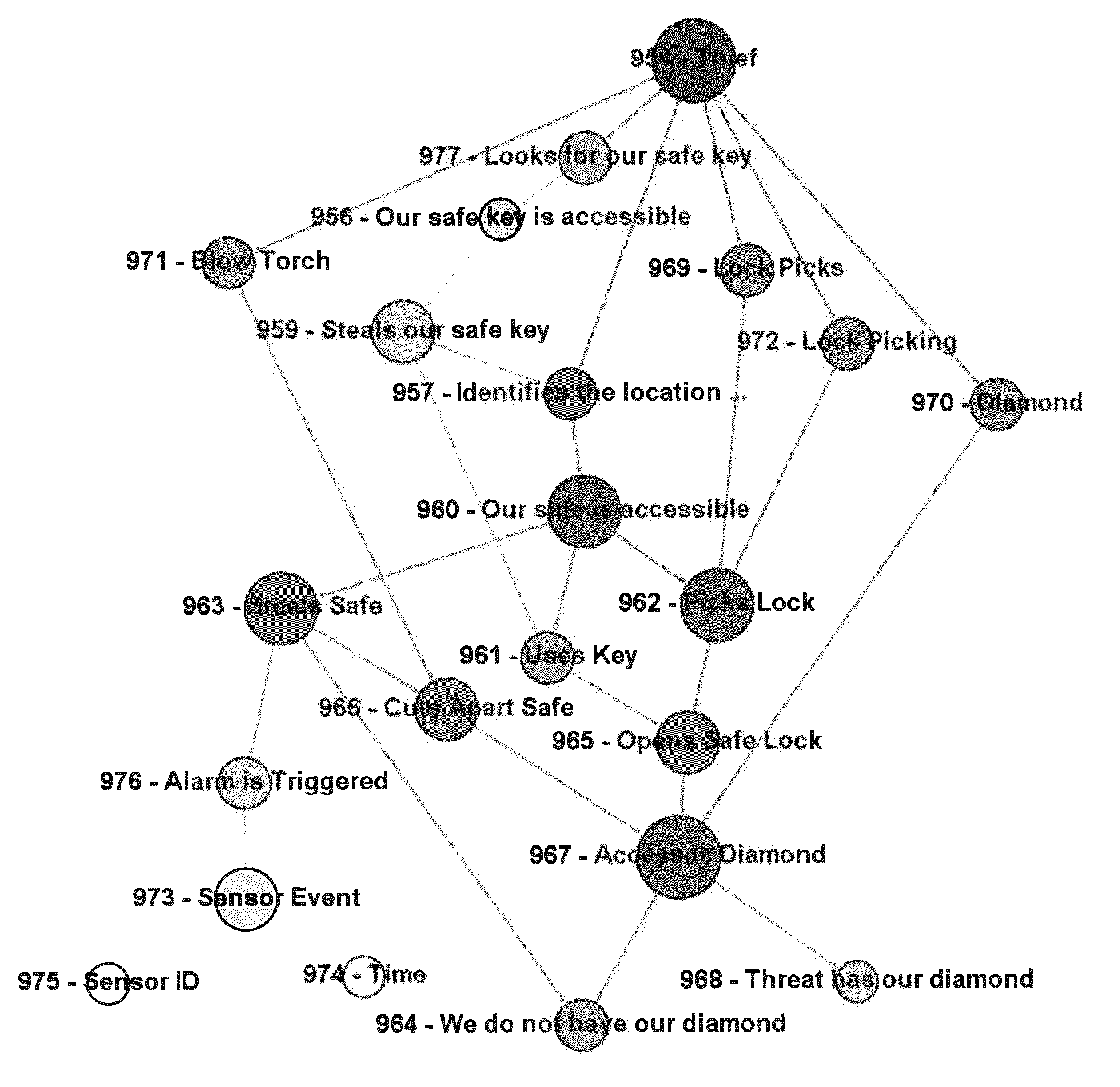

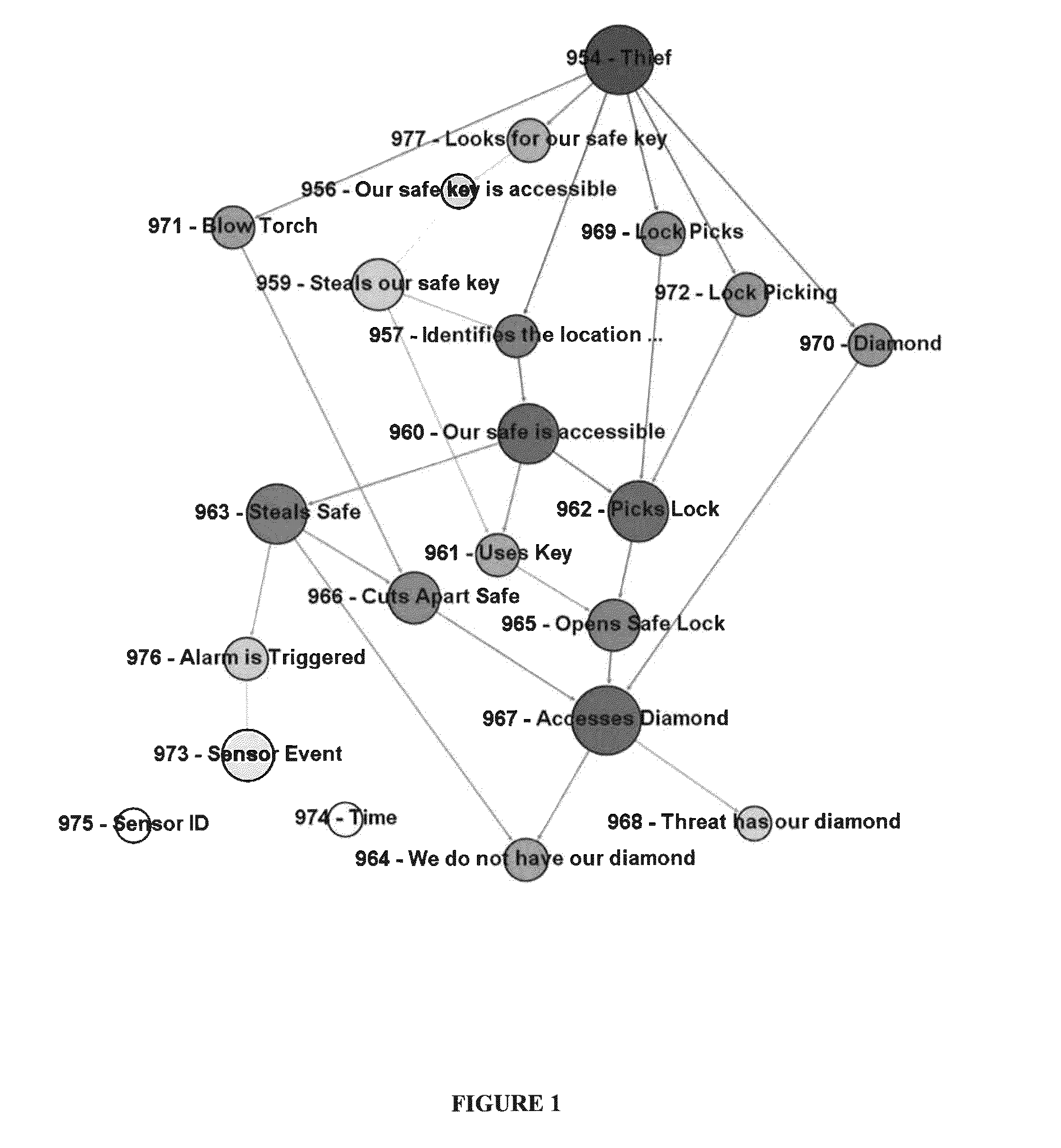

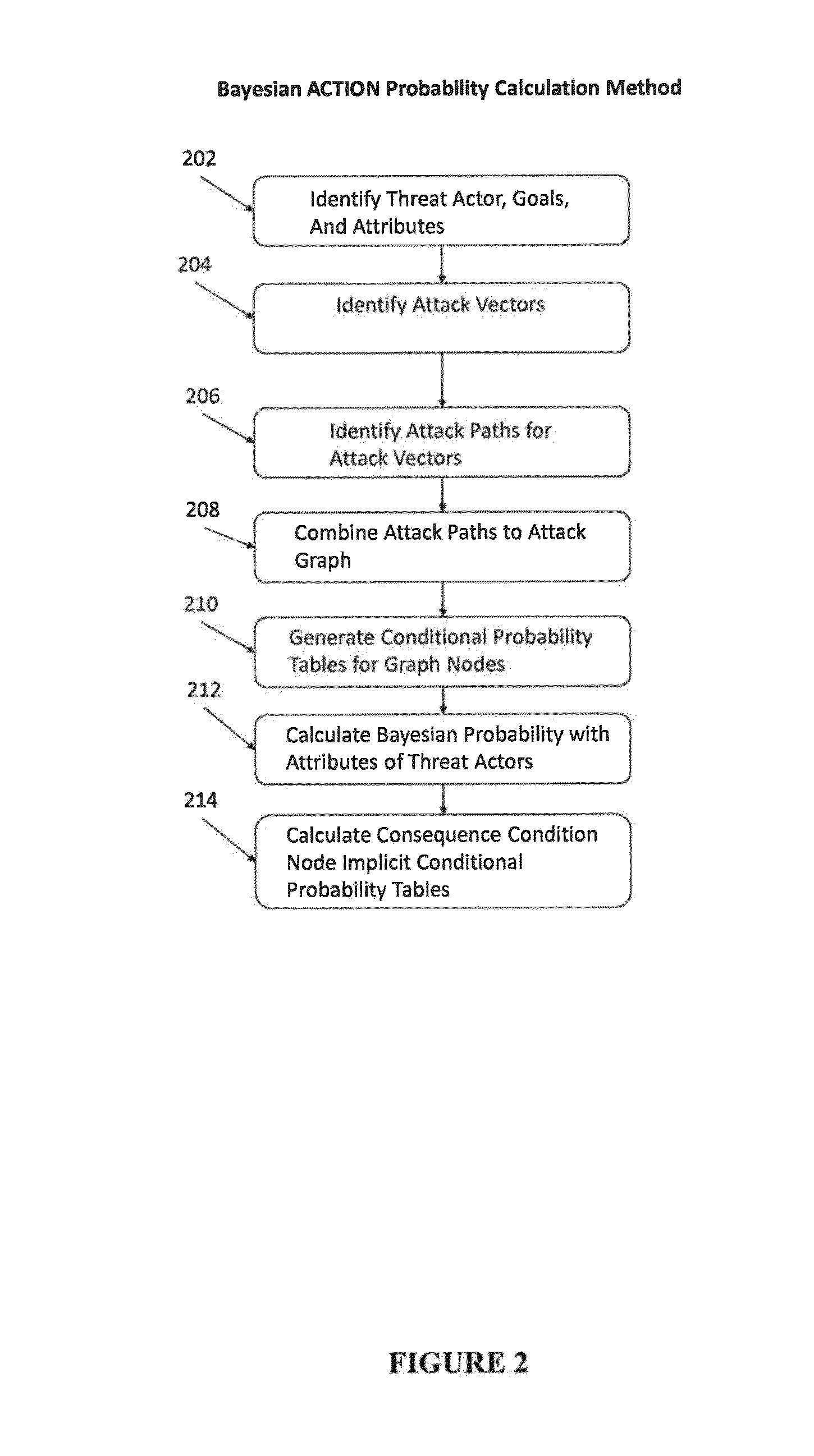

System and Method for Cyber Security Analysis and Human Behavior Prediction

ActiveUS20160205122A1Simple conditionsMemory loss protectionError detection/correctionHuman behaviorAttack graph

An improved method for analyzing computer network security has been developed. The method first establishes multiple nodes, where each node represents an actor, an event, a condition, or an attribute related to the network security. Next, an estimate is created for each node that reflects the ease of realizing the event, condition, or attribute of the node. Attack paths are identified that represent a linkage of nodes that reach a condition of compromise of network security. Next, edge probabilities are calculated for the attack paths. The edge probabilities are based on the estimates for each node along the attack path. Next, an attack graph is generated that identifies the easiest conditions of compromise of network security and the attack paths to achieving those conditions. Finally, attacks are detected with physical sensors on the network, that predict the events and conditions. When an attack is detected, security alerts are generated in response to the attacks.

Owner:BASSETT GABRIEL

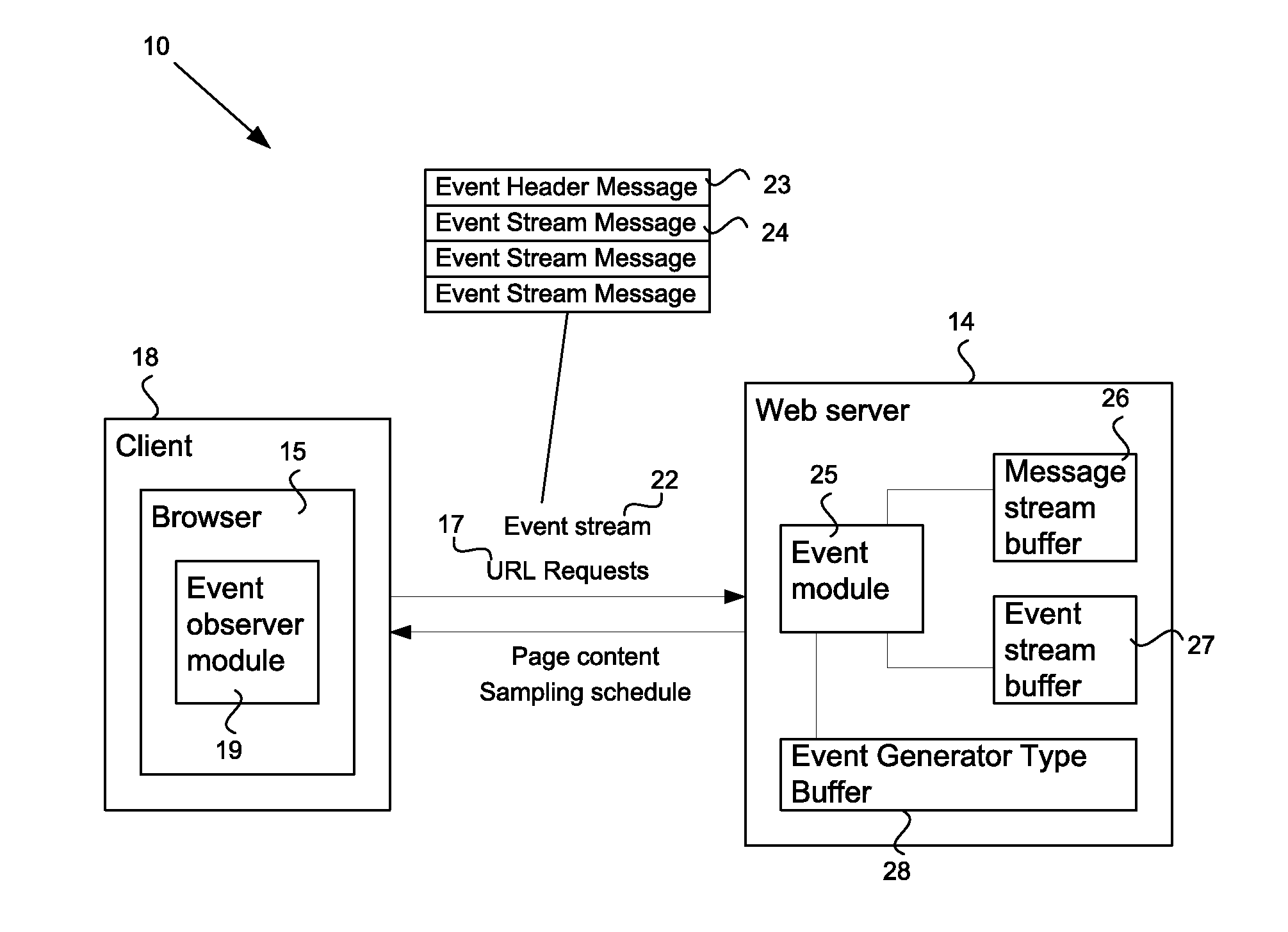

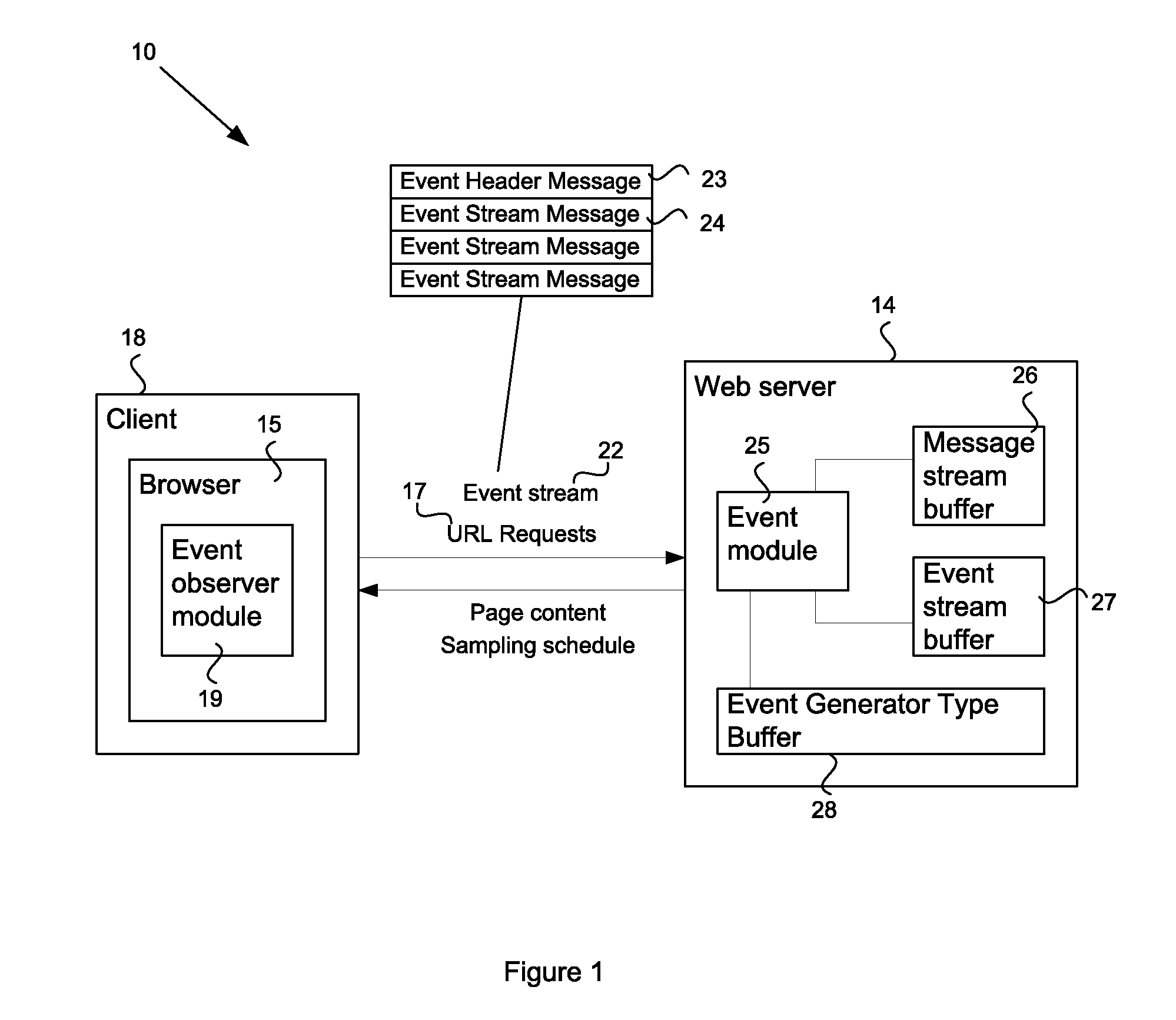

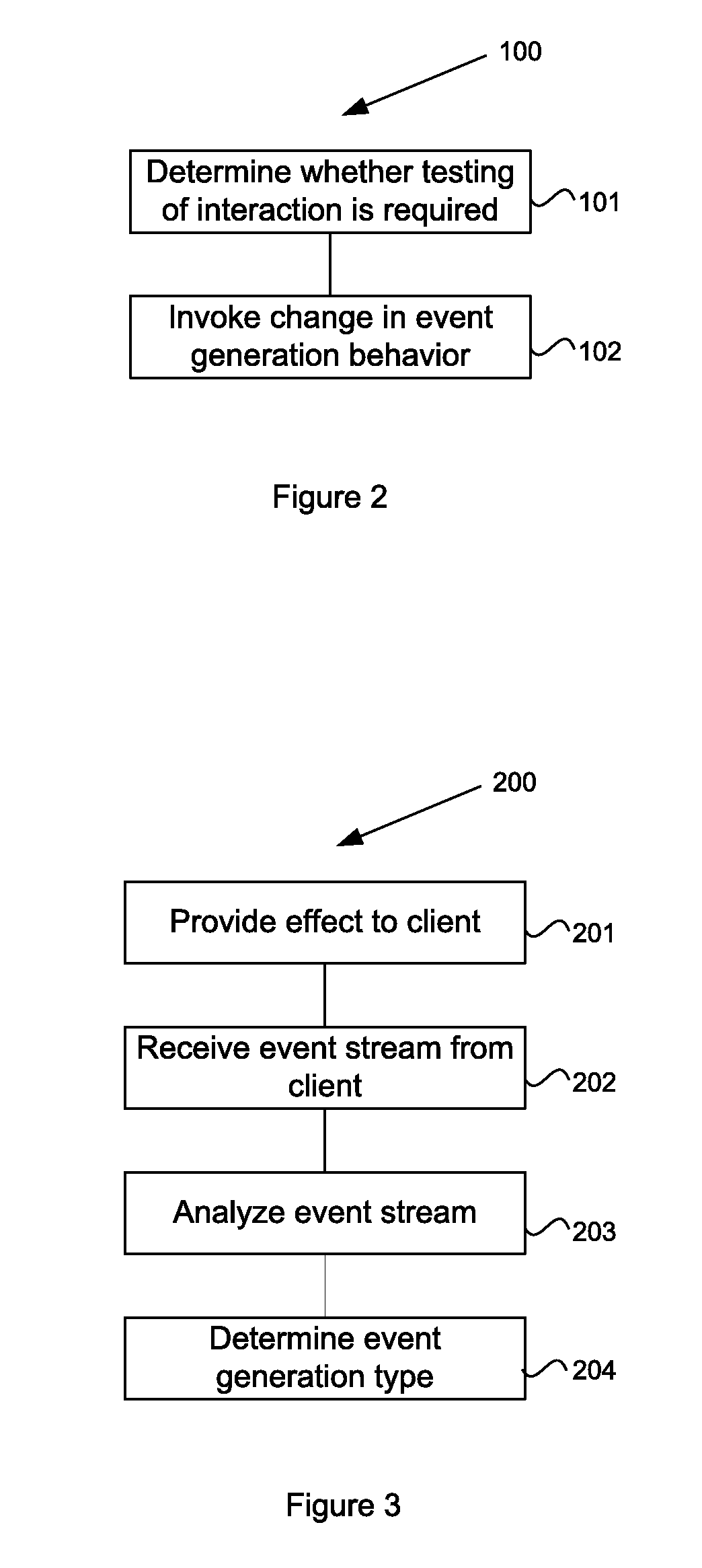

System and method for processing user interface events

ActiveUS20100287229A1Multiple digital computer combinationsDigital data authenticationHuman behaviorHuman interaction

A system and method to detect and prevent non-human interaction between a client and a web server invokes an effect to change the event generation behavior at the client. Subsequent event streams from the client to the server are analyzed to determine whether the event streams contain events corresponding to expected reactions of a human operator at the client to the effect. Indications of non-human behavior may invoke more direct human testing, for example using a dynamic CAPTCHA application, or may cause a termination of the client / URL interaction.

Owner:ORACLE INT CORP

System and method for cyber security analysis and human behavior prediction

ActiveUS9292695B1Attacked easilyError detection/correctionPlatform integrity maintainanceHuman behaviorAttack graph

A method for analyzing computer network security has been developed. The method first establishes multiple nodes, where each node represents an actor, an event, a condition, or an attribute related to the network security. Next, an estimate is created for each node that reflects the case of realizing the event, condition, or attribute of the node. Attack paths are identified that represent a linkage of nodes that reach a condition of compromise of network security. Next, edge probabilities are calculated for the attack paths. The edge probabilities are based on the estimates for each node along the attack path. Finally, an attack graph is generated that identifies the easiest conditions of compromise of network security and the attack paths to achieving those conditions.

Owner:BASSETT GABRIEL

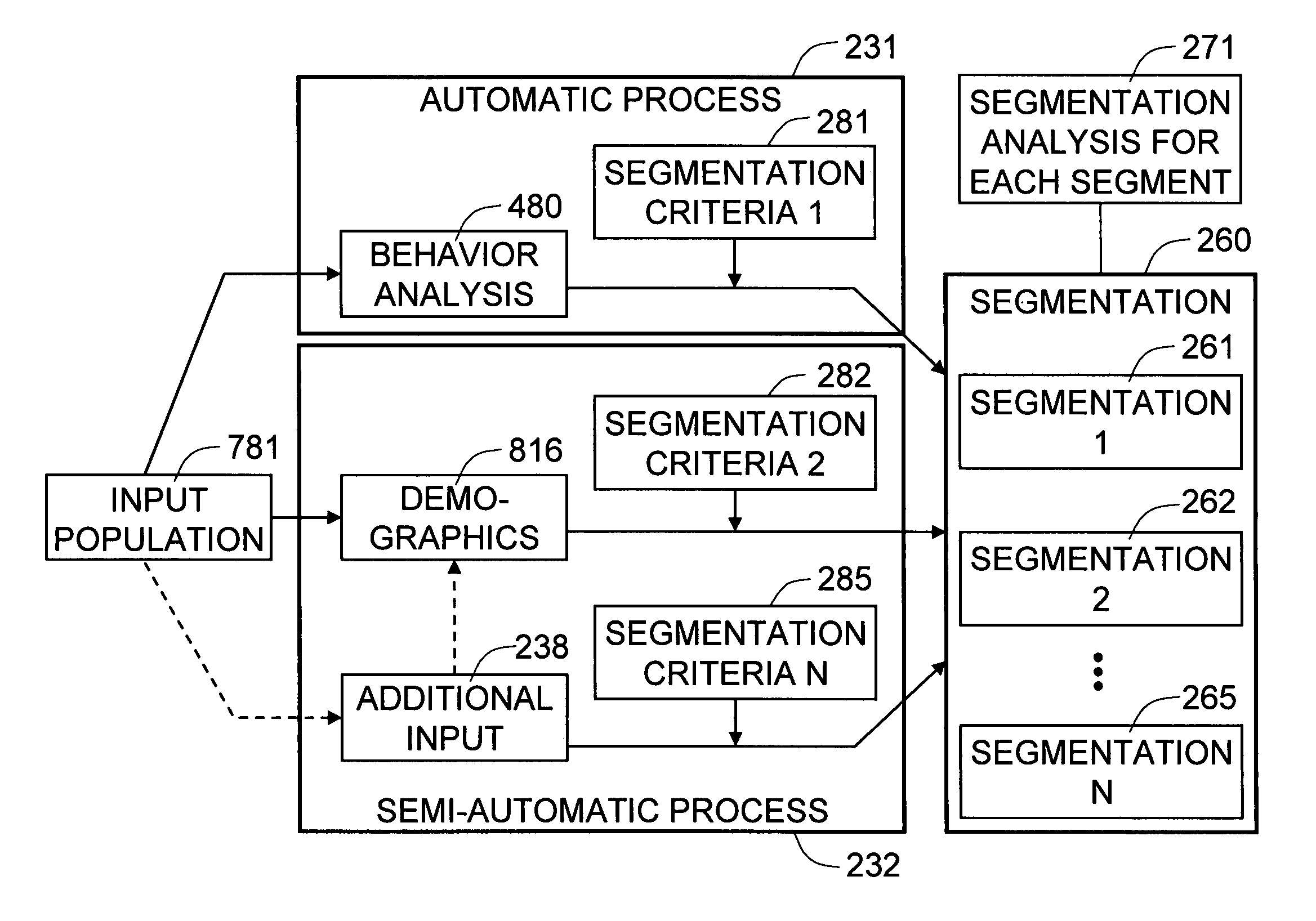

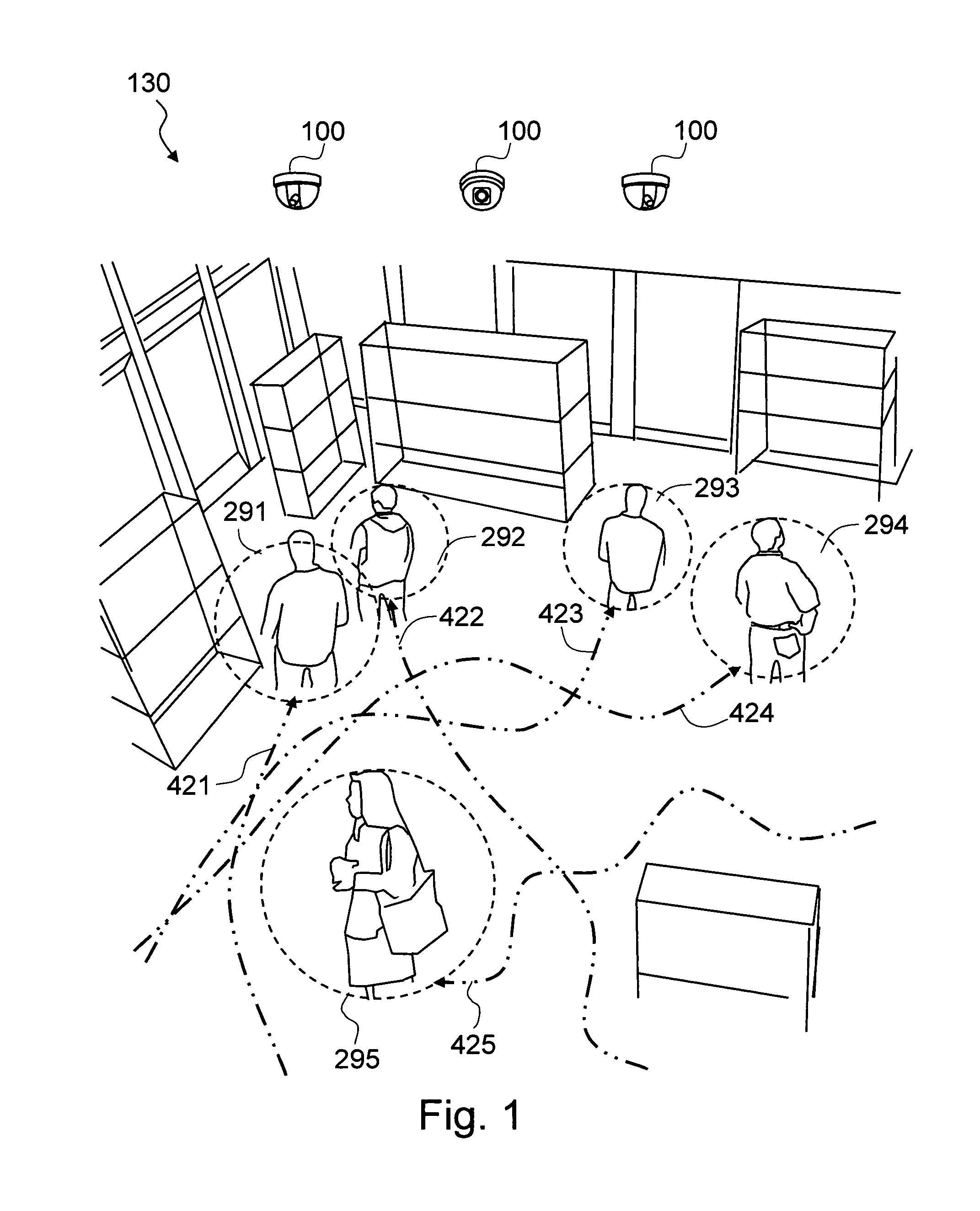

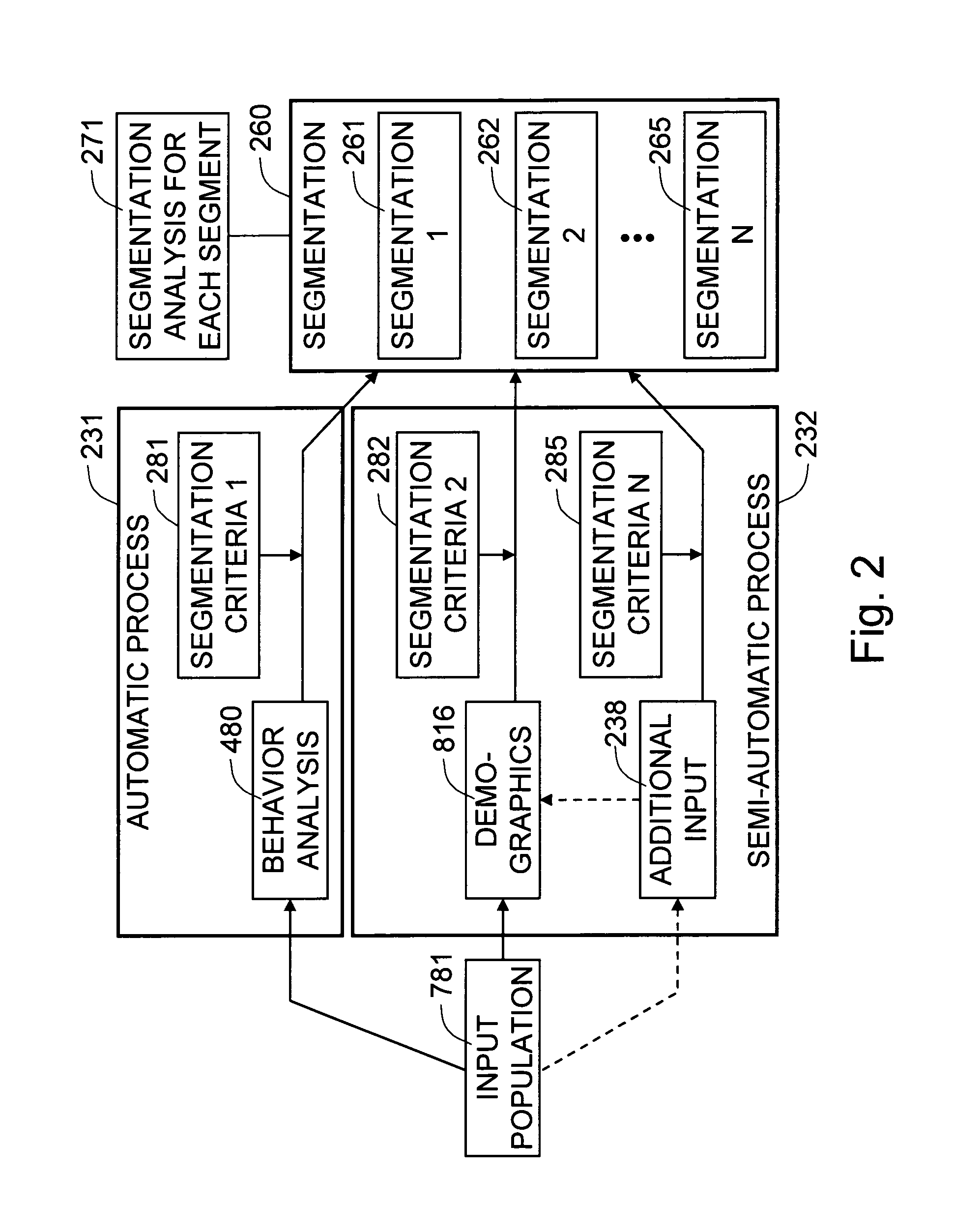

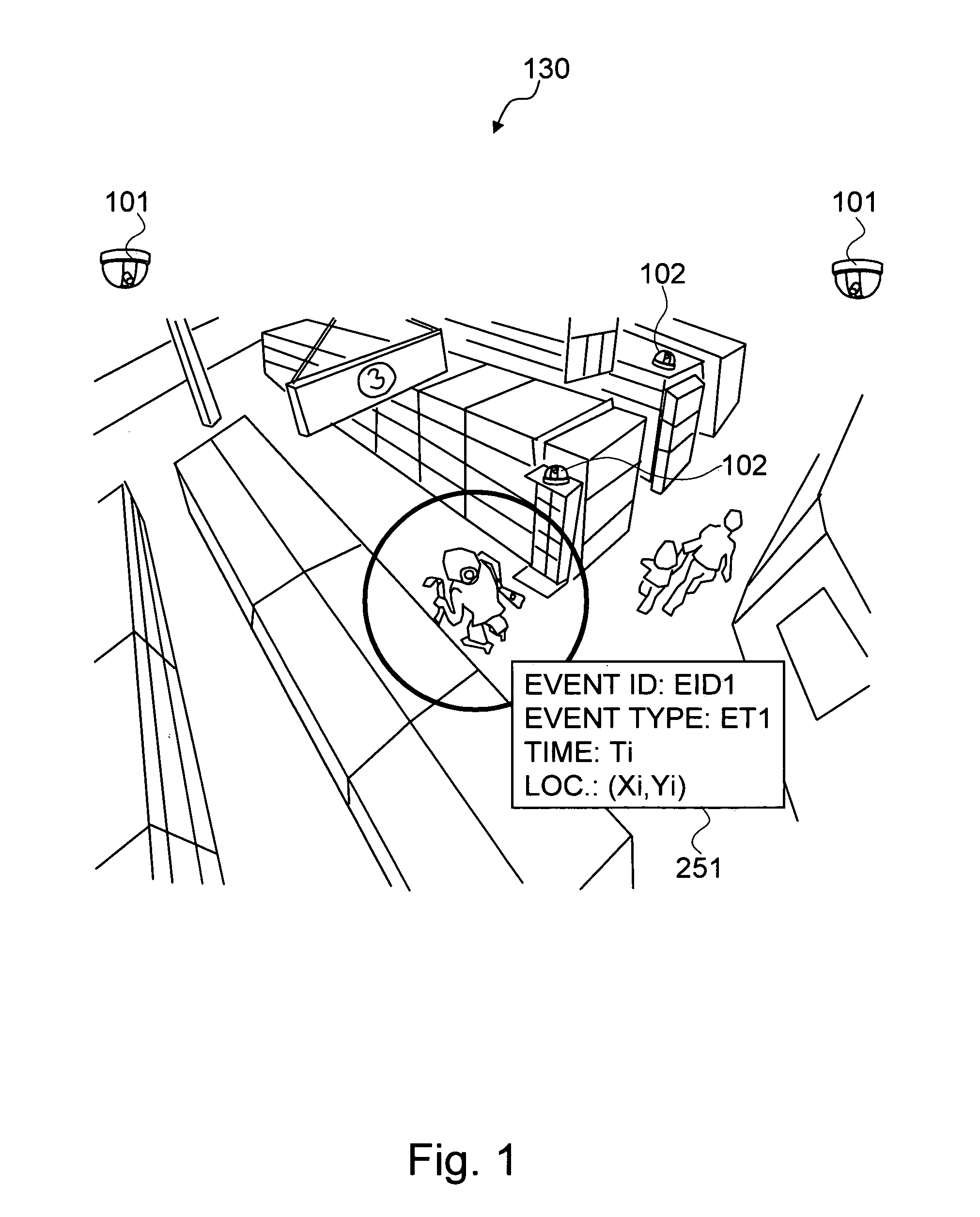

Method and system for segmenting people in a physical space based on automatic behavior analysis

The present invention is a method and system for segmenting a plurality of persons in a physical space based on automatic behavior analysis of the persons in a preferred embodiment. The behavior analysis can comprise a path analysis as one of the characterization methods. The present invention applies segmentation criteria to the output of the video-based behavior analysis and assigns segmentation label to each of the persons during a predefined window of time. In addition to the behavioral characteristics, the present invention can also utilize other types of visual characterization, such as demographic analysis, or additional input sources, such as sales data, to segment the plurality of persons in another exemplary embodiment. The present invention captures a plurality of input images of the persons in the physical space by a plurality of means for capturing images. The present invention processes the plurality of input images in order to understand the behavioral characteristics, such as shopping behavior, of the persons for the segmentation purpose. The processes are based on a novel usage of a plurality of computer vision technologies to analyze the visual characterization of the persons from the plurality of input images. The physical space may be a retail space, and the persons may be customers in the retail space.

Owner:VIDEOMINING CORP

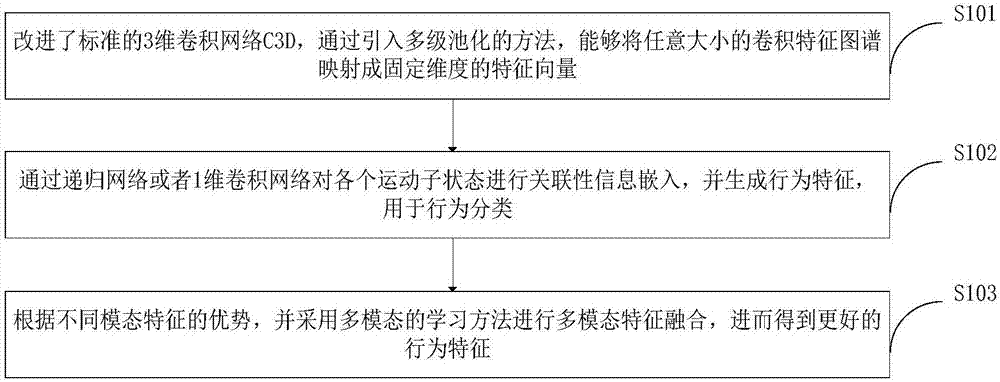

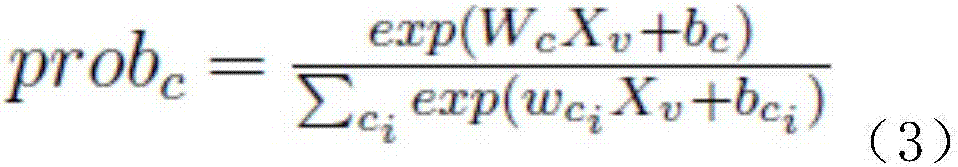

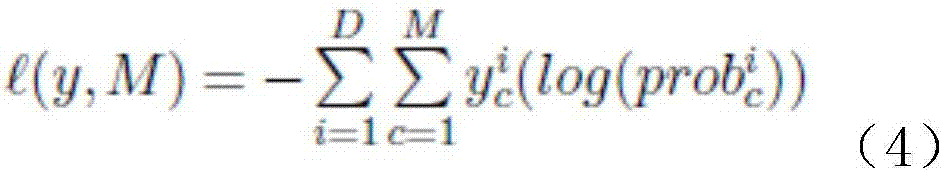

Human behavior identification method based on 3D deep convolutional network

ActiveCN107506712AImprove robustnessScale upCharacter and pattern recognitionNeural architecturesHuman behaviorFeature extraction

The invention belongs to the field of computer vision video motion identification, and discloses a human behavior identification method based on a 3D deep convolutional network. The human behavior identification method comprises the steps of: firstly, dividing a video into a series of consecutive video segments; then, inputting the consecutive video segments into a 3D neural network formed by a convolutional computation layer and a space-time pyramid pooling layer to obtain features of the consecutive video segments; and then calculating global video features by means of a long and short memory model, and regarding the global video features as a behavior pattern. The human behavior identification method has obvious advantages, can perform feature extraction on video segments of arbitrary resolution and time length by improving a standard 3D convolutional network C3D and introducing multistage pooling, improves the great robustness of the model to behavior change, is conductive to increasing video training data scale while maintaining video quality, and improves the integrity of behavior information through carrying out correlation information embedding according to motion sub-states.

Owner:CHENGDU KOALA URAN TECH CO LTD

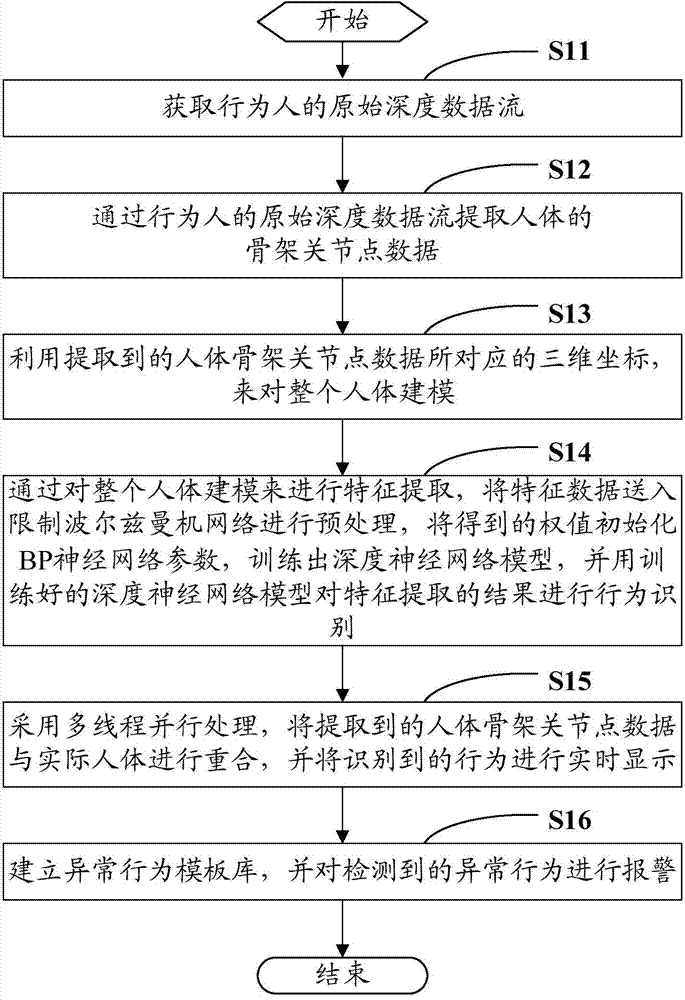

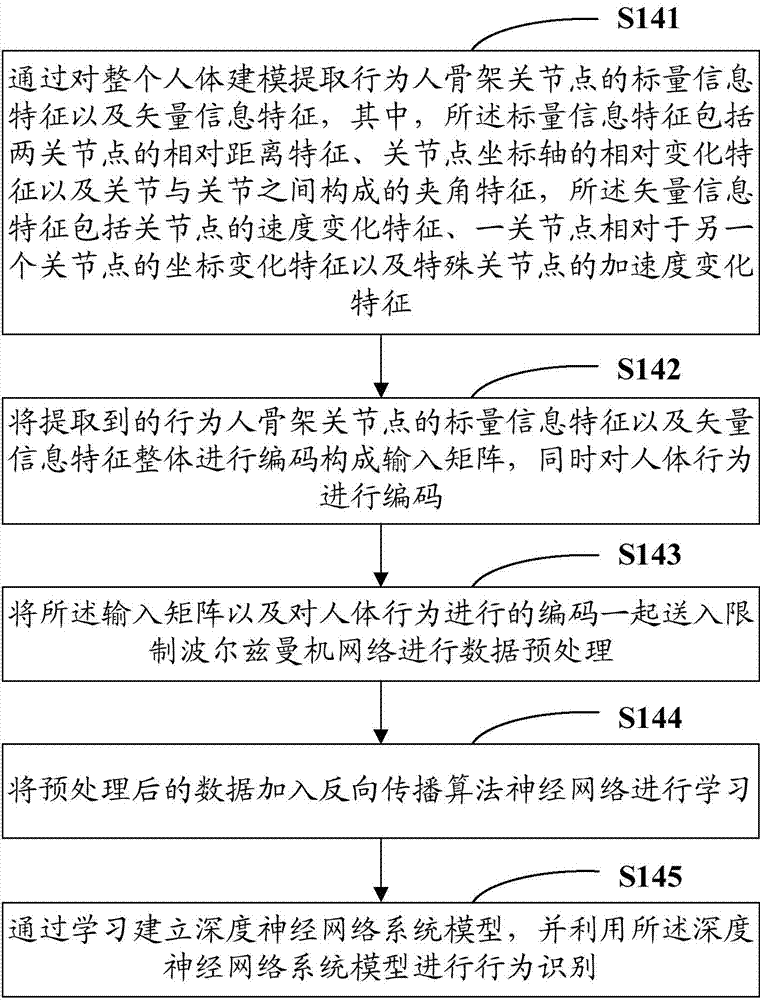

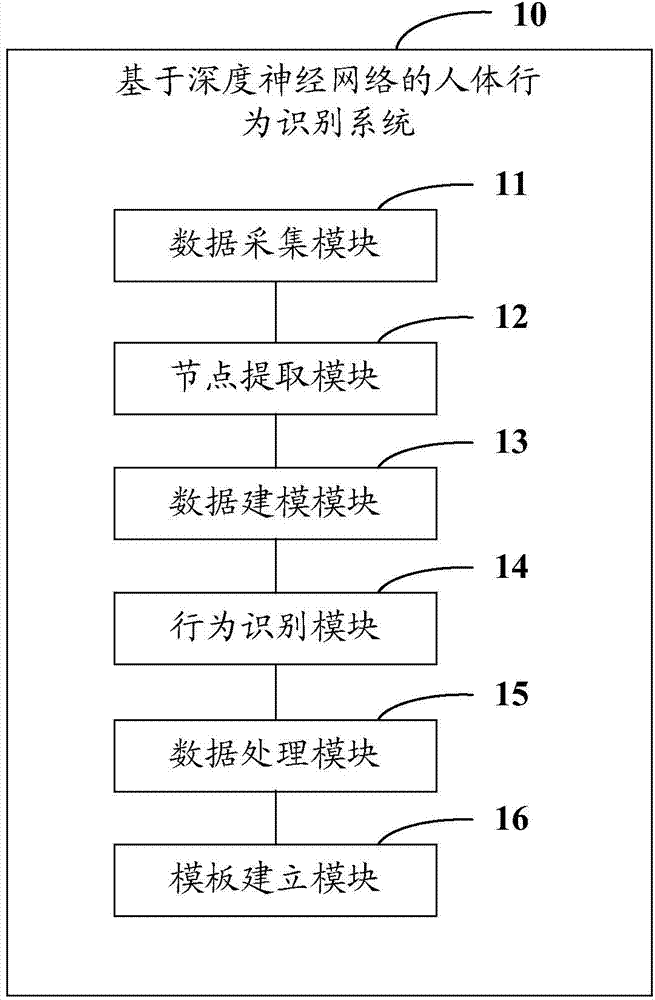

Human behavior recognition method and human behavior recognition system based on depth neural network

InactiveCN104850846APrivacy protectionImprove accuracyCharacter and pattern recognitionNeural learning methodsHuman behaviorHuman body

The invention provides a human behavior recognition method based on a depth neural network, comprising the following steps: acquiring original depth data stream of an actor; extracting human skeleton joint data from the original depth data stream of the actor; modeling the entire human body with three-dimensional coordinates corresponding to the extracted human skeleton joint data; extracting features by modeling the entire human body, sending feature data to a restricted Boltzmann machine network for preprocessing, training out a depth neural network model based on received weight initialization BP neural network parameters, and identifying a behavior from a feature extraction result; overlapping the extracted human skeleton joint data and the actual human body through multi-threaded parallel processing, and displaying the identified behavior in real time; and establishing an abnormal behavior template library and alarming for a detected abnormal behavior. The change of human behavior can be detected in real time, and alarm can be raised for abnormal behaviors (such as fall) of a human body.

Owner:SHENZHEN UNIV

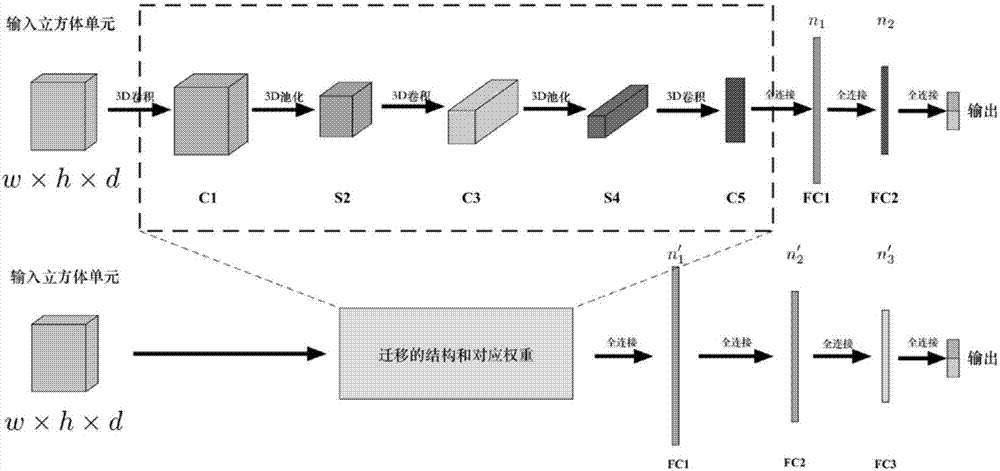

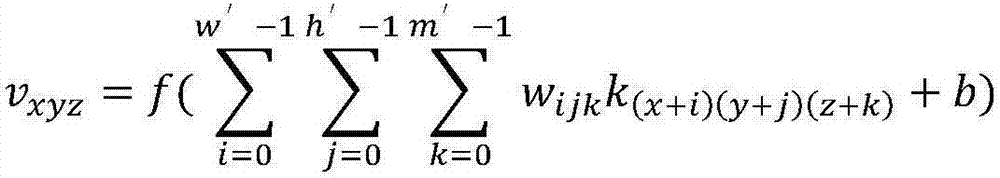

Human behavior identification method based on three-dimensional convolutional neural network and transfer learning model

ActiveCN107506740AImprove accuracyImprove detection accuracyCharacter and pattern recognitionMachine learningHuman behaviorData set

The present invention relates to a human behavior identification method based on a three-dimensional convolutional neural network and a transfer learning model. The method comprises: performing sampling frame by frame of video, stacking obtained continuous single-frame images on a time dimension to form an image cube with a certain size, and taking the image cube as the input of the three-dimensional convolutional neural network; performing training of a basic multi-classification three-dimensional convolutional neural network model when implementation is performed, selecting part of classes of input samples from a test result to construct a sub-data set, training a plurality of dichotomy models on the basis of the sub-data set, and selecting a plurality of models with the best dichotomy result; and finally, employing the transfer learning to transfer the knowledge learned by the models to an original multi-classification model to perform retraining of the transferred multi-classification model. Therefore, the multi-classification identification accuracy is improved and human behavior identification with high accuracy is realized.

Owner:BEIHANG UNIV

Apparatus for behavior analysis and method thereof

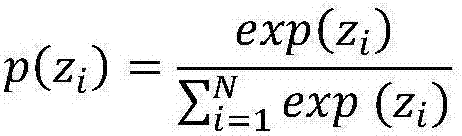

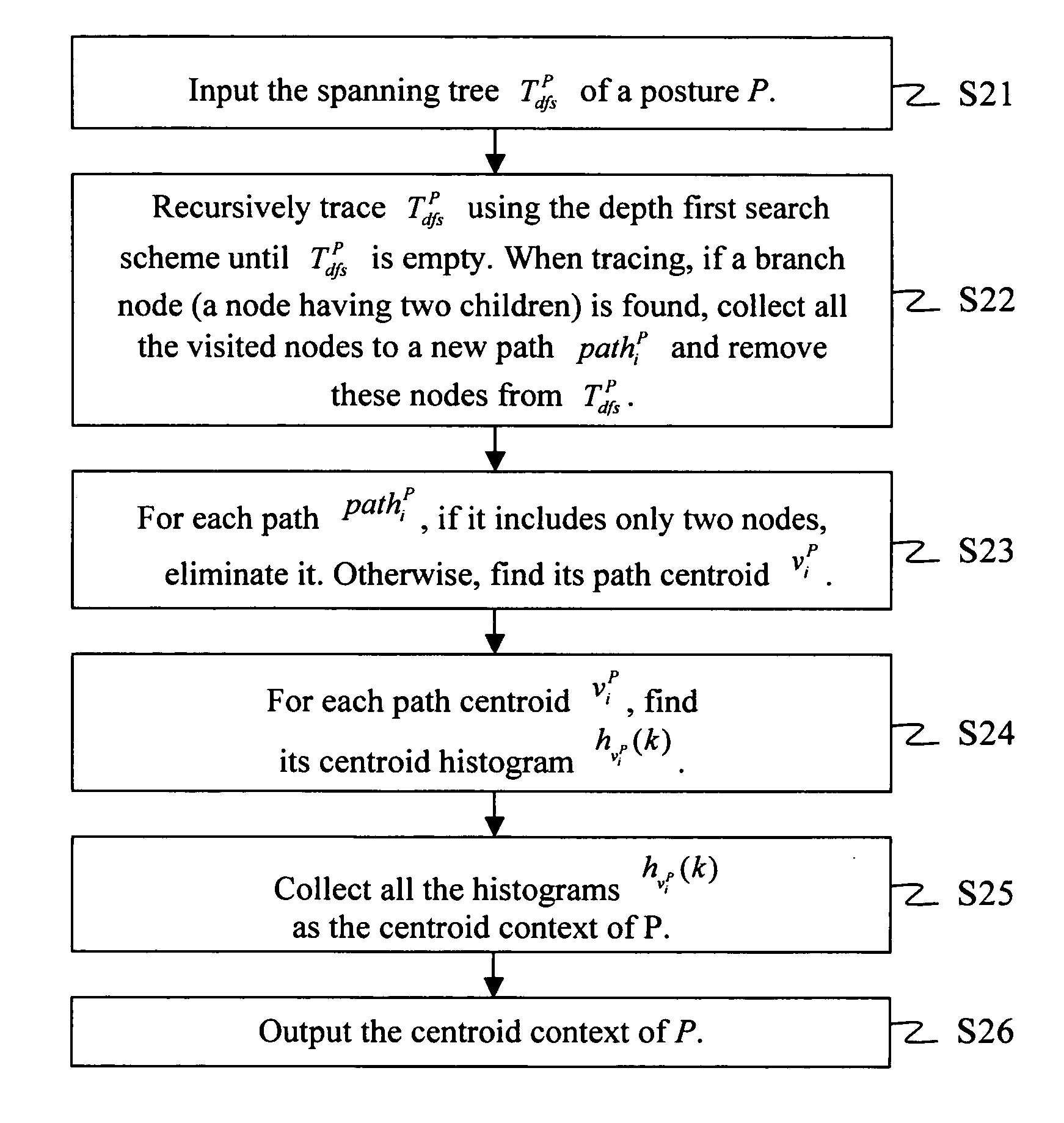

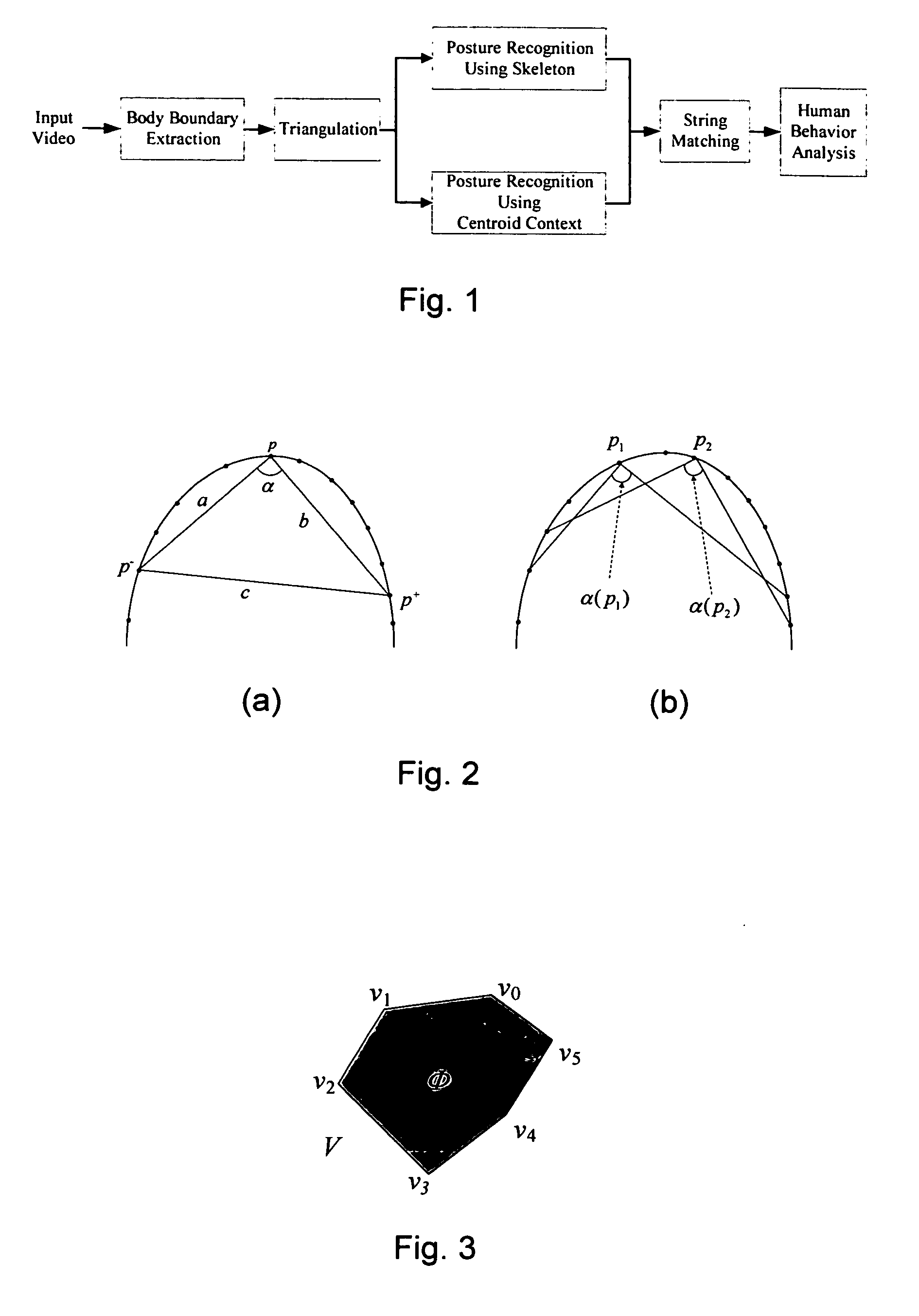

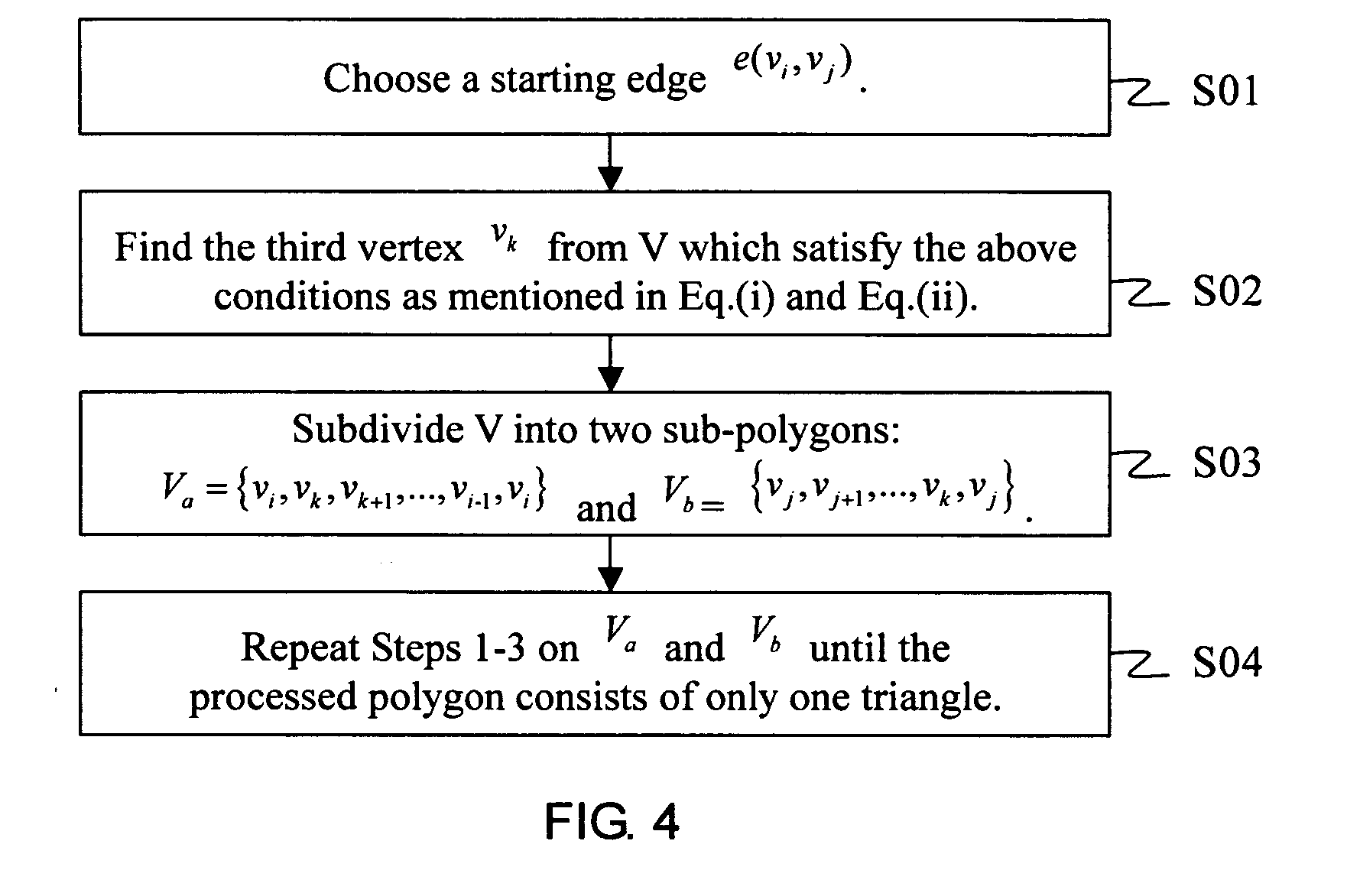

InactiveUS20100278391A1Easy to analyzeEasy to compareImage analysisMedical automated diagnosisHuman behaviorTriangulation

In the present invention, an apparatus for behavior analysis and method thereof is provided. In this apparatus, each behavior is analyzed and has its corresponding posture sequence through a triangulation-based method of triangulating the different triangle meshes. The two important posture features, the skeleton feature and the centroid context, are extracted and complementary to each other. The outstanding ability of posture classification can generate a set of key postures for coding a behavior sequence to a set of symbols. Then, based on the string representation, a novel string matching scheme is proposed to analyze different human behaviors even though they have different scaling changes. The proposed method of the present invention has been proved robust, accurate, and powerful especially in human behavior analysis.

Owner:NAT CHIAO TUNG UNIV

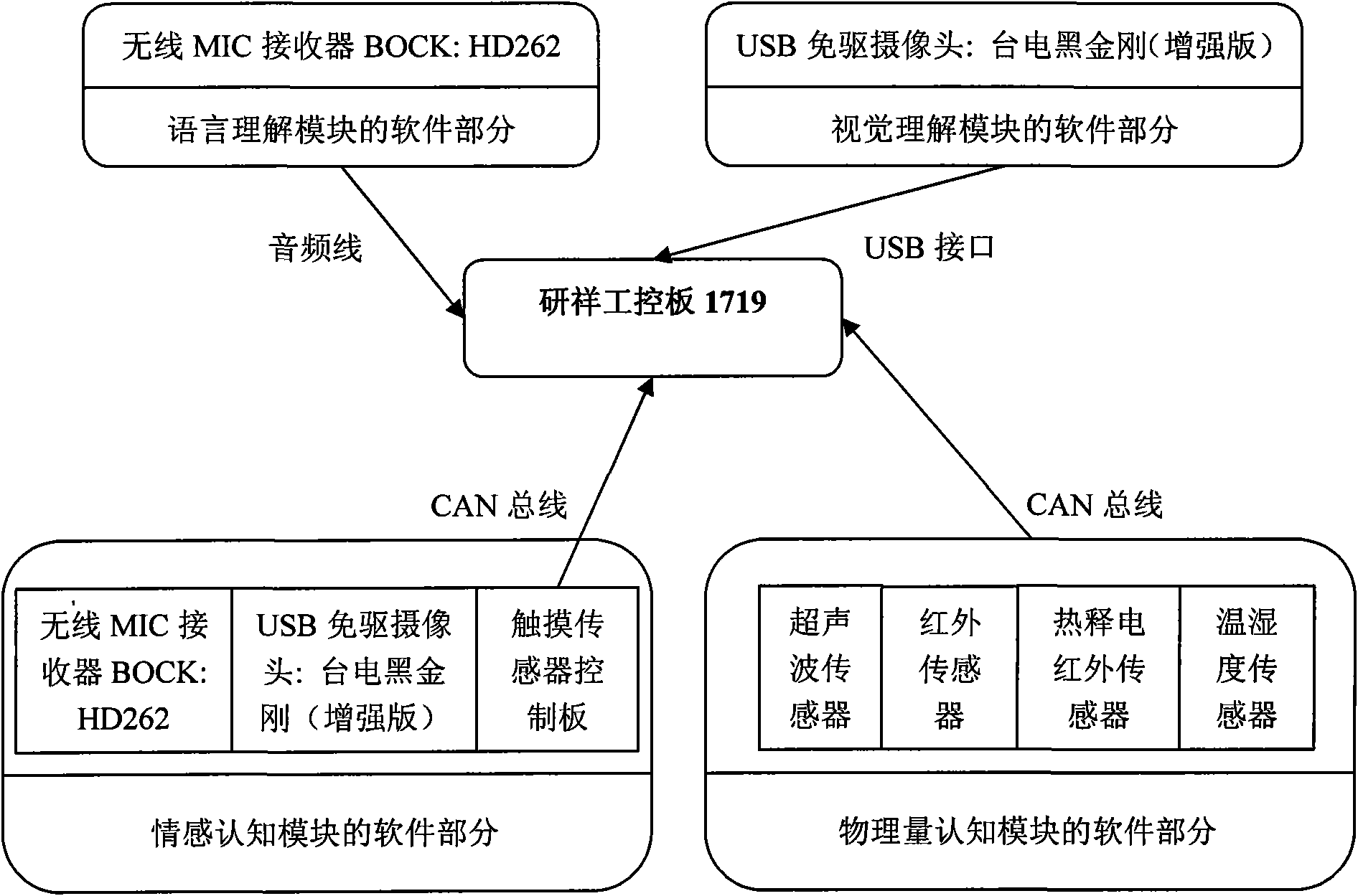

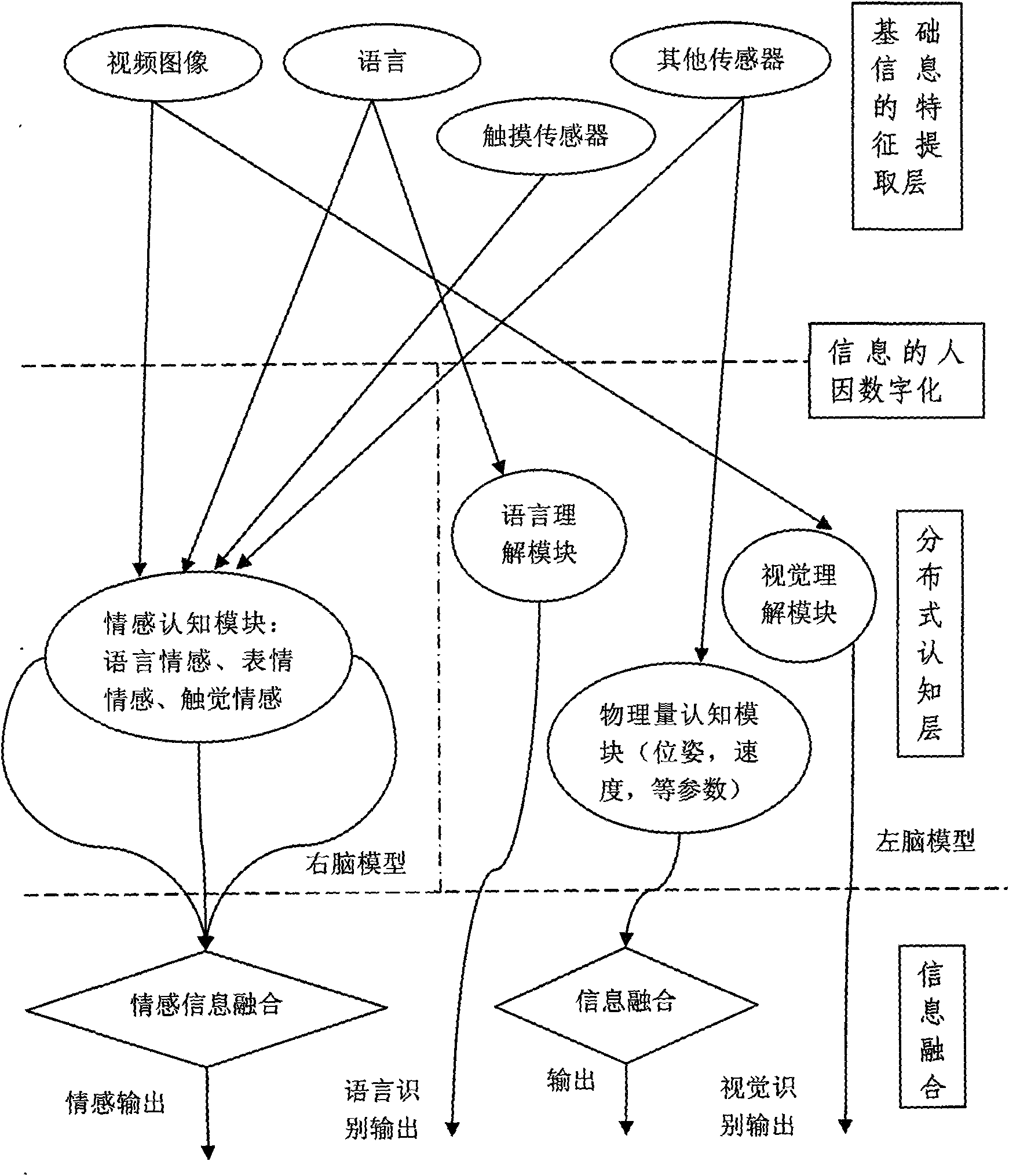

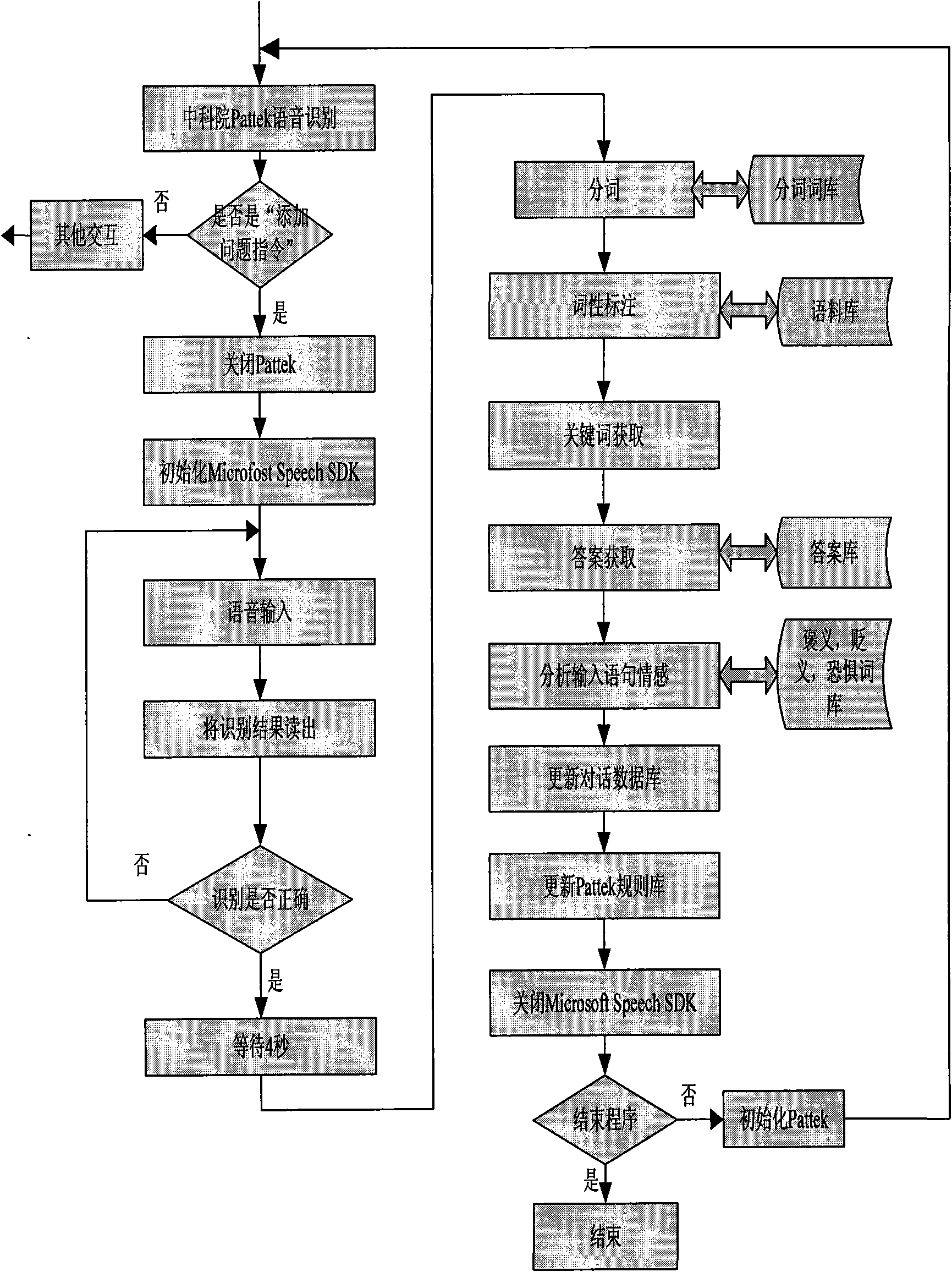

Distributed cognitive technology for intelligent emotional robot

InactiveCN101604204AInput/output for user-computer interactionCharacter and pattern recognitionHuman behaviorLanguage understanding

The invention provides distributed cognitive technology for an intelligent emotional robot, which can be applied in the field of multi-channel human-computer interaction in service robots, household robots, and the like. In a human-computer interaction process, the multi-channel cognition for the environment and people is distributed so that the interaction is more harmonious and natural. The distributed cognitive technology comprises four parts, namely 1) a language comprehension module which endows a robot with an ability of understanding human language after the steps of word division, word gender labeling, key word acquisition, and the like; 2) a vision comprehension module which comprises related vision functions such as face detection, feature extraction, feature identification, human behavior comprehension, and the like; 3) an emotion cognition module which extracts related information in language, expression and touch, analyzes user emotion contained in the information, synthesizes a comparatively accurate emotion state, and makes the intelligent emotional robot cognize the current emotion of a user; and 4) a physical quantity cognition module which makes the robot understand the environment and self state as the basis of self adjustment.

Owner:UNIV OF SCI & TECH BEIJING

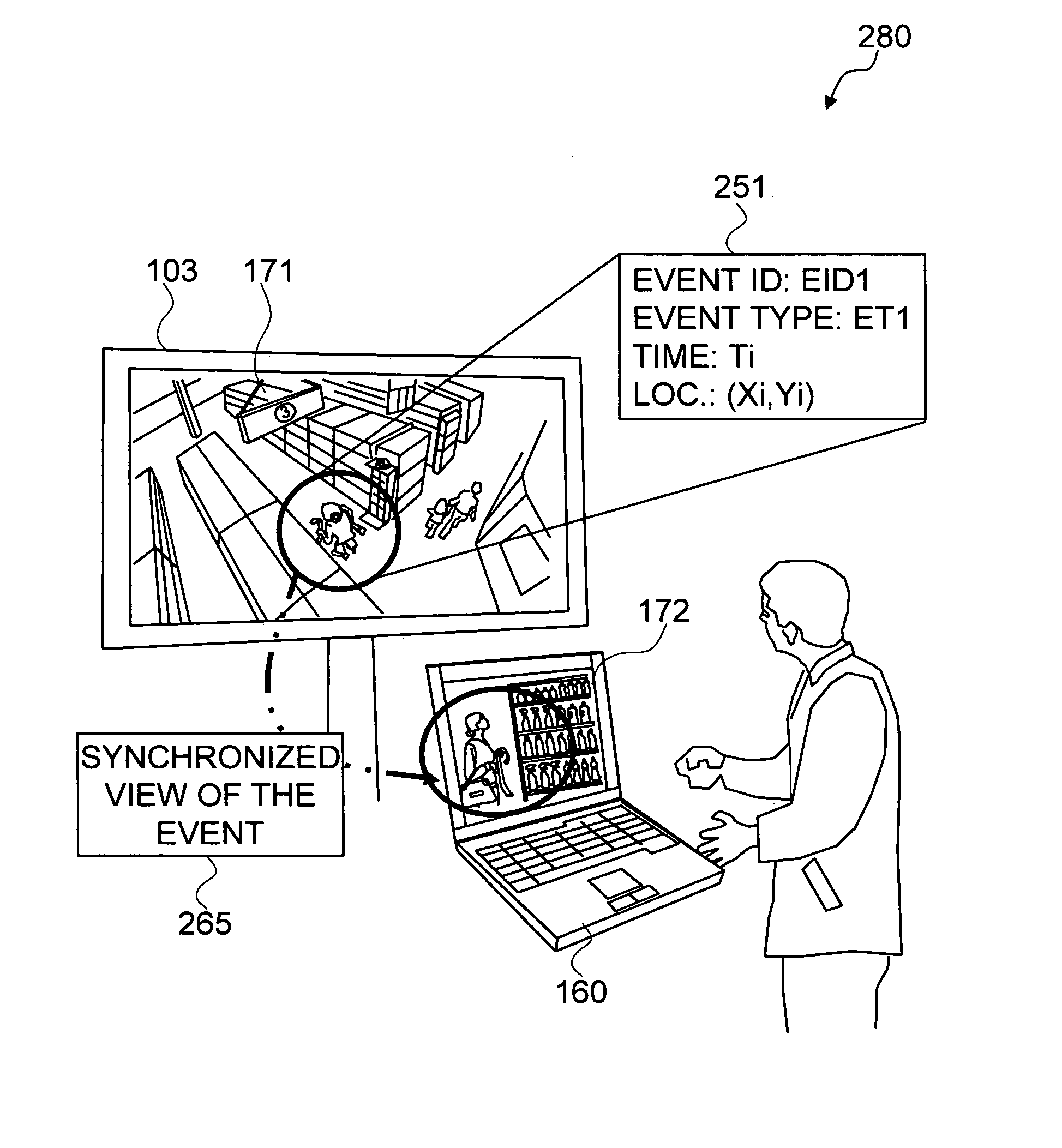

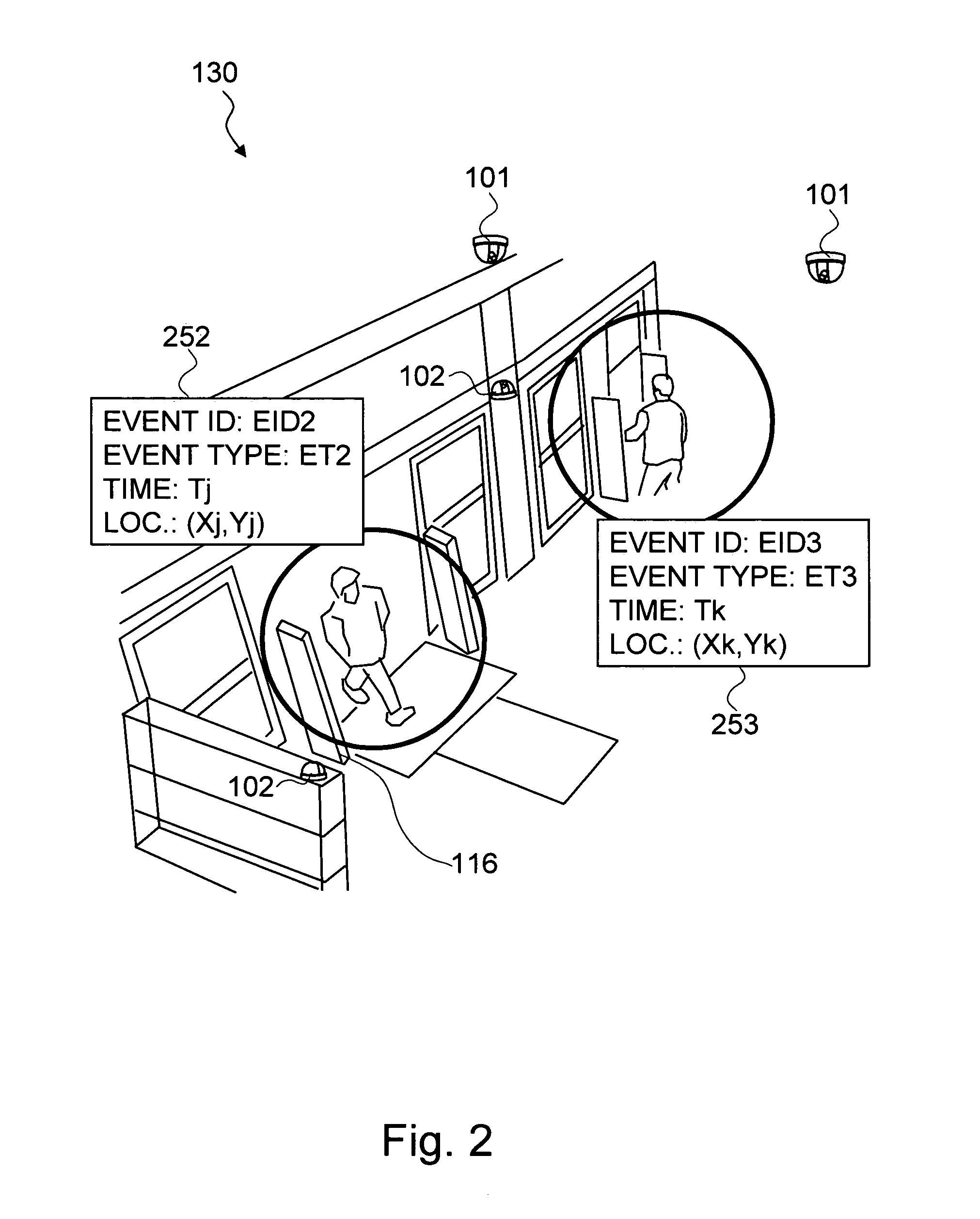

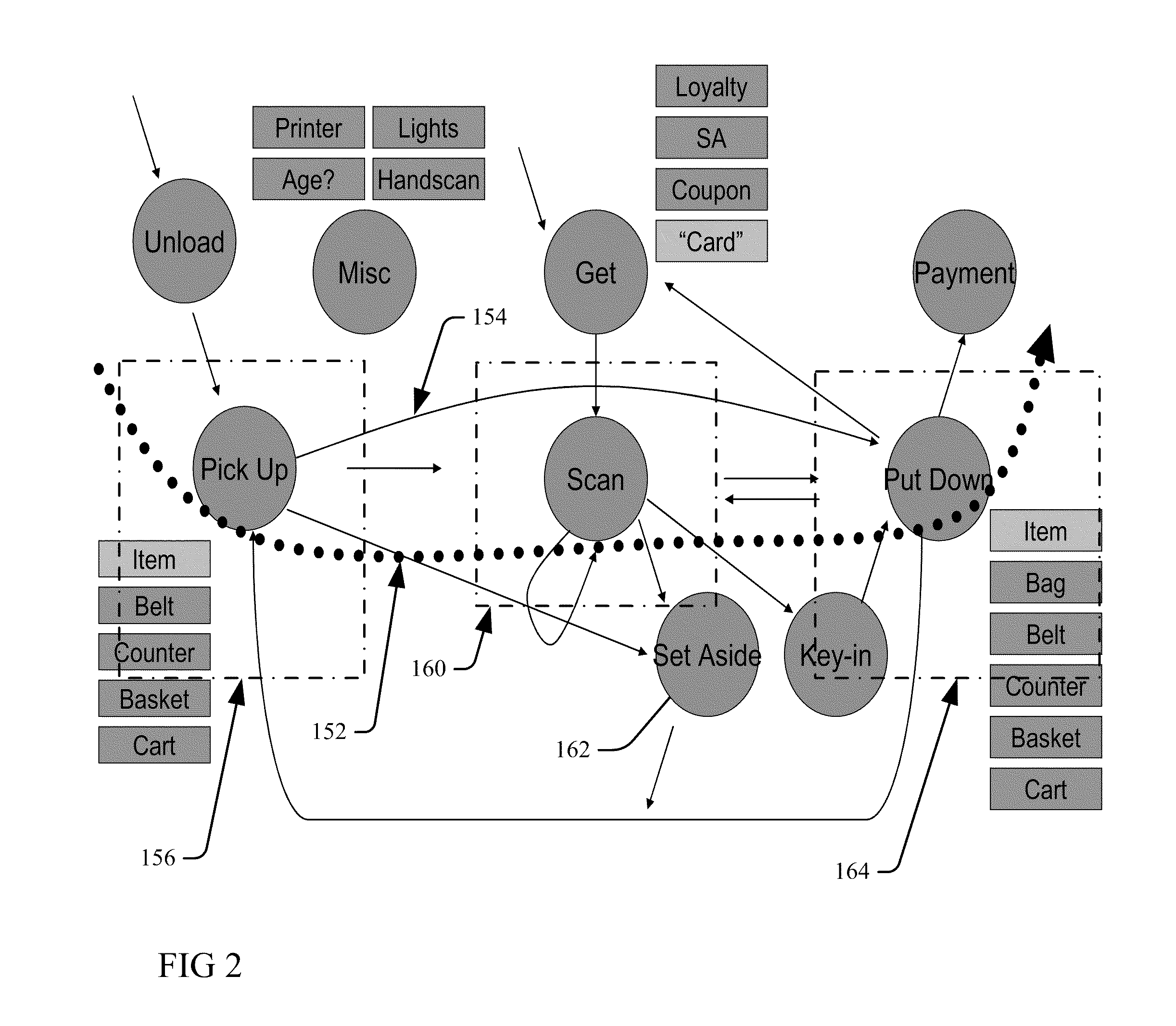

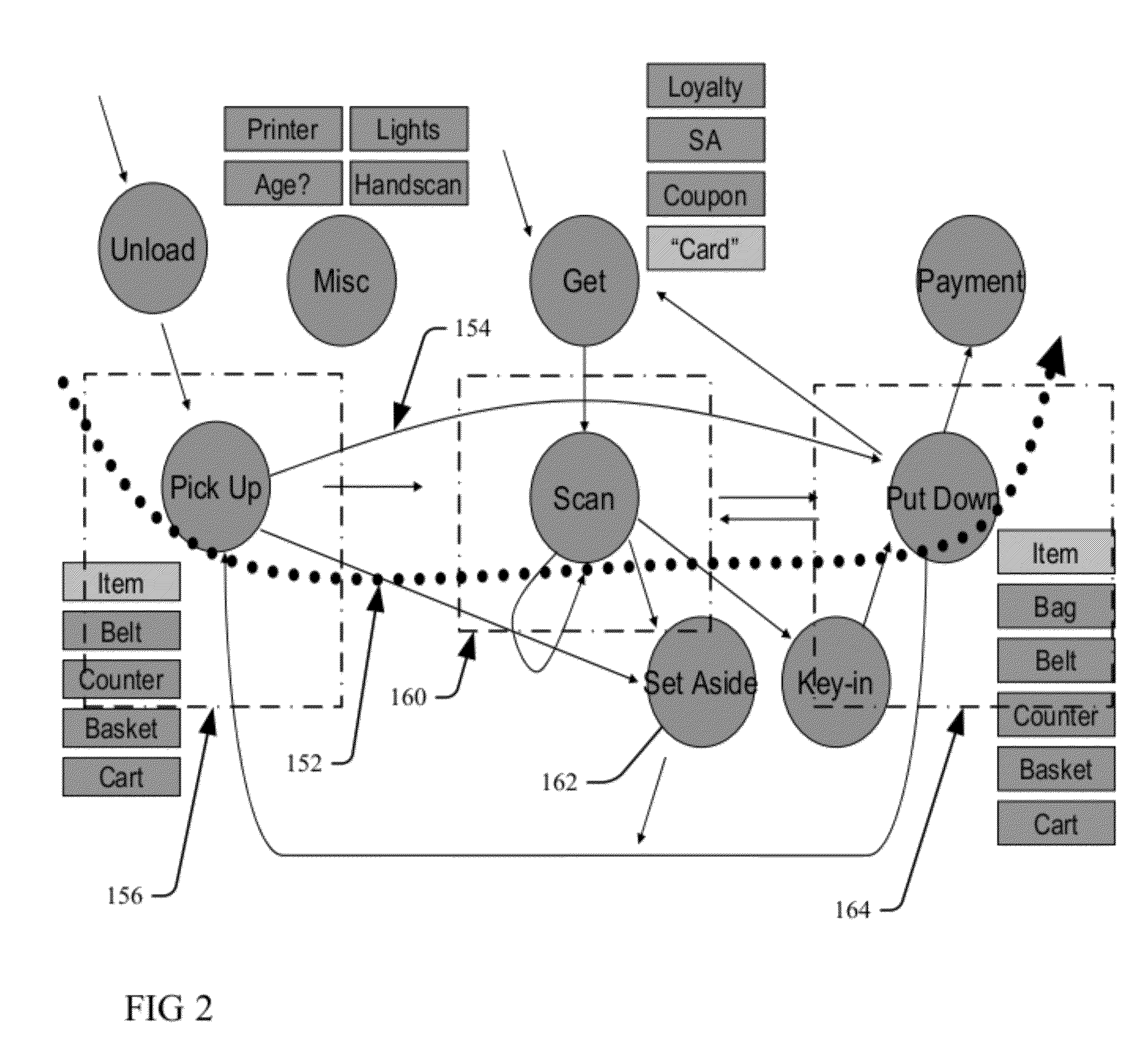

Method and system for optimizing the observation and annotation of complex human behavior from video sources

ActiveUS8665333B1Efficient analysisIncrease basket size and loyaltyTelevision system detailsImage analysisHuman behaviorPhysical space

The present invention is a method and system for optimizing the observation and annotation of complex human behavior from video sources by automatically detecting predefined events based on the behavior of people in a first video stream from a first means for capturing images in a physical space, accessing a synchronized second video stream from a second means for capturing images that are positioned to observe the people more closely using the timestamps associated with the detected events from the first video stream, and enabling an annotator to annotate each of the events with more labels using a tool. The present invention captures a plurality of input images of the persons by a plurality of means for capturing images and processes the plurality of input images in order to detect the predefined events based on the behavior in an exemplary embodiment. The processes are based on a novel usage of a plurality of computer vision technologies to analyze the human behavior from the plurality of input images. The physical space may be a retail space, and the people may be customers in the retail space.

Owner:MOTOROLA SOLUTIONS INC

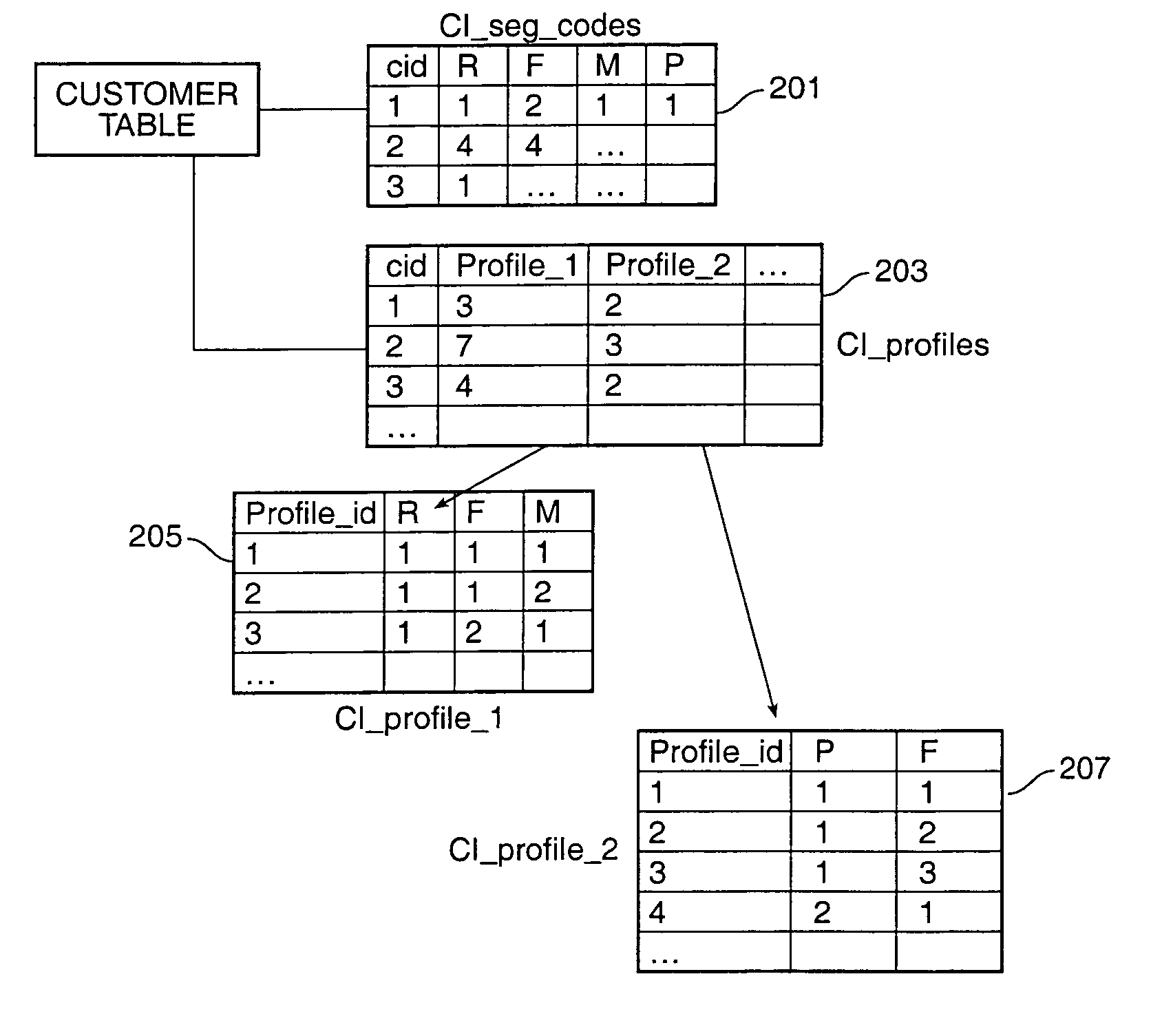

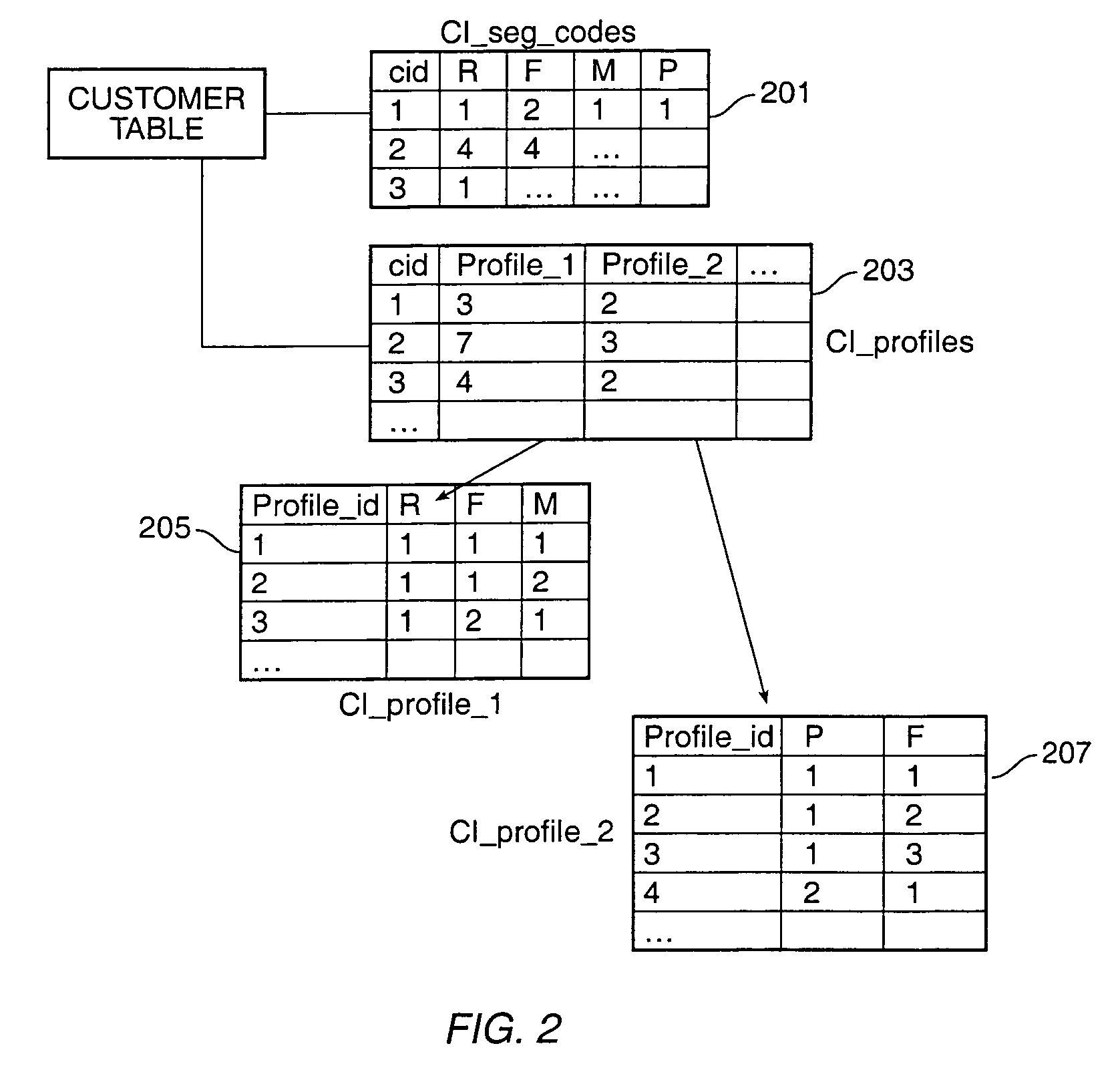

Method for dynamic profiling

InactiveUS7092959B2The process is convenient and fastData processing applicationsData miningHuman behaviorData warehouse

According to the invention, techniques for profiling of human behavior based upon analyzing data contained in databases, data marts and data warehouses. In an exemplary embodiment, the invention provides for creating a dynamic customer profile by analyzing relationships in data from one or more data sources of an enterprise. The method can be used with many popular visualization tools, such as On Line Analytical Processing (OLAP) tools and the like. The method is especially useful in conjunction with a meta-model based technique for modeling the enterprise data. The enterprise is typically a business activity, but can also be other loci of human activity. The human behavior profiled is typically that of a customer, but can be any other type of human behavior. Embodiments according to the invention can display data from a variety of sources in order to provide visual representations of data in a data warehousing environment.

Owner:HON HAI PRECISION IND CO LTD

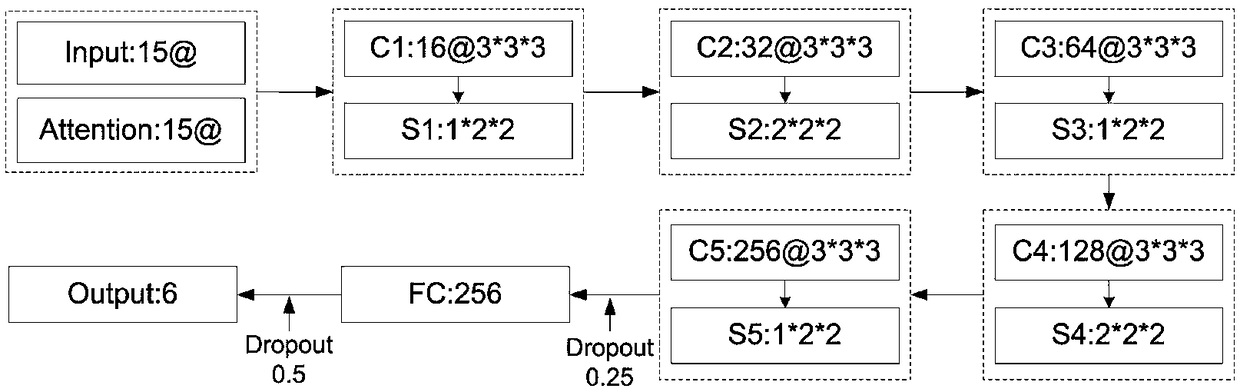

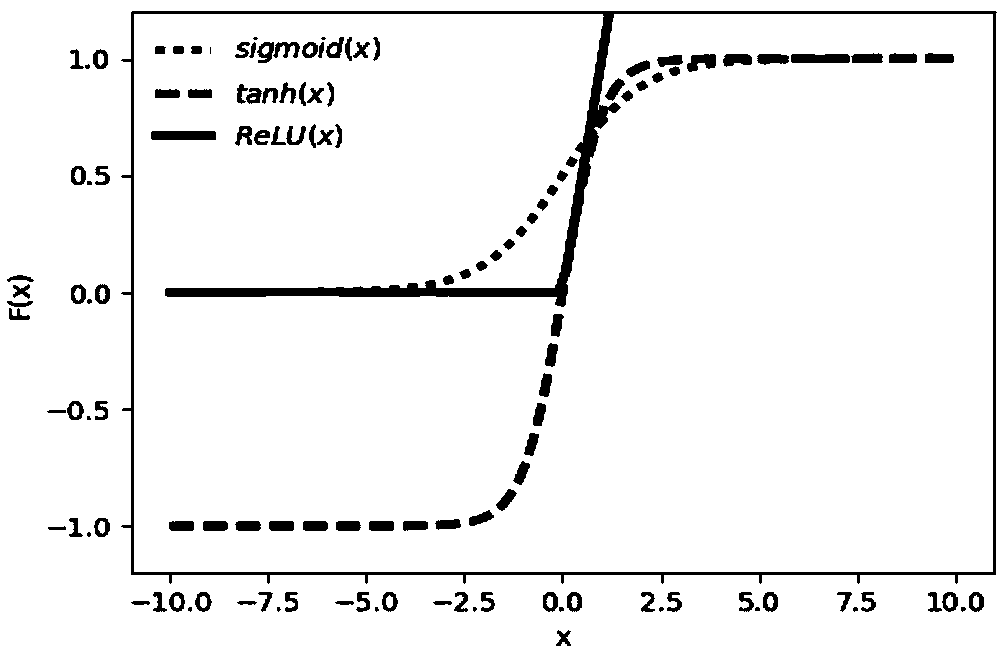

Human behavior recognition method based on attention mechanism and 3D convolutional neural network

ActiveCN108830157AImprove the accuracy of behavior recognitionEfficient use ofCharacter and pattern recognitionNeural architecturesHuman behaviorActivation function

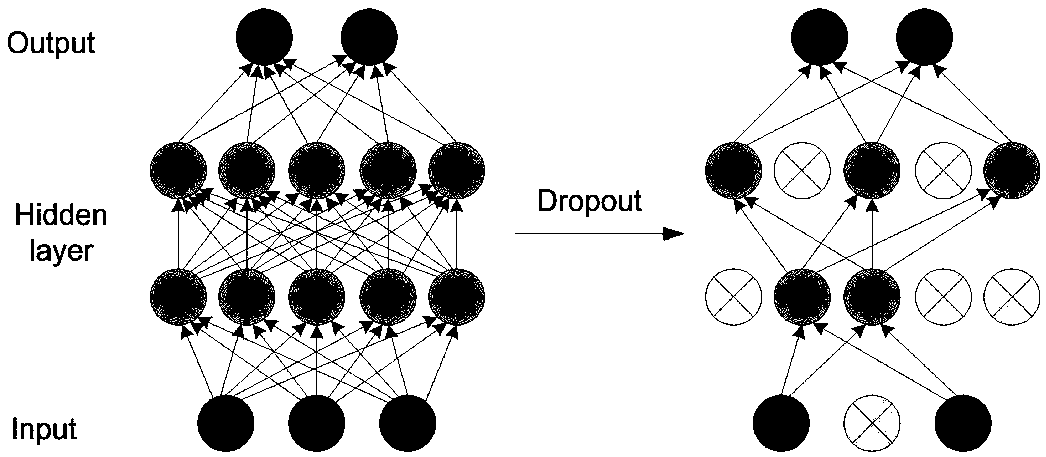

The invention discloses a human behavior recognition method based on an attention mechanism and a 3D convolutional neural network. According to the human behavior recognition method, a 3D convolutional neural network is constructed; and the input layer of the 3D convolutional neural network includes two channels: an original grayscale image and an attention matrix. A 3D CNN model for recognizing ahuman behavior in a video is constructed; an attention mechanism is introduced; a distance between two frames is calculated to form an attention matrix; the attention matrix and an original human behavior video sequence form double channels inputted into the constructed 3D CNN and convolution operation is carried out to carry out vital feature extraction on a visual focus area. Meanwhile, the 3DCNN structure is optimized; a Dropout layer is randomly added to the network to freeze some connection weights of the network; the ReLU activation function is employed, so that the network sparsity isimproved; problems that computing load leap and gradient disappearing due to the dimension increasing and the layer number increasing are solved; overfitting under a small data set is prevented; and the network recognition accuracy is improved and the time losses are reduced.

Owner:NORTH CHINA ELECTRIC POWER UNIV (BAODING) +1

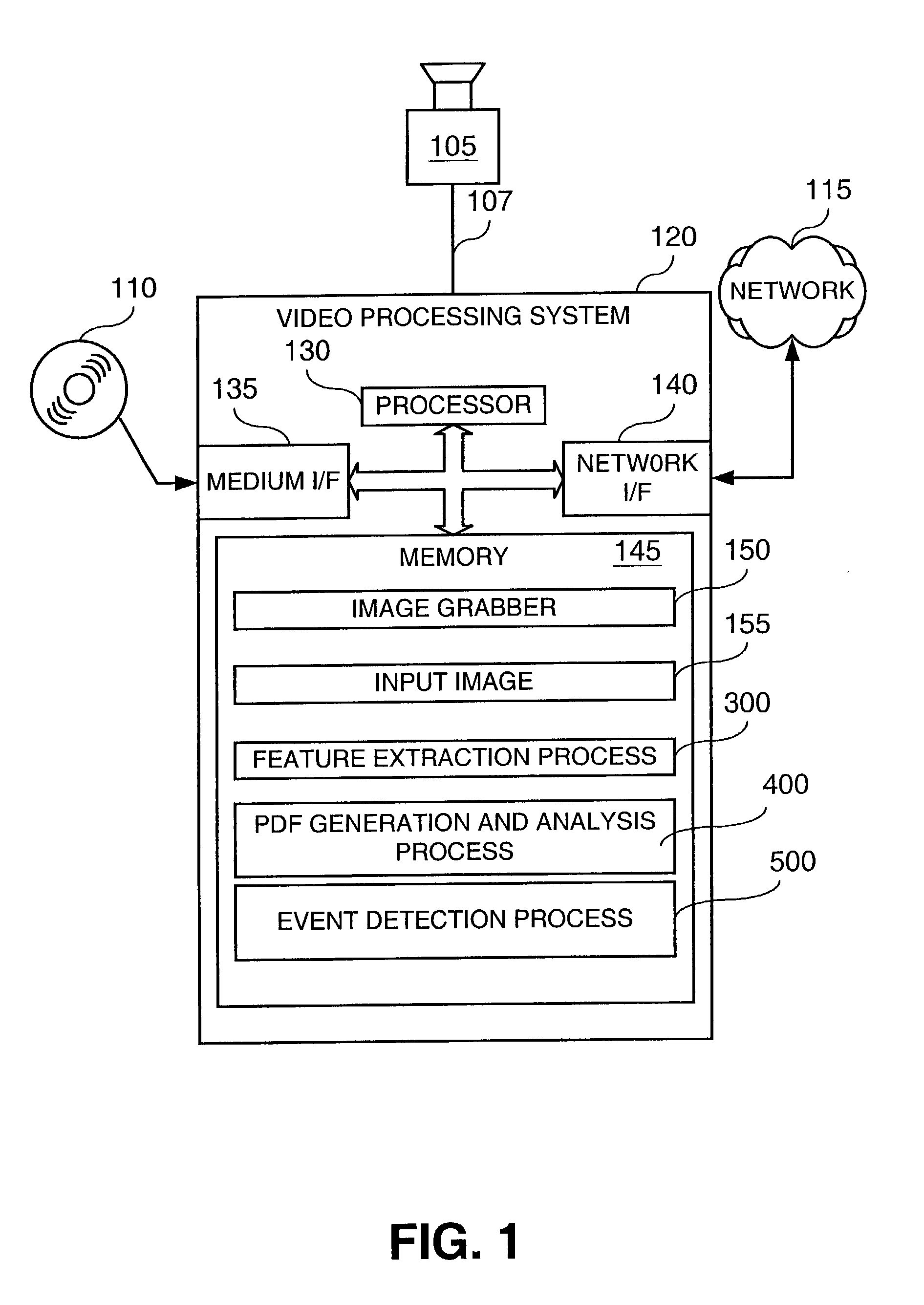

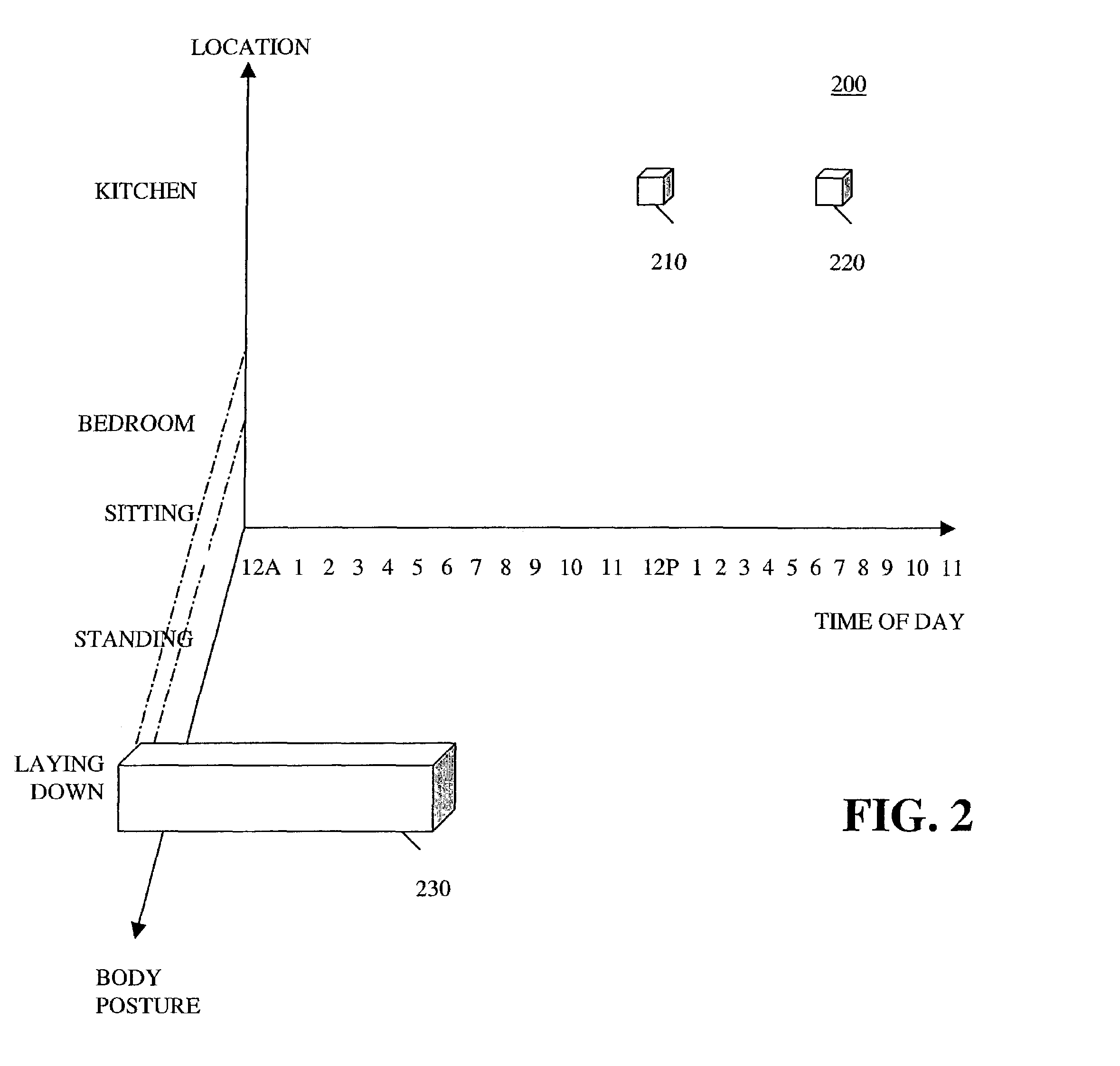

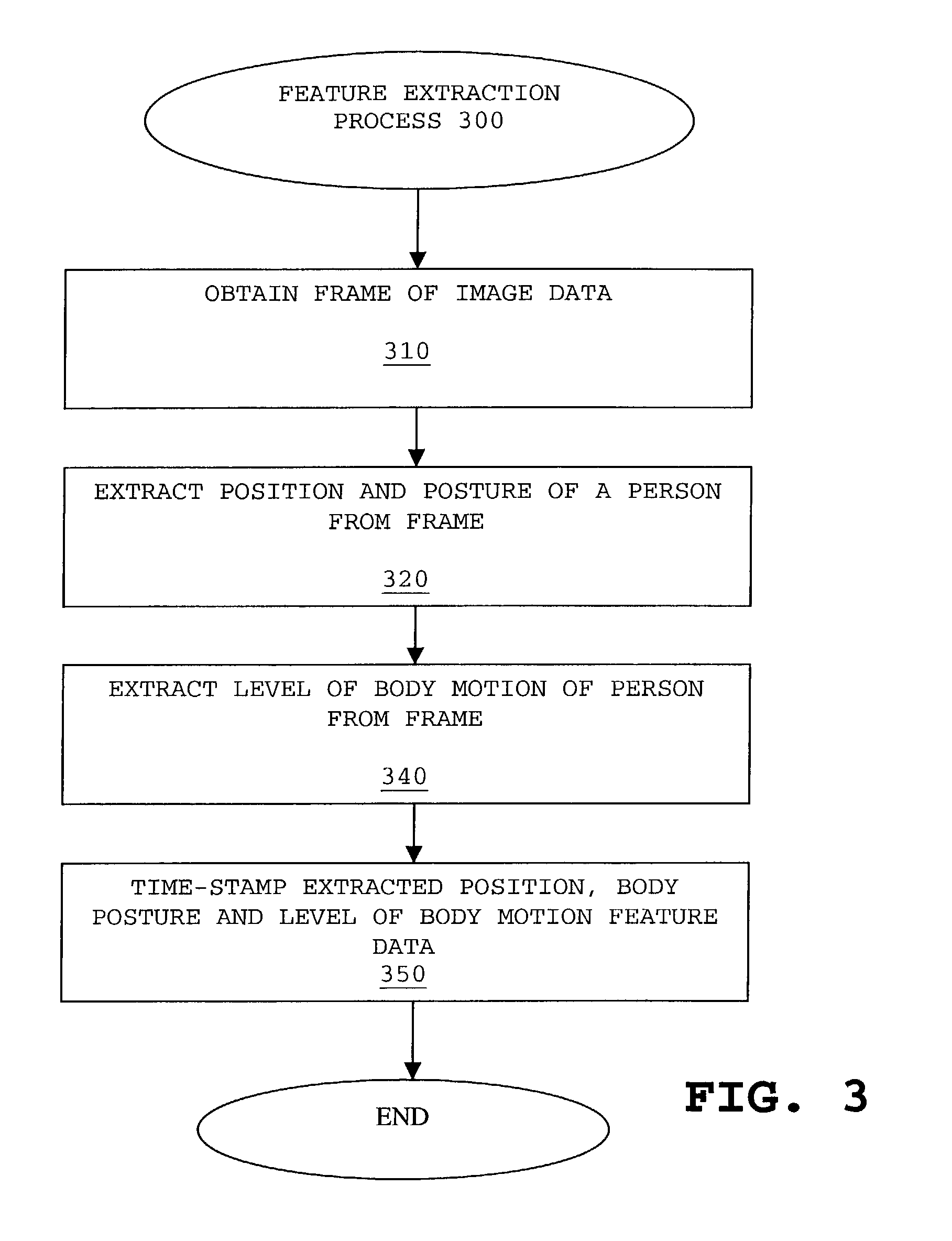

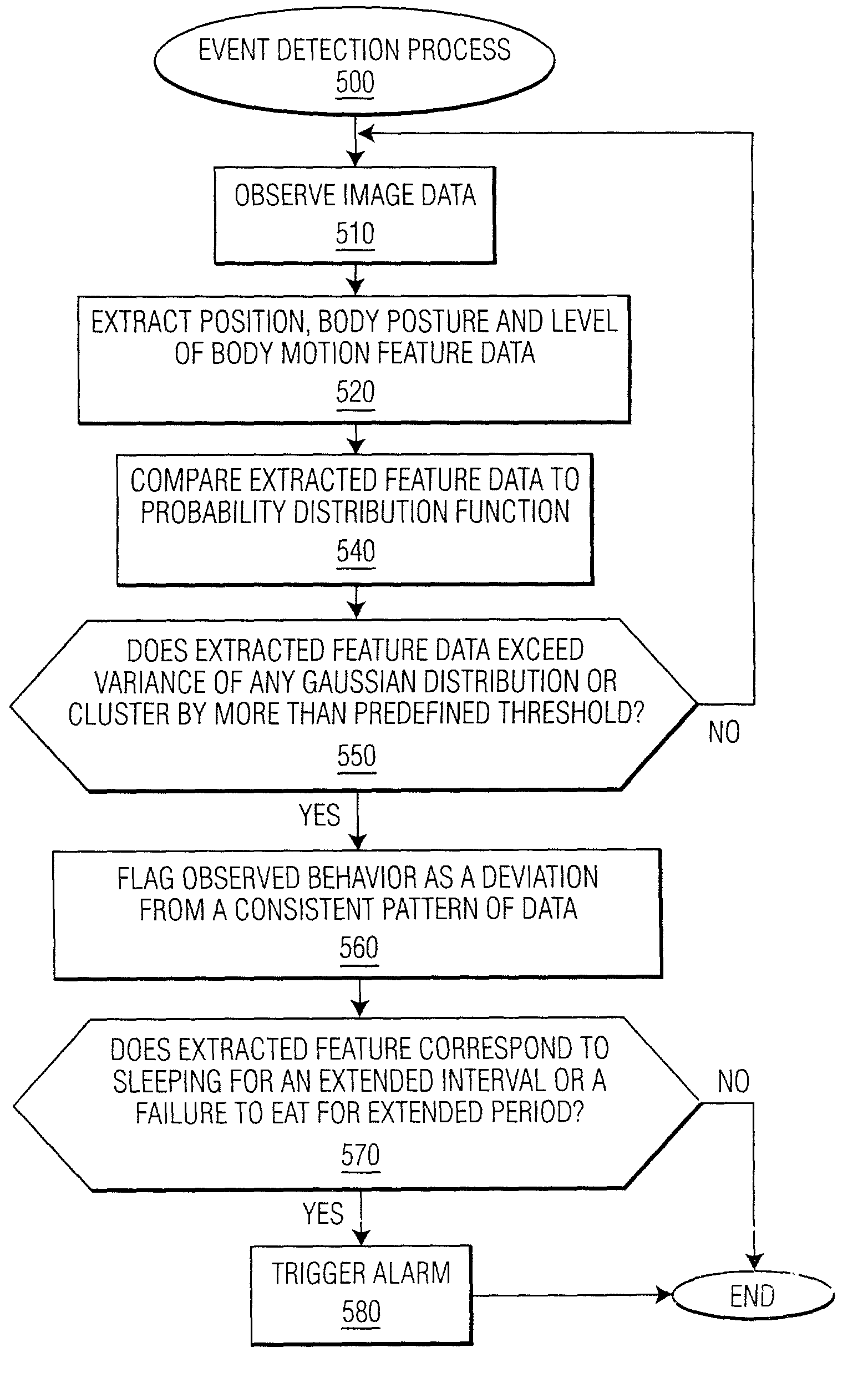

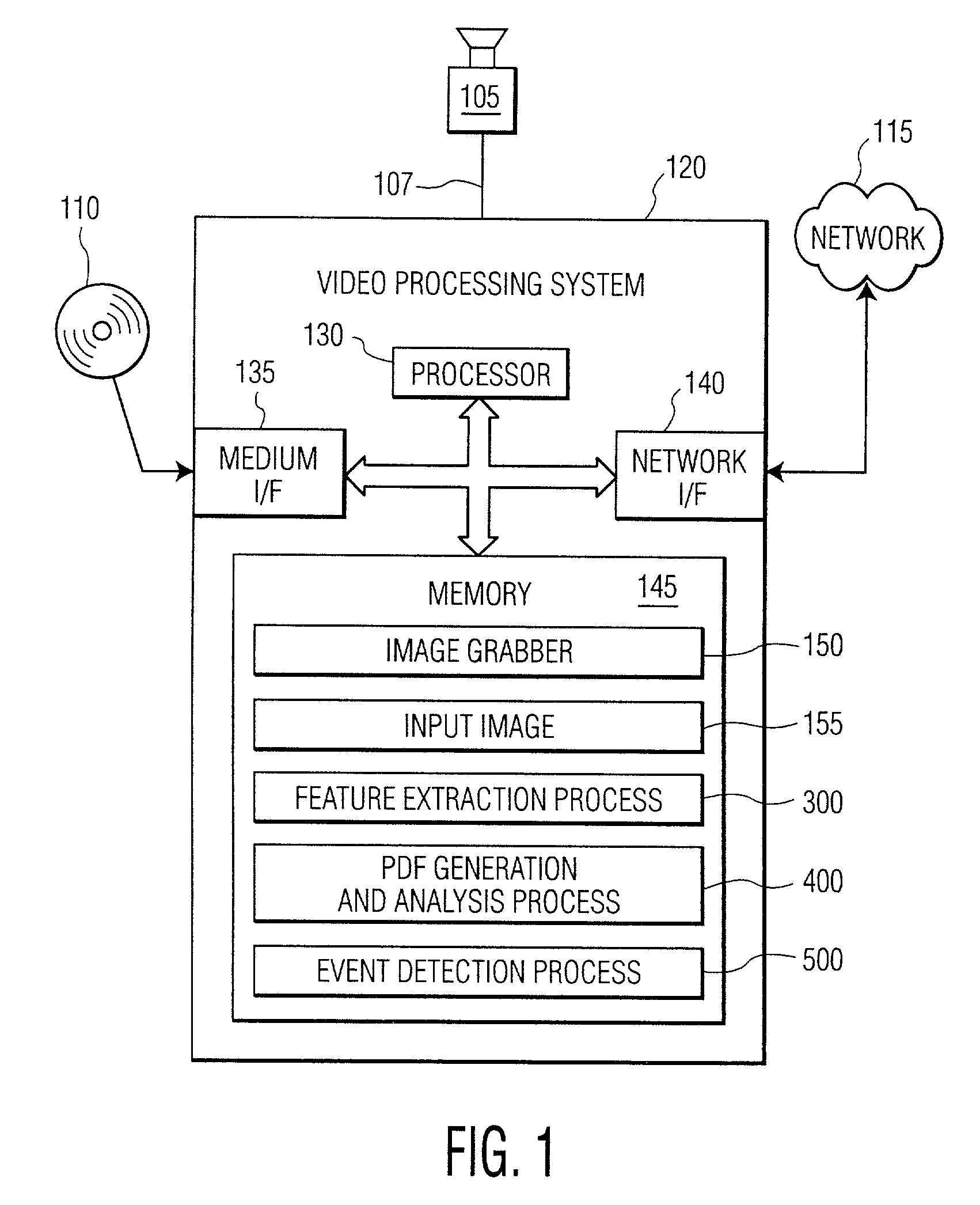

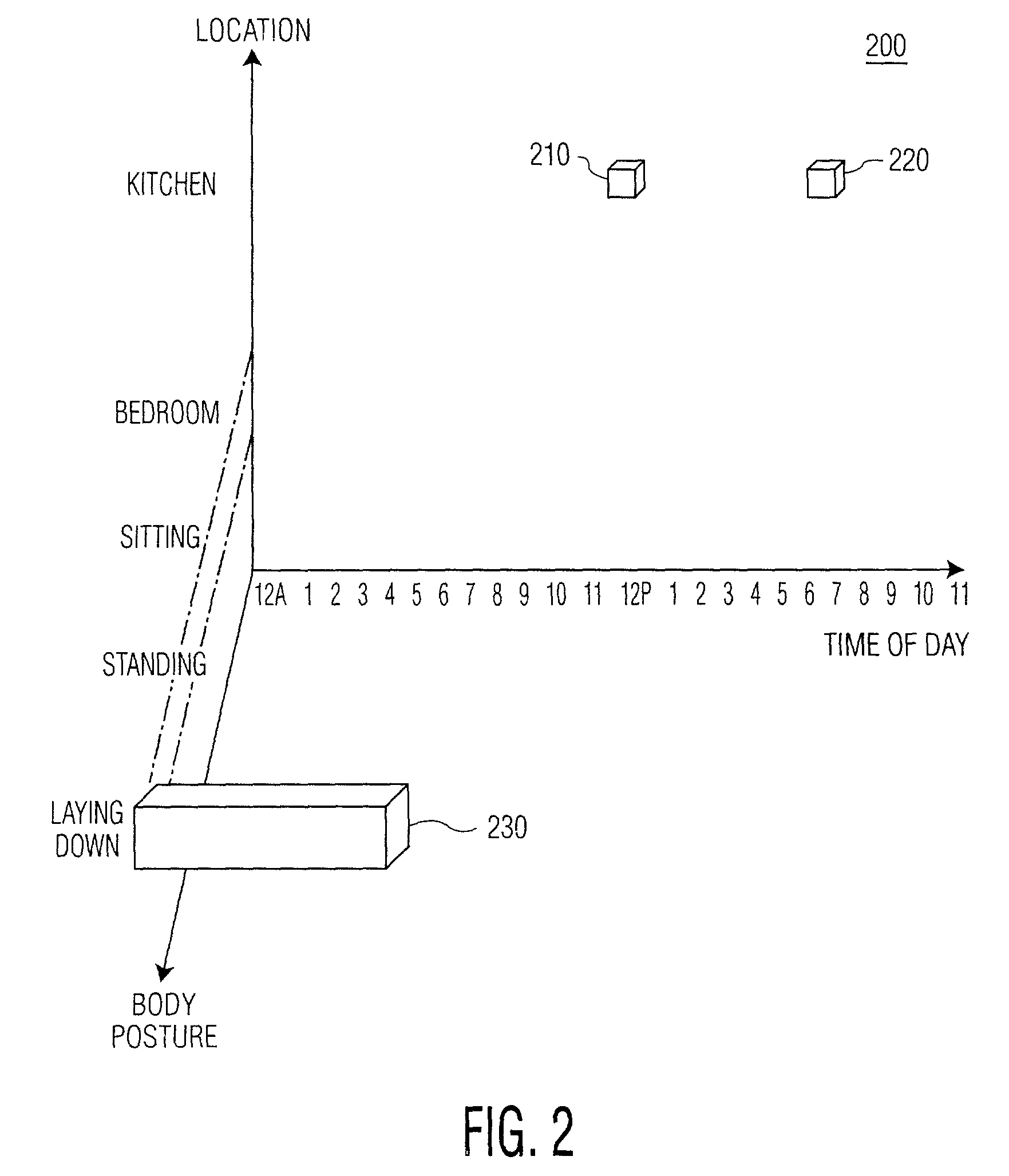

Method and apparatus for detecting an event based on patterns of behavior

A system and apparatus are disclosed for modeling patterns of behavior of humans or other animate objects and detecting a violation of a repetitive pattern of behavior. The behavior of one or more persons is observed over time and features of the behavior are recorded in a multi-dimensional space. Over time, the multi-dimensional data provides an indication of patterns of human behavior. Activities that are repetitive in terms of time, location and activity, such as sleeping and eating, would appear as a Gaussian distribution or cluster in the multi-dimensional data. Probability distribution functions can be analyzed using known Gaussian or clustering techniques to identify repetitive patterns of behavior and characteristics thereof, such as a mean and variance. Deviations from repetitive patterns of behavior can be detected and an alarm can be triggered, if appropriate.

Owner:SIGNIFY HLDG BV

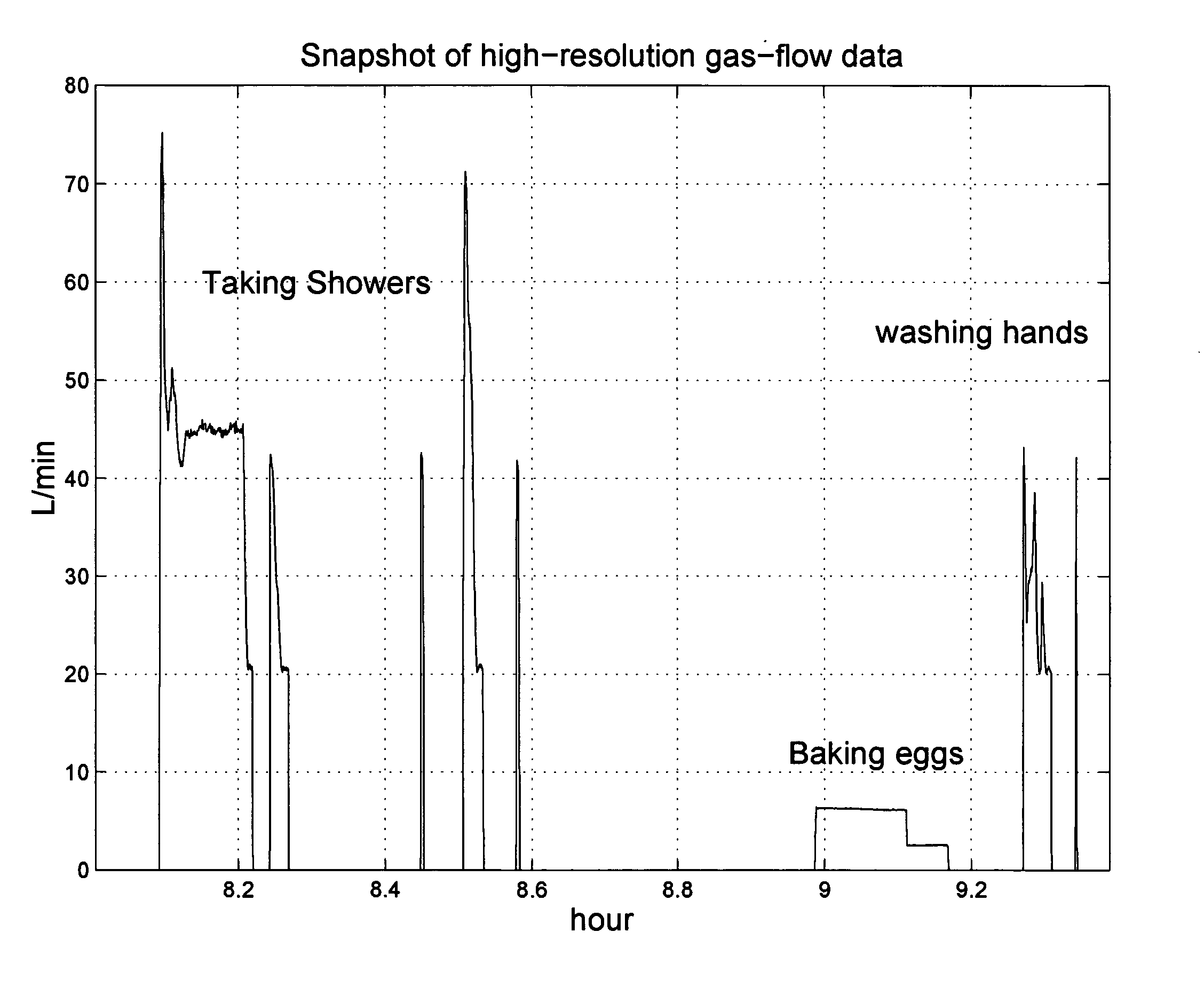

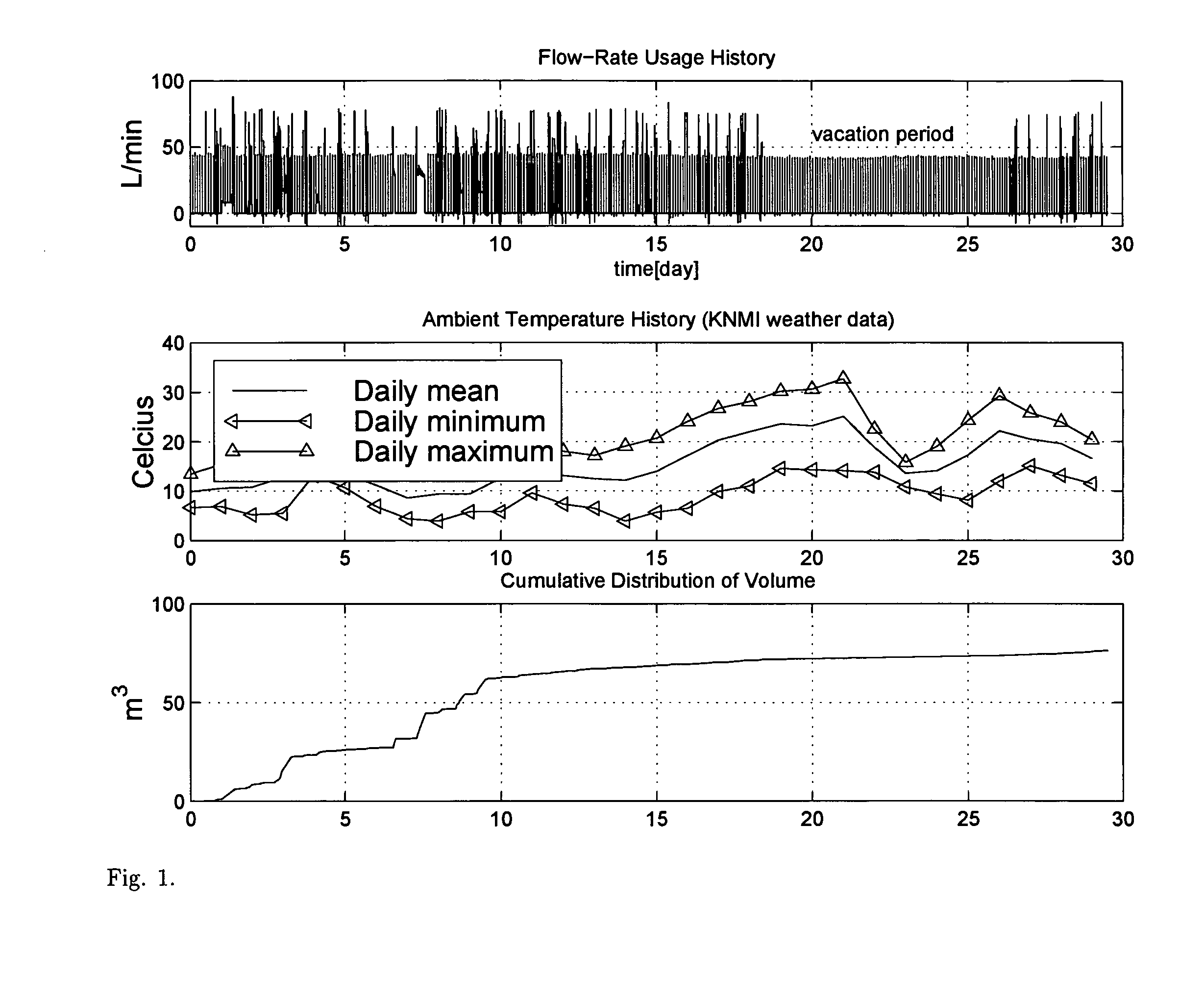

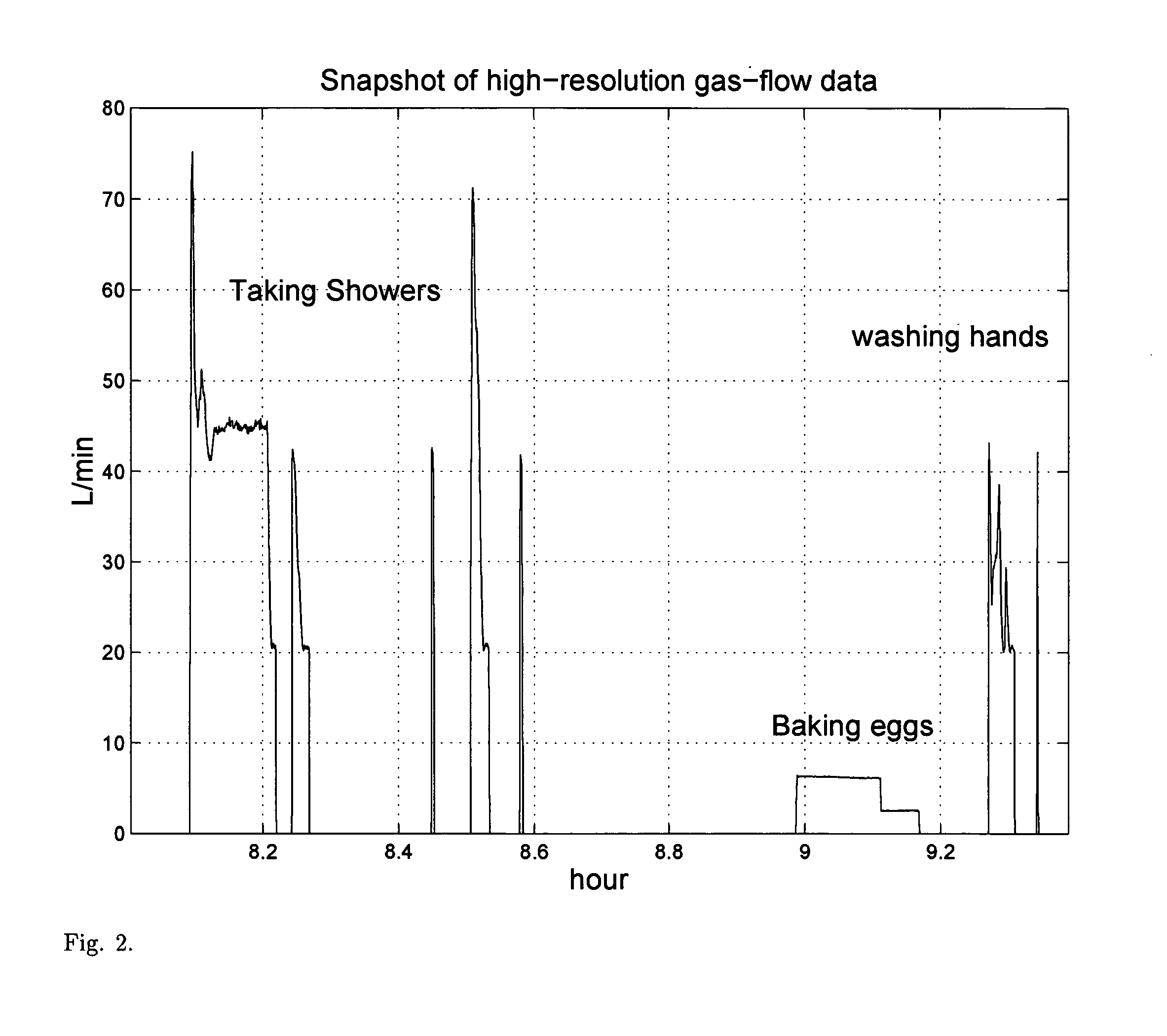

Gas-energy observatory

Heating is a significant factor in residential gas-energy usage. Saving energy on heating is receiving increasing attention with rising energy prices and the Kyoto Protocol on reducing greenhouse gas emissions. Energy awareness and energy efficiency hereby become important qualifications for human behavior and residential building codes, and become a factor in the evolution of the global climate. Here, we describe a novel measurement and validation system for domestic gas-energy usage in combination with primary weather data. We disclose a gas-energy observatory which visualizes human behavior in gas-energy consumption associated with domestic facilities, and measures home energy efficiency by calorimetry. Weather-sensitivity analysis quantifies energy-usage as a function of small variations in room temperature and home energy efficiency. Weather-sensitivity data can be used to calculate changes in room-temperature settings or improvements in home insulation for a desired reduction in CO2-output. By public dissemination of its primary weather data, it creates in dual-use at no additional cost a novel in-situ climate observational systems with unprecedented wide-area coverage and spatial resolution.

Owner:VAN PUTTEN MAURITIUS H P M +3

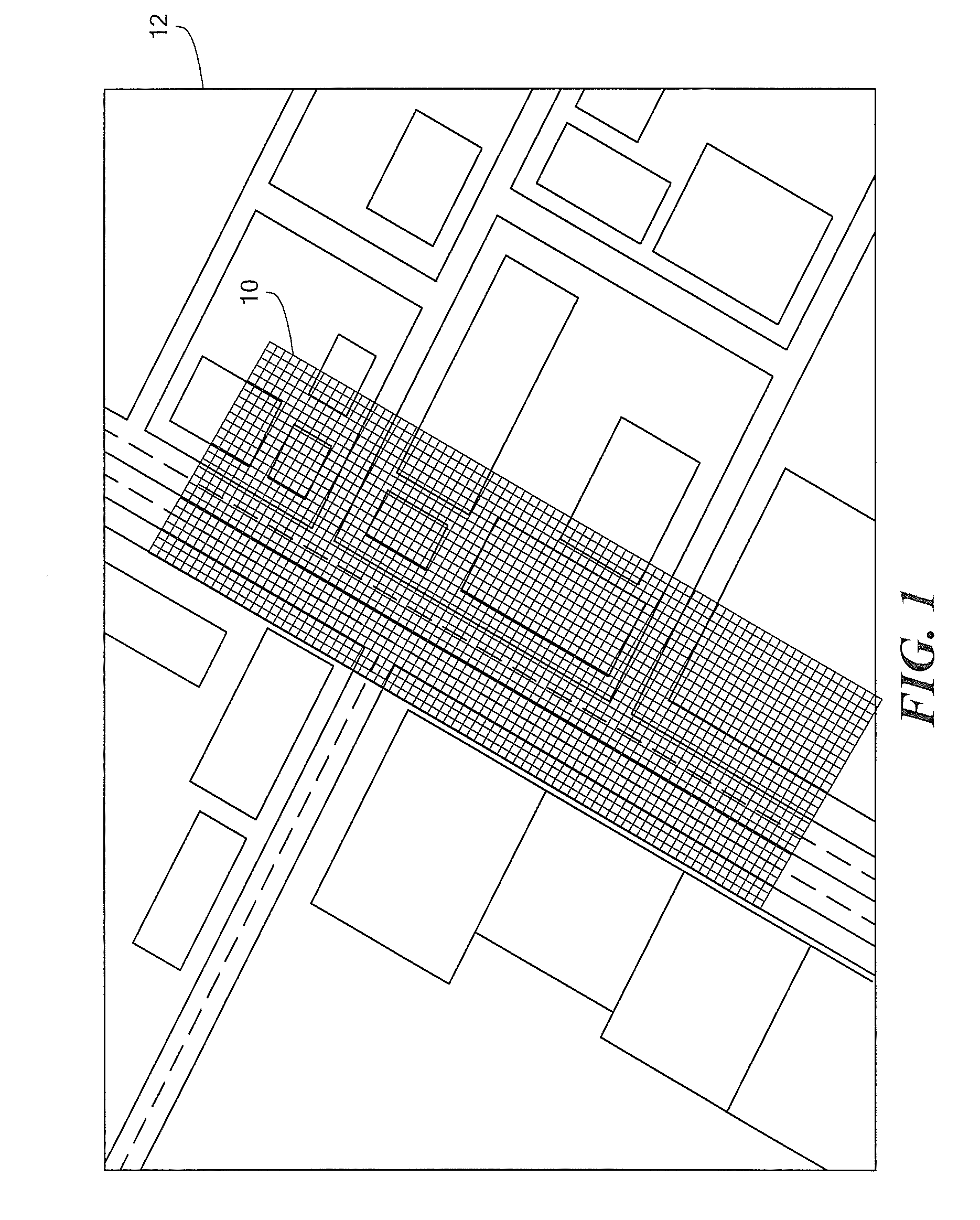

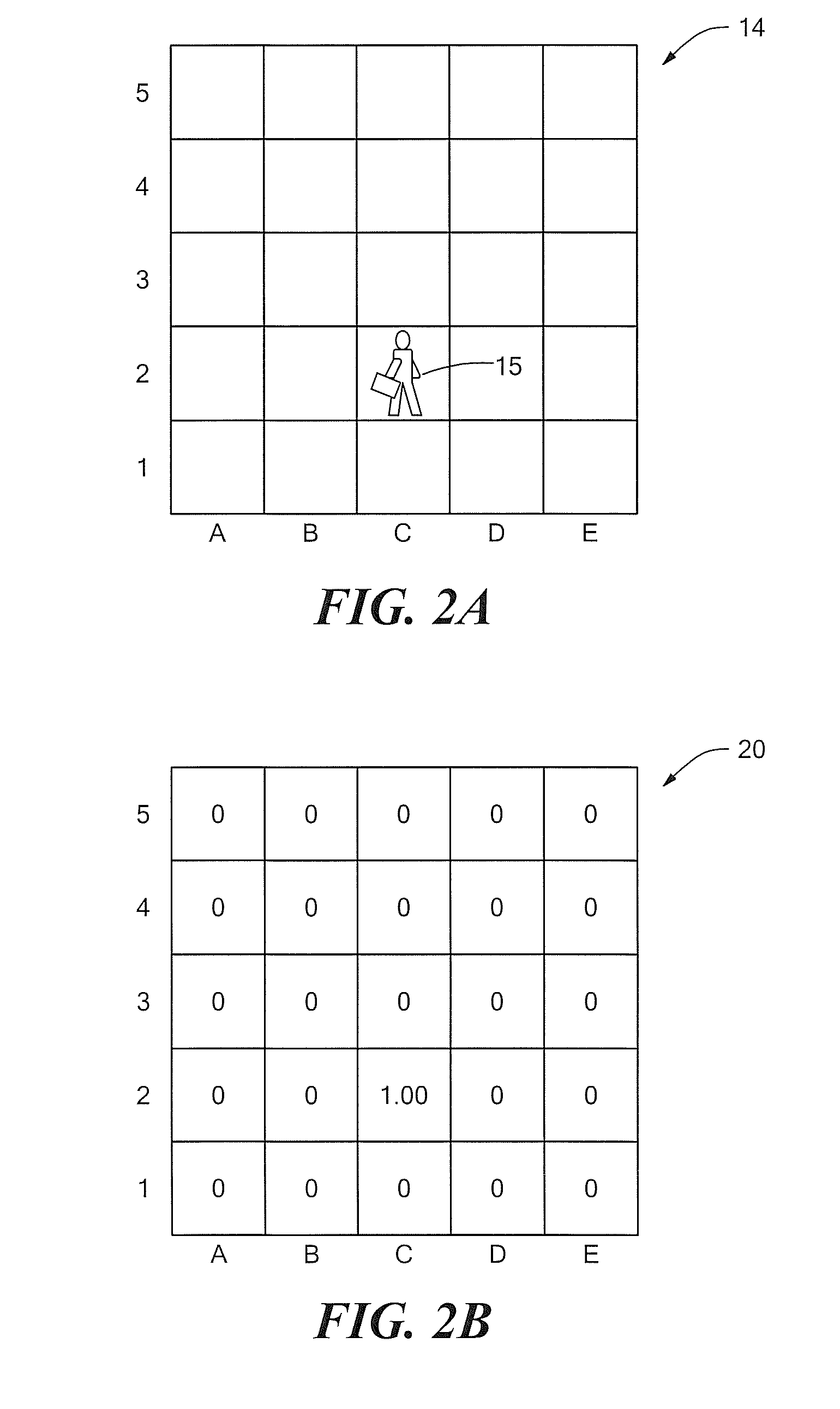

Non-kinematic behavioral mapping

ActiveUS20100305858A1The transmission is compactReduce the amount requiredAnti-collision systemsCharacter and pattern recognitionHuman behaviorComputer graphics (images)

A system and methodology / processes for non-kinematic / behavioral mapping to a local area abstraction (LAA) includes a technique for populating an LAA wherein human behavior or other non-strictly-kinematic motion may be present.

Owner:RAYTHEON CO

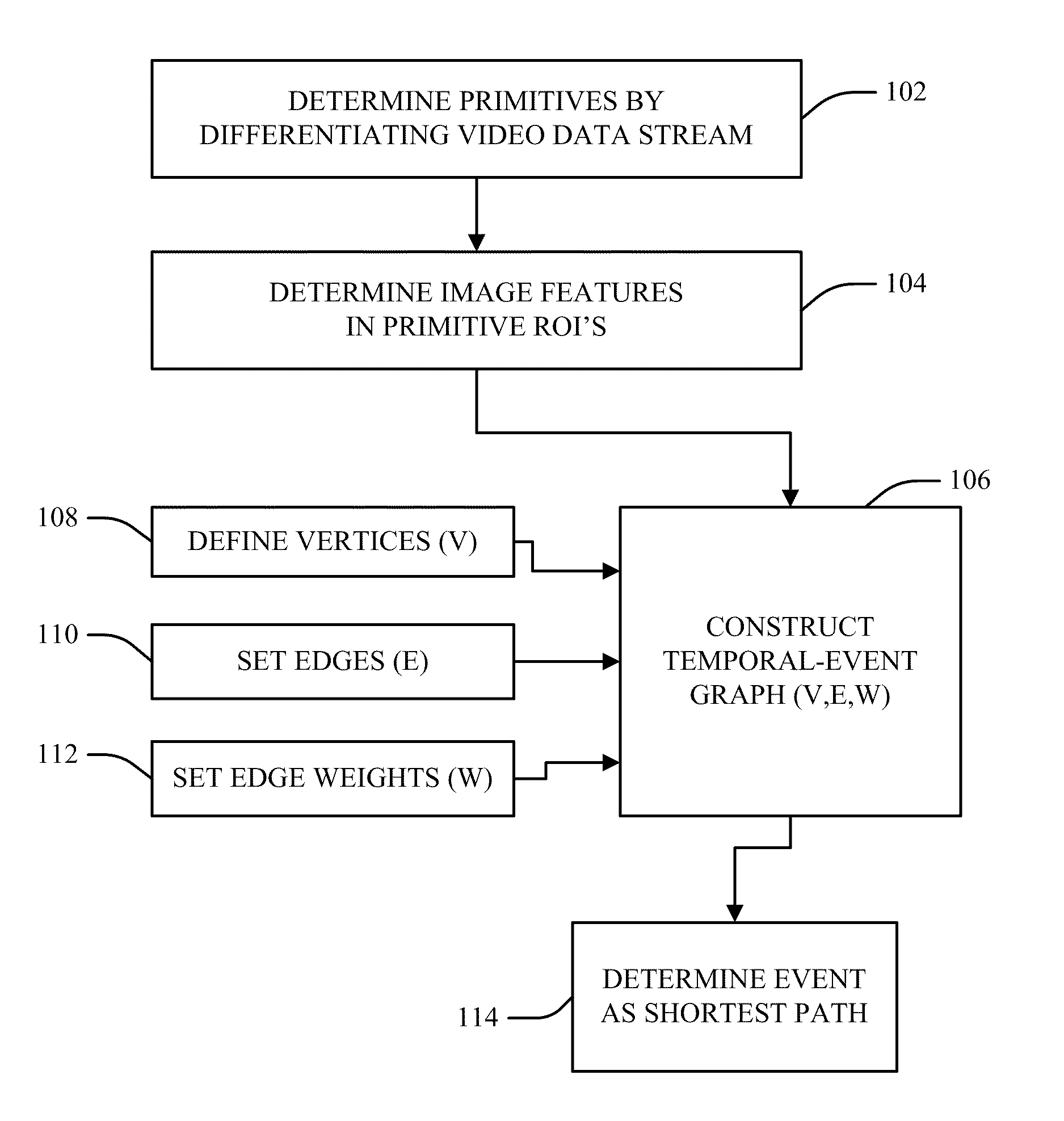

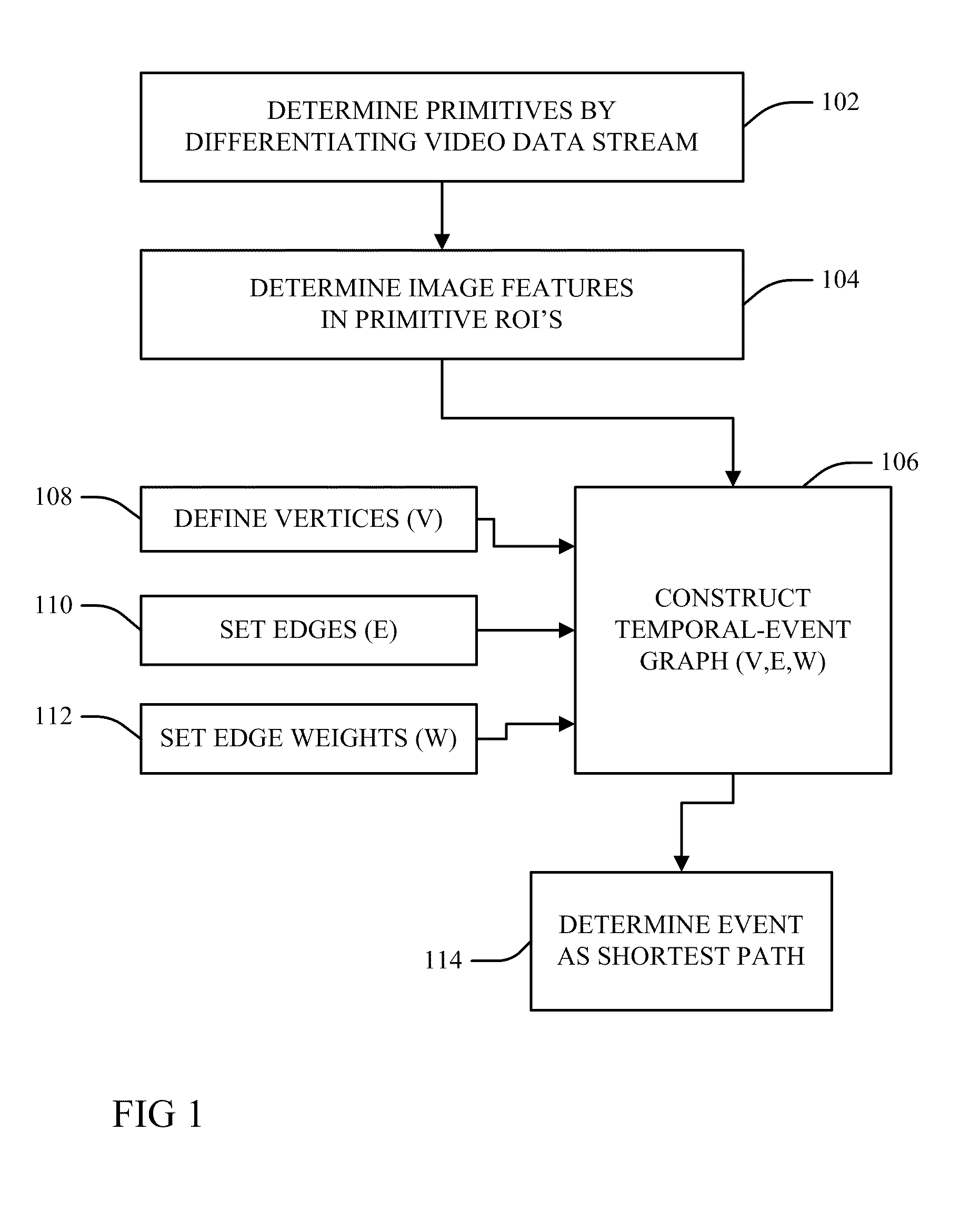

Sequential event detection from video

InactiveUS20120008836A1Drawing from basic elementsCharacter and pattern recognitionGraphicsHuman behavior

Human behavior is determined by sequential event detection by constructing a temporal-event graph with vertices representing adjacent first and second primitive images of a plurality of individual primitive images parsed from a video stream, and also of first and second idle states associated with the respective first and second primitive images. Constructing the graph is a function of an edge set between the adjacent first and second primitive images, and an edge weight set as a function of a discrepancy between computed visual features within regions of interest common to the adjacent first and second primitive images. A human activity event is determined as a function of a shortest distance path of the temporal-event graph vertices.

Owner:AIRBNB

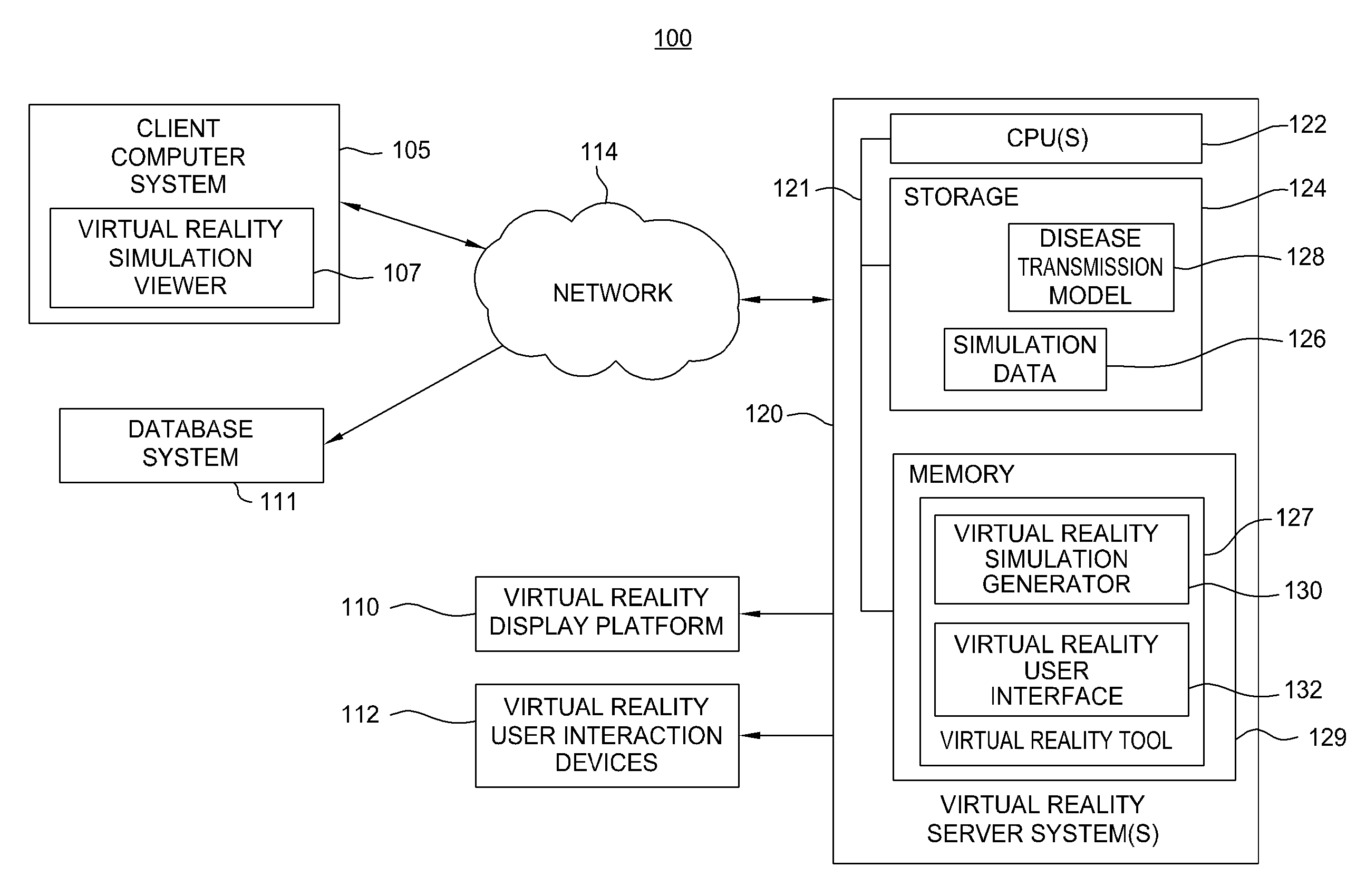

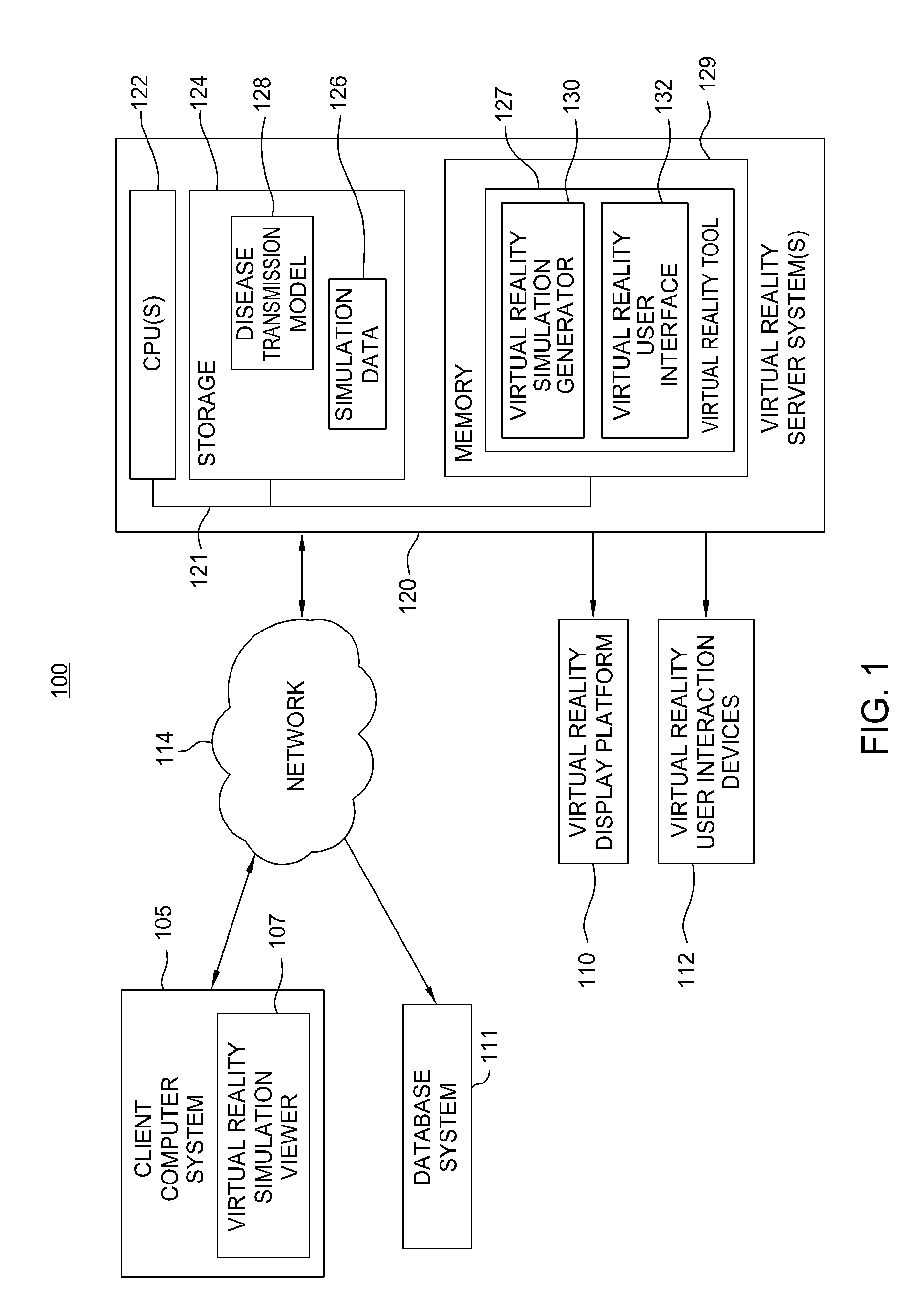

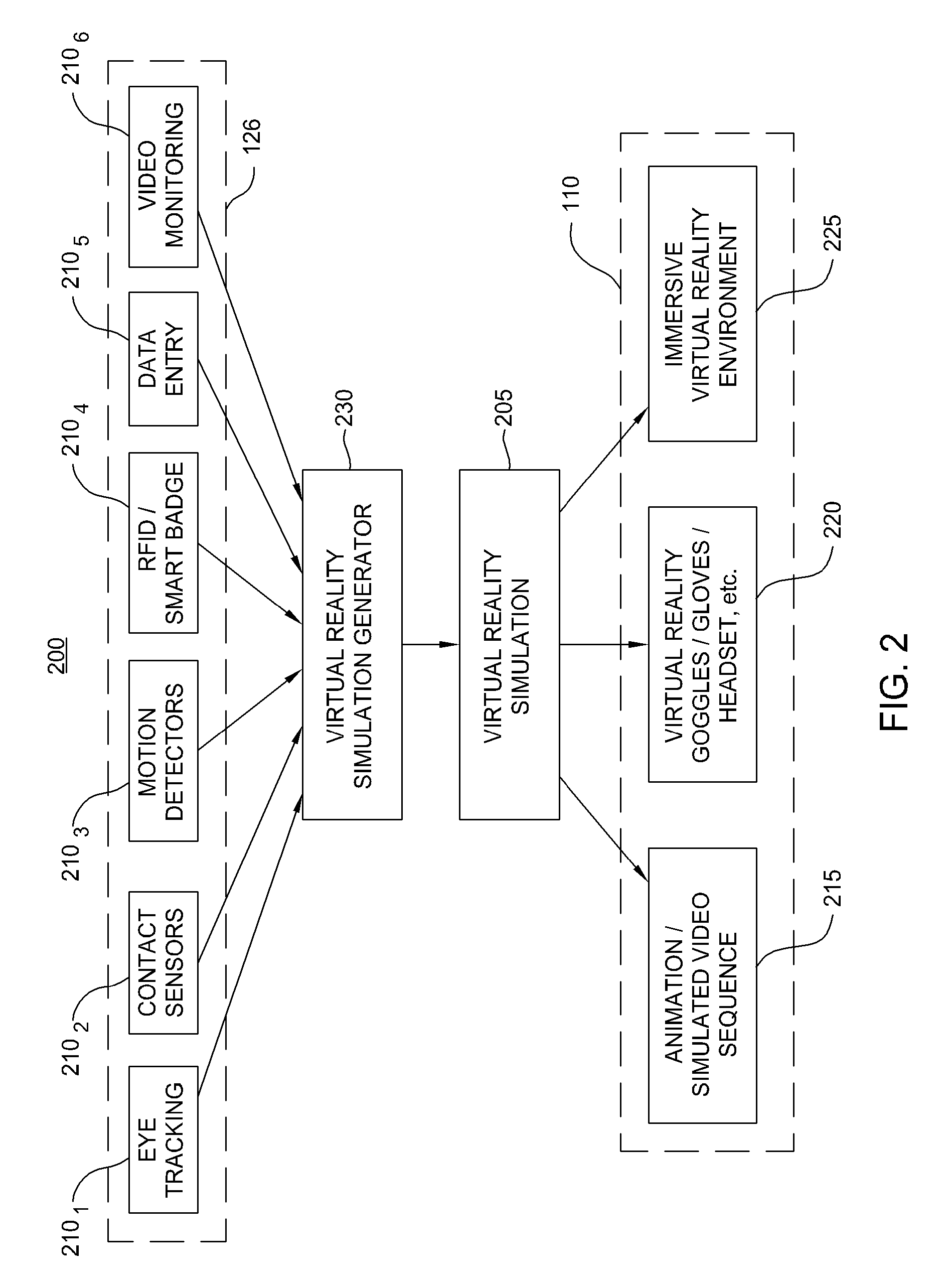

Virtual reality tools for development of infection control solutions

InactiveUS20090112541A1Analogue computers for chemical processesTeaching apparatusHuman behaviorComputer science

Embodiments of the invention provide virtual reality tools used to develop improved infection control solutions. The virtual reality tools may be applied to a variety of health care environments to develop improved infection control solutions, including improved training systems for modifying human behavior regarding hand washing (or other infection control behaviors), improved systems for evaluating the effectiveness of a proposed infection control solution, and for a producer / seller of anti-microbial products to demonstrate the superiority of one product over another or the superiority of one proposed solution over another.

Owner:KIMBERLY-CLARK WORLDWIDE INC

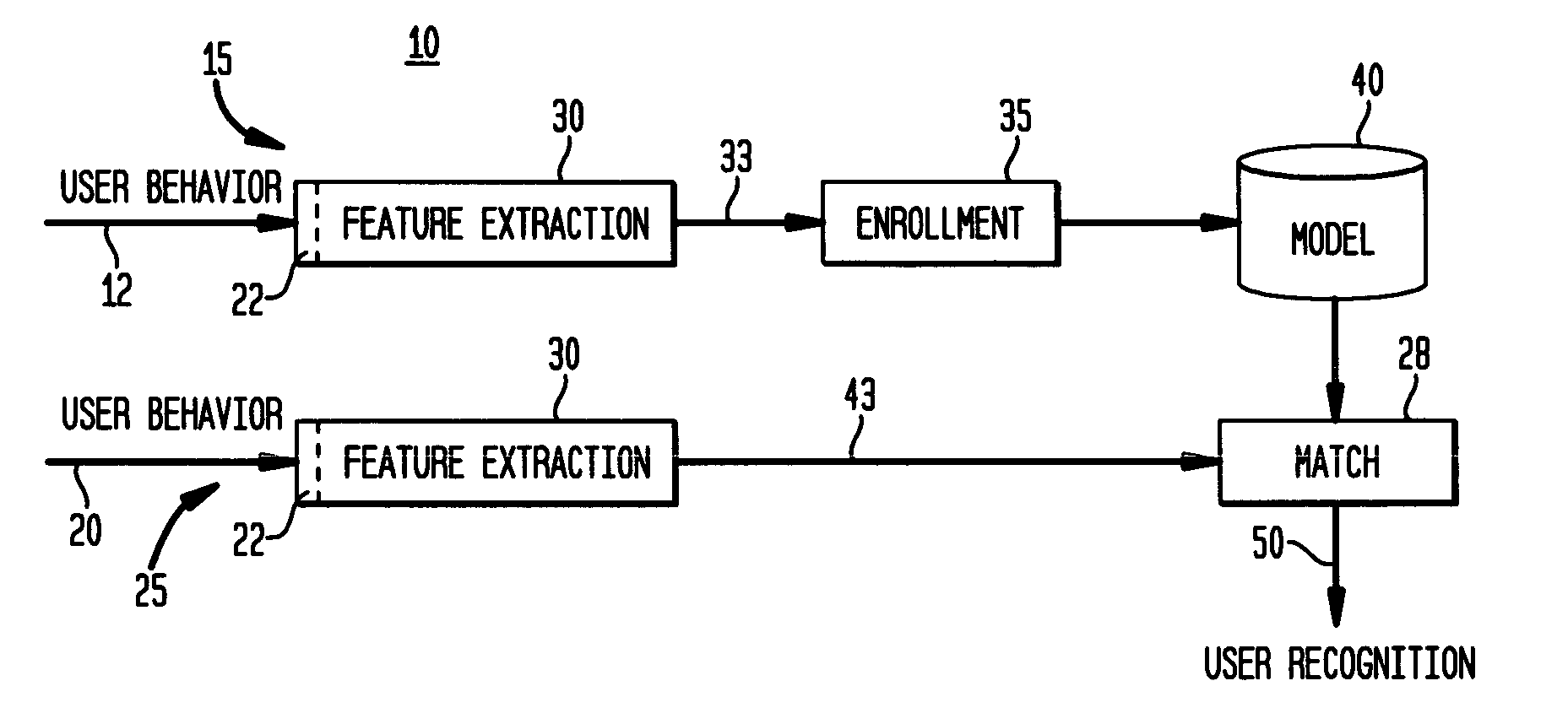

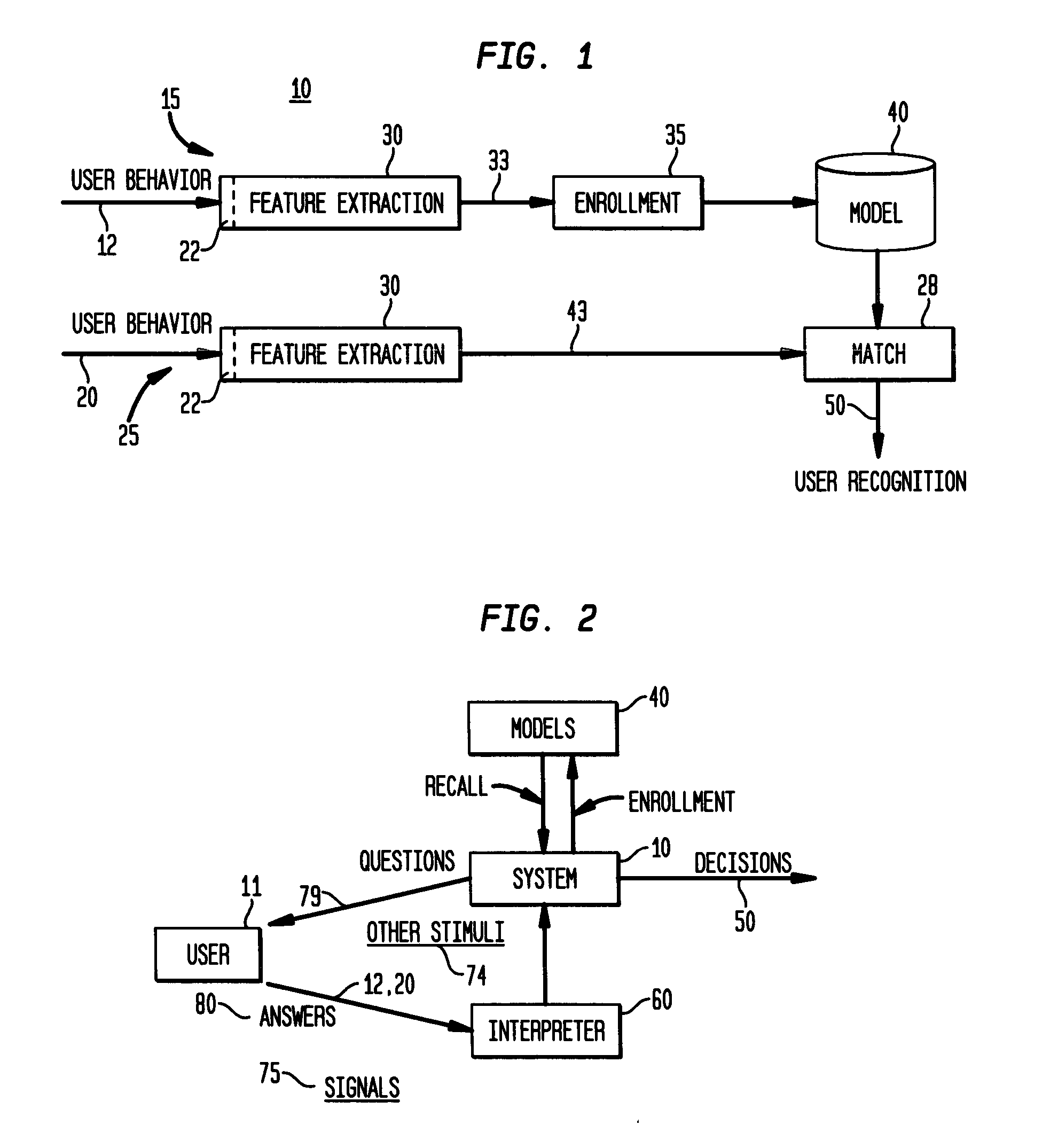

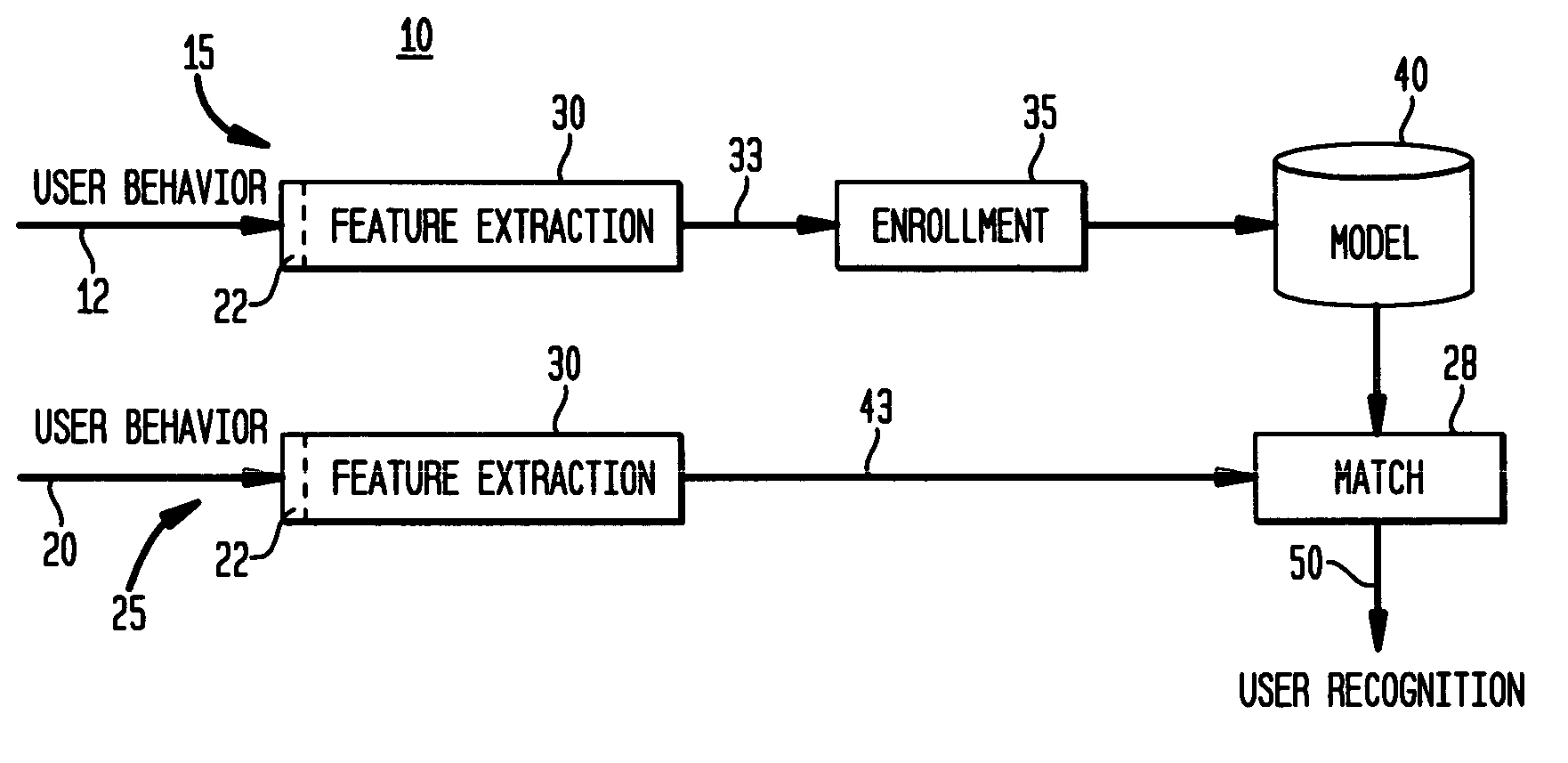

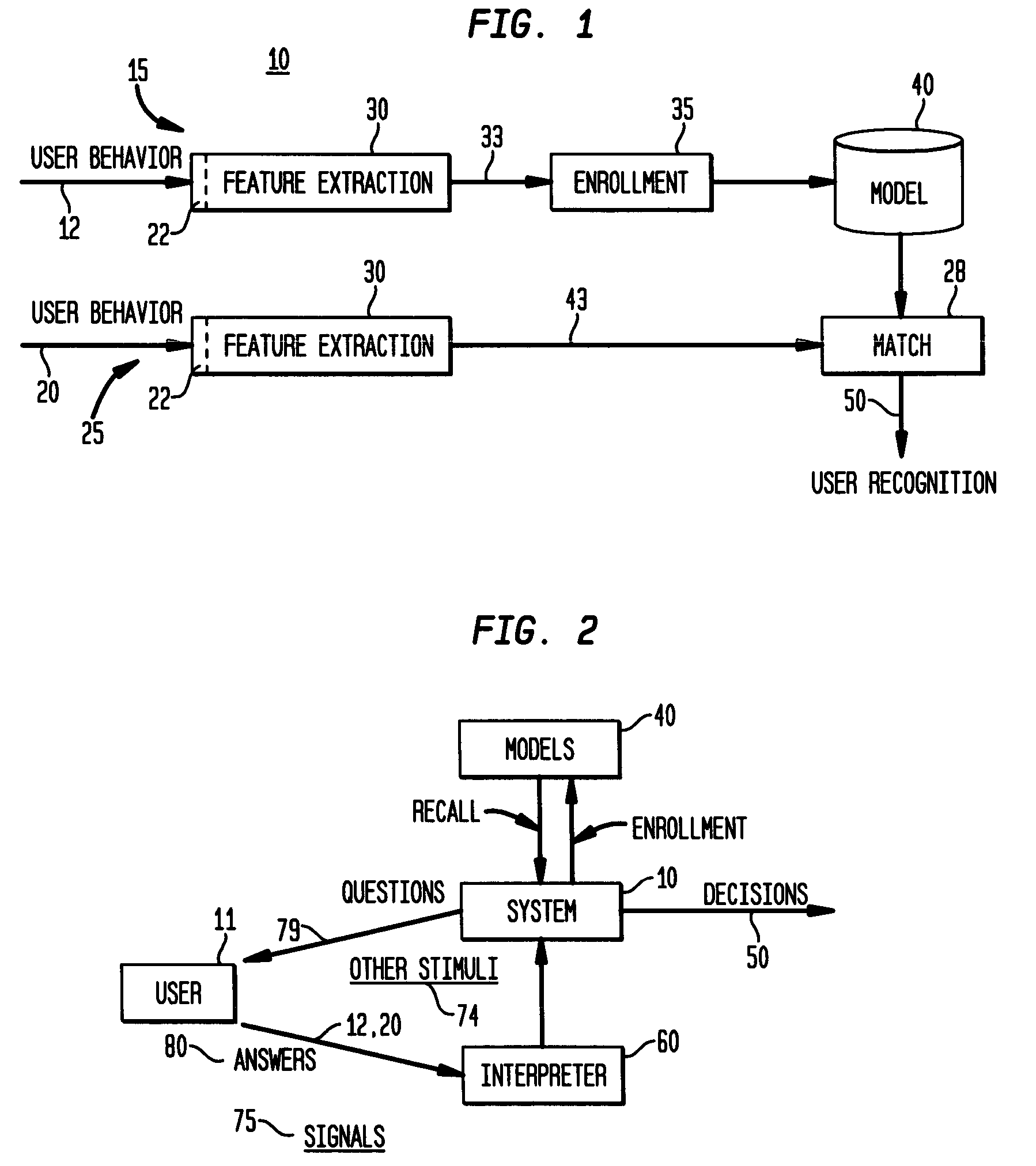

Method and system for user authentication and identification using behavioral and emotional association consistency

InactiveUS20050022034A1Digital data processing detailsUser identity/authority verificationEEG deviceBehavioral response

A system and method for determining and authenticating a person's identity by generating a behavioral profile for that person by presenting that person with various stimulus and measuring that person's response characteristics in an enrollment stage. That person's response profile, once generated is stored. When that user subsequently needs to access a secure resource, that user to be authorized is presented with the stimulus that was presented at the time of generating that person's behavioral profile and the person's responses are detected and compared to his / her behavioral profile. If a match is detected, that user is identified. The user's behavioral response may be in the form of signals as detected by sensor means that detects visual or audible emotional cues or as signals resulting from that person's behavior as detected by polygraph or EEG devices.

Owner:IBM CORP

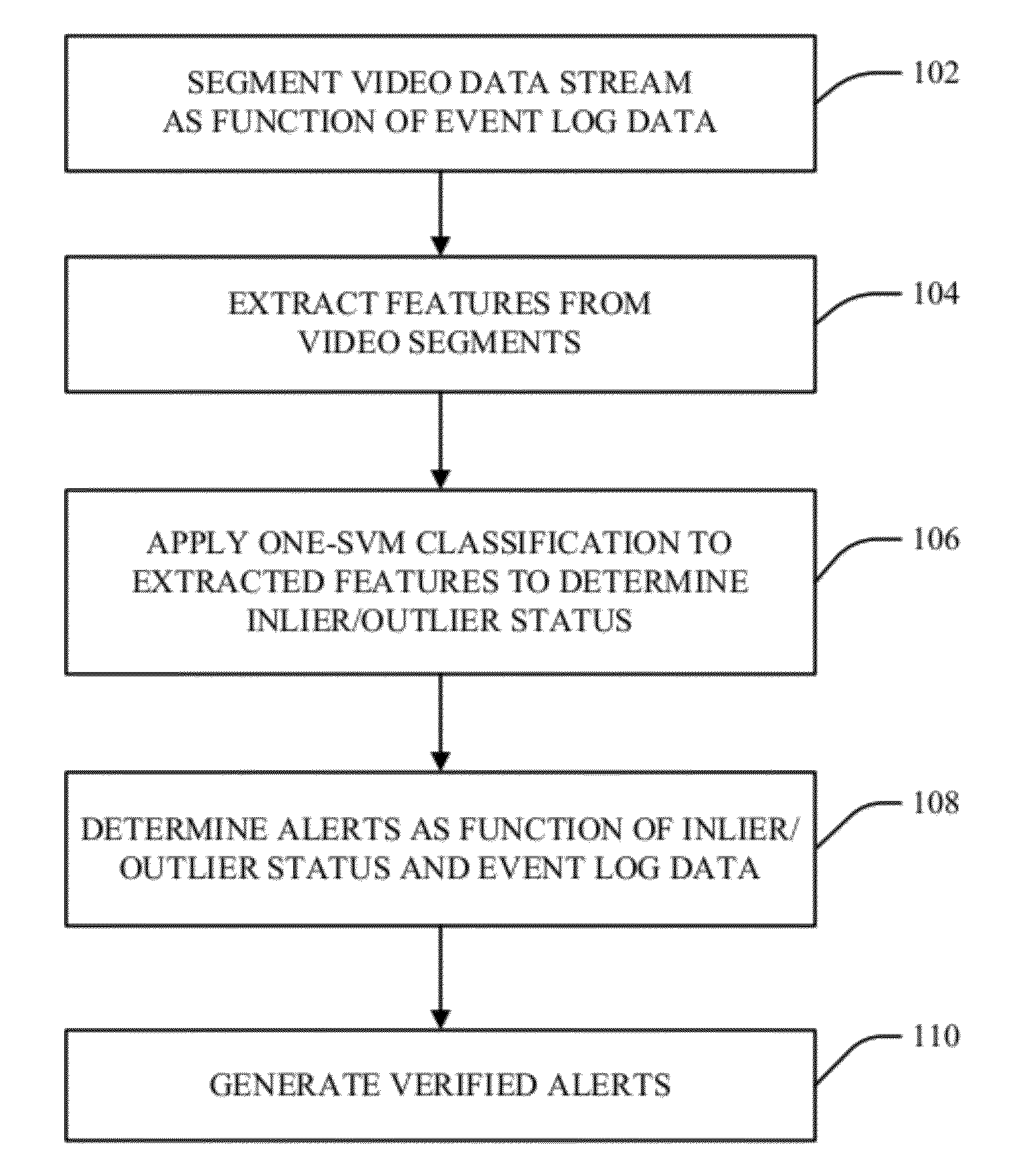

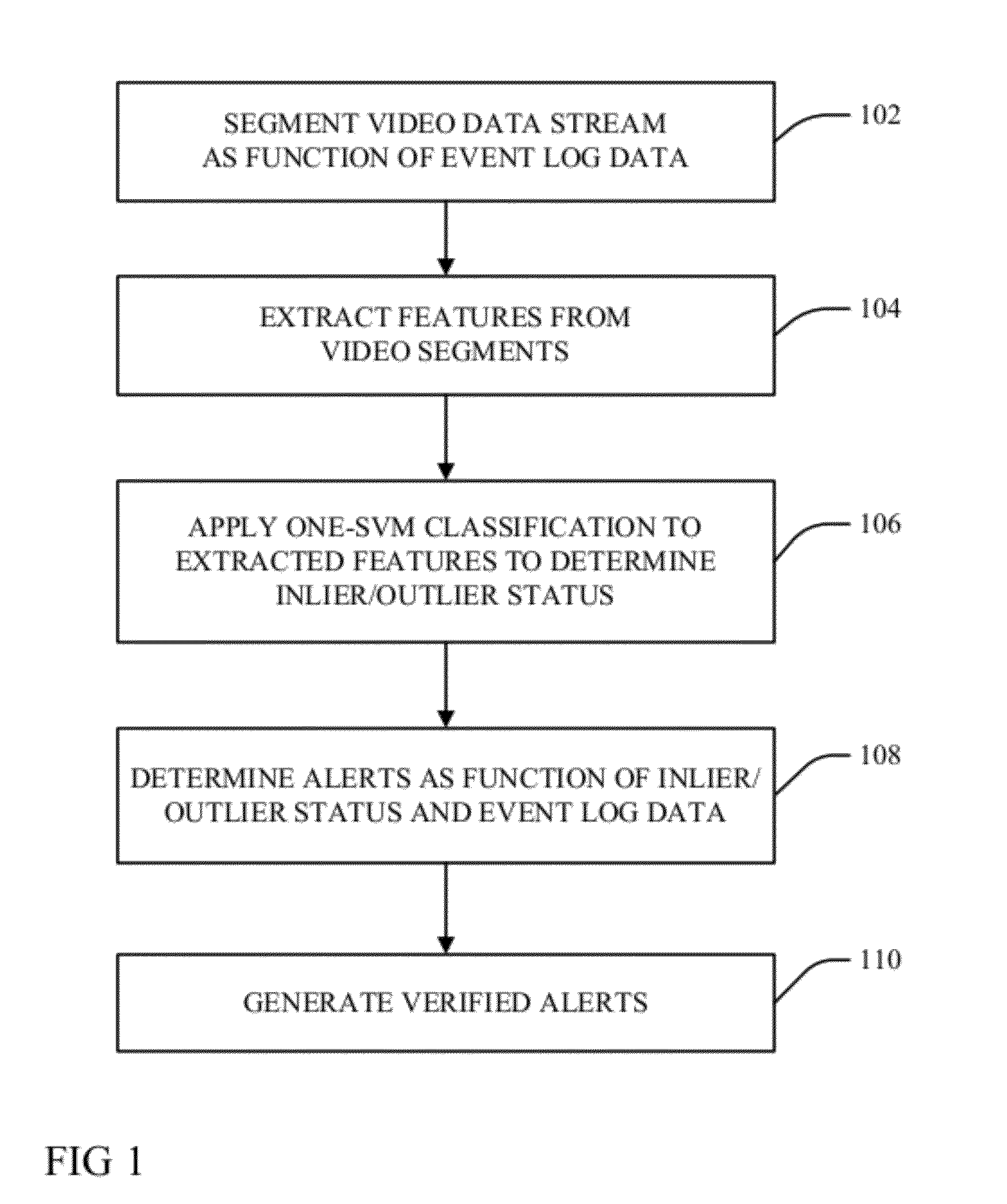

Activity determination as function of transaction log

ActiveUS20120075450A1Character and pattern recognitionColor television detailsHuman behaviorImaging analysis

Human behavior alerts are determined from a video stream through application of video analytics that parse a video stream into a plurality of segments, wherein each of the segments are either temporally related to at least one of a plurality of temporally distinct transactions in an event data log; or they are each associated with a pseudo transaction marker if not temporally related to at least one of the temporally distinct transactions and an image analysis indicates a temporal correlation with at least one of the distinct transactions is expected. Visual image features are extracted from the segments and one-SVM classification is performed on the extracted features to categorize segments into inliers or outliers relative to a threshold boundary. Event of concern alerts are issued with respect to the inlier segments associated with the associated pseudo transaction marker.

Owner:SERVICENOW INC +1

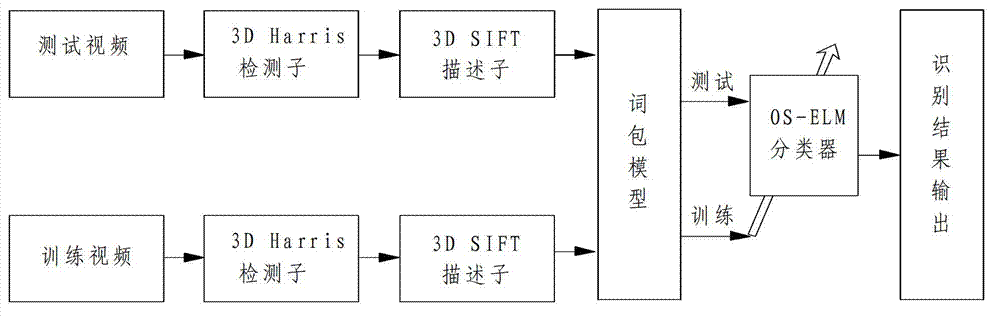

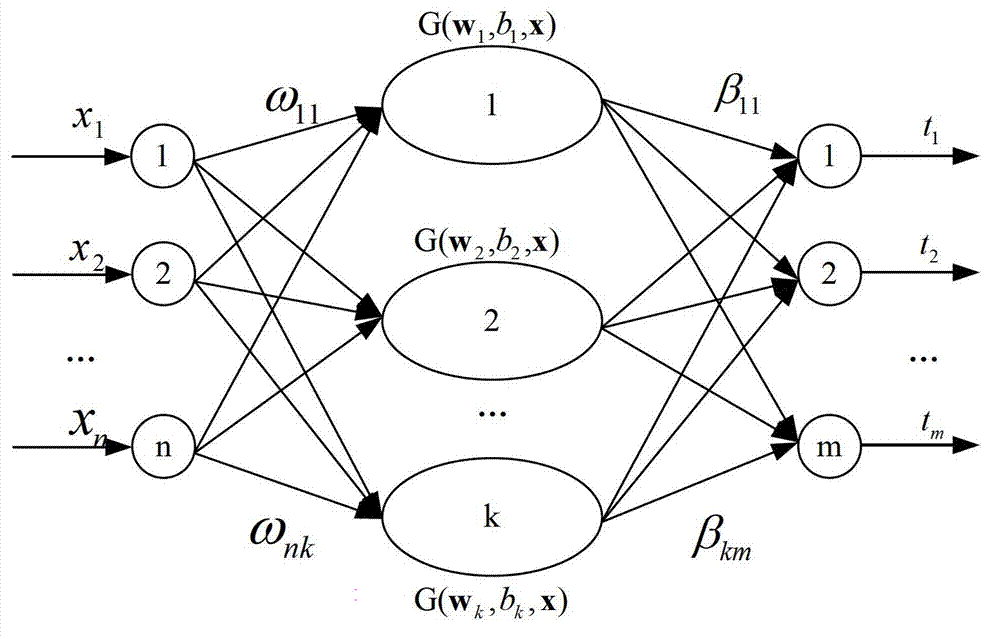

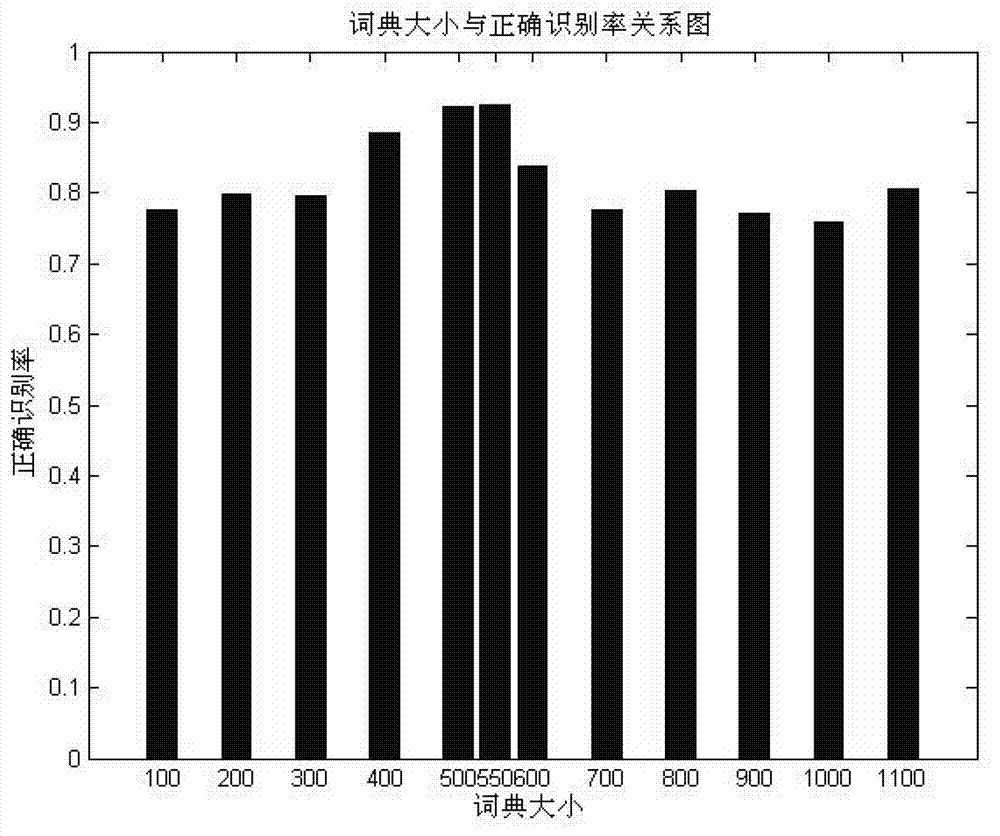

On-line sequential extreme learning machine-based incremental human behavior recognition method

InactiveCN102930302AImprove recognition accuracyAdaptableCharacter and pattern recognitionHuman bodyLearning machine

The invention discloses an on-line sequential extreme learning machine-based incremental human behavior recognition method. According to the method, a human body can be captured by a video camera on the basis of an activity range of everyone. The method comprises the following steps of: (1) extracting a spatio-temporal interest point in a video by adopting a third-dimensional (3D) Harris corner point detector; (2) calculating a descriptor of the detected spatio-temporal interest point by utilizing a 3D SIFT descriptor; (3) generating a video dictionary by adopting a K-means clustering algorithm, and establishing a bag-of-words model of a video image; (4) training an on-line sequential extreme learning machine classifier by using the obtained bag-of-words model of the video image; and (5) performing human behavior recognition by utilizing the on-line sequential extreme learning machine classifier, and performing on-line learning. According to the method, an accurate human behavior recognition result can be obtained within a short training time under the condition of a few training samples, and the method is insensitive to environmental scenario changes, environmental lighting changes, detection object changes and human form changes to a certain extent.

Owner:SHANDONG UNIV

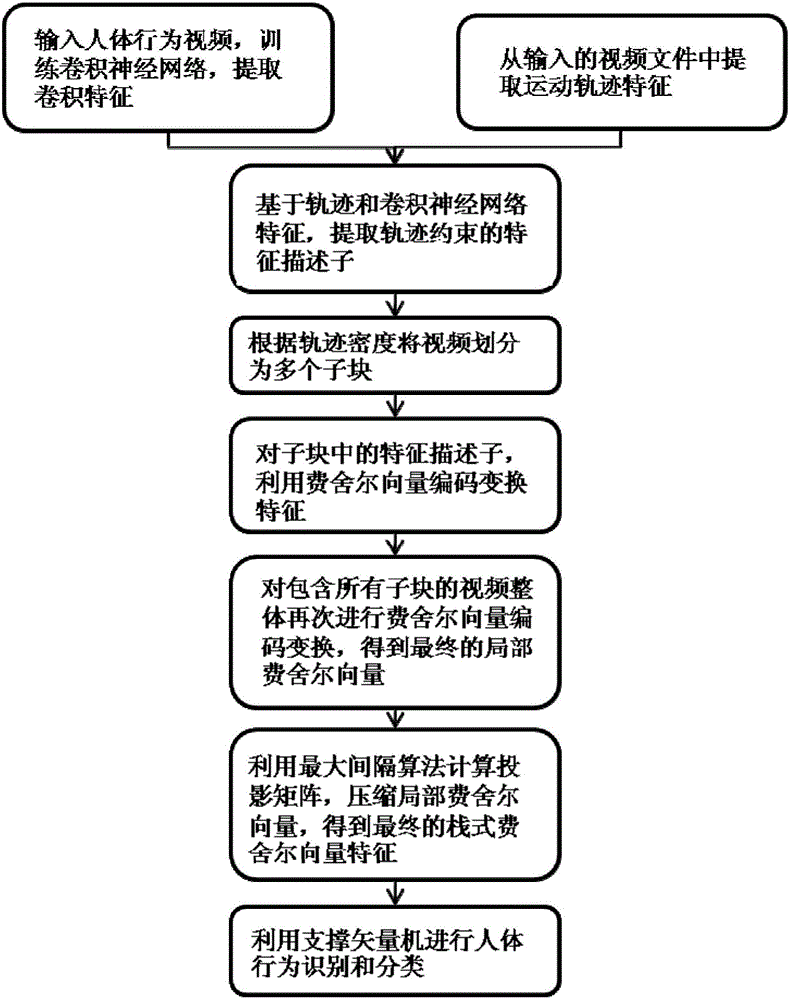

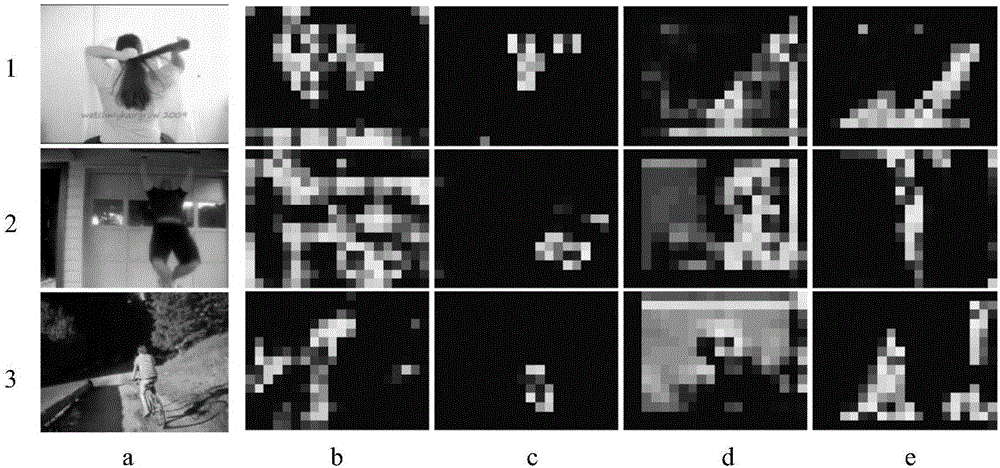

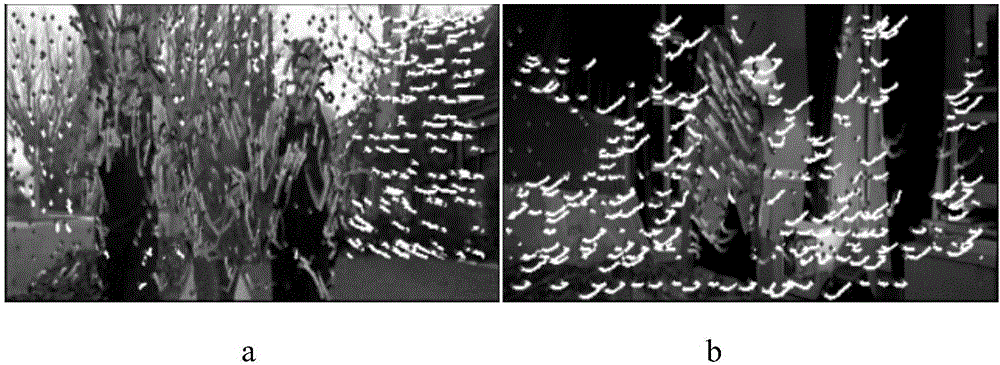

Track and convolutional neural network feature extraction-based behavior identification method

ActiveCN106778854AReduce computational complexityReduced characteristicsCharacter and pattern recognitionNeural architecturesVideo monitoringHuman behavior

The invention discloses a track and convolutional neural network feature extraction-based behavior identification method, and mainly solves the problems of computing redundancy and low classification accuracy caused by complex human behavior video contents and sparse features. The method comprises the steps of inputting image video data; down-sampling pixel points in a video frame; deleting uniform region sampling points; extracting a track; extracting convolutional layer features by utilizing a convolutional neural network; extracting track constraint-based convolutional features in combination with the track and the convolutional layer features; extracting stack type local Fisher vector features according to the track constraint-based convolutional features; performing compression transformation on the stack type local Fisher vector features; training a support vector machine model by utilizing final stack type local Fisher vector features; and performing human behavior identification and classification. According to the method, relatively high and stable classification accuracy can be obtained by adopting a method for combining multilevel Fisher vectors with convolutional track feature descriptors; and the method can be widely applied to the fields of man-machine interaction, virtual reality, video monitoring and the like.

Owner:XIDIAN UNIV

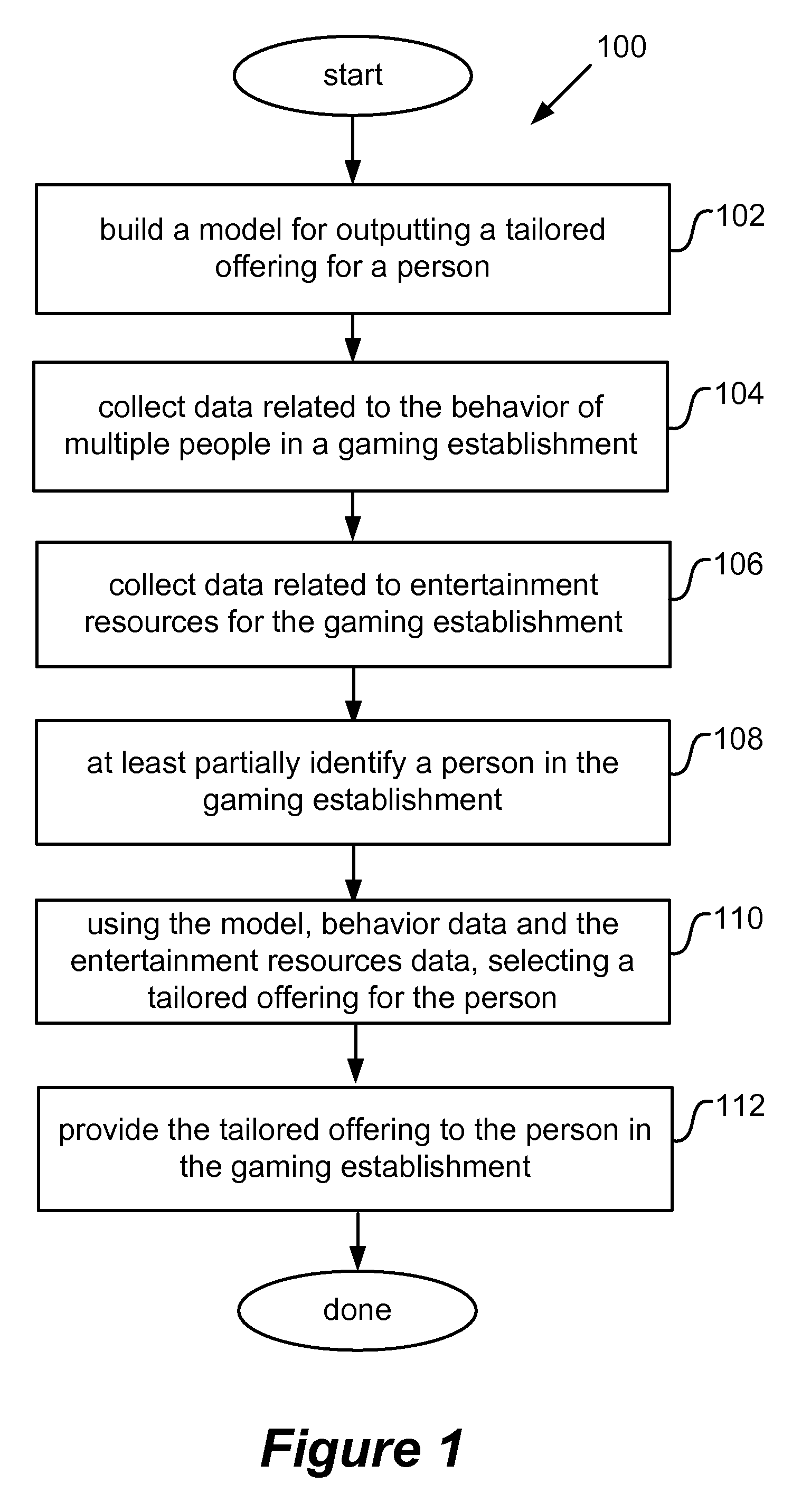

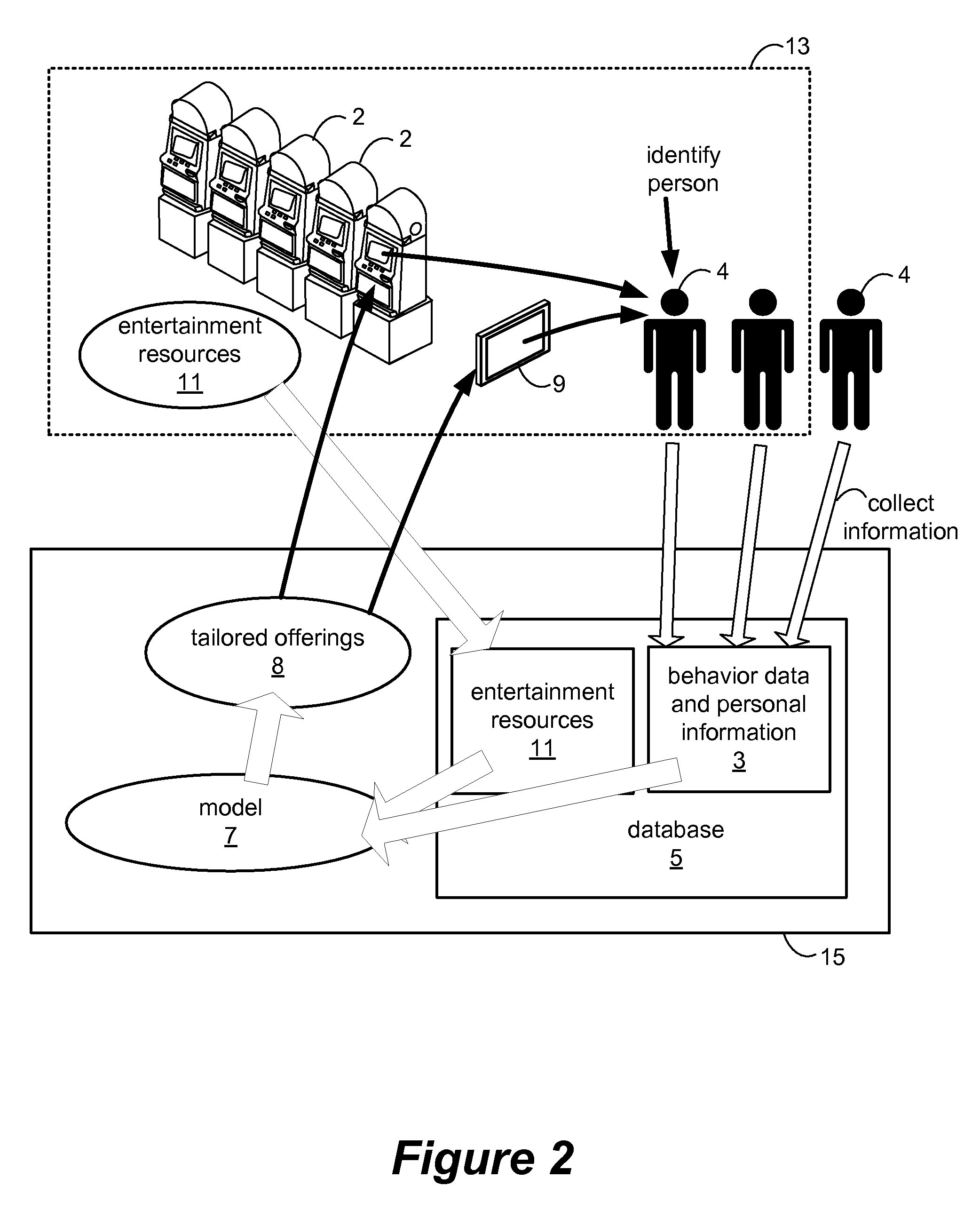

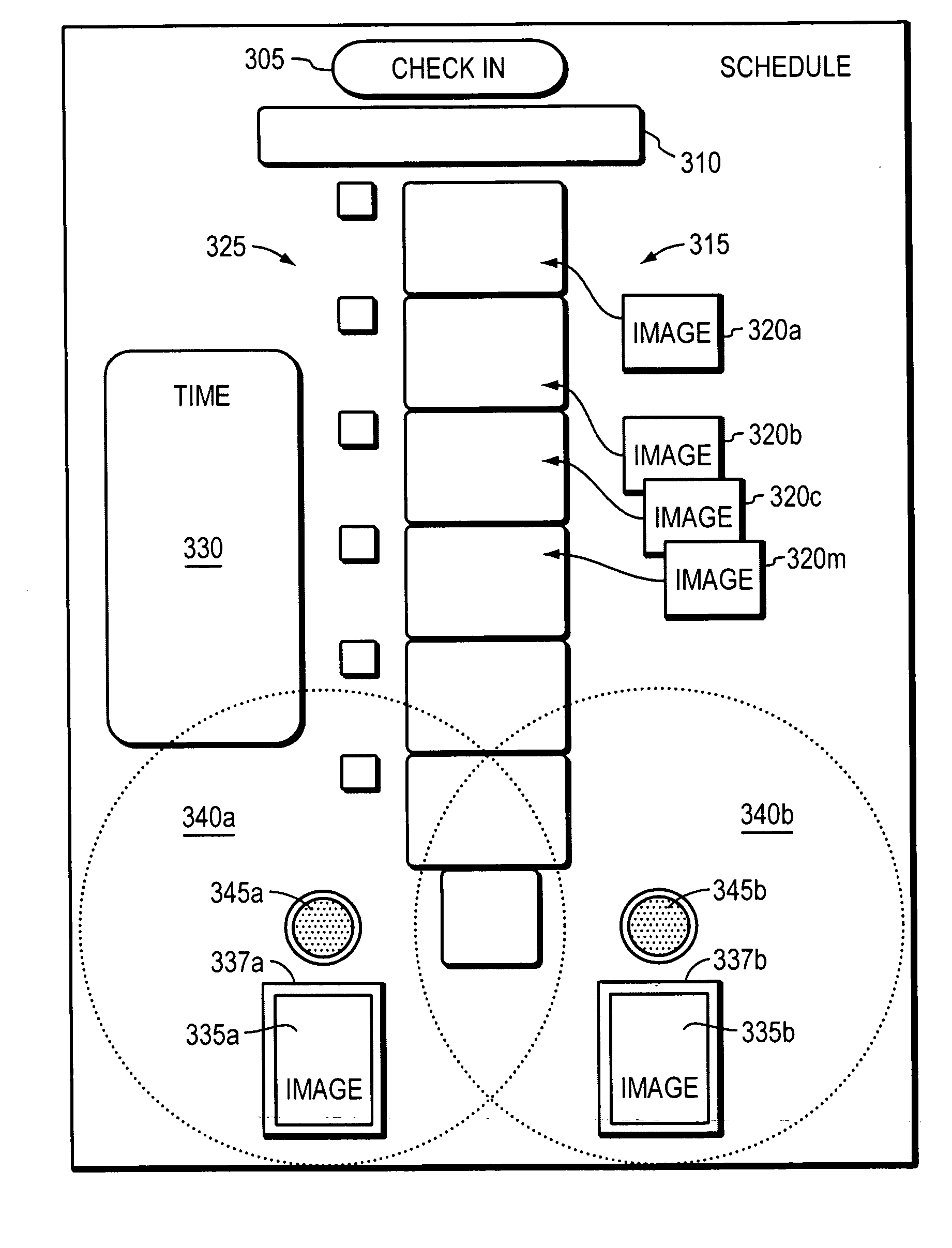

Casino patron tracking and information use

ActiveUS8979646B2Enhanced interactionReconfiguring entertainment resourcesApparatus for meter-controlled dispensingVideo gamesHuman behaviorGame machine

Owner:IGT

Method and apparatus for developing a person's behavior

ActiveUS20070117073A1Assist in developing the behavior in the personElectrical appliancesTeaching apparatusHuman behaviorAdaptive response

An embodiment of an apparatus, or corresponding method, for developing a person's behavior according to the principles of the present invention comprises at least one visual behavior indicator that represents a behavior desired of a person viewing the at least one visual behavior indicator. The apparatus, or corresponding method, further includes at least two visual choice indicators viewable with the at least one visual behavior indicator that represent choices available to the person, the choices assisting in developing the behavior in the person by assisting the person in choosing an appropriately adaptive response supporting the desired behavior or as an alternative to behavior contrary to the desired behavior.

Owner:BEE VISUAL

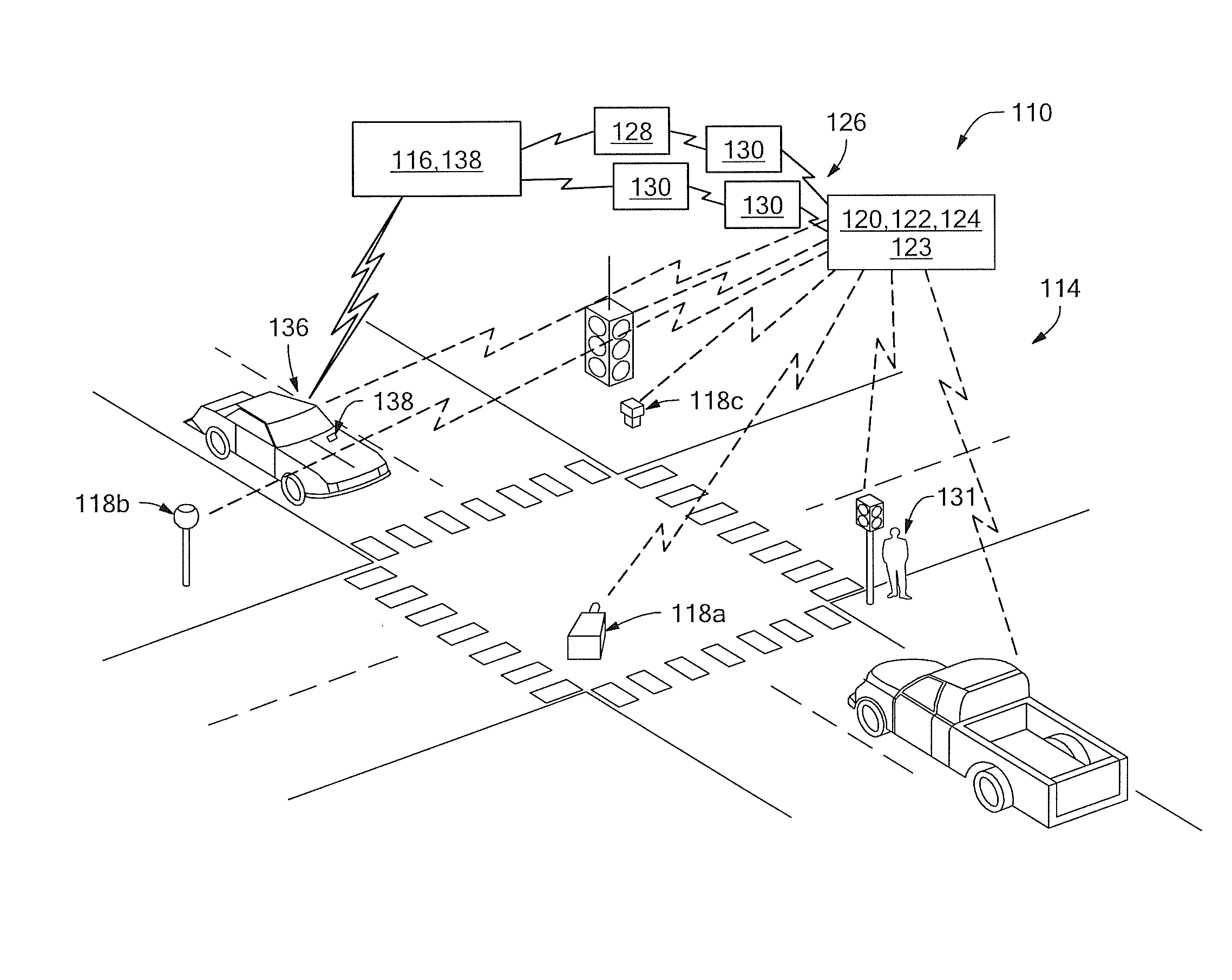

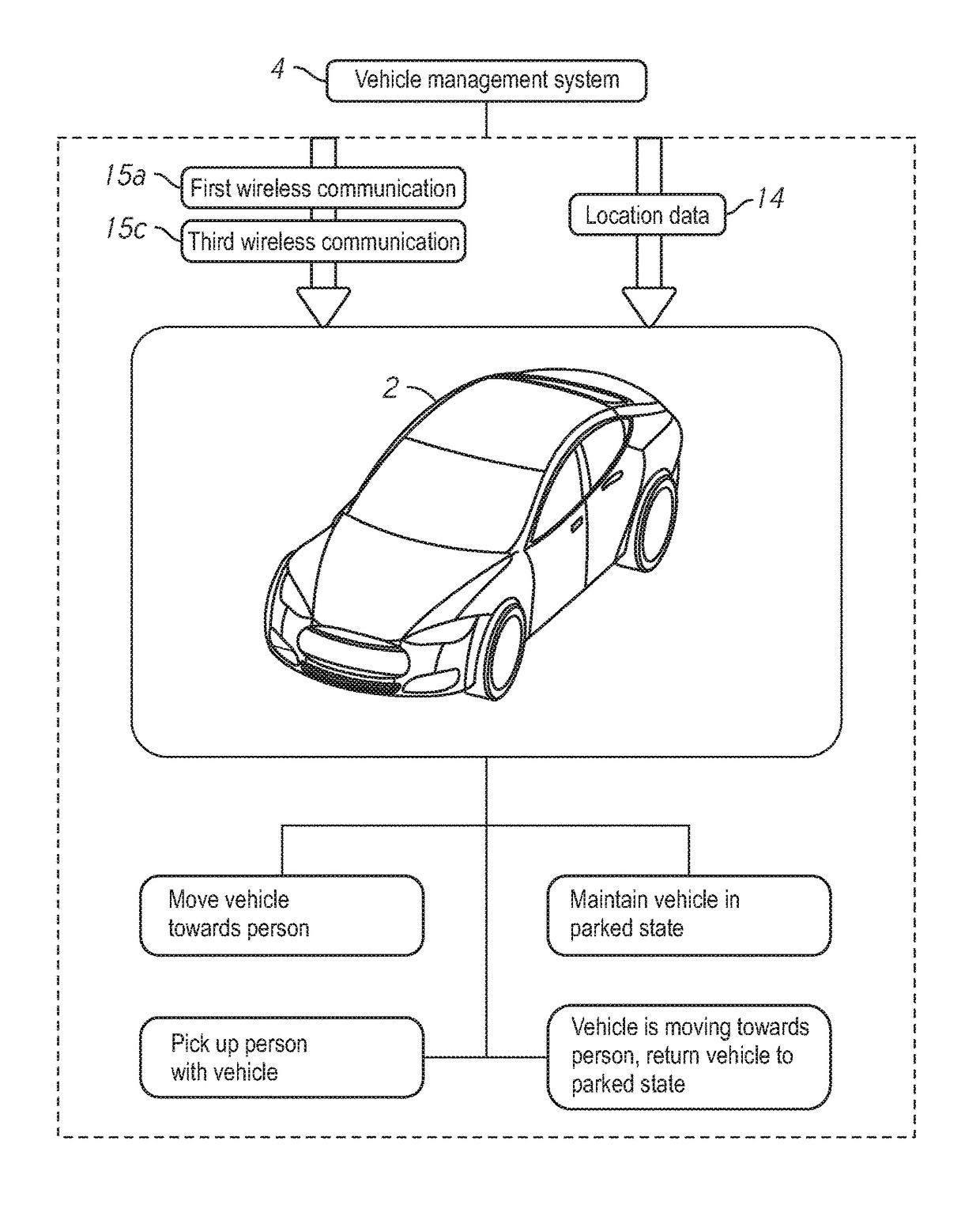

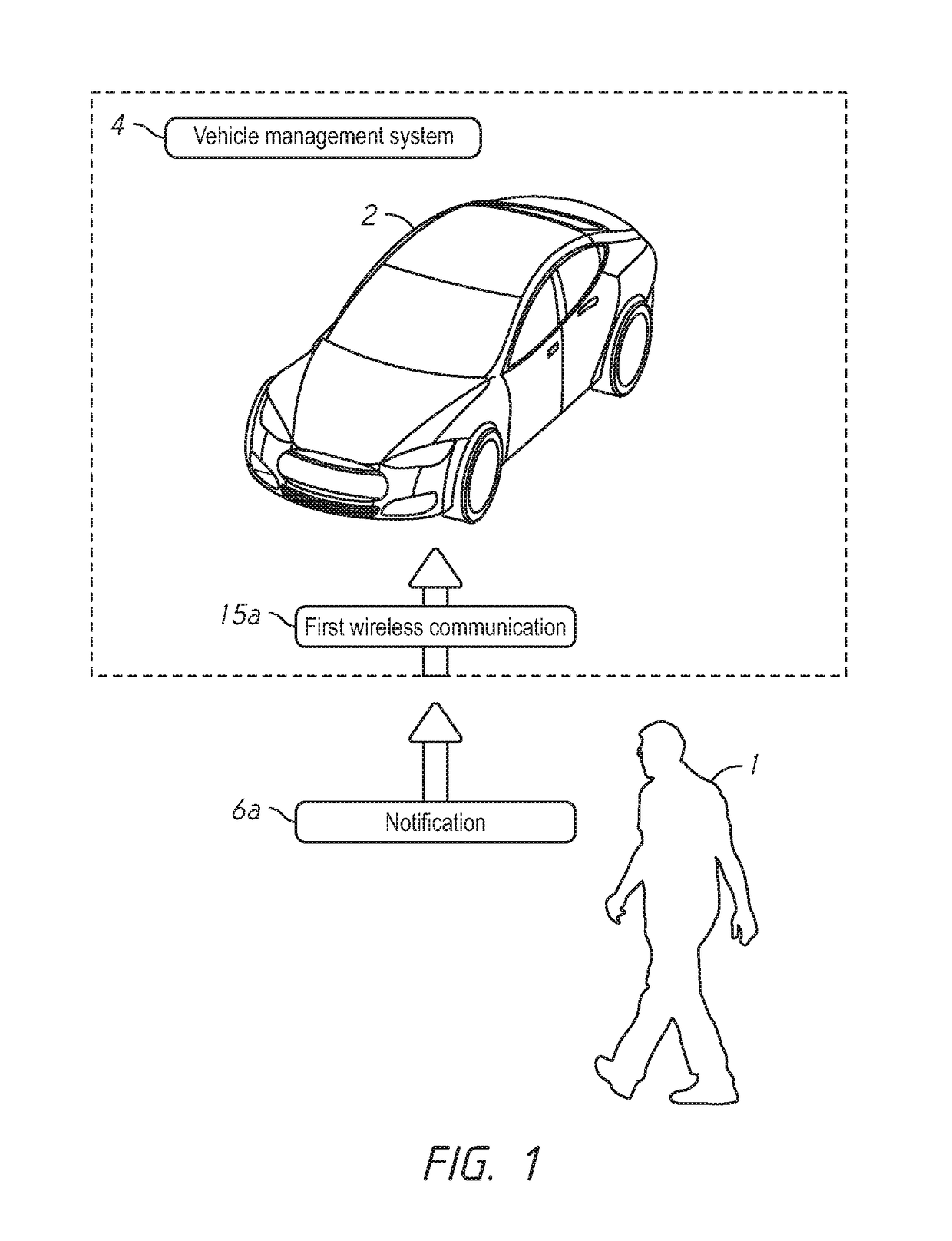

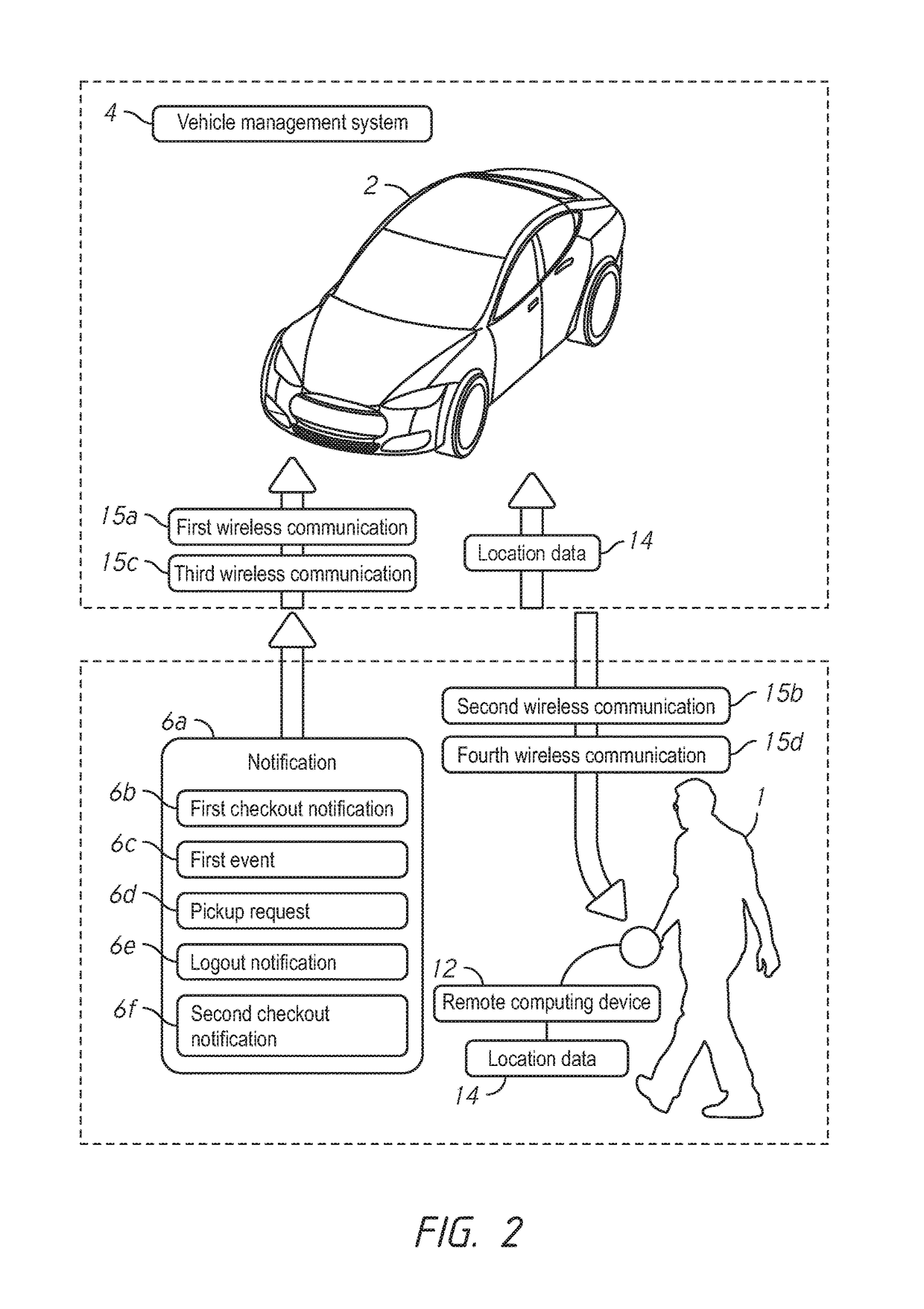

Self-driving vehicle systems and methods

ActiveUS9646356B1Unlimited attention spanAbility of self-driving vehicles to save lives is so impressiveAutonomous decision making processAcoustic signal devicesHuman behaviorEngineering

Self-driving vehicles have unlimited potential to learn and predict human behavior and perform actions accordingly. Several embodiments described herein enable a self-driving vehicle to monitor human activity and predict when and where the human will be located and whether the human needs a ride from the self-driving vehicle. Self-driving vehicles will be able to perform such tasks with incredible efficacy and accuracy that will allow self-driving vehicles to proliferate at a much faster rate than would otherwise be the case.

Owner:DRIVENT LLC

Method and system for user authentication and identification using behavioral and emotional association consistency

InactiveUS7249263B2Digital data processing detailsUser identity/authority verificationEEG deviceHuman behavior

A system and method for determining and authenticating a person's identity by generating a behavioral profile for that person by presenting that person with various stimulus and measuring that person's response characteristics in an enrollment stage. That person's response profile, once generated is stored. When that user subsequently needs to access a secure resource, that user to be authorized is presented with the stimulus that was presented at the time of generating that person's behavioral profile and the person's responses are detected and compared to his / her behavioral profile. If a match is detected, that user is identified. The user's behavioral response may be in the form of signals as detected by sensor means that detects visual or audible emotional cues or as signals resulting from that person's behavior as detected by polygraph or EEG devices.

Owner:IBM CORP

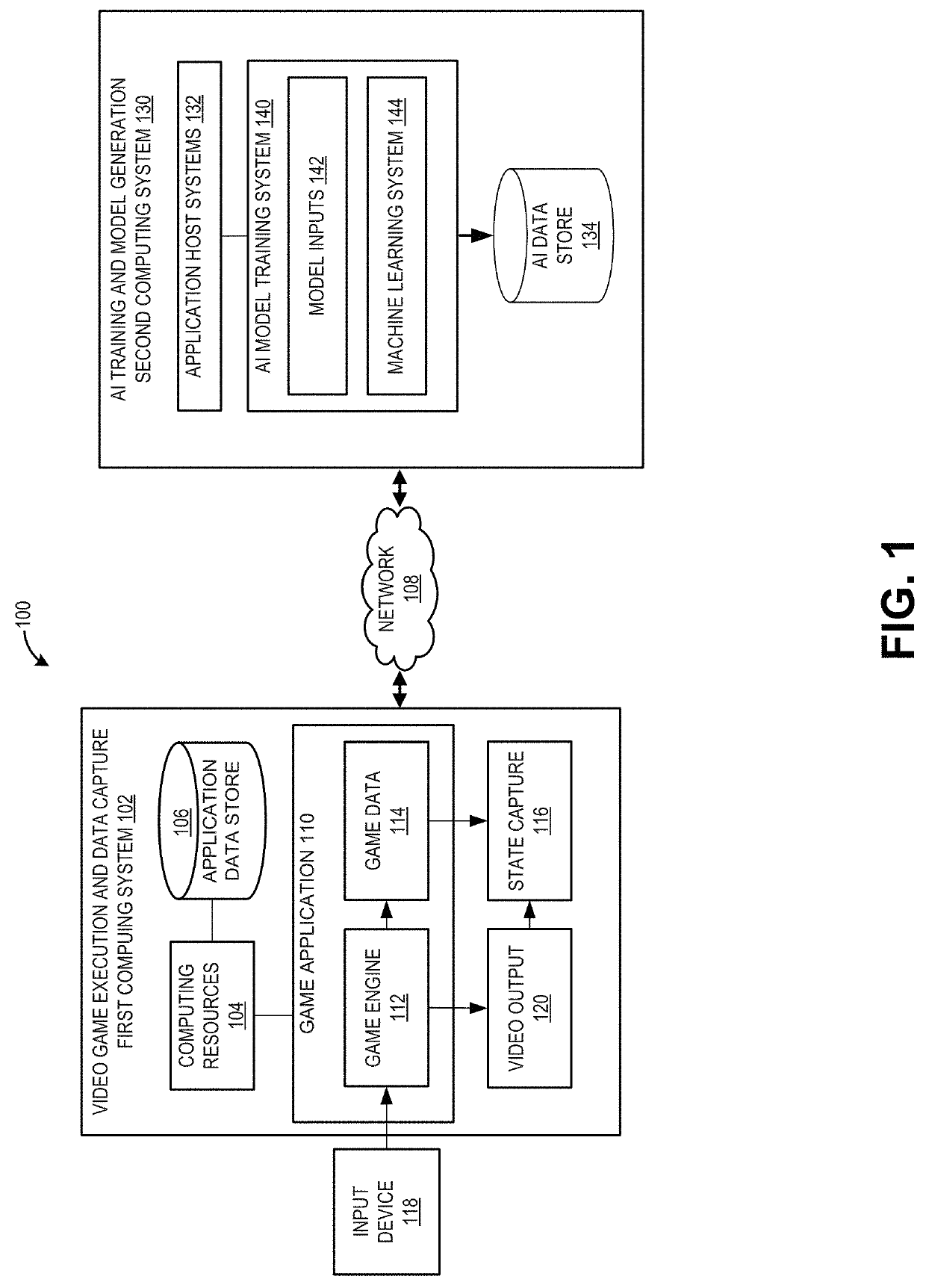

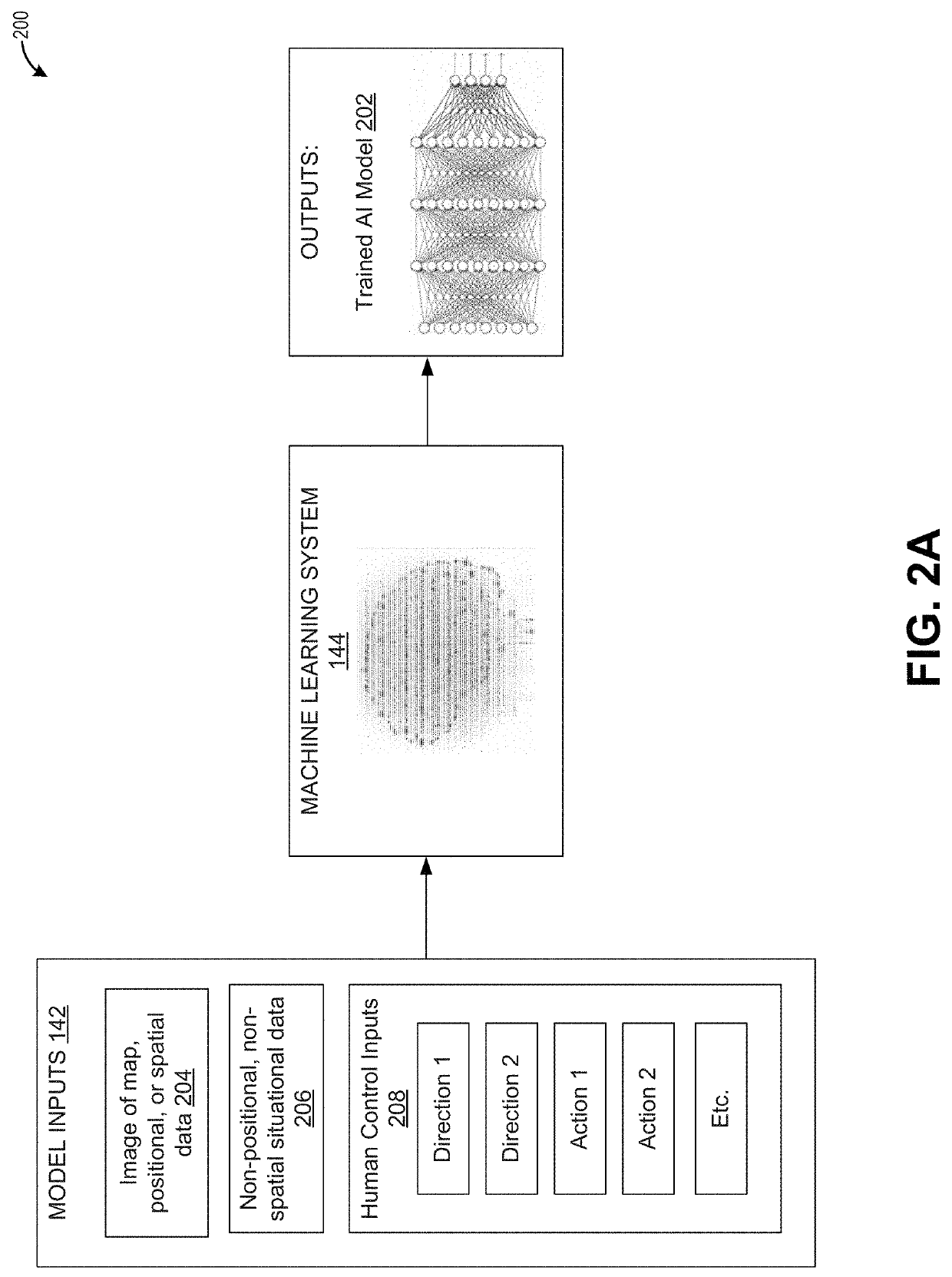

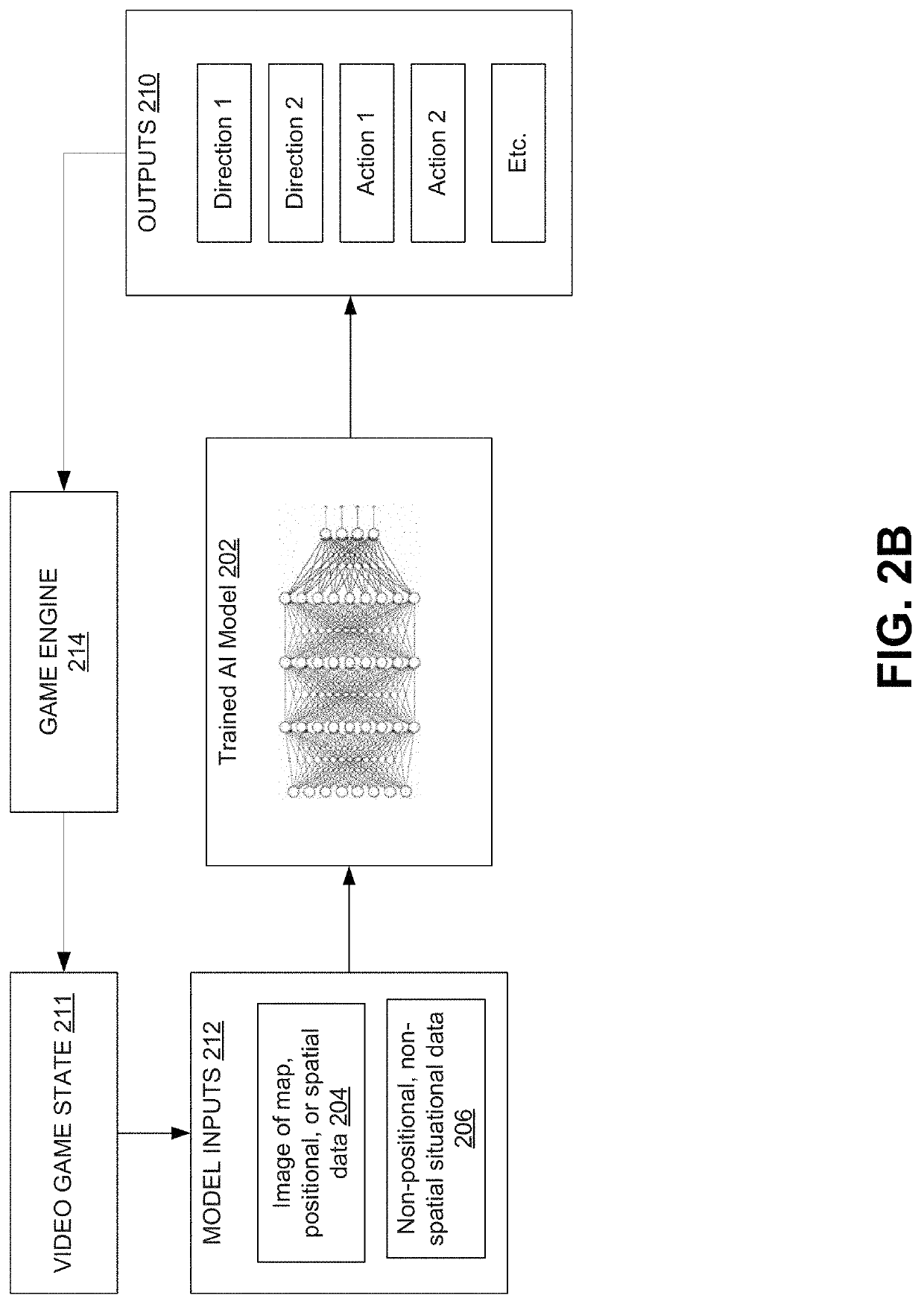

Artificial intelligence for emulating human playstyles

An artificially intelligent entity can emulate human behavior in video games. An AI model can be made by receiving gameplay logs of a video gameplay session, generating, based on the gameplay data, first situational data indicating first states of the video game, generating first control inputs provided by a human, the first control inputs corresponding to the first states of the video game, training a first machine learning system using the first situational data and corresponding first control inputs, and generating, using the first machine learning system, a first artificial intelligence model. The machine learning system can include a convolutional neural network. Inputs to the machine learning system can include a retina image and / or a matrix image.

Owner:ELECTRONICS ARTS INC

Method and apparatus for modeling behavior using a probability distrubution function

A method and apparatus are disclosed for modeling patterns of behavior of humans or other animate objects and detecting a violation of a repetitive pattern of behavior. The behavior of one or more persons is observed over time and features of the behavior are recorded in a multi-dimensional space. Over time, the multi-dimensional data provides an indication of patterns of human behavior. Activities that are repetitive in terms of time, location and activity, such as sleeping and eating, would appear as a Gaussian distribution or cluster in the multi-dimensional data. Probability distribution functions can be analyzed using known Gaussian or clustering techniques to identify repetitive patterns of behavior and characteristics thereof, such as a mean and variance. Deviations from repetitive patterns of behavior can be detected and an alarm can be triggered, if appropriate.

Owner:KONINKLIJKE PHILIPS ELECTRONICS NV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com