Human behavior identification method based on three-dimensional convolutional neural network and transfer learning model

A three-dimensional convolution and neural network technology, applied in the field of image processing, can solve the problems of the same motion integrity, motion range, motion speed difference, difficulty in distinguishing, incomplete motion, etc., to achieve fast recognition speed, high detection accuracy, and improved accuracy. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0033] In order to make the purpose, technical solution and advantages of the present invention clearer, the present invention will be further described in detail below in conjunction with the accompanying drawings and specific embodiments.

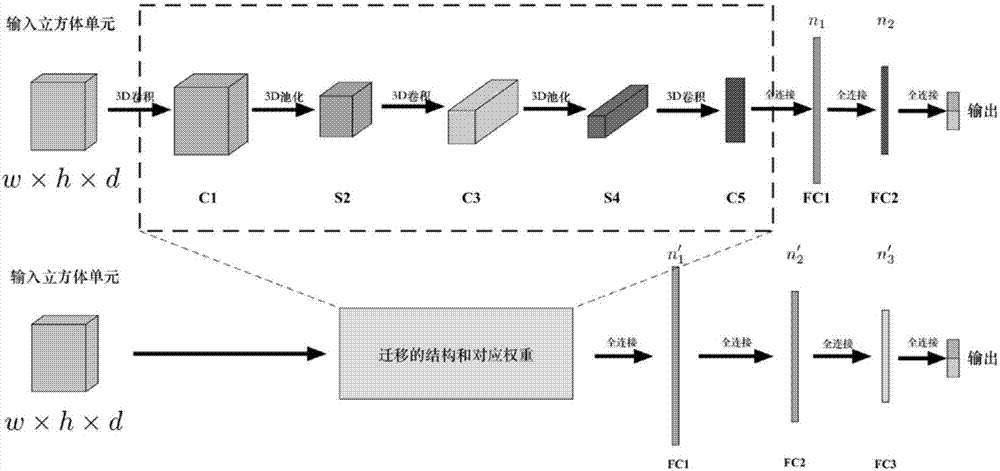

[0034] Such as figure 1 As shown, the present invention specifically realizes the following steps:

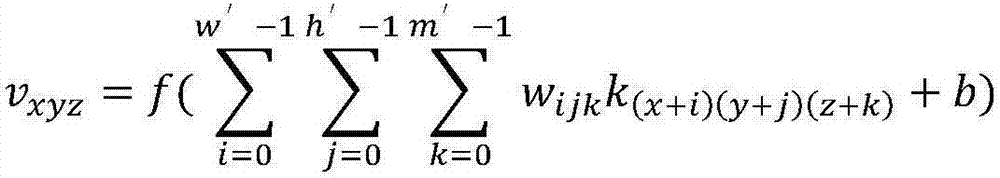

[0035] Step 1. Read the video, decompose the video into many continuous single-frame images, and then stack the single-frame images to obtain the cube structure required by the neural network, and at the same time determine the corresponding behavior classification label for each cube structure. That is, from the original video data, the video is disassembled into a series of continuous frame images by frame-by-frame sampling, and they are stacked in the time dimension to obtain many cube structures with a size of w×h×d that can fully present an action. Among them, w represents the width of the image, h represents the height of the image,...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com