Patents

Literature

2638 results about "Behavior recognition" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Behavior recognition is based on several factors. These include the location and movement of the nose point, center point, and tail base of the animal; its body shape and contour; and information about the cage in which testing takes place (such as where the walls, the feeder, and the drinking bottle are located).

Behavior recognition system

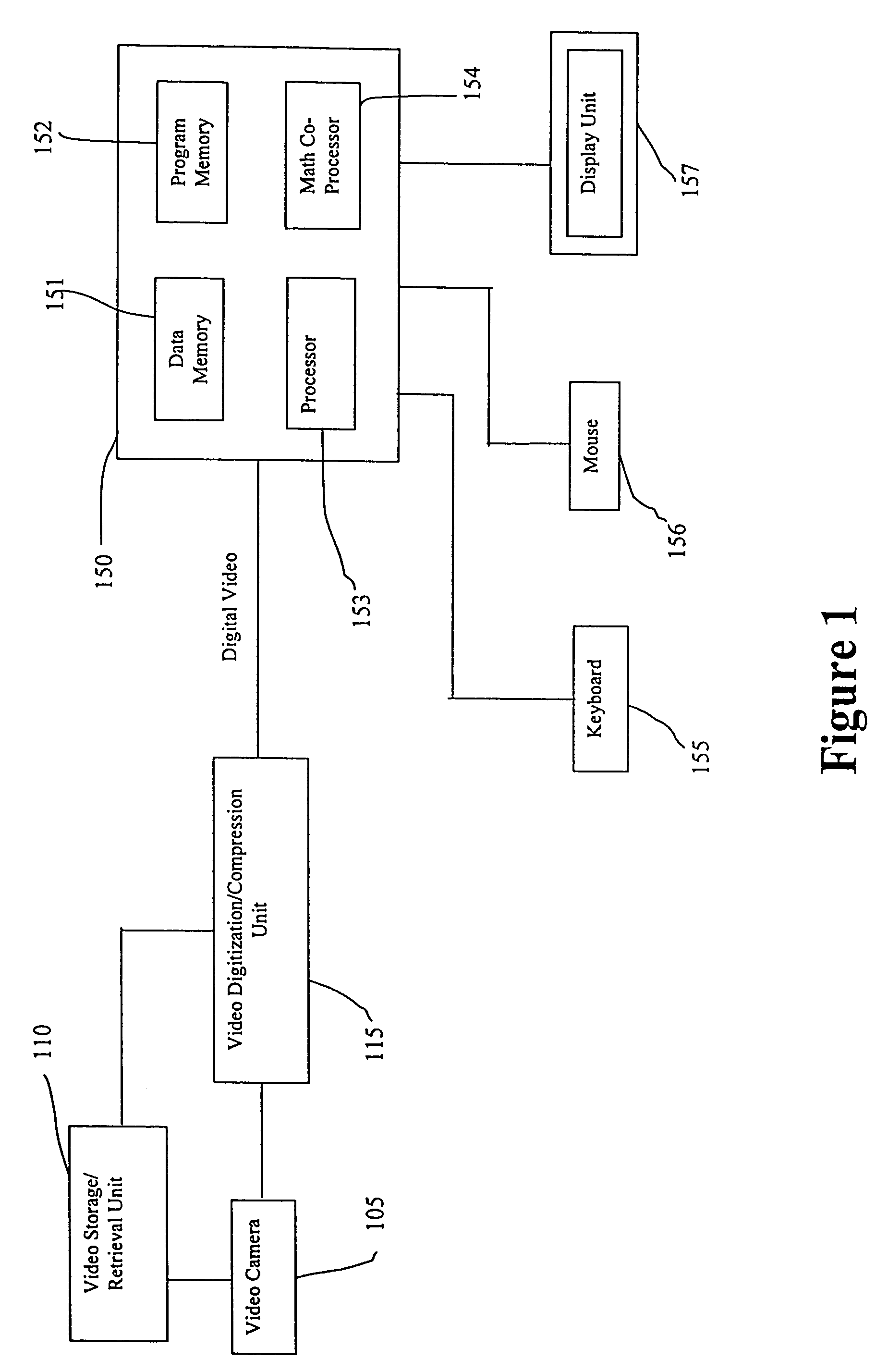

InactiveUS7036094B1Easy to identifyCharacter and pattern recognitionCathode-ray tube indicatorsGaitBehavior recognition

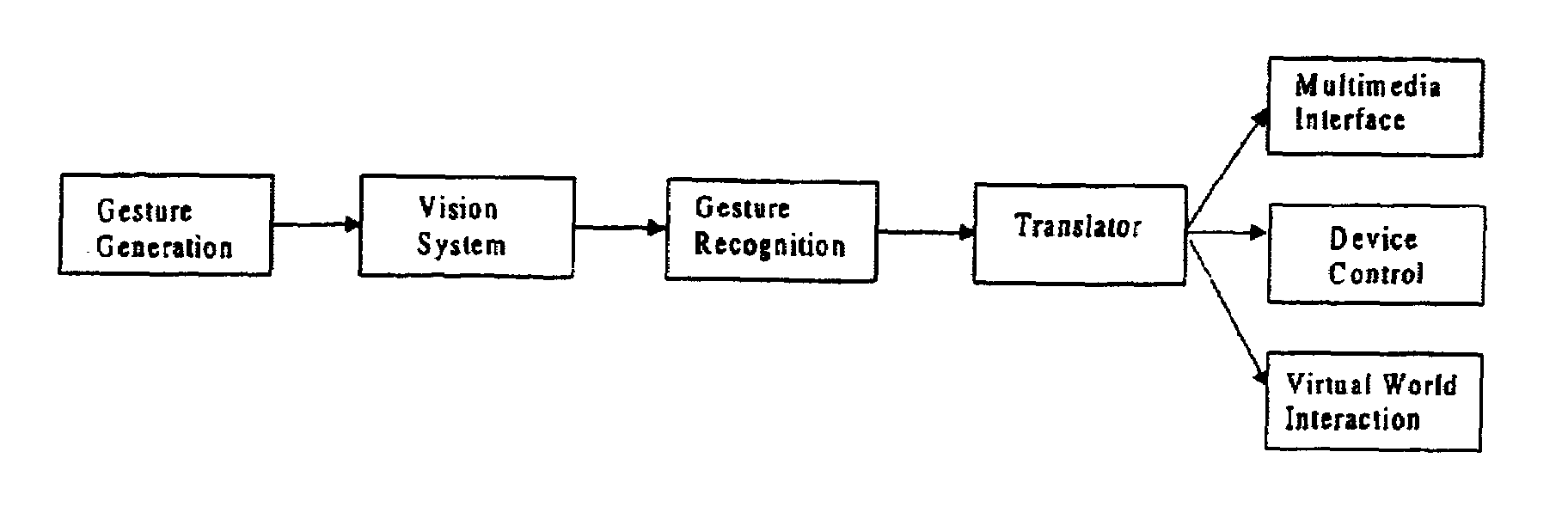

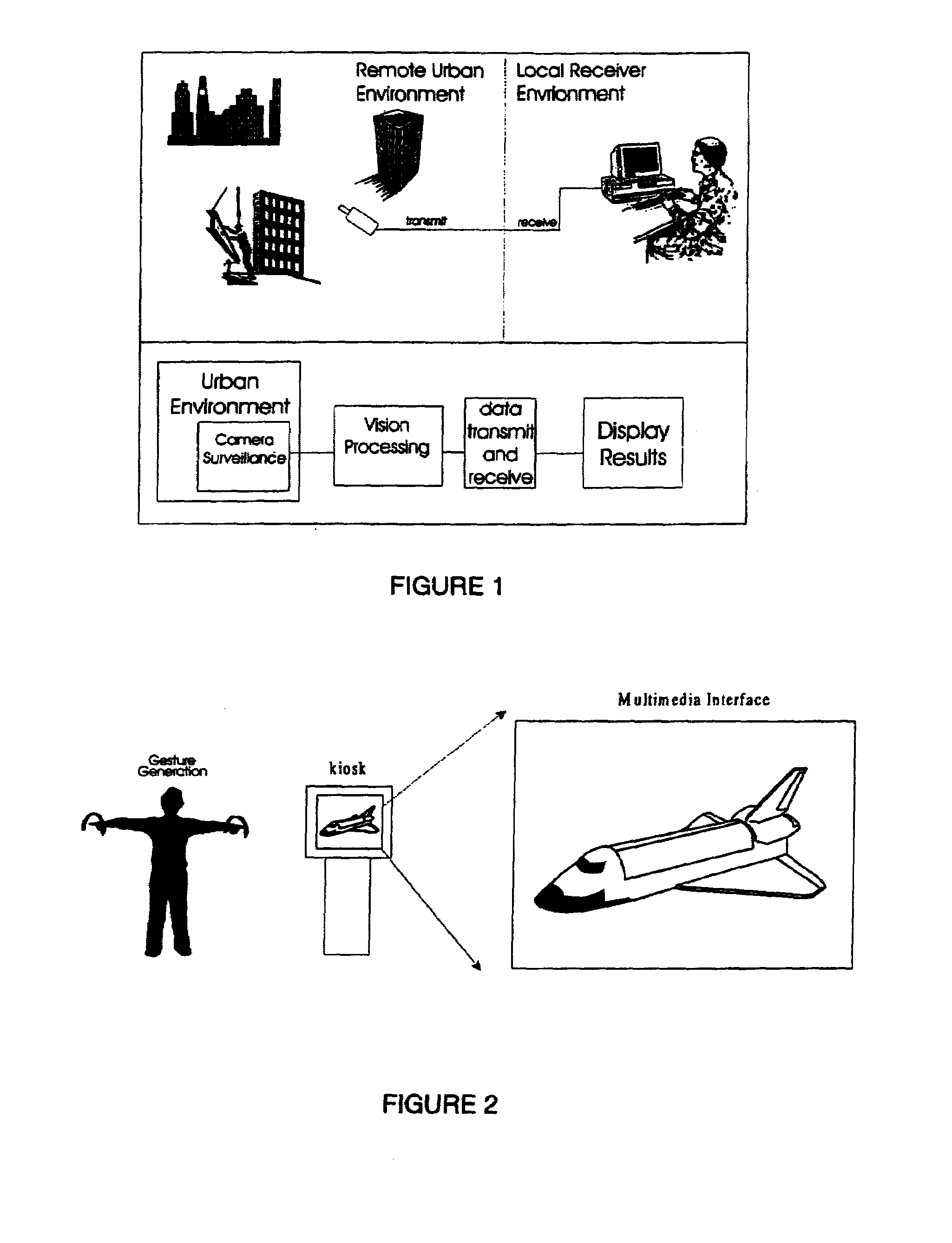

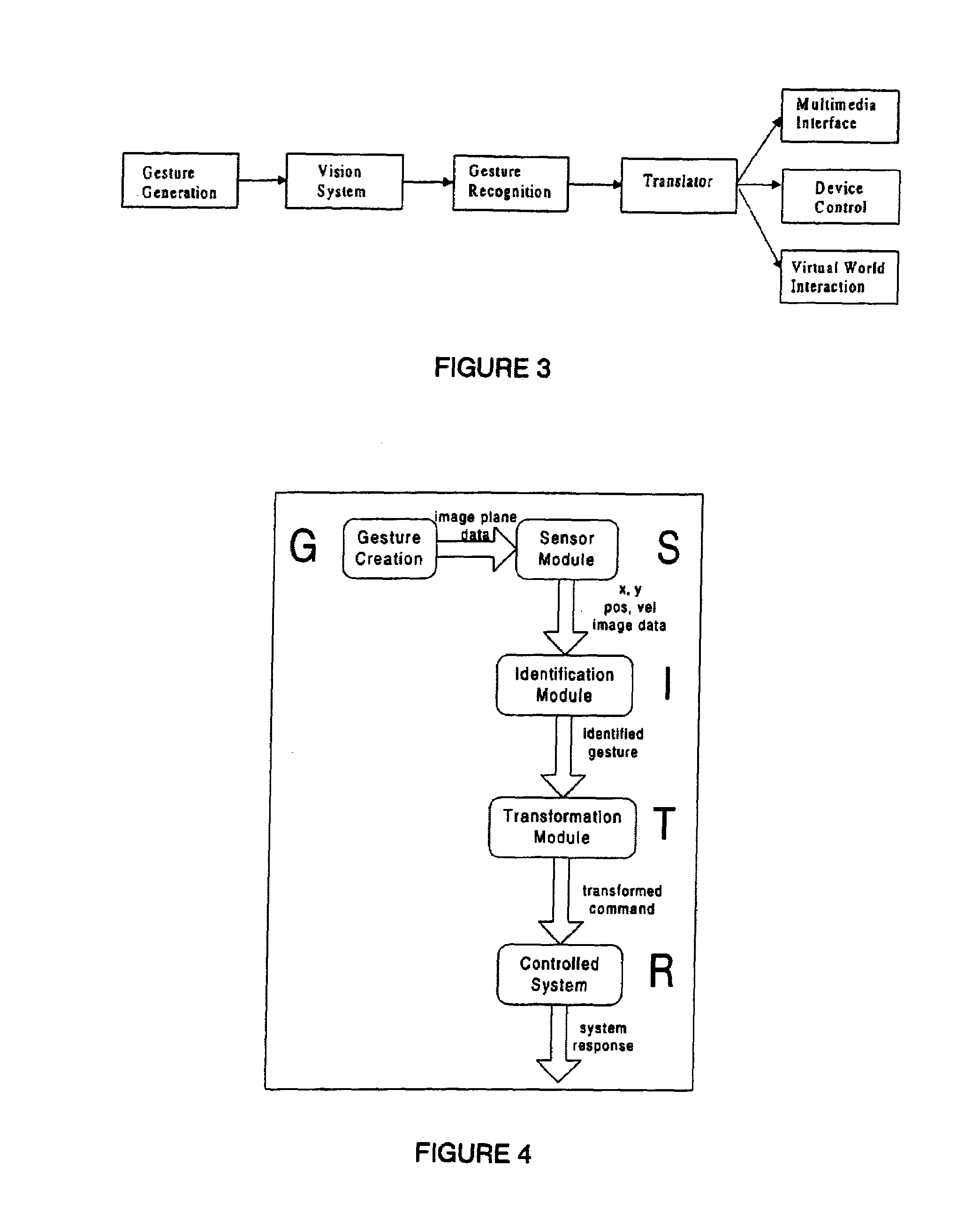

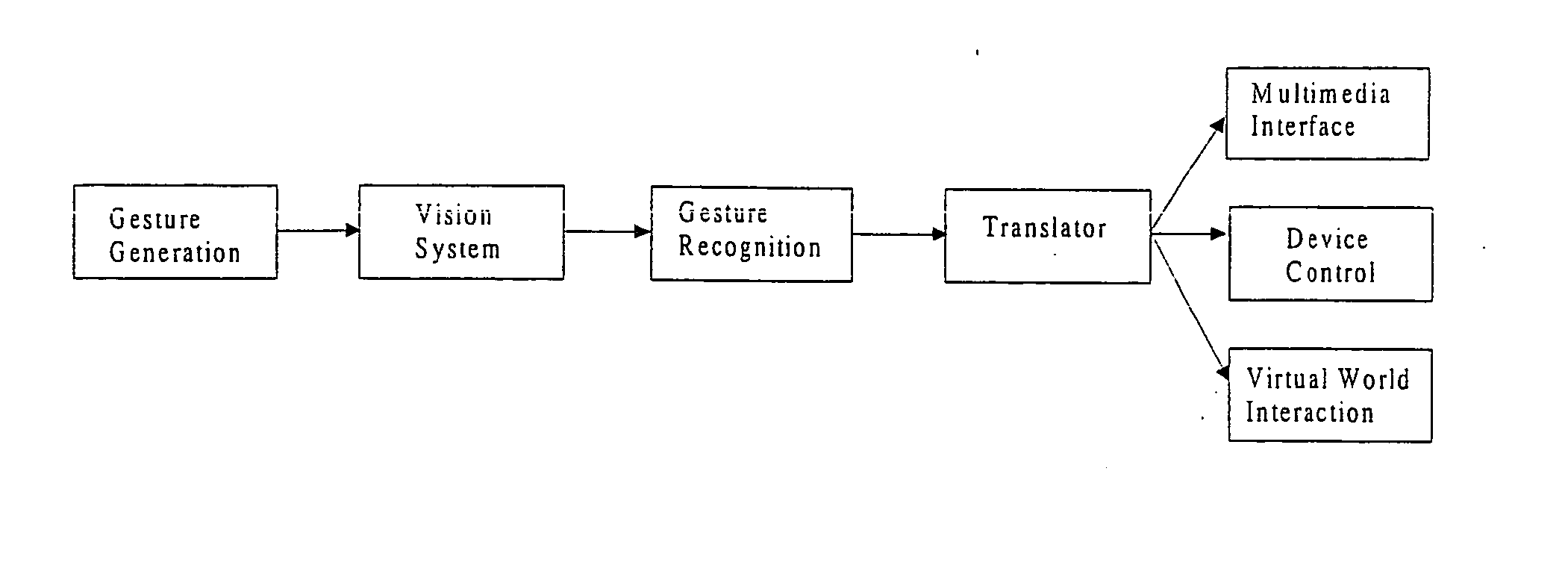

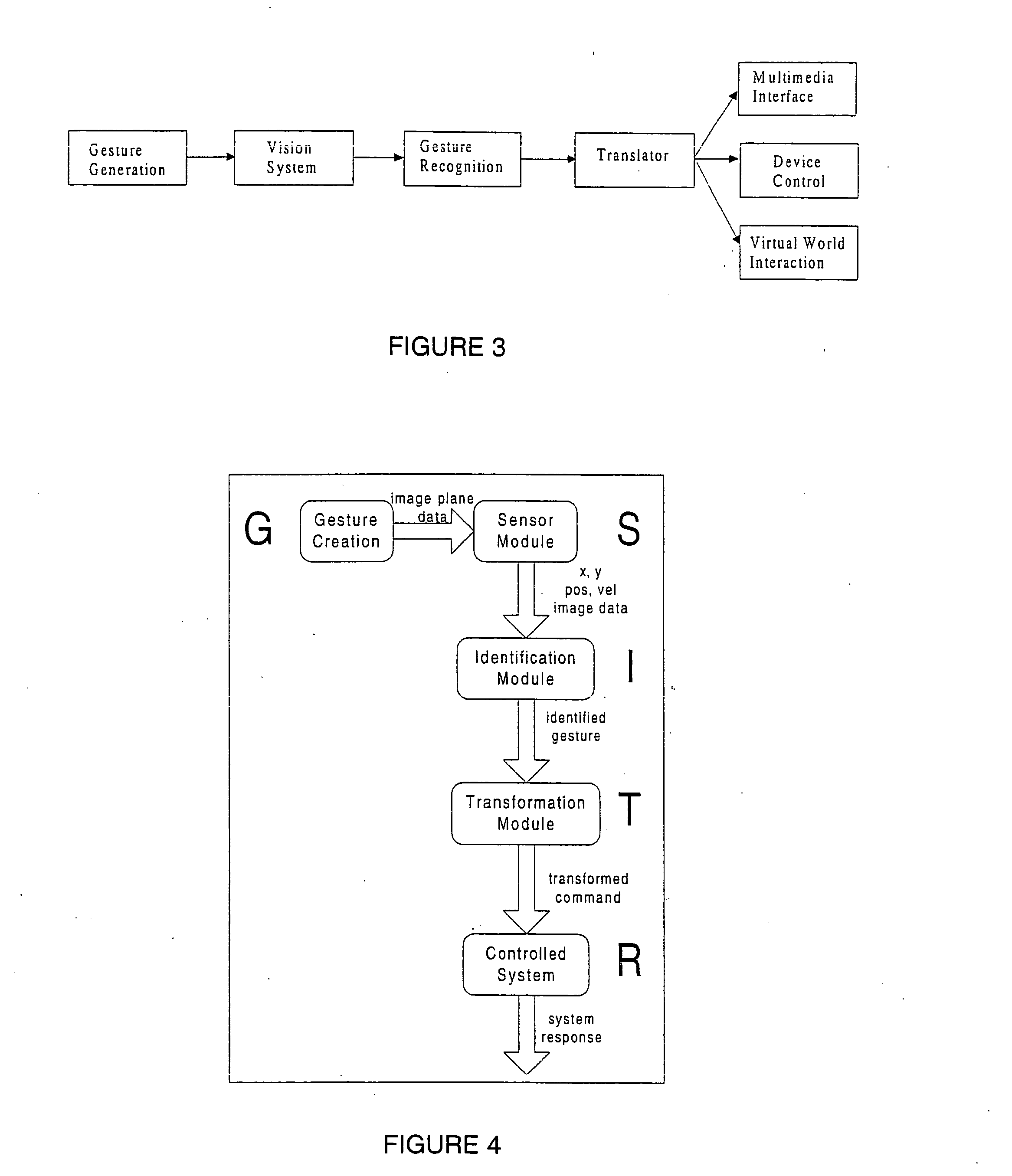

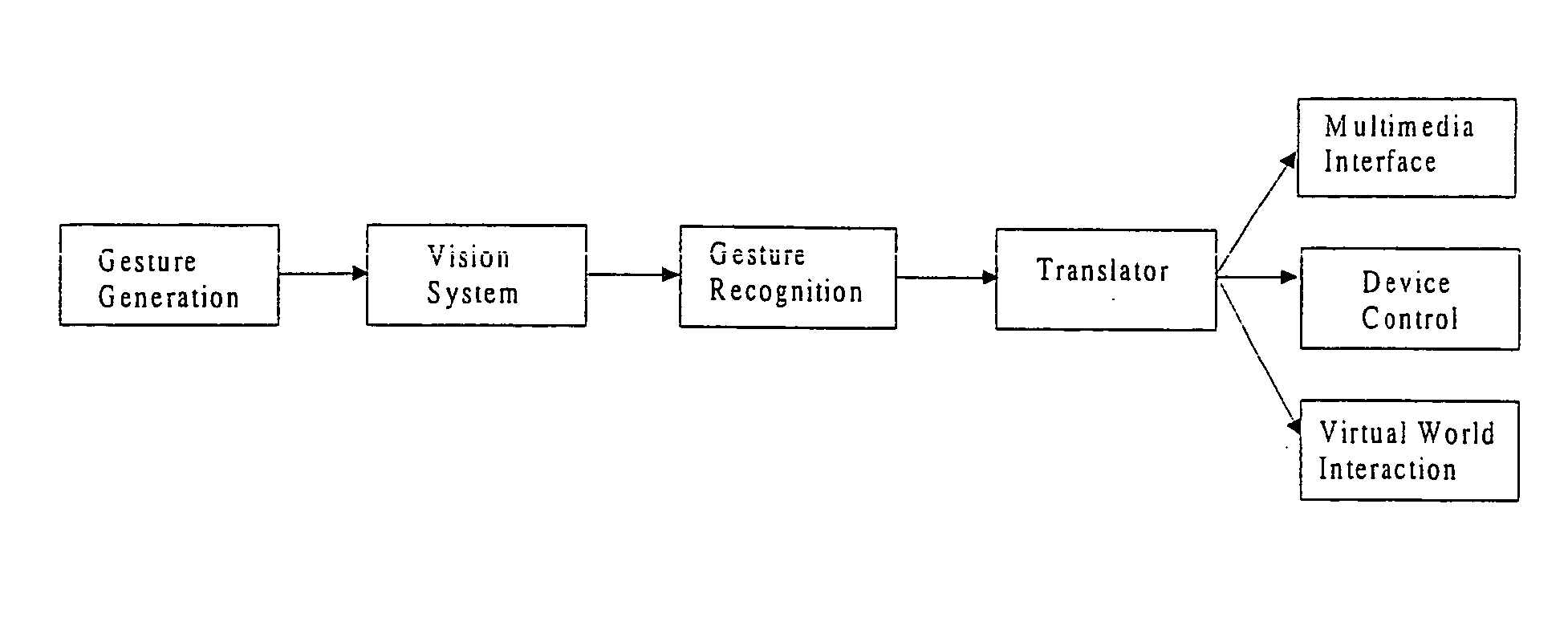

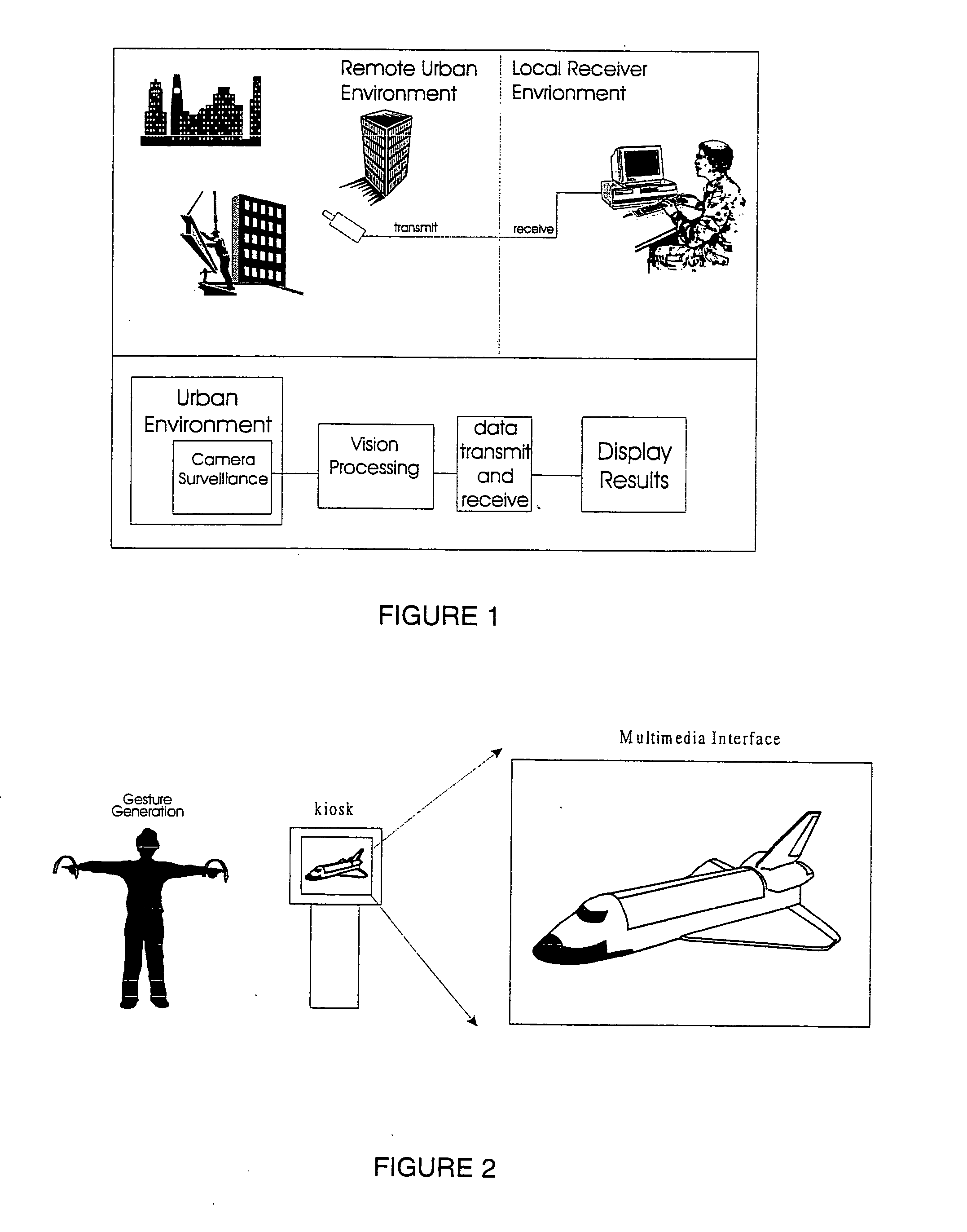

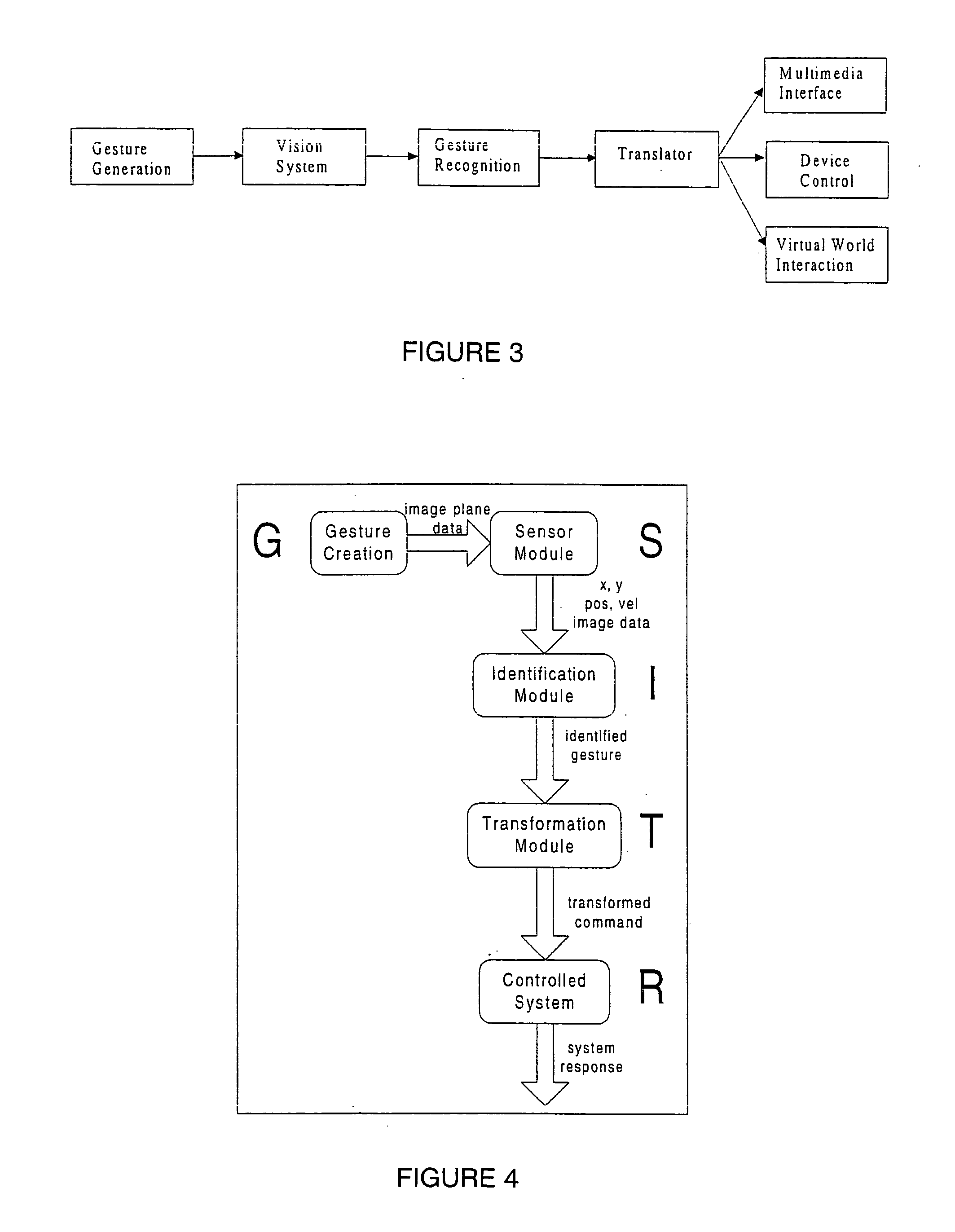

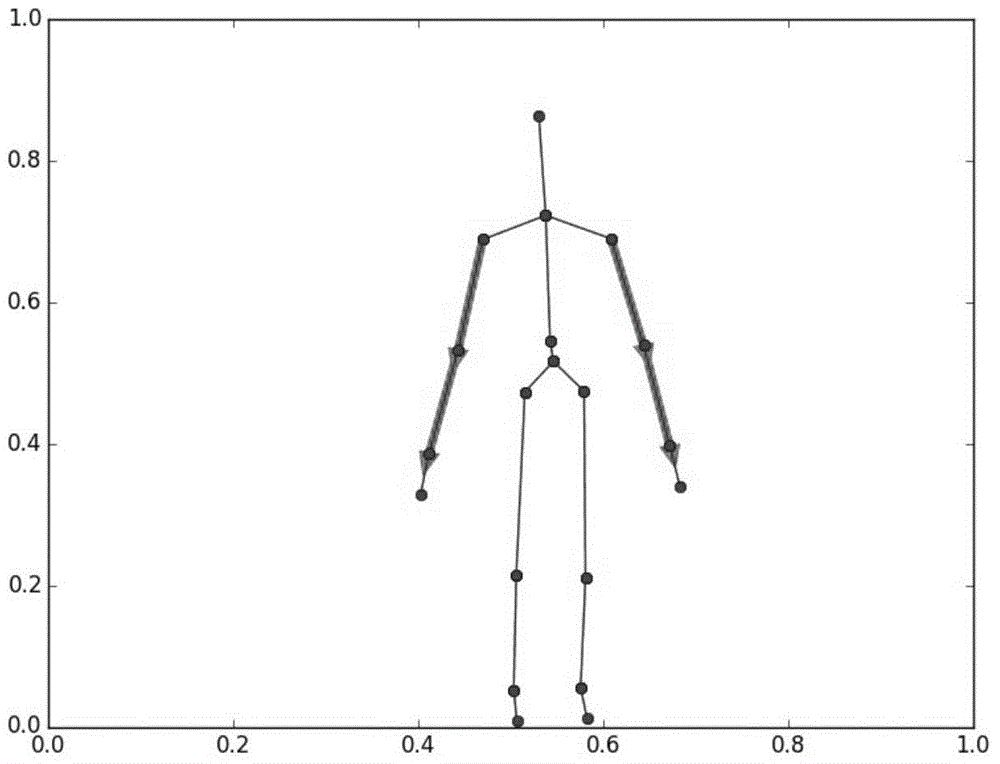

A system for recognizing various human and creature motion gaits and behaviors is presented. These behaviors are defined as combinations of “gestures” identified on various parts of a body in motion. For example, the leg gestures generated when a person runs are different than when a person walks. The system described here can identify such differences and categorize these behaviors. Gestures, as previously defined, are motions generated by humans, animals, or machines. Where in the previous patent only one gesture was recognized at a time, in this system, multiple gestures on a body (or bodies) are recognized simultaneously and used in determining behaviors. If multiple bodies are tracked by the system, then overall formations and behaviors (such as military goals) can be determined.

Owner:JOLLY SEVEN SERIES 70 OF ALLIED SECURITY TRUST I

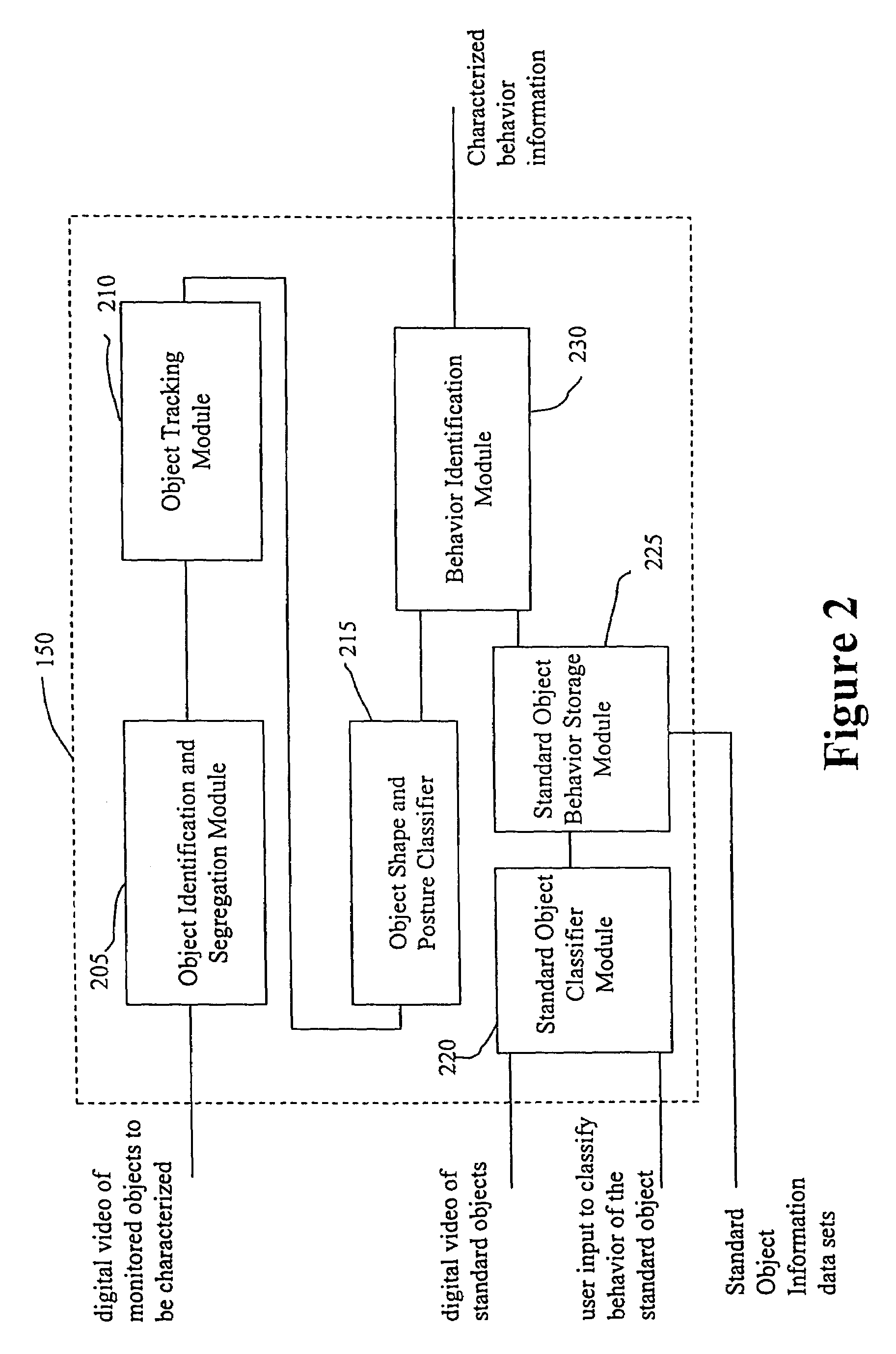

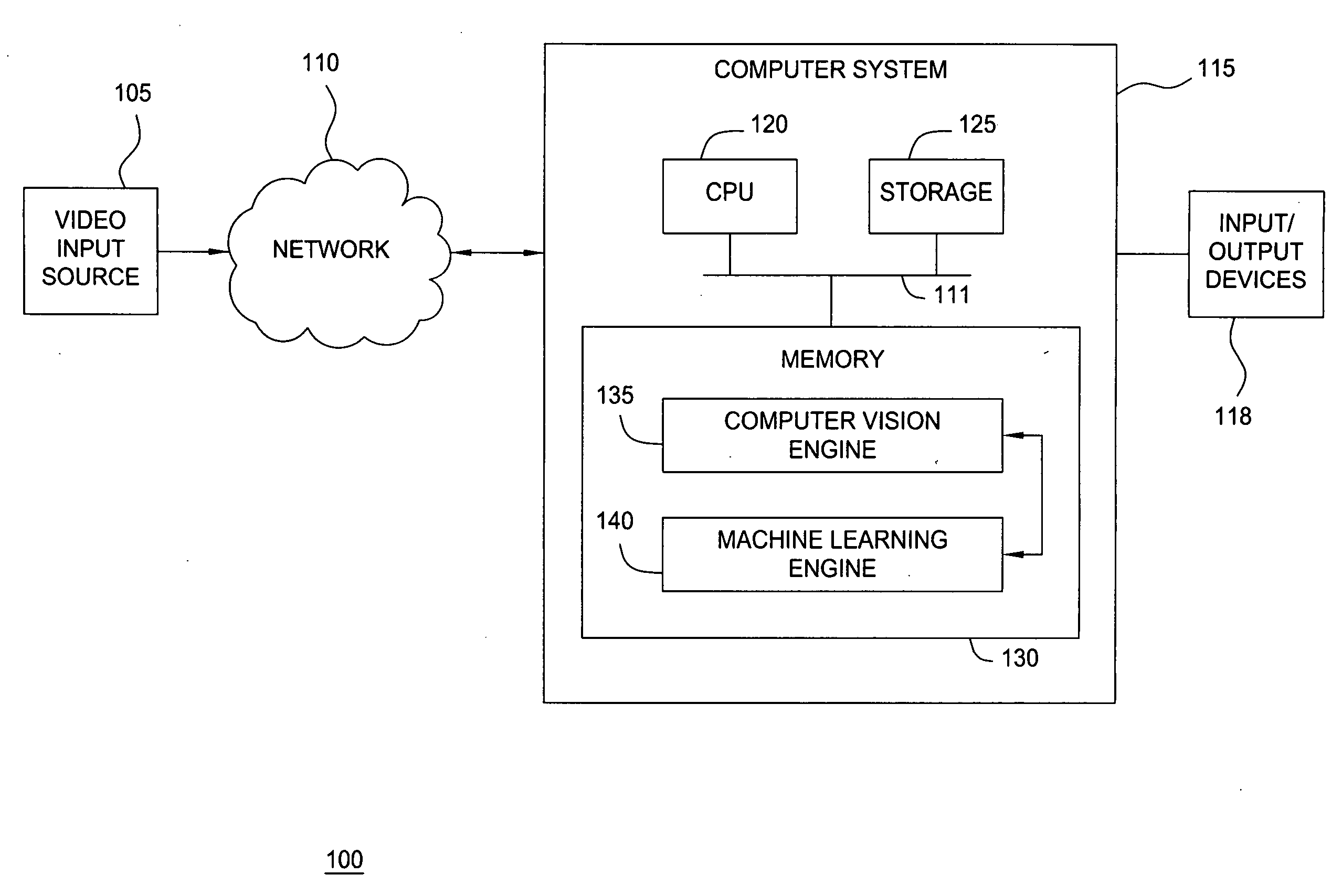

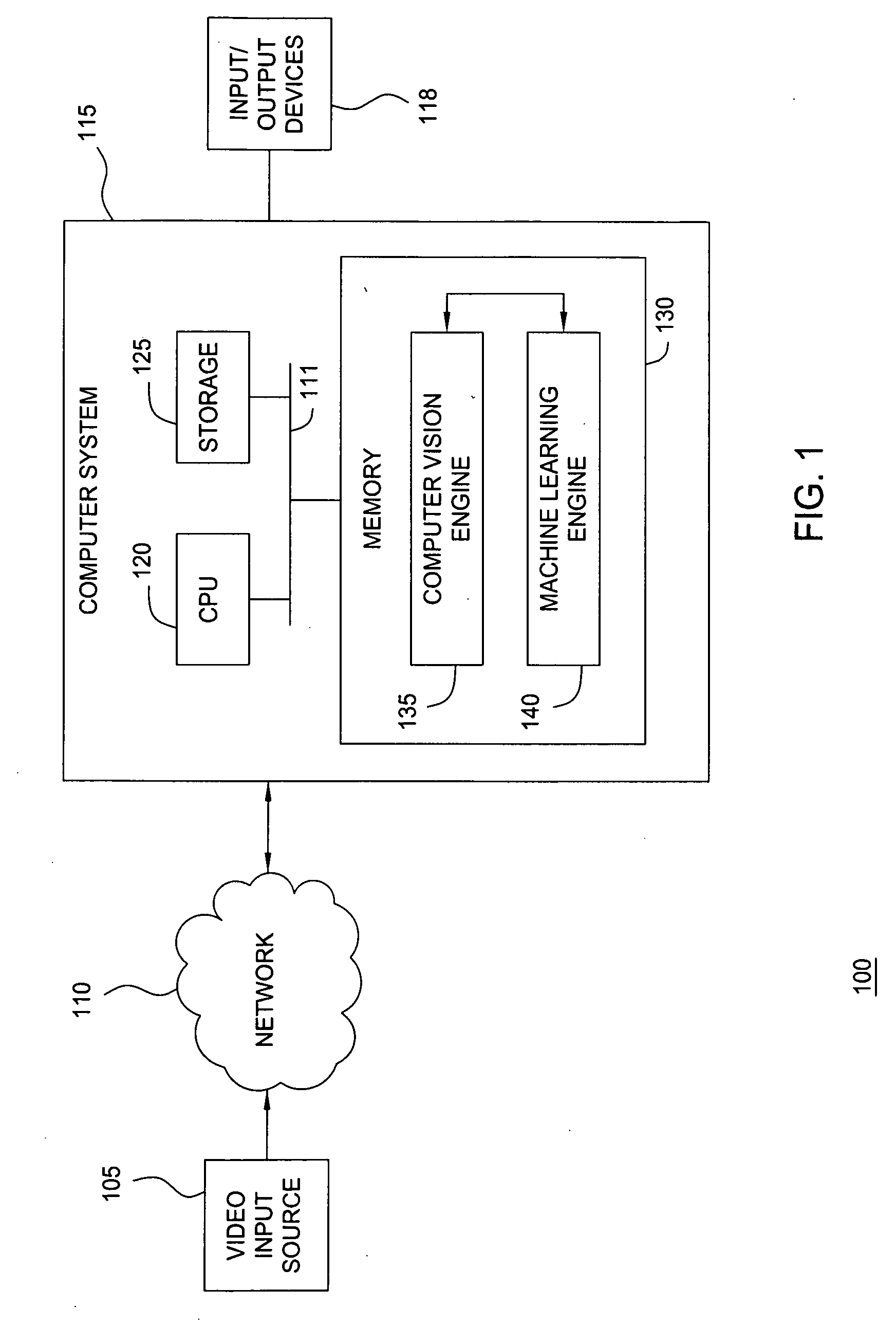

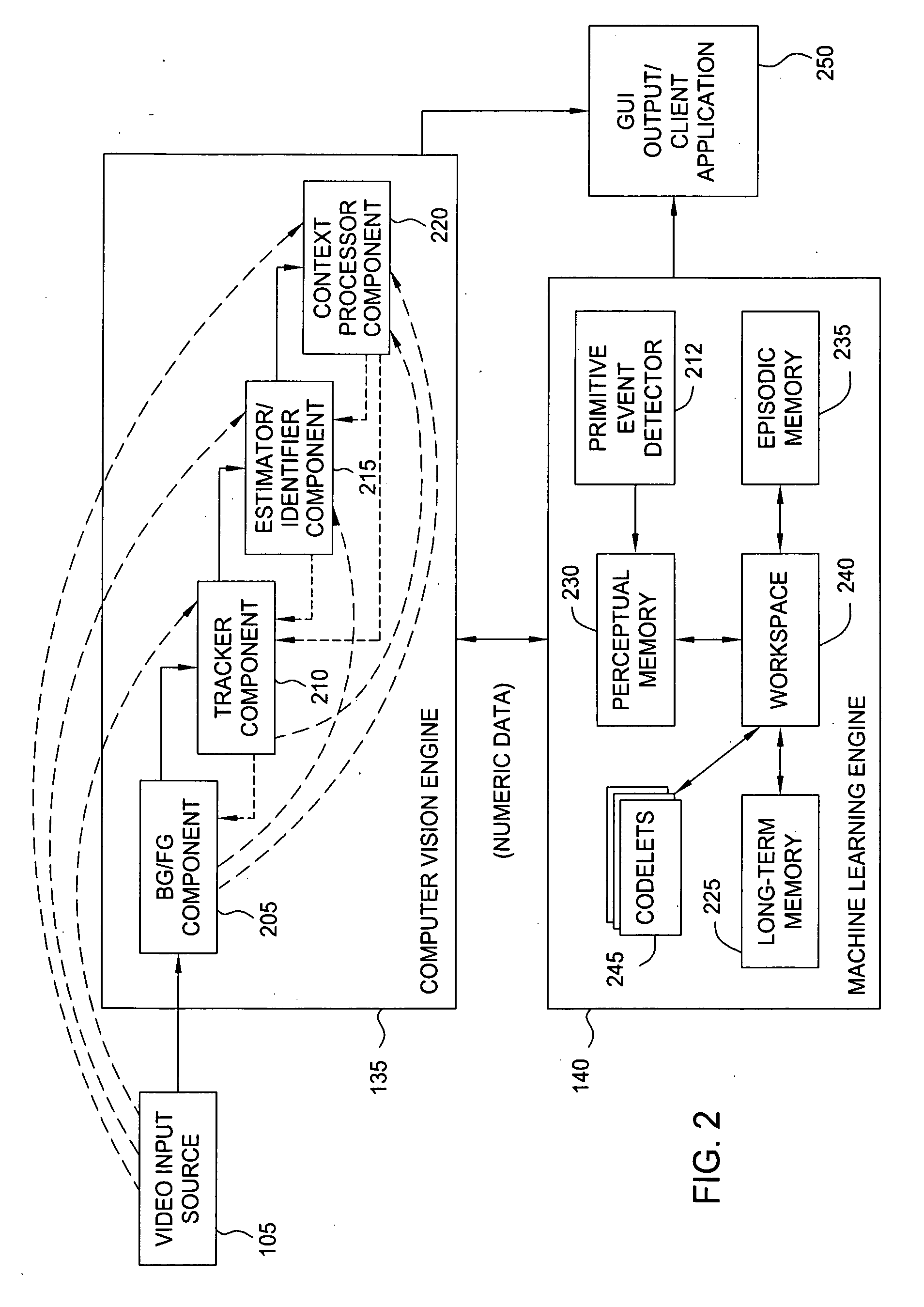

System and method for object identification and behavior characterization using video analysis

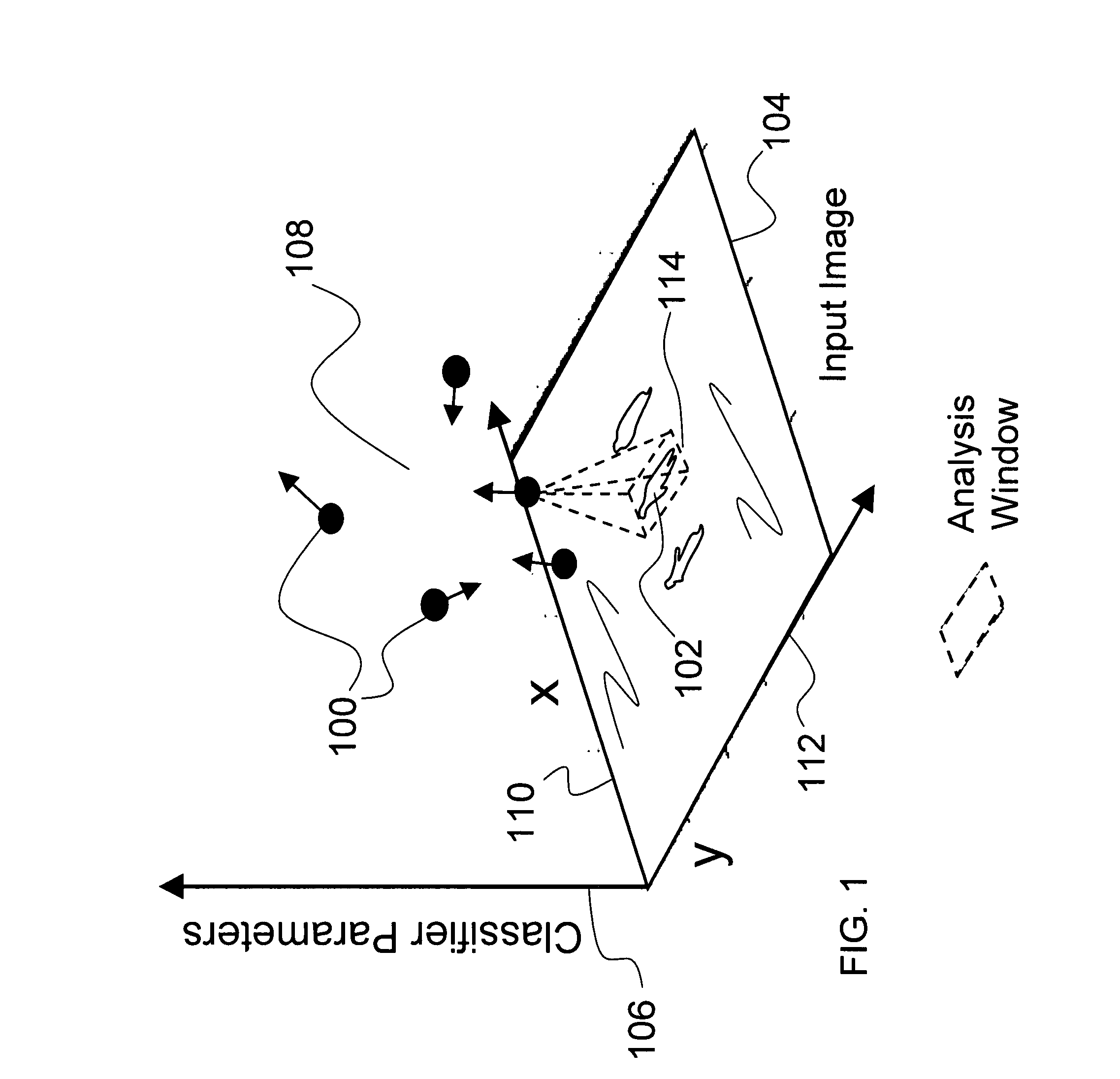

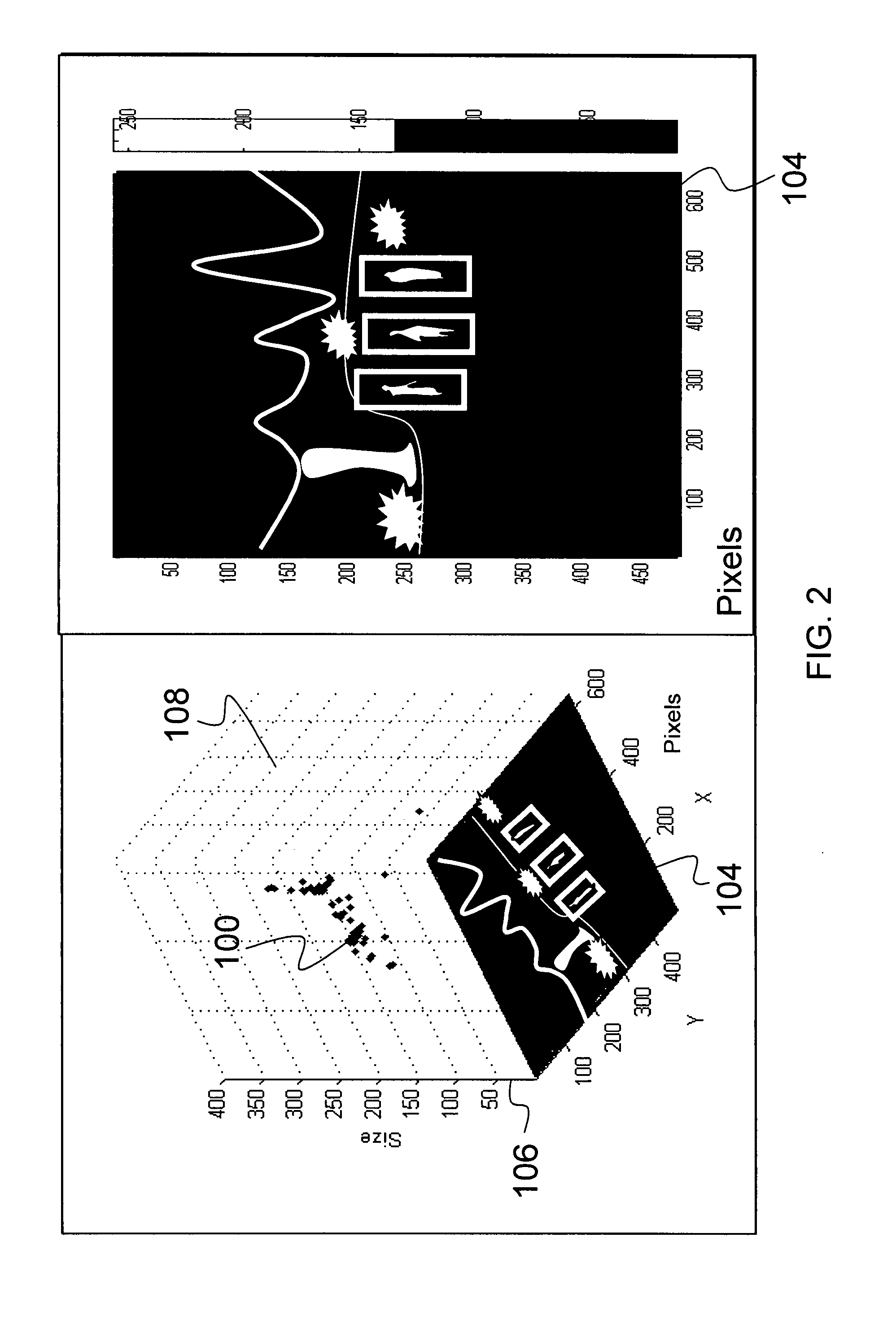

InactiveUS7068842B2Accurate identificationEfficient detectionImage enhancementImage analysisProbabilistic methodAnimal behavior

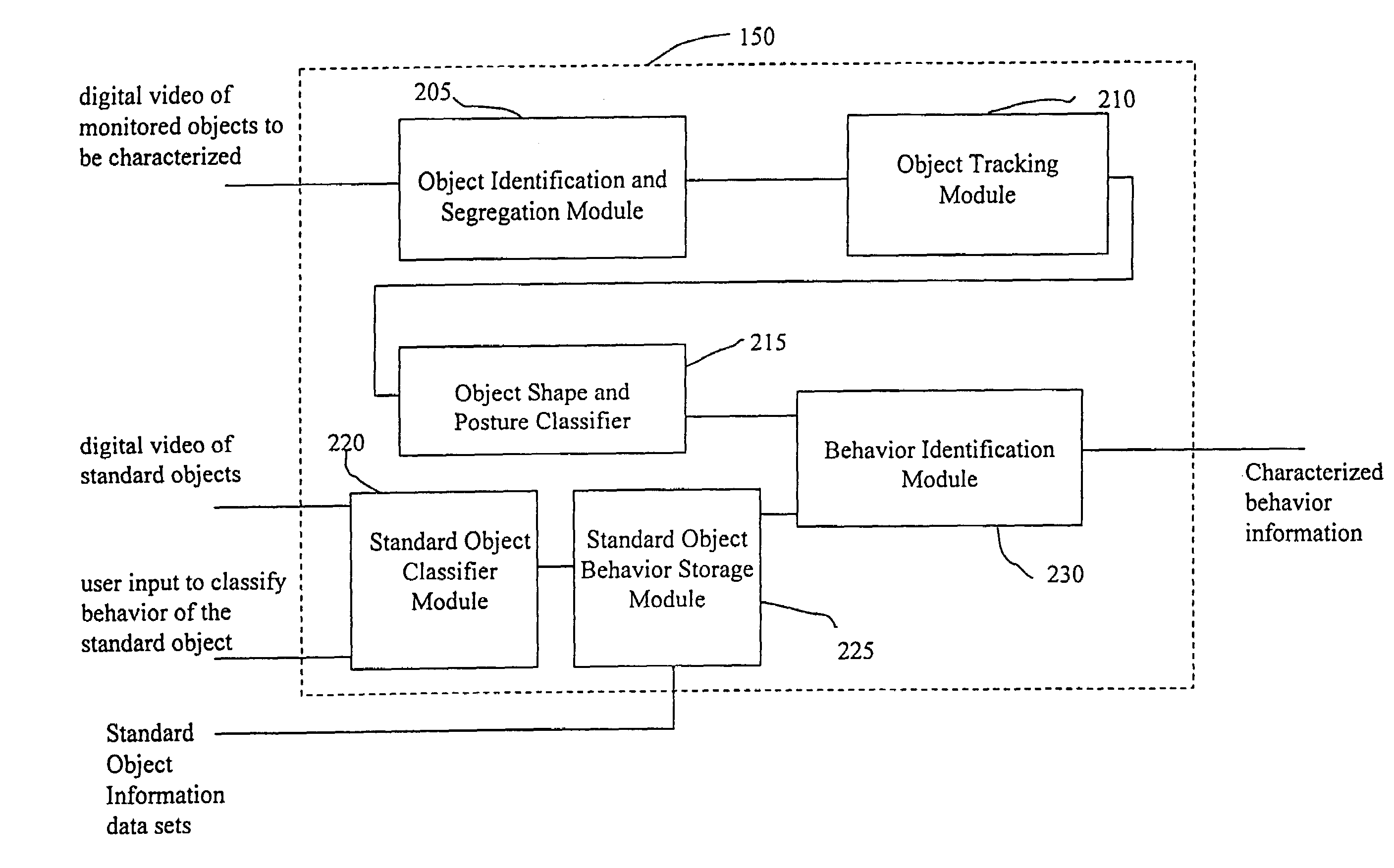

In general, the present invention is directed to systems and methods for finding the position and shape of an object using video. The invention includes a system with a video camera coupled to a computer in which the computer is configured to automatically provide object segmentation and identification, object motion tracking (for moving objects), object position classification, and behavior identification. In a preferred embodiment, the present invention may use background subtraction for object identification and tracking, probabilistic approach with expectation-maximization for tracking the motion detection and object classification, and decision tree classification for behavior identification. Thus, the present invention is capable of automatically monitoring a video image to identify, track and classify the actions of various objects and the object's movements within the image. The image may be provided in real time or from storage. The invention is particularly useful for monitoring and classifying animal behavior for testing drugs and genetic mutations, but may be used in any of a number of other surveillance applications.

Owner:CLEVER SYS

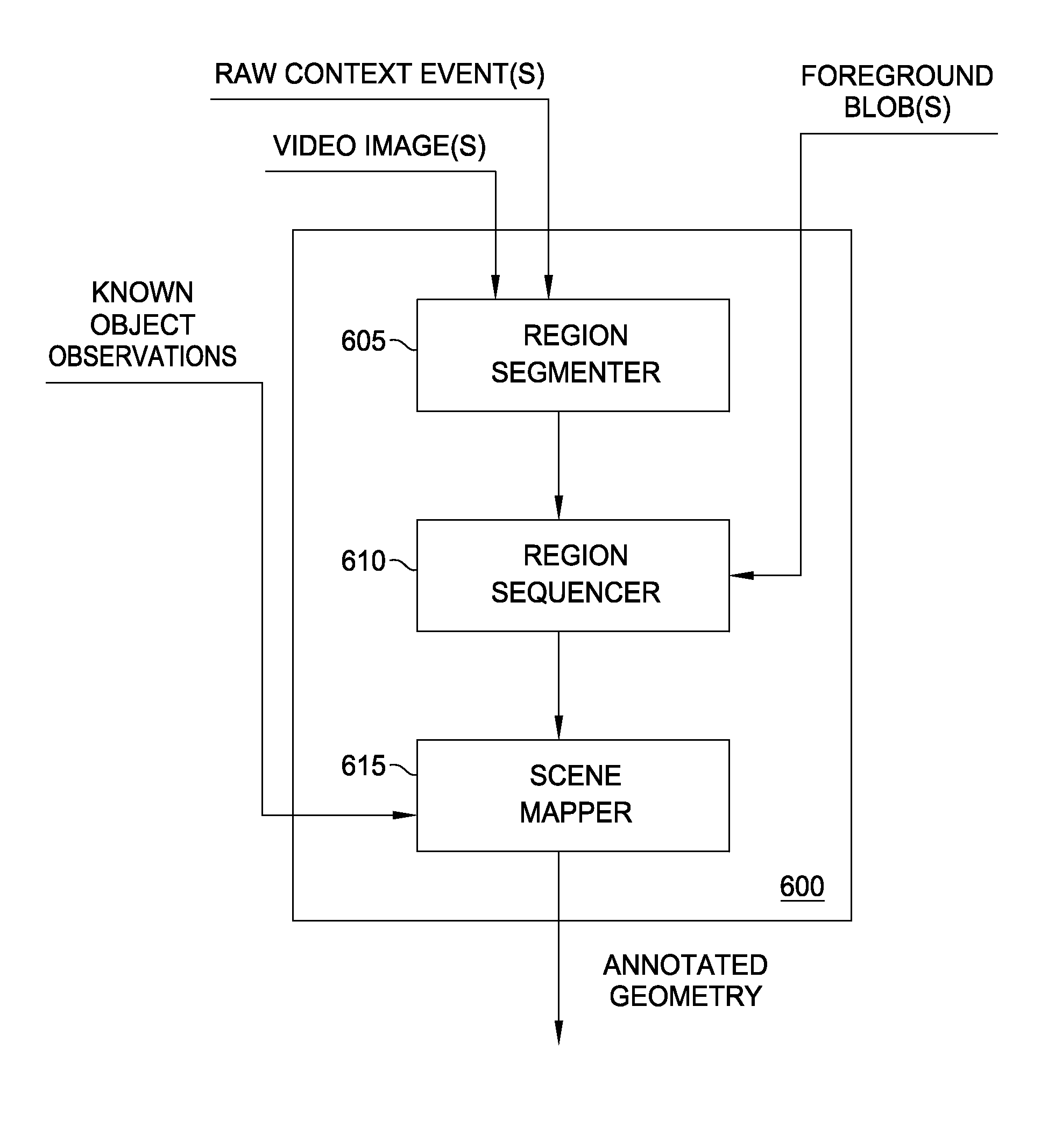

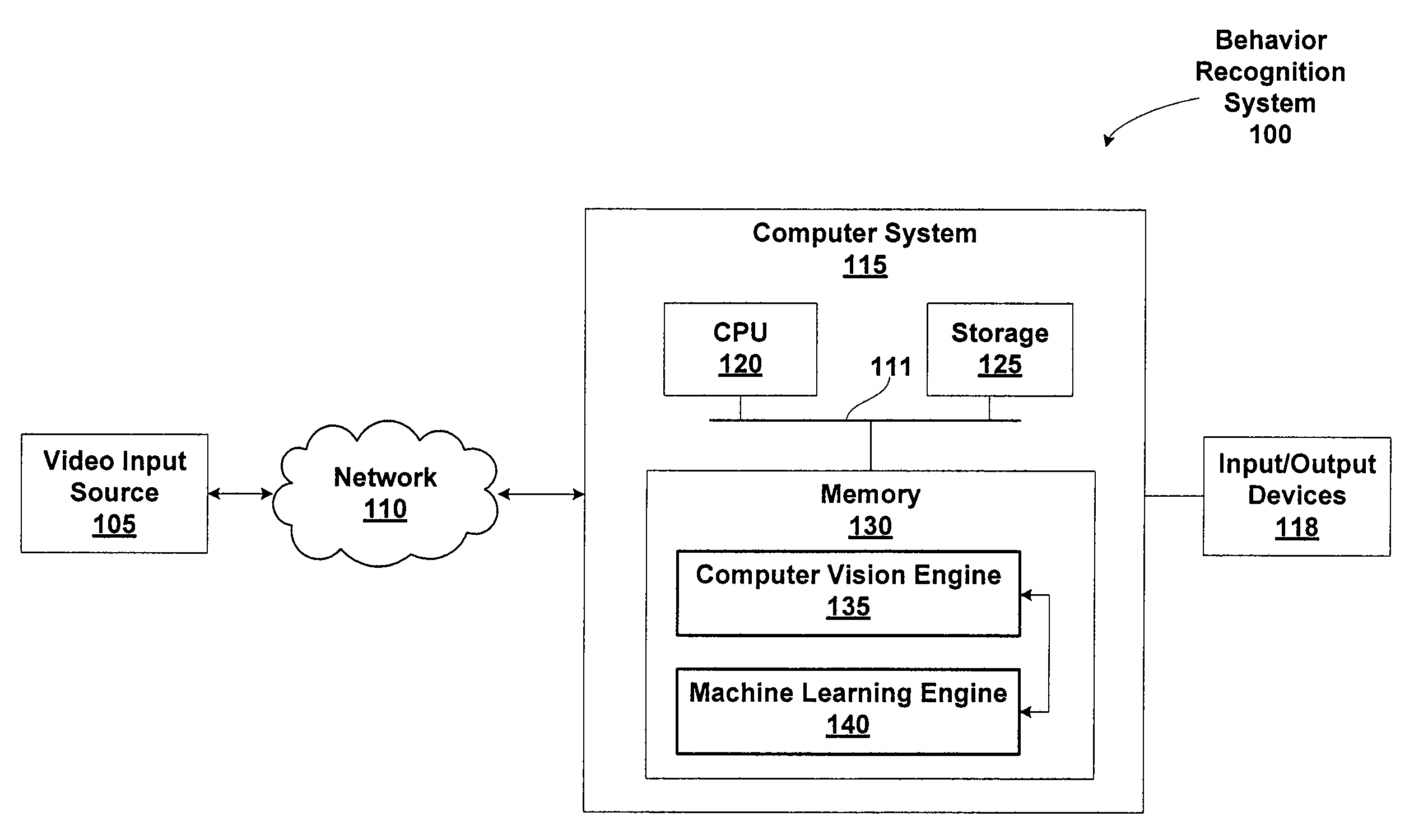

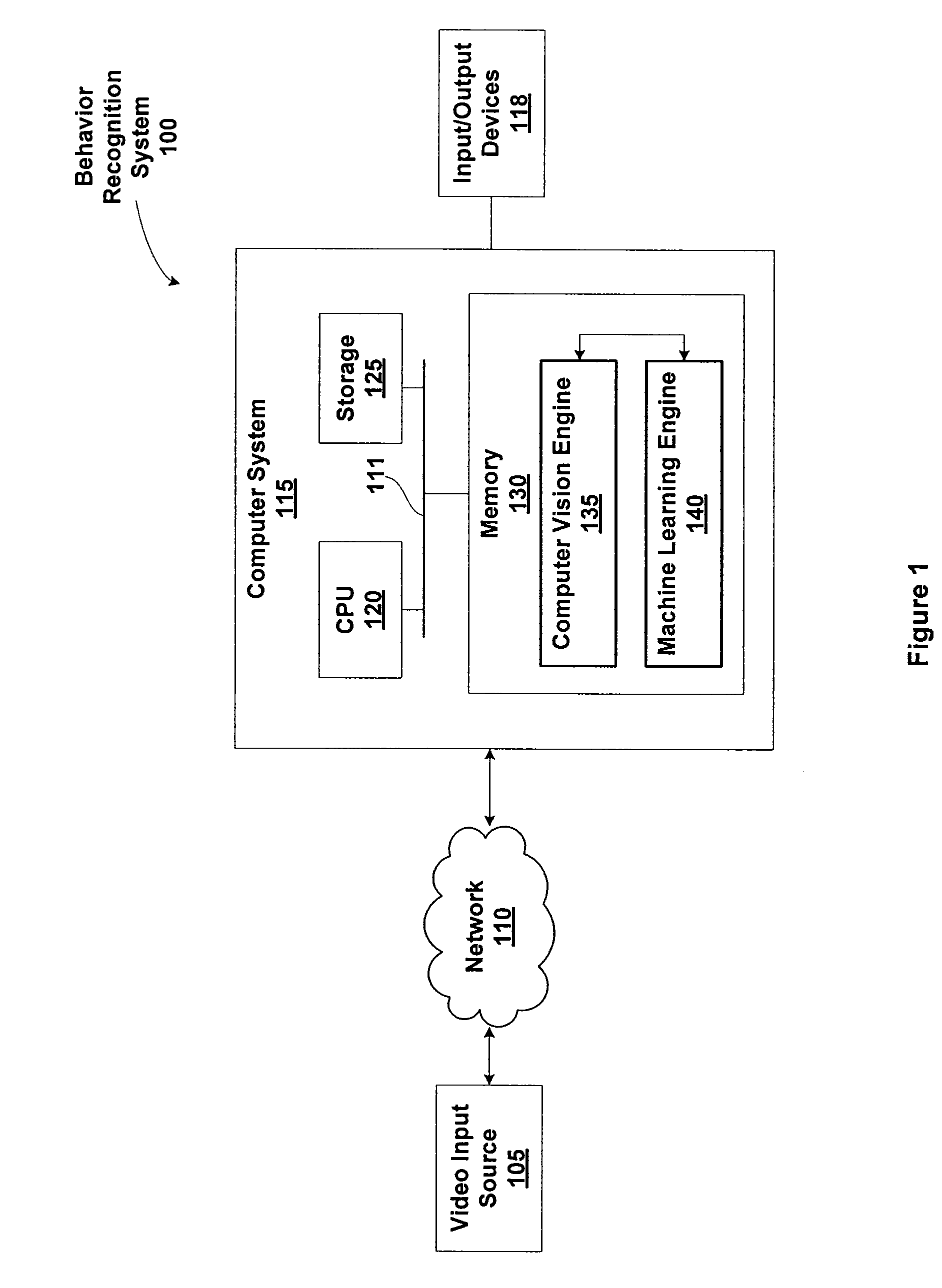

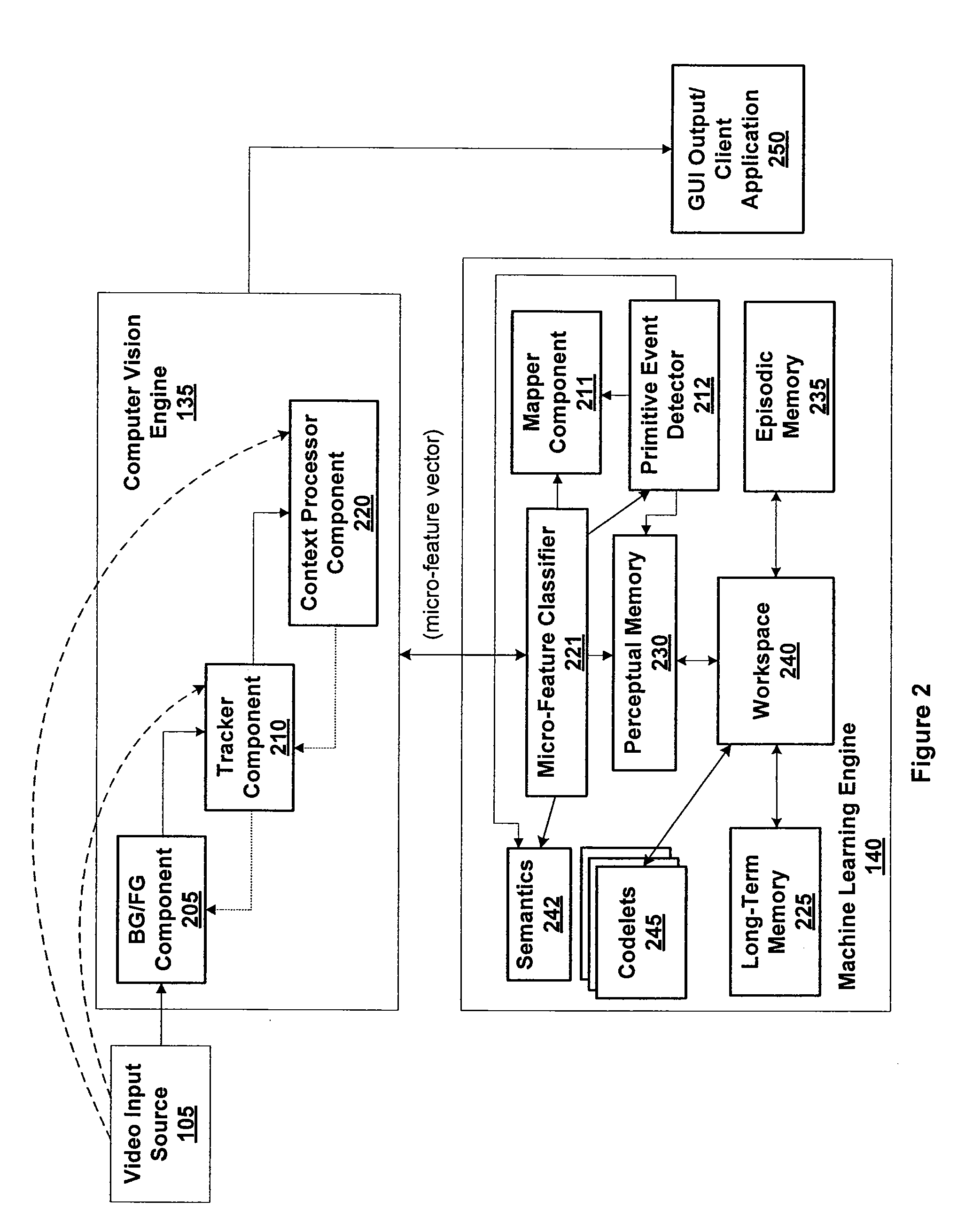

Behavioral recognition system

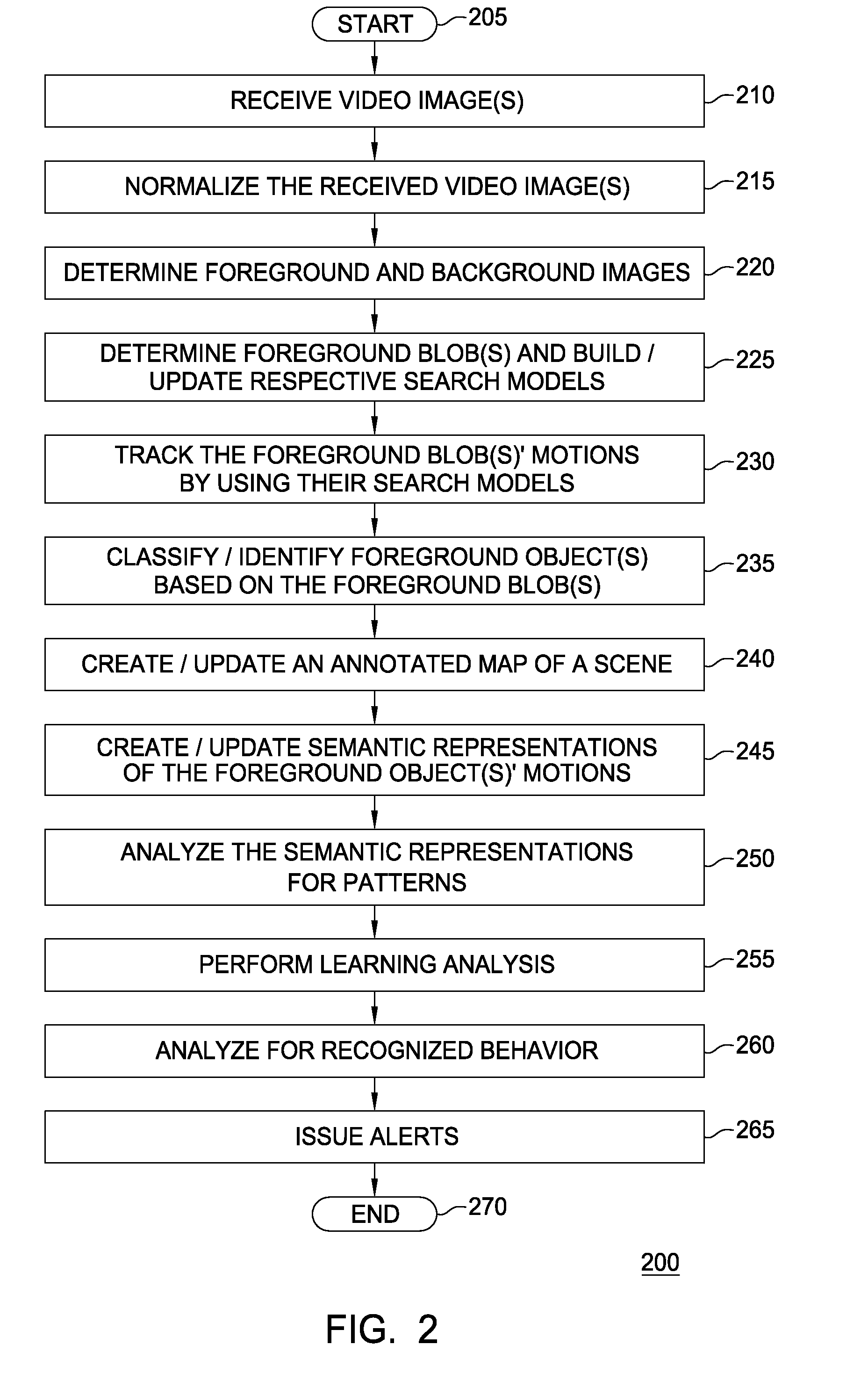

ActiveUS20080193010A1Digital computer detailsCharacter and pattern recognitionSemantic representationAnomalous behavior

Embodiments of the present invention provide a method and a system for analyzing and learning behavior based on an acquired stream of video frames. Objects depicted in the stream are determined based on an analysis of the video frames. Each object may have a corresponding search model used to track an object's motion frame-to-frame. Classes of the objects are determined and semantic representations of the objects are generated. The semantic representations are used to determine objects' behaviors and to learn about behaviors occurring in an environment depicted by the acquired video streams. This way, the system learns rapidly and in real-time normal and abnormal behaviors for any environment by analyzing movements or activities or absence of such in the environment and identifies and predicts abnormal and suspicious behavior based on what has been learned.

Owner:MOTOROLA SOLUTIONS INC

Behavior recognition system

InactiveUS20090274339A9Television system detailsCharacter and pattern recognitionBiological motionGait

A system for recognizing various human and creature motion gaits and behaviors is presented. These behaviors are defined as combinations of “gestures” identified on various parts of a body in motion. For example, the leg gestures generated when a person runs are different than when a person walks. The system described here can identify such differences and categorize these behaviors. Gestures, as previously defined, are motions generated by humans, animals, or machines. Multiple gestures on a body (or bodies) are recognized simultaneously and used in determining behaviors. If multiple bodies are tracked by the system, then overall formations and behaviors (such as military goals) can be determined.

Owner:JOLLY SEVEN SERIES 70 OF ALLIED SECURITY TRUST I

Behavior recognition system

InactiveUS20060210112A1Television system detailsCharacter and pattern recognitionBiological motionGait

Owner:JOLLY SEVEN SERIES 70 OF ALLIED SECURITY TRUST I

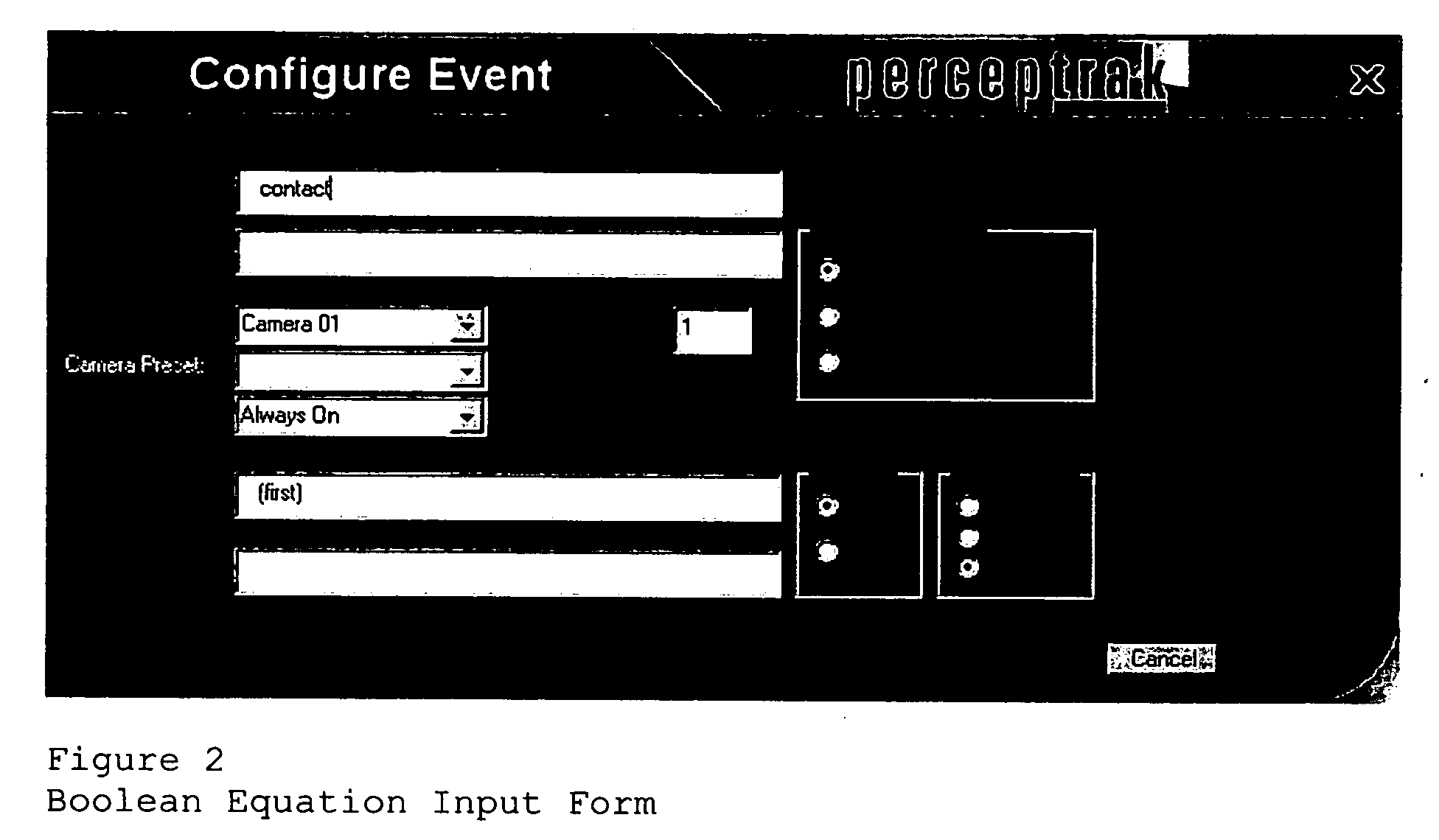

Intelligent video behavior recognition with multiple masks and configurable logic inference module

InactiveUS20060222206A1Character and pattern recognitionClosed circuit television systemsMulti eventSystem usage

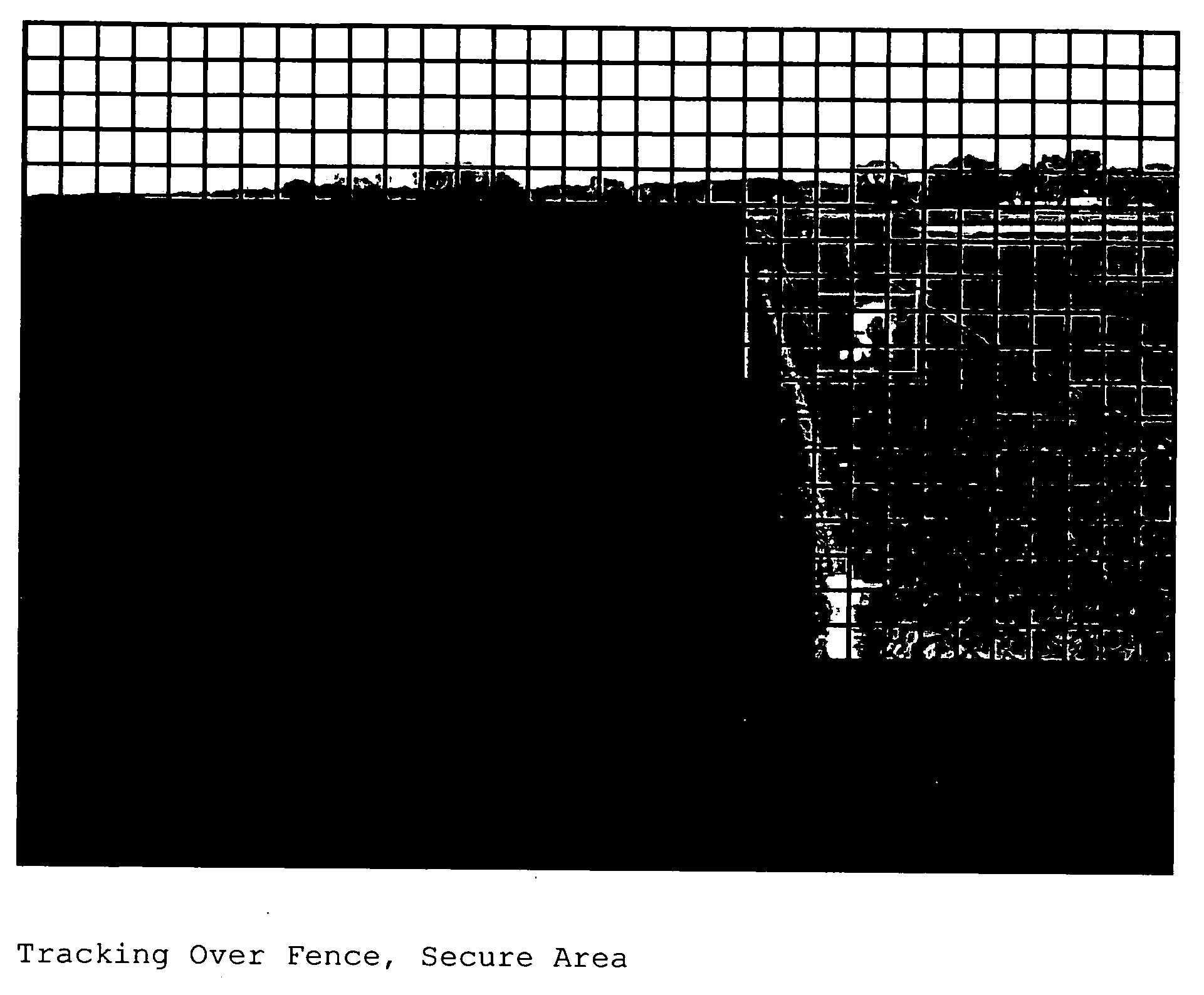

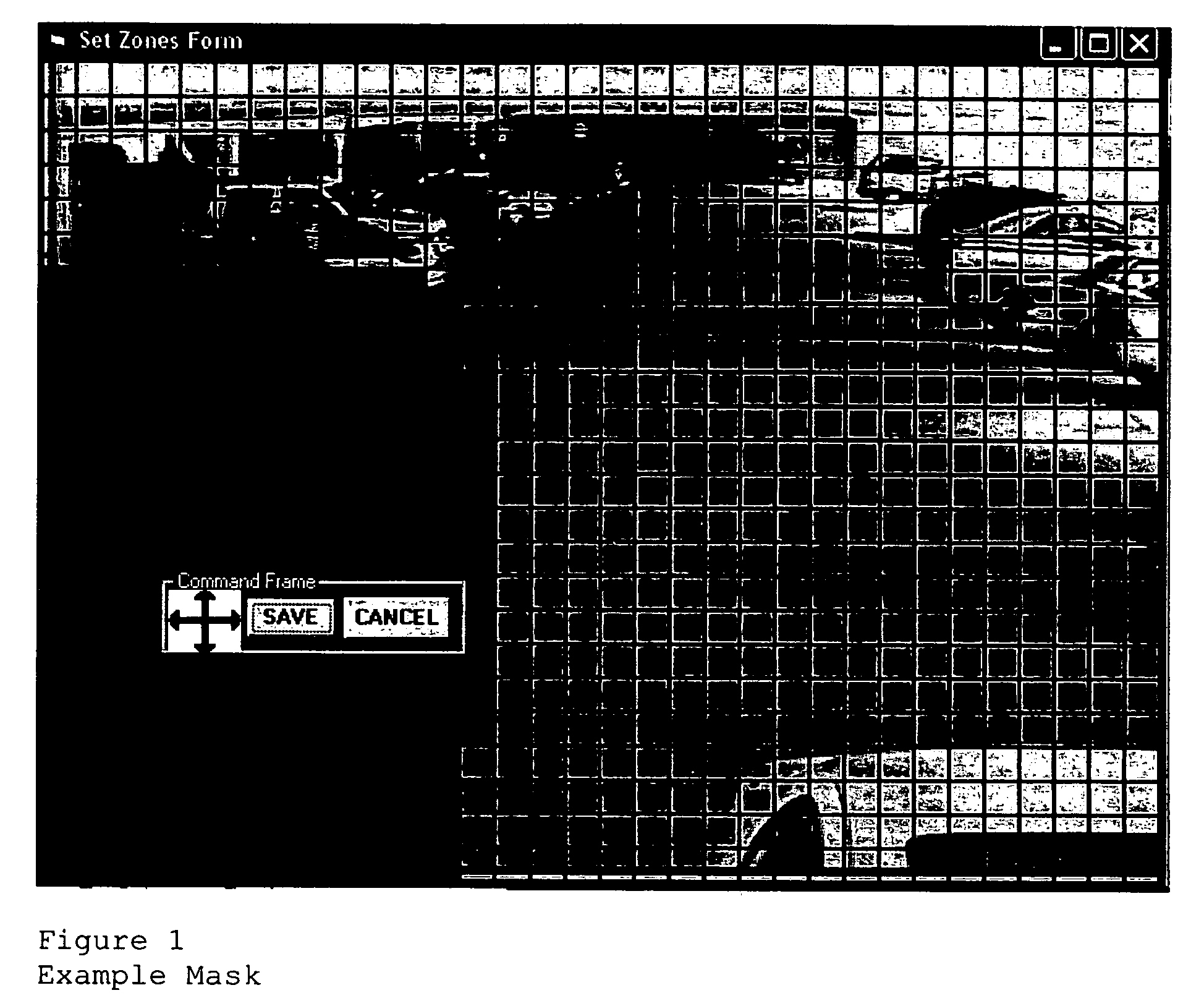

Methodology of implementing complex behavior recognition in an intelligent video system includes multiple event detection defining activity in different areas of the scene (“What”), multiple masks defining areas of a scene (“Where”), configurable time parameters (“When”), and a configurable logic inference engine to allow Boolean logic analysis based on any combination of logic-defined events and masks. Events are detected in a video scene that consists of one or more camera views termed a “virtual view”. The logic-defined event is a behavioral event connoting behavior, activities, characteristics, attributes, locations and / or patterns of a target subject of interest. A user interface allows a system user to select behavioral events for logic definition by the Boolean equation in accordance with a perceived advantage, need or purpose arising from context of system use.

Owner:CERNIUM

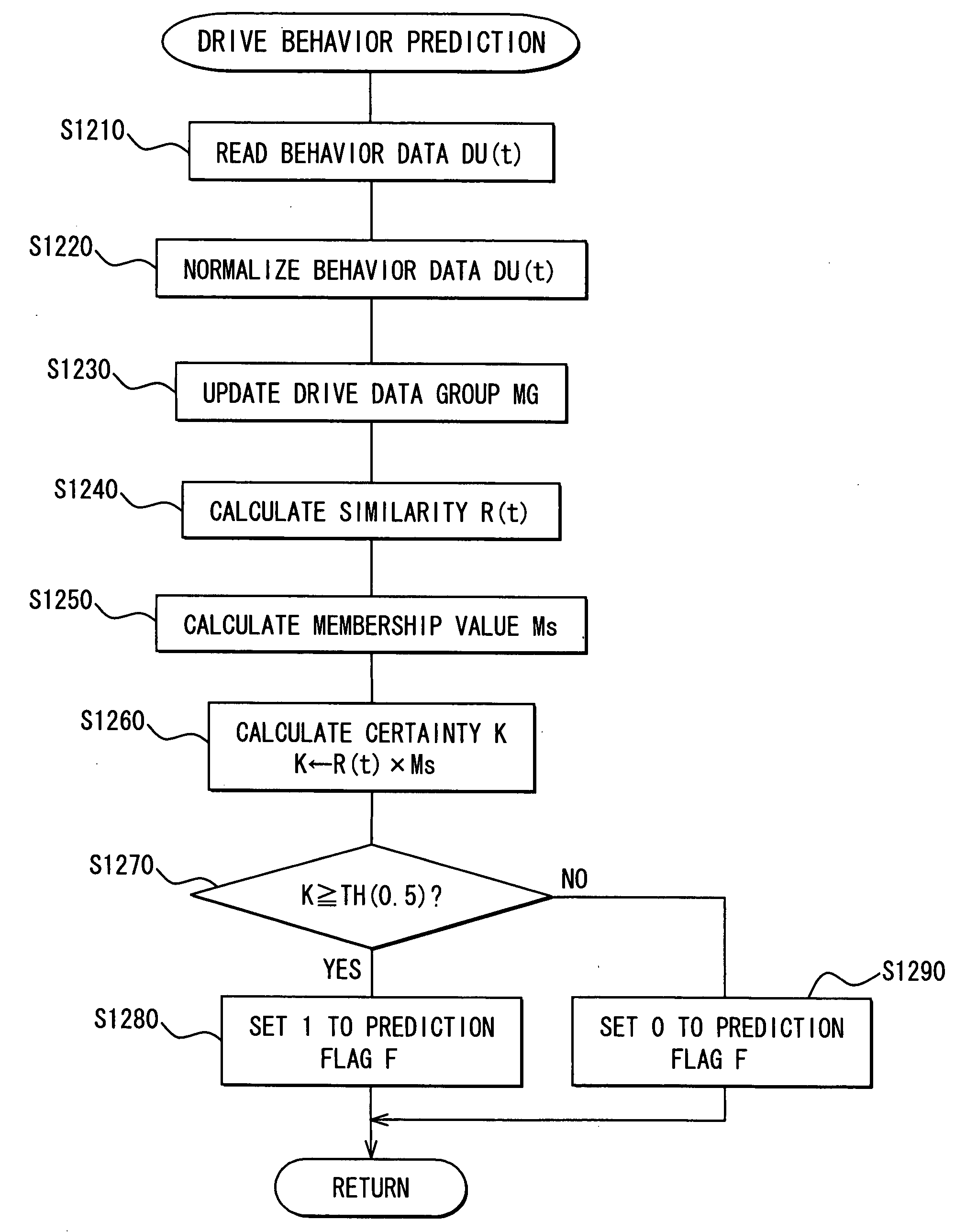

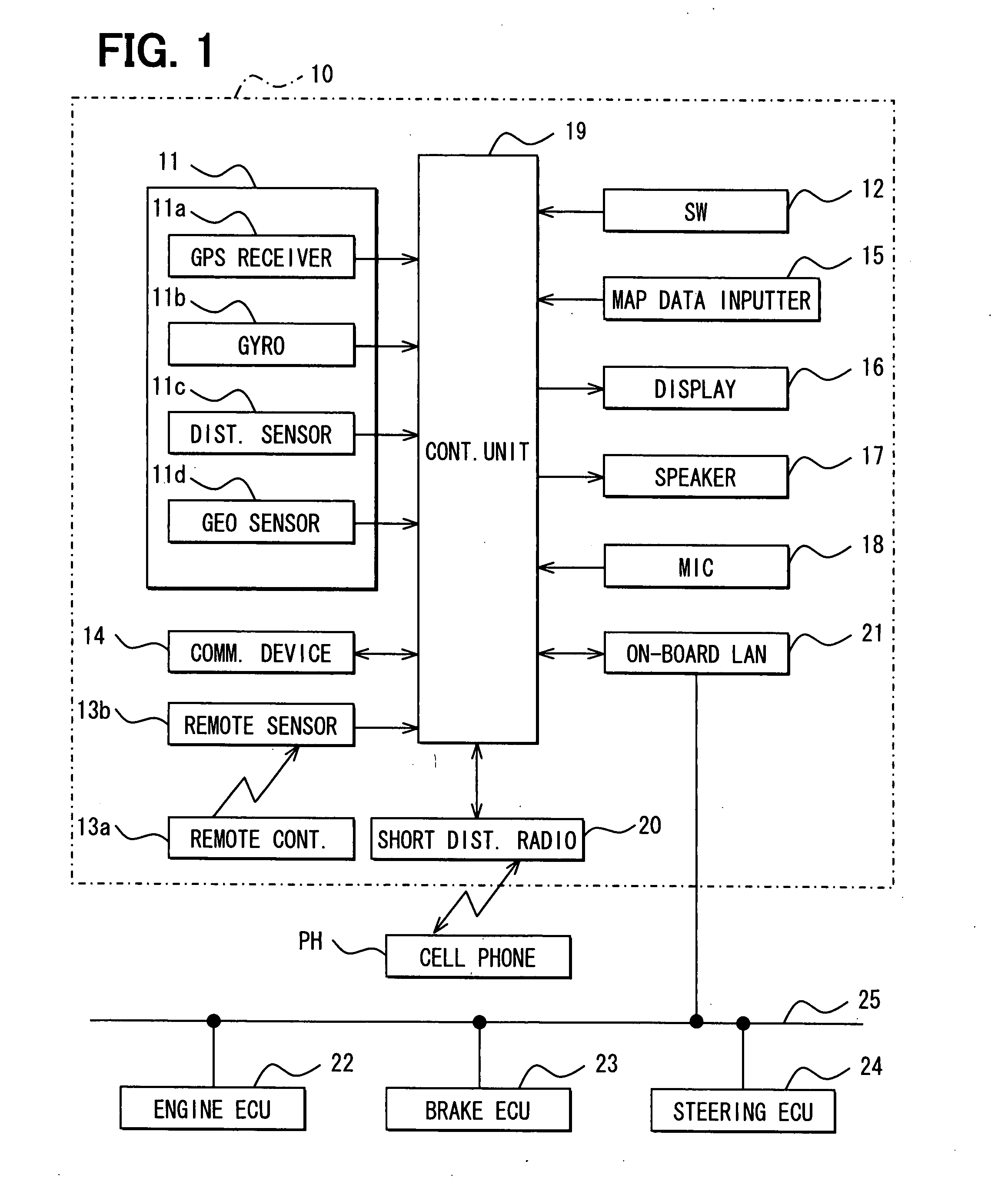

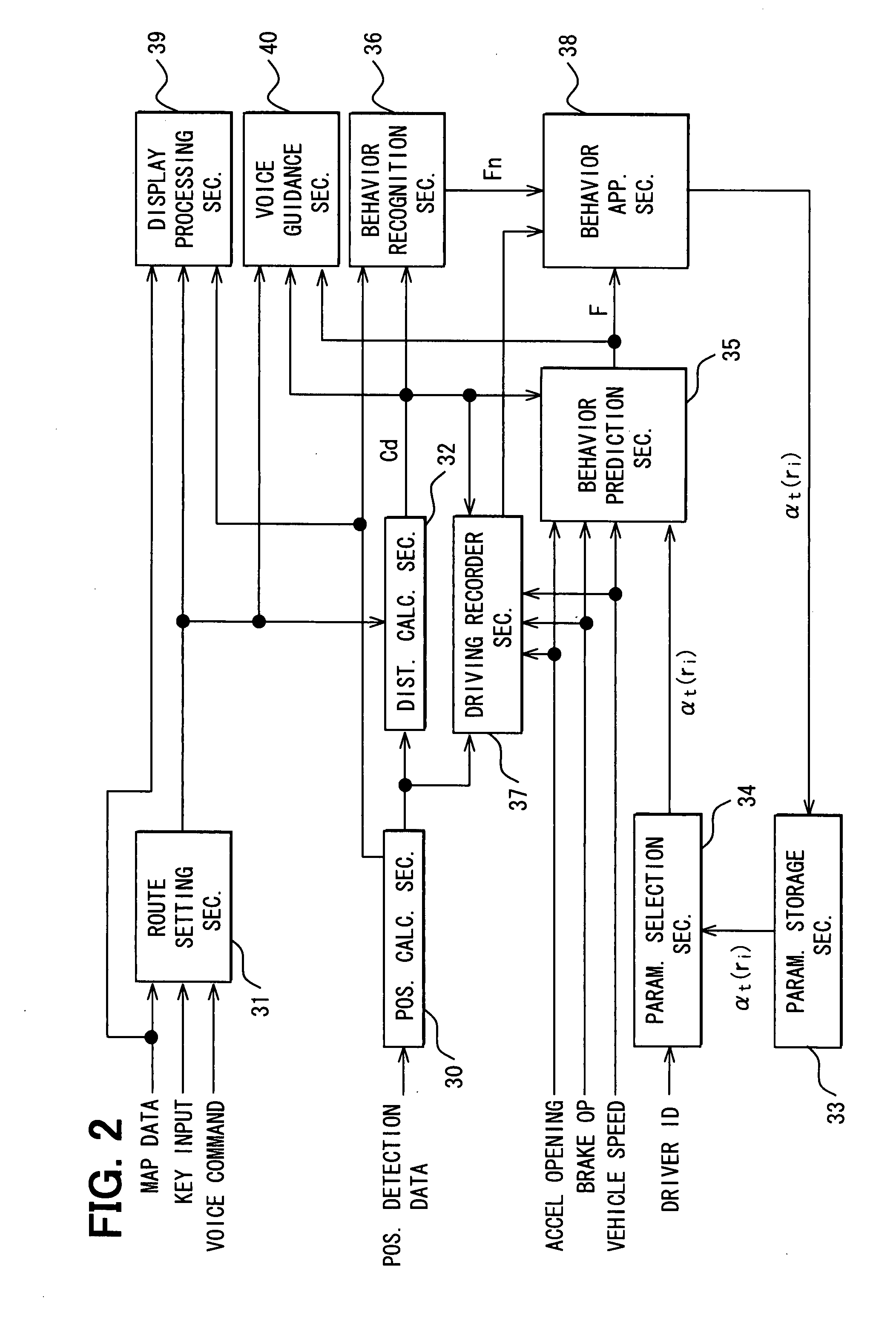

Driving behavior prediction method and apparatus

ActiveUS20080120025A1Improve reliabilityImprove forecast accuracyVehicle testingInstruments for road network navigationObject pointAlgorithm

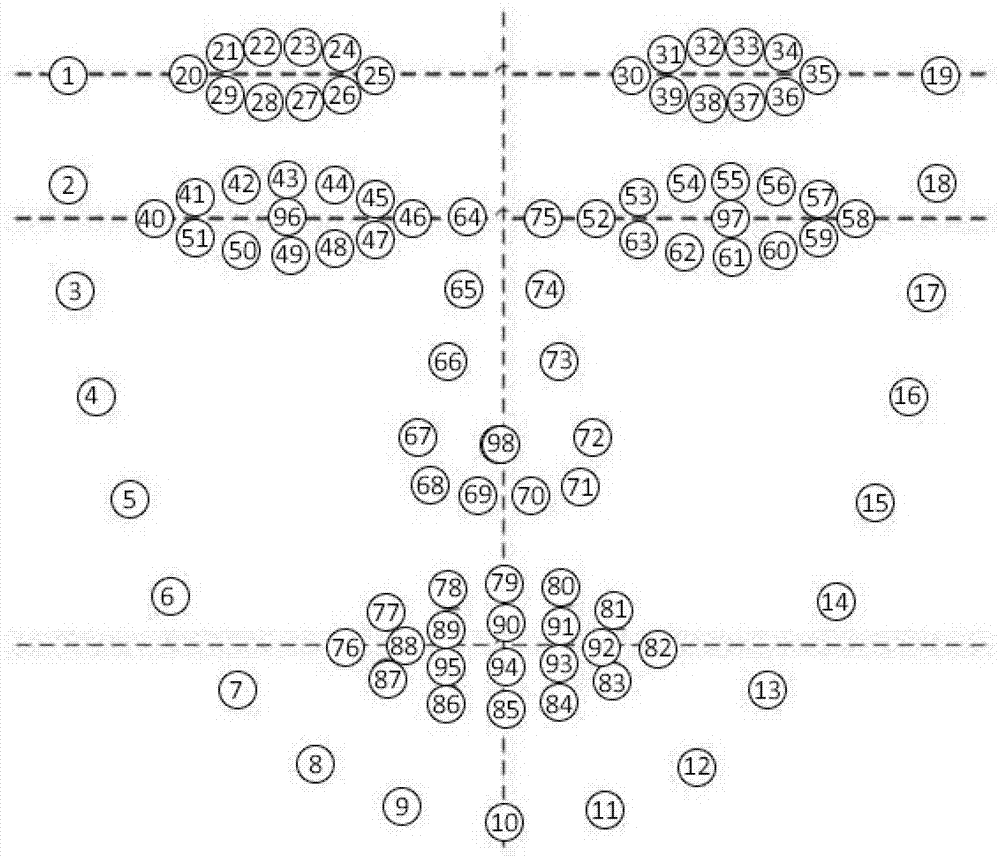

A driving behavior prediction apparatus includes, for accurately predicting a driving behavior, a position calculation unit for a subject vehicle position calculation, a route setting unit for setting a navigation route, a distance calculation unit for calculating a distance to a nearest object point, a parameter storage unit for storing a template weighting factor that reflects a driving operation tendency of a driver, a driving behavior prediction unit for predicting a driver's behavior based on vehicle information and the template weighting factor, a driving behavior recognition unit for recognizing driver's behavior at the object point, and a driving behavior learning unit for updating the template weighting factor so as to study the driving operation tendency of the driver in a case that a prediction result by the driving behavior prediction unit agrees with a recognition result by the driving behavior recognition unit.

Owner:DENSO CORP

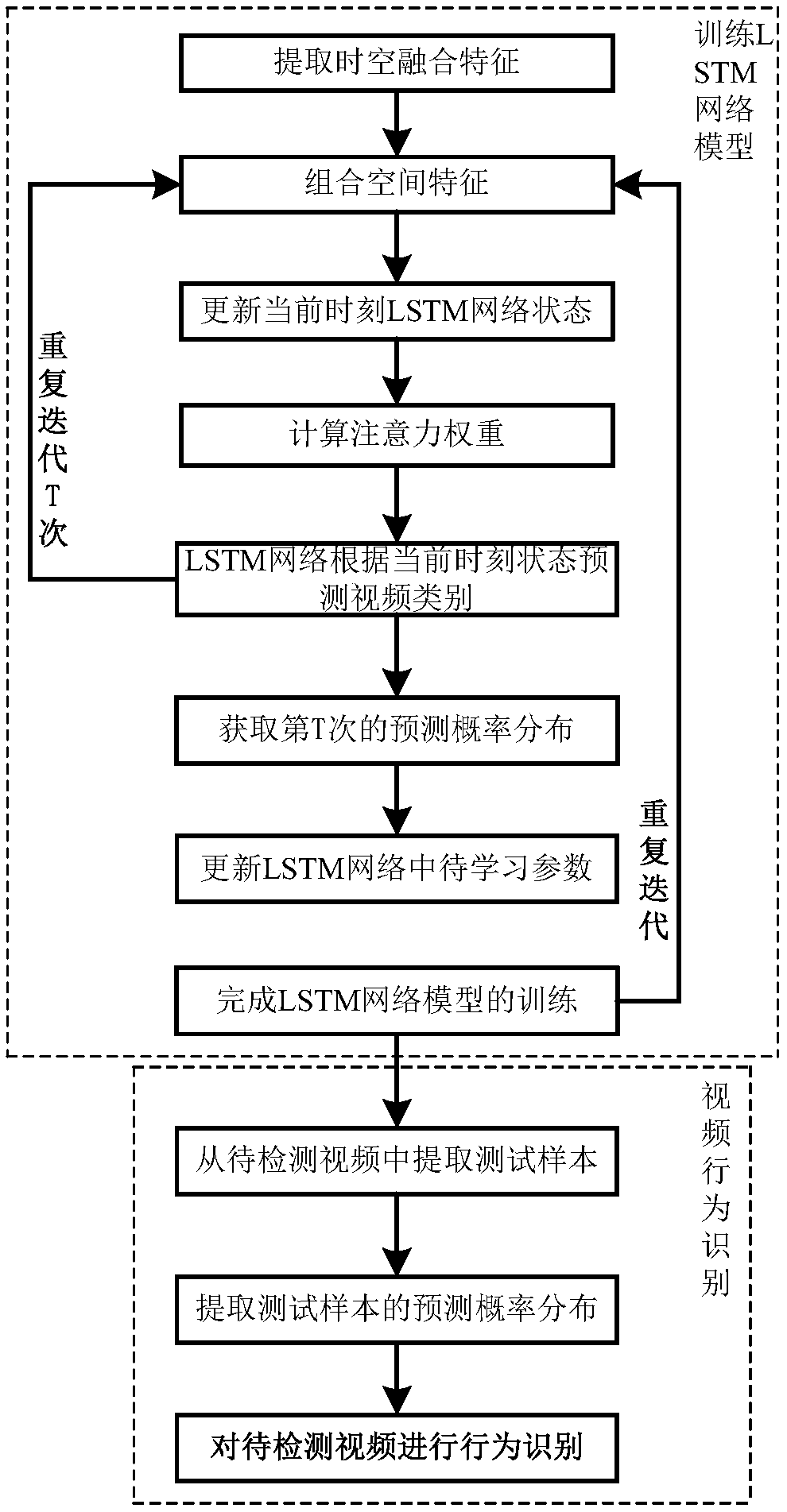

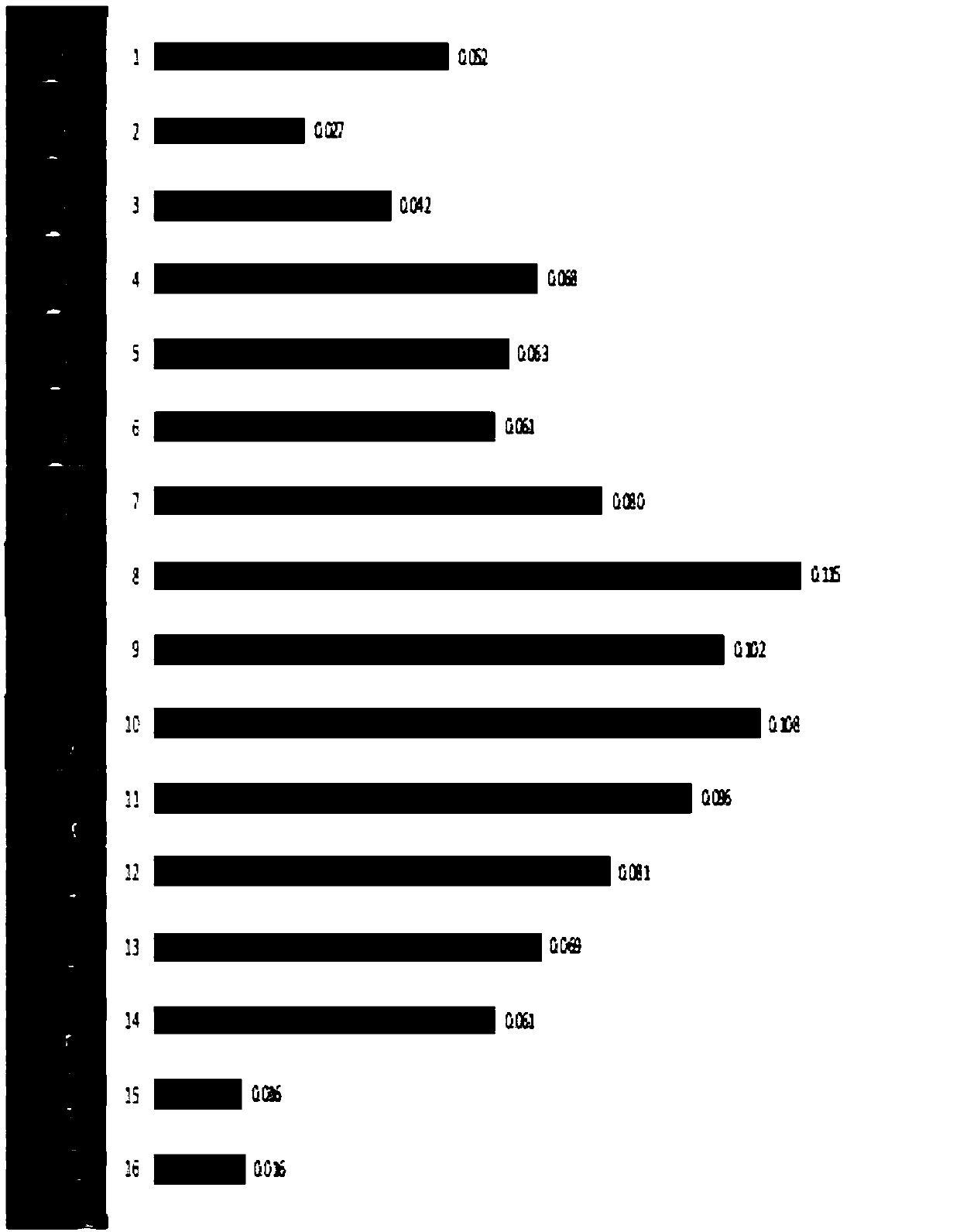

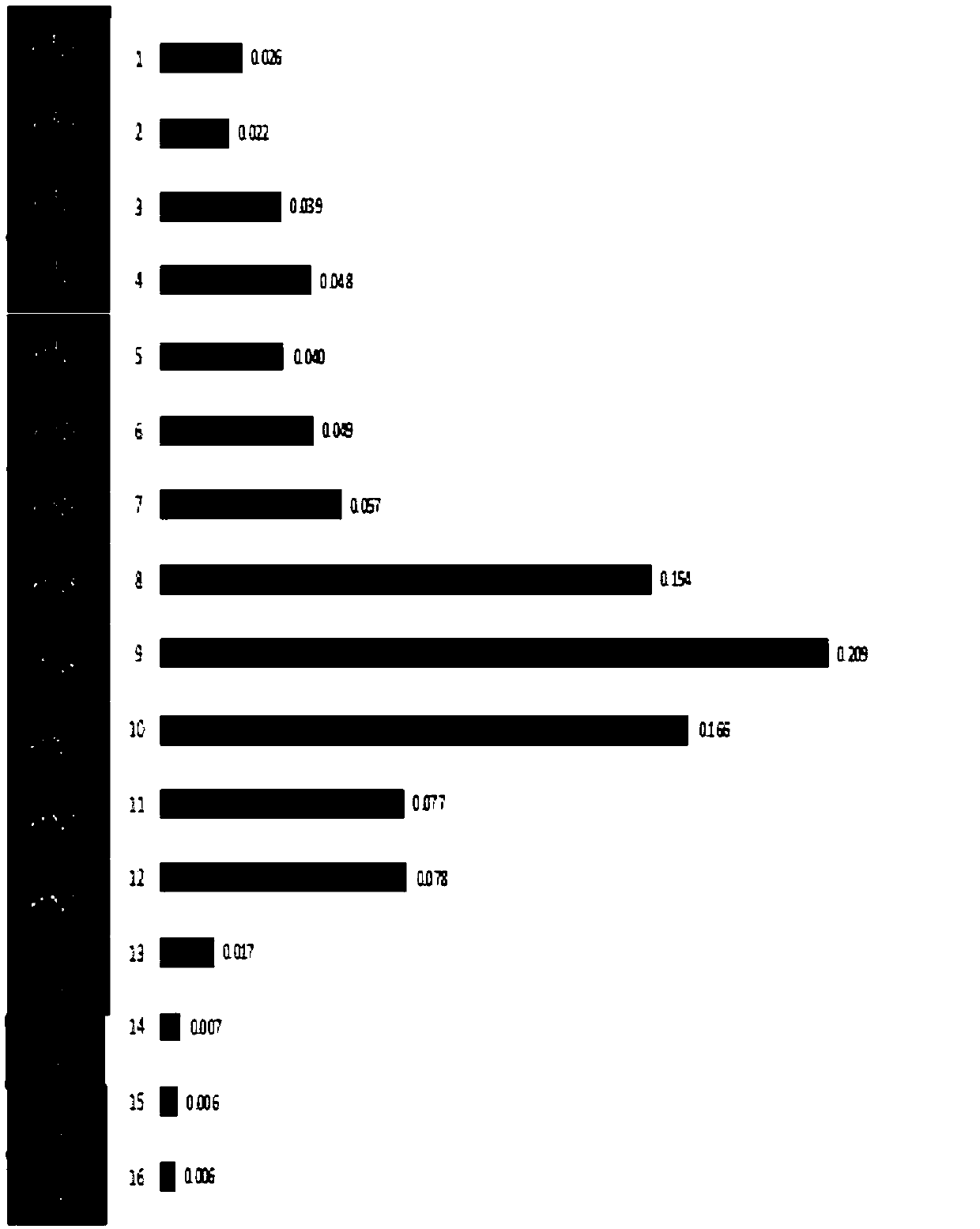

A video behavior recognition method based on spatio-temporal fusion features and attention mechanism

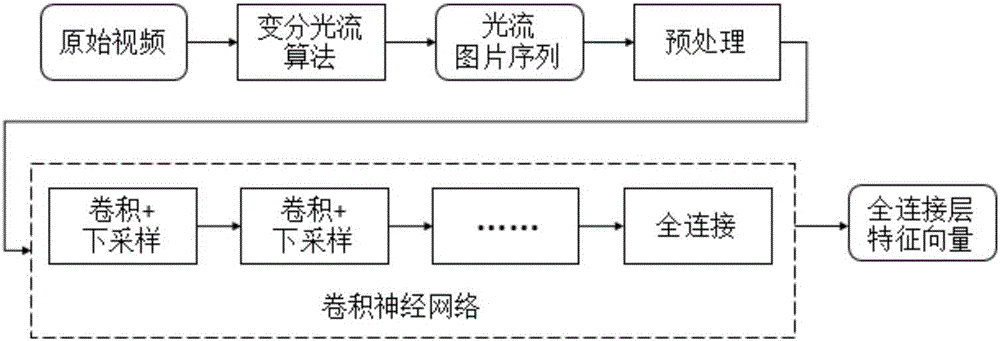

ActiveCN109101896AImprove accuracyEfficient use ofCharacter and pattern recognitionNeural architecturesFrame sequenceKey frame

The invention discloses a video behavior identification method based on spatio-temporal fusion characteristics and attention mechanism, The spatio-temporal fusion feature of input video is extracted by convolution neural network Inception V3, and then combining with the attention mechanism of human visual system on the basis of spatio-temporal fusion characteristics, the network can automaticallyallocate weights according to the video content, extract the key frames in the video frame sequence, and identify the behavior from the video as a whole. Thus, the interference of redundant information on the identification is eliminated, and the accuracy of the video behavior identification is improved.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

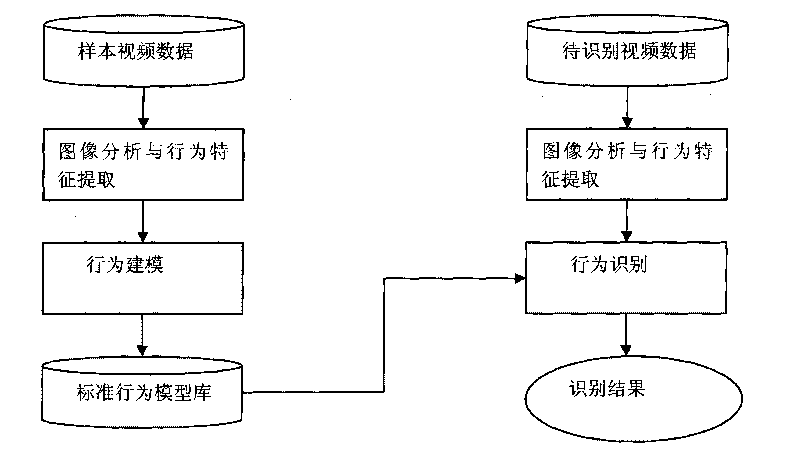

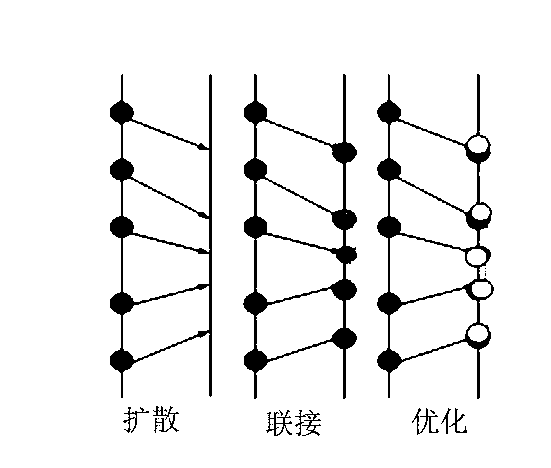

Movement human abnormal behavior identification method based on template matching

InactiveCN101719216AGood effectThe effect is accurateCharacter and pattern recognitionVisual technologyAnomalous behavior

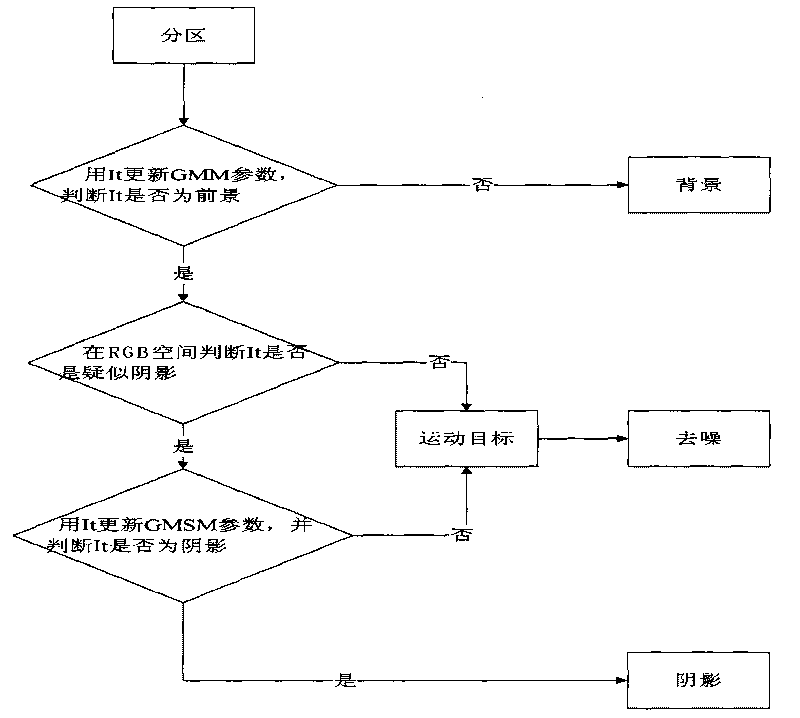

The invention relates to a movement human abnormal behavior identification method based on template matching, which mainly comprises the steps of: video image acquisition and behavior characteristic extraction. The movement human abnormal behavior identification method is a mode identification technology based on statistical learning of samples. The movement of a human is analyzed and comprehended by using a computer vision technology, the behavior identification is directly carried out based on geometric calculation of a movement region and recording and alarming are carried out; the Gaussian filtering denoising and the neighborhood denoising are combined for realizing the denoising, thereby improving the independent analysis property and the intelligent monitoring capacity of an intelligent monitoring system, achieving higher identification accuracy for abnormal behaviors, effectively removing the complex background and the noise of a vision acquired image, and improving the efficiency and the robustness of the detection algorithm. The invention has simple modeling, simple algorithm and accurate detection, can be widely applied to occasions of banks, museums and the like, and is also helpful to improve the safety monitoring level of public occasions.

Owner:XIDIAN UNIV

Crime monitoring method based on face recognition technology and behavior and sound recognition

ActiveCN103246869ALock in timeInhibit deteriorationCharacter and pattern recognitionSpeech recognitionSound recognitionGps positioning

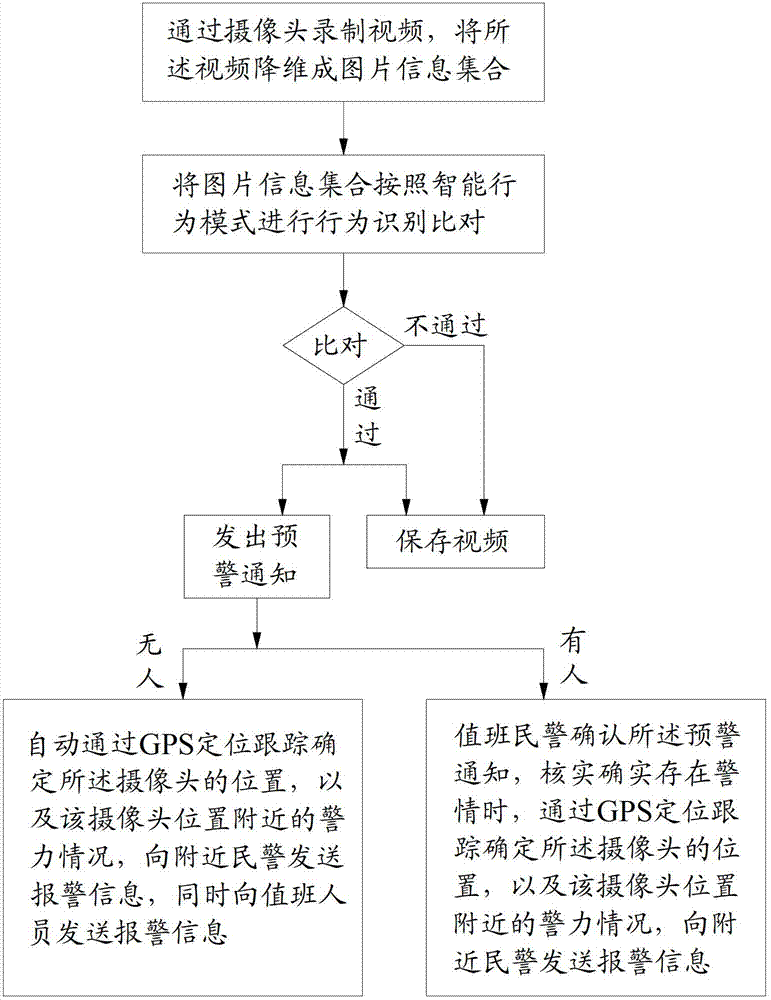

The invention provides a crime monitoring method based on the face recognition technology and behavior and sound recognition, which includes the following steps: Step 1, recoding a video through a camera, reducing dimensions of the video to form a picture message ensemble; Step 2, performing the recognition comparison to the picture message ensemble as per an intelligent behavior pattern, and issuing an early warning and storing the video if the comparison is successful; and Step 3, verifying the crime situation by a police on duty, confirming the position of the camera through a GPS for positioning and tracking and confirming the police strength nearby, and sending crime situation to polices nearby by the police on duty, and if no police is on duty, confirming the position of the camera automatically through the GPS for positioning and tracking and confirming the police strength nearby, and sending crime situation to polices nearby and staff on duty. According to the invention, different intelligent behavior patterns are set as per the monitoring requirements of different situations, targeted monitoring is introduced, early warning prevention in advance is realized, the case is prevented from further worsening, the time in solving a criminal case is shortened, and the detection rate is improved.

Owner:FUJIAN YIRONG INFORMATION TECH

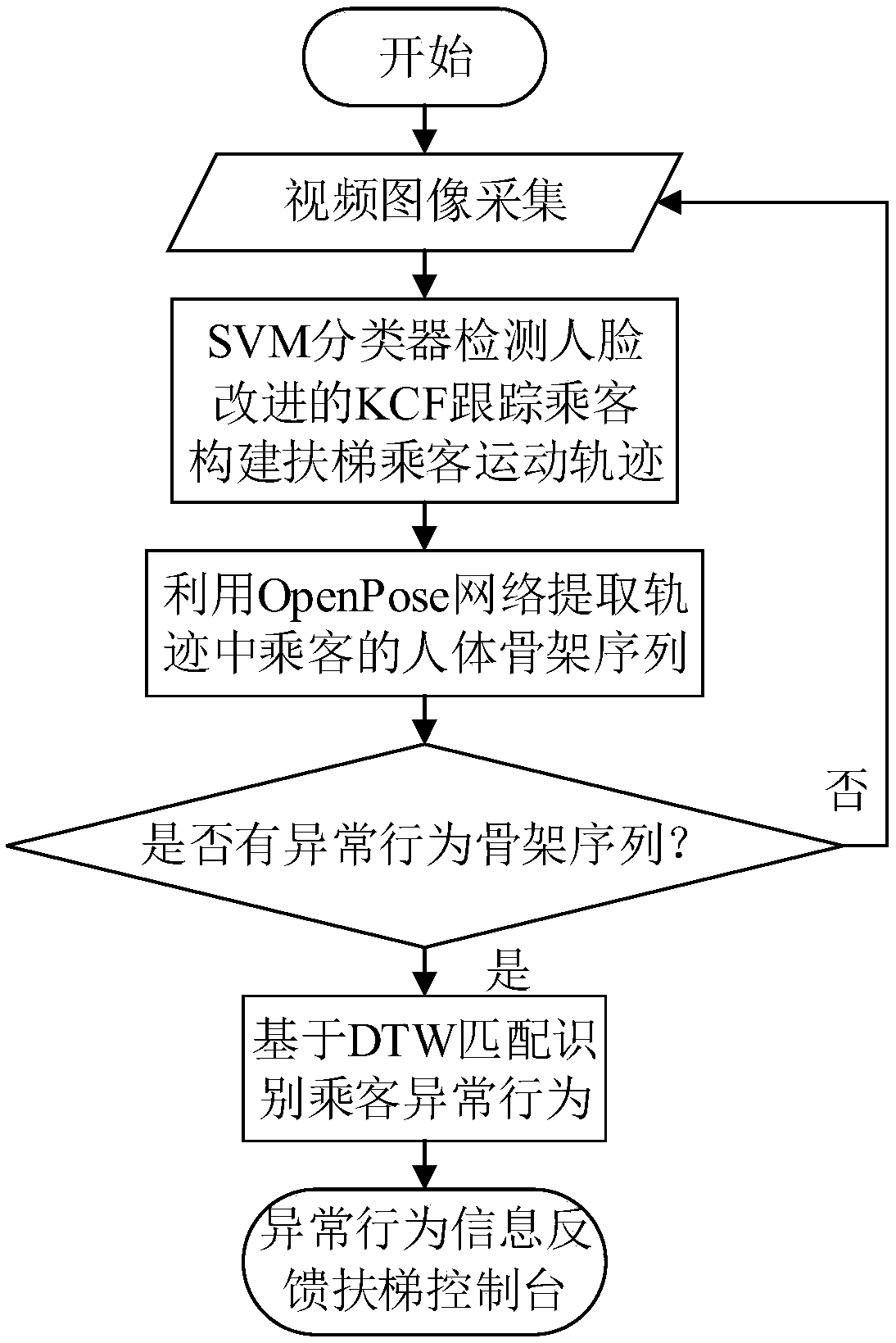

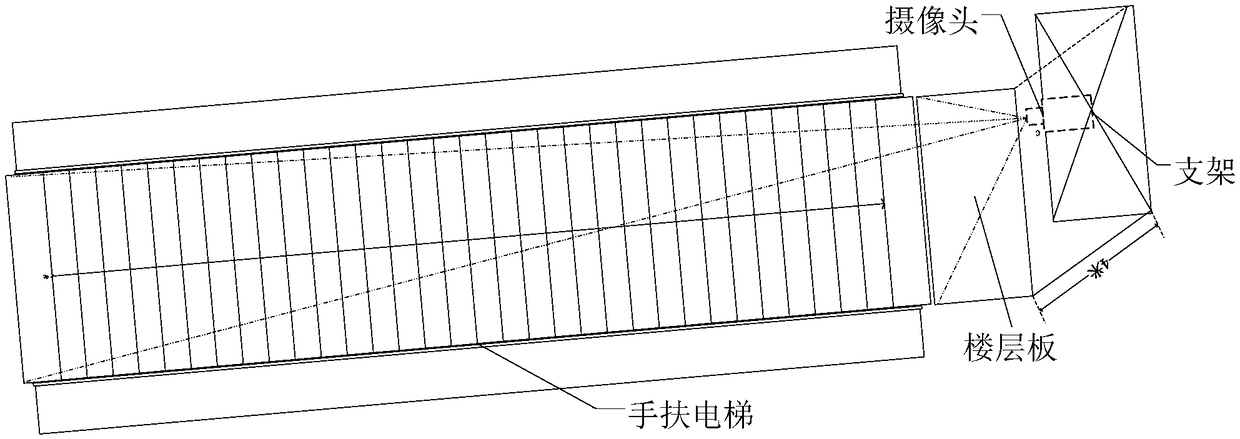

Passenger abnormal behavior recognition method based on human skeleton sequence

ActiveCN109460702AAccurate and stable buildAccurate and stable trajectoryImage enhancementImage analysisTemplate matchingAnomalous behavior

The invention discloses a passenger abnormal behavior identification method based on a human skeleton sequence, which comprises the following steps: 1) shooting an escalator area monitoring video image by a camera; 2) detecting that passenger face through the SVM and trac the passenger face with the KCF to obtain the passenger motion trajectory in the escalator; 3) extracting that human skeleton from the image by using the OpenPose depth learn network; 4) matching that human skeleton to the corresponding passenger trajectory to construct the human skeleton sequence of the passenger; 5) detecting that abnormal behavior skeleton sequence from the passenger human skeleton sequence through template match; 6) using DTW to match it with various abnormal behavior skeleton sequence template to identify abnormal behavior. That invention can accurately and real-time identify a plurality of abnormal behavior of passengers in the escalator base on human skeleton sequence, according to the abnormalbehavior type to control the operation situation of the escalator, and avoid the occurrence of safety accident.

Owner:SOUTH CHINA UNIV OF TECH +1

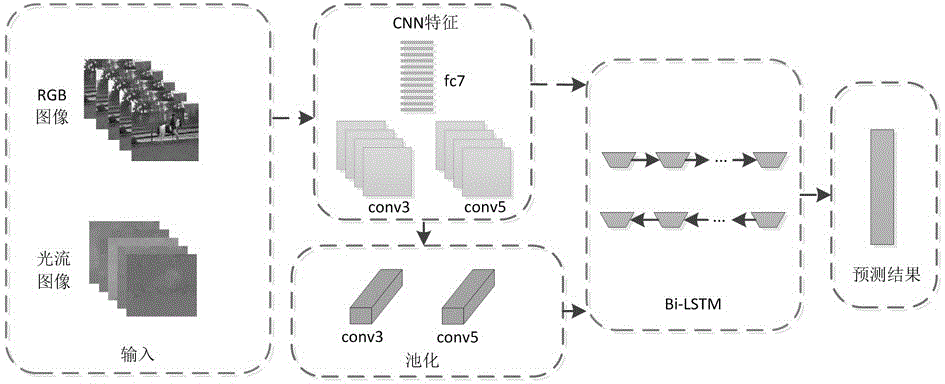

Bidirectional long short-term memory unit-based behavior identification method for video

InactiveCN106845351AImprove accuracyGuaranteed accuracyCharacter and pattern recognitionNeural architecturesTime domainTemporal information

Owner:SUZHOU UNIV

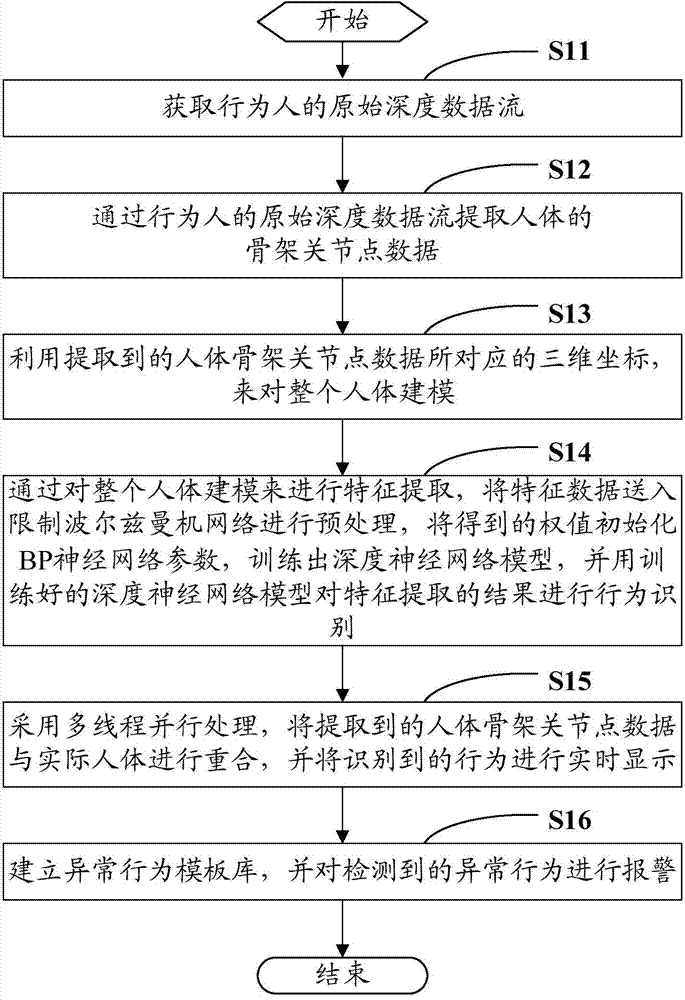

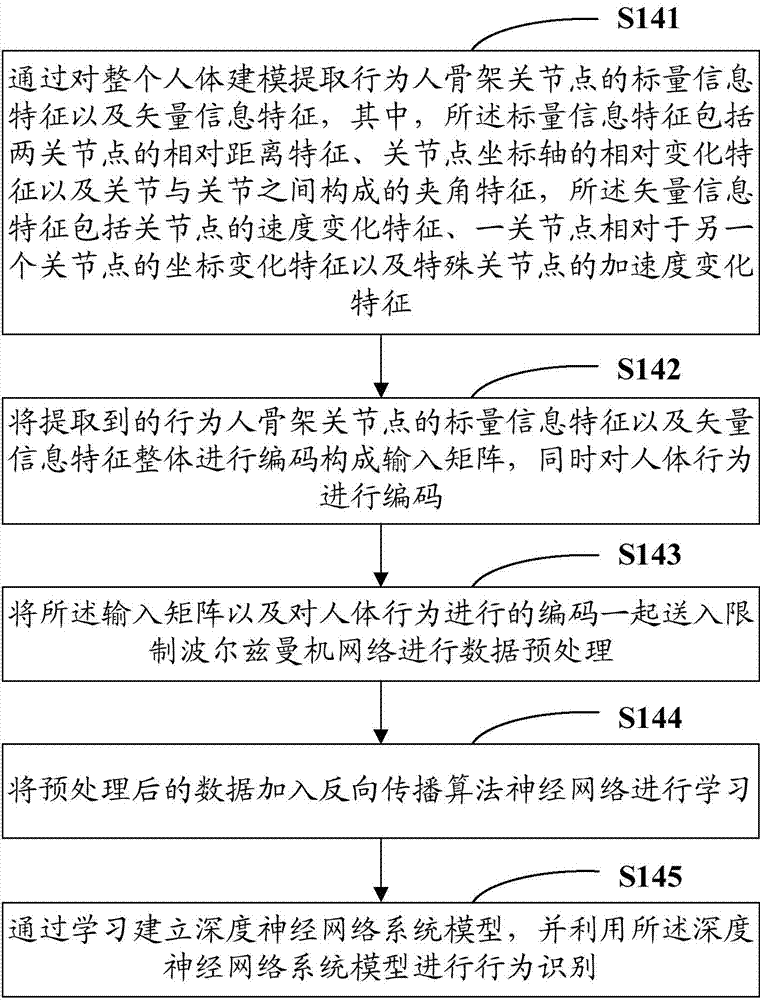

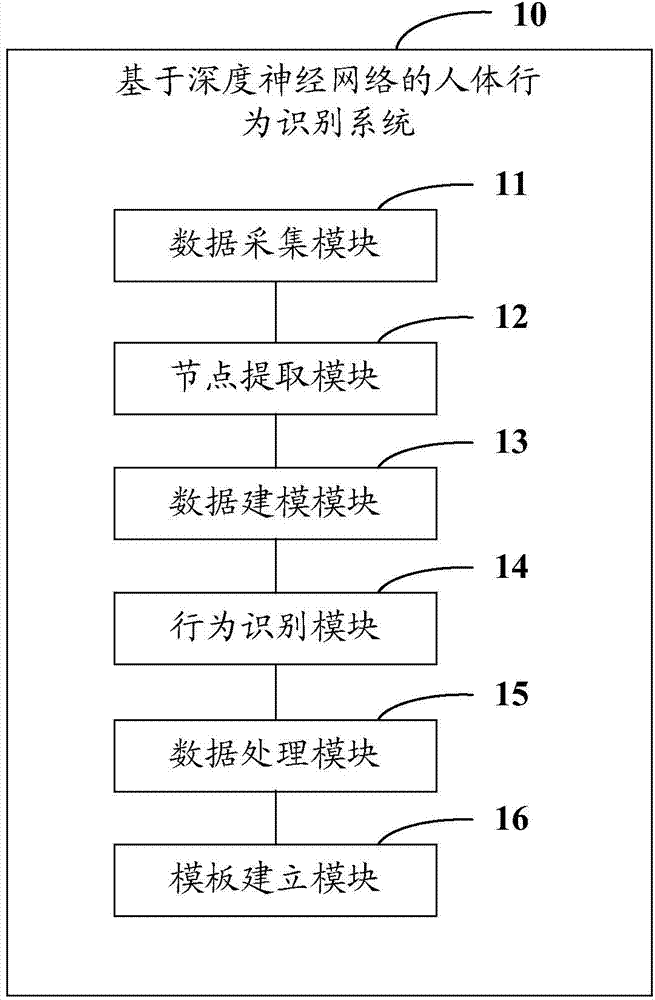

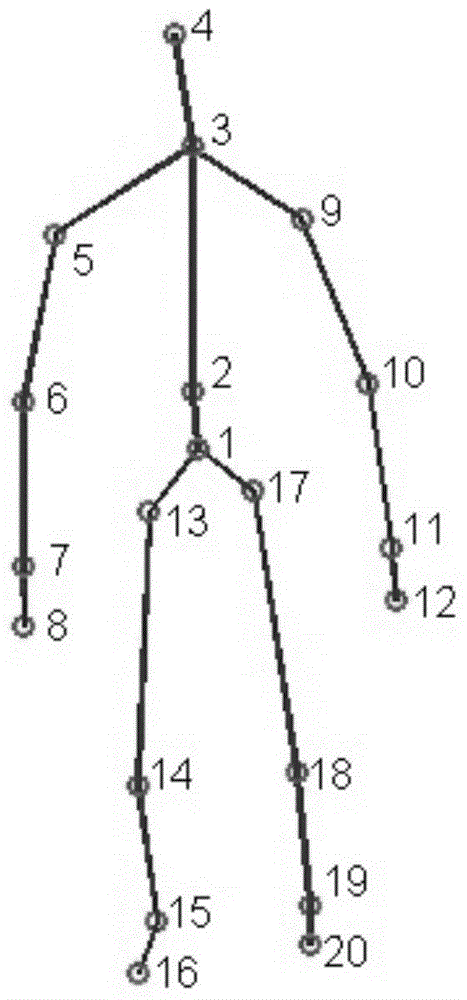

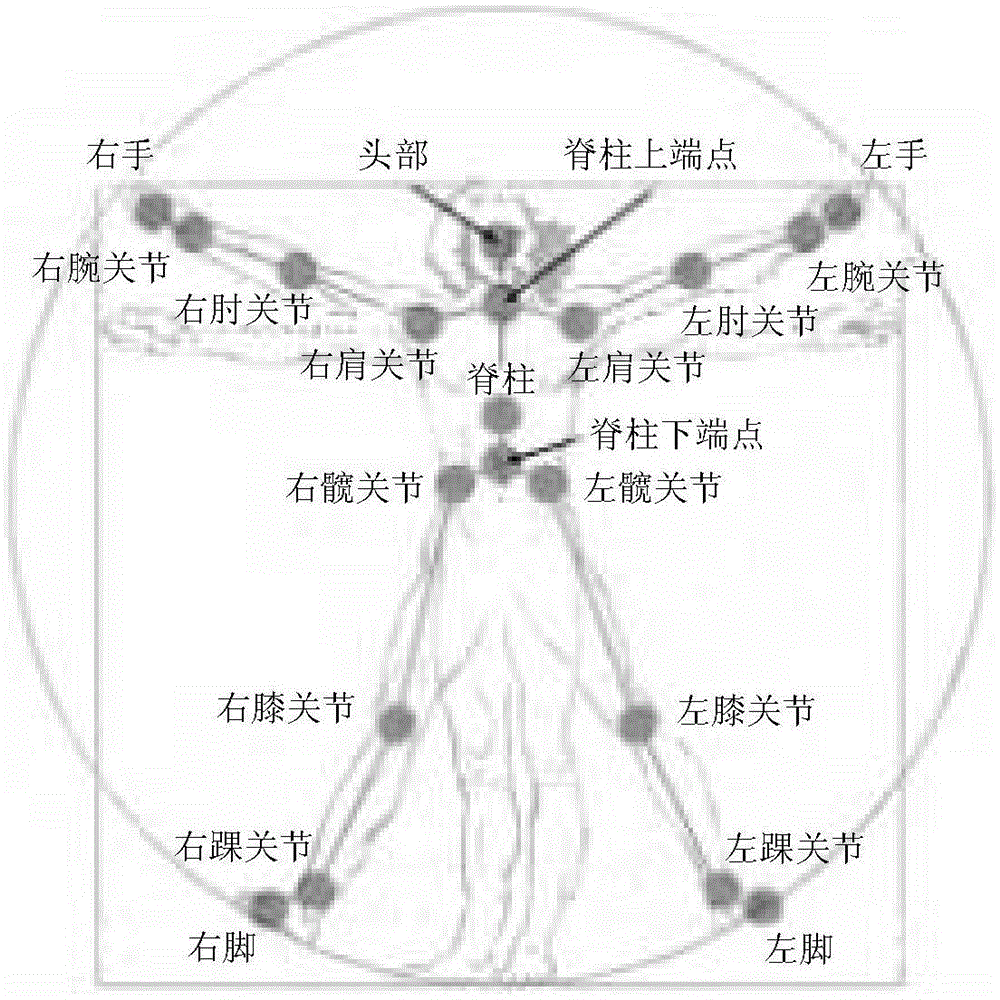

Human behavior recognition method and human behavior recognition system based on depth neural network

InactiveCN104850846APrivacy protectionImprove accuracyCharacter and pattern recognitionNeural learning methodsHuman behaviorHuman body

The invention provides a human behavior recognition method based on a depth neural network, comprising the following steps: acquiring original depth data stream of an actor; extracting human skeleton joint data from the original depth data stream of the actor; modeling the entire human body with three-dimensional coordinates corresponding to the extracted human skeleton joint data; extracting features by modeling the entire human body, sending feature data to a restricted Boltzmann machine network for preprocessing, training out a depth neural network model based on received weight initialization BP neural network parameters, and identifying a behavior from a feature extraction result; overlapping the extracted human skeleton joint data and the actual human body through multi-threaded parallel processing, and displaying the identified behavior in real time; and establishing an abnormal behavior template library and alarming for a detected abnormal behavior. The change of human behavior can be detected in real time, and alarm can be raised for abnormal behaviors (such as fall) of a human body.

Owner:SHENZHEN UNIV

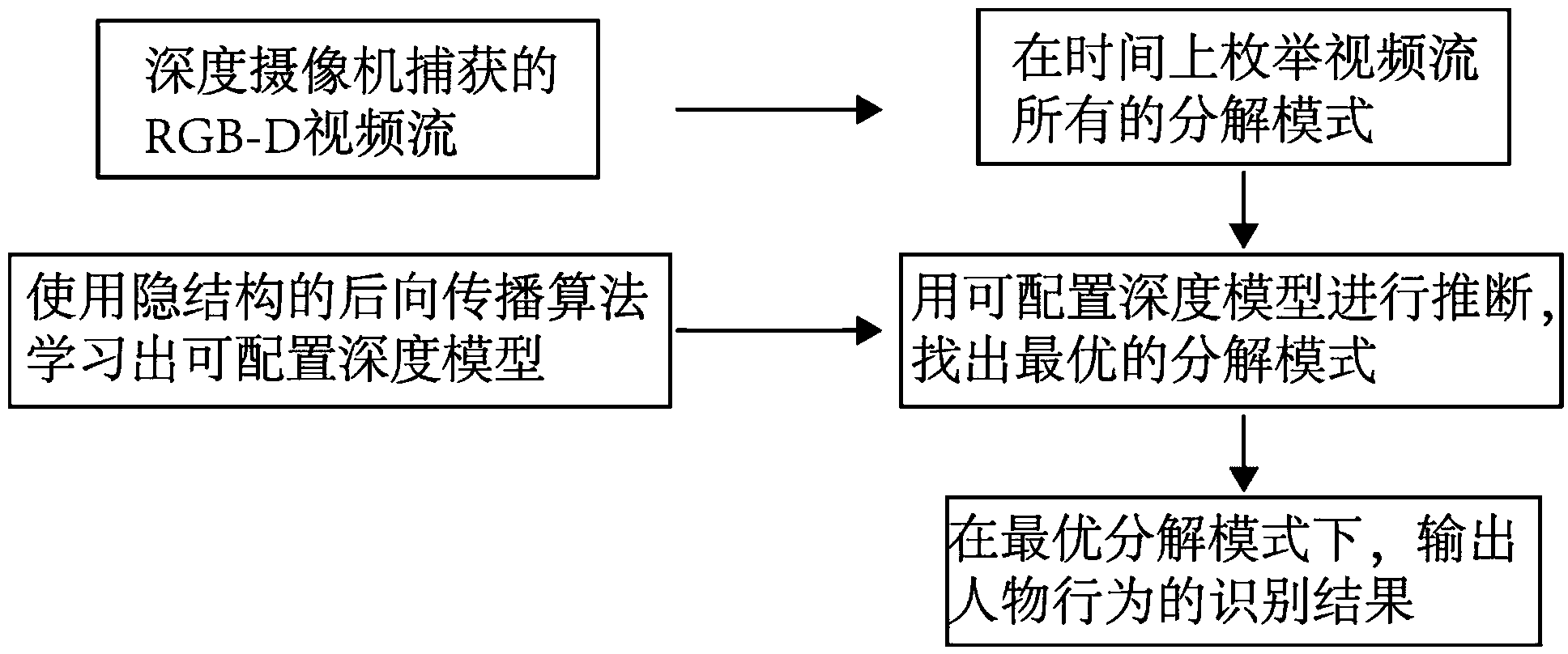

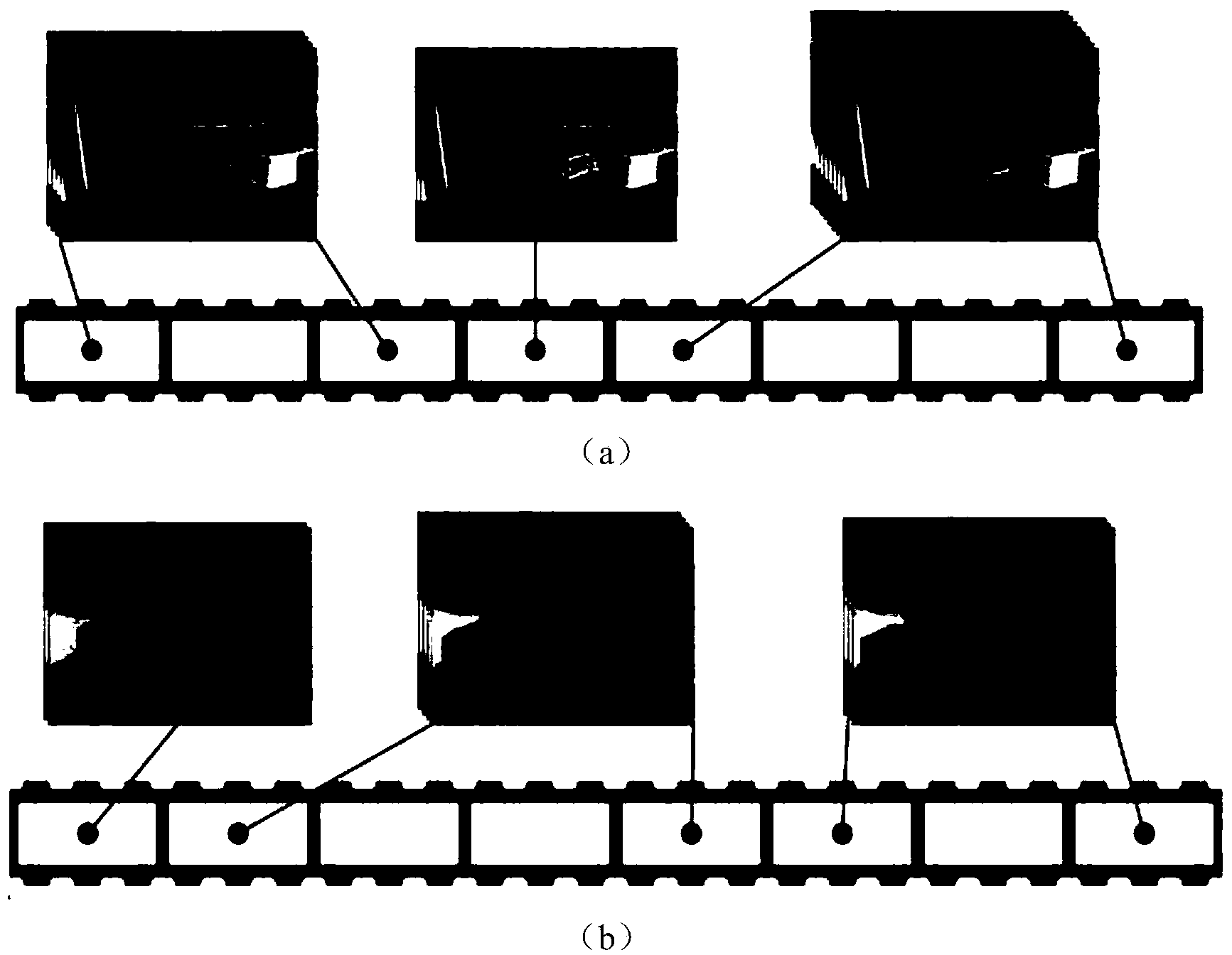

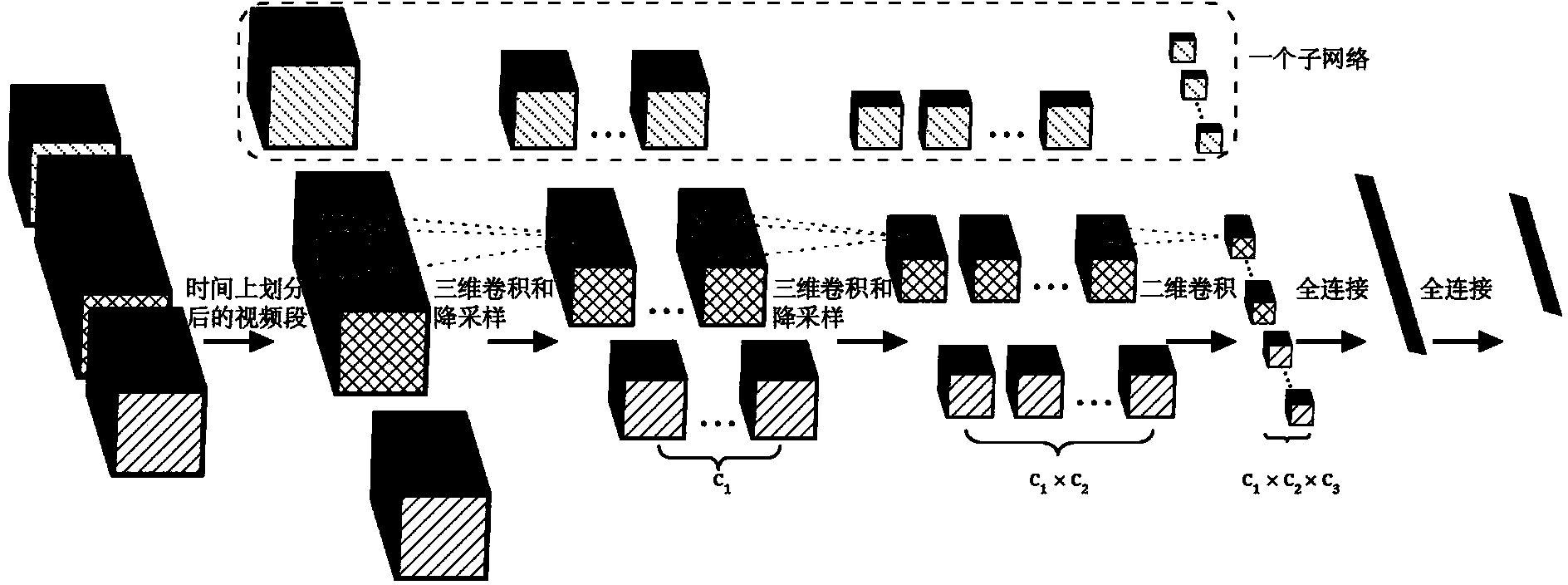

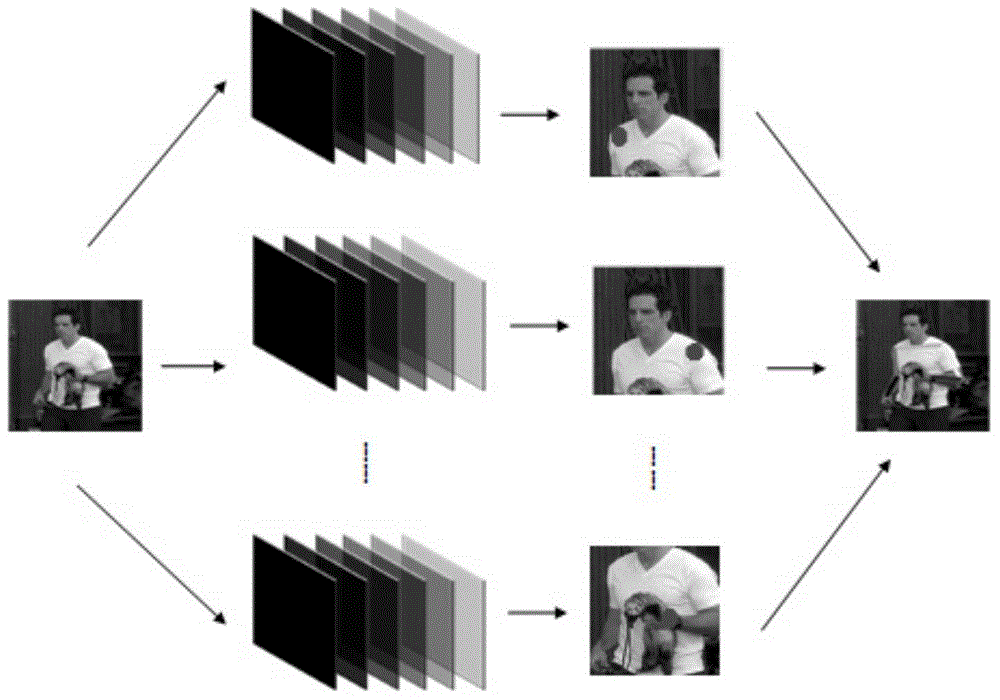

Configurable convolutional neural network based red green blue-distance (RGB-D) figure behavior identification method

ActiveCN104217214AImprove accuracyAutomatic extraction of spatio-temporal featuresBiological neural network modelsCharacter and pattern recognitionTime domainAlgorithm

The invention discloses a configurable convolutional neural network based red green blue-distance (RGB-D) figure behavior identification method. According to the method, a (configurable) deeply convolutional neural network is constructed based on a dynamic adjustment structure. The identification method can be used for directly processing RGB-D video data, and can perform dynamic adjustment on a network structure according to the change of the figure behavior in time domain, thereby effectively and automatically extracting spatial-temporal features of complicated figure behaviors, and finally greatly improving the accuracy rate of figure behavior identification.

Owner:SYSU CMU SHUNDE INT JOINT RES INST +1

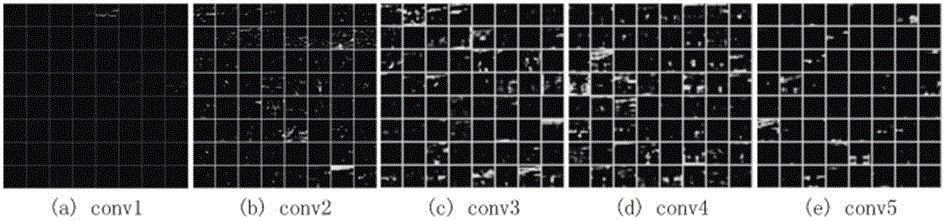

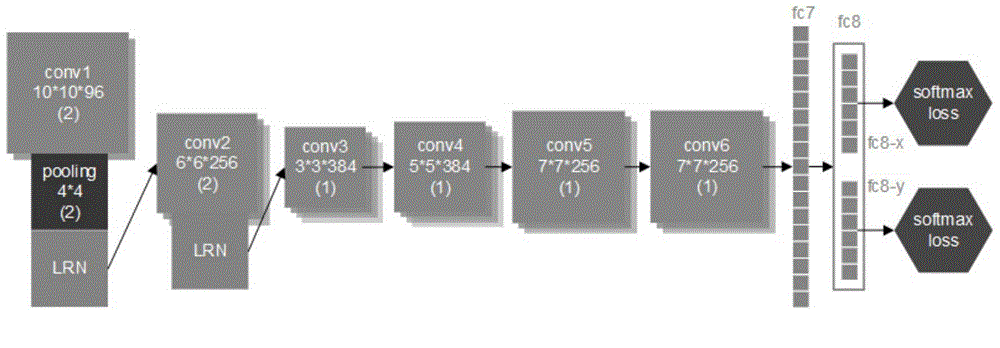

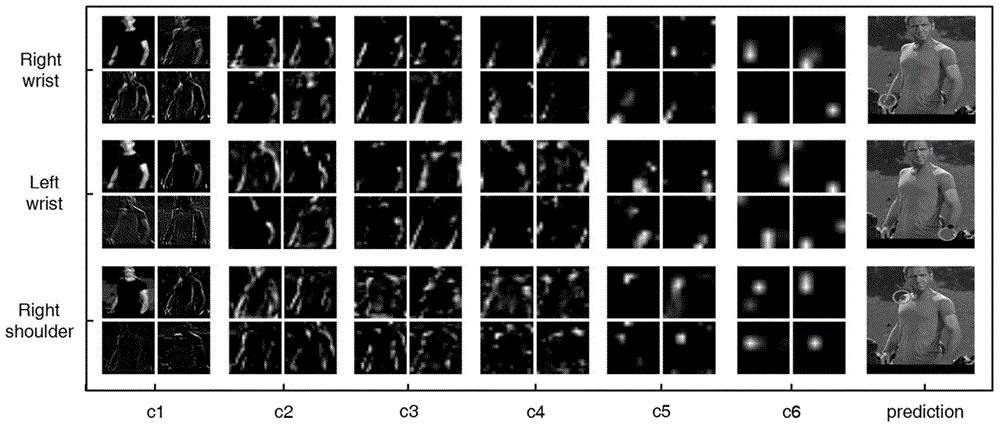

Human body gesture identification method based on depth convolution neural network

InactiveCN105069413AOvercome limitationsEasy to trainBiometric pattern recognitionHuman bodyInformation processing

The invention discloses a human body gesture identification method based on a depth convolution neural network, belongs to the technical filed of mode identification and information processing, relates to behavior identification tasks in the aspect of computer vision, and in particular relates to a human body gesture estimation system research and implementation scheme based on the depth convolution neural network. The neural network comprises independent output layers and independent loss functions, wherein the independent output layers and the independent loss functions are designed for positioning human body joints. ILPN consists of an input layer, seven hidden layers and two independent output layers. The hidden layers from the first to the sixth are convolution layers, and are used for feature extraction. The seventh hidden layer (fc7) is a full connection layer. The output layers consist of two independent parts of fc8-x and fc8-y. The fc8-x is used for predicting the x coordinate of a joint. The fc8-y is used for predicting the y coordinate of the joint. When model training is carried out, each output is provided with an independent softmax loss function to guide the learning of a model. The human body gesture identification method has the advantages of simple and fast training, small computation amount and high accuracy.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

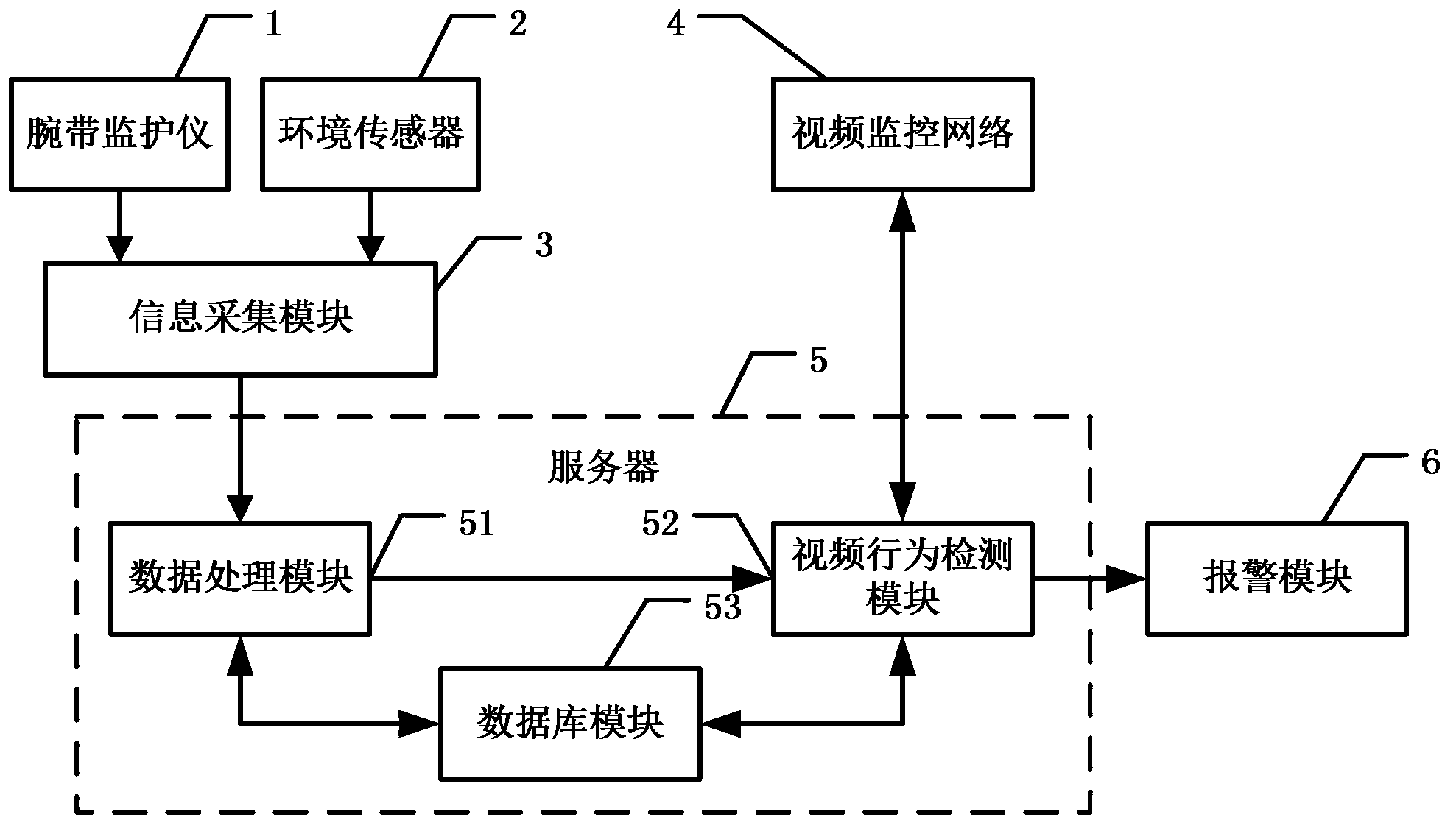

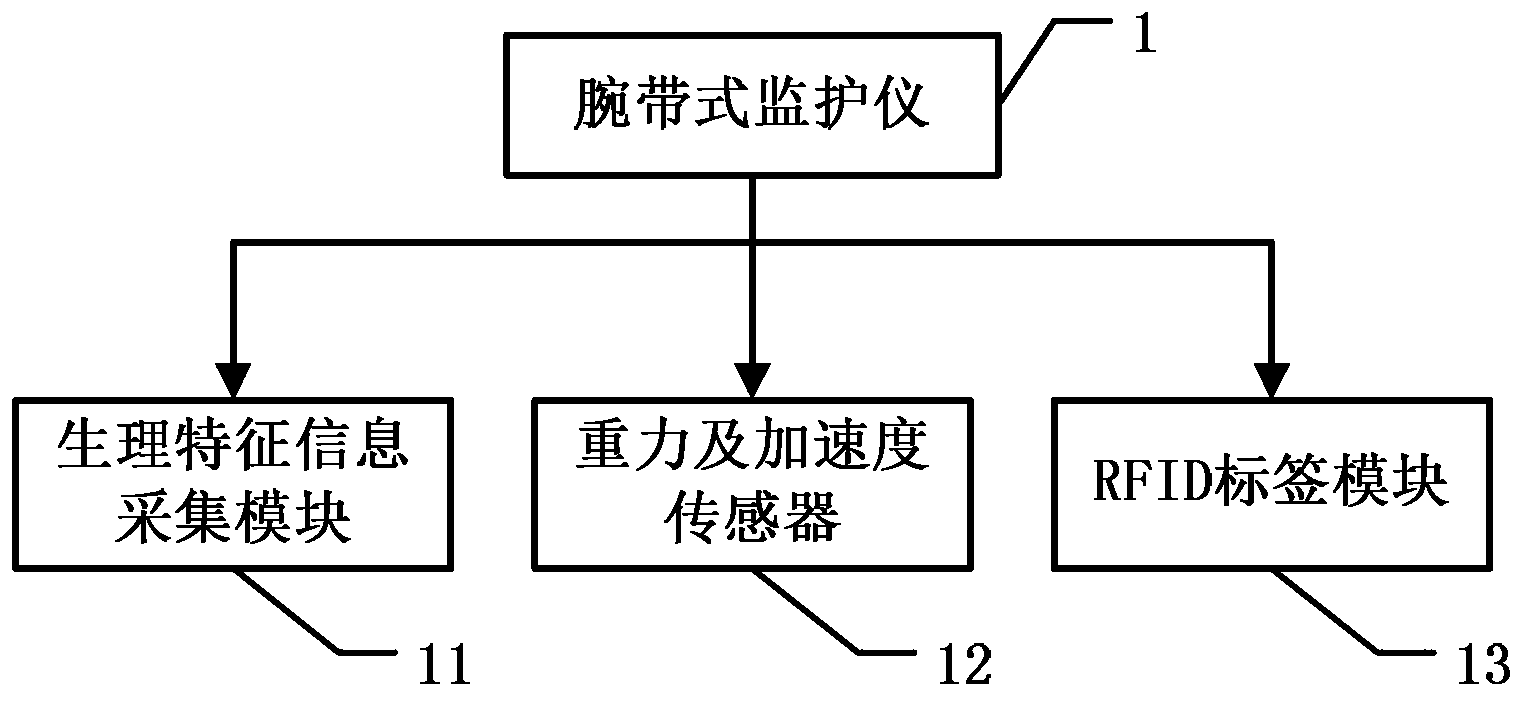

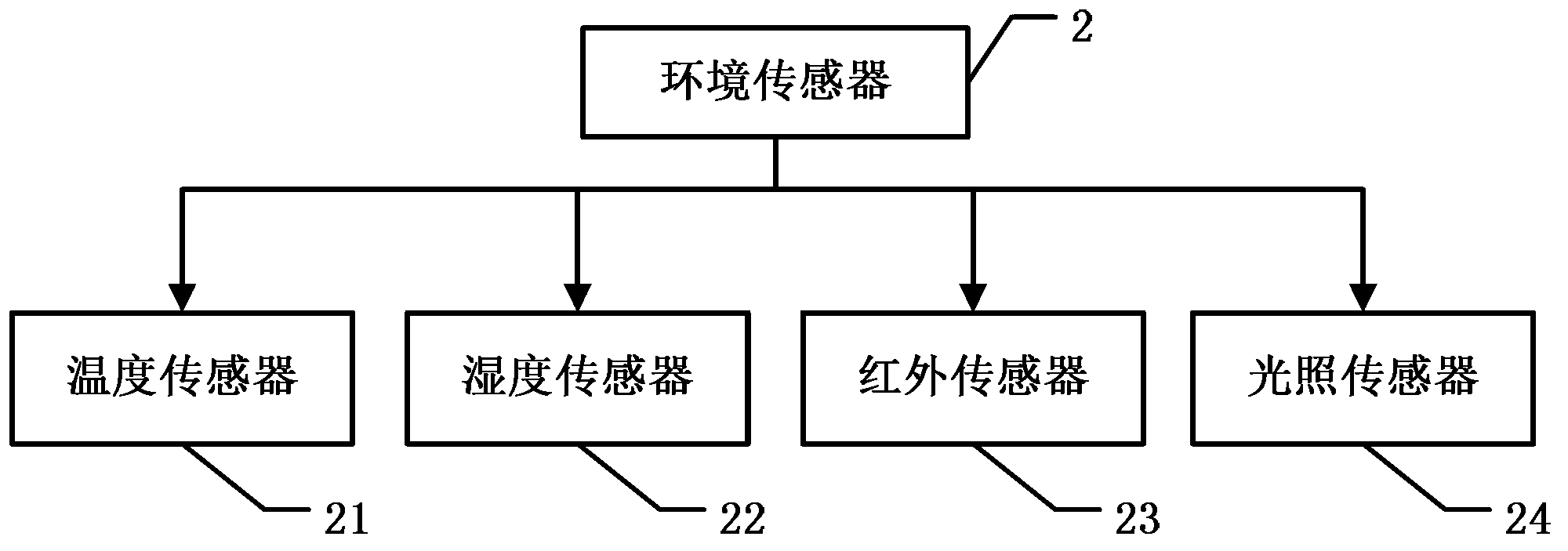

Gerocamium intelligent nursing system and method based on Internet of Things technology

InactiveCN103325080AAvoid harmDeep hurtData processing applicationsAlarmsVideo monitoringThe Internet

The invention discloses a gerocamium intelligent nursing system and method based on the Internet of Things technology. The Internet of Things technology and the behavior identification technology are combined in the gerocamium intelligent nursing system and method to monitor physiological information, attitude information and environment information of the old through a patient monitor and an environment sensor, and meanwhile, position information of the old is computed through RFID label information carried by the patient monitor. When abnormal conditions occur, a video monitoring network obtains sequence images of monitoring video of the old according to the position information of the old, behavior identification is conducted on the sequence images, and then whether dangerous situations occur or not is judged according to the behaviors of the old. By means of the grocamium intelligent nursing system and method, dual judgment is conducted when the old are in danger, danger warning accuracy is improved, and after setup is completed, the grocamium intelligent nursing system can run automatically, and therefore workload of nursing personnel is lowered and nursing quality is improved.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

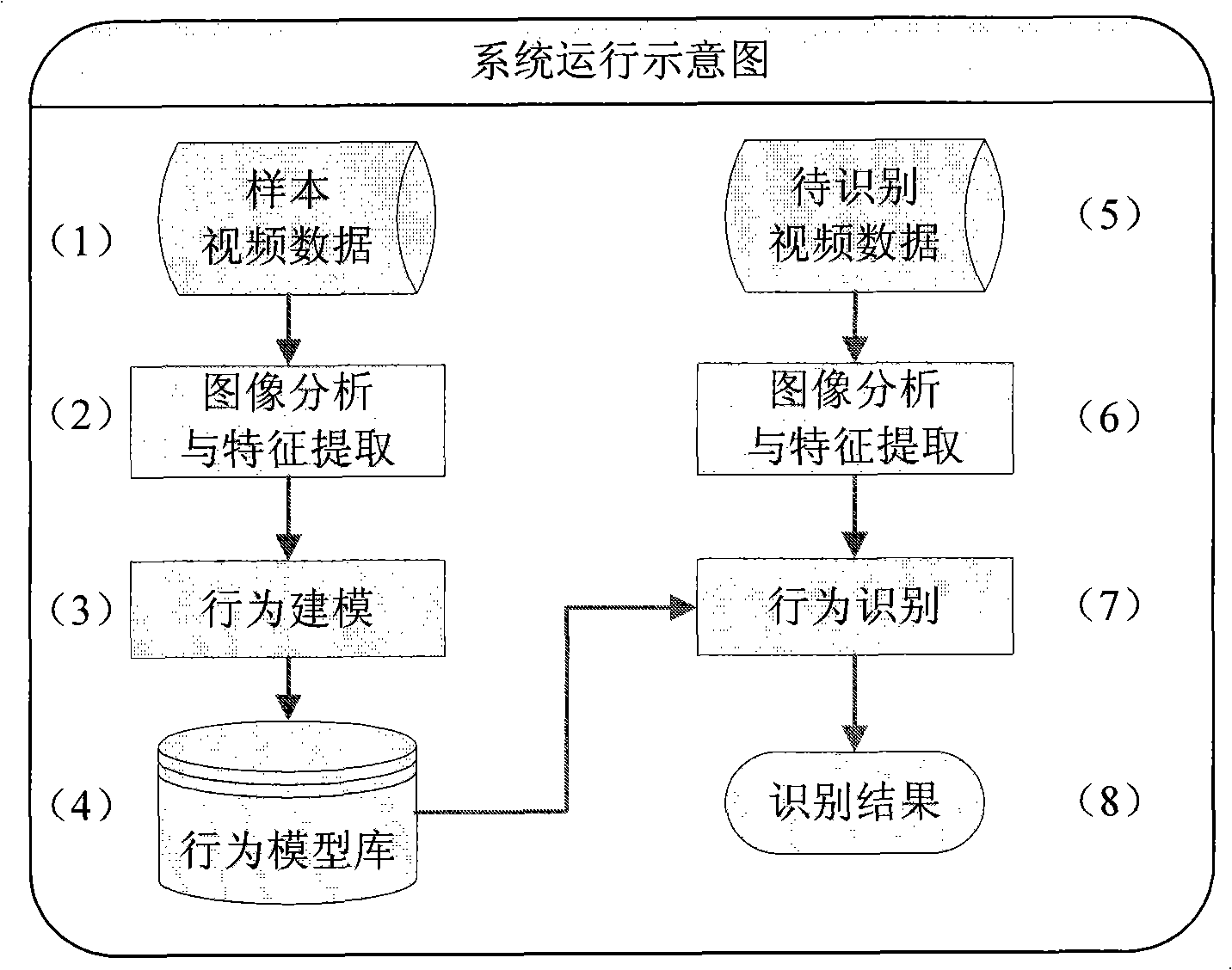

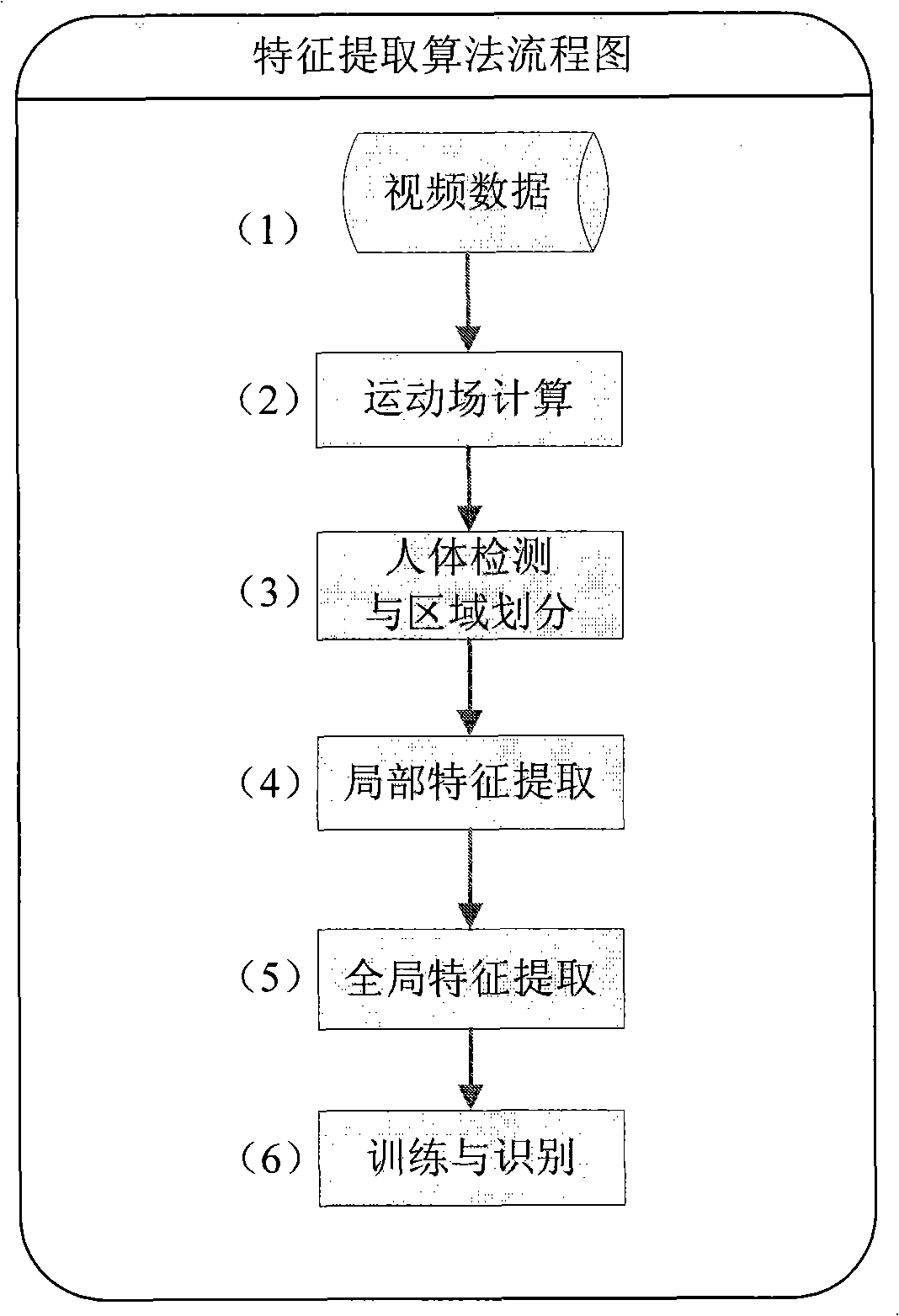

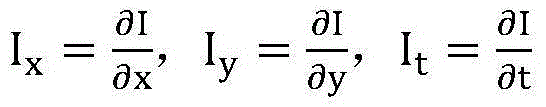

Exception action detecting method based on athletic ground partial statistics characteristic analysis

The invention relates to an anomaly detection method based on a motion field local statistical character analysis. The system mainly comprises the motion field analysis of video images, a local statistical character extraction and a statistical study and a mode identification technology based on samples. Firstly, the characteristics of objects in an image are extracted through a basic motion analyzing technology, the motion status is calculated, and a motion field is formed. On the basis, the statistical character extraction is implemented on the local motion information to obtain the local motion characteristics of the motion field. Finally, the space distribution relationship of the motion characteristics is expressed by global structured information. A method based on the statistical study is adopted for recognizing behavior styles. The algorithm implements the behavior recognition directly through the analysis based on the motion information to improve the efficiency and the robustness of the algorithm.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

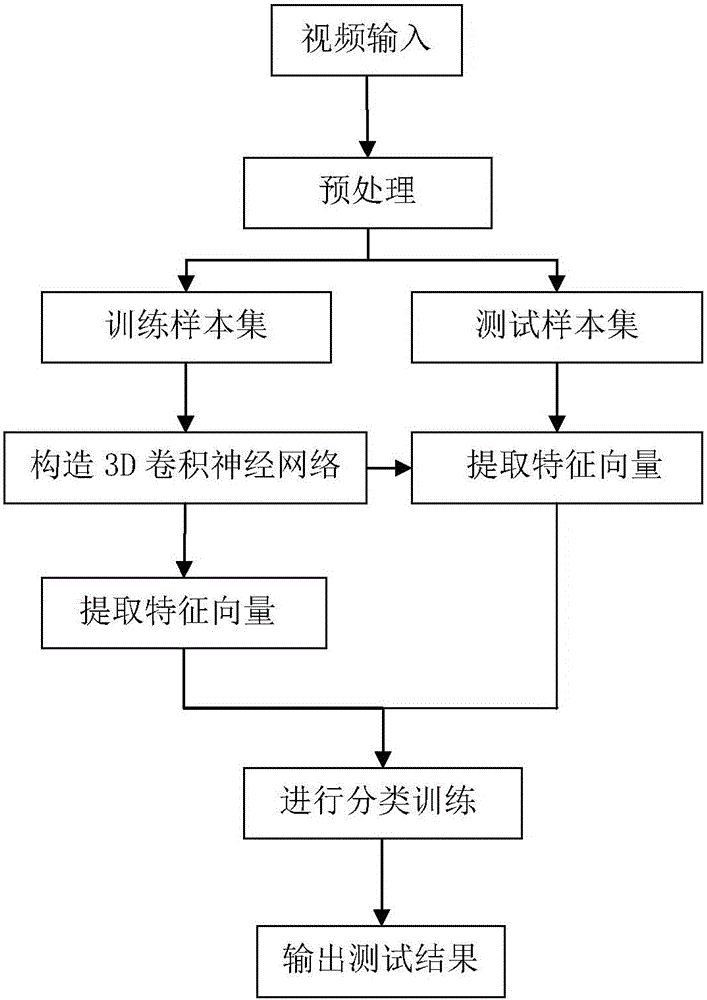

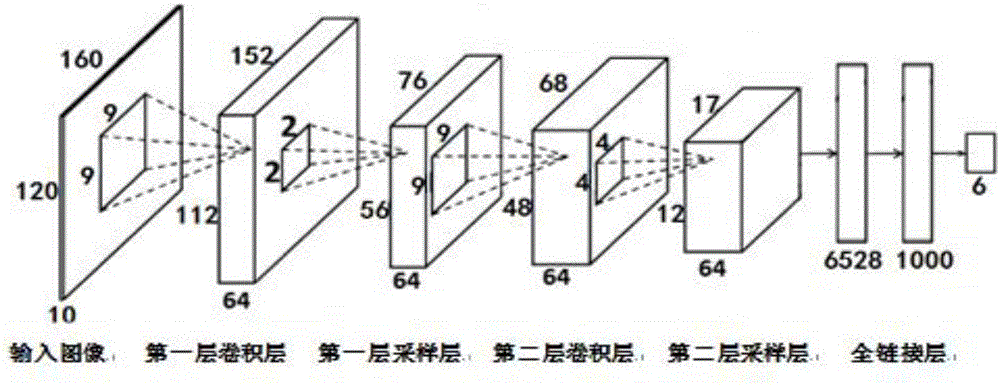

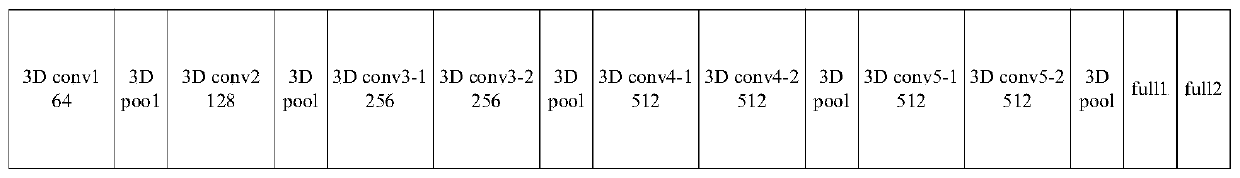

3D (three-dimensional) convolutional neural network based human body behavior recognition method

InactiveCN105160310AThe extracted features are highly representativeFast extractionCharacter and pattern recognitionHuman bodyFeature vector

The present invention discloses a 3D (three-dimensional) convolutional neural network based human body behavior recognition method, which is mainly used for solving the problem of recognition of a specific human body behavior in the fields of computer vision and pattern recognition. The implementation steps of the method are as follows: (1) carrying out video input; (2) carrying out preprocessing to obtain a training sample set and a test sample set; (3) constructing a 3D convolutional neural network; (4) extracting a feature vector; (5) performing classification training; and (6) outputting a test result. According to the 3D convolutional neural network based human body behavior recognition method disclosed by the present invention, human body detection and movement estimation are implemented by using an optical flow method, and a moving object can be detected without knowing any information of a scenario. The method has more significant performance when an input of a network is a multi-dimensional image, and enables an image to be directly used as the input of the network, so that a complex feature extraction and data reconstruction process in a conventional recognition algorithm is avoided, and recognition of a human body behavior is more accurate.

Owner:XIDIAN UNIV

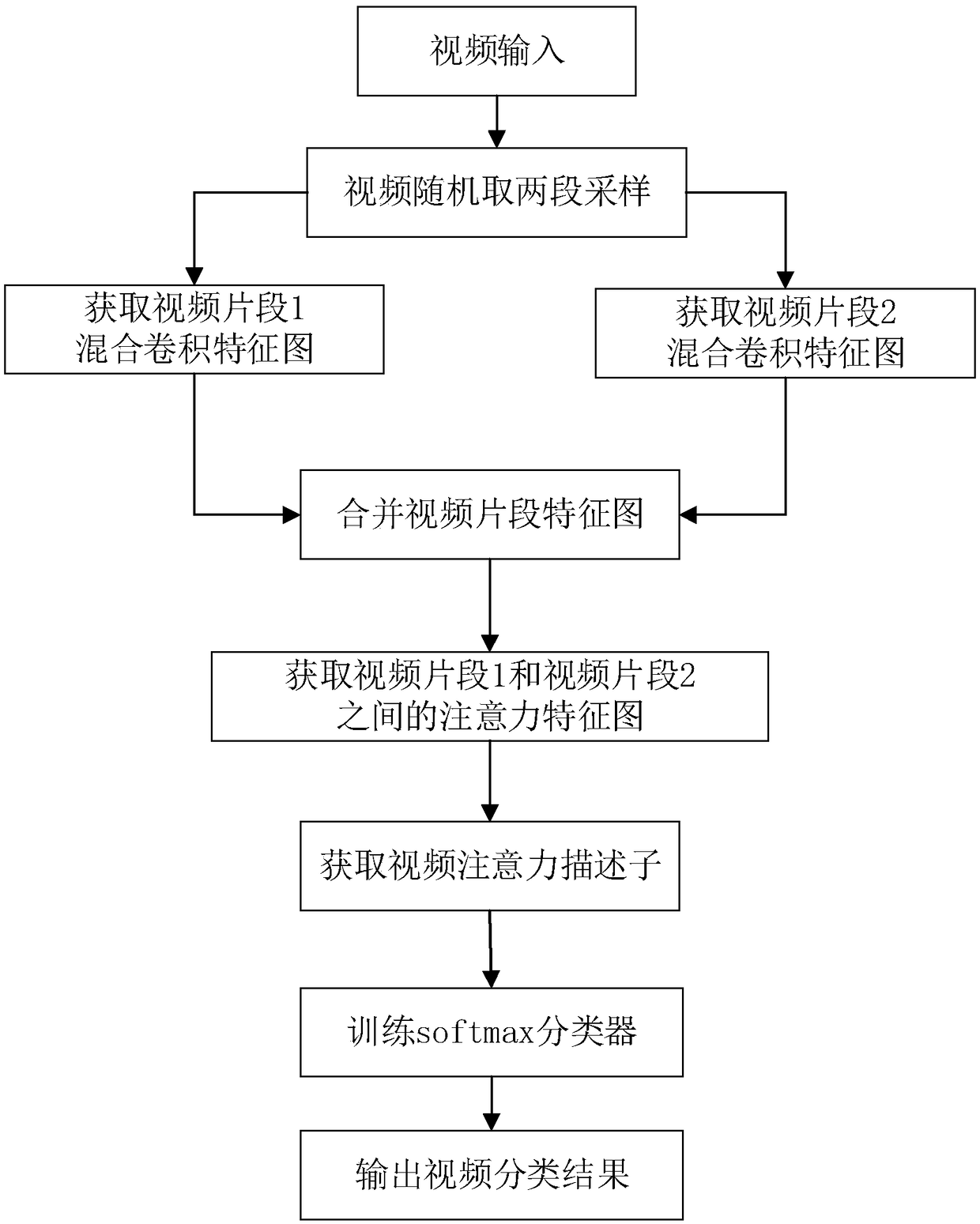

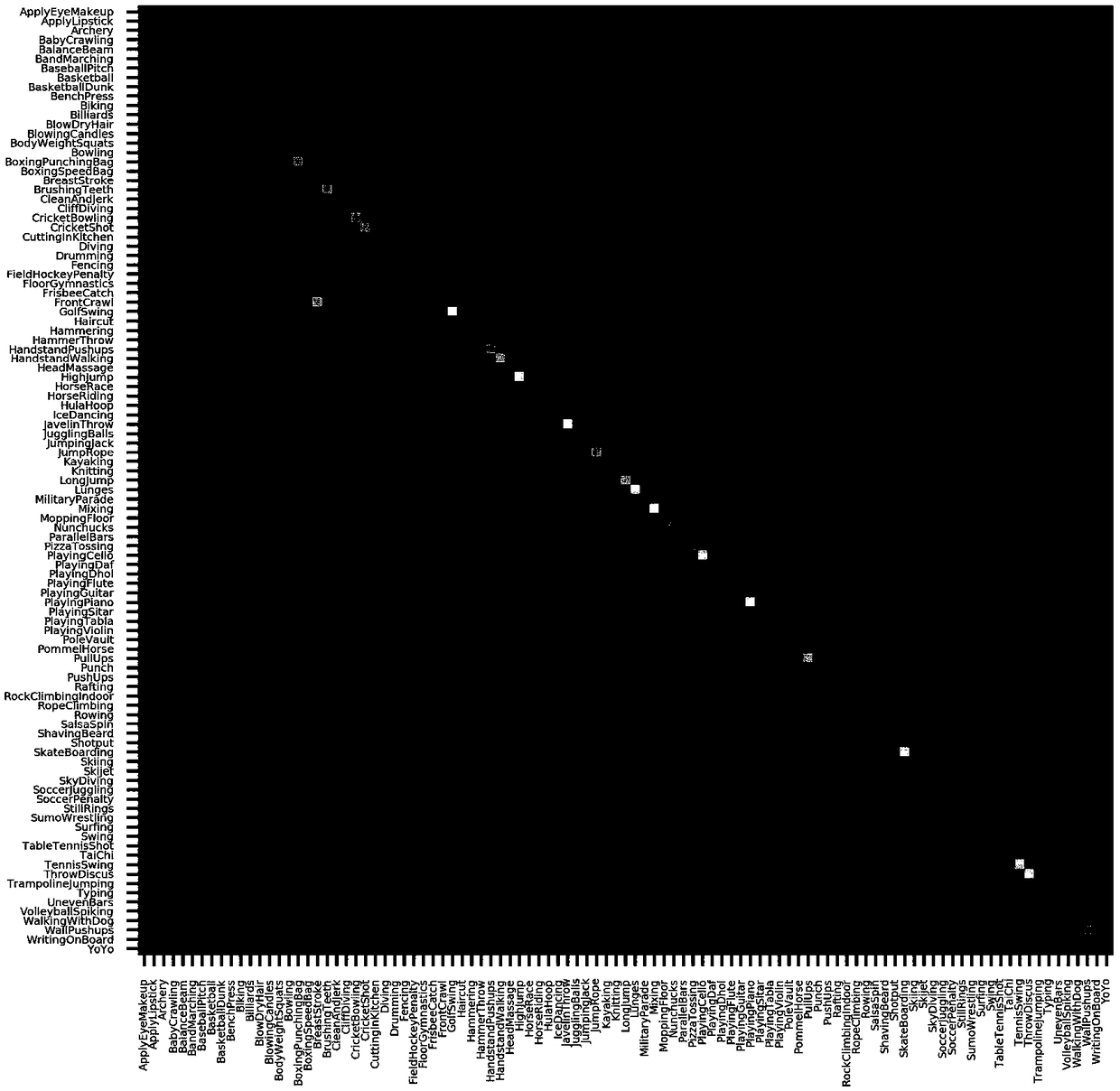

Video classification based on hybrid convolution and attention mechanism

ActiveCN109389055ASmall amount of calculationImprove accuracyCharacter and pattern recognitionNeural architecturesVideo retrievalData set

The invention discloses a video classification method based on a mixed convolution and attention mechanism, which solves the problems of complex calculation and low accuracy of the prior art. The method comprises the following steps of: selecting a video classification data set; Segmented sampling of input video; Preprocessing two video segments; Constructing hybrid convolution neural network model; The video mixed convolution feature map is obtained in the direction of temporal dimension. Video Attention Feature Map Obtained by Attention Mechanism Operation; Obtaining a video attention descriptor; Training the end-to-end entire video classification model; Test Video to be Categorized. The invention directly obtains mixed convolution characteristic maps for different video segments, Compared with the method of obtaining optical flow features, the method of obtaining optical flow features reduces the computational burden and improves the speed, introduces the attention mechanism betweendifferent video segments, describes the relationship between different video segments and improves the accuracy and robustness, and is used for video retrieval, video tagging, human-computer interaction, behavior recognition, event detection and anomaly detection.

Owner:XIDIAN UNIV

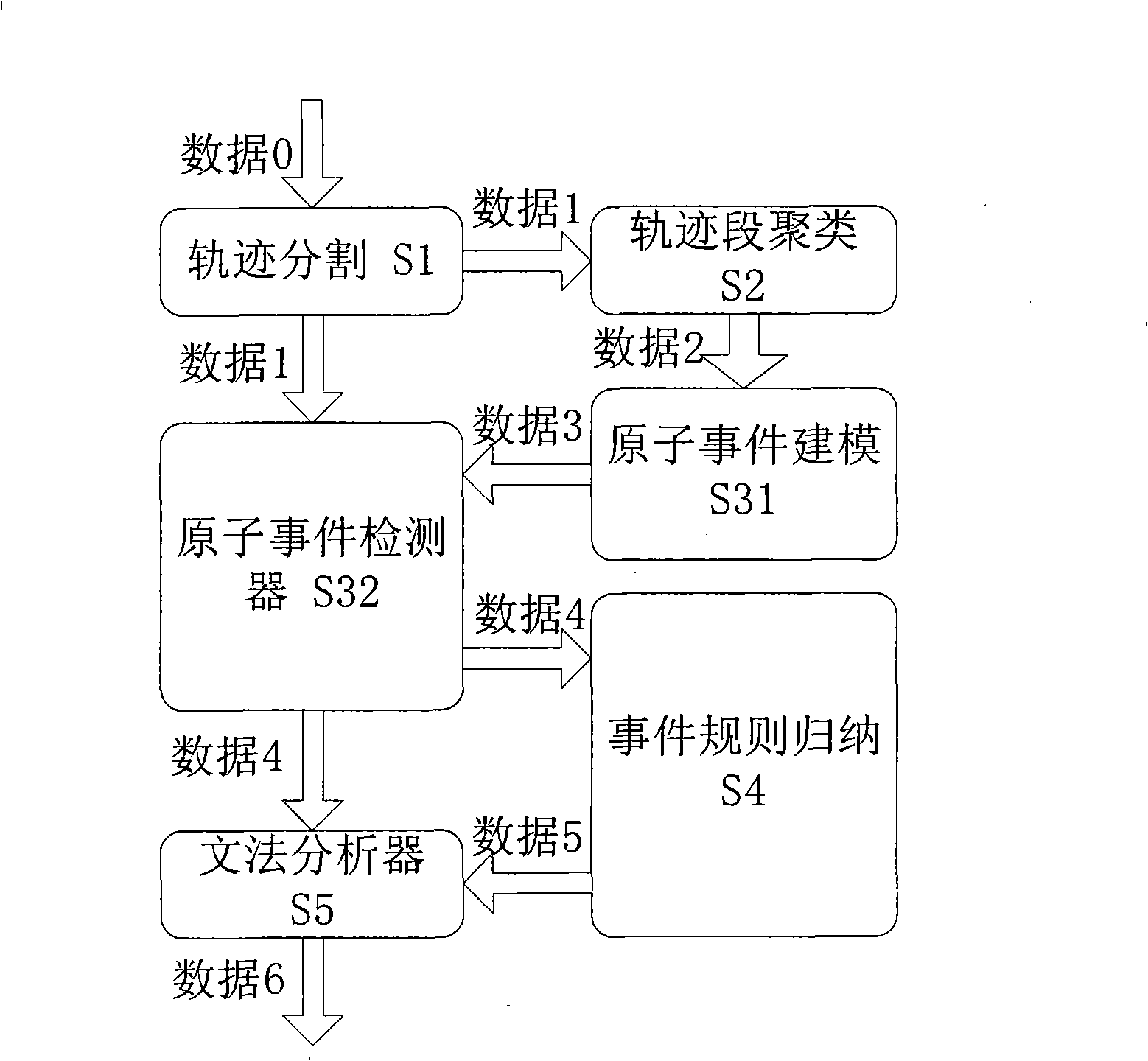

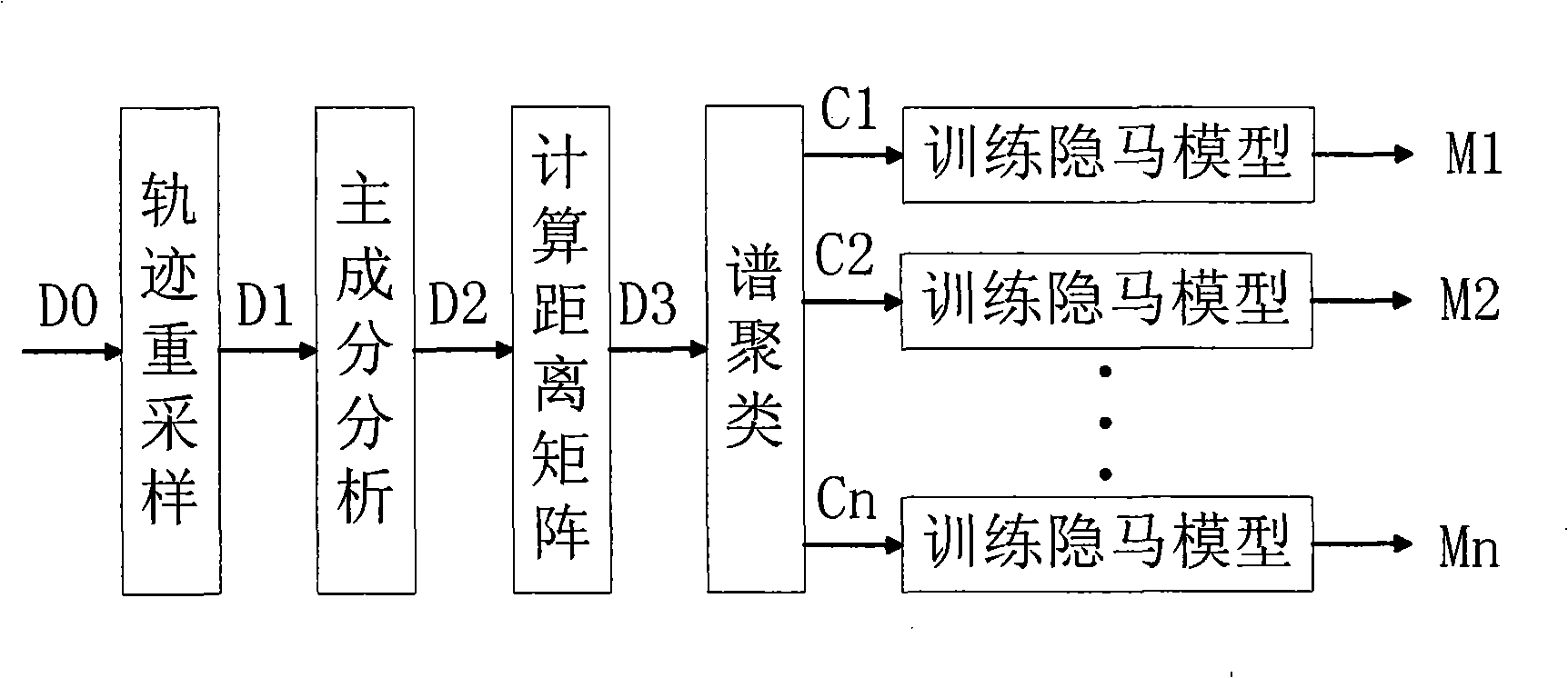

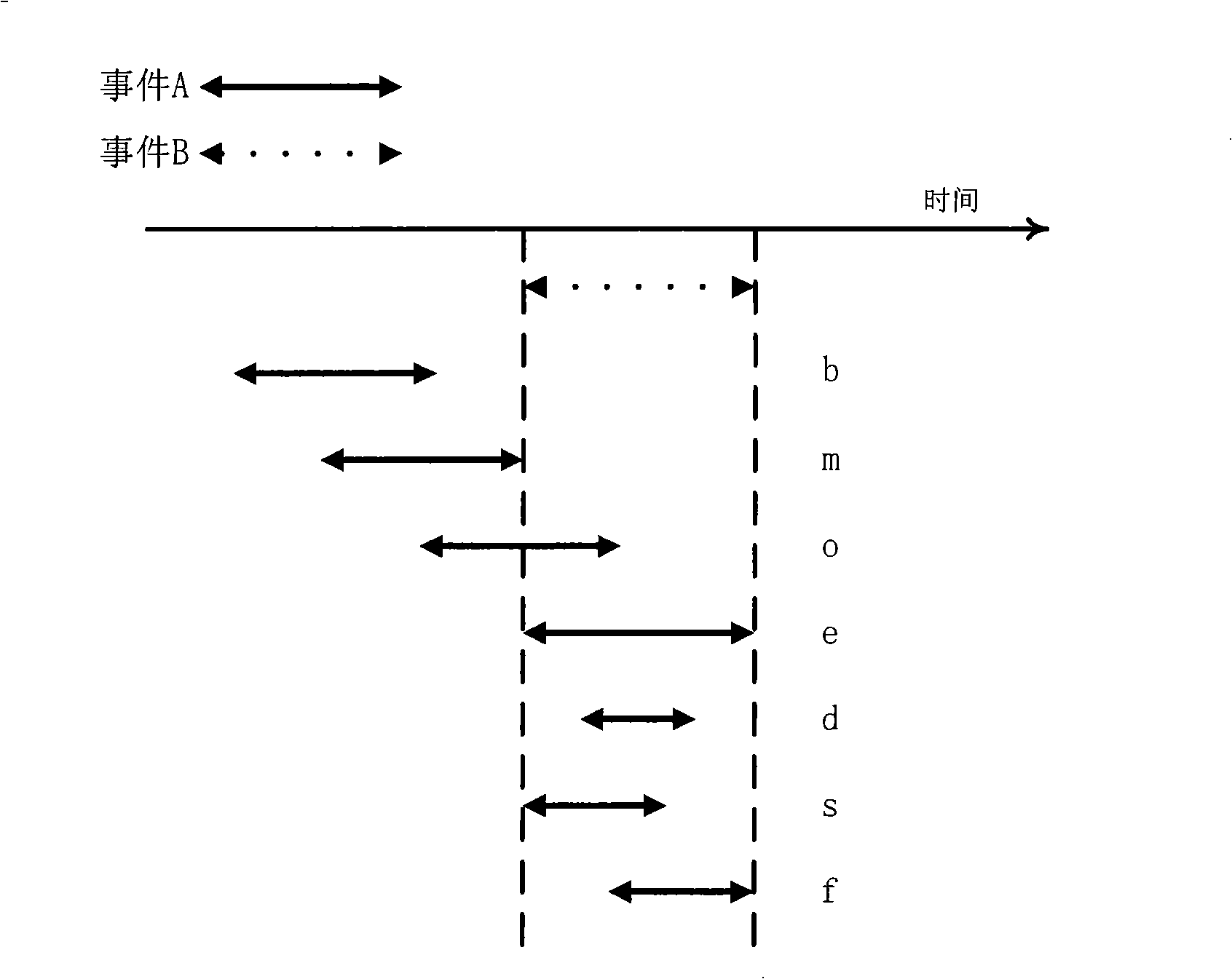

Video frequency behaviors recognition method based on track sequence analysis and rule induction

InactiveCN101334845AReduce manpower consumptionCharacter and pattern recognitionVideo monitoringSequence analysis

The invention discloses a method for identifying the video action based on trajectory sequence analysis and rule induction, which solves the problems of large labor intensity. The method of the invention divides a complete trajectory in a scene into a plurality of trajectory section with basic meaning, and obtains a plurality of basic movement modes as atomic events through the trajectory clustering; meanwhile, a hidden Markov model is utilized for establishing a model to obtain the event rule contained in the trajectory sequence by inducting the algorithm based on the minimum description length and based on the event rule, an expanded grammar analyzer is used for identifying an interested event. The invention provides a complete video action identification frame and also a multi-layer rule induction strategy by taking the space-time attribute, which significantly improves the effectiveness of the rule learning and promotes the application of the pattern recognition in the identification of the video action. The method of the invention can be applied to the intelligent video surveillance and automatic analysis of movements of automobiles or pedestrians under the current monitored scene so as to lead a computer to assist people or substitute people to complete monitor tasks.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

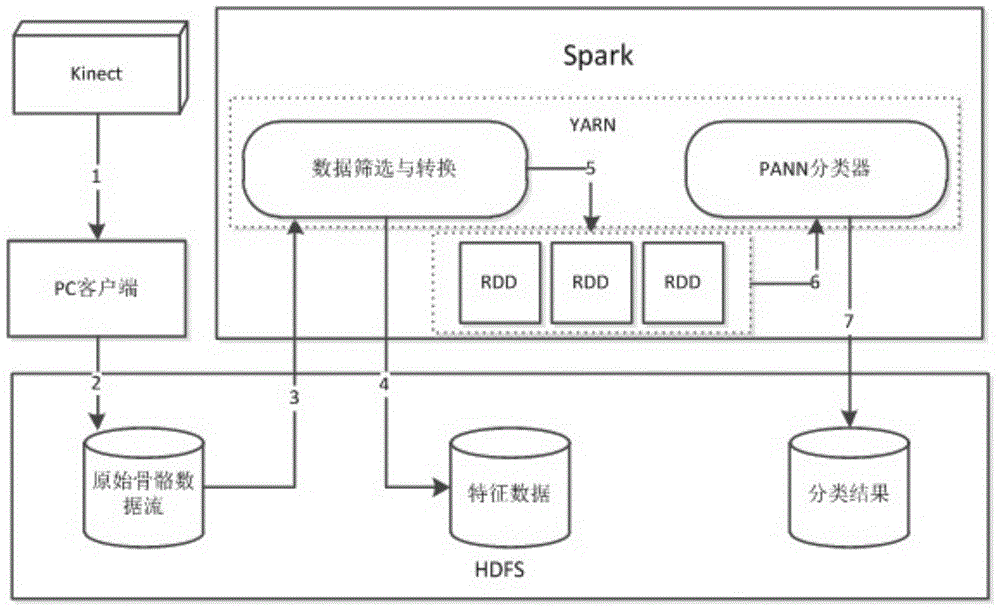

Parallelized human body behavior identification method

InactiveCN104899561AIncrease training rateReduce generationCharacter and pattern recognitionExtensibilityHuman body

The present invention discloses a parallelized human body behavior identification method. According to the method, skeleton data of Kinect is used as input; a distributed behavior identification algorithm is implemented based on a Spark computing framework; and a complete parallel identifying process is formed. Acquisition of the skeleton data of a human body is based on scene depth acquisition capacity of Kinect and the data is preprocessed to ensure invariability of displacement and scale of characteristics; and a human body structural vector, joint included angle information and skeleton weight bias are respectively selected for static behavior characteristics and a dynamic behavior searching algorithm for a structural similarity is provided. On the identification algorithm, a neural network algorithm is parallelized on Spark; a quasi-newton method L-BFGS is adopted to optimize a network weight updating process; and the training speed is obviously increased. According to an identification platform, a Hadoop distributed file system HDFS is used as a behavior data storage layer; Spark is applied to a universal resource manager YARN; the parallel neural network algorithm is used as an upper application; and the integral system architecture has excellent extendibility.

Owner:SOUTH CHINA UNIV OF TECH

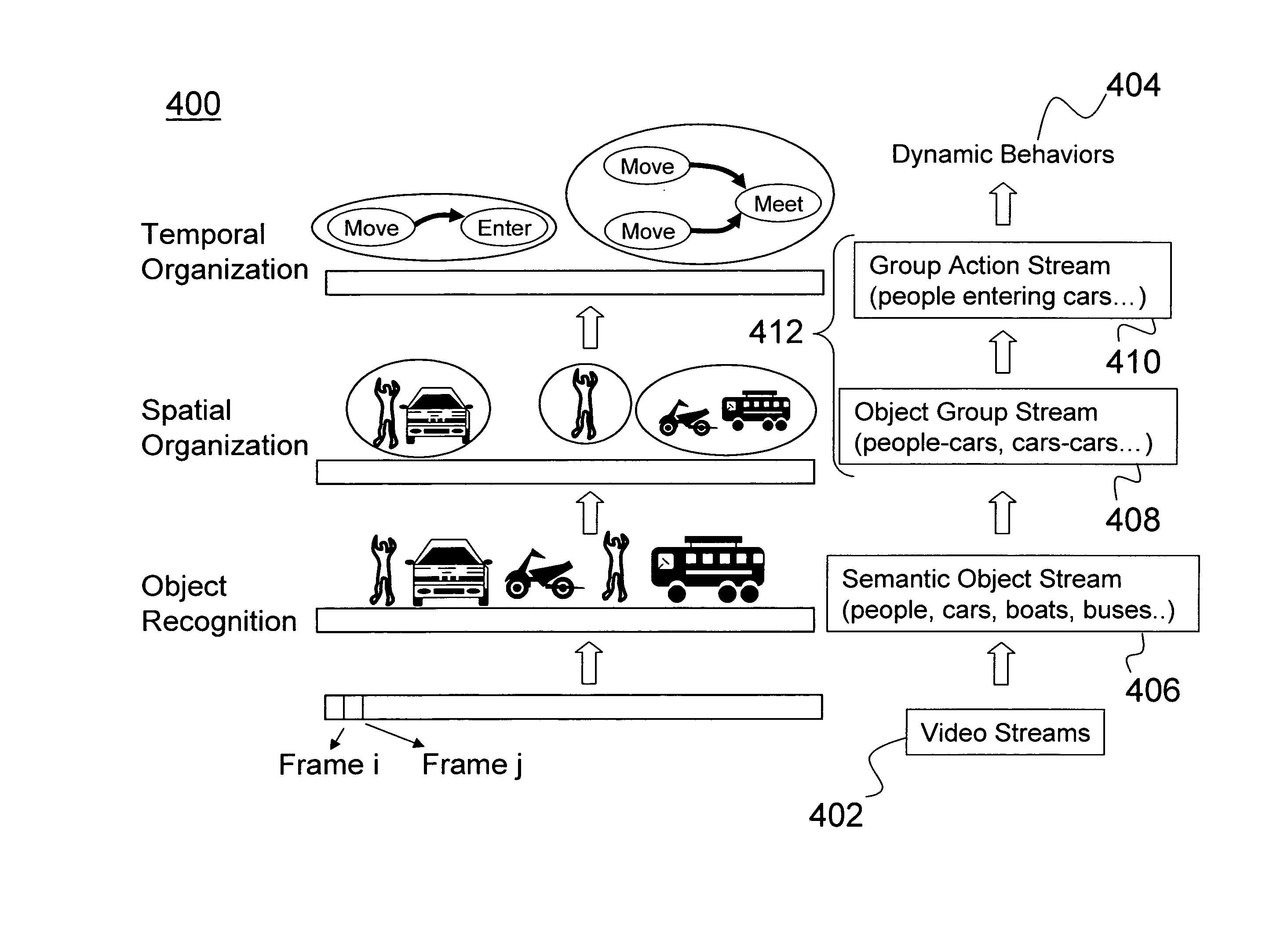

Behavior recognition using cognitive swarms and fuzzy graphs

Described is a behavior recognition system for detecting the behavior of objects in a scene. The system comprises a semantic object stream module for receiving a video stream having at least two frames and detecting objects in the video stream. Also included is a group organization module for utilizing the detected objects from the video stream to detect a behavior of the detected objects. The group organization module further comprises an object group stream module for spatially organizing the detected objects to have relative spatial relationships. The group organization module also comprises a group action stream module for modeling a temporal structure of the detected objects. The temporal structure is an action of the detected objects between the two frames, whereby through detecting, organizing and modeling actions of objects, a user can detect the behavior of the objects.

Owner:HRL LAB

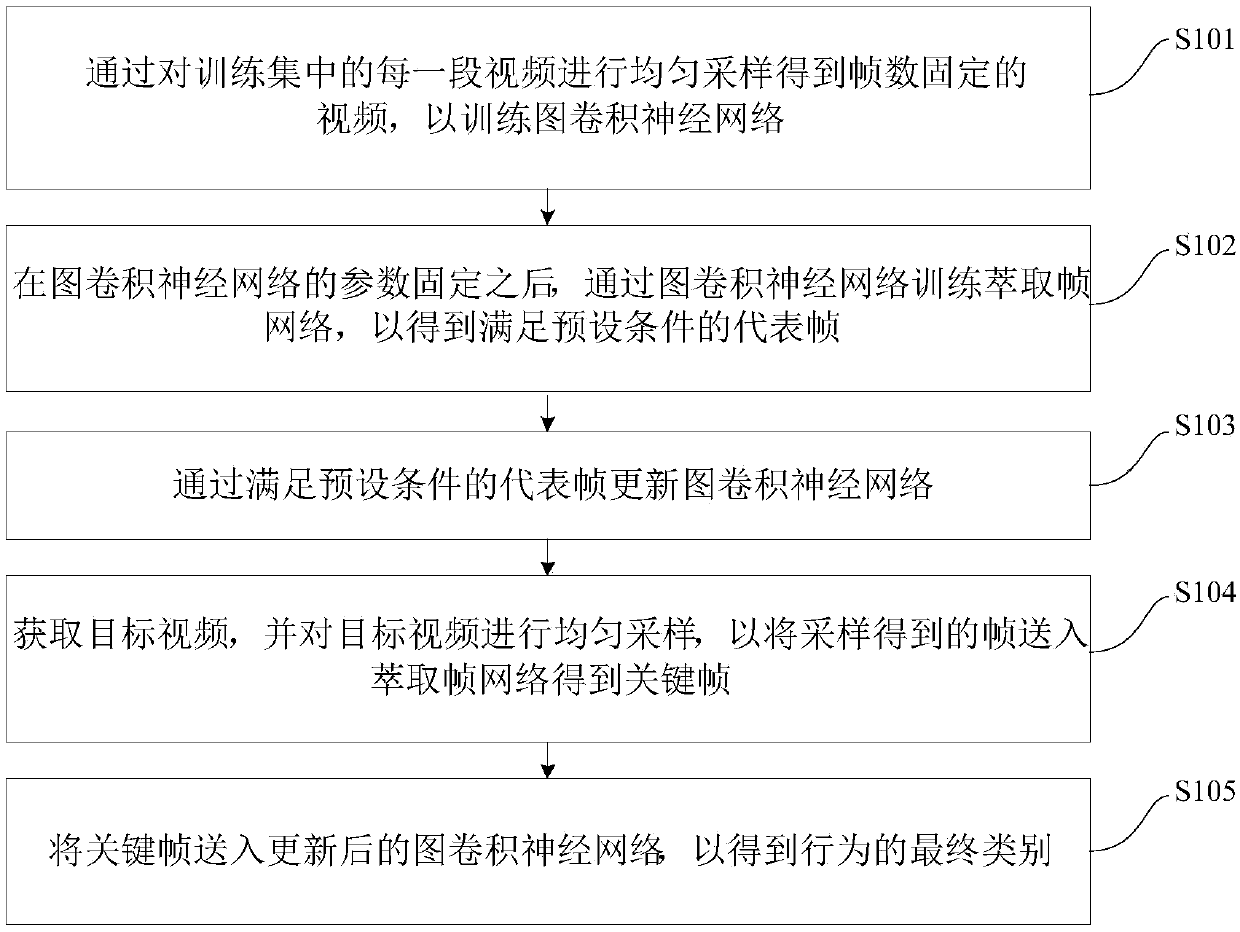

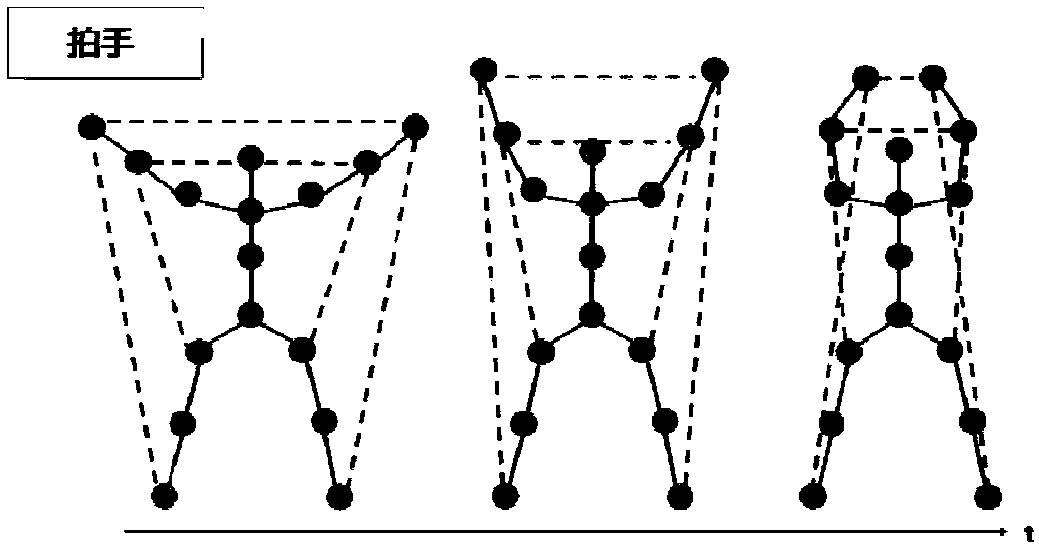

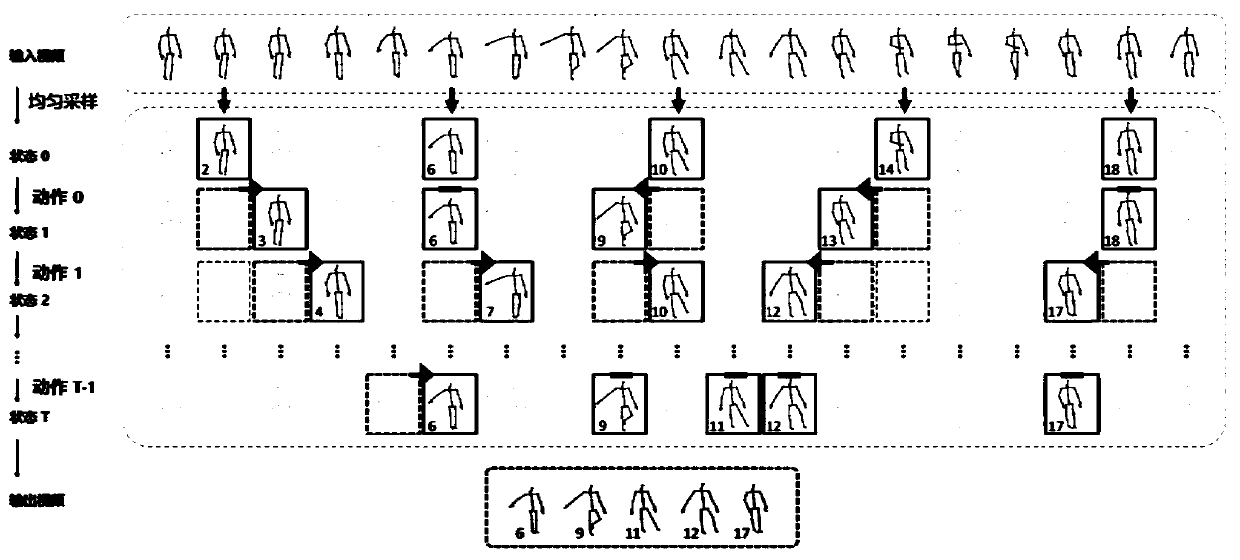

Human skeleton behavior recognition method and device based on deep reinforcement learning

ActiveCN108304795AImprove discrimination abilityEasy to identifyCharacter and pattern recognitionNeural architecturesFixed frameKey frame

The invention discloses a human skeleton behavior recognition method and device based on deep reinforcement learning. The method comprises: uniform sampling is carried out on each video segment in a training set to obtain a video with a fixed frame number, thereby training a graphic convolutional neural network; after parameter fixation of the graphic convolutional neural network, an extraction frame network is trained by using the graphic convolutional neural network to obtain a representative frame meeting a preset condition; the graphic convolutional neural network is updated by using the representative frame meeting the preset condition; a target video is obtained and uniform sampling is carried out on the target video, so that a frame obtained by sampling is sent to the extraction frame network to obtain a key frame; and the key frame is sent to the updated graphic convolutional neural network to obtain a final type of the behavior. Therefore, the discriminability of the selectedframe is enhanced; redundant information is removed; the recognition performance is improved; and the calculation amount at the test phase is reduced. Besides, with full utilization of the topologicalrelationship of the human skeletons, the performance of the behavior recognition is improved.

Owner:TSINGHUA UNIV

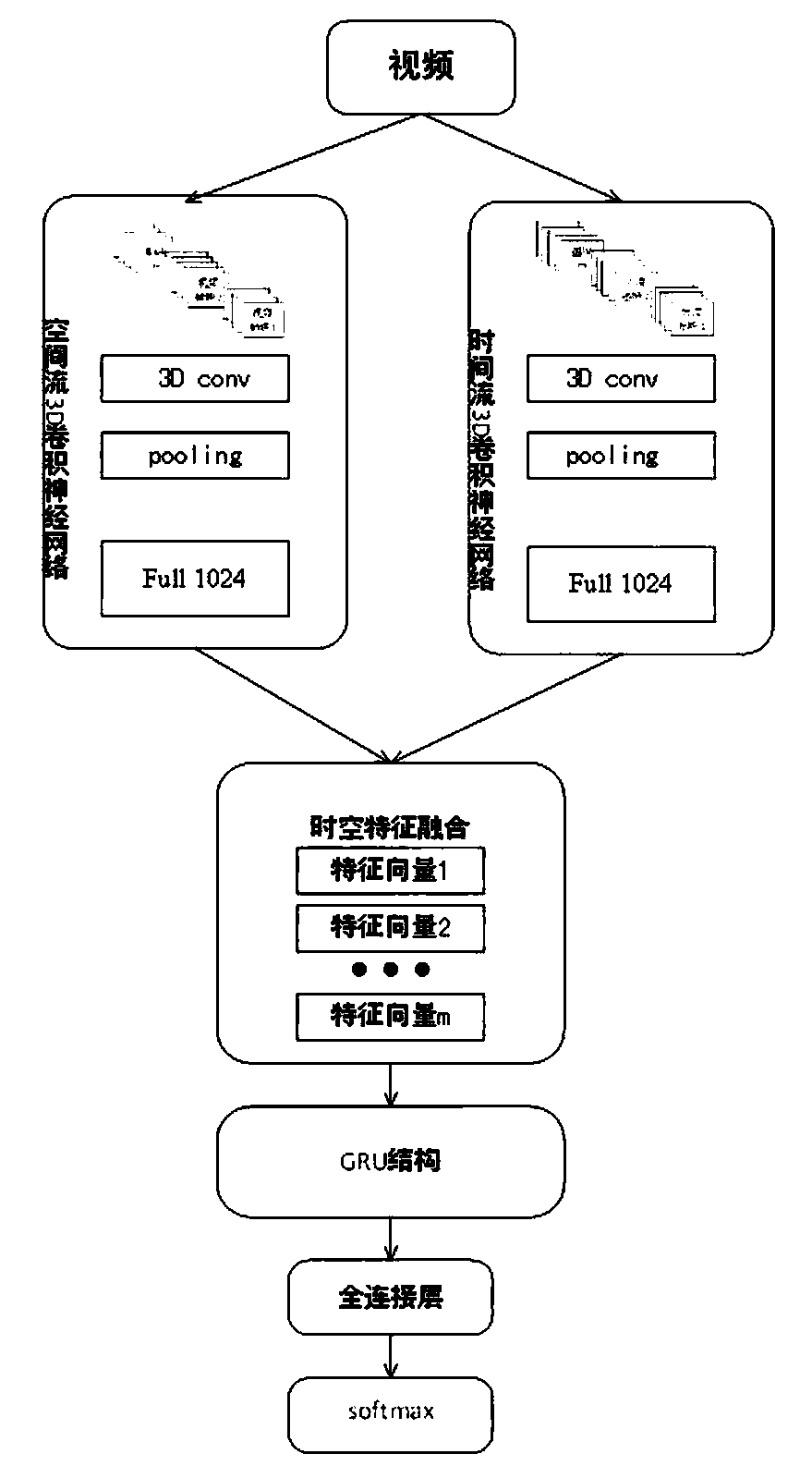

Behavior recognition technical method based on deep learning

PendingCN110188637AGood feature expression abilityEasy to useCharacter and pattern recognitionNeural architecturesVideo monitoringNetwork model

The invention discloses a behavior recognition technical method based on deep learning, which solves a problem that the intelligentization of a video monitoring system in the prior art needs to be improved. The method comprises the following steps of constructing a deeper space-time double-flow CNN-GRU neural network model by adopting a mode of combining a double-flow convolutional neural networkand a GRU network; extracting the time domain and space domain features of the video; extracting the long-time serialization characteristics of the spatial-temporal characteristic sequence according to the capability of the GRU network for memorizing information, and carrying out the behavior recognition of a video through employing a softmax classifier; proposing a new related entropy-based lossfunction; and with the help of a method for processing the mass information by referring to a human brain visual nerve attention mechanism, introducing an attention mechanism before a space-time double-flow CNN-GRU neural network model performs space-time feature fusion. The accuracy of the model provided by the technology is 61.5%, and compared with an algorithm based on a double-flow convolutional neural network, the recognition rate is improved to a certain extent.

Owner:XIDIAN UNIV

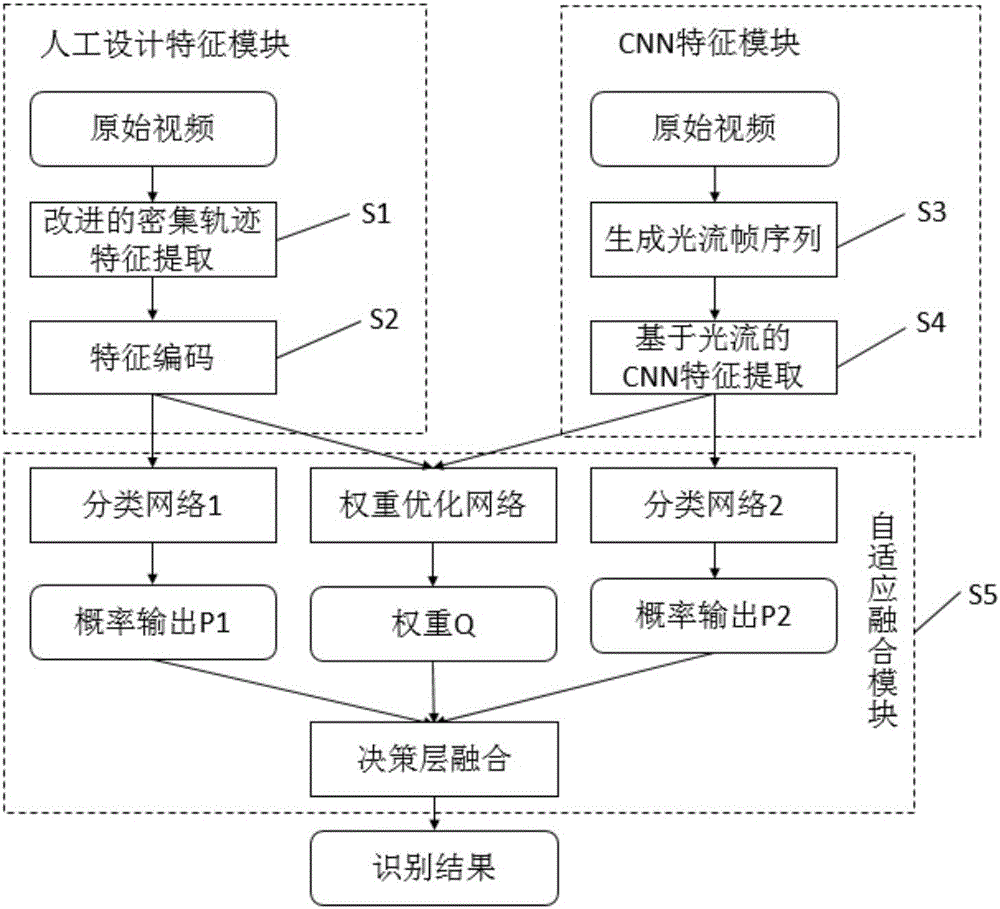

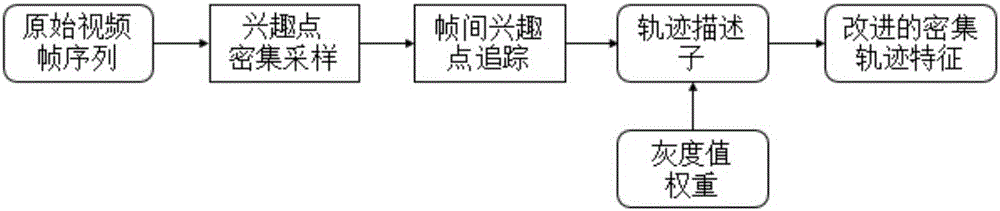

Infrared behavior identification method based on adaptive fusion of artificial design feature and depth learning feature

ActiveCN105787458AImprove reliabilityInnovative feature fusionCharacter and pattern recognitionNeural learning methodsData setFeature coding

The invention relates to an infrared behavior identification method based on adaptive fusion of an artificial design feature and a depth learning feature. The method comprises: S1, improved dense track feature extraction is carried out on an original video by using an artificial design feature module; S2, feature coding is carried out on the extracted artificial design feature; S3, with a CNN feature module, optic flow information extraction is carried out on an original video image sequence by using a variation optic flow algorithm, thereby obtaining a corresponding optic flow image sequence; S4, CNN feature extraction is carried out on the optic flow sequence obtained at the S3 by using a convolutional neural network; and S5, a data set is divided into a training set and a testing set; and weight learning is carried out on the training set data by using a weight optimization network, weight fusion is carried out on probability outputs of a CNN feature classification network and an artificial design feature classification network by using the learned weight, an optimal weight is obtained based on a comparison identification result, and then the optimal weight is applied to testing set data classification. According to the method, a novel feature fusion way is provided; and reliability of behavior identification in an infrared video is improved. Therefore, the method has the great significance in a follow-up video analysis.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

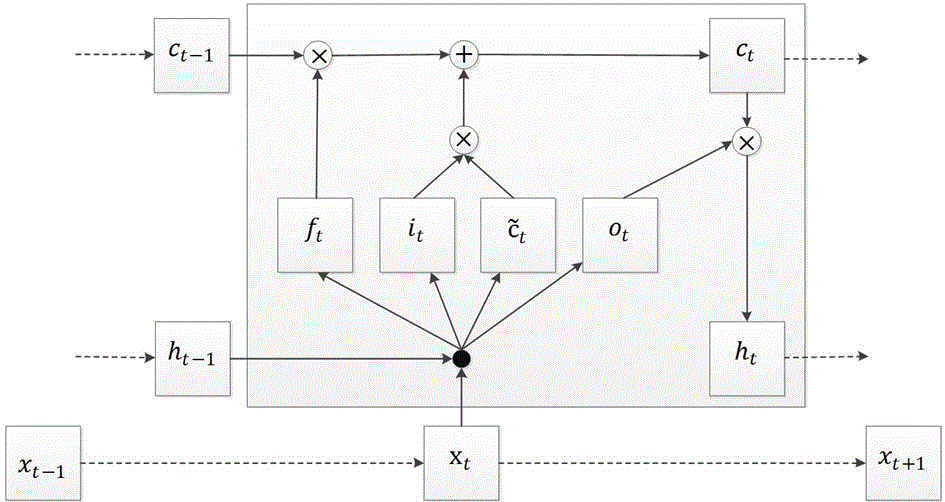

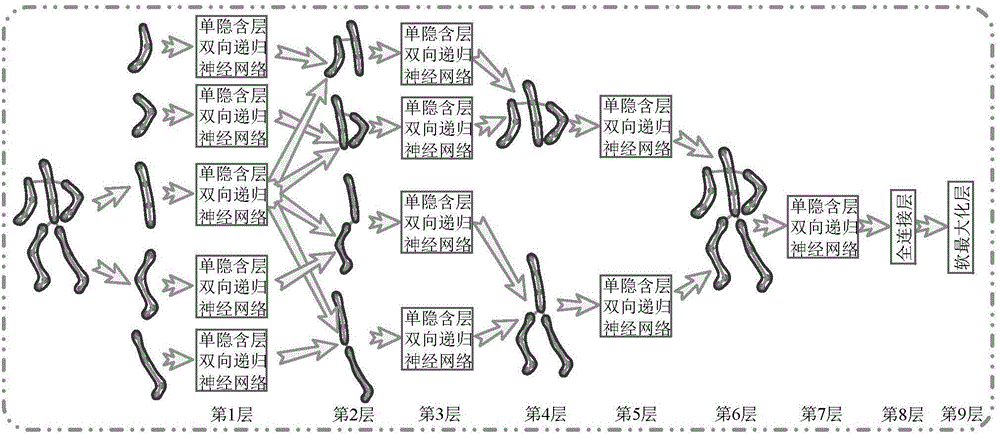

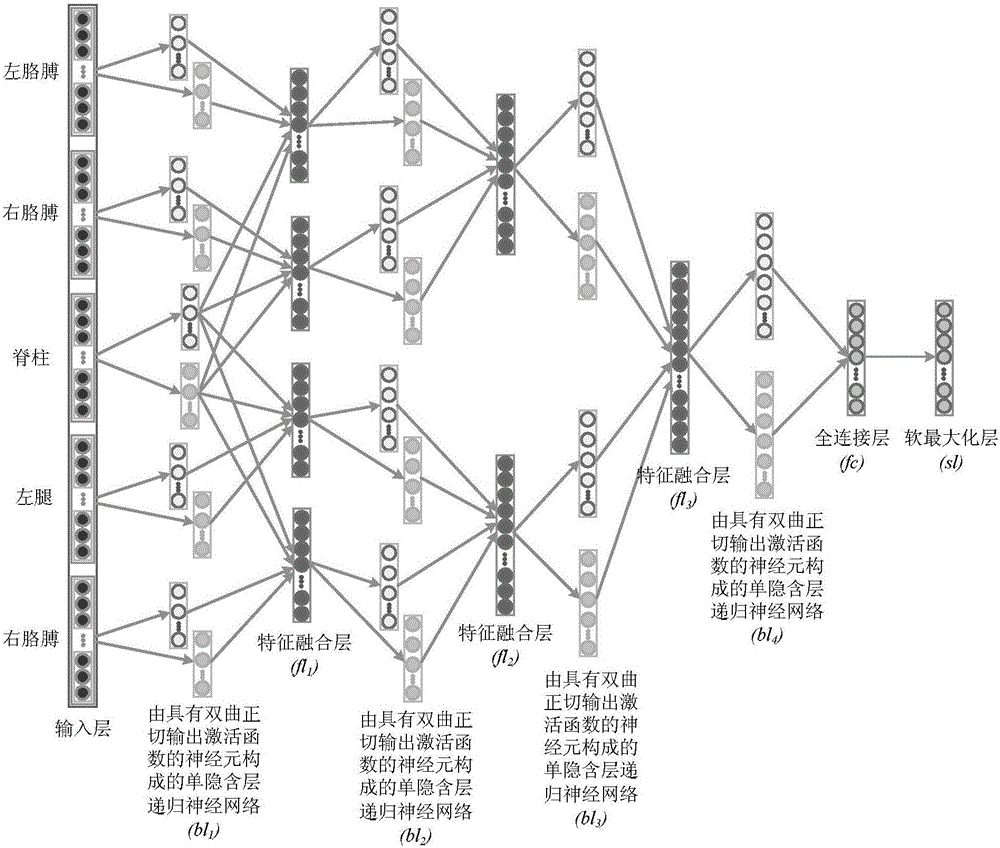

Behavior identification method based on recurrent neural network and human skeleton movement sequences

ActiveCN104615983AEasy accessHigh precision recognition rateCharacter and pattern recognitionNeural architecturesHuman bodyVideo monitoring

The invention discloses a behavior identification method based on a recurrent neural network and human skeleton movement sequences. The method comprises the following steps of normalizing node coordinates of extracted human skeleton posture sequences to eliminate influence of absolute space positions, where a human body is located, on an identification process; filtering the skeleton node coordinates through a simple smoothing filter to improve the signal to noise ratio; sending the smoothed data into the hierarchic bidirectional recurrent neural network for deep characteristic extraction and identification. Meanwhile, the invention provides a hierarchic unidirectional recurrent neural network model for coping with practical real-time online analysis requirements. The behavior identification method based on the recurrent neural network and the human skeleton movement sequences has the advantages of designing an end-to-end analyzing mode according to the structural characteristics and the motion relativity of human body, achieving high-precision identification and meanwhile avoiding complex computation, thereby being applicable to practical application. The behavior identification method based on the recurrent neural network and the human skeleton movement sequence is significant to the fields of intelligent video monitoring based on the depth camera technology, intelligent traffic management, smart city and the like.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

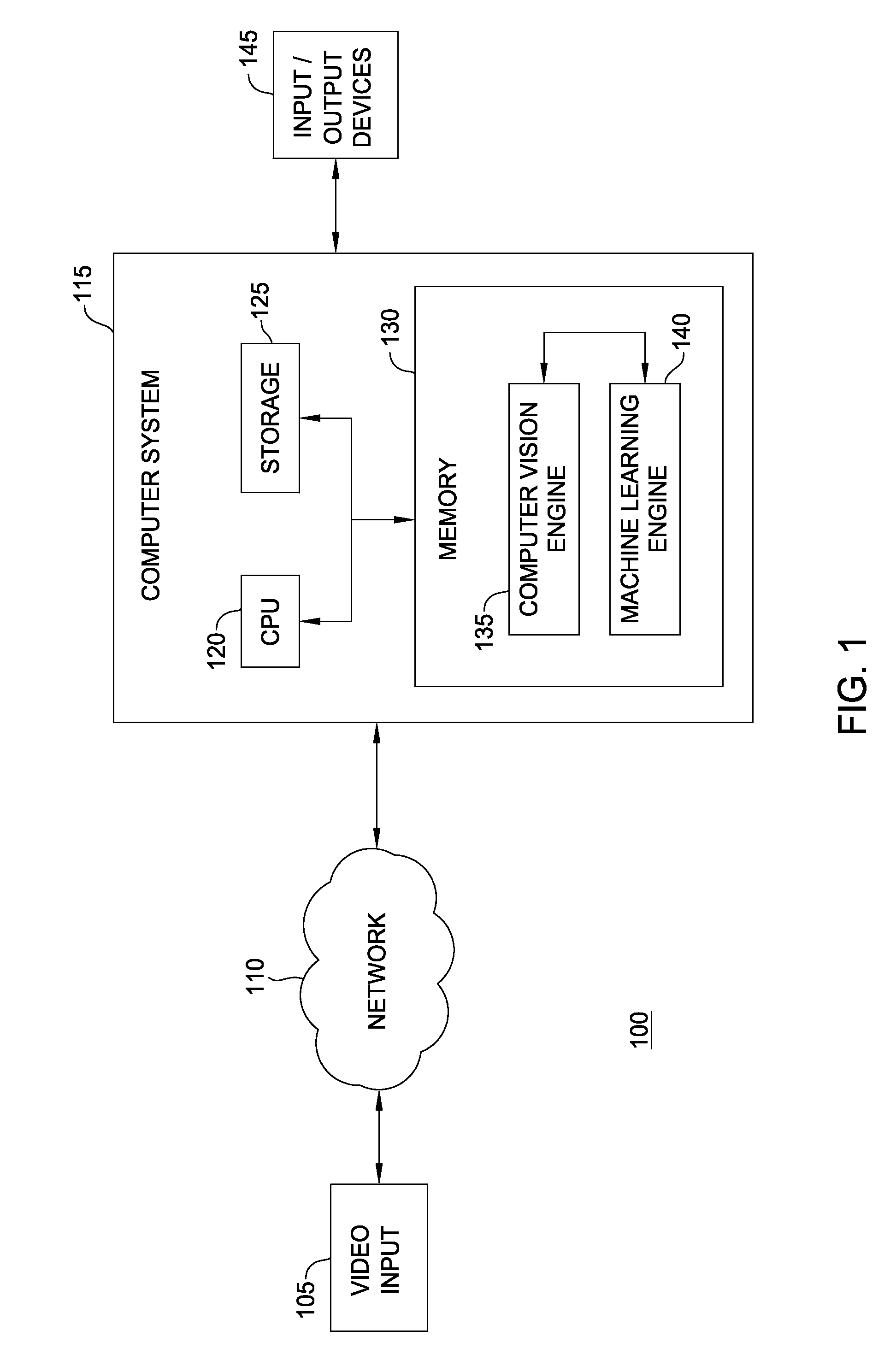

Identifying anomalous object types during classification

ActiveUS20110052068A1Character and pattern recognitionElectric/magnetic detectionTraining phaseObject definition

Techniques are disclosed for identifying anomaly object types during classification of foreground objects extracted from image data. A self-organizing map and adaptive resonance theory (SOM-ART) network is used to discover object type clusters and classify objects depicted in the image data based on pixel-level micro-features that are extracted from the image data. Importantly, the discovery of the object type clusters is unsupervised, i.e., performed independent of any training data that defines particular objects, allowing a behavior-recognition system to forgo a training phase and for object classification to proceed without being constrained by specific object definitions. The SOM-ART network is adaptive and able to learn while discovering the object type clusters and classifying objects and identifying anomaly object types.

Owner:MOTOROLA SOLUTIONS INC

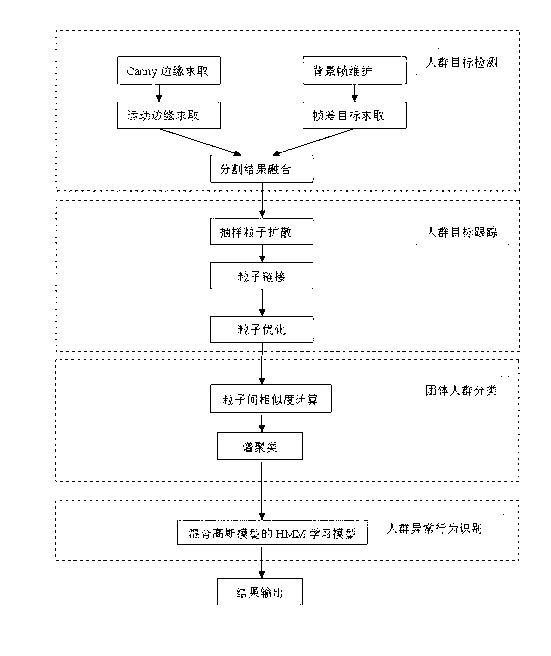

Method for detecting group crowd abnormal behaviors in video monitoring

InactiveCN102799863AEffective trackingCharacter and pattern recognitionVideo monitoringFrame difference

The invention provides a method for detecting group crowd abnormal behaviors in video monitoring. The method comprises the steps of: video target detection: obtaining video objects through edge information difference detection in successive frames, and obtaining video objects with movement change through frame difference of a foreground frame and a background frame, obtaining a relatively accurate movement target by combining two video object detection results; video target tracking: tracking targets to obtain corresponding movement tracks through a video particle-based long-period movement estimation method; group crowd detection: carrying out spectral clustering analysis on the distance between the tracks and advancing speed information through movement characteristics of the group crowd in video; and identification of group crowd abnormal behaviors: establishing a model for crowd tracks by using an MGHMM (Mixed Gaussian Hidden Markov Model), and identifying blockage and fall through sudden change of a normal track. The invention integrates technologies of crowd target detection, group target track, mode identification and machine learning.

Owner:安吉安融智能科技有限公司

Hierarchical sudden illumination change detection using radiance consistency within a spatial neighborhood

Techniques are disclosed for detecting sudden illumination changes using radiance consistency within a spatial neighborhood. A background / foreground (BG / FG) component of a behavior recognition system may be configured to generate a background image depicting a scene background. Further, the (BG / FG) component may periodically evaluate a current video frame to determine whether a sudden illumination change has occurred. A sudden illumination change occurs when scene lighting changes dramatically from one frame to the next (or over a small number of frames).

Owner:INTELLECTIVE AI INC

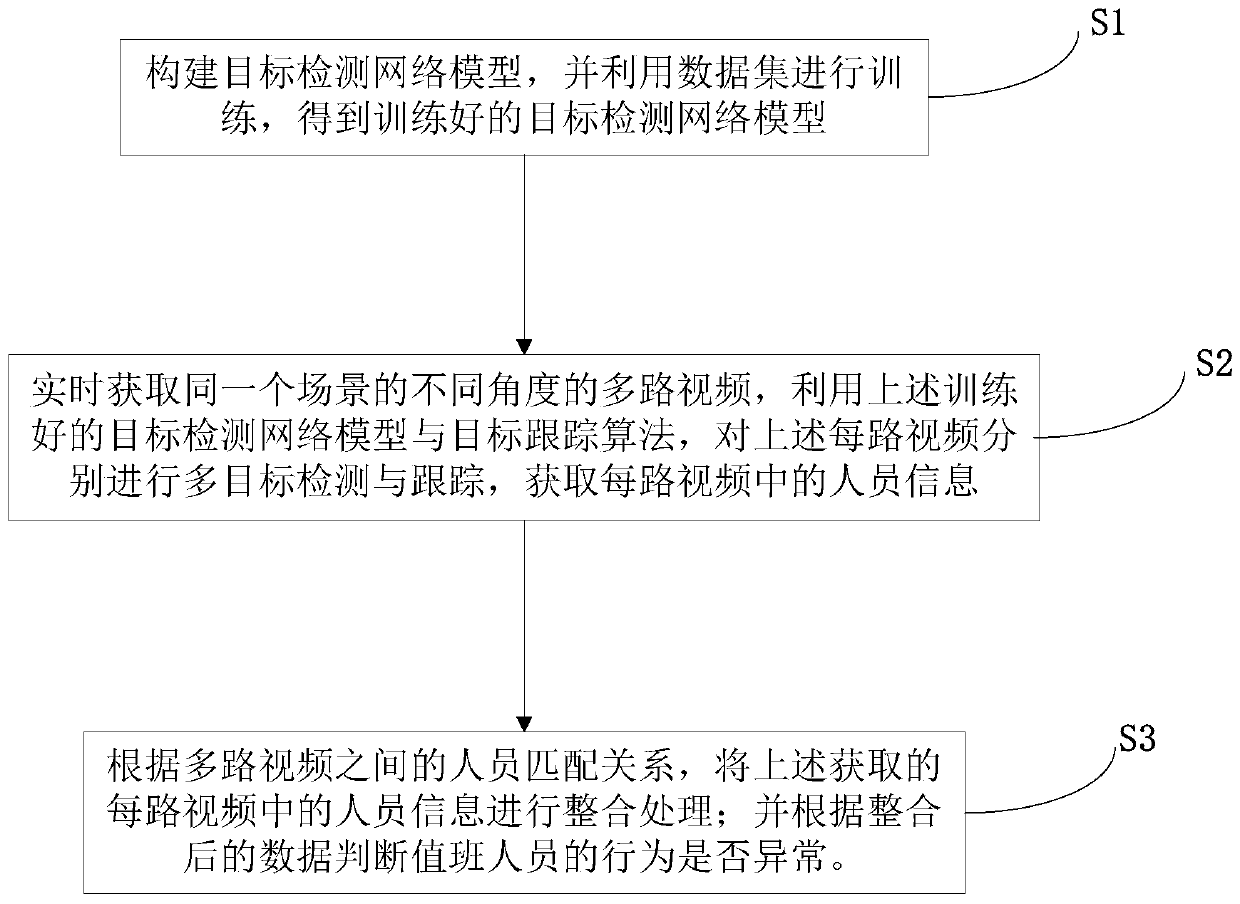

An operator on duty violation behavior detection method and system

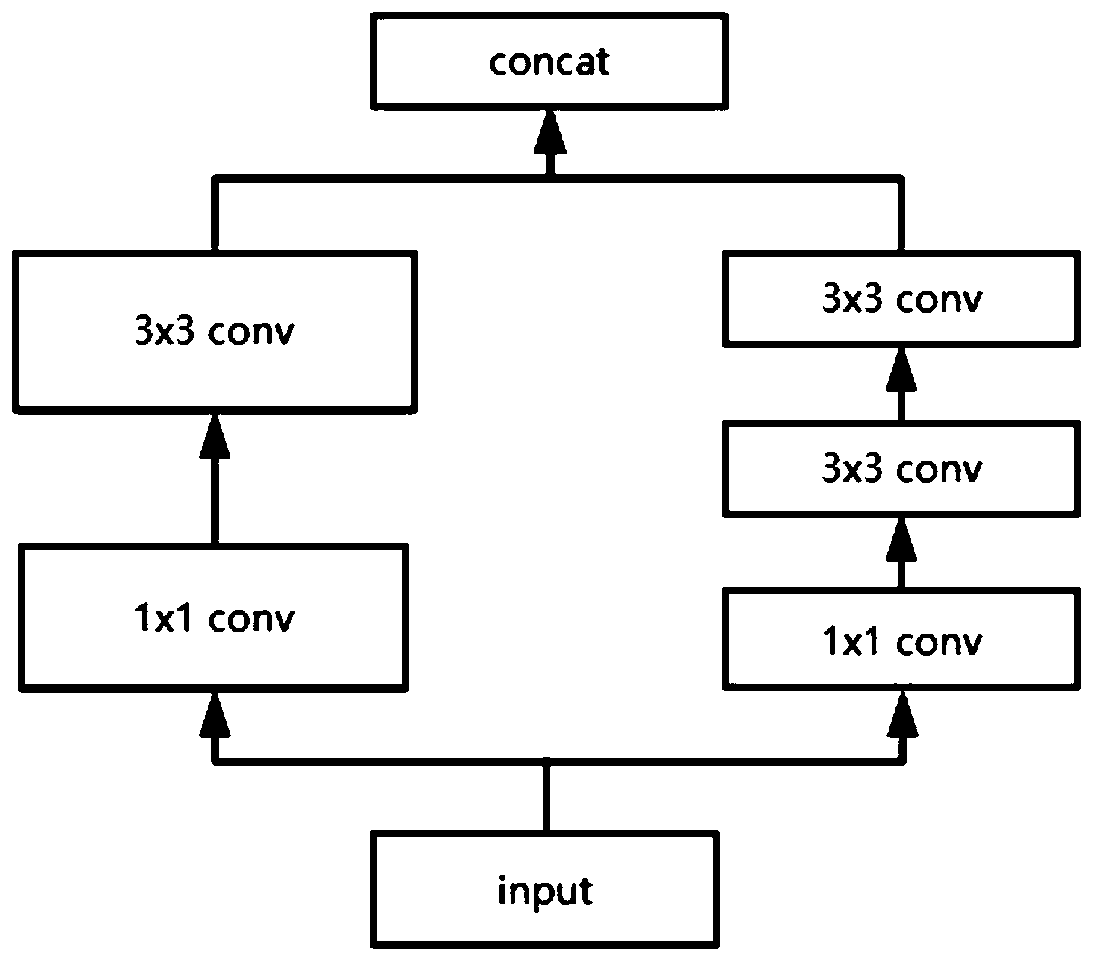

ActiveCN109711320AGuaranteed uptimeImprove accuracyCharacter and pattern recognitionNeural learning methodsData setObject tracking algorithm

The invention relates to an on-duty person violation behavior detection method and system, belongs to the technical field of intelligent video analysis, and solves the problems of low efficiency, highcost and low recognition accuracy of an existing detection method. The method comprises the following steps: constructing a target detection network model, and training by using a data set; acquiringmultiple paths of videos at different angles in the same scene in real time, carrying out multi-target detection and tracking by utilizing a trained target detection network model and a target tracking algorithm, acquiring personnel information in each path of videos, carrying out integration processing, and judging whether behaviors of personnel on duty are abnormal or not. According to the invention, an environment camera video is used as an input video source for intelligent video analysis; the system supports multi-channel video source input and fusion analysis, greatly improves the recognition accuracy of illegal behaviors through deep learning and data modeling means, and achieves the real-time and accurate monitoring of the duty behaviors of personnel on duty in scenes such as a monitoring center, a duty room, a command center and the like.

Owner:XINGTANG TELECOMM TECH CO LTD +2

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com