Patents

Literature

130 results about "Time structure" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Structure Time :> TIME. The structure Time provides an abstract type for representing times and time intervals, and functions for manipulating, converting, writing, and reading them.

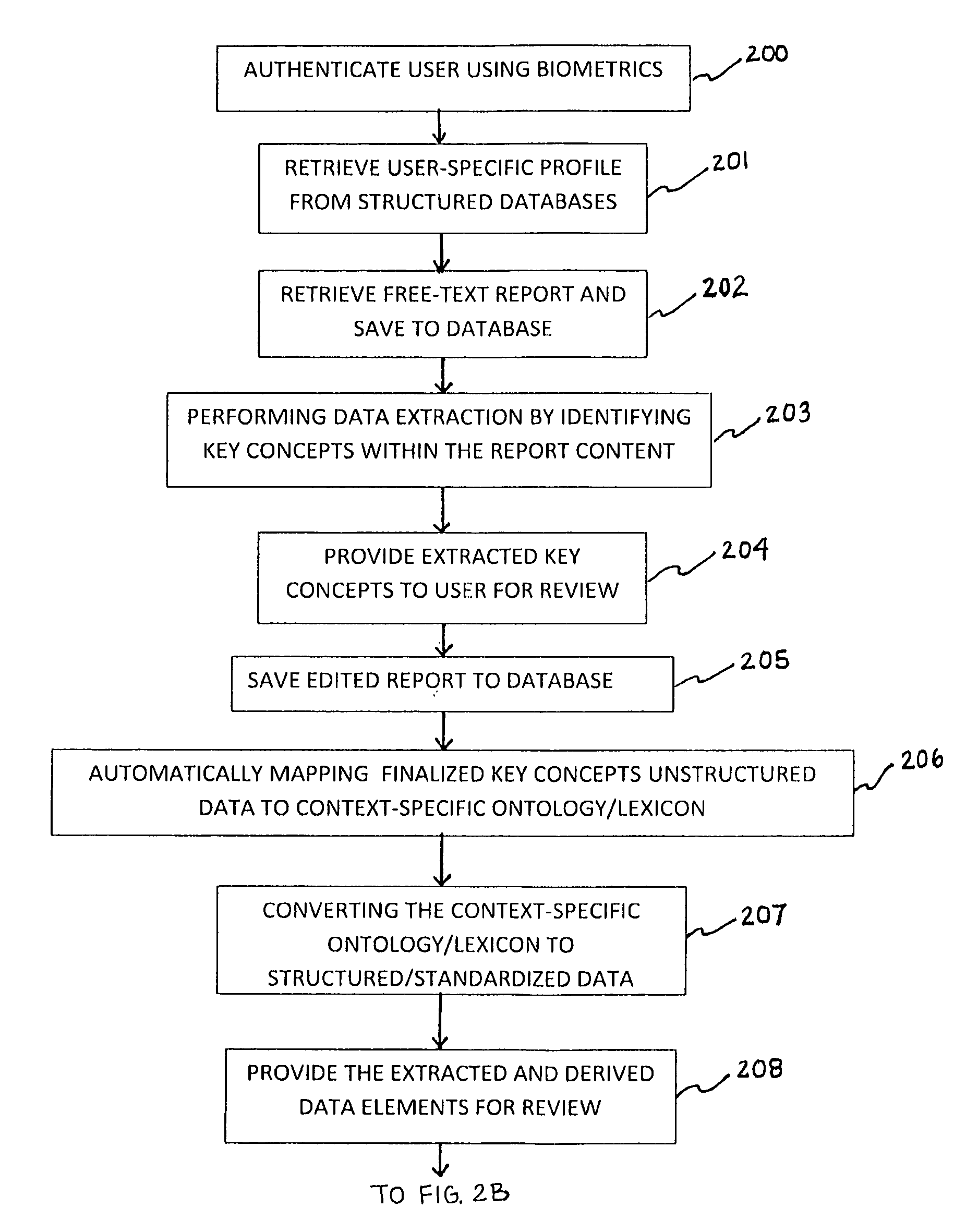

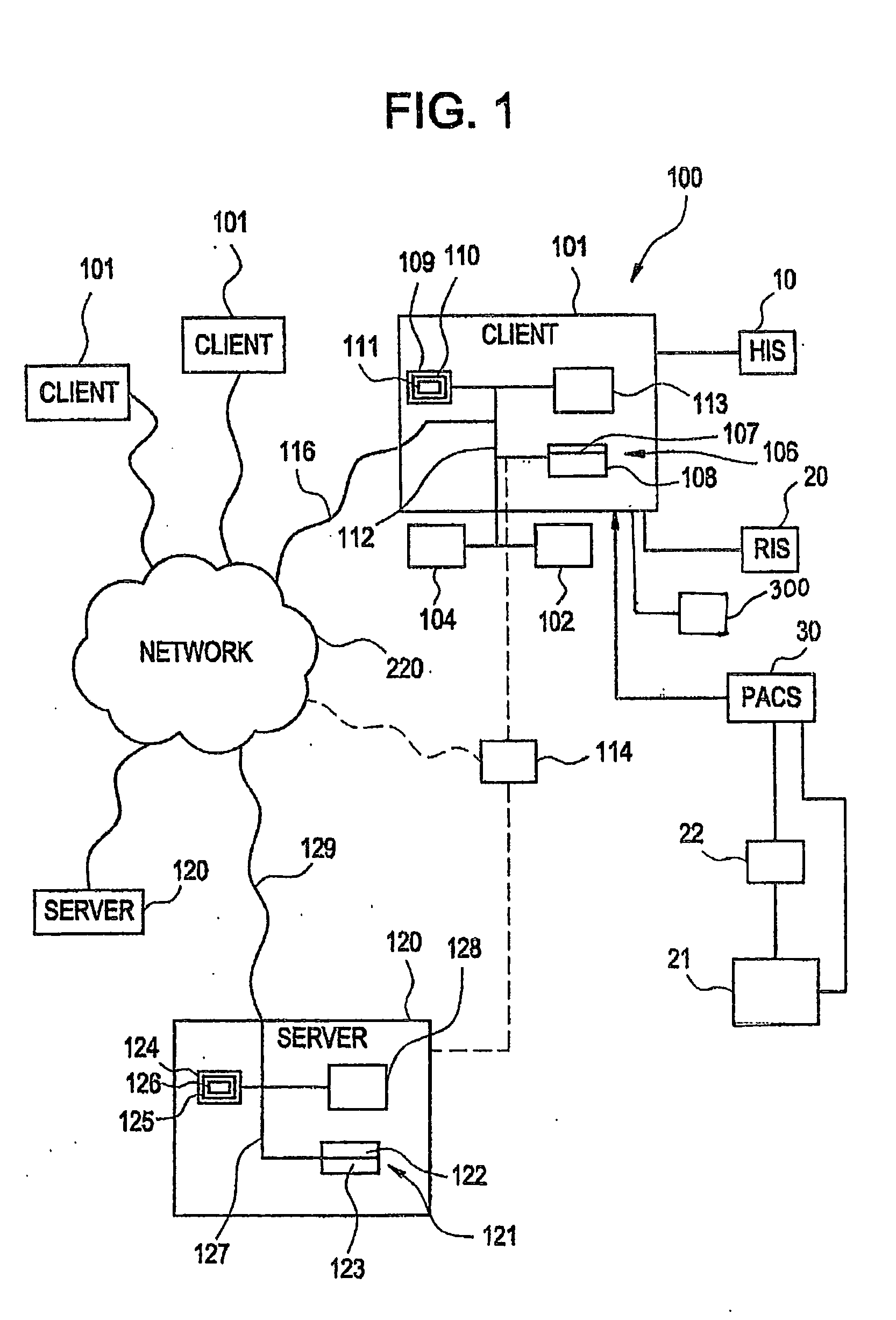

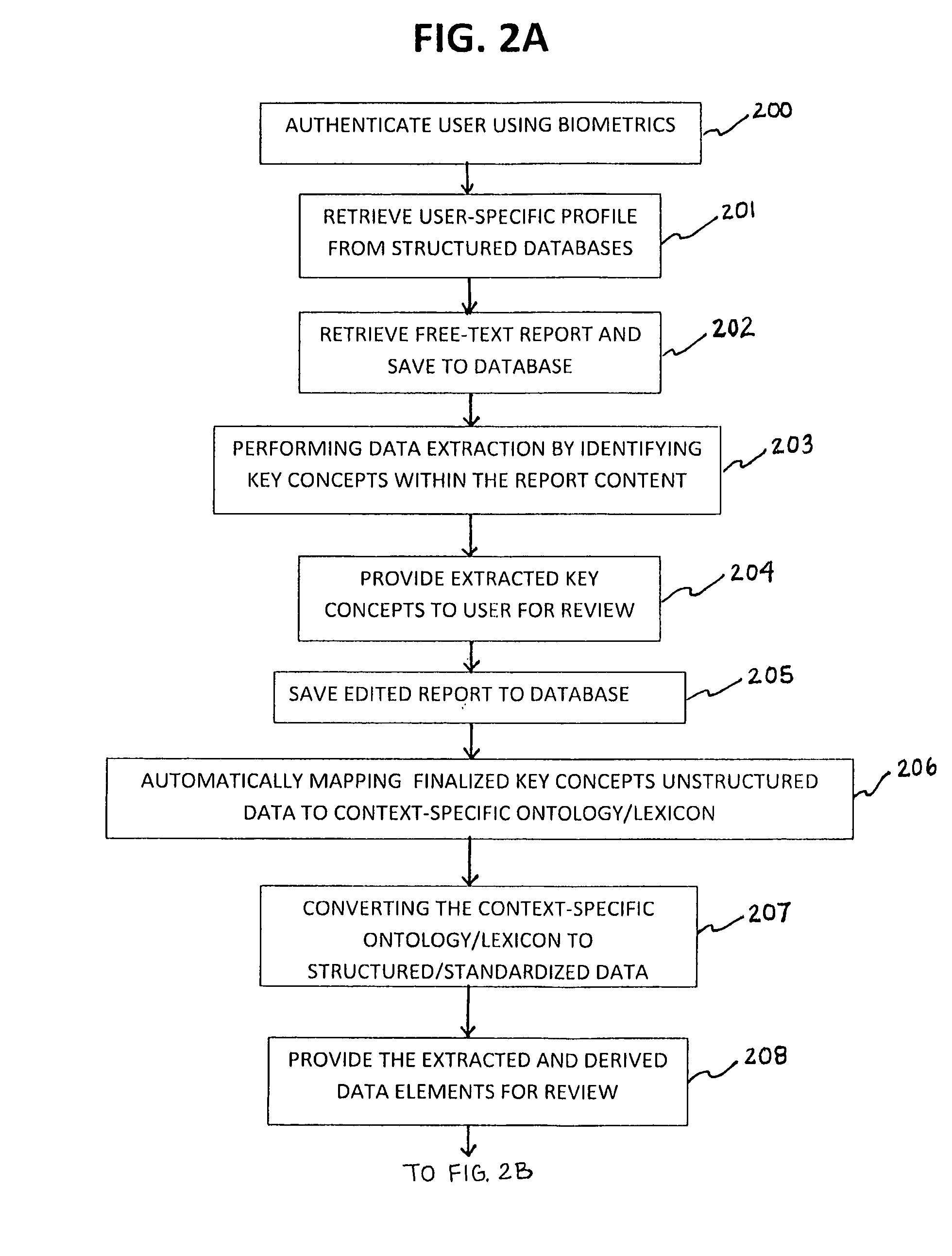

Method of extracting real-time structured data and performing data analysis and decision support in medical reporting

InactiveUS20100145720A1Medical data miningData processing applicationsTime structureTreatment options

The present invention relates to a methodology for the conversion of unstructured, free text data (contained within medical reports) into standardized, structured data, and also relates to a decision support feature for use in diagnosis and treatment options.

Owner:REINER BRUCE

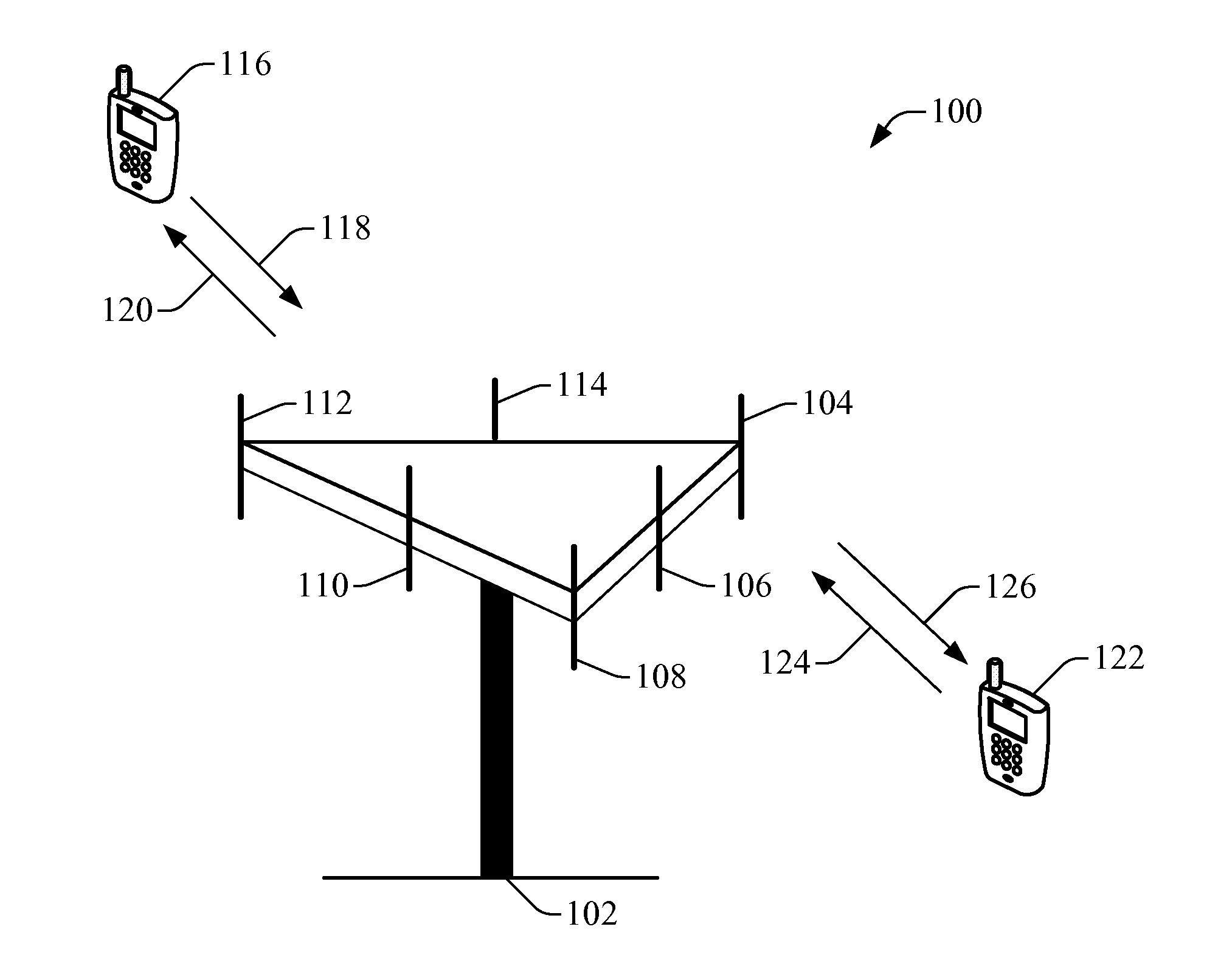

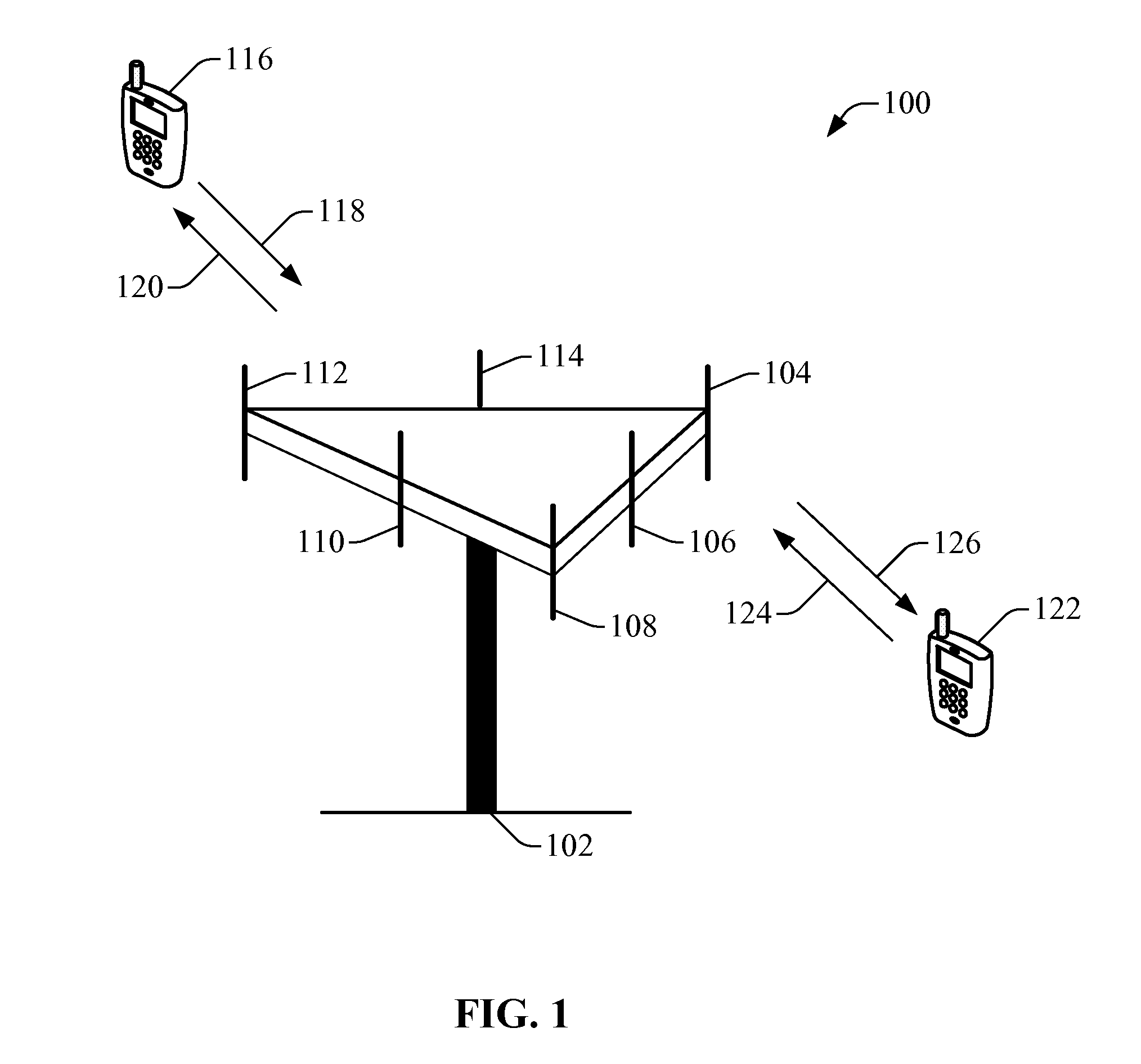

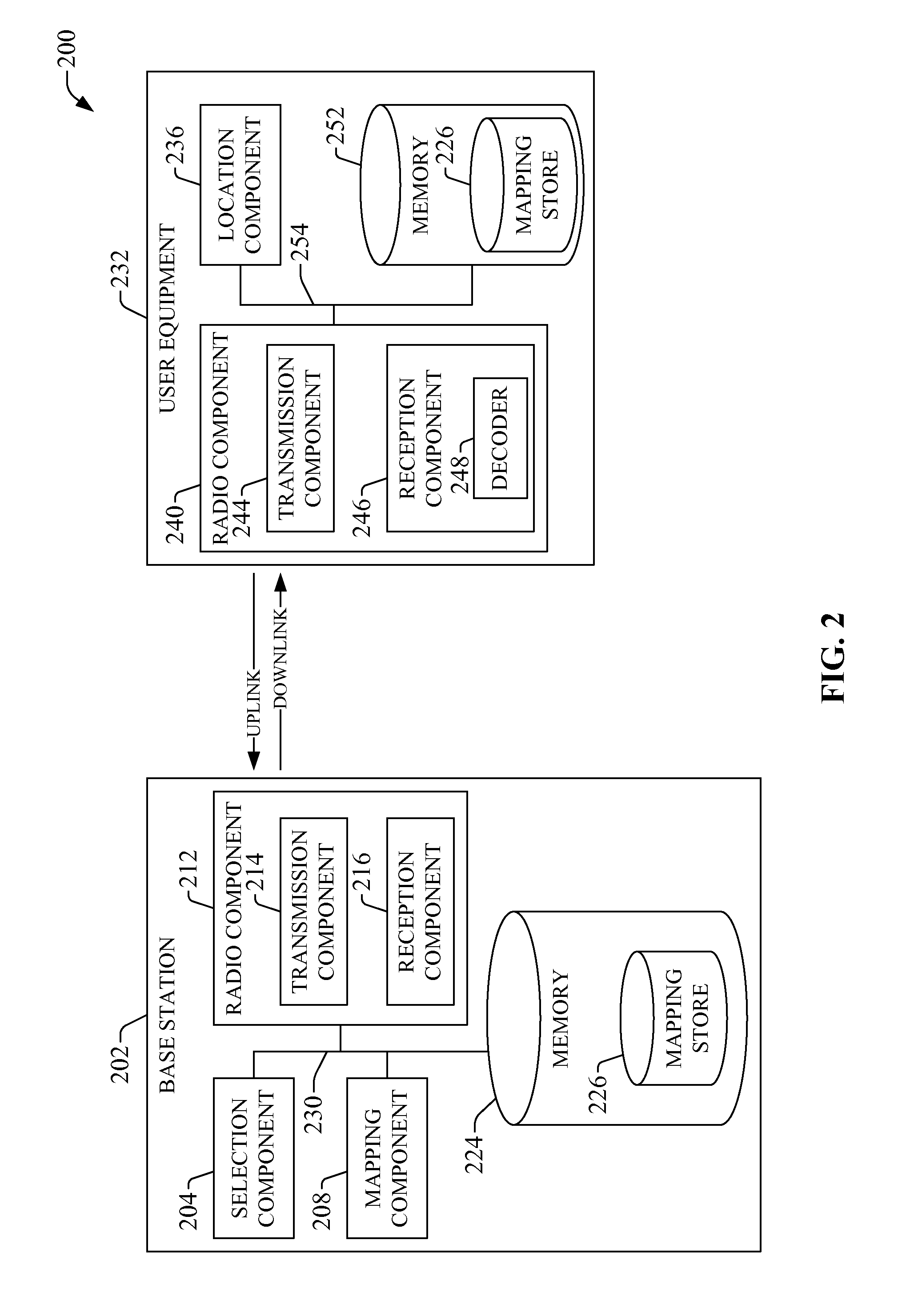

Positioning reference signals in a telecommunication system

Systems and methods are described to supply positioning reference signal (PRS) in a telecommunication system. A base station supplies a PRS sequence according at least to a time-frequency pattern of modulation symbols, wherein the time-frequency pattern assigns a modulation symbol to each frequency tone in a block of time-frequency resources allocated to transmit PRS. The base station associates a modulation symbol in the time-frequency pattern with a reference symbol in the PRS sequence through a mapping that represents the time-frequency pattern. The PRS sequence is conveyed to user equipment through delivery of a set of modulation symbols established through the mapping. Different time-frequency patterns can be exploited based on time-structure of a radio sub-frame. The user equipment receives the PRS sequence according to at least the time-frequency pattern of modulation symbols and utilizes at least the PRS sequence as part of a process to produce a location estimate.

Owner:QUALCOMM INC

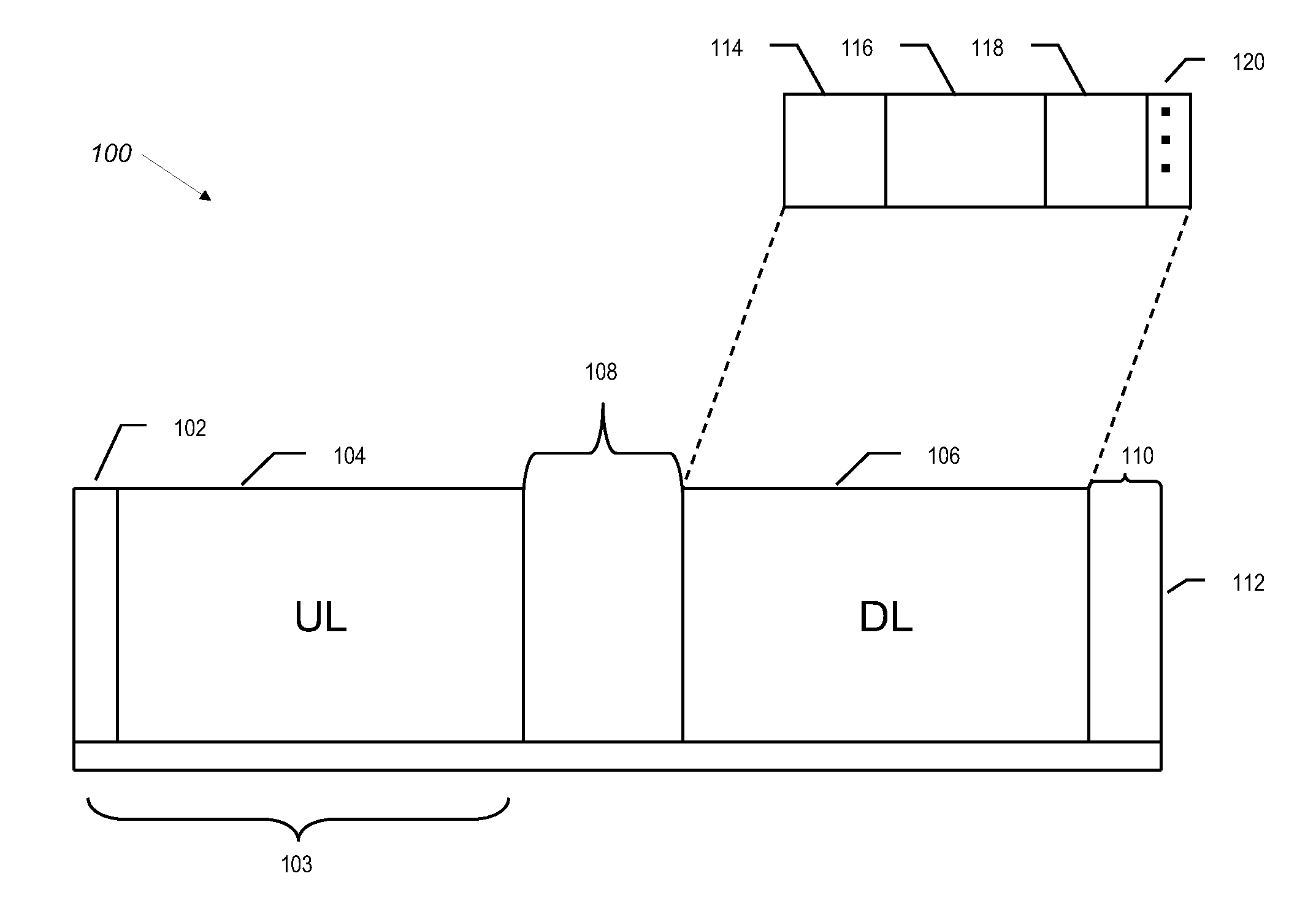

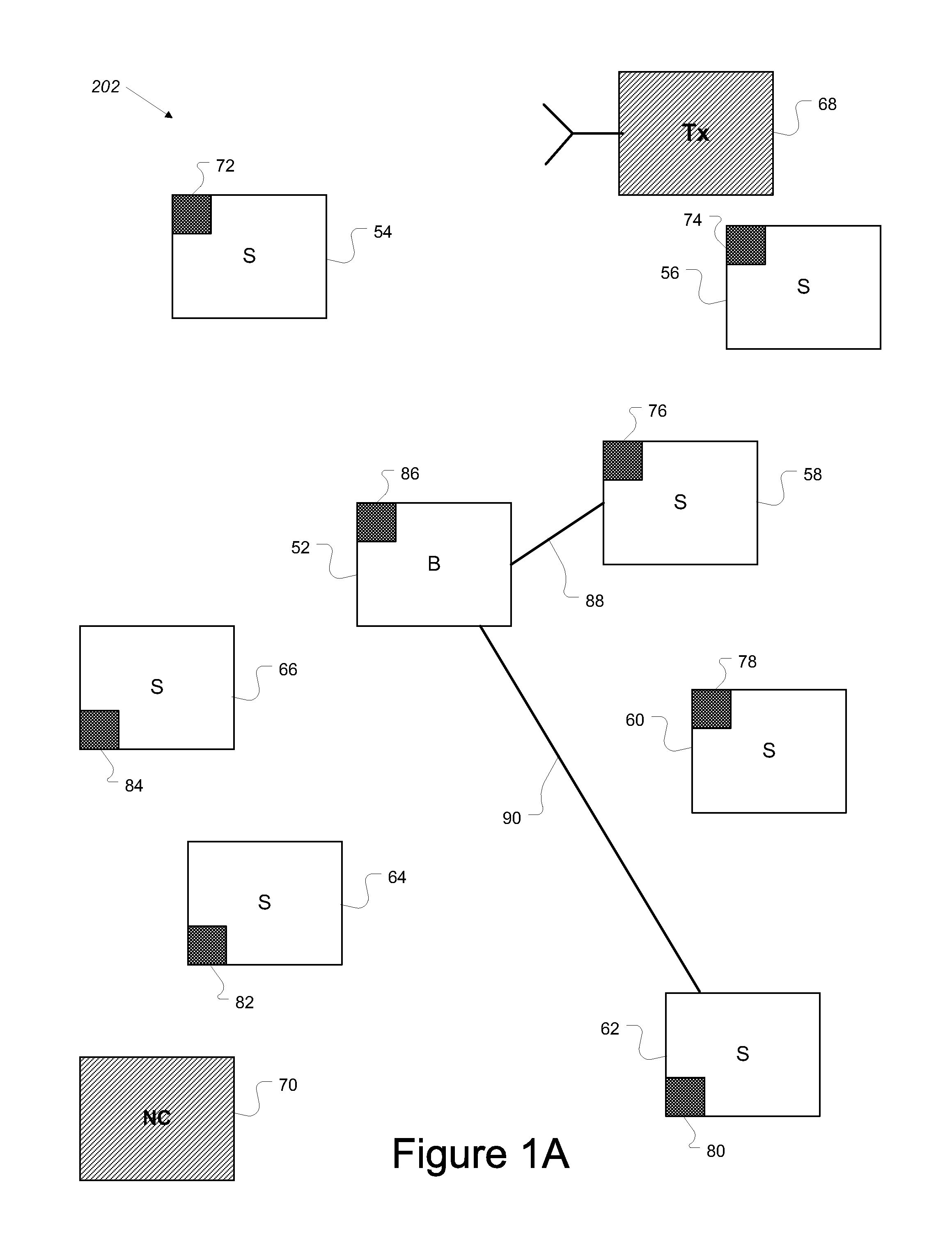

Methods for using a detector to monitor and detect channel occupancy

InactiveUS8027249B2Avoid interferenceTransmitters monitoringSite diversityTime structureCurrent channel

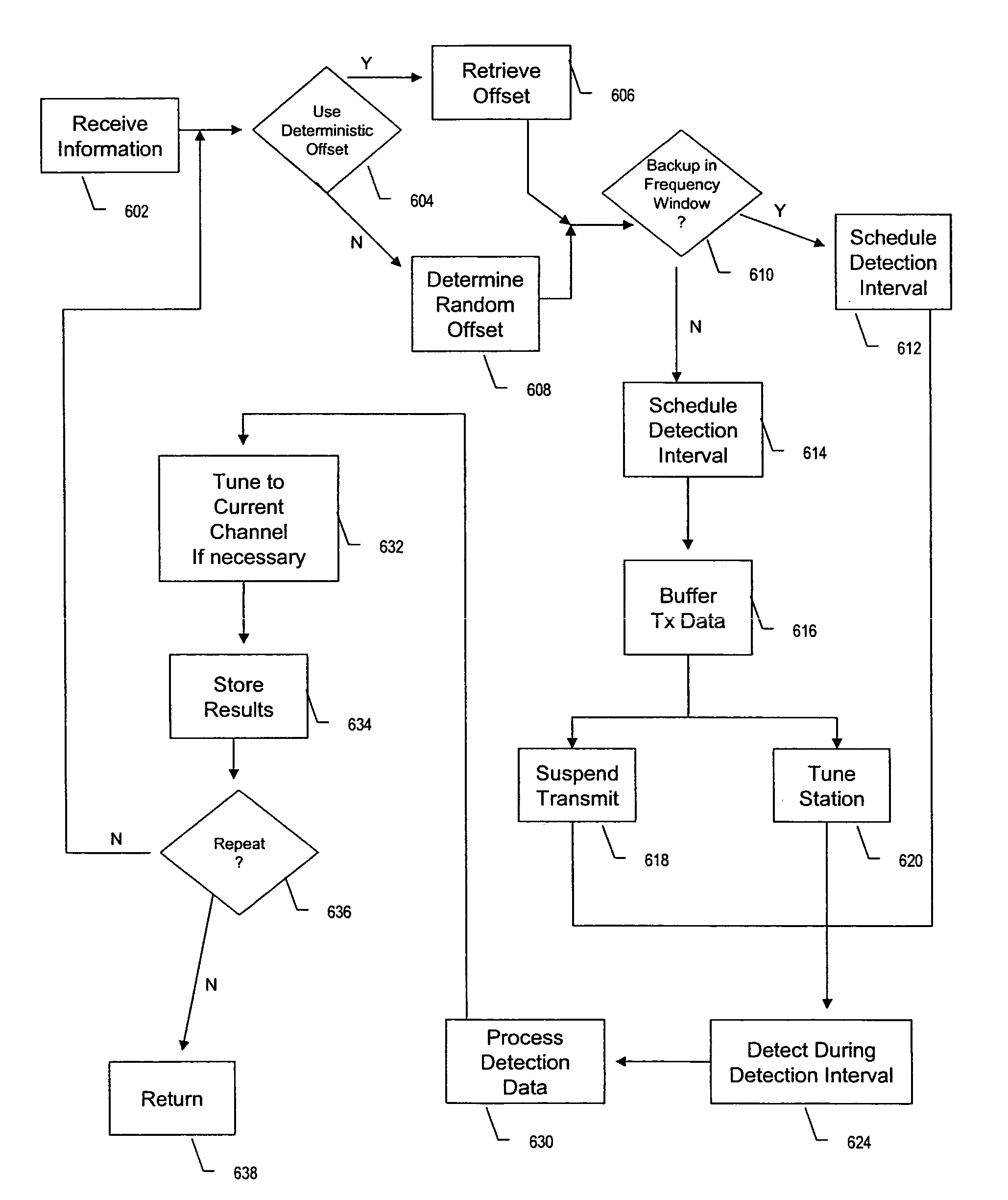

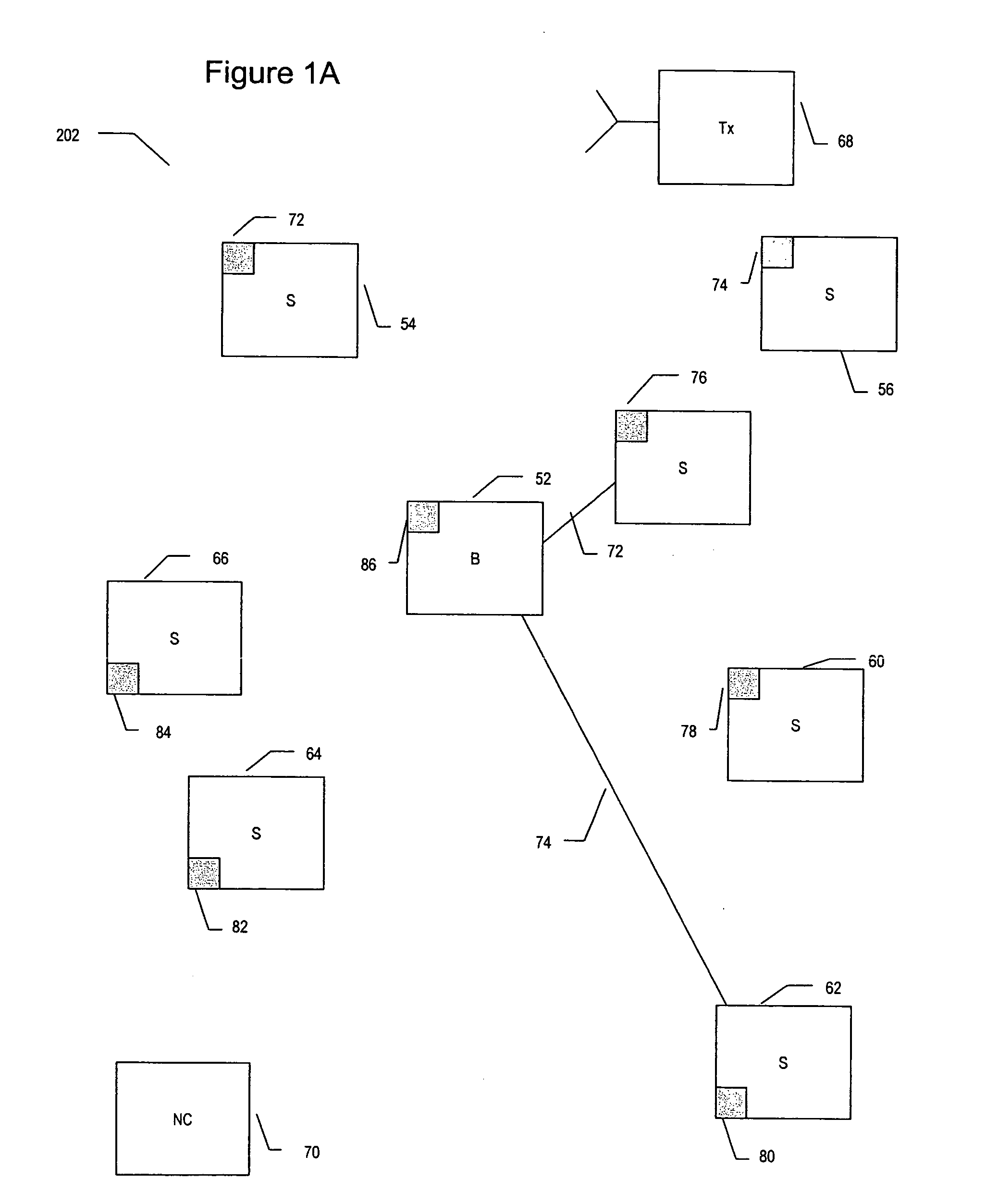

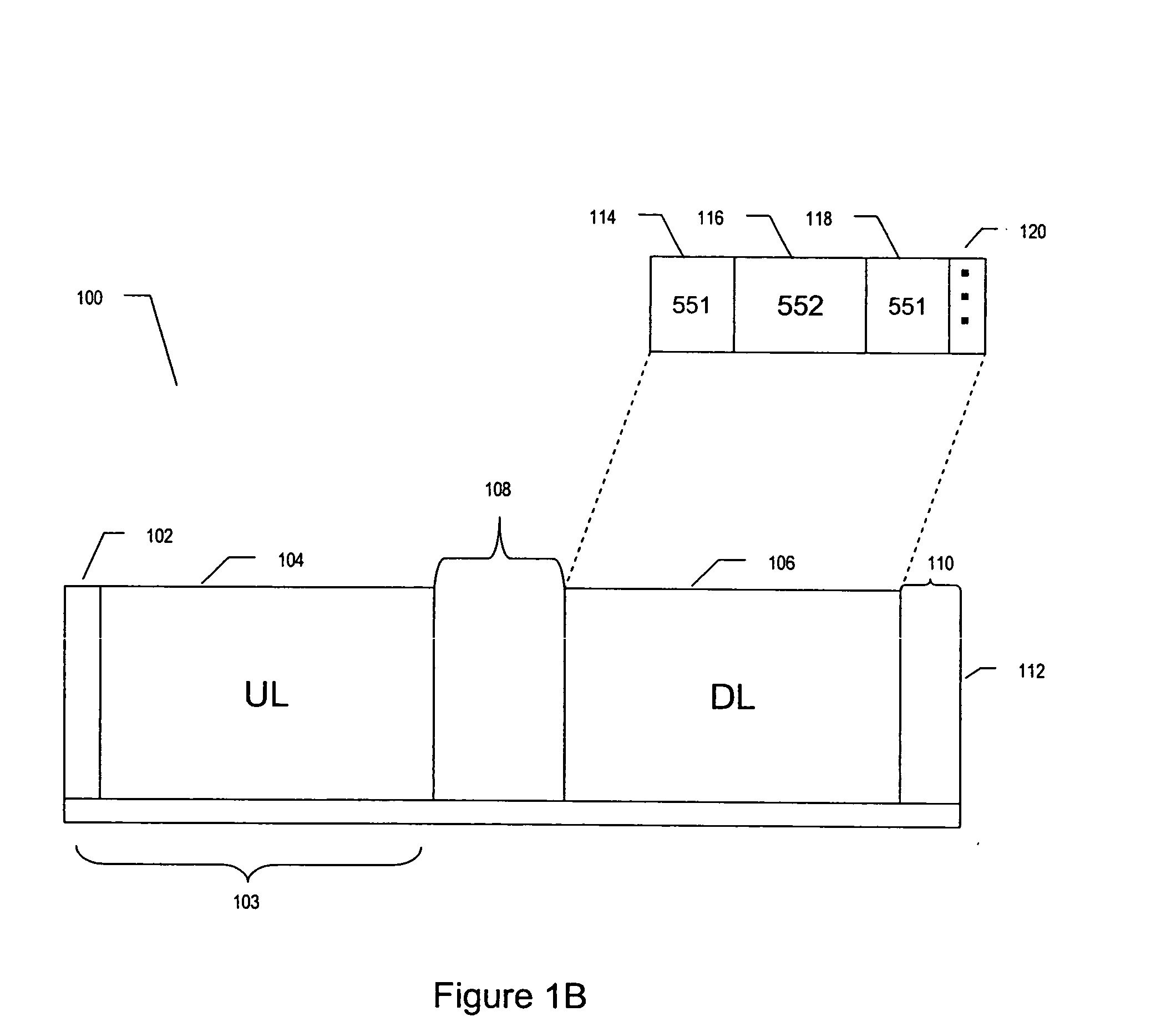

Methods for using a detector to monitor and detect channel occupancy are disclosed. The detector resides on a station within a network using a framed format having a periodic time structure. When non-cooperative transmissions are detected by the network, the detector assesses the availability of a backup channel enabling migration of the network. The backup channel serves to allow the network to migrate transparently when the current channel becomes unavailable. The backup channel, however, could be occupied by another network that results in the migrating network interfering with the network already using the backup channel. Thus, the detector detects active transmission sources on the backup channel to determine whether the backup channel is occupied. Methods for using the detector include scheduling detection intervals asynchronously. The asynchronous detection uses offsets from a reference point within a frame.

Owner:SHARED SPECTRUM

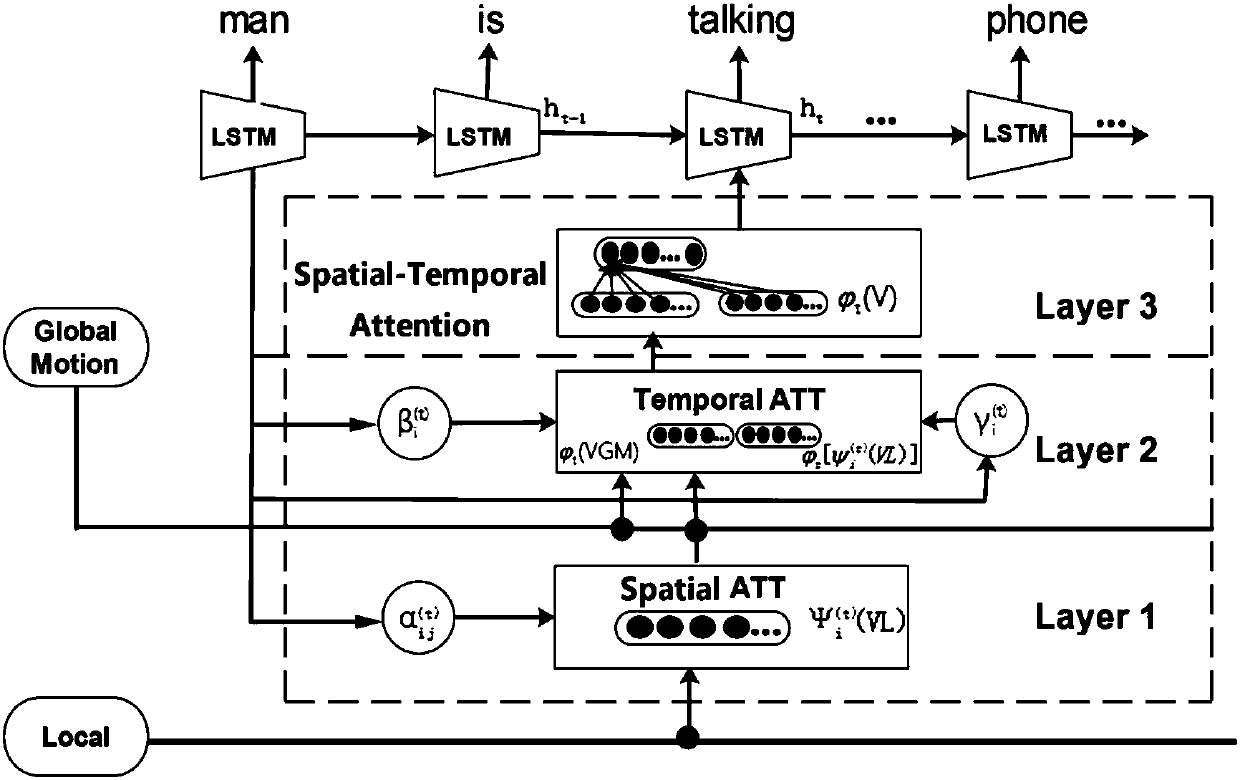

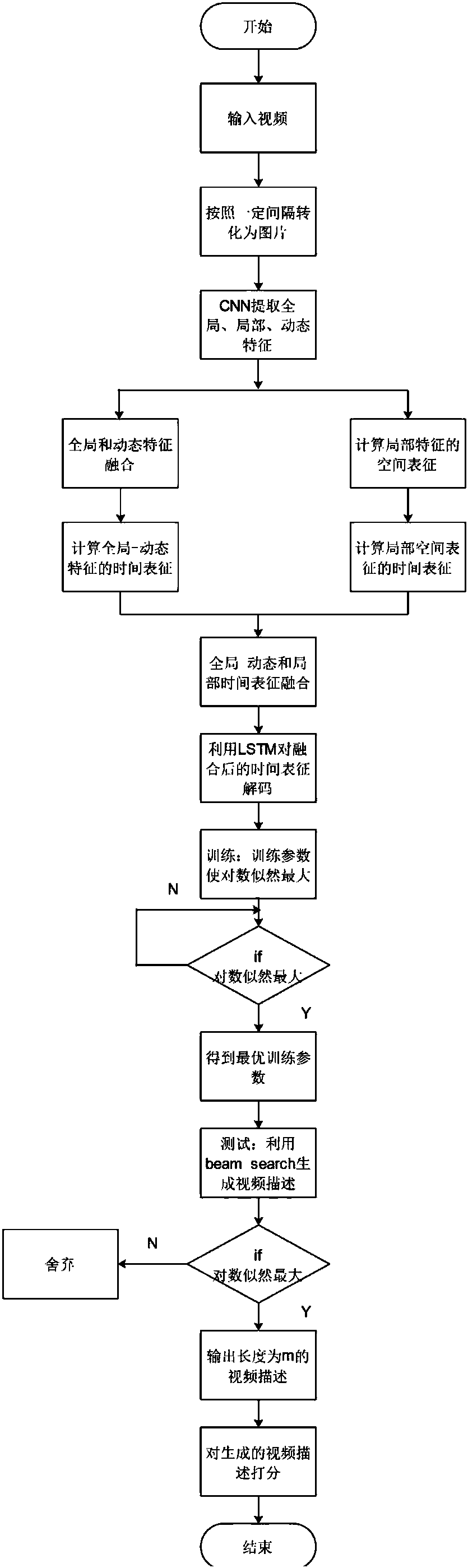

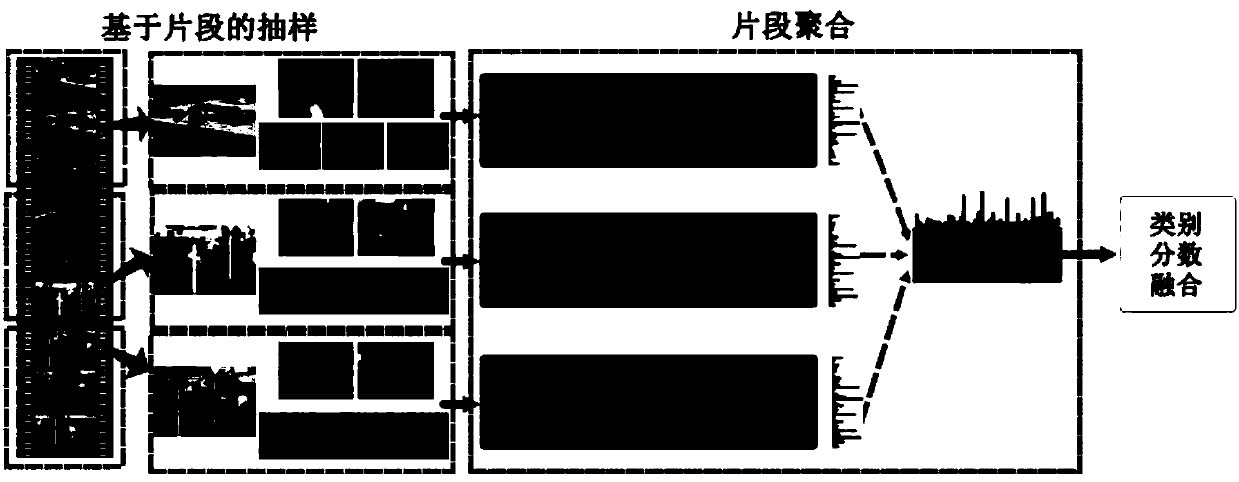

Video content description method by means of space and time attention models

ActiveCN107066973ACharacter and pattern recognitionNeural architecturesAttention modelTime structure

The invention discloses a video content description method by means of space and time attention models. The global time structure in the video is captured by means of the time attention model, the space structure on each frame of picture is captured by the space attention model, and the method aims to realize that the video description model masters the main event in the video and enhances the identification capability of the local information. The method includes preprocessing the video format; establishing the time and space attention models; and training and testing the video description model. By means of the time attention model, the main time structure in the video can be maintained, and by means of the space attention model, some key areas in each frame of picture are focused in each frame of picture, so that the generated video description can capture some key buy easy neglected detailed information while mastering the main event in the video content.

Owner:HANGZHOU DIANZI UNIV

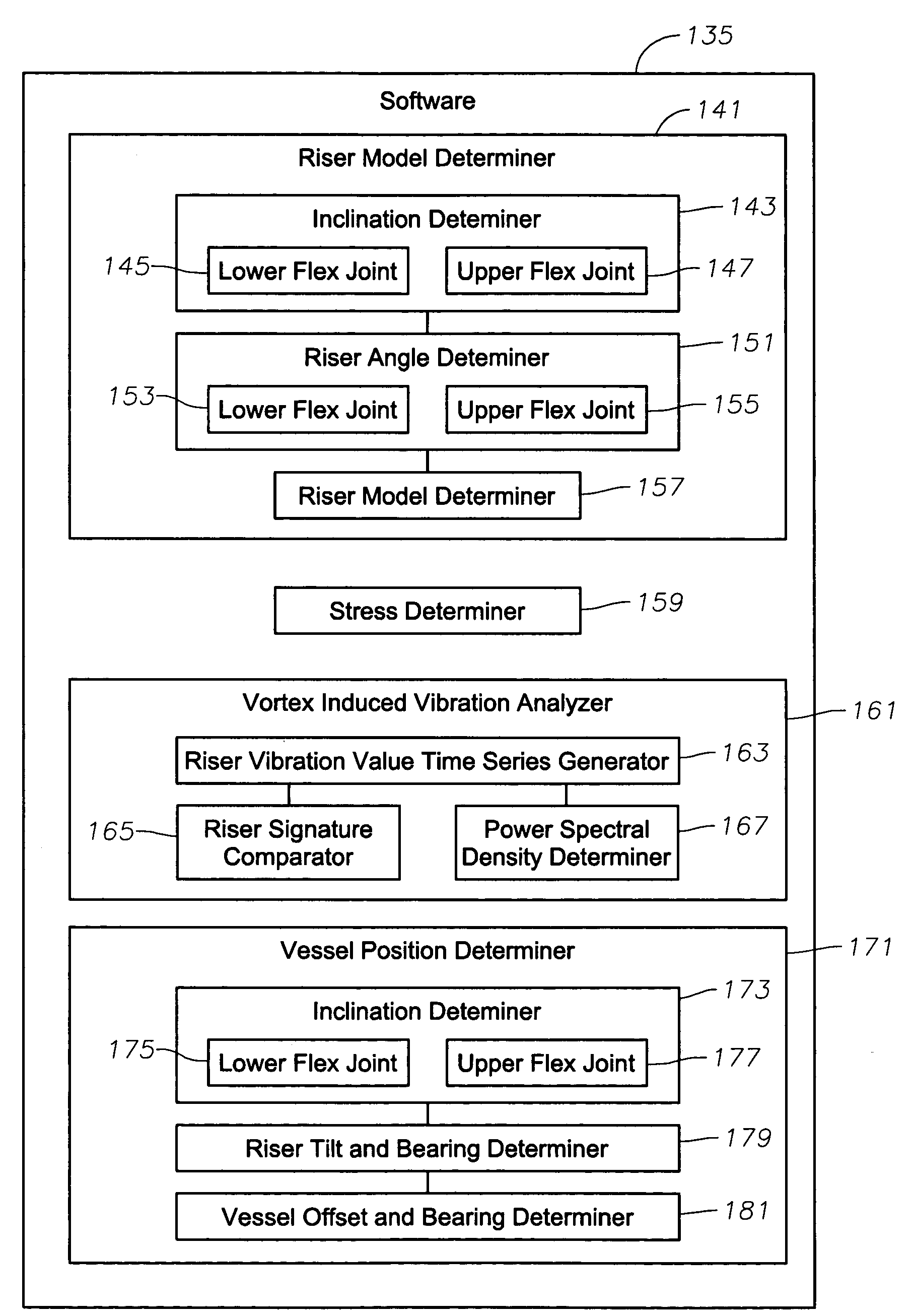

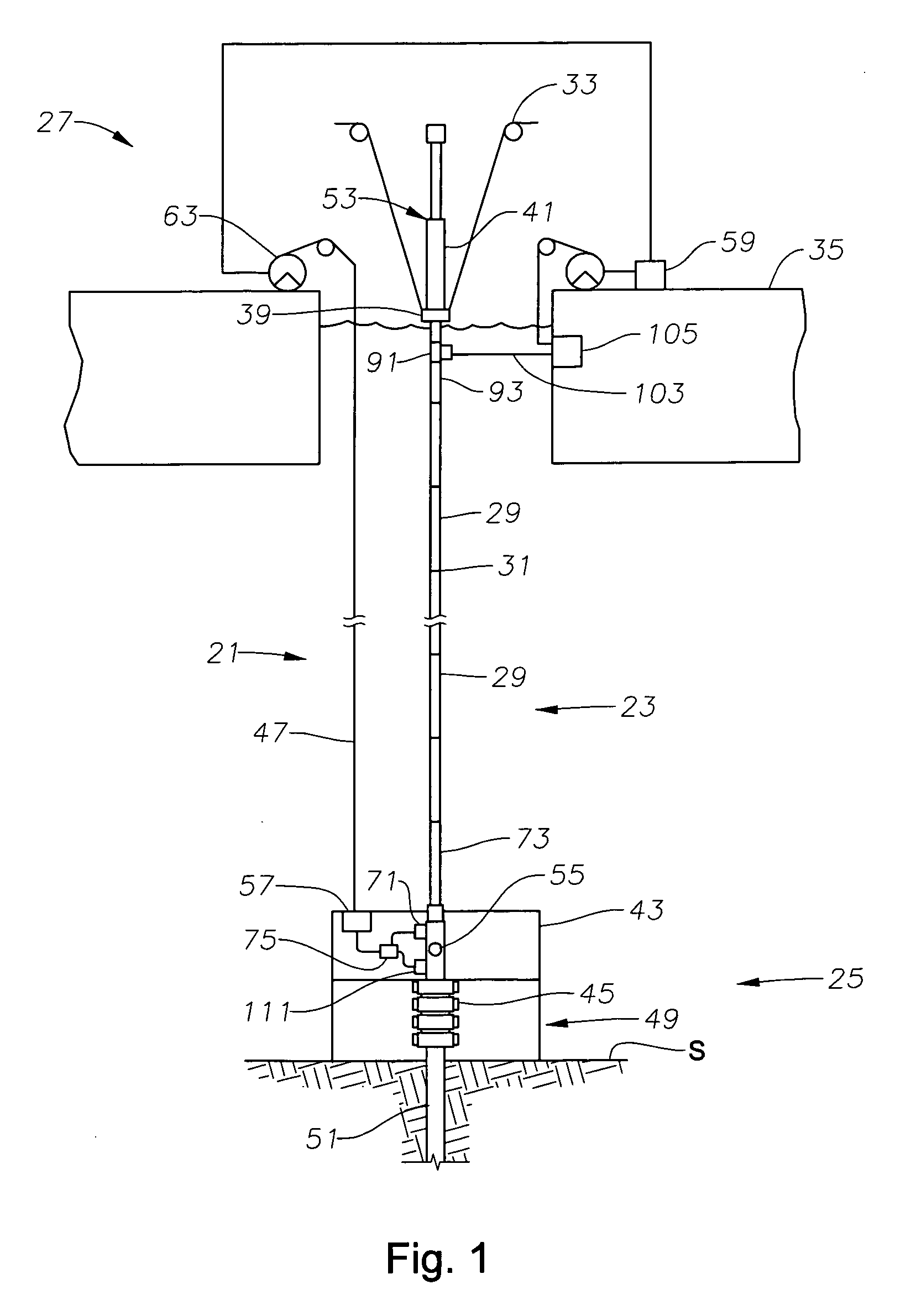

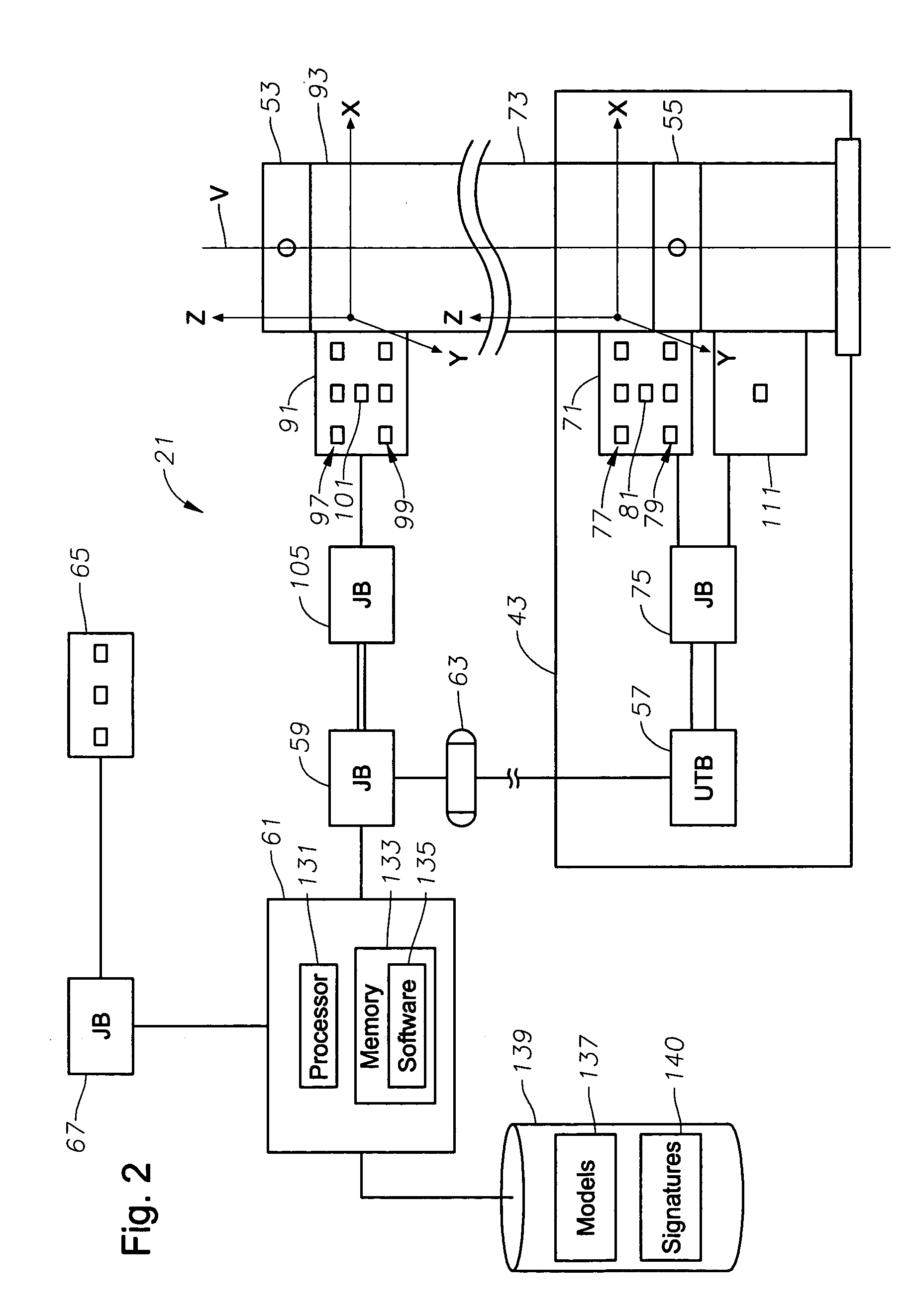

System for sensing riser motion

InactiveUS7328741B2Easy to integrateHighly accurate riser joint anglesDrilling rodsSteering by propulsive elementsTime structureSystems analysis

A riser monitoring assembly is provided to monitor and manage a riser extending between subsea well equipment and a floating vessel. A riser measurement instrument module is connected adjacent a selected portion of the riser provides dynamic orientation data for the selected portion of the riser. A computer having a memory associated therewith and riser system analyzing management software stored thereon is in communication with the riser measurement module to process data received therefrom. The riser monitoring assembly can utilize real-time orientation data for the selected portion of the riser to analyze the riser dynamic behavior, to determine a model of the real-time structure of the riser, to determine and manage the existence of vortex induced vibration, to determine and manage riser stress levels, to manage riser inspection and riser maintenance, and to supplement determination and management of the position of the vessel.

Owner:VETCO GRAY

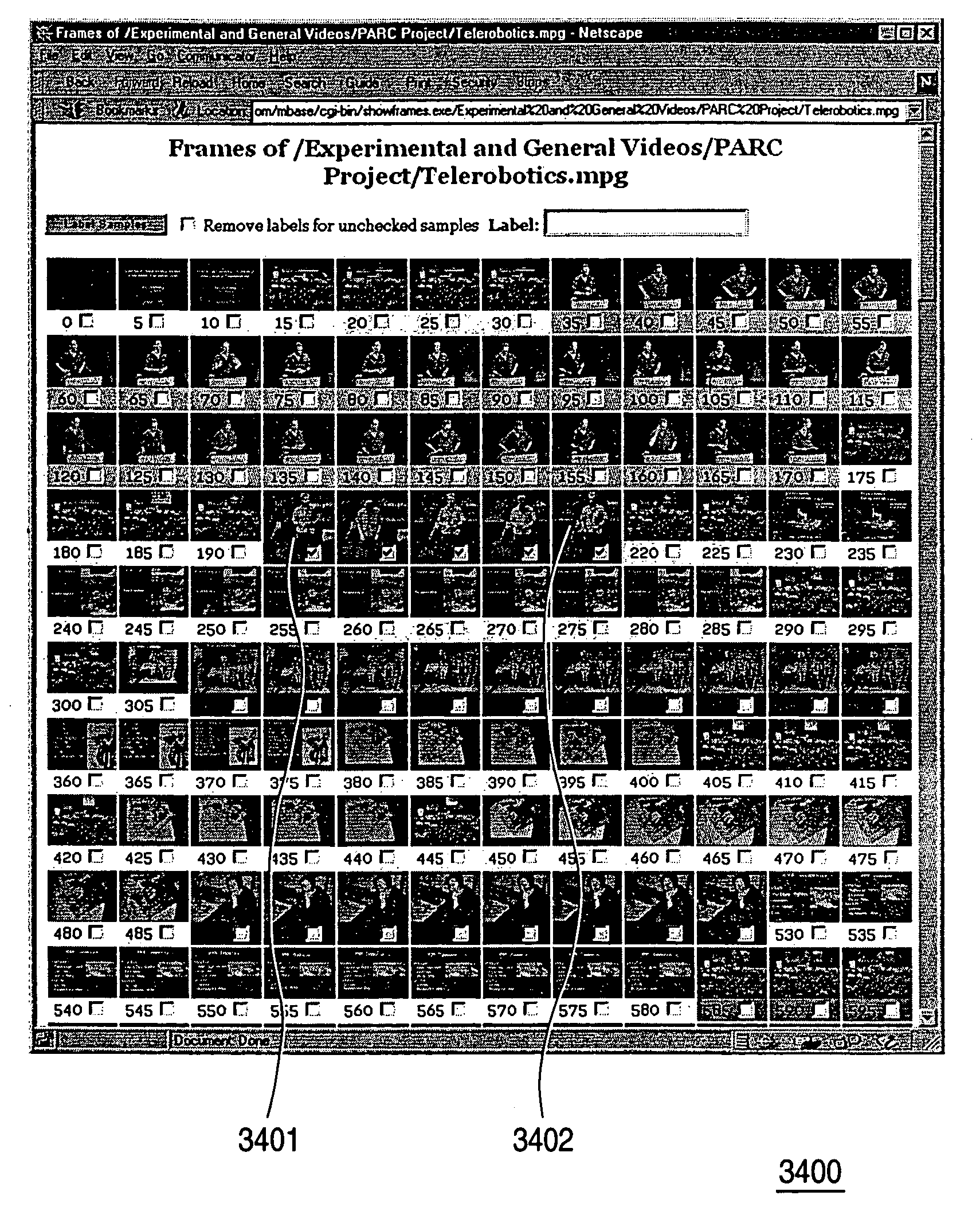

Methods and apparatuses for interactive similarity searching, retrieval and browsing of video

InactiveUS7246314B2Find quicklyQuick calculationTelevision system detailsImage analysisGraphicsFeature vector

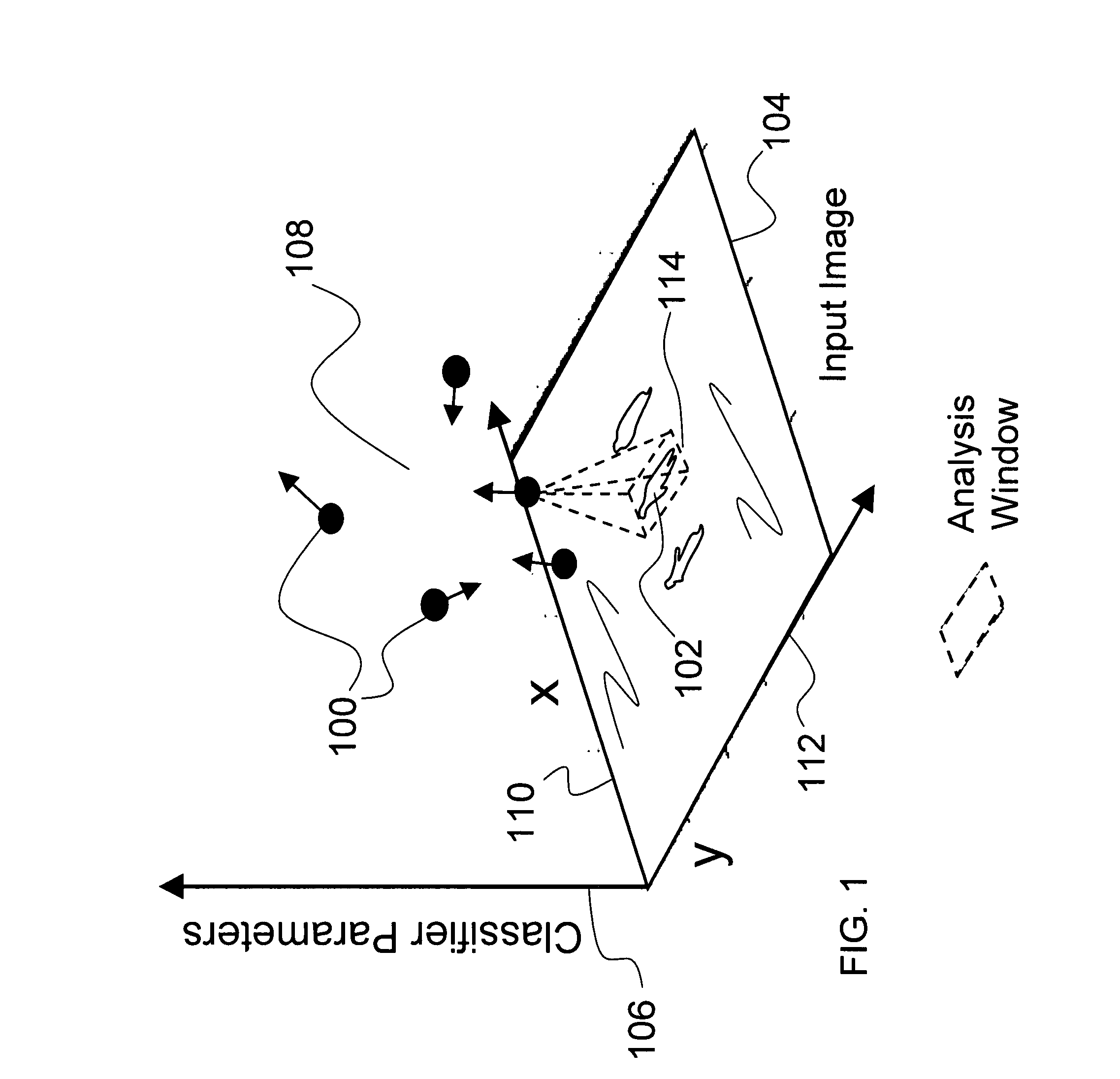

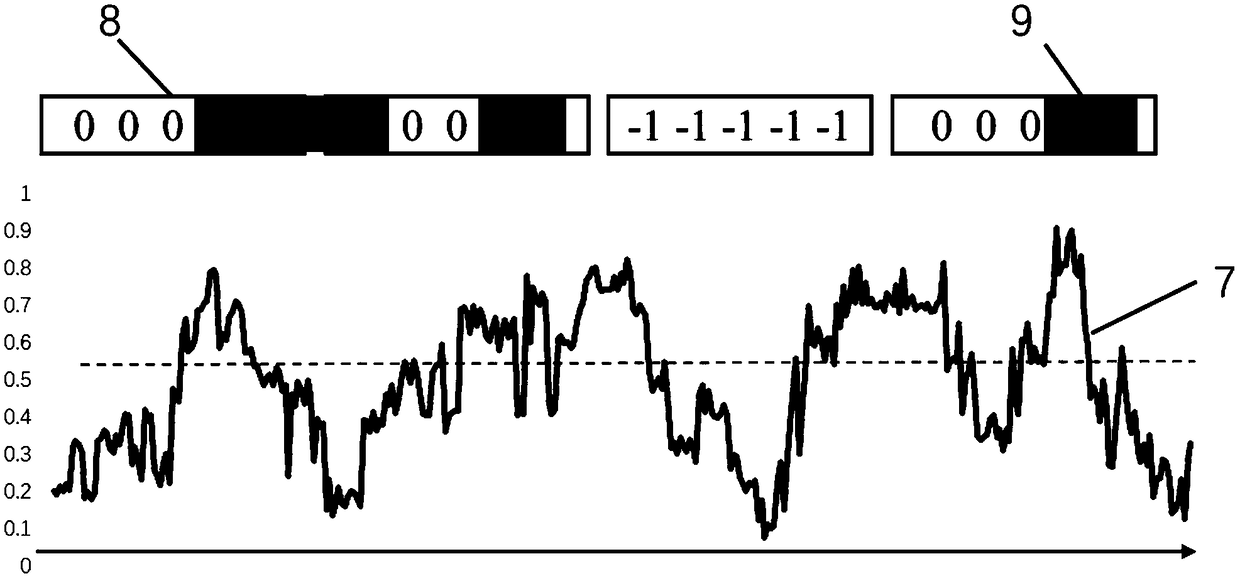

Methods for interactive selecting video queries consisting of training images from a video for a video similarity search and for displaying the results of the similarity search are disclosed. The user selects a time interval in the video as a query definition of training images for training an image class statistical model. Time intervals can be as short as one frame or consist of disjoint segments or shots. A statistical model of the image class defined by the training images is calculated on-the-fly from feature vectors extracted from transforms of the training images. For each frame in the video, a feature vector is extracted from the transform of the frame, and a similarity measure is calculated using the feature vector and the image class statistical model. The similarity measure is derived from the likelihood of a Gaussian model producing the frame. The similarity is then presented graphically, which allows the time structure of the video to be visualized and browsed. Similarity can be rapidly calculated for other video files as well, which enables content-based retrieval by example. A content-aware video browser featuring interactive similarity measurement is presented. A method for selecting training segments involves mouse click-and-drag operations over a time bar representing the duration of the video; similarity results are displayed as shades in the time bar. Another method involves selecting periodic frames of the video as endpoints for the training segment.

Owner:FUJIFILM BUSINESS INNOVATION CORP +1

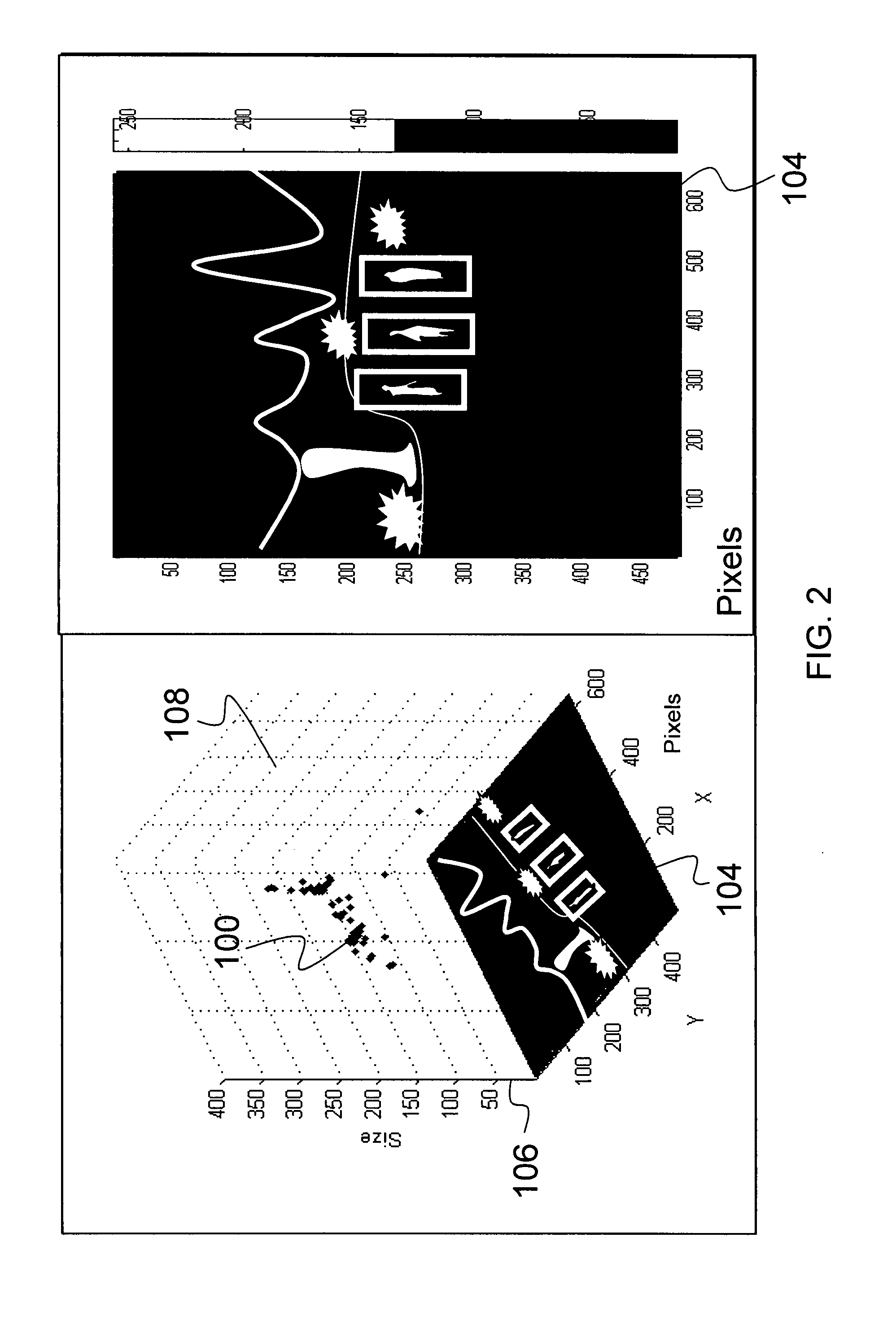

Behavior recognition using cognitive swarms and fuzzy graphs

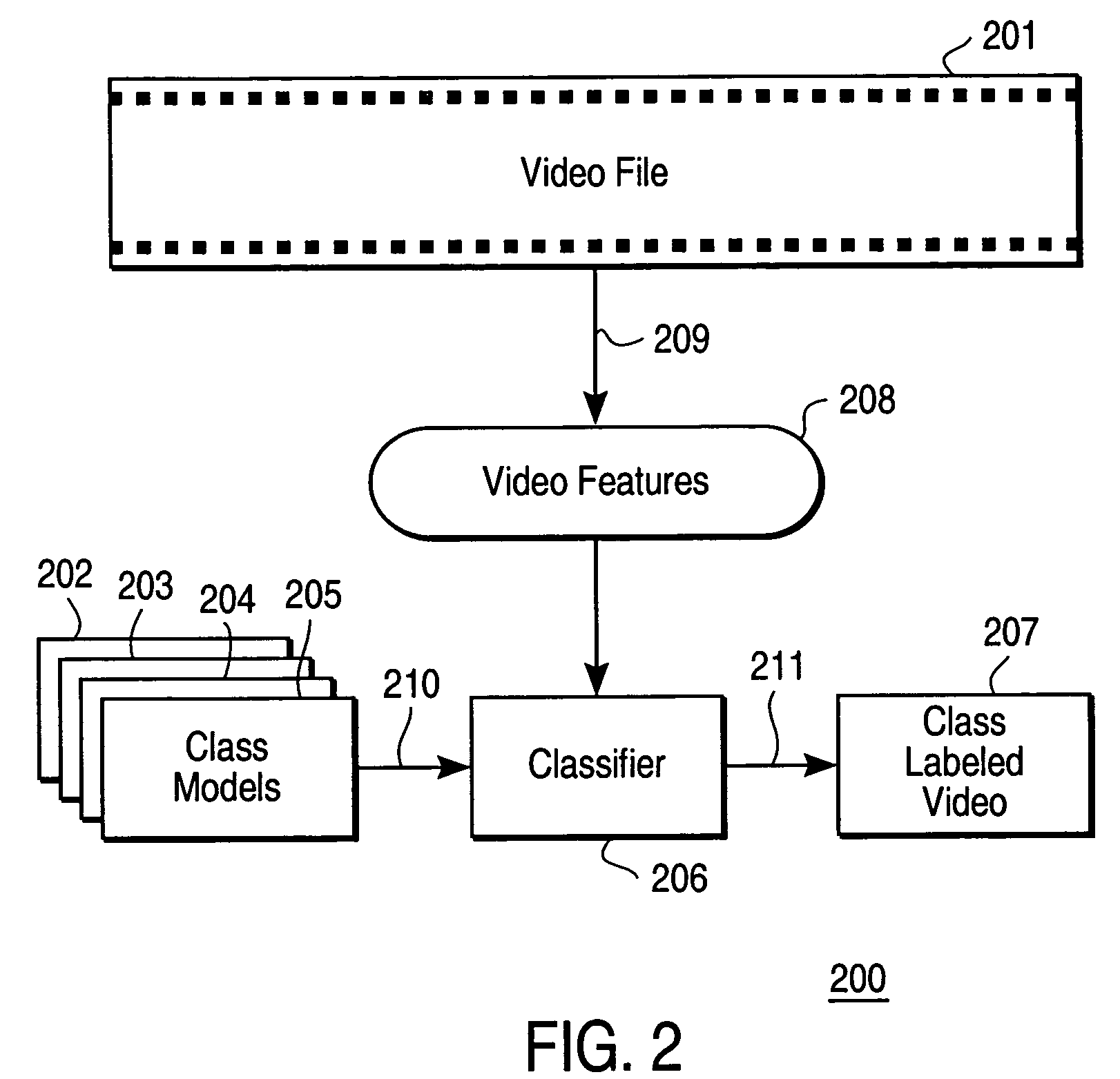

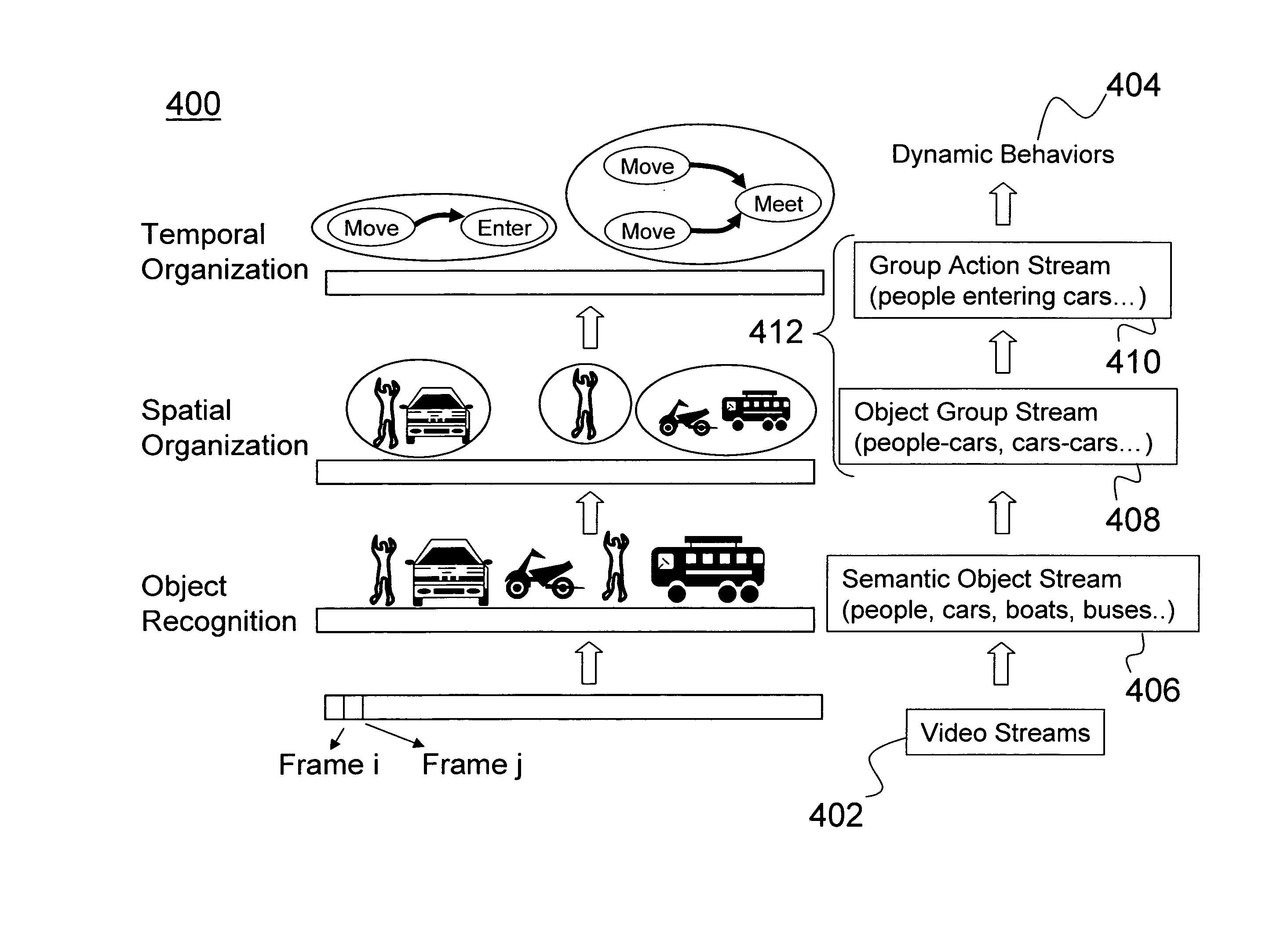

Described is a behavior recognition system for detecting the behavior of objects in a scene. The system comprises a semantic object stream module for receiving a video stream having at least two frames and detecting objects in the video stream. Also included is a group organization module for utilizing the detected objects from the video stream to detect a behavior of the detected objects. The group organization module further comprises an object group stream module for spatially organizing the detected objects to have relative spatial relationships. The group organization module also comprises a group action stream module for modeling a temporal structure of the detected objects. The temporal structure is an action of the detected objects between the two frames, whereby through detecting, organizing and modeling actions of objects, a user can detect the behavior of the objects.

Owner:HRL LAB

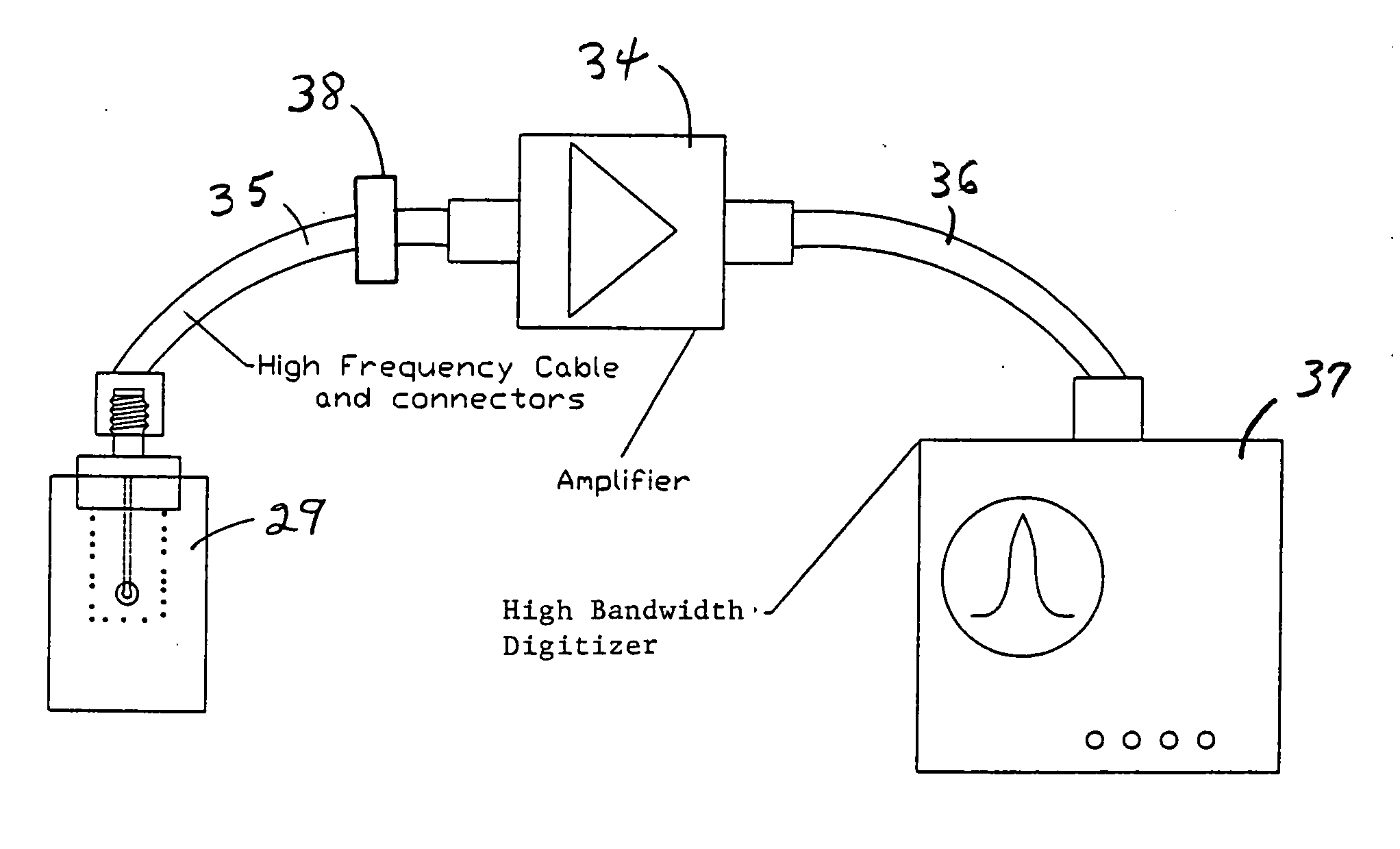

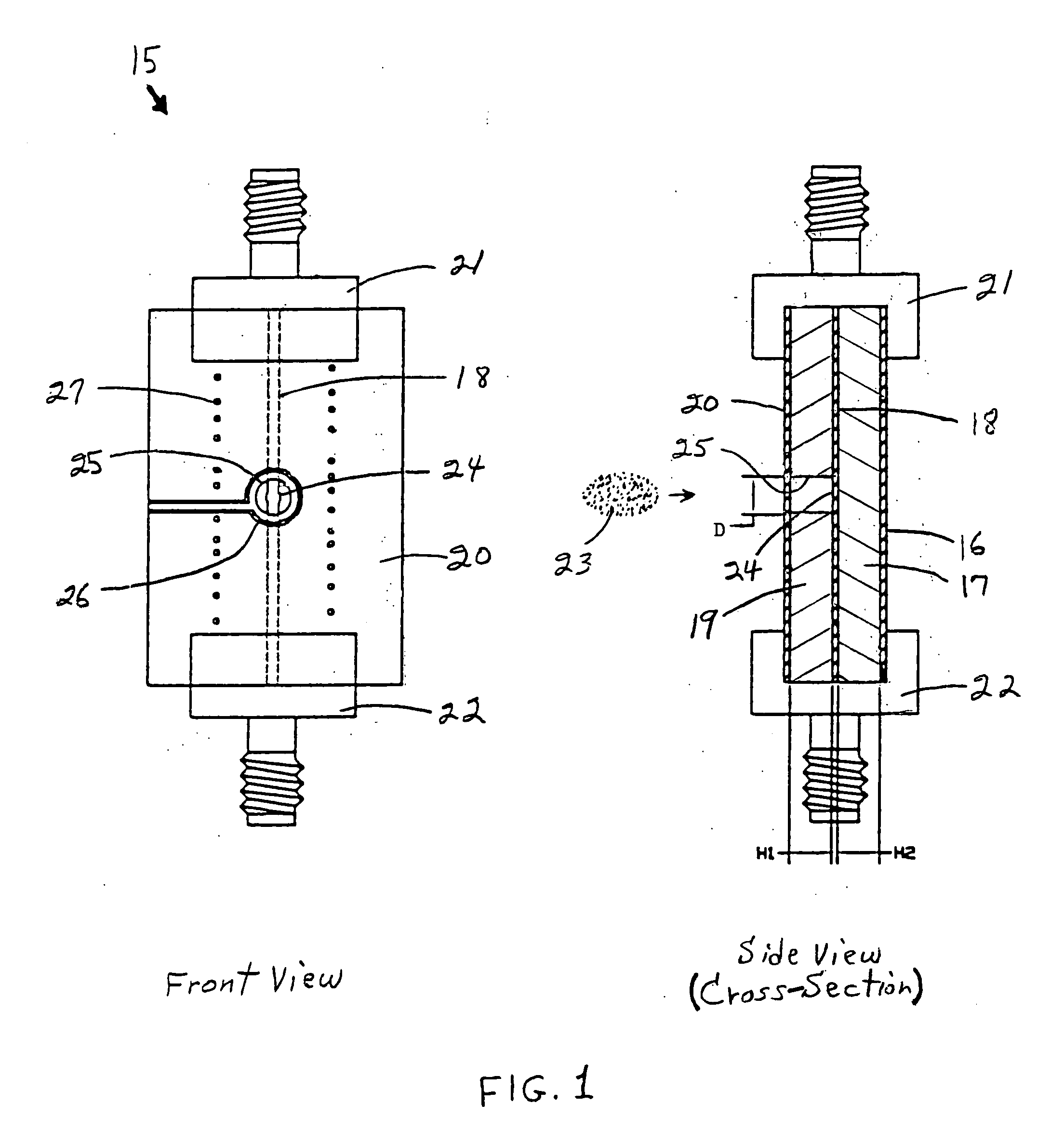

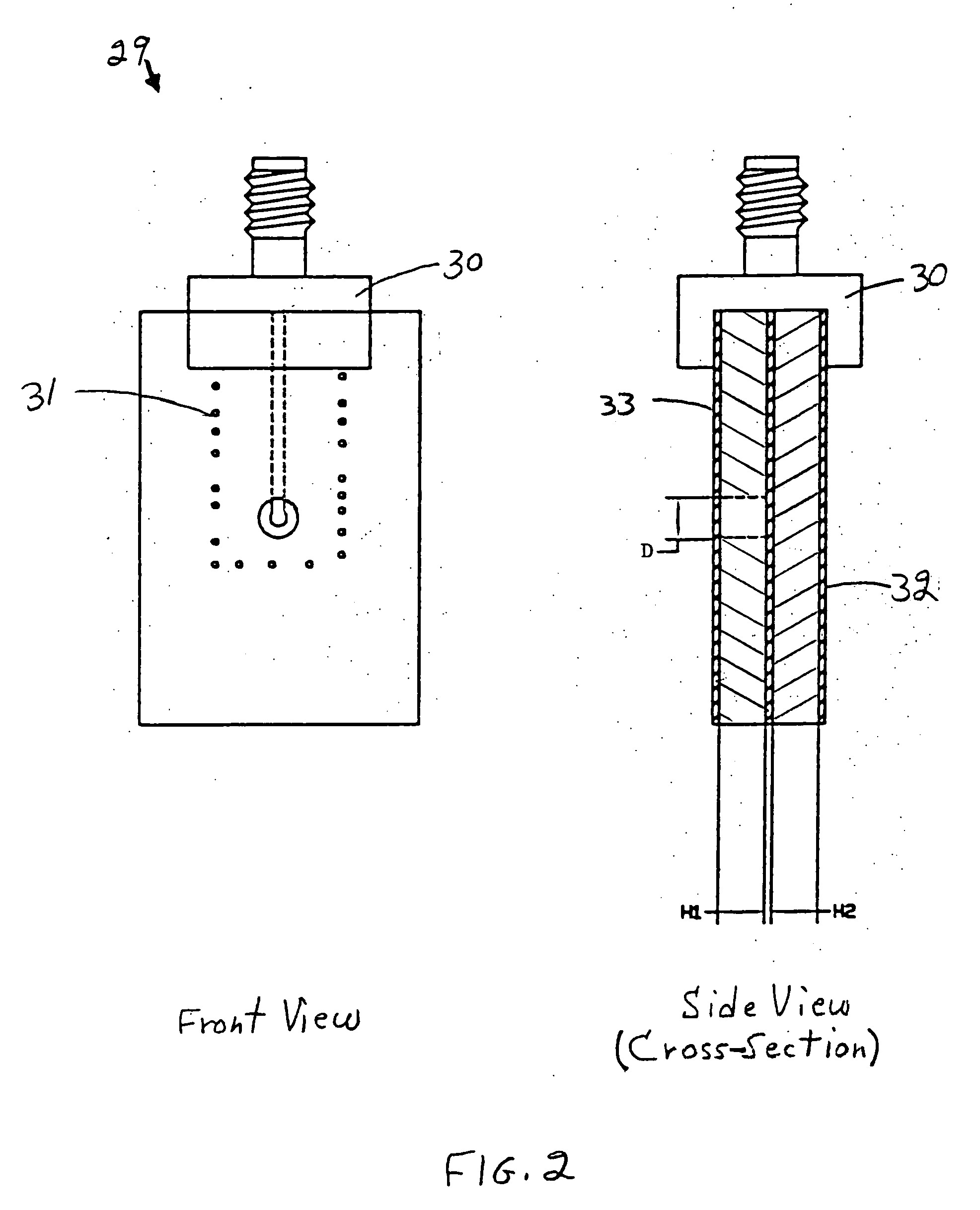

Fast faraday cup with high bandwidth

InactiveUS20050212503A1High bandwidthGood dispersionThermometer detailsBeam/ray focussing/reflecting arrangementsTime structureHigh bandwidth

A circuit card stripline Fast Faraday cup quantitatively measures the picosecond time structure of a charged particle beam. The stripline configuration maintains signal integrity, and stitching of the stripline increases the bandwidth. A calibration procedure ensures the measurement of the absolute charge and time structure of the charged particle beam.

Owner:UT BATTELLE LLC

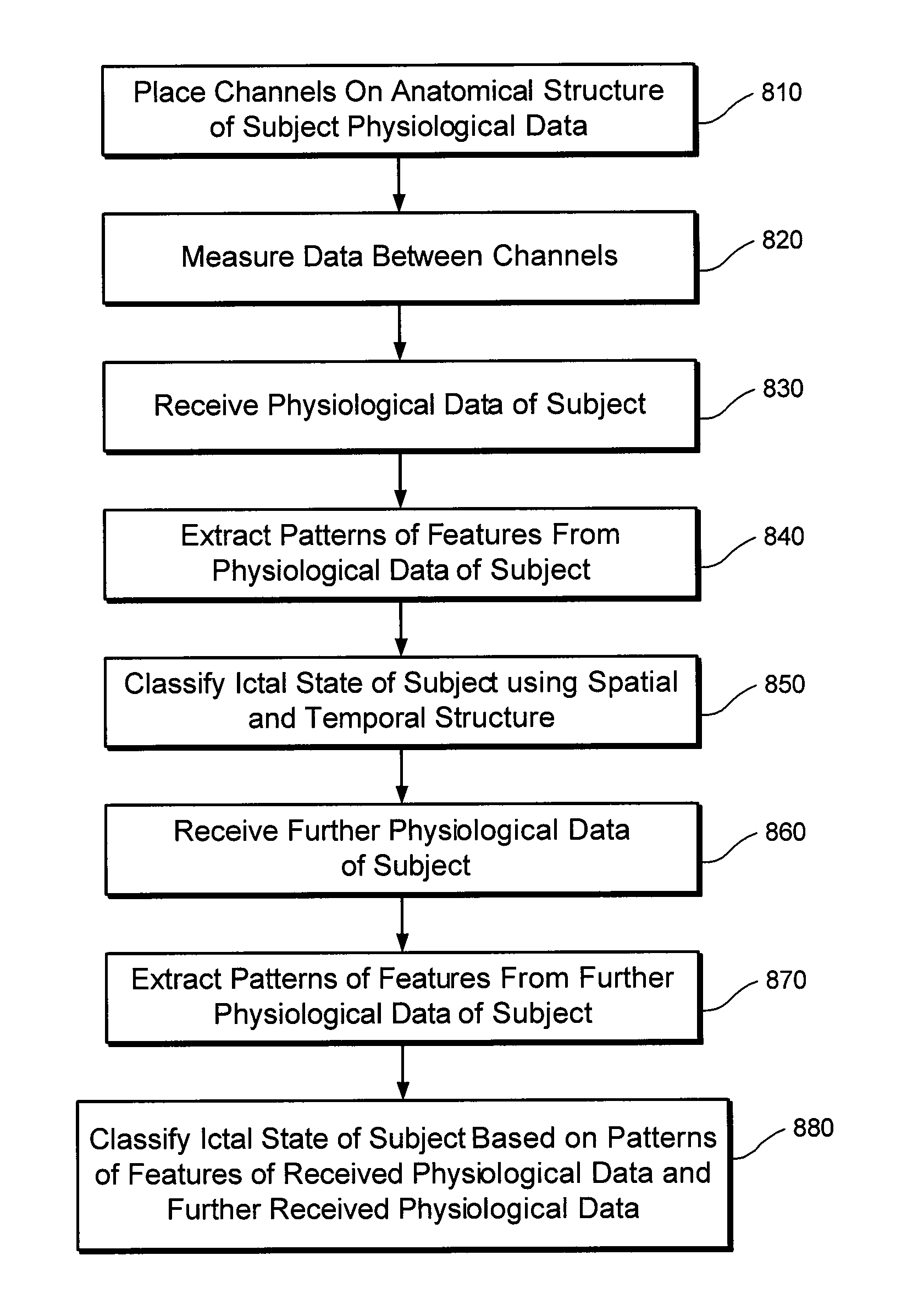

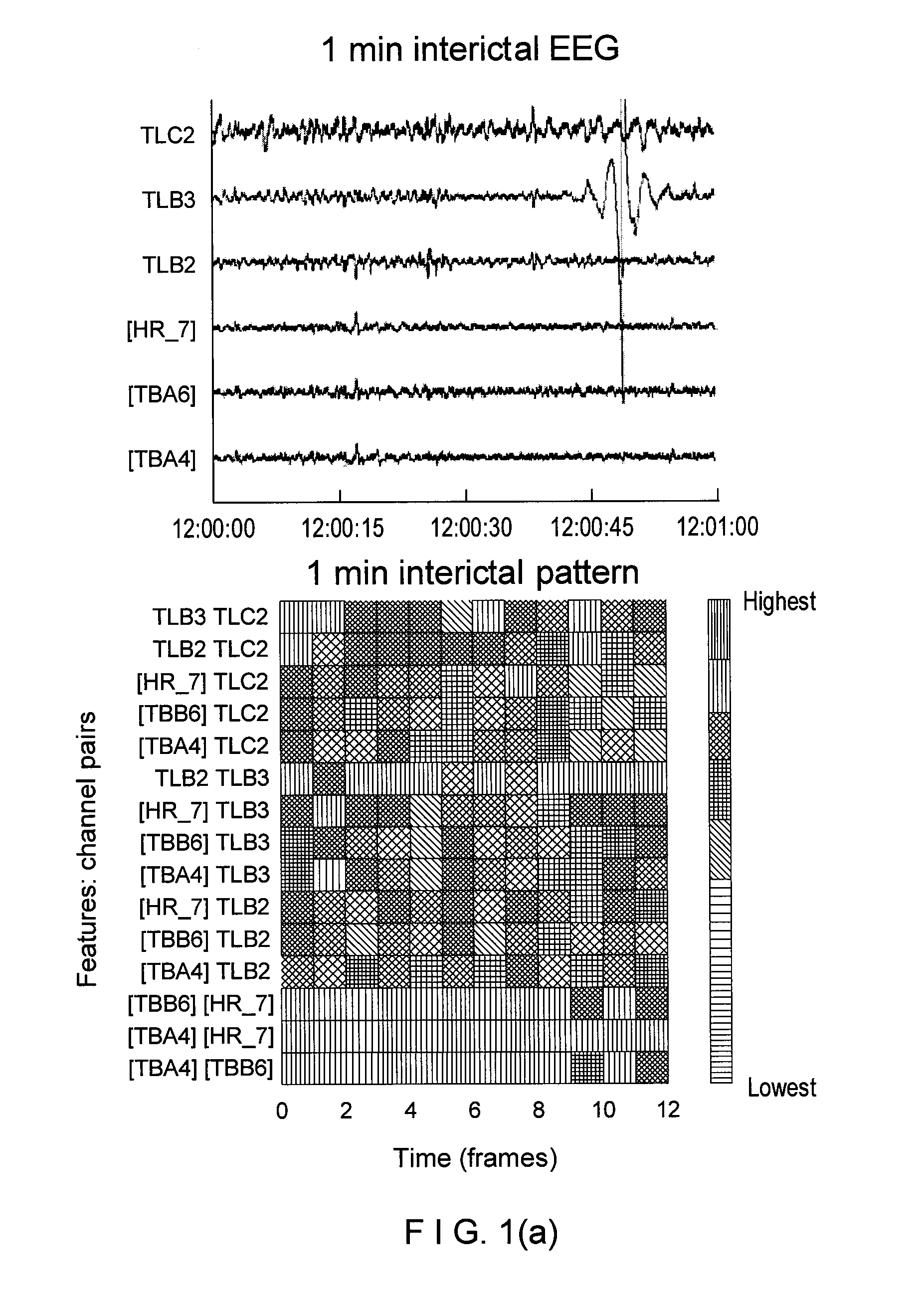

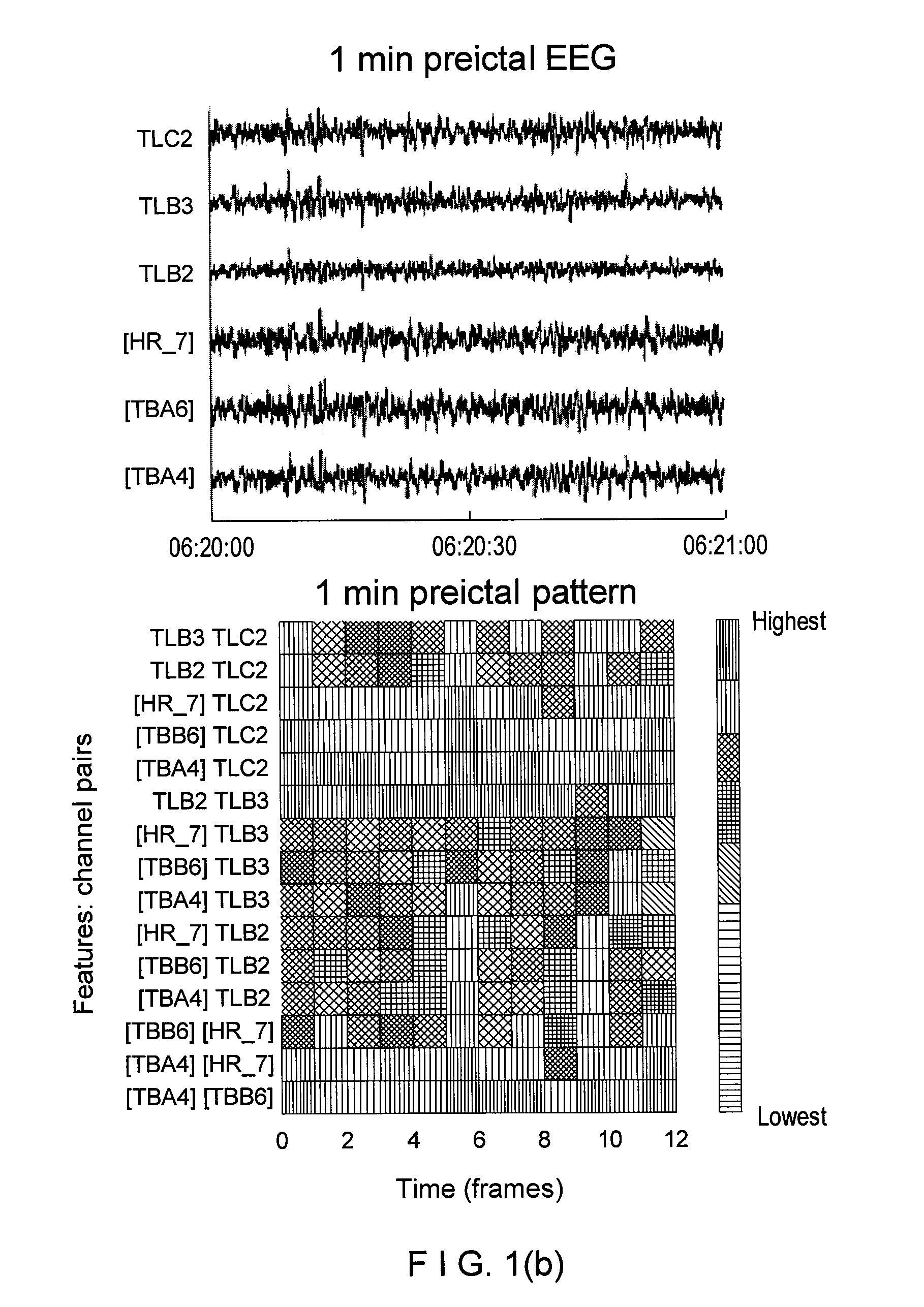

Method, system, and computer-accessible medium for classification of at least one ictal state

An exemplary methodology, procedure, system, method and computer-accessible medium can be provided for receiving physiological data for the subject, extracting one or more patterns of features from the physiological data, and classifying the at least one state of the subject using a spatial structure and a temporal structure of the one or more patterns of features, wherein at least one of the at least one state is an ictal state.

Owner:NEW YORK UNIV

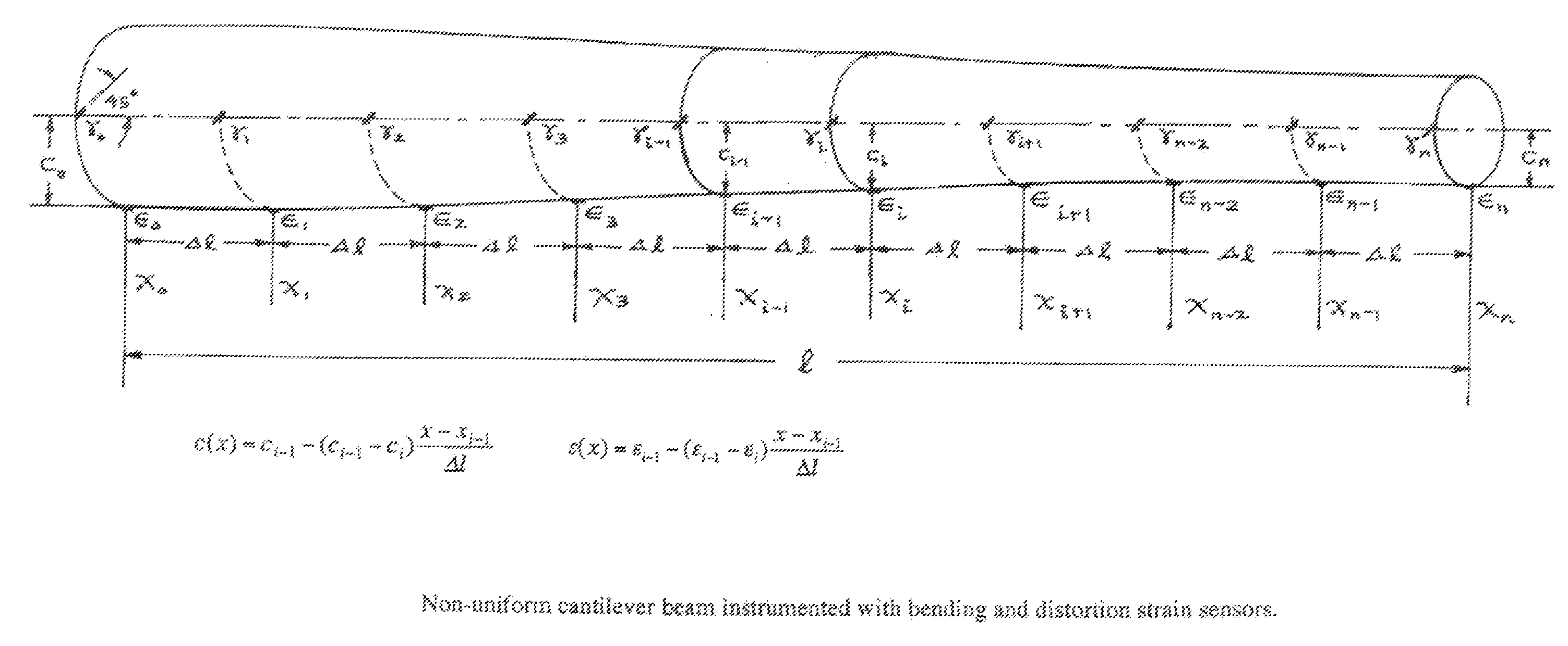

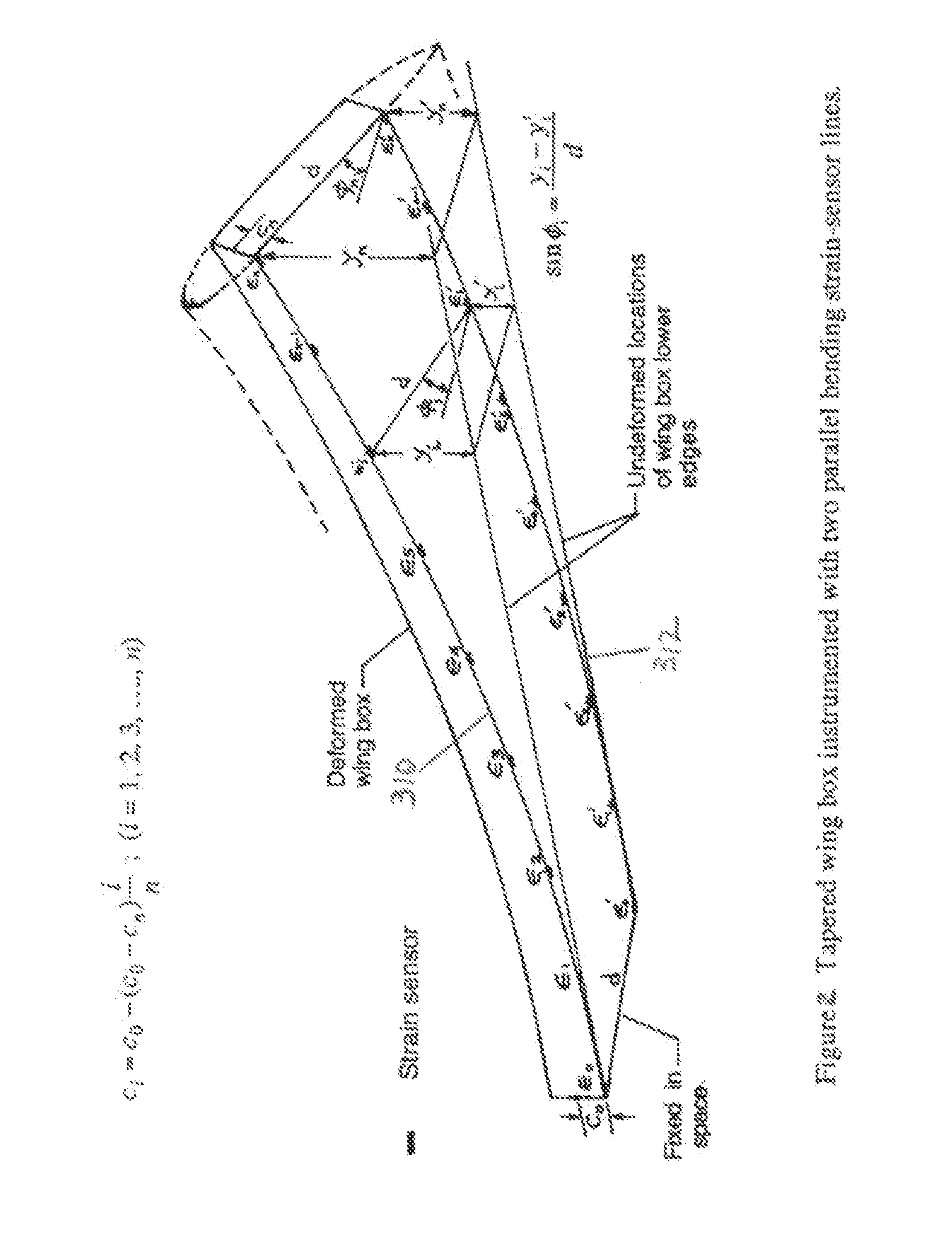

Method for real-time structure shape-sensing

InactiveUS7520176B1Force measurement by measuring optical property variationOptical apparatus testingTime structureEngineering

The invention is a method for obtaining the displacement of a flexible structure by using strain measurements obtained by stain sensors. By obtaining the displacement of structures in this manner, one may construct the deformed shape of the structure and display said deformed shape in real-time, enabling active control of the structure shape if desired.

Owner:NAT AERONAUTICS & SPACE ADMINISTATION UNITED STATES OF AMERICA AS REPRESENTED BY THE DIRECTOR OF

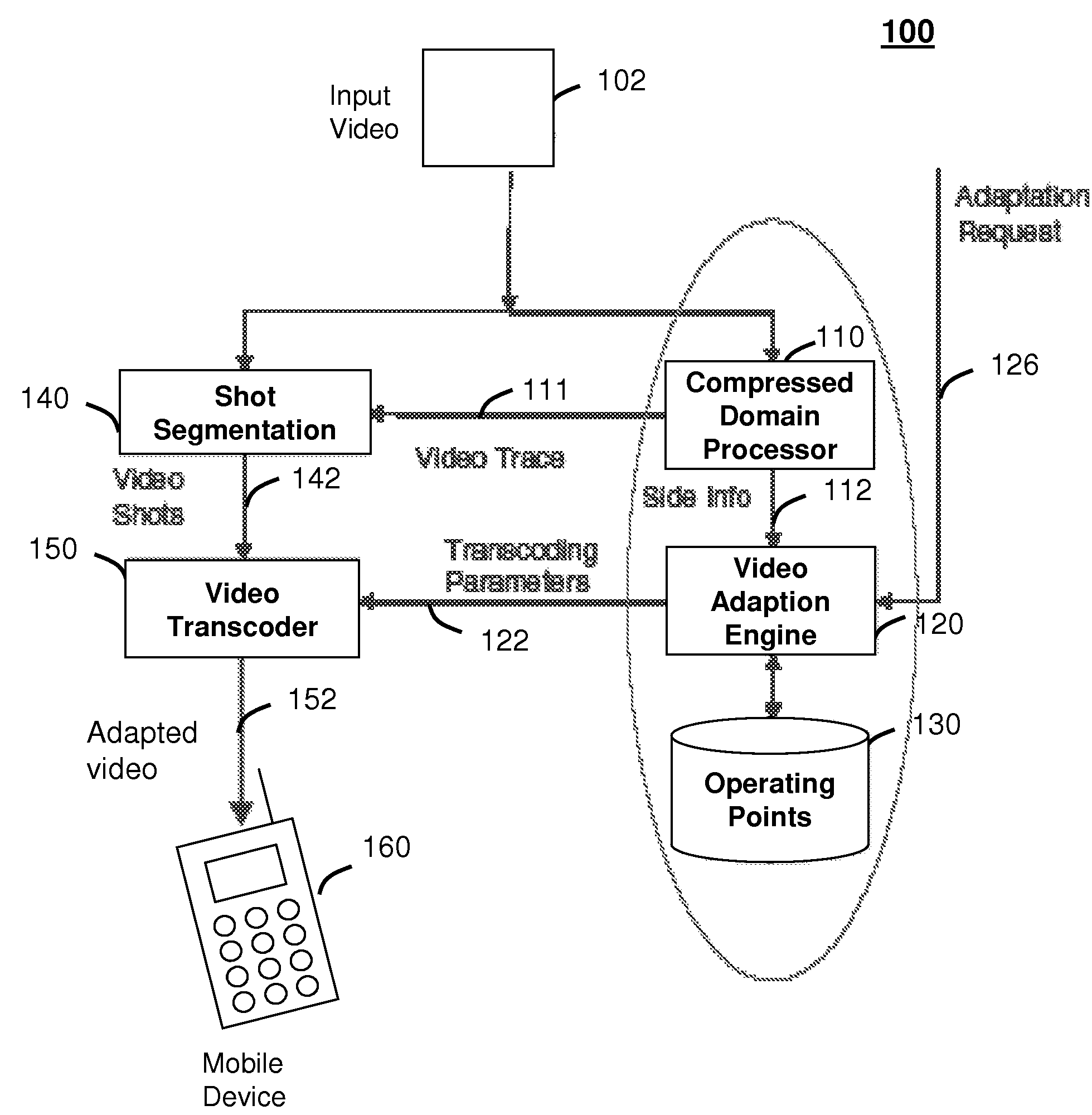

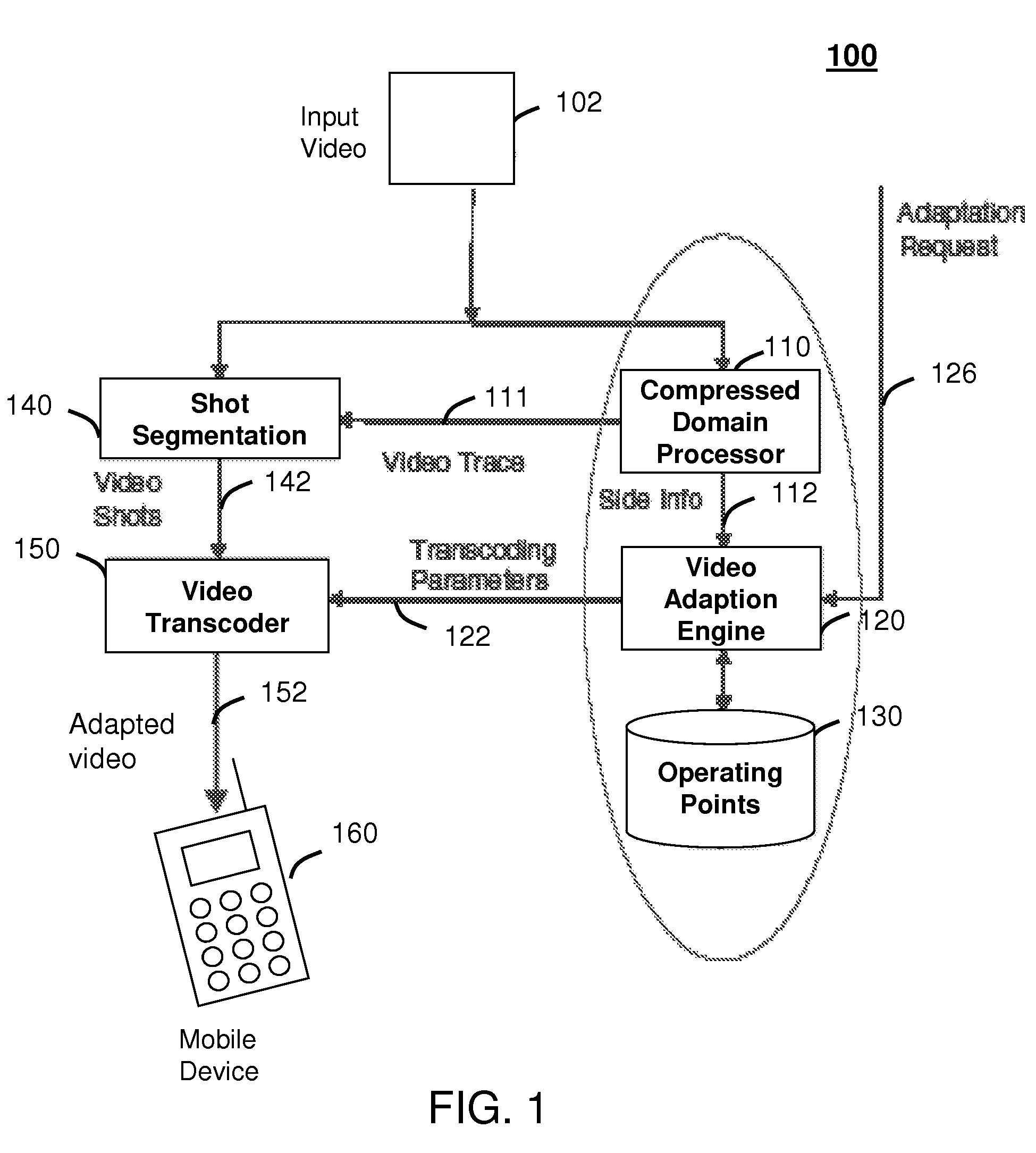

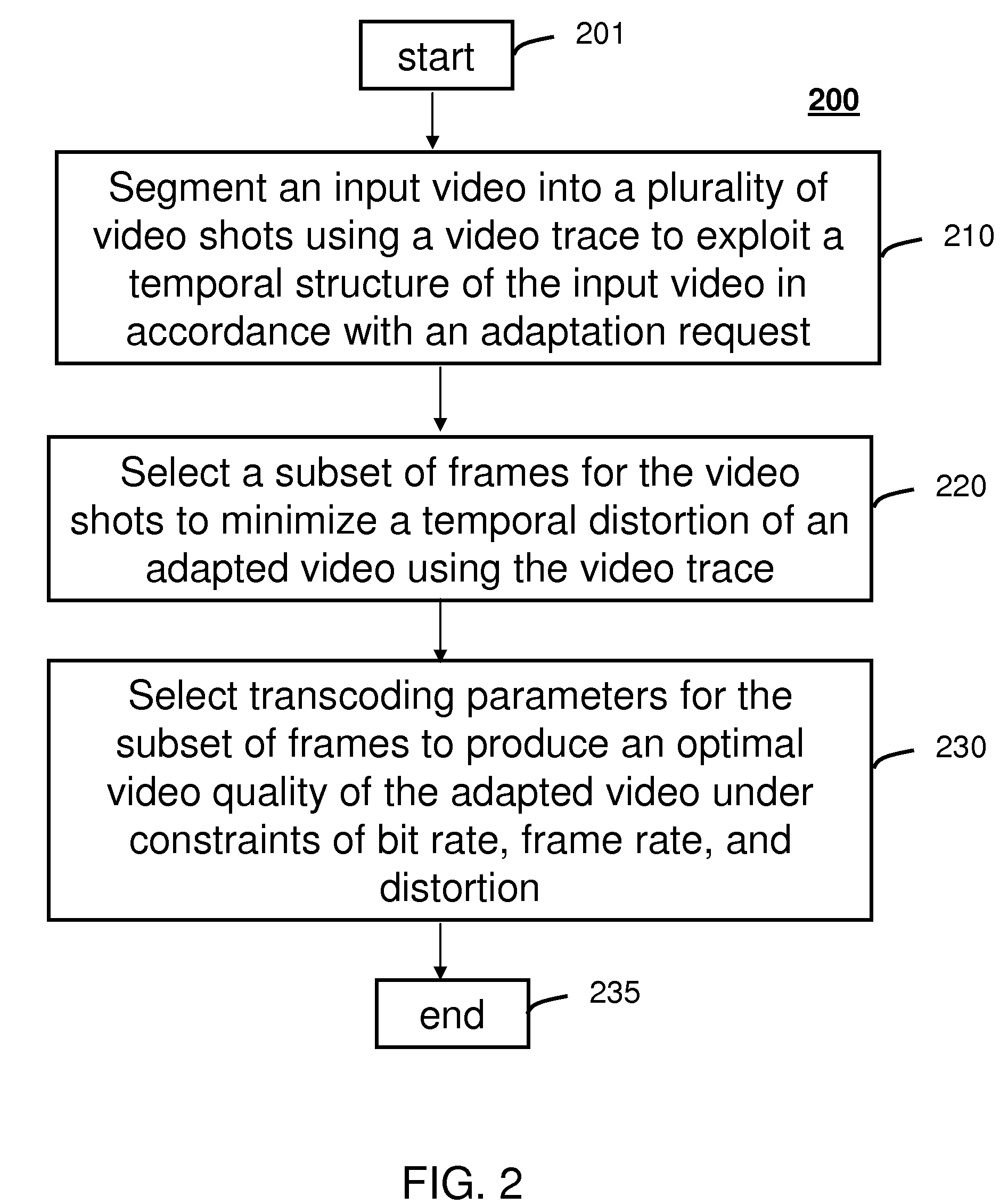

Method and system for intelligent video adaptation

InactiveUS20080123741A1Quality improvementColor television with pulse code modulationColor television with bandwidth reductionTime structureRate distortion

A system (100) and method (200) for efficient video adaptation of an input video (102) is provided. The method can include segmenting (210) the input video into a plurality of video shots (142) using a video trace (111) to exploit a temporal structure of the input video, selecting (220) a subset of frames (144) for the video shots that minimizes a distortion of adapted video (152) using the video trace, and selecting transcoding parameters (122) for the subset of frames to produce an optimal video quality of the adapted video under constraints of frame rate, bit rate, and viewing time constraint. The video trace is a compact representation for temporal and spatial distortions for frames in the input video. A spatio-temporal rate-distortion model (320) provides selection of the transcoding parameters during adaptation.

Owner:GOOGLE TECH HLDG LLC

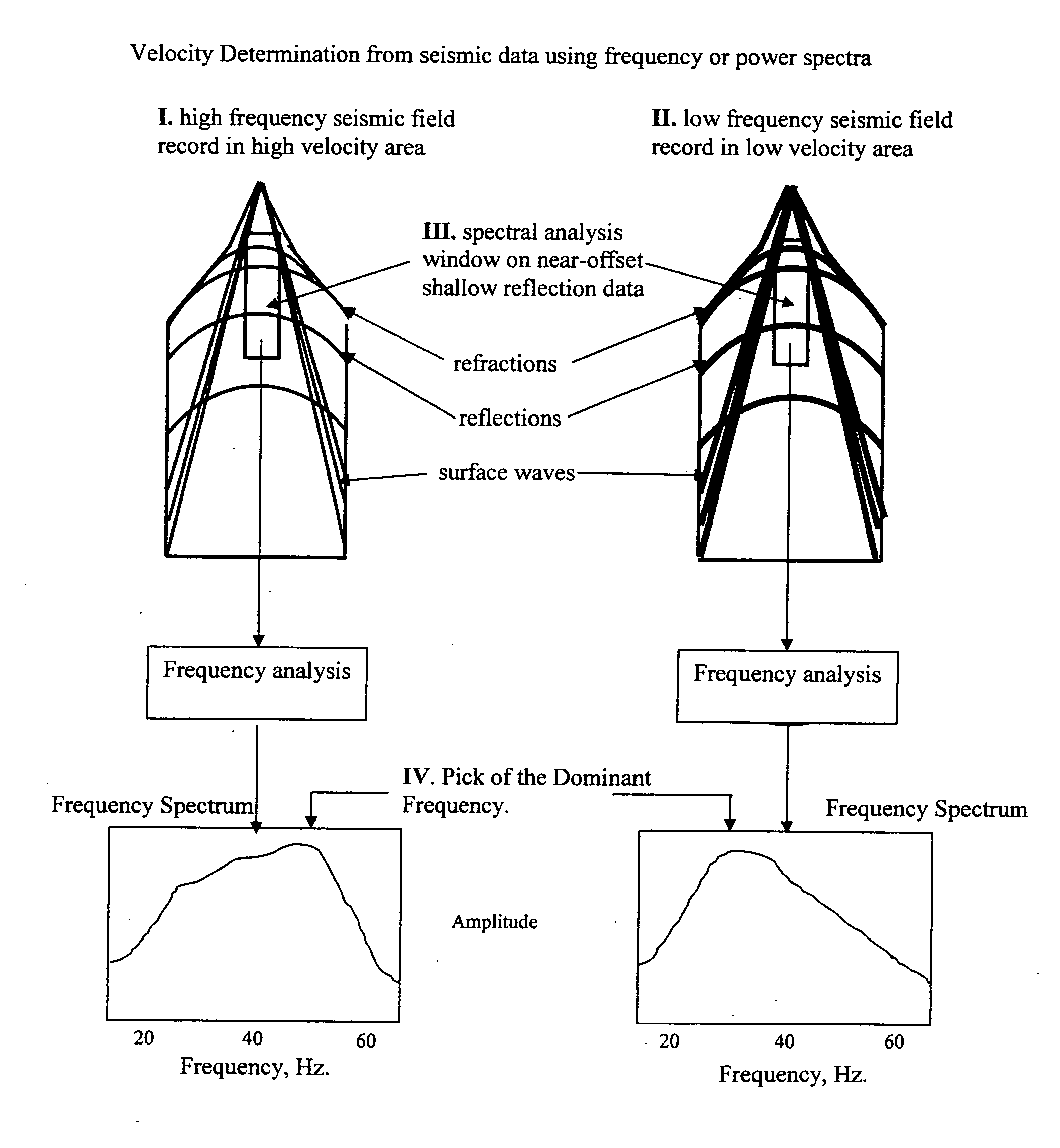

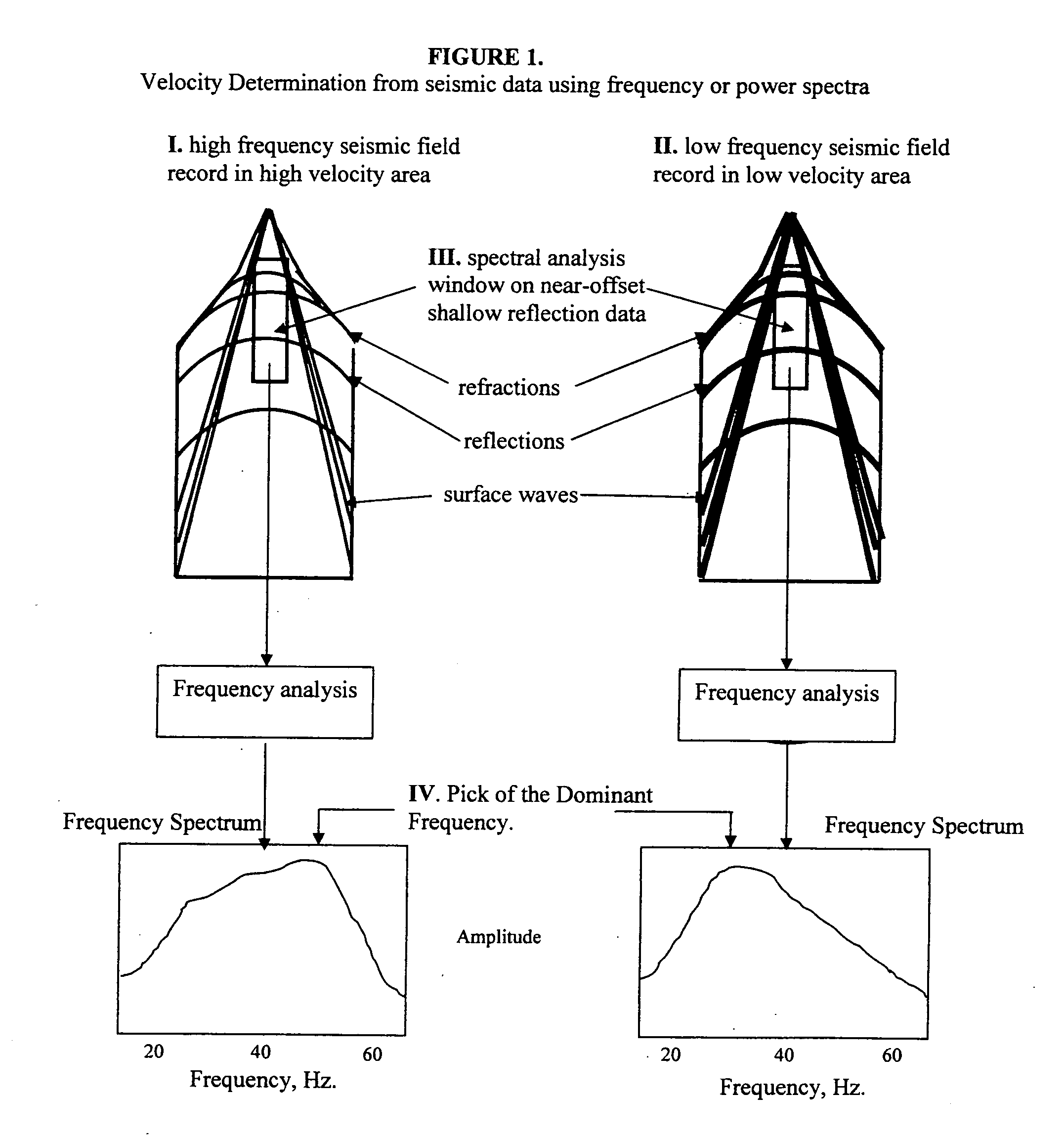

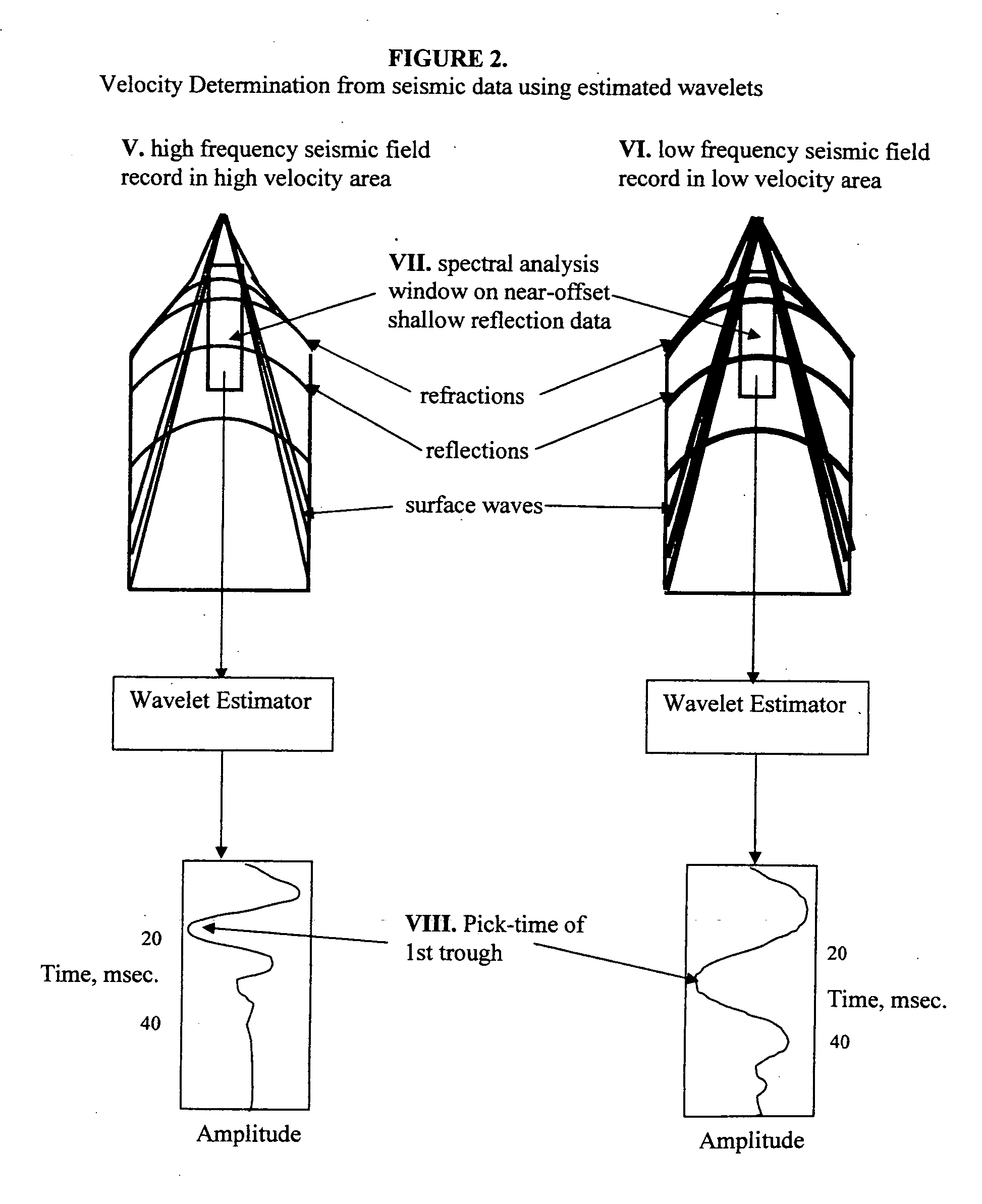

Velocity determination of the near-surface layers in the earth using exploration 2D or 3D seismic data

InactiveUS20050256648A1Seismic signal processingSpecial data processing applicationsTime structureSeismic survey

Several methods for determining the near-surface layer velocity in the earth (can include the weathering layer velocity) from exploration seismic 2D or 3D data are presented. These velocity measurements are to be used in time-correcting seismic data during data processing in refraction statics, datum statics, elevation statics derivation and application or any other data processing scheme wherein the near-surface velocity is required. They can also be used as the near-surface velocity model for depth-migration of seismic data. The velocity of the near-surface is directly related to the character of the shot records themselves. By statistically measuring this character from the shot records in an automated fashion, a large amount of data can be processed and the character measurement numerically converted to a velocity measurement using benchmark velocities. A complete near-surface velocity field for the seismic survey can be created in this way and used to correct for false time-structure in seismic datasets used for hydrocarbon exploration or any other sub-surface exploration purposes.

Owner:WEST MICHAEL PATRICK

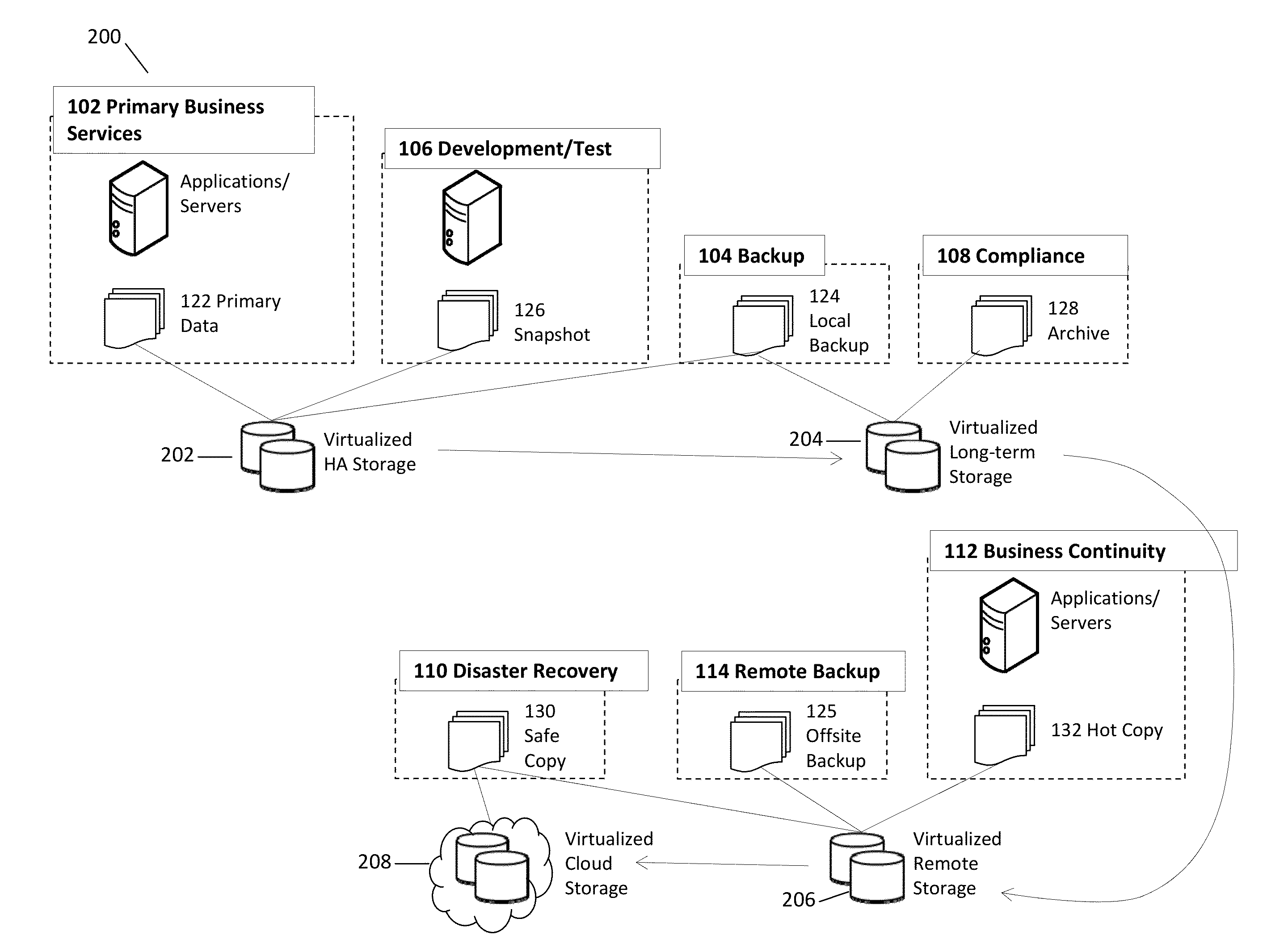

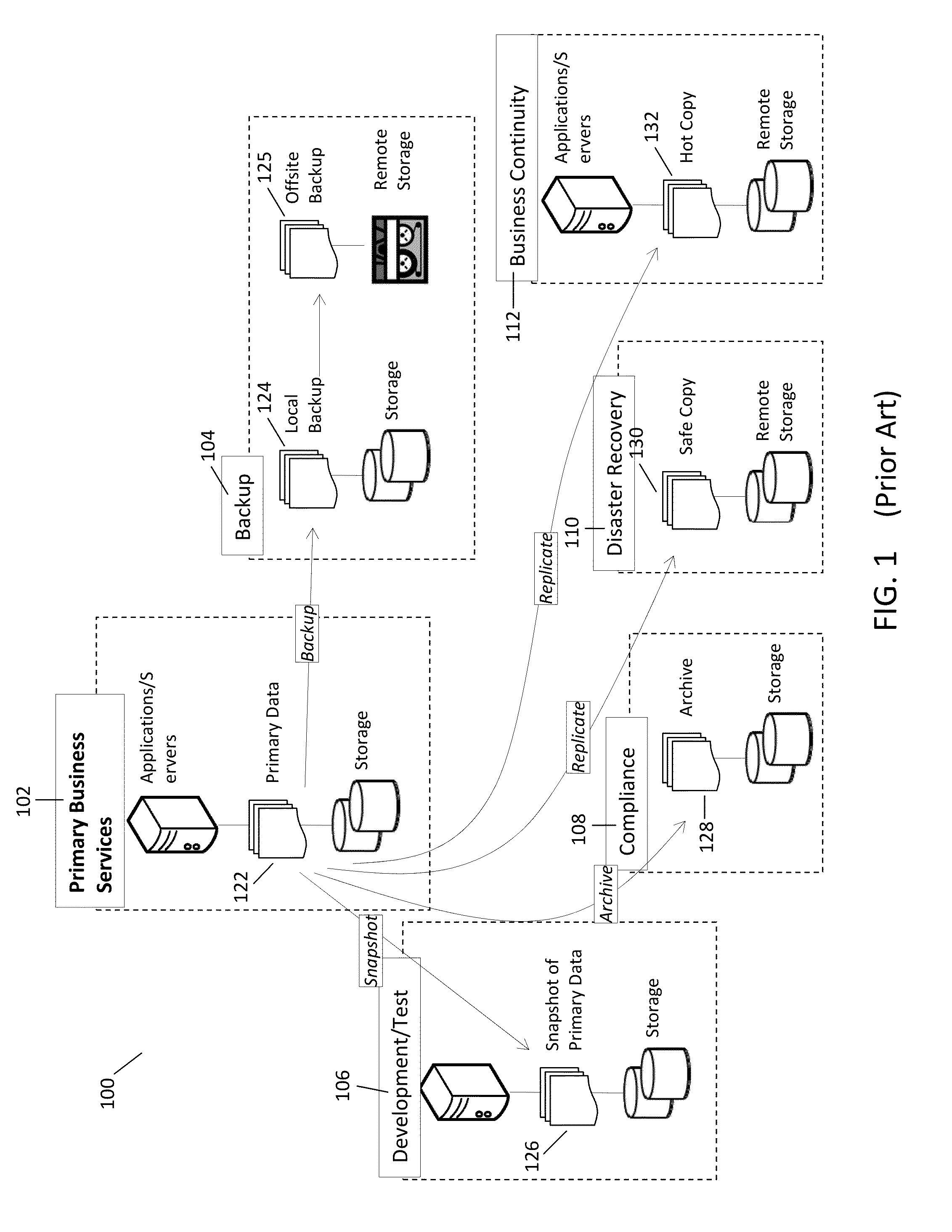

System and method for creating deduplicated copies of data by sending difference data between near-neighbor temporal states

ActiveUS20120124014A1Digital data information retrievalDigital data processing detailsTime structureState dependent

Systems and methods are disclosed for using a first deduplicating store to update a second deduplicating store with information representing how data objects change over time, said method comprising: at a first and a second deduplicating store, for each data object, maintaining an organized arrangement of temporal structures to represent a corresponding data object over time, wherein each structure is associated with a temporal state of the data object and wherein the logical arrangement of structures is indicative of the changing temporal states of the data object; finding a temporal state that is common to and in temporal proximity to the current state of the first and second deduplicating stores; and compiling and sending a set of hash signatures for the content that has changed from the common state to the current temporal state of the first deduplicating store.

Owner:GOOGLE LLC

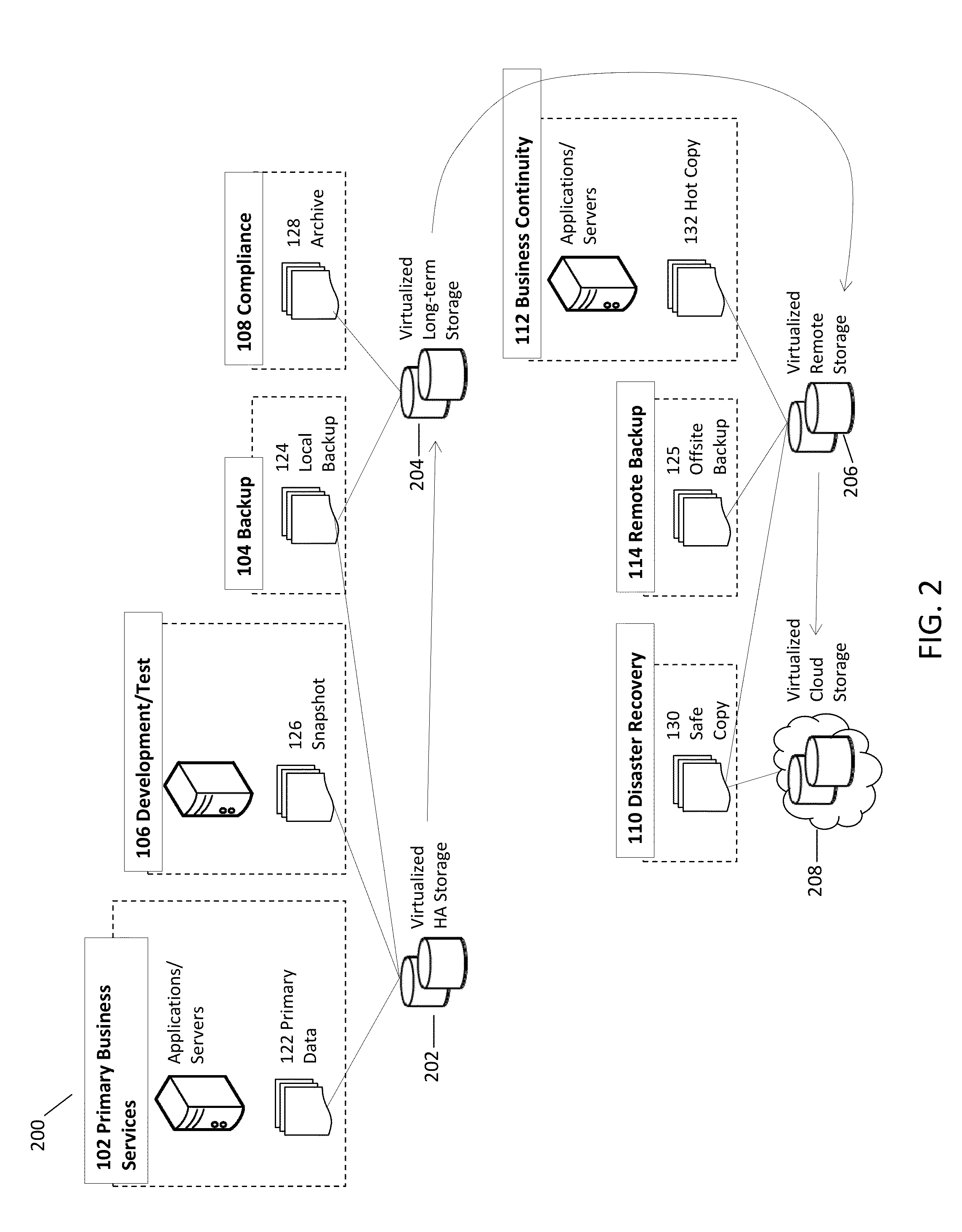

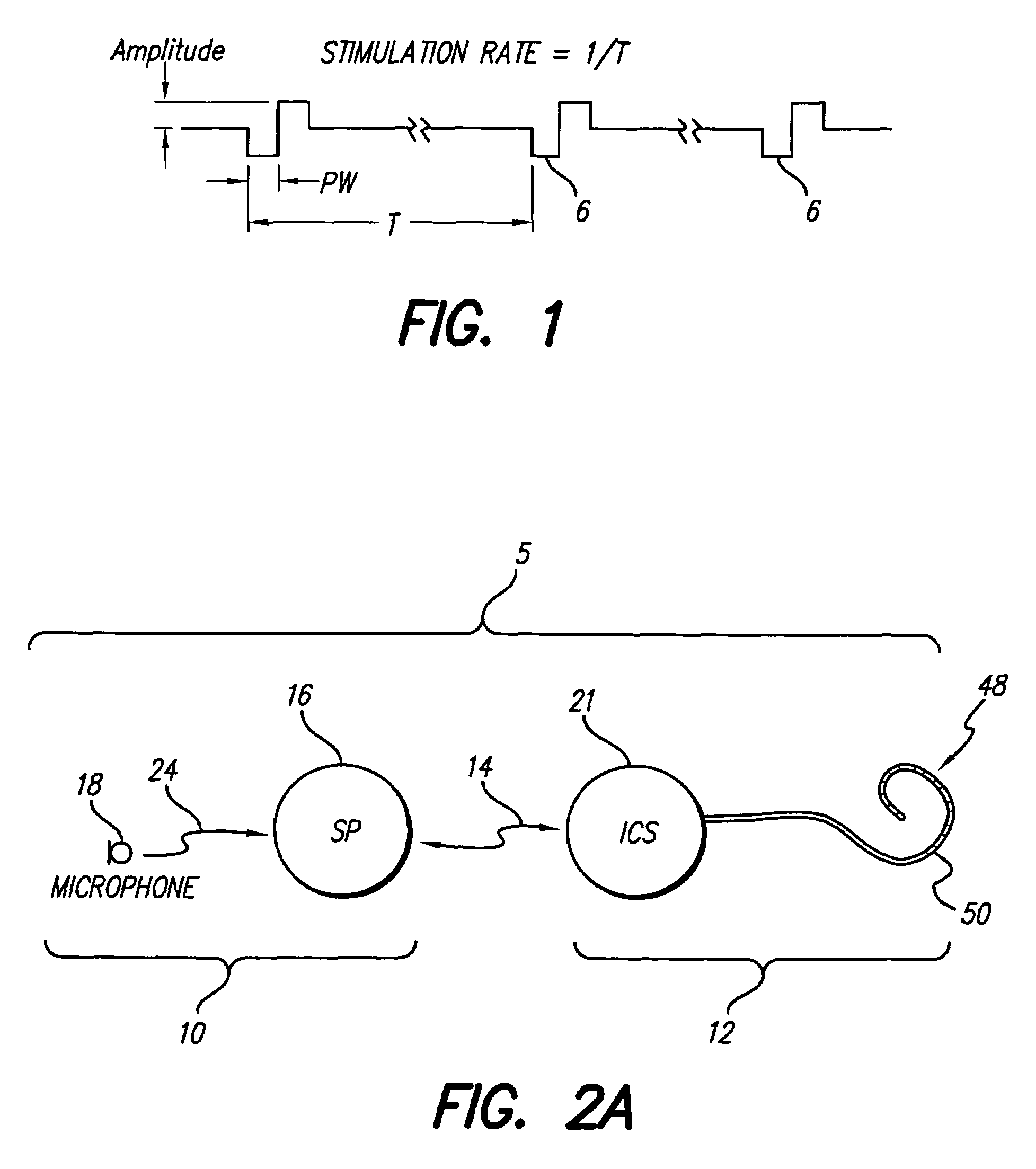

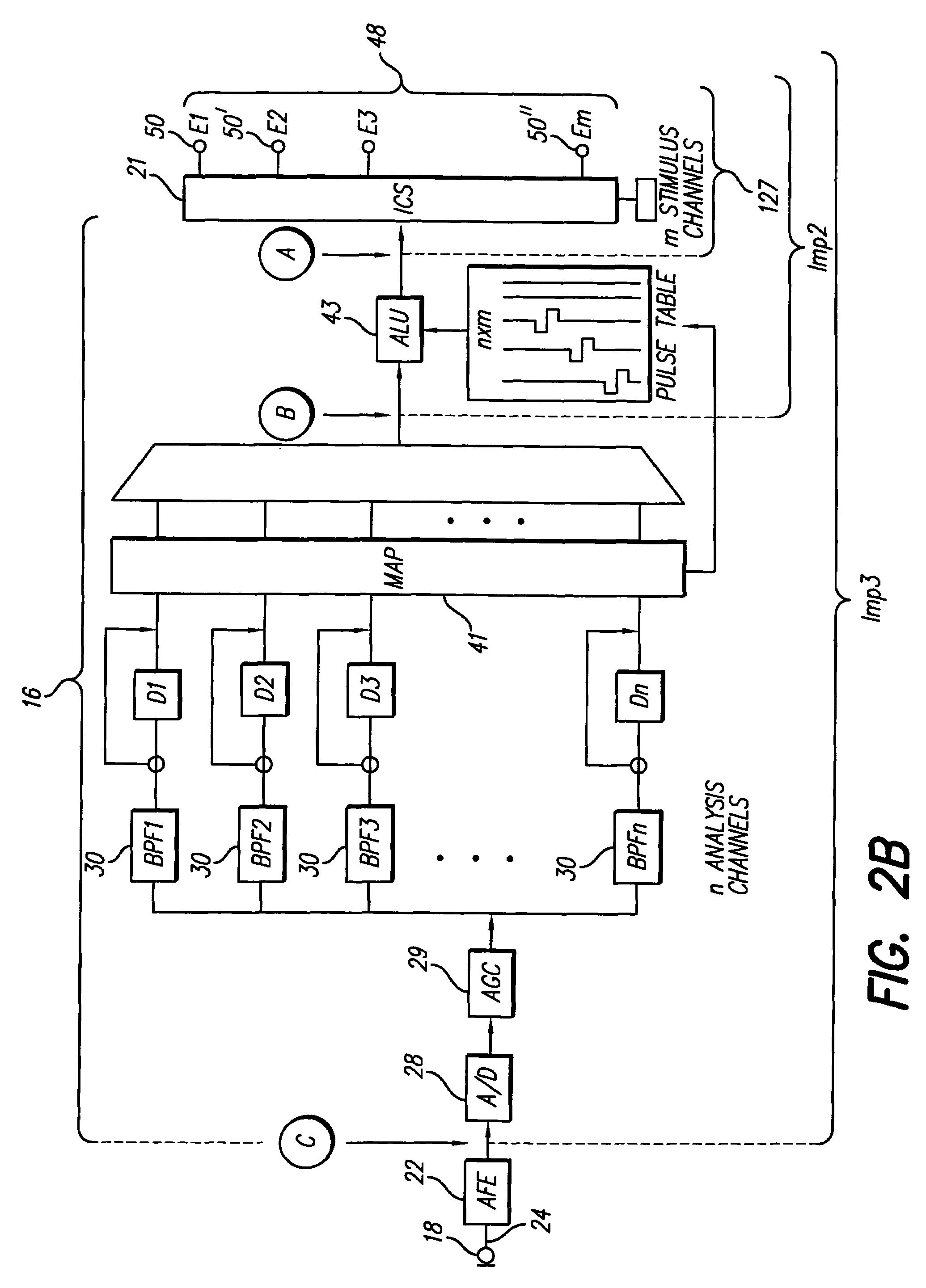

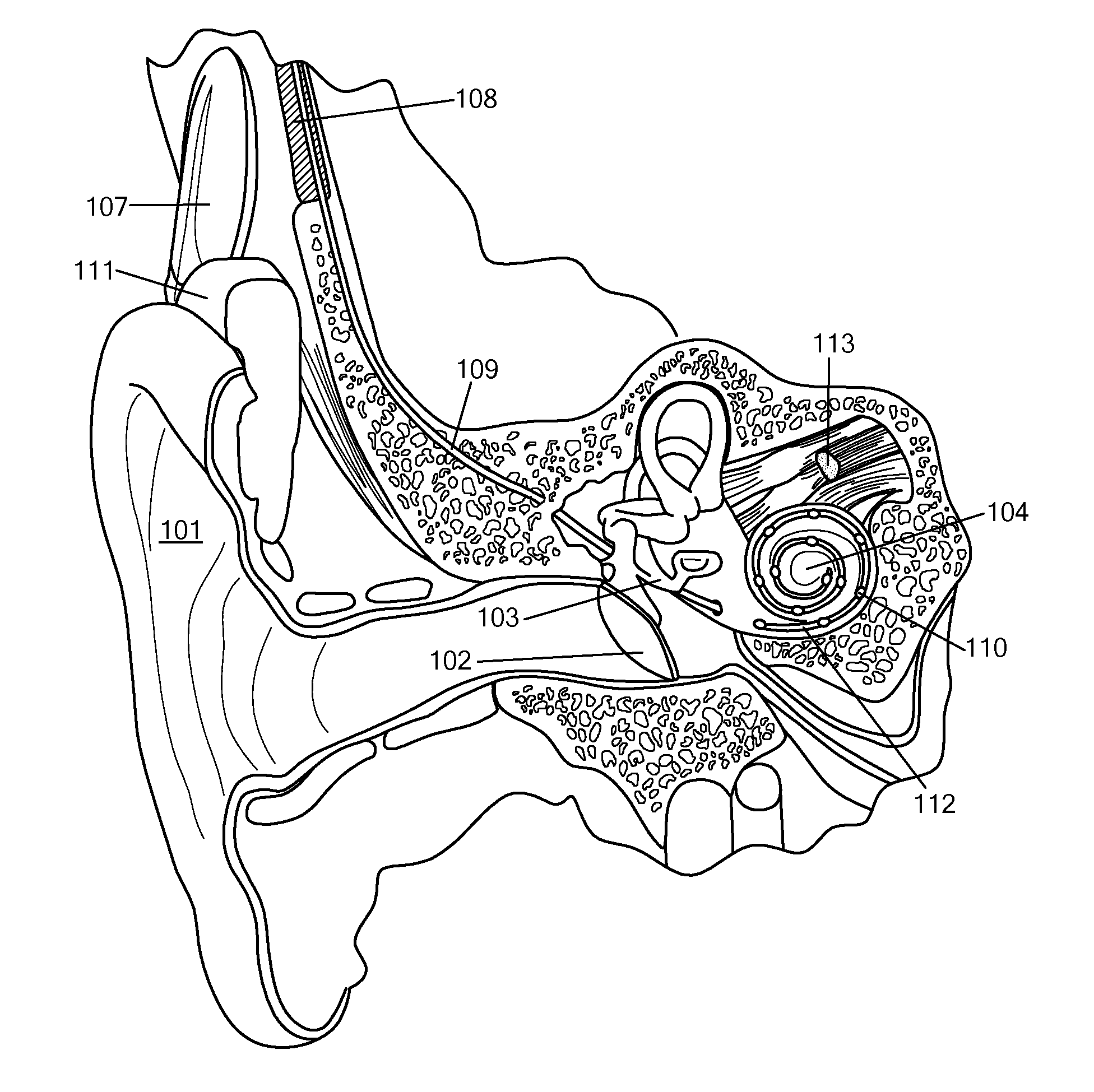

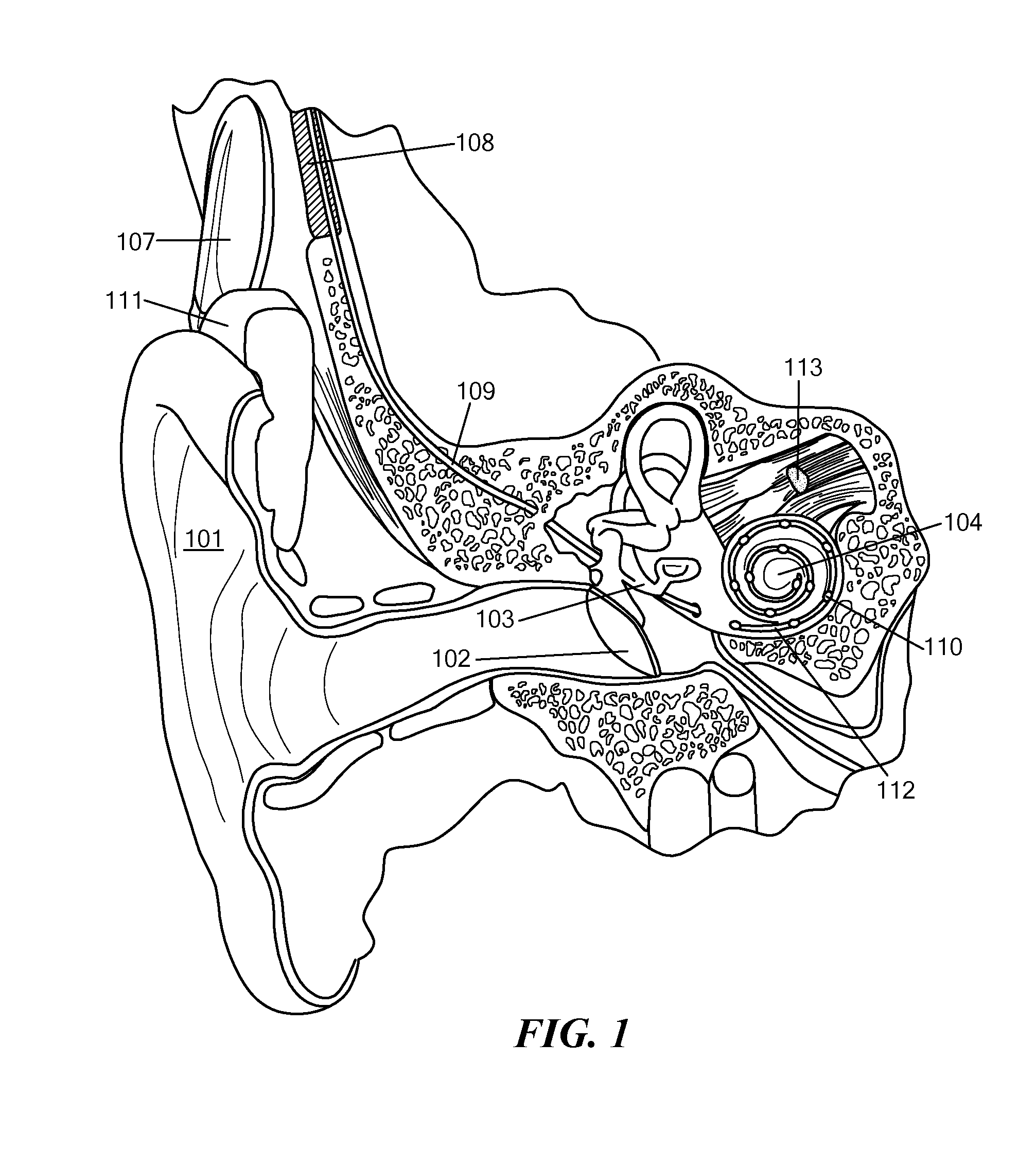

Method and system to convey the within-channel fine structure with a cochlear implant

The present invention provides a cochlear stimulation system and method for capturing and translating fine time structure (“FTS”) in incoming sounds and delivering this information spatially to the cochlea. The system comprises a FTS estimator / analyzer and a current navigator. An embodiment of the method comprises analyzing the incoming sounds within a time frequency band, extracting the slowly varying frequency components and estimating the FTS to obtain a more precise dominant FTS component within a frequency band. After adding the fine structure to the carrier to identify a precise dominant FTS component in each analysis frequency band (or stimulation channel), a stimulation current may be “steered” or directed, using the concept of virtual electrodes, to the precise spatial location (place) on the cochlea that corresponds to the dominant FTS component. This process is simultaneously repeated for each stimulation channel and each FTS component.

Owner:ADVANCED BIONICS AG

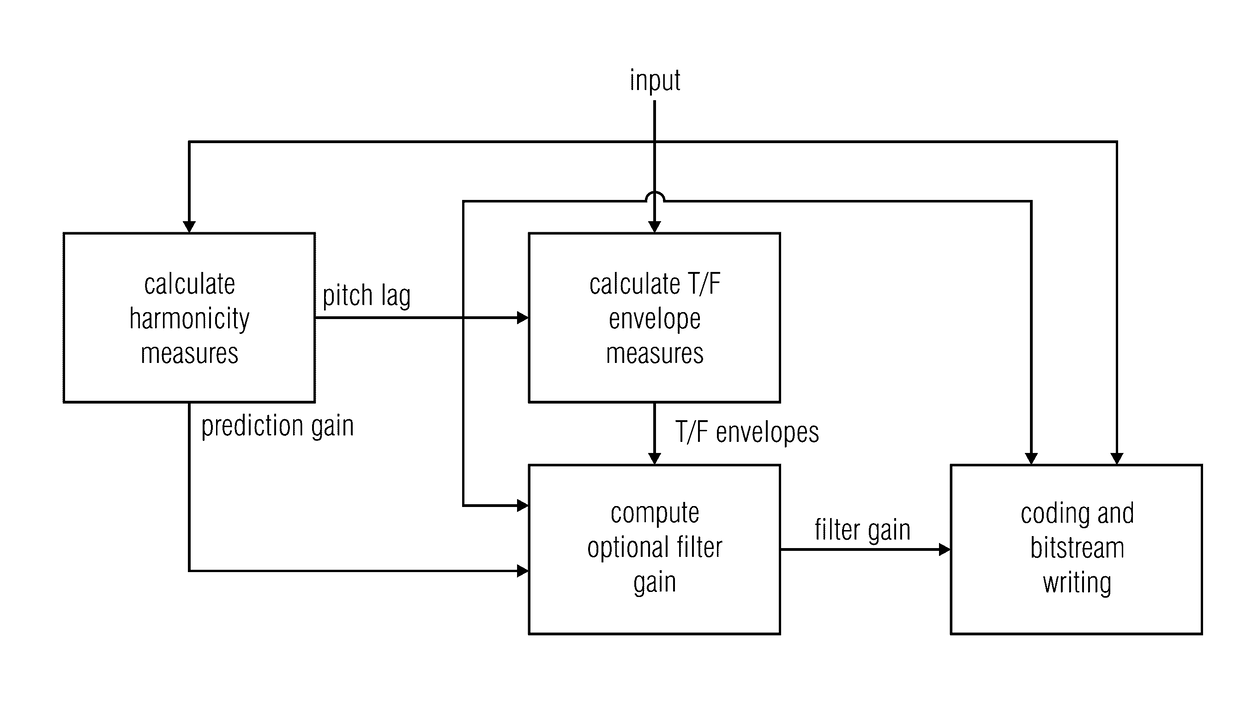

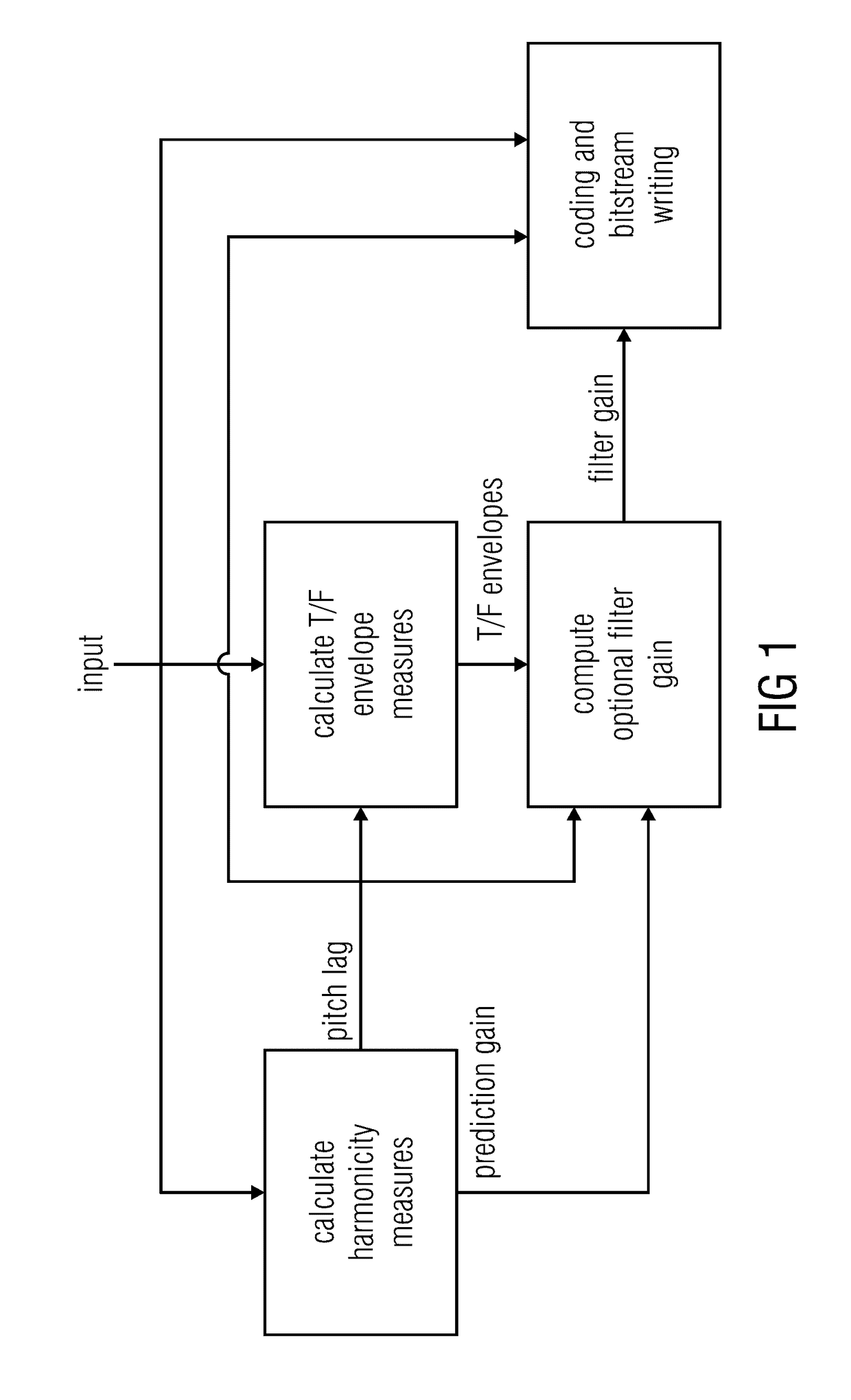

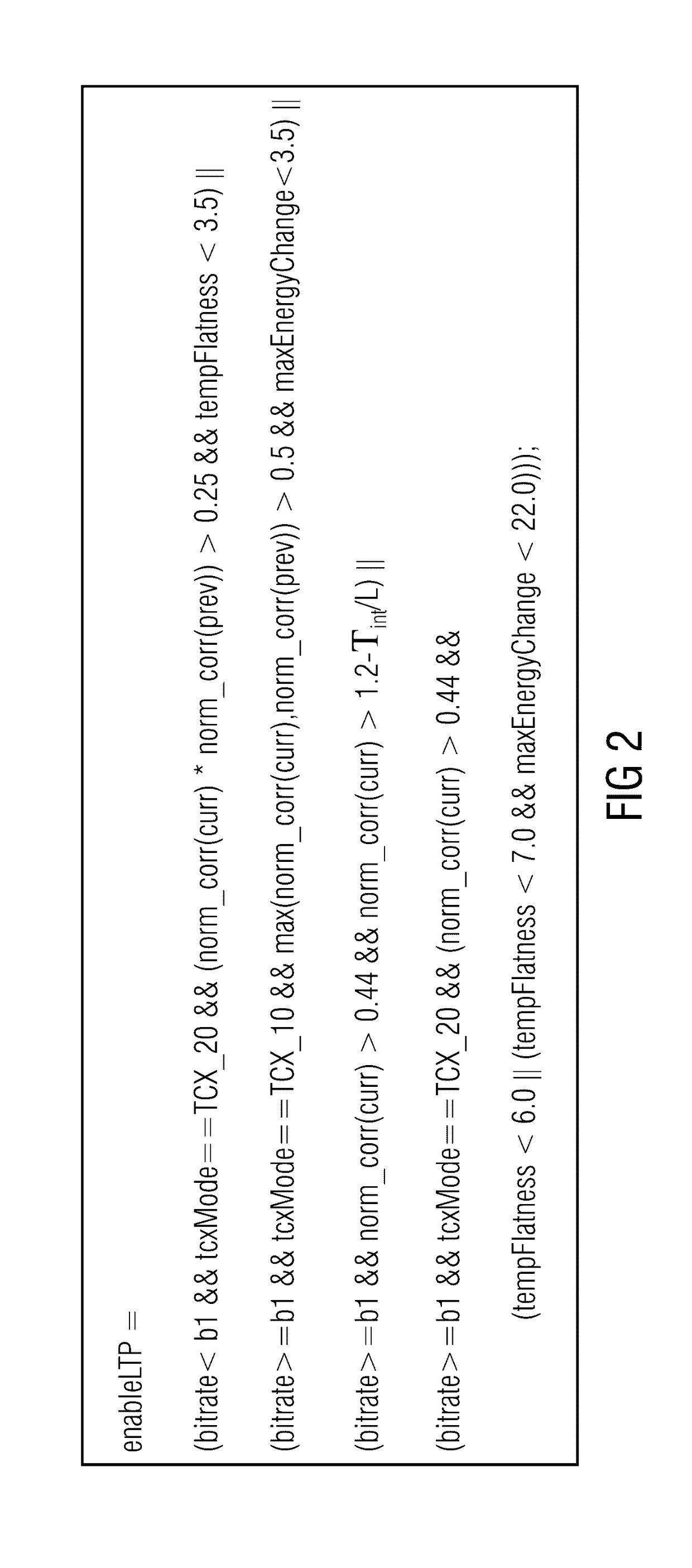

Harmonicity-dependent controlling of a harmonic filter tool

The coding efficiency of an audio codec using a controllable—switchable or even adjustable—harmonic filter tool is improved by performing the harmonicity-dependent controlling of this tool using a temporal structure measure in addition to a measure of harmonicity in order to control the harmonic filter tool. In particular, the temporal structure of the audio signal is evaluated in a manner which depends on the pitch. This enables to achieve a situation-adapted control of the harmonic filter tool so that in situations where a control made solely based on the measure of harmonicity would decide against or reduce the usage of this tool, although using the harmonic filter tool would, in that situation, increase the coding efficiency, the harmonic filter tool is applied, while in other situations where the harmonic filter tool may be inefficient or even destructive, the control reduces the appliance of the harmonic filter tool appropriately.

Owner:FRAUNHOFER GESELLSCHAFT ZUR FOERDERUNG DER ANGEWANDTEN FORSCHUNG EV

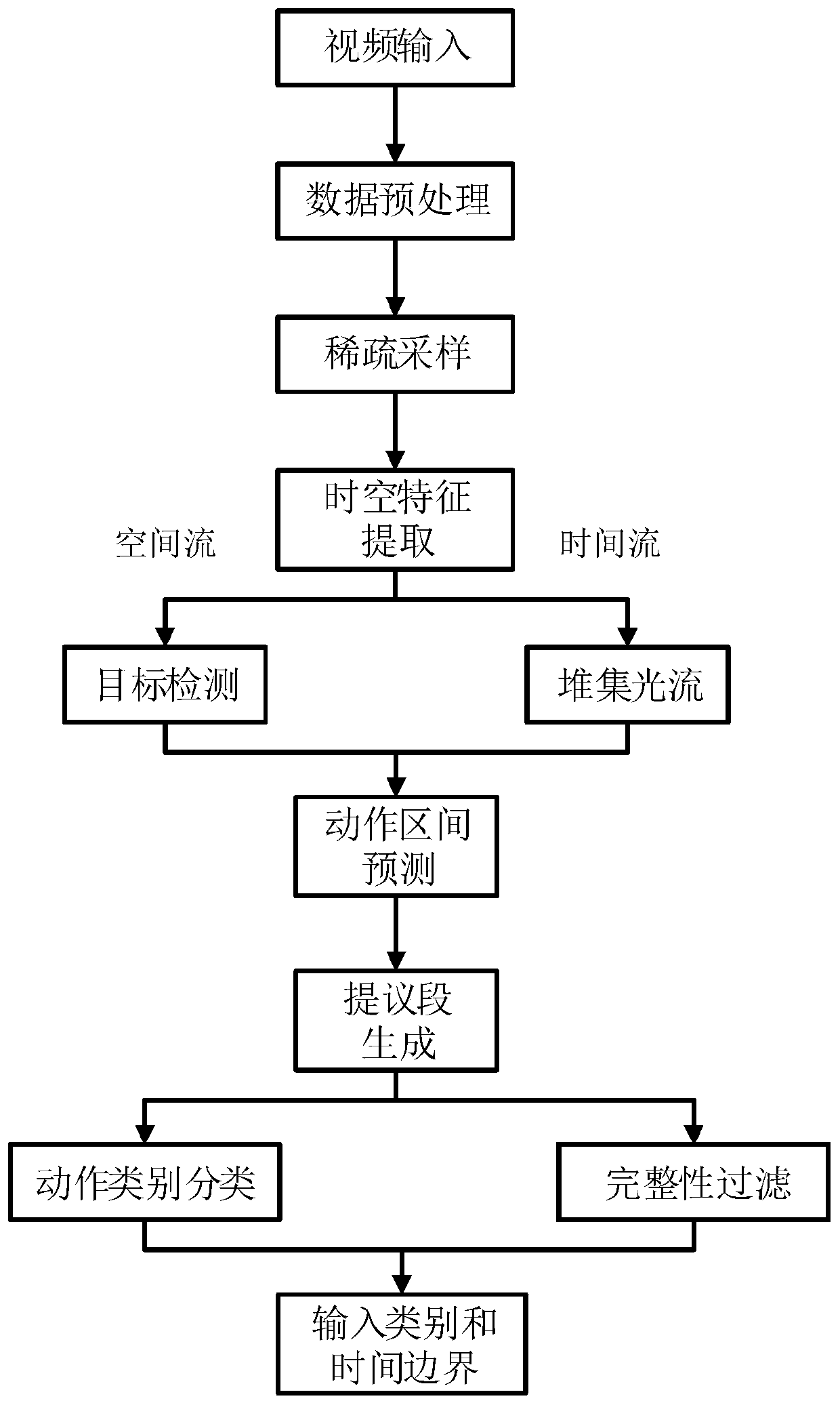

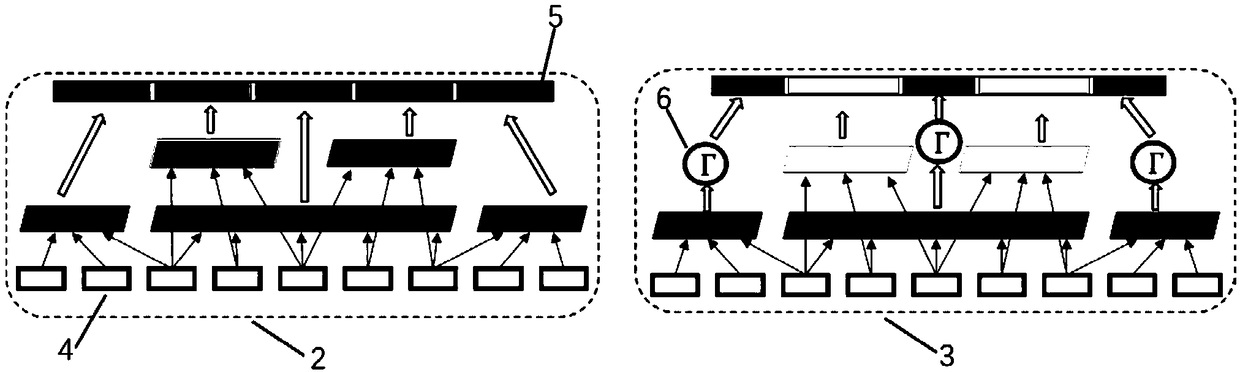

Human body action detection and positioning method based on space-time combination

PendingCN109784269AEfficient use ofSolve the problem of extremely different lengthsCharacter and pattern recognitionTime structureHuman body

The invention discloses a human motion detection and positioning method based on space-time combination, and the method comprises the steps: taking an unpruned video as an input, dividing the video into a plurality of short units with the same length through data preprocessing, carrying out the sparse sampling randomly, and extracting the space-time characteristics through a double-flow convolutional neural network; Secondly, entering a space-time joint network to judge the occurrence interval of actions to obtain a group of action scoring oscillograms, inputting the action oscillograms into aGTAG network, and setting different thresholds to meet different positioning precision requirements and obtain action proposal sections with different granularities; All the action proposal sectionsdetect the types of actions through an action classifier, the time boundary of action occurrence is finely corrected through an integrity filter, and human body action detection and positioning in a complex scene are achieved. The method provided by the invention can be applied to actual scenes with serious human body shielding, changeable postures and more interference objects, and can be used for well processing activity categories with different time structures.

Owner:CHINA UNIV OF PETROLEUM (EAST CHINA)

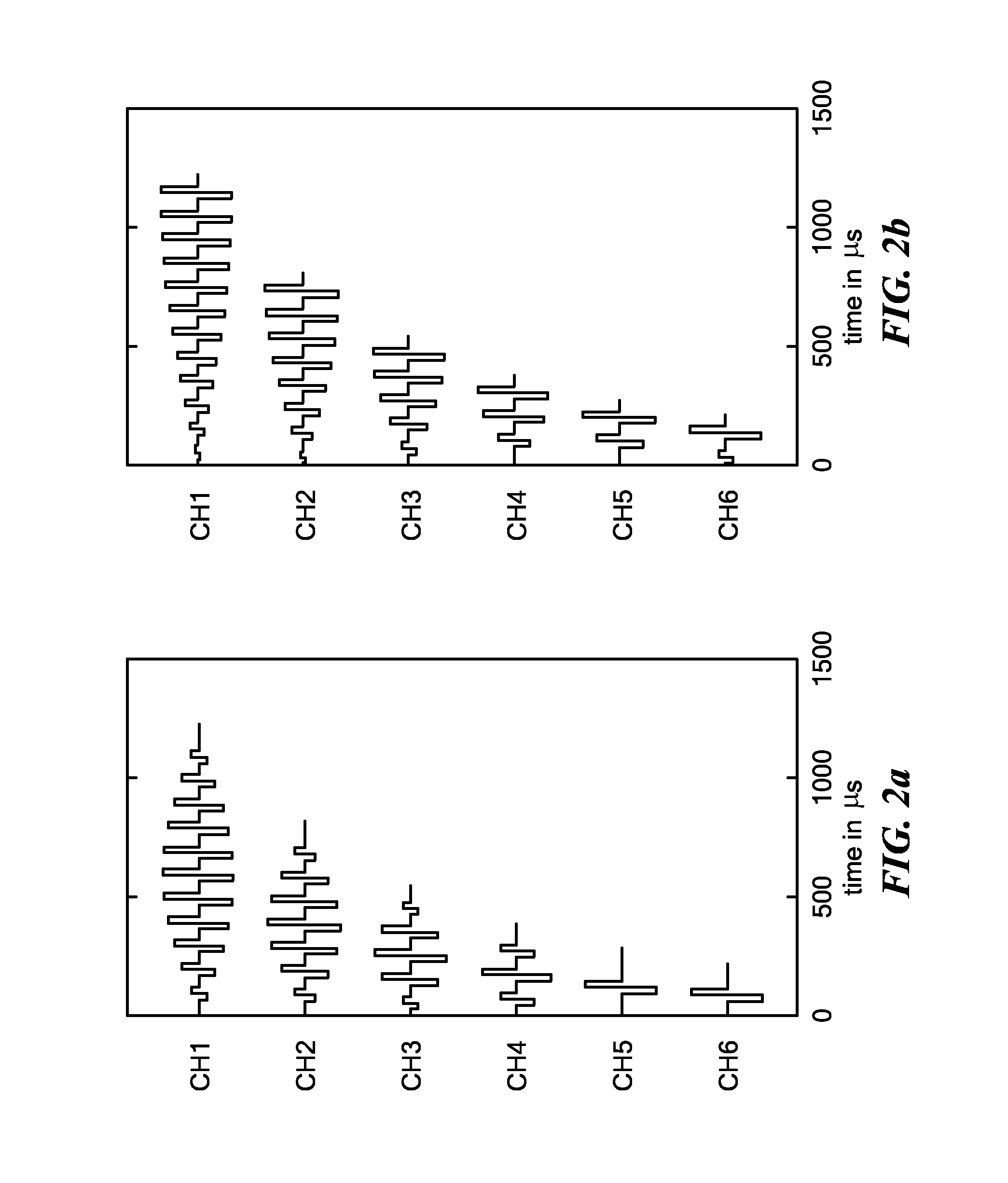

Enhancing Fine Time Structure Transmission for Hearing Implant System

A system and method of signal processing for a hearing implant. The hearing implant includes at least one electrode, each electrode associated with a channel specific sampling sequence. An acoustic audio signal is processed to generate for each electrode a band pass signal representing an associated band of audio frequency. For each electrode, a sequence signal is determined as a function of the electrode's associated band pass signal and channel specific sampling sequence. An envelope of each band pass signal is determined. The envelope of each band pass signal is filtered to reduce modulations resulting from unresolved harmonics, creating for each electrode an associated filtered envelope signal. Each electrode's sequence signal is weighted based, at least in part, on the electrode's associated filtered envelope signal.

Owner:MED EL ELEKTROMEDIZINISCHE GERAETE GMBH

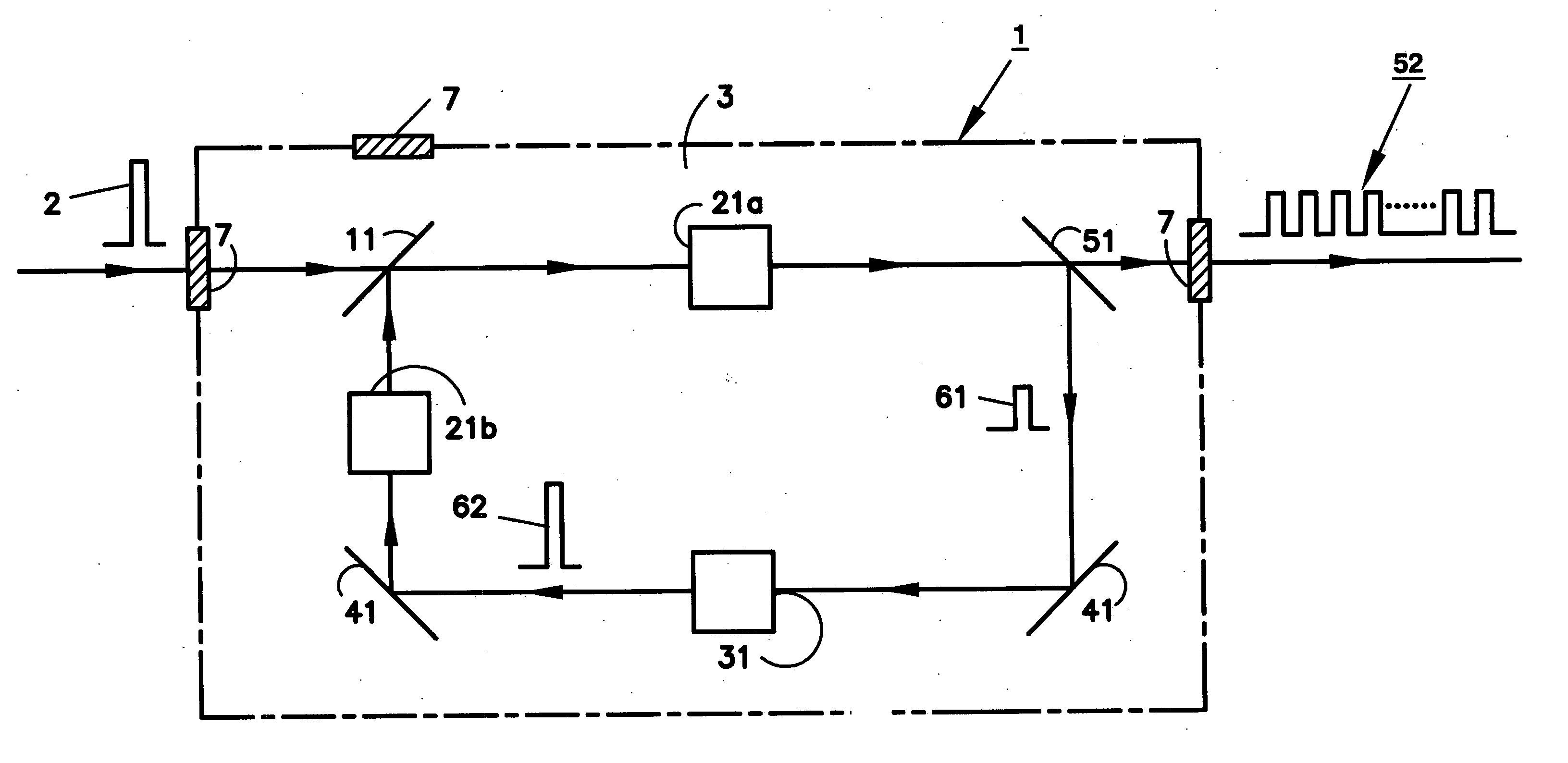

Laser pulse multiplier

ActiveUS20070047601A1Increase the number ofImproves electron production rateOptical resonator shape and constructionFibre transmissionTime structureOptoelectronics

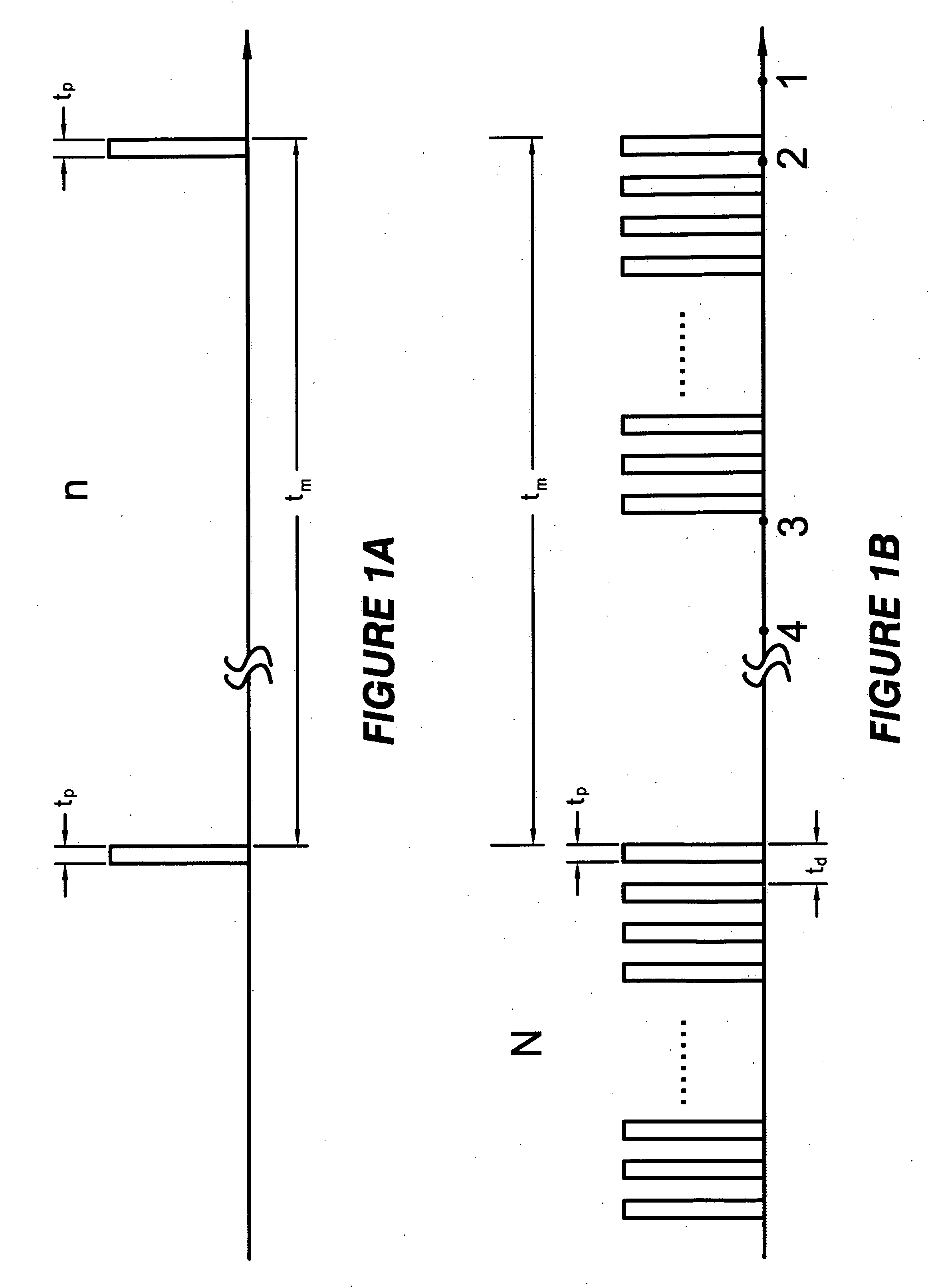

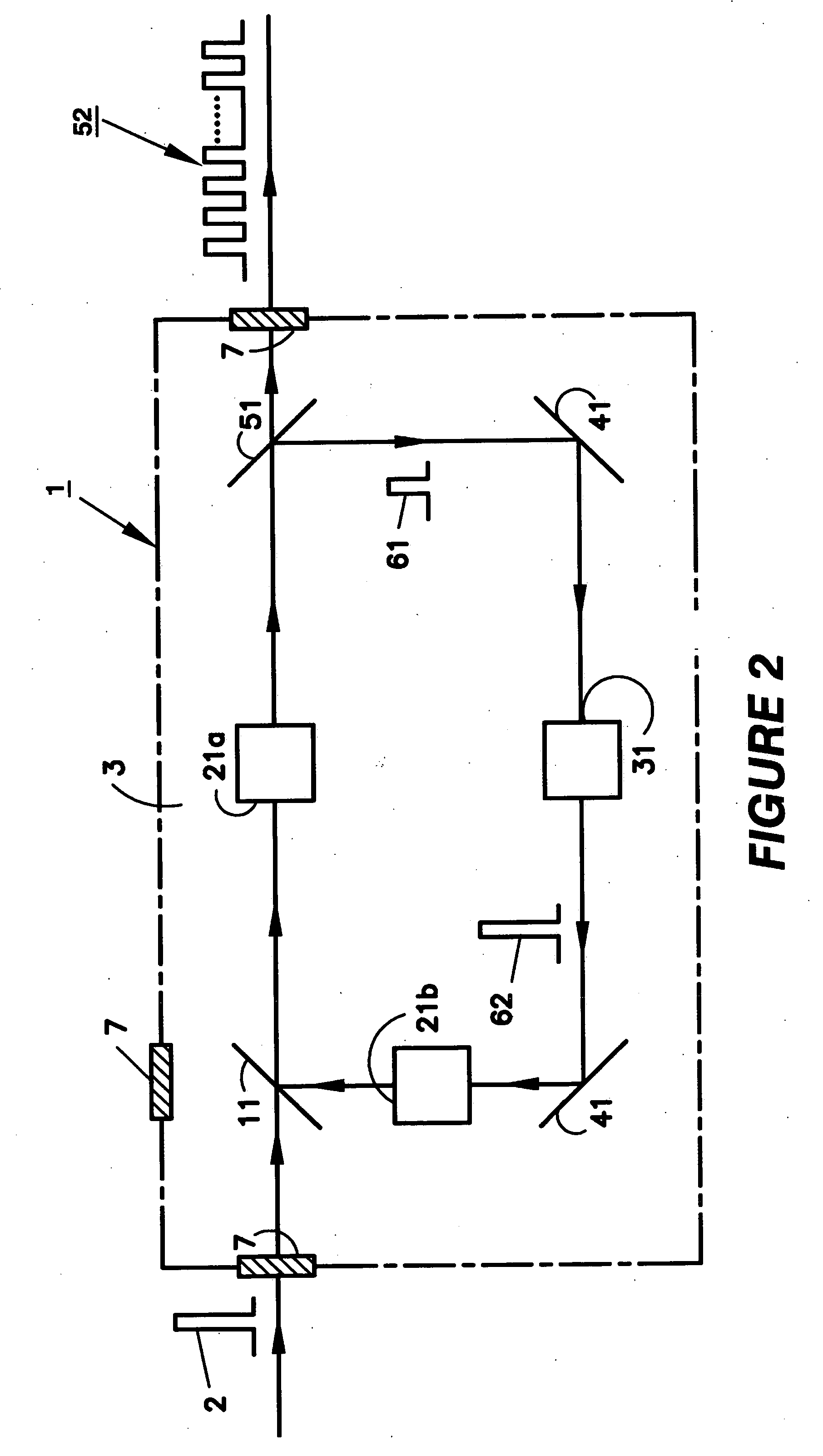

A device for generating a plurality of laser pulses from a recirculating single laser pulse in an optical delay line that is capable of partially transmitting the trapped pulse out of the delay line. The energy of the partially reflected trapped pulse is restored before beginning another round trip in the delay line. The time structure of an output pulse train thus generated by the device comprises a sequence of macro pulses, each comprising a plurality of micro pulses.

Owner:DULY RES

Semiconductor structure processing using multiple laterally spaced laser beam spots delivering multiple blows

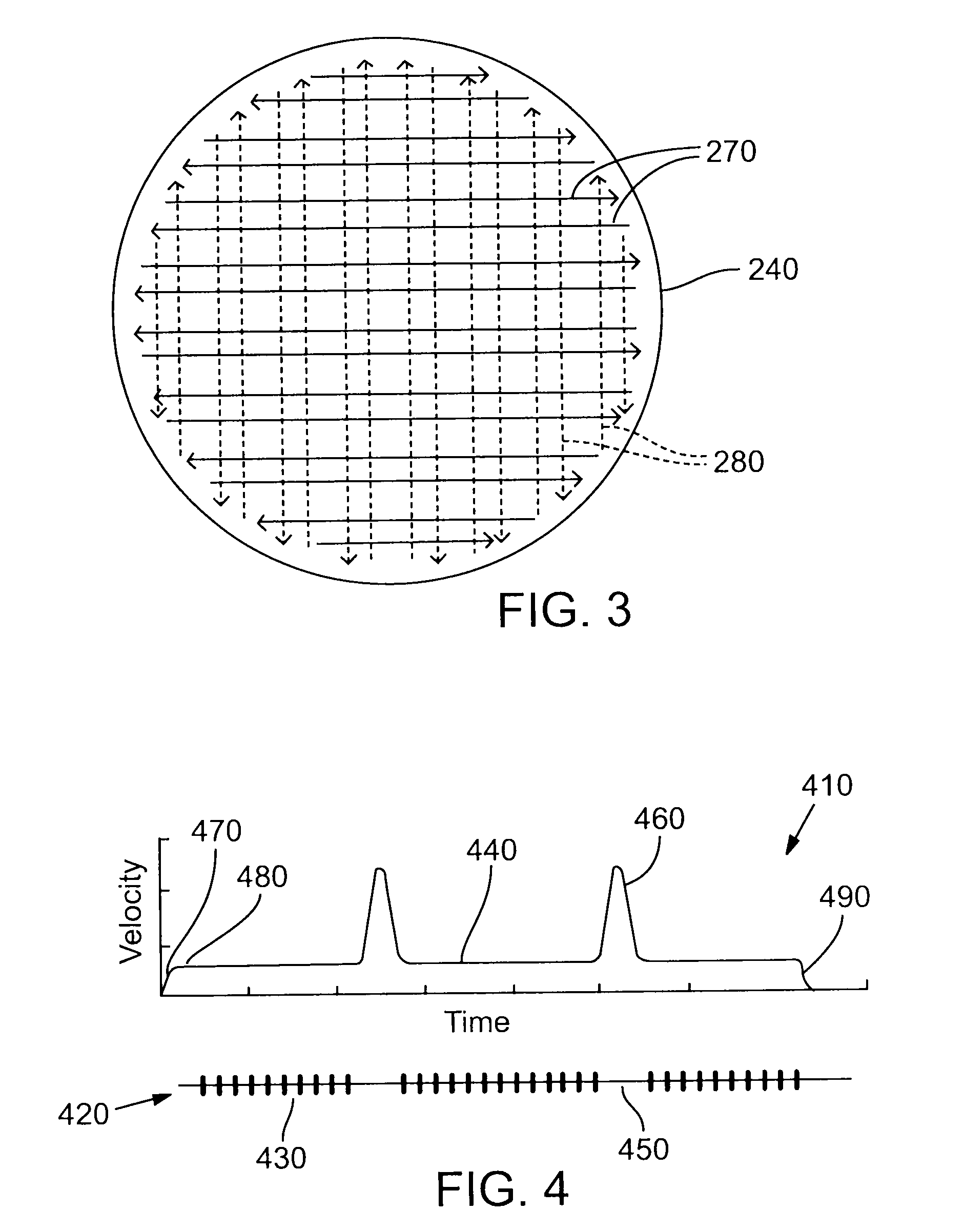

InactiveUS20100089881A1Increase intimacySemiconductor/solid-state device manufacturingLaser beam welding apparatusTime structureSemiconductor structure

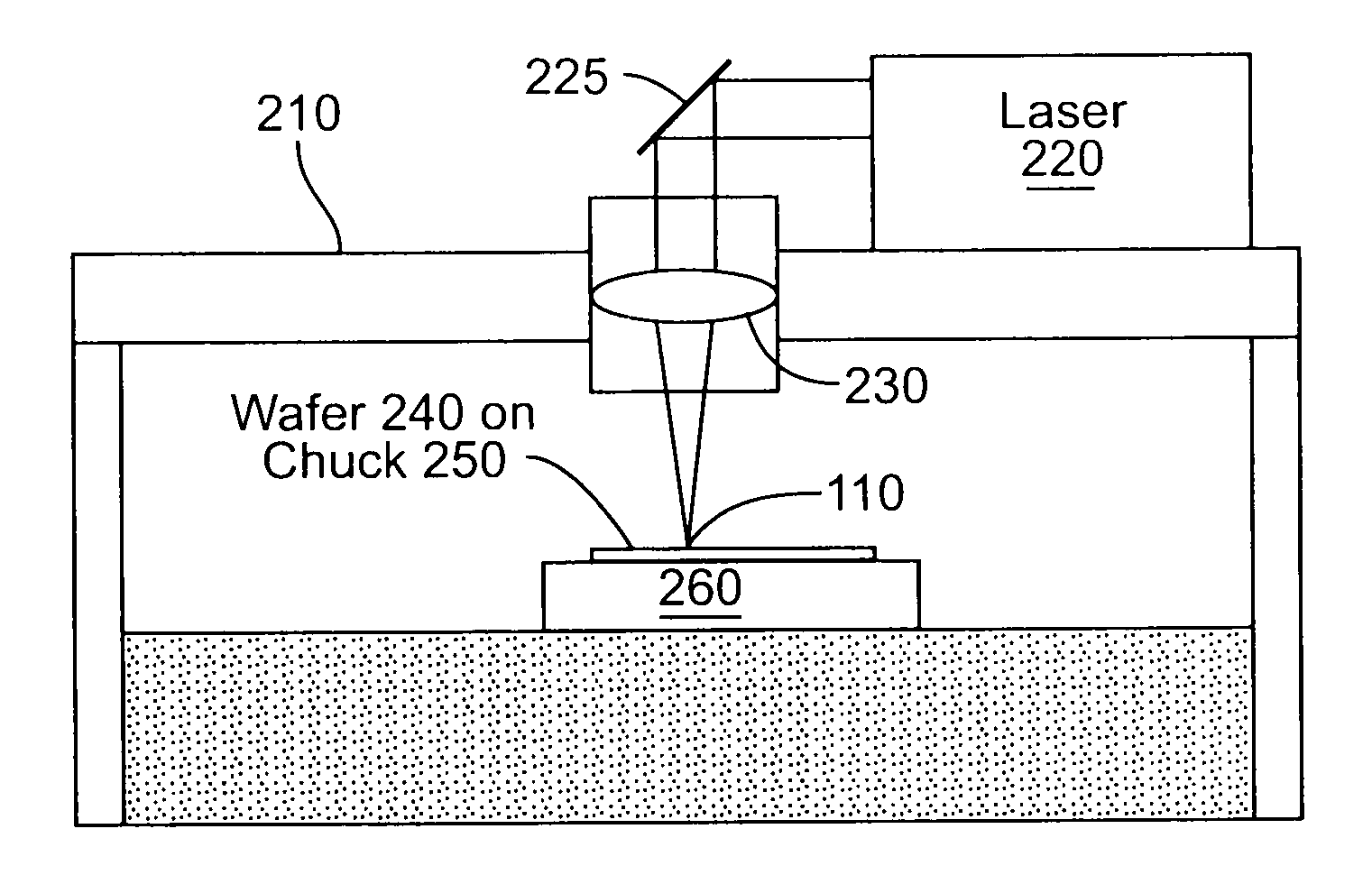

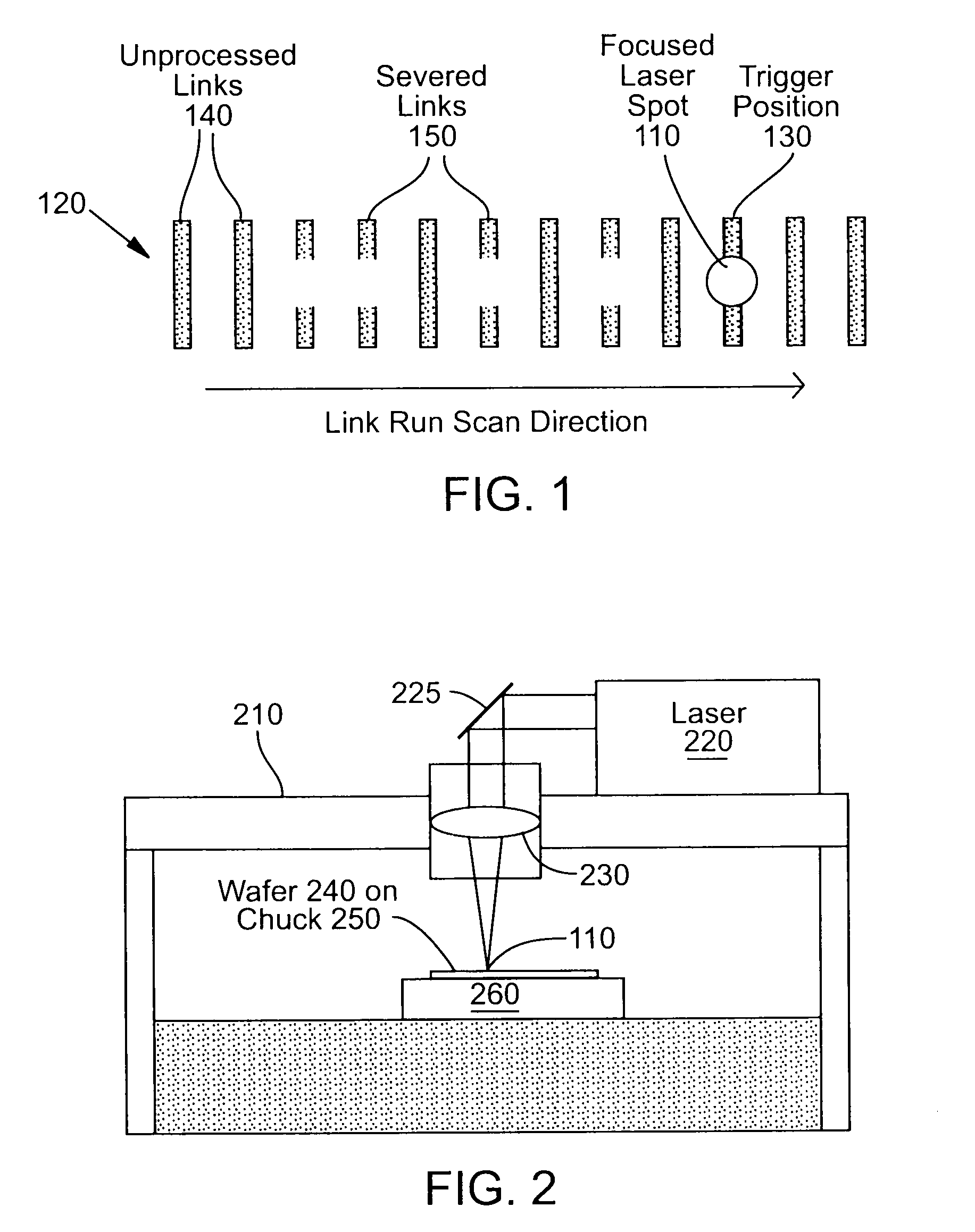

Methods and systems process a semiconductor substrate having a plurality of structures to be selectively irradiated with multiple laser beams. The structures are arranged in a plurality of substantially parallel rows extending in a generally lengthwise direction. The method generates a first laser beam that propagates along a first laser beam axis that intersects a first target location on or within the semiconductor substrate. The method also generates a second laser beam that propagates along a second laser beam axis that intersects a second target location on or within the semiconductor substrate. The second target location is offset from the first target location in a direction perpendicular to the lengthwise direction of the rows by some amount such that, when the first target location is a structure on a first row of structures, the second target location is a structure or between two adjacent structures on a second row distinct from the first row. The method moves the semiconductor substrate relative to the first and second laser axes in a direction approximately parallel to the rows of structures, so as to pass the first target location along the first row to irradiate for a first time selected structures in the first row, and so as to simultaneously pass the second target location along the second row to irradiate for a second time structures previously irradiated by the first laser beam during a previous pass of the first target location along the second row.

Owner:ELECTRO SCI IND INC

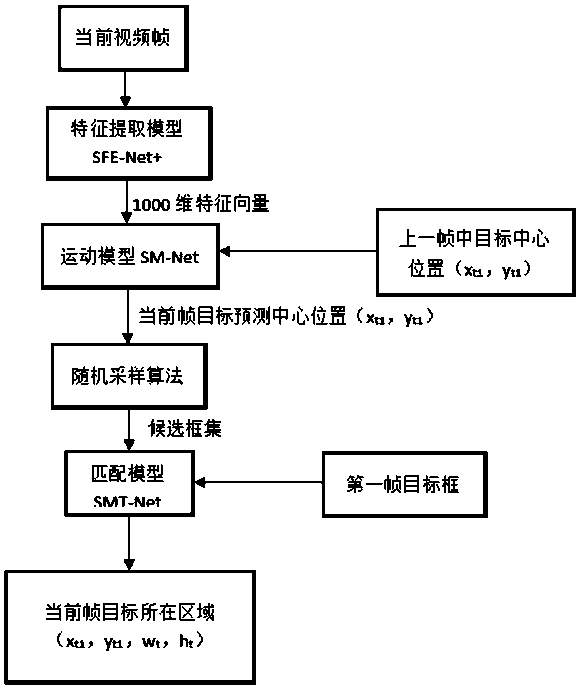

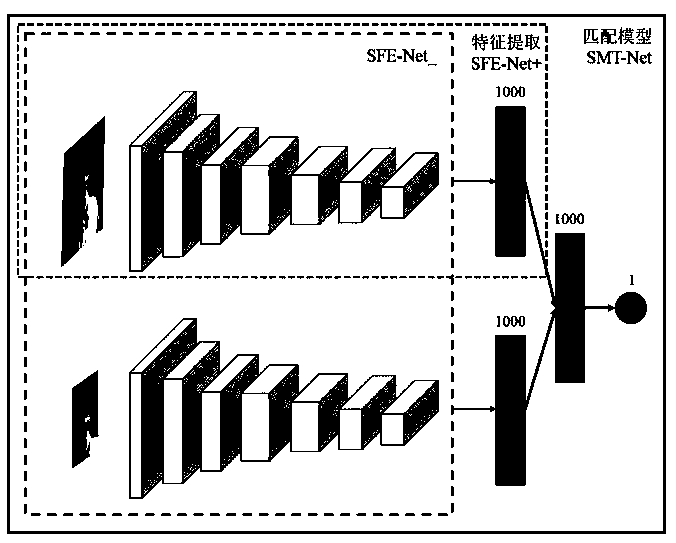

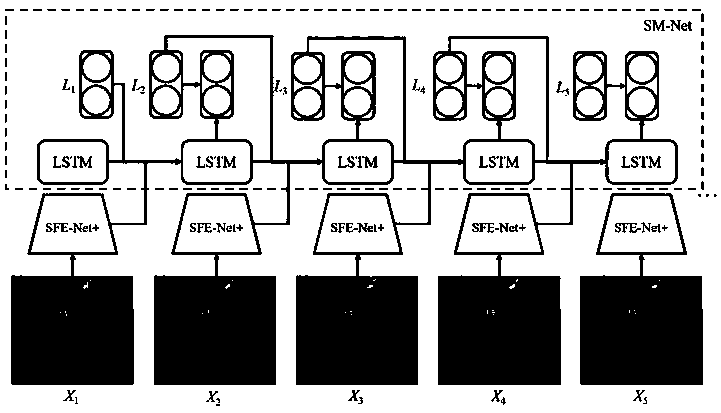

Target forecasting and tracking method based on recurrent neural network

ActiveCN108257158AMove quicklyDeformation fastImage analysisNeural architecturesTime structurePattern recognition

The invention relates to a target forecasting and tracking method based on a recurrent neural network. The method includes the steps: firstly, extracting characteristic information according to a current video frame, acquiring 1000-dimensional characteristics by a single network of an SFE-Net+ model and inputting the characteristics to a motion model SM-Net; secondly, forecasting the center position of a target in a current frame according to the characteristic information and the center position of a previous frame target by the SM-Net of a time structure; thirdly, selecting a certain numberof candidate boxes from the periphery of the center position in a random sampling mode; finally, inputting the candidate boxes to a matching model SMT-Net, judging similarities of the candidate boxesand target boxes of a first frame one by one, and selecting the candidate boxes with the highest similarity. An area occupied by the candidate boxes with the highest similarity in an original image isa final area of the target in the current frame. Objects can be tracked in complicated situations.

Owner:FUZHOU UNIV

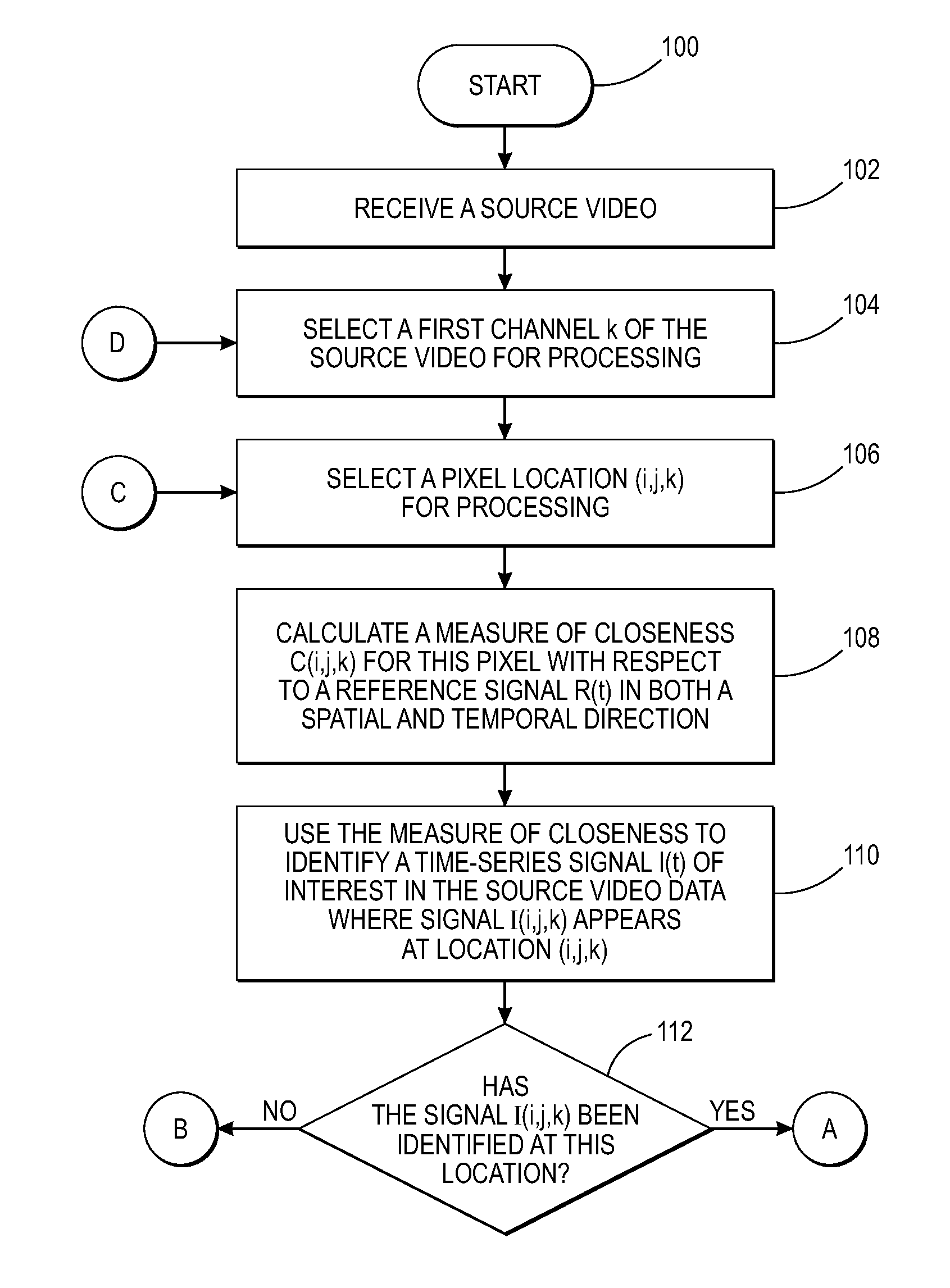

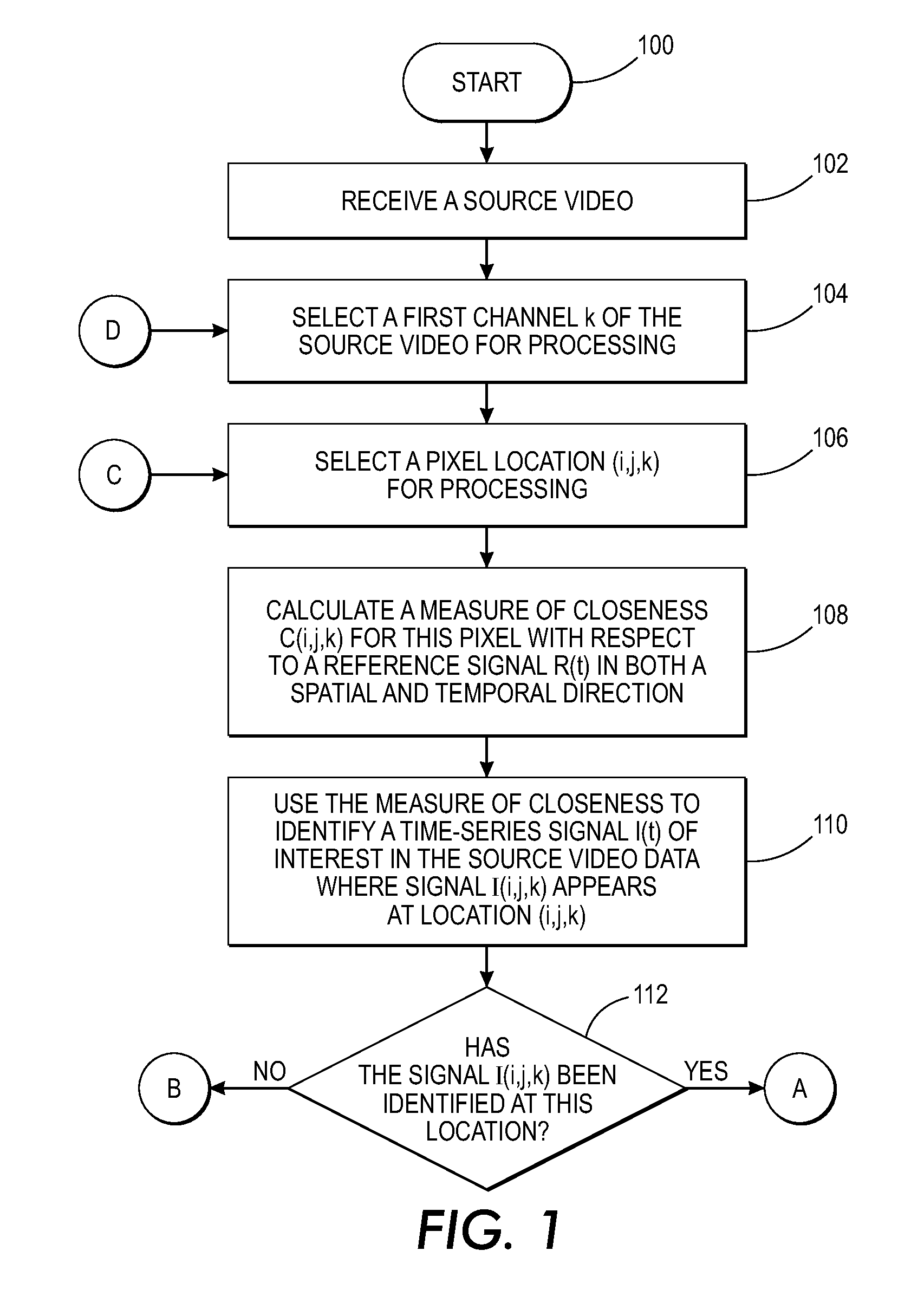

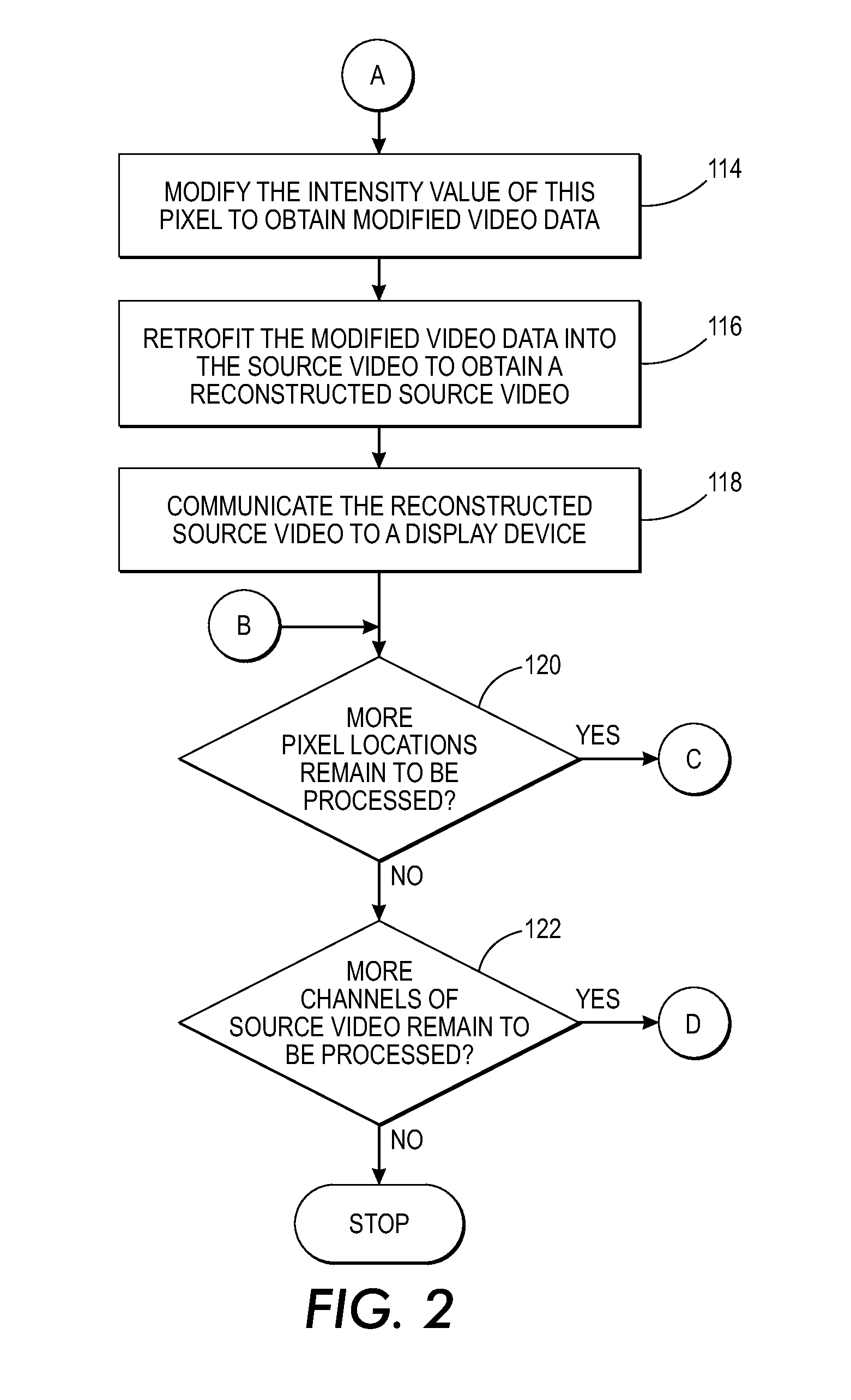

Processing source video for real-time enhancement of a signal of interest

ActiveUS20140205175A1Readily apparentImprove real-time performanceImage enhancementImage analysisTime structureSensing applications

What is disclosed is a system and method for real-time enhancement of an identified time-series signal of interest in a video that has a similar spatial and temporal structure to a given reference signal, as determined by a measure of closeness. A closeness measure is computed for pixels of each image frame of each channel of a multi-channel video to identify a time-series signal of interest. The intensity of pixels associated with that time-series signal is modified based on a product of the closeness measure and the reference signal scaled by an amplification factor. The modified pixel intensity values are provided back into the source video to generate a reconstructed video such that, upon playback of the reconstructed video, viewers thereof can visually examine the amplified time-series signal, see how it is distributed and how it propagates. The methods disclosed find their uses in remote sensing applications such as telemedicine.

Owner:XEROX CORP

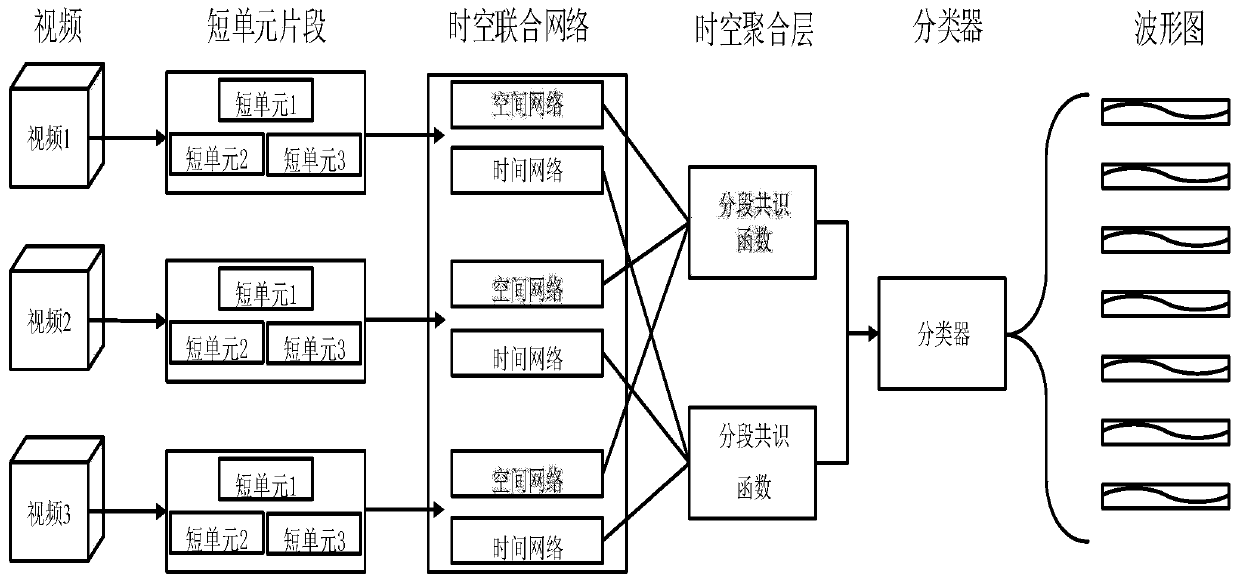

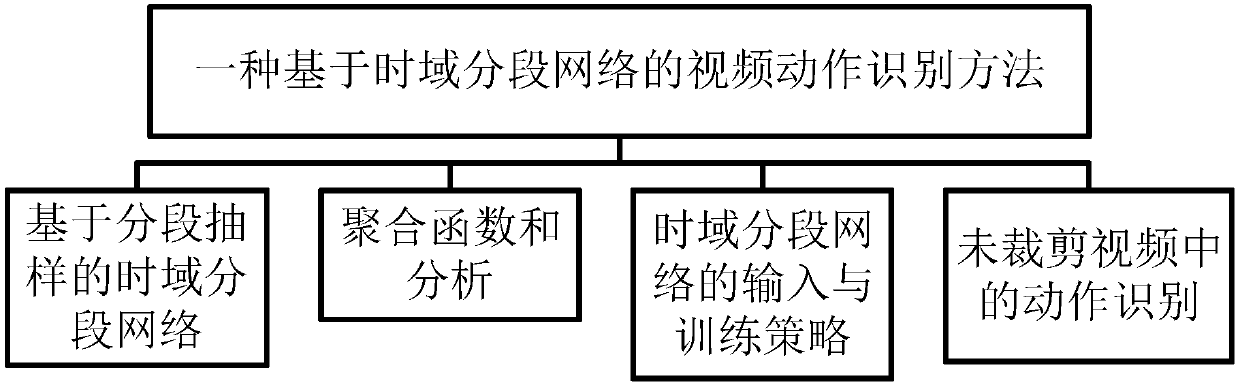

Video motion identification method based on time domain segmentation network

InactiveCN107480642ACharacter and pattern recognitionNeural architecturesPattern recognitionTime structure

The present invention provides a video motion identification method based on a time domain segmentation network. The main content of the method comprises: time domain segmentation network (TSN) based on segmented sampling, clustering function and analysis, time domain segmentation network input and training strategy and motion identification in non-cutting video. The process of the method comprises: dividing video into equal continuous time, randomly extracting one segment from corresponding segments, each segment in a sequence generating motion-level segment-stage prediction, designing a common function, aggregating the segment-level prediction into a video-level score, and in the training process, defining an optimal target in the video-level prediction, and performing optimization through an iteration updating model parameters. The sampling and aggregation modules based on segmentation are employed to establish a long-term time structure to use the whole motion video to effectively learn a motion model, store long-term video and improve the sensitivity and accuracy for the detection and identification of the motion.

Owner:SHENZHEN WEITESHI TECH

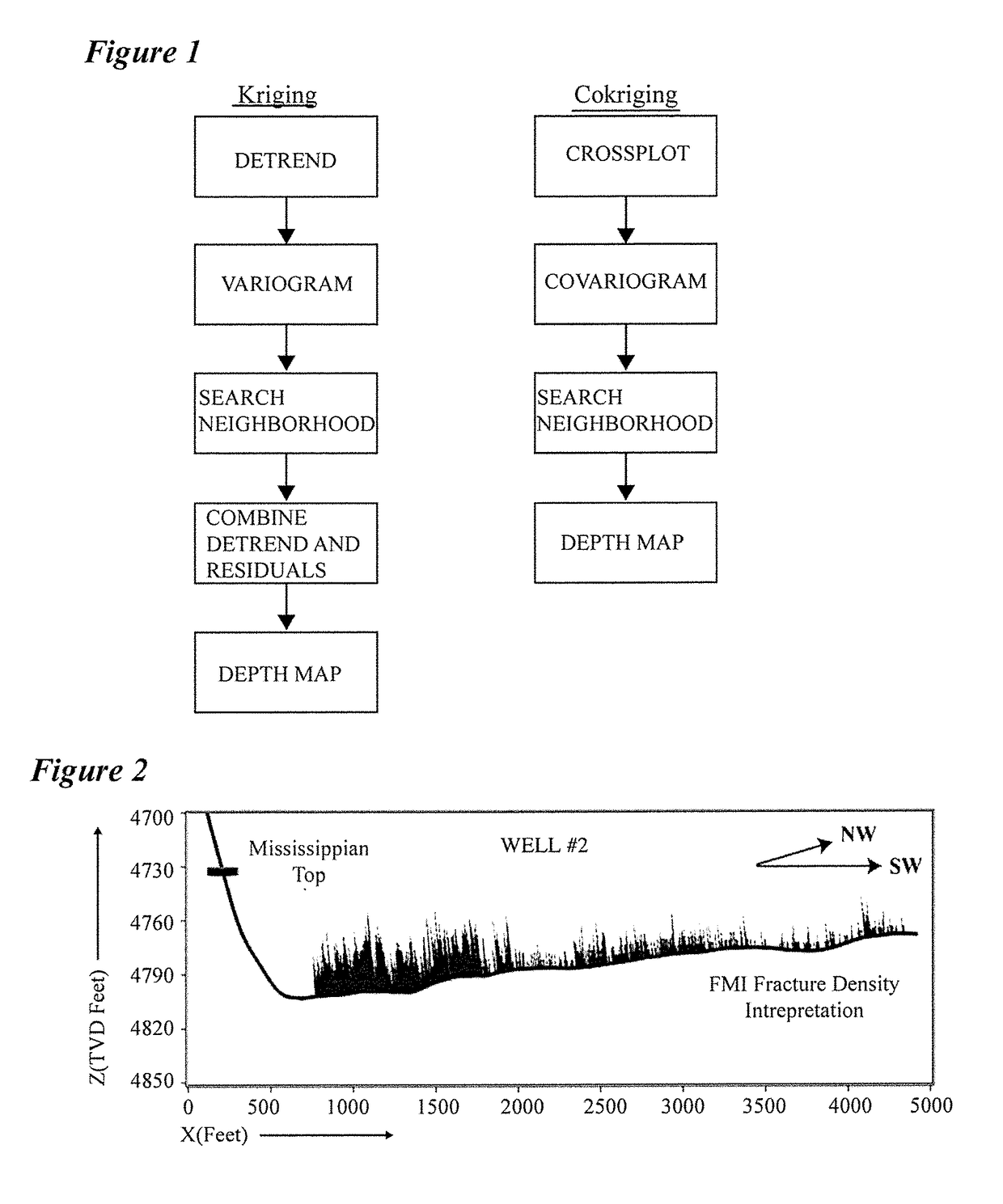

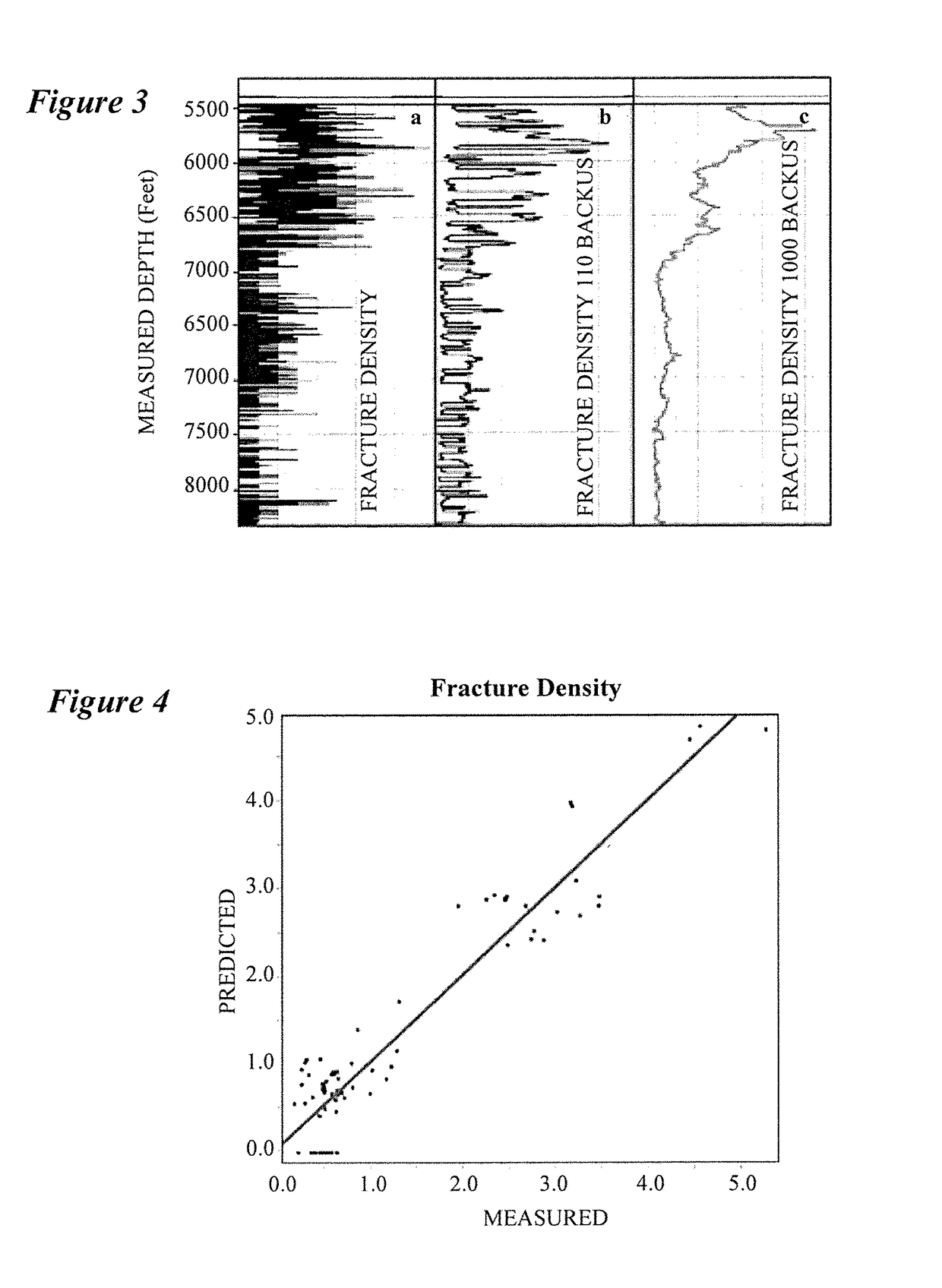

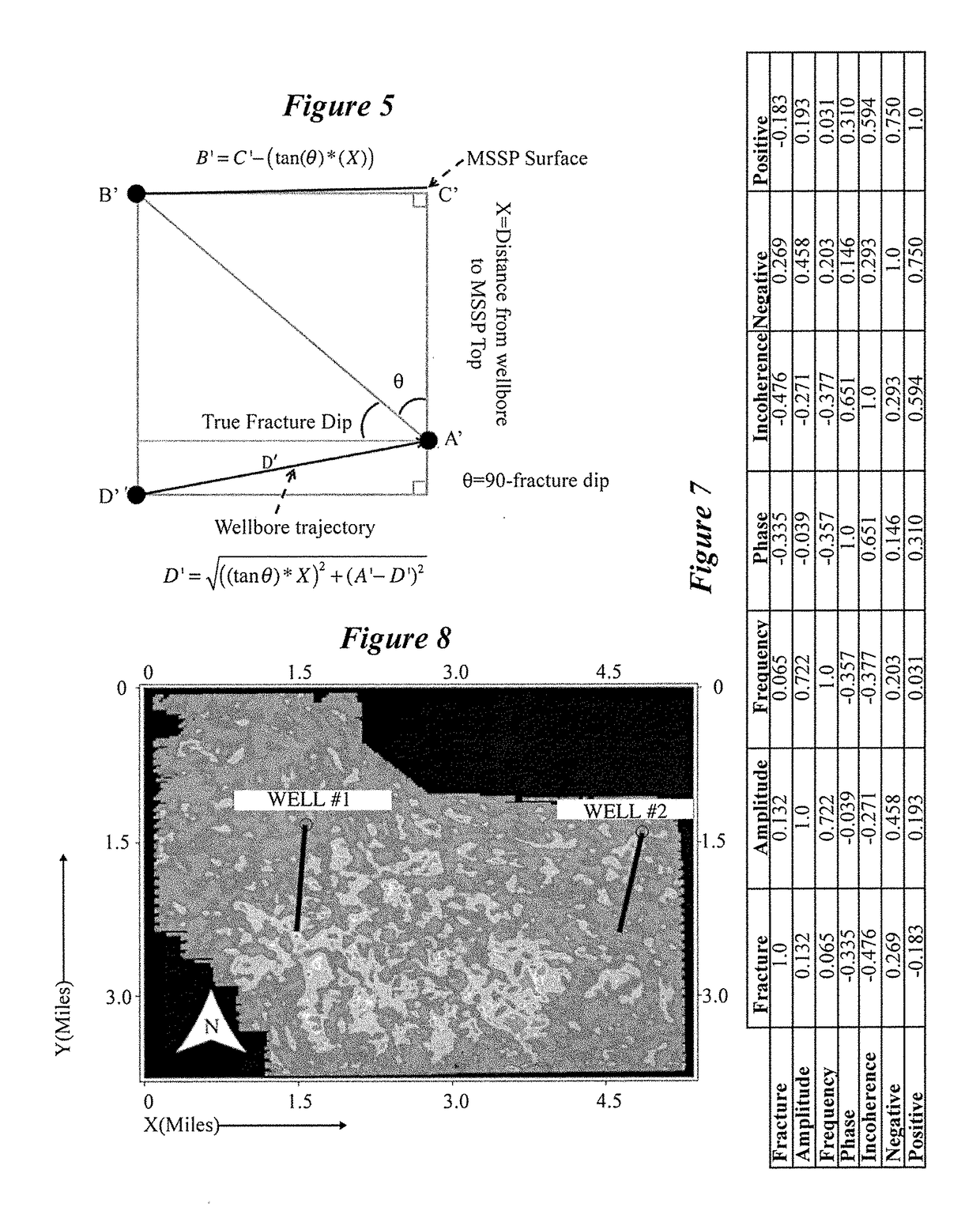

Methods of generation of fracture density maps from seismic data

A method is herein presented to statistically combine multiple seismic attributes for generating a map of the spatial density of fractures. According to an embodiment a first step involves interpreting the formation of interest in 3D seismic volume first to create its time structure map. The second step is creating depth structure of the formation of interest from its time structure map. In this application geostatistical methods have been used for depth conversional, although other methods could be used instead. The third step is extraction of a number of attributes, such as phase, frequency and amplitudes, from the time structure map. The next step is to project the fracture density onto the top of the target formation. The final step is to combine these attributes using a statistical method known as Multi-variant non-linear regression to predict fracture density.

Owner:BOARD OF REGENTS FOR OKLAHOMA STATE UNIVERSITY

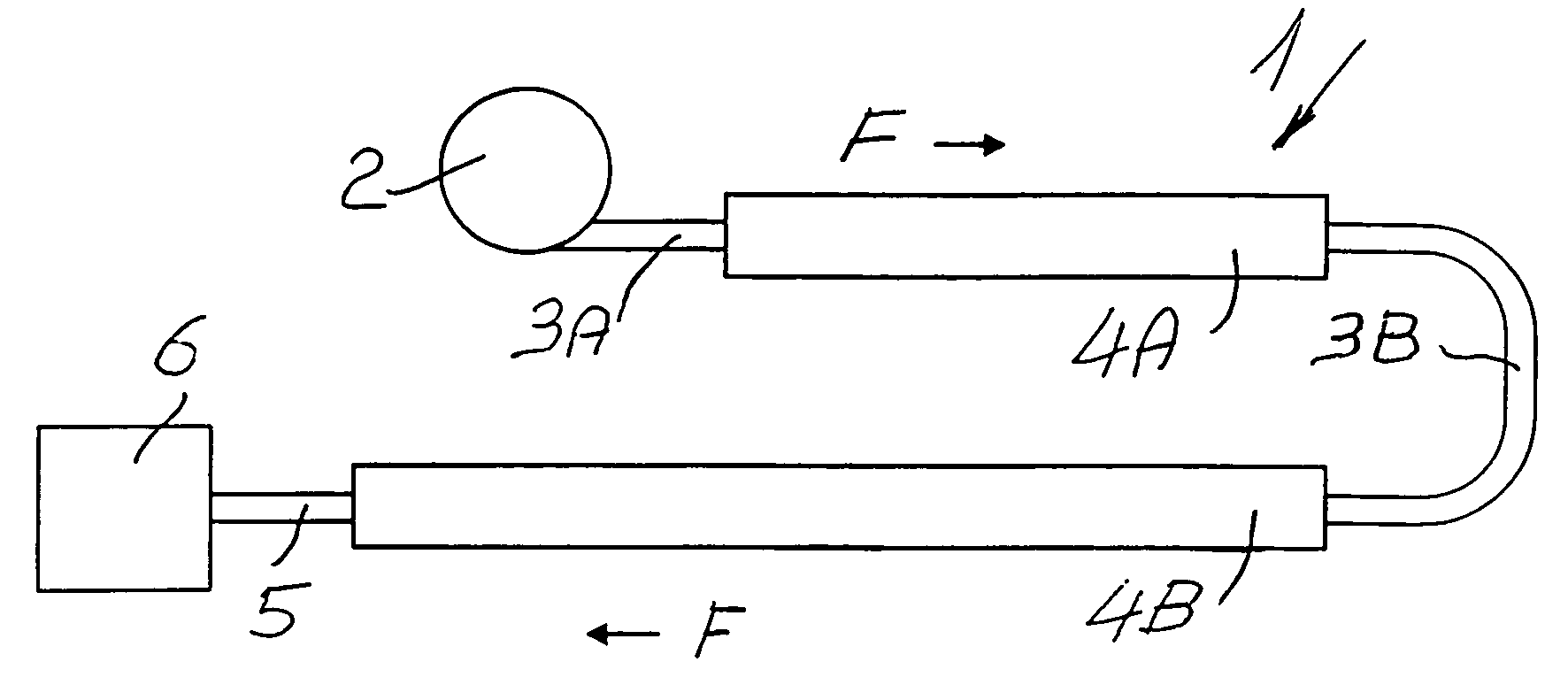

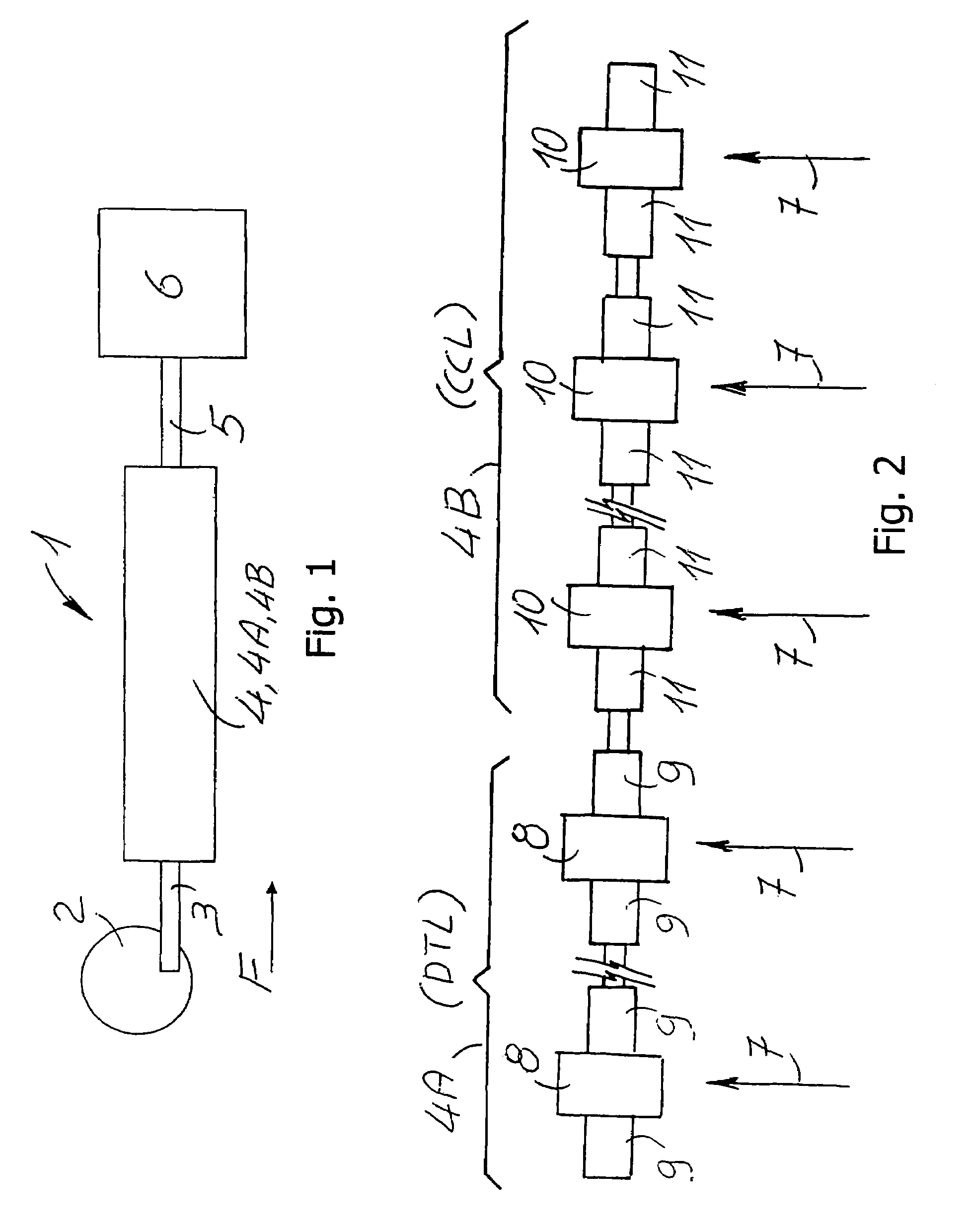

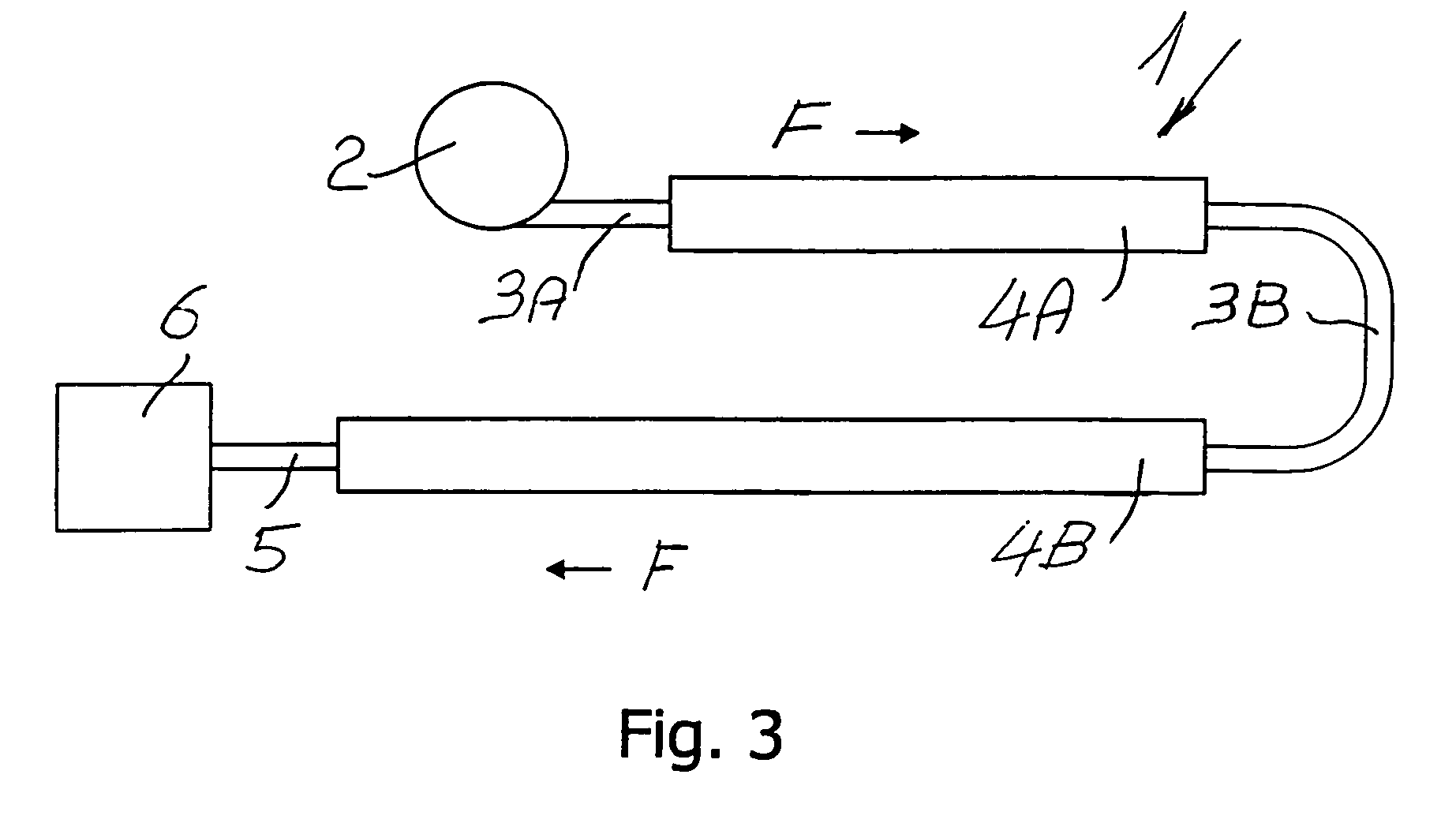

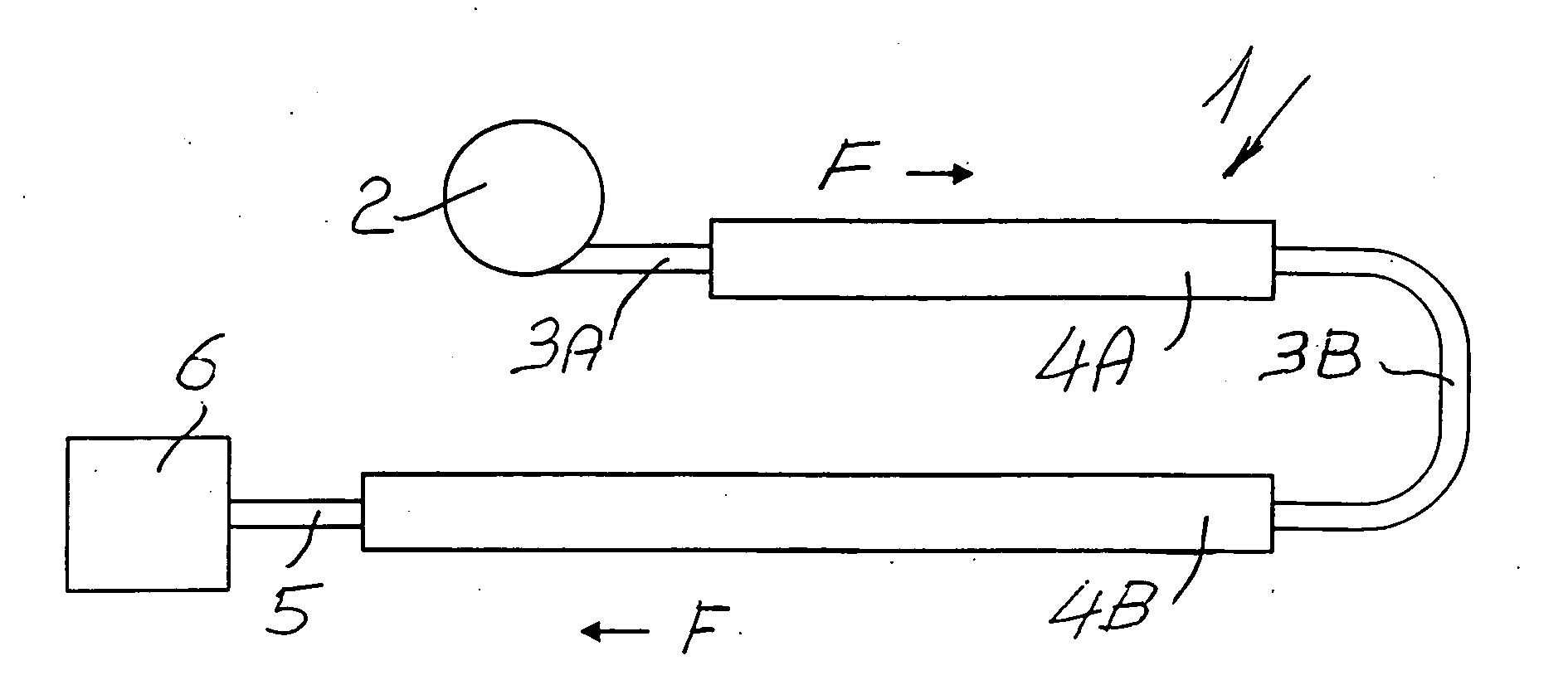

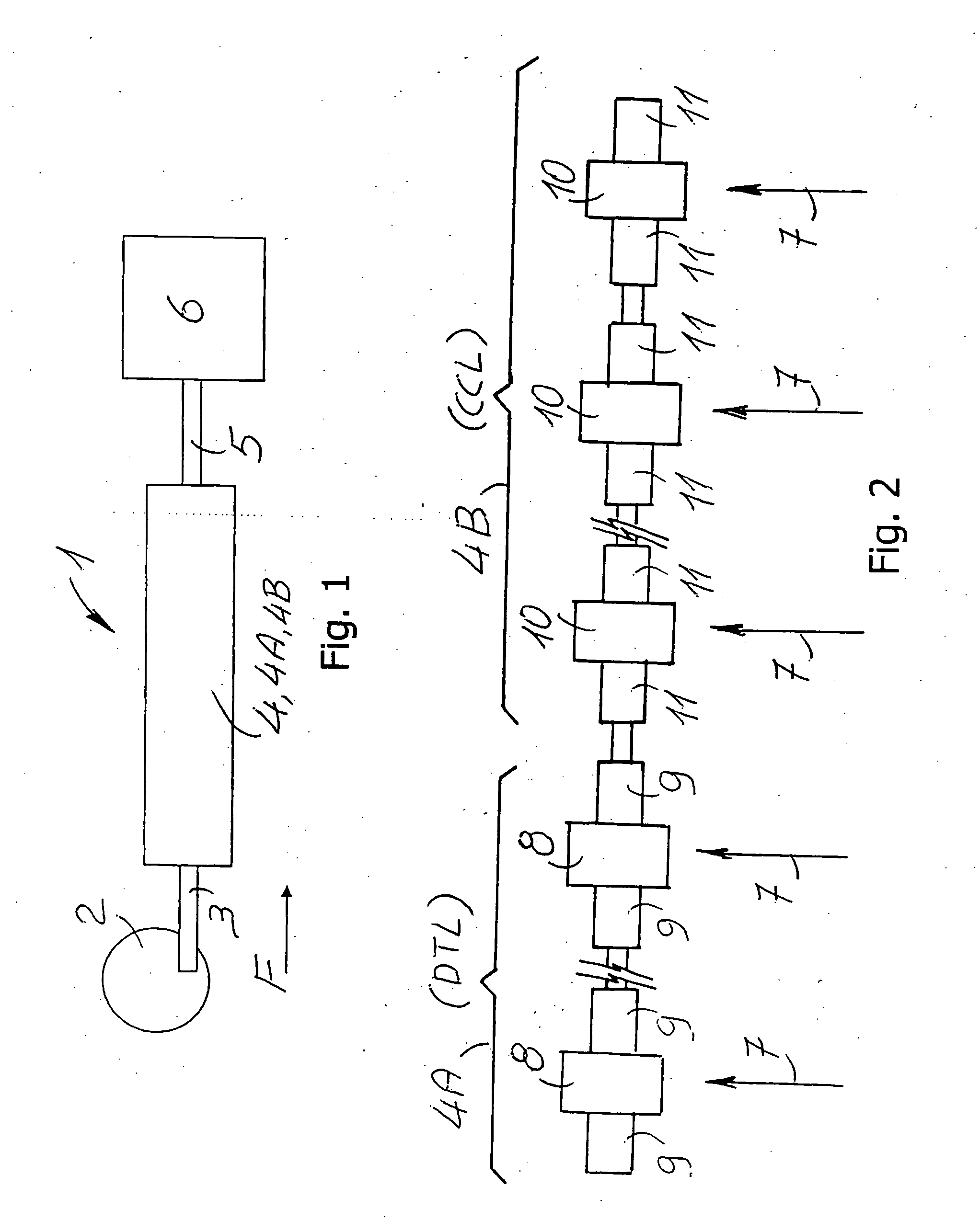

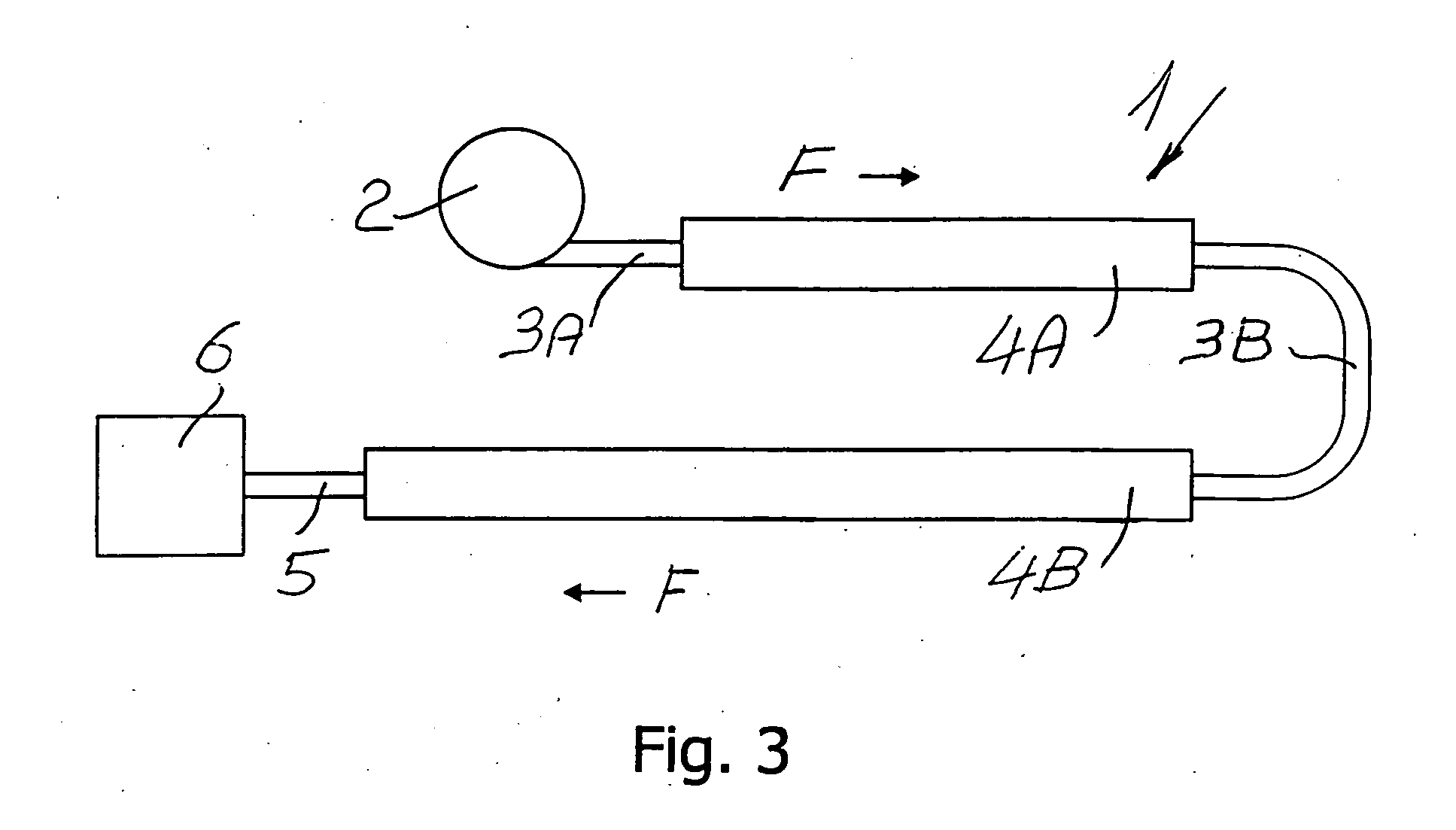

Ion acceleration system for hadrontherapy

ActiveUS7423278B2High complexityHigh errorTransit-time tubesMagnetic resonance acceleratorsTime structureHigh energy

Owner:ADVANCED ONCOTHERAPY PLC

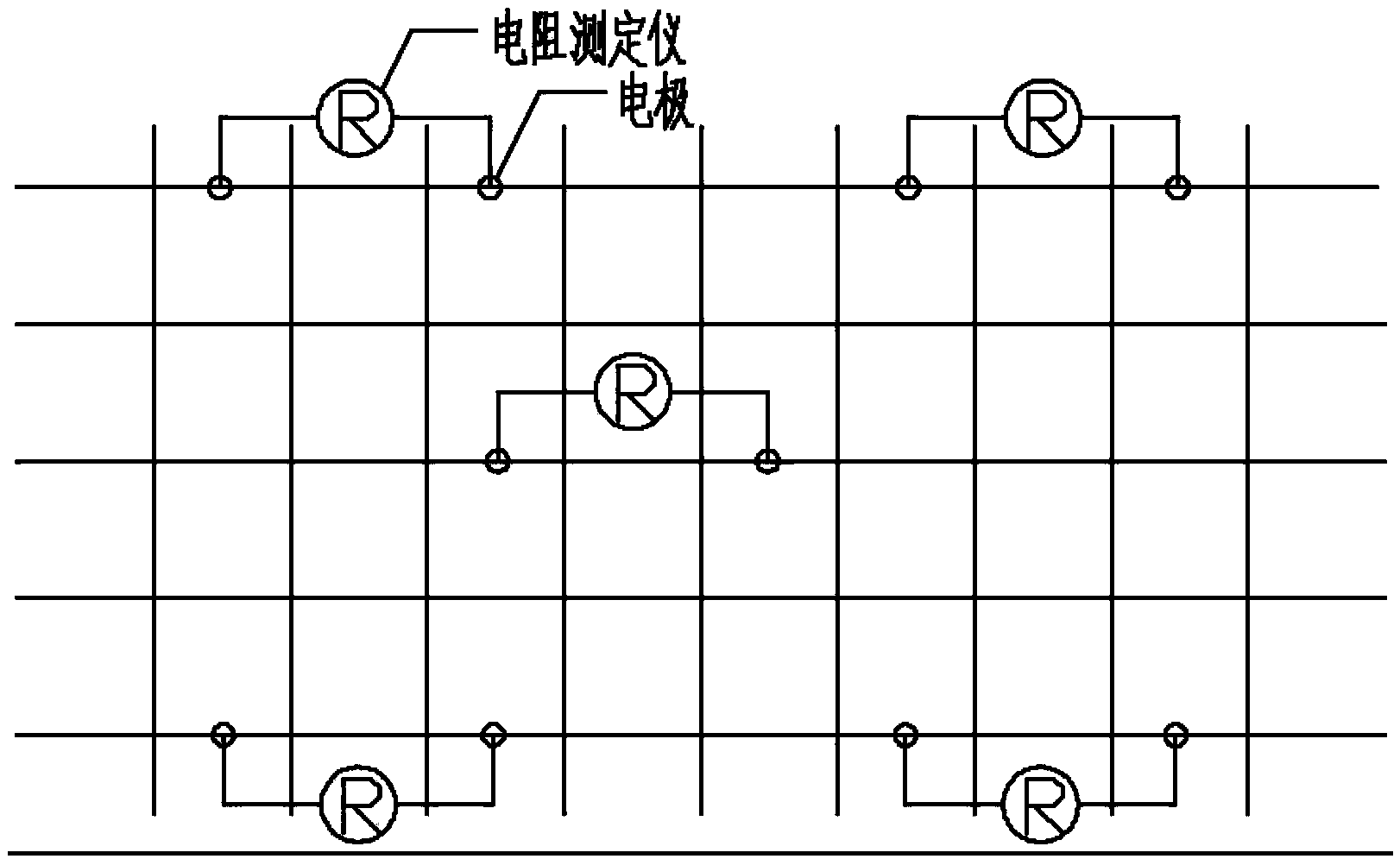

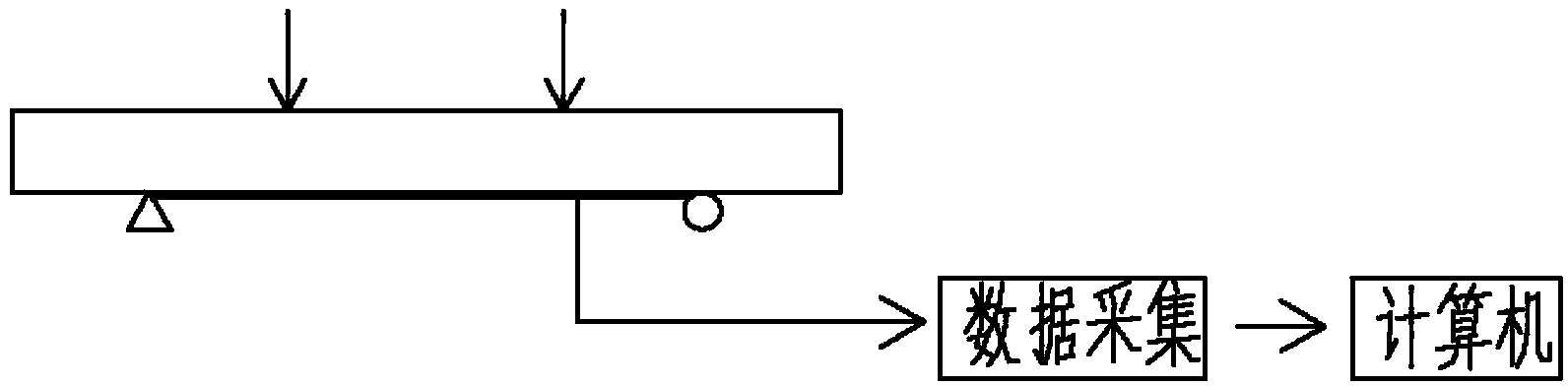

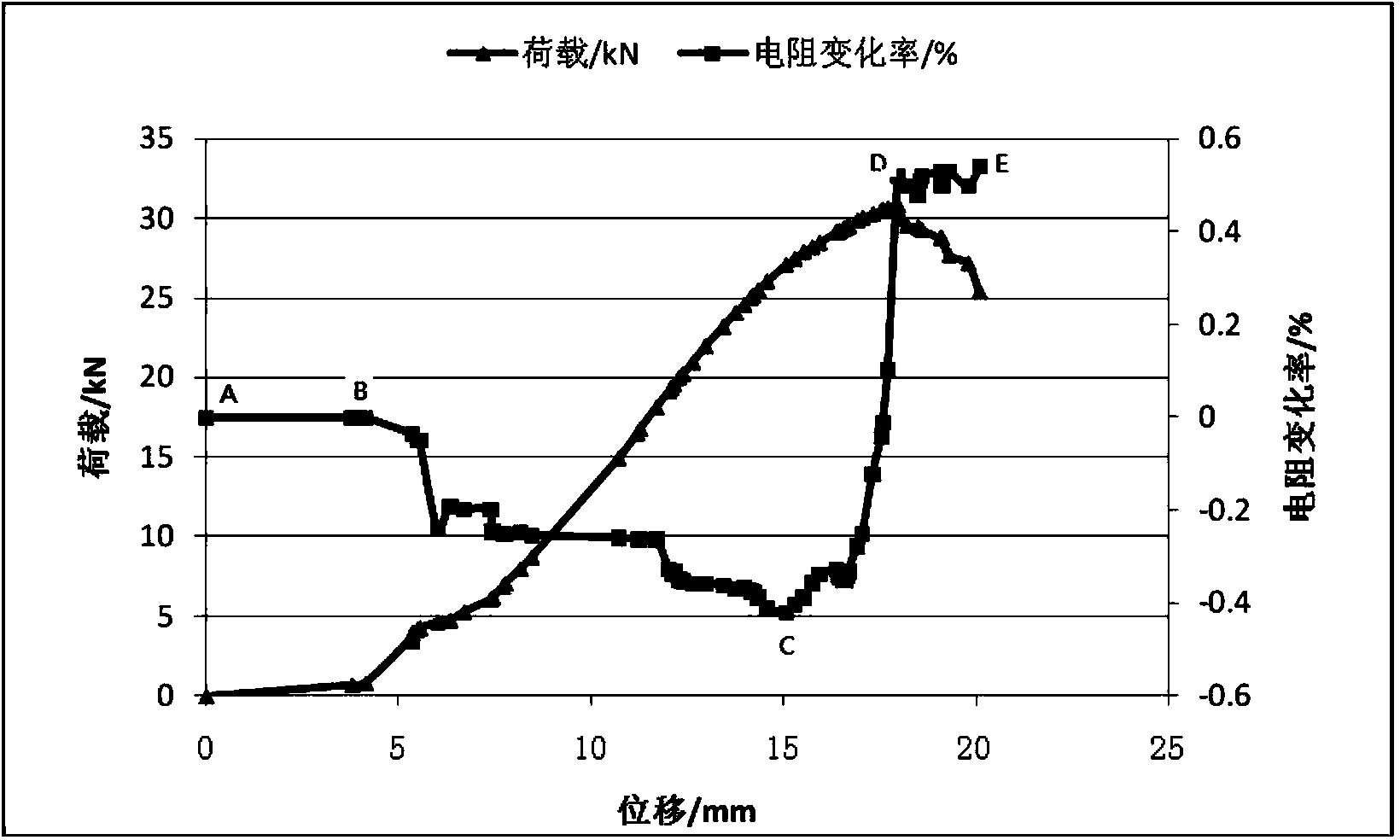

Self-monitoring intelligent textile reinforced concrete and manufacturing method thereof

InactiveCN103808765AEffective structureEfficient Structural Health MonitoringPreparing sample for investigationMaterial resistanceTime structureFiber

The invention discloses self-monitoring intelligent textile reinforced concrete and a manufacturing method thereof. In a process of TRC (textile reinforced concrete) manufacturing, an electrode is adhered to a carbon fiber in a textile net; stress, stress conditions and stress distribution of all regions in the structure are monitored by dynamically detecting the change of the resistivity of intelligent TRC. The self-monitoring intelligent textile reinforced concrete can supply effective bearing force and stretchability to the structure and supply an effective monitoring technology to the safety of the structure in a service stage; the aim of integrating a high-efficiency structure and real-time structure health monitoring is fulfilled.

Owner:YANCHENG INST OF TECH

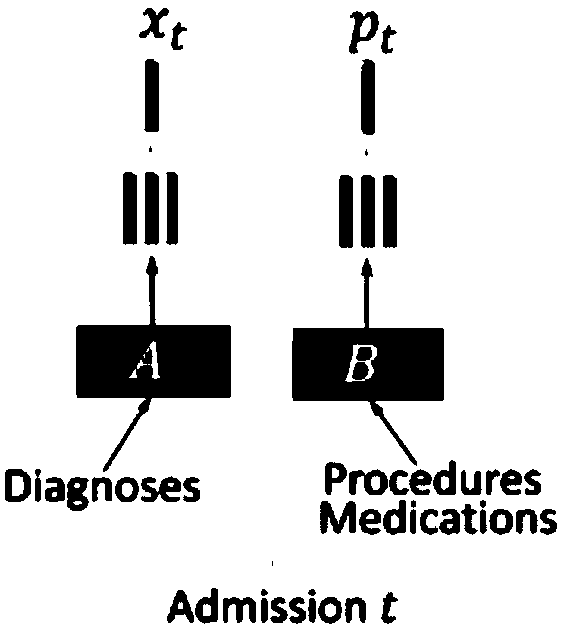

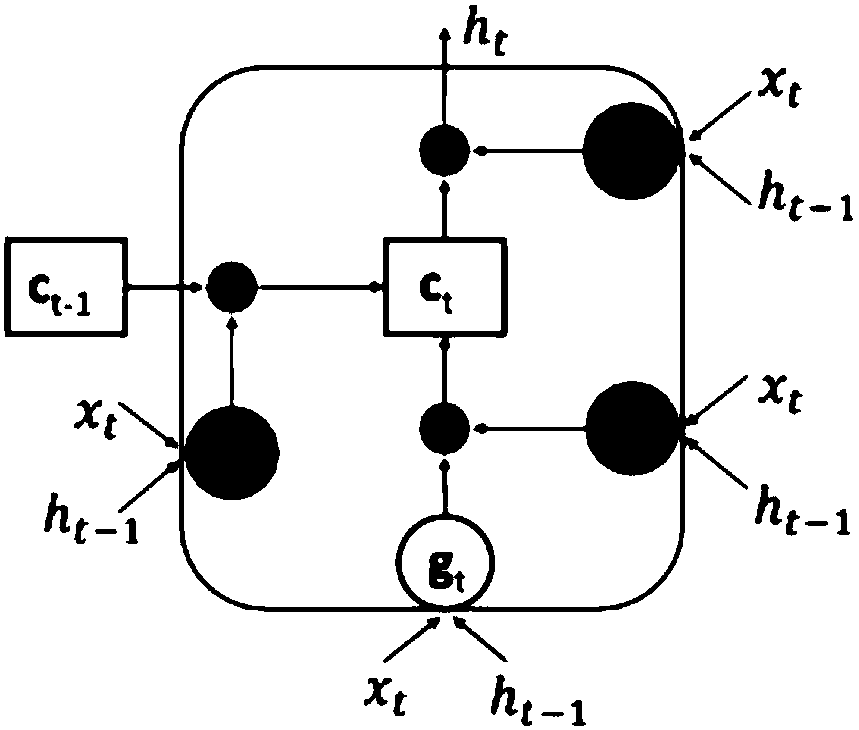

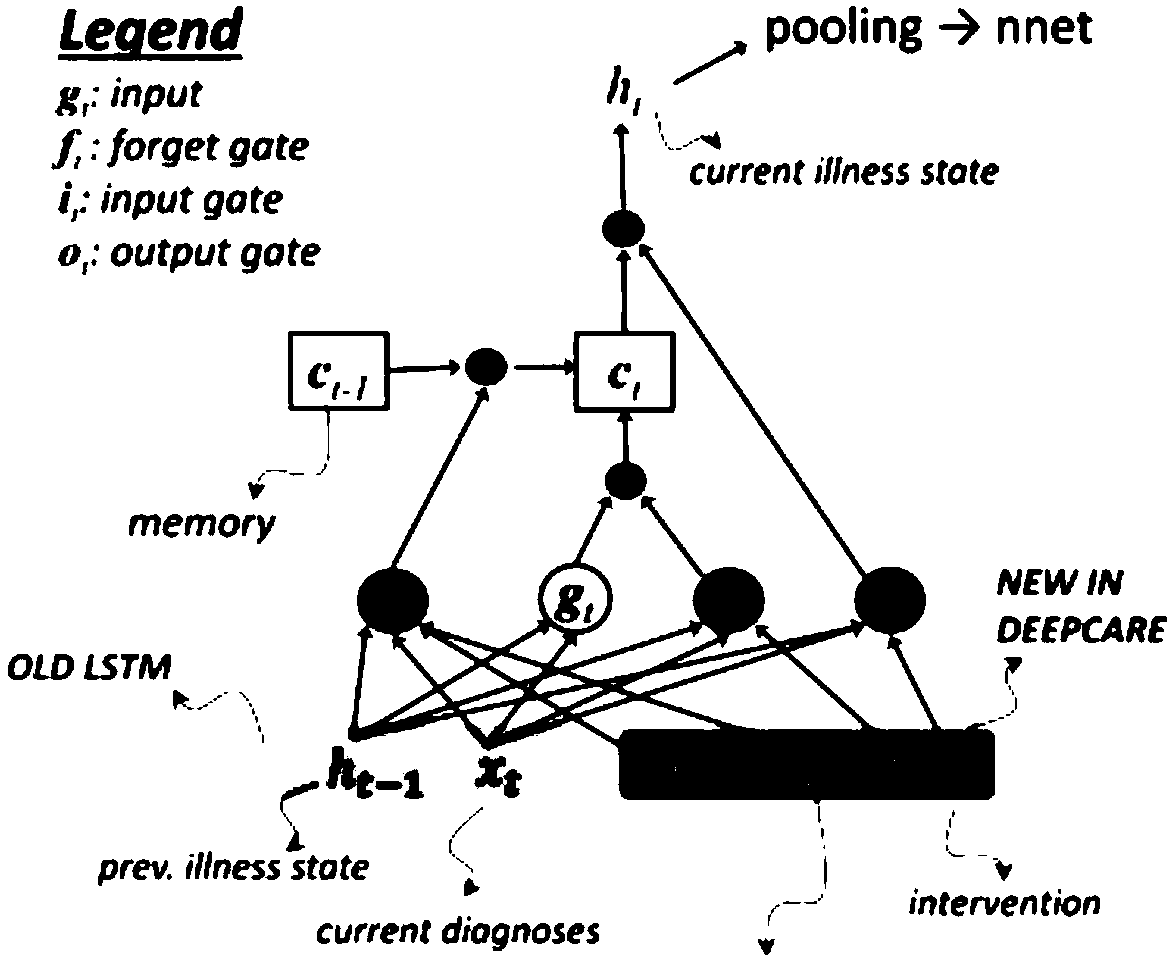

Method for deeply learning and predicting medical track based on medical records

ActiveCN109599177AProven validityImprove accuracyHealth-index calculationMedical automated diagnosisMedical recordTime structure

The invention discloses a method for deeply learning and predicting medical track based on medical records. The method comprises the following steps: S1, encoding diagnostic information and intervention information on admission through an encoding scheme and converting code into vector to acquire diagnostic information conversion vector (the formula is shown in the description) and intervention information conversion vector (the formula is shown in the description) separately, and converting the diagnostic information and intervention information on admission for one time into one 2M-dimensional vector [xt, pt]; S2, input the vector [xt, pt] into an LSTM model, and evaluating the current output value ht to obtain the current disease state; S3, predicting a diagnostic code dt+1 according tothe disease state ht and predicting the disease progression through the diagnostic code dt+1; S4, calculating an intervention code st of the time t, increasing a time structure in the LSTM model, collecting the historical disease states in multiple time ranges, collecting the state of each section of a horizontal time shaft, collecting all the diseases states, stacking into a vector (the formulais shown in the description), and feeding back the vector (the formula is shown in the description) into a nerve network to predict the future risk result Y.

Owner:莫毓昌

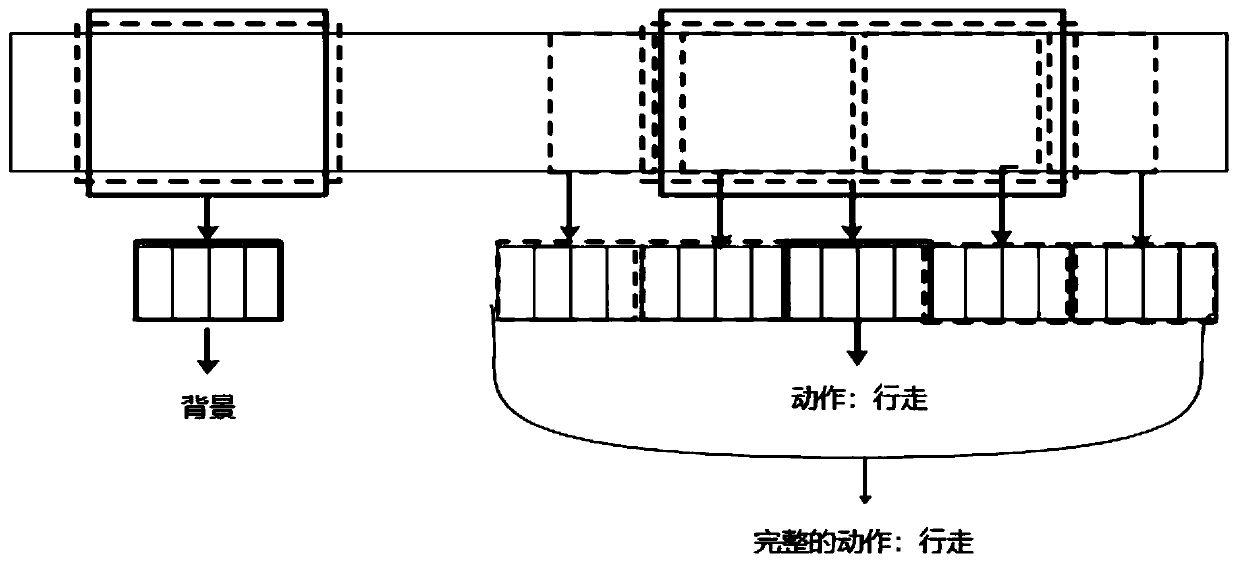

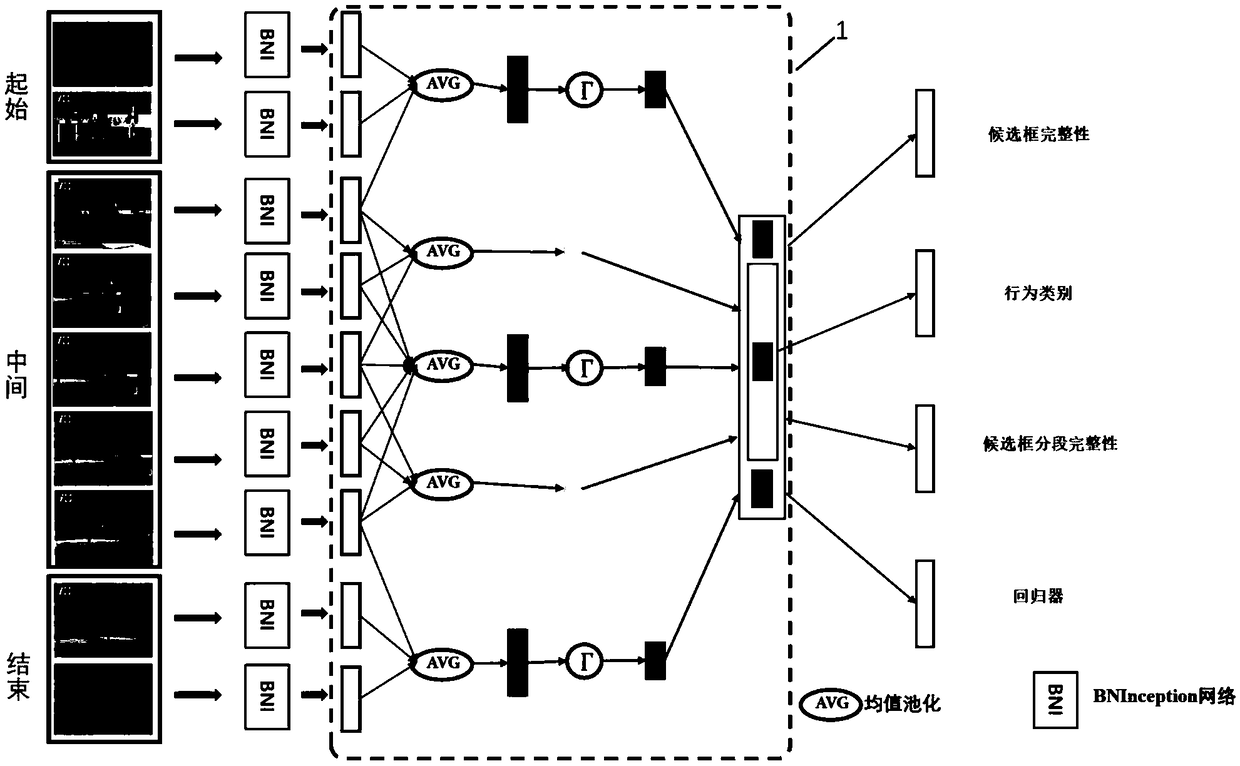

Video behavior timeline detection method

ActiveCN108830212AEasy to detectThe test result is accurateCharacter and pattern recognitionClosed circuit television systemsPattern recognitionTime structure

The invention discloses a video behavior timeline detection method. The method comprises the following steps: performing modeling based on a deep learning and time structure, detecting a video behavior timeline in combination with coarse granularity detection and fine granularity detection, and extracting temporal and spatial characteristics of a video by using a dual-flow model on the basis of anexisting model SSN; modeling a time structure of a behavior, and dividing a single behavior into three stages; then providing a new characteristic pyramid capable of effectively extracting time boundary information of a video behavior; and finally, combining the coarse granularity detection and the fine granularity detection to make a detection result more precise. The video behavior timeline detection method is high in detection precision, and the detection precision is higher than the detection precision of all of the current existing disclosed methods; the video behavior timeline detectionmethod is wide in application range, is applicable to detection of video clips in which people are interested in an intelligent monitoring system or a human-machine supervision system, is favorable for subsequent analysis and processing, and has an important application value.

Owner:PEKING UNIV SHENZHEN GRADUATE SCHOOL

Methods for using a detector to monitor and detect channel occupancy

InactiveUS20080095042A1Avoid interferenceConfidenceTransmitters monitoringSite diversityTime structureCurrent channel

Methods for using a detector to monitor and detect channel occupancy are disclosed. The detector resides on a station within a network using a framed format having a periodic time structure. When non-cooperative transmissions are detected by the network, the detector assesses the availability of a backup channel enabling migration of the network. The backup channel serves to allow the network to migrate transparently when the current channel becomes unavailable. The backup channel, however, could be occupied by another network that results in the migrating network interfering with the network already using the backup channel. Thus, the detector detects active transmission sources on the backup channel to determine whether the backup channel is occupied. Methods for using the detector include scheduling detection intervals asynchronously. The asynchronous detection uses offsets from a reference point within a frame.

Owner:SHARED SPECTRUM

Ion acceleration system for hadrontherapy

ActiveUS20060170381A1High complexityHigh error marginTransit-time tubesMagnetic resonance acceleratorsTime structureEmissivity

A system for ion acceleration for medical purposes includes a conventional or superconducting cyclotron, a radiofrequency linear accelerator (Linac), a Medium Energy Beam Transport line (MEBT) connected, at the low energy side, to the exit of the cyclotron, and at the other side, to the entrance of the linear radiofrequency accelerator, as well as a High Energy Beam Transport line (HEBT) connected at high energy side to the radiofrequency linear accelerator exit and at the other end, to a system for the dose distribution to the patient. The high operation frequency of the Linac allows for reduced consumption and a remarkable compactness facilitating its installation in hospital structures. The use of a modular LINAC allows varying in active way the energy and the current of the therapeutic beam, having a small emittance and a time structure adapted to the dose distribution based on the technique known as the “spot scanning”.

Owner:ADVANCED ONCOTHERAPY PLC

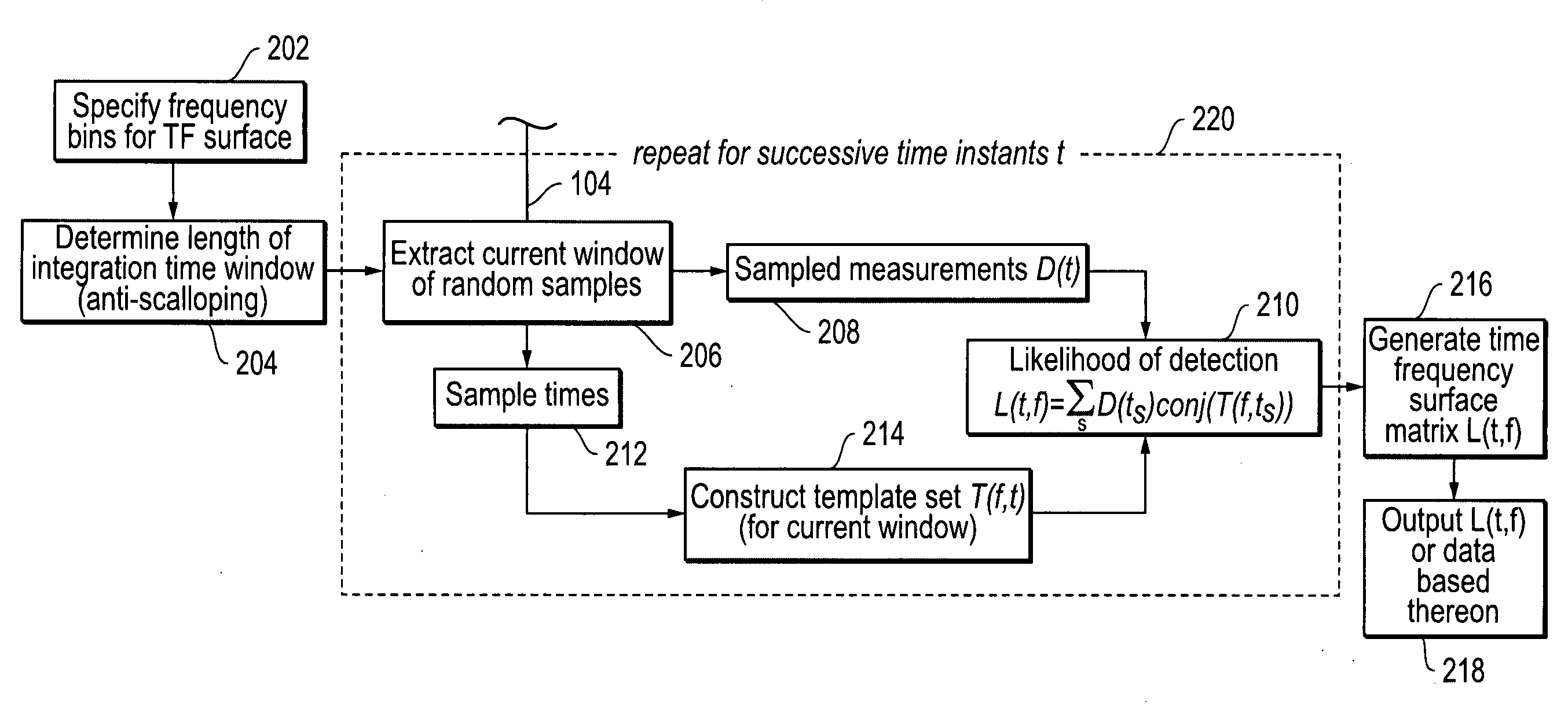

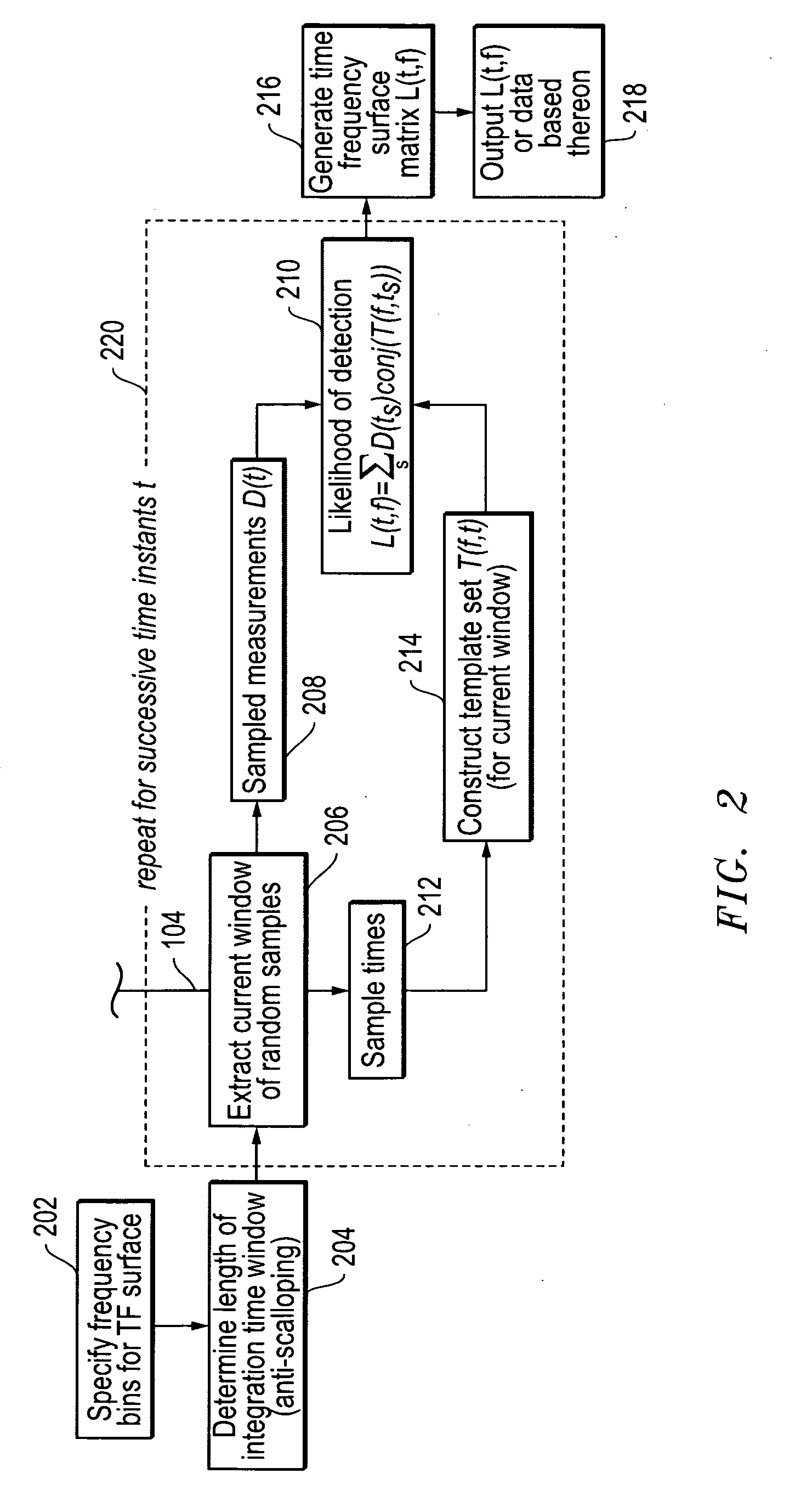

Systems and methods for construction of time-frequency surfaces and detection of signals

InactiveUS20100002777A1Wave based measurement systemsModulated-carrier systemsTime structureData stream

Systems and methods for detecting and / or identifying signals that employ streaming processing to generate time-frequency surfaces by sampling a datastream according to a temporal structure that may be chosen as needed. The sampled datastream may be correlated with a set of templates that span the band of interest in a continuous manner, and used to generate time-frequency surfaces from irregularly sampled data with arbitrary structure that has been sampled with non-constant and non-Nyquist sampling rates where such non-constant rates are needed or desired.

Owner:L 3 COMM INTEGRATED SYST

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com