Patents

Literature

612 results about "Attention model" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Attention is focused in this model on information deemed important by the individual, while information seen as not as important is processed less thoroughly by the human brain. During this attenuation model, the information is processed for physical characteristics and the recognition of words through a filter.

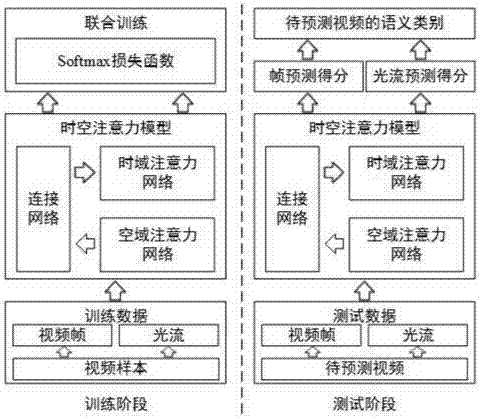

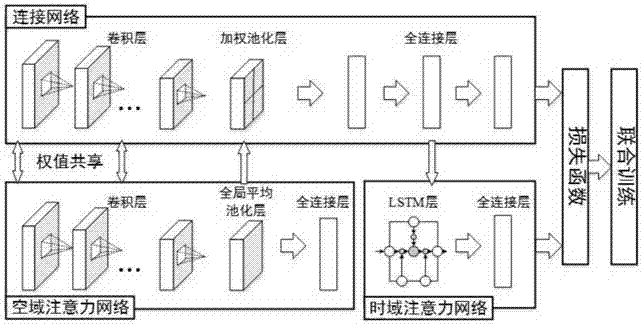

Space-time attention based video classification method

ActiveCN107330362AImprove classification performanceTime-domain saliency information is accurateCharacter and pattern recognitionAttention modelTime domain

The invention relates to a space-time attention based video classification method, which comprises the steps of extracting frames and optical flows for training video and video to be predicted, and stacking a plurality of optical flows into a multi-channel image; building a space-time attention model, wherein the space-time attention model comprises a space-domain attention network, a time-domain attention network and a connection network; training the three components of the space-time attention model in a joint manner so as to enable the effects of the space-domain attention and the time-domain attention to be simultaneously improved and obtain a space-time attention model capable of accurately modeling the space-domain saliency and the time-domain saliency and being applicable to video classification; extracting the space-domain saliency and the time-domain saliency for the frames and optical flows of the video to be predicted by using the space-time attention model obtained by learning, performing prediction, and integrating prediction scores of the frames and the optical flows to obtain a final semantic category of the video to be predicted. According to the space-time attention based video classification method, modeling can be performing on the space-domain attention and the time-domain attention simultaneously, and the cooperative performance can be sufficiently utilized through joint training, thereby learning more accurate space-domain saliency and time-domain saliency, and thus improving the accuracy of video classification.

Owner:PEKING UNIV

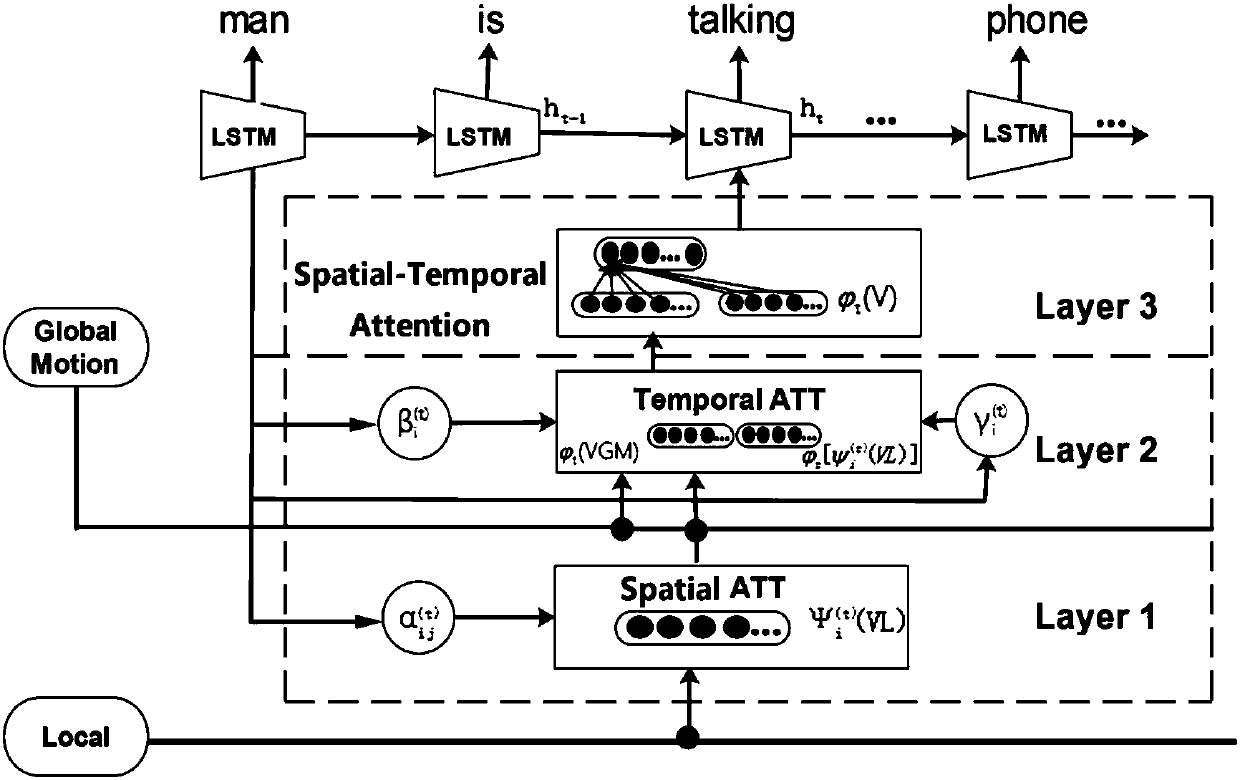

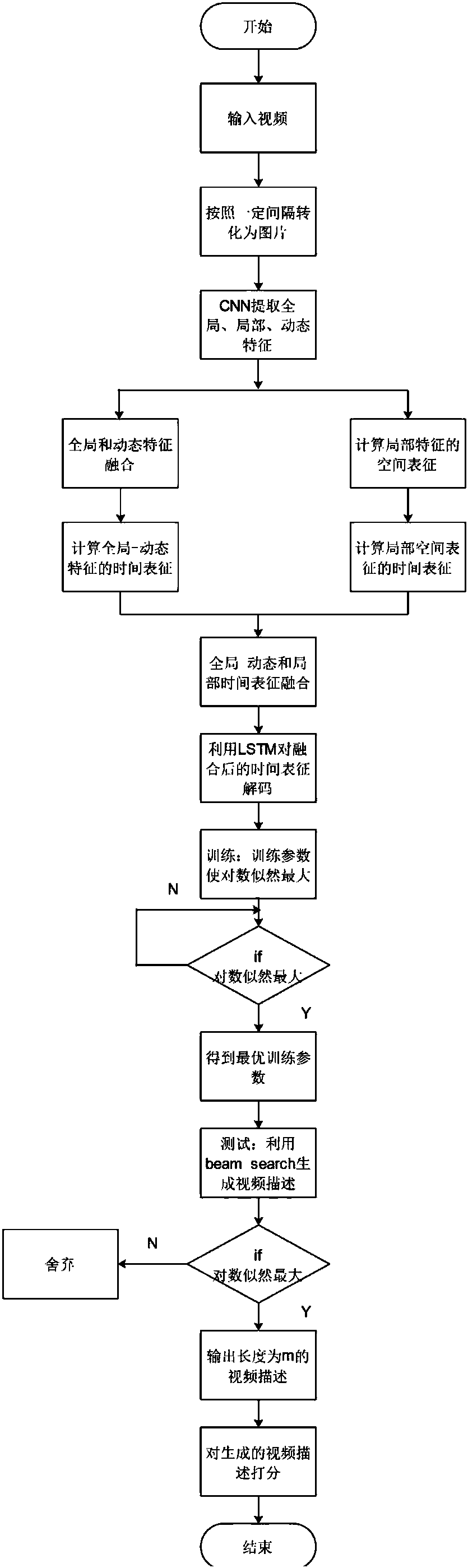

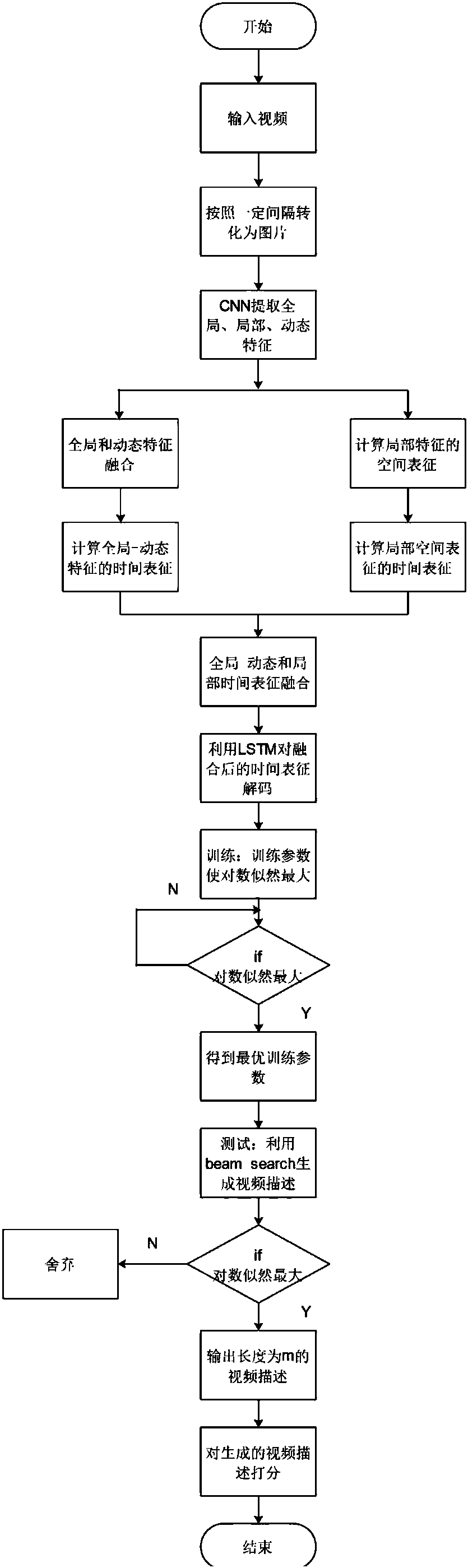

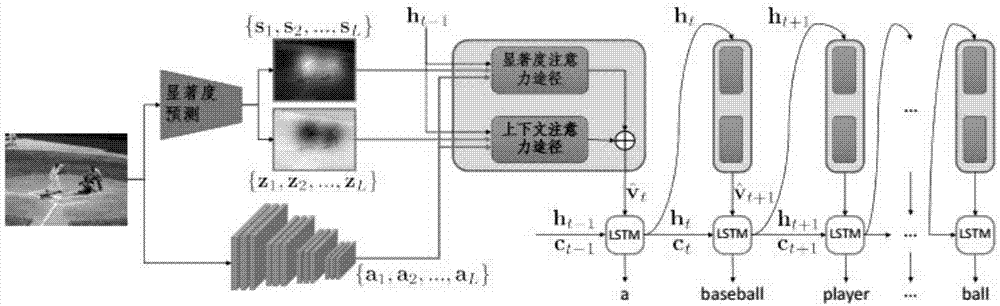

Video content description method by means of space and time attention models

ActiveCN107066973ACharacter and pattern recognitionNeural architecturesAttention modelTime structure

The invention discloses a video content description method by means of space and time attention models. The global time structure in the video is captured by means of the time attention model, the space structure on each frame of picture is captured by the space attention model, and the method aims to realize that the video description model masters the main event in the video and enhances the identification capability of the local information. The method includes preprocessing the video format; establishing the time and space attention models; and training and testing the video description model. By means of the time attention model, the main time structure in the video can be maintained, and by means of the space attention model, some key areas in each frame of picture are focused in each frame of picture, so that the generated video description can capture some key buy easy neglected detailed information while mastering the main event in the video content.

Owner:HANGZHOU DIANZI UNIV

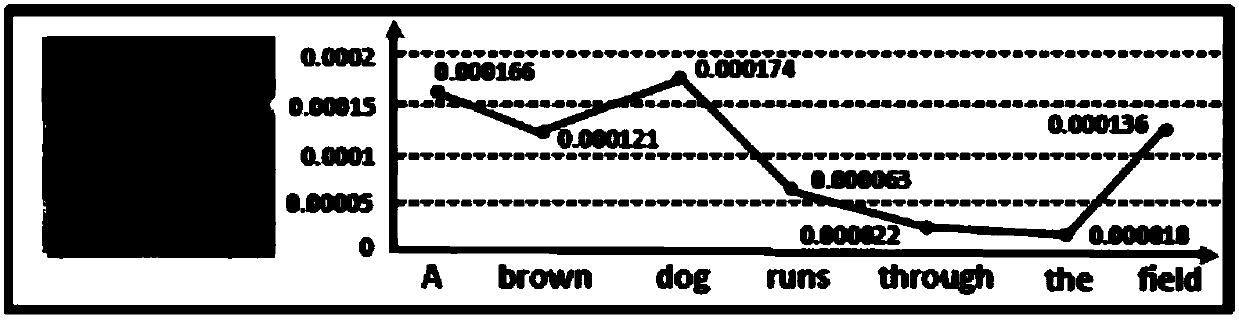

Image subtitle generation method and system fusing visual attention and semantic attention

ActiveCN107608943ASolve build problemsGood effectCharacter and pattern recognitionNeural architecturesAttention modelImaging Feature

The invention discloses an image subtitle generation method and system fusing visual attention and semantic attention. The method comprises the steps of extracting an image feature from each image tobe subjected to subtitle generation through a convolutional neural network to obtain an image feature set; building an LSTM model, and transmitting a previously labeled text description correspondingto each image to be subjected to subtitle generation into the LSTM model to obtain time sequence information; in combination with the image feature set and the time sequence information, generating avisual attention model; in combination with the image feature set, the time sequence information and words of a previous time sequence, generating a semantic attention model; according to the visual attention model and the semantic attention model, generating an automatic balance policy model; according to the image feature set and a text corresponding to the image to be subjected to subtitle generation, building a gLSTM model; according to the gLSTM model and the automatic balance policy model, generating words corresponding to the image to be subjected to subtitle generation by utilizing anMLP (multilayer perceptron) model; and performing serial combination on all the obtained words to generate a subtitle.

Owner:CHINA UNIV OF PETROLEUM (EAST CHINA)

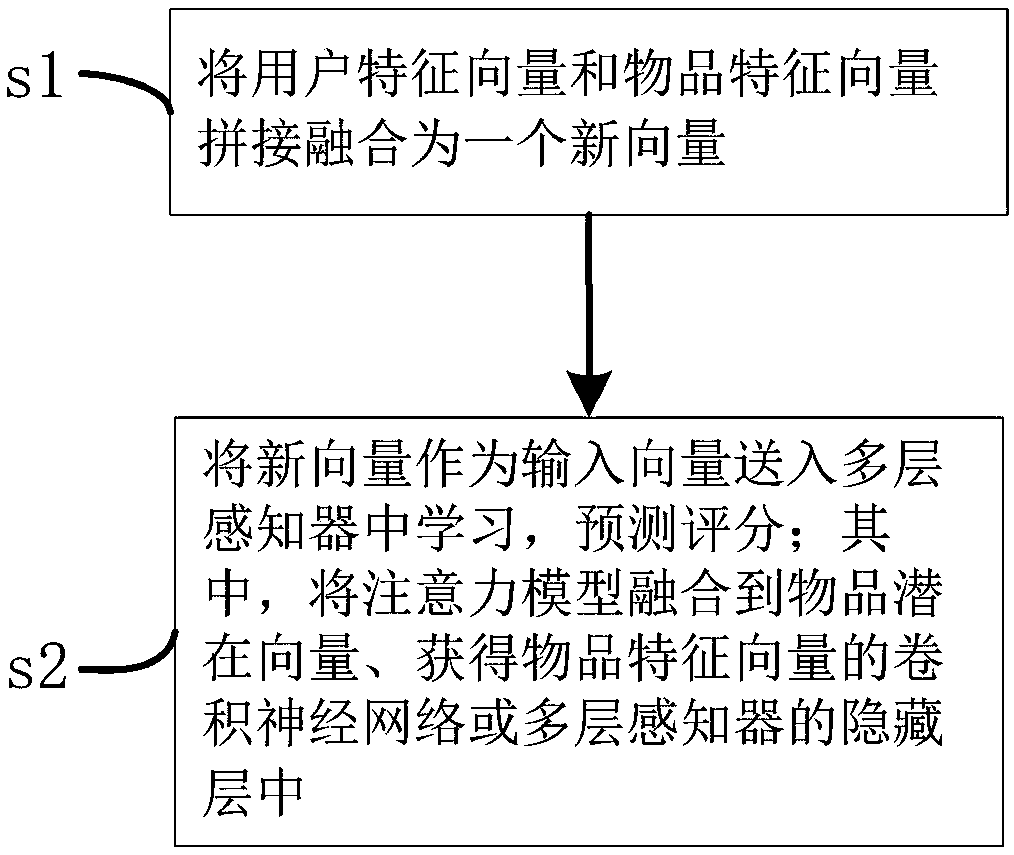

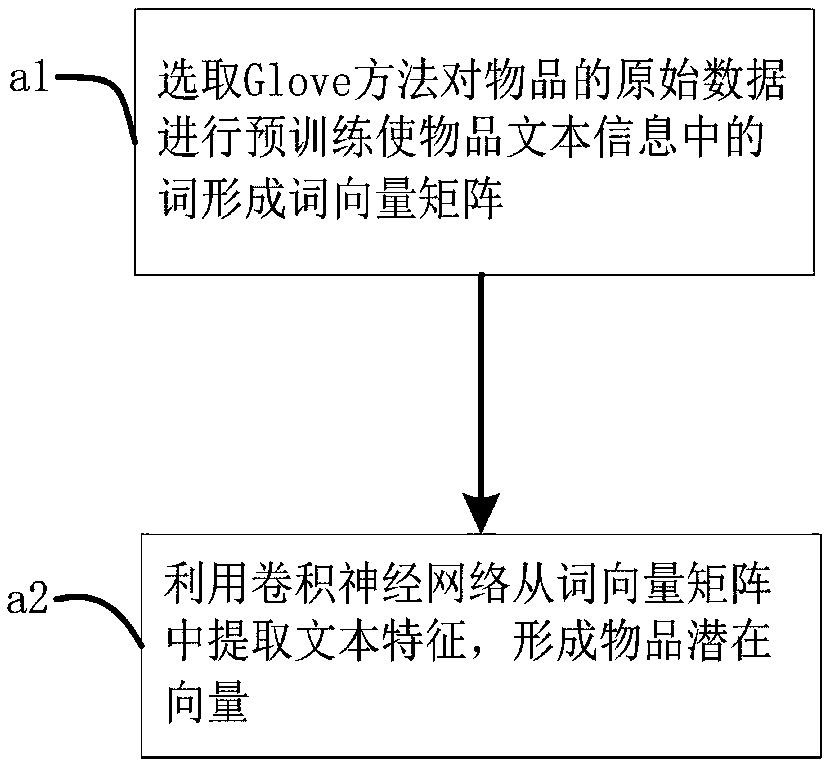

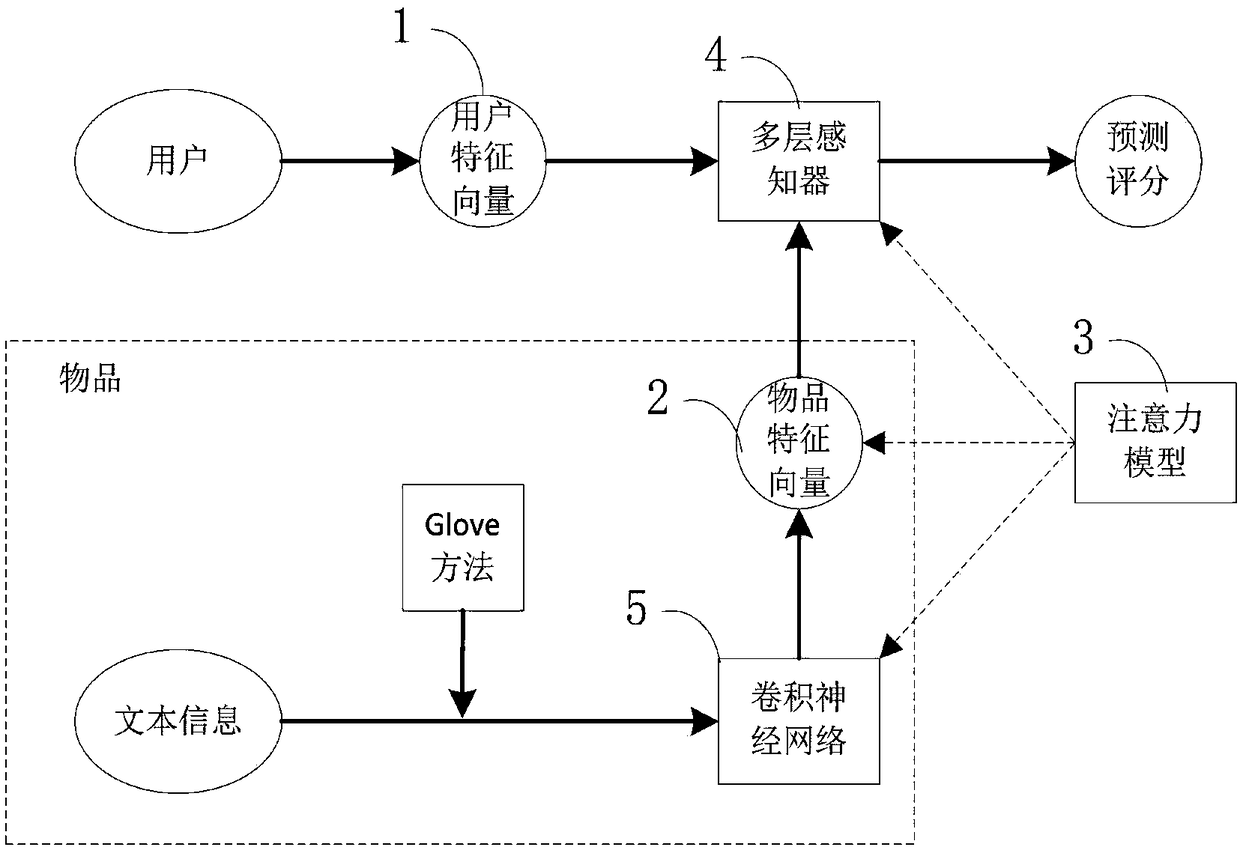

Convolution neural network collaborative filtering recommendation method and system based on attention model

ActiveCN109299396AEfficient extractionImprove rating prediction accuracyDigital data information retrievalNeural architecturesHidden layerFeature vector

The invention discloses a collaborative filtering recommendation method and a collaborative filtering recommendation system of a convolution neural network integrating an attention model, which relates to the technical field of data mining recommendation, improves feature extraction efficiency and scoring prediction accuracy, reduces operation and maintenance cost, simplifies cost management mode,and is convenient for joint operation and large-scale promotion and application. The invention relates to a collaborative filtering recommendation method of a convolution neural network integrating an attention model, comprising the following steps: step S1, splicing a user feature vector and an item feature vector into a new vector; S2, sending the new vector as an input vector into the multi-layer perceptron to learn and predict the score; The attention model is fused into the object potential vector to obtain the convolution neural network of the object feature vector or the hidden layer of the multi-layer perceptron.

Owner:NORTHEAST NORMAL UNIVERSITY

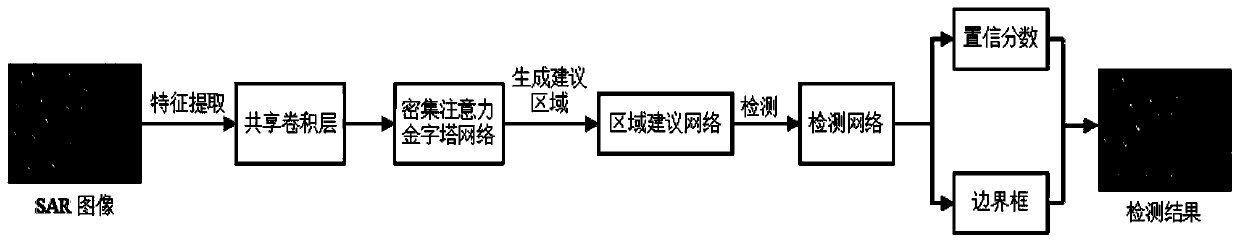

Attention pyramid network-based SAR image multi-scale ship detection method

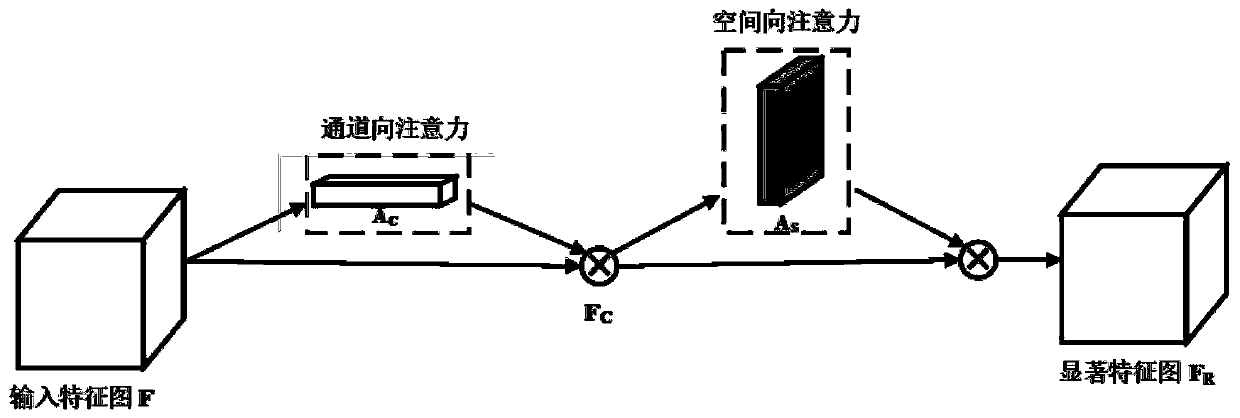

ActiveCN110084210AEasy to detectAccurate removalScene recognitionNeural architecturesAttention modelFeature extraction

The invention belongs to the technical field of radar remote sensing, and relates to an attention pyramid network-based SAR image multi-scale ship detection method. On the basis of an existing featurepyramid network, the invention provides a multi-scale feature extraction method with adaptive selection of significant features, namely the feature pyramid network based on a dense attention mechanism, and the method is applied to SAR image multi-scale ship target detection. Prominent features are highlighted from global and local ranges through the channel attention model and the spatial attention model respectively, and better detection performance is obtained; meanwhile, the attention mechanism is applied to the multi-scale fusion process of each layer, the characteristics can be enhancedlayer by layer, false alarm targets can be effectively eliminated, and the detection precision is improved.

Owner:UNIV OF ELECTRONIC SCI & TECH OF CHINA

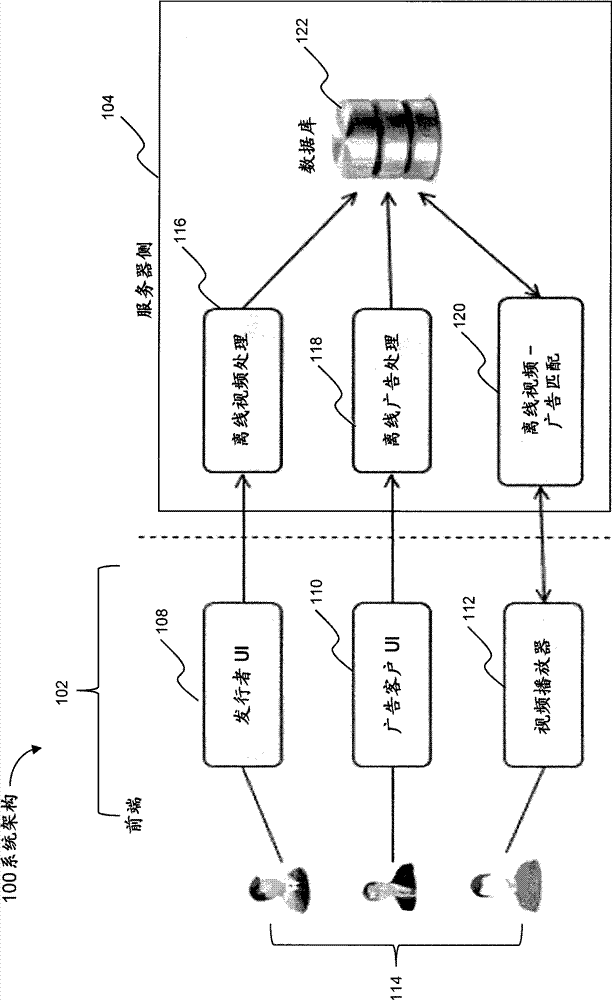

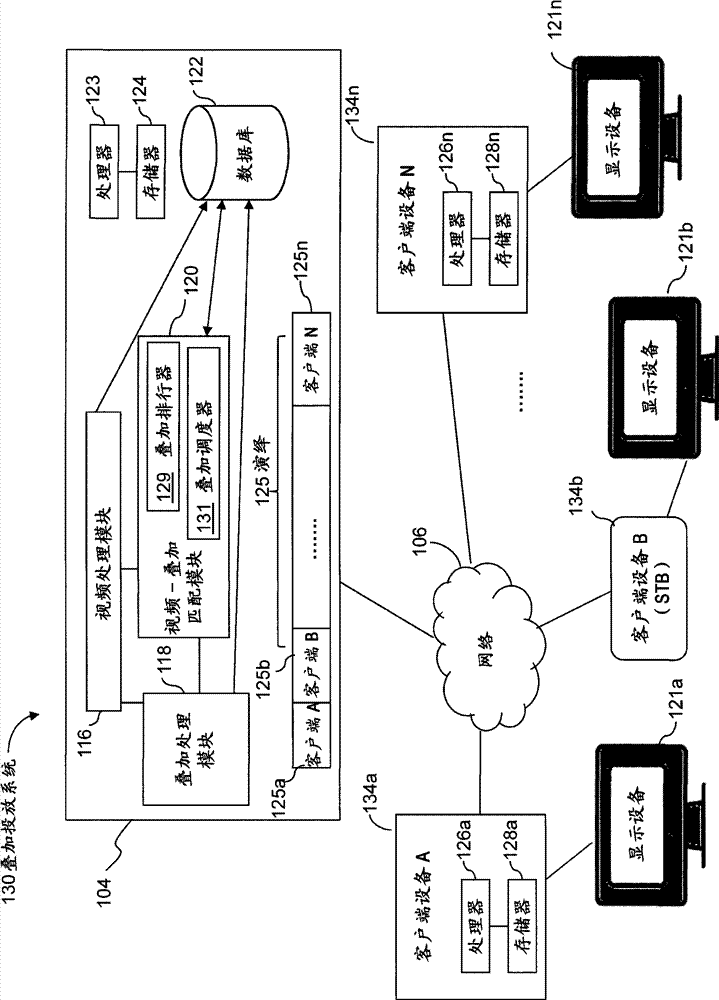

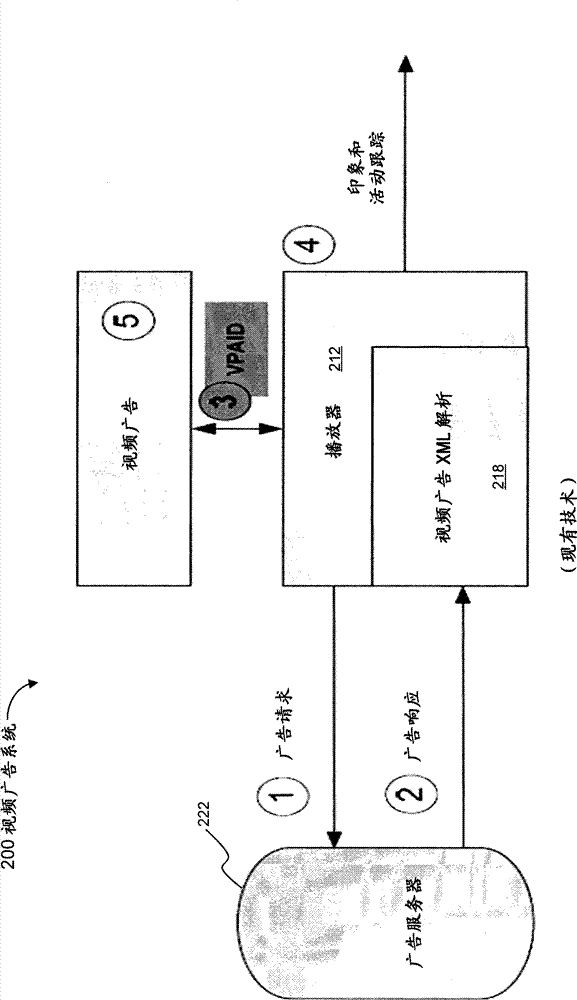

Placing unobtrusive overlays in video content

Methods and systems for placing an overlay in video content are provided. A method receives video content and input indicating an overlay to be placed in the video. The method determines, based on overlay and video properties, locations where the overlay can be placed. The method presents suggested locations for the overlay and receives a selection of a suggested location. The overlay is placed in the selected location. A system includes memory with instructions for inserting an overlay into video content. The system receives an indication of an overlay to be placed in the video, performs attention modeling on the video to identify zones likely to be of interest to a viewer. The system presents locations within the identified zones where the overlay can be inserted and receives a selection of a location. The system inserts the overlay into the selected location and renders the video with the inserted overlay.

Owner:ADOBE INC

Event trigger word extraction method based on document level attention mechanism

ActiveCN108829801AEasy to identifyRealize identificationNeural architecturesSpecial data processing applicationsAttention modelAlgorithm

The invention relates to an event trigger word extraction method, in particular to an event trigger word extraction method based on a document level attention mechanism, comprising the following steps: (1) preprocessing training corpus; (2) performing word vector training by using PubMed database corpus; (3) constructing a distributed representation way of a sample; (4) constructing a characteristic representation way based on BiLSTM-Attention; (5) adopting CRF learning, and acquiring an optimal sequence labeling result of the current document sequence; and (6) extracting event trigger words.The method provided by the invention has the advantages that firstly a BIO tag labeling way is adopted, and recognition including multi-word trigger word recognition is realized; secondly a corresponding simple word and characteristic distributed representation way is constructed for a trigger word recognition task; and thirdly, a BiLSTM-Attention model is proposed, a distributed representation structure specific to the currently input document level information is realized by introducing an Attention mechanism, and trigger word recognition effect is improved.

Owner:DALIAN UNIV OF TECH

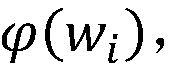

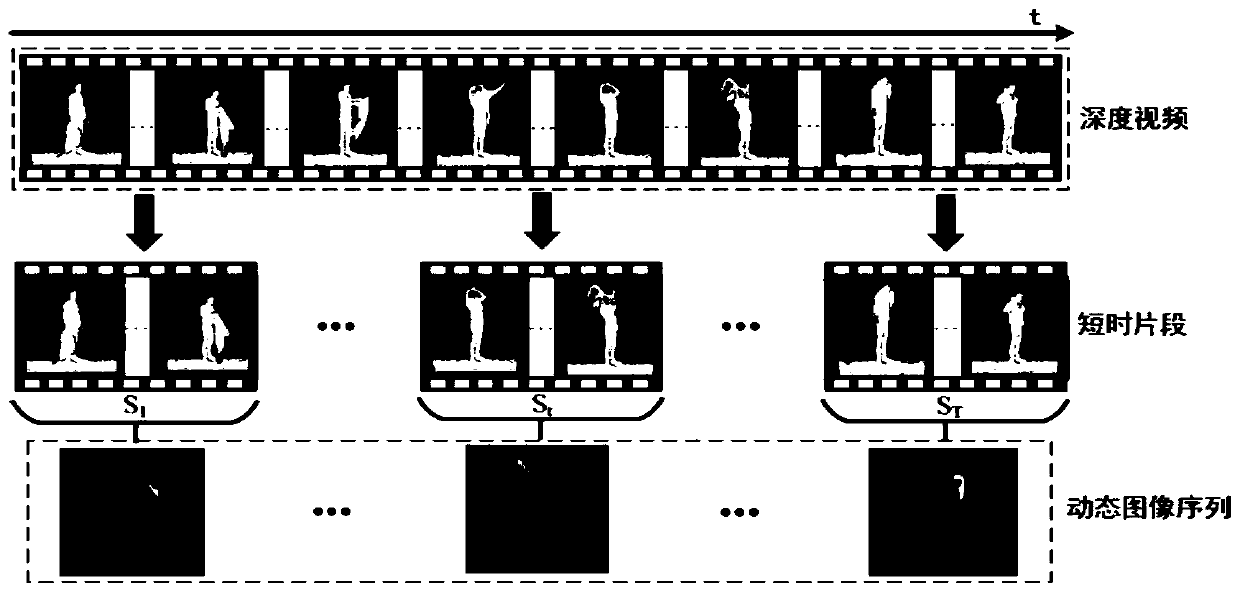

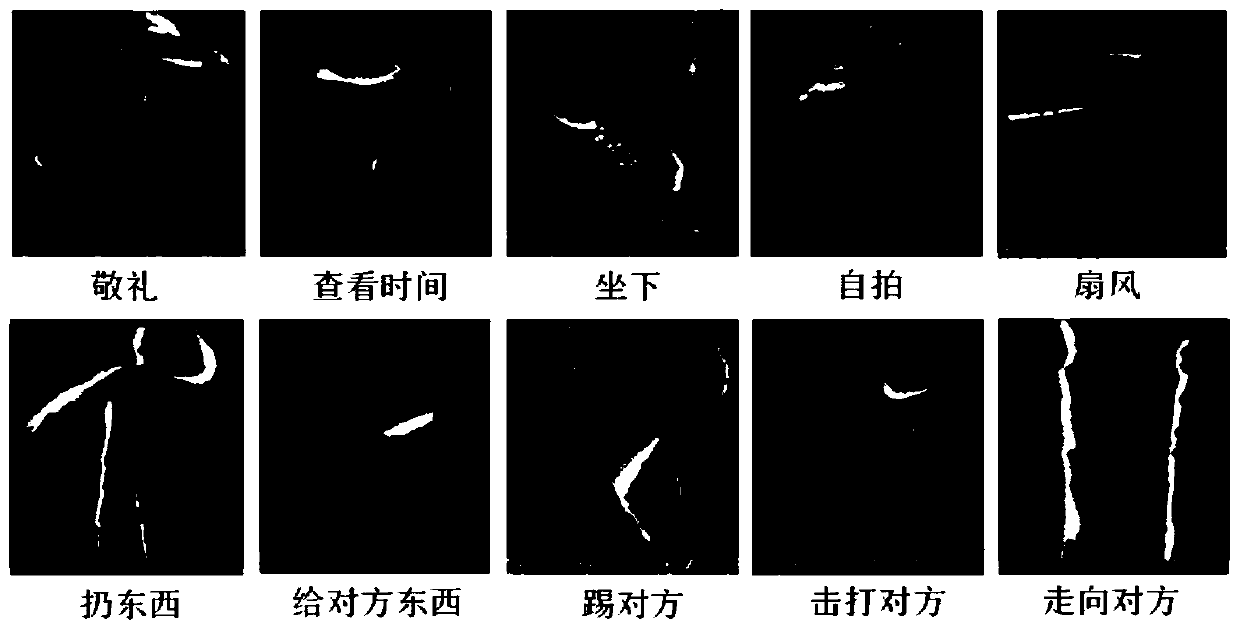

Depth video behavior identification method and system

ActiveCN110059662AEffective studyFocusCharacter and pattern recognitionTemporal informationHuman body

The invention discloses a depth video behavior identification method and system, and the method comprises the steps: enabling the dynamic image sequence representation of a depth video to serve as theinput of CNNs, embedding a channel and space-time interest point attention model after a CNNs convolution layer, and carrying out the optimization adjustment of a convolution feature map, and finally, applying the global average pooling to the adjusted convolutional feature map of the input depth video, generating feature representation of the behavior video, inputting the feature representationinto an LSTM network, capturing time information of human body behaviors, and classifying the time information. According to the method, evaluation is carried out on three challenging public human body behavior data sets, experimental results show that the method can extract space-time information with identification ability, and the performance of video human body behavior identification is remarkably improved. Compared with other existing methods, the method has the advantage that the behavior recognition rate is effectively increased.

Owner:SHANDONG UNIV

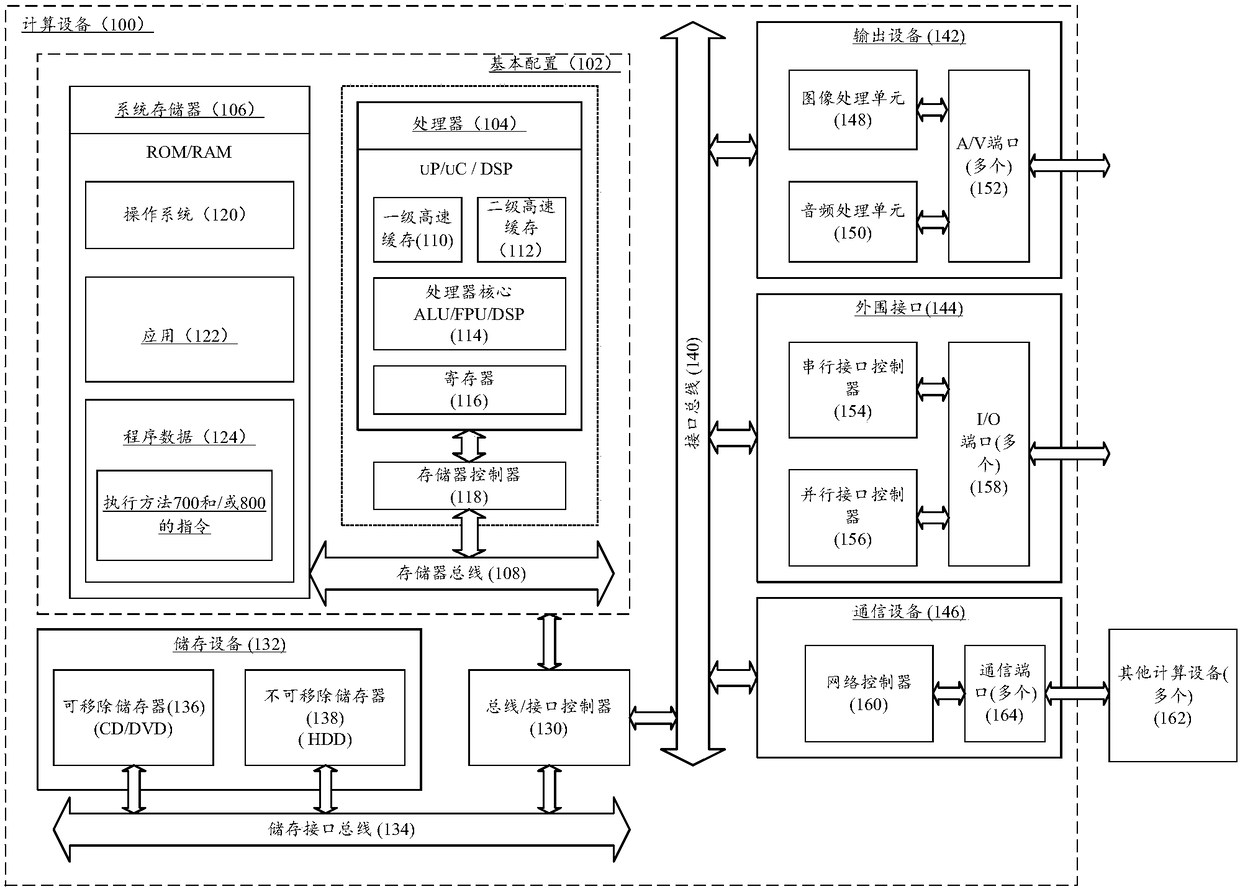

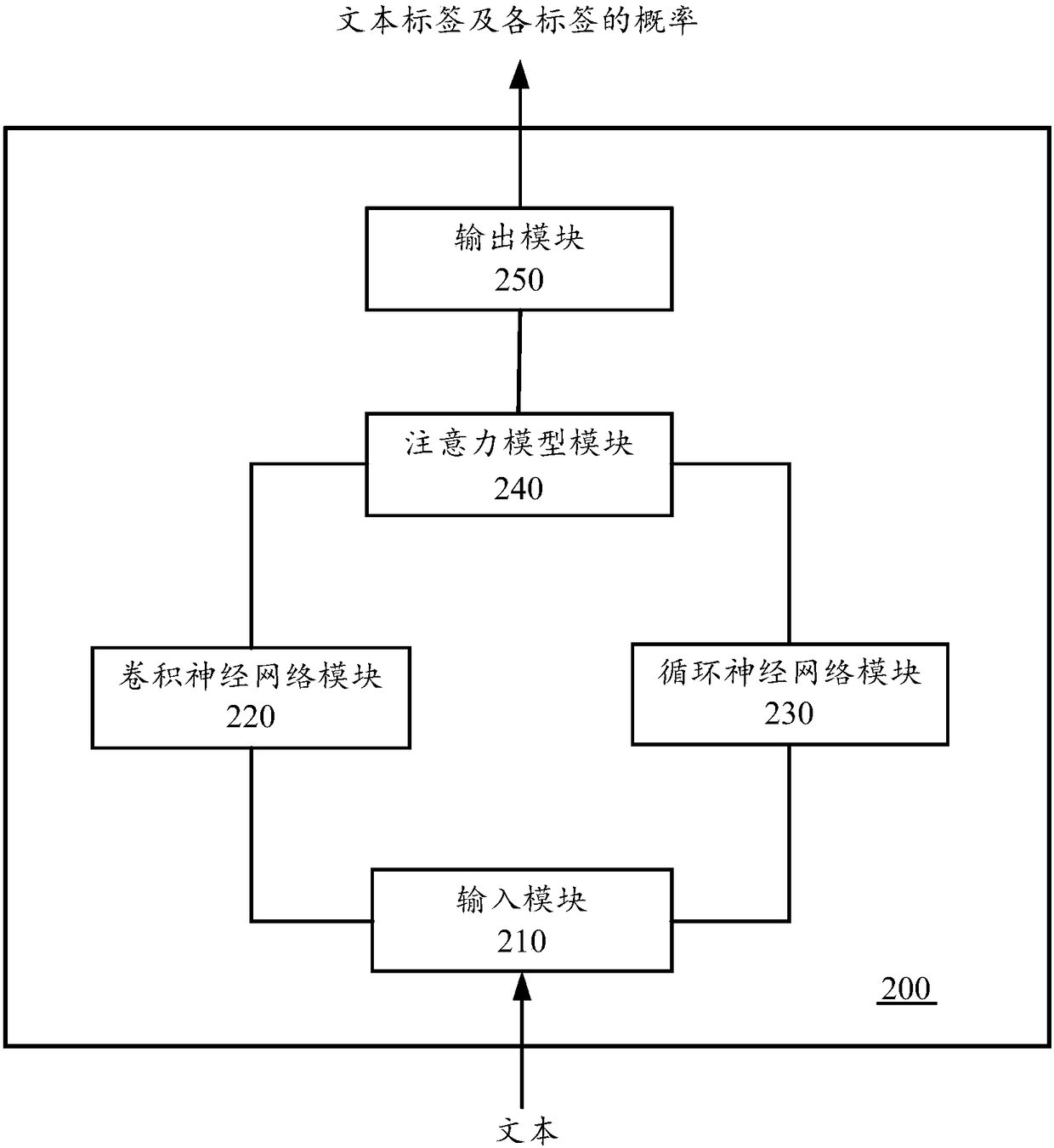

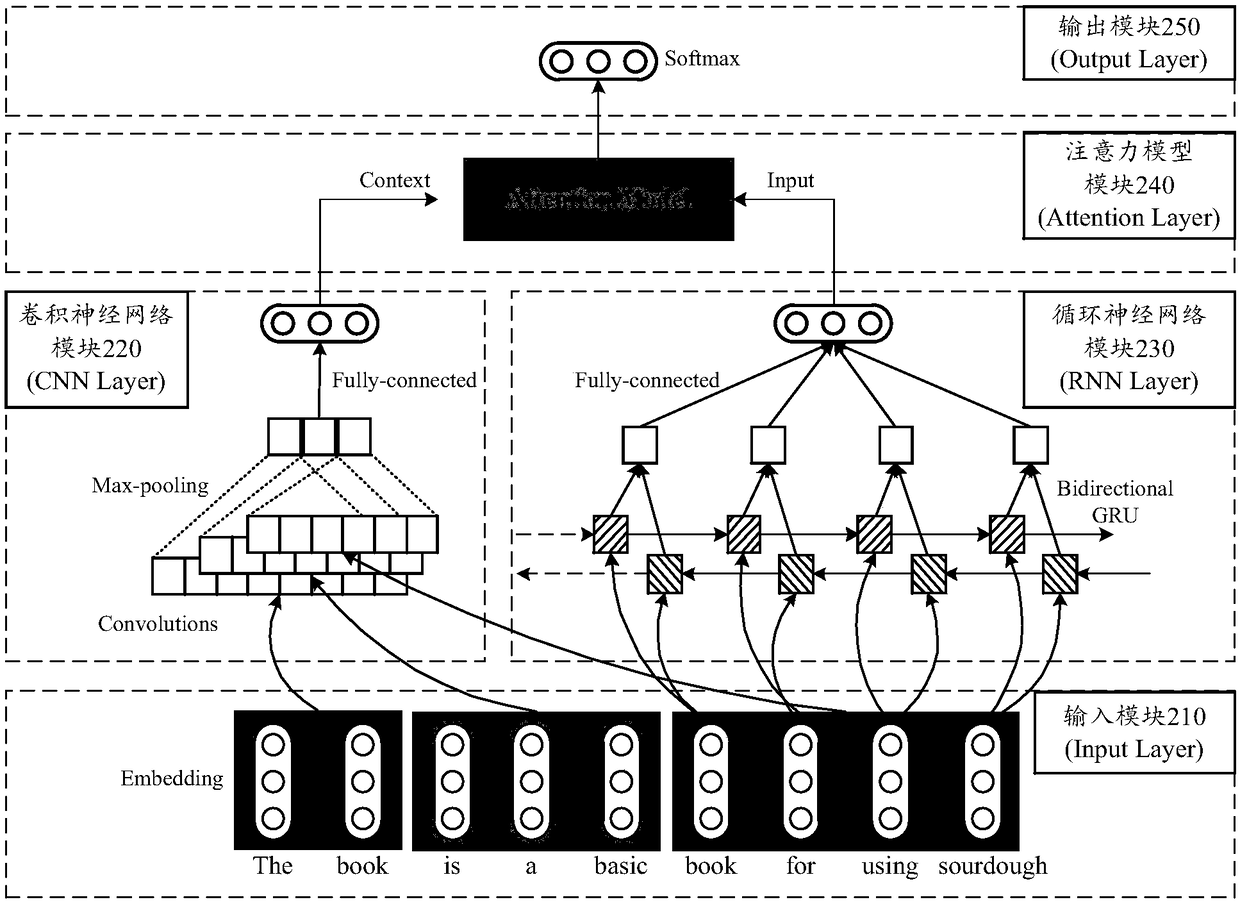

Text label marking device and method, and computing device

The invention discloses a text label marking device, which is used for marking a text label. The device comprises an input module suitable to receive text inputs and convert and output texts to a vector matrix, a convolutional neural network module connected with the input module and suitable to output local semantic features of the texts according to the vector matrix, a recurrent neural networkmodule connected with the input module and suitable to output long-distance semantic features of the texts according to the vector matrix, an attention model module connected with the convolutional neural network module and the recurrent neural network module and suitable to output weights of individual characters in the texts according to the local semantic features and the long-distance semanticfeatures, and an output module connected with the attention model module and suitable to receive the weights of the individual characters in the texts and output text labels and probabilities of thelabels. The invention furthermore discloses a training method for the text label marking device, a corresponding text label marking method and a computing device.

Owner:BEIJING YUNJIANG TECH CO LTD

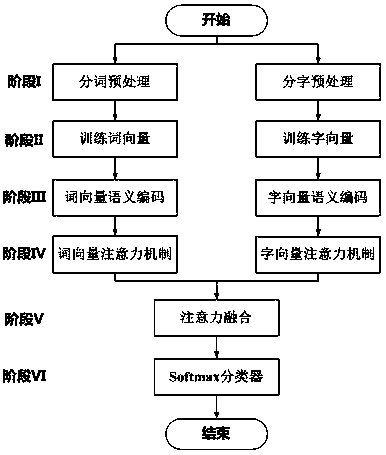

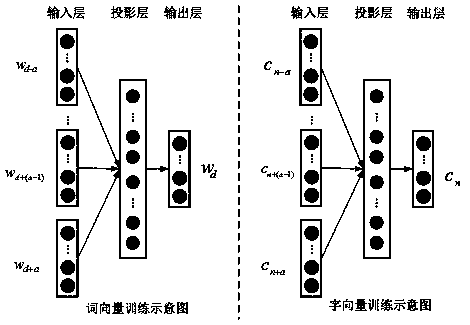

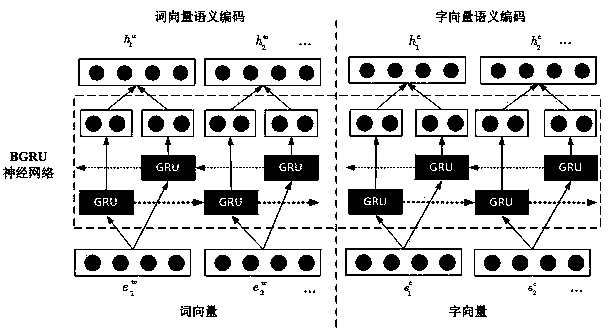

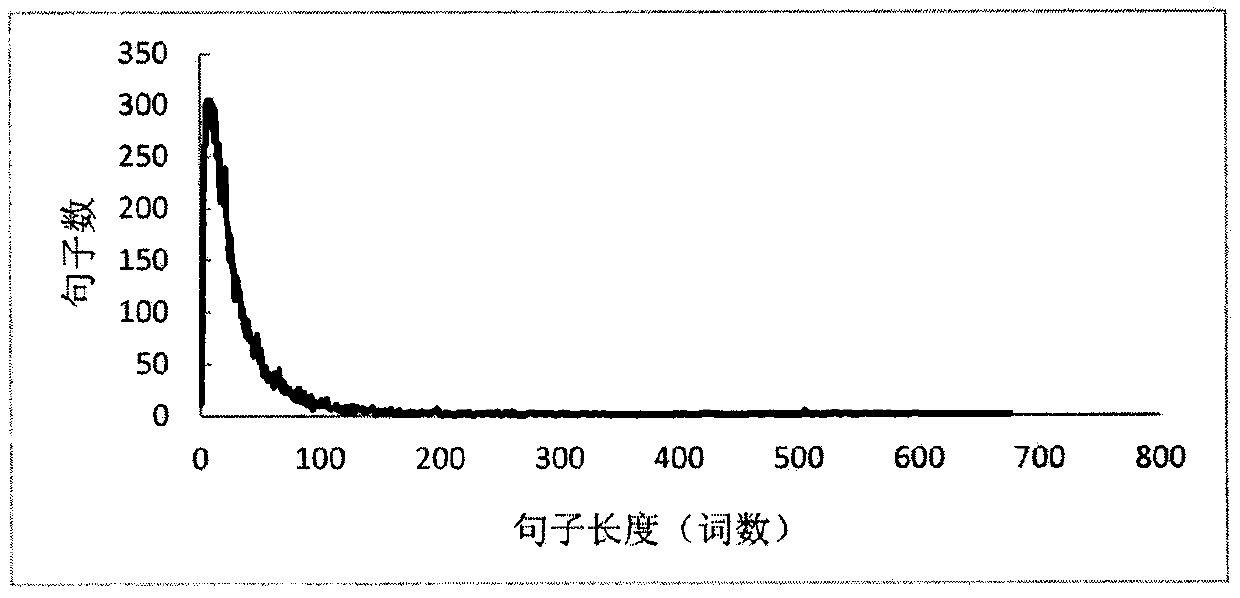

Fused attention model-based Chinese text classification method

InactiveCN108595590ARich in featuresEfficiently captures semantic dependenciesSemantic analysisSpecial data processing applicationsAttention modelText categorization

The invention discloses a fused attention model-based Chinese text classification method. The method comprises the following steps of: respectively segmenting a text into a corresponding word set anda corresponding character set through word segmentation preprocessing and character segmentation preprocessing, and training a word vector and a character vector corresponding to the text by adoptionof a feature embedding method according to the obtained word set and character set; respectively carrying out semantic encoding on the word vector and the character vector by taking a bidirectional gate circulation unit neural network as an encoder, and obtaining a word attention vector and a character attention vector in the text by adoption of a word vector attention mechanism and a character vector attention mechanism; obtaining a fused attention vector; and predicting a category of the text through a softmax classifier. The method is capable of solving the problem that more redundant features exist in the classification process as existing Chinese text classification methods neglects character feature information of texts, the extracted texts are single in features, all the pieces of semantic information of the texts are difficult to cover and features having obvious contribution to the classification are not focused.

Owner:中国科学院电子学研究所苏州研究院

Visual question and answer fusion enhancement method based on multi-modal fusion

ActiveCN110377710AImprove accuracyHigh expressionDigital data information retrievalCharacter and pattern recognitionAttention modelVisually impaired

The invention discloses a visual question and answer fusion enhancement method based on multi-modal fusion. The method comprises the following steps of 1, constructing a time sequence model by utilizing a GRU structure, obtaining feature representation learning of a problem, and utilizing output which is extracted from Faster R-CNN and is based on an attention model from bottom to top as the feature representation; 2, performing multi-modal reasoning based on an attention model Transformer, and introducing the attention model for performing multi-modal fusion on a picture-problem-answer tripleset, and establishing an inference relation; and 3, providing different reasoning processes and result outputs for different implicit relationships, and performing label distribution regression learning according to the result outputs to determine answers. According to the method, answers are obtained based on specific pictures and questions and directly applied to applications serving the blind,the blind or visually impaired people can be helped to better perceive the surrounding environment, the method is also applied to a picture retrieval system, and the accuracy and diversity of pictureretrieval are improved.

Owner:HANGZHOU DIANZI UNIV

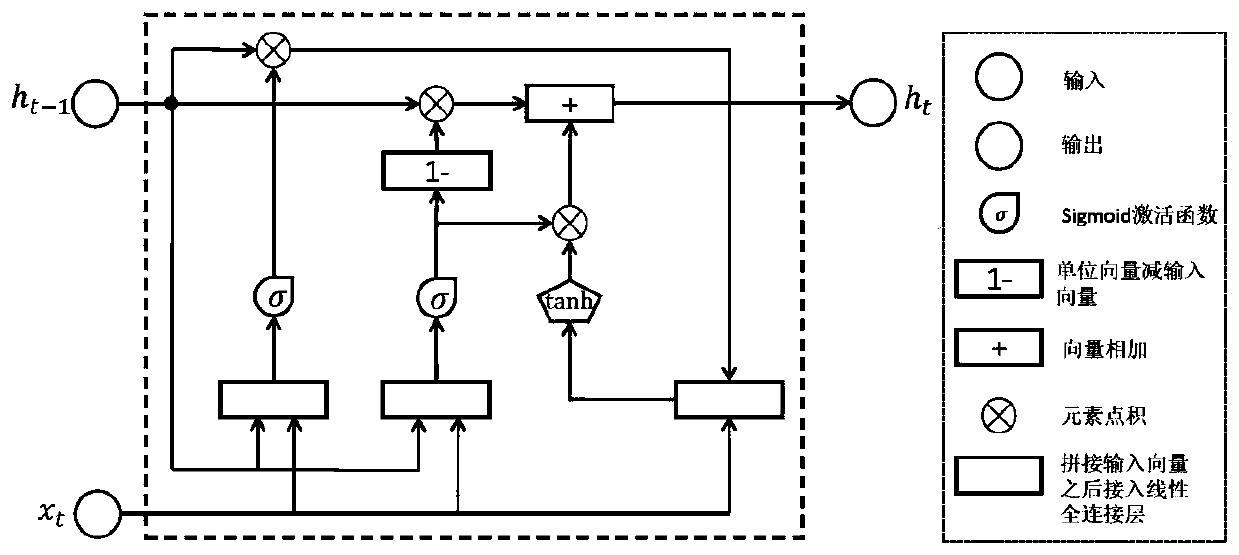

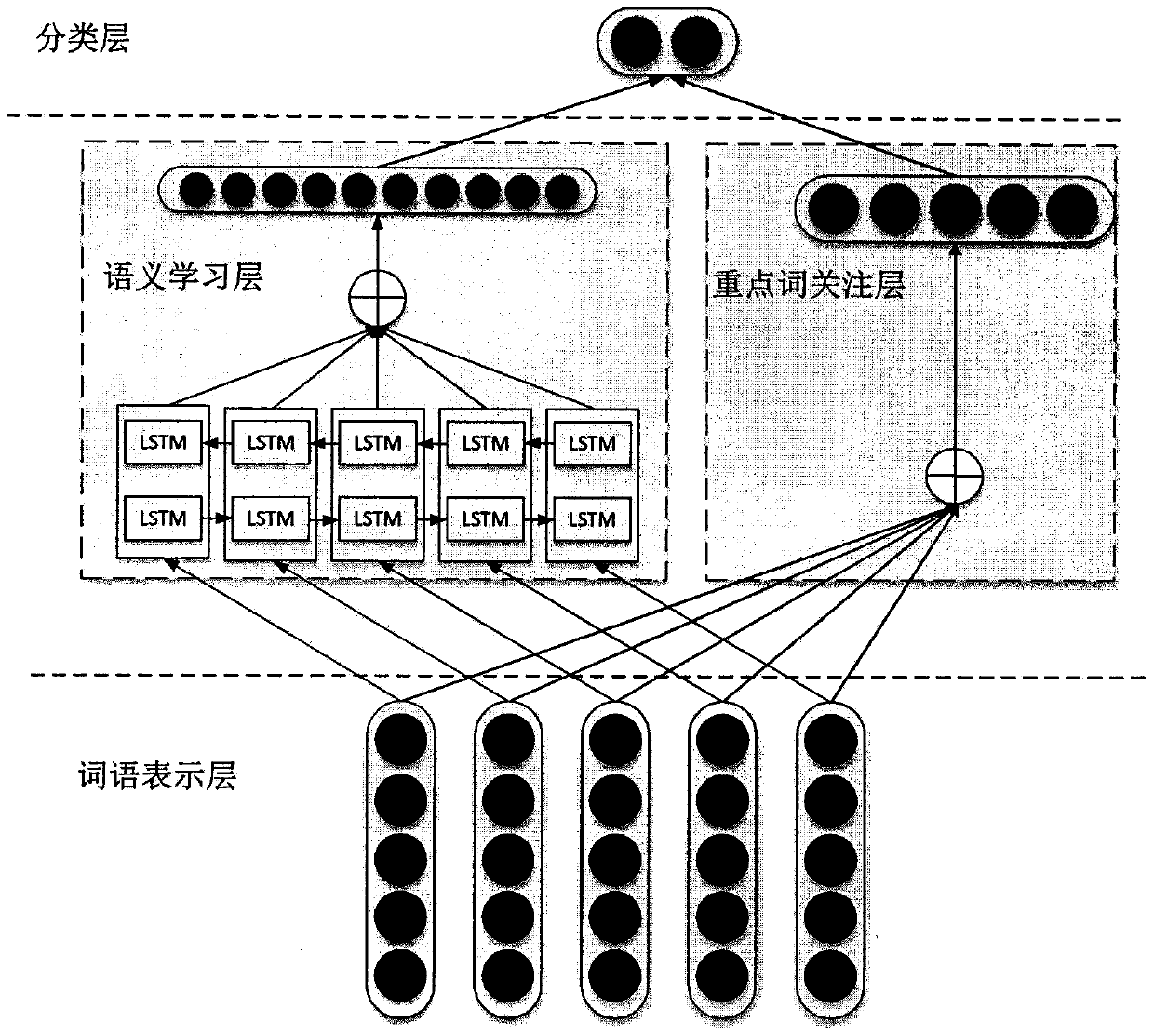

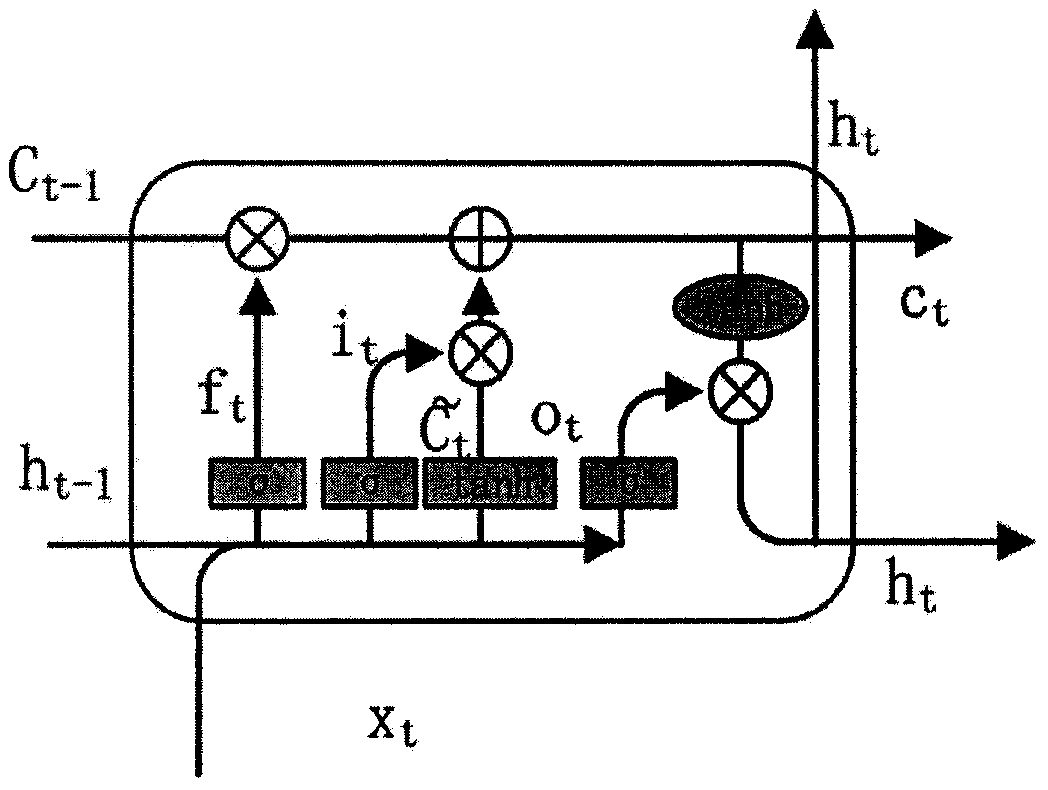

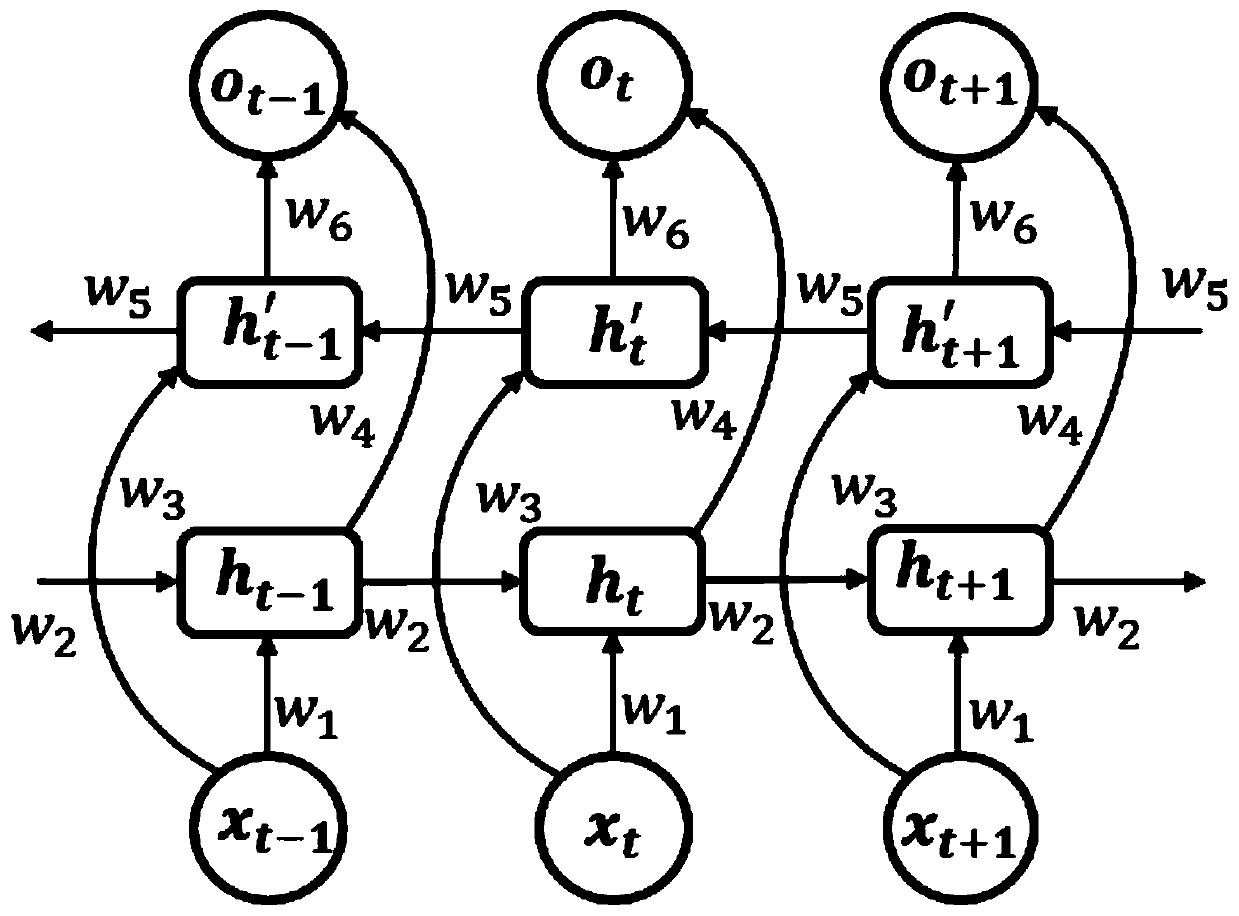

A bidirectional LSTM model emotion analysis method based on attention enhancement

InactiveCN109710761AImprove performanceRaise attentionCharacter and pattern recognitionNeural architecturesAttention modelAlgorithm

The invention relates to a bidirectional LSTM model emotion analysis method based on attention enhancement. According to the method, an attention mechanism and a bidirectional LSTM model are combined;text semantic information is learned by using a bidirectional LSTM model; the attention mechanism is used for enhancing attention to key words, the method comprises the steps that firstly, input sentences are expressed through pre-trained word vectors, then expression is conducted through a bidirectional LSTM model and attention model learning, the vectors expressed through the two parts are spliced, and finally text sentiment analysis work is completed through a classifier. According to the method, the semantic information of the text is learned by using bidirectional LSTM; a self-attentionmechanism established on a word vector is used for enhancing the attention degree of emotion keywords in the sentence; A word vector attention mechanism and a bidirectional LSTM are of a parallel structure, experiments show that the model provided by the invention shows superior performance, and exceeds a known best model on a plurality of indexes, so that the requirements of practical applicationcan be well met.

Owner:CHINA NAT INST OF STANDARDIZATION +1

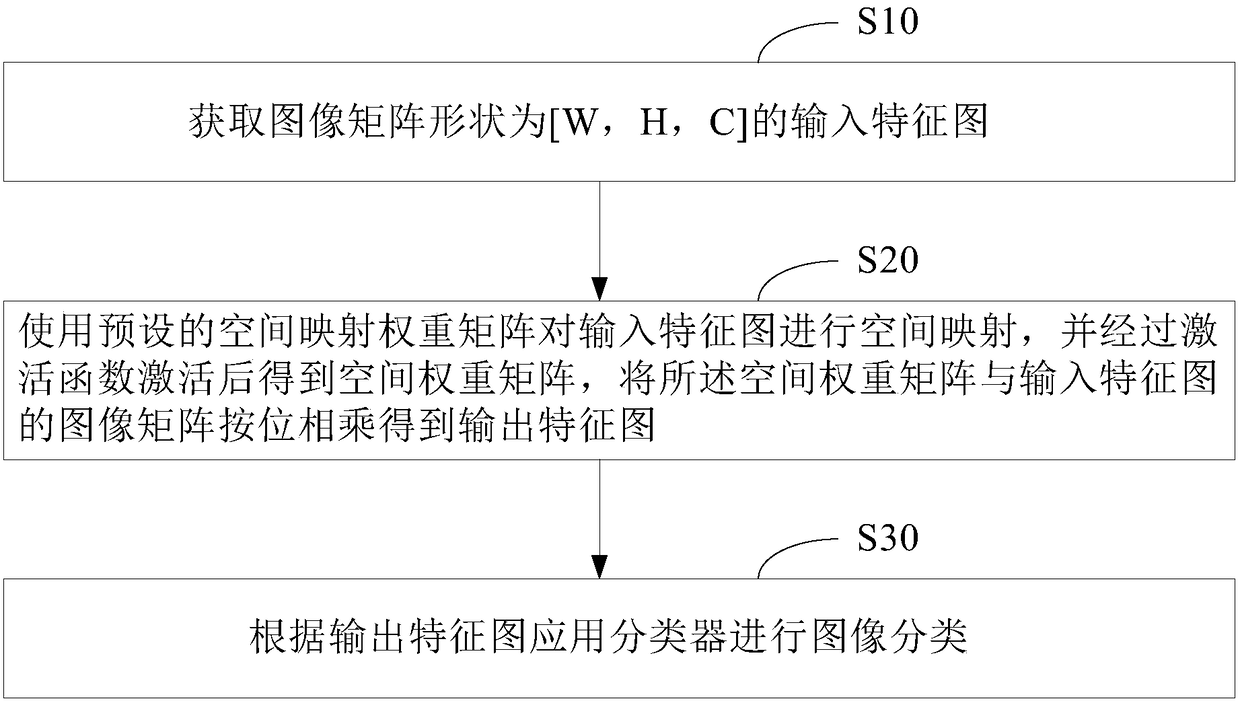

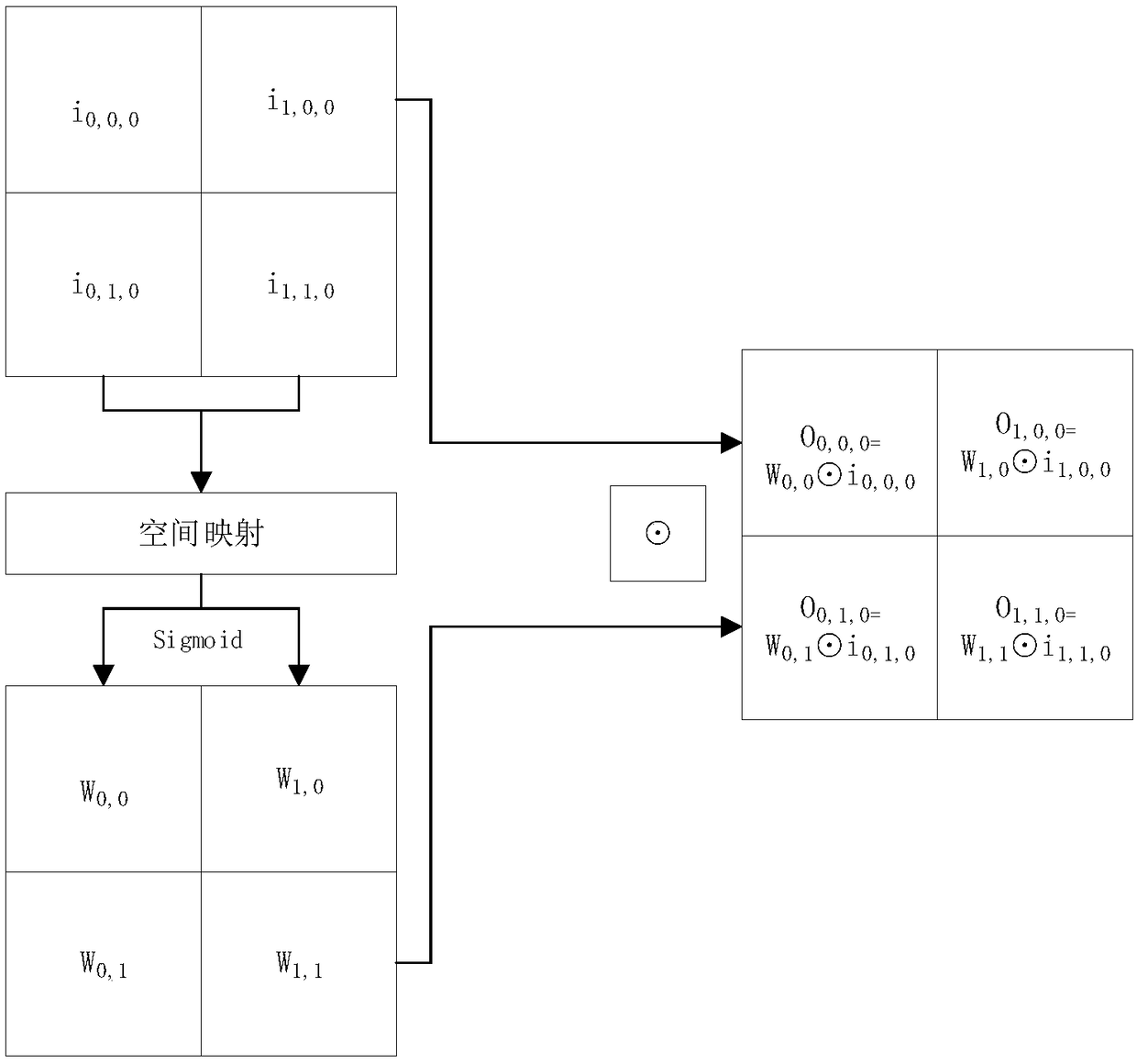

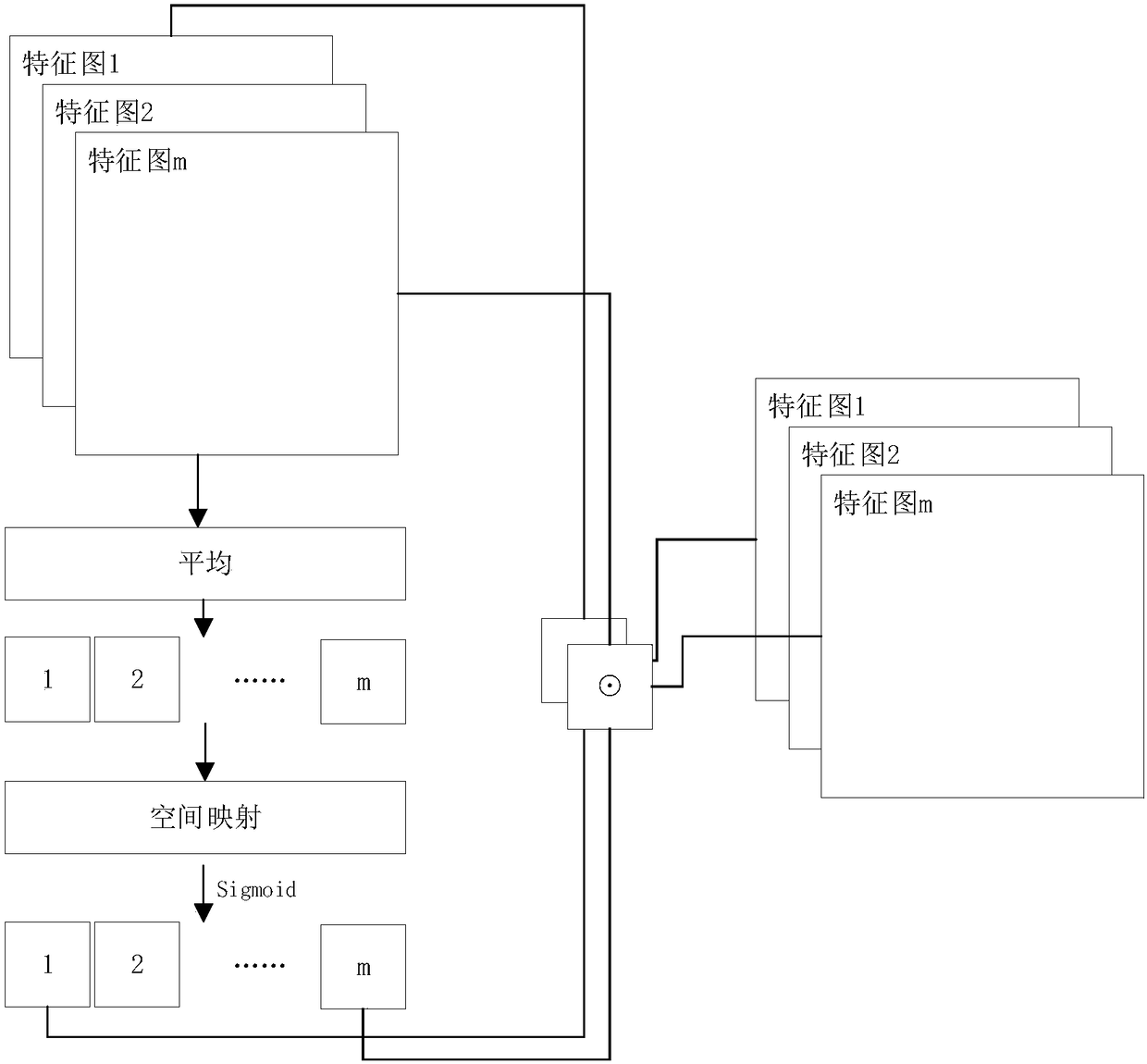

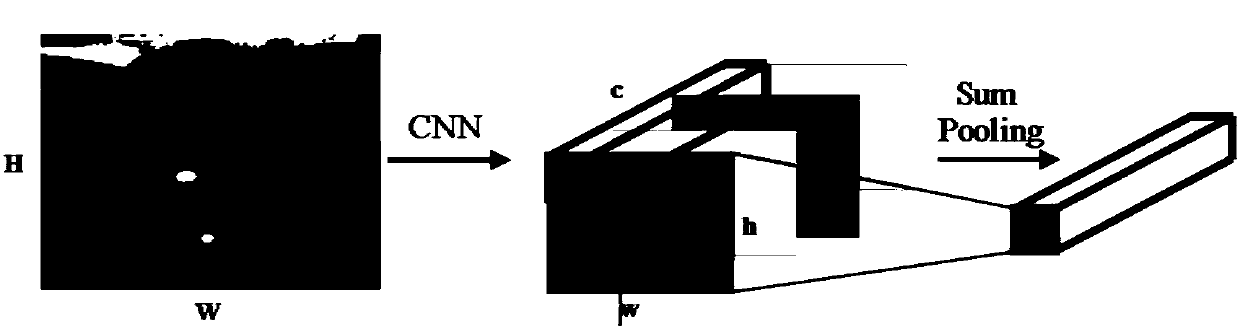

Attention model-based image identification method and system

InactiveCN108364023AImprove the extraction effectImprove targetingCharacter and pattern recognitionNeural architecturesAttention modelFeature extraction

The invention provides an attention model-based image identification method and system. The method comprises the steps of firstly obtaining an input feature graph with an image matrix in a shape [W,H,C], wherein W is width, H is height and C is a channel number; and secondly performing space mapping on the input feature graph by using a preset space mapping weight matrix, performing activation through an activation function to obtain a space weight matrix, and multiplying the space weight matrix by the image matrix of the input feature graph by bit to obtain an output feature graph, wherein the preset space mapping weight matrix is a space attention matrix [C,1] with attention depending on image width and height, at the moment, the shape of the space weight matrix is [W,H,1], or the presetspace mapping matrix is a channel attention matrix [C,C] with attention depending on the image channel number, at the moment, the shape of the space weight matrix is [1,1,C]. The pertinence of feature extraction can be effectively improved, so that the extraction capability of image local features is enhanced.

Owner:BEIJING DAJIA INTERNET INFORMATION TECH CO LTD

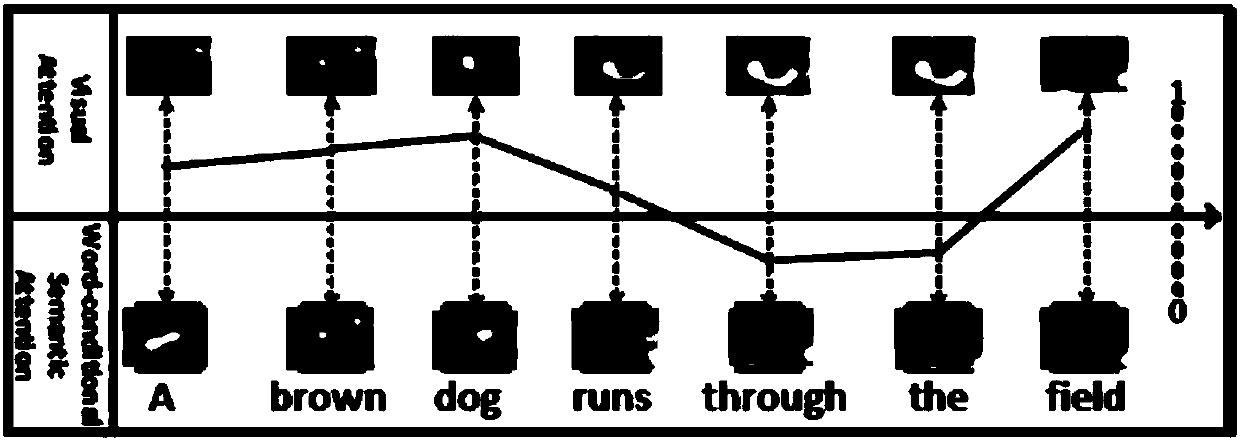

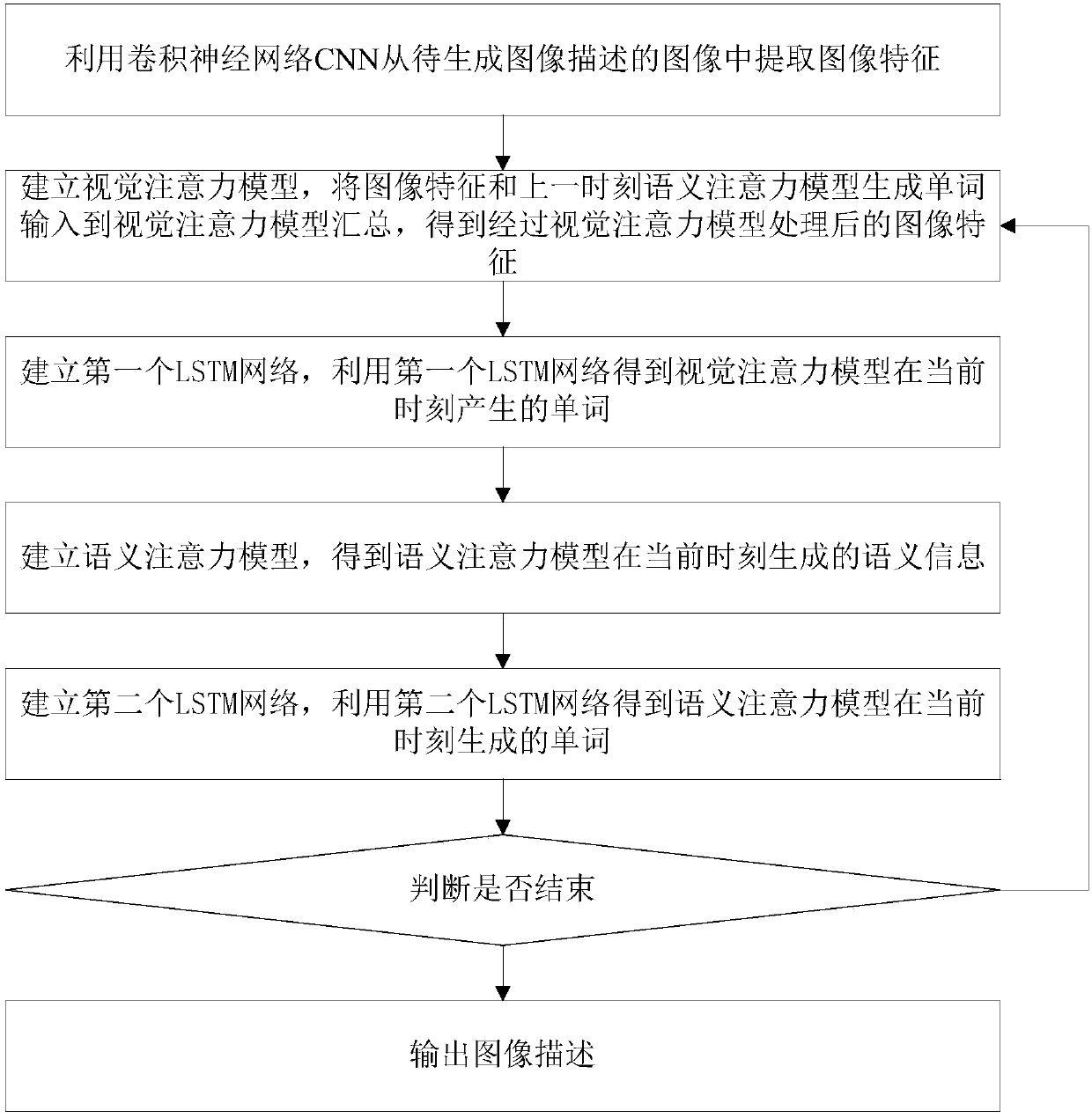

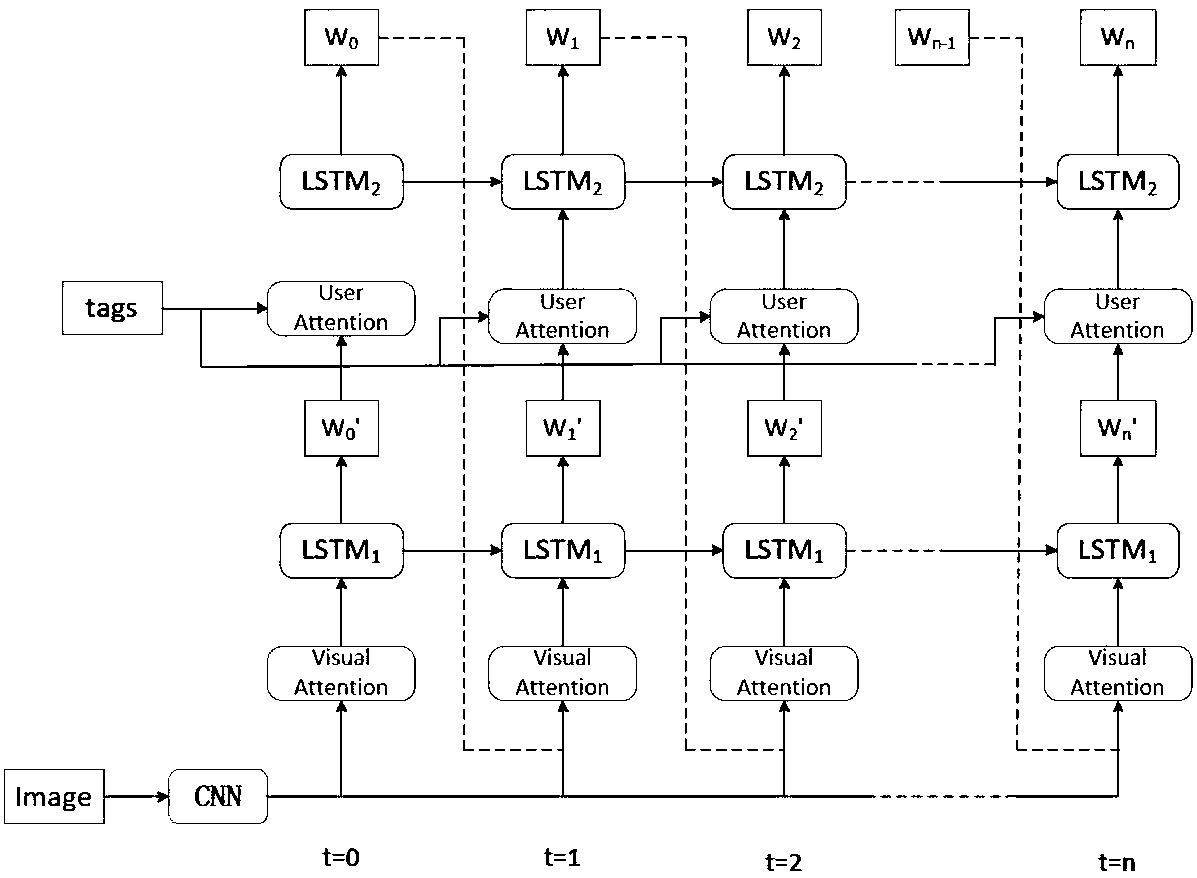

Image description method and system based on vision and semantic attention combined strategy

InactiveCN107563498AReduce dependenceAccurate descriptionCharacter and pattern recognitionNeural architecturesAttention modelSemantics

The invention discloses an image description method and system based on a vision and semantic attention combined strategy. The steps include utilizing a convolutional neural network (CNN) to extract image features from an image whose image description is to be generated; utilizing a visual attention model of the image to process the image features, feeding the image features processed by the visual attention model to a first LSTM network to generate words, then utilizing a semantic attention model to process the generated words and predefined labels to obtain semantic information, then utilizing a second LSTM network to process semantics to obtain words generated by the semantic attention model, repeating the abovementioned steps, and finally performing series combination on all the obtained words to generate image description. The method provided by the invention not only utilizes a summary of the input image, but also enriches information in the aspects of vision and semantics, and enables a generated sentence to reflect content of the image more truly.

Owner:CHINA UNIV OF PETROLEUM (EAST CHINA)

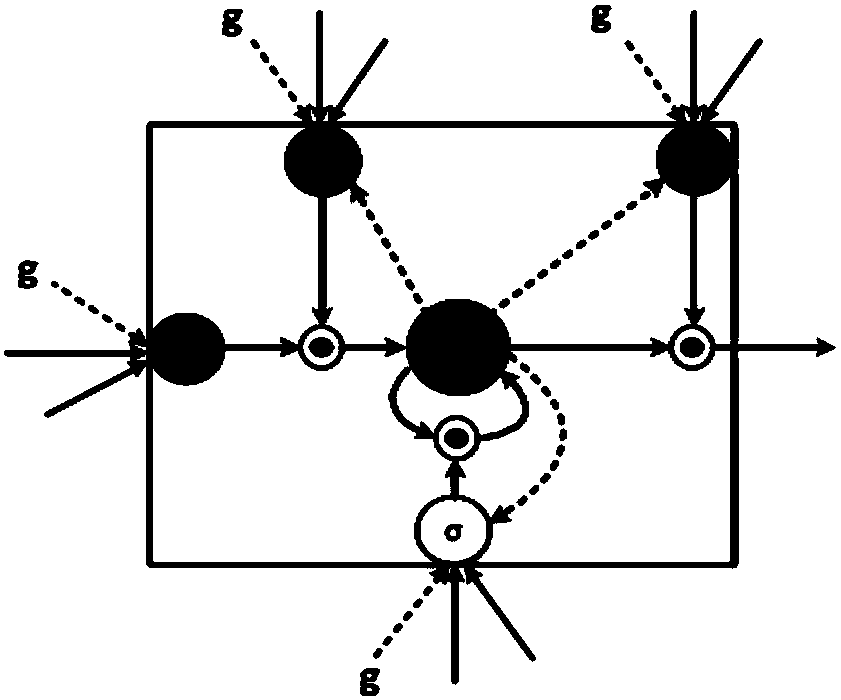

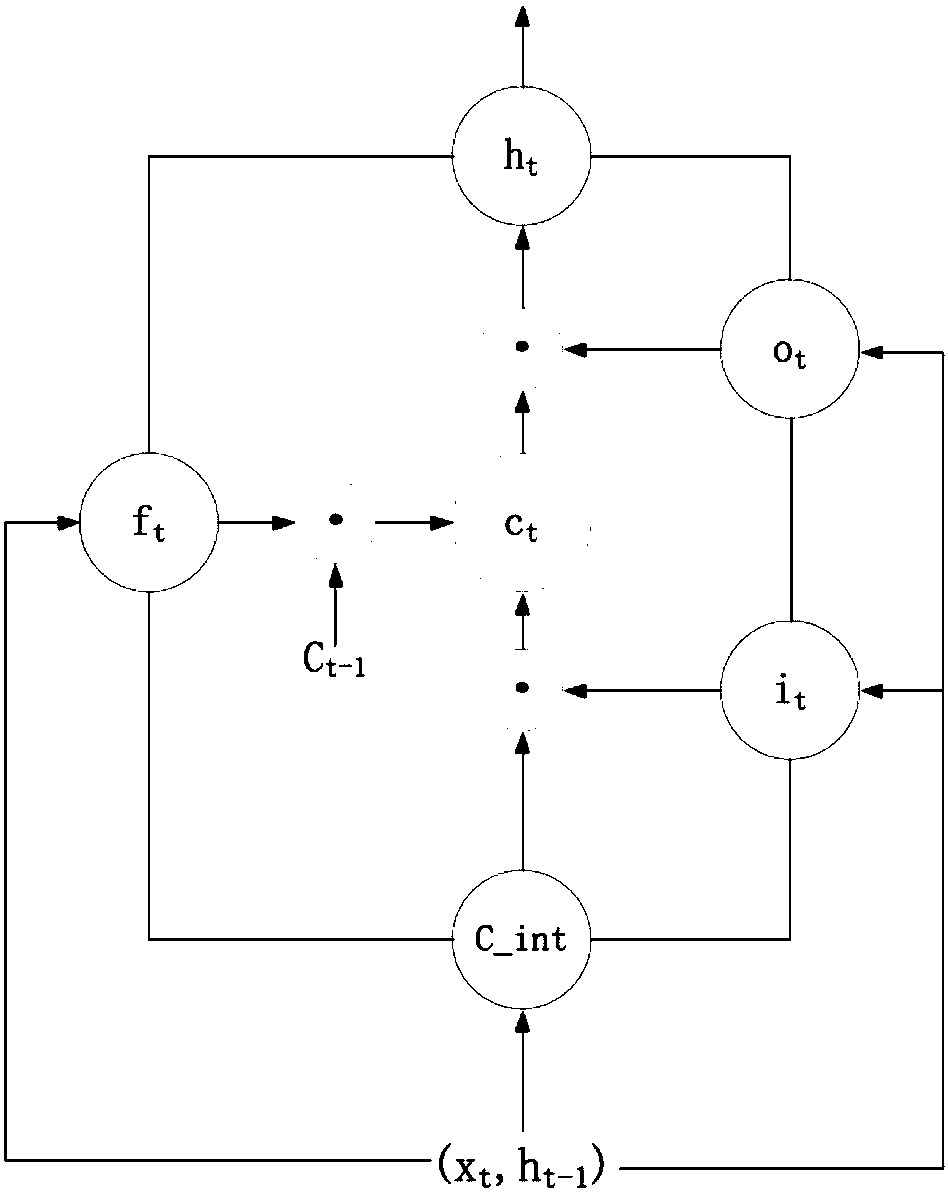

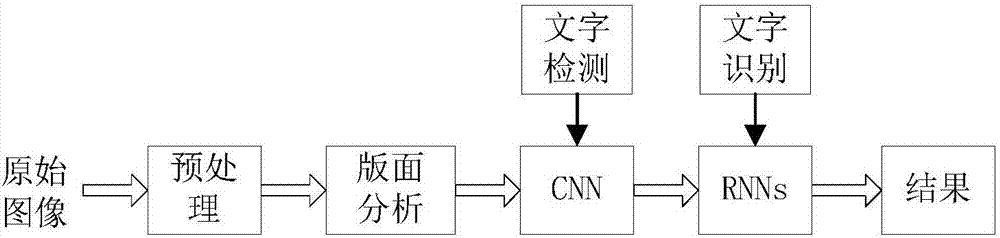

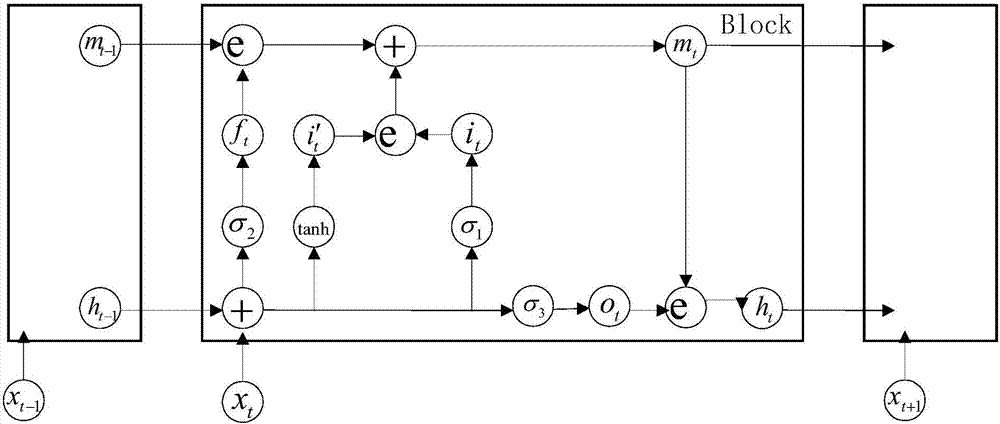

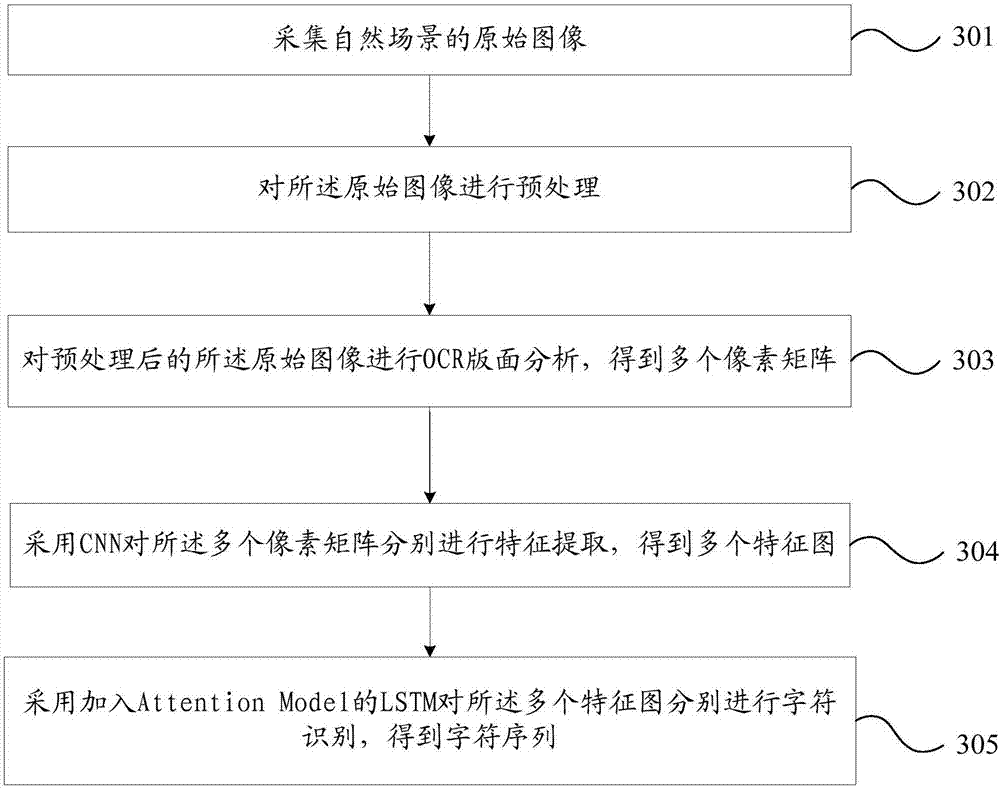

Character identifying method and character identifying system

ActiveCN106960206AReduce complexityReduce workloadCharacter and pattern recognitionAttention modelFeature extraction

An embodiment of the invention provides a character identifying method and system. The method includes collecting an original image of a nature scene; performing pre-treatment on the original image; performing OCR layout analysis on the original image subjected to the pre-treatment and obtaining a plurality of pixel matrixes; performing characteristic extraction on the pixel matrixes by adopting a CNN (Convolutional Neural Network) and obtaining a plurality of characteristic patterns; performing character identification on the characteristic patterns by adopting an LSTM (Long Short Term Memory) provided with an Attention Model and obtaining a character sequence, wherein a forget gate of the LSTM provided with the Attention Model is replaced with the Attention Model. According to the invention, by utilizing the LSTM algorithm provided with the Attention Model, the characteristic sequence extracted by using the CNN algorithm is identified as the corresponding character sequence, so that required text information is obtained and operation parameters are reduced. At the same time, through control of different influence on current characters by different context content, information in long term memory can be transmitted to the current characters perfectly, so that character identification accuracy is improved.

Owner:BEIJING SINOVOICE TECH CO LTD

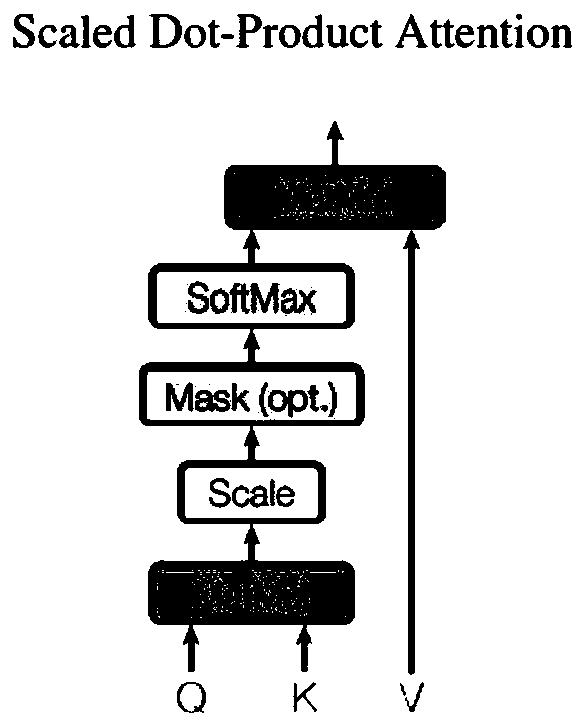

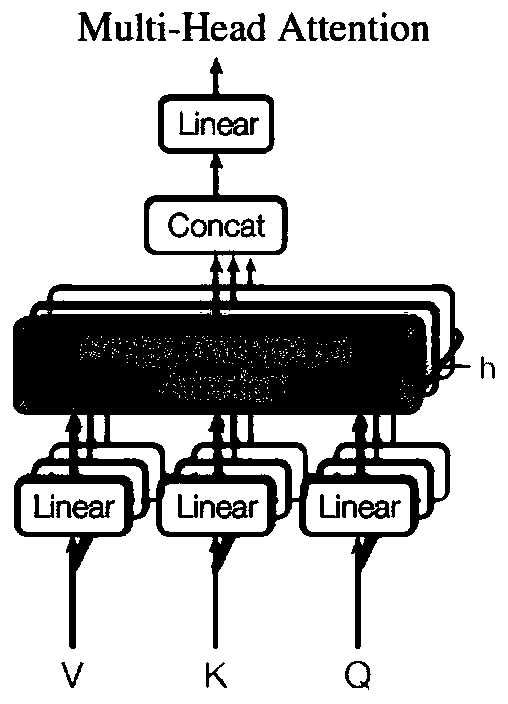

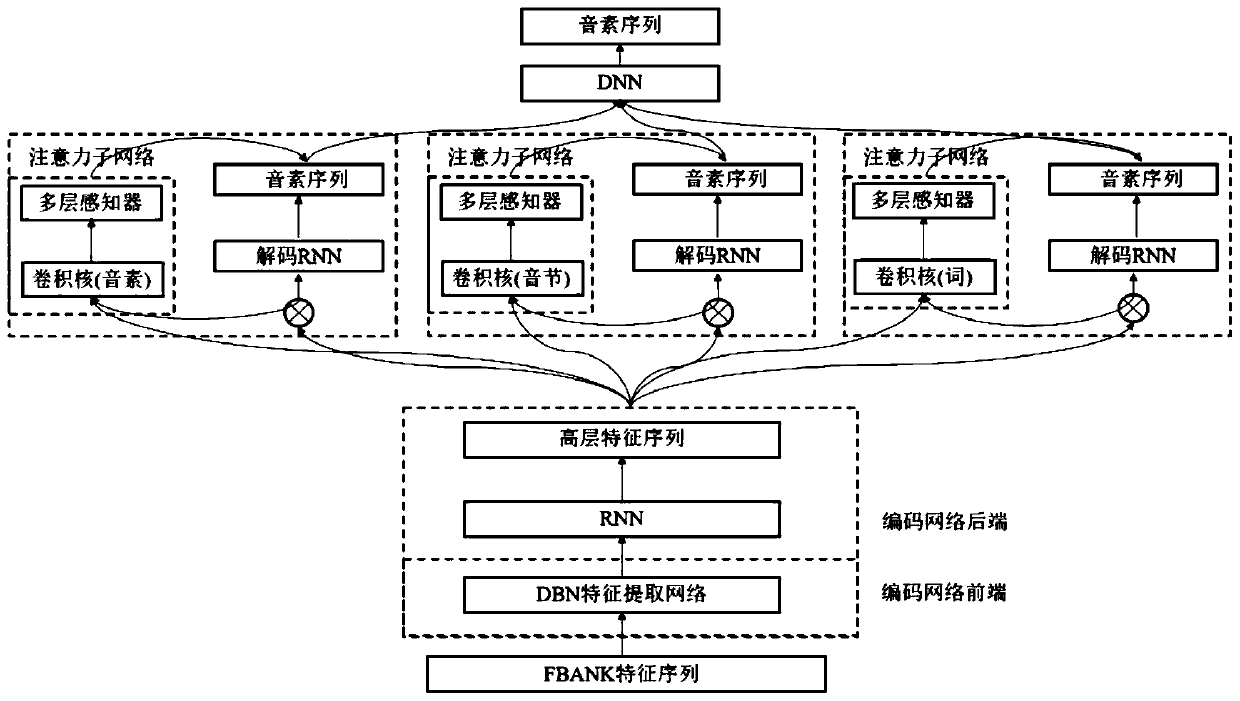

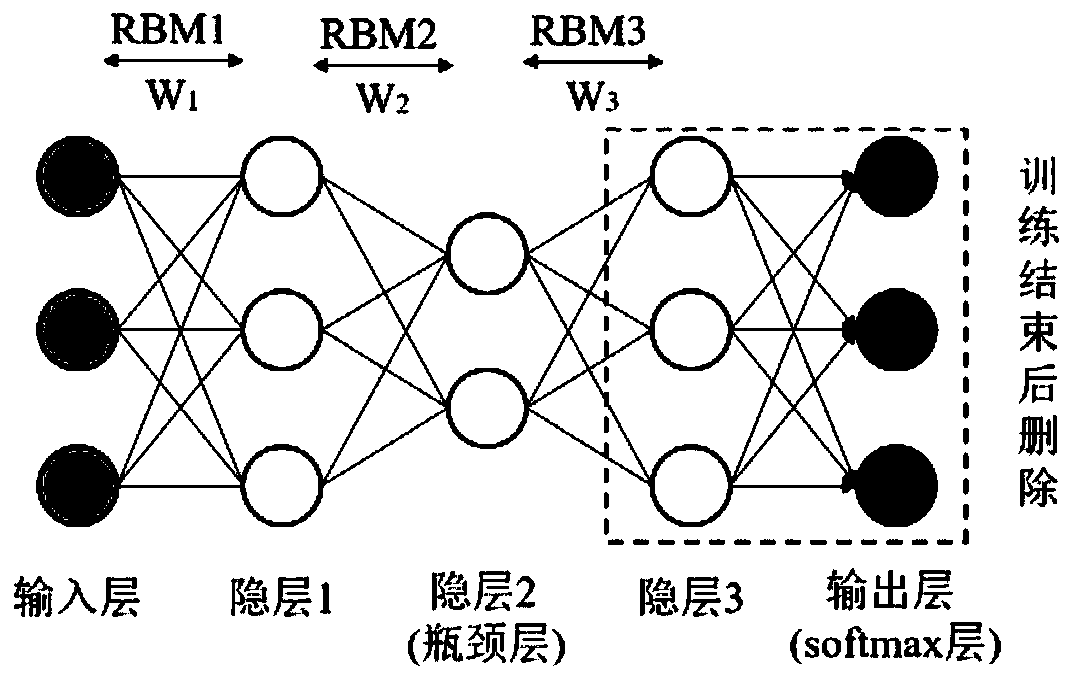

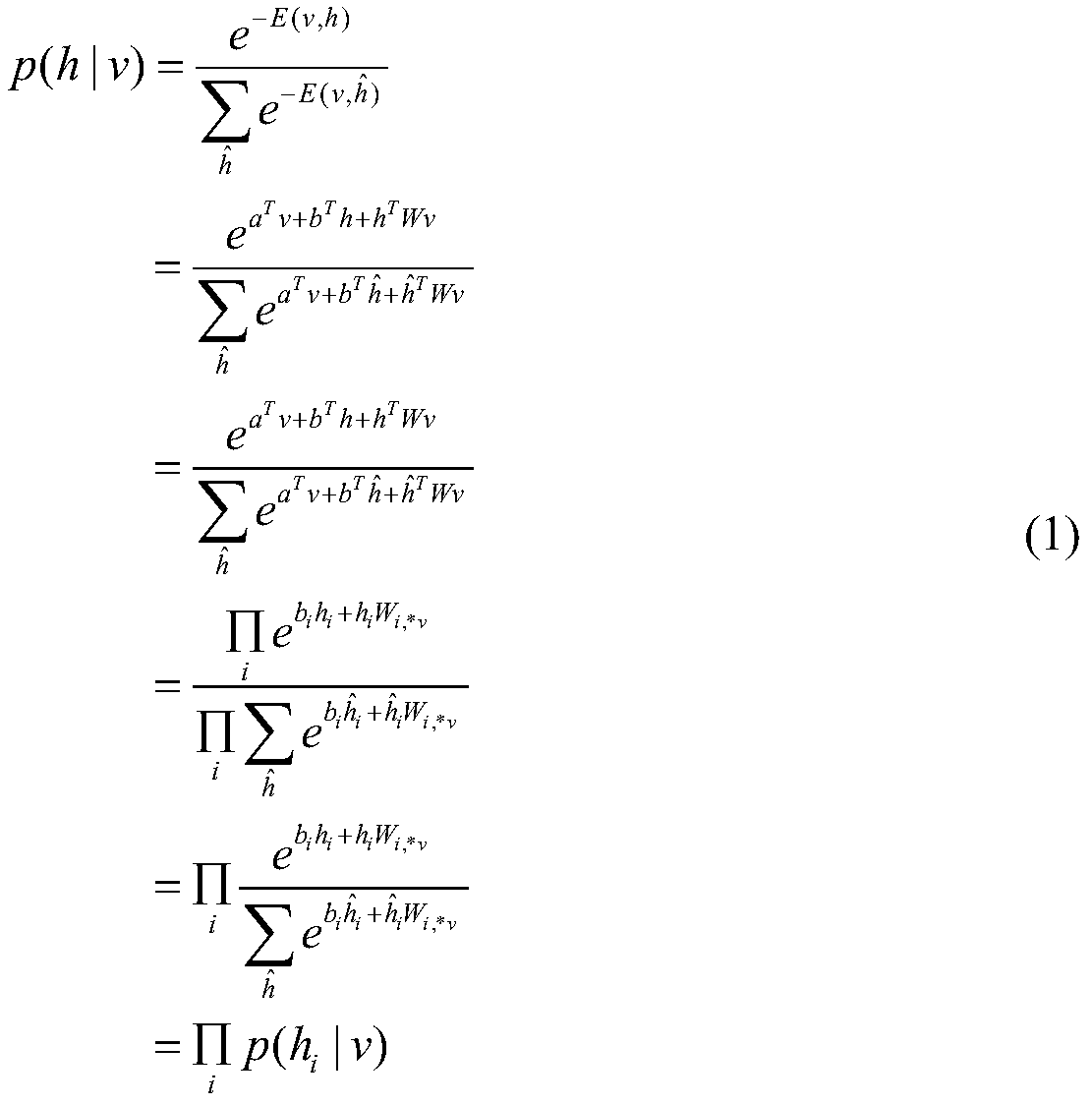

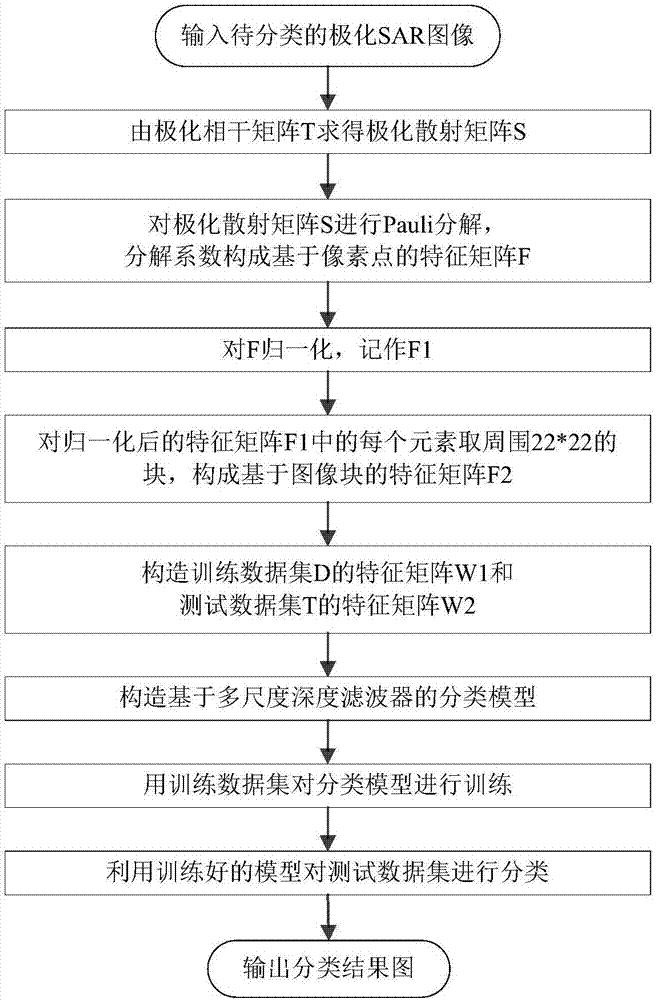

Speech recognition model establishing method based on bottleneck characteristics and multi-scale and multi-headed attention mechanism

The invention provides a speech recognition model establishing method based on bottleneck characteristics and a multi-scale and multi-headed attention mechanism, and belongs to the field of model establishing methods. A traditional attention model has the problems of poor recognition performance and simplex attention scale. According to the speech recognition model establishing method based on thebottleneck characteristics and the multi-scale and multi-headed attention mechanism, the bottleneck characteristics are extracted through a deep belief network to serve as a front end, the robustnessof a model can be improved, a multi-scale and multi-headed attention model constituted by convolution kernels of different scales is adopted as a rear end, model establishing is conducted on speech elements at the levels of phoneme, syllable, word and the like, and recurrent neural network hidden layer state sequences and output sequences are calculated one by one; and elements of the positions where the output sequences are located are calculated through decoding networks corresponding to attention networks of all heads, and finally all the output sequences are integrated into a new output sequence. The recognition effect of a speech recognition system can be improved.

Owner:HARBIN INST OF TECH

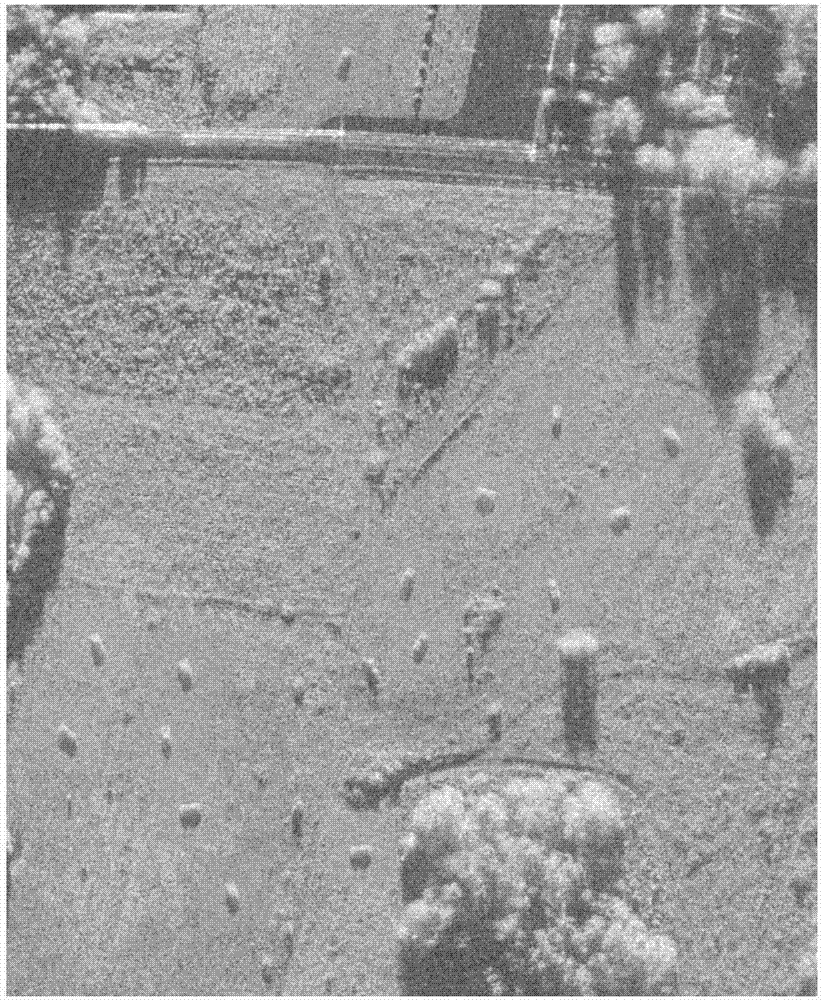

CNN and selective attention mechanism based SAR image target detection method

InactiveCN107247930AImprove accuracyOvercoming pixel-level processingScene recognitionNeural architecturesAttention modelData set

The invention discloses a CNN and selective attention mechanism based SAR image target detection method. An SAR image is obtained; a training data set is expanded; a classification model composed of the CNN is constructed; the expanded training data set is used to train the classification model; significance test is carried out on a test image via a simple attention model (a spectral residual error method) of image visual significance to obtain a significant characteristic image; and morphological processing is carried out on the significant characteristic image, the processed characteristic image is marked with connected domains, target candidate areas corresponding to different mass centers are extracted by taking the mass centers of the connected domains as the centers, and the target candidate areas are translated within pixels in the surrounding to generate an target detection result. According to the invention, the CNN and the selective attention mechanism are applied to SAR image target detection in a combined way, the efficiency and accuracy of SAR image target detection are improved, the method can be applied to target classification and identification, and the problem that detection in the prior art is low in detection efficiency and accuracy is solved mainly.

Owner:XIDIAN UNIV

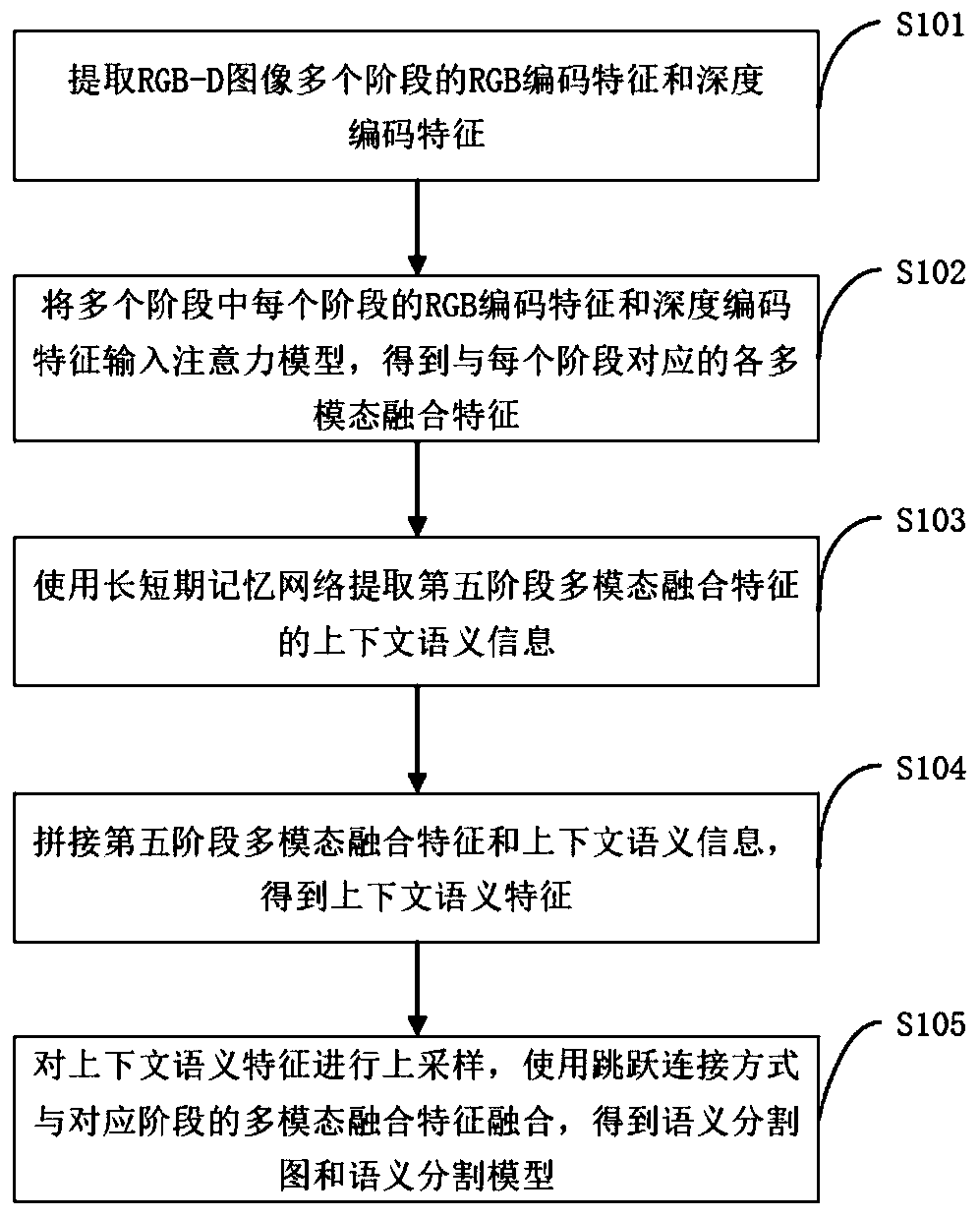

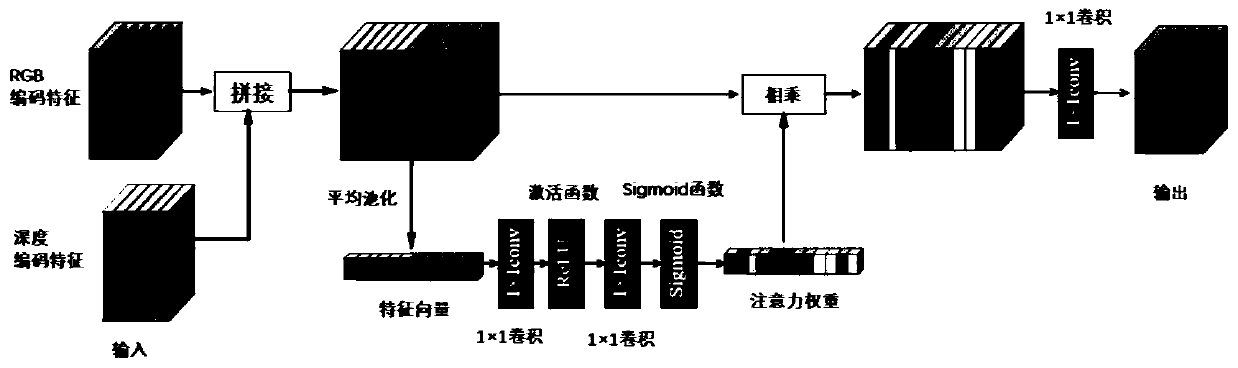

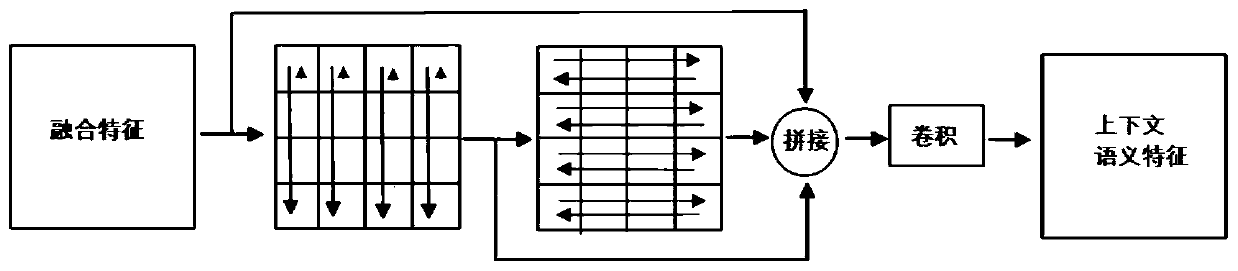

Semantic segmentation method and system for RGB-D image

ActiveCN110298361AImproving Semantic Segmentation AccuracyEfficient use ofCharacter and pattern recognitionNeural architecturesAttention modelComputer vision

The invention discloses a semantic segmentation method and system for an RGB-D image. The semantic segmentation method comprises the steps: extracting RGB coding features and depth coding features ofan RGB-D image in multiple stages; inputting the RGB coding features and the depth coding features of each stage in the plurality of stages into an attention model to obtain each multi-mode fusion feature corresponding to each stage; extracting context semantic information of the multi-modal fusion features in the fifth stage by using a long short-term memory network; splicing the multi-modal fusion features and the context semantic information in the fifth stage to obtain context semantic features; and performing up-sampling on the context semantic features, and fusing the context semantic features with the multi-modal fusion features of the corresponding stage by using a jump connection mode to obtain a semantic segmentation map and a semantic segmentation model. By extracting RGB codingfeatures and depth coding features of the RGB-D image in multiple stages, the semantic segmentation method effectively utilizes color information and depth information of the RGB-D image, and effectively mines context semantic information of the image by using a long short-term memory network, so that the semantic segmentation accuracy of the RGB-D image is improved.

Owner:HANGZHOU WEIMING XINKE TECH CO LTD +1

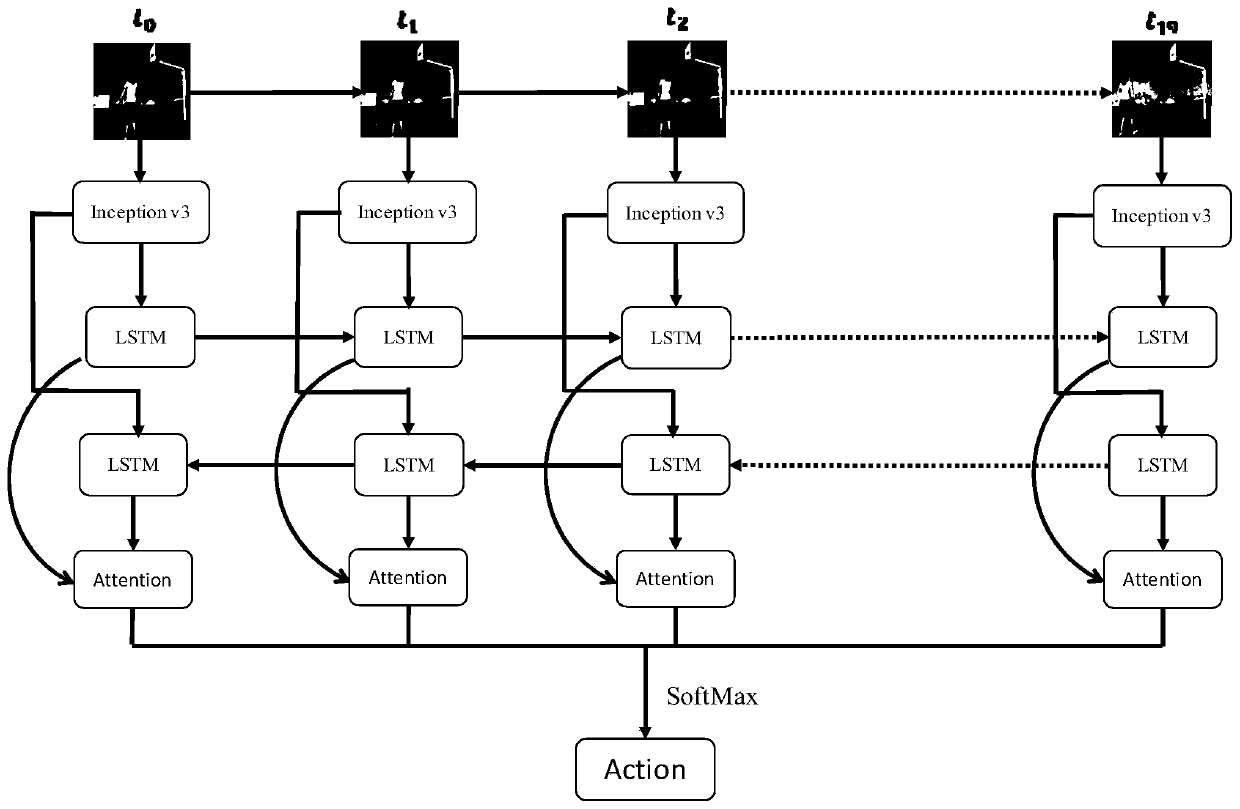

Human body behavior identification method based on Bi-LSTM-Attention model

InactiveCN109784280ASolving Depth ProblemsImprove performanceCharacter and pattern recognitionNeural architecturesAttention modelFeature vector

The invention provides a human body behavior identification method based on a Bi-LSTM-Attention model. The method comprises the following steps: step S1, inputting an extracted video frame into an Inception V3 model, using the Inception V3 model to increase the depth of a convolutional neural network and reduce network parameters at the same time, and fully extracting depth features of the video frame to obtain related feature vectors; step S2, transmitting the feature vector obtained in the step S1 to Bi-LSTM neural network for processing, fully learning time sequence characteristics betweenthe video frames through the BI-LSTM neural network; and step S3, transmitting the time sequence feature vectors obtained in the step S2 to an attention mechanism model to adaptively perceive the network weight which has a great influence on the identification result, so that the features related to the network weight can get more attention. The recognition rate of human body behaviors can be improved.

Owner:JIANGNAN UNIV

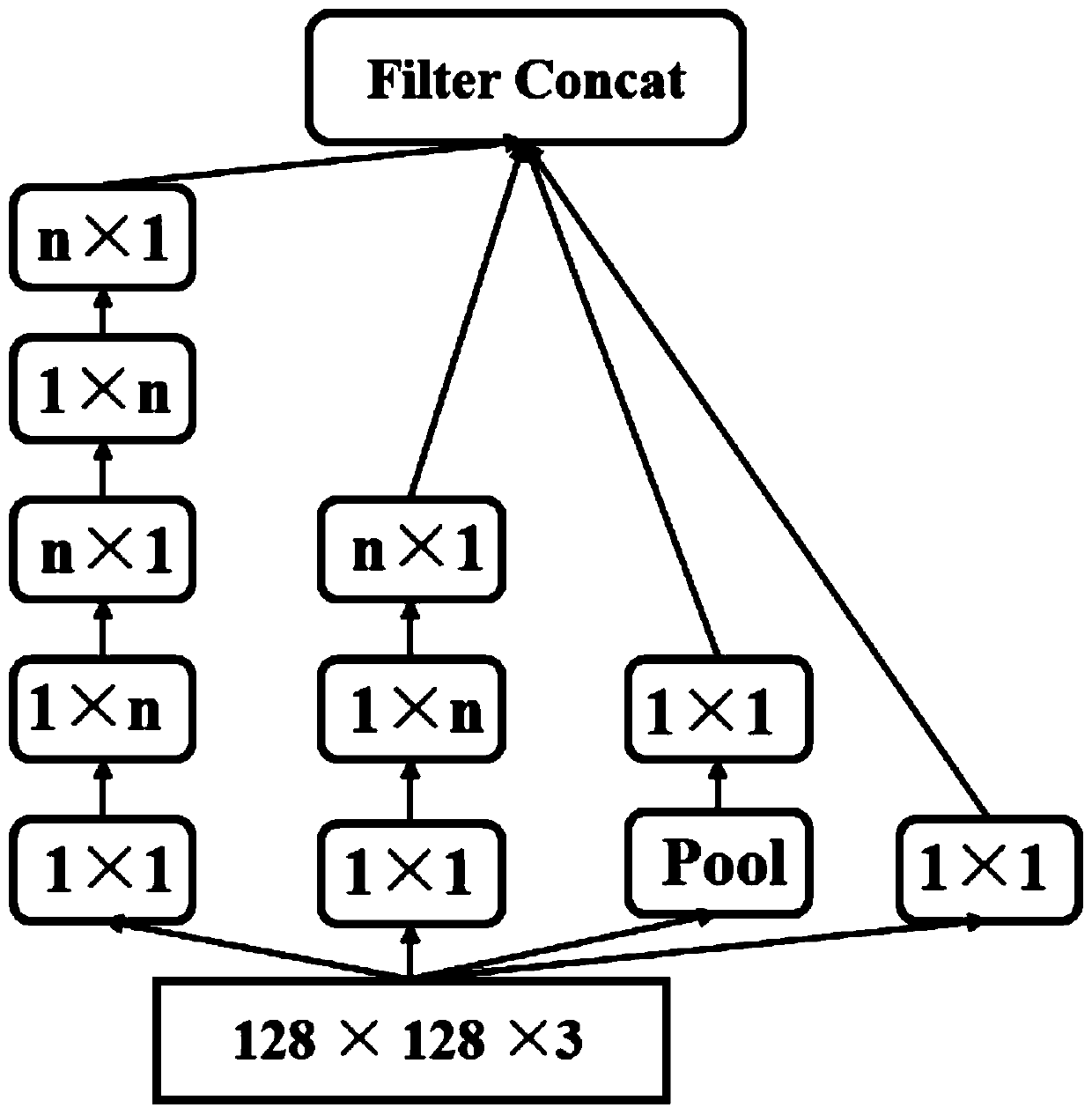

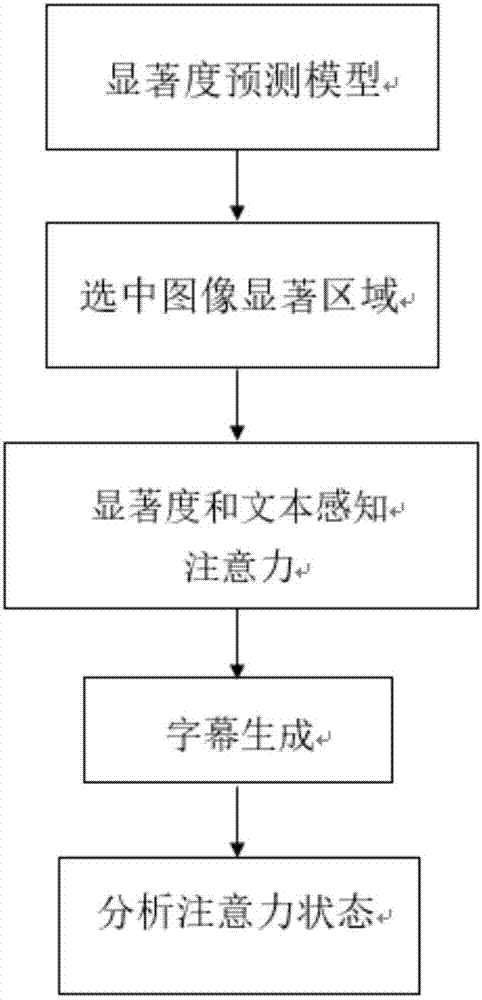

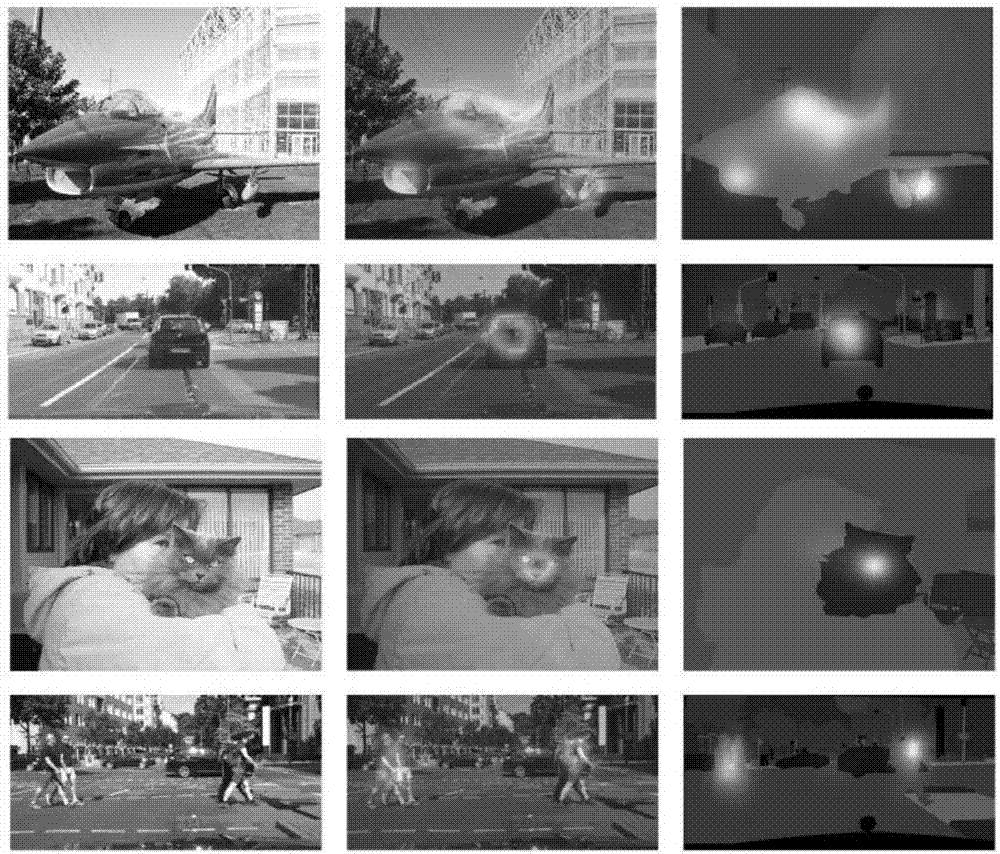

Image subtitle generating method based on novel attention model

InactiveCN107391709ACharacter and pattern recognitionNeural learning methodsAttention modelGenerative process

The invention provides an image subtitle generating method based on a novel attention model. The image subtitle generating method mainly includes the steps of establishing a significance forecasting model; selecting a significant image region; forming significance and text perception attention, generating subtitles; analyzing the attention state, wherein different parts of input images are focused in the subtitle generating process through a novel subtitle structure, particularly, significant parts and parts needing to be combined with the context in the images are given through the significance forecasting model; images are extracted through a convolutional neural network, and corresponding subtitles are generated through the recurrent neural network; through attention model extension, two attention paths are created in the significance forecasting model, wherein one attention path importantly concerns the significant region, and another attention path importantly concerns the context region; the two paths jointly cooperate in the subtitle generating process, excellent subtitles are generated step by step, and a further contribution is provided for the innovative solution of image subtitle generating.

Owner:SHENZHEN WEITESHI TECH

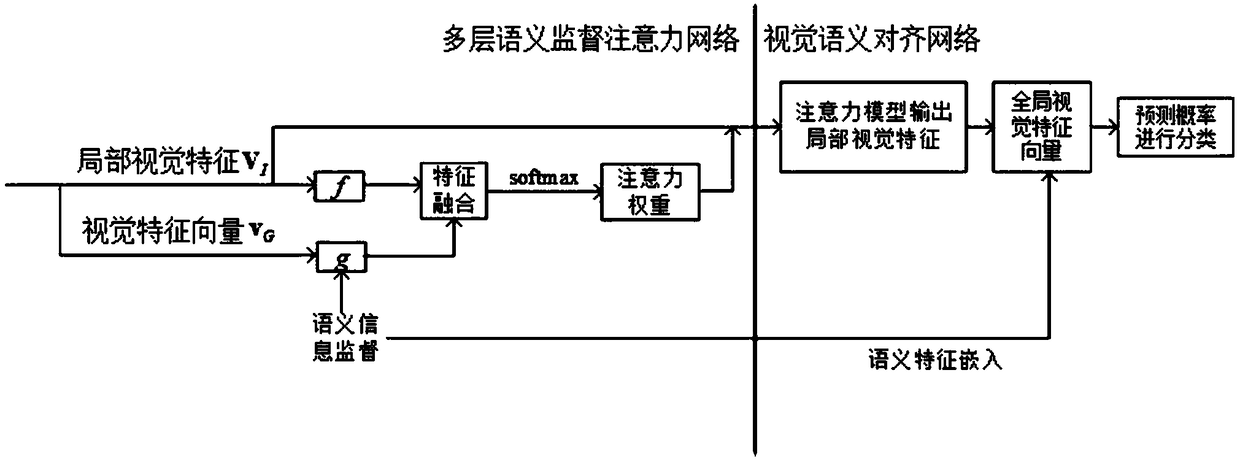

Fine-grained zero-sample classification method based on multi-layer semantic supervised attention model

InactiveCN109447115AEasy to classifyCharacter and pattern recognitionNeural architecturesAttention modelNetwork output

The present invention relates to a fine-grained zero-sample classification method based on multi-layer semantic supervised attention model. The local visual features of fine-grained images are extracted by a convolution neural network, and the local visual features of fine-grained images are supervised by the text description information of categories as category semantic features, and the local visual features of fine-grained images are weighted gradually. The loss function of the multi-level semantic supervised attention model is obtained by mapping the class semantic features to the hiddenspace local visual features. The global visual features of fine-grained images are combined with the local visual features weighted by the multi-layer semantic supervised attention model as the new visual features of images. The category semantic features are embedded into the new visual feature space, and the visual features and semantic features of the output of the multi-layer semantic supervised attention network are aligned, and the softmax function is used for classification. The method of the invention can input the extracted visual features and category semantic features, and output the classification result of the image.

Owner:TIANJIN UNIV

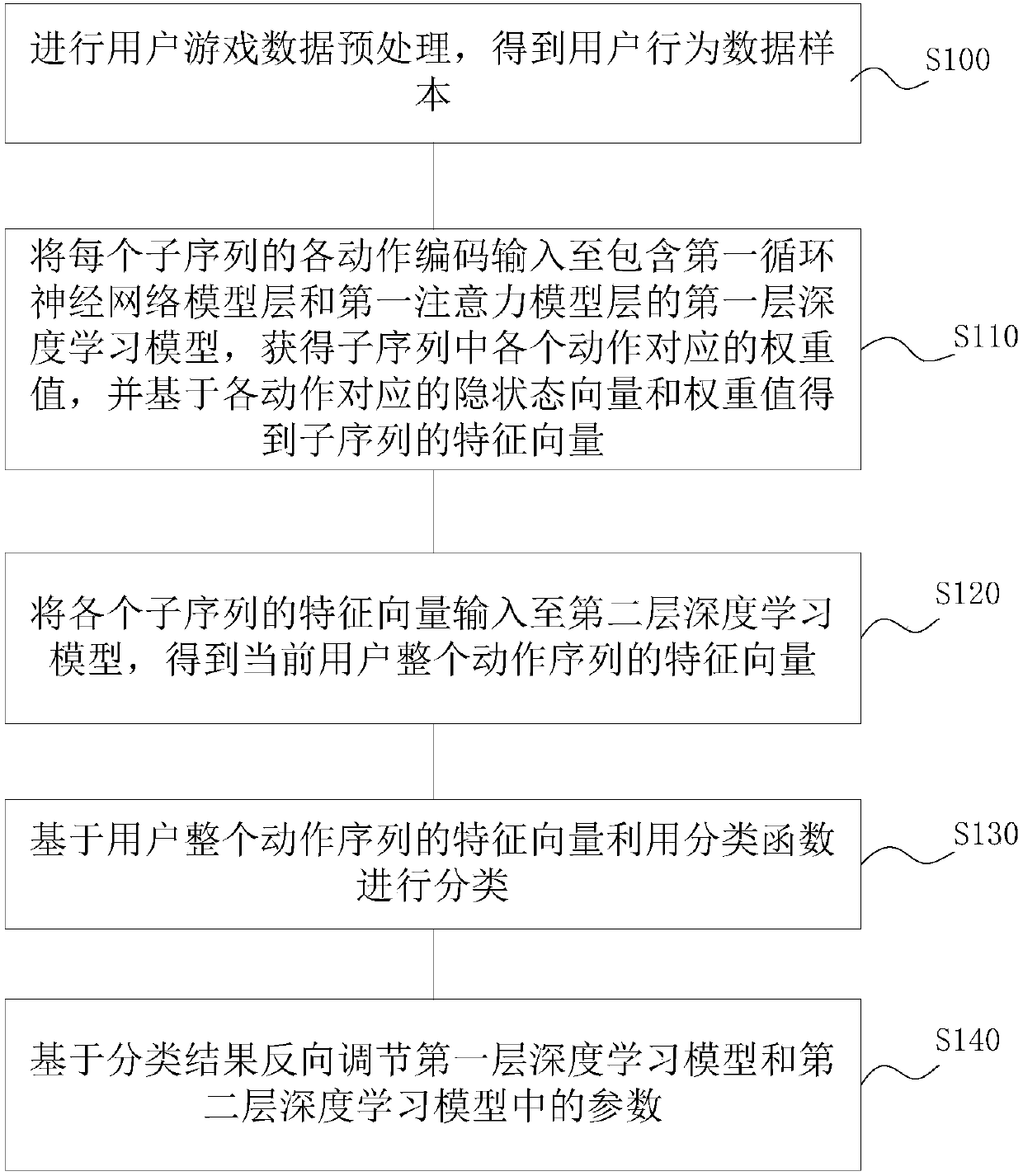

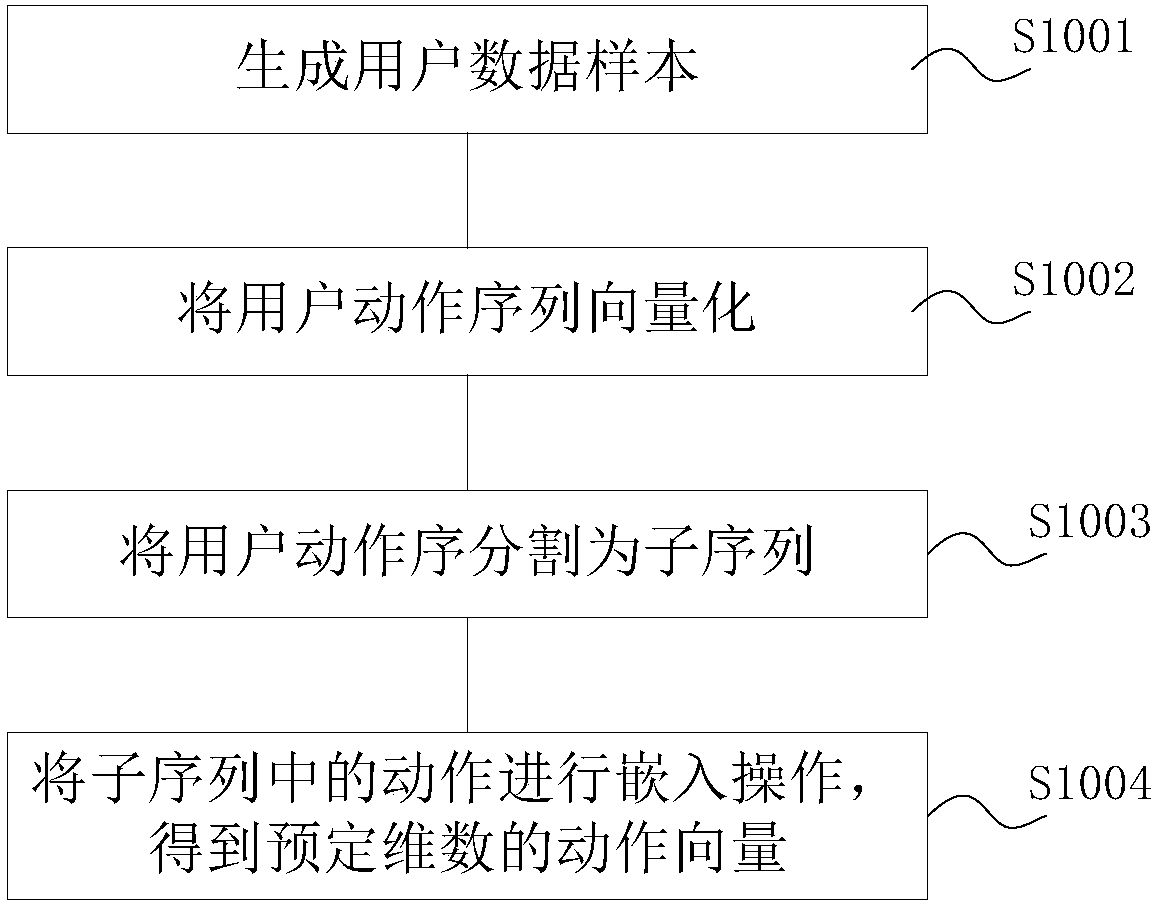

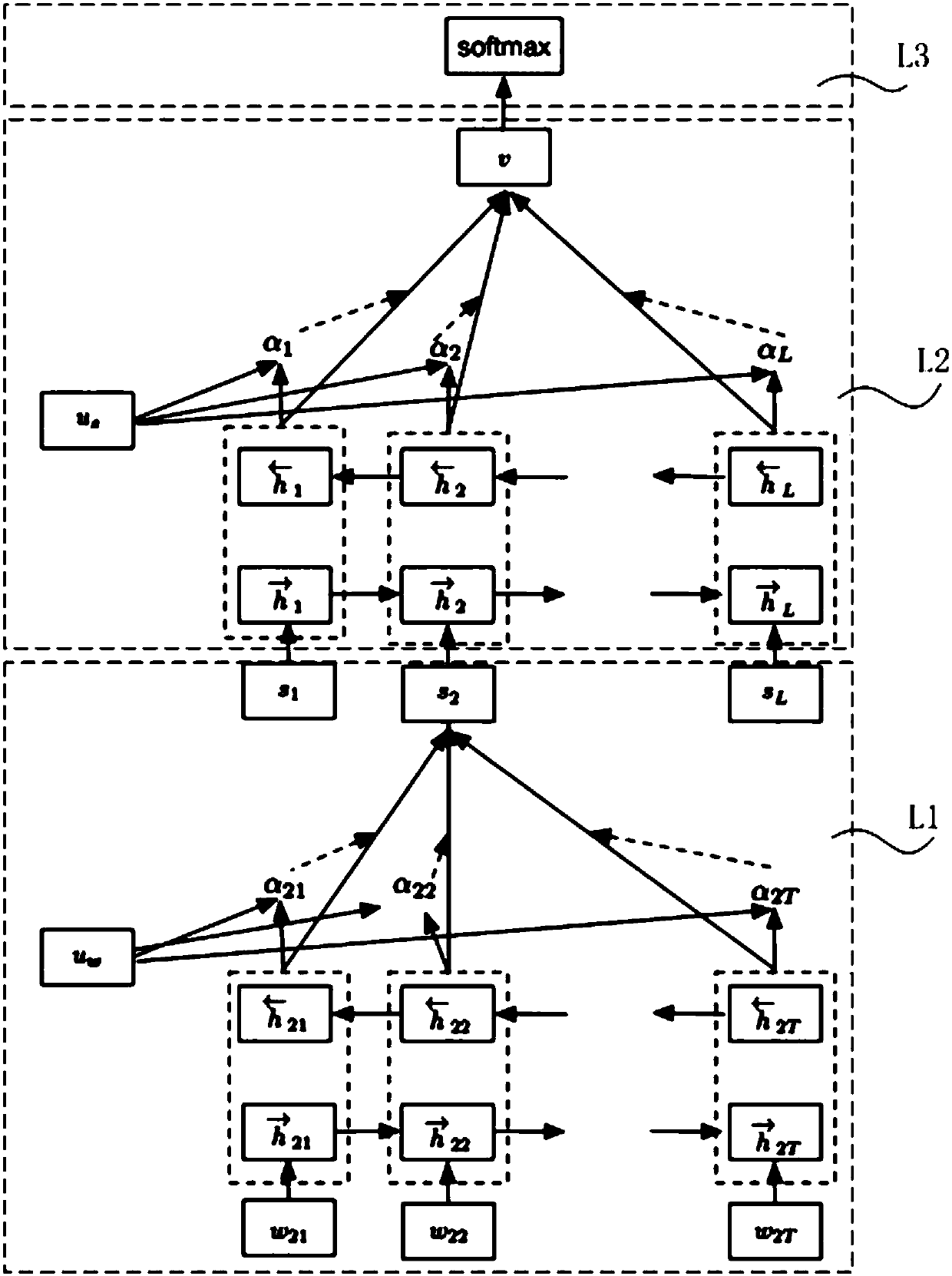

Game user behavior analysis method and computer readable storage medium

ActiveCN107944915AReduce churnImprove game contentBiological neural network modelsMarket data gatheringPaymentAttention model

The invention provides a user behavior analysis method and a computer readable storage medium. The method comprises the following steps that a neural network model including a cyclic neural network model and an attention model is trained by using user behavior data samples so as to acquire the corresponding weight value of each motor of different behavior types of users, wherein the user behaviordata samples include the motion sequences of different behavior types of users. The objective reasons of loss of the user or the behavior of no paying and other behaviors caused by the game can be analyzed in a short period of time by acquiring the weight relation between the motion sequences of the user in the game and the loss of the user and user payment, and the game content can be improved ina targeted way through the result obtained by analysis so that loss of the user can be reduced and even the user can be attracted back to the game.

Owner:BEIJING BYTEDANCE NETWORK TECH CO LTD

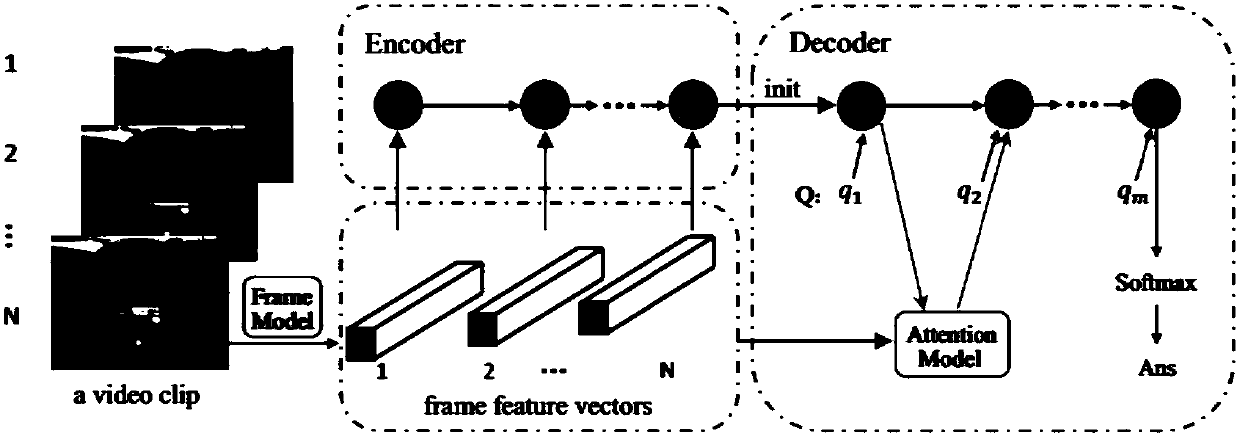

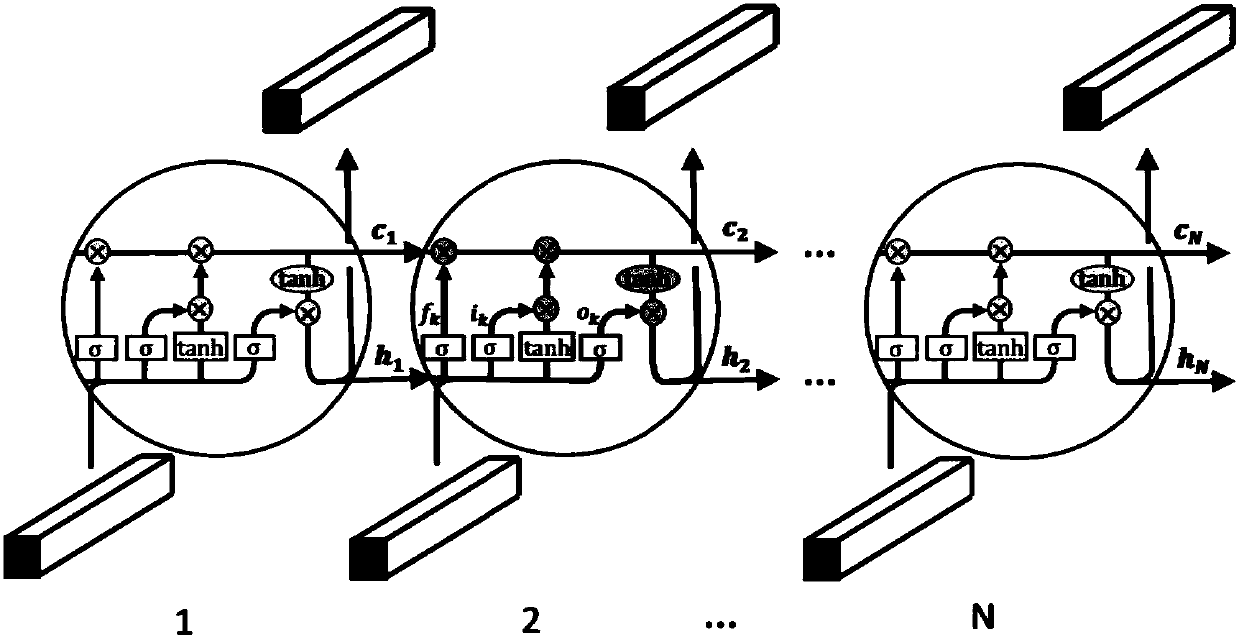

Video question-answering method based on attention model

InactiveCN107818306AEasy to parseSmooth connectionCharacter and pattern recognitionNeural architecturesFeature vectorAttention model

The invention discloses a video question-answering method based on an attention model. The method is designed based on an encoding and decoding framework, and the visual information and the semantic information of videos are learned in the end-to-end mode. Through the end-to-end design, the method can effectively strengthen the contact between the visual information and the semantic information. According to the method, a frame model is automatically designed to extract the feature vector of a video. During the encoding stage, the scene characteristic representation of the video is learned through the long-and-short-term memory network. The scene characteristic representation is used as an initial state to be input into a decoding-stage text model. Meanwhile, an attention mechanism added in the text model. By means of the attention mechanism, the contact between a video frame and a problem can be effectively enhanced, so that the semantic information of the video can be better analyzed. Therefore, the video question-answering method based on the attention model has a good effect.

Owner:TIANJIN UNIV

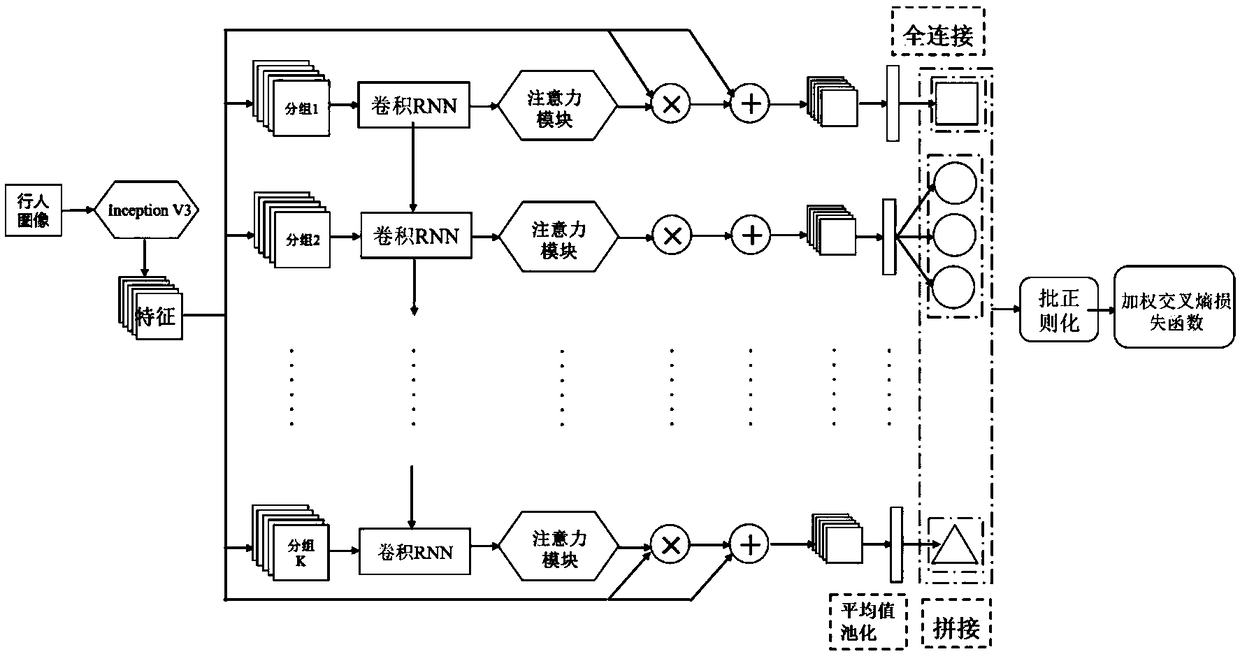

Recurrent neural network attention model-based pedestrian attribute recognition network and technology

ActiveCN108921051AHigh pedestrian attribute recognition accuracyAccuracy of highlight pedestrian attribute recognitionCharacter and pattern recognitionNeural architecturesAttention modelPrediction probability

The invention provides a recurrent neural network attention model-based pedestrian attribute recognition network and technology. The pedestrian attribute recognition network comprises a first convolutional neural network, a recurrent neural network and a second convolutional neural network, wherein the first convolutional neural network is used for extracting a whole body image feature of a pedestrian by taking an original body image of the pedestrian as an input; the recurrent neural network is used for outputting an attention heat map of an attribute group concerned at the current moment andlocally highlighted pedestrian features by taking the whole body image feature of the pedestrian as a first input and taking an attention heat map of an attribute group concerned at the last moment as a second input; and the second convolutional neural network is used for outputting an attribute prediction probability of the currently concerned group by taking the locally highlighted pedestrian feature as an input. According to the network and technology, a recurrent neural network attention model is utilized to mine an association relationship of pedestrian attribute area space positions soas to highlight positions of areas corresponding to attributes in images, so that higher pedestrian attribute recognition precision is realized.

Owner:TSINGHUA UNIV

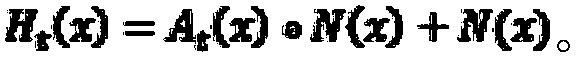

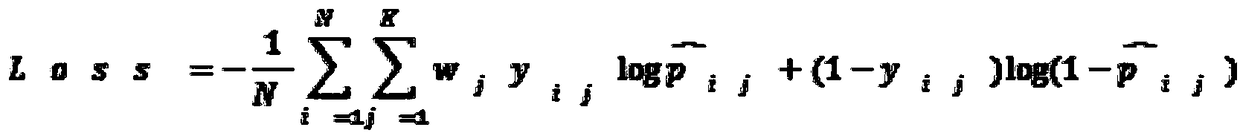

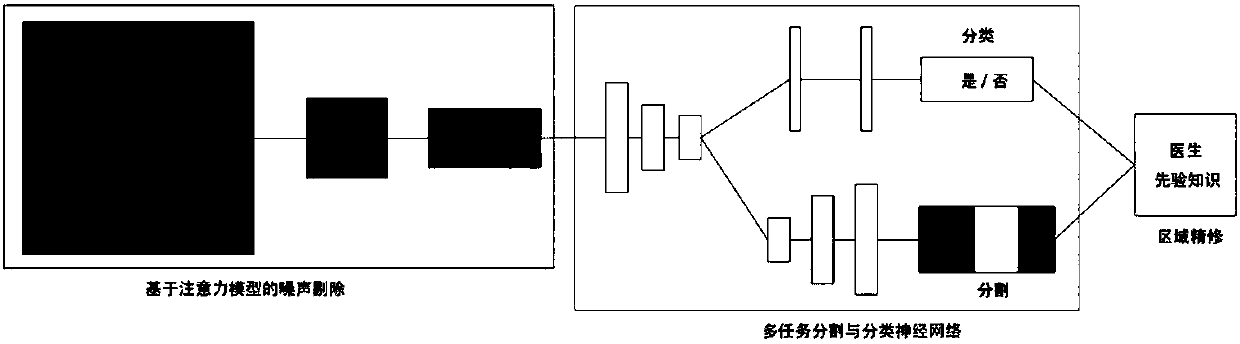

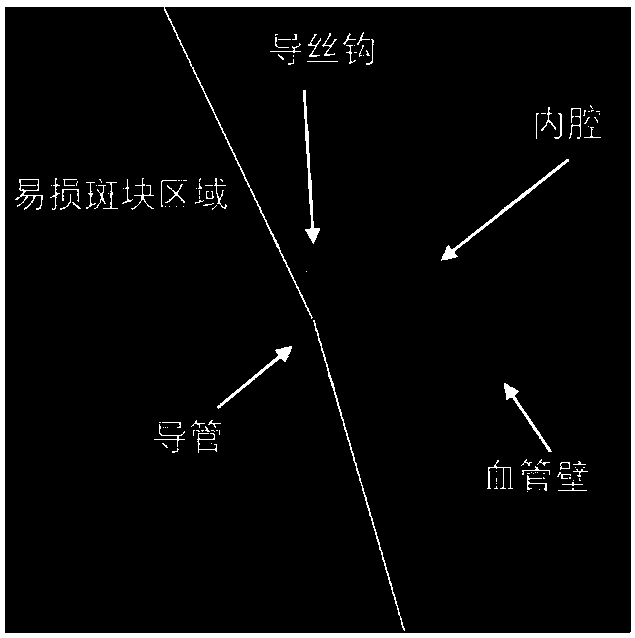

Cardiovascular vulnerable plaque recognition method and system based on attention model and multi-task neural network

ActiveCN108492272APrecise positioningThe final test result is accurateImage enhancementImage analysisAttention modelPattern recognition

The invention discloses a cardiovascular vulnerable plaque recognition method and system based on an attention model and a multi-task neural network. The method comprises the steps of (1) eliminatingnoise in an original polar coordinate image based on a top-down attention model, (2) classifying and segmenting a vulnerable plaque image in a preprocessed image by using a multi-task neural network,and (3) performing regional refinement on a classified and segmented vulnerable plaque image. The system includes a subsystem which eliminates the noise in the original polar coordinate image to obtain the preprocessed image based on the top-down attention model, a subsystem which classifies and segments a vulnerable plaque in the preprocessed image by using the multi-task neural network and a subsystem which carries out regional refinement on the classified and segmented vulnerable plaque image, and the subsystems are connected in order. The noise interference of a blood vessel to subsequentvulnerable plaque recognition is eliminated, and thus the positioning of the vulnerable plaque is more accurate.

Owner:XI AN JIAOTONG UNIV

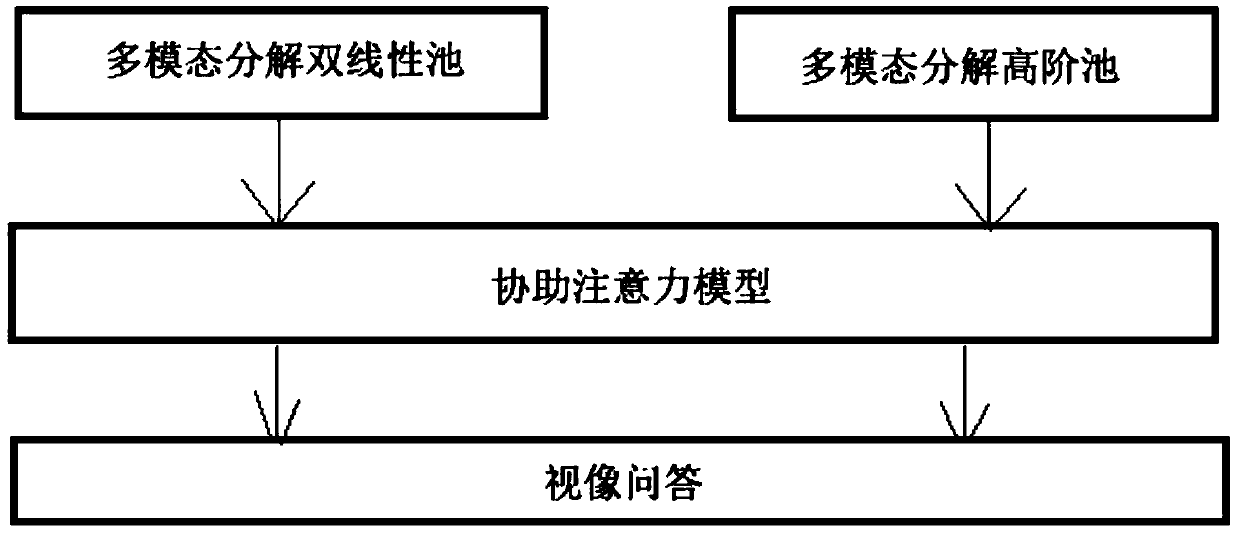

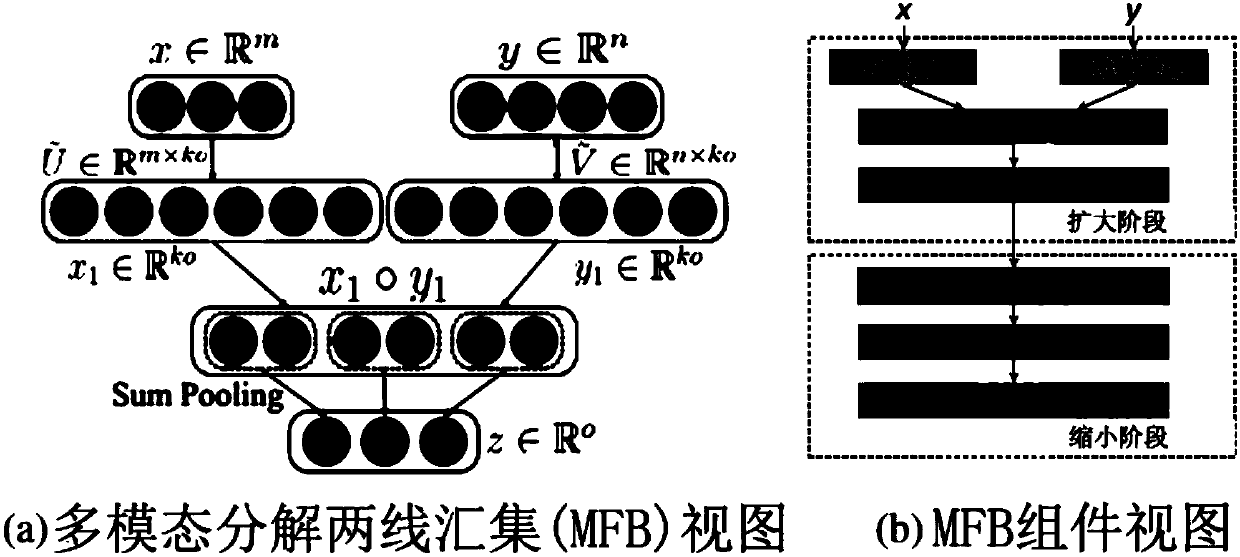

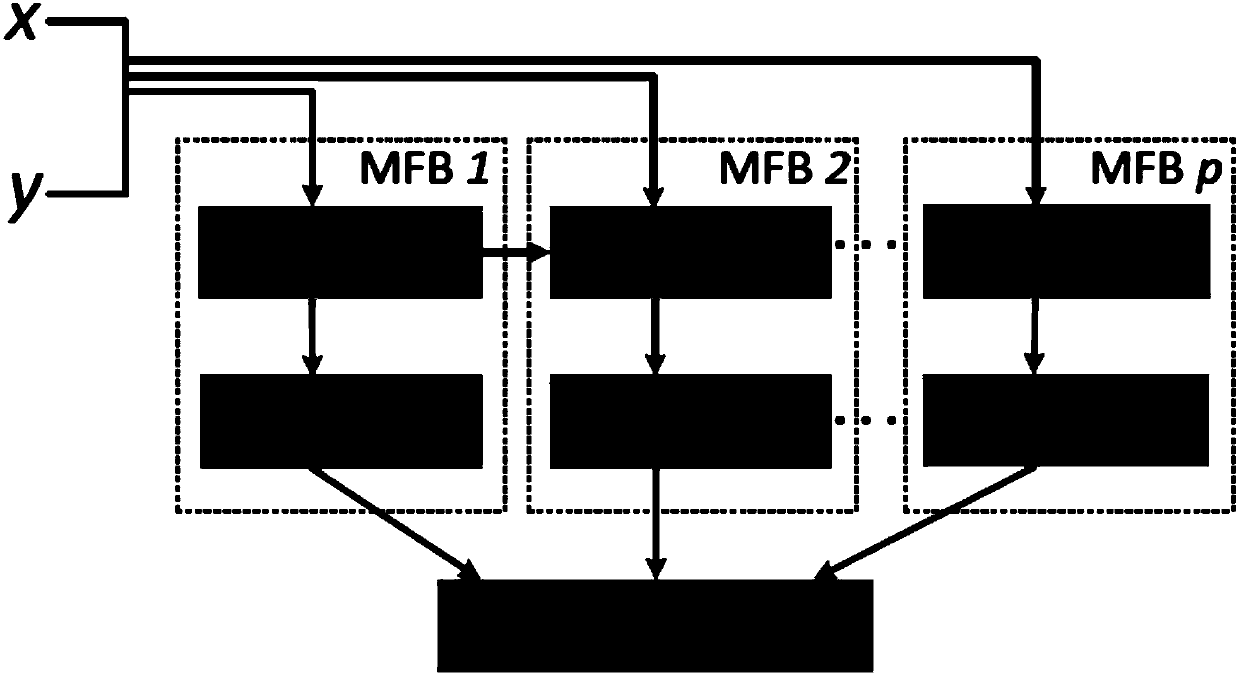

Visual question and answer method based on multi-modal decomposition model

InactiveCN107679582AImprove performanceEffective understandingCharacter and pattern recognitionNetwork architectureMesh grid

A visual question and answer method based on a multi-modal decomposition model is provided. The method is characterized in that the image is trained in an ImageNet dataset and image features are extracted, the question is marked as texts into feature vectors; a collaborative attention model is introduced into the basic network architecture, image and question related features are learned, and fine-grained correlation among multi-modal features are characterized; and multi-modal features enter a multi-modal decomposition bilinear pool (MFB) or a multi-modal decomposition high order cell (MFH) module image to generate a fusion image question feature z, and the z is put into the classifier to predict the best matching answer. According to the method provided by the present invention, the collaborative attention model is used to predict the correlation between each spatial grid in the image and the question, and the best matching answer can be accurately predicted in a facilitated manner;and in combination with the image attention mechanism, the model can effectively understand which image region is important to the question, so that the performance of the model and the accuracy of the question and answer can be significantly improved.

Owner:SHENZHEN WEITESHI TECH

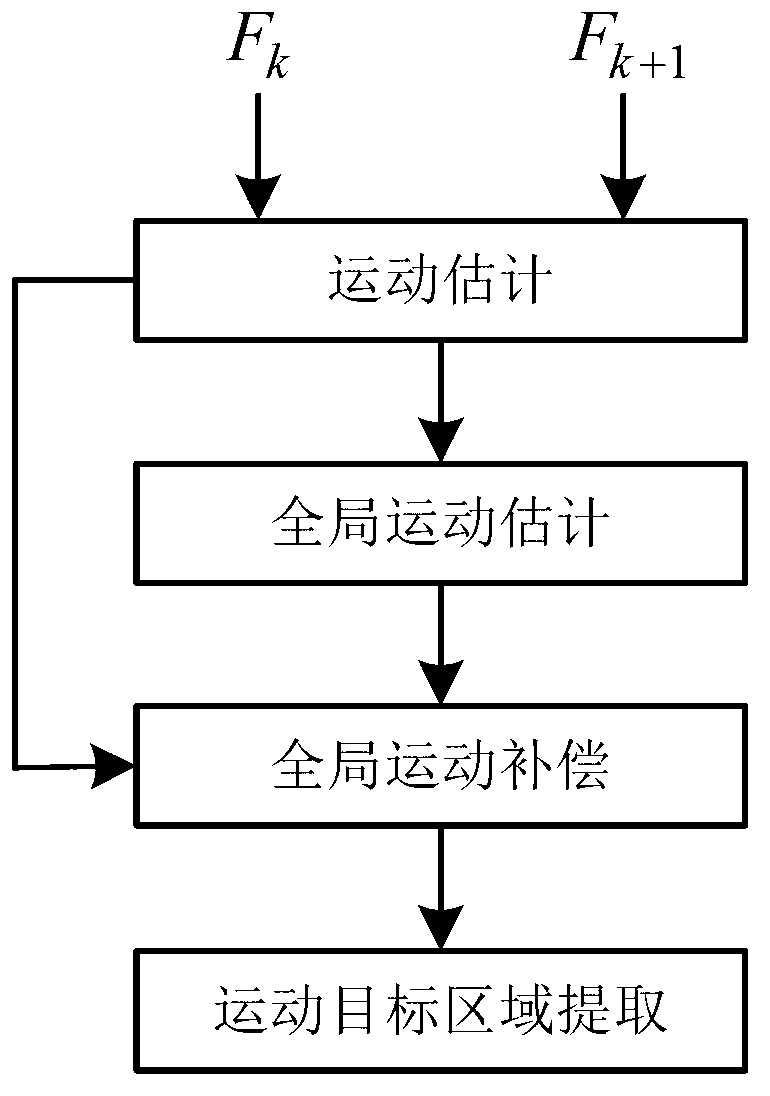

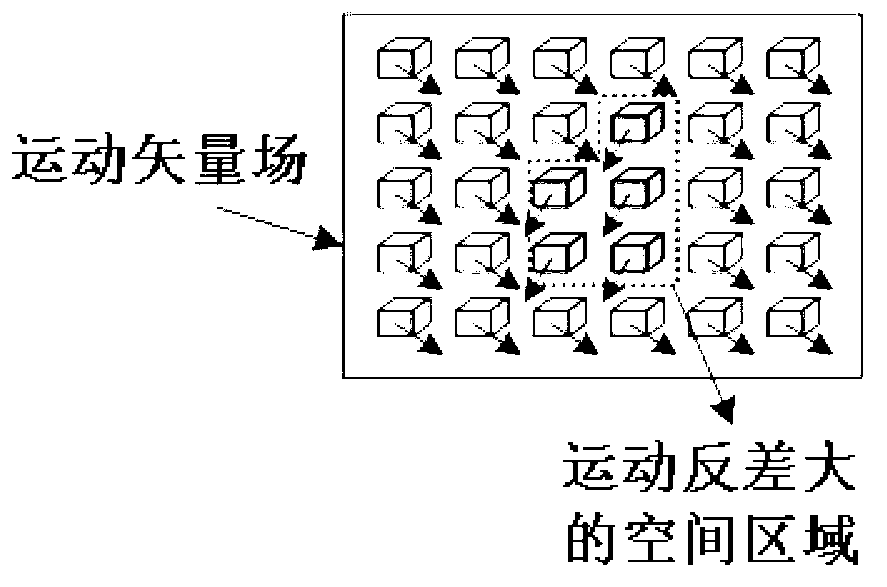

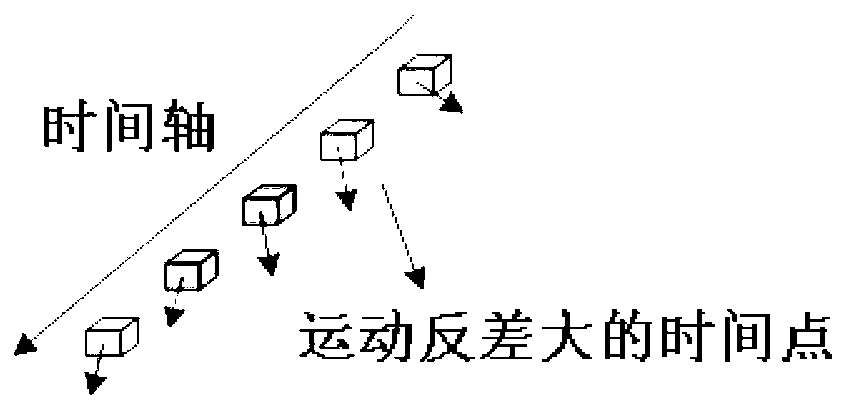

Target detection method based on time-space multiscale motion attention analysis

InactiveCN103065326AEfficient detectionReduce the impact of object detectionImage analysisAttention modelMotion vector

The invention discloses a target detection method based on a time-space multiscale motion attention analysis. The method comprises a first step of creating a time-space motion blending attention model, a second step of carrying out time-space filtering on a motion vector field, wherein motion vector fields with different time scales are obtained by filtering the motion vector field on a time dimension, and an optimum time scale is obtained according to a certain principle, and a third step of carrying out multiscale motion attention blending. The target detection method based on the time-space multiscale motion attention analysis has the advantages that an appropriate time scale is selected to deal with the motion vector field to carry out attention calculation, the effects on the target measurement by the factors such as light stream estimation errors are reduced, boundedness of a traditional method is overcome, moving target zones can be effectively detected in an overall situation motion scene, and the target detection method based on the time-space multiscale motion attention analysis has good robustness compared with the same kind of methods.

Owner:XIAN UNIV OF TECH

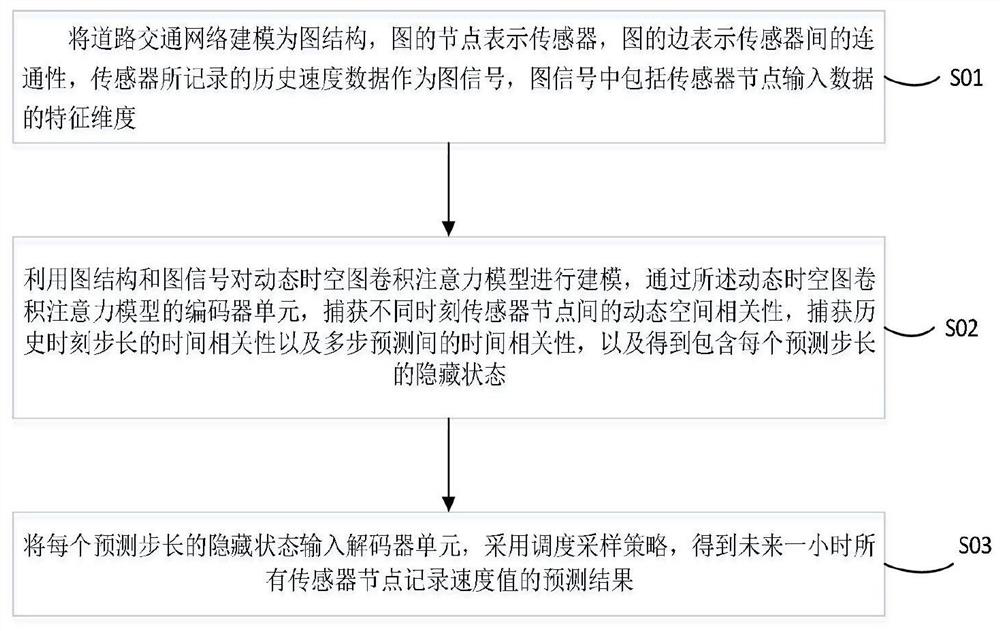

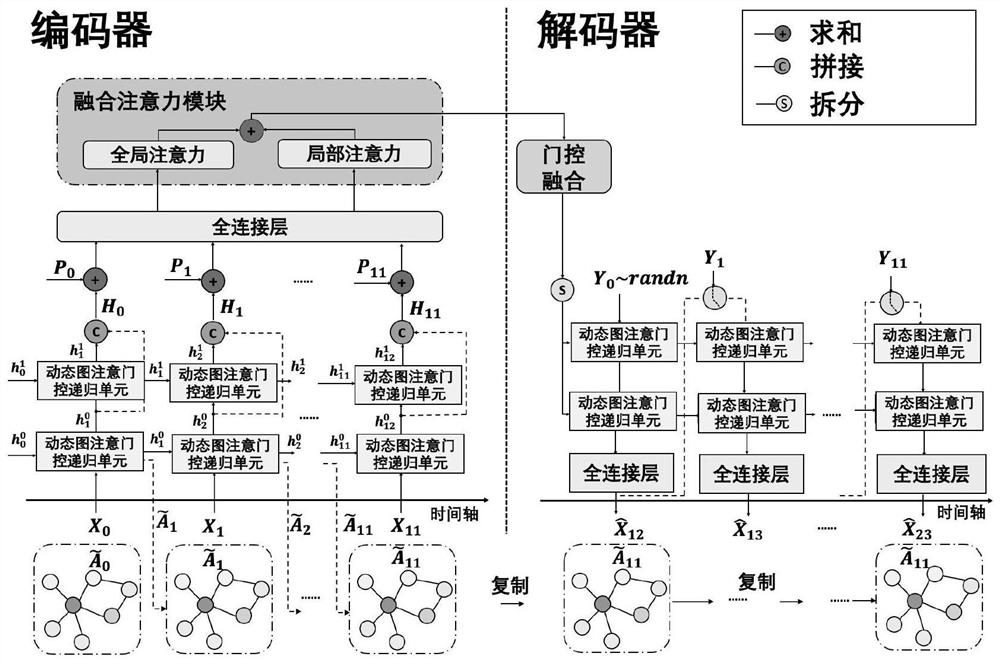

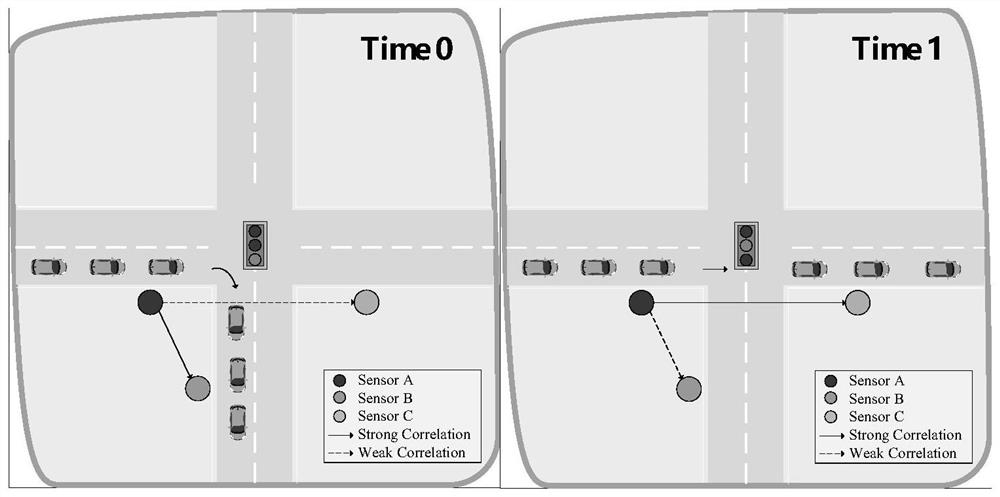

Traffic prediction method and device based on dynamic space-time diagram convolution attention model

PendingCN113487088ACapturing real-time spatial dependenciesDetection of traffic movementForecastingAttention modelTraffic prediction

The invention discloses a traffic prediction method and device based on a dynamic space-time diagram convolution attention model. Comprising the following steps: modeling a road traffic network into a graph structure; modeling a dynamic space-time diagram convolution attention model by using the diagram structure and the diagram signal; through an encoder unit of the dynamic space-time diagram convolution attention model, capturing dynamic space correlation among sensor nodes at different moments, capturing time correlation of step lengths at historical moments and time correlation among multi-step prediction, and obtaining a hidden state including each prediction step length; and enabling the decoder unit to receive the hidden state of each prediction step length, and obtaining a prediction result of recording speed values of all sensor nodes in the next one hour by adopting a scheduling sampling strategy. Experiments prove that the method of the invention can effectively obtain a traffic prediction result in the next one hour, and the model of the method of the invention exceeds SOTA.

Owner:HARBIN INST OF TECH SHENZHEN GRADUATE SCHOOL

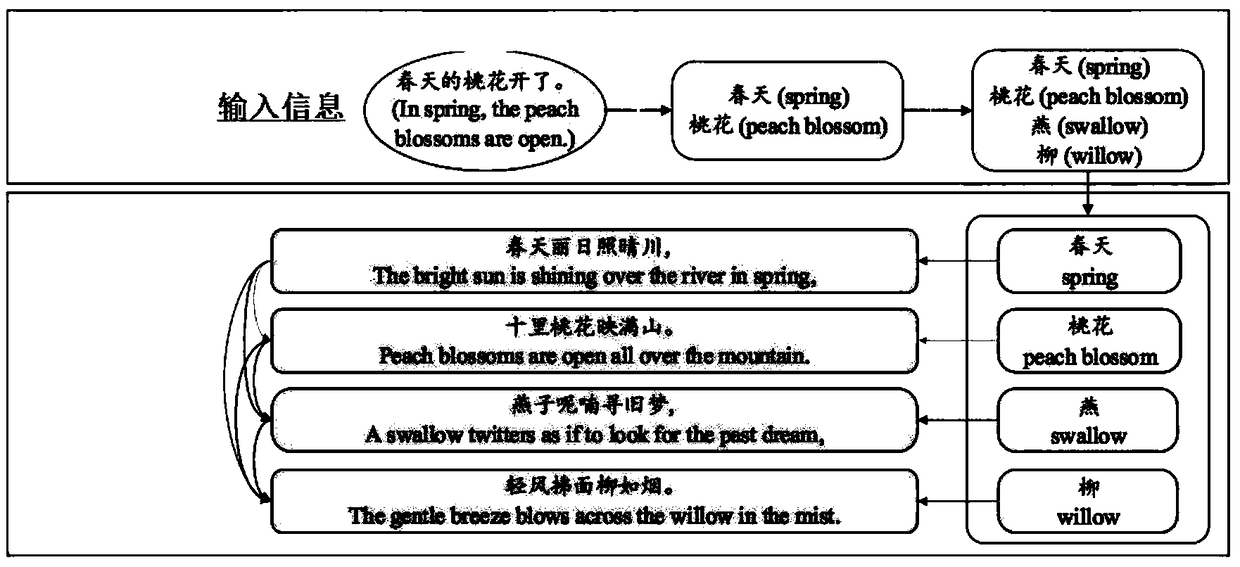

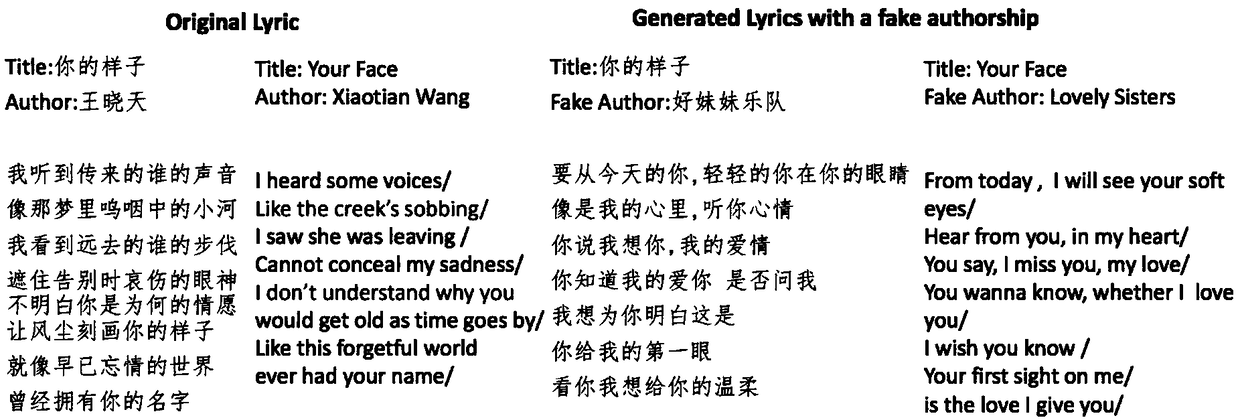

Text generation method, apparatus, electronic device, and computer-readable medium

ActiveCN109086408AFully generatedText validNeural learning methodsSpecial data processing applicationsInformation processingAttention model

The present disclosure relates to a text generation method, an apparatus, an electronic device and a computer readable medium. The invention relates to the field of computer information processing. The method comprises the following steps: determining a subject term set, a song title, a song rhyme foot and a paragraph structure according to the input information of a user; and generating text through the set of subject words, the song name, the song rhyme and the paragraph structure, and a depth learning model with an attention model. The present disclosure relates to a text generation method,an apparatus, an electronic device and a computer readable medium capable of generating a variety of effective poems conforming to music.

Owner:TENCENT TECH (SHENZHEN) CO LTD

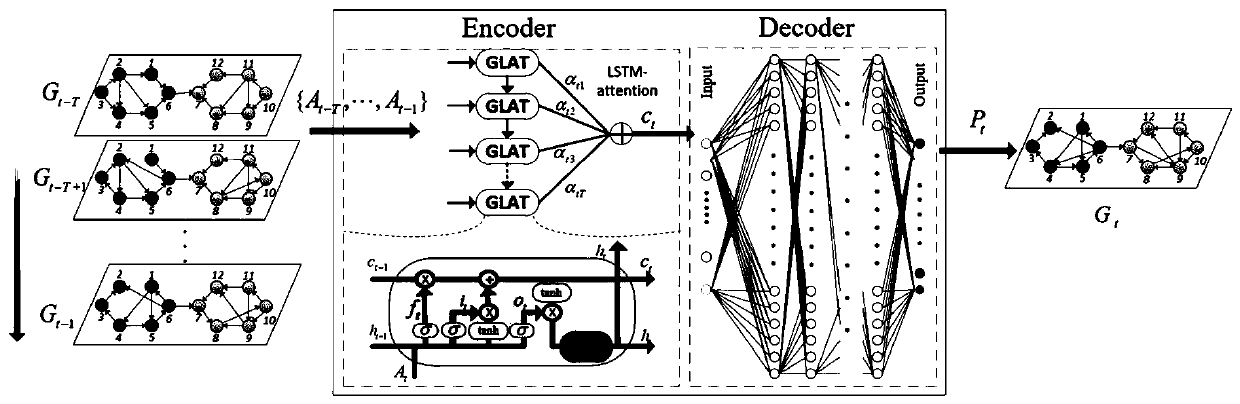

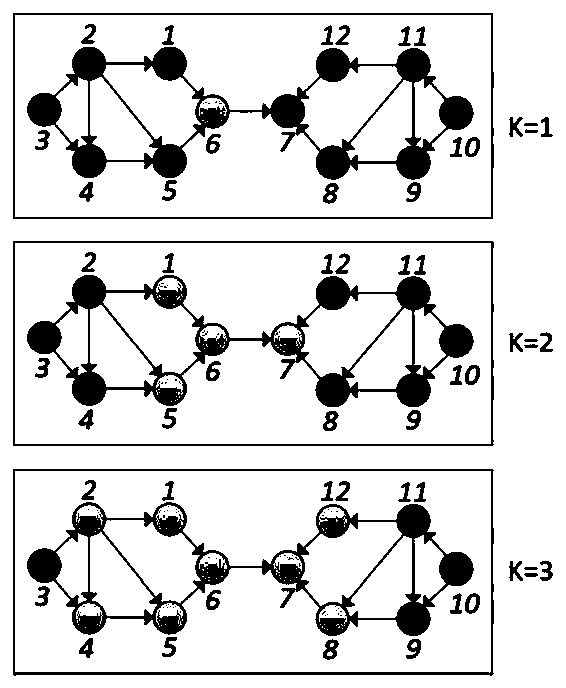

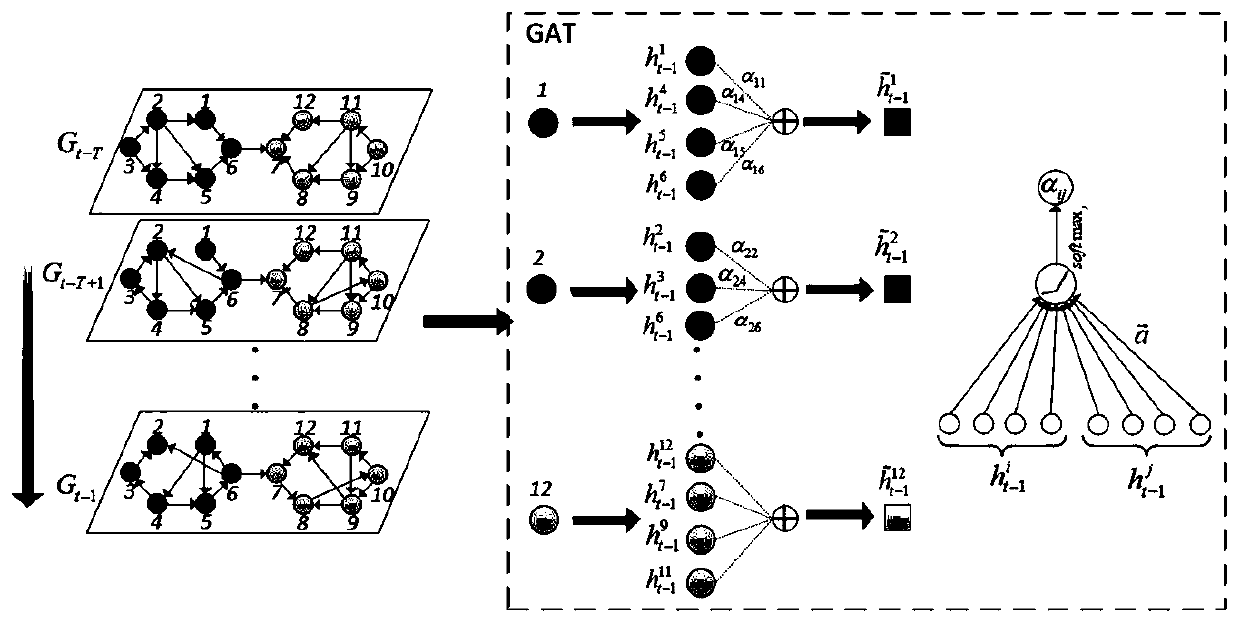

Dynamic link prediction method based on space-time attention deep model

The invention discloses a dynamic link prediction method for a space-time attention deep model, and the method comprises the following steps: taking an adjacent matrix A corresponding to a dynamic network as an input, and the dynamic network comprises a social network, a communication network, a scientific cooperation network or a social security network; extracting a hidden layer vector {ht-T,..., ht-1} from the hidden layer vectors {ht-T,..., ht-1} by means of an LSTM-attention model, calculating a context vector at according to the hidden layer vectors {ht-T,..., ht-1} at T moments, and inputting the context vector at the T moments into a decoder as a space-time feature vector; and decoding the input time feature vector at by adopting a decoder, and outputting a probability matrix whichis obtained by decoding and is used for representing whether a link exists between the nodes or not, thereby realizing the prediction of the dynamic link. According to the dynamic link prediction method, link prediction of the end-to-end dynamic network is realized by extracting the spatial and temporal characteristics of the dynamic network.

Owner:ZHEJIANG UNIV OF TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com