Patents

Literature

1680 results about "Network output" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A network basic input output system (NetBIOS) is a system service that acts on the session layer of the OSI model and controls how applications residing in separate hosts/nodes communicate over a local area network. NetBIOS is an application programming interface (API), not a networking protocol as many people falsely believe.

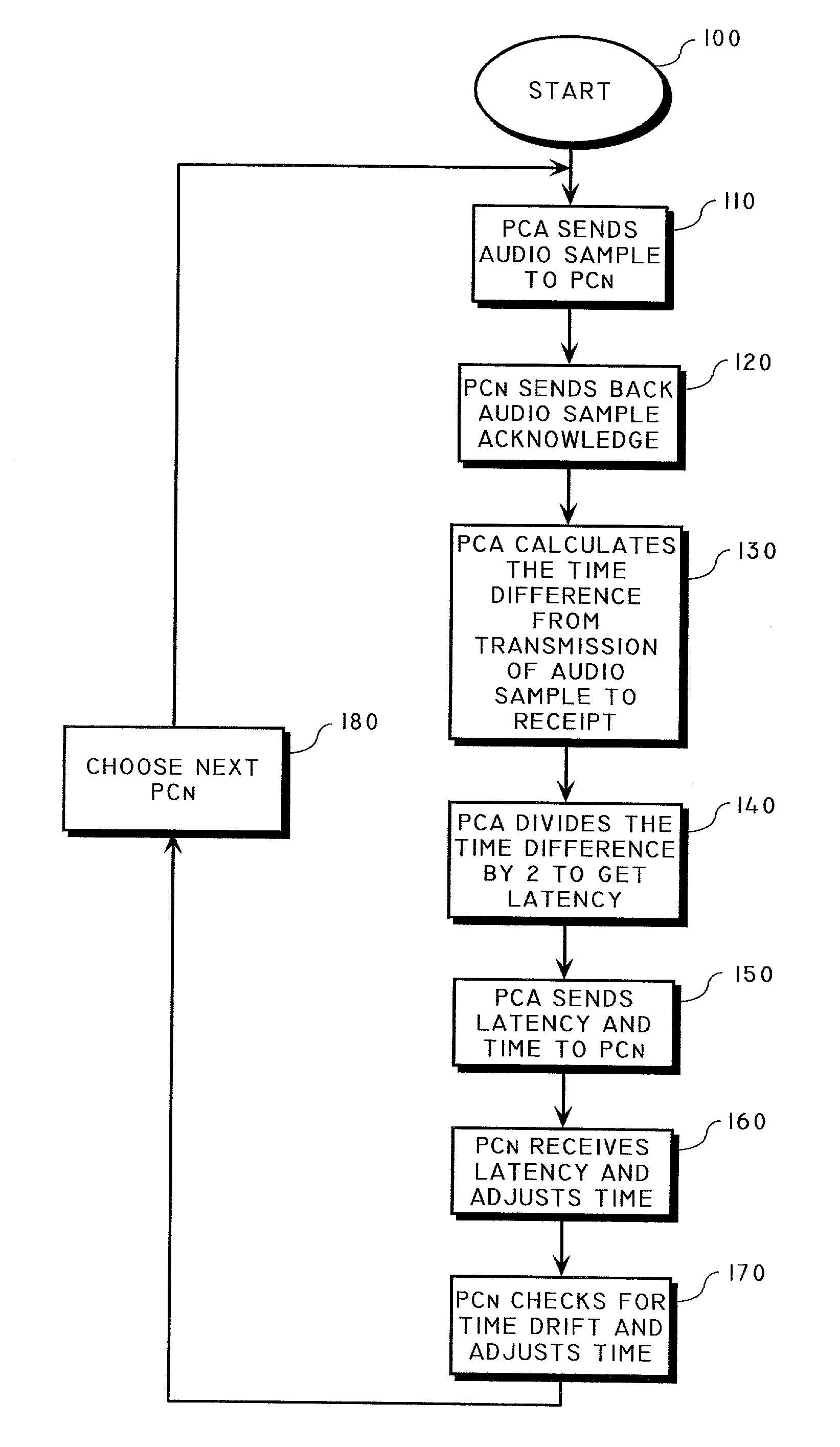

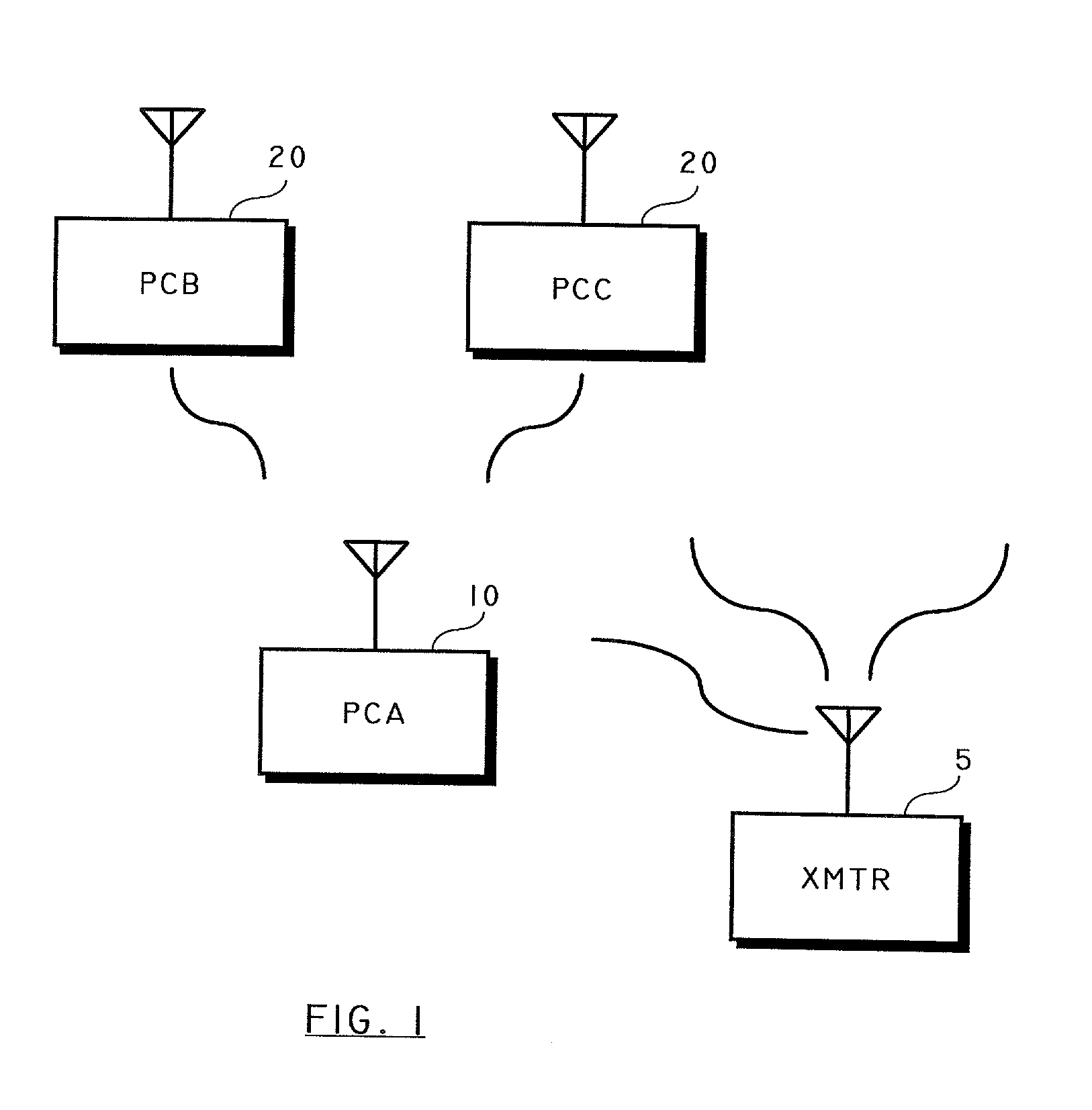

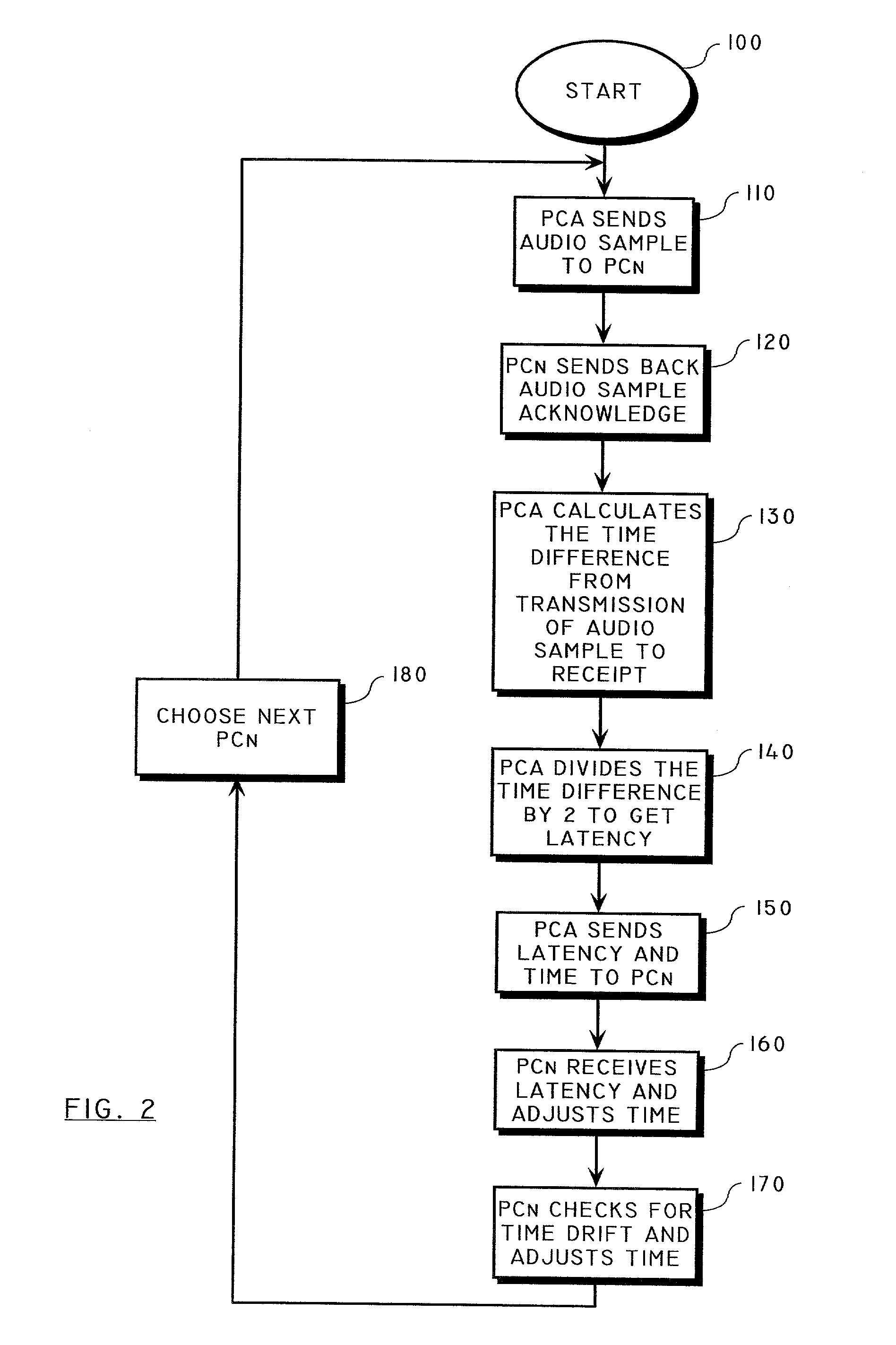

Method of synchronizing the playback of a digital audio broadcast using an audio waveform sample

ActiveUS7392102B2Time-division multiplexSpecial data processing applicationsNetwork outputDigital audio broadcasting

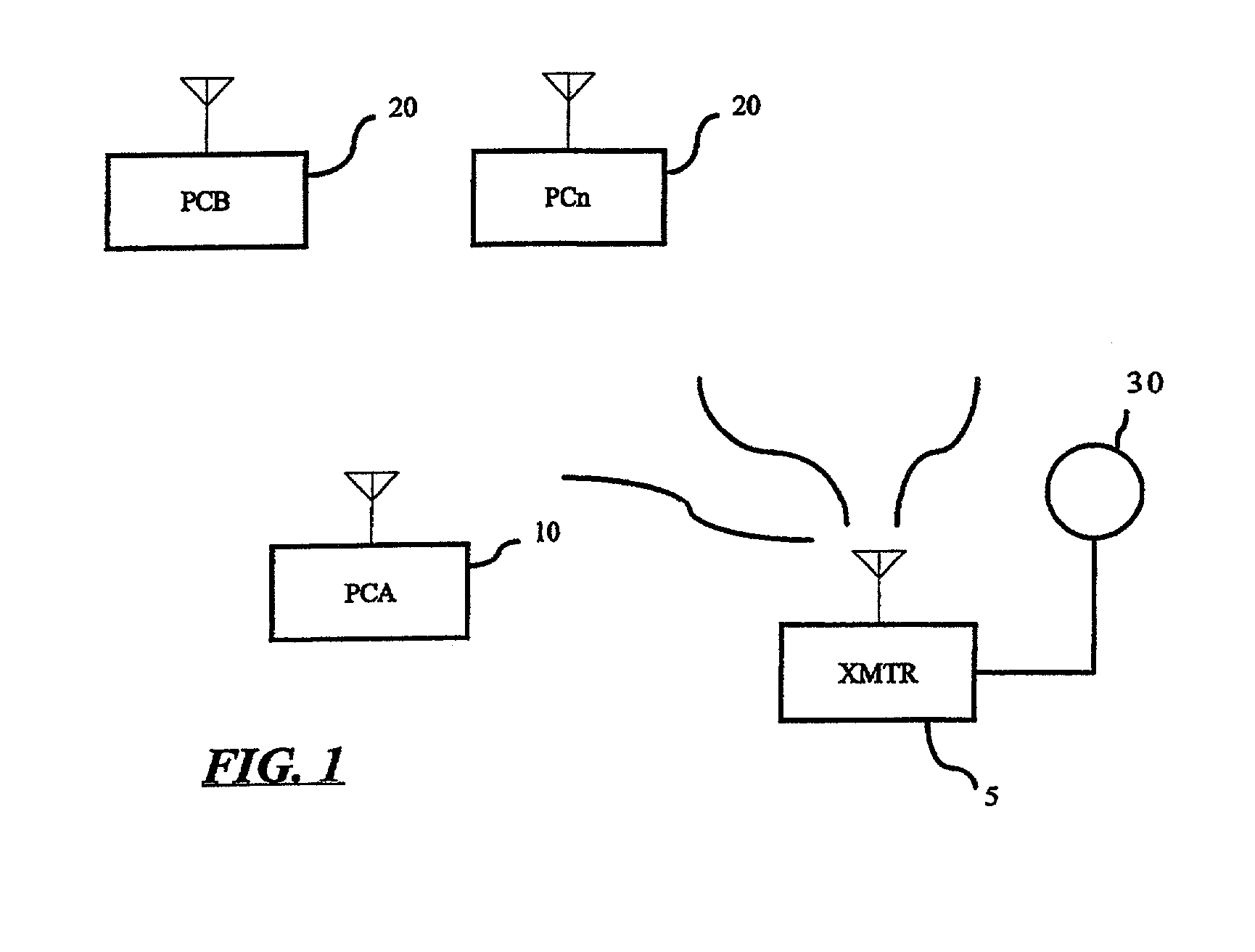

A method is provided for synchronizing the playback of a digital audio broadcast on a plurality of network output devices by inserting an audio waveform sample in an audio stream of the digital audio broadcast. The method includes the steps of outputting a first unique signal as part of an audio signal which has unique identifying characteristics and is regularly occurring, outputting a second unique signal so that the time between the first and second unique signals must be significantly greater than the latency between sending and receiving devices, and coordinating play of audio by an audio waveform sample assuring the simultaneous output of the audio signal from multiple devices. An algorithm in hardware, software, or a combination of the two identifies the audio waveform sample in the audio stream. The digital audio broadcast from multiple receivers does not present to a listener any audible delay or echo effect.

Owner:GATEWAY

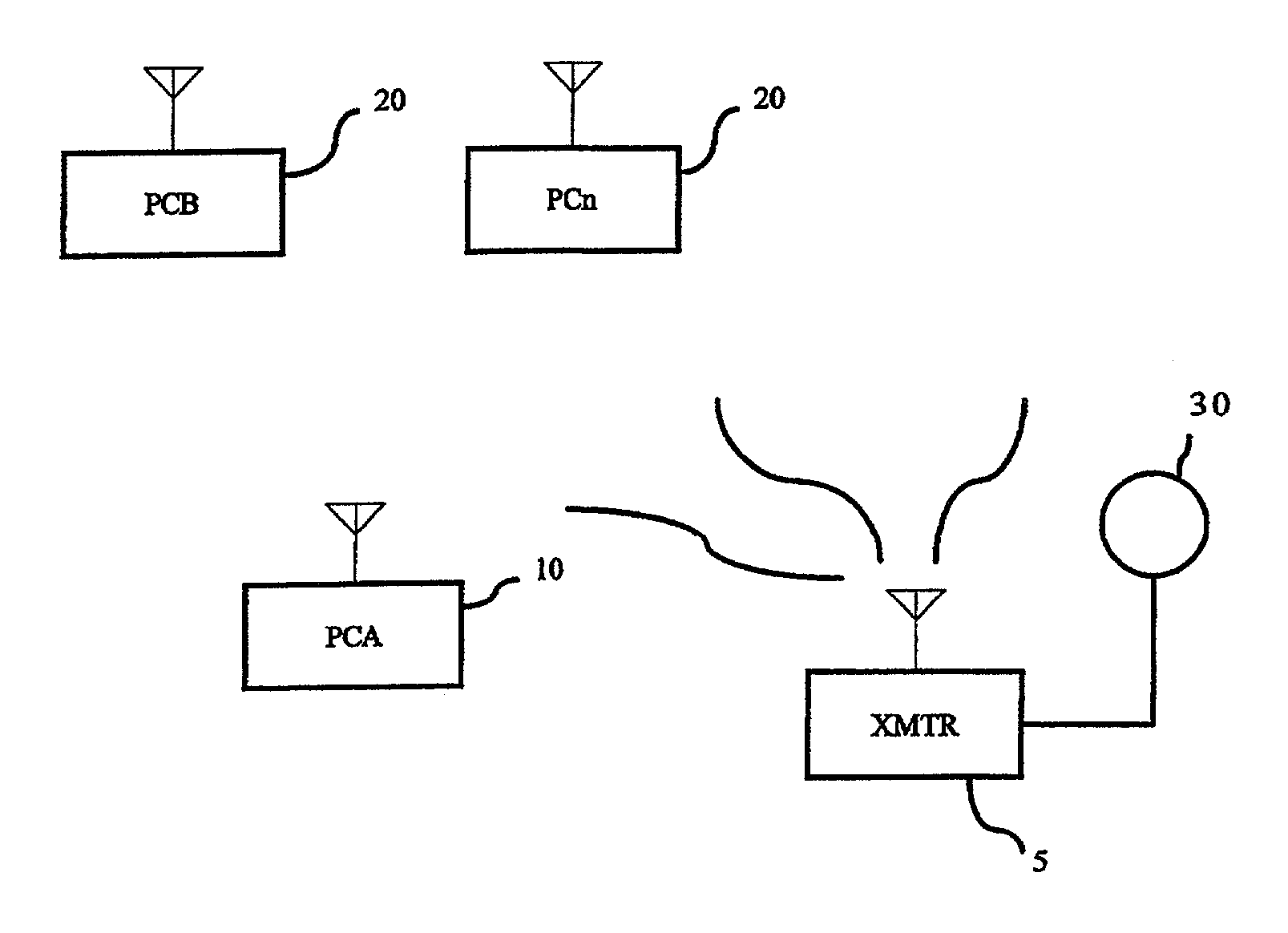

Method to synchronize playback of multicast audio streams on a local network

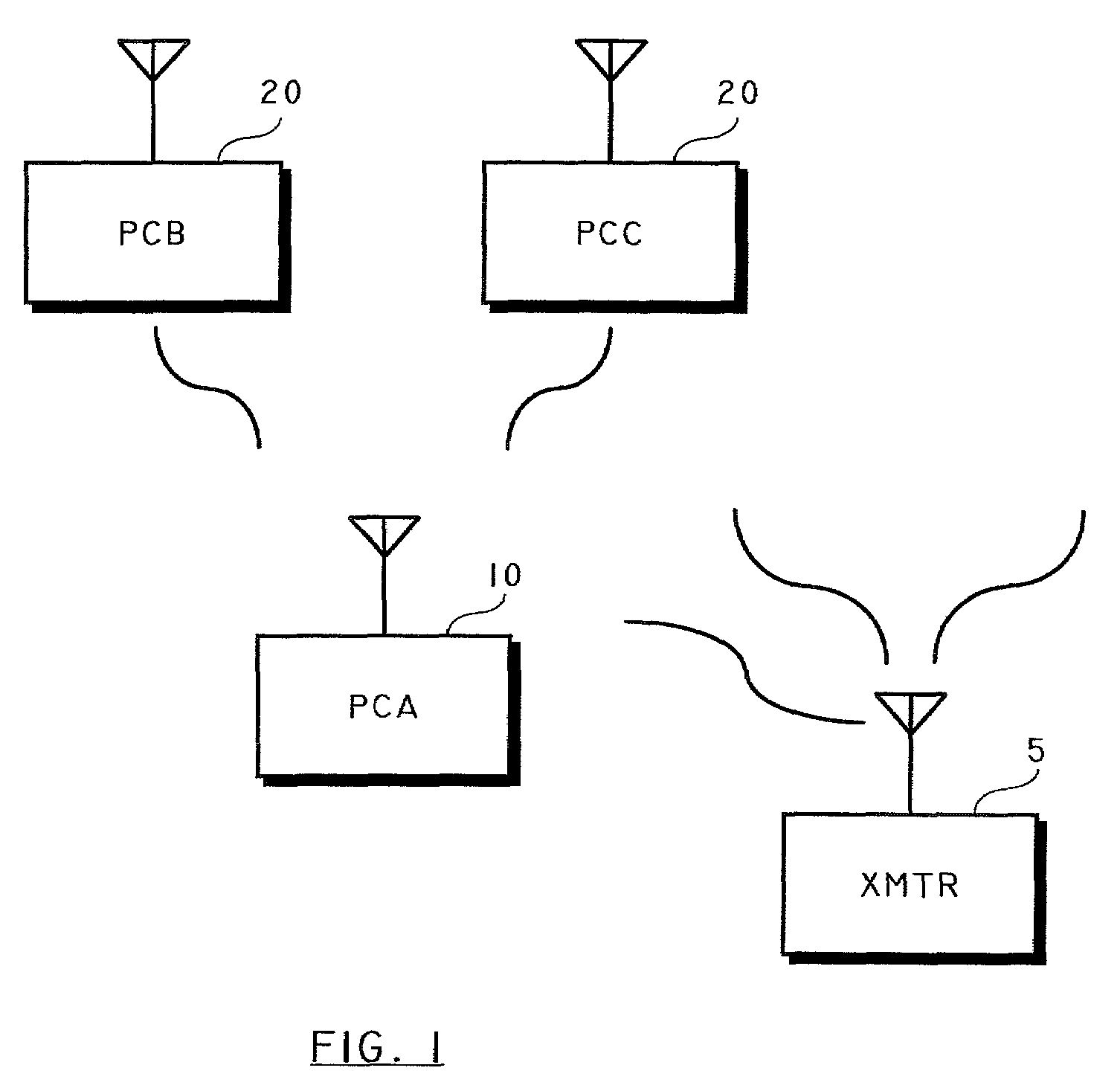

A method is provided for synchronizing the playback of a digital audio broadcast on a plurality of network output devices using a microphone near a source, embedded control codes, and the audio patterns from the network output devices. An optional, additional manual adjustment method relies on a graphical user interface for adjustment and audible pulses from the devices which are to be synchronized. Synchronization of the audio is accomplished with clock synchronization of the network output devices. The digital audio broadcast from multiple receivers does not present to a listener any audible delay or echo effect.

Owner:GATEWAY

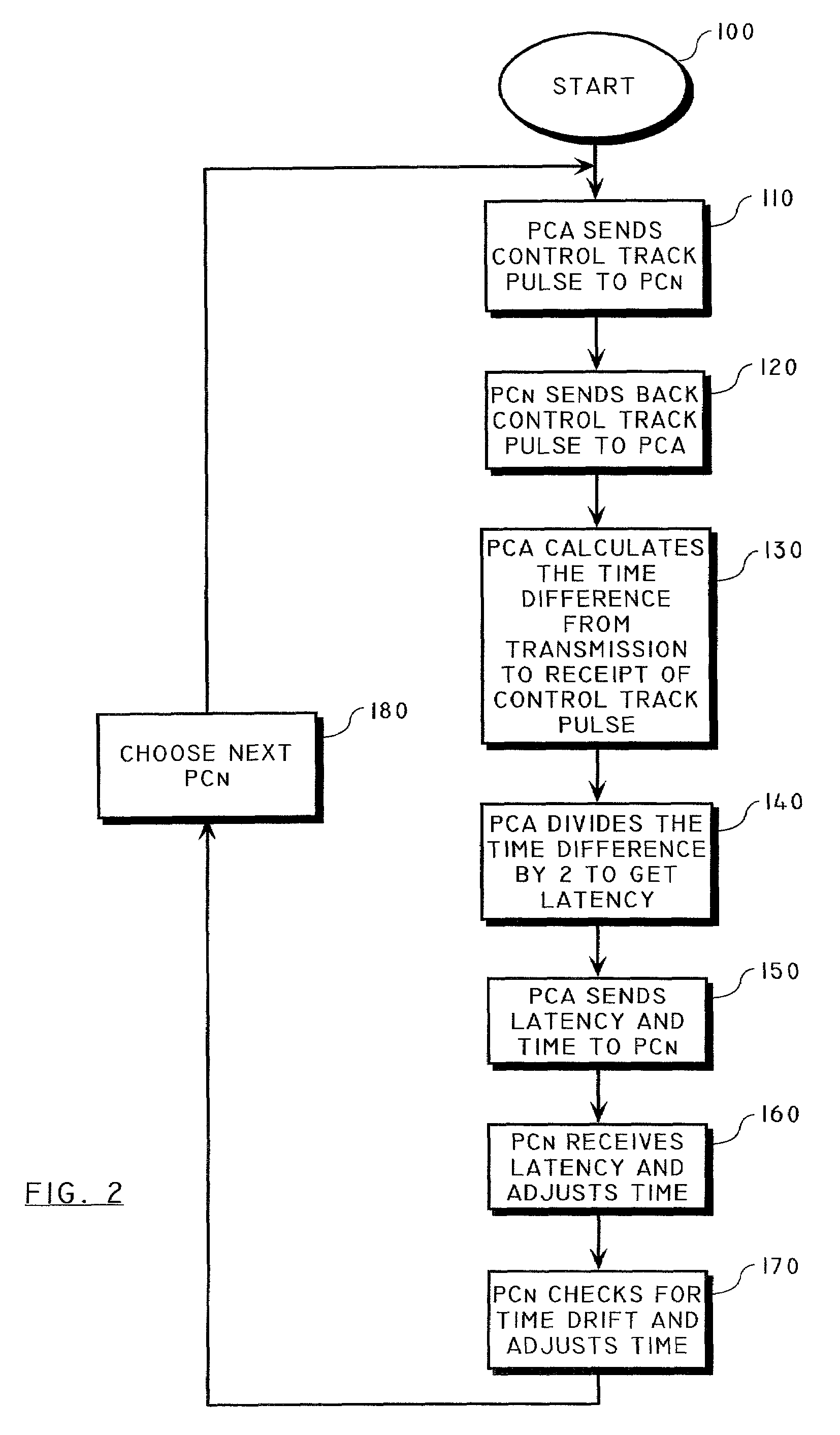

Method of synchronizing the playback of a digital audio broadcast by inserting a control track pulse

ActiveUS7209795B2Broadcast transmission systemsTime-division multiplexNetwork outputDigital audio broadcasting

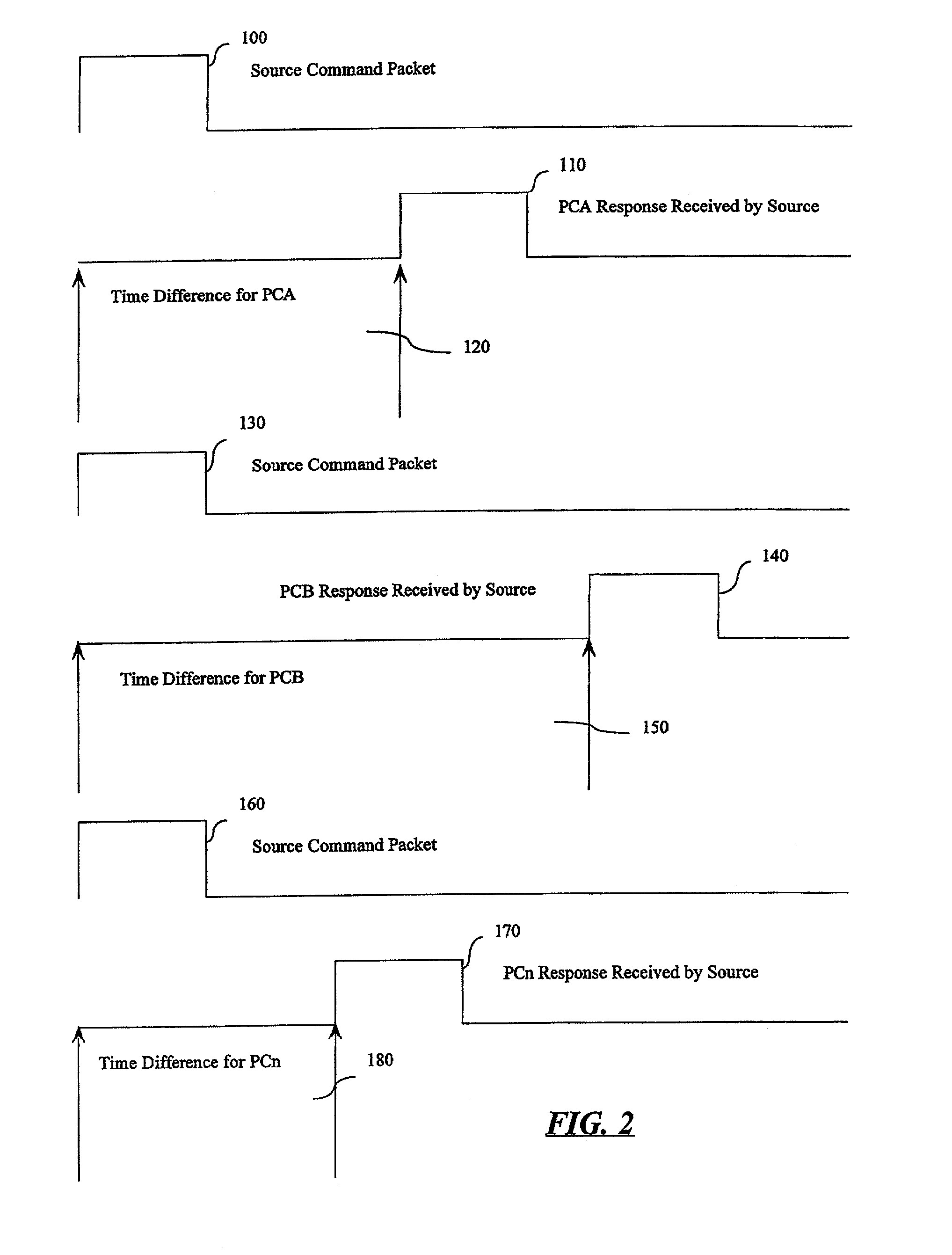

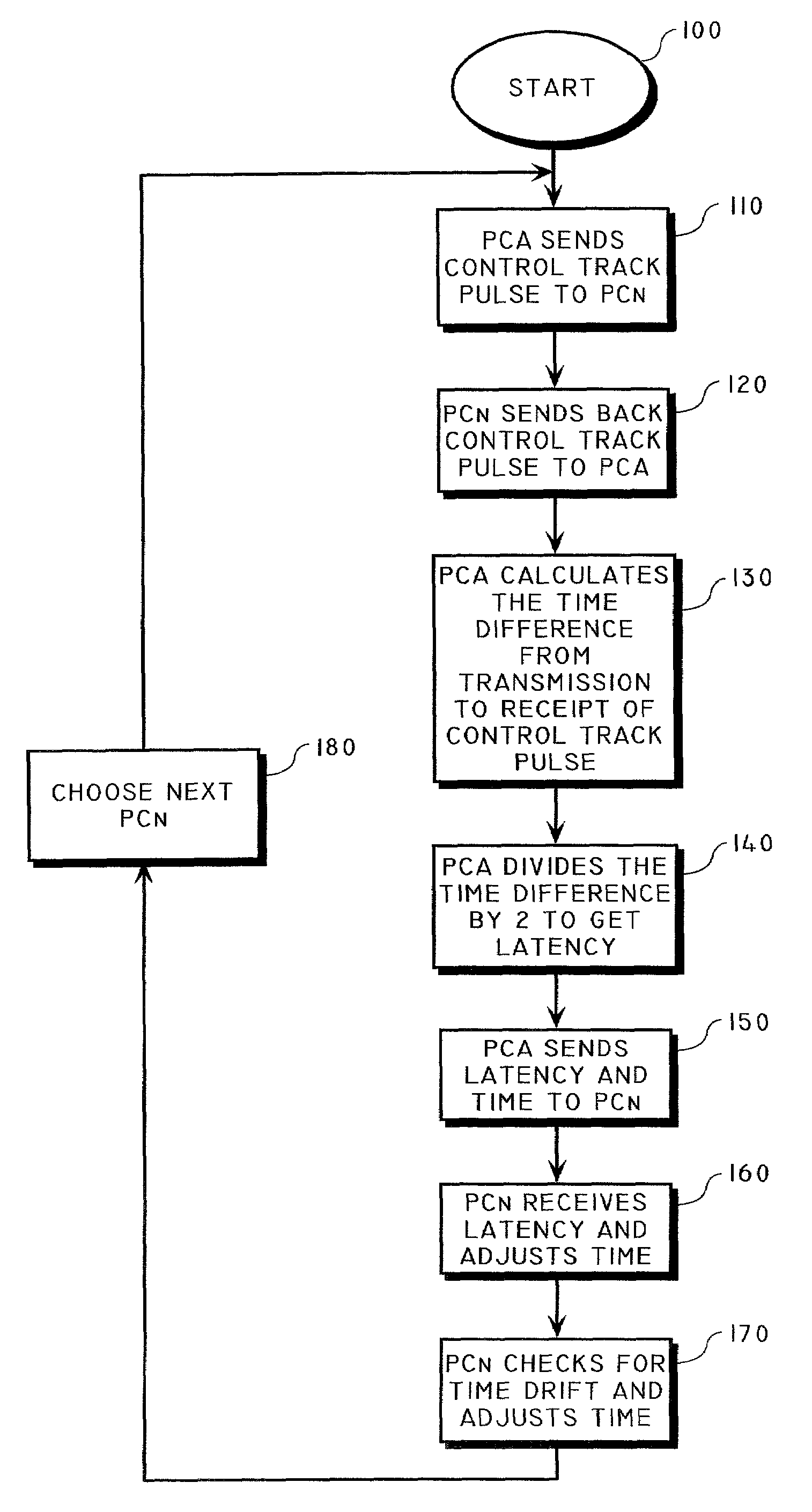

A method is provided for synchronizing the playback of a digital audio broadcast on a plurality of network output devices by inserting a control track pulse in an audio stream of the digital audio broadcast. The method includes the steps of outputting a first control track pulse as part of an audio signal which has unique identifying characteristics and is regularly occurring, outputting a second control track pulse so that the time between the first and second control track pulses must be significantly greater than the latency between sending and receiving devices, and coordinating play of audio at the time of the occurrence of the transmission of the second control track pulse assuring the simultaneous output of the audio signal from multiple devices. The control track pulses have a value unique from any other portion of the audio stream. The digital audio broadcast from multiple receivers does not present to a listener any audible delay or echo effect.

Owner:GATEWAY

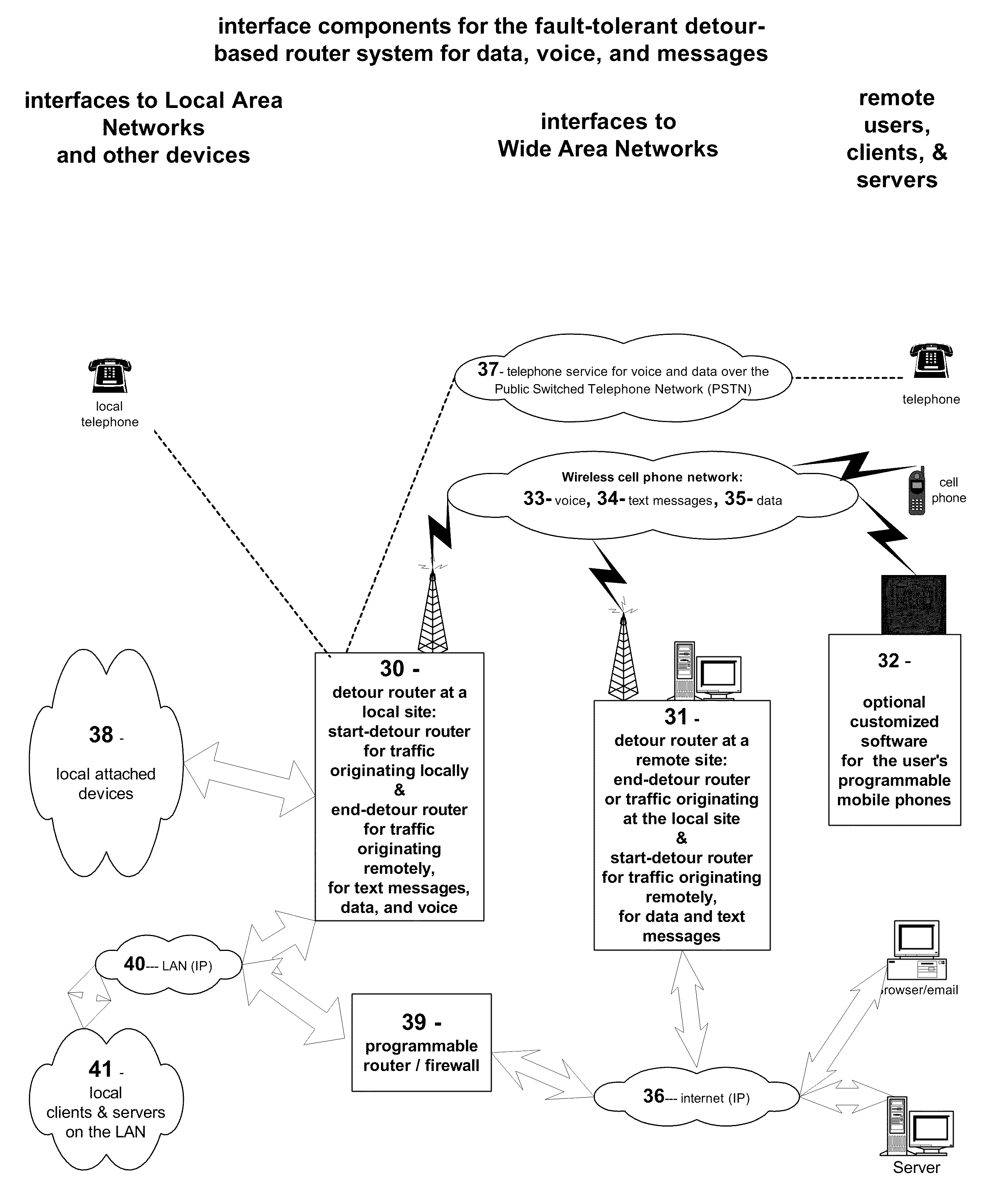

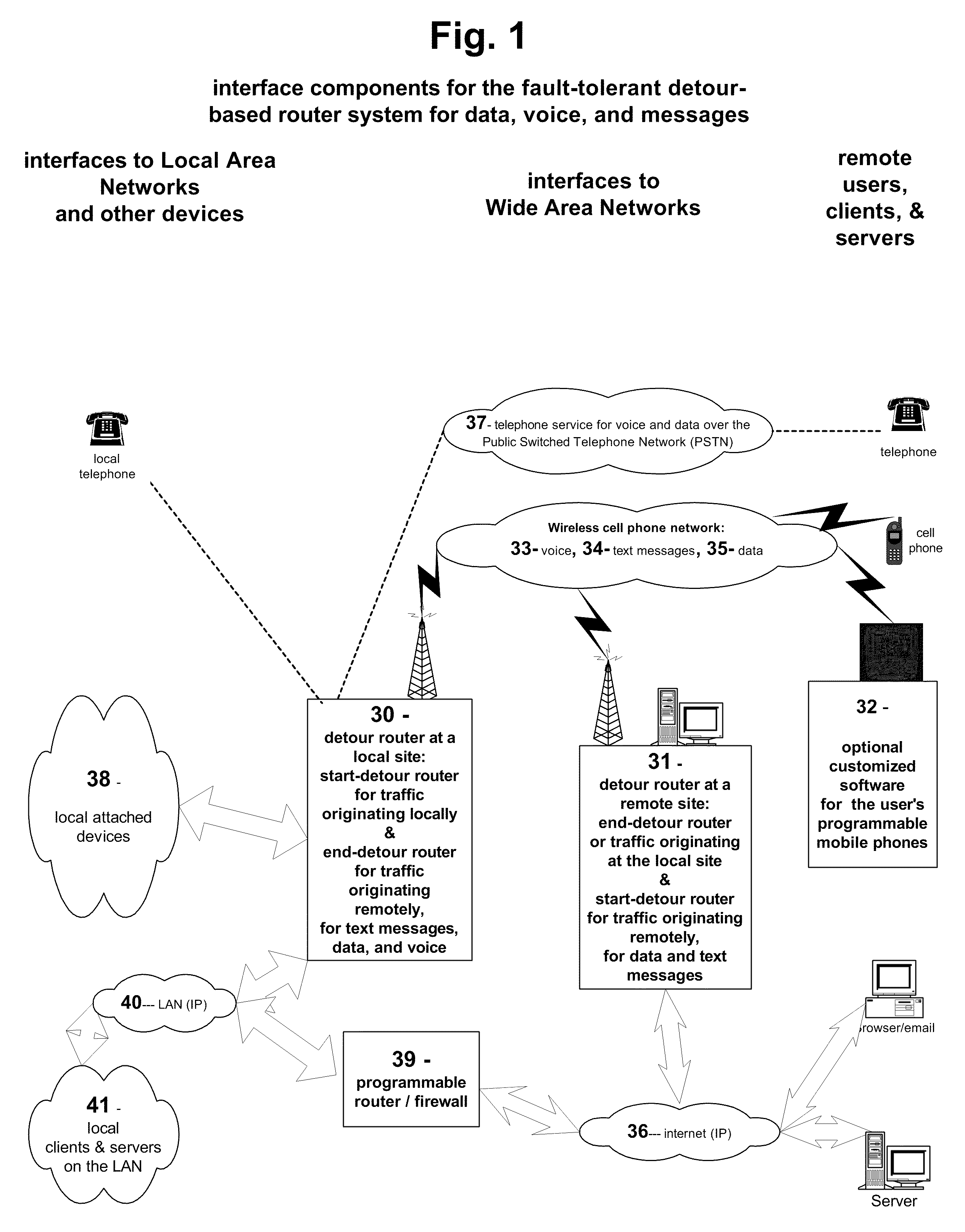

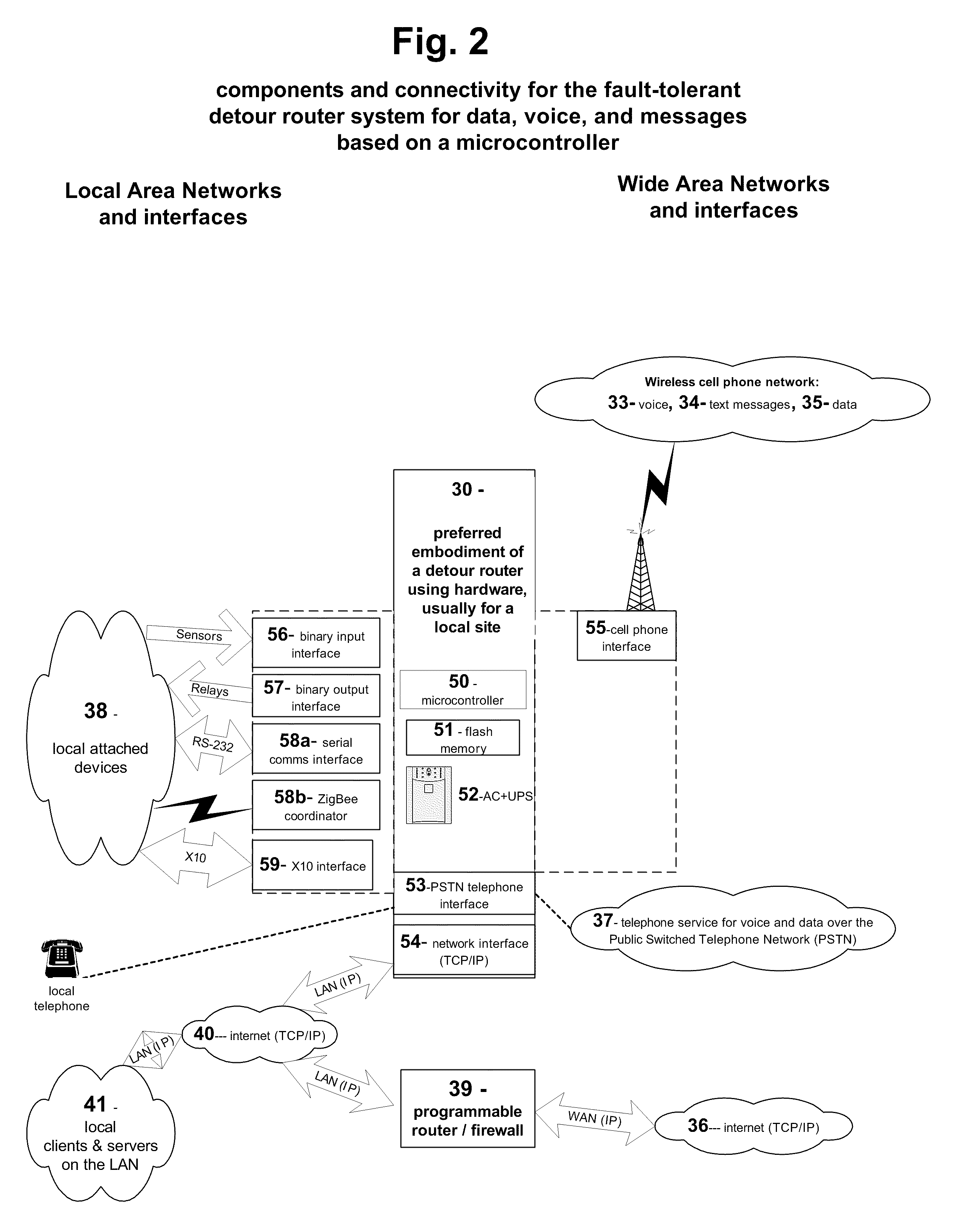

Fault-tolerant, multi-network detour router system for text messages, data, and voice

InactiveUS20100267390A1Avoid discontinuitiesReduce loss rateMessaging/mailboxes/announcementsWireless commuication servicesWide areaThe Internet

The invention provides a method and system for fault-tolerant communication. It utilizes three wide area networks, including the cell phone network, the internet and the telephone network (PSTN). The method and system monitors the wide-area networks and sends warning messages if they cannot be accessed from a local site. Primary failure conditions relate to the access and use of the three wide-area networks. Secondary failure conditions include power outages. The possible fault conditions include: ‘telephone out’, ‘internet out’, ‘wireless radio out’, ‘power out’. If these failure conditions are detected, the method and system alerts the user and redirects voice, text message, and data traffic via a detour over a different wide-area network in order to avoid that failure.A method and system has been disclosed that routs information over one of multiple networks in a fault-tolerant manner. In case of network failure, detour routers allow the start-detour routers to switch the information flow over another network to an end-detour router, where the information flow is switched back to the originally-intended network. Cell radios are utilized, as well as telephones and the internet to provide redundancy and fail-over under ‘telephone out’, ‘internet out’, ‘cell phone out’ conditions. The method has particular strengths in supporting communication even with dynamically assigned addresses, thus assuring that remote users receive monitoring and alarm information in a timely manner.

Owner:LIN GAO +2

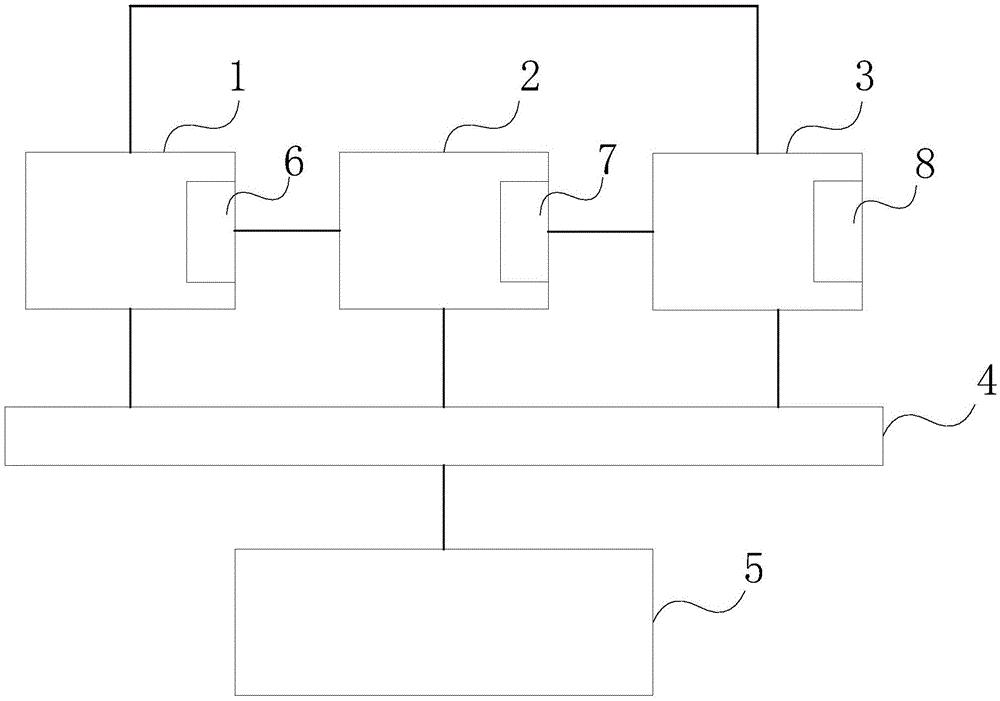

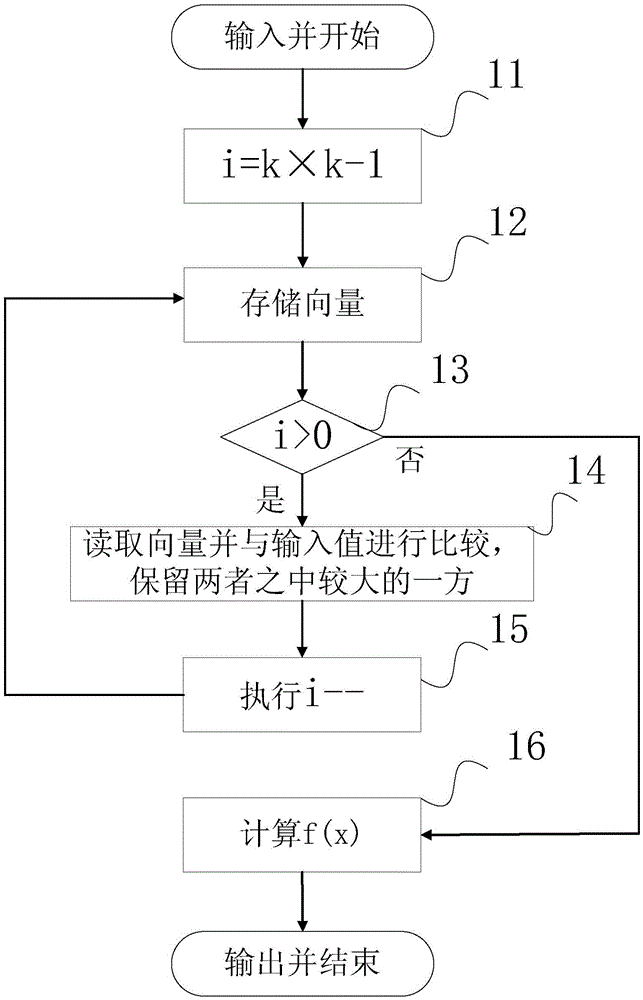

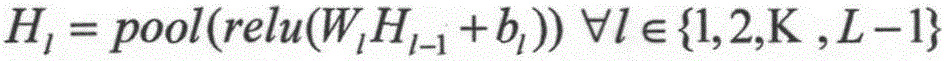

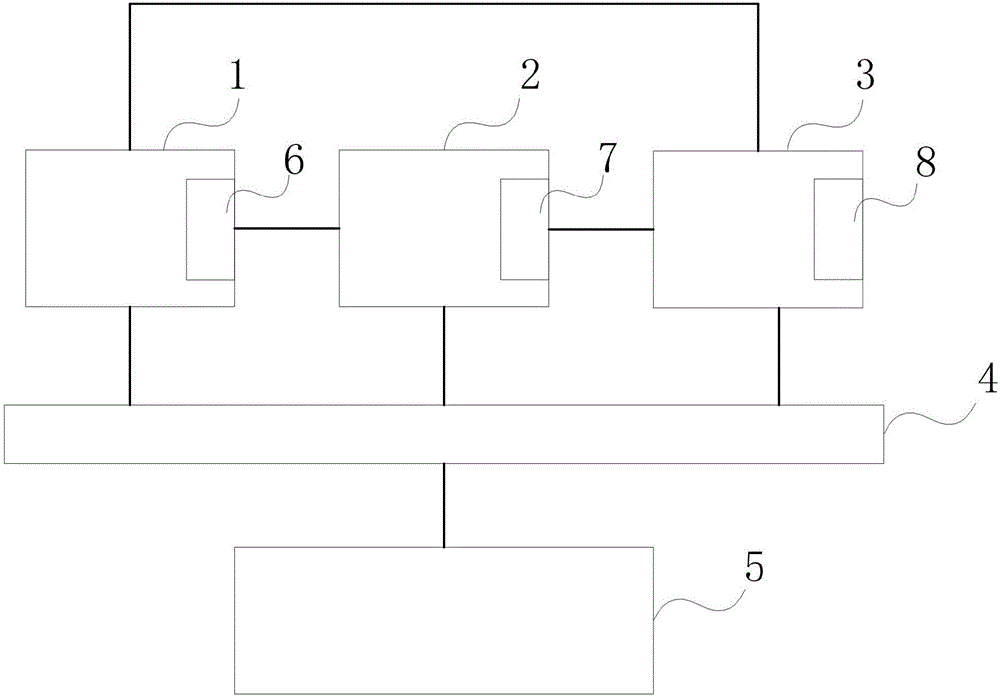

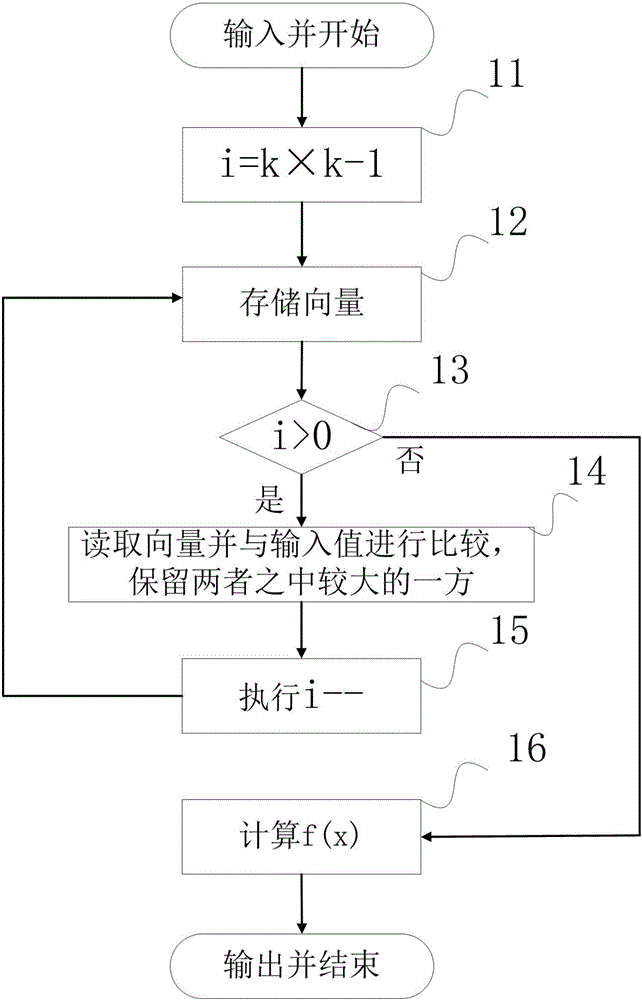

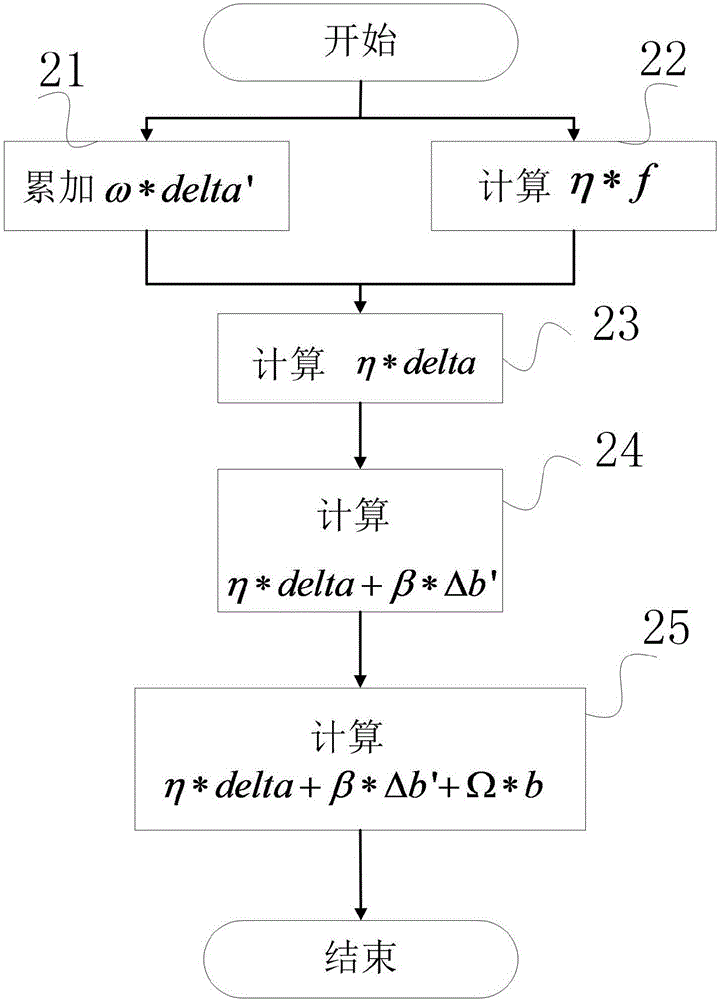

Calculation apparatus and method for accelerator chip accelerating deep neural network algorithm

InactiveCN105488565AExtended waiting timeConsume morePhysical realisationNeural learning methodsSynaptic weightNerve network

The invention provides a calculation apparatus and method for an accelerator chip accelerating a deep neural network algorithm. The apparatus comprises a vector addition processor module, a vector function value calculator module and a vector multiplier-adder module, wherein the vector addition processor module performs vector addition or subtraction and / or vectorized operation of a pooling layer algorithm in the deep neural network algorithm; the vector function value calculator module performs vectorized operation of a nonlinear value in the deep neural network algorithm; the vector multiplier-adder module performs vector multiplication and addition operations; the three modules execute programmable instructions and interact to calculate a neuron value and a network output result of a neural network and a synaptic weight variation representing the effect intensity of input layer neurons to output layer neurons; and an intermediate value storage region is arranged in each of the three modules and a main memory is subjected to reading and writing operations. Therefore, the intermediate value reading and writing frequencies of the main memory can be reduced, the energy consumption of the accelerator chip can be reduced, and the problems of data missing and replacement in a data processing process can be avoided.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

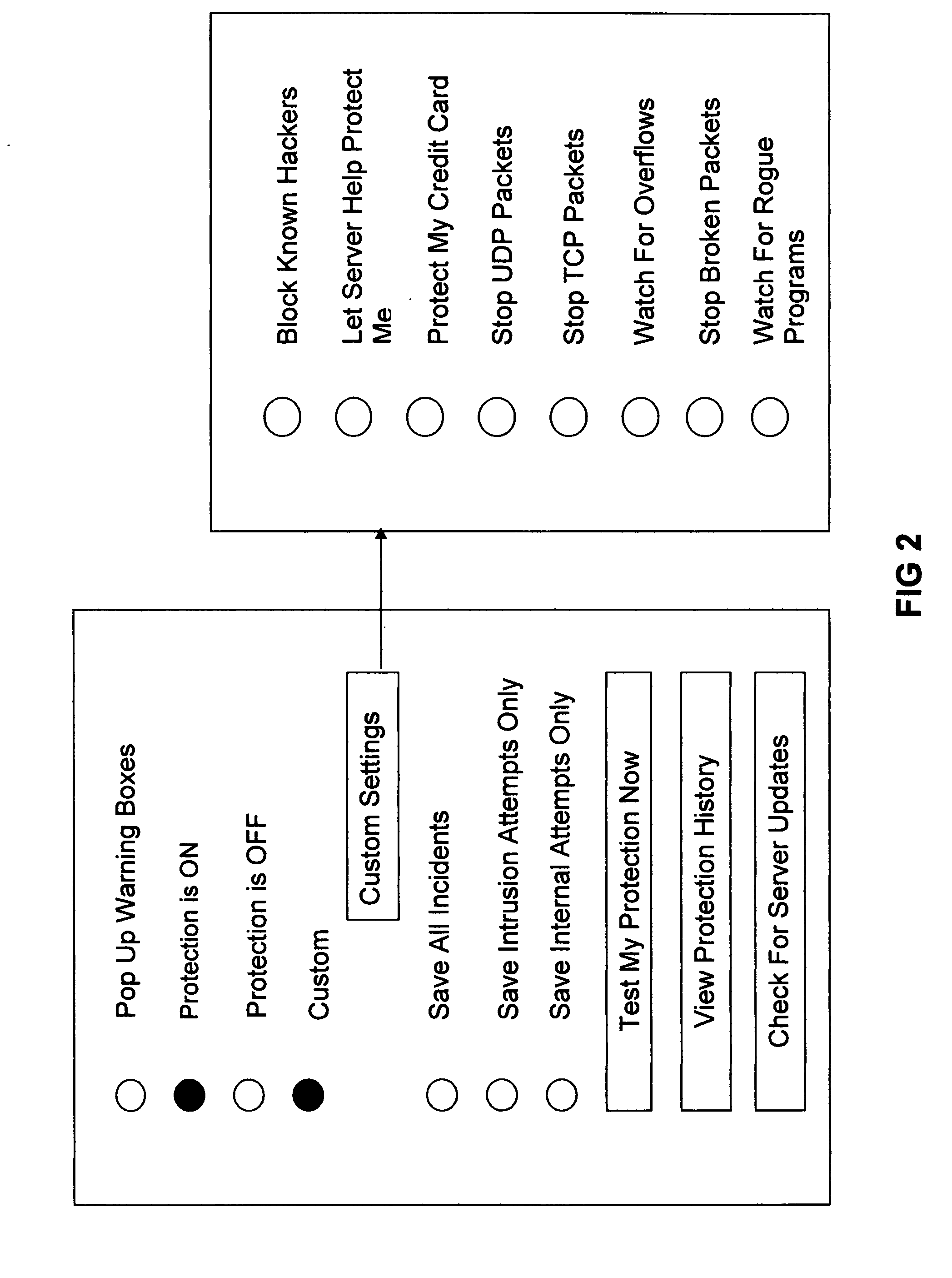

Systems and methods for detecting and preventing unauthorized access to networked devices

InactiveUS20050108557A1Prevent unauthorized accessReduce impactUser identity/authority verificationUnauthorized memory use protectionNetwork outputInput device

Devices, systems, and methods for detecting and preventing unauthorized access to computer networks. Devices include a server enabled with an application that interacts with a counter-part PC application to determine whether input devices of the PC have been active within a predetermined time. Methods include providing a subscription-based service for PC users to determine whether unauthorized network output activity has occurred from a respective user's PC.

Owner:KAYO DAVID GEORGE +2

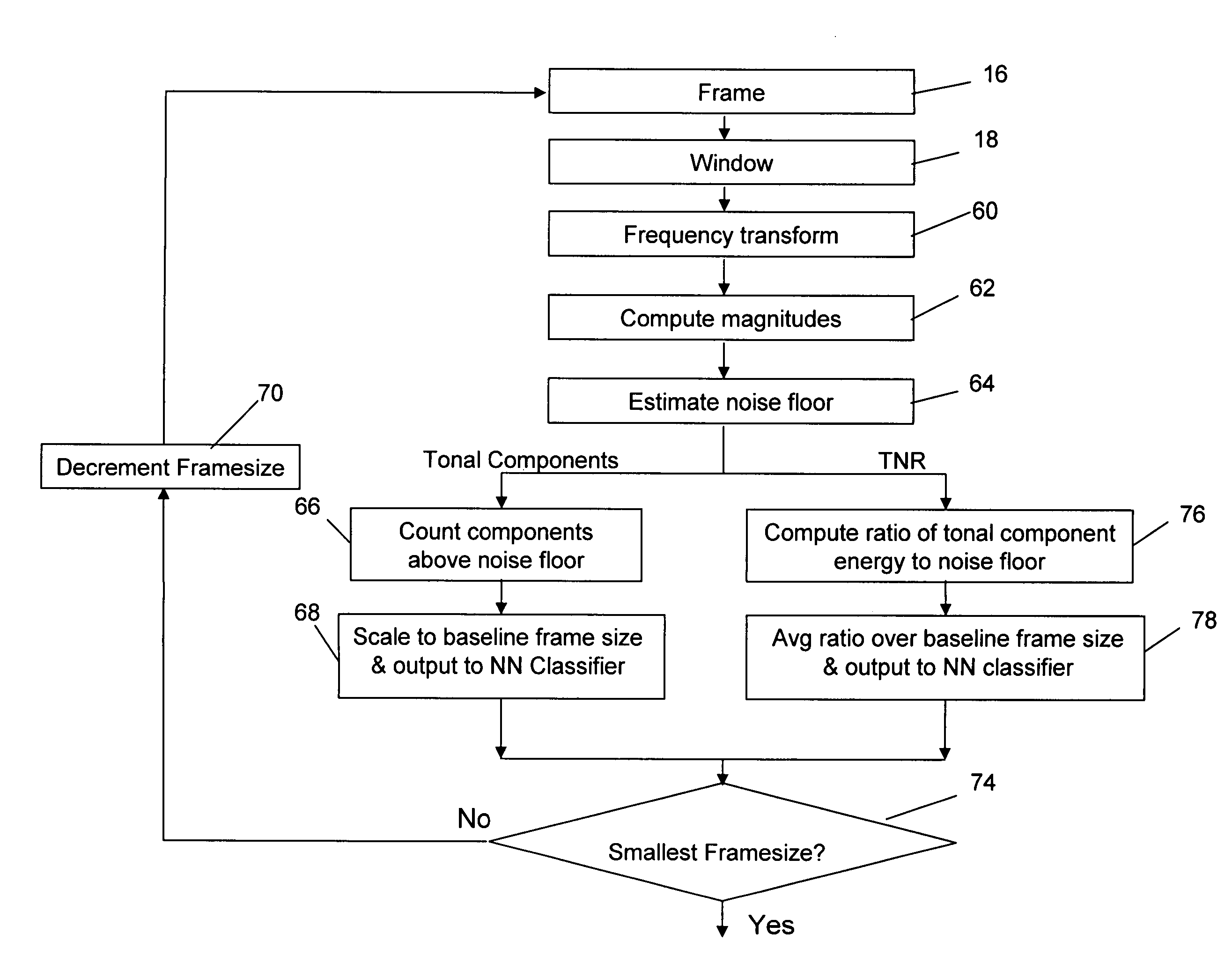

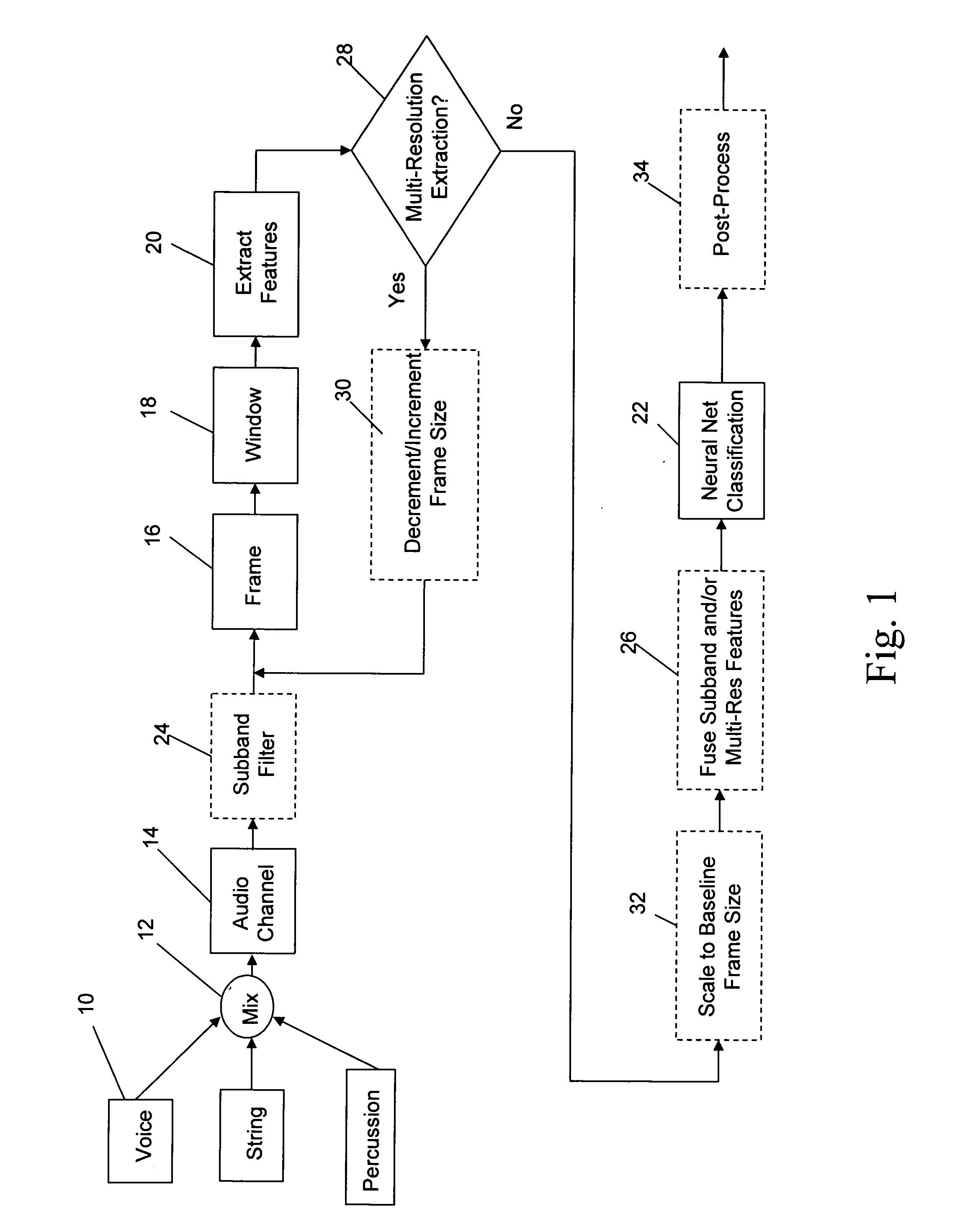

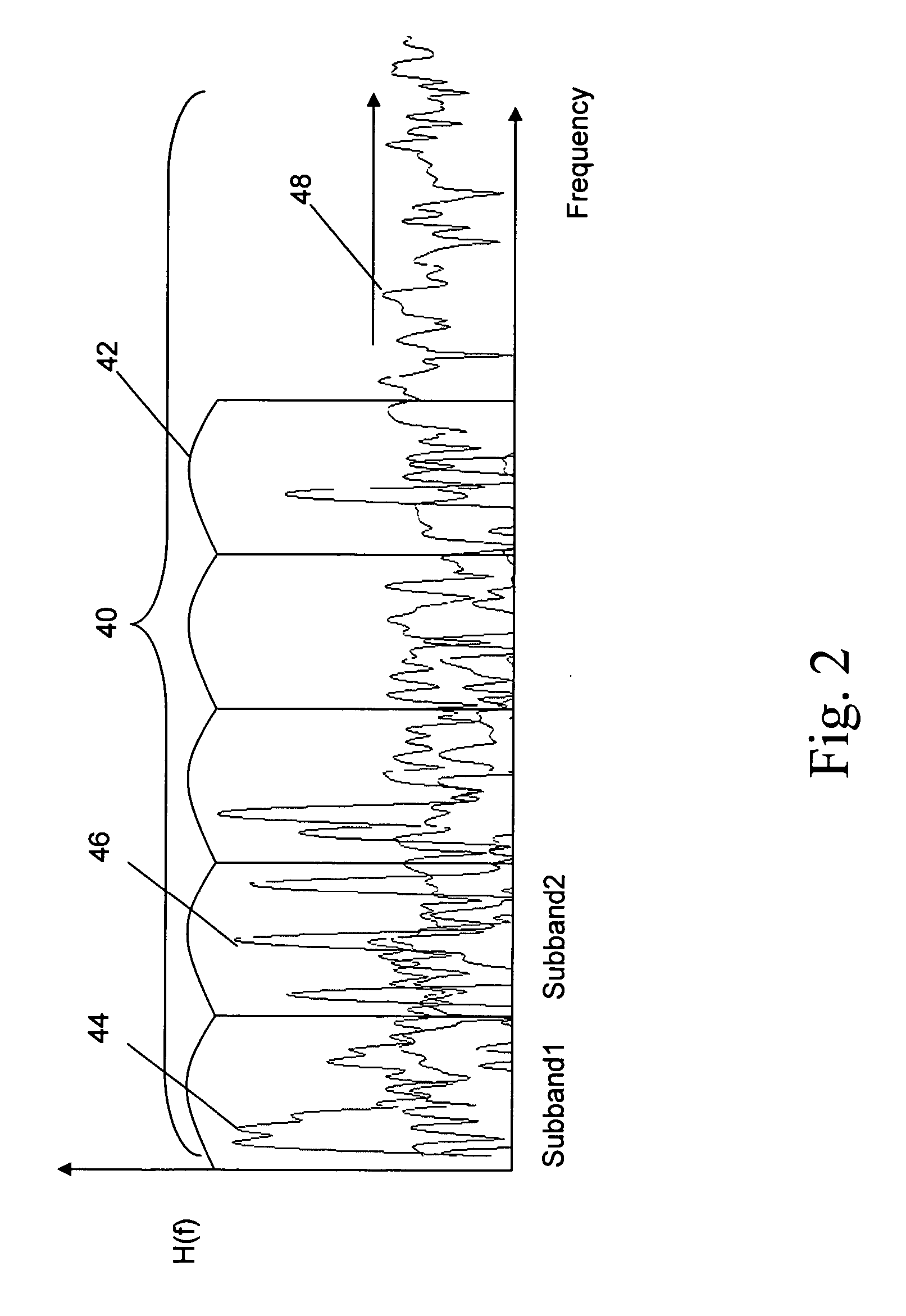

Neural network classifier for separating audio sources from a monophonic audio signal

InactiveUS20070083365A1Improve robustnessEasy to separateSpeech recognitionNetwork outputAudio signal

A neural network classifier provides the ability to separate and categorize multiple arbitrary and previously unknown audio sources down-mixed to a single monophonic audio signal. This is accomplished by breaking the monophonic audio signal into baseline frames (possibly overlapping), windowing the frames, extracting a number of descriptive features in each frame, and employing a pre-trained nonlinear neural network as a classifier. Each neural network output manifests the presence of a pre-determined type of audio source in each baseline frame of the monophonic audio signal. The neural network classifier is well suited to address widely changing parameters of the signal and sources, time and frequency domain overlapping of the sources, and reverberation and occlusions in real-life signals. The classifier outputs can be used as a front-end to create multiple audio channels for a source separation algorithm (e.g., ICA) or as parameters in a post-processing algorithm (e.g. categorize music, track sources, generate audio indexes for the purposes of navigation, re-mixing, security and surveillance, telephone and wireless communications, and teleconferencing).

Owner:DTS

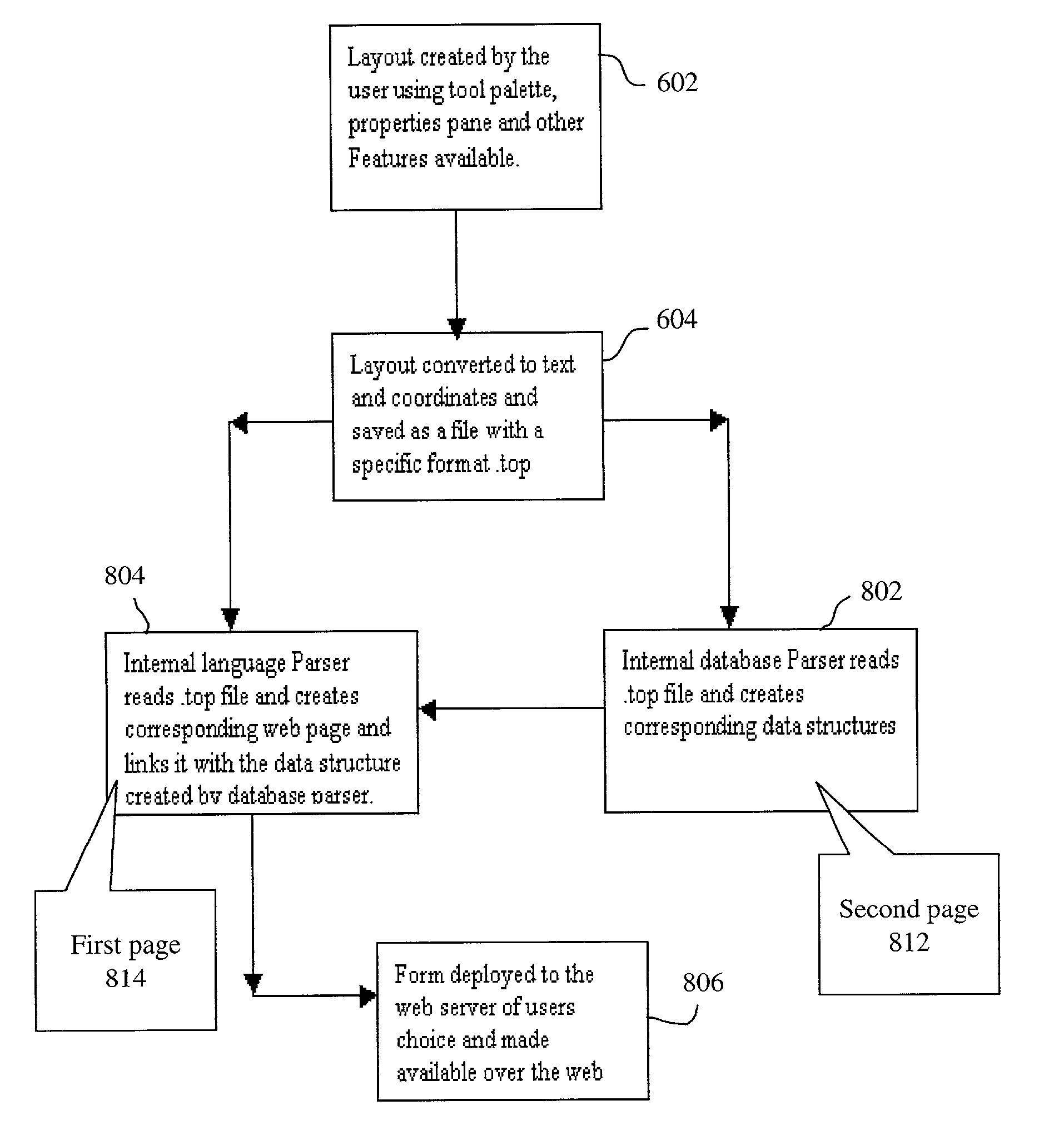

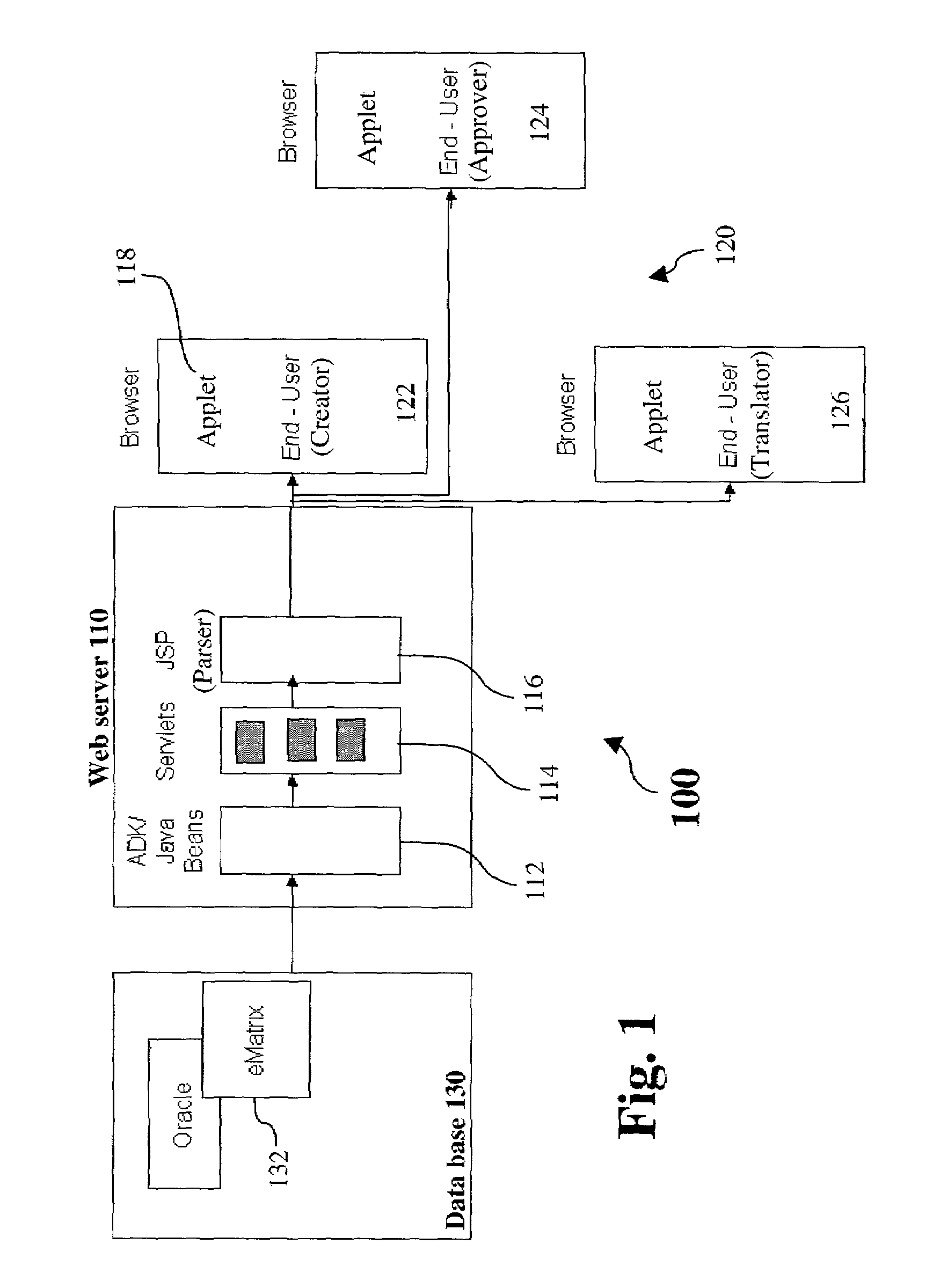

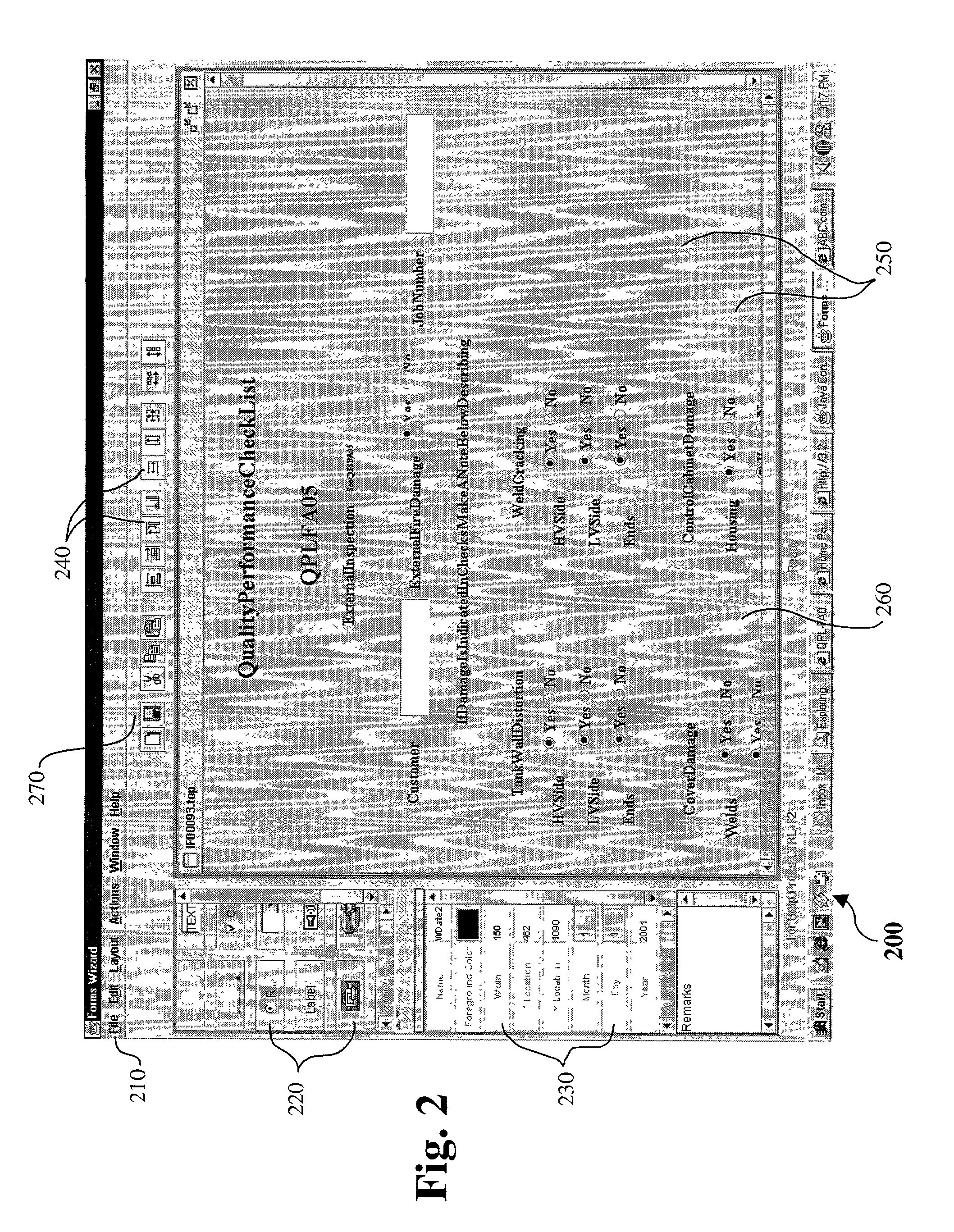

Creating data structures from a form file and creating a web page in conjunction with corresponding data structures

InactiveUS7032170B2Digital data processing detailsNatural language data processingGraphicsArray data structure

The invention provides a system and method for creating a form. The method includes providing a graphical user interface including a plurality of selectable components and a design space; a creator selecting at least one of the selectable components and disposing each of the selected selectable components in the design space to generate a form layout; converting the form layout to data information; and saving the data information to a form file, the form file being output to a database over a network.

Owner:GENERAL ELECTRIC CO

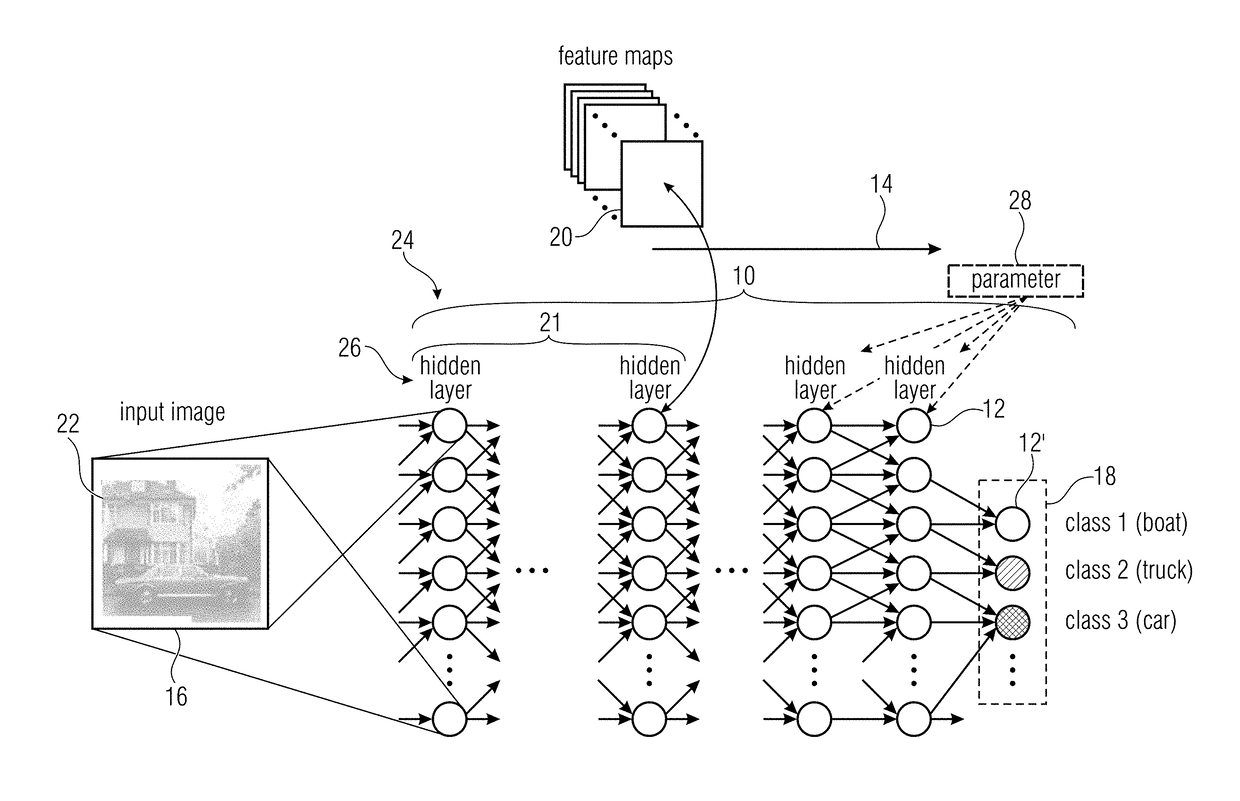

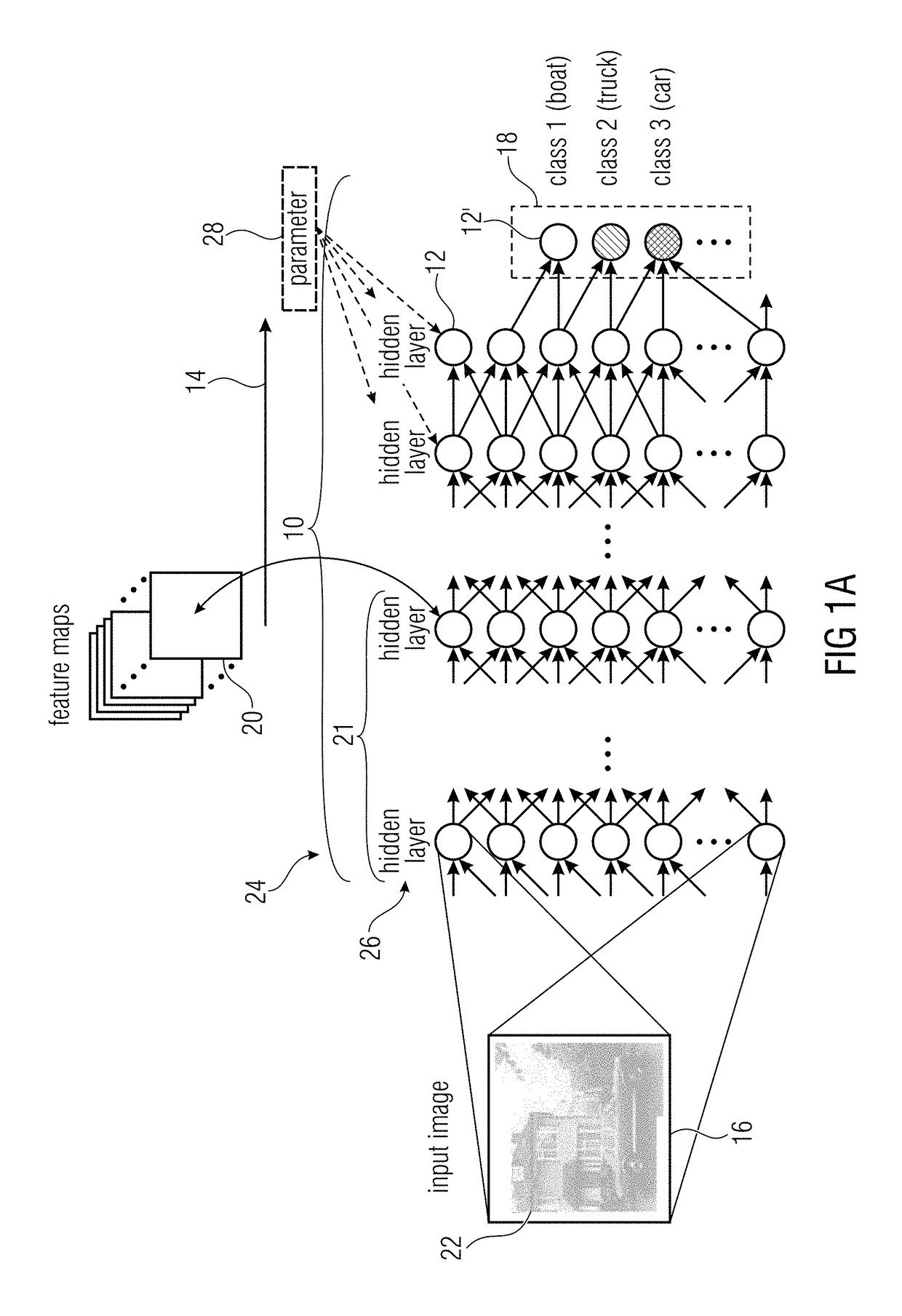

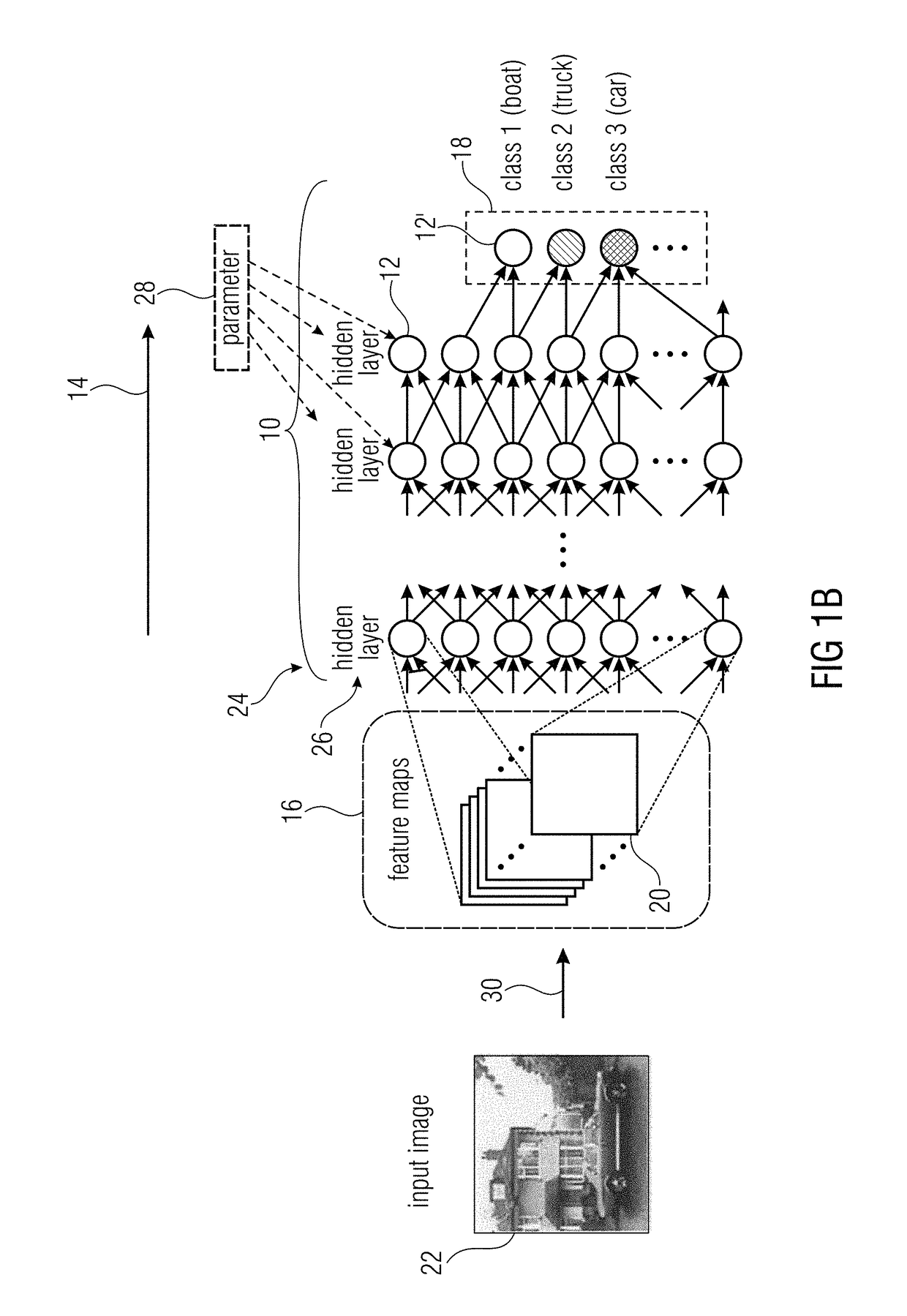

Relevance score assignment for artificial neural networks

The task of relevance score assignment to a set of items onto which an artificial neural network is applied is obtained by redistributing an initial relevance score derived from the network output, onto the set of items by reversely propagating the initial relevance score through the artificial neural network so as to obtain a relevance score for each item. In particular, this reverse propagation is applicable to a broader set of artificial neural networks and / or at lower computational efforts by performing same in a manner so that for each neuron, preliminarily redistributed relevance scores of a set of downstream neighbor neurons of the respective neuron are distributed on a set of upstream neighbor neurons of the respective neuron according to a distribution function.

Owner:FRAUNHOFER GESELLSCHAFT ZUR FOERDERUNG DER ANGEWANDTEN FORSCHUNG EV +1

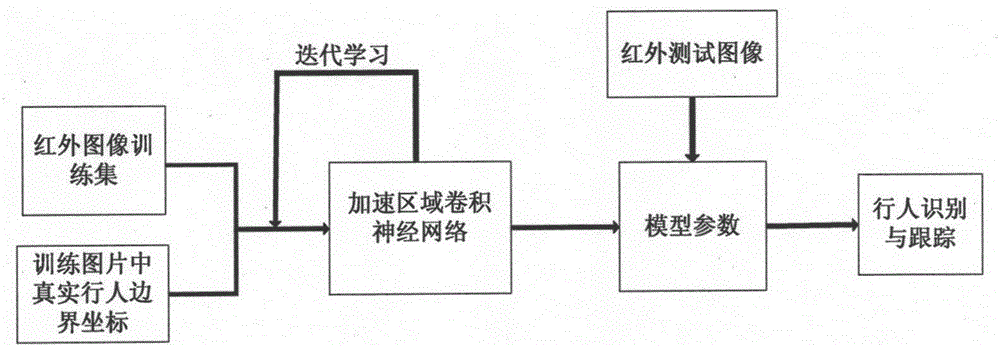

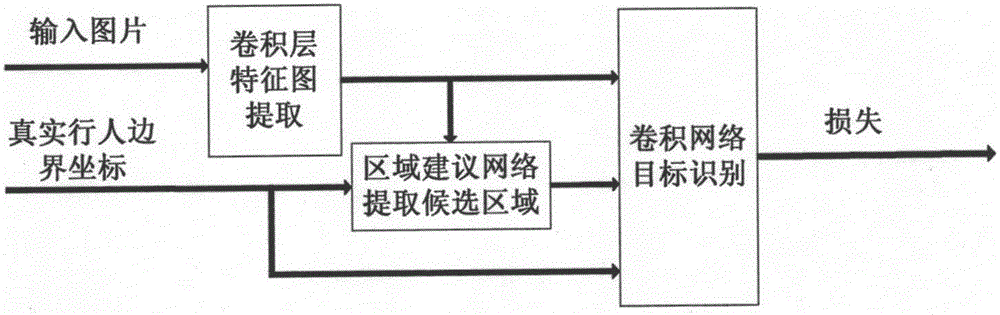

Pedestrian detection and tracking method based on accelerated area Convolutional Neural Network

InactiveCN106845430AGuaranteed correctnessGuaranteed real-timeBiometric pattern recognitionNeural learning methodsData setSimulation

The invention relates to a pedestrian recognition and tracking method based on an accelerated area Convolutional Neural Network. Firstly, training and testing data set are preprocessed according to the requirements through a robot with an infrared camera to acquire a training dataset and a testing dataset at night, and then, actual target position labeling is conducted on all training and testing photos and is recorded to a sample file; then, the accelerated area Convolutional Neural Network is constructed, the accelerated area Convolutional Neural Network is trained by using the training dataset, and the final probability belonging to a pedestrian area and a bounding box of the area are calculated out from network output by the usage of a non-maximum suppression algorithm; the accuracy of the network is tested by the usage of the testing dataset, and a network model consistent with the requirements is obtained; photos collected by the robot at night are input to an accelerated area Convolutional Neural Network model, and the probability belonging to the pedestrian area and the bounding box of the area are online output by a model in real time. According to the pedestrian detection and tracking method based on the accelerated area Convolutional Neural Network, a pedestrian in an infrared image can be effectively recognized, and real-time tracking for a pedestrian target in an infrared video can be achieved.

Owner:DONGHUA UNIV

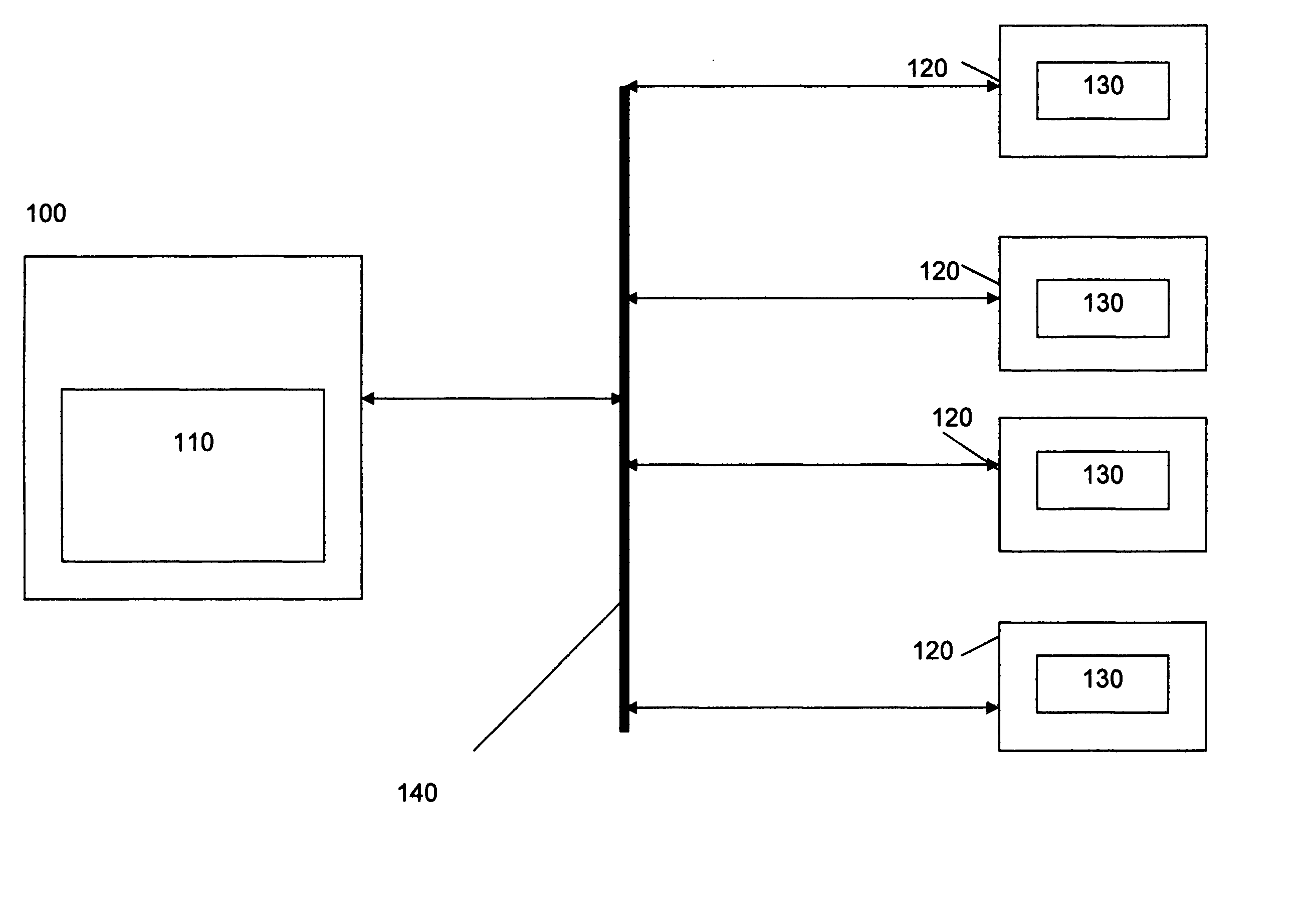

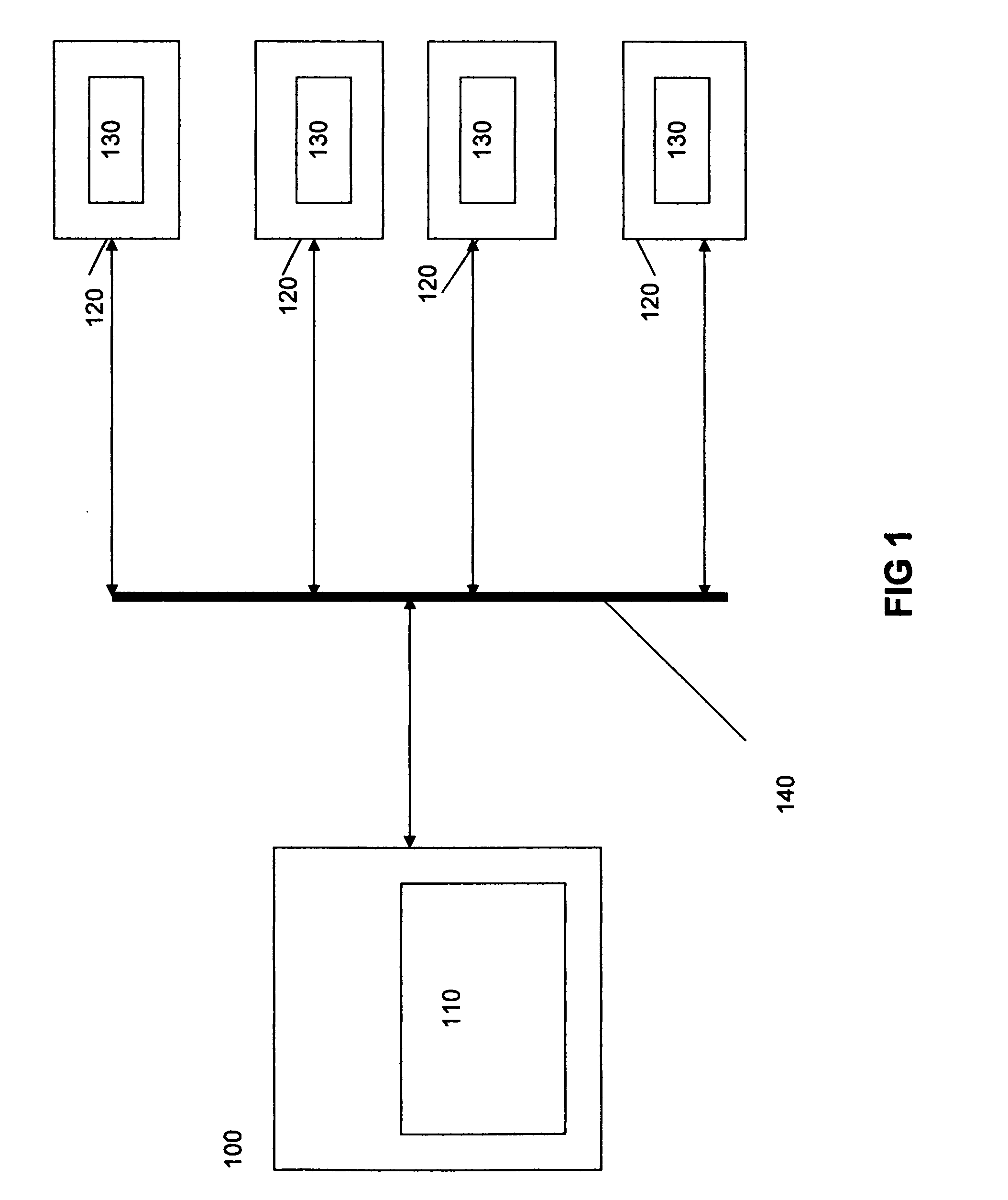

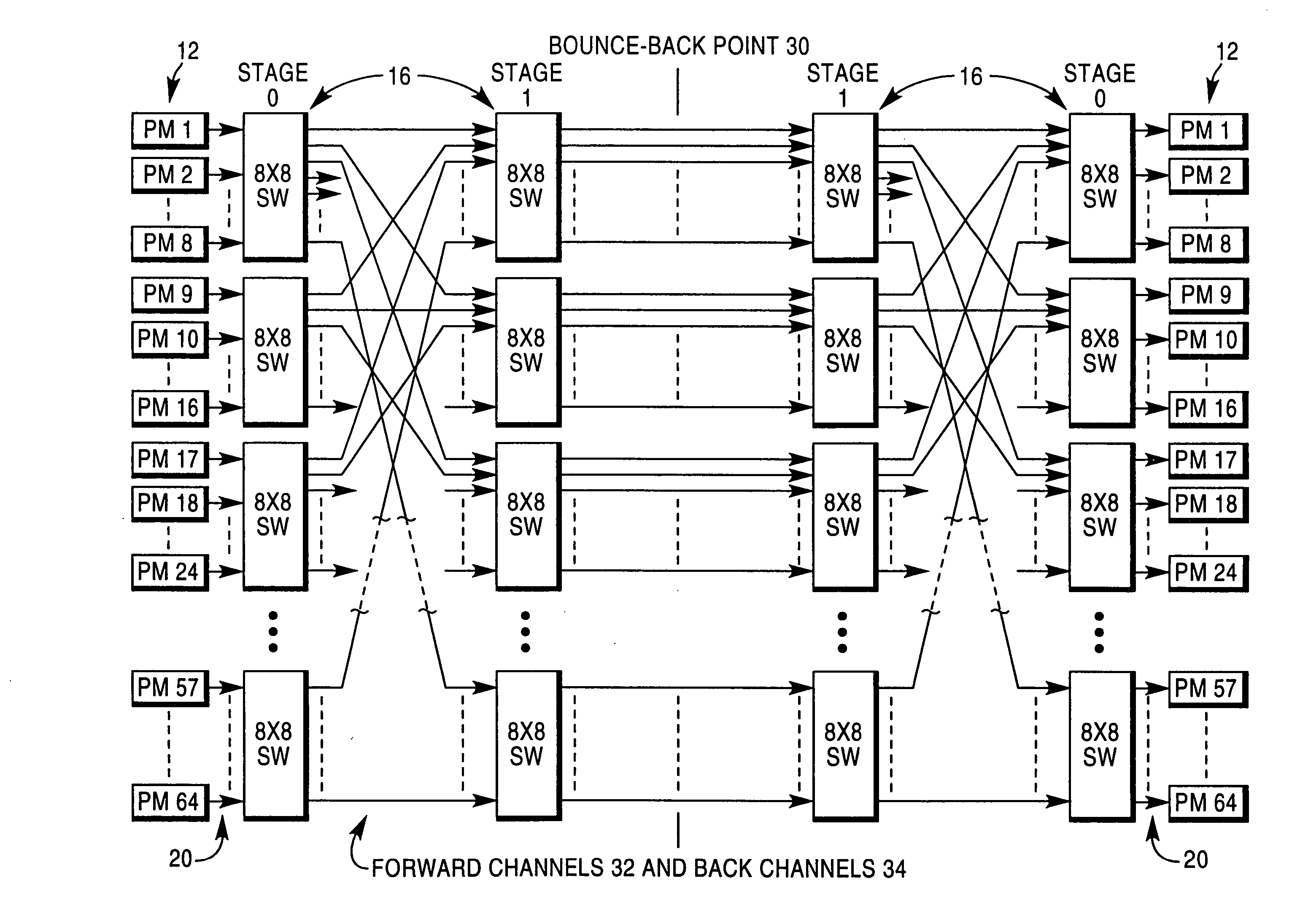

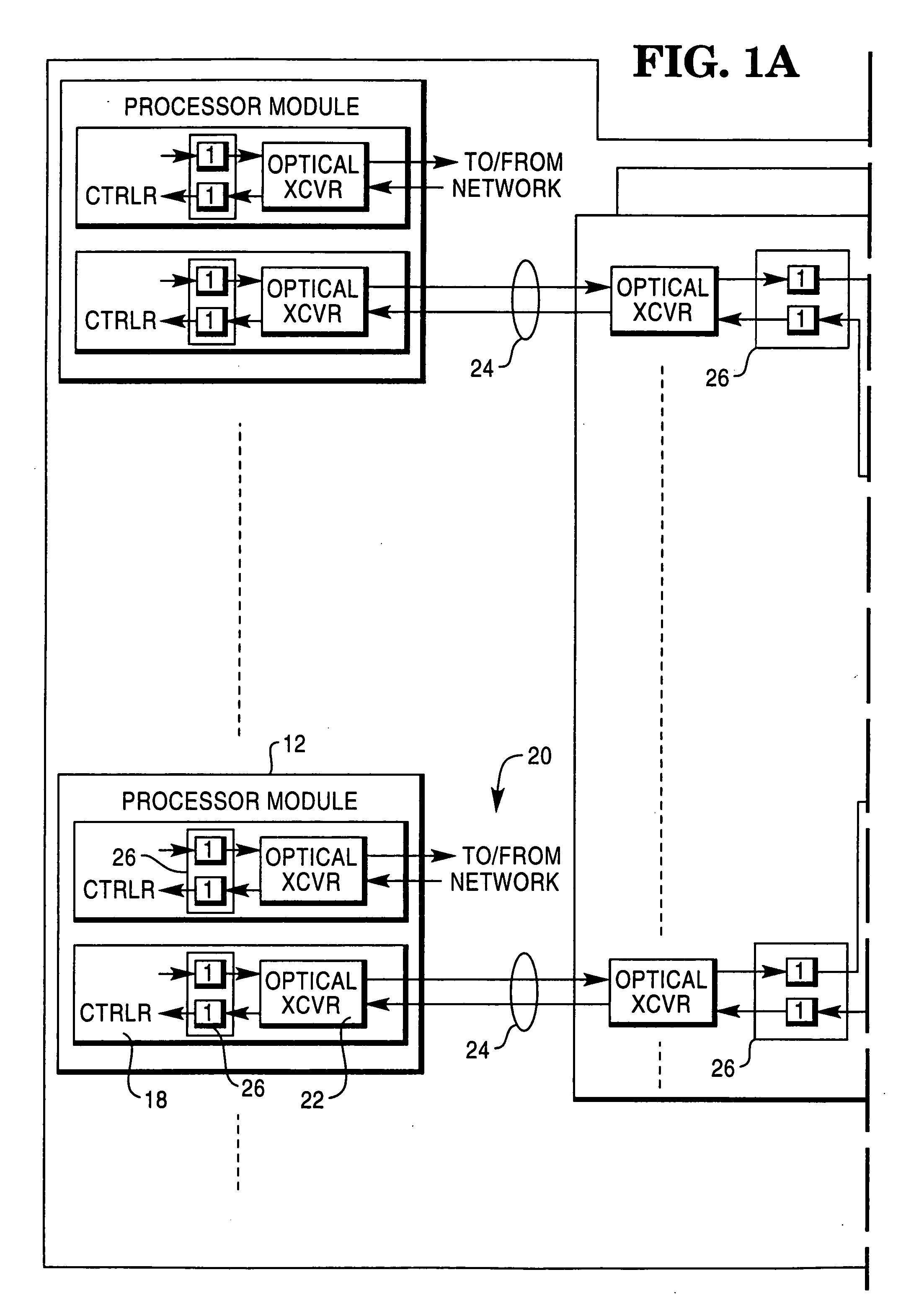

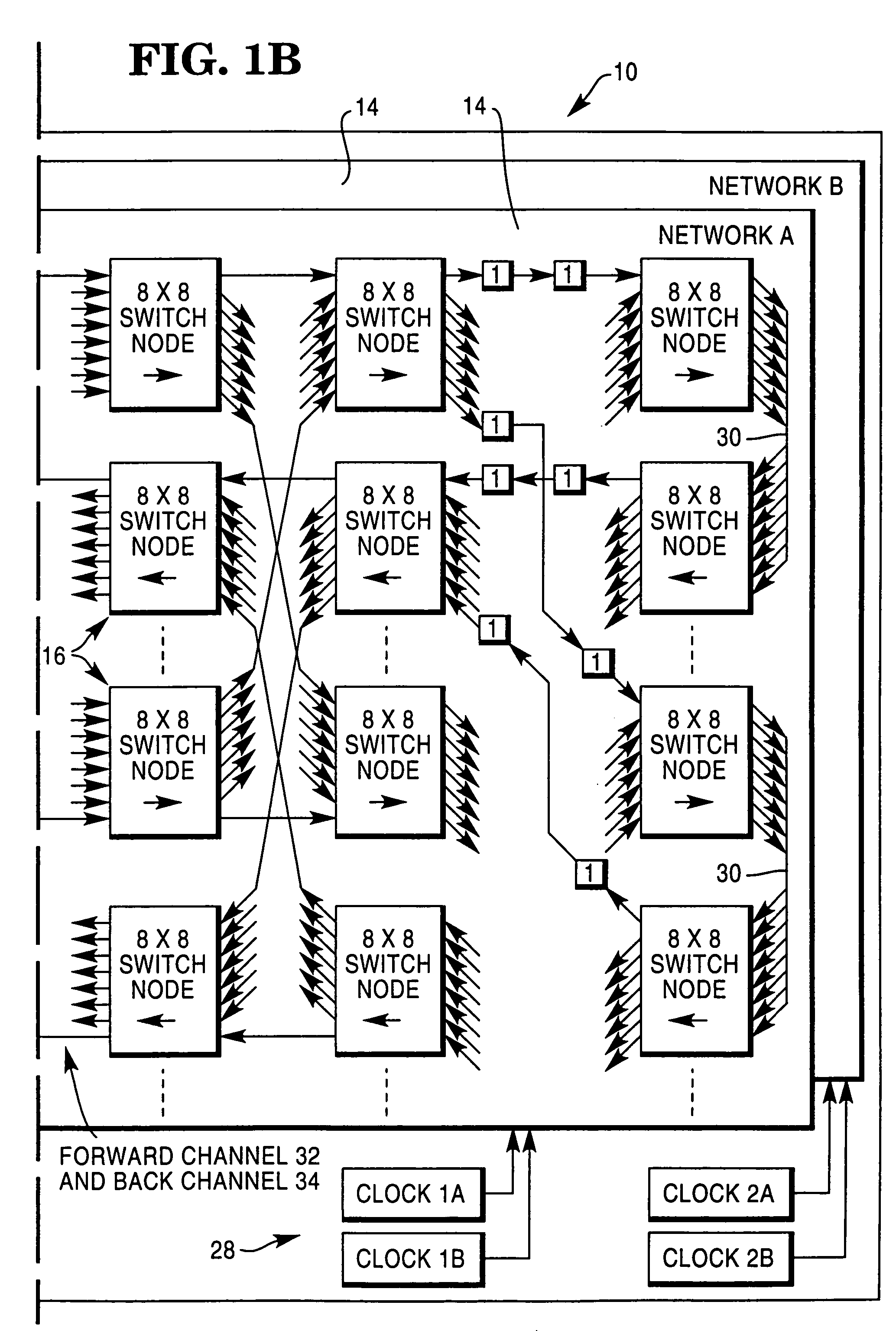

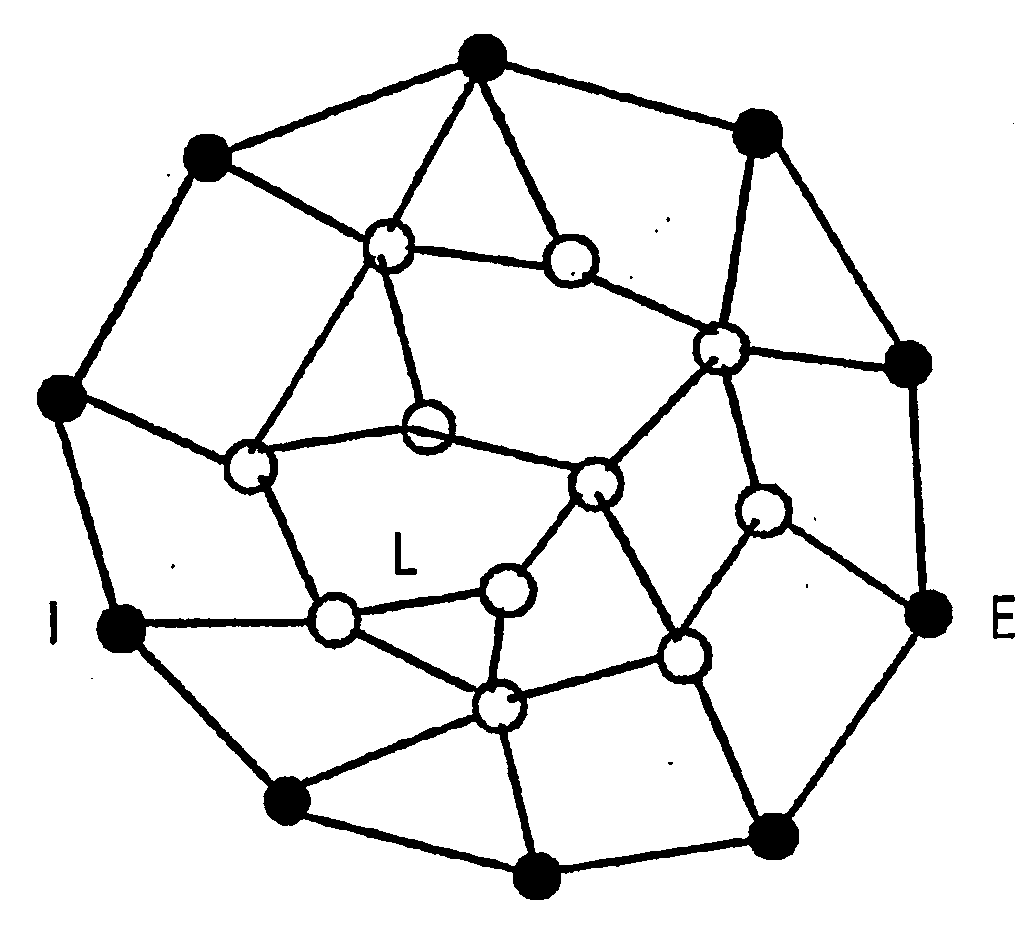

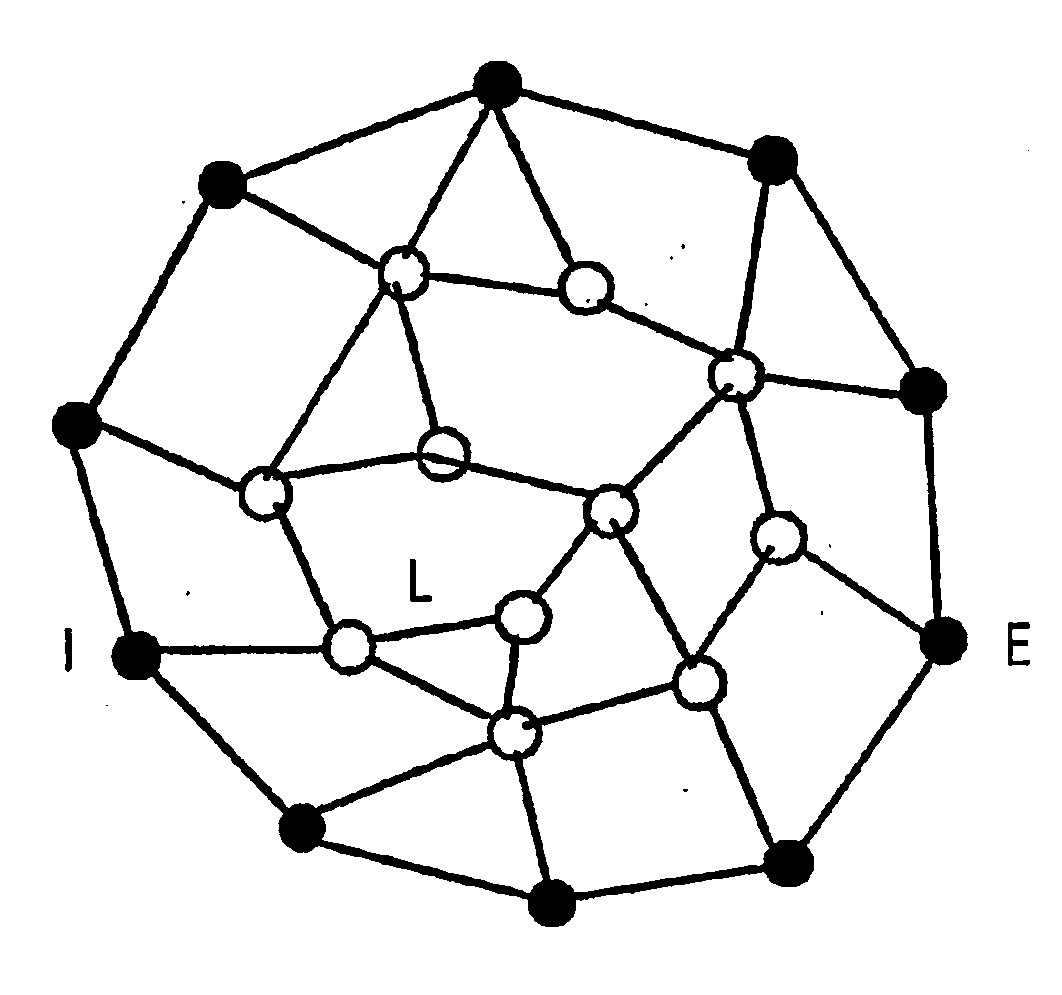

Reconfigurable, fault tolerant, multistage interconnect network and protocol

InactiveUS20060013207A1Improve fault toleranceReduce contentionMultiplex system selection arrangementsRadiation pyrometryFault toleranceMassively parallel

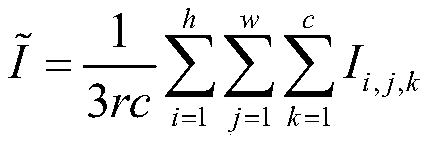

A multistage interconnect network (MIN) capable of supporting massive parallel processing, including point-to-point and multicast communications between processor modules (PMs) which are connected to the input and output ports of the network. The network is built using interconnected switch nodes arranged in 2 [logb N] stages, wherein b is the number of switch node input / output ports, N is the number of network input / output ports and [logb N] indicates a ceiling function providing the smallest integer not less than logb N. The additional stages provide additional paths between network input ports and network output ports, thereby enhancing fault tolerance and lessening contention.

Owner:TERADATA US

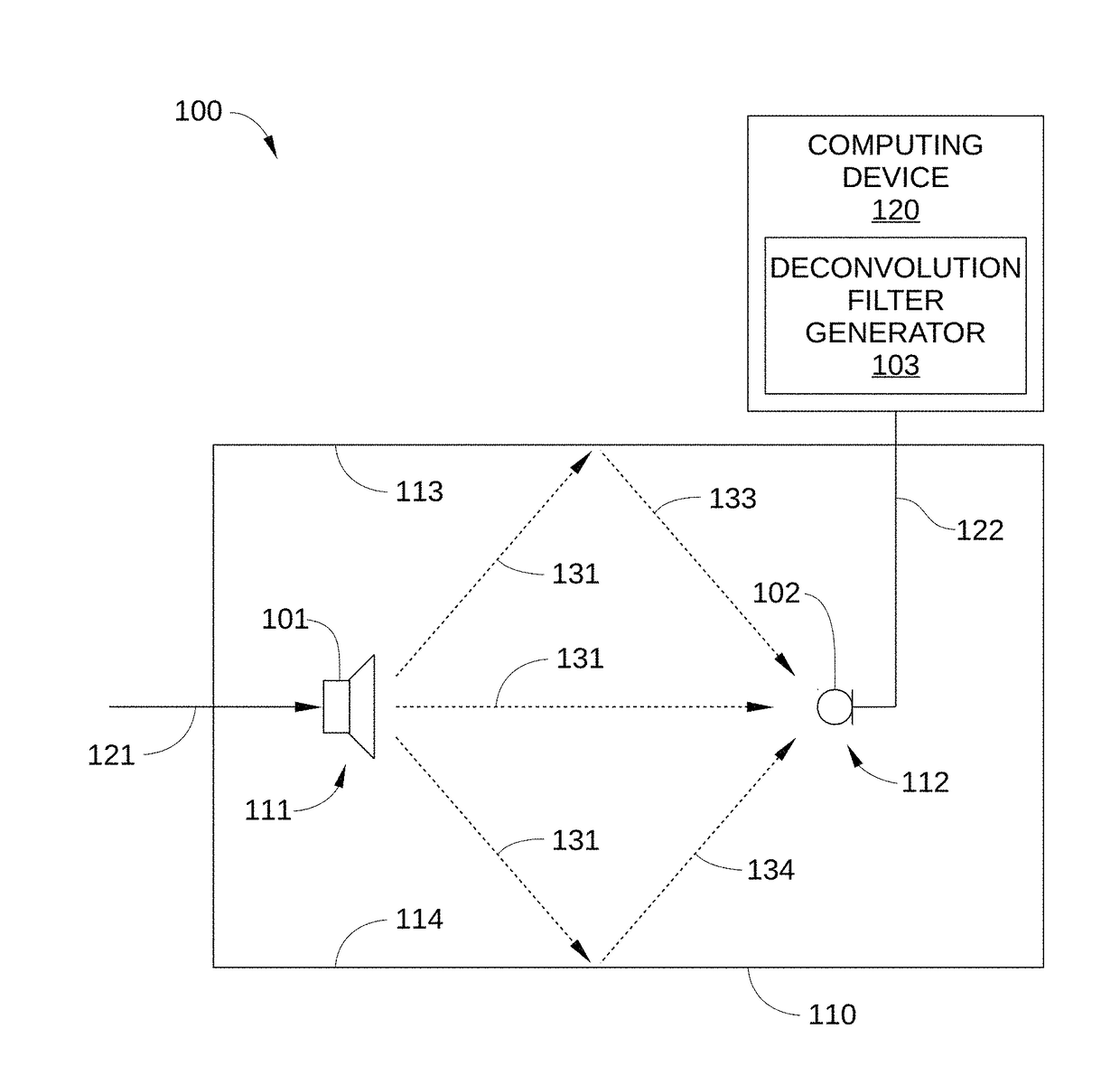

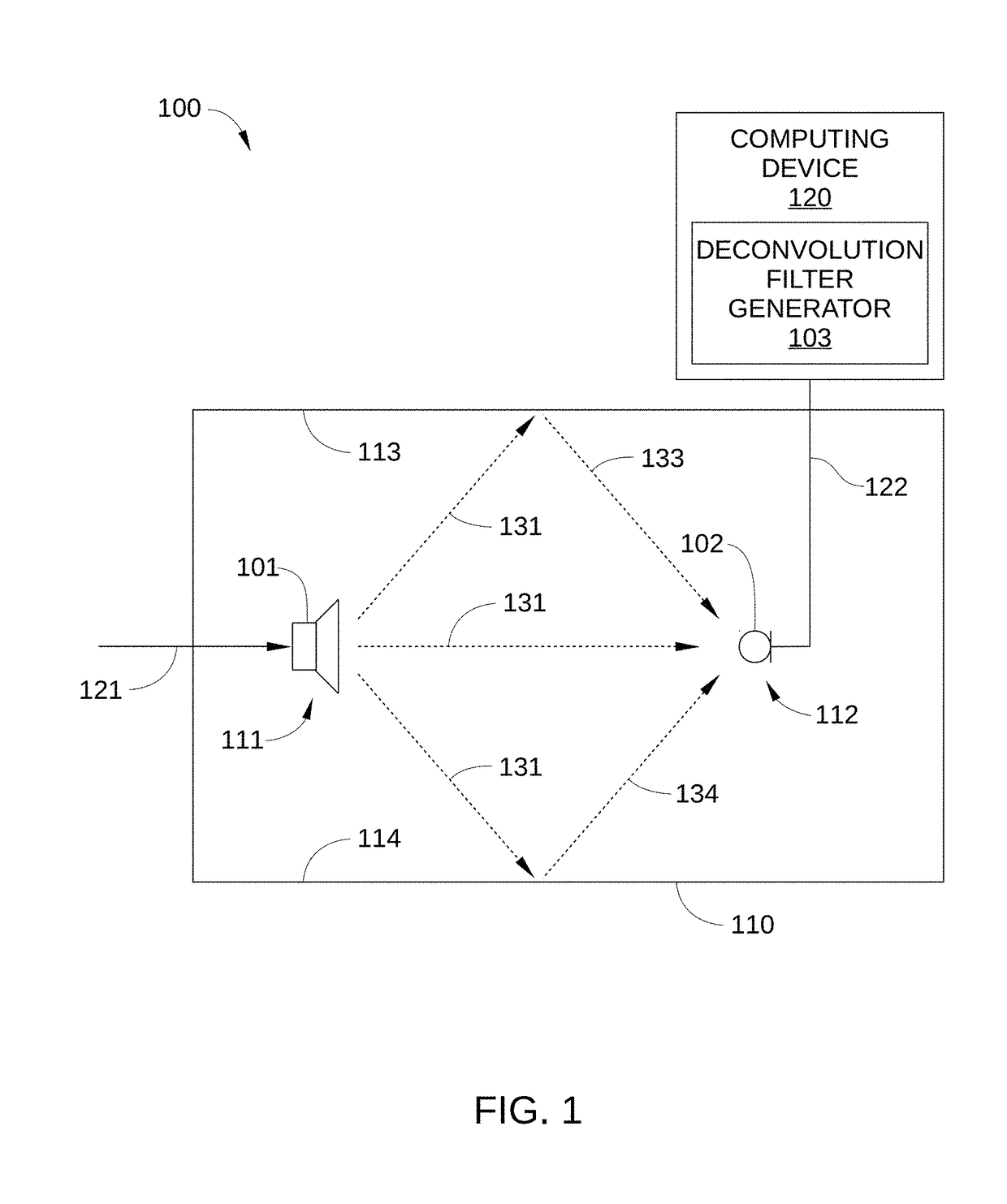

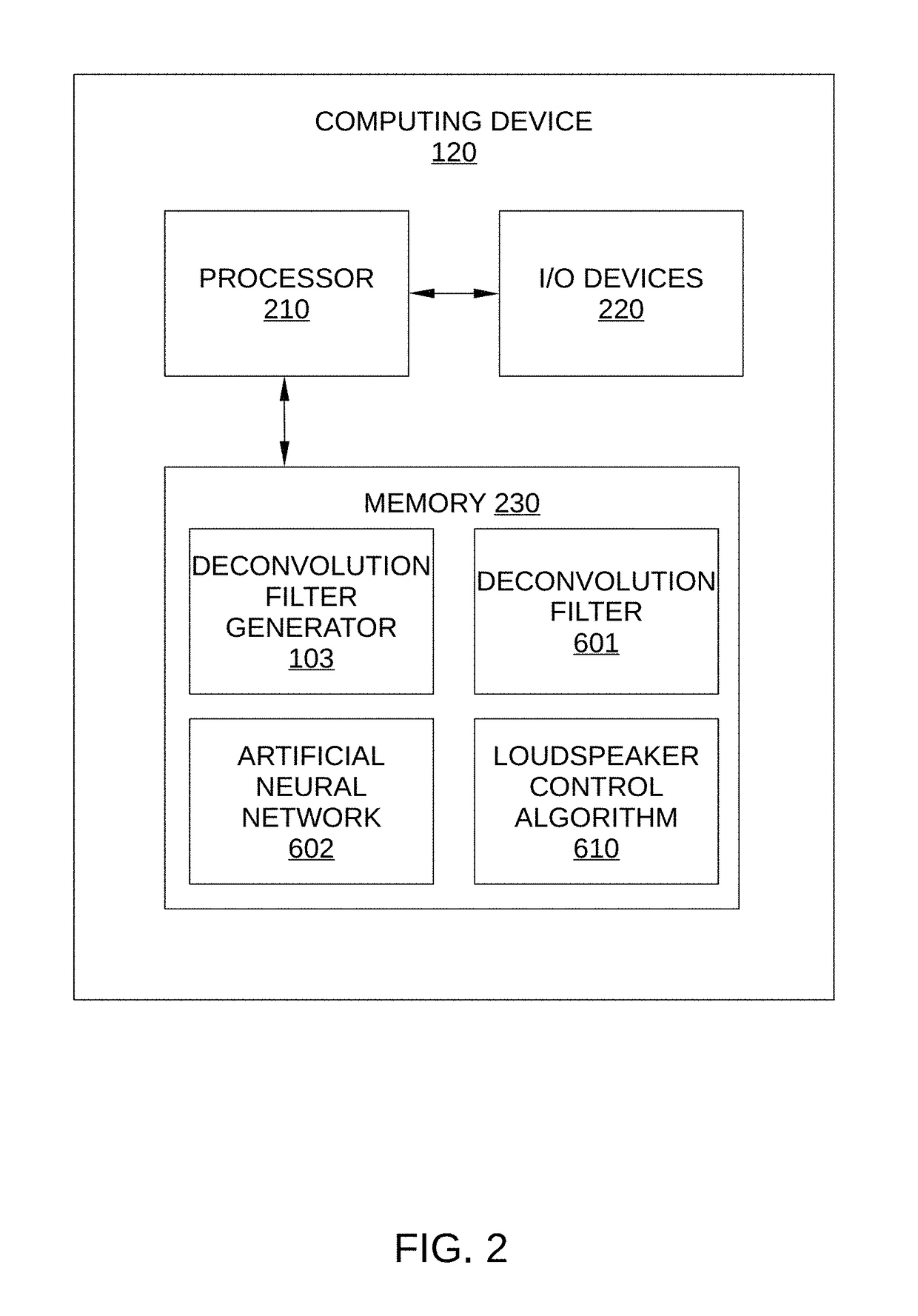

Neural network-based loudspeaker modeling with a deconvolution filter

ActiveUS20170303039A1Remove distortionReduced responseSignal processingSound input/outputConvolution filterNetwork output

A technique for controlling a loudspeaker system with an artificial neural network includes filtering, with a deconvolution filter, a measured system response of a loudspeaker and a reverberant environment in which the loudspeaker is disposed to generate a filtered response, wherein the measured system response corresponds to an audio input signal applied to the loudspeaker while the loudspeaker is disposed in the reverberant environment. The techniques further include generating, via a neural network model, an initial neural network output based on the audio input signal, comparing the initial neural network output to the filtered response to determine an error value, and generating, via the neural network model, an updated neural network output based on the audio input signal and the error value.

Owner:HARMAN INT IND INC

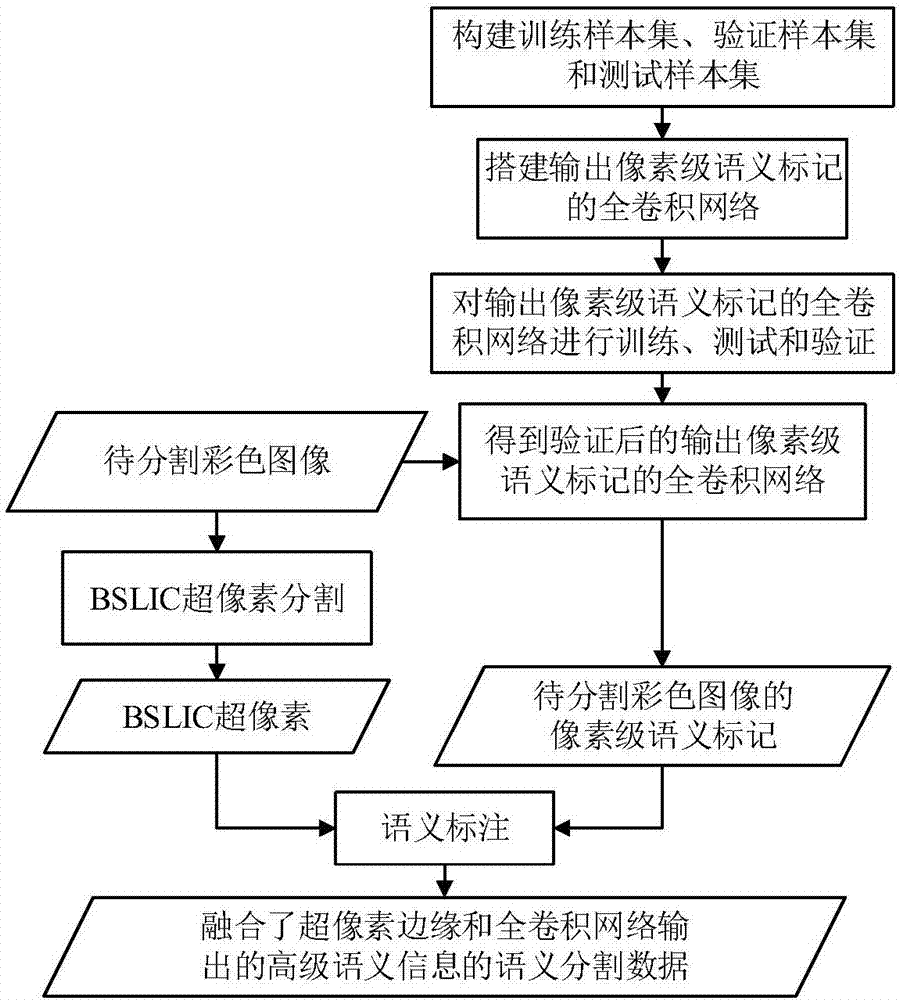

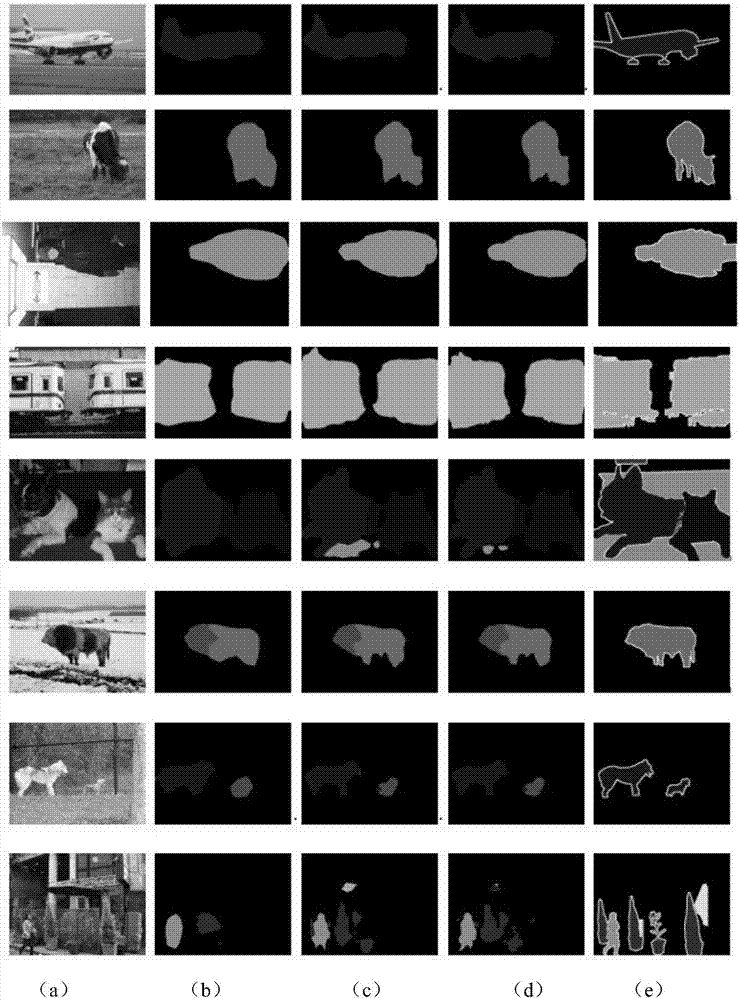

Image semantic segmentation method based on super-pixel edge and full convolutional network

ActiveCN107424159AImprove fitImprove Segmentation AccuracyImage analysisNeural architecturesNetwork outputImage segmentation

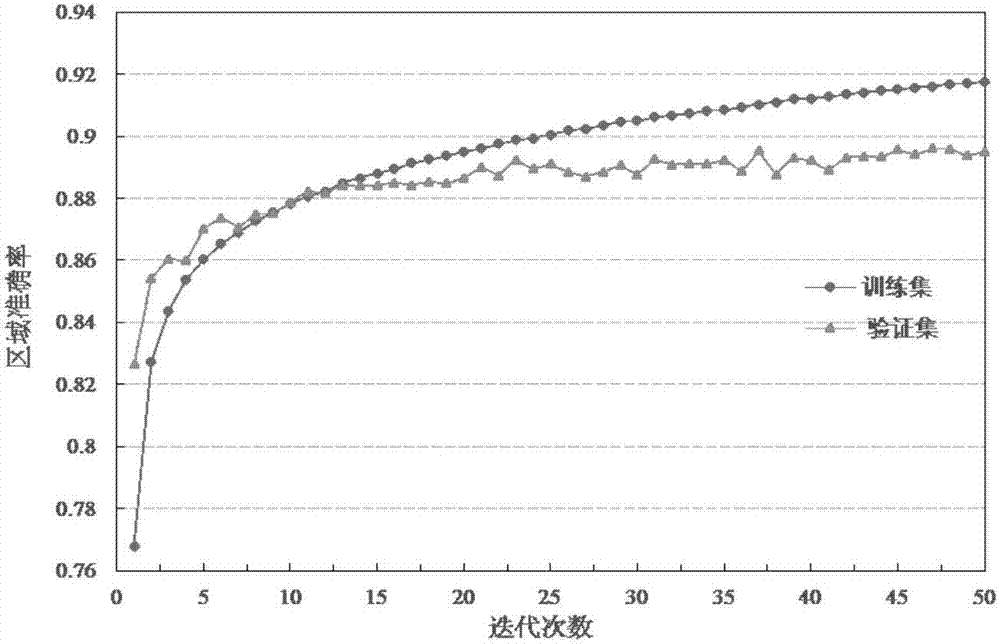

The invention proposes an image semantic segmentation method based on a super-pixel edge and a full convolutional network, so that a technical problem of low accuracy in the existing image semantic segmentation method is solved. The method comprises: a training sample set, a testing sample set, and a verification sample set are constructed; a full convolutional network outputting a pixel-level semantic mark is trained, tested, and verified; semantic segmentation is carried out on a to-be-segmented image by using the verified full convolutional network outputting a pixel-level semantic mark to otain a pixel-level semantic mark; BSLIC sub-pixel segmentation is carried out on the to-be-segmented image; and semantic marking is carried out on BSLIC super-pixels by using the pixel-level semantic mark to obtain a semantic segmentation result with combination of the super-pixel edge and the high-level semantic information outputted by the full convolutional network. Therefore, the original full convolutional network segmentation accuracy is kept and the segmentation accuracy of the small edge is improved, so that the image segmentation accuracy is enhanced. The image semantic segmentation method can be applied to classification, identification, and tracking occasions requiring target detection.

Owner:XIDIAN UNIV

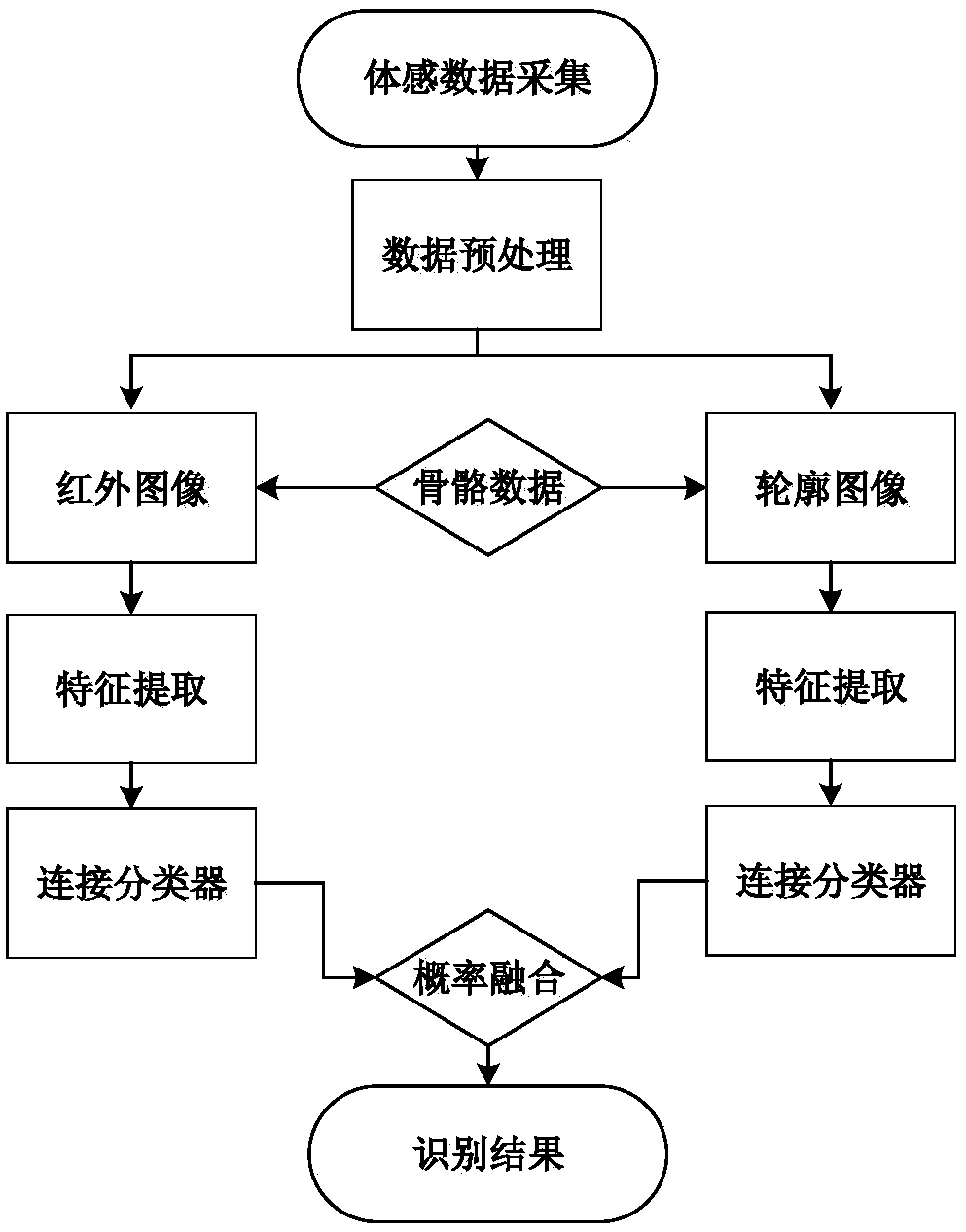

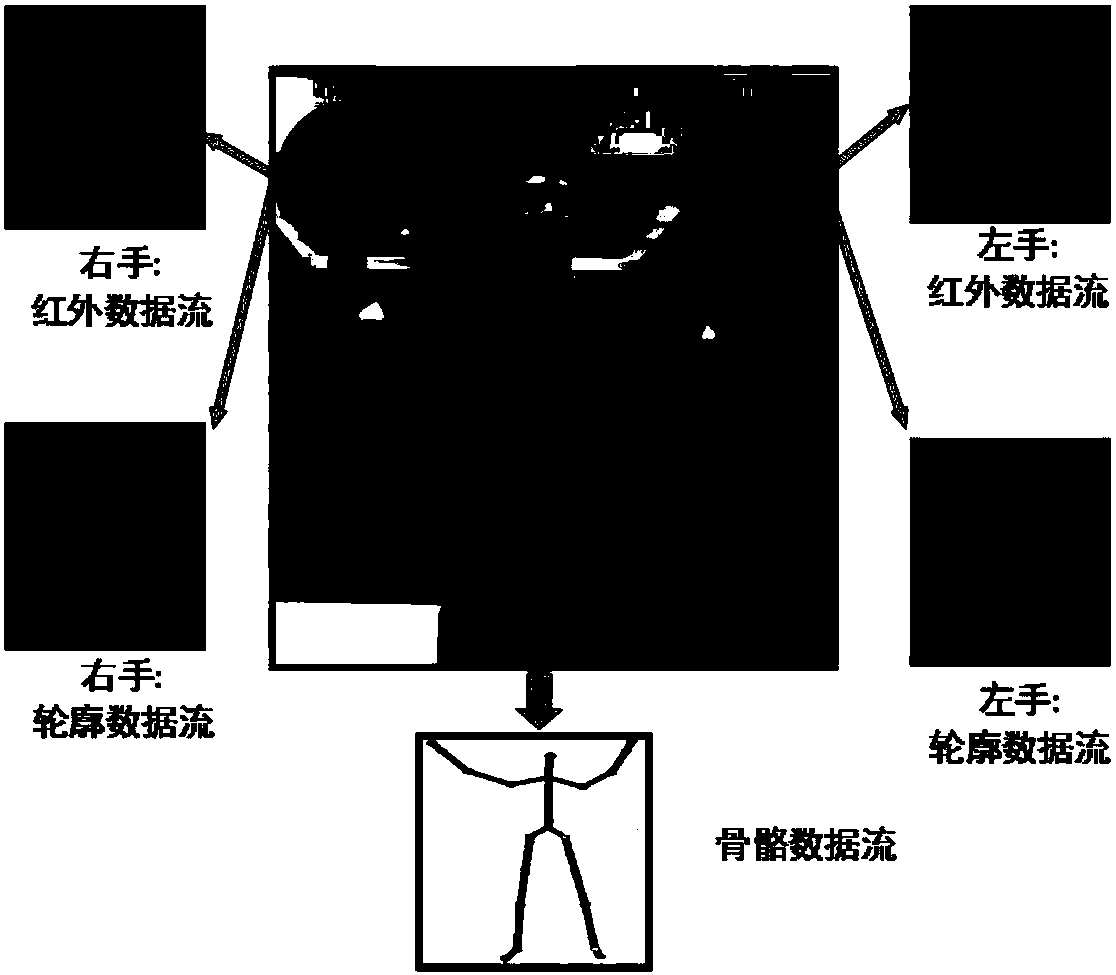

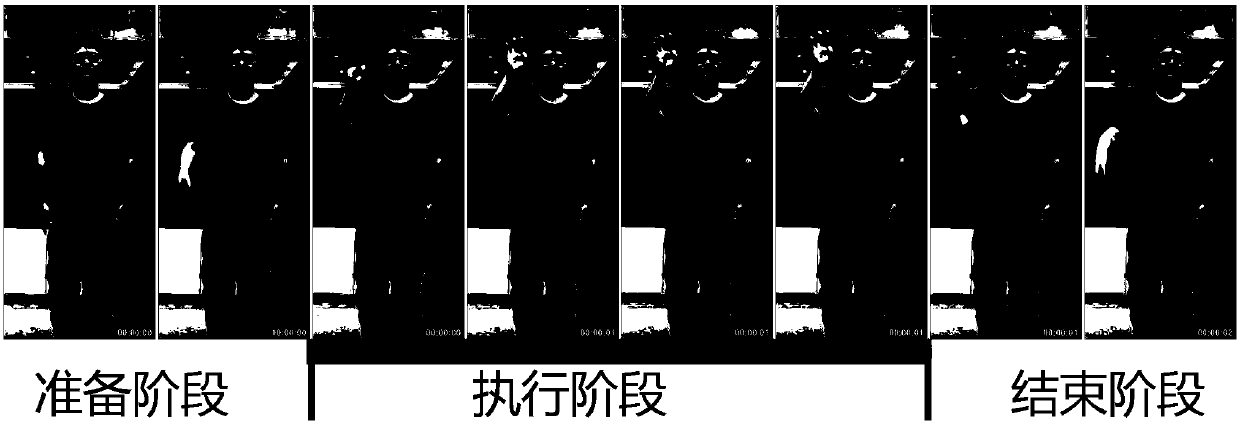

3D convolutional neural network sign language identification method integrated with multi-modal data

ActiveCN107679491AOvercoming featureOvercome precisionCharacter and pattern recognitionNerve networkComputation complexity

The invention discloses a 3D convolutional neural network sign language identification method integrated with multi-modal data. The 3D convolutional neural network sign language identification methodintegrated with multi-modal data includes the steps: constructing a deep neural network, respectively performing characteristic extraction on an infrared image and a contour image of a gesture from the spatial dimension and the time dimension of a video, and integrating two network output based on different data formats to perform final classification of sign language. The 3D convolutional neuralnetwork sign language identification method integrated with multi-modal data can accurately extract the limb movement track information in two different data formats, can effectively reduce the computing complexity of a model, uses a deep learning strategy to integrate the classification results of two networks, and effectively solves the problem that single classifier encounters error classification because of data loss, so as to enable the model to have relatively higher robustness for illumination and background noise of different scenes.

Owner:HUAZHONG NORMAL UNIV

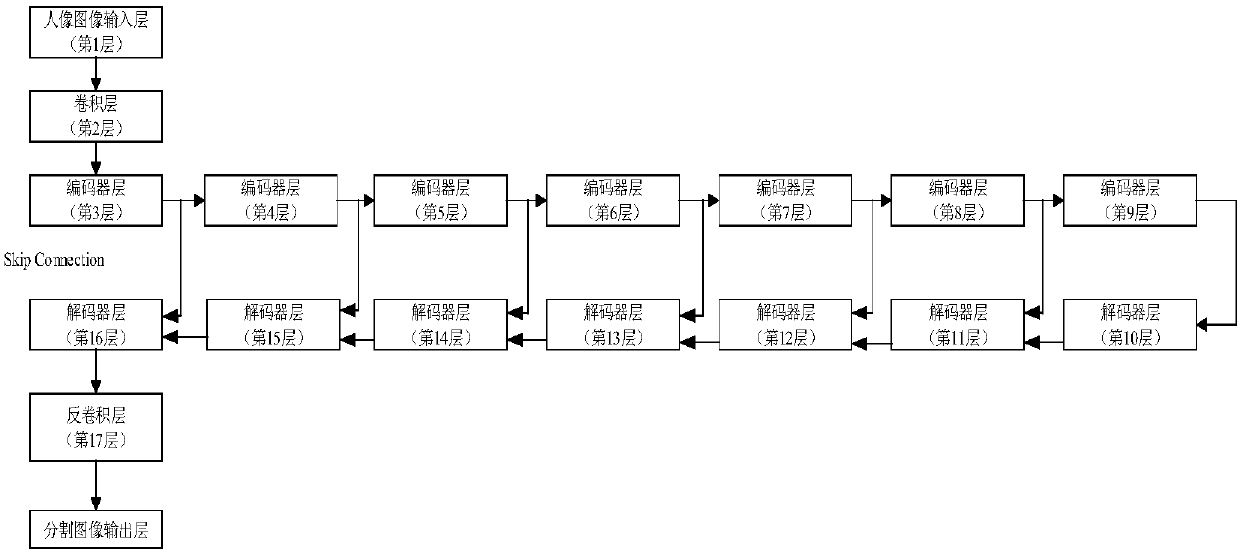

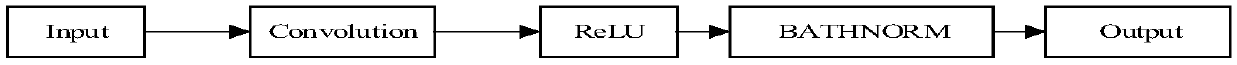

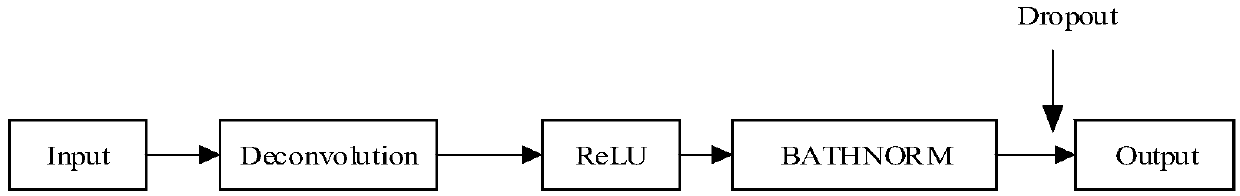

Generative adversarial network-based pixel-level portrait cutout method

ActiveCN107945204AImprove Segmentation AccuracyGood segmentation effectImage enhancementImage analysisConditional random fieldData set

The invention discloses a generative adversarial network-based pixel-level portrait cutout method and solves the problem that massive data sets with huge making costs are needed to train and optimizea network in the field of machine cutout. The method comprises the steps of presetting a generative network and a judgment network of an adversarial learning mode, wherein the generative network is adeep neural network with a jump connection; inputting a real image containing a portrait to the generative network for outputting a person and scene segmentation image; inputting first and second image pairs to the judgment network for outputting a judgment probability, and determining loss functions of the generative network and the judgment network; according to minimization of the values of theloss functions of the two networks, adjusting configuration parameters of the two networks to finish training of the generative network; and inputting a test image to the trained generative network for generating the person and scene segmentation image, randomizing the generated image, and finally inputting a probability matrix to a conditional random field for further optimization. According tothe method, a training image quantity is reduced in batches; and the efficiency and the segmentation precision are improved.

Owner:XIDIAN UNIV

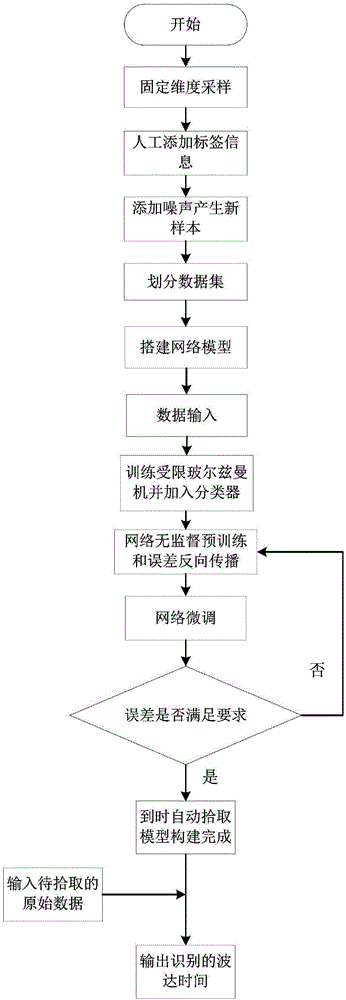

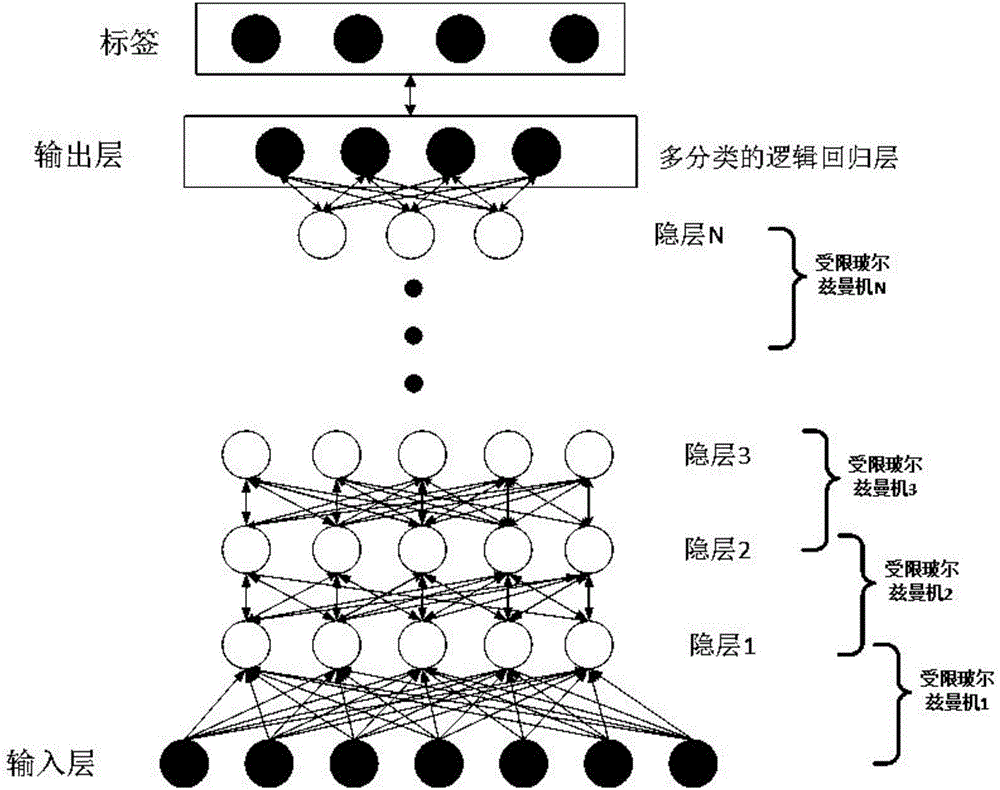

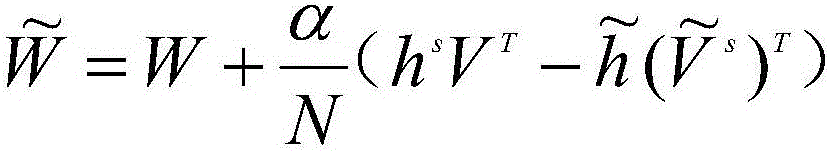

Automatic microseismic signal arrival time picking method based on depth belief neural network

InactiveCN106405640ACharacter and pattern recognitionSeismic signal processingData setNetwork output

The invention discloses an automatic microseismic signal arrival time picking method based on a depth belief neural network. According to the method, each microseismic record is sampled according unified fixed dimensions, the signal arrival time of partial records is manually picked and is taken as the label information of the corresponding records; the information-picked records and labels of the information-picked records are taken as a total data set during network construction, including a training data set, a verification data set and a test data set; through inputting the data to the depth belief neural network for training and testing, the depth belief neural network is constructed; the actually-acquired to-be-processed data is inputted to the trained network model to carry out microseismic signal identification and automatic arrival time picking, and the network output is an arrival time point of the microseismic data.

Owner:CHINA UNIV OF MINING & TECH (BEIJING)

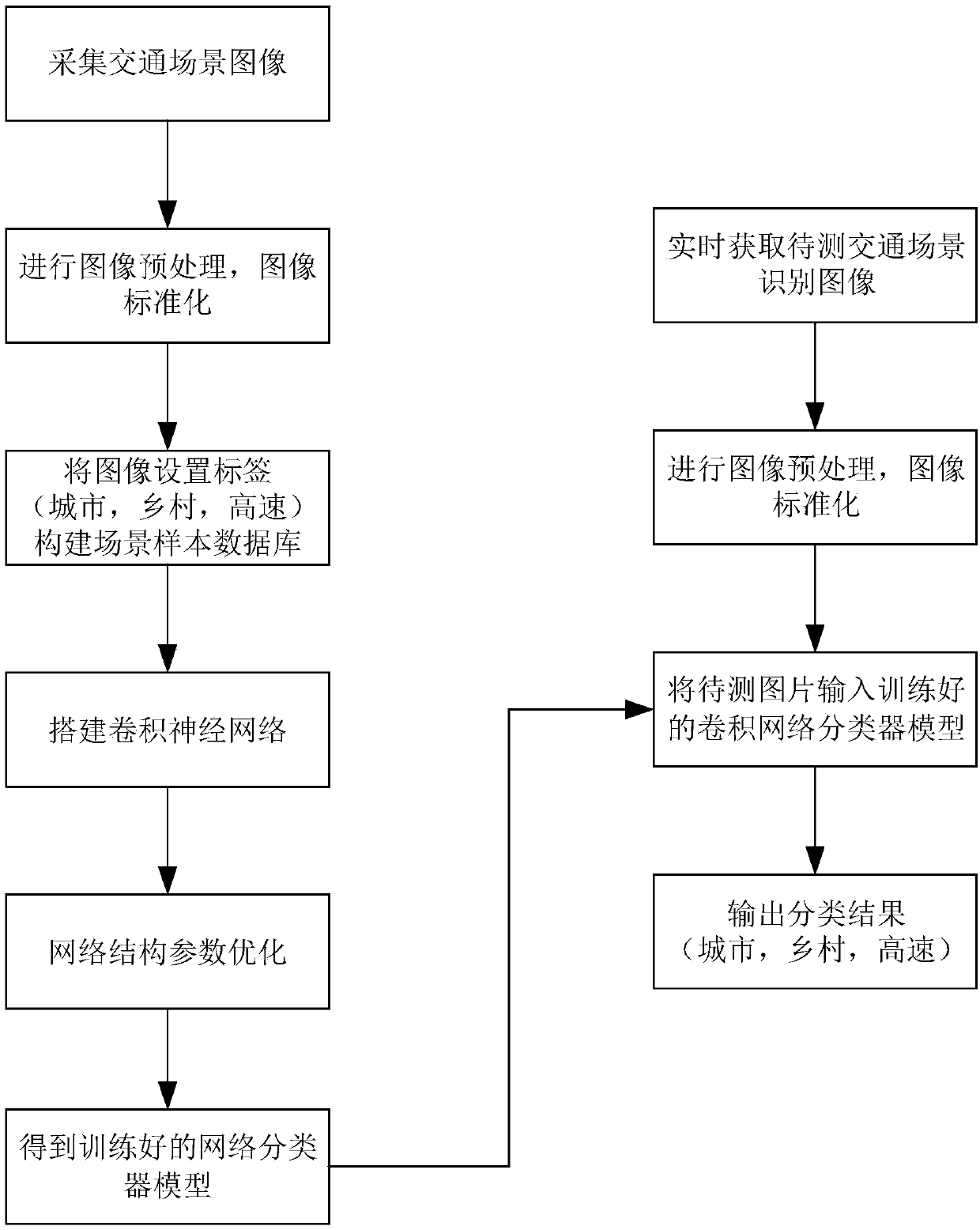

Driving scene classification method based on convolution neural network

InactiveCN107609602AReduce complexityReduce the numberCharacter and pattern recognitionNeural learning methodsFeature extractionNetwork output

The invention discloses a driving scene classification method based on a convolution neural network, and the method comprises the following steps: collecting a road environment video image; carrying out the classification of a traffic scene, and building a traffic scene recognition database; extracting sample images of different driving scenes from the traffic scene recognition database, carryingout the feature extraction and multiple convolution training of the sample images through a deep convolution neural network, carrying out the rasterization of pixels, connecting the pixels to form a vector, inputting the vector into a conventional neural network, obtaining convolution neural network output, and achieving the deep learning of different driving scenes; carrying out the parameter optimization of a network structure of the built convolution neural network, obtaining a trained convolution neural network classifier, carrying out the adjustment of a traffic scene recognition model, and selecting an optimal mode as the standard of the traffic scene recognition model; carrying out the real-time collection of the image of a to-be-detected traffic scene, and inputting the image intothe traffic scene recognition model for the recognition of a road environment scene.

Owner:JILIN UNIV

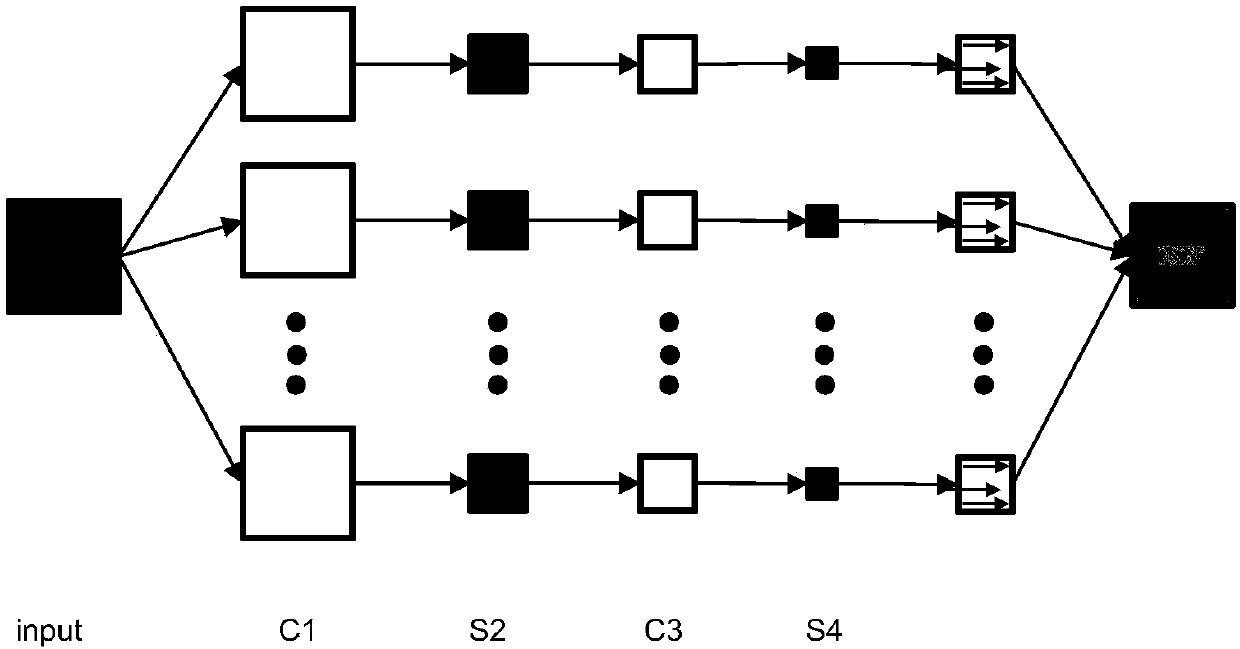

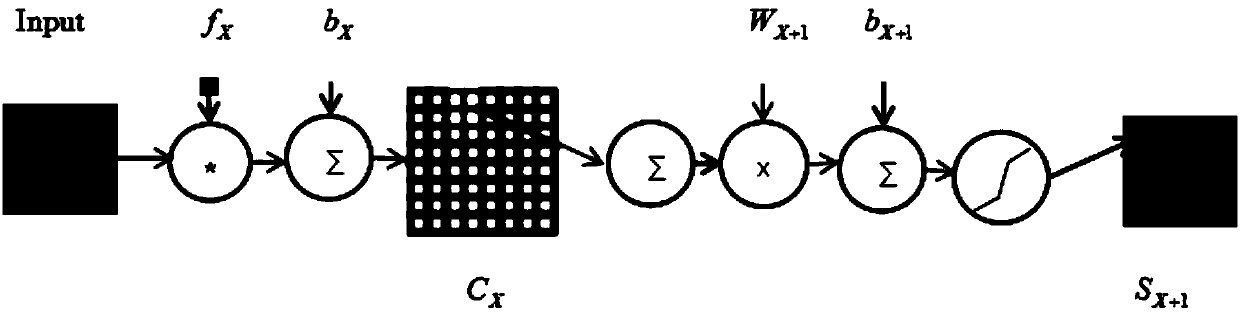

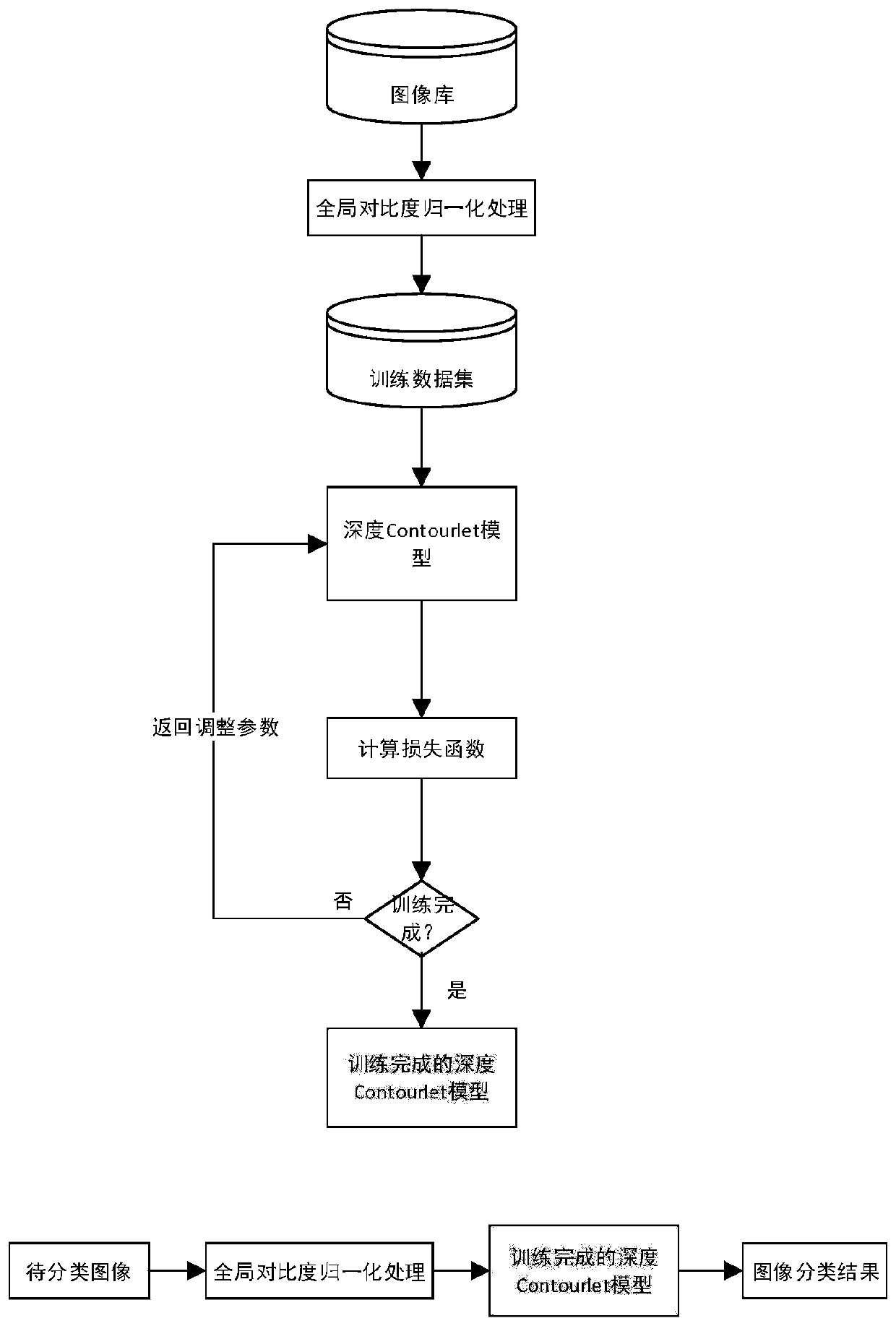

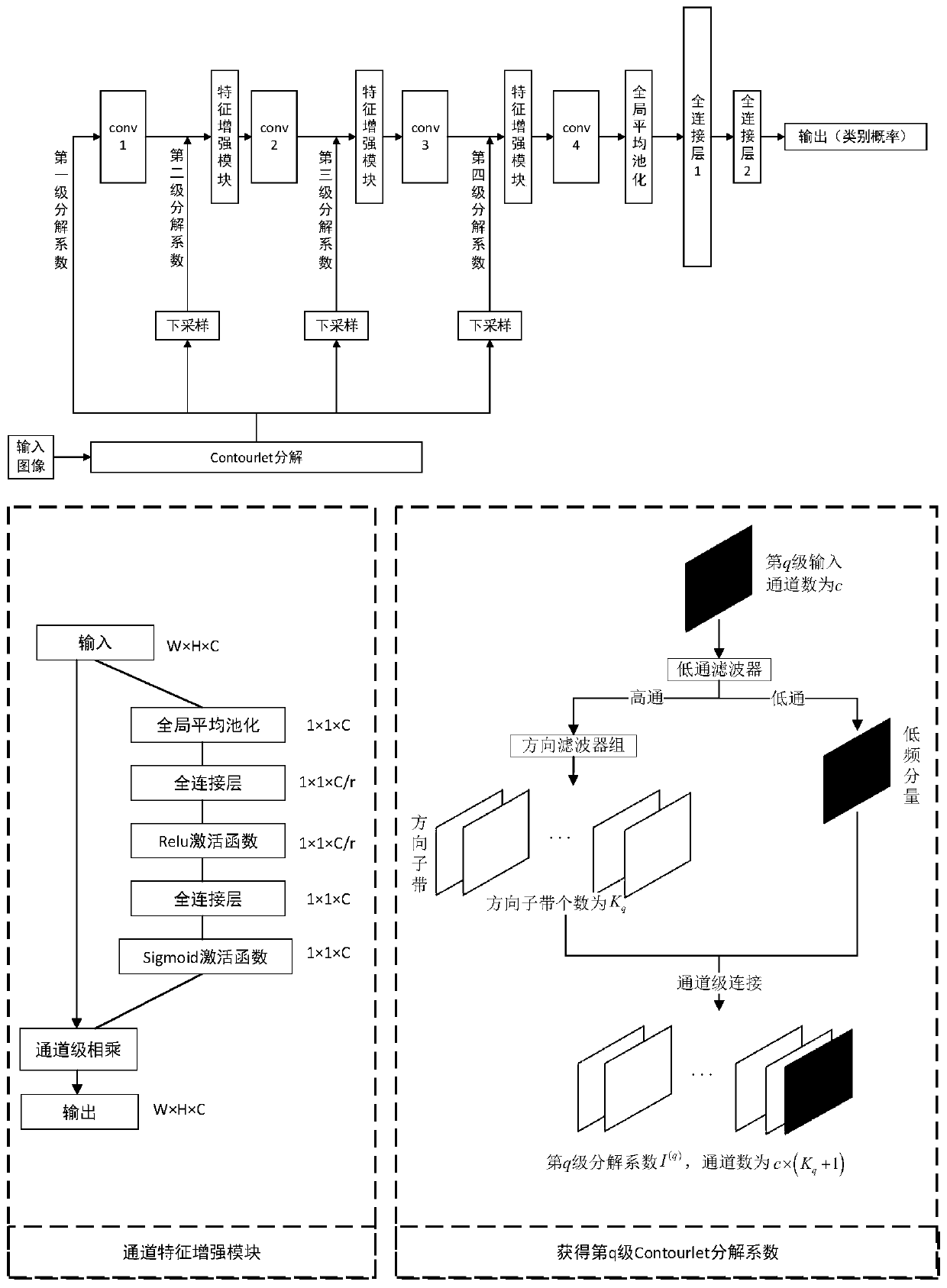

Remote sensing image classification method based on attention mechanism deep Contourlet network

ActiveCN110728224AApproximation effect is goodGood rotation invarianceScene recognitionNeural architecturesTest sampleNetwork output

The invention discloses a remote sensing image classification method based on an attention mechanism deep Contourlet network, and the method comprises the steps: building a remote sensing image library, and obtaining a training sample set and a test sample set; then, setting a Contourlet decomposition module, building a convolutional neural network model, grouping convolution layers in the model in pairs to form a convolution module, using an attention mechanism, and performing data enhancement on the merged feature map through a channel attention module; carrying out iterative training; performing global contrast normalization processing on the remote sensing images to be classified to obtain the average intensity of the whole remote sensing images, and then performing normalization to obtain the remote sensing images to be classified after normalization processing; and inputting the normalized unknown remote sensing image into the trained convolutional neural network model, and classifying the unknown remote sensing image to obtain a network output classification result. According to the method, a Contourlet decomposition method and a deep convolutional network method are combined, a channel attention mechanism is introduced, and the advantages of deep learning and Contourlet transformation can be brought into play at the same time.

Owner:XIDIAN UNIV

Access control for packet-oriented networks

InactiveUS20080198744A1Efficient methodAvoid disadvantagesError preventionTransmission systemsTraffic capacityNetwork output

The invention relates to a method for access control to a packet-oriented network. An admissibility check for a group of packets is carried out by means of a threshold value for the traffic volume between the network input node and the network output node for the flow. The transmission of the groups of data packets is not permitted when an authorisation of the transmission would lead to traffic volume exceeding the threshold value. A relationship between the threshold values and the traffic volume in partials stretches or links may be formulated by means of the proportional traffic volume over the individual partial stretches. Using the capacities of the links the threshold values for pairs of input and output nodes can be fixed such that no overload occurs on the individual links. Within the above method a flexible reaction to the drop-out of links can be achieved by means of a resetting of the threshold values. Furthermore the inclusion of other conditions is possible, for example relating to the capacity of interfaces to other networks or special demands on transmission of prioritised traffic.

Owner:SIEMENS AG

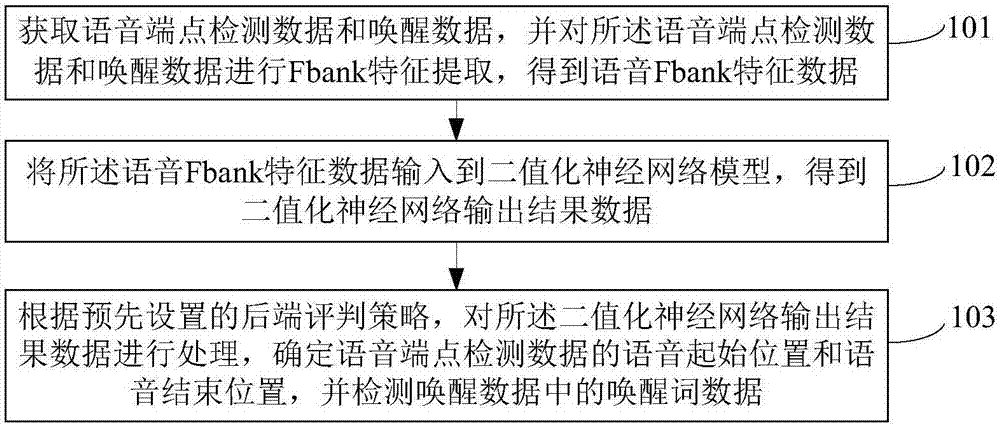

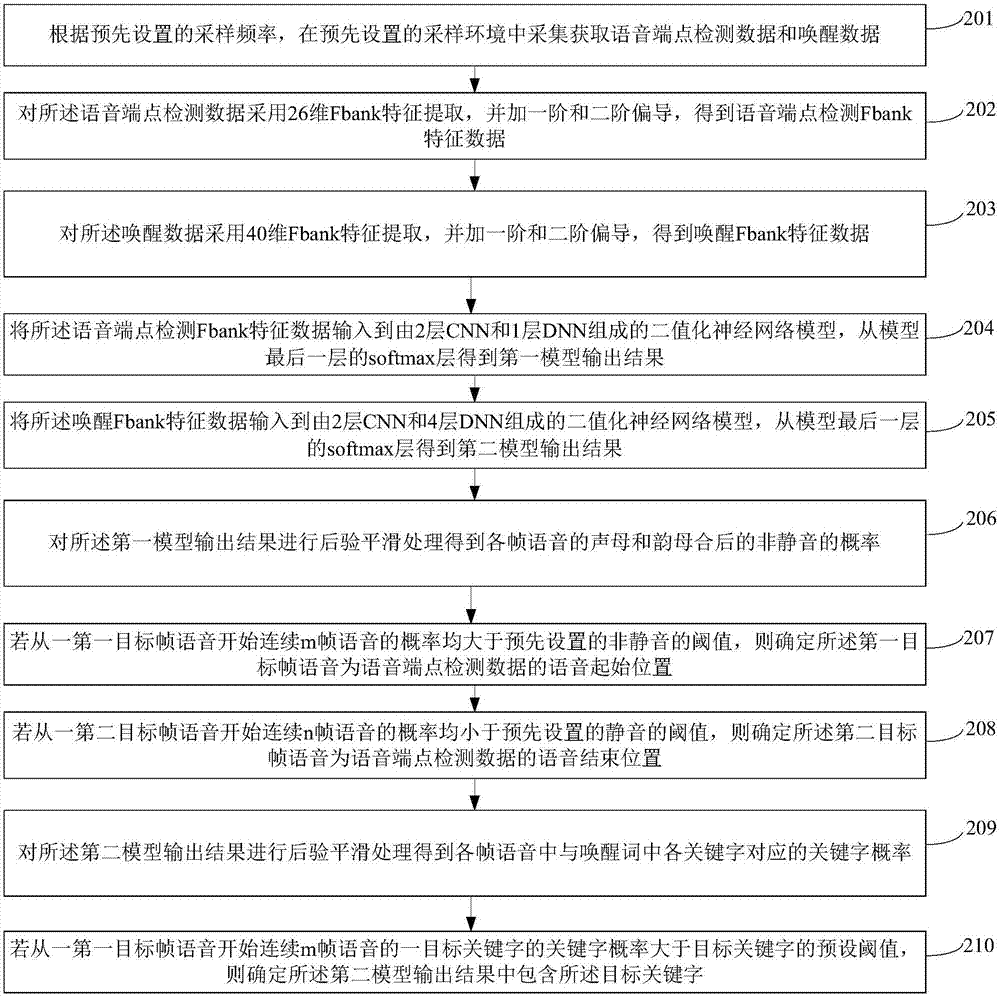

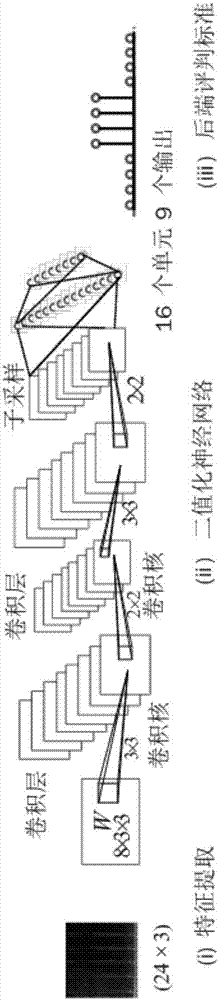

Voice activity detection and wake-up method and device

ActiveCN108010515ALower latencyReduce power consumptionSpeech recognitionFeature extractionNetwork output

The invention provides a voice activity detection and wake-up method and device, and relates to the technical field of machine learning speech recognition. The method includes the steps of acquiring voice activity detection data and wake-up data, and performing Fbank feature extraction on the voice activity detection data and wake-up data to obtain voice Fbank feature data; inputting the voice Fbank feature data to a binary neural network model to obtain binarized neural network output result data; and according to a preset backend evaluation strategy, processing the binarized neural network output result data, determining a voice start position and a voice end position of the voice activity detection data, and detecting wake-up word data in the wake-up data. The system framework of the invention can be applied to voice activity detection and voice wake-up technologies at the same time, and can implement accurate, fast, low-delay, small-model and low-power voice activity detection technologies and voice wake-up technologies.

Owner:TSINGHUA UNIV

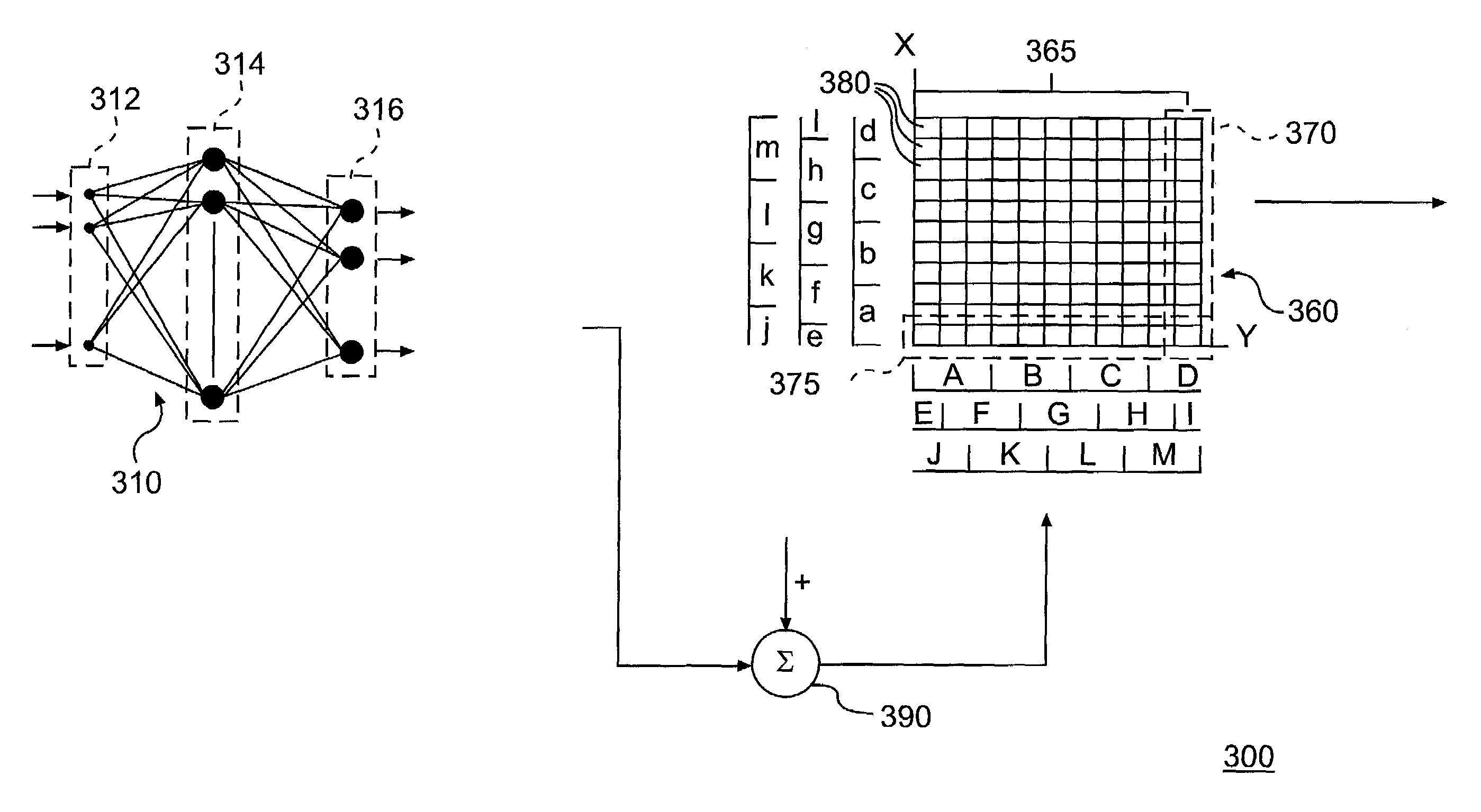

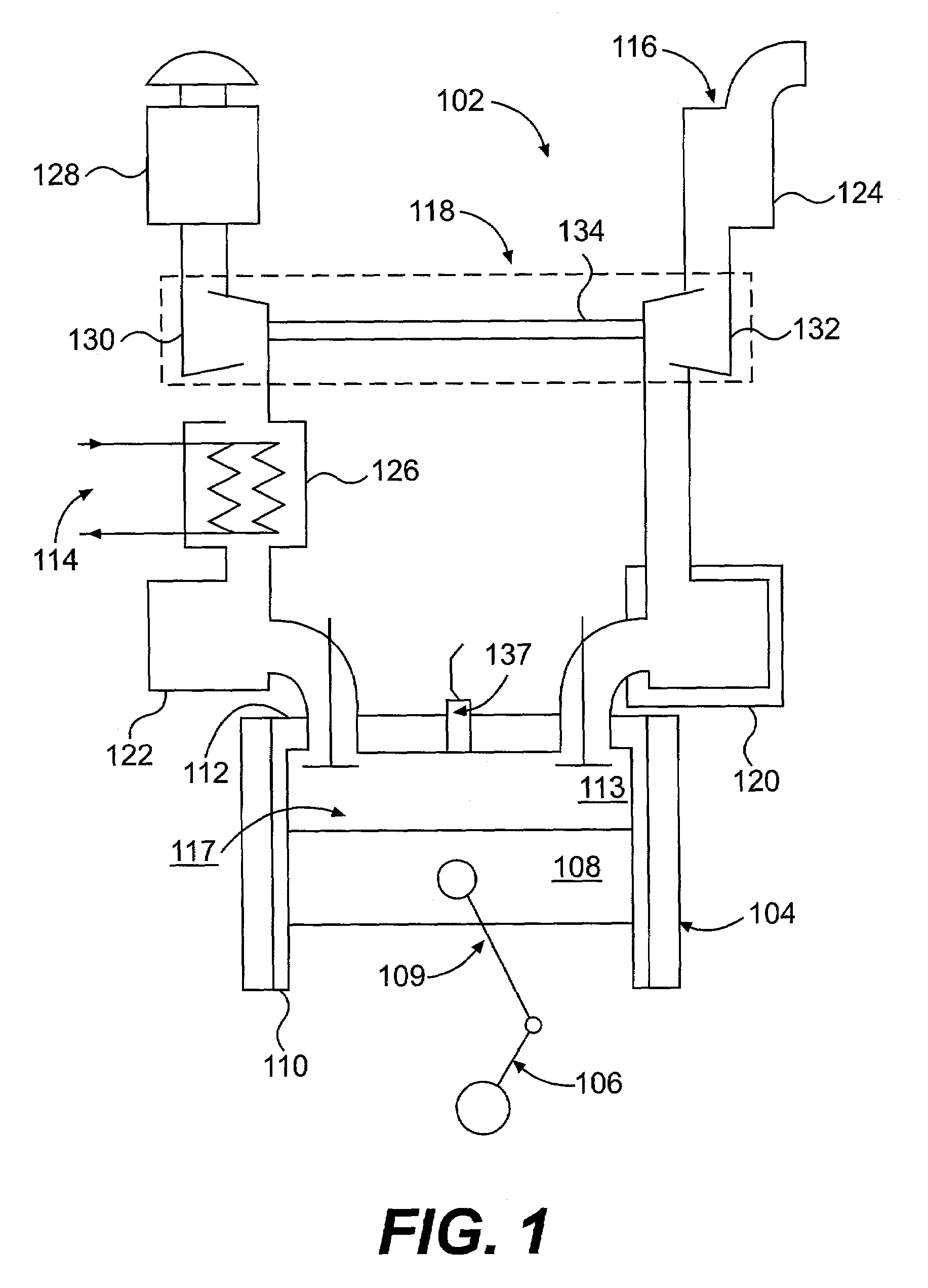

Engine control system using a cascaded neural network

A method, system and machine-readable storage medium for monitoring an engine using a cascaded neural network that includes a plurality of neural networks is disclosed. In operation, the method, system and machine-readable storage medium store data corresponding to the cascaded neural network. Signals generated by a plurality of engine sensors are then inputted into the cascaded neural network. Next, a second neural network is updated at a first rate, with an output of a first neural network, wherein the output is based on the inputted signals. In response, the second neural network outputs at a second rate, at least one engine control signal, wherein the second rate is faster than the first rate.

Owner:CATERPILLAR INC

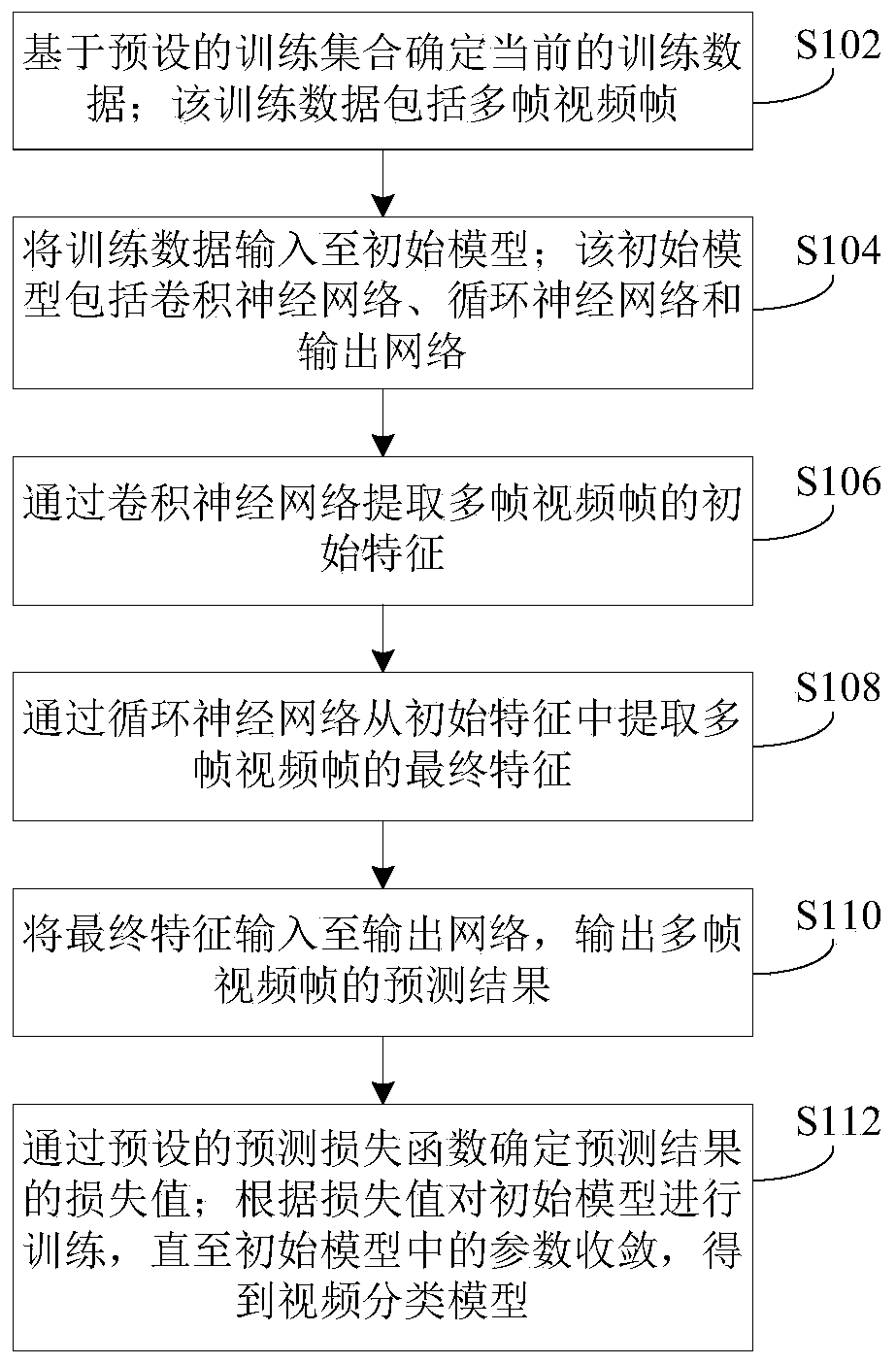

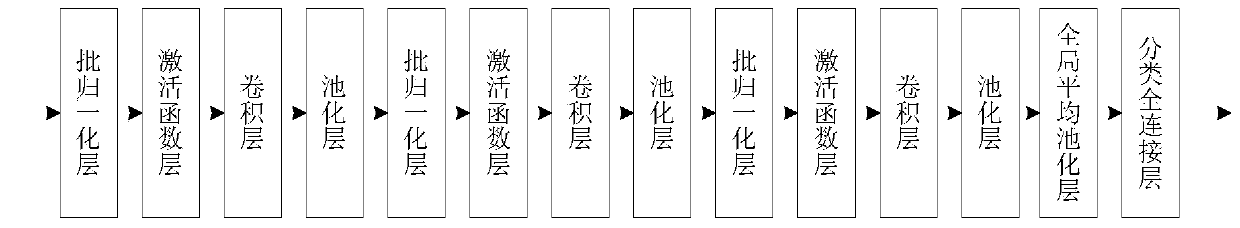

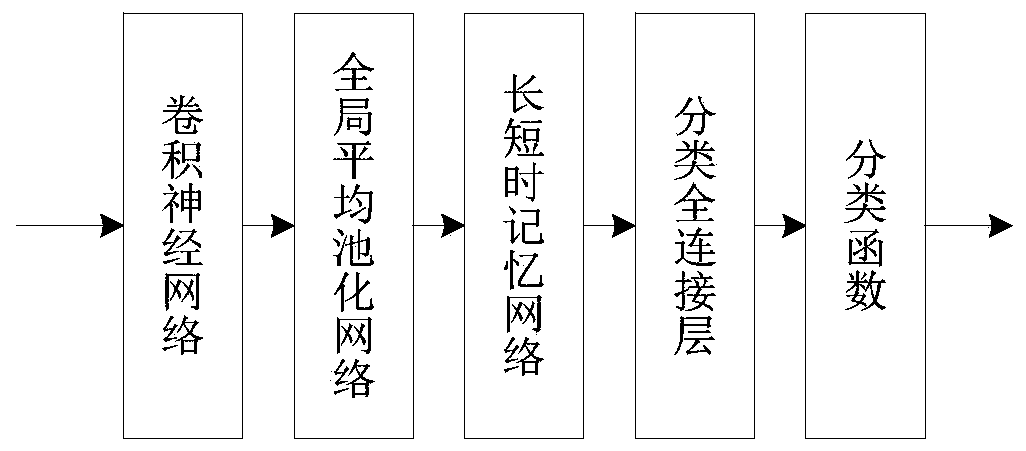

Video classification method and model training method and device thereof, and electronic equipment

ActiveCN110070067AImprove accuracyImprove training efficiencyCharacter and pattern recognitionNeural architecturesFeature extractionMultiple frame

The invention provides a video classification method, a model training method and device thereof, and electronic equipment. The training method comprises the following steps: extracting initial features of a plurality of video frames through a convolutional neural network; extracting final features of the plurality of video frames from the initial features through a recurrent neural network; inputting the final feature into an output network, and outputting a prediction result of the multi-frame video frame; determining a loss value of the prediction result through a preset loss prediction function; and training the initial model according to the loss value until parameters in the initial model converge to obtain a video classification model. According to the method, the convolutional neural network and the recurrent neural network are combined, so that the operand can be greatly reduced, and the model training and recognition efficiency is improved; and meanwhile, the association information between the video frames can be considered in the feature extraction process, so that the extracted features can accurately represent the video types, and the accuracy of video classificationis improved.

Owner:BEIJING KINGSOFT CLOUD NETWORK TECH CO LTD +1

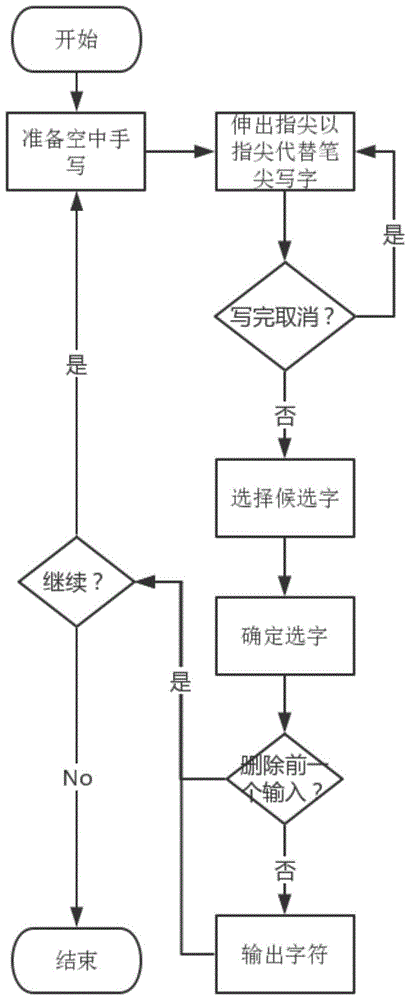

Egocentric vision in-the-air hand-writing and in-the-air interaction method based on cascade convolution nerve network

ActiveCN105718878AForecast stabilityReduce time performance consumptionCharacter and pattern recognitionNeural learning methodsFingertip detectionNerve network

The invention discloses an egocentric vision in-the-air hand-writing and in-the-air interaction method based on a cascade convolution nerve network. The method comprises steps of S1: obtaining training data; S2: designing a depth convolution nerve network used for hand detection; S3: designing a depth convolution nerve network used for gesture classification and finger tip detection; S4: cascading a first-level network and a second-level network, cutting a region of interest out of a foreground bounding rectangle output by the first-level network so as to obtain a foreground region including a hand, and then using the foreground region as the input of the second-level convolution network for finger tip detection and gesture identification; S5: judging the gesture identification, if it is a single-finger gesture, outputting the finger tip thereof and then carrying out timing sequence smoothing and interpolation between points; and S6: using continuous multi-frame finger tip sampling coordinates to carry out character identification. The invention provides an integral in-the-air hand-writing and in-the-air interaction algorithm, so accurate and robust finger tip detection and gesture classification are achieved, thereby achieving the egocentric vision in-the-air hand-writing and in-the-air interaction.

Owner:SOUTH CHINA UNIV OF TECH

Operation device and method of accelerating chip which accelerates depth neural network algorithm

ActiveCN106529668AExtended waiting timeConsume morePhysical realisationNeural learning methodsSynaptic weightAlgorithm

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

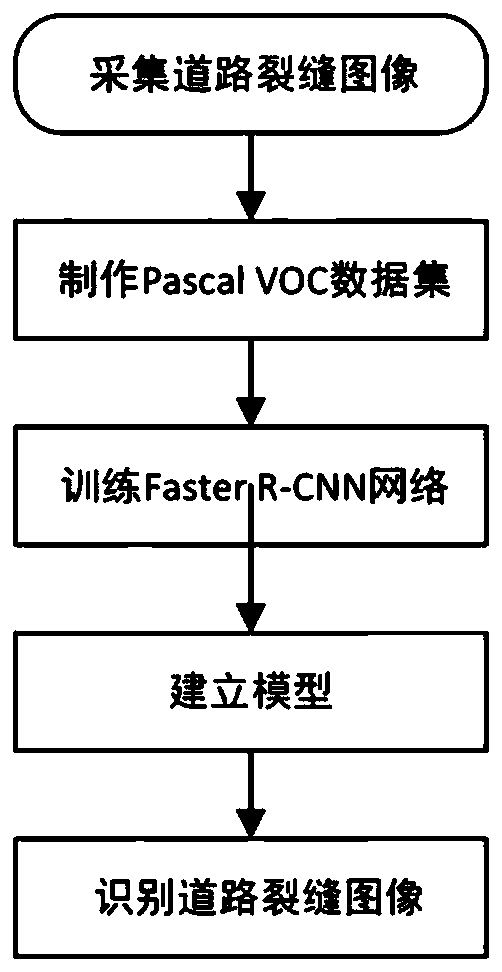

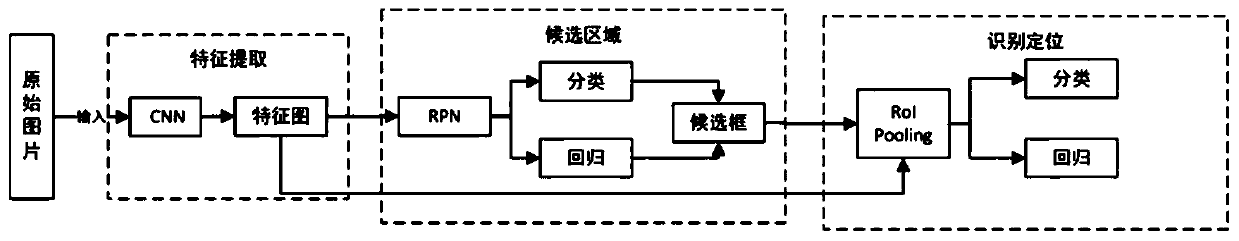

Road crack identification method based on deep learning

InactiveCN110321815AAvoid blindnessThe detection process is fastCharacter and pattern recognitionNeural architecturesNetwork outputFeature generation

The invention discloses a road crack identification method based on deep learning. The method comprises the following steps that firstly, road crack images are collected, a training set is established, and a convolutional neural network is constructed to extract features in the images to generate a feature map; faster R-CNN model is trained, the model comprises an RPN network, an RoI Pooling network and a full connection layer which are connected in sequence, the RPN network obtains a detection target and an image background and obtains a candidate box position; and then candidate regions aregenerated, the RoI Pooling network outputs a RoI feature map with a fixed size, the feature map generated by the convolutional neural network and the RoI feature map are integrated, the object category of the detection target is discriminated, and the accurate position of the object is returned. Finally, a to-be-identified road image is input into the trained Faster R-CNN model, and whether the image is a road crack image or not is judged. The method has the advantages of high detection speed and high recognition accuracy.

Owner:CHINA JILIANG UNIV

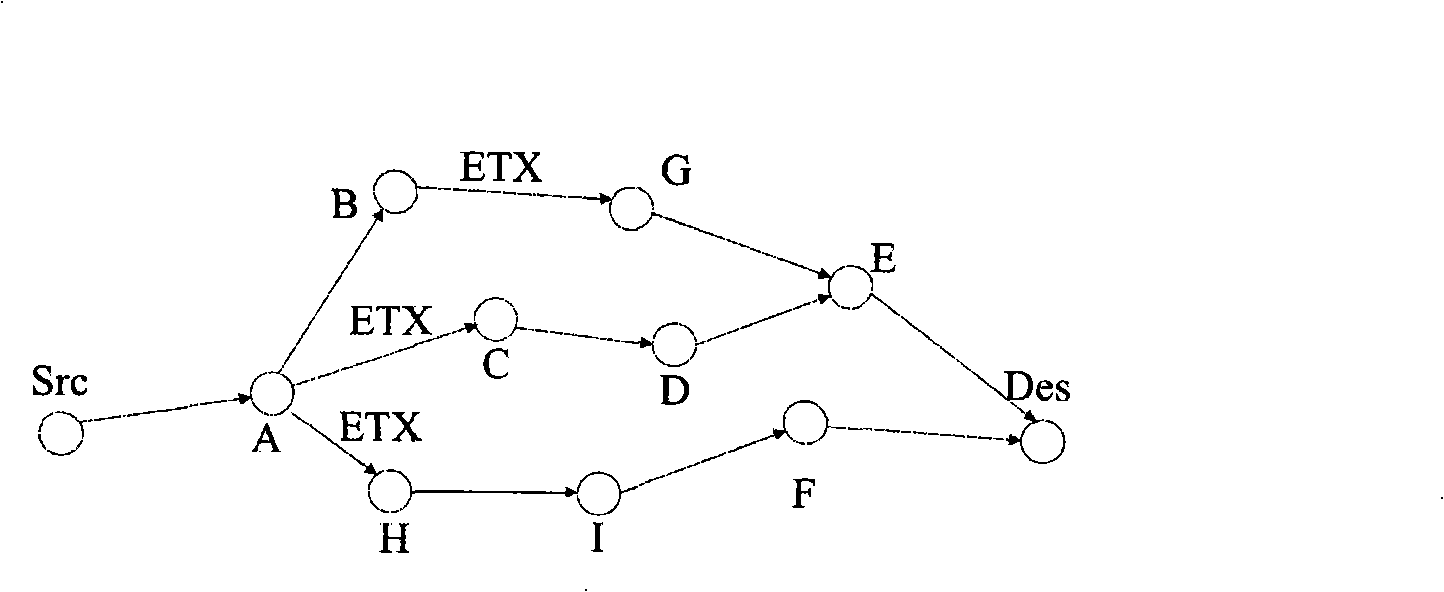

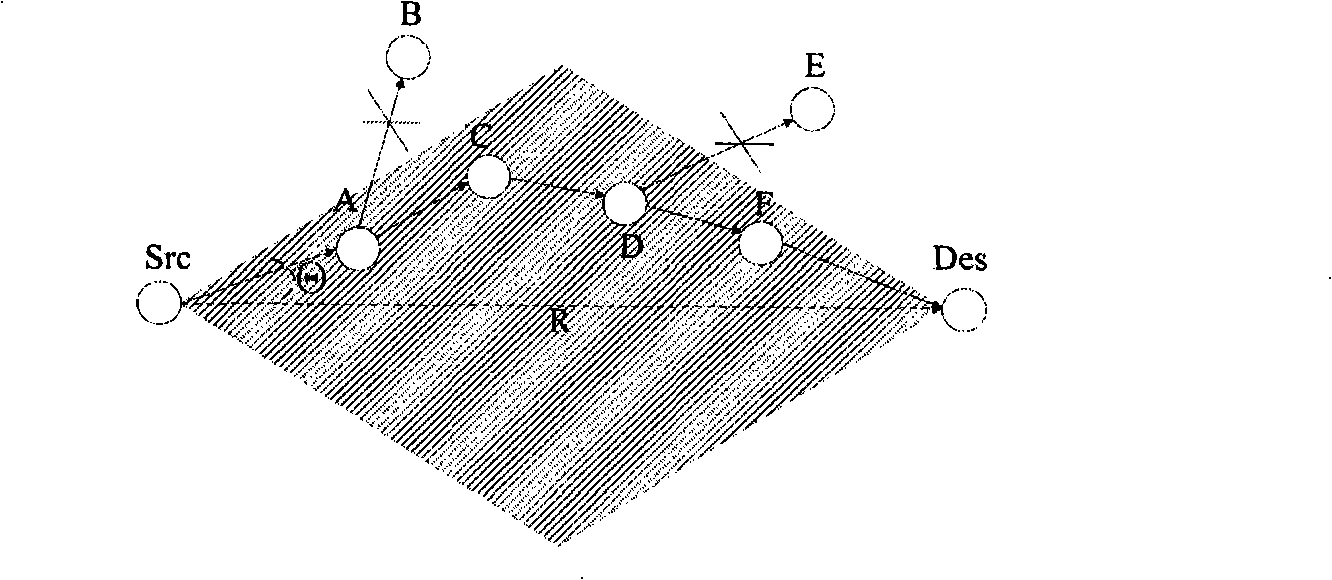

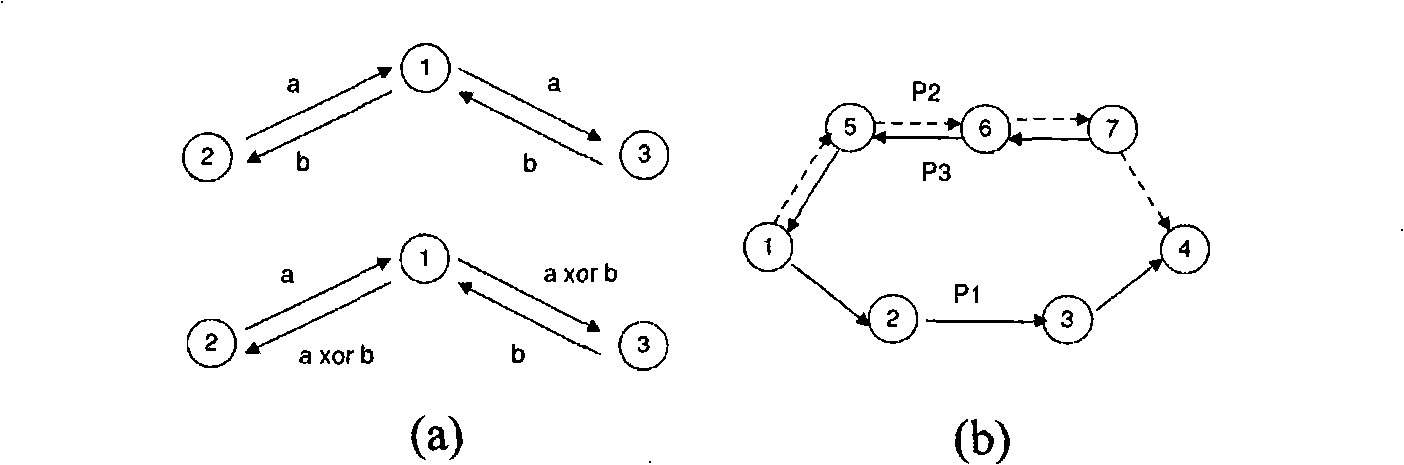

Multi-path routing method for wireless network based on network encoding

InactiveCN101325556AImprove throughputError prevention/detection by using return channelData switching networksWireless mesh networkNetwork output

A multi-route routing method based on network coding in wireless network includes the following steps: 1) routing discovering from originating nodes to object nodes; 2) after the routing discovering stage is finished, comparing route conversion incomes of each route, and originating nodes selecting the optimum W routes therein for data transmission. Compared with the prior technology, the invention proposes a multi-route routing method based on network coding in wireless network, dynamically transmits data packets on multiple routes according to reliability and coding opportunity of routes, dynamically creates rather than only waiting for coding opportunity through conversion transmission path, and multiple routes share network flow quantity loads, and maximize the route conversion, accordingly improving network output.

Owner:NANJING UNIV

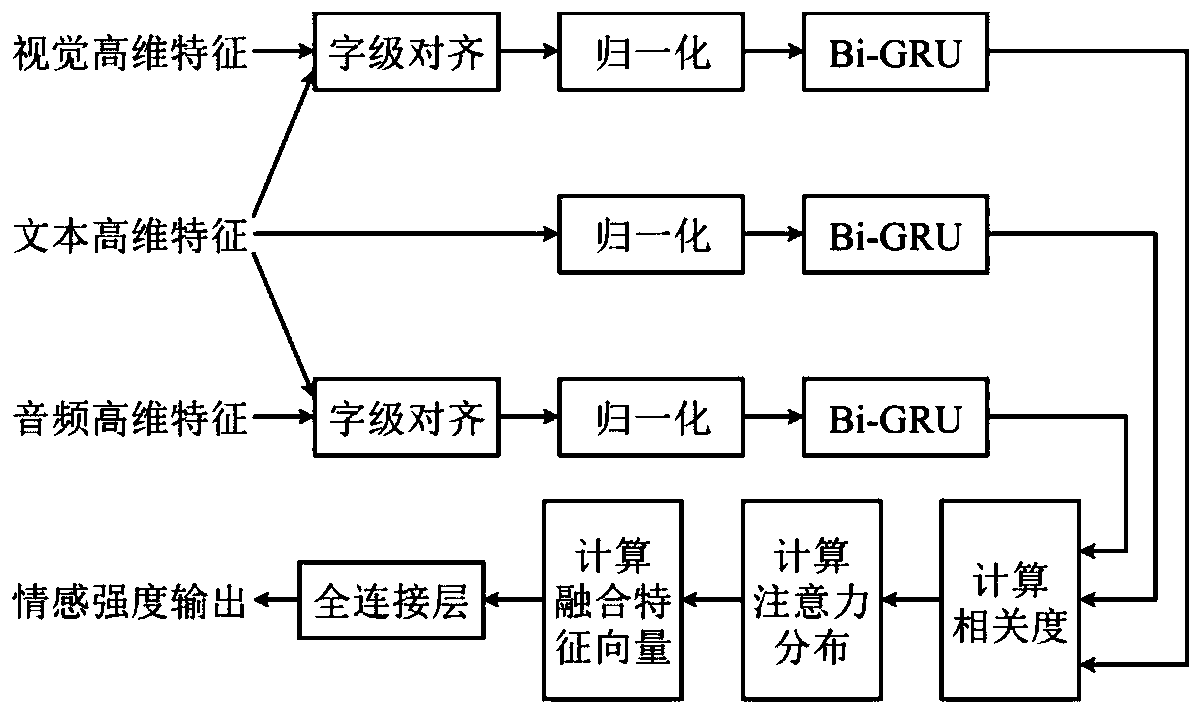

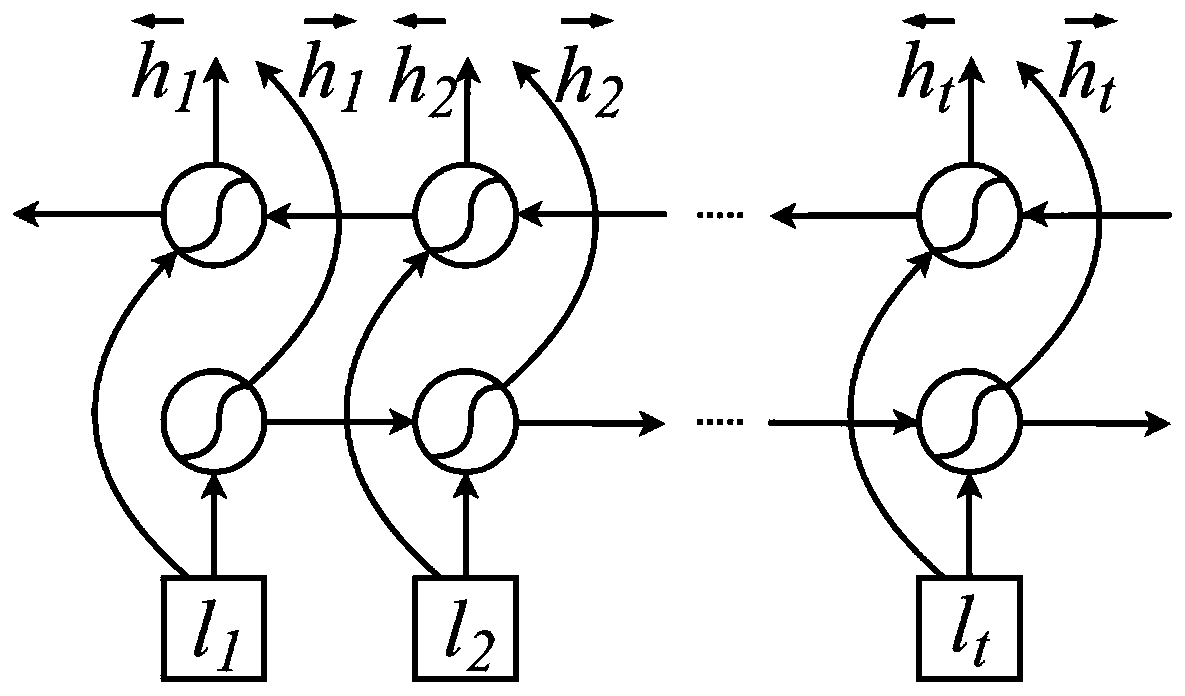

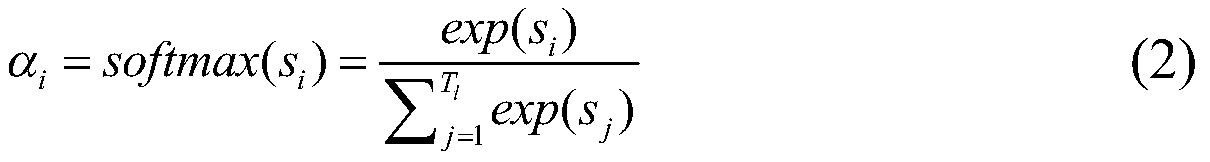

Multi-modal emotion recognition method based on fusion attention network

ActiveCN110188343AImprove accuracyOvercoming Weight Consistency IssuesCharacter and pattern recognitionNatural language data processingDiagnostic Radiology ModalityFeature vector

The invention discloses a multi-modal emotion recognition method based on a fusion attention network. The method comprises: extracting high-dimensional features of three modes of text, vision and audio, and aligning and normalizing according to the word level; then, inputting the signals into a bidirectional gating circulation unit network for training; extracting state information output by the bidirectional gating circulation unit network in the three single-mode sub-networks to calculate the correlation degree of the state information among the multiple modes; calculating the attention distribution of the plurality of modalities at each moment; wherein the state information is the weight parameter of the state information at each moment; and weighting and averaging state information ofthe three modal sub-networks and the corresponding weight parameters to obtain a fusion feature vector as input of the full connection network, a to-be-identified text, inputting vision and audio intothe trained bidirectional gating circulation unit network of each modal, and obtaining final emotion intensity output. According to the method, the problem of weight consistency of all modes during multi-mode fusion can be solved, and the emotion recognition accuracy under multi-mode fusion is improved.

Owner:ZHEJIANG UNIV OF TECH

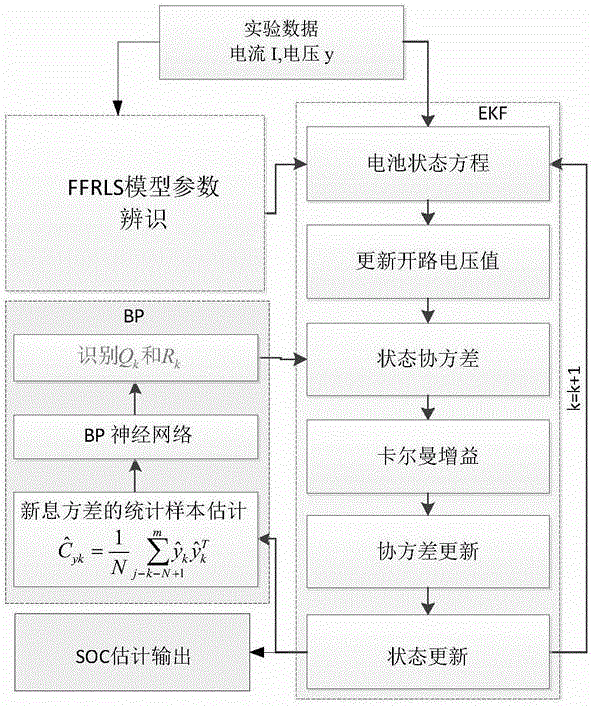

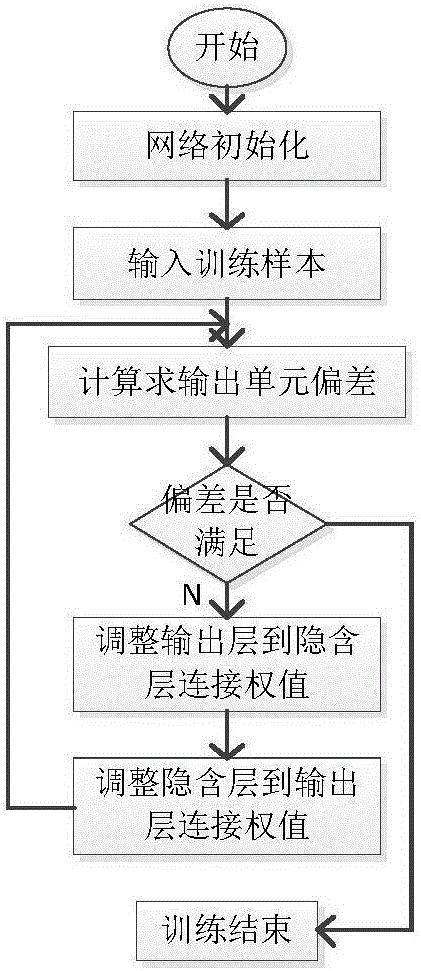

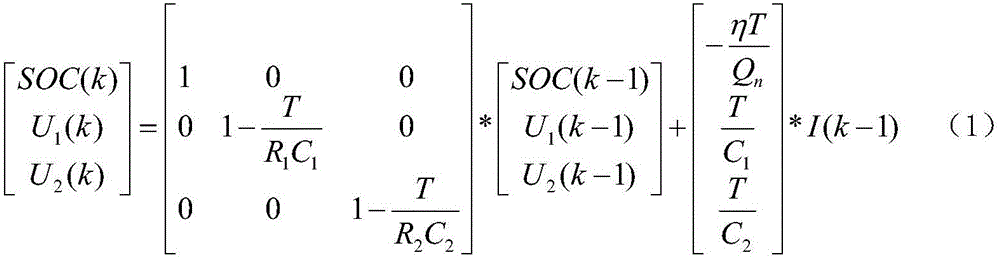

Power battery SOC estimation method

ActiveCN106054084AAccurate estimateImprove estimation accuracyElectrical testingDiffusionPower battery

The invention provides a power battery SOC estimation method, wherein power battery SOC estimation is performed based on a BP neural network assisted extended Kalman filter. According to the power battery SOC estimation method, a state estimation updated value is used as the input value of the BP neural network, and the estimated value of an observation noise variance-covariance matrix is used as an objective output value of the BP neural network, thereby performing online training on a constructed BP neural network. The observation noise variance-covariance matrix which is output by the BP neural network is supplied to an error variance-covariance prediction equation and a filtering gain equation of the extended Kalman filter, thereby realizing recursive calculation of the BP neural network assisted extended Kalman filter. The power battery SOC estimation method can settle the problems such as incapability of satisfying a requirement for online estimation, large accumulative error, easy diffusion, and easy influence by a noise in an existing estimation method. Furthermore the power battery SOC estimation method has high estimation precision.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

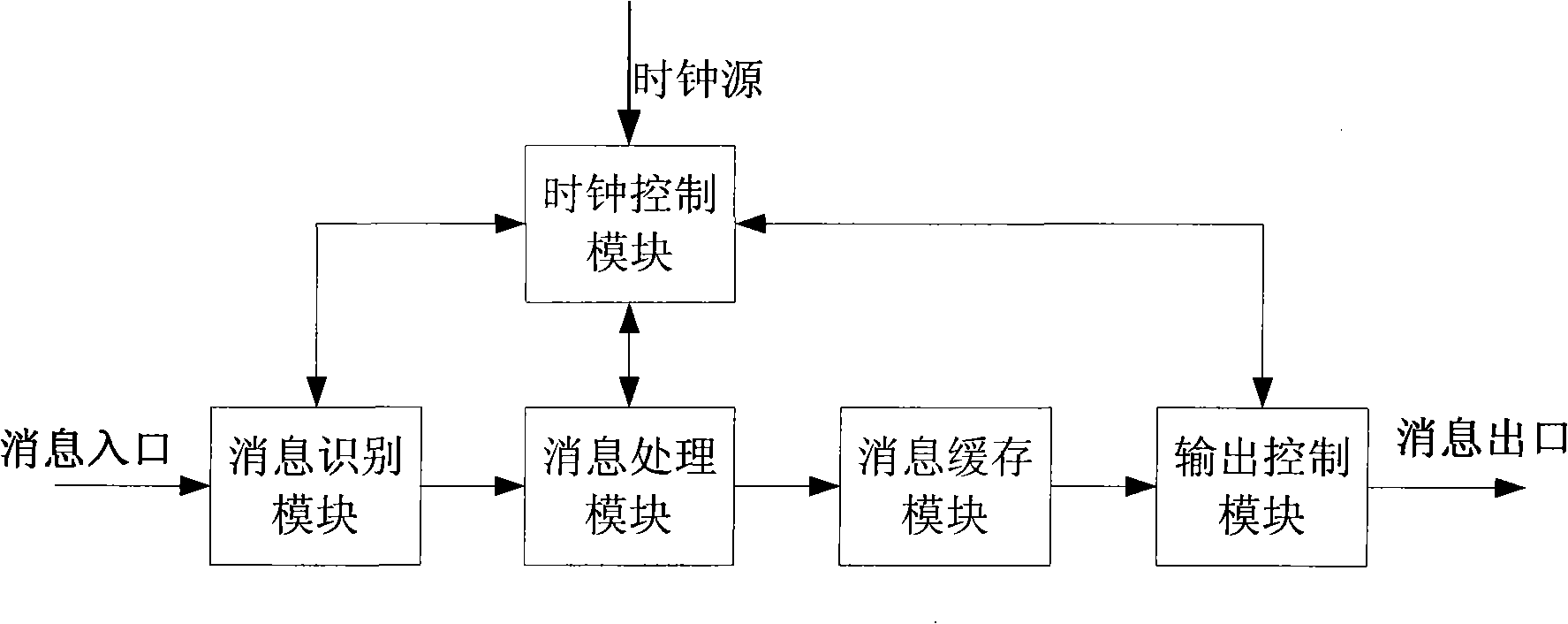

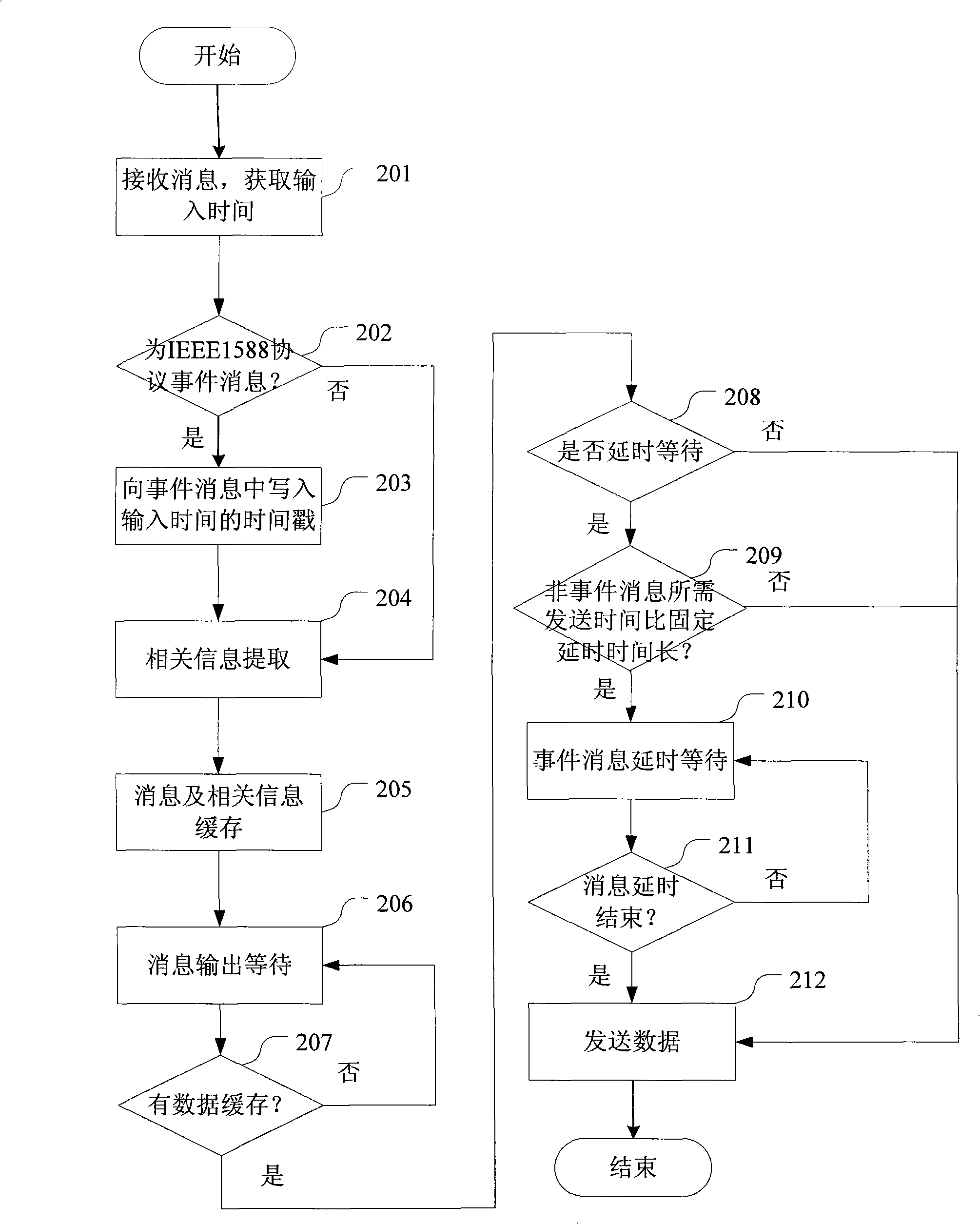

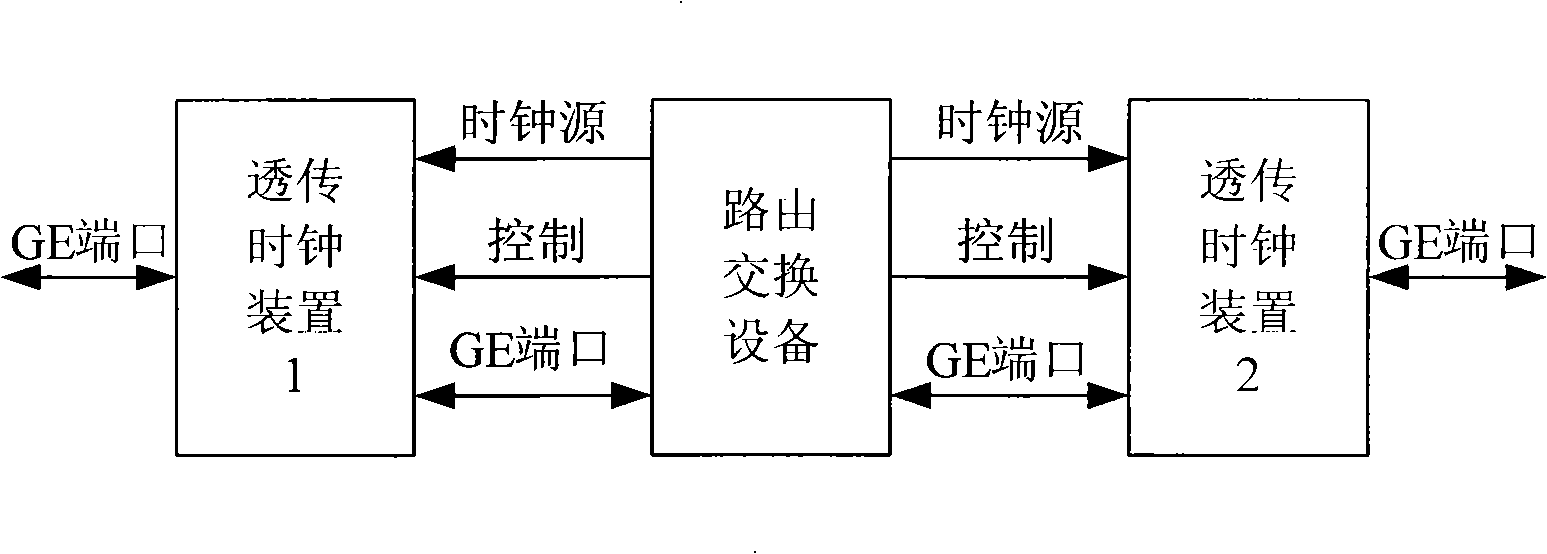

System, apparatus and method for implementing transparent transmission clock in accurate clock synchronization protocol

ActiveCN101404618AHigh feasibilityShorten development timeData switching networksSynchronising arrangementReal-time computingClock synchronization

The invention discloses a system, a device and a method thereof for realizing an unvarnished transmission clock in a precise clock synchronization protocol. In the method, when a route switching device receives messages which are input from external network, an IEEE1588 protocol event message is recognized by an unvarnished transmission clock device and a time stamp of the input time is written into the message; when the route switching device outputs messages to the external network, the time stamp of the input time of the IEEE1588 protocol event message is extracted by another unvarnished transmission clock device and a time stamp of a preset fixed delay time is rewritten into the message. With the input time regarded as the reference time, the message is output after the fixed delay time is delayed. With the system, the device and the method adopted, precise time stamp can be obtained. The synchronous time precision is improved. And the unvarnished transmission clock device can be directly applied to current route switching devices. The hardware developing time is saved. The supporting feasibility on PTP protocol under the condition of current experiment is improved.

Owner:ZTE CORP

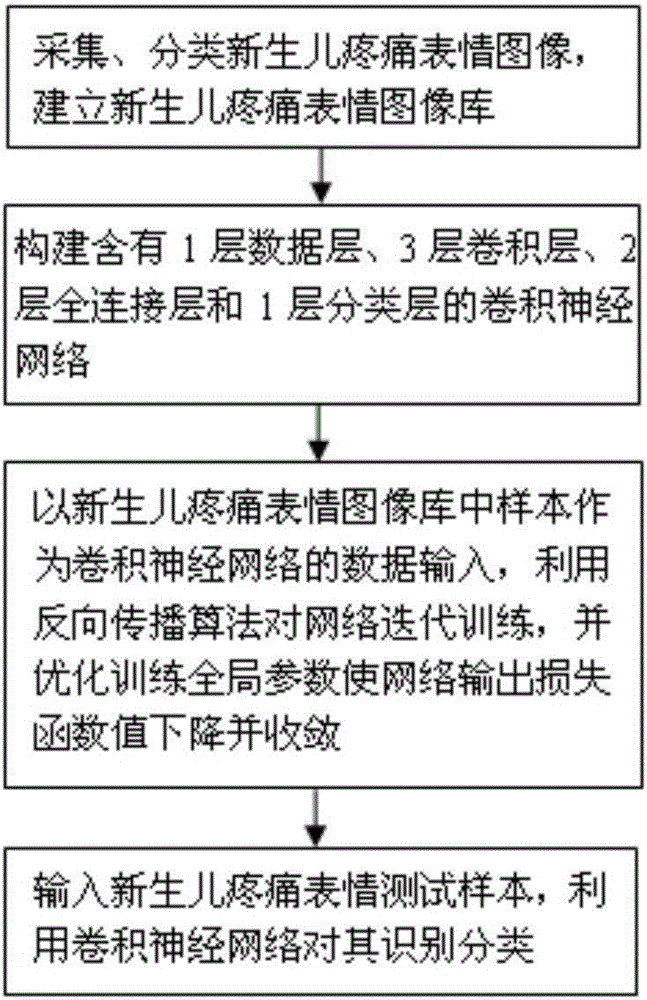

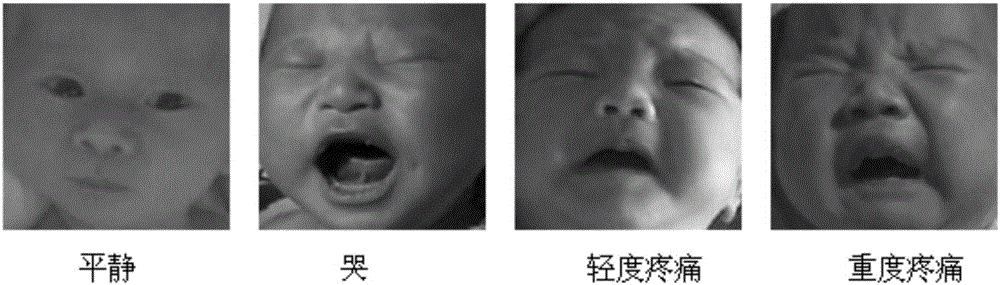

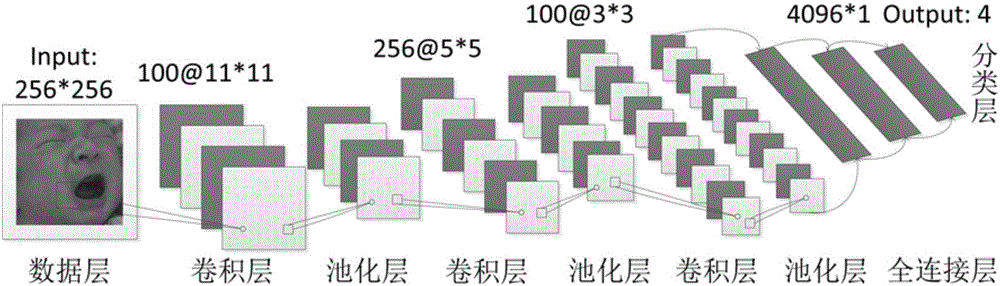

Neonatal pain expression classification method based on CNN (convolutional neural network)

InactiveCN106778657AEasy to identifyImprove robustnessNeural learning methodsAcquiring/recognising facial featuresTest sampleNetwork output

The invention discloses a neonatal pain expression classification method based on a CNN (convolutional neural network). The method comprises the following steps: firstly, neonatal pain expression images are acquired, the images are classified by professional medical staff according to calm, cry, slight pain and intense pain level by level, and a neonatal pain expression image library is established; secondly, the CNN containing one data layer, three convolutional layers, two full connection layers and one classification layer is constructed; then samples in the neonatal pain expression image library are taken as data input of the CNN, iterative training is performed on the CNN with a back propagation algorithm, and optimizing training is performed on global parameters, so that a network output loss function value is reduced and converged; finally, neonatal pain expression test samples are input and then recognized and classified with the CNN, expression recognition of neonatal infants in calm, cry, slight pain and intense pain states is realized, and one novel method and way is provided for evaluating neonatal pain degree.

Owner:NANJING UNIV OF POSTS & TELECOMM

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com